Patents

Literature

121results about How to "Improve resource usage efficiency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

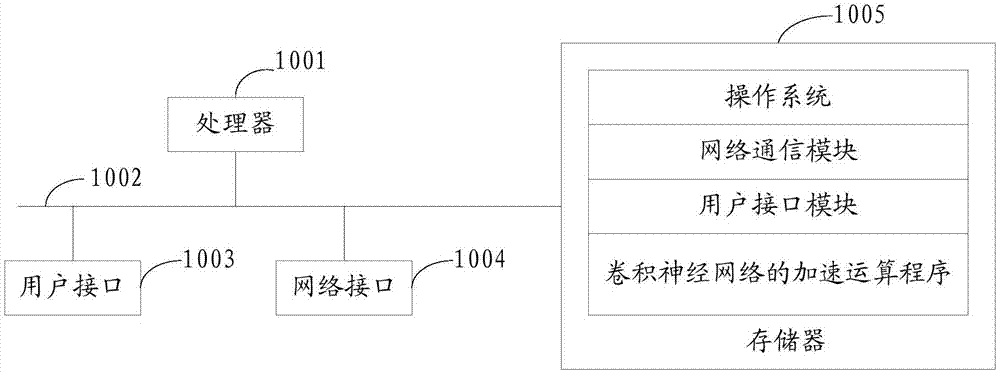

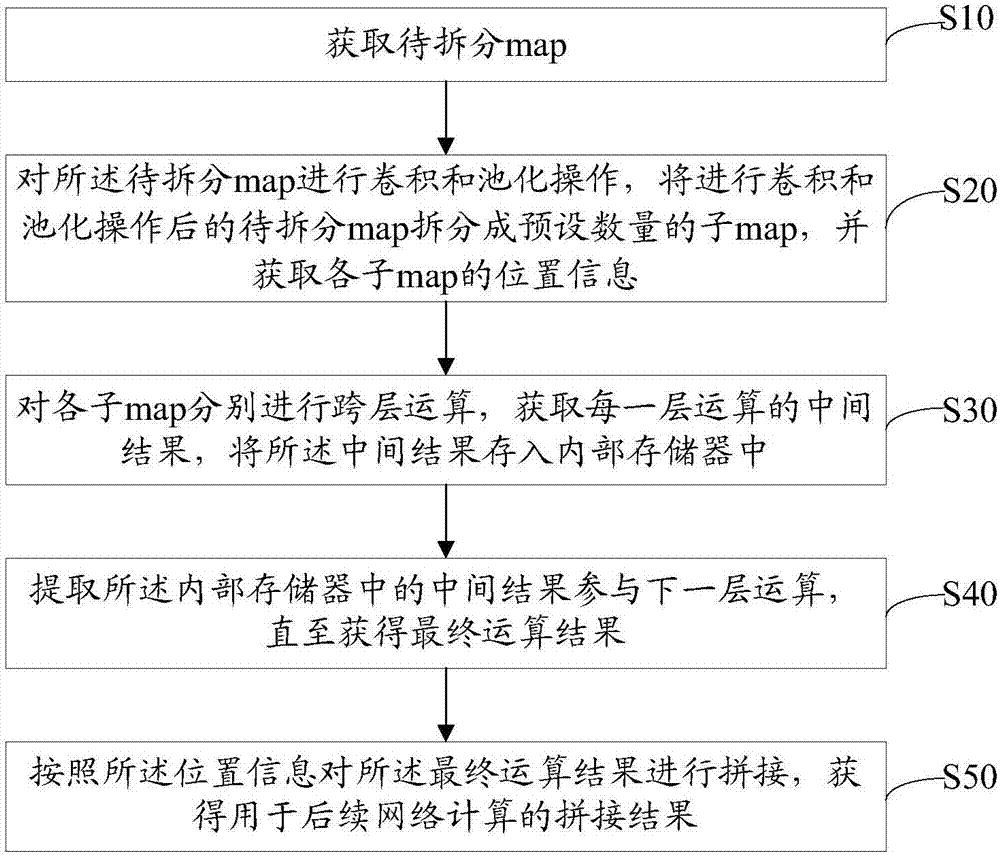

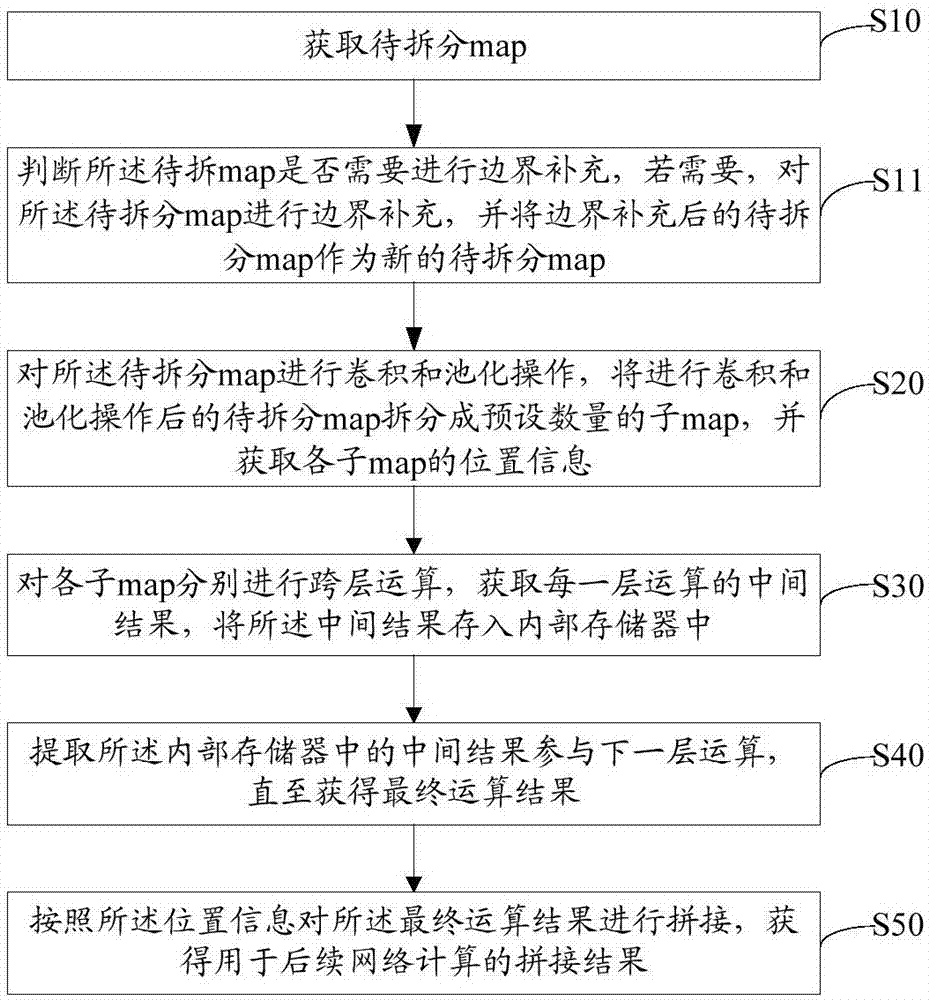

Accelerated operation method and server for convolutional neural network, and storage medium

ActiveCN107451654AIncrease computing speedImprove resource usage efficiencyNeural architecturesInteraction timeInternal memory

The invention discloses an accelerated operation method and server for a convolutional neural network, and a storage medium. A map to be split is split into a preset quantity of sub-maps, and the position information of each sub-map is obtained; each sub-map is independently subjected to cross-layer operation; an intermediate result of each-layer operation is obtained and is stored into an internal memory to extract the intermediate result in the internal memory so as to participate in next-layer operation; therefore, the internal memory is fully utilized, resources are reused, interaction time with an external memory is shortened, the operation speed and the resource use efficiency of the CNN (Convolutional Neural Network) are greatly improved, and therefore, the CNN can efficiently operate at a high speed in an embedded terminal.

Owner:深圳市自行科技有限公司

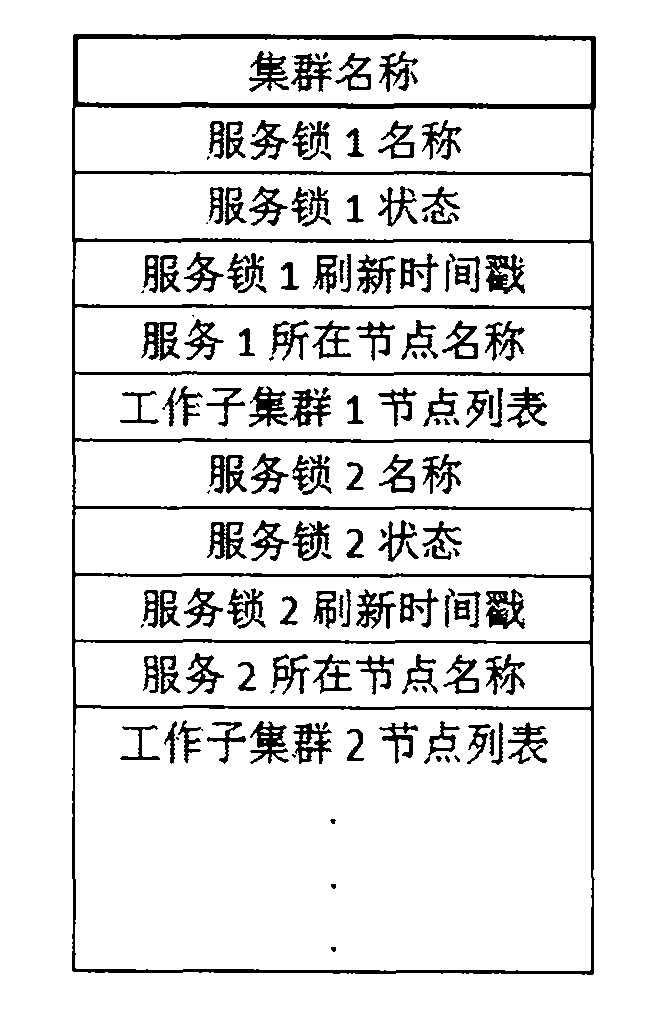

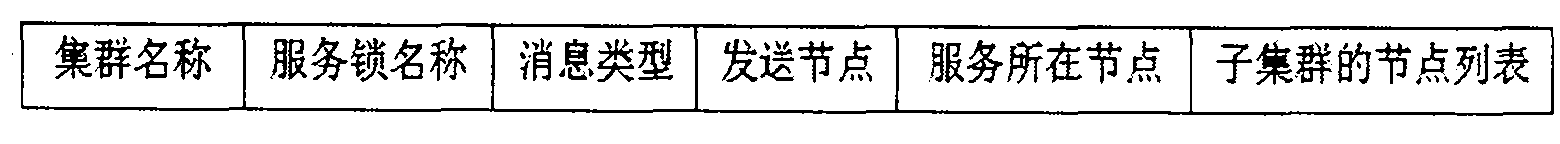

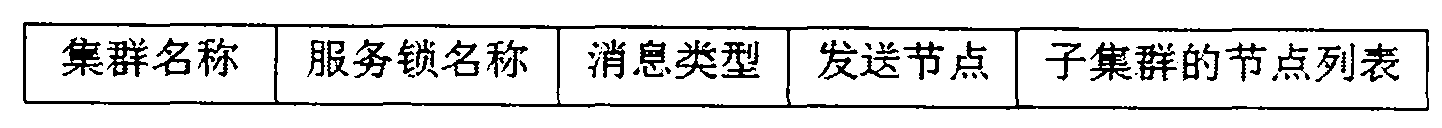

Arbitration server based cluster split-brain prevent method and device

ActiveCN103684941AAvoid the risk of simultaneous launchesImprove resource usage efficiencyNetworks interconnectionSplit-brainCluster Node

The invention discloses an arbitration server based cluster split-brain prevent method and device, and belongs to high-availability cluster split-brain prevention technology in the field of computer cluster technology, in order to solve the problem that services cannot be taken over or the services run on two nodes at the same time due to the fact that states of other nodes and running services thereof cannot be accurately distinguished when a cluster heartbeat network is interrupted. The scheme includes that when the heartbeat network is interrupted, cluster nodes not running services can take over the services only by acquiring corresponding service locks through an arbitration server so as to avoid the problem of split-brain; after the services cease, the arbitration server recovers the service locks and allows other cluster nodes to preempt the same again; in the process that multiple nodes preempt one service lock, only one node succeeds in preemption and the services can be started to prevent occurrence of the split-brain.

Owner:GUANGDONG ZHONGXING NEWSTART TECH CO LTD

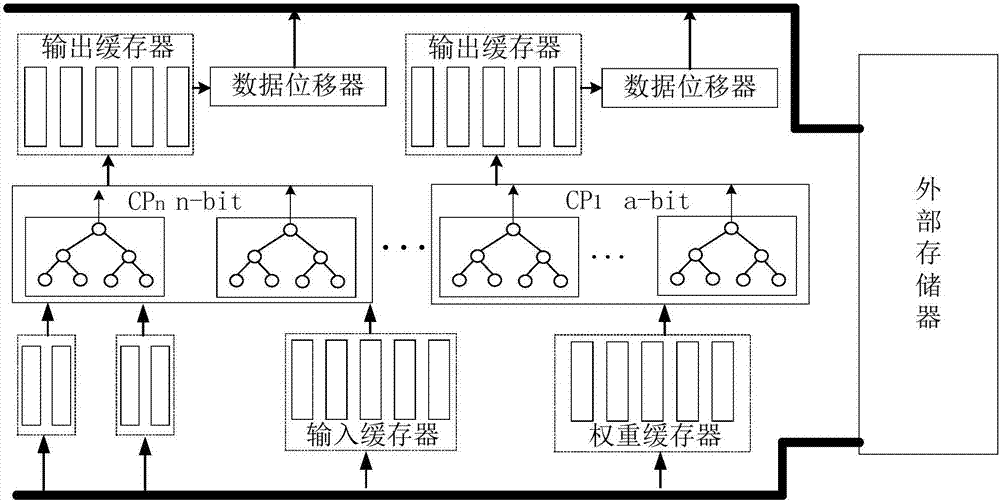

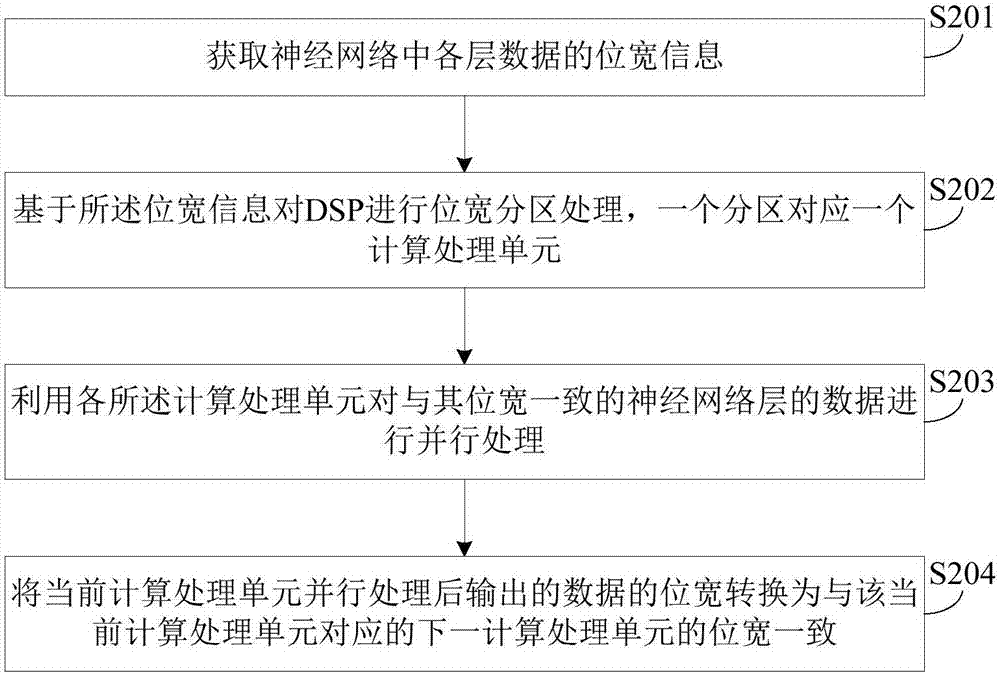

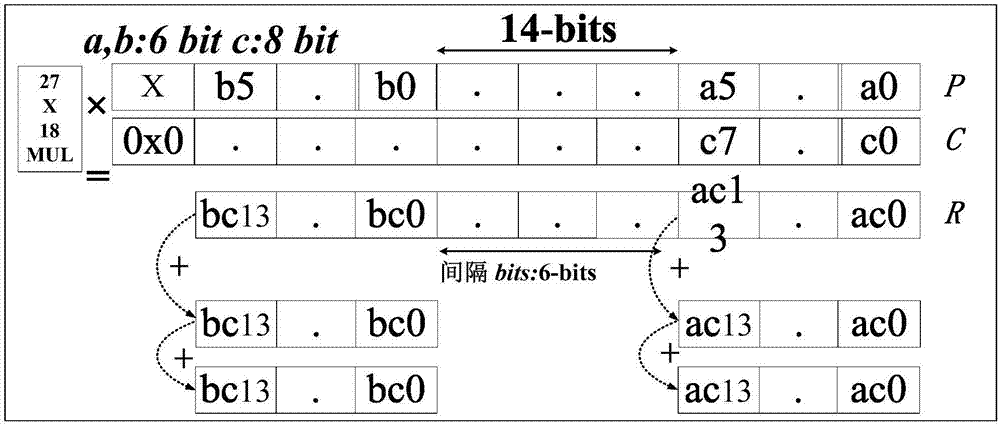

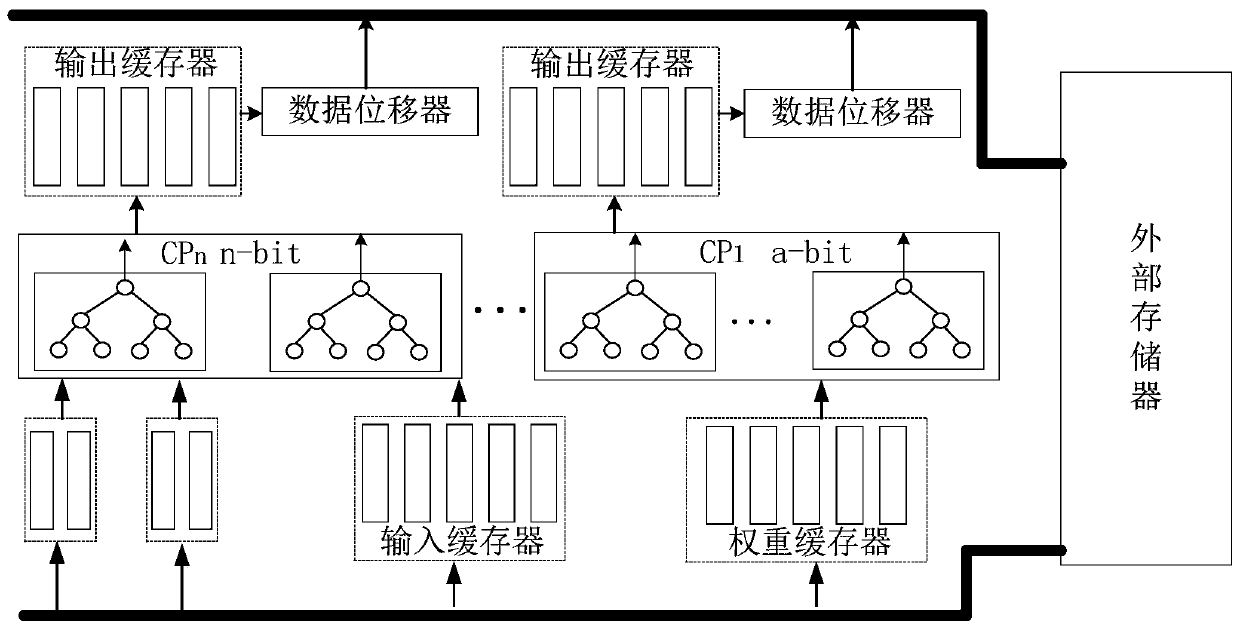

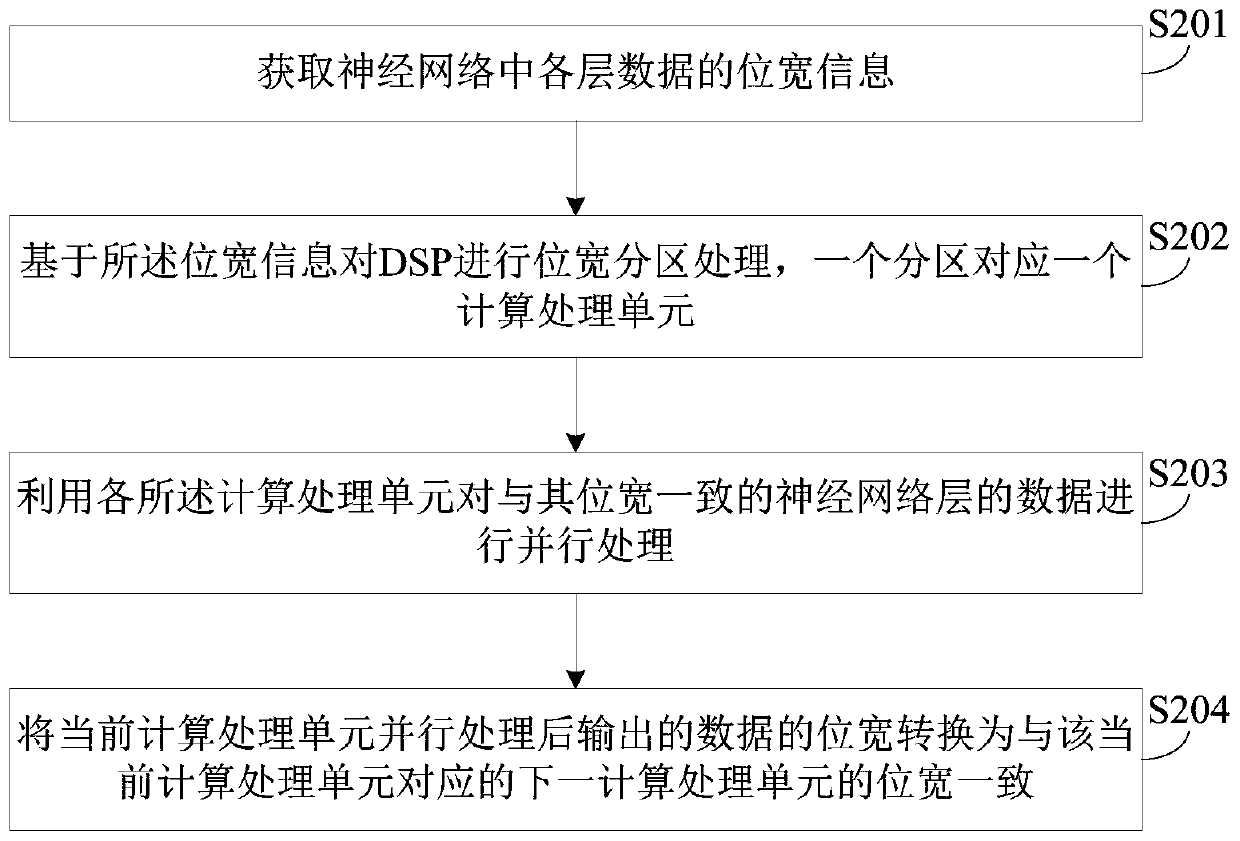

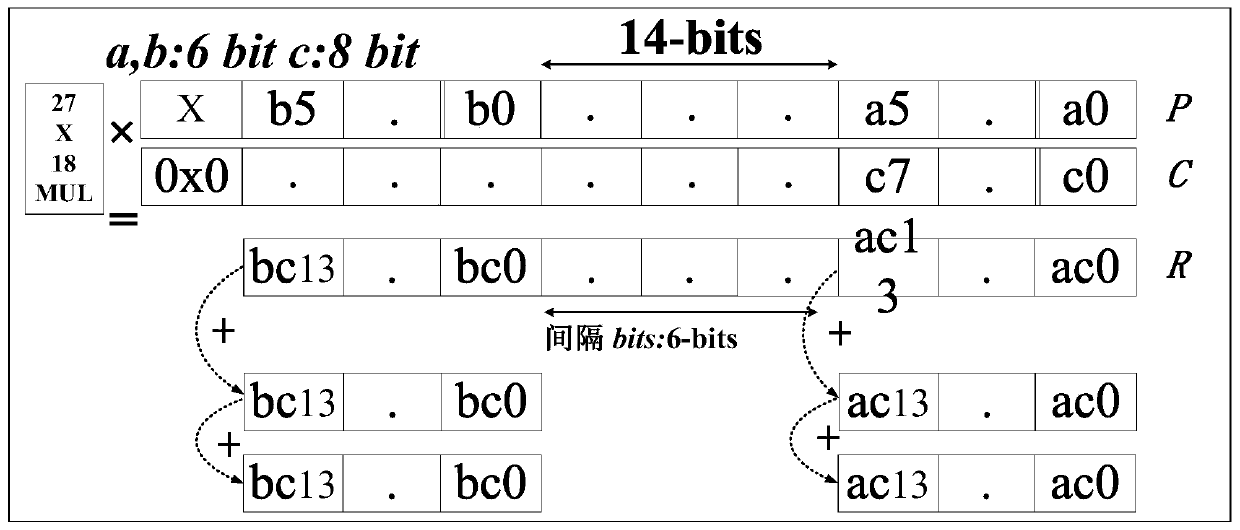

Neural network accelerator for bit width partitioning and implementation method of neural network accelerator

ActiveCN107451659AIncrease profitImprove resource usage efficiencyPhysical realisationExternal storageNerve network

The present invention provides a neural network accelerator for bit width partitioning and an implementation method of the neural network accelerator. The neural network accelerator includes a plurality of computing and processing units with different bit widths, input buffers, weight buffers, output buffers, data shifters and an off-chip memory; each of the computing and processing units obtains data from the corresponding input buffering area and weight buffer, and performs parallel processing on data of a neural network layer having a bit width consistent with the bit width of the corresponding computing and processing unit; the data shifters are used for converting the bit width of data outputted by the current computing and processing unit into a bit width consistent with the bit width of a next computing and processing unit corresponding to the current computing and processing unit; and the off-chip memory is used for storing data which have not been processed and have been processed by the computing and processing units. With the neural network accelerator for bit width partitioning and the implementation method of the neural network accelerator of the invention adopted, multiply-accumulate operation can be performed on a plurality of short-bit width data, so that the utilization rate of a DSP can be increased; and the computing and processing units (CP) with different bit widths are adopted to perform parallel computation of each layer of a neural network, and therefore, the computing throughput of the accelerator can be improved.

Owner:TSINGHUA UNIV

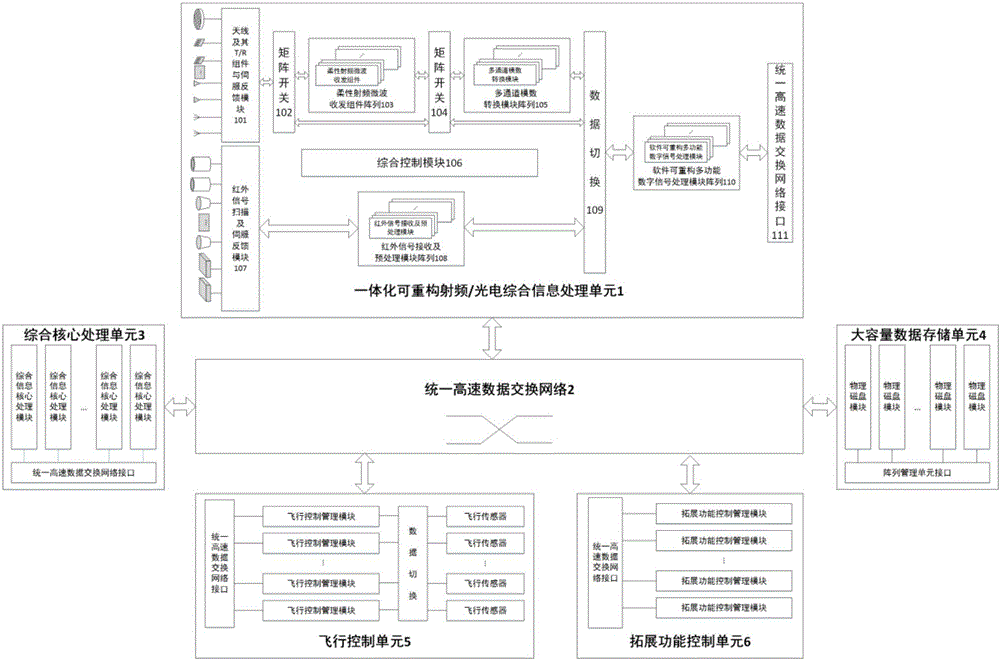

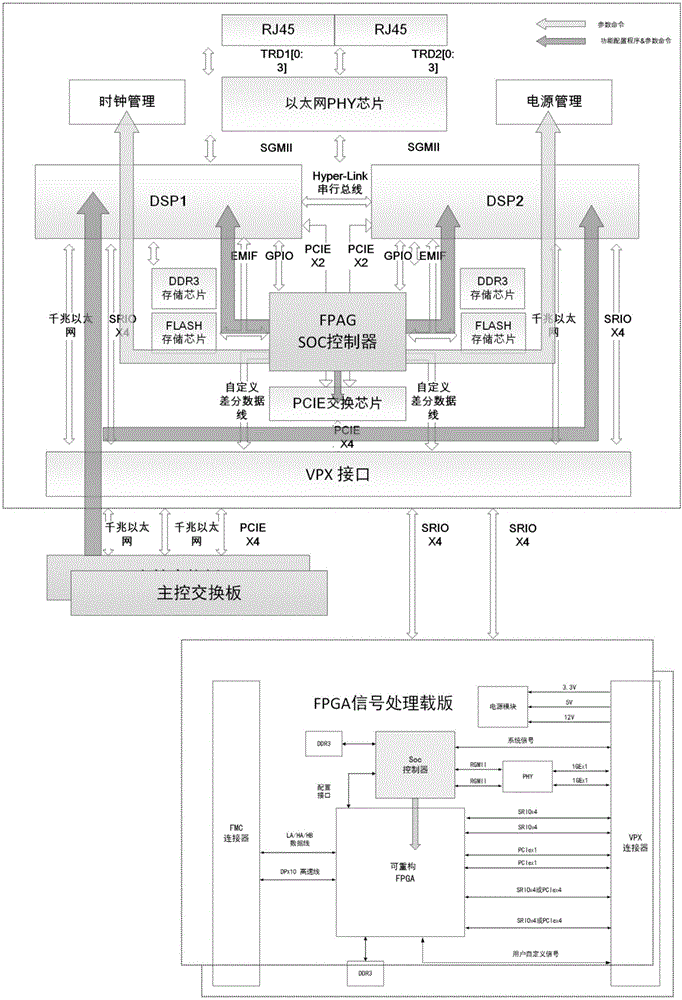

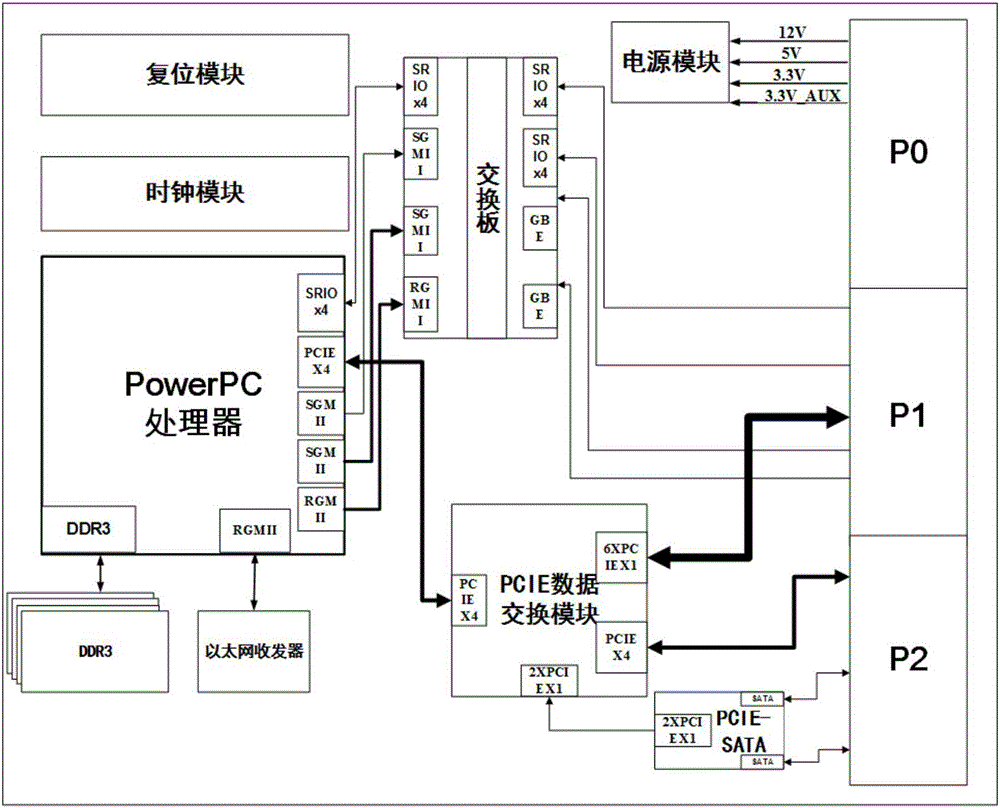

Integrated reconfigurable summarized information processing loading system

InactiveCN105786620AFull Feature SupportTake advantage ofResource allocationInformation processingComputer architecture

The invention discloses an integrated reconfigurable summarized information processing loading system.A uniform and standard modularized system structure is adopted in the system, according to actual task requirements and resource use conditions, functions and parameters of all modules are reconstructed on line on the condition of ensuring reliability, redundancy of the system is adjusted dynamically, and therefore comprehensive task processing including communication, navigation, measurement and control, target detection and recognition, flight control, information support and the like is realized.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

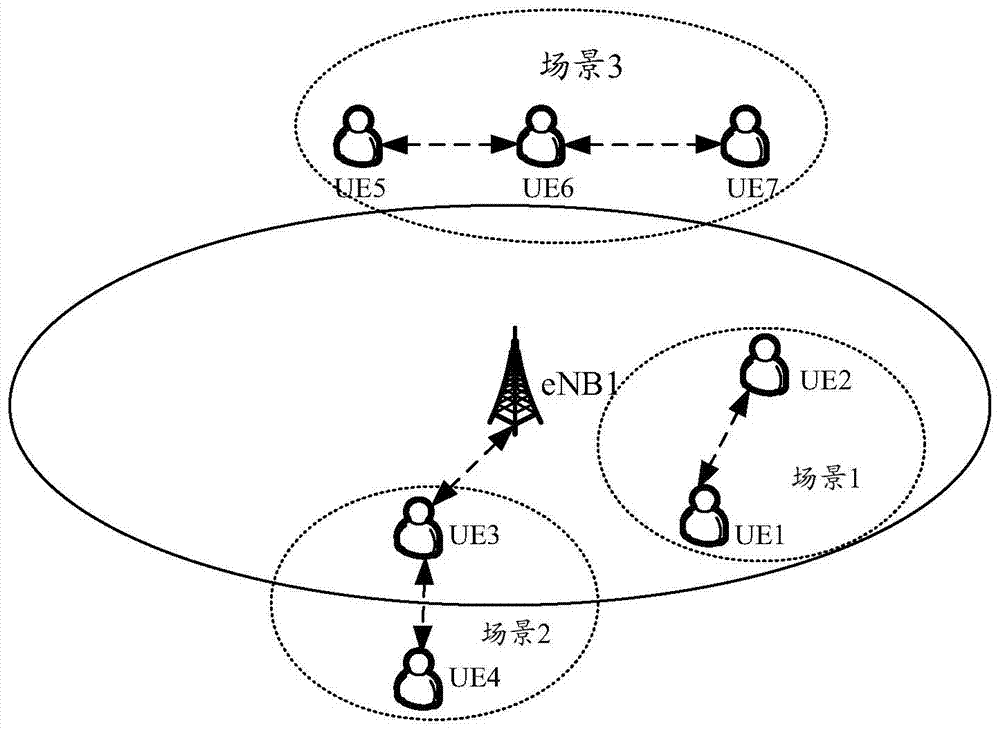

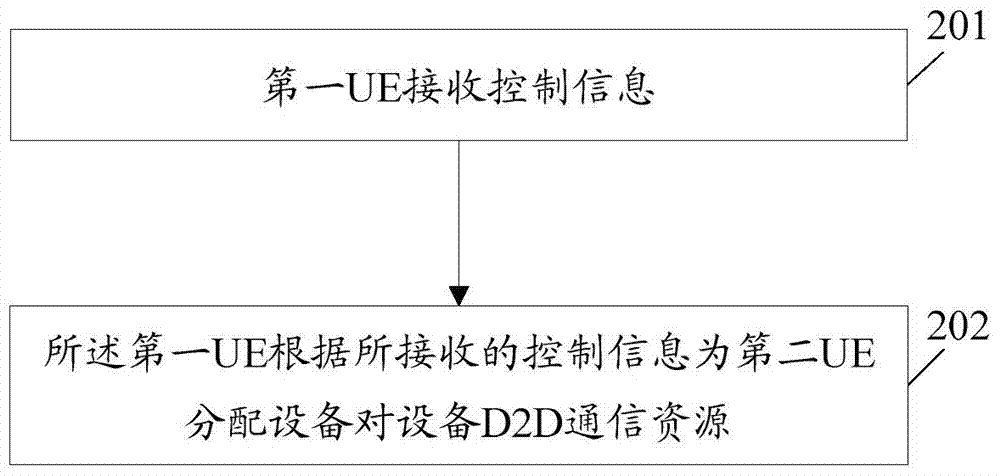

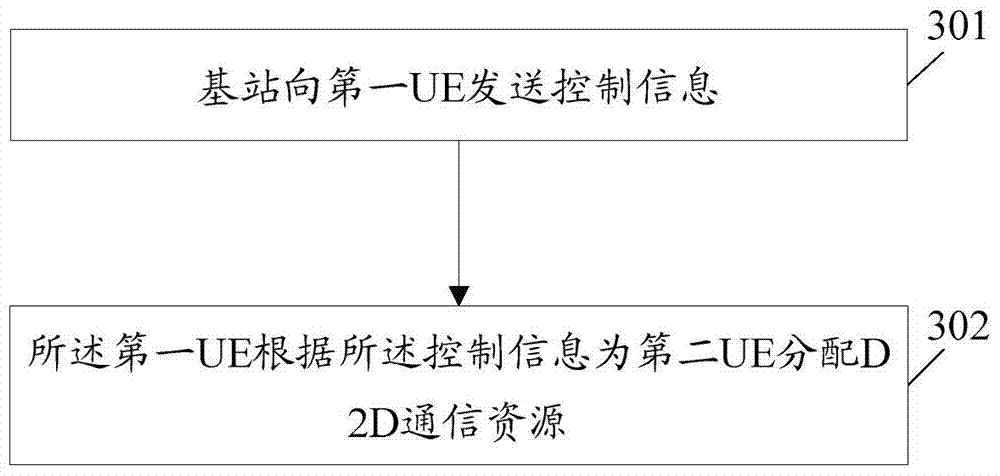

Method and system for communication resource management, and device

ActiveCN104519577AResolve interferenceImprove experienceNetwork planningResource managementUser equipment

The present invention embodiment discloses a communication resource management method, device, system and computer storage medium, said method comprising: a first UE receives control information; said first UE, in accordance with the received control information, allocates device to device (D2D) communication resources to a second UE.

Owner:ZTE CORP

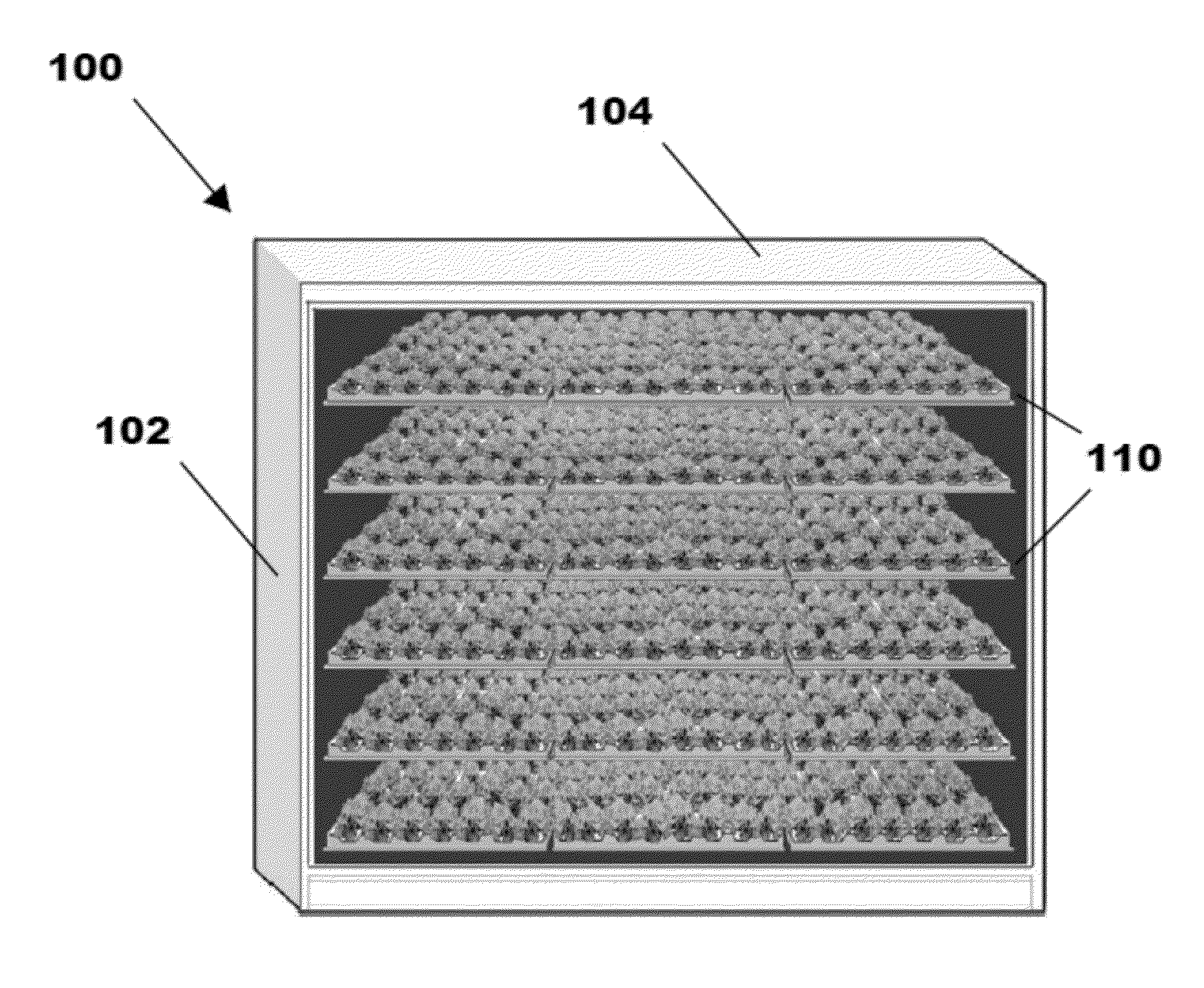

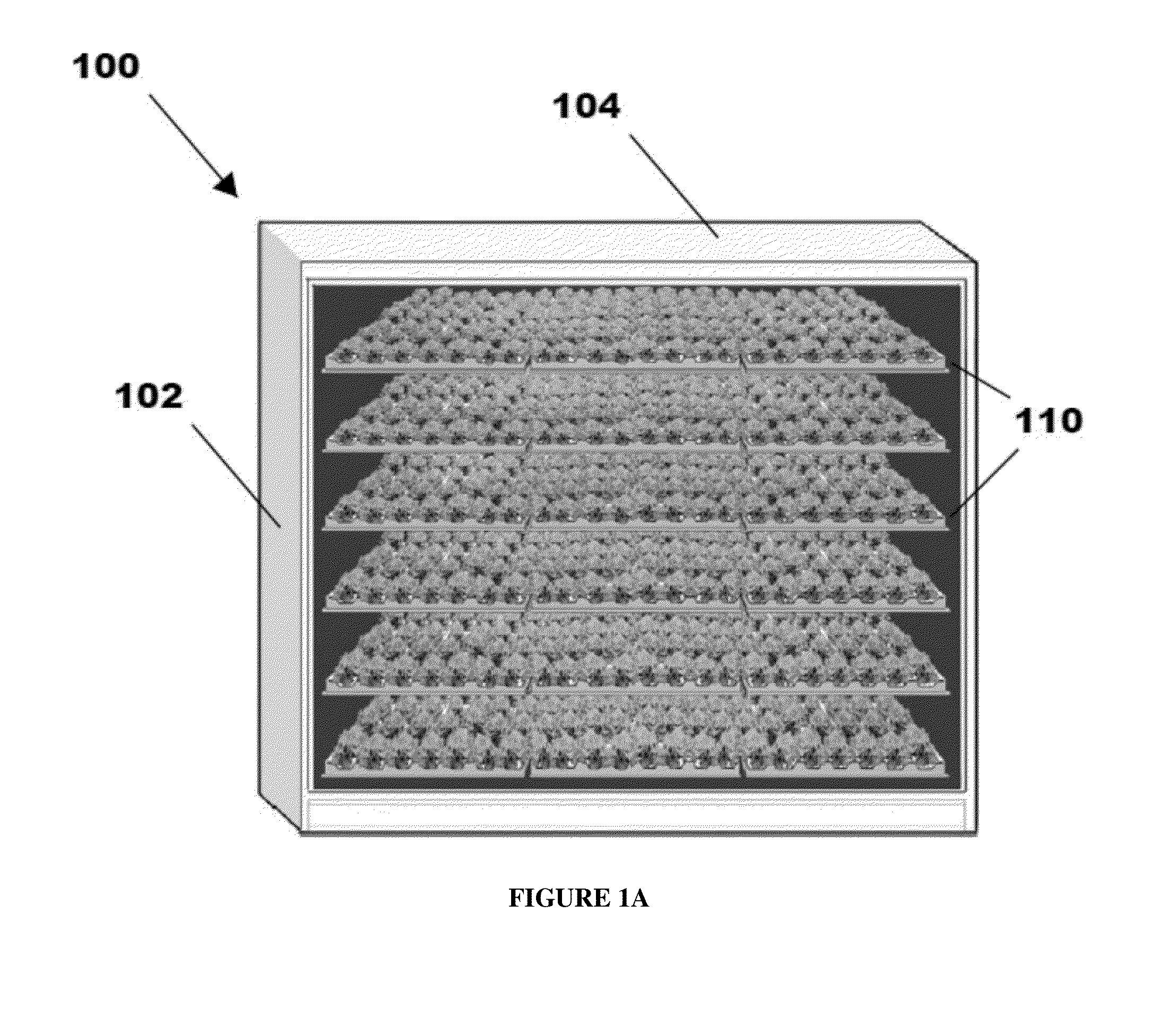

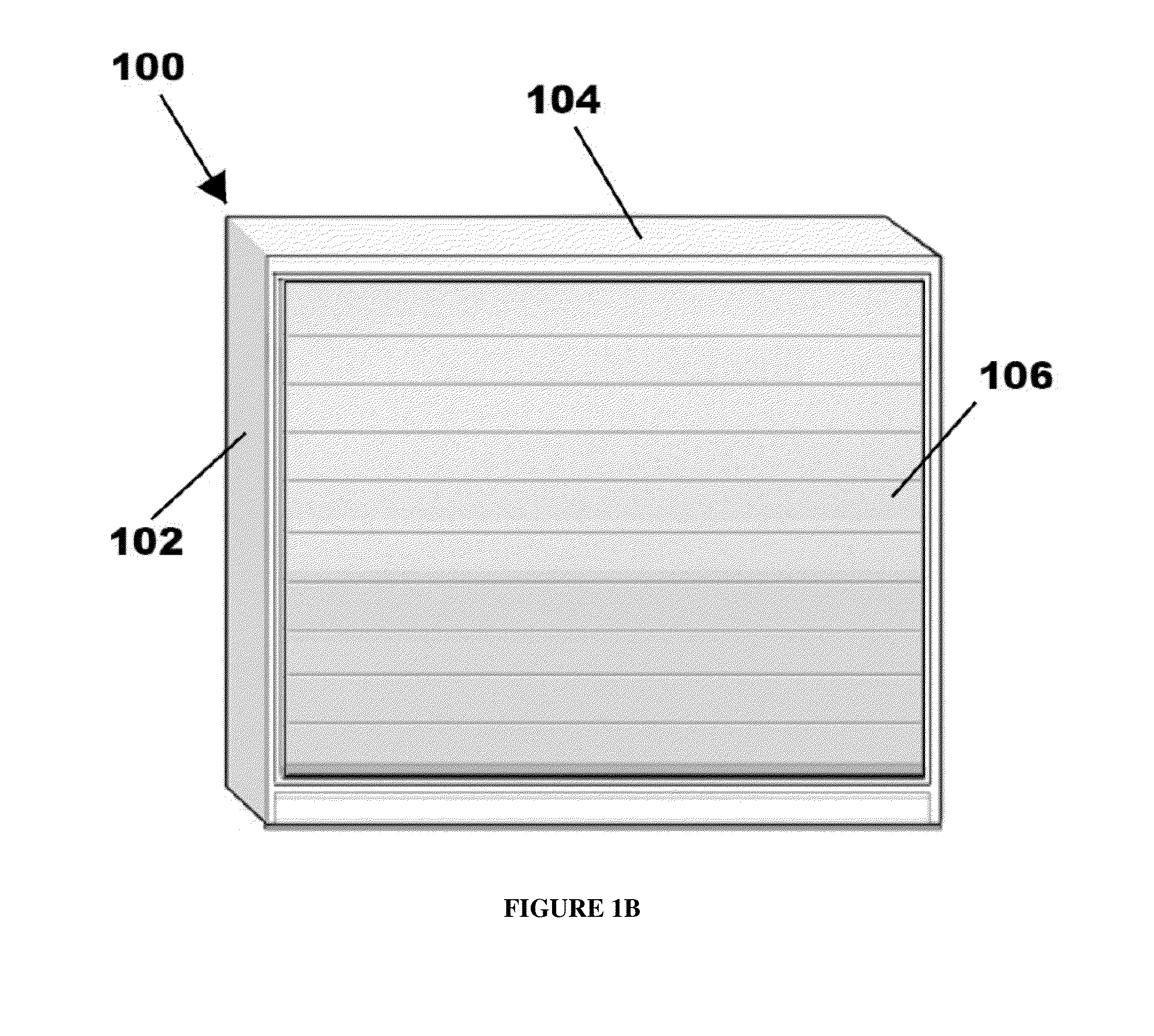

Cultivation pod

InactiveUS20140090295A1Lower the volumeImprove resource usage efficiencyClimate change adaptationAgriculture gas emission reductionEngineeringLight-emitting diode

A cultivation pod includes a platform, a nutrient supply system, and light-emitting diodes (LEDs). The platform includes a supporting tray comprising channels, each having a nutrient-film, and a plant carrier positioned on the supporting tray, where the plant carrier lies in a plane when positioned on the supporting tray and is removable in a direction that lies in the plane without removing the supporting tray from the pod. The nutrient supply system feeds nutrient media to the supporting tray and the plant carrier is removable from the pod without disconnecting the nutrient supply system from the supporting tray. The LEDs provide light to the plant carrier when the plant carrier is positioned on the supporting tray, where the LEDs lie in a planar orientation and are unevenly distributed in the substantially planar orientation.

Owner:FAMGRO

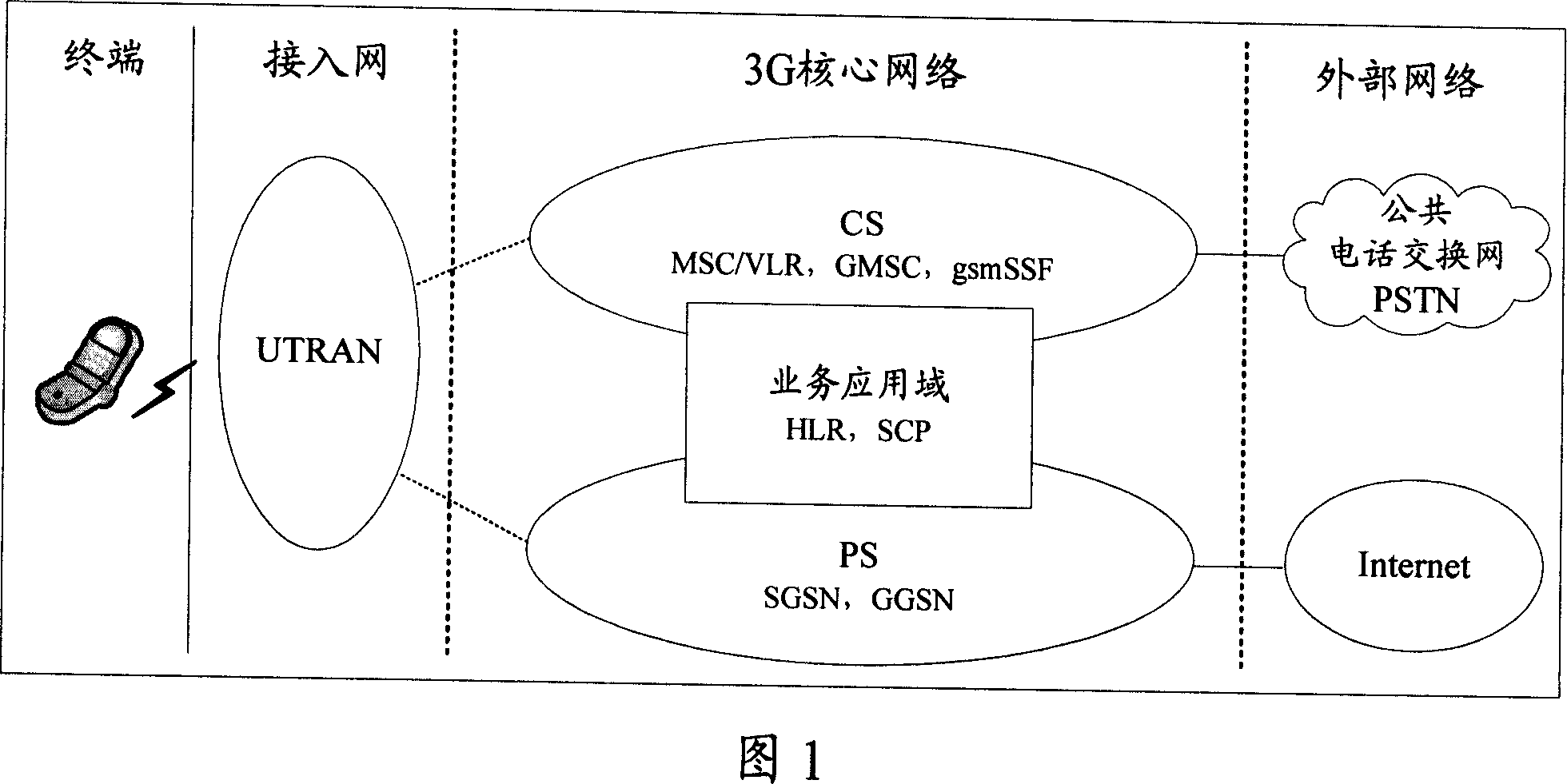

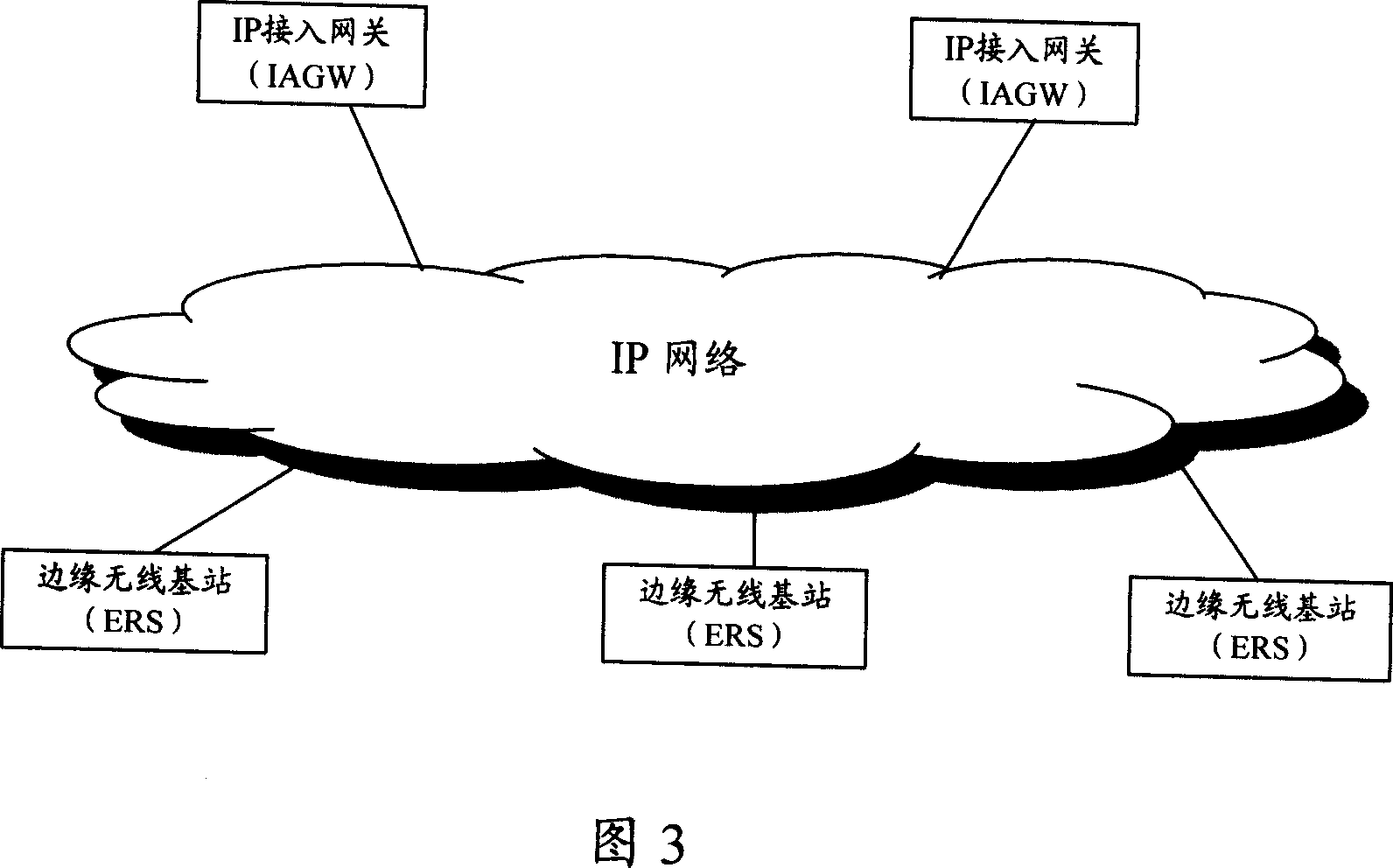

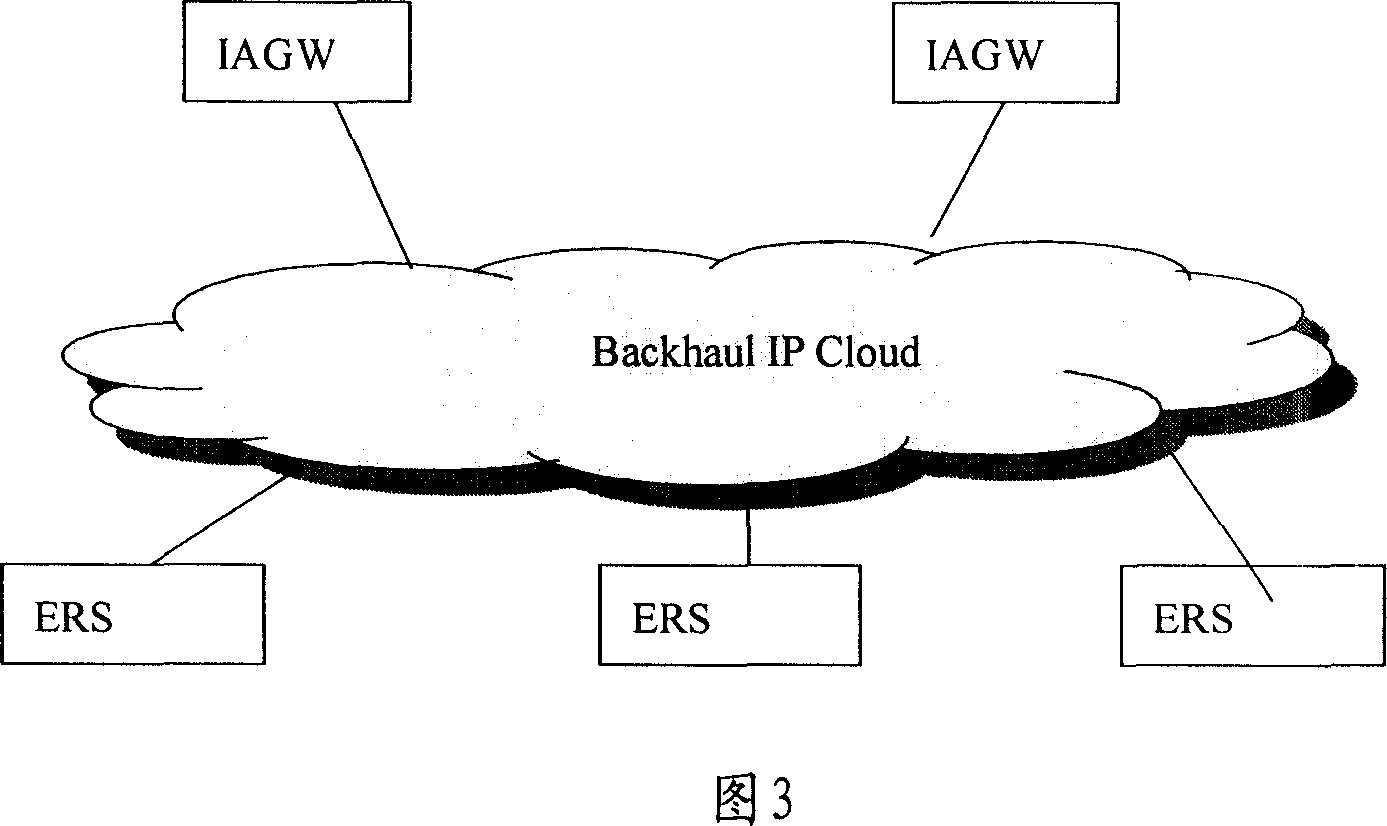

User face protocol stack and head compression method

ActiveCN1949769AReduce latencyReasonable hierarchical structureData switching networksComputer hardwareAir interface

The invention discloses a user interface protocol stack, applied to radio evoluted networkin two-layer node structure, comprising IP access gateway (IAGW) user interface protocol stack, edge radio station (ERS) user interface protocol stack and uer end (UE) user interface protocol stack, where the IAGW user interface protocol stack comprises: layer L1, layer L2, IP layer and higher layer; the ERS user interface protocol stack comprises equivalent layer L1, layer L2, IP layer and higher layer on E-I interface side between it and IAGW; the radio interface side comprises: radio layer L1, lower layer and head compression layer; the UE user interface protocol stack comprises equivalent radio layer L1, lower layer and head compression layer to those on ERS radio interface side. And the invention also discloses a head compressing method in the two-layer node structure, implementing head compression of air interface data packet between ERS and UE.

Owner:HUAWEI TECH CO LTD

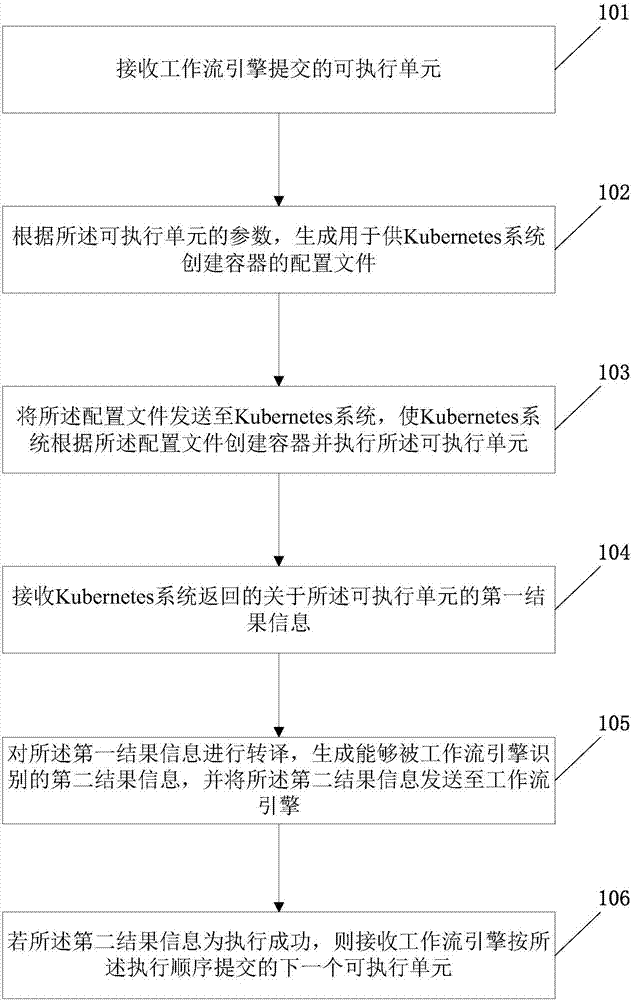

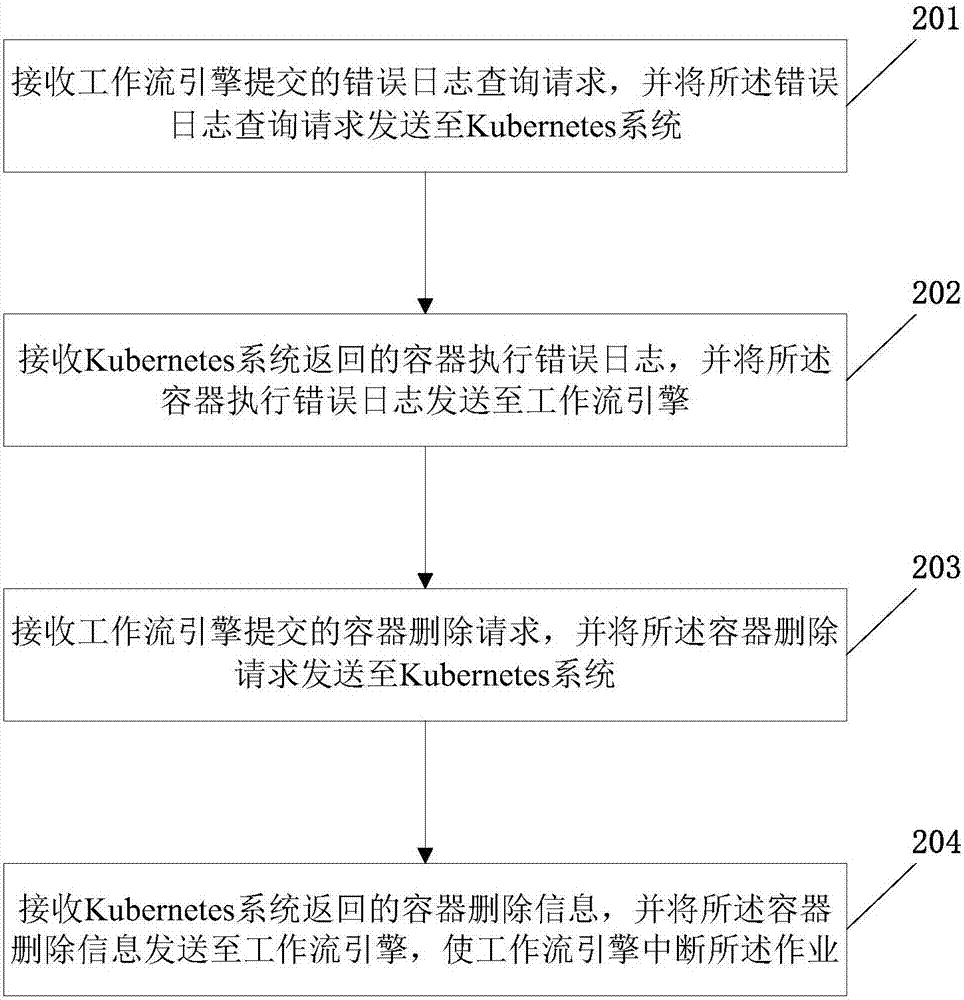

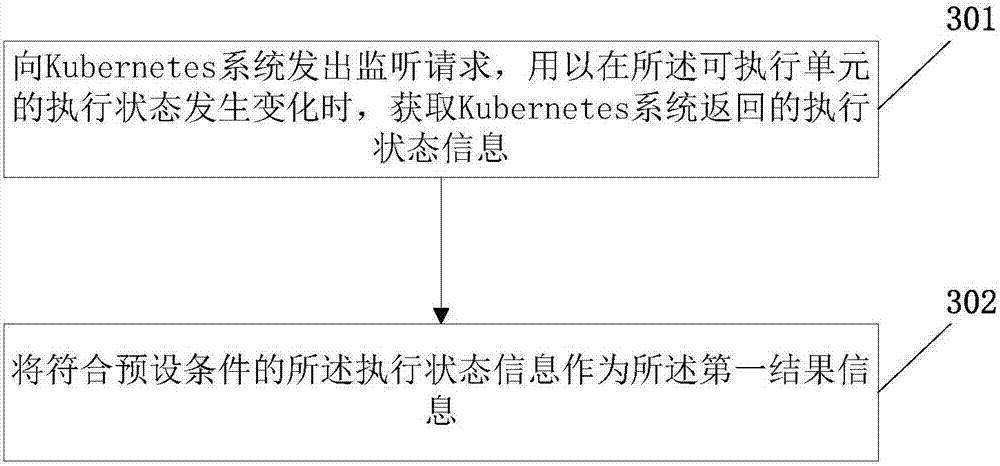

Docking workflow engine operation scheduling method and device based on Kubernetes system

ActiveCN107105009AImprove efficiencyImprove resource usage efficiencyTransmissionOperation schedulingResource utilization

The invention discloses a docking workflow engine operation scheduling method and device based on Kubernetes system. The methods include: receiving the executable unit submitted by the workflow engine; generating a configuration file for the Kubernetes system to create a container based on the parameters of the executable unit; sending the configuration file to the Kubernetes system so that the Kubernetes system creates the container according to the configuration file and executes the executable unit; receiving the first result of the executable unit returned by the Kubernetes system; translating the first result information to generate the second result information that can be recognized by the workflow engine and sending the second result information to the workflow engine; and if the second result information is successful, receiving the next executable unit that the workflow engine submits according to the execution sequence. The invention can realize the synergistic work of the workflow engine and Kubernetes system, and effectively improve the efficiency of operation execution and the resource utilization.

Owner:UNITED ELECTRONICS

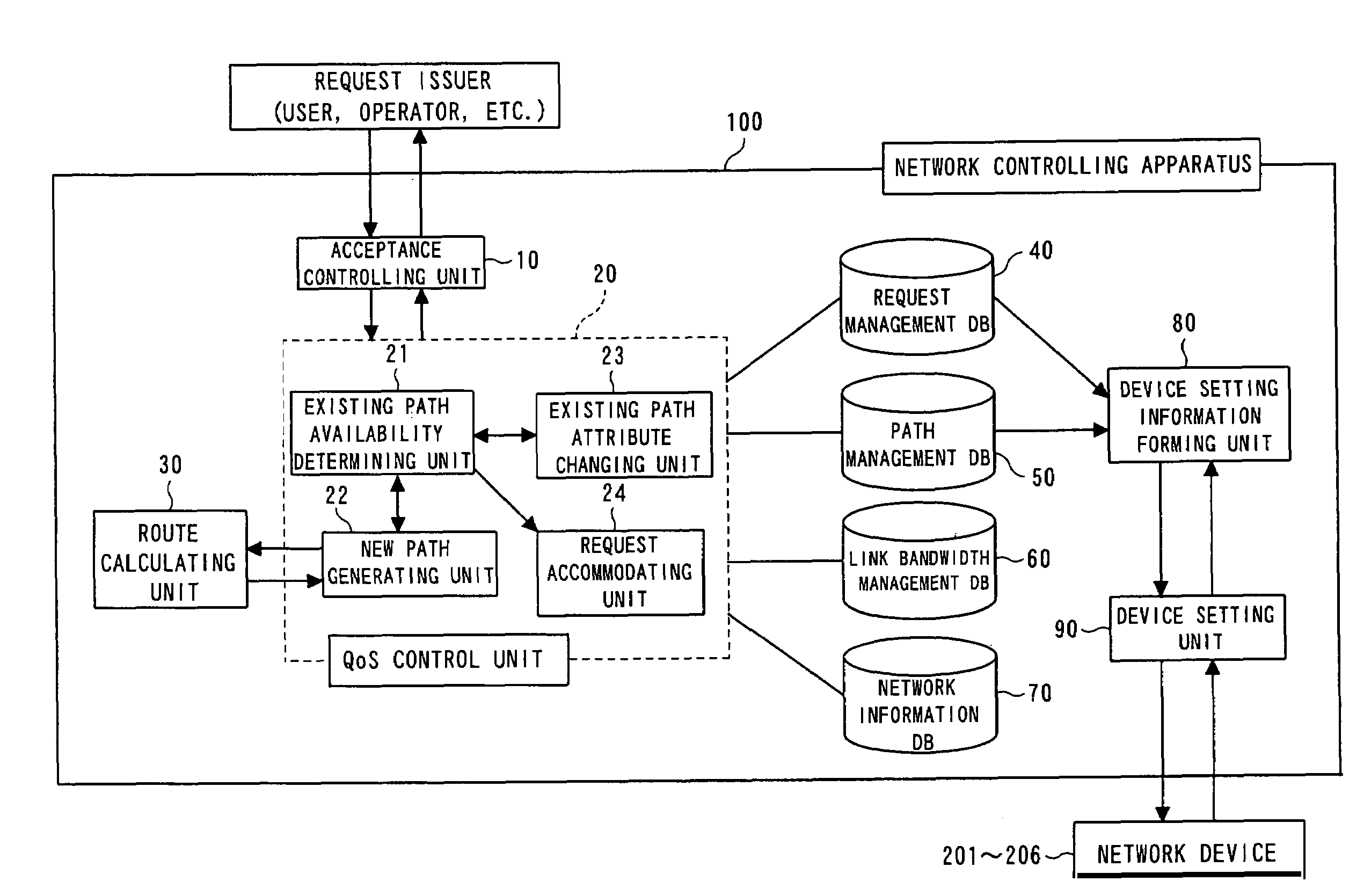

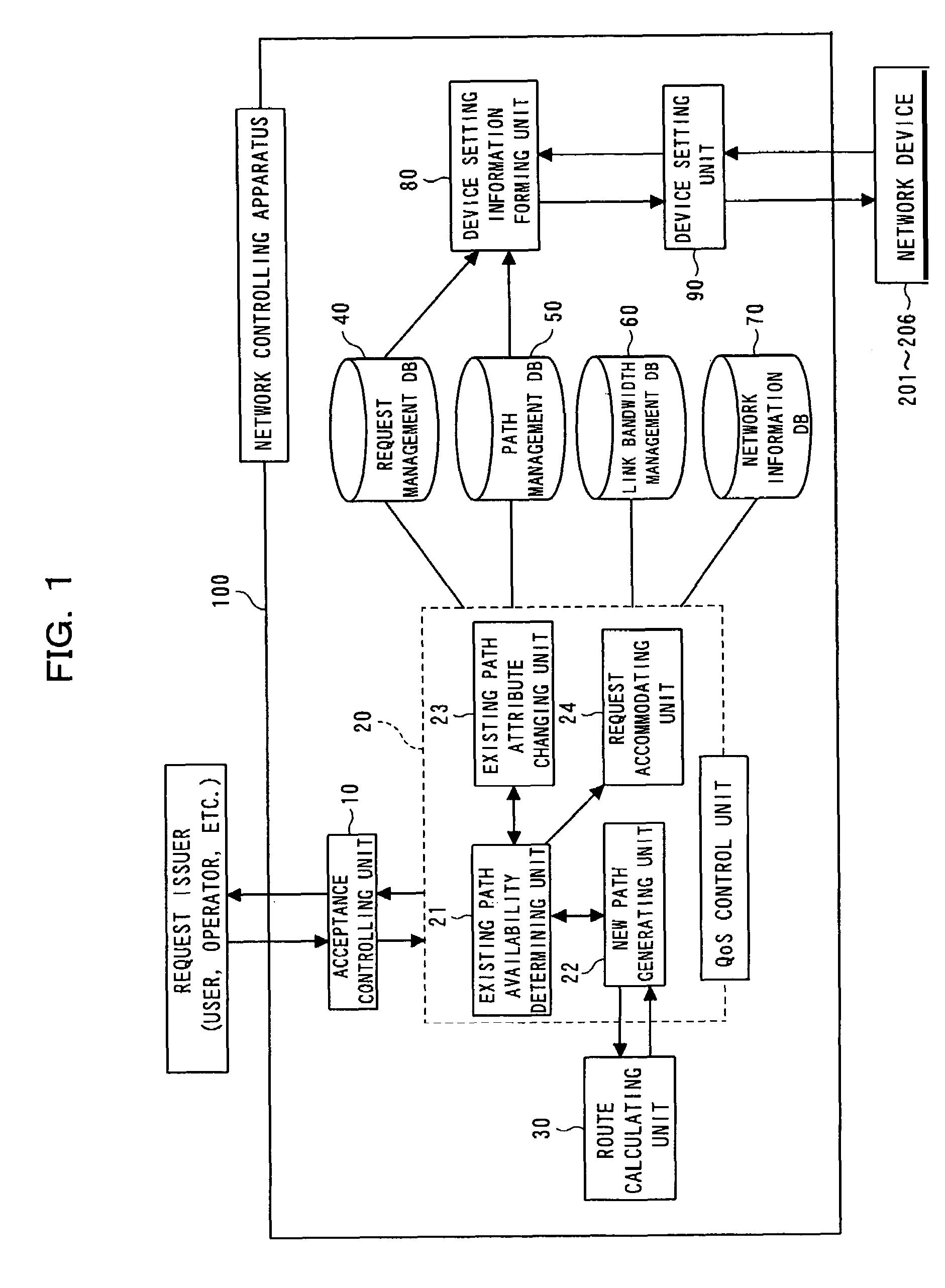

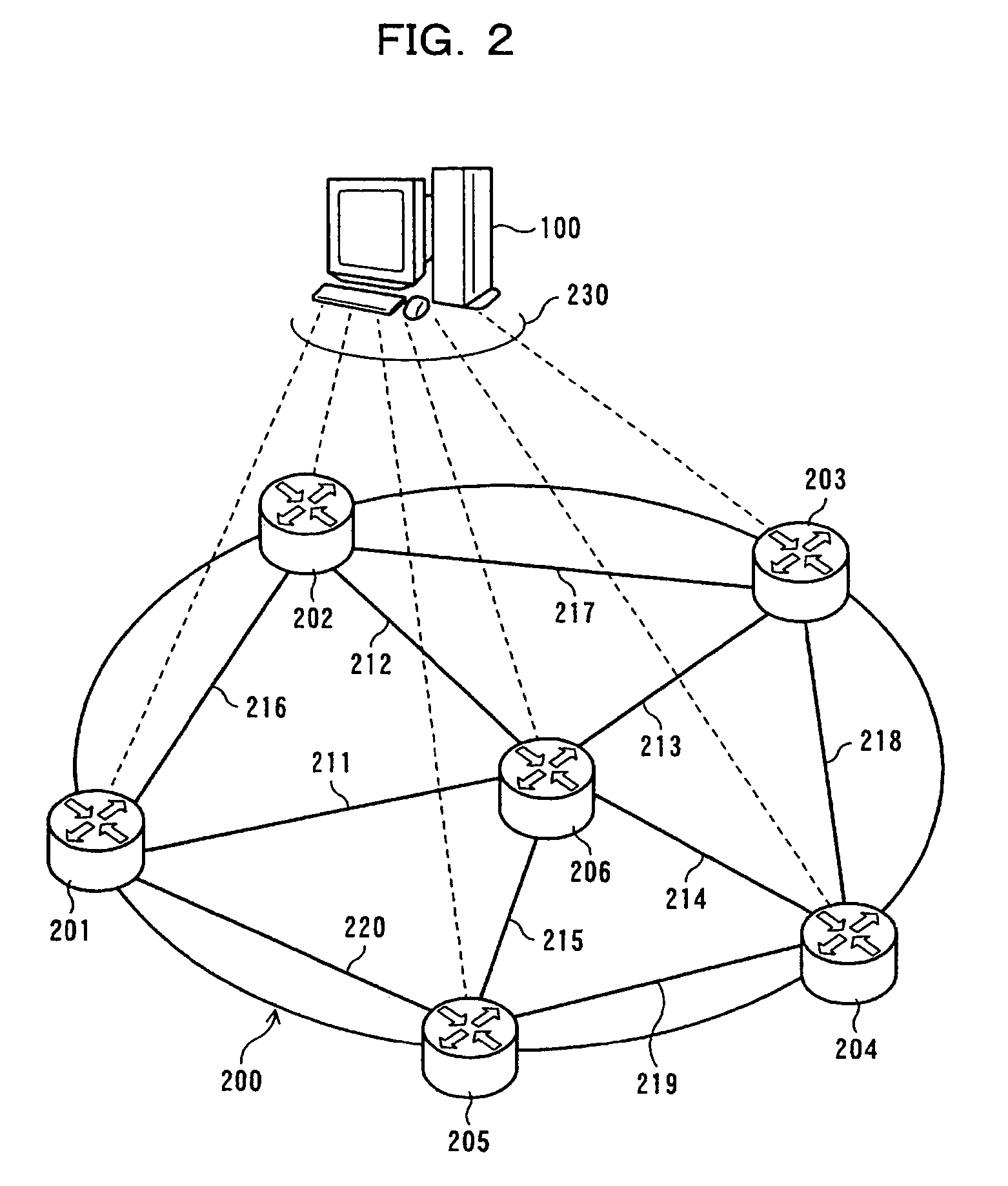

Network controlling apparatus and path controlling method therein

InactiveUS7450513B2Improve efficiencyOptimize usageError preventionTransmission systemsNetwork controlDistributed computing

A network controlling apparatus appropriately arranges a generated path every hour with a change in section and bandwidth requested in each time period to flexibly generate a path according to the state of occurrence of requests. The network apparatus comprises a storing unit storing information for management of the network, and a controlling unit controlling setting of the network device relating to a connection of network devices at two edge points in the network to obtain path setting satisfying the quality guarantee request, using a path already set or scheduled to be set, or a newly generated path, by referring to the information stored in the storing unit when receiving the quality guarantee request.

Owner:FUJITSU LTD

Neural Network Accelerator for Bit Width Partitioning and Its Implementation Method

ActiveCN107451659BIncrease profitImprove resource usage efficiencyPhysical realisationConcurrent computationExternal storage

The present invention provides a neural network accelerator for bit width partitioning and an implementation method of the neural network accelerator. The neural network accelerator includes a plurality of computing and processing units with different bit widths, input buffers, weight buffers, output buffers, data shifters and an off-chip memory; each of the computing and processing units obtains data from the corresponding input buffering area and weight buffer, and performs parallel processing on data of a neural network layer having a bit width consistent with the bit width of the corresponding computing and processing unit; the data shifters are used for converting the bit width of data outputted by the current computing and processing unit into a bit width consistent with the bit width of a next computing and processing unit corresponding to the current computing and processing unit; and the off-chip memory is used for storing data which have not been processed and have been processed by the computing and processing units. With the neural network accelerator for bit width partitioning and the implementation method of the neural network accelerator of the invention adopted, multiply-accumulate operation can be performed on a plurality of short-bit width data, so that the utilization rate of a DSP can be increased; and the computing and processing units (CP) with different bit widths are adopted to perform parallel computation of each layer of a neural network, and therefore, the computing throughput of the accelerator can be improved.

Owner:北京芯力技术创新中心有限公司

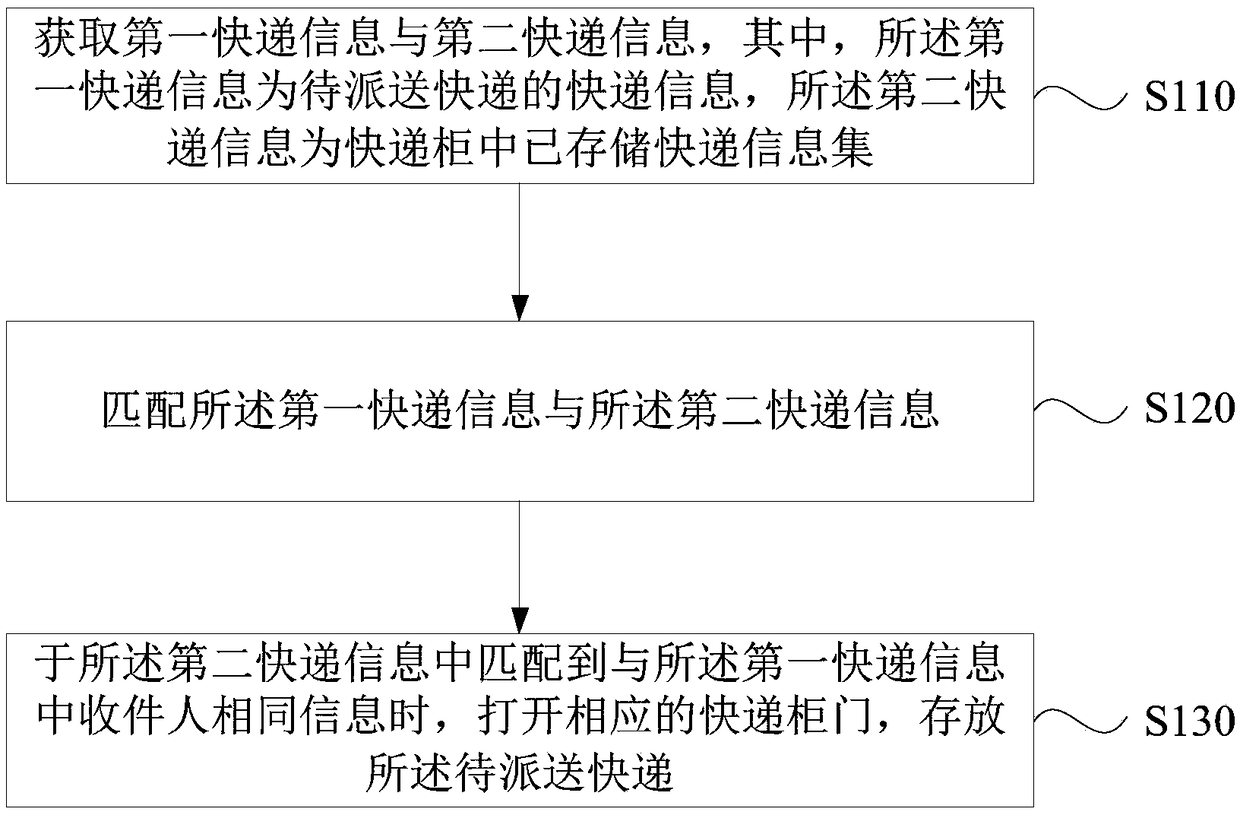

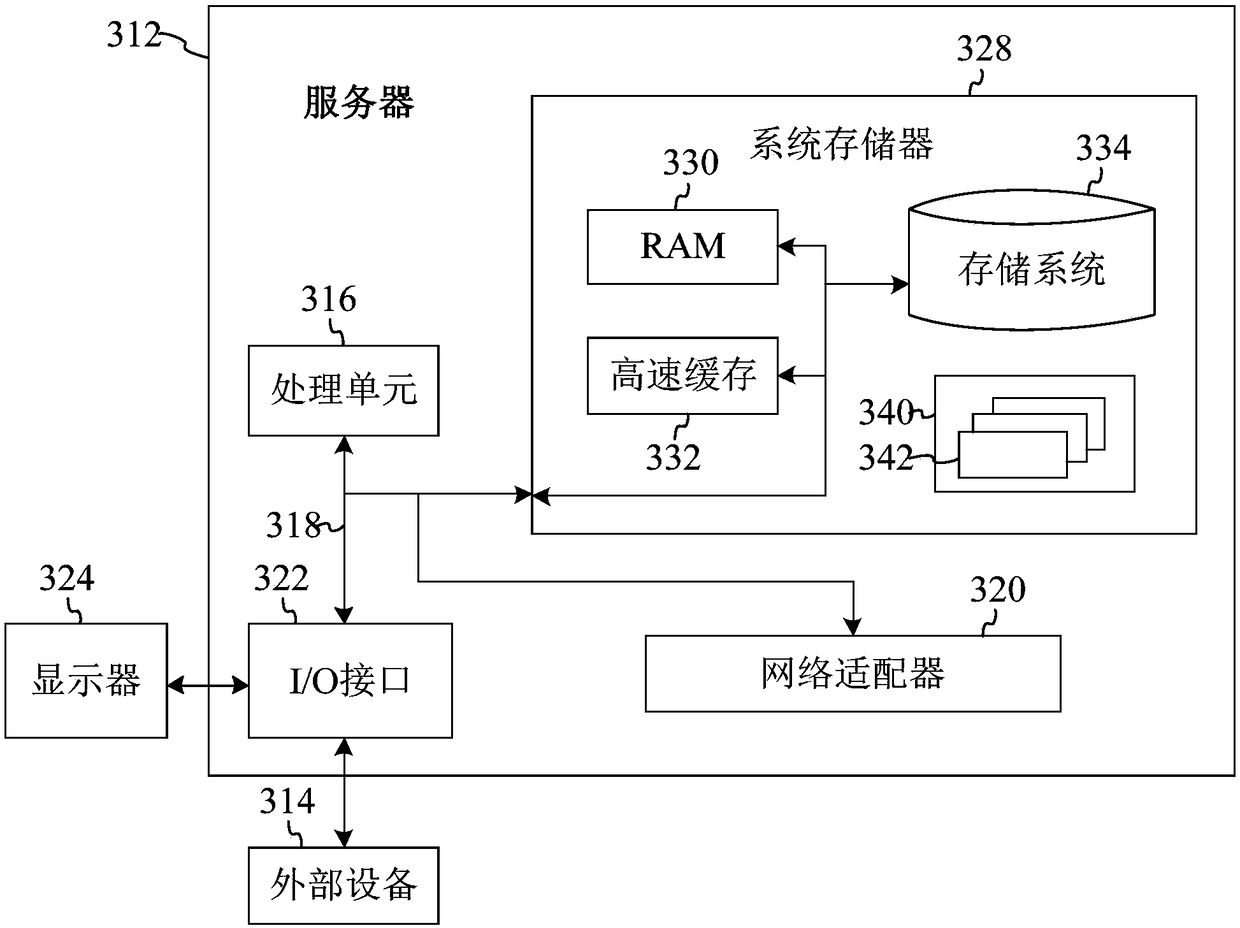

Express delivery method, apparatus, server, and storage medium

InactiveCN109034700AImprove resource usage efficiencyImprove efficiencyLogisticsComputer scienceResource use

The embodiment of the invention discloses an express delivery method, a device, a server and a storage medium. The method comprises the following steps: acquiring first express information and secondexpress information, wherein, the first express information is express information to be delivered, and the second express information is a set of express information stored in an express cabinet; matching the first courier information with the second courier information; when the second express information matches the same information as the recipient in the first express information, the corresponding express cabinet door is opened to store the express to be delivered. The embodiment of the invention solves the problem that when the same recipient has multiple express delivery cabinets, theresources of the express delivery cabinet cannot be reasonably applied, and realizes that the multiple express delivery of the same recipient is stored in an intelligent express sub-cabinet, which improves the use efficiency of the resources of the express delivery cabinet, and also facilitates the pick-up person to pick up the express delivery.

Owner:昆山品源知识产权运营科技有限公司

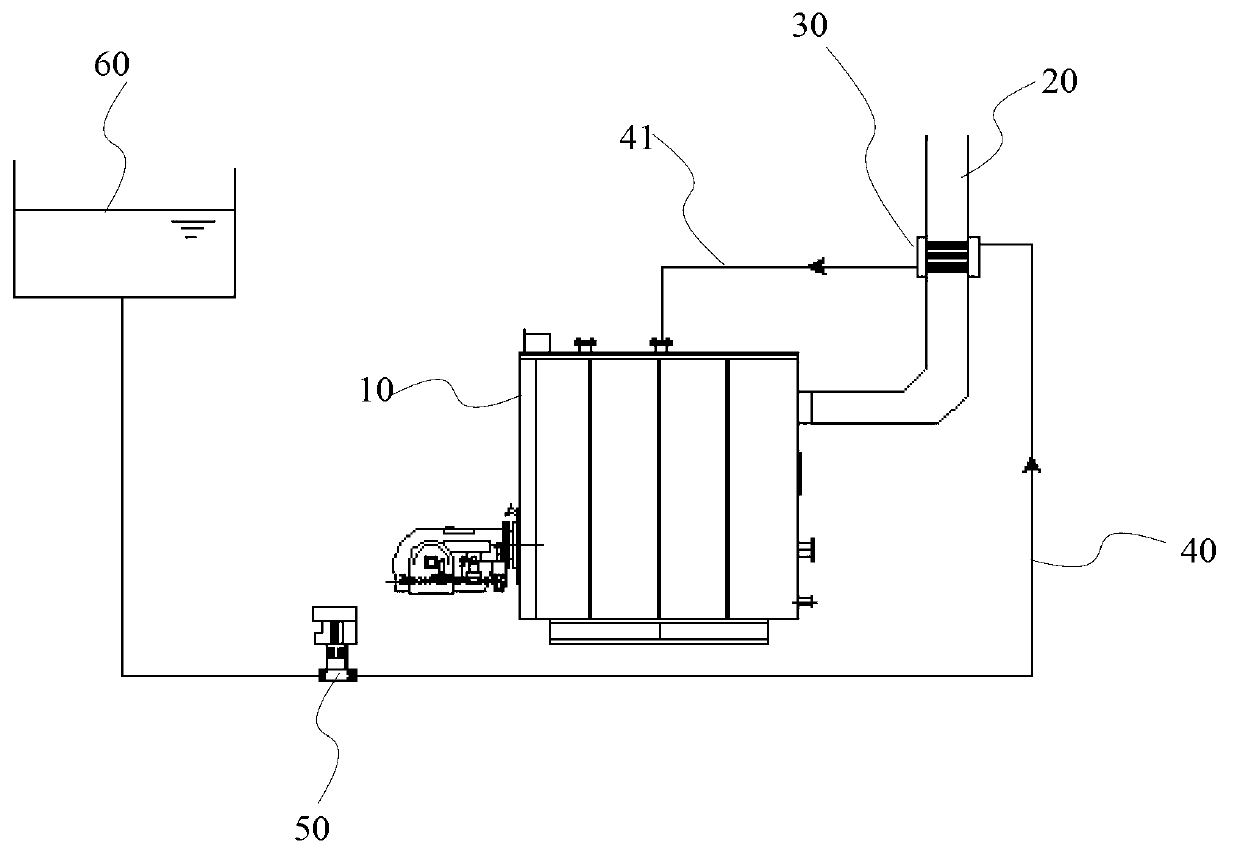

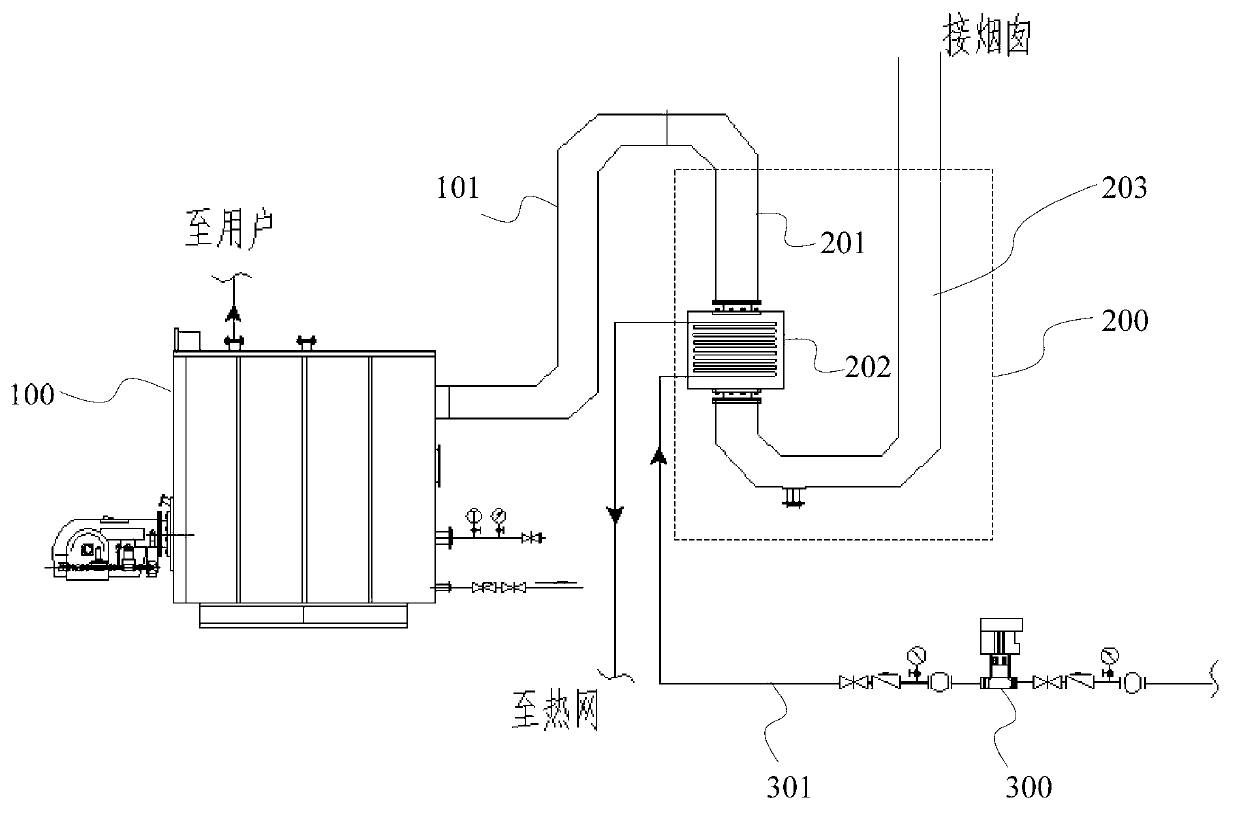

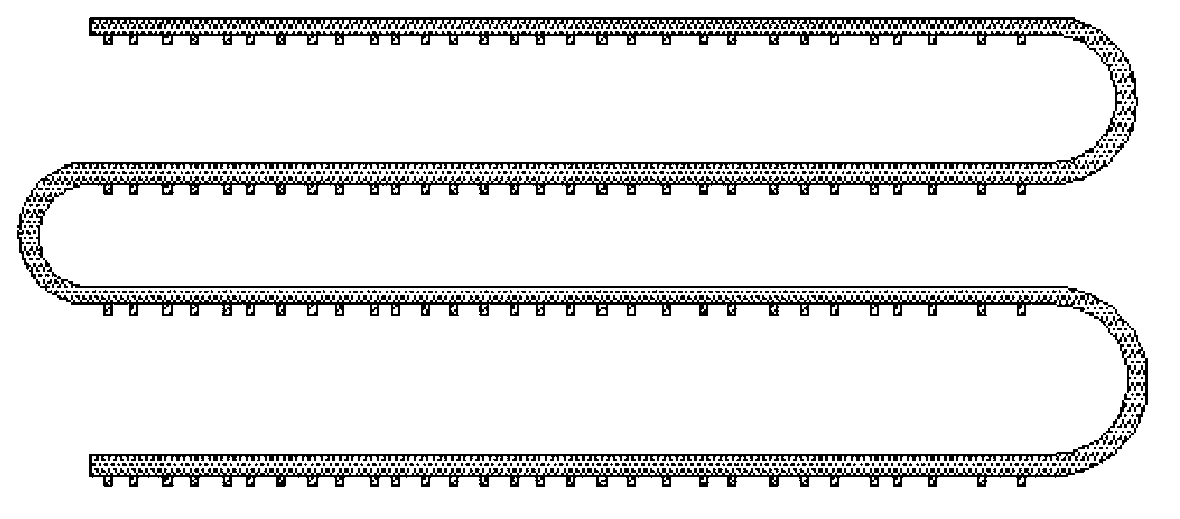

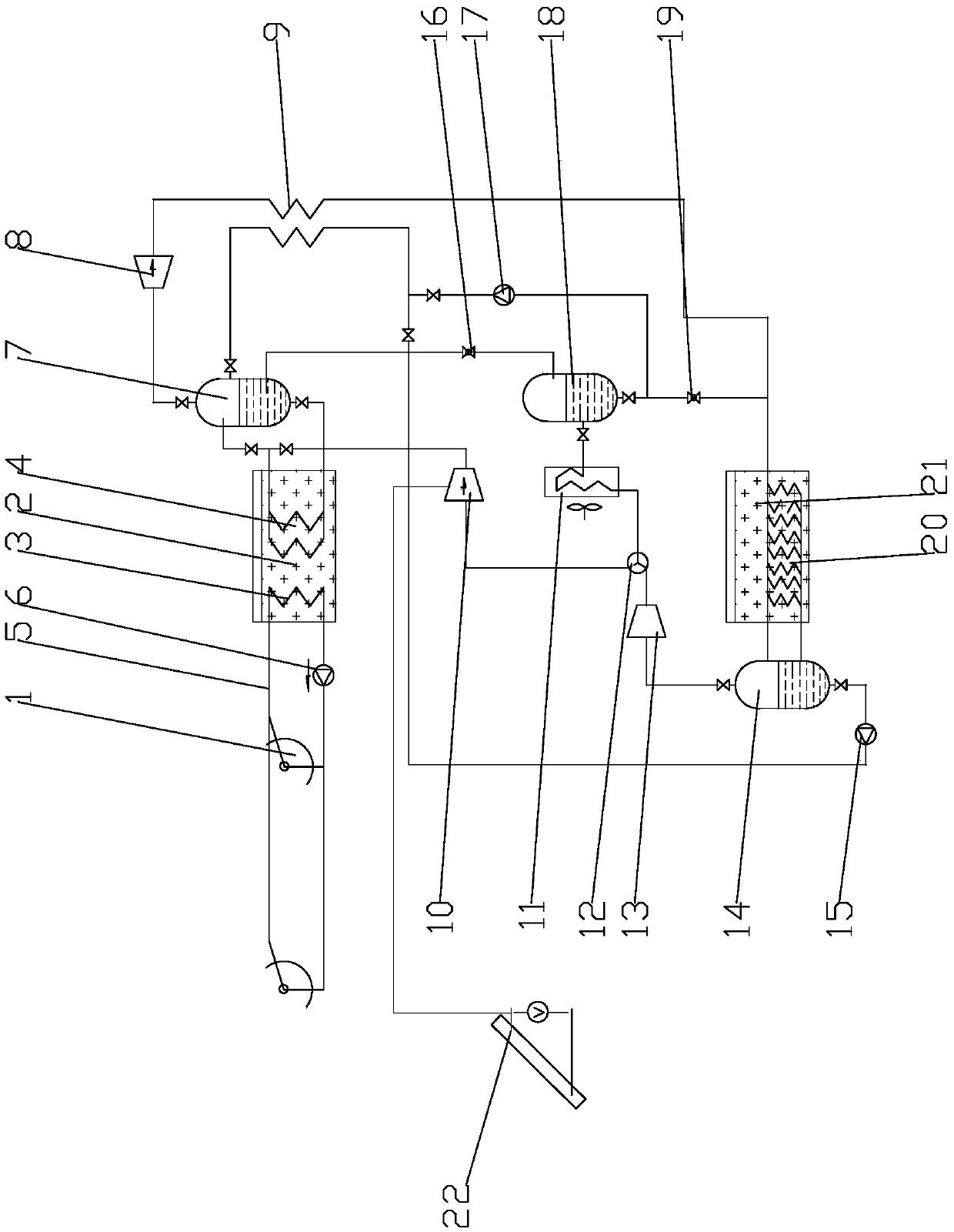

Boiler system with U-shaped flue and boiler water charging system

ActiveCN103134043AEmission reductionImprove resource usage efficiencyFluid heatersEnergy industryEngineeringWater pipe

The invention discloses a boiler system with a U-shaped flue and a boiler water charging system. The boiler system comprises a boiler body, a boiler flue and a U-shaped flue device, wherein the U-shaped flue device is connected to the boiler body through the boiler flue, the U-shaped flue device comprises a front end vertical flue, a heat exchanger and a rear end J-shaped flue, the front end vertical flue is connected to the boiler flue in a sealing mode, the fume which is discharged by a boiler flows from top to bottom in the front end vertical flue, the heat exchanger is connected to the front end vertical flue in a sealing mode, the fume which is discharged by the front end vertical flue flows the water pipe of the heat exchanger from top to bottom, the rear end J-shaped flue is connected to the heat exchanger in a sealing mode, the fume discharged by the heat exchanger flows from bottom to top in the rear end J-shaped flue and is discharged, and the rear end J-shaped flue, the front end vertical flue and the heat exchanger are connected to form a U shape. The problems that the heat exchanging is not complete due to quick flue speed, the flue is corroded, and the condensate is recovered are solved. The residual heat of the fume can be recovered deeply.

Owner:京能科技(易县)有限公司

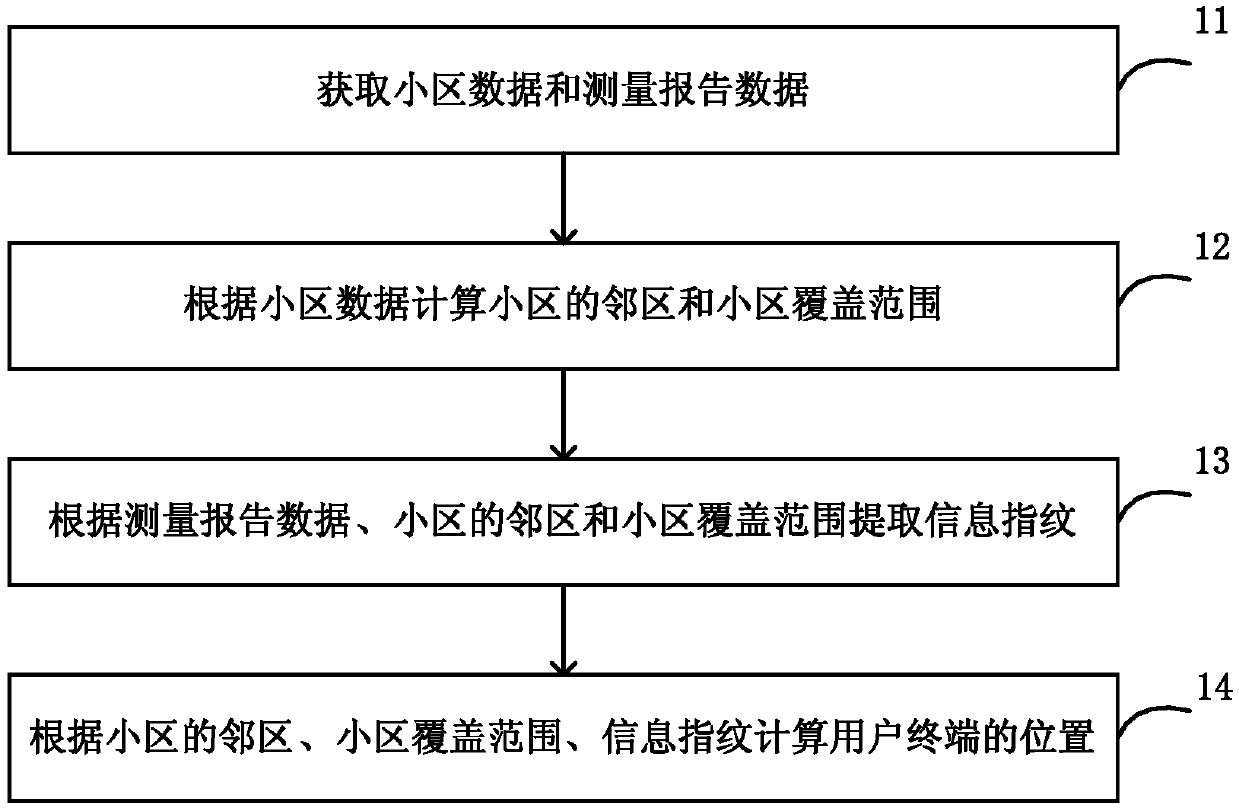

User positioning method and device

ActiveCN109905892AImprove positioning accuracyImprove resource usage efficiencyWireless communicationFingerprintData mining

The invention discloses a user positioning method and device. The method comprises the following steps: acquiring cell data and measurement report data; calculating a neighbor cell and a cell coveragerange of the cell according to the cell data; extracting an information fingerprint according to the measurement report data, the neighbor cells of the cell and the cell coverage area; and calculating the position of the user terminal according to the neighbor cell, the cell coverage area and the information fingerprint of the cell. According to the method, the user positioning accuracy is greatly improved, the resource use efficiency is improved, and the method is a basis for all position-related applications; The method can be applied to the FDD / TDD network, and the application range is wider.

Owner:CHINA TELECOM CORP LTD

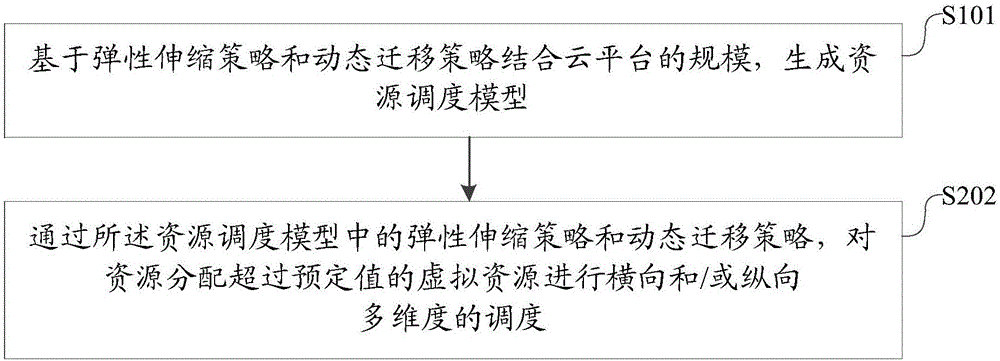

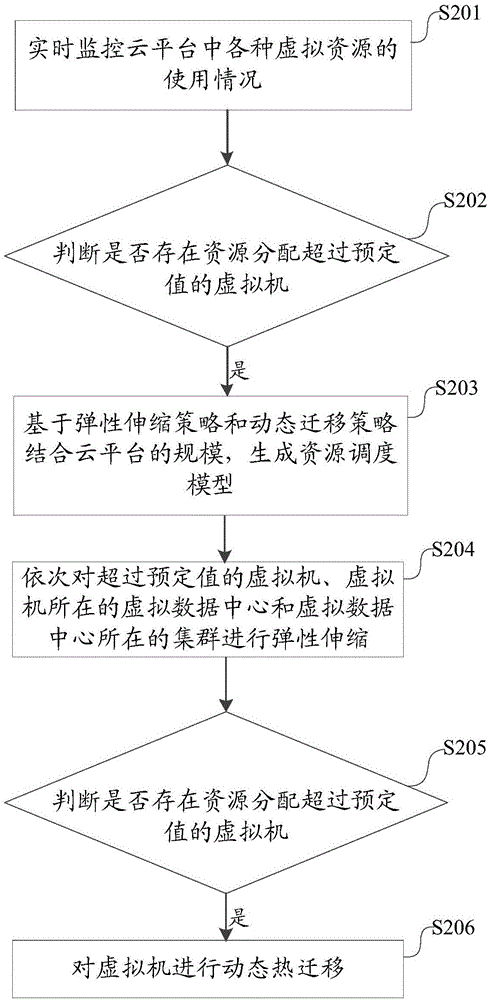

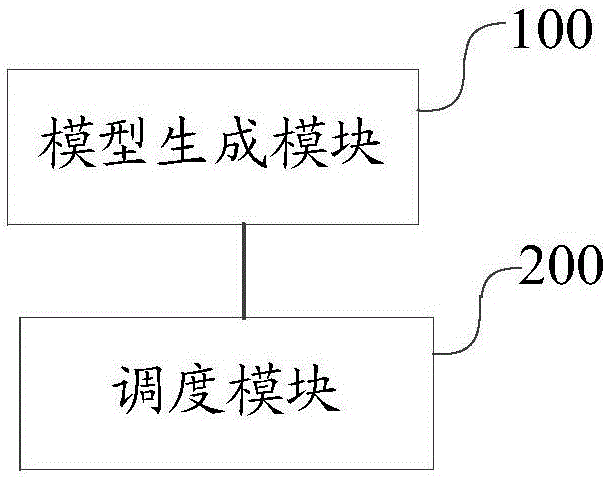

Cloud resource scheduling method and device

InactiveCN106686136AImprove resource usage efficiencyImprove stabilityTransmissionAutoscalingResource utilization

The invention discloses a cloud resource scheduling method. The cloud resource scheduling method and device comprises the steps that a resource scheduling model is generated on the basis of an elastic stretching strategy and a dynamic migration strategy by combining the scale of a cloud platform; transverse and / or longitudinal multi-dimensional scheduling is conducted on virtual resources of which resource allocation exceeds a preset value through the elastic stretching strategy and the dynamic migration strategy in the resource scheduling model. According to the cloud resource scheduling method, by adopting the resource scheduling model which is generated on the basis of the elastic stretching strategy and the dynamic migration strategy by combing the scale of the cloud platform to conduct dynamic scheduling on the virtual resources in the platform, the migration and bad dynamic loading effect problems which are likely to be caused by an instant resource utilization rate peak value can be avoided, the resource using efficiency of the cloud platform is effectively improved, the stability of the cloud platform is improved, the cloud platform is safer and more reliable, and the user experience degree is increased. The invention further discloses a cloud resource scheduling device which also has the above-mentioned advantages.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

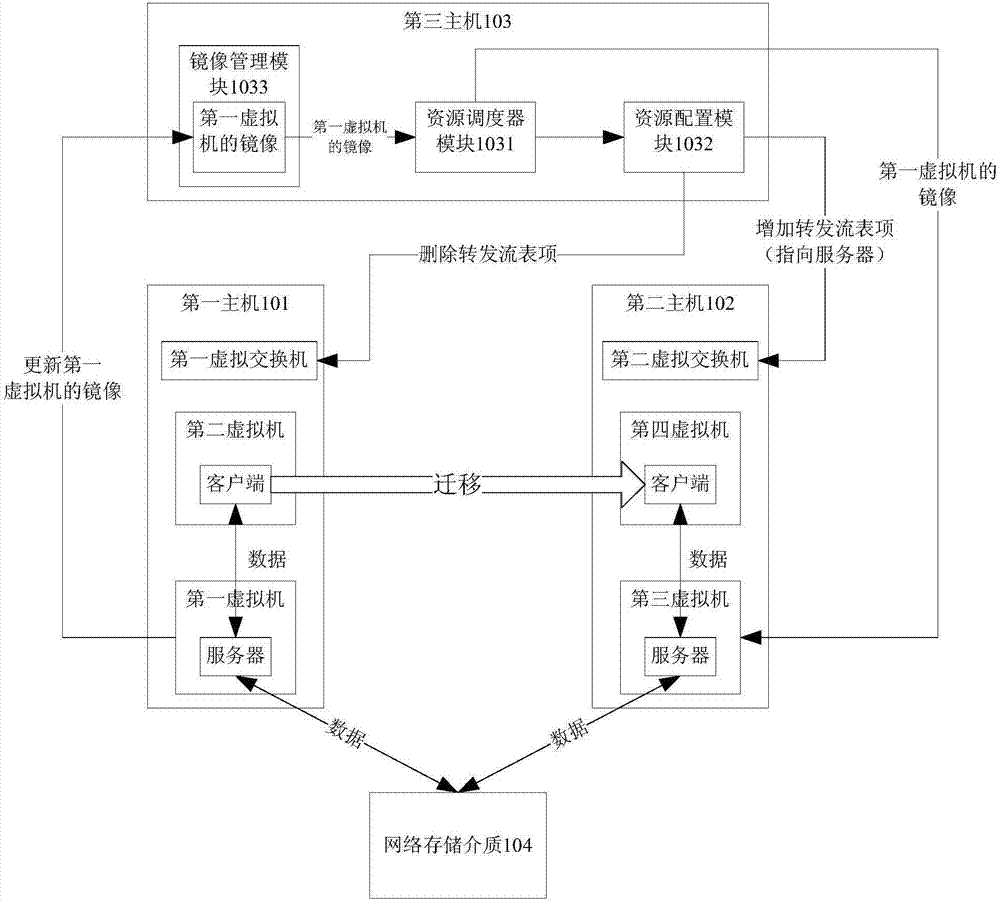

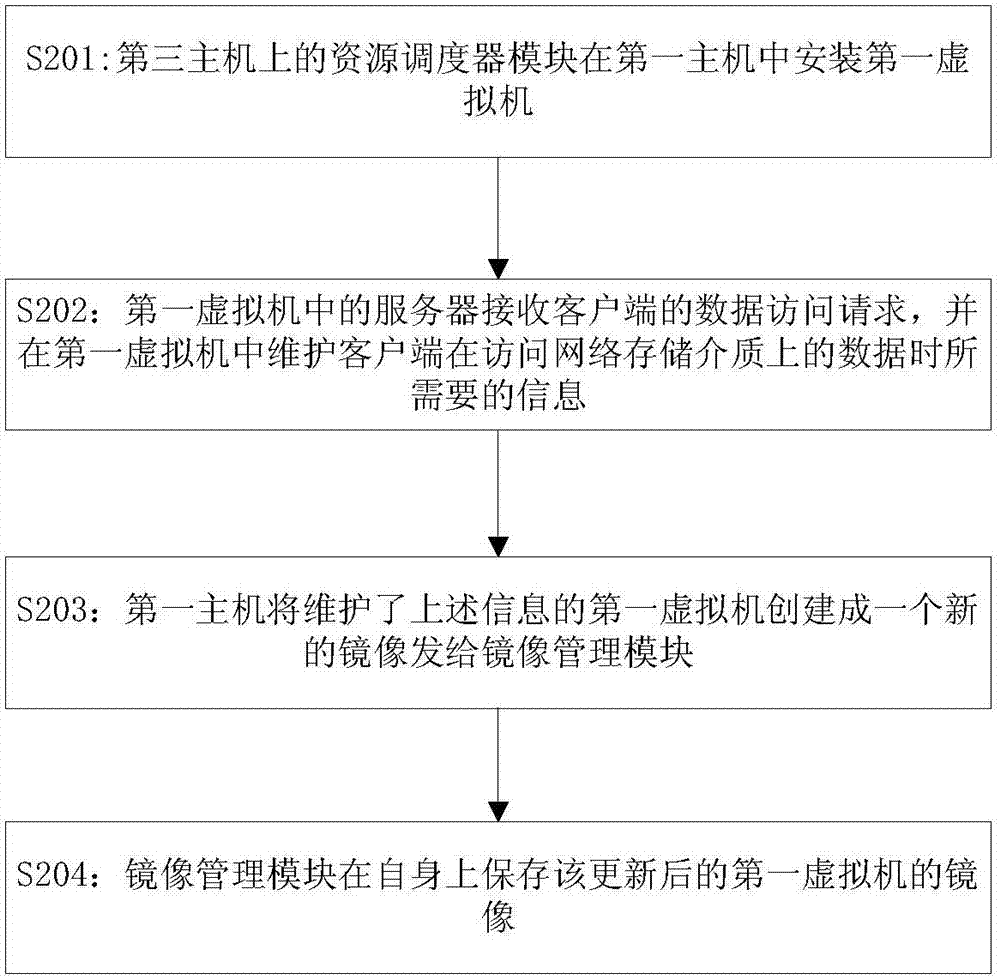

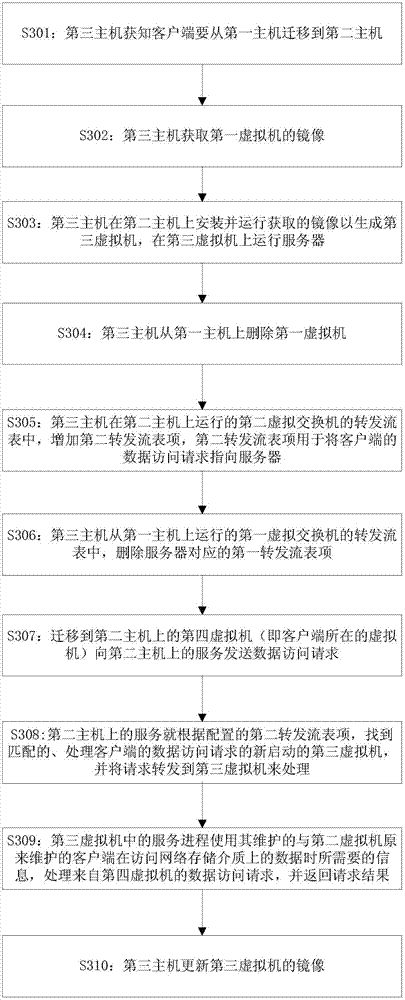

Apparatus and method for migrating virtual machine, where client is located, among different hosts

ActiveCN107368358AImprove resource usage efficiencySmall scaleProgram initiation/switchingTransmissionData accessClient-side

The invention discloses an apparatus and a method for migrating a virtual machine, where a client is located, among different hosts, and aims to ensure business continuity and shorten migration delay. The apparatus comprises a resource scheduler module and a resource configuration module, wherein the resource scheduler module is used for obtaining a mirror image of a first virtual machine located in a first host and running a server; the client runs in a second virtual machine of the first host; the obtained mirror image is installed and runs in a second host which the client is migrated to, thereby generating a third virtual machine; the server runs in the third virtual machine; and the resource configuration module is used for adding a forwarding flow entry in the second host. By installing and running the mirror image, information required for accessing data in a network storage medium by the client is migrated to the second host, and old information still can be used after the client is migrated, so that the business continuity is ensured; and by adding the forwarding flow entry, a data access request of the client is forwarded to a new virtual machine, and the client does not need to re-establish a connection with a new server, so that the migration duration is shortened.

Owner:HUAWEI TECH CO LTD

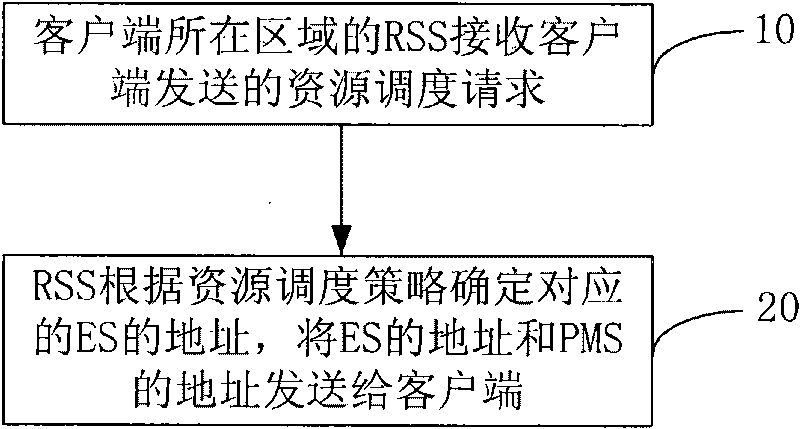

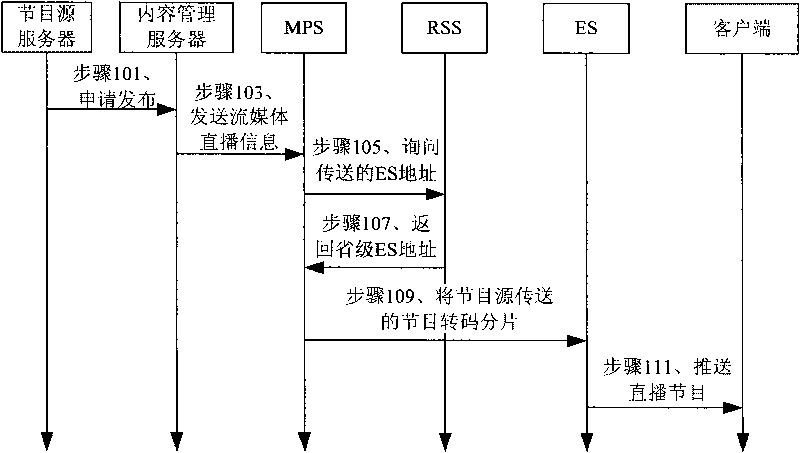

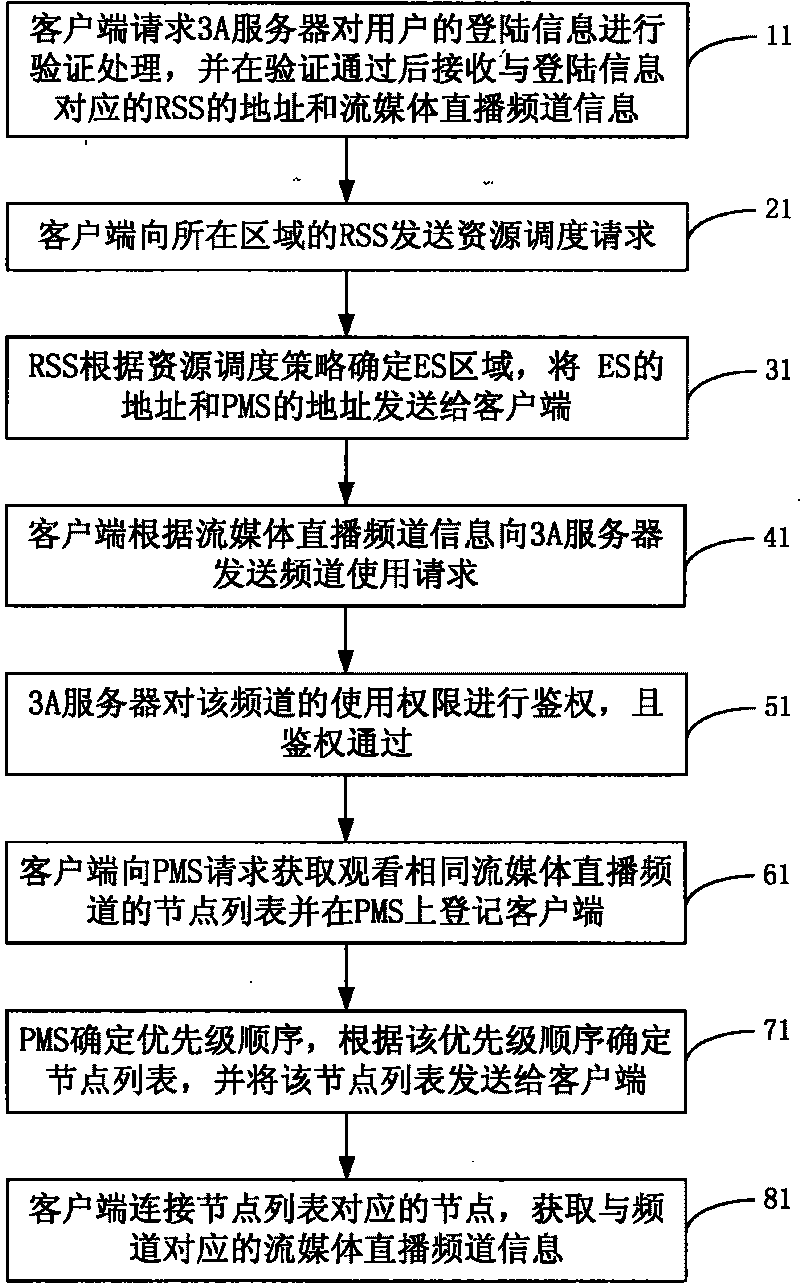

Streaming media broadcast realizing method based on peer-to-peer network

InactiveCN101764832AMeet the requirements of regionalizationSimple resource schedulingSpecial service provision for substationEdge serverClient-side

The invention discloses a streaming media broadcast realizing method based on a peer-to-peer network. The method comprises the following steps: a resource scheduling server in the region of a client end receives a resource scheduling request sent by the client end; the resource scheduling server determines the address of a border server corresponding to the client end which sends the resource scheduling request according to a resource scheduling strategy and sends the determined address of the border server corresponding to the client end and the address of a node managing server to the client end. As the resource scheduling server divides the region of the border server according to the resource scheduling strategy and the region is managed by the node managing server, the demand of flow regionalization of an operator is met, the streaming media broadcast scheduling based on the peer-to-peer network is easy, and the resource use efficiency is high.

Owner:中国网通集团宽带业务应用国家工程实验室有限公司

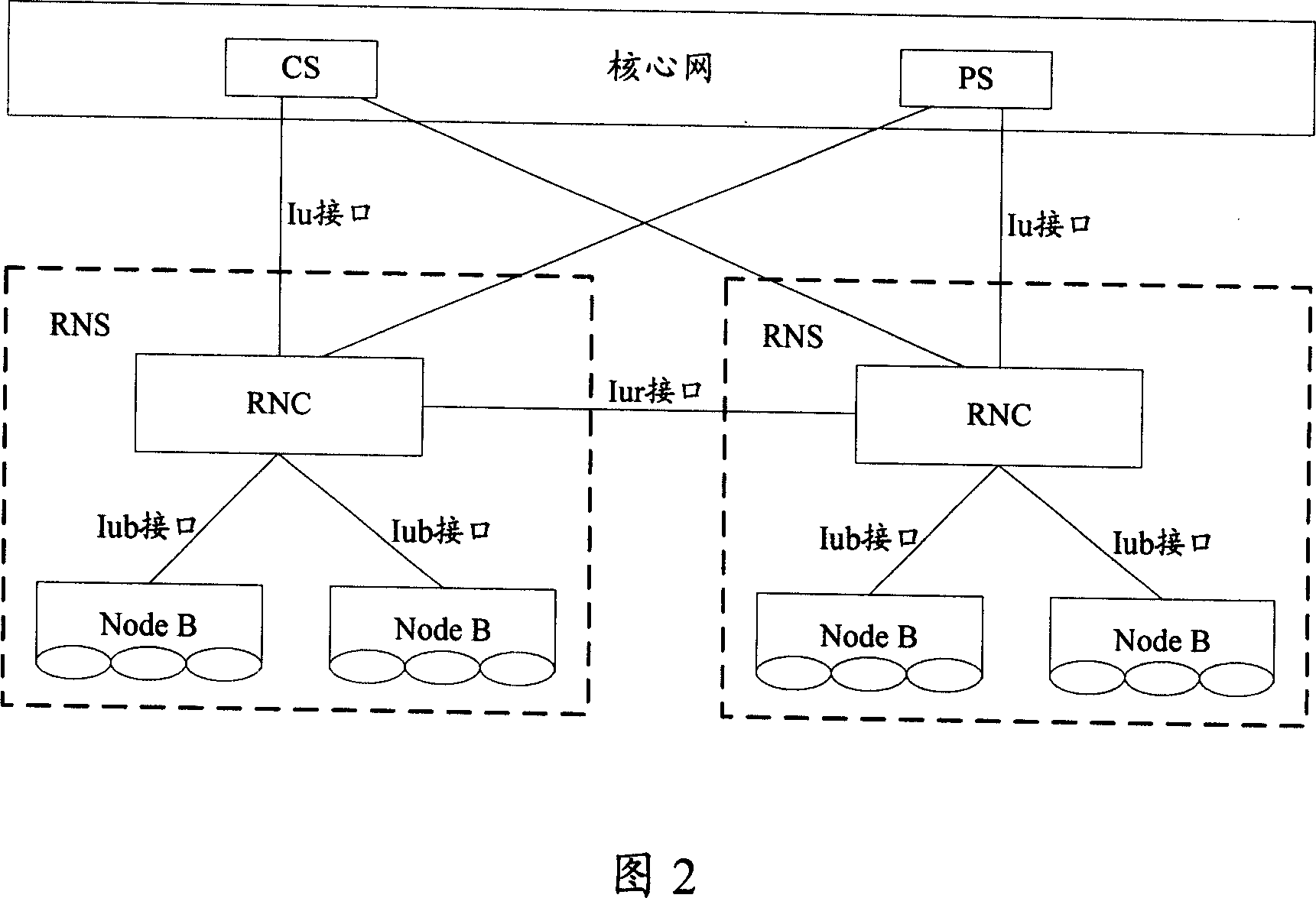

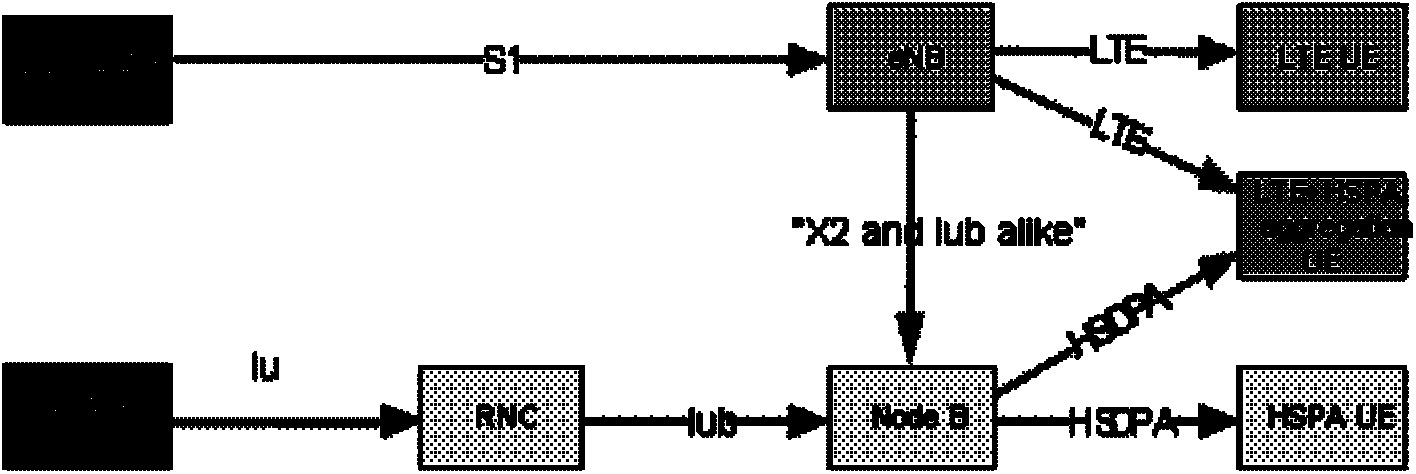

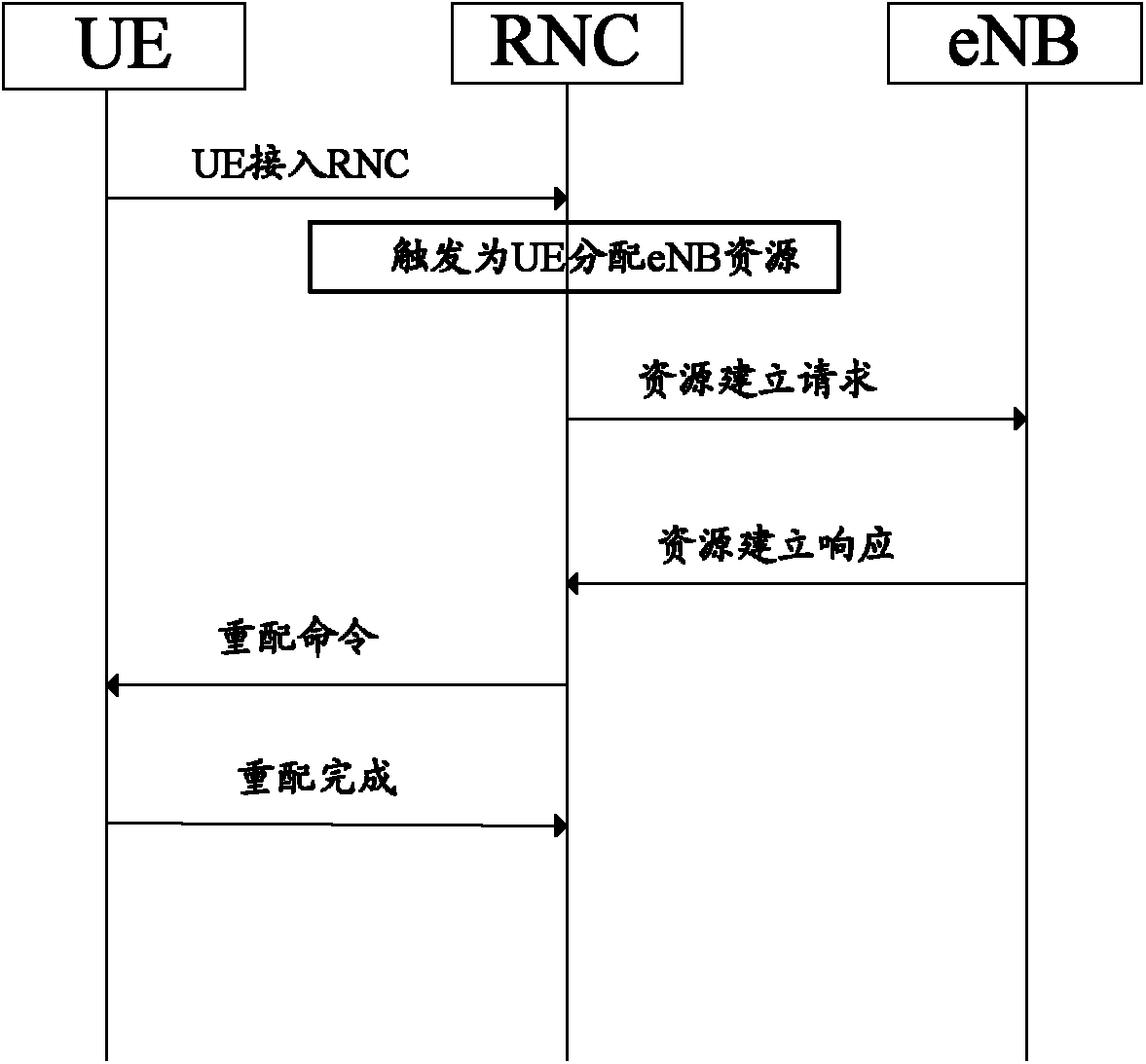

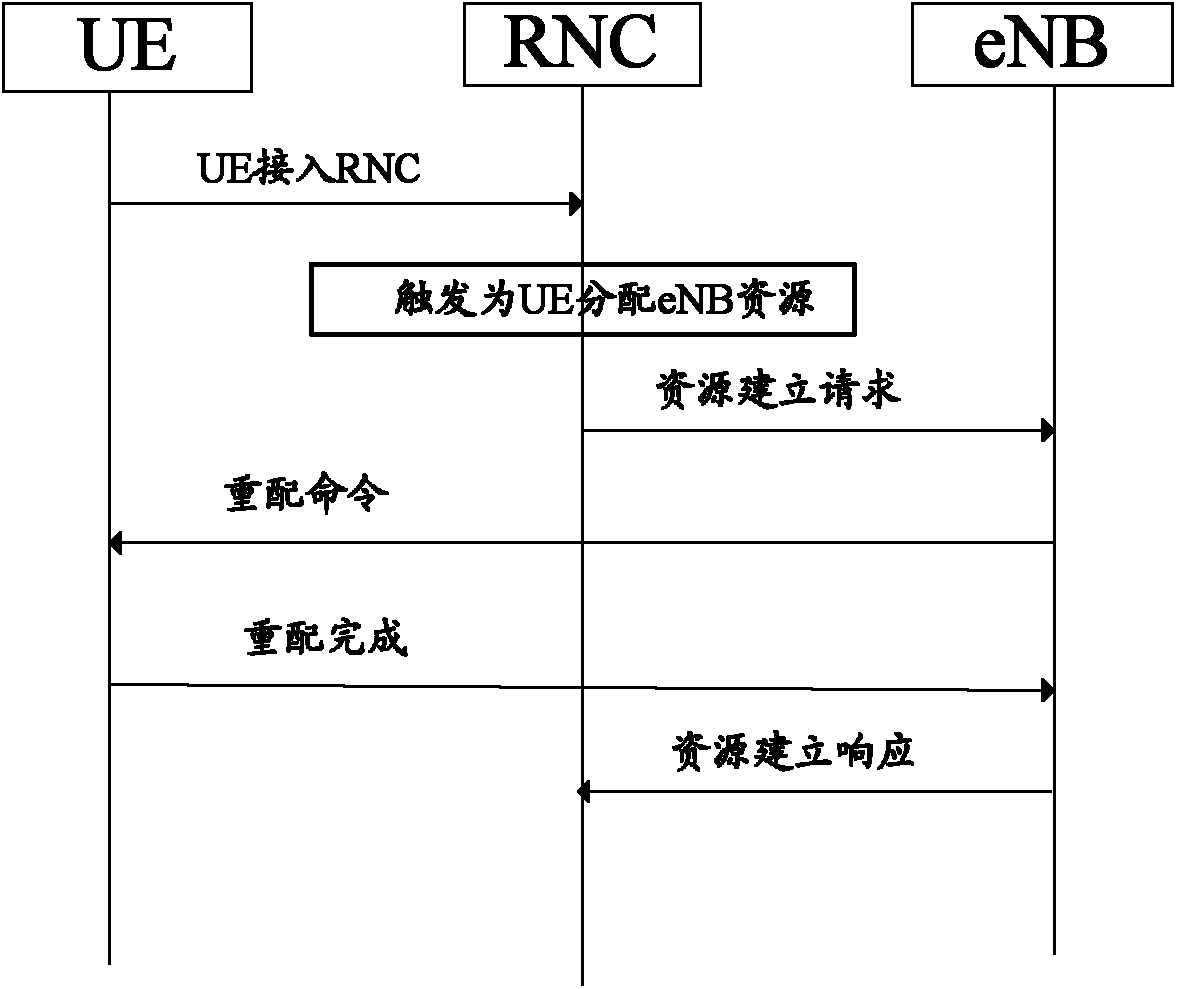

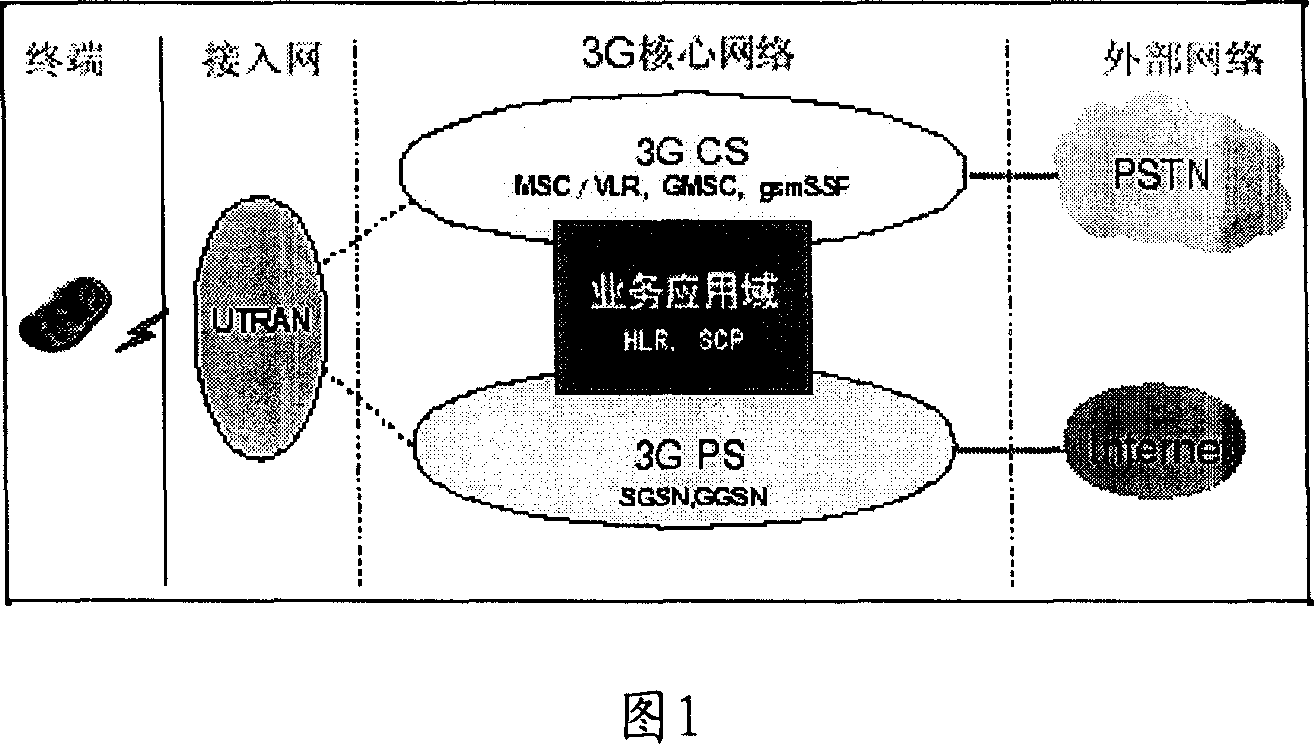

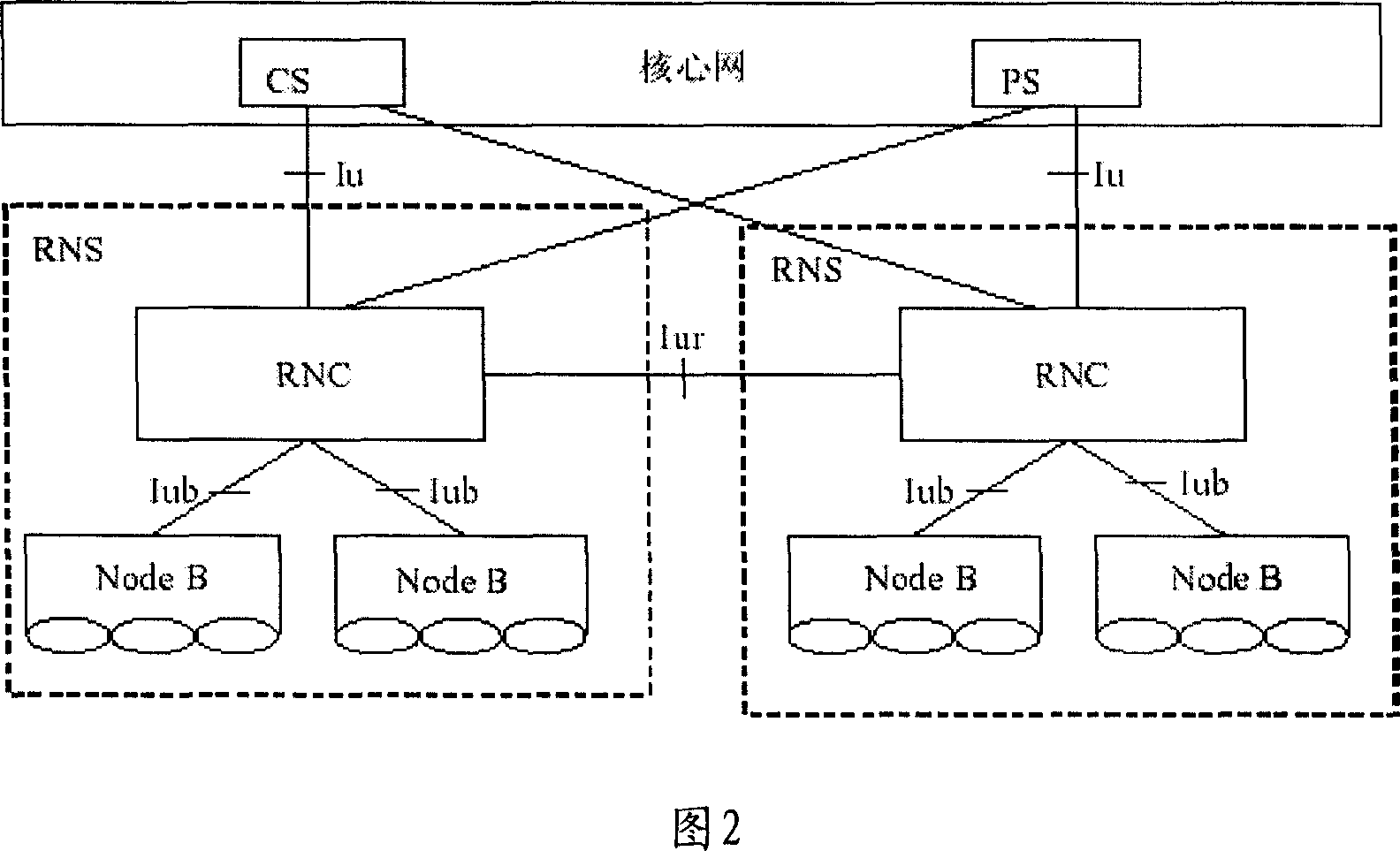

Carrier aggregation method and system for third-generation (3G) network and fourth-generation (4G) network

InactiveCN102932923ARealize Carrier AggregationIncrease speedConnection managementResource informationThird generation

The invention discloses a carrier aggregation method for a third-generation (3G) network and a fourth-generation (4G) network. The carrier aggregation method for the 3G network and the 4G network comprises the following steps that: when determining to allocate resources of the 4G network to user equipment (UE), a main radio resource connection (RRC) control anchor point informs an auxiliary RRC control anchor point to allocate the resources of the 4G network to the UE; and the main RRC control anchor point acquires information about the resources of the 4G network, which are allocated to the UE by the auxiliary RRC control anchor point. The invention also discloses a carrier aggregation system for the 3G network and the 4G network. The system implements the method. By the invention, the UE can simultaneously use carrier resources of the 3G network and the 4G network, the carrier aggregation of the 3G network and the 4G network is realized, and the speed rate of the UE and the utilization efficiency of the resources are greatly improved.

Owner:ZTE CORP

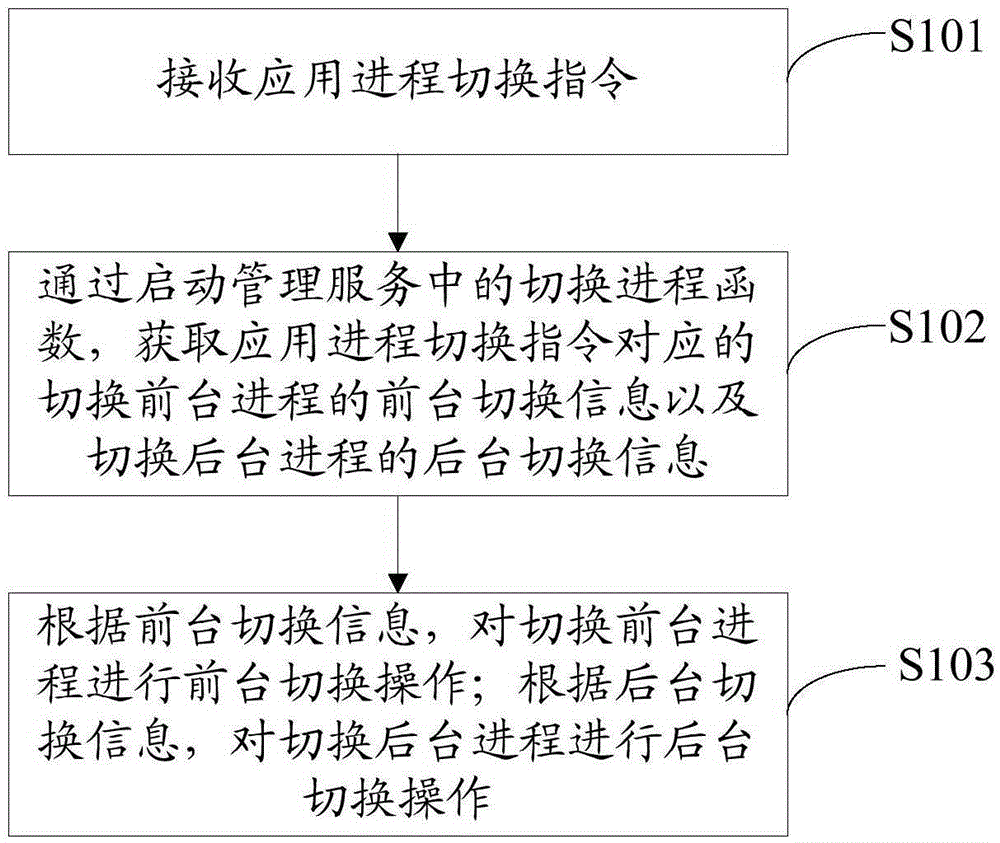

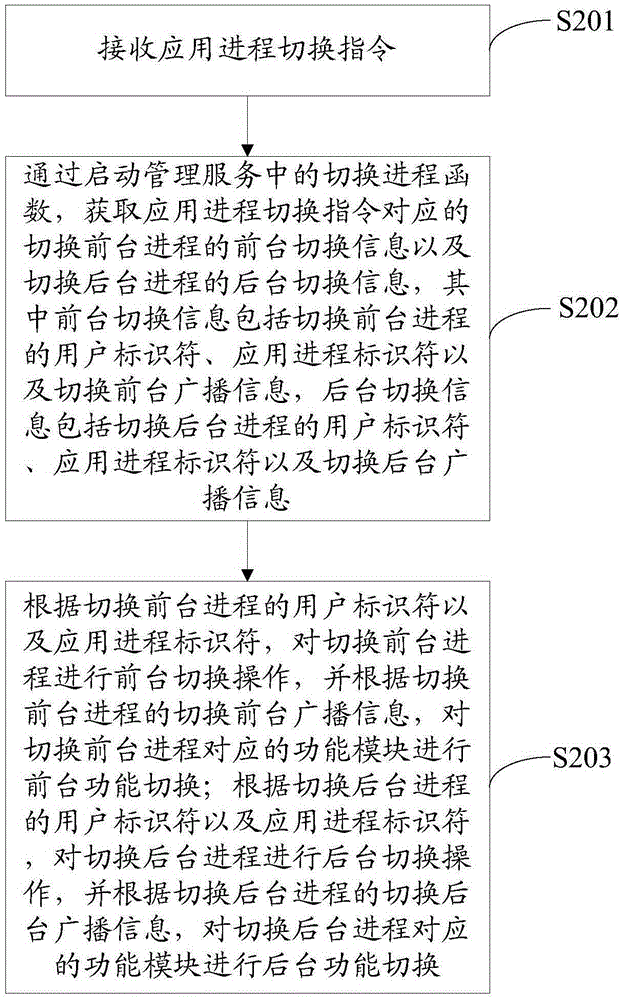

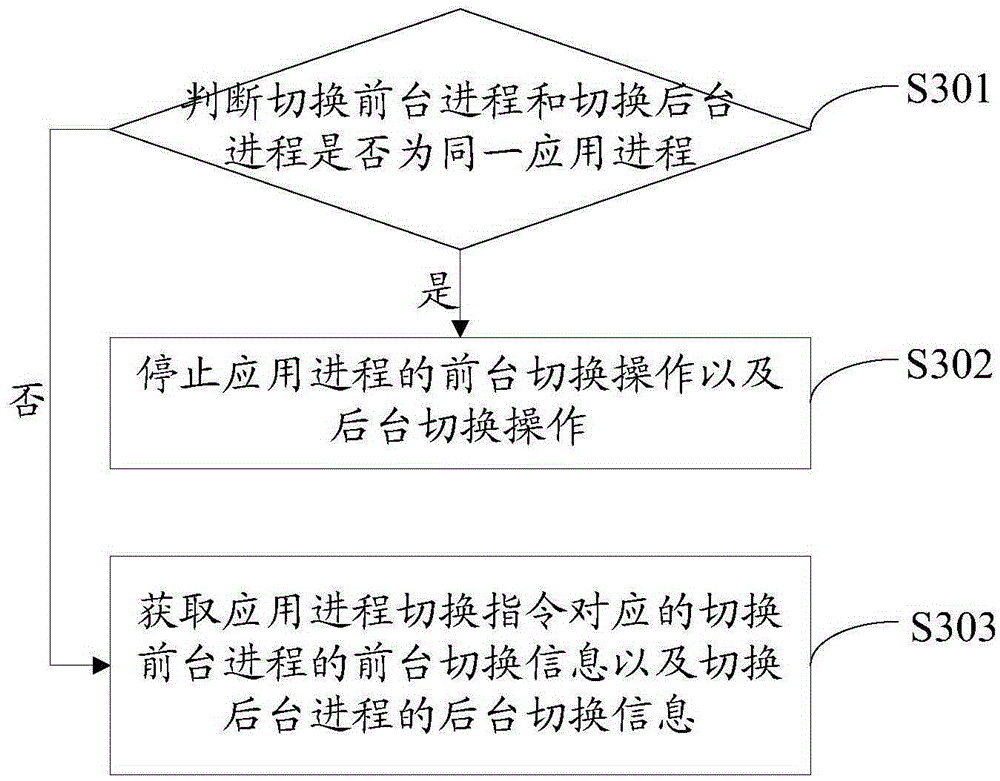

Application process management method and application process management device

ActiveCN105426244AImprove application efficiencyImprove resource usage efficiencyProgram initiation/switchingBackground processResource use

The invention provides an application process management method. The method comprises: receiving an application process switching instruction; through a switching process function in startup management service, obtaining foreground switching information of a switching foreground process and a background switching information of a switching background process, the switching information being corresponding to the application process switching instruction; according to the foreground switching information, performing foreground switching operation on the switching foreground process; and according to the background switching information, performing background switching operation on the switching background process. The invention also provides an application process management device. The application process management method and the application process management device perform foreground switching operation and background switching operation on the processes according to the foreground switching information and the background switching information of the processes, so as to prevent frequent close and open of the application processes, and improve application usage efficiency of users and resource use efficiency of a system.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

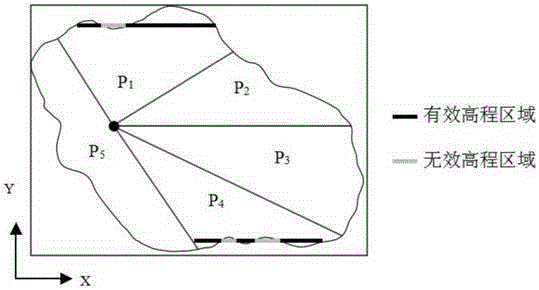

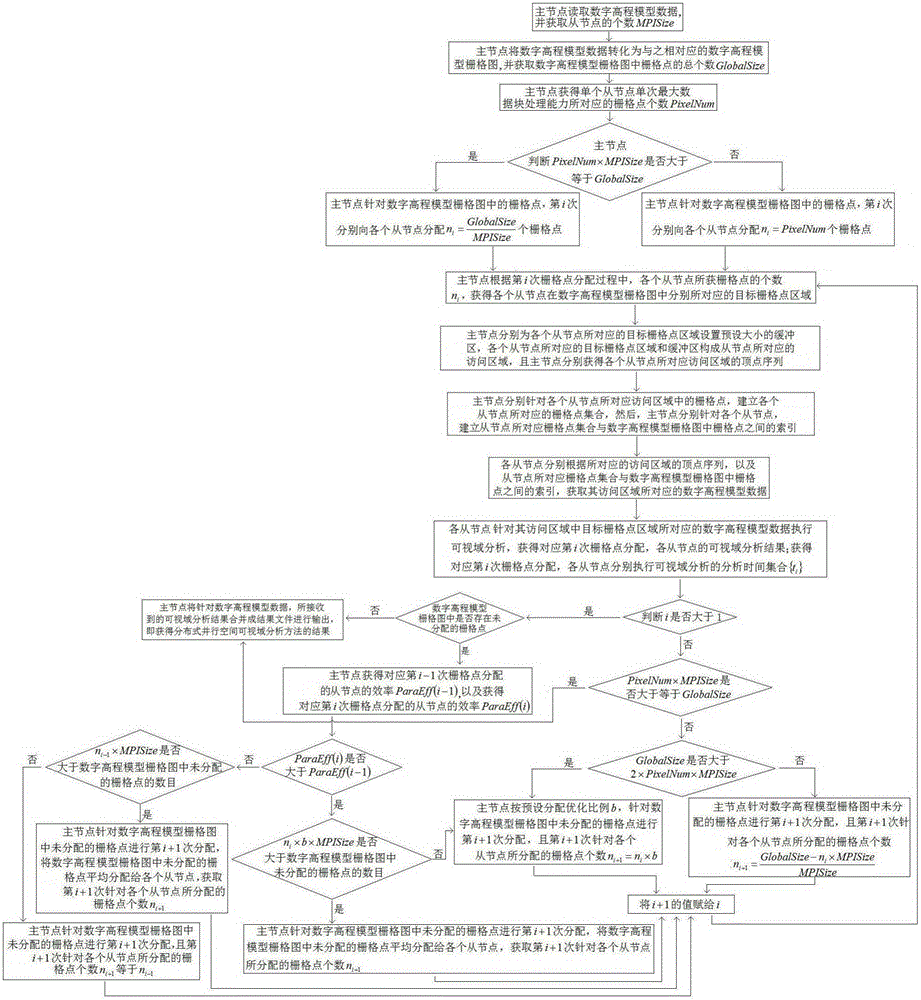

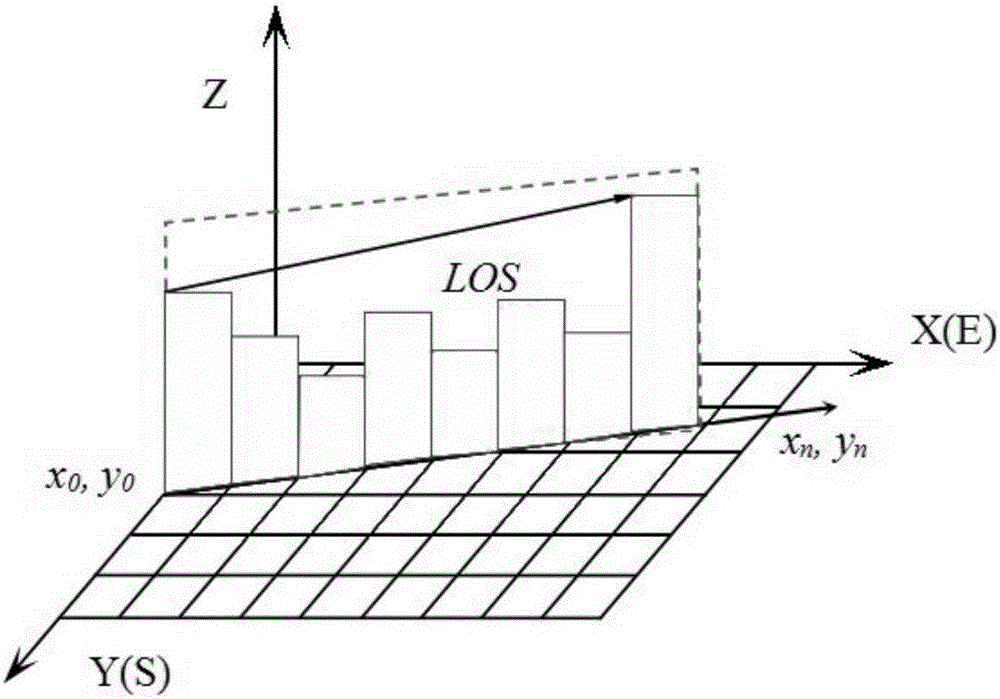

Distributed parallel spatial viewshed analysis method

ActiveCN105260523AAccurate operationImprove resource usage efficiencySpecial data processing applicationsDigital elevation modelData partitioning

The invention relates to a distributed parallel spatial viewshed analysis method. A module is subjected to parallel dynamic division by using digital elevation model data, parallelization and data scheduling of a serial algorithm are quickly completed, and irregular data partitioning and a redundancy mechanism of a sight line region are applied, so that it is ensured that a slave node can access to the digital elevation model data corresponding to a target grid point region in a distributed calculation environment, wherein an irregular data parallel policy has important guidance meaning for viewshed algorithms of different realization modes; and in combination with a buffer region mechanism, correct operations of different dependence degree algorithms are effectively ensured and resource utilization efficiency of the slave node is improved, so that the method has wide industrial application prospects.

Owner:INST OF SOIL SCI CHINESE ACAD OF SCI

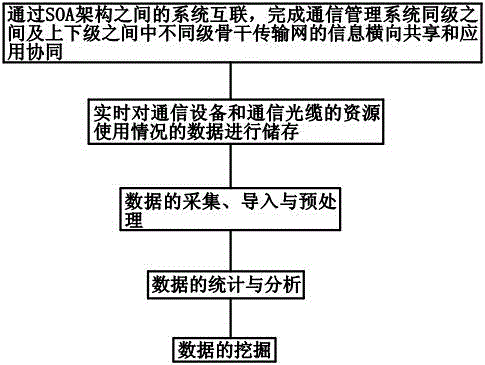

Communication network early-warning analysis system based on big data analysis

PendingCN106850249AImprove resource usage efficiencyPredict hidden dangers in advanceData processing applicationsRelational databasesResource utilizationData acquisition

The invention discloses a communication network early-warning analysis system based on big data analysis. Through adoption of SOAs, backbone transmission networks are divided into a network control and data collection layer, a platform layer and a management application layer; different levels of backbone transmission networks are managed comprehensively; through system interconnection between SOAs, information transverse sharing and application coordination of the different levels of backbone transmission networks are realized between the same levels and between upper and lower levels of a communication management system; through synthesis of the resume information, machine account information and service utilization information of the communication management system, according to level distribution of communication network resource occupation, the utilization condition of the resources is comprehensively analyzed from various aspects such as optical fiber resources, multiplexing section resources and channel resources; the resource utilization condition data of communication devices and communication optical fibers is stored in real time; through analysis of communication resource occupation of different levels, comprehensive analysis is carried out on existing massive history operation and maintenance data through utilization of a big data technology; and potential hidden dangers possibly existing in the communication network can be predicted in advance.

Owner:中国电力技术装备有限公司郑州电力设计院

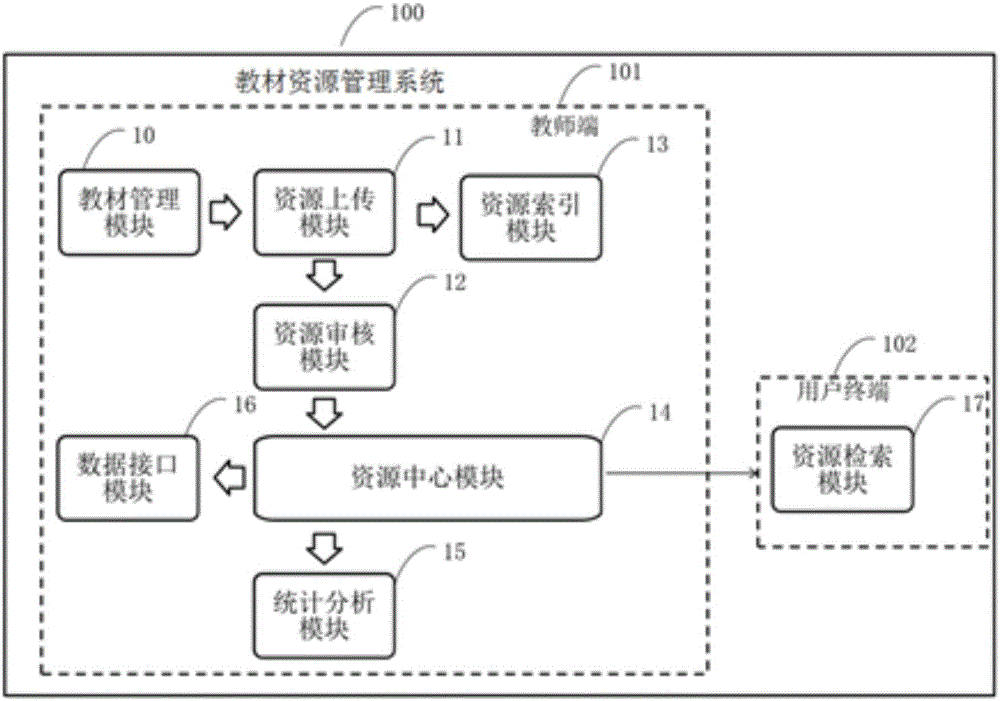

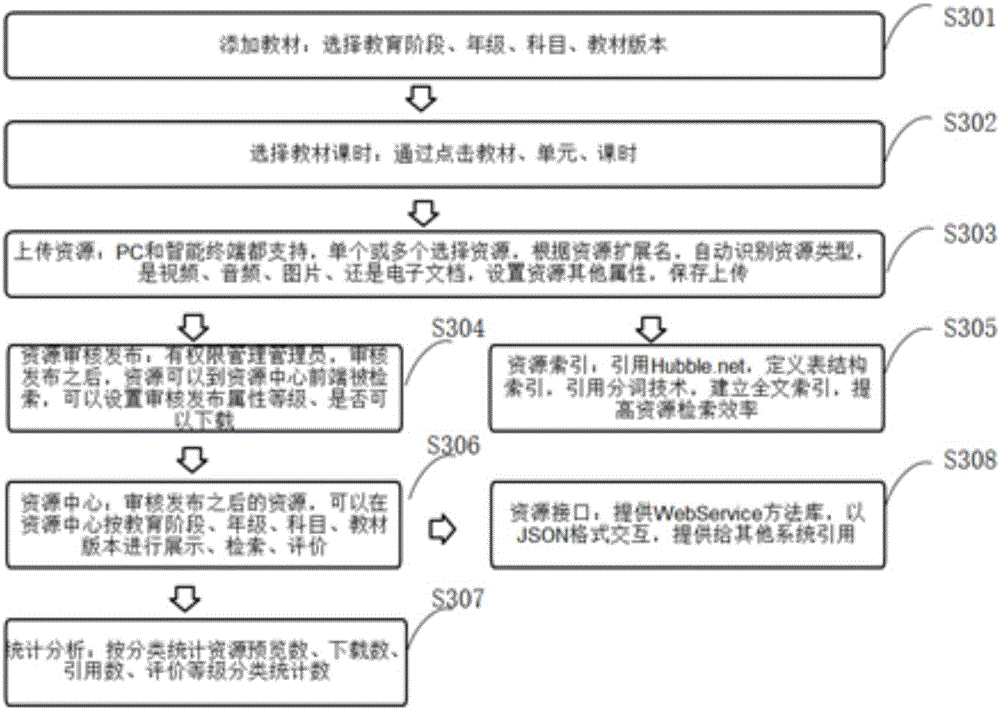

Teaching material resource management system and teaching material resource management method

InactiveCN105931161AImprove resource retrieval efficiencyQuick shareData processing applicationsStatistical analysisResource utilization

The invention relates to the technical field of network teaching, and specifically provides a teaching material resource management system and a teaching material resource management method. The teaching material resource management system comprises a teaching material management module, a resource uploading module, a resource indexing module, a resource checking module, a resource center module, a statistical analysis module, a data interface module and a resource retrieval module. The system solves the problem that a teacher needs to retrieve teaching material resources in a simplified, foolish and pertinent manner due to disorder resource storage in the existing technology. Effective management and circulation of a school or regional resources are improved. The resource utilization rate of the teacher is improved.

Owner:GUANGZHOU WEIDU COMP TECH CO LTD

Method for compressing communication message head

InactiveCN1925454ASave transmission bandwidthReduce transmission delayData switching networksThe InternetCompression method

This invention relates to message initial compression method, which comprises the following steps: defining wireless interface user interface agreement shed; processing compression process between agreement shed internet and core internet mouth. This invention can process effective compression between internet and core interface.

Owner:HUAWEI TECH CO LTD

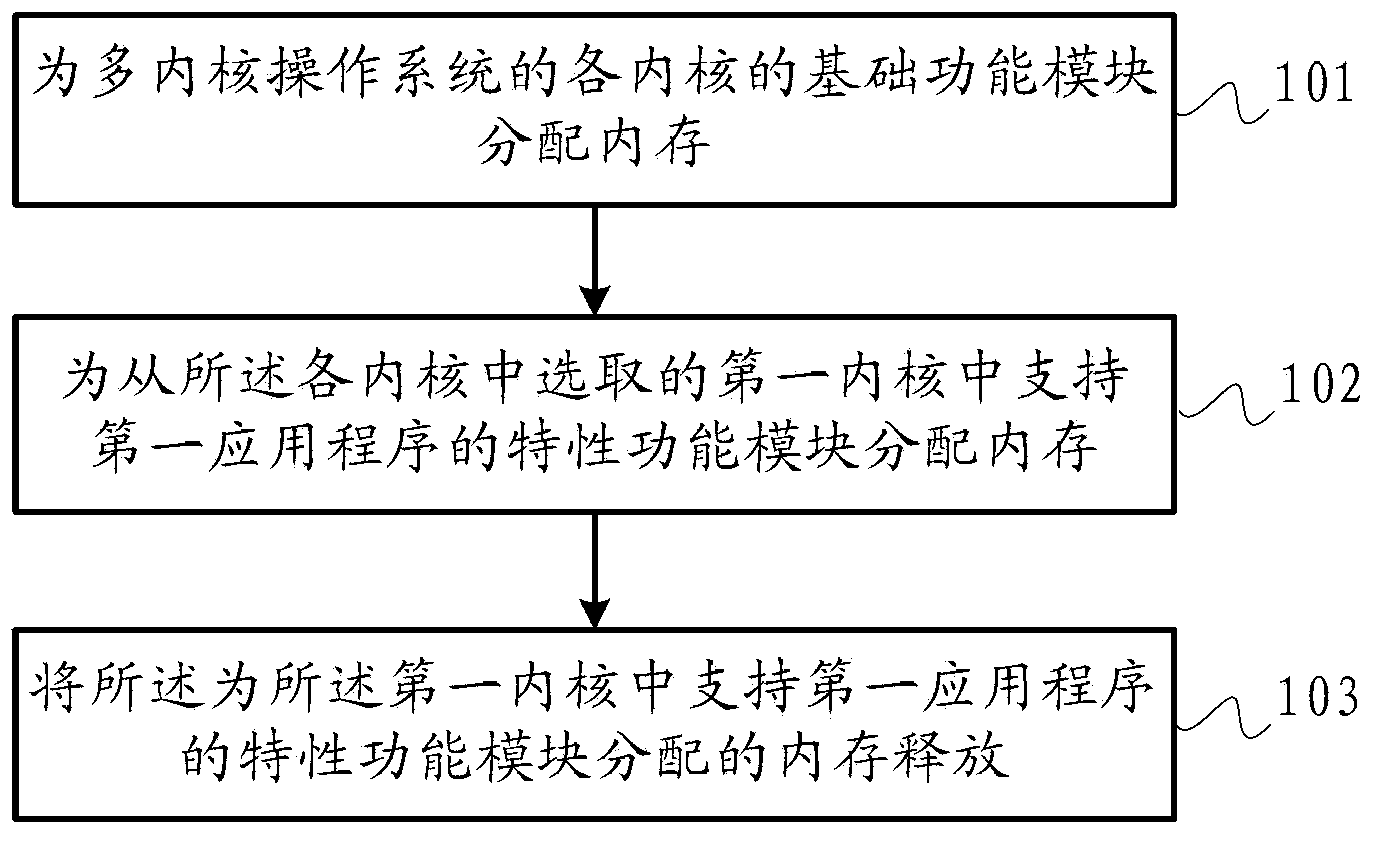

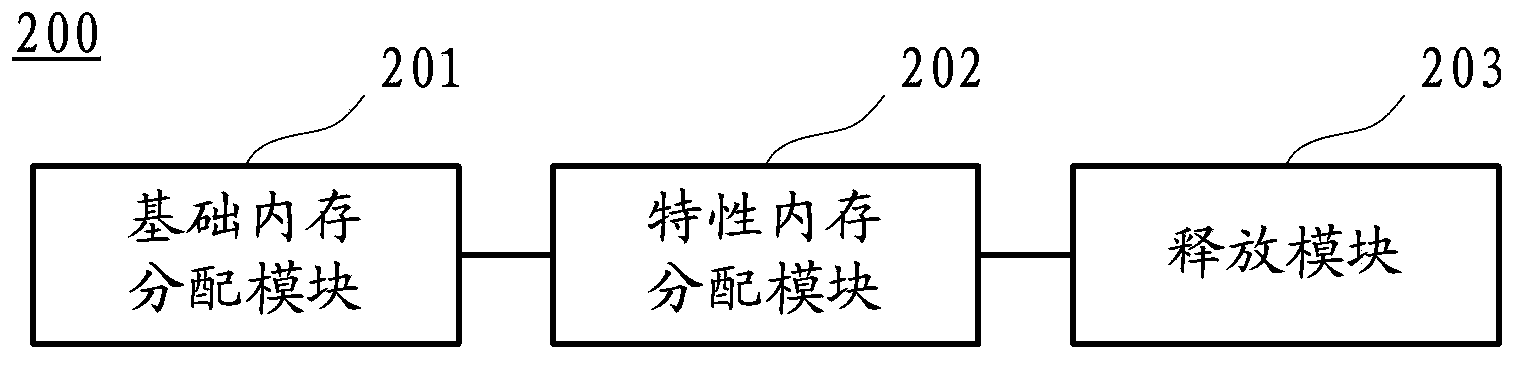

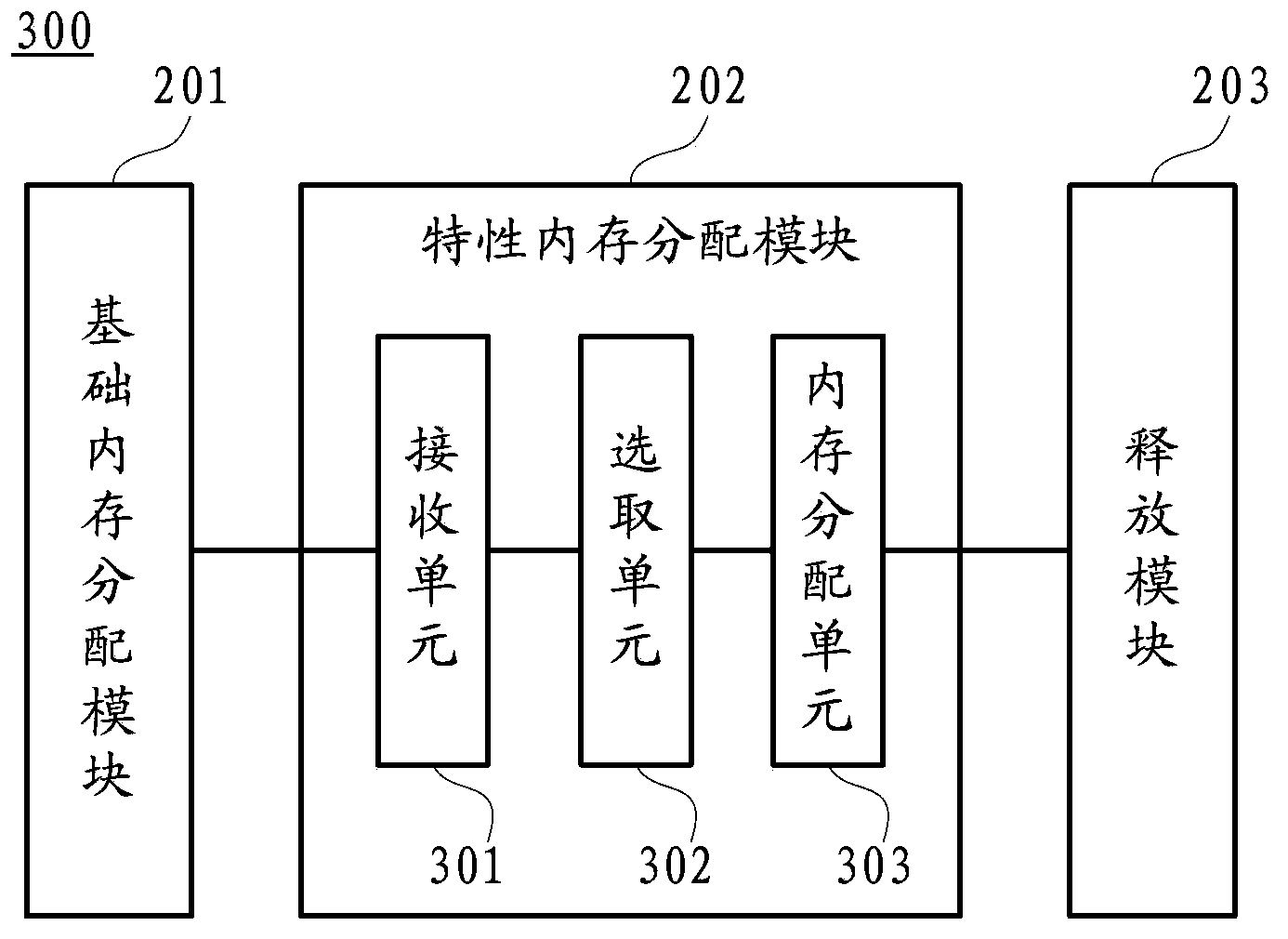

Multi-core operating system realization method, realization device and realization system

ActiveCN104077266AImprove efficiencyImprove resource usage efficiencyDigital computer detailsProgram controlOperational systemSystems management

The embodiment of the invention provides a multi-core operating system realization method, a multi-core operating system realization method and a multi-core operating system realization system, wherein the method comprises the following steps that: basic function modules of each core of a multi-core operating system is subjected to memory allocation; a characteristic function module supporting a first application program in the first core selected from each core is subjected to memory allocation; and the memory allocated for the characteristic function module supporting the first application program in the first core is released. The multi-core operating system realization method, the multi-core operating system realization method and the multi-core operating system realization system provided by the embodiment of the invention have the advantages that the goal of allocating the memory for corresponding functions of the cores according to requirements on the basis of the characteristics of the application programs is achieved, so the system management efficiency and the resource use efficiency of the multi-core operating system are improved.

Owner:HUAWEI TECH CO LTD +1

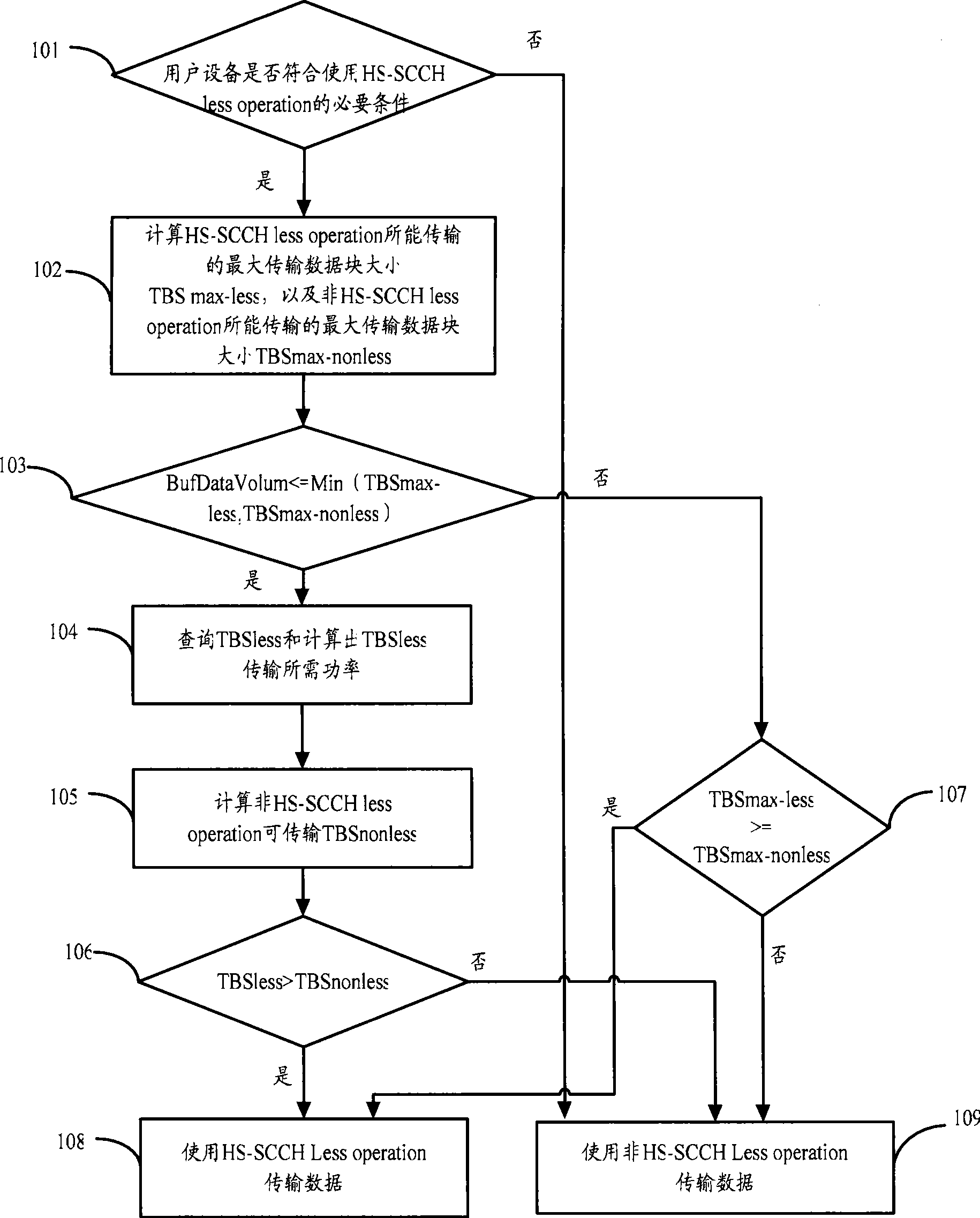

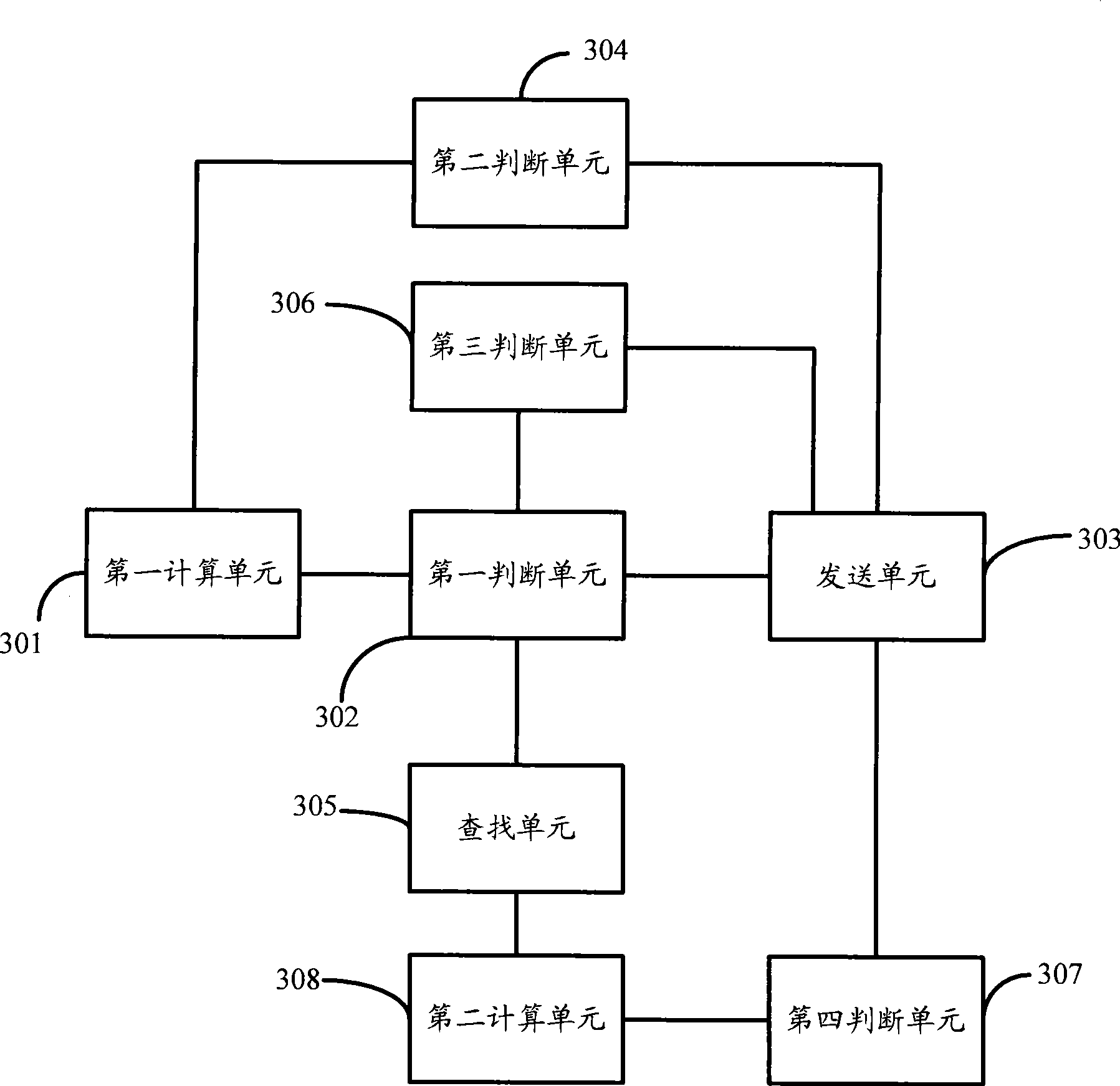

Transmission method, system and base station for high speed descending grouping access data

ActiveCN101370285AImprove throughputImprove resource usage efficiencyError preventionNetwork traffic/resource managementData transmissionResource use

The embodiment of the invention discloses a data transmission method, system and base station of the high speed downward grouping access. The technical solution of the embodiment of the invention activates the HS-SCCH less operation, when the base station judges to use the HS-SCCH less operation or non HS-SCCH less operation, the base station firstly judges whether the to-be transmitted data quantity is sufficient; if the data quantity is sufficient, the base station decides whether to select the HS-SCCH less operation according to the TBS size according to the HS-SCCH less operation or non HS-SCCH less operation, so that the base station obtains larger cargo-handling capacity; if the to-be transmitted data quantity is not sufficient, the TBS size of the HS-SCCH less operation and the non HS-SCCH less operation are compared under the same resource condition to device whether to decide to select the HS-SCCH less operation, so that the base station obtains greater resource using efficiency.

Owner:SHANGHAI HUAWEI TECH CO LTD

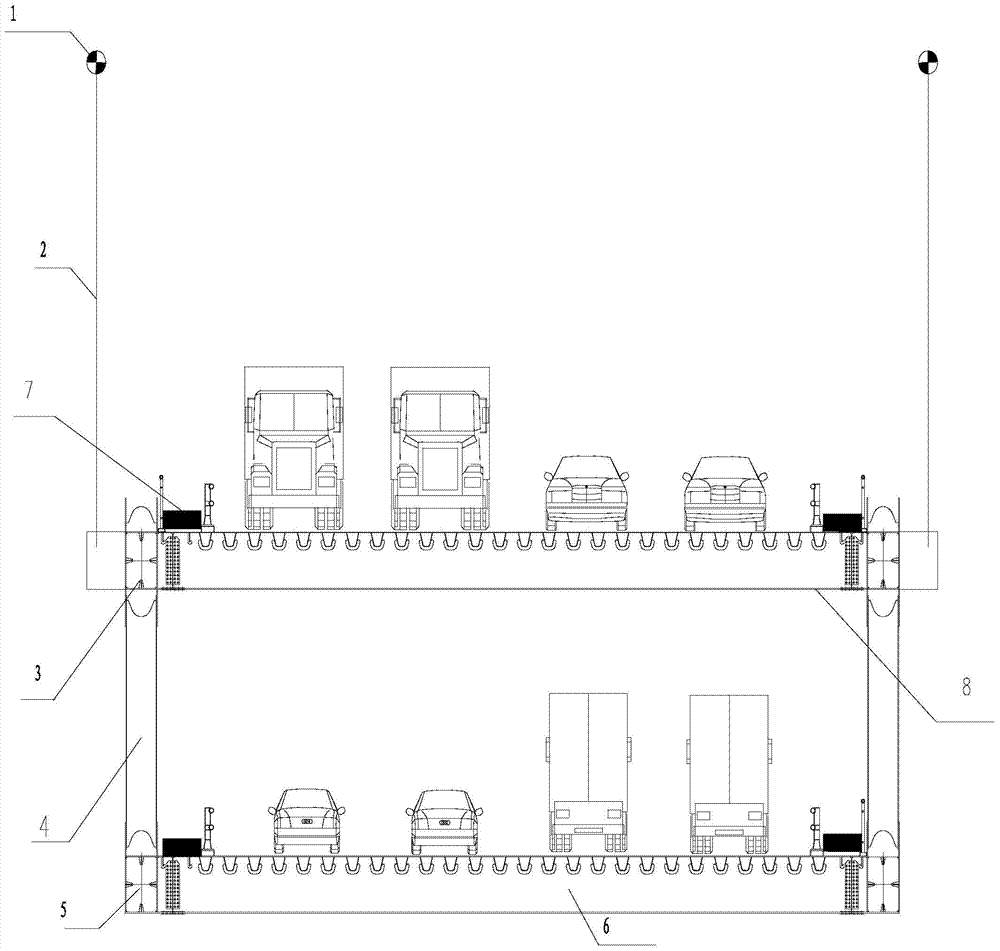

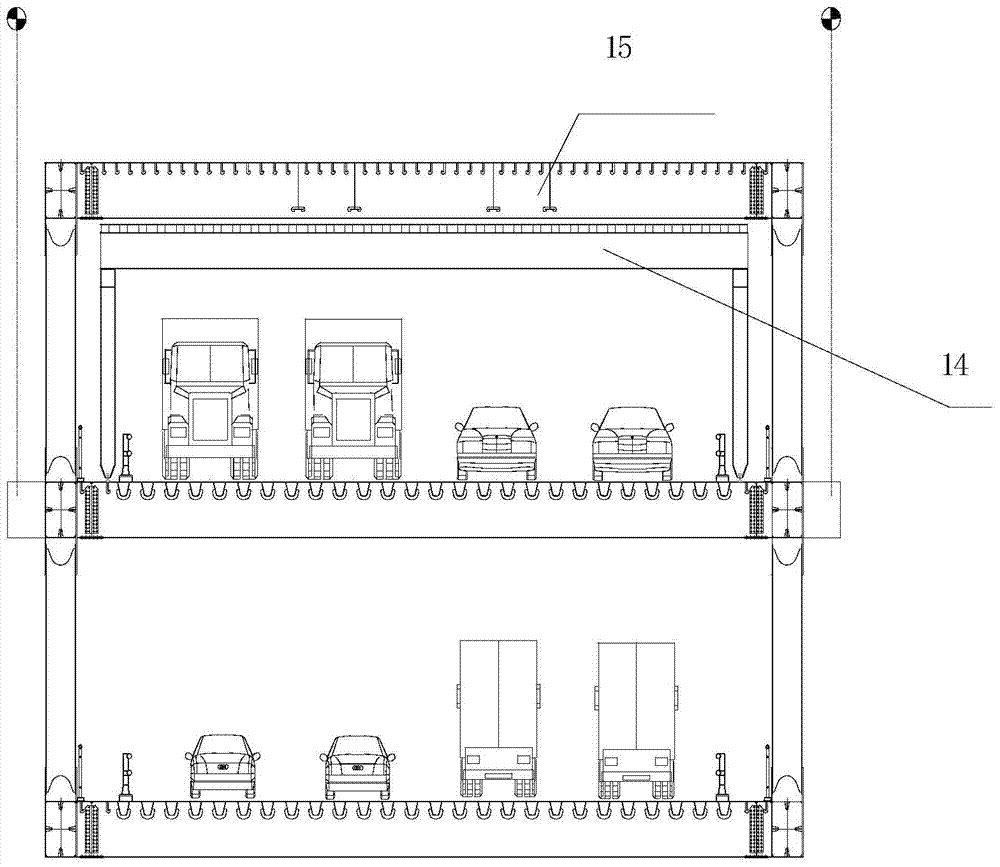

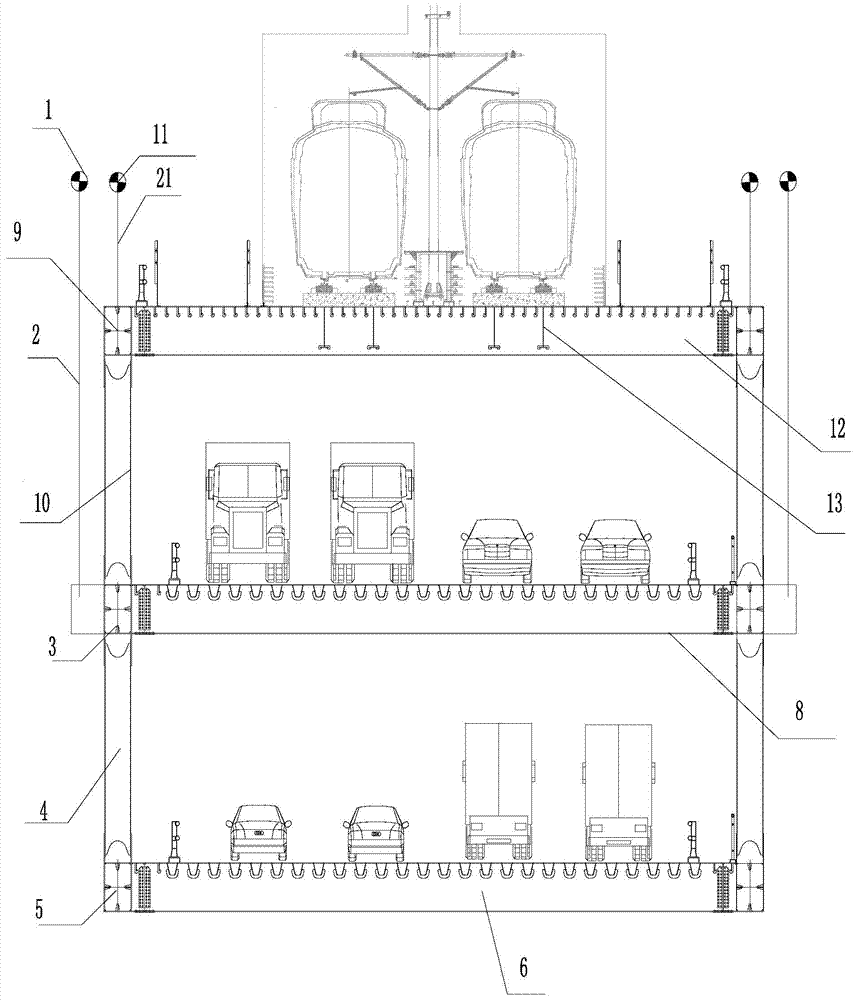

Staged implementation method of multi-bridge-floor-layer suspension bridge for highway and rail

ActiveCN104195946AImprove leaping abilityReduce the impactSuspension bridgeBridge erection/assemblyTerrainBridge deck

The invention discloses a staged construction method of a multi-bridge-floor-layer suspension bridge for a highway and a rail. The suspension bridge is a three-bridge-floor-layer suspension bridge composed of a double-layer parallel main truss structure, rail transit is located on the uppermost layer, and highway traffic is located on the middle layer and the lower layer. The staged construction method of firstly constructing the highway traffic and then constructing the rail transit is adopted, construction on the rail transit can be carried out in the running process of the highway traffic, the scheme of the three-bridge-floor-layer suspension bridge is adopted, structural efficiency can be improved, the adaptive capacity of the structure on terrain is enhanced, the structure can adapt to the requirement for staged construction of the rail transit and the highway traffic, the recent engineering construction investment is effectively reduced, idle resources are avoided, meanwhile, the requirement that existing traffic circulation is not interrupted in the long-term construction period can be met, and the influence of construction on traffic is reduced to the largest extent.

Owner:林同棪国际工程咨询(中国)有限公司

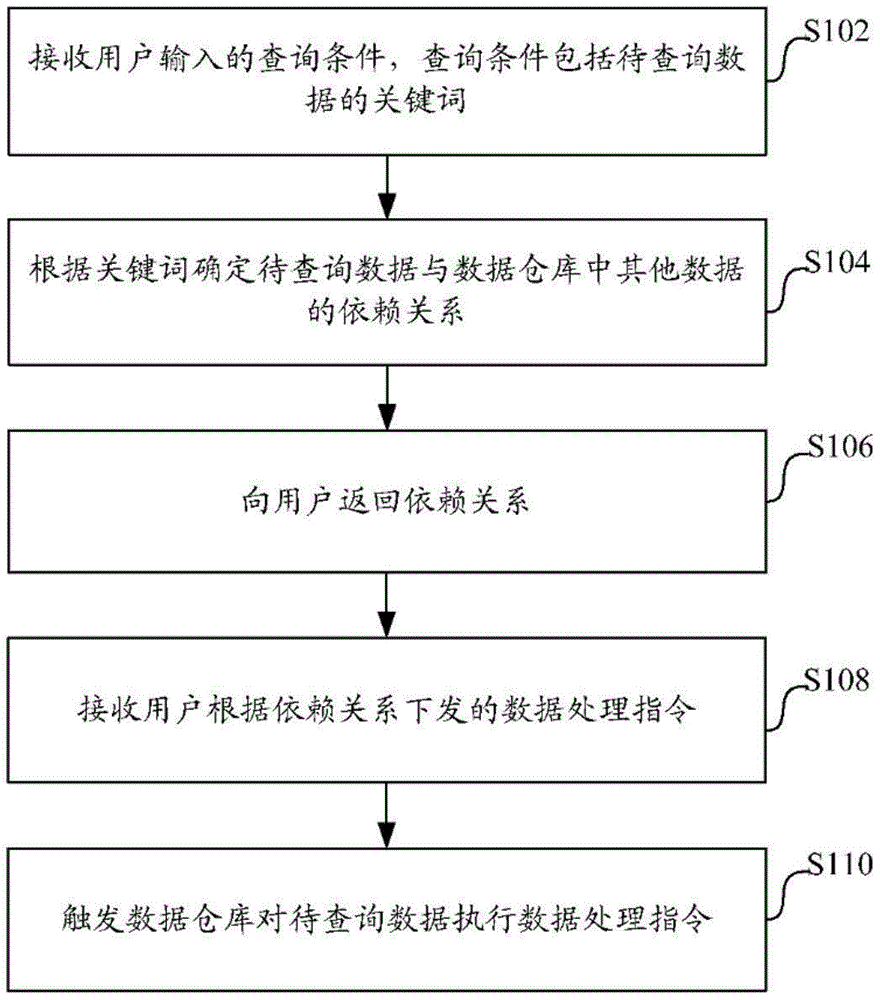

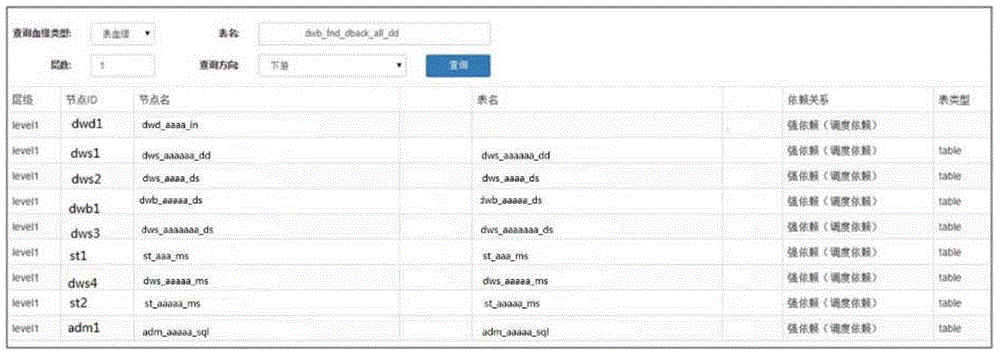

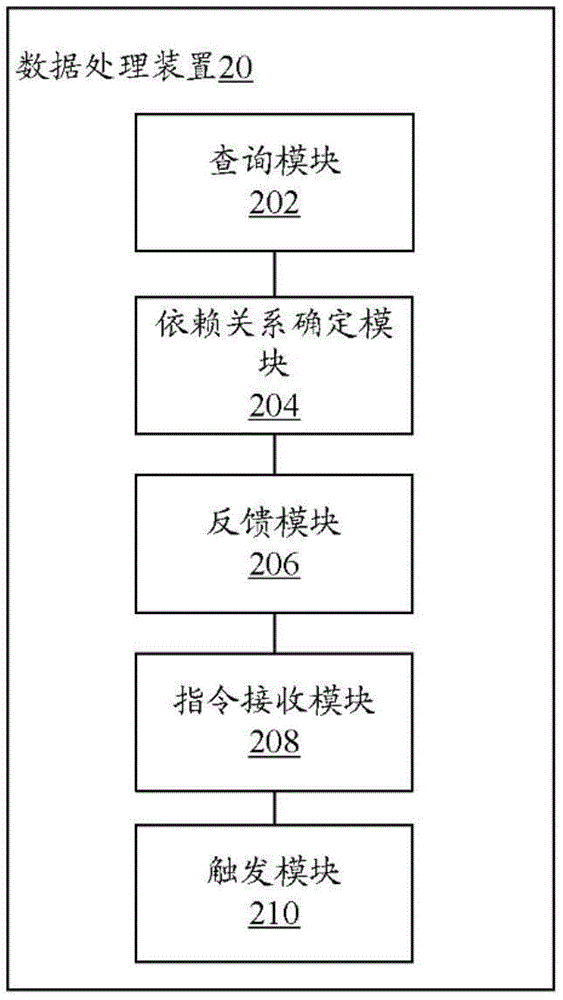

Data processing method and apparatus of data warehouse

ActiveCN106294478AAvoid wasting resourcesImprove resource usage efficiencySpecial data processing applicationsData processingResource use

Embodiments of the invention provide a data processing method and apparatus of a data warehouse. The method comprises the steps of receiving a query condition input by a user, wherein the query condition comprises a keyword of to-be-queried data; determining a dependence relationship between the to-be-queried data and other data in the data warehouse according to the keyword, wherein the dependence relationship is one of the following dependence relationships: no dependence, strong dependence and weak dependence; returning the dependence relationship to the user; receiving a data processing instruction sent by the user according to the dependence relationship; and triggering the data warehouse to execute a data processing instruction for the to-be-queried data. By adopting the method provided by the embodiment of the invention, the resource usage efficiency of the data warehouse can be improved.

Owner:ADVANCED NEW TECH CO LTD

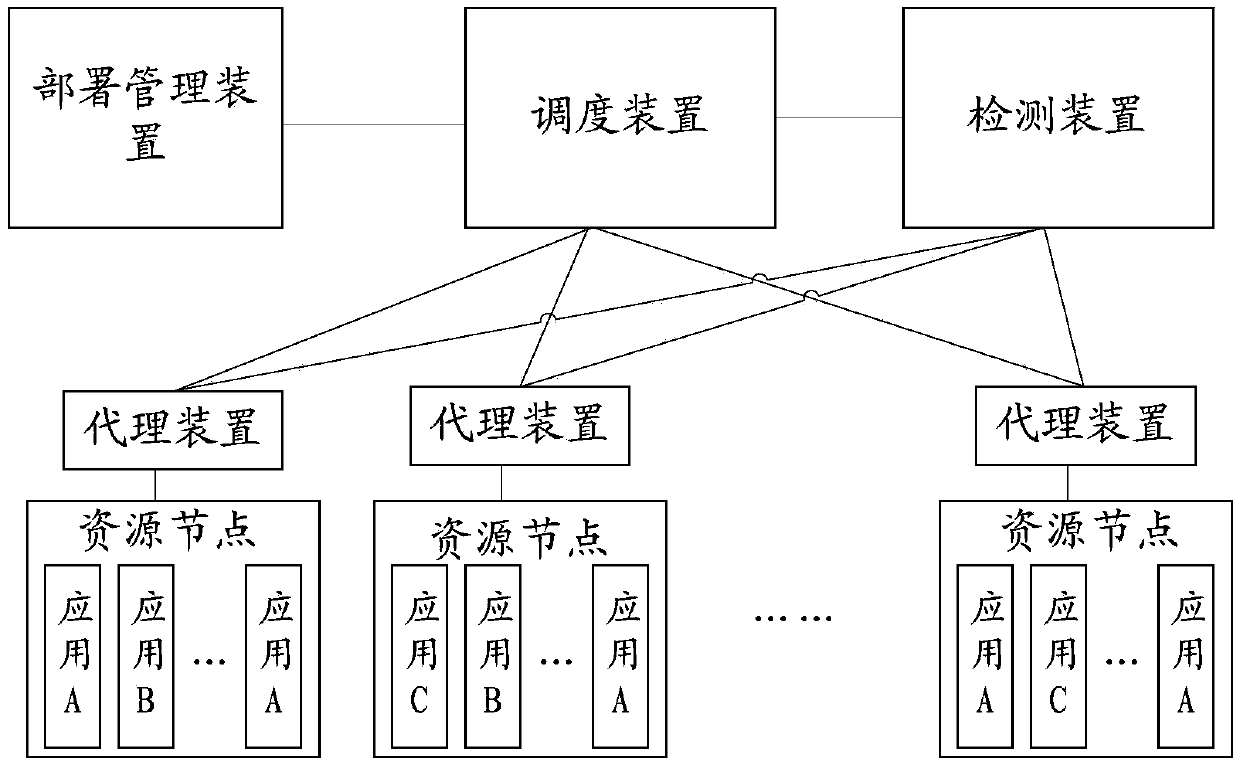

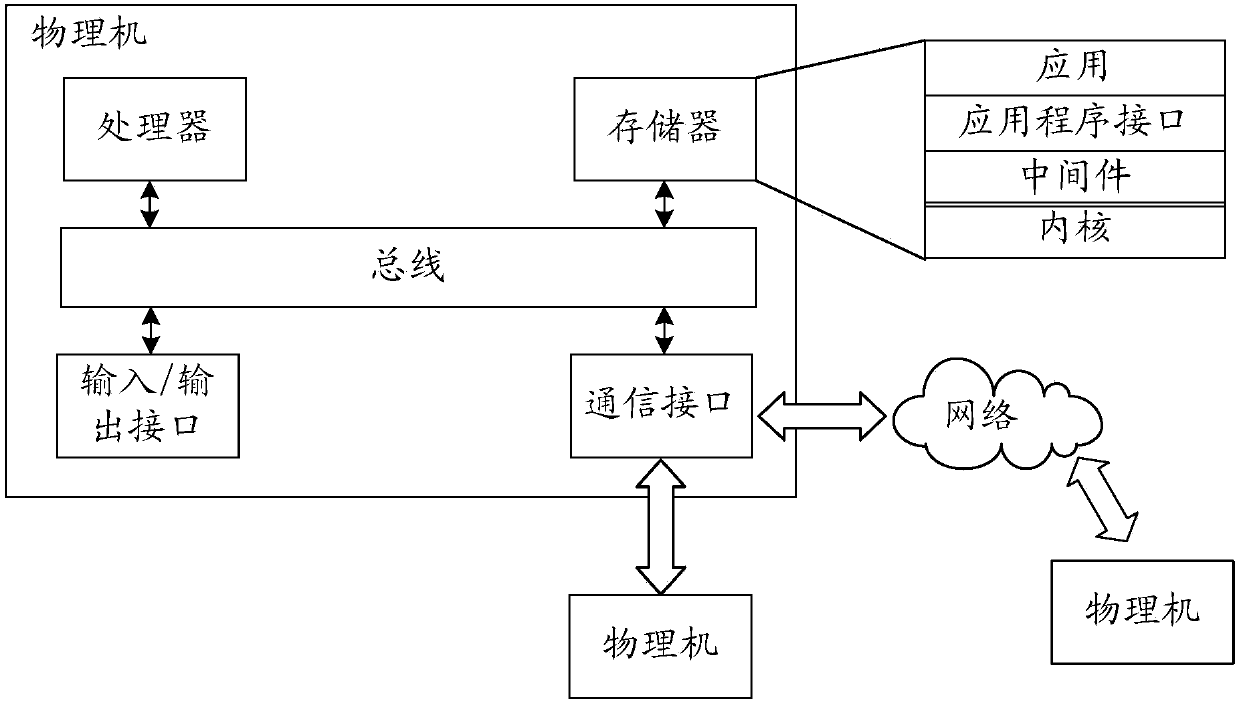

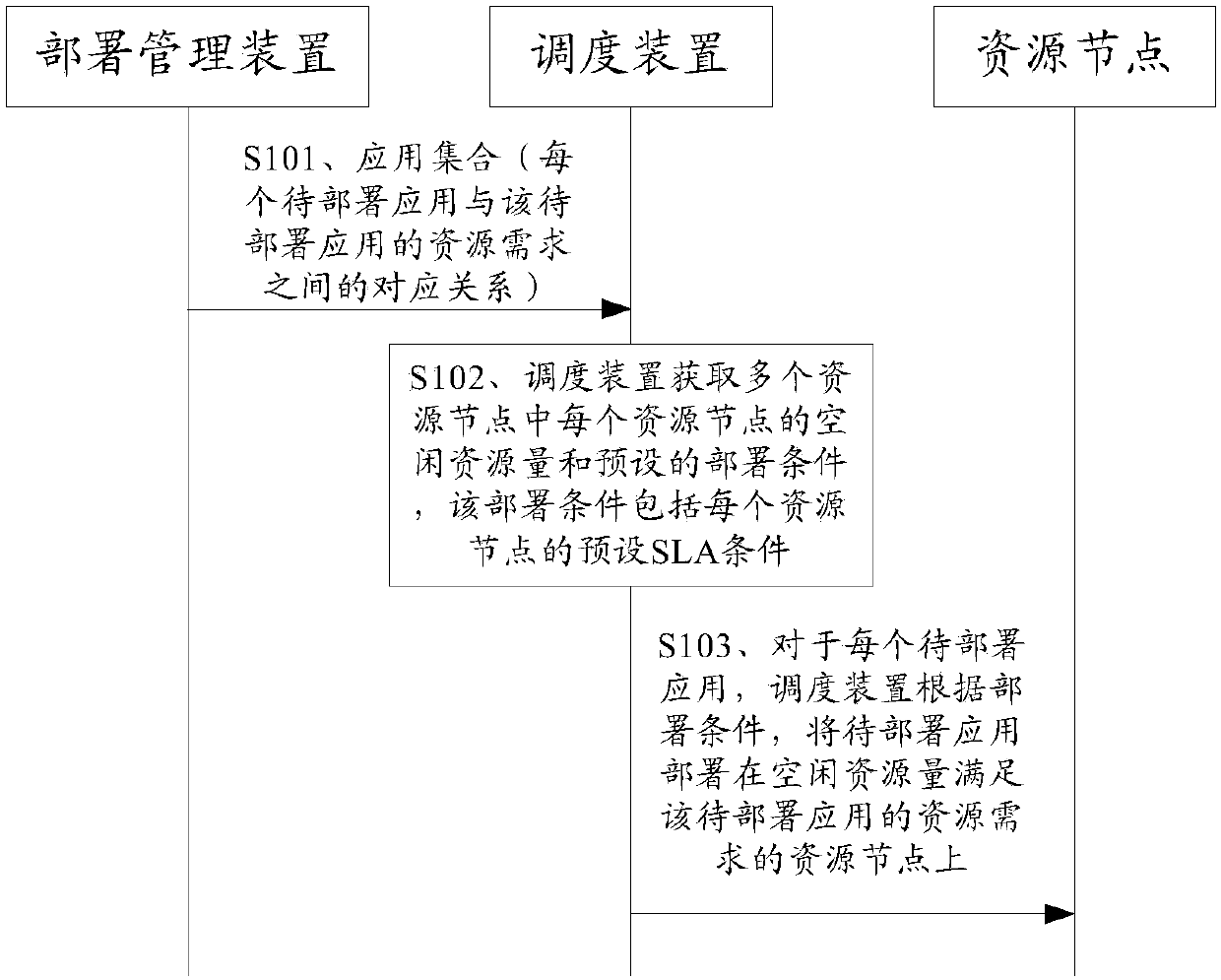

Application scheduling method and device

InactiveCN107818013AImprove resource usage efficiencyEfficient deploymentResource allocationSoftware deploymentService-level agreementApplication scheduling

The embodiment of the invention provides an application scheduling method and device, and relates to the technical field of communication. The success rate of application deployment can be increased.The method includes the steps that a scheduling device receives an application set from a deployment managing device, wherein the application set comprises corresponding relations between each application to be deployed in the application set and the resource requirement of the application to be deployed; the scheduling device obtains the free resource quantities of multiple resource nodes and a preset deployment condition, wherein the deployment condition includes the preset SLA condition of each resource node; for each application to be deployed, the scheduling device deploys the applicationto be deployed to the resource node of which the free resource quantity meets the resource requirement of the application to be deployed, wherein the service level agreement SLA parameters of the resource node after the application to be deployed is deployed meet the preset SLA condition of the resource node. The method is applied to application scheduling.

Owner:HUAWEI TECH CO LTD

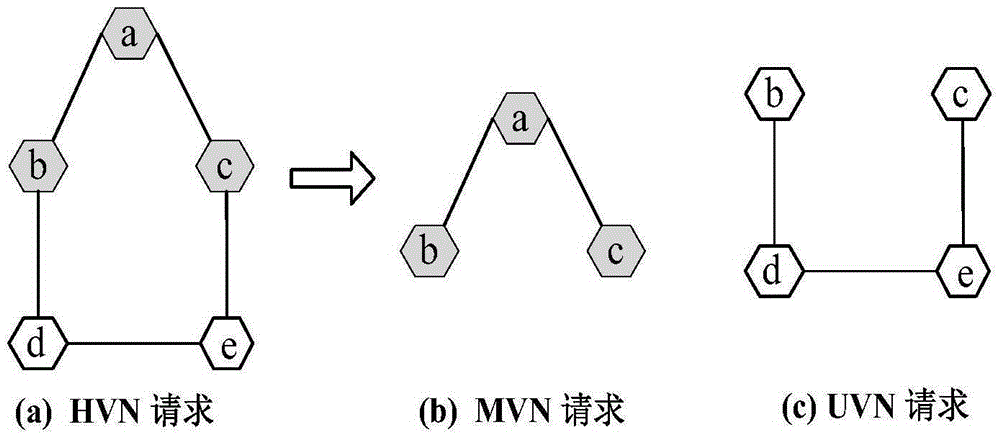

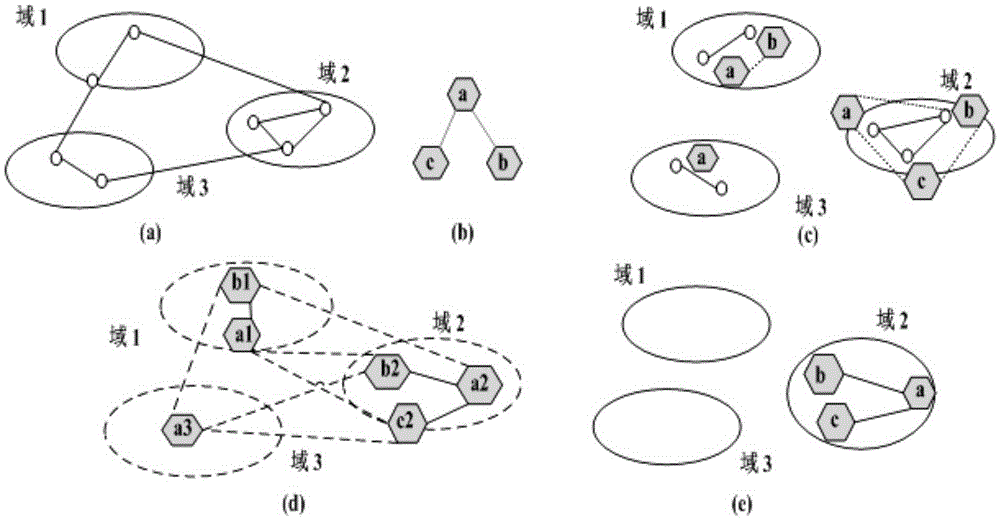

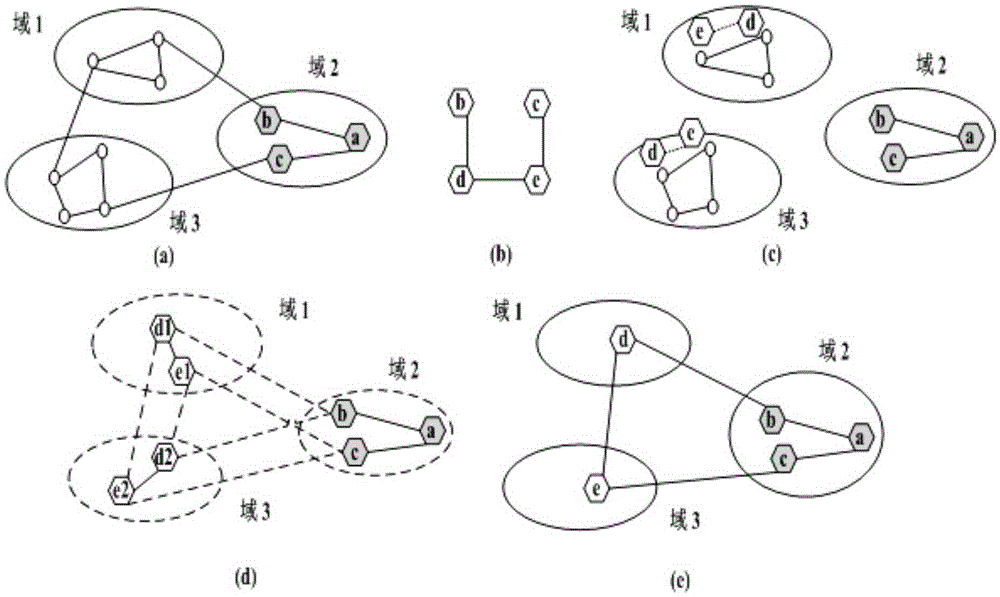

Cross-domain mapping method for hybrid virtual network (HVN)

InactiveCN105262663AImprove resource usage efficiencyLoad balancingNetworks interconnectionQuality of serviceDecomposition

The invention discloses a cross-domain mapping method for a hybrid virtual network (HVN). In the event of having one known request of a bottom multi-domain network and the HVN, node calculation resources and link bandwidth resources in the bottom network are distributed to the HVN request based on an HVNMMD-D algorithm and a spectrogram decomposition-based mapping method; therefore, a mapping scheme, which not only satisfies the QoS (Quality of Service) but also saves the mapping cost, is found; secondly, the specificities of multicast parts in the bottom multi-domain network and the HVN are comprehensively considered; common bandwidth resources and calculation resources are optimized; and thus, cross-domain mapping development requirements of the current HVN can be satisfied.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA +1

Solar thermal dynamic energy storage system

InactiveCN105507971ASimple structureImprove energy efficiencyFrom solar energyPiston pumpsAutomotive engineeringPower grid

The invention relates to an energy storage system, in particular to a solar thermal dynamic energy storage system, belonging to the technical field of energy storage. The solar thermal dynamic energy storage system comprises a solar collecting system, a compressed energy storage system and a thermal power expansion system, wherein a photovoltaic power generation device can generate electricity to drive a high-pressure compressor to work, external electric energy can drive the high-pressure compressor and a low-pressure compressor to work through a power grid of a power station, and an expansion machine can swell to generate electricity to release energy. According to the energy storage system, the valley electric energy of the power grid of the power station and residual electric quantity generated through daytime solar photovoltaic power generation can be stored, the solar photovoltaic power generation and sunshine thermal power stored heat power generation can be fully utilized, thereby improving the utilization efficiency of energy, lowering the power generation cost and increasing the economic benefit.

Owner:江苏朗禾农光聚合科技有限公司

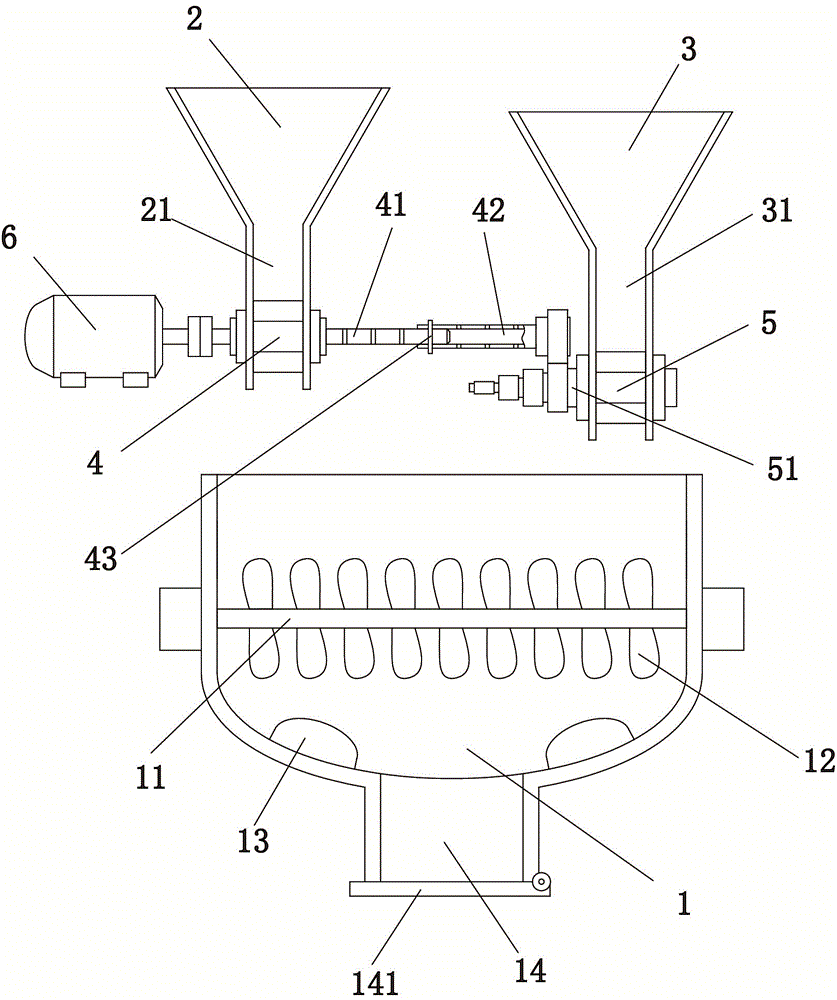

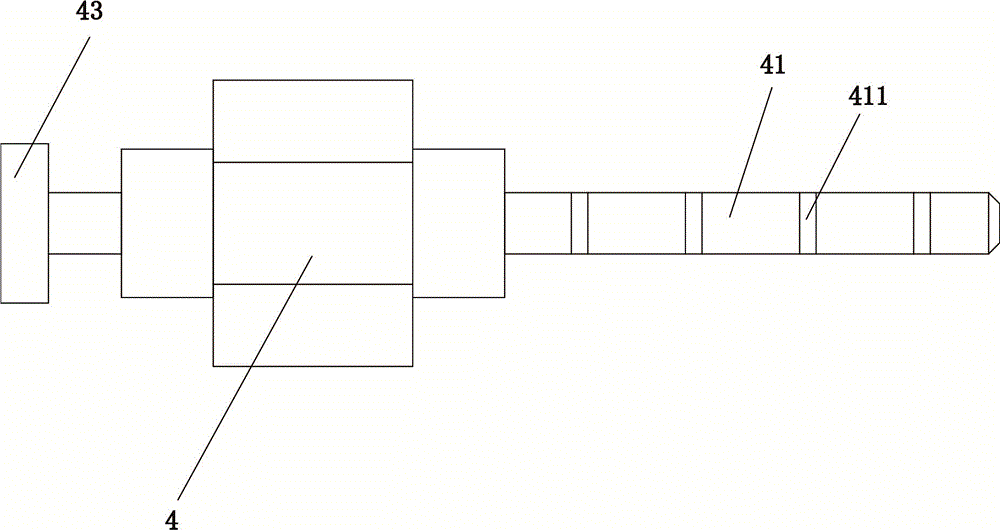

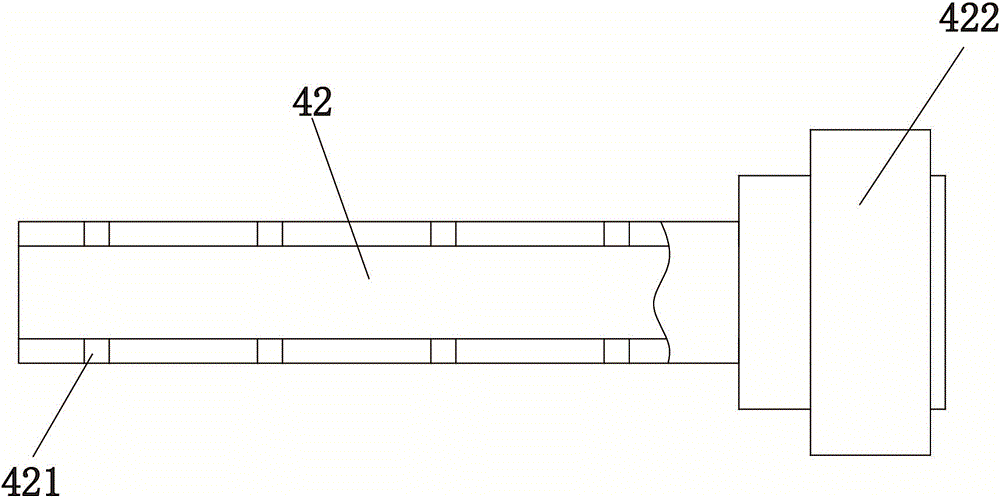

Simple and precise mixing device of injection molding machine

The invention discloses a simple and precise mixing device of an injection molding machine. The simple and precise mixing device comprises a mixing bin, wherein a fresh material hopper and an old material hopper are arranged above the mixing bin; the fresh material hopper is connected with a fresh material channel; an impeller A is rotationally arranged in the fresh material channel; one end face of the impeller A is connected with a locating rod; the locating rod is sleeved by a locating sleeve; the locating rod and the locating sleeve are connected together by a dowel pin to be capable of rotating together; a driving gear is arranged at the end part of the locating sleeve; the old material hopper is connected with an old material channel; an impeller B is rotationally arranged in the old material channel; a gear shaft is arranged at the end part of the impeller B; and the gear shaft and the driving gear are engaged and matched for transmission, so that the impeller B can rotate with the impeller A. Compared with the prior art, the device is simple in structure and low in manufacturing cost; a fixed quantity of reclaimed waste materials can be added into fresh materials; the resource usage efficiency is improved to the greatest extent; and the proportion of the fresh materials and the waste materials is adjustable.

Owner:宁波海洲机械有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com