Accelerated operation method and server for convolutional neural network, and storage medium

A convolutional neural network and accelerated computing technology, which is applied to the accelerated computing method of convolutional neural network, servers and storage media, can solve the problems of large amount of calculation and difficulty in making full use of hardware resources to accelerate computing, so as to reduce interaction time, The effect of improving computing speed and resource utilization efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0048] It should be understood that the specific embodiments described herein are only used to explain the present invention, but not to limit the present invention.

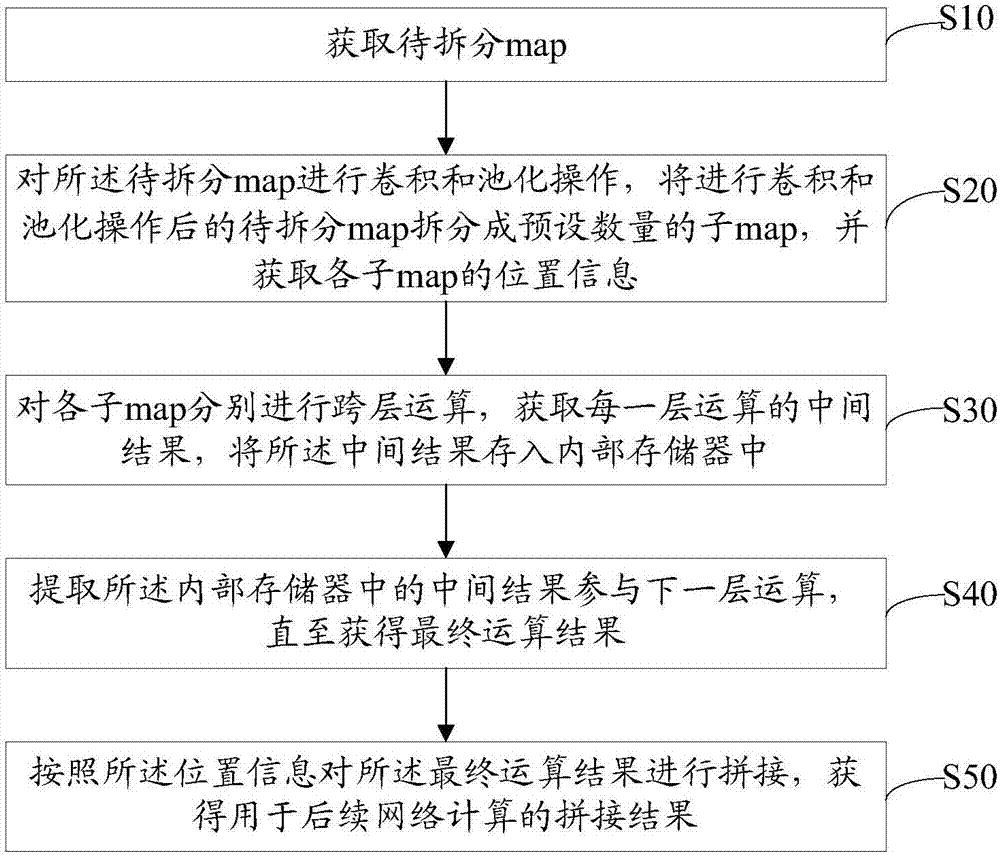

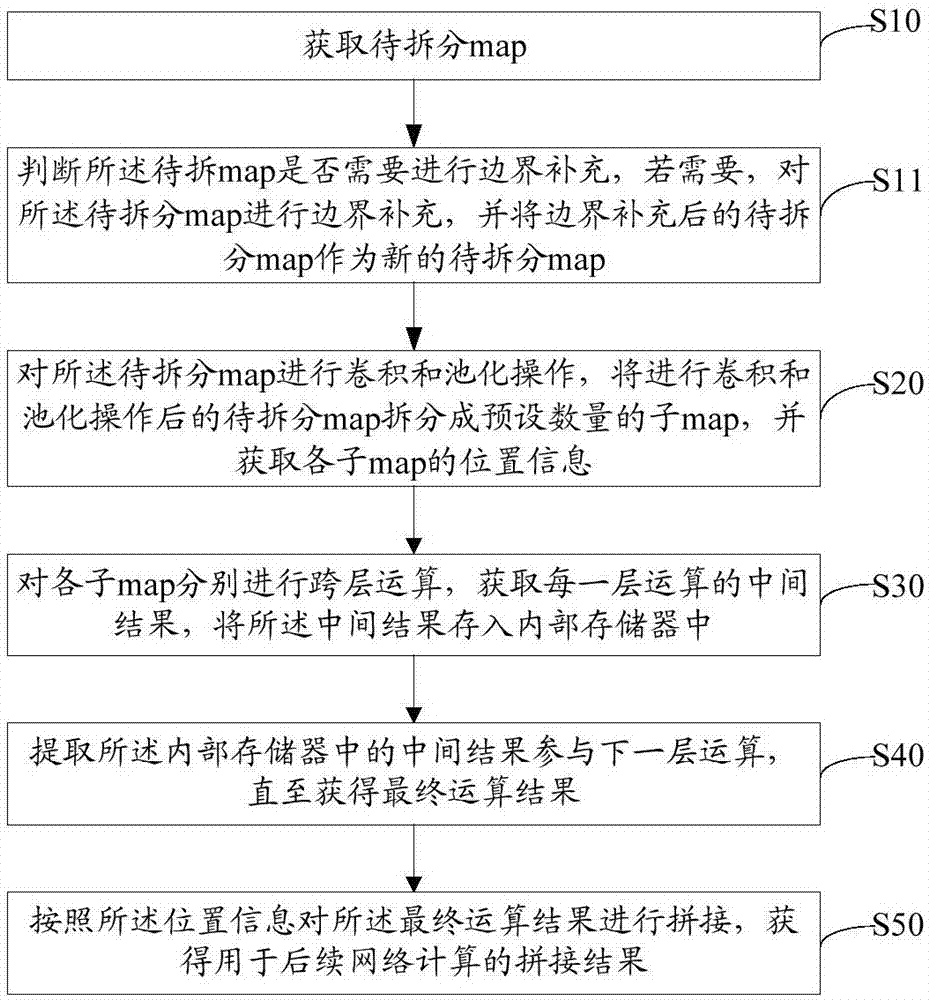

[0049] The solution of the embodiment of the present invention is mainly to obtain the map to be split, perform convolution and pooling operations on the map to be split, and split the map to be split after the convolution and pooling operations are performed into presets A number of sub-maps, and obtain the location information of each sub-map, perform cross-layer operations on each sub-map, obtain the intermediate result of each layer operation, store the intermediate result in the internal memory, and extract the internal memory The intermediate result of is involved in the next layer of calculations until the final calculation result is obtained, and the final calculation result is spliced according to the position information to obtain the splicing result for subsequent network calculations. The technical so...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com