GPU cluster environment-oriented method for avoiding GPU resource contention

A GPU cluster and resource contention technology, applied in the field of avoiding GPU resource contention, can solve problems such as unreasonable GPU assignment, low GPU utilization, and inability to execute in parallel, avoiding resource contention, improving system throughput, The effect of optimizing the execution

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

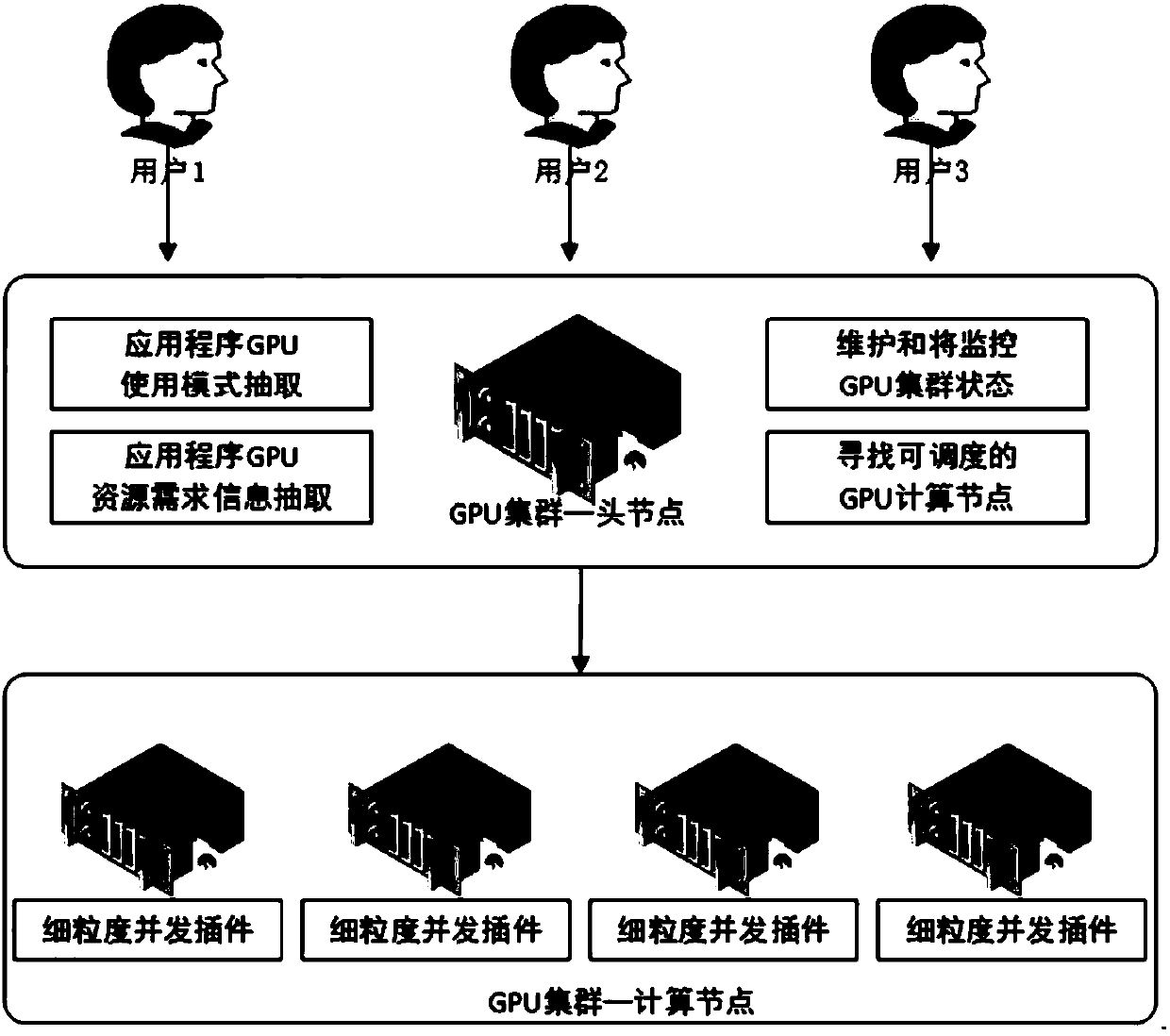

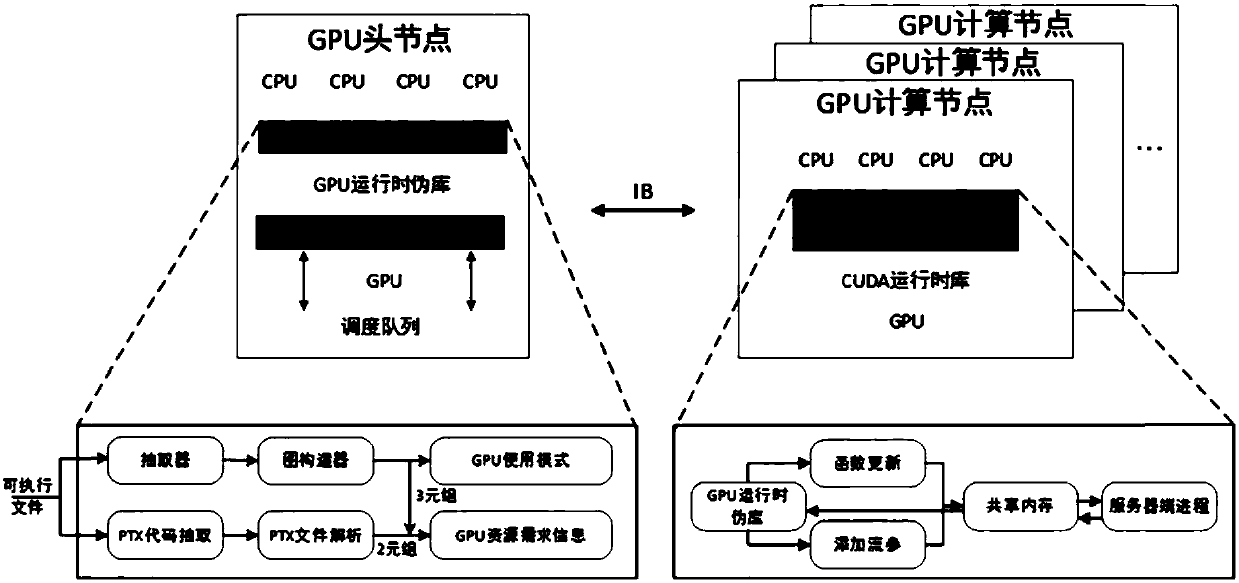

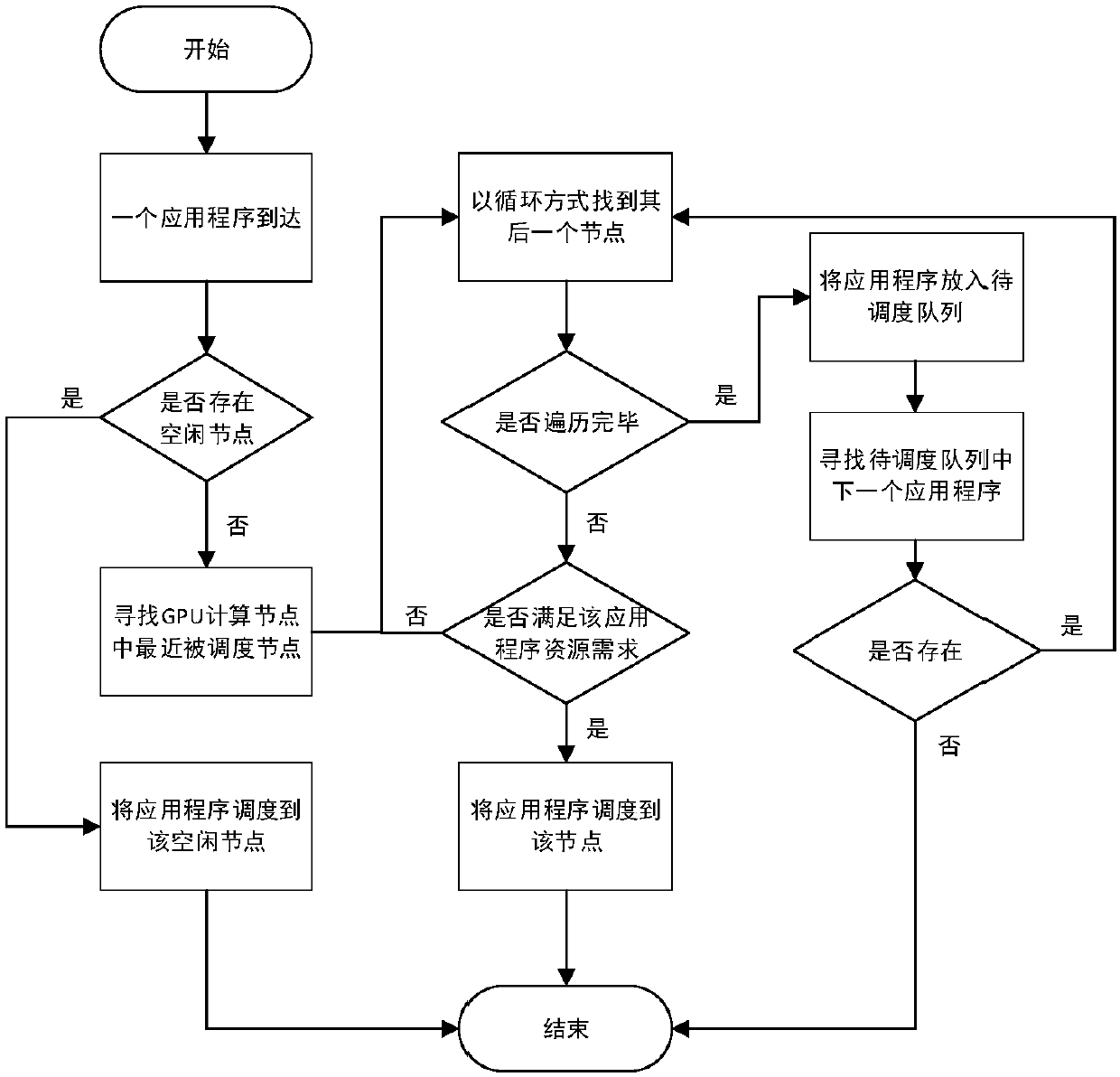

[0087] combine figure 1 and figure 2 , GPU clusters are divided into two types of nodes: GPU head nodes and GPU computing nodes. Among them, there is only one GPU head node, and the rest are GPU computing nodes, and the nodes are connected through Ethernet or Infiniband. Each node in the GPU cluster is configured with the same number and model of NVIDIA Kepler GPUs. On each GPU computing node, install the GPU operating environment of CUDA7.0 or later.

[0088] Combined with the existing functions in the GPU cluster platform, three modules are added to the platform: application GPU behavior feature extraction module, application scheduling module and multi-application fine-grained concurrency module. The specific implementation steps will be described with an example:

[0089] In the application GPU behavior feature extraction module, the extracted information includes: GPU memory application operations (cudaMalloc, etc.), data copy operations between the host and GPU devi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com