Patents

Literature

910 results about "Graphical processing unit" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

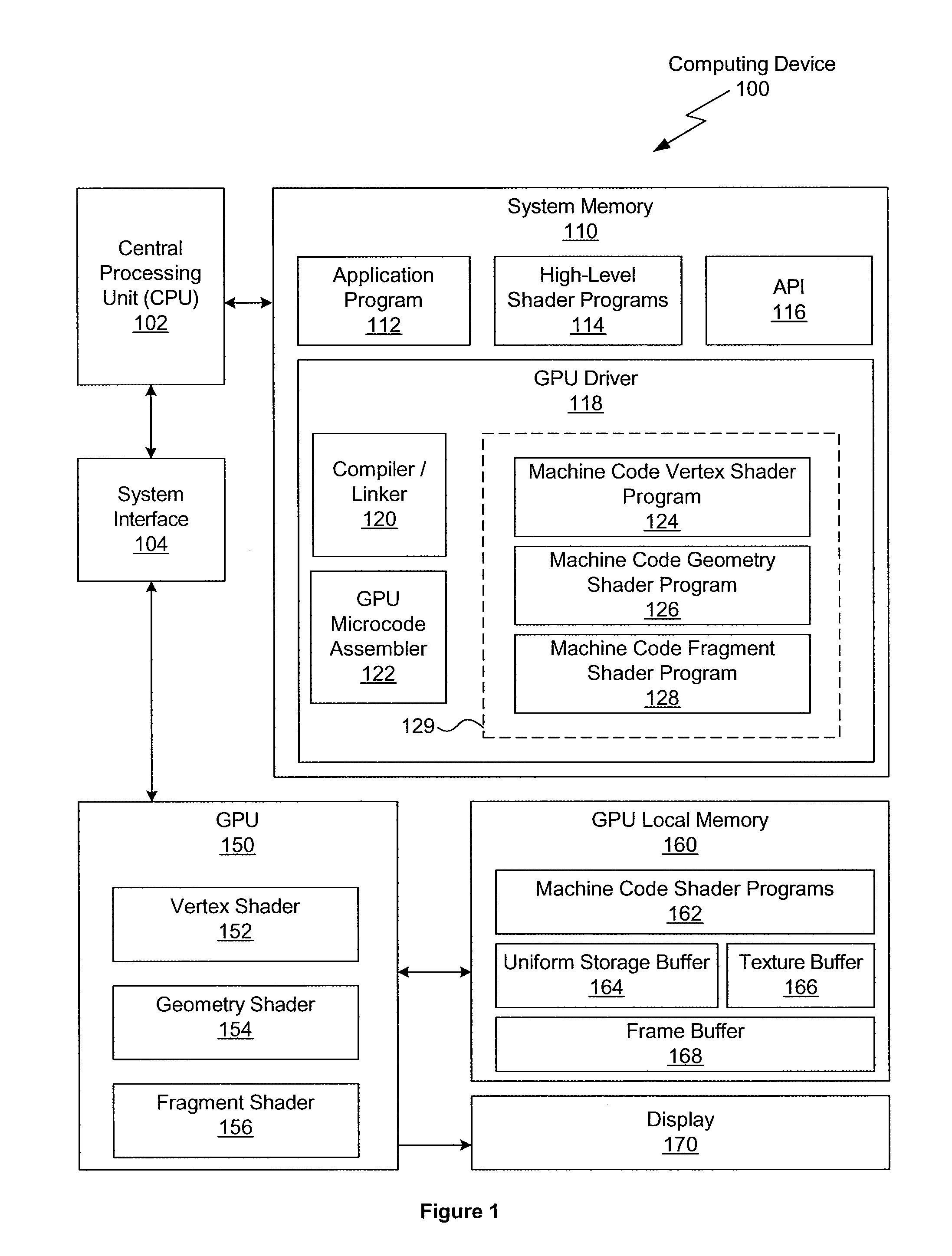

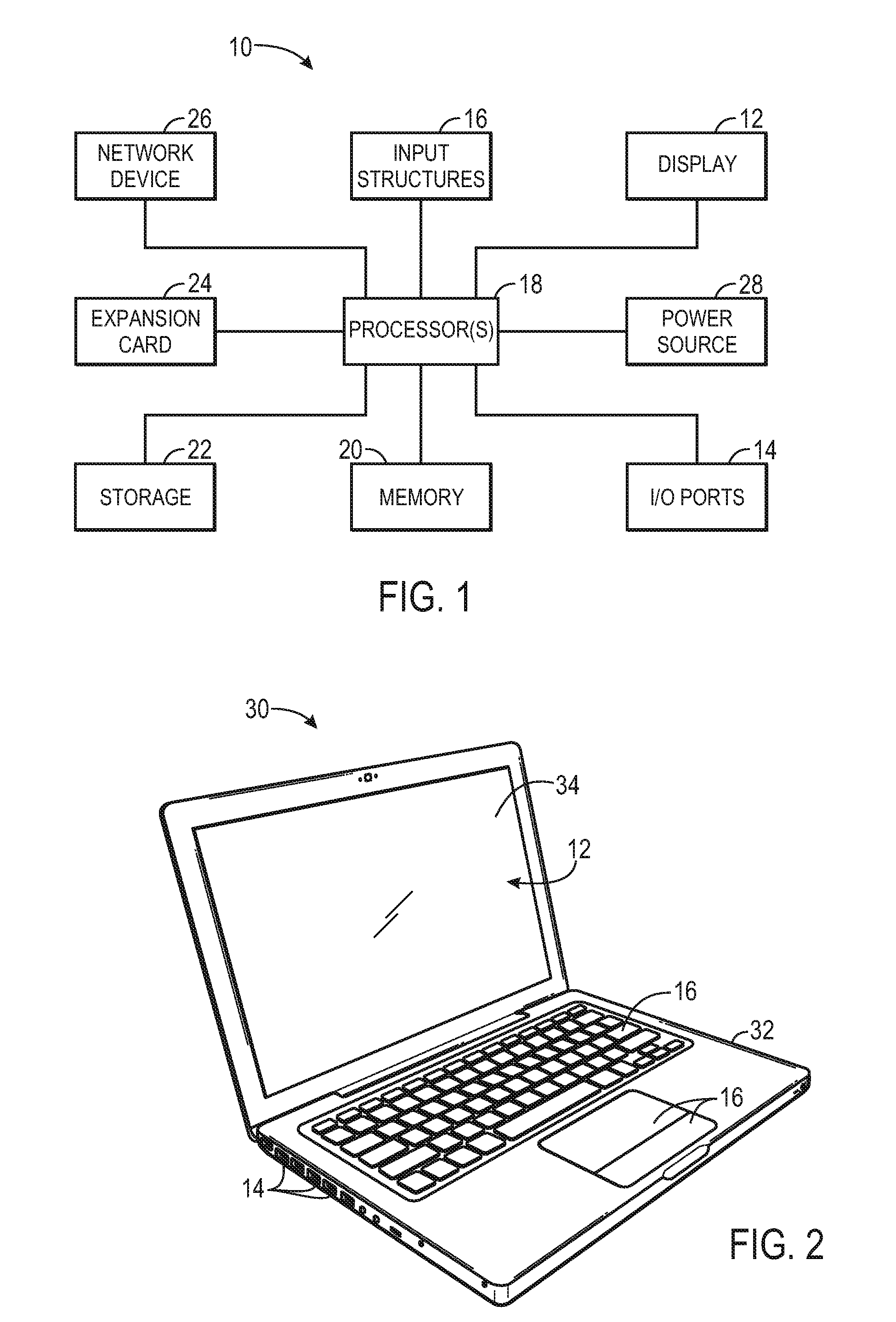

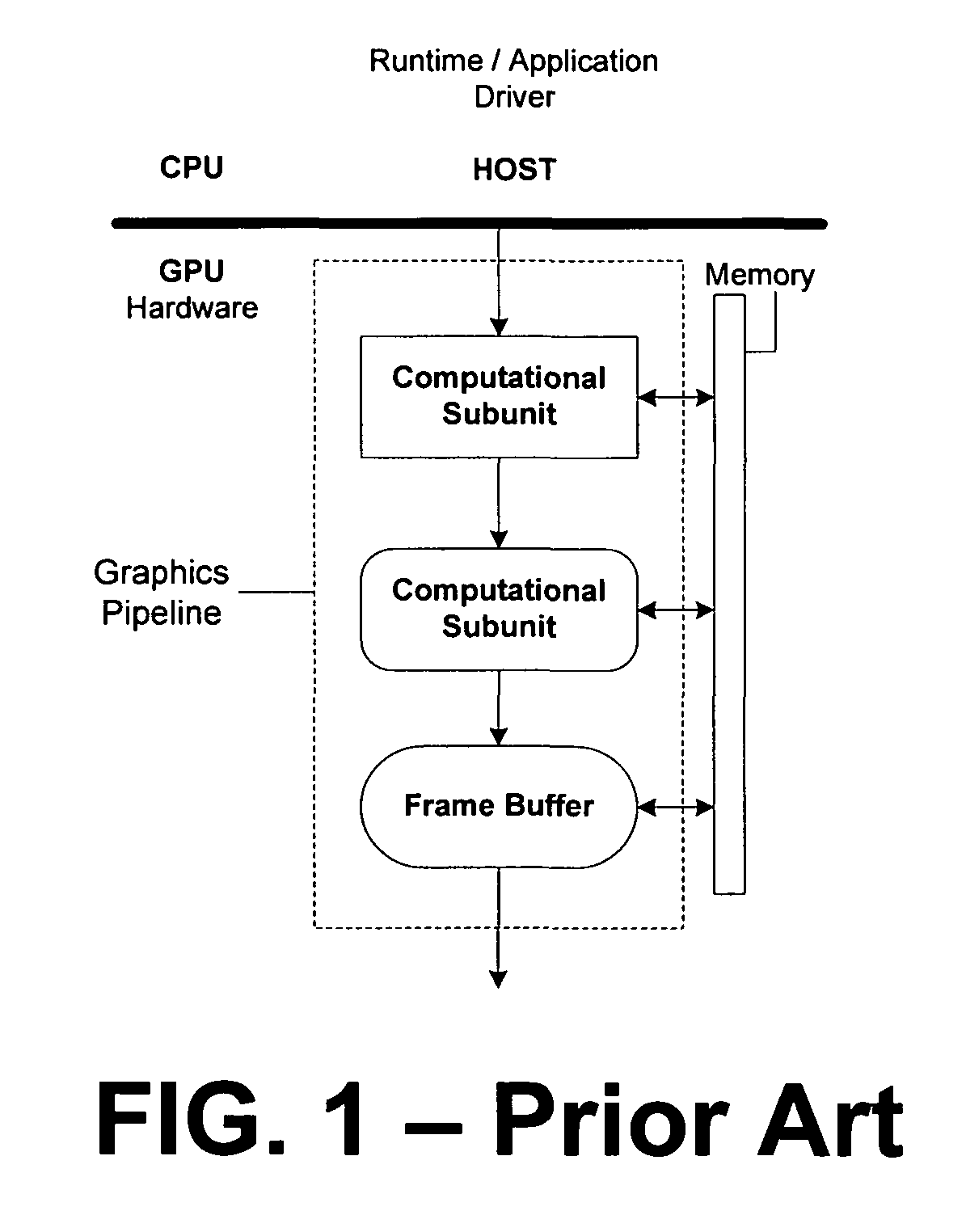

A graphics processing unit ( GPU) is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. GPUs are used in embedded systems, mobile phones, personal computers, workstations, and game consoles.

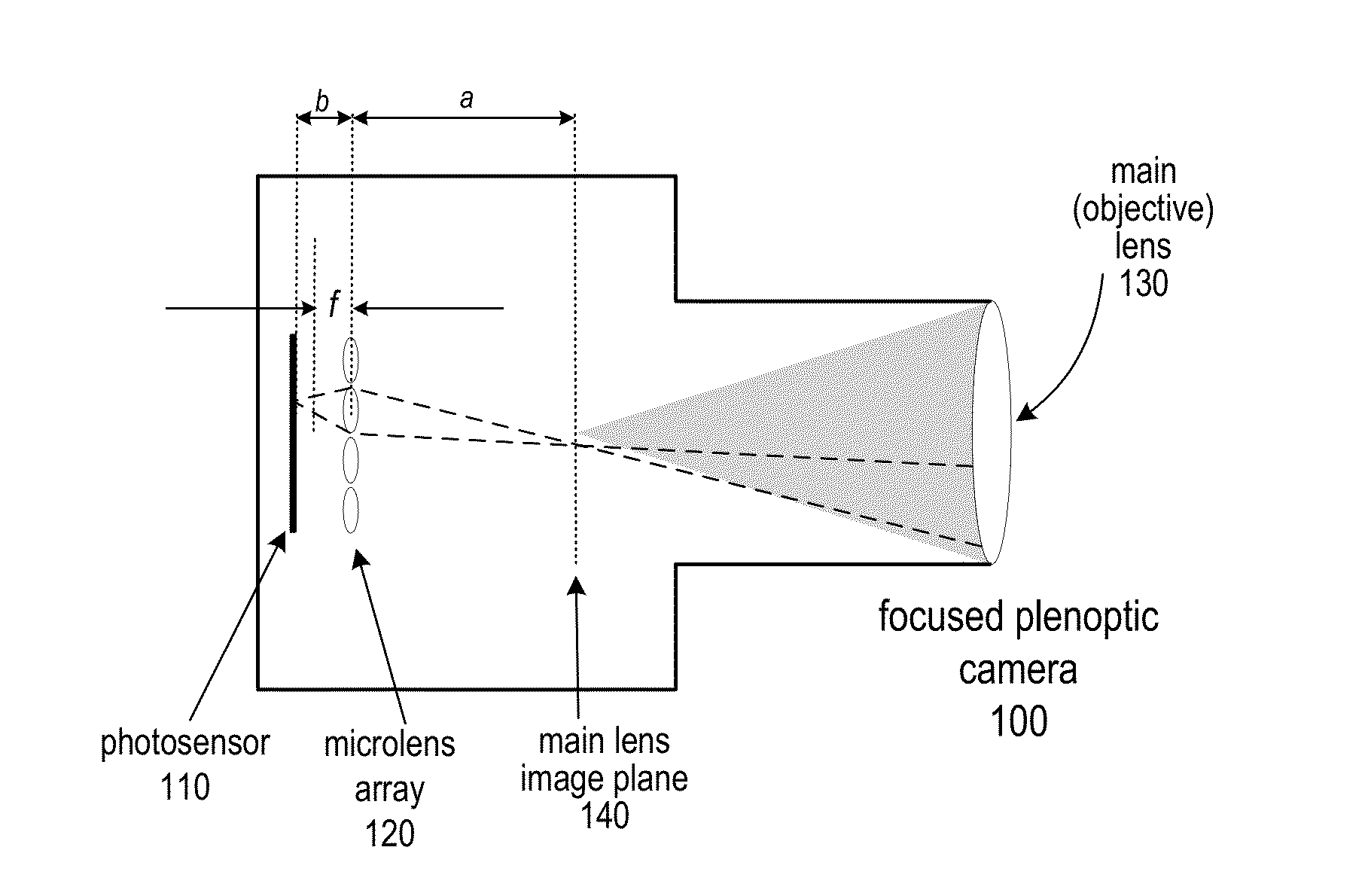

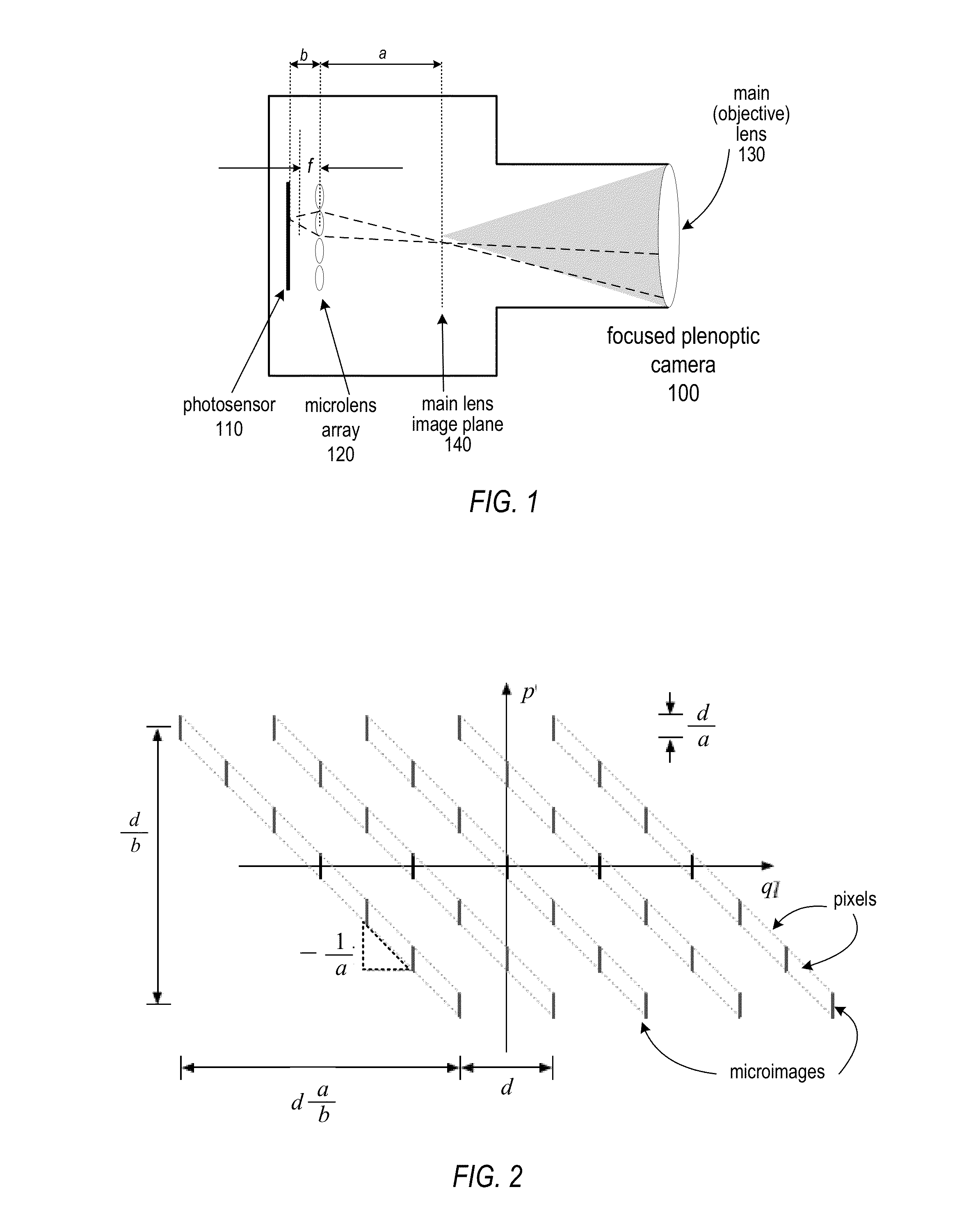

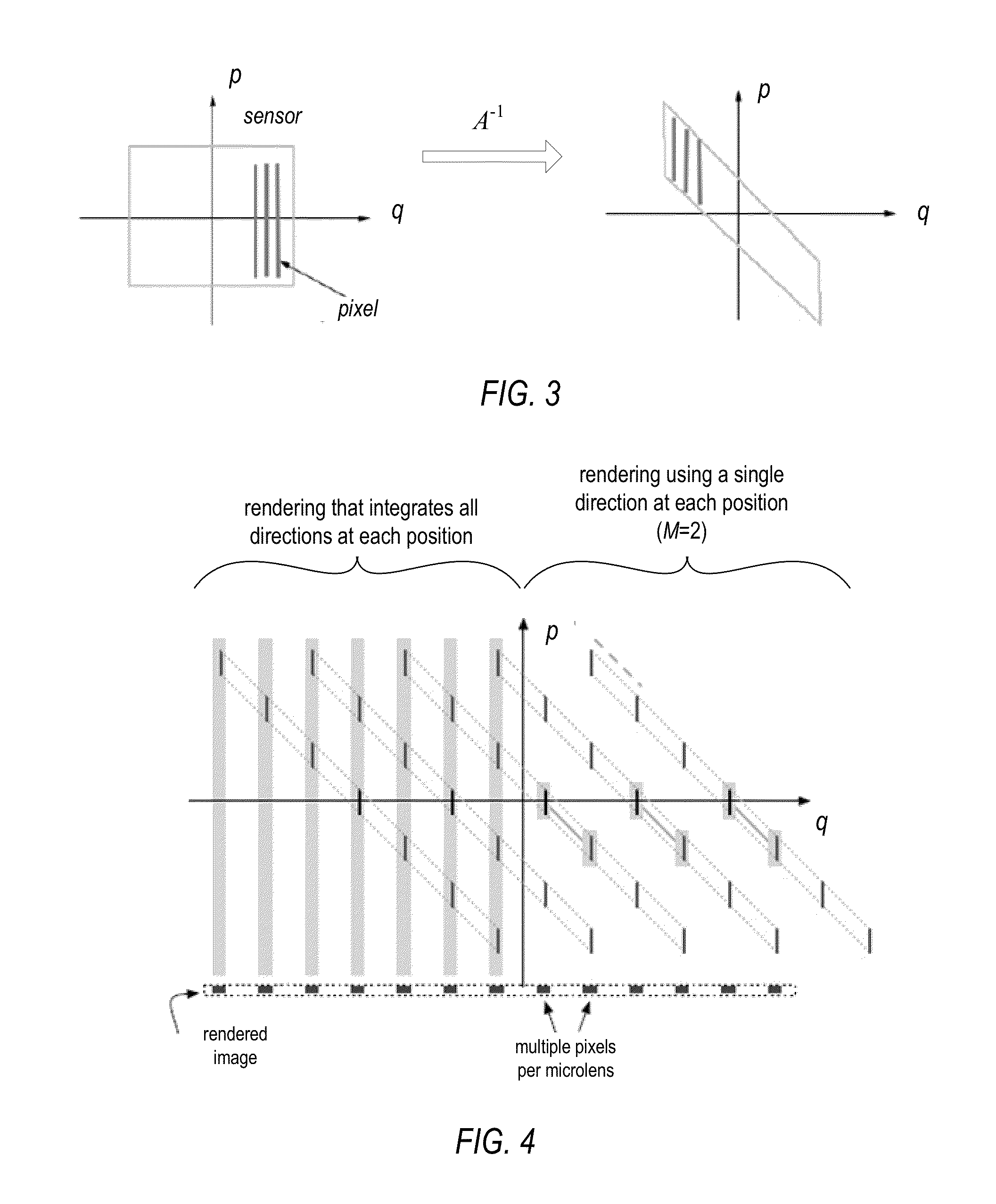

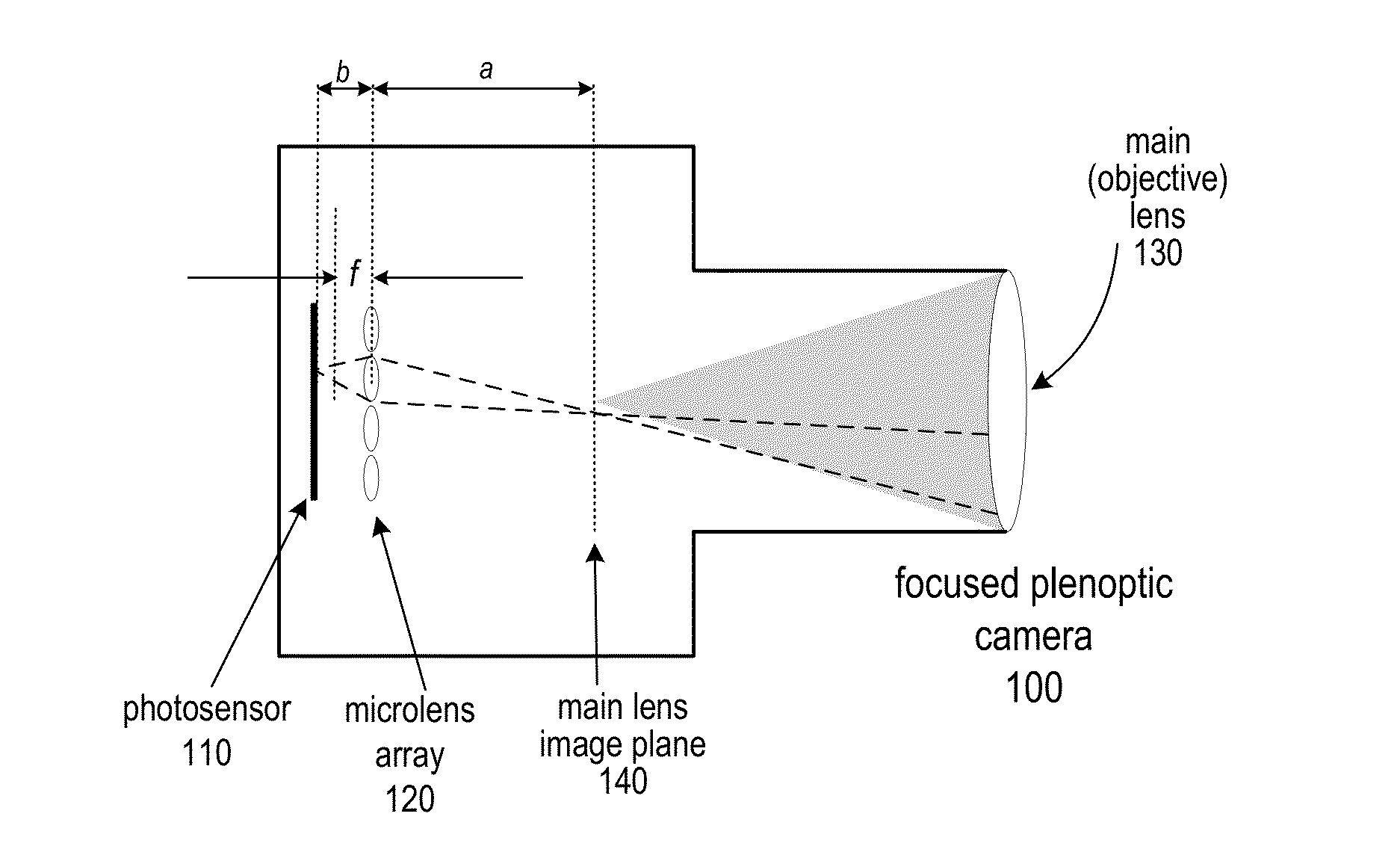

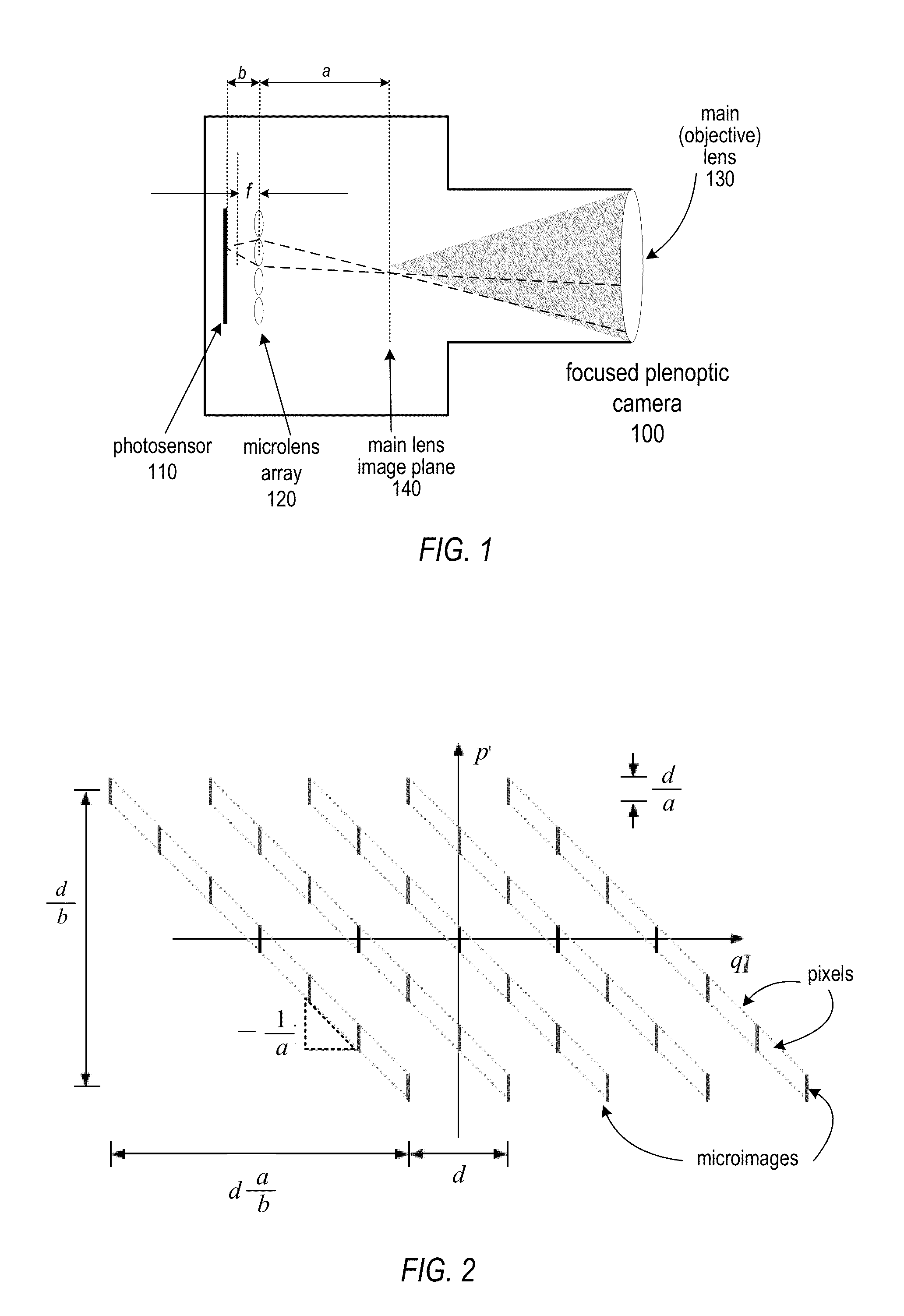

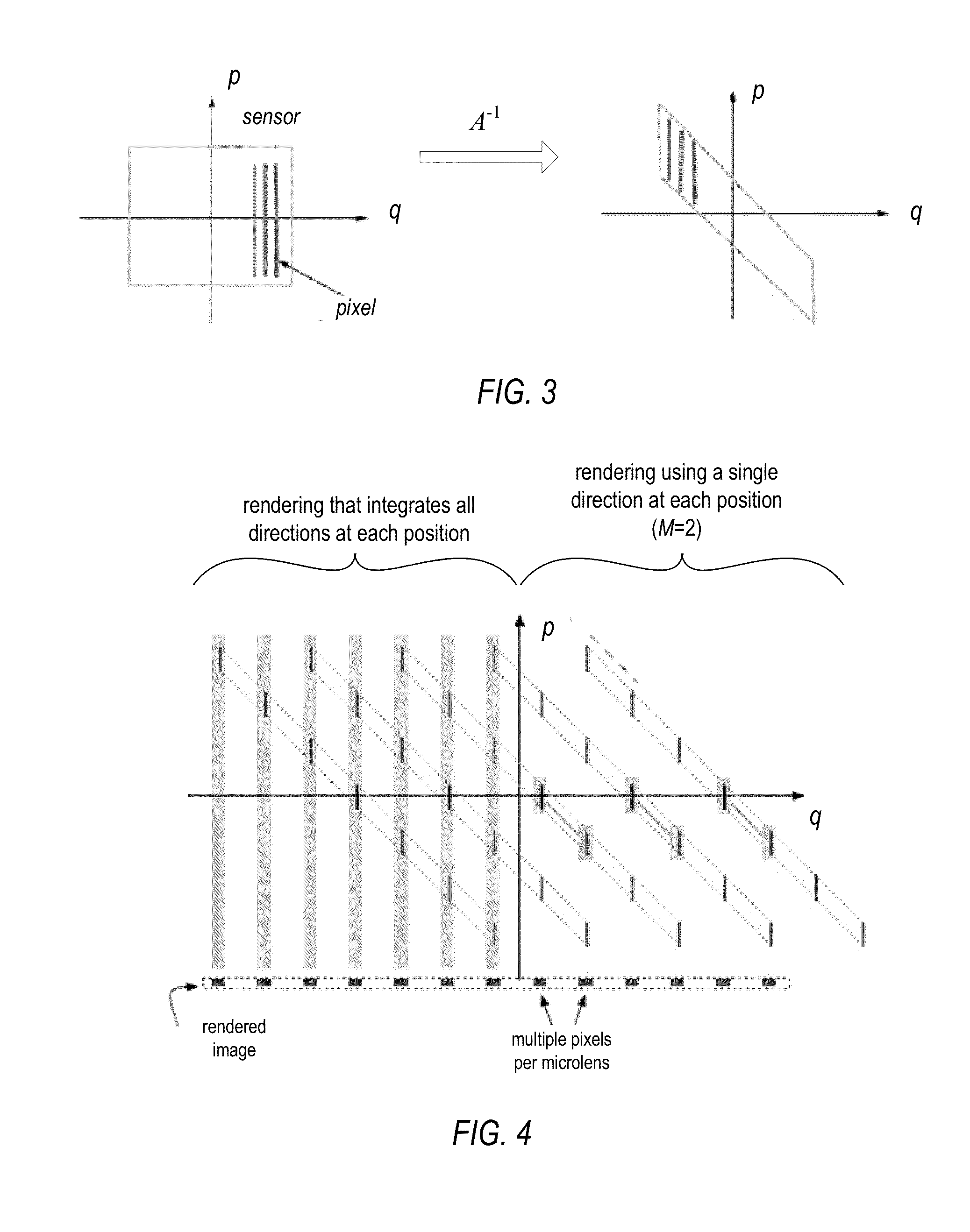

Methods, Apparatus, and Computer-Readable Storage Media for Blended Rendering of Focused Plenoptic Camera Data

ActiveUS20130120605A1High resolutionTelevision system detailsColor television detailsGraphicsMultiple point

Methods, apparatus, and computer-readable storage media for rendering focused plenoptic camera data. A rendering with blending technique is described that blends values from positions in multiple microimages and assigns the blended value to a given point in the output image. A rendering technique that combines depth-based rendering and rendering with blending is also described. Depth-based rendering estimates depth at each microimage and then applies that depth to determine a position in the input flat from which to read a value to be assigned to a given point in the output image. The techniques may be implemented according to parallel processing technology that renders multiple points of the output image in parallel. In at least some embodiments, the parallel processing technology is graphical processing unit (GPU) technology.

Owner:ADOBE SYST INC

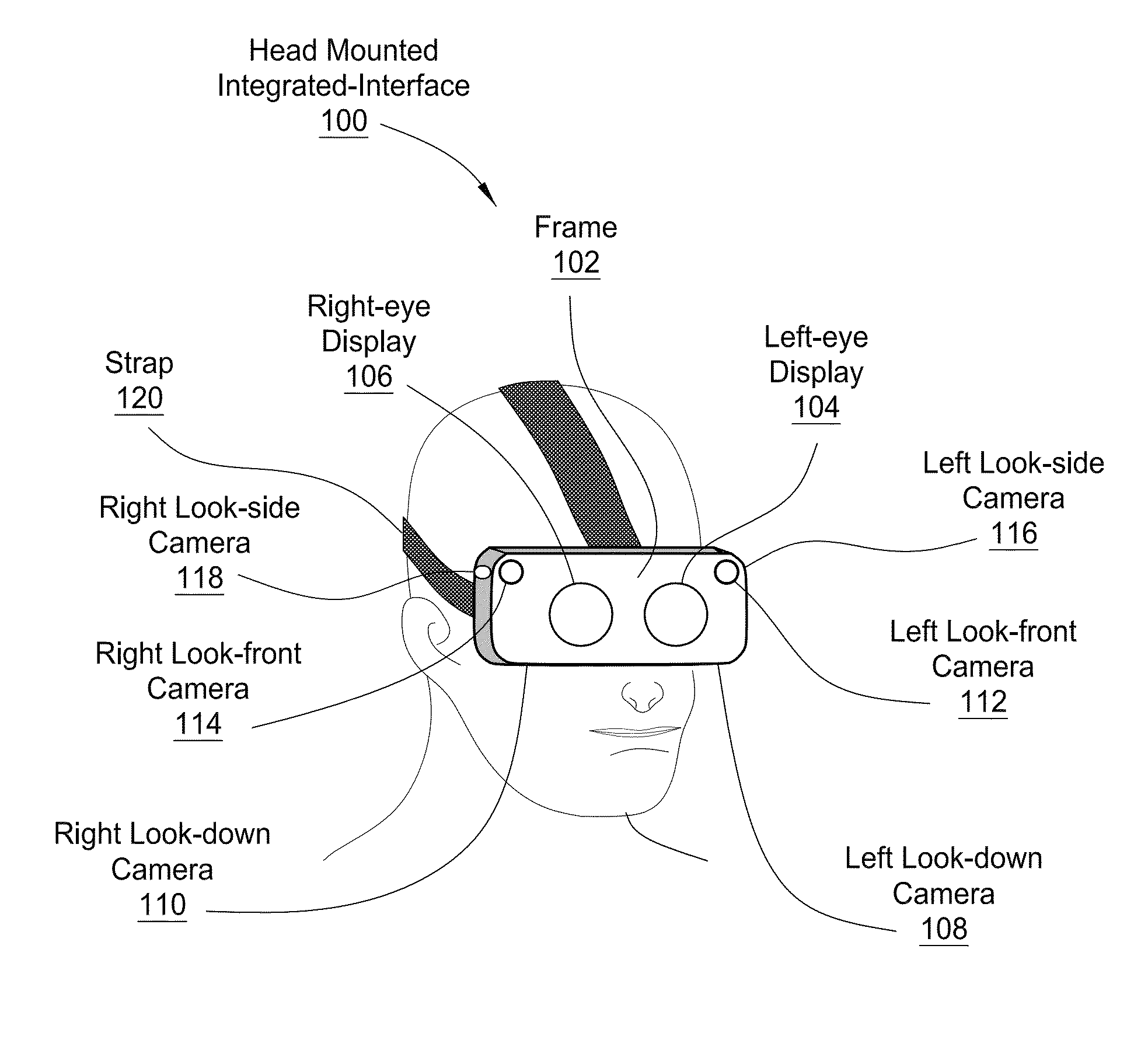

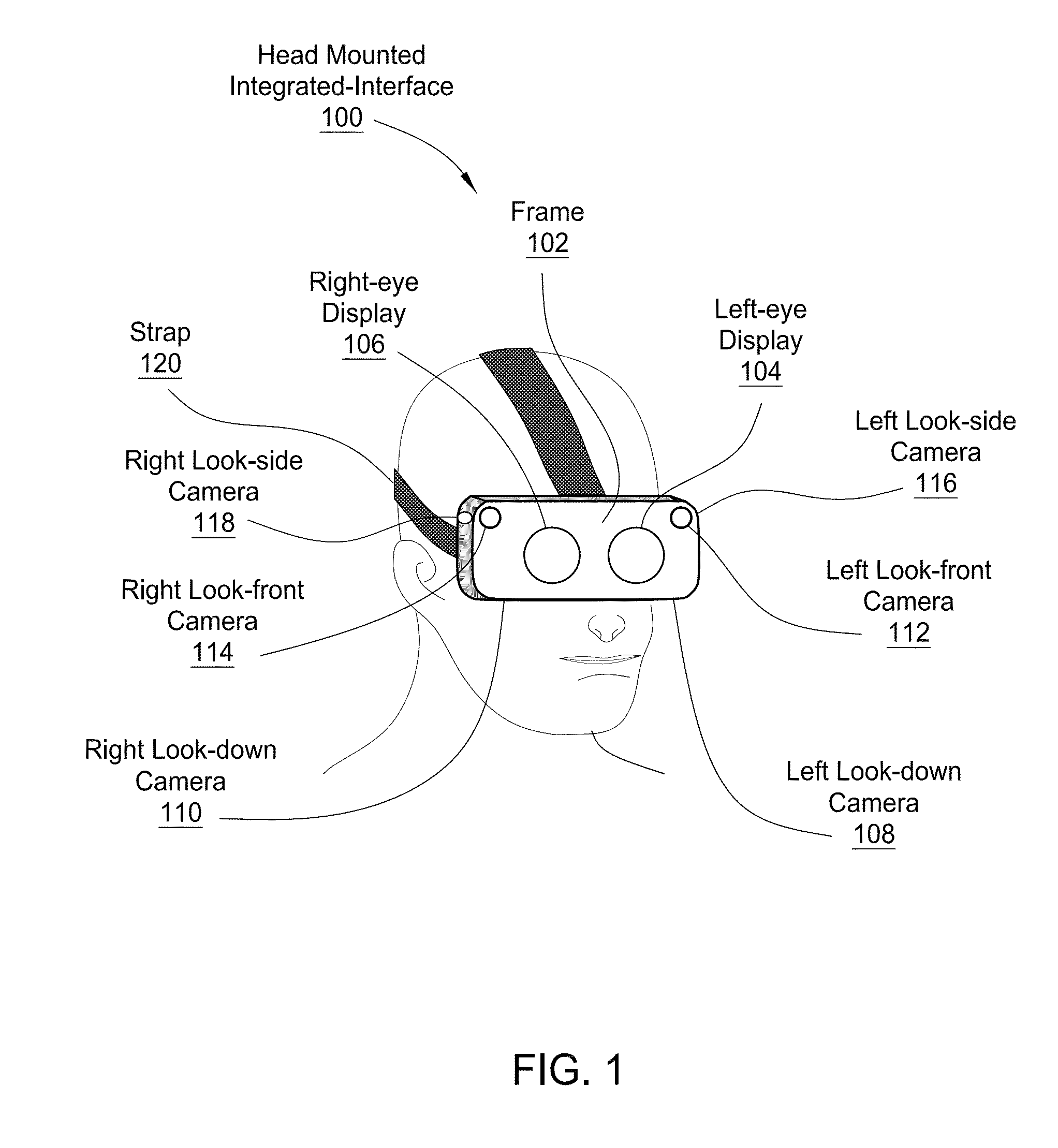

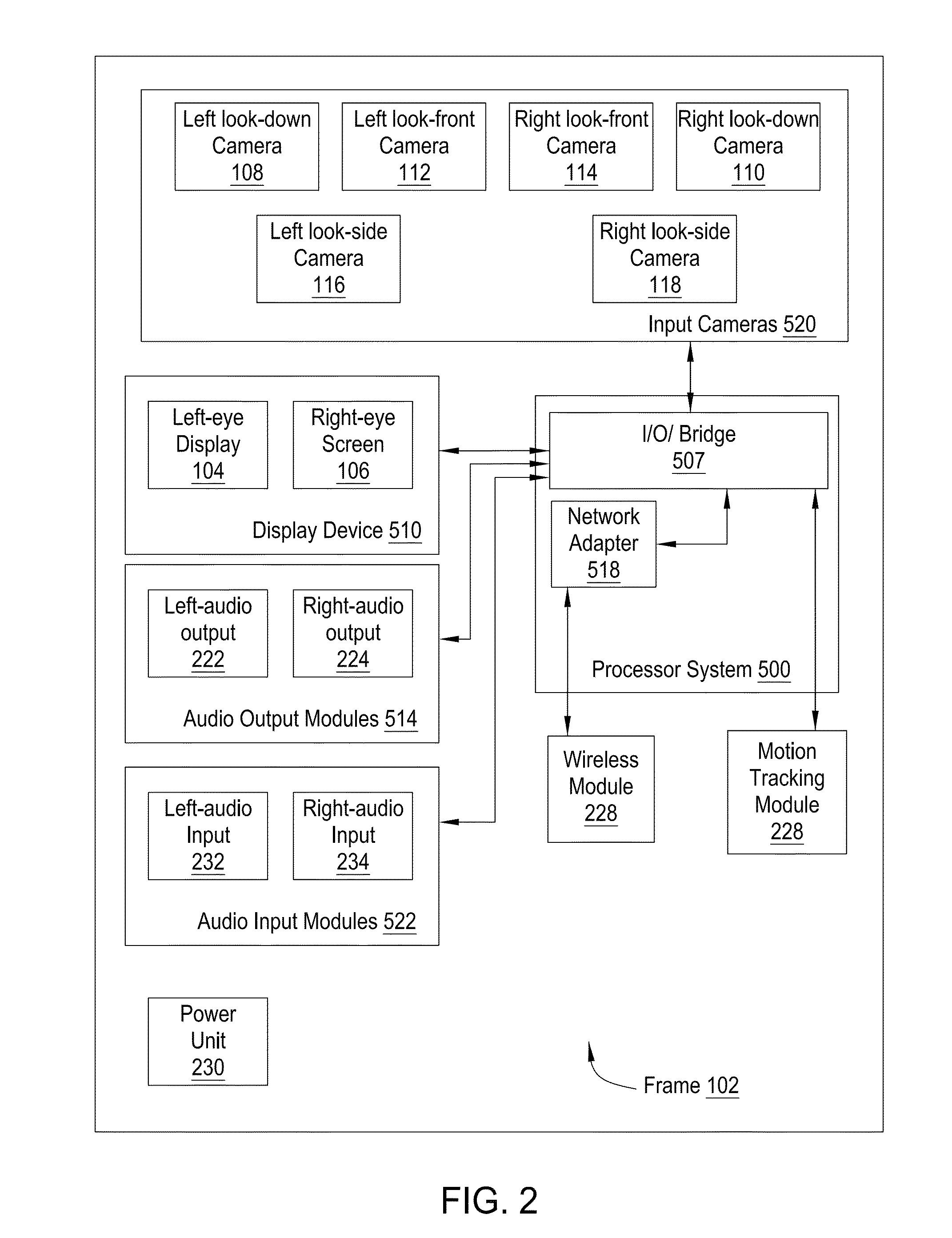

Head-mounted integrated interface

InactiveUS20150138065A1Improve versatilityImprove portabilityInput/output for user-computer interactionCathode-ray tube indicatorsGraphicsLarge screen

A head mounted integrated interface (HMII) is presented that may include a wearable head-mounted display unit supporting two compact high resolution screens for outputting a right eye and left eye image in support of the stereoscopic viewing, wireless communication circuits, three-dimensional positioning and motion sensors, and a processing system which is capable of independent software processing and / or processing streamed output from a remote server. The HMII may also include a graphics processing unit capable of also functioning as a general parallel processing system and cameras positioned to track hand gestures. The HMII may function as an independent computing system or as an interface to remote computer systems, external GPU clusters, or subscription computational services, The HMII is also capable linking and streaming to a remote display such as a large screen monitor.

Owner:NVIDIA CORP

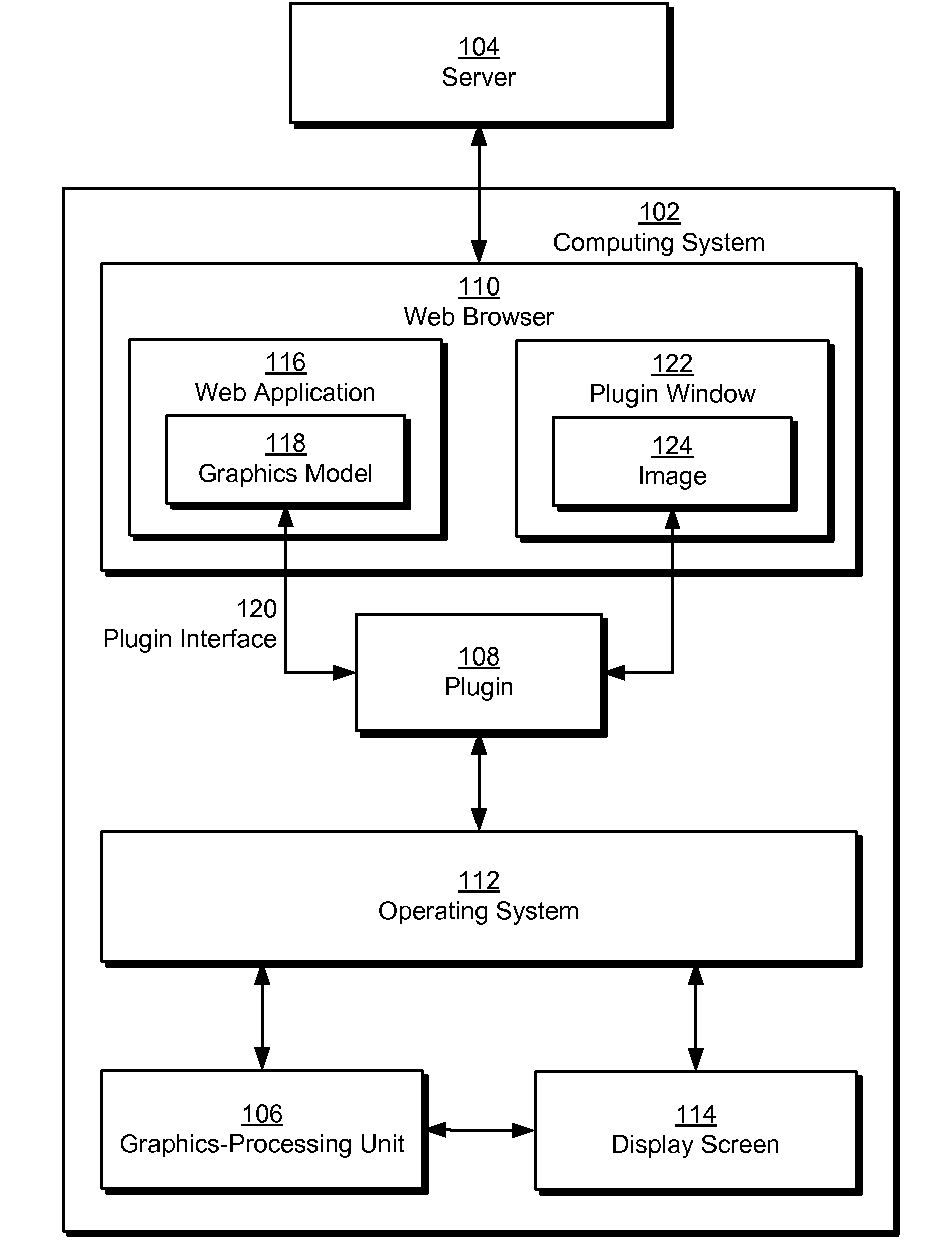

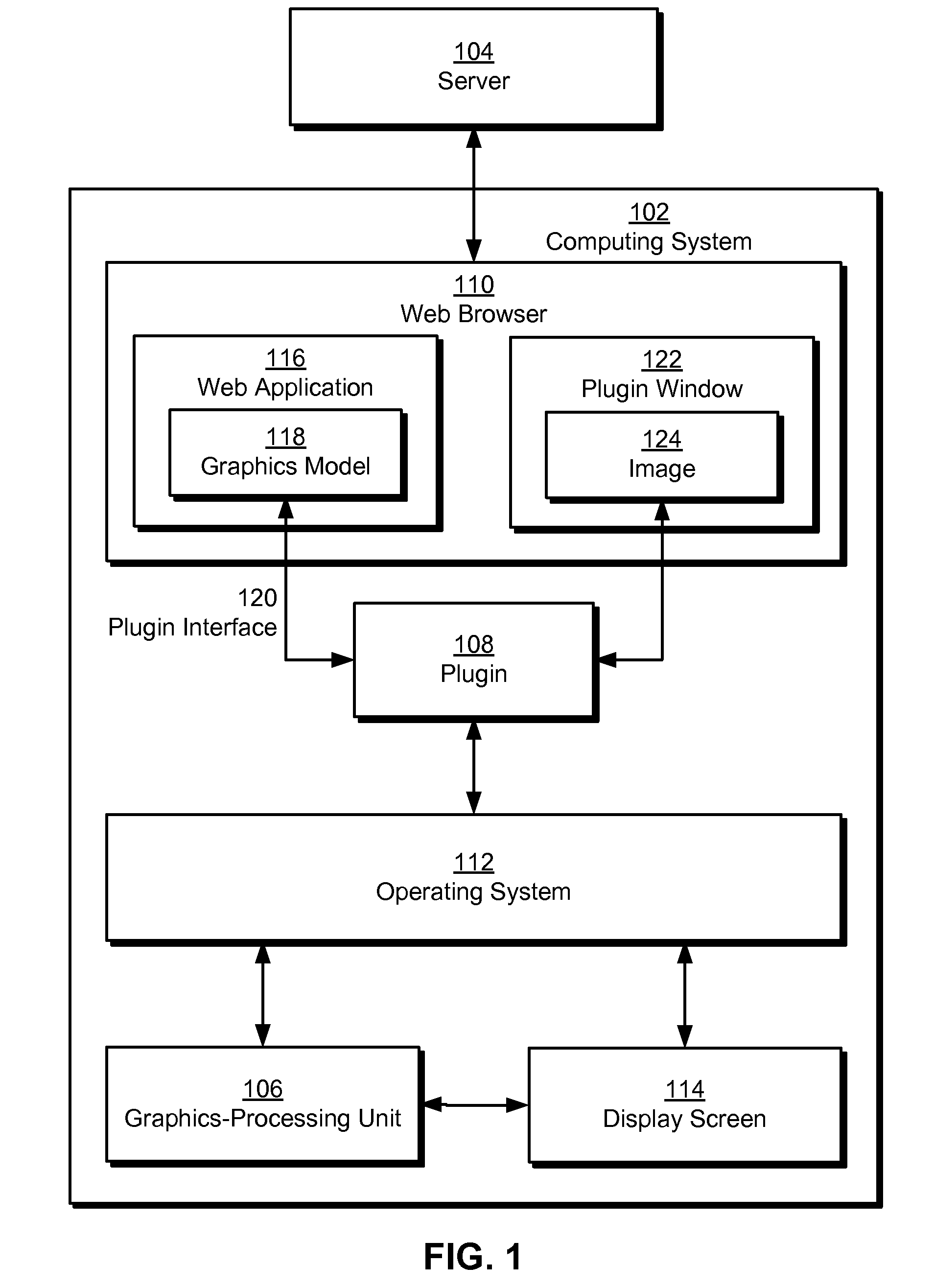

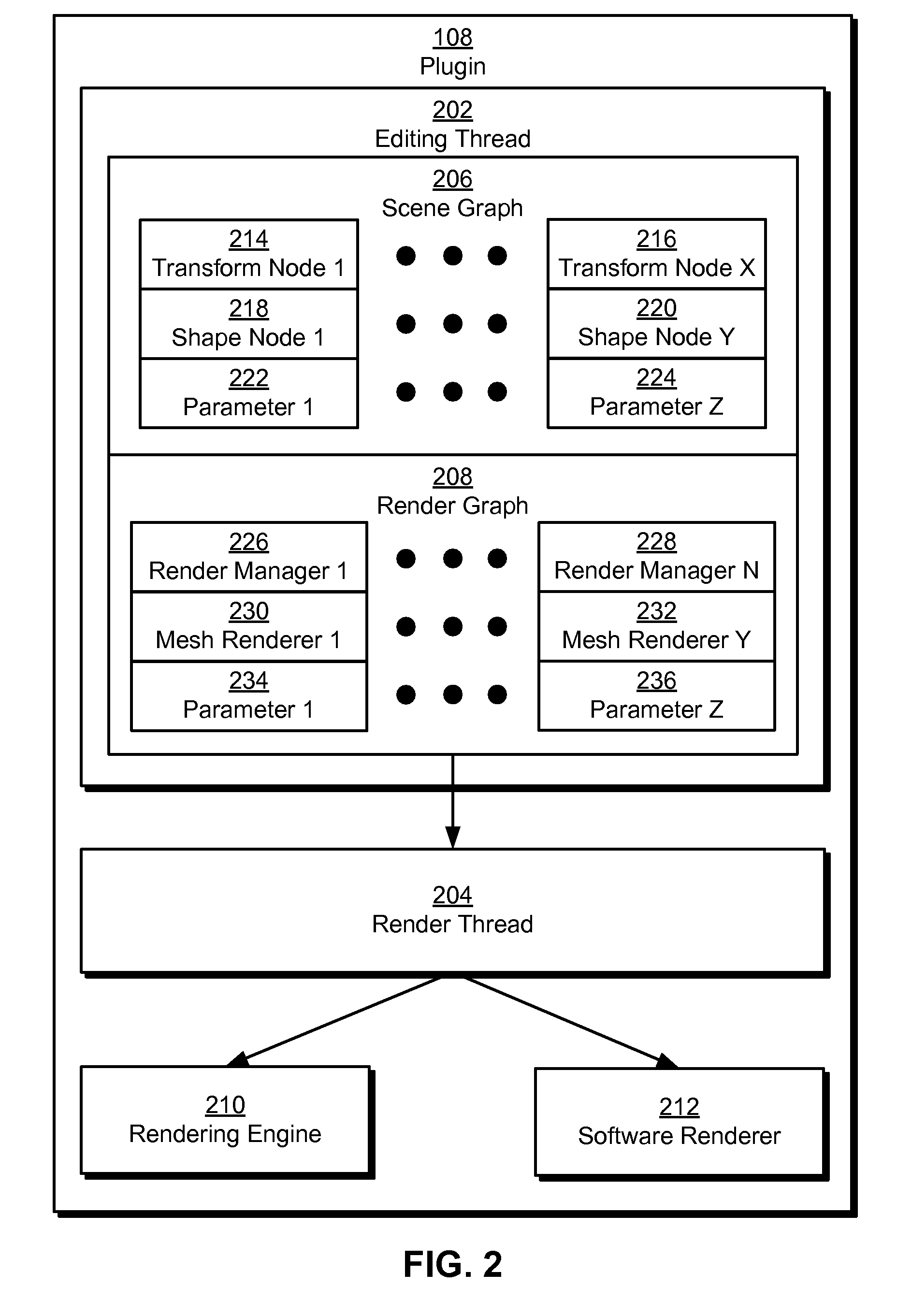

Web-based graphics rendering system

Some embodiments of the present invention provide a system that renders graphics in a computing system that includes a plugin associated with a web browser in the computing system and a web application configured to execute in the web browser. During operation, the web application specifies a graphics model and provides the graphics model to the plugin. Next, the plugin generates a graphics-processing unit (GPU) command stream from the graphics model. Finally, the plugin sends the GPU command stream to a GPU of the computing system, which renders an image corresponding to the graphics model.

Owner:GOOGLE LLC

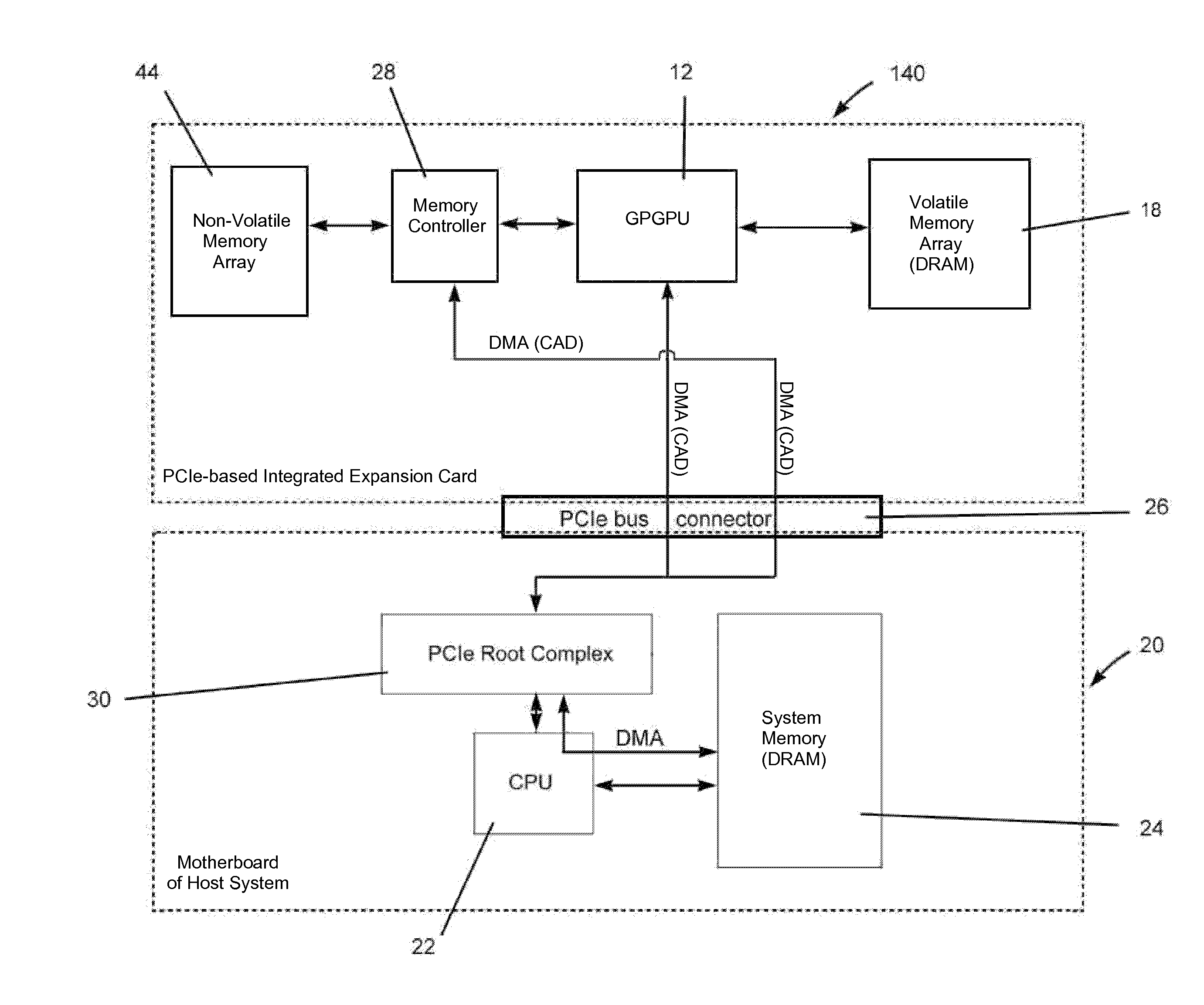

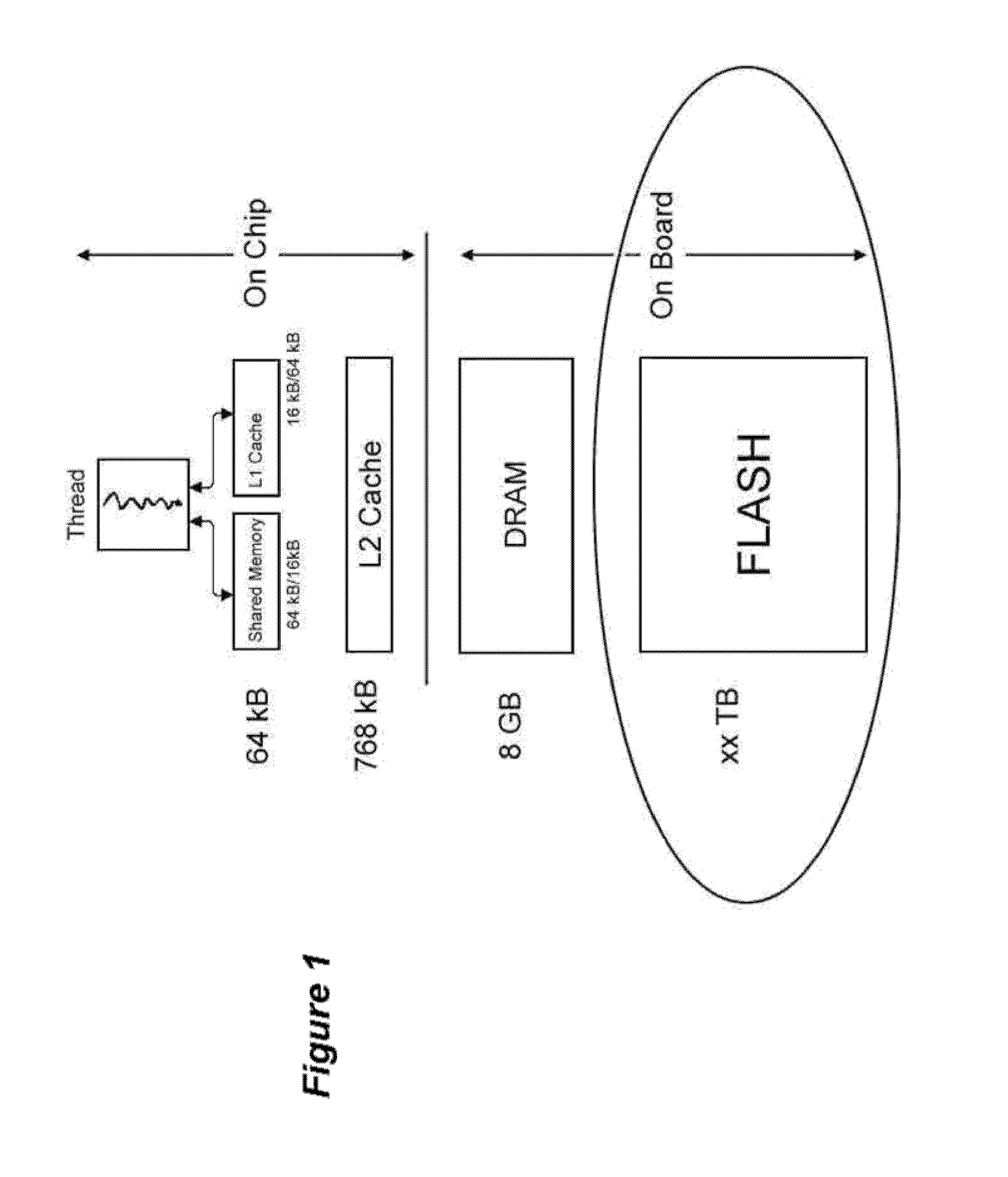

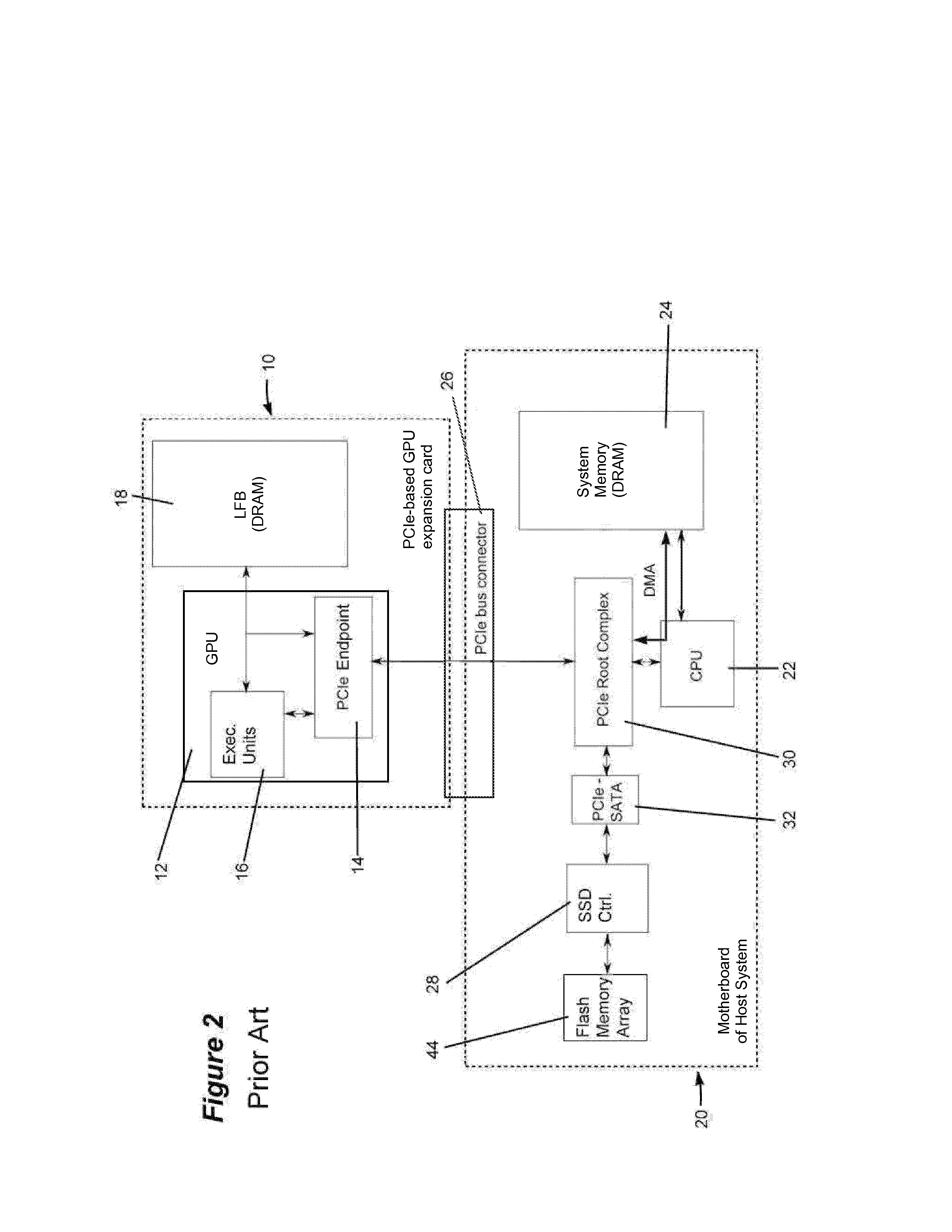

Integrated storage/processing devices, systems and methods for performing big data analytics

ActiveUS20140129753A1Faster throughputComponent plug-in assemblagesElectric digital data processingGeneral purposeGraphics

Architectures and methods for performing big data analytics by providing an integrated storage / processing system containing non-volatile memory devices that form a large, non-volatile memory array and a graphics processing unit (GPU) configured for general purpose (GPGPU) computing. The non-volatile memory array is directly functionally coupled (local) with the GPU and optionally mounted on the same board (on-board) as the GPU.

Owner:KIOXIA CORP

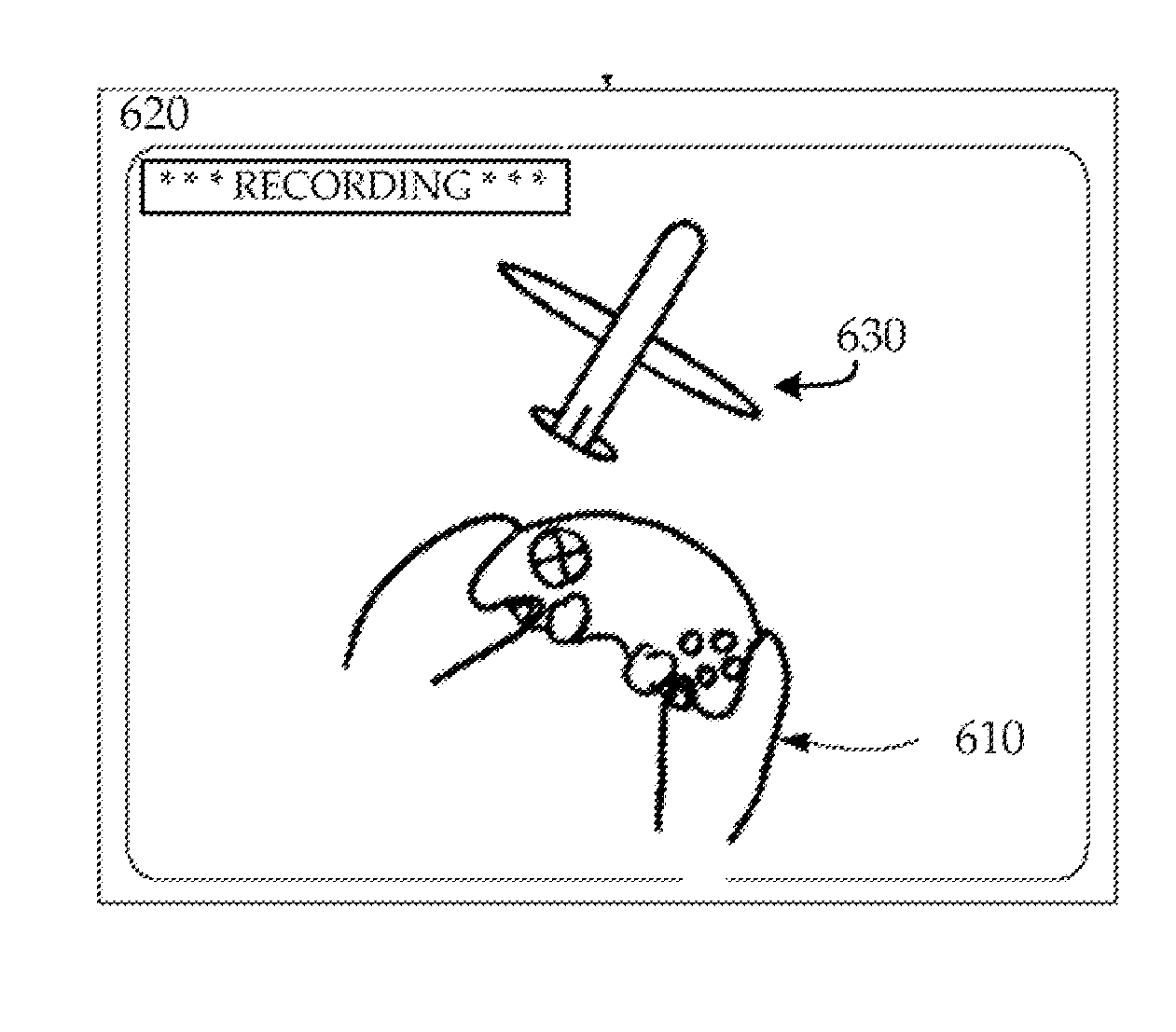

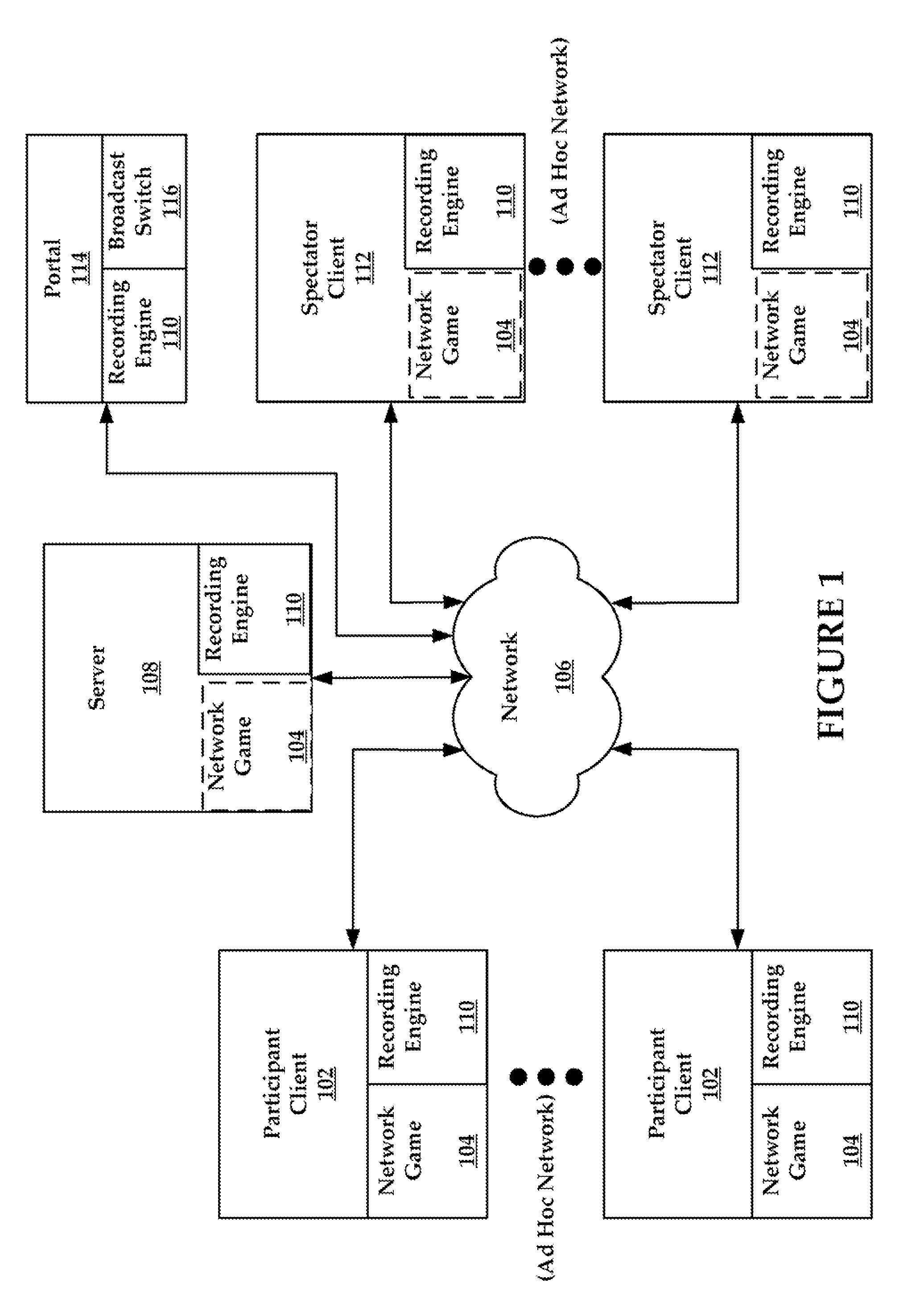

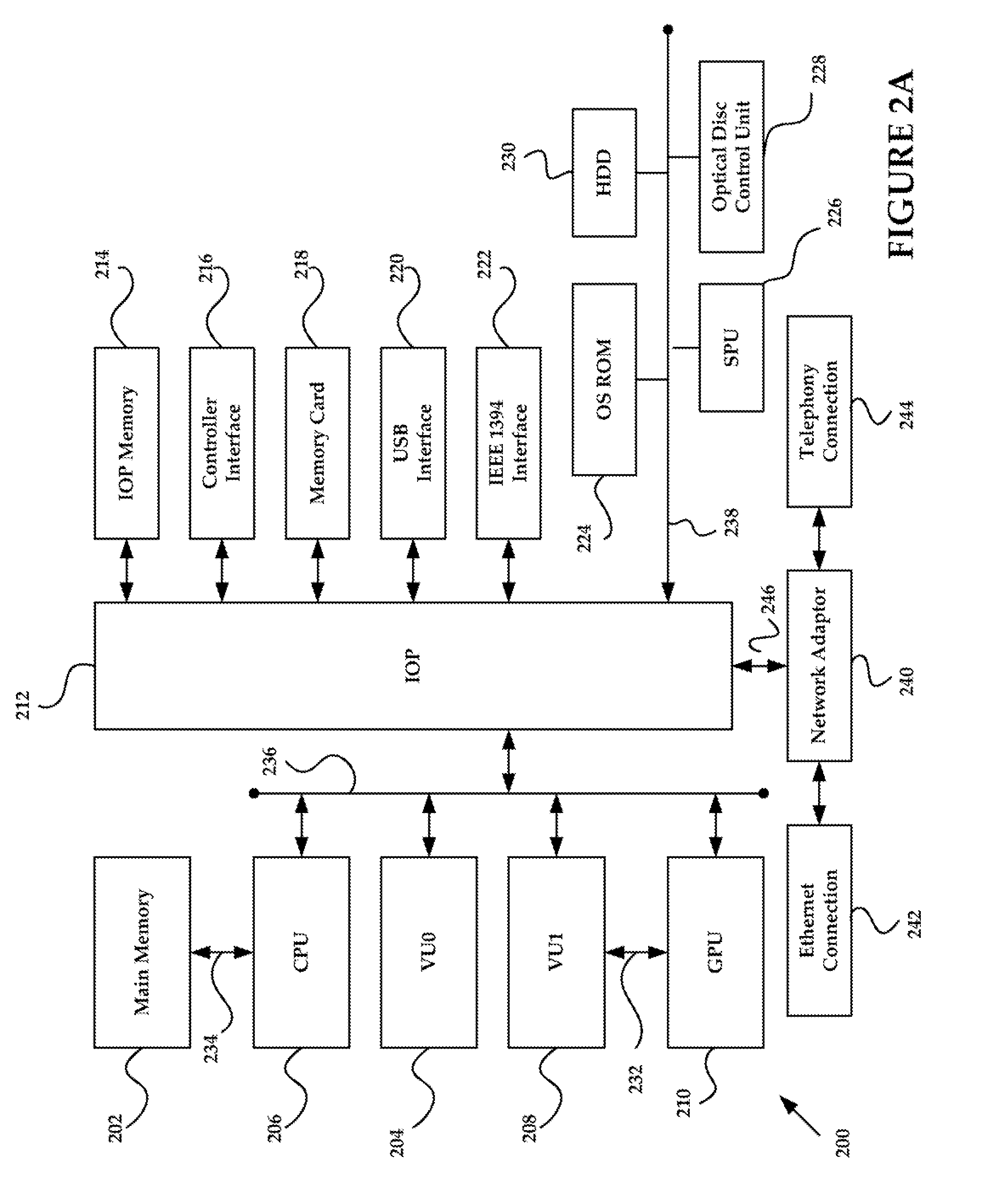

Video Game Recording and Playback with Visual Display of Game Controller Manipulation

A system and method for recording and playback of game data is provided. A recording engine may be configured to record and playback game data, including broadcast data received over a network. The recording engine may be included in a game console or at other network-enabled devices, such as a desktop computer. The recording engine may be configured to record the game data in a buffer memory, an archival memory, and to assist in the display of the game (e.g., interacting with a graphics processing unit). Game data stored in the buffer memory may later be transferred to the archival memory. Playback commands may be operable when a portion of the game broadcast has been recorded. When a playback command is received, the game recording engine may access a portion of the game data from buffer or archival memory and displays a selected portion and may be subject to certain seek functions (e.g., fast forward and rewind). Spectators may be able to independently archive and playback game data using local memory.

Owner:SONY INTERACTIVE ENTRTAINMENT LLC

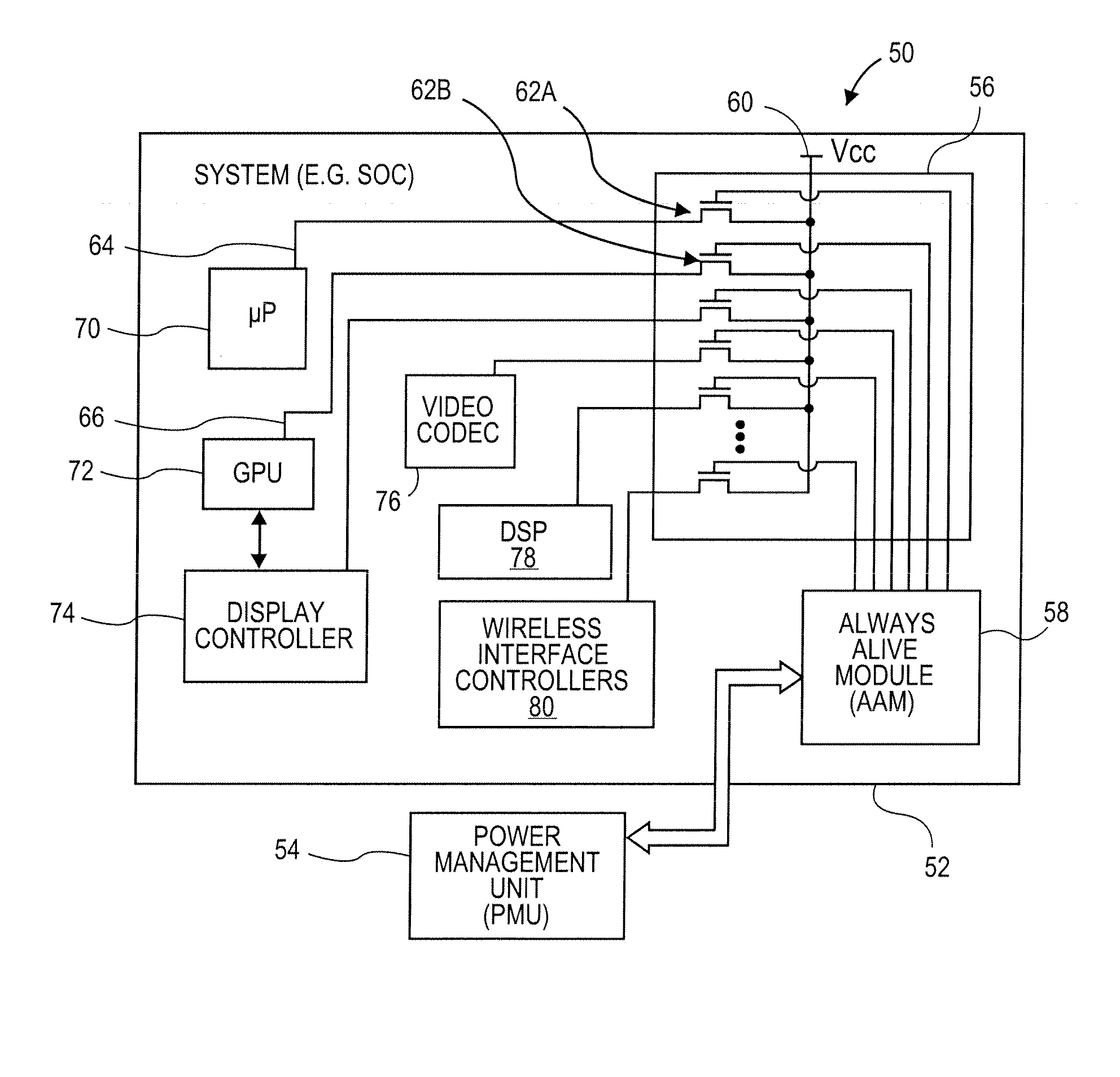

Methods and Systems for Power Management in a Data Processing System

ActiveUS20080168285A1Lower latencyReduce total powerEnergy efficient ICTVolume/mass flow measurementData processing systemGeneral purpose

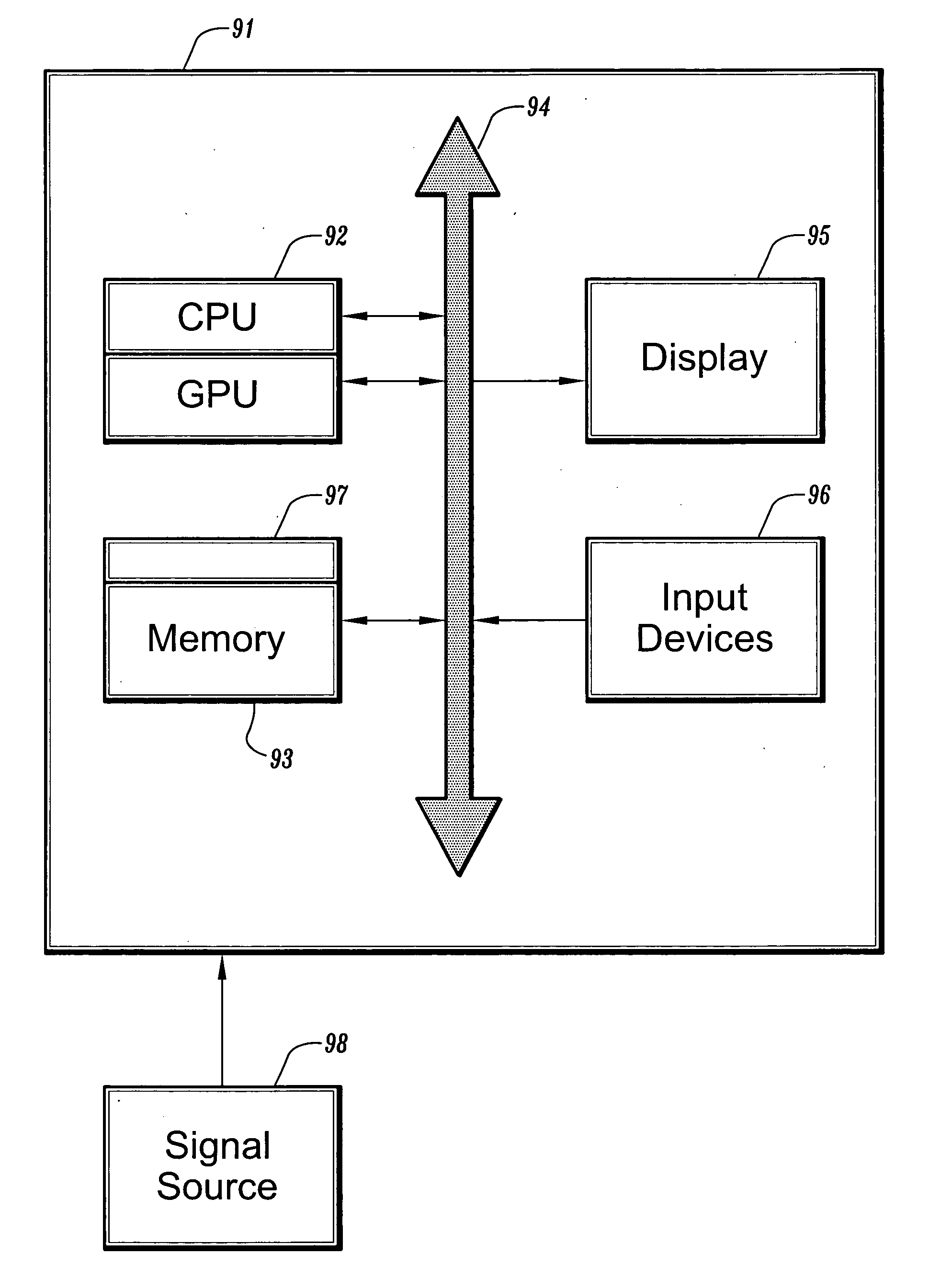

Methods and systems for managing power consumption in data processing systems are described. In one embodiment, a data processing system includes a general purpose processing unit, a graphics processing unit (GPU), at least one peripheral interface controller, at least one bus coupled to the general purpose processing unit, and a power controller coupled to at least the general purpose processing unit and the GPU. The power controller is configured to turn power off for the general purpose processing unit in response to a first state of an instruction queue of the general purpose processing unit and is configured to turn power off for the GPU in response to a second state of an instruction queue of the GPU. The first state and the second state represent an instruction queue having either no instructions or instructions for only future events or actions.

Owner:APPLE INC

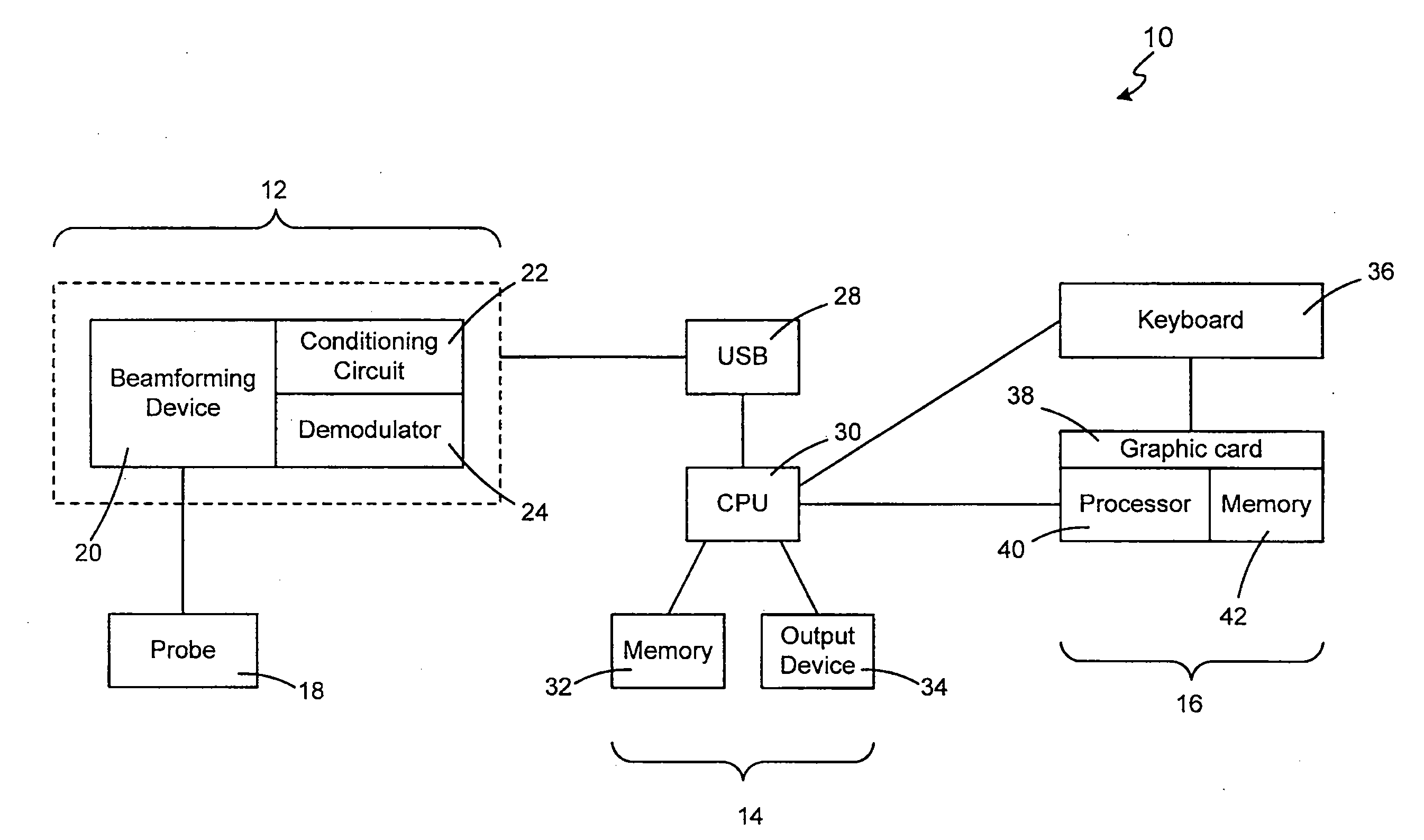

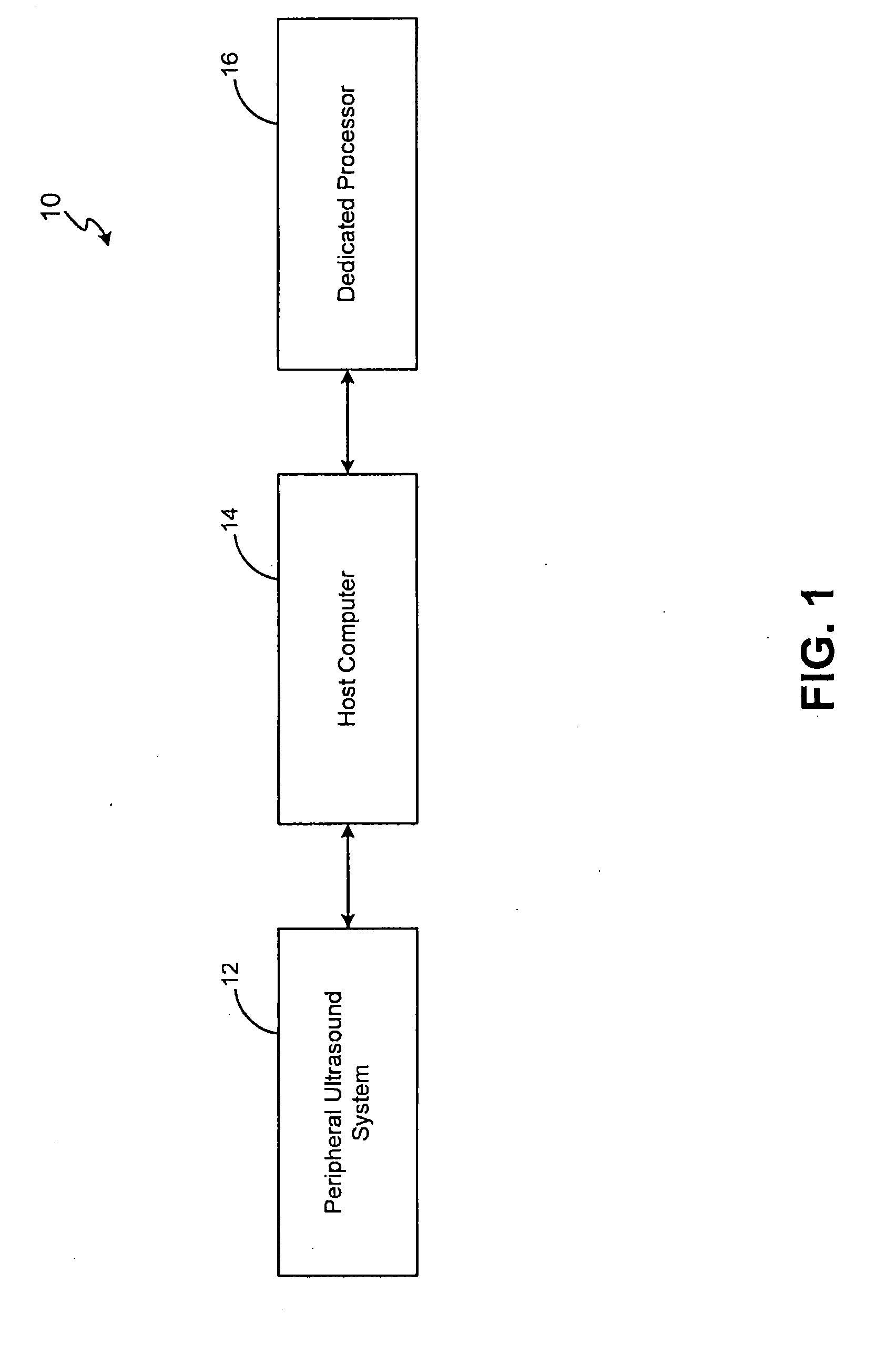

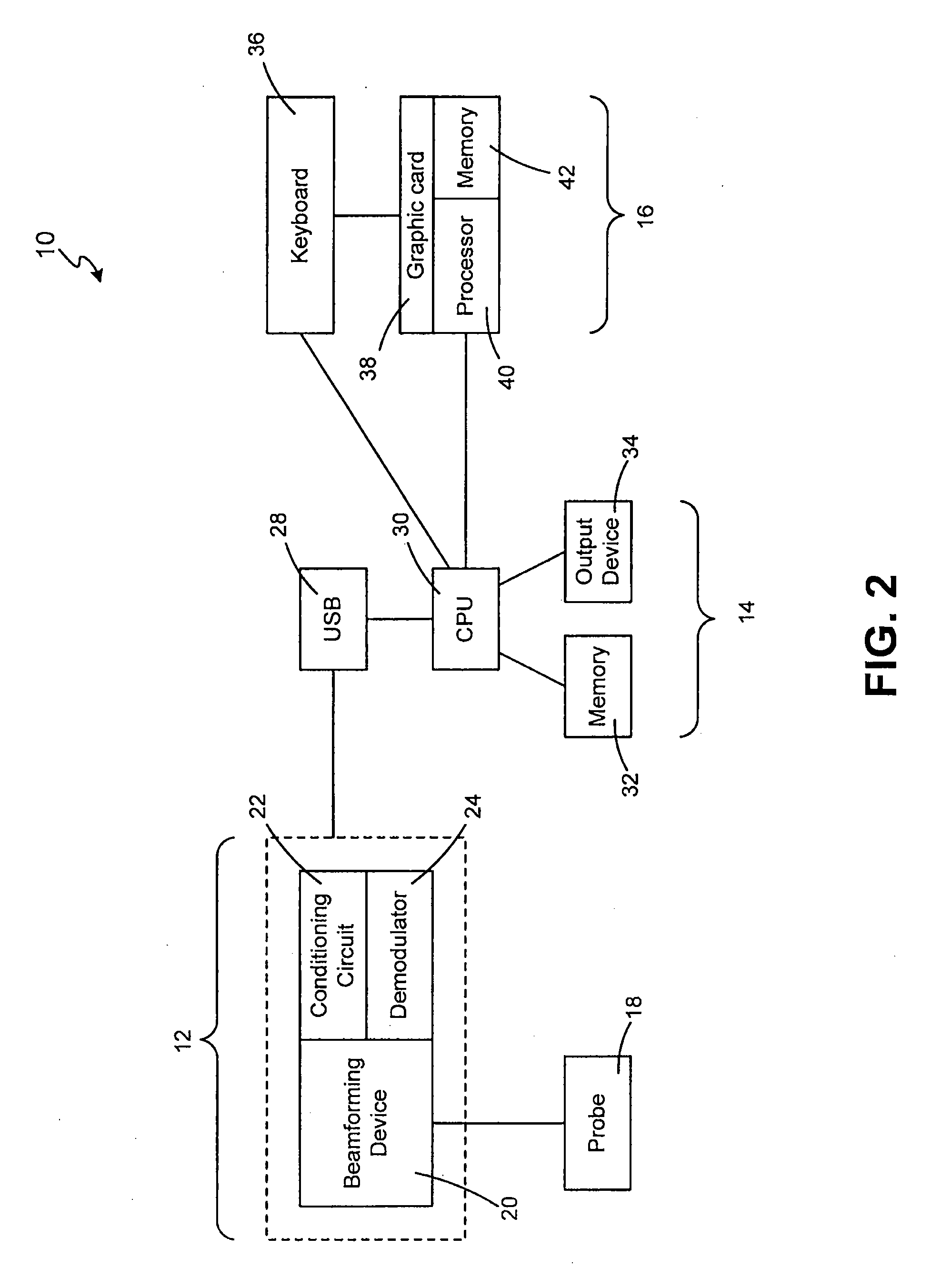

Ultrasound system and method for imaging and/or measuring displacement of moving tissue and fluid

ActiveUS20080086054A1Increase speedQuality improvementBlood flow measurement devicesOrgan movement/changes detectionGraphic cardSonification

A system and method for improved imaging is disclosed. An exemplary system provides a peripheral ultrasound system connected to a host computer with a plug-and-play interface such as a USB. An exemplary system utilizes a dedicated graphics processing unit such as a graphics card to analyze data obtained from a region of interest to produce an image on one or more output units for the user's viewing. Based on the image displayed on the output units, the user can determine the velocity of the moving tissue and fluid. The system of the present invention can be used to produce a Doppler color flow map or for power Doppler imaging.

Owner:GUIDED THERAPY SYSTEMS LLC

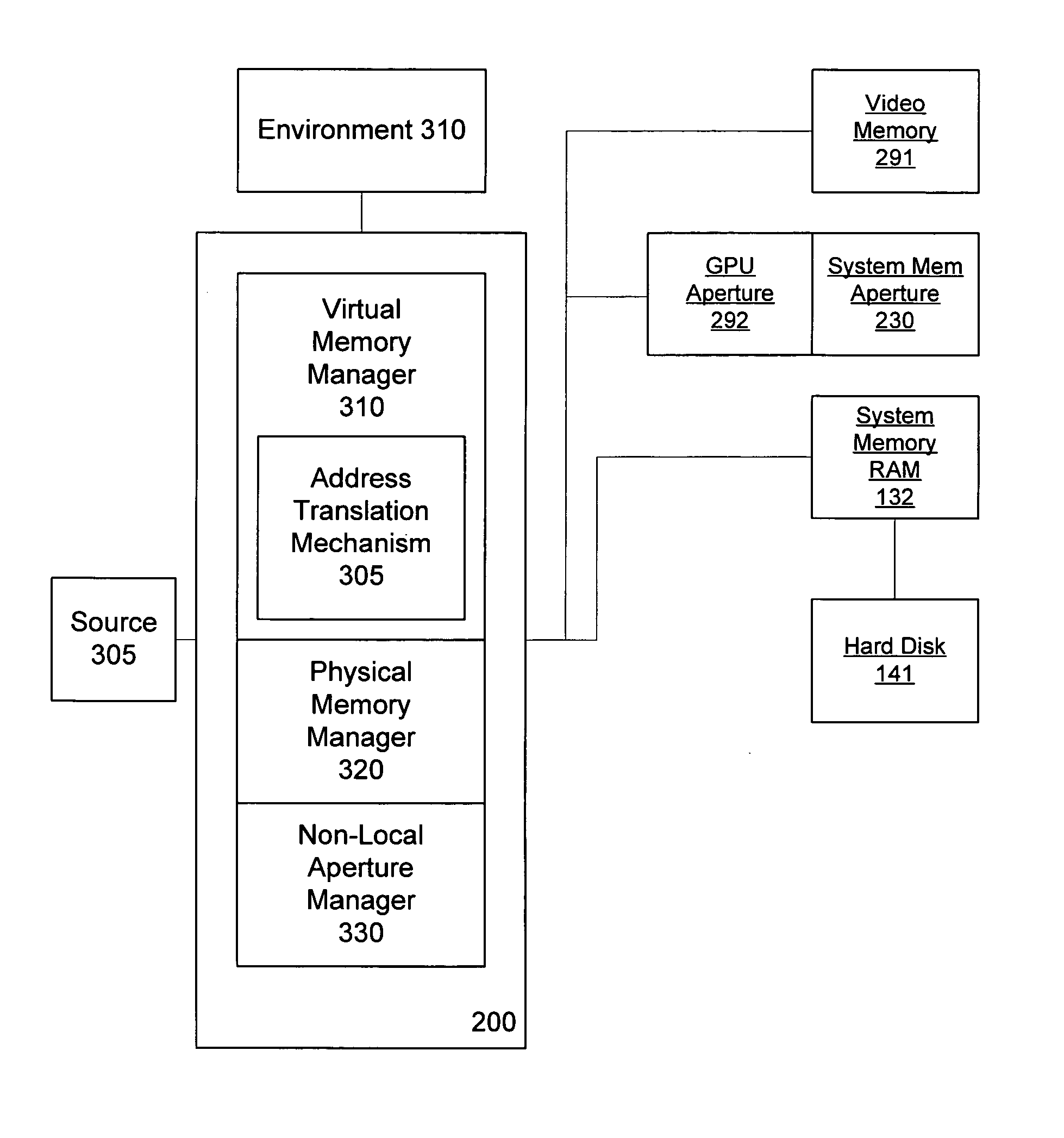

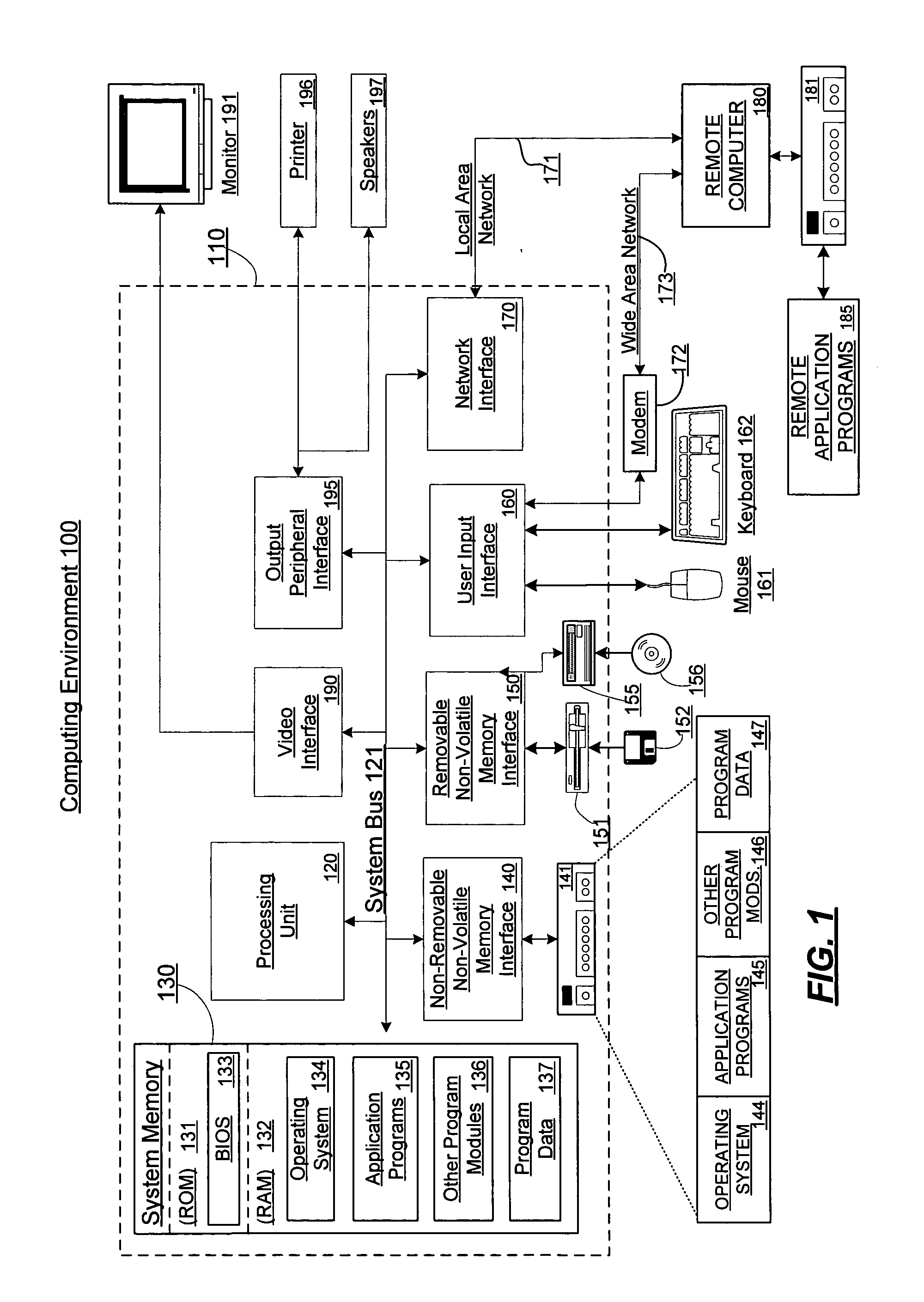

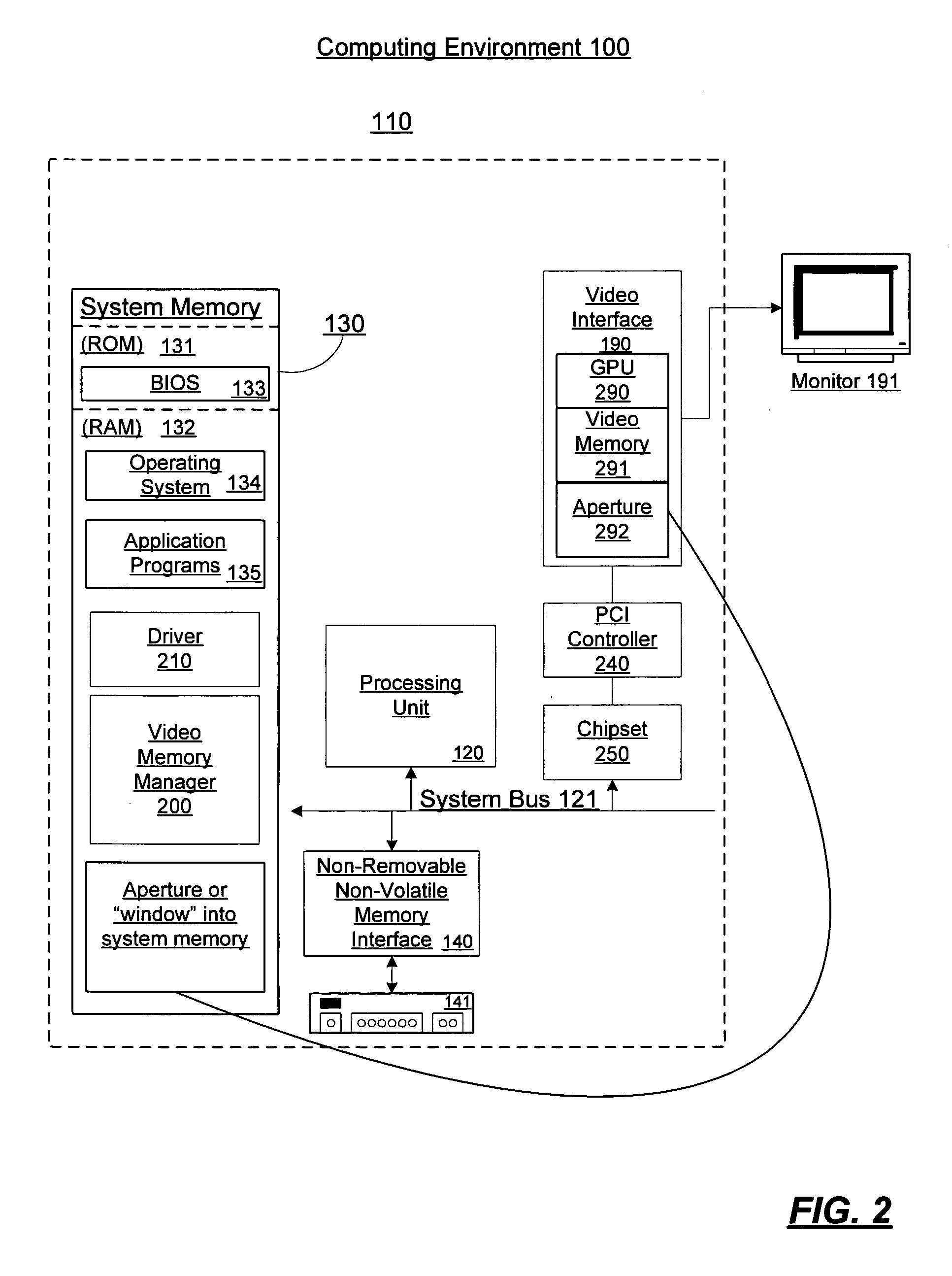

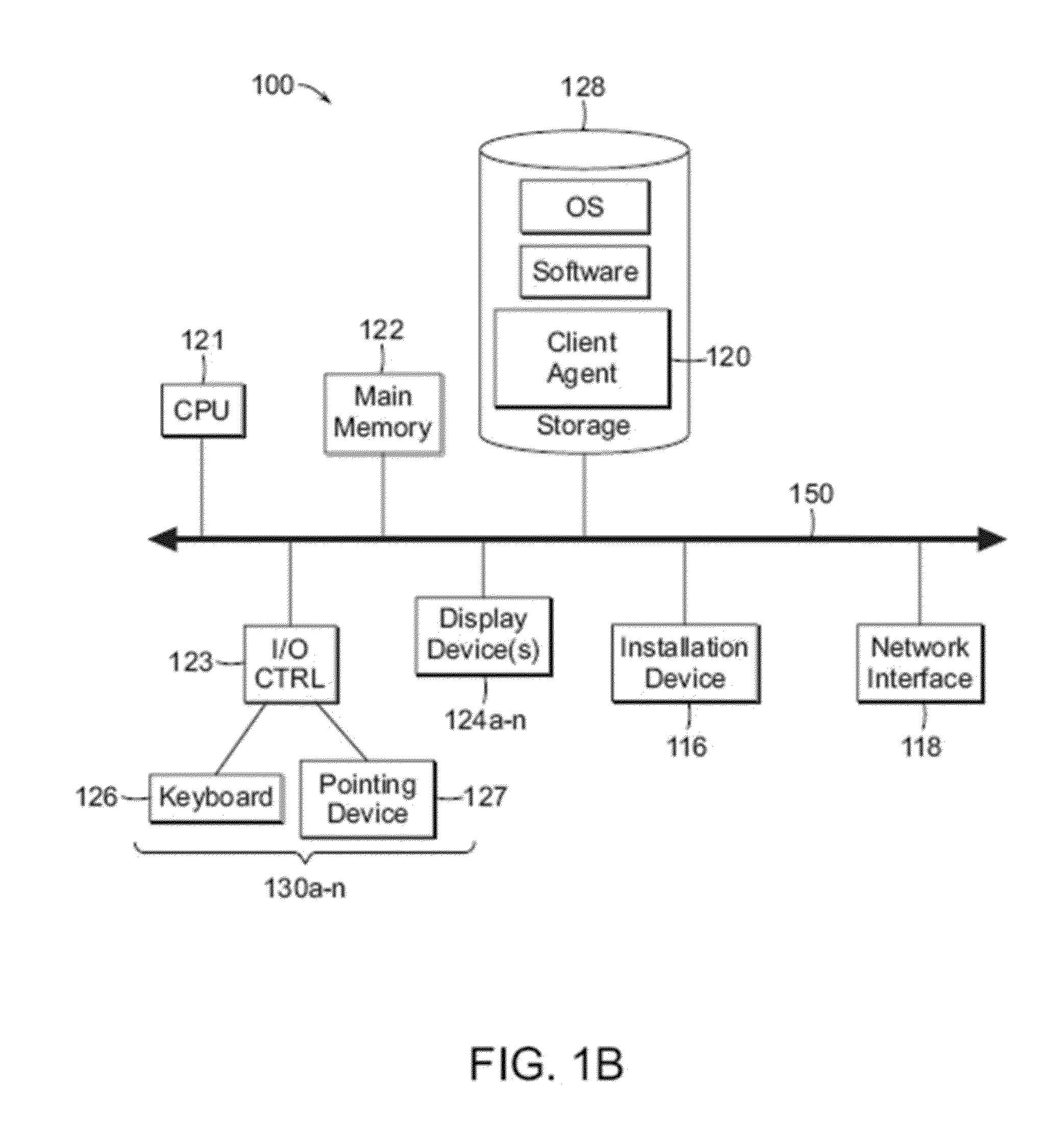

Video memory management

A video memory manager manages and virtualizes memory so that an application or multiple applications can utilize both system memory and local video memory in processing graphics. The video memory manager allocates memory in either the system memory or the local video memory as appropriate. The video memory manager may also manage the system memory accessible to the graphics processing unit via an aperture of the graphics processing unit. The video memory manager may evict memory from the local video memory as appropriate, thereby freeing a portion of local video memory use by other applications. In this manner, a graphics processing unit and its local video memory may be more readily shared by multiple applications.

Owner:MICROSOFT TECH LICENSING LLC

Methods and apparatuses for load balancing between multiple processing units

ActiveUS8284205B2PerformanceProcessingEnergy efficient ICTDigital data processing detailsGraphicsDigital signal processing

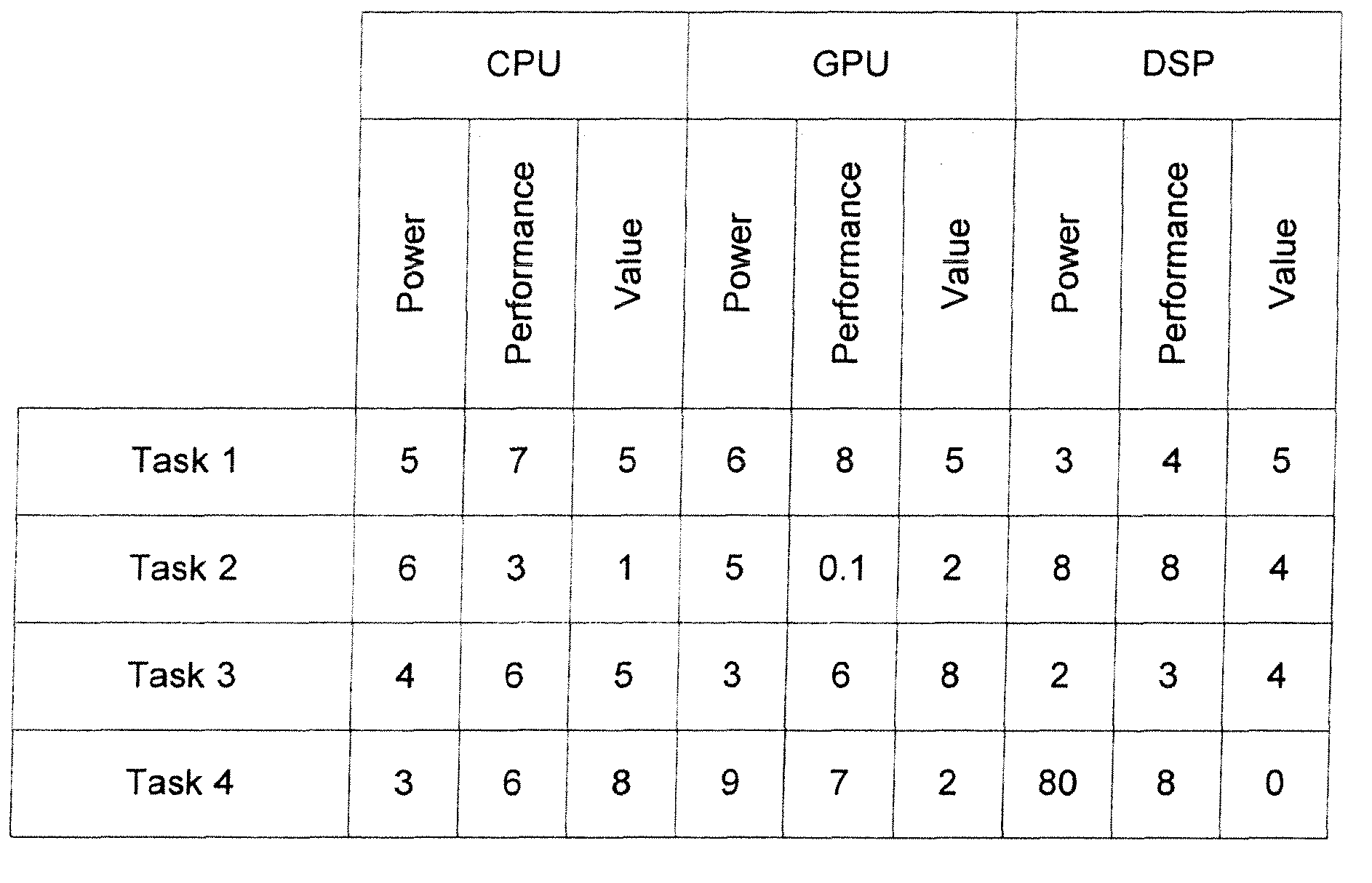

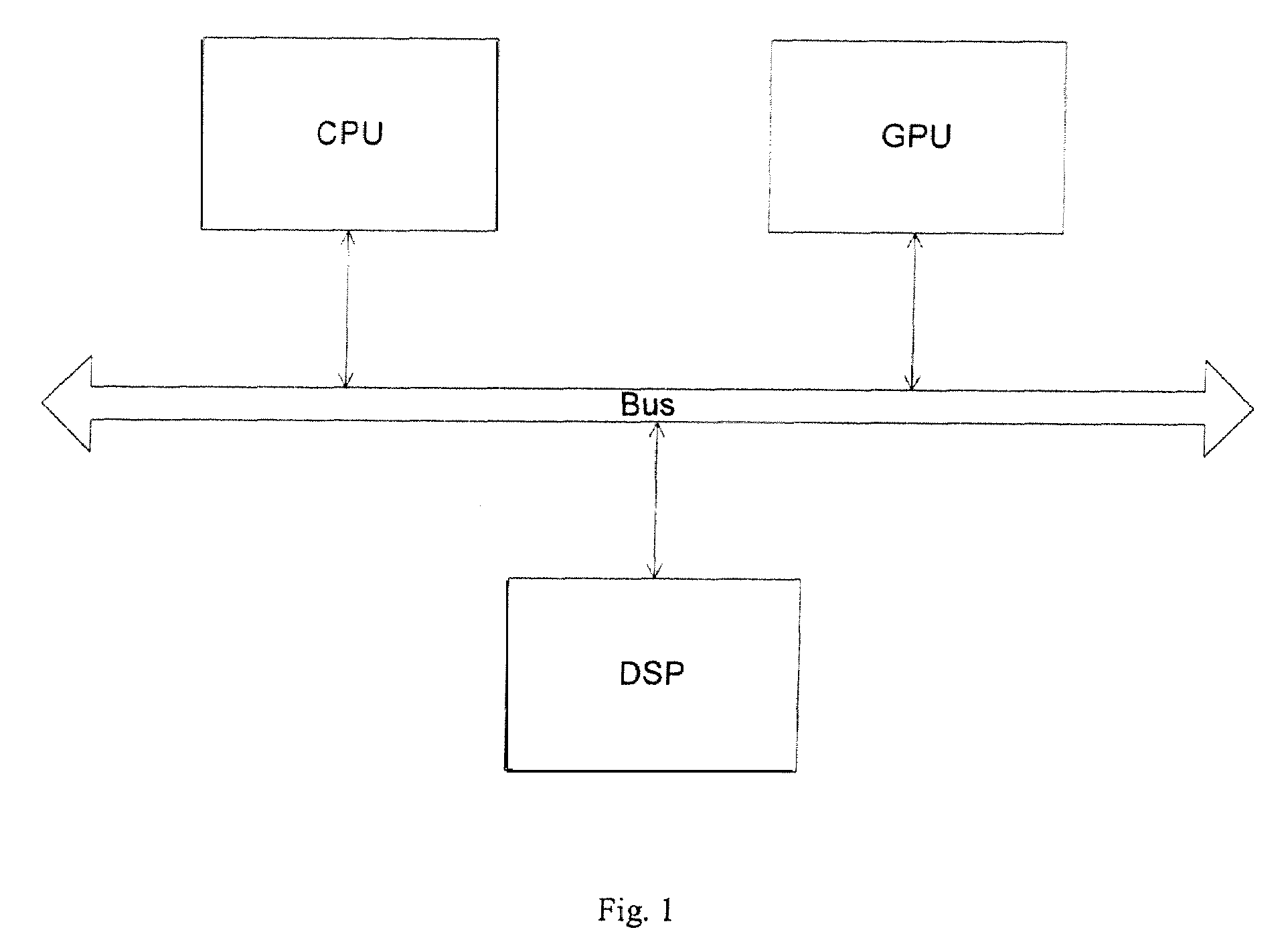

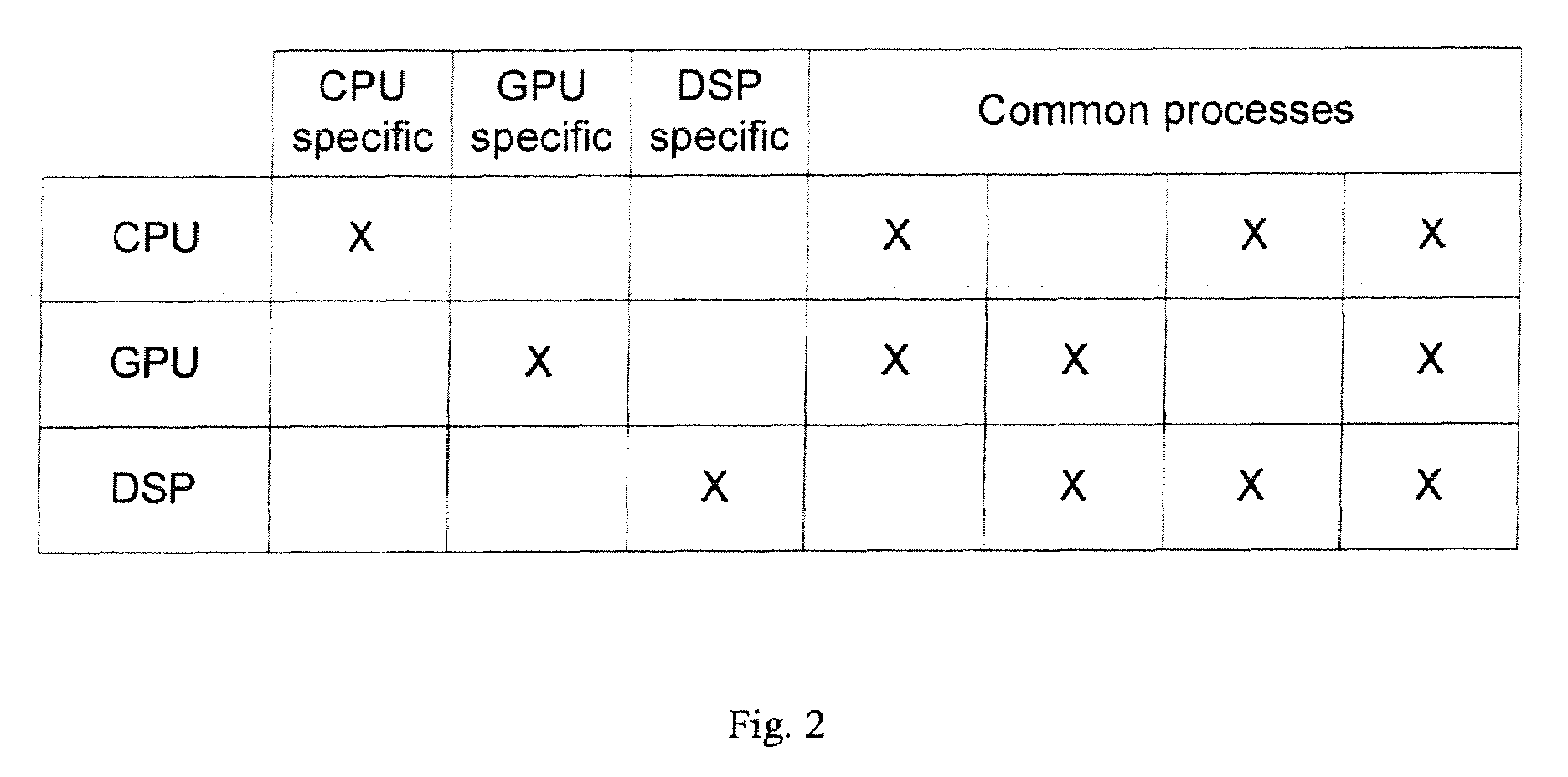

Exemplary embodiments of methods and apparatuses to dynamically redistribute computational processes in a system that includes a plurality of processing units are described. The power consumption, the performance, and the power / performance value are determined for various computational processes between a plurality of subsystems where each of the subsystems is capable of performing the computational processes. The computational processes are exemplarily graphics rendering process, image processing process, signal processing process, Bayer decoding process, or video decoding process, which can be performed by a central processing unit, a graphics processing units or a digital signal processing unit. In one embodiment, the distribution of computational processes between capable subsystems is based on a power setting, a performance setting, a dynamic setting or a value setting.

Owner:APPLE INC

System and method for GPU-based 3D nonrigid registration

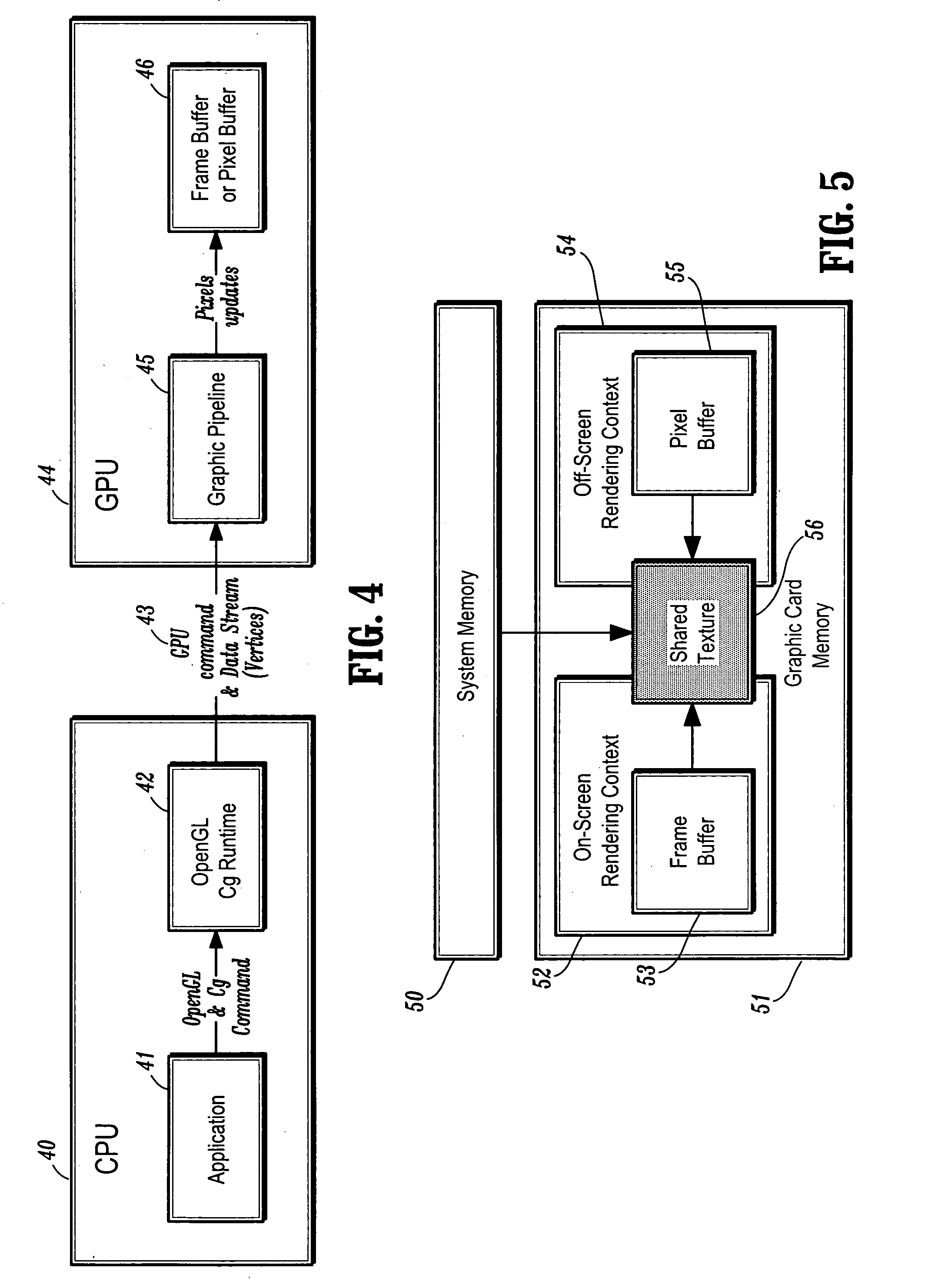

A method of registering two images using a graphics processing unit includes providing a pair of images with a first and second image, calculating a gradient of the second image, initializing a displacement field on the grid point domain of the pair of images, generating textures for the first image, the second image, the gradient, and the displacement field, and loading said textures into the graphics processing unit. A pixel buffer is created and initialized with the texture containing the displacement field. The displacement field is updated from the first image, the second image, and the gradient for one or more iterations in one or more rendering passes performed by the graphics processing unit.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

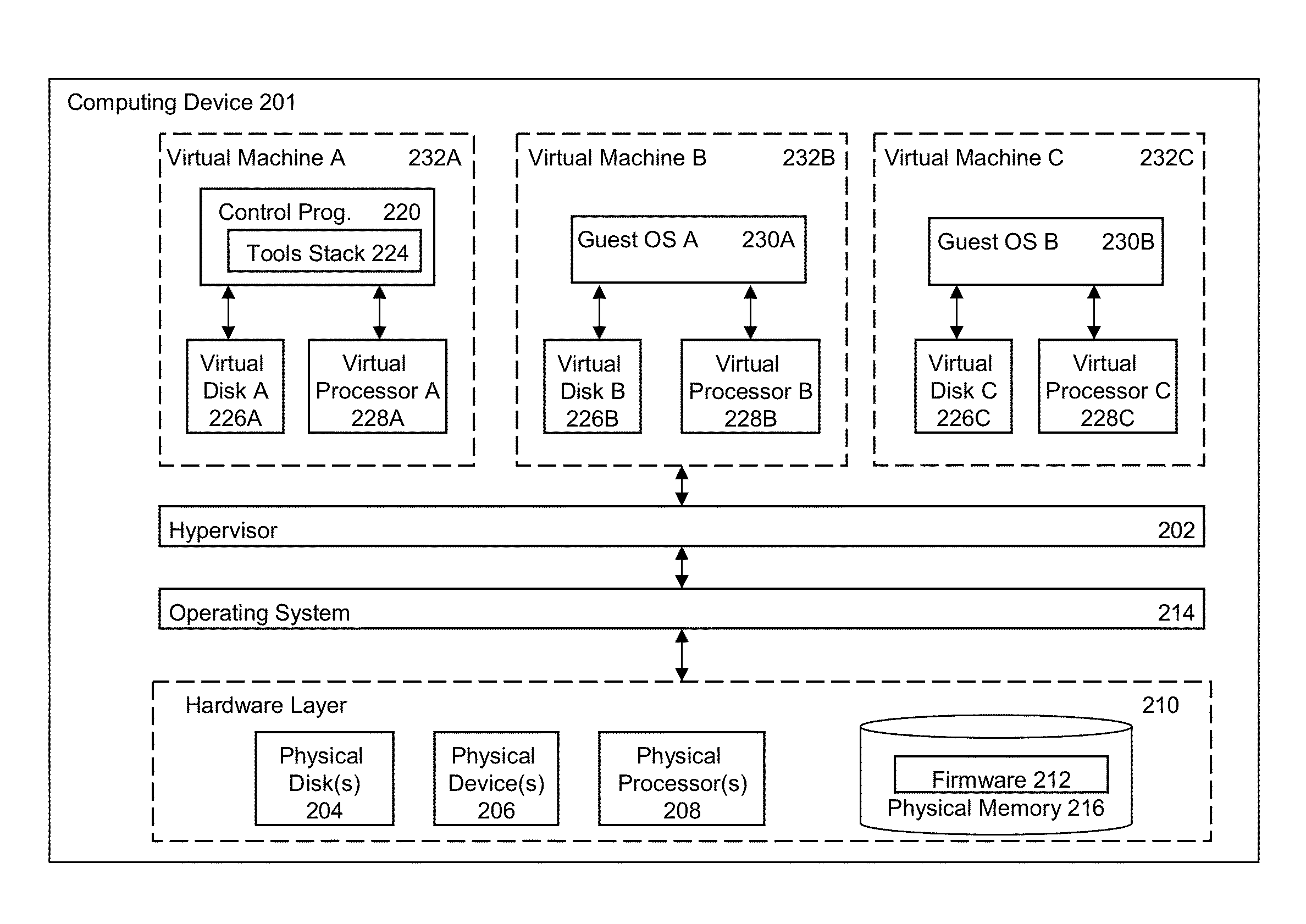

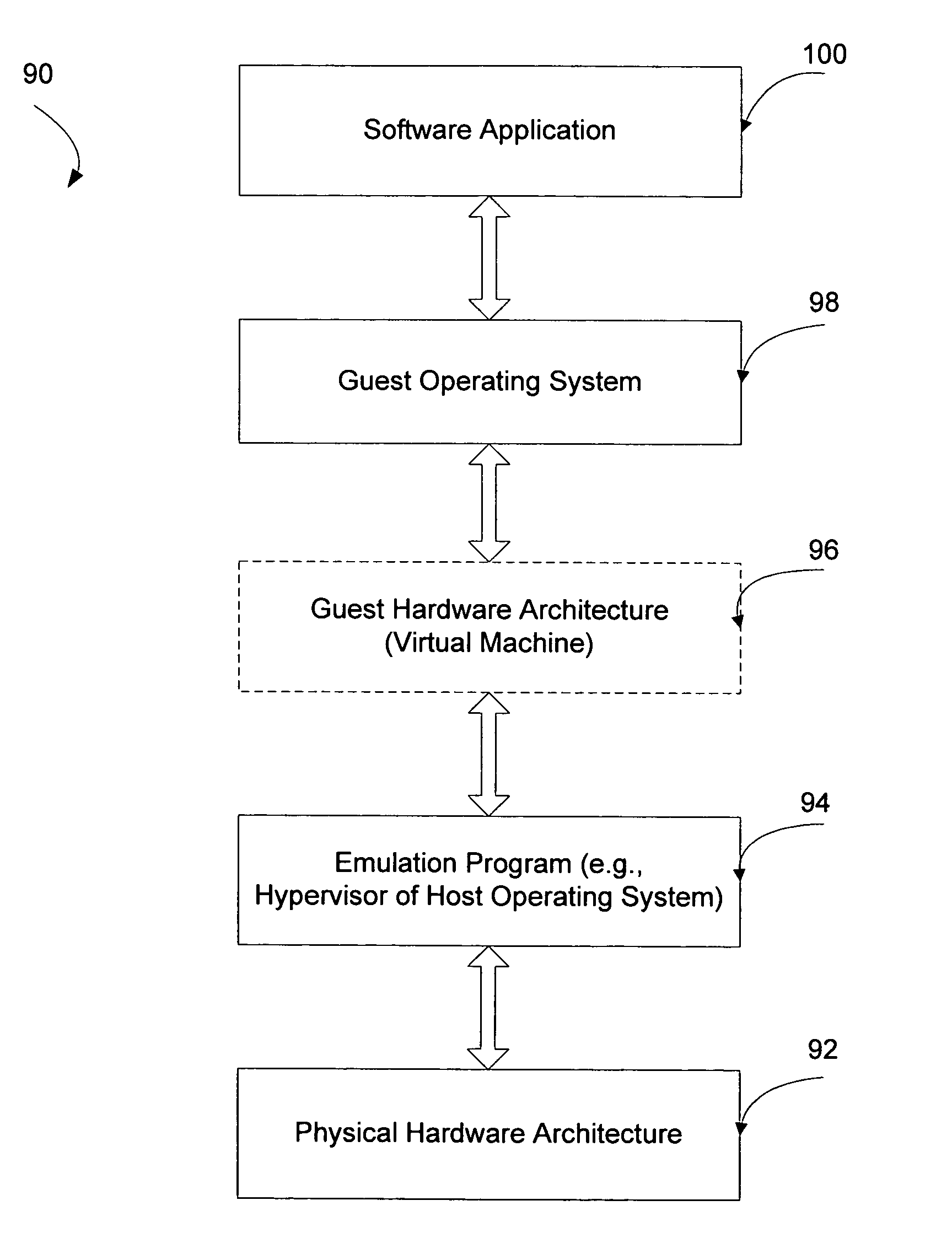

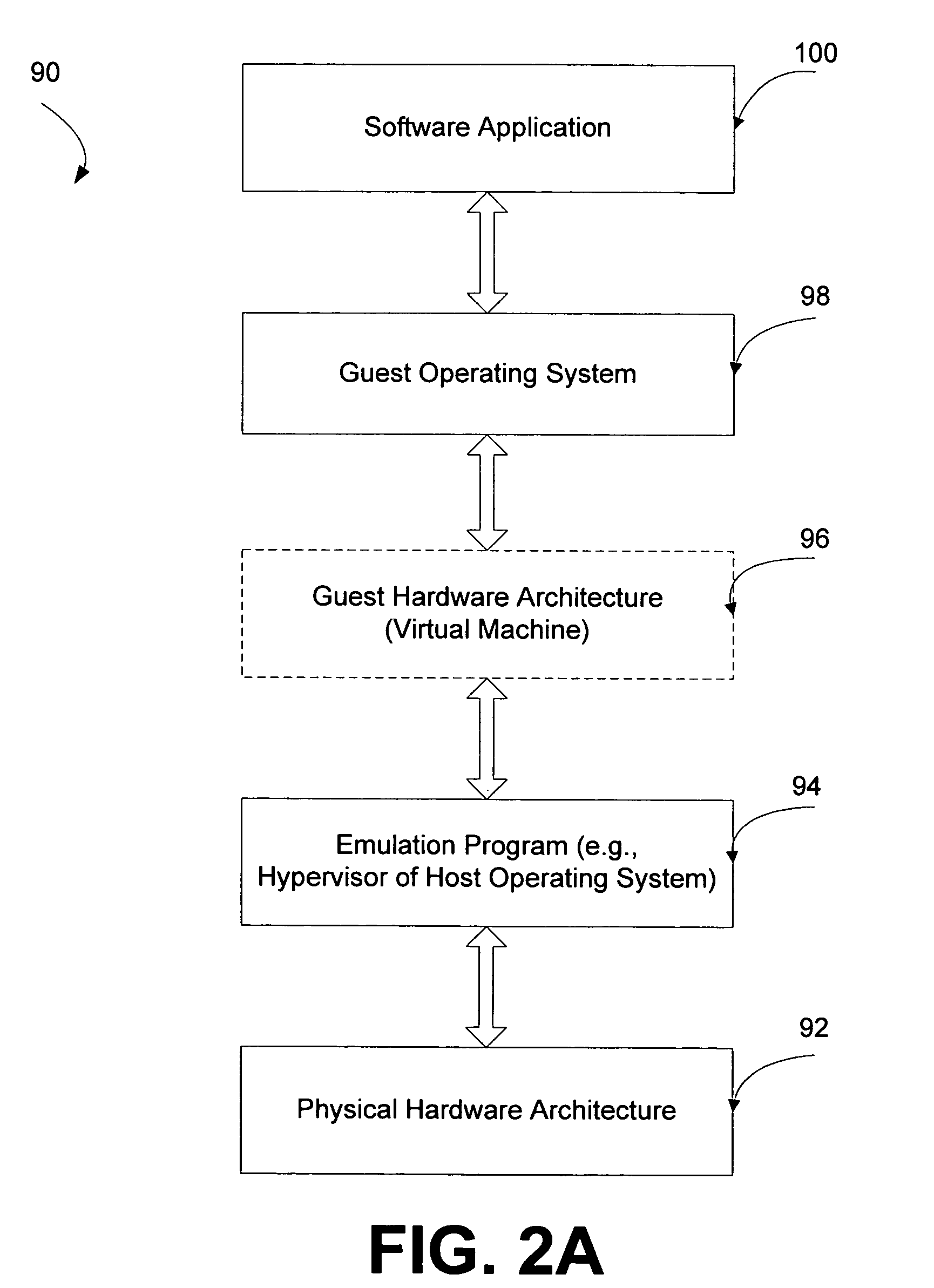

Methods and systems for maintaining state in a virtual machine when disconnected from graphics hardware

ActiveUS20130155083A1Avoiding significant degradation in user experienceProcessor architectures/configurationSoftware simulation/interpretation/emulationVirtual machineGraphics hardware

The present disclosure is directed towards methods and systems for maintaining state in a virtual machine when disconnected from graphics hardware. The virtual machine is one of a plurality of virtual machines hosted by a hypervisor executing on a computing device. A control virtual machine may be hosted by a hypervisor executing on a computing device. The control virtual machine may store state information of a graphics processing unit (GPU) of the computing device. The GPU may render an image from a first virtual machine. The control virtual machine may remove, from the first virtual machine, access to the GPU. The control virtual machine may redirect the first virtual machine to a GPU emulation program. The GPU emulation program may render the image from the first virtual machine using at least a portion of the stored state information.

Owner:CITRIX SYST INC

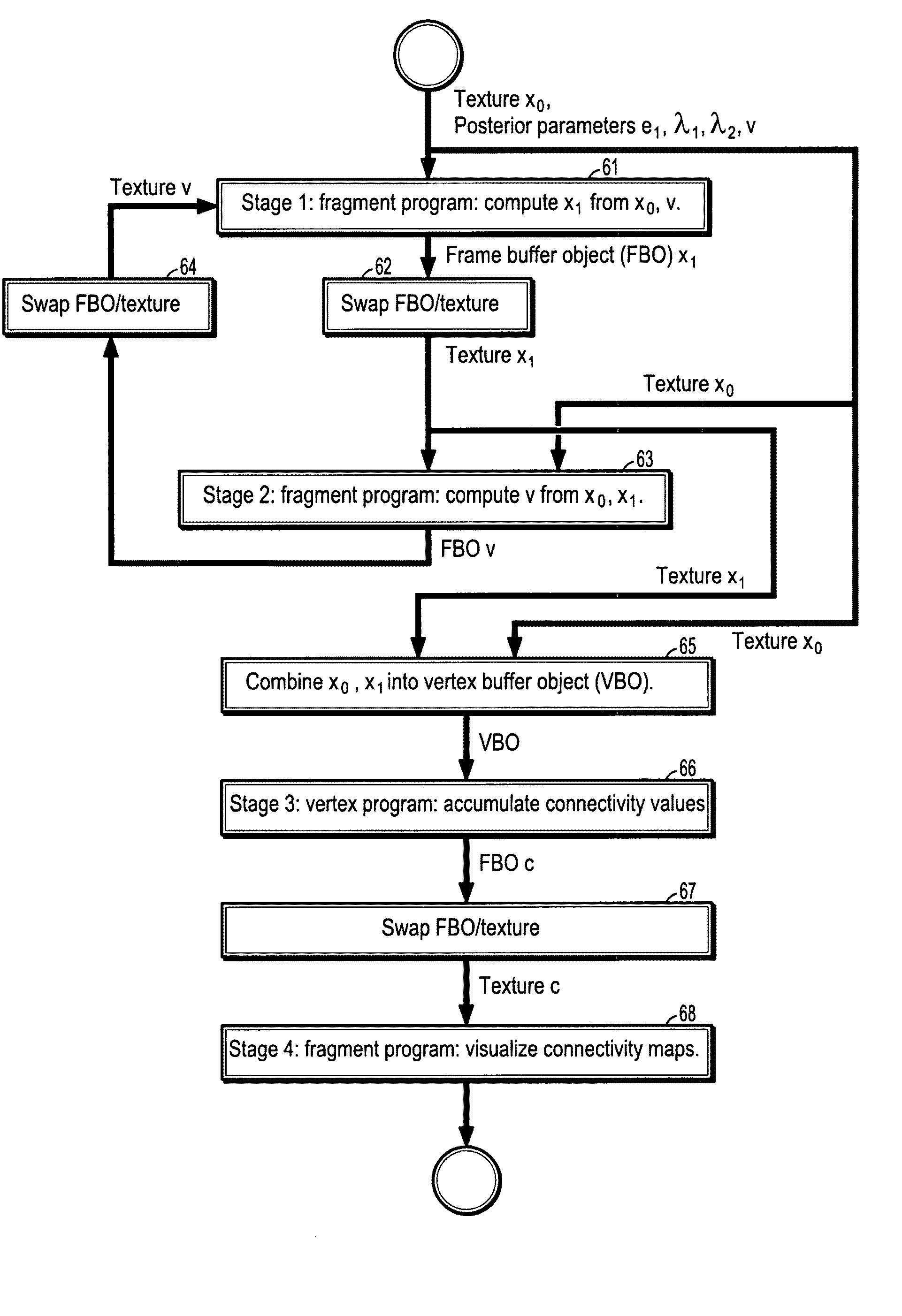

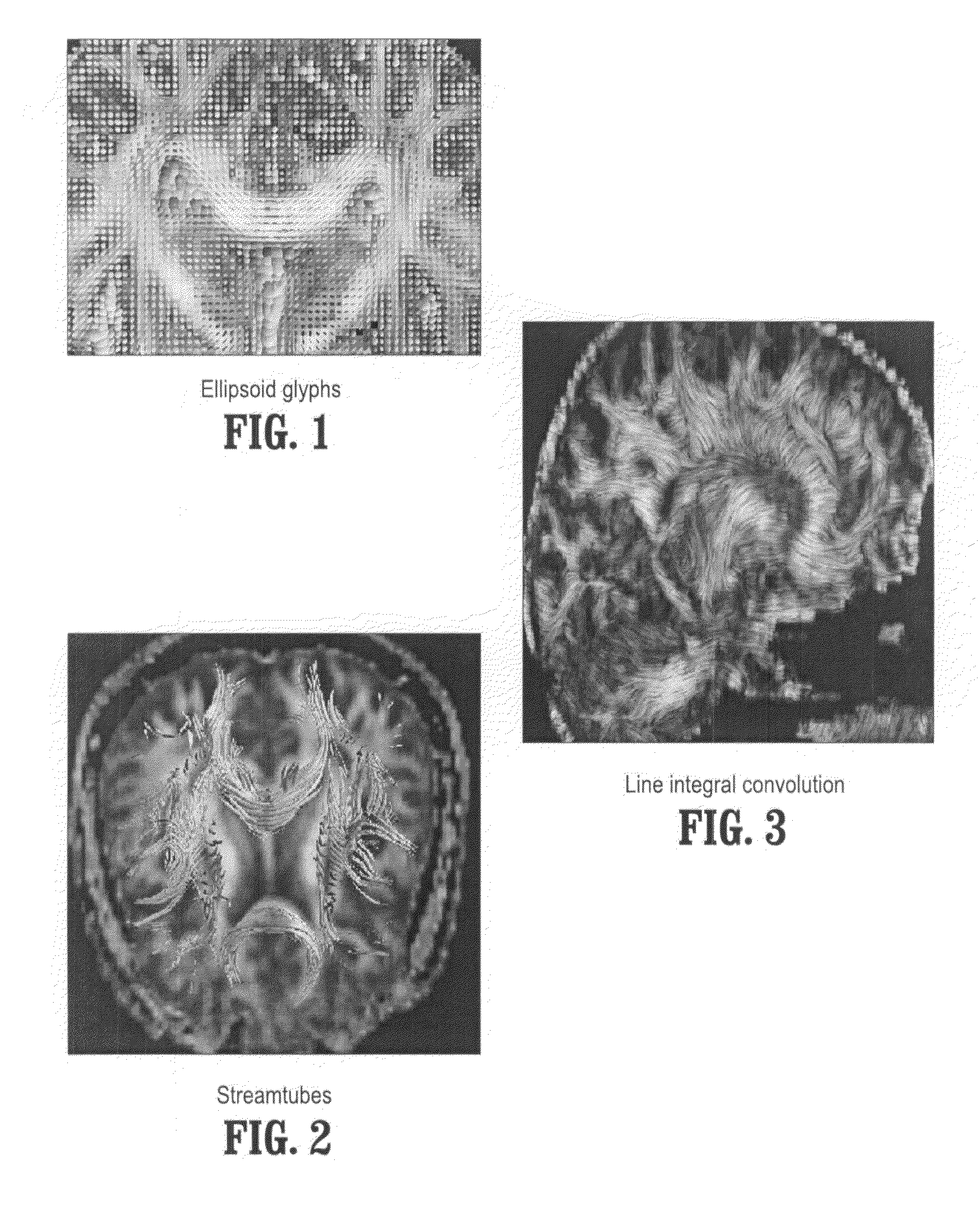

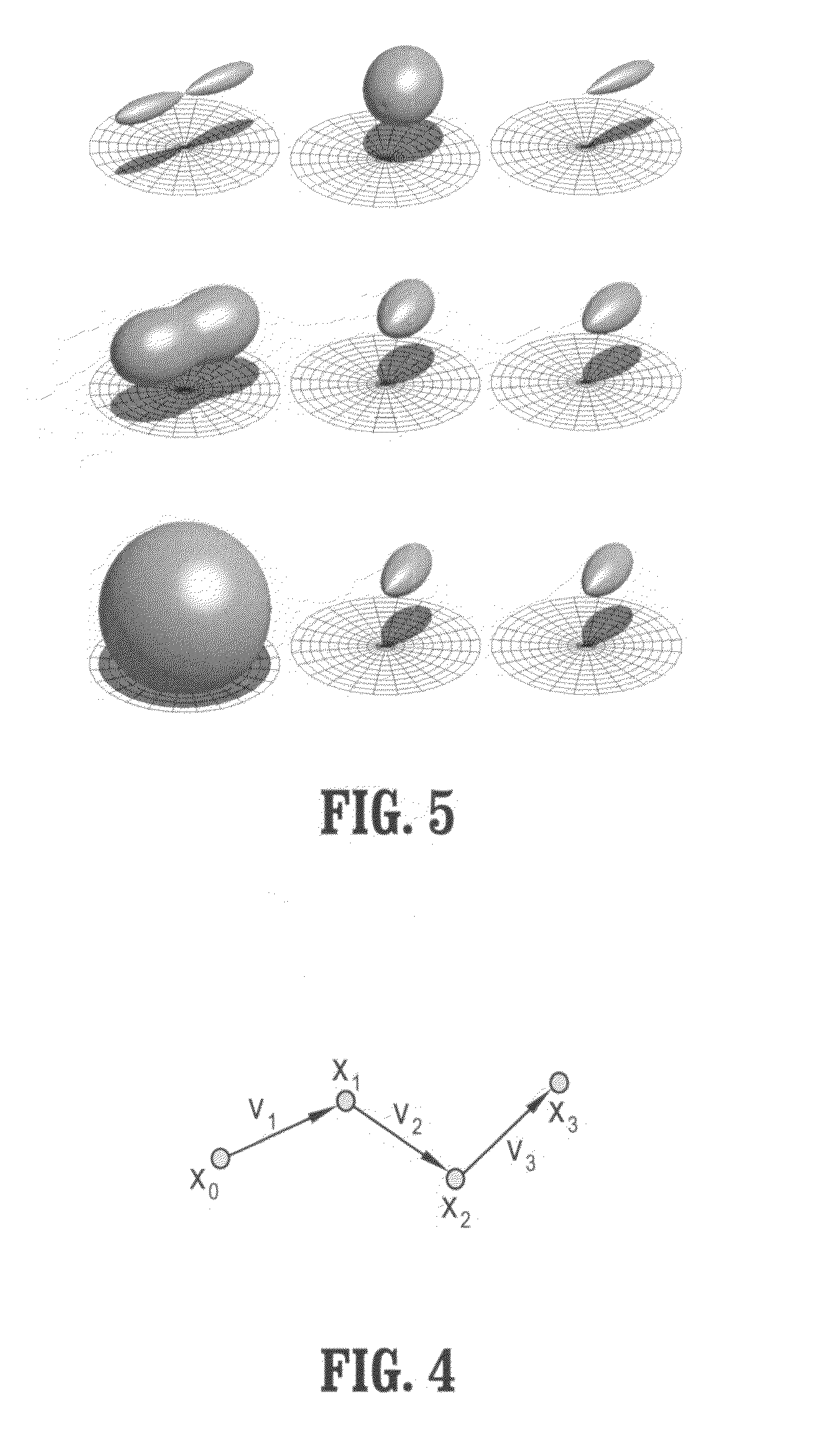

System and method for stochastic DT-MRI connectivity mapping on the GPU

InactiveUS7672790B2Highly parallelizableDrawing from basic elementsMagnetic measurementsGraphicsDiffusion

A graphics processing unit implemented method for fiber tract mapping from diffusion tensor magnetic resonance imaging data includes providing a diffusion tensor magnetic resonance brain image volume, initializing a set of fiber positions in a 3D set of points, fiber displacements, and a posterior distribution for an updated fiber displacement in terms of the initial displacements and diffusion tensors, randomly sampling a set of updated fiber displacements from said posterior distribution, computing a new set of fiber positions from said initial fiber positions and said updated fiber displacements, wherein a fiber path comprises a set of fiber points connected by successive fiber displacements, accumulating connectivity values in each point of said 3D set of points by additive alpha-blending a scaled value if a fiber path has passed through a point and adding zero if not, and rendering said connectivity values.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

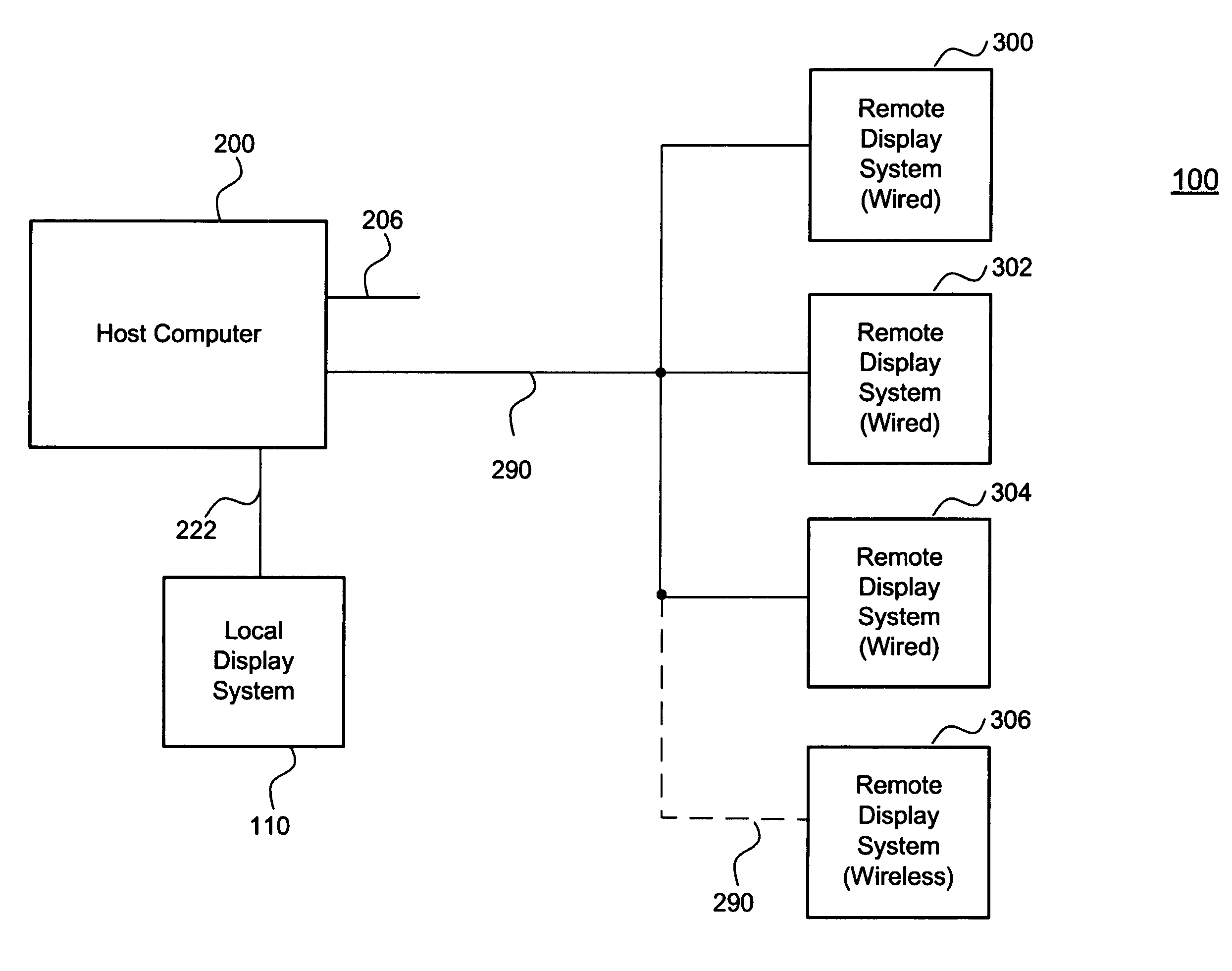

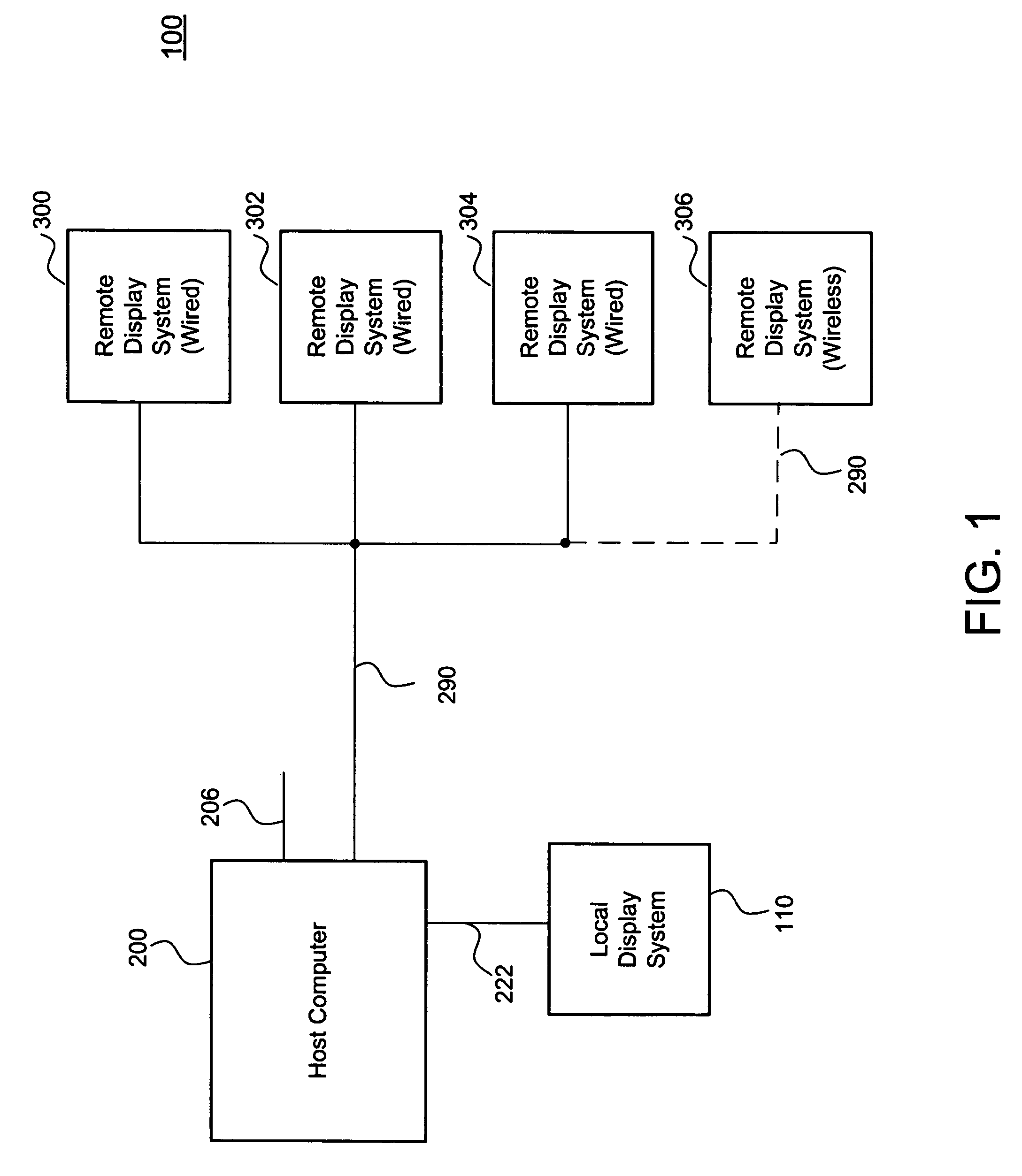

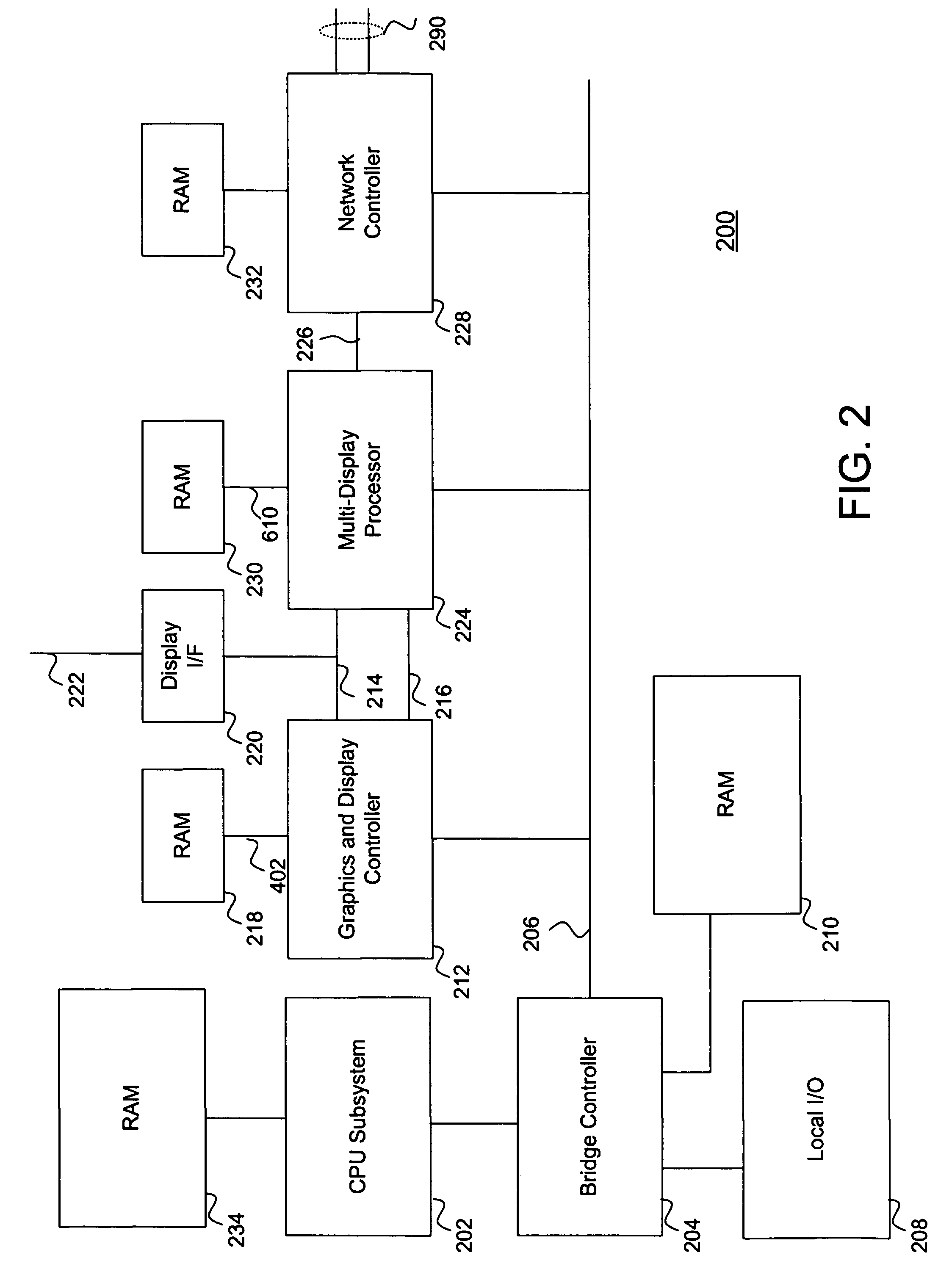

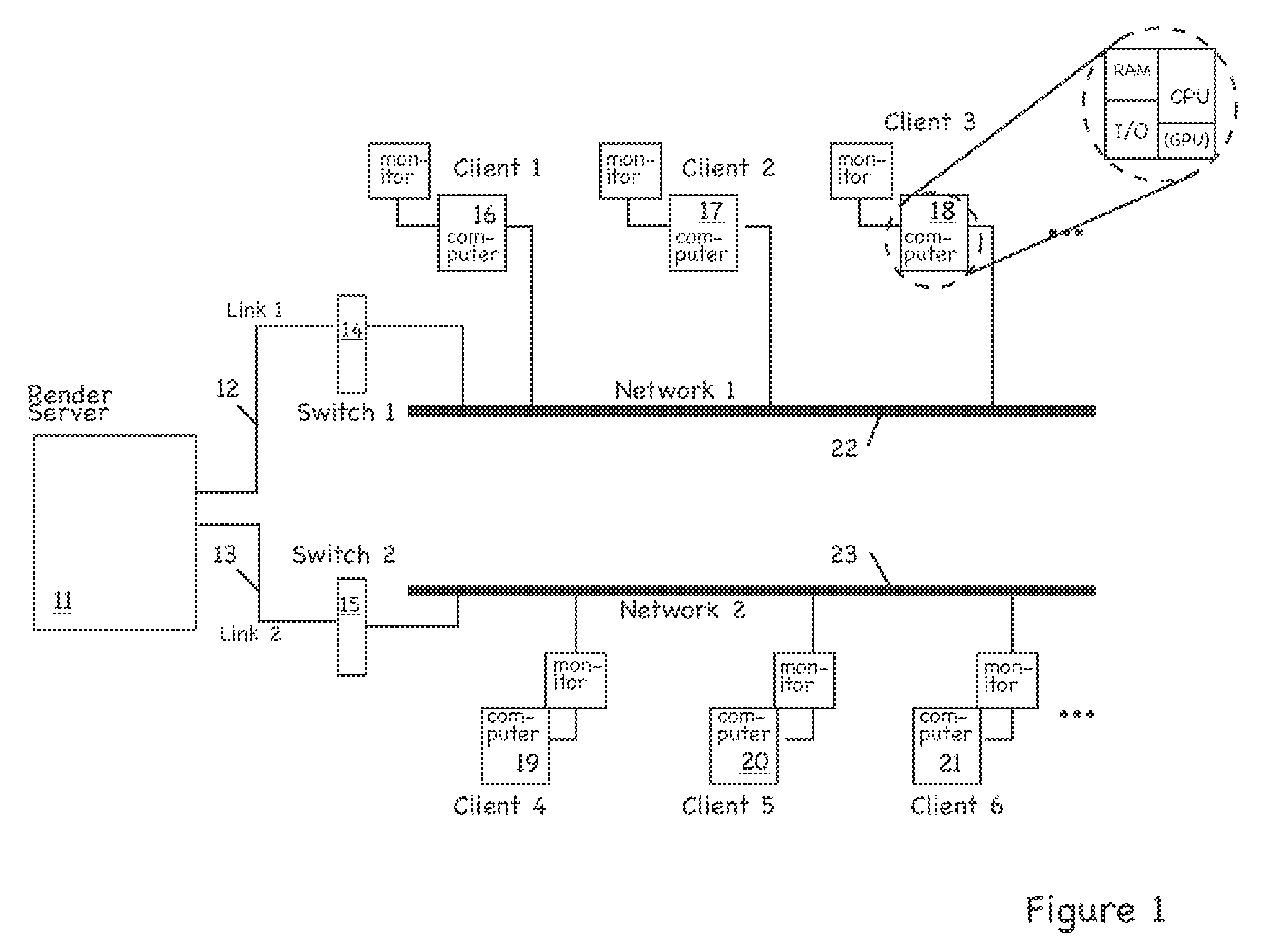

Computer system for supporting multiple remote displays

ActiveUS7667707B1Increase ratingsLower latencyTelevision system detailsCathode-ray tube indicatorsTraffic capacityGraphics

A multi-display computer system comprises a host computer system that processes windowed desktop environments for multiple remote displays, multiple users or a combination of the two. For each display and for each frame, the multi-display processor responsively manages each necessary portion of a windowed desktop environment. The necessary portions of the windowed desktop environment are further processed, encoded, and where necessary, transmitted over the network to the remote display for each user. Embodiments integrate the multi-display processor with the graphics processing unit, network controller, main memory controller or a combination of the three. The encoding process is optimized for network traffic and special attention is made to assure that all users have low latency interactive capabilities.

Owner:III HLDG 1

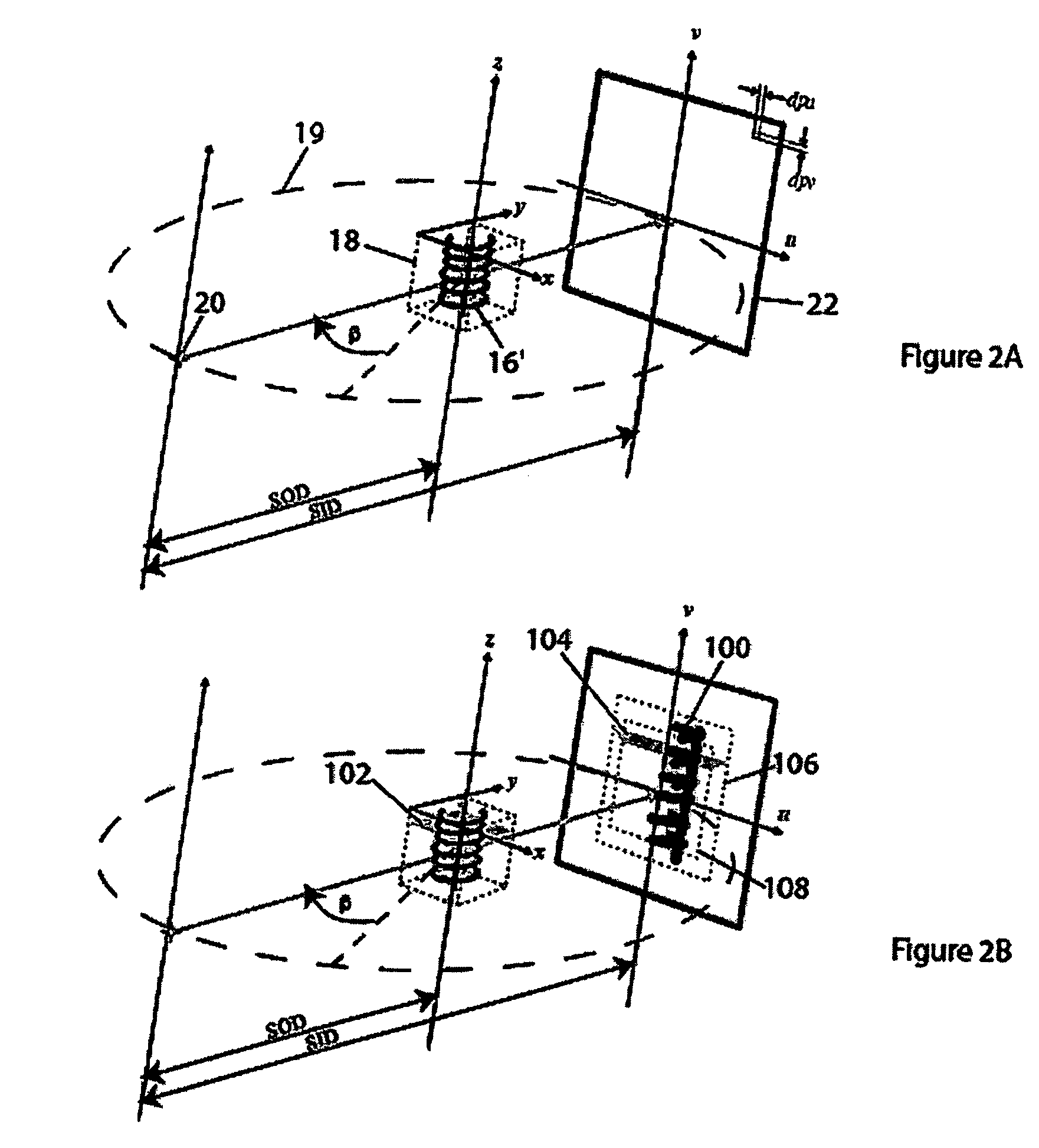

Method and apparatus for reconstruction of 3D image volumes from projection images

ActiveUS7693318B1Reconstruction from projectionMaterial analysis using wave/particle radiationGraphicsVoxel

The invention provides improvements in reconstructive imaging of the type in which a volume is reconstructed from a series of measured projection images (or other two-dimensional representations) by utilizing the capabilities of graphics processing units (GPUs). In one aspect, the invention configures a GPU to reconstruct a volume by initializing an estimated density distribution of that volume to arbitrary values in a three-dimensional voxel-based matrix and, then, determining the actual density distribution iteratively by, for each of the measured projections, (a) forward-projecting the estimated volume computationally and comparing the forward-projection with the measured projection, (b) generating a correction term for each pixel in the forward-projection based on that comparison, and (c) back-projecting the correction term for each pixel in the forward-projection onto all voxels of the volume that were mapped into that pixel in the forward-projection.

Owner:PME IP

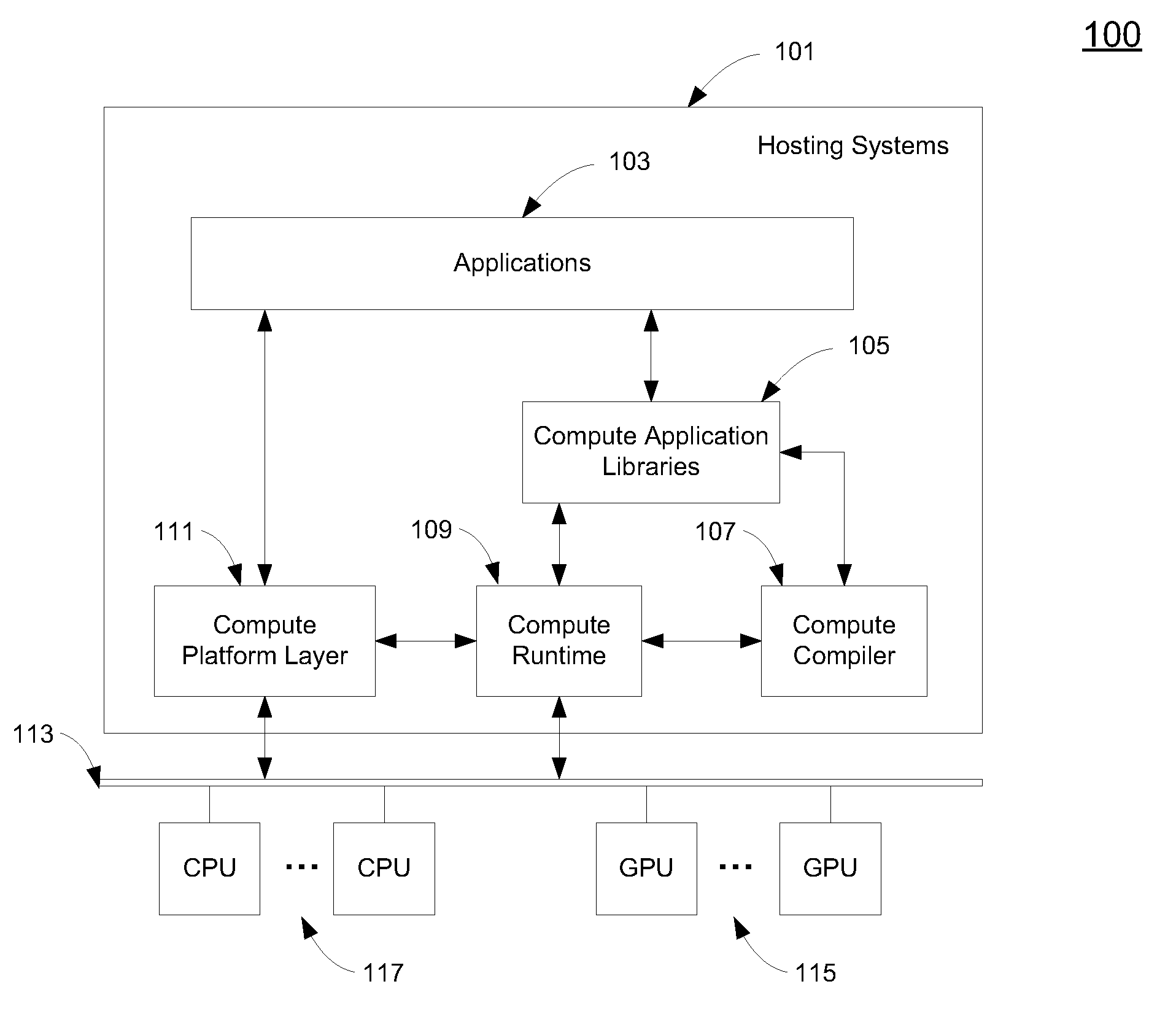

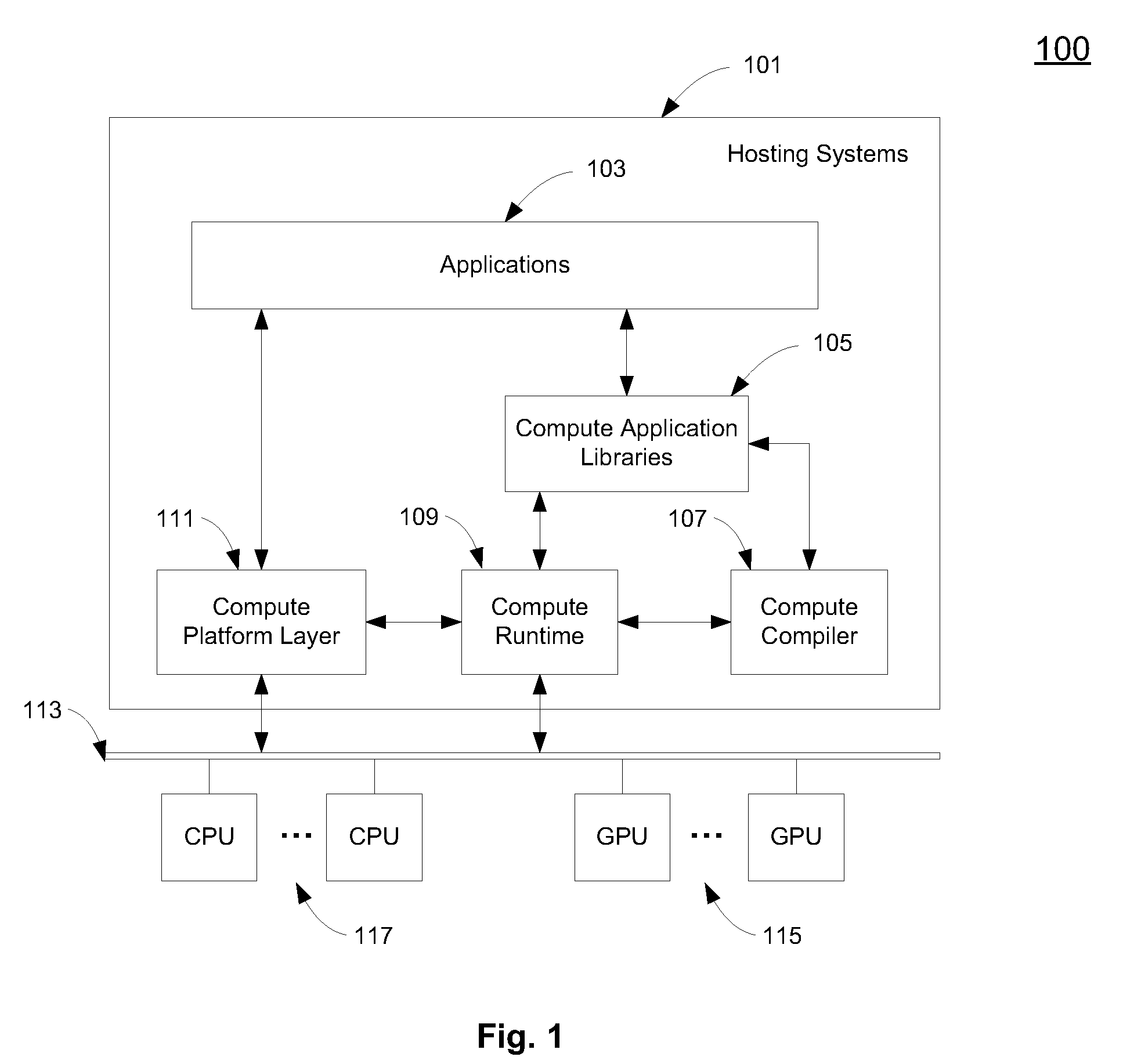

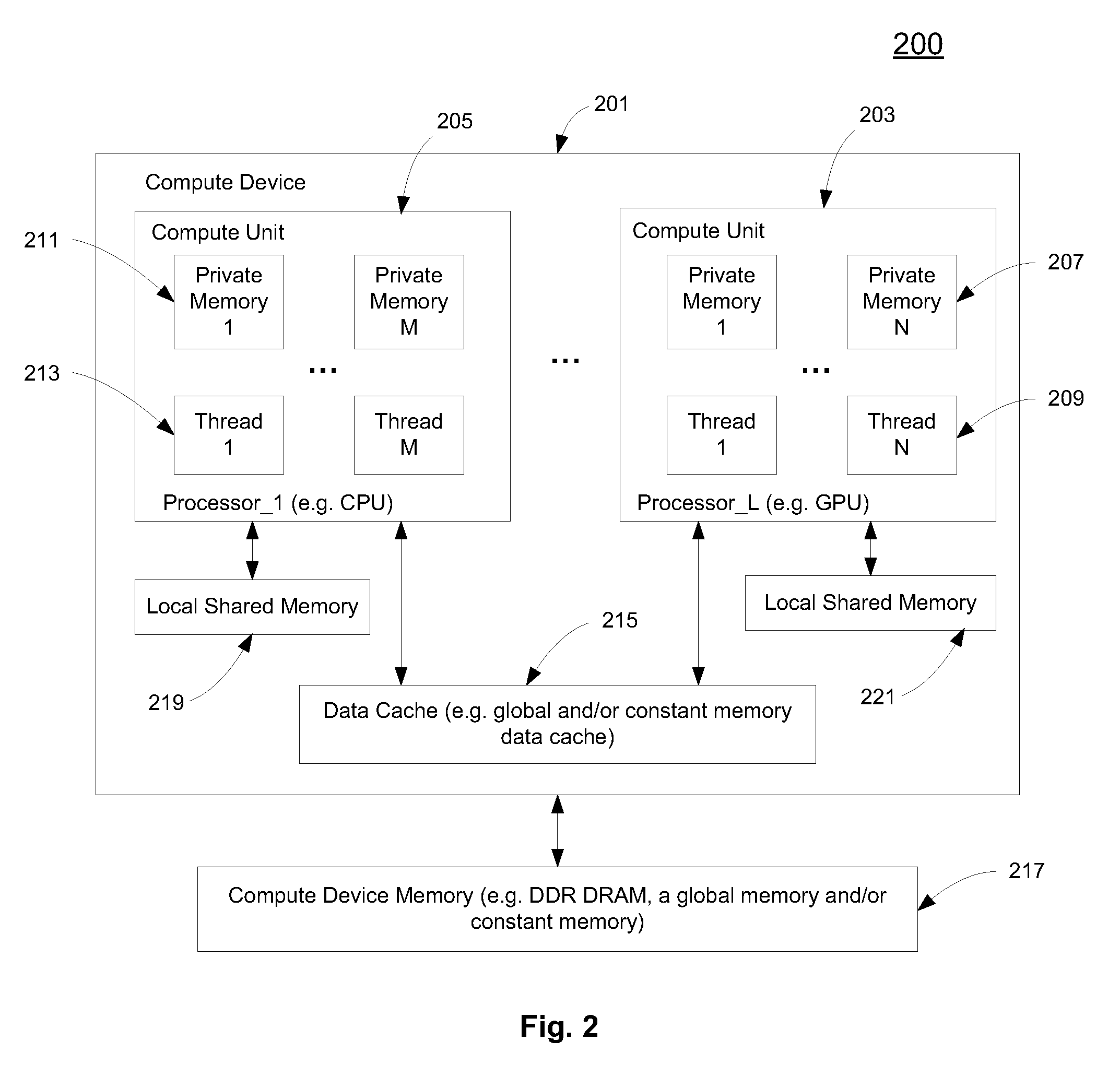

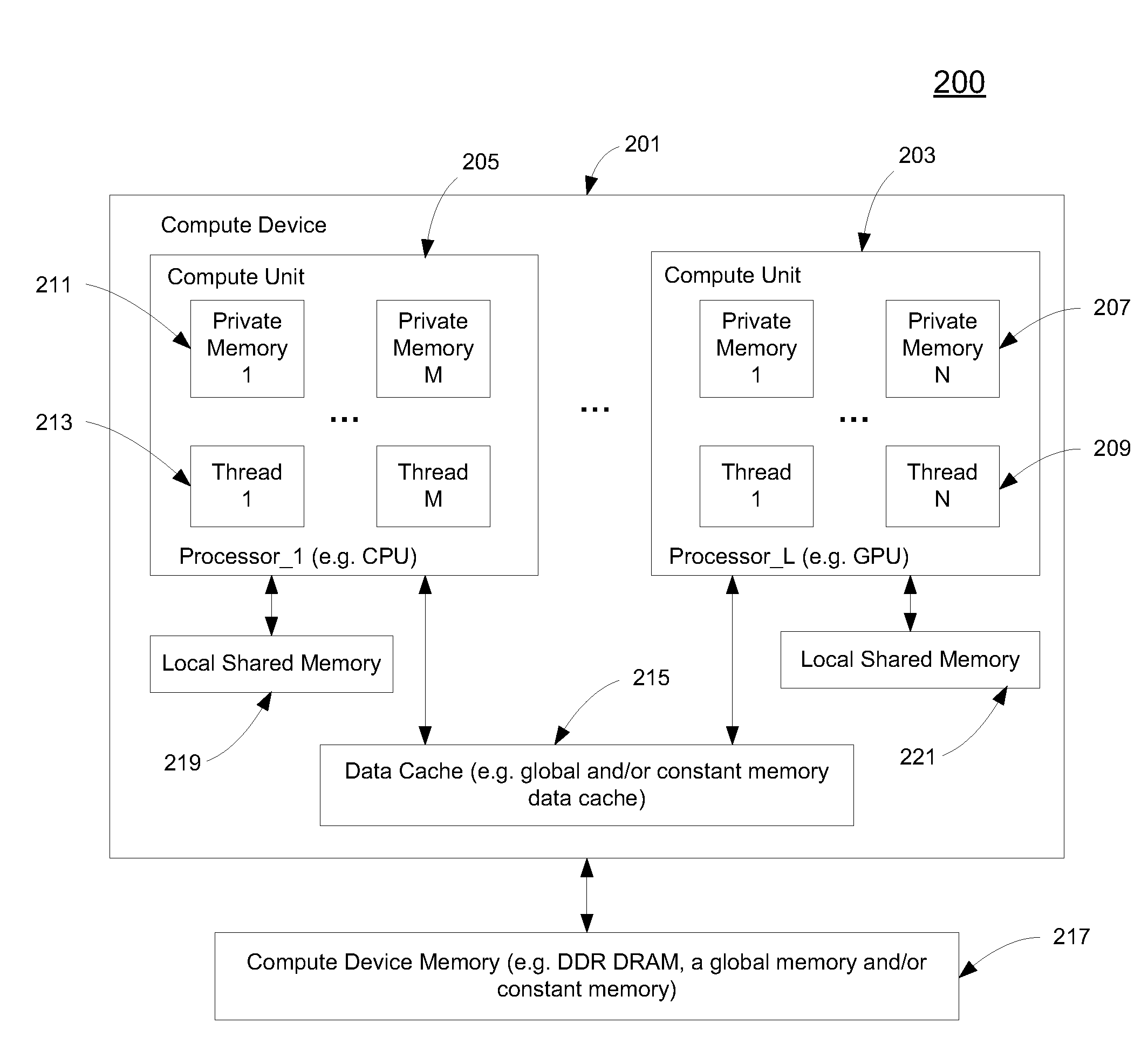

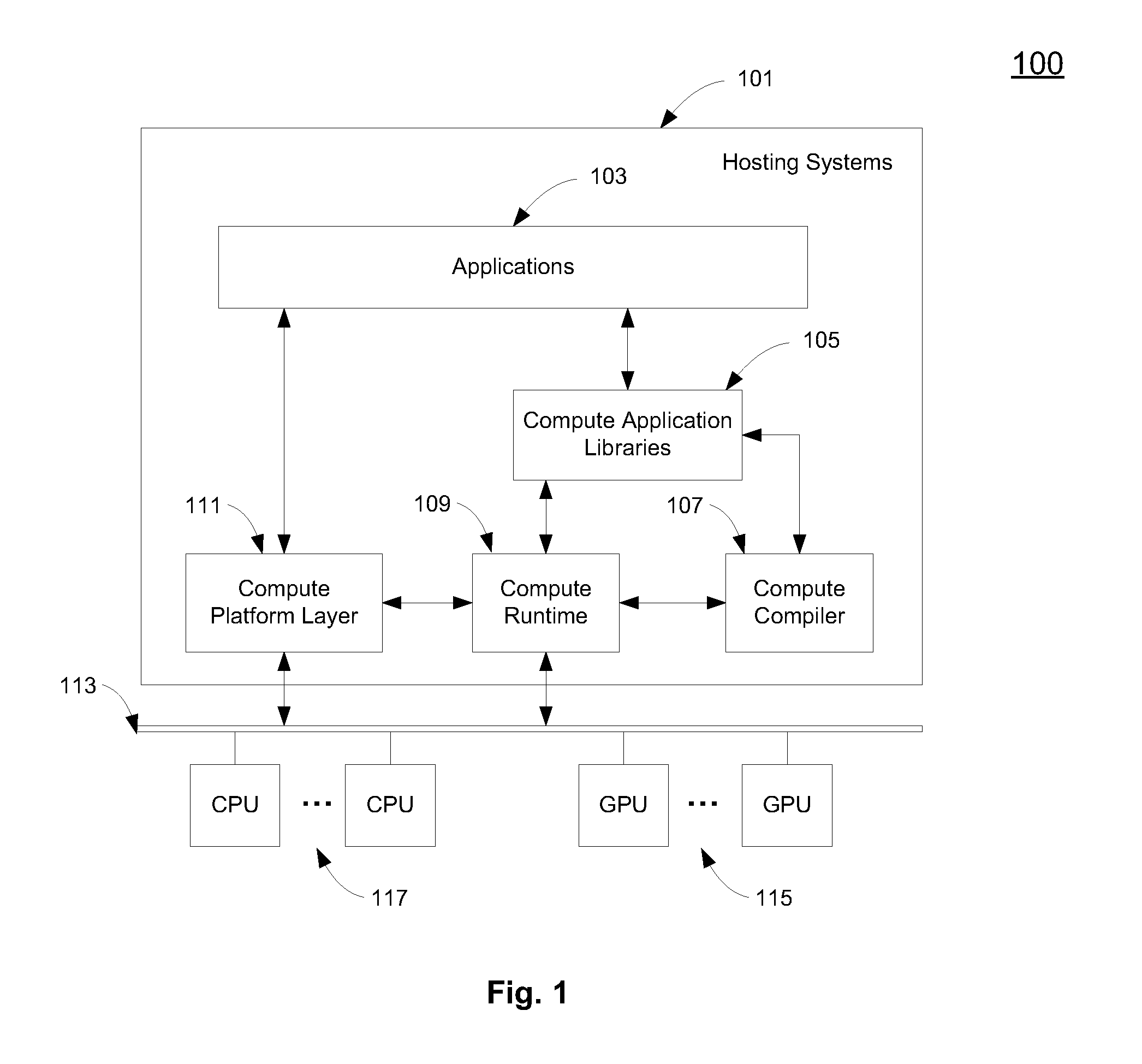

Application programming interfaces for data parallel computing on multiple processors

ActiveUS20090307699A1Interprogram communicationConcurrent instruction executionGraphicsApplication programming interface

A method and an apparatus for a parallel computing program calling APIs (application programming interfaces) in a host processor to perform a data processing task in parallel among compute units are described. The compute units are coupled to the host processor including central processing units (CPUs) and graphic processing units (GPUs). A program object corresponding to a source code for the data processing task is generated in a memory coupled to the host processor according to the API calls. Executable codes for the compute units are generated from the program object according to the API calls to be loaded for concurrent execution among the compute units to perform the data processing task.

Owner:APPLE INC

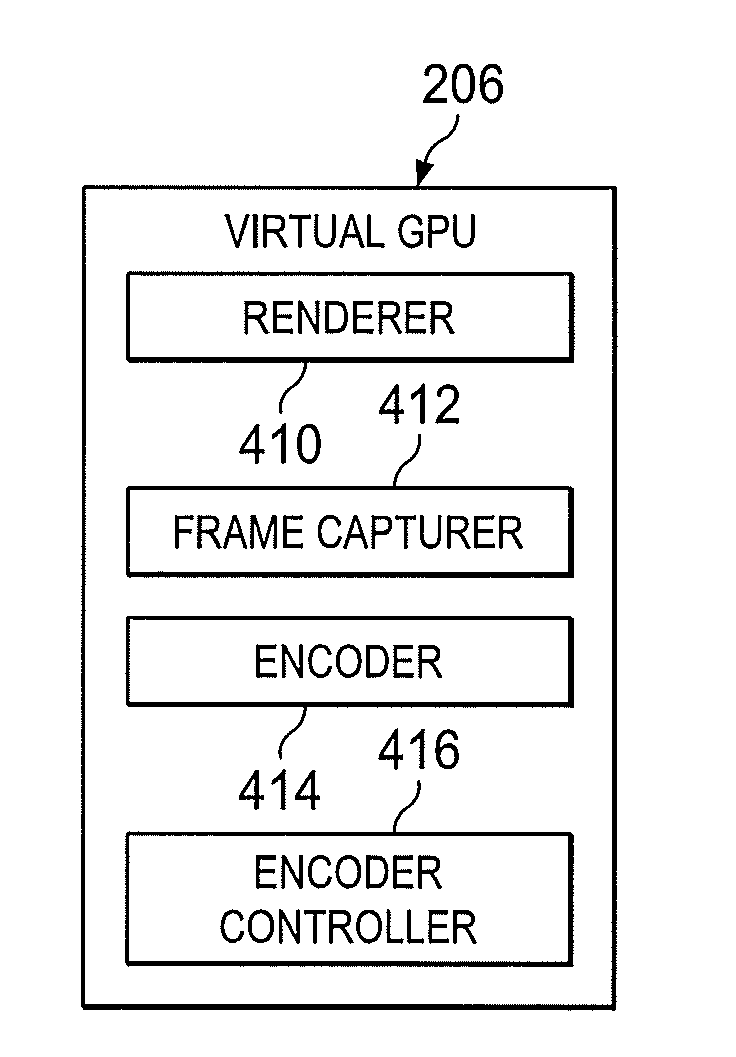

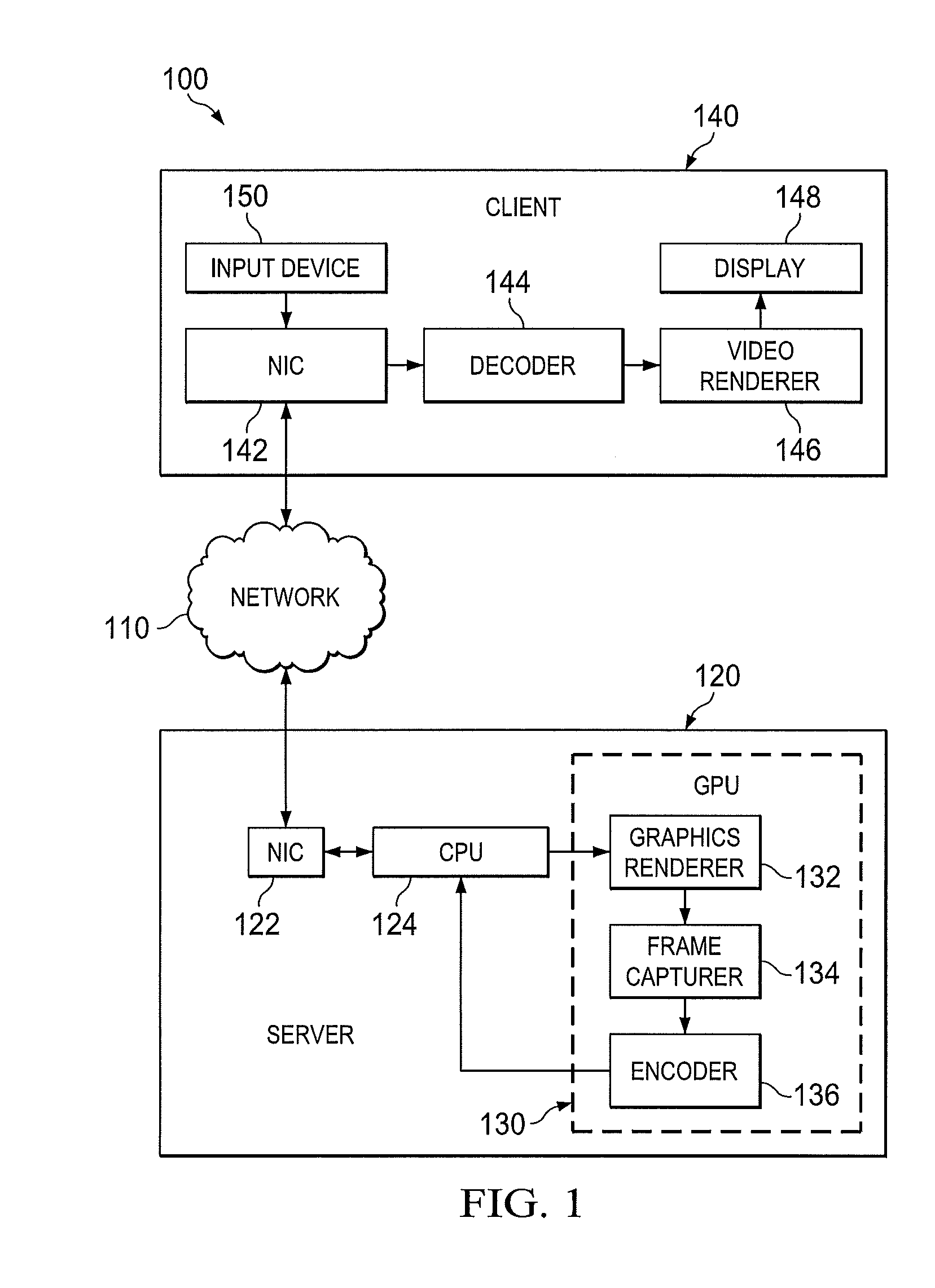

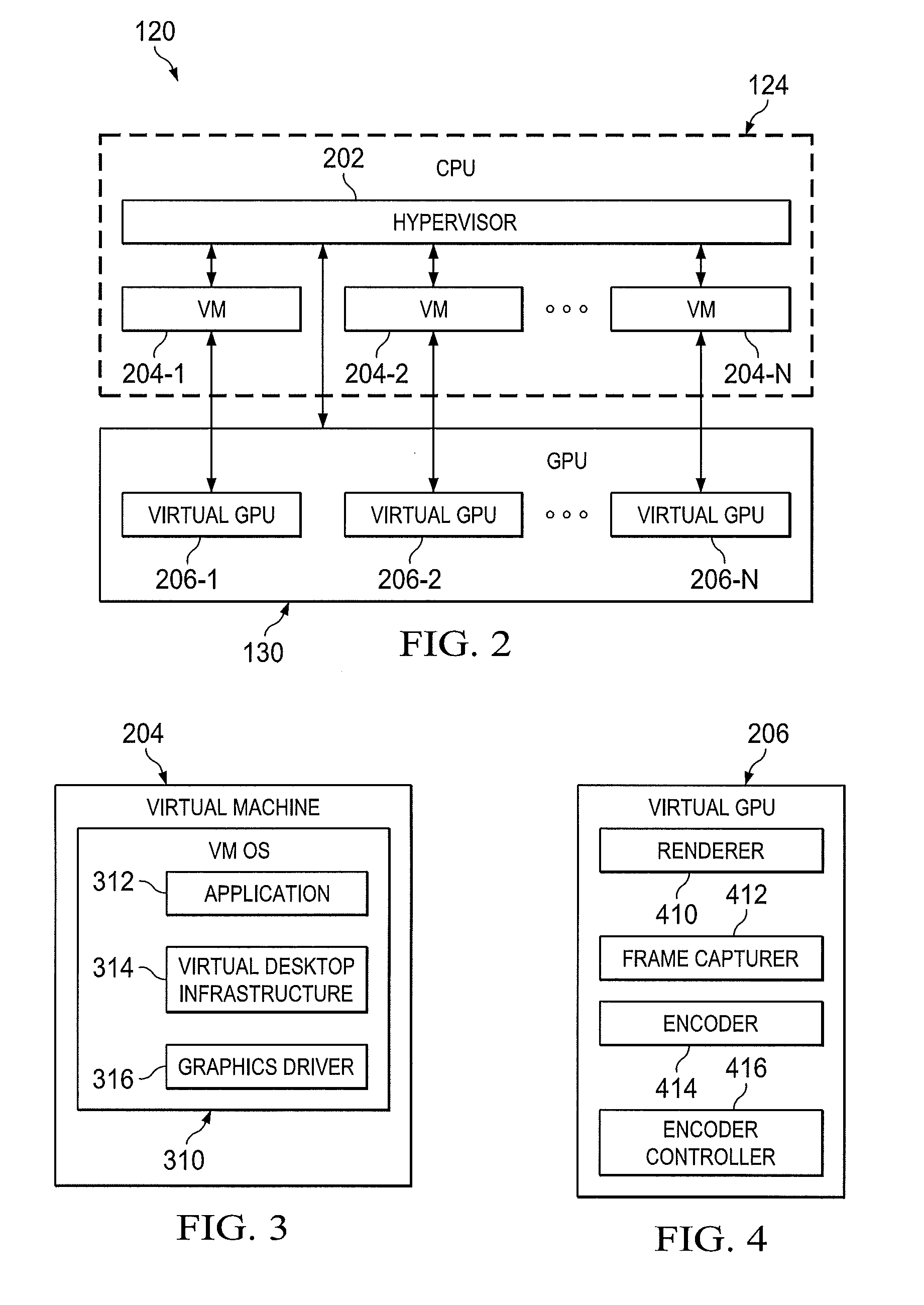

Encoder controller graphics processing unit and method of encoding rendered graphics

InactiveUS20140286390A1Color television with pulse code modulationColor television with bandwidth reductionGraphicsEncoder

An encoder controller graphics processing unit (GPU) and a method of encoding rendered graphics. One embodiment of the encoder controller GPU includes: (1) an encoder operable to encode rendered frames of a video stream for transmission to a client, and (2) an encoder controller configured to detect a mark embedded in a rendered frame of the video stream and cause the encoder to begin encoding.

Owner:NVIDIA CORP

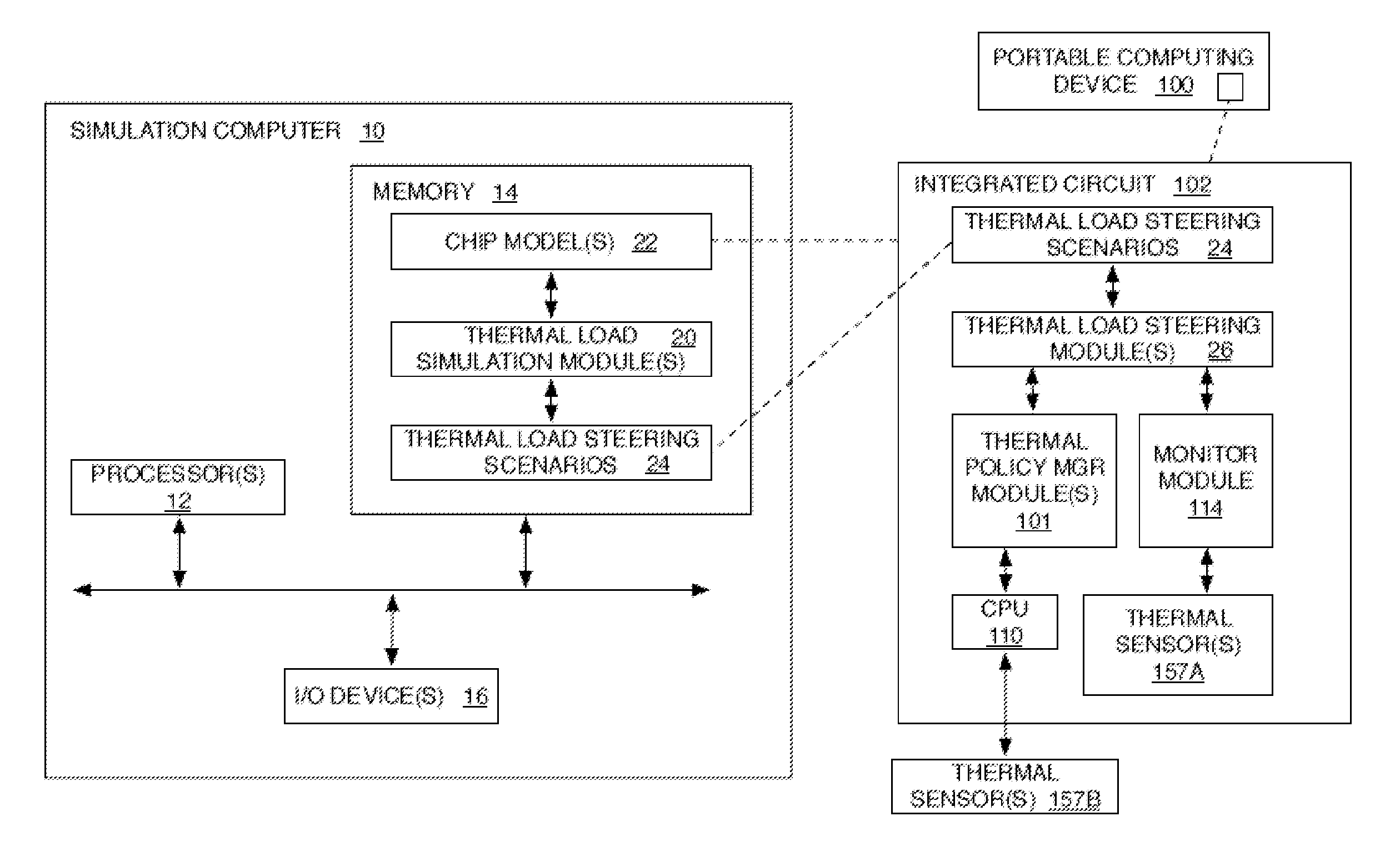

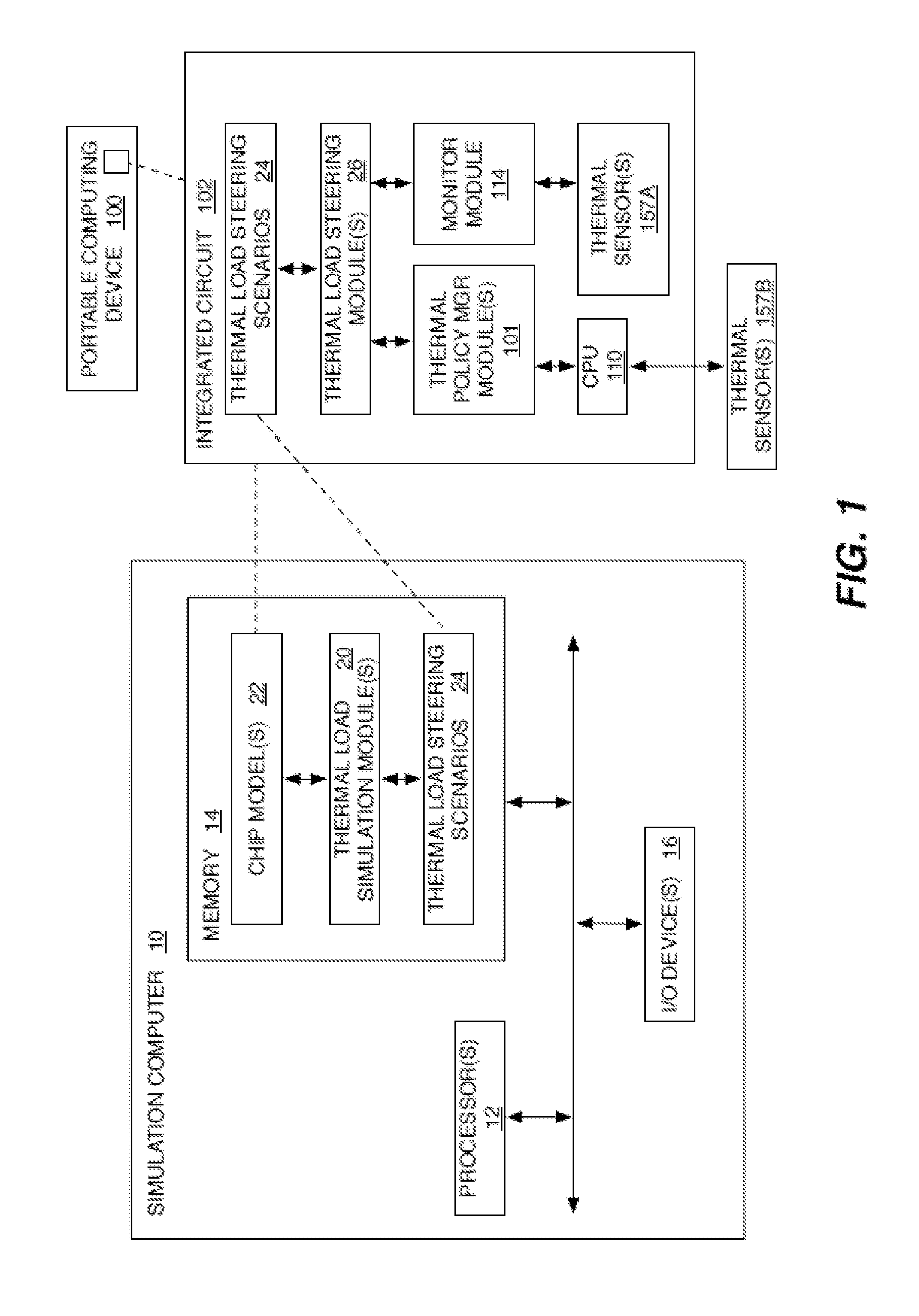

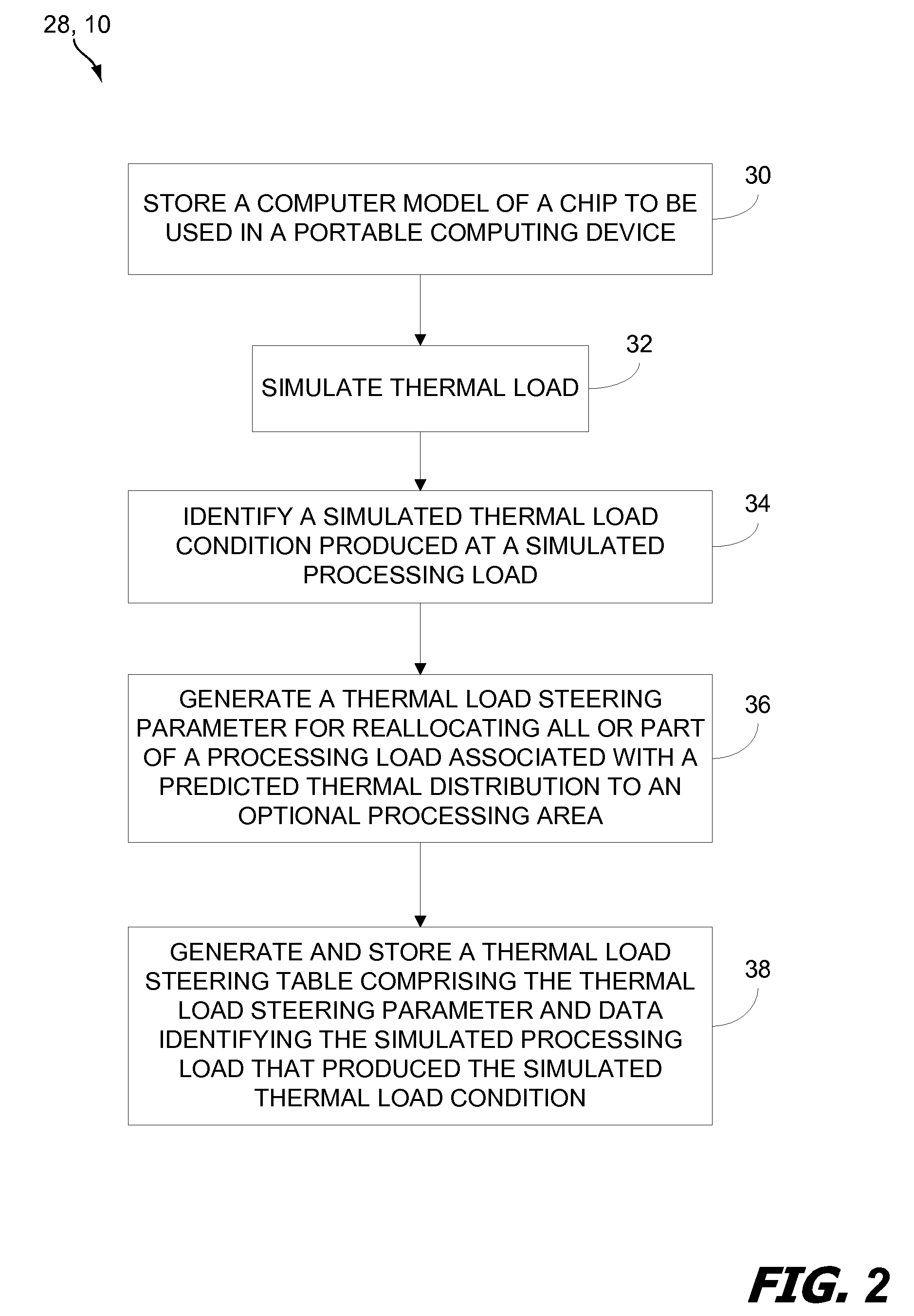

Method and system for thermal load management in a portable computing device

ActiveUS20120271481A1Reduce power densityLower quality of serviceEnergy efficient ICTDigital data processing detailsProcessingHeat energy

Methods and systems for leveraging temperature sensors in a portable computing device (“PCD”) are disclosed. The sensors may be placed within the PCD near known thermal energy producing components such as a central processing unit (“CPU”) core, graphical processing unit (“GPU”) core, power management integrated circuit (“PMIC”), power amplifier, etc. The signals generated by the sensors may be monitored and used to trigger drivers running on the processing units. The drivers are operable to cause the reallocation of processing loads associated with a given component's generation of thermal energy, as measured by the sensors. In some embodiments, the processing load reallocation is mapped according to parameters associated with pre-identified thermal load scenarios. In other embodiments, the reallocation occurs in real time, or near real time, according to thermal management solutions generated by a thermal management algorithm that may consider CPU and / or GPU performance specifications along with monitored sensor data.

Owner:QUALCOMM INC

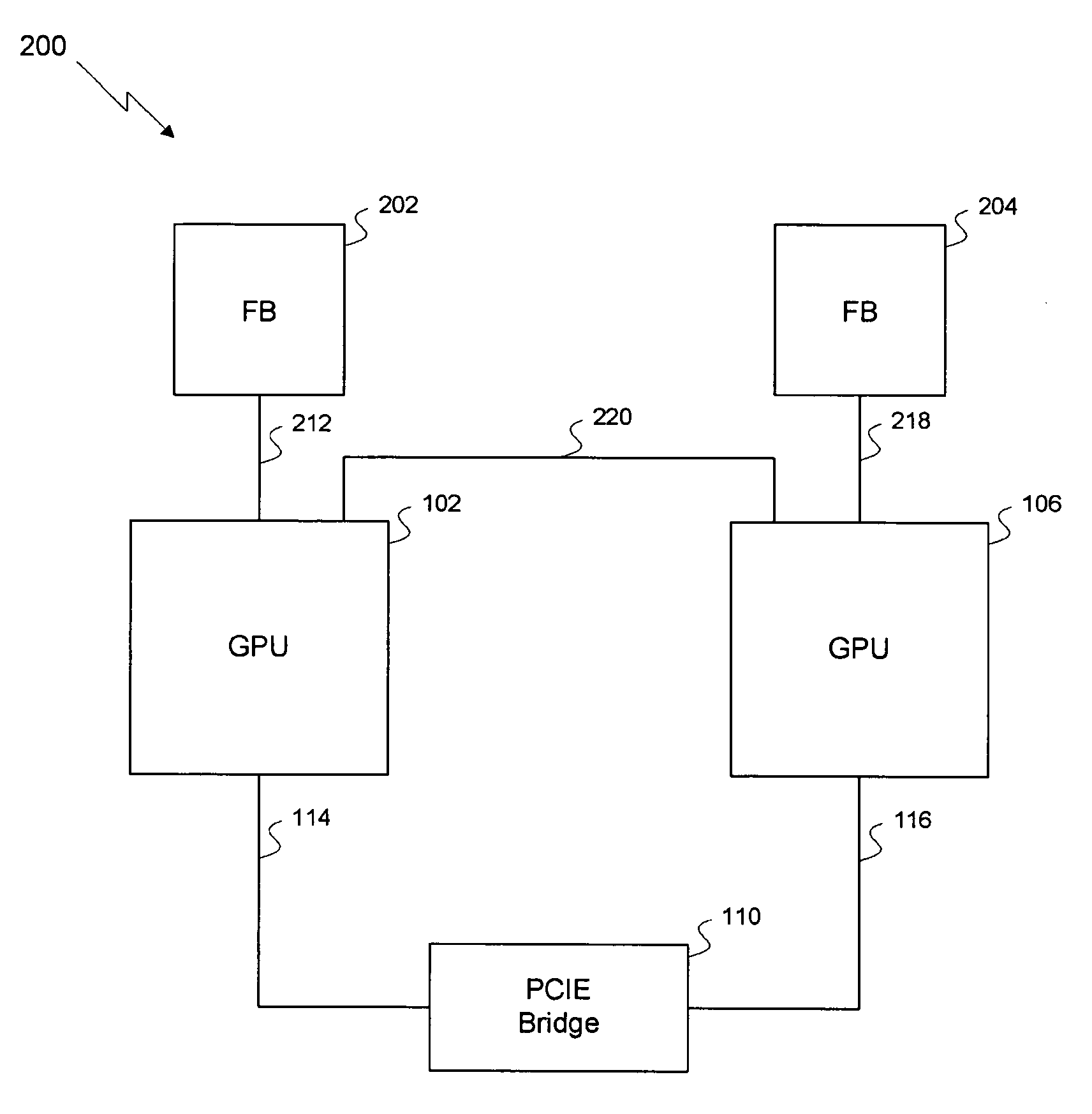

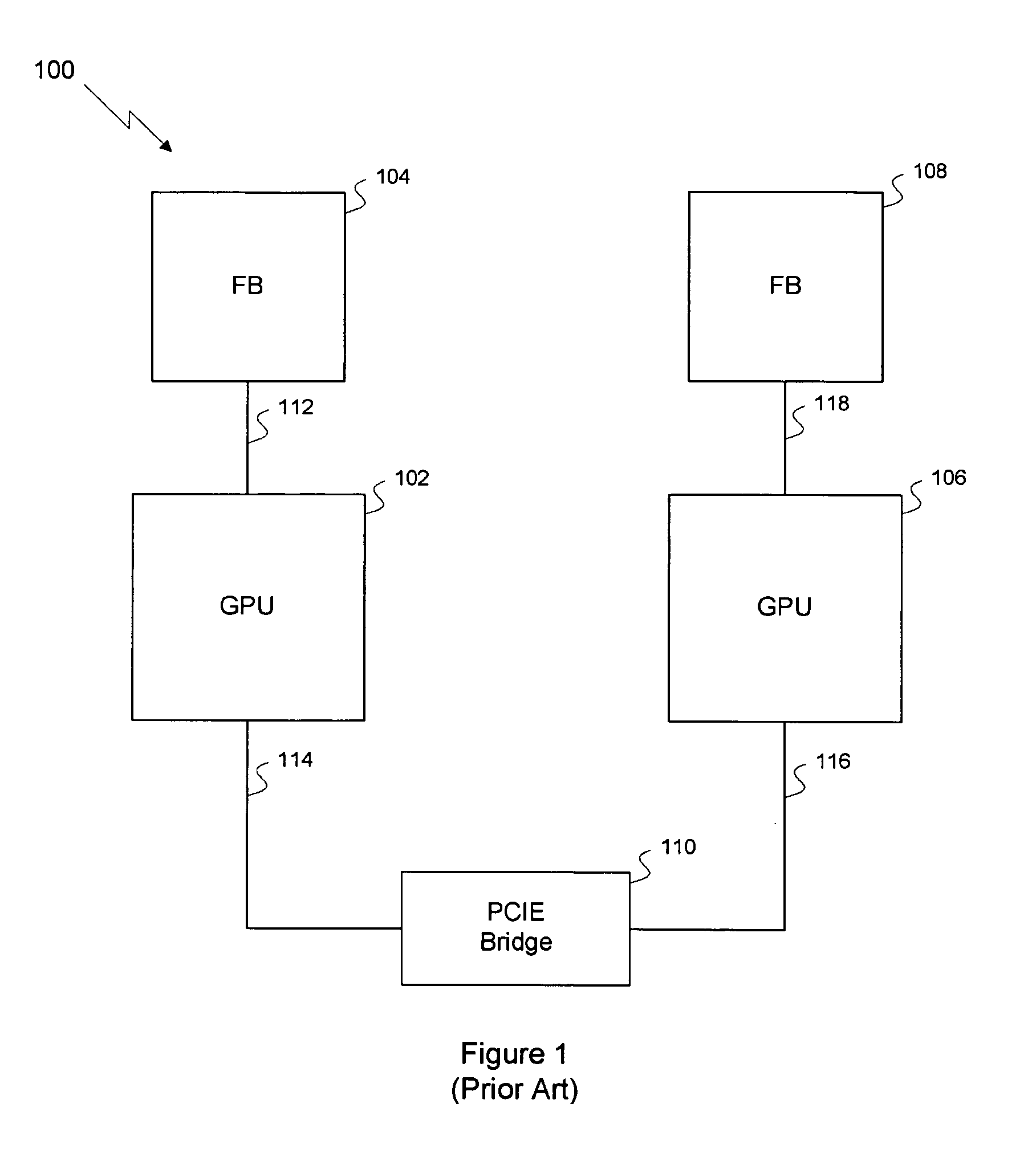

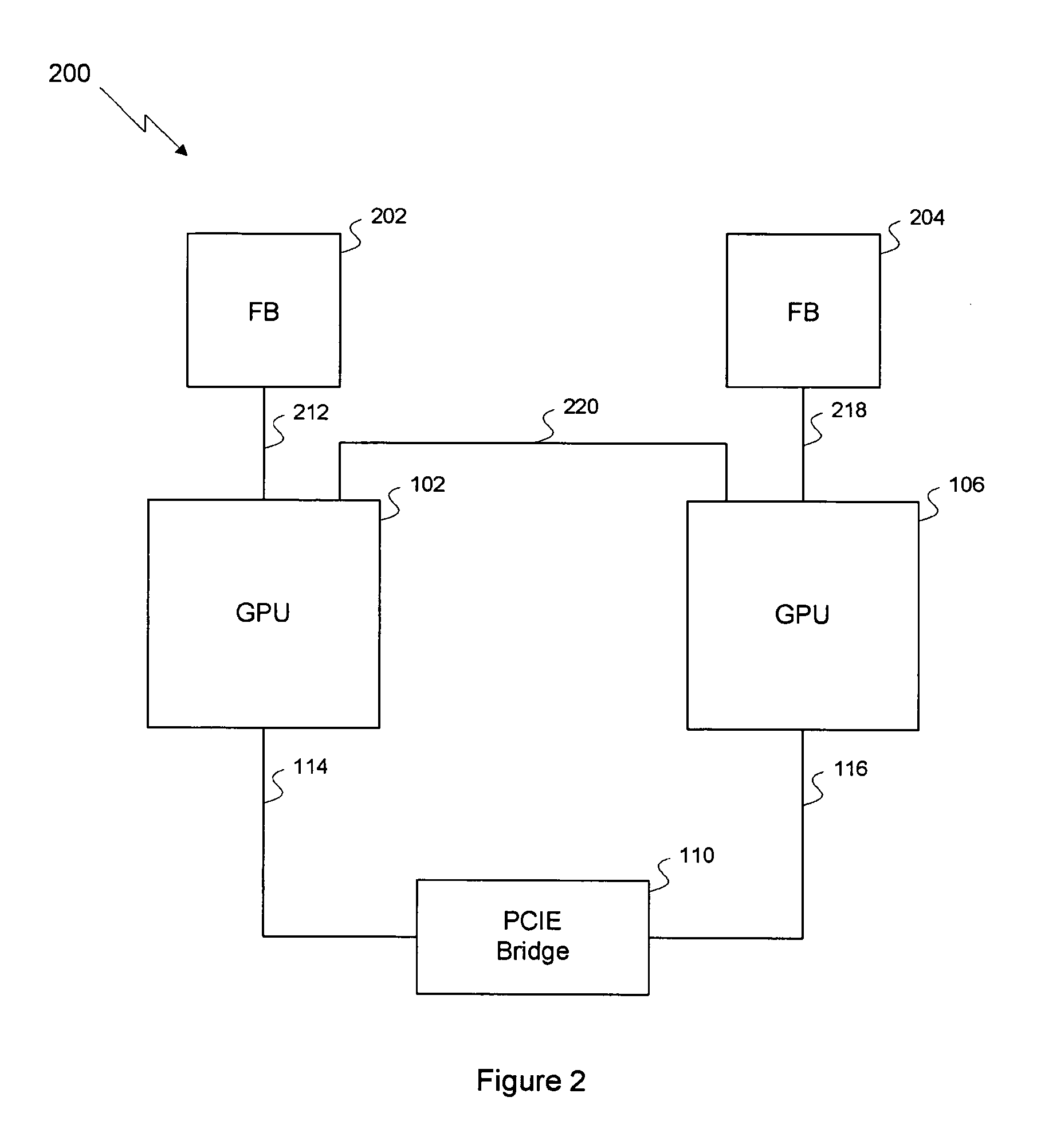

Efficient multi-chip GPU

ActiveUS7616206B1Raise the ratioMore scalableCathode-ray tube indicatorsMultiple digital computer combinationsData compressionExtensibility

One embodiment of the invention sets forth a technique for efficiently combining two graphics processing units (“GPUs”) to enable an improved price-performance tradeoff and better scalability relative to prior art multi-GPU designs. Each GPU's memory interface is split into a first part coupling the GPU to its respective frame buffer and a second part coupling the GPU directly to the other GPU, creating an inter-GPU private bus. The private bus enables higher bandwidth communications between the GPUs compared to conventional communications through a PCI Express™ bus. Performance and scalability are further improved through render target interleaving; render-to-texture data duplication; data compression; using variable-length packets in GPU-to-GPU transmissions; using the non-data pins of the frame buffer interfaces to transmit data signals; duplicating vertex data, geometry data and push buffer commands across both GPUs; and performing all geometry processing on each GPU.

Owner:NVIDIA CORP

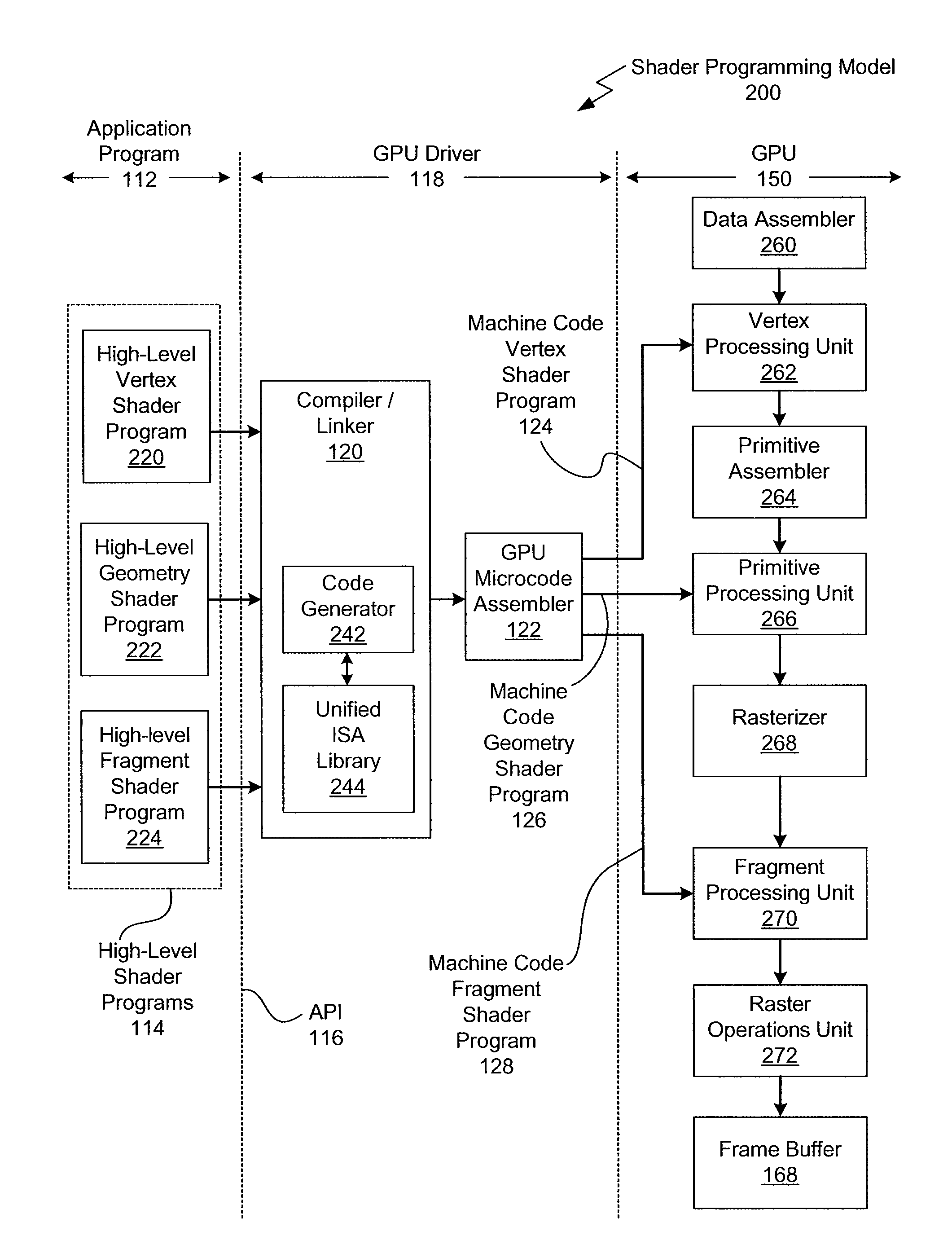

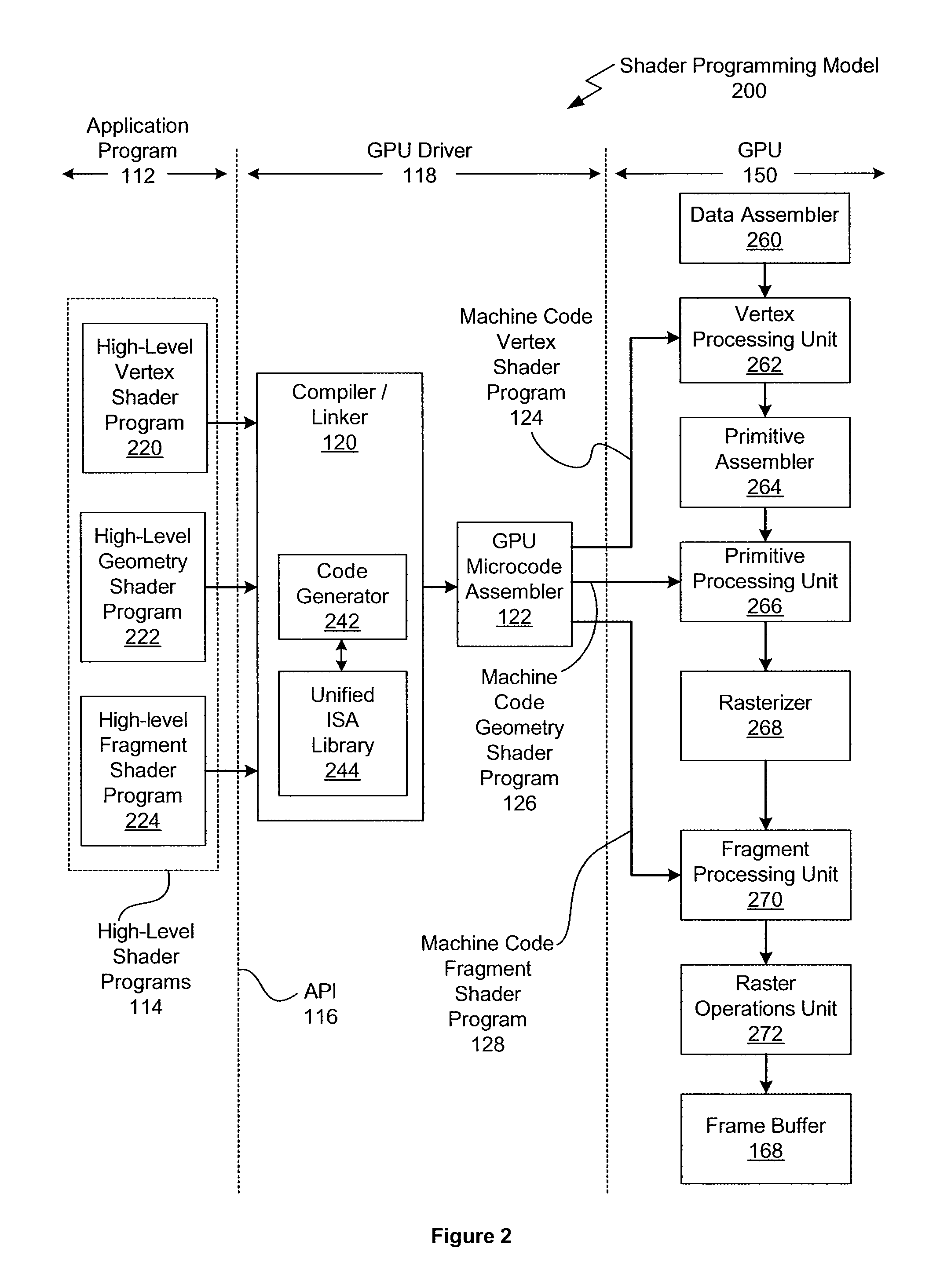

Integer-based functionality in a graphics shading language

One embodiment of the present invention sets forth a technique for improving the flexibility and programmability of a graphics pipeline by adding application programming interface (API) extensions to the OpenGL Shading Language (GLSL) that provide native support for integer data types and operations. The integer API extensions span from the API to the hardware execution units within a graphics processing unit (GPU), thereby providing native integer support throughout the graphics pipeline.

Owner:NVIDIA CORP

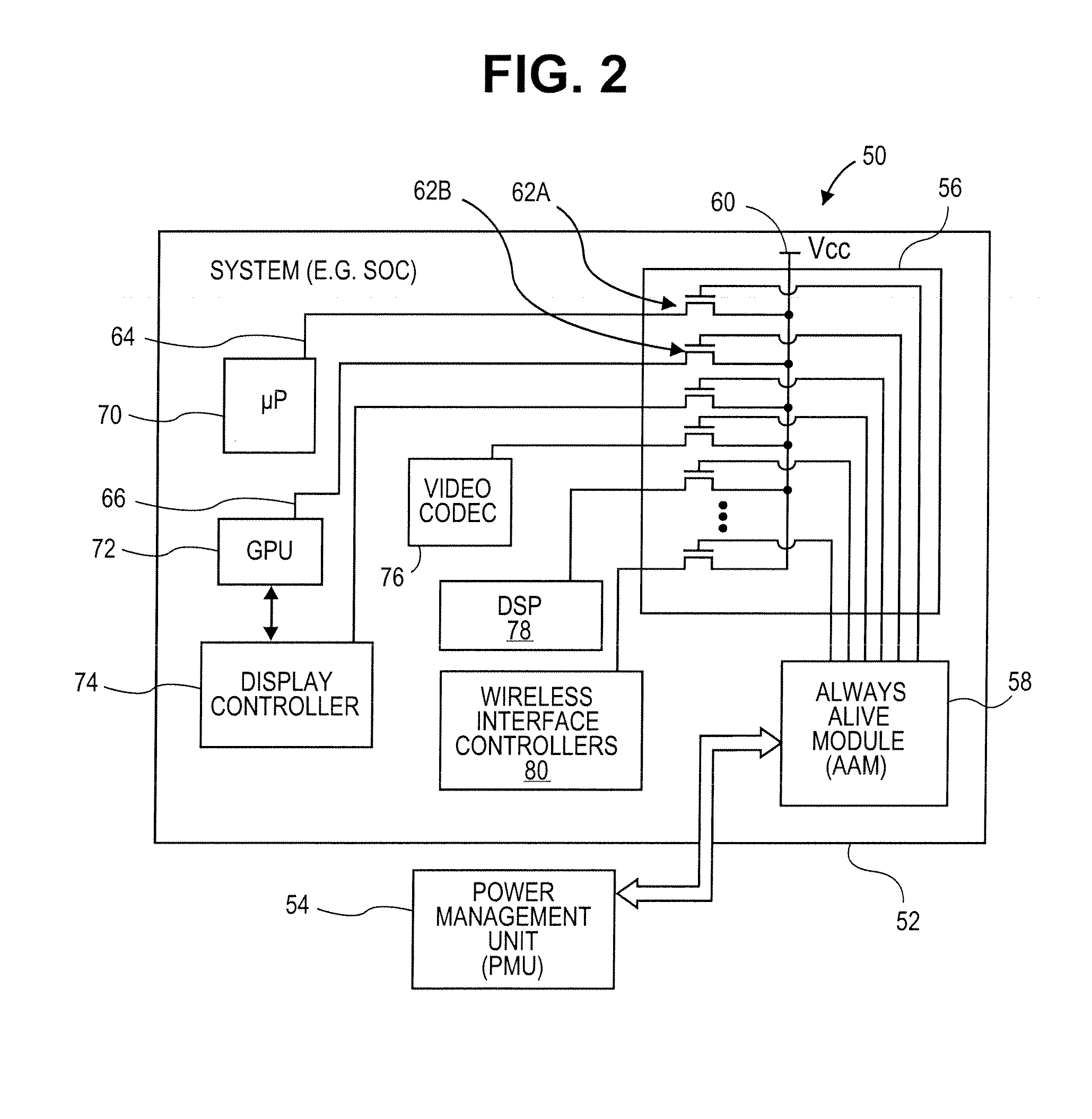

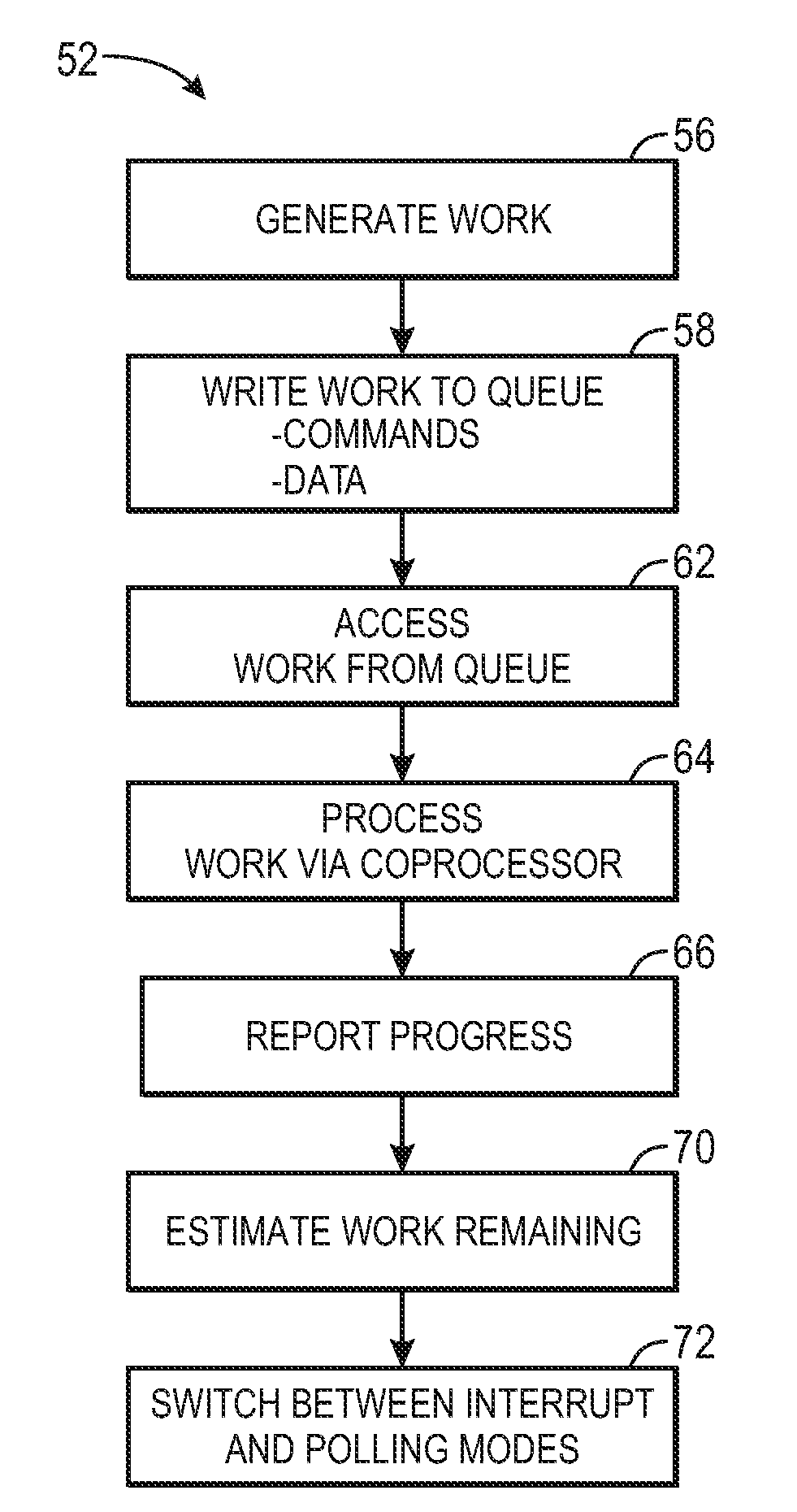

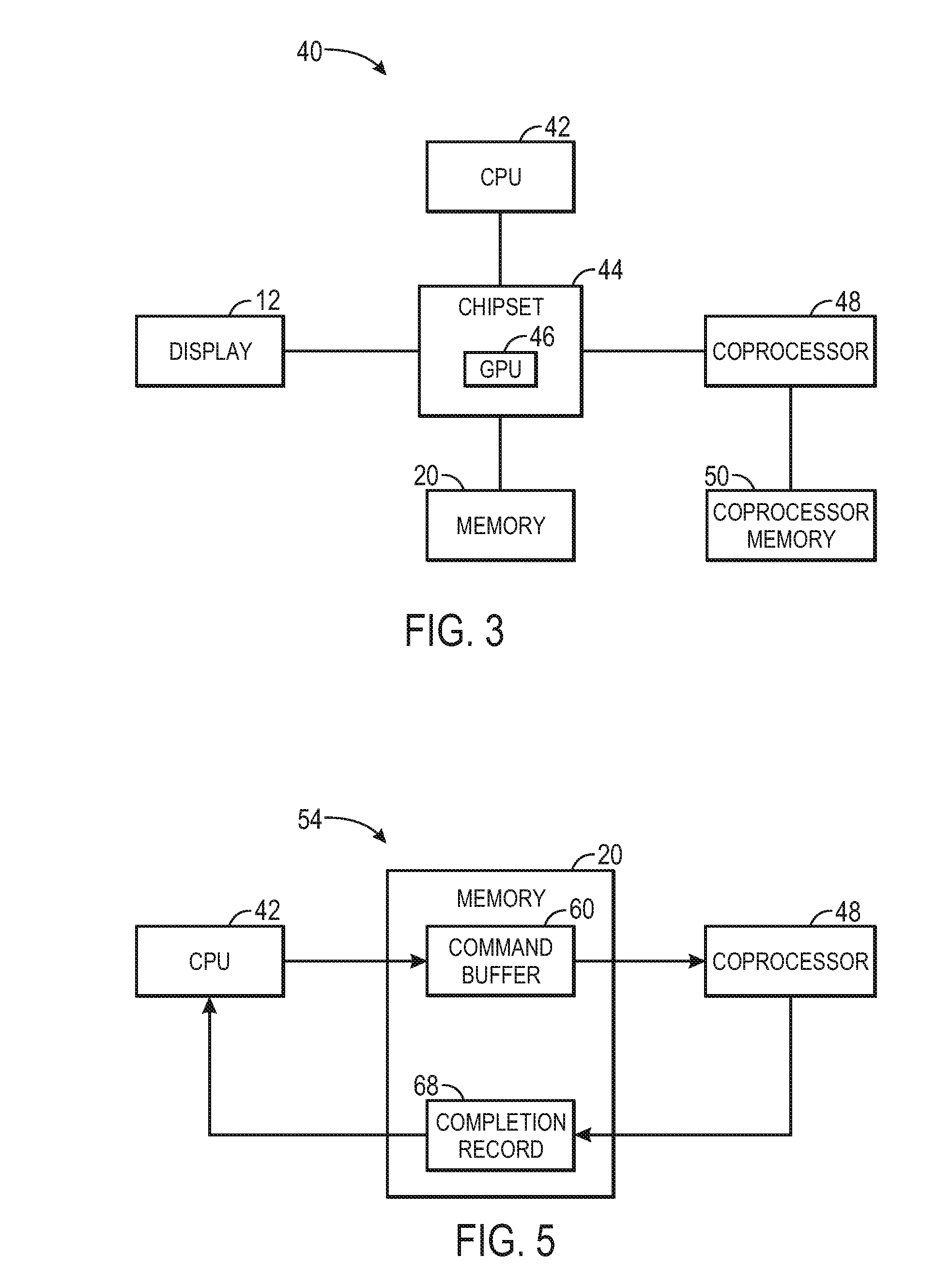

Power-efficient interaction between multiple processors

ActiveUS20110023040A1Energy efficient ICTProgram control using stored programsGraphicsProcessing Instruction

A technique for processing instructions in an electronic system is provided. In one embodiment, a processor of the electronic system may submit a unit of work to a queue accessible by a coprocessor, such as a graphics processing unit. The coprocessor may process work from the queue, and write a completion record into a memory accessible by the processor. The electronic system may be configured to switch between a polling mode and an interrupt mode based on progress made by the coprocessor in processing the work. In one embodiment, the processor may switch from an interrupt mode to a polling mode upon completion of a threshold amount of work by the coprocessor. Various additional methods, systems, and computer program products are also provided.

Owner:APPLE INC

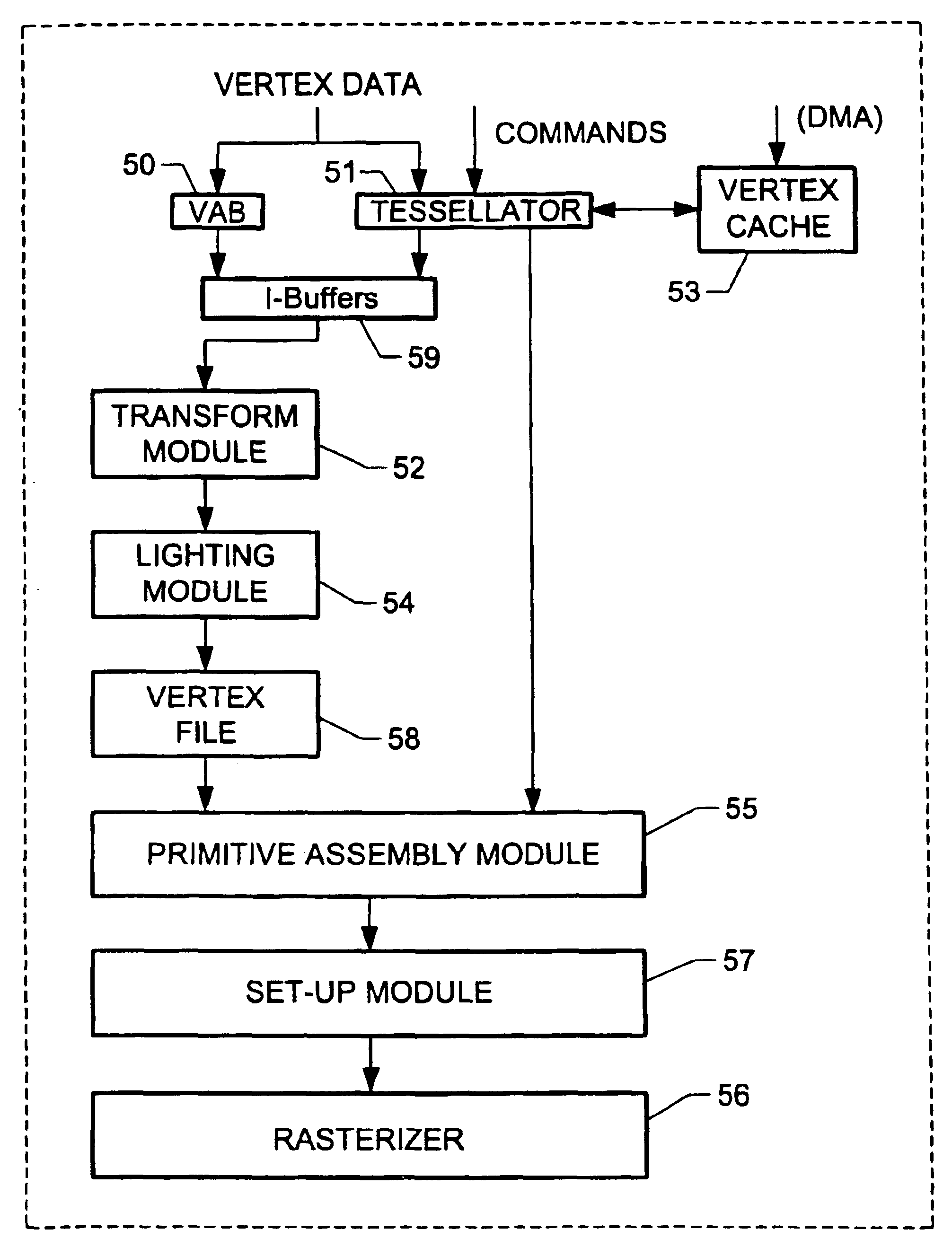

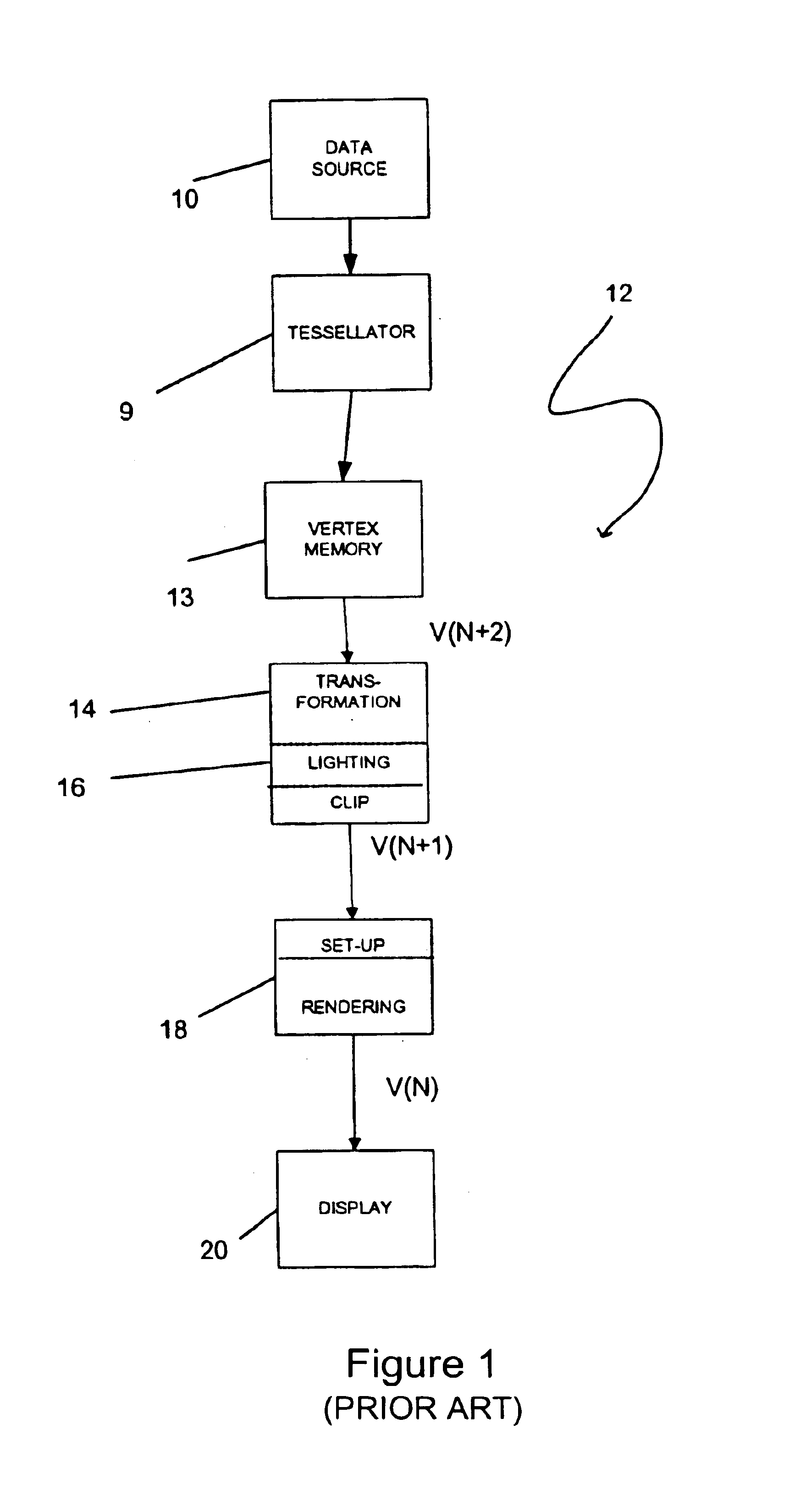

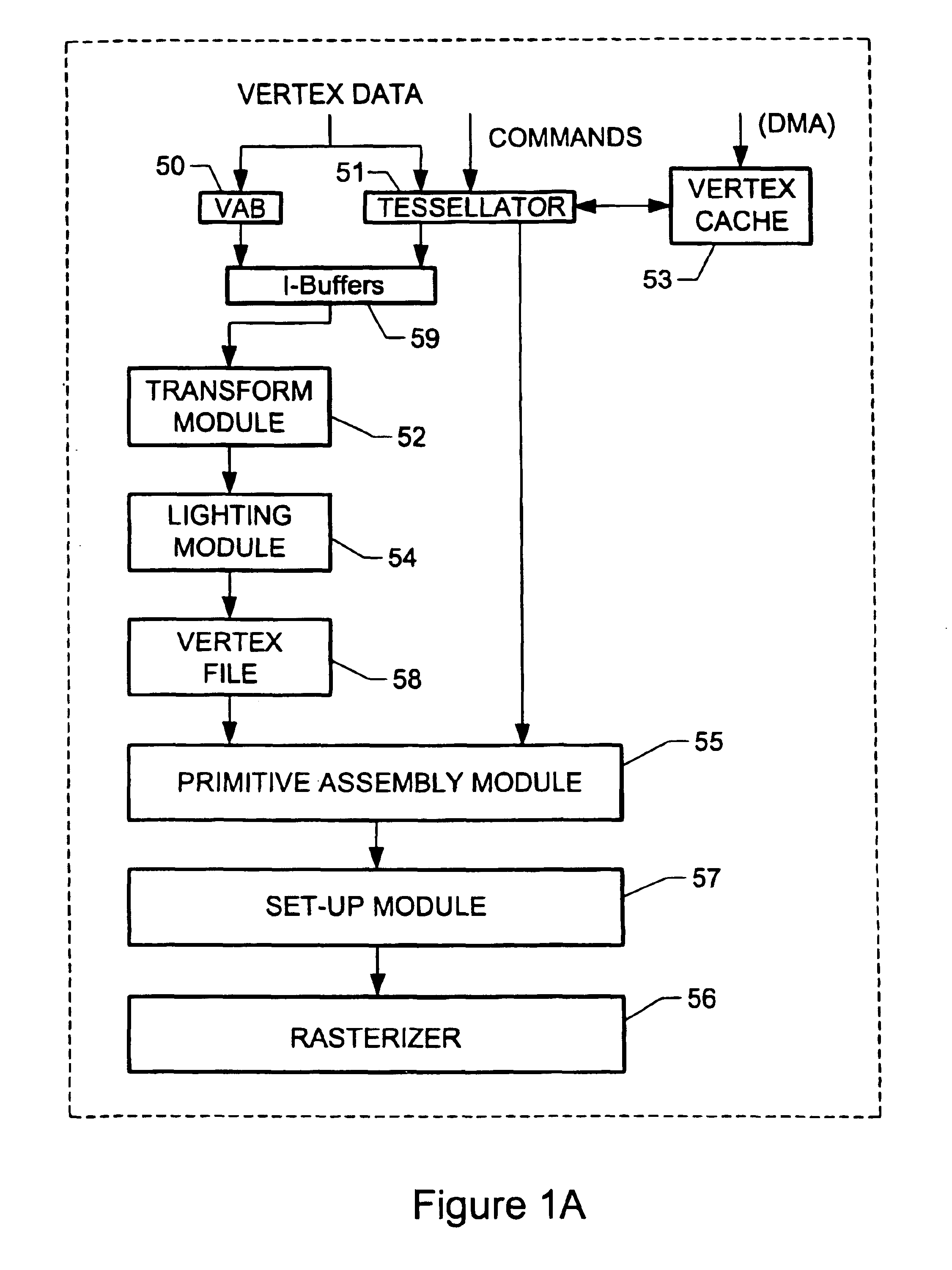

Integrated tessellator in a graphics processing unit

InactiveUS6906716B2Improve performanceQuality improvementProcessor architectures/configurationElectric digital data processingGraphicsSemiconductor

An integrated graphics pipeline system is provided for graphics processing. Such system includes a tessellation module that is positioned on a single semiconductor platform for receiving data for tessellation purposes. Tessellation refers to the process of decomposing either a complex surface such as a sphere or surface patch into simpler primitives such as triangles or quadrilaterals, or a triangle into multiple smaller triangles. Also included on the single semiconductor platform is a transform module adapted to transform the tessellated data from a first space to a second space. Coupled to the transform module is a lighting module which is positioned on the single semiconductor platform for performing lighting operations on the data received from the transform module. Also included is a rasterizer coupled to the lighting module and positioned on the single semiconductor platform for rendering the data received from the lighting module.

Owner:NVIDIA CORP

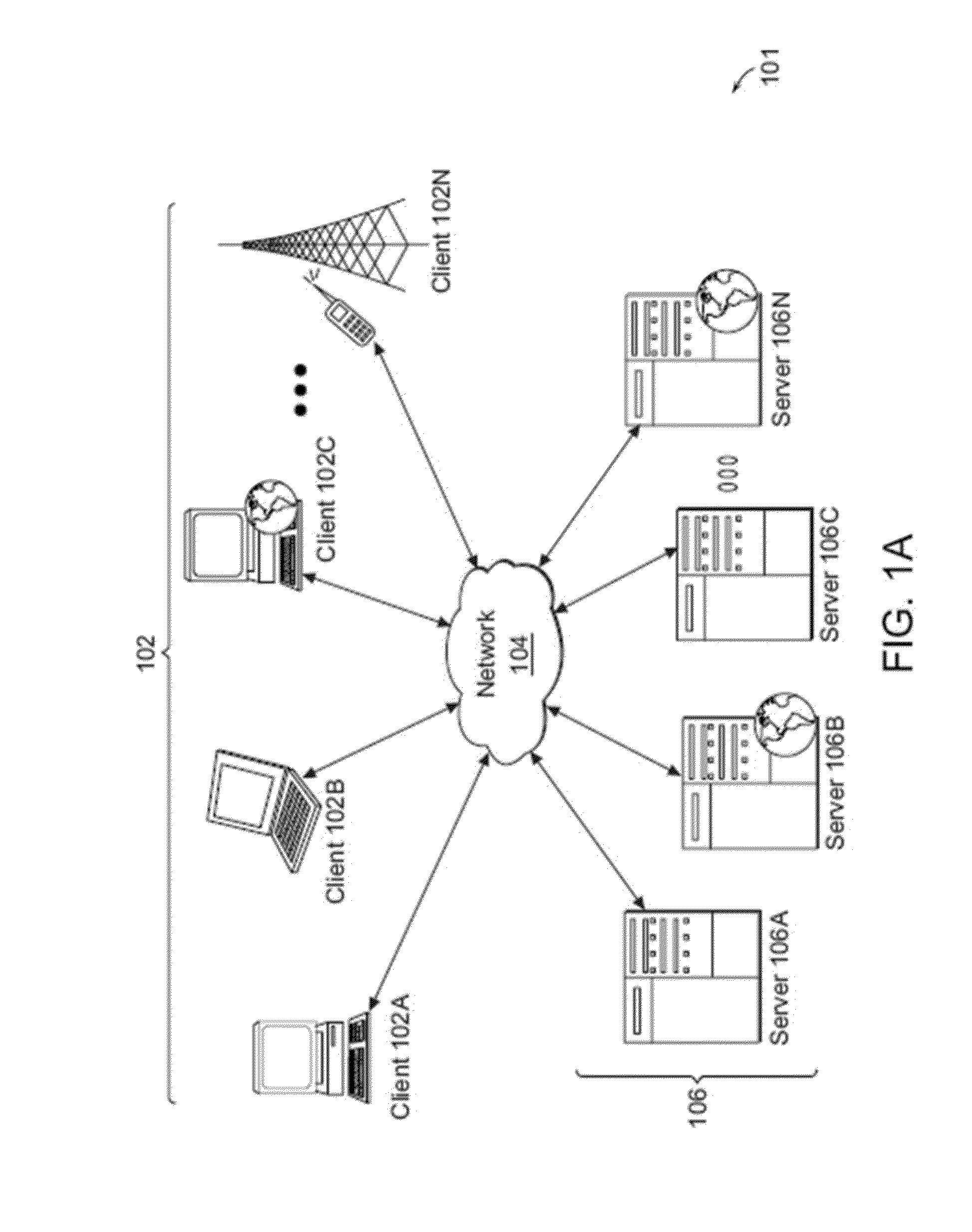

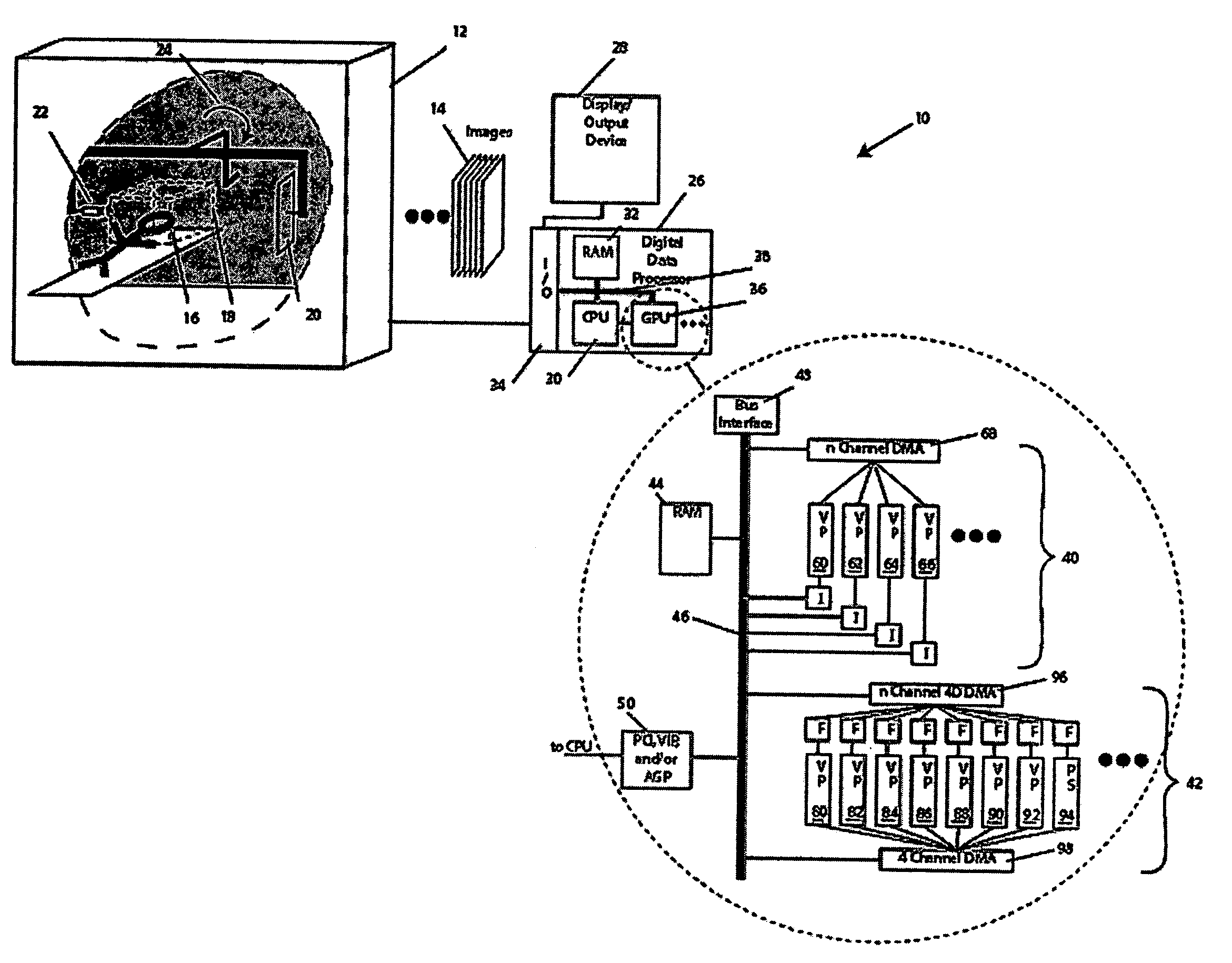

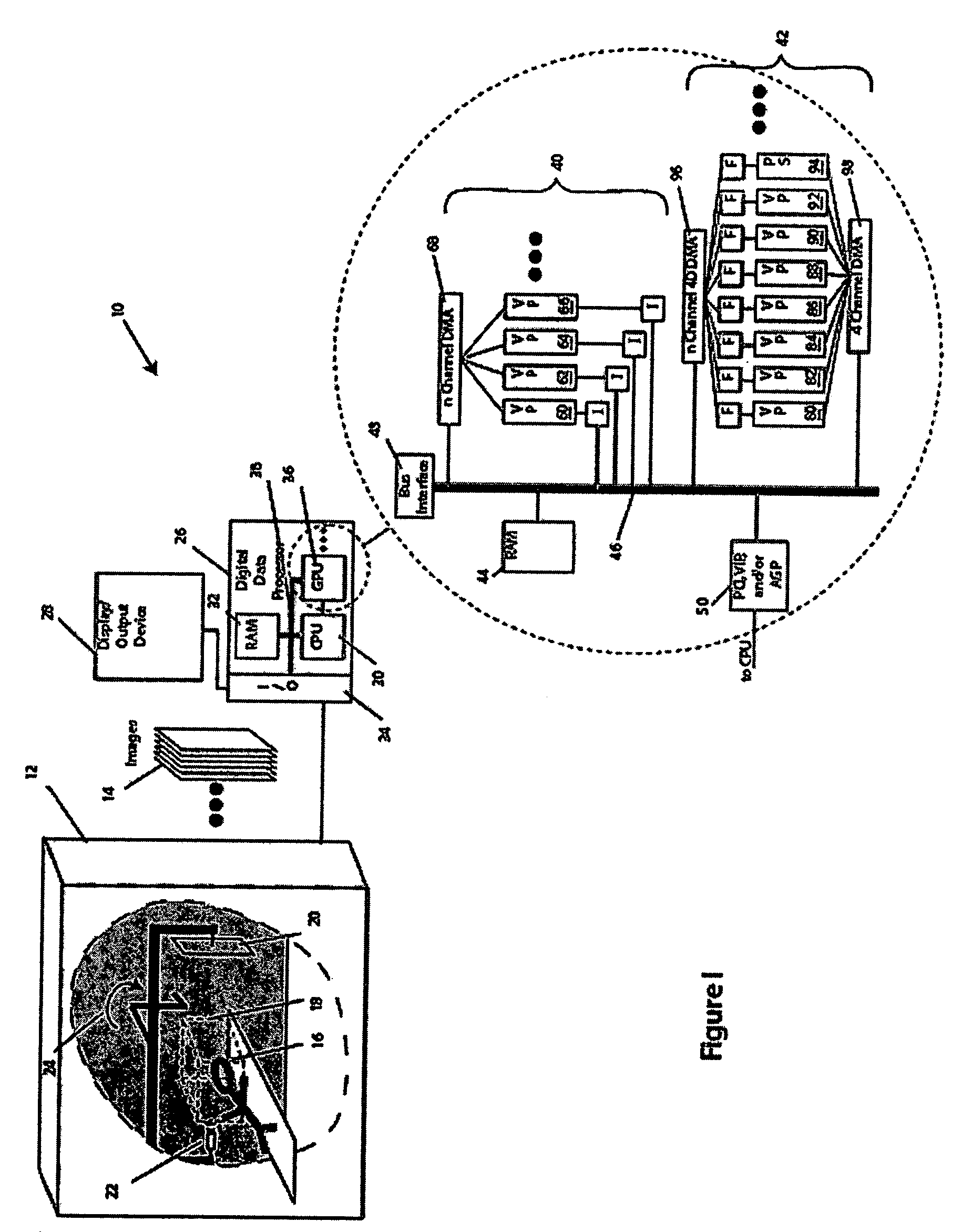

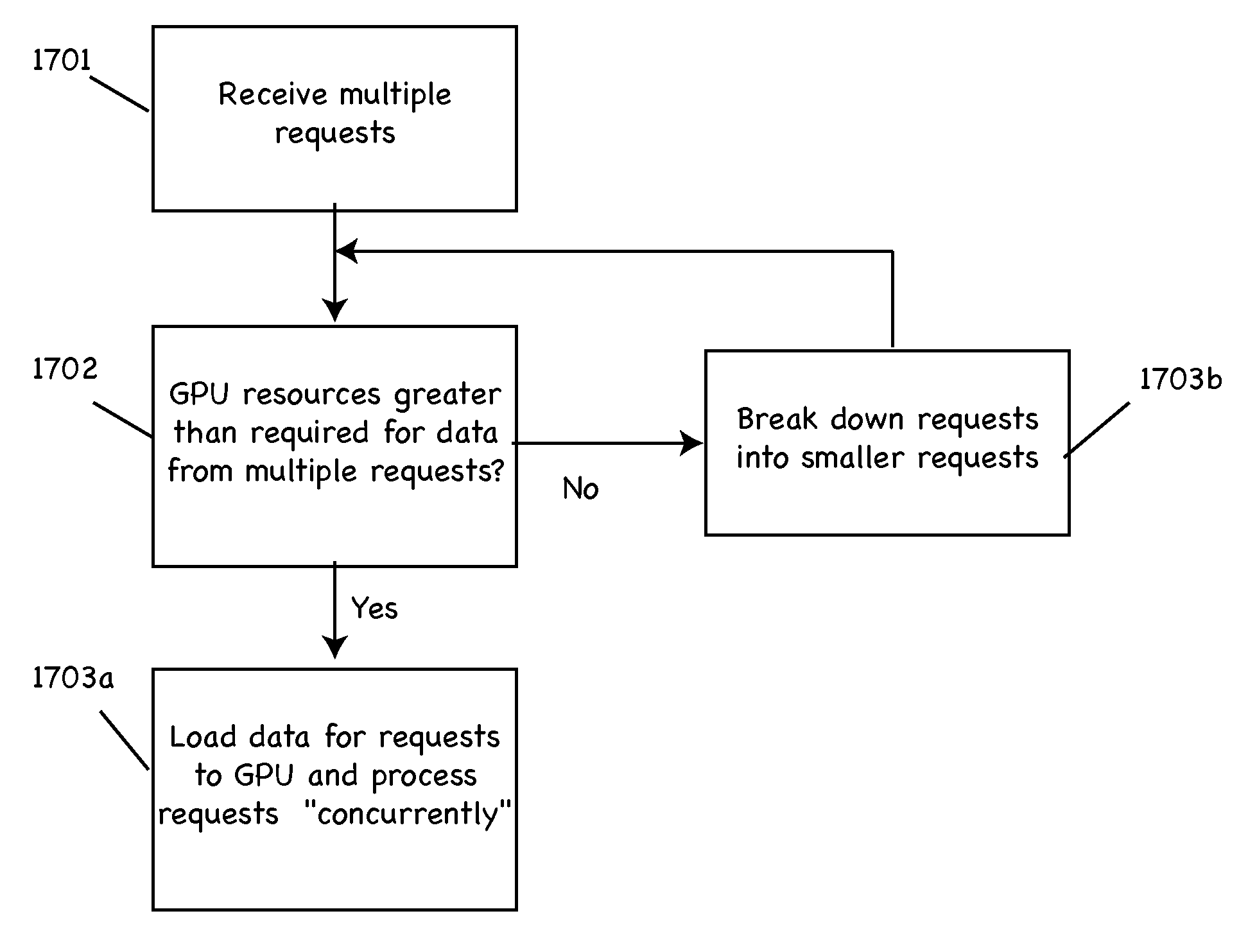

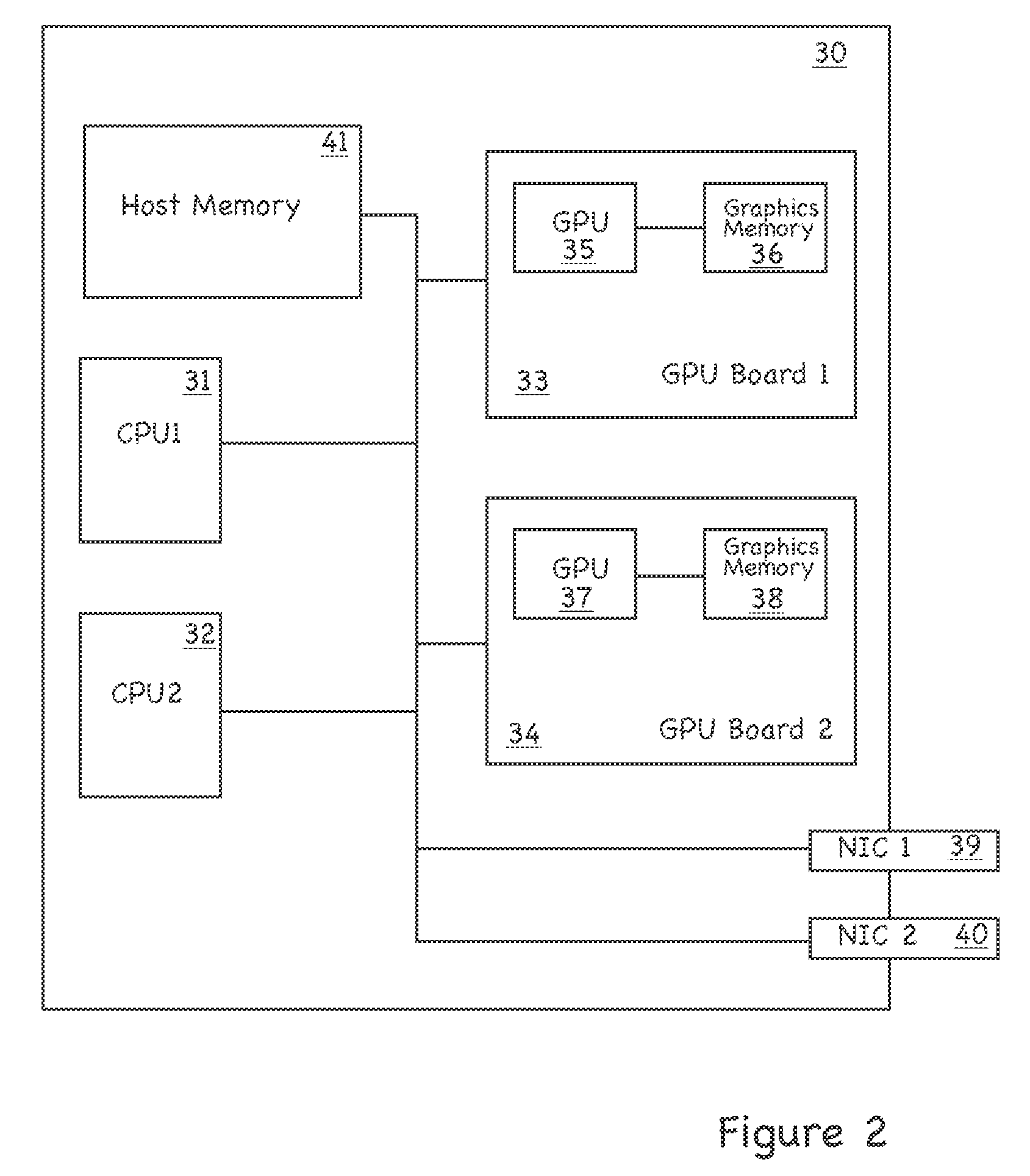

Multi-user multi-GPU render server apparatus and methods

ActiveUS8319781B2Minimize timeLess computation timeMultiprogramming arrangementsCathode-ray tube indicatorsGraphicsDigital data

The invention provides, in some aspects, a system for rendering images, the system having one or more client digital data processors and a server digital data processor in communications coupling with the one or more client digital data processors, the server digital data processor having one or more graphics processing units. The system additionally comprises a render server module executing on the server digital data processor and in communications coupling with the graphics processing units, where the render server module issues a command in response to a request from a first client digital data processor. The graphics processing units on the server digital data processor simultaneously process image data in response to interleaved commands from (i) the render server module on behalf of the first client digital data processor, and (ii) one or more requests from (a) the render server module on behalf of any of the other client digital data processors, and (b) other functionality on the server digital data processor.

Owner:PME IP

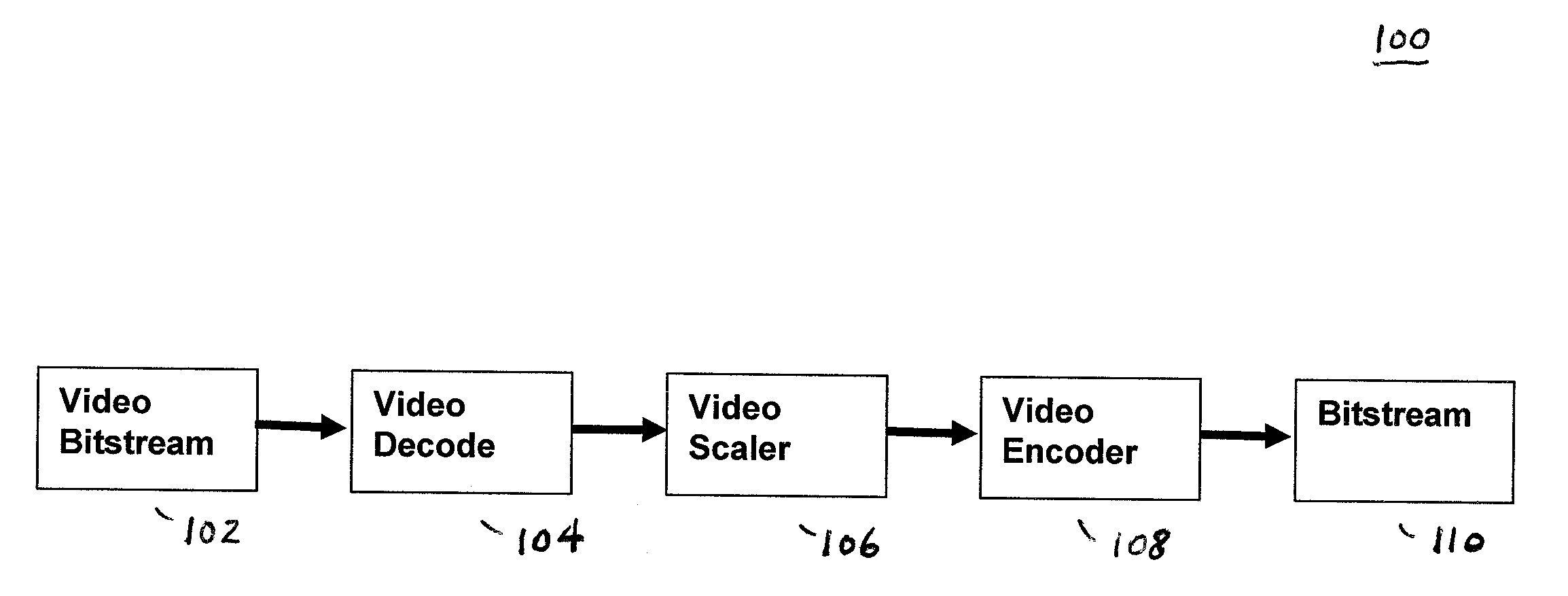

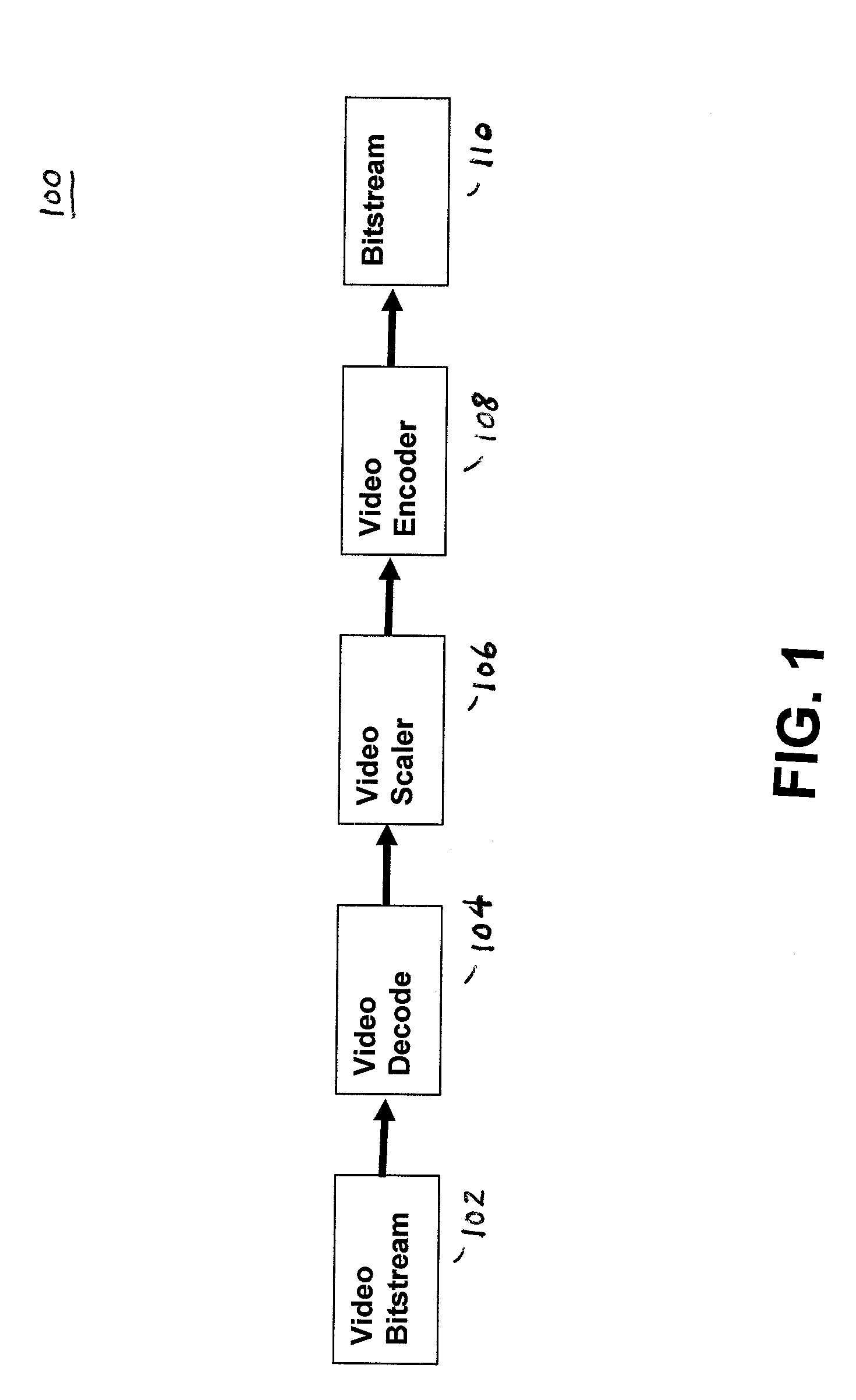

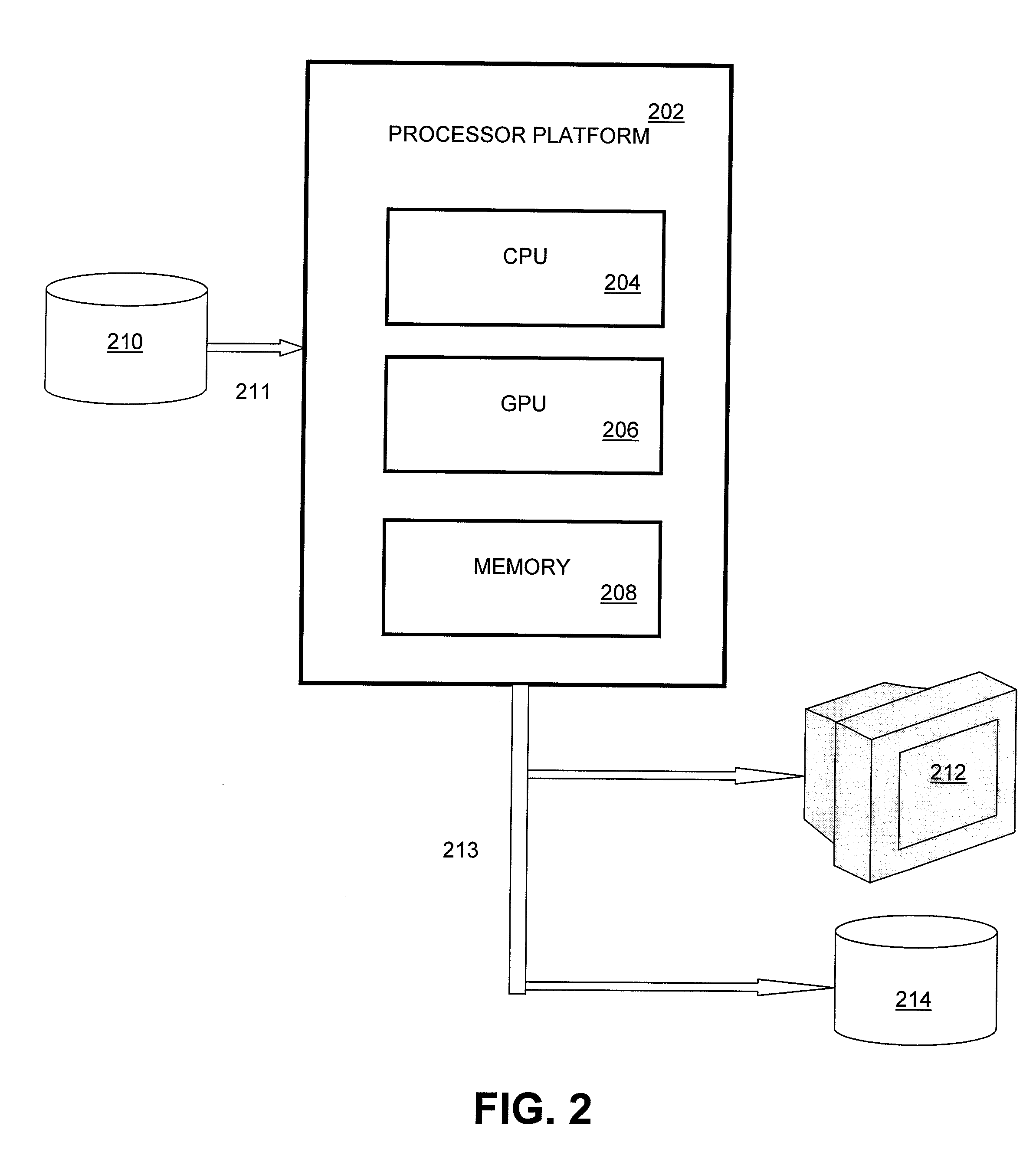

Software Video Transcoder with GPU Acceleration

ActiveUS20090060032A1Picture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningTranscodingWorkload

Embodiments of the invention as described herein provide a solution to the problems of conventional methods as stated above. In the following description, various examples are given for illustration, but none are intended to be limiting. Embodiments are directed to a transcoding system that shares the workload of video transcoding through the use of multiple central processing unit (CPU) cores and / or one or more graphical processing units (GPU), including the use of two components within the GPU: a dedicated hardcoded or programmable video decoder for the decode step and compute shaders for scaling and encoding. The system combines usage of an industry standard Microsoft DXVA method for using the GPU to accelerate video decode with a GPU encoding scheme, along with an intermediate step of scaling the video.

Owner:ADVANCED MICRO DEVICES INC

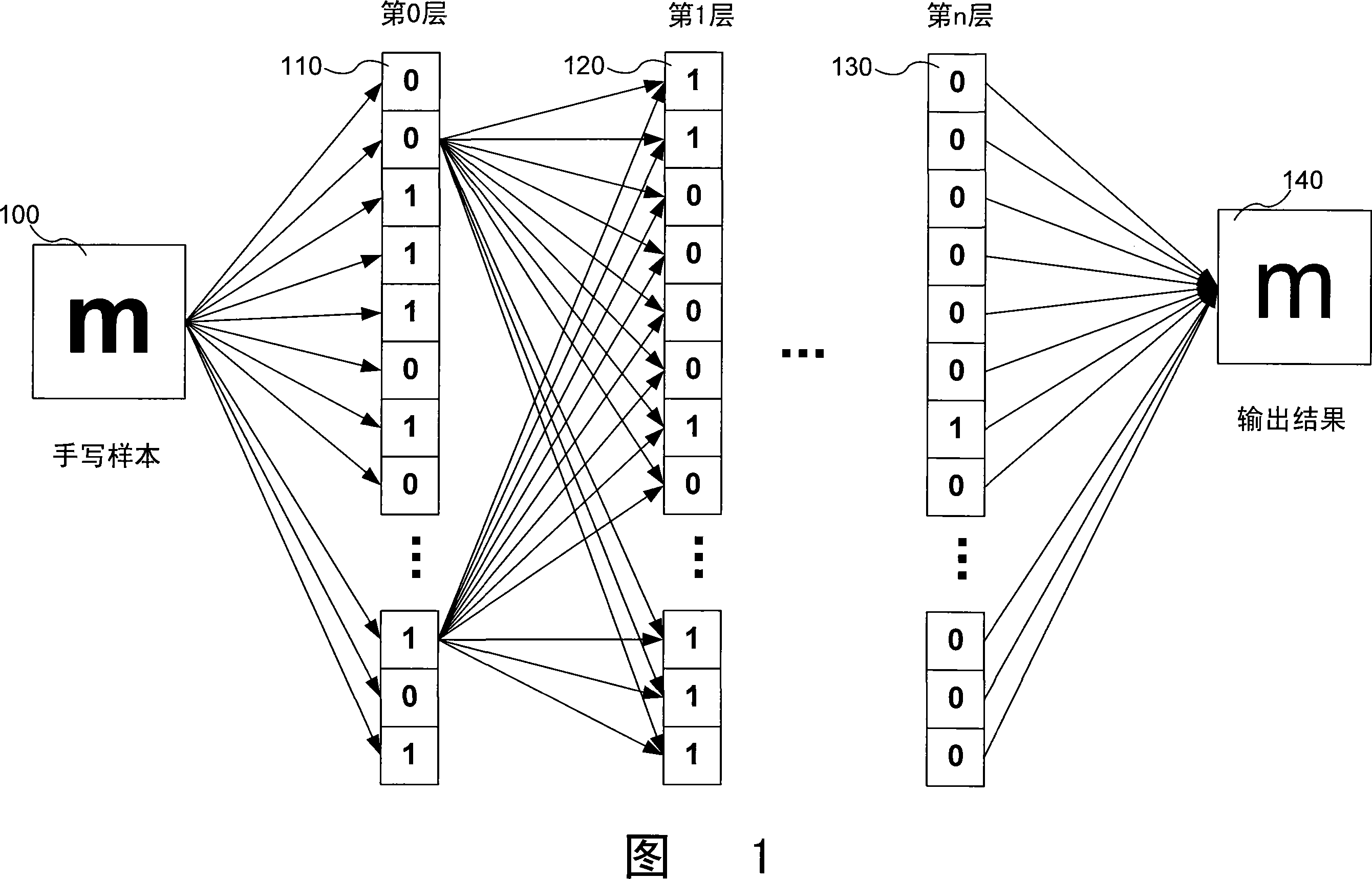

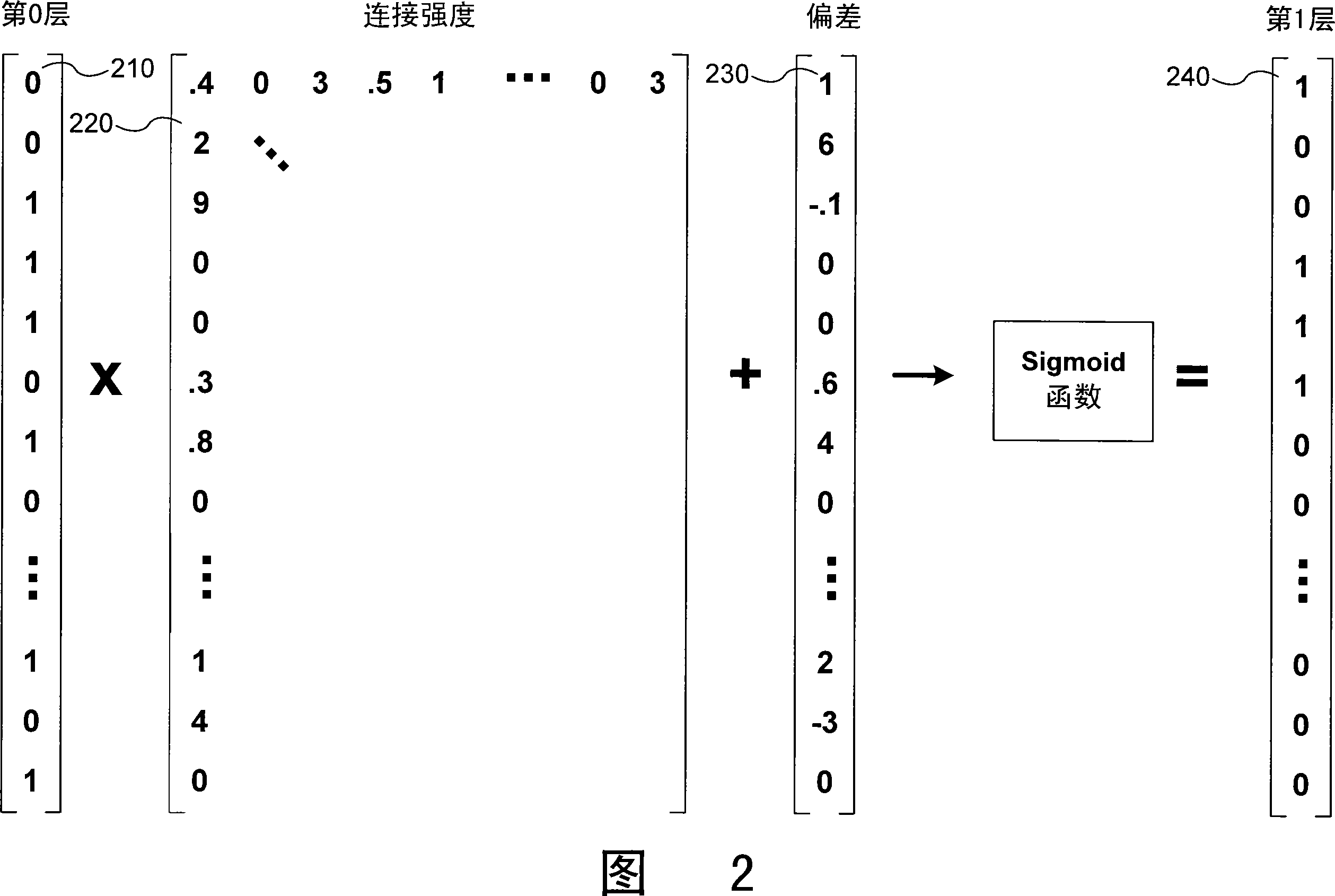

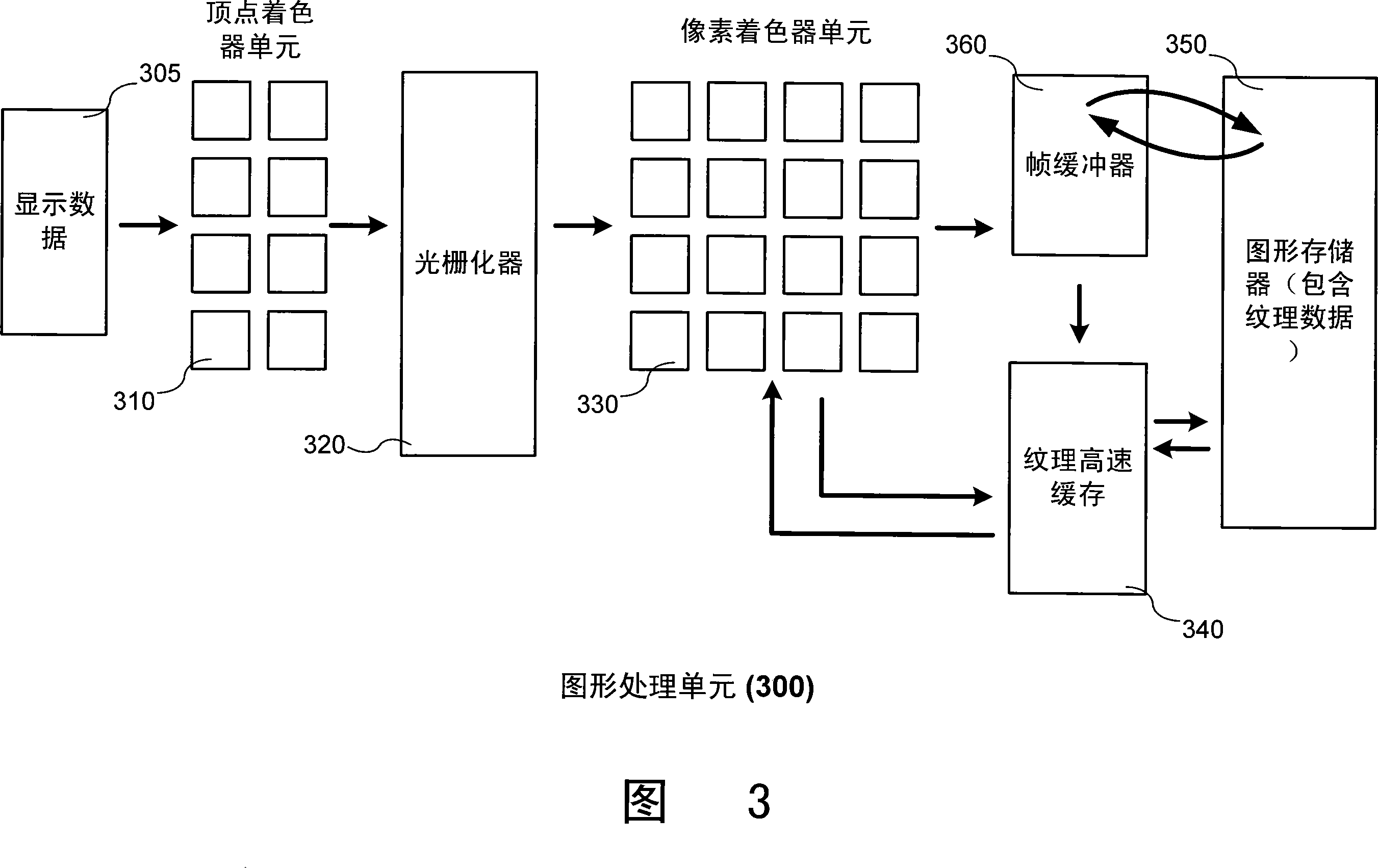

Training convolutional neural networks on graphics processing units

InactiveCN101253493ADigital computer detailsImage data processing detailsComputational scienceError function

A convolutional neural network is implemented on a graphics processing unit. The network is then trained through a series of forward and backward passes, with convolutional kernels and bias matrices modified on each backward pass according to a gradient of an error function. The implementation takes advantage of parallel processing capabilities of pixel shader units on a GPU, and utilizes a set of start-to-finish formulas to program the computations on the pixel shaders. Input and output to the program is done through textures, and a multi-pass summation process is used when sums are needed across pixel shader unit registers.

Owner:MICROSOFT TECH LICENSING LLC

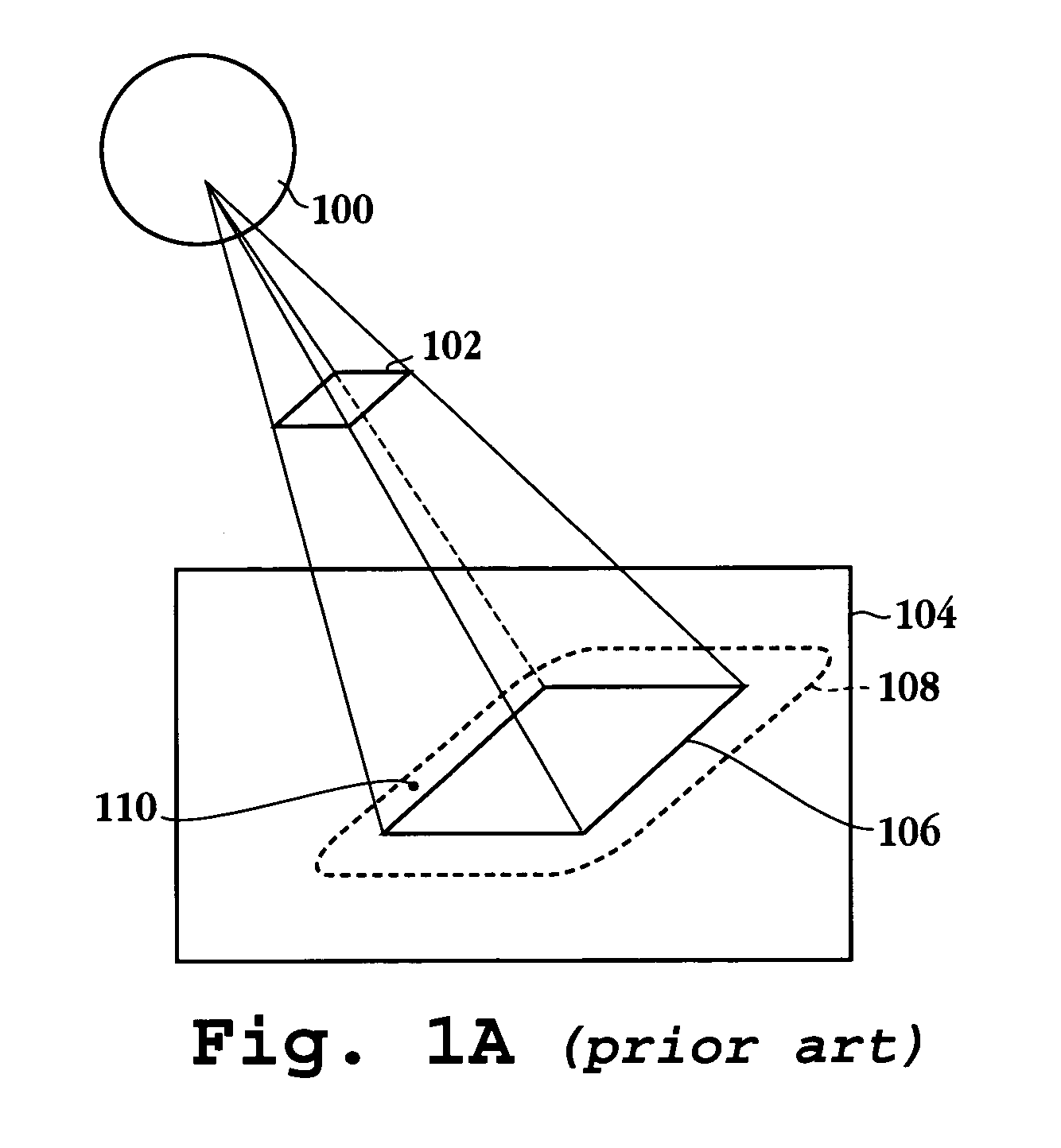

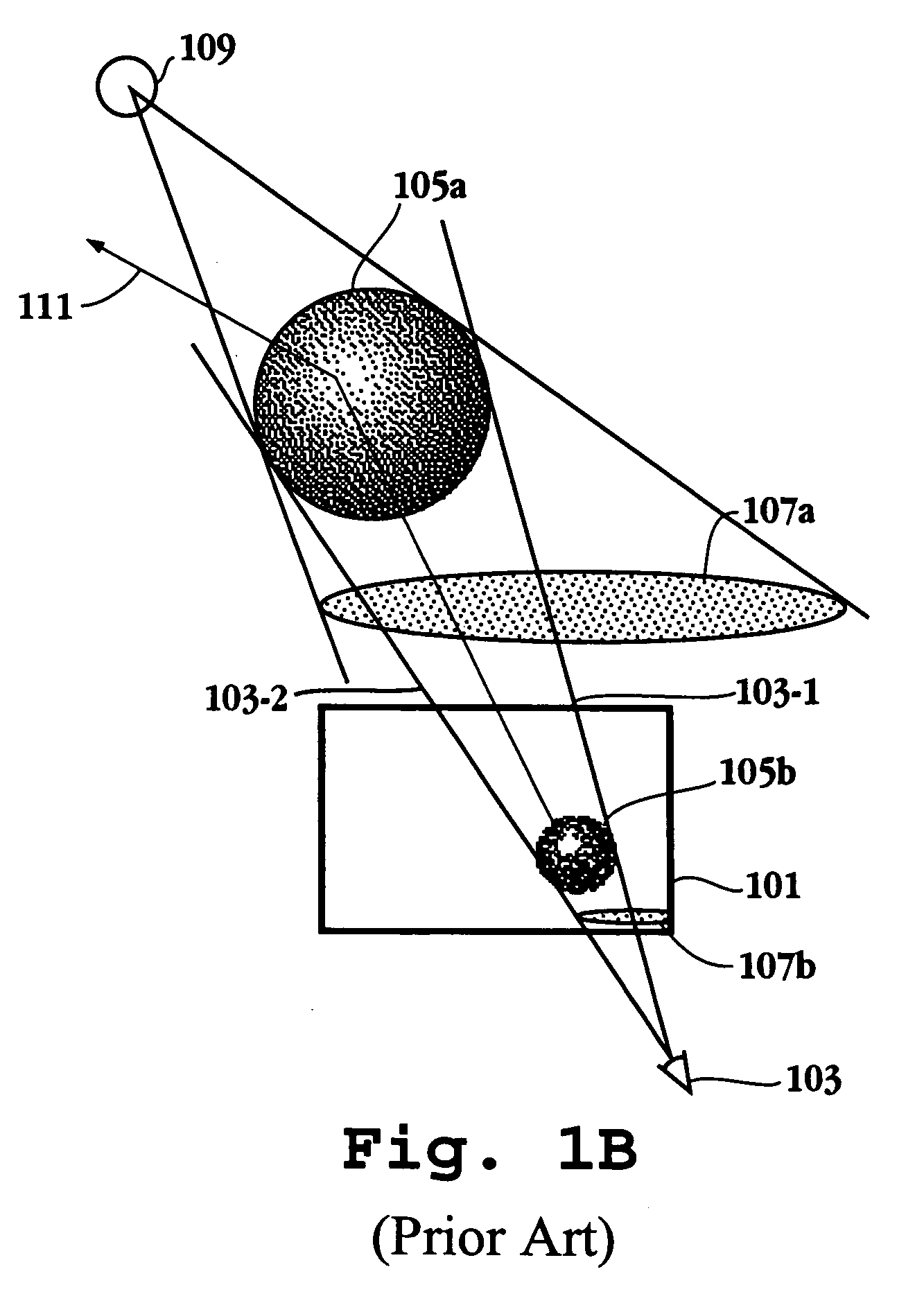

Methods, Apparatus, and Computer-Readable Storage Media for Depth-Based Rendering of Focused Plenoptic Camera Data

ActiveUS20130120356A1High resolution3D-image renderingDetails involving computational photographyGraphicsMultiple point

Methods, apparatus, and computer-readable storage media for rendering focused plenoptic camera data. A depth-based rendering technique is described that estimates depth at each microimage and then applies that depth to determine a position in the input flat from which to read a value to be assigned to a given point in the output image. The techniques may be implemented according to parallel processing technology that renders multiple points of the output image in parallel. In at least some embodiments, the parallel processing technology is graphical processing unit (GPU) technology.

Owner:ADOBE INC

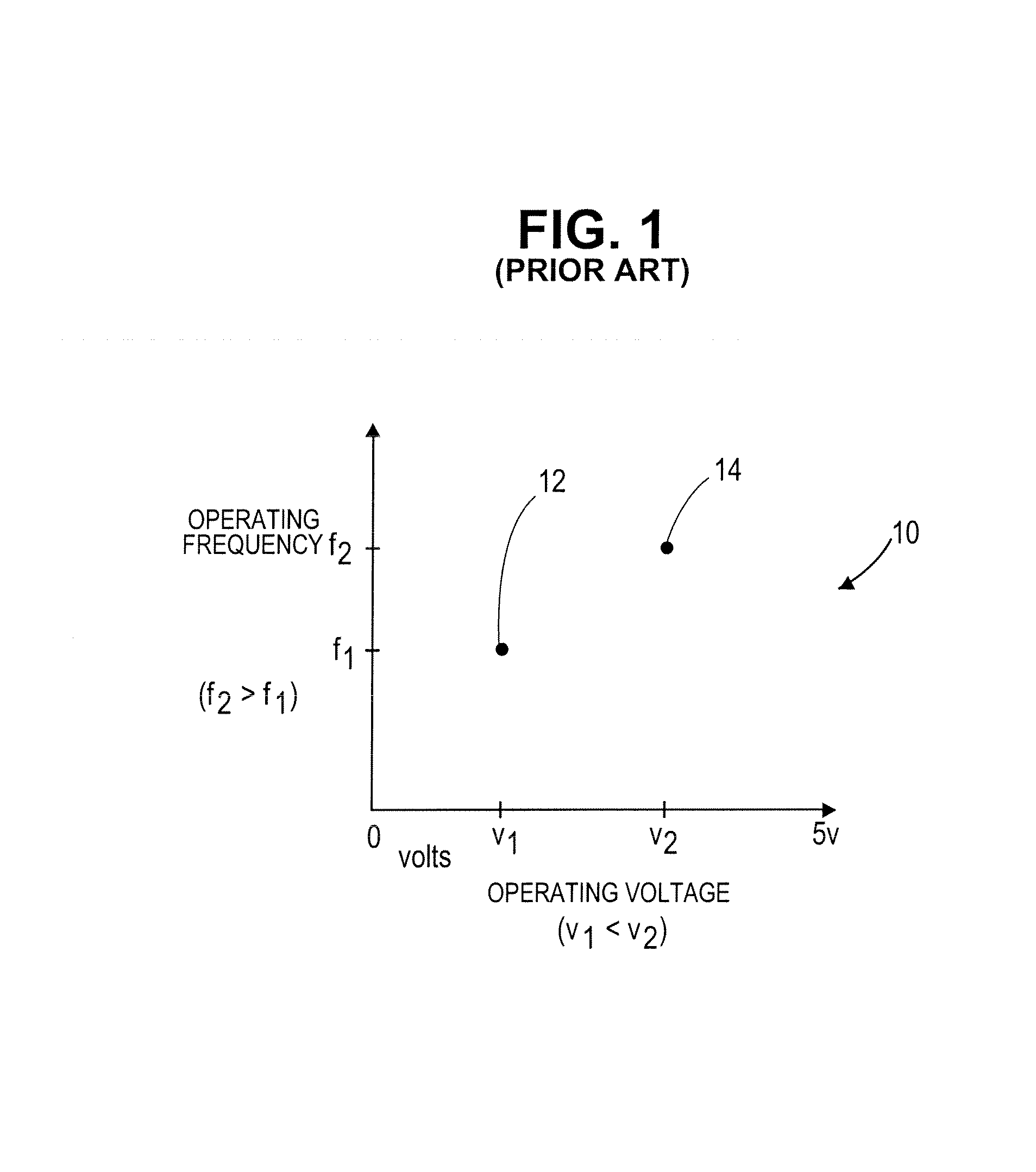

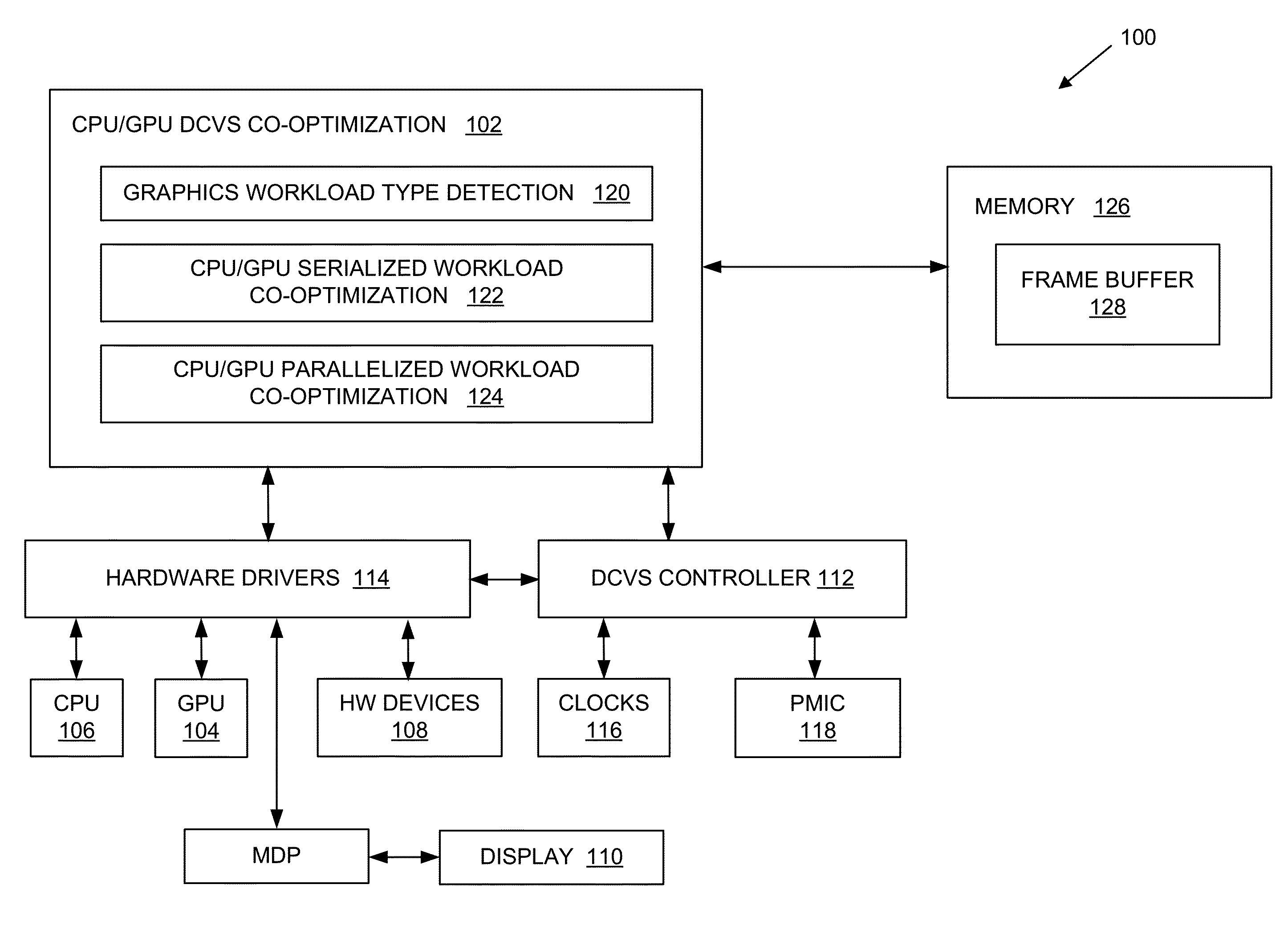

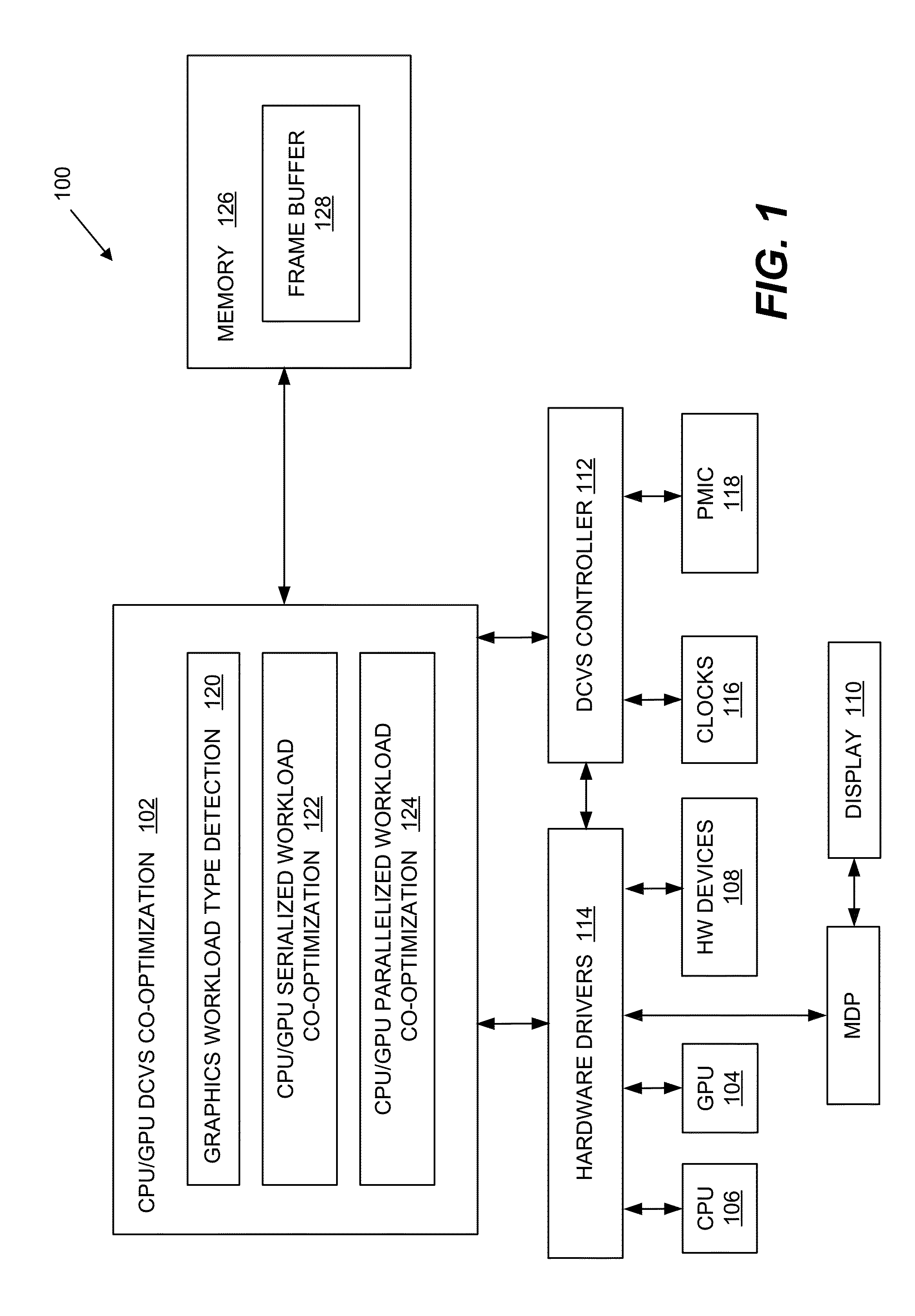

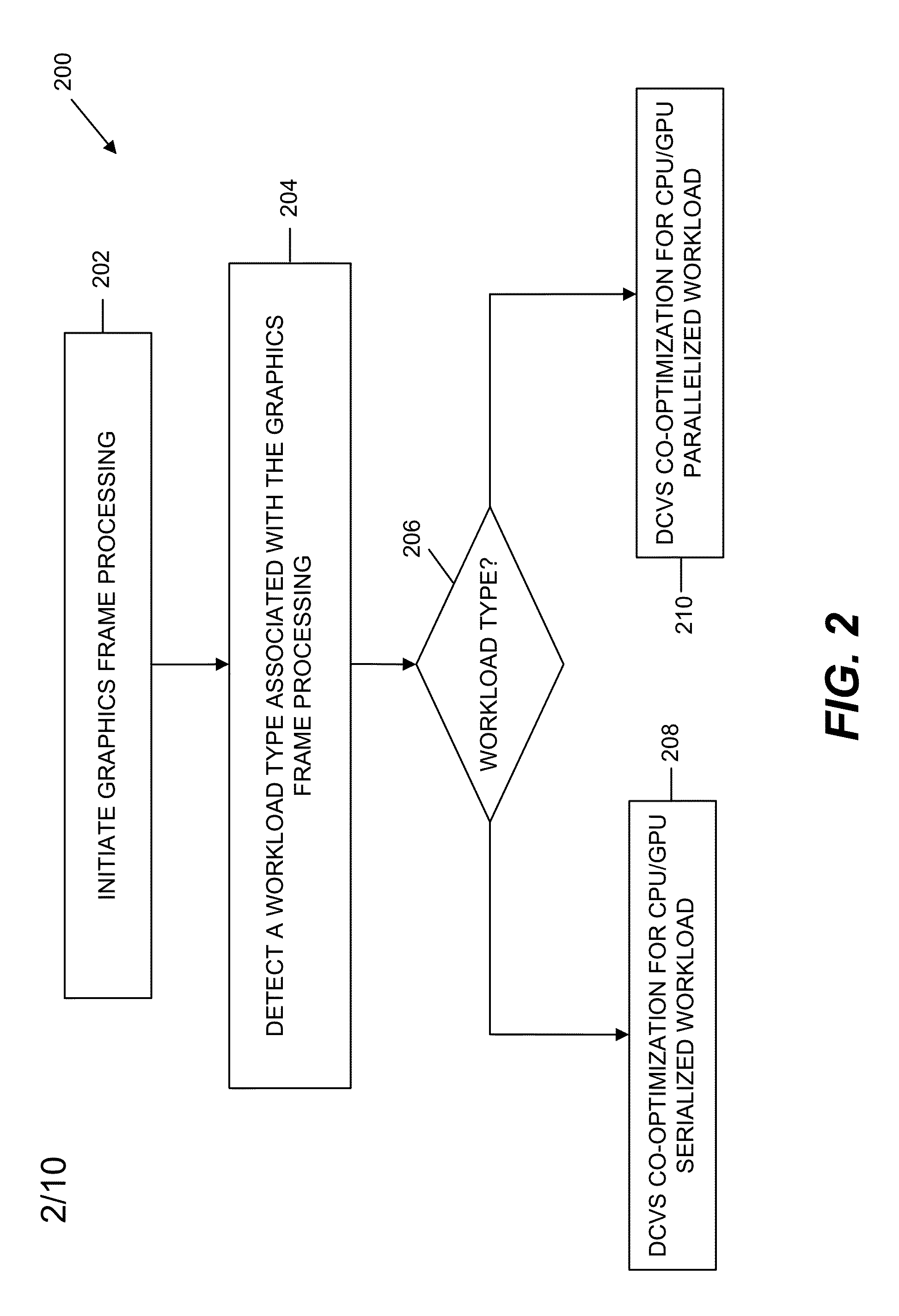

Cpu/gpu dcvs co-optimization for reducing power consumption in graphics frame processing

ActiveUS20150317762A1Minimize power consumptionEnergy efficient ICTStatic indicating devicesGraphicsComputational science

Systems, methods, and computer programs are disclosed for minimizing power consumption in graphics frame processing. One such method comprises: initiating graphics frame processing to be cooperatively performed by a central processing unit (CPU) and a graphics processing unit (GPU); receiving CPU activity data and GPU activity data; determining a set of available dynamic clock and voltage / frequency scaling (DCVS) levels for the GPU and the CPU; and selecting from the set of available DCVS levels an optimal combination of a GPU DCVS level and a CPU DCVS level, based on the CPU and GPU activity data, which minimizes a combined power consumption of the CPU and the GPU during the graphics frame processing.

Owner:QUALCOMM INC

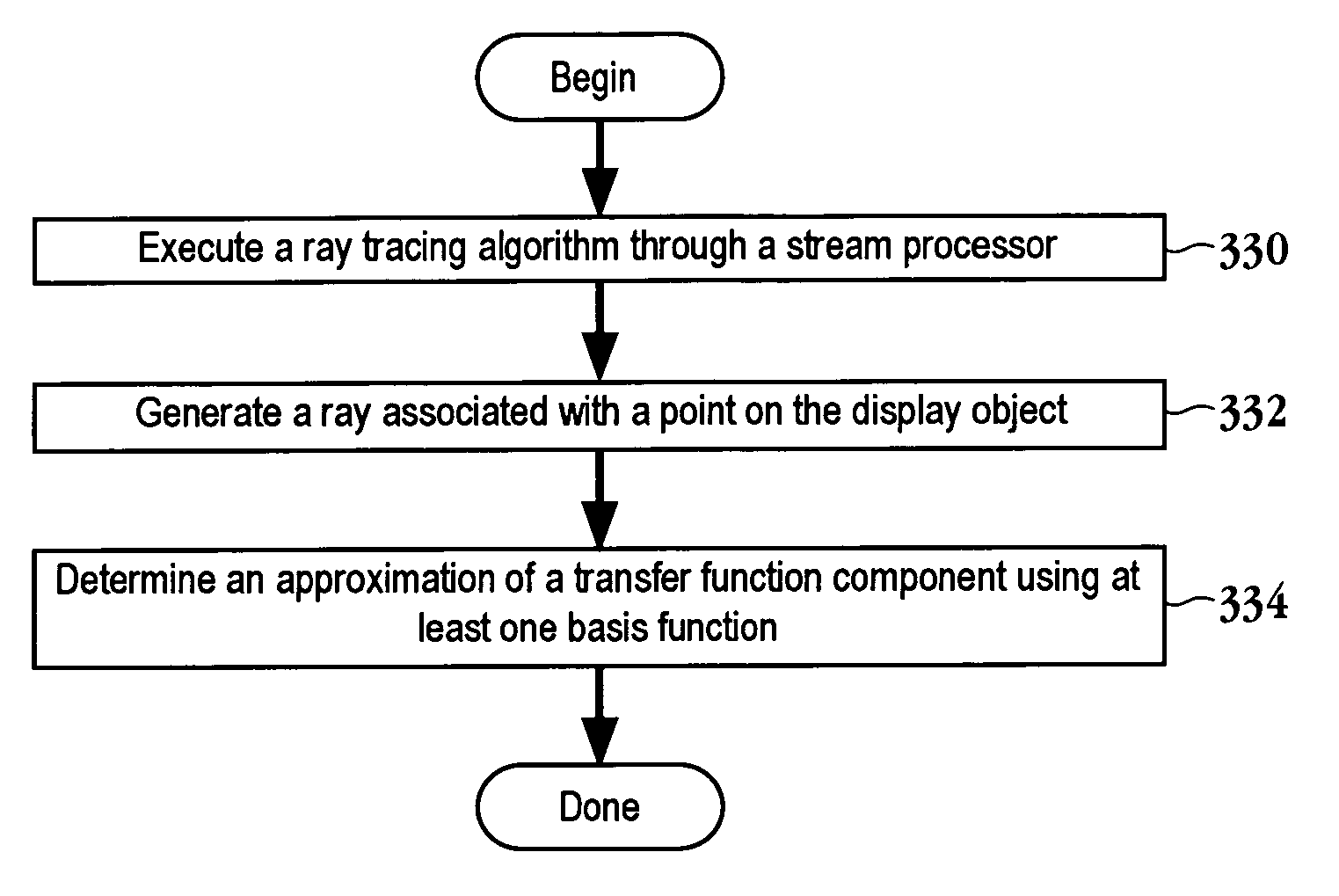

Method and apparatus for real-time global illumination incorporating stream processor based hybrid ray tracing

A method for calculating a lighting function for an object to be rendered using a basis function is provided. The method includes calculating a transfer function approximation of the lighting function through a stream processor. A method for presenting lighting characteristics associated with a display object in real-time and a method for determining secondary illumination features for an object to be displayed are also provided. A computer readable medium and a computing device having a graphics processing unit capable of determining lighting characteristics for an object in real time are also included.

Owner:SONY COMPUTER ENTERTAINMENT INC

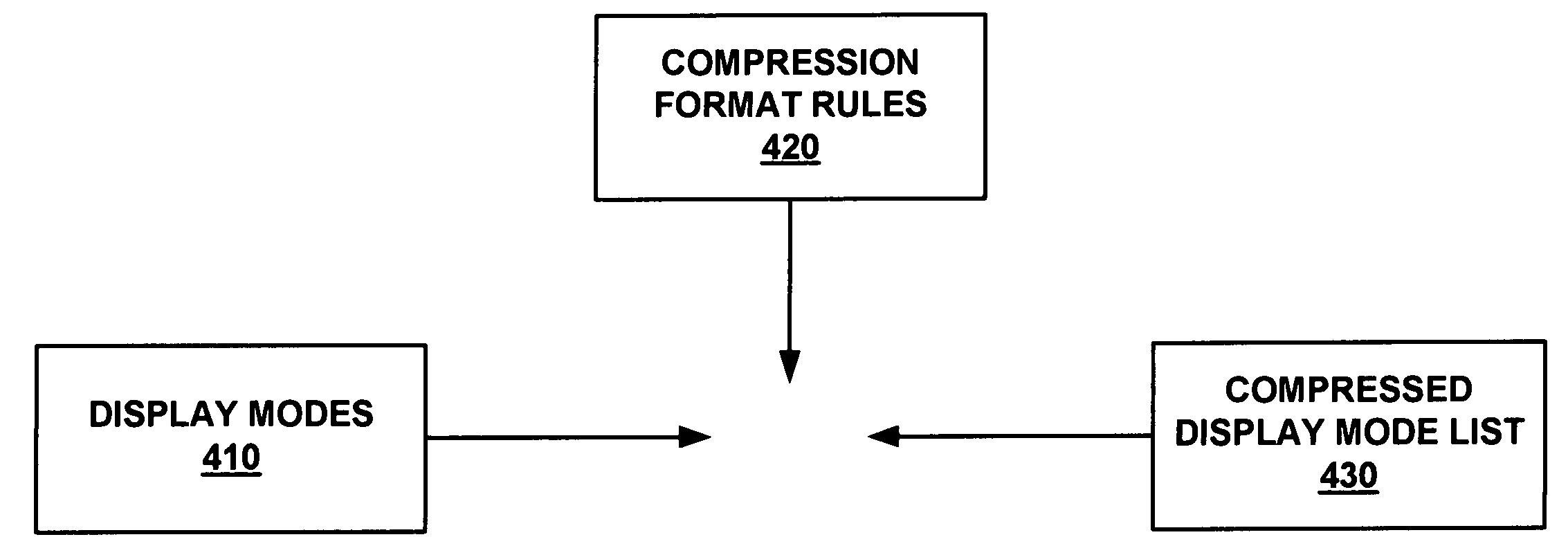

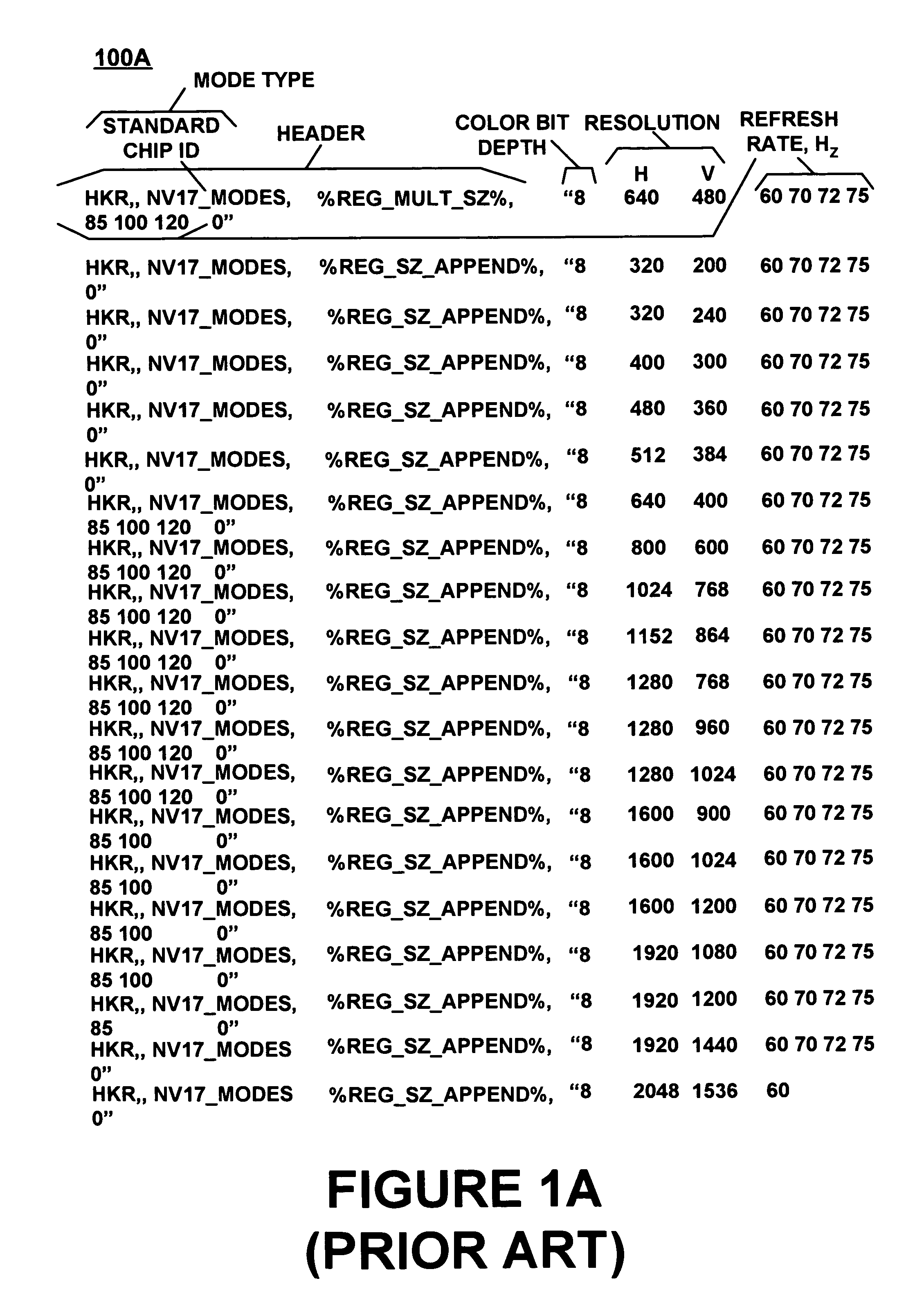

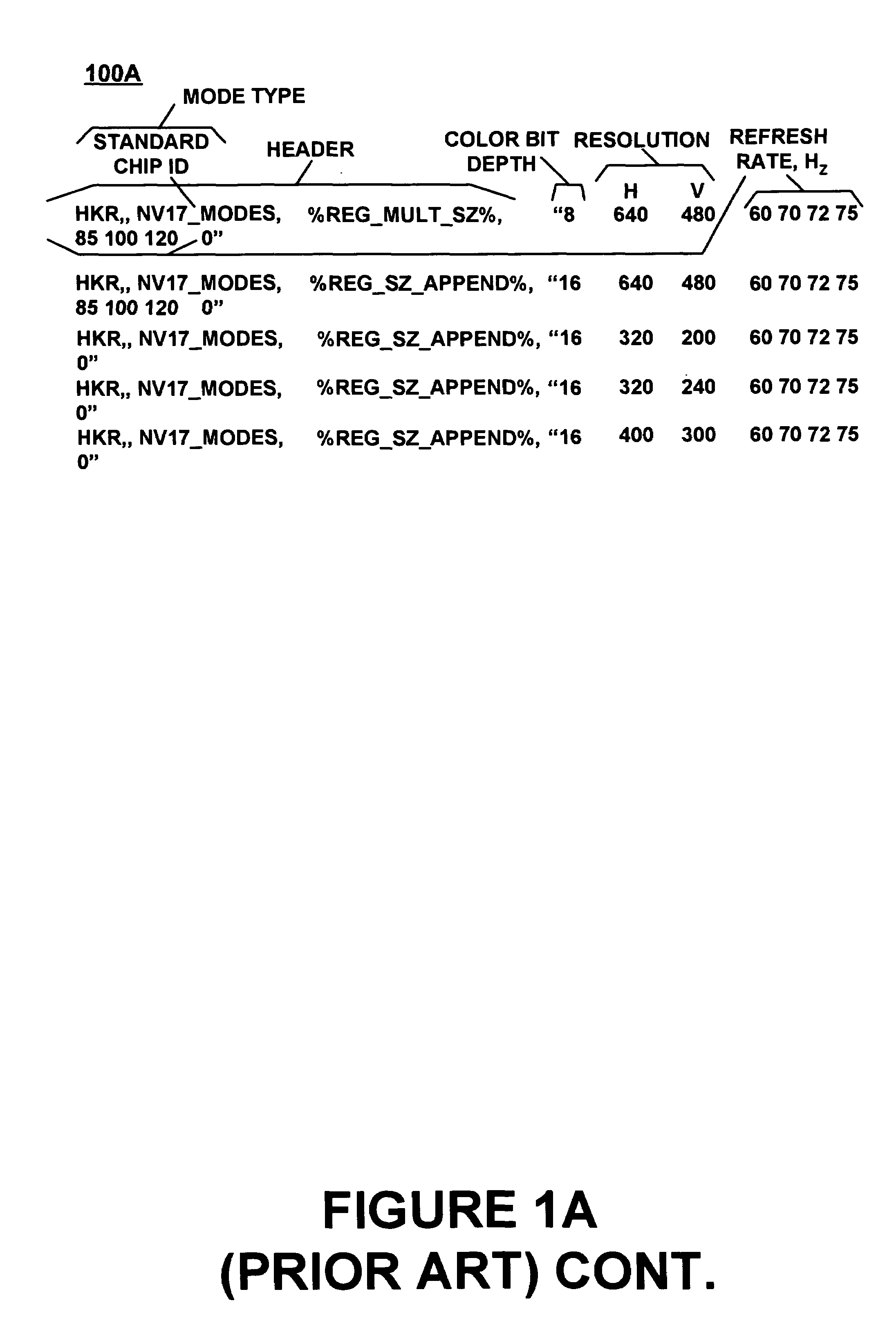

Method and system using compressed display mode list

ActiveUS7129909B1Reduce and minimize sizeCathode-ray tube indicatorsItem transportation vehiclesGraphicsDisplay device

A method and system using a compressed display mode list is disclosed. In particular, the compressed display mode list includes a plurality of data representing the display modes. The data is formatted according to a plurality of compression format rules. The compression format rules reduce and minimize the size of the compressed display mode list. A driver controls a graphical processing unit that renders an image for displaying on a display device according to a selected display mode from the compressed display mode list. Moreover, a computer-readable medium can store the compressed display mode list.

Owner:NVIDIA CORP

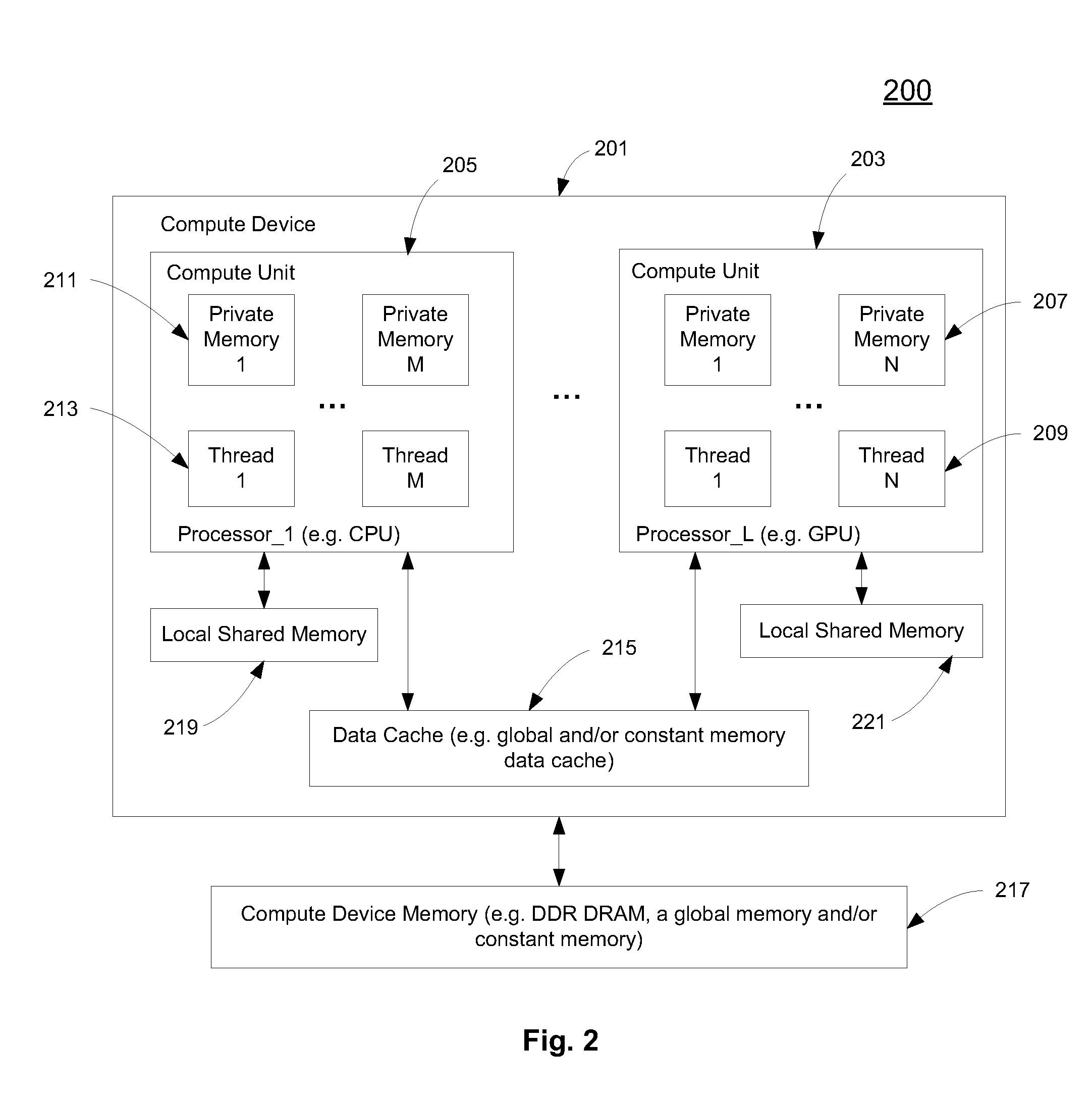

Multi-dimensional thread grouping for multiple processors

ActiveUS20090307704A1Optimize runtime resource usageResource allocationProgram control using stored programsGraphicsMulti processor

A method and an apparatus that determine a total number of threads to concurrently execute executable codes compiled from a single source for target processing units in response to an API (Application Programming Interface) request from an application running in a host processing unit are described. The target processing units include GPUs (Graphics Processing Unit) and CPUs (Central Processing Unit). Thread group sizes for the target processing units are determined to partition the total number of threads according to a multi-dimensional global thread number included in the API request. The executable codes are loaded to be executed in thread groups with the determined thread group sizes concurrently in the target processing units.

Owner:APPLE INC

Systems and methods for virtualizing graphics subsystems

ActiveUS8274518B2Program control using stored programsProcessor architectures/configurationVirtualizationGraphics accelerator

Systems and methods for applying virtual machines to graphics hardware are provided. In various embodiments of the invention, while supervisory code runs on the CPU, the actual graphics work items are run directly on the graphics hardware and the supervisory code is structured as a graphics virtual machine monitor. Application compatibility is retained using virtual machine monitor (VMM) technology to run a first operating system (OS), such as an original OS version, simultaneously with a second OS, such as a new version OS, in separate virtual machines (VMs). VMM technology applied to host processors is extended to graphics processing units (GPUs) to allow hardware access to graphics accelerators, ensuring that legacy applications operate at full performance. The invention also provides methods to make the user experience cosmetically seamless while running multiple applications in different VMs. In other aspects of the invention, by employing VMM technology, the virtualized graphics architecture of the invention is extended to provide trusted services and content protection.

Owner:MICROSOFT TECH LICENSING LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com