Patents

Literature

333results about "Details involving computational photography" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

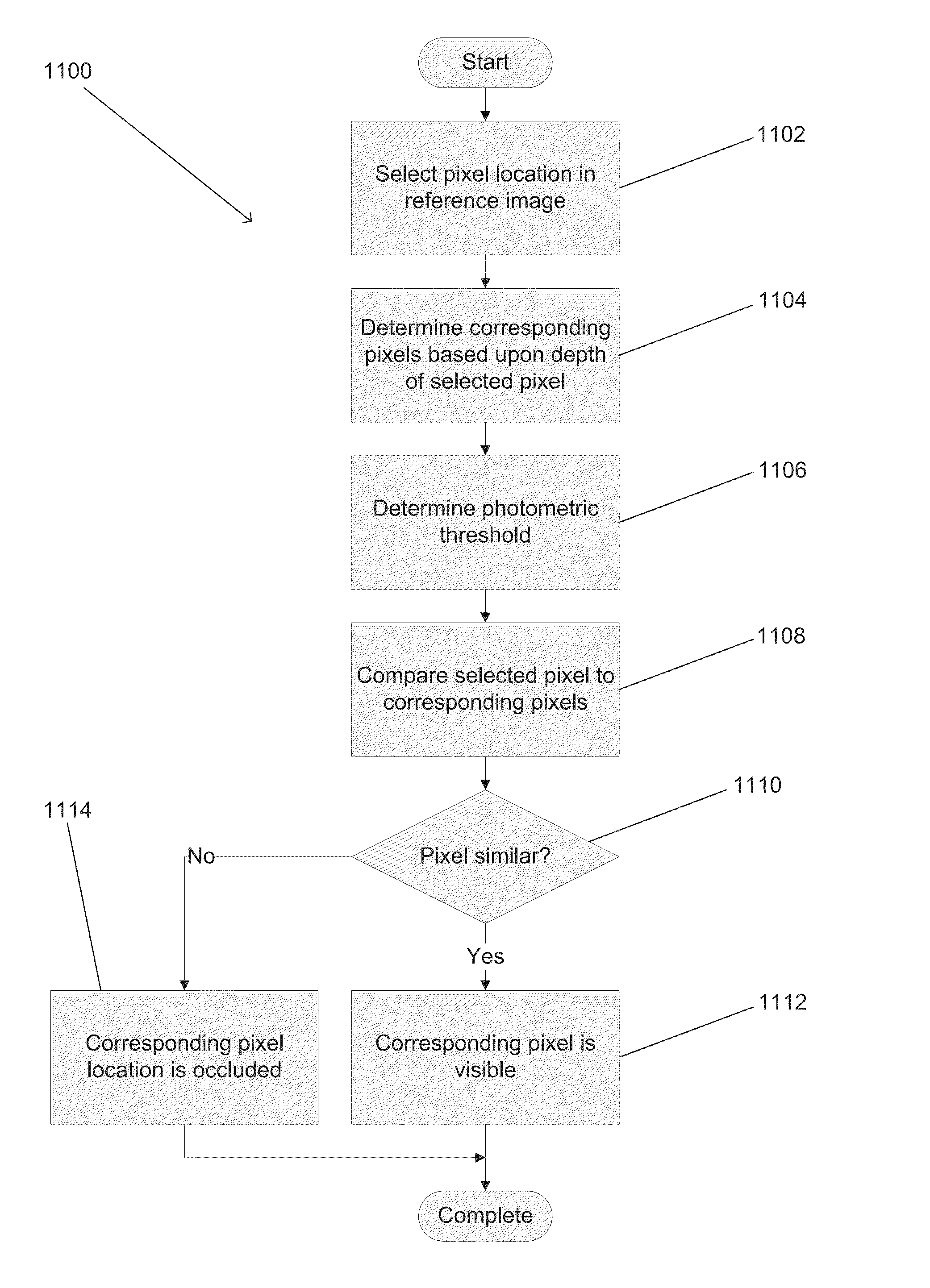

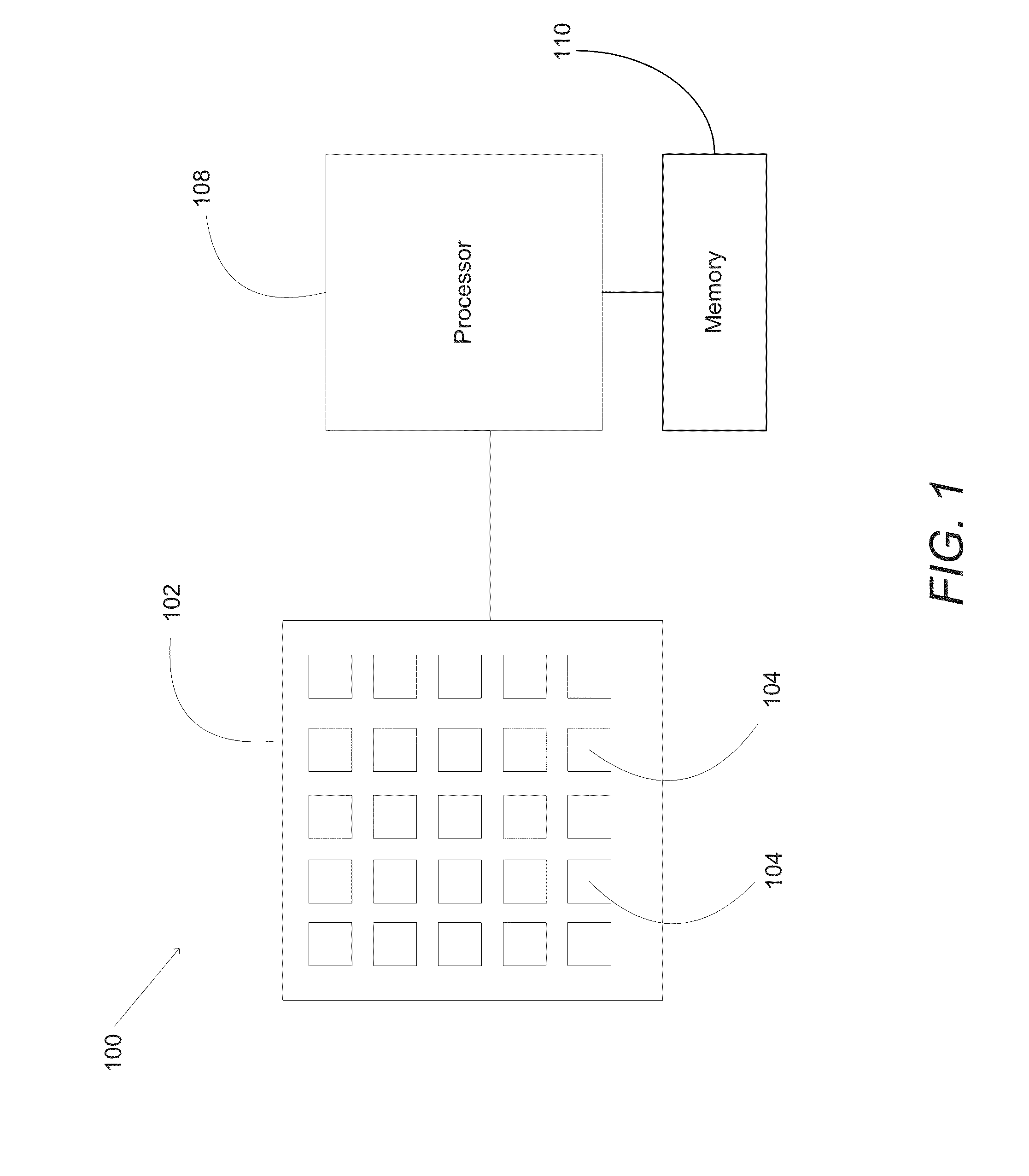

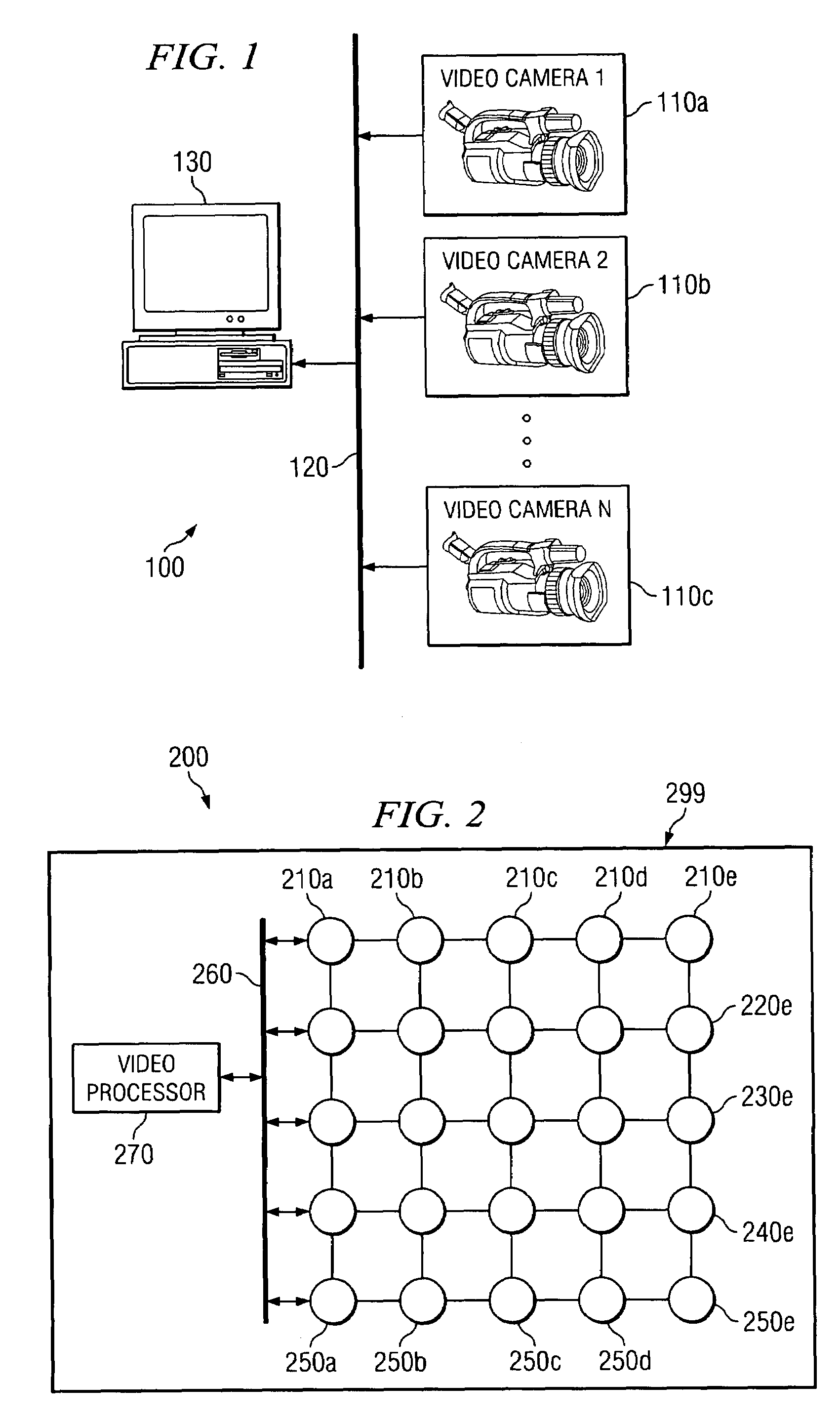

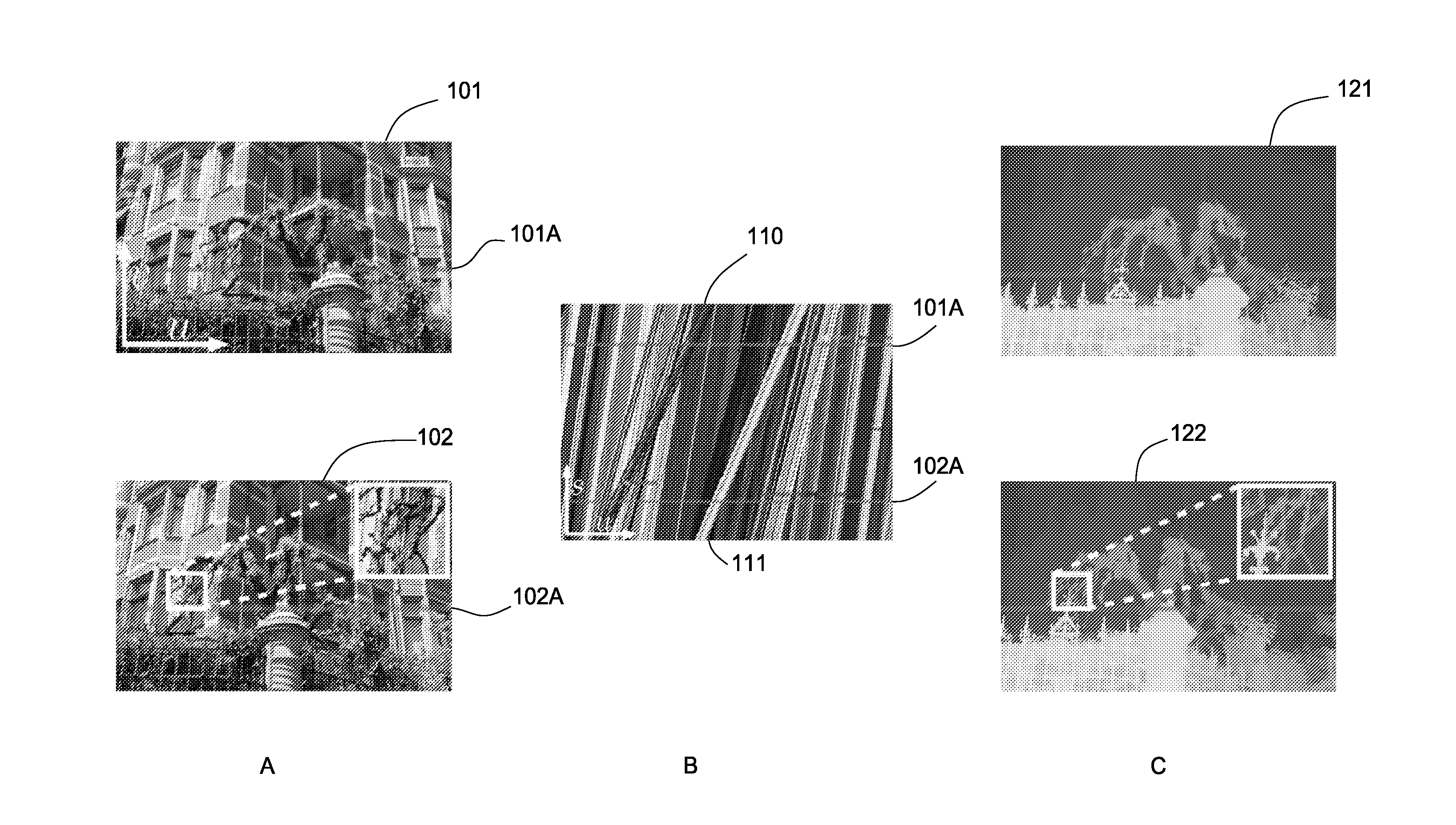

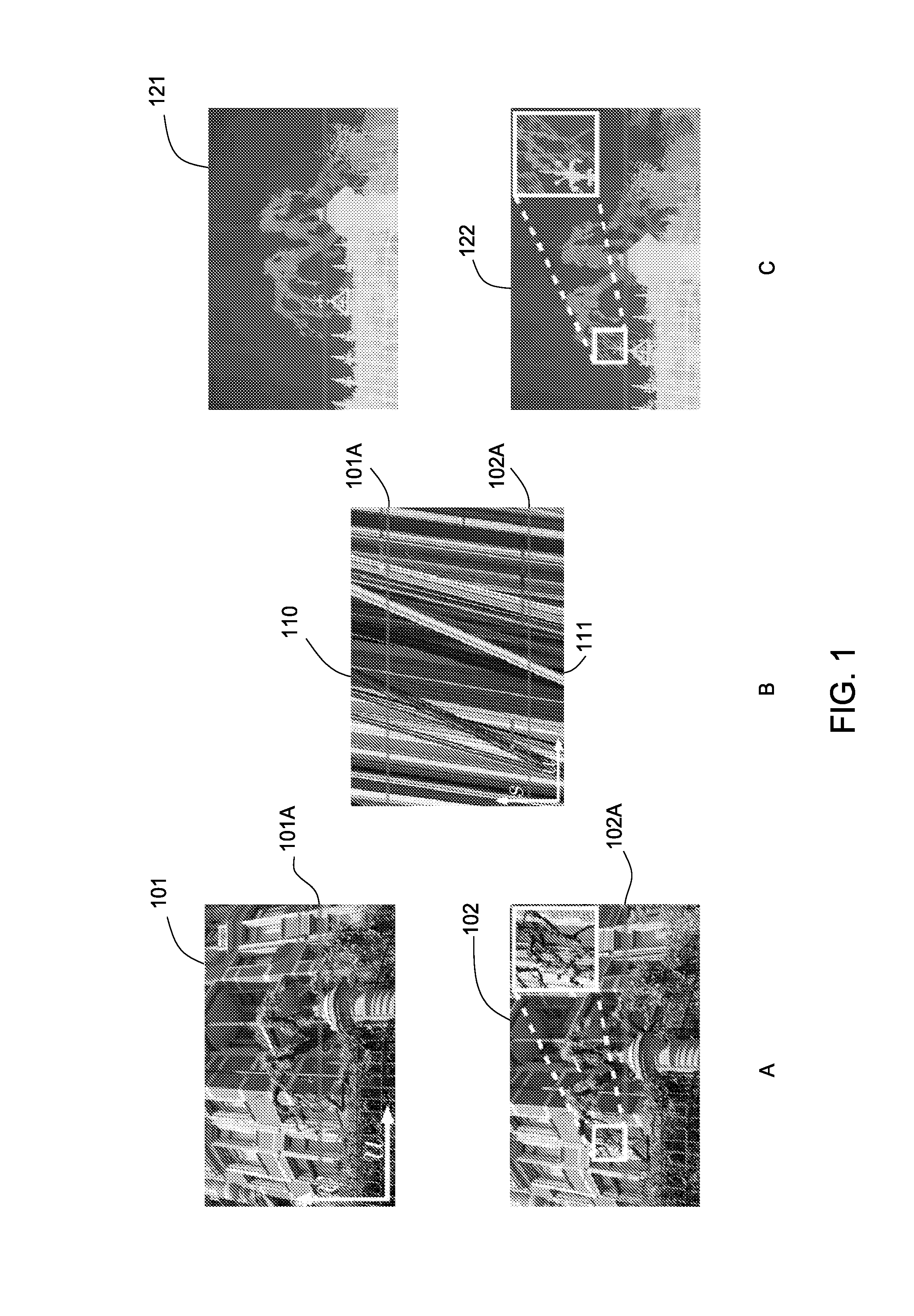

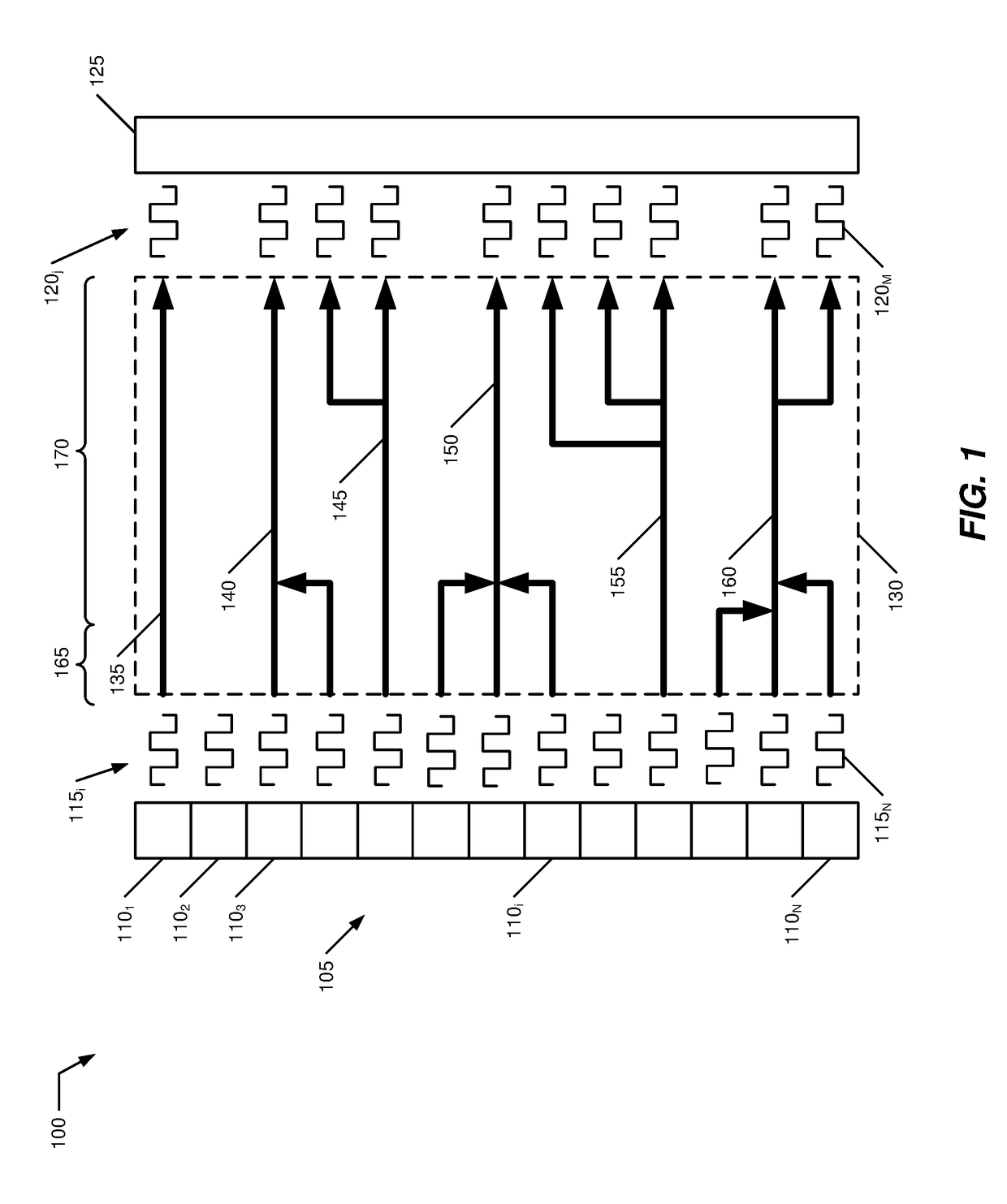

Systems and methods for parallax detection and correction in images captured using array cameras that contain occlusions using subsets of images to perform depth estimation

ActiveUS8619082B1Reduce the weighting appliedMinimal costImage enhancementTelevision system detailsParallaxViewpoints

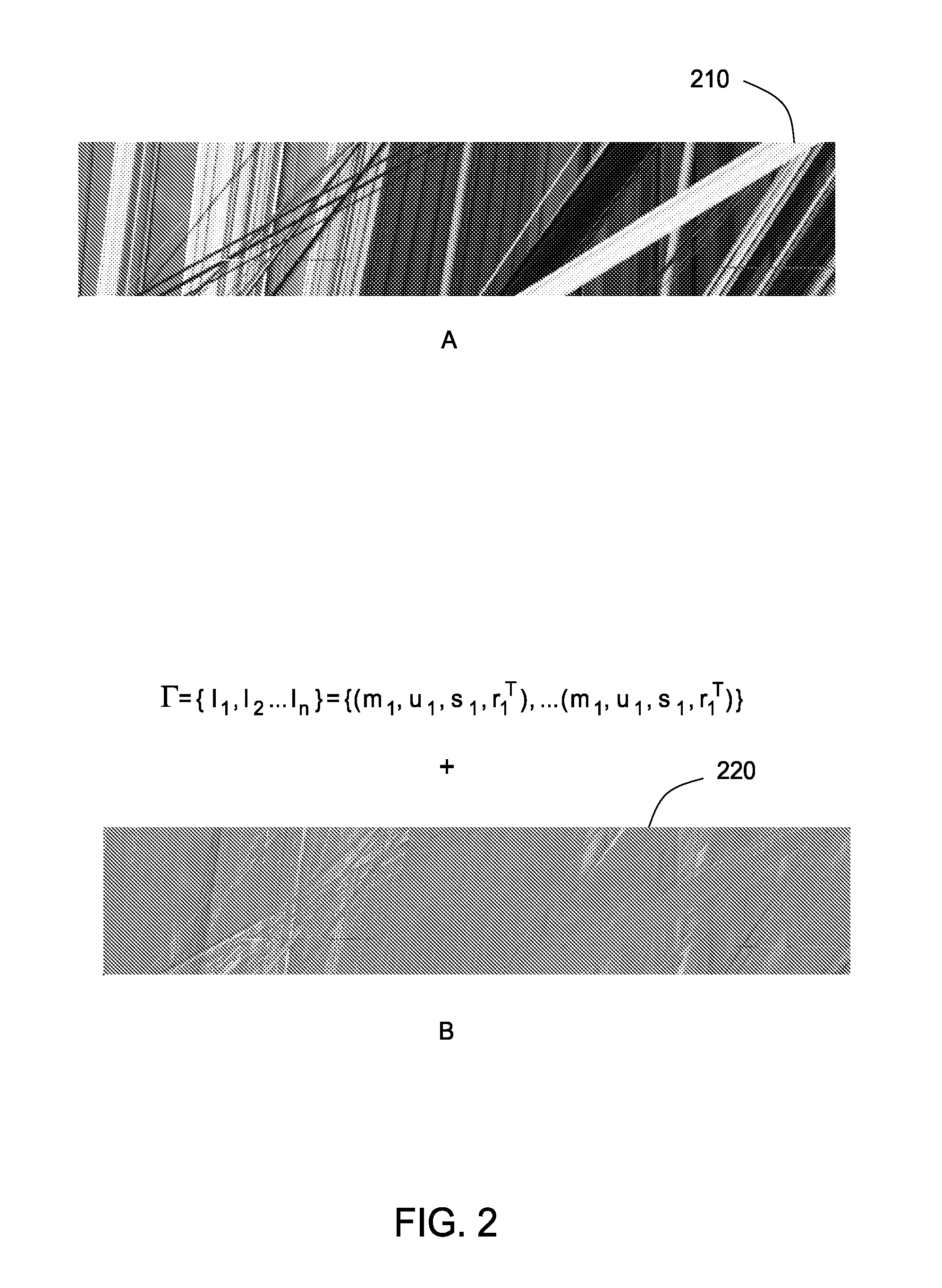

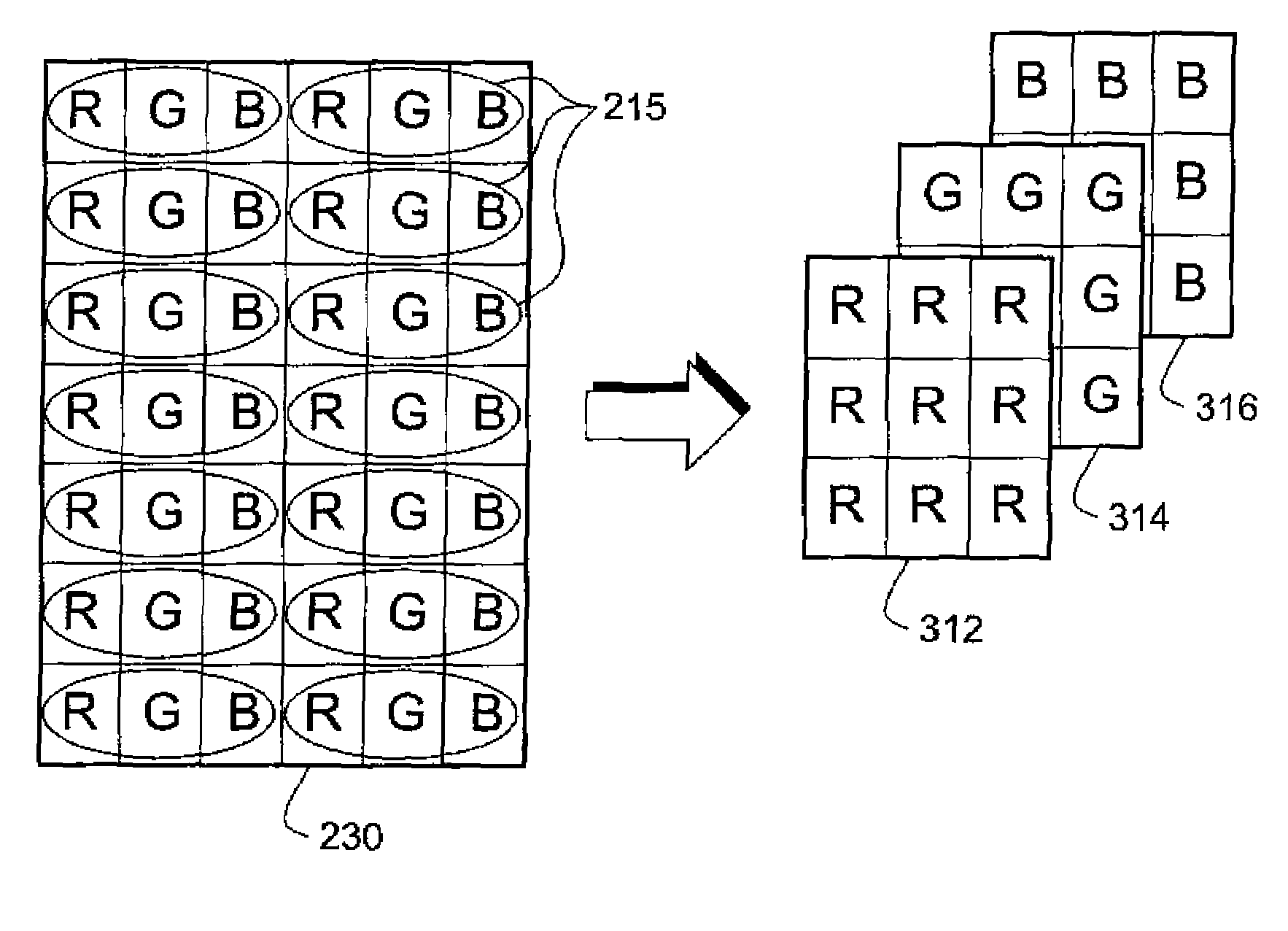

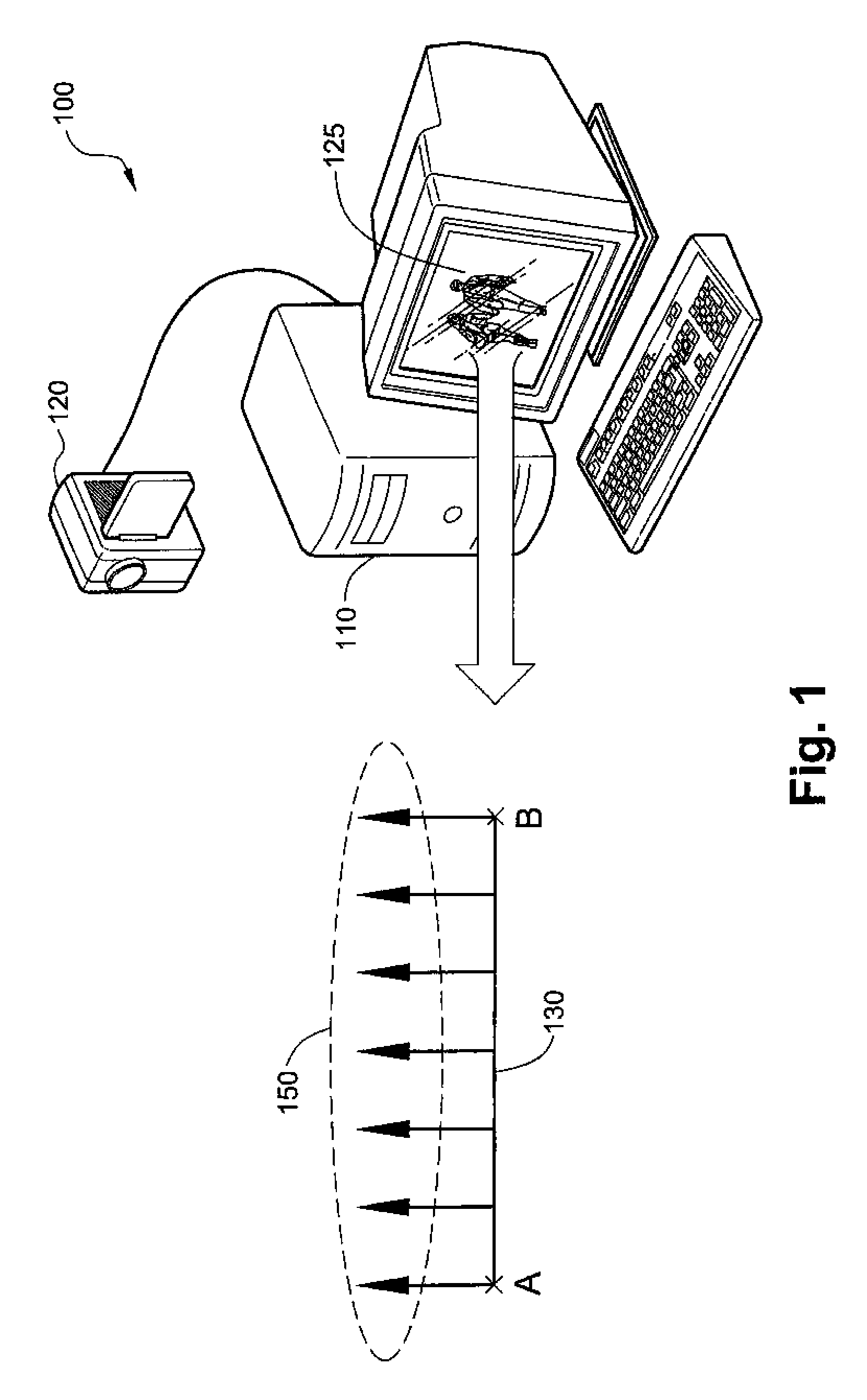

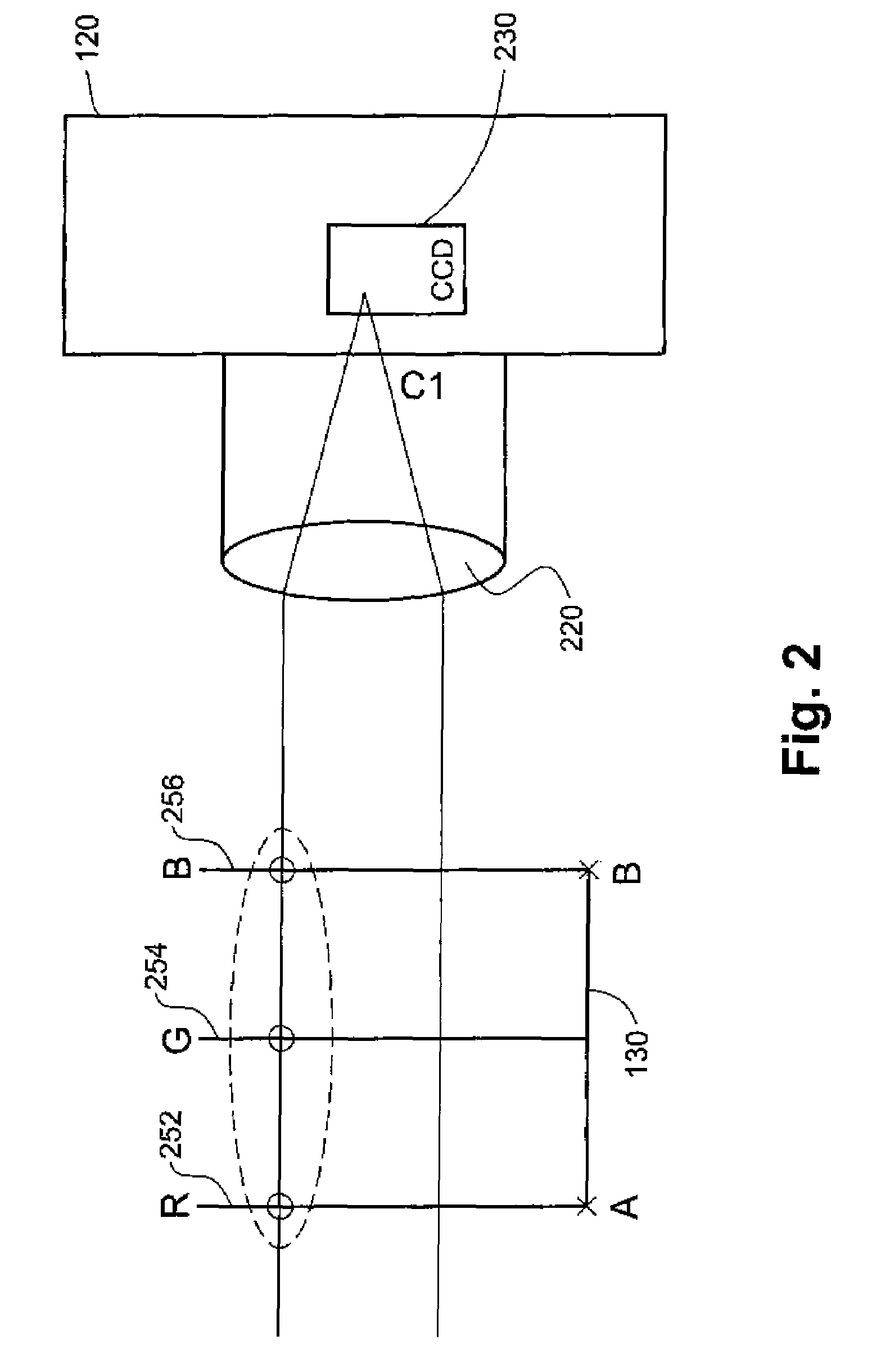

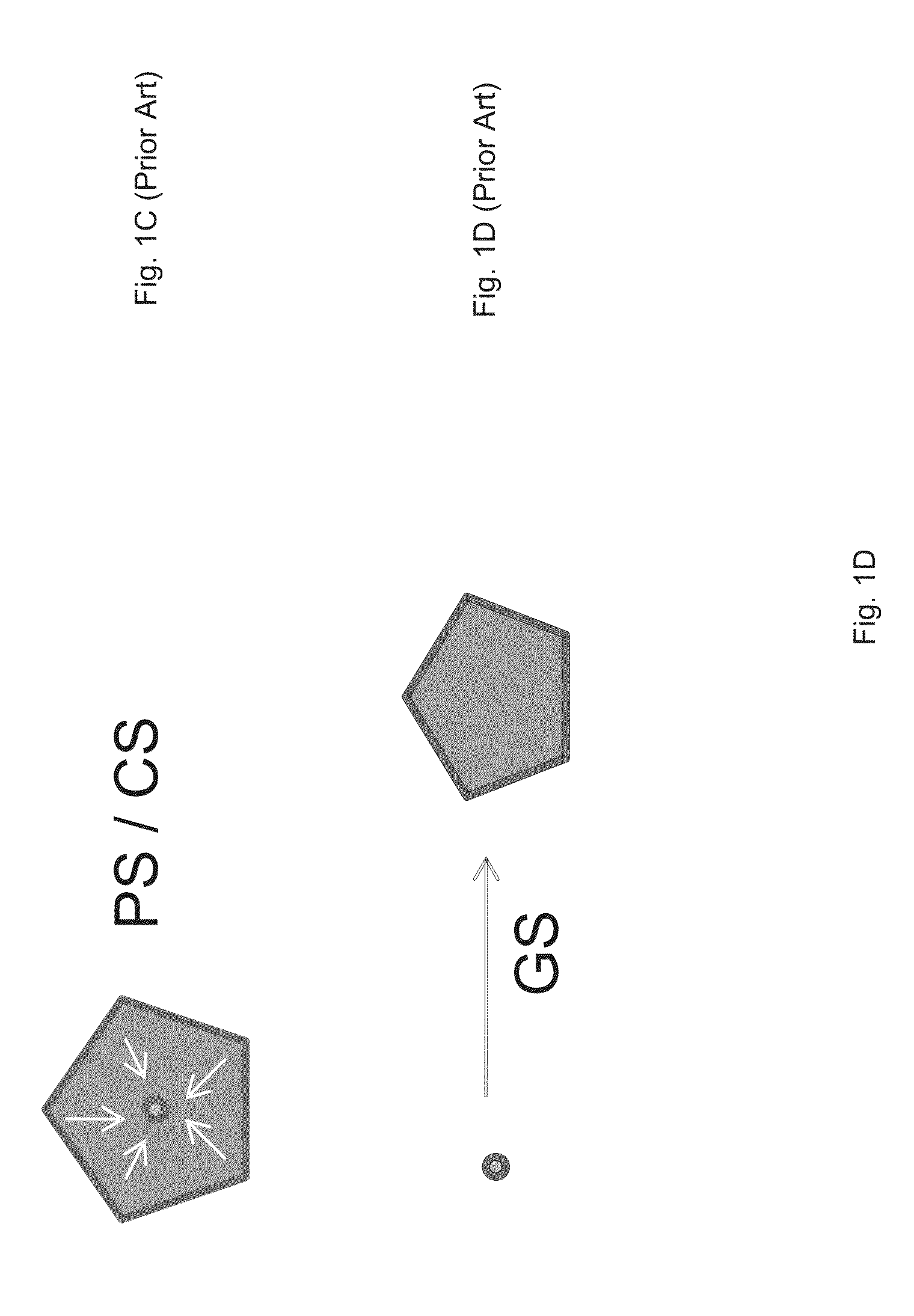

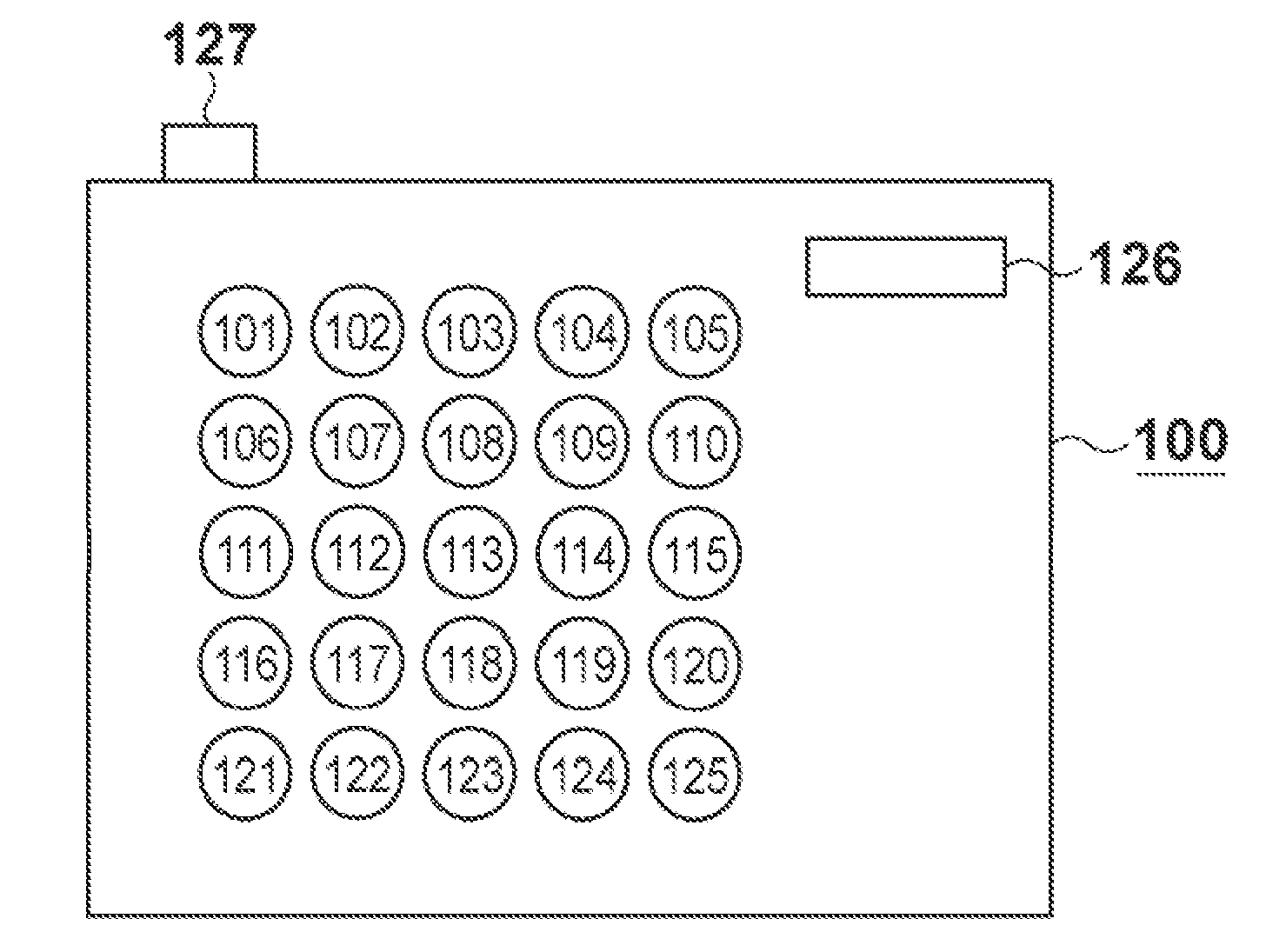

Systems in accordance with embodiments of the invention can perform parallax detection and correction in images captured using array cameras. Due to the different viewpoints of the cameras, parallax results in variations in the position of objects within the captured images of the scene. Methods in accordance with embodiments of the invention provide an accurate account of the pixel disparity due to parallax between the different cameras in the array, so that appropriate scene-dependent geometric shifts can be applied to the pixels of the captured images when performing super-resolution processing. In several embodiments, detecting parallax involves using competing subsets of images to estimate the depth of a pixel location in an image from a reference viewpoint. In a number of embodiments, generating depth estimates considers the similarity of pixels in multiple spectral channels. In certain embodiments, generating depth estimates involves generating a confidence map indicating the reliability of depth estimates.

Owner:FOTONATION LTD

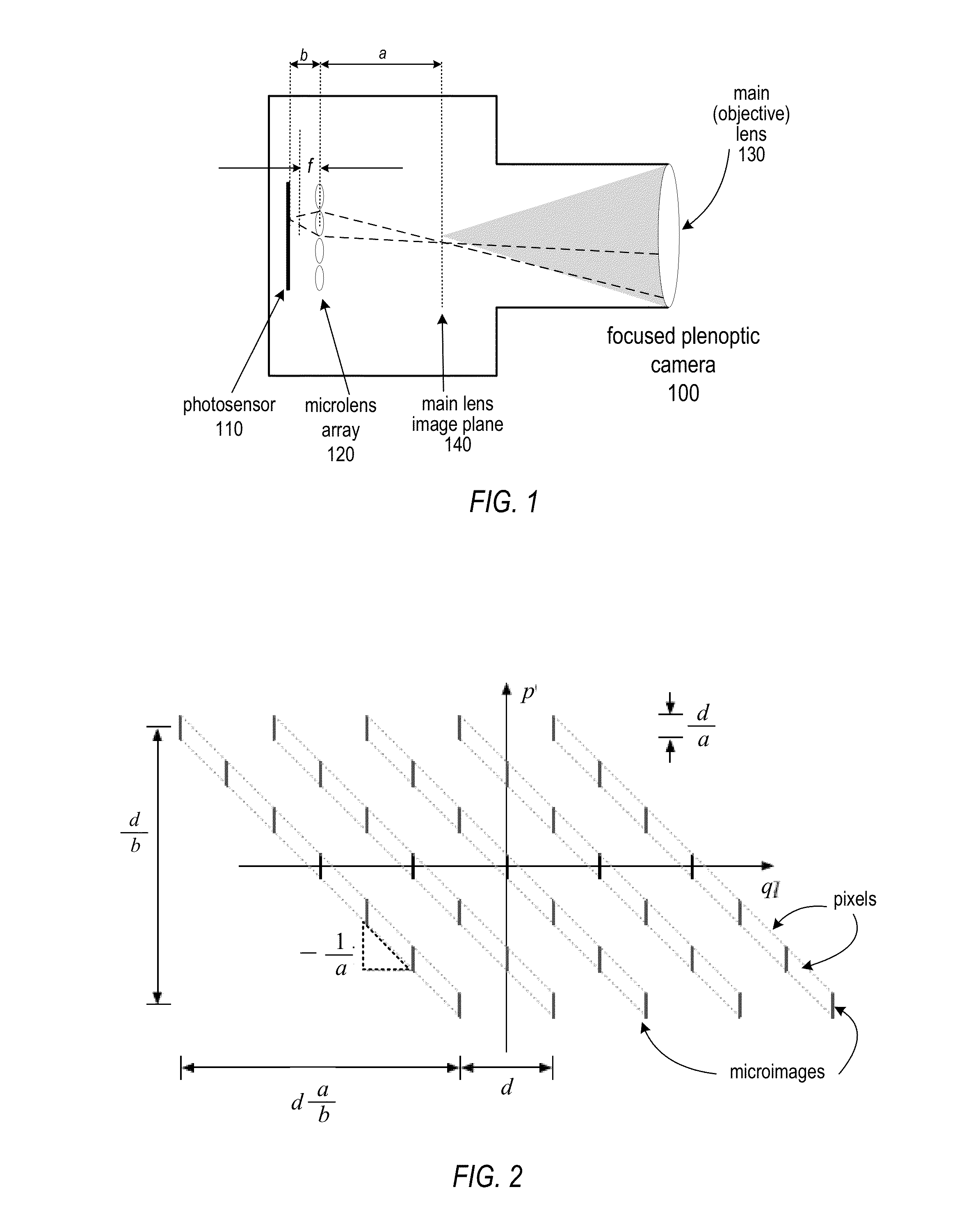

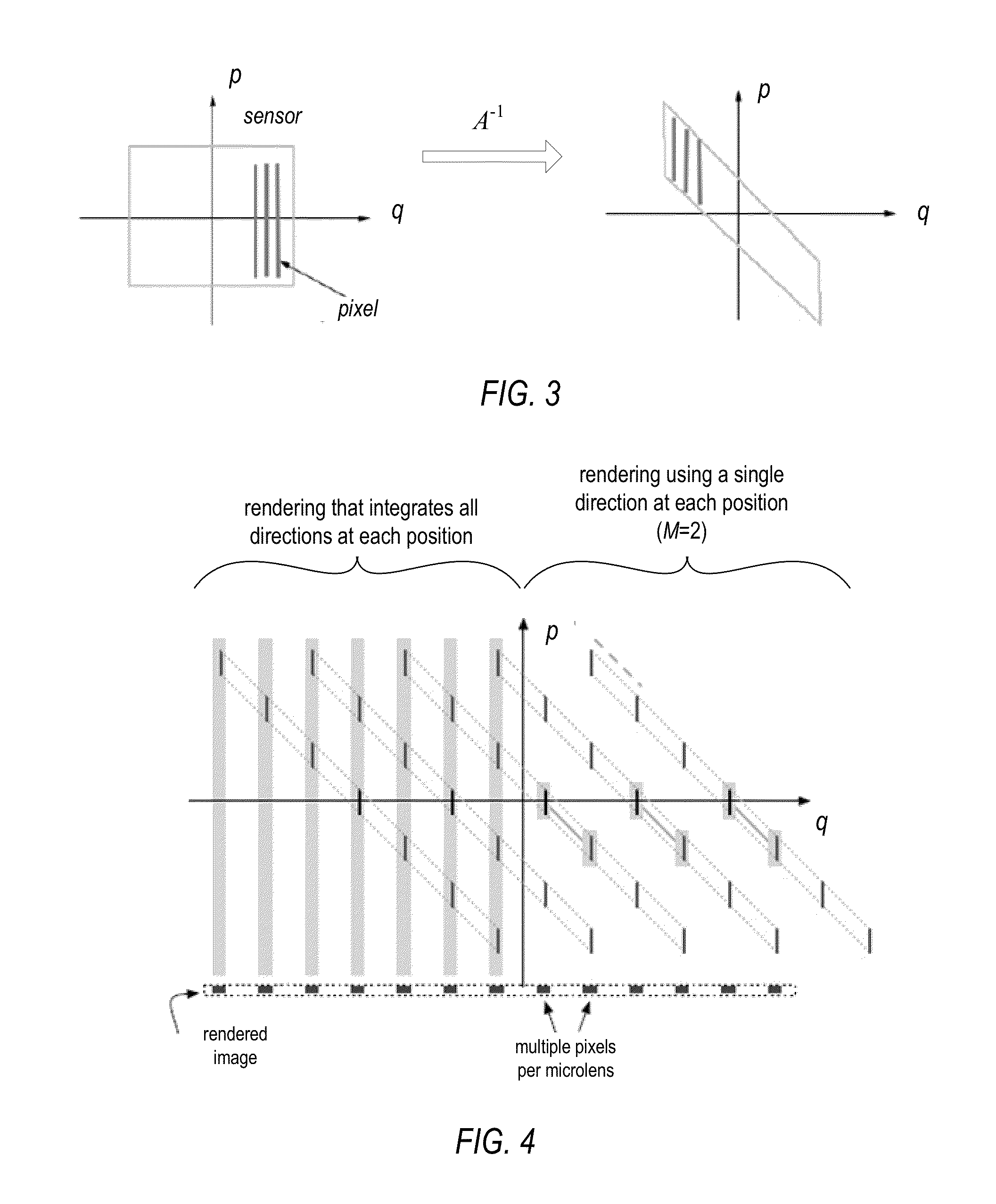

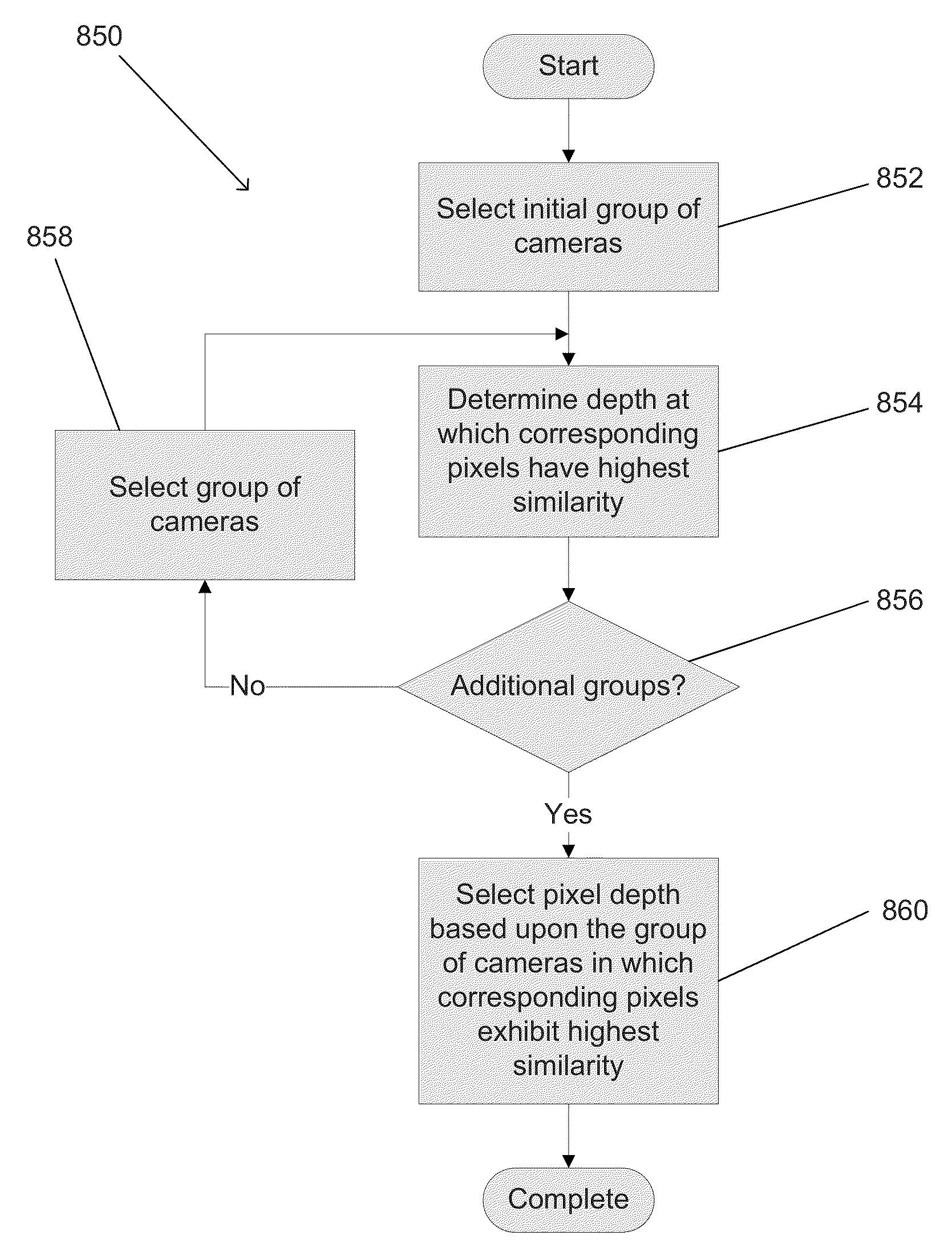

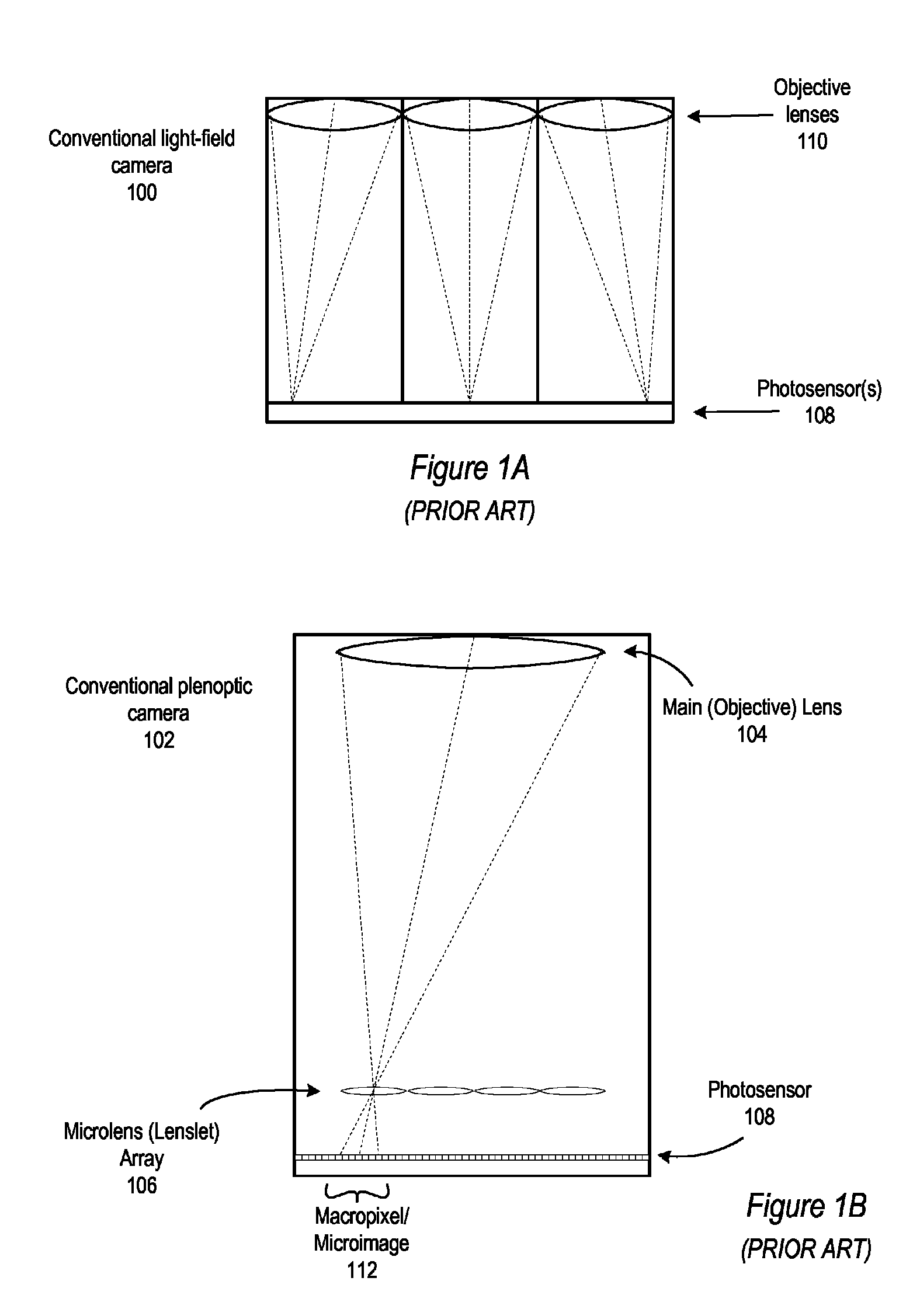

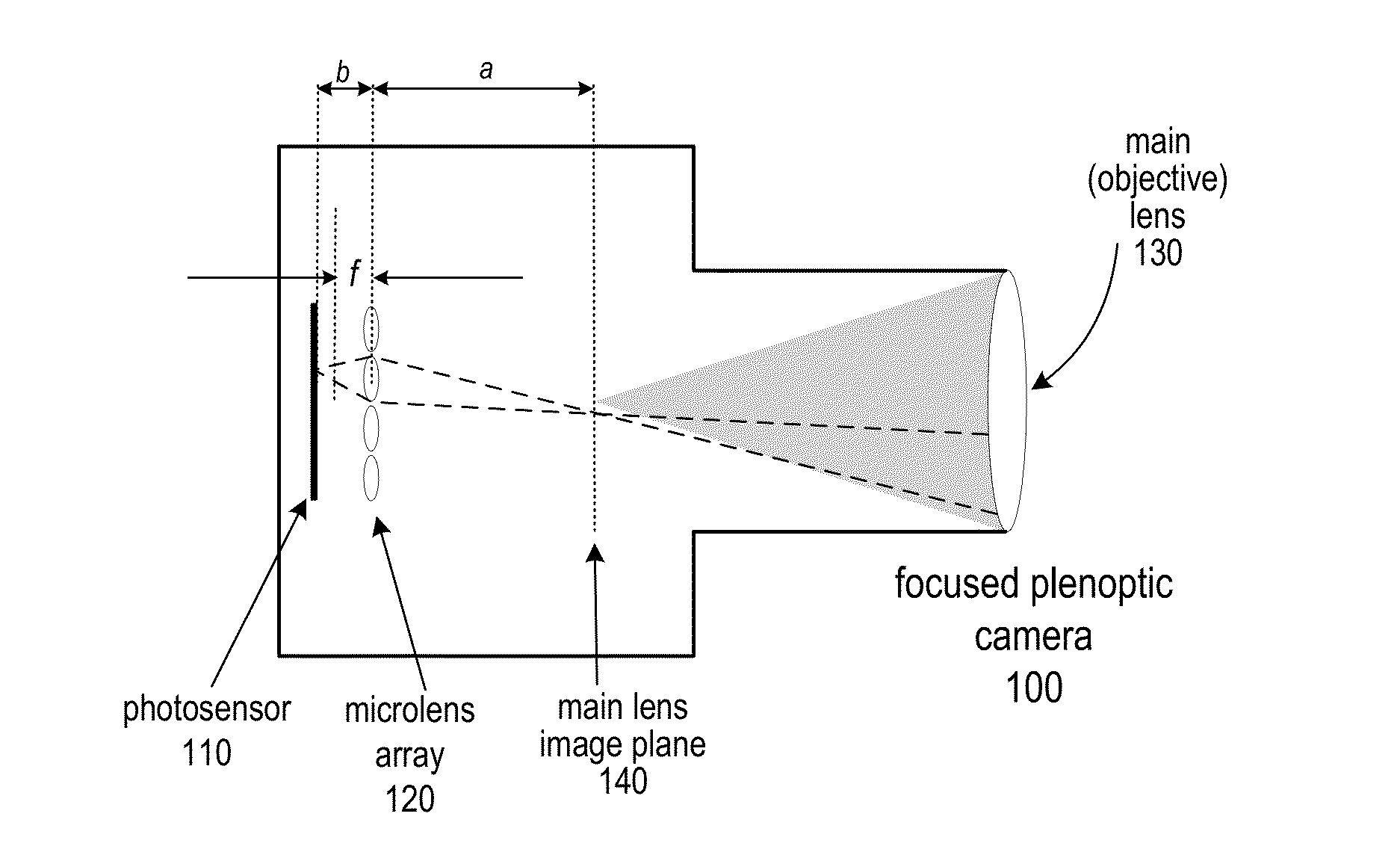

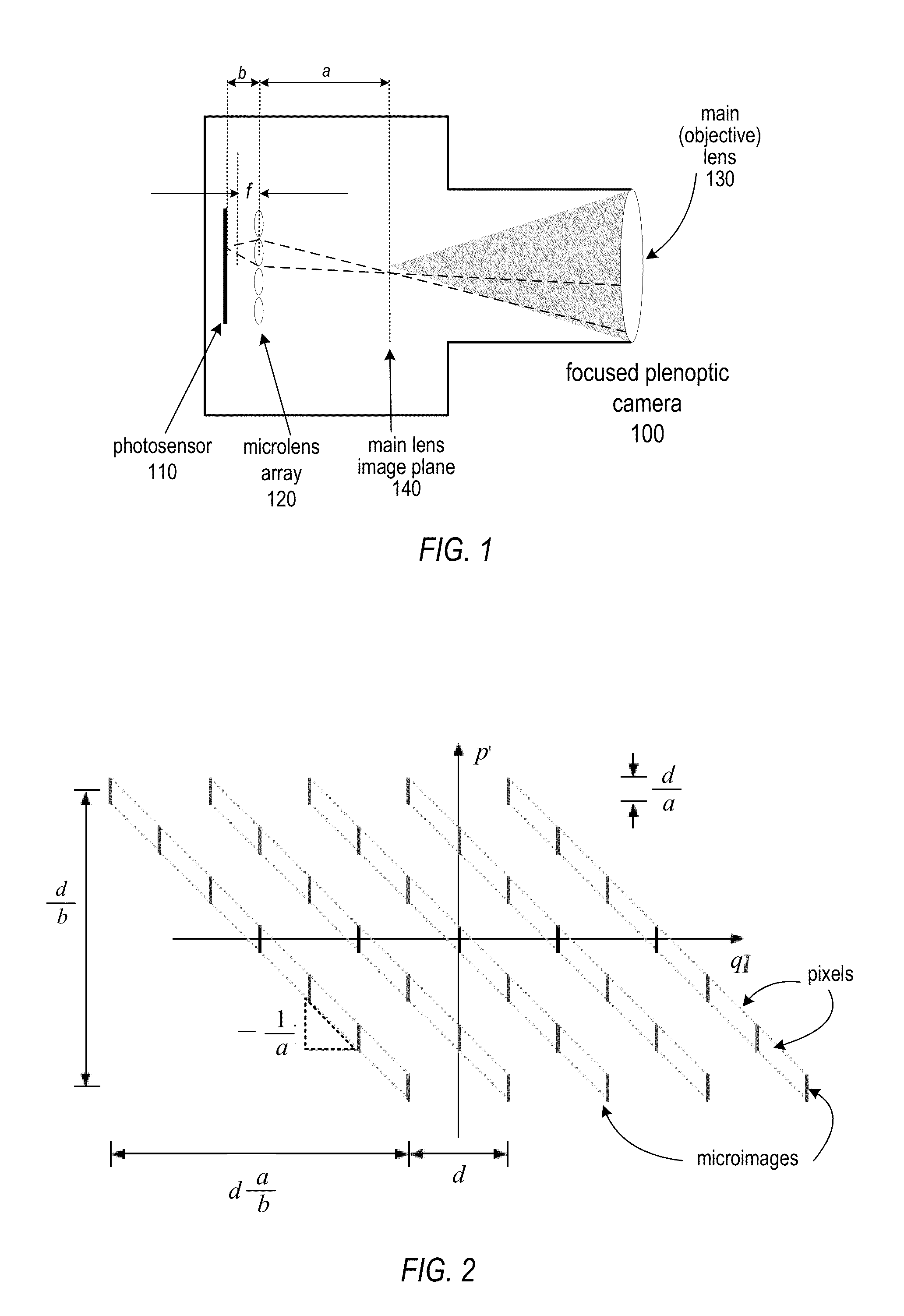

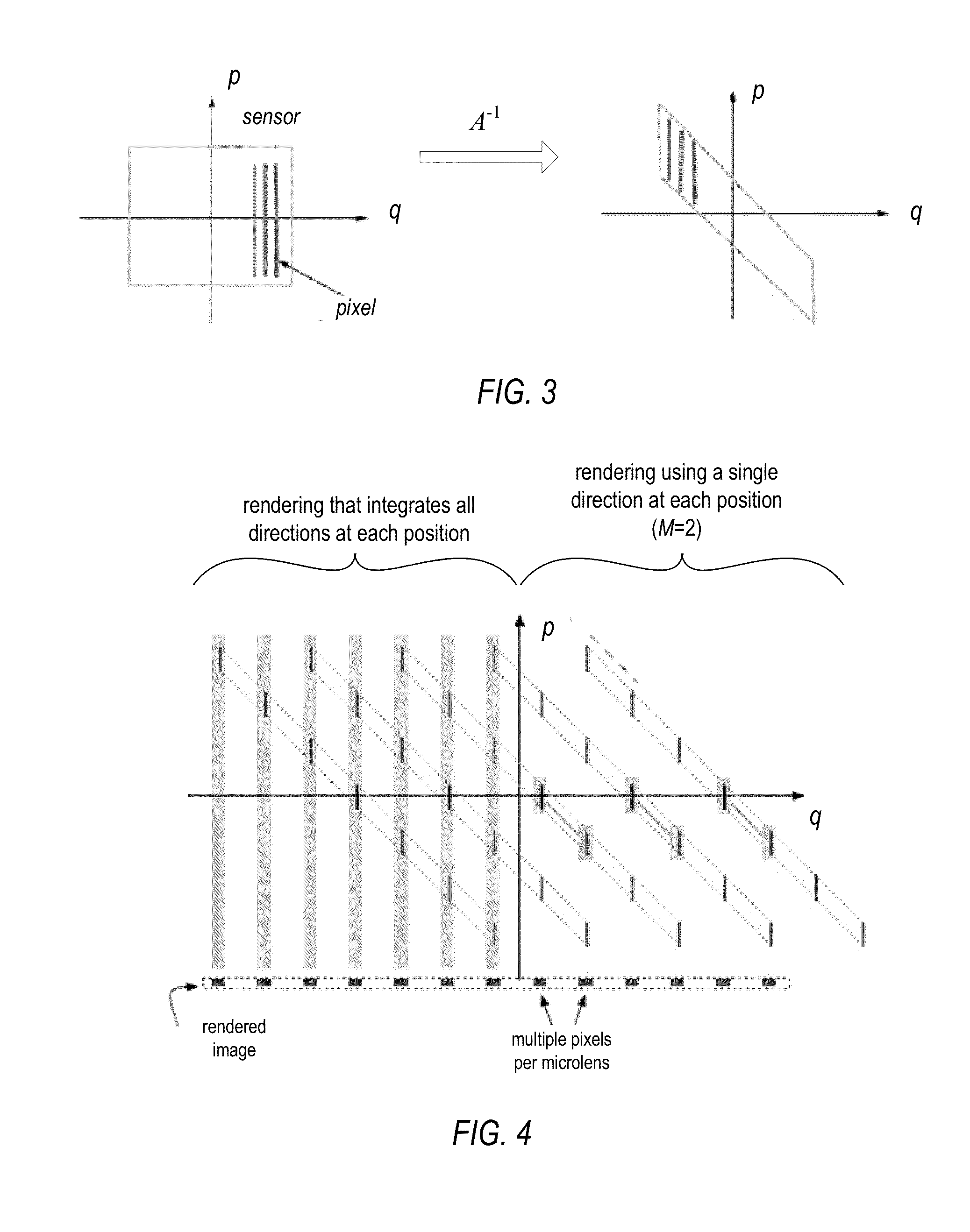

Methods, Apparatus, and Computer-Readable Storage Media for Blended Rendering of Focused Plenoptic Camera Data

ActiveUS20130120605A1High resolutionTelevision system detailsColor television detailsGraphicsMultiple point

Methods, apparatus, and computer-readable storage media for rendering focused plenoptic camera data. A rendering with blending technique is described that blends values from positions in multiple microimages and assigns the blended value to a given point in the output image. A rendering technique that combines depth-based rendering and rendering with blending is also described. Depth-based rendering estimates depth at each microimage and then applies that depth to determine a position in the input flat from which to read a value to be assigned to a given point in the output image. The techniques may be implemented according to parallel processing technology that renders multiple points of the output image in parallel. In at least some embodiments, the parallel processing technology is graphical processing unit (GPU) technology.

Owner:ADOBE SYST INC

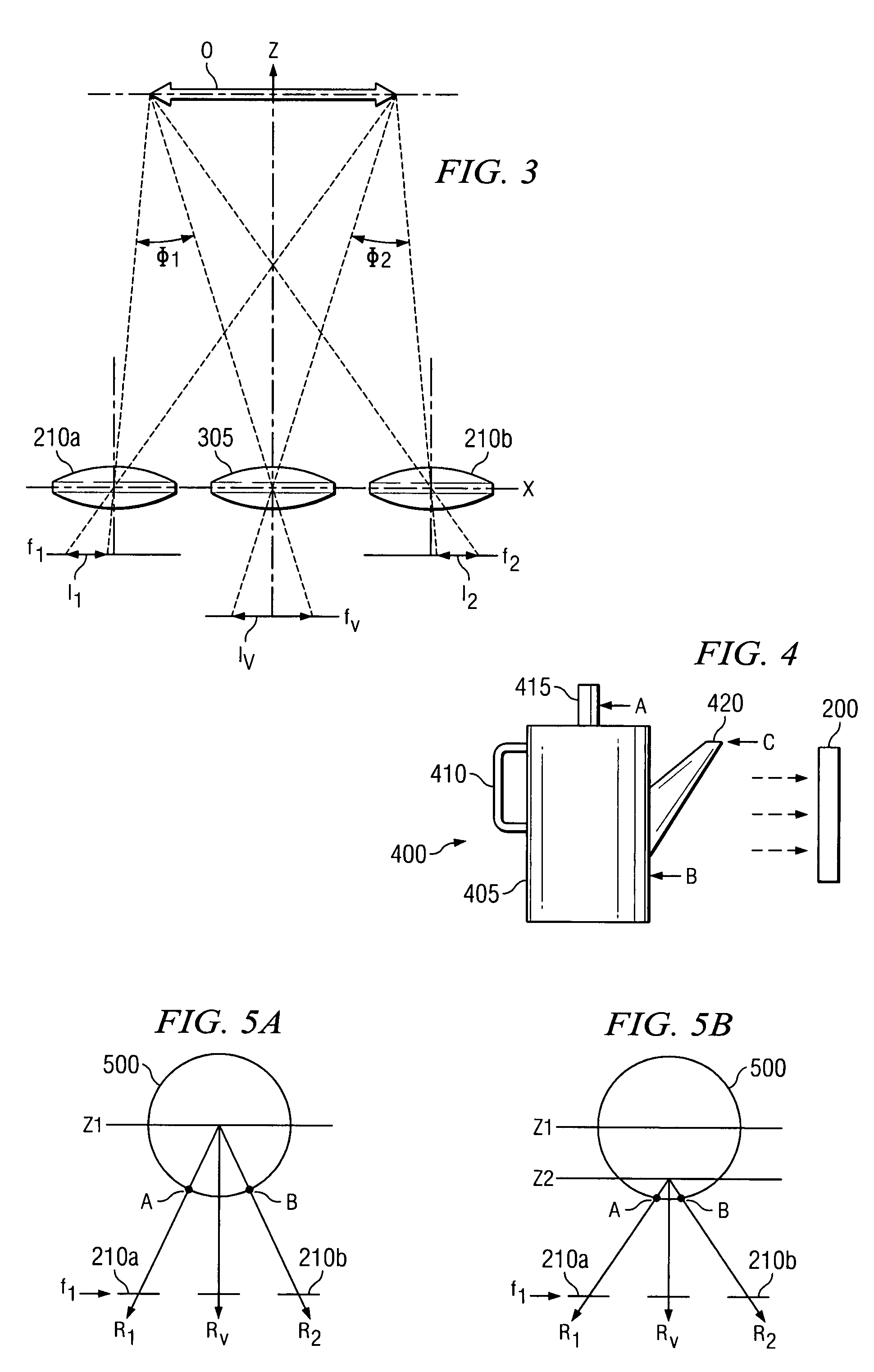

Systems and methods for performing depth estimation using image data from multiple spectral channels

ActiveUS8780113B1Reduce the weighting appliedMinimal costImage enhancementImage analysisParallaxViewpoints

Systems in accordance with embodiments of the invention can perform parallax detection and correction in images captured using array cameras. Due to the different viewpoints of the cameras, parallax results in variations in the position of objects within the captured images of the scene. Methods in accordance with embodiments of the invention provide an accurate account of the pixel disparity due to parallax between the different cameras in the array, so that appropriate scene-dependent geometric shifts can be applied to the pixels of the captured images when performing super-resolution processing. In a number of embodiments, generating depth estimates considers the similarity of pixels in multiple spectral channels. In certain embodiments, generating depth estimates involves generating a confidence map indicating the reliability of depth estimates.

Owner:FOTONATION LTD

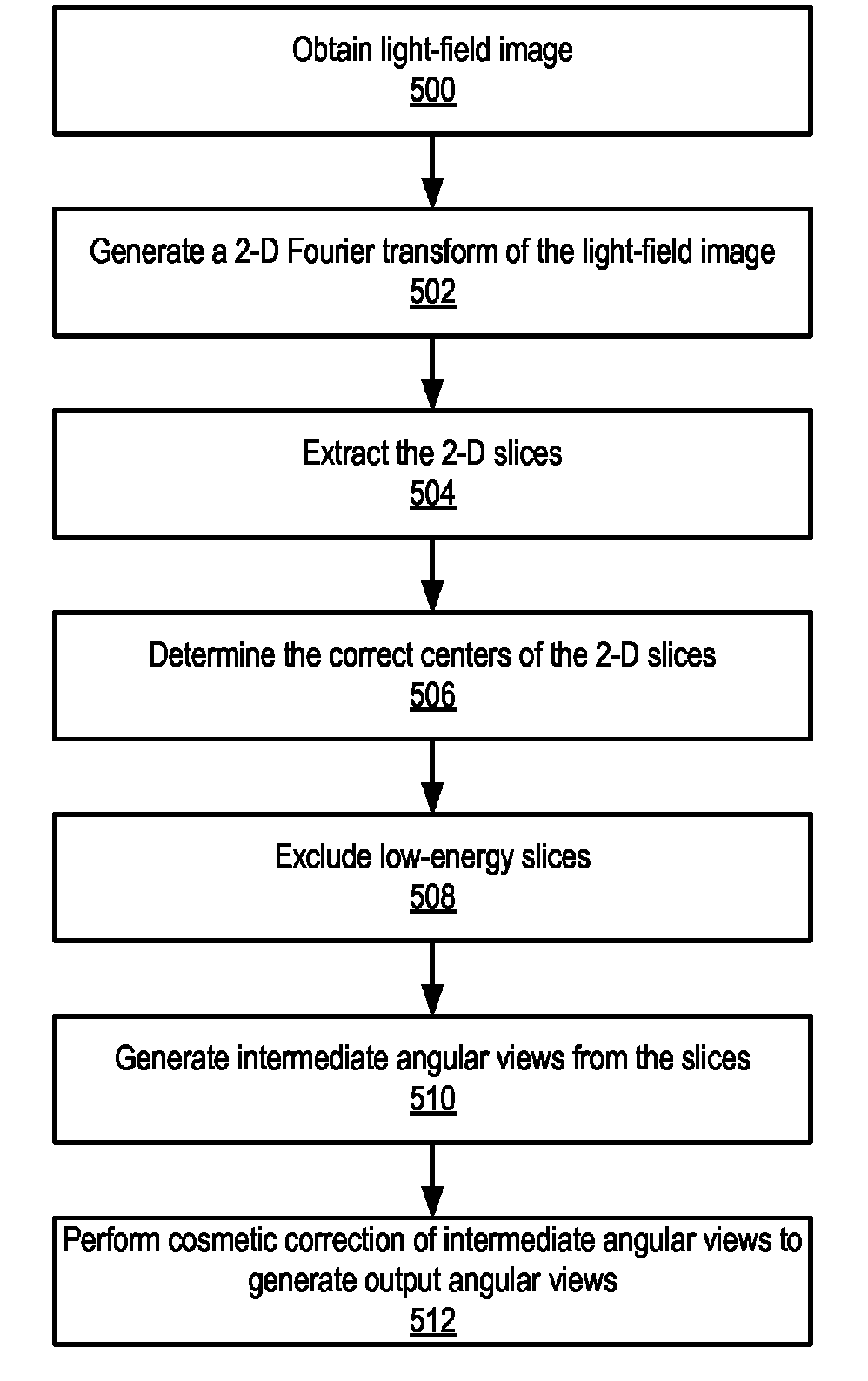

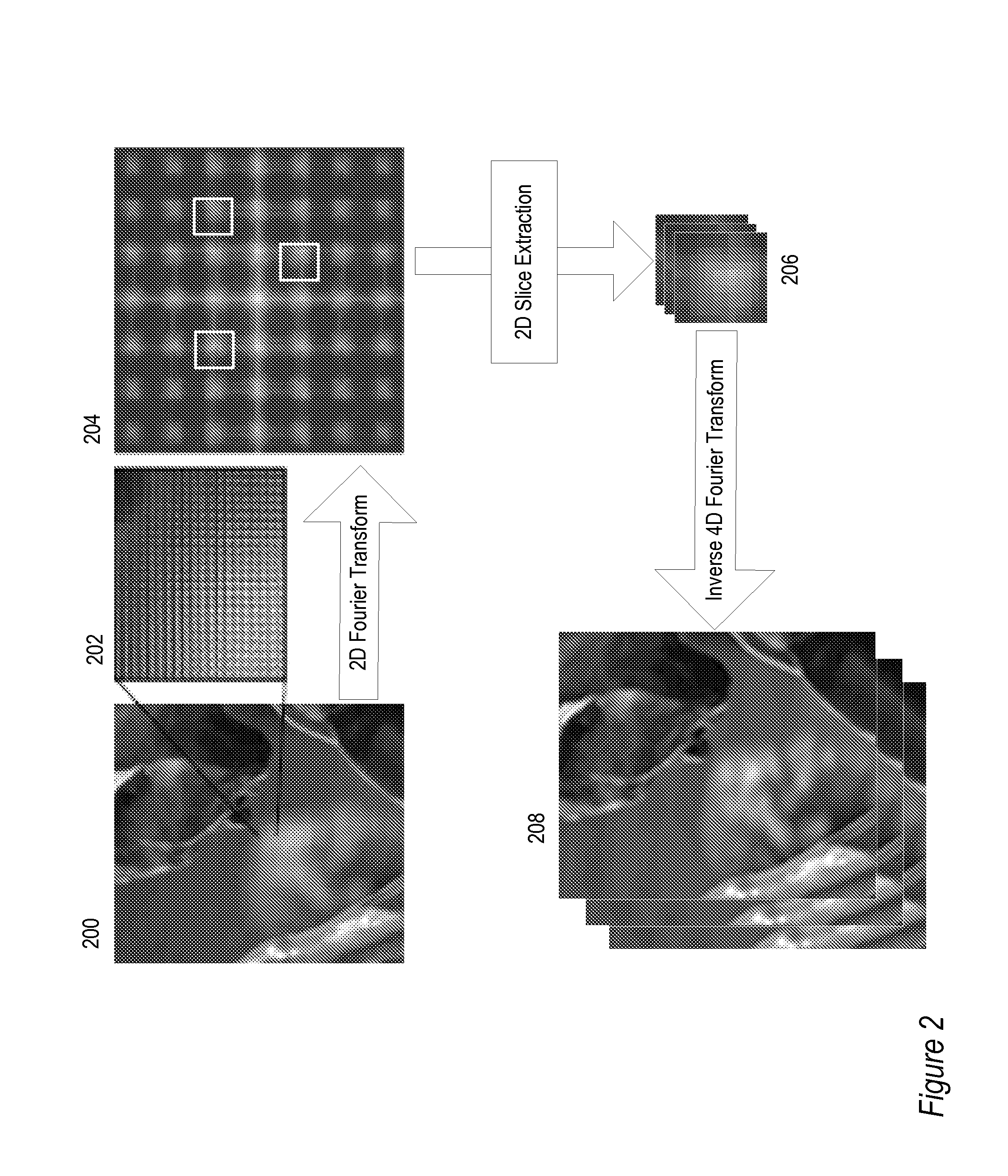

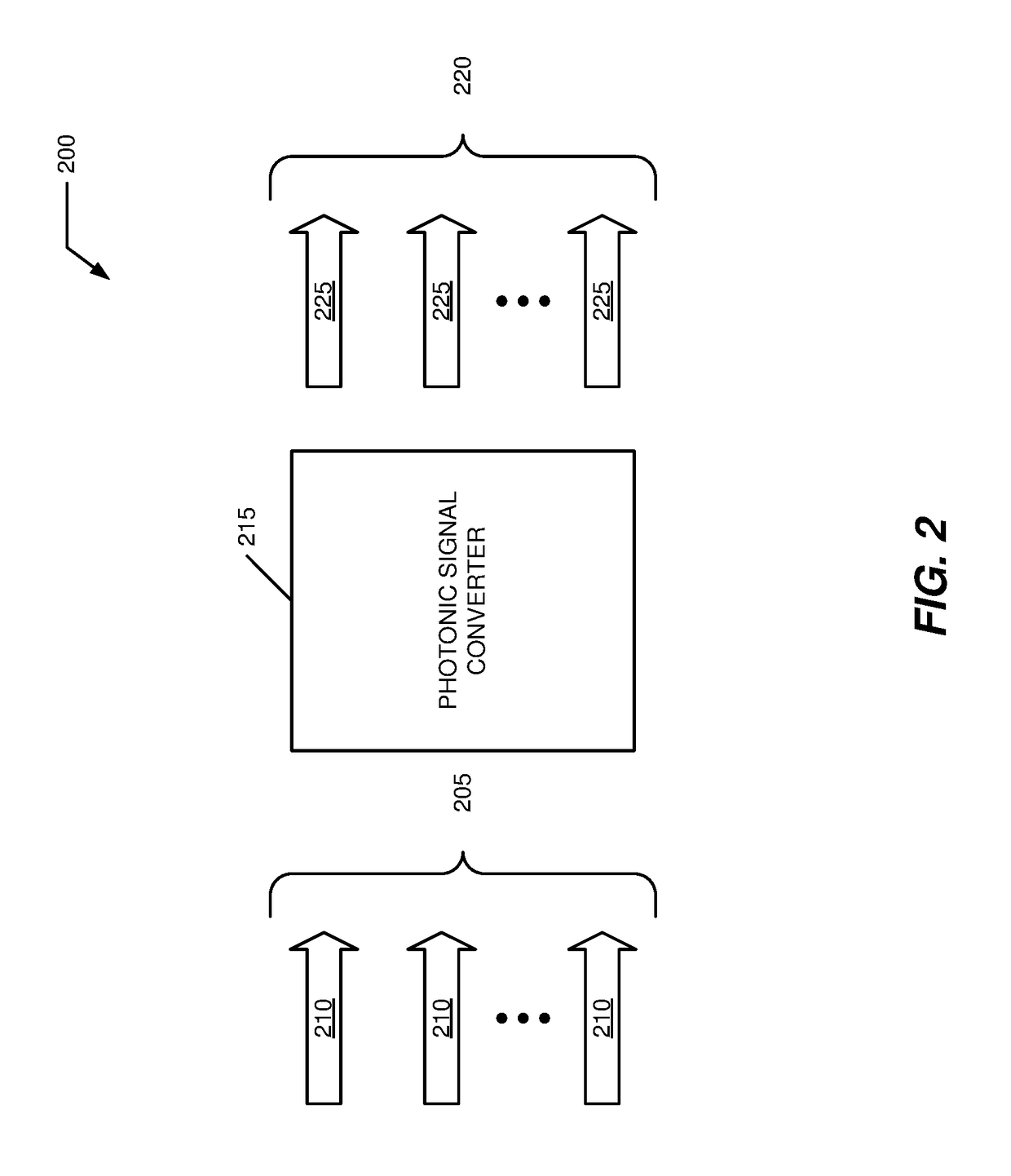

Method and apparatus for managing artifacts in frequency domain processing of light-field images

ActiveUS8244058B1Reduce and eliminate wave artifactReduce and eliminate artifactImage enhancementImage analysisComputer scienceCorrection method

Various methods and apparatus for removing artifacts in frequency domain processing of light-field images are described. Methods for the reduction or removal of the artifacts are described that include methods that may be applied during frequency domain processing and a method that may be applied during post-processing of resultant angular views. The methods may be implemented in software as or in a light-field frequency domain processing module. The described methods include an oversampling method to determine the correct centers of slices, a phase multiplication method to determine the correct centers of slices, a method to exclude low-energy slices, and a cosmetic correction method.

Owner:ADOBE SYST INC

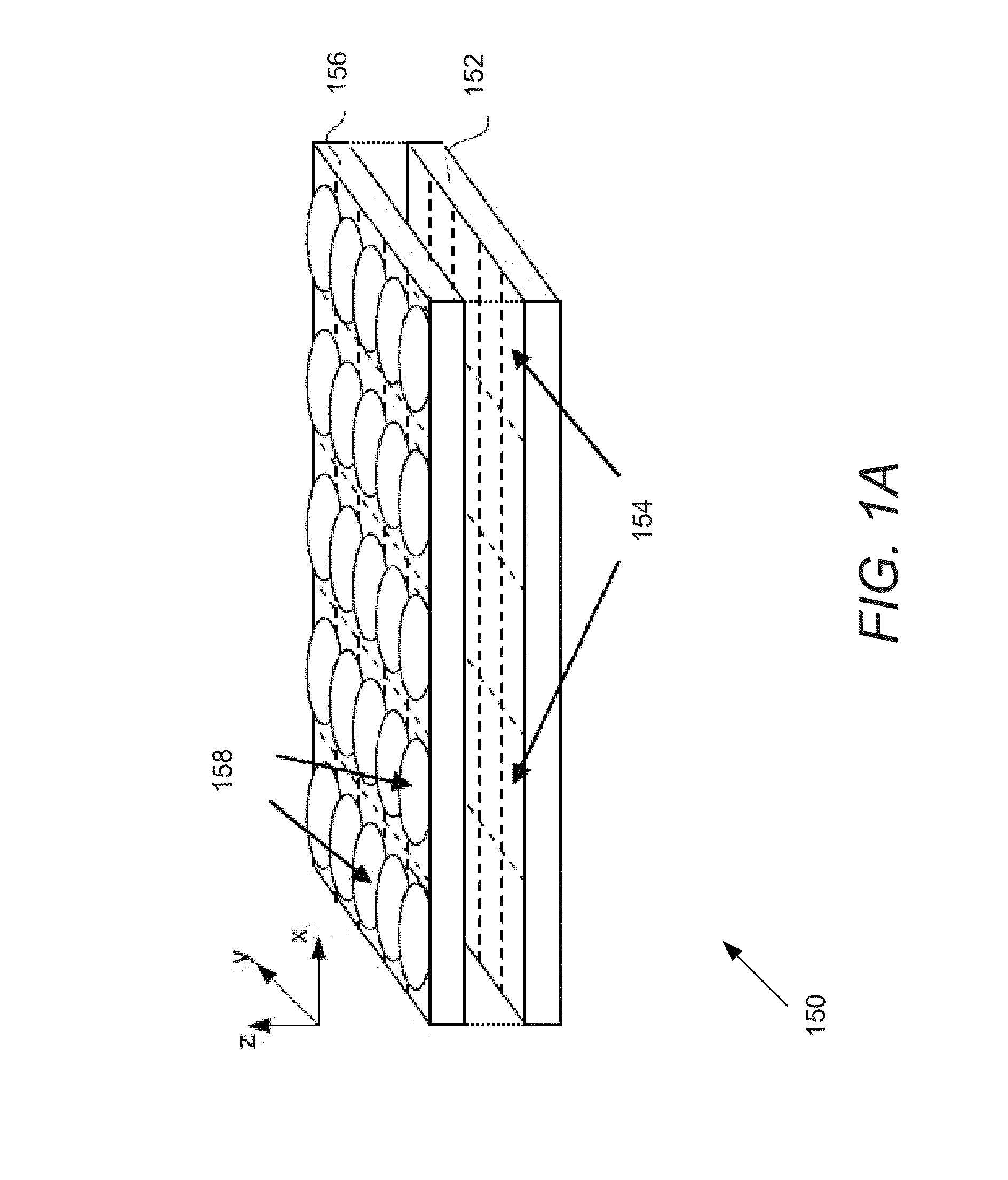

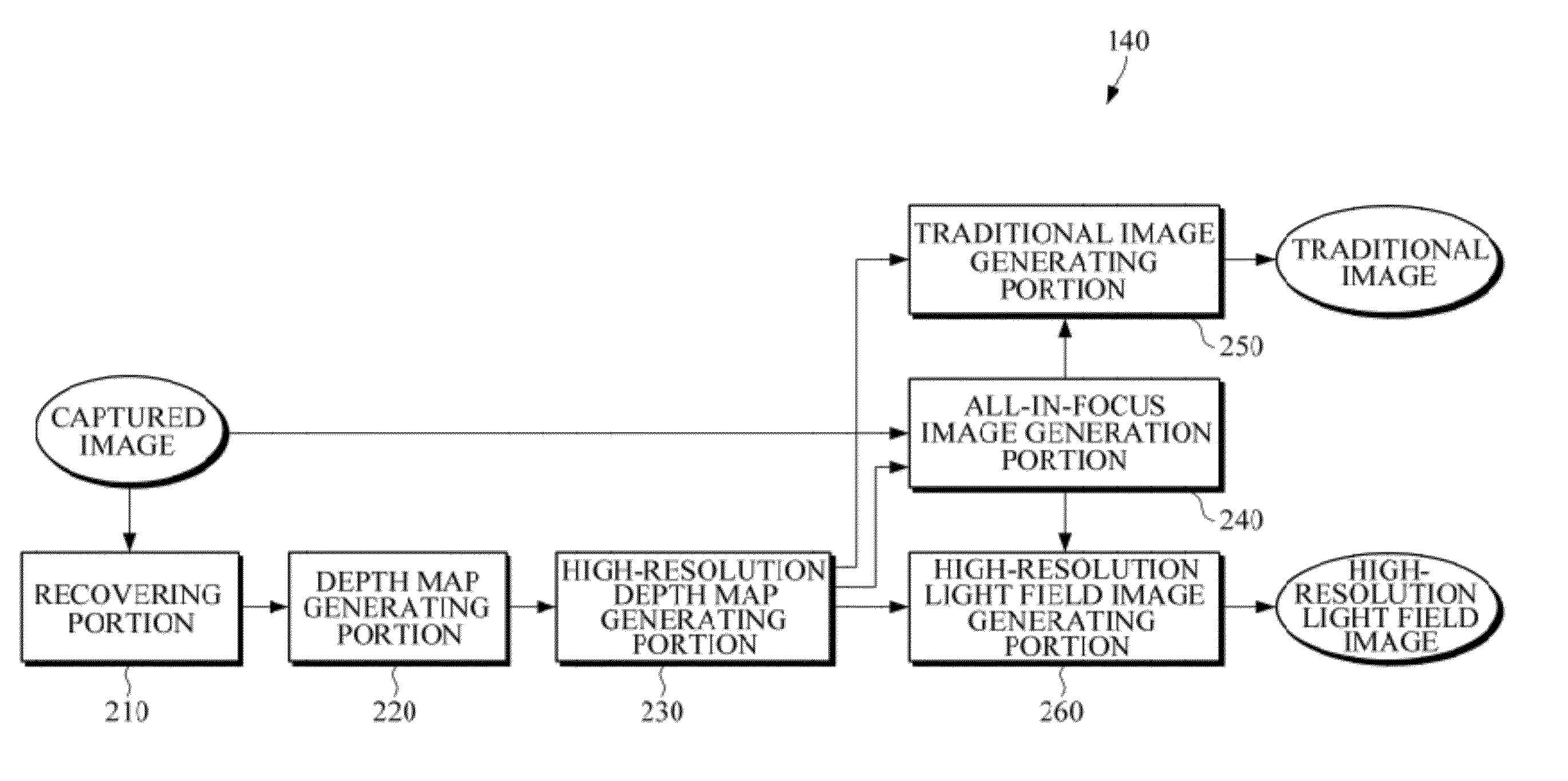

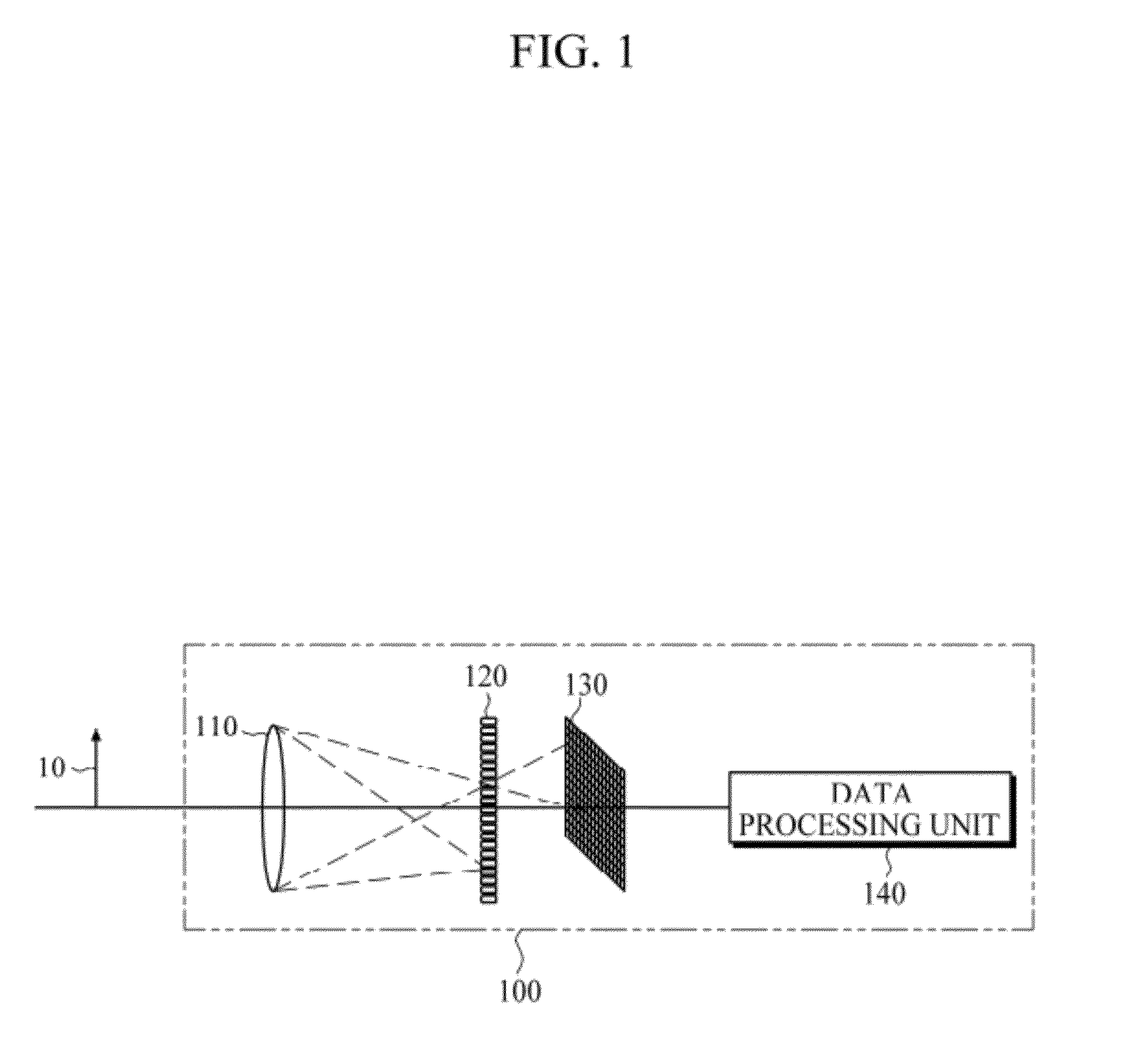

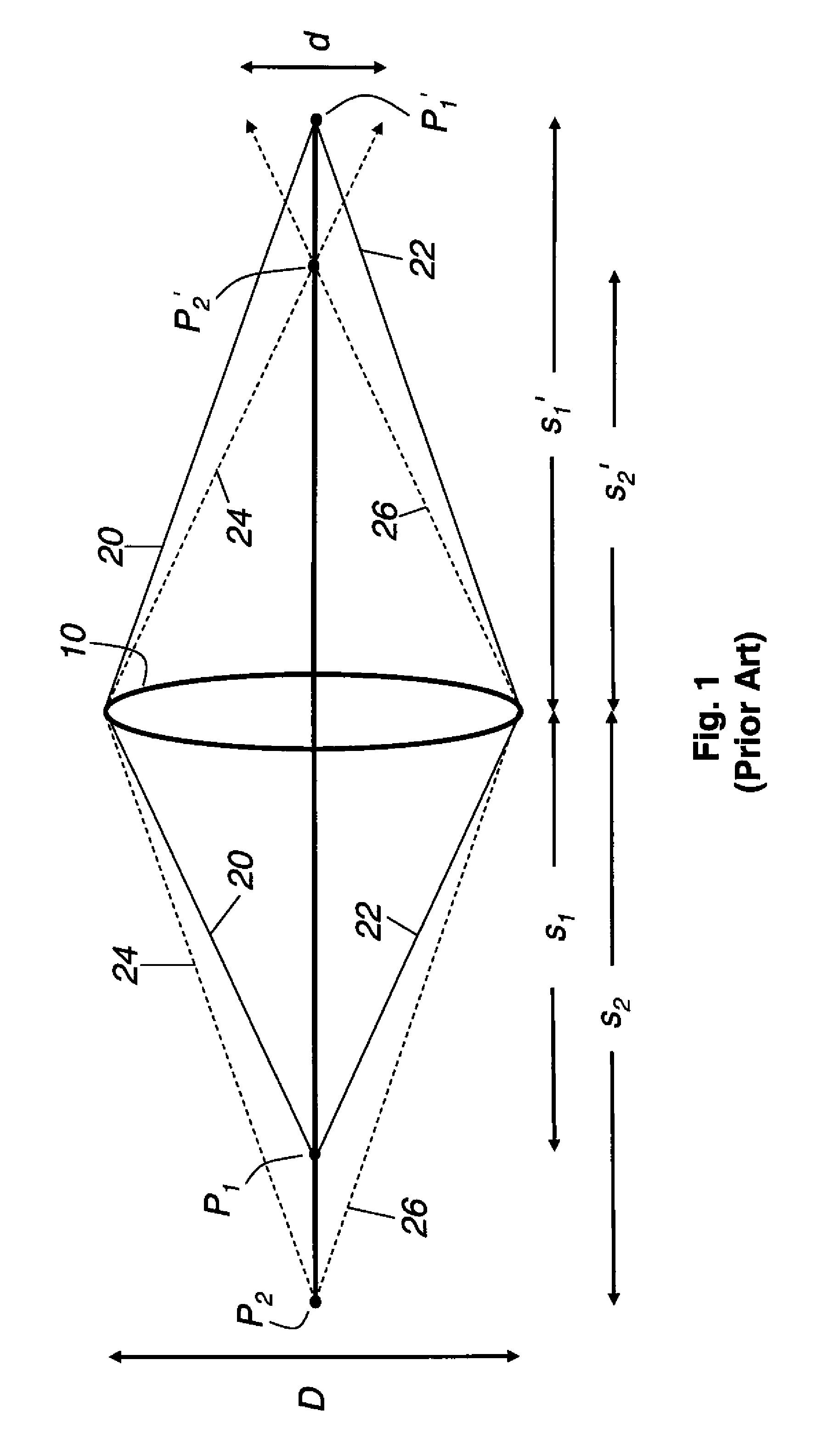

Apparatus and method for processing light field data using a mask with an attenuation pattern

ActiveUS20120057040A1Image enhancementTelevision system detailsUltrasound attenuationProcessing element

Provided are an apparatus and method for processing a light field image that is acquired and processed using a mask to spatially modulate a light field. The apparatus includes a lens, a mask to spatially modulate 4D light field data of a scene passing through the lens to include wideband information on the scene, a sensor to detect a 2D image corresponding to the spatially modulated 4D light field data, and a data processing unit to recover the 4D light field data from the 2D image to generate an all-in-focus image.

Owner:SAMSUNG ELECTRONICS CO LTD +1

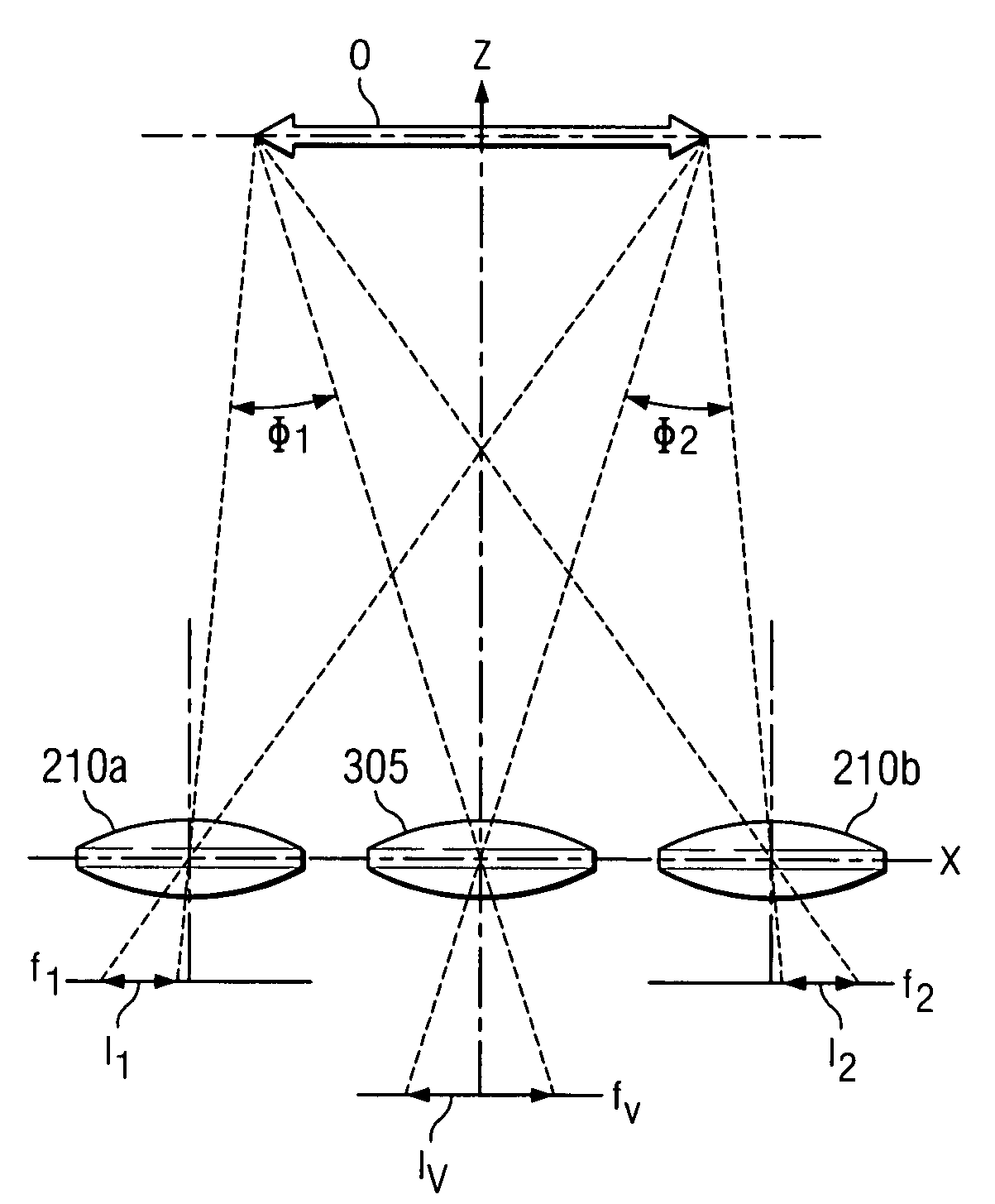

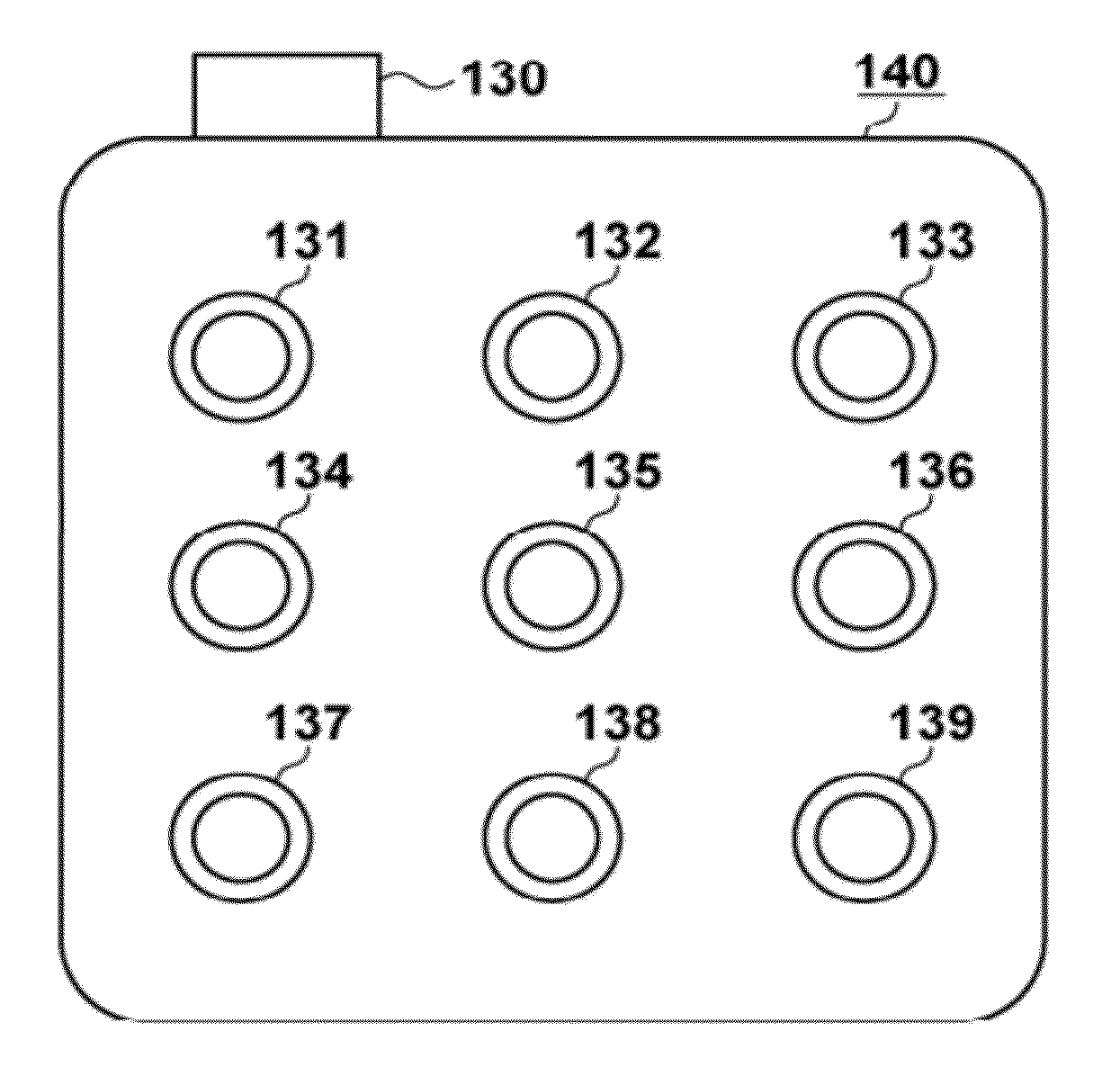

Compound camera and methods for implementing auto-focus, depth-of-field and high-resolution functions

Owner:STMICROELECTRONICS SRL

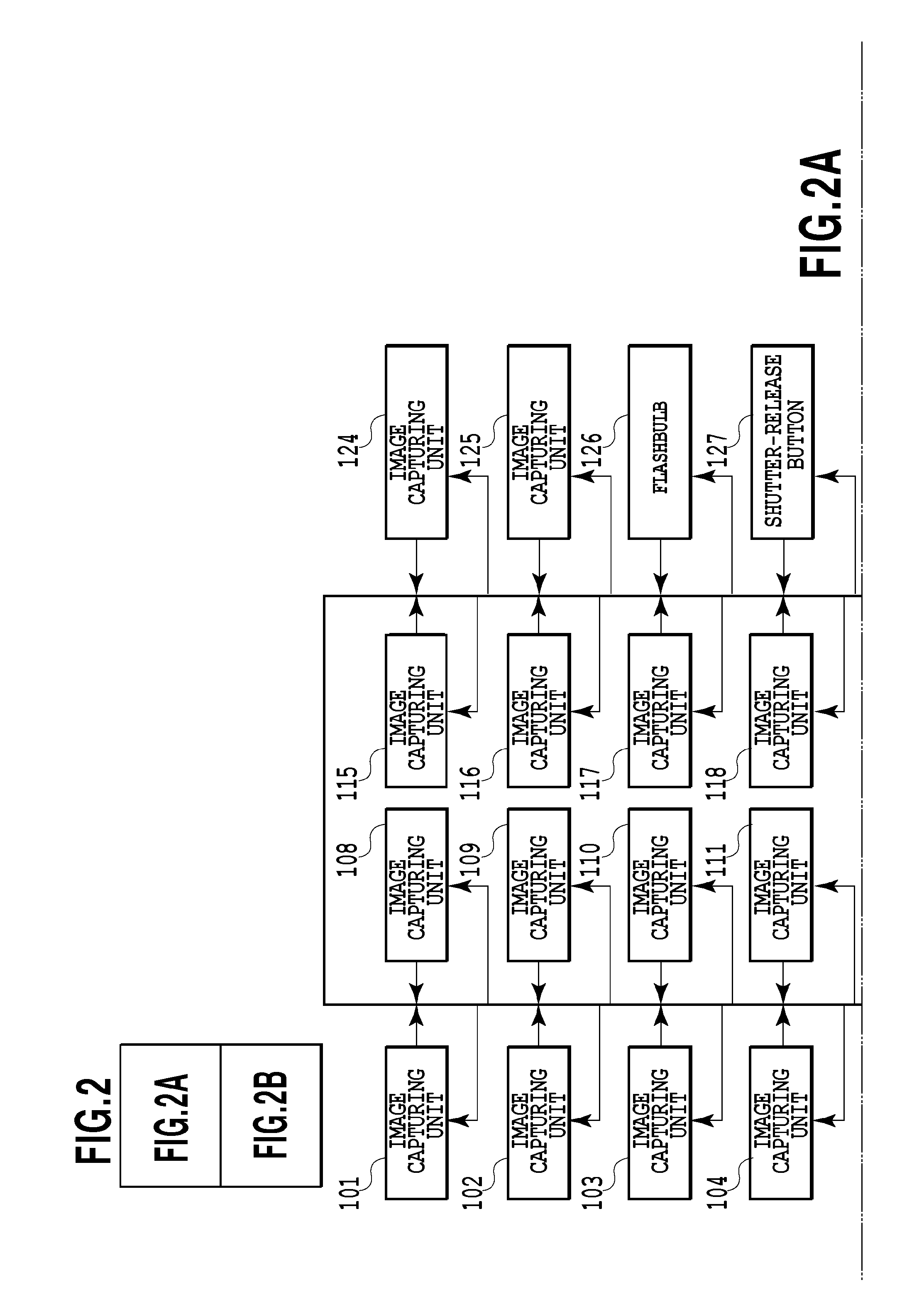

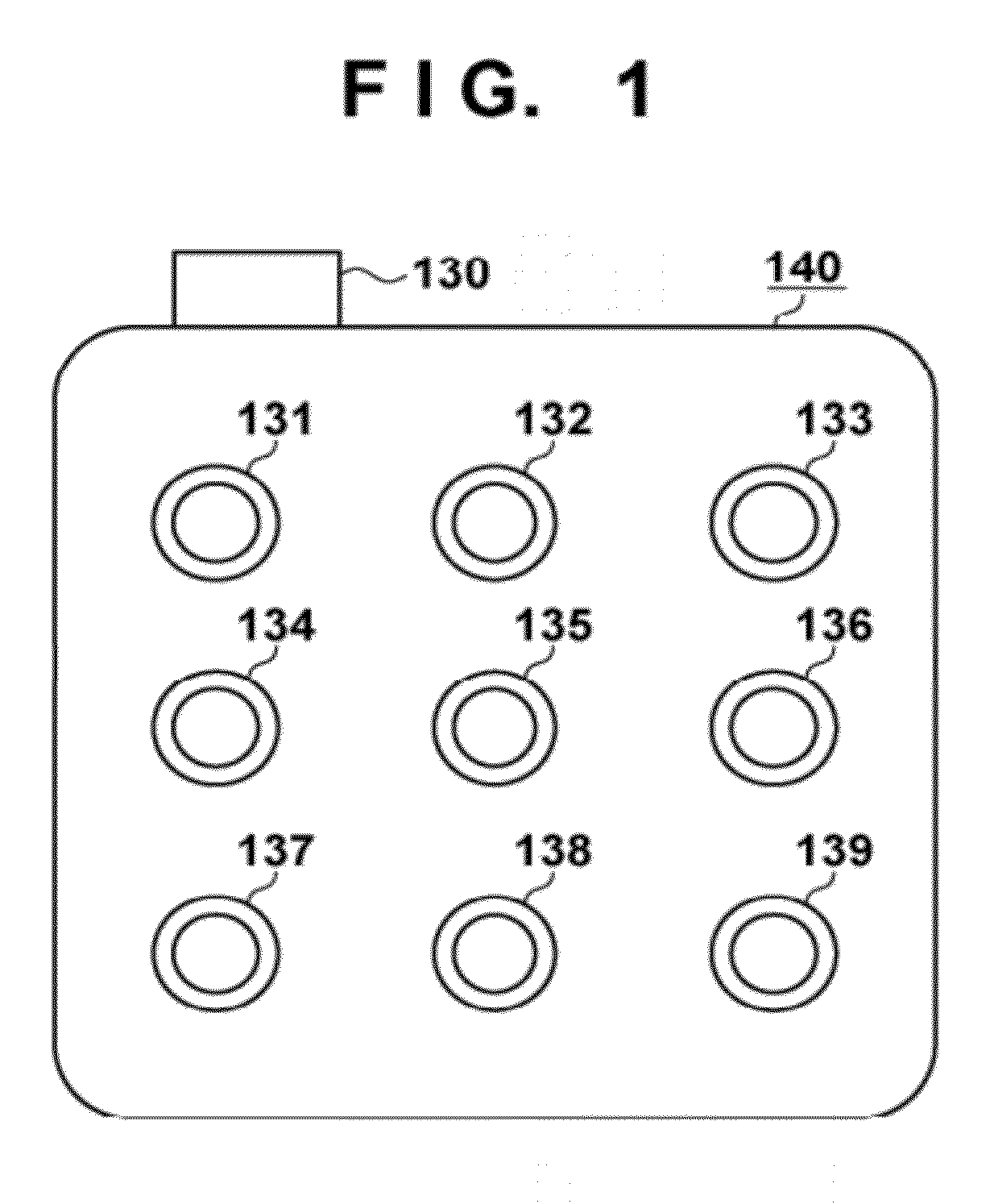

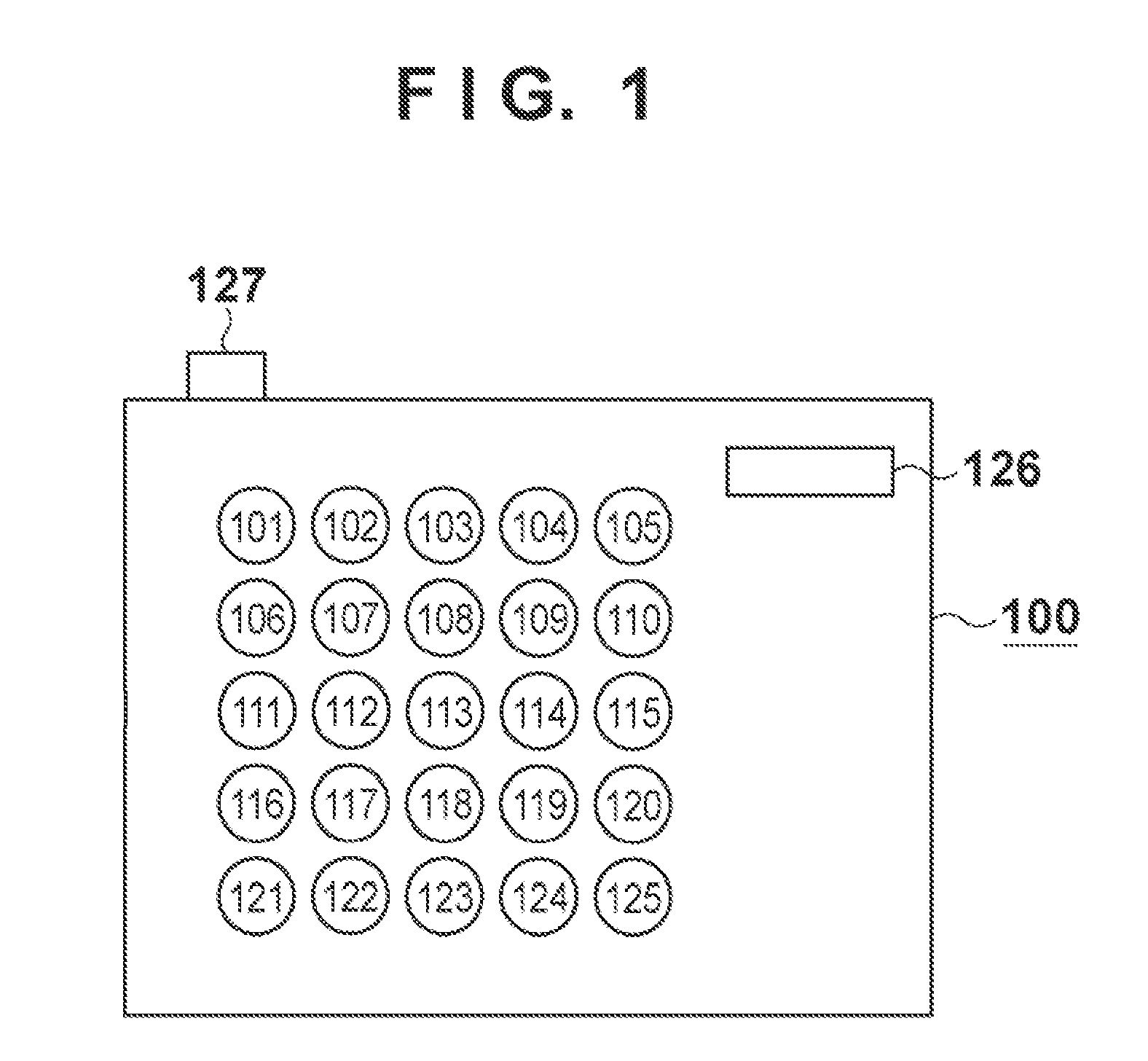

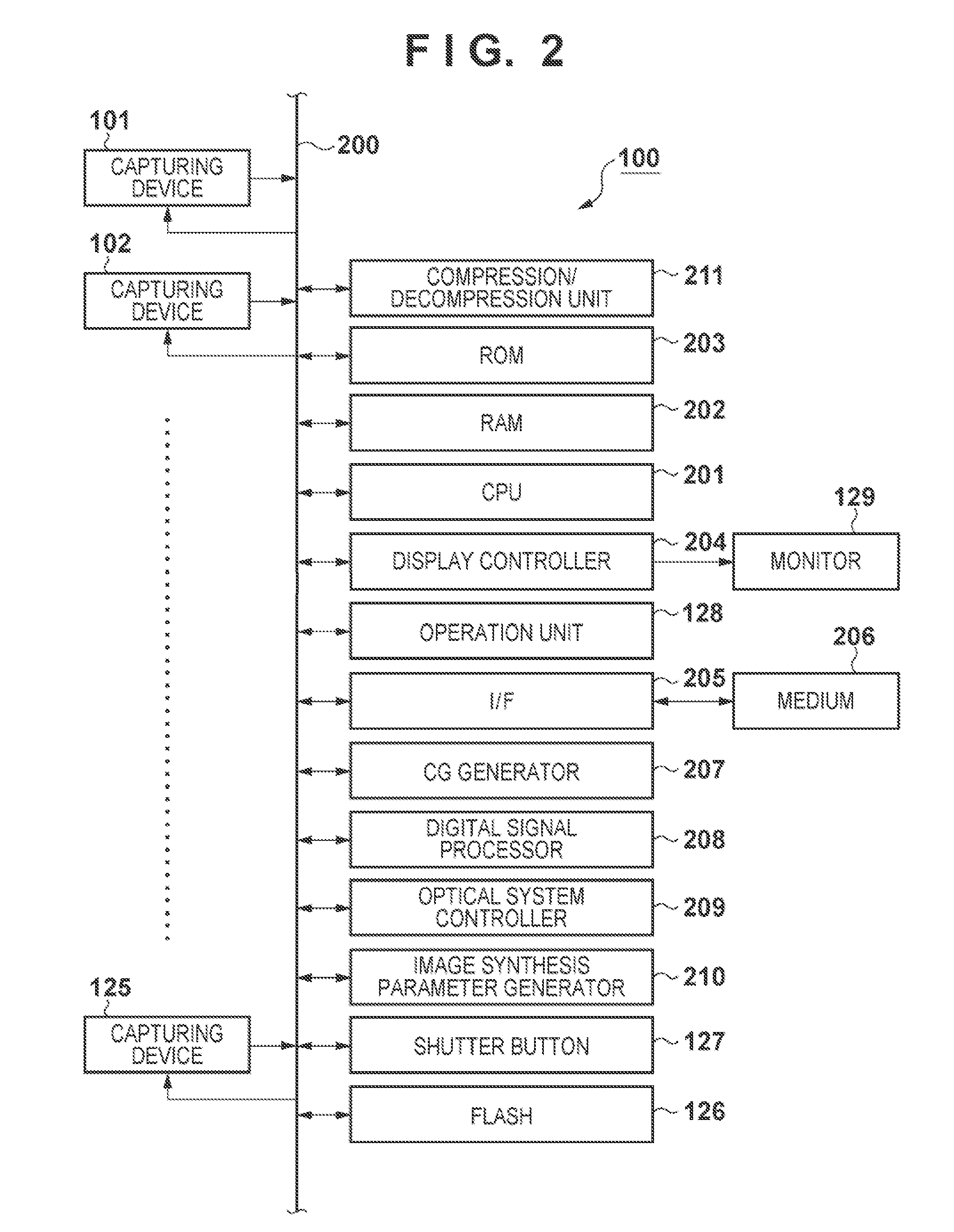

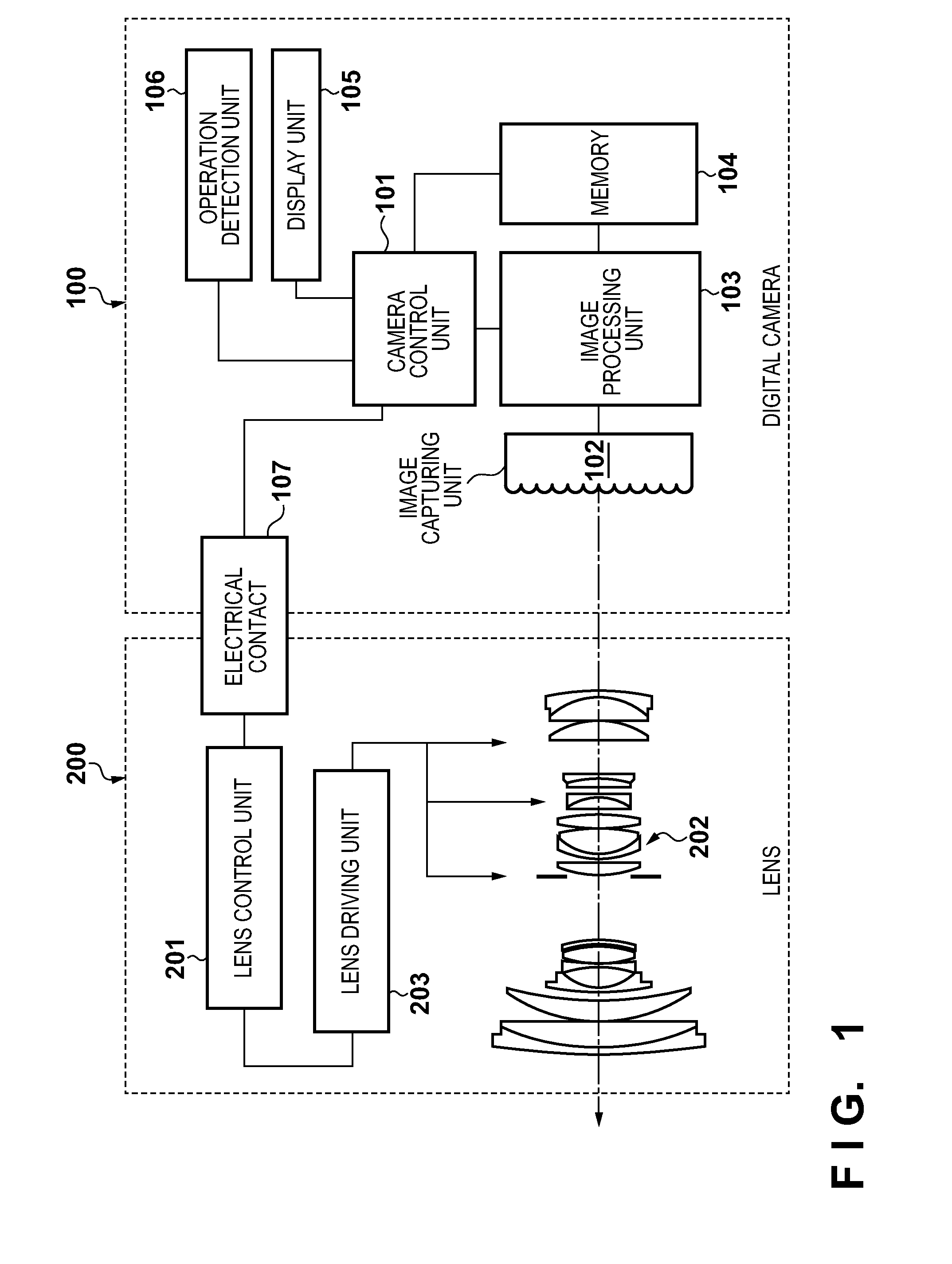

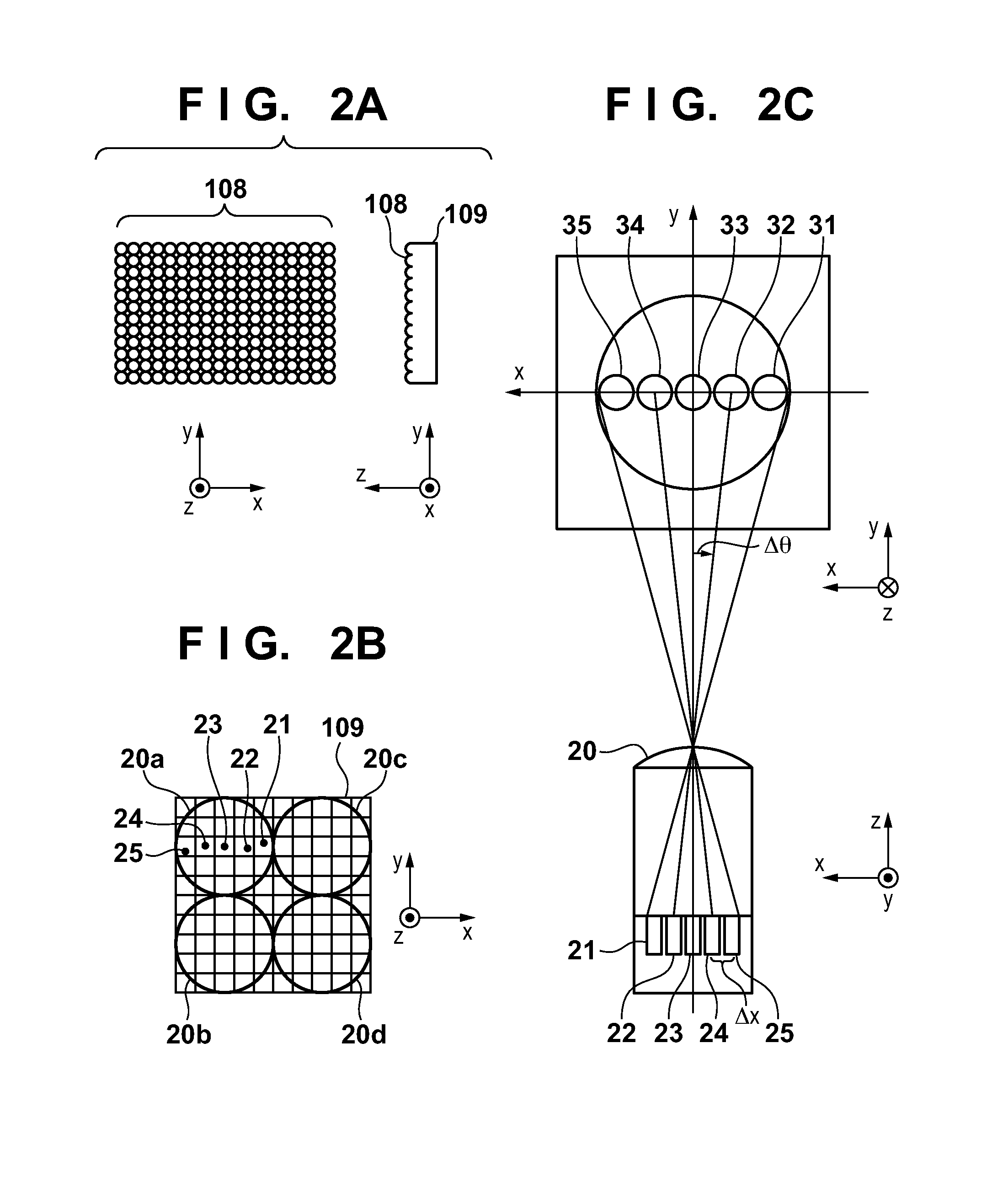

Image processing apparatus, image processing method and program

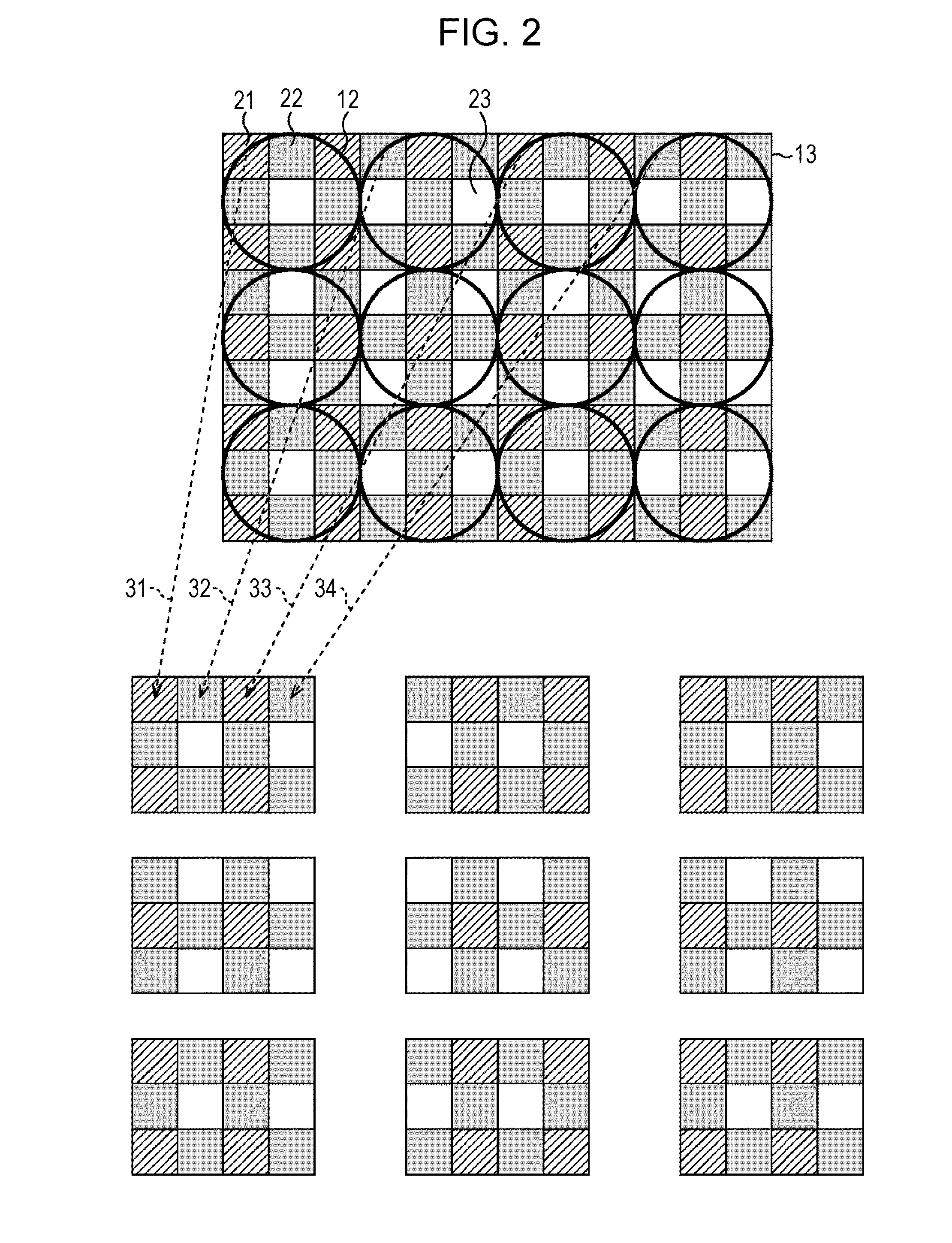

ActiveUS20120307099A1Improve image qualityHigh-resolution imageTelevision system detailsImage enhancementImaging processingImaging quality

When the super-resolution processing is performed, loss of signal occurs due to the aperture effect, and adversely affects the image quality. In order to improve the image quality by suppressing the occurrence of loss of signal, a pixel aperture characteristic provided for some of image capturing units differs from a pixel aperture characteristic provided for the other image capturing units, and a plurality of digital images are captured by these image capturing units and are synthesized thereafter.

Owner:CANON KK

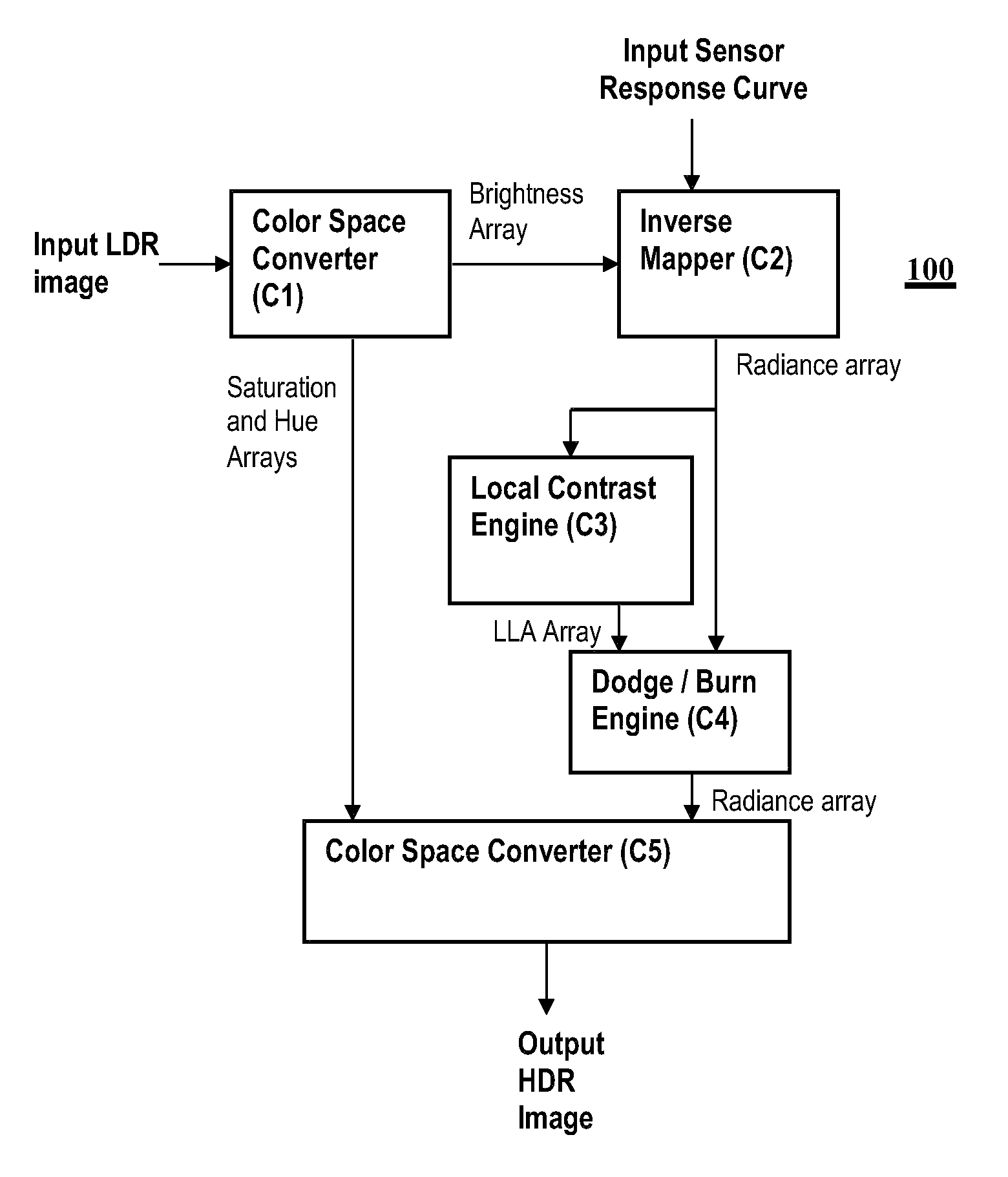

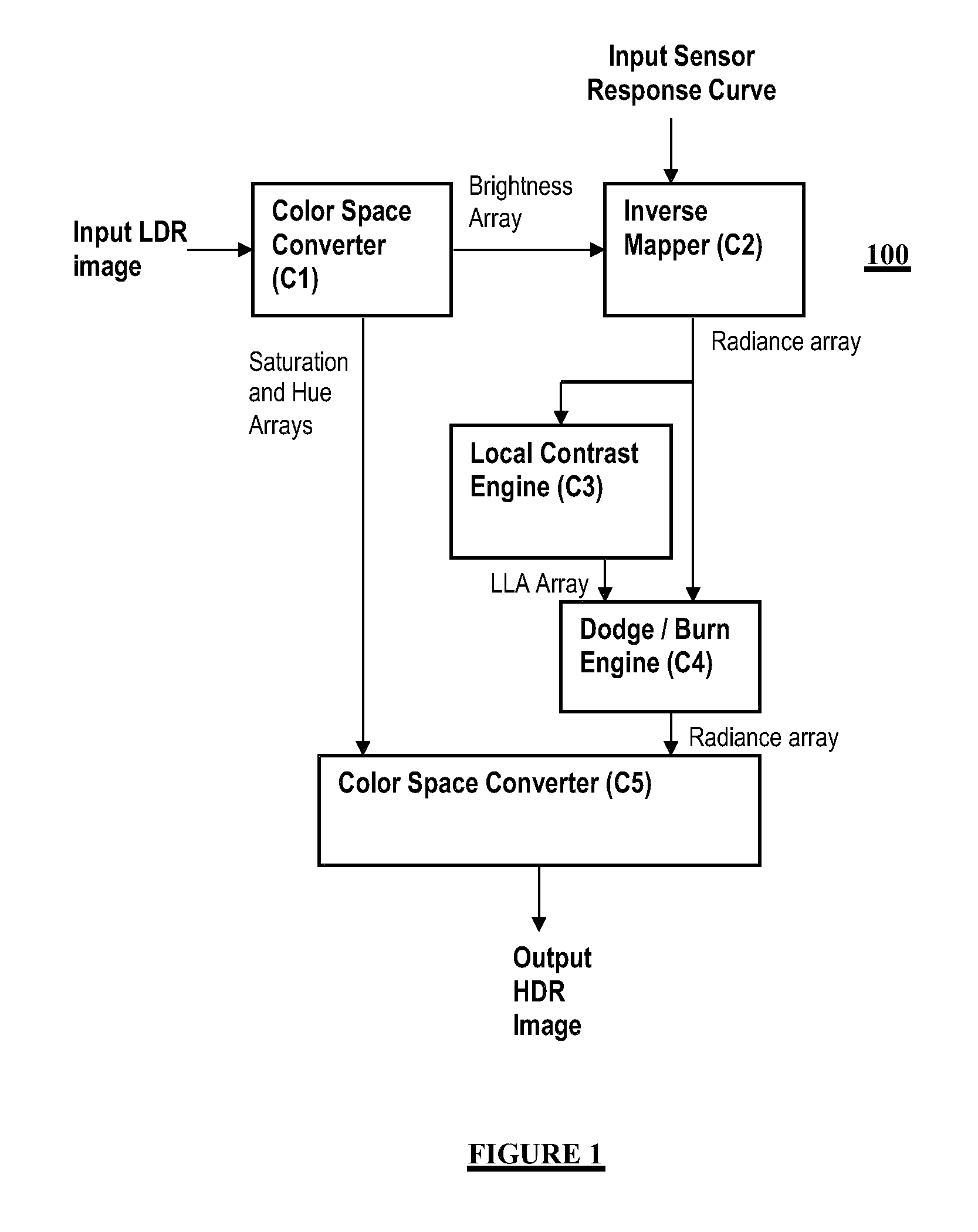

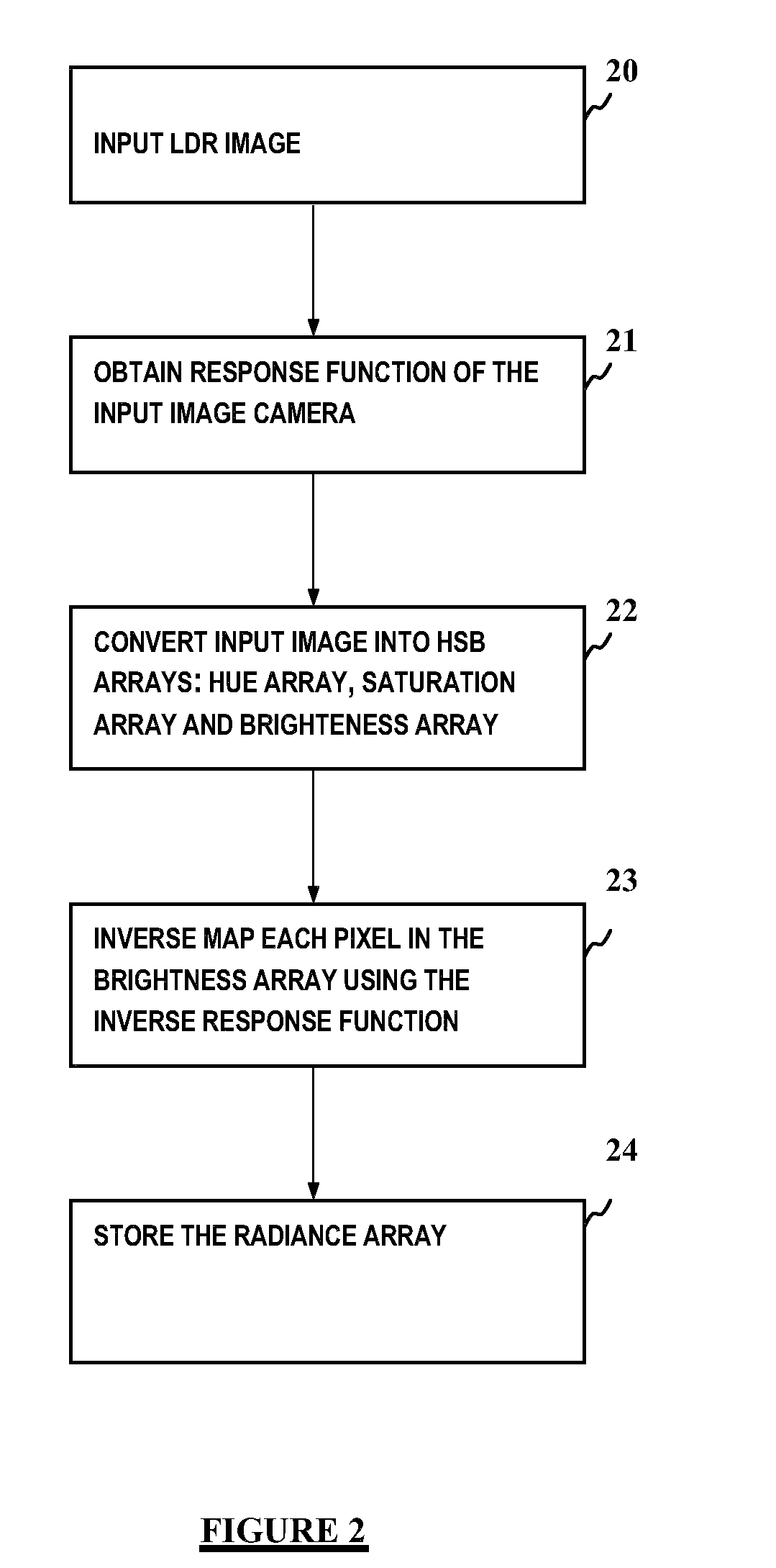

Transforming a digital image from a low dynamic range (LDR) image to a high dynamic range (HDR) image

The invention provides a method for transforming an image from a Low Dynamic Range (LDR) image obtained with a given camera to a High Dynamic Range (HDR) image, the method comprising:obtaining the exposure-pixel response curve (21) for said given cameraconverting the LDR image to HSB color space arrays (22), said HSB color space arrays including a Hue array, a Saturation array and a Brigthness array; anddetermining a Radiance array (23, 24) by inverse mapping each pixel in said Brightness array using the inverse of the exposure-pixel response curve (f-1).

Owner:IBM CORP

Image processing device and method, recording medium, and program

ActiveUS20130135448A1Reduce noiseHigh resolutionTelevision system detailsImage enhancementParallaxImaging processing

An image processing device includes a viewpoint separating unit configured to separate multi-viewpoint image data, including images of multiple viewpoints and representing intensity distribution of light and the direction of travel of light according to positions and pixel values of pixels, into a plurality of single-viewpoint image data for each of the individual viewpoints; and a parallax control unit configured to control amount of parallax between the plurality of single-viewpoint image data obtained by separation into individual viewpoints by the viewpoint separating unit.

Owner:SONY CORP

Image capturing apparatus, image processing apparatus, and method thereof

An image processing apparatus and method relate to an imaging process which is applied to image data captured by an image capturing apparatus such as a multi-eye camera, multi-view camera, and the like. The image processing apparatus and method generates synthetic image data which focused on a curved focus surface by compositing multi-view image data captured from multi-viewpoints.

Owner:CANON KK

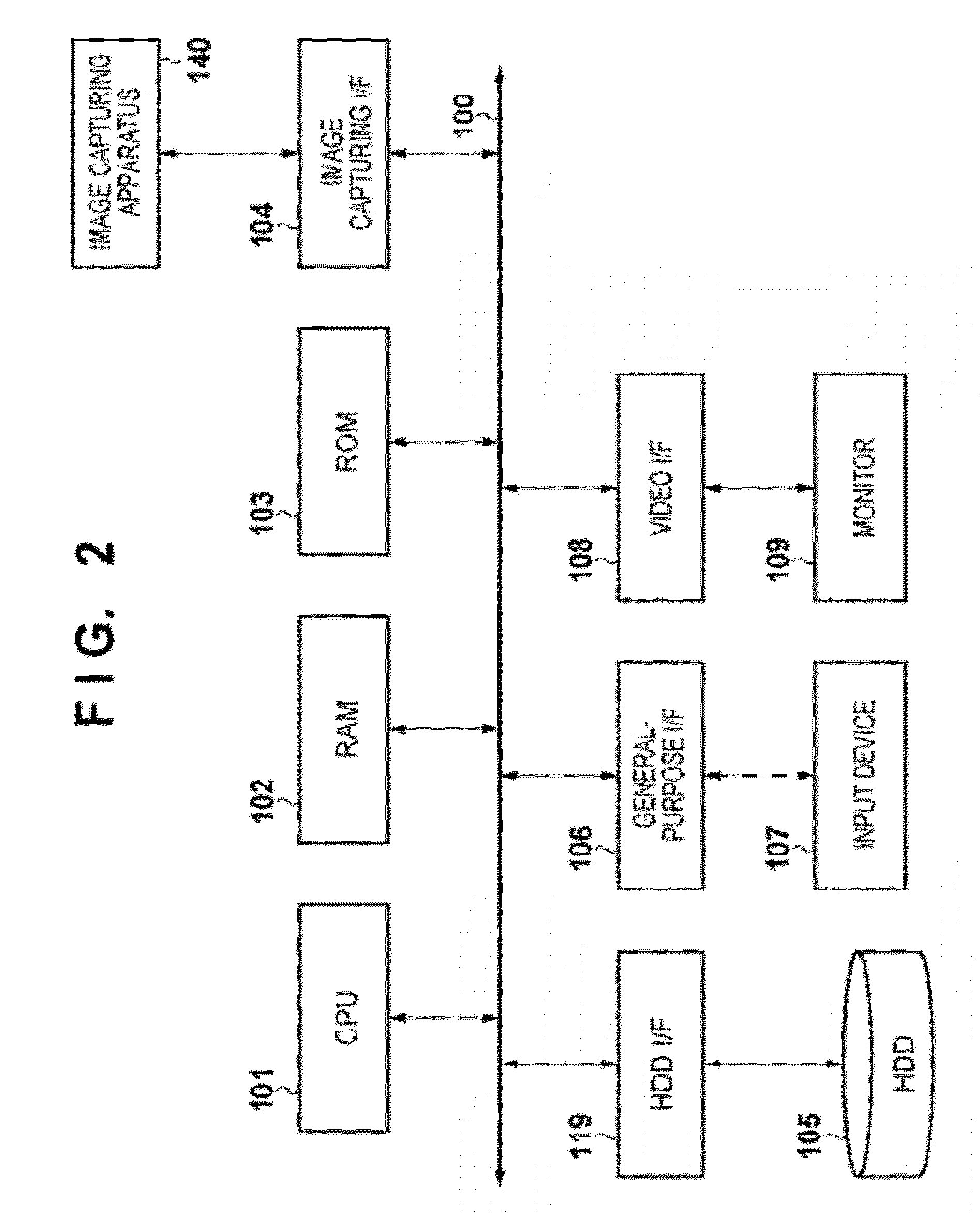

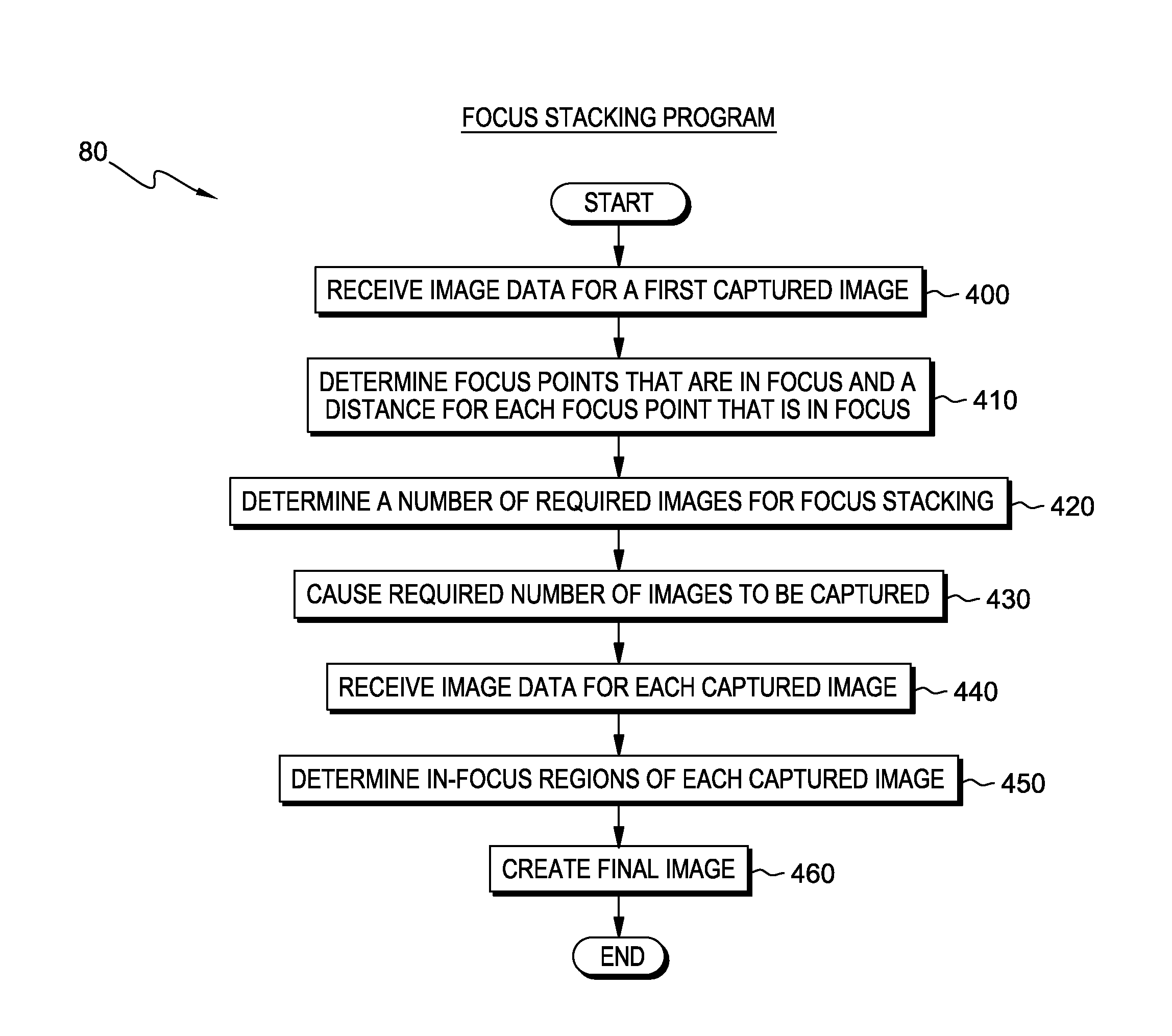

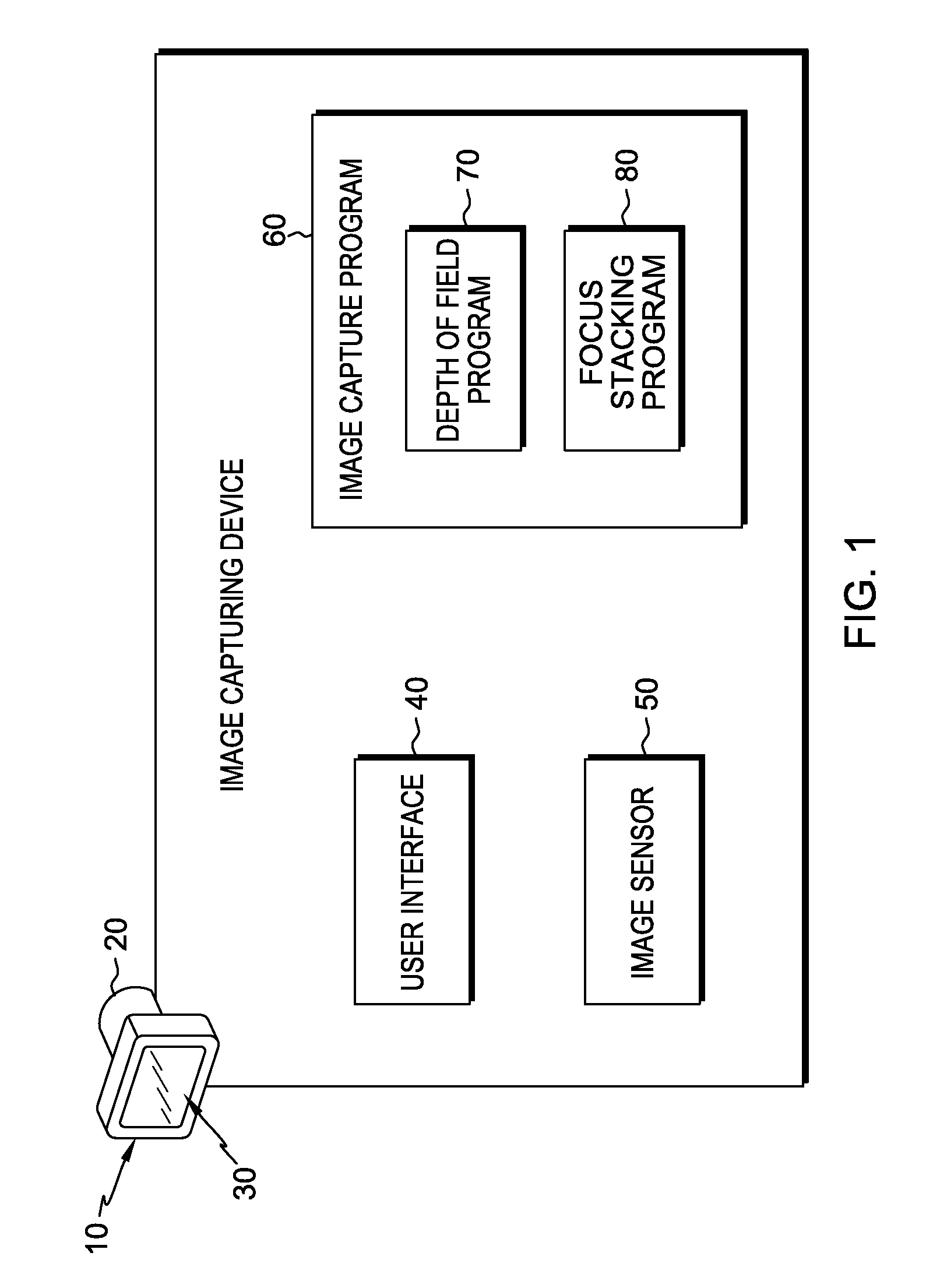

Automatic focus stacking of captured images

In a method for generating a focused image of subject matter, a first image is received, wherein the first image includes subject matter and a first set of image data. One or more processors generates a first depth map for the first image. One or more processors determines at least a second image to be captured. The second image is received, wherein the second image includes the subject matter of the first image and at least a second portion of the subject matter is in focus. One or more processors generate a composite image comprising at least the first portion of the first image and the second portion of the second image.

Owner:IBM CORP

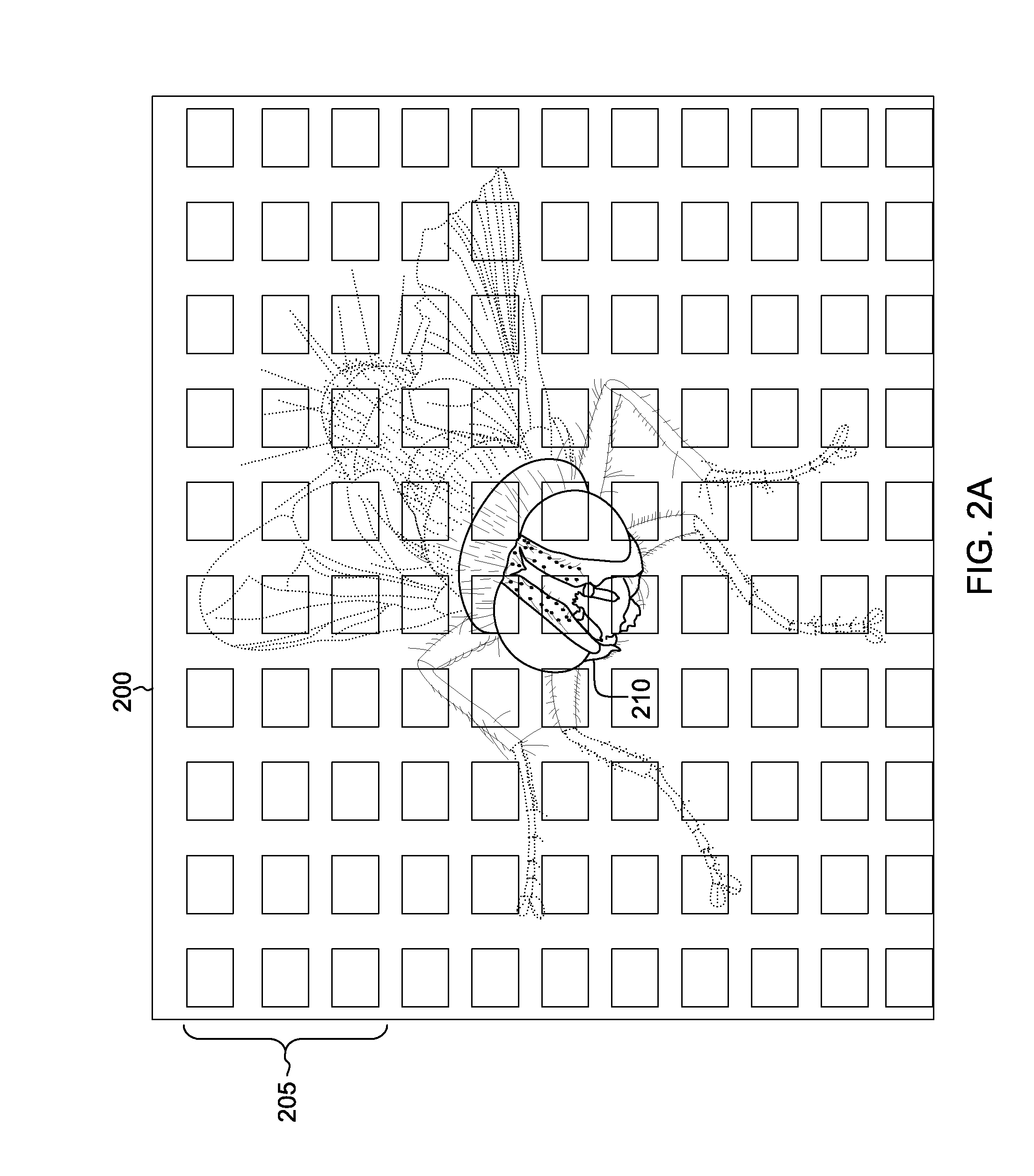

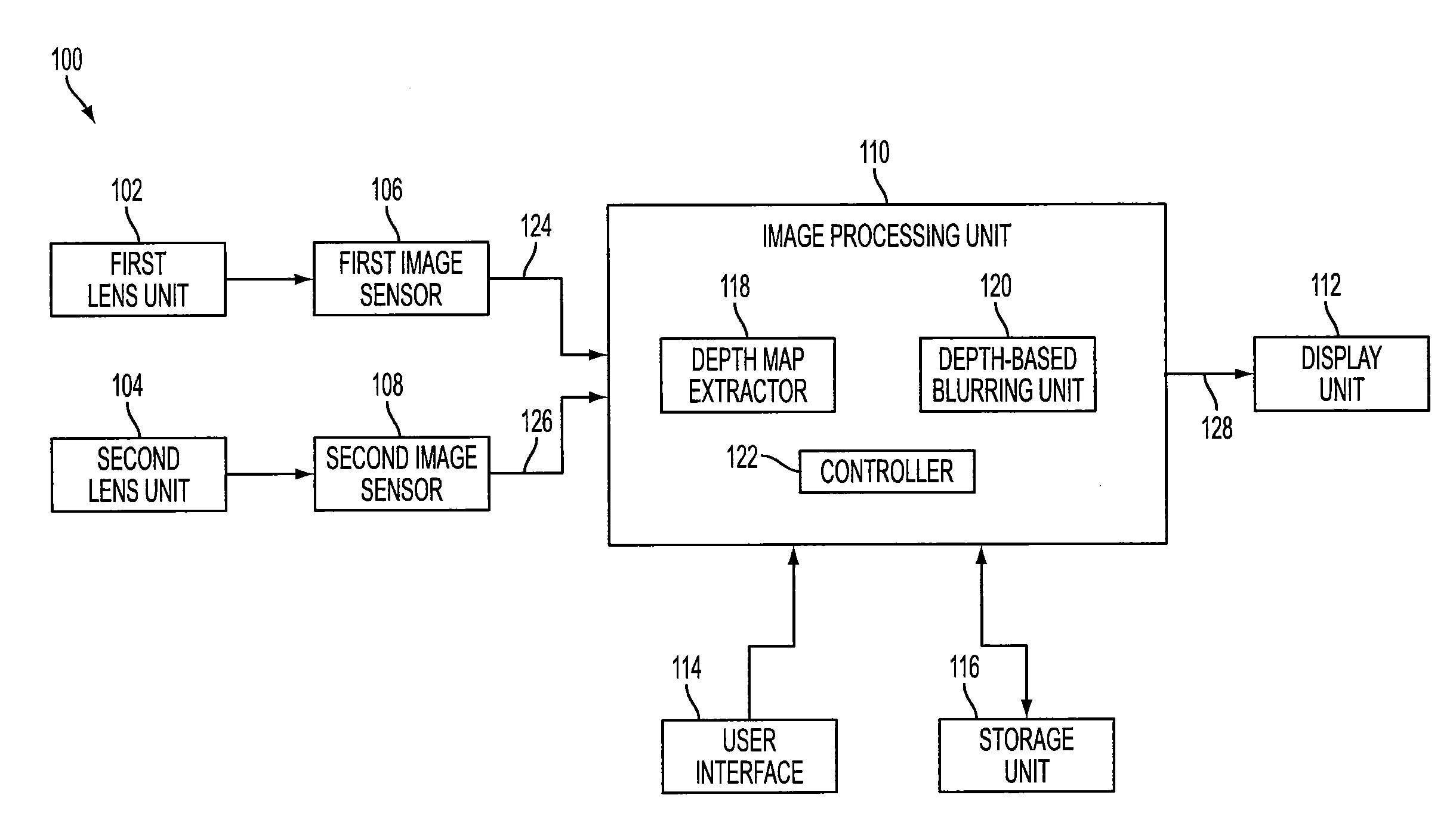

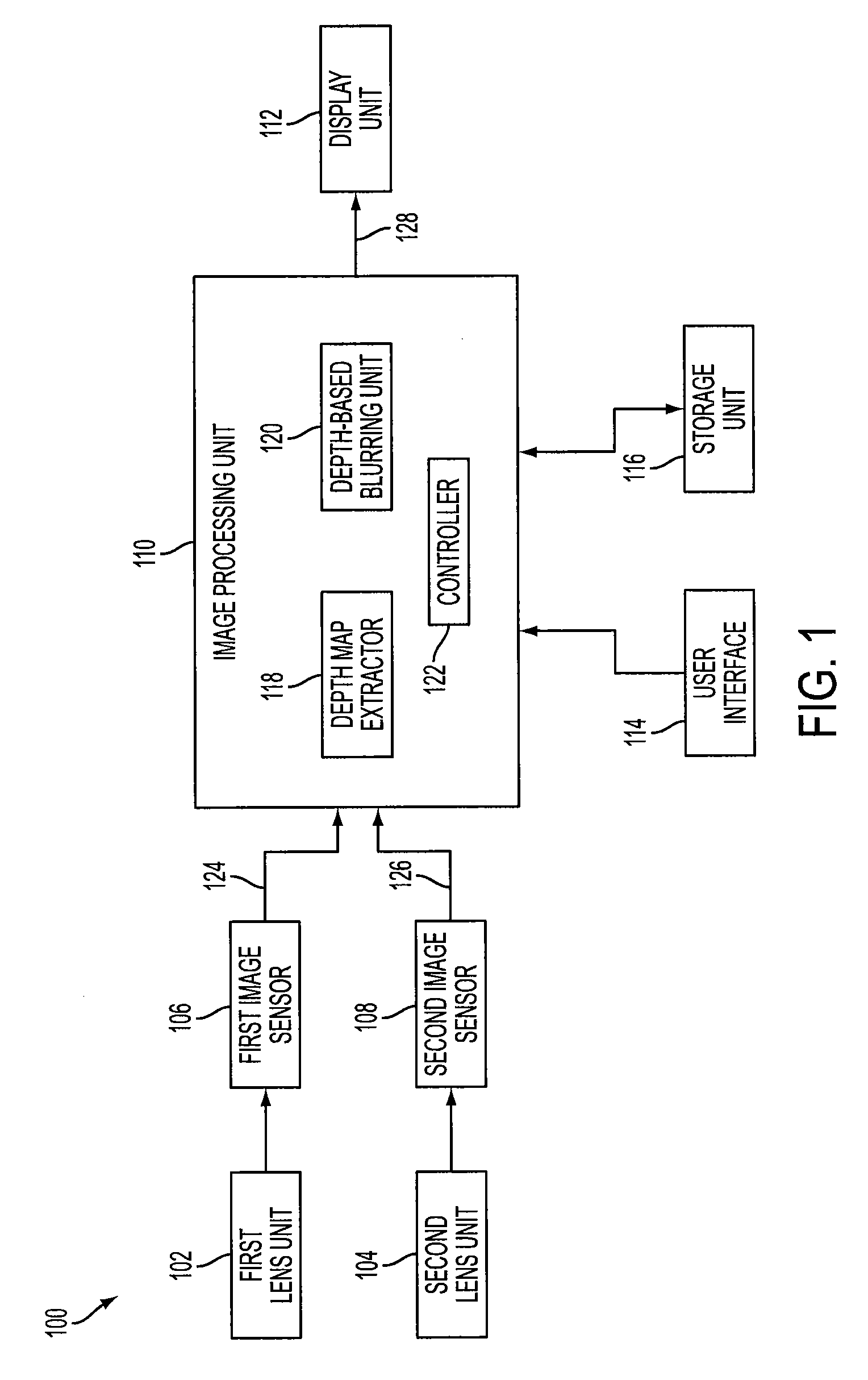

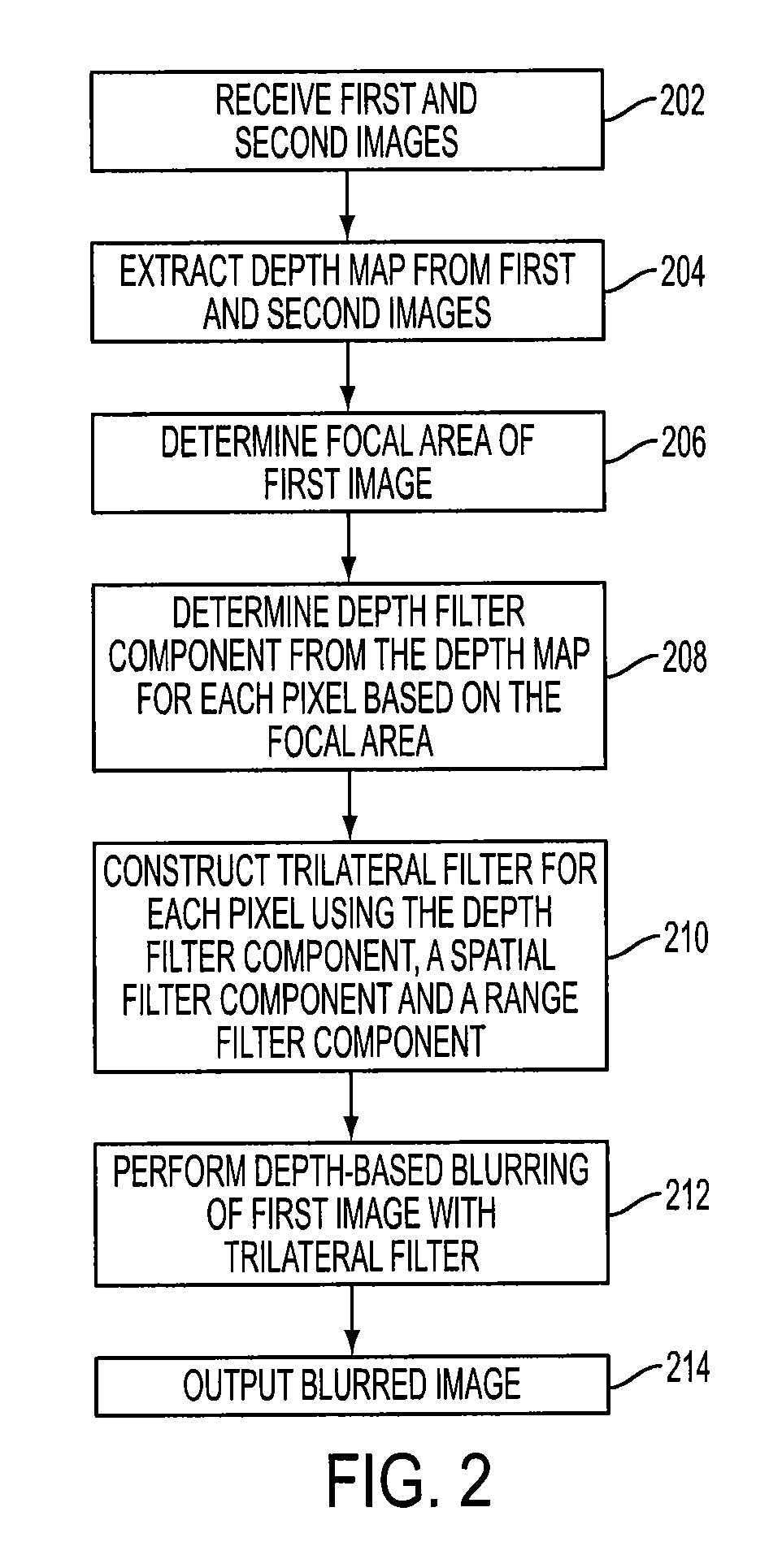

Method of depth-based imaging using an automatic trilateral filter for 3D stereo imagers

A system of stereo imagers, including image processing units and methods of blurring an image, is presented. The image is received from an image sensor. For each pixel of the image, a depth filter component is determined based on a focal area of the image and a depth map associated with the image. For each pixel of the image, a trilateral filter is generated that includes a spatial filter component, a range filter component and the depth filter component. The respective trilateral filter is applied to corresponding pixels of the image to blur the image outside of the focal area. A refocus area or position may be determined by imaging geometry or may be selected manually via a user interface.

Owner:SEMICON COMPONENTS IND LLC

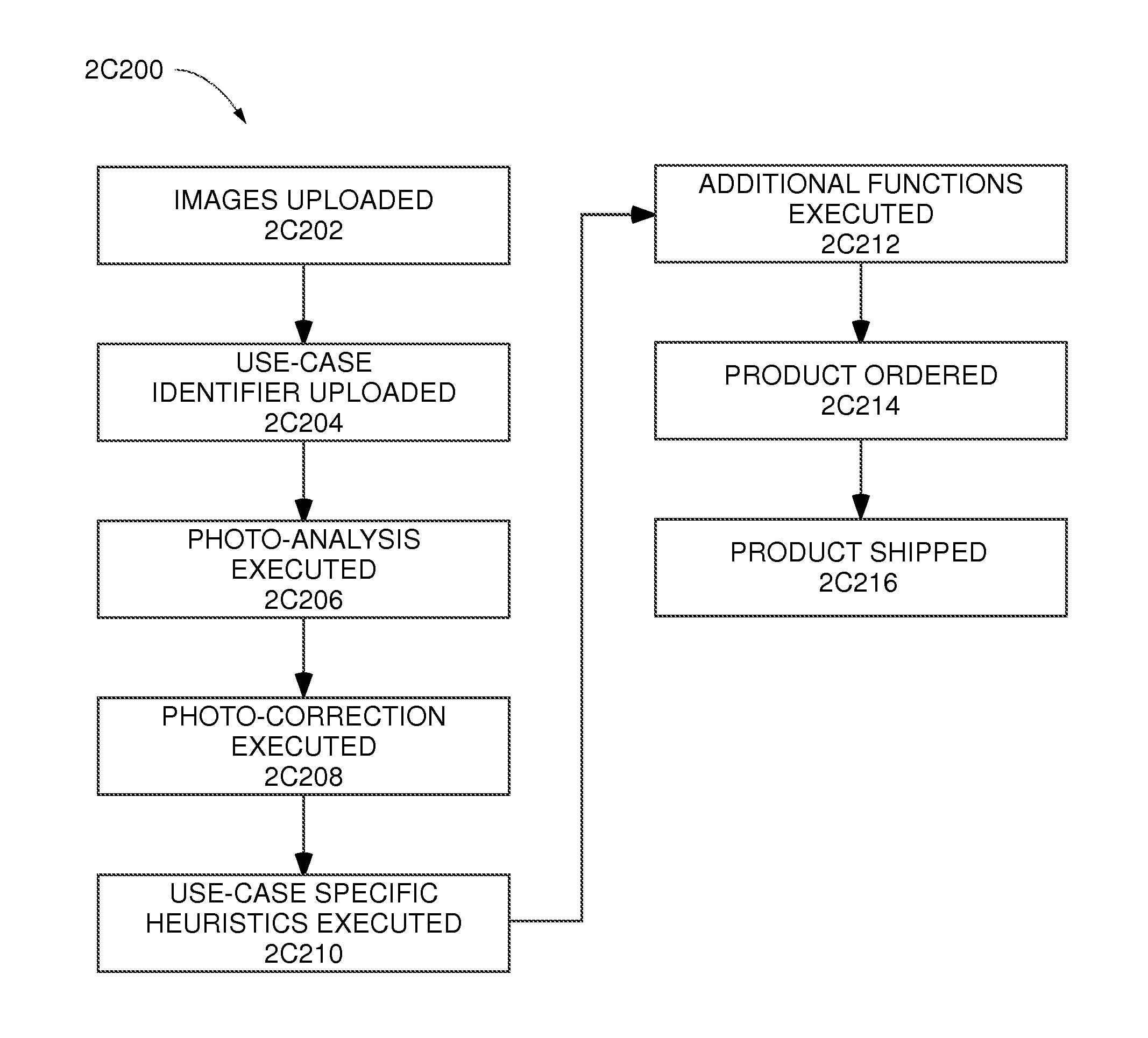

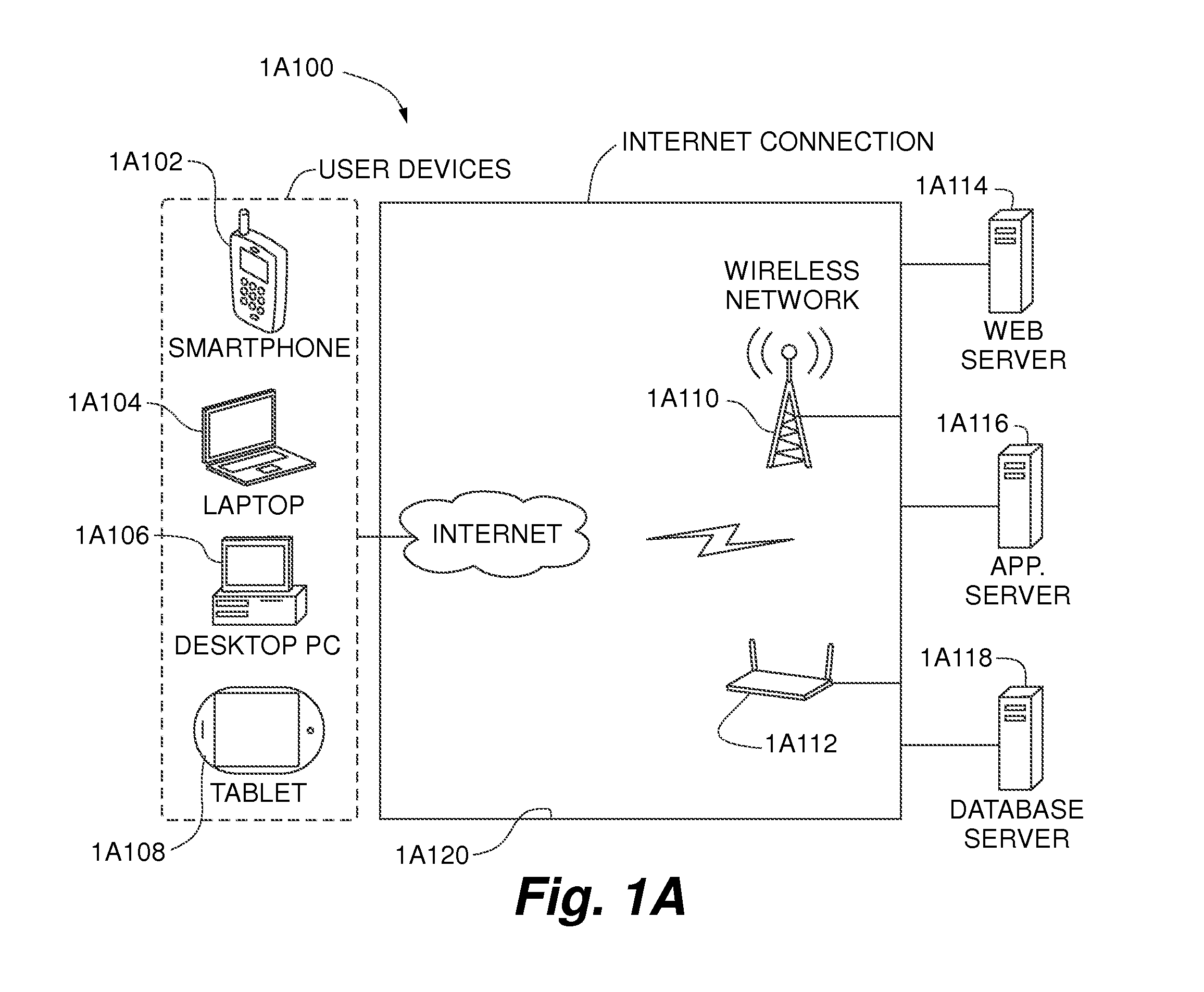

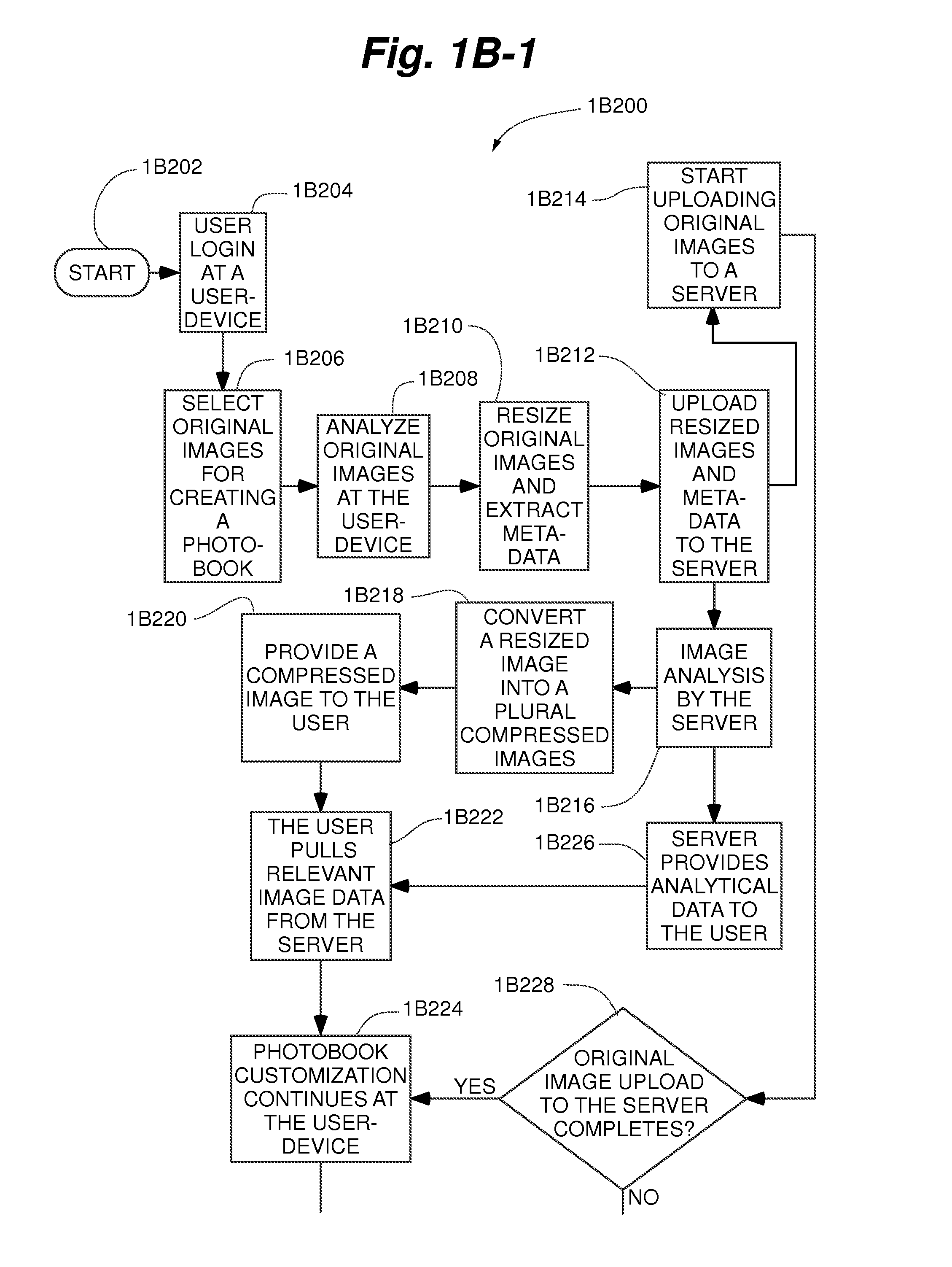

Systems and methods for automatically creating a photo-based project based on photo analysis and image metadata

ActiveUS8923551B1Closer to purchaseDigital data information retrievalTexturing/coloringUser deviceMetadata

In some embodiments, a server, system, and method for automatically creating a photo-based project based on photo analysis and image metadata is disclosed. The method includes the steps of: receiving a plurality of images from a user-device, reading embedded metadata from the plurality of images, and storing said plurality of images in a data repository; performing photo analysis on the plurality of images in the data repository to determine a visual content and relevant metadata in the images; customizing the photo-based project automatically by performing one or more automatic customization actions based on the visual content in and the relevant metadata in the plurality of images; placing the plurality of images automatically in one or more particular page layouts of the photo-based project based on the customization performed; and generating a printed product comprising the plurality of images based on the customization performed.

Owner:INTERACTIVE MEMORIES

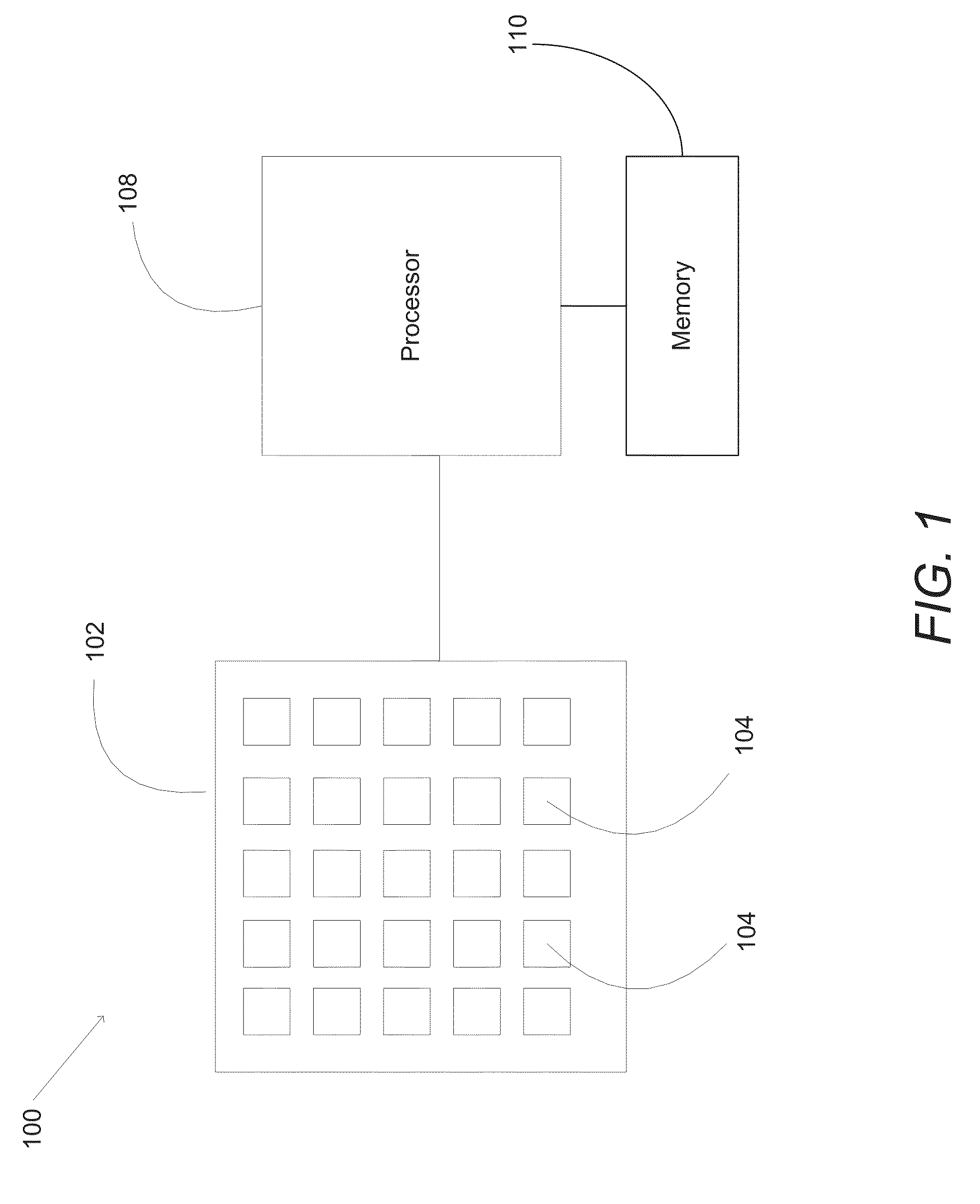

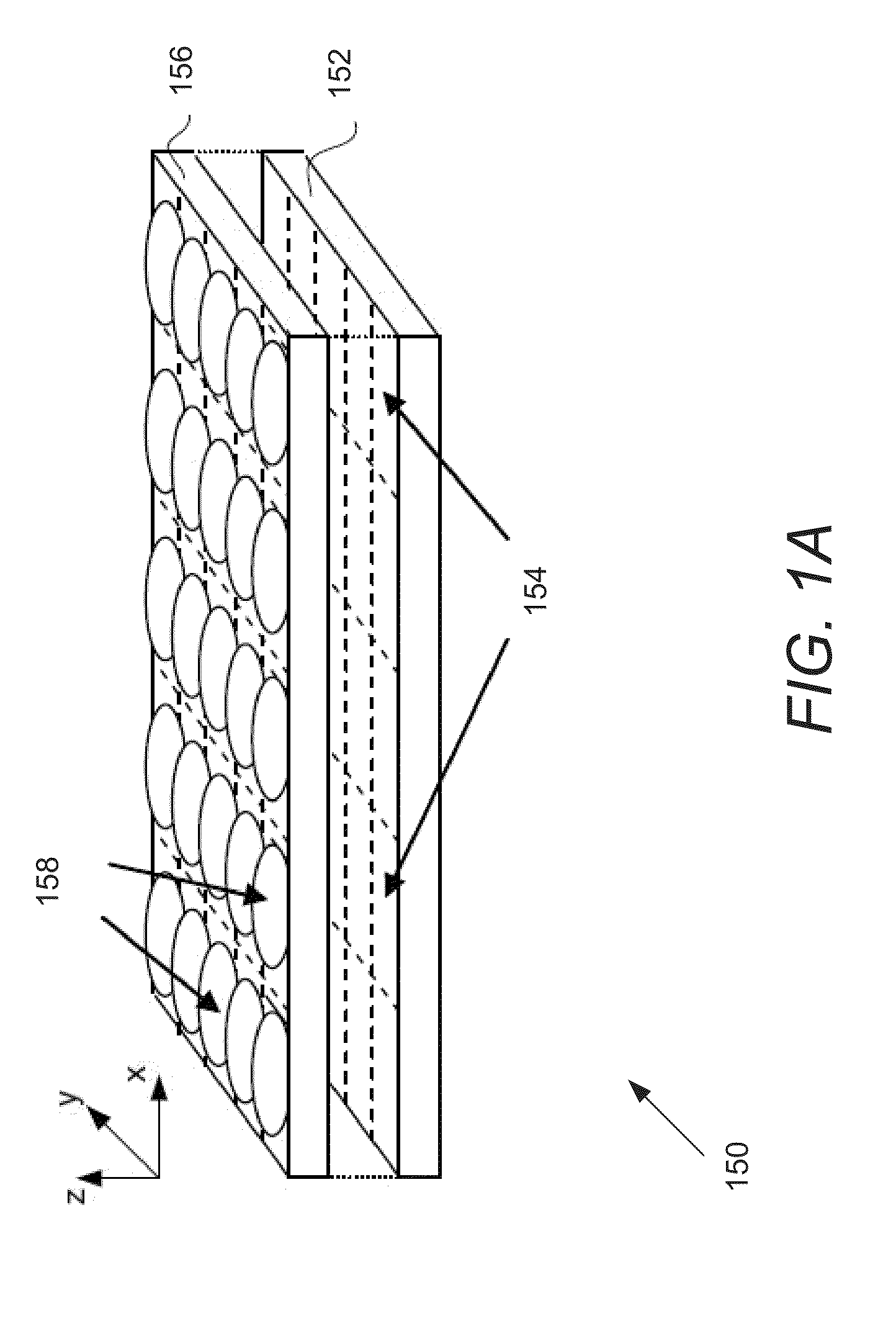

Methods, Apparatus, and Computer-Readable Storage Media for Depth-Based Rendering of Focused Plenoptic Camera Data

ActiveUS20130120356A1High resolution3D-image renderingDetails involving computational photographyGraphicsMultiple point

Methods, apparatus, and computer-readable storage media for rendering focused plenoptic camera data. A depth-based rendering technique is described that estimates depth at each microimage and then applies that depth to determine a position in the input flat from which to read a value to be assigned to a given point in the output image. The techniques may be implemented according to parallel processing technology that renders multiple points of the output image in parallel. In at least some embodiments, the parallel processing technology is graphical processing unit (GPU) technology.

Owner:ADOBE INC

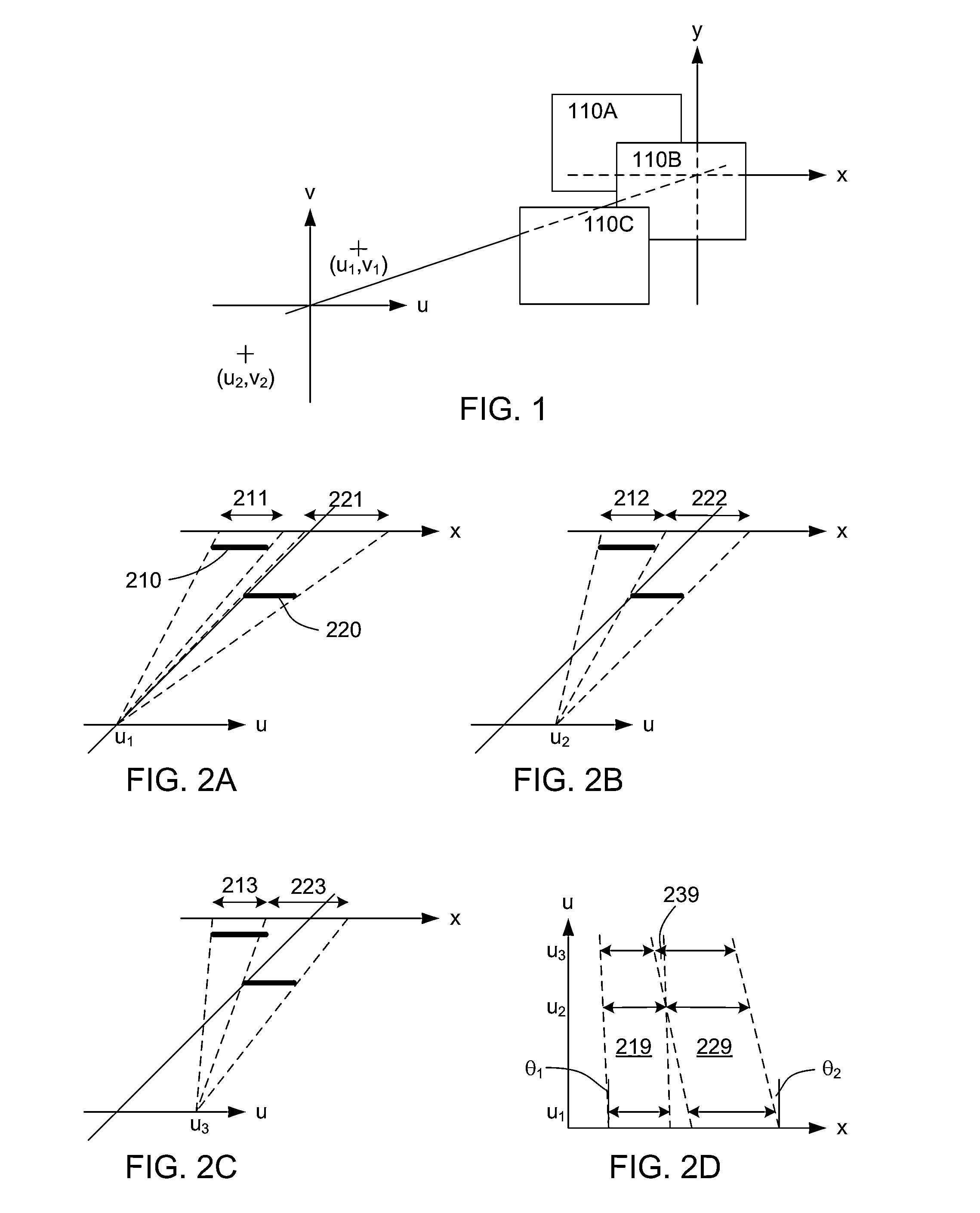

Sparse light field representation

The disclosure provides an approach for generating a sparse representation of a light field. In one configuration, a sparse representation application receives a light field constructed from multiple images, and samples and stores a set of line segments originating at various locations in epipolar-plane images (EPI), until the EPIs are entirely represented and redundancy is eliminated to the extent possible. In addition, the sparse representation application determines and stores difference EPIs that account for variations in the light field. Taken together, the line segments and the difference EPIs compactly store all relevant information that is necessary to reconstruct the full 3D light field and extract an arbitrary input image with a corresponding depth map, or a full 3D point cloud, among other things. This concept also generalizes to higher dimensions. In a 4D light field, for example, the principles of eliminating redundancy and storing a difference volume remain valid.

Owner:DISNEY ENTERPRISES INC

Image creation with software controllable depth of field

A method of controlling depth of field of an image by a computer after the image has been taken based on the data acquired while taking the images including, acquiring multiple images from the same perspective with different focal points, selecting parameters for preparing a displayable image, constructing an image using the data of the acquired multiple images according to the selected parameters; and displaying the constructed image.

Owner:AVAGO TECH INT SALES PTE LTD

Method and system for rendering simulated depth-of-field visual effect

ActiveUS20140368494A1Quality improvementHigh-fidelity depth-of-field visual effectImage enhancementImage analysisPattern recognitionFast Fourier transform

Systems and methods for rendering depth-of-field visual effect on images with high computing efficiency and performance. A diffusion blurring process and a Fast Fourier Transform (FFT)-based convolution are combined to achieve high-fidelity depth-of-field visual effect with Bokeh spots in real-time applications. The brightest regions in the background of an original image are enhanced with Bokeh effect by virtue of FFT convolution with a convolution kernel. A diffusion solver can be used to blur the background of the original image. By blending the Bokeh spots with the image with gradually blurred background, a resultant image can present an enhanced depth-of-field visual effect. The FFT-based convolution can be computed with multi-threaded parallelism.

Owner:NVIDIA CORP

Image processing apparatus and method thereof

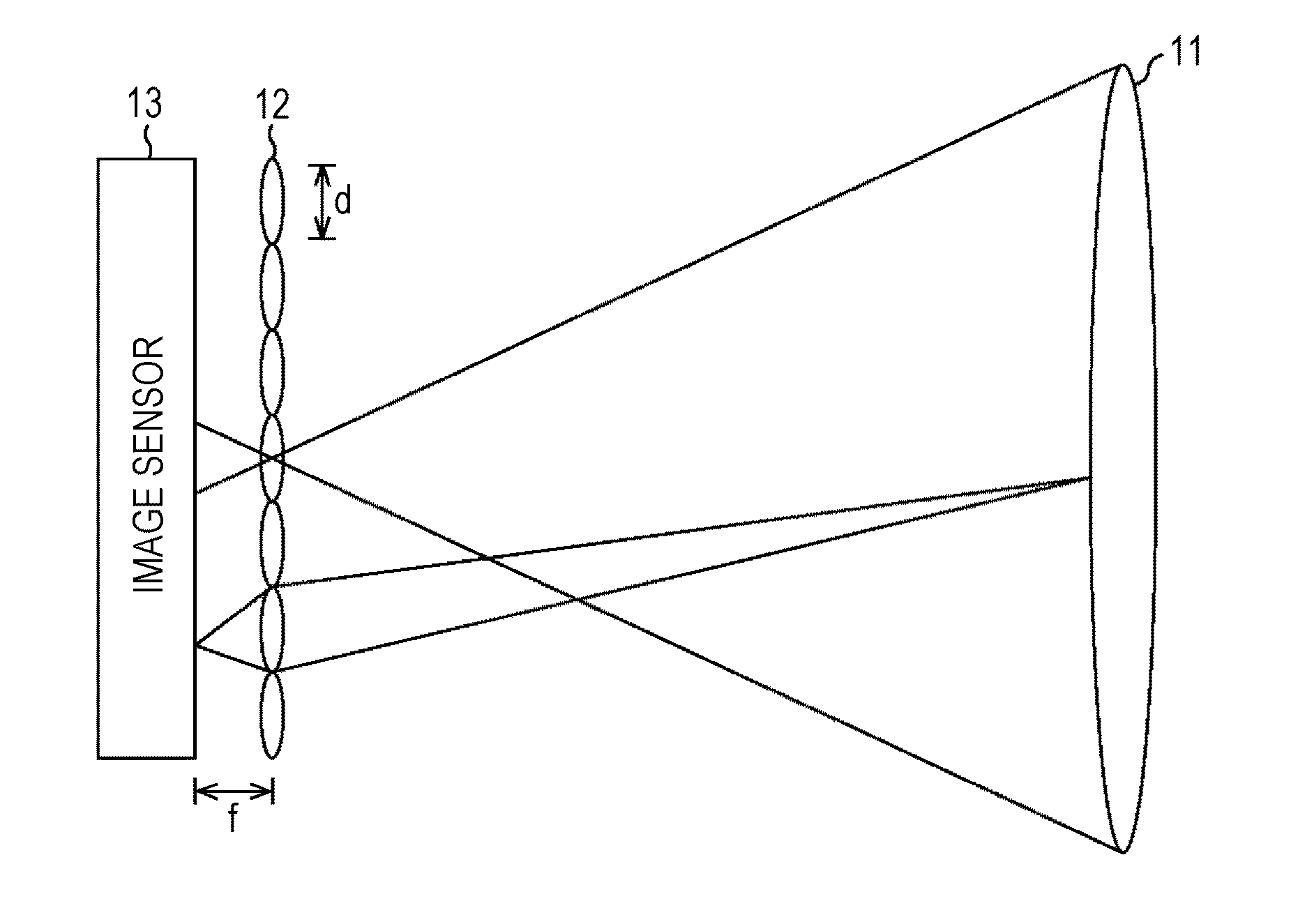

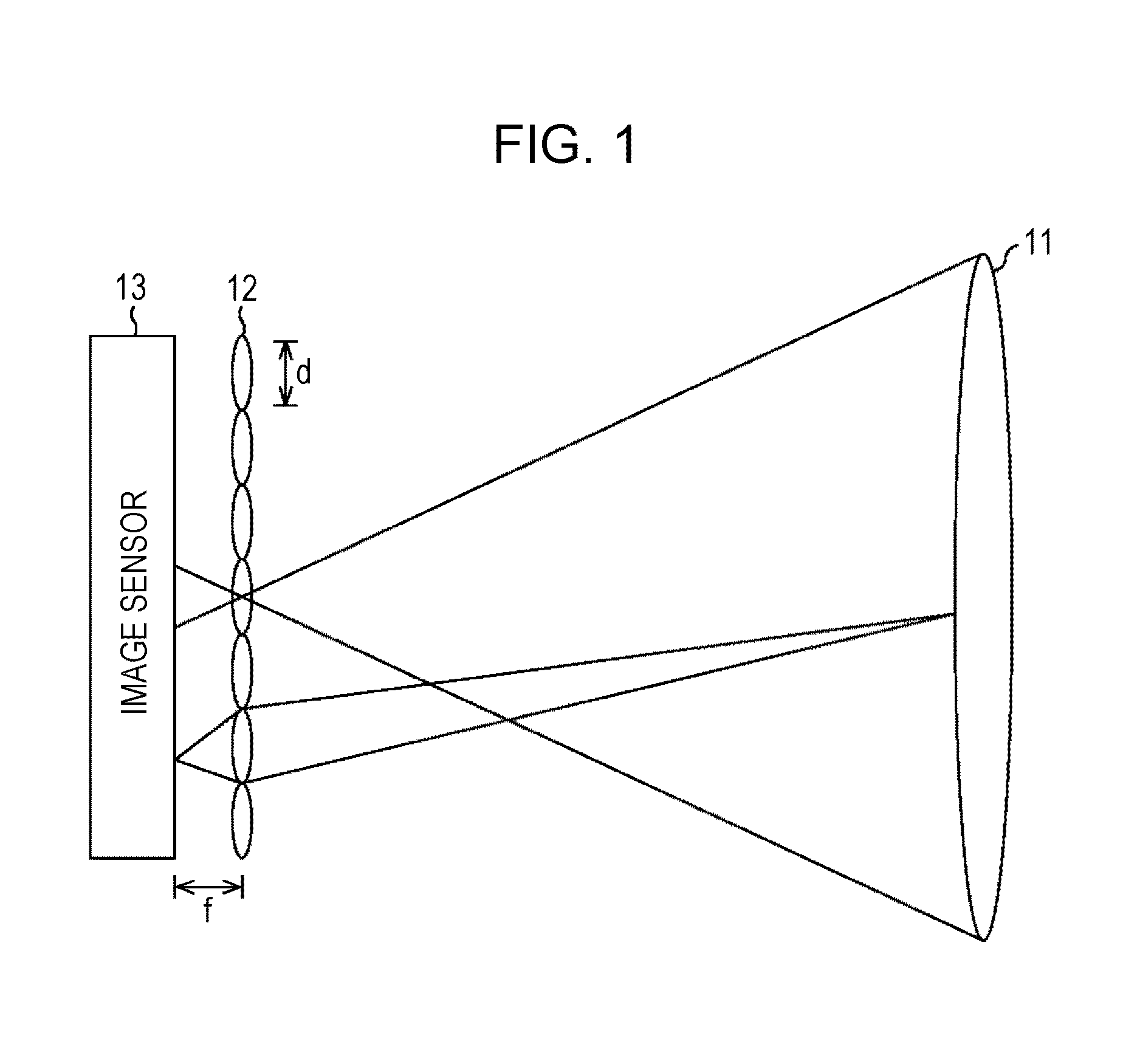

ActiveUS20120287329A1High resolutionTelevision system detailsImage enhancementImaging processingImage resolution

A plurality of first image data having a first resolution, which are obtained by capturing images from a plurality of viewpoints, and capturing information in the capturing operation are input. Based on the capturing information, a plurality of candidate values are set as a synthesis parameter required to synthesize second image data having a second resolution higher than the first resolution from the first image data. Using a candidate value selected from the plurality of candidate values as a synthesis parameter, the second image data is synthesized from the plurality of first image data.

Owner:CANON KK

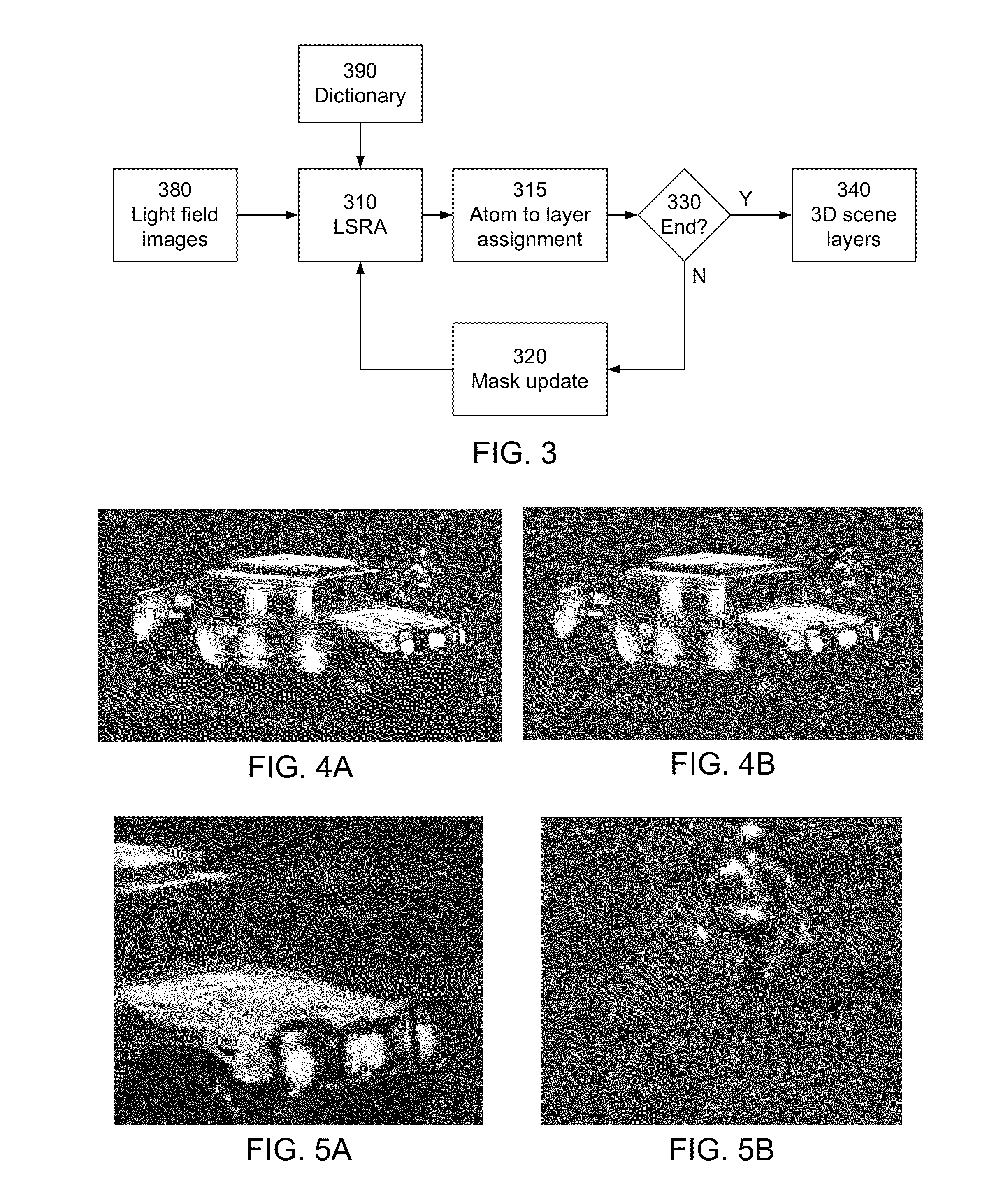

Occlusion-Aware Reconstruction of Three-Dimensional Scenes from Light Field Images

A three-dimensional scene is modeled as a set of layers representing different depths of the scene, and as masks representing occlusions of layers by other layers. The layers are represented as linear combinations of atoms selected from an overcomplete dictionary. An iterative approach is used to alternately estimate the atom coefficients for layers from a light field image of the scene, assuming values for the masks, and to estimate the masks given the estimated layers. In one approach, the atoms in the dictionary are ridgelets oriented at different angles, where there is a correspondence between depth and angle.

Owner:RICOH KK

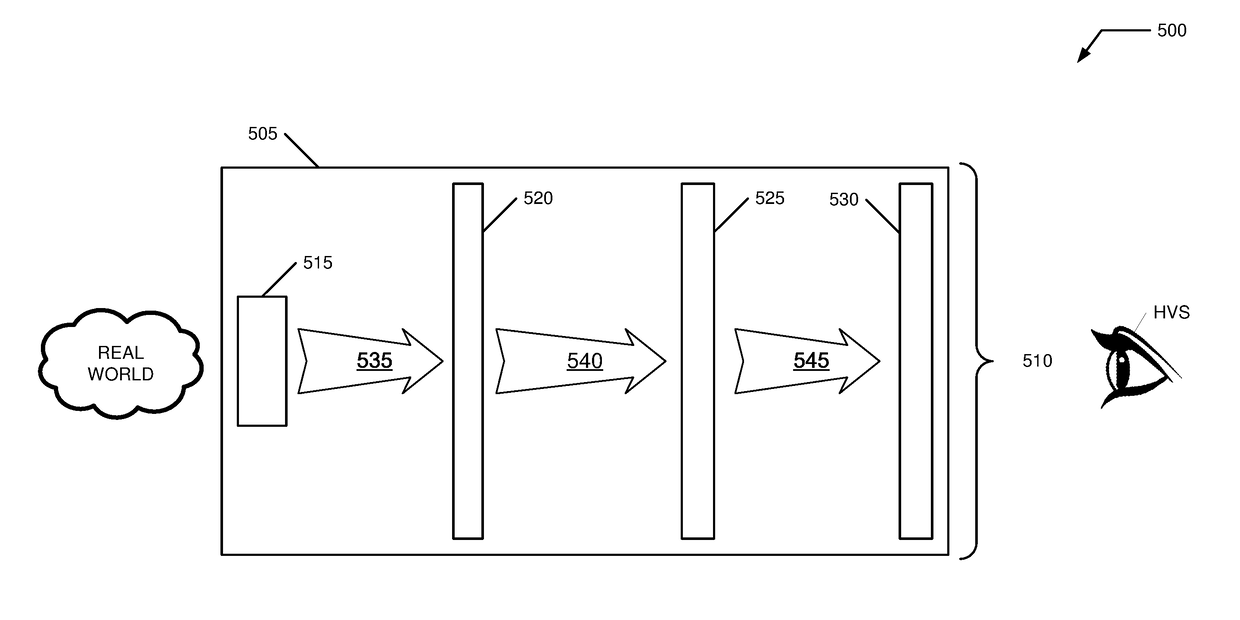

Hybrid photonic vr/ar systems

InactiveUS20180122143A1Need for goodEasy to handleImage enhancementImage analysisPhotonicsComputer vision

A VR / AR system, method, architecture includes an augmentor that concurrently receives and processes real world image constituent signals while producing synthetic world image constituent signals and then interleaves / augments these signals for further processing. In some implementations, the real world signals (pass through with possibility of processing by the augmentor) are converted to IR (using, for example, a false color map) and interleaved with the synthetic world signals (produced in IR) for continued processing including visualization (conversion to visible spectrum), amplitude / bandwidth processing, and output shaping for production of a set of display image precursors intended for a HVS.

Owner:PHOTONICA

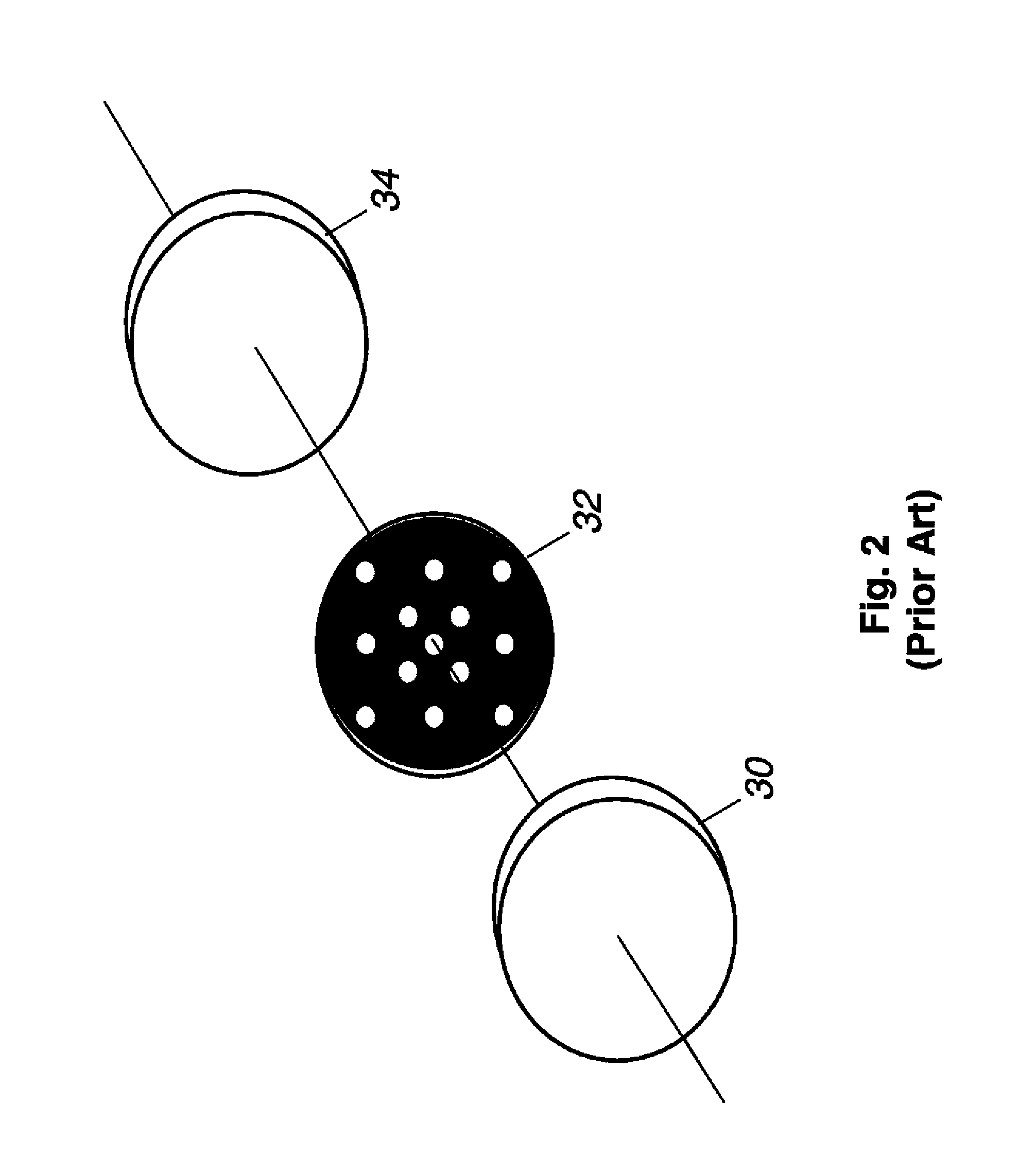

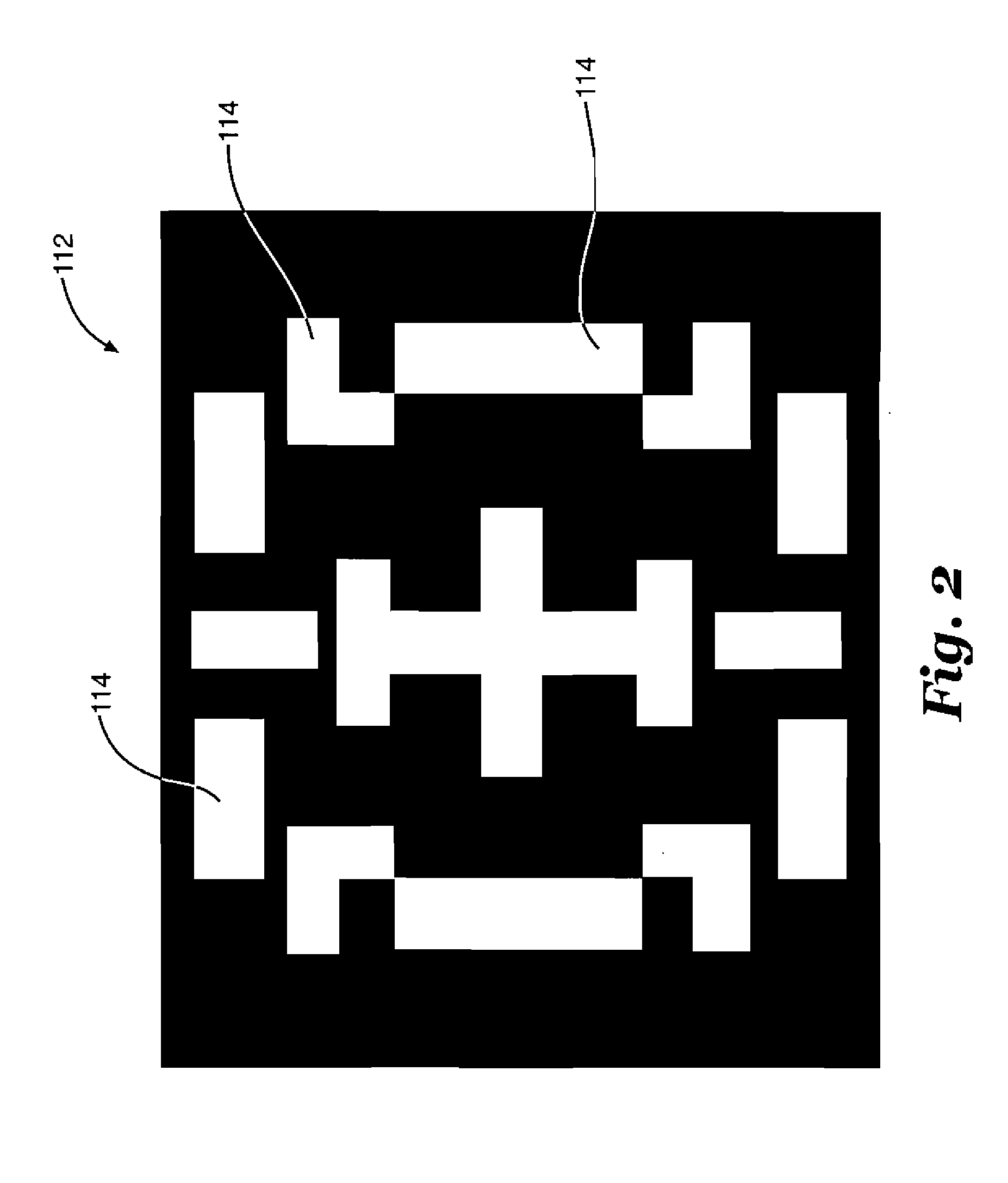

Coded aperture camera with adaptive image processing

ActiveUS20120076362A1Increase valueReduce complexityImage enhancementImage analysisCamera lensImaging processing

An image capture device is used to identify object range information, and includes: providing an image capture device, an image sensor, a coded aperture, and a lens; and using the image capture device to capture a digital image of the scene from light passing through the lens and the coded aperture, the scene having a plurality of objects. The method further includes: dividing the digital image into a set of blocks; assigning a point spread function (psf) value to each of the blocks; combining contiguous blocks in accordance with their psf values; producing a set of blur parameters based upon the psf values of the combined blocks and the psf values of the remaining blocks; producing a set of deblurred images based upon the captured image and each of the blur parameters; and using the set of deblurred images to determine the range information for the objects in the scene.

Owner:APPLE INC

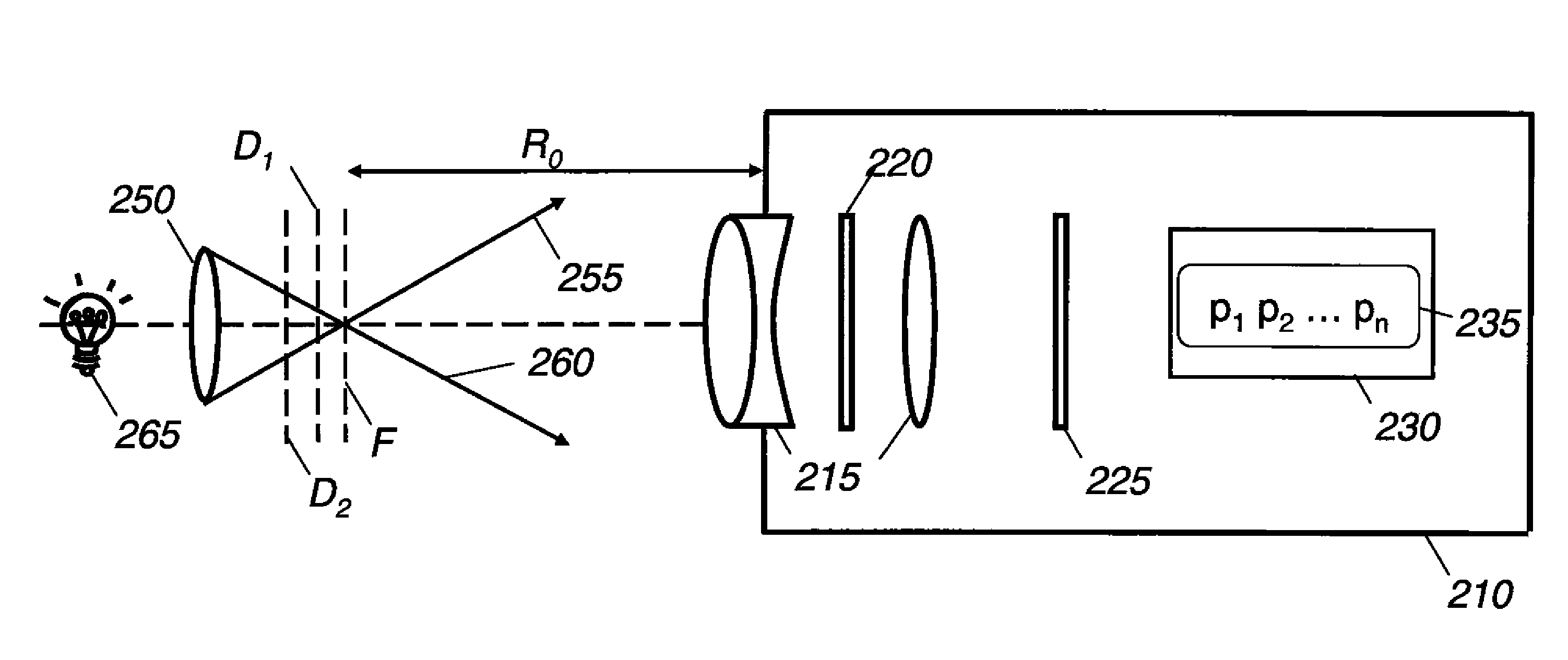

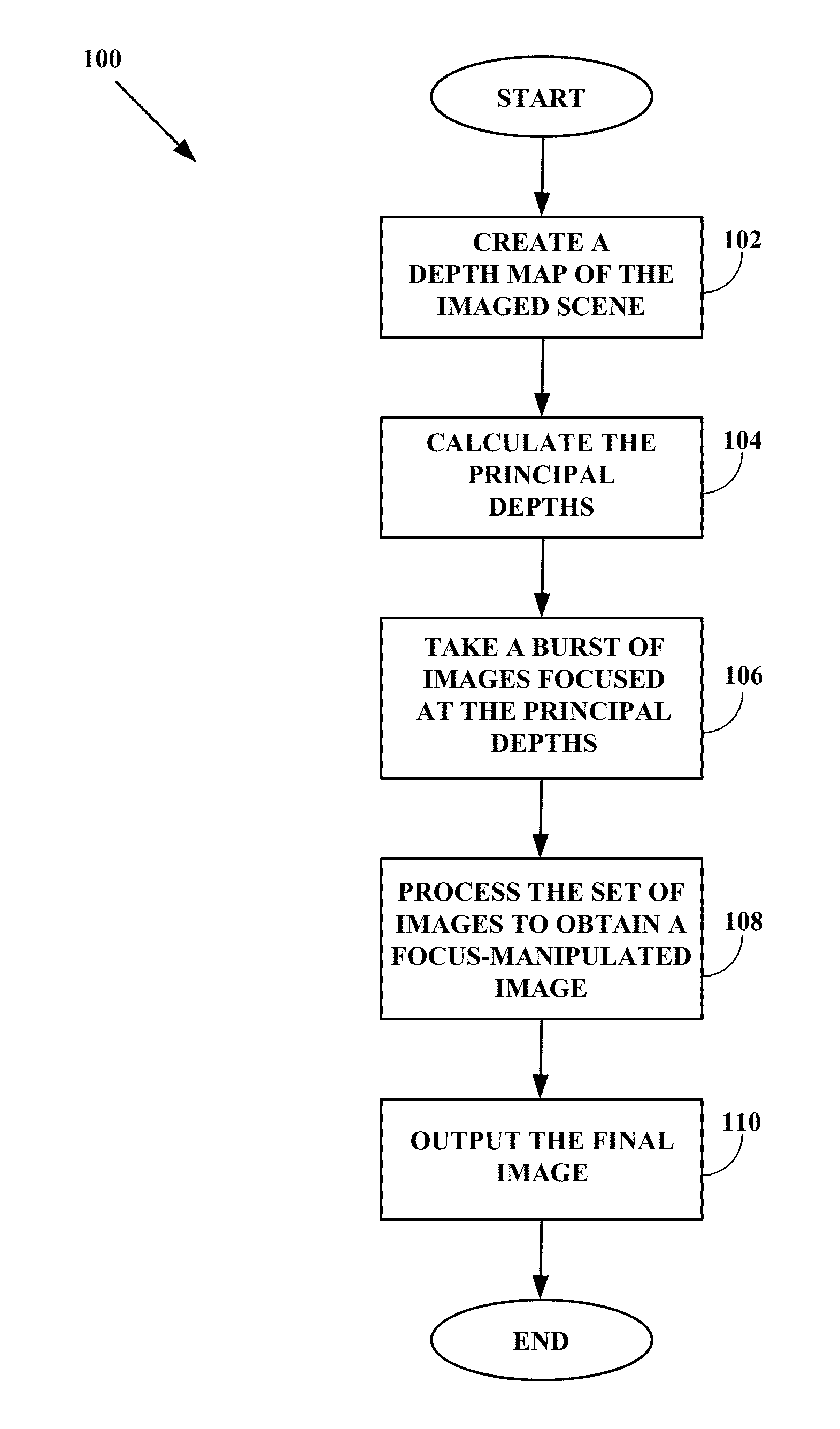

Image capture for later refocusing or focus-manipulation

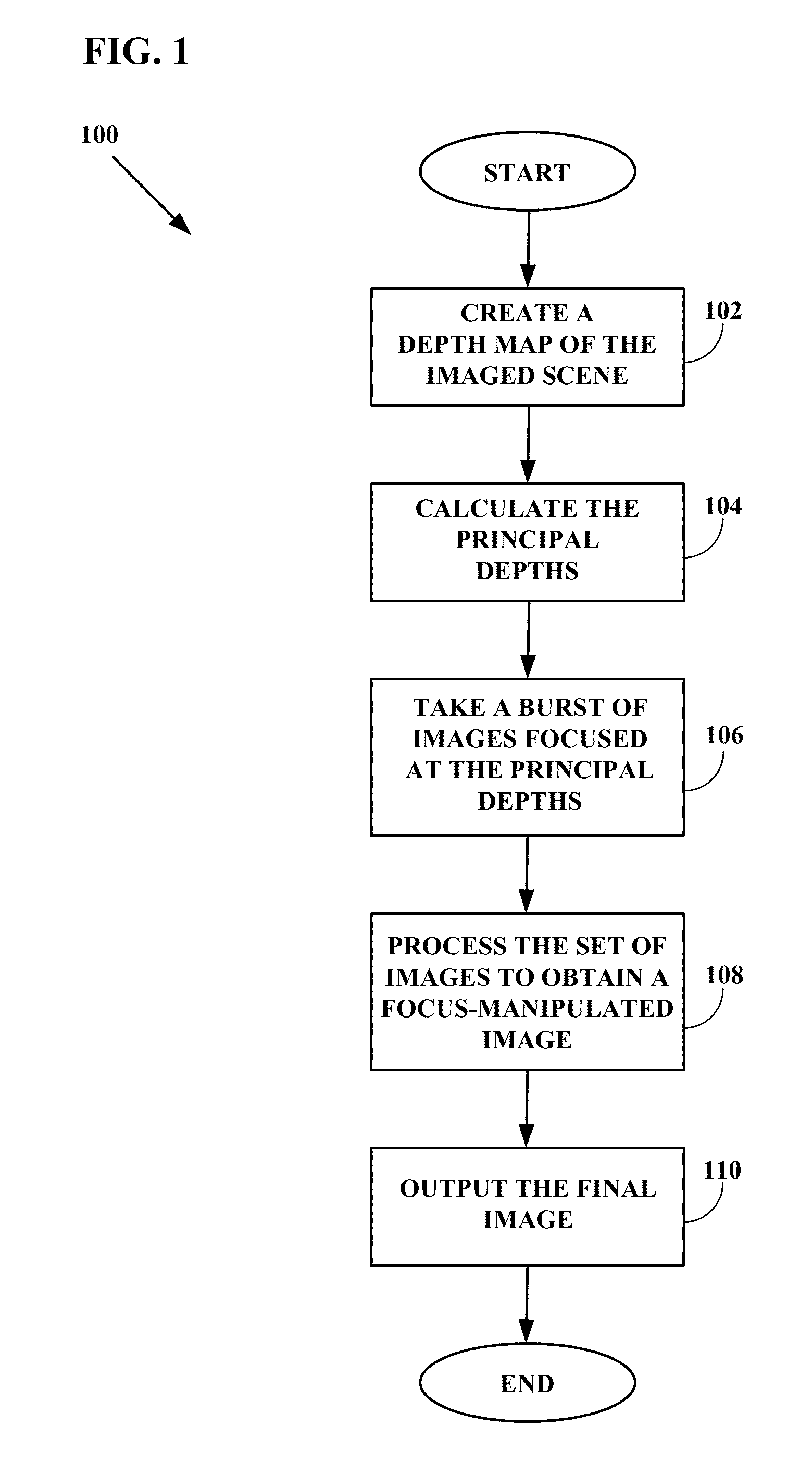

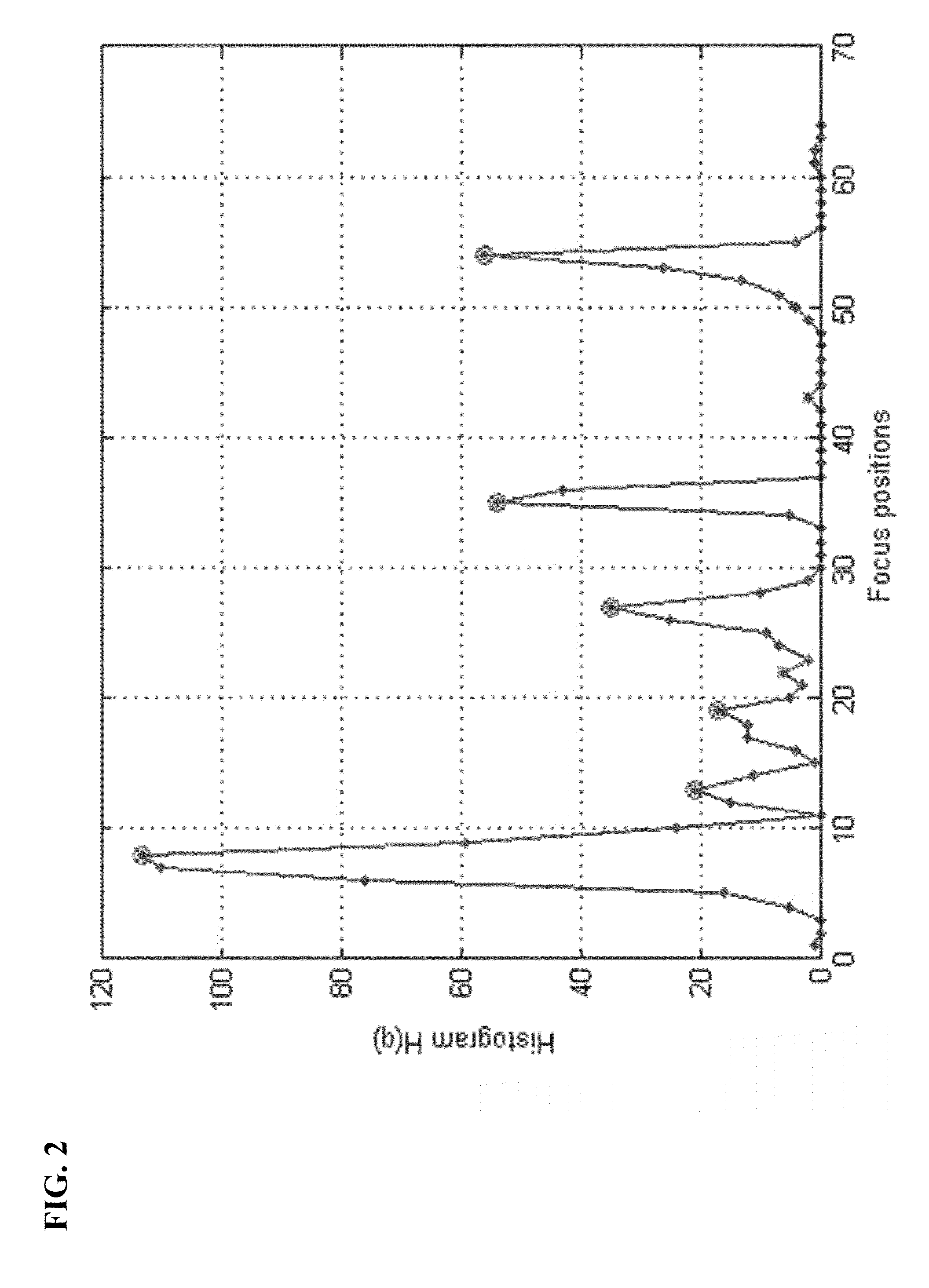

ActiveUS20130044254A1Reduce the numberTelevision system detailsImage enhancementUser inputCombined use

A system, method, and computer program product for capturing images for later refocusing. Embodiments estimate a distance map for a scene, determine a number of principal depths, capture a set of images, with each image focused at one of the principal depths, and process captured images to produce an output image. The scene is divided into regions, and the depth map represents region depths corresponding to a particular focus step. Entries having a specific focus step value are placed into a histogram, and depths having the most entries are selected as the principal depths. Embodiments may also identify scene areas having important objects and include different important object depths in the principal depths. Captured images may be selected according to user input, aligned, and then combined using blending functions that favor only scene regions that are focused in particular captured images.

Owner:QUALCOMM INC

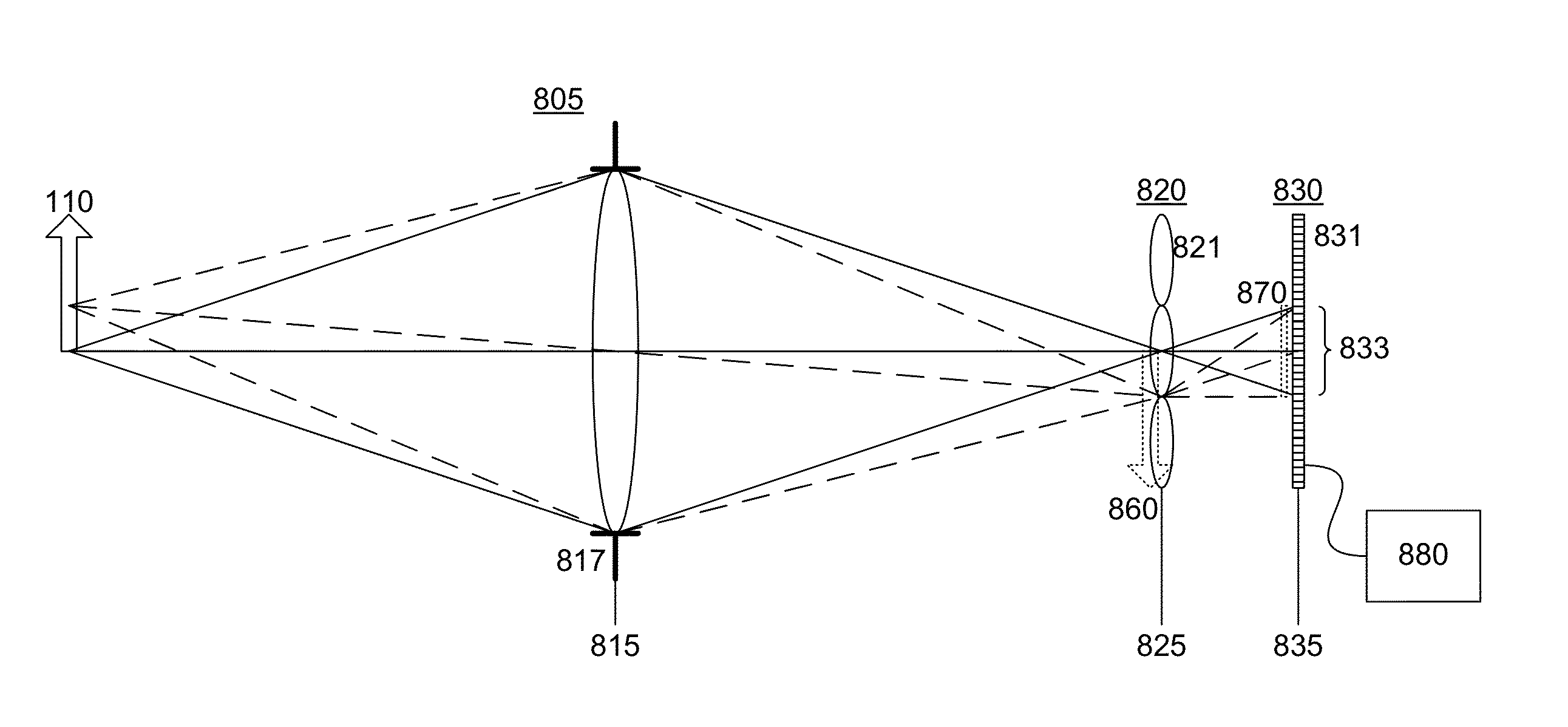

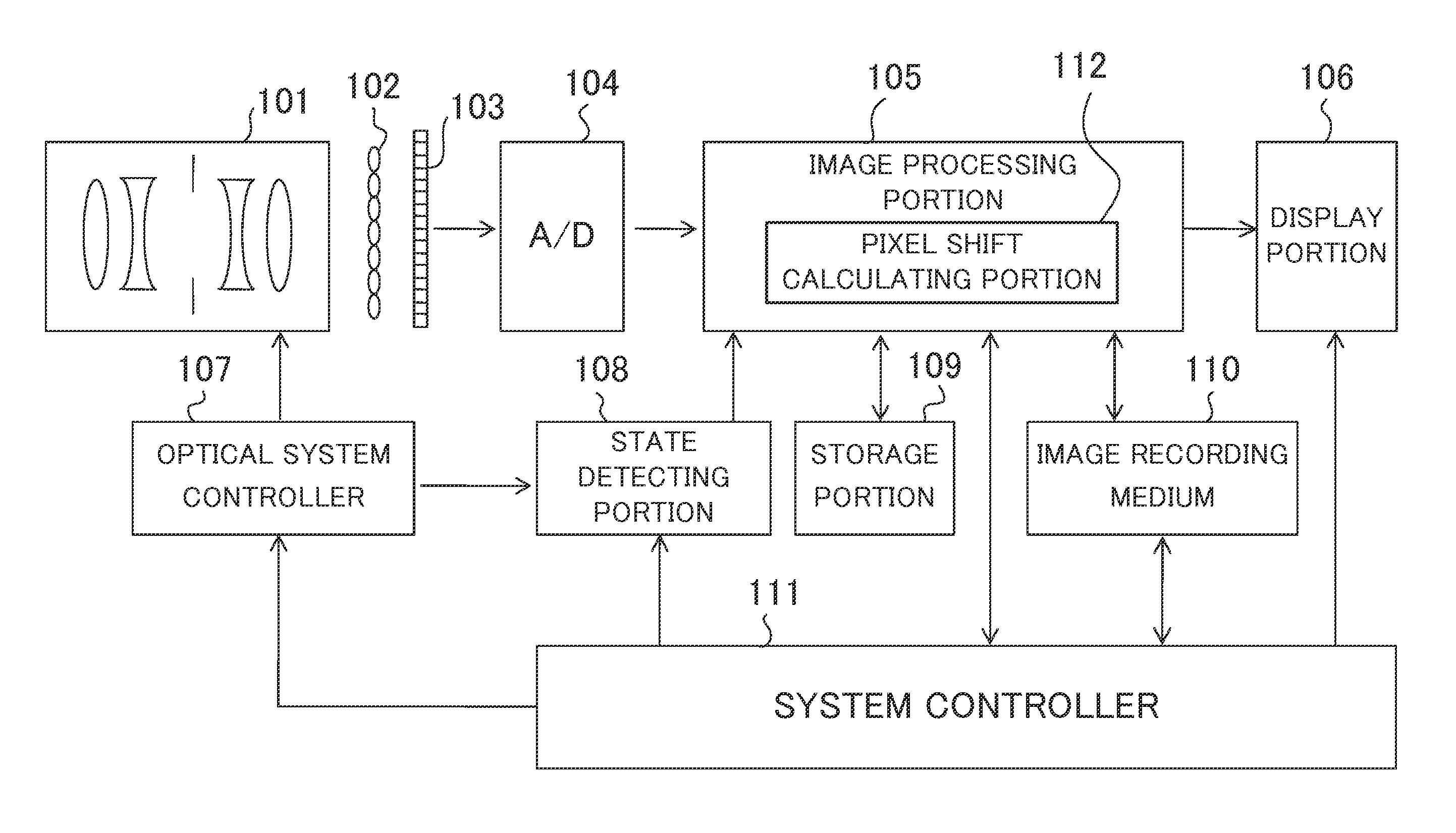

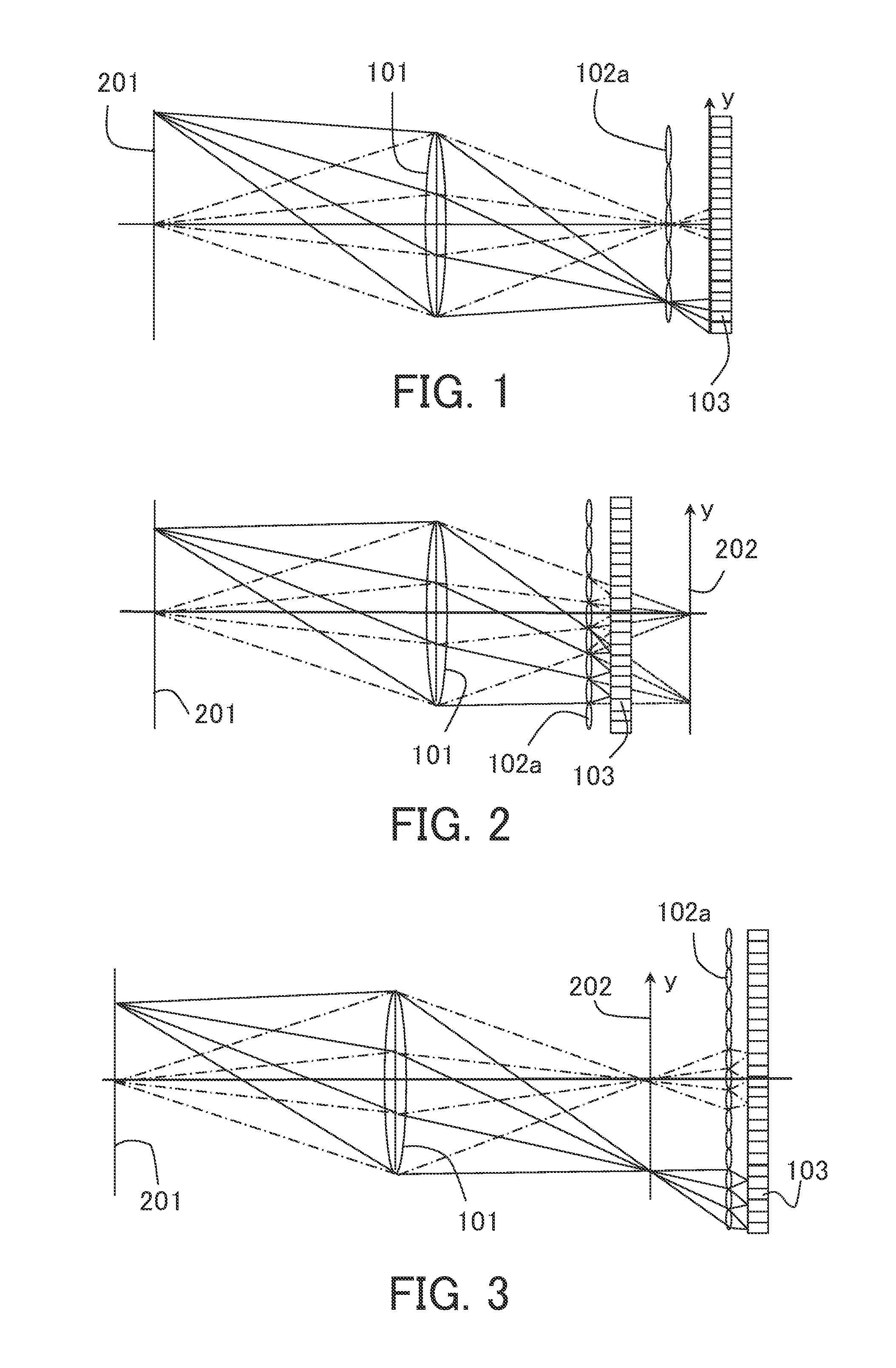

Image processing apparatus, image processing method, image pickup apparatus, method of controlling image pickup apparatus, and non-transitory computer-readable storage medium

ActiveUS20130329120A1High resolutionTelevision system detailsImage enhancementImaging processingVirtual image

An image processing method is capable of performing a reconstruction for an input image so as to generate a plurality of output images having a plurality of focus positions different from each other, and the image processing method includes the steps of obtaining the input image that is an image containing information of an object space obtained from a plurality of points of view using an image pickup apparatus having an imaging optical system and an image pickup element including a plurality of pixels, and calculating a pixel shift amount of pixels that are to be combined by the reconstruction for a plurality of virtual imaging planes corresponding to the plurality of focus positions.

Owner:CANON KK

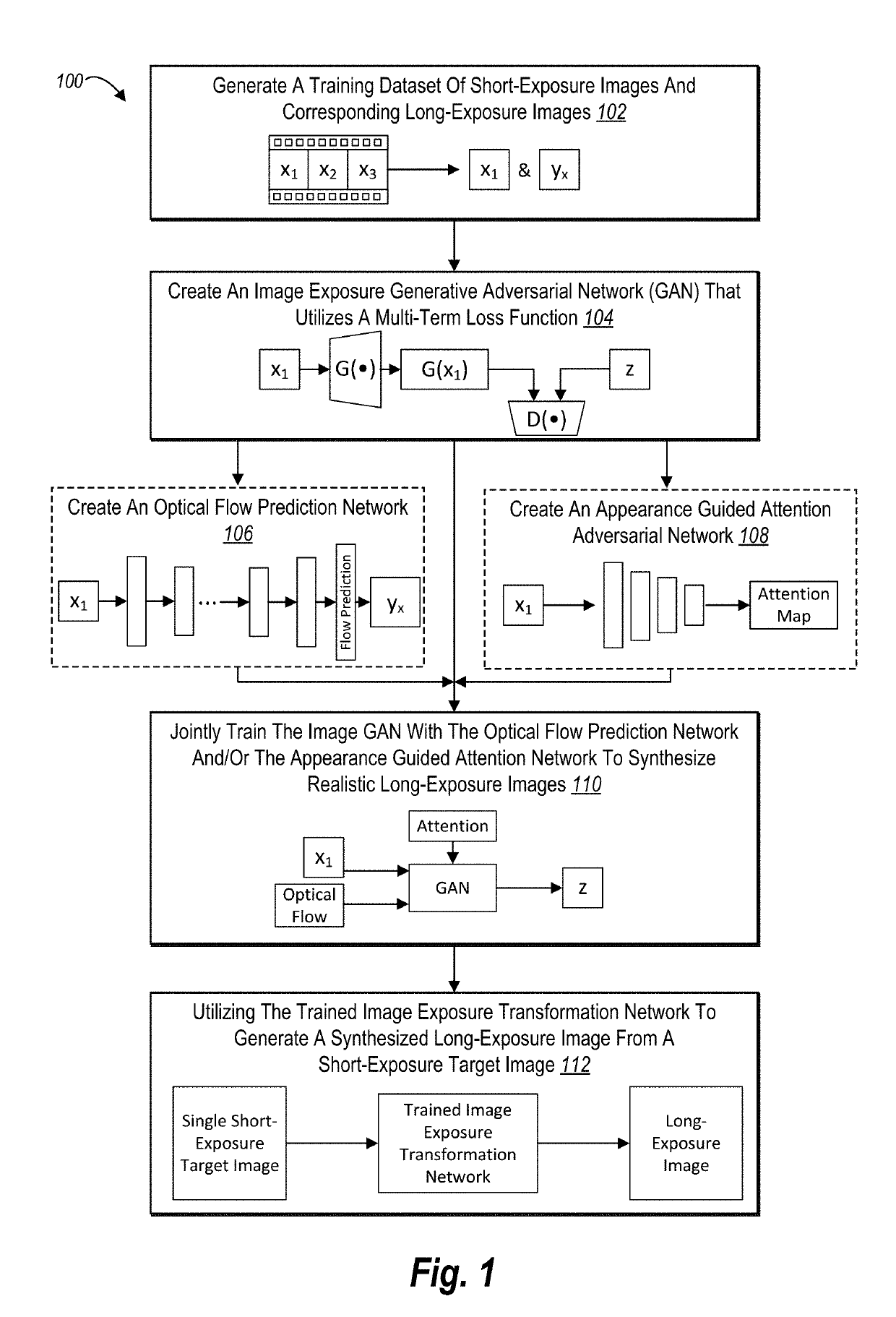

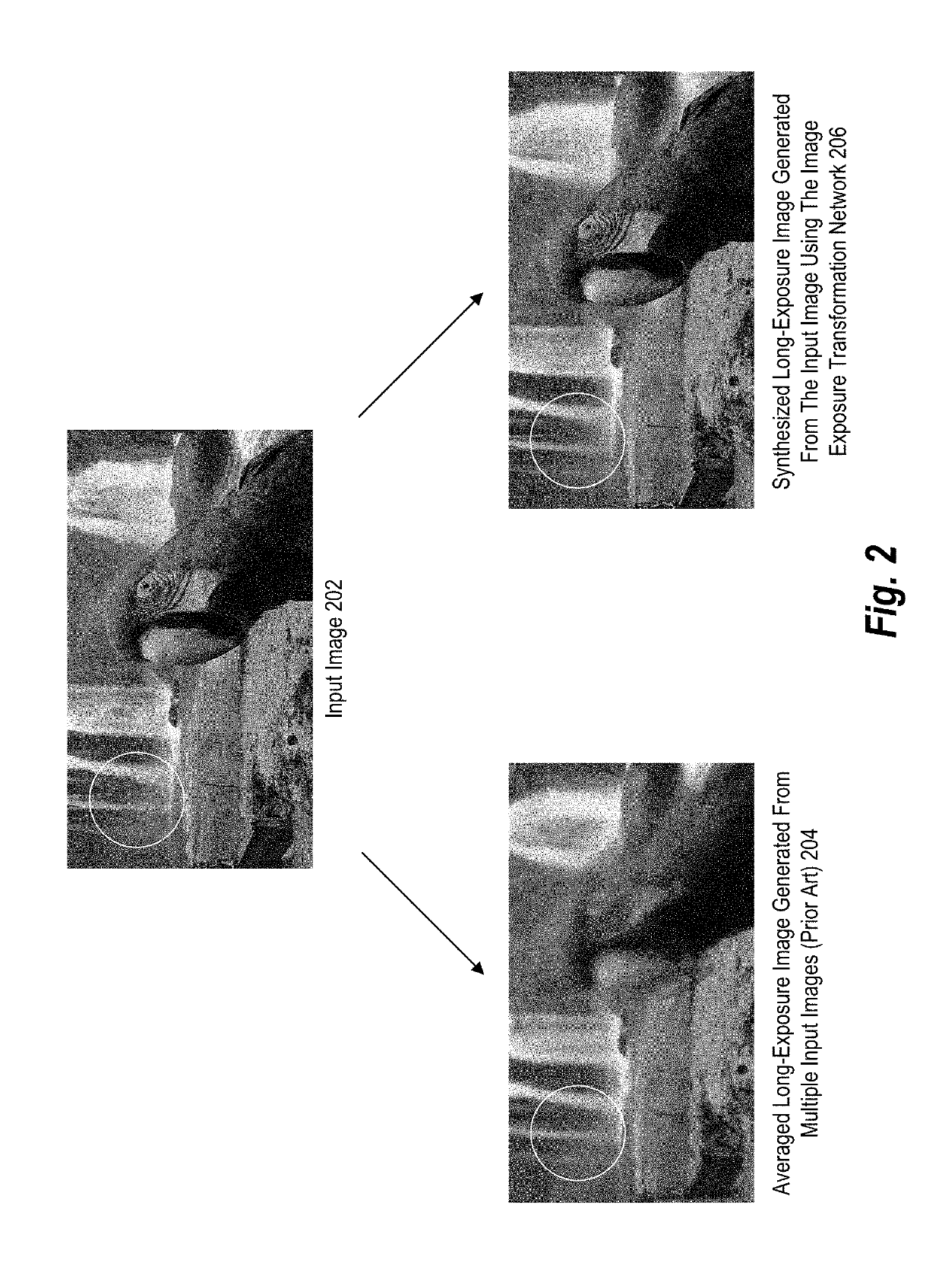

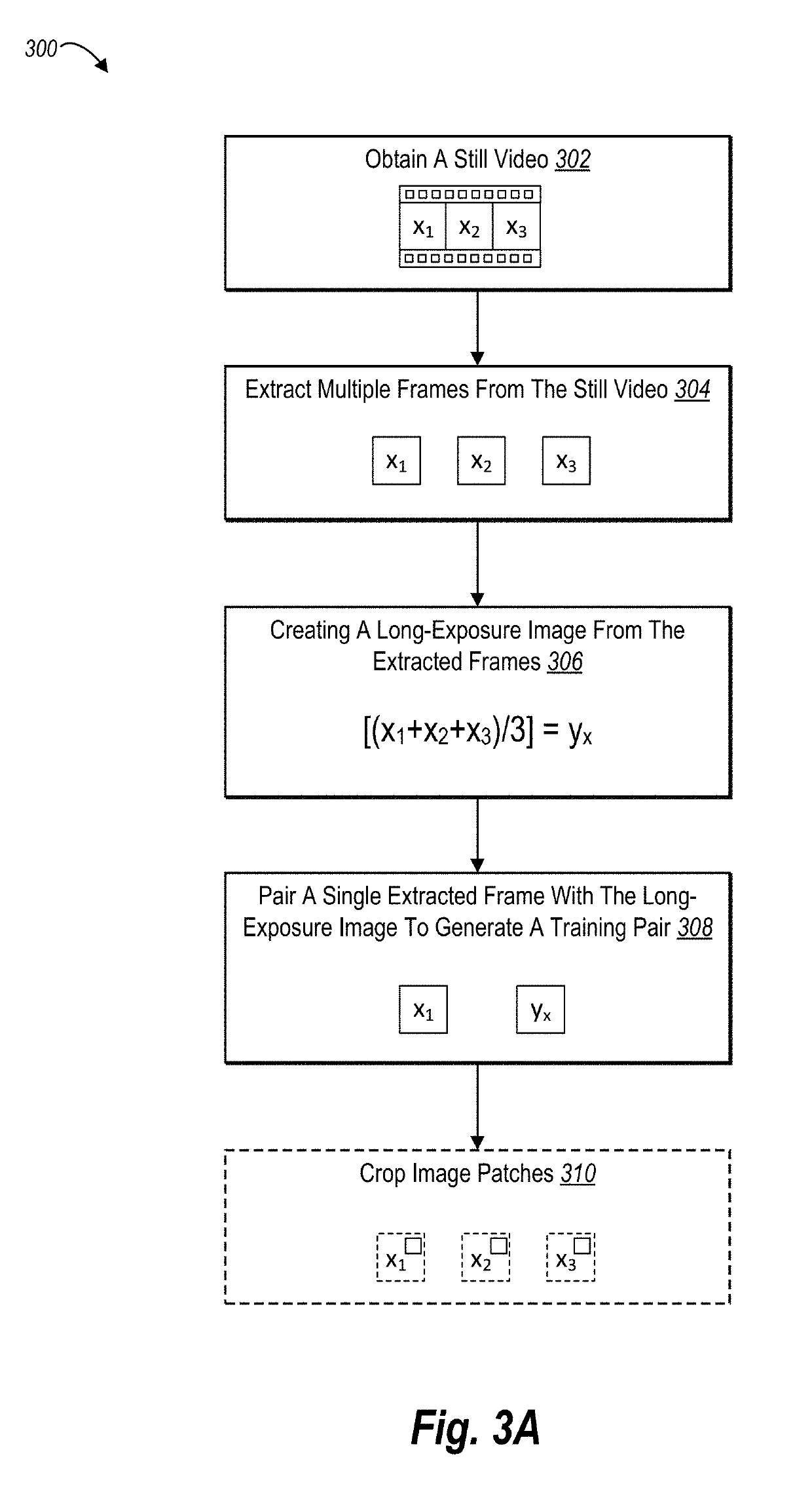

Training and utilizing an image exposure transformation neural network to generate a long-exposure image from a single short-exposure image

ActiveUS20190333198A1Accurate exposureRealistic long-exposureImage enhancementImage analysisGround truthOptical flow

The present disclosure relates to training and utilizing an image exposure transformation network to generate a long-exposure image from a single short-exposure image (e.g., still image). In various embodiments, the image exposure transformation network is trained using adversarial learning, long-exposure ground truth images, and a multi-term loss function. In some embodiments, the image exposure transformation network includes an optical flow prediction network and / or an appearance guided attention network. Trained embodiments of the image exposure transformation network generate realistic long-exposure images from single short-exposure images without additional information.

Owner:ADOBE INC

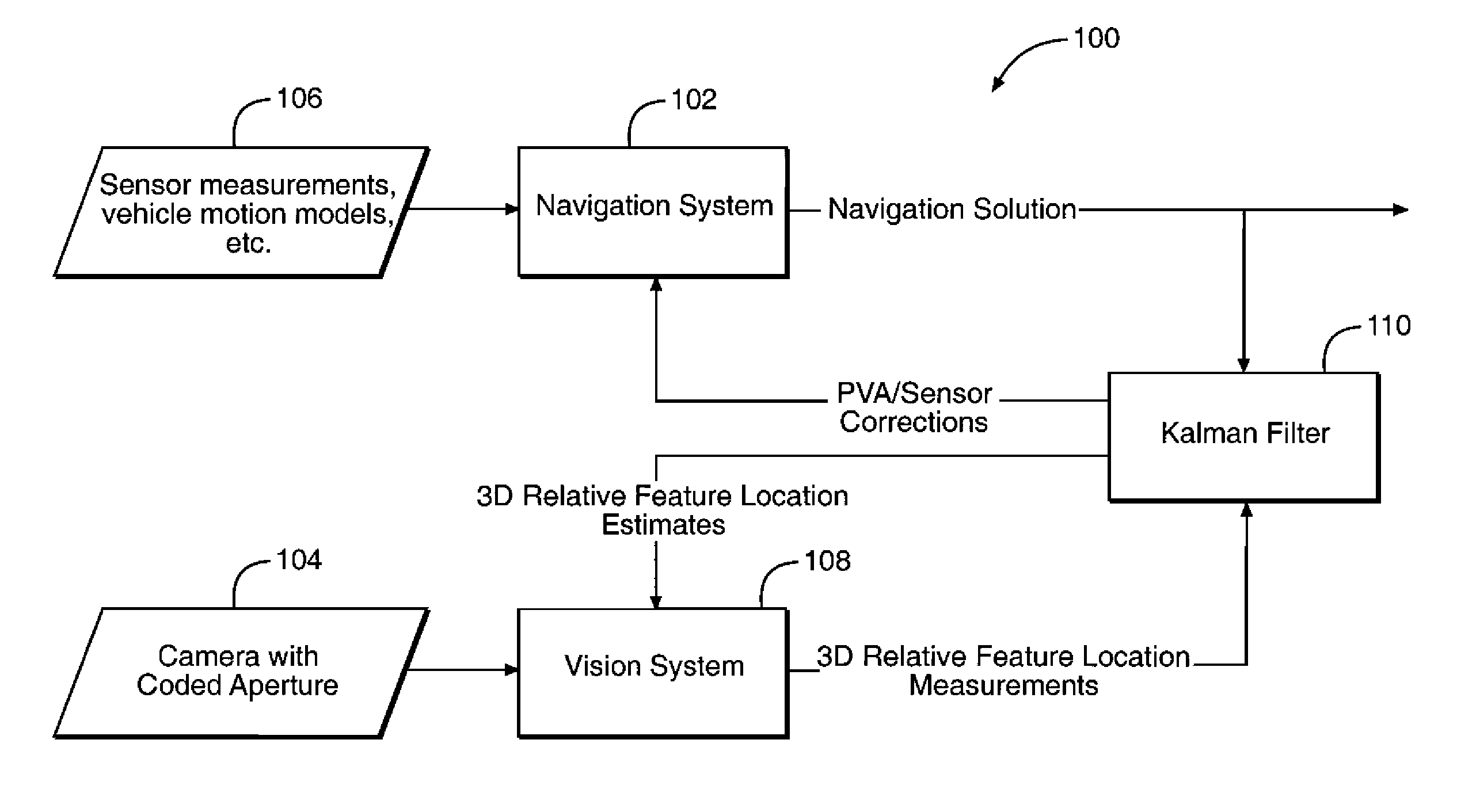

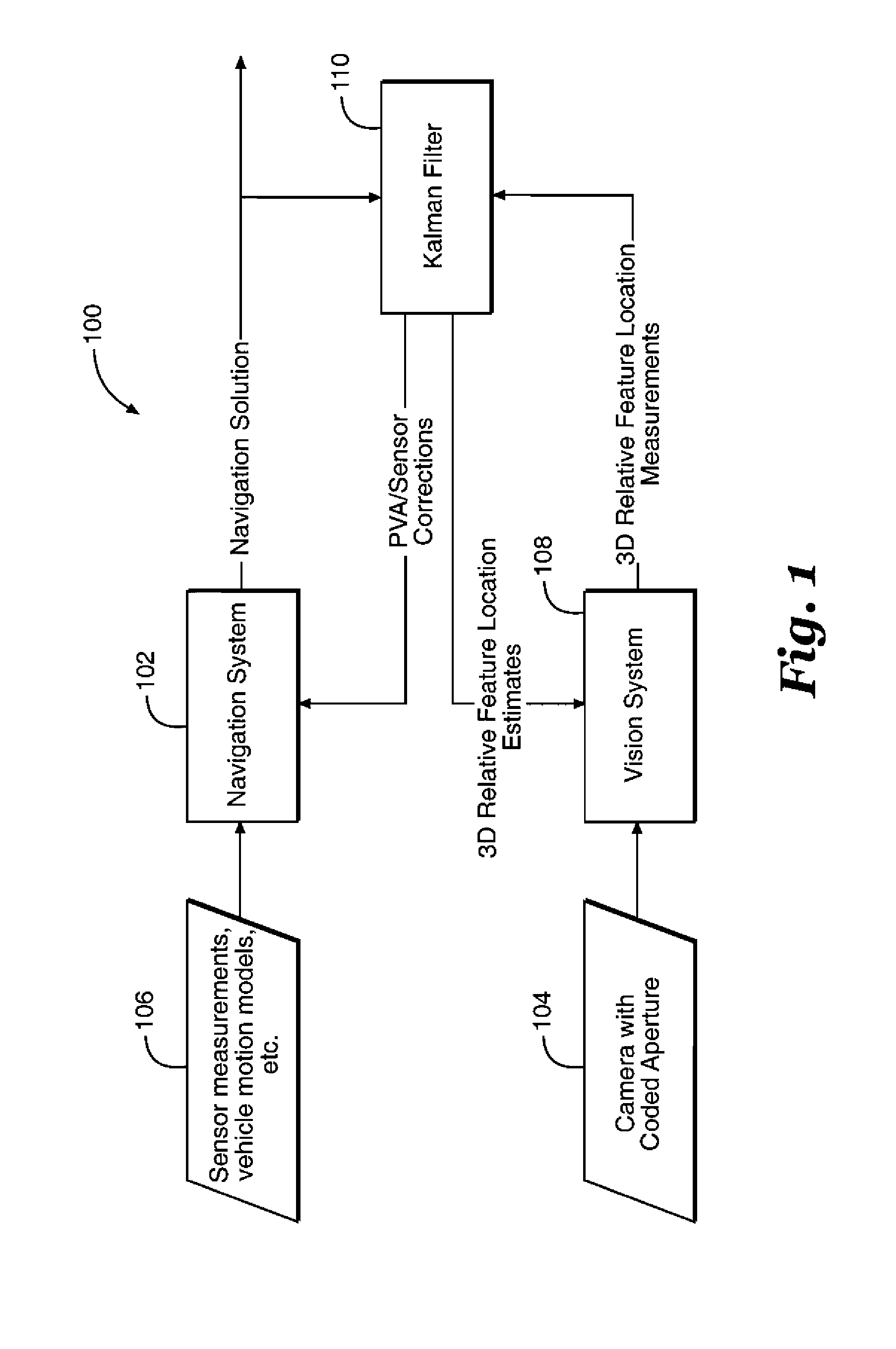

Coded aperture aided navigation and geolocation systems

InactiveUS8577539B1Improve performanceReducing measurement covarianceImage enhancementImage analysisMicro air vehicleGeolocation

A micro air vehicle having a navigation system with a single camera to determine position and attitude of the vehicle using changes the direction to the observed features. The difference between the expected directions to the observed features versus the measured direction to the observed features is used to correct a navigation solution.

Owner:THE GOVERNMENT OF THE US SEC THE AIR FORCE

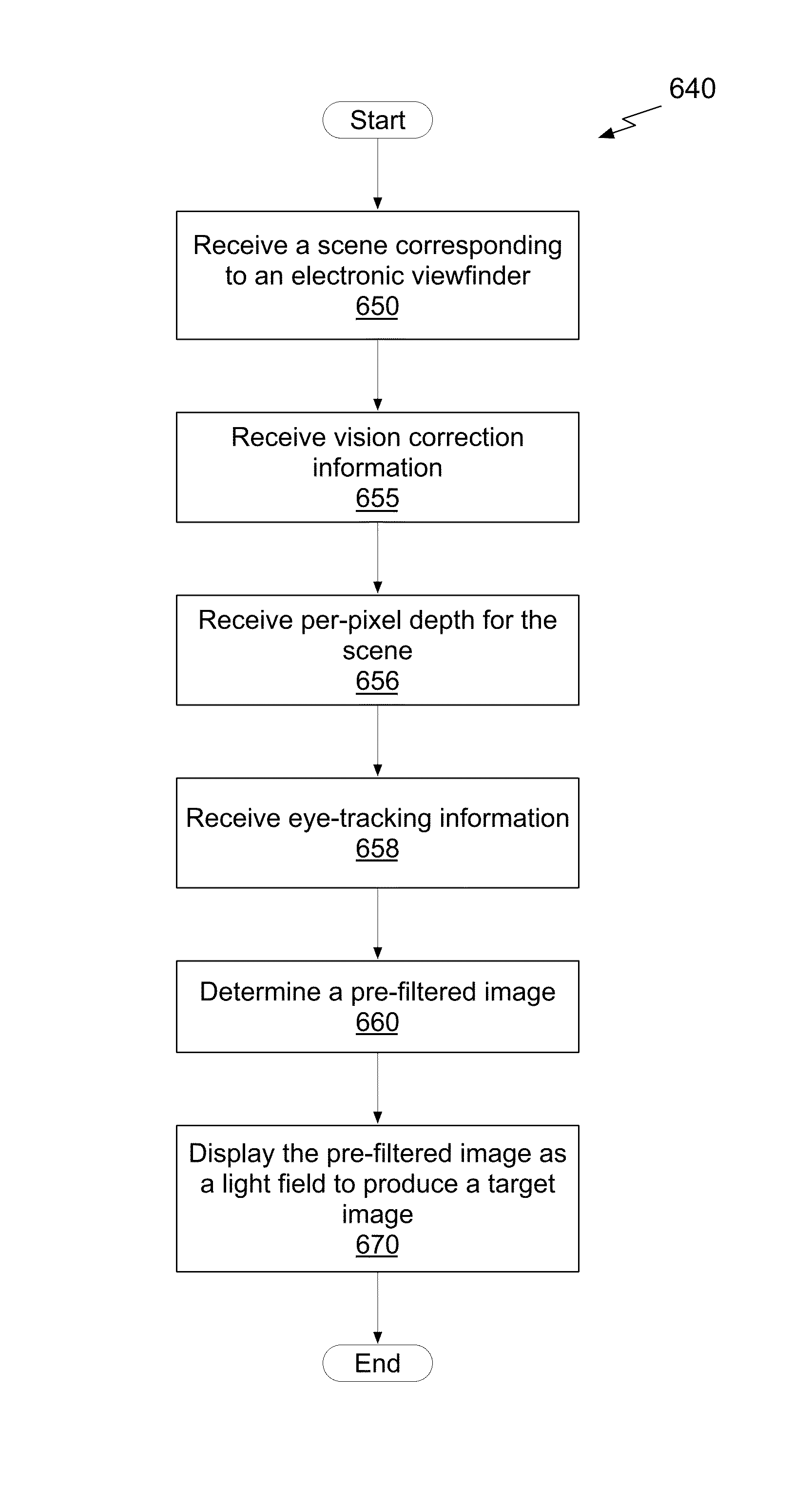

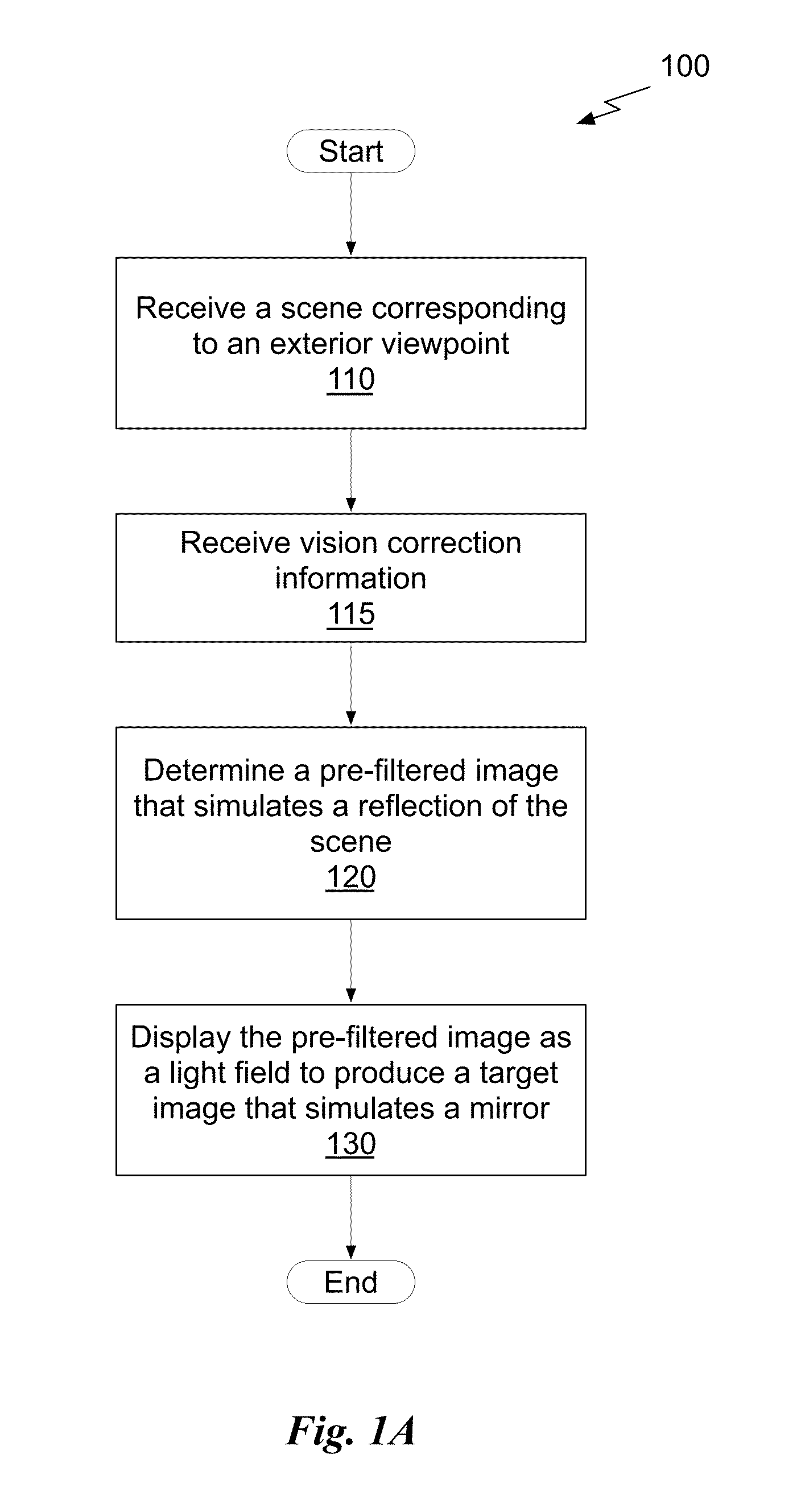

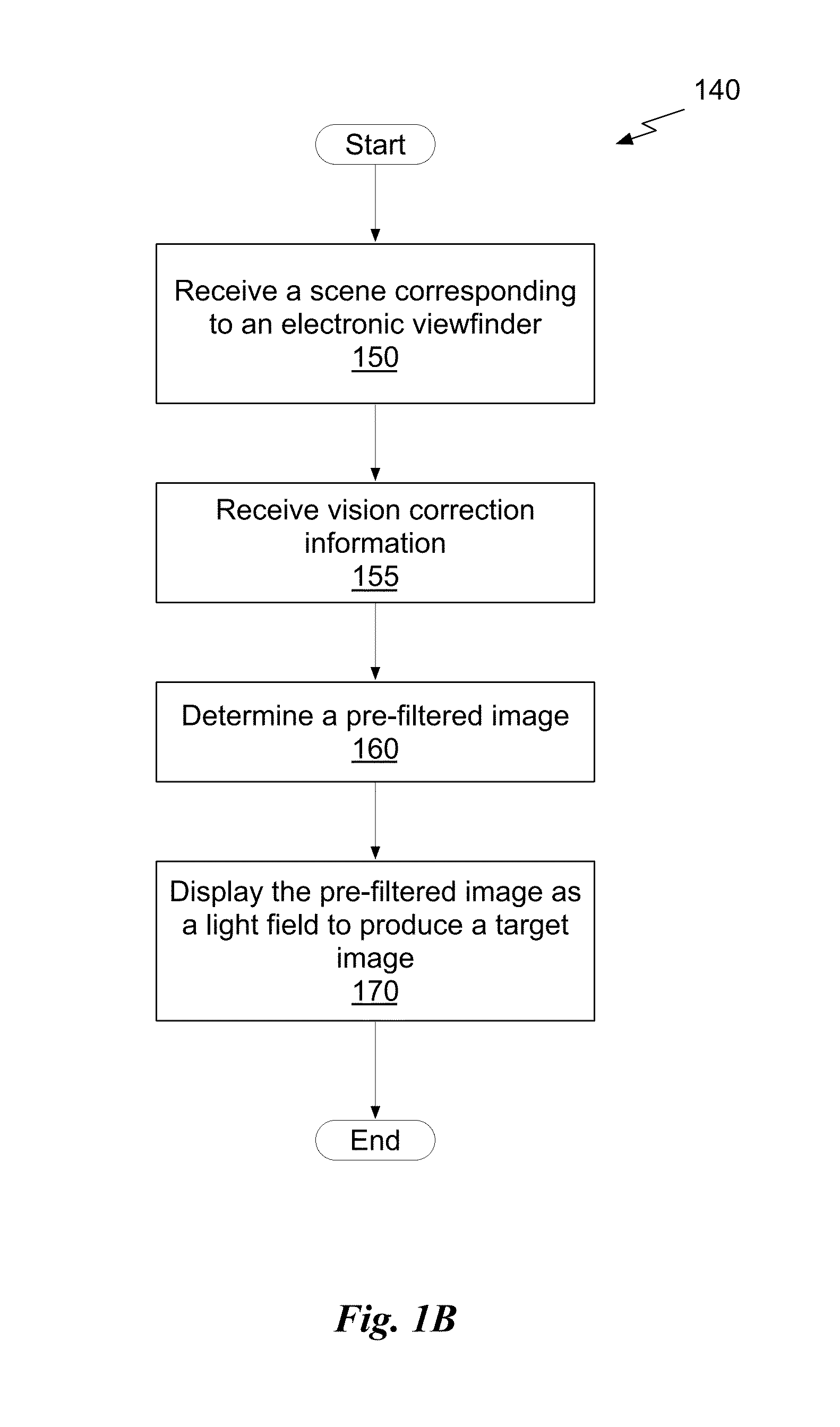

System, method, and computer program product for displaying a scene as a light field

A system, method, and computer program product that displays a light field to simulate an electronic viewfinder of an image capture device. The method includes the operations of receiving a scene corresponding to the electronic viewfinder and determining a pre-filtered image that simulates the scene, where the pre-filtered image represents a light field and corresponds to a target image. The pre-filtered image is displayed as the light field to produce the target image.

Owner:NVIDIA CORP

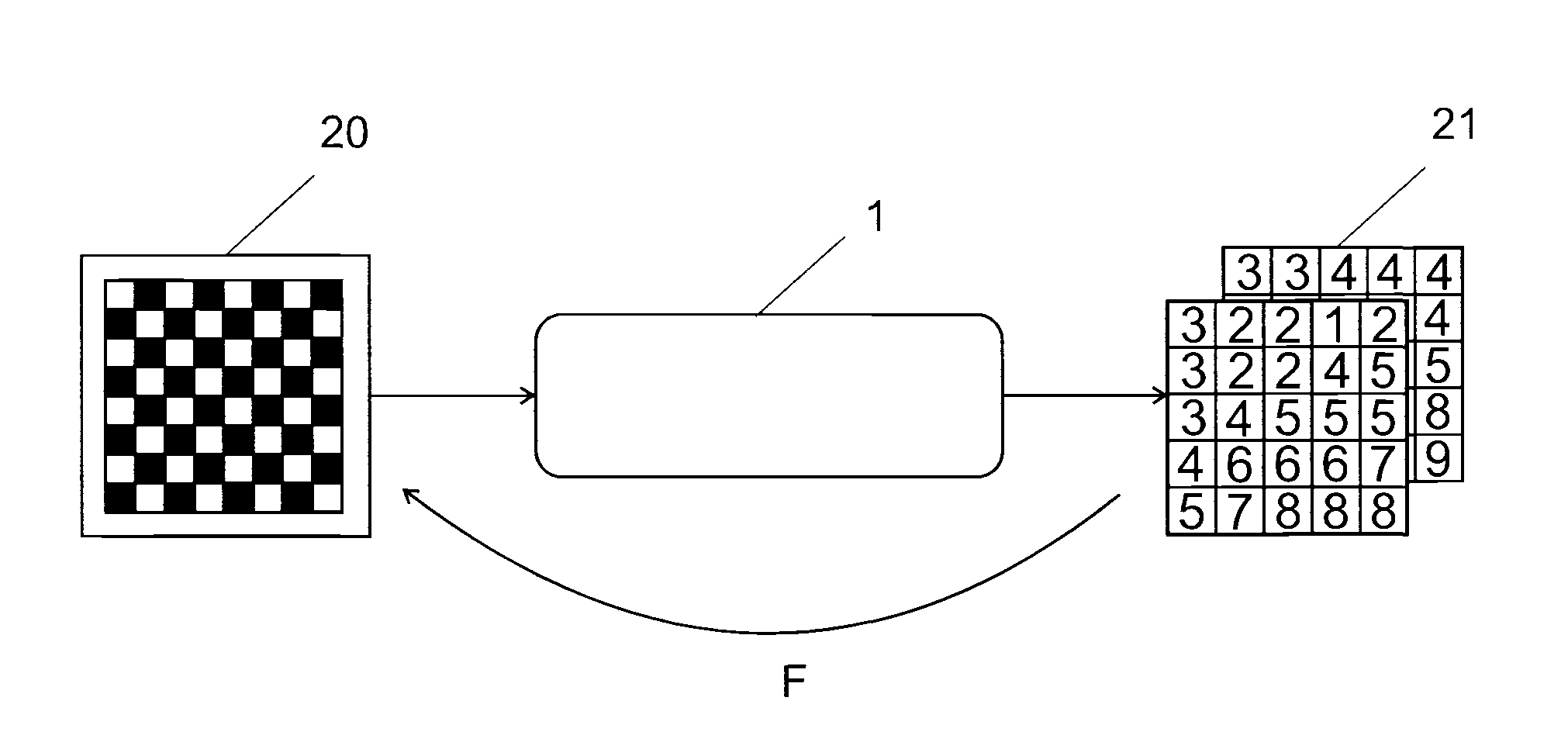

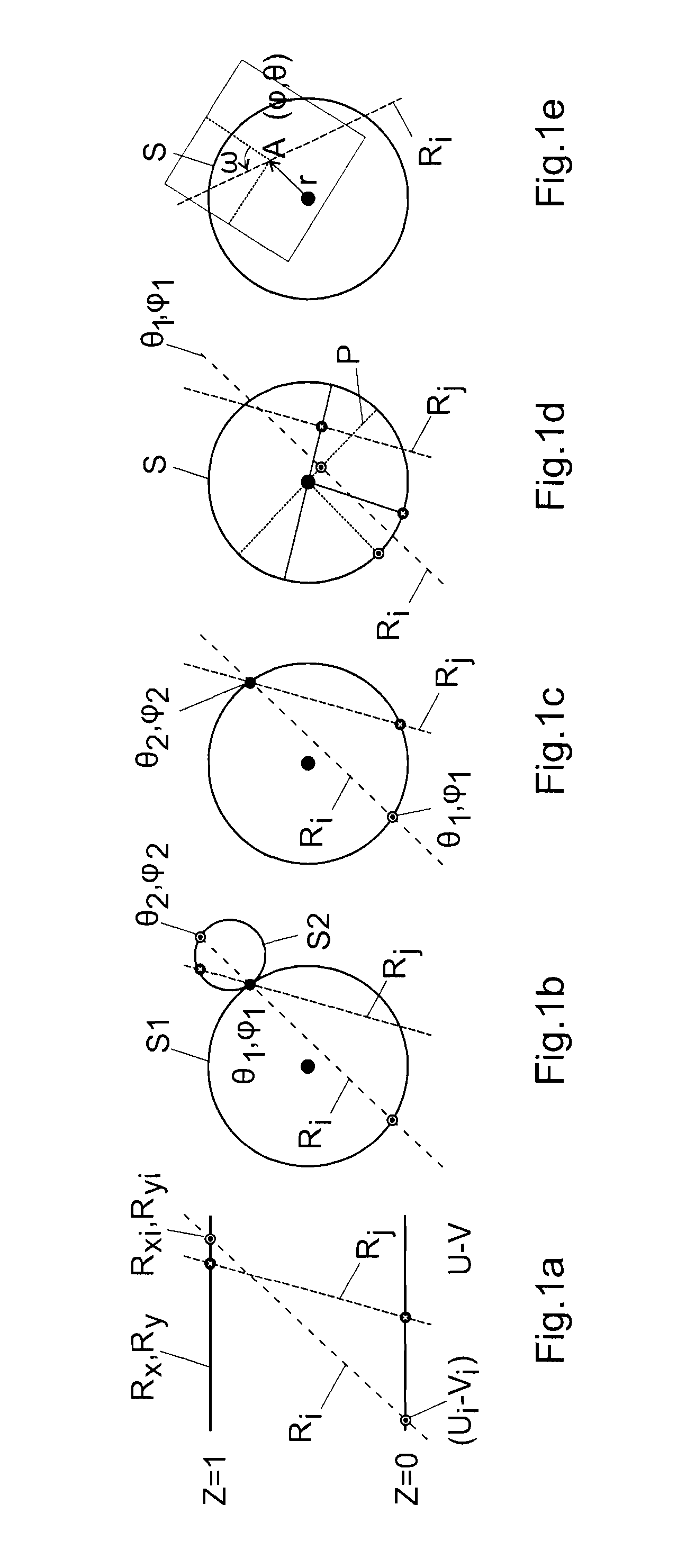

Light field processing method

A light field processing method for processing data corresponding to a light field, comprising:capturing with a plenoptic camera initial data representing a light field in a format dependent from said plenoptic camera;converting said initial data into converted data representing said light field in a camera independent format;processing said converted data so as to generate processed data representing a different light field.

Owner:ECOLE POLYTECHNIQUE FEDERALE DE LAUSANNE (EPFL)

Face pose rectification method and apparatus

ActiveUS20160086017A1Increased precision and robustnessImage enhancementImage analysisPattern recognitionDepth map

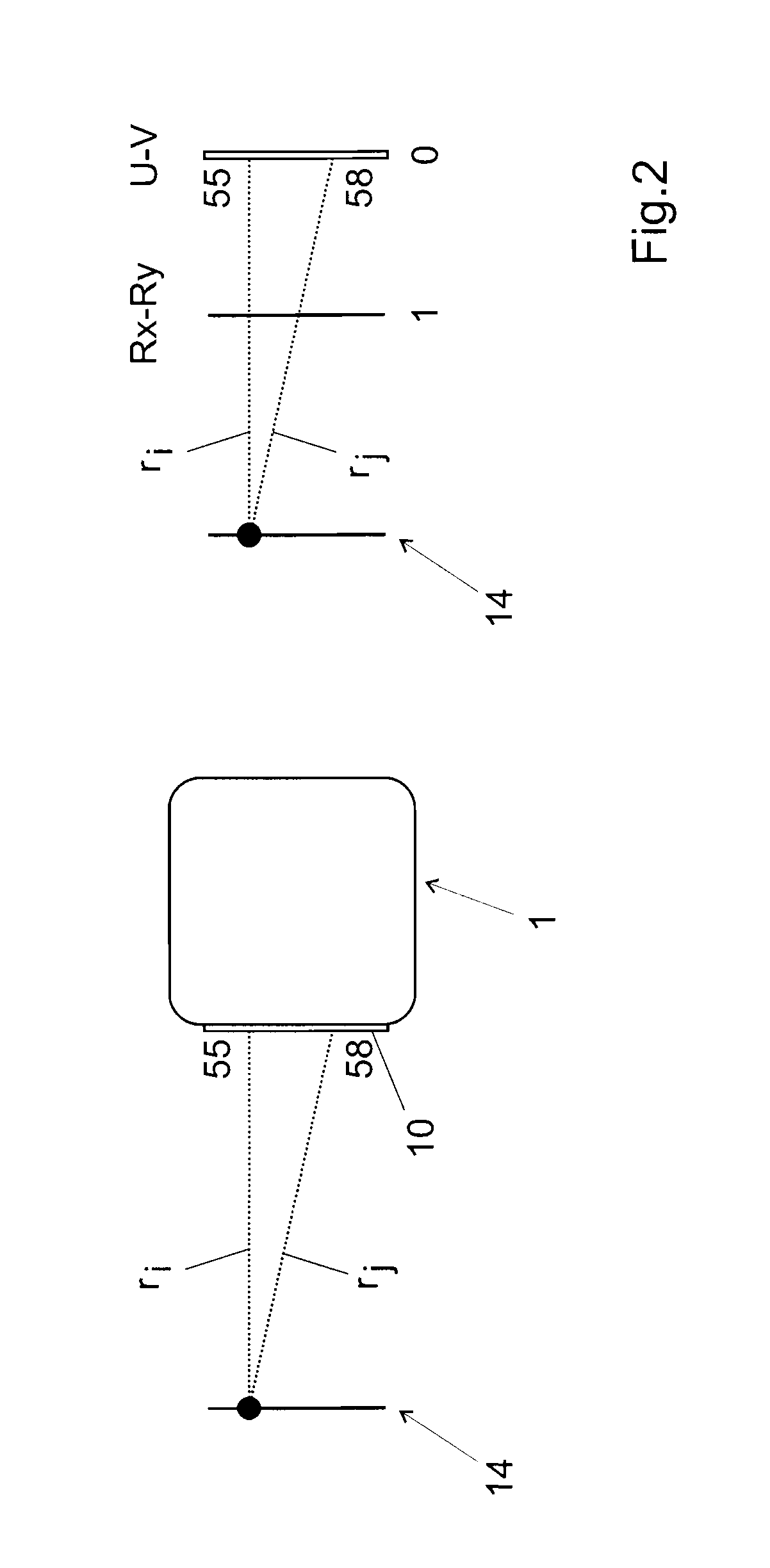

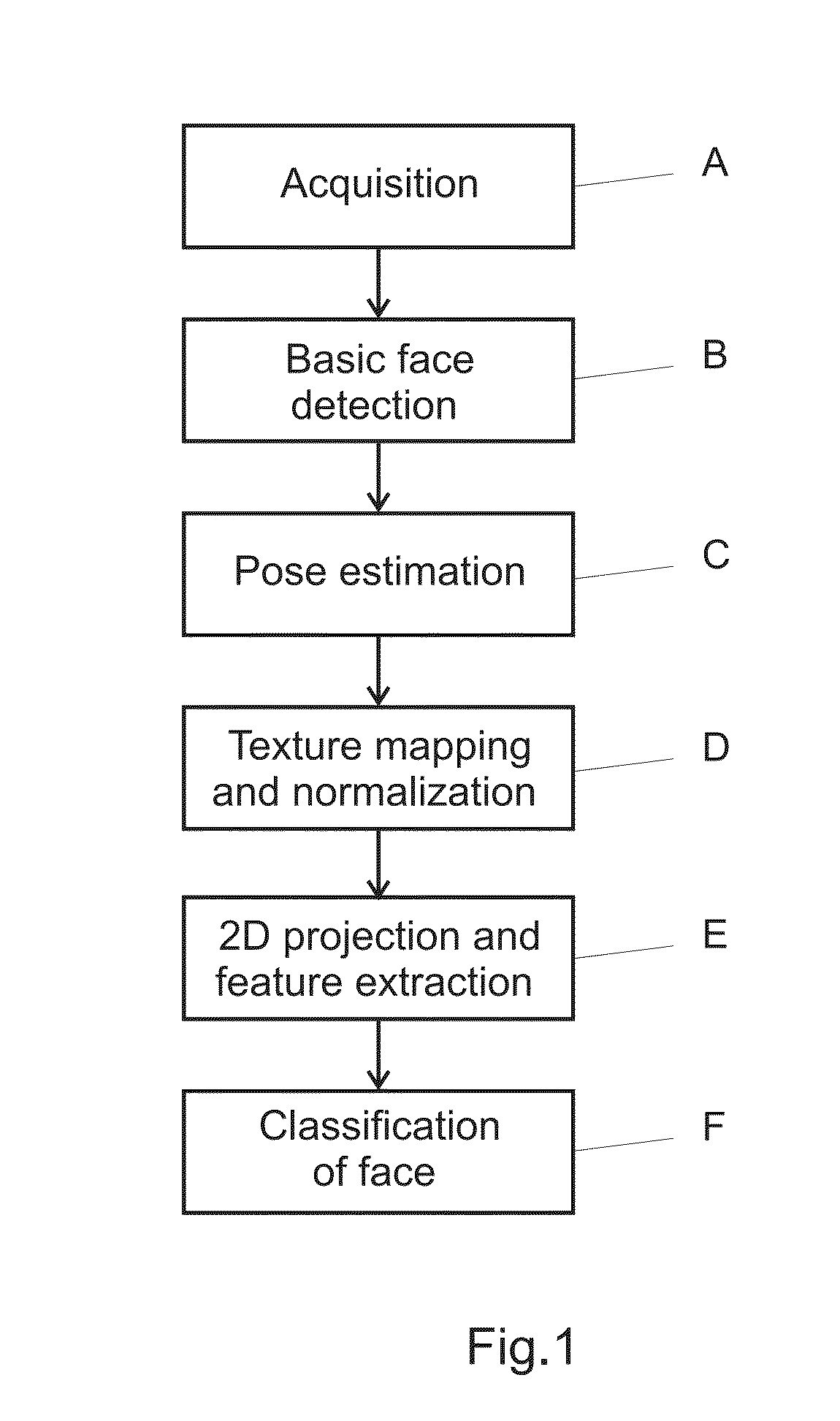

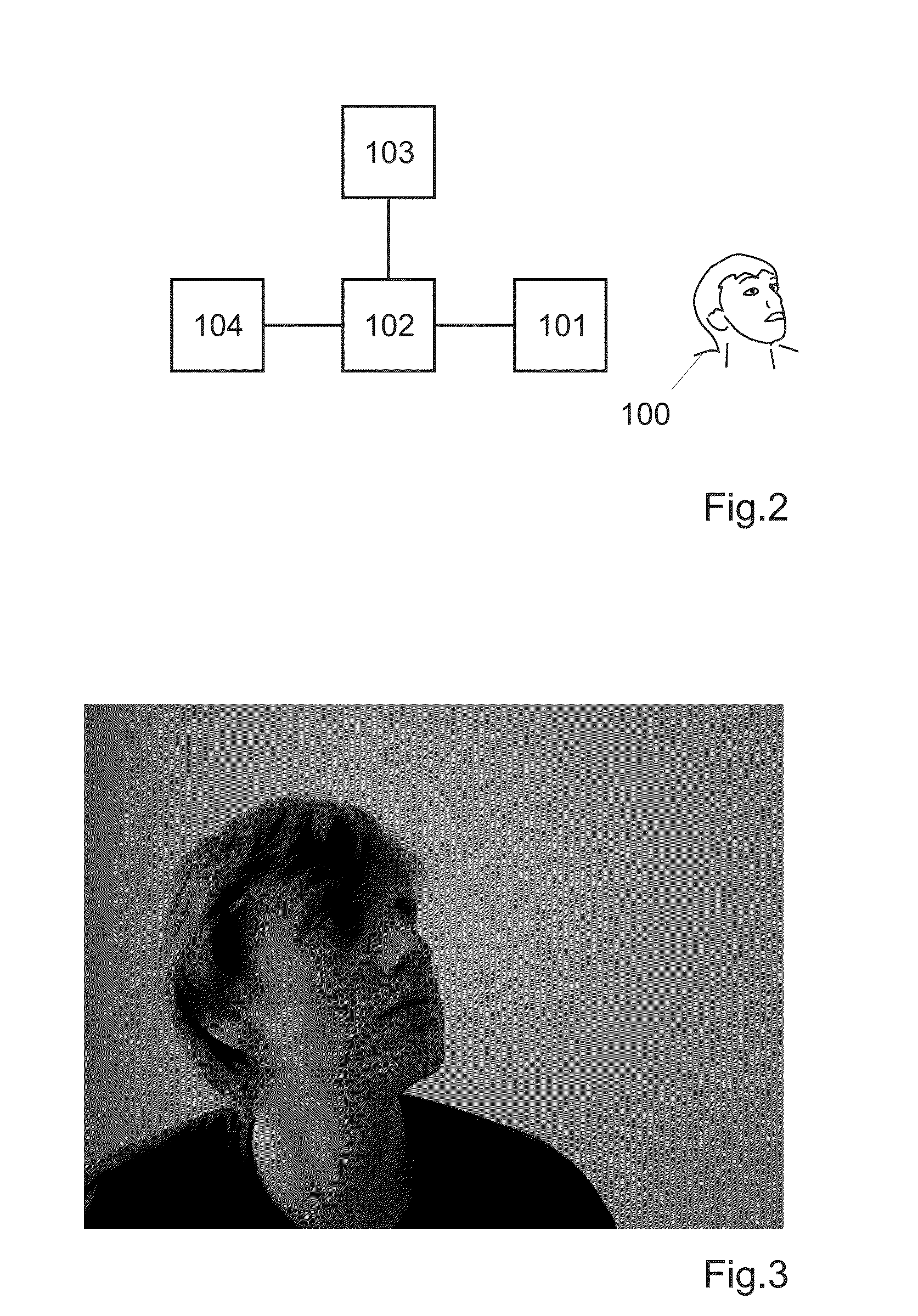

A pose rectification method for rectifying a pose in data representing face images, comprising the steps of:A-acquiring a least one test frame including 2D near infrared image data, 2D visible light image data, and a depth map;C-estimating the pose of a face in said test frame by aligning said depth map with a 3D model of a head of known orientation;D-mapping at least one of said 2D image on the depth map, so as to generate textured image data;E-projecting the textured image data in 2D so as to generate data representing a pose-rectified 2D projected image.

Owner:KEYLEMON

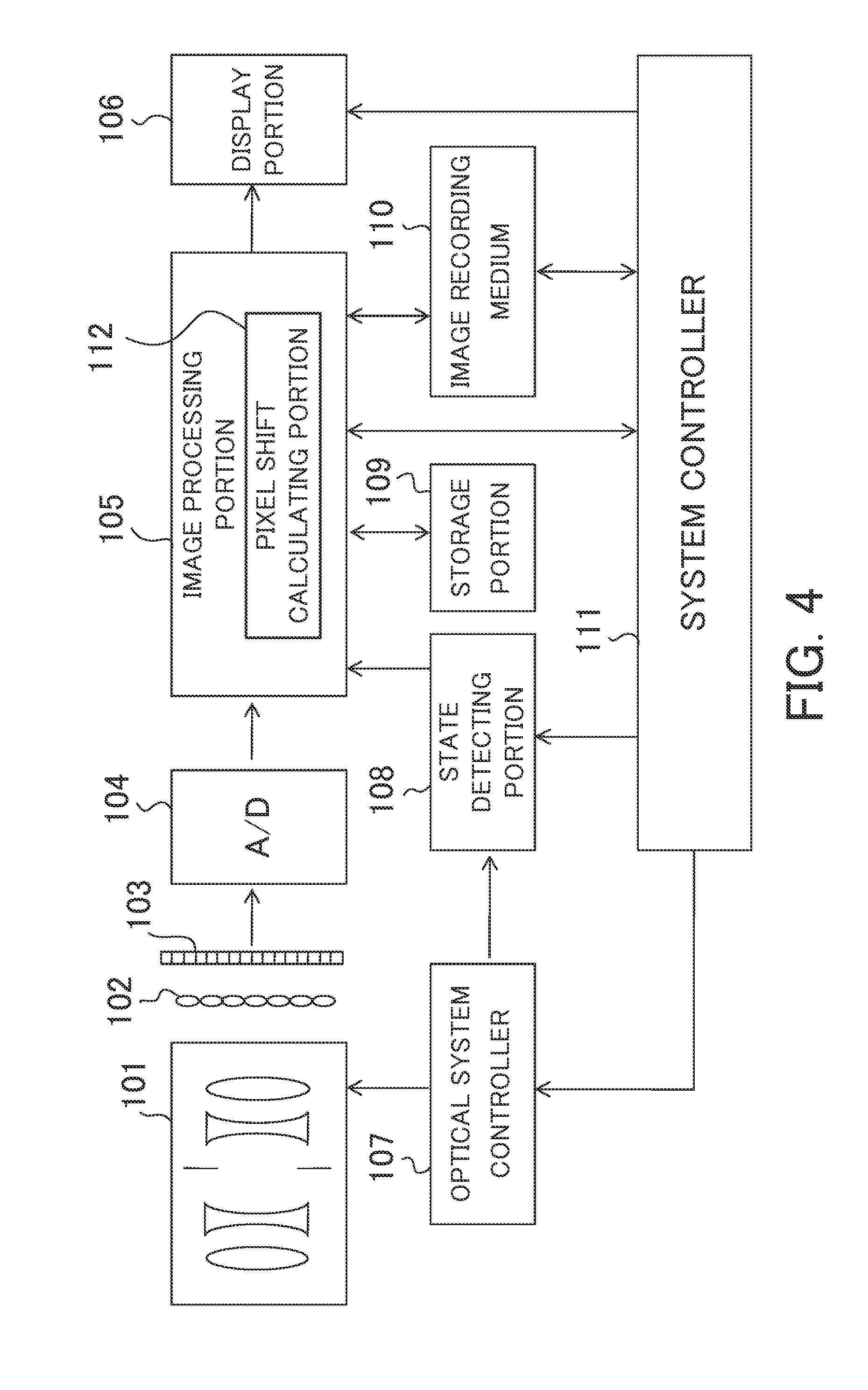

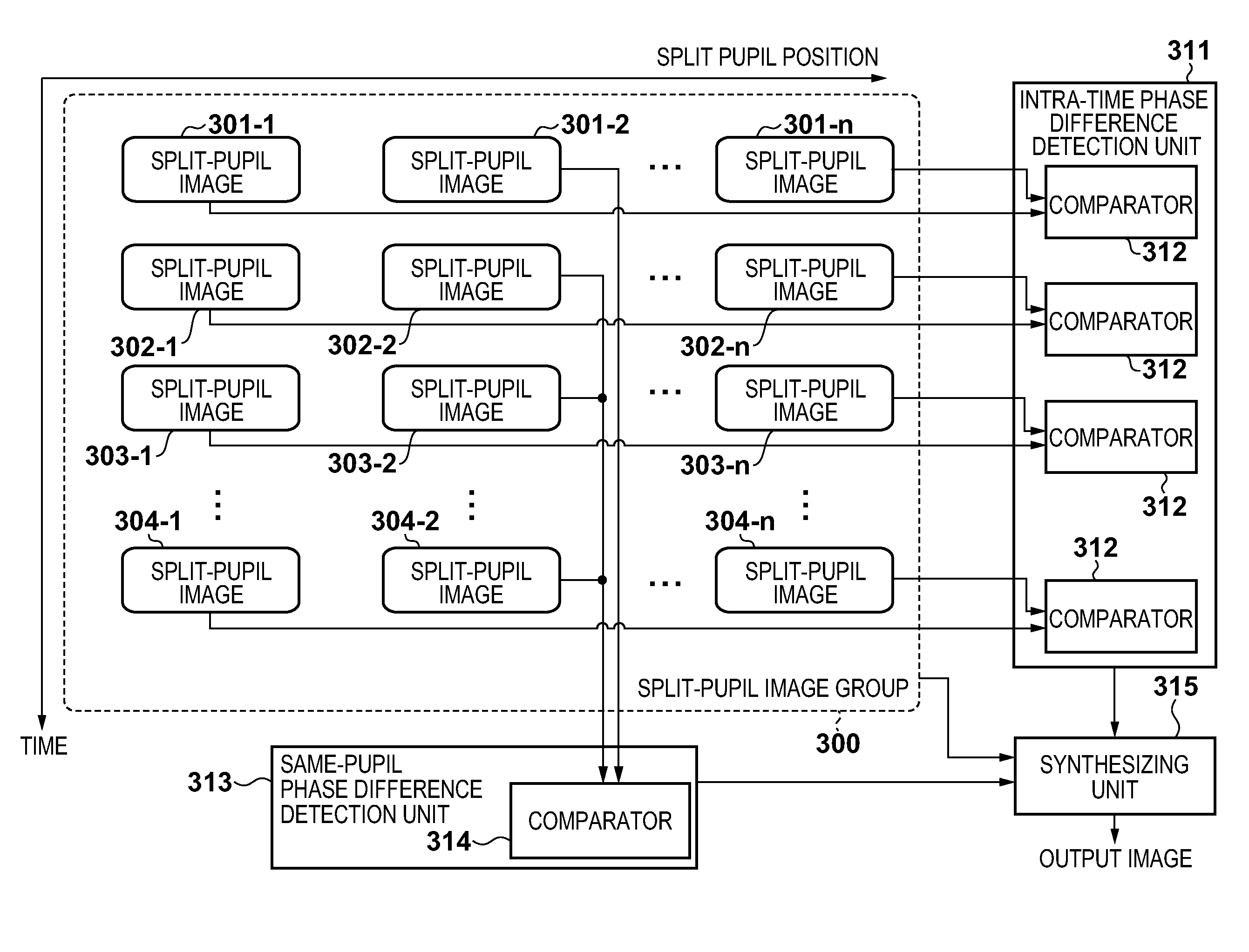

Image processing apparatus, image capturing apparatus, control method, and recording medium

ActiveUS20140226038A1FavorableImage enhancementTelevision system detailsImaging processingPhase difference

An image processing apparatus obtains a plurality of images obtained by shooting the same subject at different positions, at each of a plurality of timings. Then, a first phase difference between images obtained at the same time, and a second phase difference between images obtained by shooting at the same position but at different timings, are detected. The image processing apparatus then selects at least some of the obtained images as images to be used in synthesis in accordance with a set generation condition, synthesizes the images so as to eliminate at least one of the first and second phase differences, and outputs the resulting image.

Owner:CANON KK

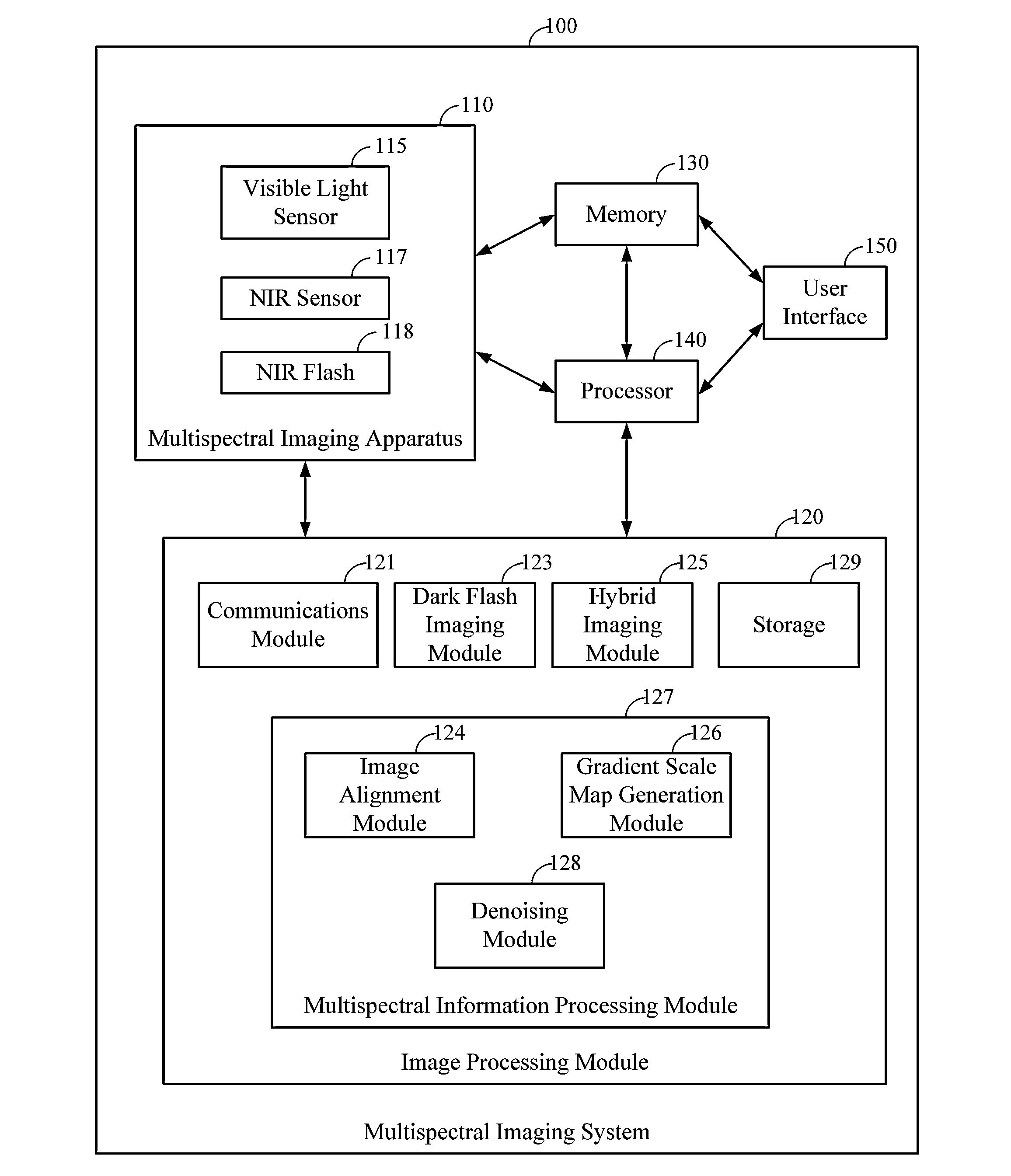

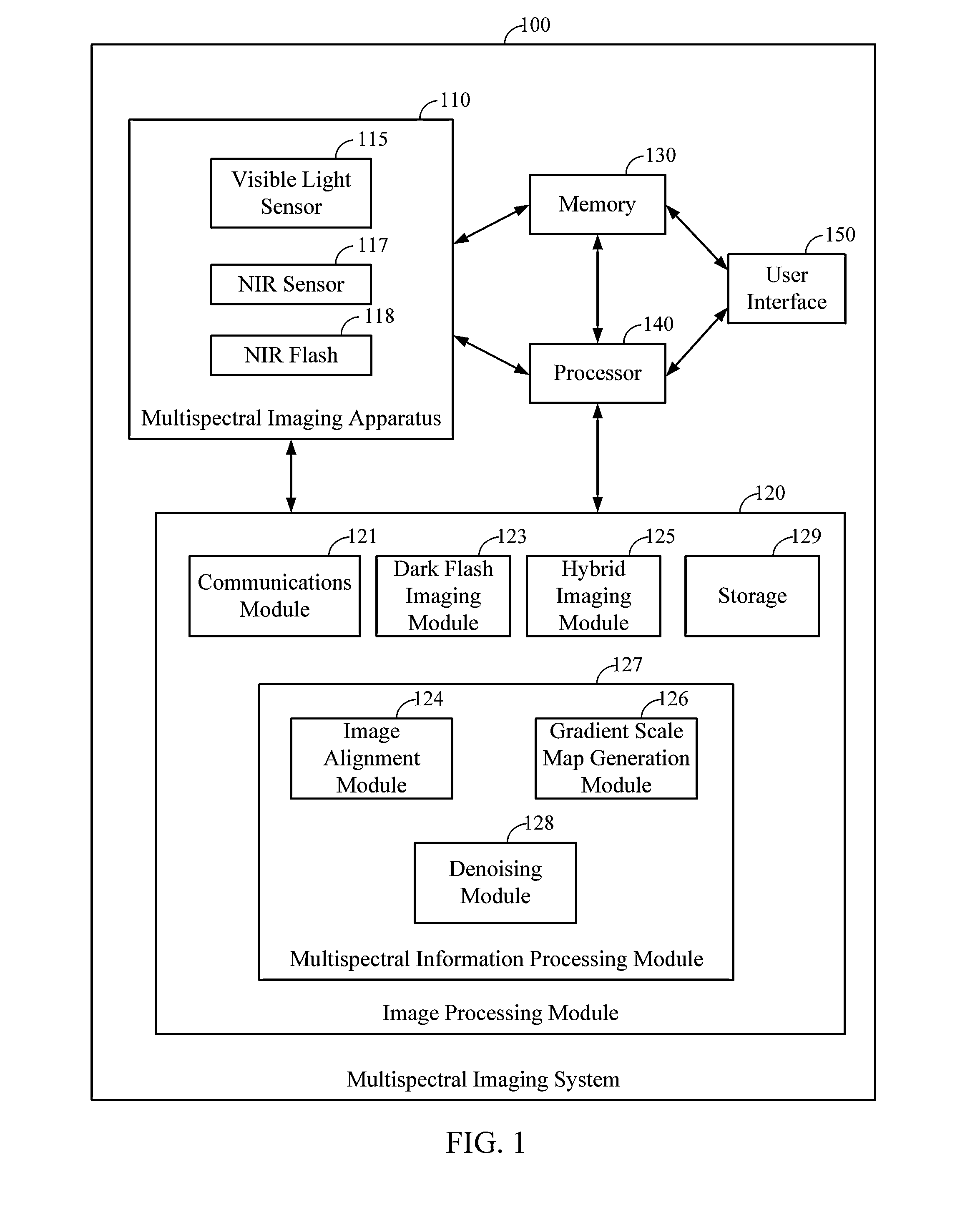

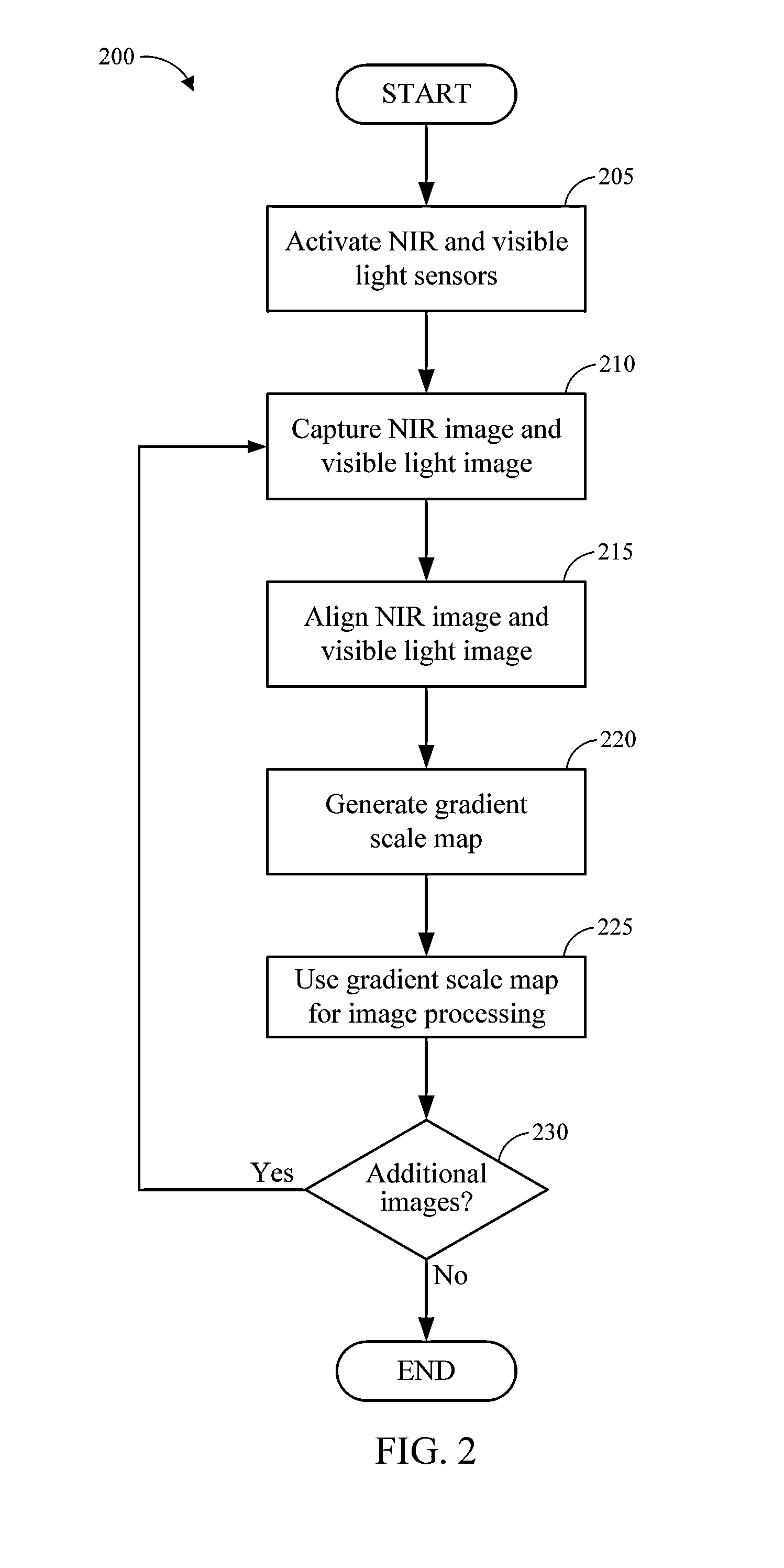

Near infrared guided image denoising

Systems and methods for multispectral imaging are disclosed. The multispectral imaging system can include a near infrared (NIR) imaging sensor and a visible imaging sensor. The disclosed systems and methods can be implemented to de-noise a visible light image using a gradient scale map generated from gradient vectors in the visible light image and a NIR image. The gradient scale map may be used to determine the amount of de-noising guidance applied from the NIR image to the visible light image on a pixel-by-pixel basis.

Owner:QUALCOMM INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com