Patents

Literature

267 results about "Confidence map" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

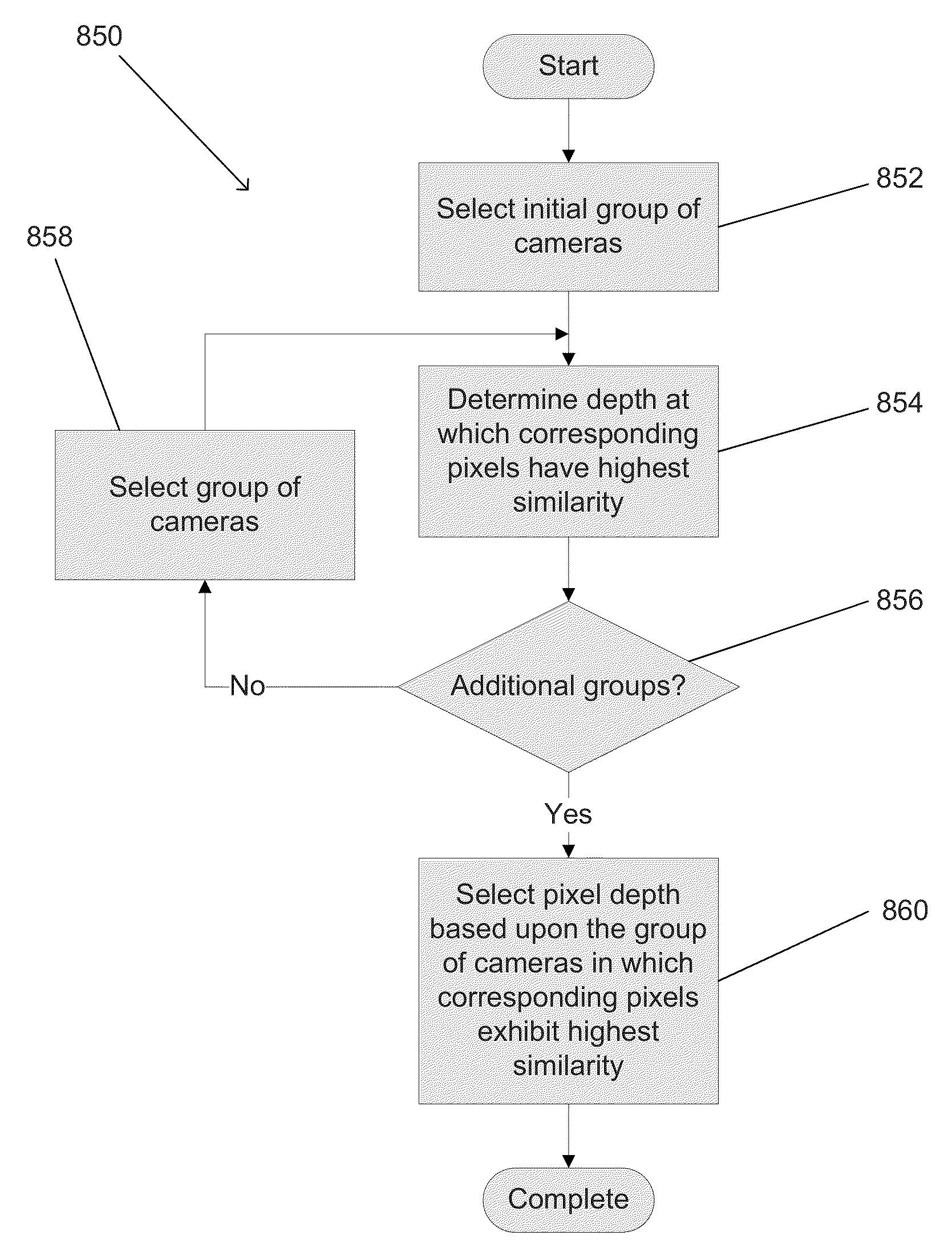

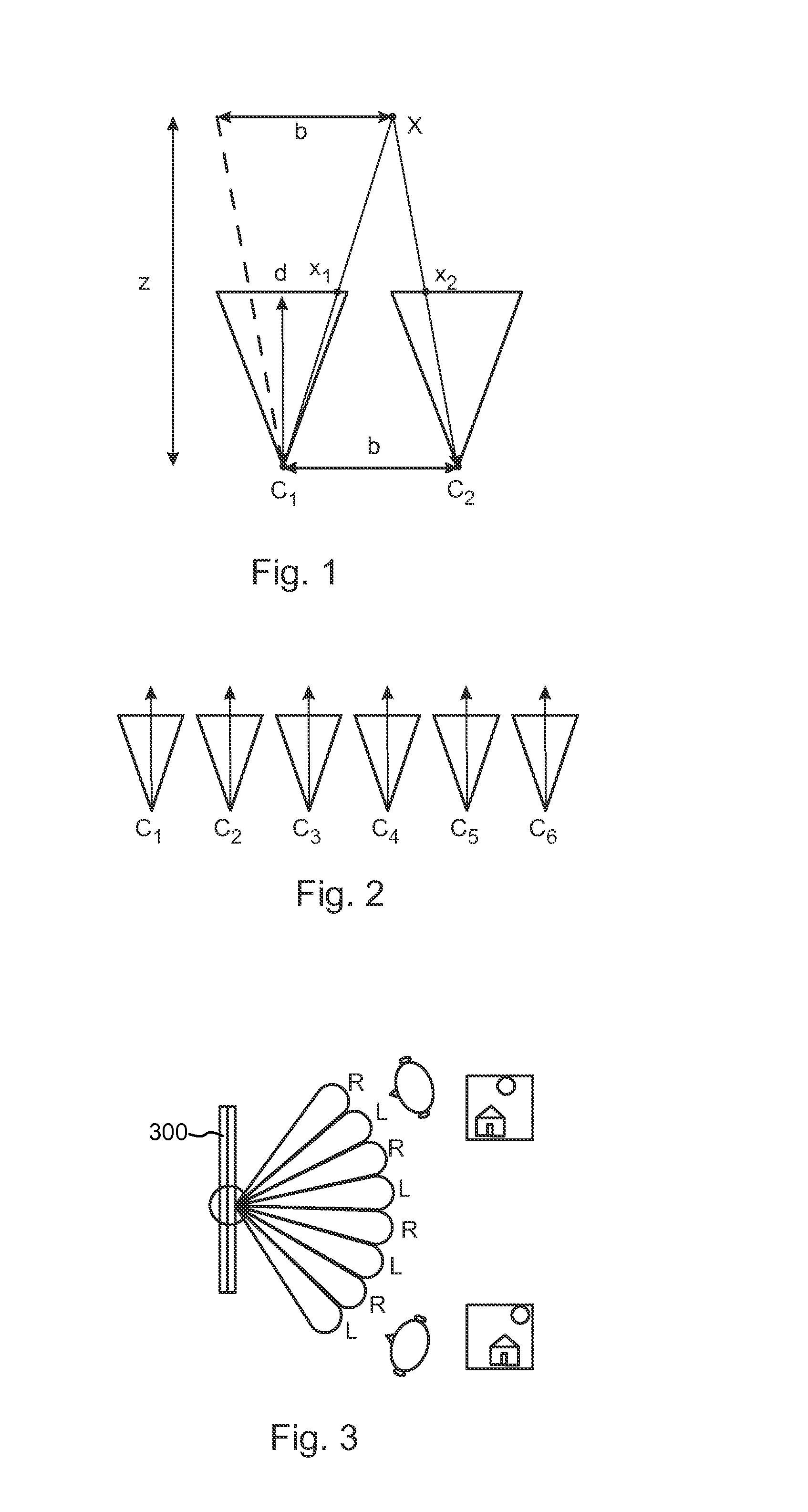

Systems and methods for parallax detection and correction in images captured using array cameras that contain occlusions using subsets of images to perform depth estimation

ActiveUS8619082B1Reduce the weighting appliedMinimal costImage enhancementTelevision system detailsParallaxViewpoints

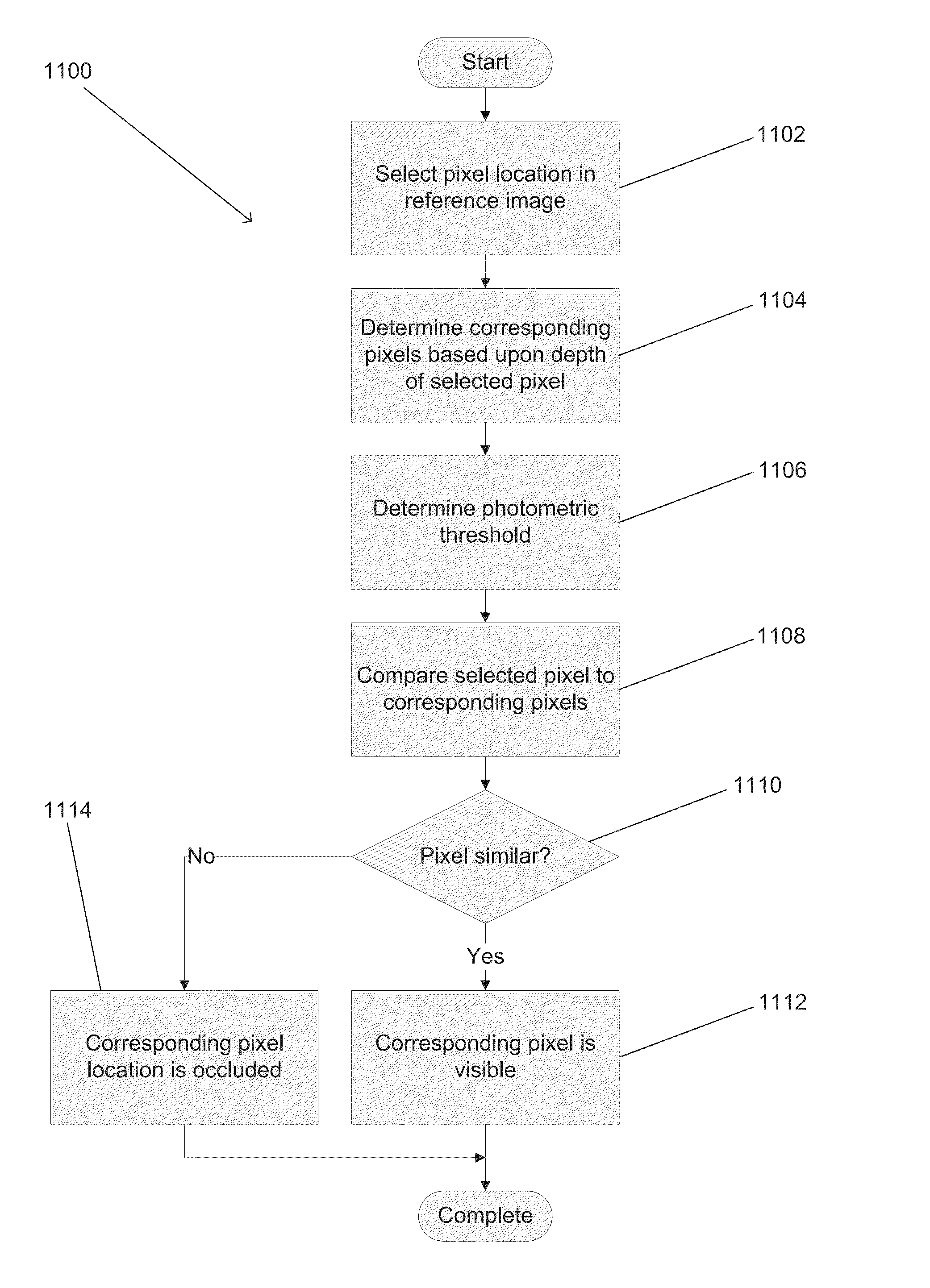

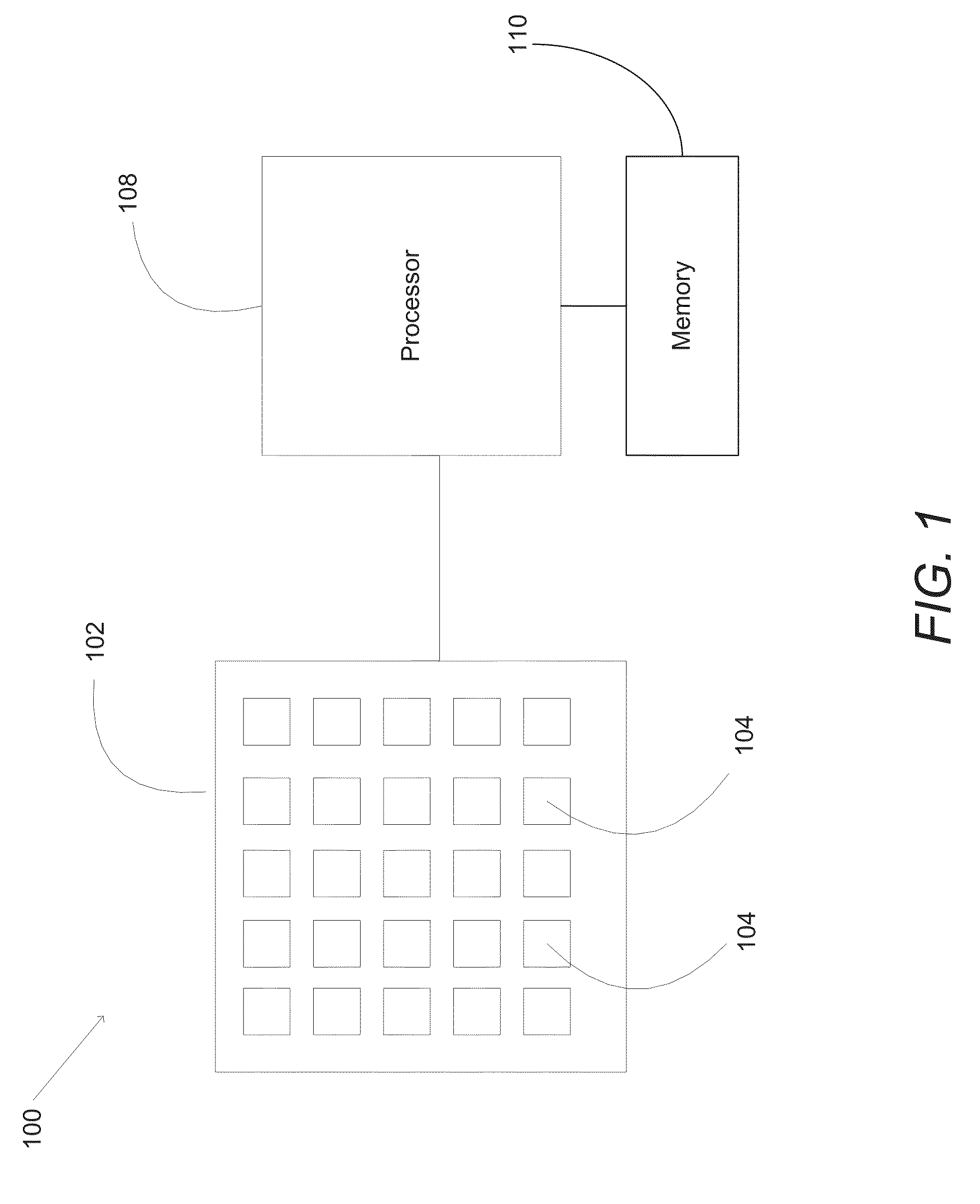

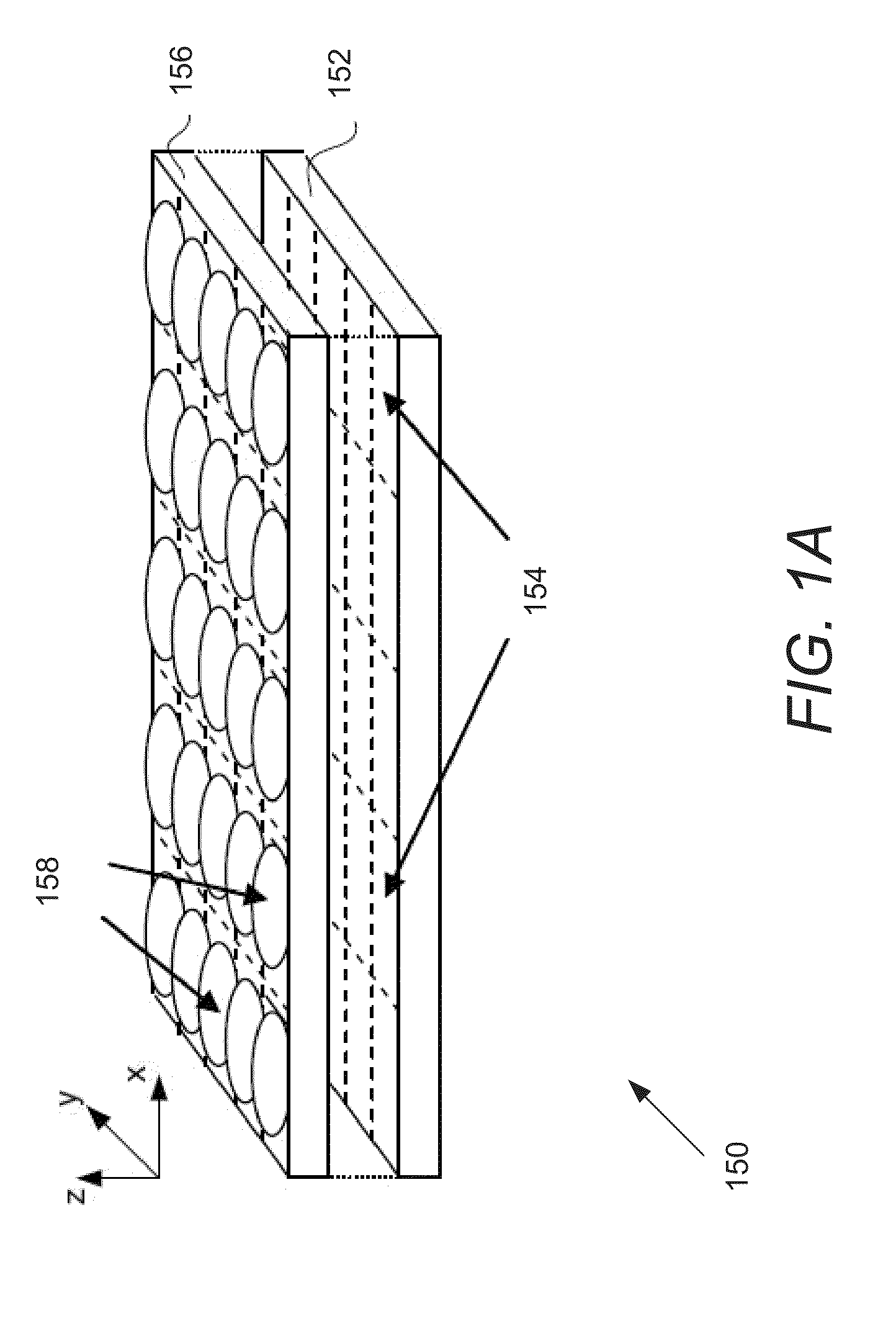

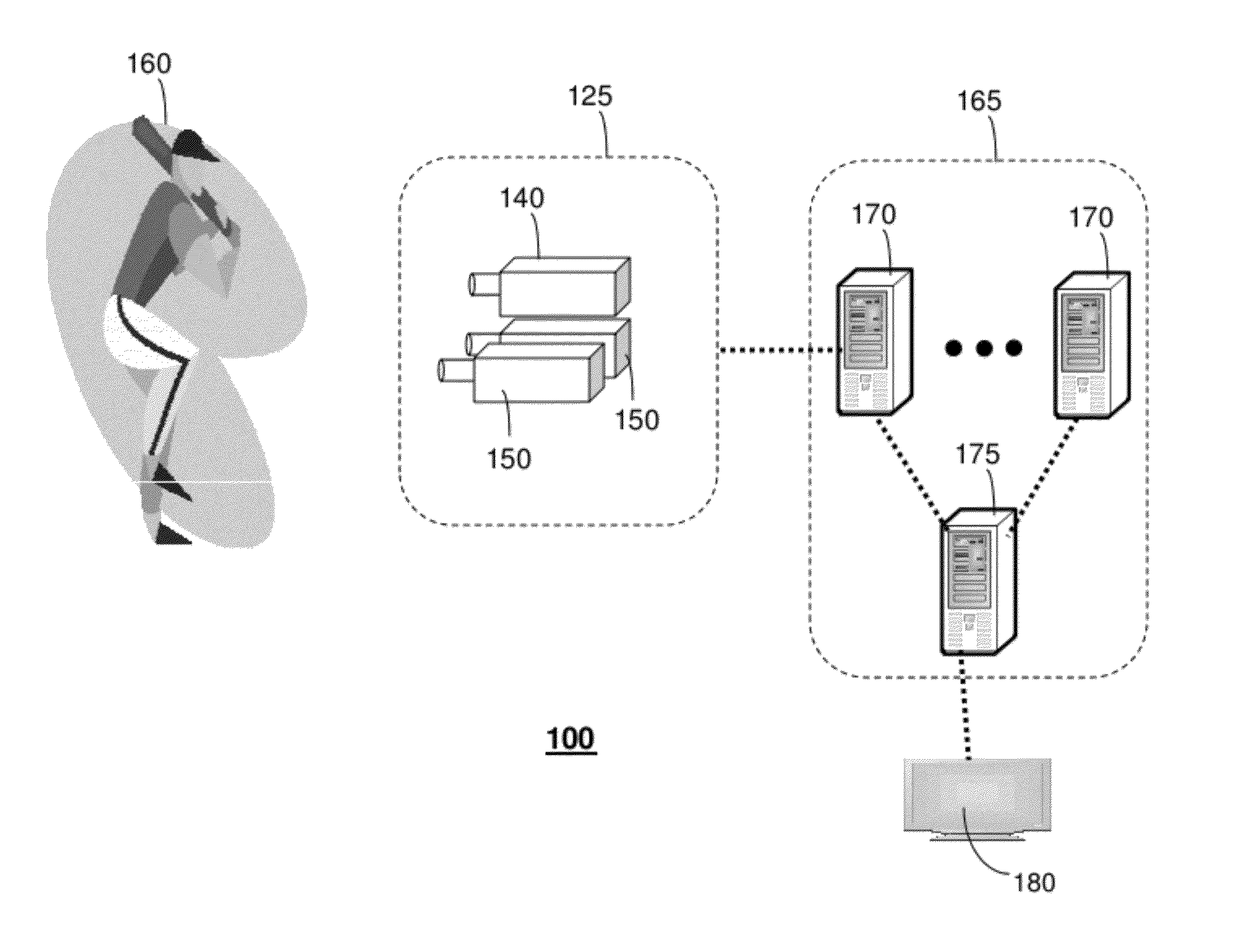

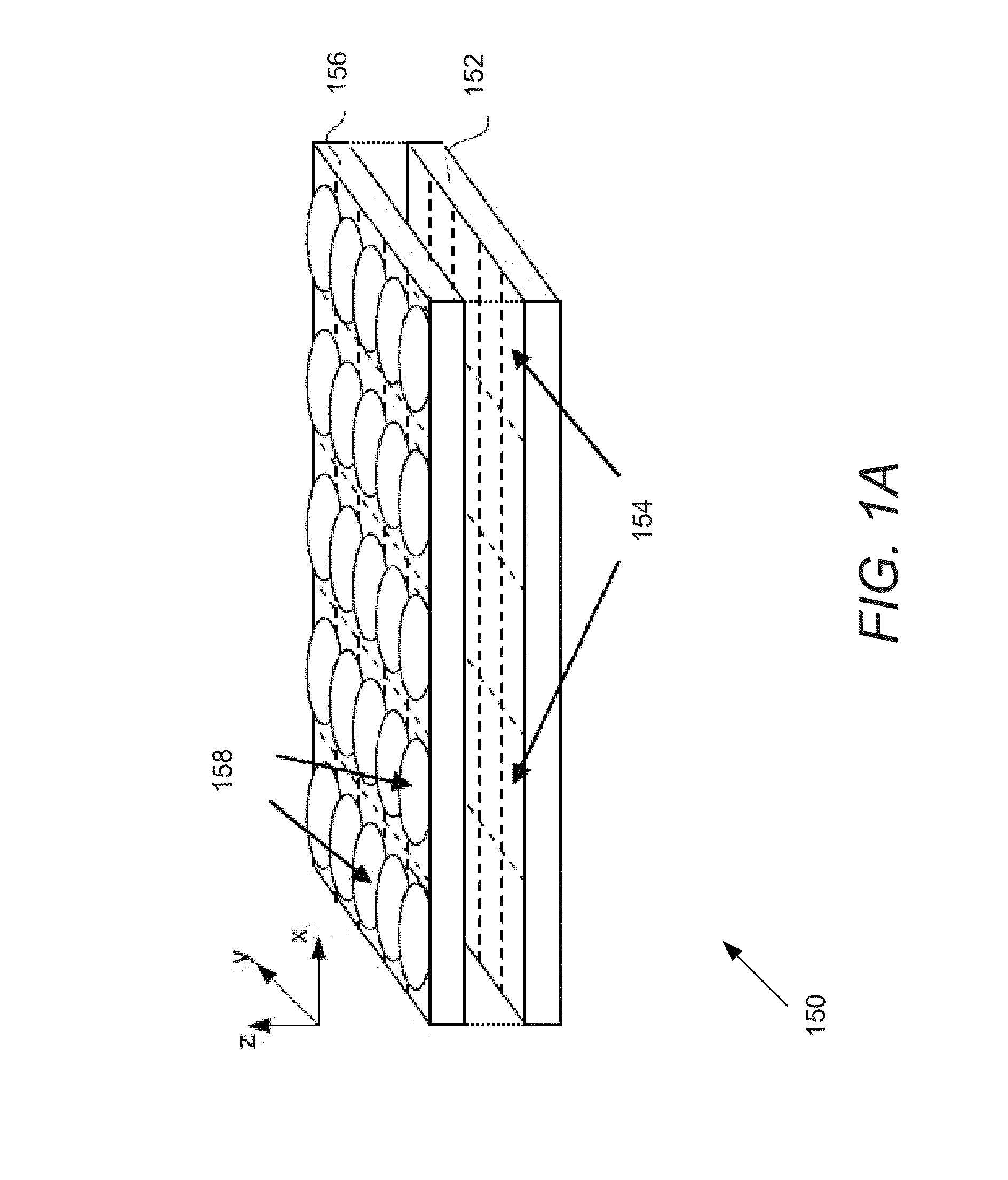

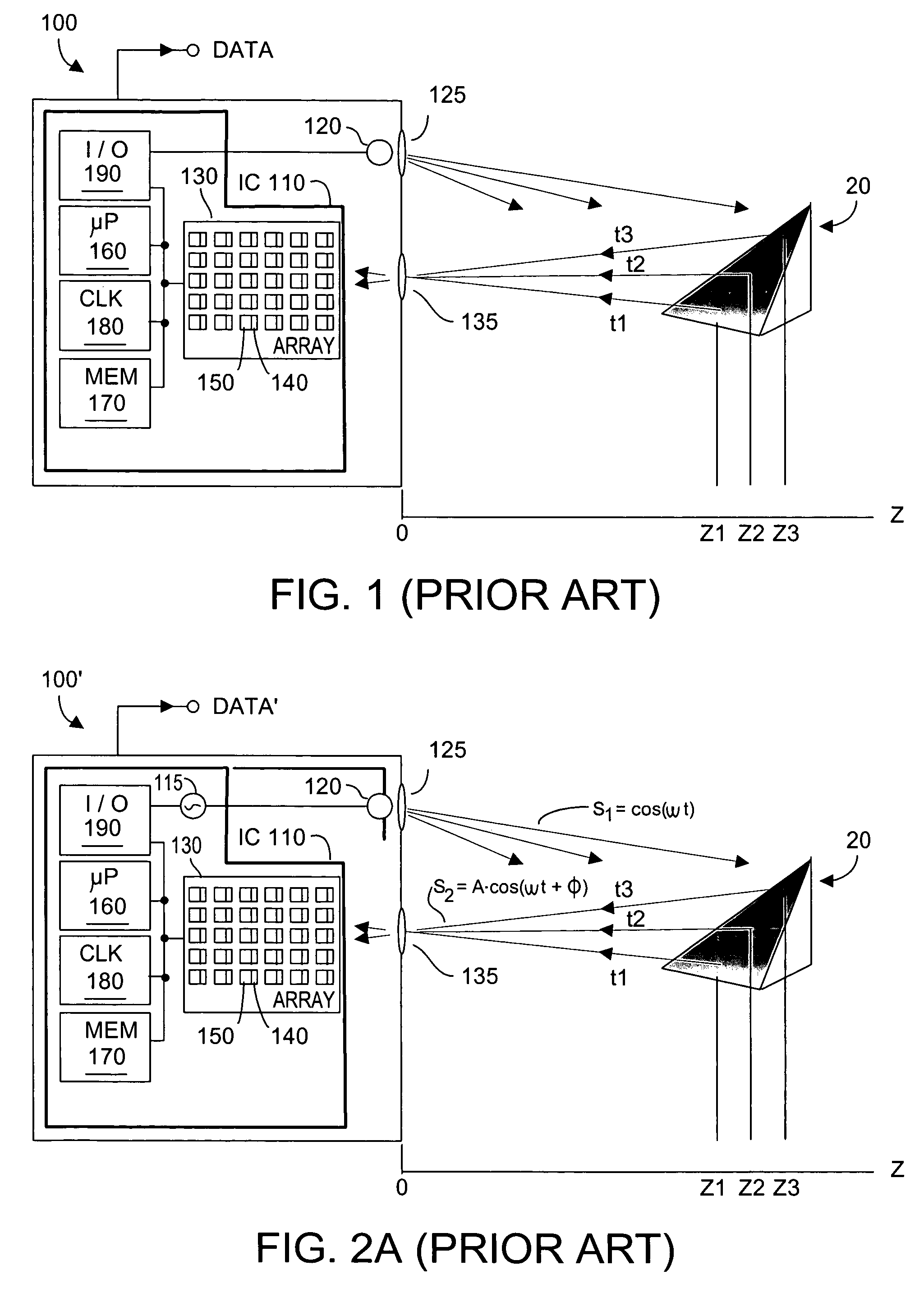

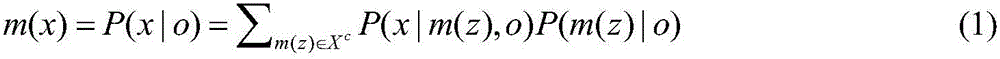

Systems in accordance with embodiments of the invention can perform parallax detection and correction in images captured using array cameras. Due to the different viewpoints of the cameras, parallax results in variations in the position of objects within the captured images of the scene. Methods in accordance with embodiments of the invention provide an accurate account of the pixel disparity due to parallax between the different cameras in the array, so that appropriate scene-dependent geometric shifts can be applied to the pixels of the captured images when performing super-resolution processing. In several embodiments, detecting parallax involves using competing subsets of images to estimate the depth of a pixel location in an image from a reference viewpoint. In a number of embodiments, generating depth estimates considers the similarity of pixels in multiple spectral channels. In certain embodiments, generating depth estimates involves generating a confidence map indicating the reliability of depth estimates.

Owner:FOTONATION LTD

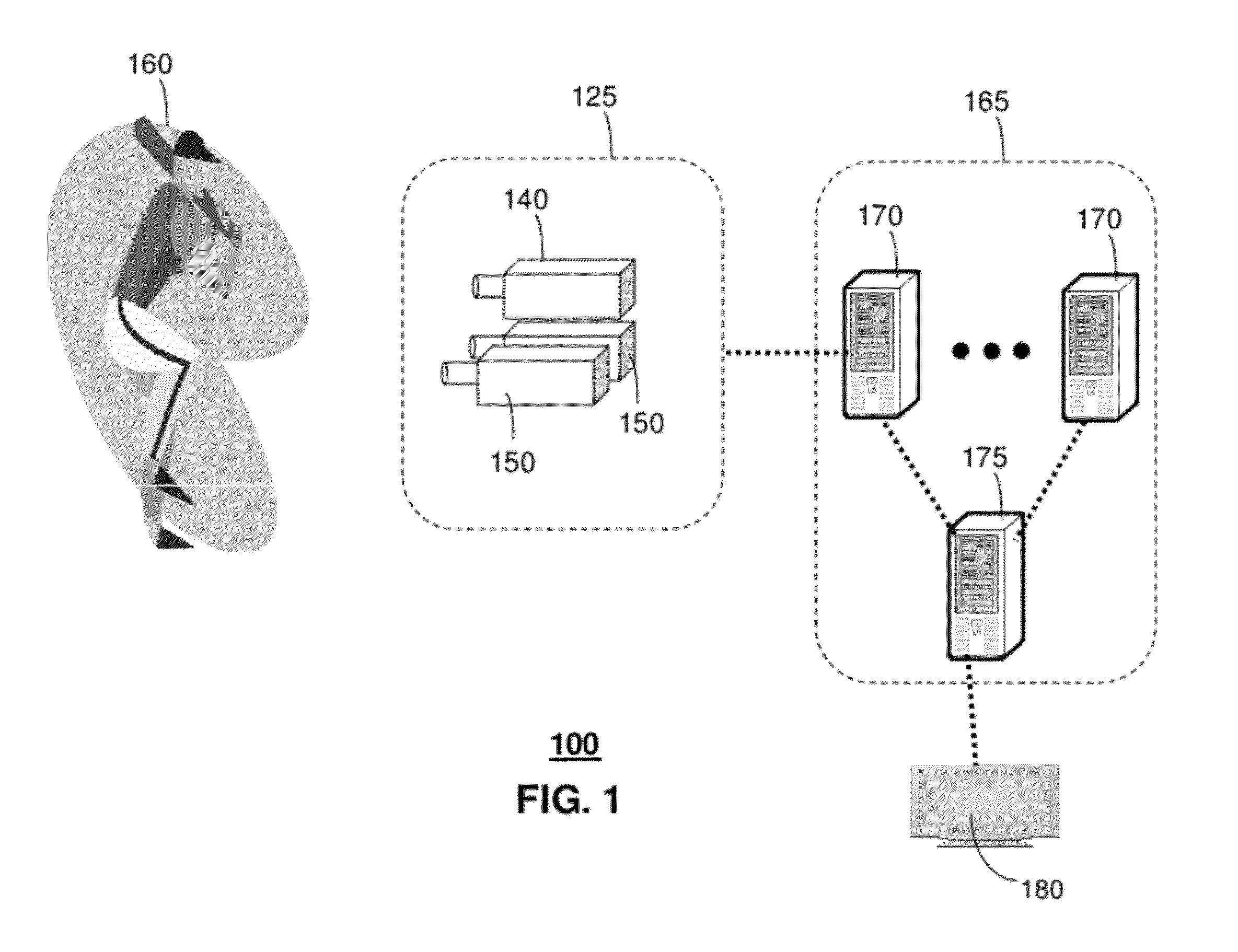

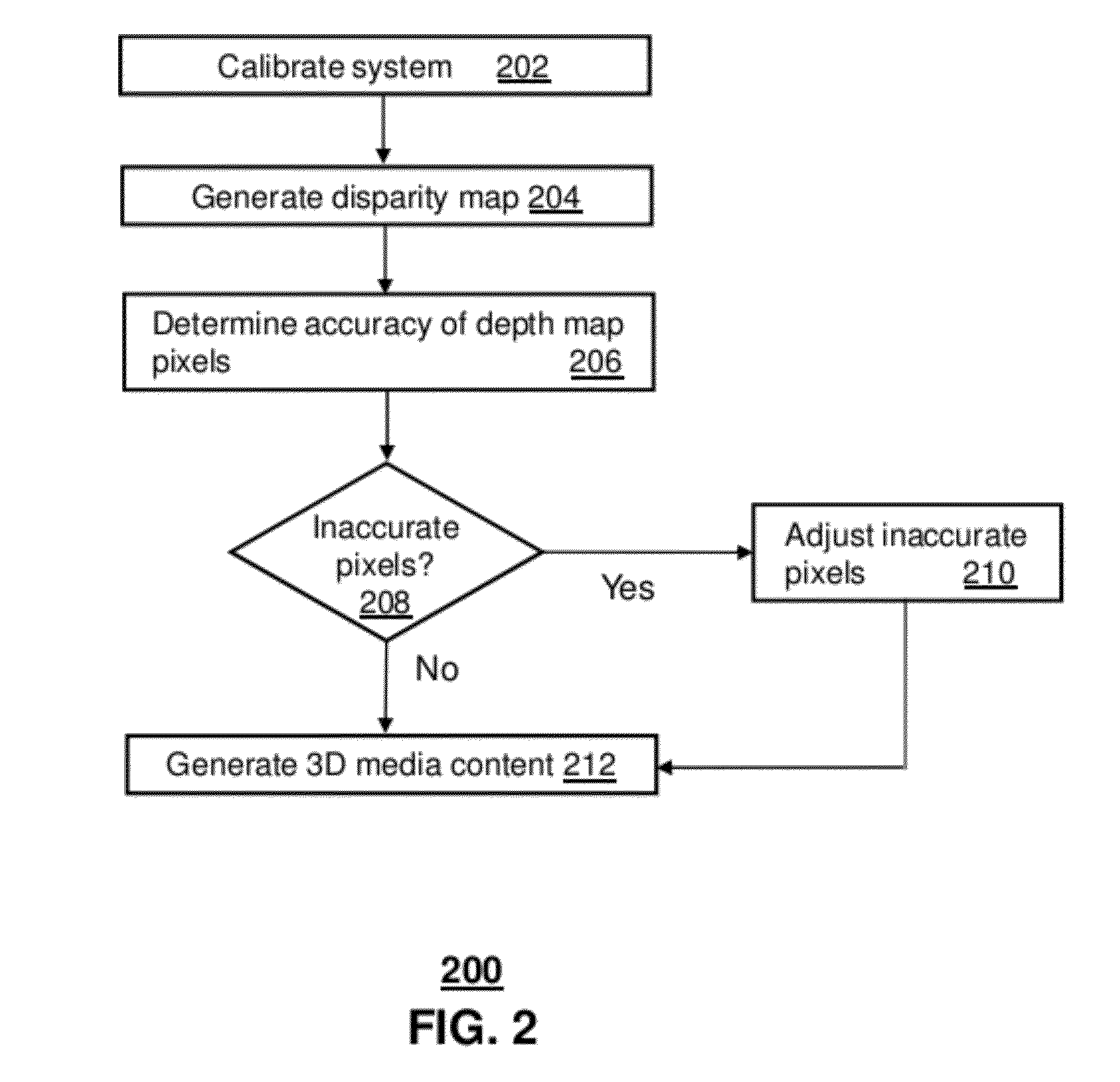

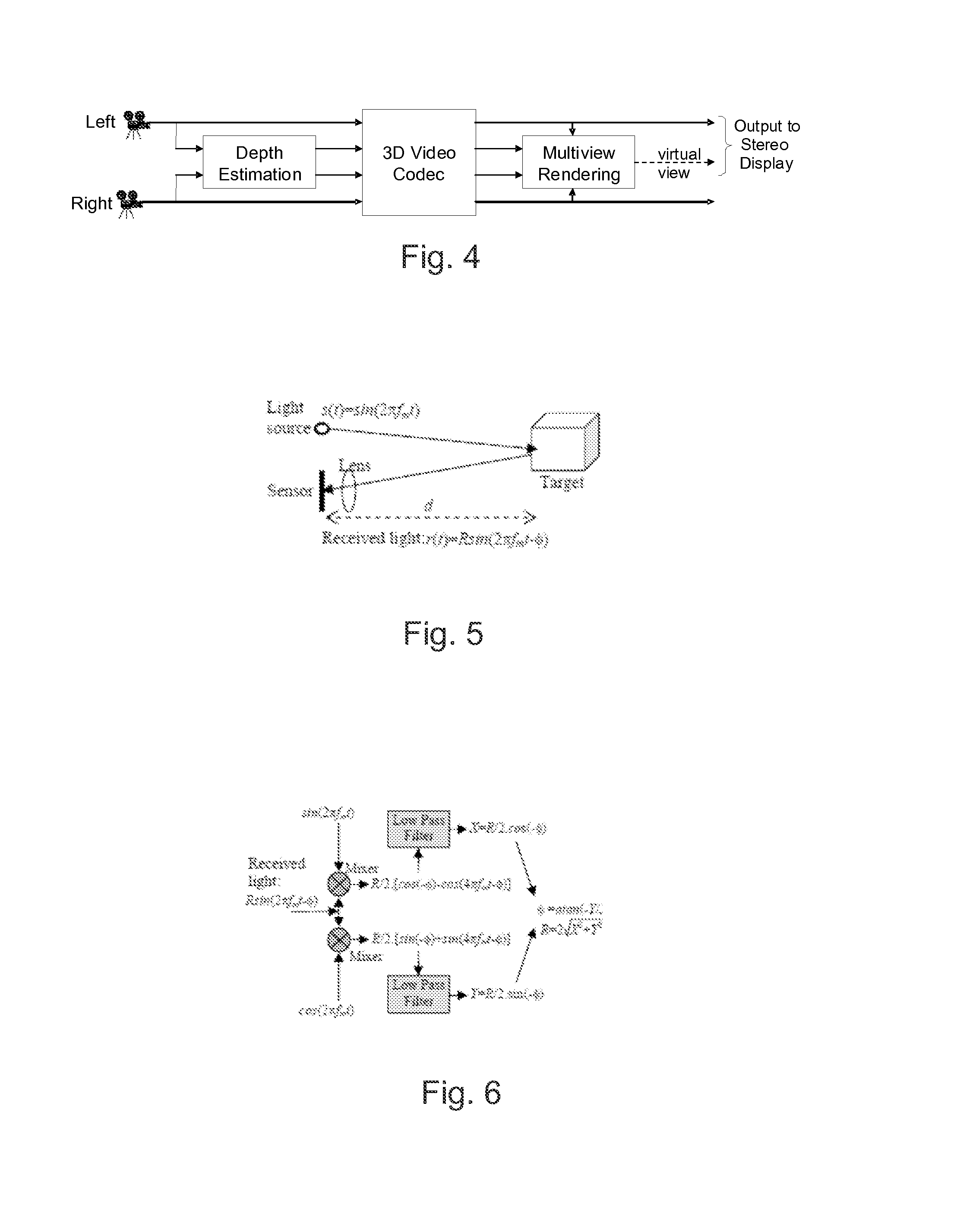

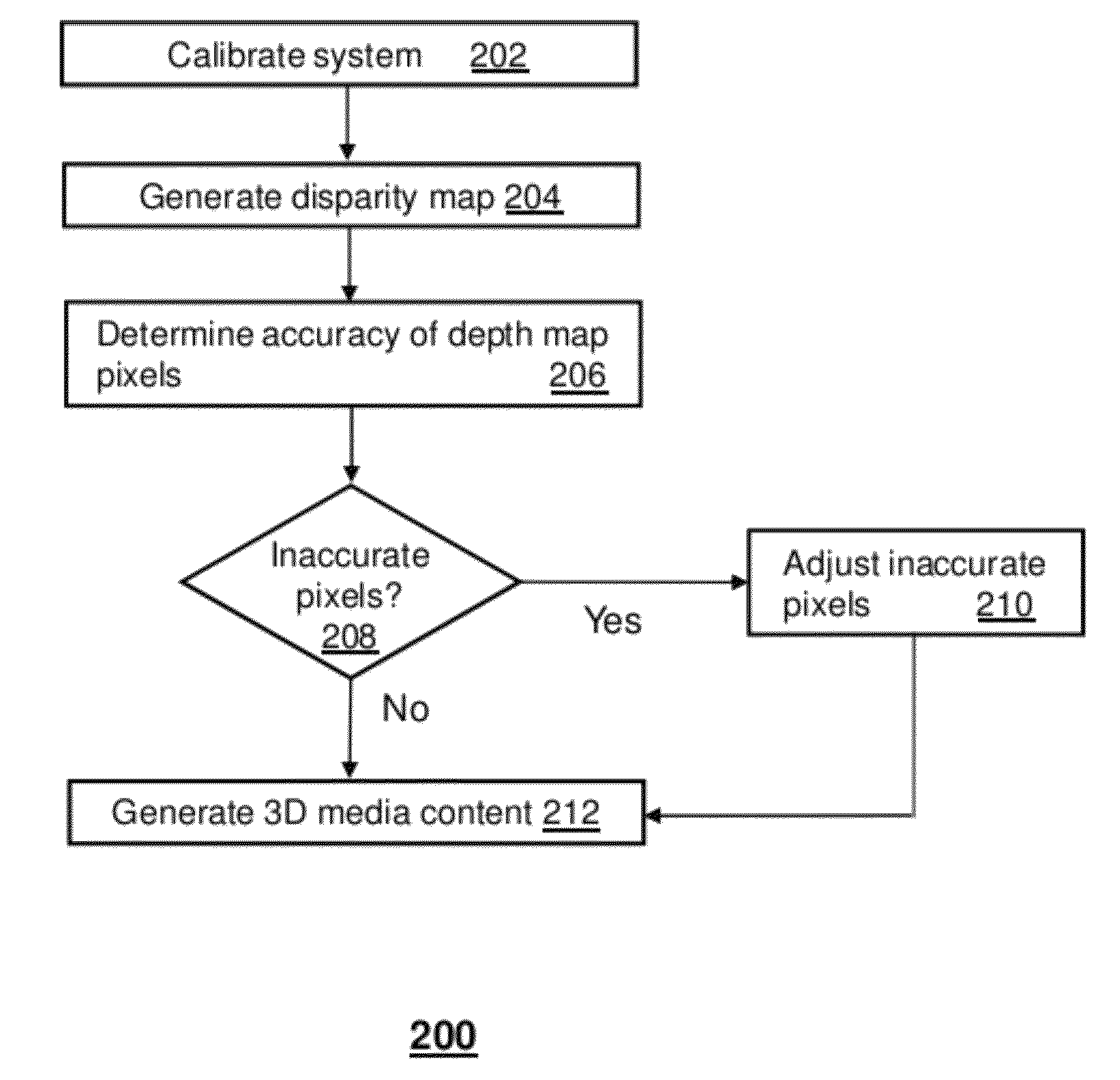

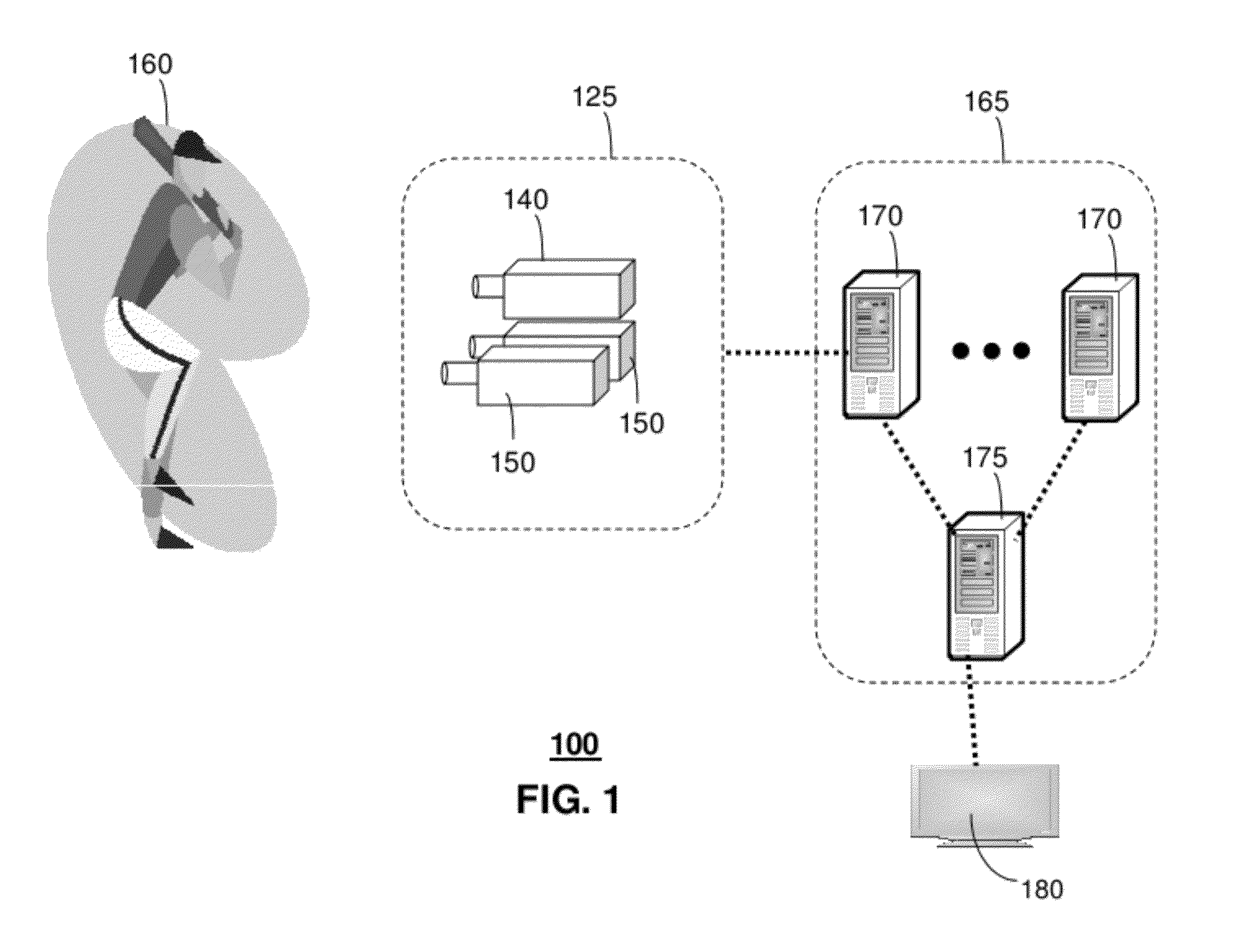

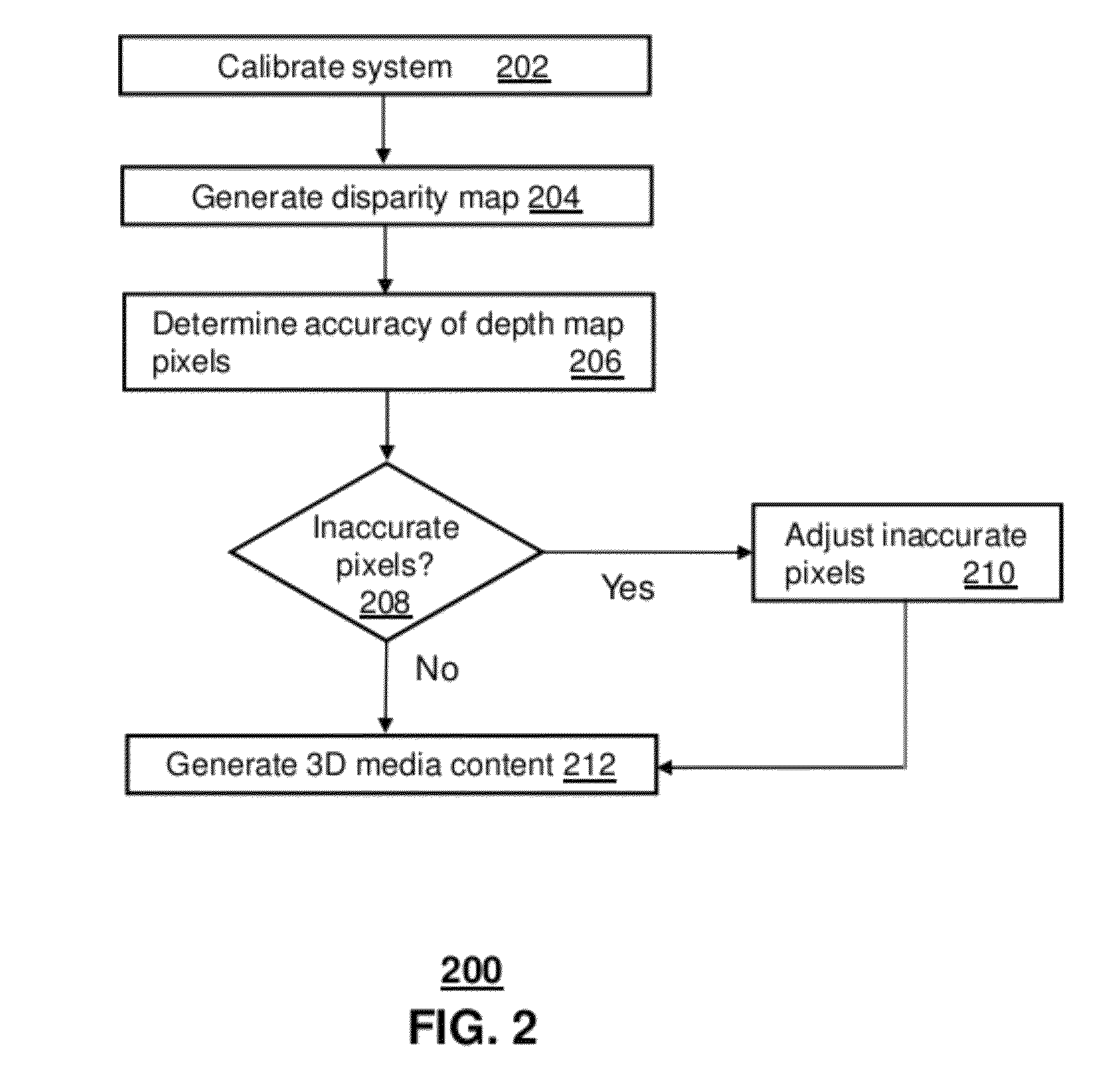

Apparatus and method for providing three dimensional media content

A system that incorporates teachings of the exemplary embodiments may include, for example, means for generating a disparity map based on a depth map, means for determining accuracy of pixels in the depth map where the determining means identifies the pixels as either accurate or inaccurate based on a confidence map and the disparity map, and means for providing an adjusted depth map where the providing means adjusts inaccurate pixels of the depth map using a cost function associated with the inaccurate pixels. Other embodiments are disclosed.

Owner:AT&T INTPROP I L P

Systems and methods for performing depth estimation using image data from multiple spectral channels

ActiveUS8780113B1Reduce the weighting appliedMinimal costImage enhancementImage analysisParallaxViewpoints

Systems in accordance with embodiments of the invention can perform parallax detection and correction in images captured using array cameras. Due to the different viewpoints of the cameras, parallax results in variations in the position of objects within the captured images of the scene. Methods in accordance with embodiments of the invention provide an accurate account of the pixel disparity due to parallax between the different cameras in the array, so that appropriate scene-dependent geometric shifts can be applied to the pixels of the captured images when performing super-resolution processing. In a number of embodiments, generating depth estimates considers the similarity of pixels in multiple spectral channels. In certain embodiments, generating depth estimates involves generating a confidence map indicating the reliability of depth estimates.

Owner:FOTONATION LTD

Methods and system to quantify depth data accuracy in three-dimensional sensors using single frame capture

InactiveUS7408627B2Improve reliabilityHighly unreliableOptical rangefindersElectromagnetic wave reradiationPhotodetectorConfidence map

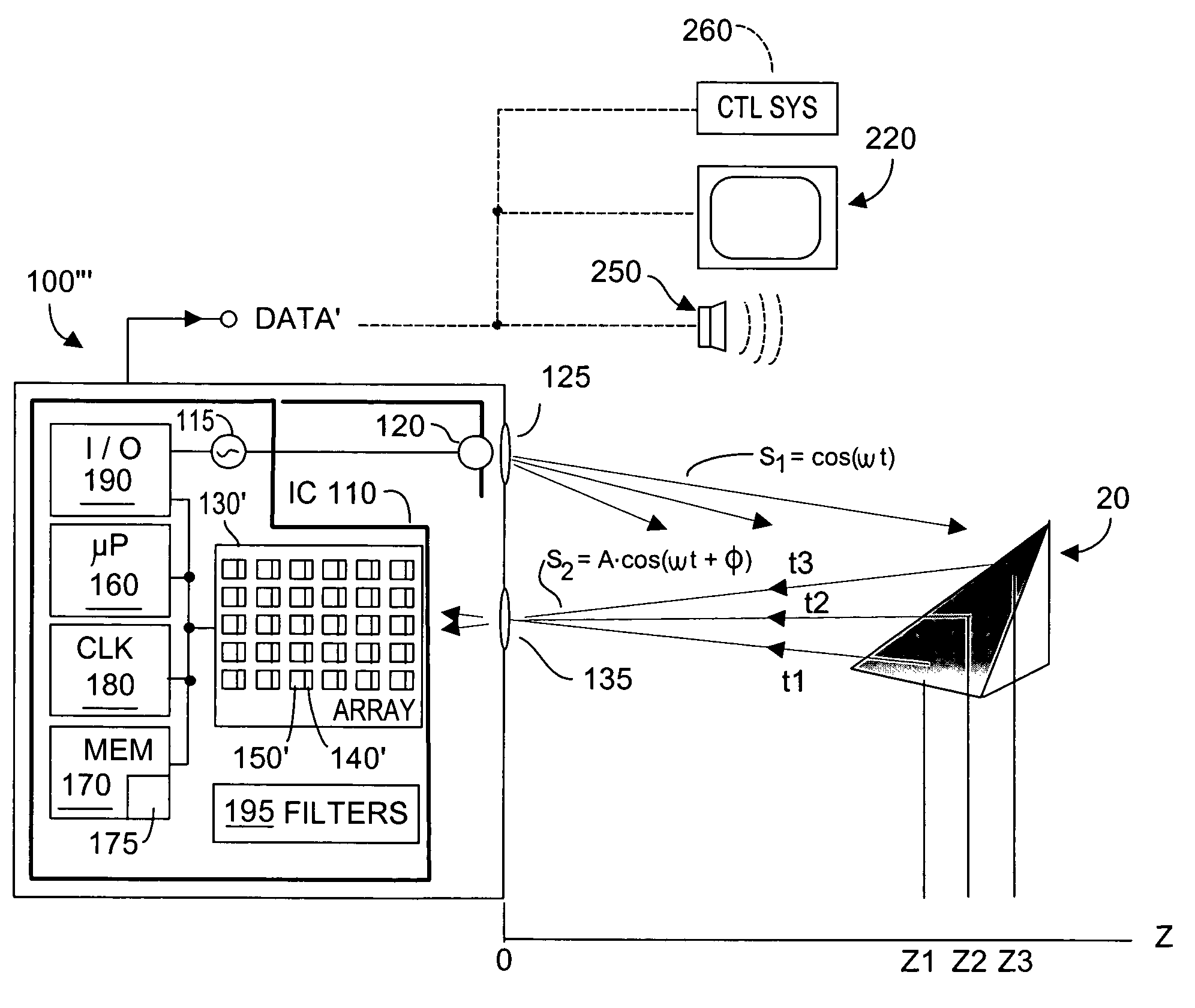

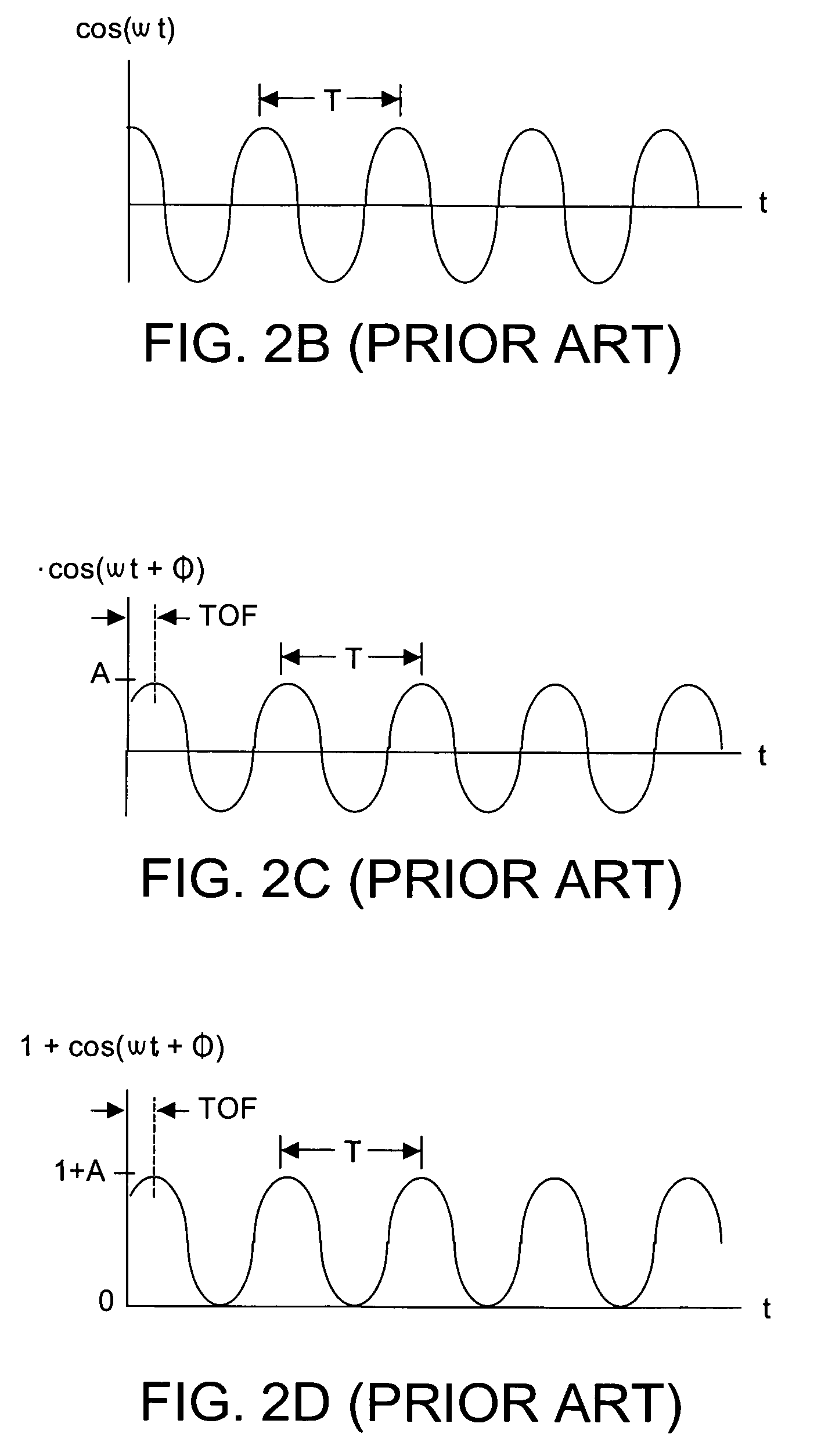

A method and system dynamically calculates confidence levels associated with accuracy of Z depth information obtained by a phase-shift time-of-flight (TOF) system that acquires consecutive images during an image frame. Knowledge of photodetector response to maximum and minimum detectable signals in active brightness and total brightness conditions is known a priori and stored. During system operation brightness threshold filtering and comparing with the a priori data permits identifying those photodetectors whose current output signals are of questionable confidence. A confidence map is dynamically generated and used to advise a user of the system that low confidence data is currently being generated. Parameter(s) other than brightness may also or instead be used.

Owner:MICROSOFT TECH LICENSING LLC

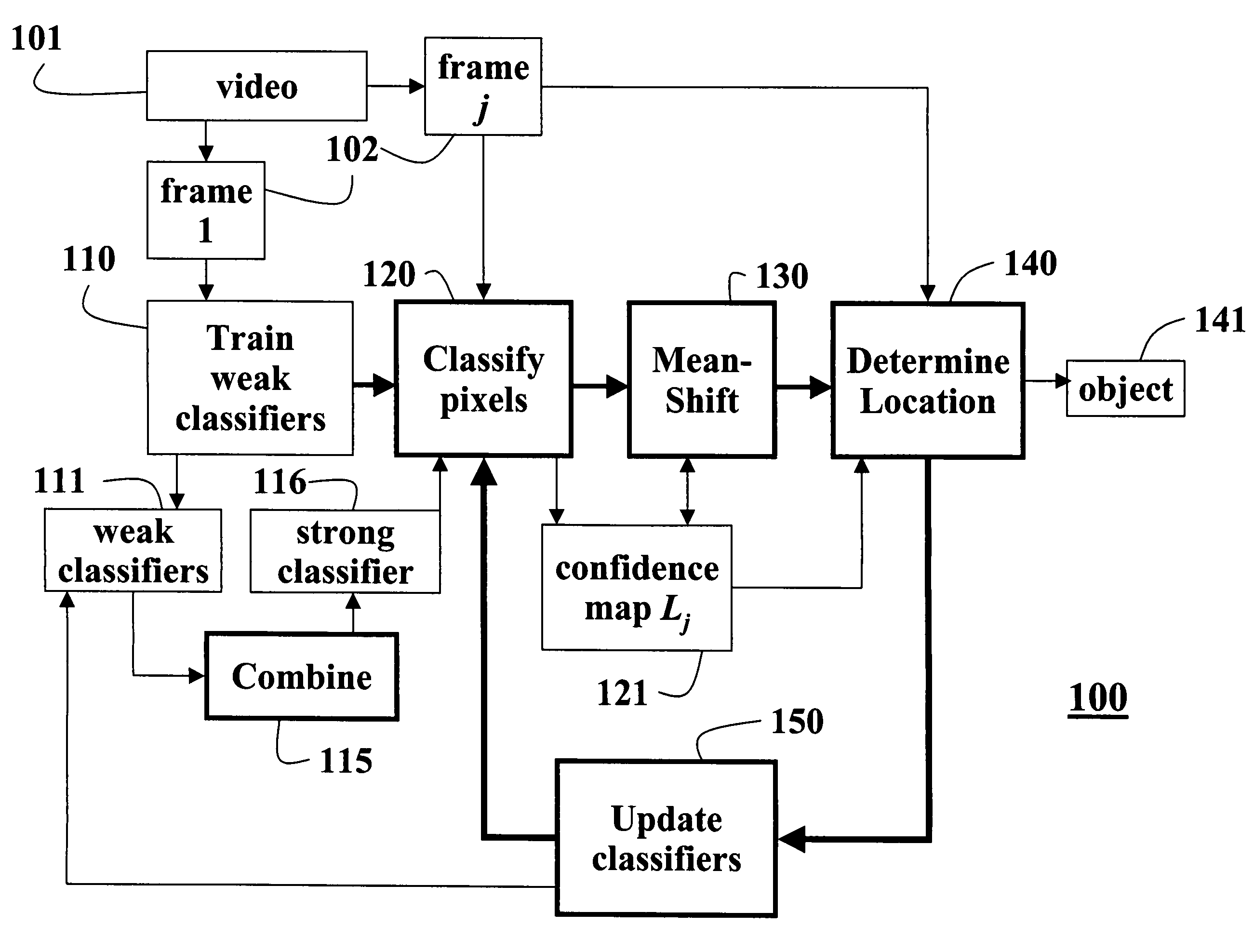

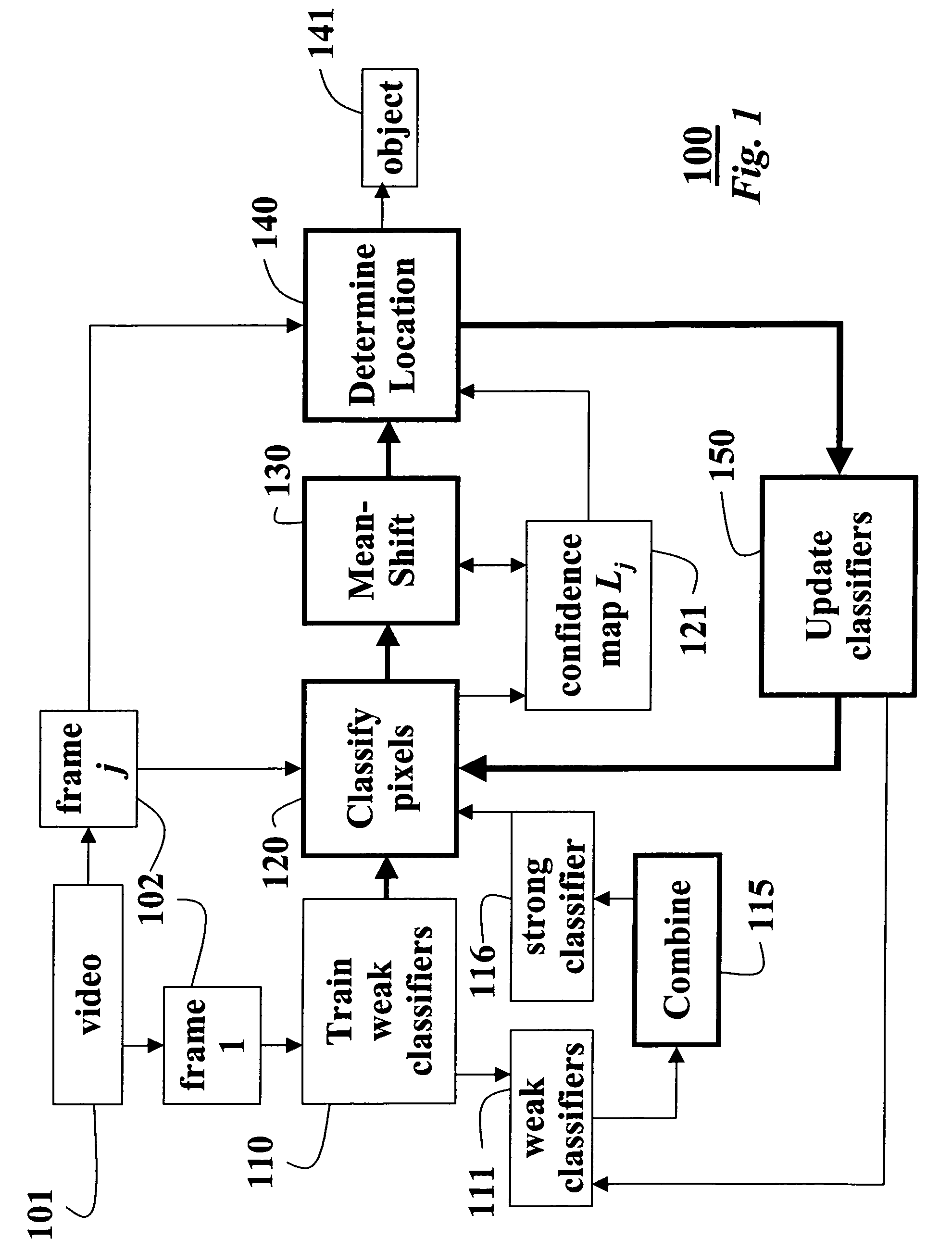

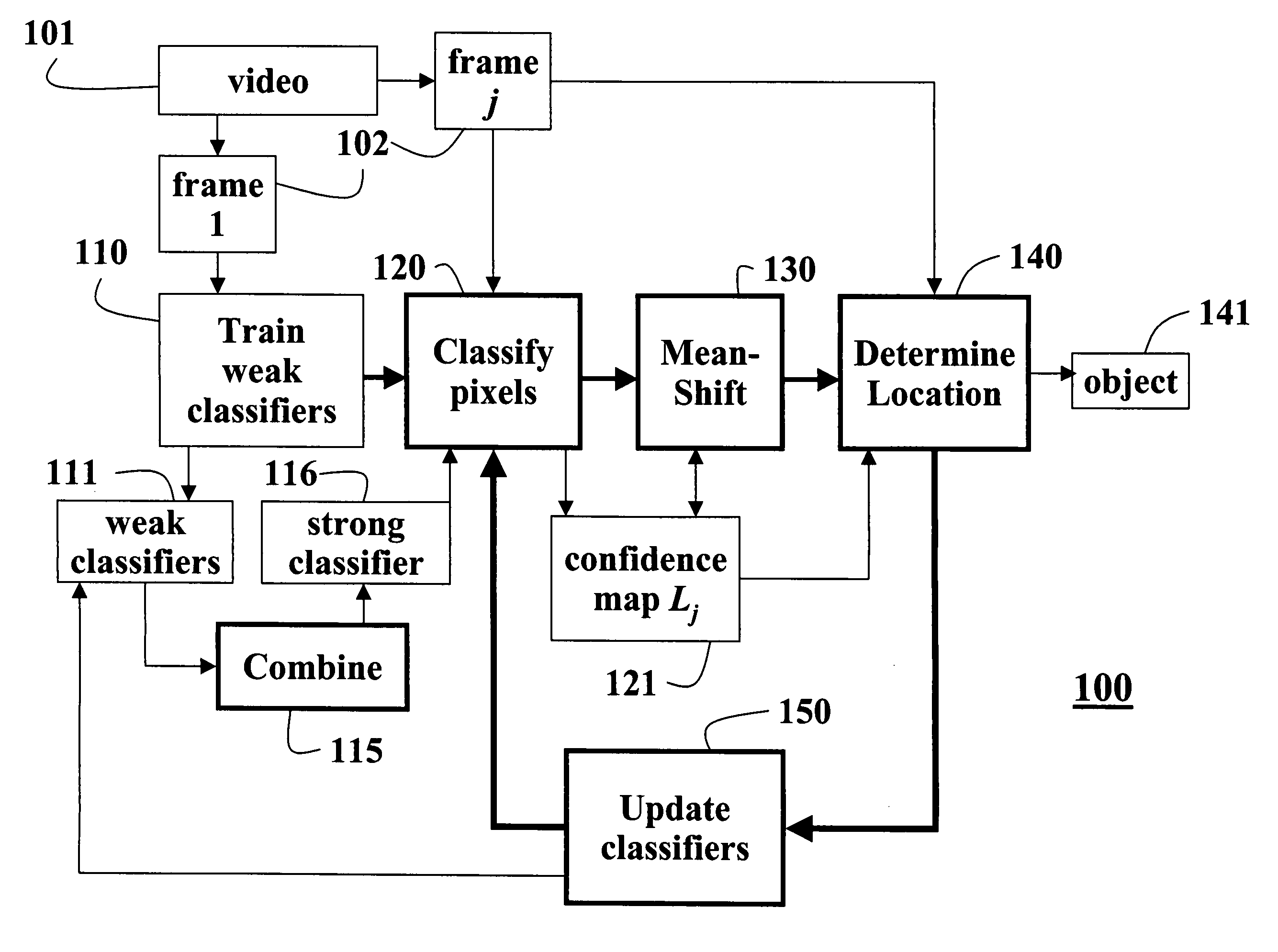

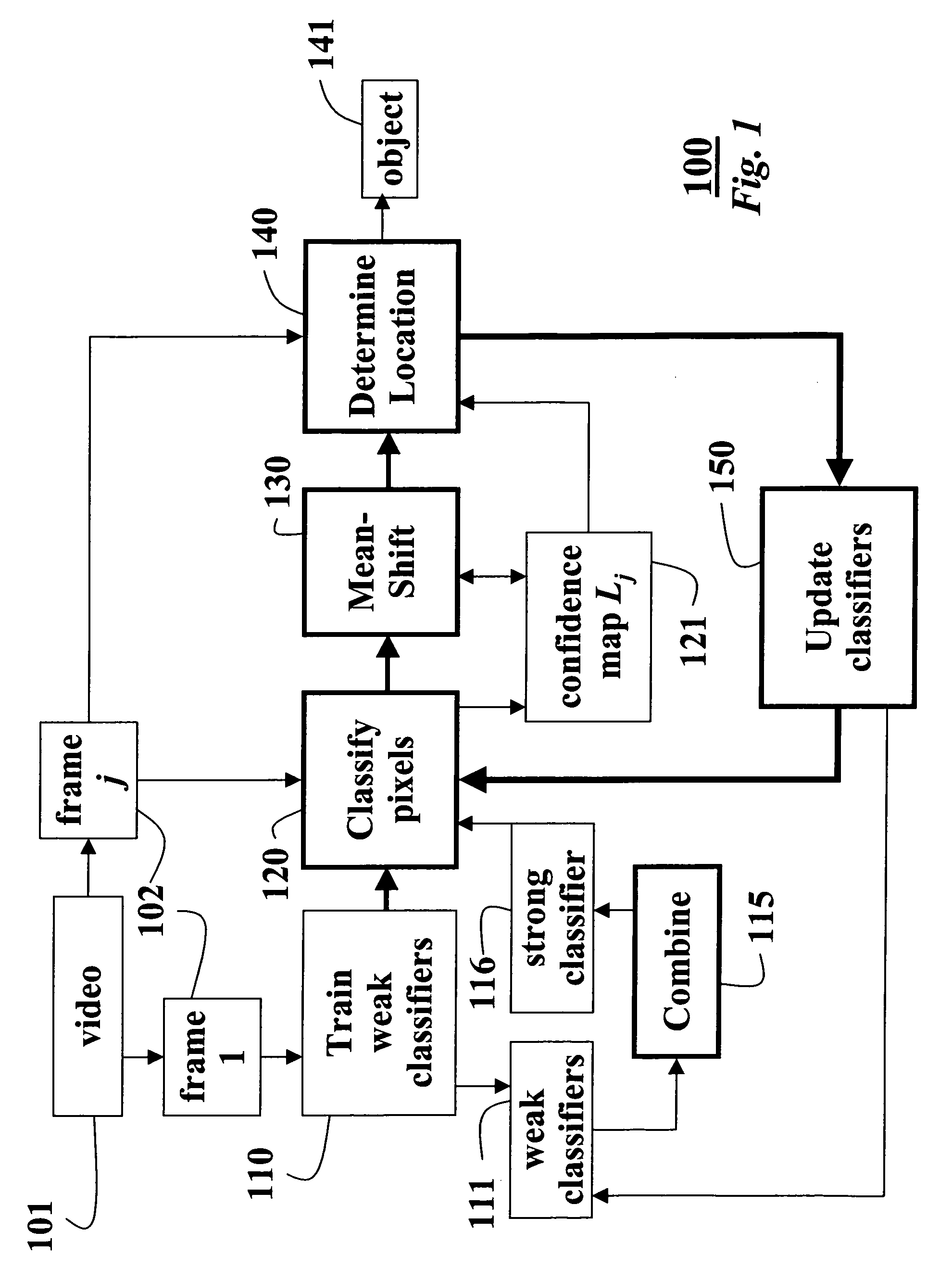

Tracking objects in videos with adaptive classifiers

InactiveUS7526101B2Improve performanceSimple calculationImage analysisCharacter and pattern recognitionFeature vectorMean-shift

Owner:MITSUBISHI ELECTRIC RES LAB INC

Tracking objects in videos with adaptive classifiers

InactiveUS20060165258A1Simple calculationImprove stabilityImage analysisCharacter and pattern recognitionFeature vectorMean-shift

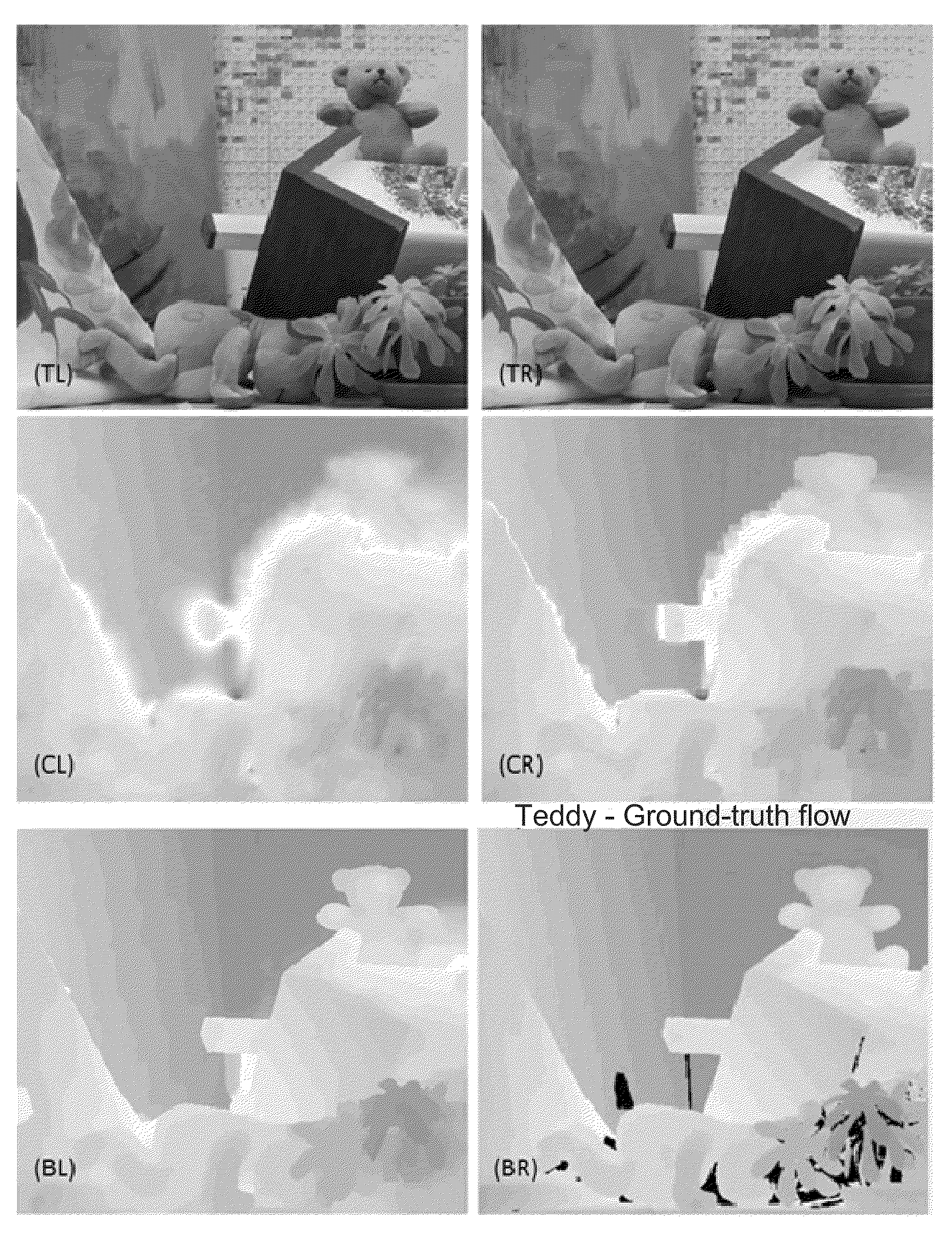

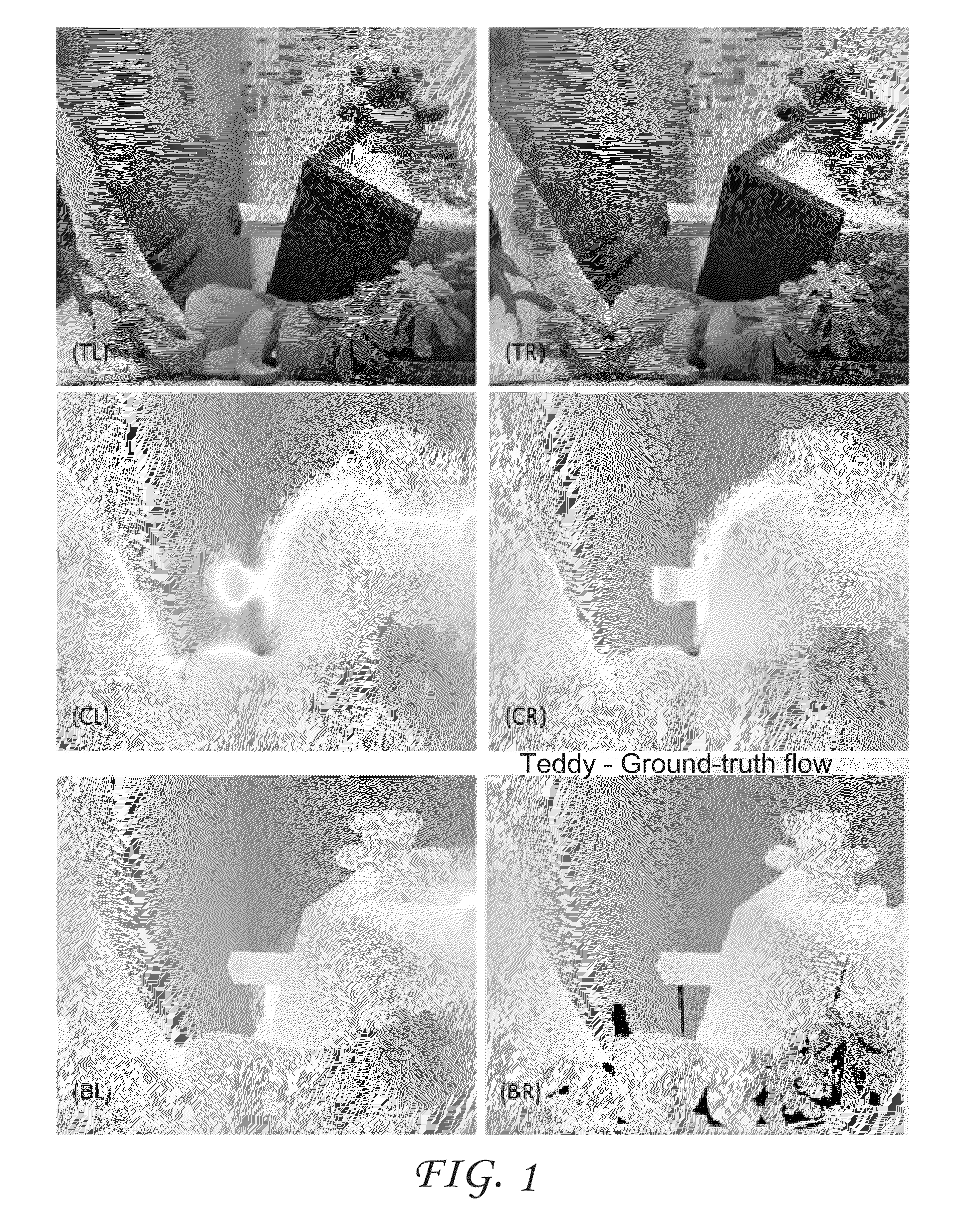

A method locates an object in a sequence of frames of a video. A feature vector is constructed for every pixel in each frame. The feature vector is used to training the weak classifiers. The weak classifiers separate pixels that are associated with the object from pixels that are associated with the background. The set of weak classifiers are combined into a strong classifier. The strong classifier labels pixels in a frame to generate a confidence map. A ‘peak’ in the confidence is located using a mean-shift operation. The peak indicates a location of the object in the frame. That is, the confidence map distinguishes the object from the background in the video.

Owner:MITSUBISHI ELECTRIC RES LAB INC

Spatio-temporal confidence maps

InactiveUS20140153784A1Improving momentum in robustnessBroaden their knowledgeImage enhancementImage analysisParallaxConfidence map

A method and an apparatus for generating a confidence map for a disparity map associated to a set of two or more images are described. Motion between at least two subsequent sets of two or more images is determined. Based on the determined motion information static and dynamic regions in the images of the sets of two or more images are detected and separated. A disparity change between a disparity value determined for a static region of a current image and a motion compensated disparity value of a previous image is determined. The result of the determination is taken into account for generating or refining a confidence map.

Owner:THOMSON LICENSING SA

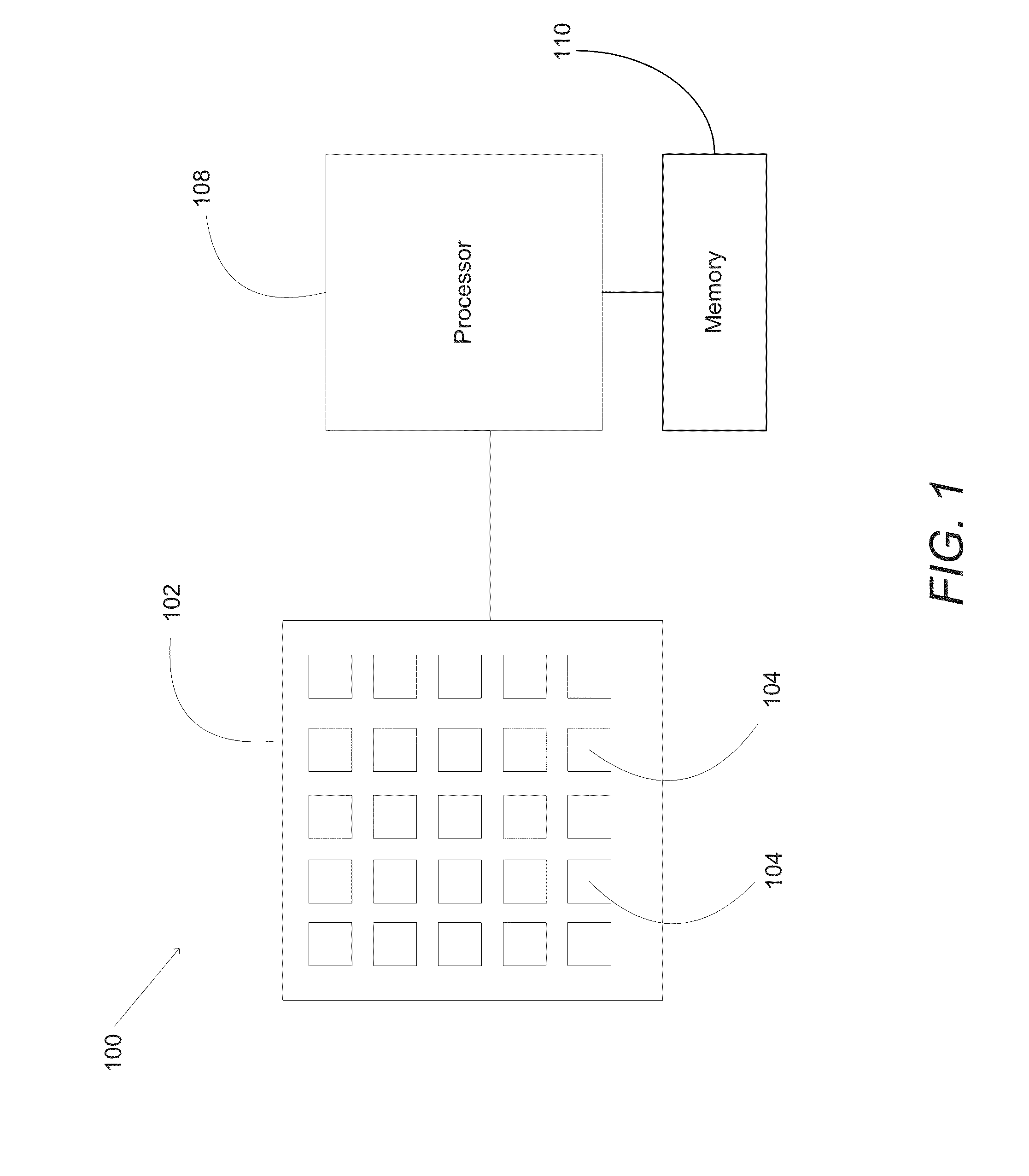

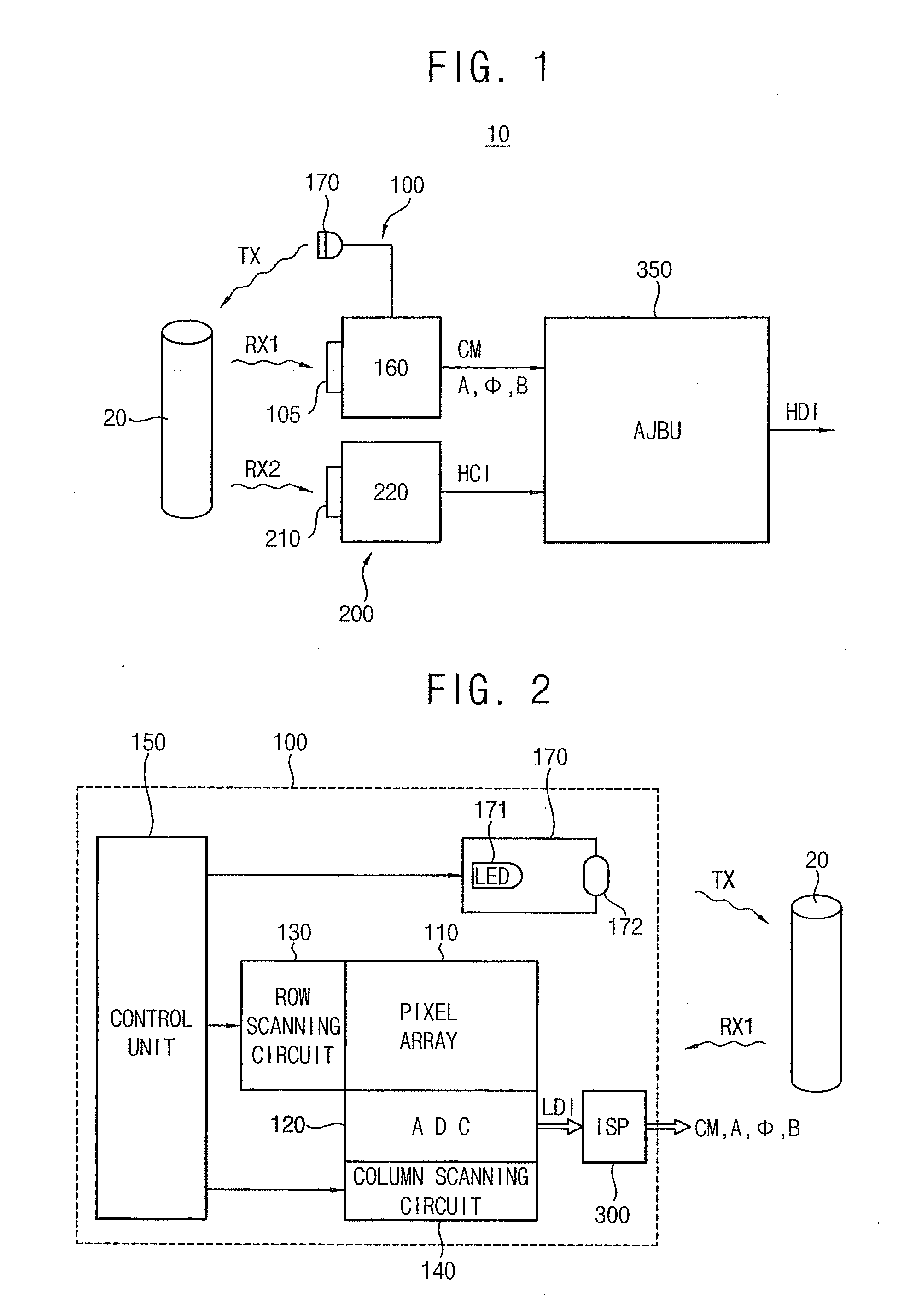

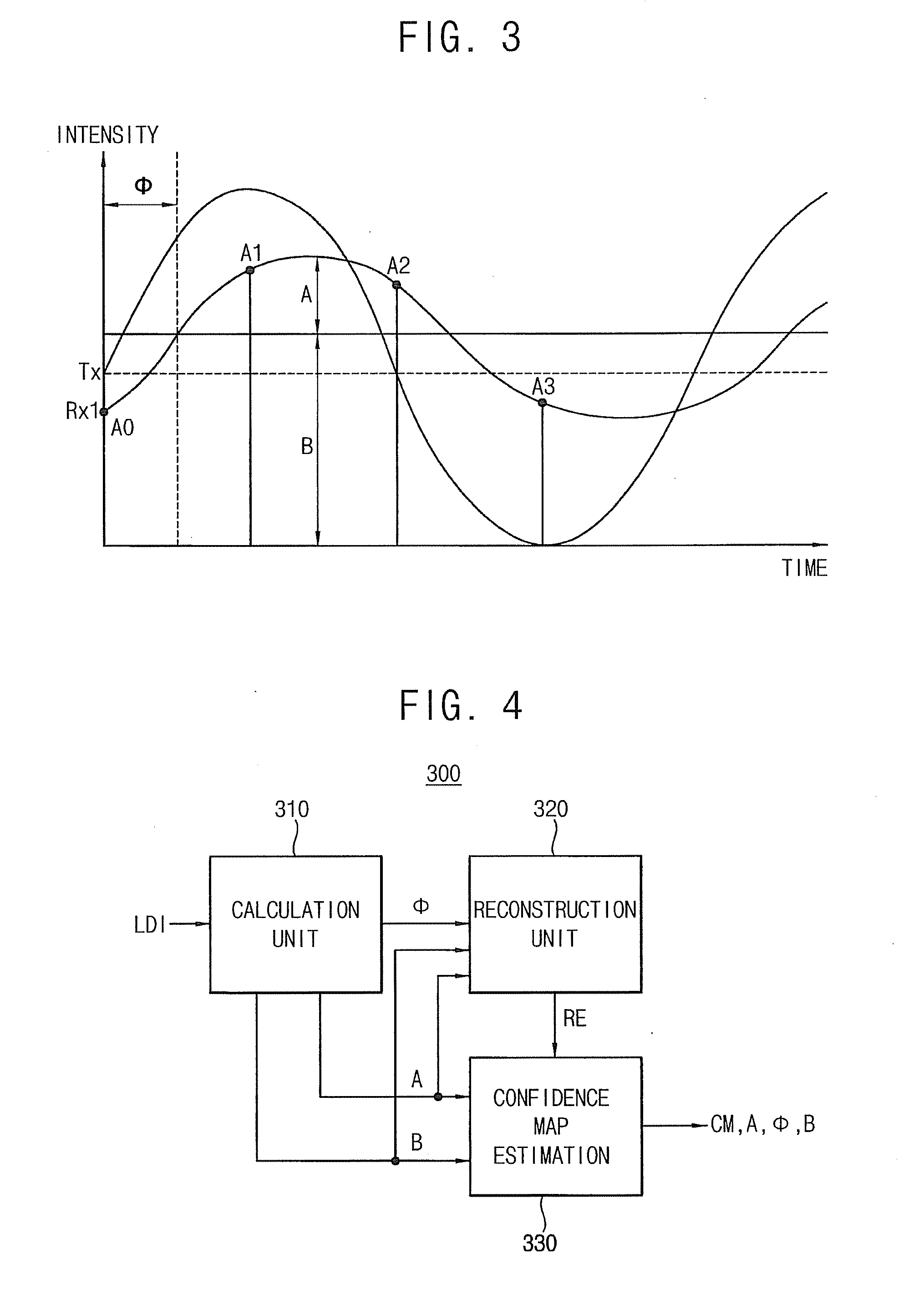

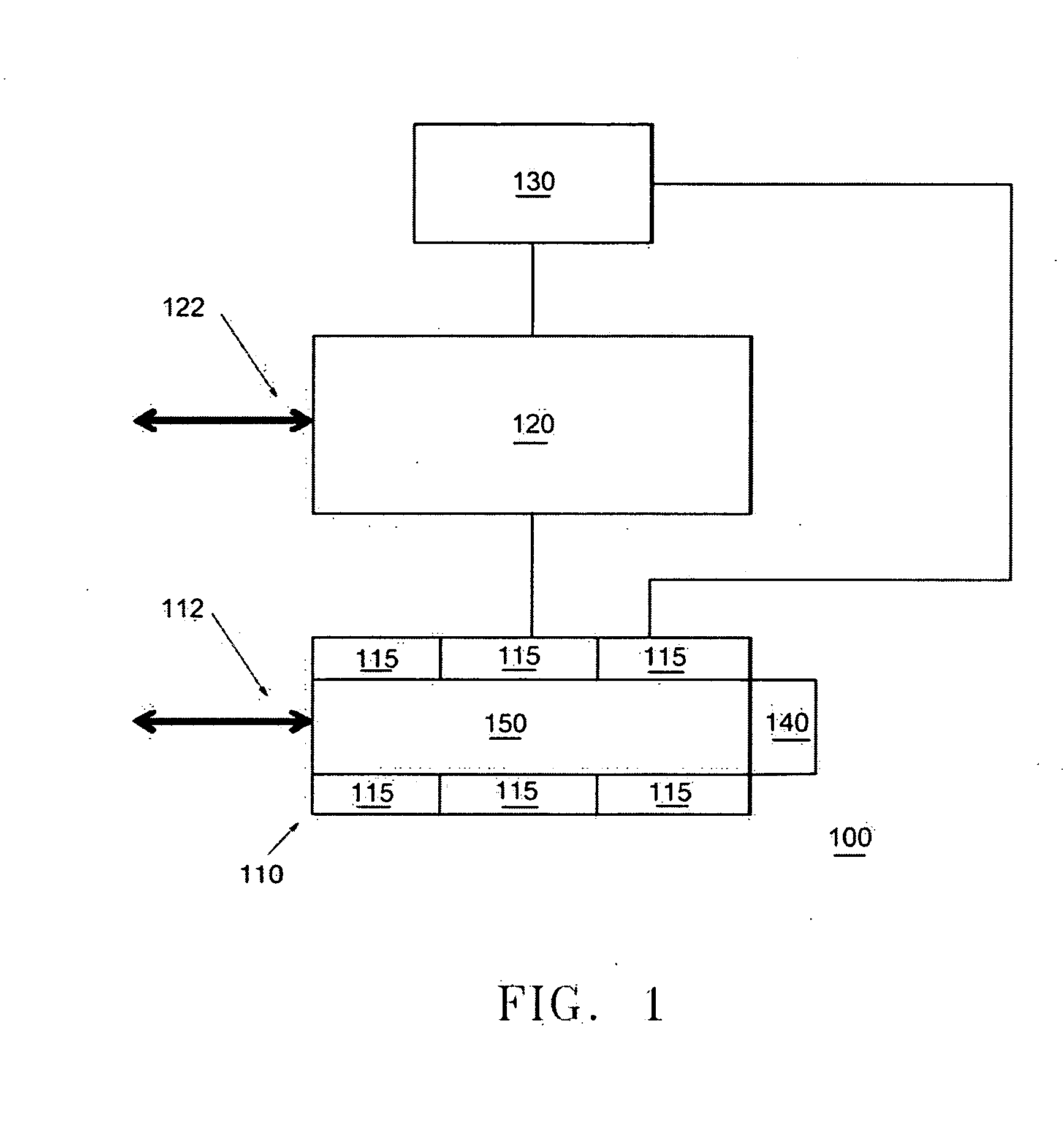

Image Processing Systems

ActiveUS20120169848A1High resolutionTelevision system detailsColor television detailsImaging processingImage resolution

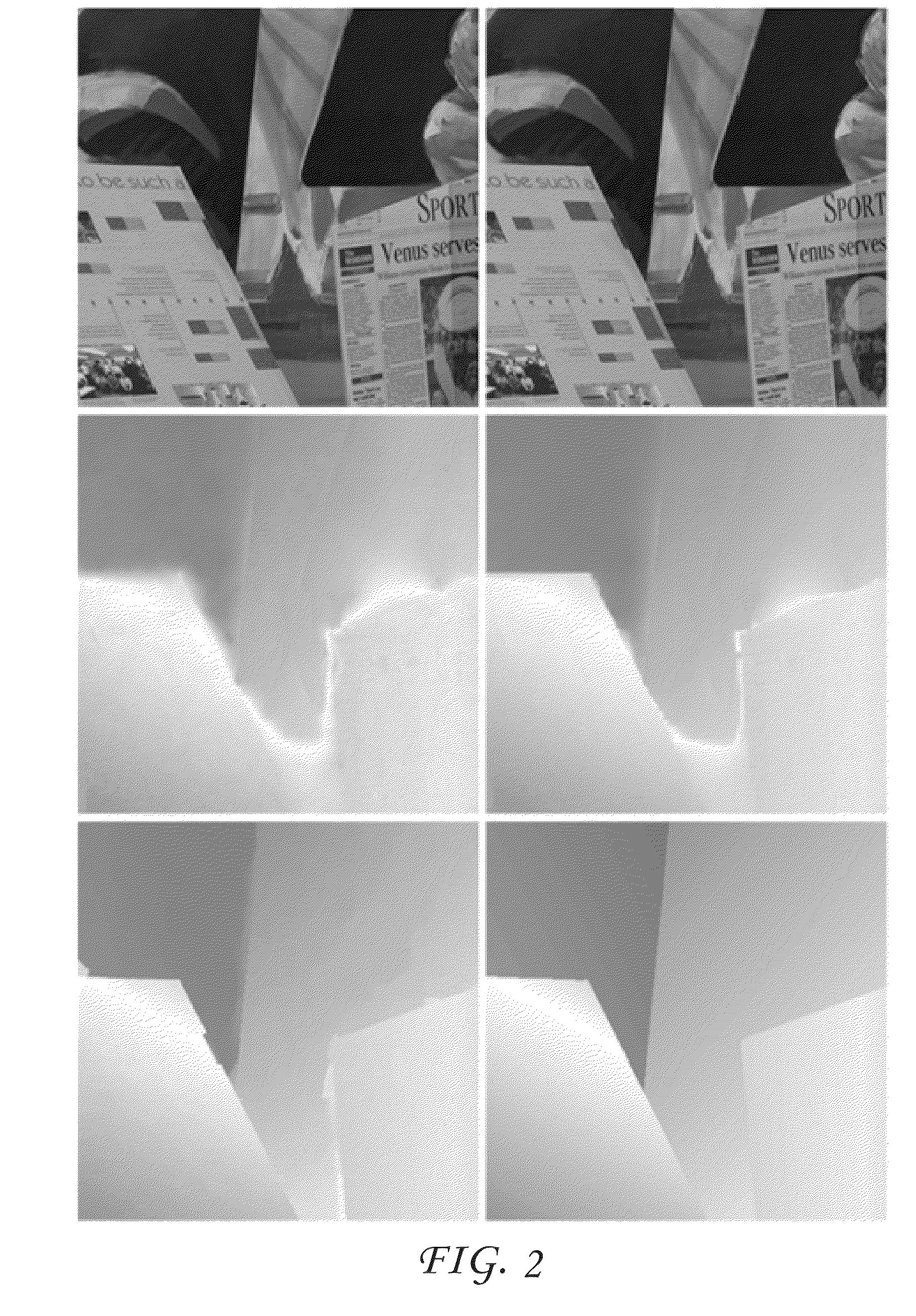

An image processing system includes a calculation unit, a reconstruction unit, a confidence map estimation unit and an up-sampling unit. The up-sampling unit is configured to perform a joint bilateral up-sampling on depth information of a first input image based on a confidence map of the first input image and a second input image with respect to an object and increase a first resolution of the first input image to a second resolution to provide an output image with the second resolution.

Owner:SAMSUNG ELECTRONICS CO LTD

Apparatus, a Method and a Computer Program for Image Processing

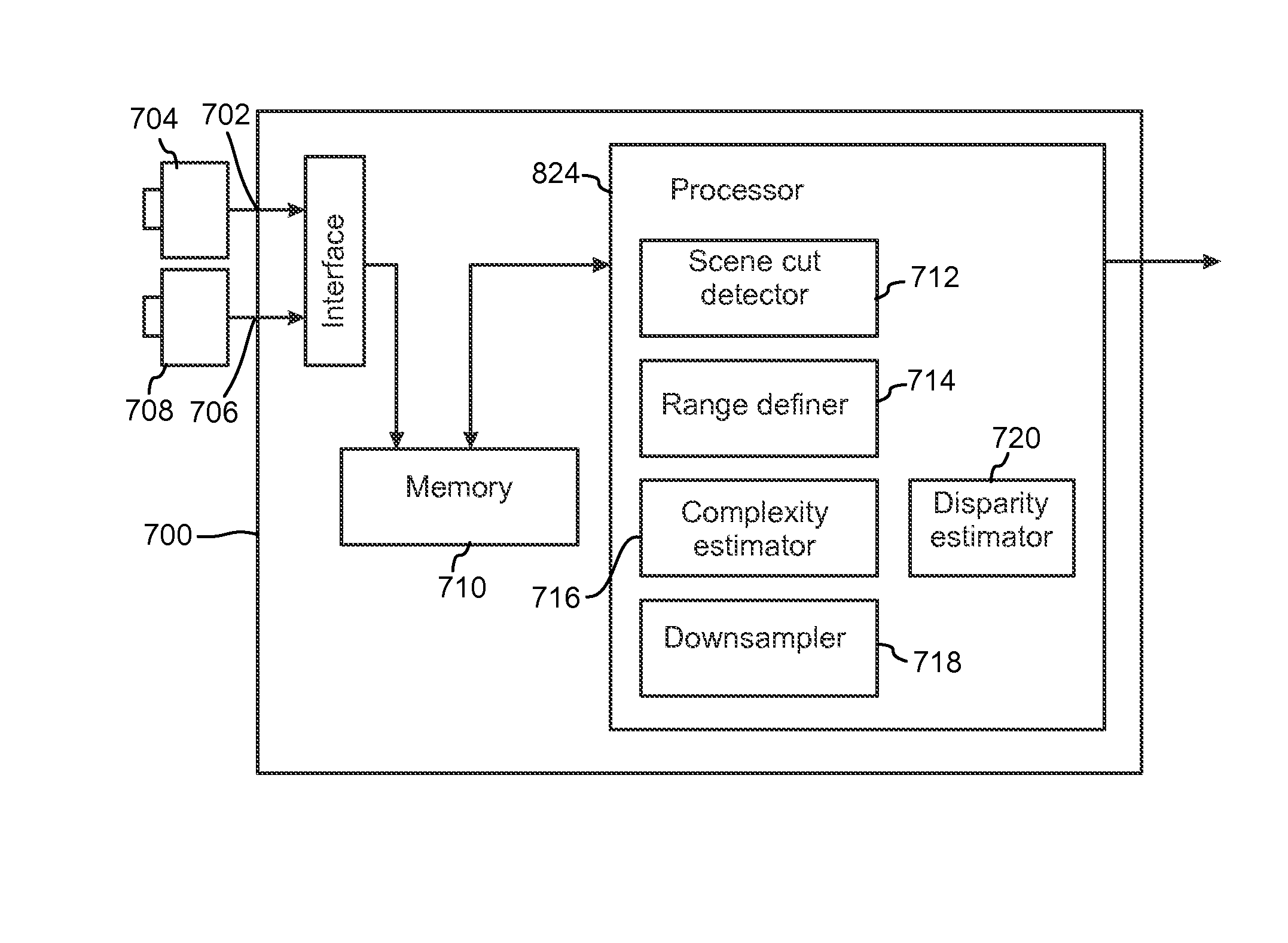

InactiveUS20140063188A1Adjust computational speedGood removal effectImage enhancementImage analysisParallaxImaging processing

There is provided methods, apparatuses and computer program products for image processing in which a pair of images may be downsampled to lower resolution pair of images and further to obtain a disparity image representing estimated disparity between at least a subset of pixels in the pair of images. A confidence of the disparity estimation may be obtained and inserted into a confidence map. The disparity image and the confidence map may be filtered jointly to obtain a filtered disparity image and a filtered confidence map by using a spatial neighborhood of the pixel location. An estimated disparity distribution of the pair of images may be obtained through the filtered disparity image and the confidence map.

Owner:WSOU INVESTMENTS LLC

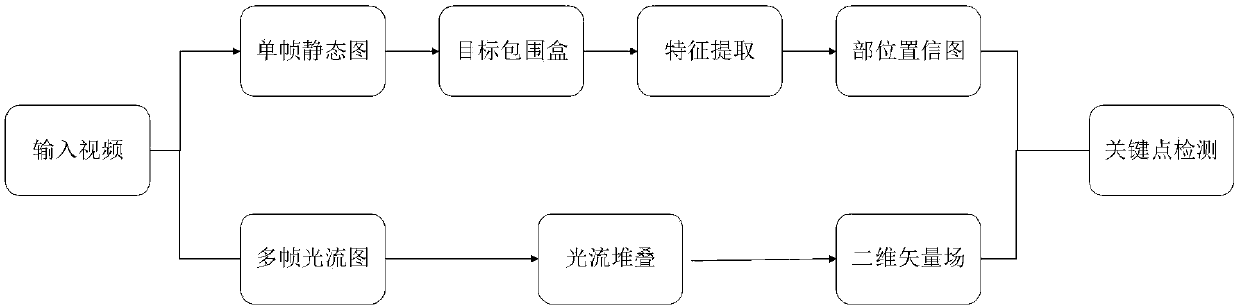

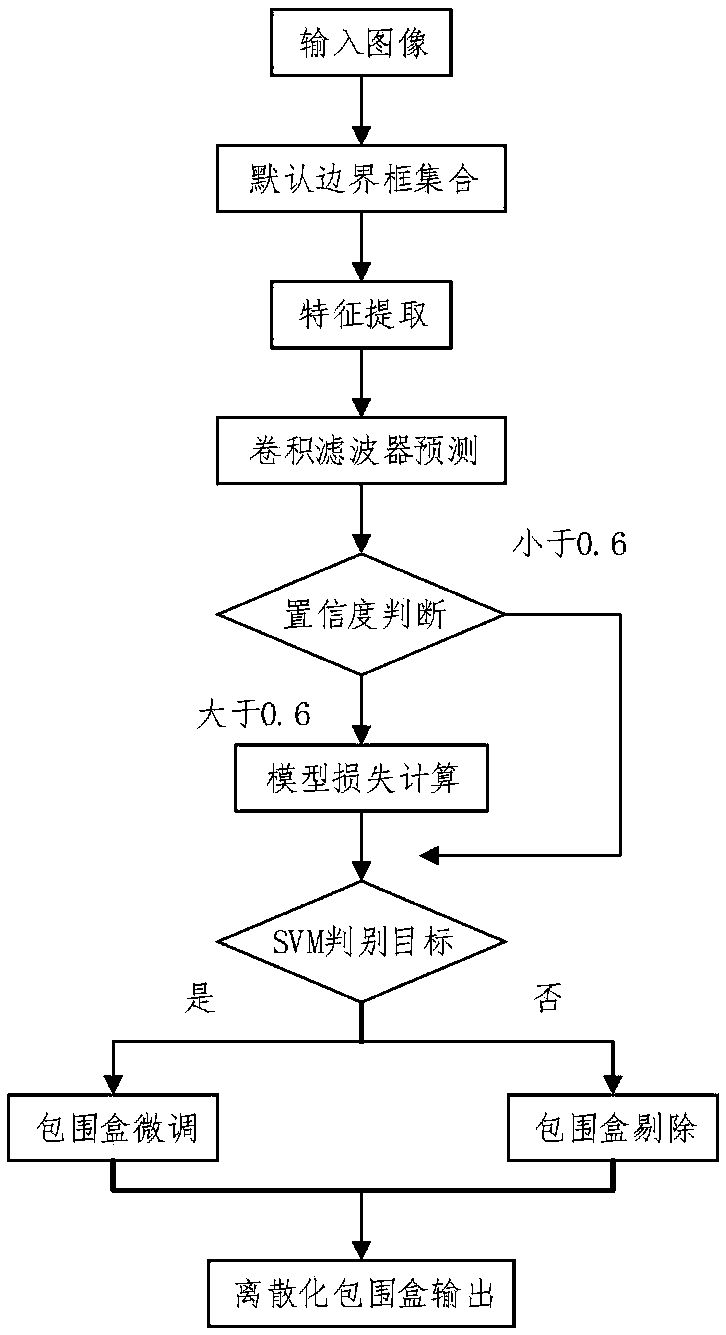

Complex scene-based human body key point detection system and method

ActiveCN108710868ASimplify complex scenesAccurate detectionClosed circuit television systemsBiometric pattern recognitionHuman bodyMultiple frame

The invention discloses a complex scene-based human body key point detection system and method. The method comprises the following steps of: inputting monitor video information to obtain a single-frame static map and a multi-frame optical flow graph; extracting features of the single-frame static map through a convolution operation so as to obtain a feature map, and in order to solve influences, on personnel target detection, of interference targets under complex scenes, judging a practical confidence coefficient and a preset confidence coefficient of the feature map by adoption of a personneltarget detection algorithm so as to obtain a discretized personnel target surrounding box; and carrying out optical flow overlapping on the multi-frame optical flow graph to form a two-dimensional vector field; extracting features in the discretized personnel target surrounding box to obtain a feature map, obtaining key points and association degrees of parts, generating a part confidence map foreach part of a human body by utilizing a predictor, and accurately detecting human body key points through the part confidence map and the two-dimensional vector field. The system and method are usedfor human body key point detection under complex scenes so as to realize accurate detection of personnel target key points.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

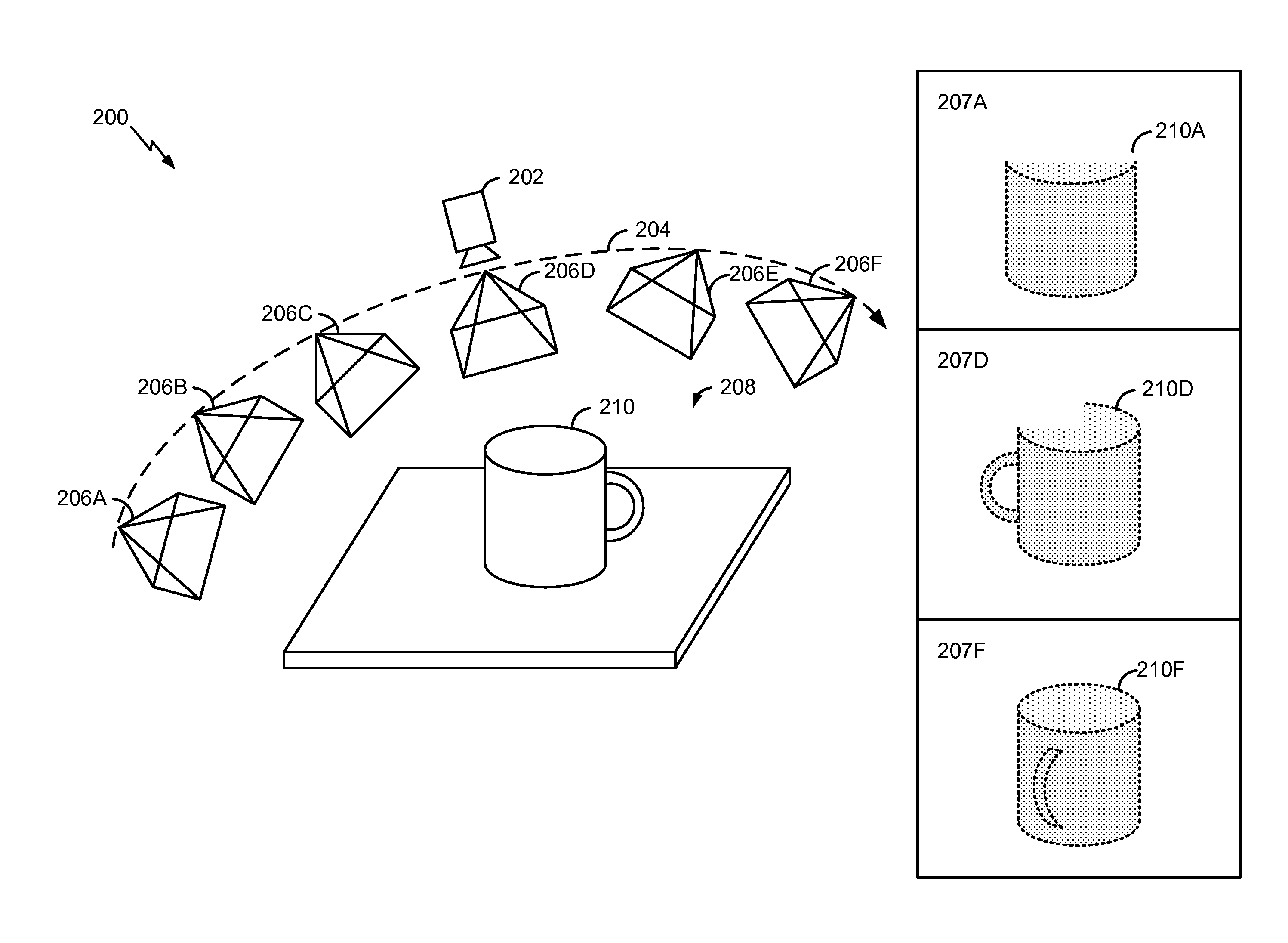

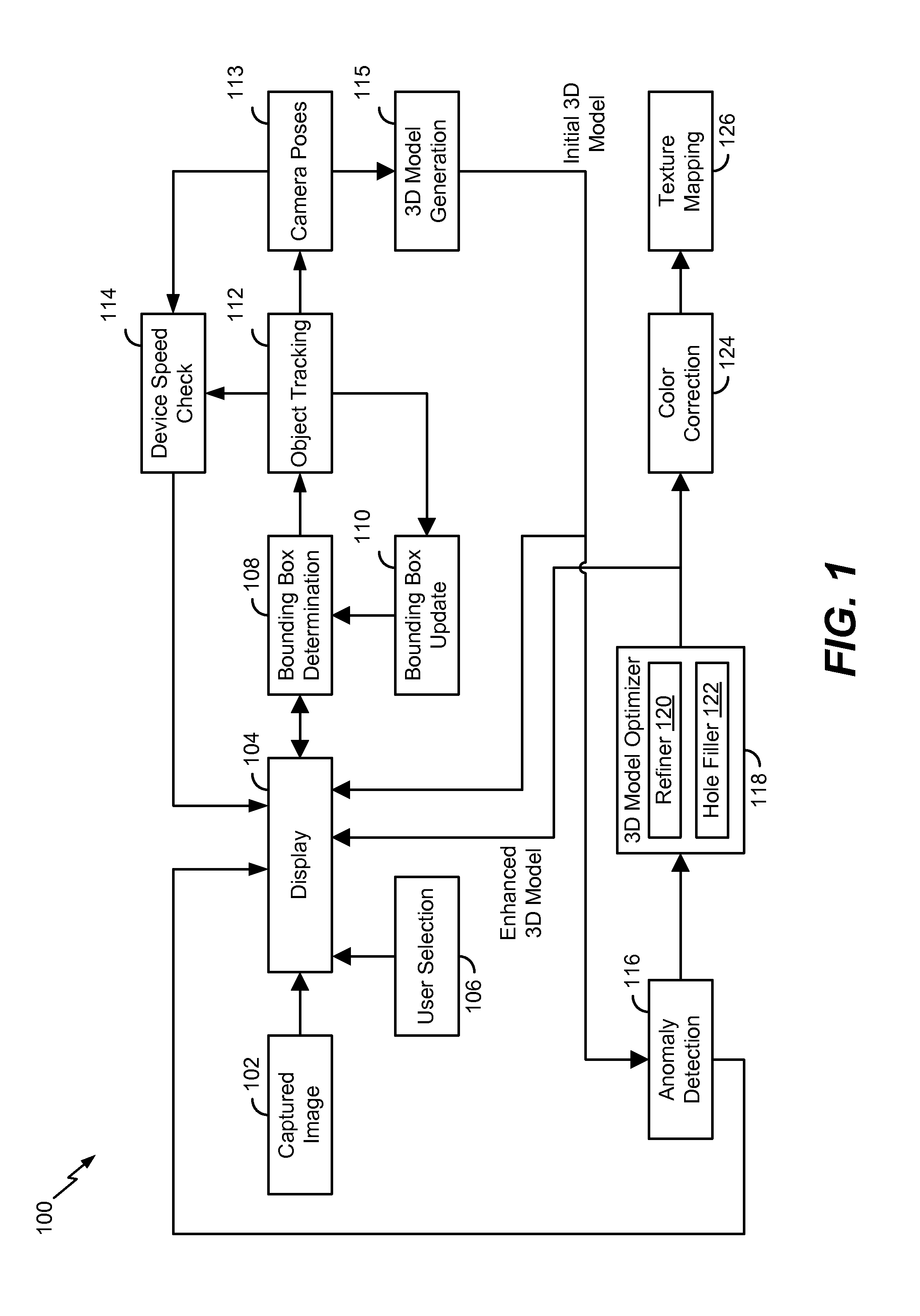

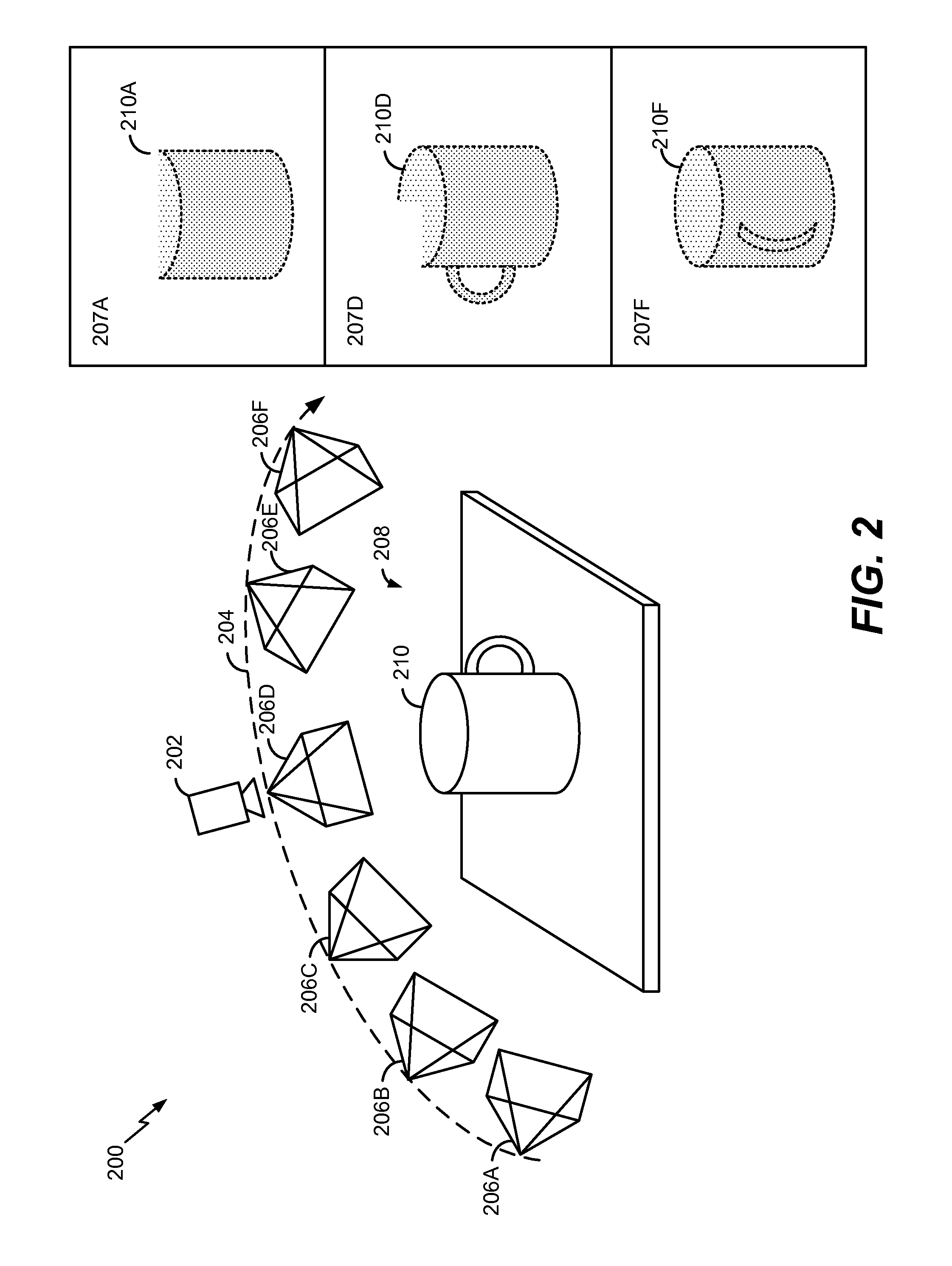

Three-dimensional model generation

A method for texture reconstruction associated with a three-dimensional scan of an object includes scanning, at a processor, a sequence of image frames captured by an image capture device at different three-dimensional viewpoints. The method also includes generating a composite confidence map based on the sequence of image frames. The composite confidence map includes pixel values for scanned pixels in the sequence of image frames. The method further includes identifying one or more holes of a three-dimensional model based on the composite confidence map.

Owner:QUALCOMM INC

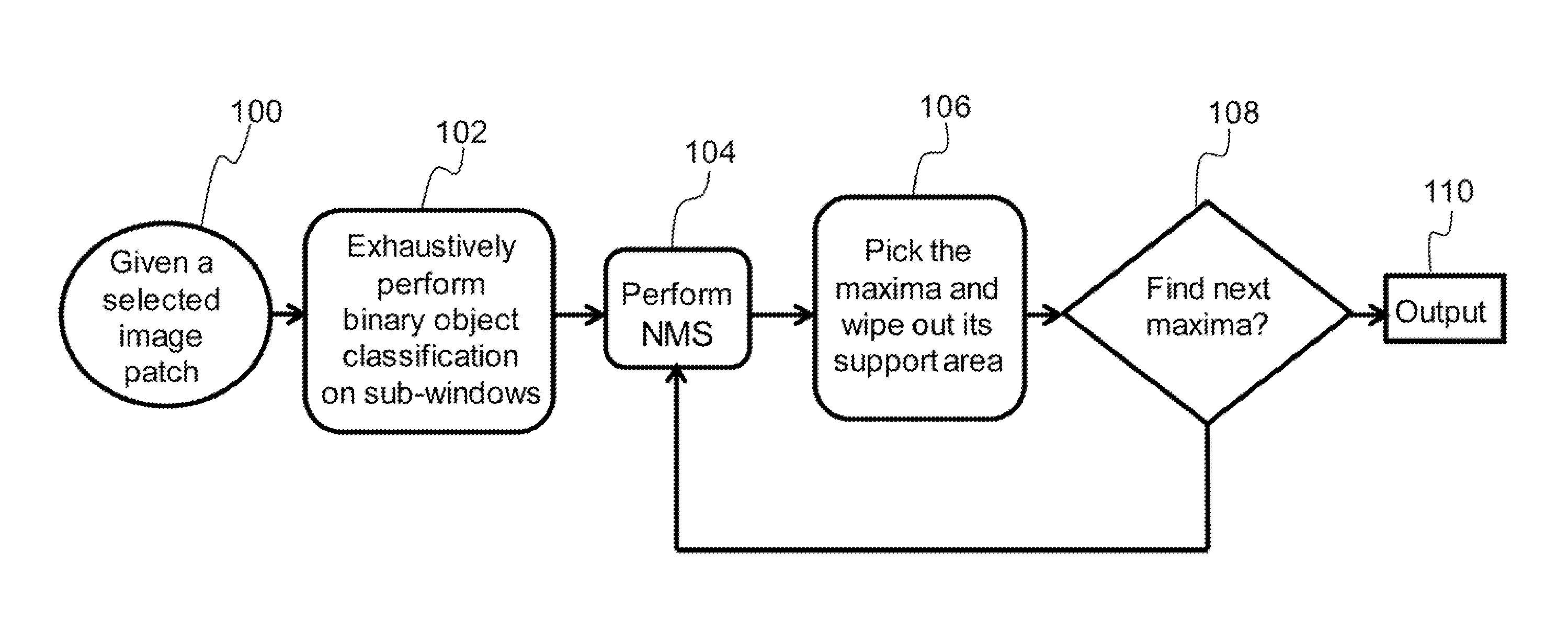

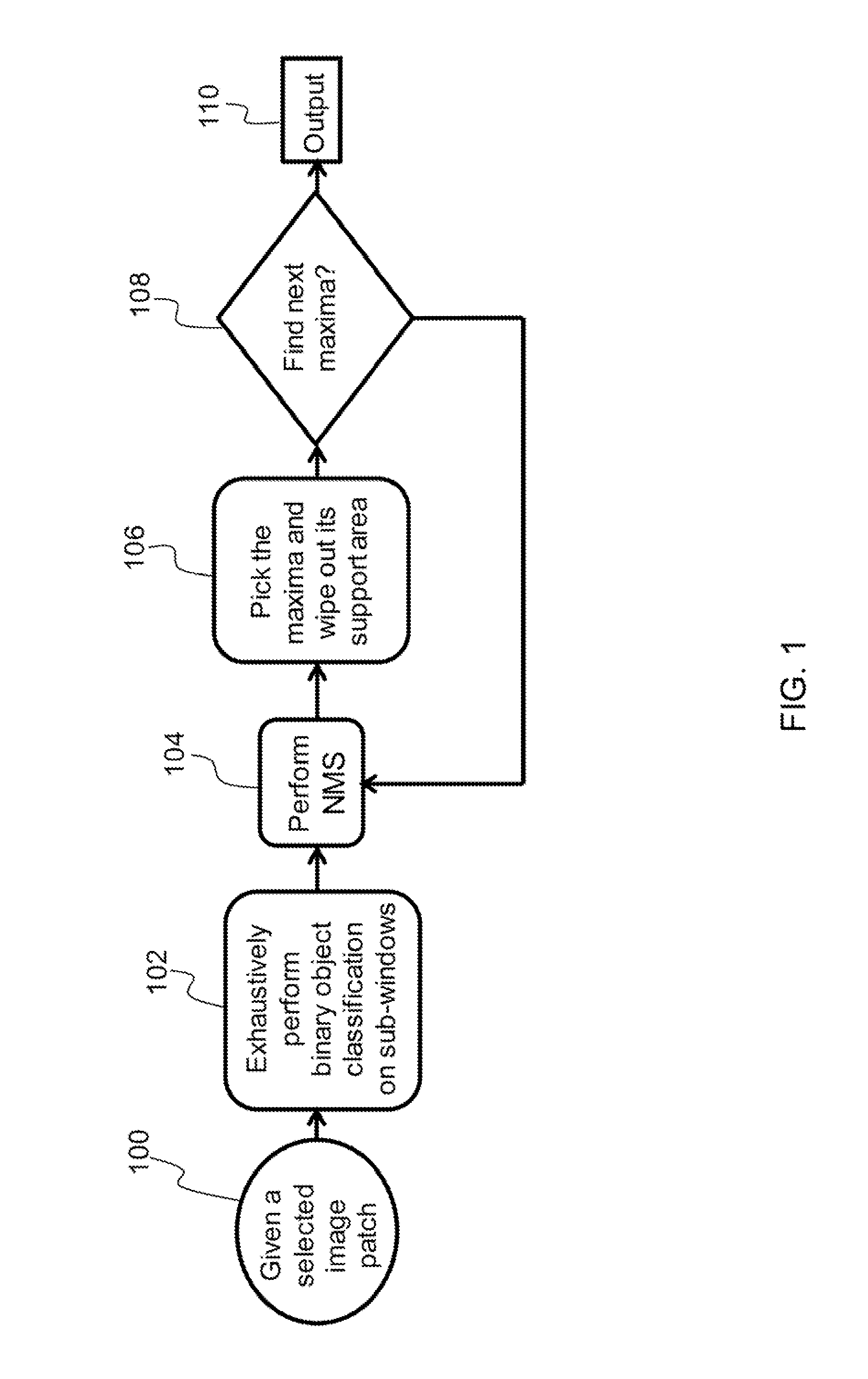

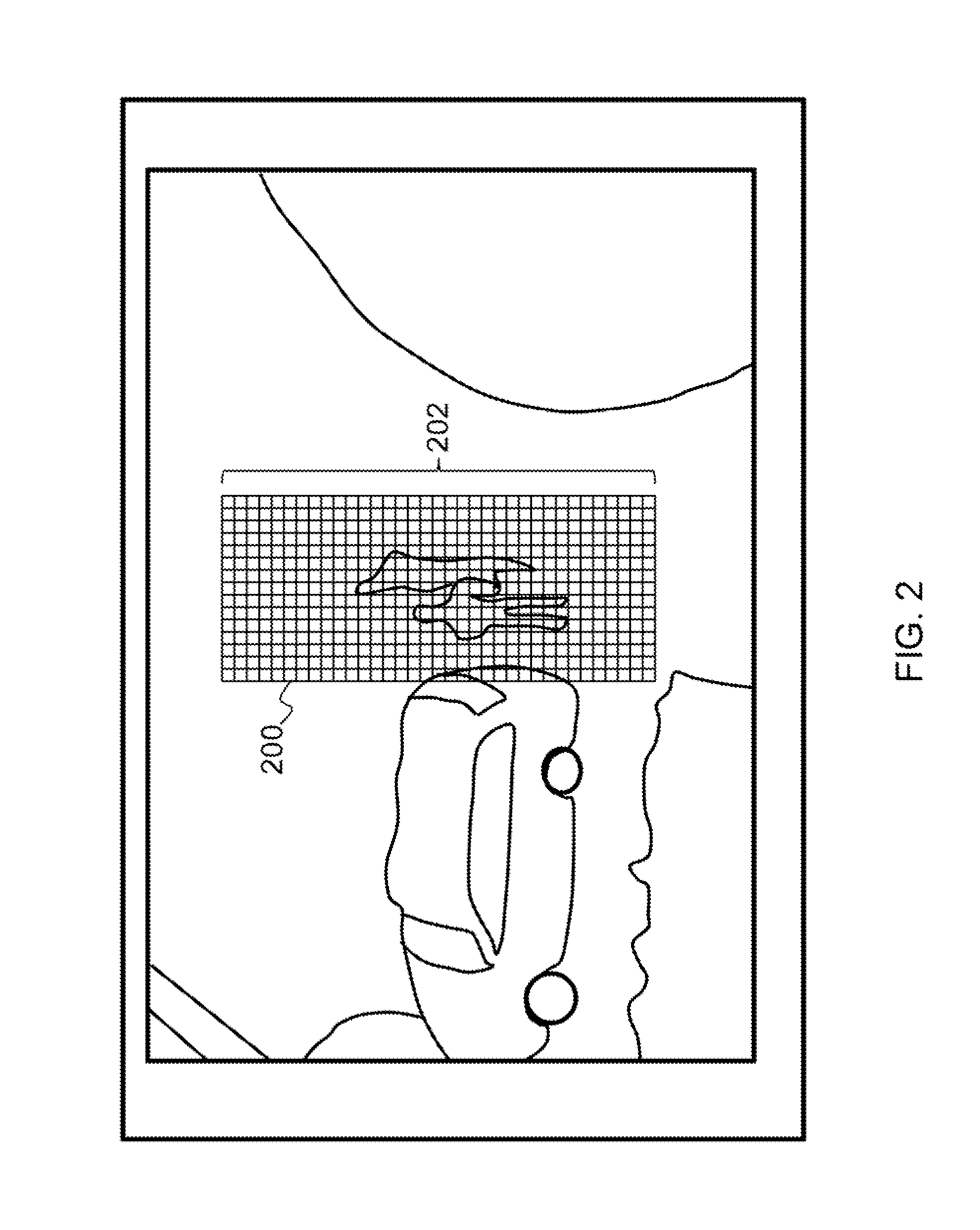

Multi-object detection and recognition using exclusive non-maximum suppression (eNMS) and classification in cluttered scenes

ActiveUS9165369B1Image analysisCharacter and pattern recognitionConfidence mapNon maximum suppression

Described is a system for multi-object detection and recognition in cluttered scenes. The system receives an image patch containing multiple objects of interest as input. The system evaluates a likelihood of existence of an object of interest in each sub-window of a set of overlapping sub-windows. A confidence map having confidence values corresponding to the sub-windows is generated. A non-maxima suppression technique is applied to the confidence map to eliminate sub-windows having confidence values below a local maximum confidence value. A global maximum confidence value is determined for a sub-window corresponding to a location of an instance of an object of interest in the image patch. The sub-window corresponding to the location of the instance of the object of interest is removed from the confidence map. The system iterates until a predetermined stopping criteria is met. Finally, detection information related to multiple instances of the object of interest is output.

Owner:HRL LAB

Continuous and stable tracking method of weak moving target in dynamic background

ActiveCN106875415ARobust trackingAvoid update errorsImage enhancementImage analysisContext modelConfidence map

The invention discloses a continuous and stable tracking method of a weak moving target in a dynamic background. The method includes acquiring the video data, and processing each frame of image in the following steps of acquiring the position coordinate of the moving target to be tracked in the current frame of image and determining the target tracking frame according to the position; establishing a spatial context model of the current frame of image for the area in the target tracking frame with a Bayesian framework; performing convolution calculation by means of the spatial context model of the current frame of image and the next frame of image to obtain a confidence map of the position of the moving target to be tracked in the next frame of image, the position with the greatest confidence is the position of the moving target to be tracked in the next frame of image; based on the double threshold moving target crisis determination, determining that when the moving target to be tracked is not shielded or lost, outputting the moving target position in the next frame of image when the tracking of the current frame of image is completed; otherwise, updating the target tracking frame and re-checking. The method realizes the continuous and stable tracking of the target under the condition of background interference and shielding.

Owner:北京理工雷科电子信息技术有限公司

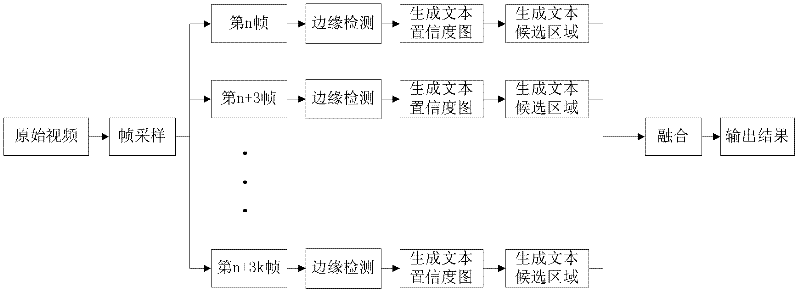

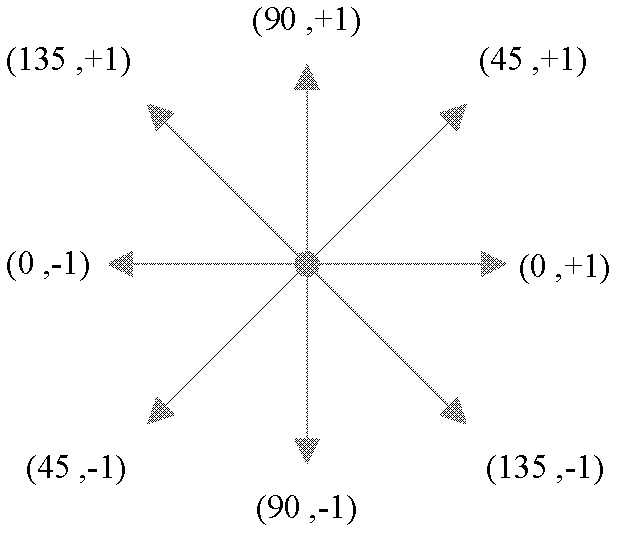

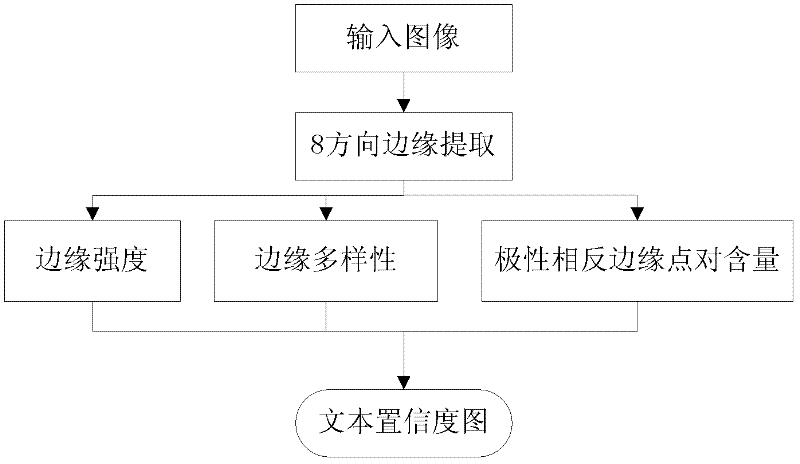

Method for detecting and positioning text area in video

ActiveCN102542268AThe detection process is fastFast positioningCharacter and pattern recognitionComputer graphics (images)Multiple frame

The invention relates to a method for detecting and positioning a text area in a video. The method is characterized by comprising the following steps of: inputting the video, and sampling the input video at equal time intervals; carrying out edge detection on an image obtained by sampling; generating a text confidence map by utilizing the image obtained by detection; extracting a text candidate area according to the generated text confidence map; fusing the text candidate areas of approximately same multiple frames of images in the text candidate area; and analyzing the images of in the text area after fusing. With the adoption of the method, multi-language texts appearing in the video can be accurately positioned in real time. The method is applicable to various functions such as video content editing, indexing and retriving and the like.

Owner:北京中科阅深科技有限公司

Apparatus and method for providing three dimensional media content

A system that incorporates teachings of the exemplary embodiments may include, for example, means for generating a disparity map based on a depth map, means for determining accuracy of pixels in the depth map where the determining means identifies the pixels as either accurate or inaccurate based on a confidence map and the disparity map, and means for providing an adjusted depth map where the providing means adjusts inaccurate pixels of the depth map using a cost function associated with the inaccurate pixels. Other embodiments are disclosed.

Owner:AT&T INTPROP I L P

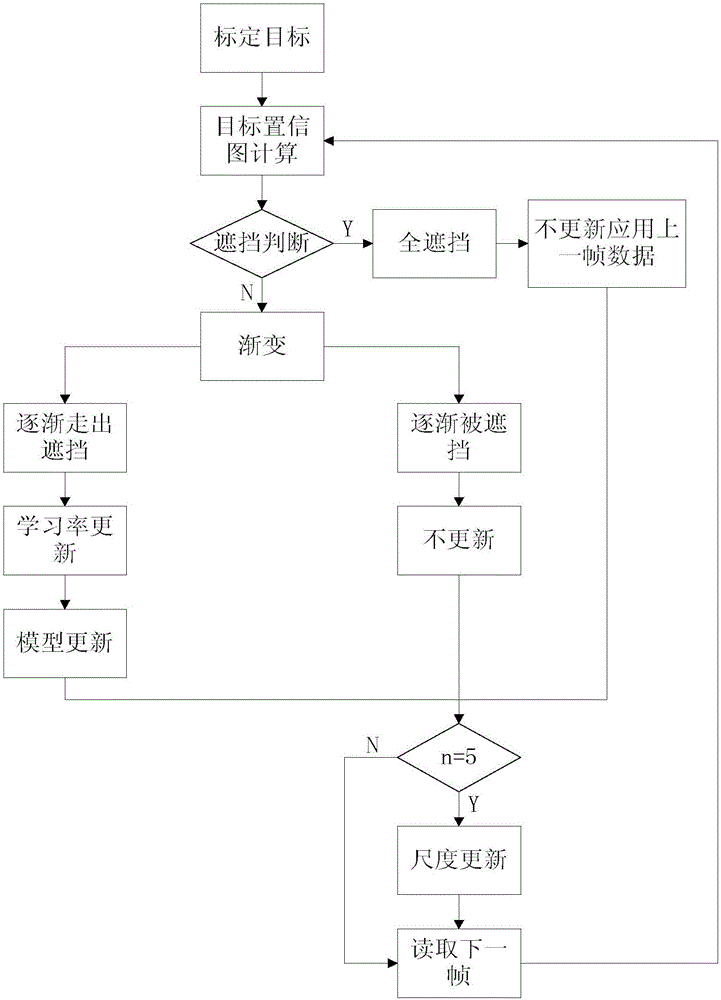

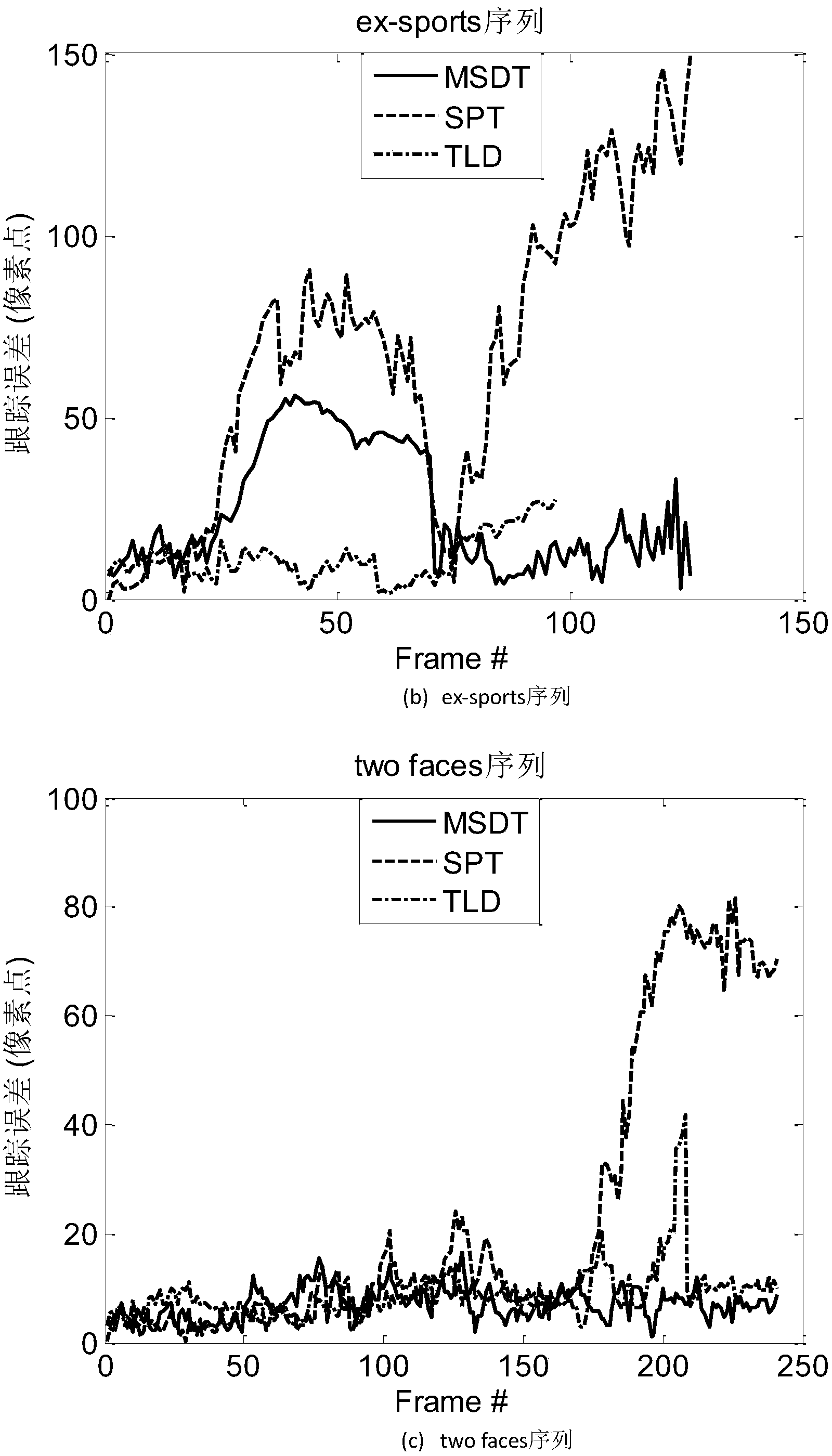

Target tracking method for video sequence

ActiveCN106485732AImprove robustnessReduce error accumulationImage enhancementImage analysisConfidence mapVideo sequence

The invention discloses a target tracking method for a video sequence. The target tracking method comprises the steps of performing normalization processing on a target image firstly; then extracting space-tine contextual information of the target according to a target position of an initial frame, constructing a space-time contextual model, and establishing a relation between the space-time contextual model and a target position confidence map so as to perform target tracking; then determining whether the learning rate of the space-time contextual model is updated or not by adopting a sheltering mechanism, and updating the space-time contextual model by the updated learning rate; and finally, establishing a dimension updating process according to time-space significance between front and back frames in the target tracking process. According to the target tracking method, the target tracking is realized based on the space-tine information by combination with the sheltering processing mechanism and the space-time significance, so that the target tracking robustness and real-time property can be effectively improved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

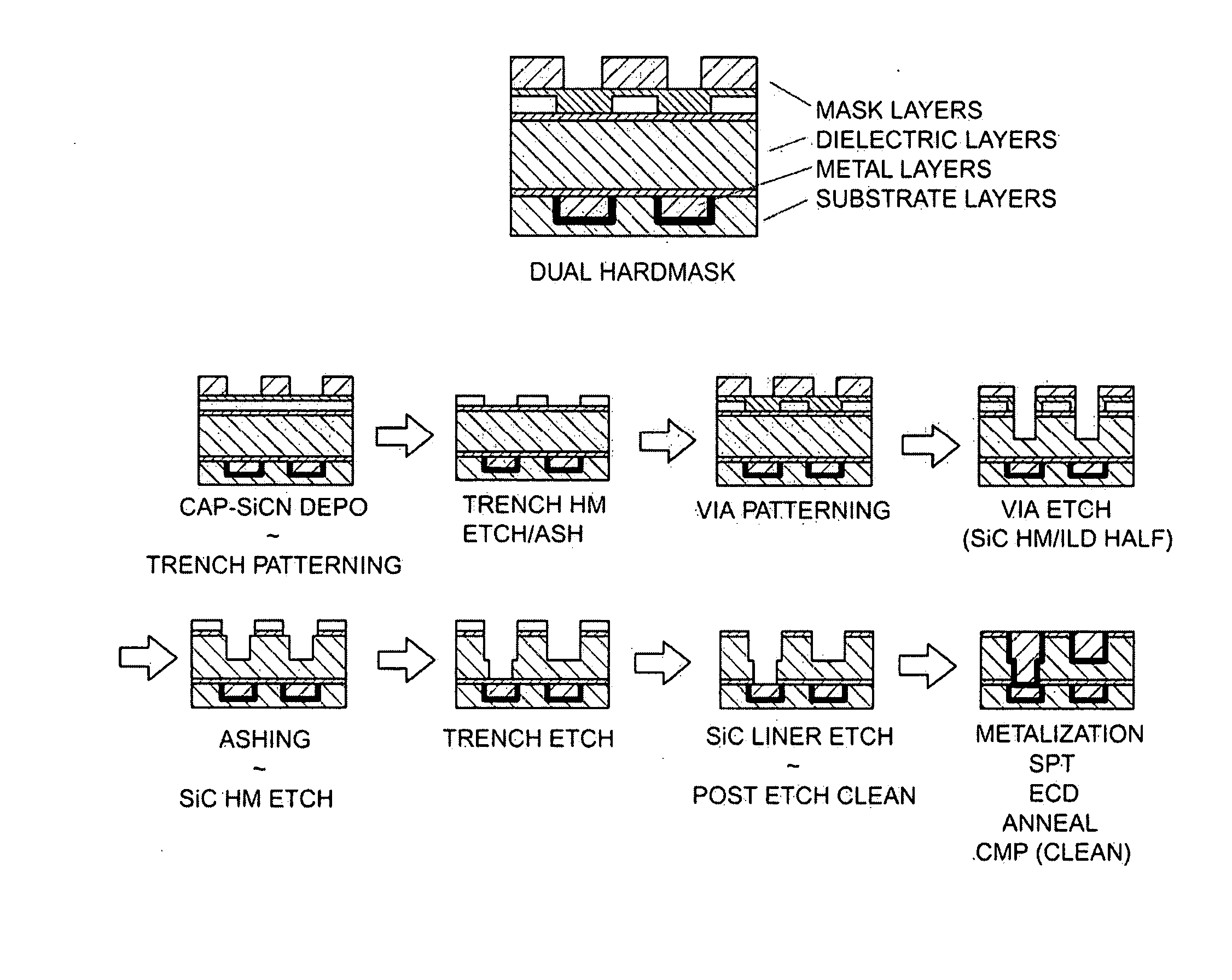

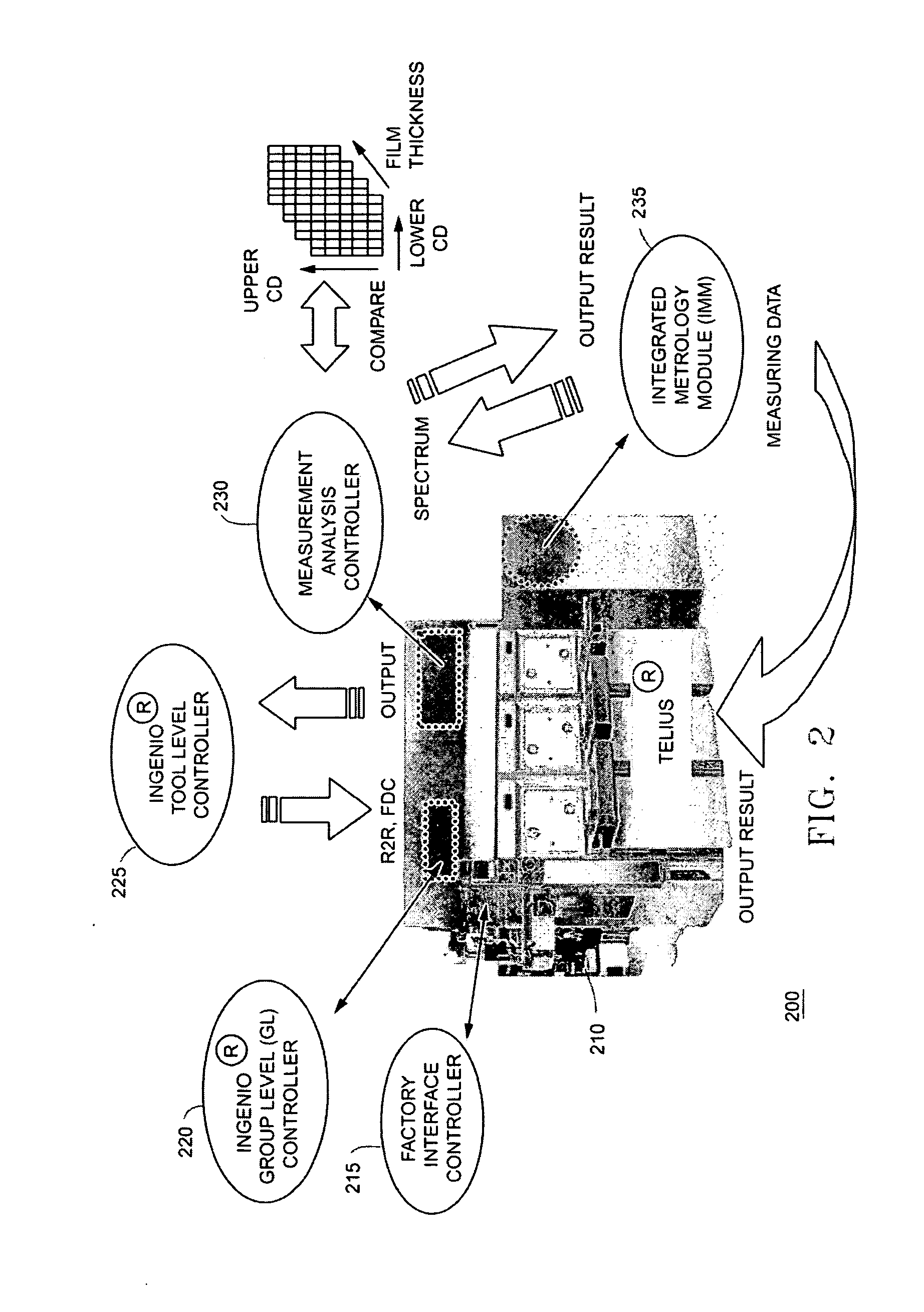

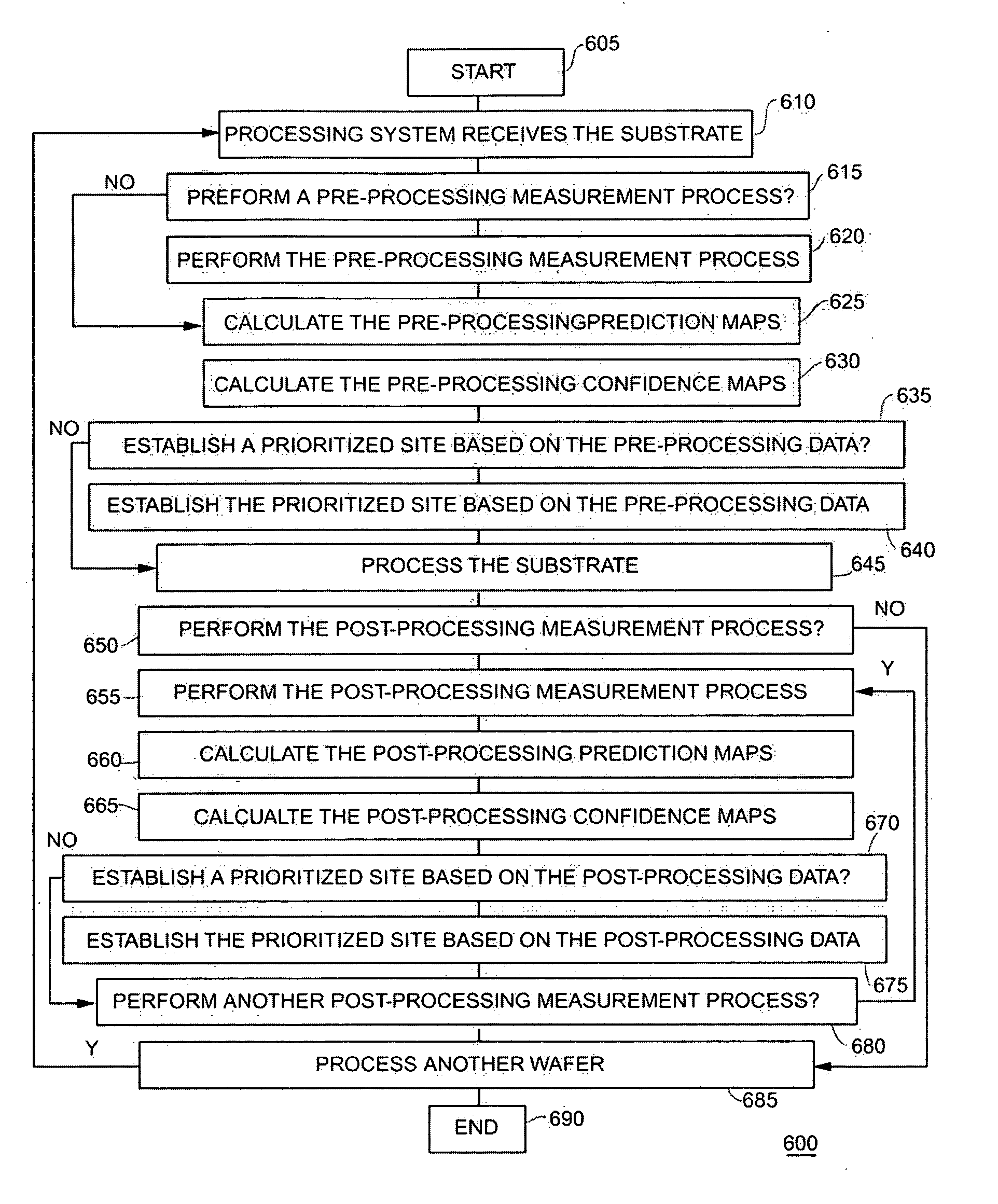

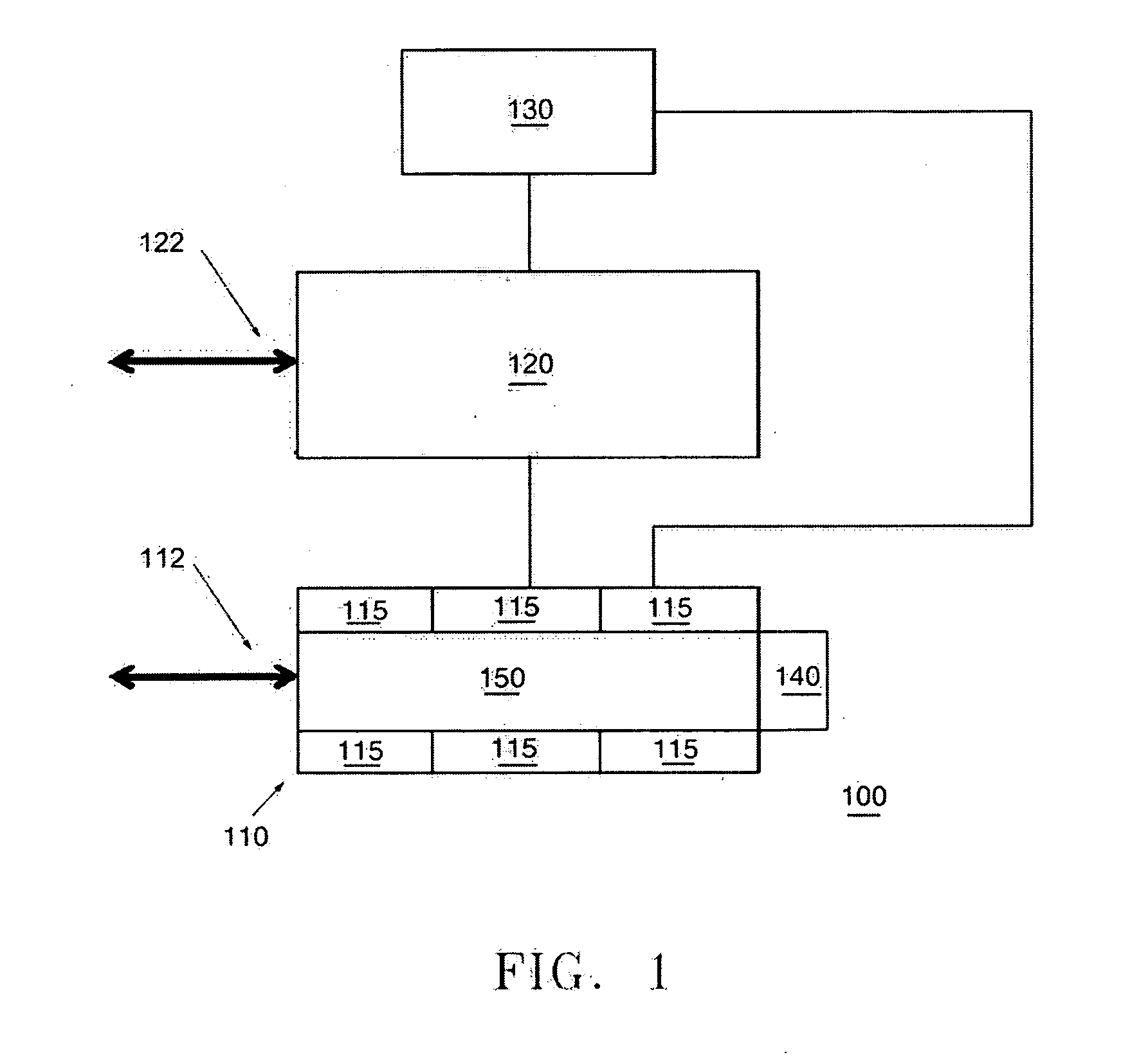

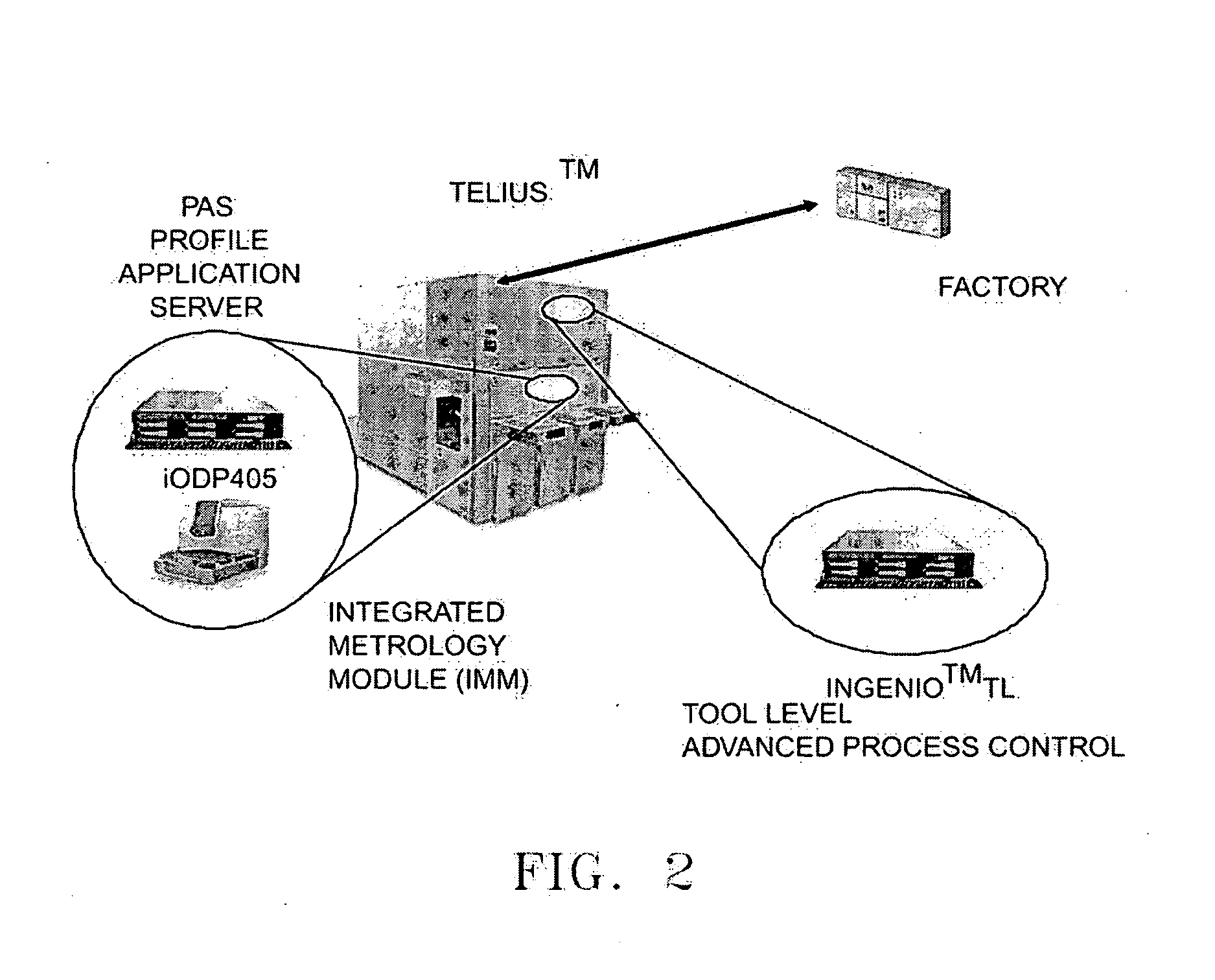

Dynamic metrology sampling for a dual damascene process

InactiveUS20070231930A1Reduce in quantityIncrease the number ofSemiconductor/solid-state device testing/measurementNuclear monitoringMetrologyConfidence map

A method of monitoring a dual damascene procedure that includes calculating a pre-processing confidence map for a damascene process, the pre-processing confidence map including confidence data for a first set of dies on the wafer. An expanded pre-processing measurement recipe is established for the damascene process when one or more values in the pre-processing confidence map are not within confidence limits established for the damascene process. A reduced pre-processing measurement recipe for the first damascene process is established when one or more values in the pre-processing confidence map are within confidence limits established for the damascene process.

Owner:IBM CORP +1

Dynamic metrology sampling with wafer uniformity control

InactiveUS20070237383A1Photomechanical apparatusCharacter and pattern recognitionMetrologyConfidence map

A method of processing a wafer is presented that includes creating a pre-processing measurement map using measured metrology data for the wafer including metrology data for at least one isolated structure on the wafer, metrology data for at least one nested structure on the wafer, or mask data. At least one pre-processing prediction map is calculated for the wafer. A pre-processing confidence map is calculated for the wafer. The pre-processing confidence map includes a set of confidence data for the plurality of dies on the wafer. A prioritized measurement site is determined when the confidence data for one or more dies is not within the confidence limits. A new measurement recipe that includes the prioritized measurement site is then created.

Owner:IBM CORP +1

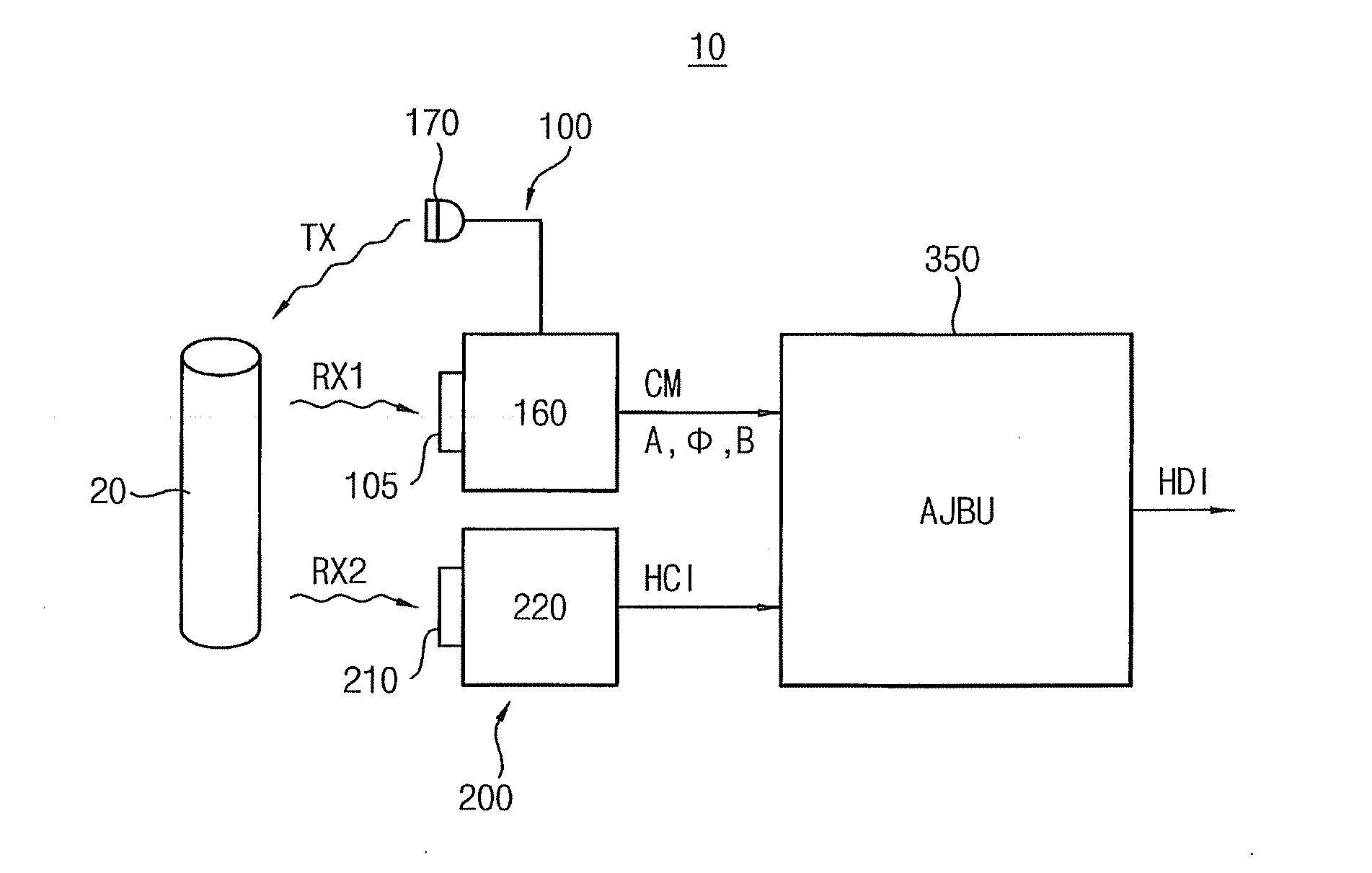

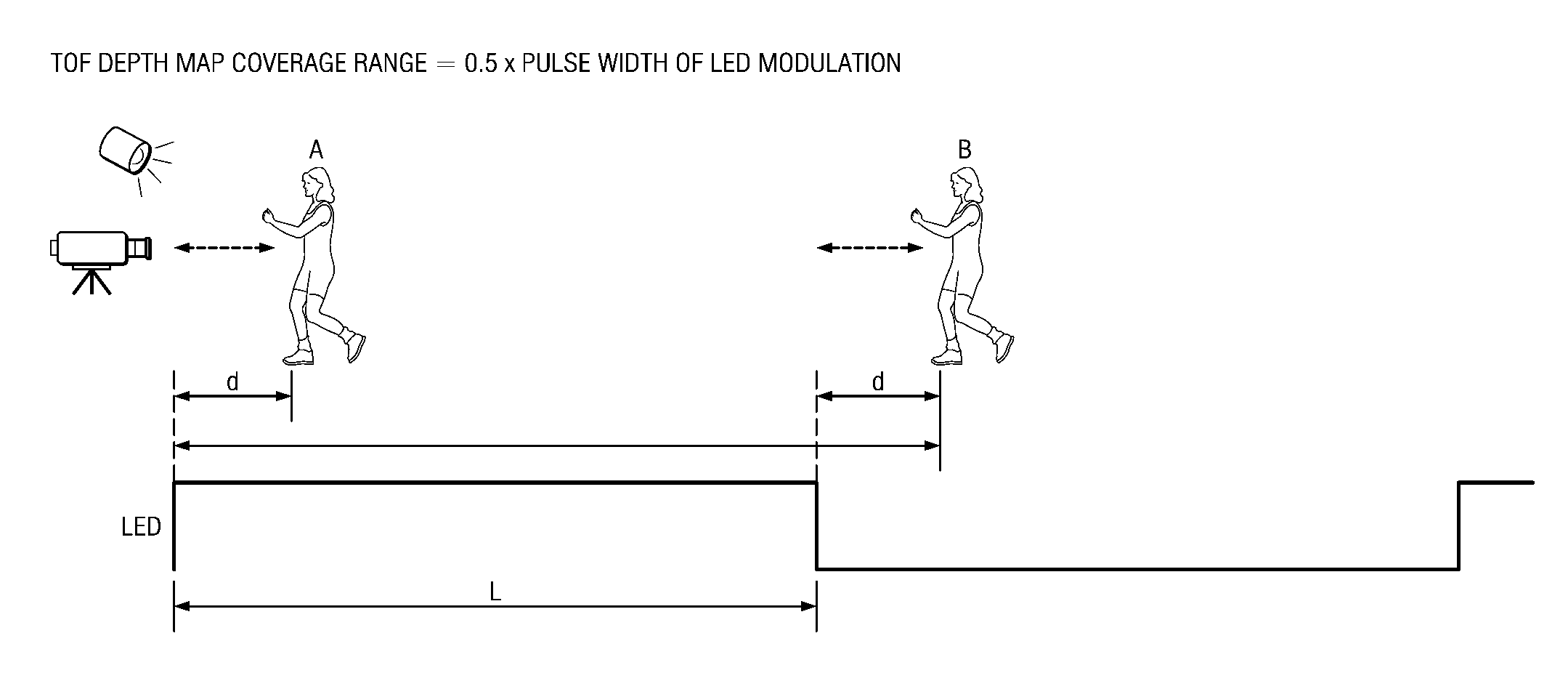

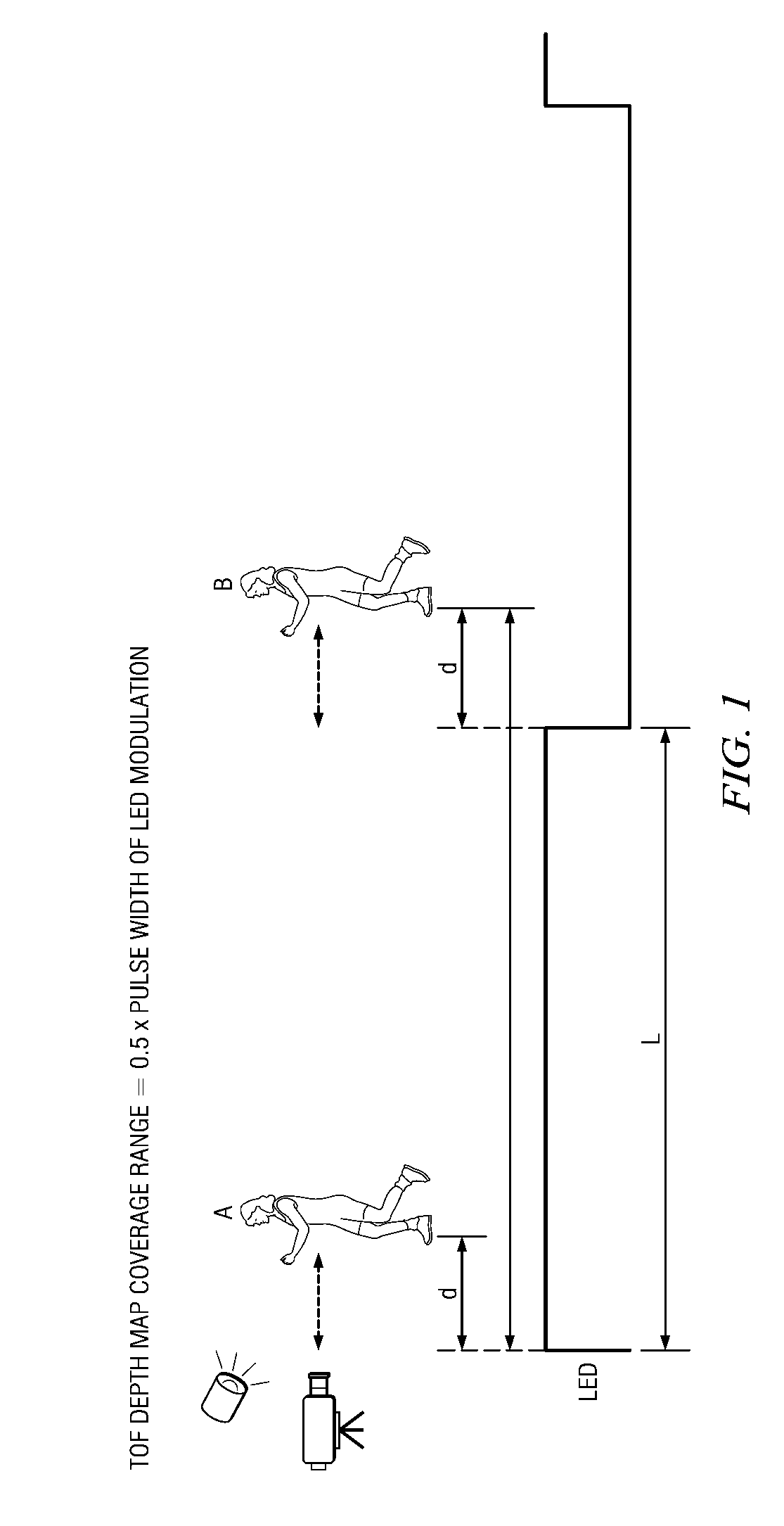

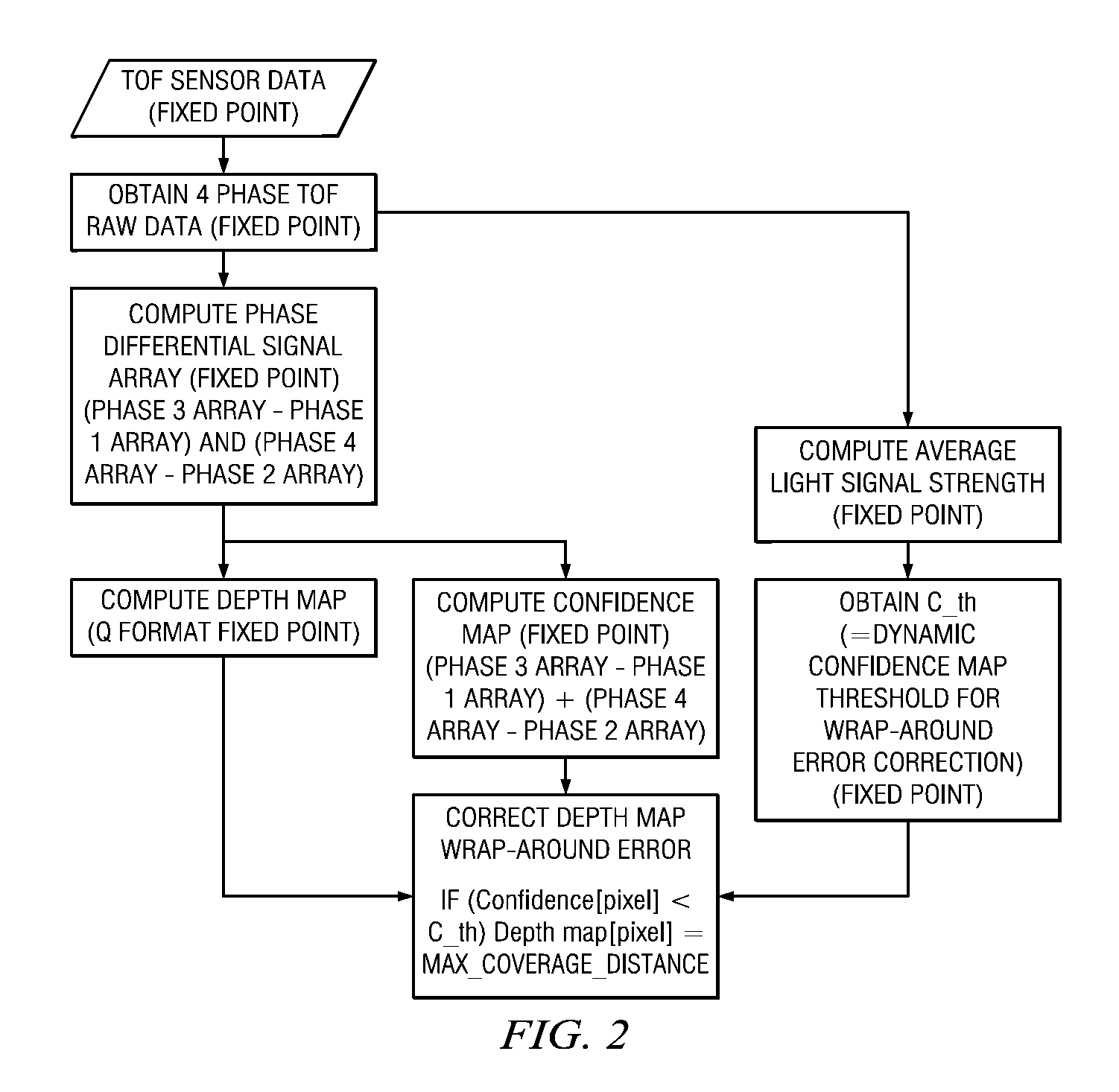

Method and apparatus for controlling time of flight confidence map based depth noise and depth coverage range

ActiveUS20120123718A1Fix bugsOptical rangefindersElectrical measurementsTime of flight sensorConfidence map

A method and apparatus for fixing a depth map wrap-around error and a depth map coverage by a confidence map. The method includes dynamically determining a threshold for the confidence map threshold based on an ambient light environment, wherein the threshold is reconfigurable depending on LED light strength distribution in TOF sensor, extracting, via the digital processor, four phases of Time Of Flight raw data from the Time Of Flight sensor data, computing a phase differential signal array for fixed point utilizing the four phases of the Time Of Flight raw data, computing the depth map, confidence map and the average light signal strength utilizing the phase differential signal array, obtaining the dynamic confidence map threshold for wrap around error correction utilizing the average light signal strength, and correcting the depth map wrap-around error utilizing the depth map, the confidence map and the dynamic confidence map threshold.

Owner:TEXAS INSTR INC

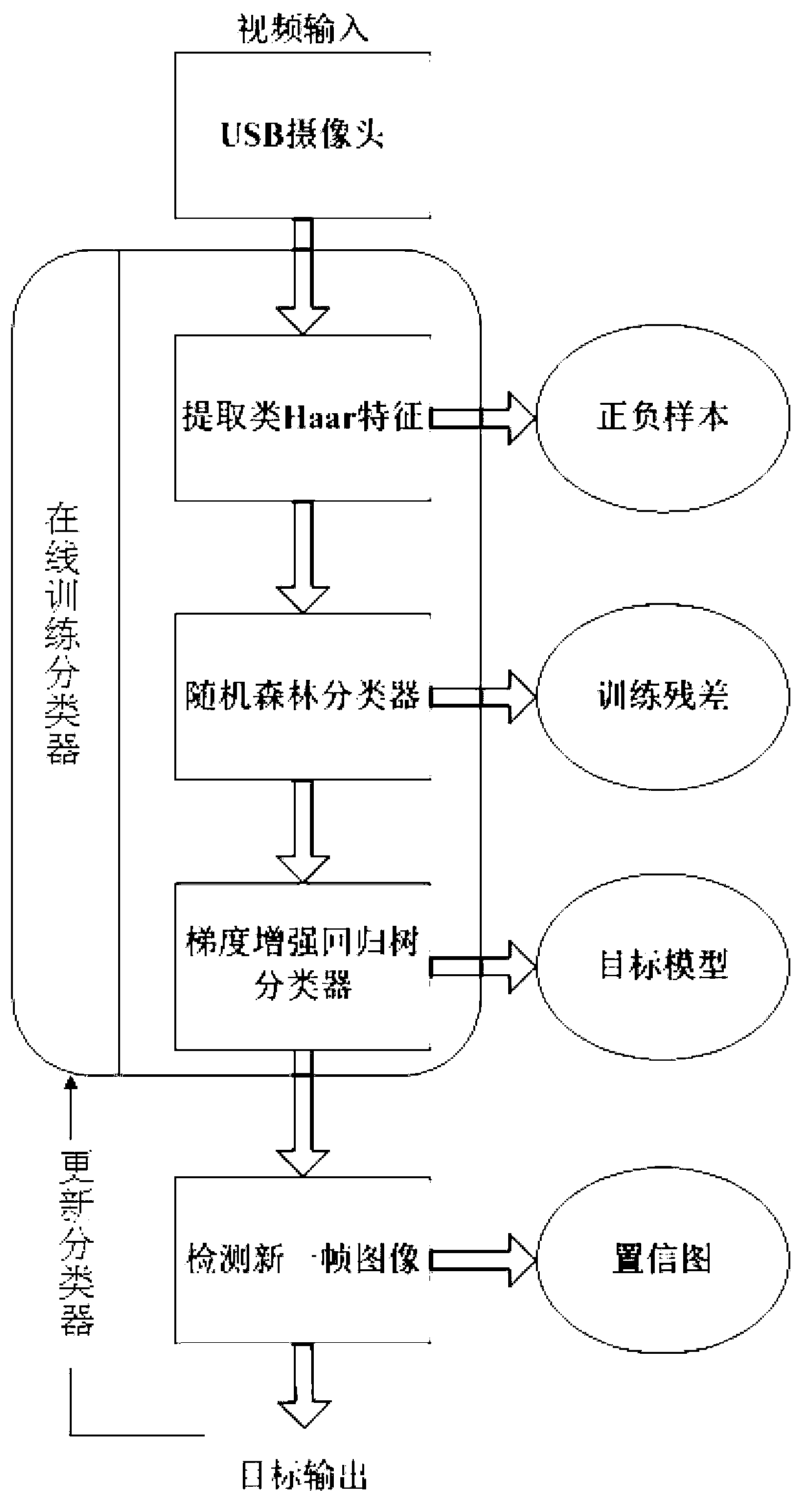

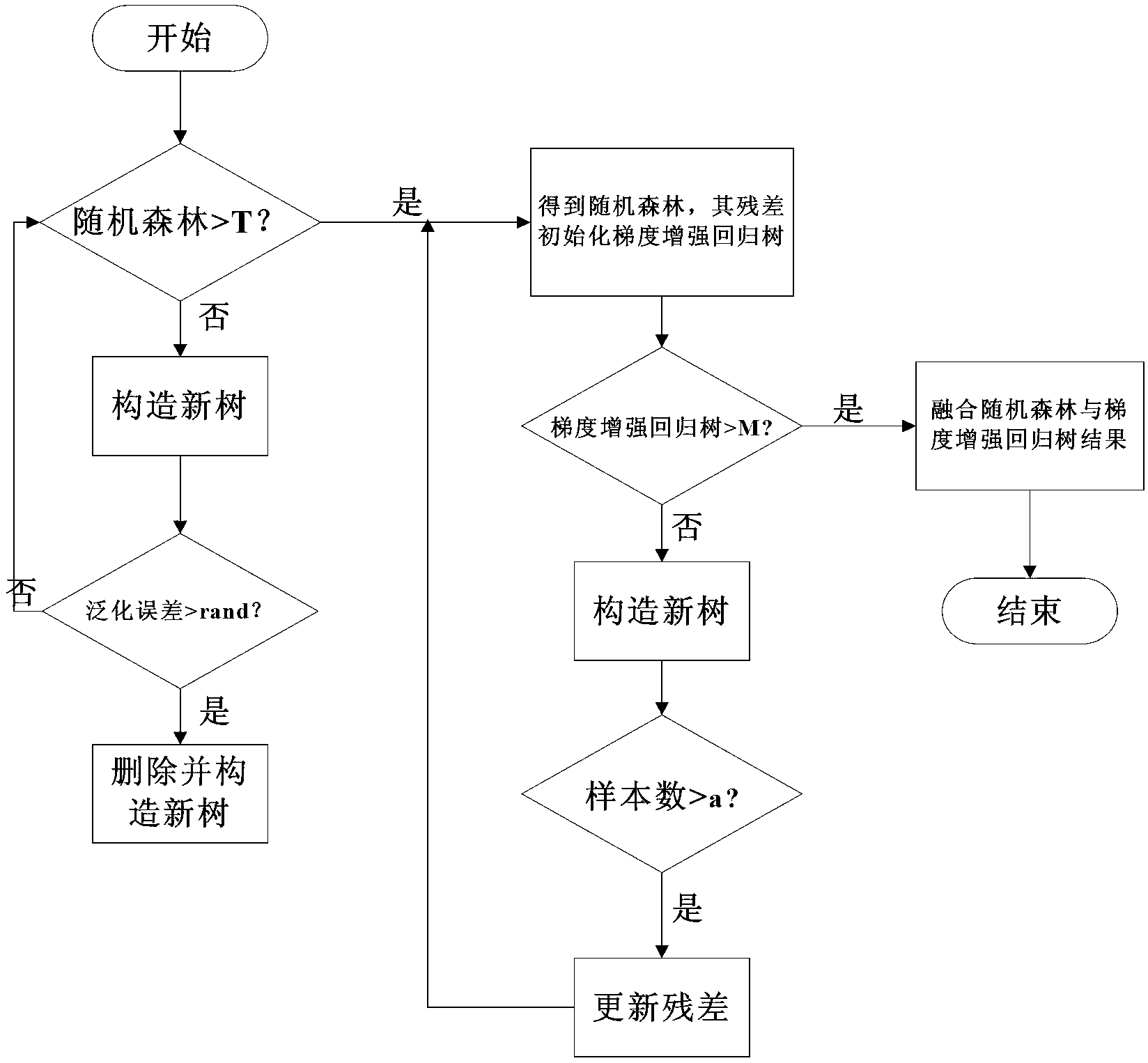

Target tracking method and system based on on-line initialization gradient enhancement regression tree

ActiveCN103226835AImprove object tracking performanceSolve the appearanceImage analysisMargin classifierConfidence map

The invention relates to a target tracking method and a system based on an on-line initialization gradient enhancement regression tree. In the system consisting of a video input end, a tracking target output end and an on-line training classifier, the method comprises the steps of 1) selecting a tracking target from a video series and extracting positive and negative samples of a Haar-like feature, 2) randomly establishing the on-line classifier according to the positive and negative samples to obtain a training residual error, 3) conducting training amendment by taking the training residual error as a training sample of the on-line classifier and establishing a target model, and 4) acquiring an image confidence map from a next frame of video image, determining a maximum position of a confidence value in a target window, and accomplishing tracking. According to the method and the system, the tree can be converged to an optimum point quickly to ensure that the random forest detection optimization is accomplished, the classifier is updated through on-line study, and the problems of appearance variation, rapid movement and shielding of a target are solved well.

Owner:PEKING UNIV SHENZHEN GRADUATE SCHOOL

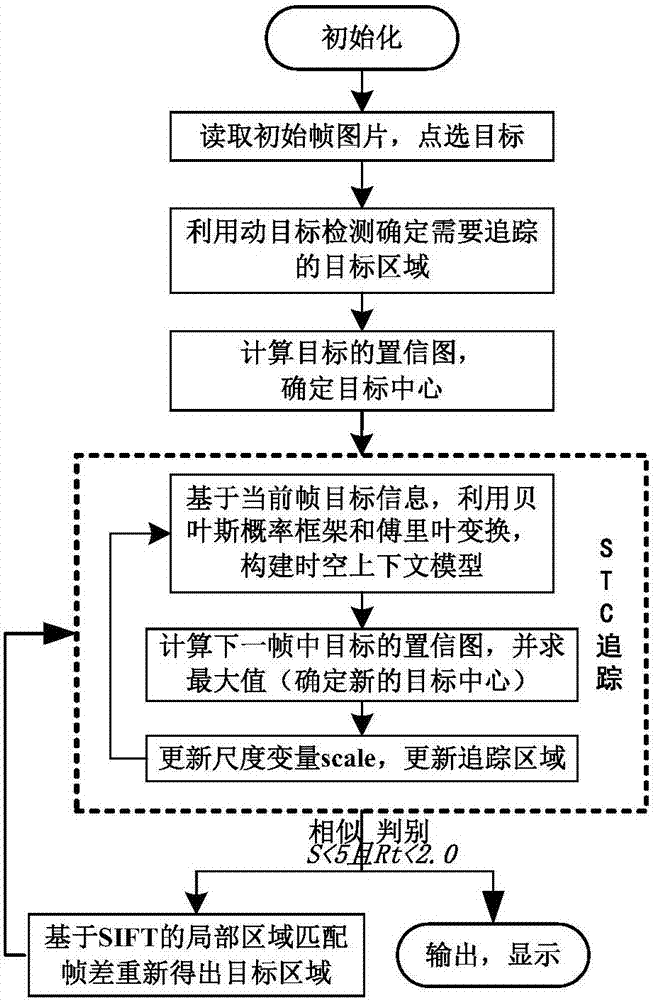

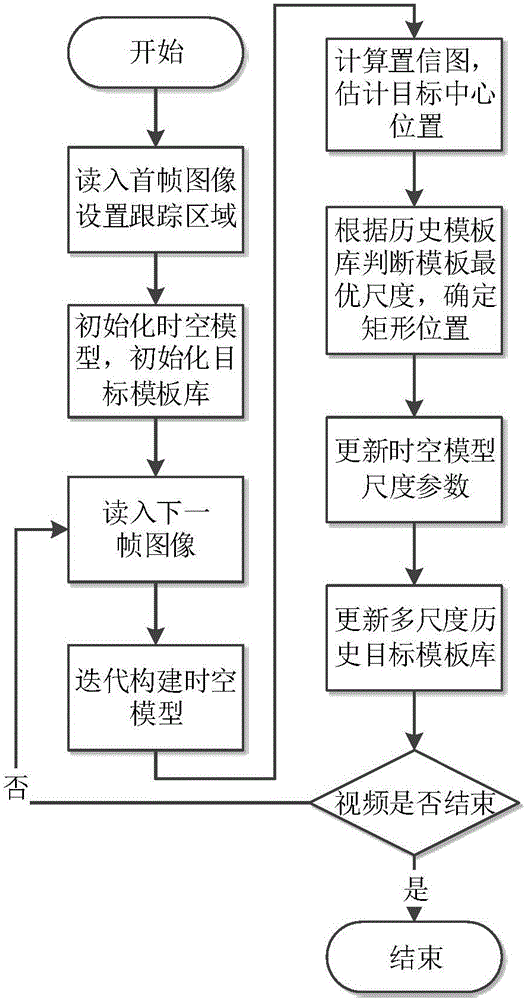

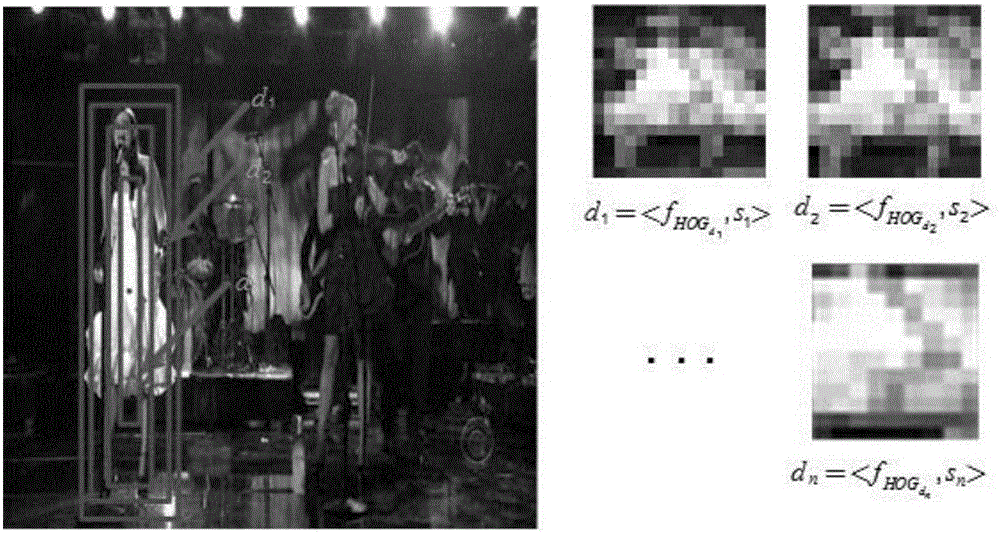

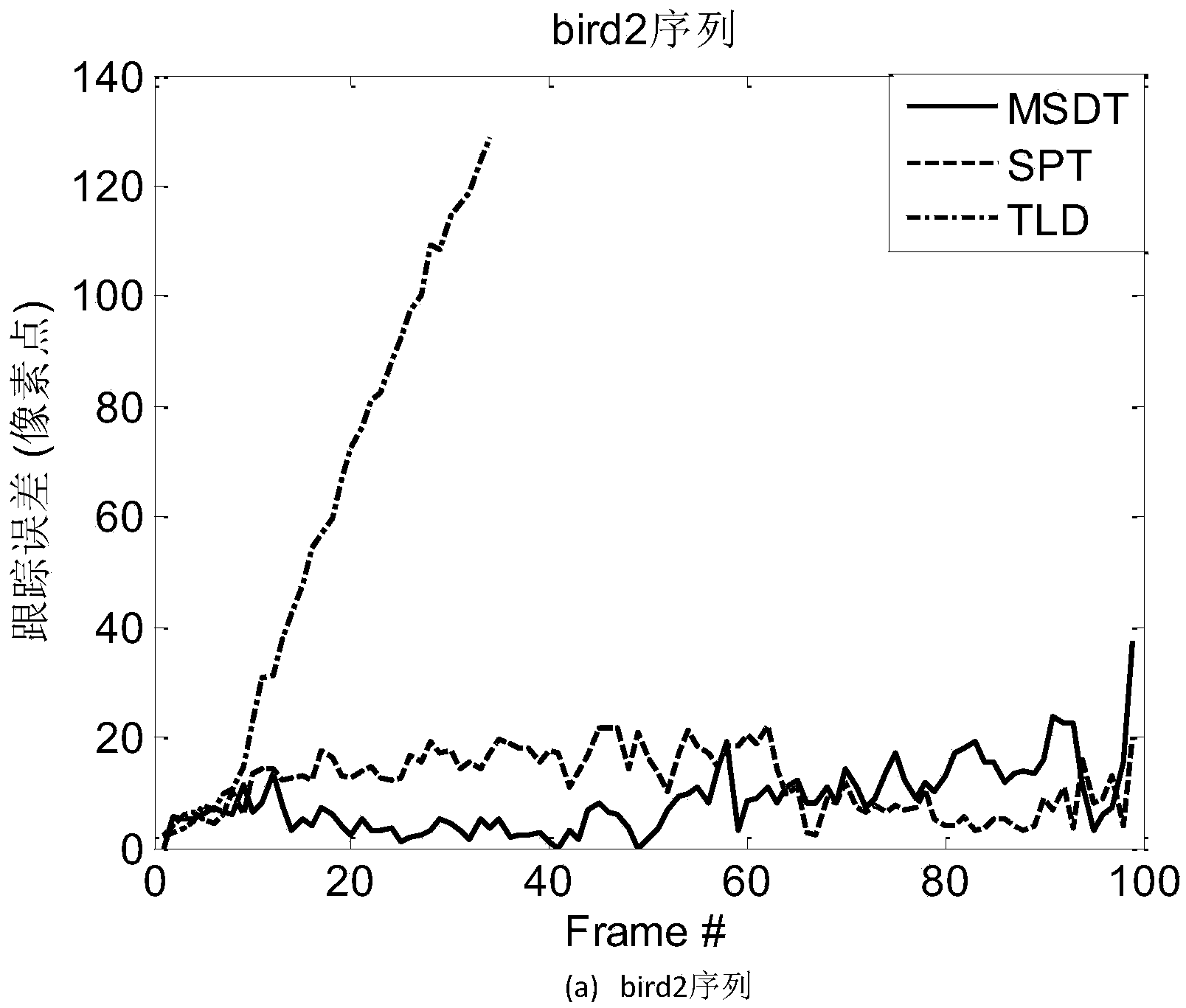

Object scale self-adaption tracking method based on spatial-temporal model

ActiveCN105117720AEnables real-time target trackingImprove accuracyCharacter and pattern recognitionPattern recognitionConfidence map

The invention discloses an object scale self-adaption tracking method based on a spatial-temporal model, and the method comprises the following steps: beginning a video, reading in a first frame of image, manually assigning the rectangle position of a tracked object; then based on context airspace, initializing the spatial-temporal model and a multiscale history object template library; next reading in a next frame of image, building the spatial-temporal model by iteration, calculating a confidence map, and estimating an object center position; then according to the history object template library, judging a template optimal scale, determining the rectangle position of the object, completing tracking of the current frame of object, and updating scale parameters of the spatial-temporal model and the multiscale history object template library; finally detecting whether the video is finished or not, continuously reading in a next frame if the video is not finished, or completing tracking. By adopting the object scale self-adaption tracking method, the object appearance scale change is effectively coped with under the conditions of illumination change, partial blocking and swift moving, and the robust tracking is realized.

Owner:JIANGNAN UNIV

Remote sensing type urban image extracting method

The invention provides a remote sensing type urban image extracting method. The method comprises the following steps: S1, performing characteristics extracting for altitude data of an ASTER VNIR satellite remote sensing image and derived products and a sample of a PALSAR HH / HV satellite remote sensing image; S2, extracting obvious urban and non-urban points based on the spectral characteristics of the urban and non-urban part; S3, performing confidence spreading by the LLGC using the obvious urban and non-urban points as the initial information based on the characteristics distribution feature of data to be classified, so as to obtain an urban confidence map; S4, obtaining the confidence of the whole remote sensing image, weighting and randomly sampling to obtain a training sample; S5, classifying the urban based on SVM, namely, classifying by the SVM method on the basis of the characteristic vectors extracted in the step S1 and the sample data extracted in step S4, and then obtaining a urban map subjected to binarization according to a classification label. With the adoption of the method, the problems of high cost and high time consumption and the like caused by manual sampling in the prior art can be solved.

Owner:INST OF AGRI RESOURCES & REGIONAL PLANNING CHINESE ACADEMY OF AGRI SCI

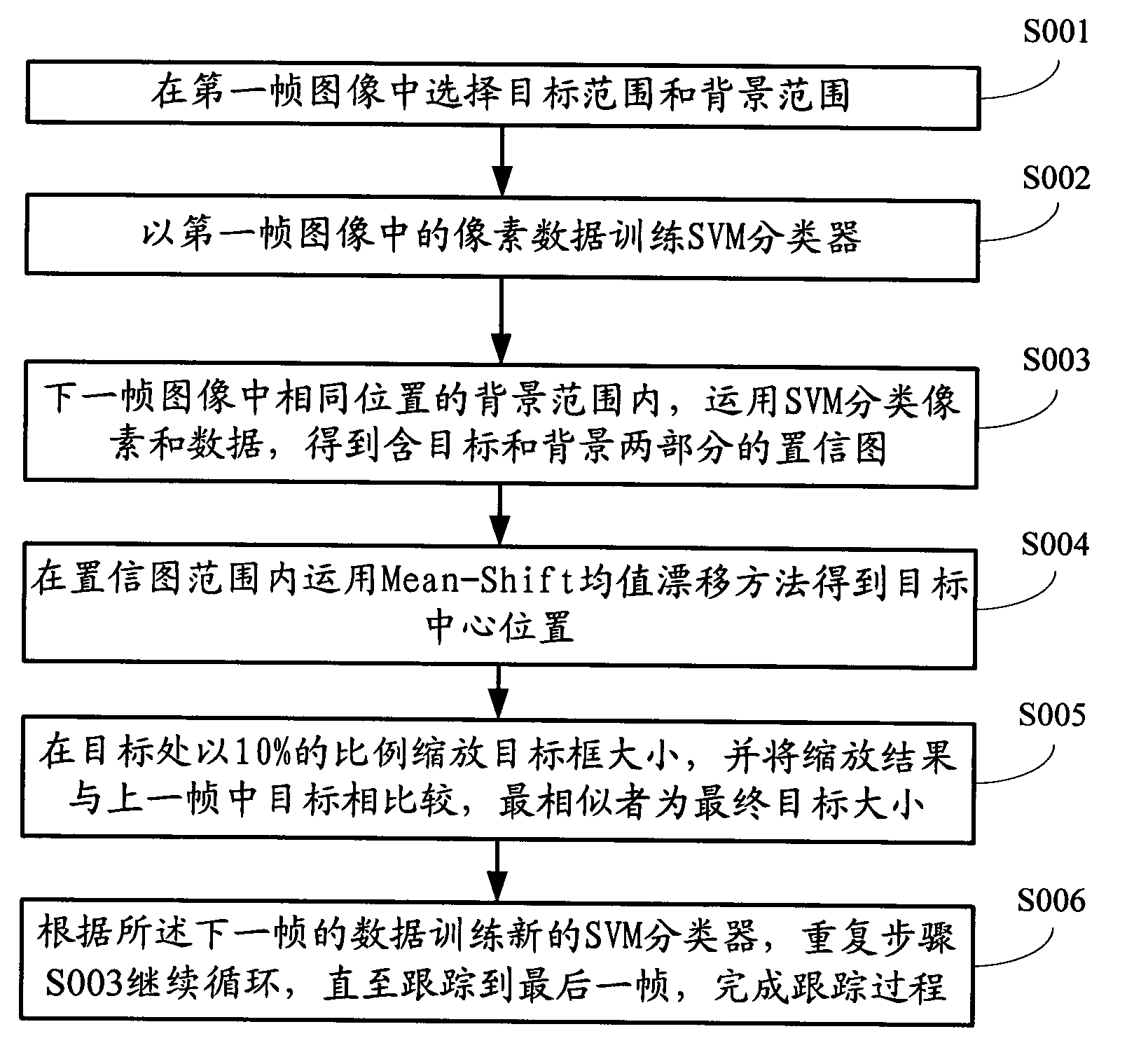

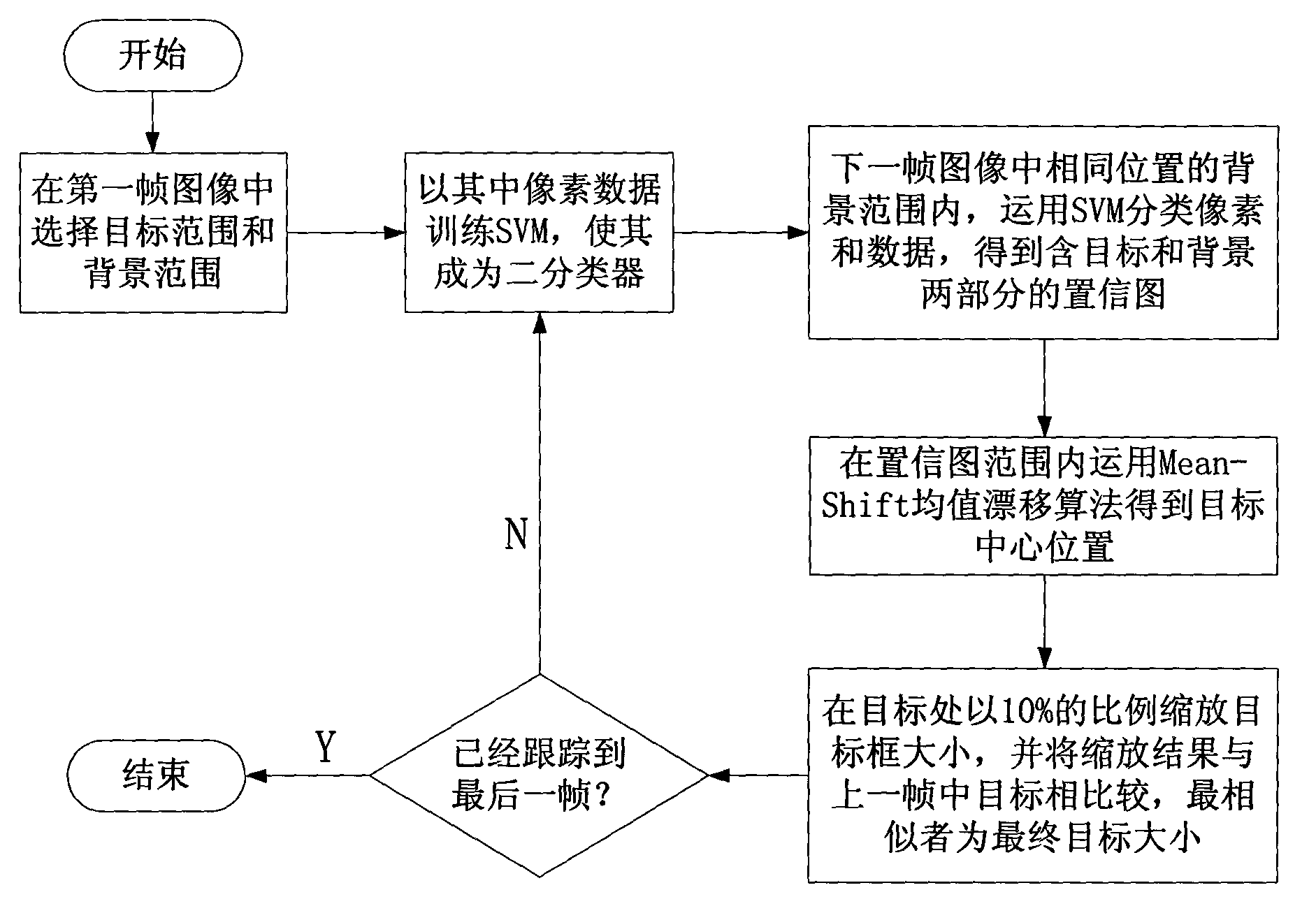

Video target tracking method based on SVM and Mean-Shift

InactiveCN103886322AImprove real-time performanceCharacter and pattern recognitionMean-shiftSvm classifier

The invention discloses a video target tracking method based on SVM and Mean-Shift. The method comprise the following steps: selecting target scope and background scope in a first frame image; training an SVM classifier based on pixel data in the first frame image; obtaining a confidence map comprising target and background two parts by utilizing the SVM classifier pixel and data in the background scope at the same position in the next frame image; obtaining a target center position within the confidence map scope by utilizing the Mean-Shift mean shift method; zooming the size of a target frame with the 10% proportion at the target position and comparing the zooming result with a target in the previous frame, the most similar one being the size of the final target; and training new SVM classifier based on the data of the next frame, repeating the step 3 to circle continuously until tracking to the last frame, and the tracking process is finished. The video target tracking method is based on the SVM training classifier and the Mean-Shift method, so that the video target tracking method is good in real-time performance, accuracy and robustness, and is appropriate for the dynamic background and the tracking of nonrigid targets.

Owner:RES INST OF SUN YAT SEN UNIV & SHENZHEN

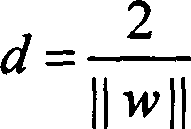

Multi-scale superpixel-fused target tracking method

The invention discloses a multi-scale superpixel-fused target tracking method. Through the method, a more accurate target confidence map can be obtained by constructing a superpixel-based discriminant appearance model, and consequently the target tracking accuracy degree and robustness are effectively improved. Specifically, the method includes the first step of adopting superpixel classification results with different scales to vote for properties of one pixel point and then obtaining the more accurate target confidence map, and the second step of updating the appearance model by continuously updating a classifier so that the appearance model can continuously adapt to scenes such as illumination changes and complicated backgrounds. Consequently, more accurate and robust tracking can be achieved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

An apparatus, a method and a computer program for image processing

ActiveCN104662896AChange sizeComputational complexity has no effectImage enhancementImage analysisParallaxImaging processing

There is provided methods, apparatuses and computer program products for image processing in which a pair of images may be downsampled to lower resolution pair of images and further to obtain a disparity image representing estimated disparity between at least a subset of pixels in the pair of images. A confidence of the disparity estimation may be obtained and inserted into a confidence map. The disparity image and the confidence map may be filtered jointly to obtain a filtered disparity image and a filtered confidence map by using a spatial neighborhood of the pixel location. An estimated disparity distribution of the pair of images may be obtained through the filtered disparity image and the confidence map.

Owner:WSOU INVESTMENTS LLC

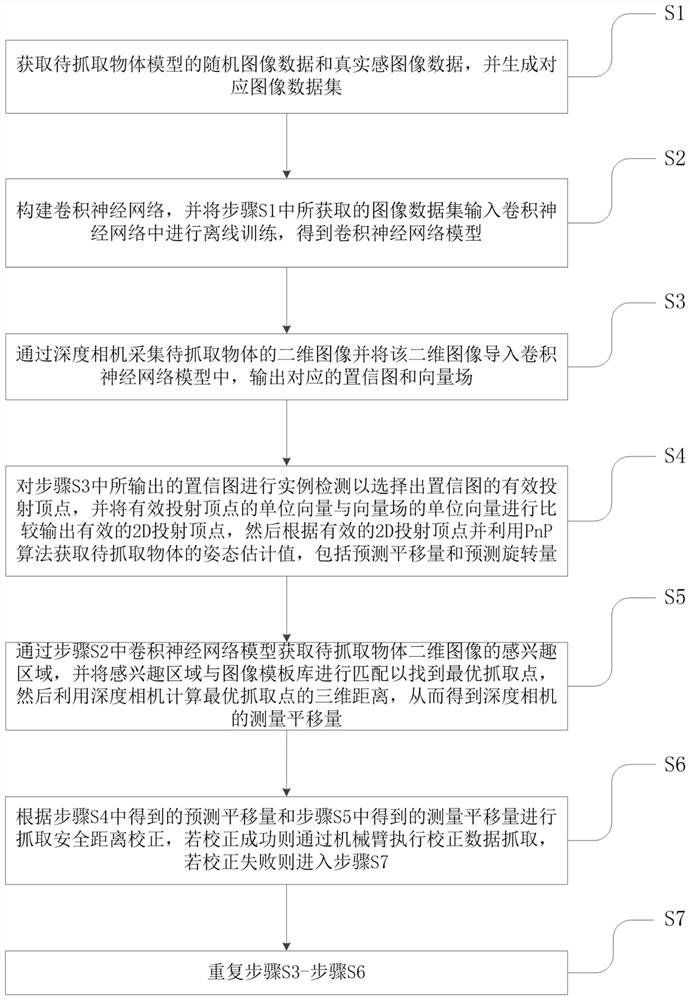

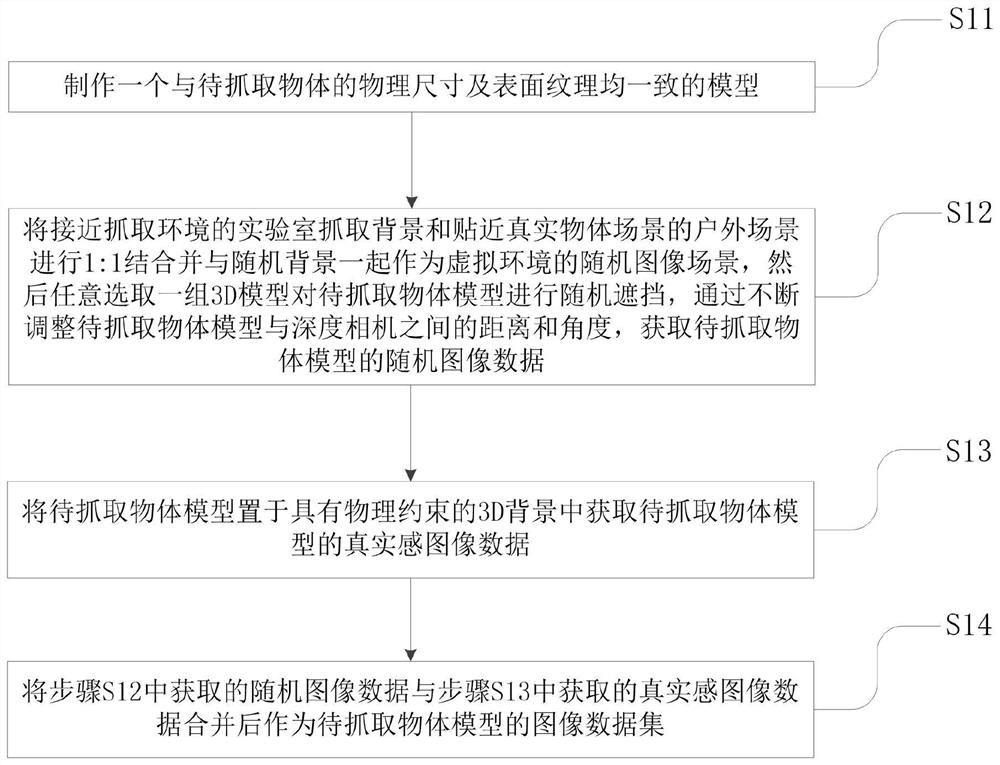

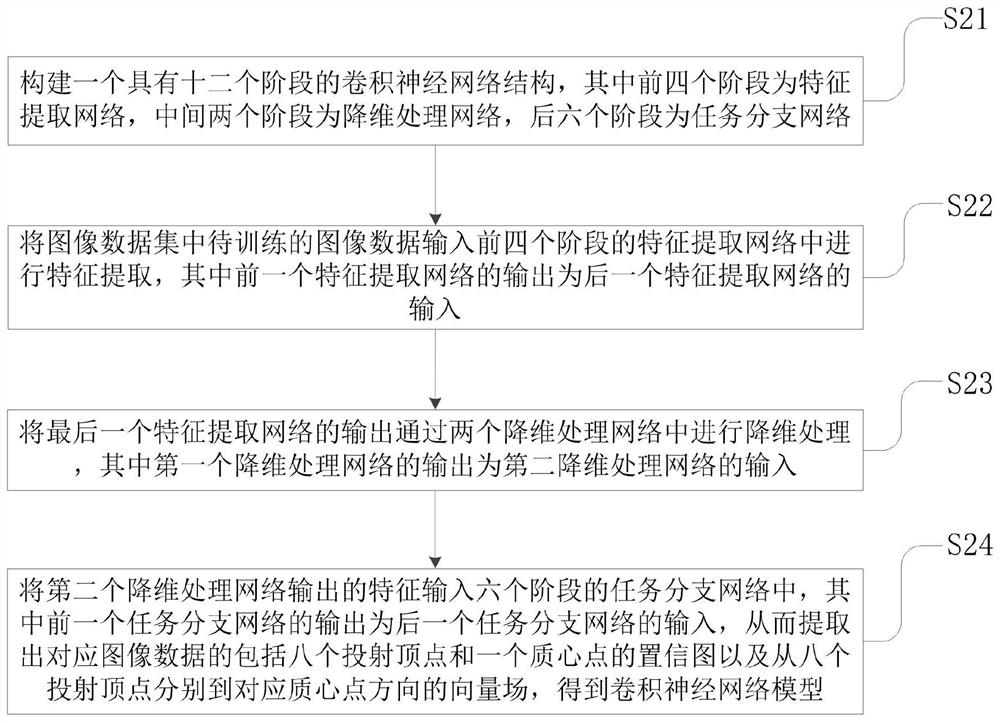

Single-image robot disordered target grabbing method based on pose estimation and correction

ActiveCN111738261ARobustImprove robustnessProgramme-controlled manipulatorImage enhancementData scrapingData set

The invention particularly discloses a single-image robot disordered target grabbing method based on pose estimation and correction. The method comprises the steps: S1, generating an image data set ofa to-be-grabbed object model; S2, constructing a convolutional neural network model according to the image data set in the step S1; S3, importing the two-dimensional image of the to-be-grabbed objectinto the trained convolutional neural network model to extract a corresponding confidence map and a vector field; S4, obtaining a predicted translation amount and a predicted rotation amount of the to-be-grabbed object; S5, finding the optimal grabbing point of the object to be grabbed and calculating the measurement translation amount of the depth camera; S6, performing grabbing safety distancecorrection according to the predicted translation amount of the object to be grabbed and the measured translation amount of the depth camera, executing correction data grabbing if the correction succeeds, and entering S7 if the correction fails; and S7, repeating the steps S3-S6. The disordered target grabbing method provided by the invention has the characteristics of high reliability, strong robustness and good real-time performance, can meet the existing industrial production requirements, and has a relatively high application value.

Owner:张辉

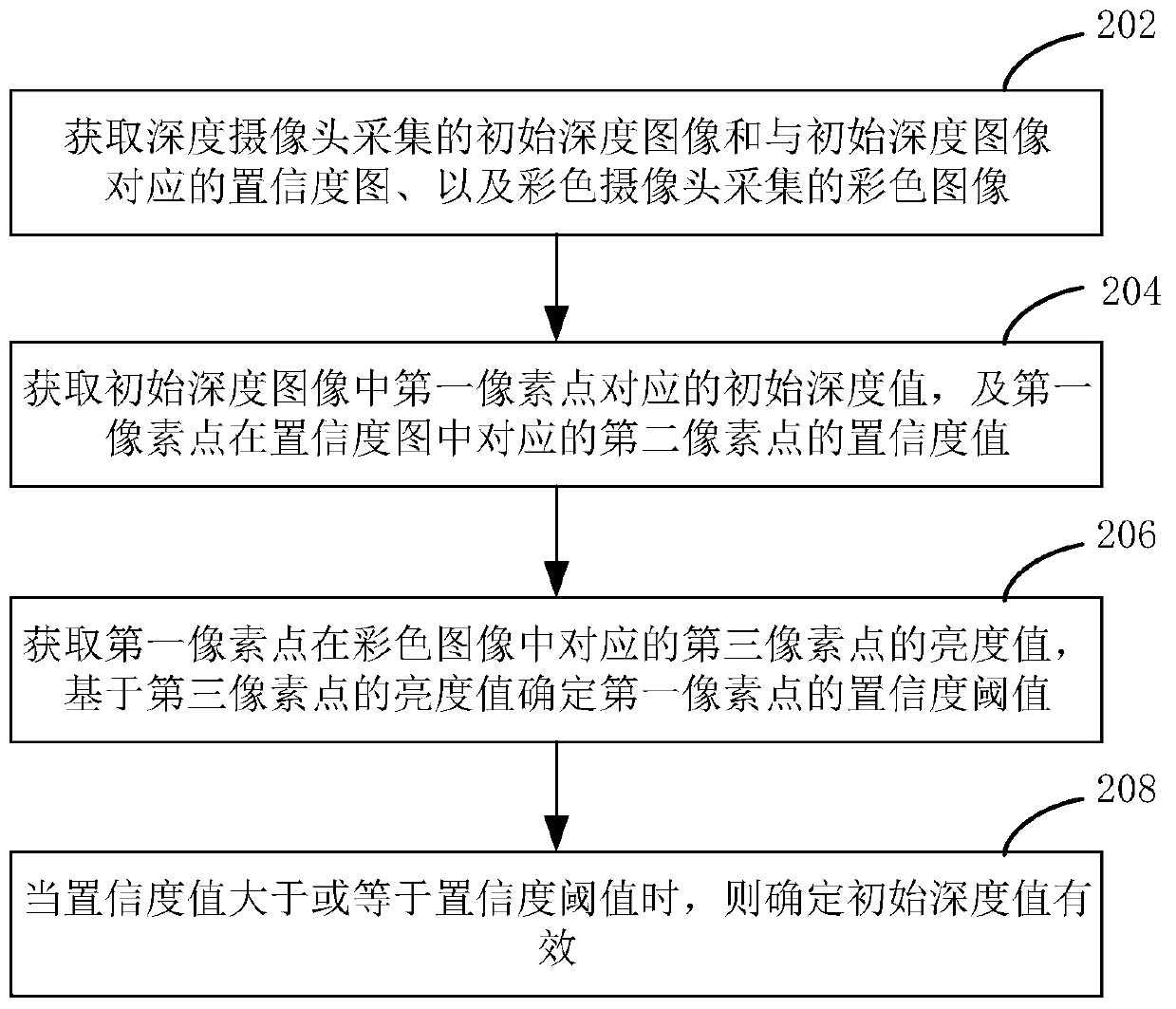

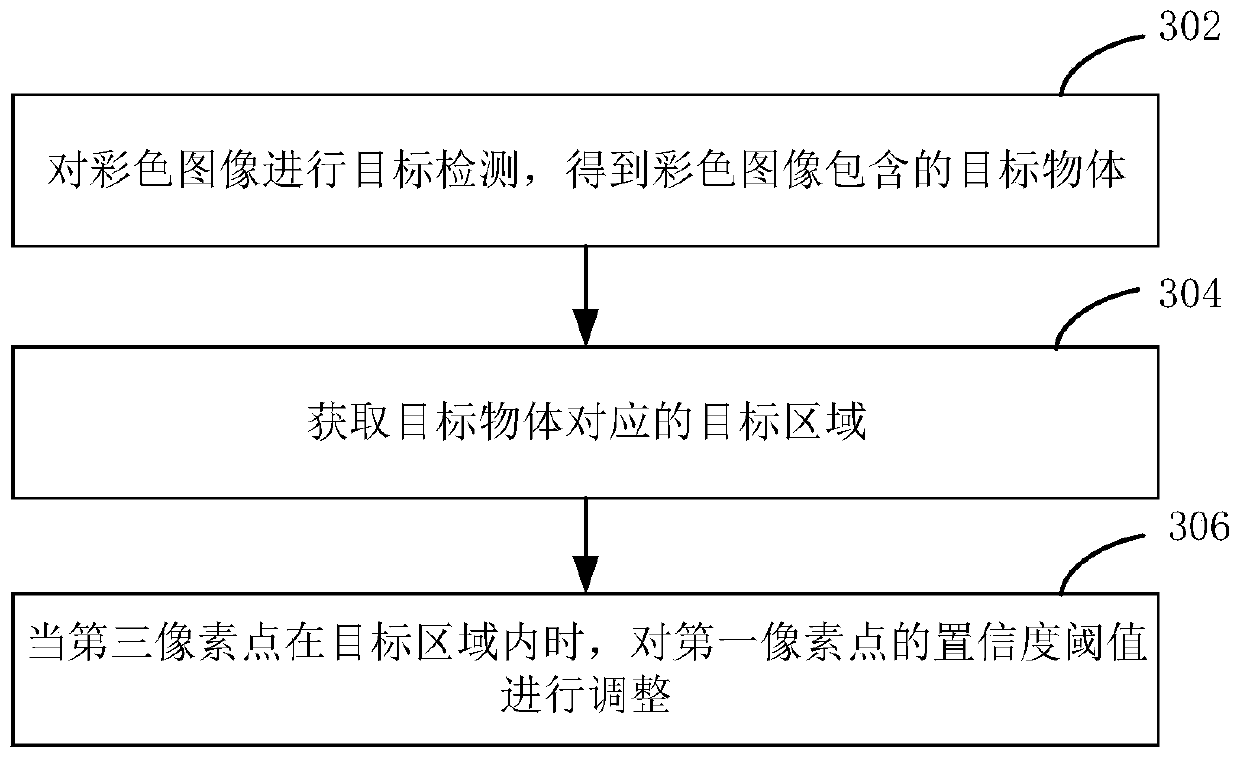

Image processing method and device, electronic equipment and computer readable storage medium

The invention relates to an image processing method and device, electronic equipment and a computer readable storage medium. The method comprises the following steps of: obtaining a sample; obtainingan initial depth image collected by a depth camera and a confidence map corresponding to the initial depth image; acquiring a color image by the color camera; obtaining an initial depth value corresponding to a first pixel point in the initial depth image, obtaining a confidence coefficient map of a first pixel point and a confidence coefficient value of a second pixel point corresponding to the first pixel point in the confidence coefficient map, obtaining a brightness value of a third pixel point corresponding to the first pixel point in the color image, determining a confidence coefficientthreshold of the first pixel point based on the brightness value, and determining that an initial depth value is effective when the confidence coefficient value is greater than or equal to the confidence coefficient threshold. The confidence coefficient threshold value can be determined according to the brightness of the corresponding pixel point in the color image, and whether the depth information of the corresponding pixel point in the depth image is effective or not is determined according to the confidence coefficient threshold value, so that the accuracy of the depth information can be improved.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

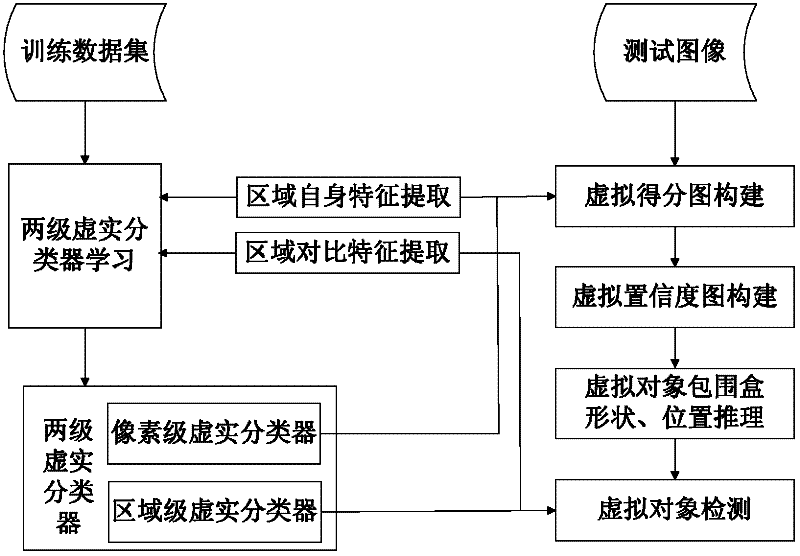

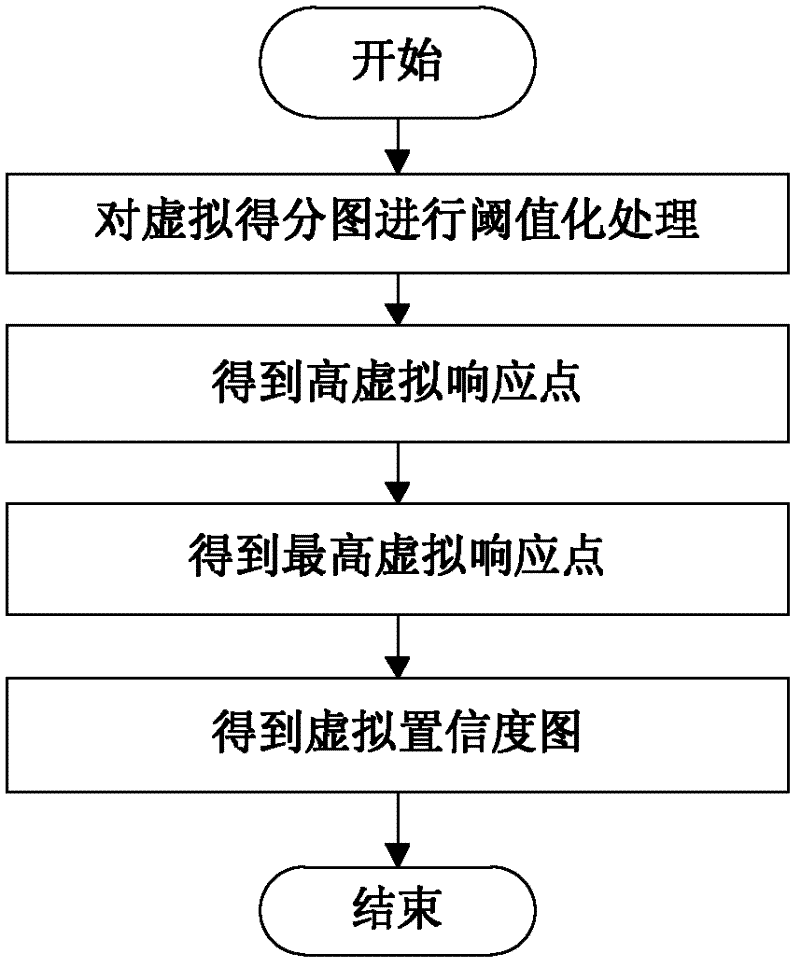

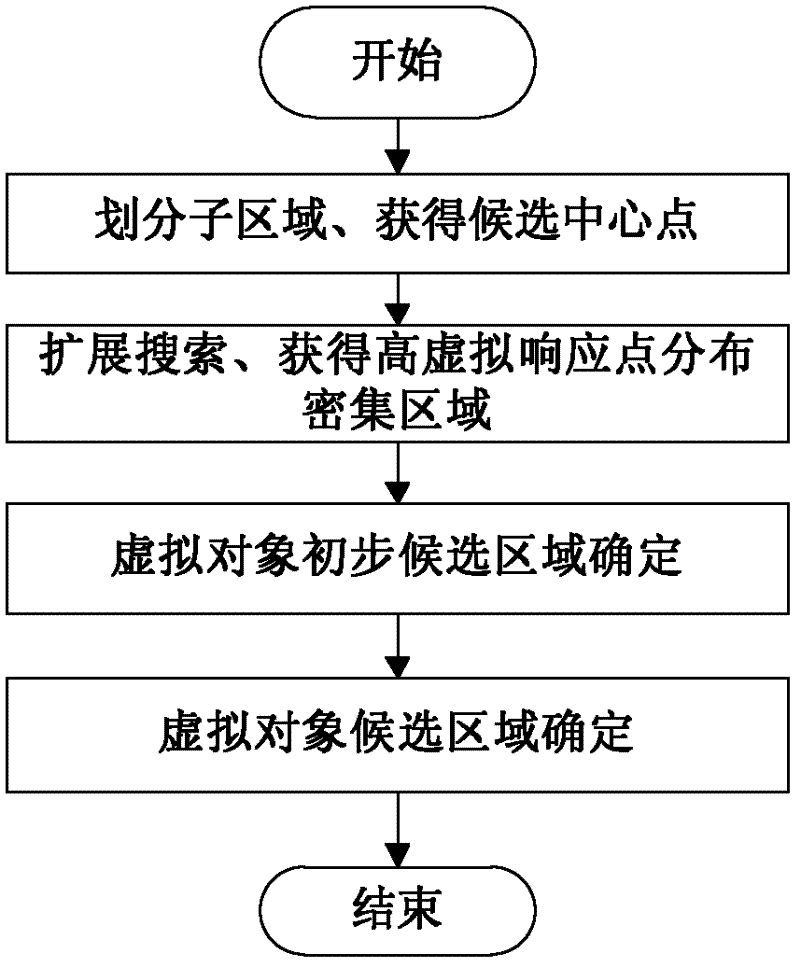

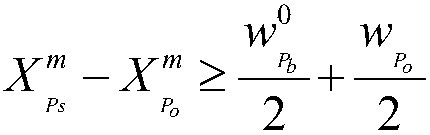

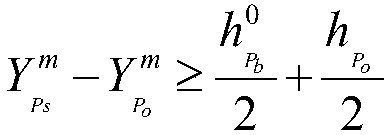

Confidence map-based method for distinguishing and detecting virtual object of augmented reality scene

InactiveCN102509104AMeet the needs of final inspectionImprove positionCharacter and pattern recognitionScore plotConfidence map

The invention relates to a confidence map-based method for distinguishing and detecting a virtual object of an augmented reality scene. The method comprises the following steps of: selecting vitality and reality classification features; constructing a pixel level vitality and reality classifier by means of the vitality and reality classification features; extracting regional comparison features of the augmented reality scene and a real scene respectively by means of the vitality and reality classification features, and constructing a region level vitality and reality classifier; giving a testaugmented reality scene, detecting by means of the pixel level vitality and reality classifier and a small-size detection window to acquire a virtual score plot which reflects each pixel vitality andreality classification result; defining a virtual confidence map, and acquiring the virtual confidence map of the test augmented reality scene by thresholding; acquiring the rough shape and the position of a virtual object bounding box according to the distribution situation of high virtual response points in the virtual confidence map; and detecting by means of the region level vitality and reality classifier and a large-size detection window in the test augmented reality scene to acquire a final detection result of the virtual object. The method can be applied to the fields of film and television manufacturing, digital entertainment, education training and the like.

Owner:BEIHANG UNIV

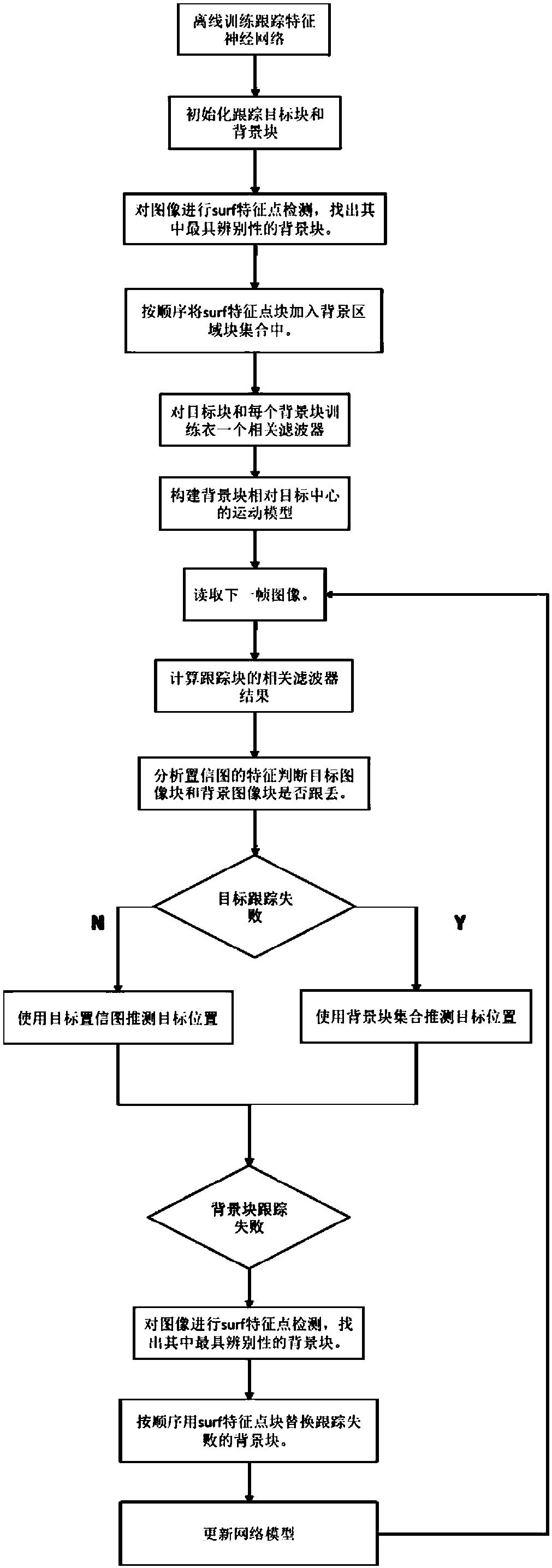

Target tracking method fusing convolutional network features and discriminant correlation filter

ActiveCN108470355AOptimize underlying featuresImage enhancementImage analysisDiscriminantCorrelation filter

The invention discloses a target tracking method fusing convolutional network features and a discriminant correlation filter. An end-to-end lightweight network system structure is established; and theconvolutional features are trained by learning rich stream information in continuous frames, thereby improving feature representation and tracking precision. A correlation filter tracking component is constructed as a special layer in a network to track a single image block; in the tracking process, a target block and multiple background blocks are tracked at the same time; by perceiving a structural relationship between a target and surrounding background blocks, a model is built for a part with a high discrimination degree for the target and a surrounding environment; a target tracking effect is measured through a relationship between a peak sidelobe ratio and a peak value of a confidence map; and under the condition of high tracking difficulty such as large-area shielding, target shapeextreme deformation, illumination drastic change, locating is performed by automatically utilizing the discriminated background part.

Owner:SUN YAT SEN UNIV

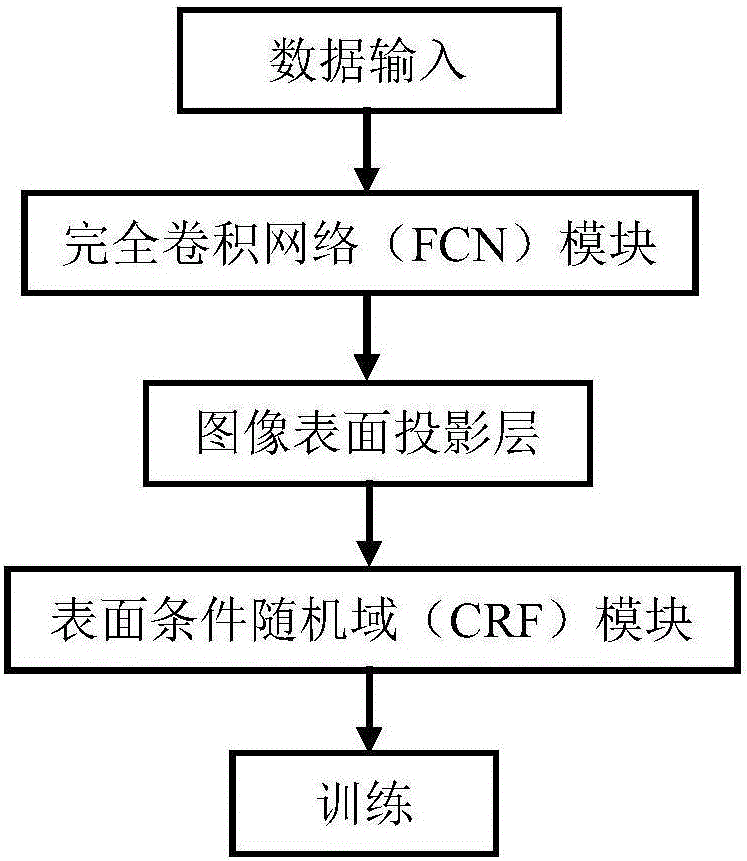

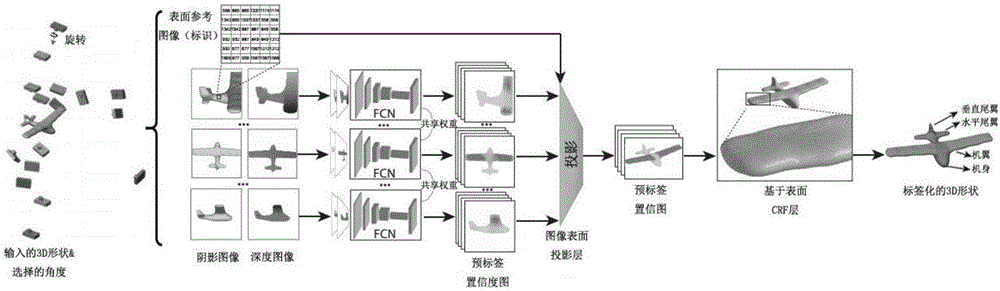

Three-dimensional shape segmentation and semantic marking method based on projection convolutional network

The invention proposes a three-dimensional shape segmentation and semantic marking method based on a projection convolutional network. An input is represented by adoption of a three-dimensional shape of a polygonal grid, information points cover a shape surface to a large extent, a rendering shape is a shadow image and a depth image, a dual-channel image is generated, through a fully-connected network (FCN) module of a same image, a confidence map is output for each function module of each input image, an image surface projection layer aggregates confidence maps of a plurality of views, combined with a boundary clue, surface condition random field (CRF) spreading is performed, modules of a task are trained, and finally a segmentation semantic marking result is obtained. The three-dimensional shape segmentation and semantic marking method based on a projection convolutional network does not need to use any manpower to adjust a geometric descriptor, reduces occlusion and covers the shape surface, does not lose a significant part label, effectively associates information, an occlusive part is also marked, integrity and coherence of segmentation are ensured, and the method is remarkably superior to previous methods.

Owner:SHENZHEN WEITESHI TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com