Object scale self-adaption tracking method based on spatial-temporal model

An adaptive tracking, spatiotemporal model technology, applied in character and pattern recognition, instruments, computer parts, etc., can solve problems such as the decline of tracking accuracy, and achieve the effect of robust tracking, improved accuracy, and wide application prospects.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] In order to better illustrate the purpose, concrete steps and characteristics of the present invention, the present invention will be described in further detail below in conjunction with the accompanying drawings:

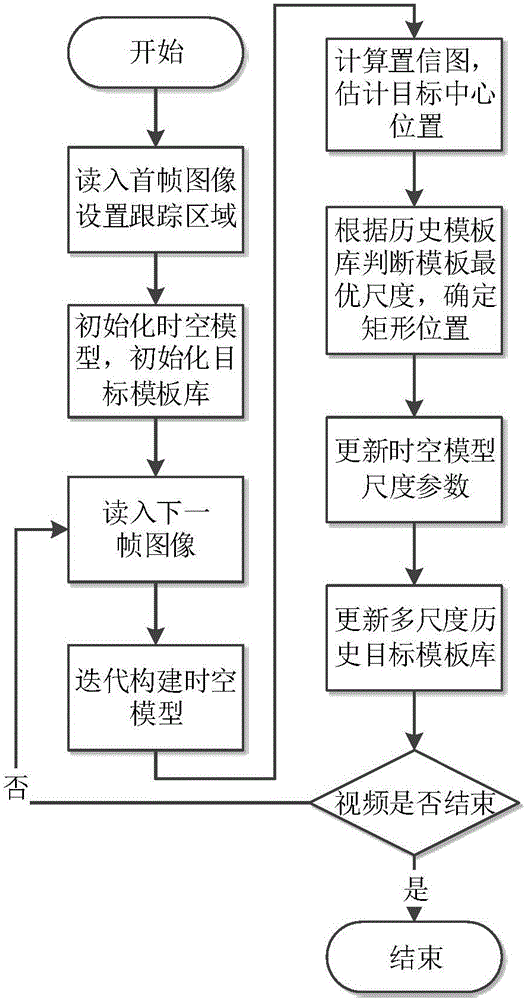

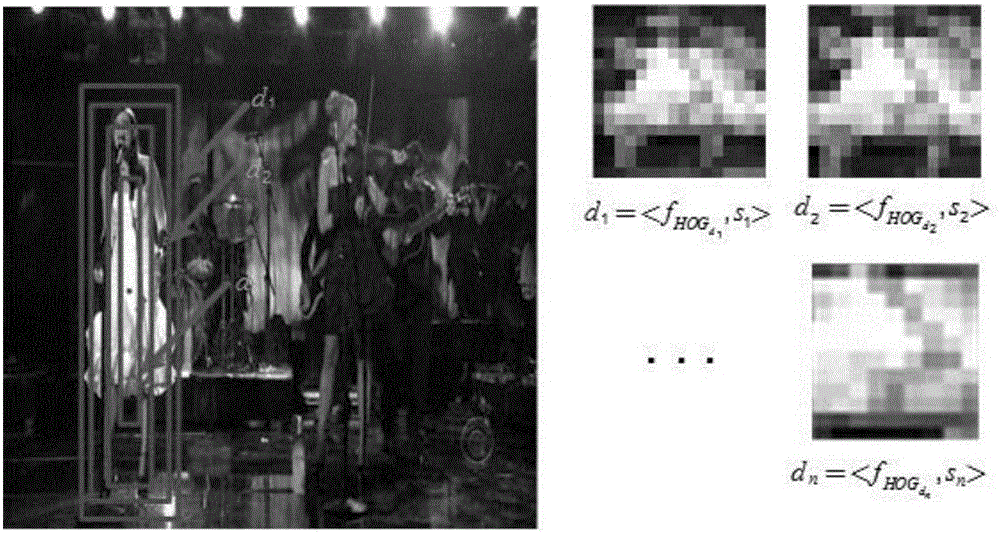

[0034] refer to figure 1 , a kind of target scale self-adaptive tracking method based on the space-time model proposed by the present invention mainly includes the following steps:

[0035] Step 1. Read in the first frame image Image 1 , manually specify the tracking target rectangle position Ζ;

[0036] Step 2. Based on the context space Ω c , initialize the space-time model make

[0037] H 1 s t c ( X ) = h 1 S C ( X ) = F - ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com