Patents

Literature

231 results about "Time of flight sensor" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

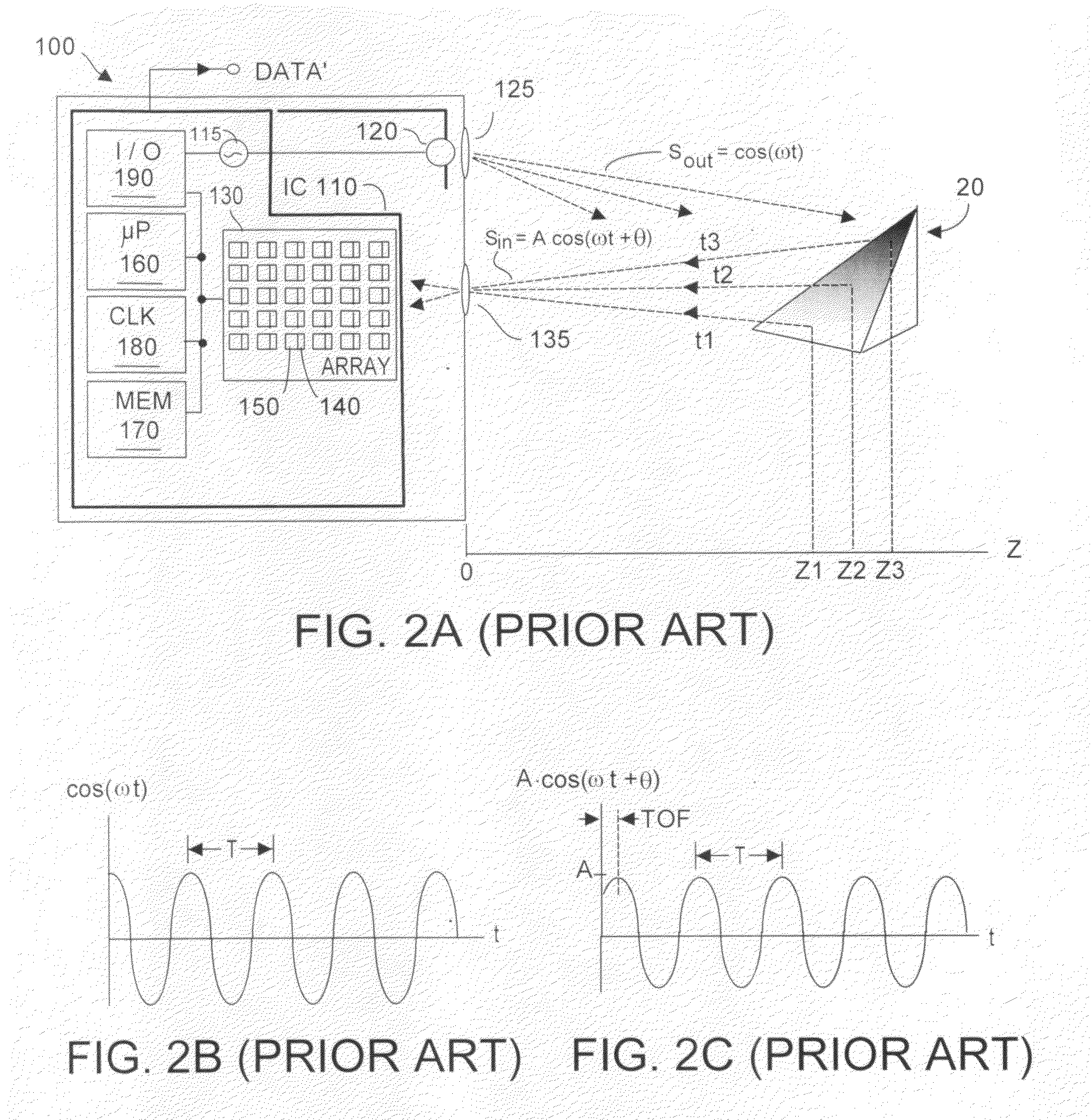

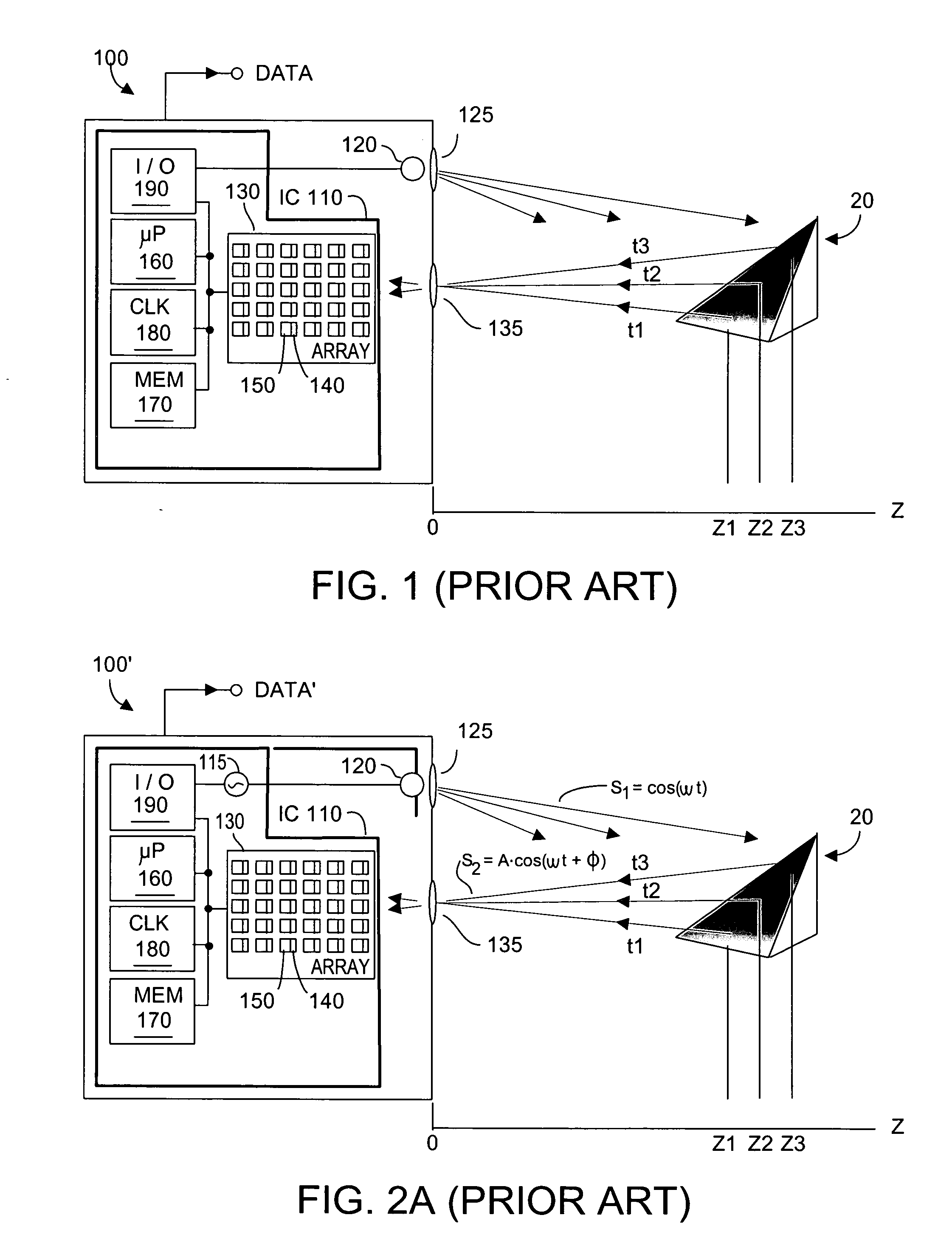

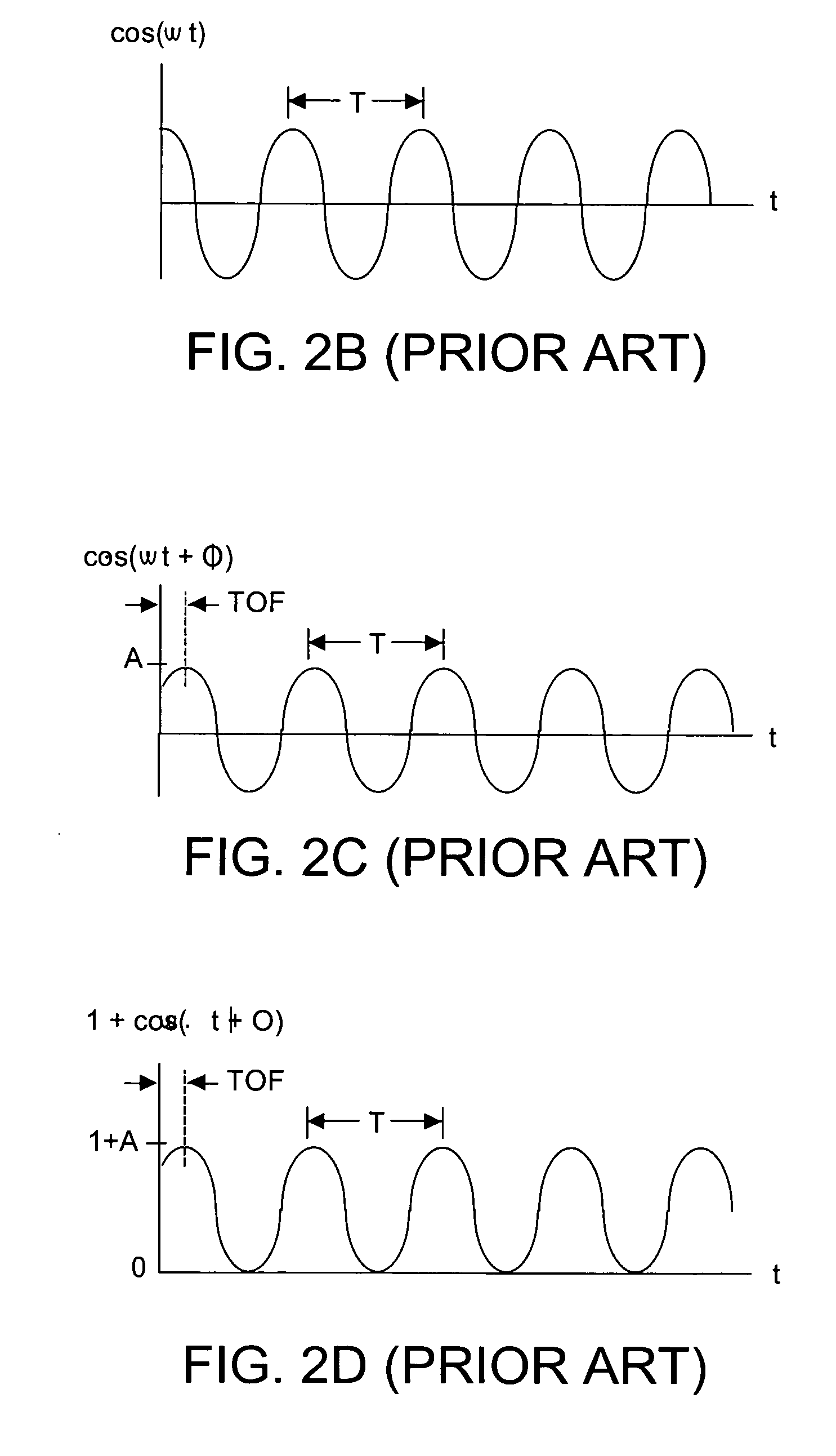

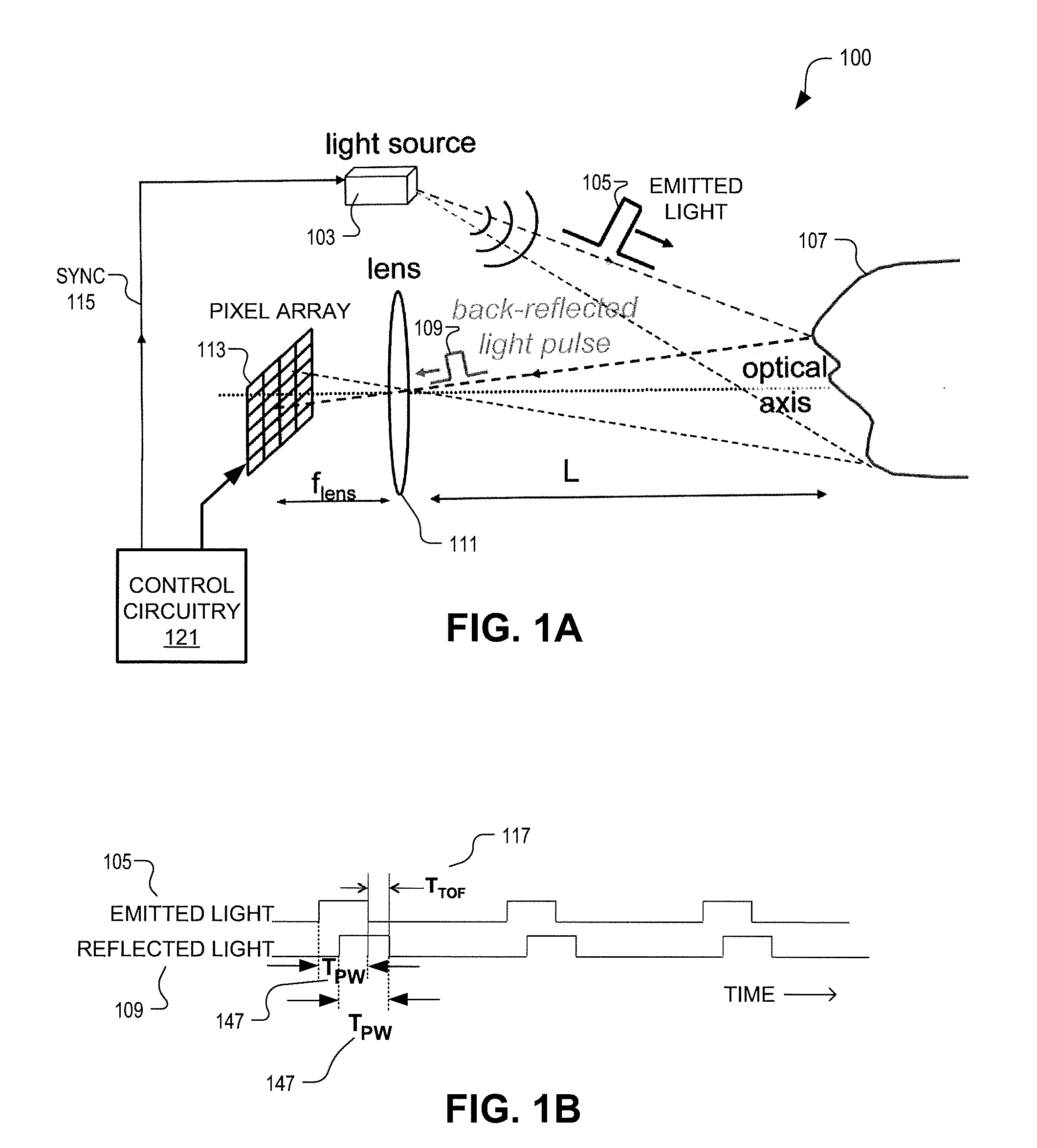

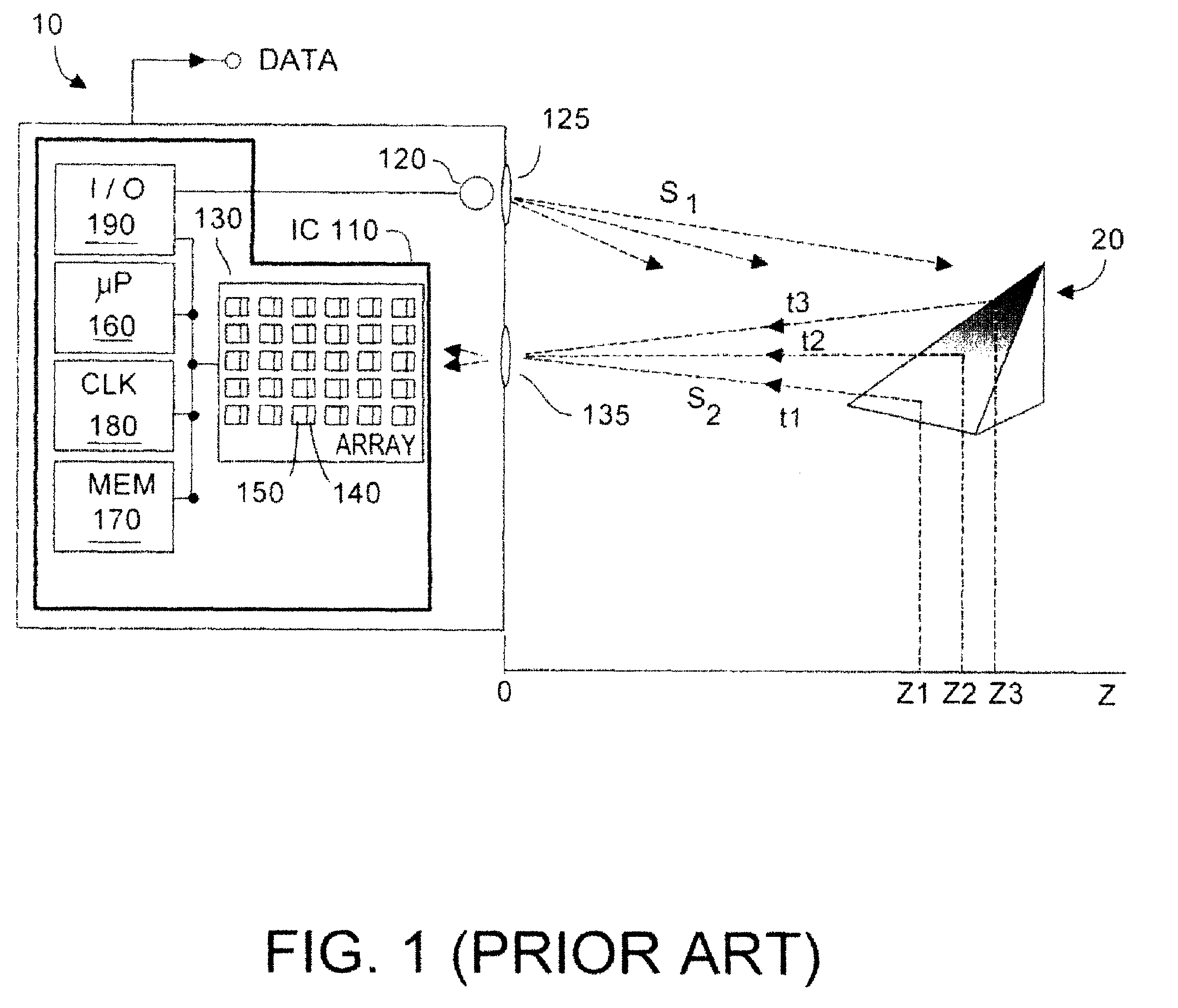

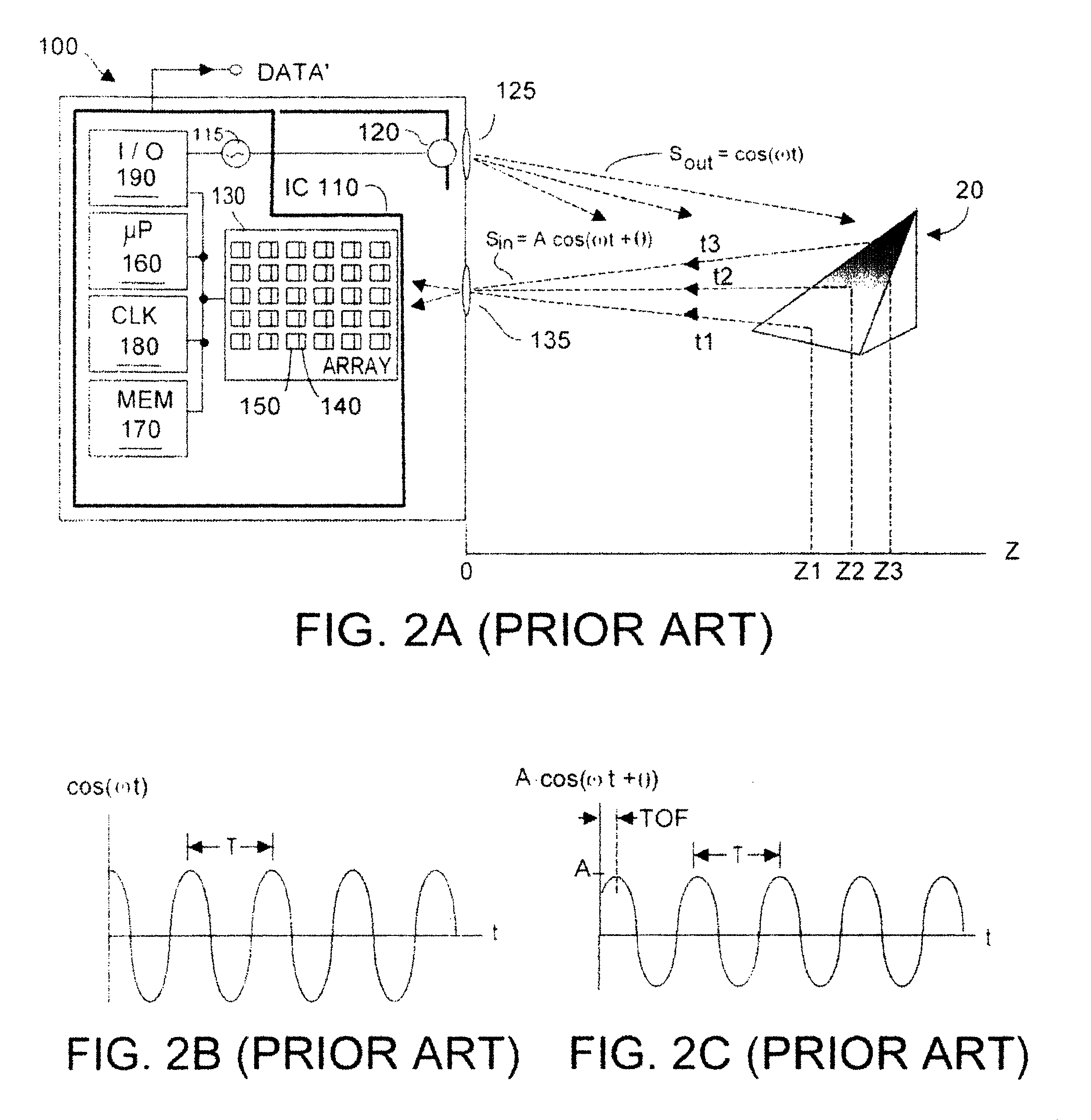

Flash Lidar Time of Flight Sensor Technology Principle. Time-of-Flight (ToF) is a method for measuring the distance between a sensor and an object, based on the time difference between the emission of a signal and its return to the sensor, after being reflected by an object.

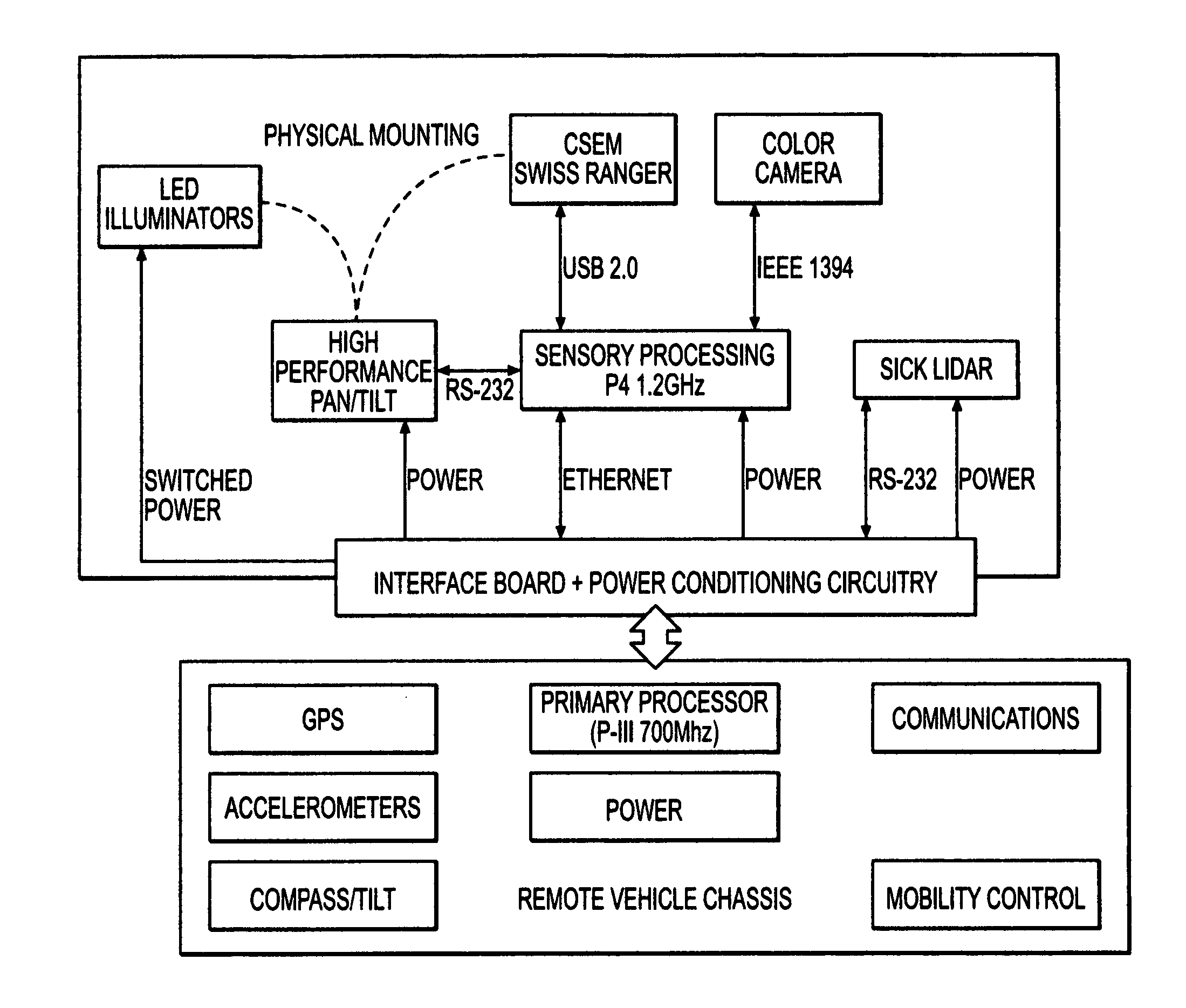

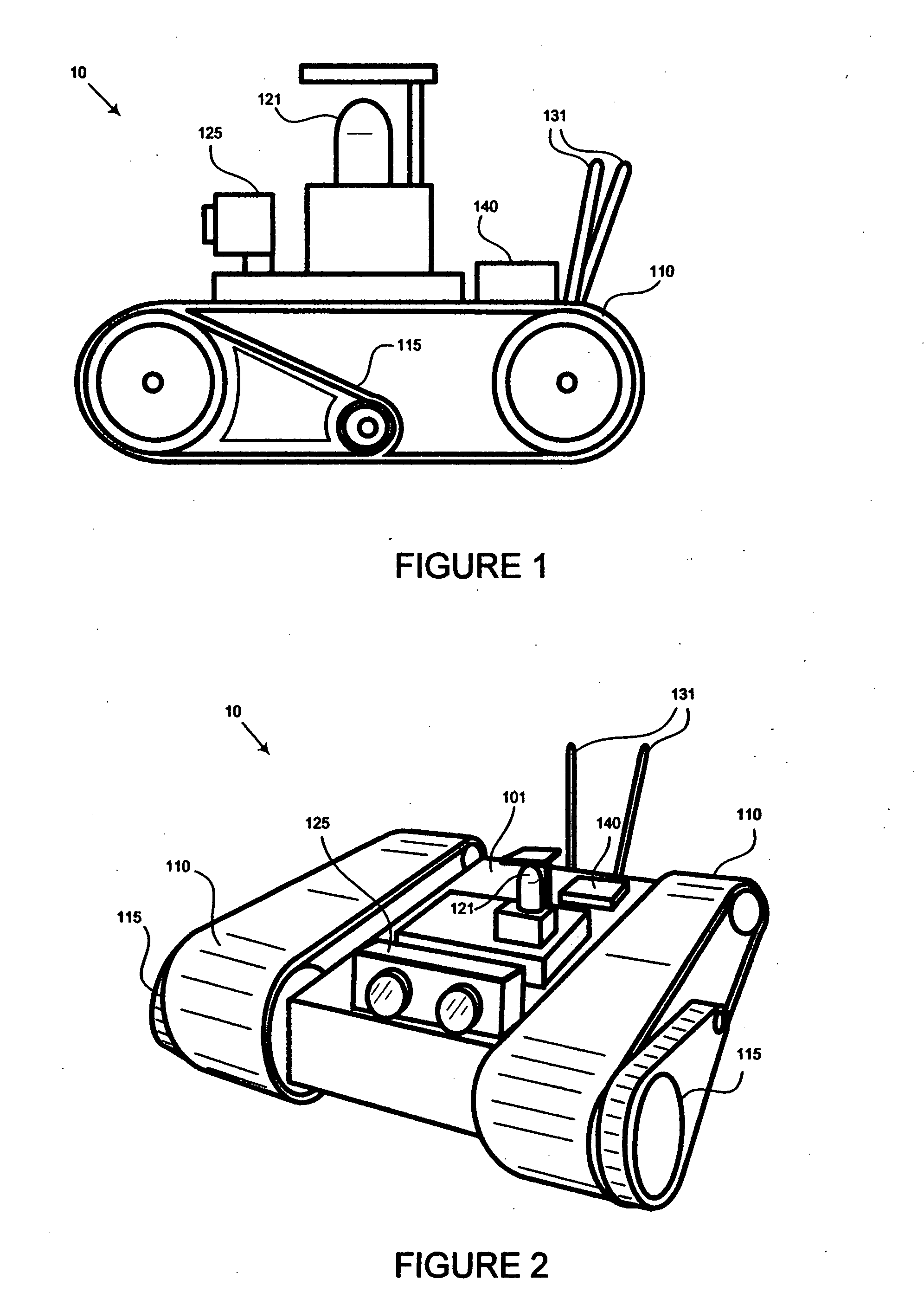

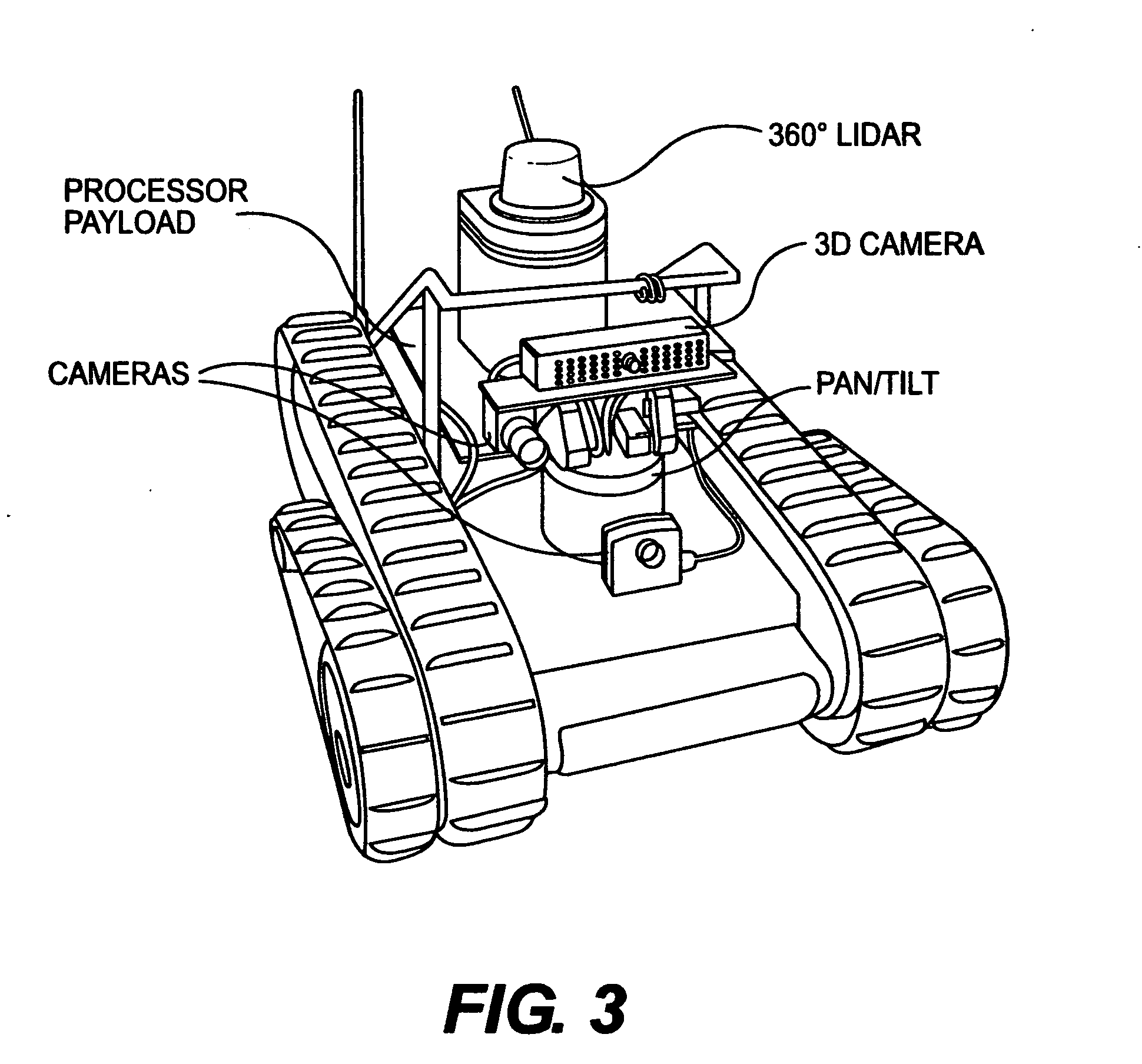

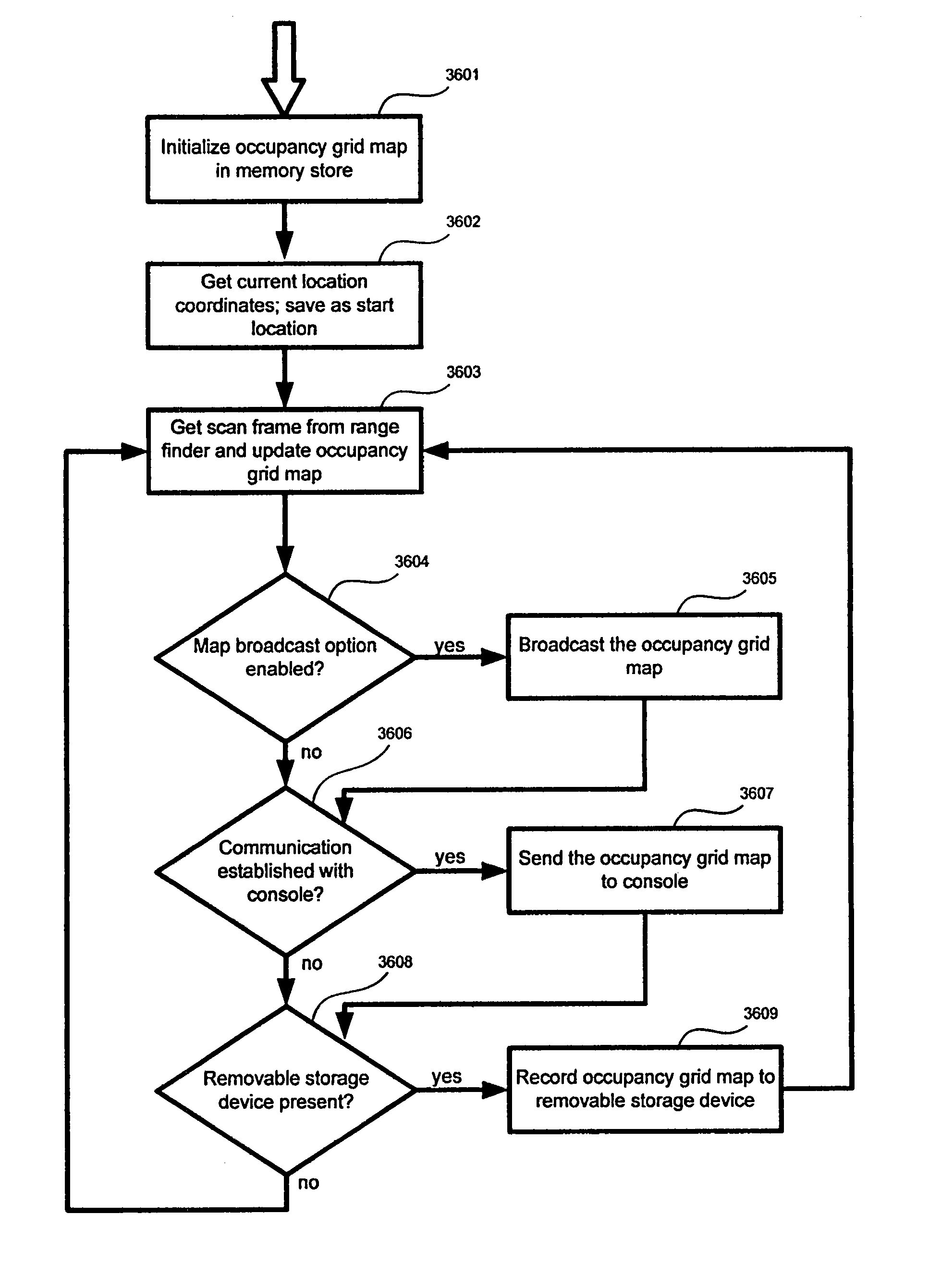

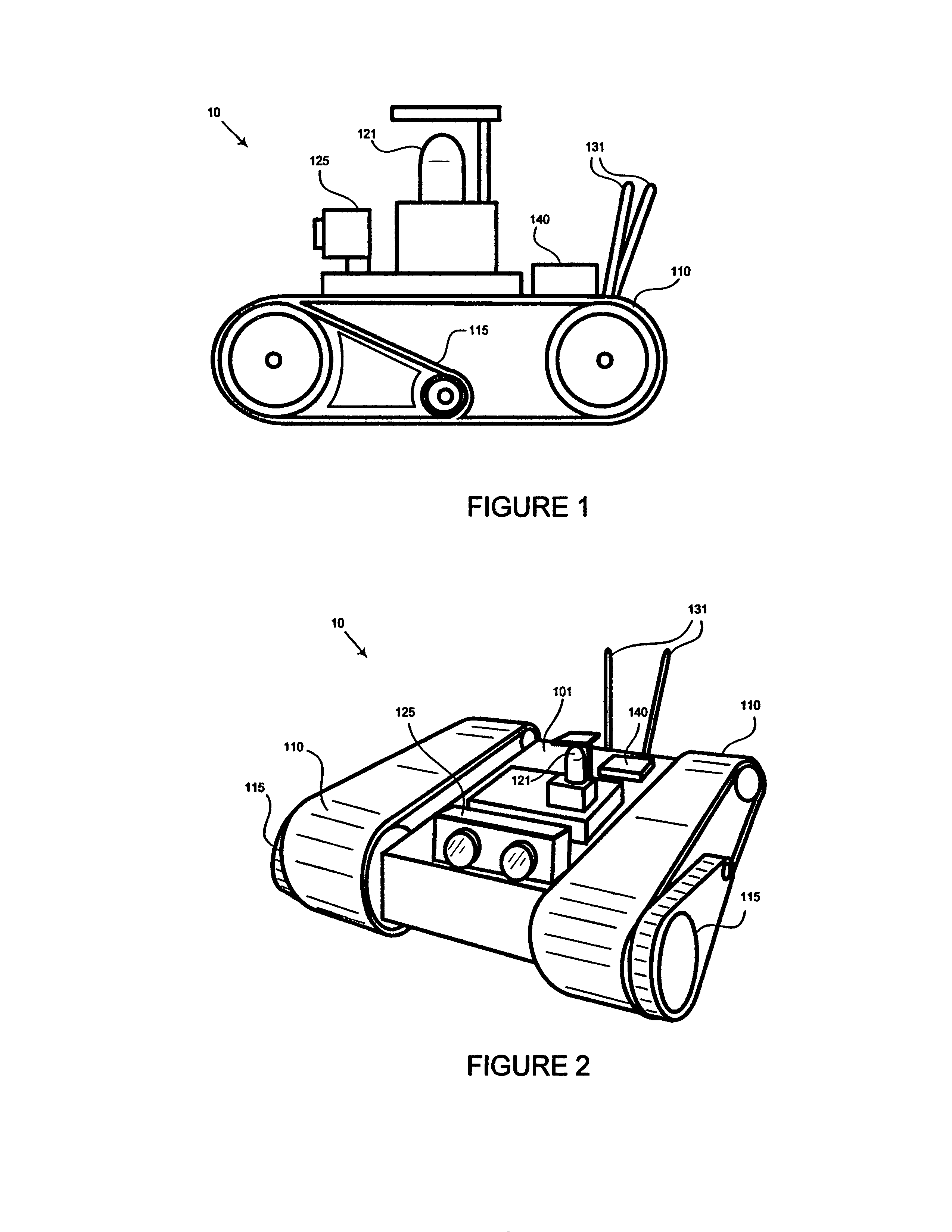

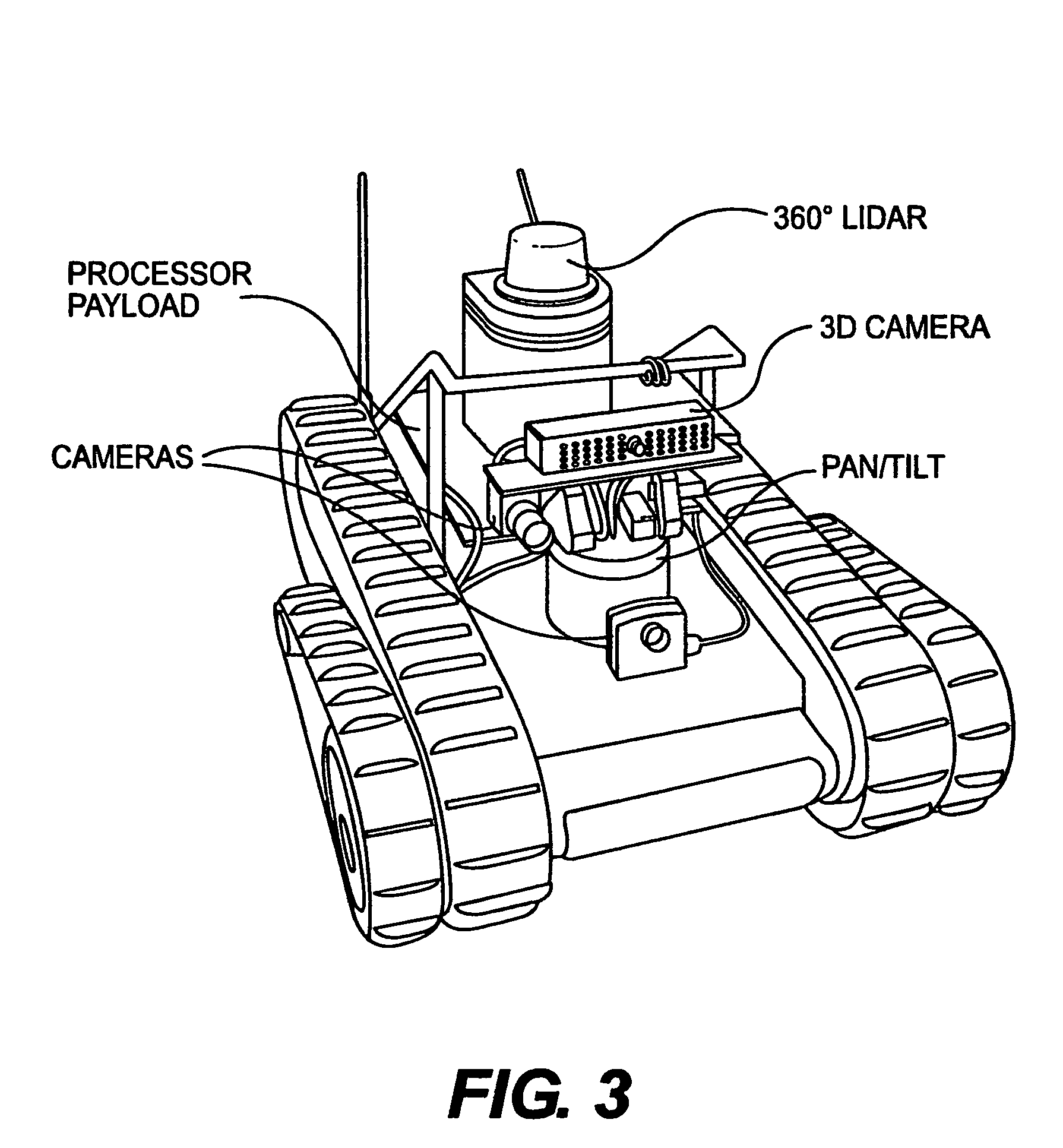

Method and system for controlling a remote vehicle

ActiveUS20080027591A1Autonomous decision making processDigital data processing detailsAutonomous behaviorTime of flight sensor

A system for controlling more than one remote vehicle. The system comprises an operator control unit allowing an operator to receive information from the remote vehicles and send commands to the remote vehicles via a touch-screen interface, the remote vehicles being capable of performing autonomous behaviors using information received from at least one sensor on each remote vehicle. The operator control unit sends commands to the remote vehicles to perform autonomous behaviors in a cooperative effort, such that high-level mission commands entered by the operator cause the remote vehicles to perform more than one autonomous behavior sequentially or concurrently. The system may perform a method for generating obstacle detection information from image data received from one of a time-of-flight sensor and a stereo vision camera sensor.

Owner:IROBOT CORP

Method and system for controlling a remote vehicle

ActiveUS8577538B2Autonomous decision making processDigital data processing detailsTime of flight sensorAutonomous behavior

A system for controlling more than one remote vehicle. The system comprises an operator control unit allowing an operator to receive information from the remote vehicles and send commands to the remote vehicles via a touch-screen interface, the remote vehicles being capable of performing autonomous behaviors using information received from at least one sensor on each remote vehicle. The operator control unit sends commands to the remote vehicles to perform autonomous behaviors in a cooperative effort, such that high-level mission commands entered by the operator cause the remote vehicles to perform more than one autonomous behavior sequentially or concurrently. The system may perform a method for generating obstacle detection information from image data received from one of a time-of-flight sensor and a stereo vision camera sensor.

Owner:IROBOT CORP

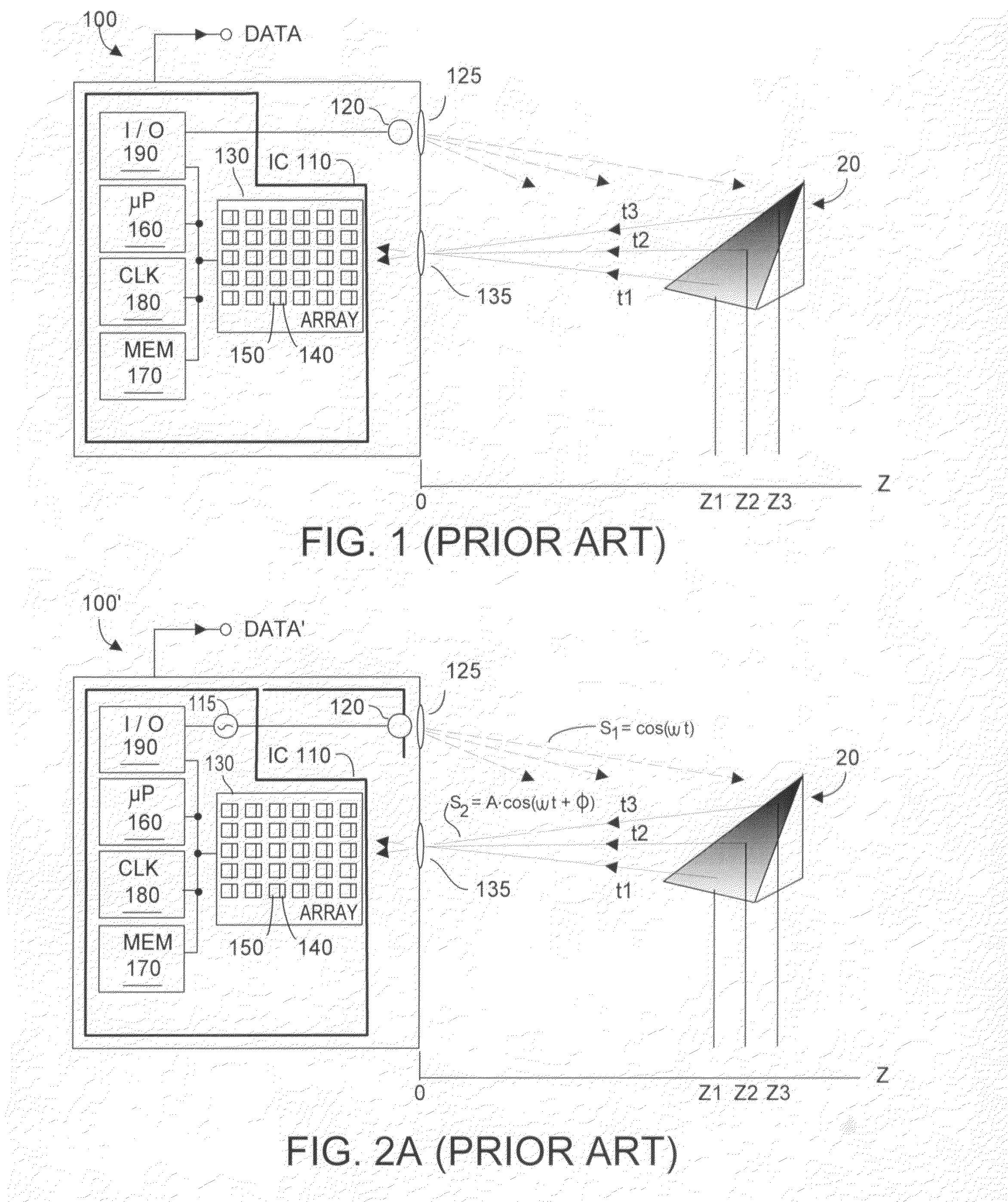

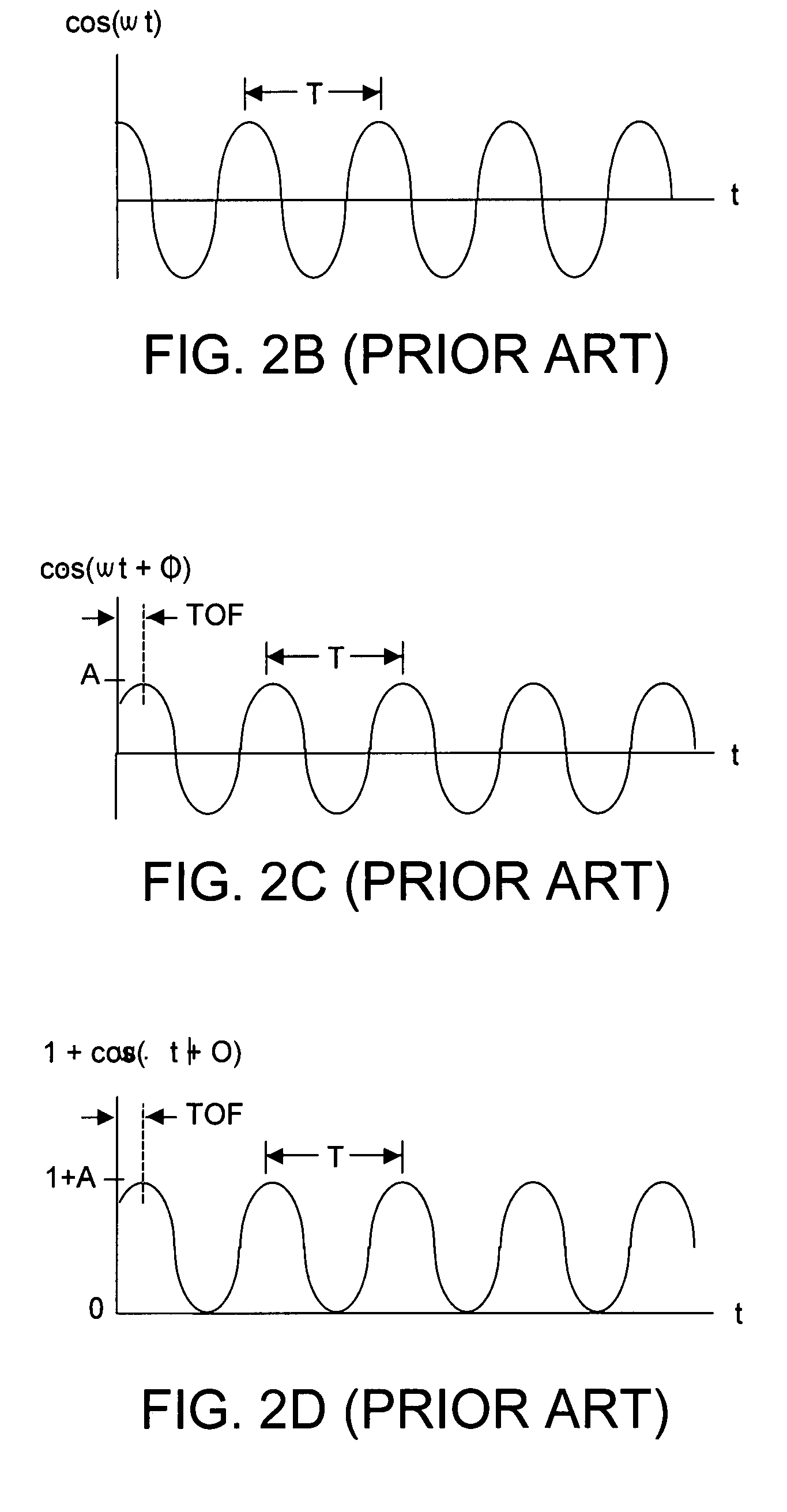

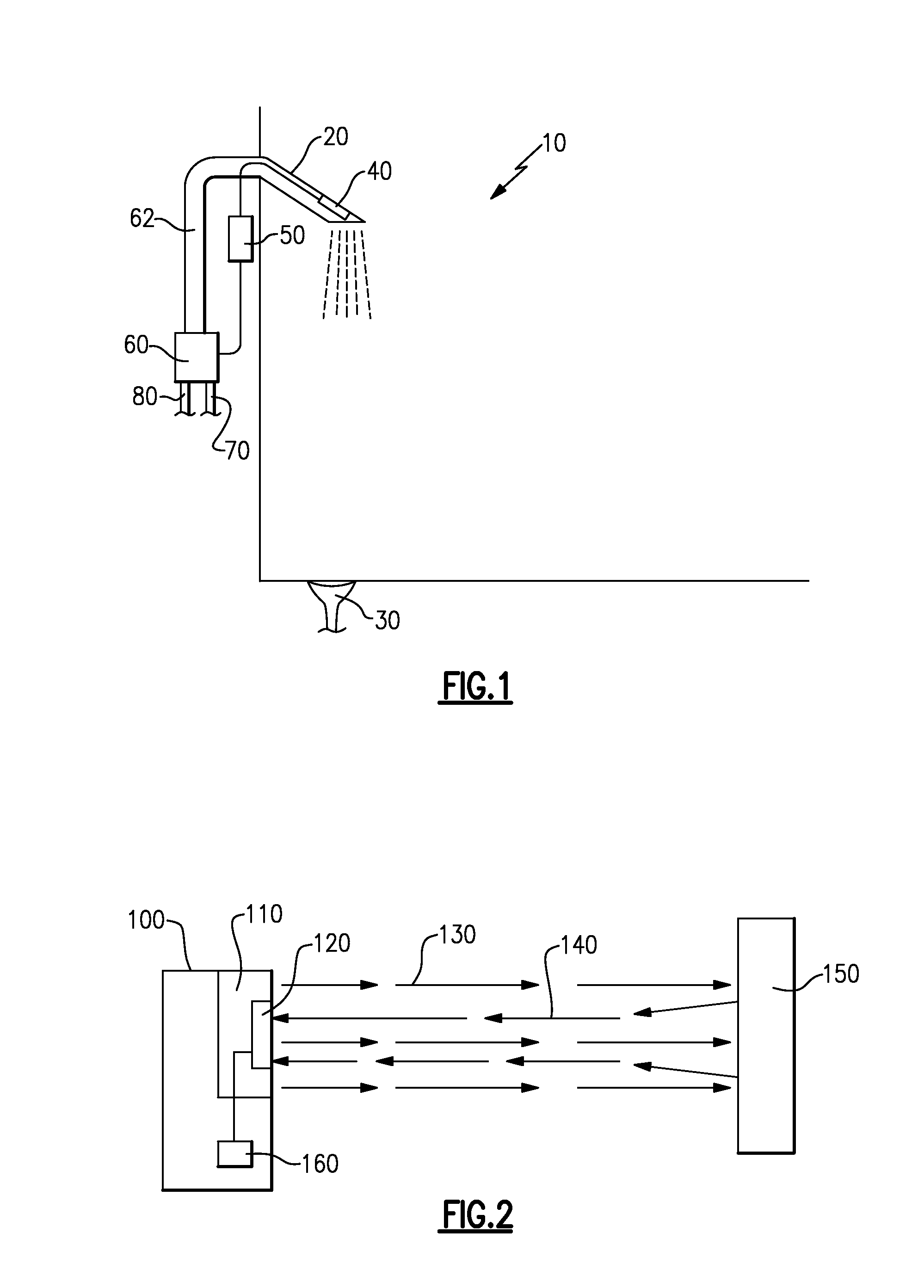

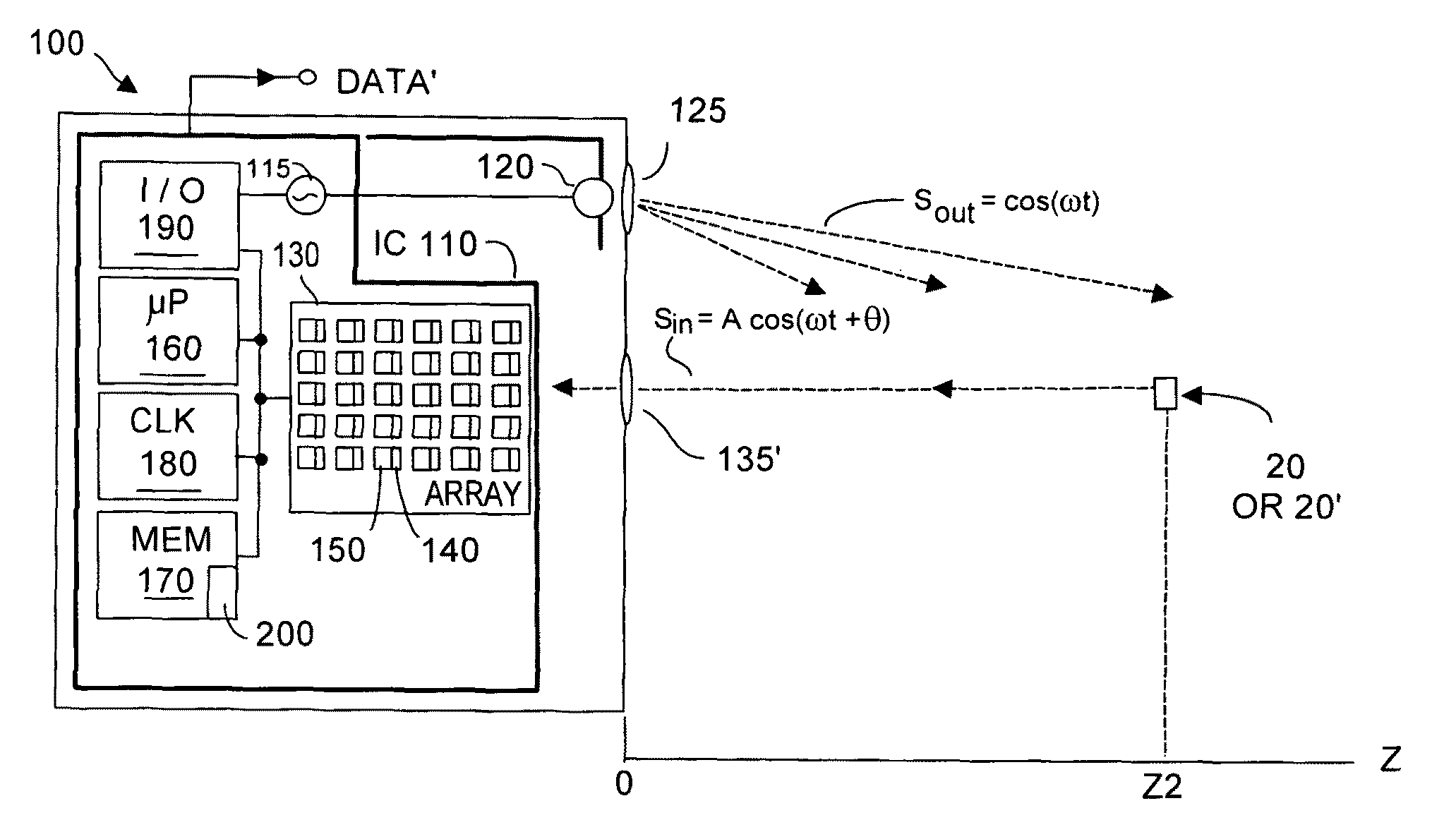

Method and system to correct motion blur and reduce signal transients in time-of-flight sensor systems

ActiveUS7283213B2Reduces signal integrity problemReduce transferOptical rangefindersElectromagnetic wave reradiationTime of flight sensorShutter

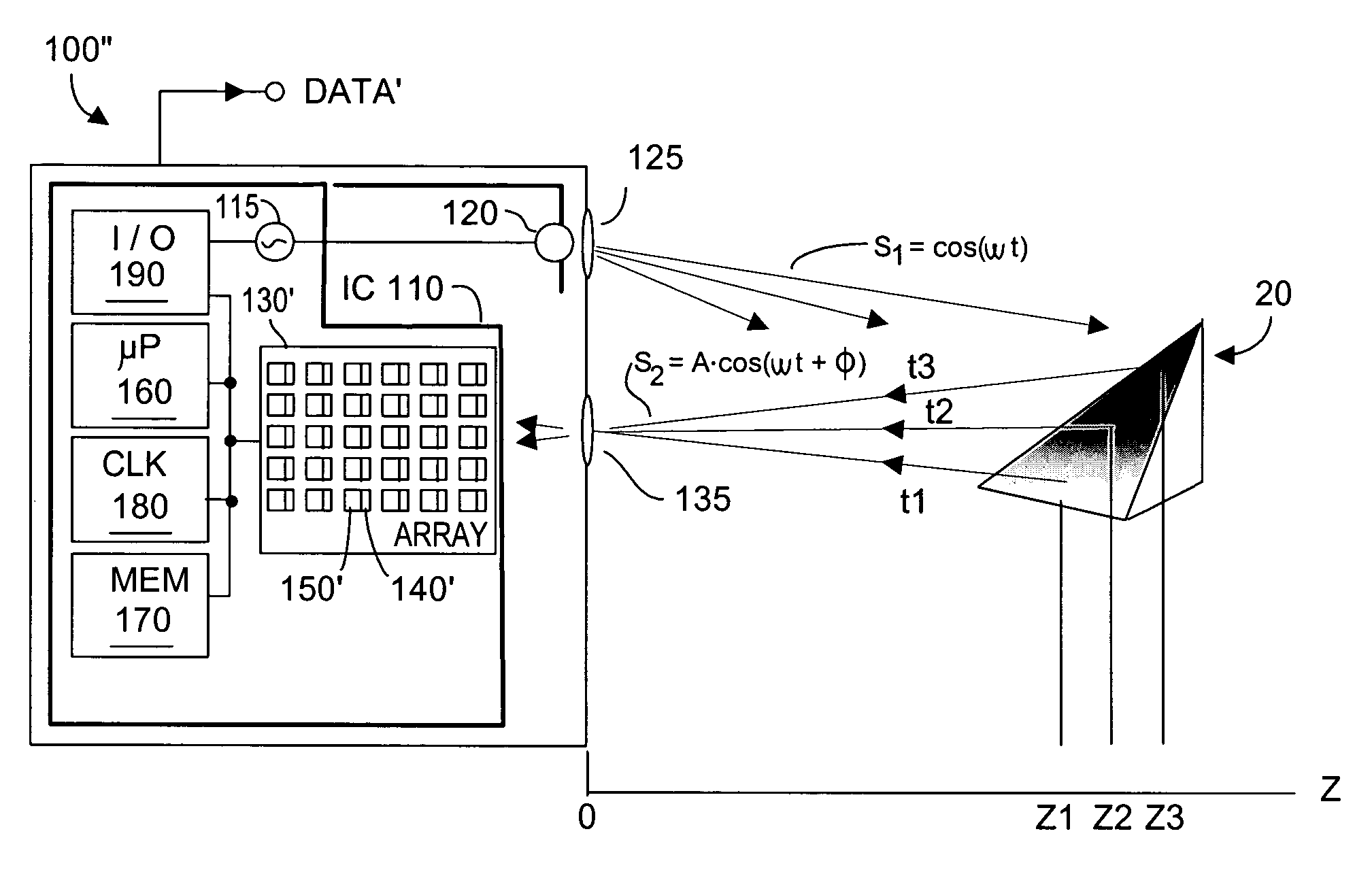

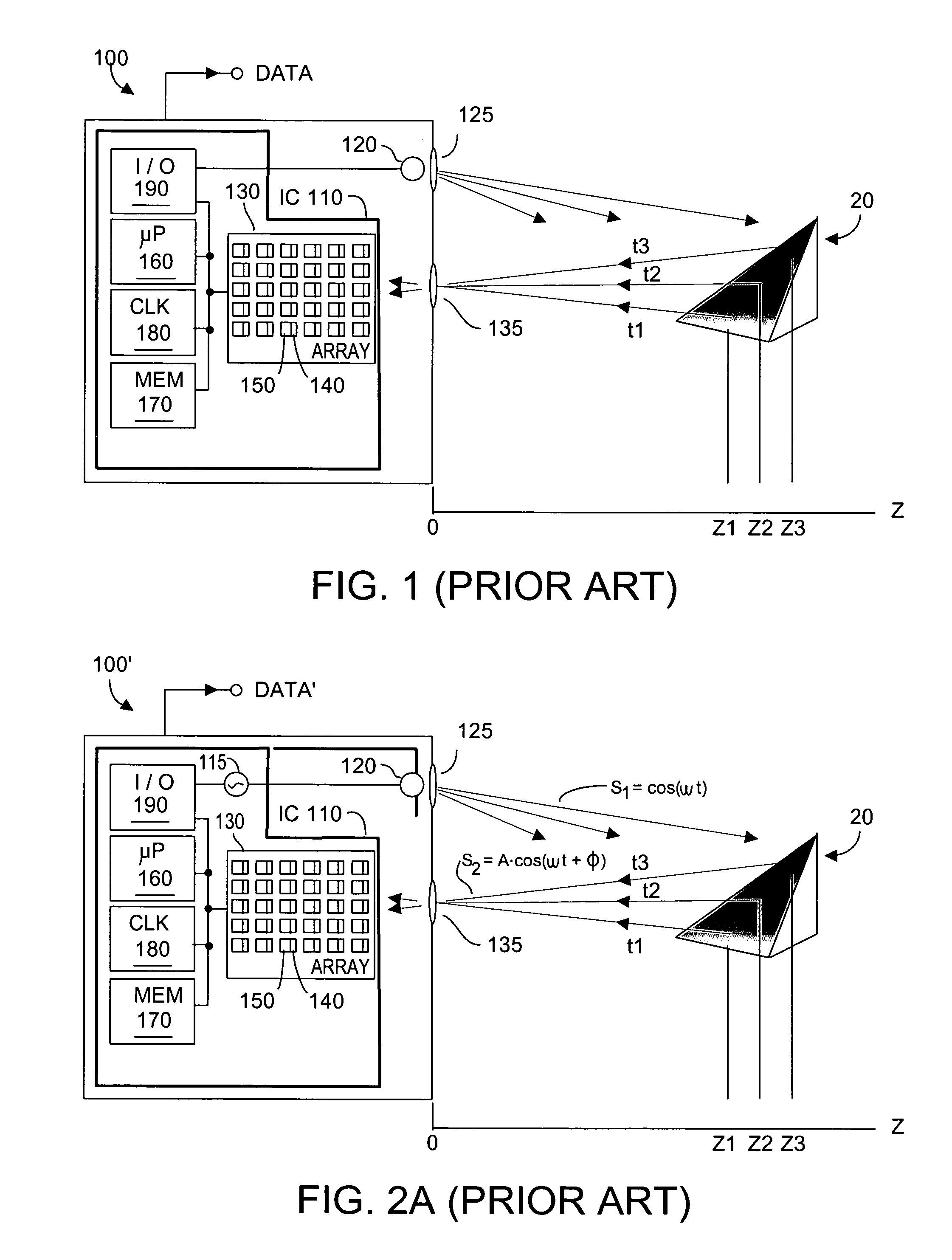

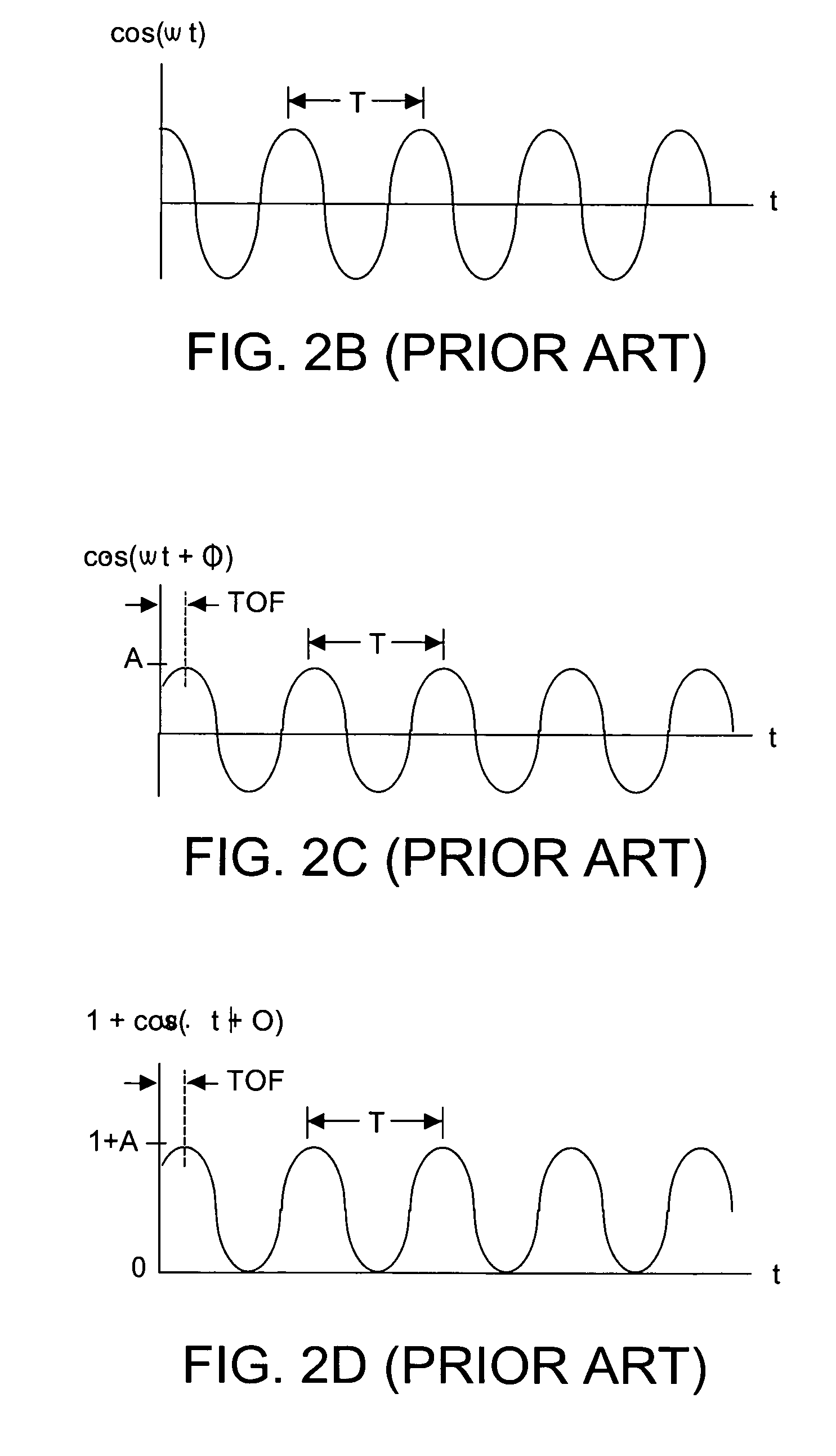

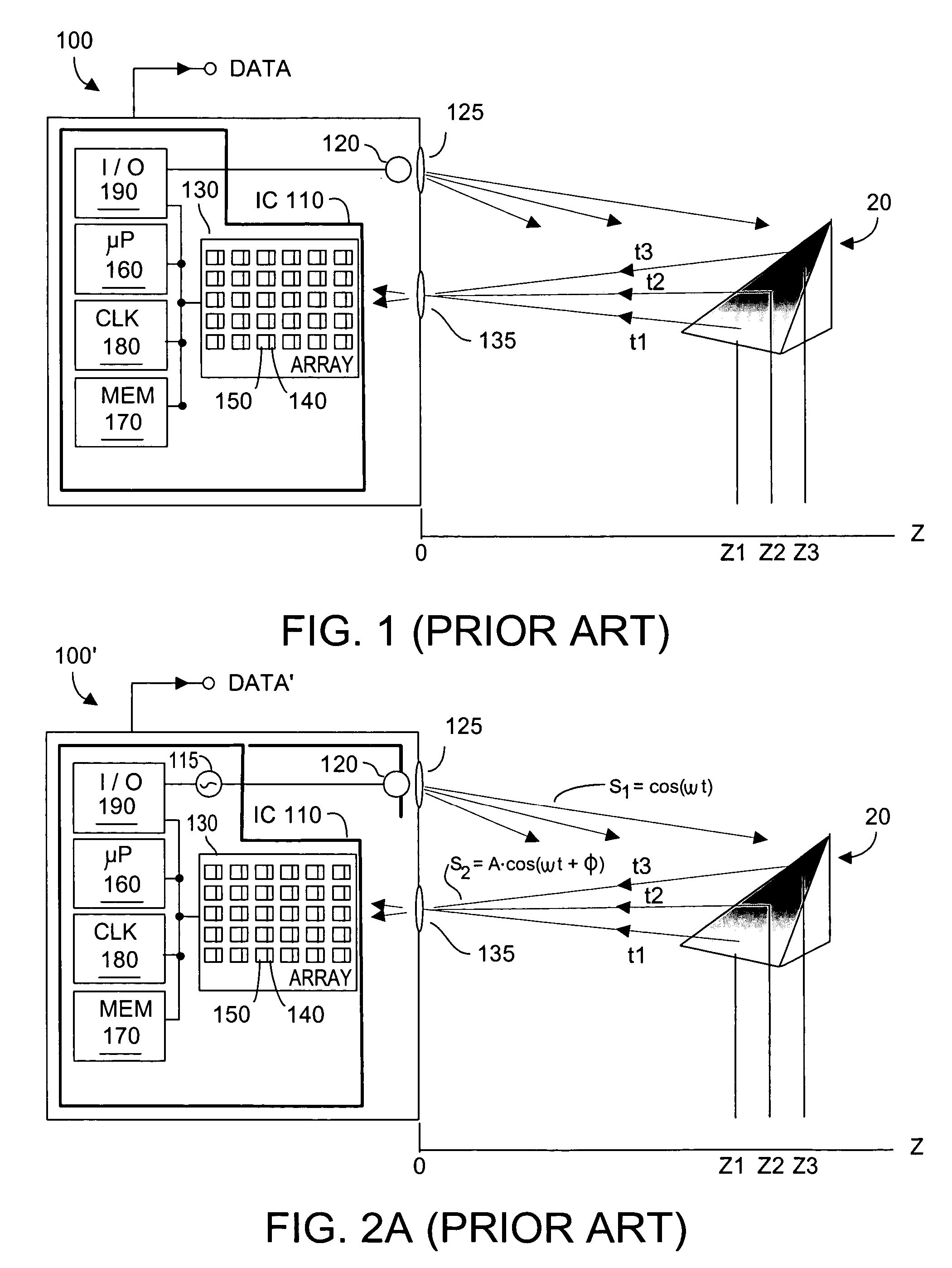

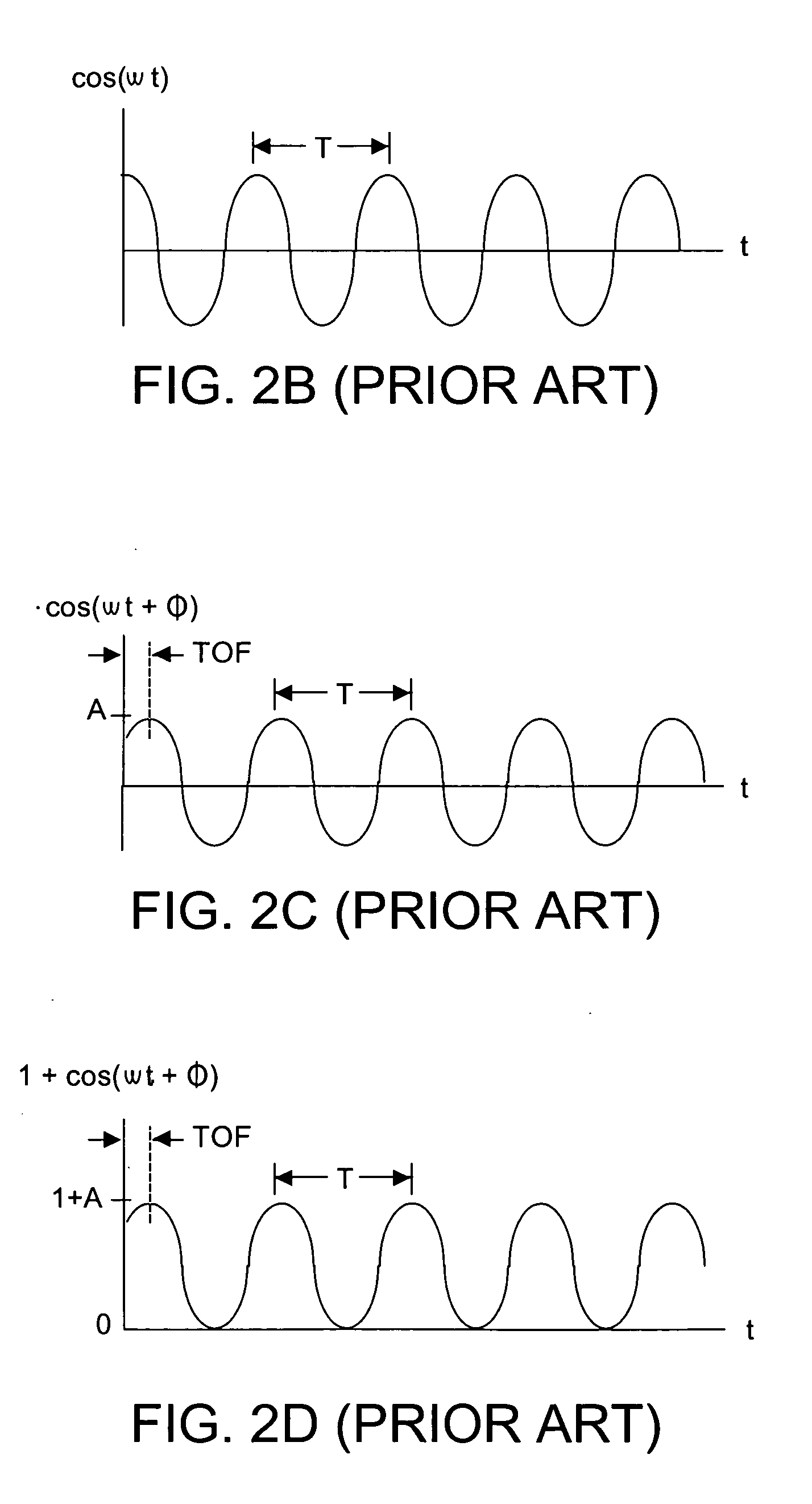

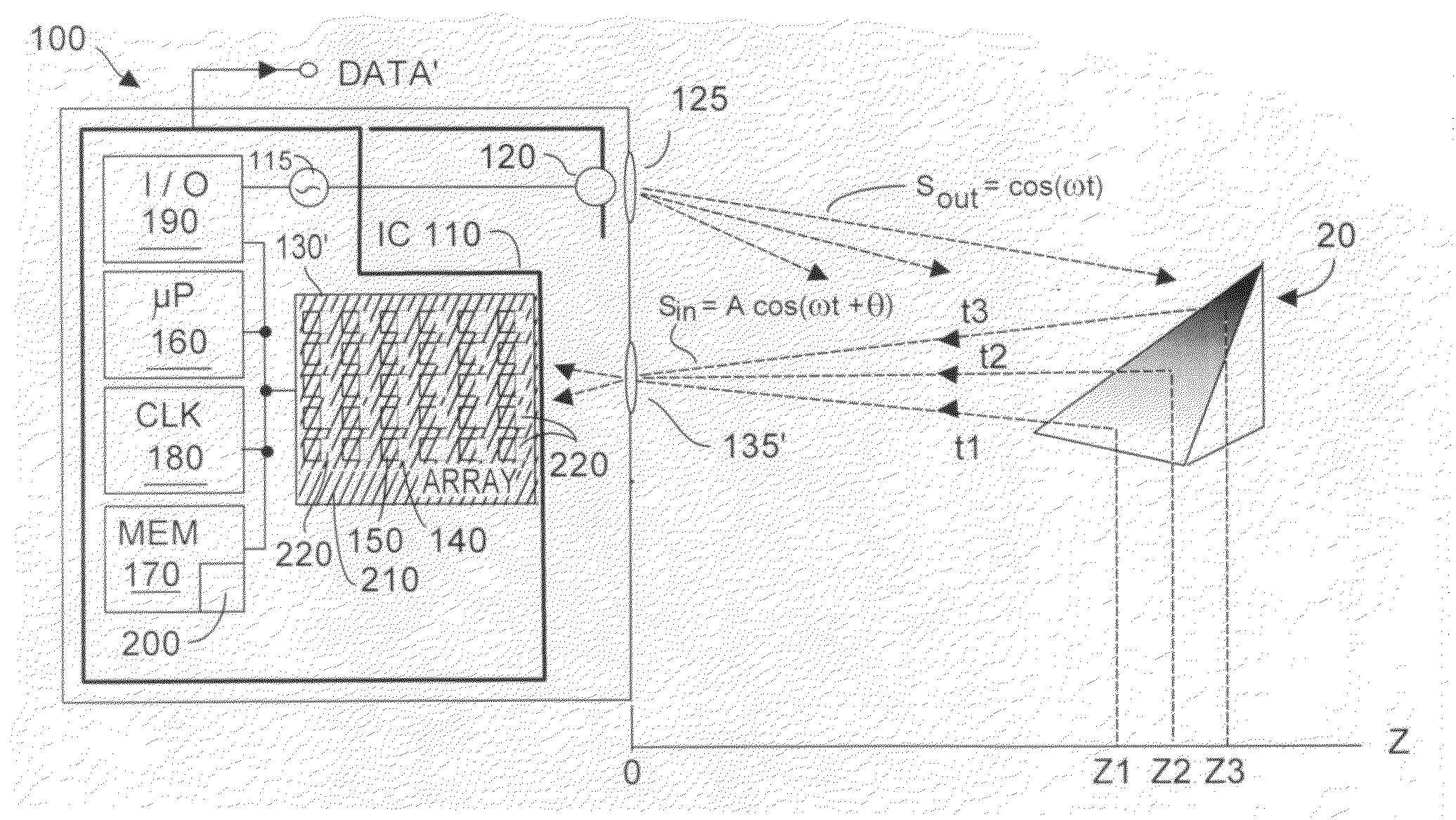

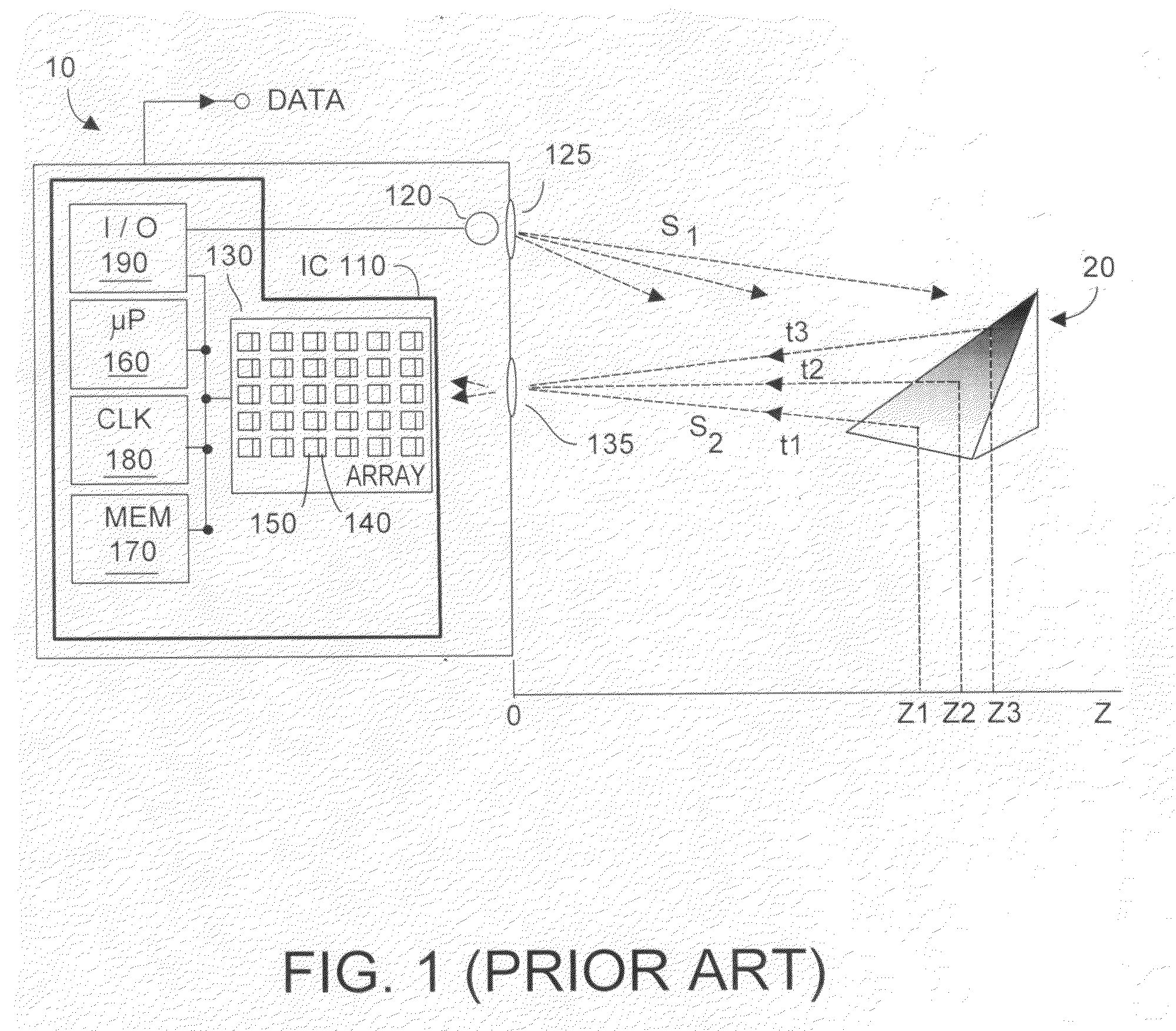

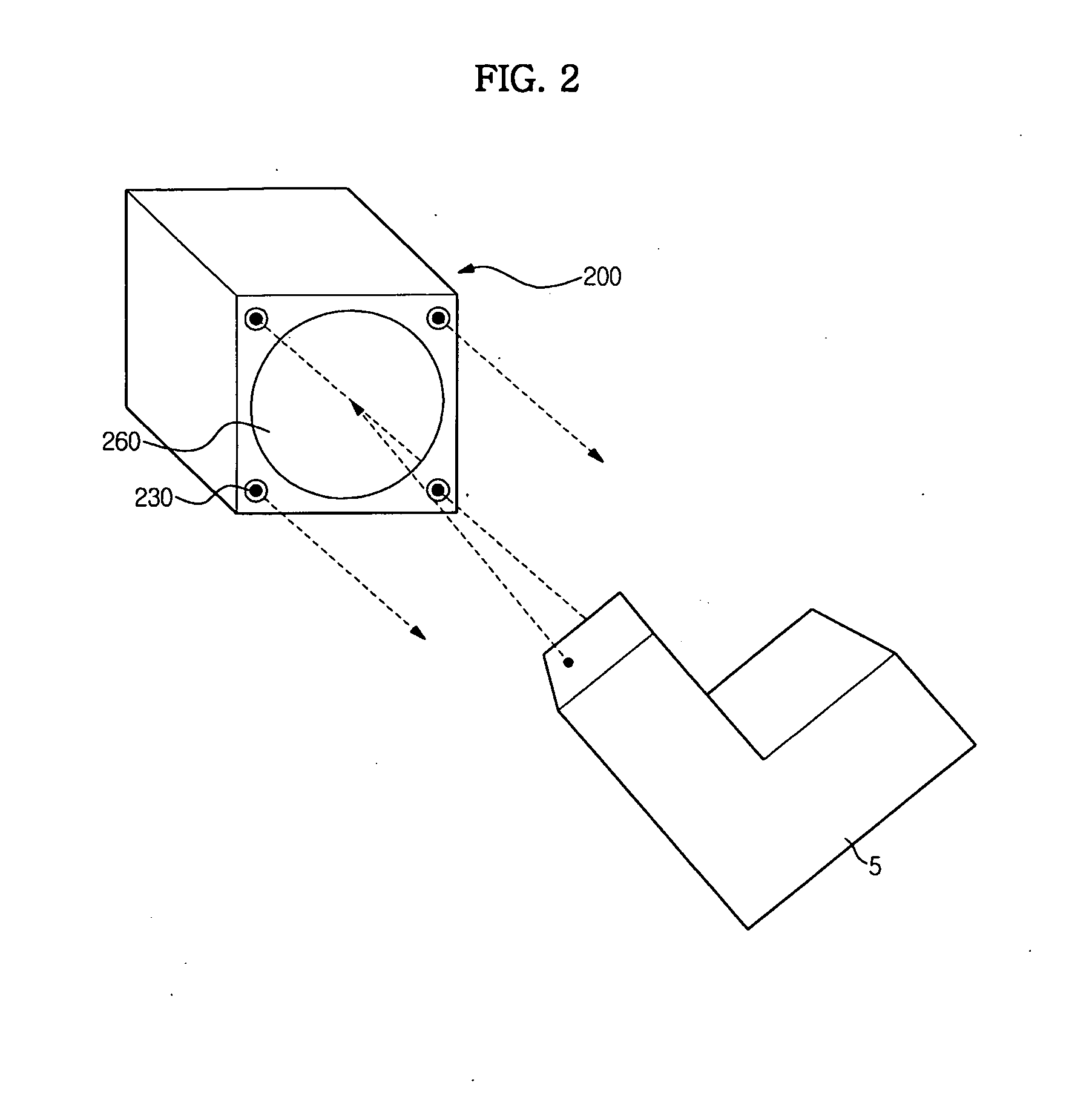

TOF system shutter time needed to acquire image data in a time-of-flight (TOF) system that acquires consecutive images is reduced, thus decreasing the time in which relative motion can occur. In one embodiment, pixel detectors are clocked with multi-phase signals and integration of the four signals occurs simultaneously to yield four phase measurements from four pixel detectors within a single shutter time unit. In another embodiment, phase measurement time is reduced by a factor (1 / k) by providing super pixels whose collection region is increased by a factor “k” relative to a normal pixel detector. Each super pixel is coupled to k storage units and four-phase sequential signals. Alternatively, each pixel detector can have k collector regions, k storage units, and share common clock circuitry that generates four-phase signals. Various embodiments can reduce the mal-effects of clock signal transients upon signals, and can be dynamically reconfigured as required.

Owner:MICROSOFT TECH LICENSING LLC

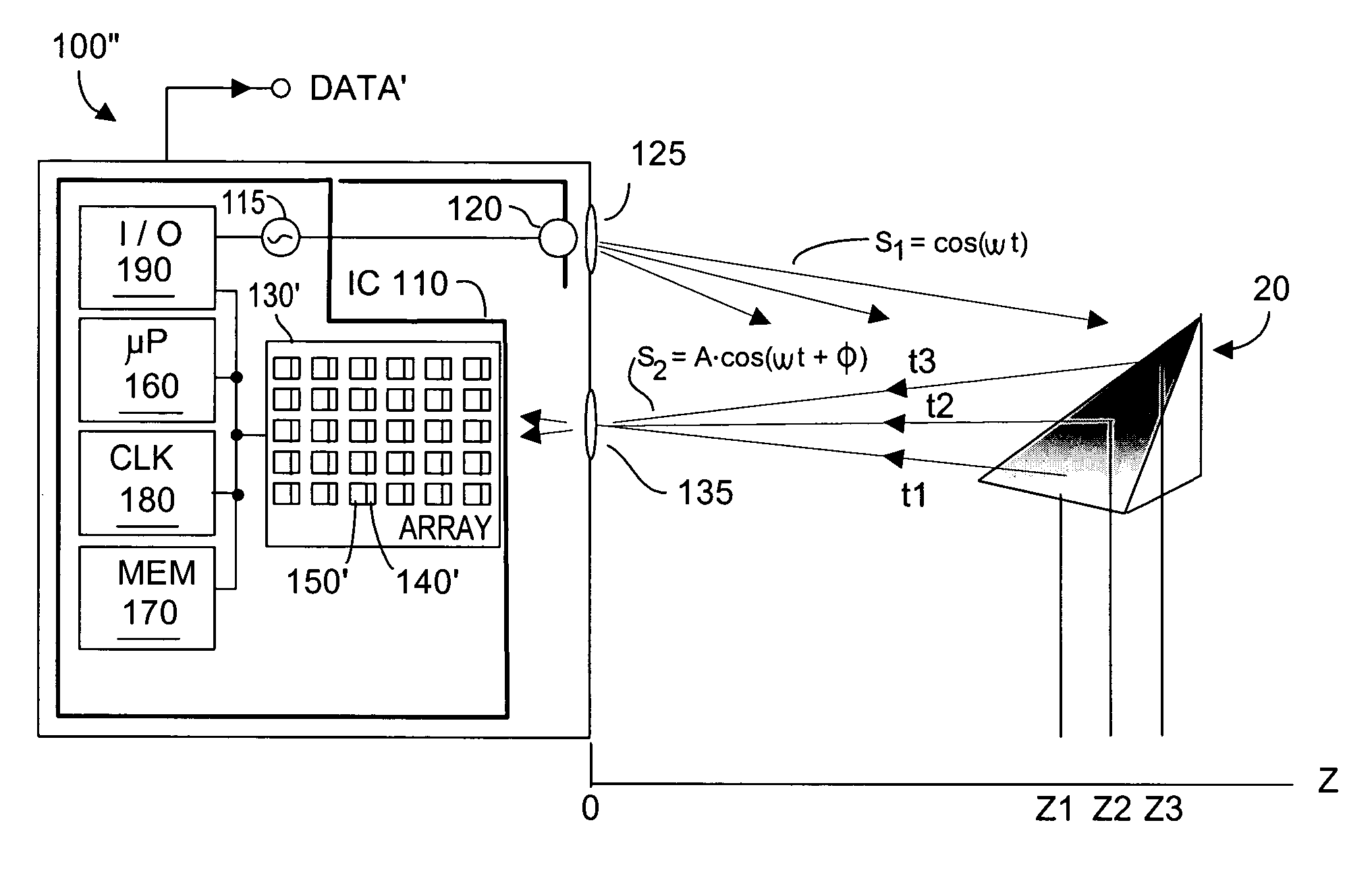

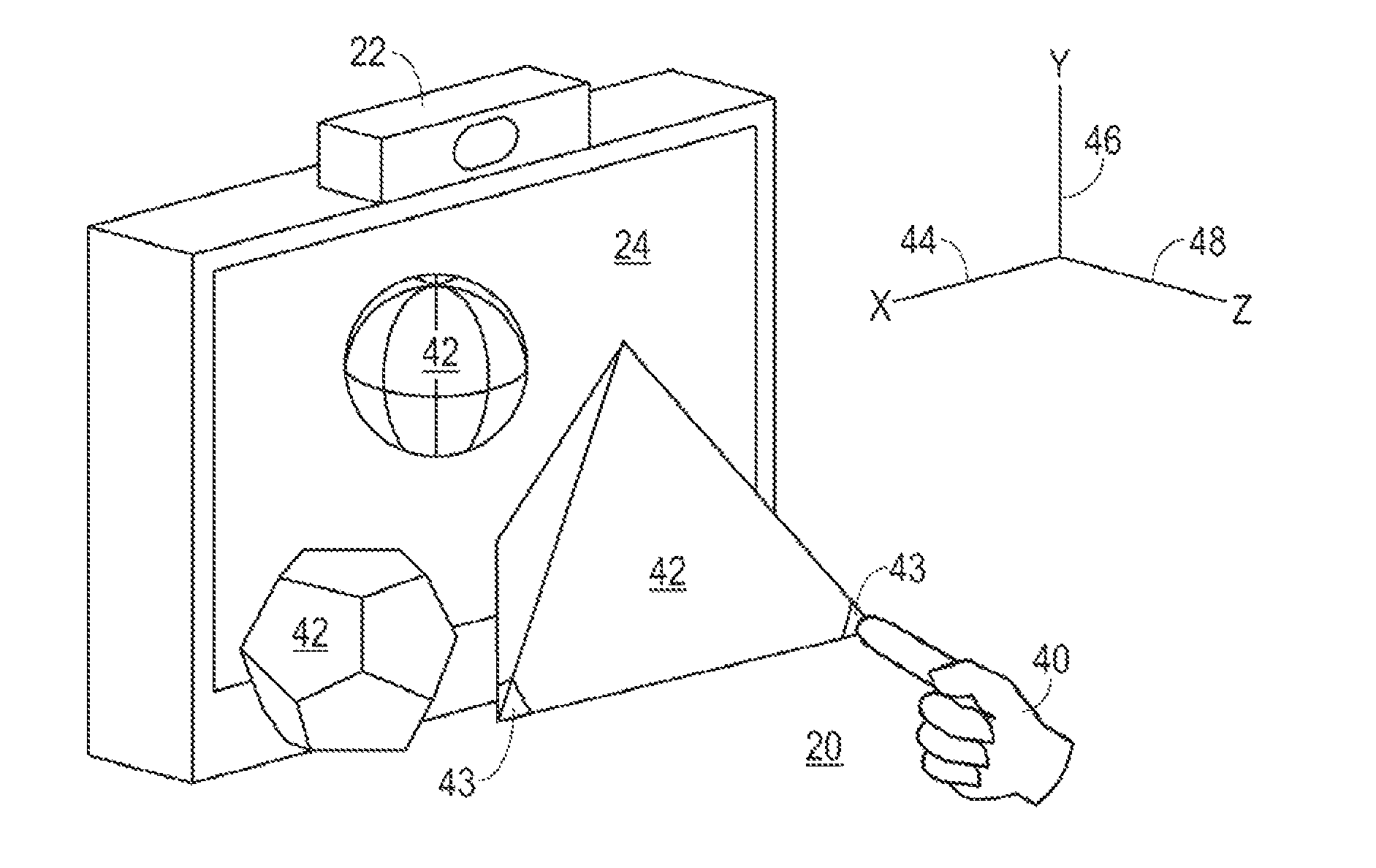

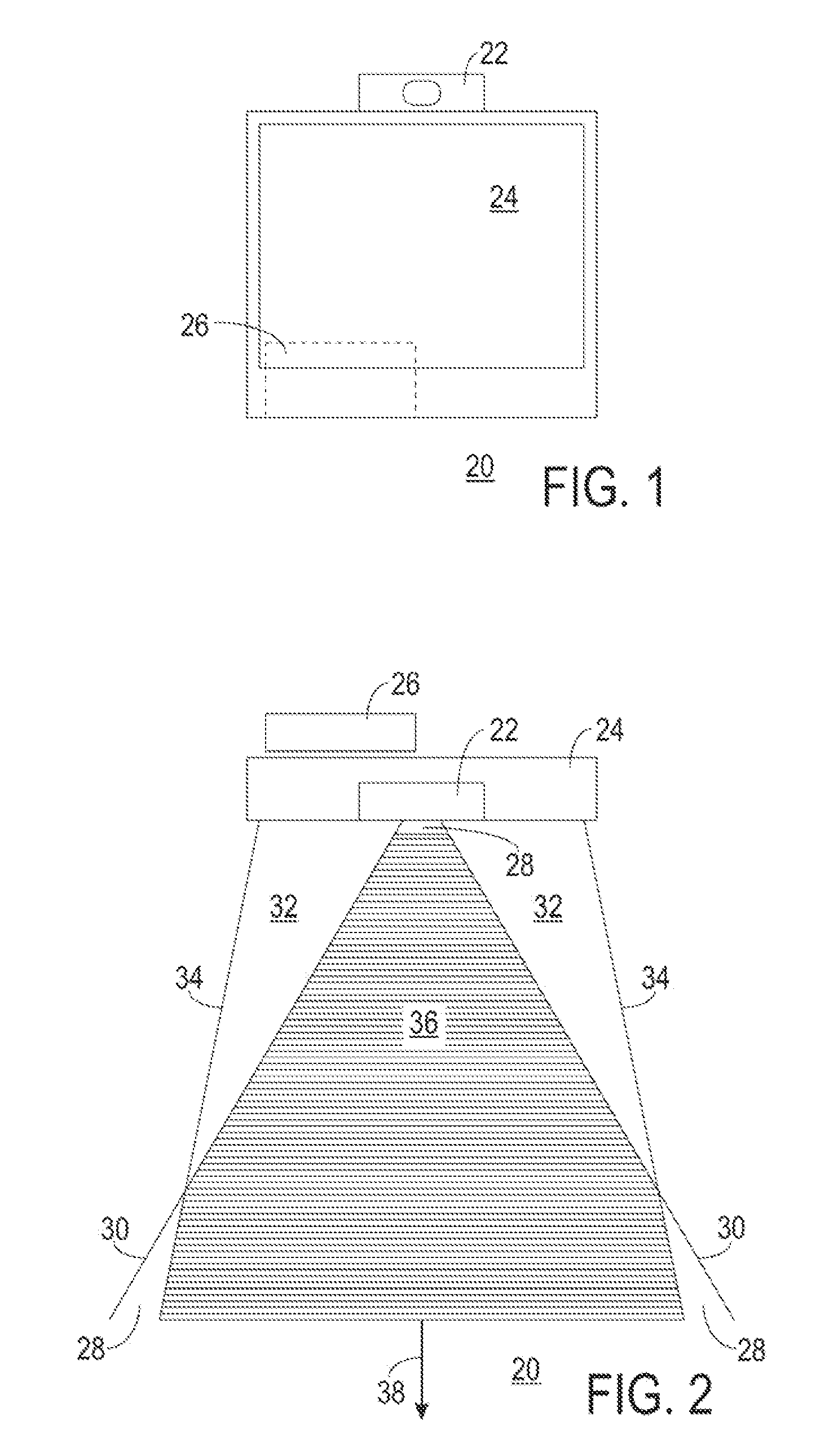

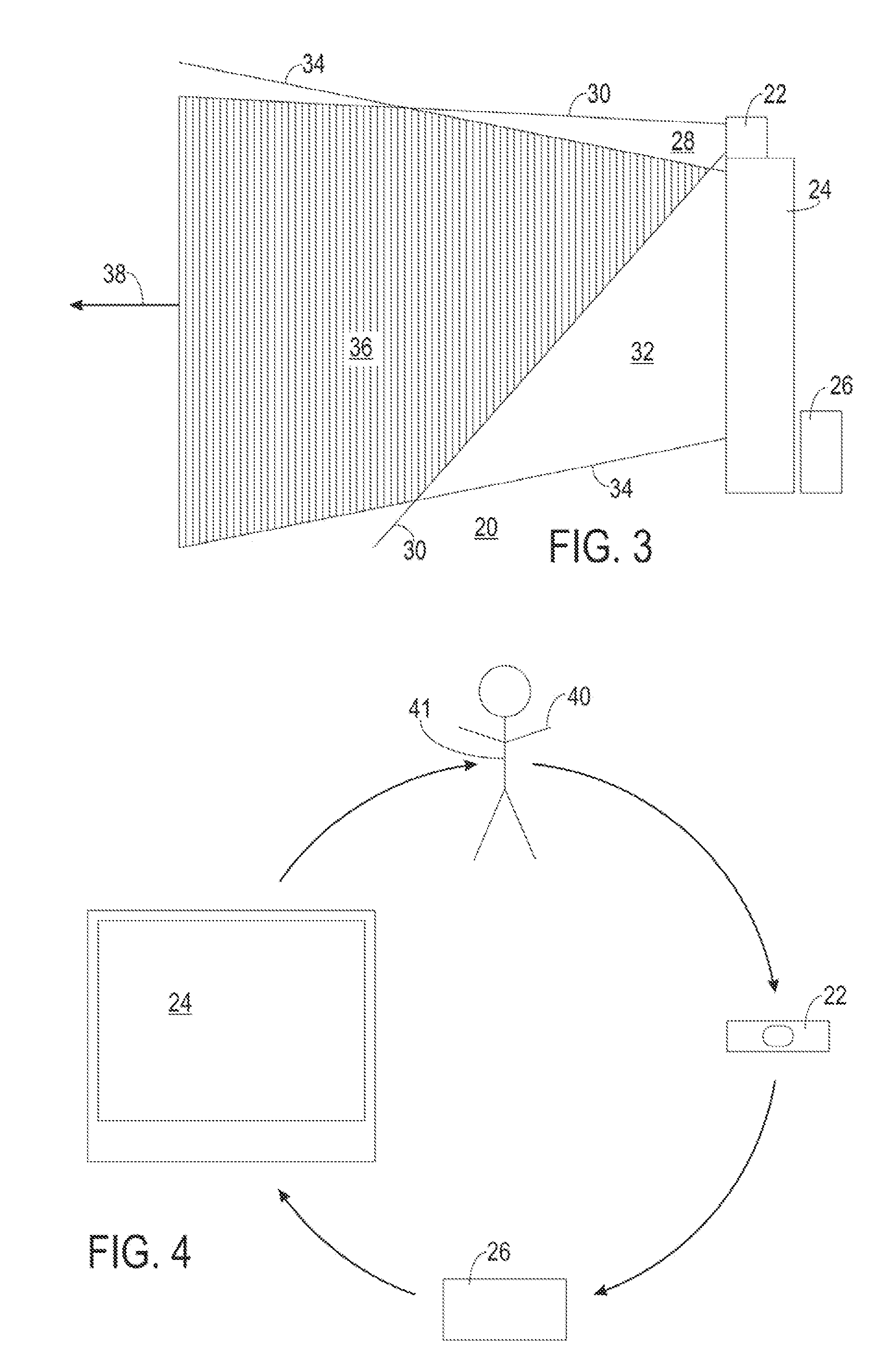

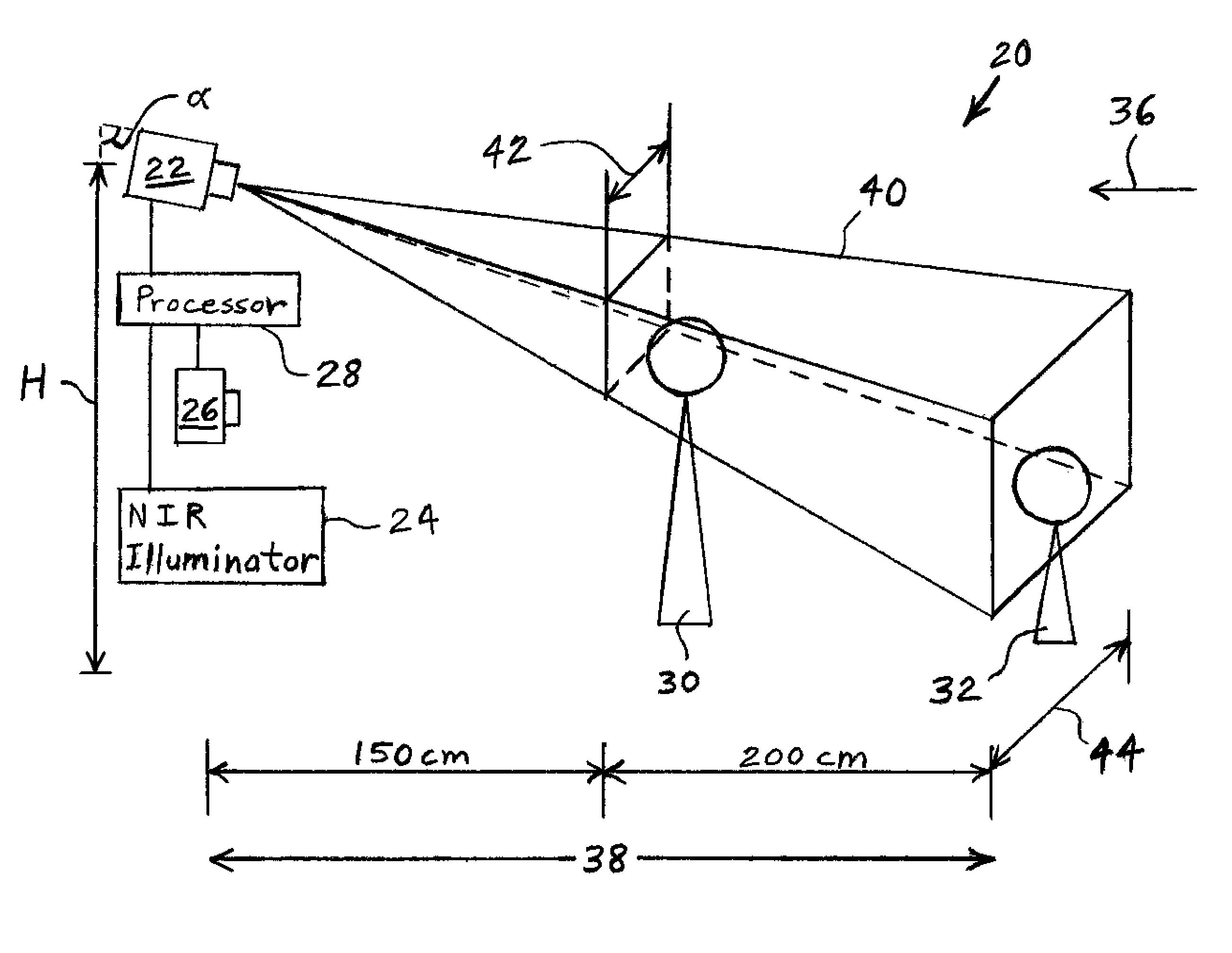

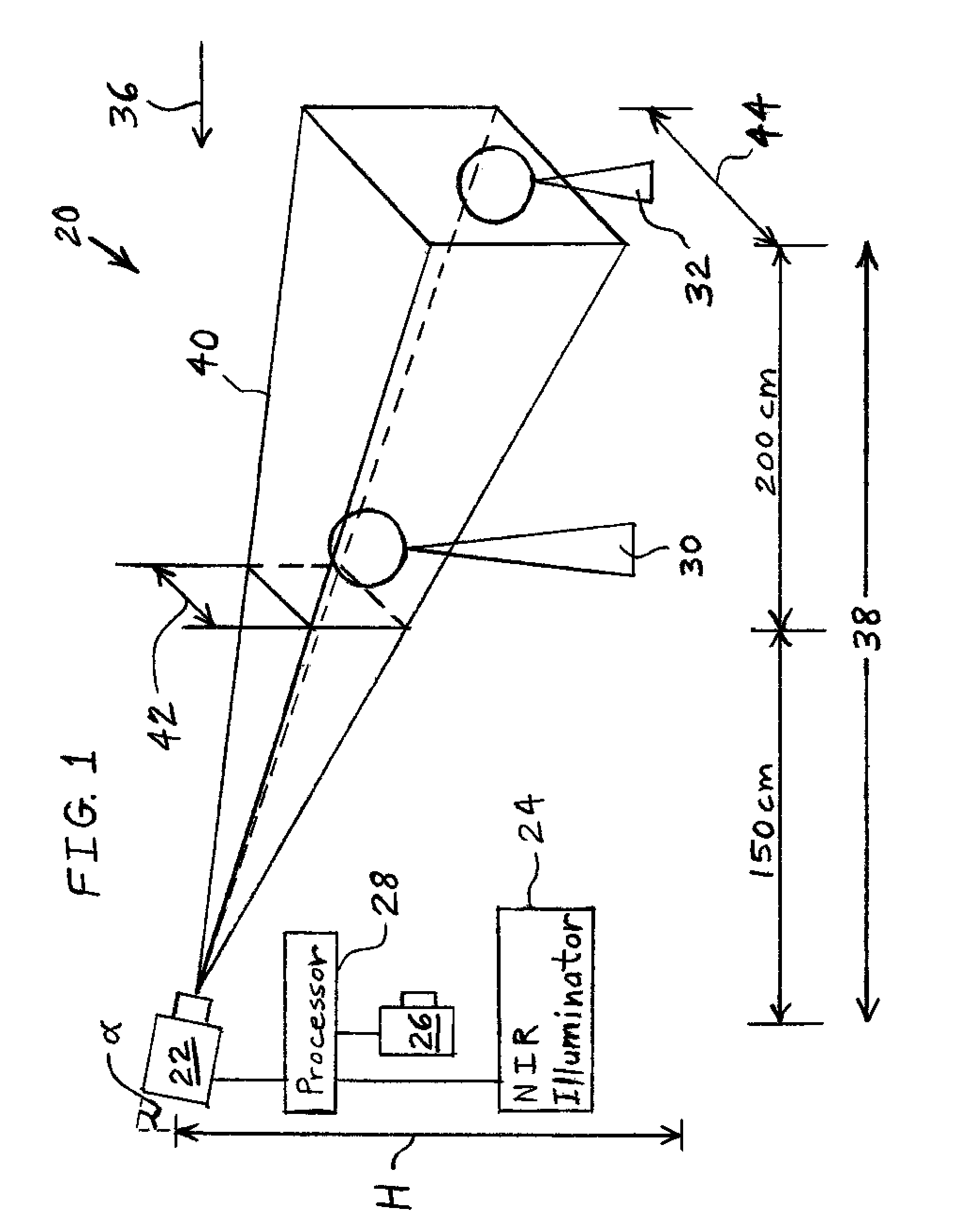

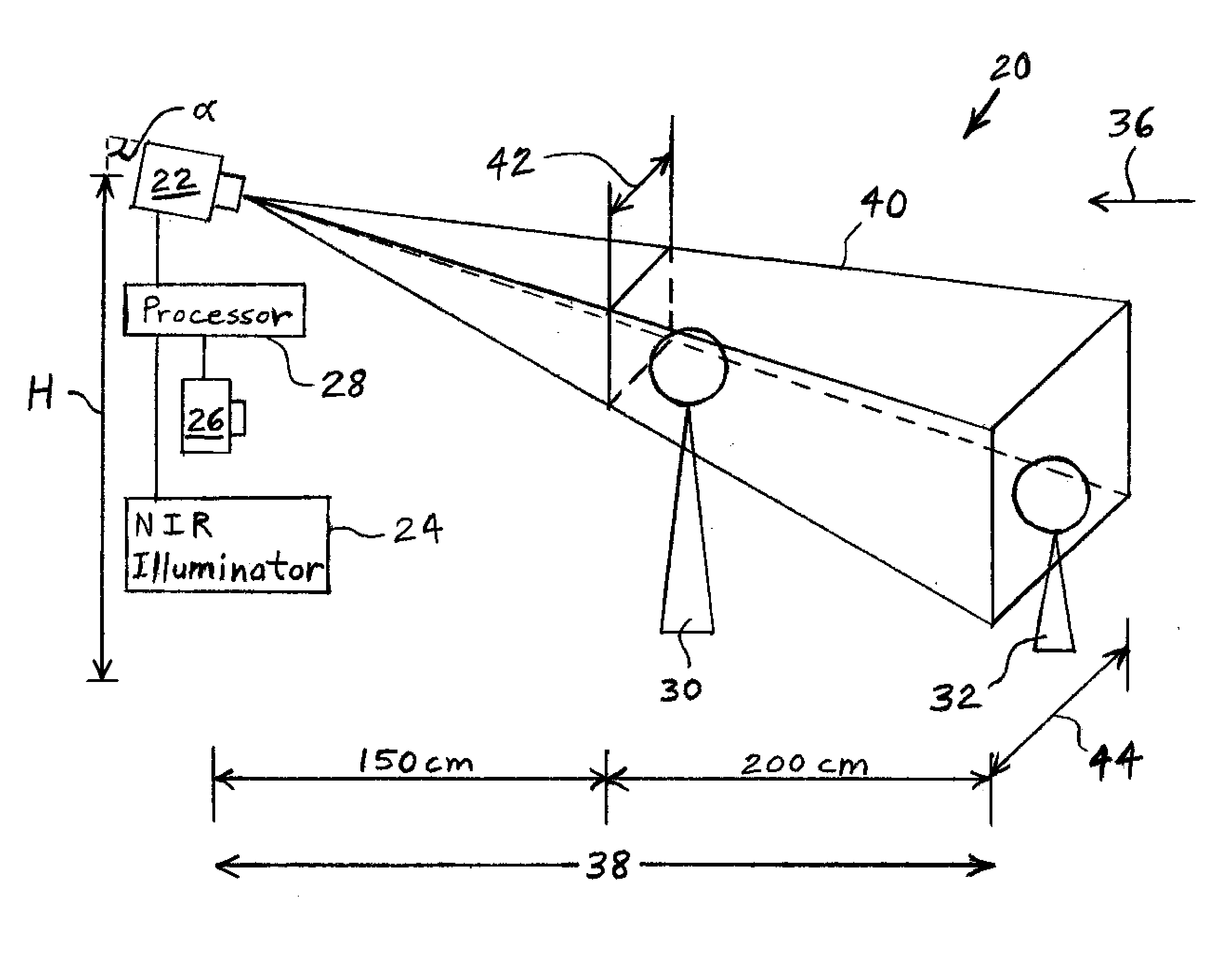

Three-Dimensional Virtual-Touch Human-Machine Interface System and Method Therefor

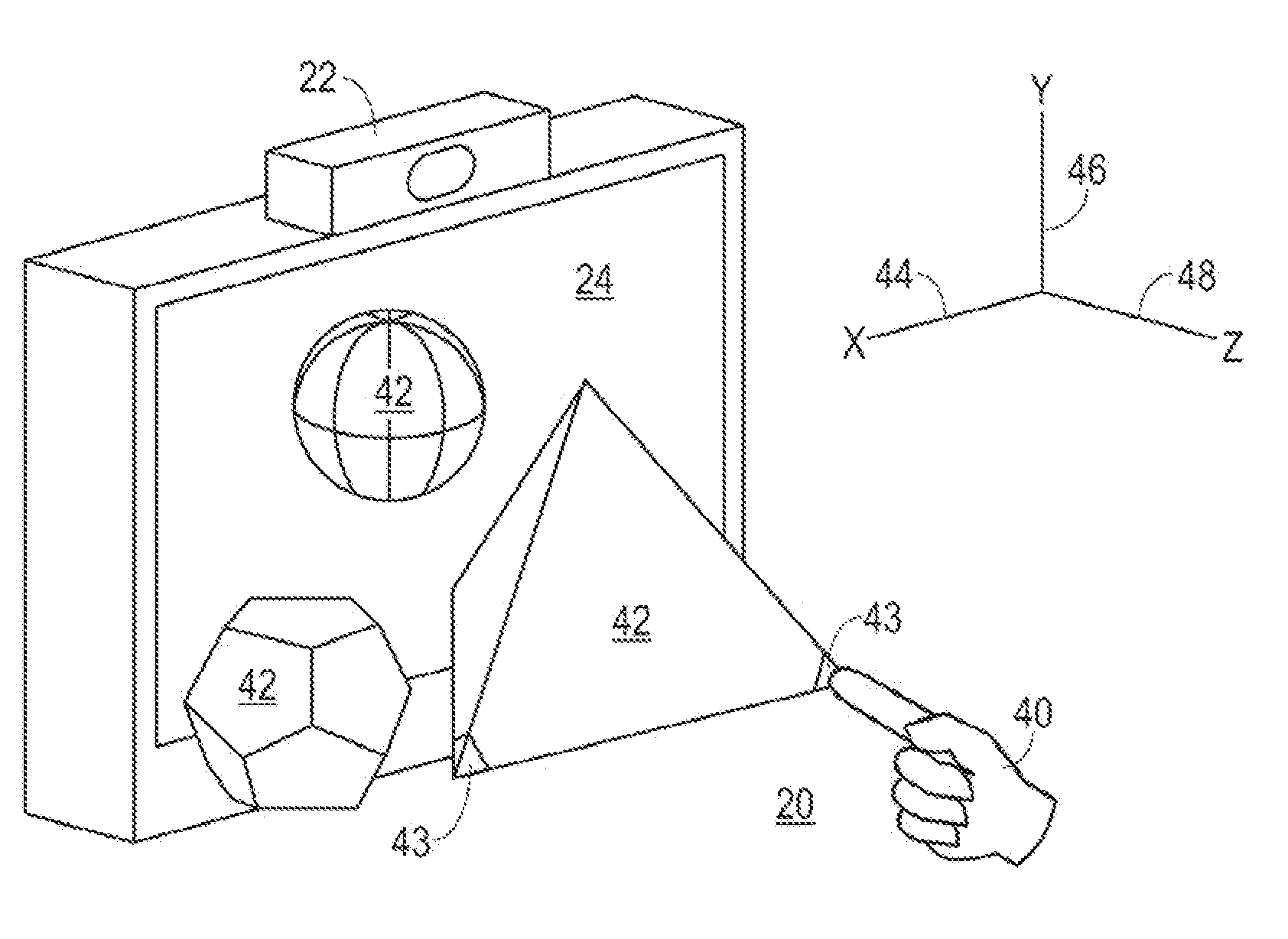

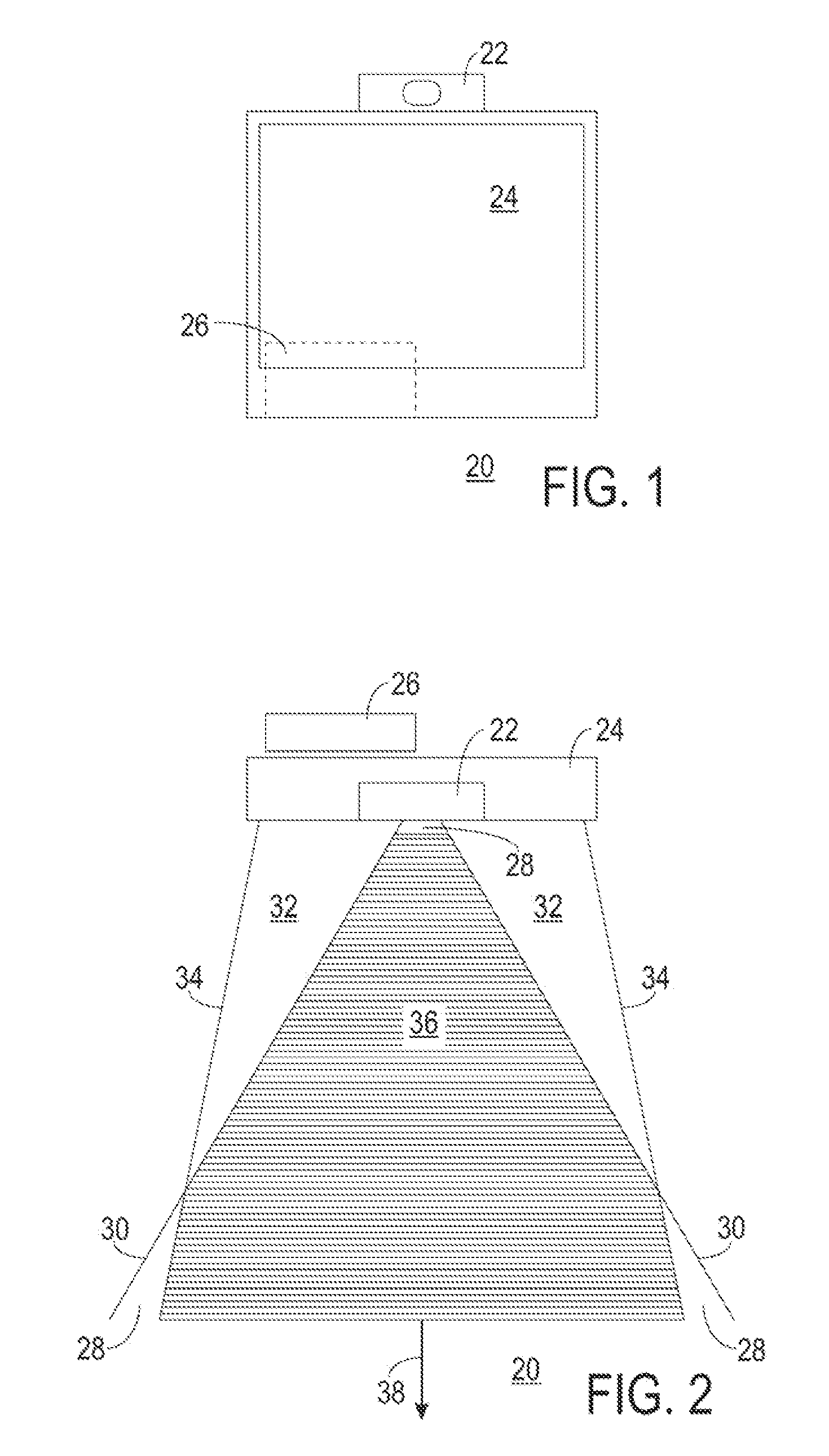

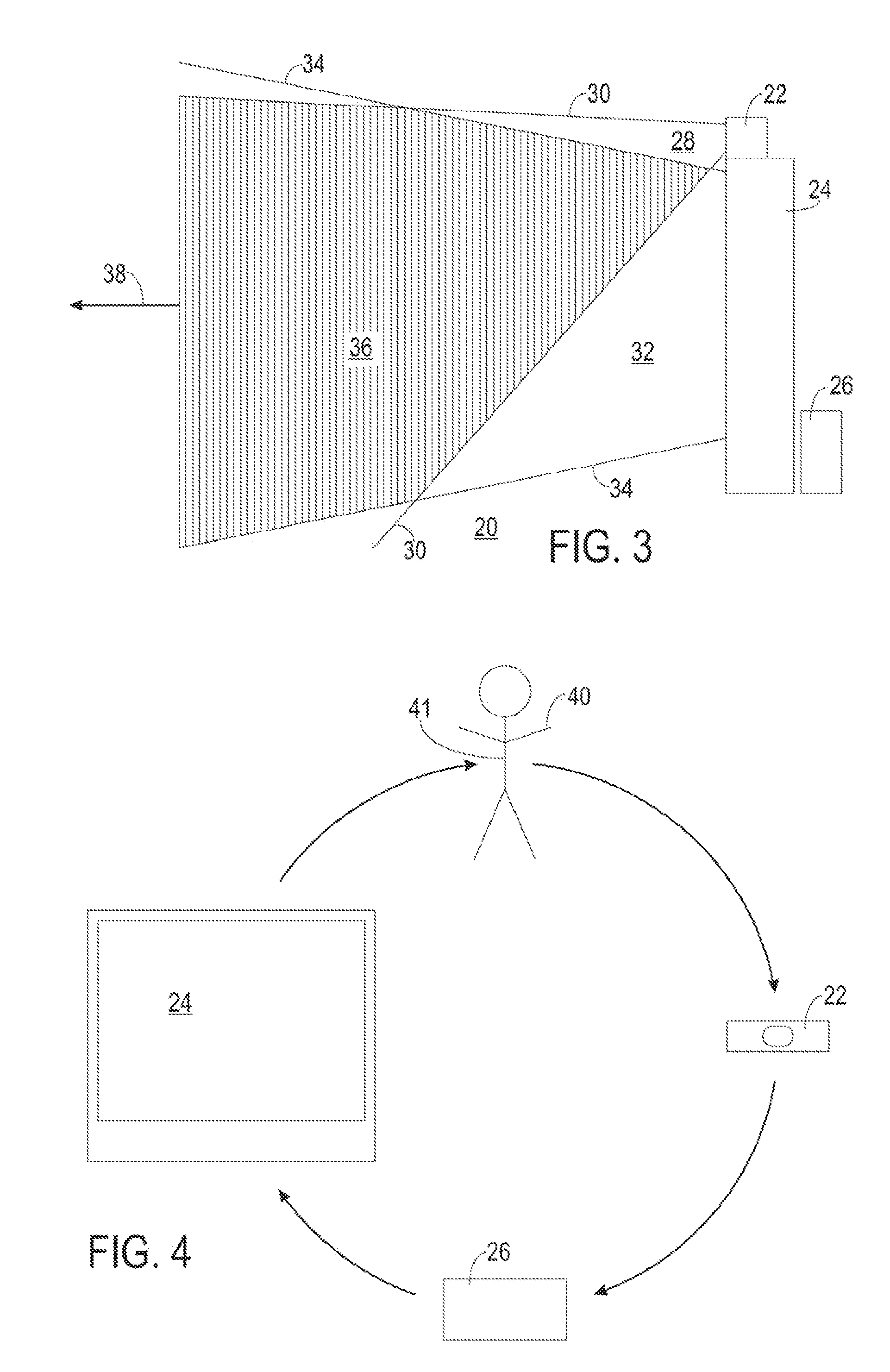

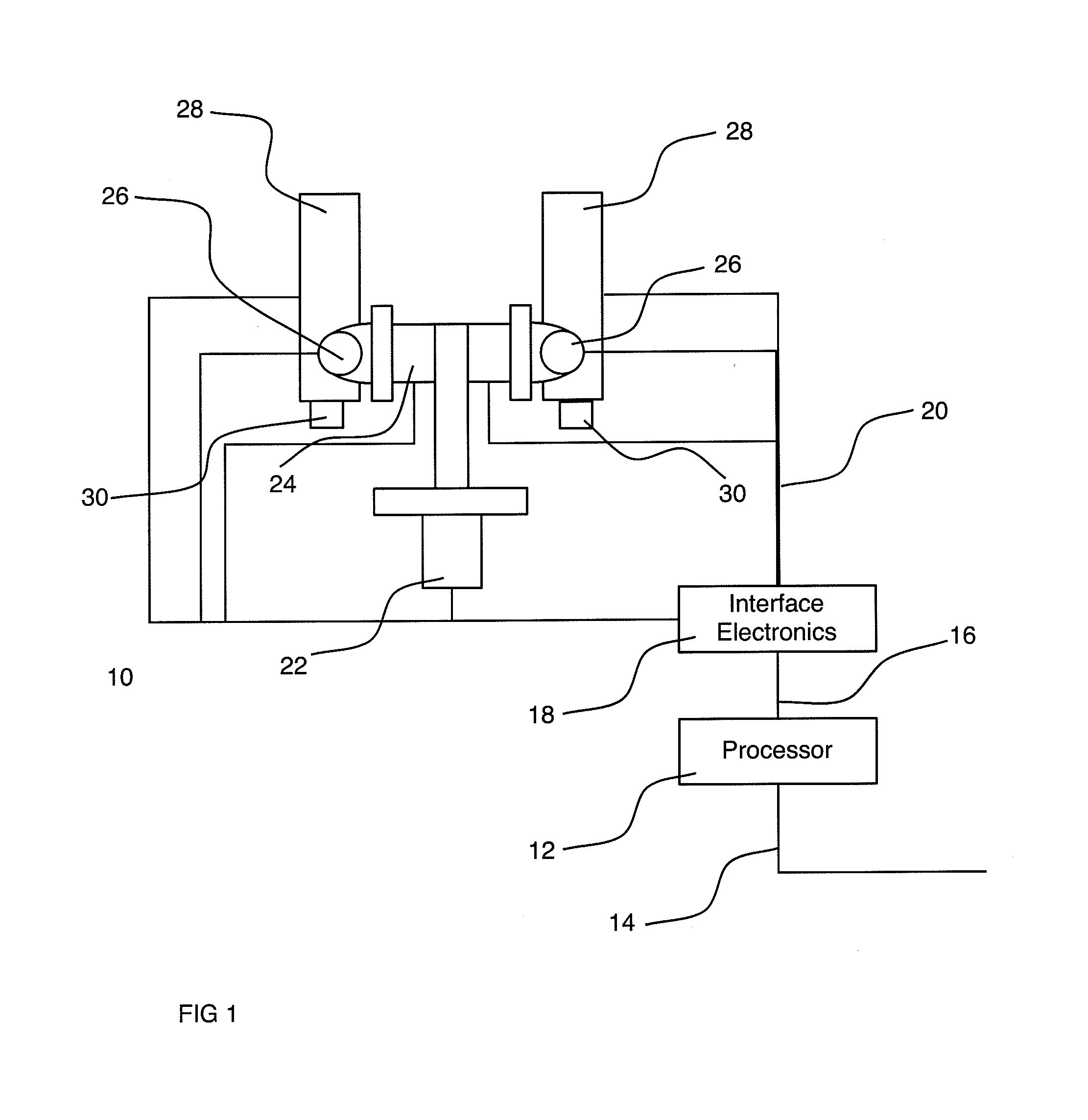

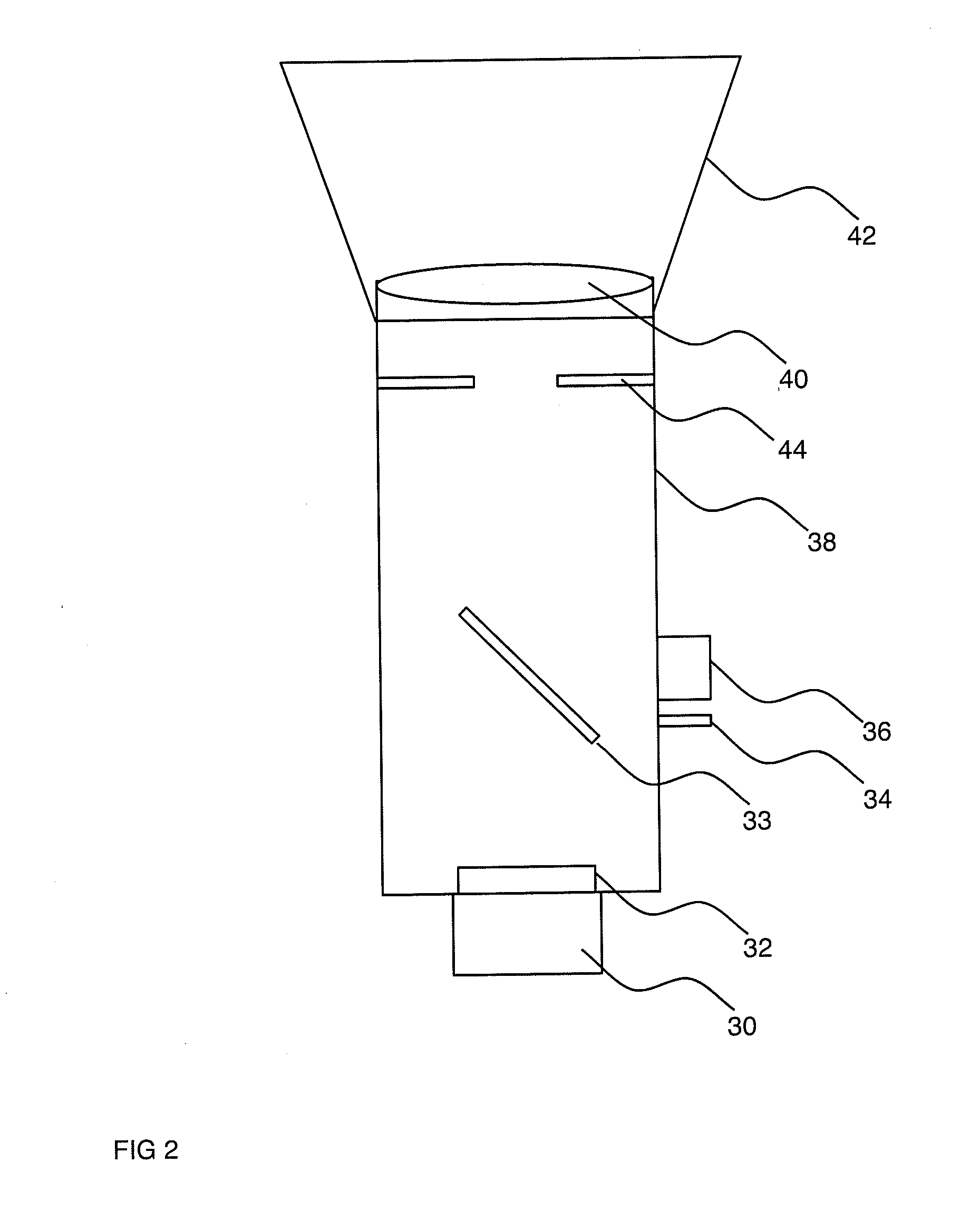

ActiveUS20070132721A1Cathode-ray tube indicatorsVideo gamesHuman–machine interfaceTime of flight sensor

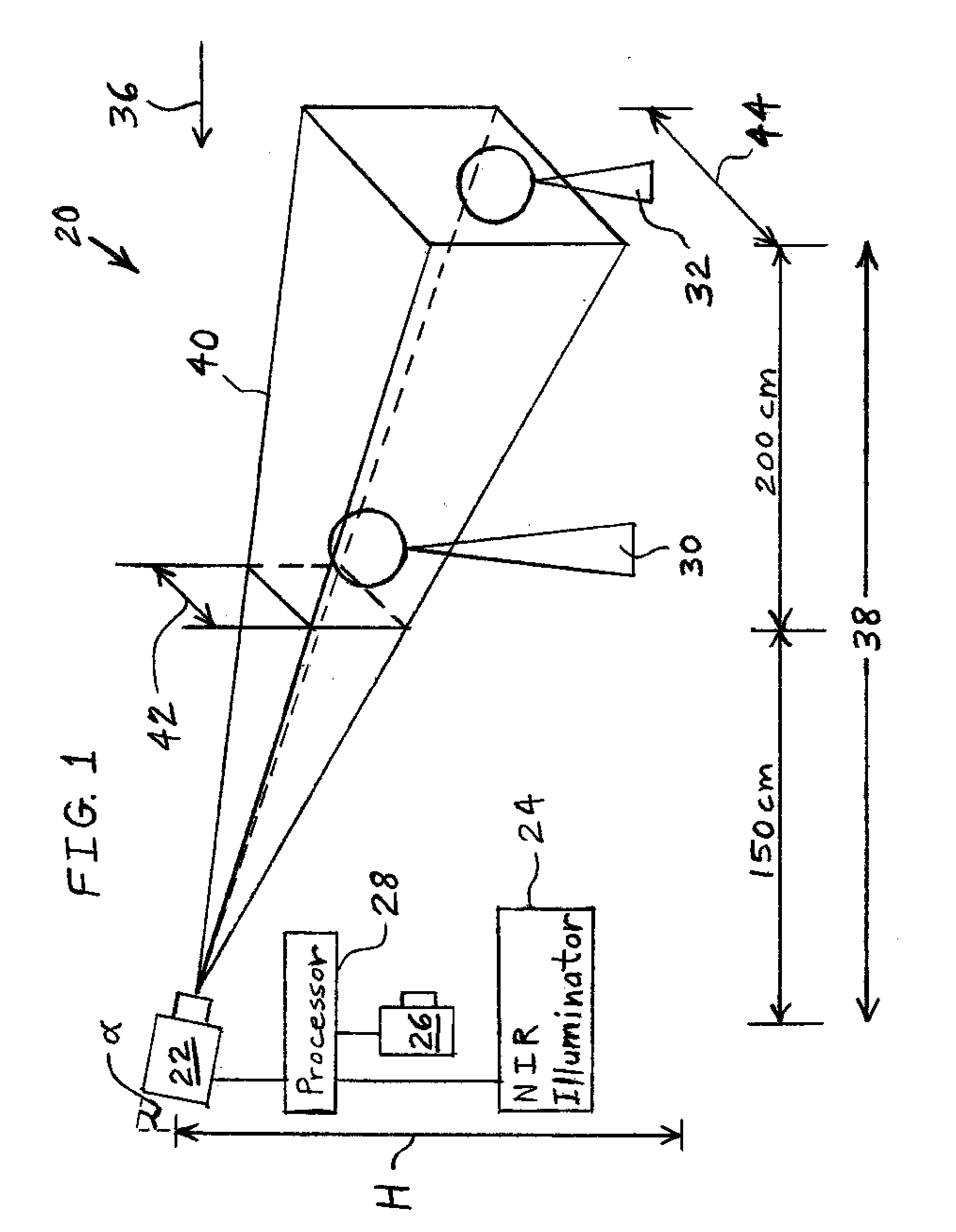

A three-dimensional virtual-touch human-machine interface system (20) and a method (100) of operating the system (20) are presented. The system (20) incorporates a three-dimensional time-of-flight sensor (22), a three-dimensional autostereoscopic display (24), and a computer (26) coupled to the sensor (22) and the display (24). The sensor (22) detects a user object (40) within a three-dimensional sensor space (28). The display (24) displays an image (42) within a three-dimensional display space (32). The computer (26) maps a position of the user object (40) within an interactive volumetric field (36) mutually within the sensor space (28) and the display space (32), and determines when the positions of the user object (40) and the image (42) are substantially coincident. Upon detection of coincidence, the computer (26) executes a function programmed for the image (42).

Owner:MICROSOFT TECH LICENSING LLC

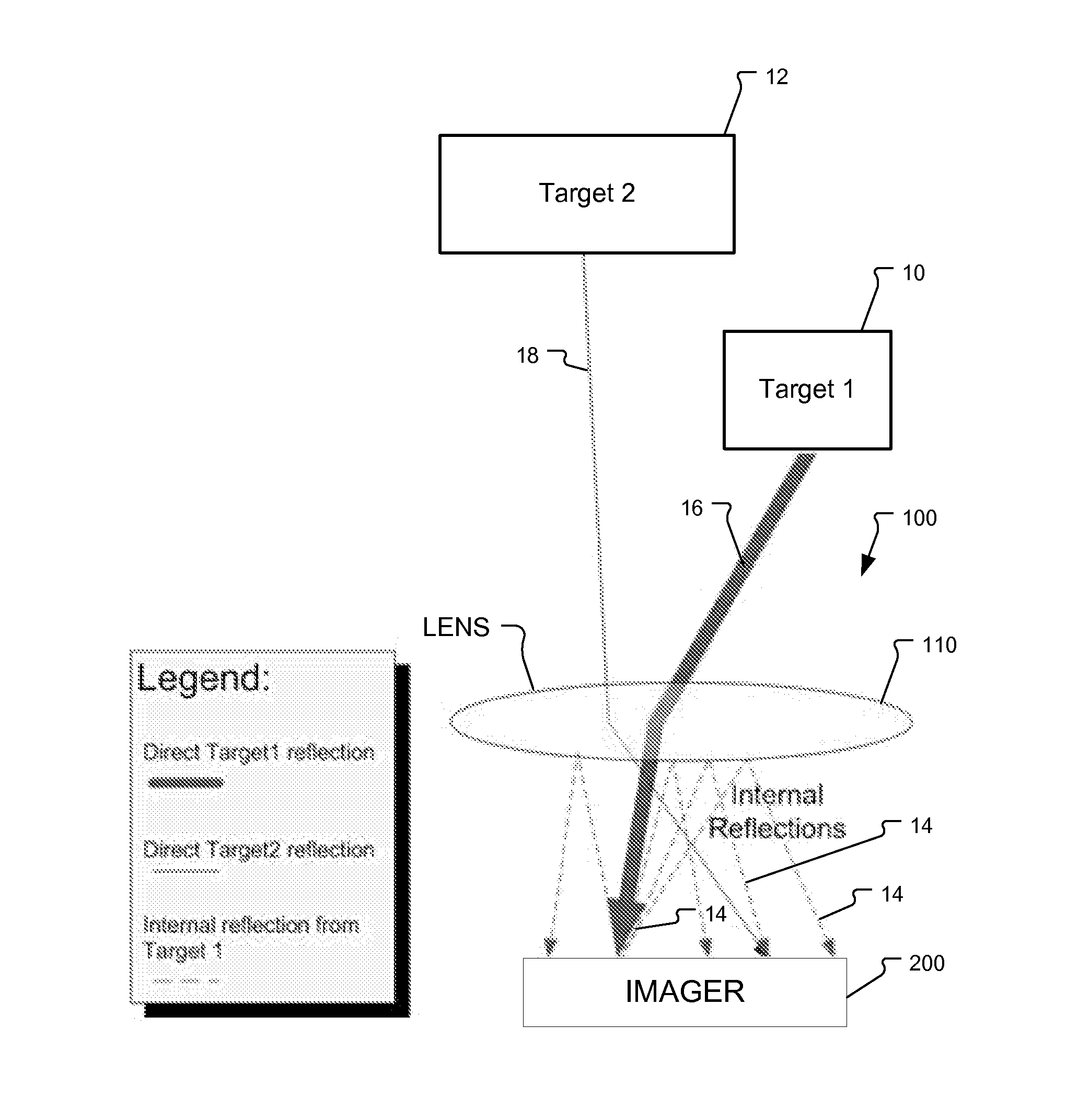

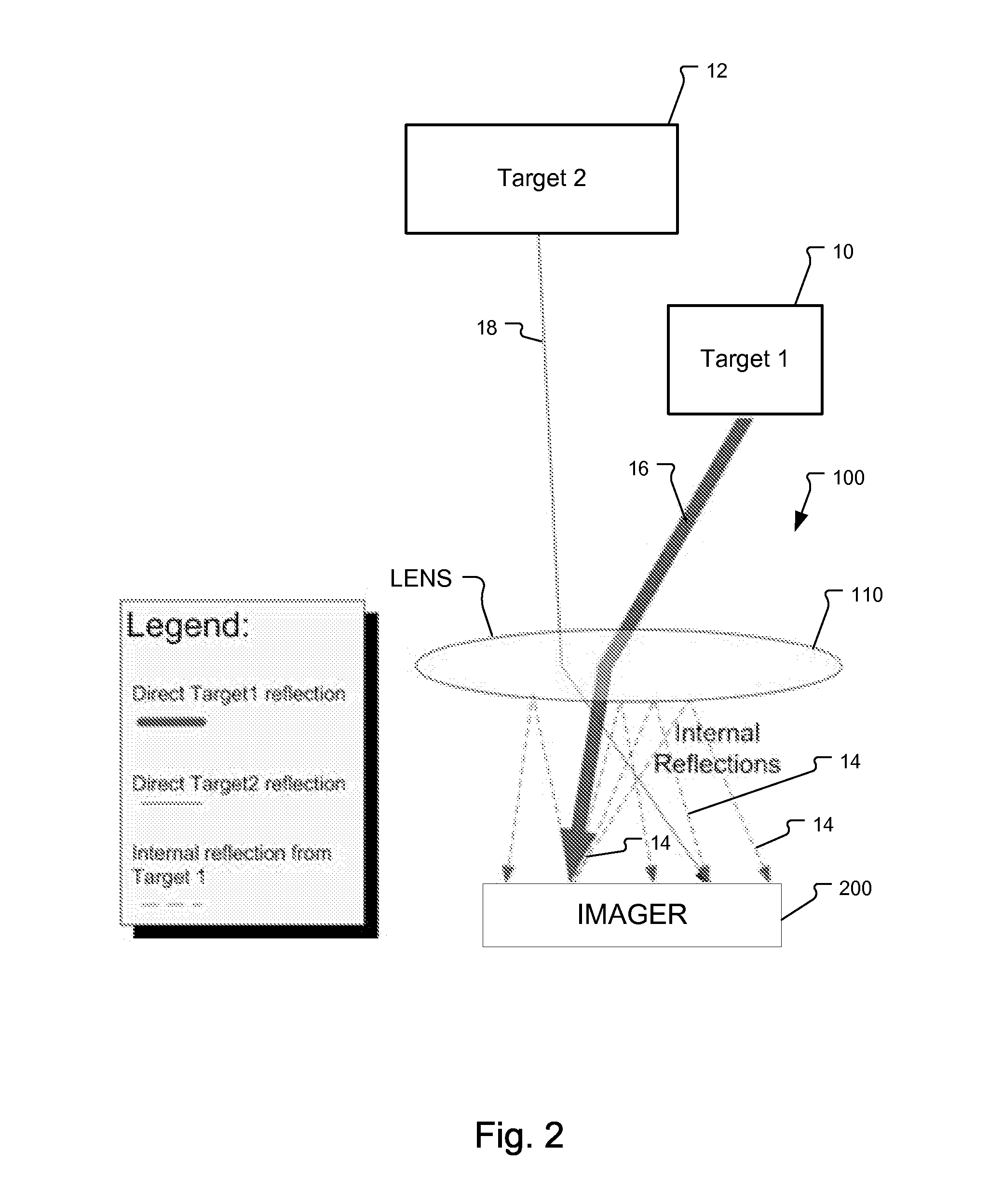

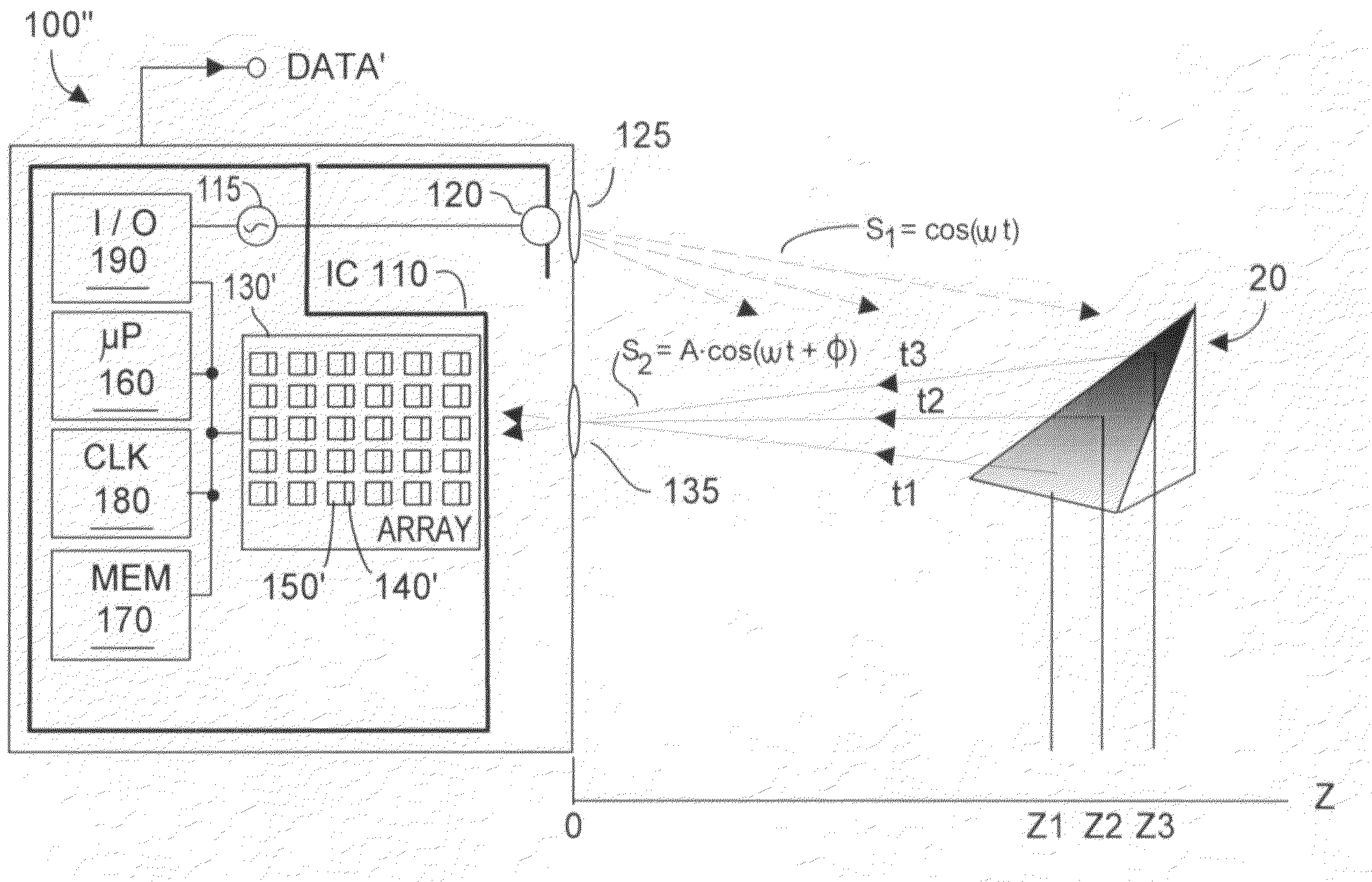

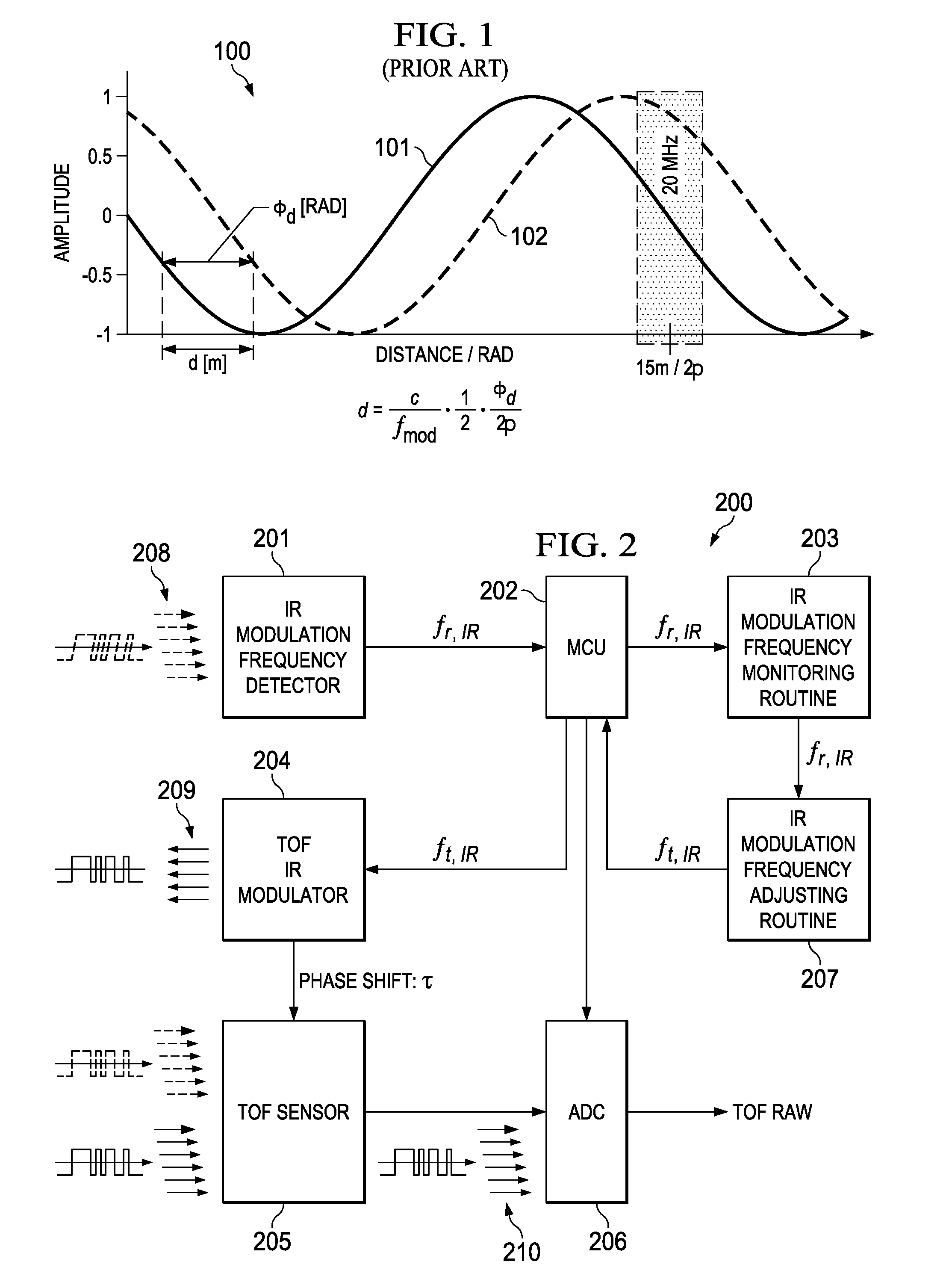

Multi-Path Compensation Using Multiple Modulation Frequencies in Time of Flight Sensor

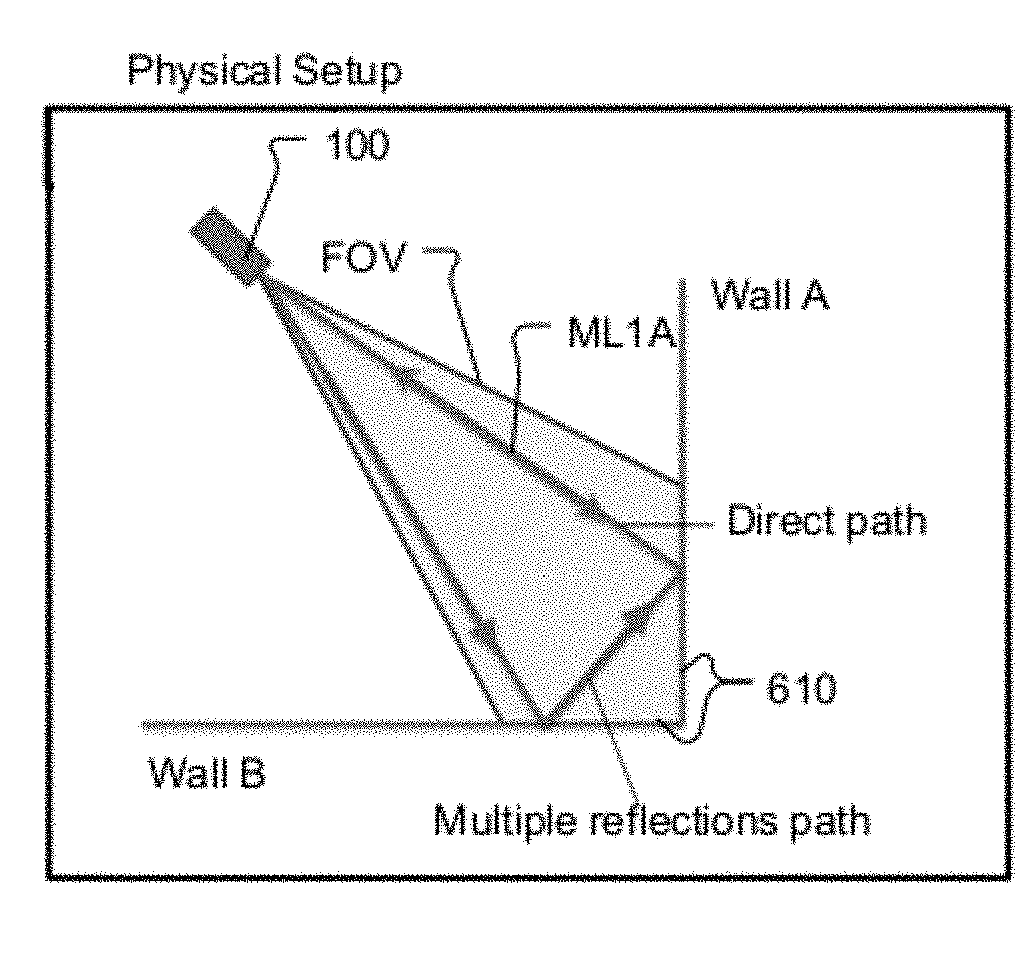

ActiveUS20120033045A1Electromagnetic wave reradiationSteroscopic systemsTime of flight sensorMulti path

A method to compensate for multi-path in time-of-flight (TOF) three dimensional (3D) cameras applies different modulation frequencies in order to calculate / estimate the error vector. Multi-path in 3D TOF cameras might be caused by one of the two following sources: stray light artifacts in the TOF camera systems and multiple reflections in the scene. The proposed method compensates for the errors caused by both sources by implementing multiple modulation frequencies.

Owner:AMS SENSORS SINGAPORE PTE LTD

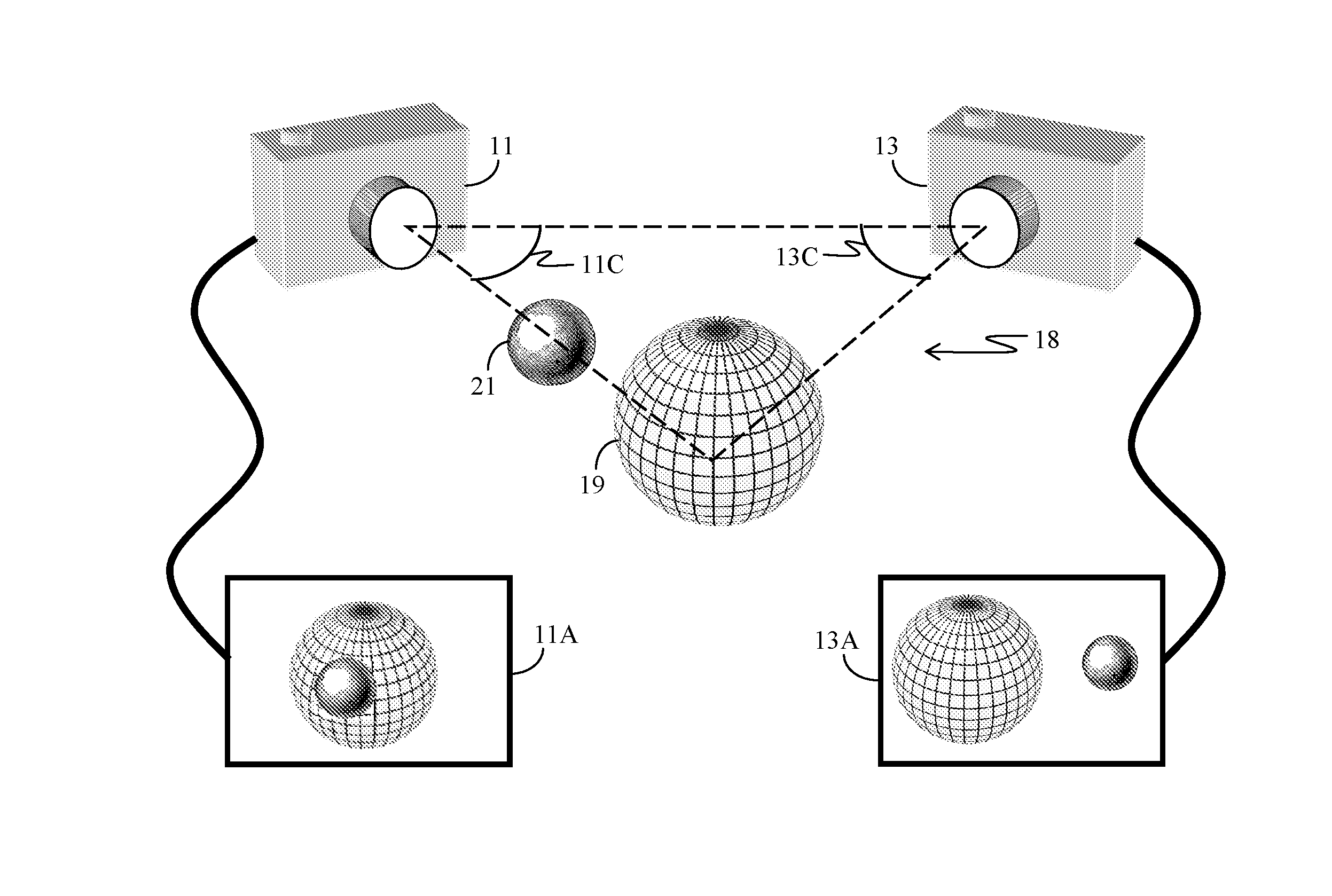

Real Time Sensor and Method for Synchronizing Real Time Sensor Data Streams

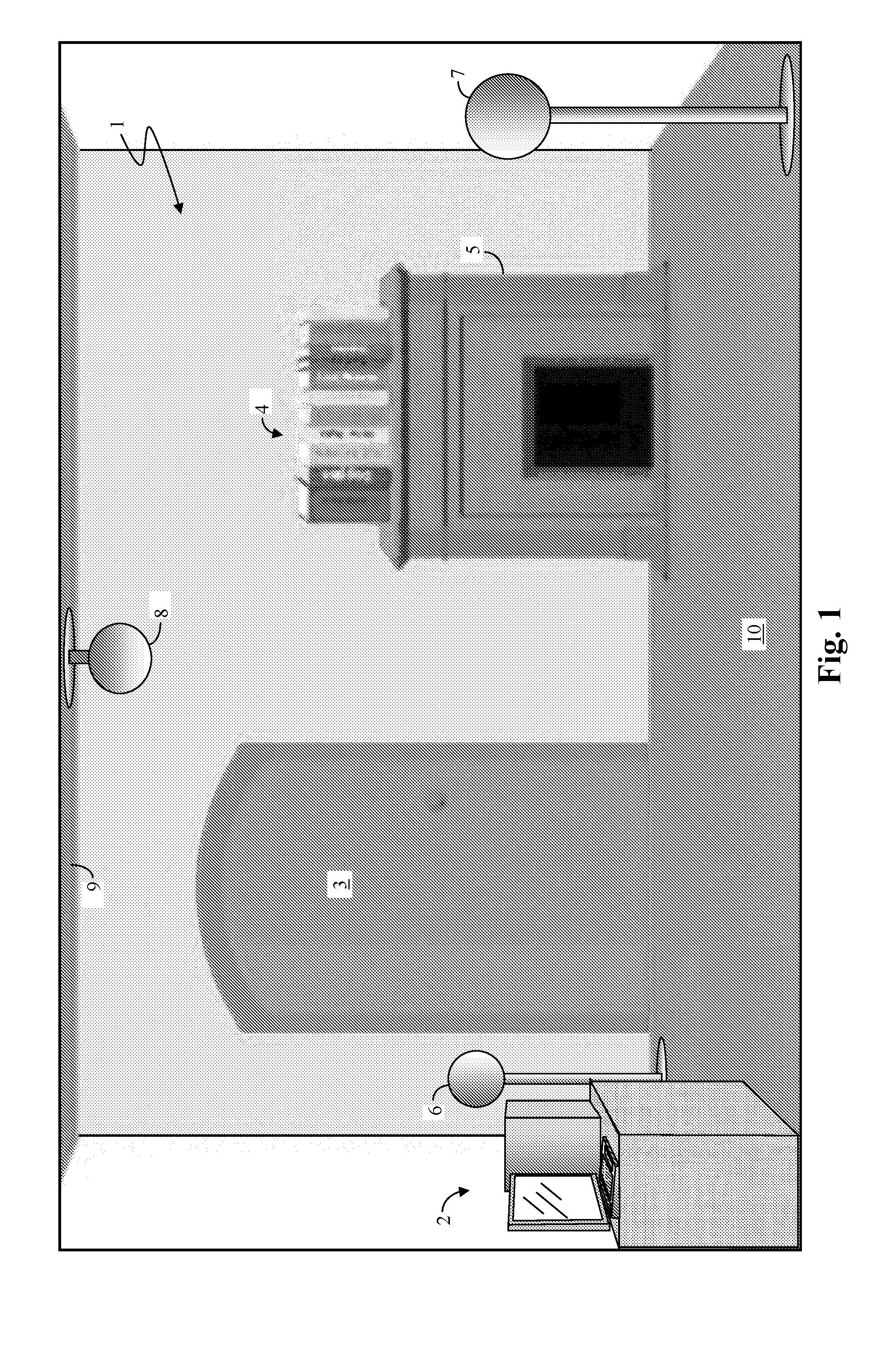

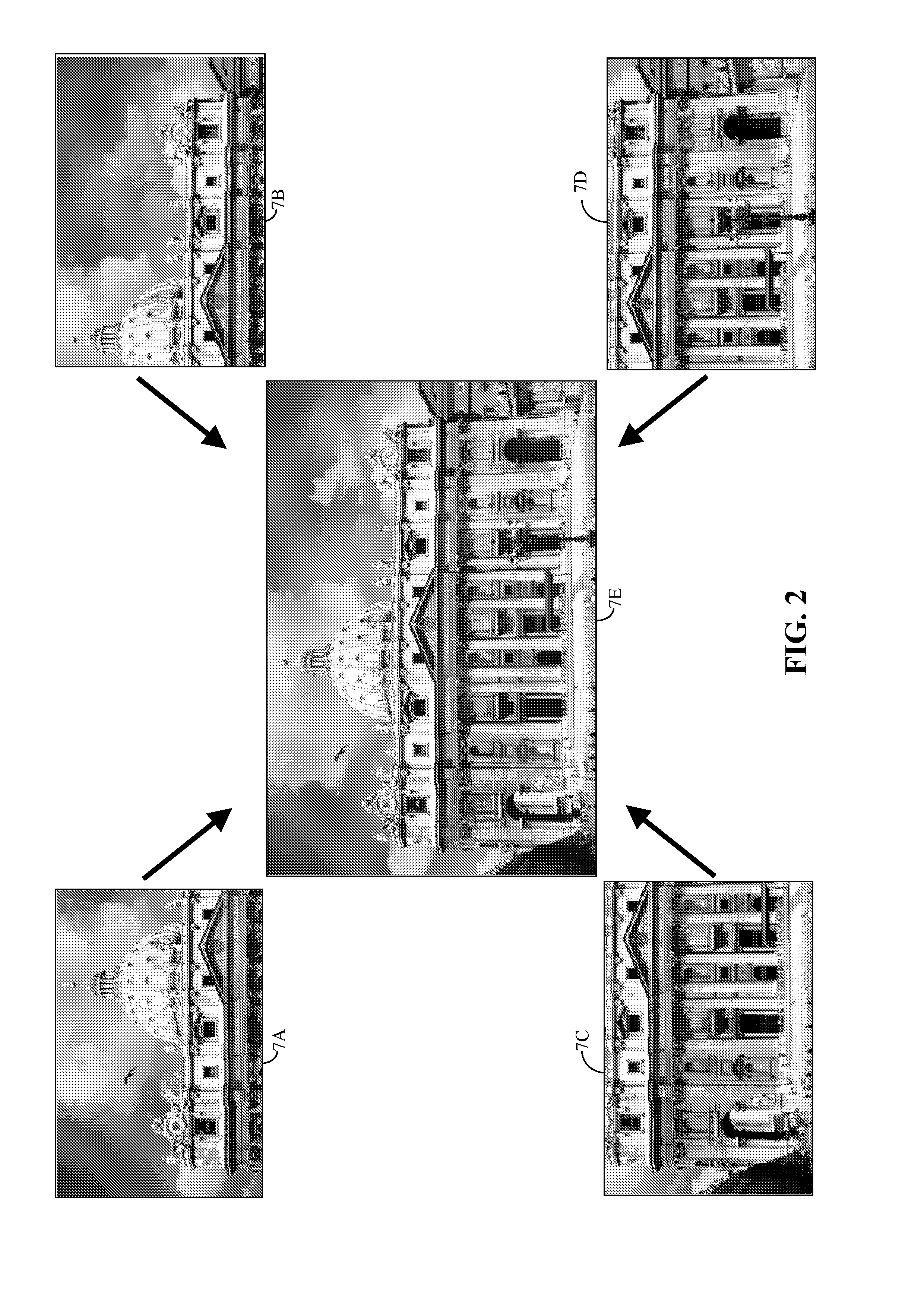

A Holocam Orb system uses multiple Holocam Orbs (Orbs) within a real-life environment to generate an artificial reality representation of the real-life environment in real time. Each Orb is an electronic and software unit that includes a local logic module, a local CPU and multiple synchronous and asynchronous sensors, include stereo cameras, time-of-flight sensors, inertial measurement units and a microphone array. Each Orb synchronizes itself to a common master clock, and packages its asynchrony data into data bundles whose timings are matched to frame timing of synchronous sensors, and all gathered data bundles and data frames are given a time stamp using a reference clock common to all Orbs. The overlapping sensor data from all the Orbs is combined to create the artificial reality representation.

Owner:SEIKO EPSON CORP

Multi-path compensation using multiple modulation frequencies in time of flight sensor

ActiveUS9753128B2Optical rangefindersElectromagnetic wave reradiationTime of flight sensorComputer science

A method to compensate for multi-path in time-of-flight (TOF) three dimensional (3D) cameras applies different modulation frequencies in order to calculate / estimate the error vector. Multi-path in 3D TOF cameras might be caused by one of the two following sources: stray light artifacts in the TOF camera systems and multiple reflections in the scene. The proposed method compensates for the errors caused by both sources by implementing multiple modulation frequencies.

Owner:AMS SENSORS SINGAPORE PTE LTD

Method and system to correct motion blur and reduce signal transients in time-of-flight sensor systems

ActiveUS7450220B2Reduces signal integrity problemReduce transferOptical rangefindersTime of flight sensorShutter

Owner:MICROSOFT TECH LICENSING LLC

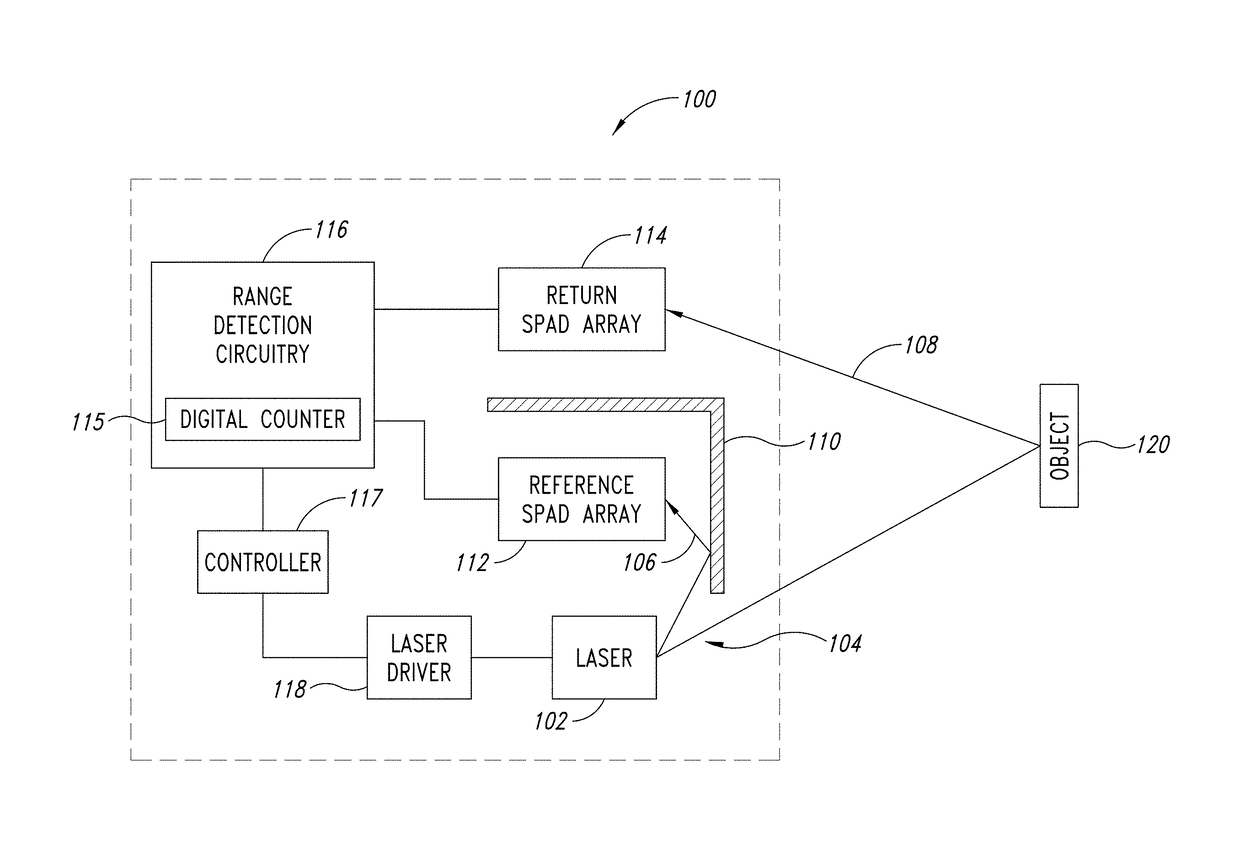

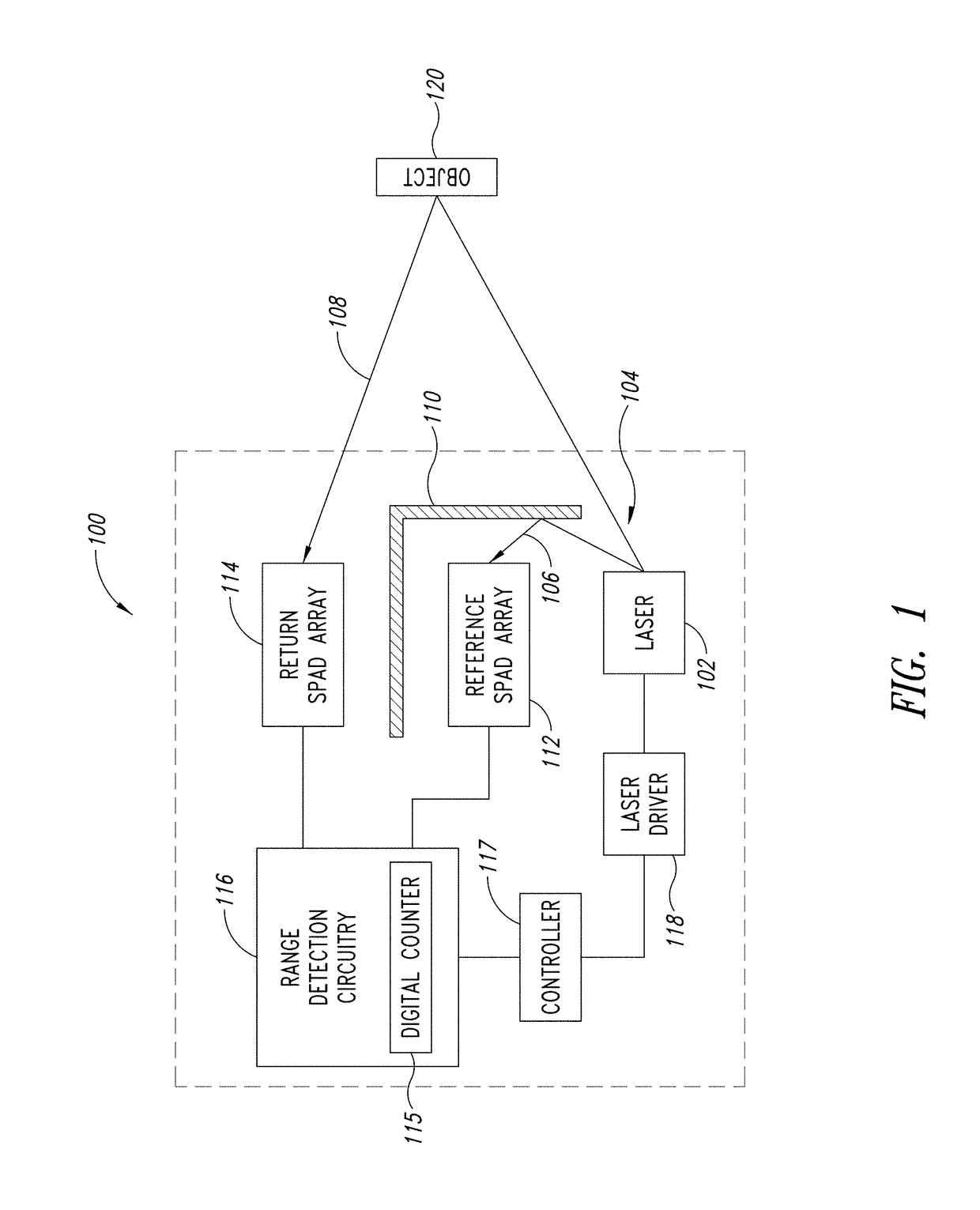

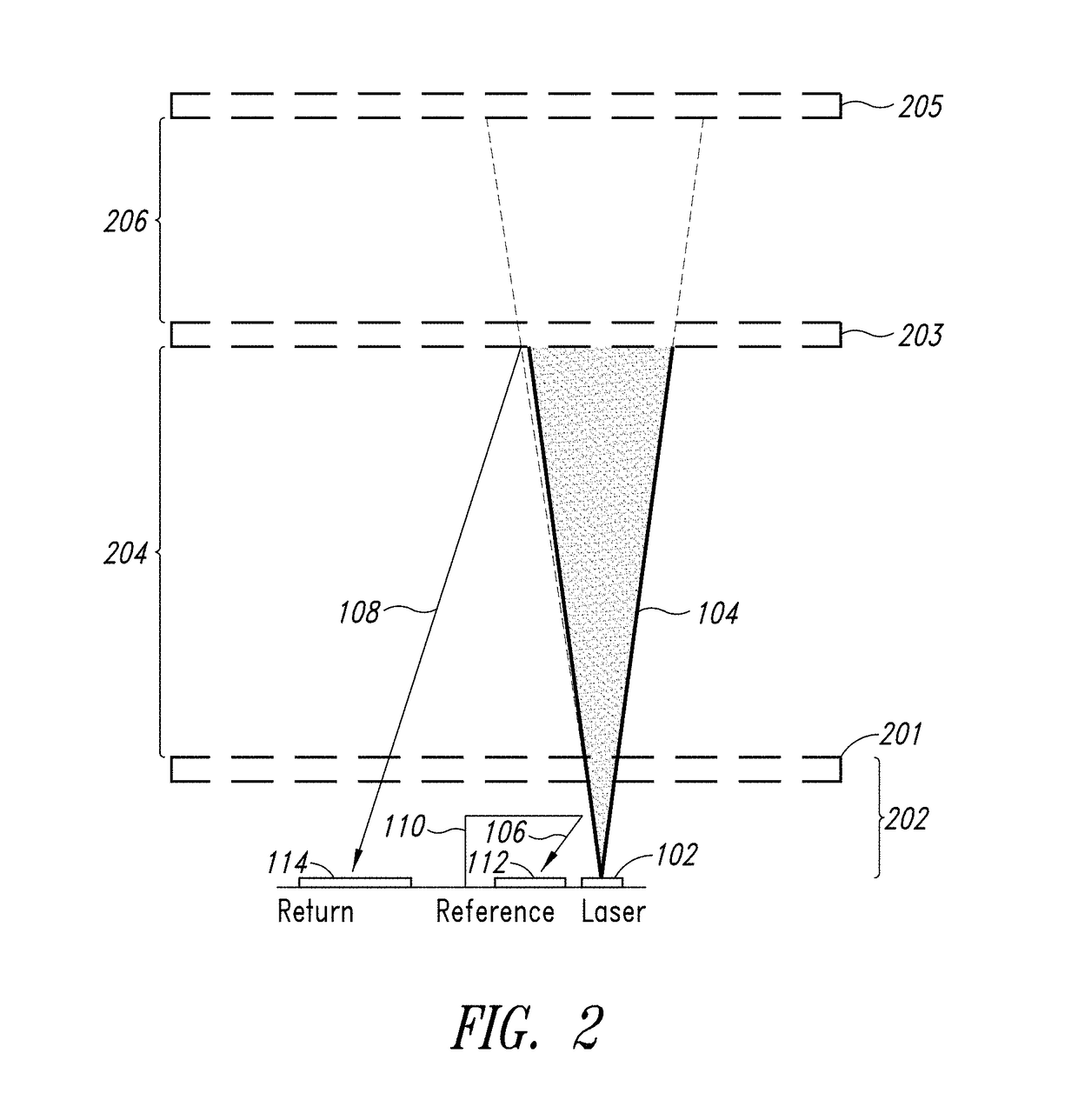

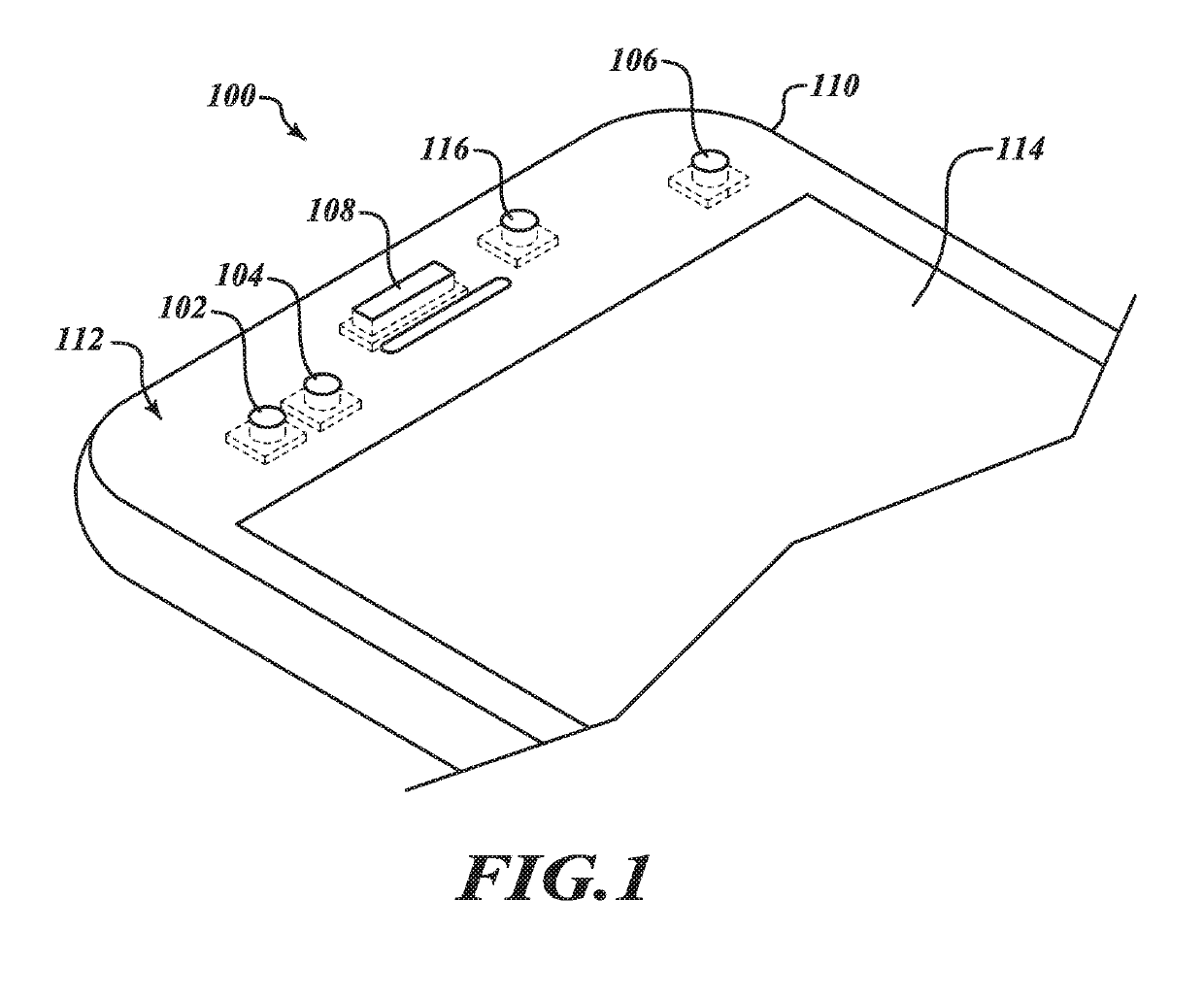

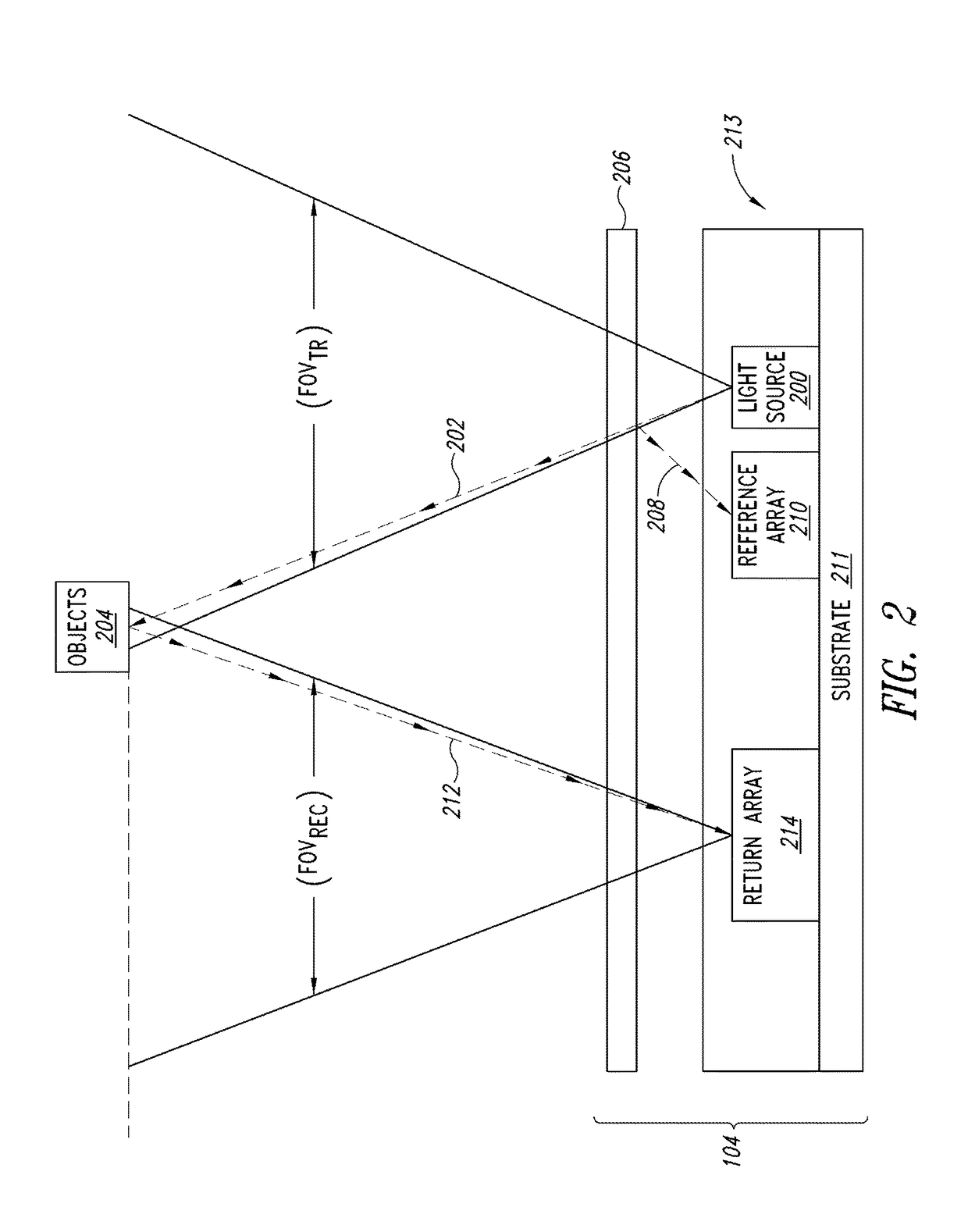

Adaptive laser power and ranging limit for time of flight sensor

ActiveUS20170356981A1Increase rangeImprove accuracyElectromagnetic wave reradiationDriver circuitSingle-photon avalanche diode

A time of flight range detection device includes a laser configured to transmit an optical pulse into an image scene, a return single-photon avalanche diode (SPAD) array, a reference SPAD array, a range detection circuit coupled to the return SPAD array and the reference SPAD array, and a laser driver circuit. The range detection circuit in operation determines a distance to an object based on signals from the return SPAD array and the reference SPAD array. The laser driver circuit in operation varies an output power level of the laser in response to the determined distance to the object.

Owner:STMICROELECTRONICS SRL

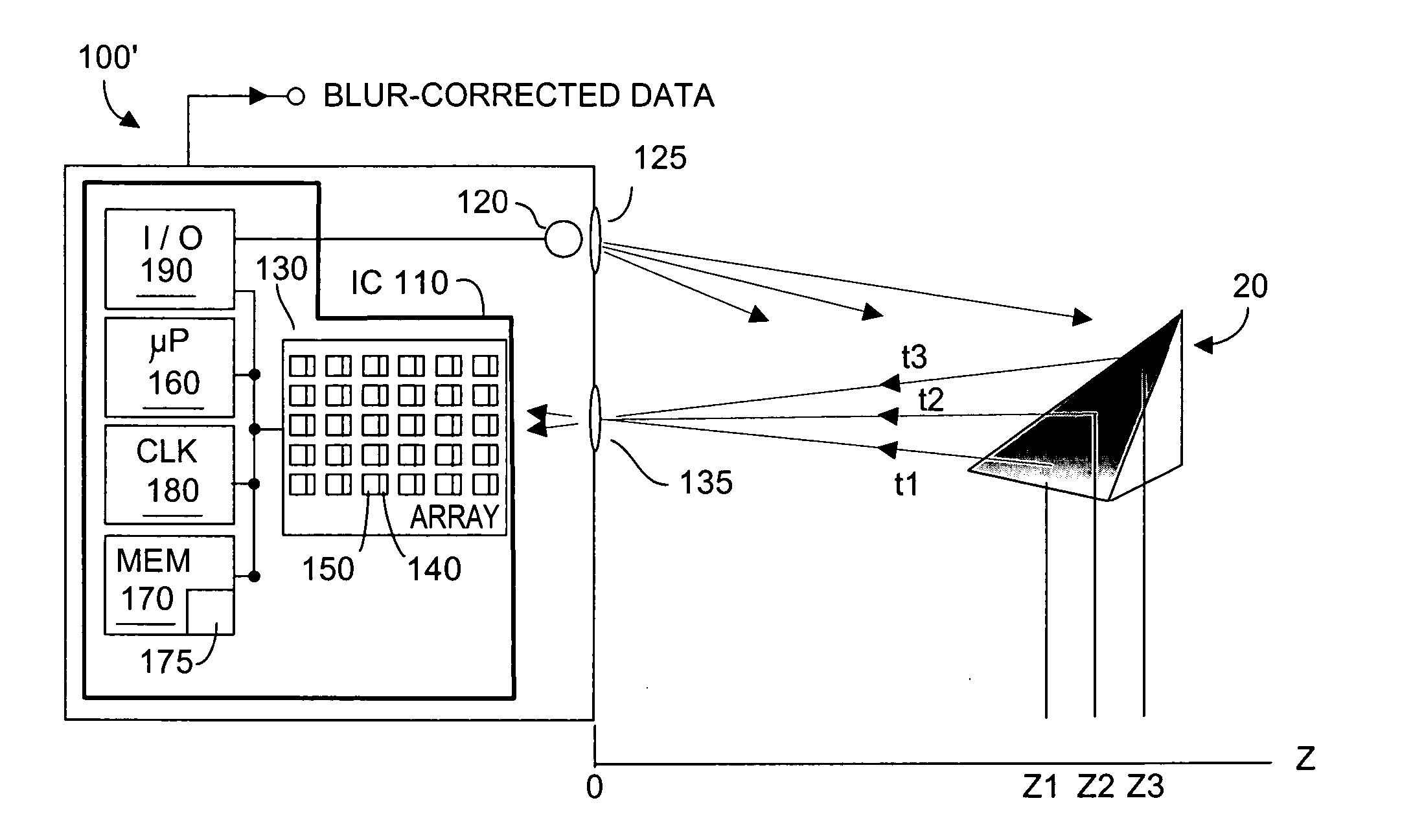

Method and system to correct motion blur in time-of-flight sensor systems

A method and system corrects motion blur in time-of-flight (TOF) image data in which acquired consecutive images may evidence relative motion between the TOF system and the imaged object or scene. Motion is deemed global if associated with movement of the TOF sensor system, and motion is deemed local if associated with movement in the target or scene being imaged. Acquired images are subjected to global and then to local normalization, after which coarse motion detection is applied. Correction is made to any detected global motion, and then to any detected local motion. Corrective compensation results in distance measurements that are substantially free of error due to motion-blur.

Owner:CANESTA

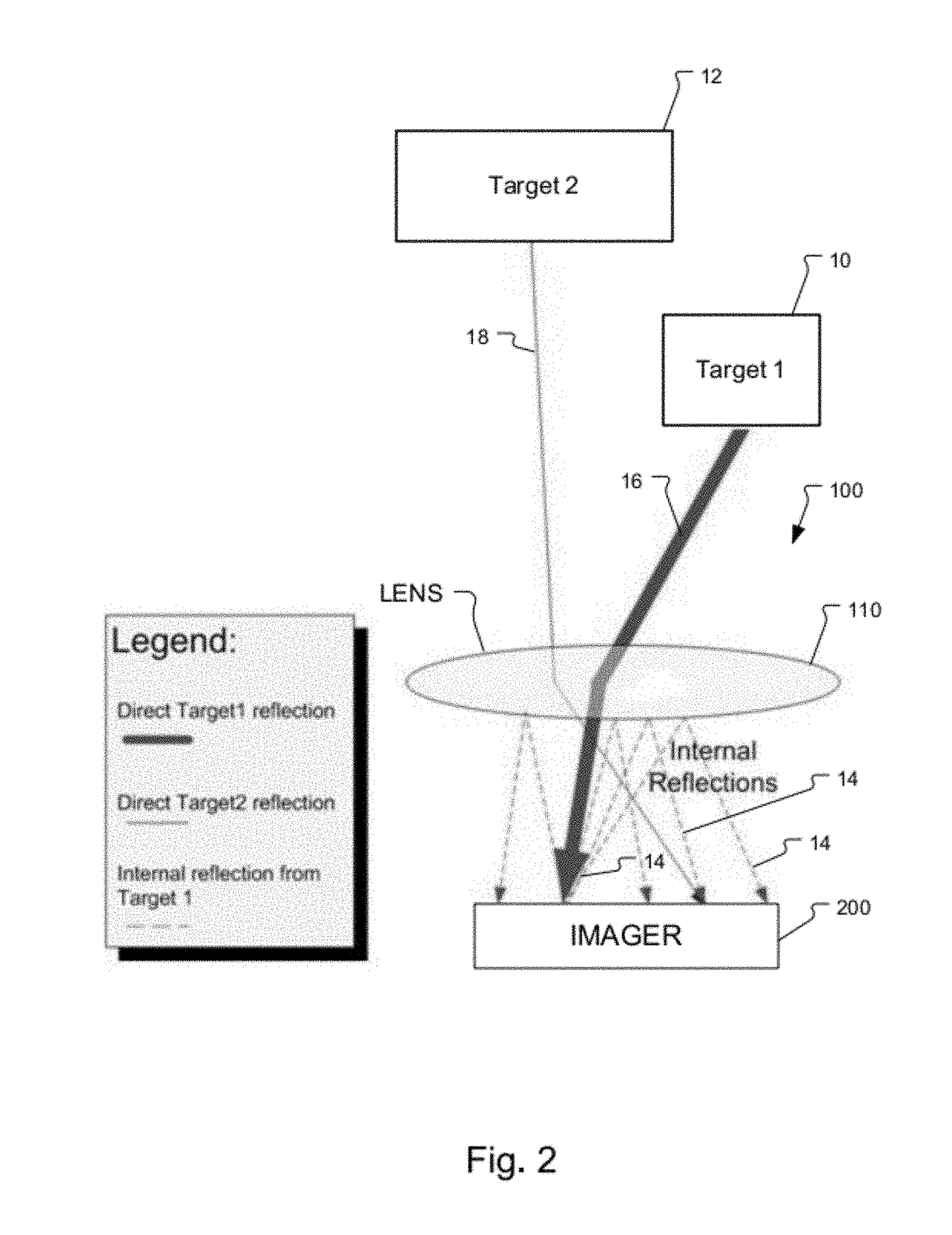

Method and system to reduce stray light reflection error in time-of-flight sensor arrays

ActiveUS20120008128A1Weakening rangeReduce surface reflectivityOptical rangefindersElectromagnetic wave reradiationSensor arrayType error

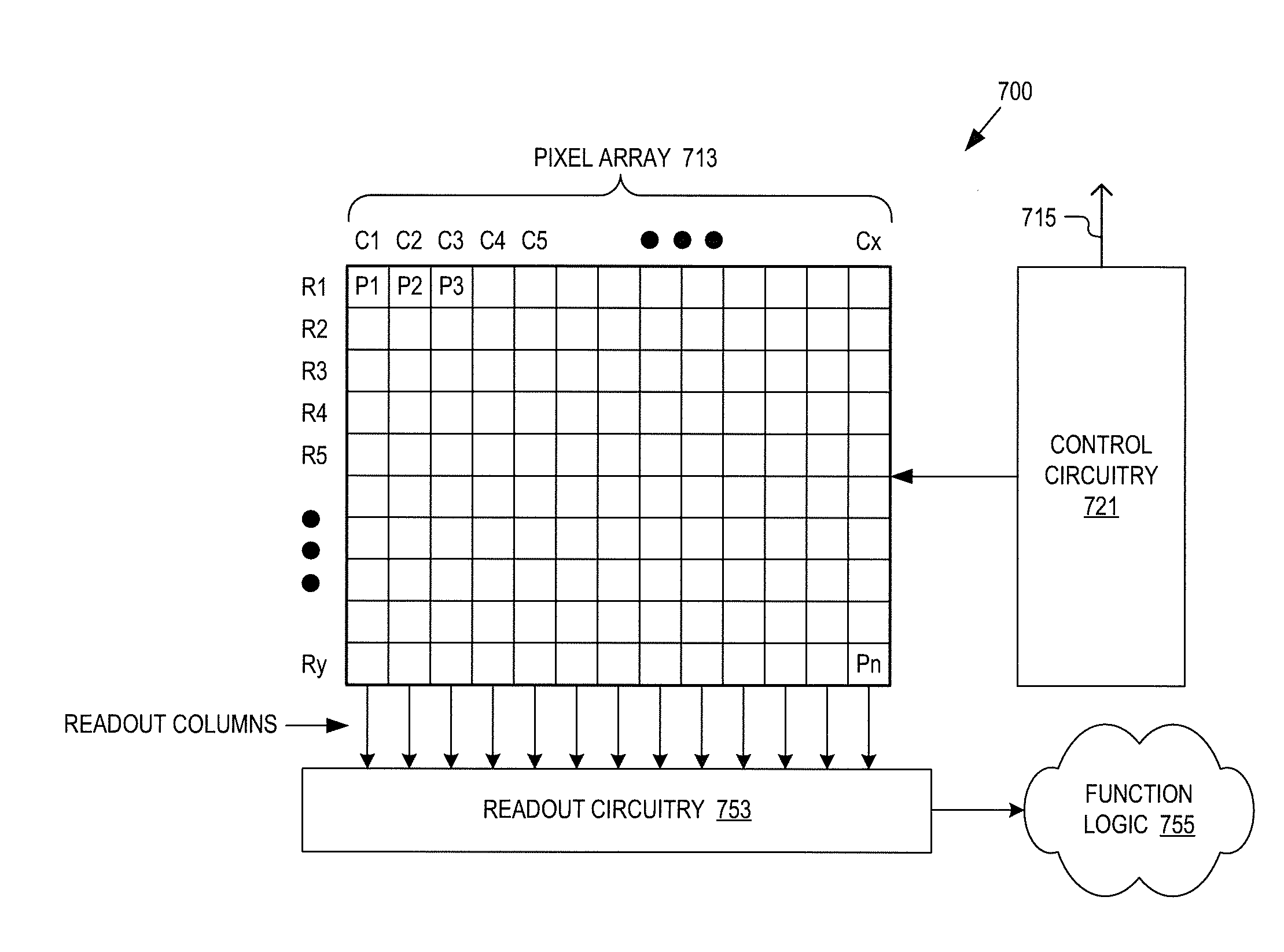

Haze-type phase shift error due to stray light reflections in a phase-type TOF system is reduced by providing a windowed opaque coating on the sensor array surface, the windows permitting optical energy to reach light sensitive regions of the pixels, and by reducing optical path stray reflection. Further haze-type error reduction is obtained by acquiring values for a plurality (but not necessarily all) of pixel sensors in the TOF system pixel sensor array. Next, a correction term for the value (differential or other) acquired for each pixel in the plurality of pixel sensors is computed and stored. Modeling response may be made dependent upon pixel (row,column) location within the sensor array. During actual TOF system runtime operation, detection data for each pixel, or pixel groups (super pixels) is corrected using the stored data. Good optical system design accounts for correction, enabling a simple correction model.

Owner:MICROSOFT TECH LICENSING LLC

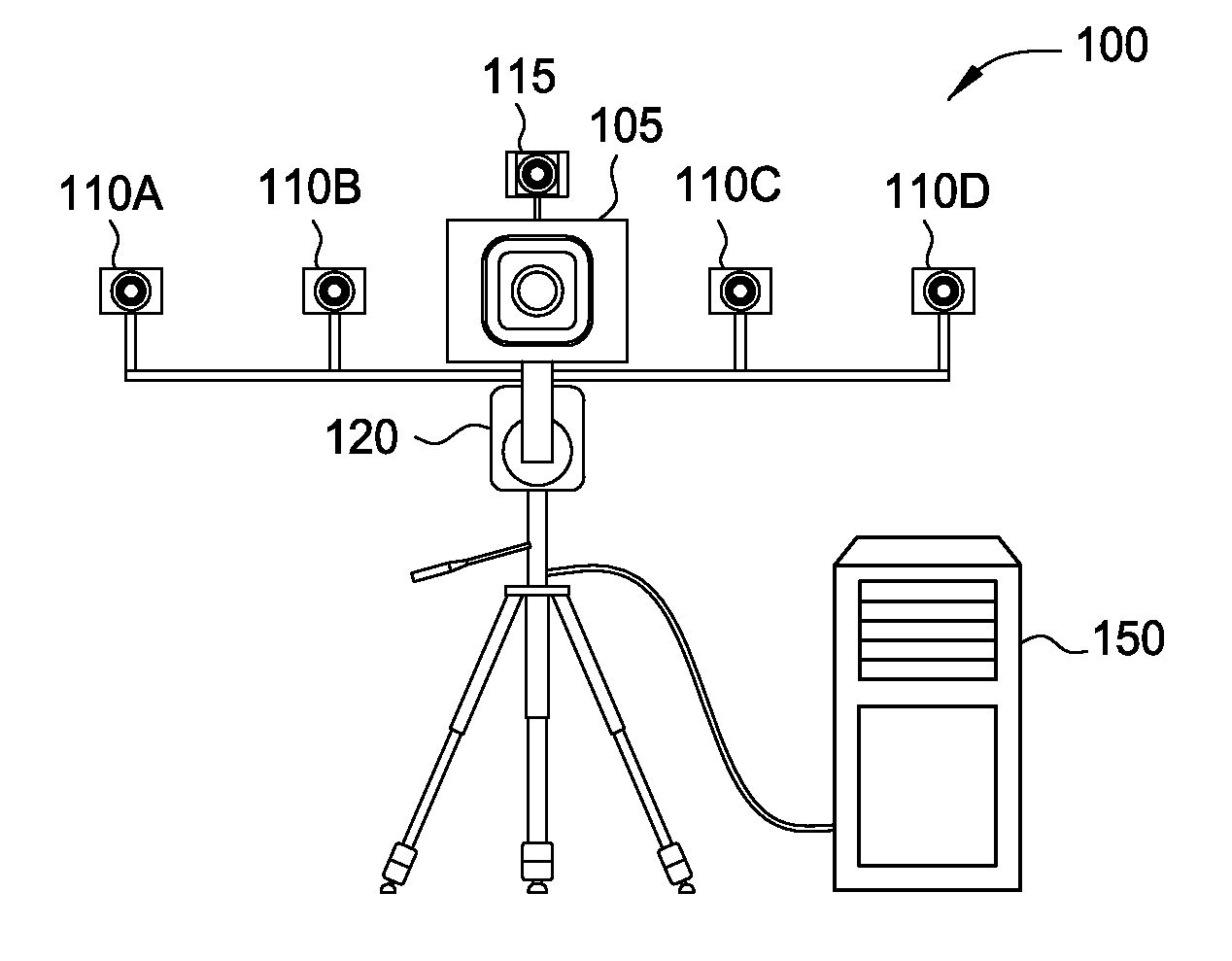

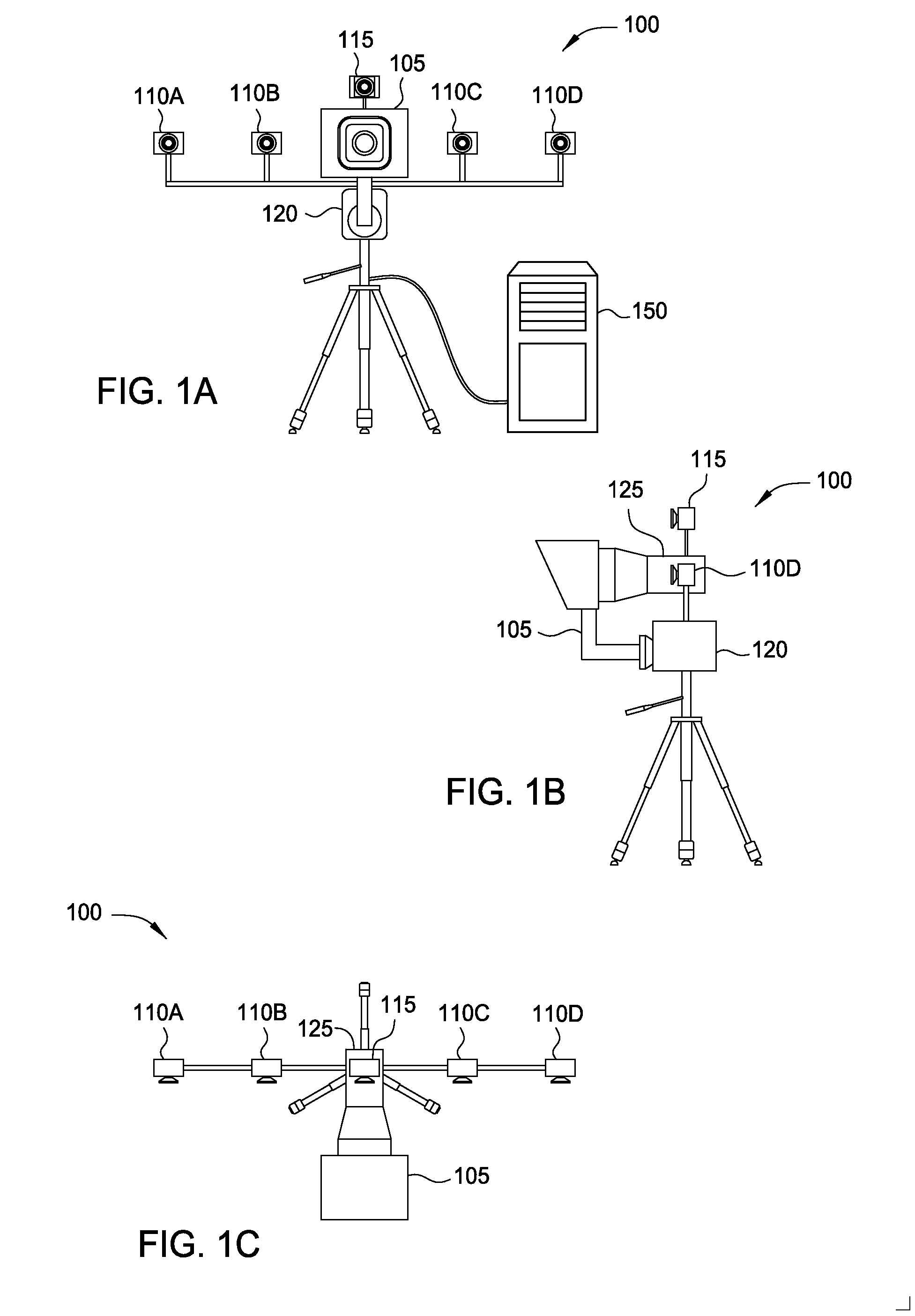

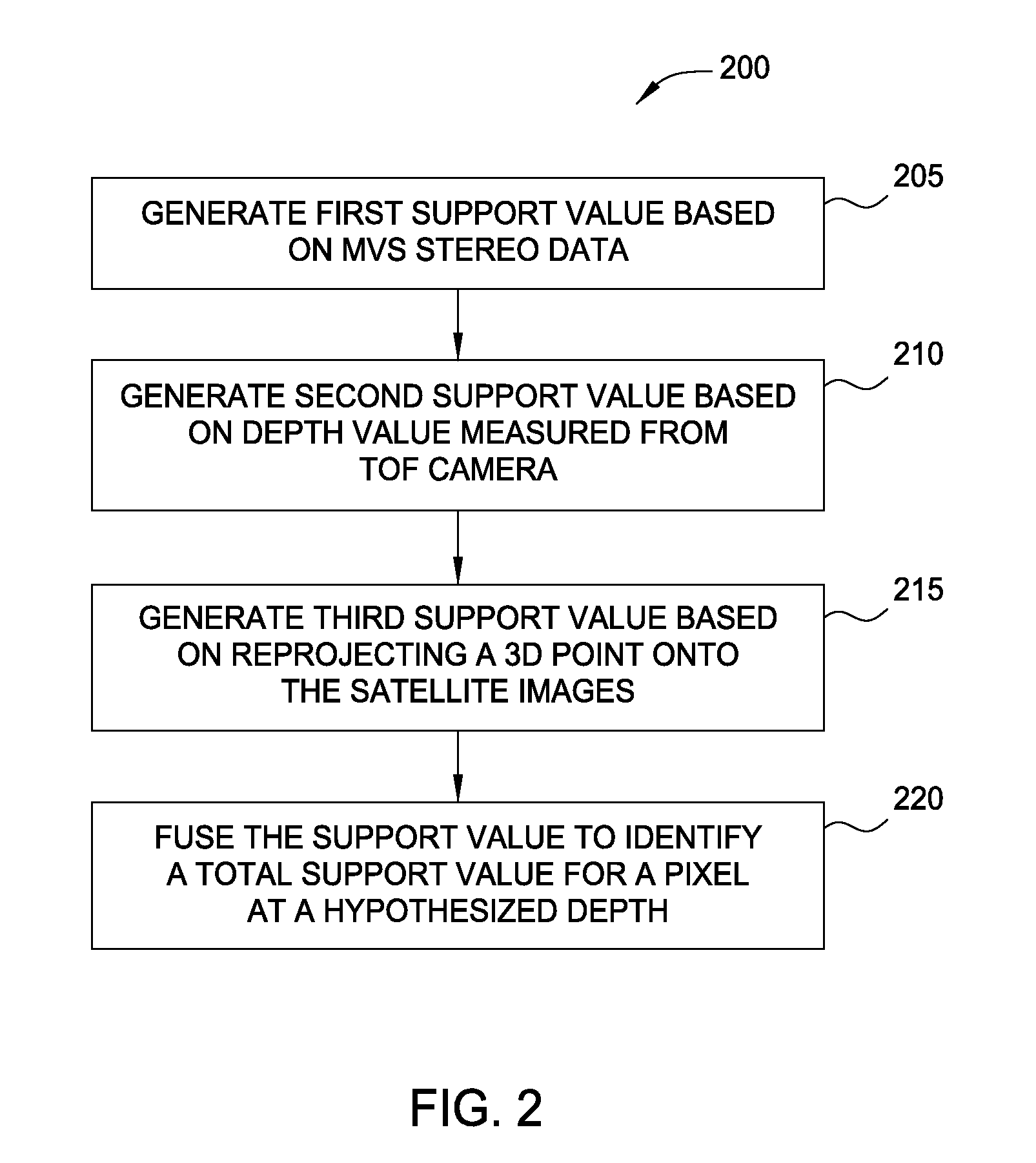

Sensor fusion for depth estimation

To generate a pixel-accurate depth map, data from a range-estimation sensor (e.g., a time-of flight sensor) is combined with data from multiple cameras to produce a high-quality depth measurement for pixels in an image. To do so, a depth measurement system may use a plurality of cameras mounted on a support structure to perform a depth hypothesis technique to generate a first depth-support value. Furthermore, the apparatus may include a range-estimation sensor which generates a second depth-support value. In addition, the system may project a 3D point onto the auxiliary cameras and compare the color of the associated pixel in the auxiliary camera with the color of the pixel in reference camera to generate a third depth-support value. The system may combine these support values for each pixel in an image to determine respective depth values. Using these values, the system may generate a depth map for the image.

Owner:DISNEY ENTERPRISES INC

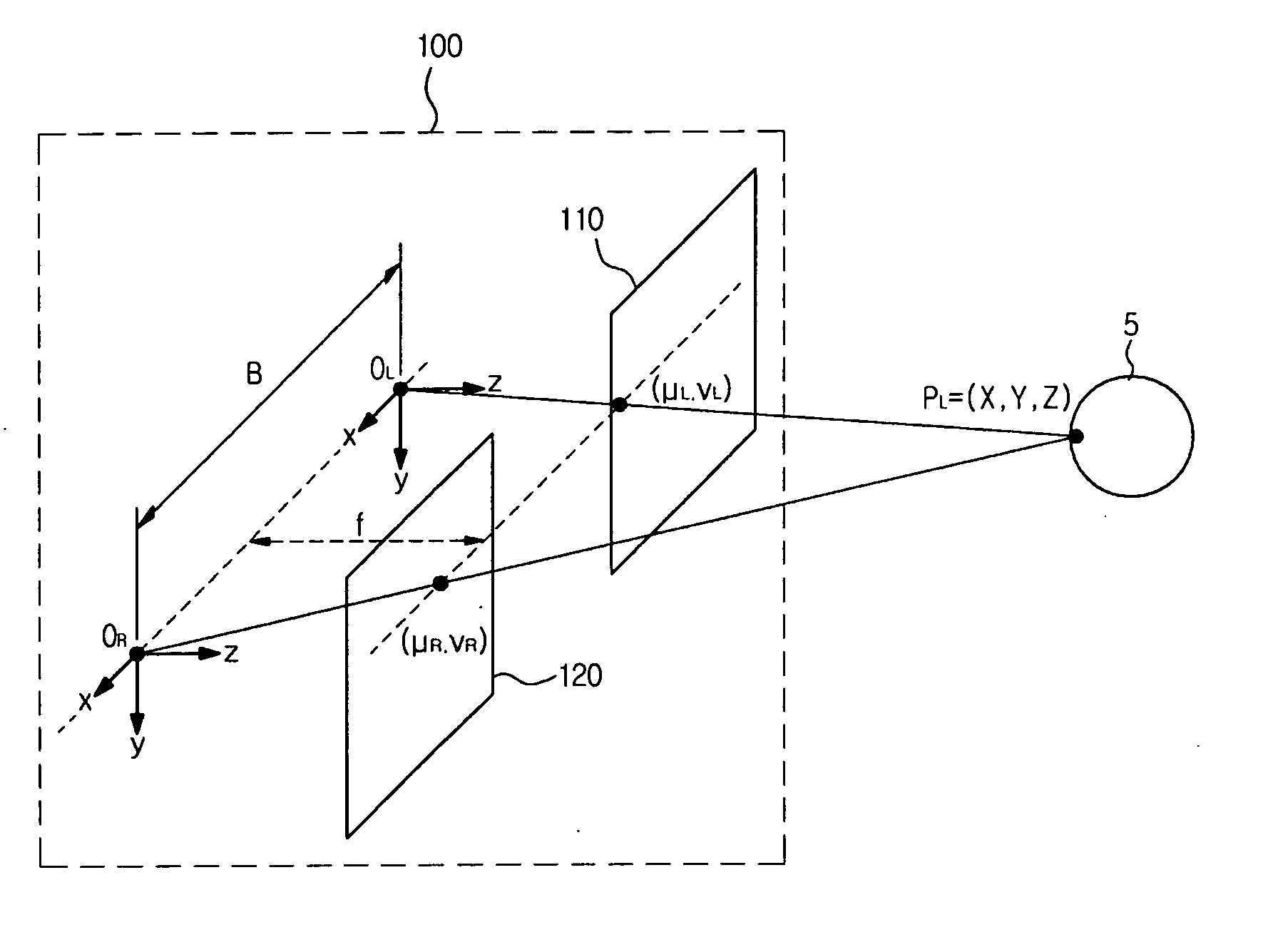

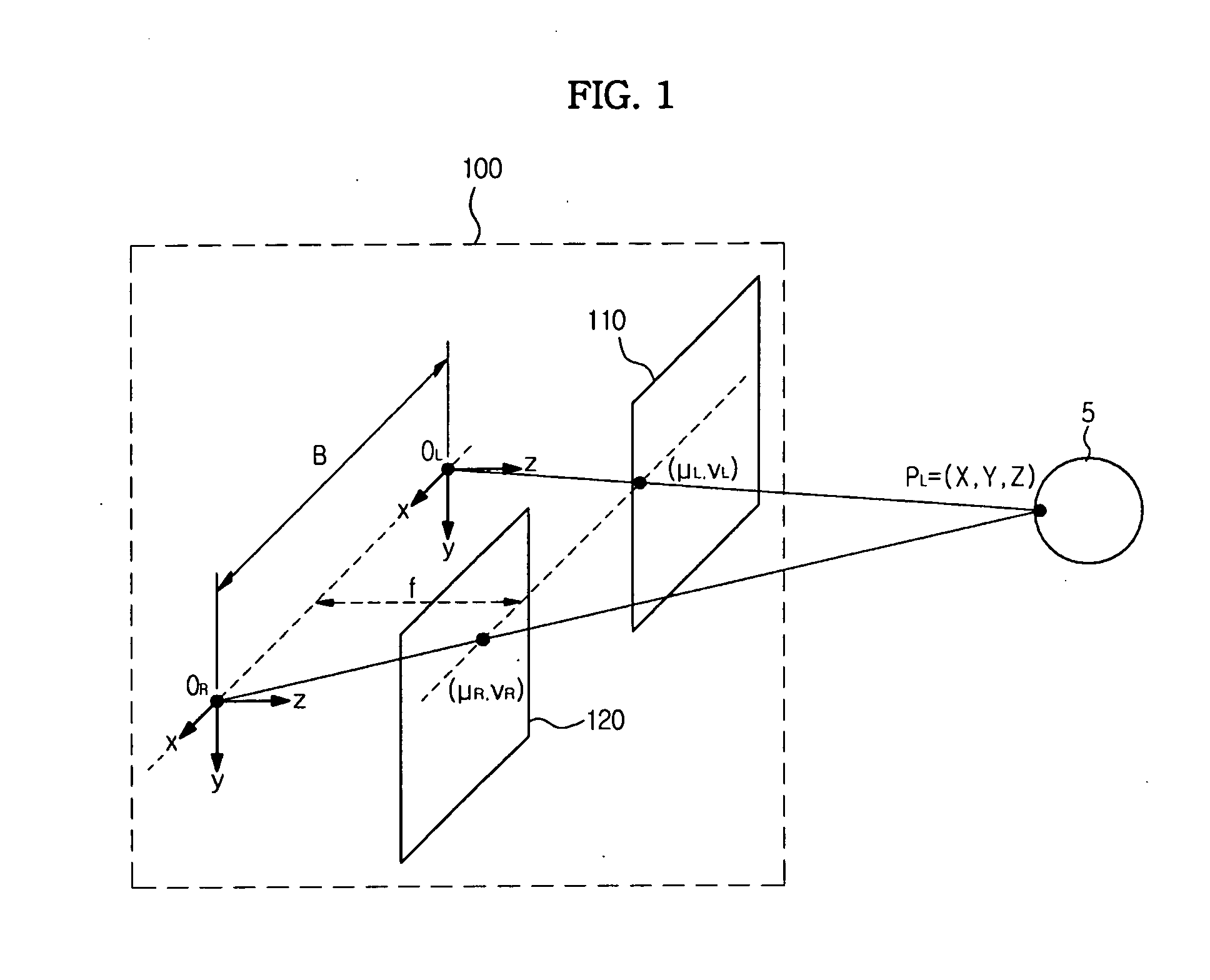

System and method for extracting three-dimensional coordinates

A system and method for extracting 3D coordinates, the method includes obtaining, by a stereoscopic image photographing unit, two images of a target object, and obtaining 3D coordinates of the object on the basis of coordinates of each pixel of the two images, measuring, by a Time of Flight (TOF) sensor unit, a value of a distance to the object, and obtaining 3D coordinates of the object on the basis of the measured distance value, mapping pixel coordinates of each image to the 3D coordinates obtained through the TOF sensor unit, and calibrating the mapped result, determining whether each set of pixel coordinates and the distance value to the object measured through the TOF sensor unit are present, calculating a disparity value on the basis of the distance value or the pixel coordinates, and calculating 3D coordinates of the object on the basis of the calculated disparity value.

Owner:SAMSUNG ELECTRONICS CO LTD

Method and system to correct motion blur and reduce signal transients in time-of-flight sensor systems

ActiveUS20060176469A1Reduces signal integrity problemReduce transferOptical rangefindersElectromagnetic wave reradiationTime of flight sensorPartition of unity

TOF system shutter time needed to acquire image data in a time-of-flight (TOF) system that acquires consecutive images is reduced, thus decreasing the time in which relative motion can occur. In one embodiment, pixel detectors are clocked with multi-phase signals and integration of the four signals occurs simultaneously to yield four phase measurements from four pixel detectors within a single shutter time unit. In another embodiment, phase measurement time is reduced by a factor (1 / k) by providing super pixels whose collection region is increased by a factor “k” relative to a normal pixel detector. Each super pixel is coupled to k storage units and four-phase sequential signals. Alternatively, each pixel detector can have k collector regions, k storage units, and share common clock circuitry that generates four-phase signals. Various embodiments can reduce the mal-effects of clock signal transients upon signals, and can be dynamically reconfigured as required.

Owner:MICROSOFT TECH LICENSING LLC

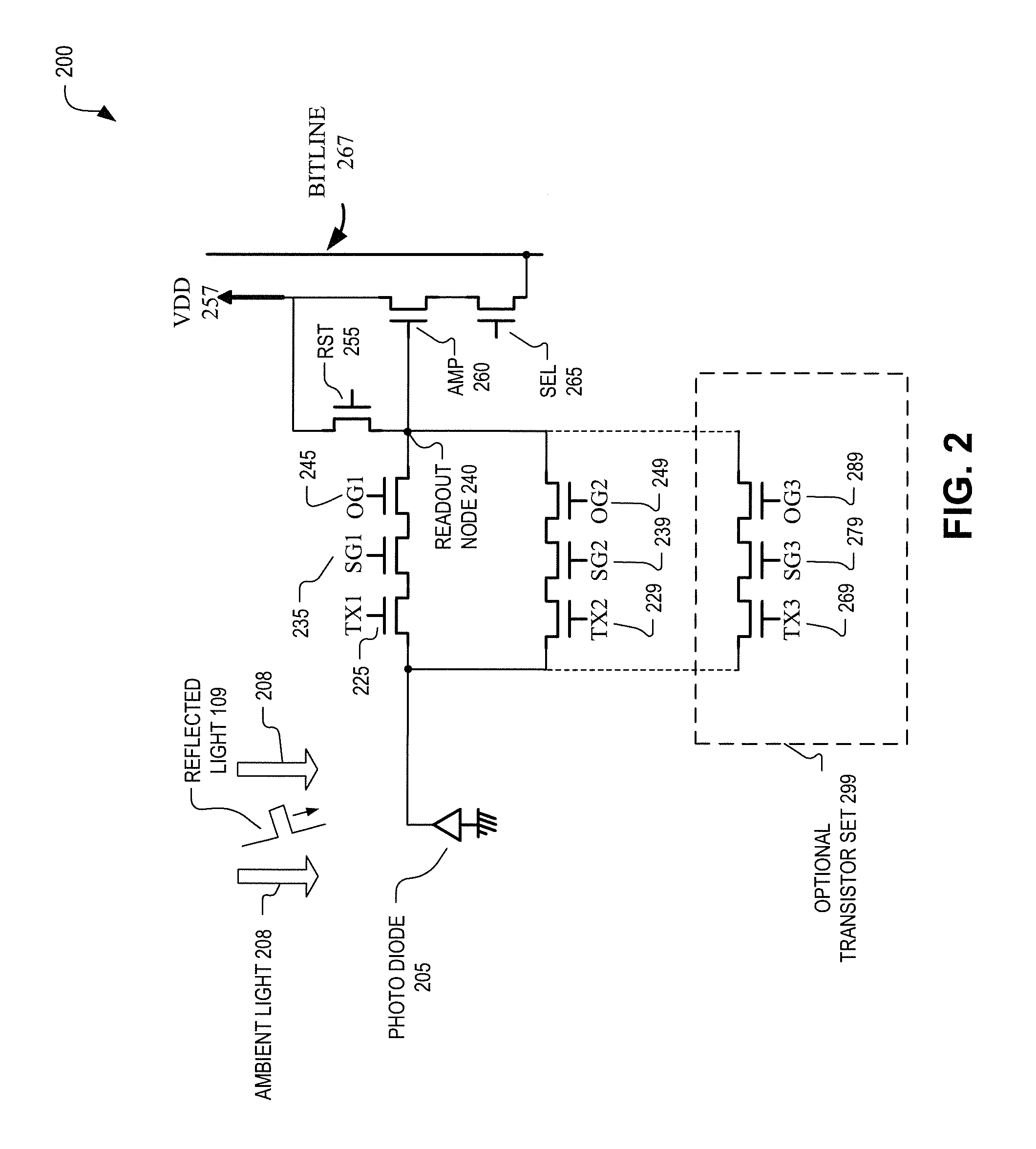

Circuit configuration and method for time of flight sensor

An apparatus includes a photodiode, a first and second storage transistor, a first and second transfer transistor, and a first and second output transistor. The first transfer transistor selectively transfers a first portion of the image charge from the photodiode to the first storage transistor for storing over multiple accumulation periods. The first output transistor selectively transfers a first sum of the first portion of the image charge to a readout node. The second transfer transistor selectively transfers a second portion of the image charge from the photodiode to the second storage transistor for storing over the multiple accumulation periods. The second output transistor selectively transfers a second sum of the second portion of the image charge to the readout node.

Owner:OMNIVISION TECH INC

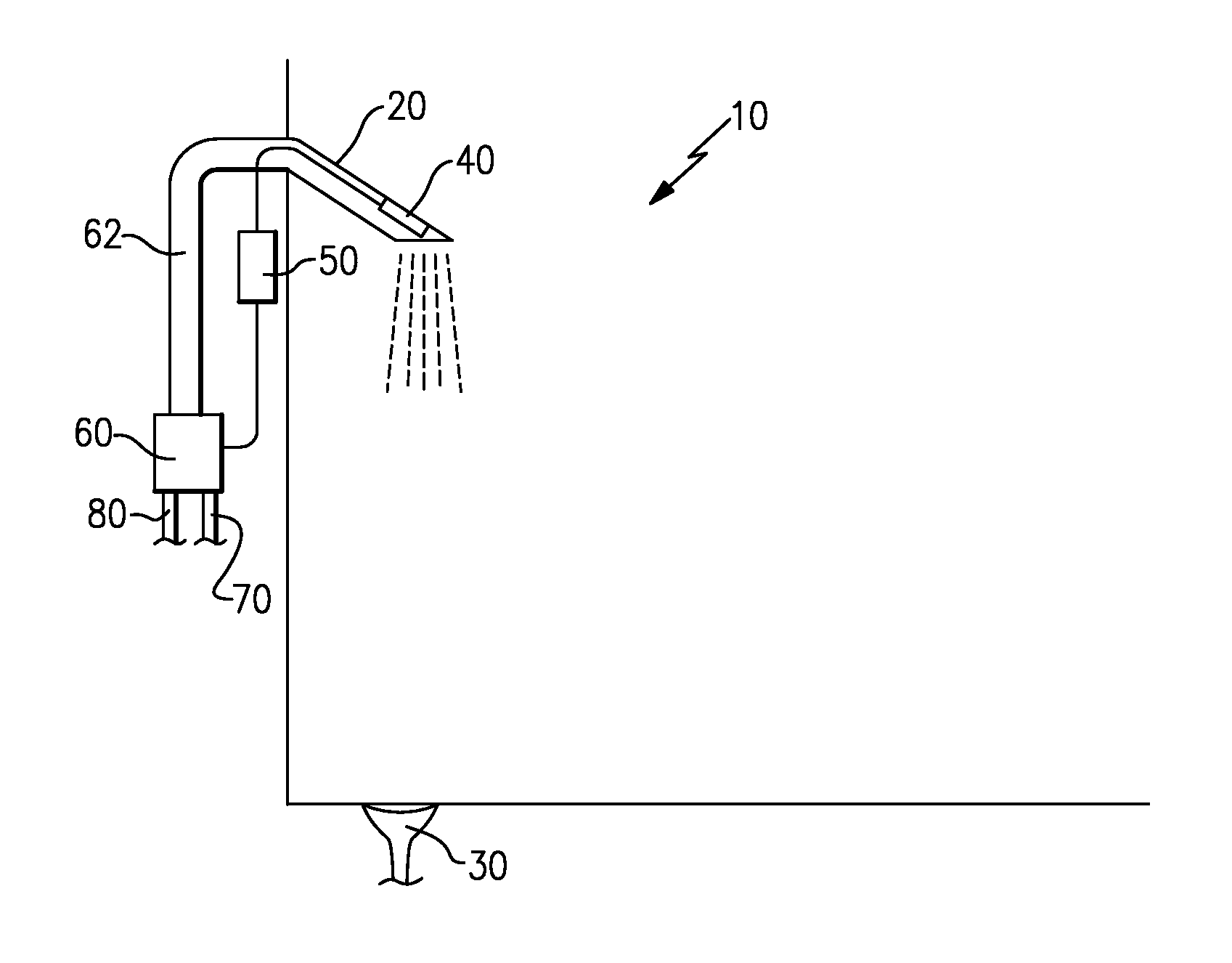

Time of flight proximity sensor

InactiveUS20150268342A1Opening closed containersBottle/container closureProximity sensorTime of flight sensor

An automated dispensing fixture includes a controller controllably coupled to at least one valve. The valve is operable to control fluid flow through a dispensing fixture. The automated dispensing fixture also includes at least one time of flight sensor communicatively coupled to the controller, such that the controller is operable to detect a position of an object relative to the dispensing fixture.

Owner:MASCO CANADA

Three-dimensional virtual-touch human-machine interface system and method therefor

A three-dimensional virtual-touch human-machine interface system (20) and a method (100) of operating the system (20) are presented. The system (20) incorporates a three-dimensional time-of-flight sensor (22), a three-dimensional autostereoscopic display (24), and a computer (26) coupled to the sensor (22) and the display (24). The sensor (22) detects a user object (40) within a three-dimensional sensor space (28). The display (24) displays an image (42) within a three-dimensional display space (32). The computer (26) maps a position of the user object (40) within an interactive volumetric field (36) mutually within the sensor space (28) and the display space (32), and determines when the positions of the user object (40) and the image (42) are substantially coincident. Upon detection of coincidence, the computer (26) executes a function programmed for the image (42).

Owner:MICROSOFT TECH LICENSING LLC

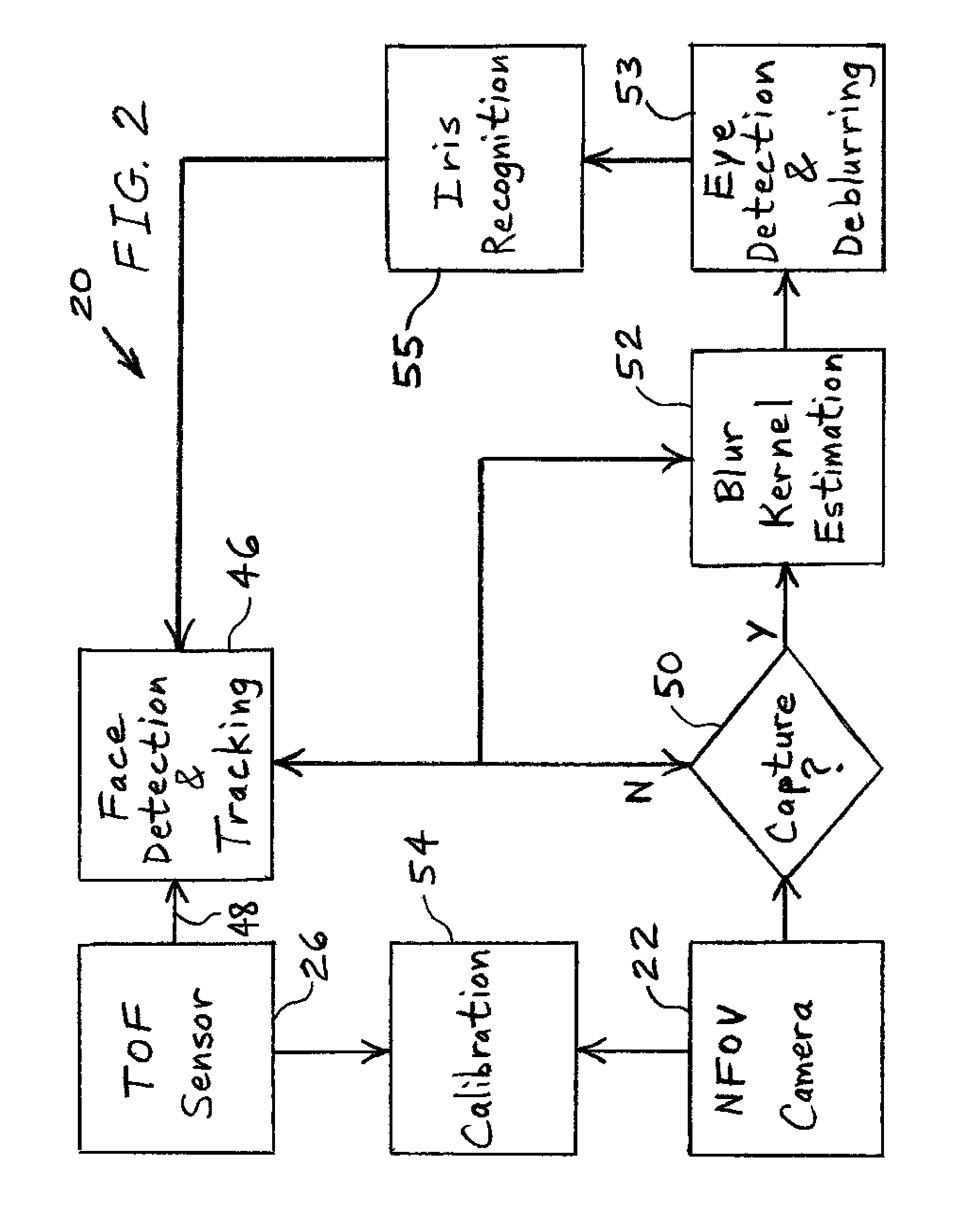

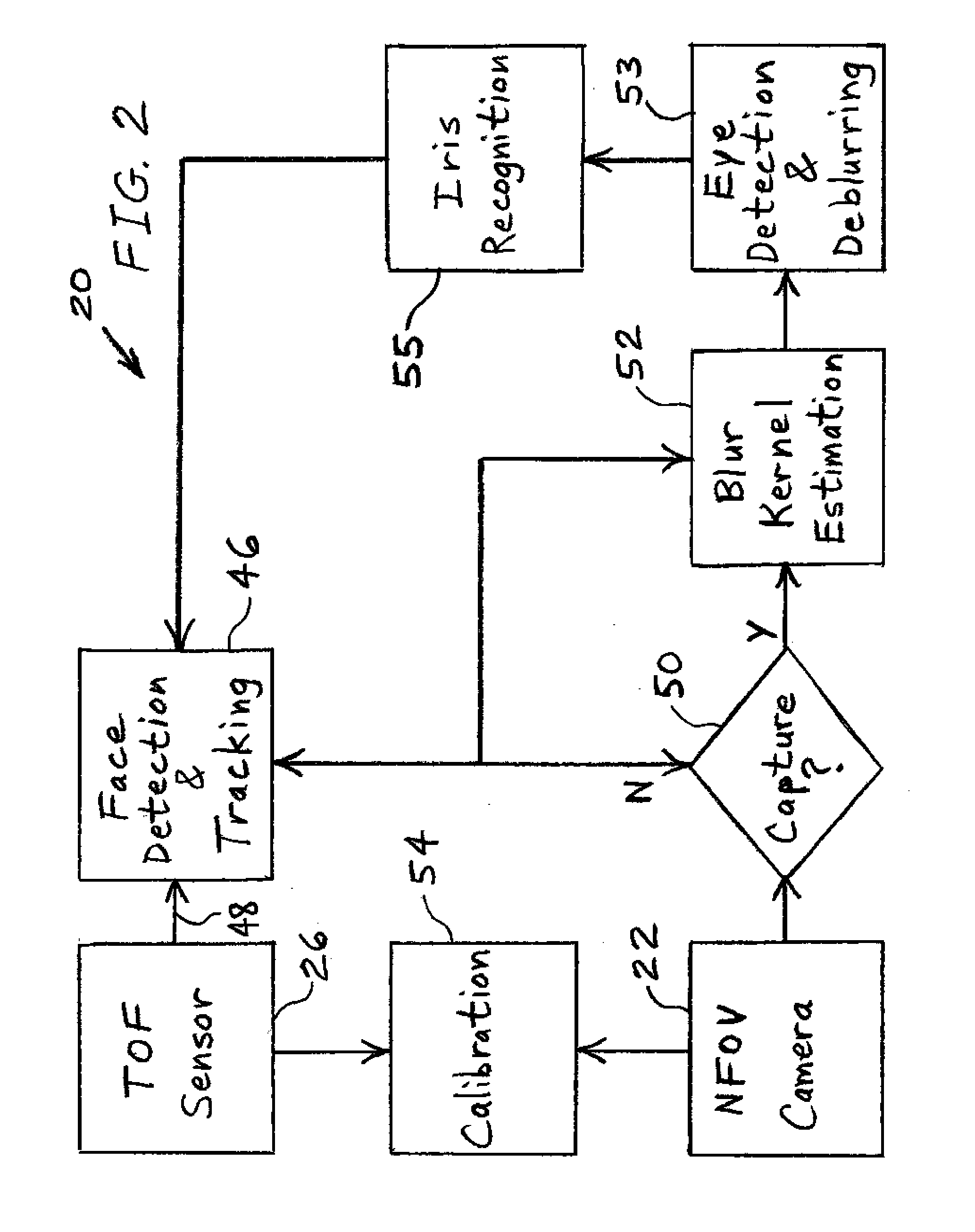

Time-of-flight sensor-assisted iris capture system and method

ActiveUS7912252B2Add depthIncrease volumeTelevision system detailsAcquiring/recognising eyesTime of flight sensorComputer vision

Owner:ROBERT BOSCH GMBH

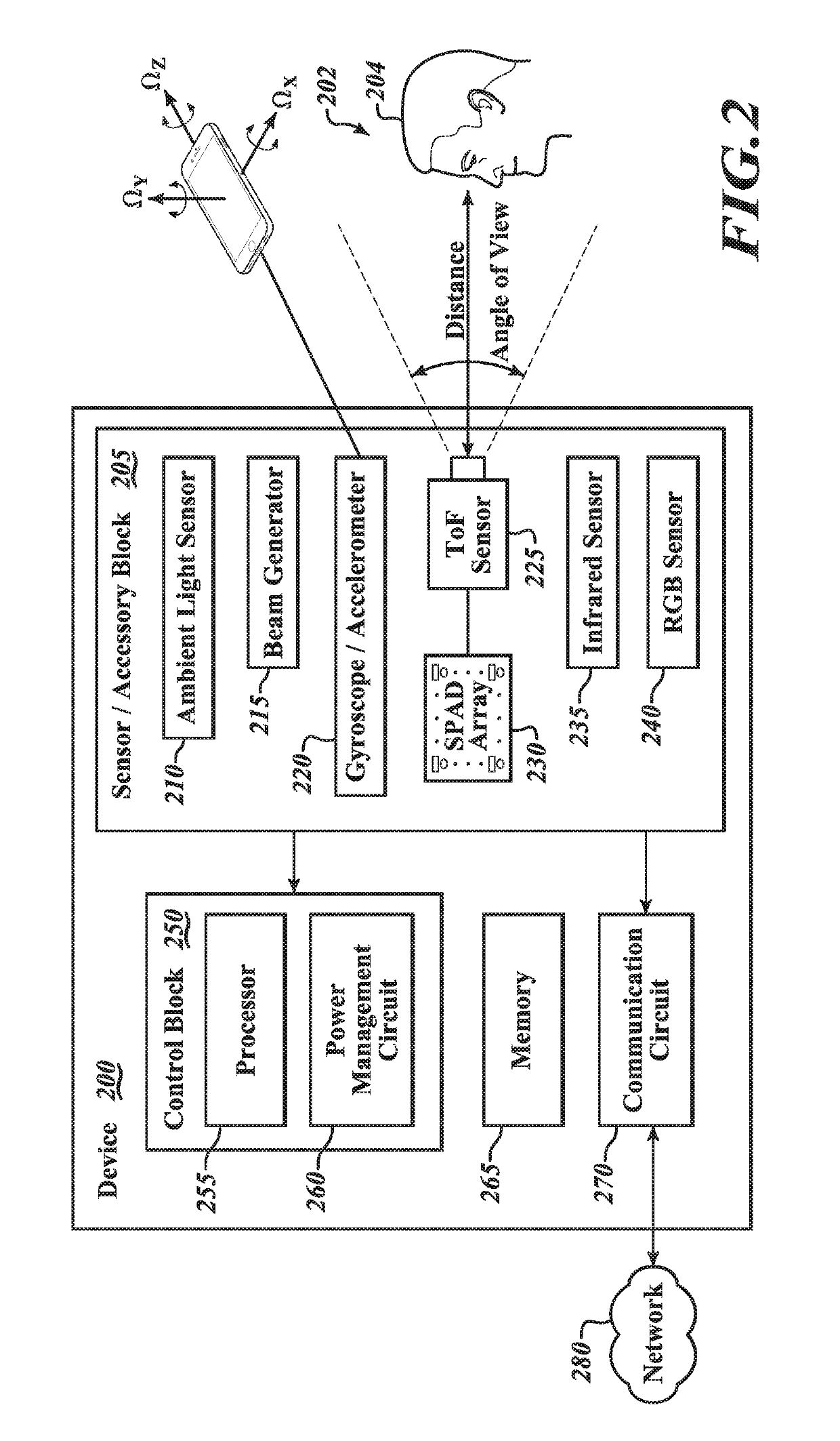

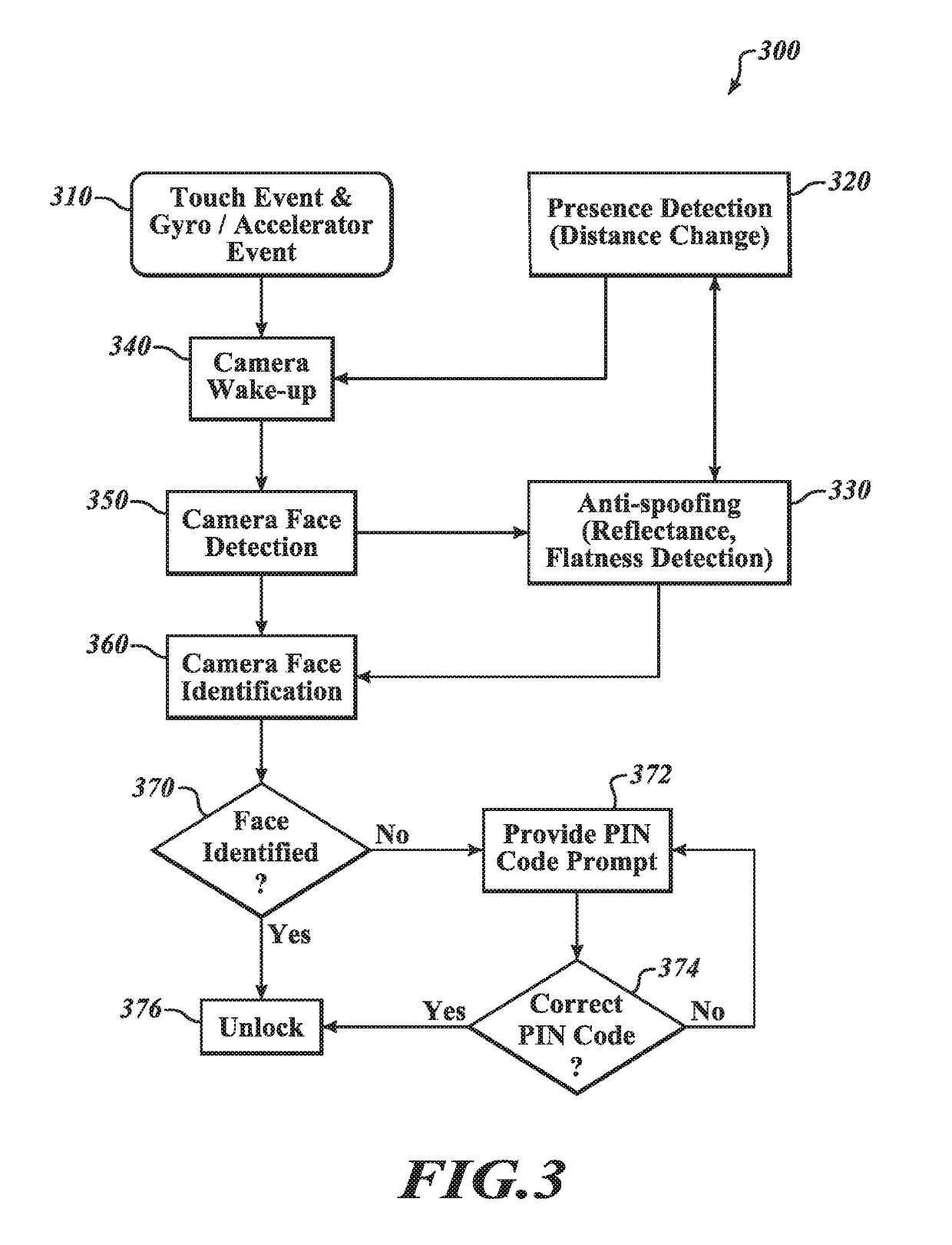

Facial authentication systems and methods utilizing time of flight sensing

ActiveUS20190213309A1Improve accuracyImprove securityImage enhancementImage analysisTime of flight sensorAuthentication system

The present disclosure is directed to a system and method of authenticating a user's face with a ranging sensor. The ranging sensor includes a time of flight sensor and a reflectance sensor. The ranging sensor transmits a signal that is reflected off of a user and received back at the ranging sensor. The received signal can be used to determine distance between the user and the sensor, and the reflectance value of the user. With the distance or the reflectivity, a processor can activate a facial recognition process in response to the distance and the reflectivity. A device incorporating the ranging sensor according to the present disclosure may reduce the overall power consumed during the facial authentication process.

Owner:STMICROELECTRONICS SRL

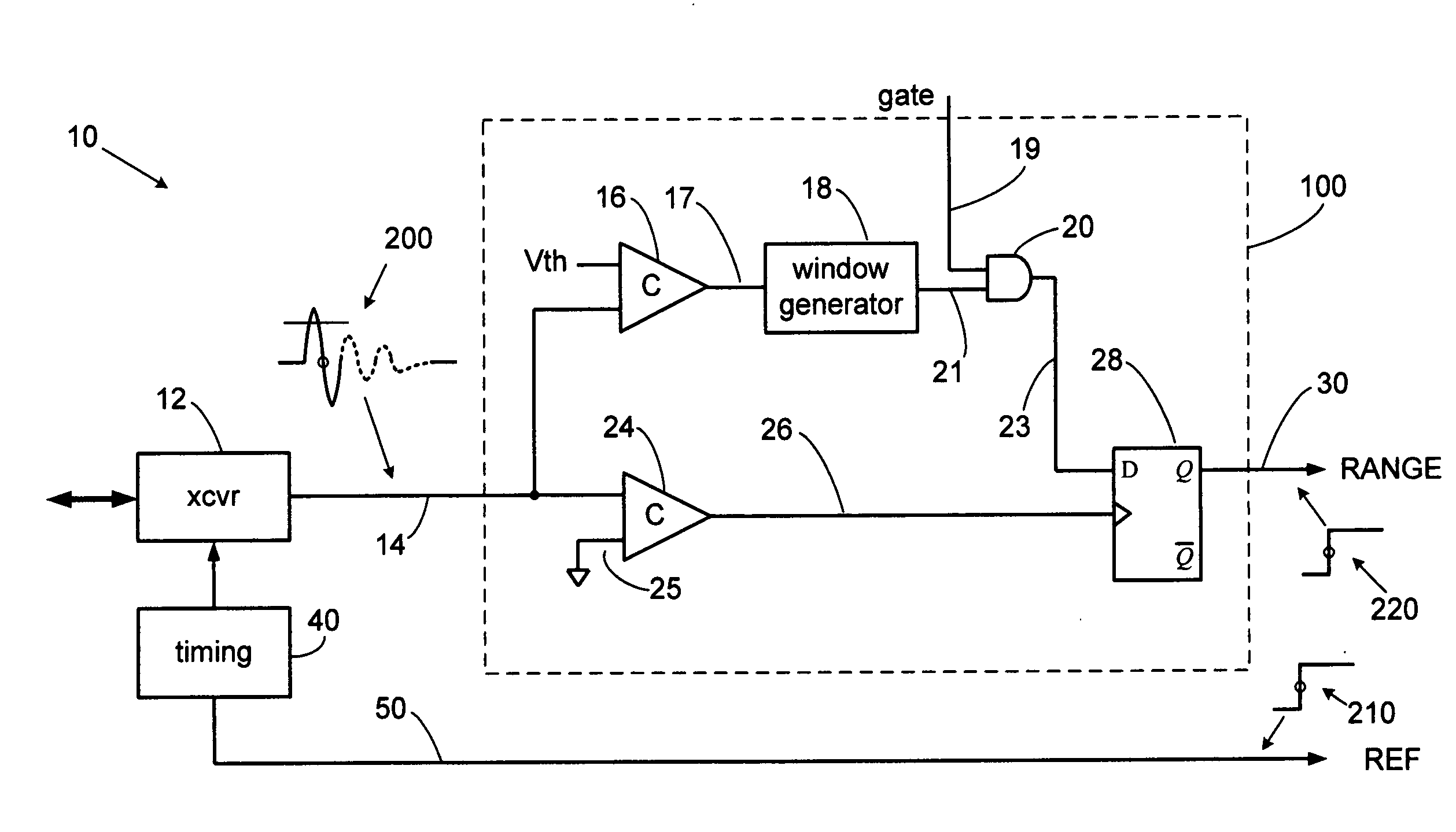

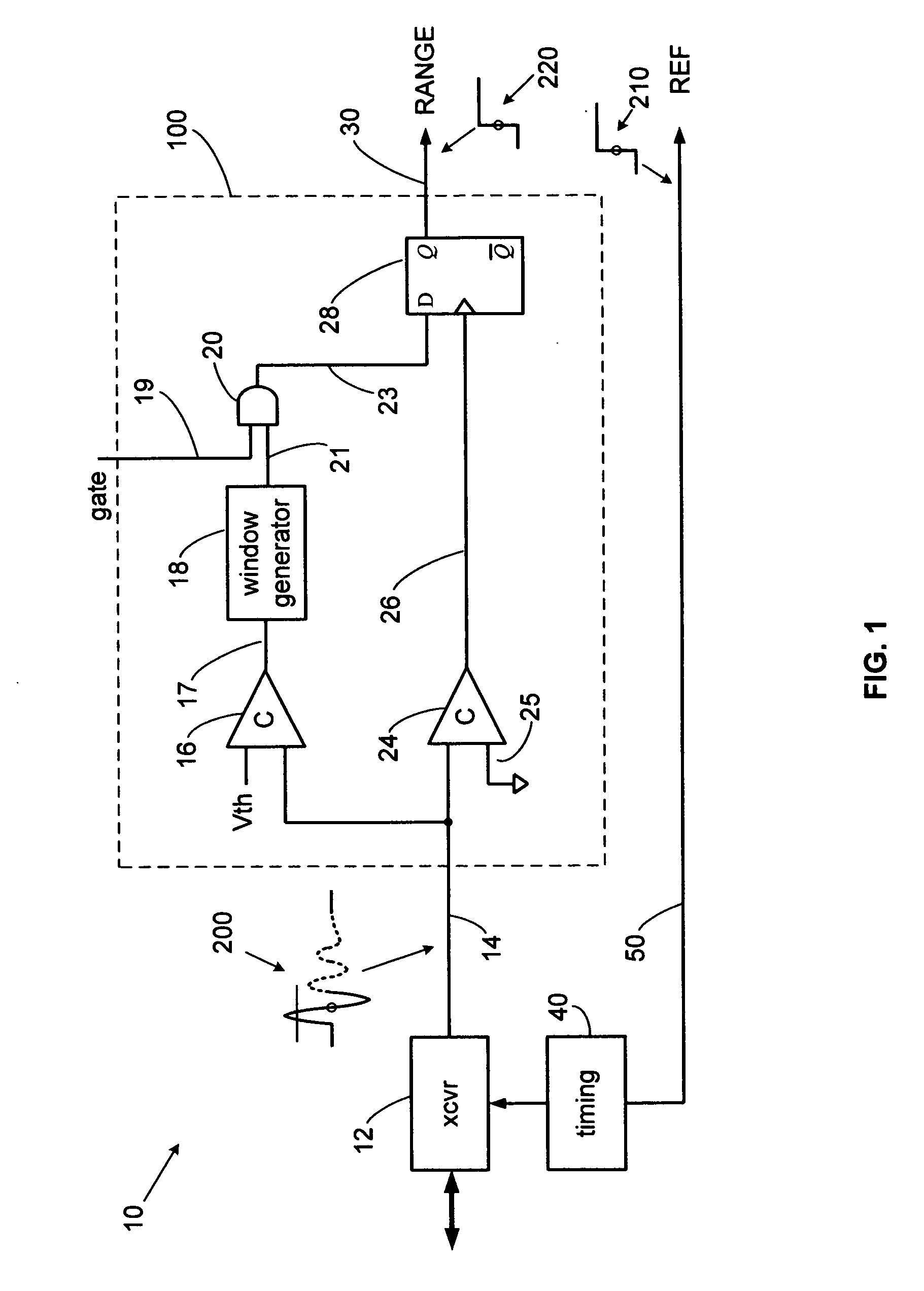

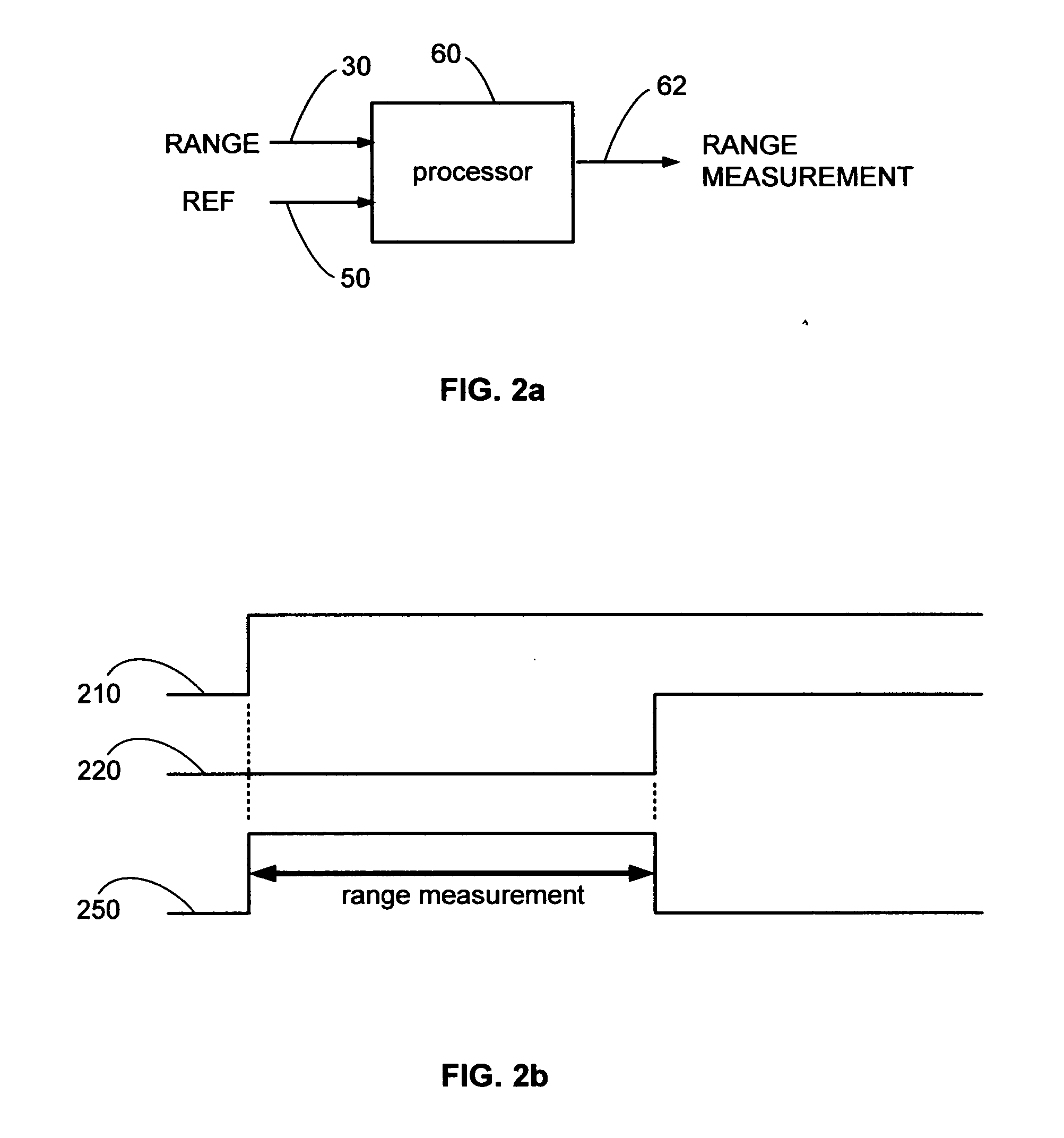

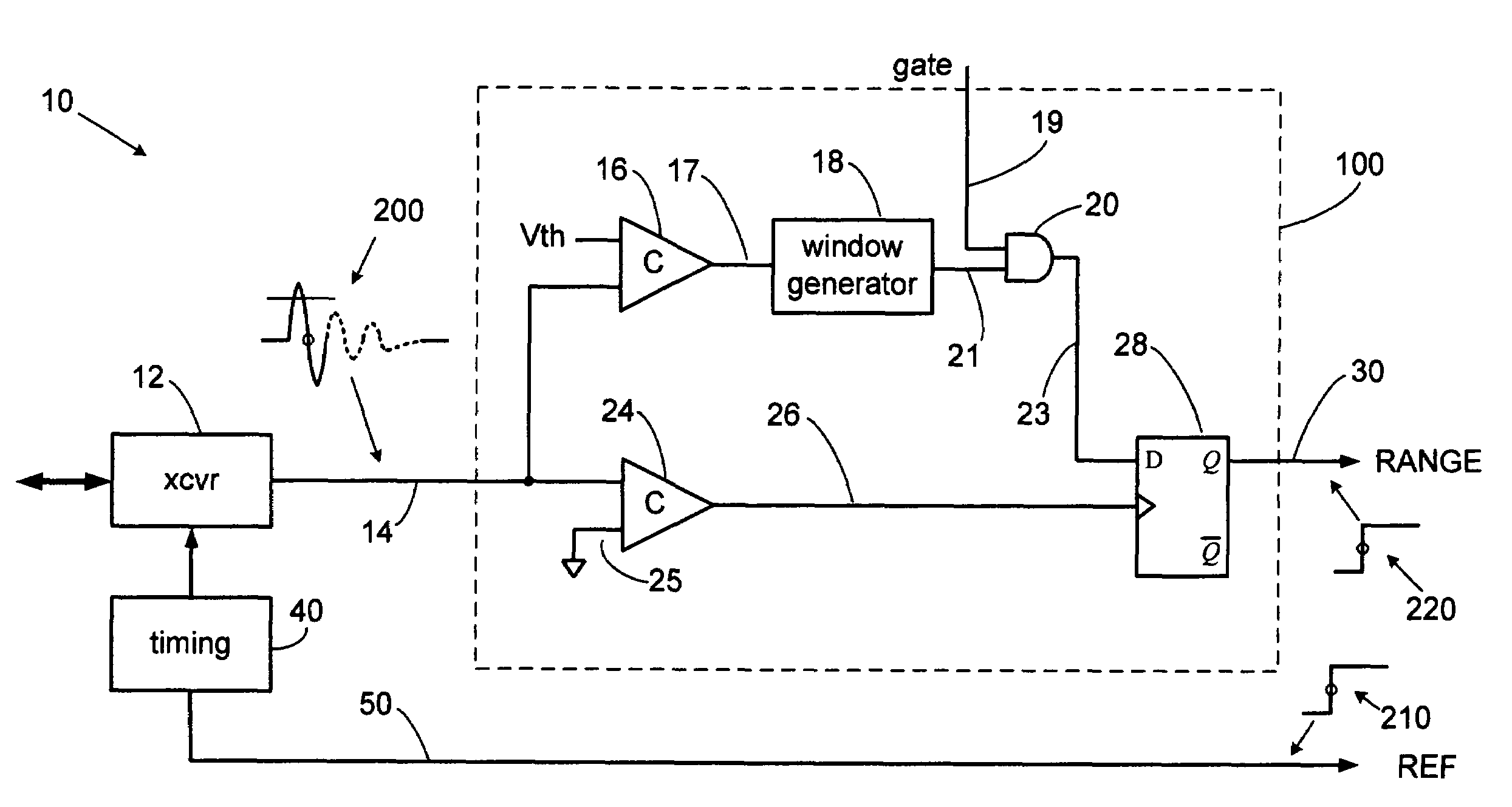

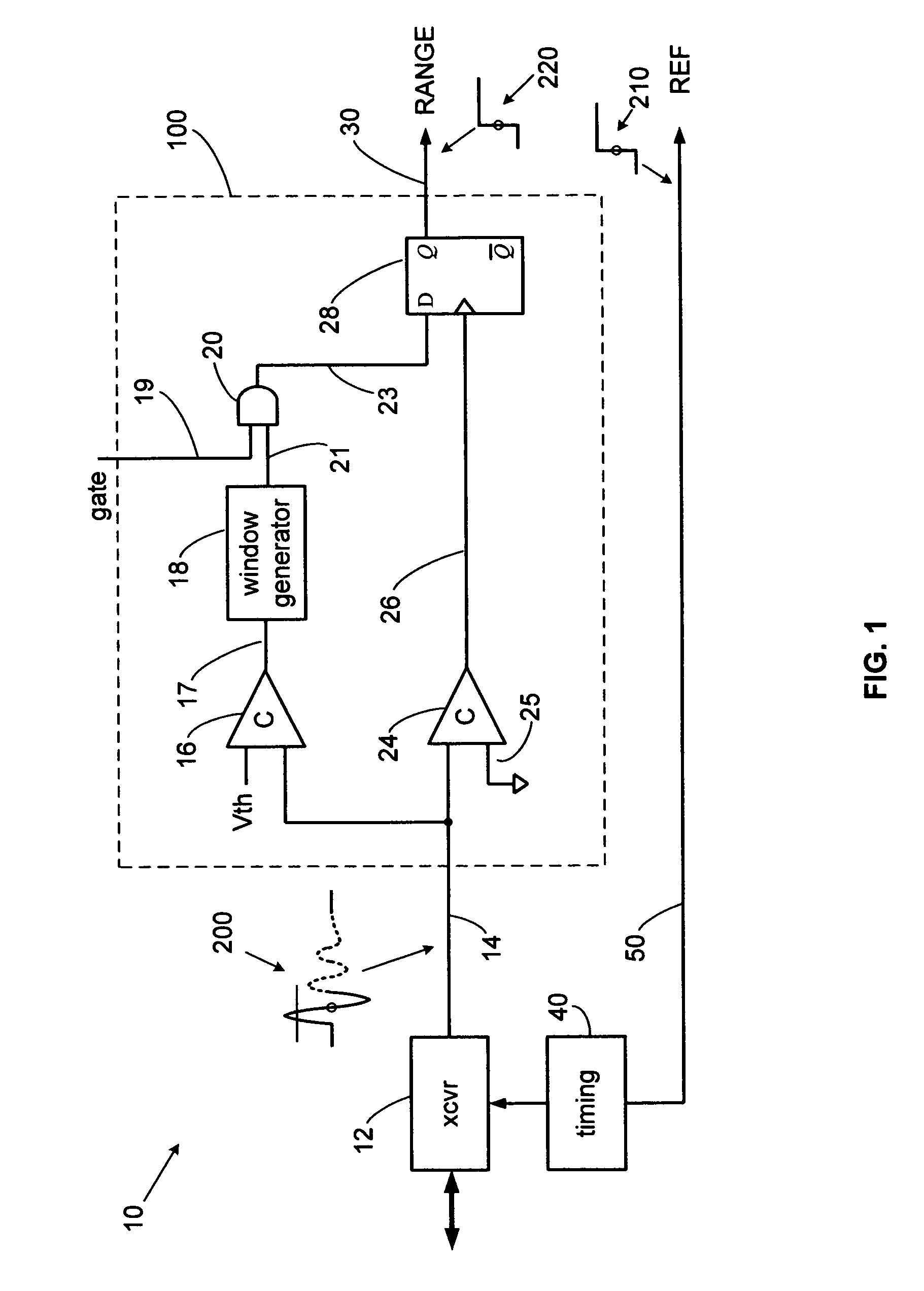

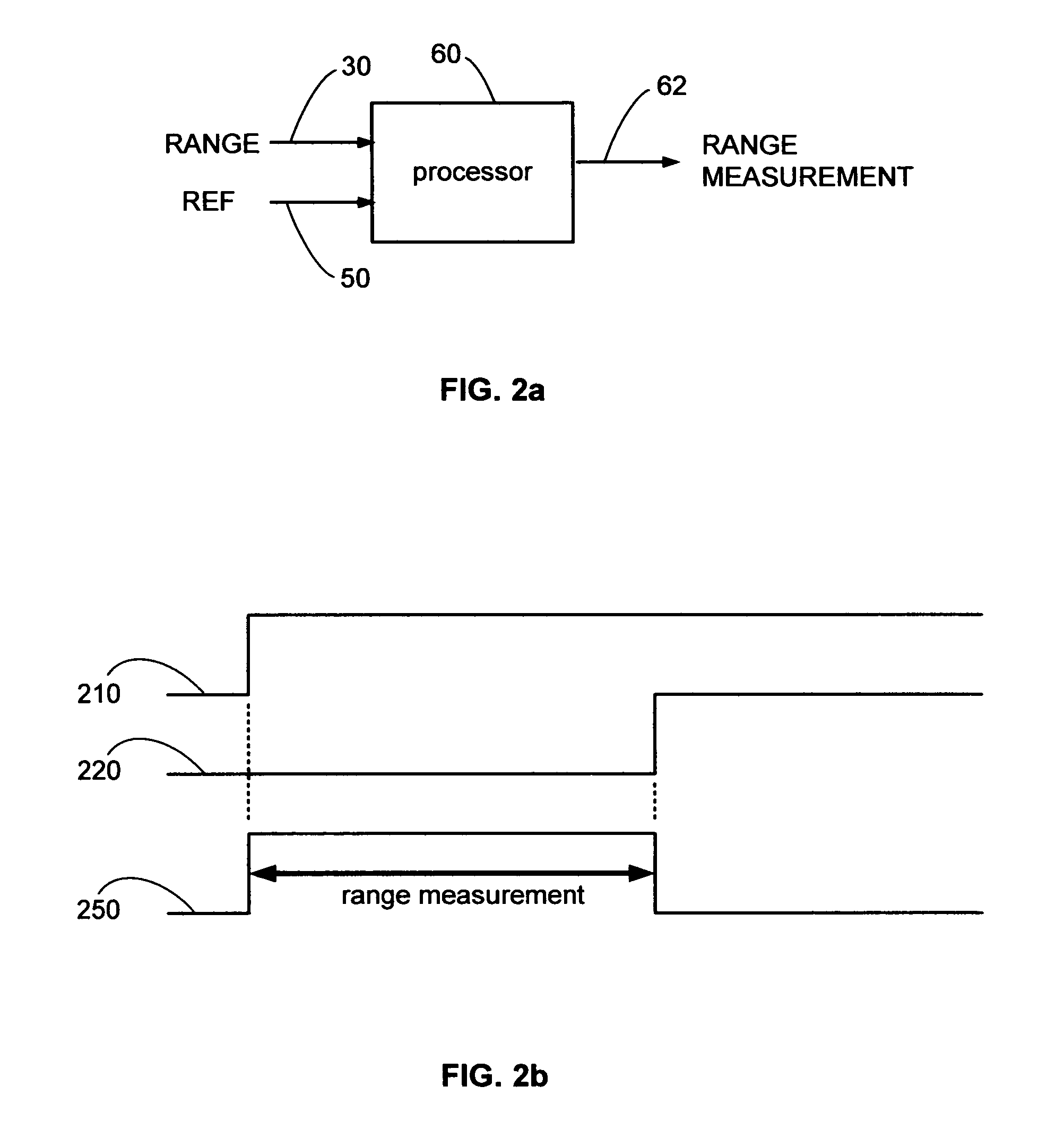

Precision pulse detection system for radar sensors

ActiveUS20080048905A1Improve accuracyGood anti-noise performanceAmplitude demodulation by homodyne/synchrodyne circuitsResistance/reactance/impedenceHandwritingPhase detector

A precision pulse detection system for time-of-flight sensors detects a zero axis crossing of a pulse after it crosses above and then falls below a threshold. Transmit and receive pulses flow through a common expanded-time receiver path to precision transmit and receive pulse detectors in a differential configuration. The detectors trigger on zero axis crossings that occur immediately after pulse lobes exceed and then drop below a threshold. Range errors caused by receiver variations cancel since transmit and receive pulses are affected equally. The system exhibits range measurement accuracies on the order of 1-picosecond without calibration even when used with transmitted pulse widths on the order of 500 picoseconds. The system can provide sub-millimeter accurate TDR, laser and radar sensors for measuring tank fill levels or for precision radiolocation systems including digital handwriting capture.

Owner:MCEWAN TECH

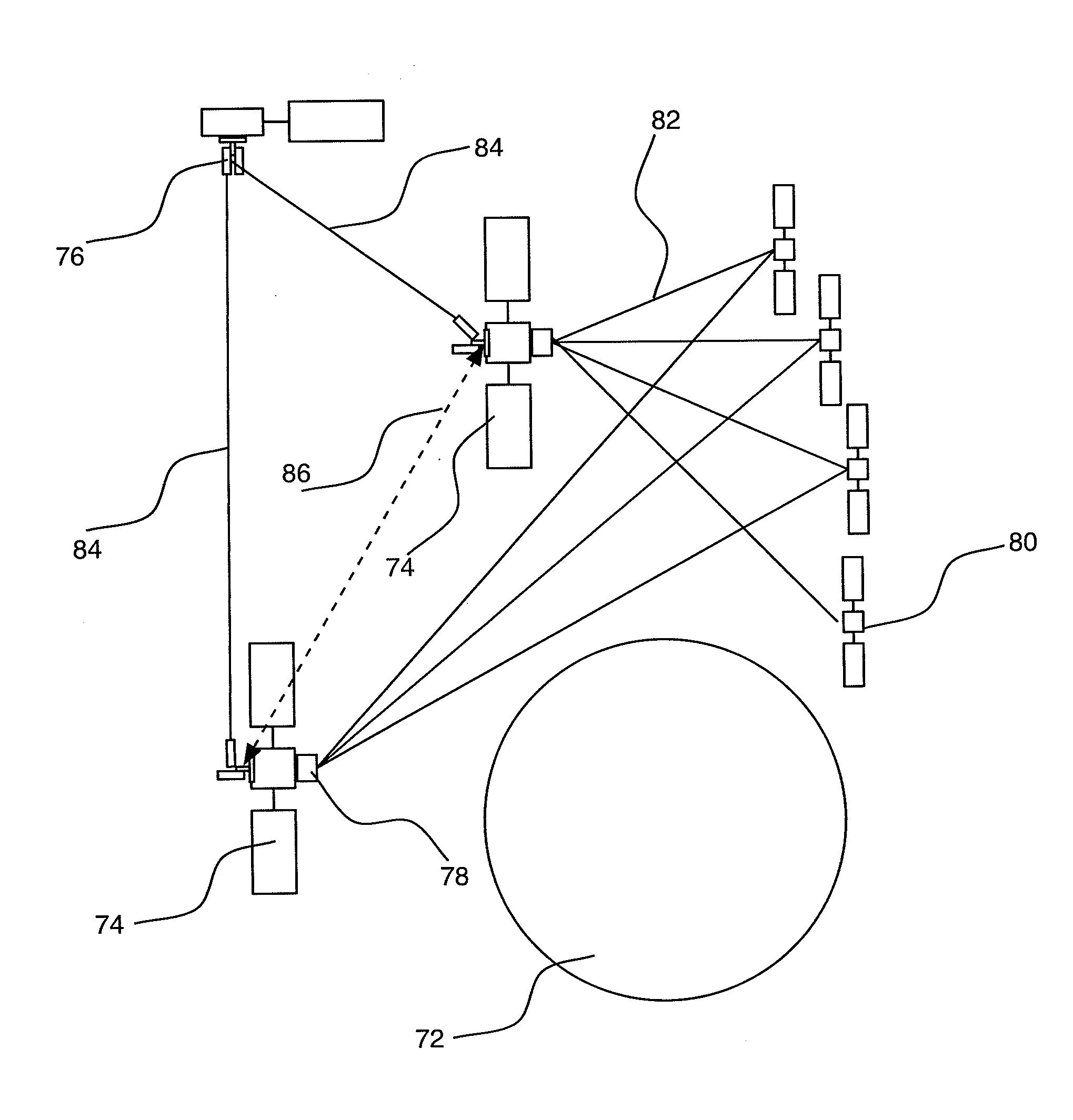

Optical navigation attitude determination and communications system for space vehicles

The invention is for a sensor for use in spacecraft navigation and communication. The system has two articulated telescopes providing navigation information and orientation information as well providing communications capability. Each telescope contains a laser and compatible sensor for optical communications and ranging, and an imaging chip for imaging the star field and planets. The three optical functions share a common optical path. A frequency selective prism or mirror directs incoming laser light to the communications and ranging sensor. The Doppler shift or time-of-flight of laser light reflected from the target can be measured. The sensor can use the range and range rate measured from the incoming laser along with measurements from the imaging chip to determine the location and velocity of the spacecraft. The laser and laser receiver provide communications capability.

Owner:PRINCETON SATELLITE SYST

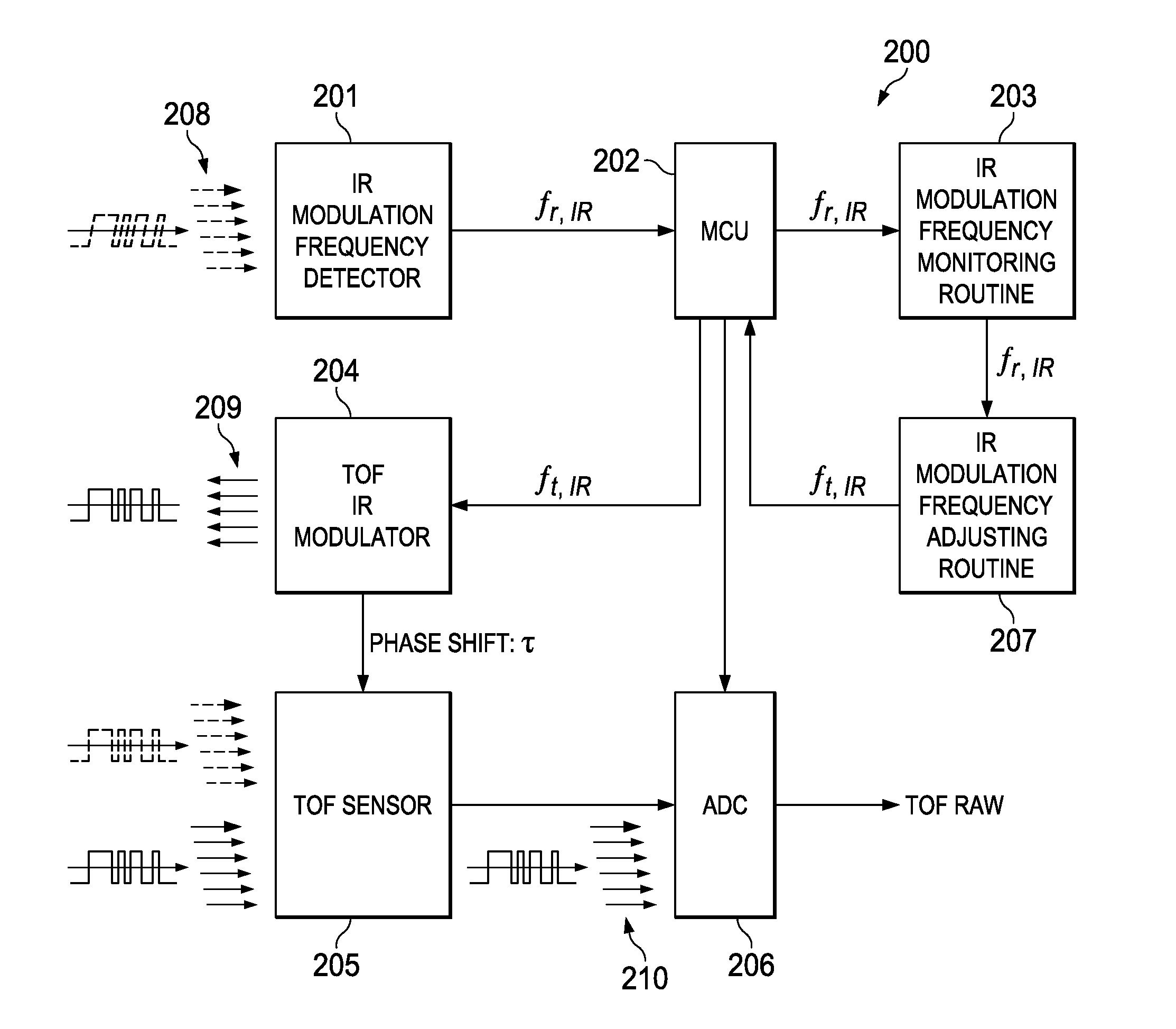

Method for time of flight modulation frequency detection and illumination modulation frequency adjustment

ActiveUS20140152974A1Cross-interference of modulated illumination among multiple TOF sensors can be avoidedOptical rangefindersPhotogrammetry/videogrammetryTime of flight sensorFrequency detection

A method removing adjecent frequency interference from a Time Of Flight sensor system by adaptively adjusting the infrared illumination frequency of the TOF sensor by measuring the interfering infrared illuminating frequencies and dynamicaly adjusting the illuminating infrared frequency of the TOF sensor to eliminate the interference.

Owner:TEXAS INSTR INC

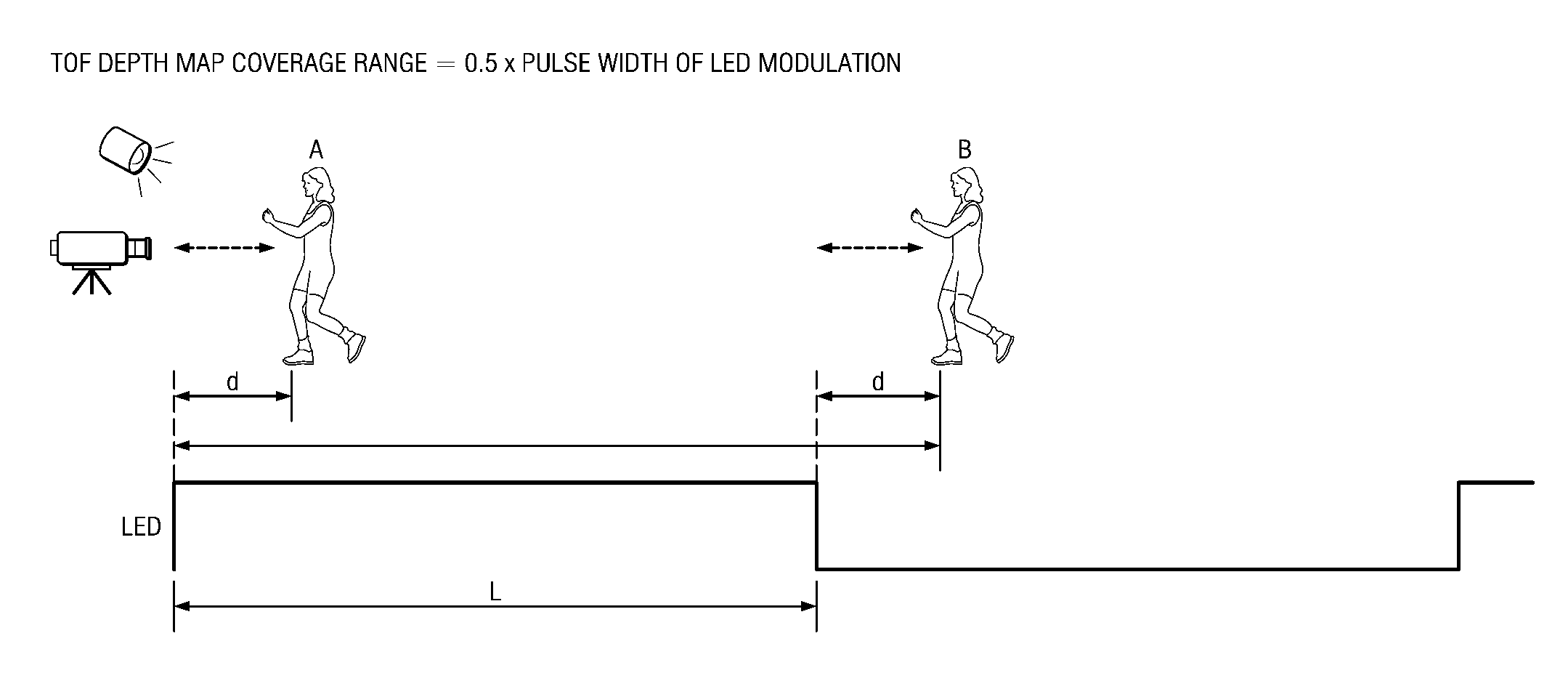

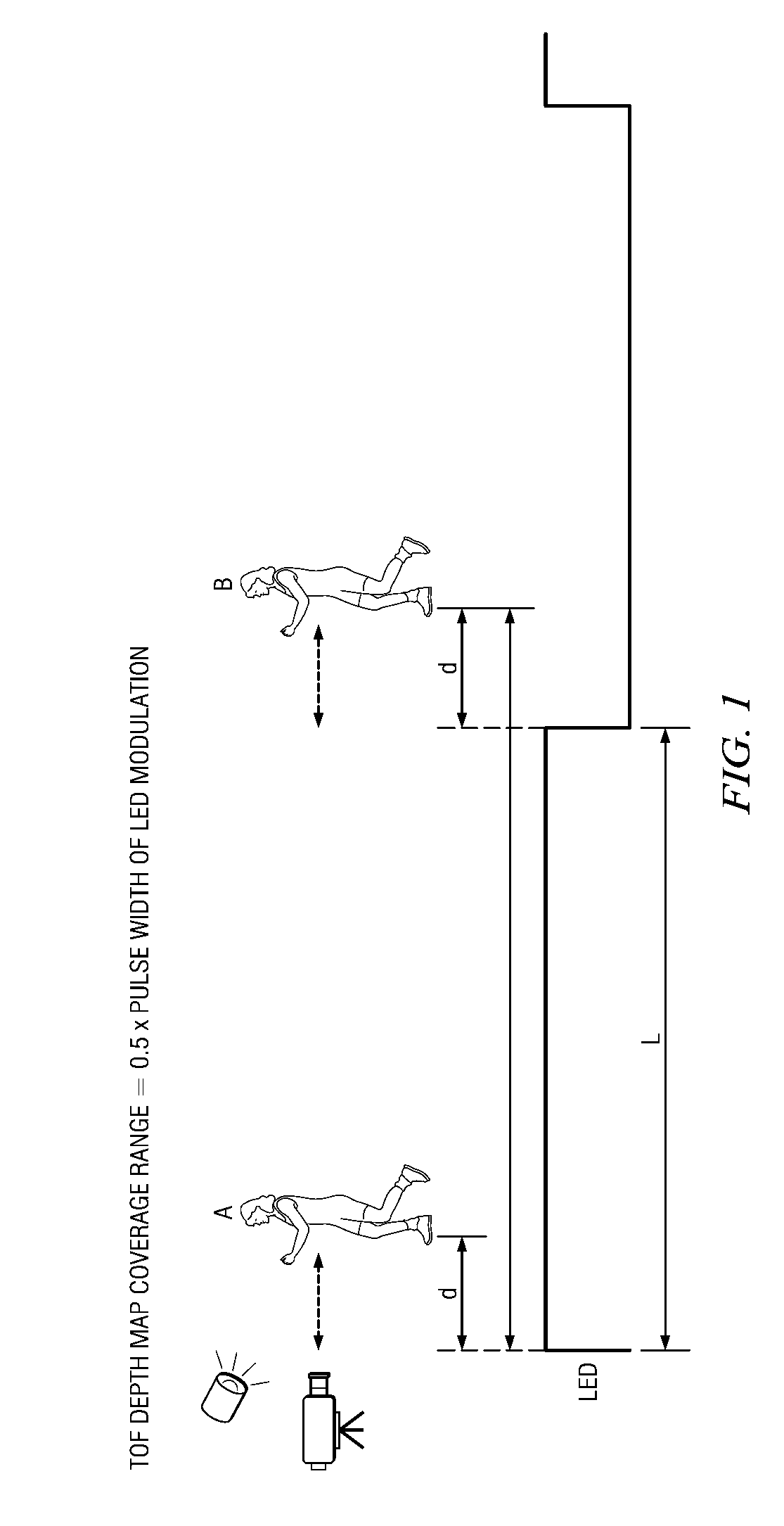

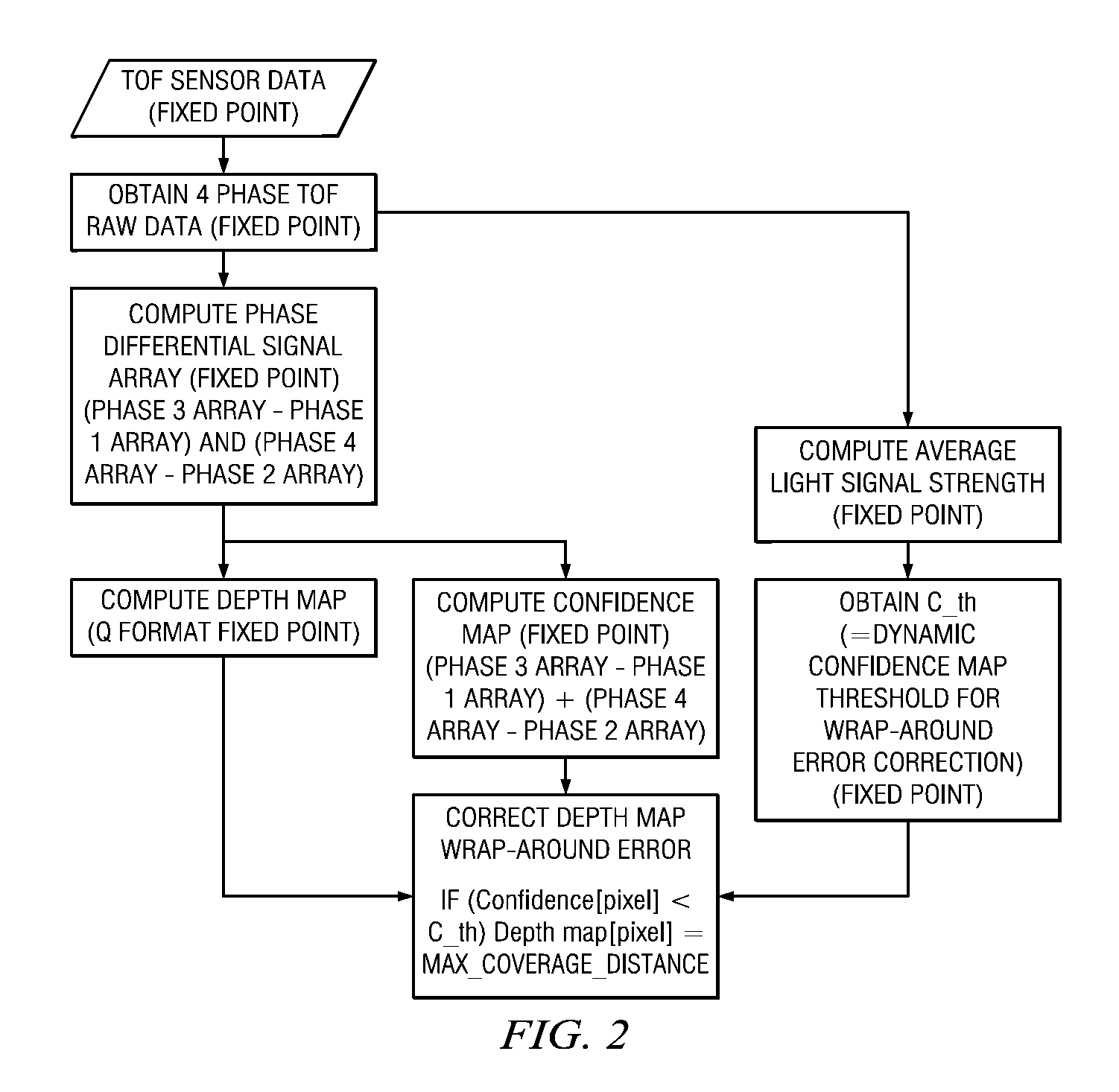

Method and apparatus for controlling time of flight confidence map based depth noise and depth coverage range

ActiveUS20120123718A1Fix bugsOptical rangefindersElectrical measurementsTime of flight sensorConfidence map

A method and apparatus for fixing a depth map wrap-around error and a depth map coverage by a confidence map. The method includes dynamically determining a threshold for the confidence map threshold based on an ambient light environment, wherein the threshold is reconfigurable depending on LED light strength distribution in TOF sensor, extracting, via the digital processor, four phases of Time Of Flight raw data from the Time Of Flight sensor data, computing a phase differential signal array for fixed point utilizing the four phases of the Time Of Flight raw data, computing the depth map, confidence map and the average light signal strength utilizing the phase differential signal array, obtaining the dynamic confidence map threshold for wrap around error correction utilizing the average light signal strength, and correcting the depth map wrap-around error utilizing the depth map, the confidence map and the dynamic confidence map threshold.

Owner:TEXAS INSTR INC

Time-of-flight sensor-assisted iris capture system and method

ActiveUS20100202666A1Add depthEnlarged capture volumeTelevision system detailsAcquiring/recognising eyesTime of flight sensorComputer vision

Owner:ROBERT BOSCH GMBH

Precision pulse detection system for radar sensors

ActiveUS7446695B2Improve accuracyGood anti-noise performanceAmplitude demodulation by homodyne/synchrodyne circuitsResistance/reactance/impedenceHandwritingPhase detector

A precision pulse detection system for time-of-flight sensors detects a zero axis crossing of a pulse after it crosses above and then falls below a threshold. Transmit and receive pulses flow through a common expanded-time receiver path to precision transmit and receive pulse detectors in a differential configuration. The detectors trigger on zero axis crossings that occur immediately after pulse lobes exceed and then drop below a threshold. Range errors caused by receiver variations cancel since transmit and receive pulses are affected equally. The system exhibits range measurement accuracies on the order of 1-picosecond without calibration even when used with transmitted pulse widths on the order of 500 picoseconds. The system can provide sub-millimeter accurate TDR, laser and radar sensors for measuring tank fill levels or for precision radiolocation systems including digital handwriting capture.

Owner:MCEWAN TECH

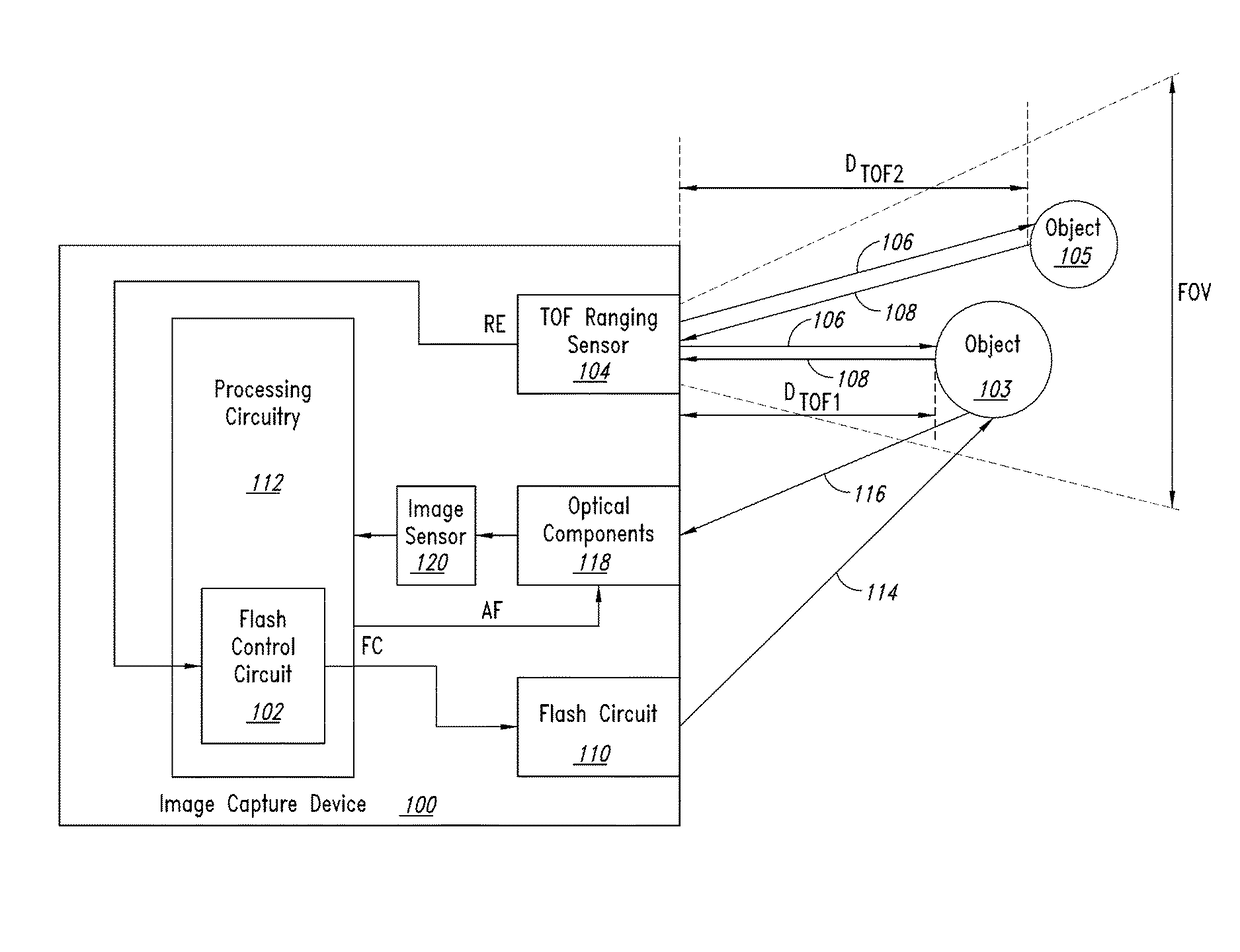

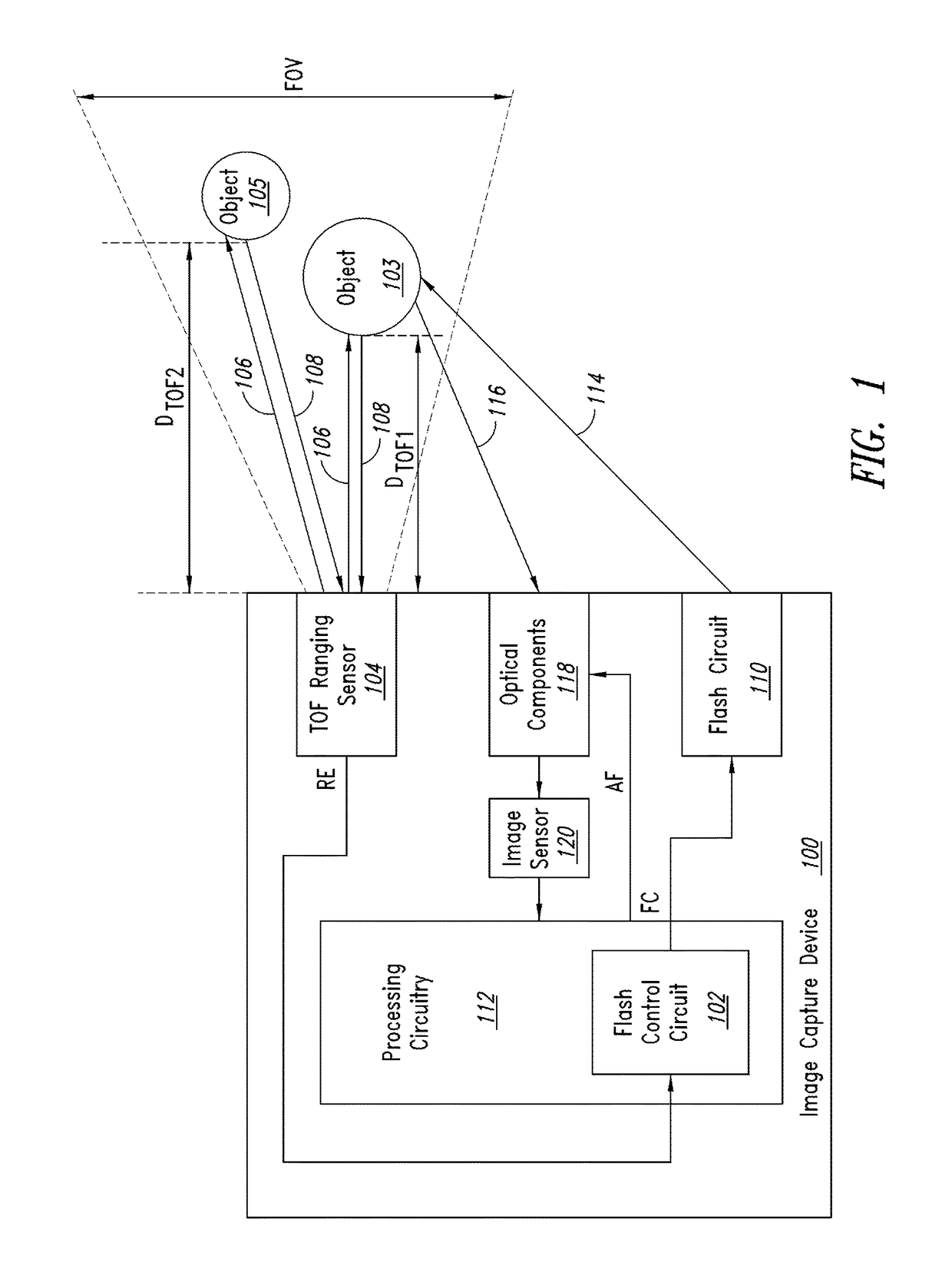

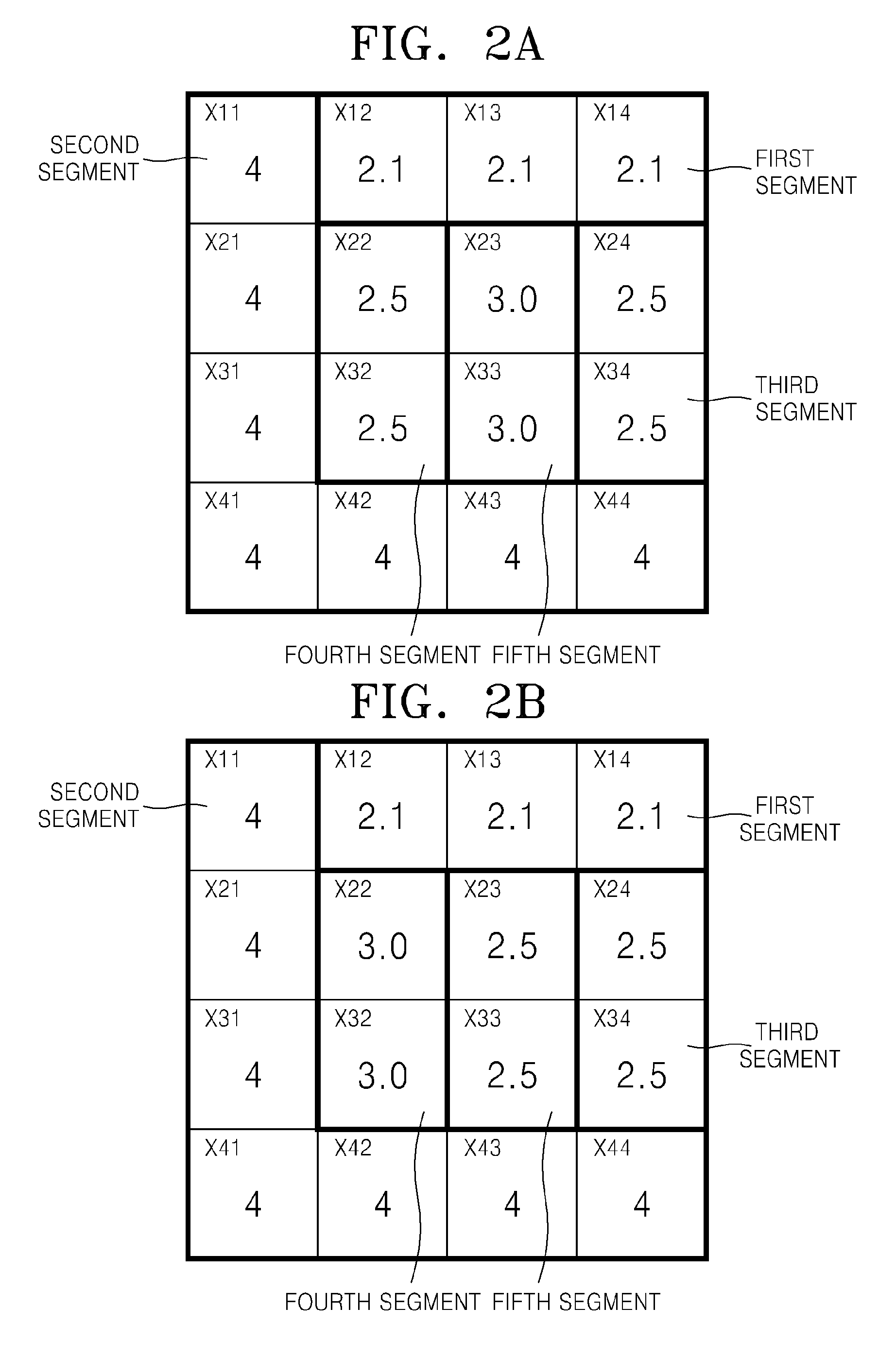

Time of flight ranging for flash control in image capture devices

InactiveUS20170353649A1Television system detailsColor television detailsTime of flight sensorControl signal

A flash control circuit of an image capture device includes a time-of-flight ranging sensor configured to sense distances to a plurality of objects within an overall field of view of the time-of-flight ranging sensor. The time-of-flight sensor is configured to generate a range estimation signal including a plurality of sensed distances to the plurality of objects. Flash control circuitry is coupled to the time-of-flight ranging sensor to receive the range estimation signal and is configured to generate a flash control signal to control a power of flash illumination light based upon the plurality of sensed distances. The time-of-flight sensor may also generate a signal amplitude for each of the plurality of sensed objects, with the flash control circuitry generating the flash control signal to the control the power of the flash illumination based on the plurality of sensed distances and signal amplitudes.

Owner:STMICROELECTRONICS (RES & DEV) LTD +1

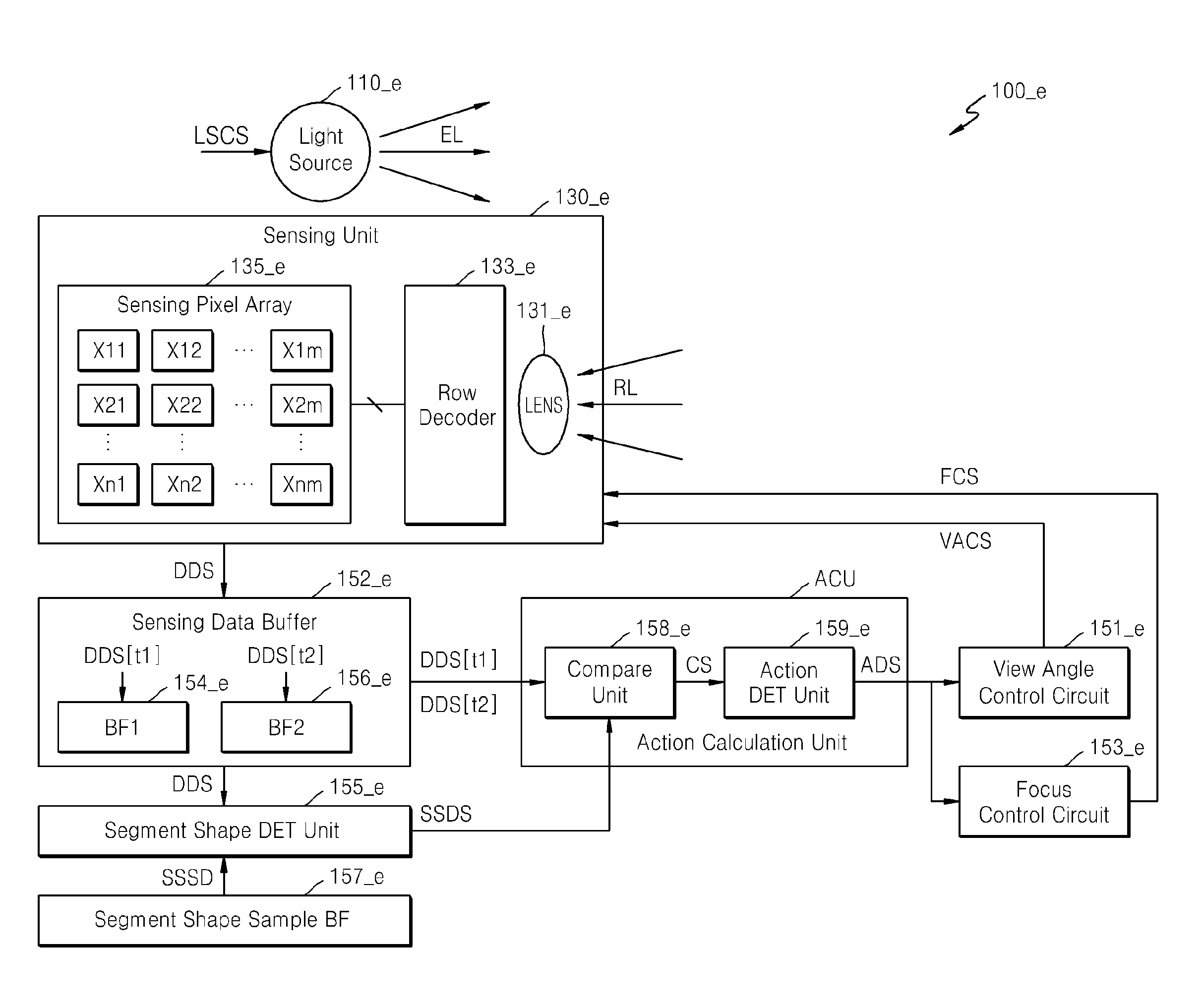

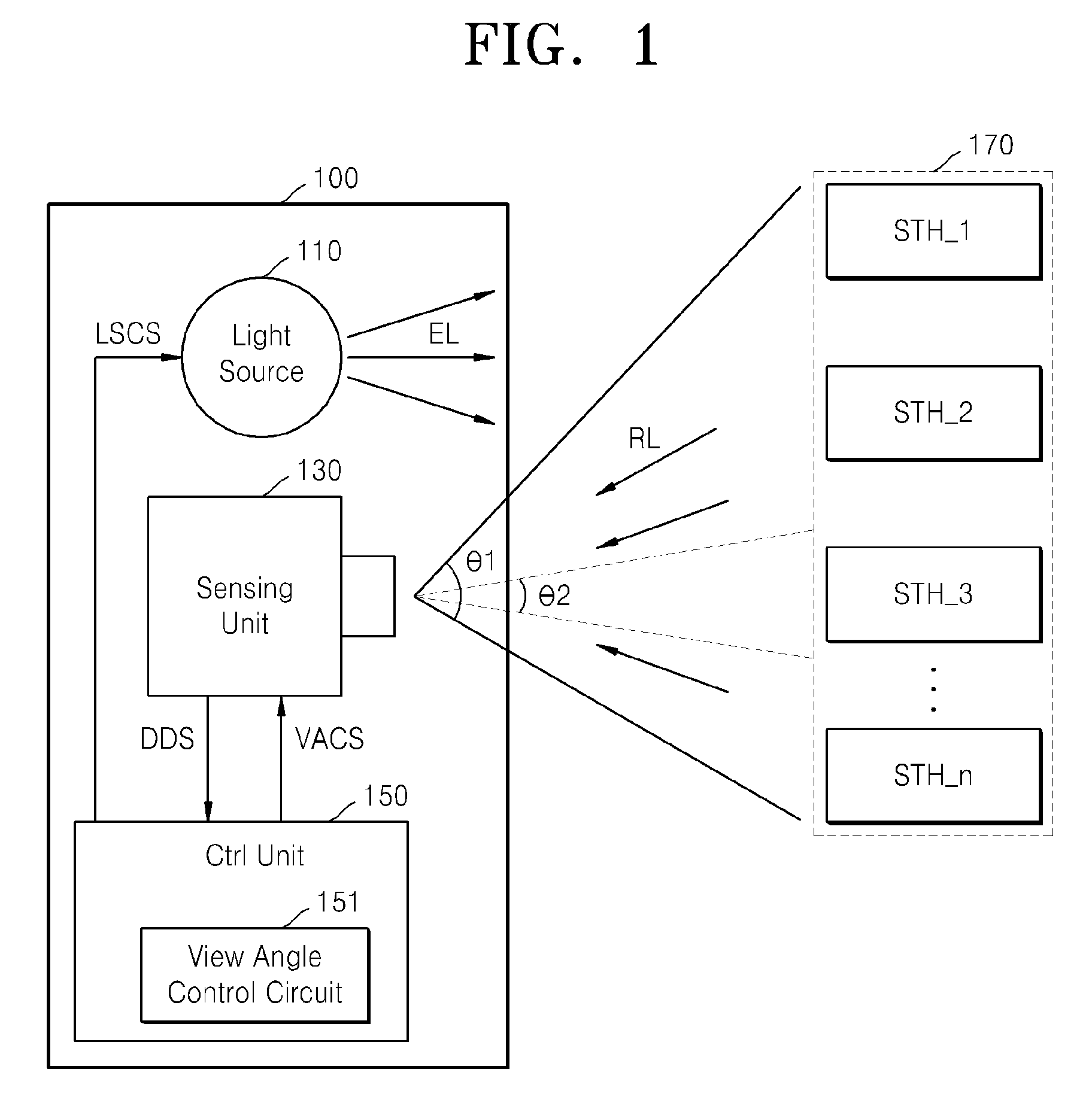

Time of flight sensor, camera using time of flight sensor, and related method of operation

InactiveUS20130235364A1Increase the number ofImprove overall senseOptical rangefindersElectromagnetic wave reradiationTime of flight sensorData signal

A time of flight (ToF) sensor comprises a light source configured to irradiate light on a subject, a sensing unit comprising a sensing pixel array configured to sense light reflected from the subject and to generate a distance data signal, and a control unit comprising a view angle control circuit. The view angle control circuit is configured to detect movement of the subject based on the distance data signal received from the sensing unit and to control a view angle of the sensing unit to increase a number of sensing pixels in the sensing pixel array that are used to sense a region in which the detected movement occurs.

Owner:SAMSUNG ELECTRONICS CO LTD

Method and system to reduce stray light reflection error in time-of-flight sensor arrays

ActiveUS8194233B2Weakening rangeReduce surface reflectivityOptical rangefindersElectromagnetic wave reradiationSensor arrayType error

Haze-type phase shift error due to stray light reflections in a phase-type TOF system is reduced by providing a windowed opaque coating on the sensor array surface, the windows permitting optical energy to reach light sensitive regions of the pixels, and by reducing optical path stray reflection. Further haze-type error reduction is obtained by acquiring values for a plurality (but not necessarily all) of pixel sensors in the TOF system pixel sensor array. Next, a correction term for the value (differential or other) acquired for each pixel in the plurality of pixel sensors is computed and stored. Modeling response may be made dependent upon pixel (row, column) location within the sensor array. During actual TOF system runtime operation, detection data for each pixel, or pixel groups (super pixels) is corrected using the stored data. Good optical system design accounts for correction, enabling a simple correction model.

Owner:MICROSOFT TECH LICENSING LLC

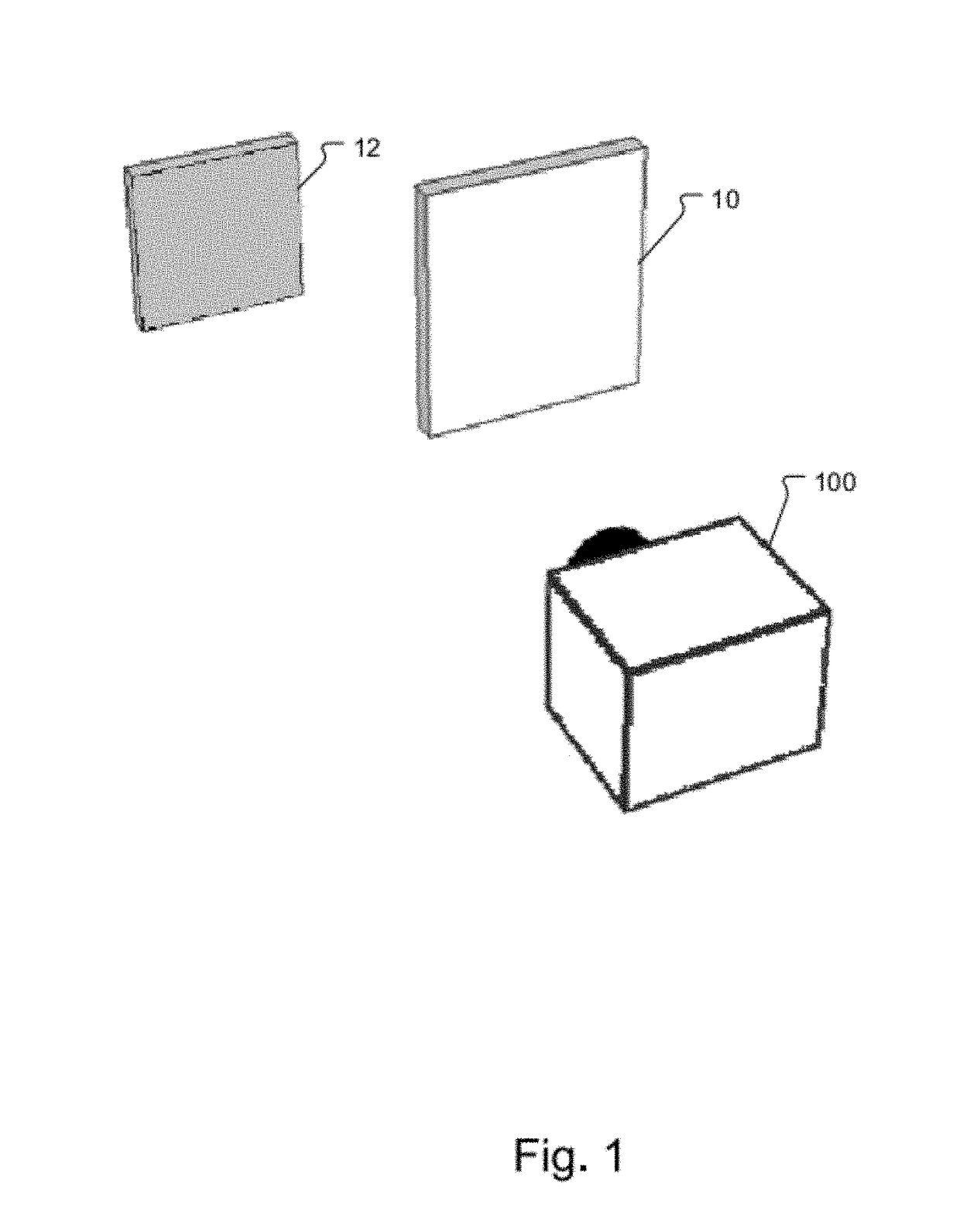

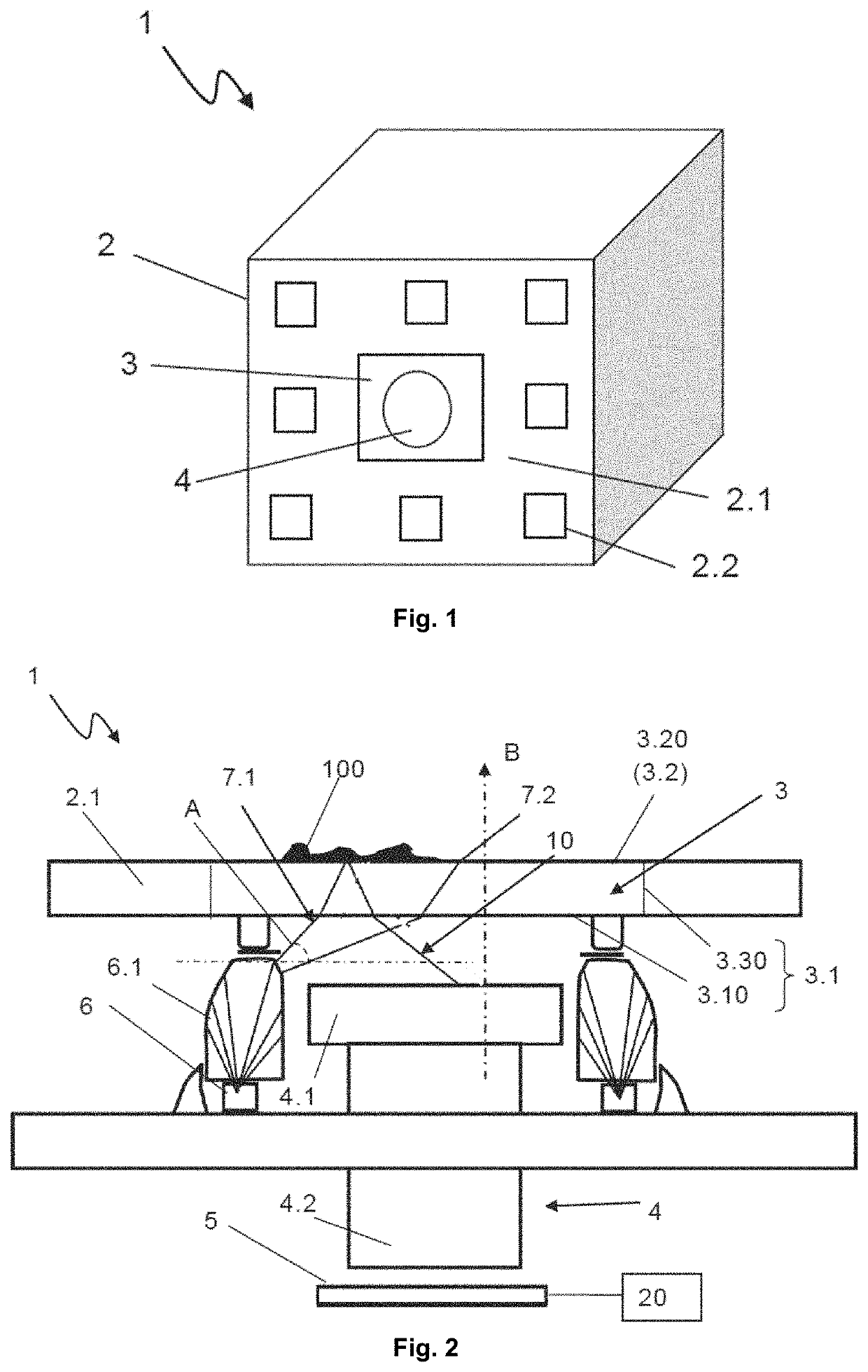

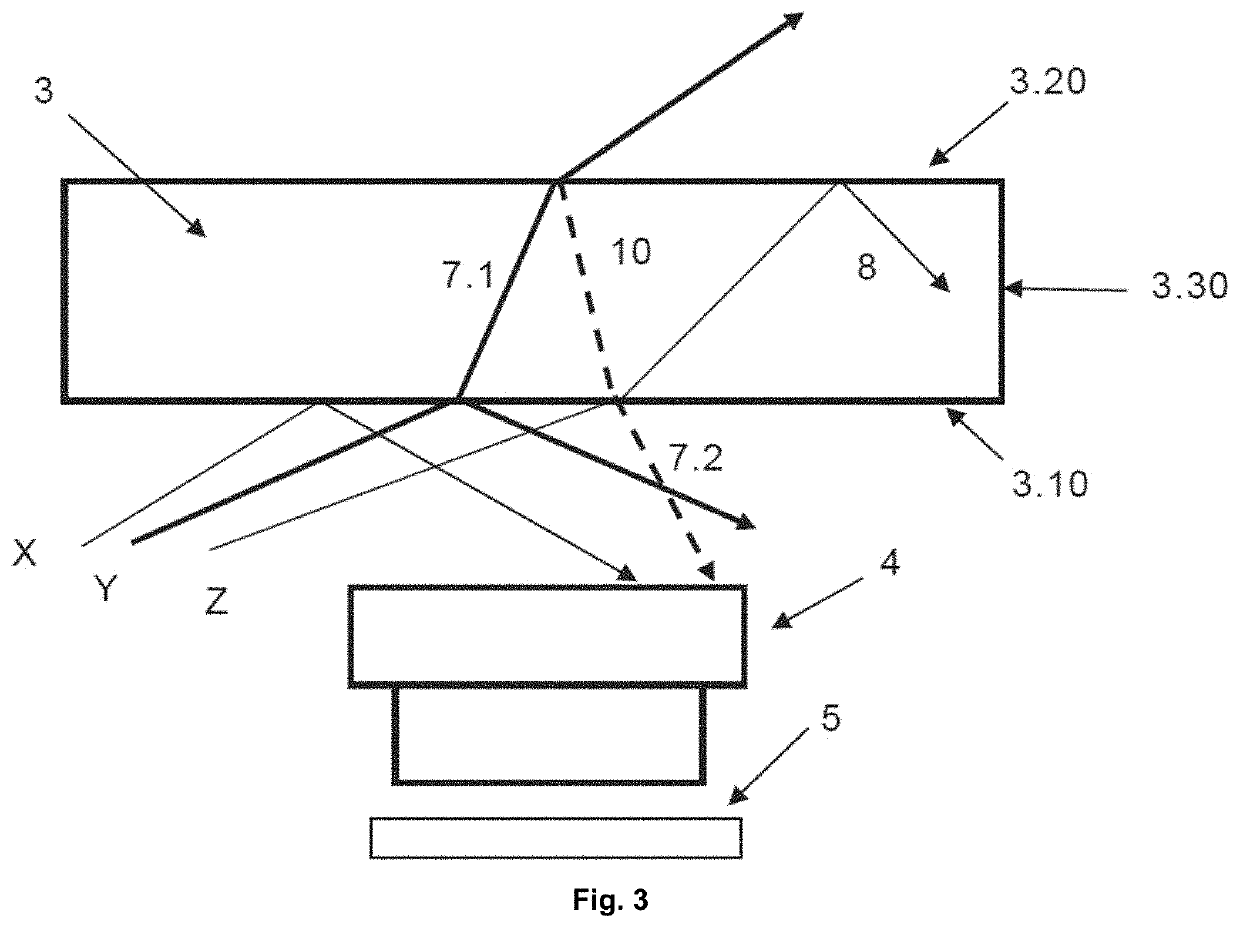

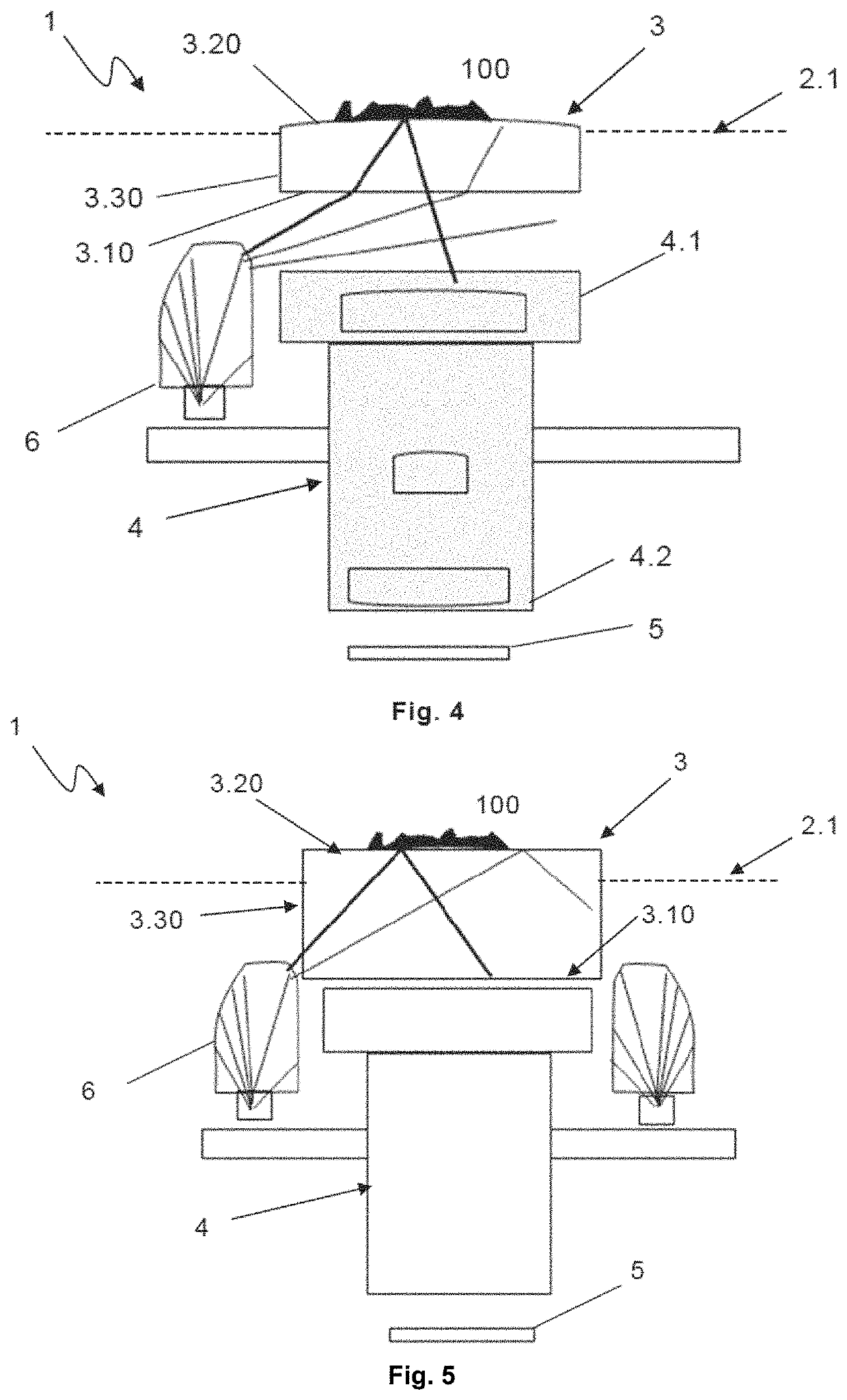

A 3d-camera device including a dirt detection unit

ActiveUS20190377072A1Short exposure timeImprove accuracyTelevision systemsAlarmsTime of flight sensorLight guide

A 3D-camera device (1) comprising a Time-Of-Flight sensor (5) and a light guide (4) with a light transparent cover (3). A light source (6) is arranged to emit light (7) into the transparent cover (3), whereby at least a portion (10) of the emitted light (7) is reflected out through a second portion (3.1) of the transparent cover (3) by dirt (100) present on the outer surface (3.20) of the transparent cover (3). The Time-Of-Flight sensor (5) is arranged to receive light (10) reflected by dirt (100) on the outer surface (3.20) of the transparent cover (3) and to output a signal (L) relative the amount of received reflected light (10). A control unit (20) configured to determine the presence of dirt (100) on the outer surface (3.20) of the transparent cover (3) from the magnitude of the signal (L) from the Time-Of-Flight sensor (5).

Owner:VEONEER SWEDEN AB

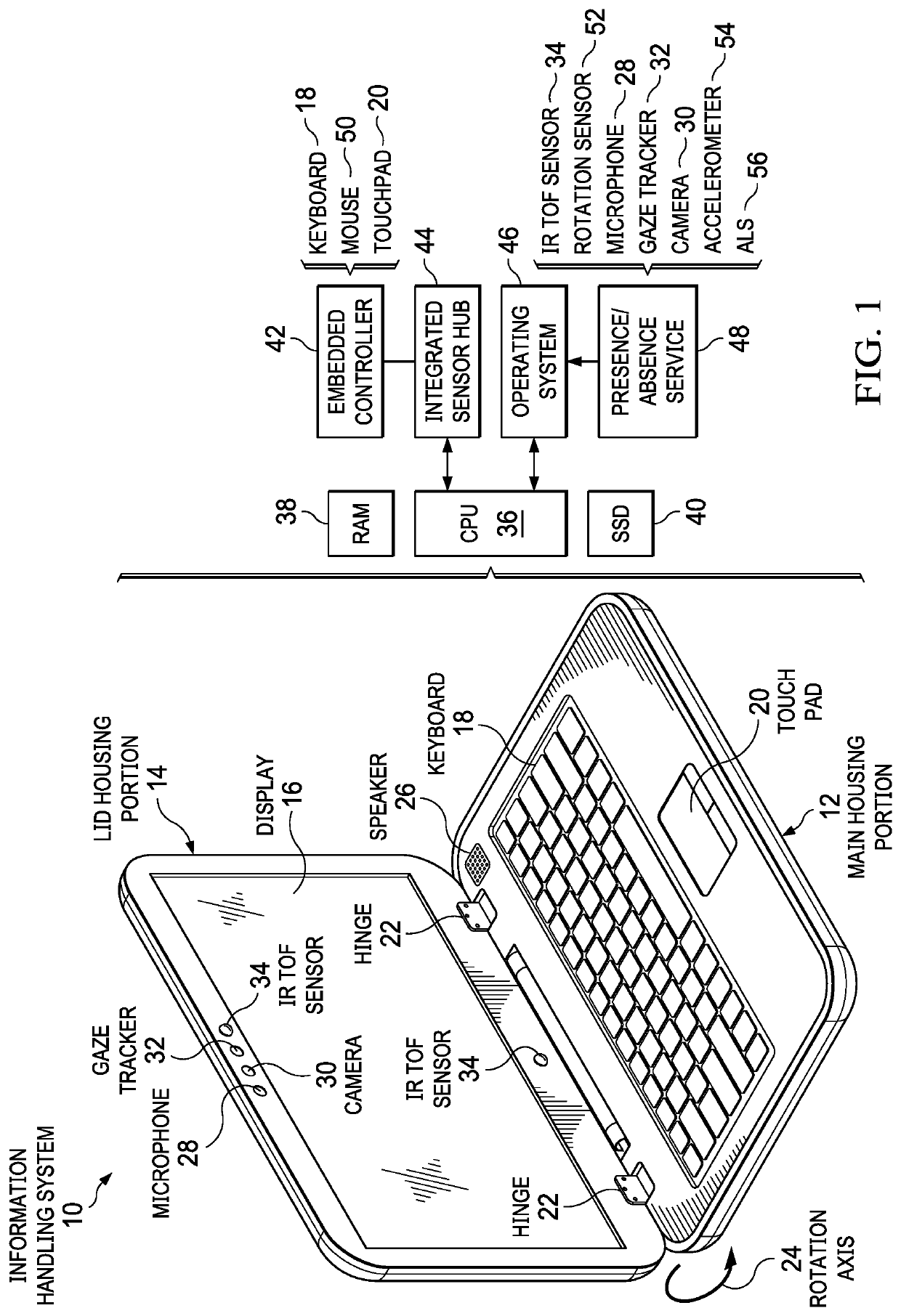

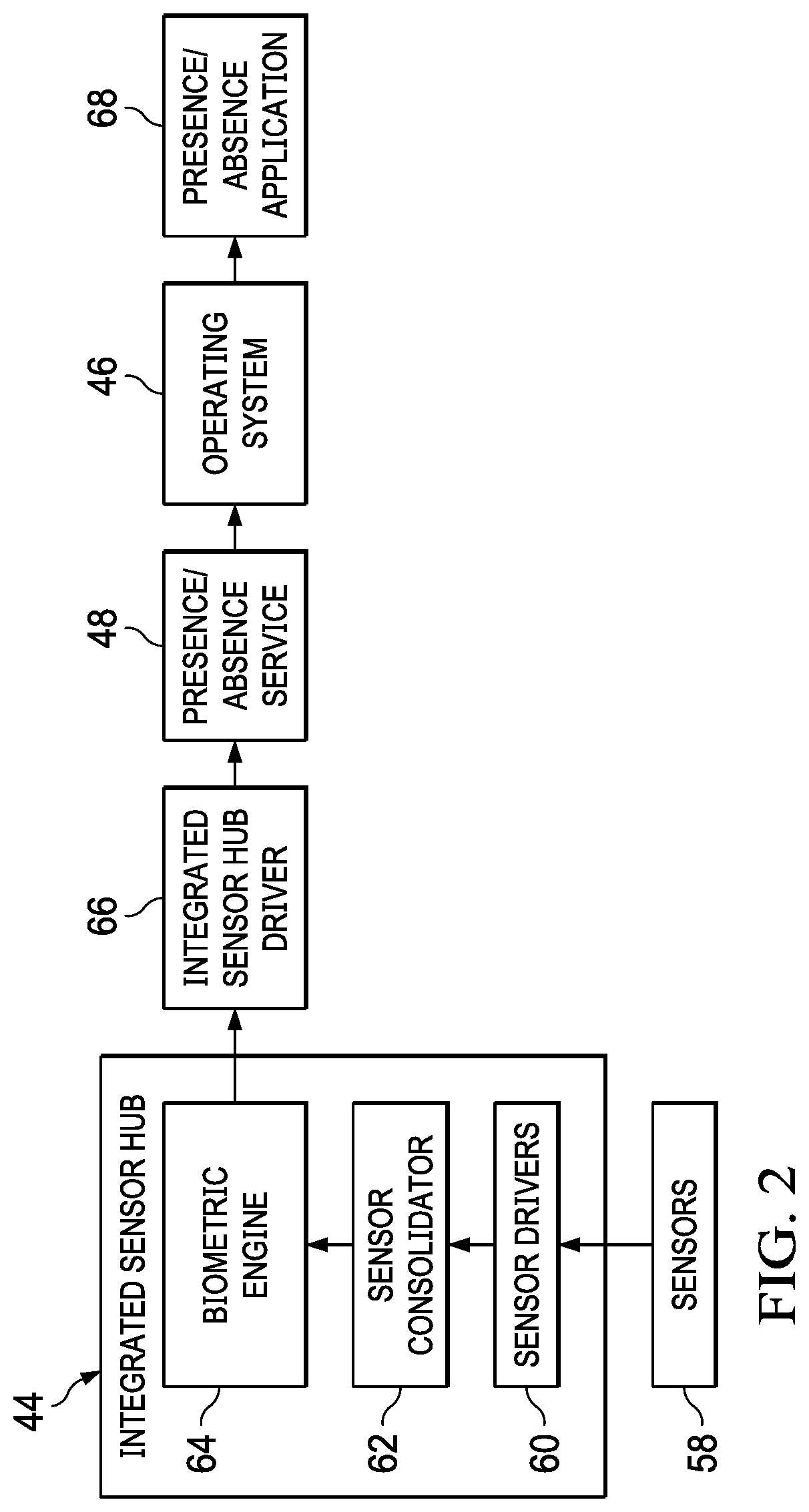

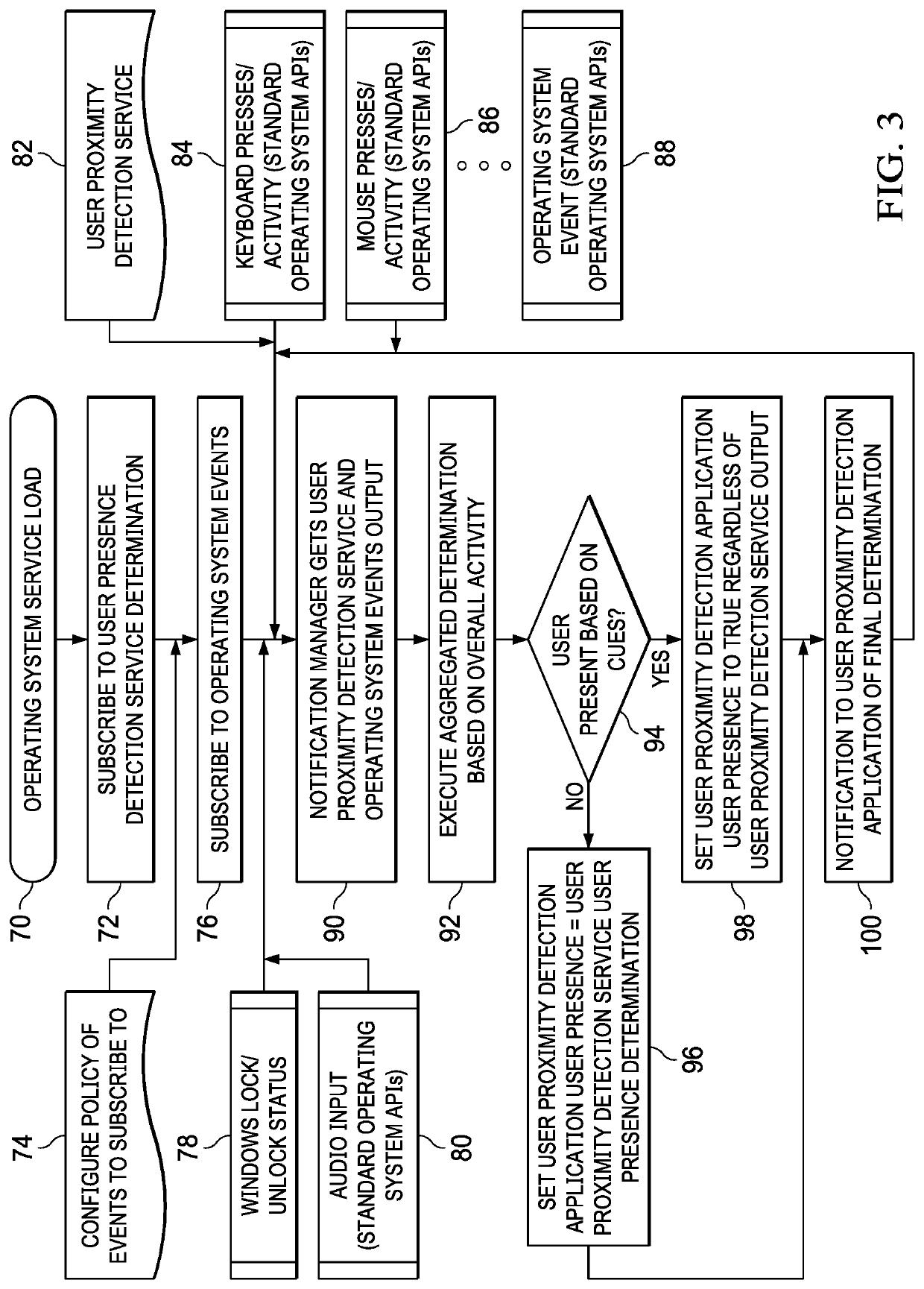

Augmented information handling system user presence detection

ActiveUS10819920B1Reduce disadvantagesReduce problemsInput/output for user-computer interactionTelevision system detailsTime of flight sensorOperational system

An information handling system transitions security settings and / or visual image presentations based upon a user absence or user presence state detected by an infrared time of flight sensor. An operating system service monitors the state detected by the infrared time of flight sensor and other context at the information handling system to selectively apply the user absence or user presence state at the information handling system.

Owner:DELL PROD LP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com