Method for detecting and positioning text area in video

A technology of area detection and video, which is applied in the direction of instruments, character and pattern recognition, computer parts, etc., to achieve the effect of fast detection and positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] In order to make the objectives, technical solutions and advantages of the present invention clearer, the present invention will be further described in detail below with reference to specific embodiments and accompanying drawings.

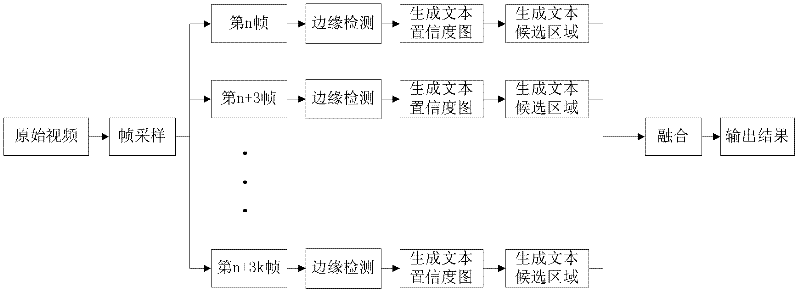

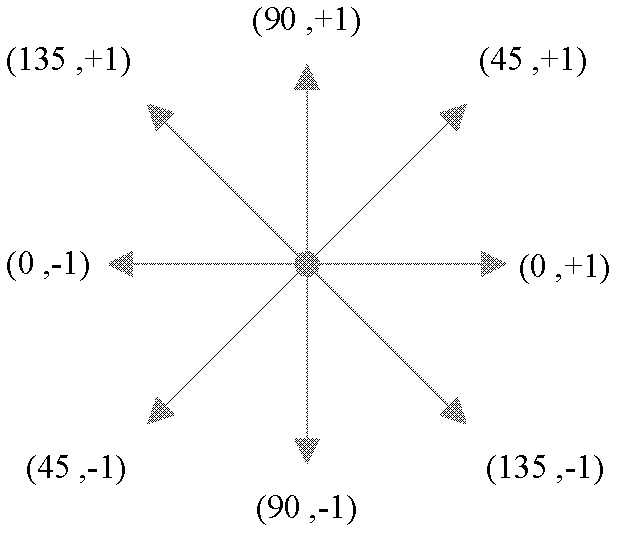

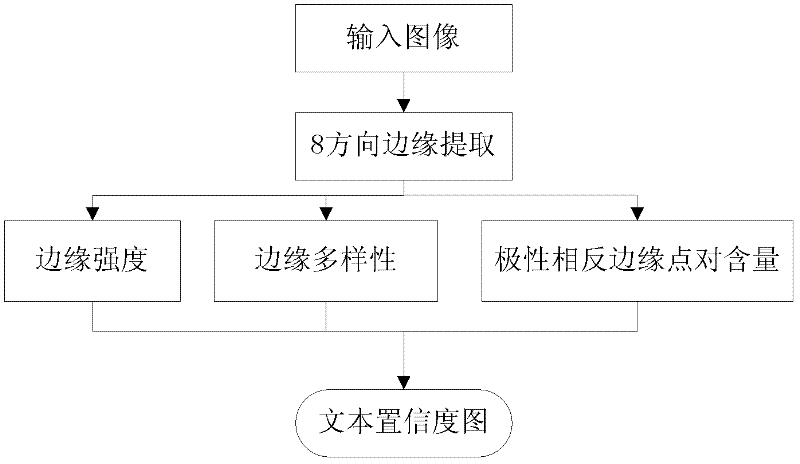

[0018] The principle of the method for detecting and locating text in a video of the present invention is mainly as follows: sampling the input video, performing edge detection on the video image obtained by sampling, using the image obtained after detection to generate a text confidence map, and obtaining the text confidence from the generated text confidence. The text candidate regions are extracted in the figure, and the text candidate regions of the multi-frame images with approximately the same text candidate regions are fused to obtain the final text region, and the text regions are divided into lines according to the horizontal and vertical projections.

[0019] figure 1 This is a flow chart of the method for detecting and locating t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com