Distributed training method based on hybrid parallelism

A training method and distributed technology, applied in the field of deep learning, can solve problems such as long training time and model failure to train, and achieve the effect of improving training speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] Below in conjunction with accompanying drawing, technical scheme of the present invention is described in further detail:

[0042]A further detailed description of the invention in conjunction with the accompanying drawings, at least a specific description of a preferred embodiment, the level of specificity of this description should be such that those skilled in the art can reproduce the invention or utility model according to the described content, Instead of spending creative labor, such as no need to carry out groping research and experiments.

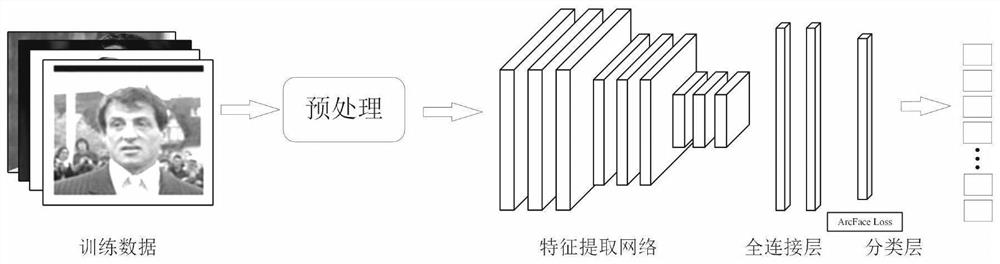

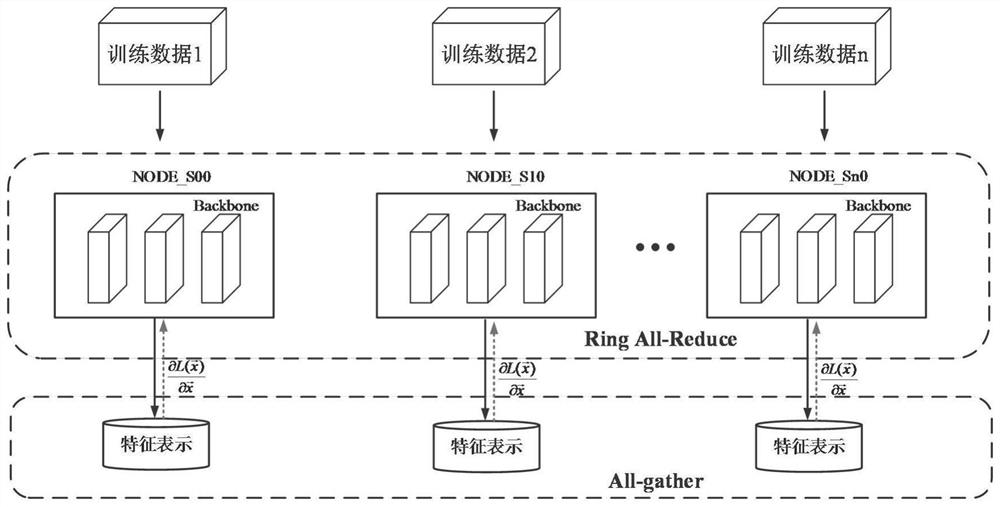

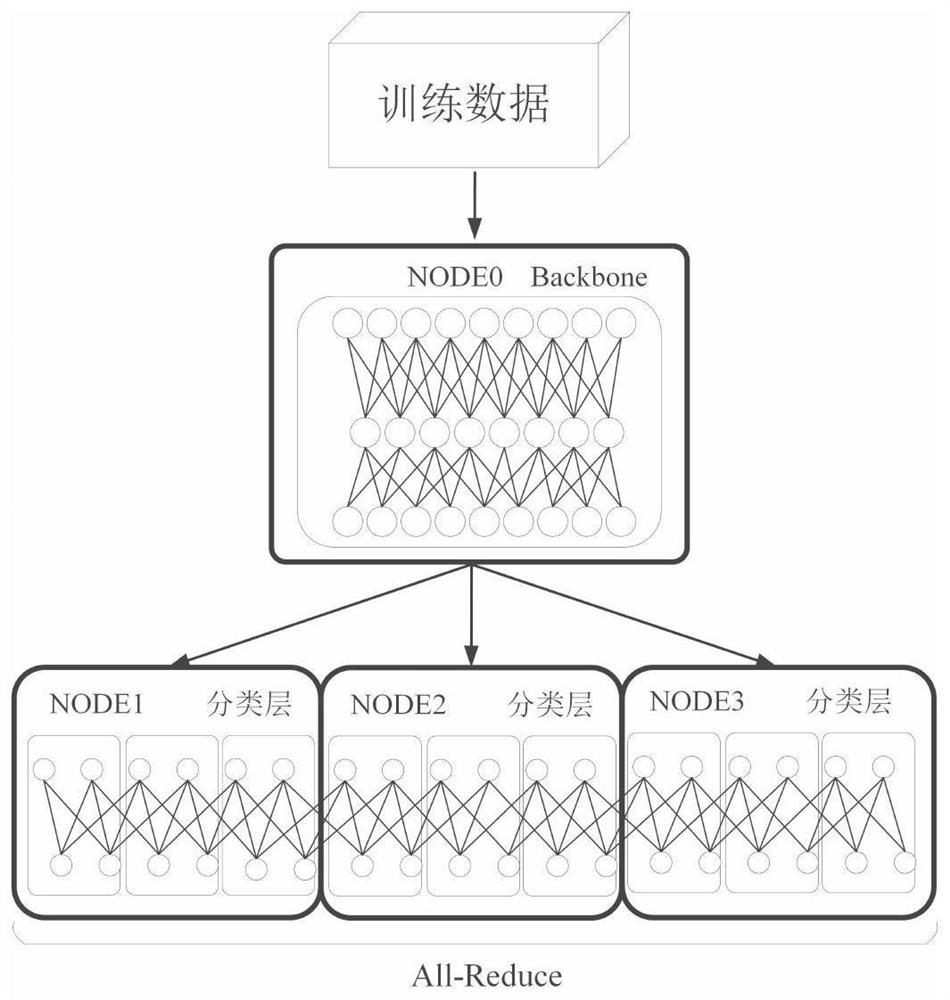

[0043] A kind of distributed model training method based on hybrid parallel, the present invention is introduced in detail here in conjunction with face recognition algorithm example facing large-scale ID, specifically comprises the following steps:

[0044] Step 1. Build a face recognition network model, in which the feature extraction network (backbone) can choose commonly used

[0045] Resnet50 model, the loss function u...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com