Feature pyramid-based remote-sensing image time-sensitive target recognition system and method

A feature pyramid and remote sensing image technology, applied in the field of remote sensing image processing, can solve the problems of poor multi-scale target detection, high demand for computing resources, large scale and many parameters, etc. The effect of reducing the number of parameters

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

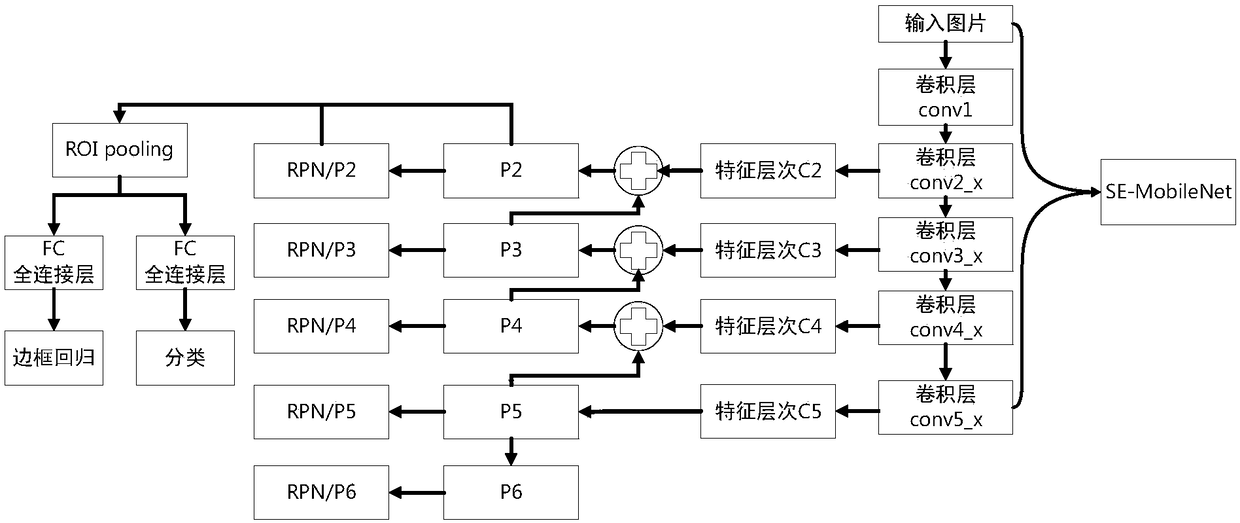

[0047] The present invention provides a remote sensing image time-sensitive target recognition system based on a feature pyramid. The image recognition system includes a target feature extraction sub-network, a feature layer sub-network, a candidate region generation sub-network and a classification regression sub-network. Among them, the target feature extraction sub-network has multiple levels of output terminals, the classification regression sub-network has multiple levels of input terminals and RPN input terminals, and the feature layer sub-network and candidate region generation sub-network have multiple levels of input terminals and A plurality of hierarchical output terminals, a hierarchical output terminal of the target feature extraction subnetwork is connected with a hierarchical input terminal of the feature layer subnetwork, a hierarchical output terminal of the feature layer subnetwork is connected with a hierarchical input terminal of the candidate region generati...

Embodiment 2

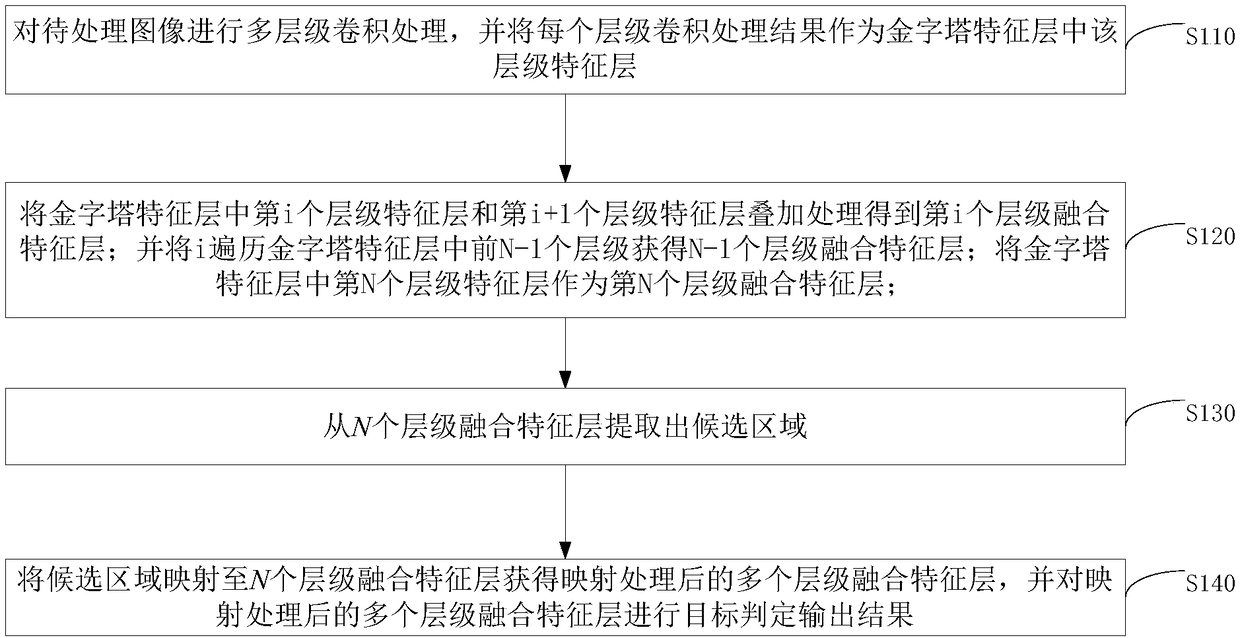

[0050] On the basis of Embodiment 1, the feature layer sub-network includes a plurality of feature layer sub-modules, denoted as the first feature layer sub-module, the second feature layer sub-module, ..., the i-th feature layer sub-module, ..., the Nth feature layer sub-module. Among them, one input end of the i-th feature layer sub-module is used as a layer input end of the feature layer sub-network, and the other input end of the i-th feature layer sub-module is the same as the output end of the i+1th feature layer sub-module Connection, the input end of the Nth feature layer sub-module is used as a layer input end of the feature layer sub-network, 1≤i≤N-1, the first N-1 feature layer sub-modules are used for the upper layer feature layer and The current feature layer is superimposed to obtain the current fusion feature layer; the Nth feature layer sub-module is used to output the current feature layer as the current fusion feature layer, and the previous feature layer is ...

Embodiment 3

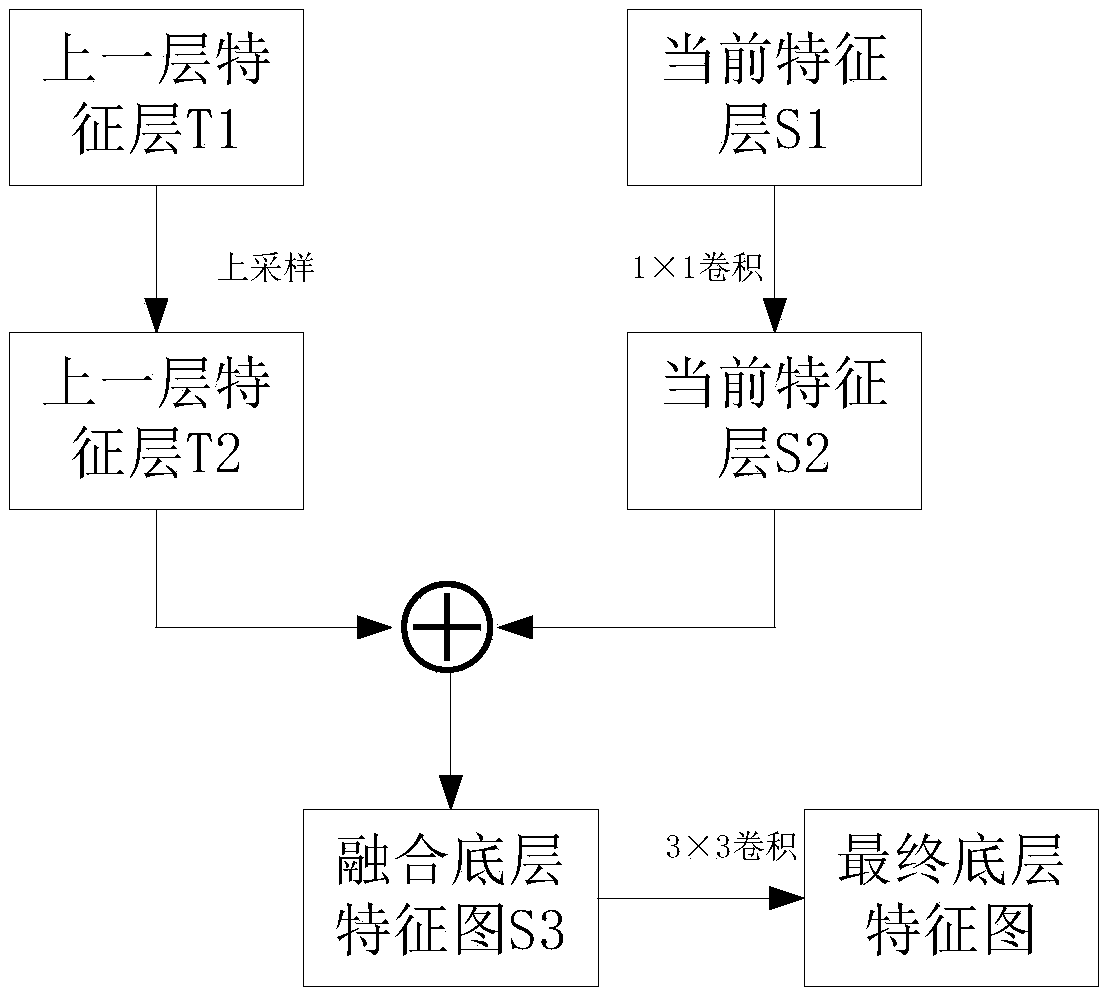

[0052] like figure 2 As shown, on the basis of Embodiment 2, any one of the feature layer submodules in the first N-1 feature layer submodules includes the upper layer processing subunit, the current processing subunit and the superposition unit, and the upper layer processing subunit The output terminal of the superposition unit is connected to the first input terminal of the superposition unit, the output terminal of the current processing subunit is connected to the second input terminal of the superposition unit, and the upper layer processing subunit is used to perform upsampling processing and output processing on the upper layer feature layer After the upper feature layer T2, the current processing subunit is used to perform 1×1 convolution processing on the current feature layer to output the current feature layer S2 after processing, and the superposition unit is used to process the processed upper layer feature layer and the current feature layer Overlay processing,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com