Patents

Literature

38 results about "Pipeline scheduling" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

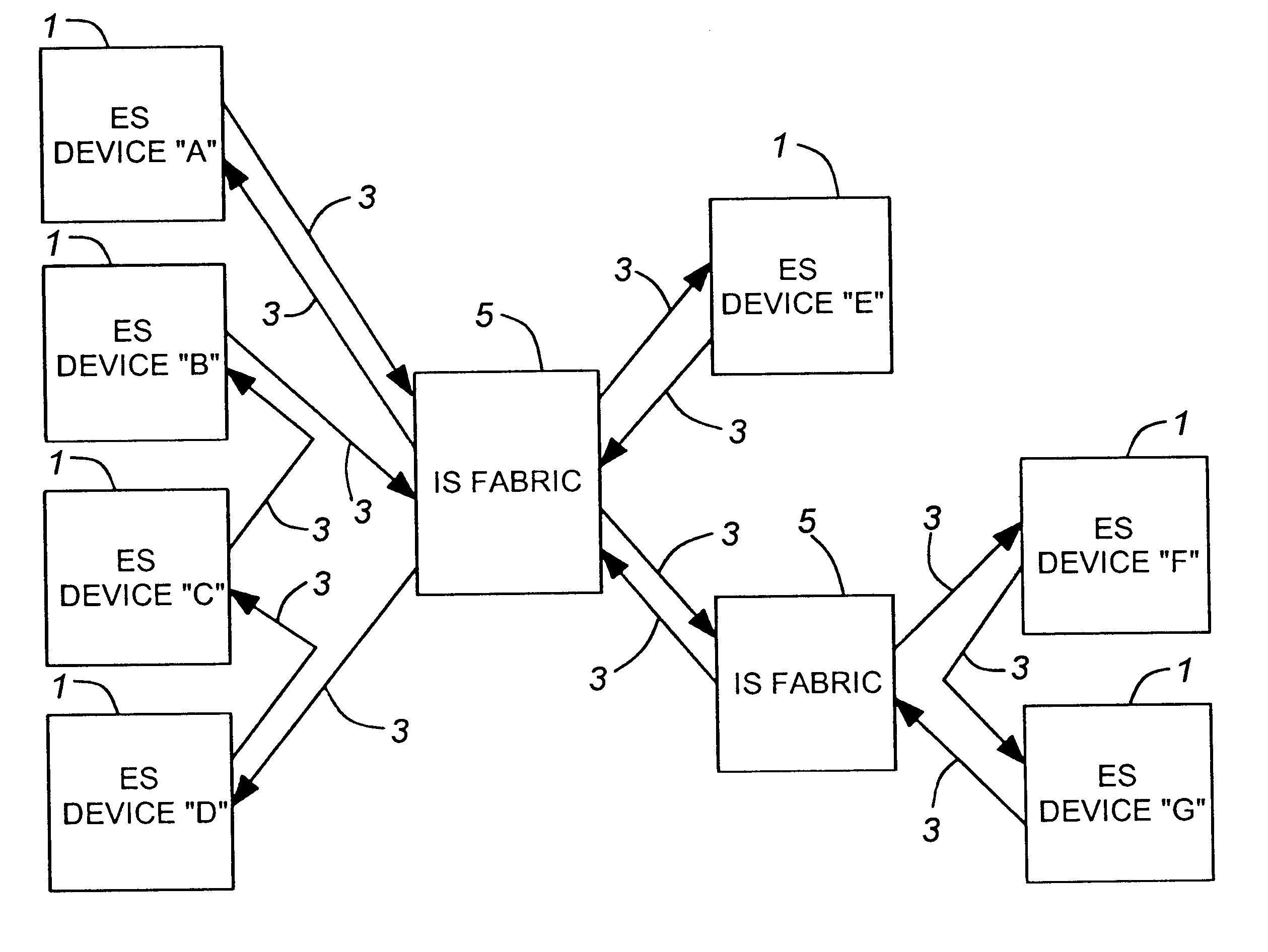

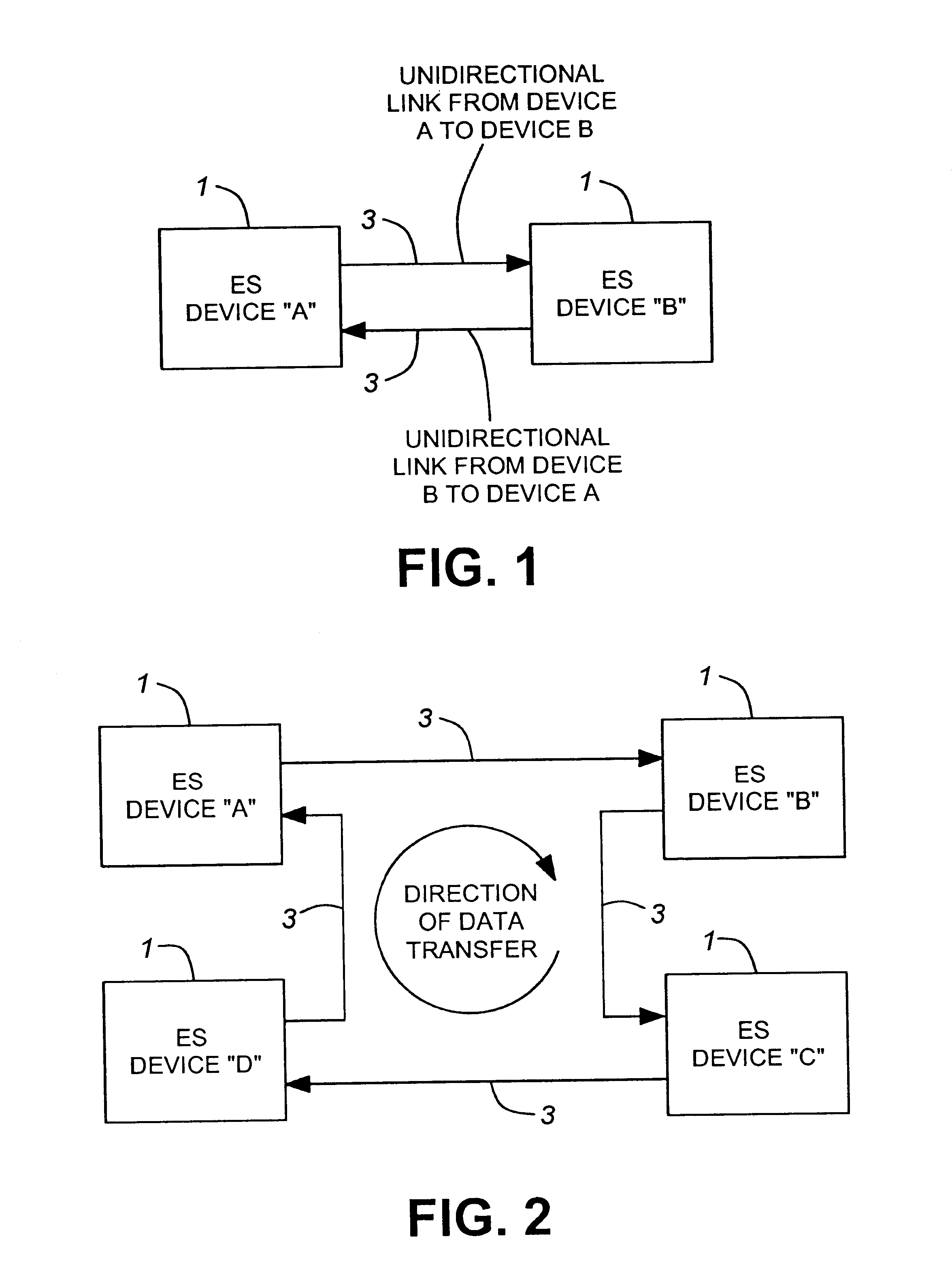

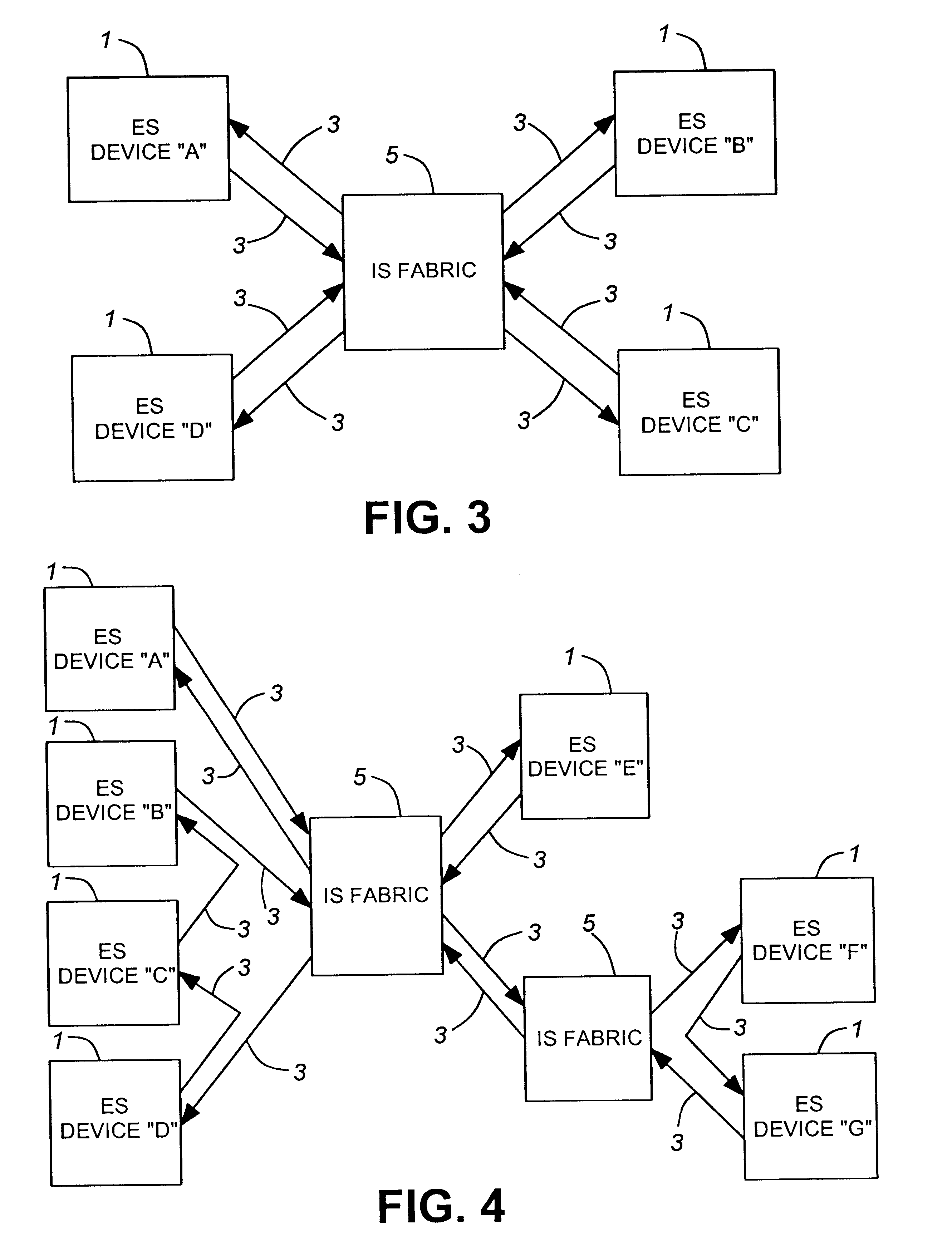

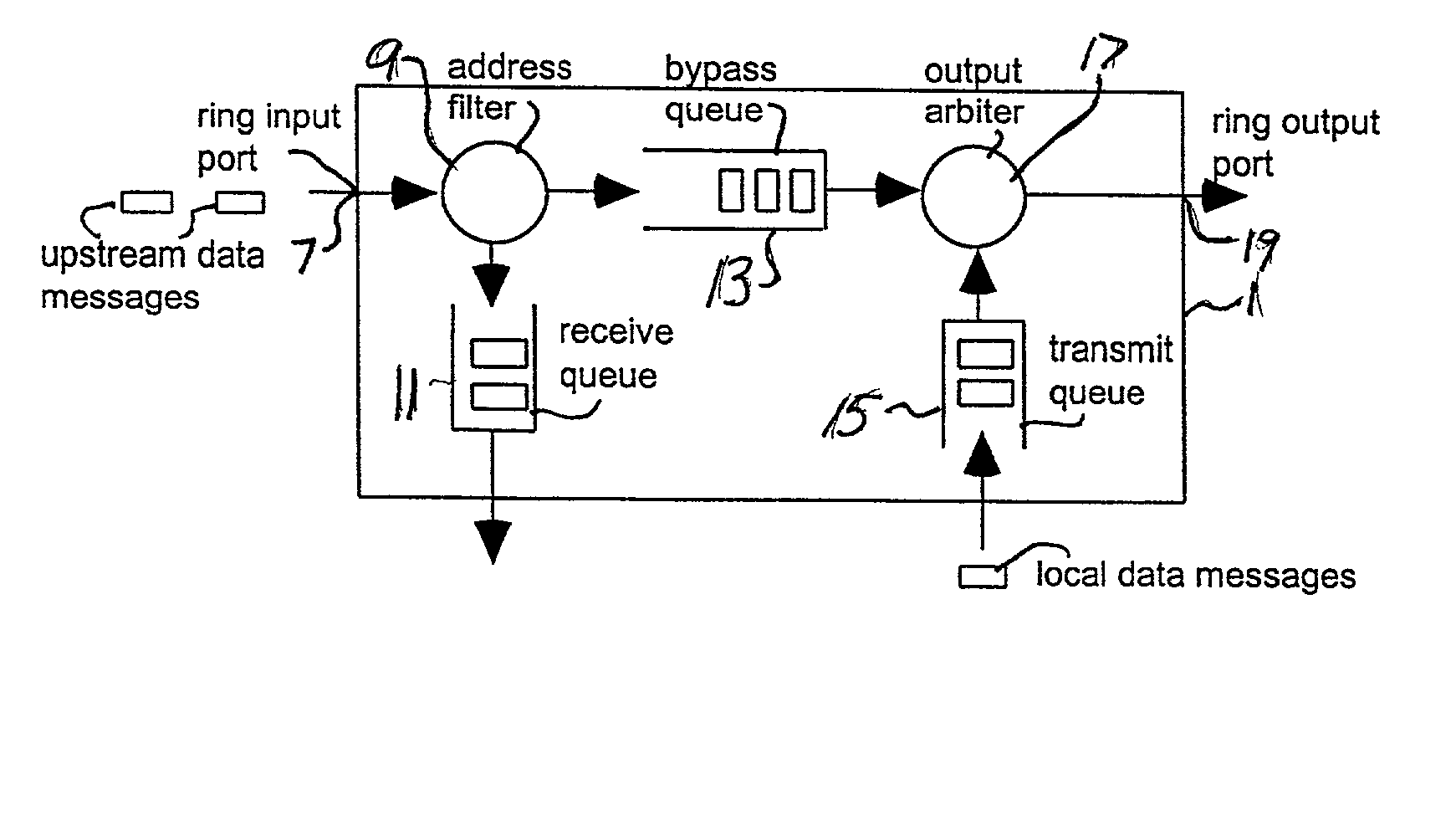

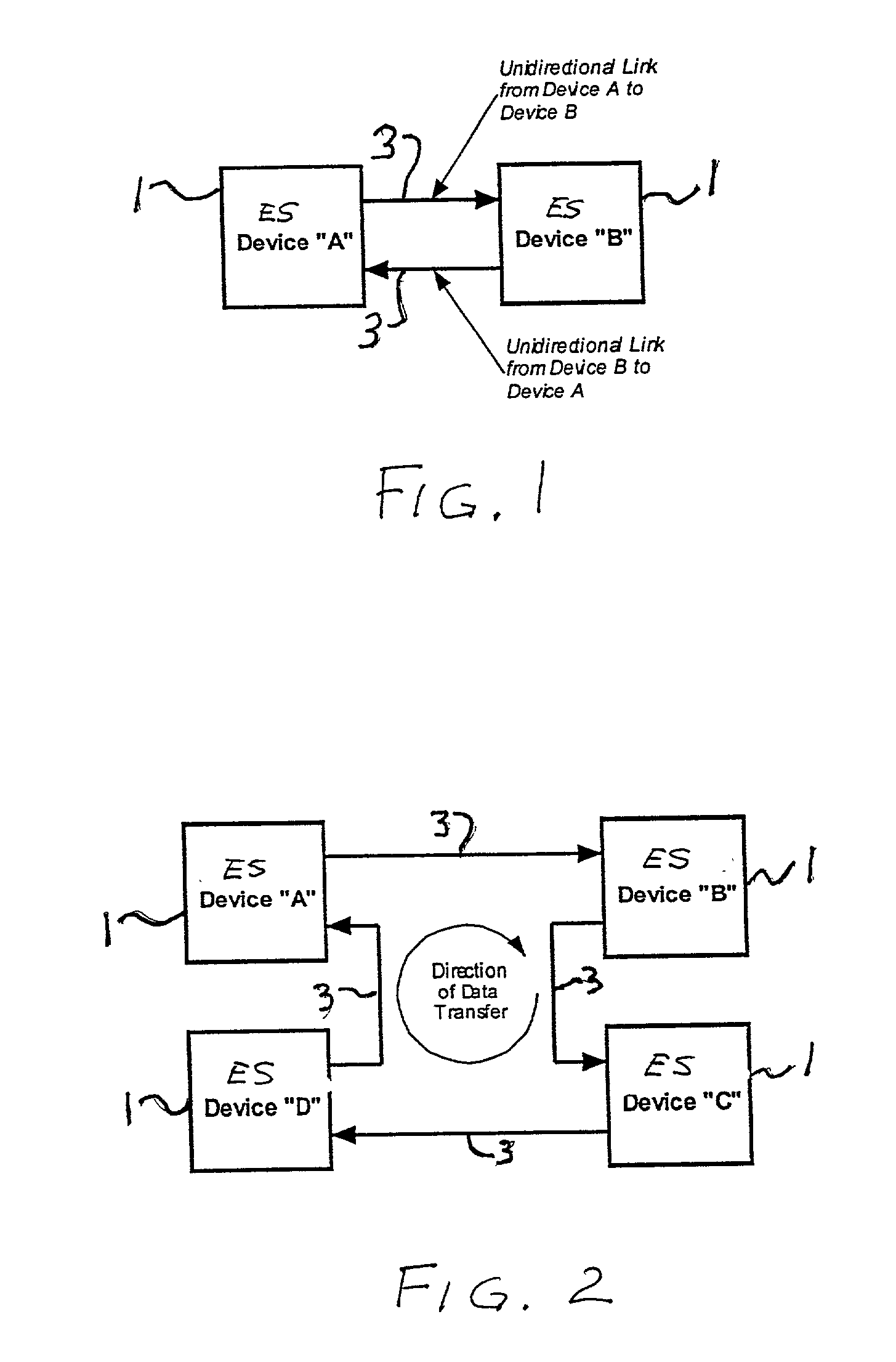

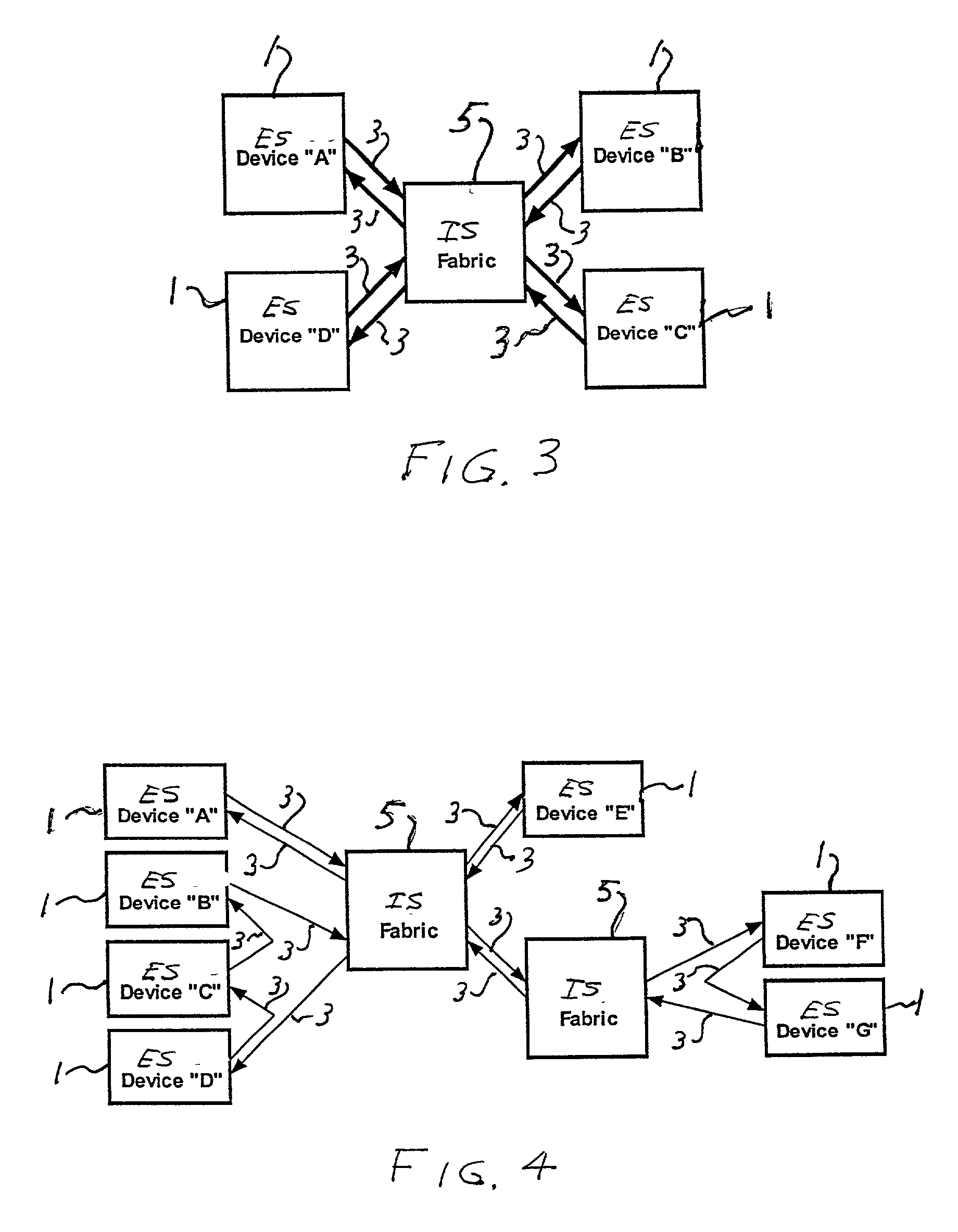

Communication method for packet switching systems

A method of communicating data frames between nodes in a network comprised of one or more end system nodes where each end system node has a unidirectional ingress port and a unidirectional egress port. The method comprises transmitting a data frame from an egress port of one end system node to the ingress port of another end system node, the receiving end system node then determining whether it is the final destination for the data frame. If the receiving end system node is the final destination of the data frame, the receiving end system node absorbs the message. If not, the receiving end system node buffers and then retransmits the data frame through its own egress port. The method provides scalability, low cost, distributed pipeline scheduling, maximum complexity of the network fabric, and maximum speed.

Owner:PMC-SIERRA

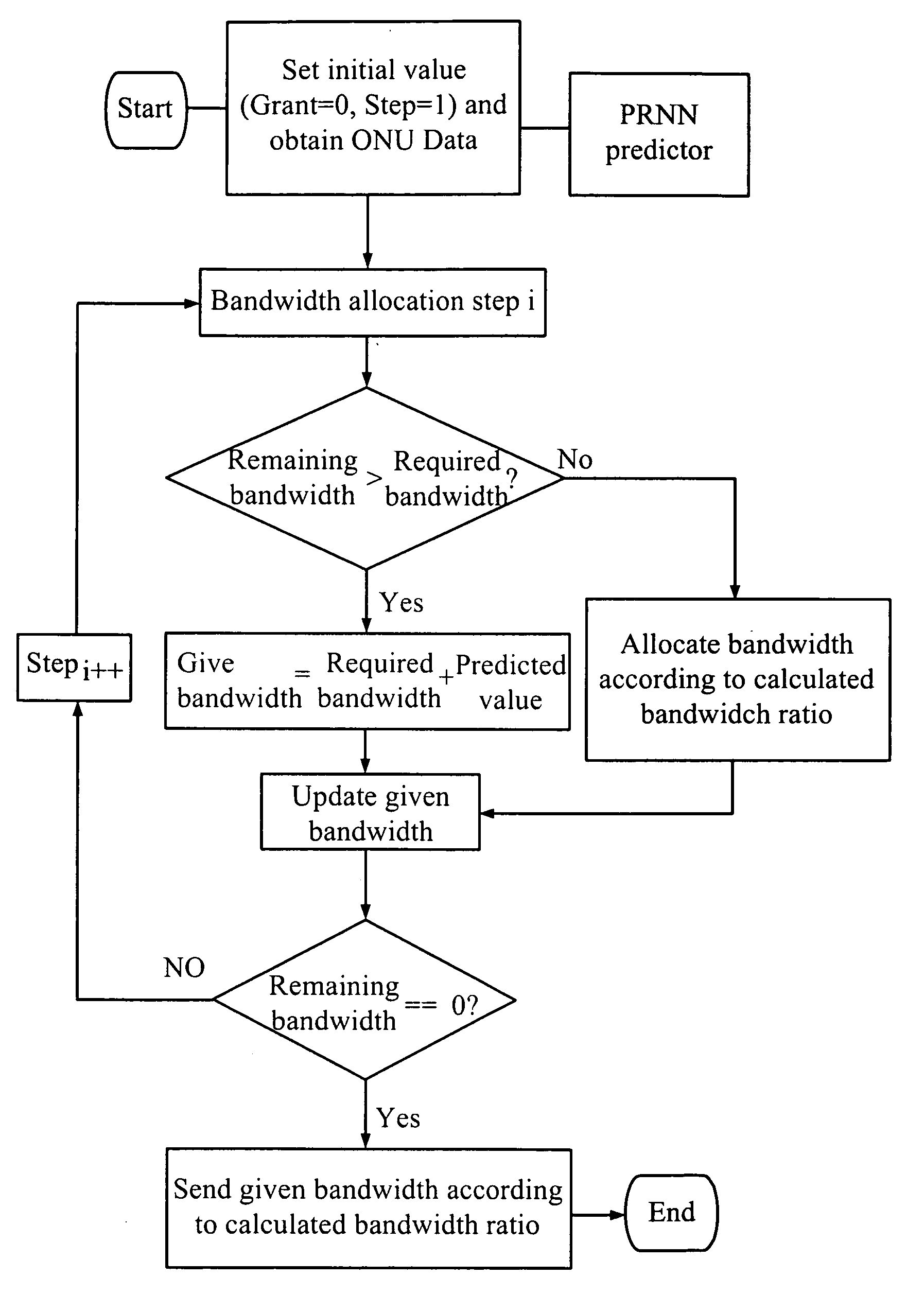

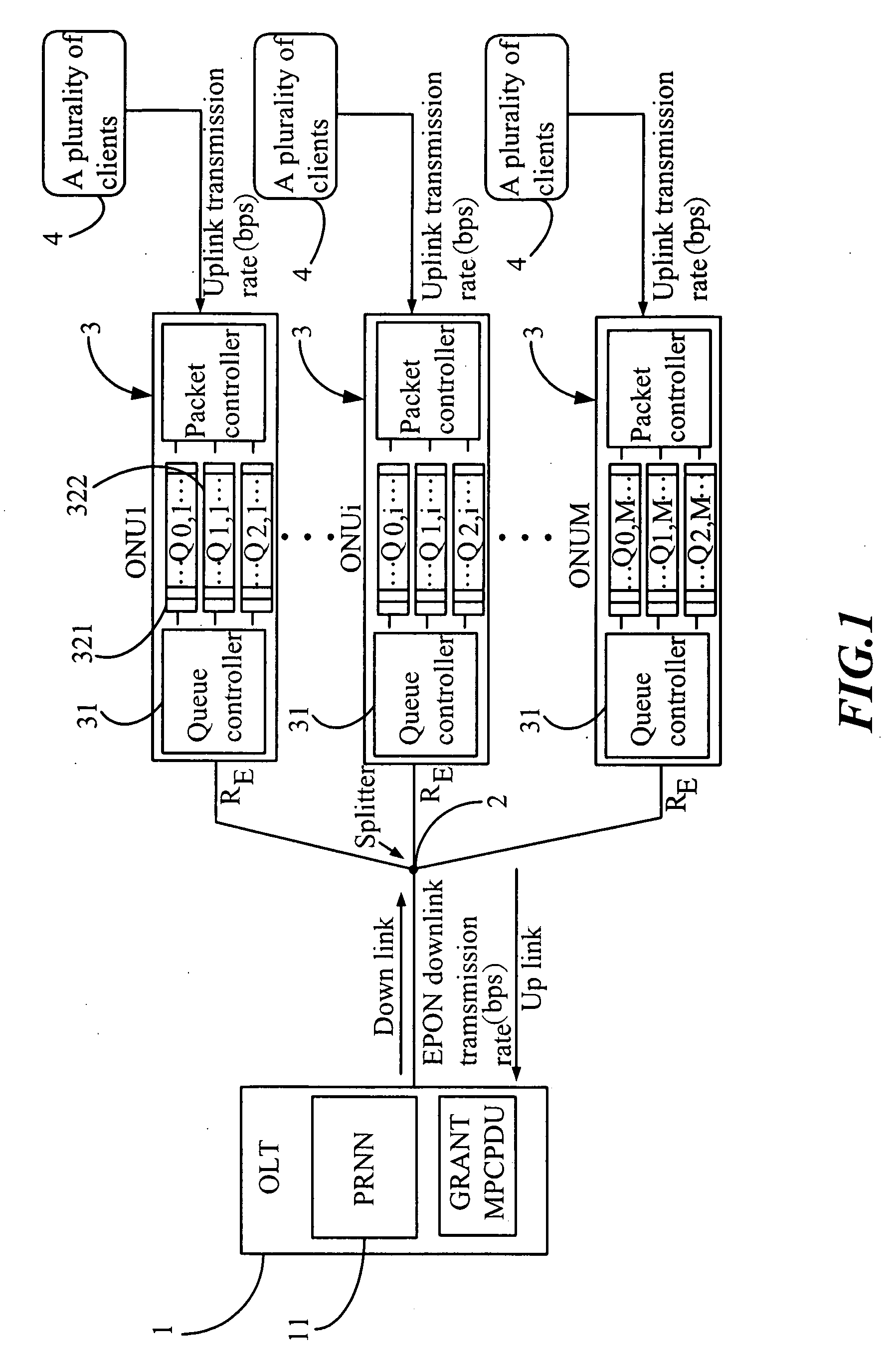

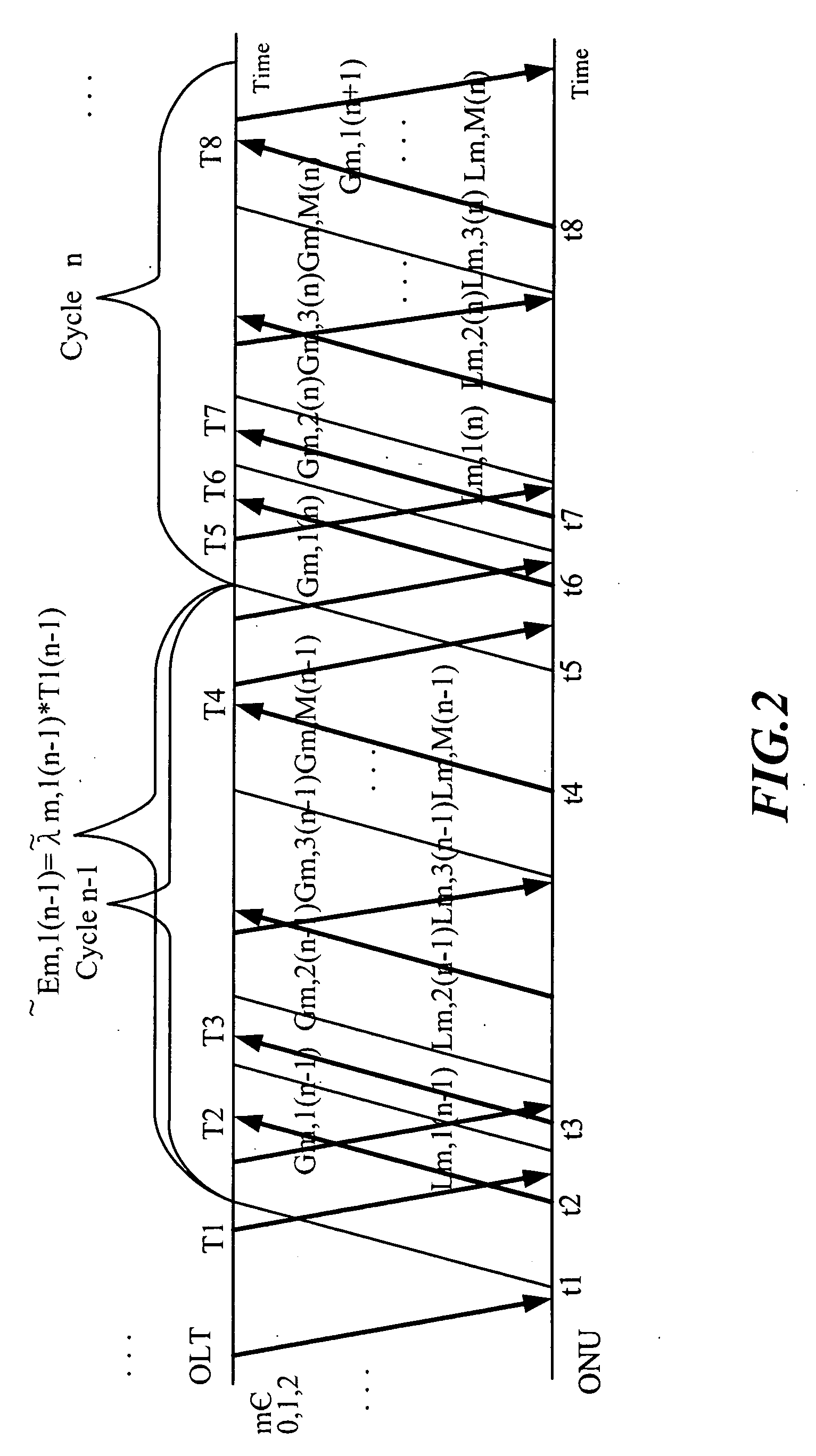

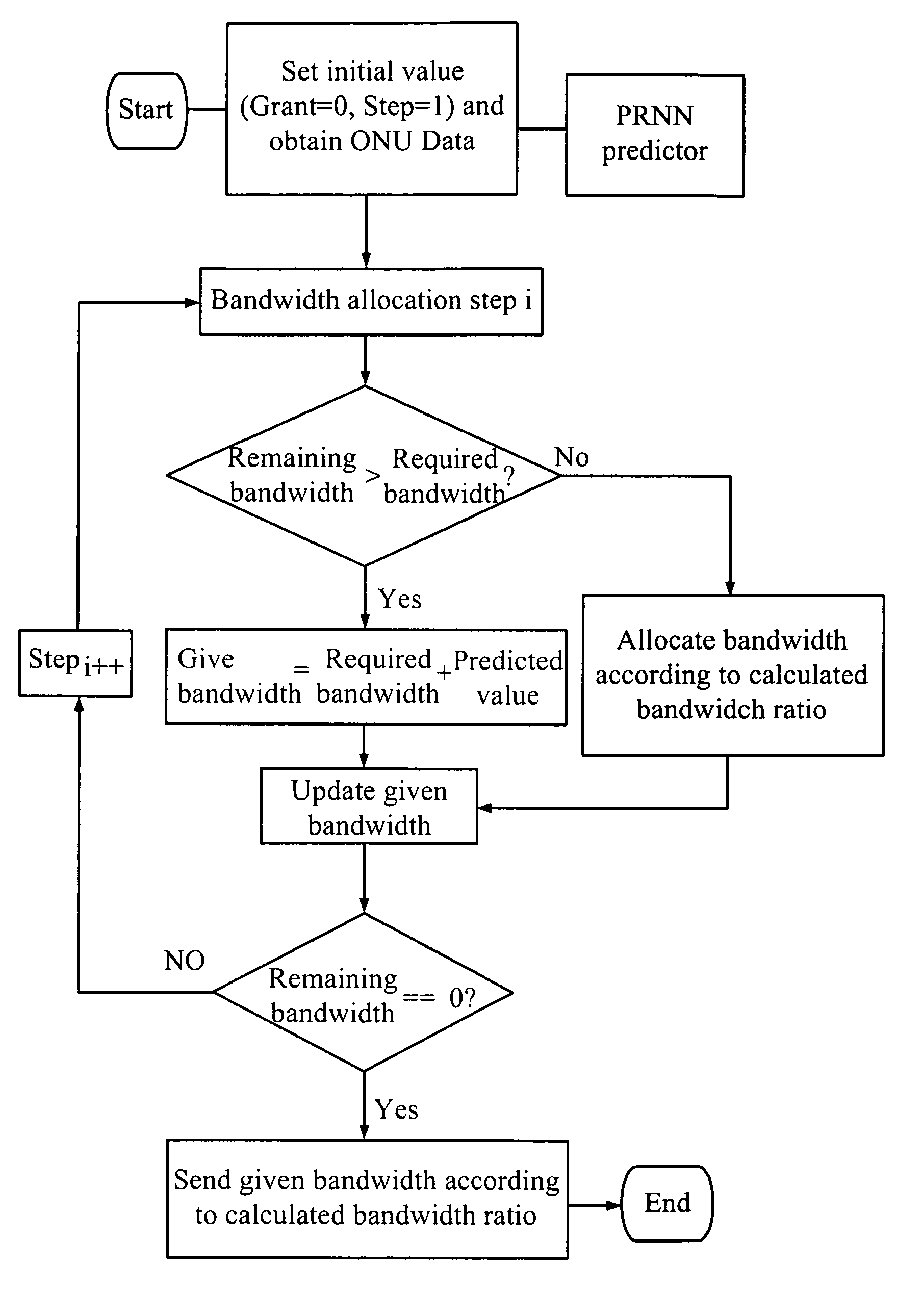

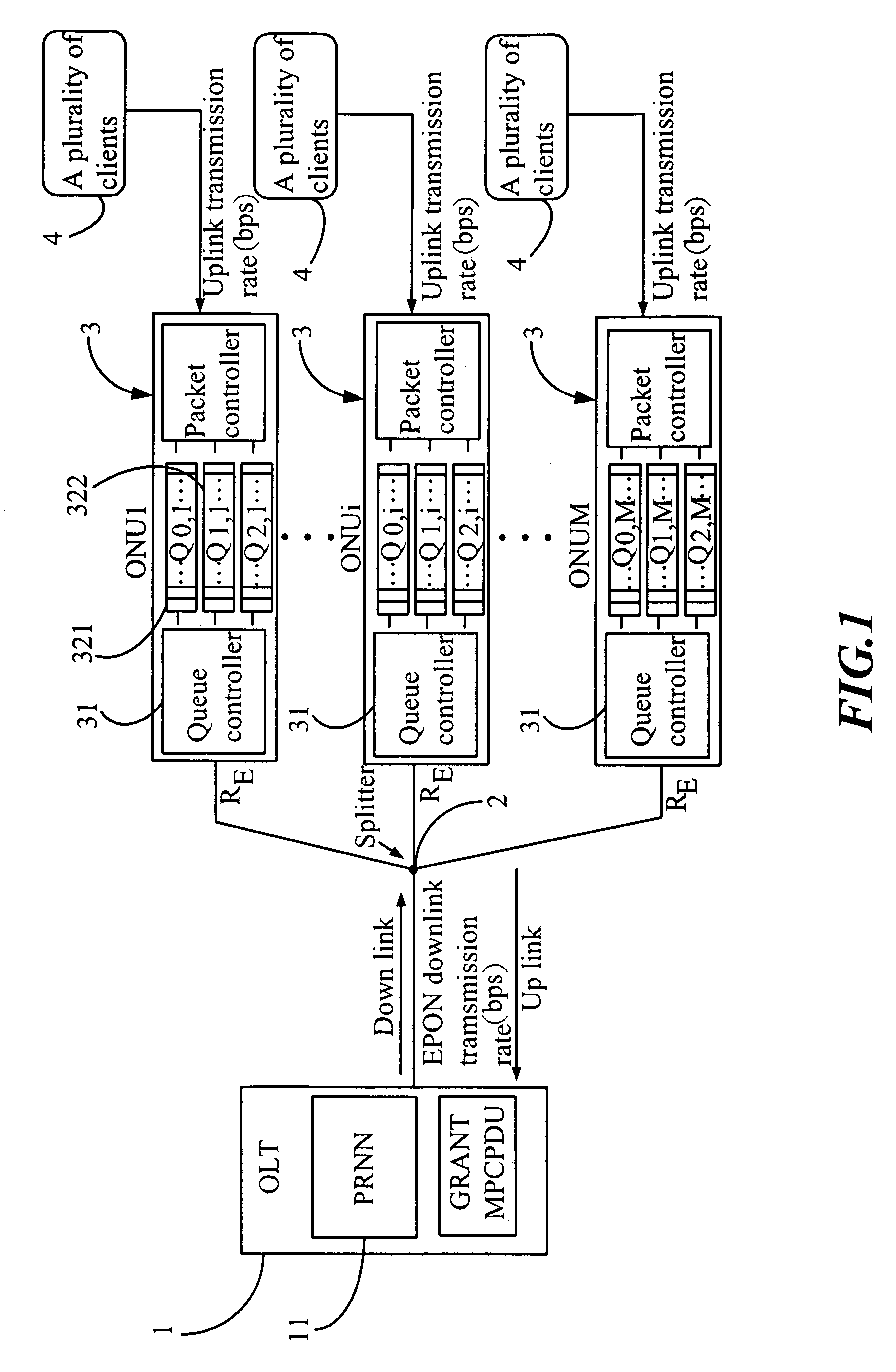

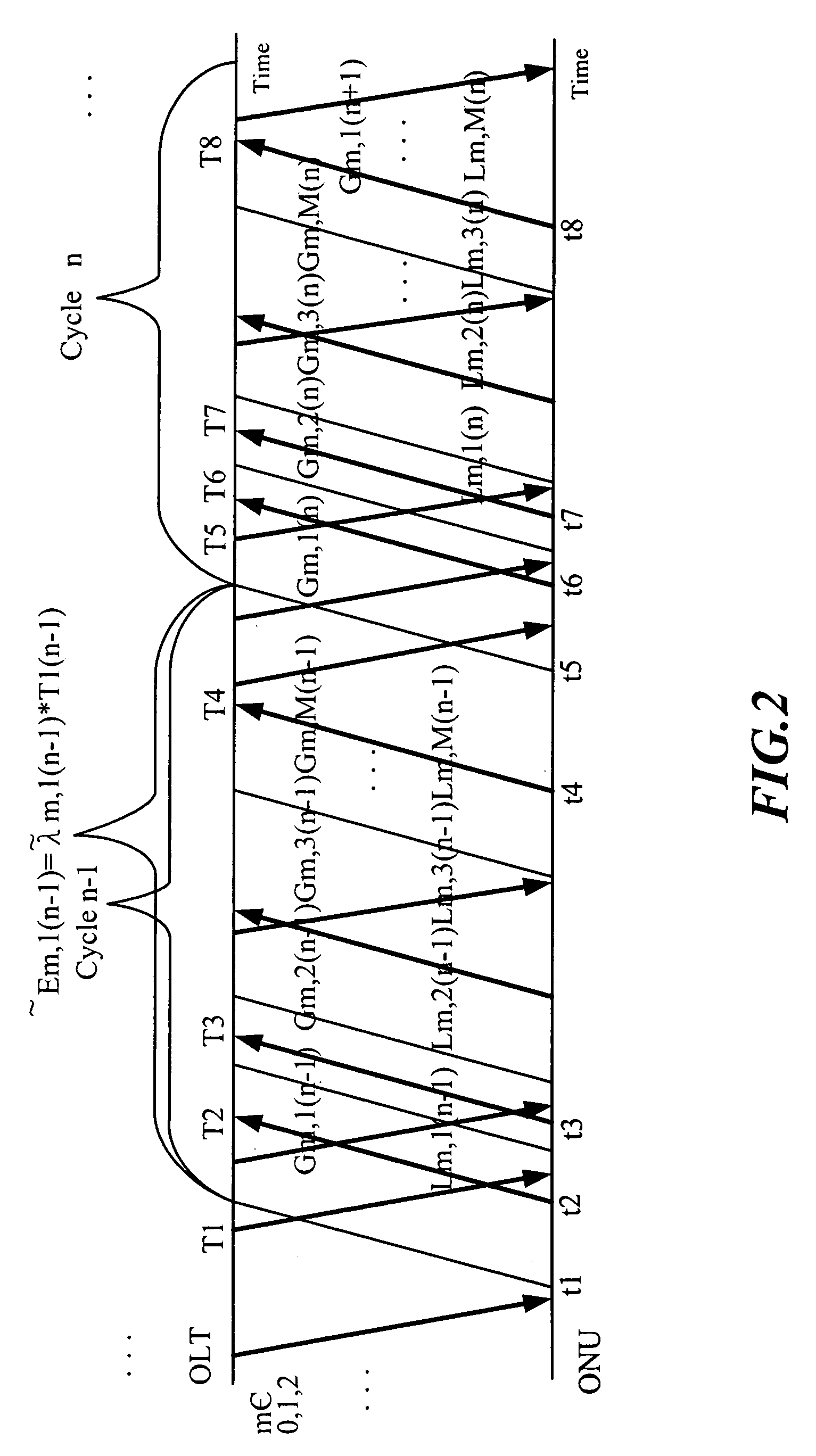

Dynamic Bandwidth Allocation Method of Ethernet Passive Optical Network

InactiveUS20100254707A1Fast convergenceAccurate predictionMultiplex system selection arrangementsTime-division optical multiplex systemsRecurrent neural netsPipeline scheduling

A dynamic bandwidth allocation method of an Ethernet passive optical network, comprises a predictor and a rule of QoS-promoted dynamic bandwidth allocation (PQ-DBA); the predictor predicts a client behavior and numbers of various kinds of packets by using a pipeline scheduling predictor consisted of a pipelined recurrent neural network (PRNN), and a learning rule of the extended recursive least squares (ERLS); the present invention establishes a better QoS traffic management for the OLT-allocated ONU bandwidth and client packets sent by priority.

Owner:CHUNGHWA TELECOM CO LTD

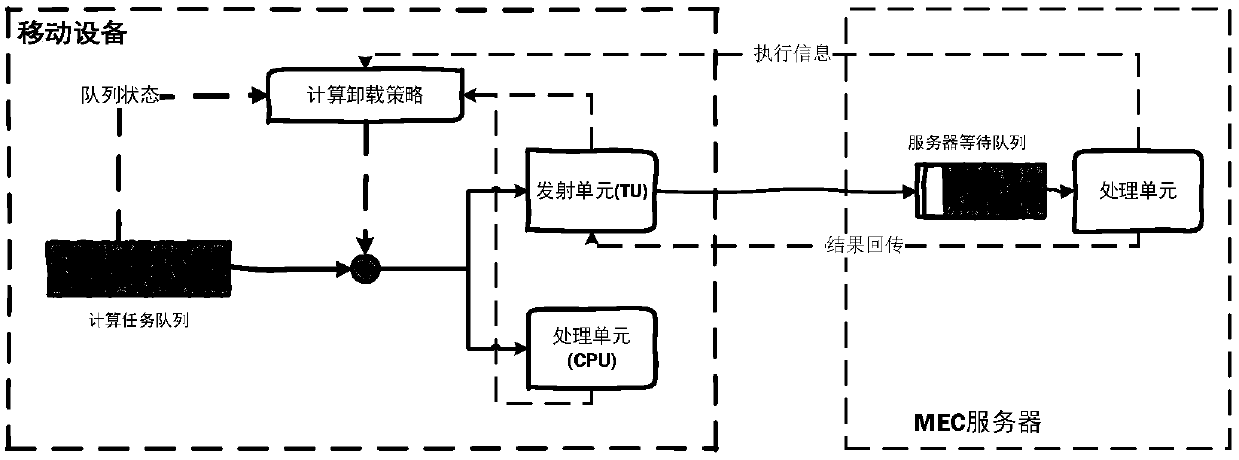

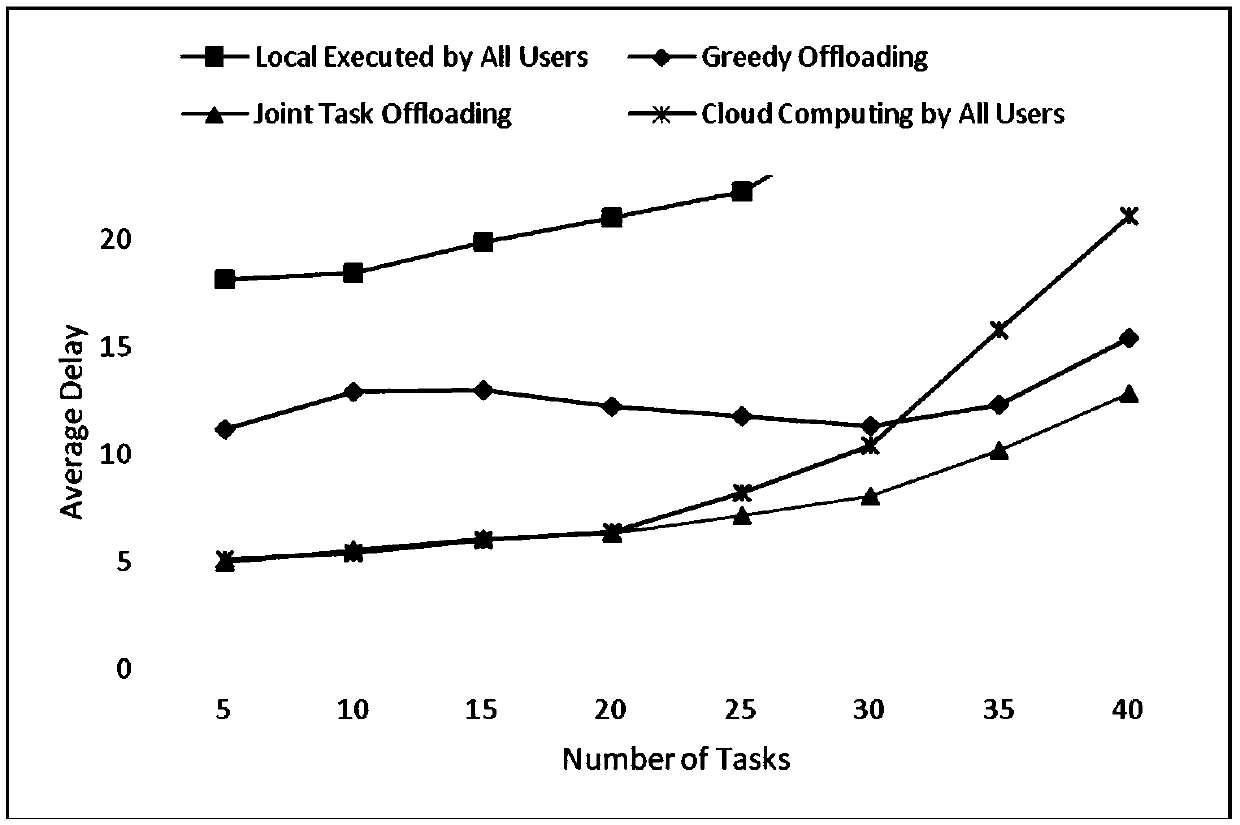

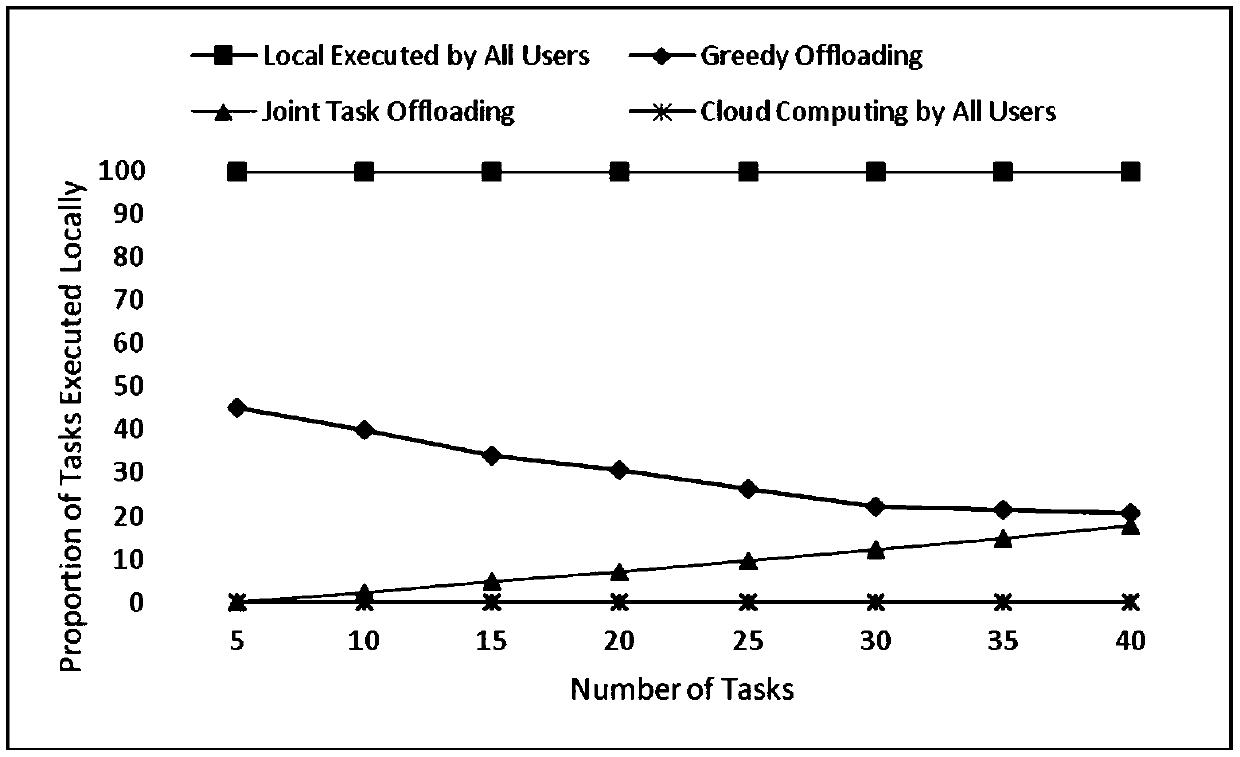

Mobile edge computing task unloading method in single-user scene

ActiveCN108920280AReduce latencyReduce energy consumptionProgram initiation/switchingResource allocationOptimal schedulingMobile edge computing

The invention discloses a mobile edge computing task unloading method in a single-user scene, and relates to the field of treatment of a mobile computing system. The invention aims to reduce the reaction time delay and energy consumption of mobile equipment. construction of single-user scene task unloading model comprises construction of a system overall model and construction of each partial model, wherein the construction of each partial model comprises: a task queue model, a local computing model, a cloud computing model and a computing task loading model; a task unloading strategy: givinga task unloading scheme targeting at minimizing the system overall load K; executing all tasks on local CPU or an MEC server based on binary particle swarm optimization; correspondingly and locally executing a load optimal scheduling strategy, and executing a load optimal scheduling strategy by the MEC server based on pipeline scheduling. Through verification, the task unloading method in a sing-user scene provided by the invention reduces the reaction time delay and energy consumption of the mobile equipment.

Owner:HARBIN INST OF TECH

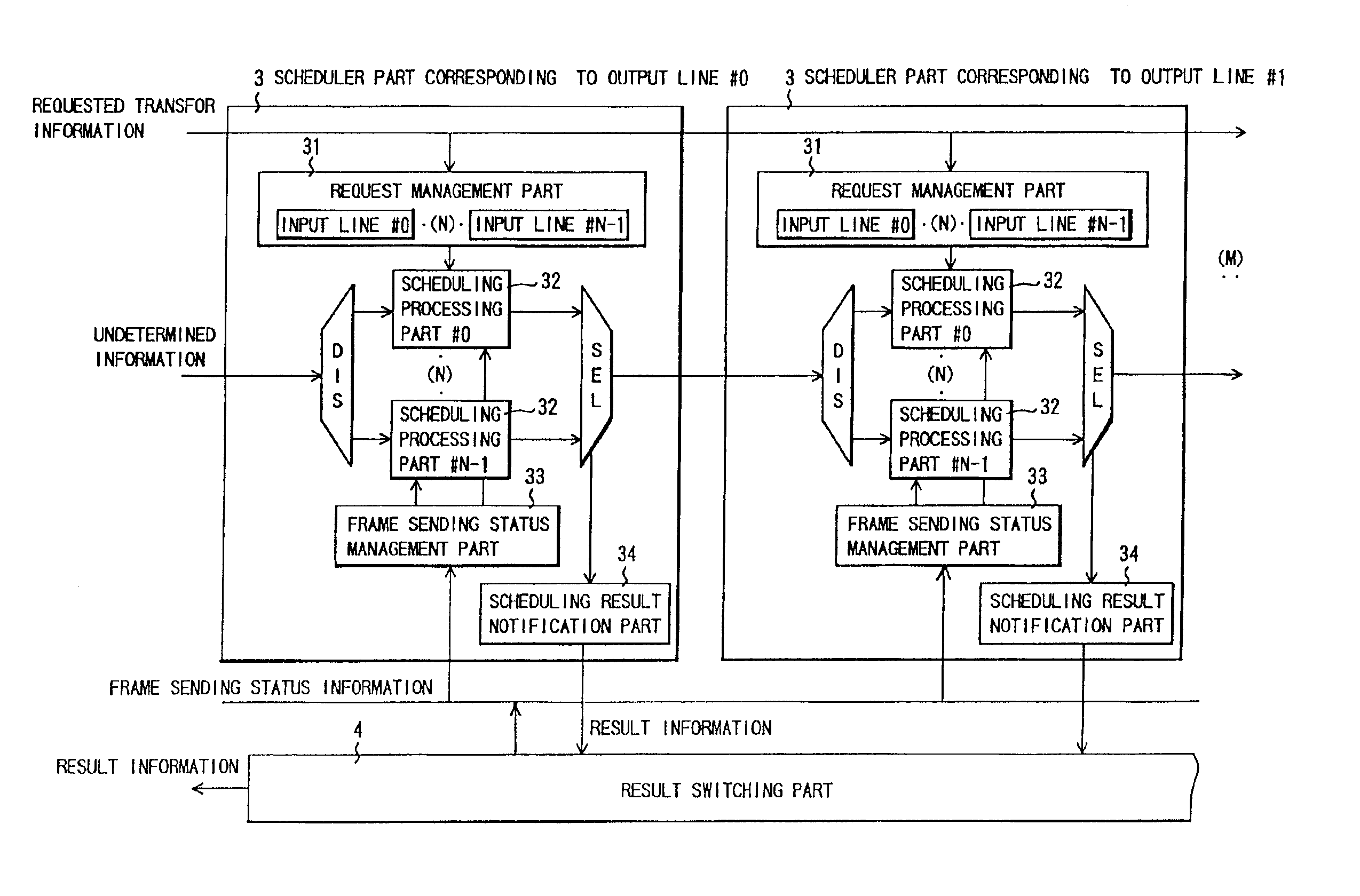

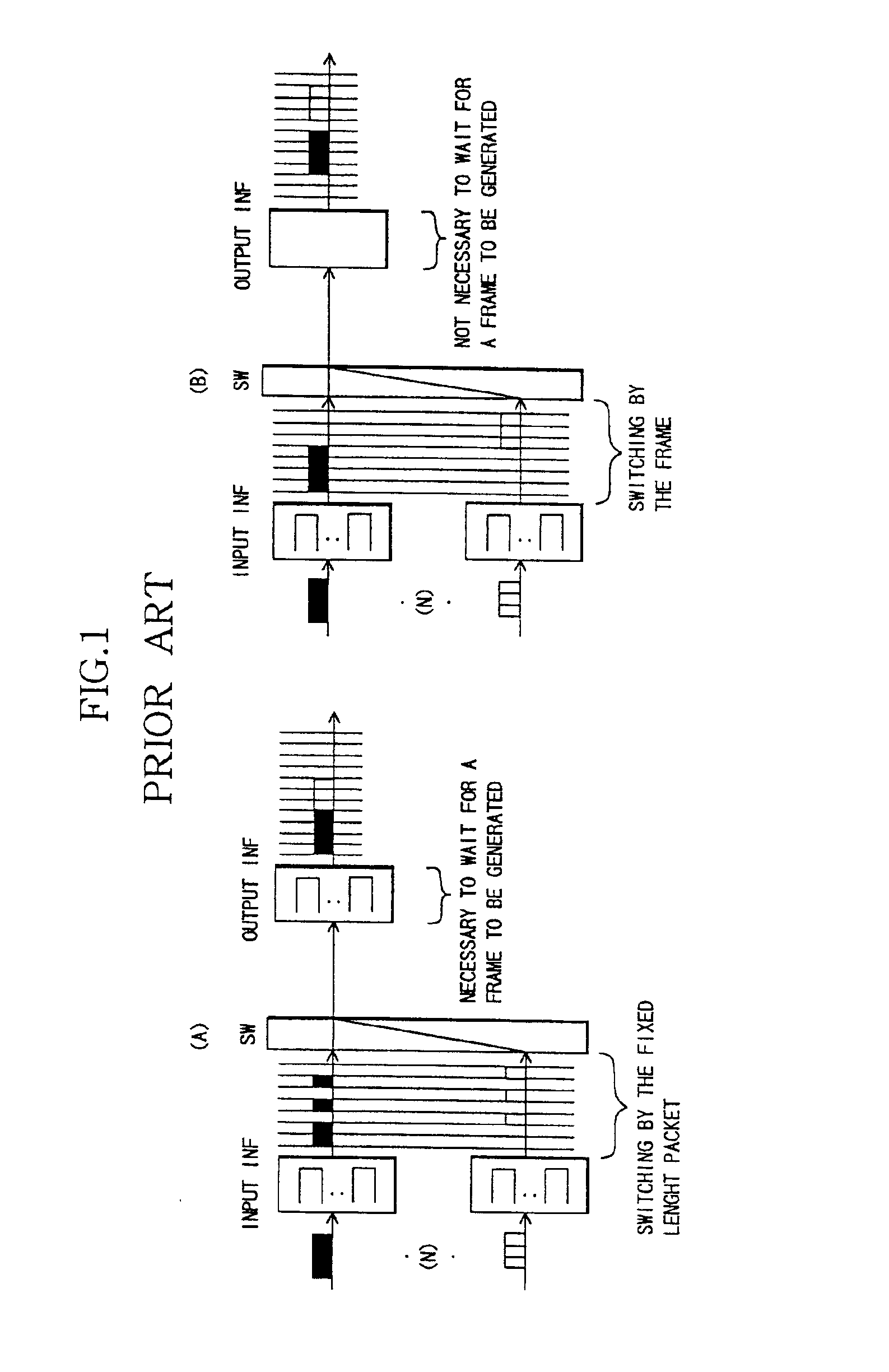

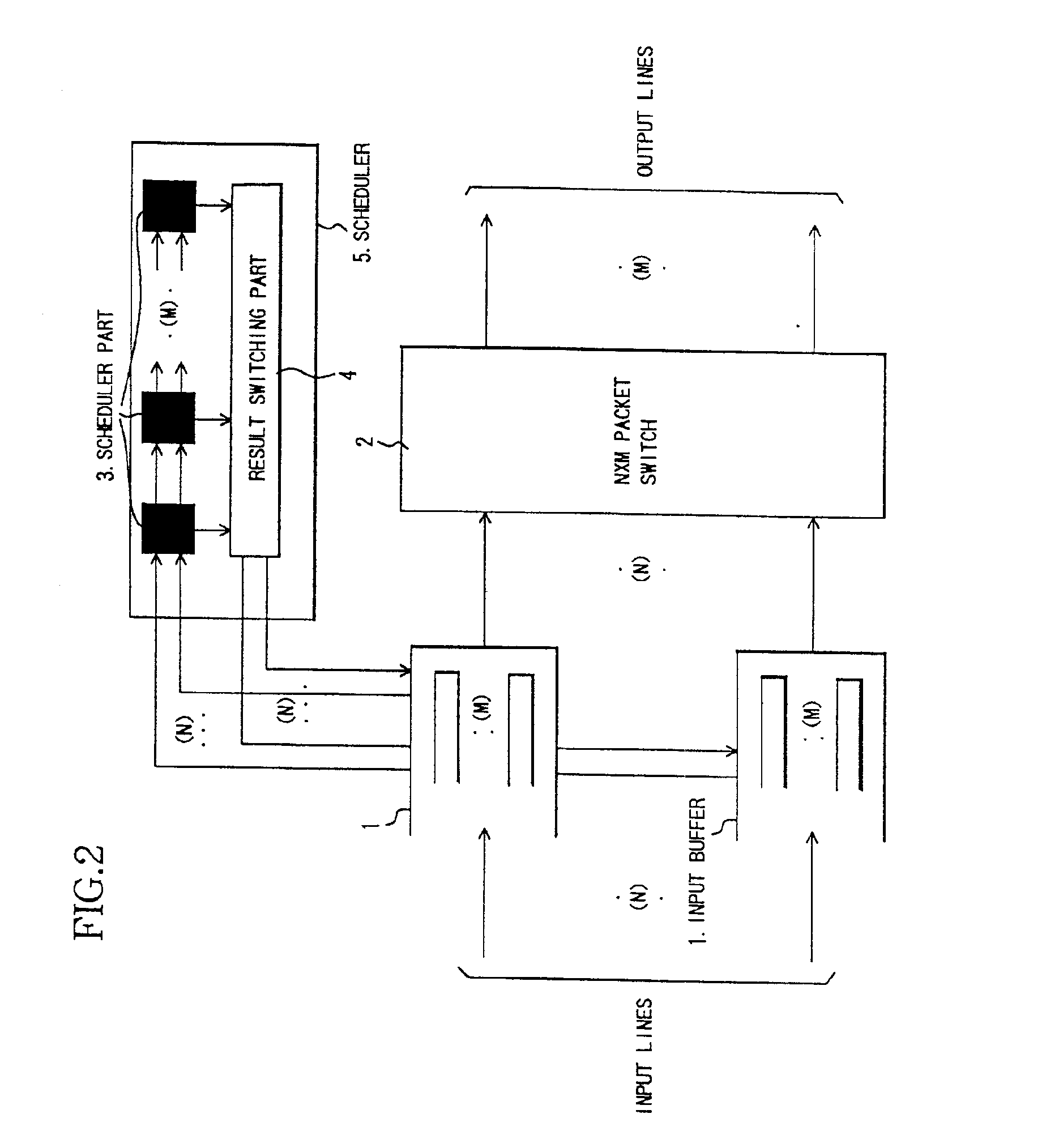

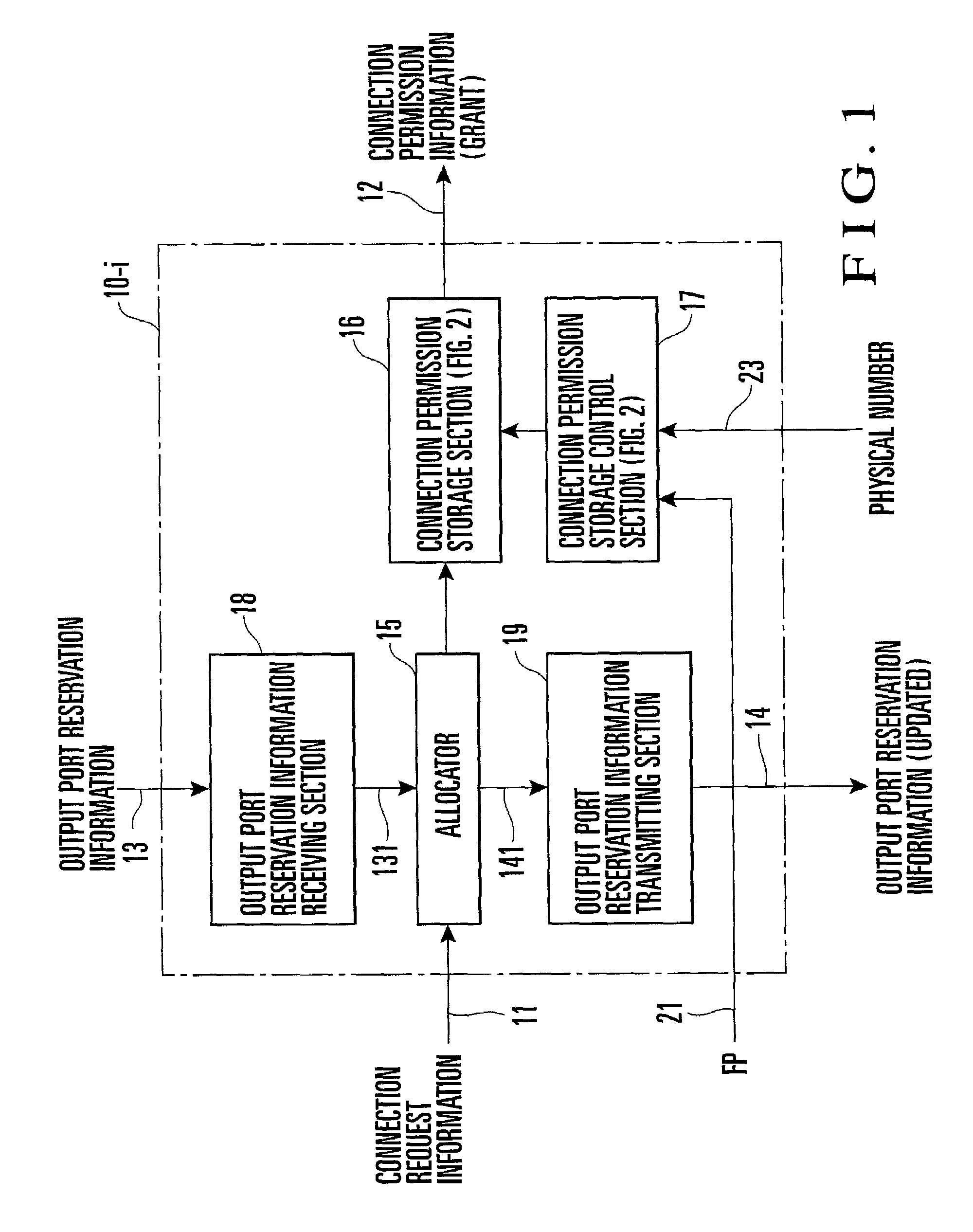

Packet switch device and scheduling control method

InactiveUS6920145B2Reduction effect in processing speedEfficient receptionData switching by path configurationProcess moduleParallel processing

A packet switch device having a plurality of input buffers; a packet switch; a plurality of schedulers, having a pipeline scheduling process module wherein a plurality of time units corresponding to the number of output lines is spent in scheduled sending process of the fixed length packets from the input buffer, and wherein the scheduled sending process is executed in a number of processes, in parallel, the number of processes corresponding to the number of the input lines, having a sending status management module wherein sending status of the fixed length packets which constitute one frame is managed for each of the input lines, and provided corresponding to any of the output lines; and at least one result notification module for notifying the input buffer of result information from the scheduled sending process performed by each of the plurality of schedulers. Further, in the scheduled sending process executed in a number of processes, in parallel, the device does not select the input line sending the fixed length packets corresponding to the same frame, and, after determining a selection, the device maintains the selection of the same input line until the completion of sending the fixed length packets corresponding to the same frame.

Owner:FUJITSU LTD

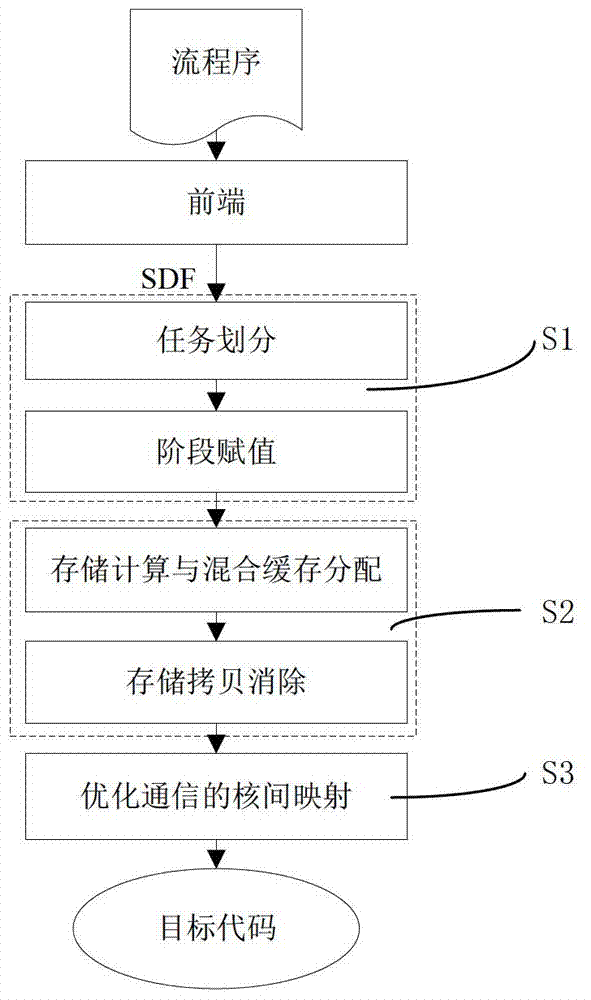

Data flow compilation optimization method oriented to multi-core cluster

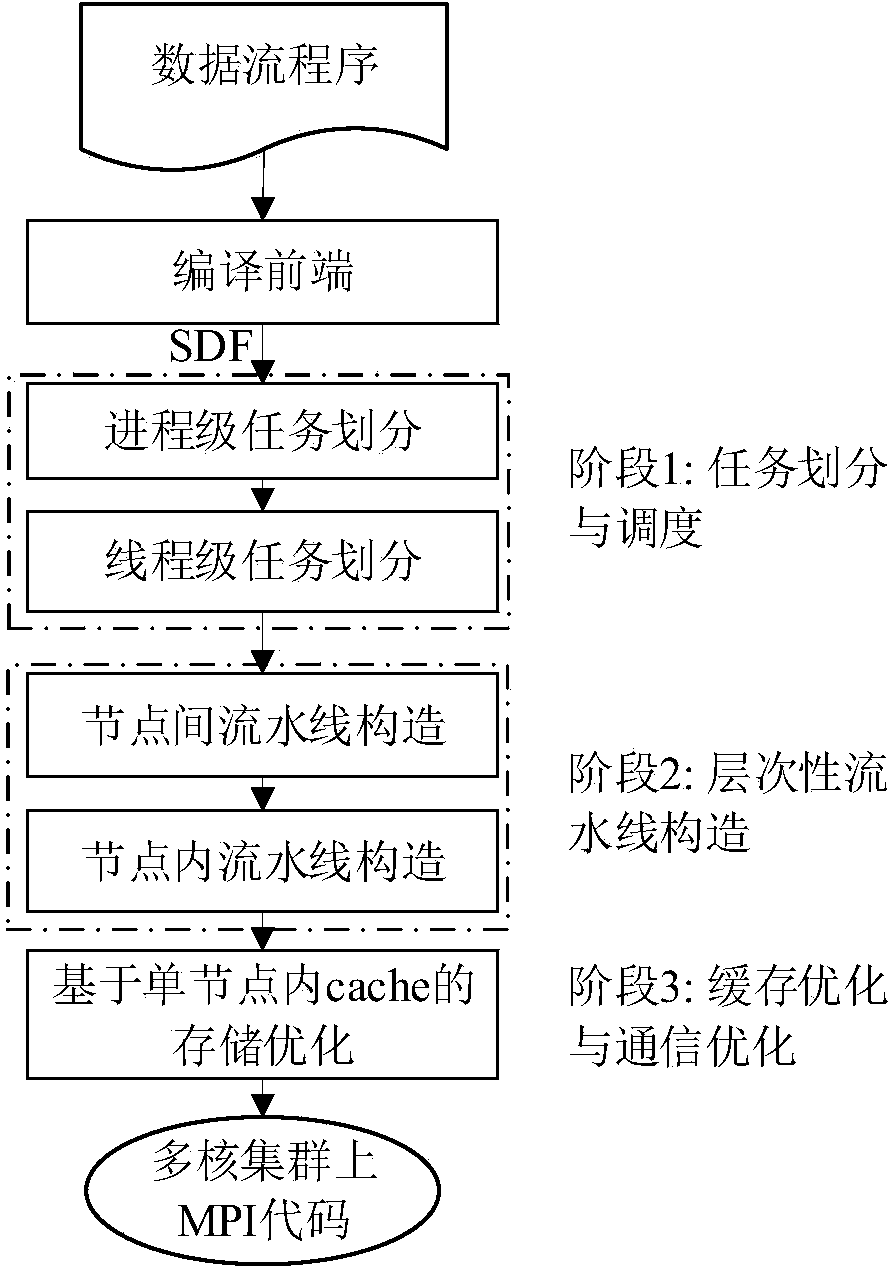

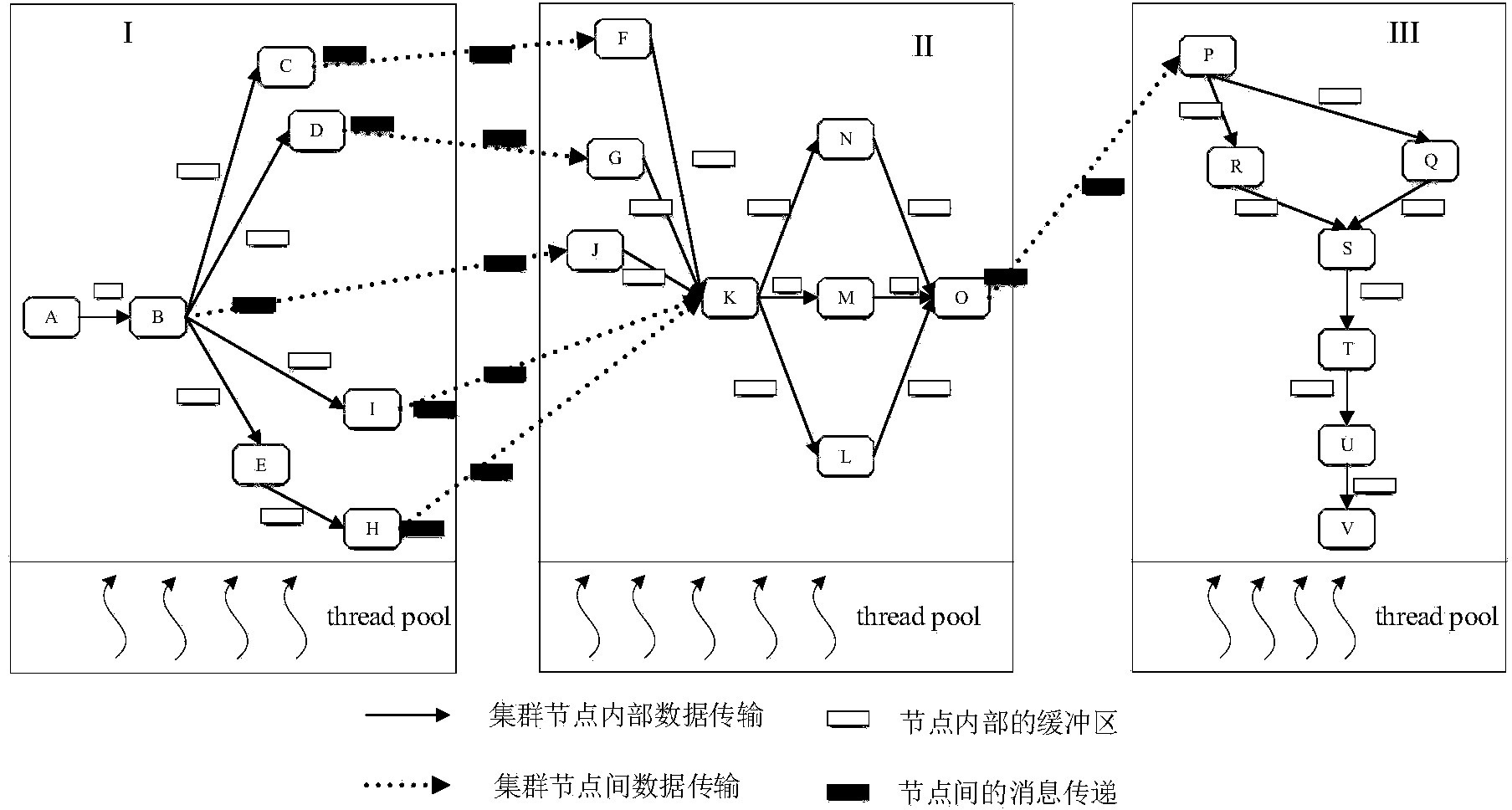

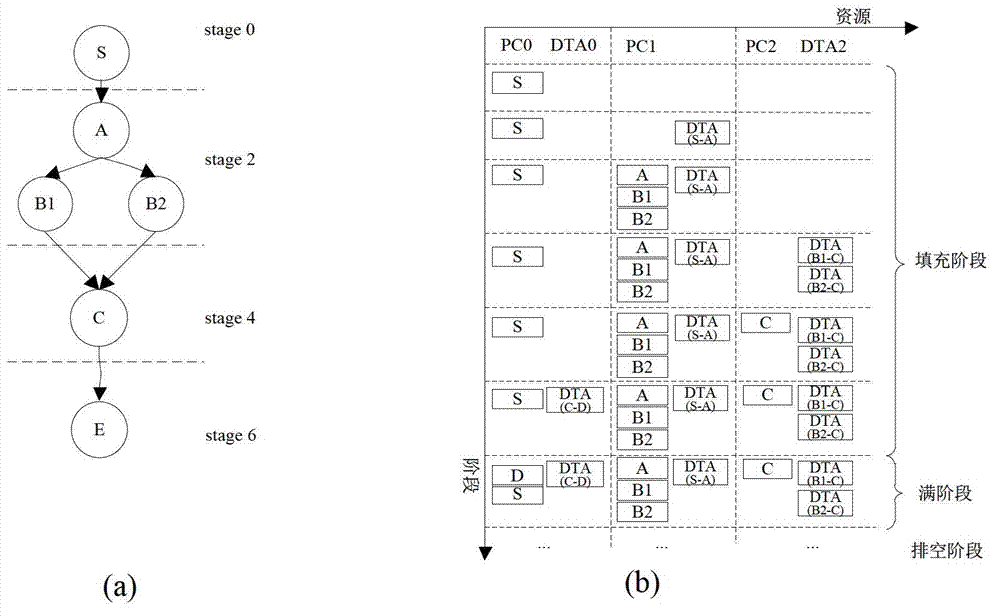

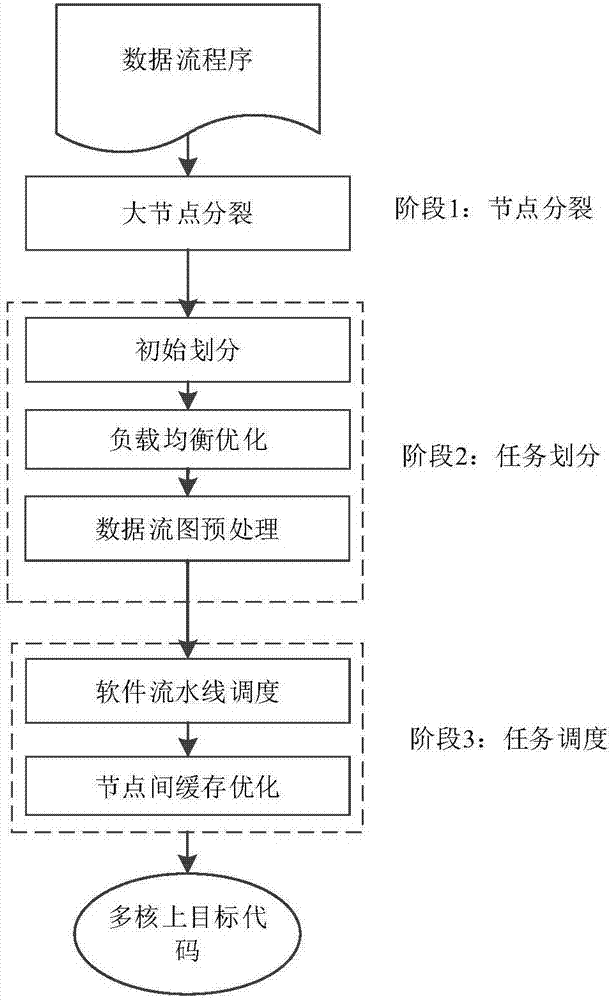

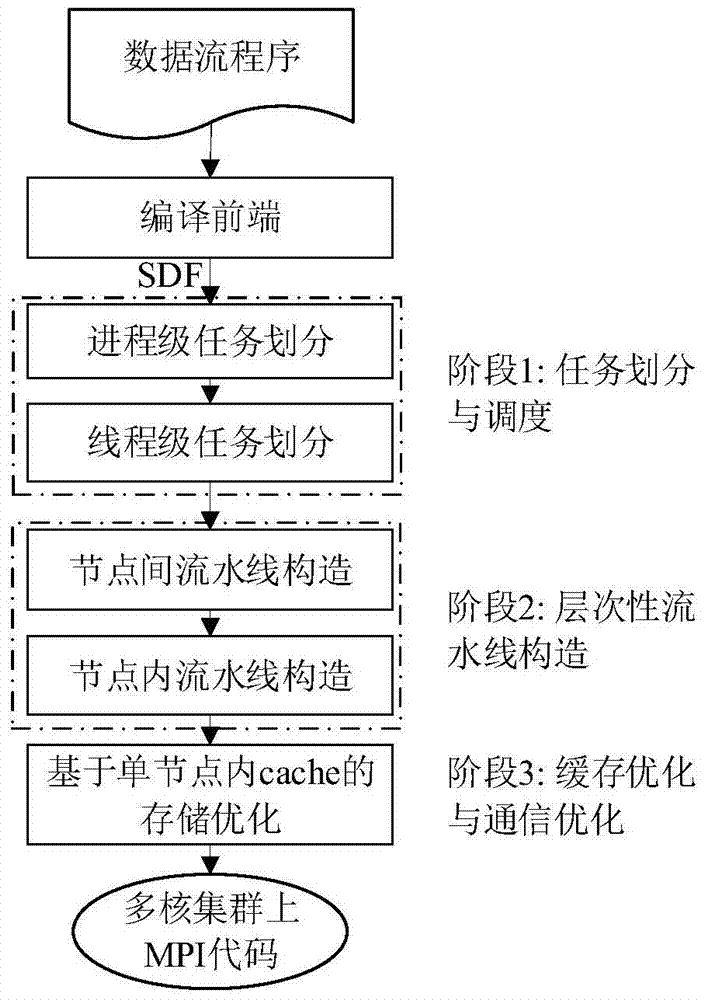

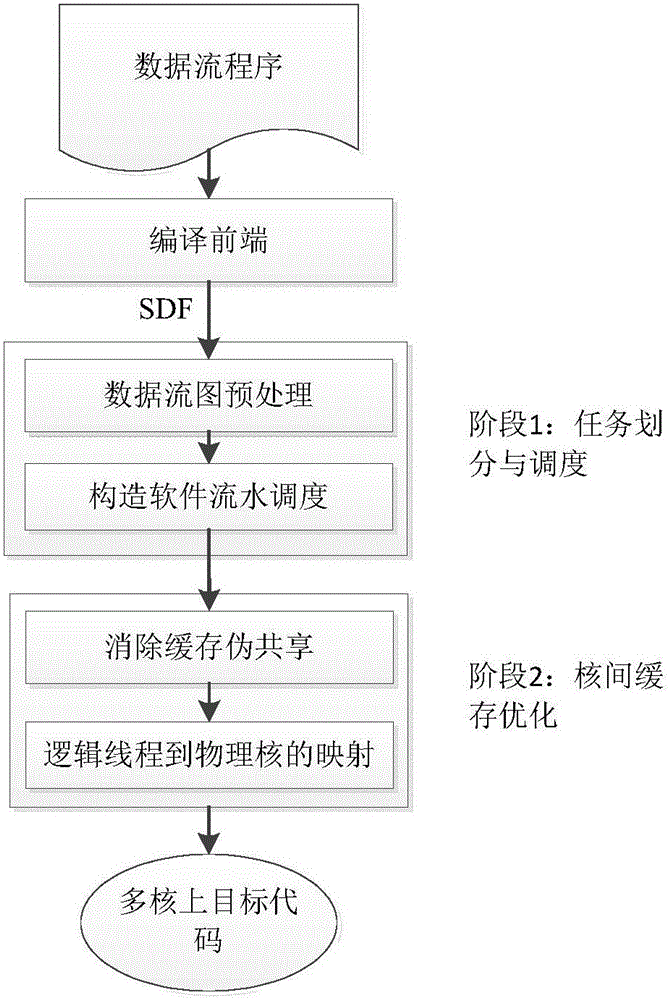

ActiveCN103970580AImplementing a three-level optimization processImprove execution performanceResource allocationMemory systemsCache optimizationData stream

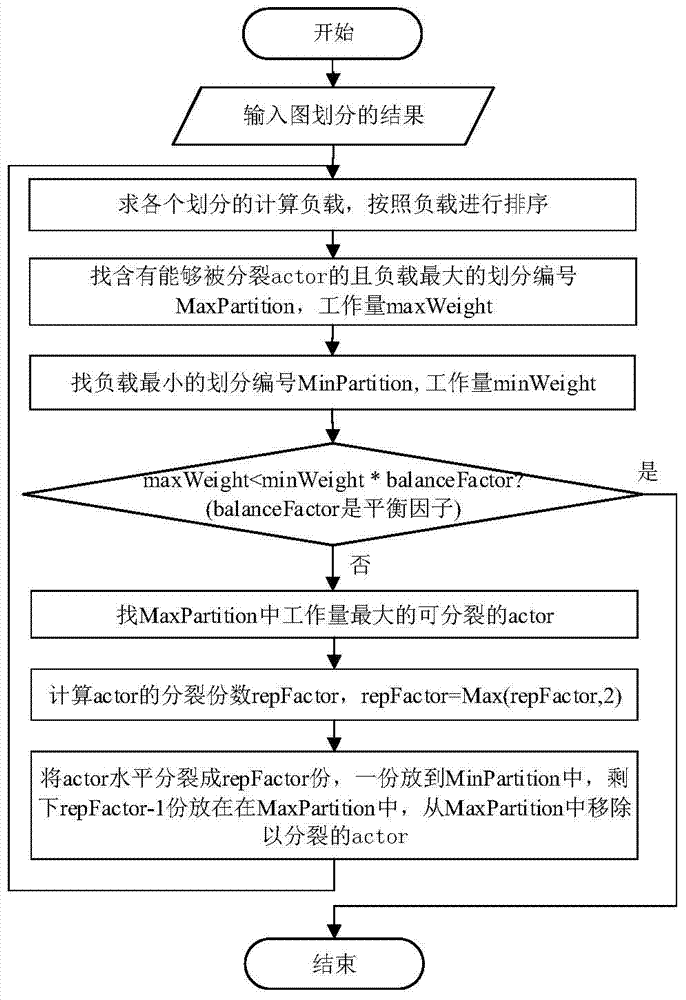

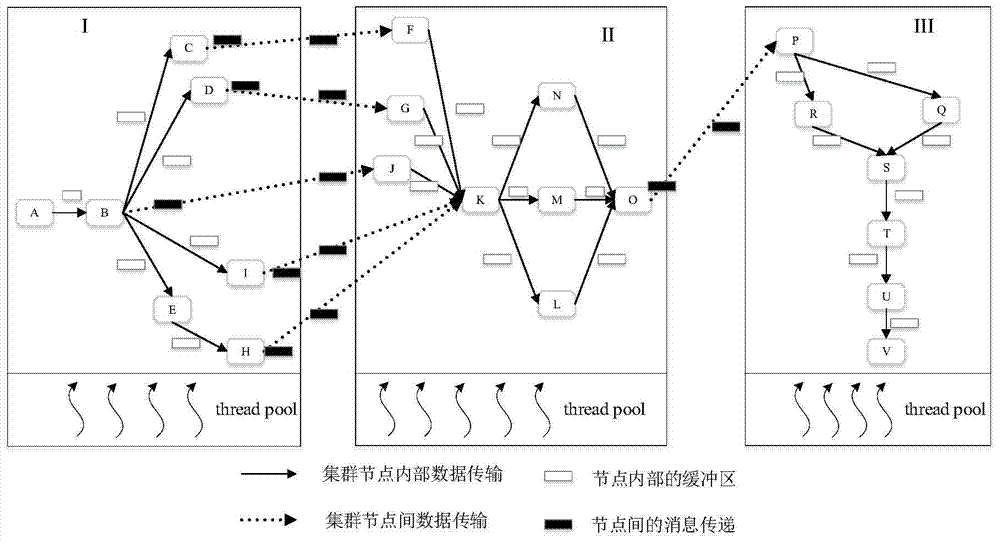

The invention discloses a data flow compilation optimization method oriented to a multi-core cluster system. The data flow compilation optimization method comprises the following steps that task partitioning and scheduling of mapping from calculation tasks to processing cores are determined; according to task partitioning and scheduling results, hierarchical pipeline scheduling of pipeline scheduling tables among cluster nodes and among cluster node inner cores is constructed; according to structural characteristics of a multi-core processor, communication situations among the cluster nodes, and execution situations of a data flow program on the multi-core processor, cache optimization based on cache is conducted. According to the method, the data flow program and optimization techniques related to the structure of the system are combined, high-load equilibrium and high parallelism of synchronous and asynchronous mixed pipelining codes on a multi-core cluster are brought into full play, and according to cache and communication modes of the multi-core cluster, cache access and communication transmission of the program are optimized; furthermore, the execution performance of the program is improved, and execution time is shorter.

Owner:HUAZHONG UNIV OF SCI & TECH

Communication method for packet switching systems

A method of communicating data frames between nodes in a network comprised of one or more end system nodes where each end system node has a unidirectional ingress port and a unidirectional egress port. The method comprises transmitting a data frame from an egress port of one end system node to the ingress port of another end system node, the receiving end system node then determining whether it is the final destination for the data frame. If the receiving end system node is the final destination of the data frame, the receiving end system node absorbs the message. If not, the receiving end system node buffers and then retransmits the data frame through its own egress port. The method provides scalability, low cost, distributed pipeline scheduling, maximum complexity of the network fabric, and maximum speed.

Owner:PMC-SIERRA

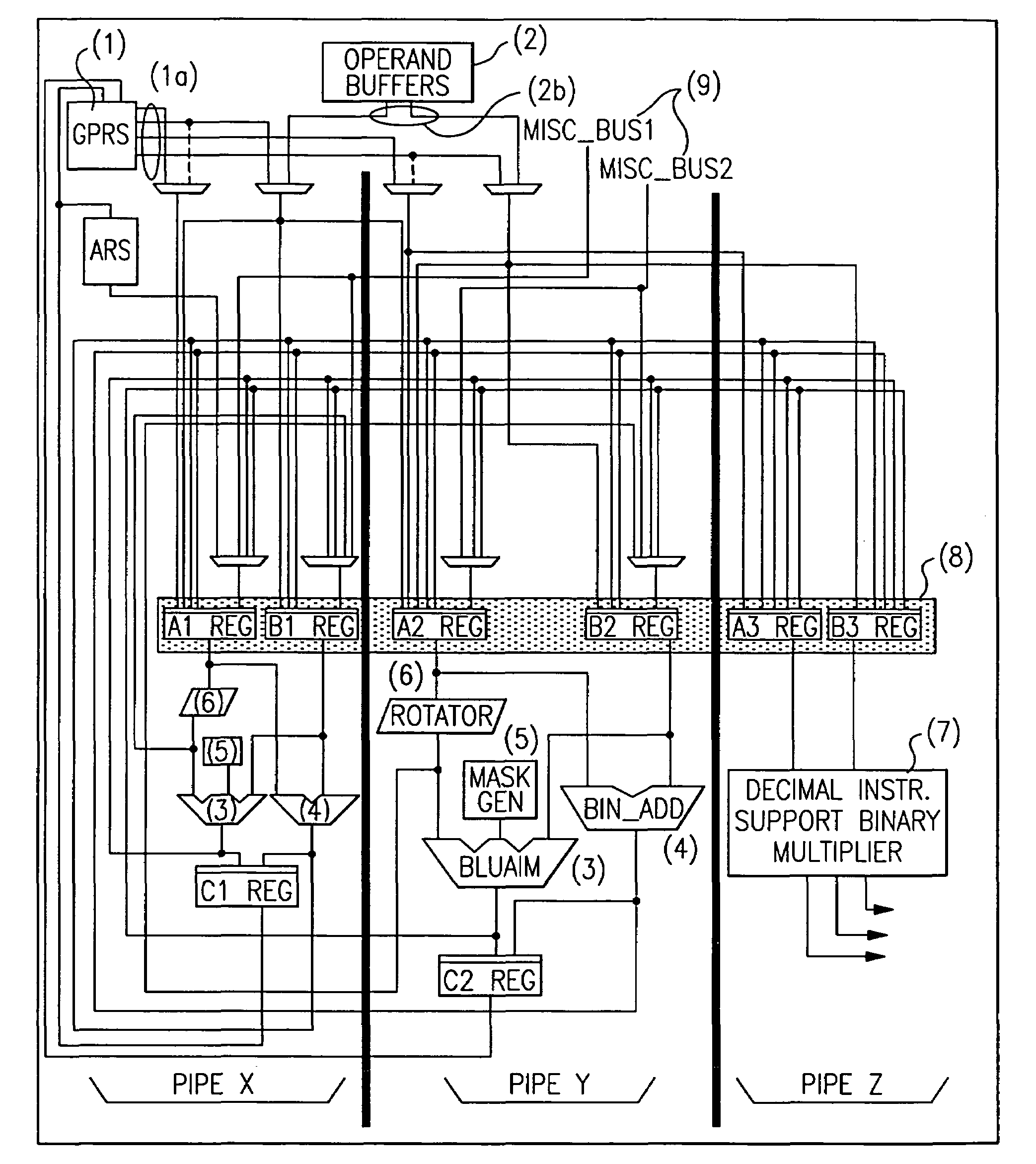

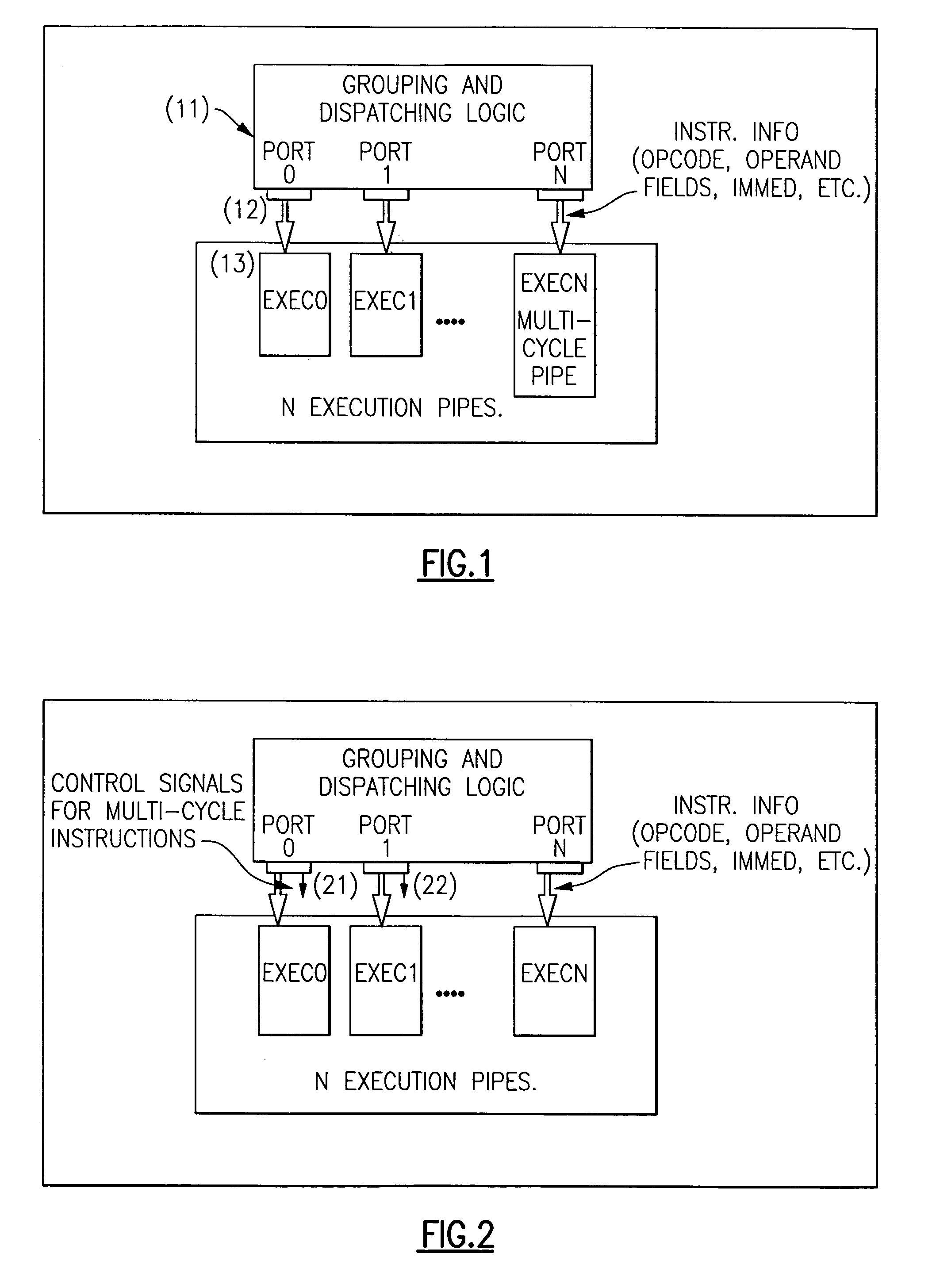

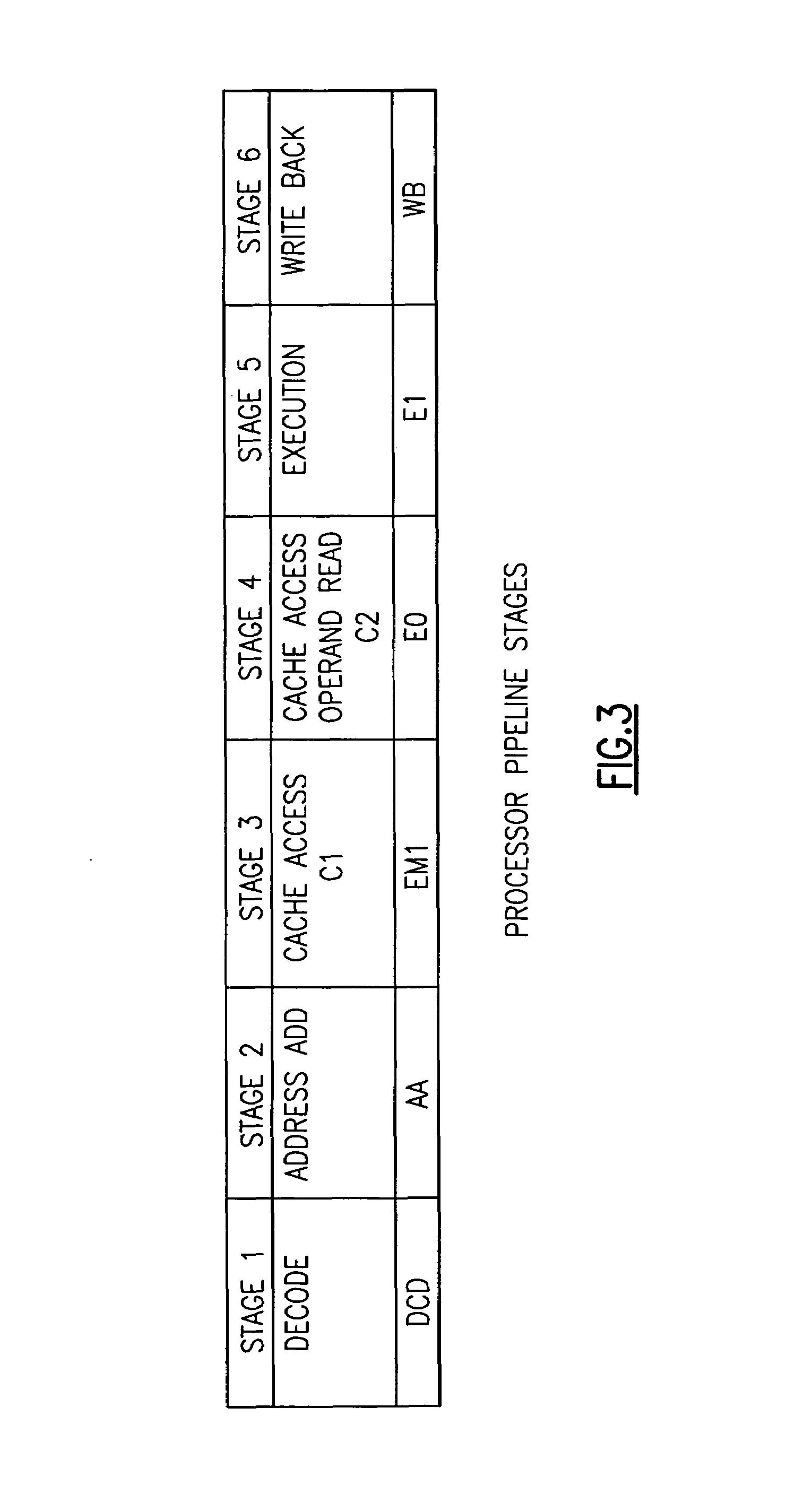

Multi-pipe dispatch and execution of complex instructions in a superscalar processor

ActiveUS7085917B2Performance maximizationImprove performanceCooking-vessel materialsRuntime instruction translationScalar processorControl signal

In a computer system, a method and apparatus for dispatching and executing multi-cycle and complex instructions. The method results in maximum performance for such without impacting other areas in the processor such as decode, grouping or dispatch units. This invention allows multi-cycle and complex instructions to be dispatched to one port but executed in multiple execution pipes without cracking the instruction and without limiting it to a single execution pipe. Some control signals are generated in the dispatch unit and dispatched with the instruction to the Fixed Point Unit (FXU). The FXU logic then execute these instructions on the available FXU pipes. This method results in optimum performance with little or no other complications. The presented technique places the flexibility of how these instructions will be executed in the FXU, where the actual execution takes place, instead of in the instruction decode or dispatch units or cracking by the compiler.

Owner:IBM CORP

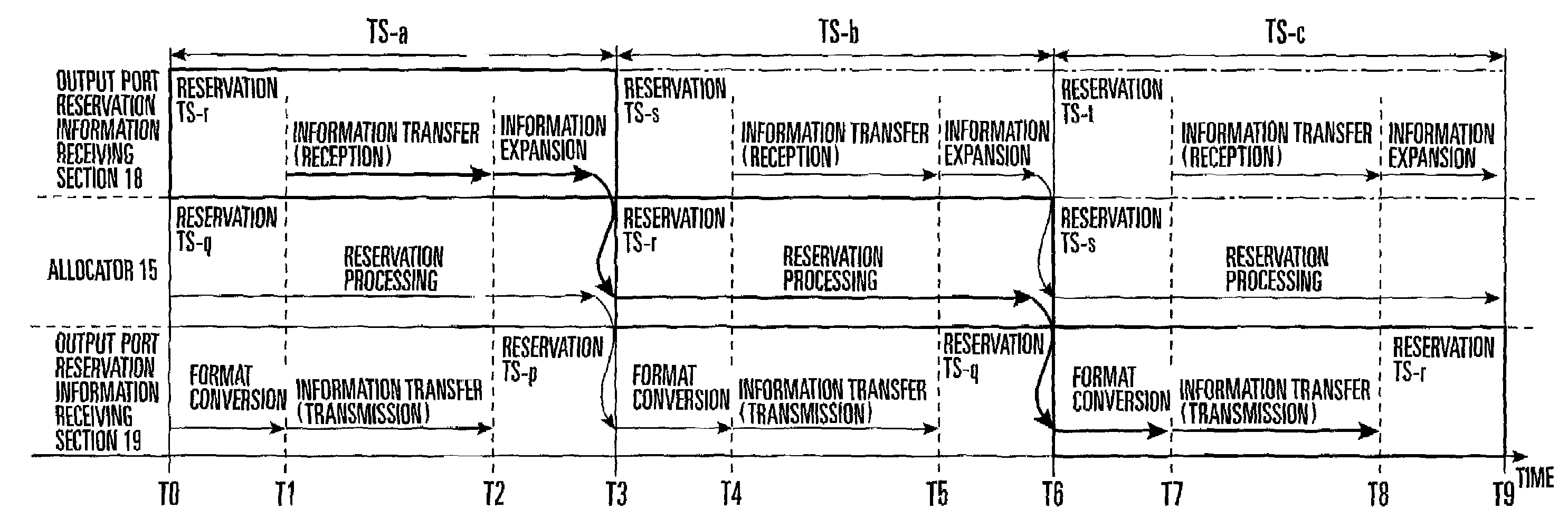

Distributed pipeline scheduling method and system

InactiveUS7142546B2Time-division multiplexData switching by path configurationInformation processingControl data

In a distributed pipeline scheduling method for a system which includes a plurality of input ports for inputting data, a plurality of output ports for outputting data, a data switch element for switching the data input from the input ports and transferring the data to the output ports, and a scheduler having a distributed scheduling architecture for controlling the data switch element, and determines connection reservations between the input ports and the output ports, the scheduler independently assigns time slots to information transfer processing and reservation processing. Processing information transfer processing and reservation processing are performed in the assigned time slots in a pipeline fashion. A distributed pipeline scheduling system and distributed scheduler are also disclosed.

Owner:NEC CORP

Flow compilation optimization method oriented to chip multi-core processor

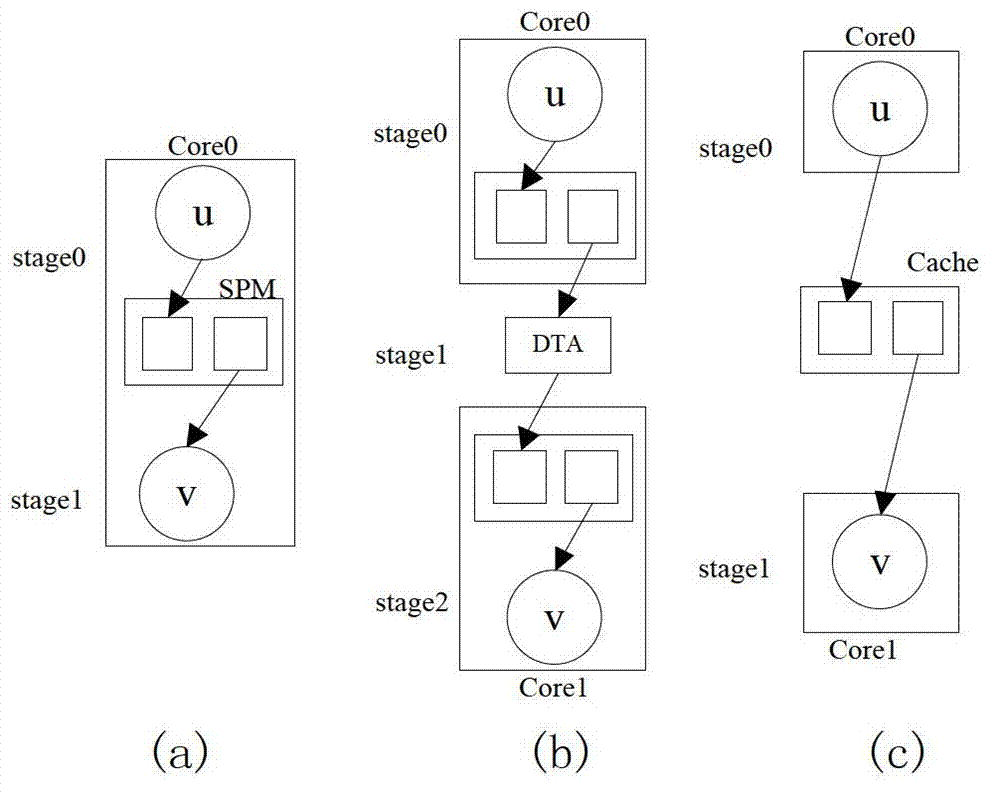

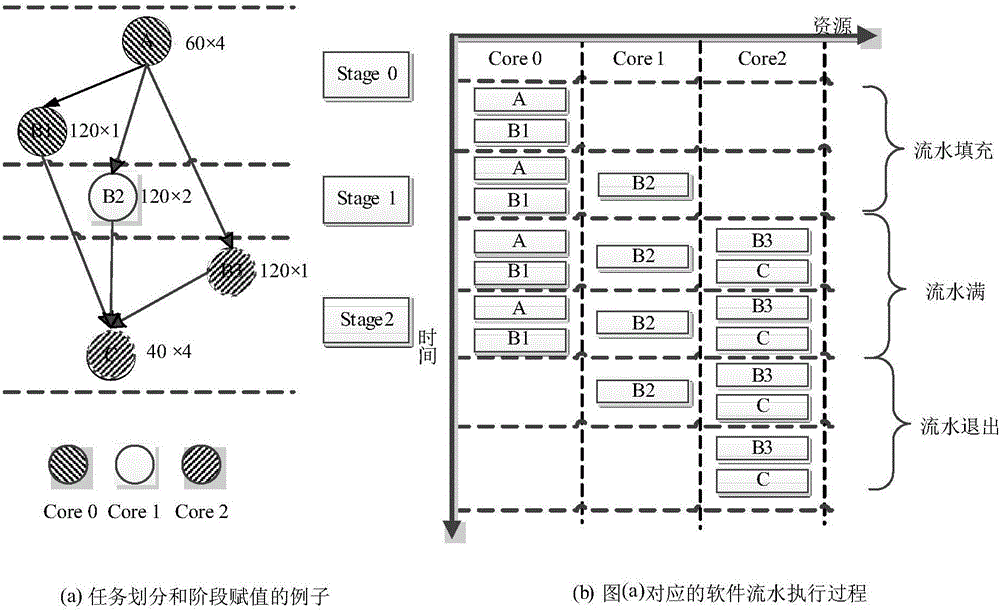

ActiveCN102855153AImprove parallelismLoad balancingConcurrent instruction executionMemory systemsFully developedProcessing core

The invention discloses a flow compilation optimization method oriented to a chip multi-core processor. The method includes a software pipeline scheduling step, a storage access optimization step and a communication optimization step, the software pipeline scheduling step refers to generating a software pipeline scheduling table, the storage access optimization step refers to caching and distributing data required by a computing task on an on-chip scratch pad memory (SPM) and a main memory of the chip multi-core processor according to the software pipeline scheduling table, and as for the communication optimization step, a mapping mode with a lowest communication traffic is determined according to an on-chip network topology of the chip multi-core processor, and thereby each virtual processing core in the software pipeline scheduling table is scheduled and mapped to an actual physical core according to the mapping mode. According to the method, the method is combined with an optimization technology, according to the optimization technology, a flow program is relevant to a system structure, a high load balance and a high parallelism of software pipeline codes on the multi-core processor are fully developed, the storage access and communication transmission of the program are optimized specific to hierarchy storage and communication mode on the chip multi-core processor, the execution performance of the program is further improved, and the execution time is short.

Owner:HUAZHONG UNIV OF SCI & TECH

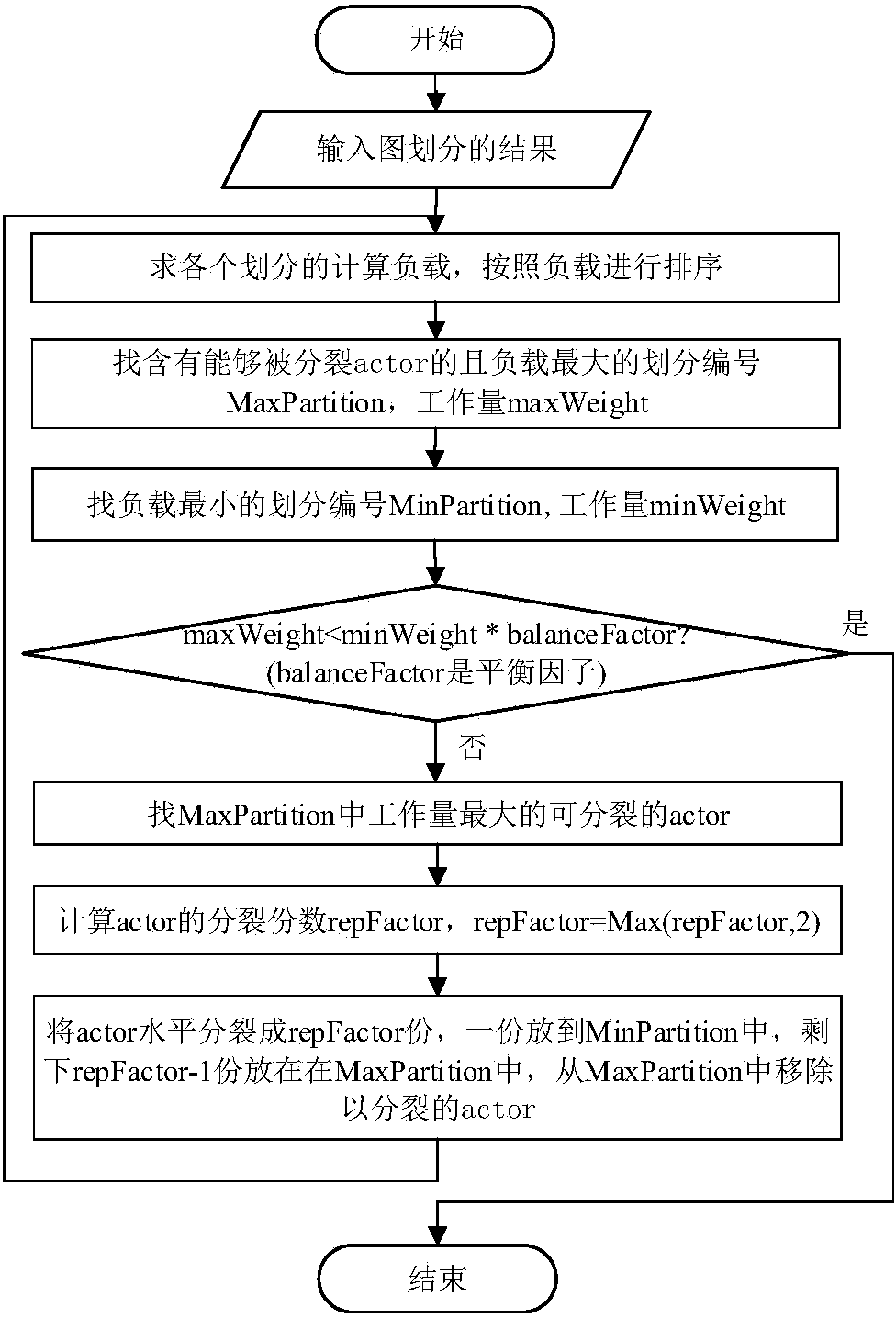

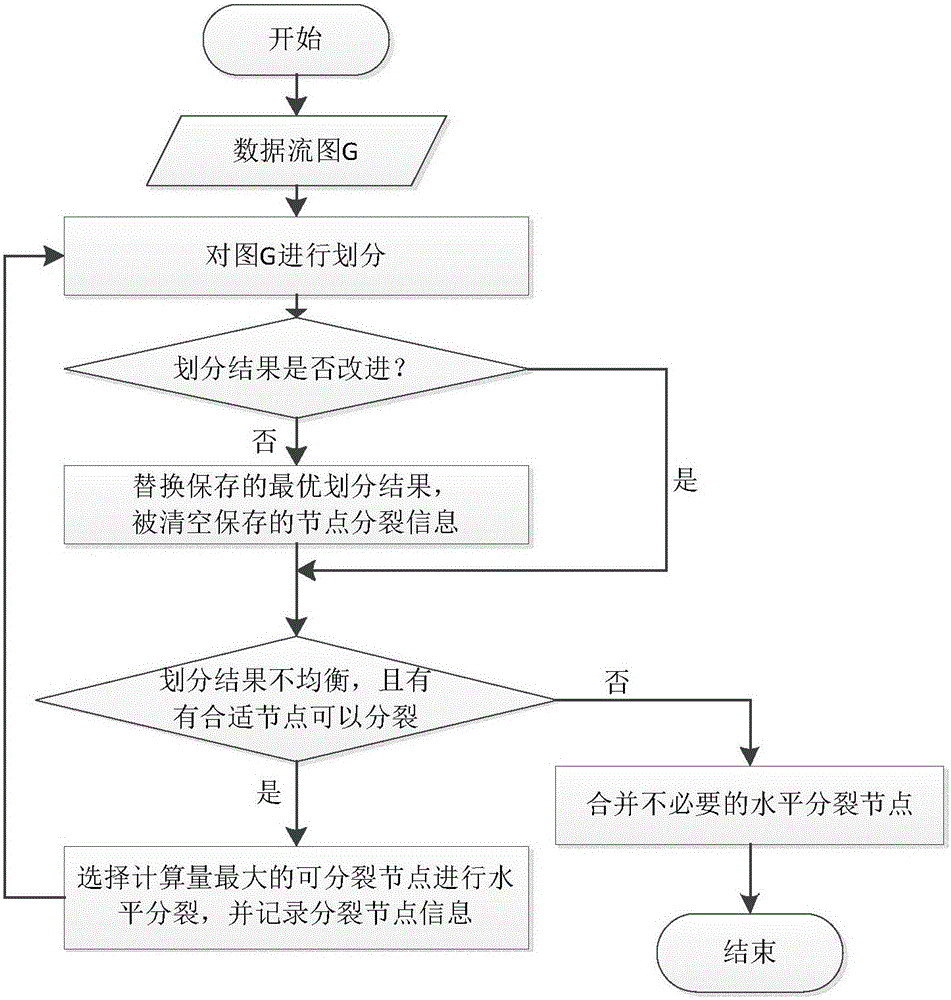

Data flow program task partitioning and scheduling method for multi-core system

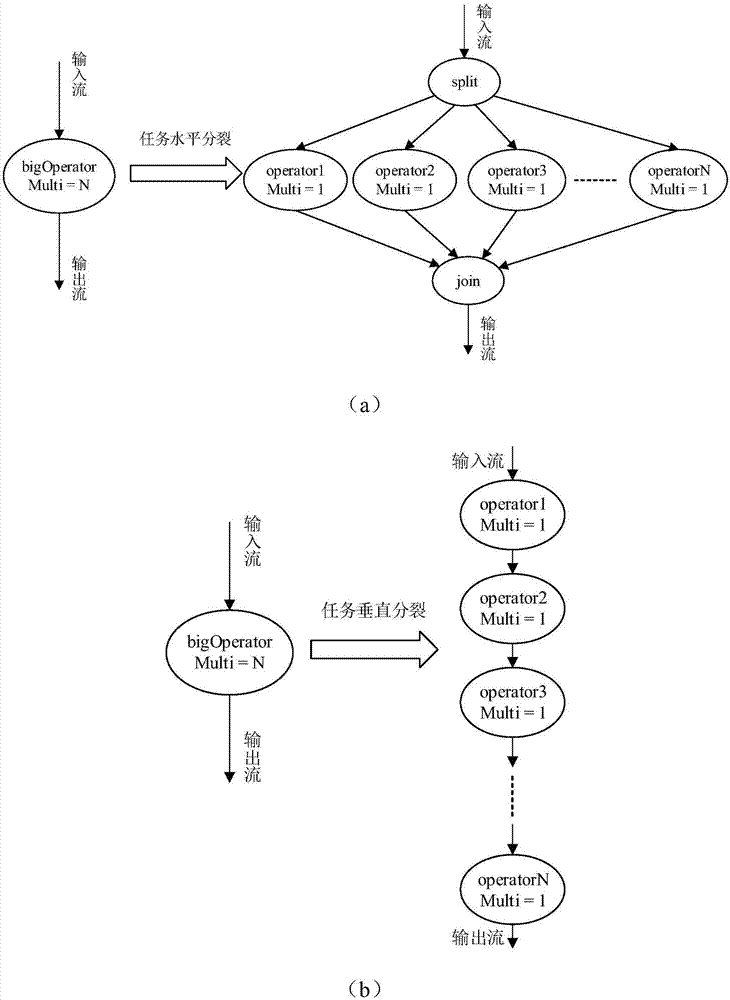

ActiveCN107247628ASmall granularityImprove parallelismResource allocationInterprogram communicationData flow programmingMulticore architecture

The invention discloses a data flow program task partitioning and scheduling method for a multi-core system. The method mainly comprises the steps of the splitting algorithm of data flow graph nodes, the GAP task partitioning algorithm, a software pipeline scheduling model and the double-buffer mechanism of the data flow graph nodes. According to the method, the data parallelism, the task parallelism and the software pipeline parallelism which are contained in the data flow programming model are utilized to maximize the program parallelism, according to the characteristics of a multi-core framework, a data flow program is scheduled, and the performance of a multi-core processor is brought into full play.

Owner:HUAZHONG UNIV OF SCI & TECH

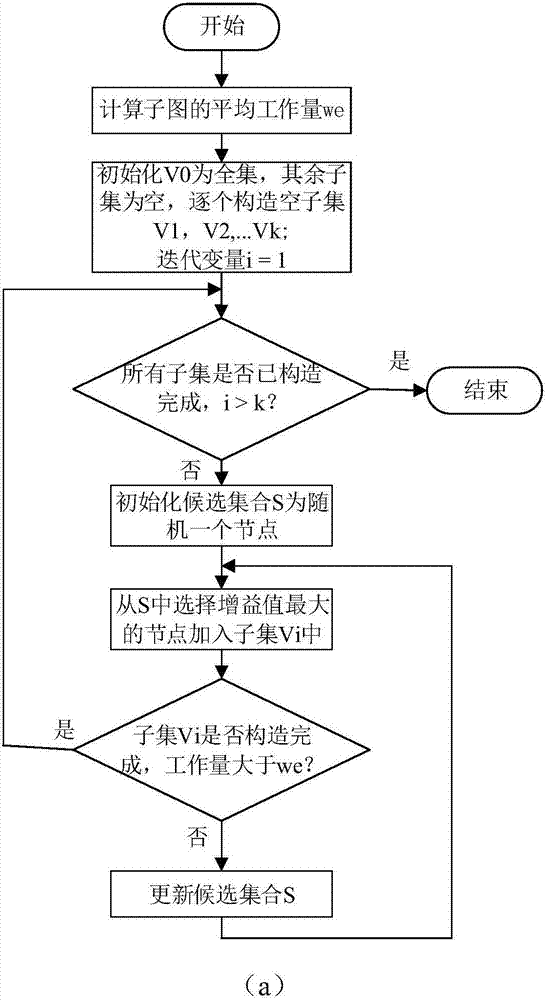

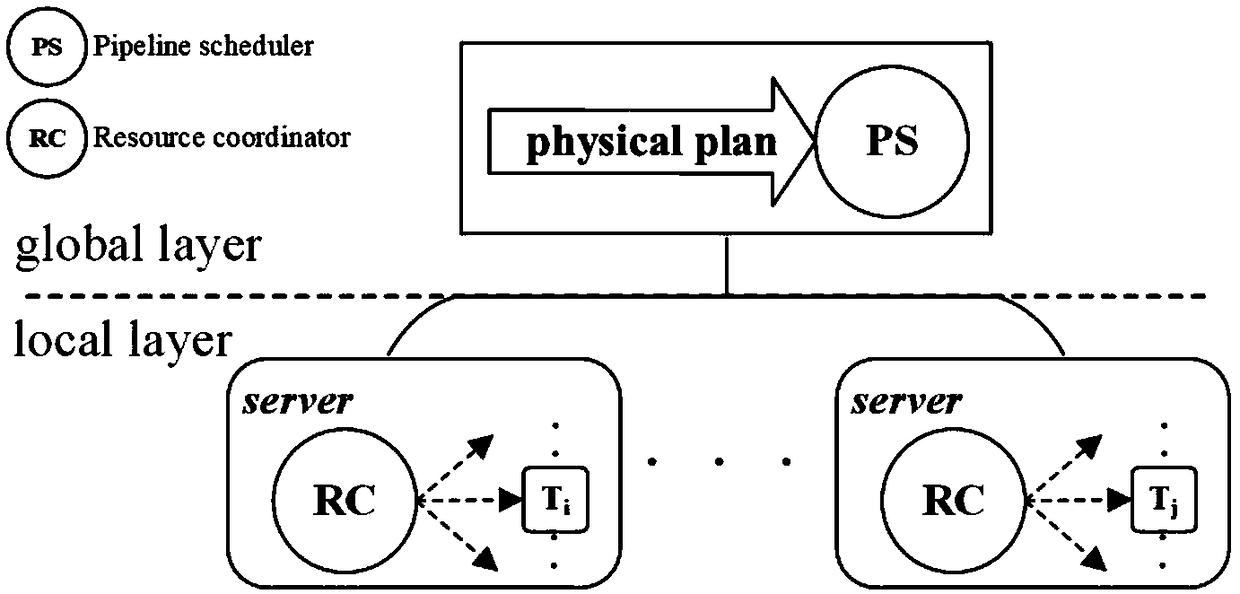

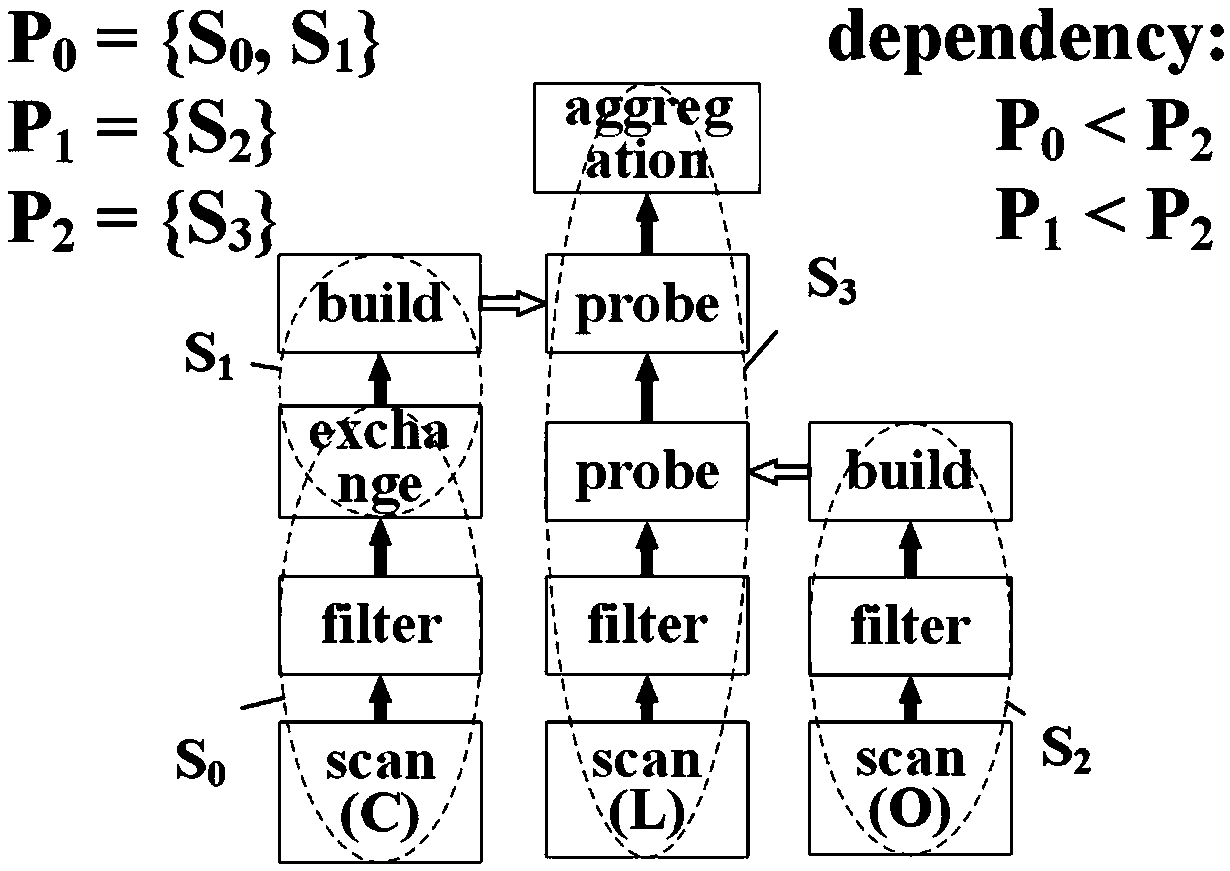

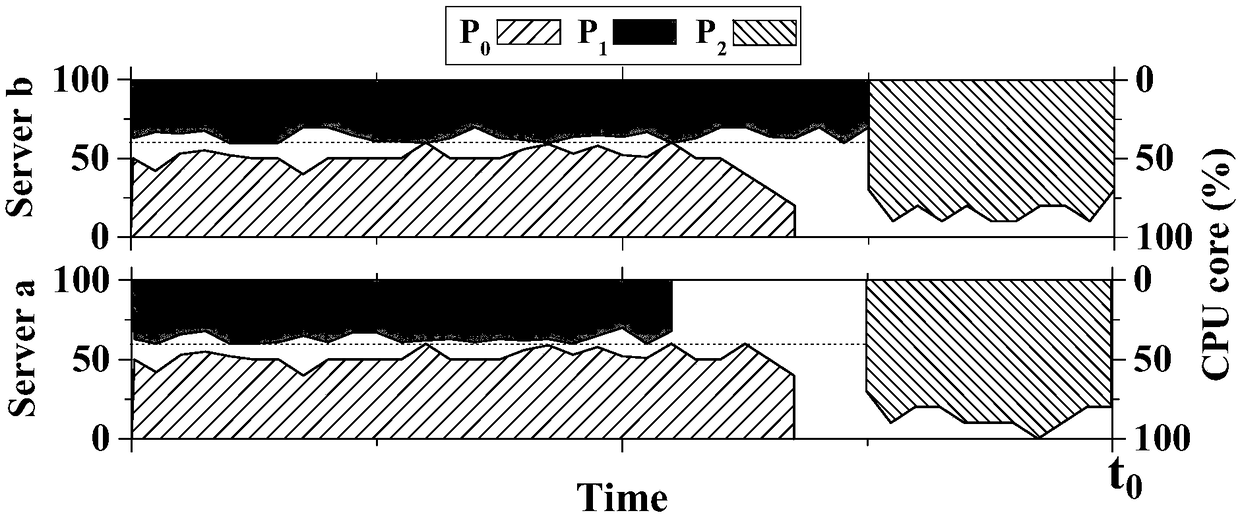

Multi-pipeline scheduling method for distributed memory database

InactiveCN109086407AFast response timeReduced execution timeSpecial data processing applicationsDistributed memoryResource utilization

An object of the present invention is to provide a multi-pipeline scheduling method for a distributed memory database, the invention dynamically schedules the pipeline with the highest priority amongthe high priority SQL queries according to the available resources in the cluster, moreover, some pipelines of the SQL statement are scheduled to fill the idle resources, which can improve the resource utilization of the whole cluster to reduce the execution time and improve the response time of the high-priority SQL query statements in the distributed memory database. The invention is easy to realize and can achieve considerable practical effects.

Owner:EAST CHINA NORMAL UNIV

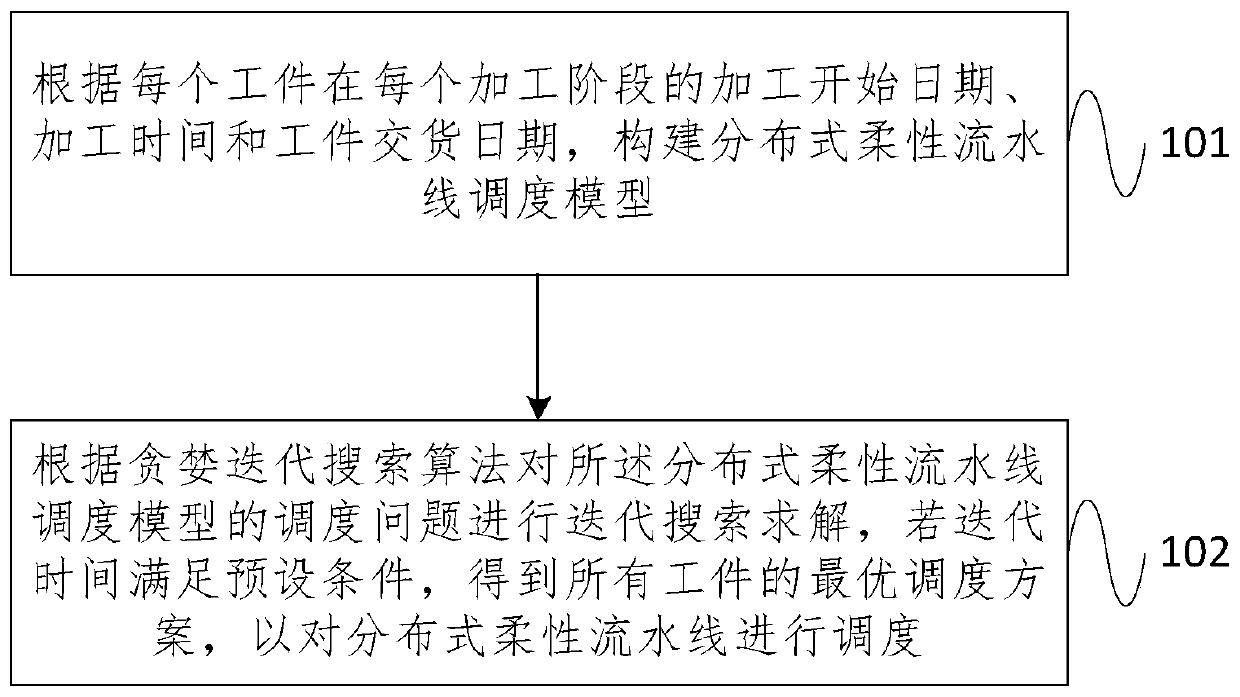

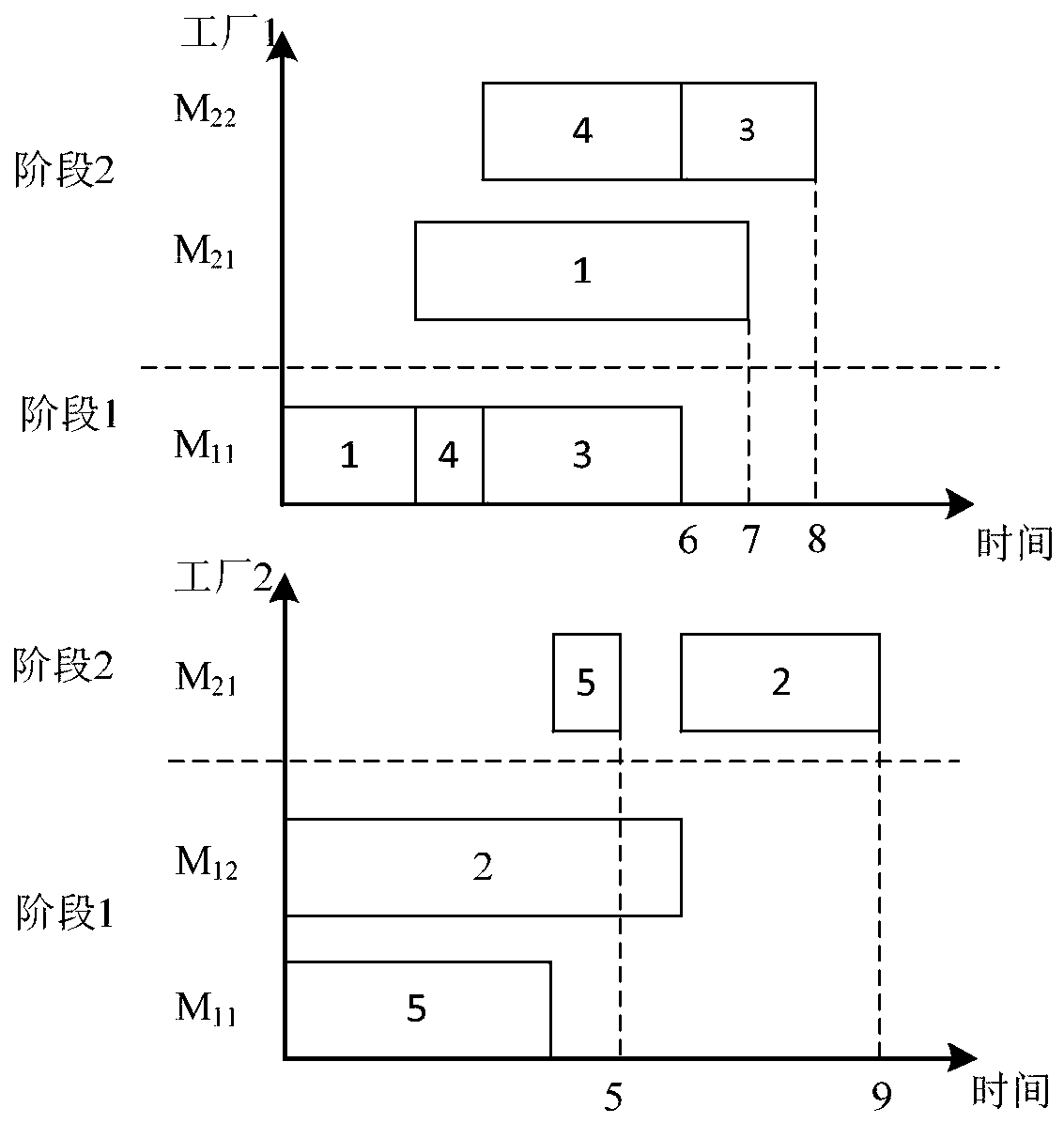

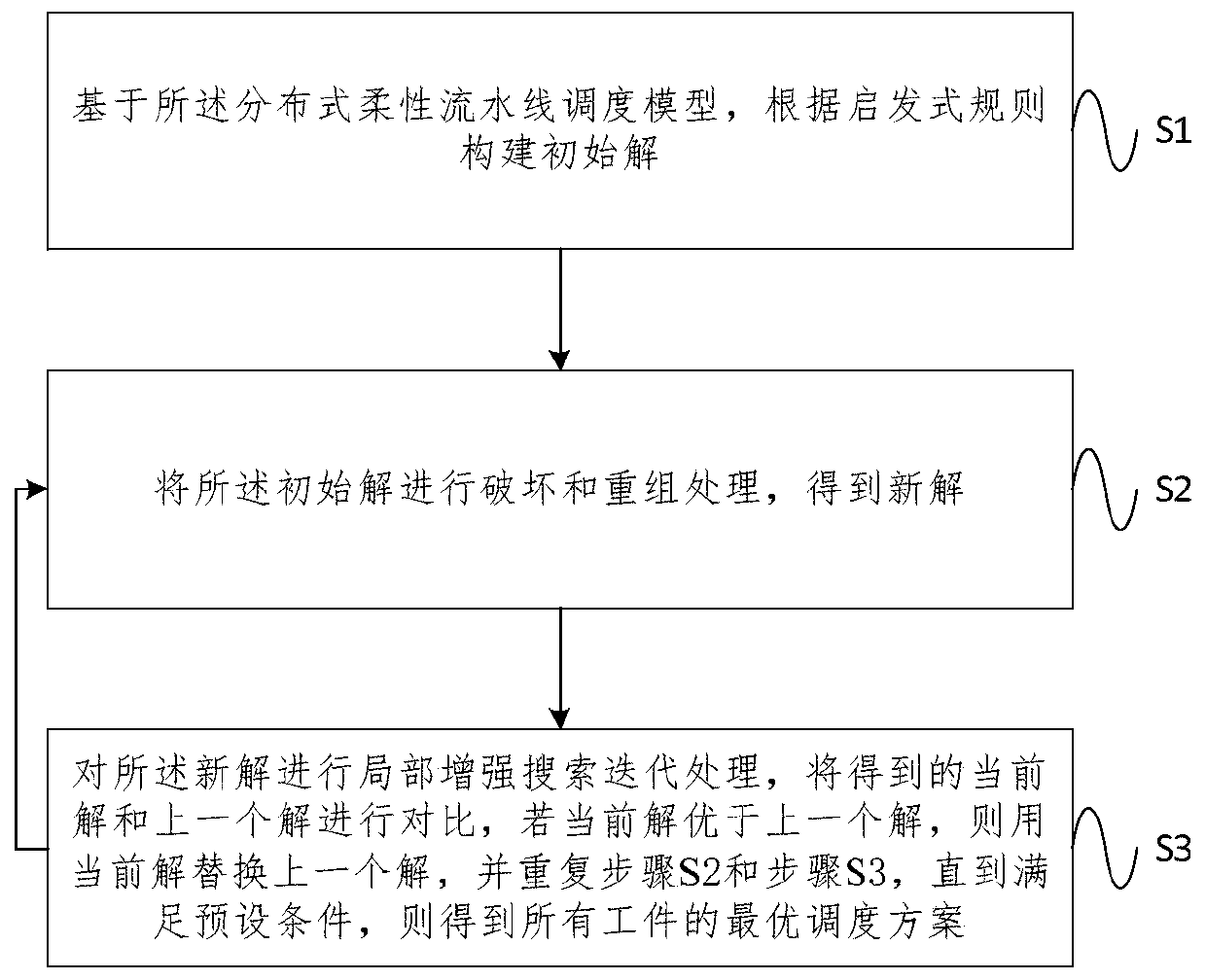

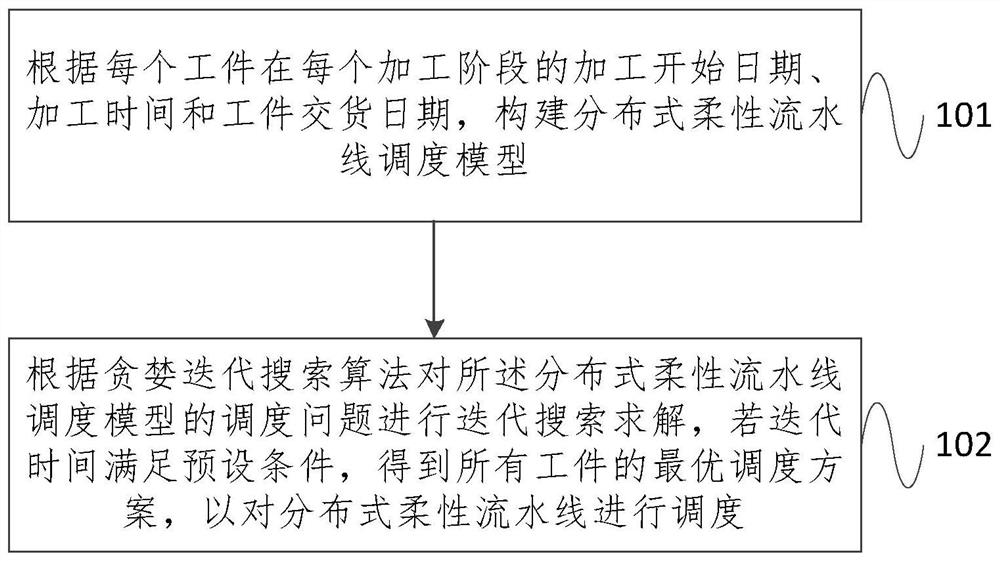

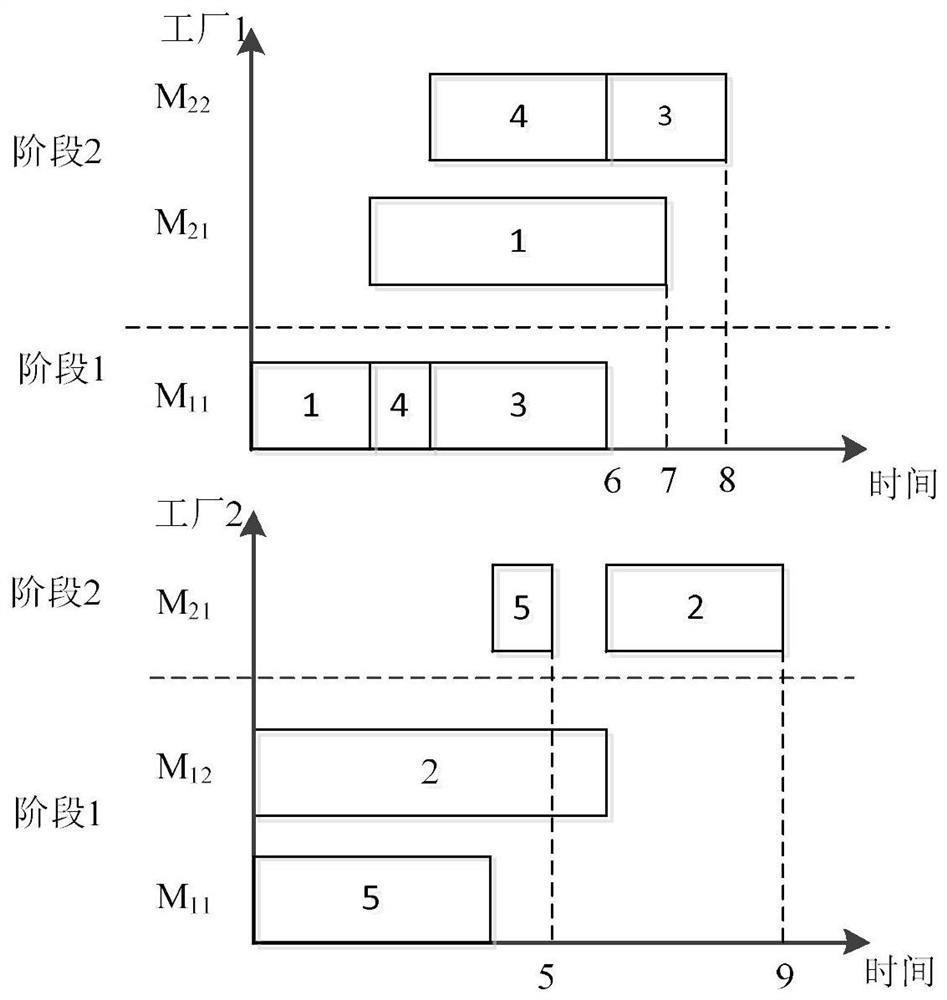

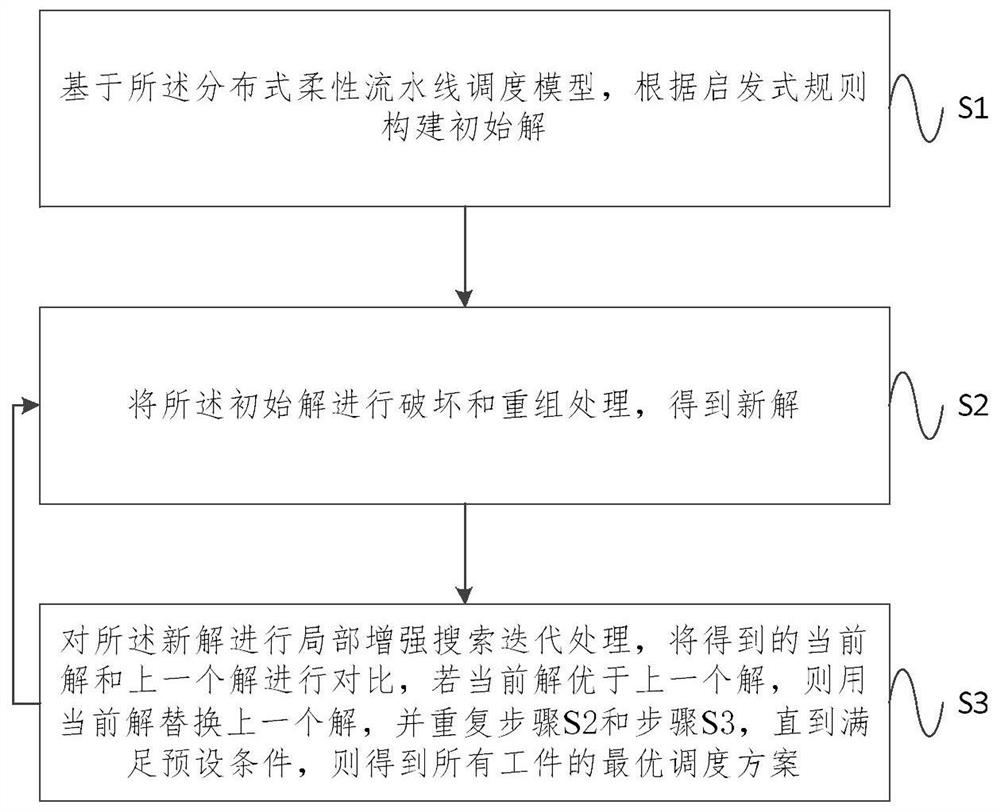

Distributed flexible pipeline scheduling method

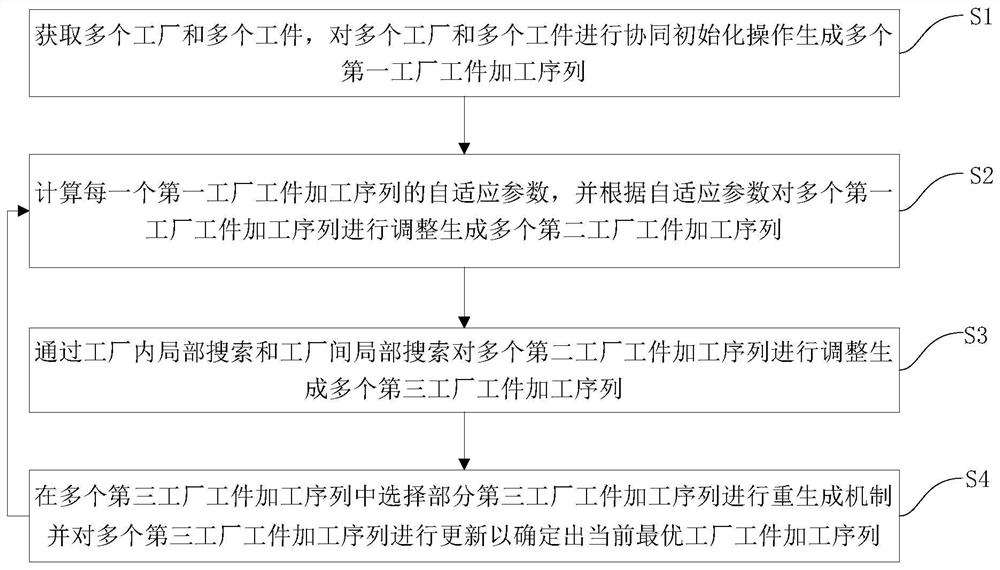

ActiveCN110288185ASolve scheduling problemsQuick calculationResourcesManufacturing computing systemsIterative searchOptimal scheduling

The embodiment of the invention provides a distributed flexible assembly line scheduling method, which comprises the following steps of: constructing a distributed flexible assembly line scheduling model according to the processing start date, the processing time and the workpiece delivery date of each workpiece in each processing stage; and performing iterative search solving on the scheduling problem of the distributed flexible assembly line scheduling model according to a greedy iterative search algorithm, and if the iteration time meets a preset condition, obtaining an optimal scheduling scheme of all workpieces so as to schedule the distributed flexible assembly line. According to the embodiment of the invention, a distributed flexible pipeline scheduling model is established; according to a greedy iterative search algorithm, the search efficiency is effectively improved, the rapid calculation of the optimization target of the distributed flexible pipeline scheduling model is realized, so that the algorithm can obtain a better scheduling scheme in a shorter time, and the problem of large-scale distributed flexible pipeline scheduling can be effectively and efficiently solved.

Owner:TSINGHUA UNIV

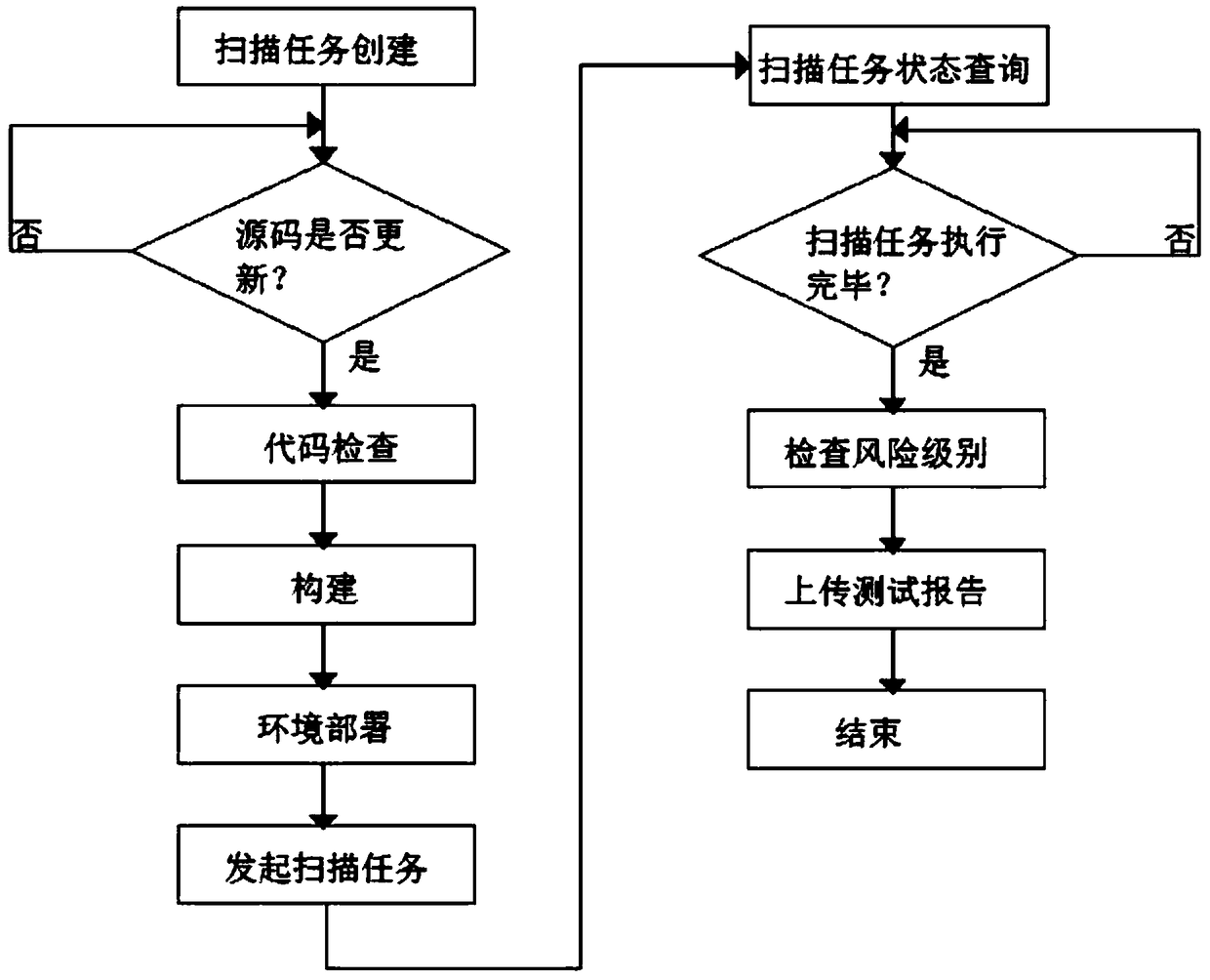

A continuous integration method based on vulnerability scanning platform

InactiveCN109241735AEfficient iterationDeal effectively with iterationPlatform integrity maintainanceTransmissionContinuous integrationAutomatic testing

The invention discloses a continuous integration method based on a vulnerability scanning platform, including a continuous integration platform, the vulnerability scanning platform and a Jenkins server. Step S100: the continuous integration platform calls the continuous integration interface of the vulnerability scanning platform to establish a vulnerability scanning rule and a scanning task, calls the Jenkins interface of the Jenkins server to create a project, construct the project and obtain a construction result; Step S200): The Jenkins server completes the automatic test of the missed sweep task of the continuous integration platform end through the PipeLine pipeline scheduling. The invention creates a vulnerability scanning rule and a scanning task, initiates scanning task, queriesscanning task status and obtains scanning task report acquisition by calling the continuous integration interface of the vulnerability scanning platform, which can effectively deal with the rapid iteration of software, ensure software safety quality and save human cost and shorten the development cycle through automated testing means.

Owner:SICHUAN PANOVASIC TECH

Dynamic bandwidth allocation method of Ethernet passive optical network

InactiveUS8068731B2Improve bandwidth utilizationFast convergenceMultiplex system selection arrangementsTime-division optical multiplex systemsTraffic capacityDynamic bandwidth allocation

A dynamic bandwidth allocation method of an Ethernet passive optical network, comprises a predictor and a rule of QoS-promoted dynamic bandwidth allocation (PQ-DBA); the predictor predicts a client behavior and numbers of various kinds of packets by using a pipeline scheduling predictor consisted of a pipelined recurrent neural network (PRNN), and a learning rule of the extended recursive least squares (ERLS); the present invention establishes a better QoS traffic management for the OLT-allocated ONU bandwidth and client packets sent by priority.

Owner:CHUNGHWA TELECOM CO LTD

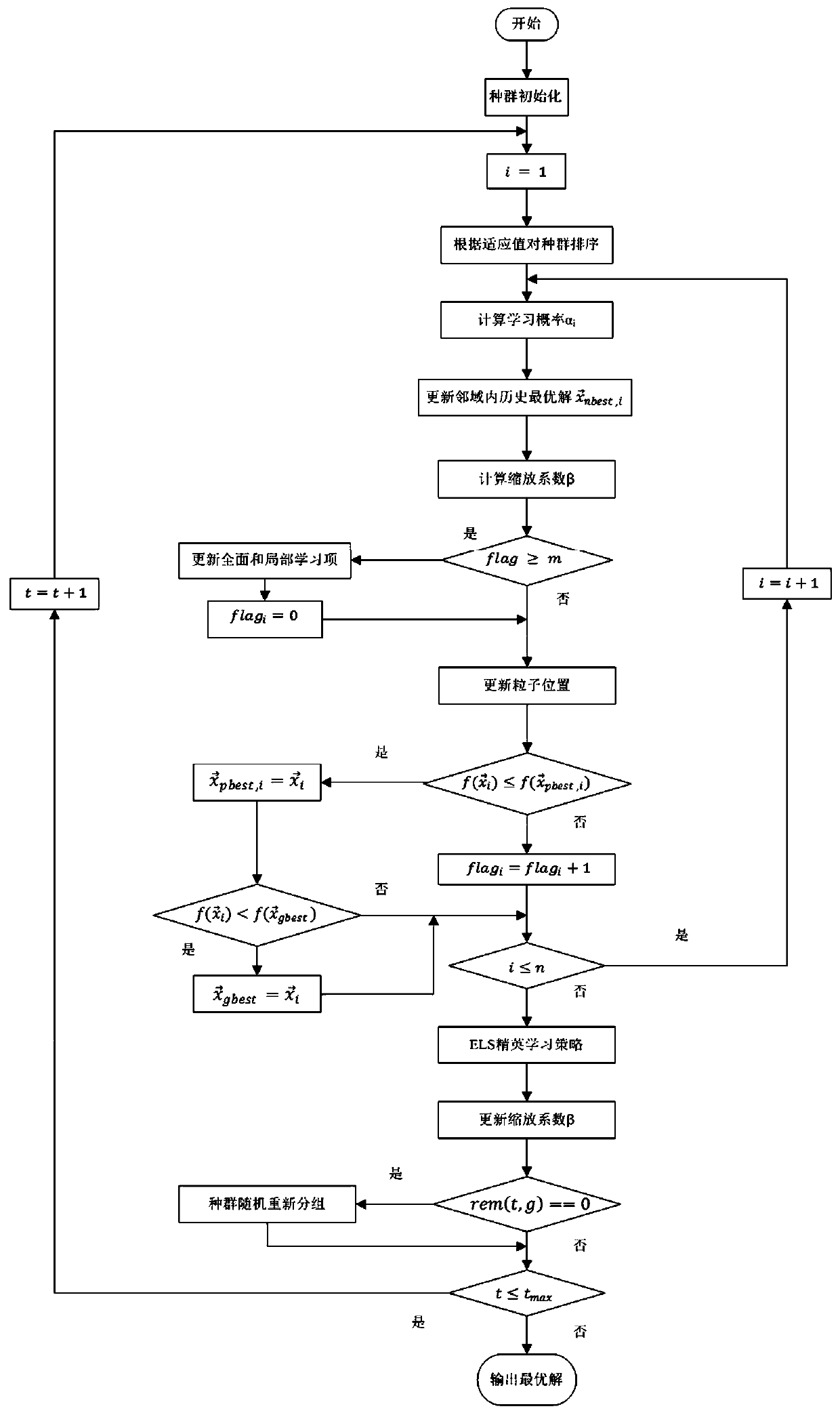

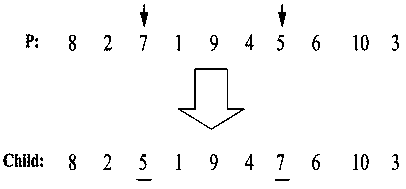

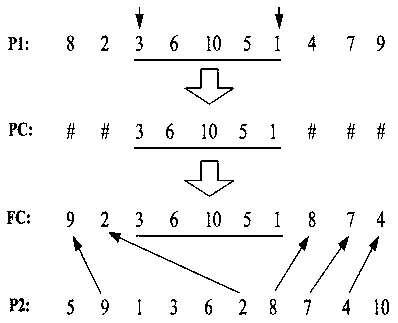

Improved unified particle swarm algorithm-based mechanical part machining pipeline scheduling method

InactiveCN110471274AOptimal Scheduling SchemeEasy to operateAdaptive controlLearning basedLocal optimum

The invention discloses a mechanical part machining pipeline scheduling method of employing a dynamic neighborhood and comprehensive learning-based discrete unified particle swarm algorithm. The mechanical part machining pipeline scheduling method comprises the following steps of reading operation time of mechanical part machining; carrying out population initialization; calculating a fitness value of each particle and sorting the particles; updating an optimal position, an individual optimal position, a global optimal position and a learning item in each particle neighborhood; carrying out global searching by adopting dynamic neighborhood and comprehensive learning-based discrete unified particle swarm optimization; carrying out elite learning strategy-based local searching; regrouping populations after a certain number of times; and recombining the learning items when updating of a global optimal solution stalls. According to the mechanical part machining pipeline scheduling method,the limitation of the dynamic neighborhood and comprehensive learning-based unified particle swarm optimization in the field of production scheduling is improved; the defects that standard particle swarm optimization depends too much on parameters and is easy to fall into local optimum are overcome; the mechanical part machining pipeline scheduling method has the characteristics of high search accuracy, a high convergence rate and the like; and furthermore, the mechanical part machining pipeline scheduling method is relatively wide in application range and can be extended to the fields of manufacturing and process industries.

Owner:余姚市浙江大学机器人研究中心 +1

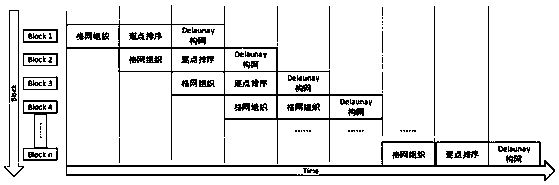

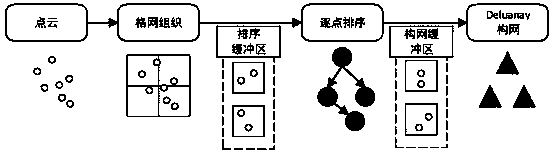

Mass point cloud Delaunay triangulation network construction method based on double spatial data organization

The invention discloses a mass point cloud Delaunay triangulation network construction method based on double spatial data organization. The method comprises four steps: point cloud grid organization,point cloud pointwise organization, point cloud Delaunay network construction and pipeline scheduling. According to the algorithm, the disadvantages of the conventional single spatial data organization method can be avoided, the requirements of the network construction method for the memory of the computer can be reduced, the adaptability of the network construction method to different point cloud distribution types can be enhanced and the efficiency of the network construction method can be enhanced.

Owner:NANJING NORMAL UNIVERSITY

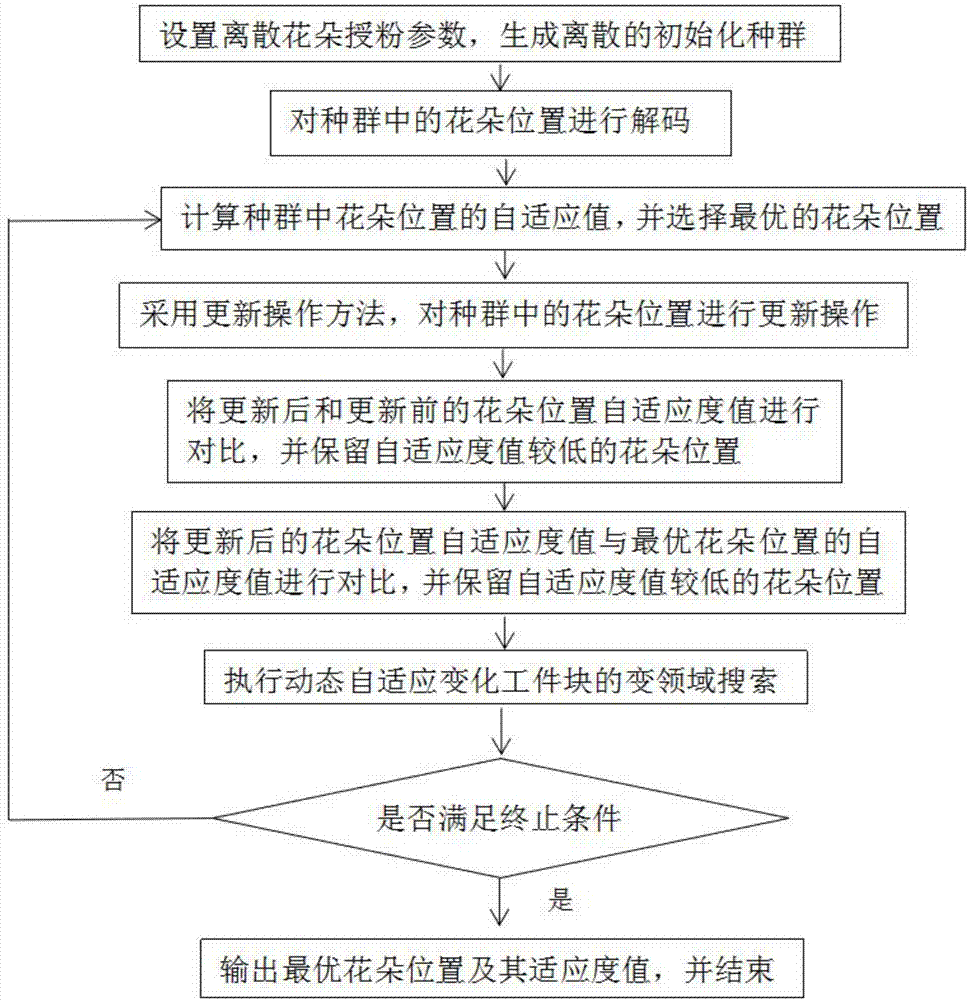

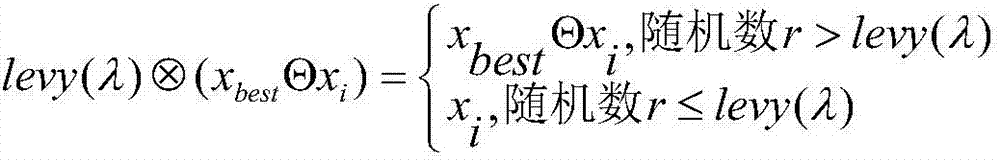

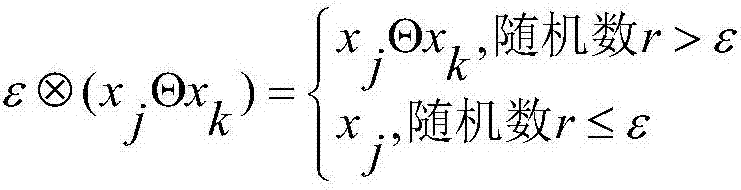

Method of solving hybrid flow-shop scheduling problem based on discrete flower pollination algorithm

ActiveCN107357267AHigh precisionVarious methodsProgramme total factory controlManufacturing technologyAlgorithm

The invention belongs to the technical field of production and manufacture, and discloses a method of solving the hybrid flow-shop scheduling problem based on a discrete flower pollination algorithm. The method includes the steps of S1: setting a target parameter and generating an initialized population; S2: decoding a flower position of the population; S3: calculating a fitness value of the flower position, and selecting an optimal flower position; S4: updating the flower position; S5: comparing the fitness values of the updated and pre-update flower positions, and retaining the flower position with a lower fitness value; S6: comparing the fitness values of the updated and optimal flower positions, and retaining the flower position with a lower fitness value; S7: performing variable neighborhood search of a dynamically adaptive changing workpiece block; and S8: judging whether an end condition is satisfied or not, and if so, outputting the optimal flower position and the fitness value thereof and ending the process; and if not, returning to the step S3. The method is feasible and effective, and methods for solving the problem of hybrid pipeline scheduling are enriched.

Owner:BAISE UNIV

Method for optimizing memory access distance on many-core processor

The invention discloses a method for optimizing a memory access distance on a many-core processor. The method comprises the following steps: generating an inter-kernel pipeline scheduling table, and performing memory access distance optimization and communication optimization on a many-core processor according to the inter-kernel pipeline scheduling table, and the step of generating the inter-kernel pipeline scheduling table specifically comprises the following steps: firstly, performing process-level task division on a synchronous data flow diagram, and determining a corresponding cluster node to which each calculation task is allocated; secondly, conducting thread-level task division on tasks in the synchronous data flow diagram in the cluster nodes, and determining processing cores, distributed to the computing tasks, in the corresponding cluster nodes. According to the method, use of caches in a many-core processor is optimized, the locality of data access and the cache utilizationrate are improved, and the running efficiency of a program is improved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY +1

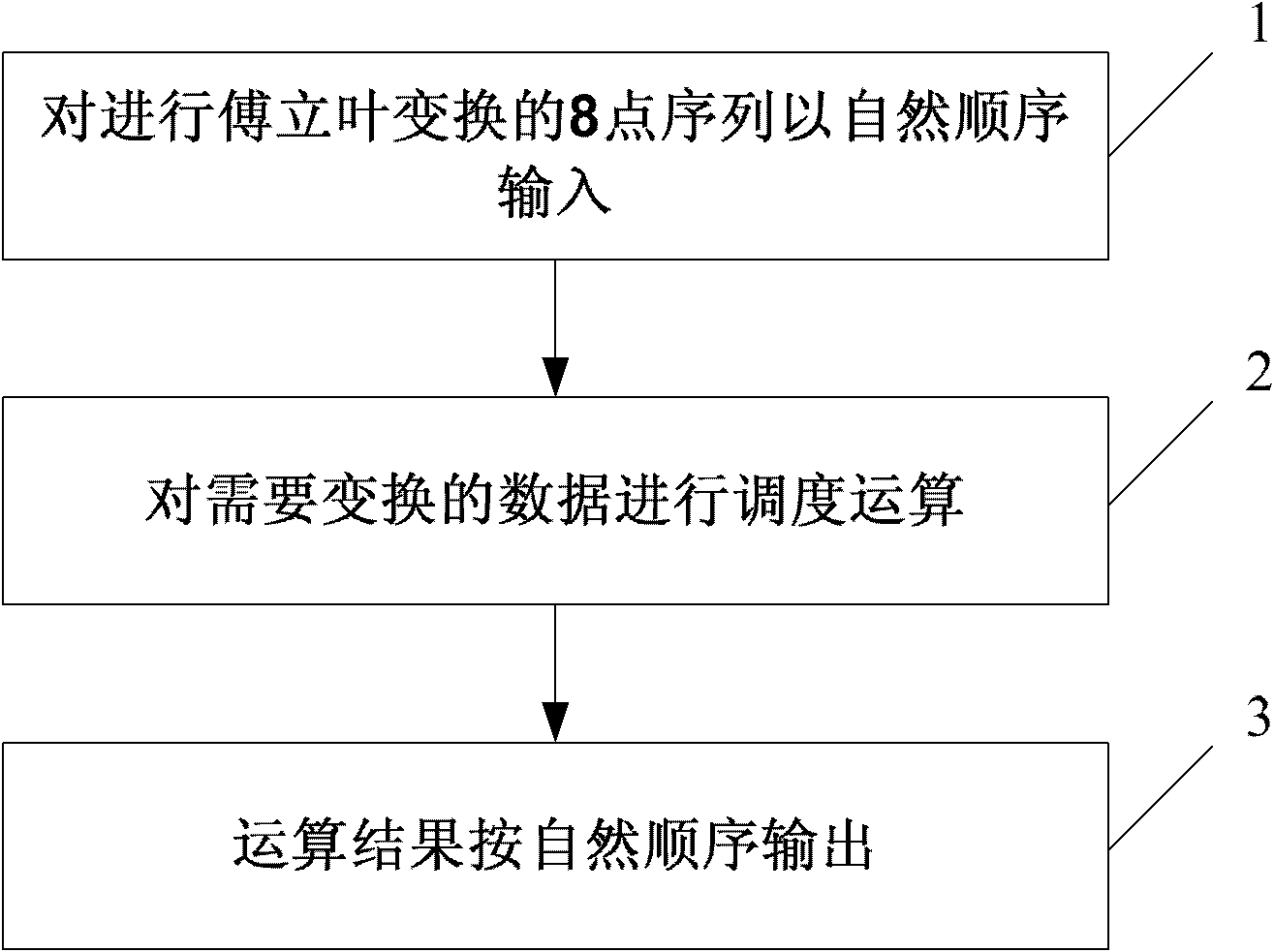

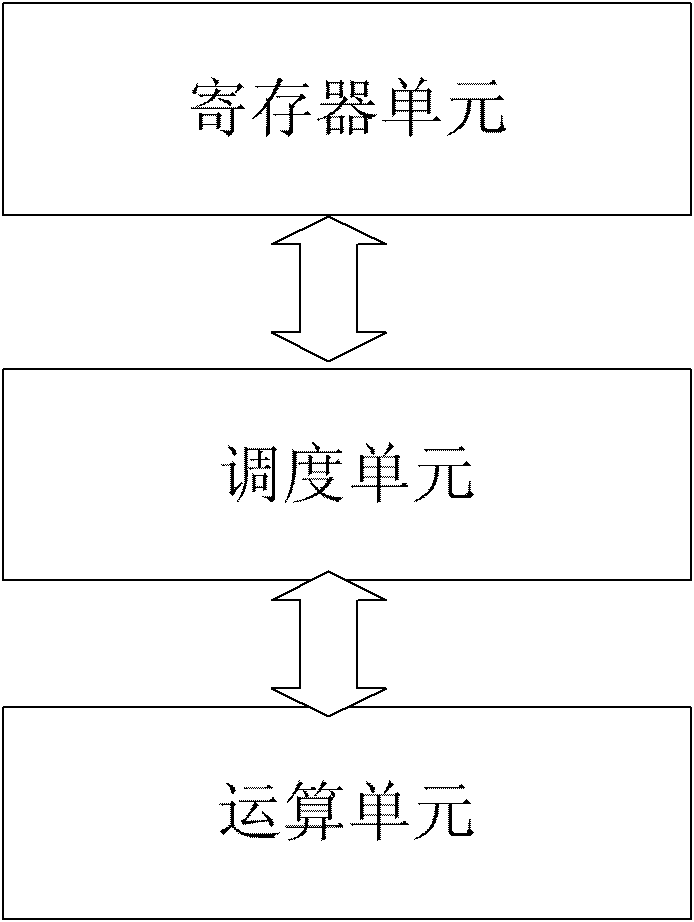

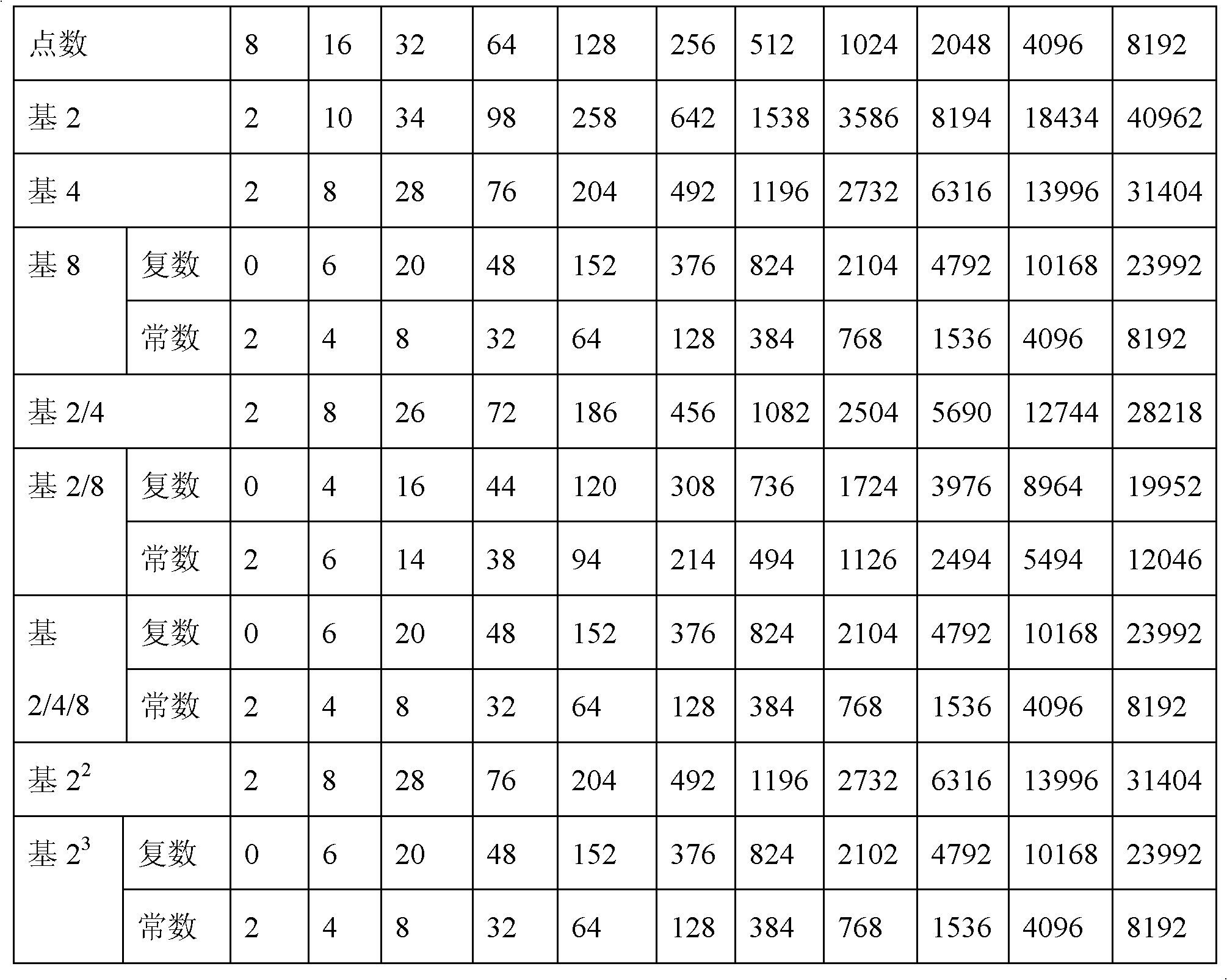

8-based fast fourier transform realization system and method based on 8

InactiveCN102339271AReduce hardware overheadReduce the numberComplex mathematical operationsProcessor registerFourier transform on finite groups

The invention relates to an 8-based fast Fourier transform realization system and method, belonging to the field of communication and integrated circuit design. The fast Fourier transform realization method realizes the 8-based fast Fourier transform by carrying out pipeline scheduling on a register unit. The fast Fourier transform realization system comprises an arithmetic element, a scheduling unit and the register unit, wherein the scheduling unit is used for realizing the 8-based fast Fourier transform by carrying out the pipeline scheduling on the register unit. The fast Fourier transform realization method is characterized in that the 8-based fast Fourier transform is realized by carrying out the pipeline scheduling on the register unit.

Owner:INST OF MICROELECTRONICS CHINESE ACAD OF SCI

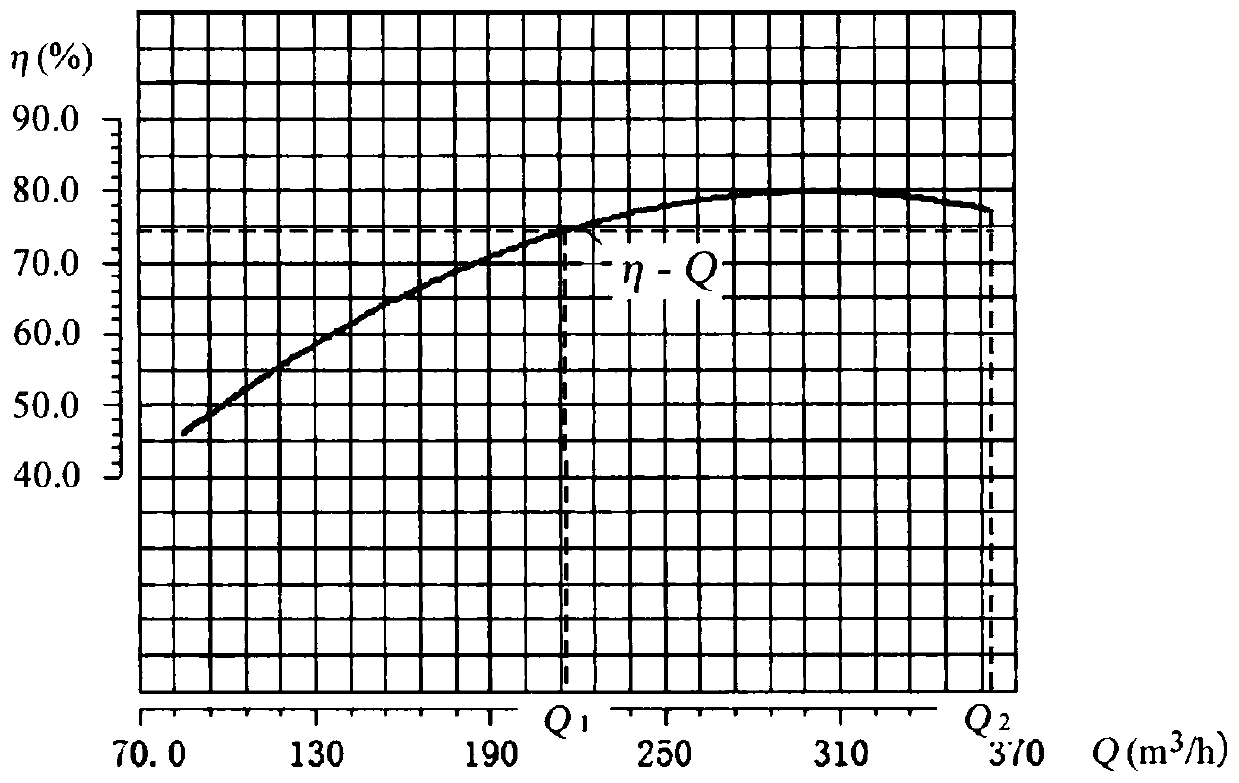

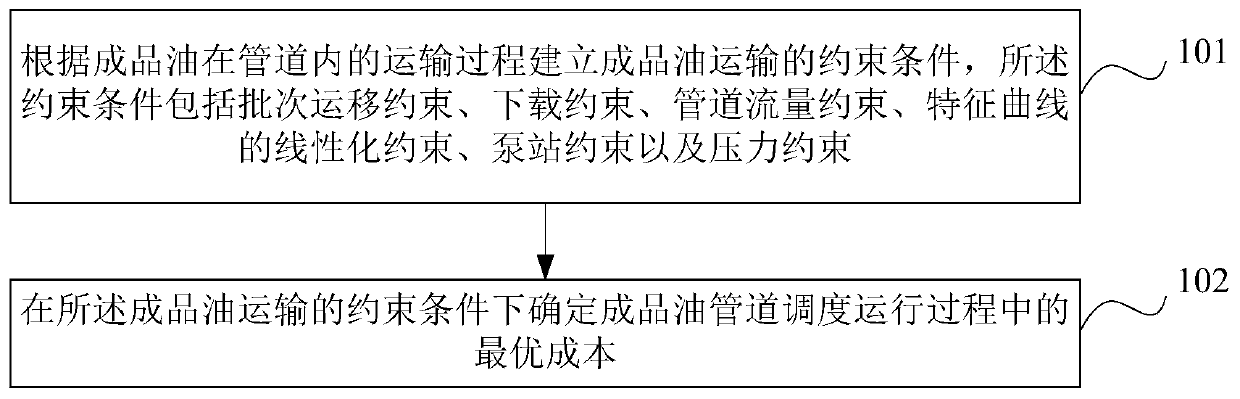

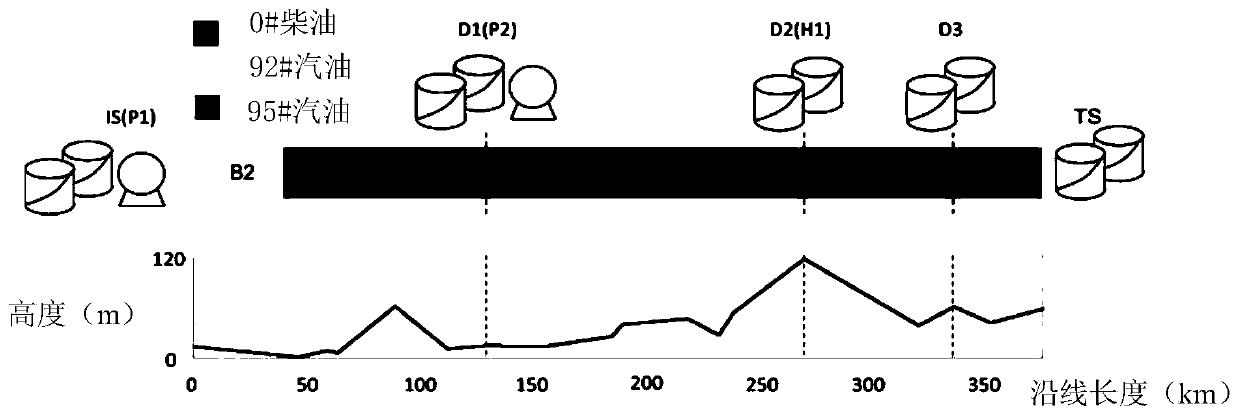

A product oil scheduling method and device based on pressure control

The invention provides a product oil scheduling method and device based on pressure control, and relates to the technical field of product oil conveying. The method comprises the steps that constraintconditions of product oil transportation are established according to the transportation process of product oil in a pipeline, and the constraint conditions comprise batch transportation constraint,downloading constraint, pipeline flow constraint, characteristic curve linearization constraint, pump station constraint and pressure constraint; and determining the optimal cost in the pipeline scheduling operation process of the product oil under the constraint condition of the product oil transportation. According to the invention, the problem that a reasonable and optimized product oil scheduling scheme cannot be provided for the research in the prior art can be overcome when the product oil scheduling faces the complex environment and conditions.

Owner:CHINA UNIV OF PETROLEUM (BEIJING)

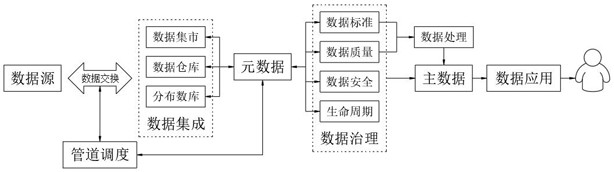

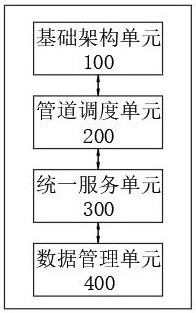

Distributed data management system of novel scheduling algorithm based on pipelines

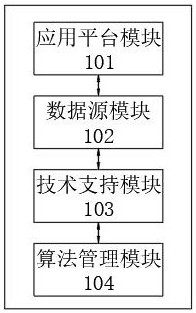

PendingCN113553381AData Governance ImplementationEasy to integrateProgram initiation/switchingResource allocationSupporting systemManagement support systems

The invention relates to the technical field of data management, in particular to a distributed data management system of a novel scheduling algorithm based on pipelines. The system comprises an infrastructure unit, a pipeline scheduling unit, a unified service unit and a data management unit; the infrastructure unit is used for managing a network architecture supporting system operation; the pipeline scheduling unit is used for managing scheduling of inter-process communication pipelines; the unified service unit is used for performing unified standardized management on the data; and the data management unit is used for comprehensively managing the data. According to the design, distributed data management can be realized, the fusion degree of the system is improved, and the ductility of the system is enhanced; the transmission effect in the data exchange process can be improved, and the integrity and safety of data are improved; through centralized scheduling management of the data, the architecture facilitates node expansion of the system, can support high-concurrency access of large users, can realize visual management of the full life cycle of the data, shortens the data management cycle, and reduces the cost waste.

Owner:中建材信息技术股份有限公司 +1

Pipeline scheduling method and device

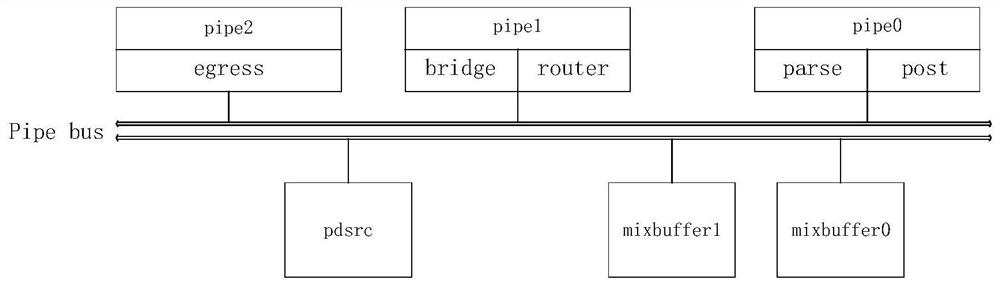

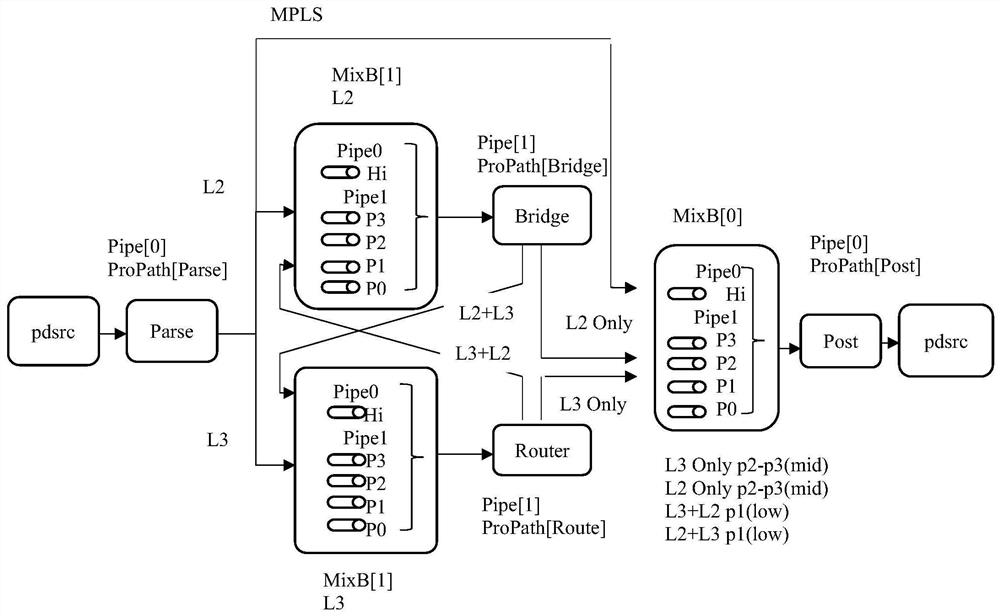

ActiveCN111884948AReduce latencyOptimize transmission delayProgram initiation/switchingInterprogram communicationParallel computingAssembly line

The invention discloses an assembly line scheduling device, which comprises three physical assembly lines, two mix buffers and a bus pipe bus, and is characterized in that the three physical assemblylines are logically divided into five assembly lines, and the five assembly lines respectively correspond to five stages of processing messages in a chip, i.e., a parse stage, a bridge stage, a routerstage, a post stage and an egress stage; the two mix buffers are used for scheduling different services and then sending the different services to the corresponding logic assembly lines; and each pipeline unit pipe, the mix buffer and the pdsrc are hung on the bus pipe bus and are used for completing the interaction among the pipeline units. According to the invention, various services are optimized in the aspect of assembly line processing length, and different services can correspond to different processing lengths, so that unnecessary processing is reduced; the pipe bus is used for replacing the original connection relationship among the members of the assembly line, so that the members of the assembly line are connected more flexibly, the expandability is very high, and the new requirements in the future can be met. The invention further provides a corresponding assembly line scheduling method.

Owner:FENGHUO COMM SCI & TECH CO LTD +1

A data flow compilation optimization method for multi-core clusters

ActiveCN103970580BImprove parallelismLoad balancingResource allocationMemory systemsData streamStructure of Management Information

The invention discloses a data flow compiling optimization method for a multi-core cluster system, comprising: determining calculation tasks and processing core mapping task division and scheduling steps; constructing pipelines between cluster nodes and cluster node cores according to the task division and scheduling results The hierarchical pipeline scheduling step of the scheduling table; the caching optimization step based on the cache is performed according to the structural characteristics of the multi-core processor, the communication situation between the cluster nodes and the execution situation of the data flow program on the multi-core processor. The method of the present invention combines the optimization technology related to the data flow program and the system structure, fully exerts the high load balance and the high parallelism of the synchronous and asynchronous mixed pipeline code on the multi-core cluster, and aims at the cache and communication mode on the multi-core cluster, The cache access and communication transmission of the program are optimized, which further improves the execution performance of the program and has a shorter execution time.

Owner:HUAZHONG UNIV OF SCI & TECH

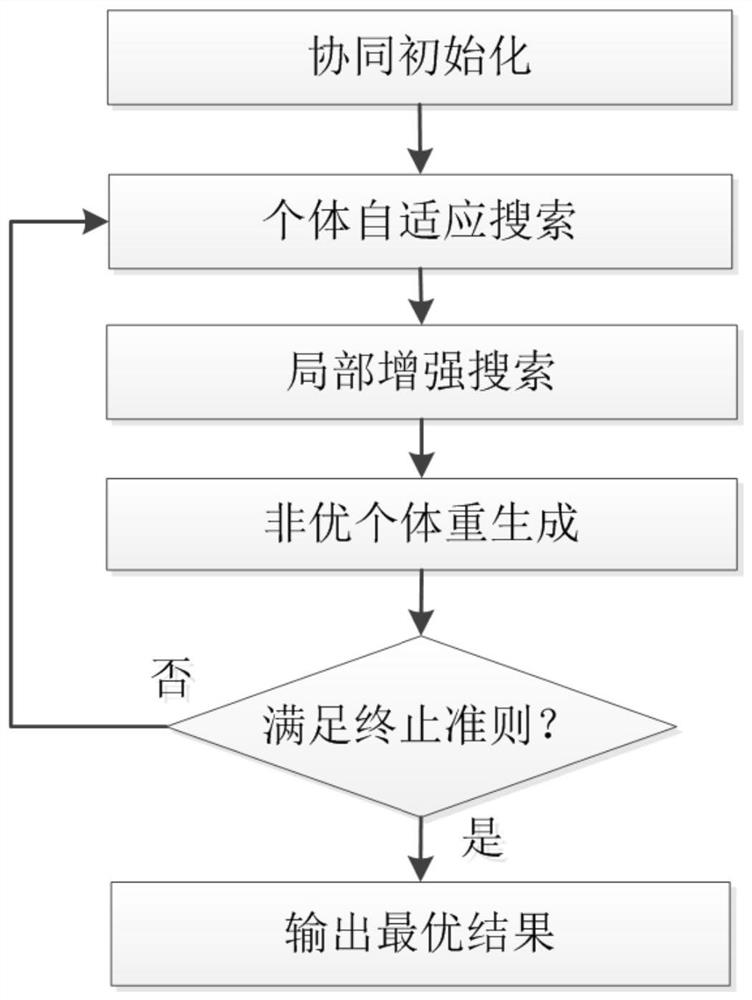

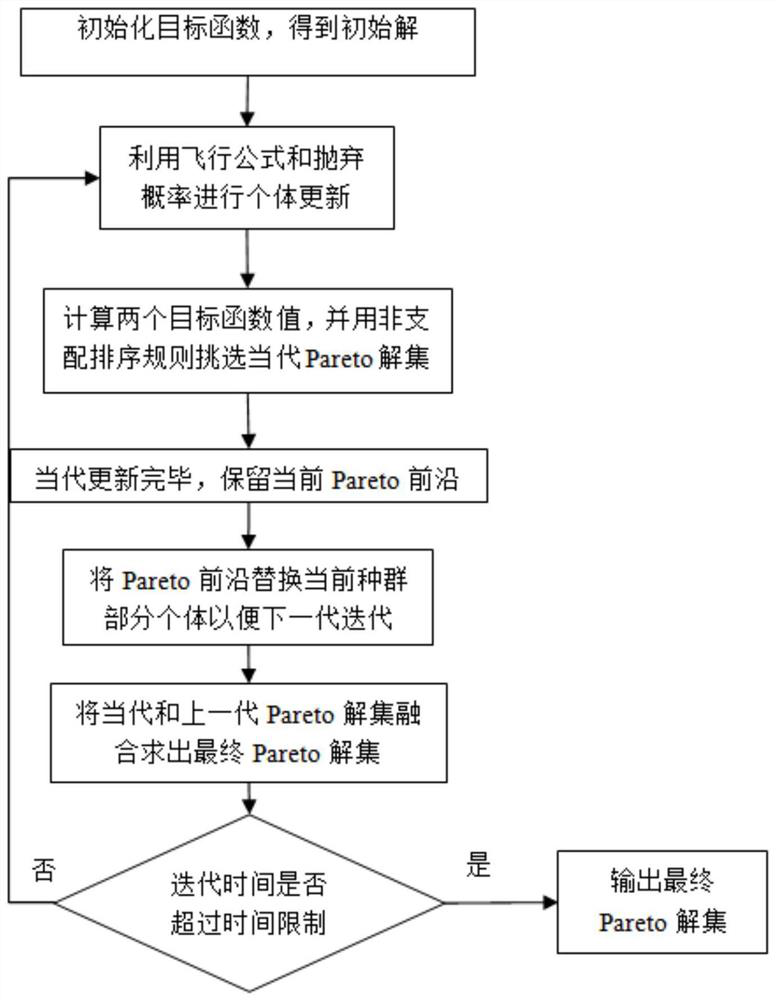

A Mixed Swarm Intelligent Optimization Method for Distributed Blocking Pipeline Scheduling

ActiveCN110458326BForecastingManufacturing computing systemsLocal search (optimization)Self adaptive

Owner:TSINGHUA UNIV

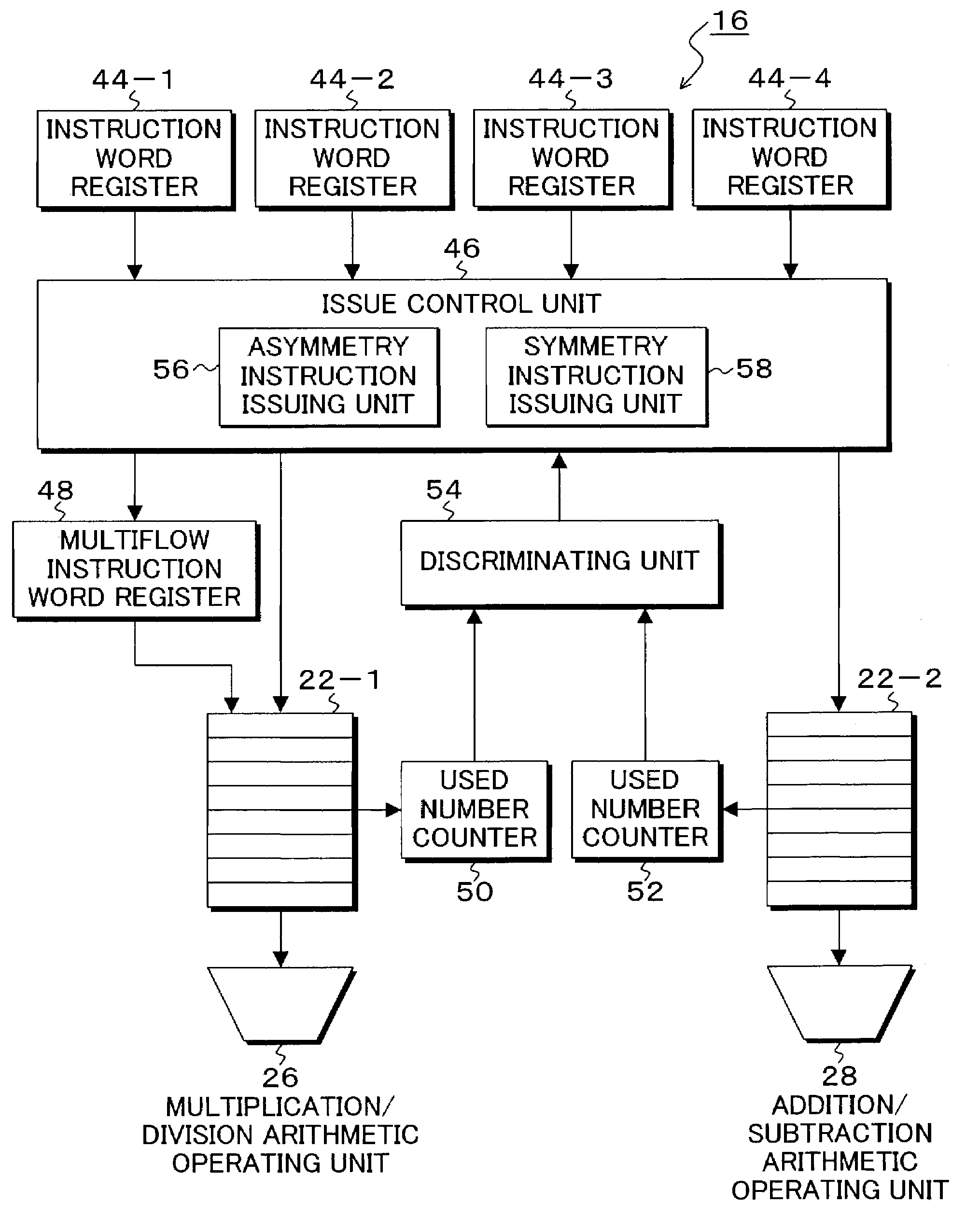

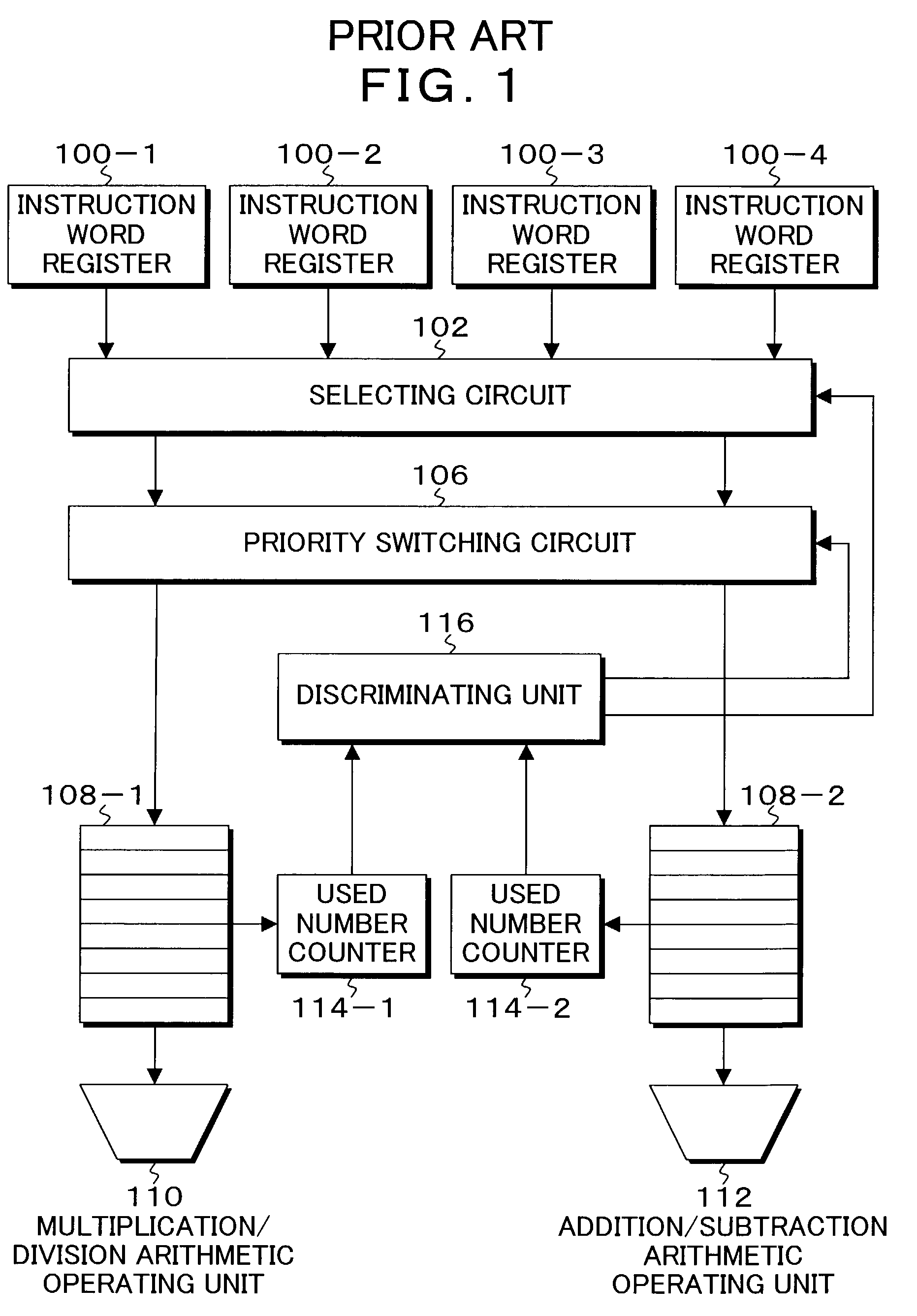

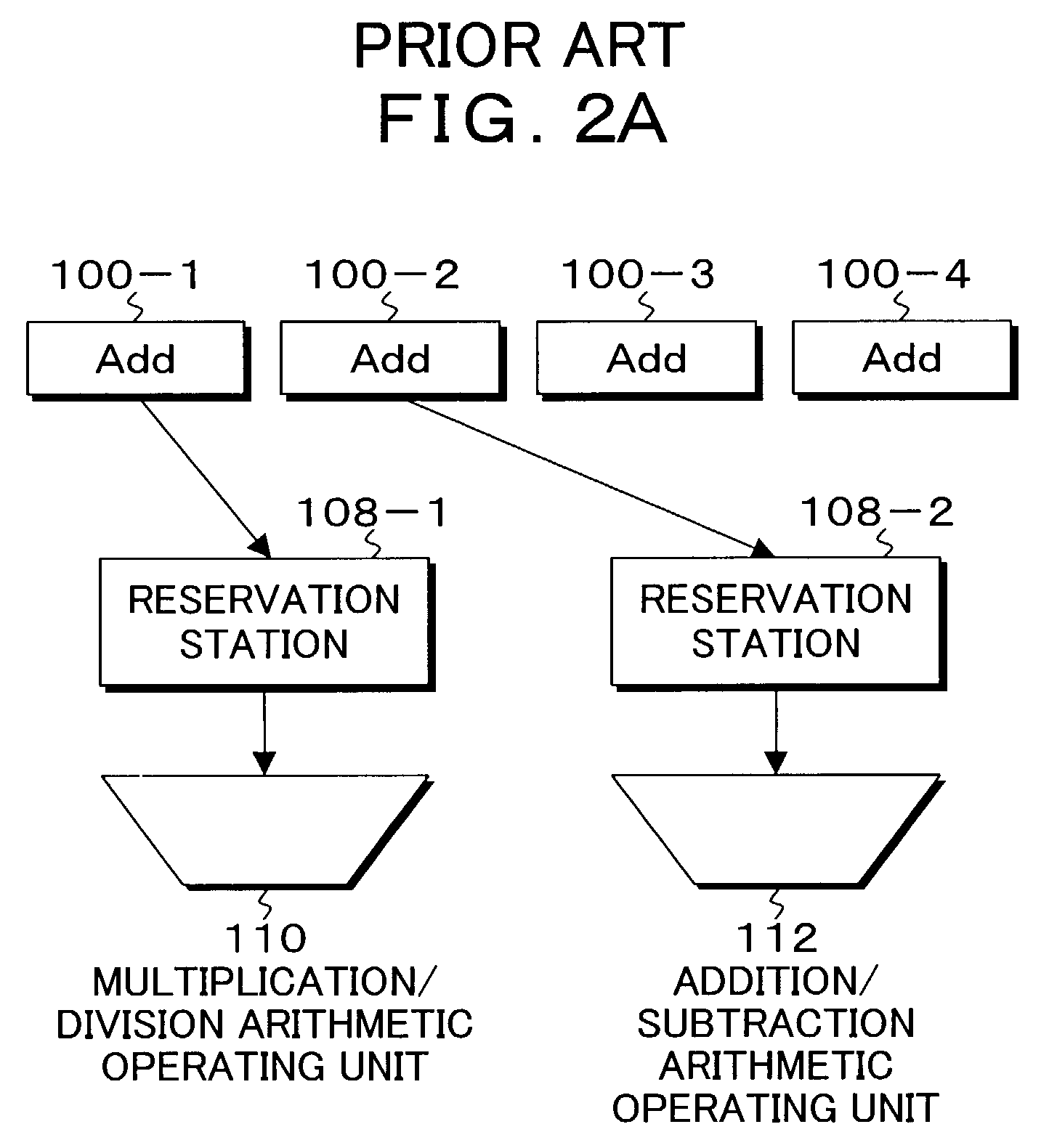

Processor for executing instruction control in accordance with dynamic pipeline scheduling and a method thereof

ActiveUS7337304B2Easy to controlDigital computer detailsConcurrent instruction executionReservation stationDynamic priority scheduling

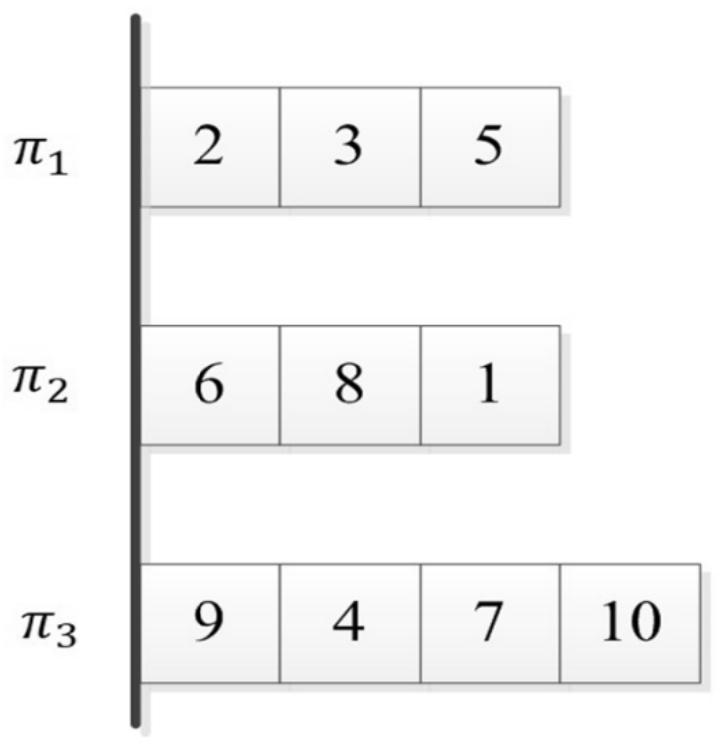

When all of a plurality of instructions are symmetry instructions, a symmetry instruction issuing unit issues the symmetry instructions to a plurality of reservation stations provided for every different arithmetic operating units until they become full. If it is determined that there is an asymmetry instruction among the plurality of instructions and the residual instructions are the symmetry instructions, an asymmetry instruction issuing unit 56 develops the asymmetry instruction into a multiflow of a previous flow and a following flow, issues the asymmetry instruction to the reservation station provided in correspondence to the specific arithmetic operating unit, and issues the residual symmetry instructions to the plurality of reservation stations provided for every different arithmetic operating units in an issuing cycle different from that of the asymmetry instruction until they become full.

Owner:FUJITSU LTD

A Data Stream Program Scheduling Method for x86 Multi-Core Processors

ActiveCN103970602BReduce overheadIncrease profitProgram initiation/switchingResource allocationData streamCache optimization

The invention discloses a data flow program scheduling method oriented to a multi-core system. The method comprises the following steps that task partitioning of mapping from calculation tasks to processor cores is determined, and software pipeline scheduling is constructed; according to structural characteristics of a multi-core processor and execution situations of a data flow program on the multi-core processor, cache optimization among the cores is conducted. According to the method, data flow parallel scheduling and optimization related to the cache structure of a multi-core framework are combined, and high parallelism of the multi-core processor is brought into full play; according to the hierarchical cache structure and the cache principle of the multi-core system, access of the calculation tasks to a communication cache region is optimized, and the throughput rate of a target program is further increased.

Owner:HUAZHONG UNIV OF SCI & TECH

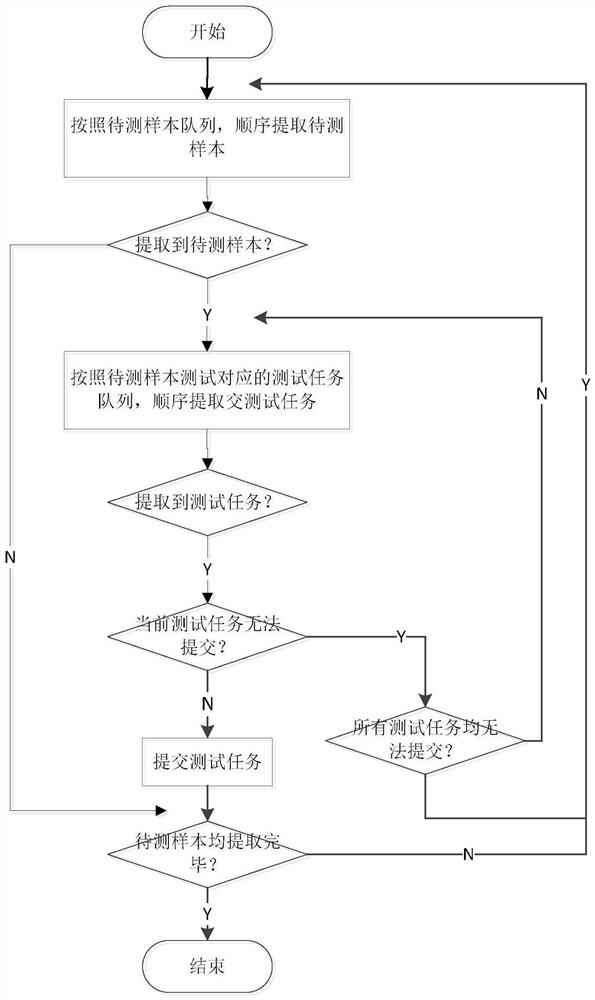

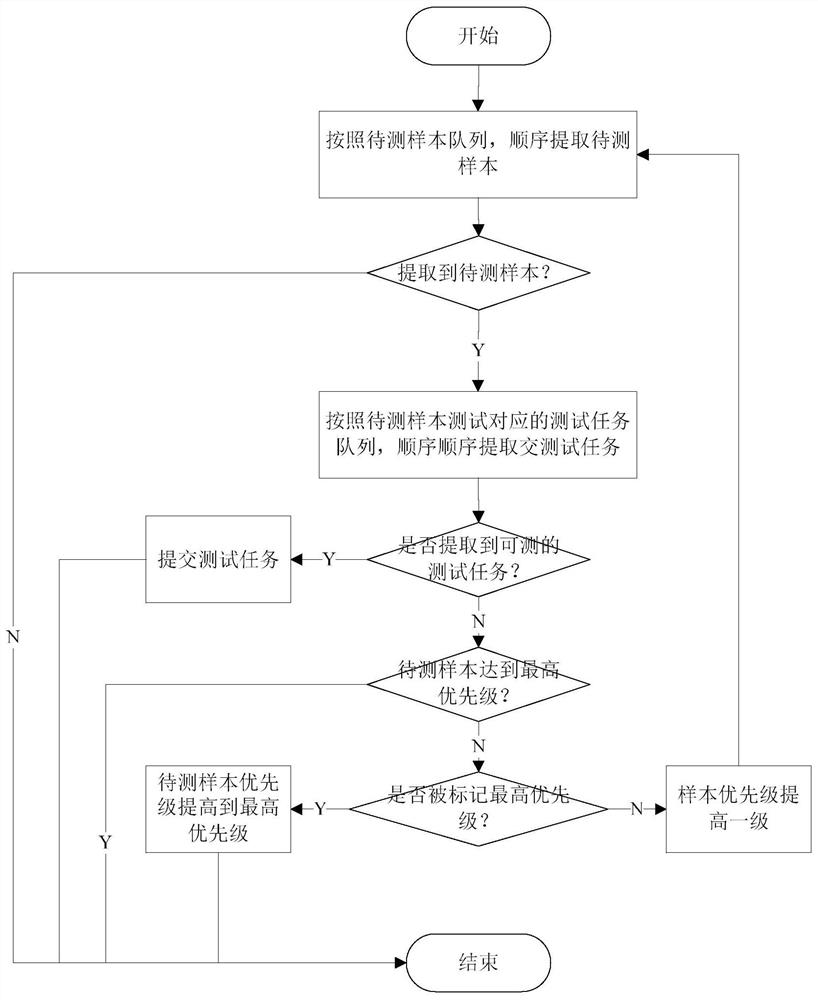

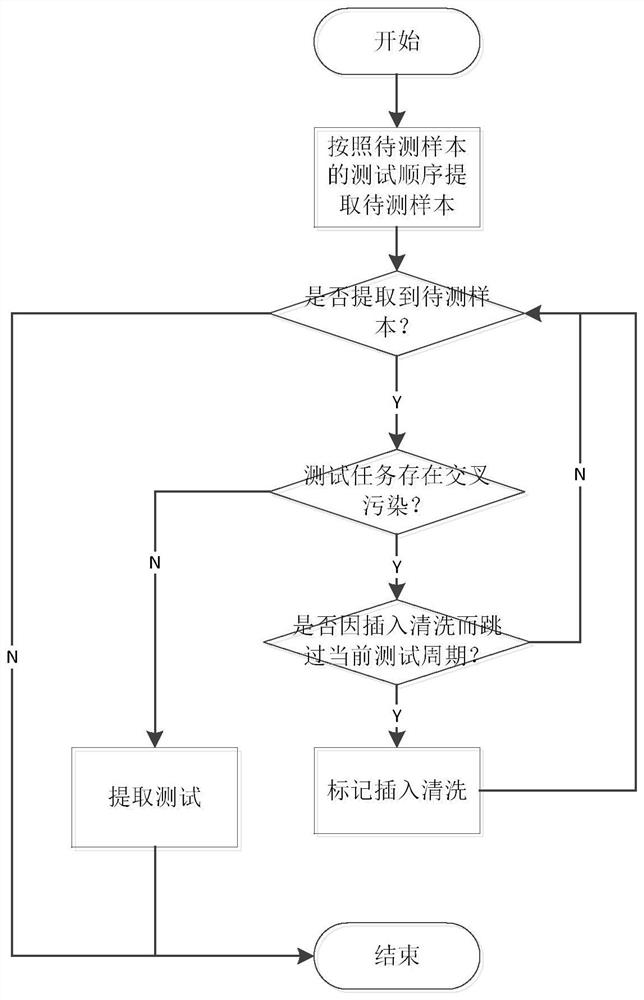

Sample test pipeline task scheduling method, system, and computer storage medium

ActiveCN111176819BAvoid cross contaminationImprove pipeline efficiencyProgram initiation/switchingResourcesEngineeringTest order

This application relates to sample test pipeline task scheduling methods, systems, and computer storage media, including the out-of-order submission mechanism after pipeline task conflicts occur when submitting in test order, and is designed to avoid out-of-order submission from affecting the orderly progress of the overall experiment The priority mechanism, and the cross-contamination avoidance mechanism designed on this basis. Solve the problem that the pipeline scheduling method of the existing sample test will cause the decrease of the instrument test throughput and the efficiency of the pipeline.

Owner:SUZHOU HYBIOME BIOMEDICAL ENG CO LTD

A Distributed Flexible Pipeline Scheduling Method

ActiveCN110288185BSolve scheduling problemsQuick calculationResourcesManufacturing computing systemsIterative searchOptimal scheduling

An embodiment of the present invention provides a distributed flexible pipeline scheduling method, including: constructing a distributed flexible pipeline scheduling model according to the processing start date, processing time and workpiece delivery date of each workpiece in each processing stage; according to the greedy iterative search The algorithm performs iterative search to solve the scheduling problem of the distributed flexible pipeline scheduling model. If the iteration time meets the preset conditions, the optimal scheduling scheme for all workpieces is obtained to schedule the distributed flexible pipeline. The embodiment of the present invention establishes a distributed flexible pipeline scheduling model, and effectively improves the search efficiency according to the greedy iterative search algorithm, and realizes the fast calculation of the optimization target of the distributed flexible pipeline scheduling model, so that the algorithm can get better results in a shorter time. The scheduling scheme can effectively and efficiently solve the large-scale distributed flexible pipeline scheduling problem.

Owner:TSINGHUA UNIV

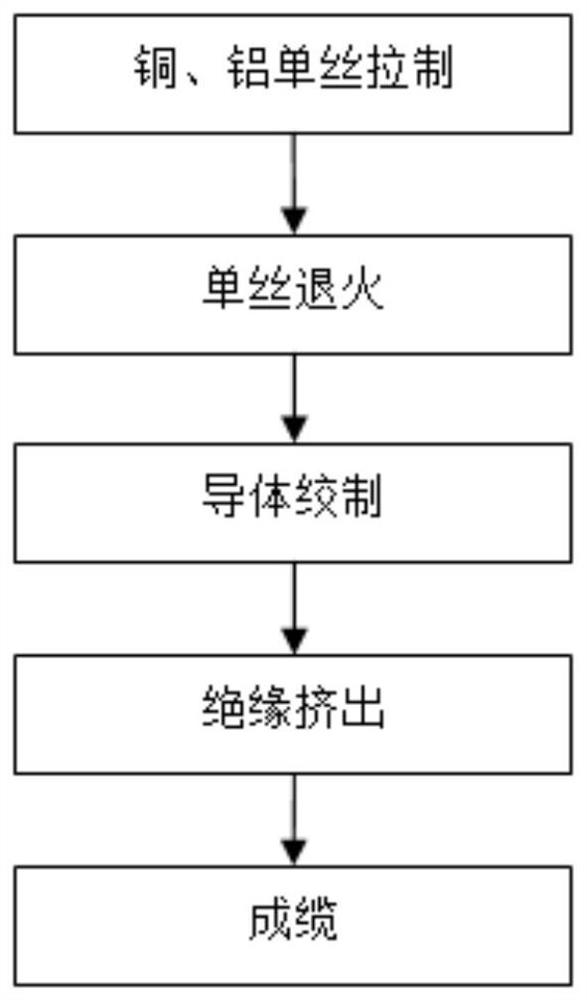

An Optimal Scheduling Method for Multi-type Cable Processing

ActiveCN108876654BIncrease diversityHigh speedArtificial lifeResourcesCompletion timeOptimal scheduling

The invention relates to an optimal scheduling method for multi-type cable processing, and belongs to the technical field of intelligent optimal scheduling of production workshops. The scheduling model is determined by determining the processing sequence of various cables, and the multi-objective optimization of the model is carried out by using the cuckoo algorithm. The scheduling model is a replacement assembly line workshop scheduling model. Using the cuckoo algorithm to obtain the optimal production sequence of multiple types of cables, while optimizing the maximum completion time and carbon emissions of cable production, this is a green dual-objective pipeline scheduling problem. The invention adopts the improved cuckoo algorithm to solve the dispatching model, and its objective function is: maximum completion time and carbon emission. Using the cuckoo algorithm to update iterations, optimize the modeling of the problem, and solve the cuckoo algorithm, the excellent solution for the production and processing of multiple types of cables can be obtained in a relatively short period of time.

Owner:KUNMING UNIV OF SCI & TECH

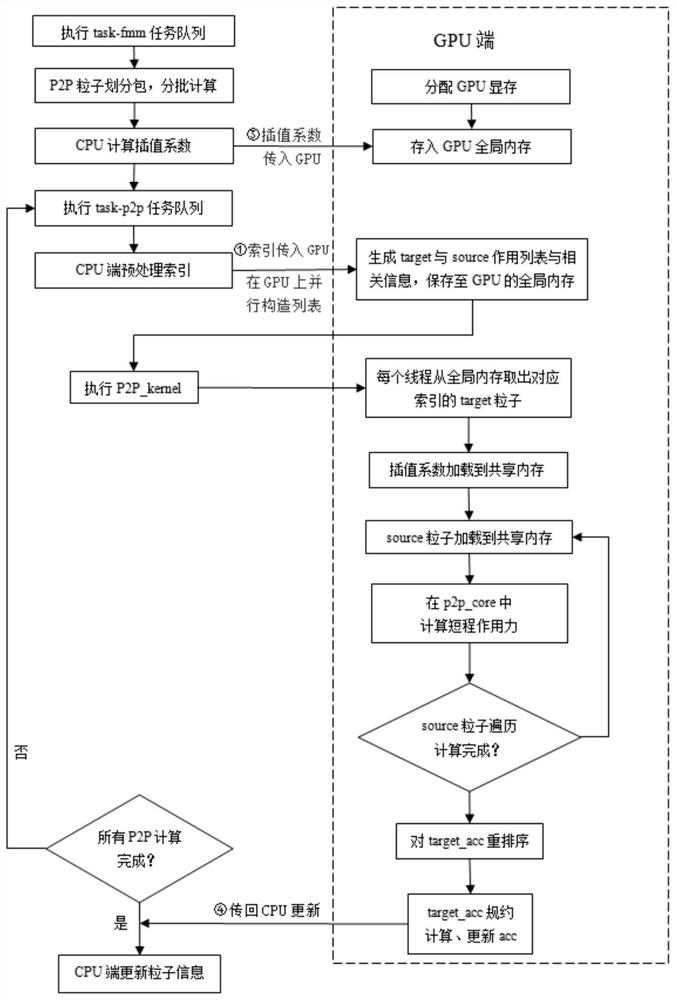

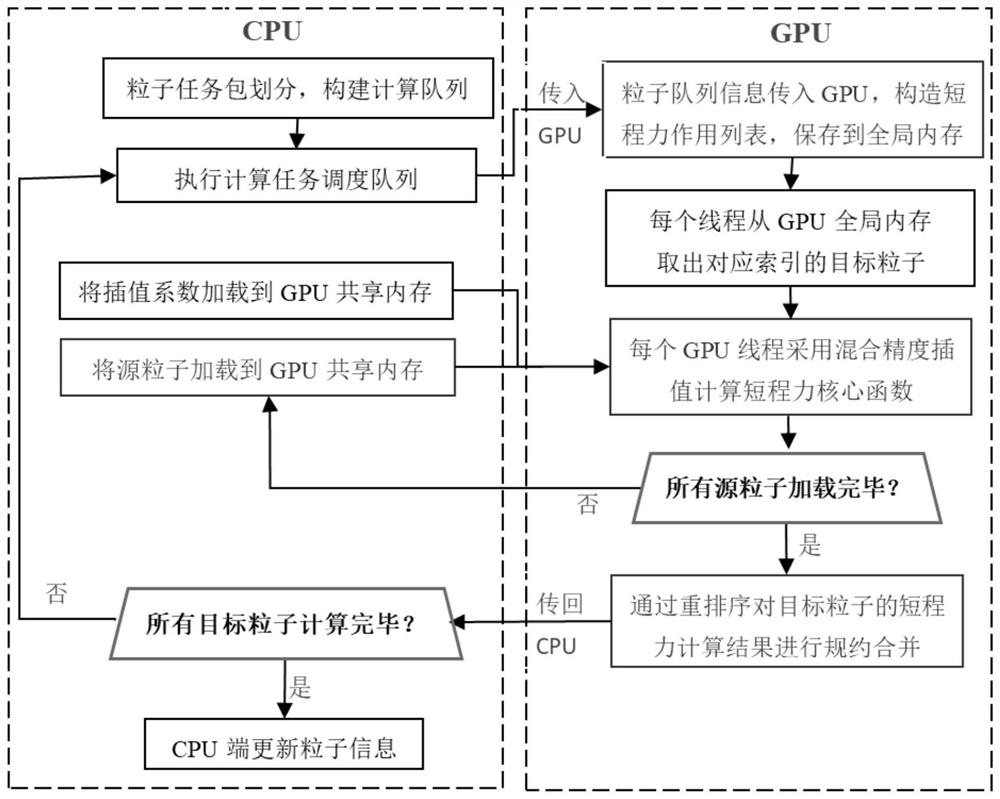

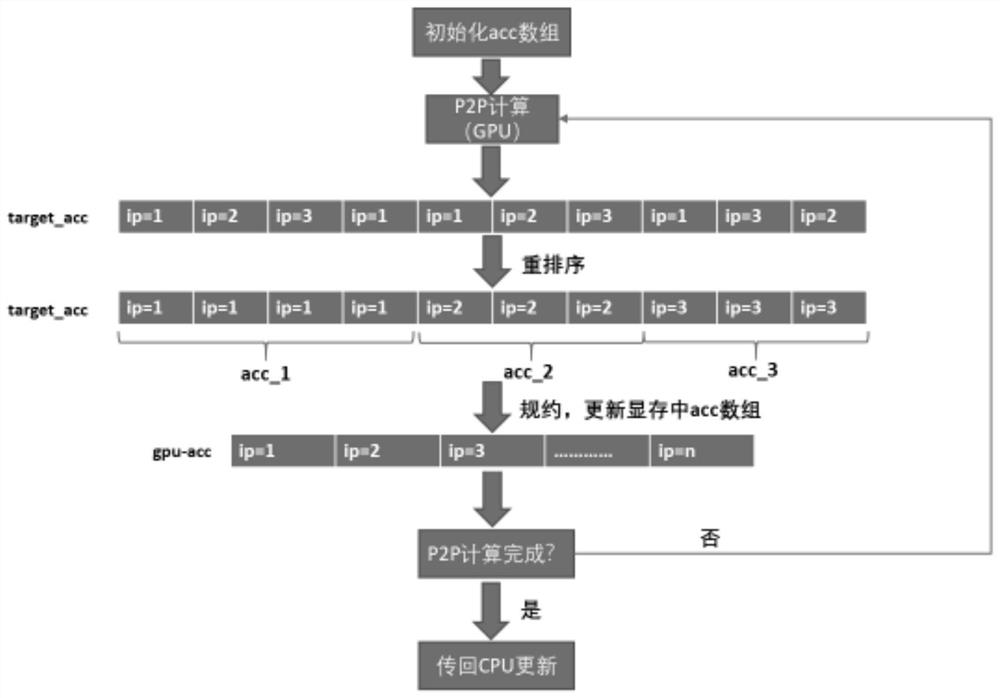

GPU-based N-body simulation program performance optimization method

ActiveCN112765870AShorten the timeReduce data transferDesign optimisation/simulationComputational scienceVideo memory

The invention relates to an N-body simulation program performance optimization method based on a GPU (Graphics Processing Unit), which comprises the following steps of: transmitting related index information to the GPU, so that a process of constructing a short-range force list is migrated to the GPU, and meanwhile, the process of constructing the list is parallelized; changing a thread block scheduling mode, and loading particle information into a shared memory of the GPU in turn through pipeline scheduling of the GPU; calculating a short-range acting force in a GPU core function by adopting an interpolation polynomial and mixing precision, transmitting the calculated interpolation constant to the GPU after the interpolation constant is calculated on the CPU, and storing the interpolation constant in a shared memory of the GPU; reordering short-range force calculation results of all particles on the GPU and then enabling the reordered short-range force calculation results to besubjected to protocol merging in a GPU global memory, and after calculation of all the particles is completed, transmitting a final result back to the CPU. According to the method, the data transmission from the CPU memory to the GPU video memory is reduced, the delay of repeated memory access is reduced, the data access efficiency in the process of calculating the short-range force by the GPU is improved, the data transmission from the GPU video memory to the CPU memory is reduced, and the time for updating the information at the CPU end is also reduced.

Owner:COMP NETWORK INFORMATION CENT CHINESE ACADEMY OF SCI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com