A distributed depth learning method and system based on a data parallel strategy

A deep learning and distributed technology, applied in the field of deep learning training systems, can solve problems such as poor scalability and flexibility of distributed training, inability to effectively reduce network communication overhead, and lack of cluster resource management functions to achieve scalability. Strong performance, high training efficiency, simple and flexible interface

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] In order to make the objectives, technical solutions and advantages of the present invention clearer, the present invention will be described in further detail below in conjunction with the embodiments and the accompanying drawings.

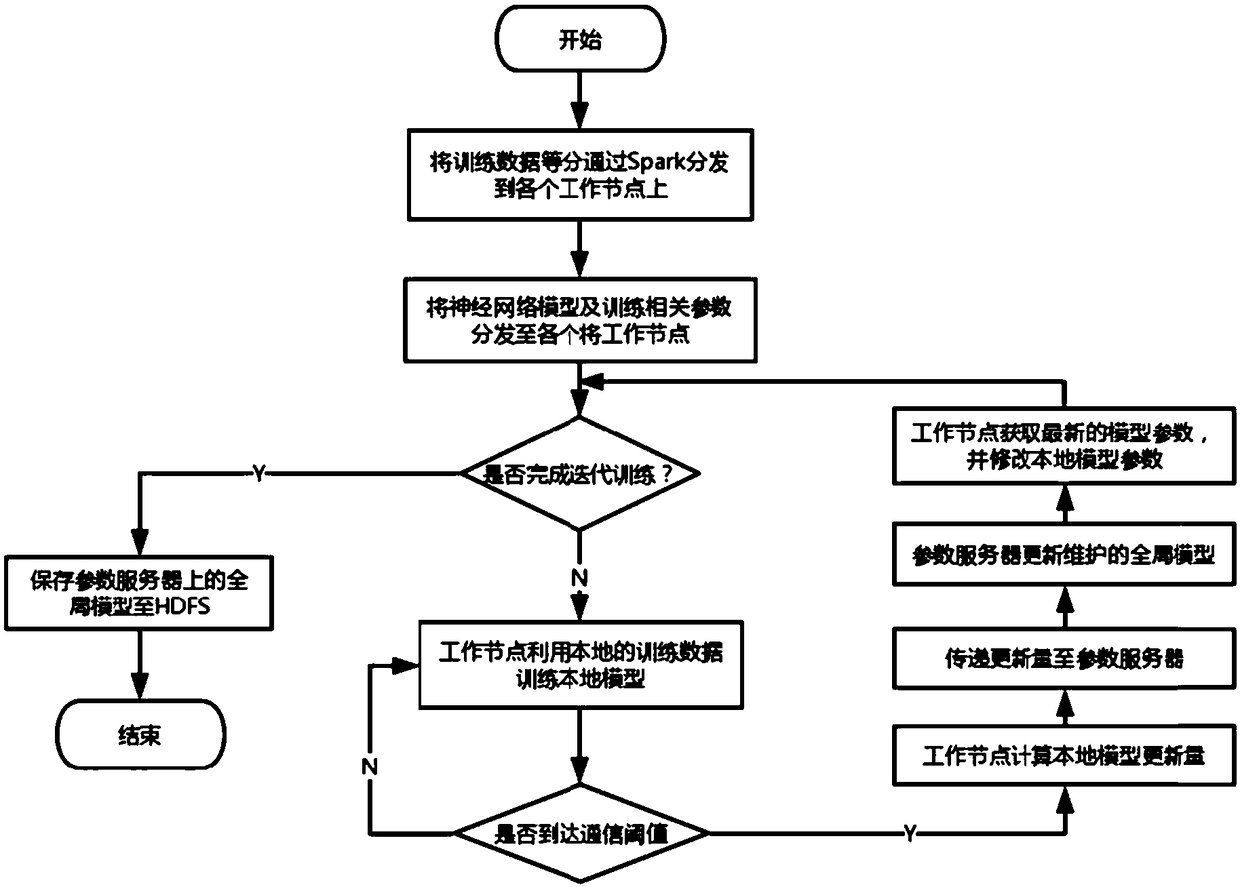

[0039] For large-scale data training, the present invention mainly adopts a data parallel strategy, combined with a parameter server to realize distributed deep learning. In the present invention, each selected working node trains partial data and maintains a local neural network model, and the parameter server receives the updated information of the working node model, and updates and maintains the global neural network model through related algorithms.

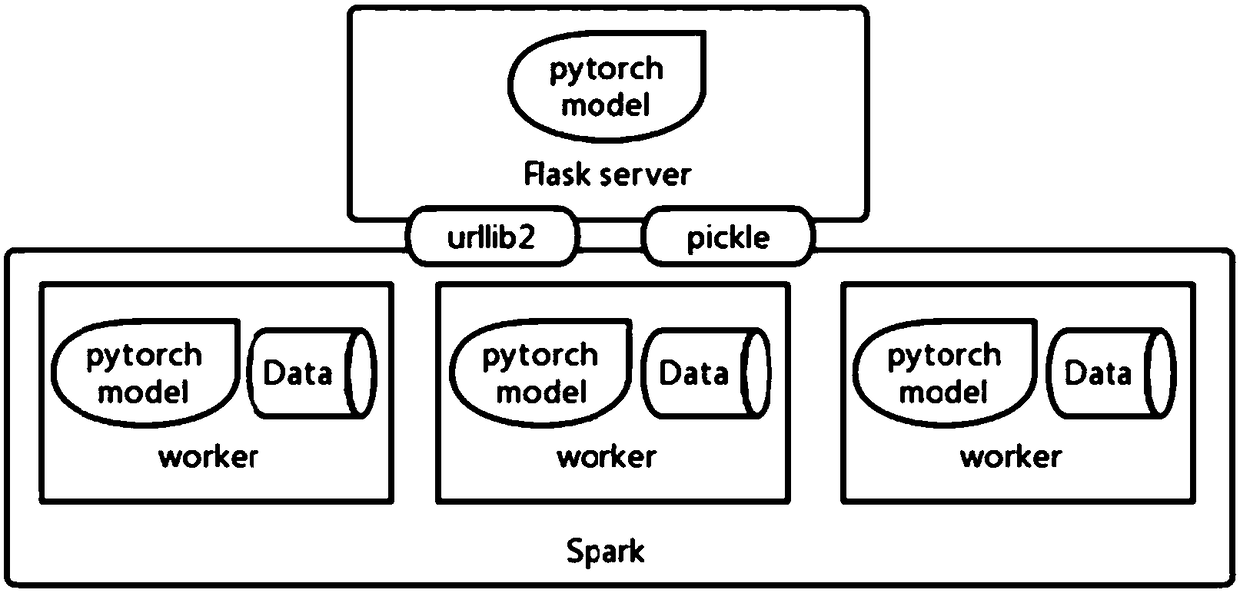

[0040] See figure 1 , The deep learning system of the present invention mainly includes big data distributed processing engine Spark, PyTorch deep learning training framework, lightweight Web application framework Flask, urllib2 module, pickle module, parameter setting module and data conversi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com