Patents

Literature

53results about "Systolic arrays" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

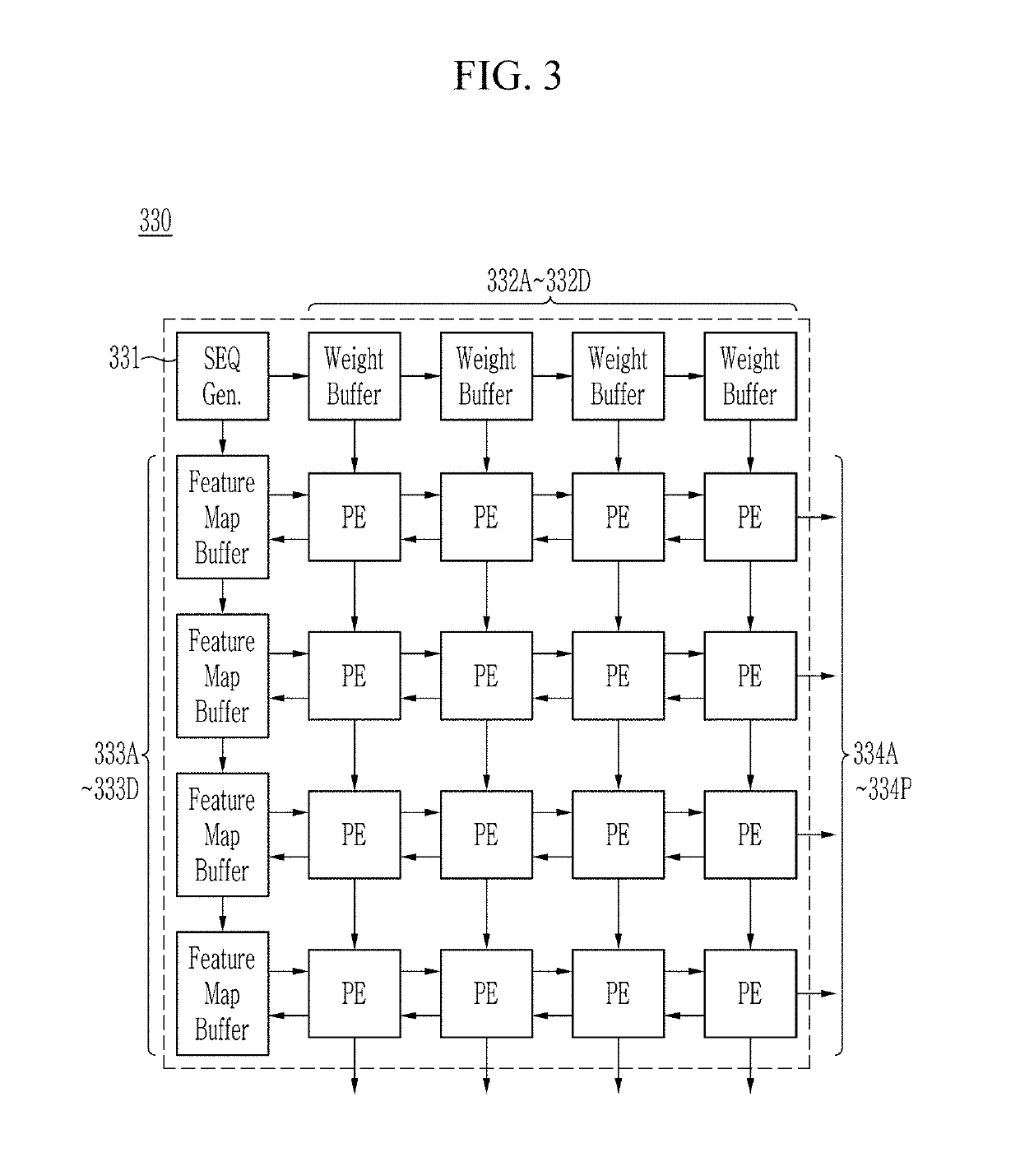

Systems And Methods For Systolic Array Design From A High-Level Program

ActiveUS20180314671A1Improve design throughputSingle instruction multiple data multiprocessorsSystolic arraysParallel computingProcessing element

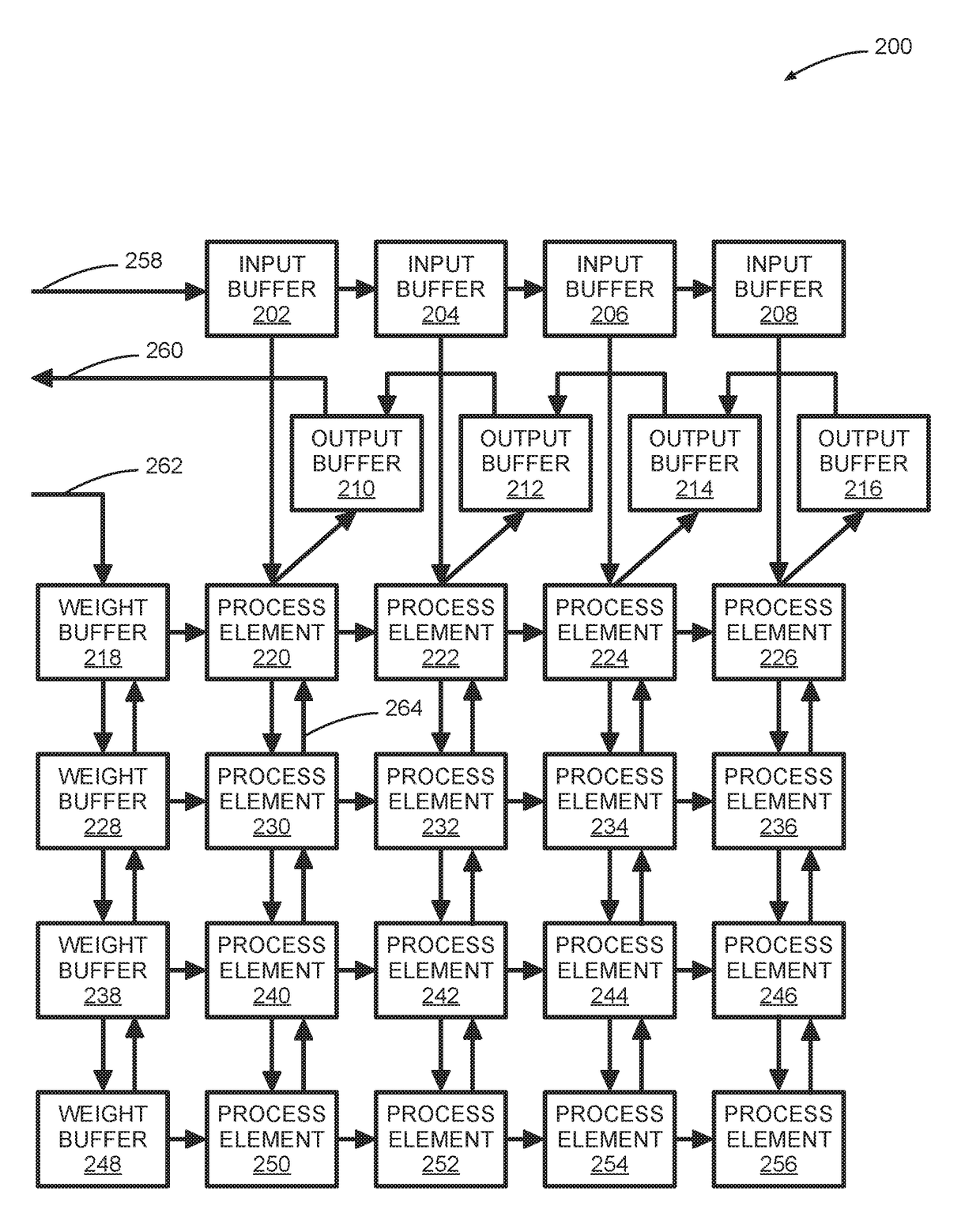

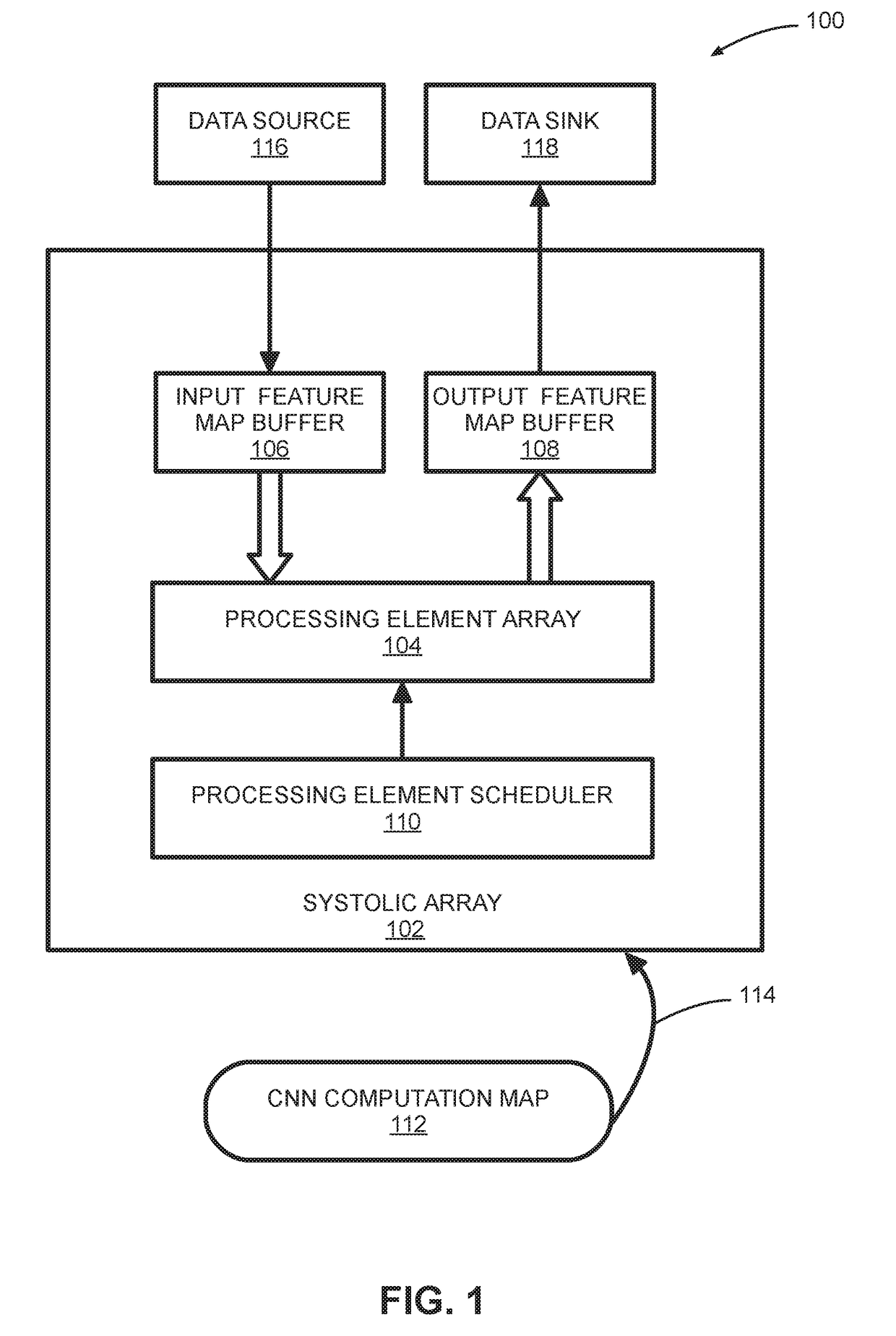

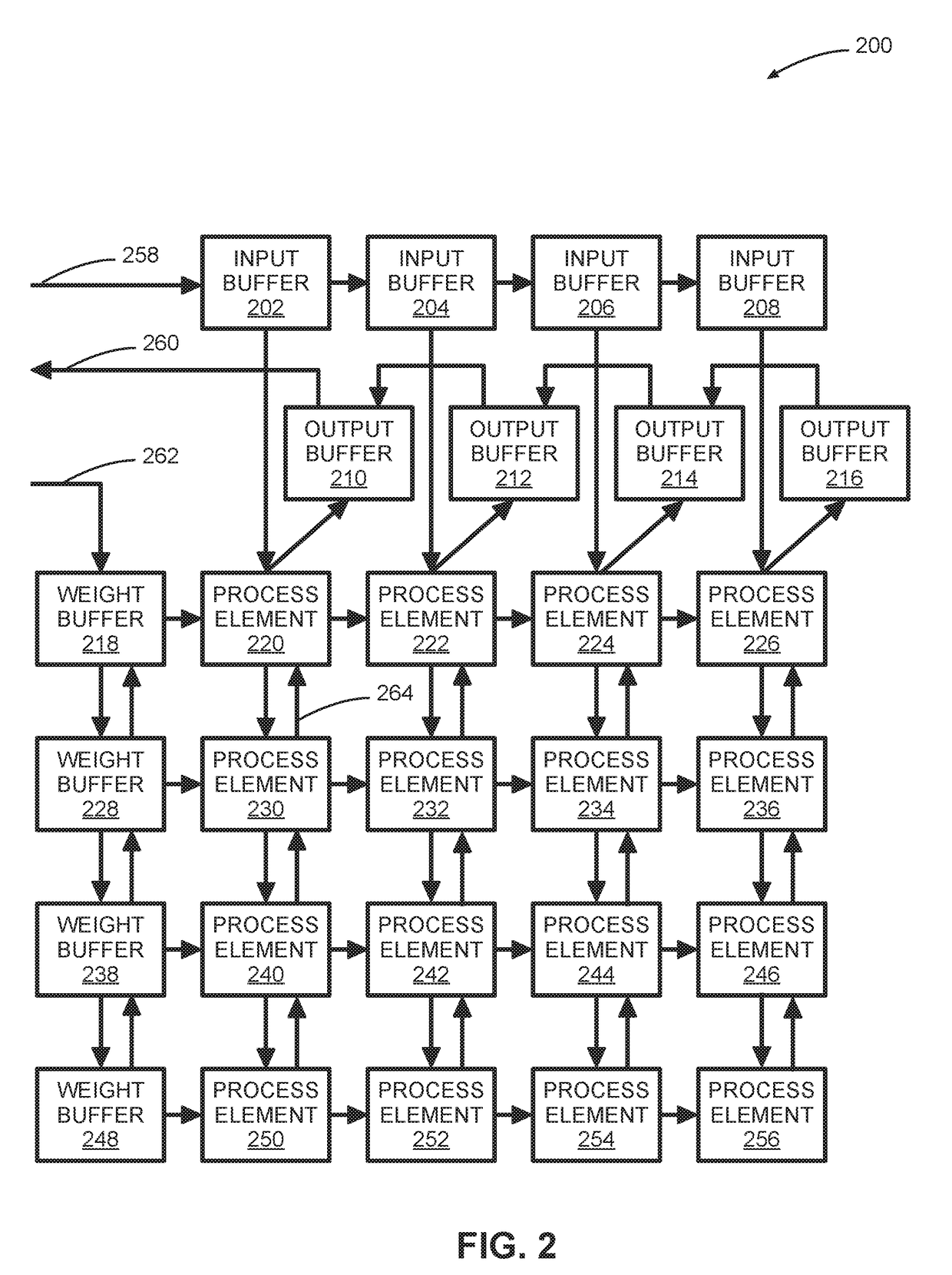

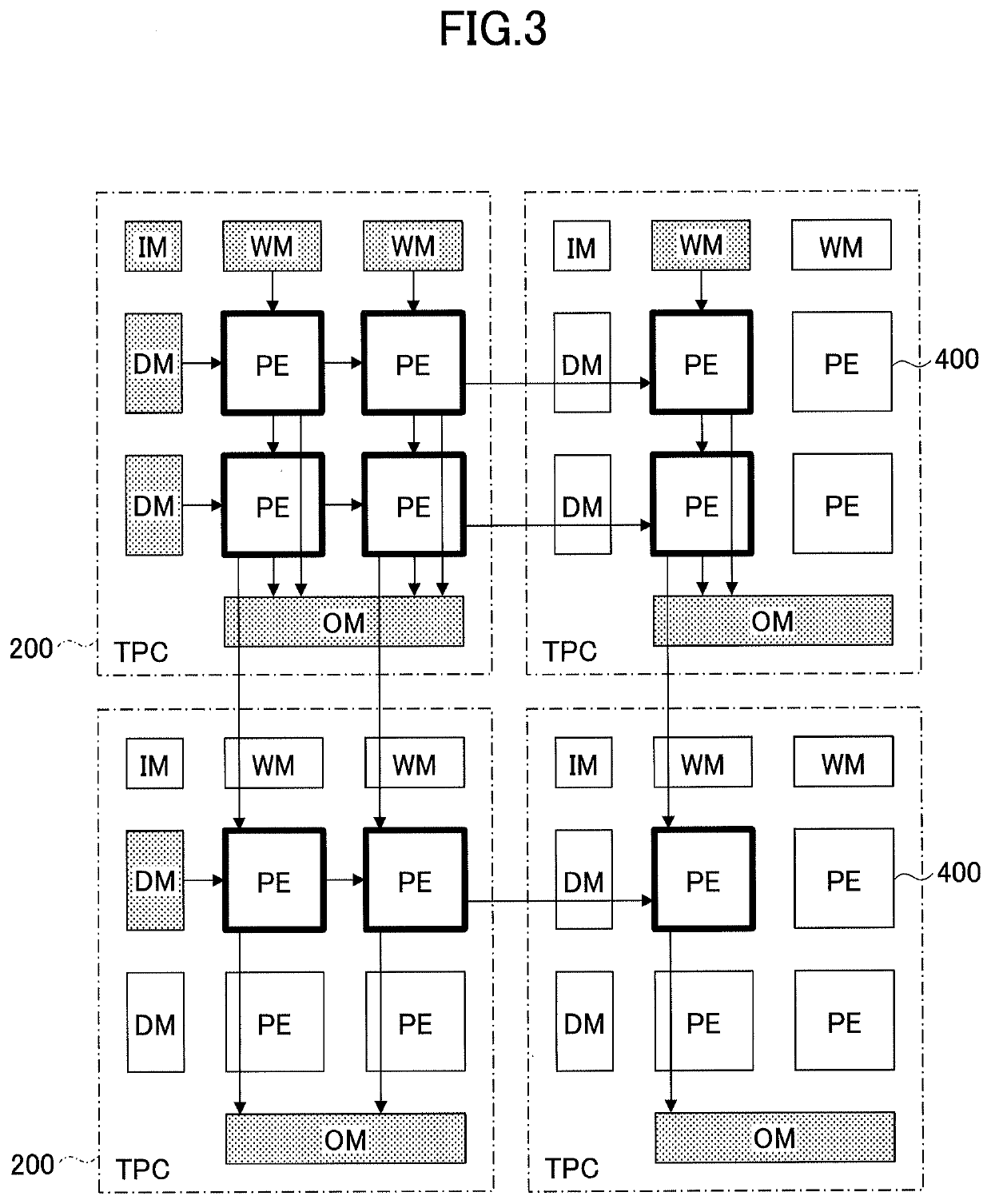

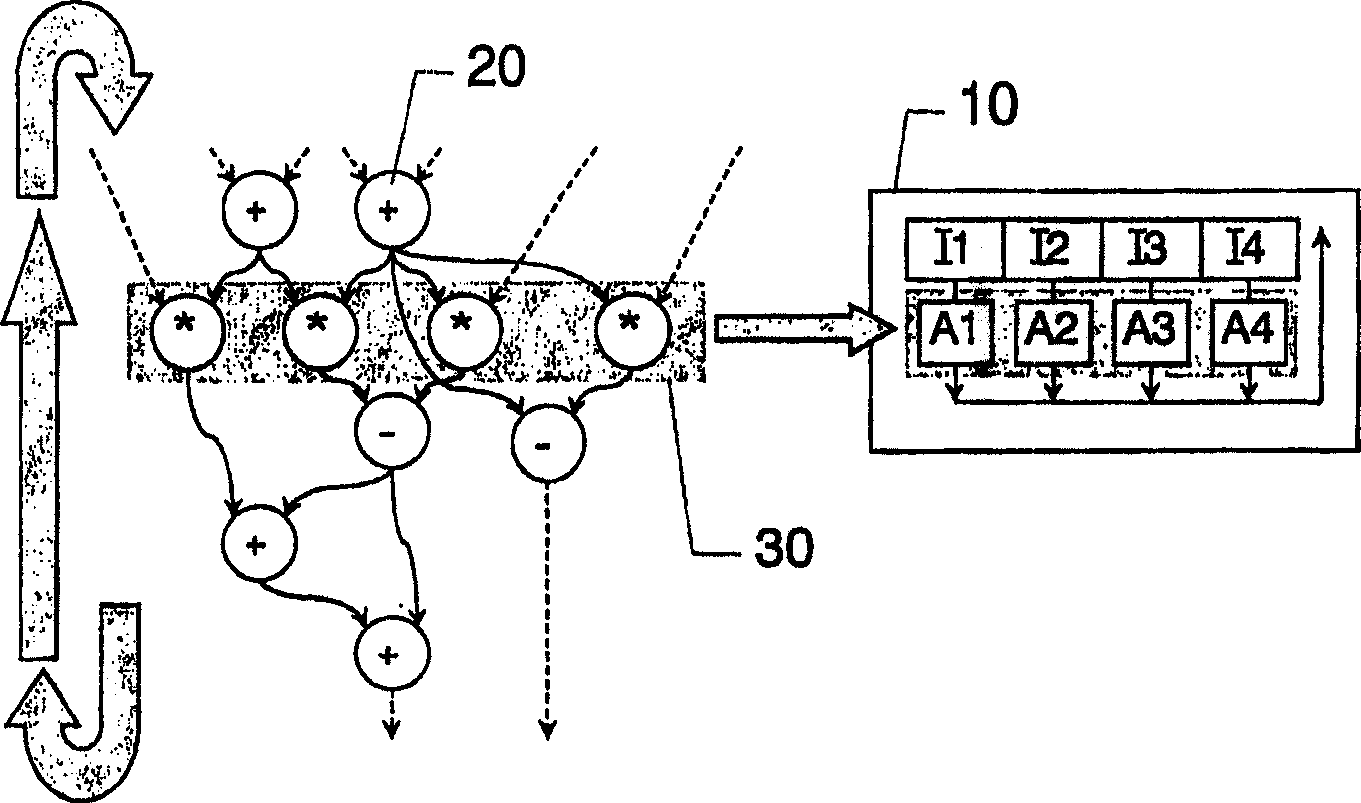

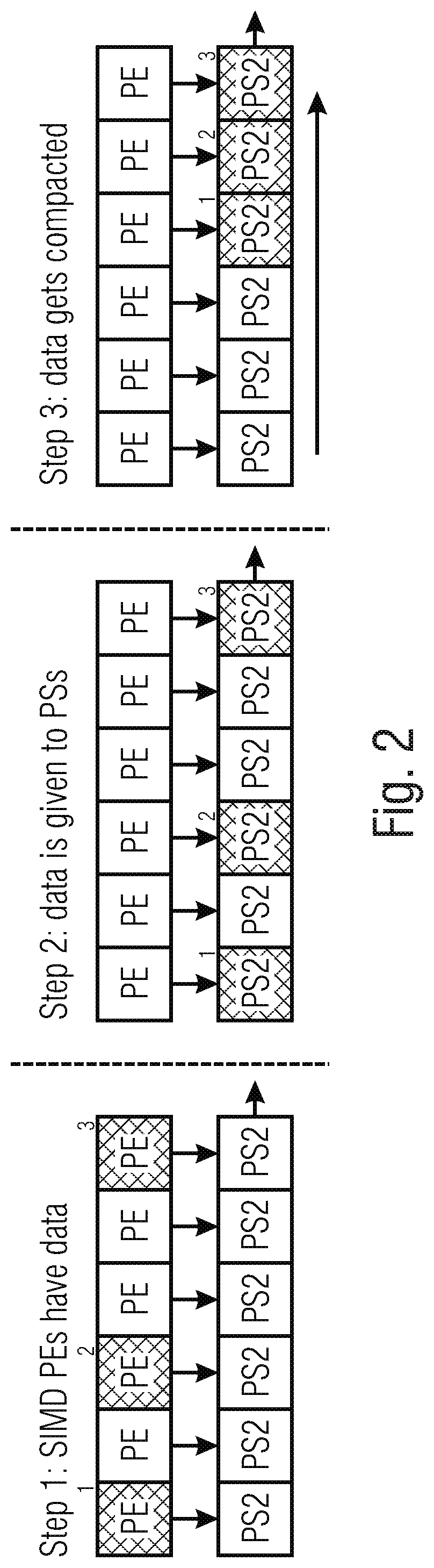

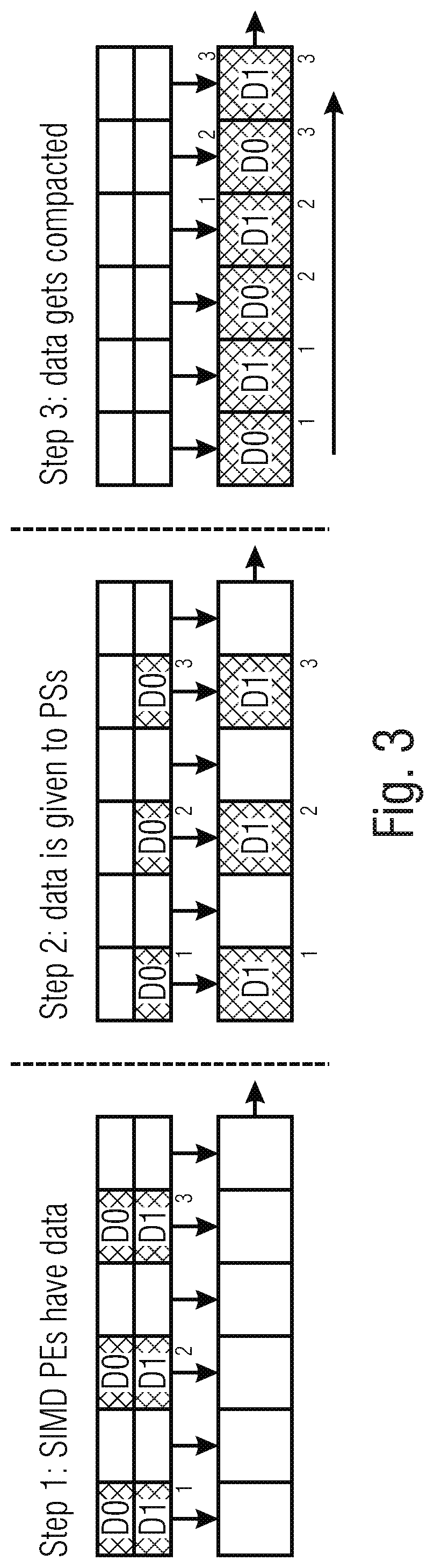

Systems and methods for automated systolic array design from a high-level program are disclosed. One implementation of a systolic array design supporting a convolutional neural network includes a two-dimensional array of reconfigurable processing elements arranged in rows and columns. Each processing element has an associated SIMD vector and is connected through a local connection to at least one other processing element. An input feature map buffer having a double buffer is configured to store input feature maps, and an interconnect system is configured to pass data to neighboring processing elements in accordance with a processing element scheduler. A CNN computation is mapped onto the two-dimensional array of reconfigurable processing elements using an automated system configured to determine suitable reconfigurable processing element parameters.

Owner:XILINX INC

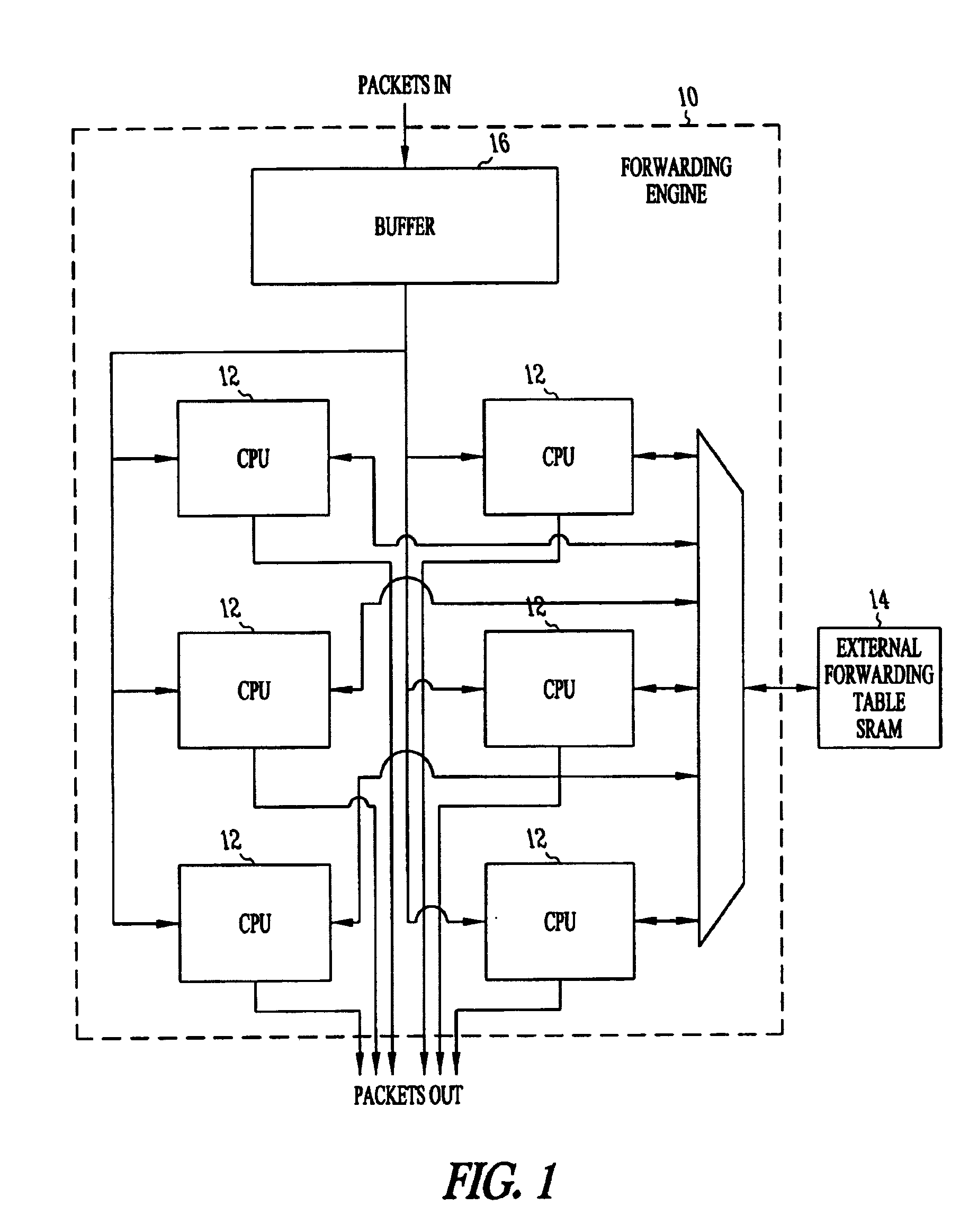

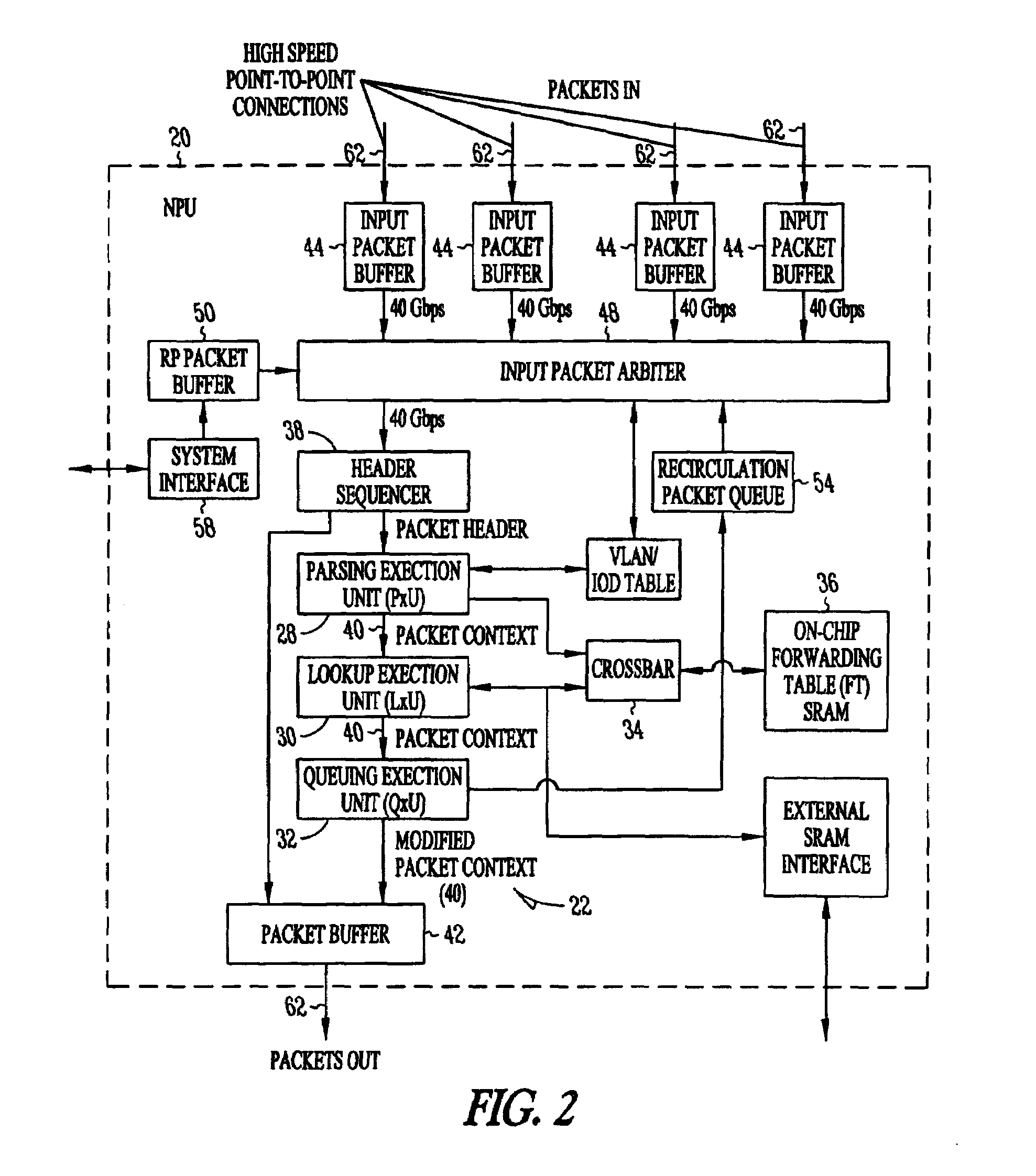

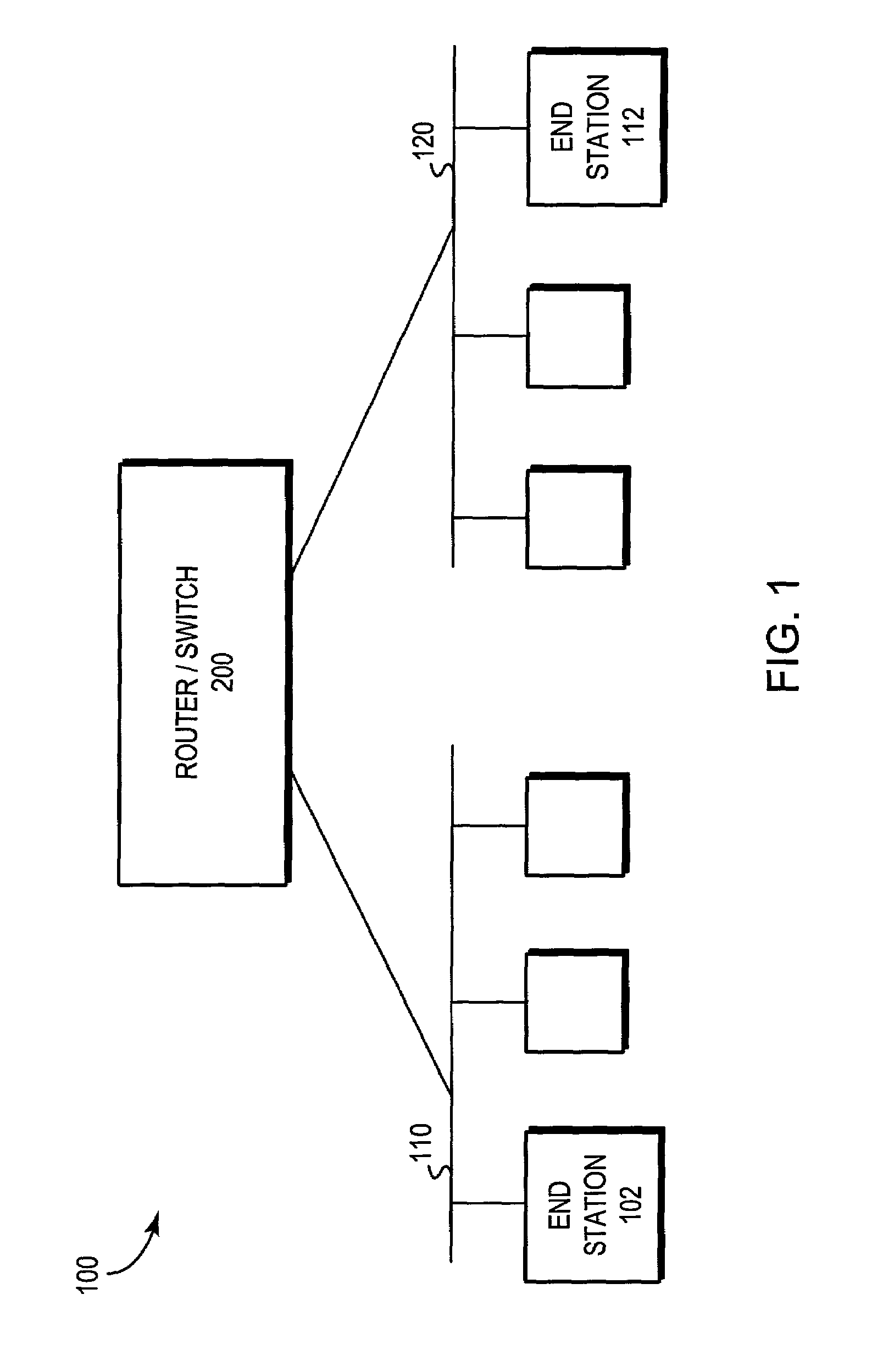

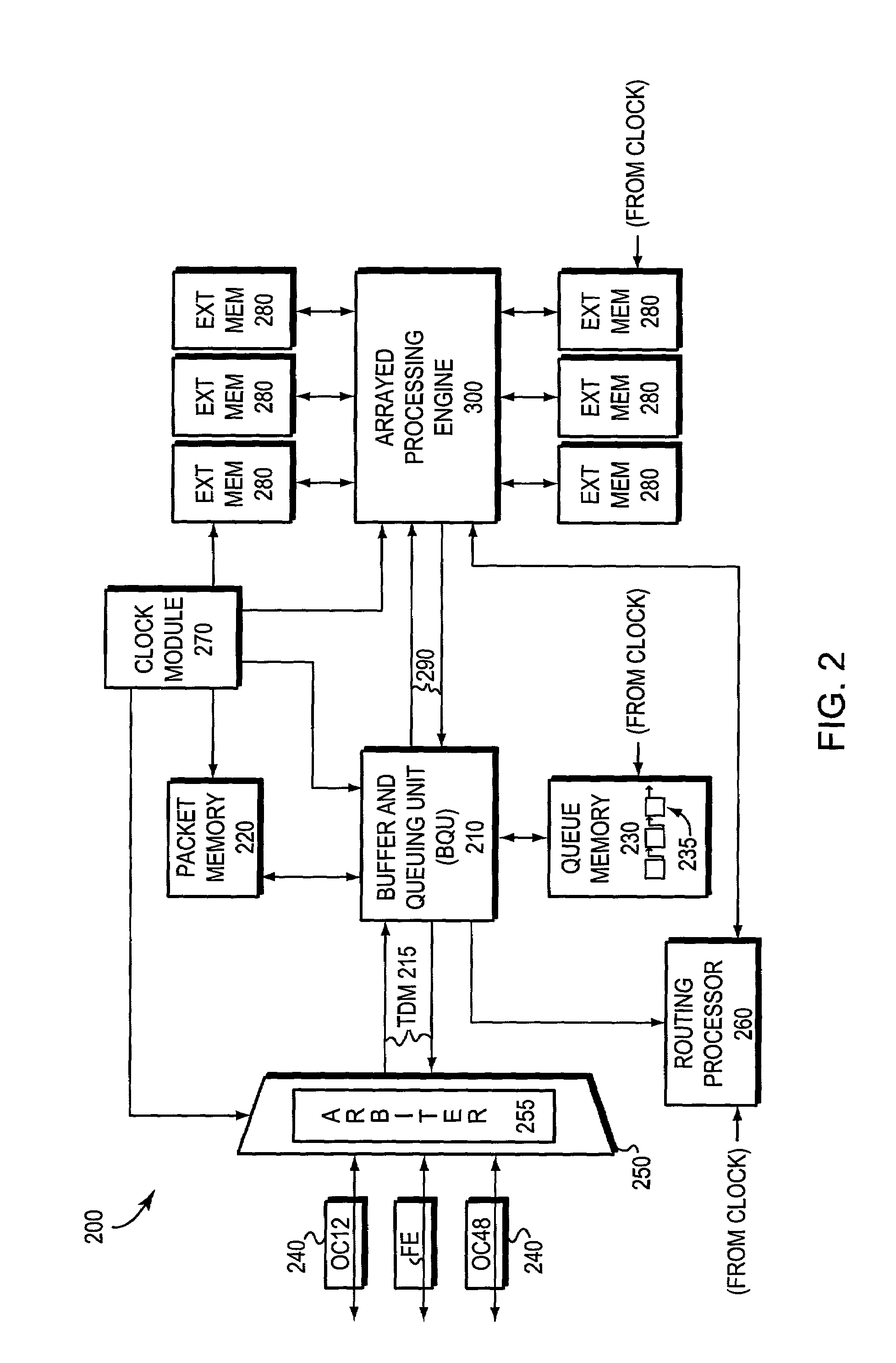

Processor having systolic array pipeline for processing data packets

InactiveUS7069372B1Input is hugeEliminate needSystolic arraysDigital data processing detailsSingle stageProcessor register

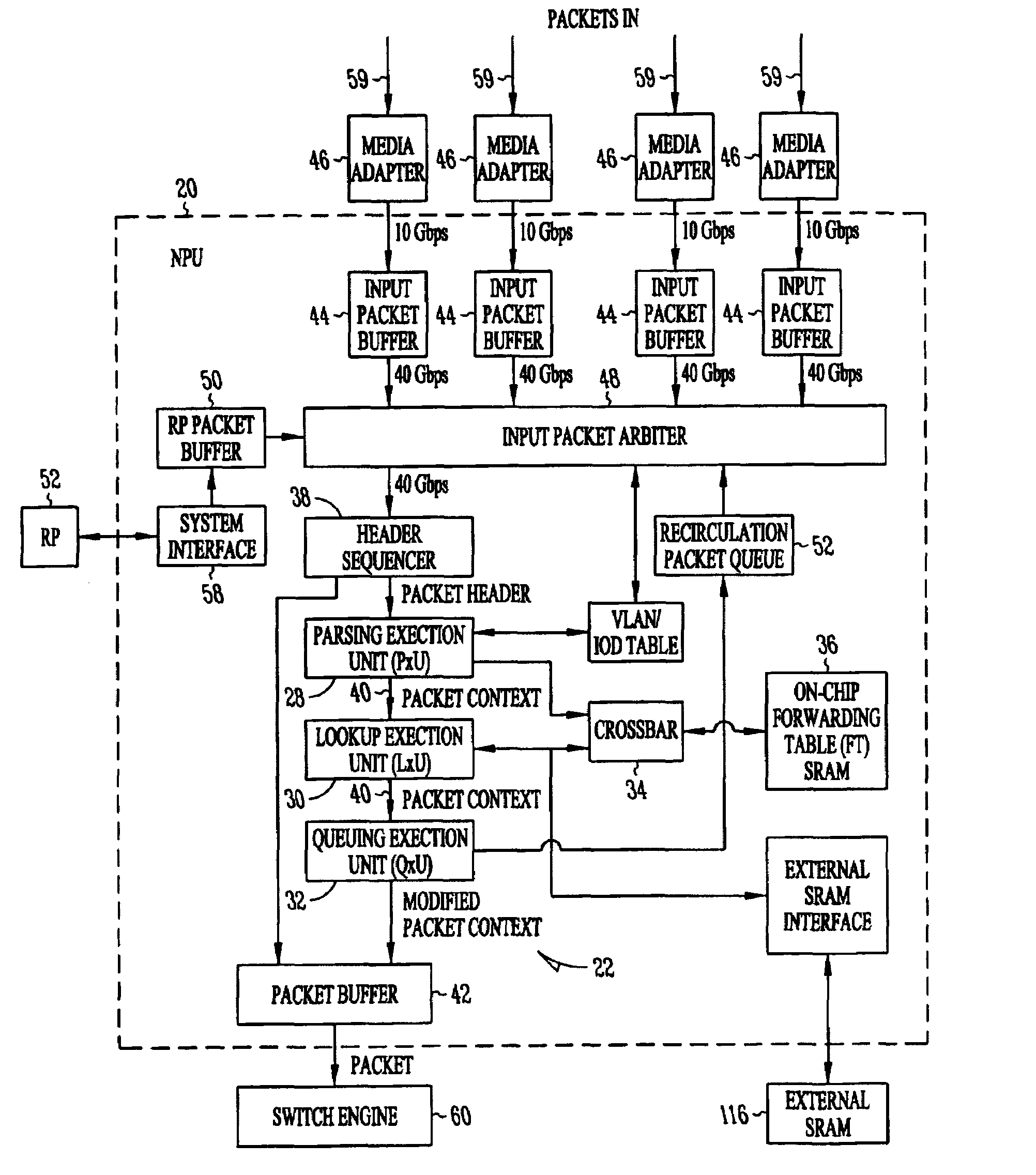

A processor for use in a router, the processor having a systolic array pipeline for processing data packets to determine to which output port of the router the data packet should be routed. In one embodiment, the systolic array pipeline includes a plurality of programmable functional units and register files arranged sequentially as stages, for processing packet contexts (which contain the packet's destination address) to perform operations, under programmatic control, to determine the destination port of the router for the packet. A single stage of the systolic array may contain a register file and one or more functional units such as adders, shifters, logical units, etc., for performing, in one example, very long instruction word (vliw) operations. The processor may also include a forwarding table memory, on-chip, for storing routing information, and a cross bar selectively connecting the stages of the systolic array with the forwarding table memory.

Owner:CISCO TECH INC

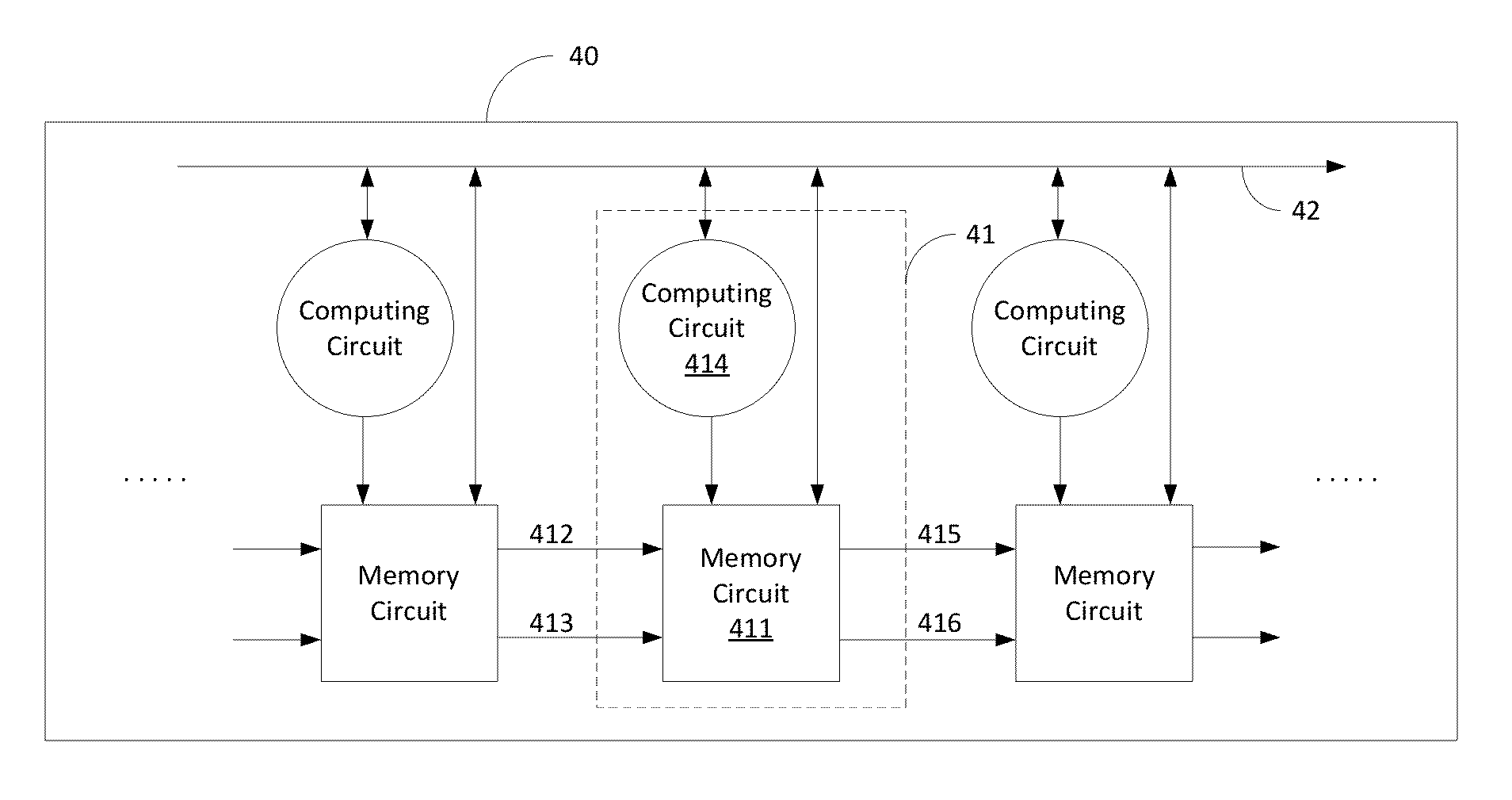

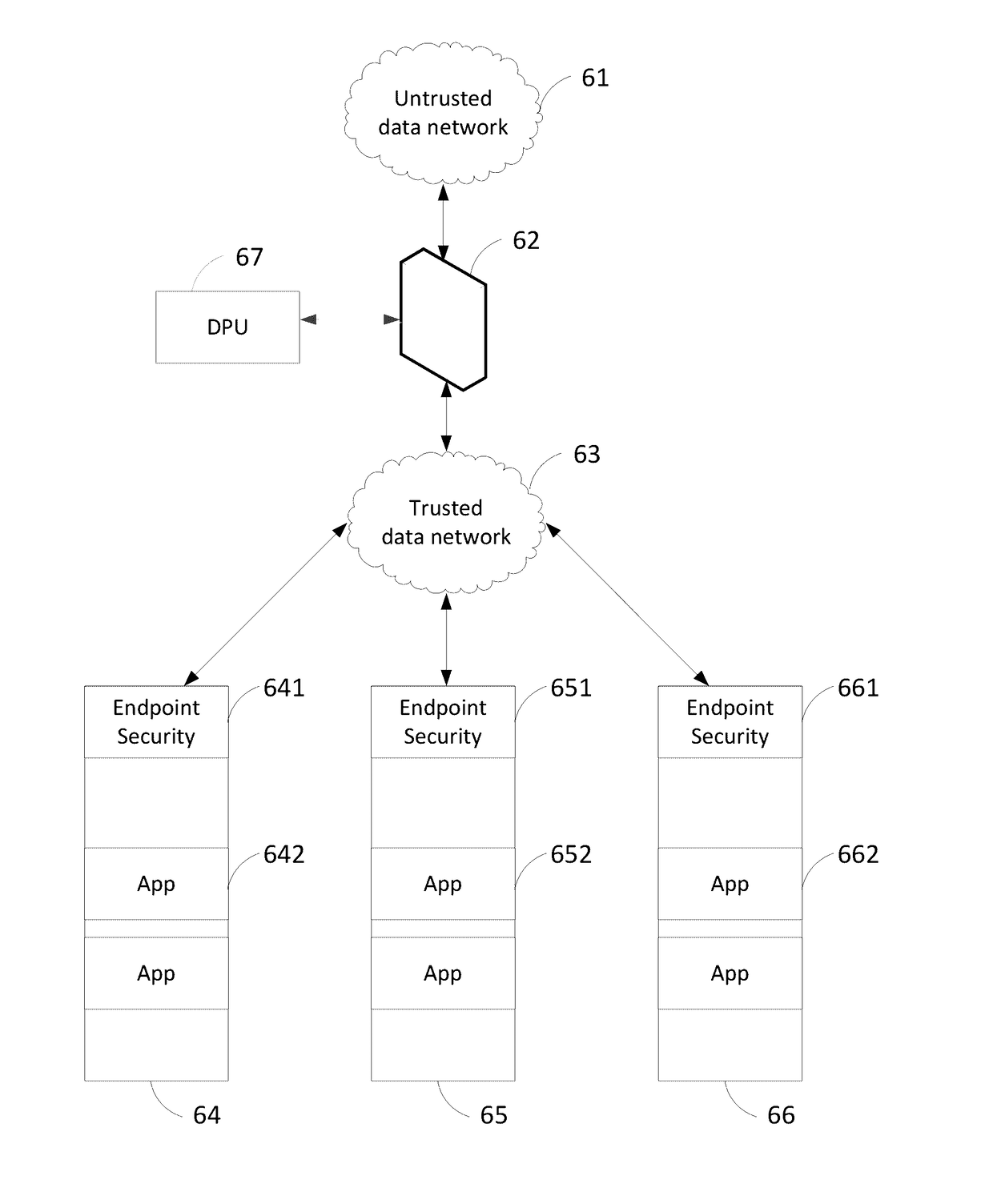

Computing Architecture for Operating on Sequential Data

ActiveUS20150127925A1Increase speedLarge scaleSystolic arraysElectric digital data processingData stream processingParallel computing

A data stream processing unit (DPU) and method for use are provided. A DPU includes a number of processing elements arranged in a sequence, and each datum in the data stream visits each processing element in sequence. Each processing element has a memory circuit, data and metadata input and output channels, and a computing circuit. The metadata input represents a partial computational state that is associated with each datum as it passes through the DPU. The computing circuit for each processing element operates on the data and metadata inputs as a function of its position in the sequence, producing an altered partial computational state that accompanies the datum. Each computing circuit may be modeled, for example, as a finite state machine, and the collection of processing elements cooperate to perform the computation. The computing circuits may be collectively programmed to perform any desired computation.

Owner:LEWIS RHODES LABS

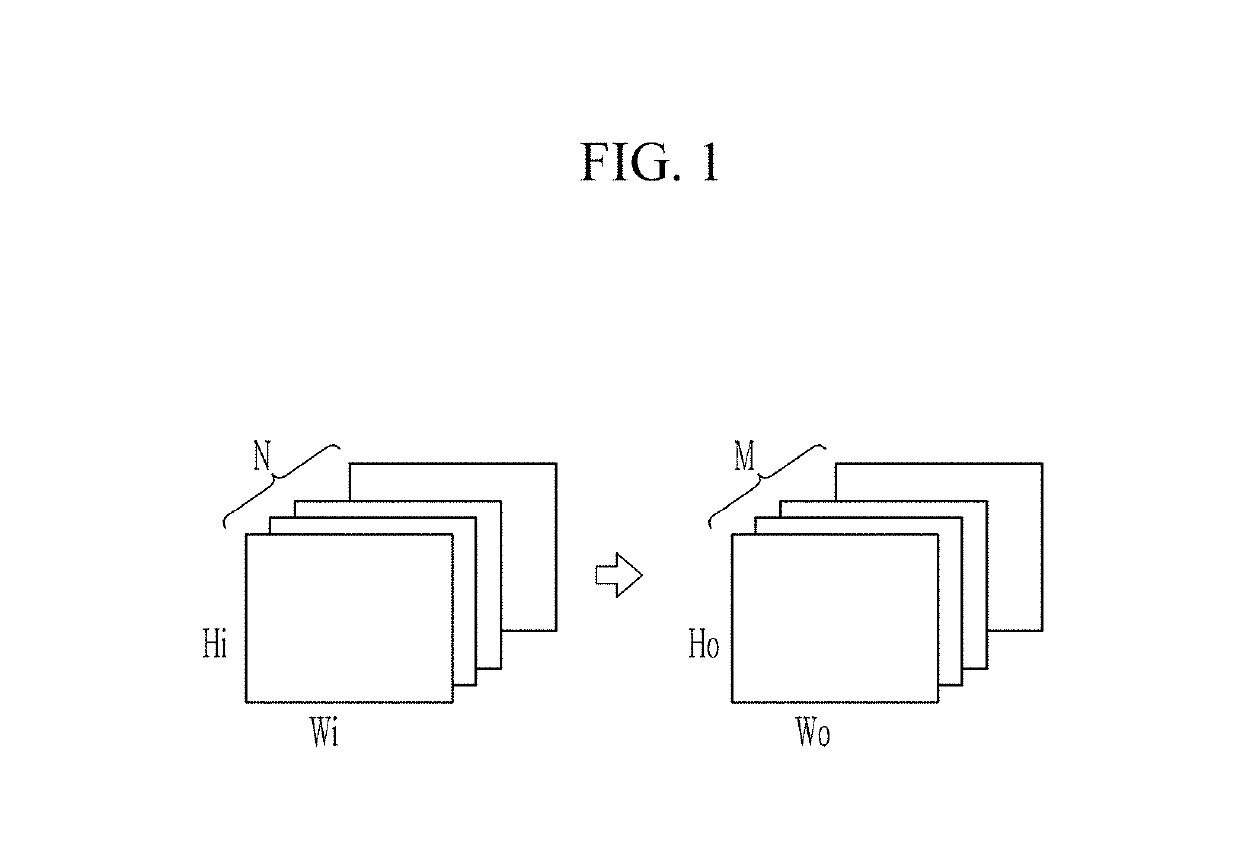

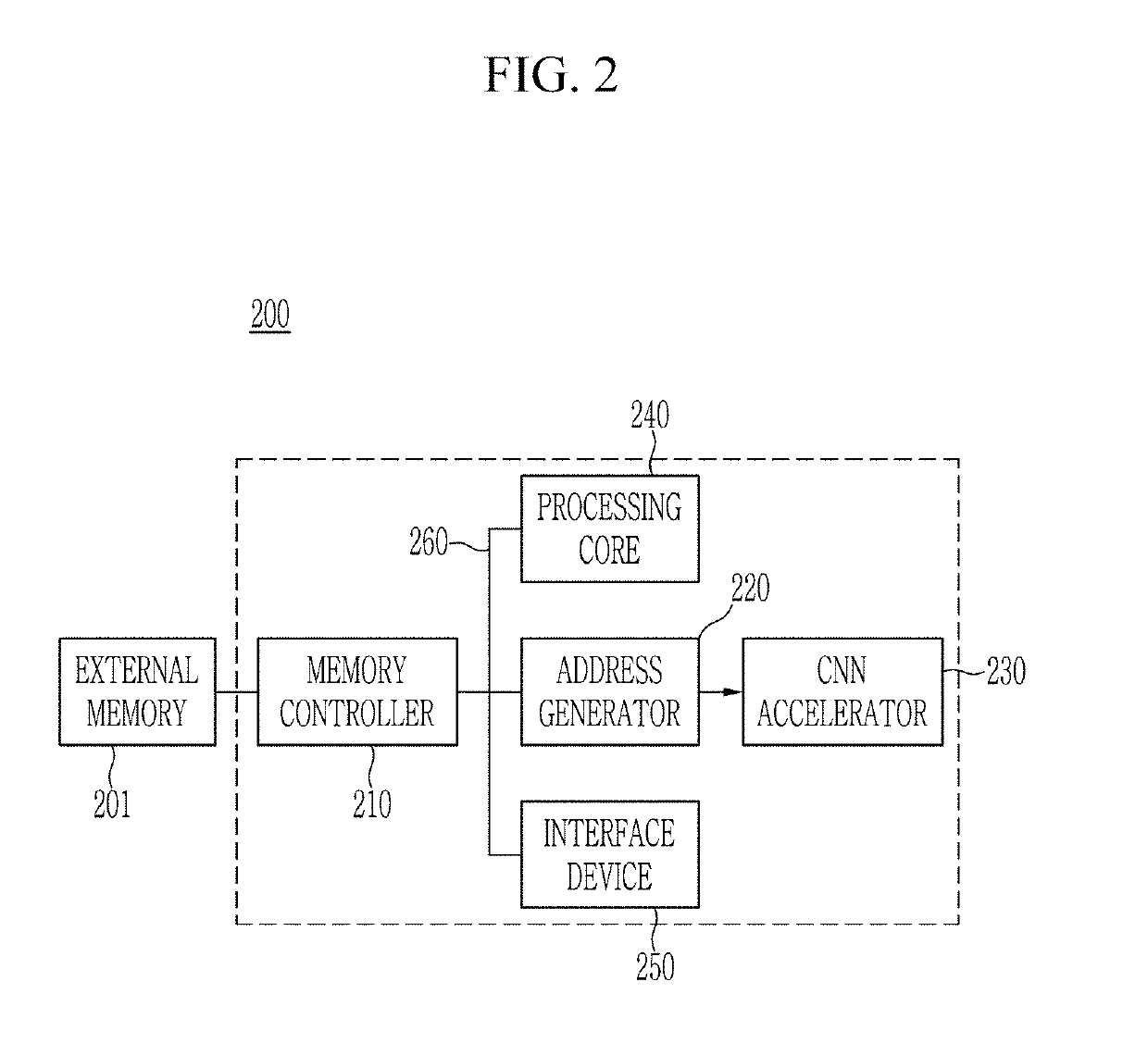

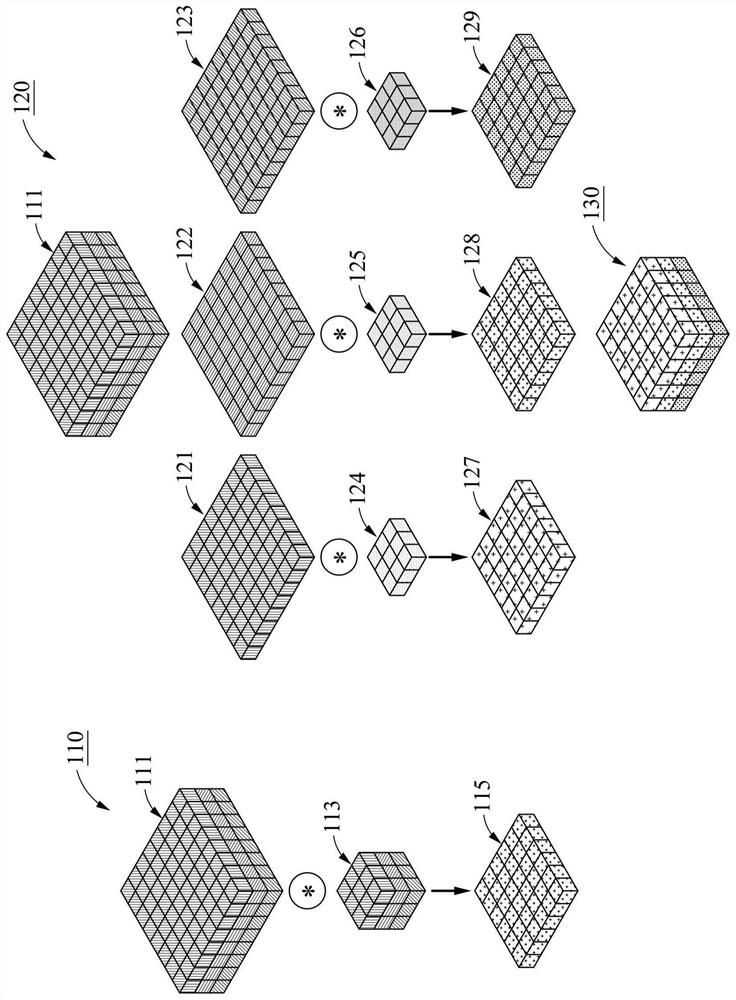

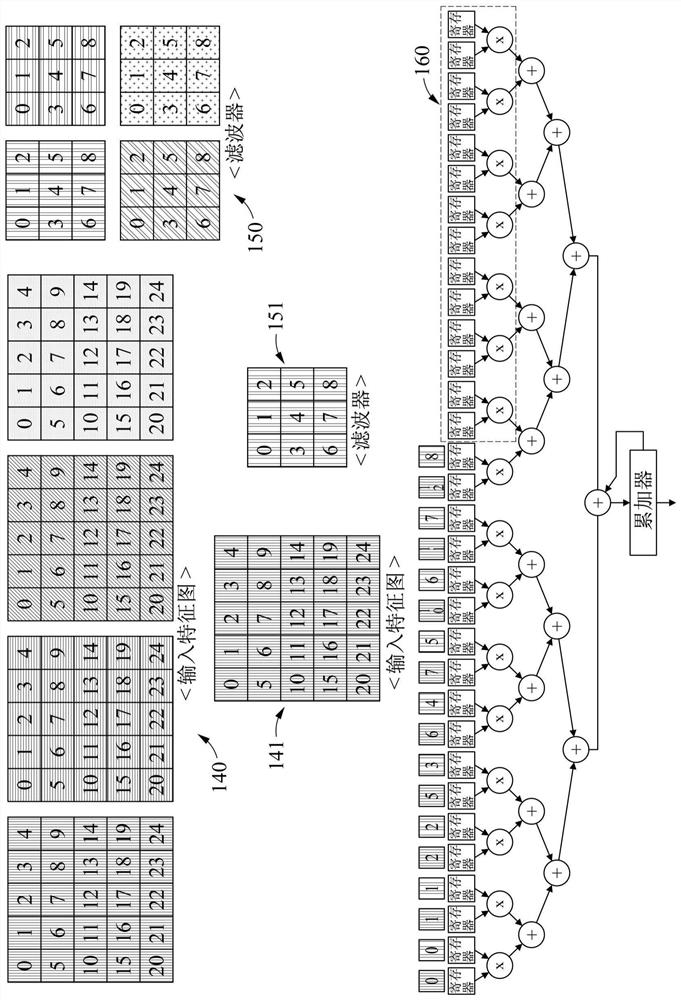

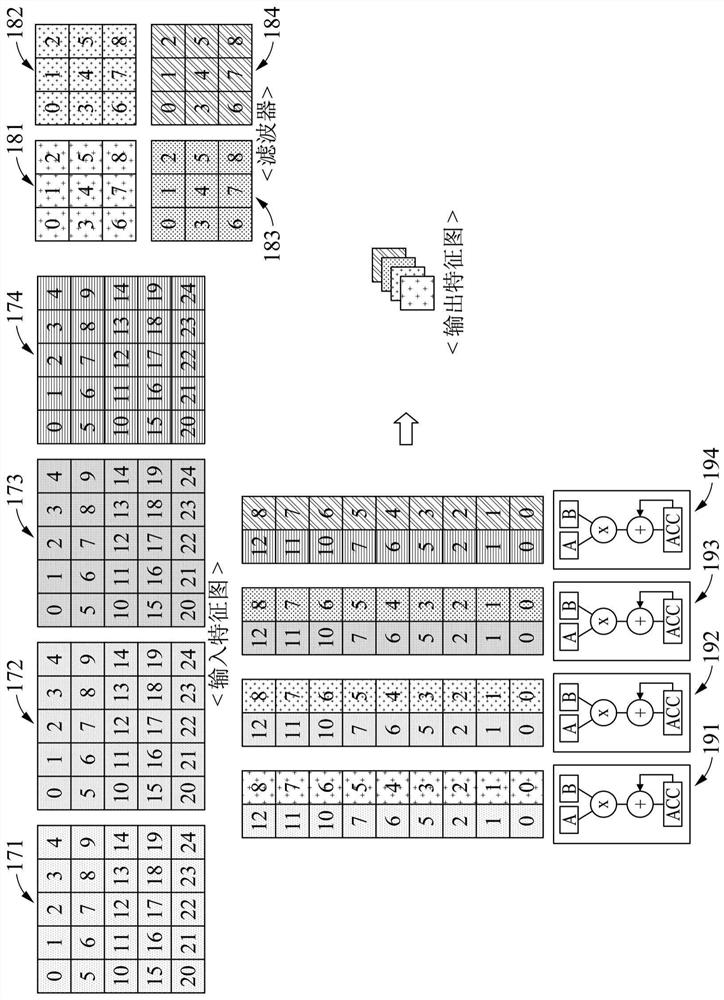

Apparatus for processing convolutional neural network using systolic array and method thereof

InactiveUS20190164037A1Efficient storageEasy to useSystolic arraysNeural architecturesAddress generatorFeature mapping

In the present invention, by providing an apparatus for processing a convolutional neural network (CNN), including a weight memory configured to store a first weight group of a first layer, a feature map memory configured to store an input feature map where the first weight group is to be applied, an address generator configured to determine a second position spaced from a first position of a first input pixel of the input feature map based on a size of the first weight group, and determine a plurality of adjacent pixels adjacent to the second position; and a processor configured to apply the first weight group to the plurality of adjacent pixels to obtain a first output pixel corresponding to the first position, a memory space may be efficiently used by saving the memory space.

Owner:ELECTRONICS & TELECOMM RES INST

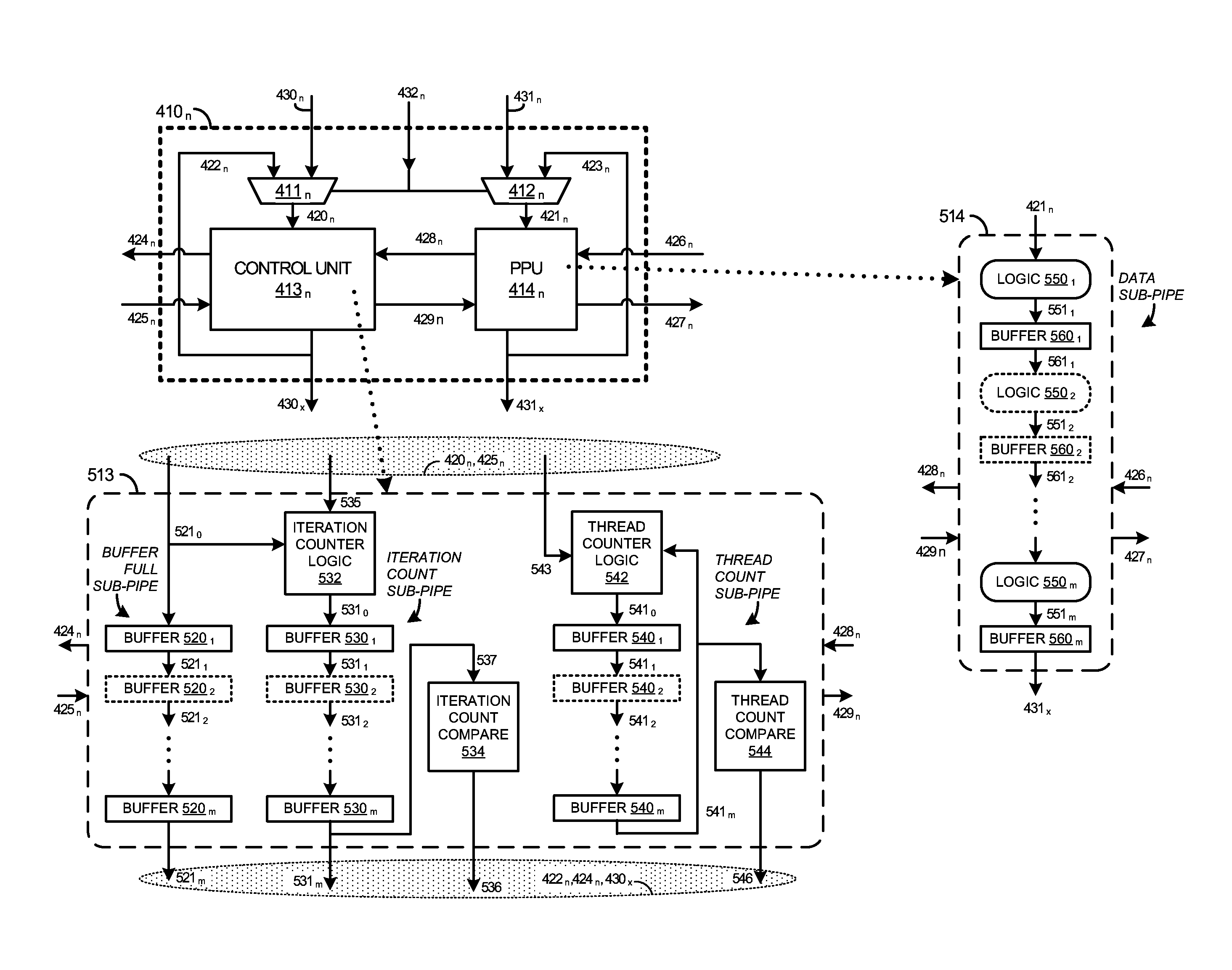

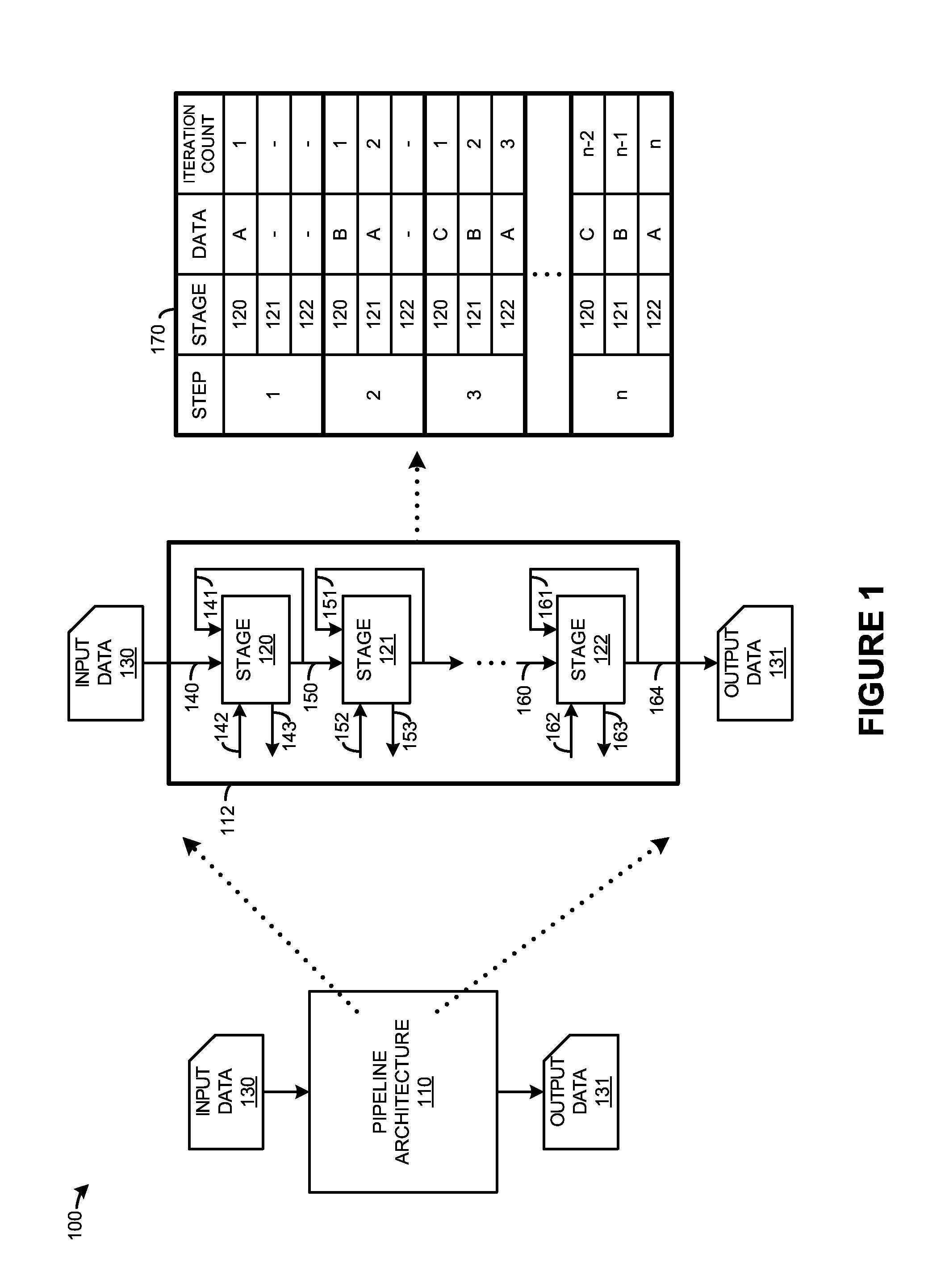

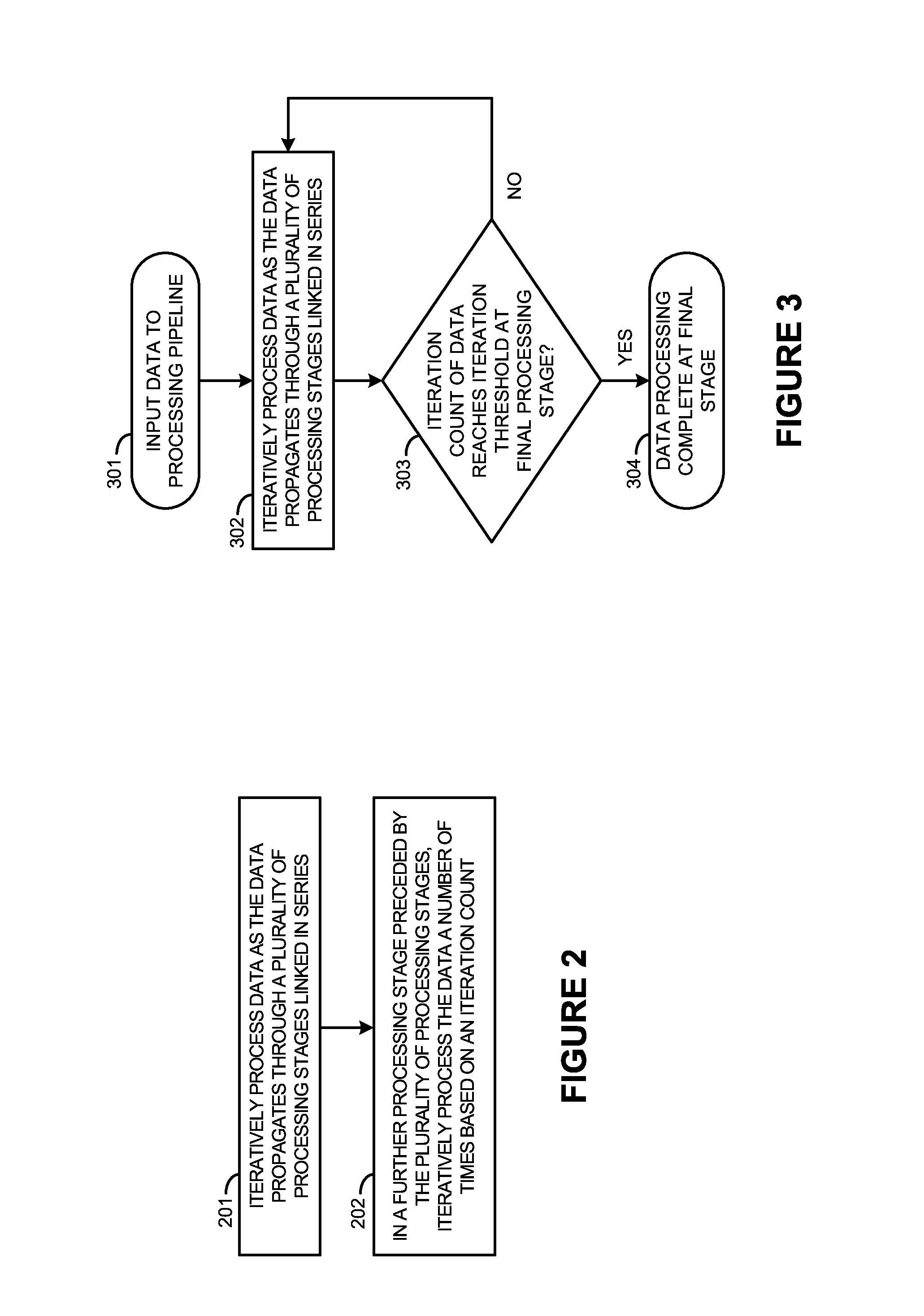

Dynamically controlled pipeline processing

ActiveUS20130212352A1Systolic arraysConcurrent instruction executionComputer hardwareComputer architecture

Systems, apparatuses, methods, and software for processing data in pipeline architectures are provided herein. In one example, a pipeline architecture is presented. The pipeline architecture includes a plurality of processing stages, linked in series, that iteratively process data as the data propagates through the plurality of processing stages. The pipeline architecture includes at least one other processing stage linked in series with and preceded by the plurality of processing stages and configured to iteratively process the data a number of times based at least on an iteration count comprising how many times the data was iteratively processed as the data propagated through the plurality of processing stages.

Owner:ANDERSON WILLIAM ERIK

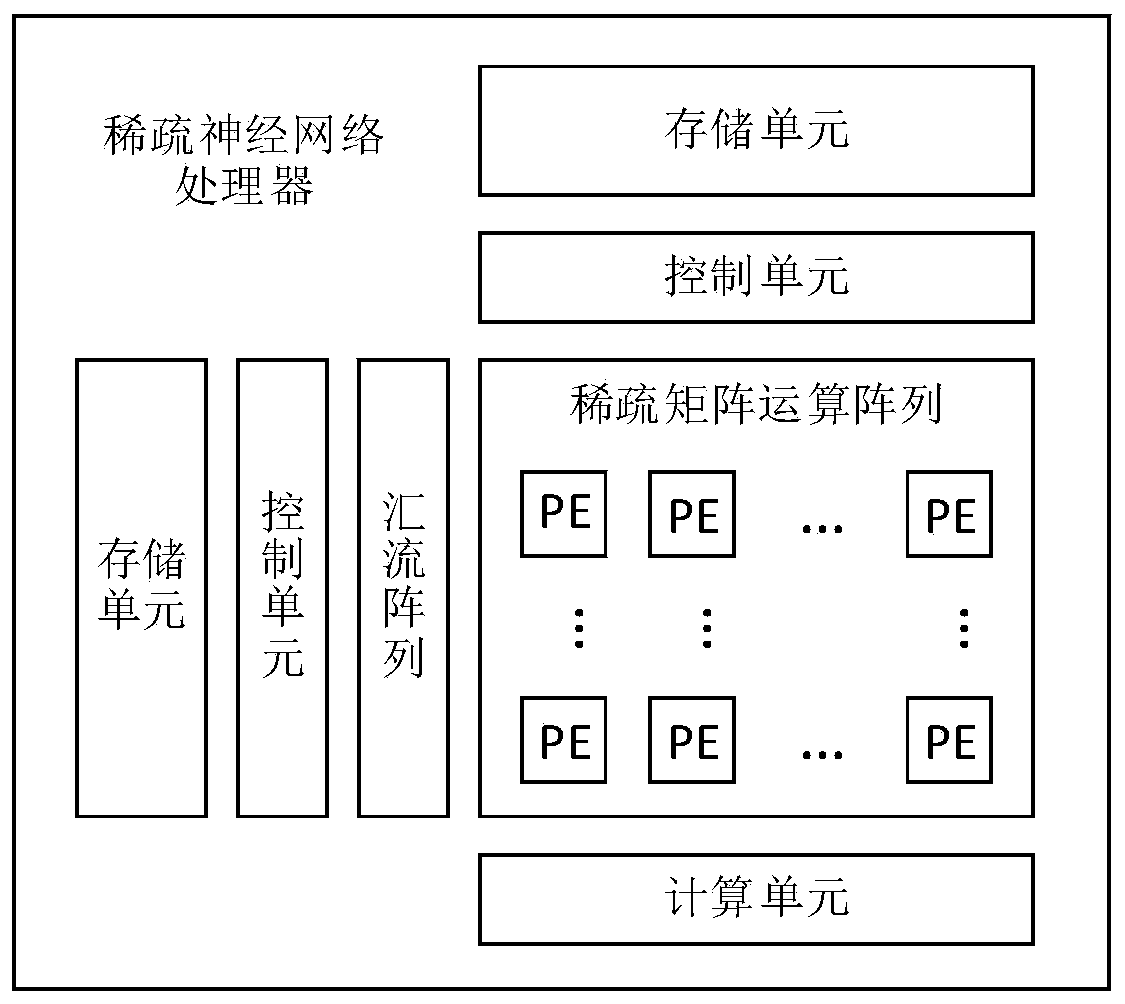

Sparse neural network processor based on systolic array

ActiveCN110705703AEliminate data redundancyHigh concurrencySystolic arraysNeural architecturesActivation functionData transformation

The invention provides a sparse neural network processor based on a systolic array. The sparse neural network processor comprises a storage unit, a control unit, a sparse matrix operation array, a calculation unit and a confluence array. The storage unit is used for storing weights, gradients, features and instruction sequences used for scheduling data streams. The control unit takes out data required by the training and reasoning process from the storage unit according to the control of the instruction sequence, converts the data into a sparse matrix operation format and sends the data into the sparse matrix operation array. The sparse matrix operation array comprises a plurality of processing units connected in a systolic array mode and is used for completing sparse matrix operation. Thecalculation unit is used for completing element-by-element operation such as a nonlinear activation function. The confluence array delivers the same data segment to different rows of the systolic array through internal data transfer to reduce storage overhead. The processor makes full use of the sparsity of the weight and the characteristics, achieves the improvement of the speed and power consumption ratio in the neural network training and reasoning process, and has the advantages of high concurrency, low bandwidth requirements and the like.

Owner:BEIHANG UNIV

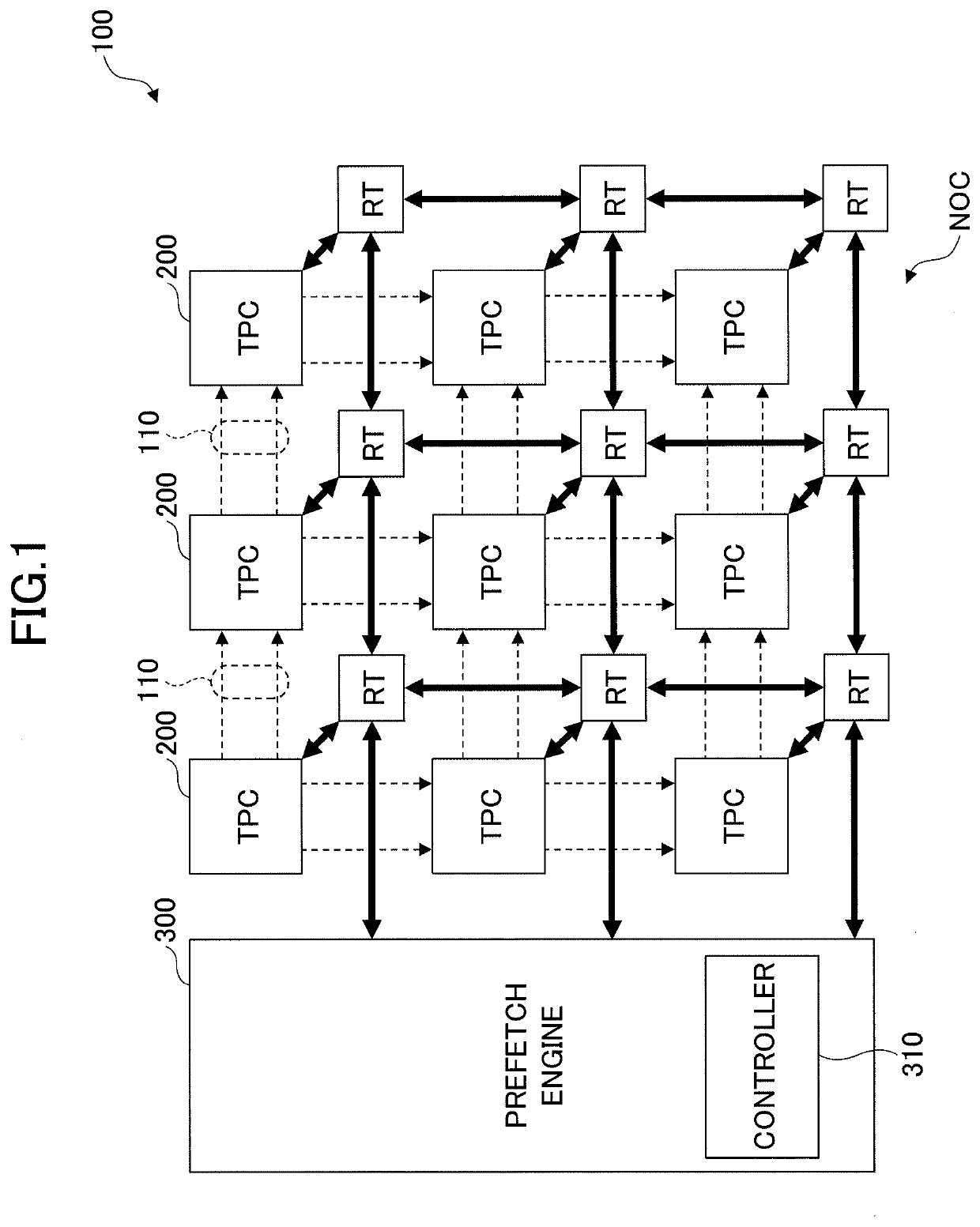

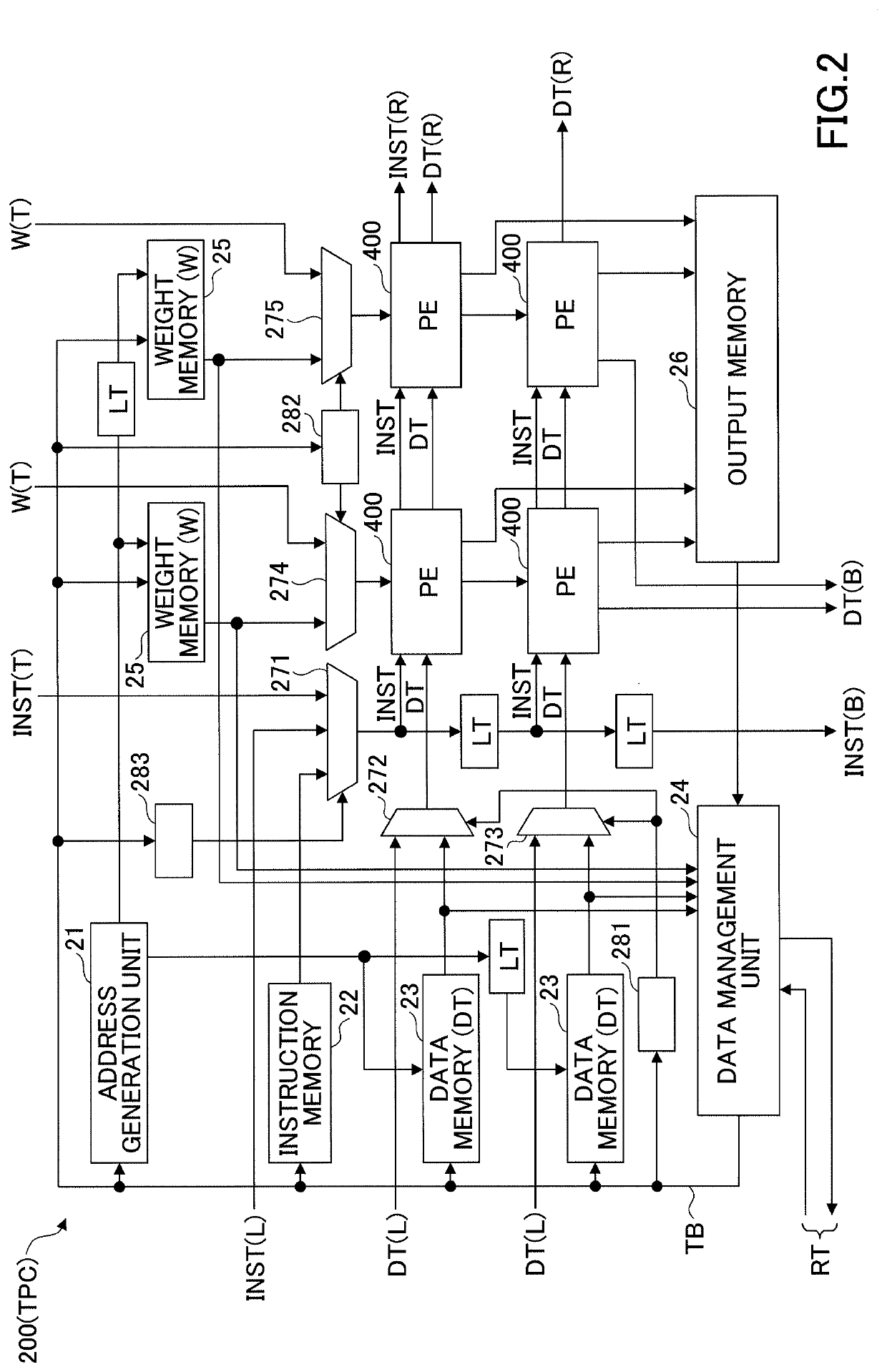

Processor and control method for processor

ActiveUS20200150958A1Efficiently perform operationGuaranteed uptimeSystolic arraysInstruction analysisMultiplexingComputer architecture

A processor having a systolic array that can perform operations efficiently is provided. The processor includes multiple processing cores aligned in a matrix, and each of the processing cores includes an arithmetic unit array including multiple arithmetic units that can form a systolic array. Each of the processing cores includes a first memory that stores first data, a second memory that stores second data, a first multiplexer that connects a first input for receiving the first data at the arithmetic unit array to an output of the first memory in the processing core or an output of the arithmetic unit array in an adjacent processing core, and a second multiplexer that connects a second input for receiving the second data at the arithmetic unit array to an output of the second memory in the processing core or an output of the arithmetic unit array in an adjacent processing core.

Owner:PREFERRED NETWORKS INC

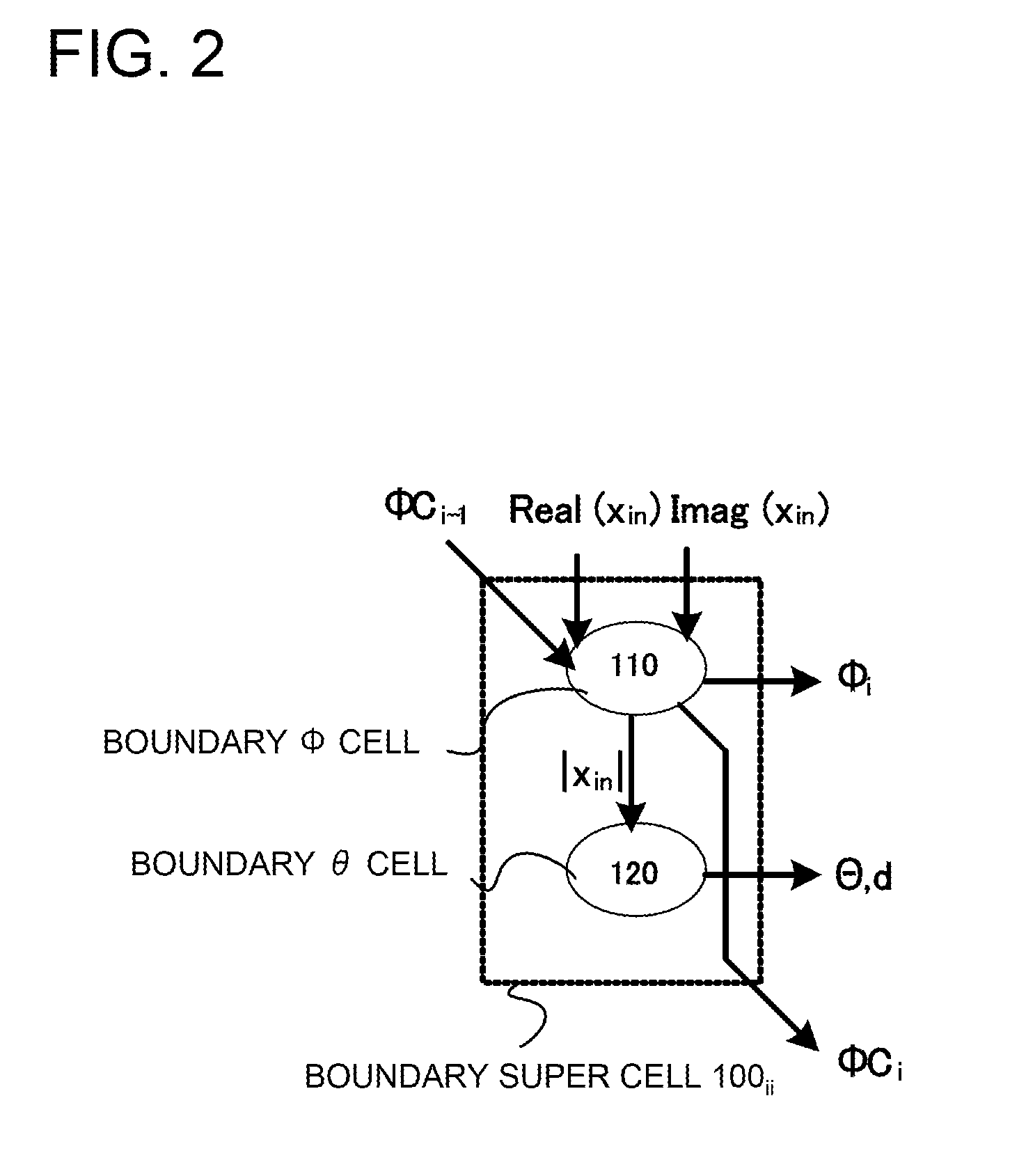

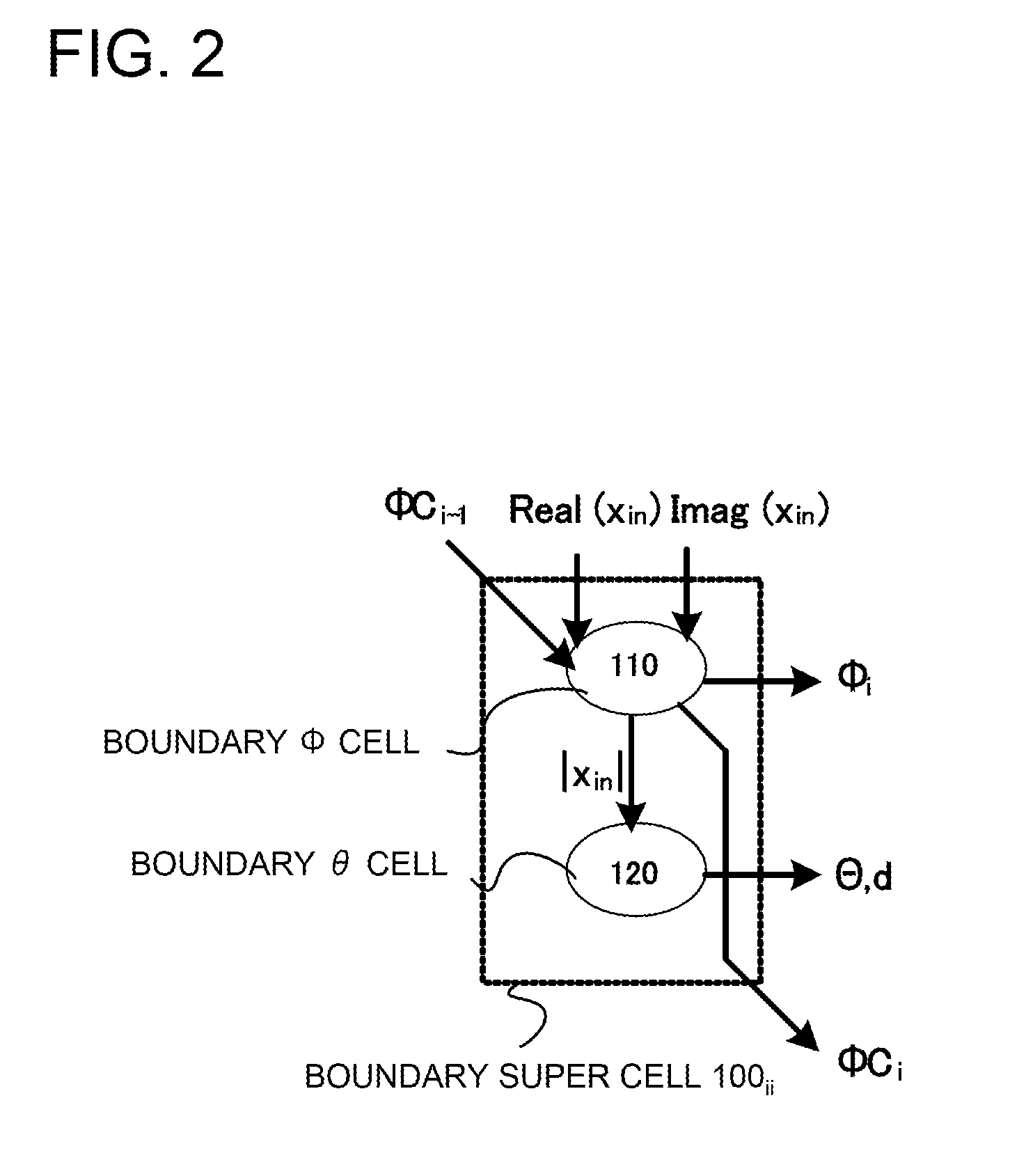

Boundary synchronization mechanism for a processor of a systolic array

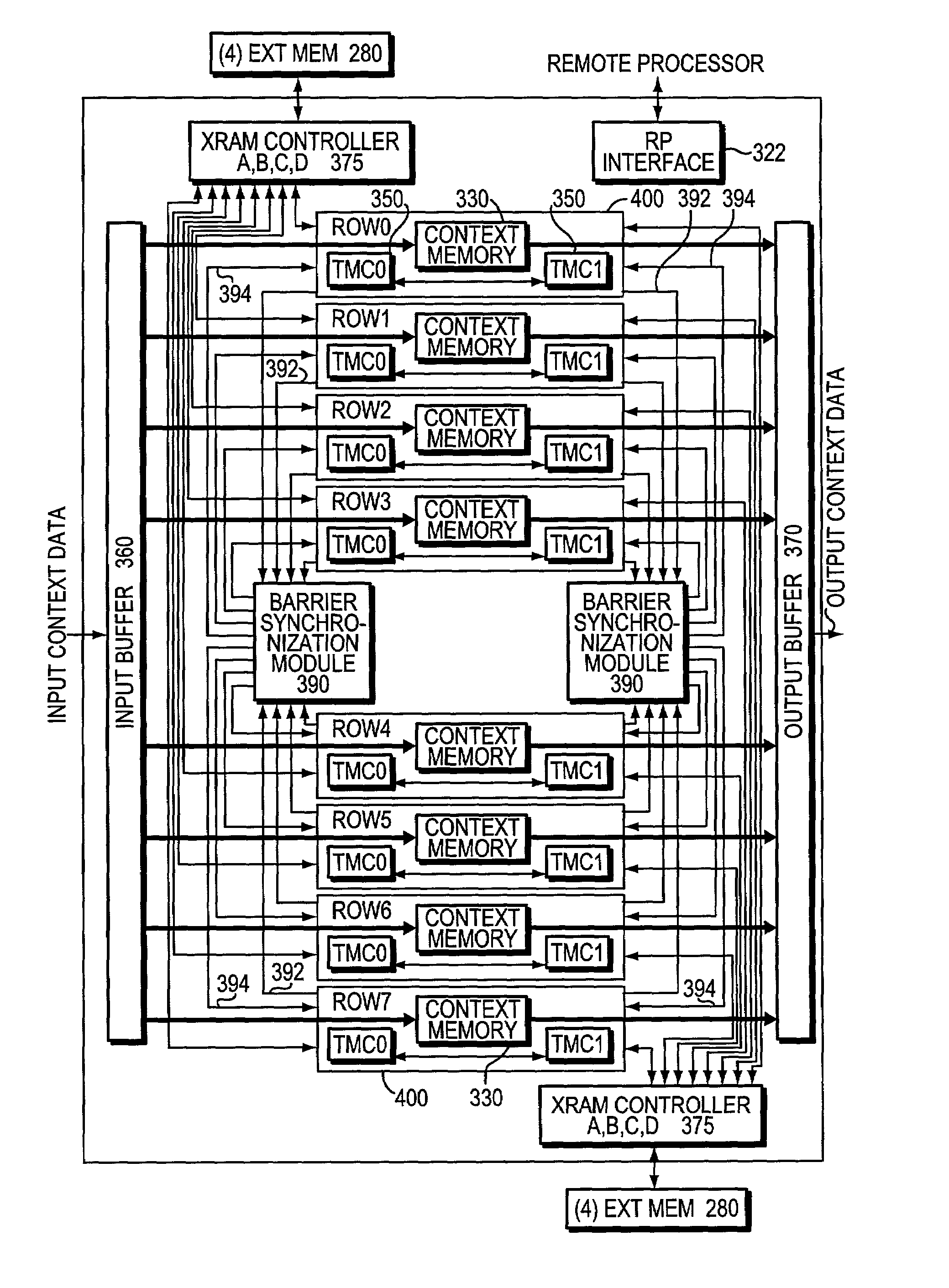

InactiveUS6986022B1Without consuming substantial memory resourceImprove latencySystolic arraysElectric digital data processingMicro-operationSystolic array

A mechanism synchronizes instruction code executing on a processor of a processing engine in an intermediate network station. The processing engine is configured as a systolic array having a plurality of processors arrayed as rows and columns. The mechanism comprises a boundary (temporal) synchronization mechanism for cycle-based synchronization within a processor of the array. The synchronization mechanism is generally implemented using specialized synchronization micro operation codes (“opcodes”).

Owner:CISCO TECH INC

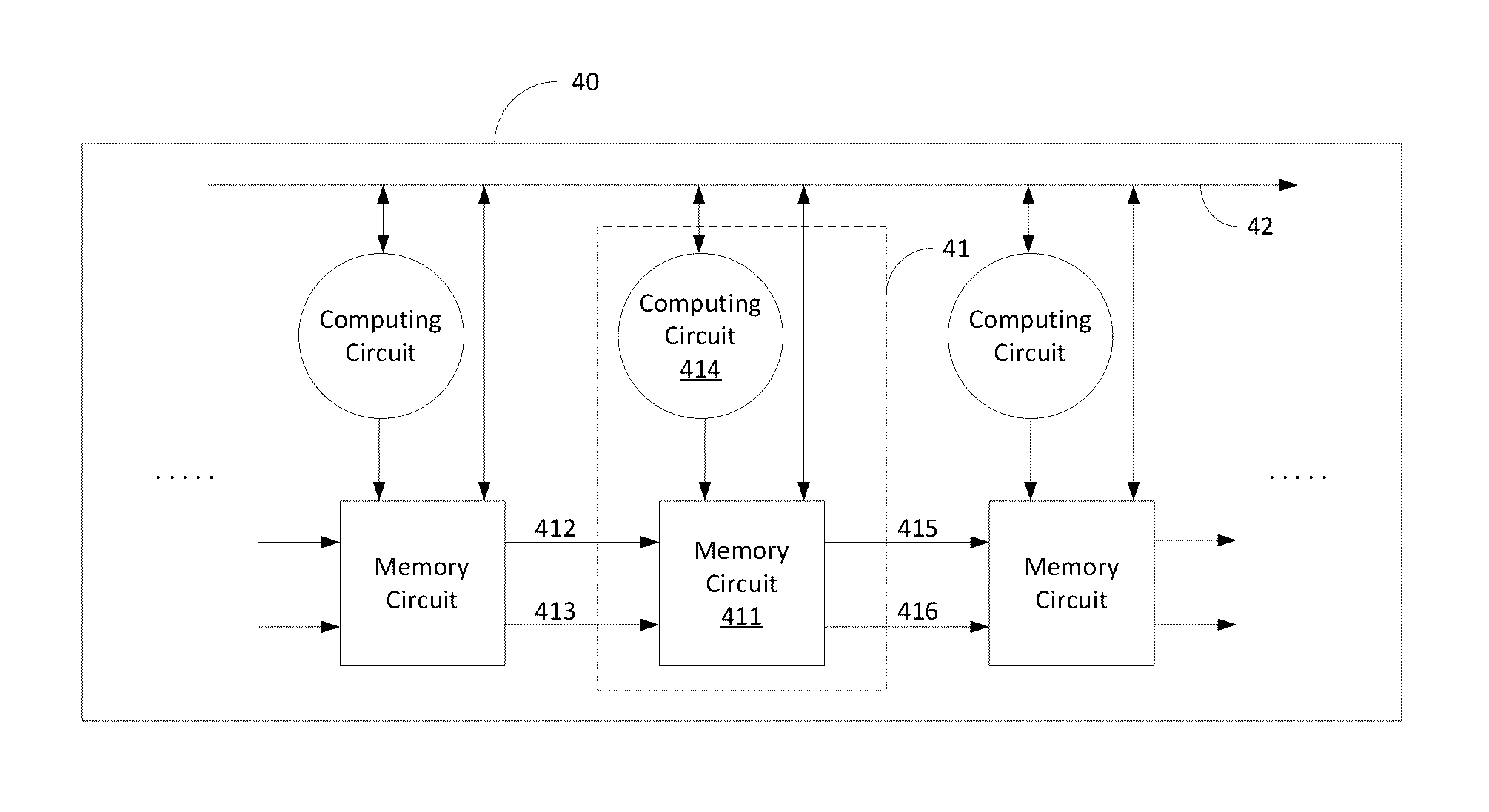

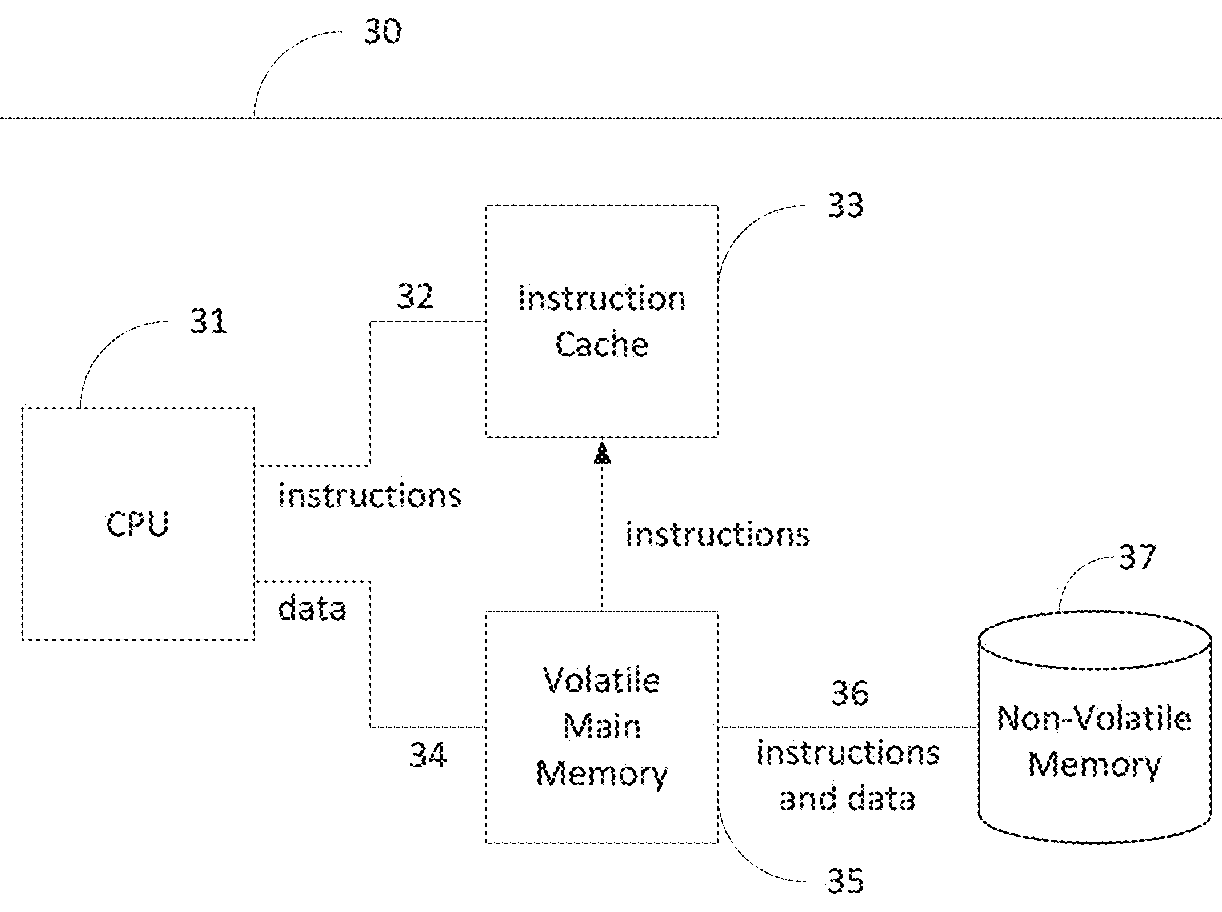

Computing architecture for operating on sequential data

ActiveUS9563599B2Increase speedLarge scaleSystolic arraysProgram controlData stream processingParallel computing

A data stream processing unit (DPU) and method for use are provided. A DPU includes a number of processing elements arranged in a sequence, and each datum in the data stream visits each processing element in sequence. Each processing element has a memory circuit, data and metadata input and output channels, and a computing circuit. The metadata input represents a partial computational state that is associated with each datum as it passes through the DPU. The computing circuit for each processing element operates on the data and metadata inputs as a function of its position in the sequence, producing an altered partial computational state that accompanies the datum. Each computing circuit may be modeled, for example, as a finite state machine, and the collection of processing elements cooperate to perform the computation. The computing circuits may be collectively programmed to perform any desired computation.

Owner:LEWIS RHODES LABS

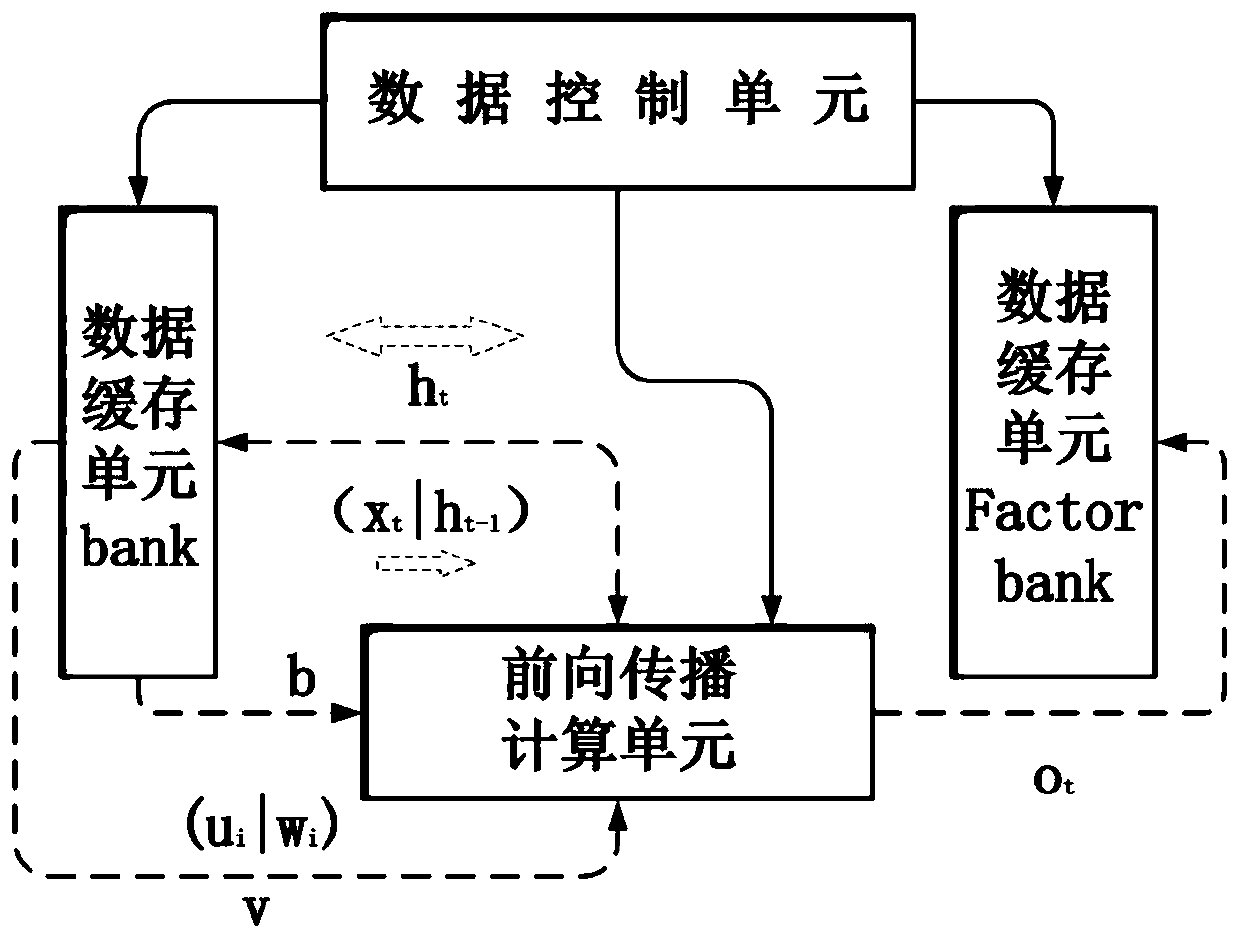

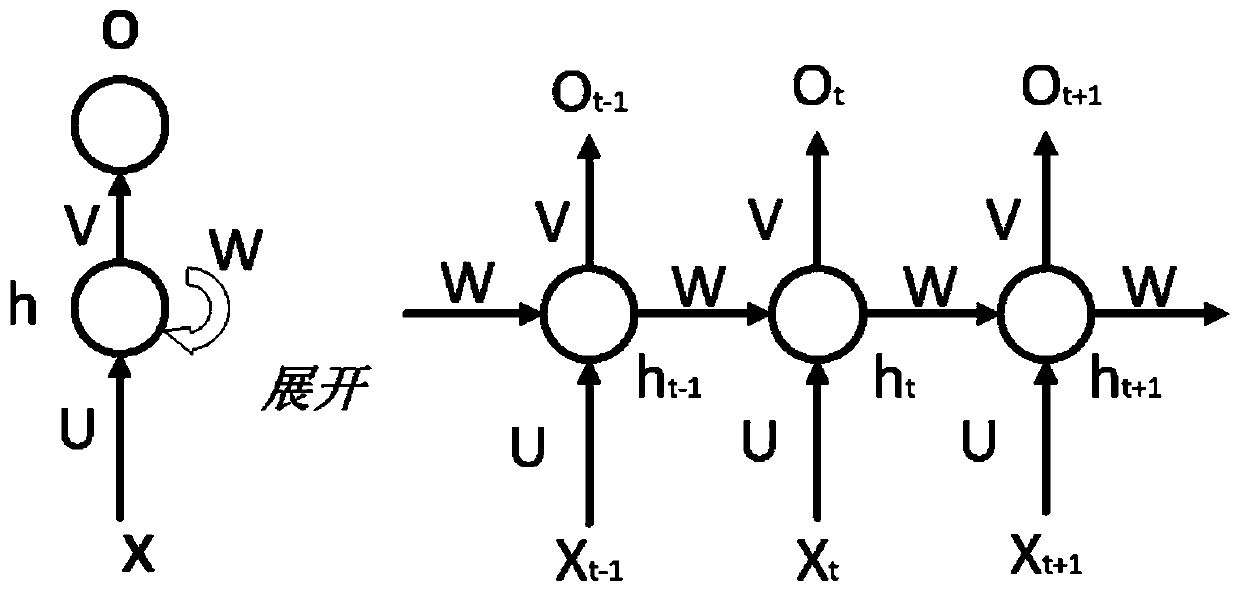

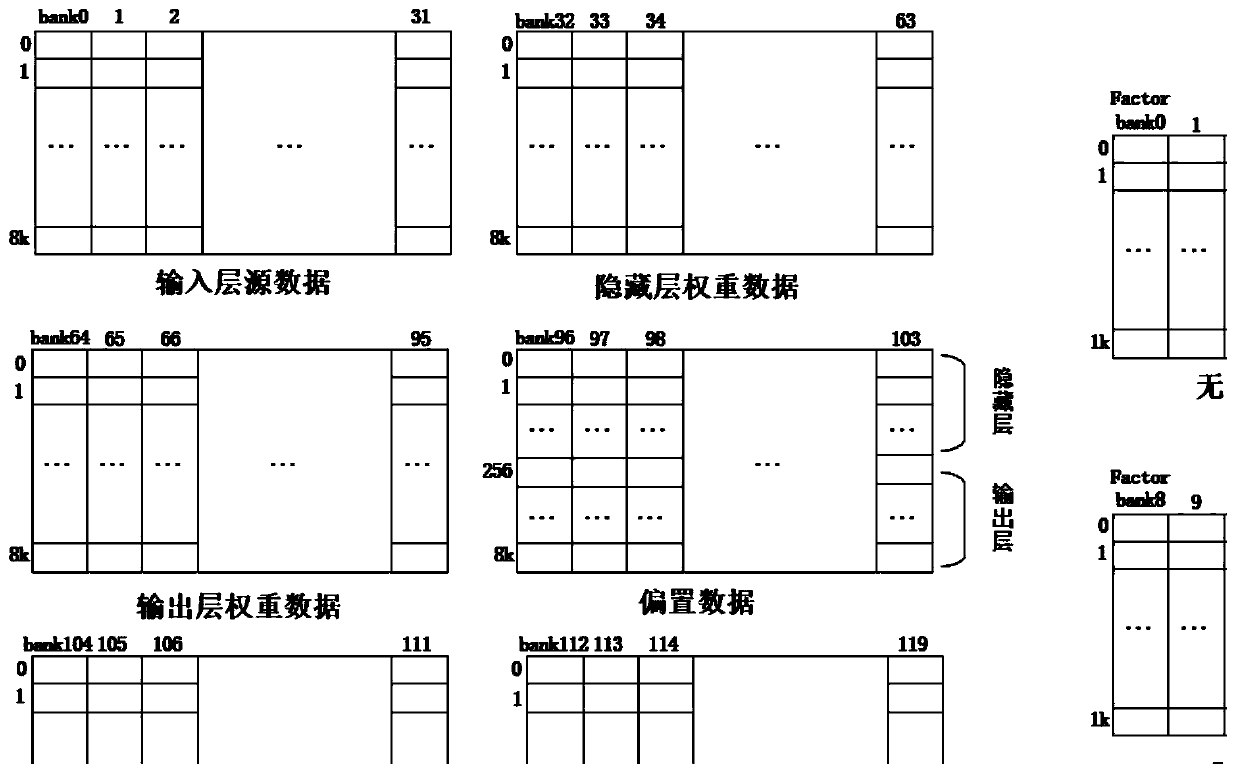

Hardware acceleration implementation system and method for RNN forward propagation model based on transverse pulsation array

ActiveCN110826710AImprove utilization efficiencyImprove scalabilitySystolic arraysNeural architecturesHidden layerActivation function

The invention discloses a hardware acceleration implementation system and a method for an RNN forward propagation model based on a transverse pulsation array. The method comprises the steps of firstly, configuring network parameters, initializing data, lateral systolic array, wherein a blocking design is adopted in the weight in calculation; partitioning a weight matrix calculated by the hidden layer according to rows; carrying out matrix multiplication vector and vector summation operation and activation function operation; calculating hidden layer neurons, obtaining hidden layer neurons according to the obtained hidden layer neurons; performing matrix multiplication vector, vector summation operation and activation function operation; generating an RNN output layer result; finally, generating an output result required by the RNN network according to time sequence length configuration information; according to the method, a hidden layer and an output layer are parallel in a multi-dimensional mode, the pipelining performance of calculation is improved, meanwhile, the characteristic of weight matrix parameter sharing in the RNN is achieved, the partitioning design is adopted, the parallelism degree of calculation is further improved, the flexibility, expandability, the storage resource utilization rate and the acceleration ratio are high, and calculation is greatly reduced.

Owner:NANJING UNIV

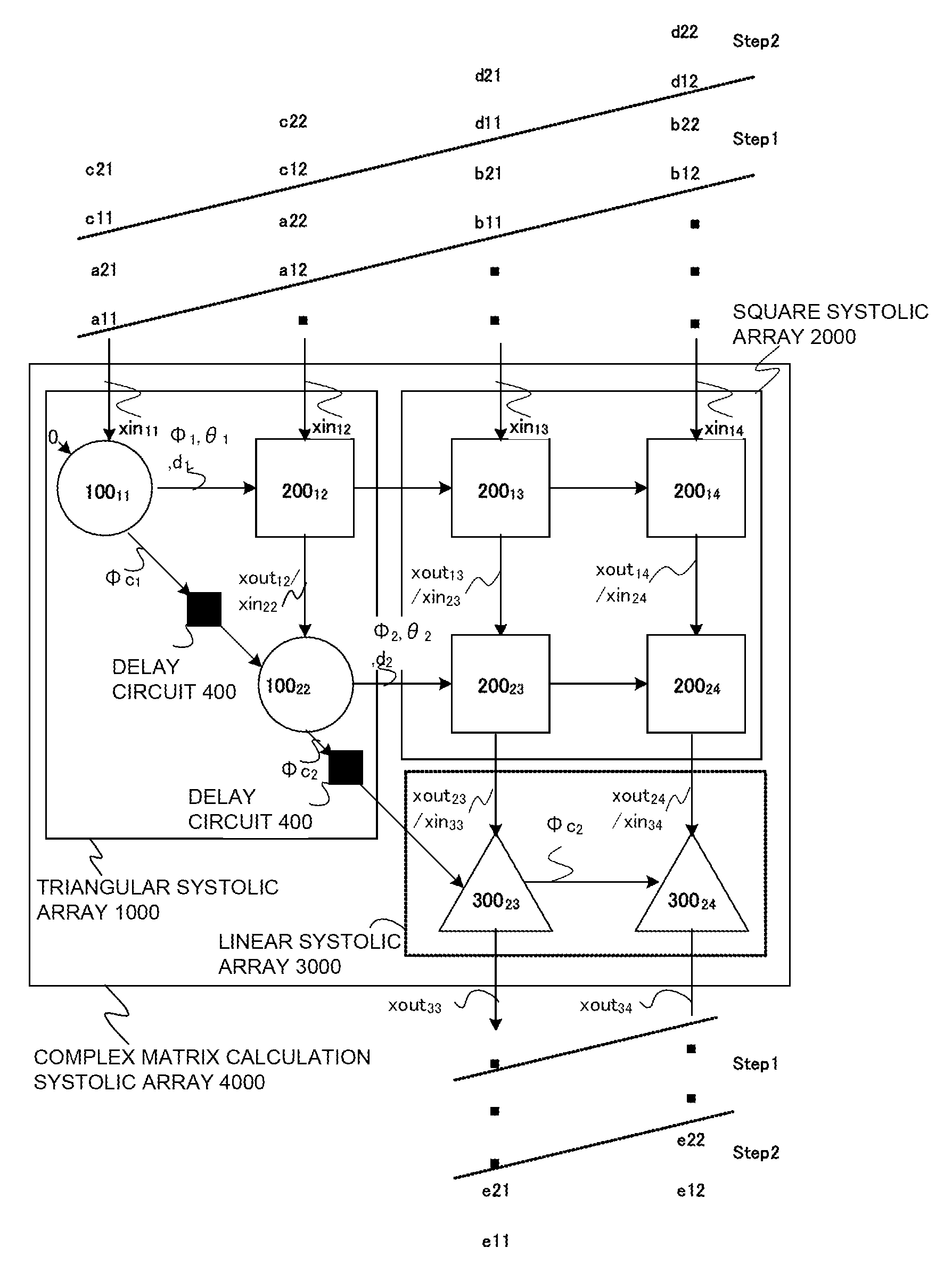

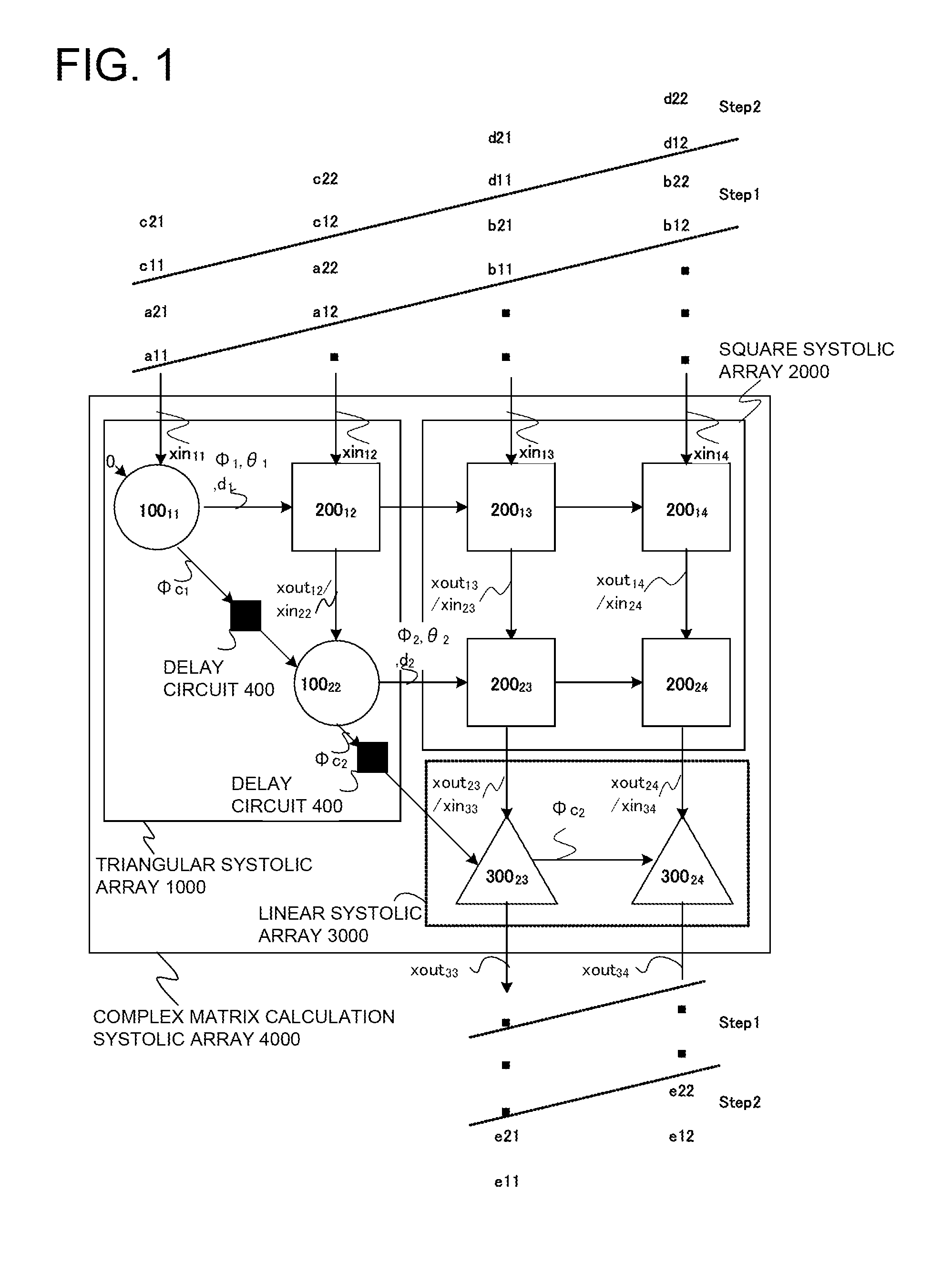

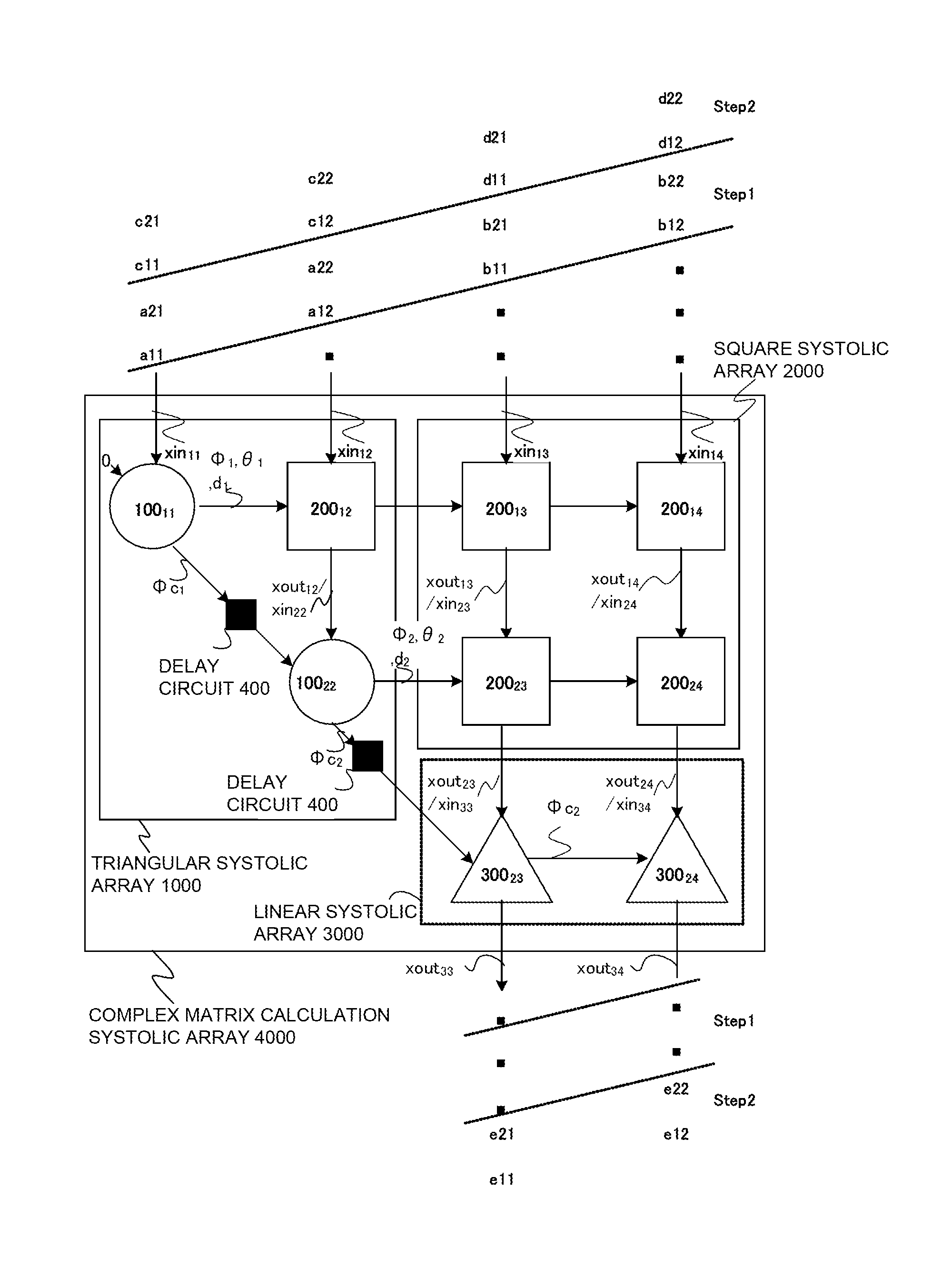

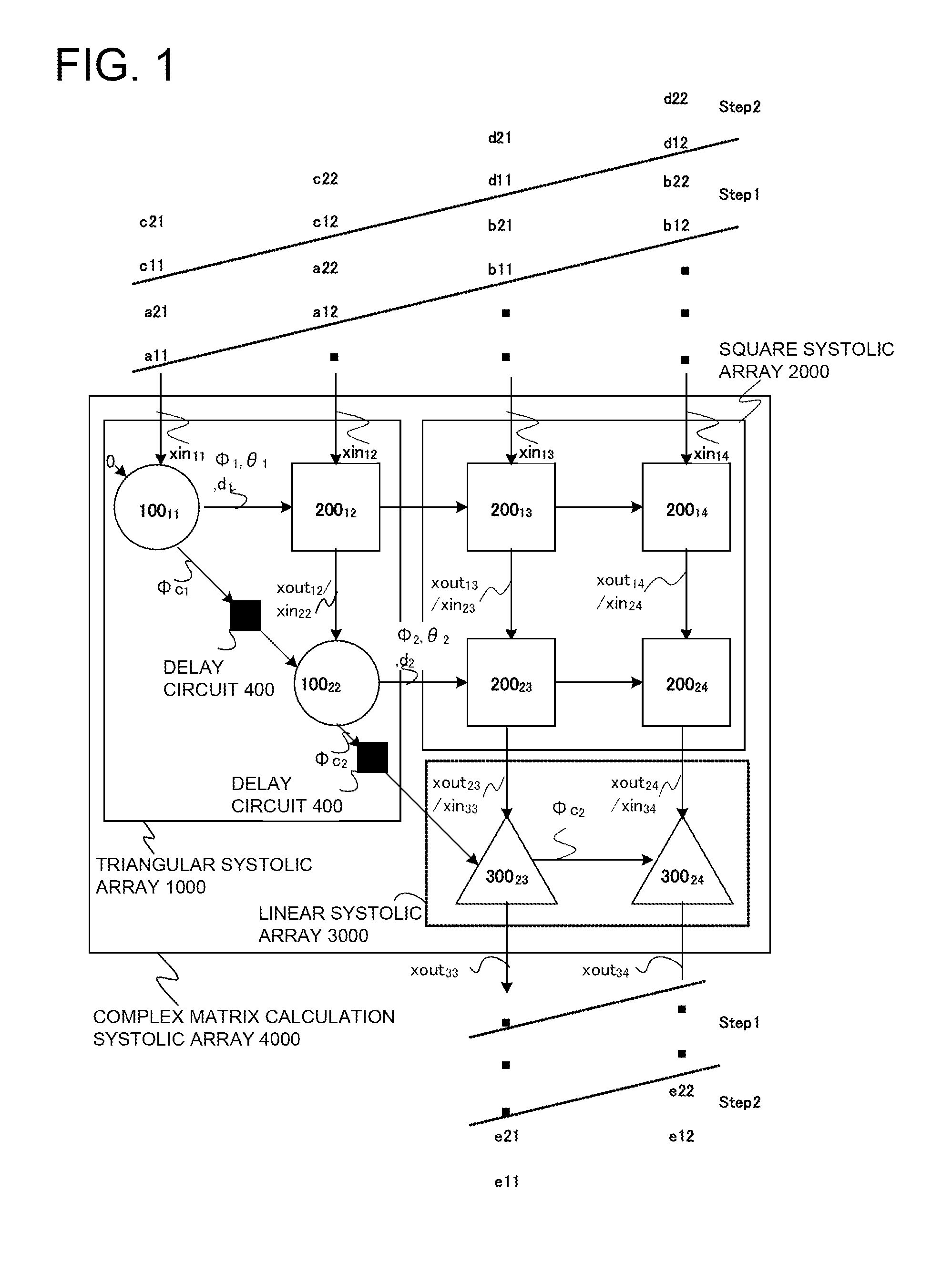

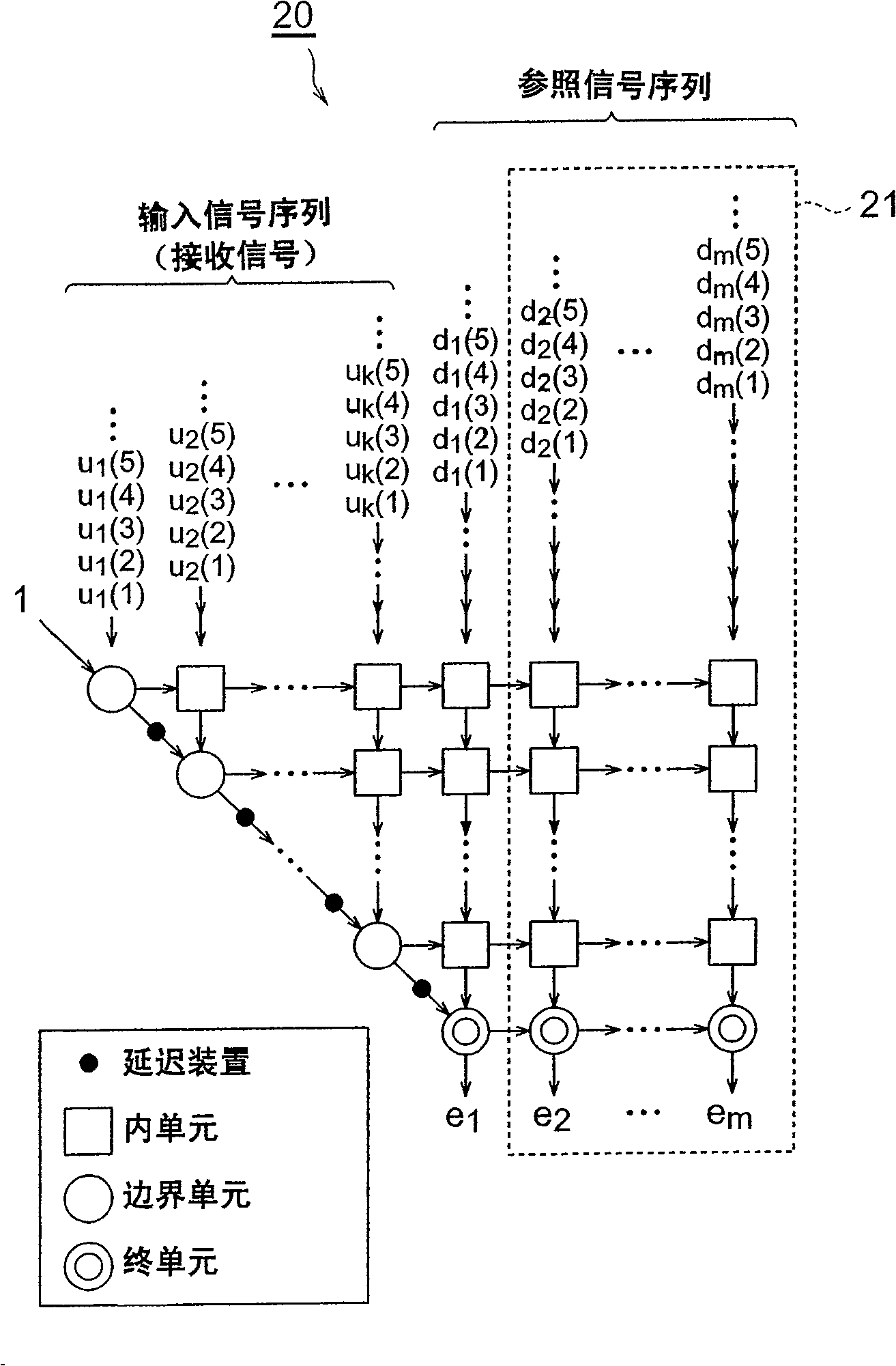

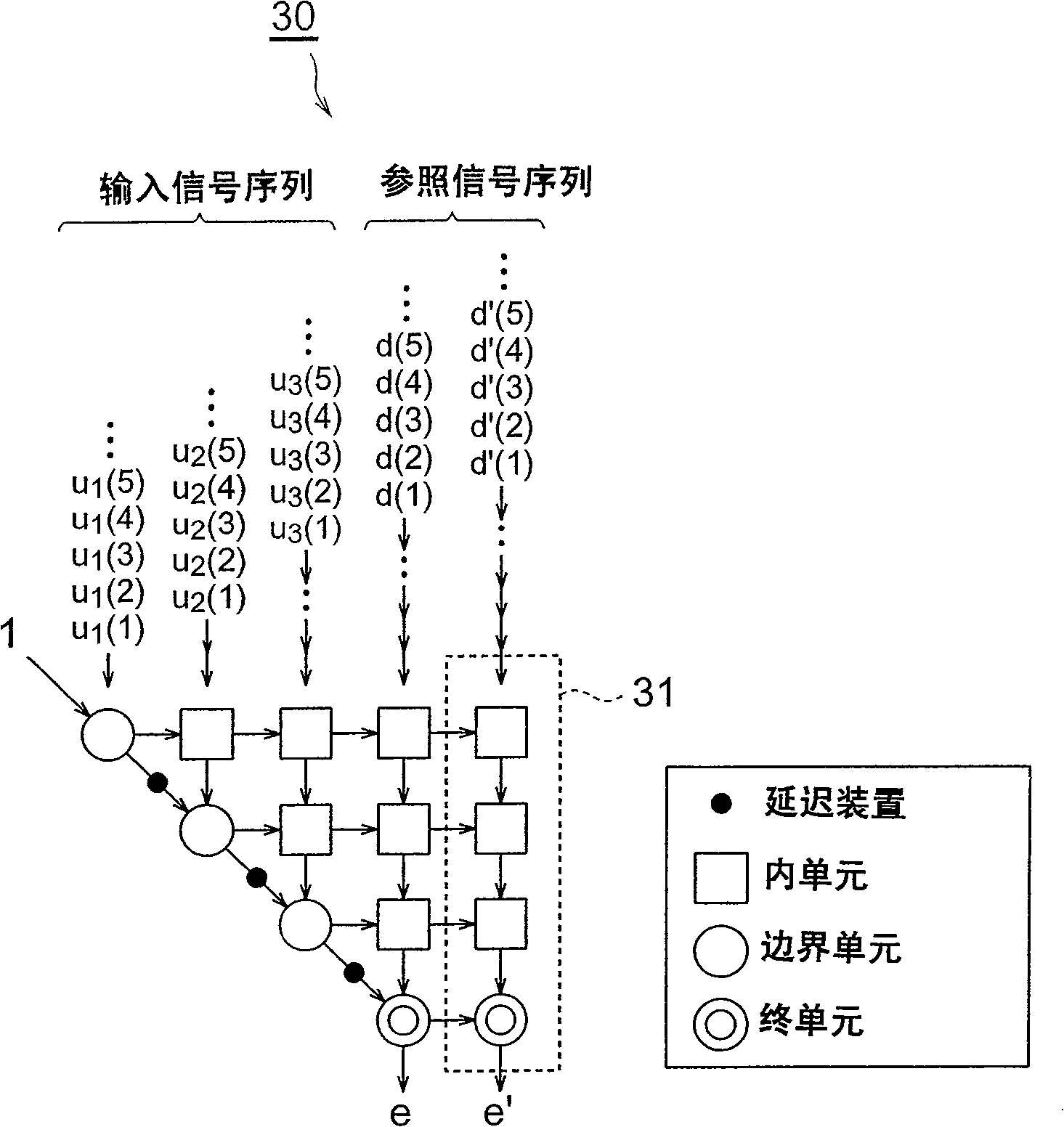

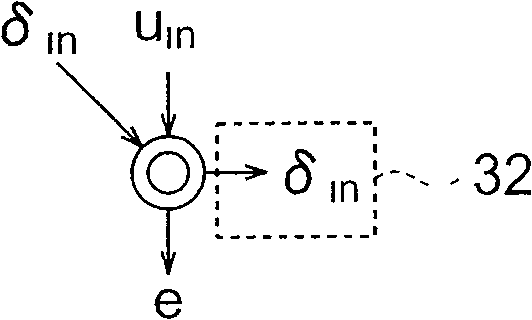

Systolic array and calculation method

ActiveUS20100250640A1Improve operating rateImprove efficiencySystolic arraysProgram controlPhase shiftedComputer science

A linear systolic array is added to the lower side of a trapezoid systolic array created by combining a triangular systolic array and a square systolic array. In order to make the connection among the cells fixed, the intermediate result output from each row of the trapezoid systolic array to a lower row is shifted in phase with respect to the intermediate result of the complex MFA algorithm, the phase shift is absorbed by the next row, and the phase shift in the intermediate result output from the last row of the trapezoid systolic array is corrected by the linear systolic array. Each cell is implemented by a CORDIC circuit that processes vector angle computation, vector rotation, division, and multiply-and-accumulate with a constant delay.

Owner:NEC CORP

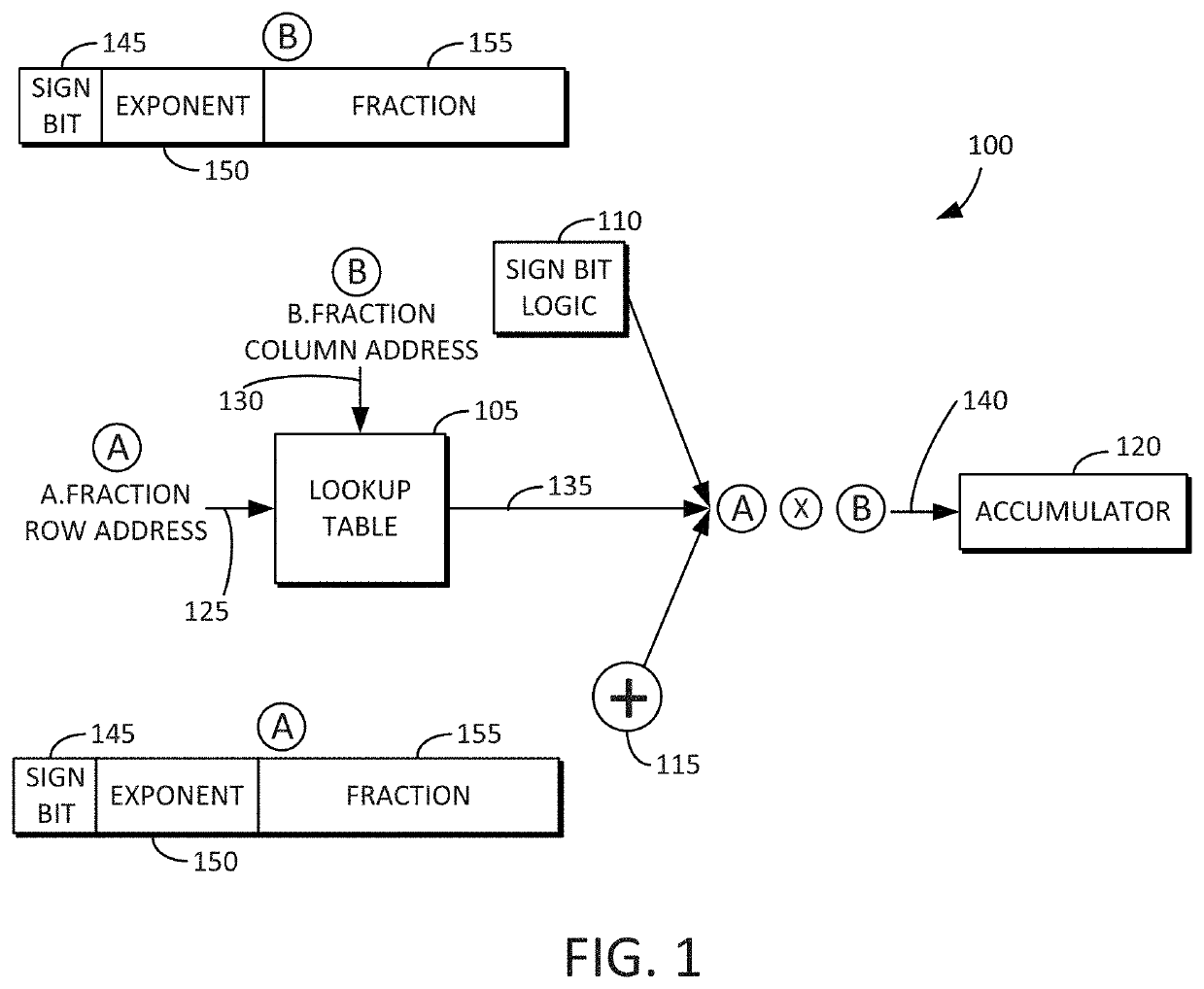

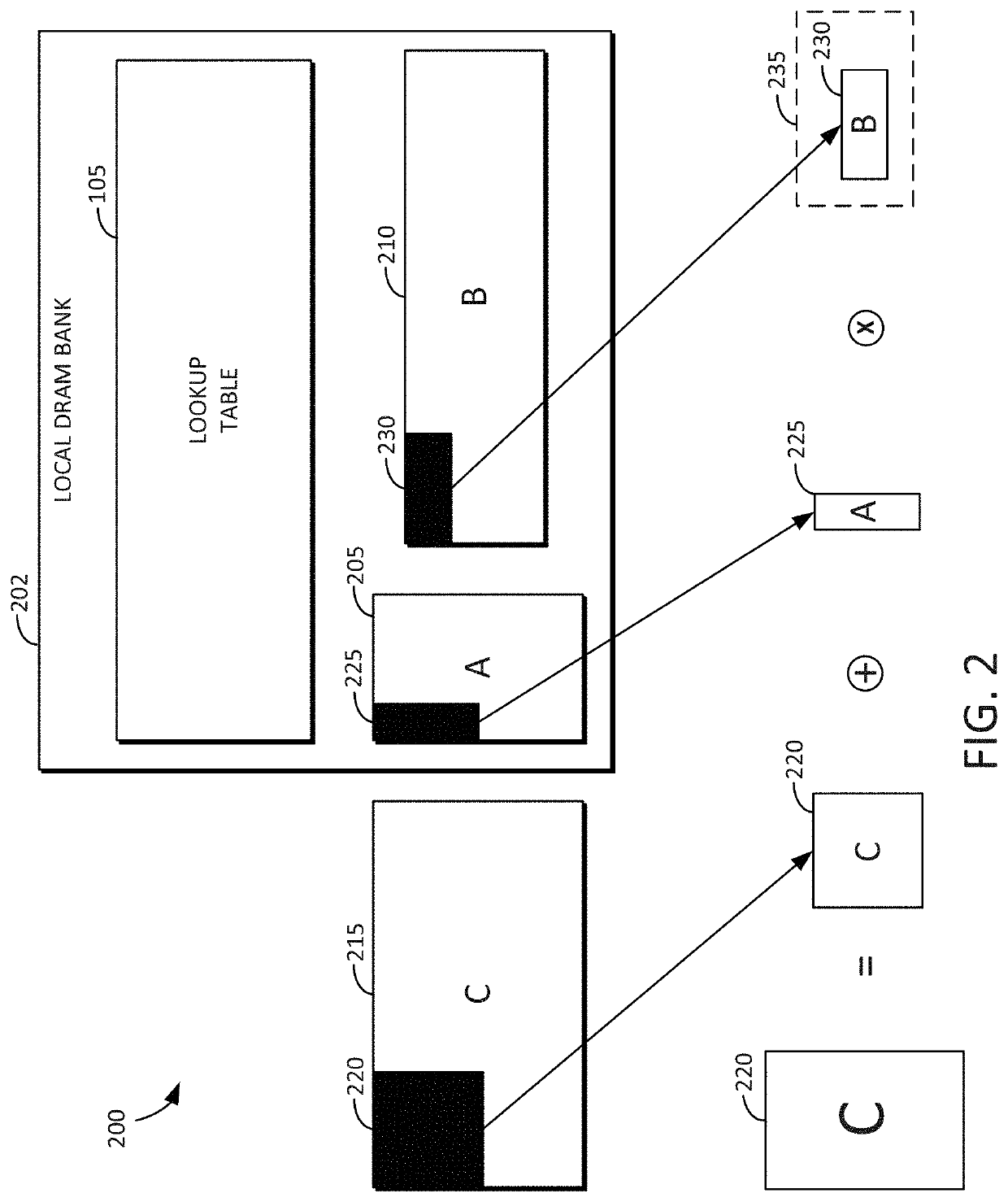

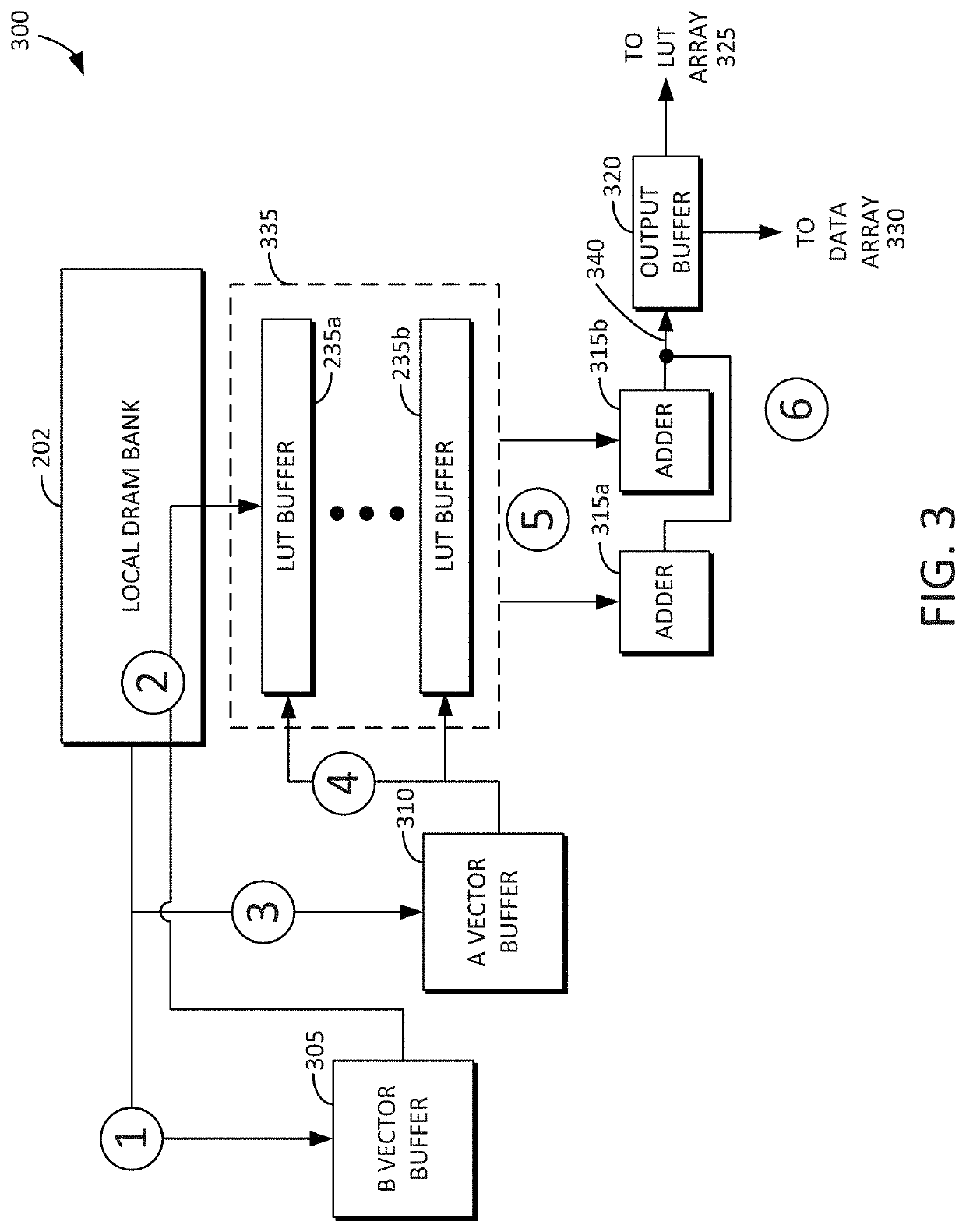

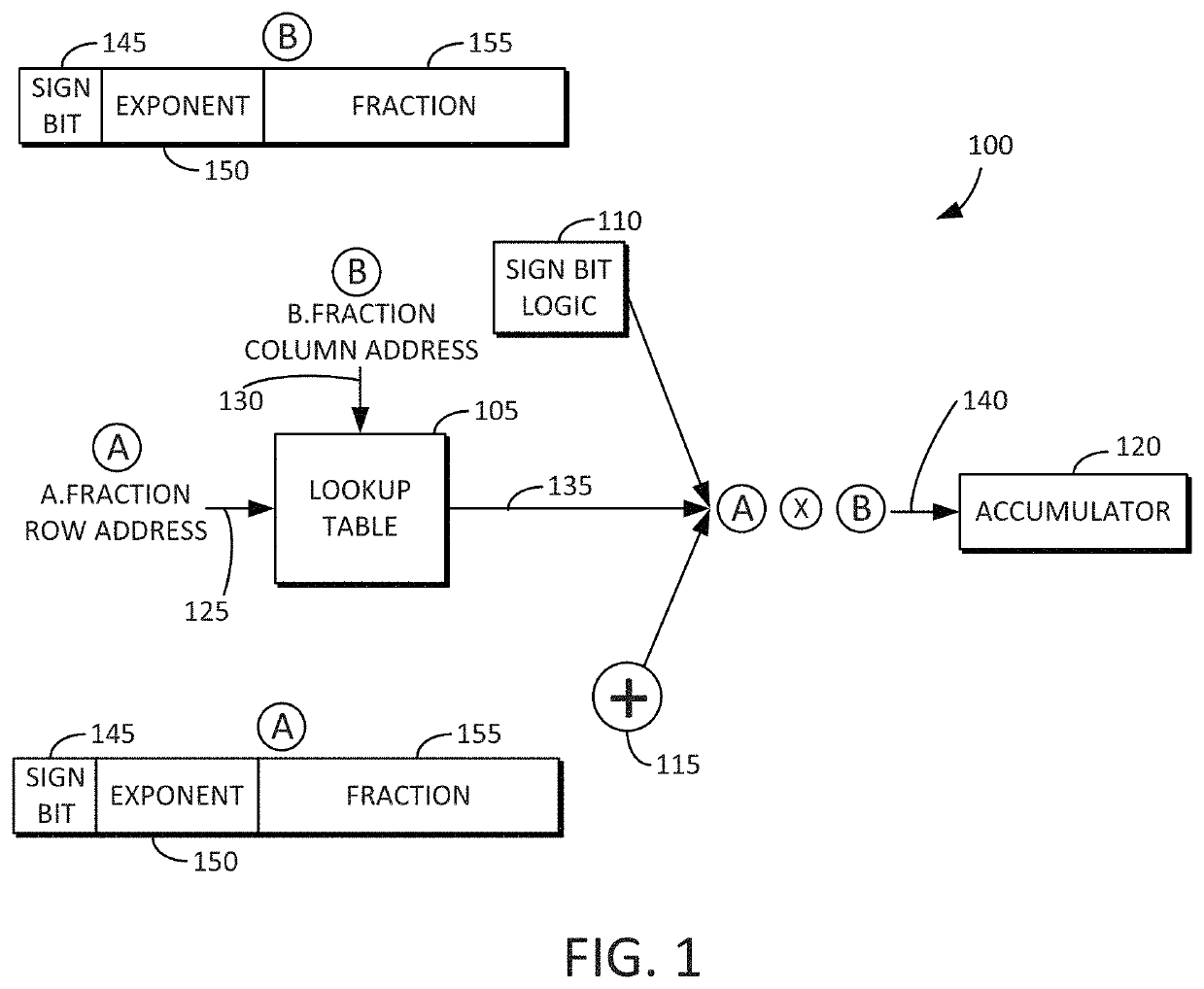

Dataflow accelerator architecture for general matrix-matrix multiplication and tensor computation in deep learning

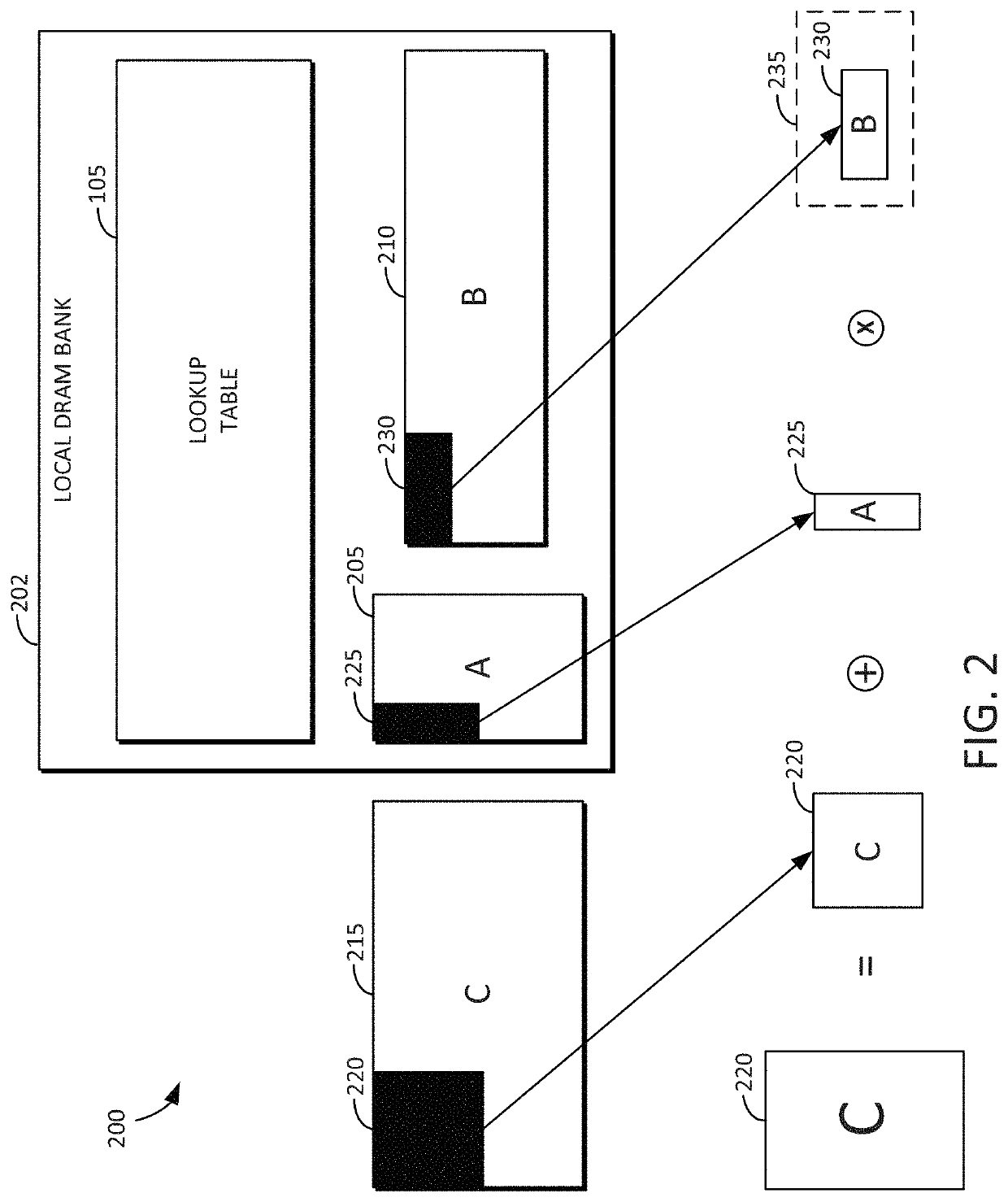

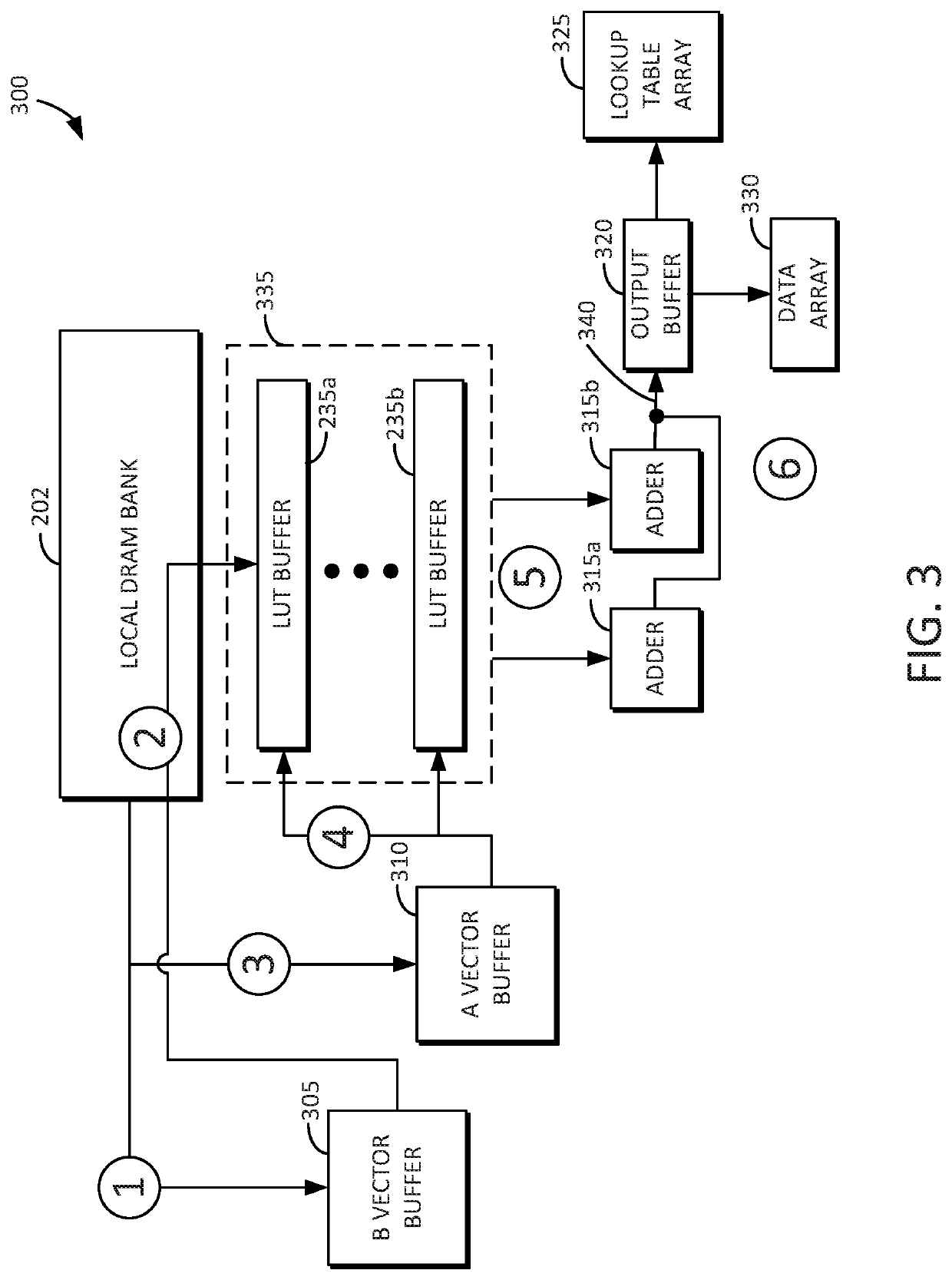

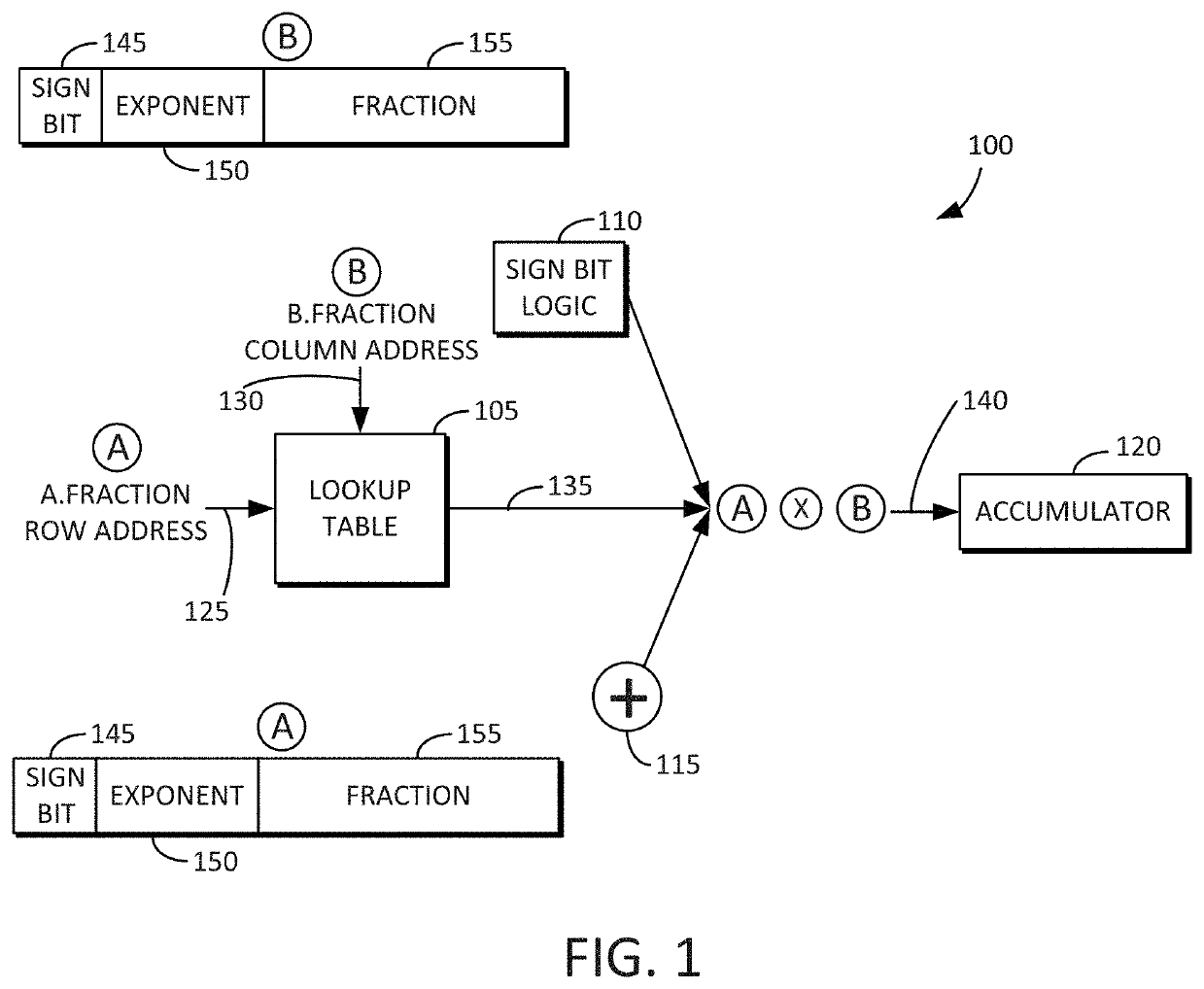

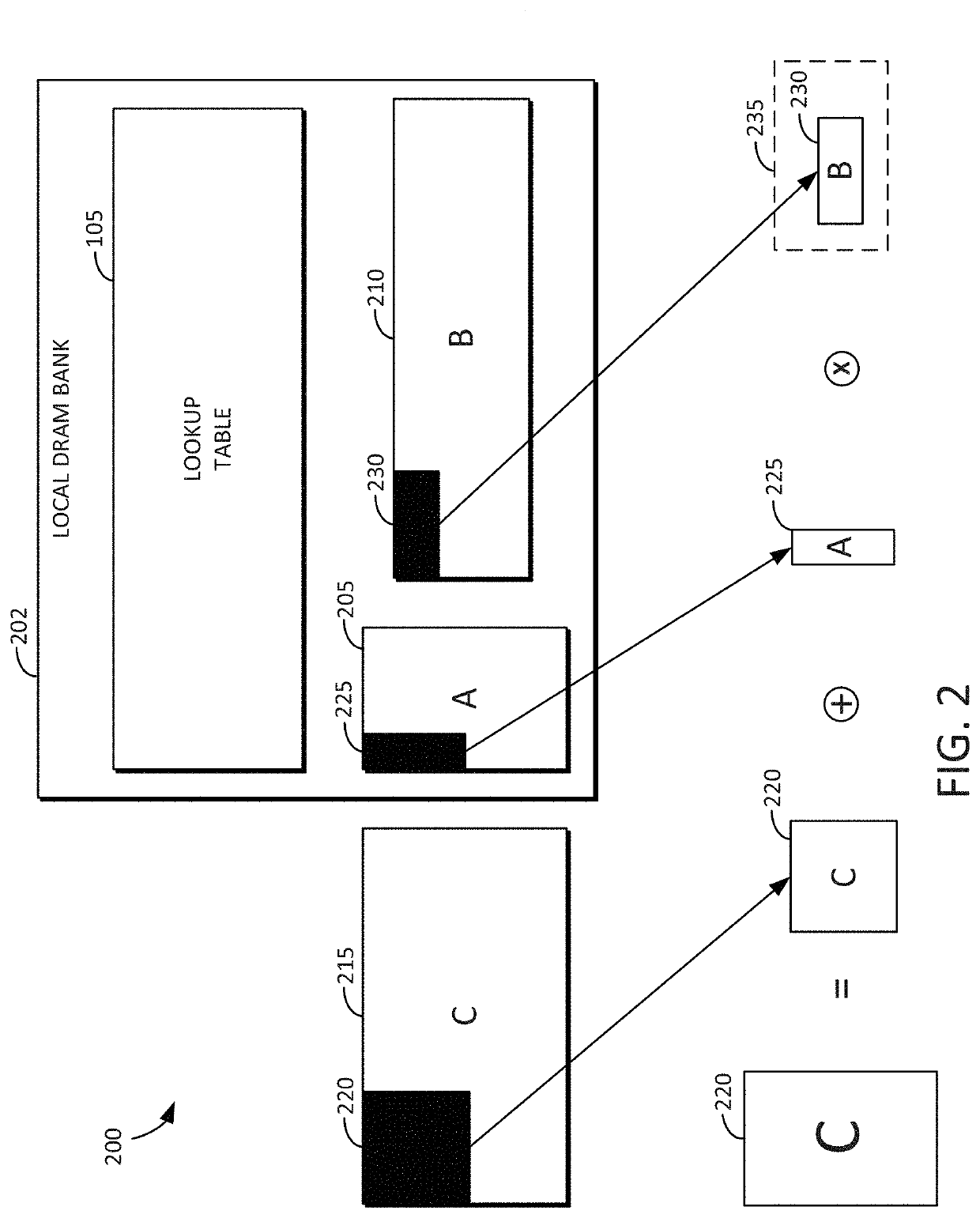

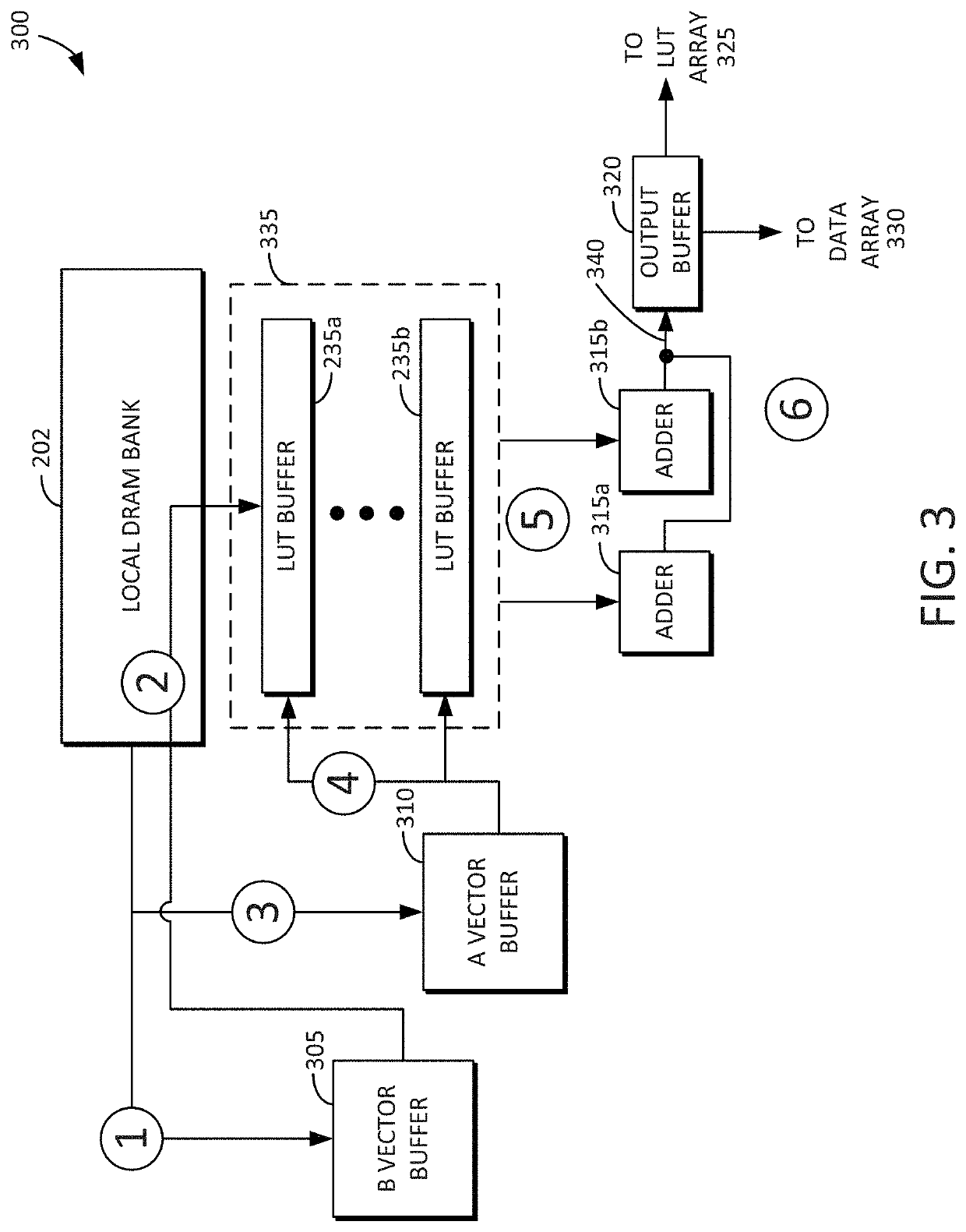

ActiveUS20200184001A1Lower latencyMemory architecture accessing/allocationSystolic arraysGeneral matrixData stream

A general matrix-matrix multiplication (GEMM) dataflow accelerator circuit is disclosed that includes a smart 3D stacking DRAM architecture. The accelerator circuit includes a memory bank, a peripheral lookup table stored in the memory bank, and a first vector buffer to store a first vector that is used as a row address into the lookup table. The circuit includes a second vector buffer to store a second vector that is used as a column address into the lookup table, and lookup table buffers to receive and store lookup table entries from the lookup table. The circuit further includes adders to sum the first product and a second product, and an output buffer to store the sum. The lookup table buffers determine a product of the first vector and the second vector without performing a multiply operation. The embodiments include a hierarchical lookup architecture to reduce latency. Accumulation results are propagated in a systolic manner.

Owner:SAMSUNG ELECTRONICS CO LTD

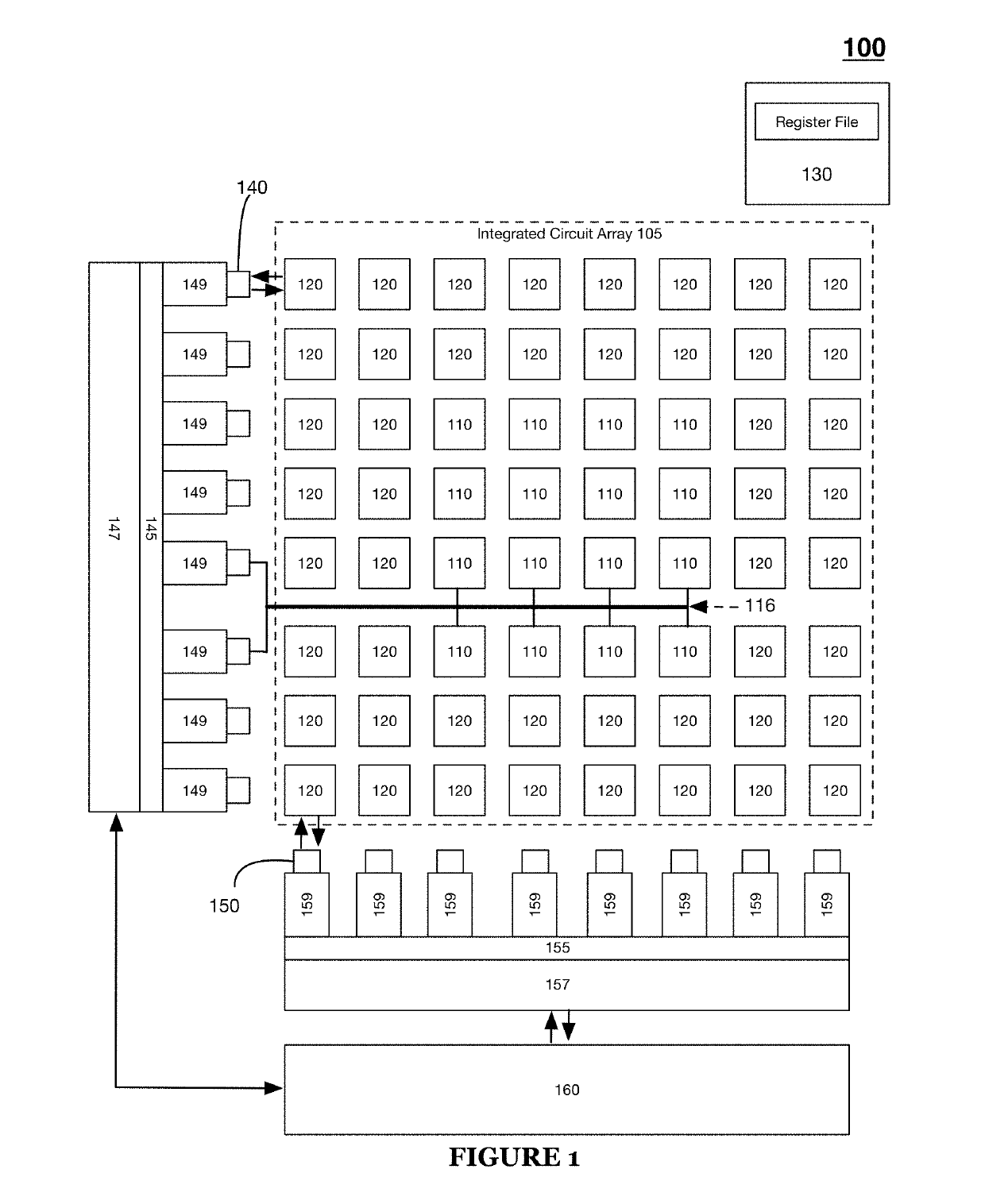

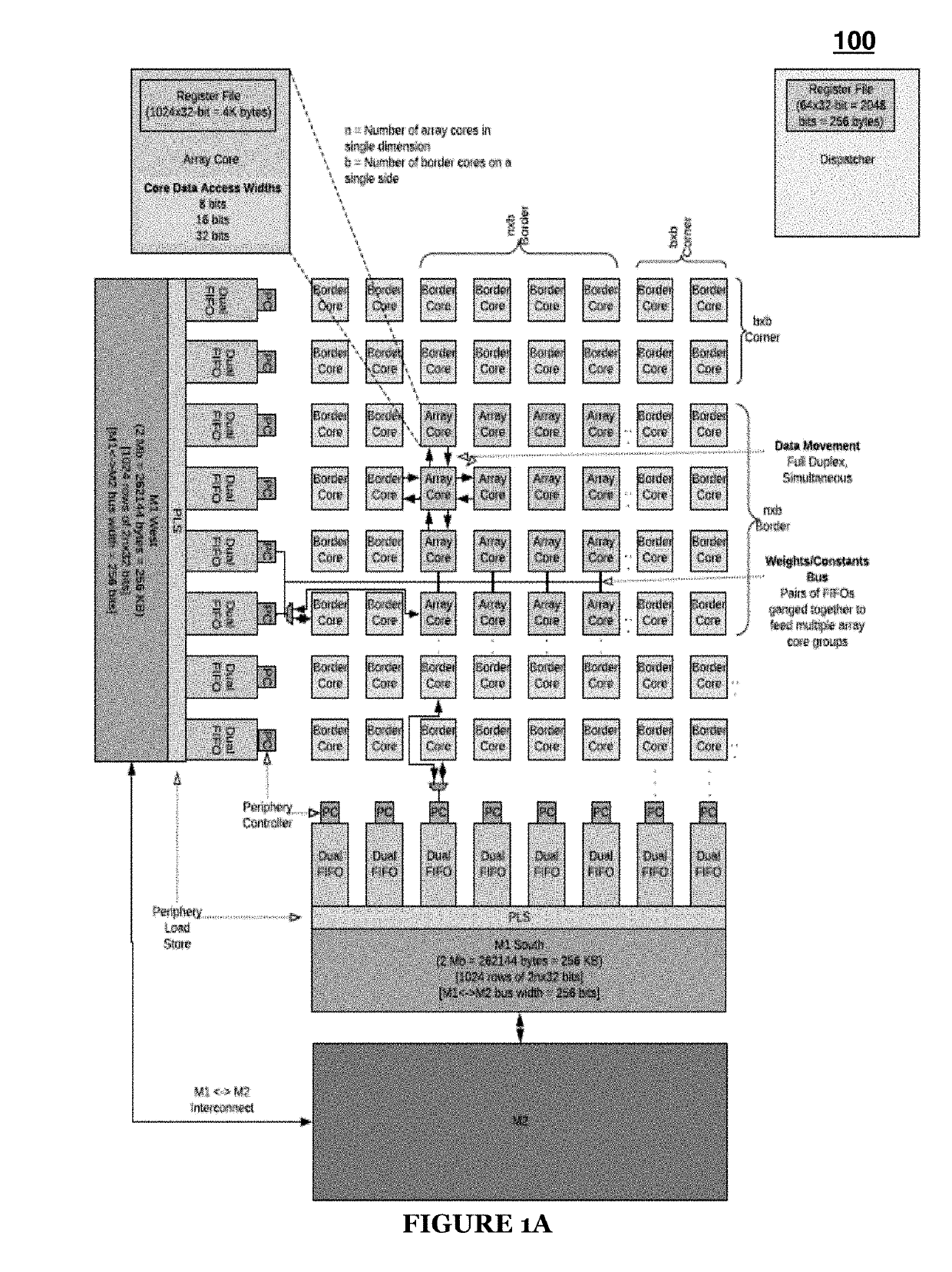

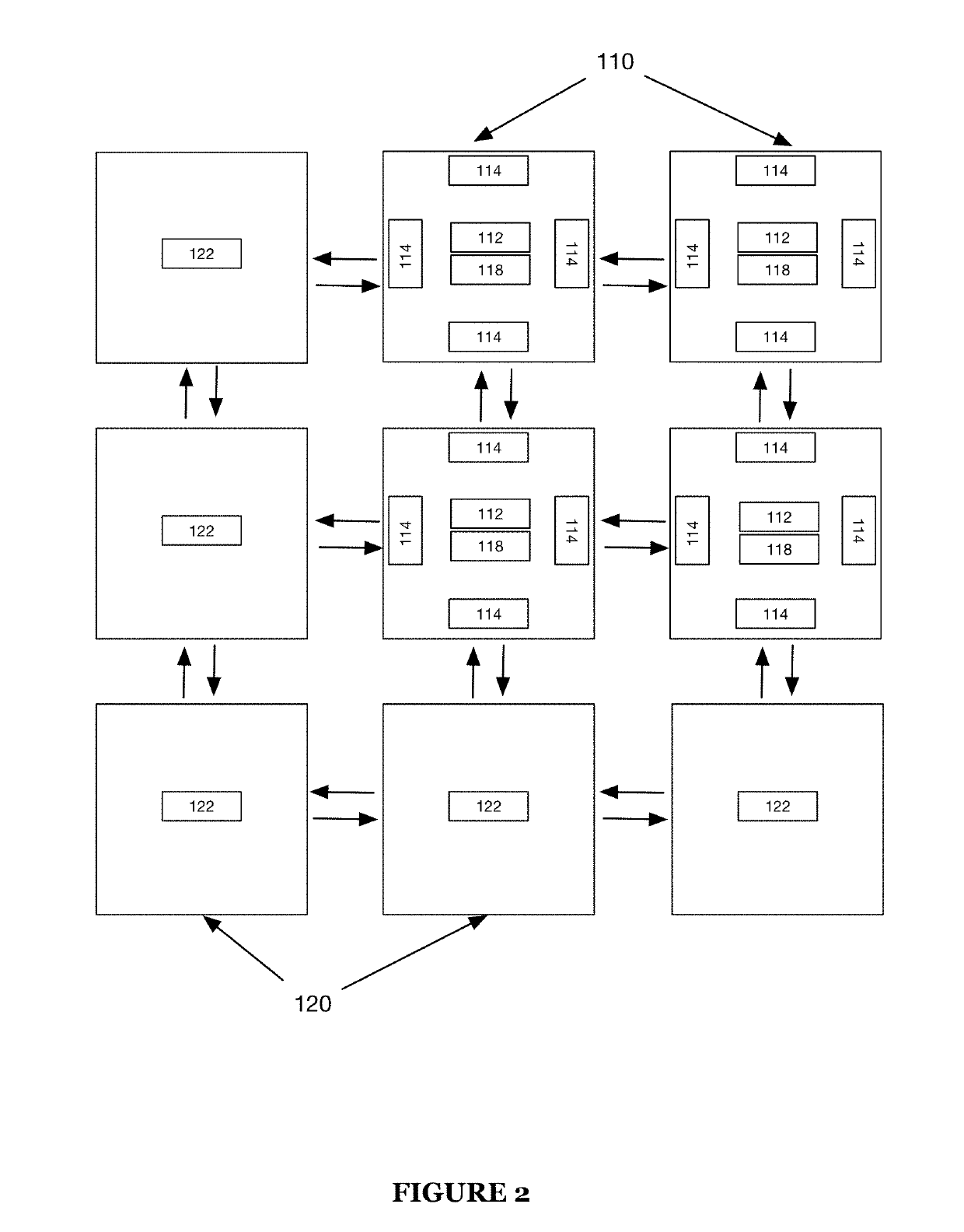

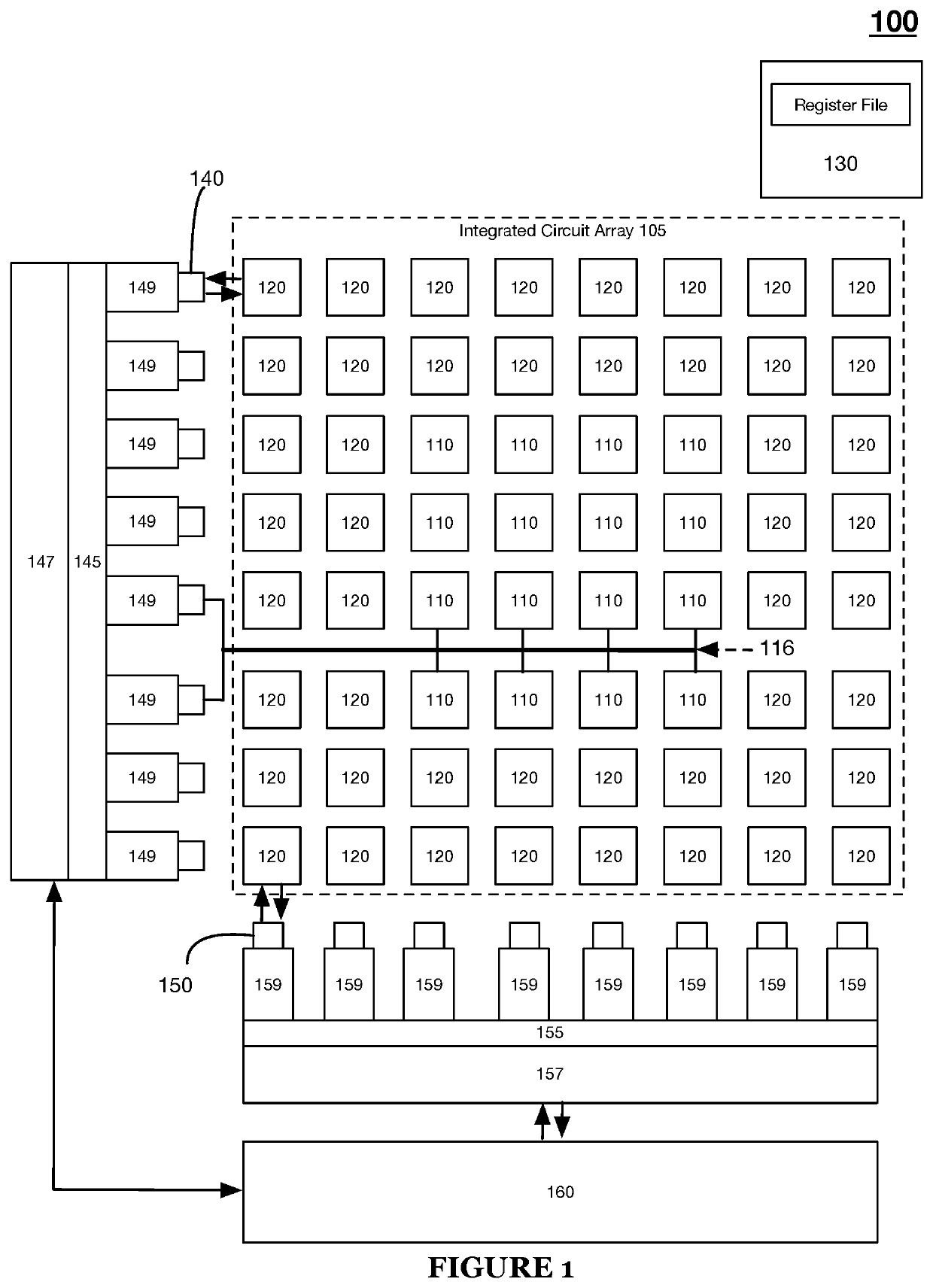

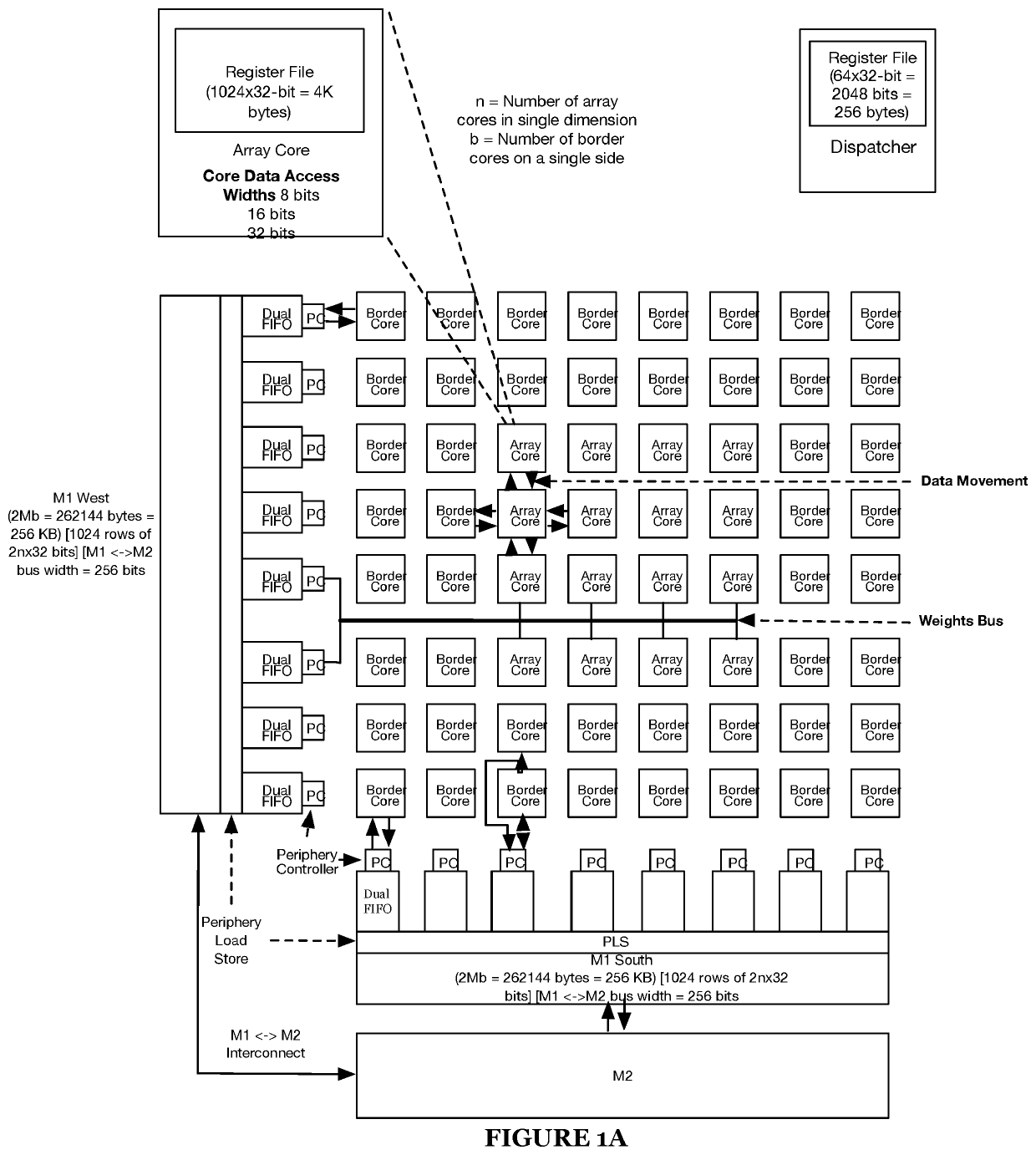

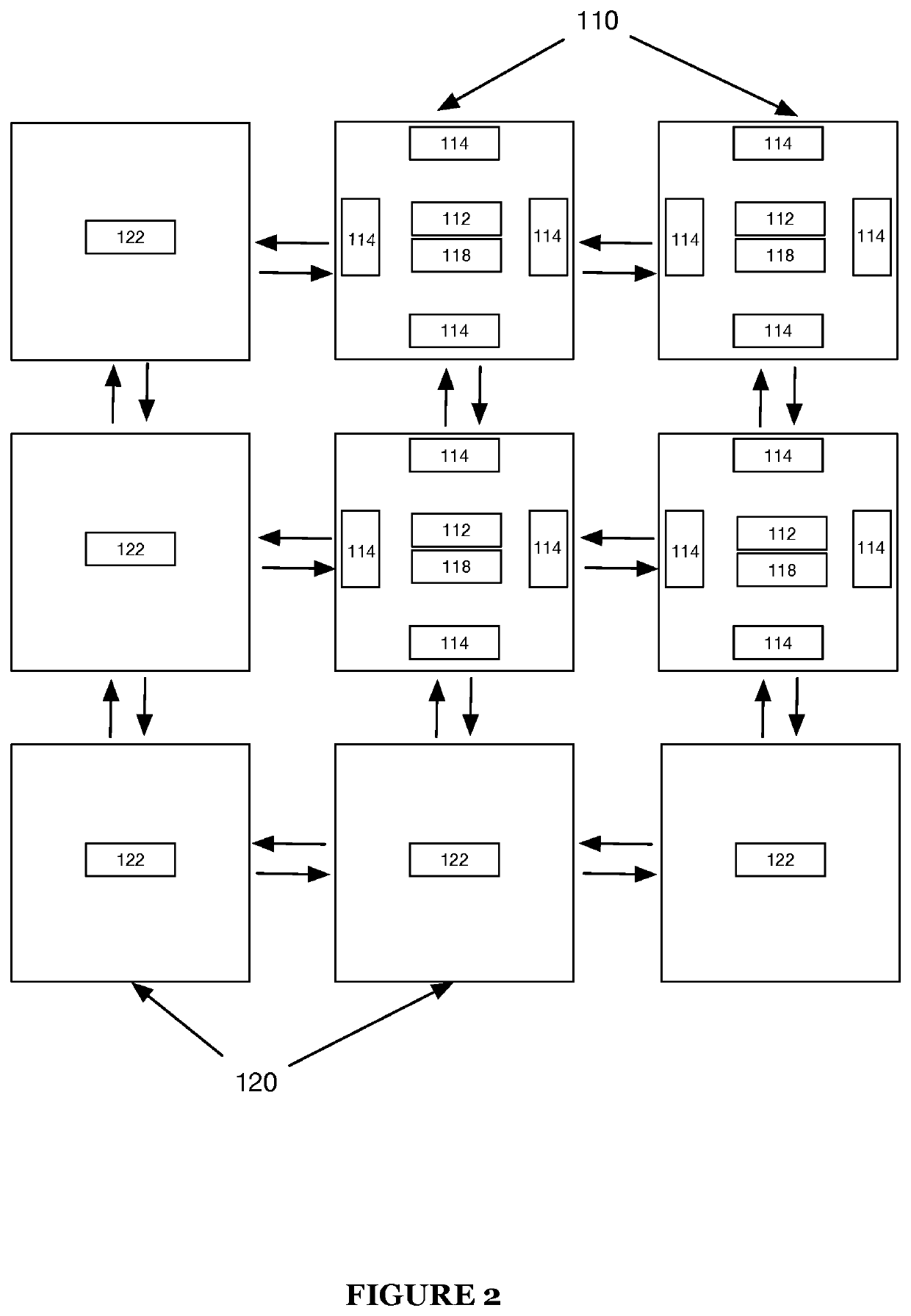

Systems and methods for implementing a machine perception and dense algorithm integrated circuit and enabling a flowing propagation of data within the integrated circuit

Systems and methods of propagating data within an integrated circuit includes: identifying a coarse data propagation path for distinct subsets of data of an input dataset that includes: setting inter-core data movements for the distinct subsets of data, the inter-core data movements defining a predetermined propagation of a given subset of data between two or more of a plurality of cores of an integrated circuit array of the integrated circuit; identifying a granular data propagation path for each distinct subset of data that includes: setting intra-core data movements for each distinct subset of data, the intra-core data movements defining a predetermined propagation of the given subset of data within one or more of the plurality of cores of the integrated circuit array of the integrated circuit; enabling a flow of the input dataset within the integrated circuit based on the coarse data propagation path and the granular propagation path.

Owner:QUADRIC IO INC

Dataflow accelerator architecture for general matrix-matrix multiplication and tensor computation in deep learning

ActiveUS11100193B2Lower latencyMemory architecture accessing/allocationSystolic arraysGeneral matrixData stream

A general matrix-matrix multiplication (GEMM) dataflow accelerator circuit is disclosed that includes a smart 3D stacking DRAM architecture. The accelerator circuit includes a memory bank, a peripheral lookup table stored in the memory bank, and a first vector buffer to store a first vector that is used as a row address into the lookup table. The circuit includes a second vector buffer to store a second vector that is used as a column address into the lookup table, and lookup table buffers to receive and store lookup table entries from the lookup table. The circuit further includes adders to sum the first product and a second product, and an output buffer to store the sum. The lookup table buffers determine a product of the first vector and the second vector without performing a multiply operation. The embodiments include a hierarchical lookup architecture to reduce latency. Accumulation results are propagated in a systolic manner.

Owner:SAMSUNG ELECTRONICS CO LTD

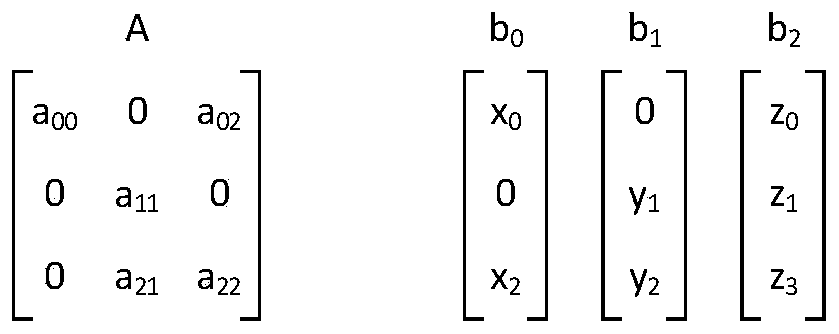

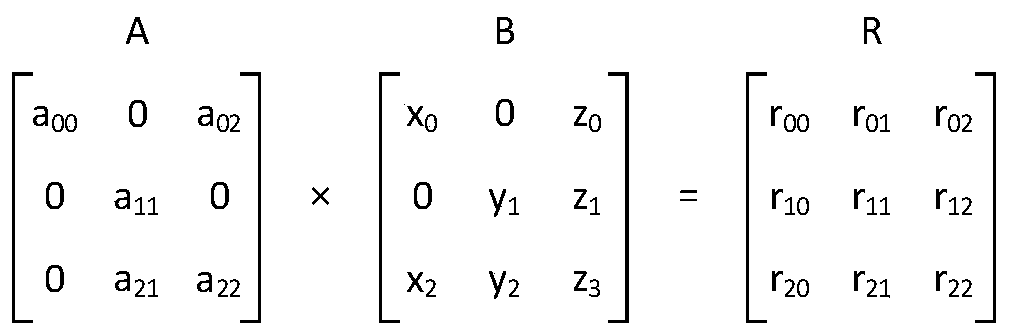

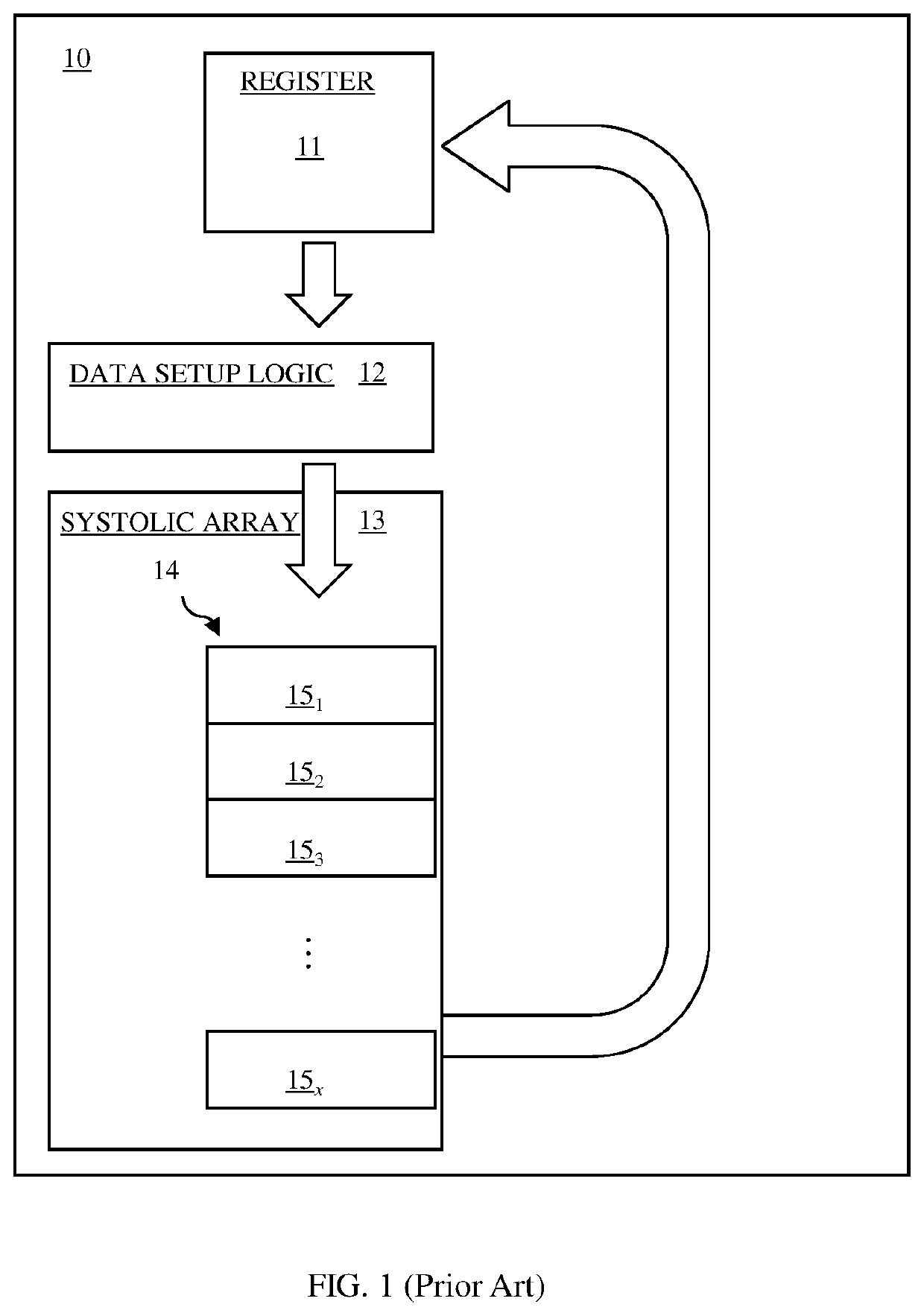

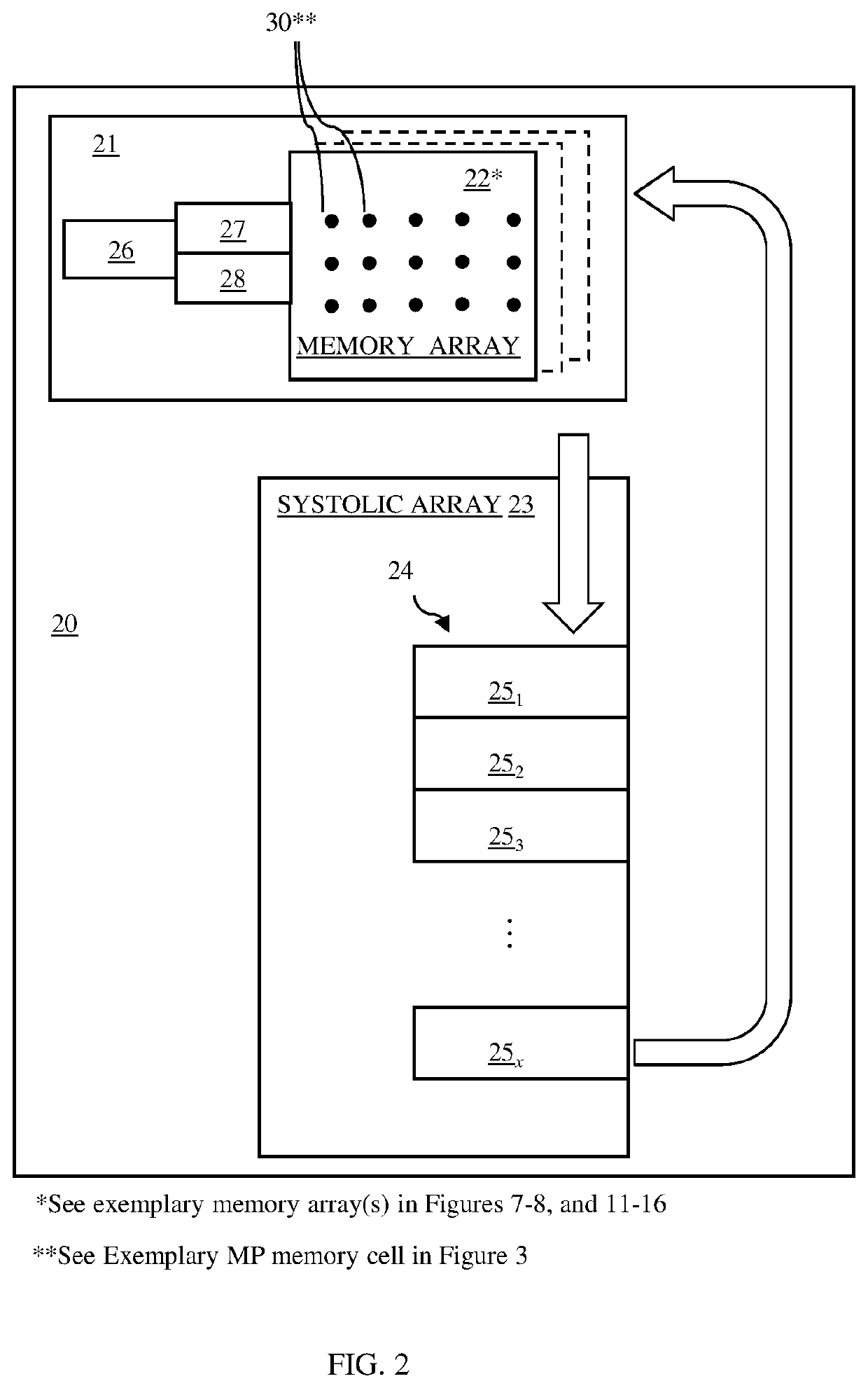

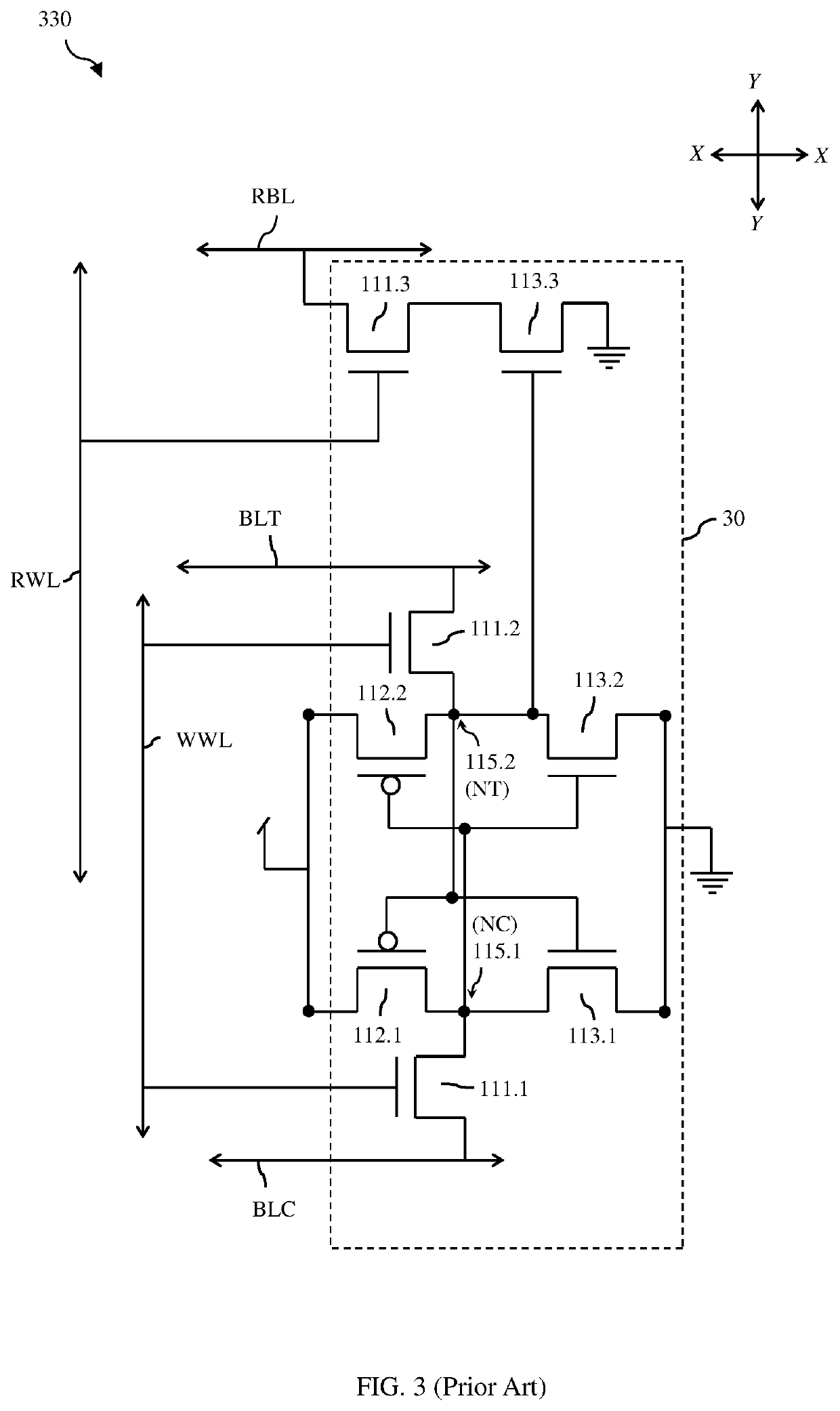

Multi-port memory architecture for a systolic array

A memory architecture and a processing unit that incorporates the memory architecture and a systolic array. The memory architecture includes: memory array(s) with multi-port (MP) memory cells; first wordlines connected to the cells in each row; and, depending upon the embodiment, second wordlines connected to diagonals of cells or diagonals of sets of cells. Data from a data input matrix is written to the memory cells during first port write operations using the first wordlines and read out from the memory cells during second port read operations using the second wordlines. Due to the diagonal orientation of the second wordlines and due to additional features (e.g., additional rows of memory cells that store static zero data values or read data mask generators that generate read data masks), data read from the memory architecture and input directly into a systolic array is in the proper order, as specified by a data setup matrix.

Owner:MARVELL ASIA PTE LTD

Systems and methods for implementing a machine perception and dense algorithm integrated circuit and enabling a flowing propagation of data within the integrated circuit

Systems and methods of propagating data within an integrated circuit includes: identifying a coarse data propagation path for distinct subsets of data of an input dataset that includes: setting inter-core data movements for the distinct subsets of data, the inter-core data movements defining a predetermined propagation of a given subset of data between two or more of a plurality of cores of an integrated circuit array of the integrated circuit; identifying a granular data propagation path for each distinct subset of data that includes: setting intra-core data movements for each distinct subset of data, the intra-core data movements defining a predetermined propagation of the given subset of data within one or more of the plurality of cores of the integrated circuit array of the integrated circuit; enabling a flow of the input dataset within the integrated circuit based on the coarse data propagation path and the granular propagation path.

Owner:QUADRIC IO INC

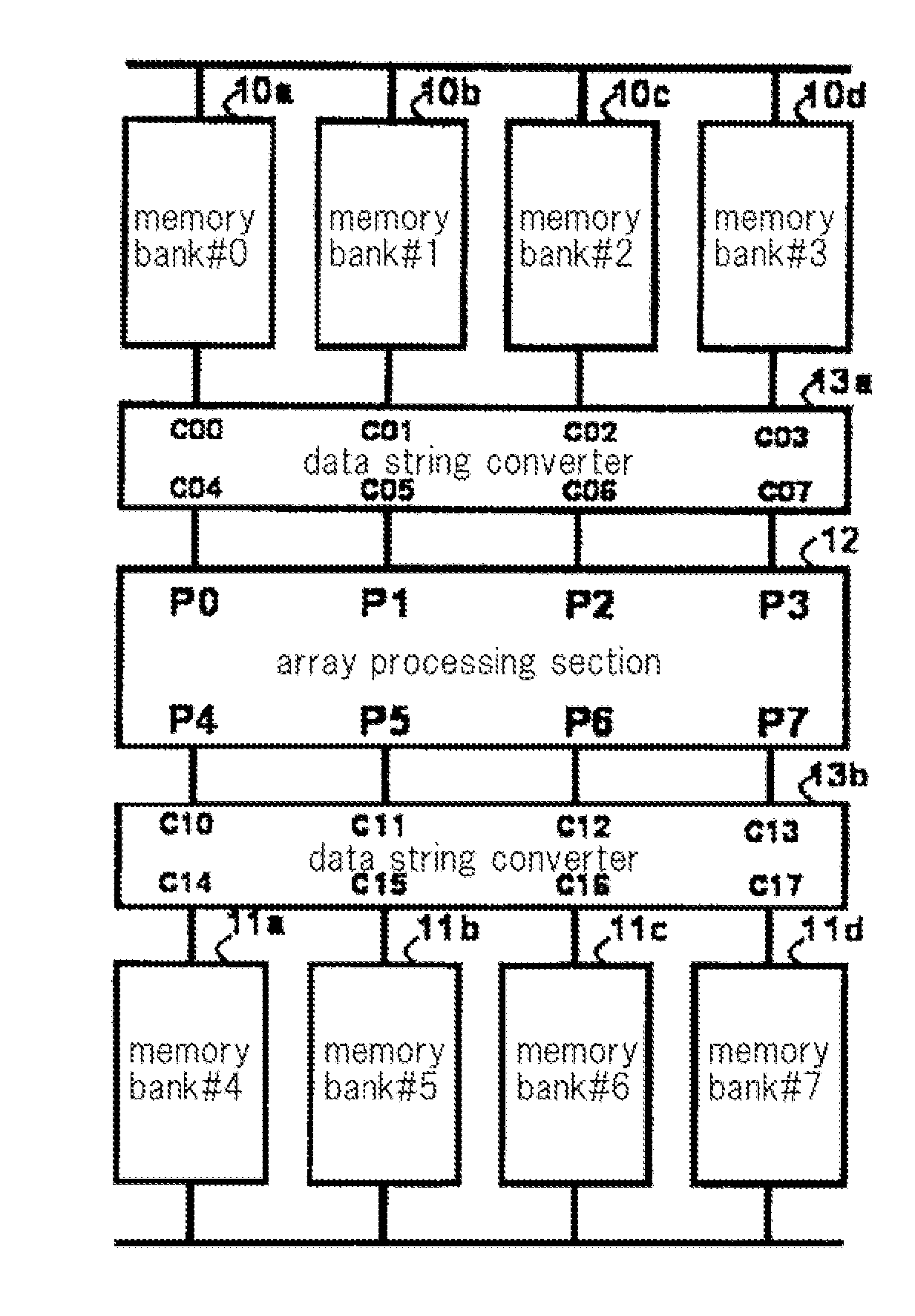

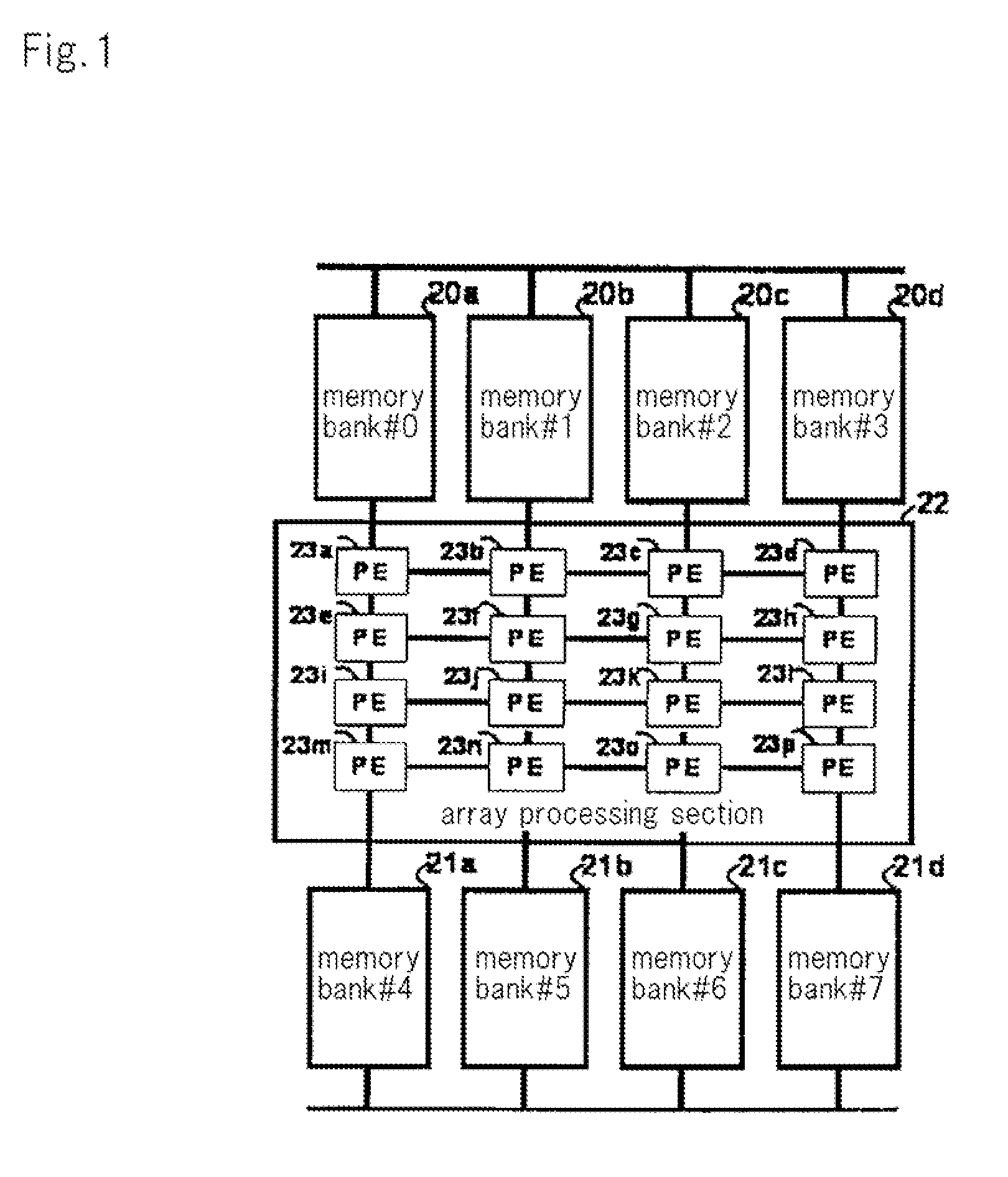

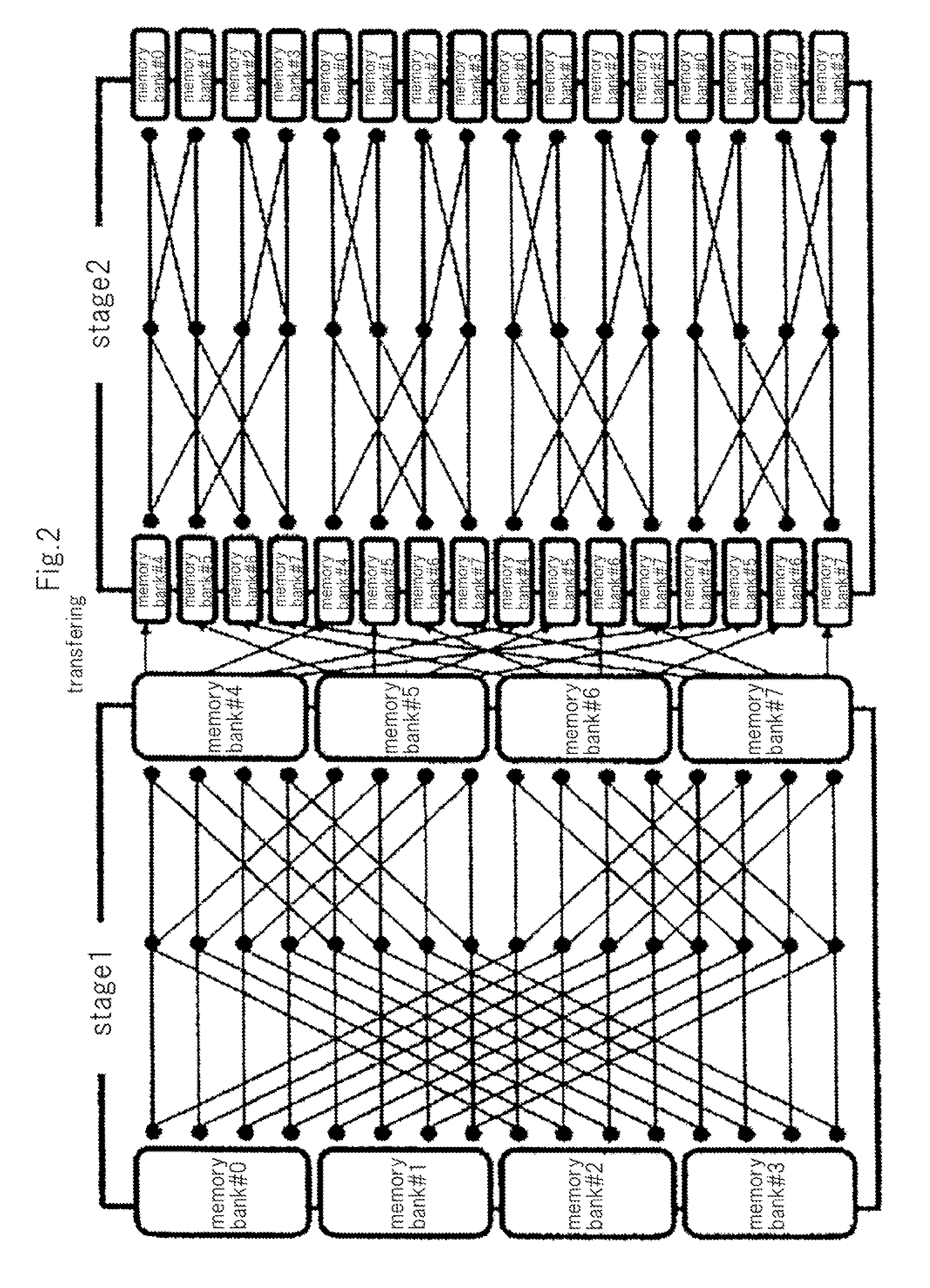

Array processor type data processing apparatus

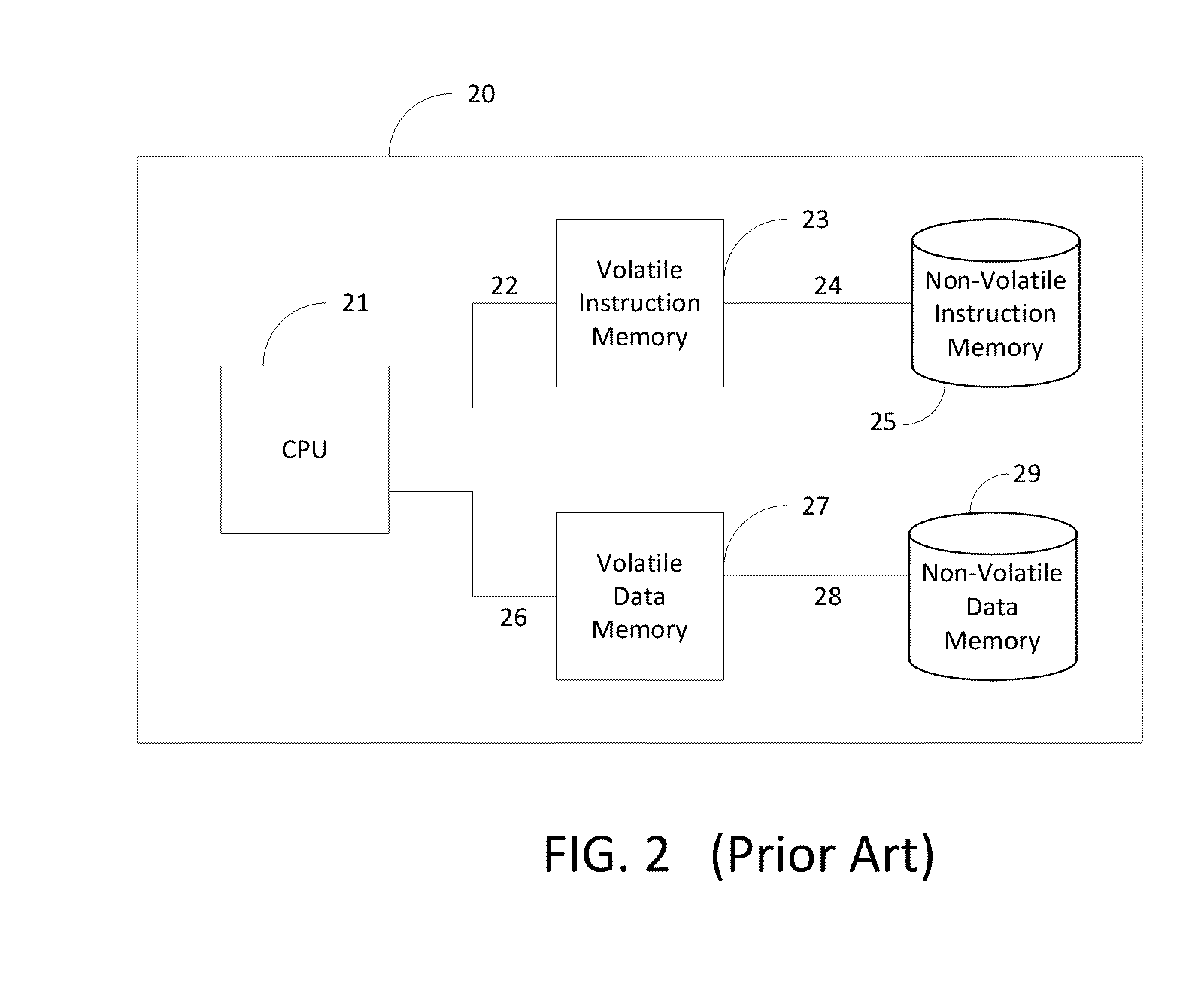

ActiveUS20100131738A1Improve processing efficiencyOut of operationSystolic arraysProgram control using wired connectionsProcessor elementData memory

In an array processing section, using data strings entered from input ports, a plurality of data processor elements execute predetermined operations while transferring data to each other, and output data strings of results of the operations from a plurality of output ports. A first data string converter converts data strings stored in a plurality of data storages of a data storage group into a placement suitable for the operations in the array processing section, and enters the converted data strings into the input ports of the array processing section. A second data string converter converts the data strings output from output ports of the array processing section into a placement to be stored in the plurality of data storages of the data storage group.

Owner:NEC CORP

Calculation circuit and deep learning system including the same

PendingUS20210256360A1High operating requirementsHigh hourly throughputSystolic arraysComputation using non-contact making devicesValue setAlgorithm

A calculation circuit may include a plurality of calculator groups constituting a systolic array composed of a plurality of rows and columns, wherein calculator groups included in each of the rows propagate a data value set through a single data path corresponding to the row in a data propagation direction, and propagate a plurality of drain value sets through a plurality of drain paths corresponding to the row in a drain propagation direction, and wherein a calculator group of the calculator groups included in each of the rows comprises a plurality of MAC (Multiplier-Accumulator) circuits, and the MAC circuits generate drain values respectively included in the drain value sets at the same time. The calculator groups included in each column may further propagate a weight value set corresponding to the column through a plurality of weight data paths corresponding to the column.

Owner:SK HYNIX INC

Context switching for computing architecture operating on sequential data

ActiveUS9996387B2Increase speedLarge scaleInput/output to record carriersSystolic arraysData stream processingParallel computing

A data stream processing unit (DPU) and methods for its use and programming are disclosed. A DPU includes a number of processing elements (PEs) arranged in a physical sequence. Each datum in the data stream visits each PE in sequence. Each PE has a memory circuit, data and metadata input and output channels, and a computing circuit. The metadata input represents a partial computational state that is associated with each datum as it passes through the DPU. Each computing circuit implements a finite state machine that operates on the data and metadata inputs as a function of its position in the sequence and a data context, producing an altered partial computational state that accompanies the datum. When the data context changes, the current state of the finite state machine is stored, and a new state is loaded. The processing elements may be collectively programmed to perform any desired computation.

Owner:LEWIS RHODES LABS

Context Switching for Computing Architecture Operating on Sequential Data

ActiveUS20170147391A1Increase speedLarge scaleInput/output to record carriersSystolic arraysTheoretical computer scienceFinite-state machine

A data stream processing unit (DPU) and methods for its use and programming are disclosed. A DPU includes a number of processing elements (PEs) arranged in a physical sequence. Each datum in the data stream visits each PE in sequence. Each PE has a memory circuit, data and metadata input and output channels, and a computing circuit. The metadata input represents a partial computational state that is associated with each datum as it passes through the DPU. Each computing circuit implements a finite state machine that operates on the data and metadata inputs as a function of its position in the sequence and a data context, producing an altered partial computational state that accompanies the datum. When the data context changes, the current state of the finite state machine is stored, and a new state is loaded. The processing elements may be collectively programmed to perform any desired computation.

Owner:LEWIS RHODES LABS

Dataflow accelerator architecture for general matrix-matrix multiplication and tensor computation in deep learning

PendingUS20200183837A1Memory architecture accessing/allocationSystolic arraysCalculated dataHemt circuits

A tensor computation dataflow accelerator semiconductor circuit is disclosed. The data flow accelerator includes a DRAM bank and a peripheral array of multiply-and-add units disposed adjacent to the DRAM bank. The peripheral array of multiply-and-add units are configured to form a pipelined dataflow chain in which partial output data from one multiply-and-add unit from among the array of multiply-and-add units is fed into another multiply-and-add unit from among the array of multiply-and-add units for data accumulation. Near-DRAM-processing dataflow (NDP-DF) accelerator unit dies may be stacked atop a base die. The base die may be disposed on a passive silicon interposer adjacent to a processor or a controller. The NDP-DF accelerator units may process partial matrix output data in parallel. The partial matrix output data may be propagated in a forward or backward direction. The tensor computation dataflow accelerator may perform a partial matrix transposition.

Owner:SAMSUNG ELECTRONICS CO LTD

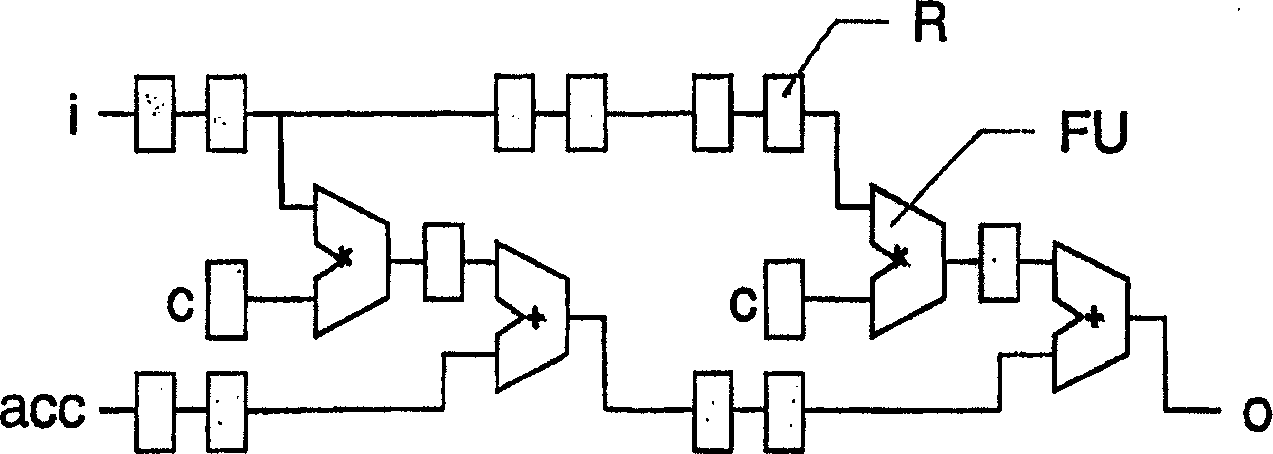

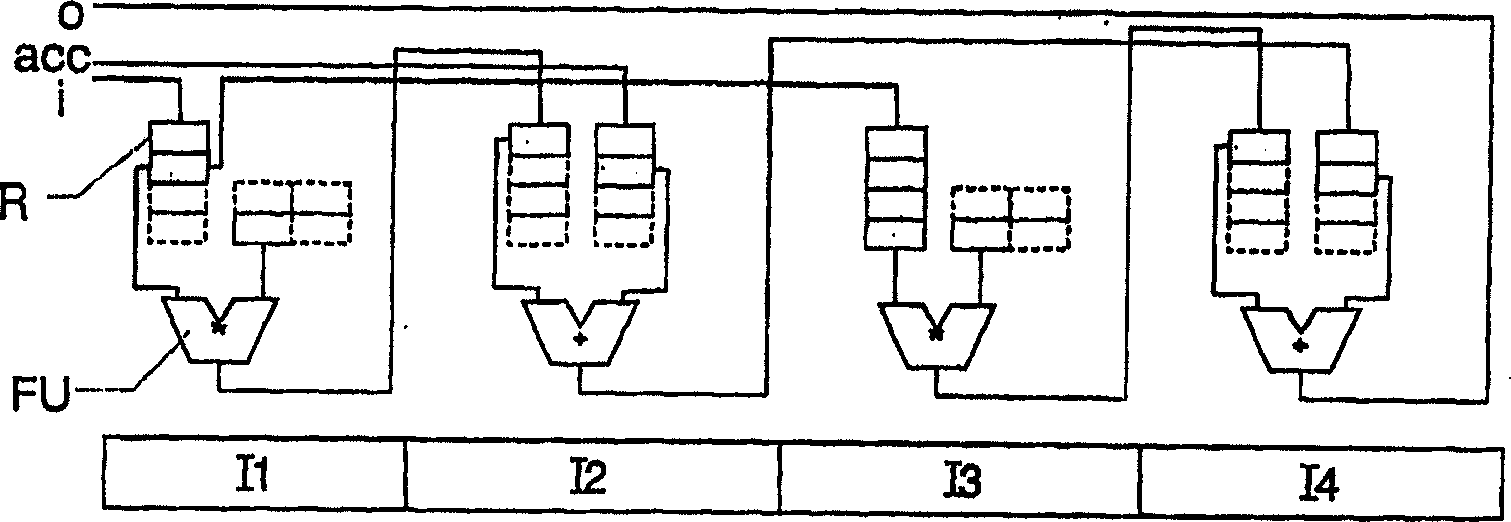

Processing method and apparatus for implementing systolic arrays

InactiveCN1647064AStay flexibleCompilation Technology ExtensionSystolic arraysDigital data processing detailsProcessor registerControl signal

The present invention relates to a processing method and apparatus for implementing a systolic-array-like structure. Input data are stored in a depth-configurable register means (DCF) in a predetermined sequence, and are supplied to a processing means (FU) for processing said input data based on control signals generated from instruction data 5 wherein the depth of the register means (DCF) is controlled in accordance with the instruction data. Thereby, systolic arrays can be mapped onto a programmable processor, e.g. a VLIW processor, without the need for explicitly issuing operations to implement the register moves that constitute the delay lines of the array.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

Systolic array and calculation method

Owner:NEC CORP

Sequential grating array device

Owner:NTT DOCOMO INC

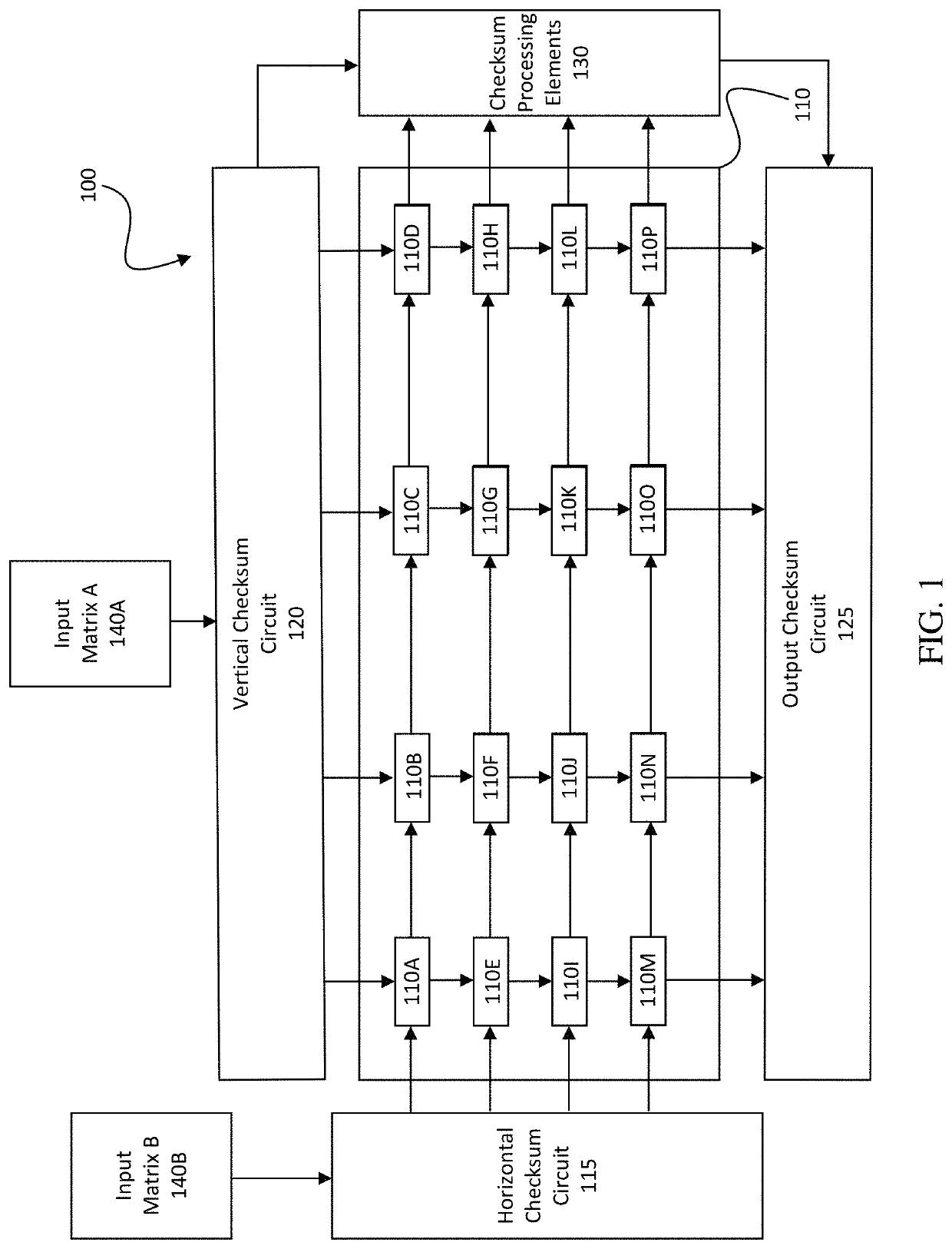

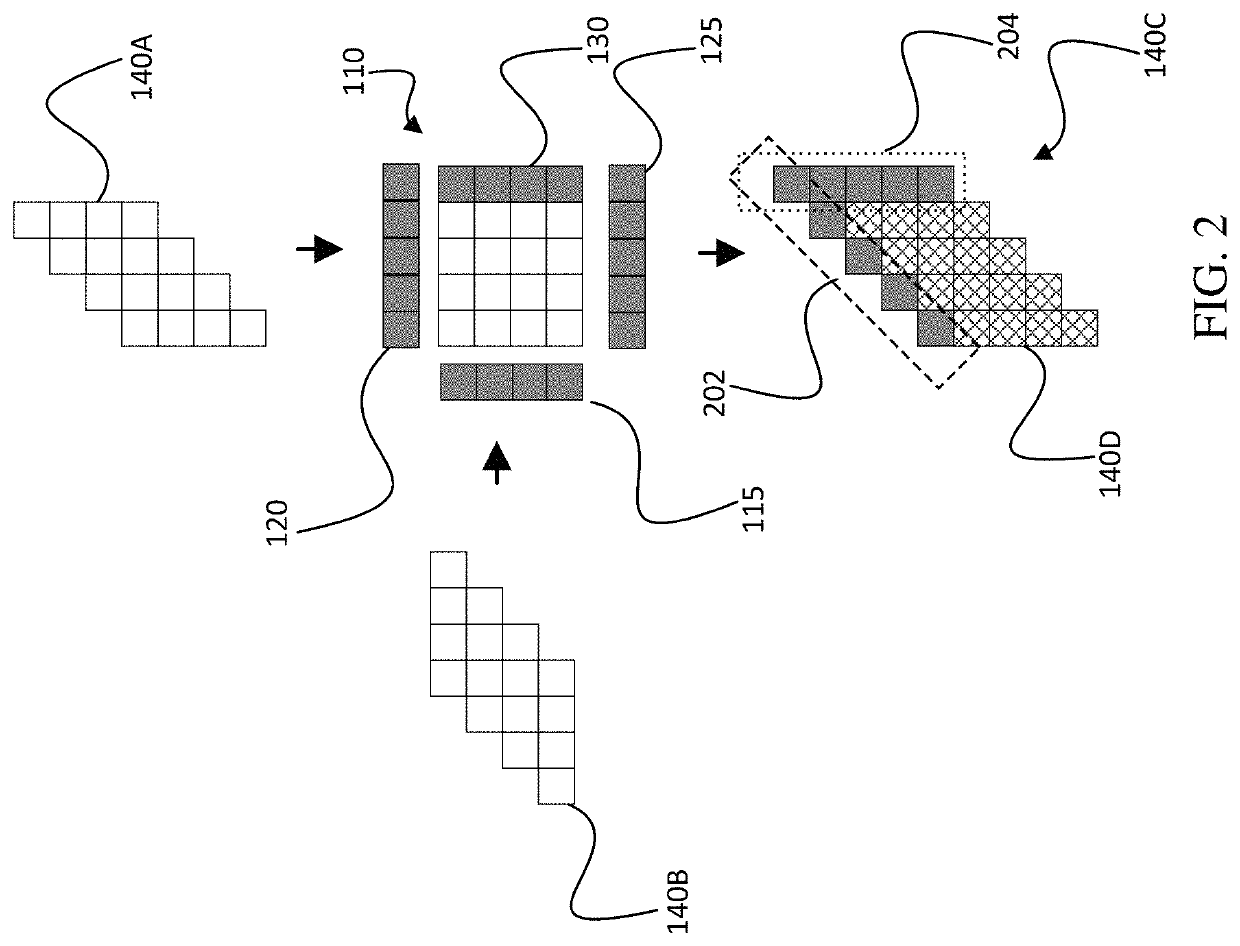

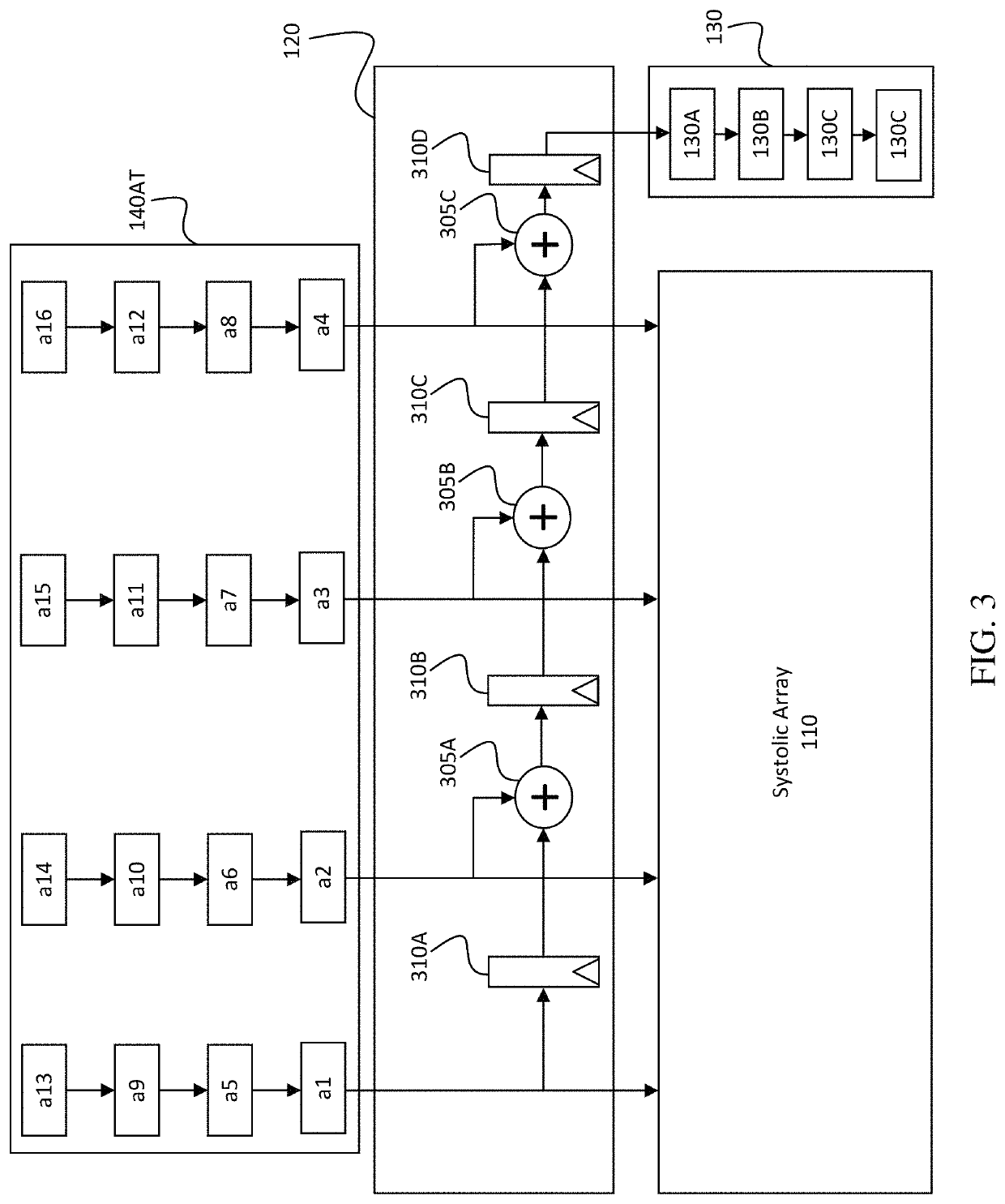

Error checking for systolic array computation

ActiveUS11507452B1Quick testImprove energy efficiencySystolic arraysRedundant data error correctionError checkAlgorithm

Aspects of the disclosure are directed to a computation unit implementing a systolic array and configured for detecting errors while processing data on the systolic array. Checksum circuit in communication with a systolic array is configured to compute checksums and perform error detection while the systolic array processes input data. Instead of pre-generating checksums in input matrices, input matrices can be directly fed into the systolic array through the checksum circuit. On the output side, the checksum circuit can generate and compare checksums with checksums in an output matrix generated by the systolic array. Error checking the operations to generate the output matrix can be performed without delaying the operations of the systolic array, and without preprocessing the input matrices.

Owner:GOOGLE LLC

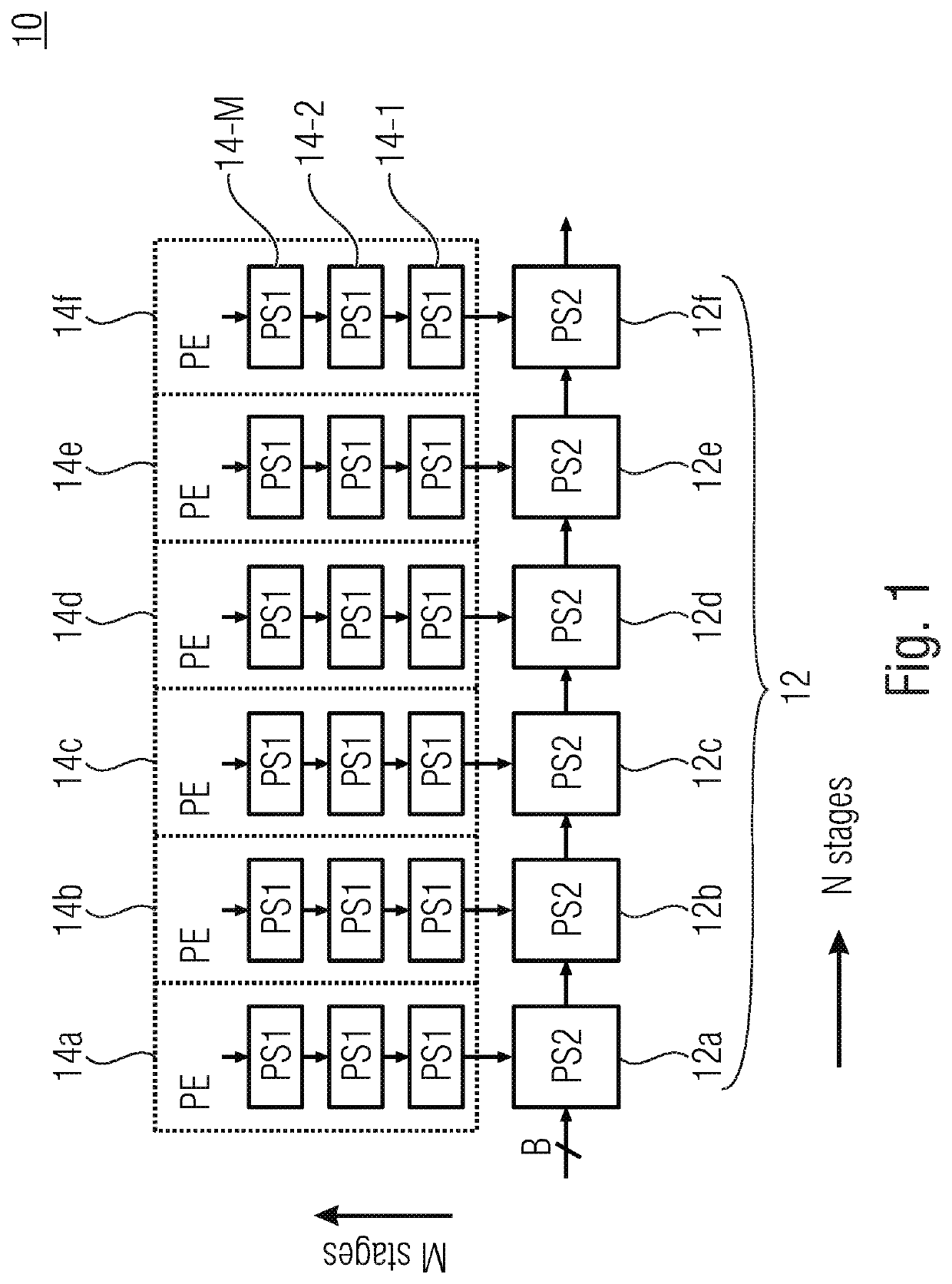

Data Bus With Multi-Input Pipeline

ActiveUS20200348942A1Advanced principleIncrease speedSingle instruction multiple data multiprocessorsSystolic arraysMulti inputParallel computing

A data bus includes process elements and a linear main pipeline. Each process element is coupled to a linear pipeline having M stages arranged in series, each of the M stages including a buffer element configured to buffer a data bit sequence and to forward the buffered data bit sequence from a first of the buffer elements to a last of the buffer elements. The linear main pipeline includes N pipeline stage elements arranged in series. Each pipeline stage element is connected to the last buffer element of a respective linear pipeline and configured to read-out one or more of the buffered data bit sequences and to forward the read-out data bit sequences from one of N pipeline stag elements to a next of the N pipeline stage elements.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

Systems And Methods For Systolic Array Design From A High-Level Program

ActiveUS20210081354A1Single instruction multiple data multiprocessorsSystolic arraysComputer architectureEngineering

Systems and methods for automated systolic array design from a high-level program are disclosed. One implementation of a systolic array design supporting a convolutional neural network includes a two-dimensional array of reconfigurable processing elements arranged in rows and columns. Each processing element has an associated SIMD vector and is connected through a local connection to at least one other processing element. An input feature map buffer having a double buffer is configured to store input feature maps, and an interconnect system is configured to pass data to neighboring processing elements in accordance with a processing element scheduler. A CNN computation is mapped onto the two-dimensional array of reconfigurable processing elements using an automated system configured to determine suitable reconfigurable processing element parameters.

Owner:XILINX INC

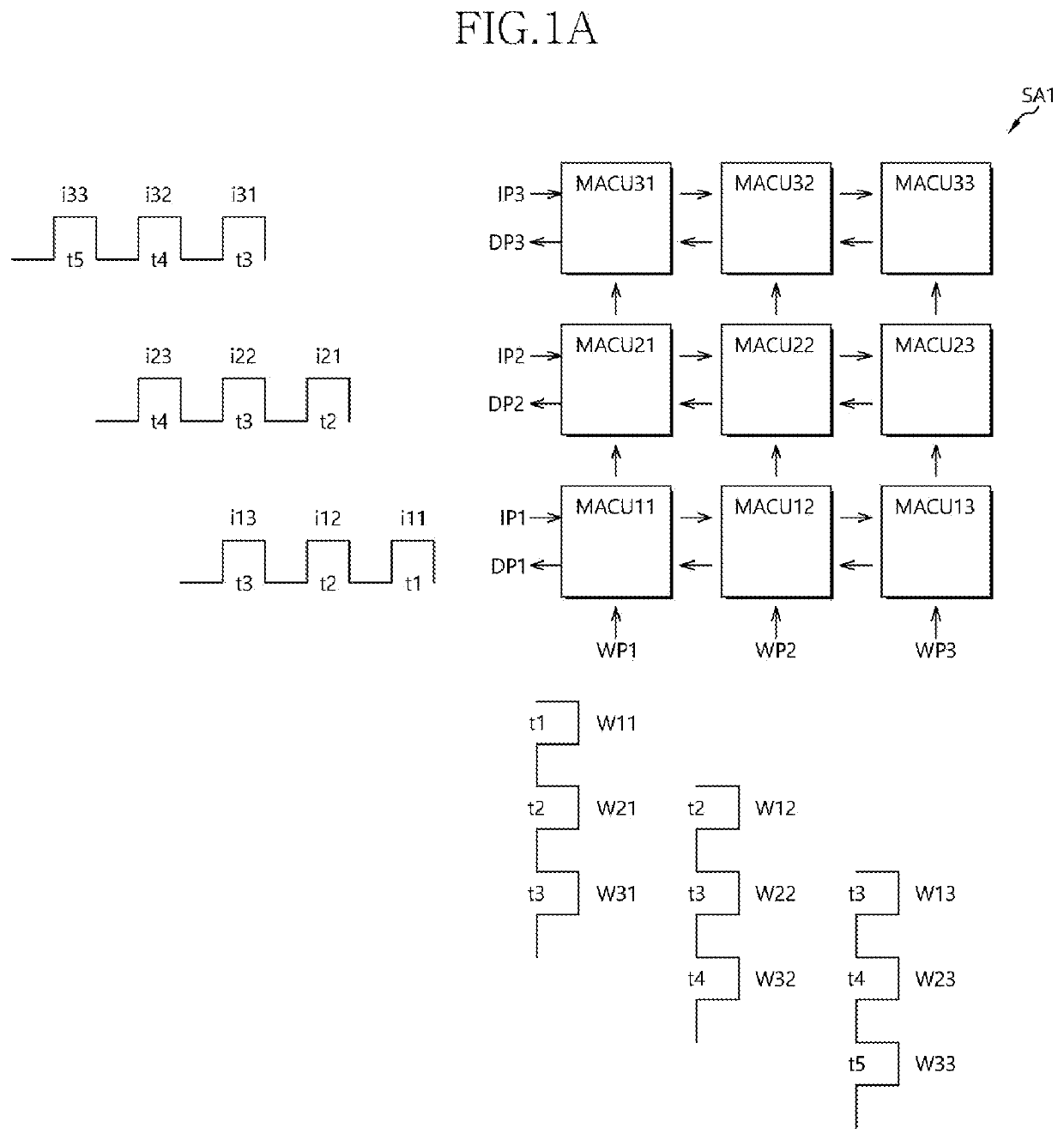

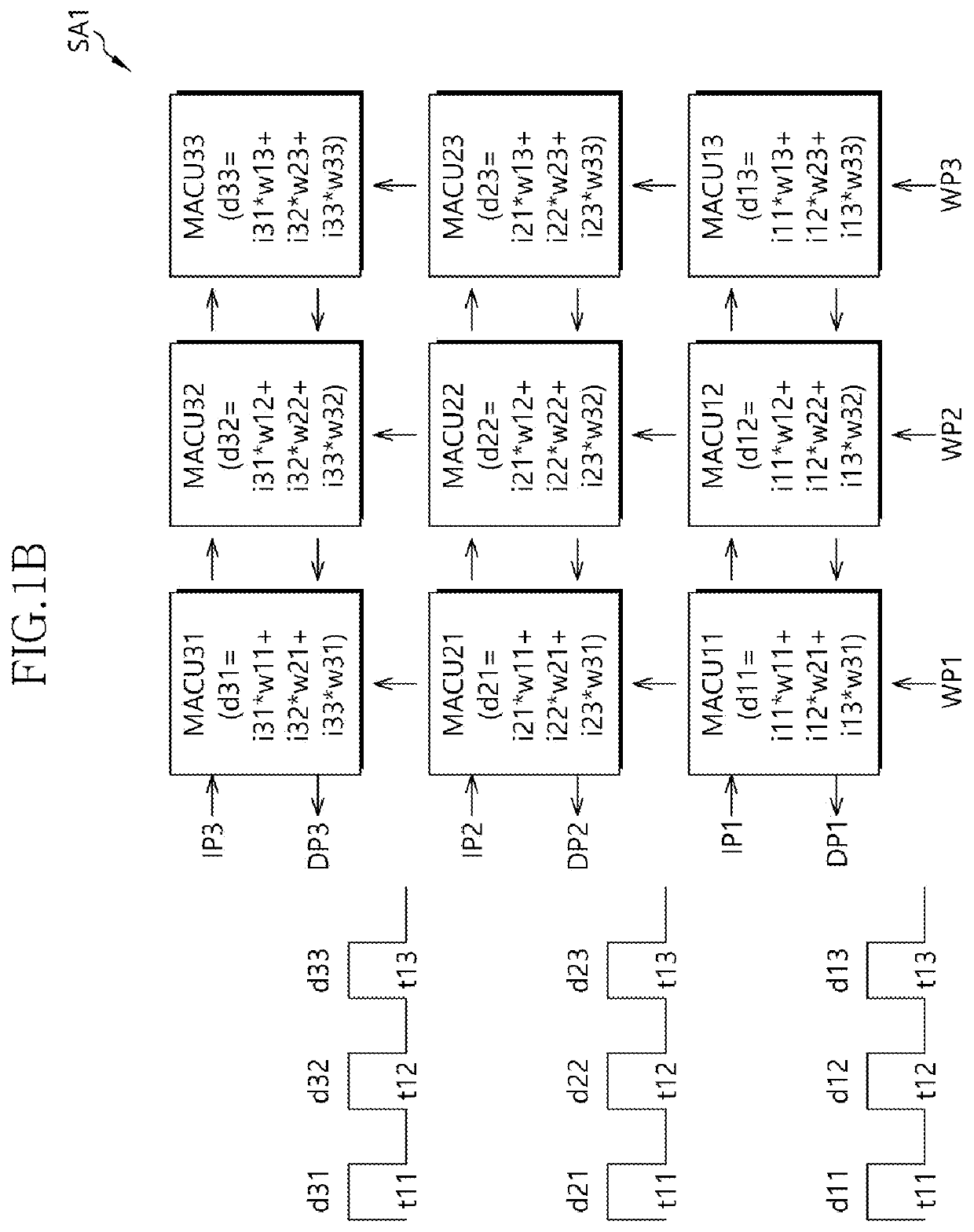

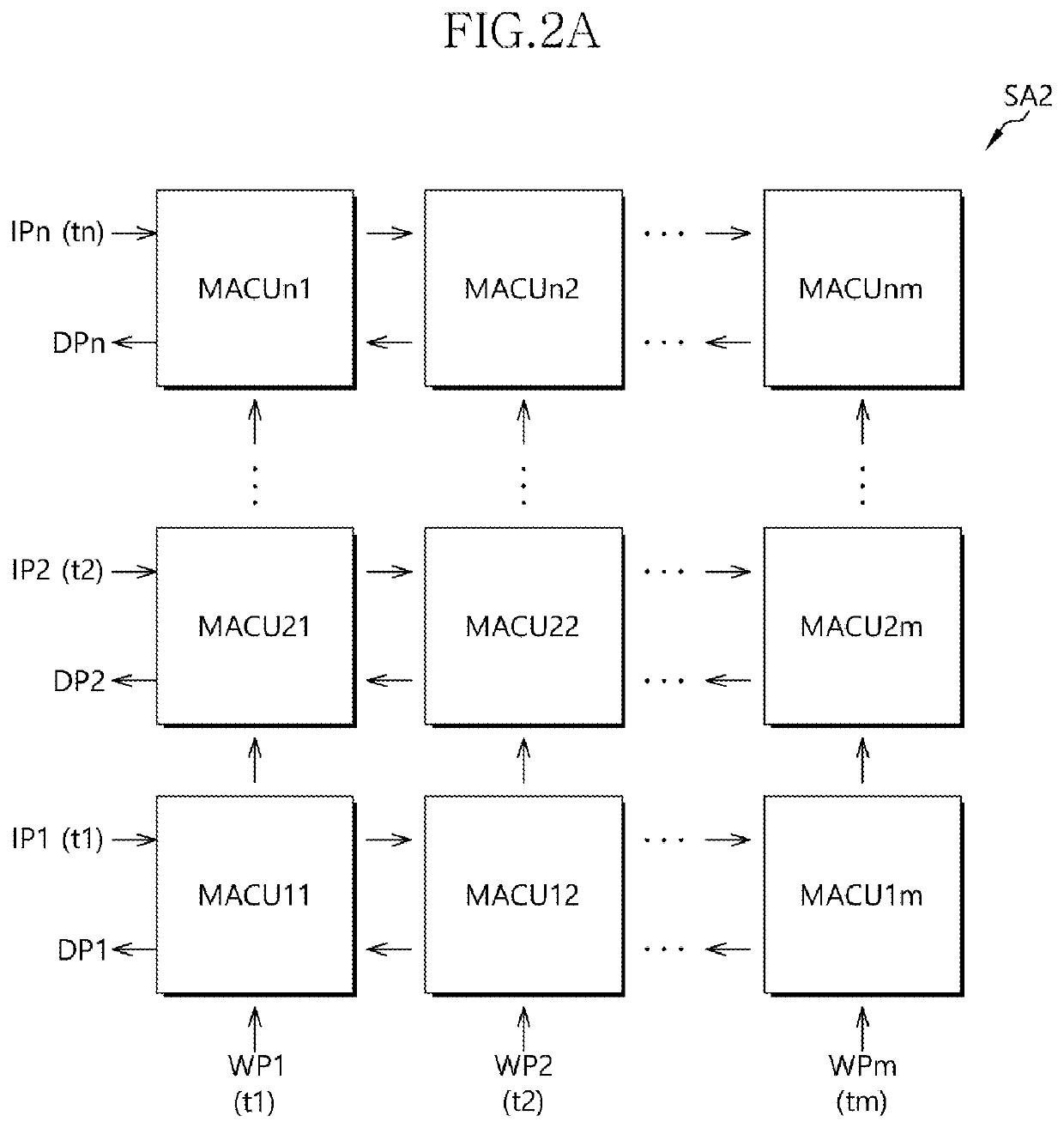

Method and apparatus for deep learning operations

Disclosed is a method and apparatus for deep learning operations. The apparatus for deep learning operations includes a processor, configured to support a plurality of different operation modes, including a systolic array having a plurality of multiplier accumulator (MAC) units, and a control circuit configured to respectively control, for each the plurality of different operation modes, select operations of the plurality of MAC units and data movements among the plurality of MAC units.

Owner:SAMSUNG ELECTRONICS CO LTD

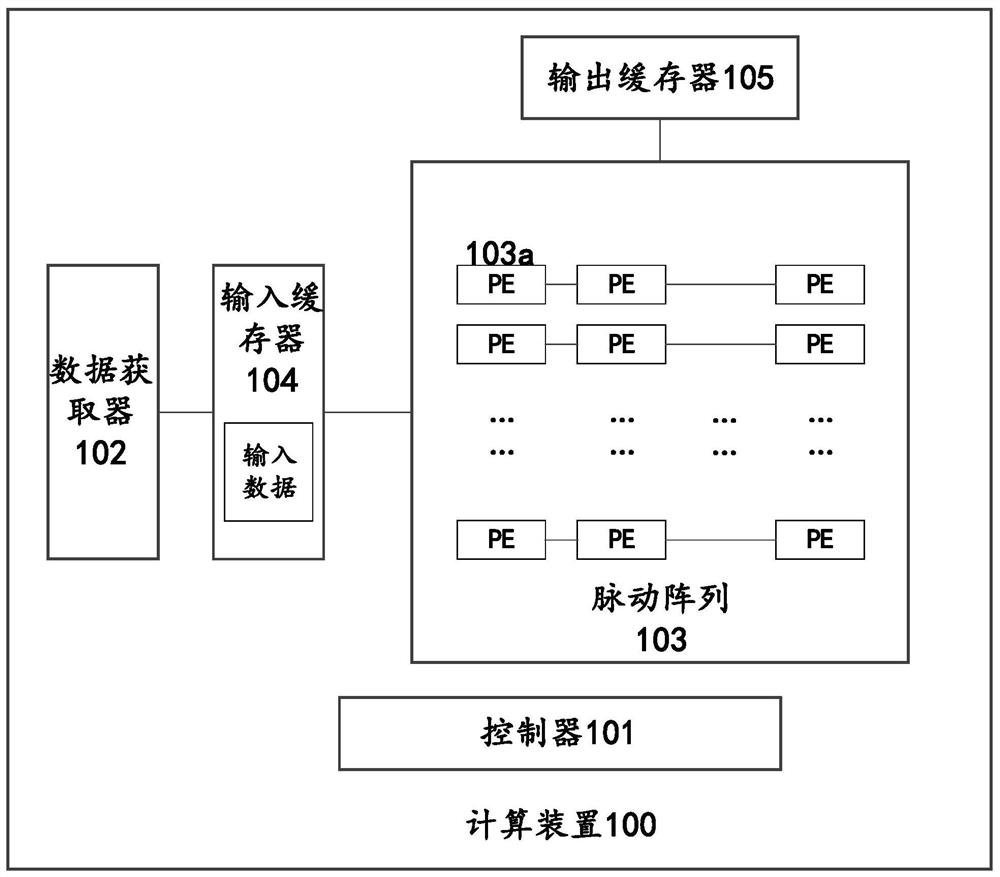

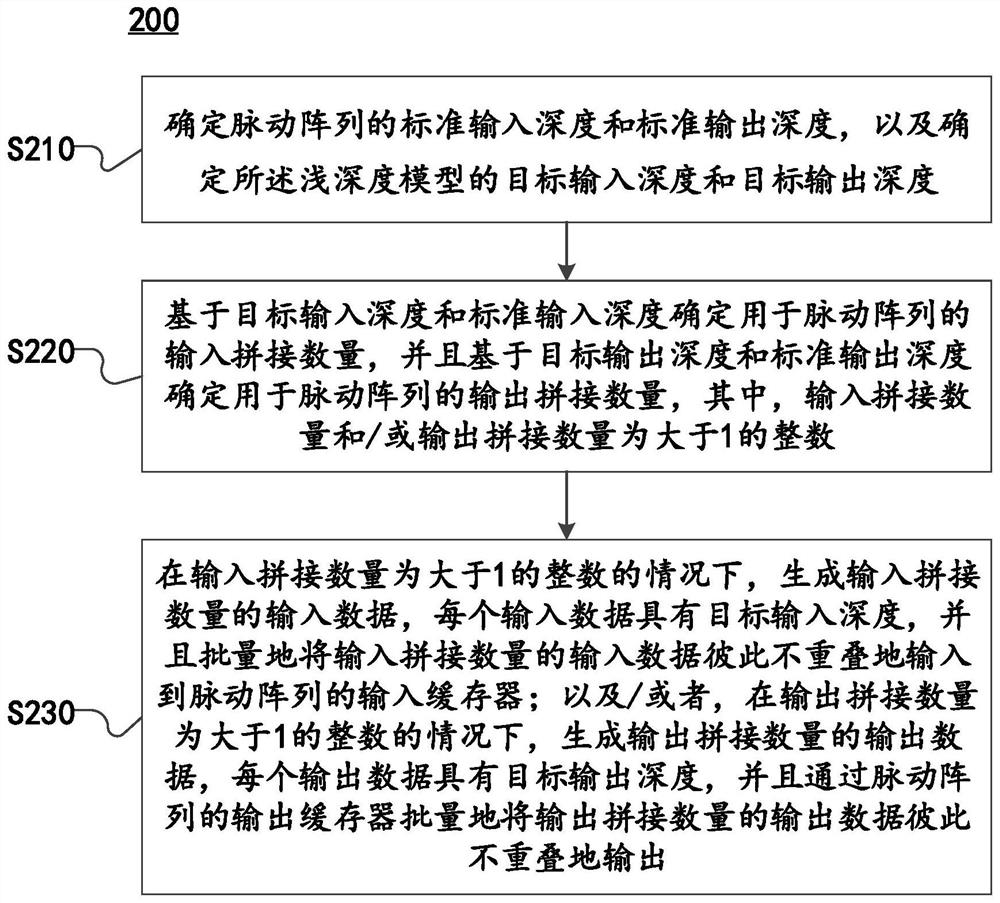

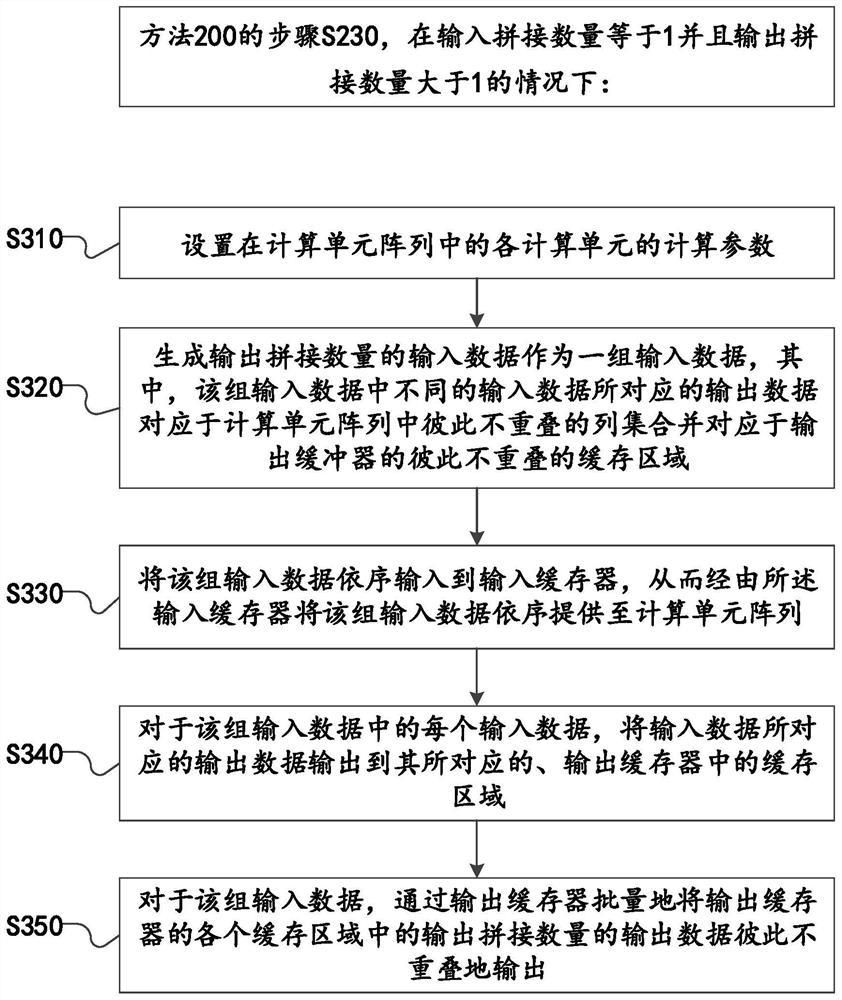

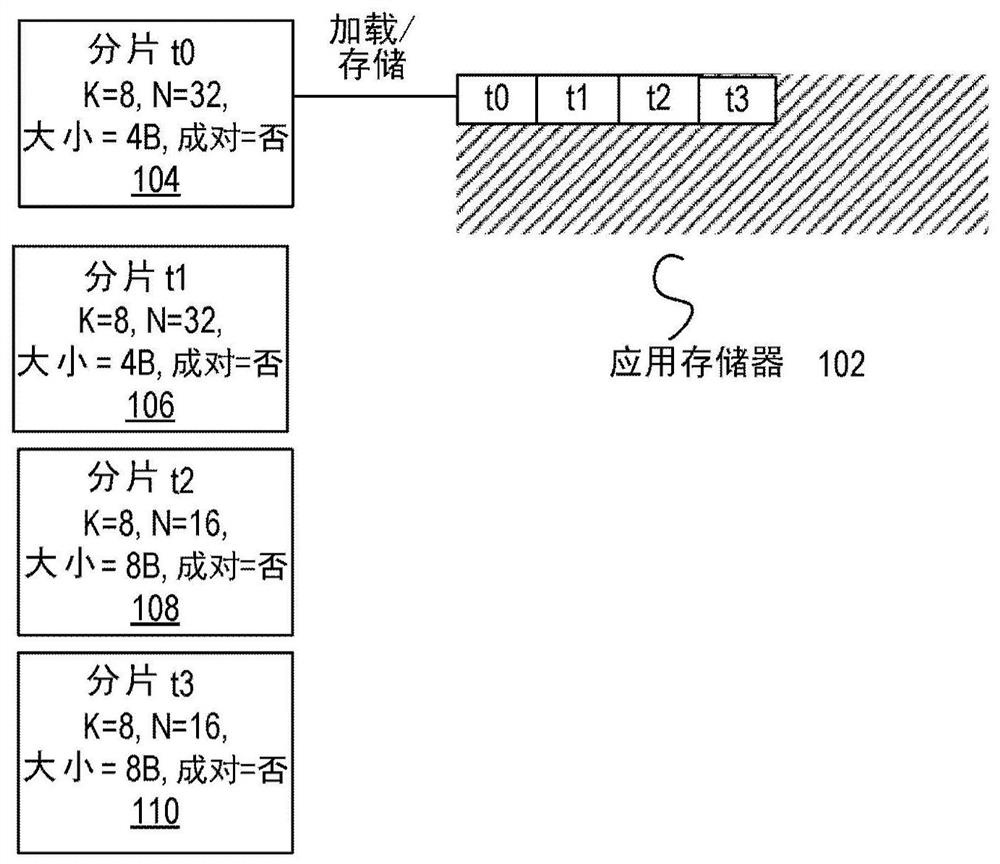

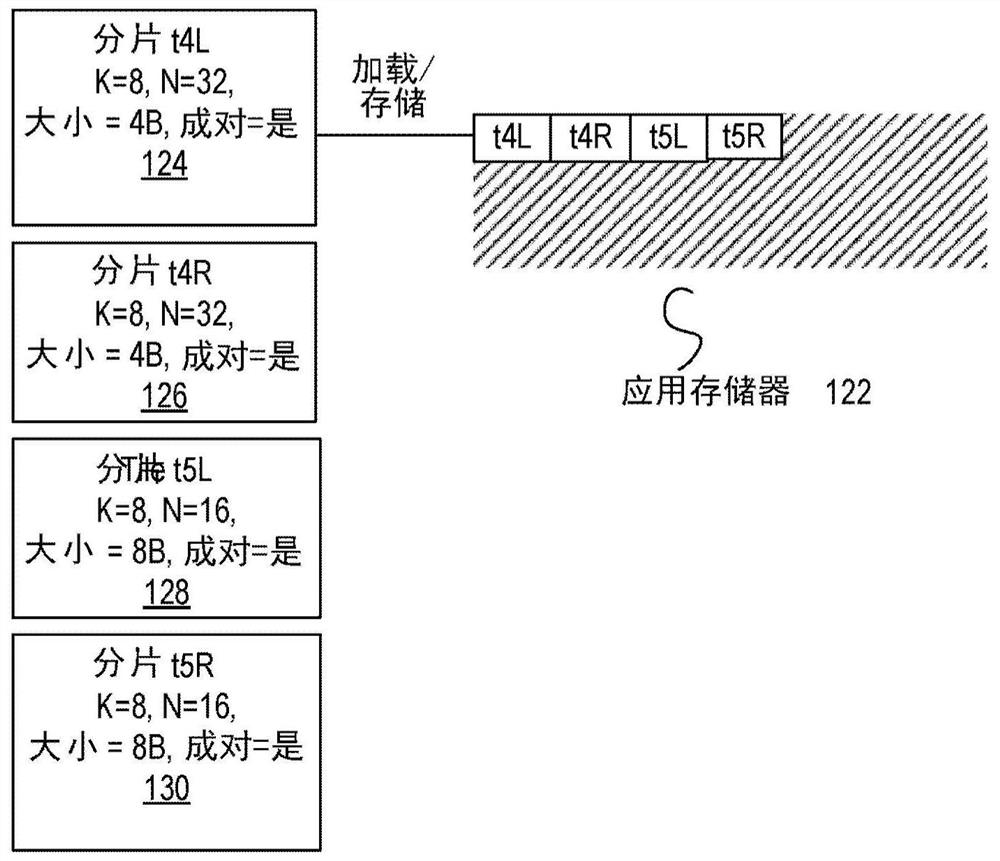

Calculation optimization method and device of shallow depth model based on systolic array

PendingCN113705069AImprove utilization efficiencyImprove moving efficiencySystolic arraysDesign optimisation/simulationAlgorithmSystolic array

The invention provides a calculation optimization method and device for a shallow depth model based on a systolic array. By determining the standard input depth / standard output depth of the systolic array and the input depth and the output depth of the shallow depth model, determining the input splicing number and the output splicing number. And according to the input splicing number and the output splicing number value, splicing the input data and / or the corresponding output data so as to obtain the input data in batches and provide the input data to the systolic array in parallel, and / or caching a plurality of output data corresponding to the input data through an output buffer and then outputting the output data in batches, therefore, the migration efficiency of the input data and / or the output data can be improved, and the utilization efficiency of the systolic array can also be improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

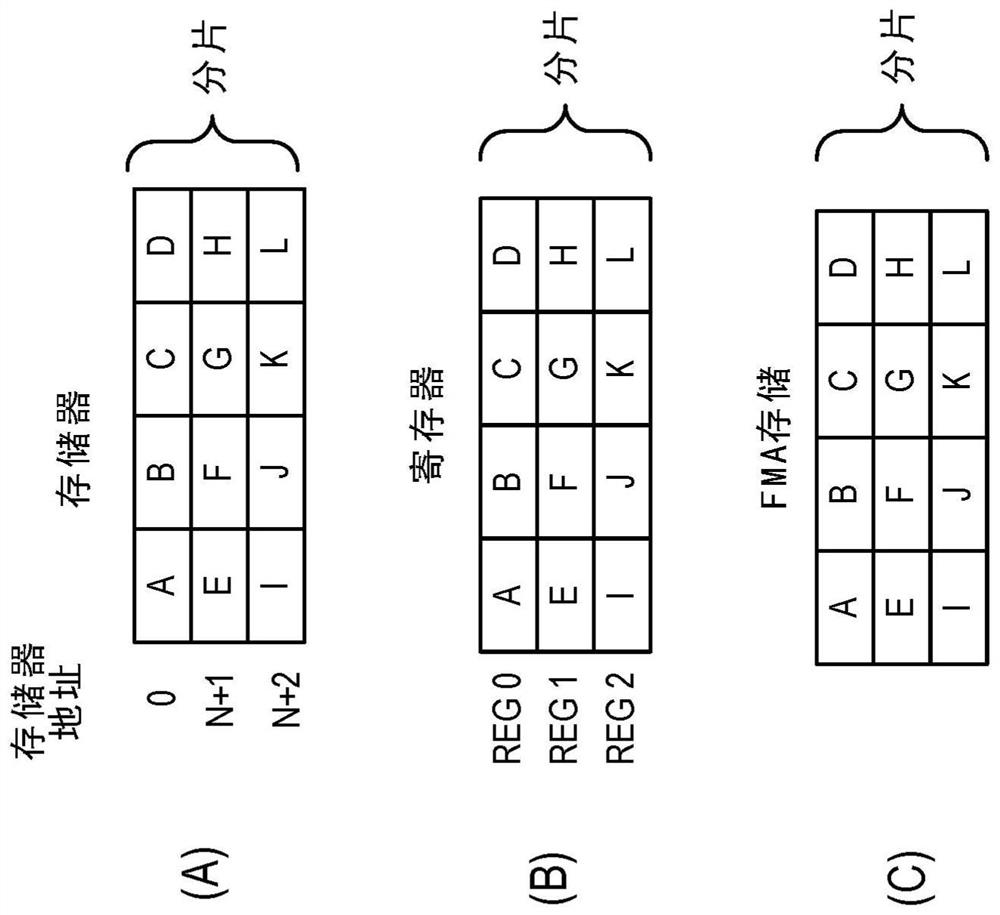

Deep learning implementations using systolic arrays and fused operations

Disclosed embodiments relate to deep learning implementations using systolic arrays and fused operations. In one example, a processor includes fetch and decode circuitry to fetch and decode an instruction having fields to specify an opcode and locations of a destination and N source matrices, the opcode indicating the processor is to load the N source matrices from memory, perform N convolutions on the N source matrices to generate N feature maps, and store results of the N convolutions in registers to be passed to an activation layer, wherein the processor is to perform the N convolutions andthe activation layer with at most one memory load of each of the N source matrices. The processor further includes scheduling circuitry to schedule execution of the instruction and execution circuitry to execute the instruction as per the opcode.

Owner:INTEL CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com