Sparse neural network processor based on systolic array

A neural network and processor technology, applied in the field of computer architecture for sparse neural network computing, can solve problems such as irregularity of sparse patterns, and difficulty in effectively utilizing the sparsity of neural networks, reducing memory capacity, reducing space overhead, The effect of removing data redundancy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] In order to make the purpose, technical solution and advantages of the present invention clearer, the present invention will be further described in detail in combination with specific embodiments and accompanying drawings.

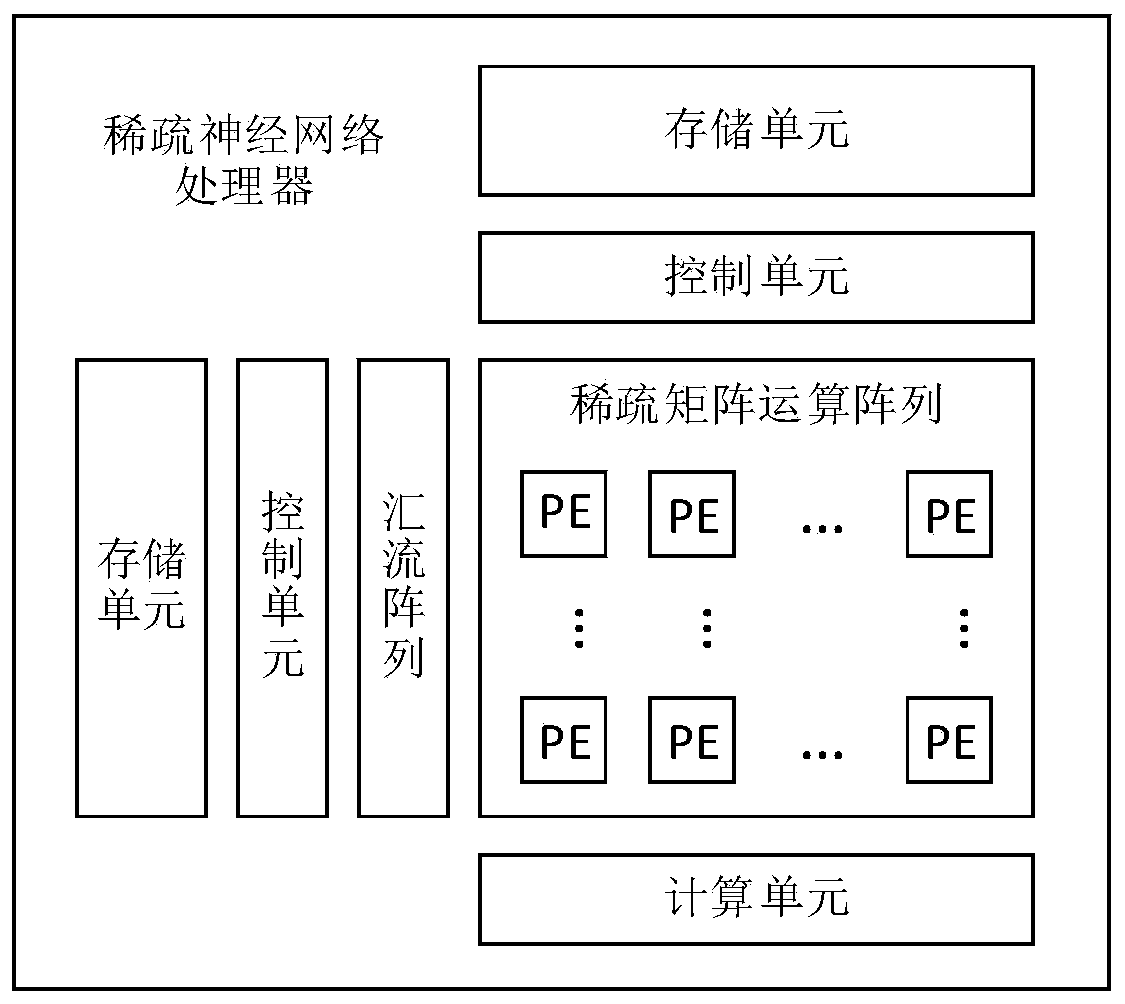

[0037] figure 1 is a schematic diagram of the architecture of a sparse neural network processor according to an embodiment of the present invention. Including storage unit, control unit, bus array, sparse matrix operation array and computing unit. The storage unit is used to store neural network weights, gradients, features, and instruction sequences for data flow scheduling. The control unit is connected to the storage unit, and according to the scheduling of the instruction sequence, obtains the required data from the storage, and reorganizes the data into the form of matrix operation, and then sends it to the sparse matrix operation array after being bridged by the bus array to complete the corresponding calculations. The calculation unit rec...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com