Bus arrays for reduced storage overhead

A storage overhead and array technology, applied to instruments, biological neural network models, calculations, etc., can solve problems such as area, cycle, timing waste, capacity waste, etc., to reduce memory capacity, reduce storage power consumption, and remove data redundancy Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] In order to make the purpose, technical solution and advantages of the present invention clearer, the present invention will be further described in detail in combination with specific embodiments and accompanying drawings.

[0033] Aiming at the problem of extra storage overhead caused by saving the same data in multiple independent memories, the present invention proposes a bus array for reducing storage overhead.

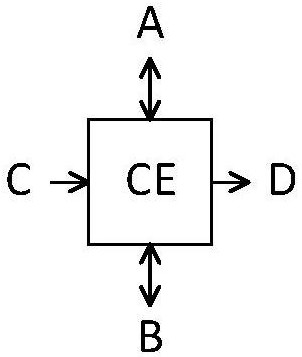

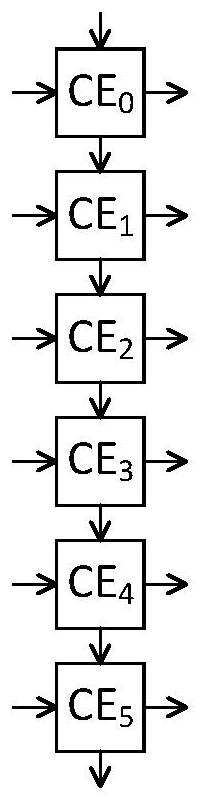

[0034] figure 1 A CE schematic of one embodiment of the invention is shown. Among them, each CE receives data input from outside the array through the C port; exchanges data with adjacent CEs through the A and B ports; and outputs data to the outside of the array through the D port. figure 2 A schematic diagram of a CE array showing one embodiment of the present invention is shown.

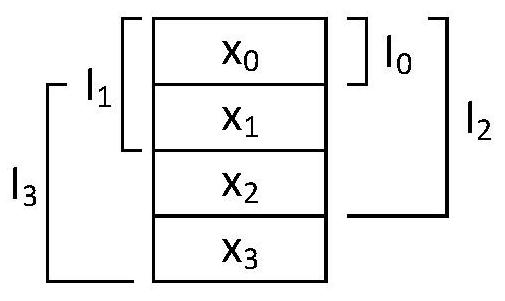

[0035] image 3 A schematic diagram showing a typical data transmission requirement for processing by the present invention, where x 0 、x 1 、x 2 with x 3 Represents fo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com