Patents

Literature

267results about How to "Guaranteed bandwidth" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

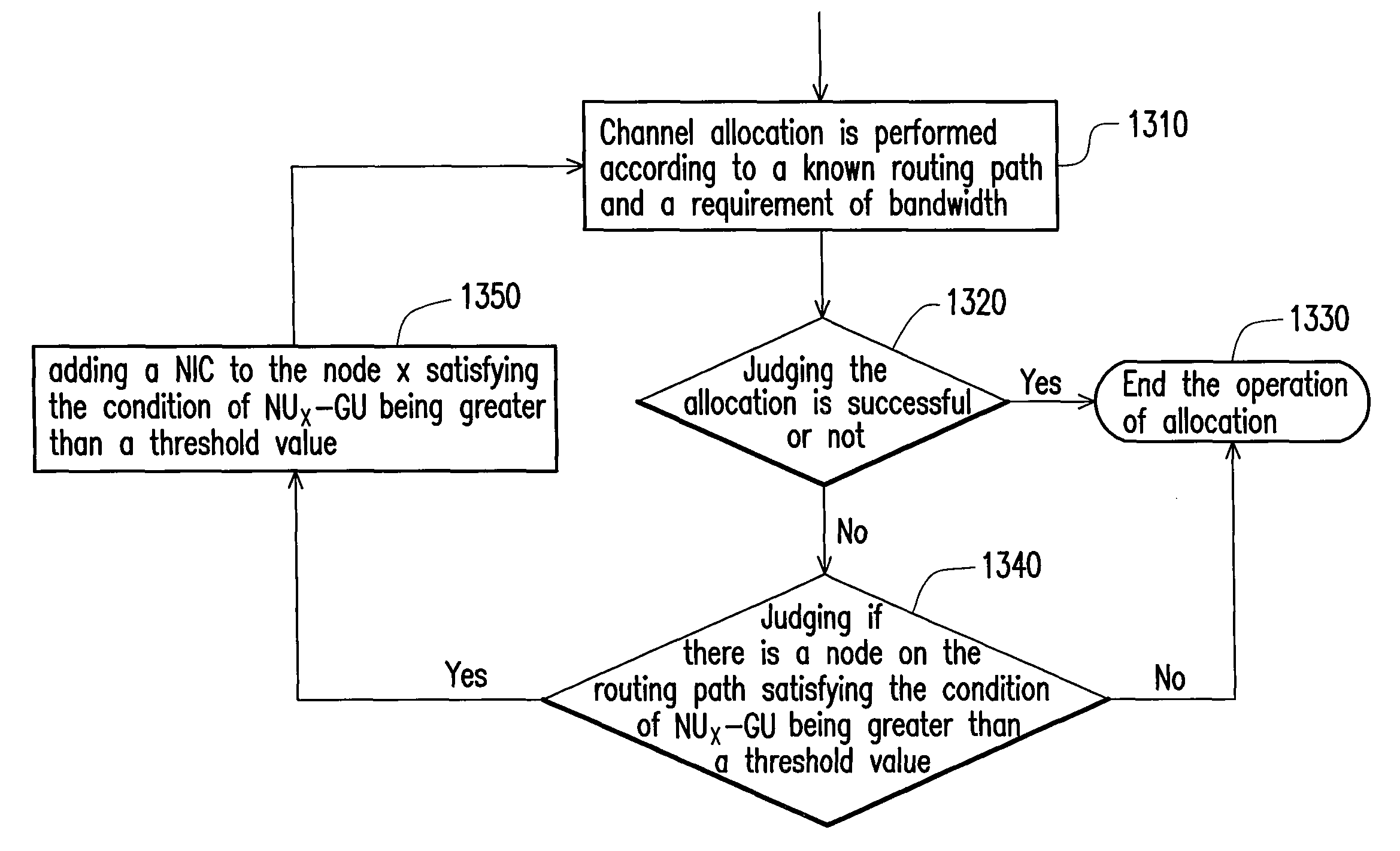

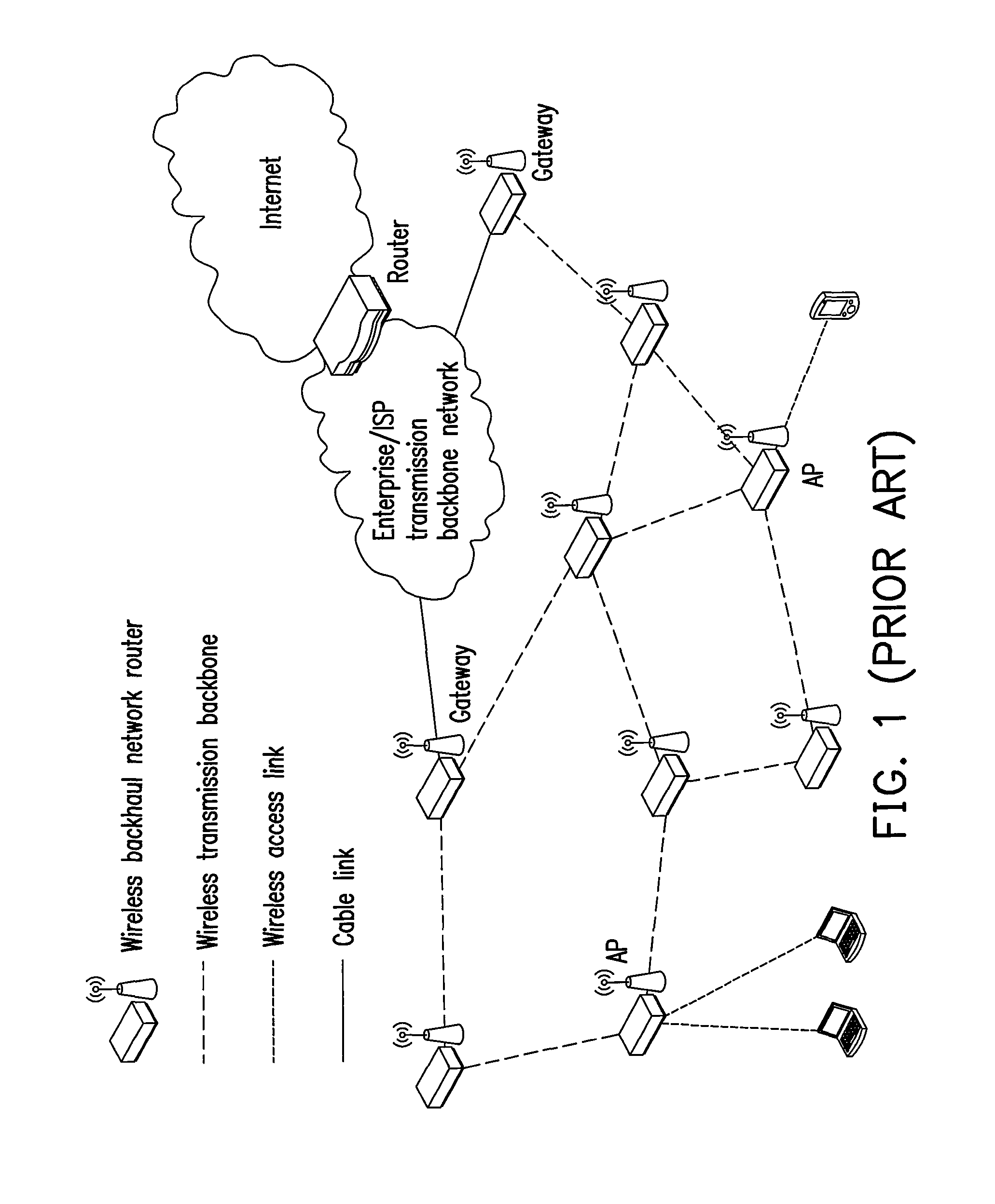

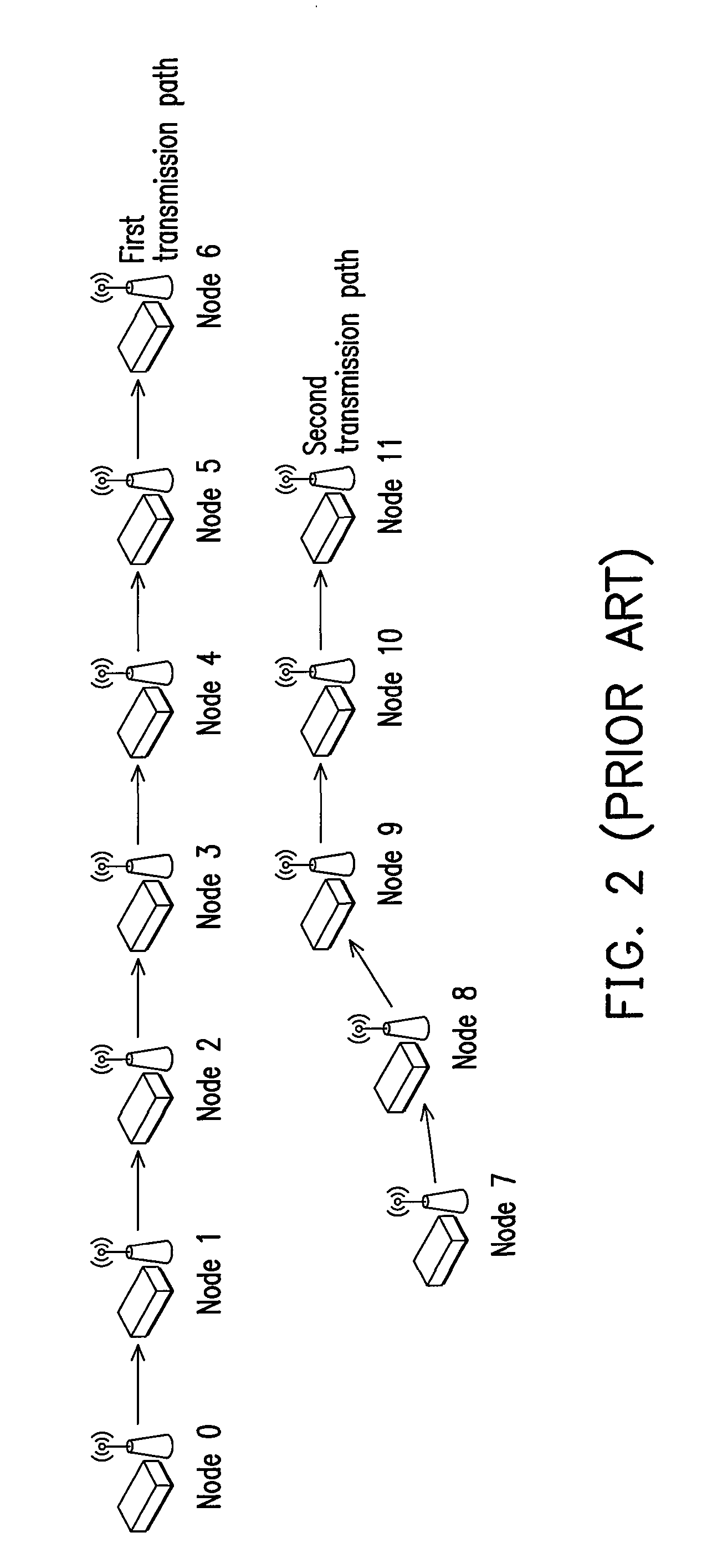

Distributed channel allocation method and wireless mesh network therewith

InactiveUS8059593B2Avoid interferenceGuaranteed bandwidthRadio transmissionWireless commuication servicesWireless mesh networkDistributed computing

Owner:IND TECH RES INST

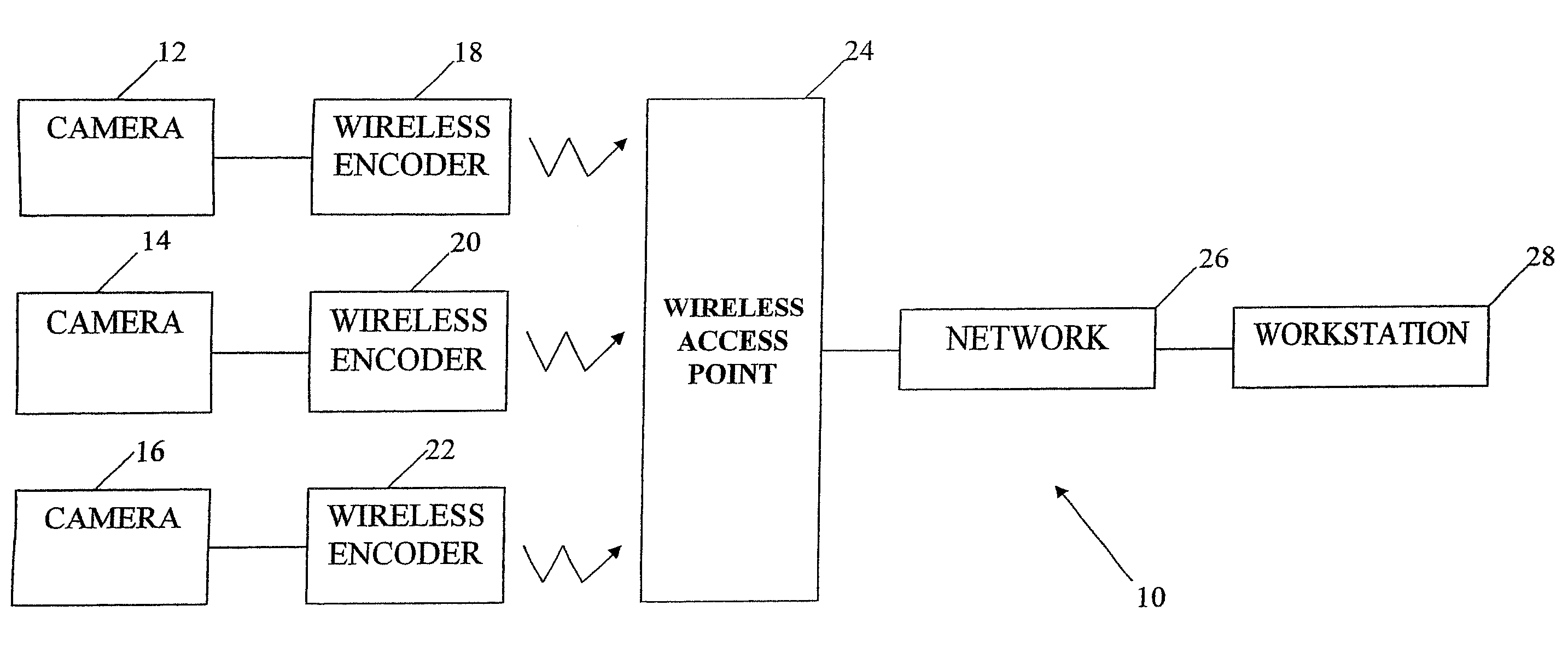

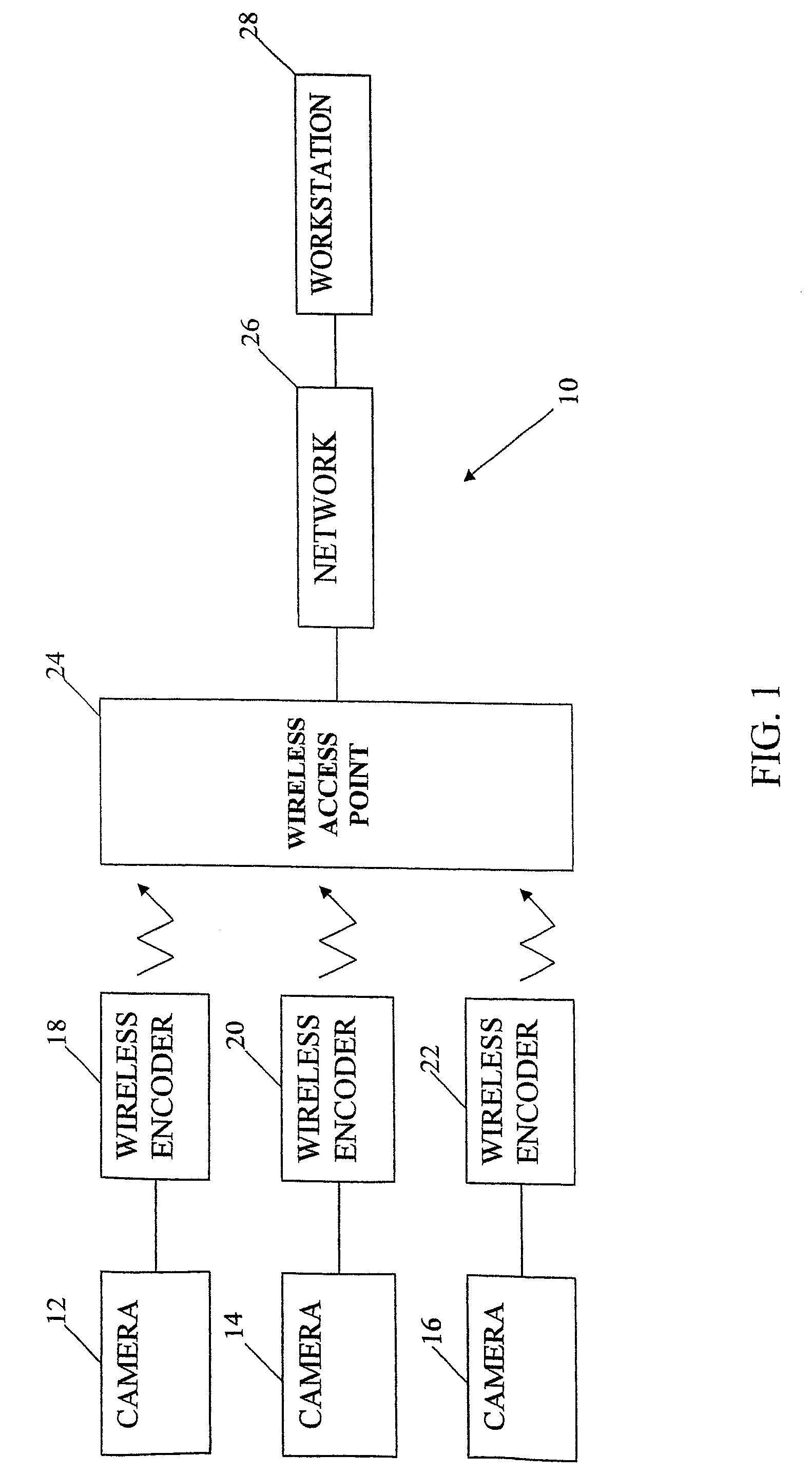

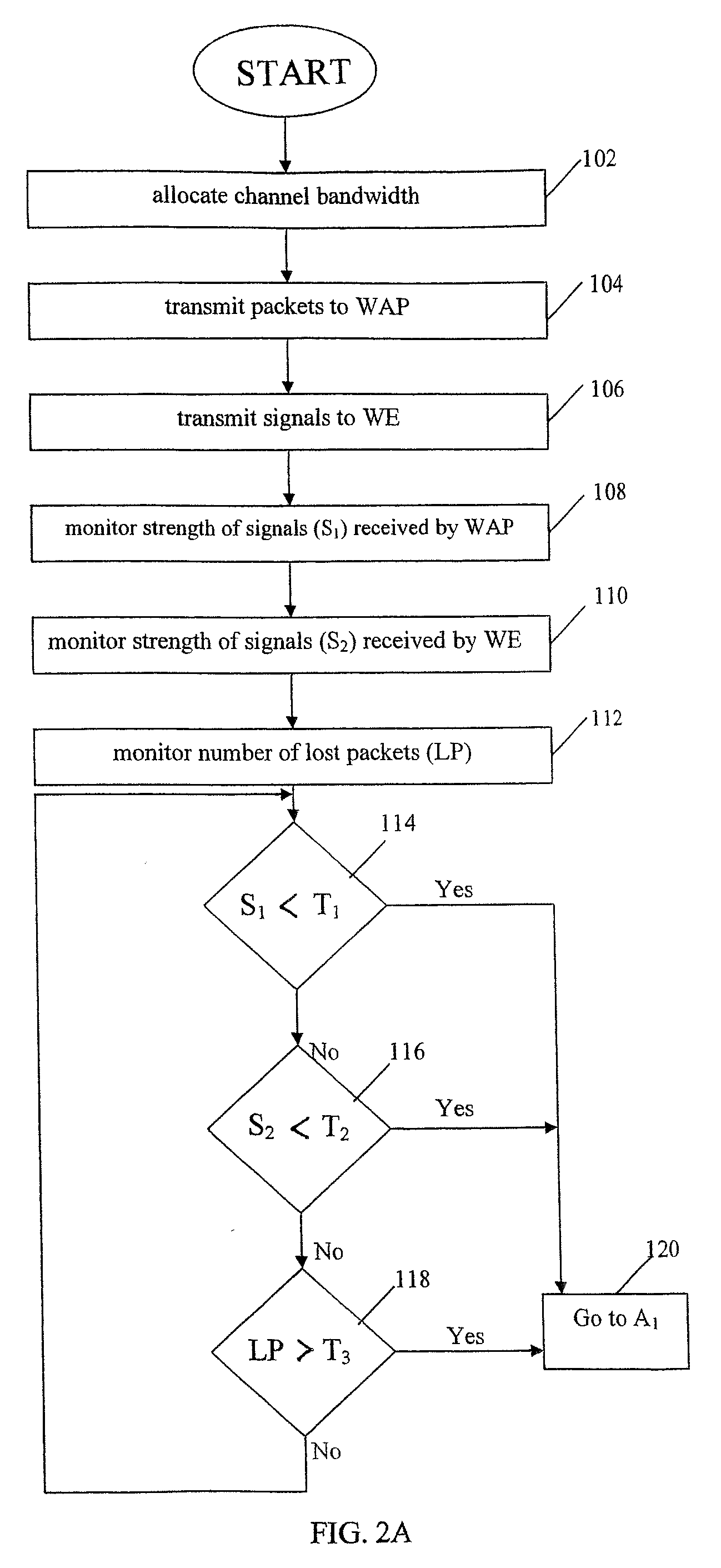

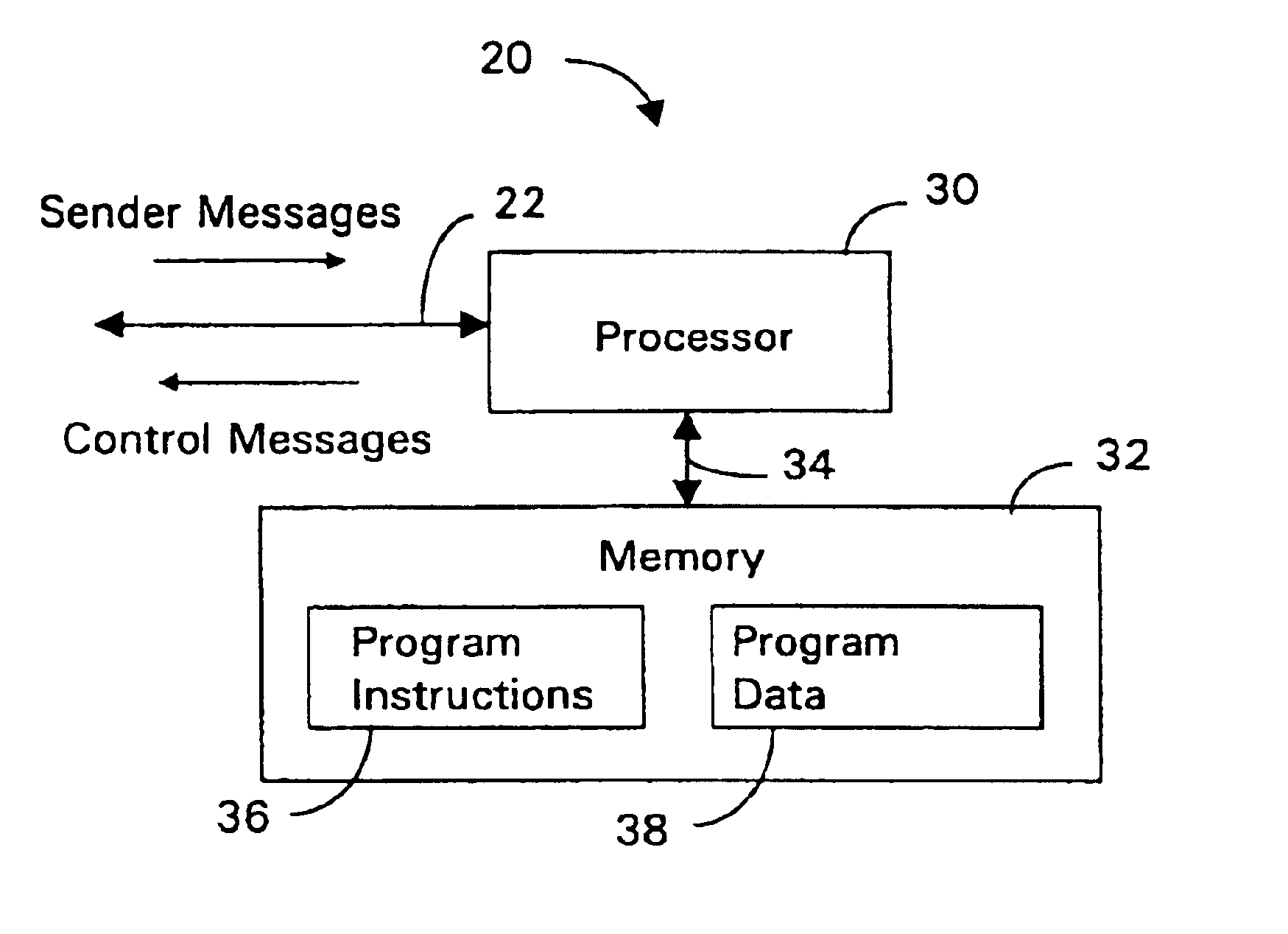

Method and apparatus for improving video performance in a wireless surveillance system

ActiveUS20080239075A1Improve video performanceGuaranteed bandwidthColor television detailsClosed circuit television systemsTelecommunicationsTransmission rate

A method of improving video performance in video surveillance system having a wireless encoder connected to a video surveillance network by a wireless access point device comprises the steps of allocating channel bandwidth to the wireless encoder from the wireless access point device, transmitting packets of video data from the wireless encoder to the wireless access point device, transmitting signals from the wireless access point device to the wireless encoder, monitoring the strength of the signals received by the wireless access point device, the strength of the signals received by the wireless encoder, and the number of lost packets of video data transmitted from the wireless encoder to the wireless access point device, sending a request from the wireless encoder to the wireless access point device to change the bit transmission rate of the wireless encoder if the strength of the signals received by the wireless access point device is less than a first threshold, if the strength of the signals received by the wireless encoder is less than a second threshold, or if the number of lost packets of video data is greater than a third threshold, and changing the bit transfer rate of the wireless encoder if the wireless access point device approves the change.

Owner:PELCO INC

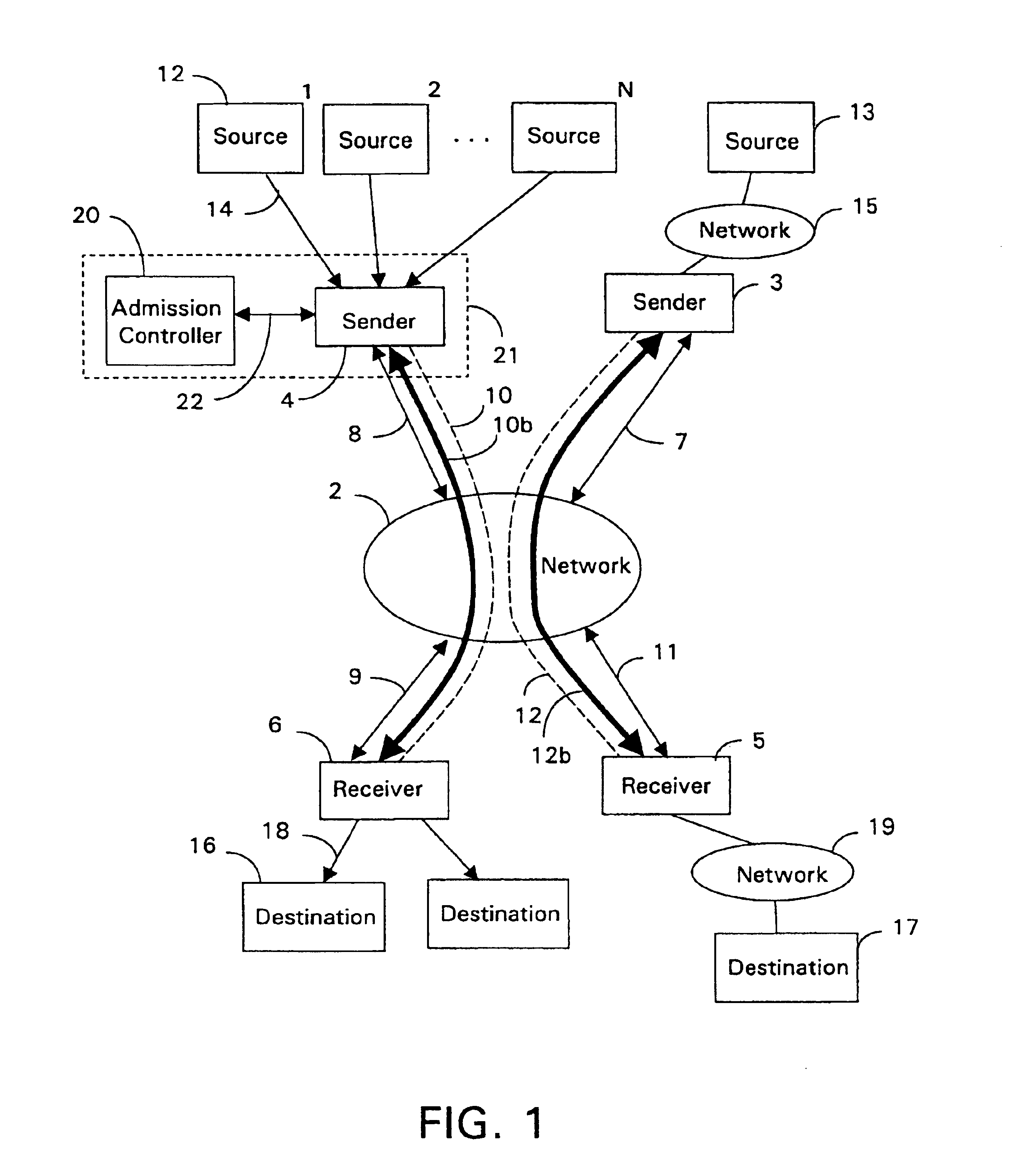

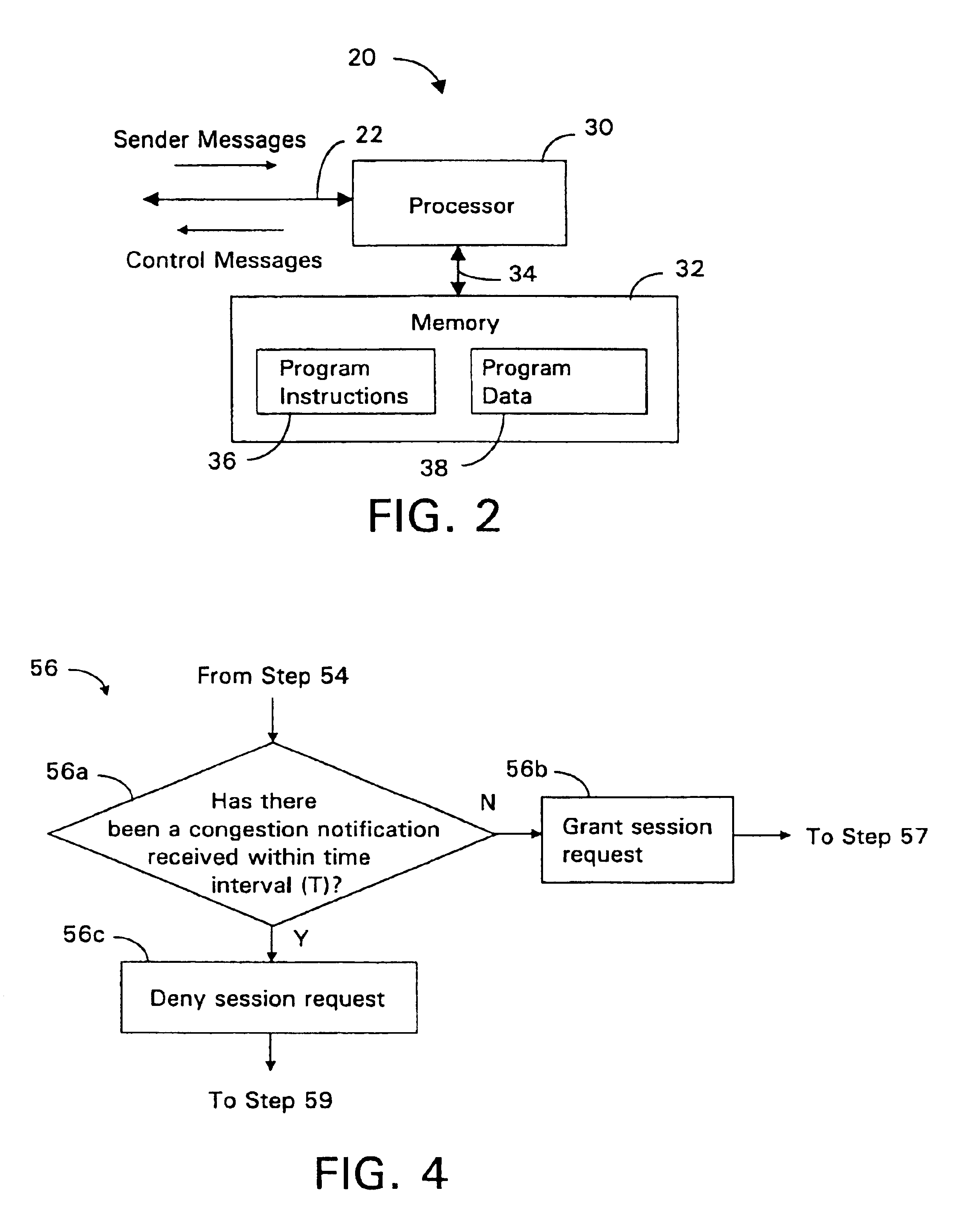

Admission control for aggregate data flows based on a threshold adjusted according to the frequency of traffic congestion notification

InactiveUS6839767B1Guaranteed bandwidthExacerbating congestion condition is avoidedError preventionTransmission systemsTraffic capacityData stream

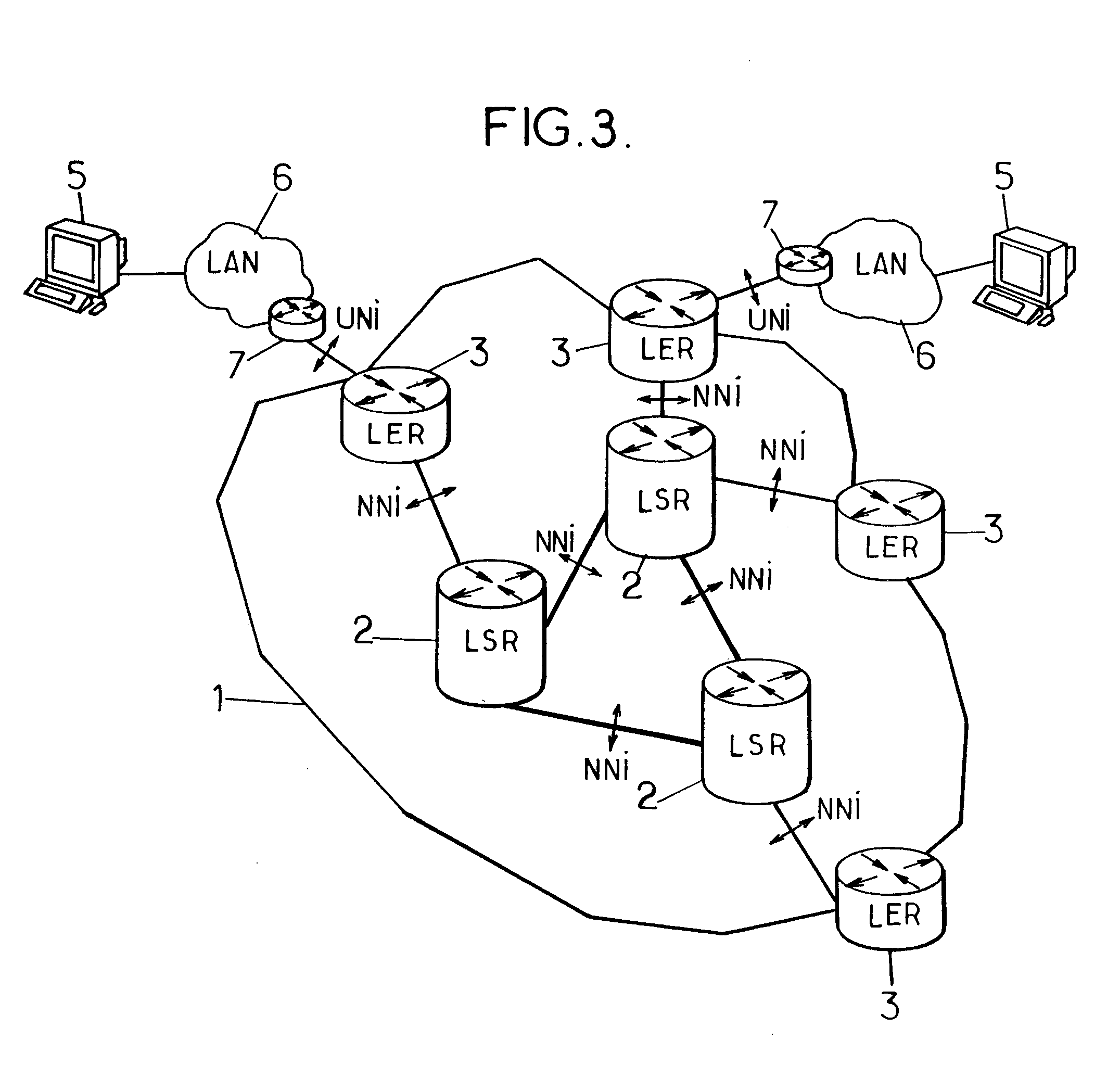

An admission controller and its method of operation for controlling admission of data flows into an aggregate data flow are described. Admitted data flows are aggregated into an aggregate data flow for transmission by a router, for example, over a data network. The aggregate data flow typically follows a pre-established path through the network; for example a multi-protocol label switched (MPLS) path. The path has minimum and maximum bandwidth limits assigned to it. The admission controller controls admission of new data flows into the aggregate data flow by granting or denying new session requests for the new data flows. Congestion notifications received from the network and bandwidth limits of the path are considered in determining whether to grant or deny a new session request. In this way, the admission controller provides elastic sharing of network bandwidth among data flows without exacerbating network congestion and while remaining within the path's bandwidth limits. The data flows may include transaction oriented traffic.

Owner:RPX CLEARINGHOUSE

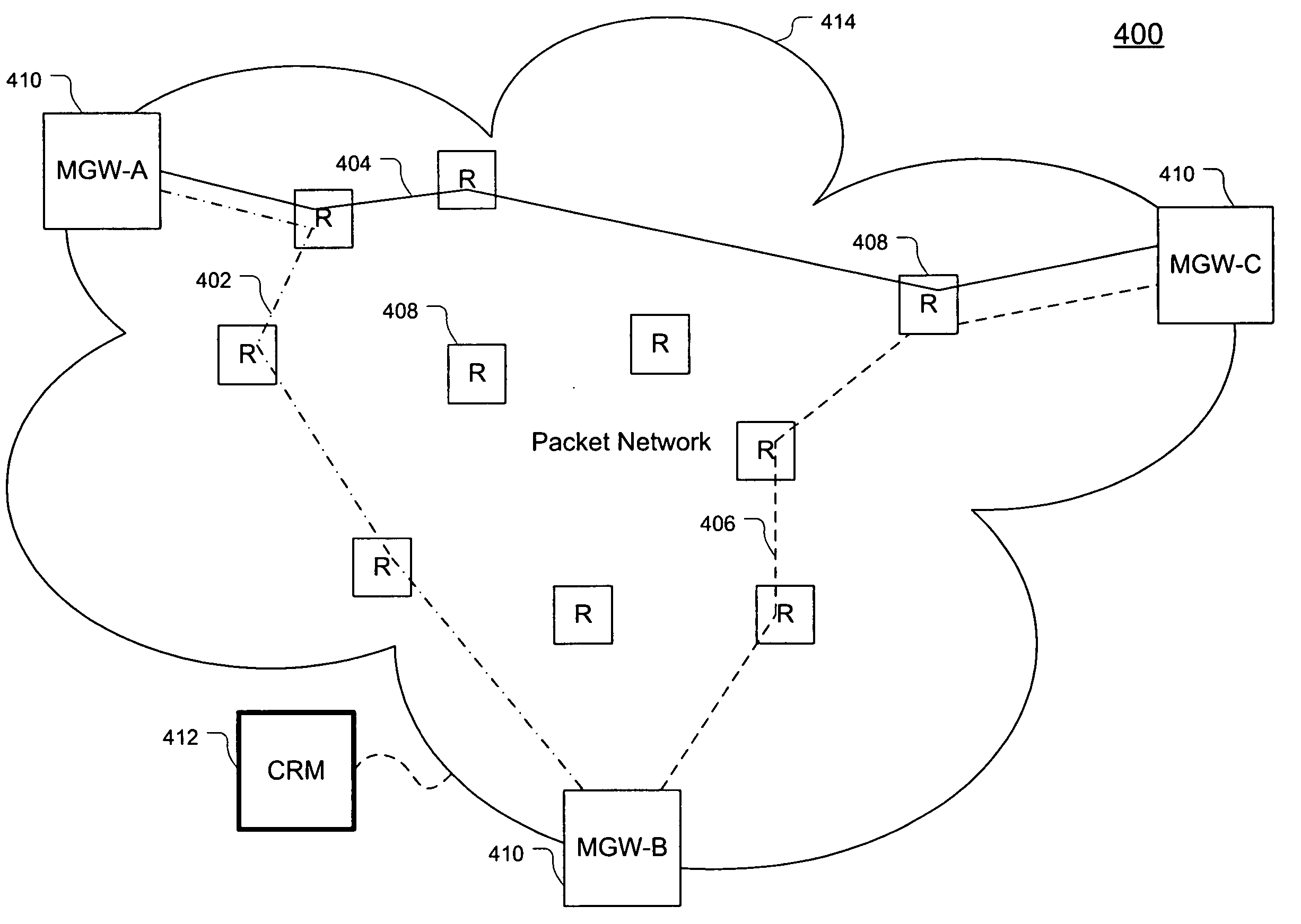

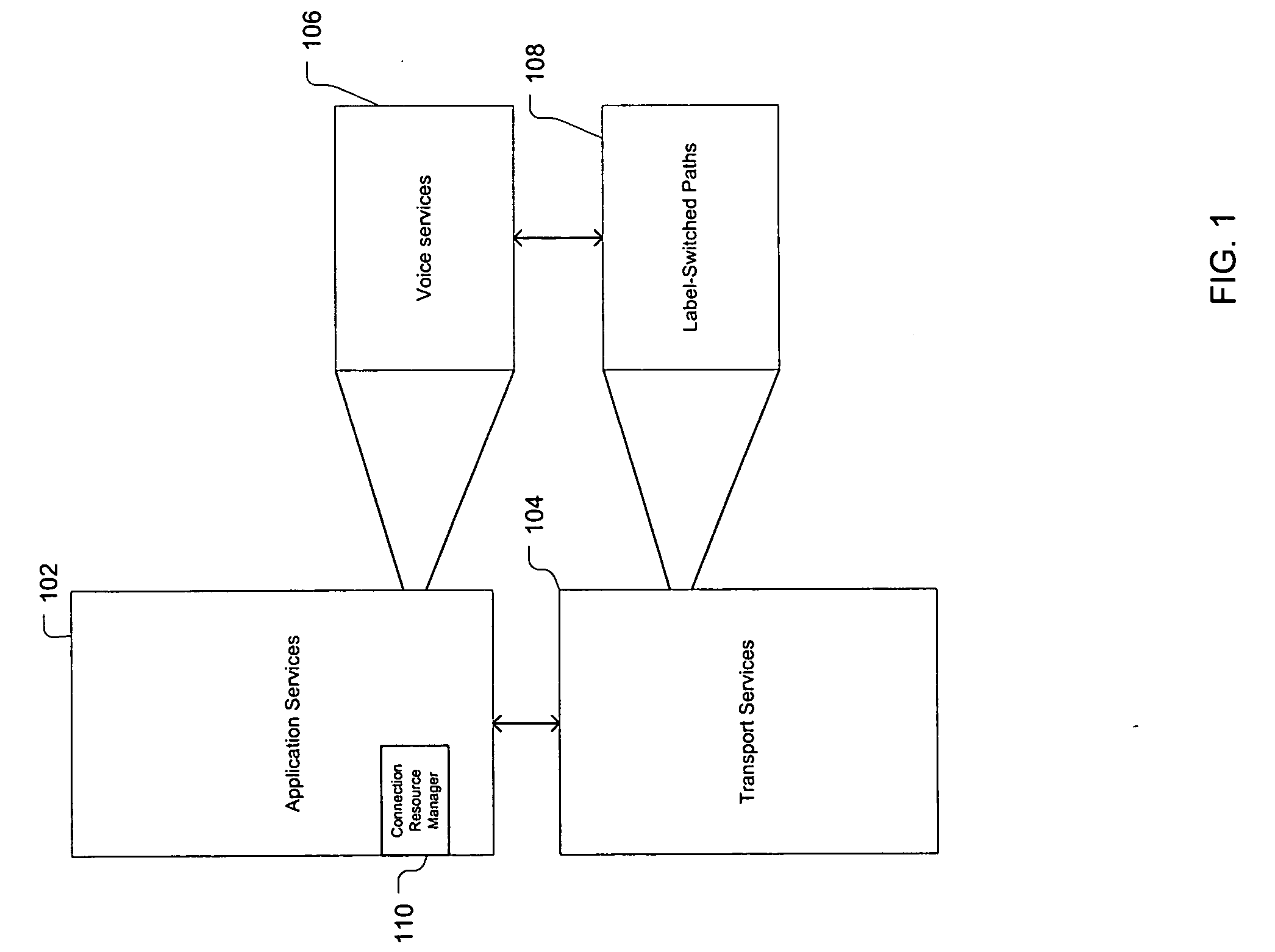

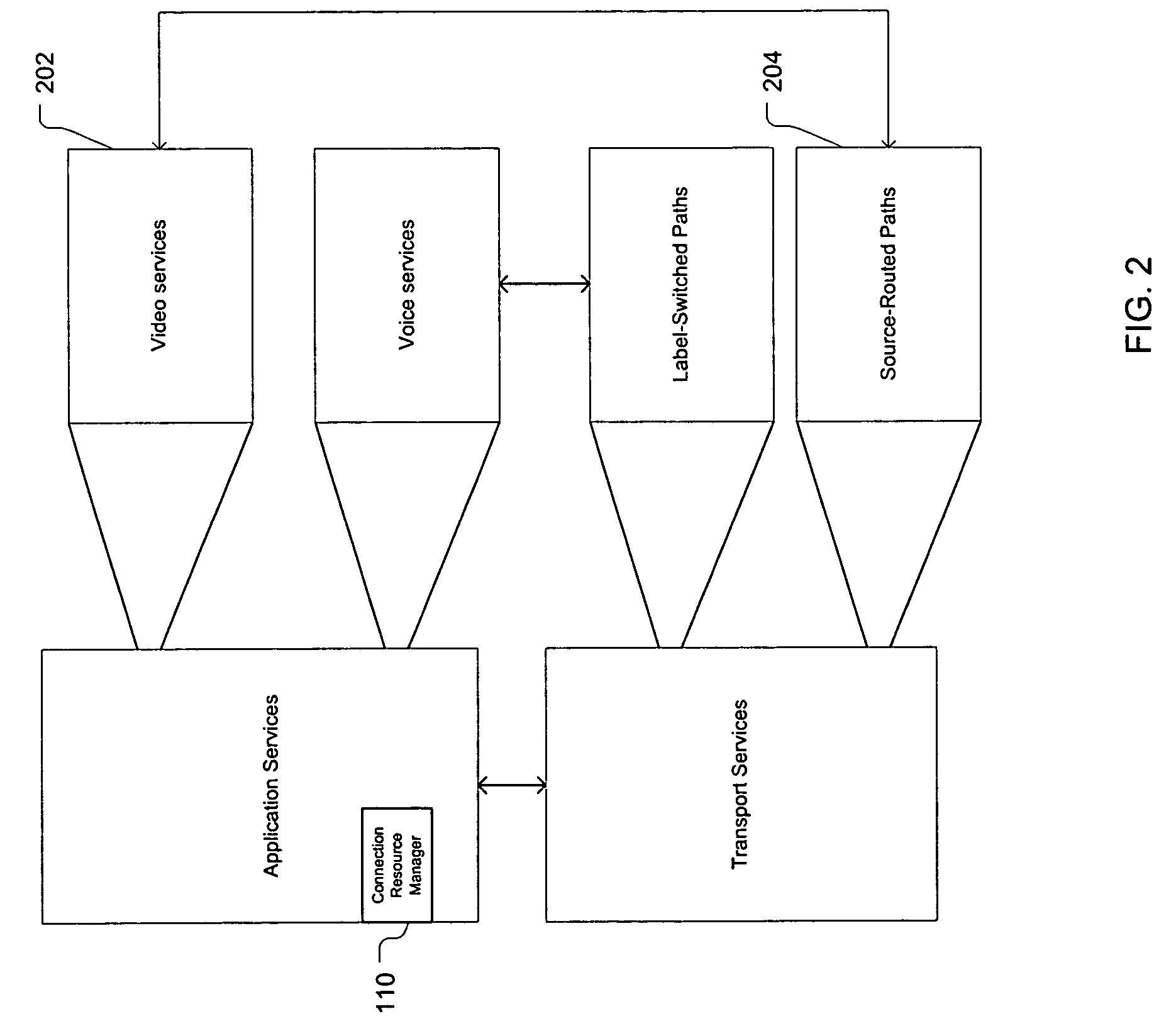

Network quality of service management

InactiveUS20050195741A1Fast response timeEfficient and reliableError preventionTransmission systemsQuality of serviceResource management

An architecture for quality-of-service (QoS) management creates a logical circuit-switched network within a packet network to support QoS-sensitive demands levied on the network. This QoS-managed network can serve to interwork, e.g., a PSTN with VoIP networks. The architecture can include a connection resource manager (CRM), which oversees bandwidth availability and demand admission / rejection on dynamically provisioned virtual trunk groups (VTGs) within the packet network, and a transport bandwidth controller (TBC). The VTGs serve to transport QoS-sensitive demands across the packet network. The TBC serves the CRM by providing an interface to routers and / or OAM systems of the packet network to size VTGs to meet QoS requirements. Media switches located at the packet network borders serve to mux / demux the demands into / from VTGs. CRMs and TBCs can be implemented as centralized, distributed, or hierarchical, and flat and aggregated variants of the architecture are supported. VTGs can be implemented using MPLS LSPs, VPNs, or source-based routing.

Owner:WSOU INVESTMENTS LLC

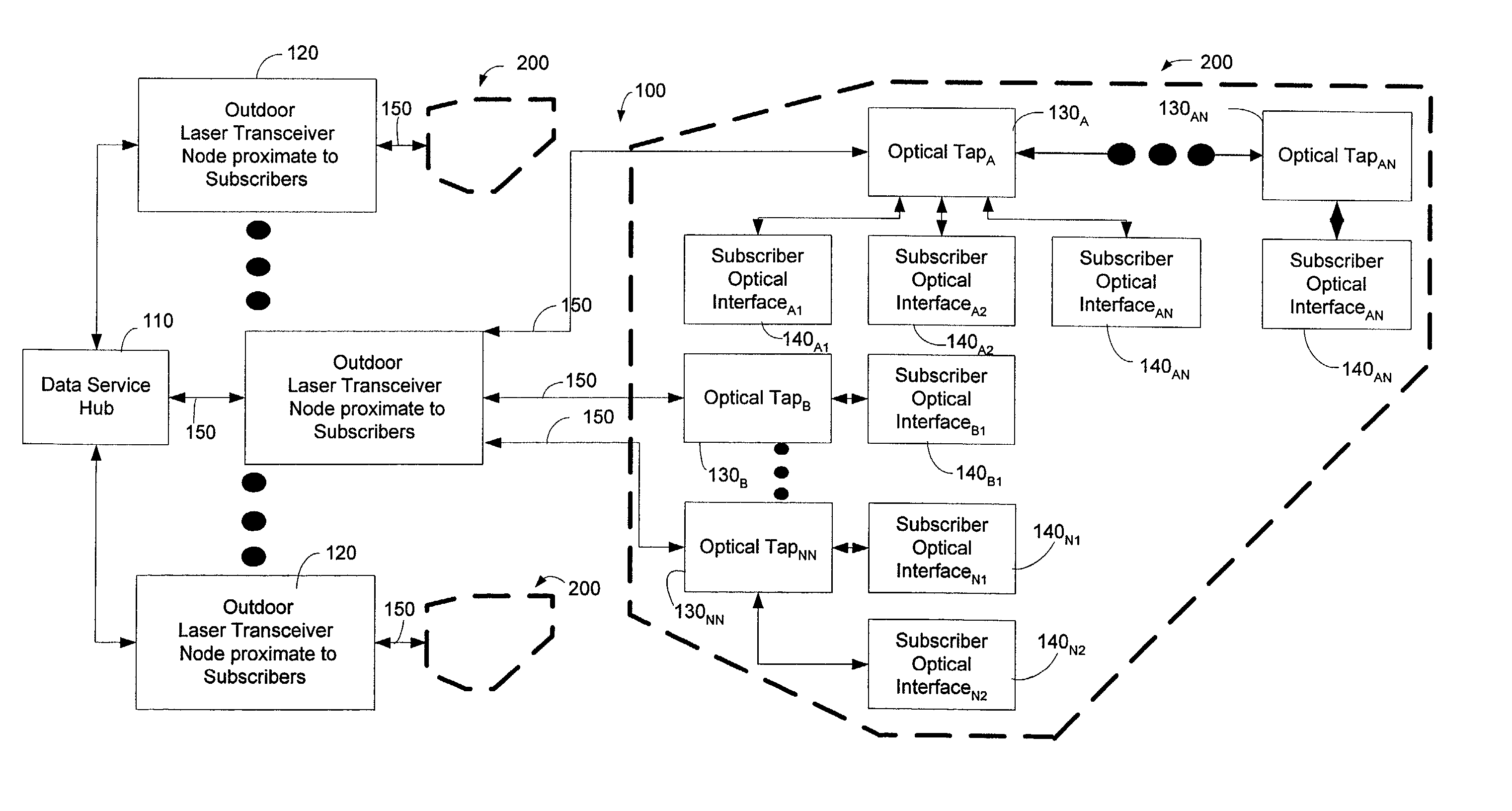

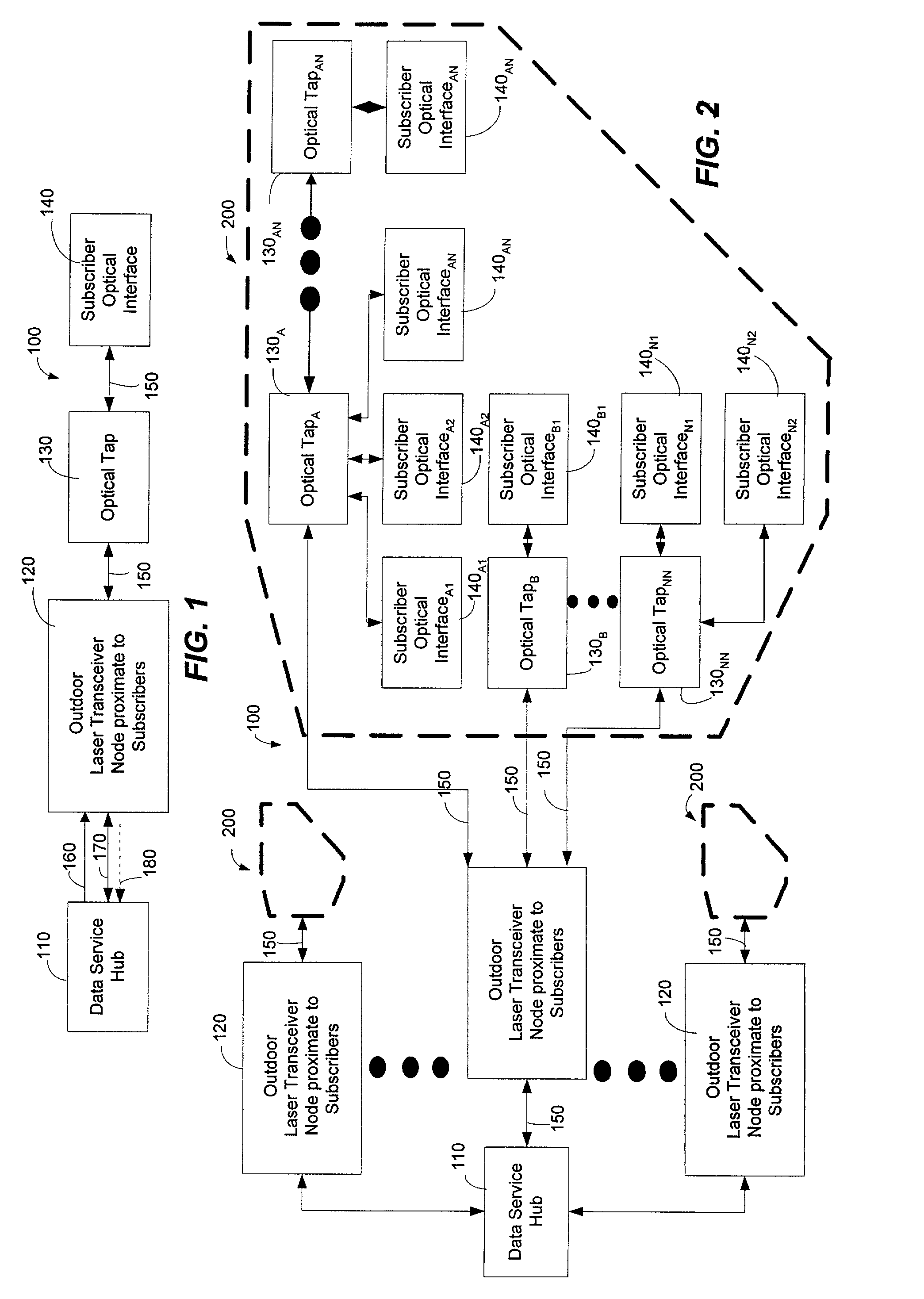

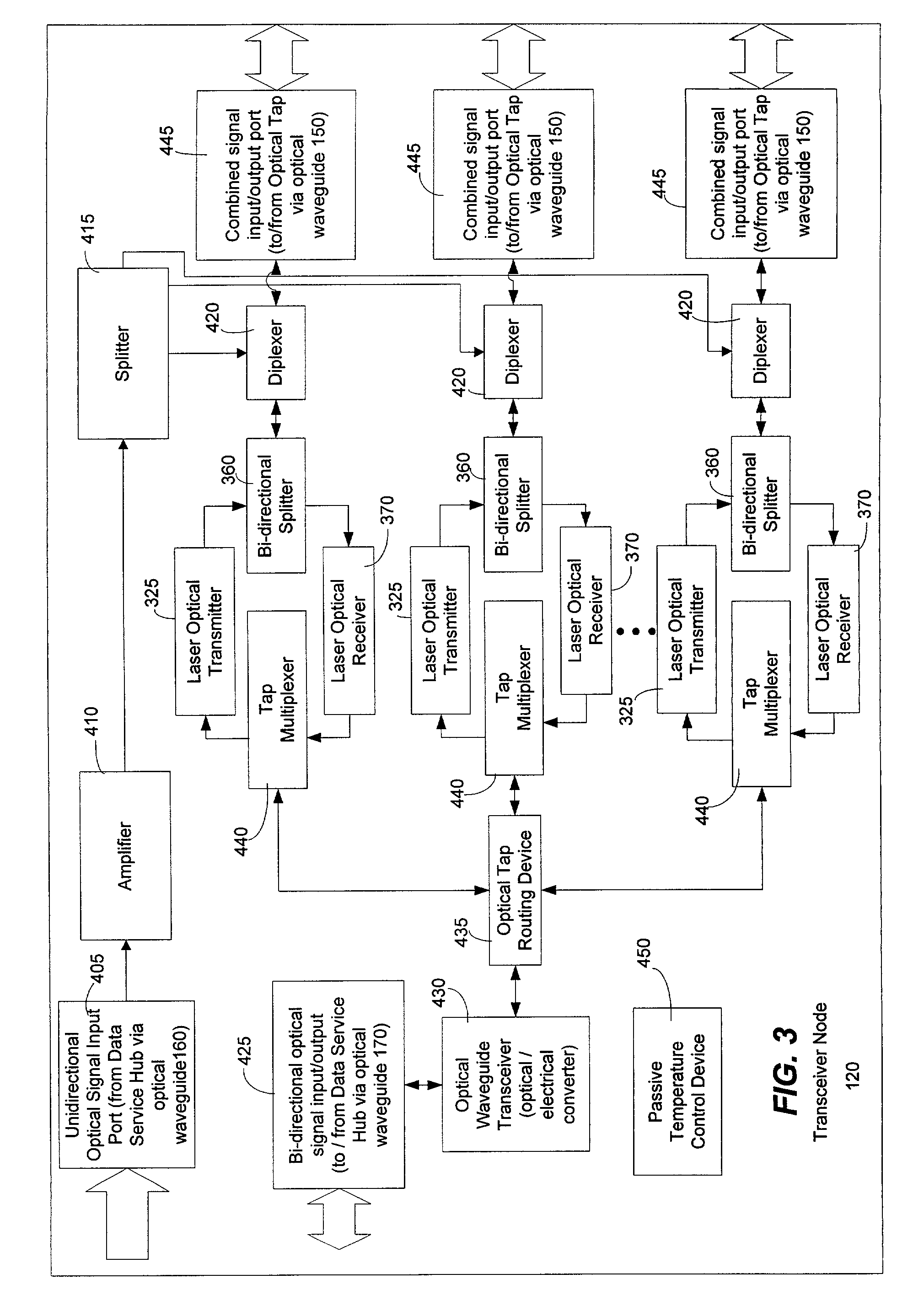

Method and system for processing upstream packets of an optical network

InactiveUS7085281B2Avoid collisionReduce performanceMultiplex system selection arrangementsTime-division optical multiplex systemsGeneral purposeTransceiver

A protocol for an optical network can control the time at which subscriber optical interfaces of an optical network are permitted to transmit data to a transceiver node. The protocol can prevent collisions of upstream transmissions between the subscriber optical interfaces of a particular subscriber group. With the protocol, a transceiver node close to the subscriber can allocate additional or reduced upstream bandwidth based upon the demand of one or more subscribers. That is, a transceiver node close to a subscriber can monitor (or police) and adjust a subscriber's upstream bandwidth on a subscription basis or on an as-needed basis. The protocol can account for aggregates of packets rather than individual packets. By performing calculation on aggregates of packets, the algorithm can execute less frequently which, in turn, permits its implementation in lower performance and lower cost devices, such as software executing in a general purpose microprocessor.

Owner:ARRIS ENTERPRISES LLC

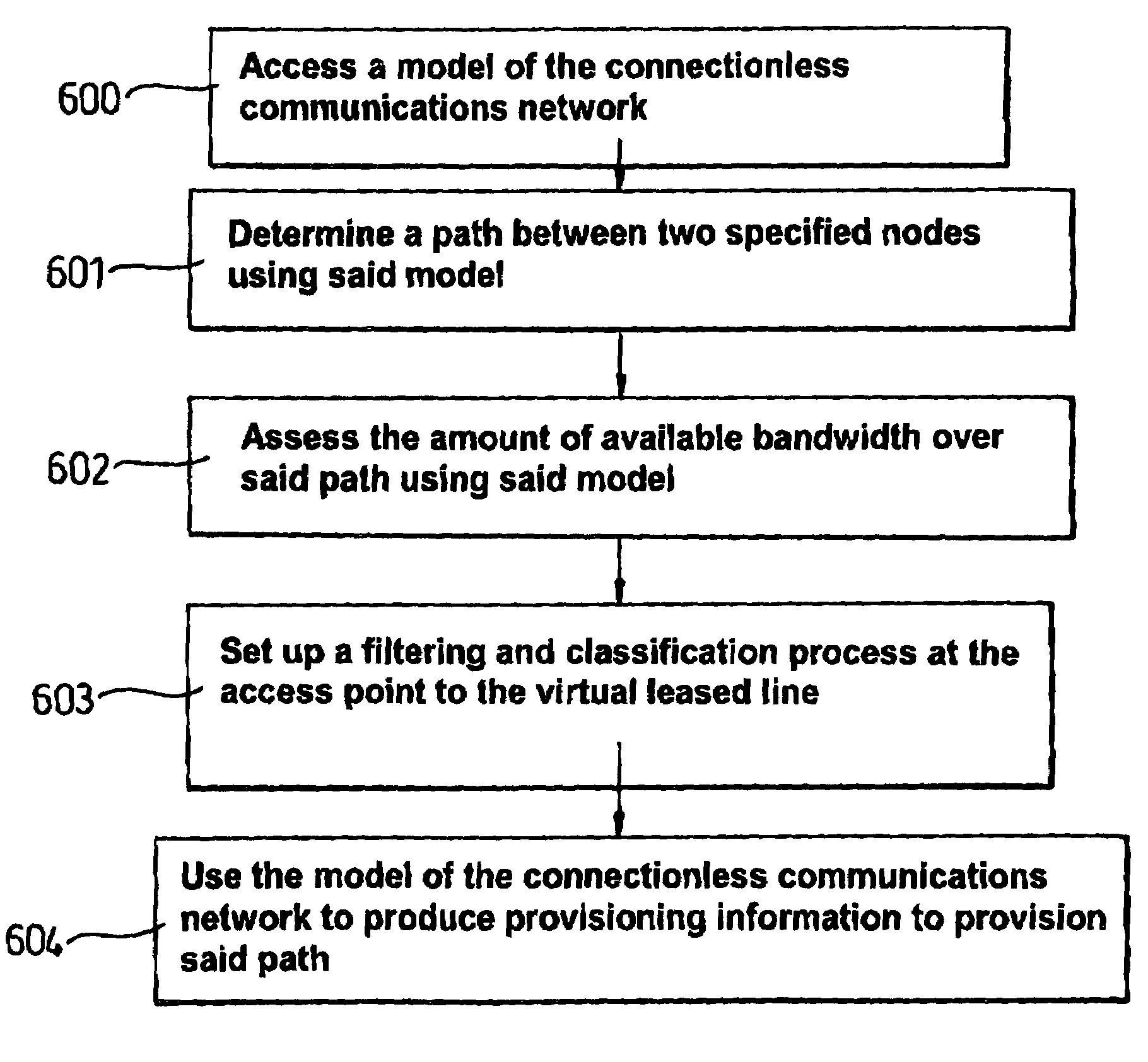

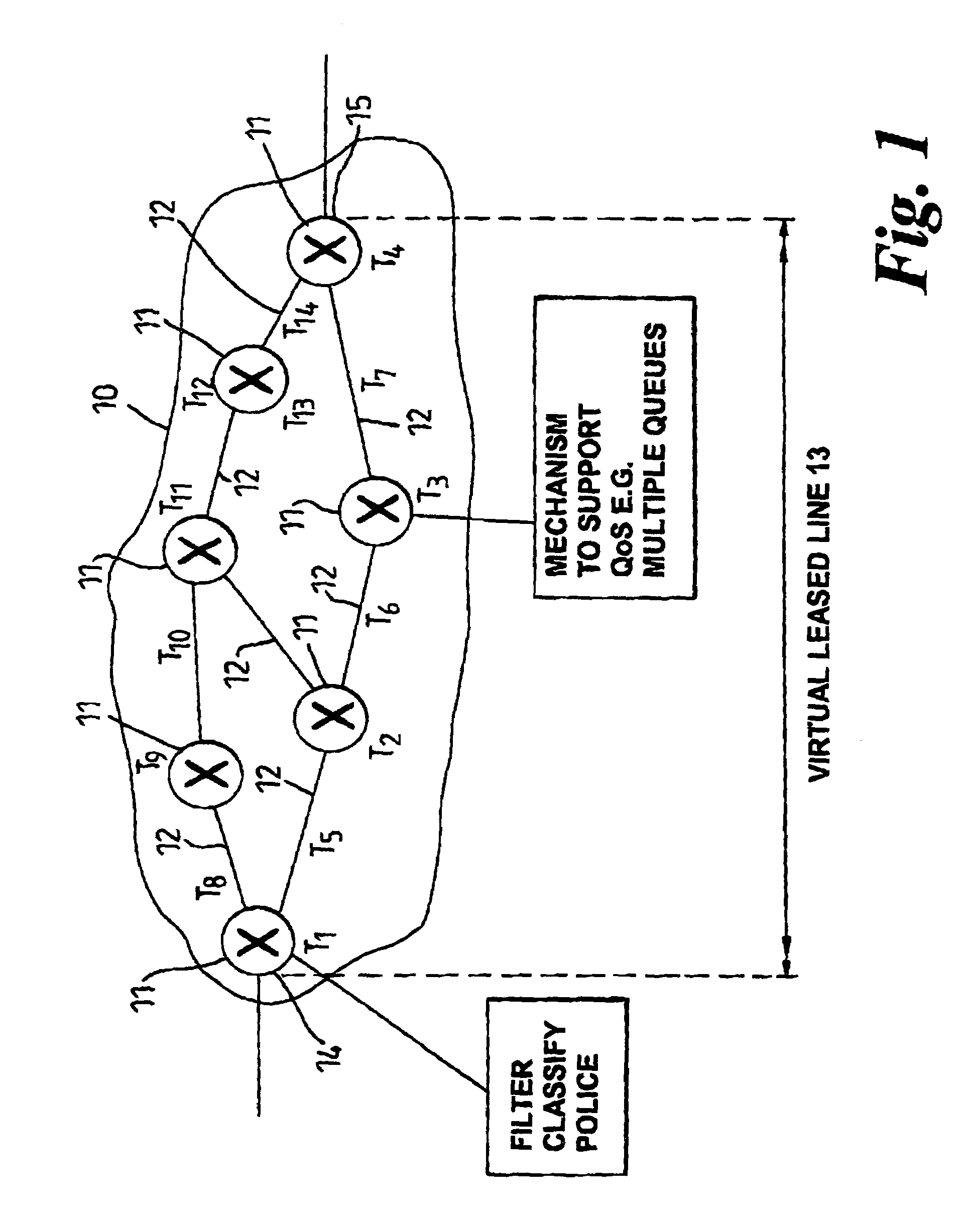

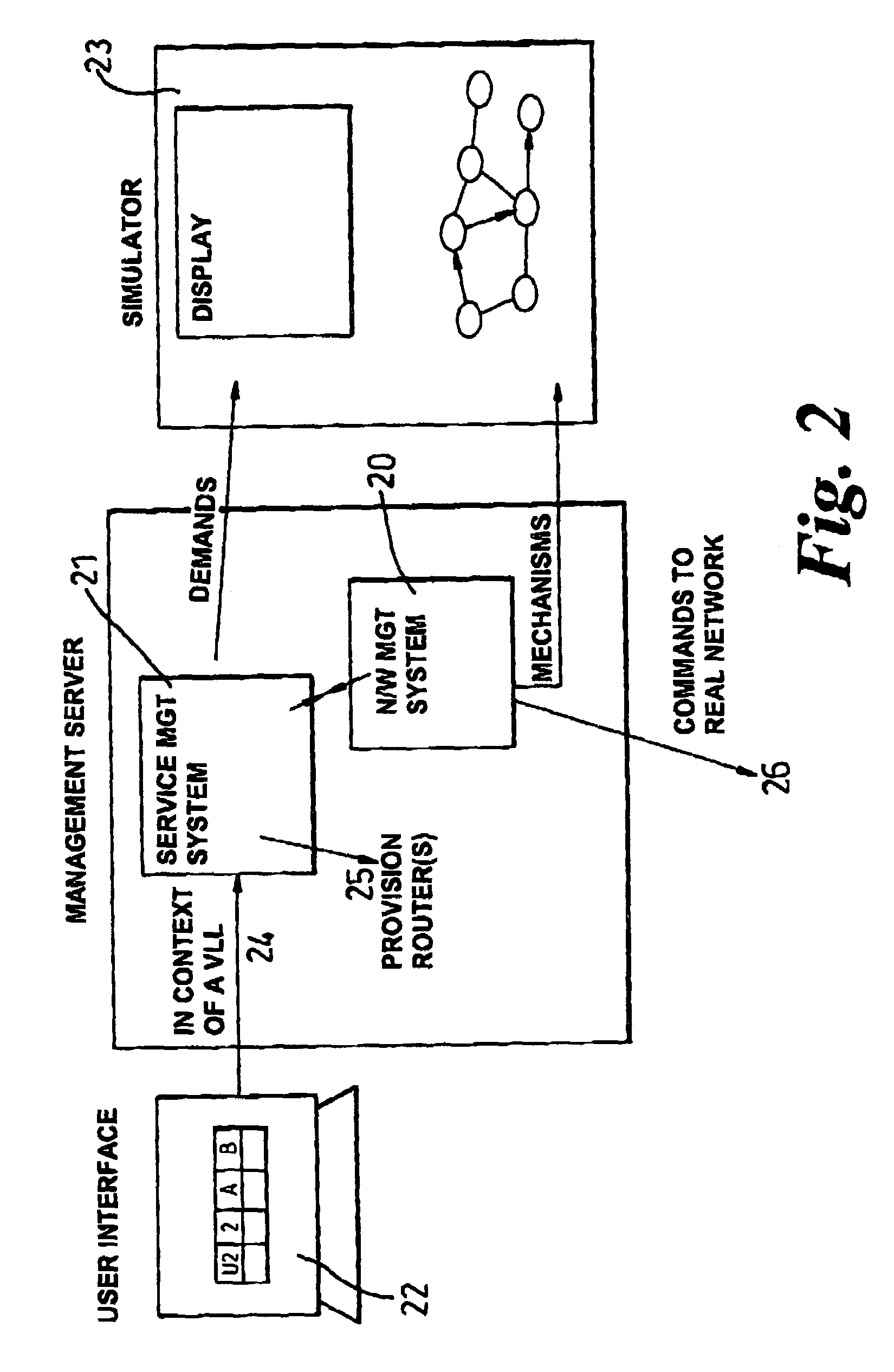

Method of provisioning a route in a connectionless communications network such that a guaranteed quality of service is provided

InactiveUS6959335B1Guarantee bandwidth and quality of serviceEasily manageMultiple digital computer combinationsStore-and-forward switching systemsTraffic volumeDifferentiated service

Leased lines are provisioned over an internet protocol communications network by providing bandwidth tallies at each node and link in the network. Traffic to be sent over the leased line is labelled as high priority at the entry point to the leased line. Differentiated services mechanisms are set up at each node in the route to allow high priority traffic on the leased line to be processed before other traffic. A customer requests a leased line between two points and with a specified bandwidth and quality of service. Bandwidth tallies are checked along the chosen path to ensure that the requested bandwidth is available. As well as this checks are made to ensure that no more than a threshold level of high priority traffic will be present at any one node or link. Once the network is configured such that sufficient bandwidth is available and high priority traffic levels will not exceed the threshold level, the leased line is available for use.

Owner:CIENA

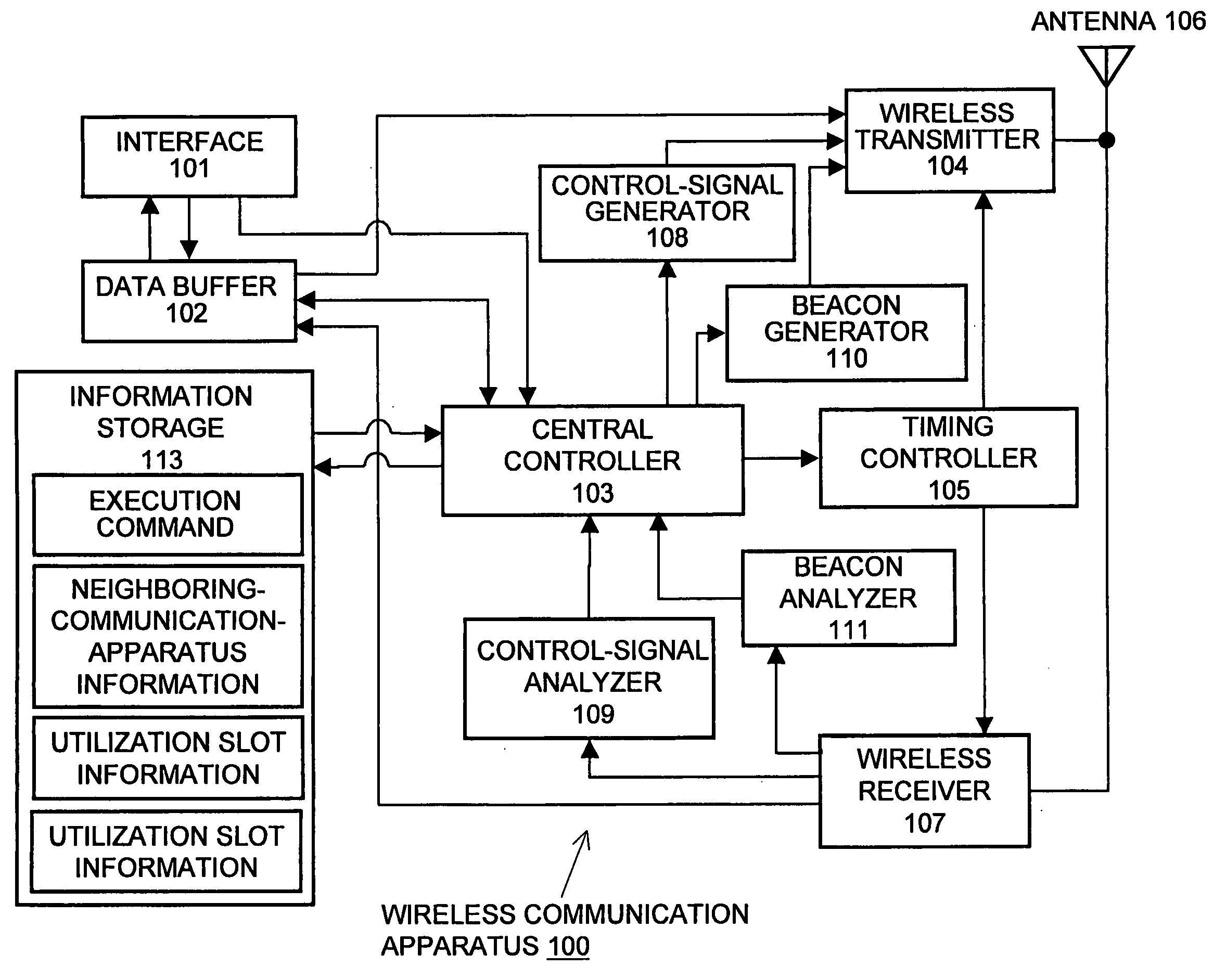

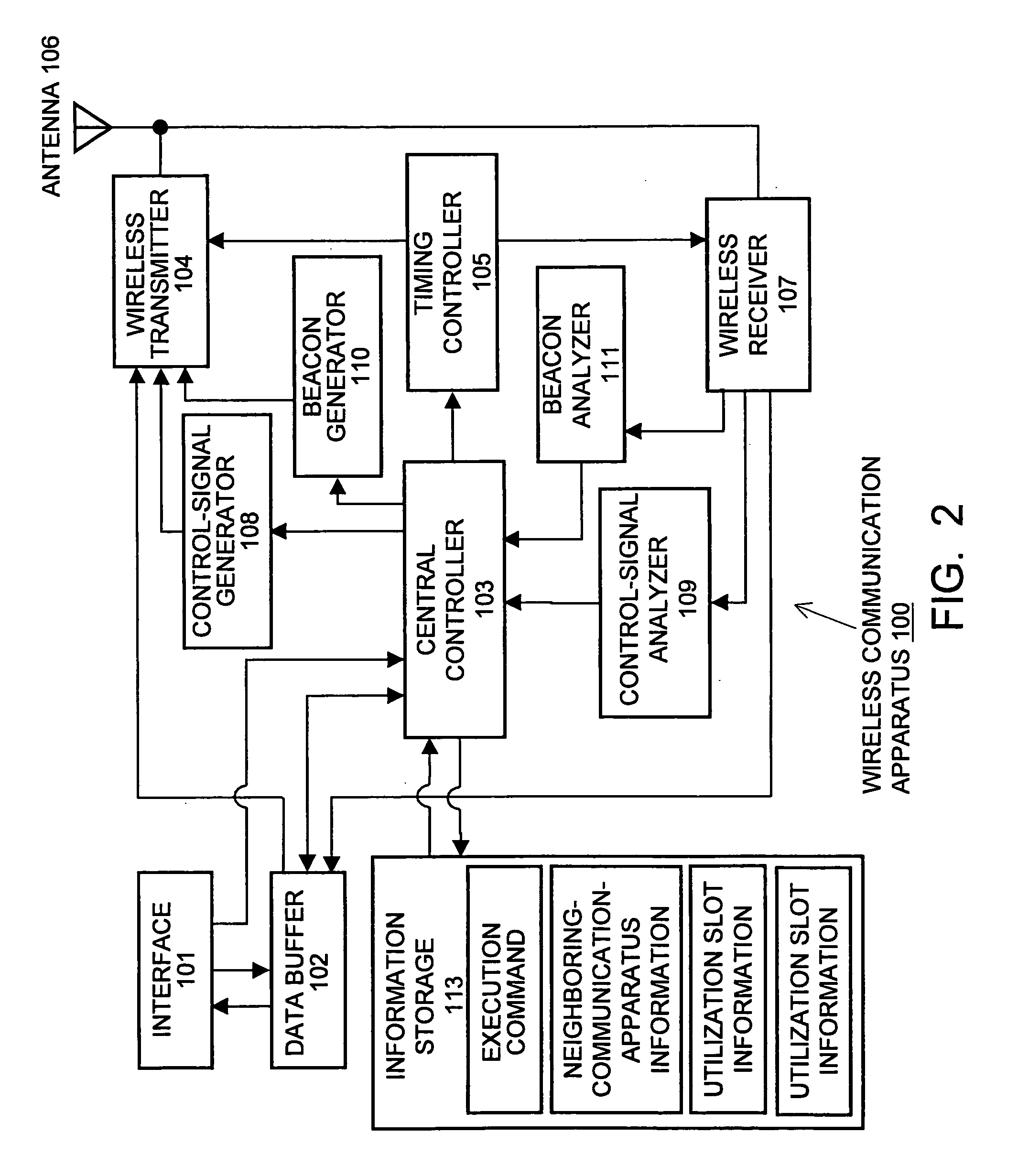

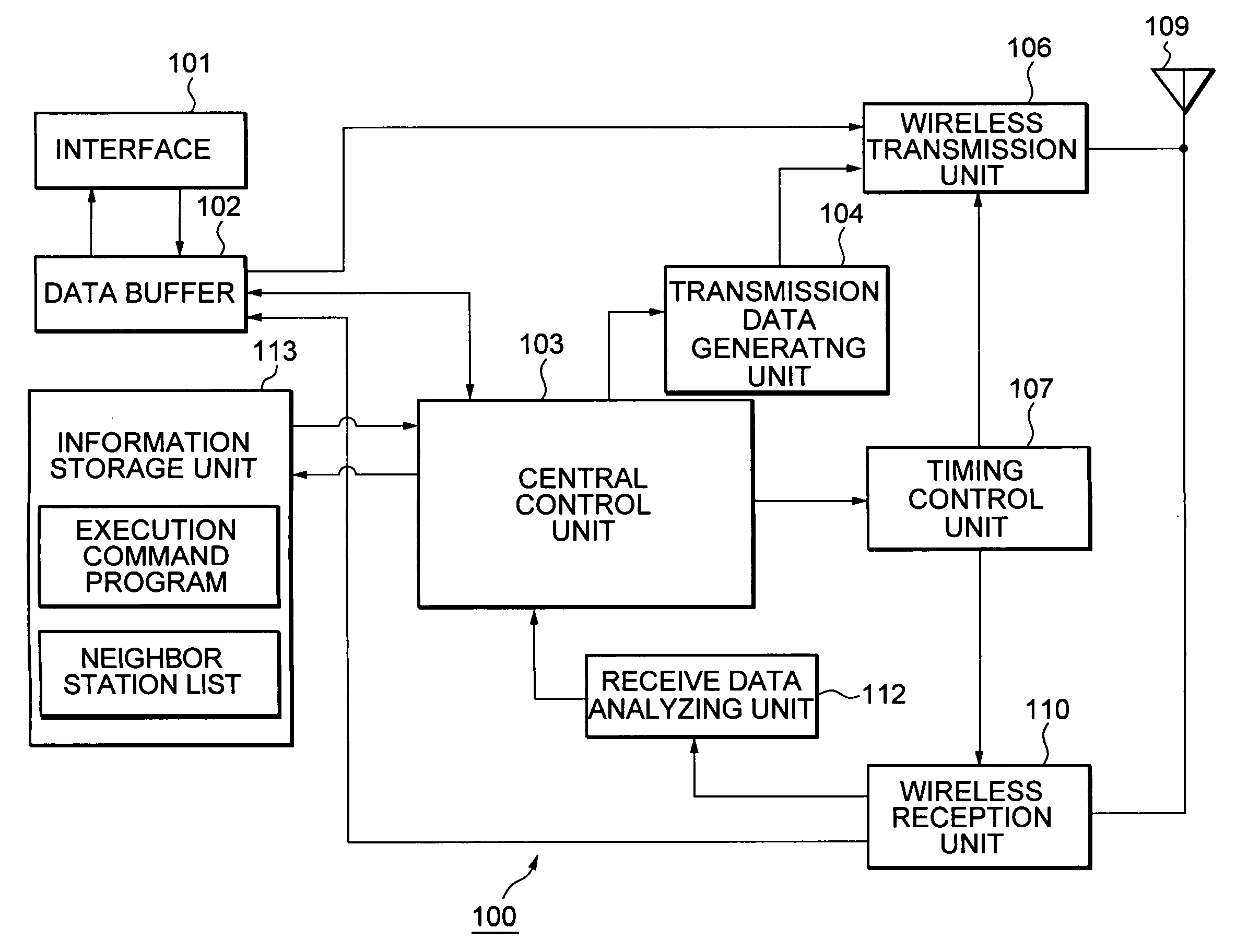

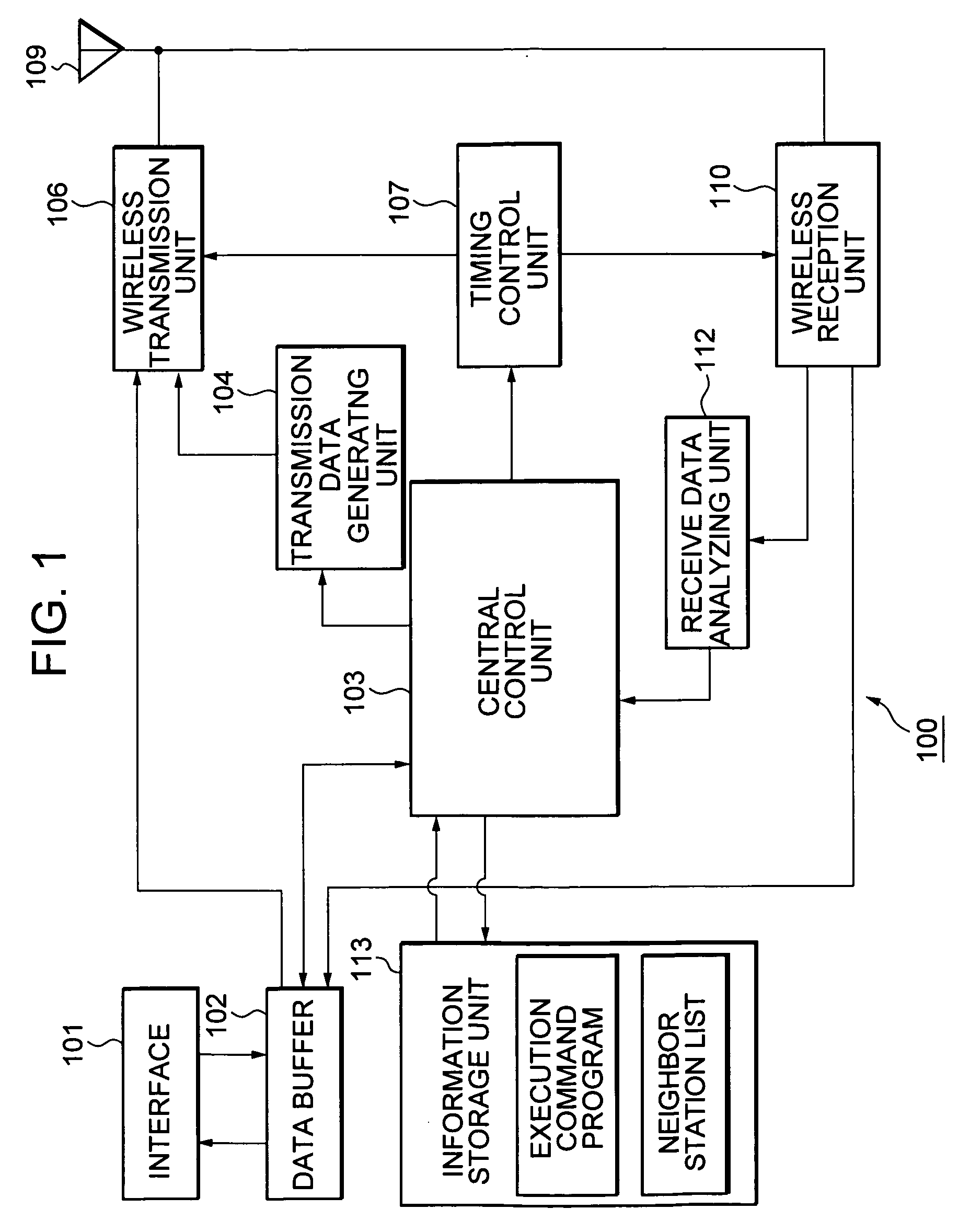

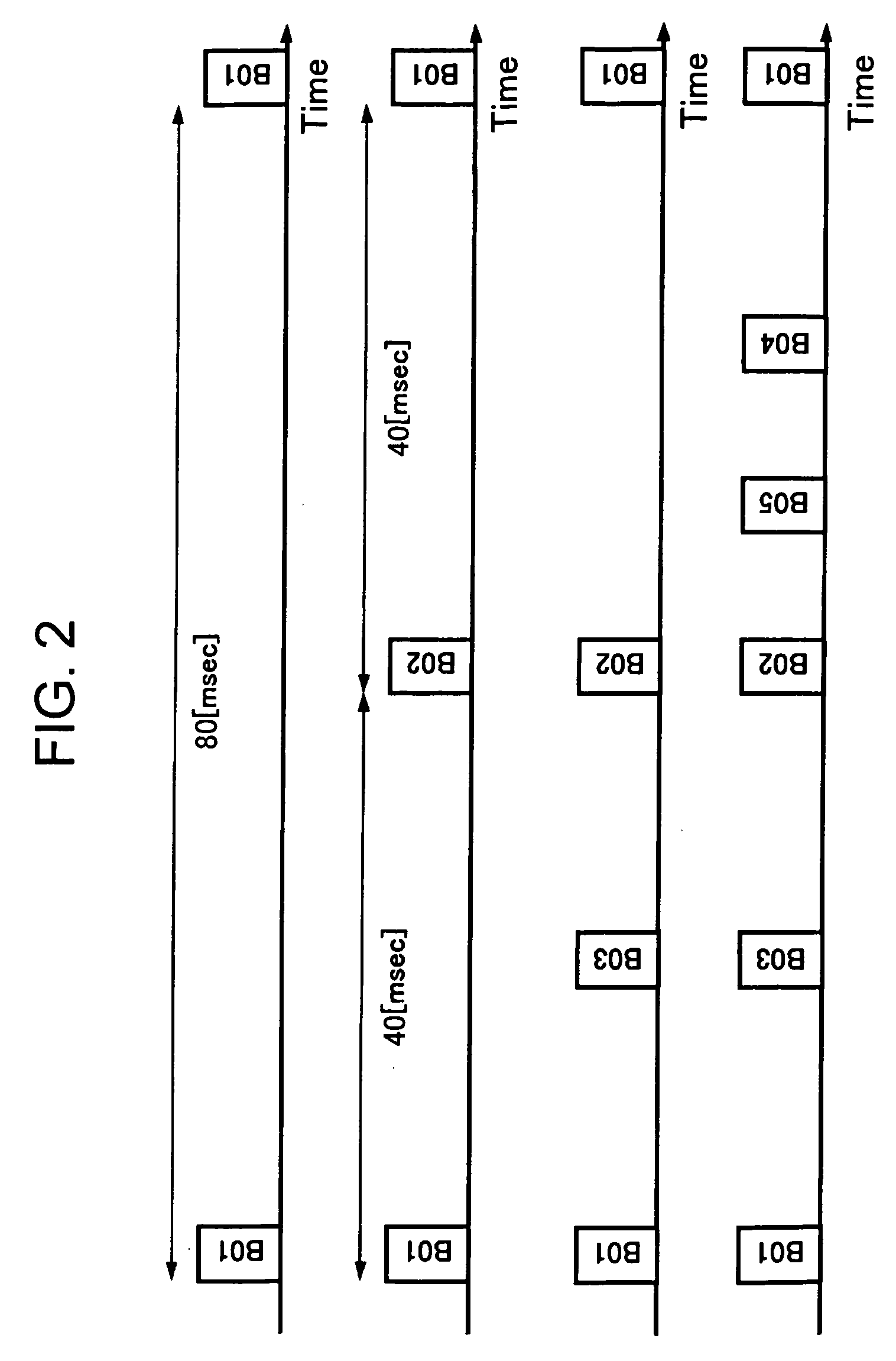

Radio communication system, radio communication device, radio communication method, and computer program

InactiveUS20050096031A1Guaranteed normal transmissionGuaranteed bandwidthSynchronisation arrangementBroadband local area networksCommunications systemComputer science

A wireless communication network that forms an ad-hoc network without the arrangement of a controlling station sets a period that a communication apparatus can utilize with priority and performs isochronous communication in the period as required. When isochronous communication has not been performed or after isochronous communication has finished in the priority utilization period, other communication apparatuses perform arbitrary communication. When another communication is performed in a communication apparatus's own priority utilization period, the start of isochronous communication is temporarily delayed. In an ad-hoc communication environment, data having a real-time characteristic, such as AV content, can be efficiently transmitted through the isochronous communication.

Owner:SONY CORP

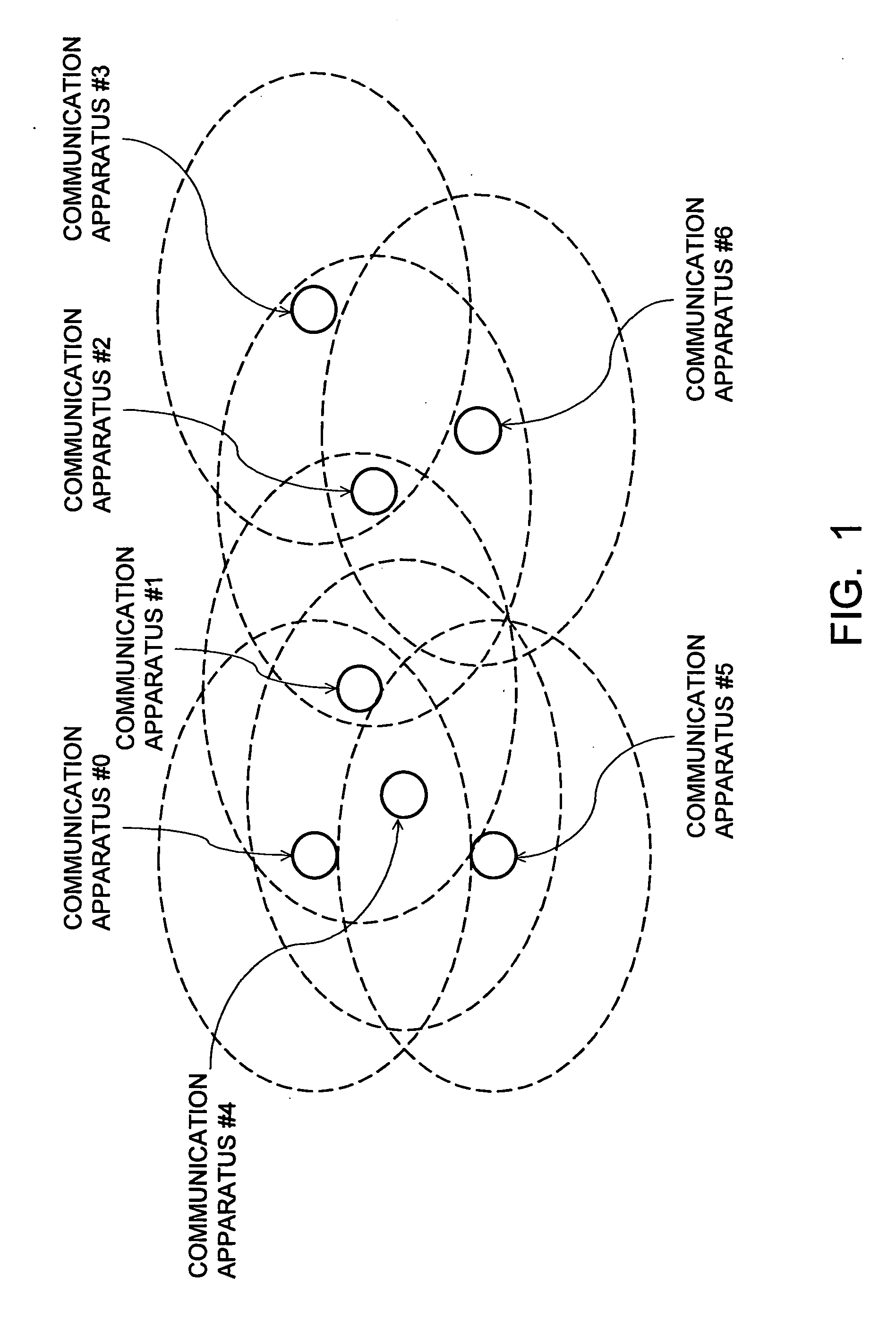

Wireless communication system, wireless communication apparatus, wireless communication method and computer program

ActiveUS20050243782A1Avoid mutual interferenceGuaranteed bandwidthNetwork traffic/resource managementAssess restrictionCommunications systemTime zone

The present invention eliminates a transmission-waiting operation which is unnecessary while accommodating a prioritized traffic. Although a communication station enters a transmission-disallowed state in response to detection of a signal addressed to another station in accordance with medium access control based on CSMA, if the communication station receives a frame transmitted with priority to a local station during the transmission waiting, it cancels the transmission-disallowed state, sends back a frame responding the frame transmitted with priority and effectively operates a transmission prioritized period. The communication station starts a search procedure to perform a processing of evading duplication of the transmission prioritized periods if it judges a possibility of a problem occurring in a time zone in which reception with priority is possible.

Owner:REDWOOD TECHNOLOGIES LLC

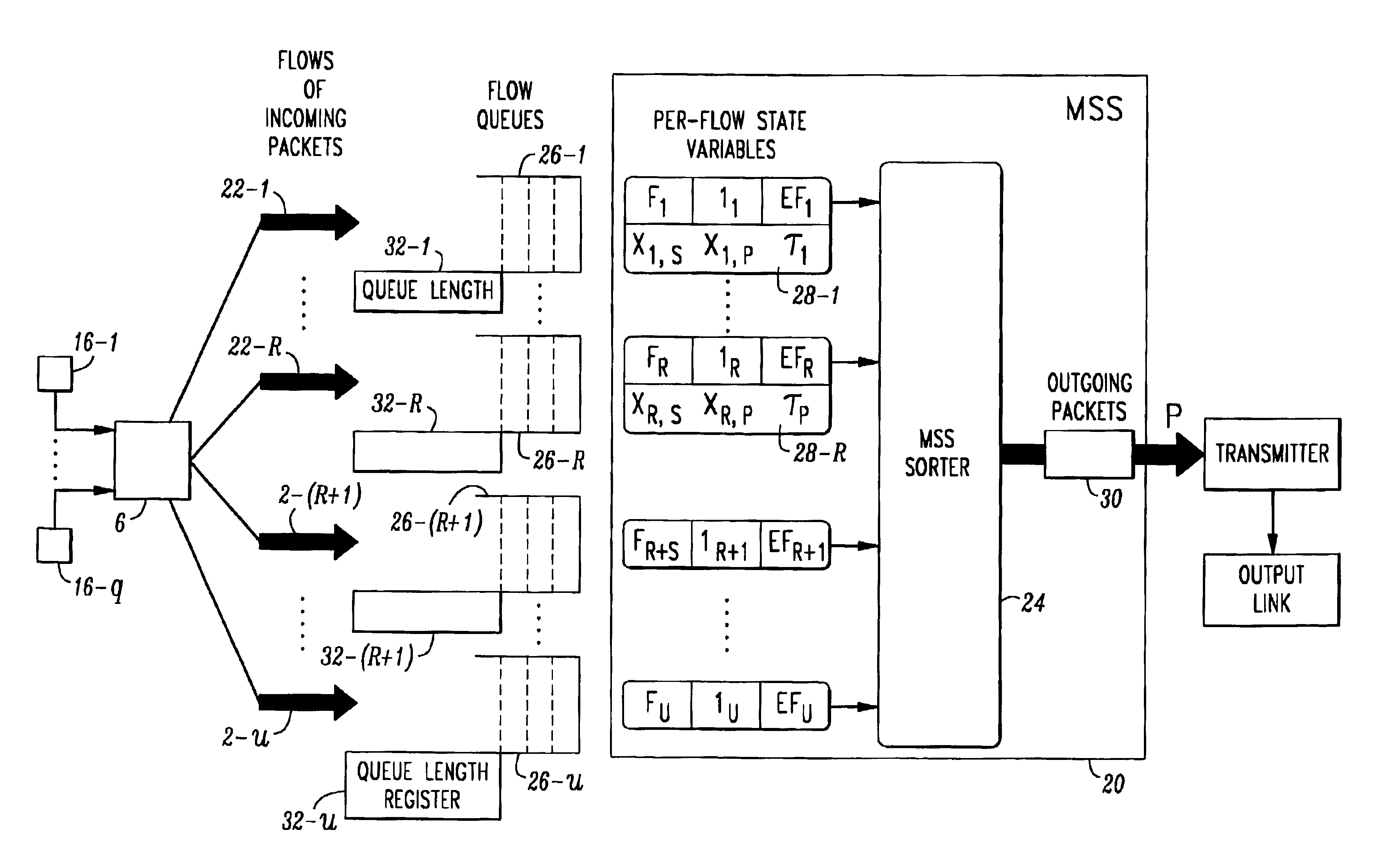

Method and apparatus for guaranteeing data transfer rates and enforcing conformance with traffic profiles in a packet network

InactiveUS6937561B2Improve fairnessImprove latencyError preventionTransmission systemsData transmissionTraffic profile

A monolithic shaper-scheduler is used for the efficient integration of scheduling and dual-leaky-bucket shaping in a single structure. By making the evolution of the timestamps of the backlogged flows independent of their shaping parameters, the performance drawbacks of prior-art shaping architectures are overcome. The monolithic shaper-scheduler tests each packet flow as being either “virtually compliant” or “virtually incompliant” when a new packet arrives to the head of its queue. The test for “virtual compliance” is based on traffic profiles associated with the flows. The result of the test is used in conjunction with the timestamp and eligibility flag of each packet flow to efficiently schedule the transmission of packets.

Owner:INTEL CORP

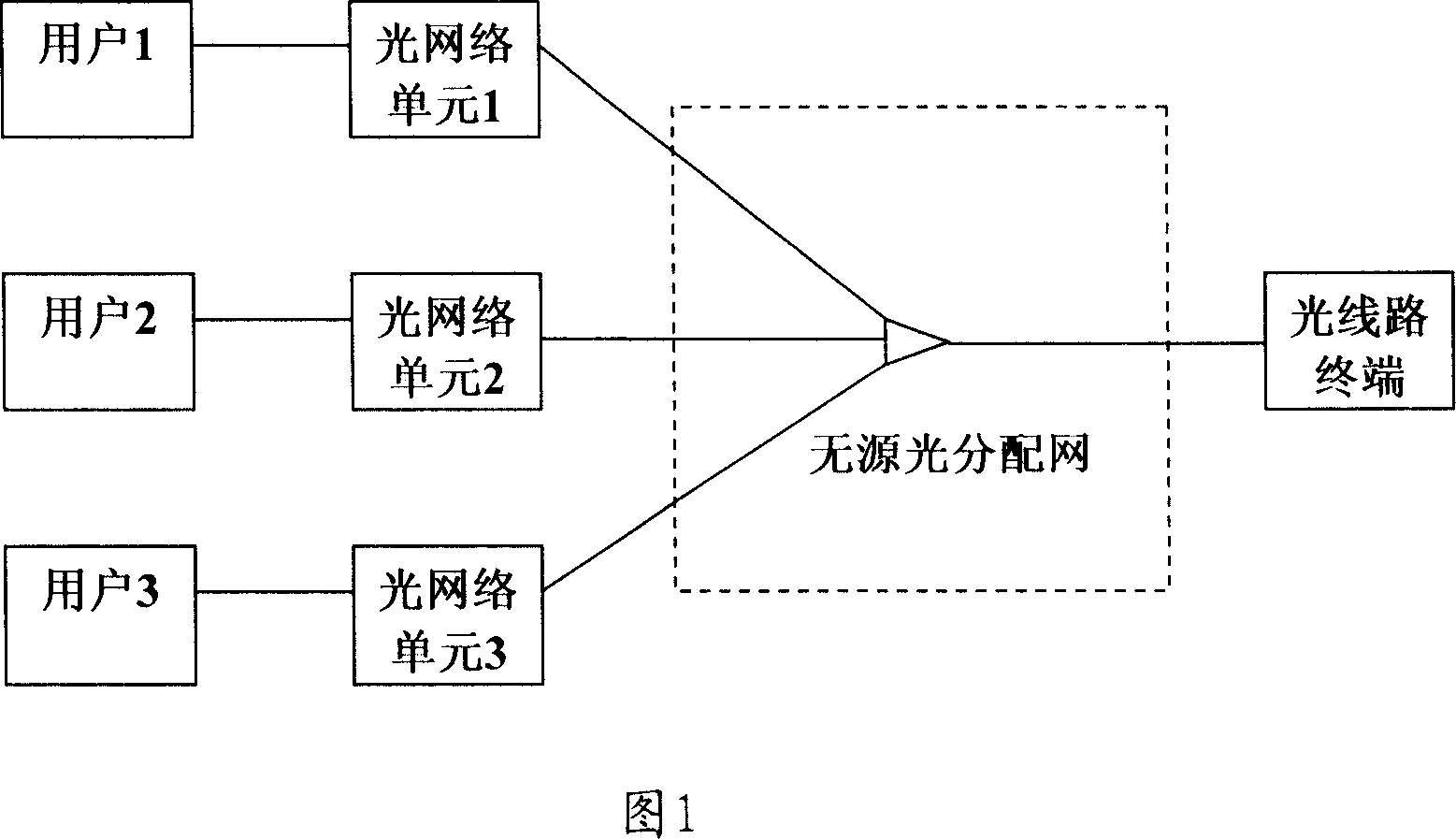

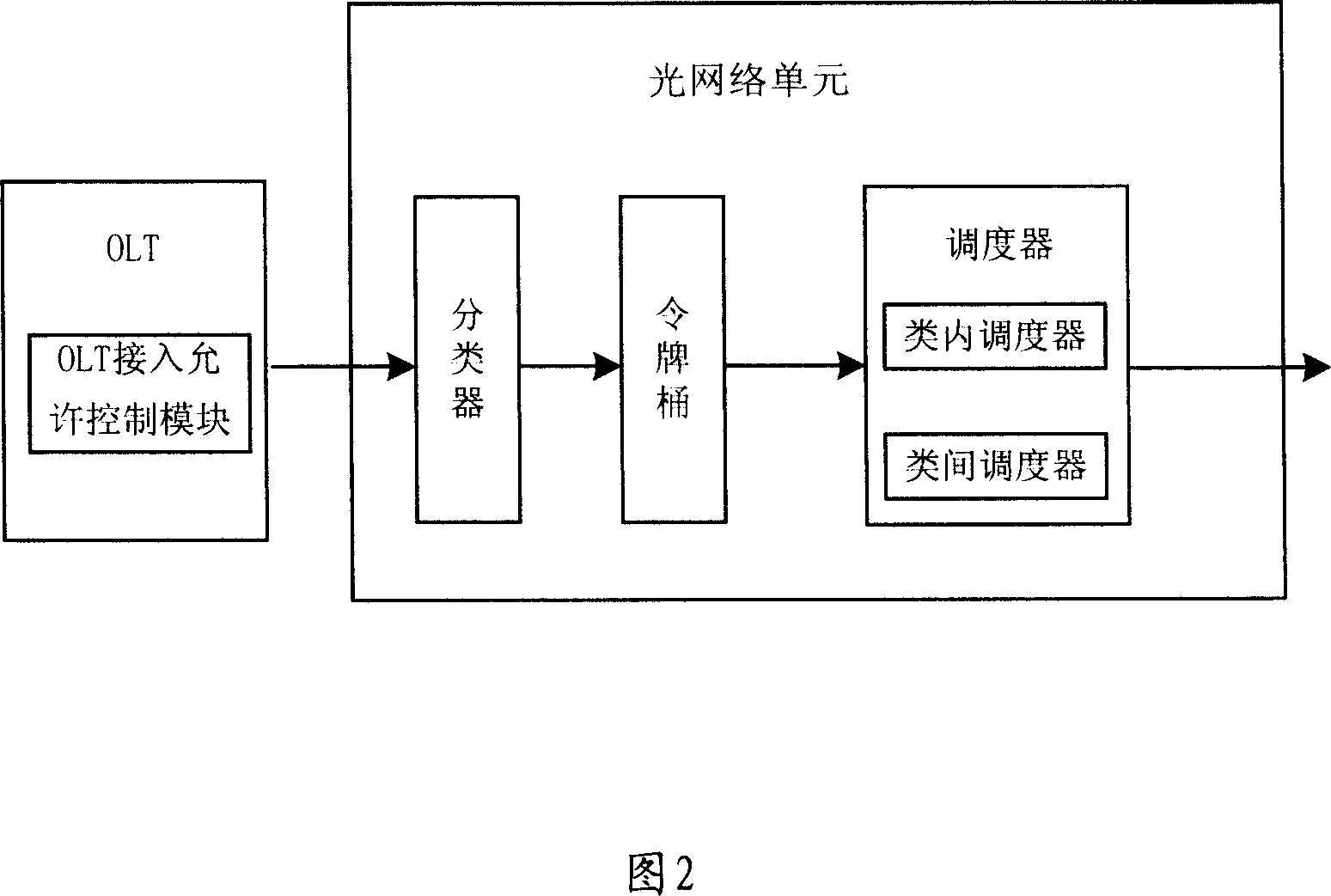

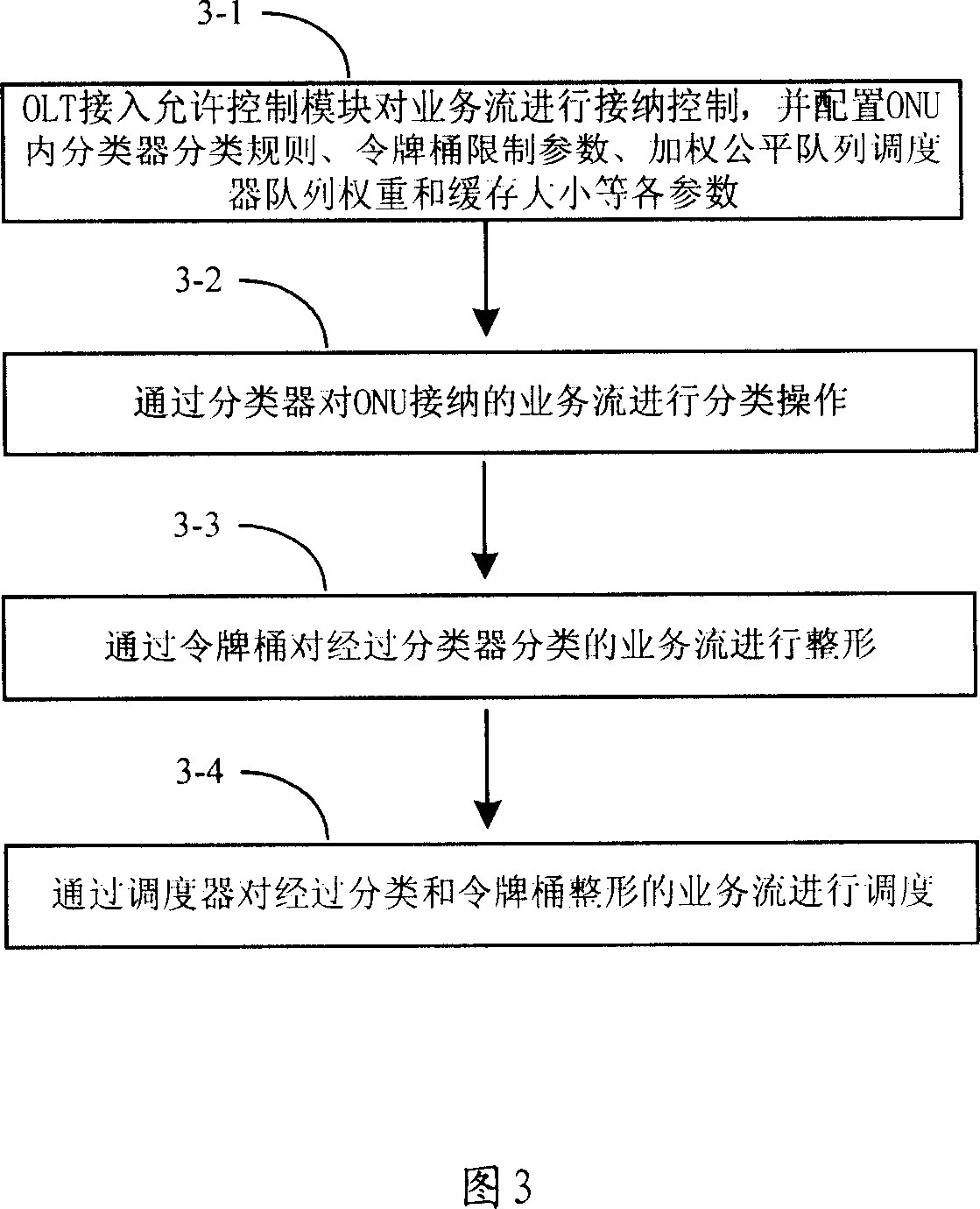

System and method for bandwidth allocation in the remote device of the passive optical network

InactiveCN101035389AGuaranteed bandwidthGuaranteed demandMultiplex system selection arrangementsStore-and-forward switching systemsDynamic bandwidth allocationComputer network

The invention provides a system and method for making bandwidth allocation in remote equipment in passive optical network (PON). And the system mainly comprises: OLT (optical line terminal) for making receiving control on the service stream applied by remote equipment in the PON and configuring the service stream classifying, shaping and scheduling parameters in the remote equipment by signaling control; remote equipment for classifying, shaping and scheduling the received service stream according to the OLT-configured parameters and allocating corresponding bandwidth to the received service stream and outputting in the scheduling sequence. And it can assure bandwidth and delay demand of single service stream in remote equipment in PON and eliminates mutual influence between the service streams of packets in the same or different service grades.

Owner:SHANGHAI JIAO TONG UNIV +1

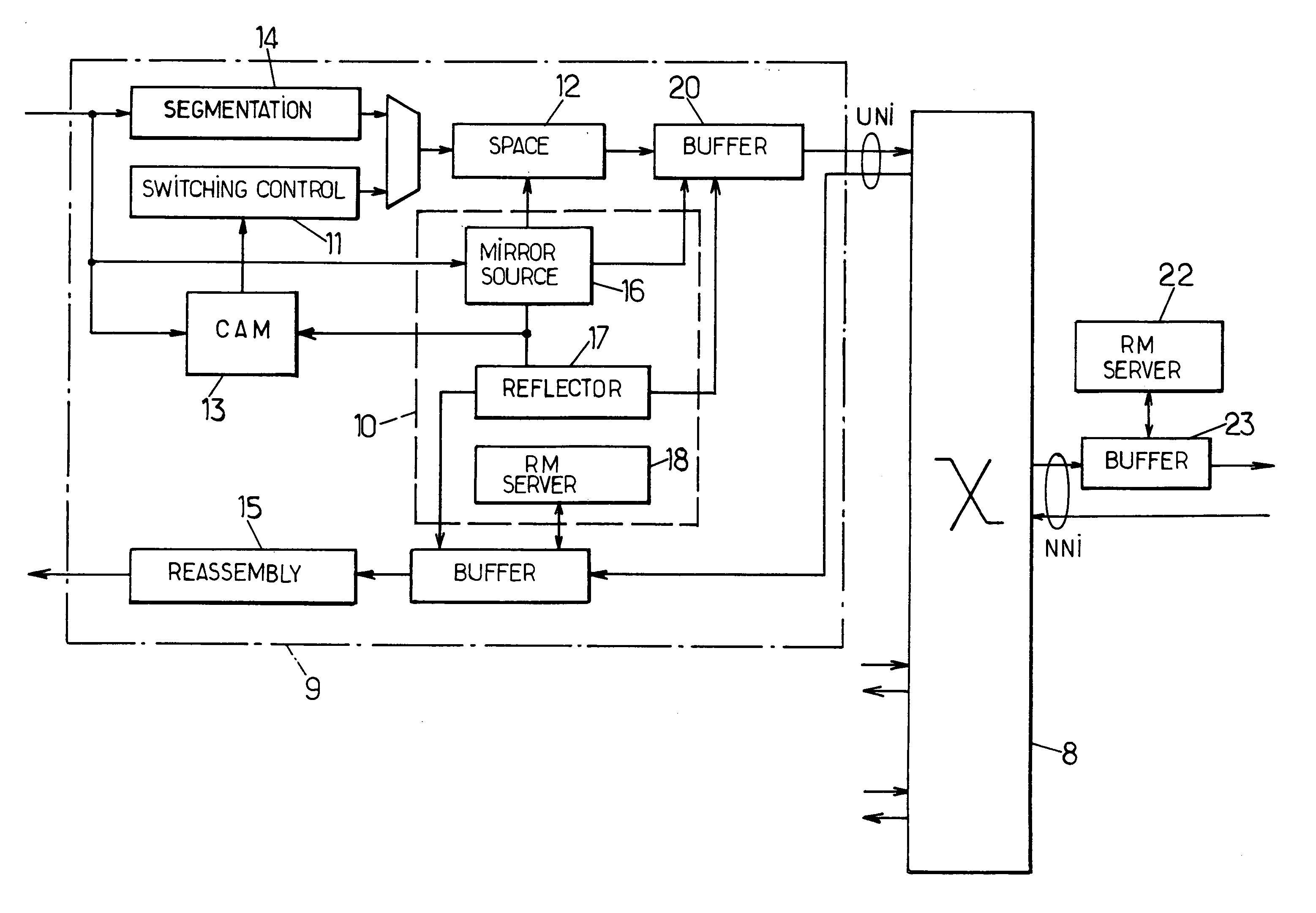

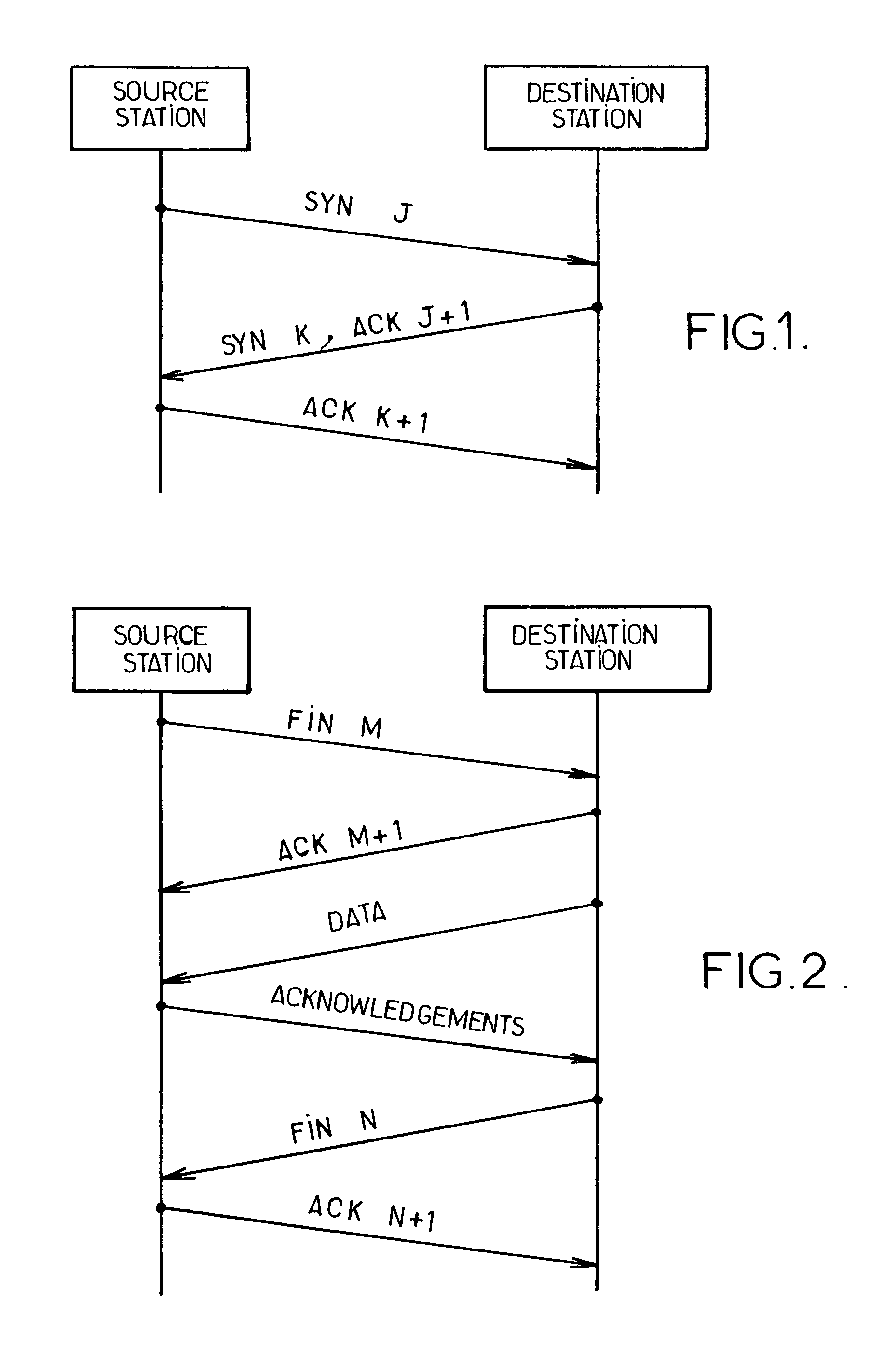

Method of transmitting data flows over an ATM network and device for implementing the method

InactiveUS6967927B1Quality assuranceGuaranteed bandwidthError preventionTransmission systemsData streamResource management

Data flows generated in accordance with a connected mode protocol and formatted in packets in accordance with a non-connected mode protocol are transmitted via an ATM network. A set of virtual circuits of the ATM network is assigned in advance to each pair of access points of the ATM network, without allocating transmission rate resources to them. When an access point receives a request, formulated in accordance with the connected mode protocol, to establish a connection between a source address and a destination address accessible via another access point of the ATM network, an available virtual circuit is selected from the set assigned to this pair of access points and a resource management cell containing a request to activate the virtual circuit is transmitted on the selected virtual circuit. When this cell is received by a node of the ATM network located on the selected virtual circuit, a transmission rate resource is allocated, if it is available, to the selected virtual circuit.

Owner:FRANCE TELECOM SA

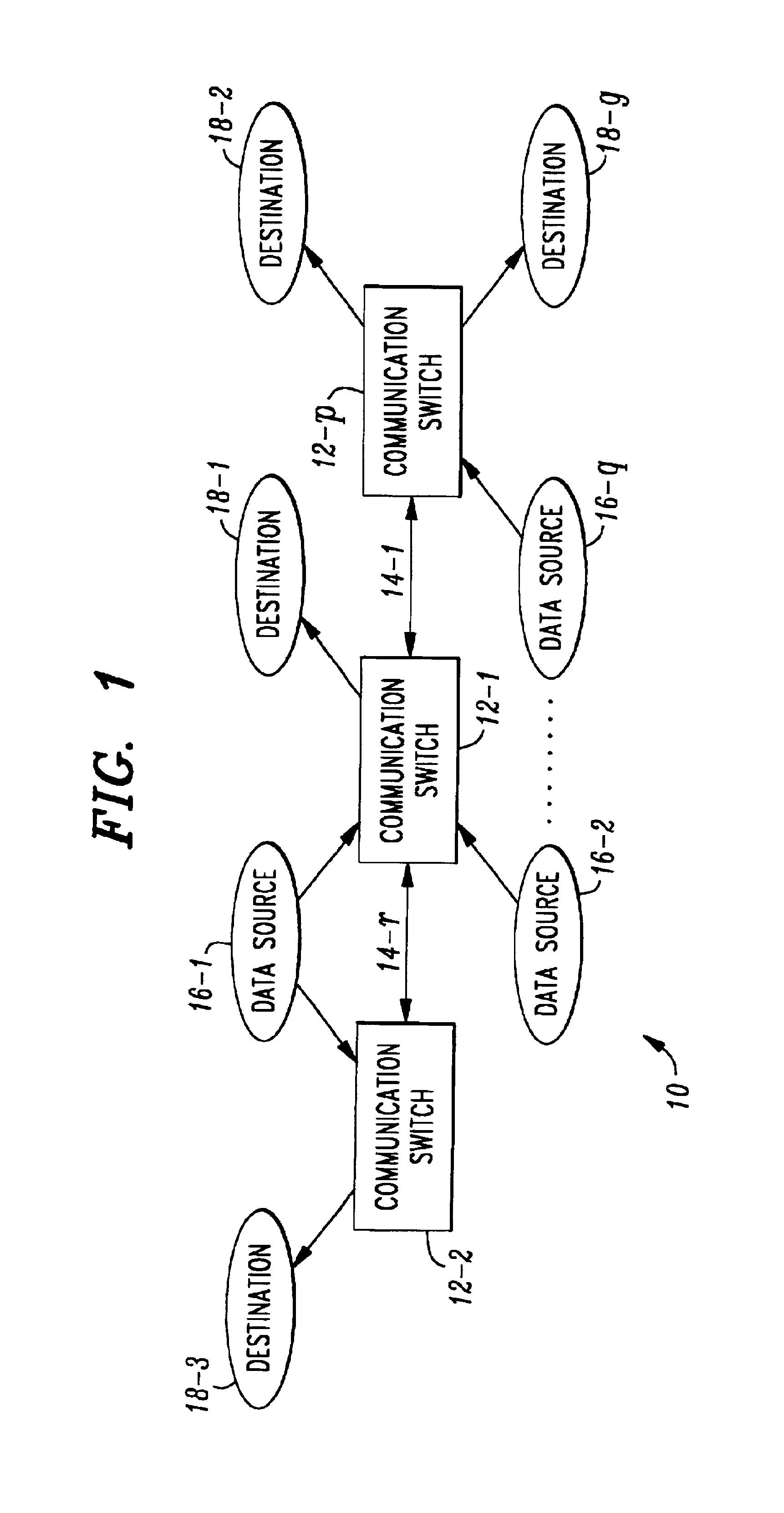

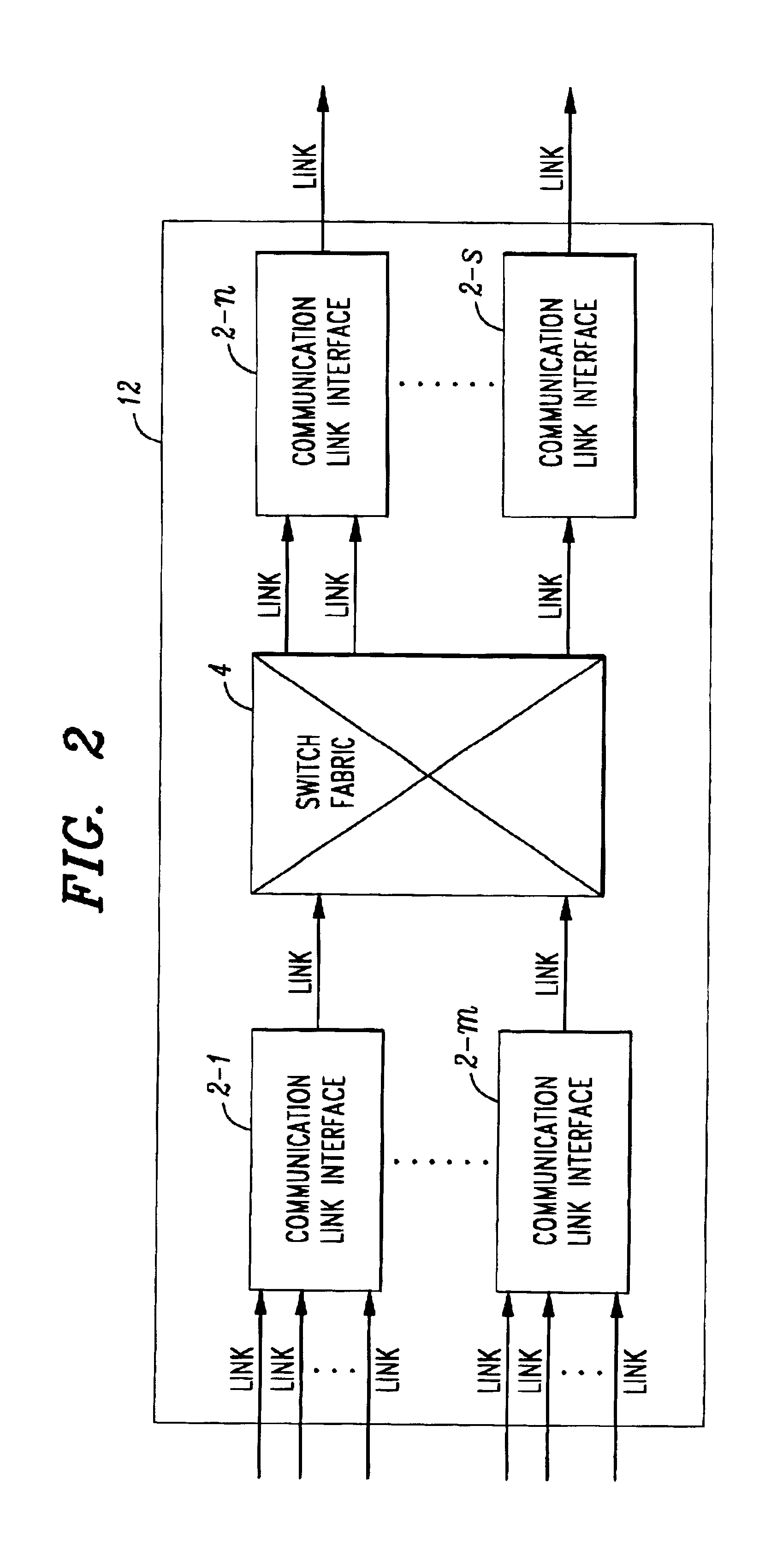

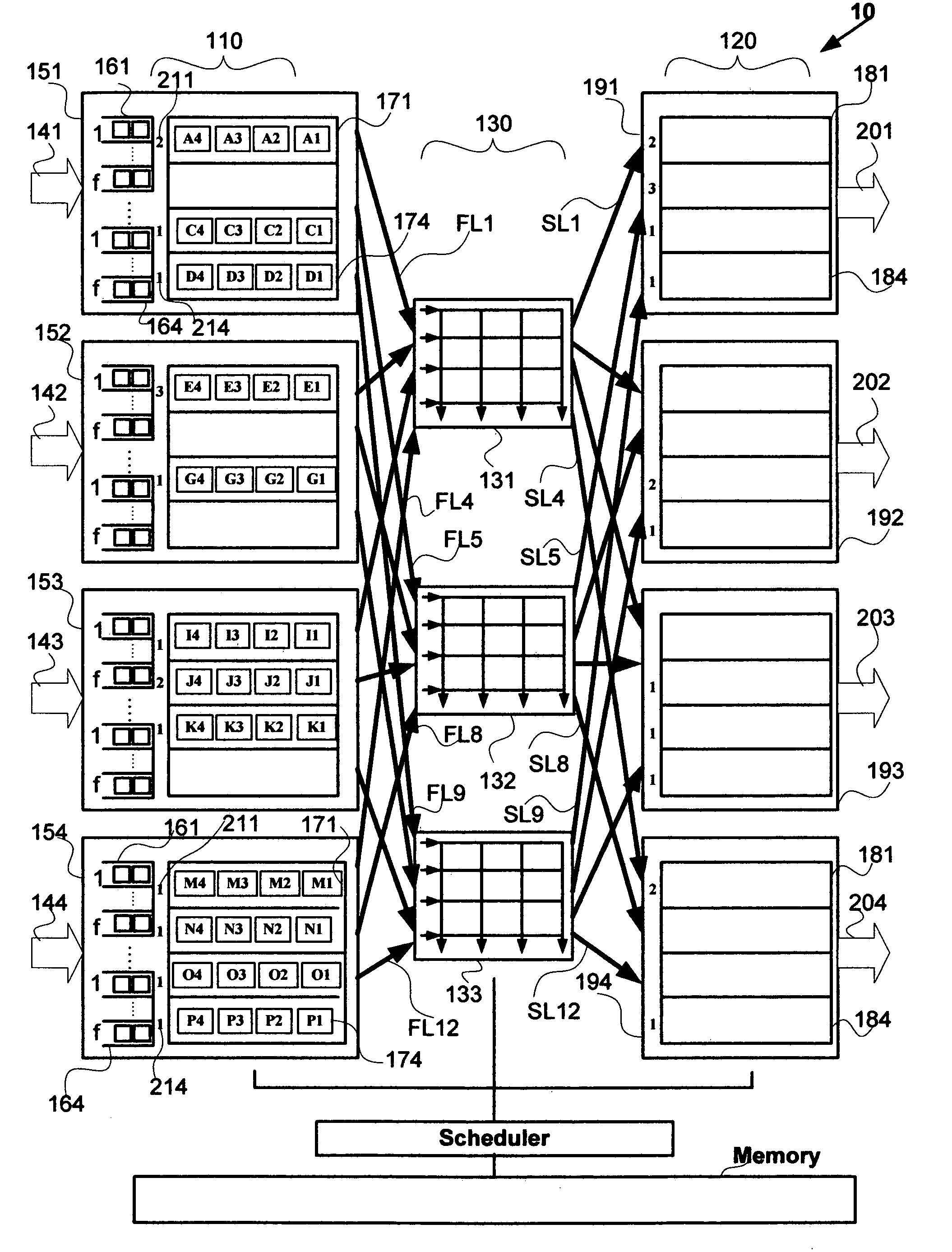

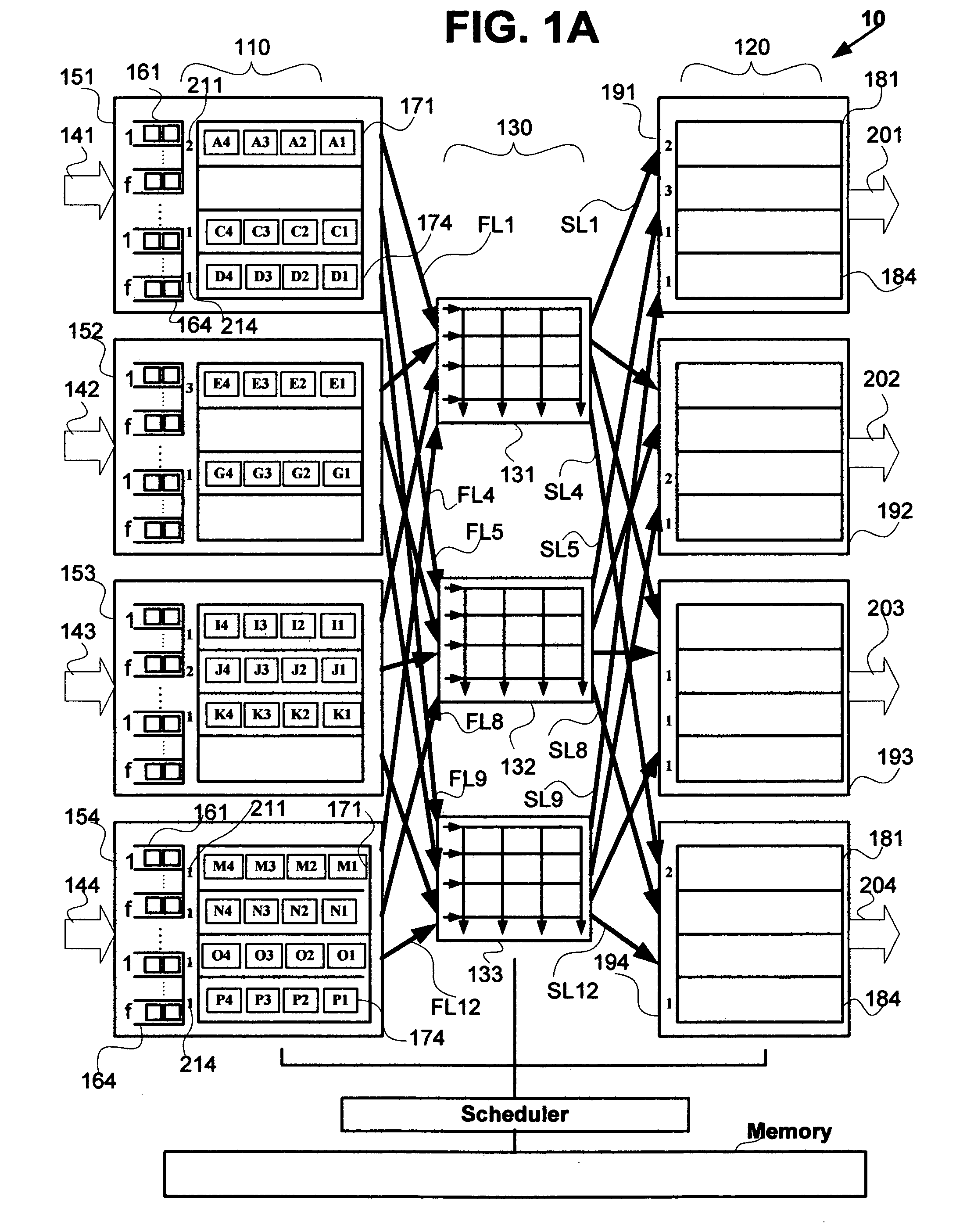

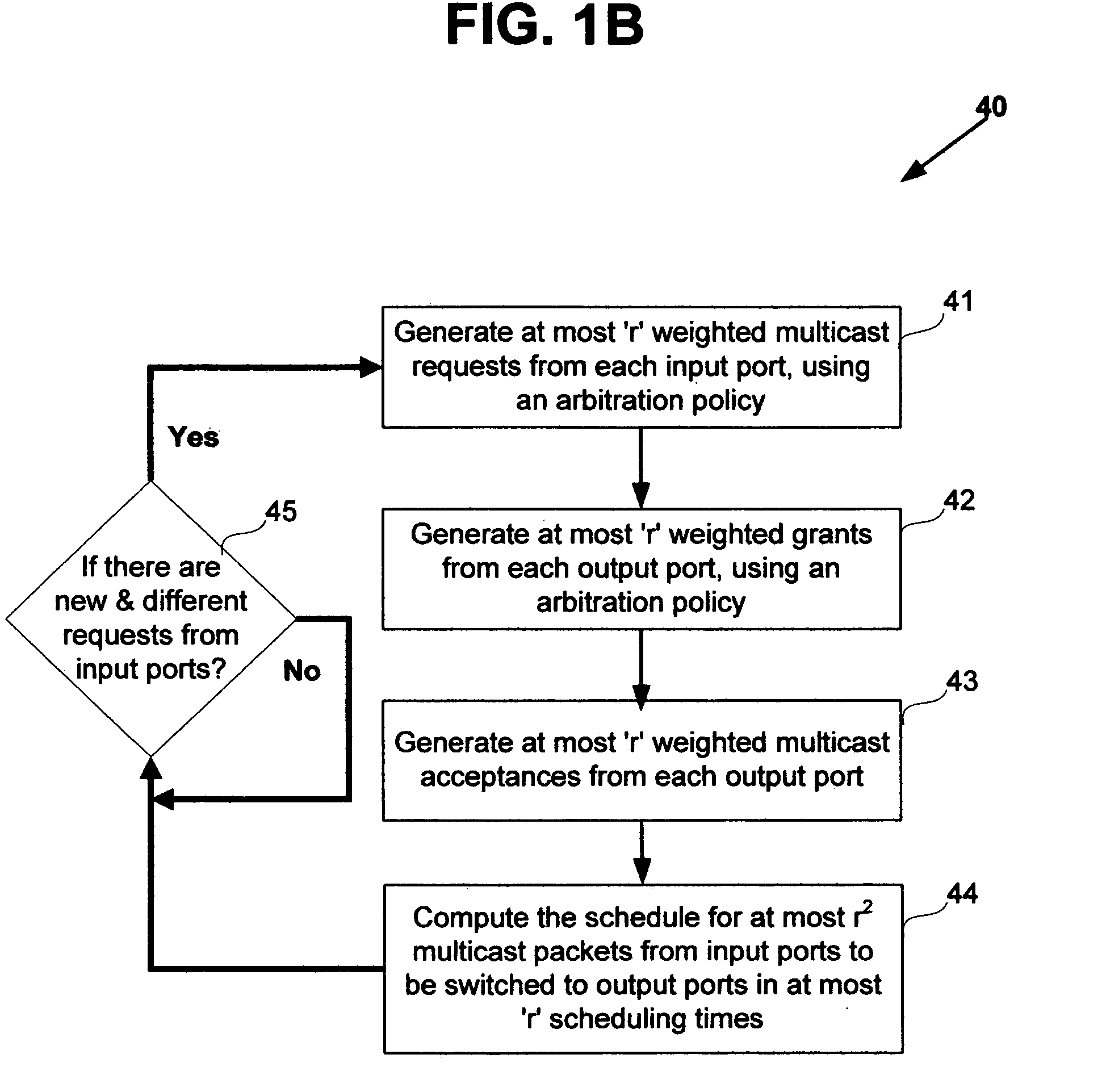

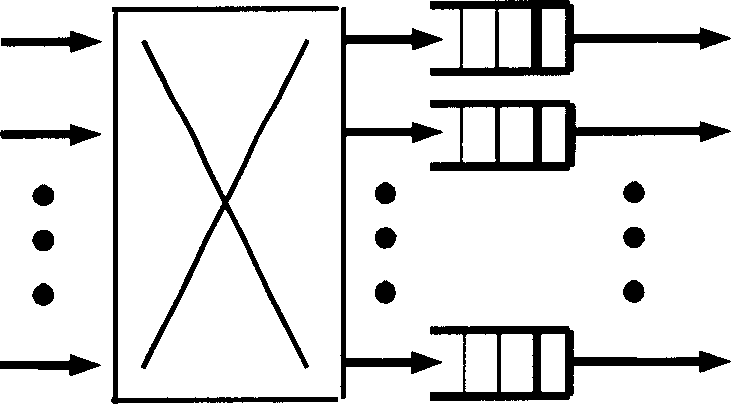

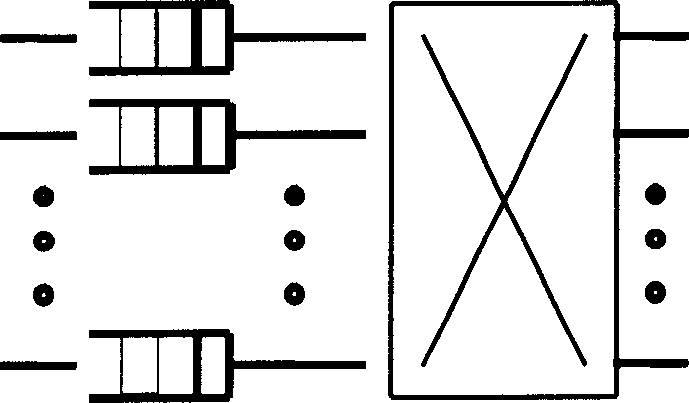

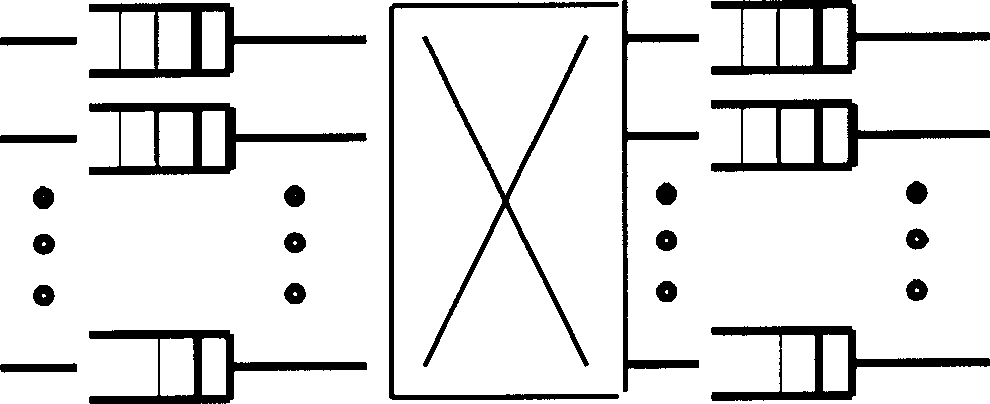

Nonblocking and deterministic multirate multicast packet scheduling

InactiveUS20070053356A1Guaranteed bandwidthGuaranteed LatencyData switching by path configurationSwitching timeMulti rate

A system for scheduling multirate multicast packets through an interconnection network having a plurality of input ports, a plurality of output ports, and a plurality of input queues, comprising multirate multicast packets with rate weight, at each input port is operated in nonblocking manner in accordance with the invention by scheduling corresponding to the packet rate weight, at most as many packets equal to the number of input queues from each input port to each output port. The scheduling is performed so that each multicast packet is fan-out split through not more than two interconnection networks and not more than two switching times. The system is operated at 100% throughput, work conserving, fair, and yet deterministically thereby never congesting the output ports. The system performs arbitration in only one iteration, with mathematical minimum speedup in the interconnection network. The system operates with absolutely no packet reordering issues, no internal buffering of packets in the interconnection network, and hence in a truly cut-through and distributed manner. In another embodiment each output port also comprises a plurality of output queues and each packet is transferred corresponding to the packet rate weight, to an output queue in the destined output port in deterministic manner and without the requirement of segmentation and reassembly of packets even when the packets are of variable size. In one embodiment the scheduling is performed in strictly nonblocking manner with a speedup of at least three in the interconnection network. In another embodiment the scheduling is performed in rearrangeably nonblocking manner with a speedup of at least two in the interconnection network. The system also offers end to end guaranteed bandwidth and latency for multirate multicast packets from input ports to output ports. In all the embodiments, the interconnection network may be a crossbar network, shared memory network, clos network, hypercube network, or any internally nonblocking interconnection network or network of networks.

Owner:TEAK TECH

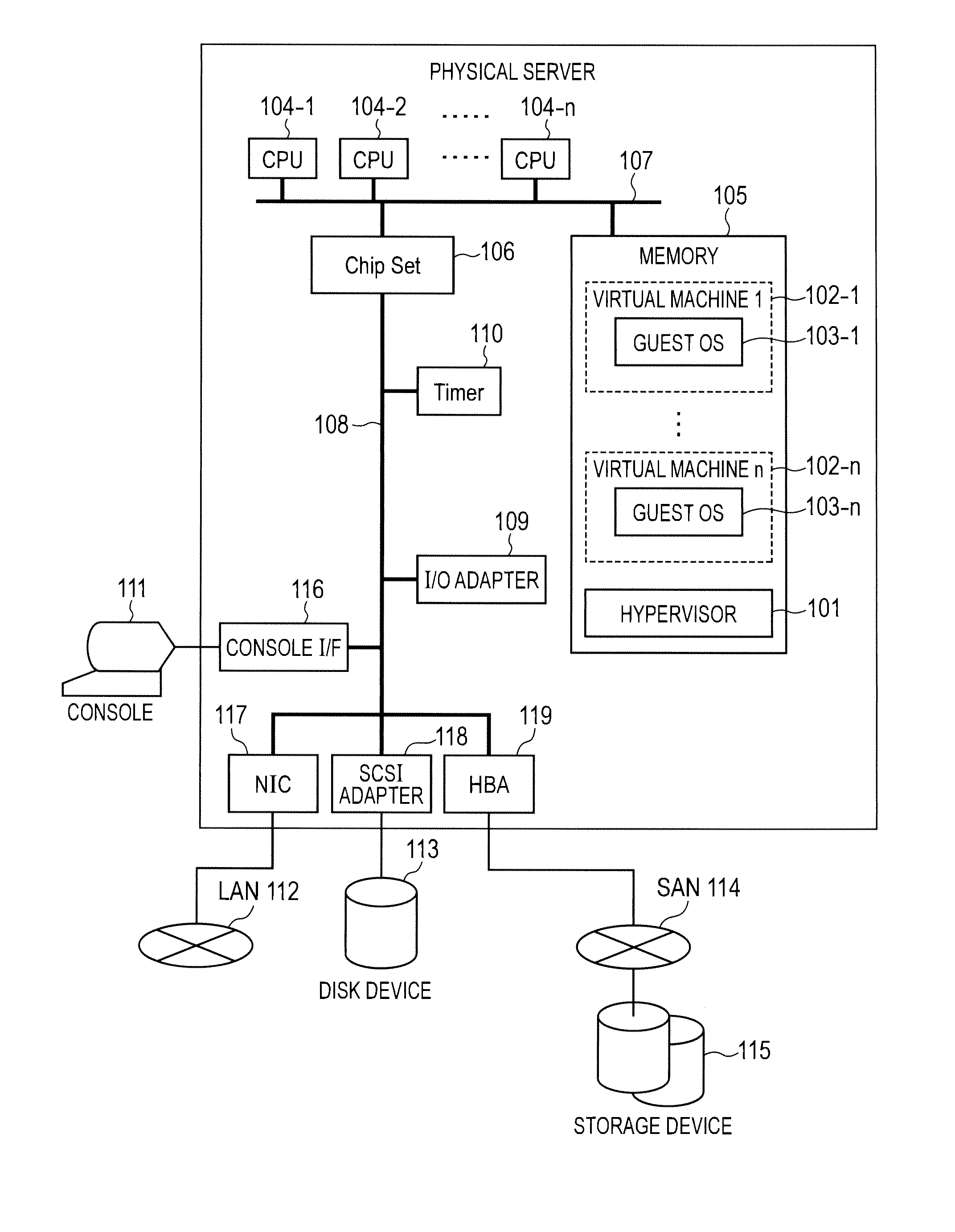

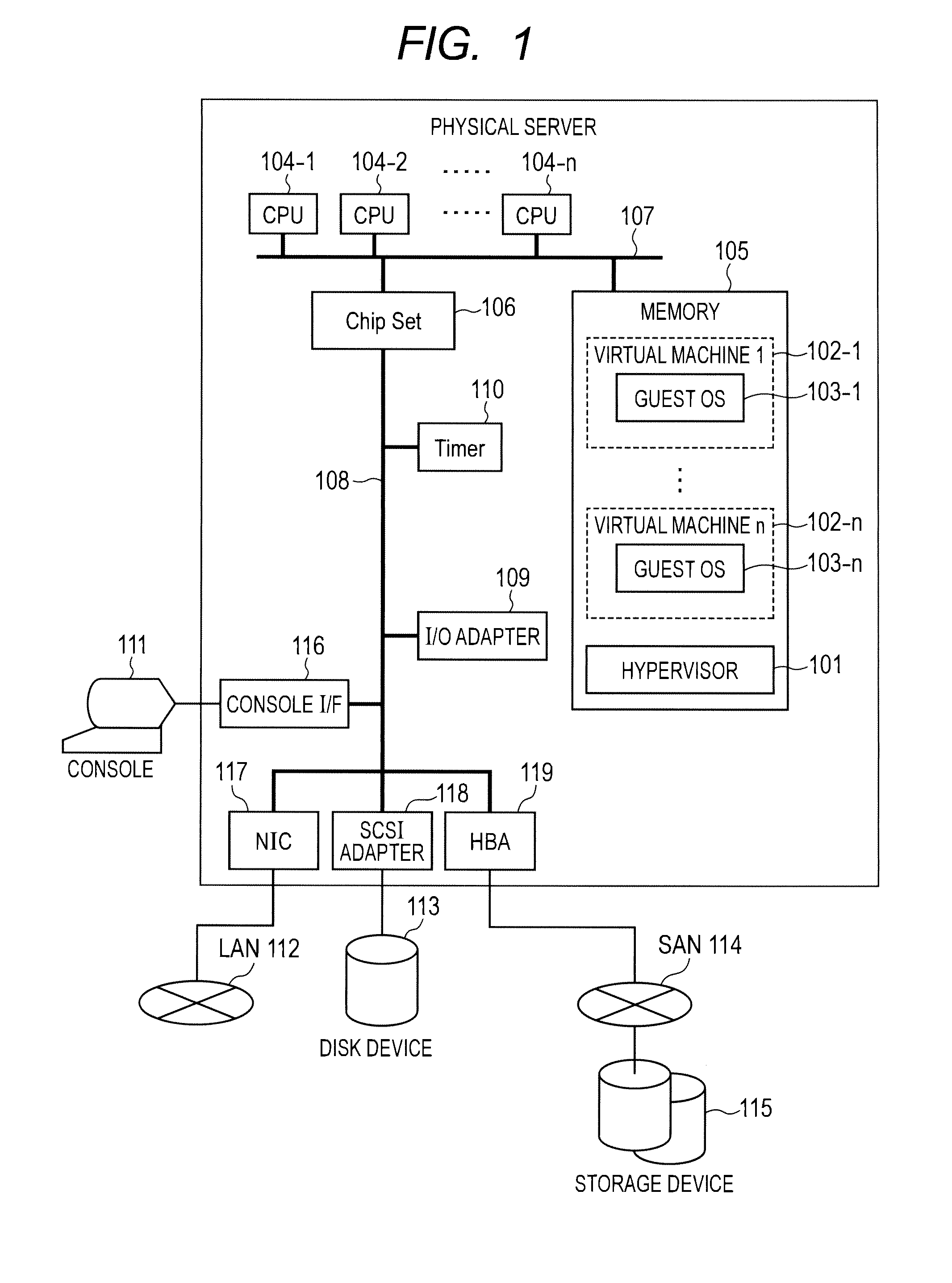

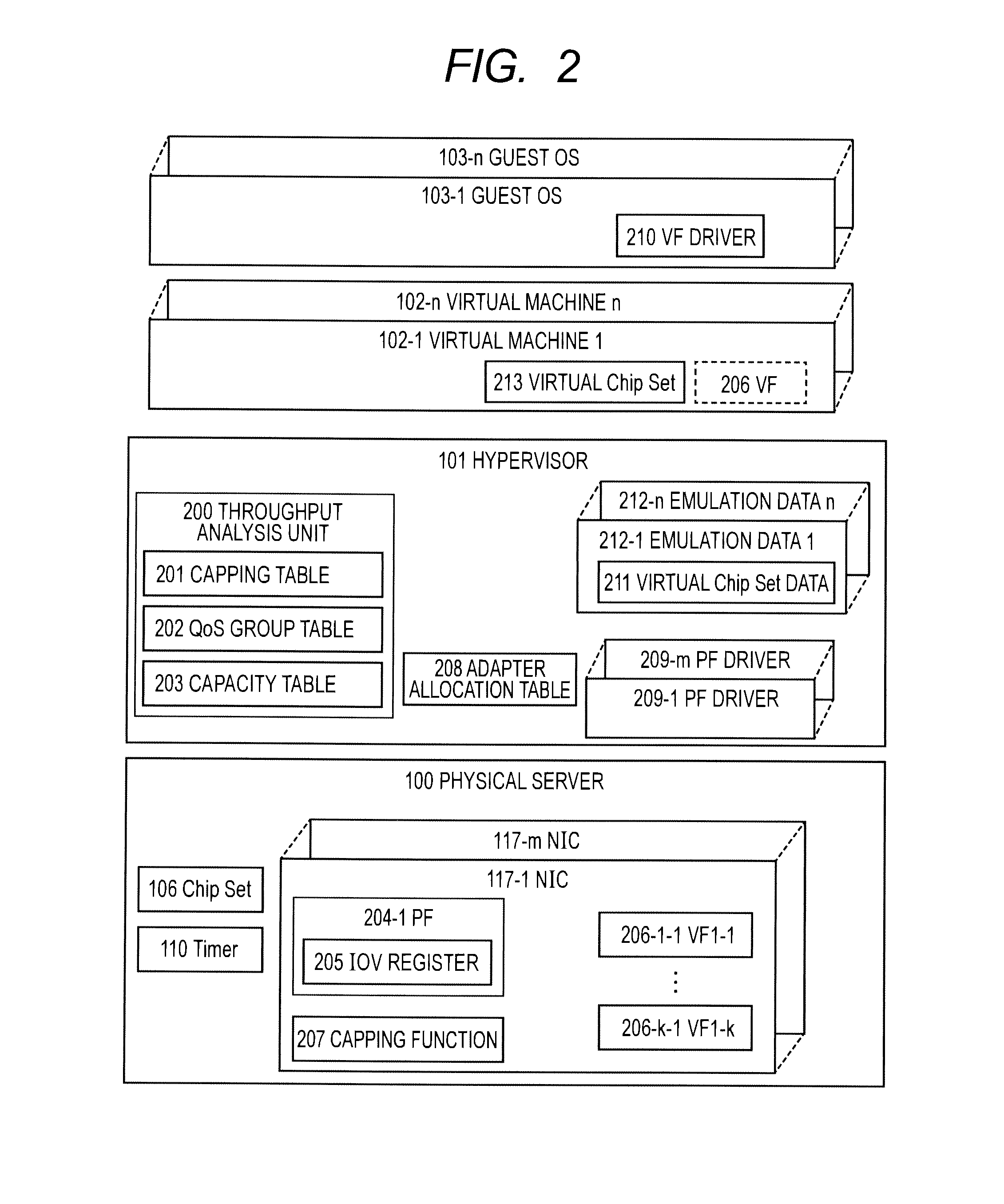

Computer and bandwidth control method

InactiveUS20130254767A1Guaranteed bandwidthBandwidth usageTransmissionSoftware simulation/interpretation/emulationVirtualizationManagement unit

A computer with a processor, memory, and one or more network interfaces, the computer having a virtualization management unit for managing a virtual computer and a bandwidth control unit for controlling a bandwidth in use in a virtual computer group comprised of one or more virtual computers, in which the virtualization management unit contains an analysis unit for managing a bandwidth in use of virtual network interfaces allocated to the virtual computers, the analysis unit measures the bandwidth in use of the each virtual computer, determines whether there exists a first virtual computer group whose bandwidth in use is smaller than a guaranteed bandwidth, and commands to control the bandwidth of a second virtual computer group whose bandwidth in use is larger than the guaranteed bandwidth, and the bandwidth control unit secures a free bandwidth just equal to a shortage of the guaranteed bandwidth of the first virtual computer group.

Owner:HITACHI LTD

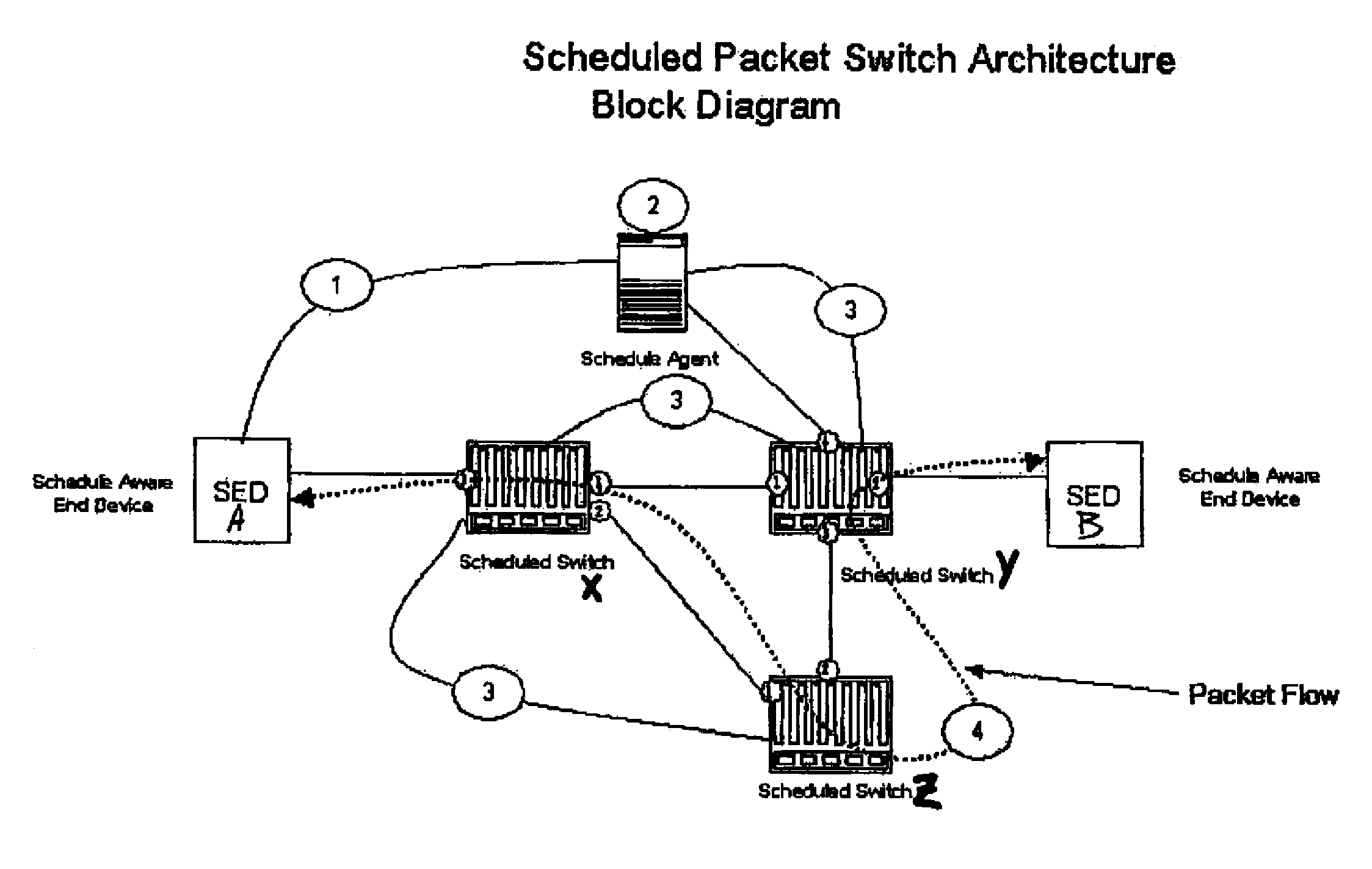

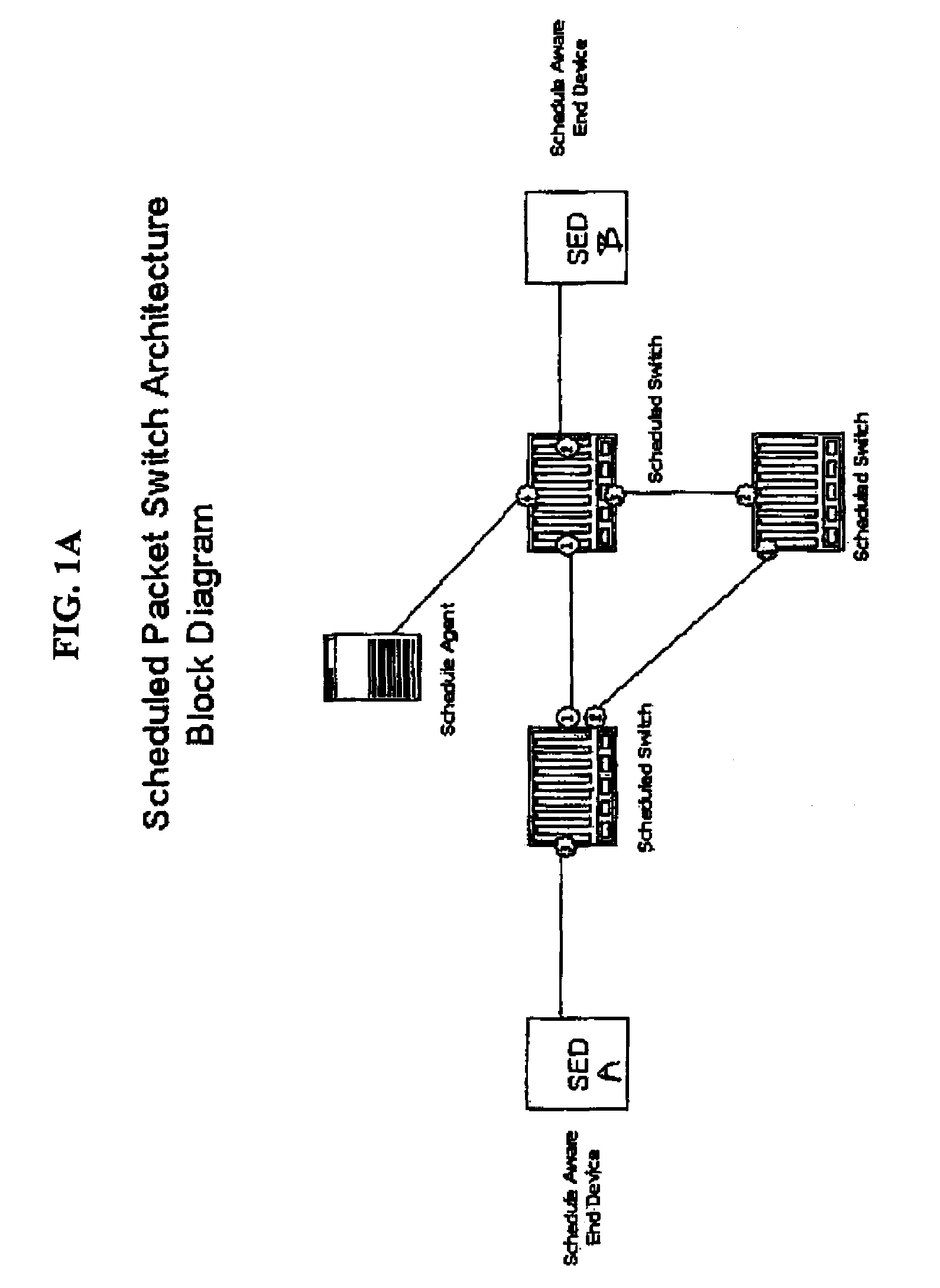

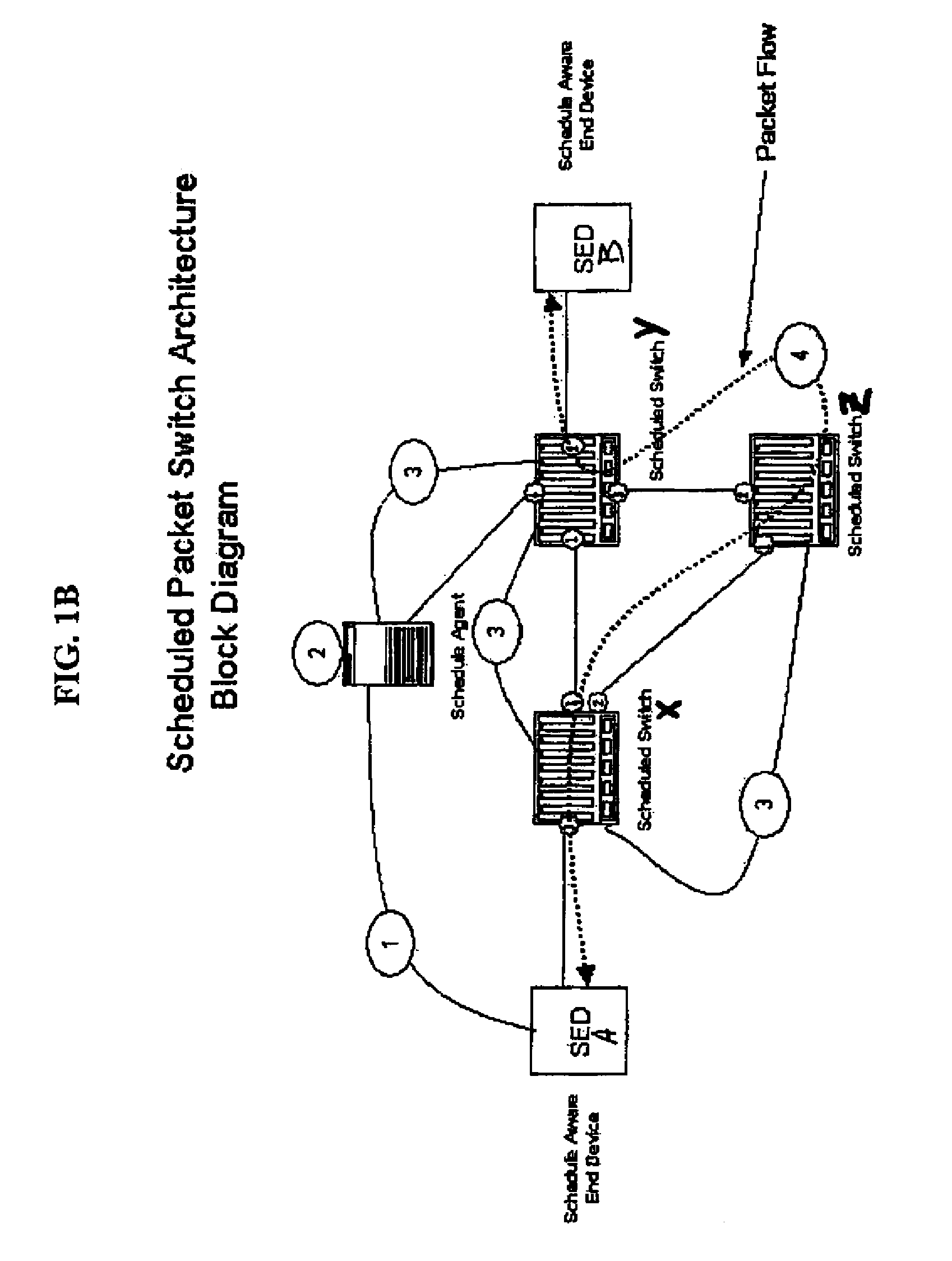

Method for achieving high-availability of itineraries in a real-time network scheduled packet routing system

ActiveUS8477616B1Reduce needGuaranteed bandwidthError preventionTransmission systemsAppointment timeTopology information

A system using scheduled times for transmission at each link guarantees bandwidth for transmitting data across a packet network. A scheduling agent determines availability of data paths across a network according to pre-selected criteria and real-time network topology information. Precise schedules are determined for transmission and reception appointments for data packets to traverse each link and switch in the network including compensation for transmission delays and switch latencies, resulting in a fixed packet flow itinerary for each connection. Itineraries are communicated to schedule-aware switches and endpoints and appointment times are reserved for transmission of the scheduled data packets. Scheduled packets arriving at each switch are forwarded according to their predetermined arrival and departure schedules, rather than their headers or contents, relieving the switches from making real-time routing decisions. Any unscheduled transmission times remain available for routing of unscheduled packets according to their IP headers. Real-time transmission of data can be guaranteed in each scheduled path, and schedule selection criteria may be adjusted according to network utilization and tolerable setup delay and end-to-end delay.

Owner:AVAYA INC

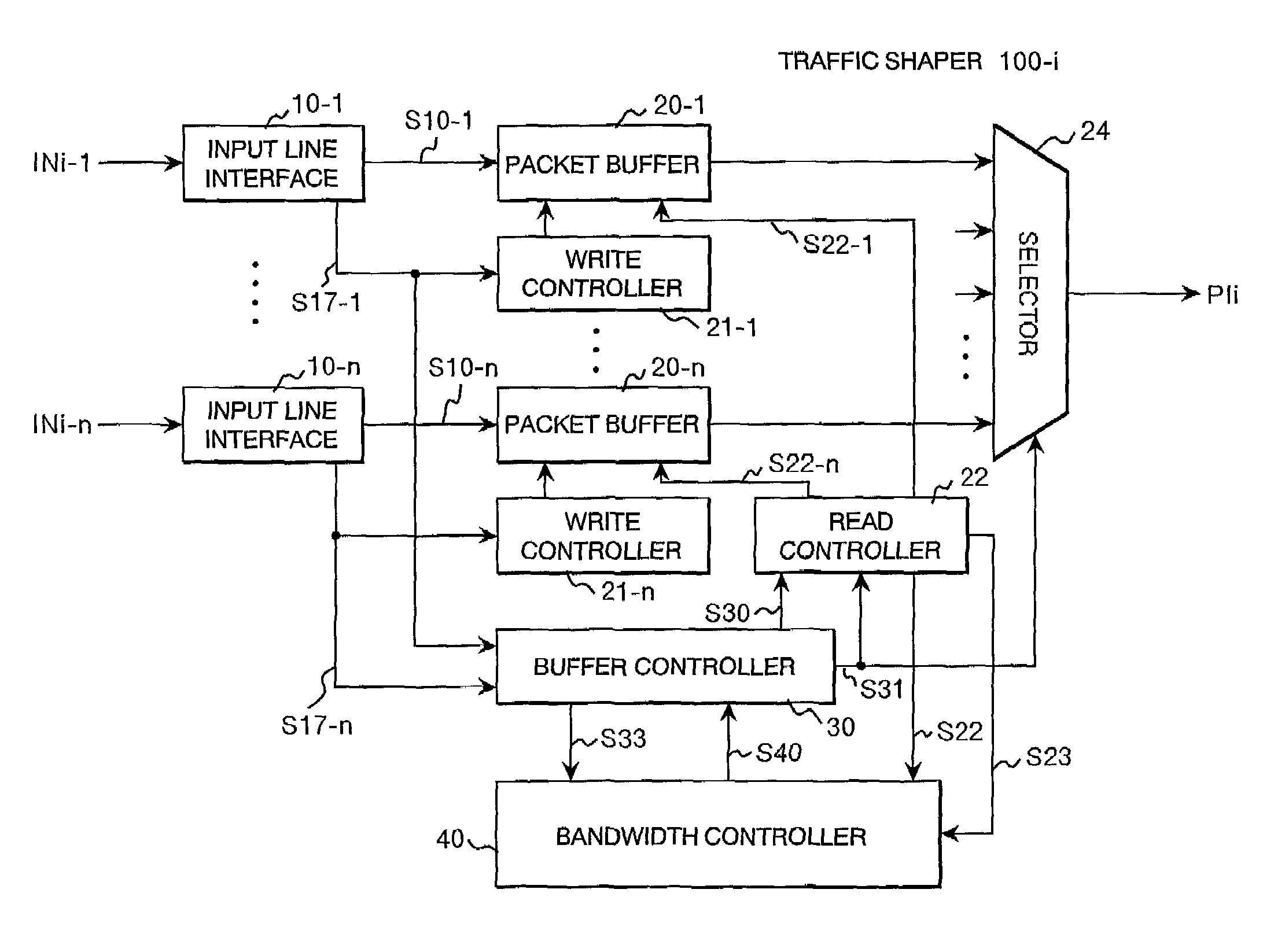

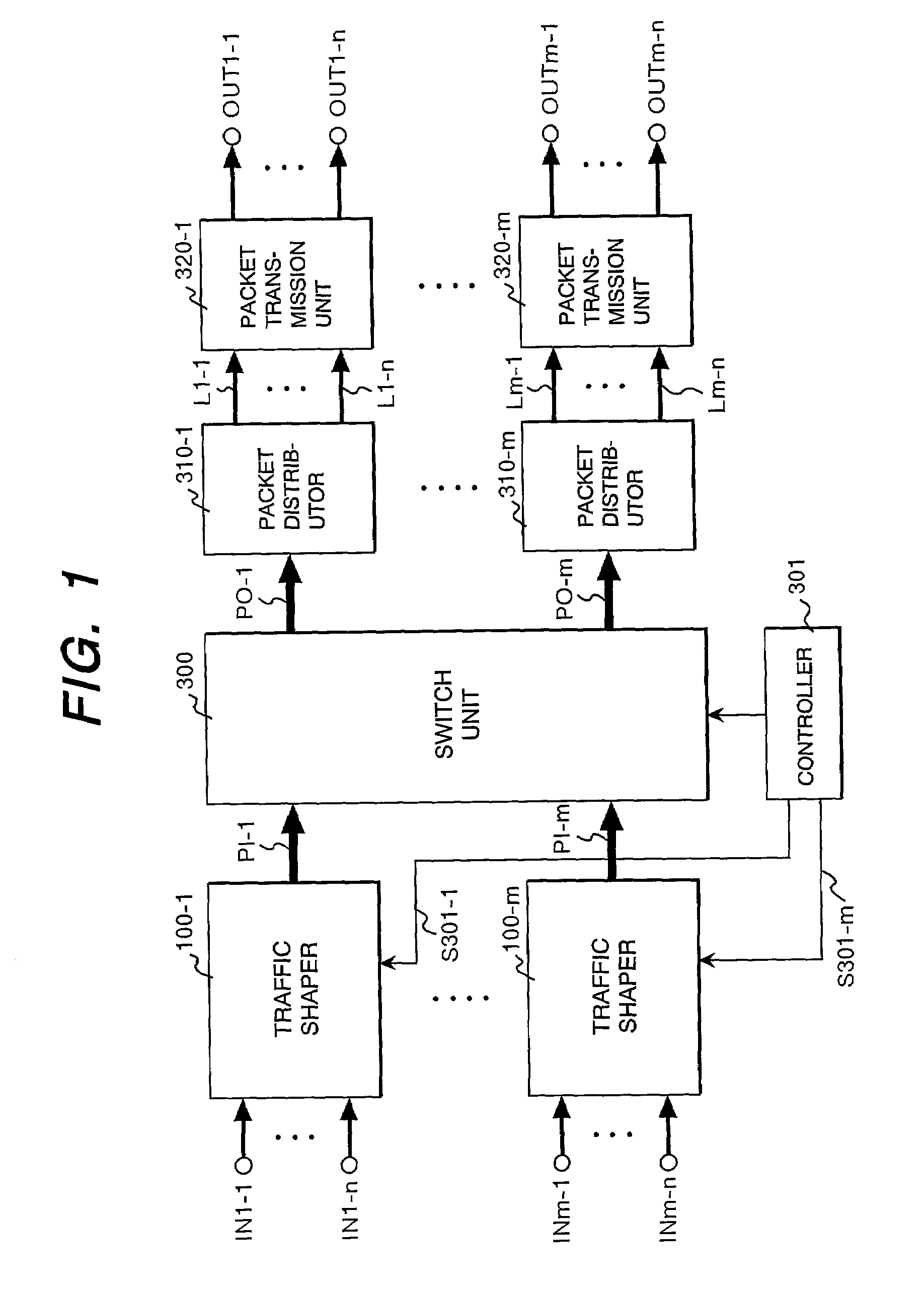

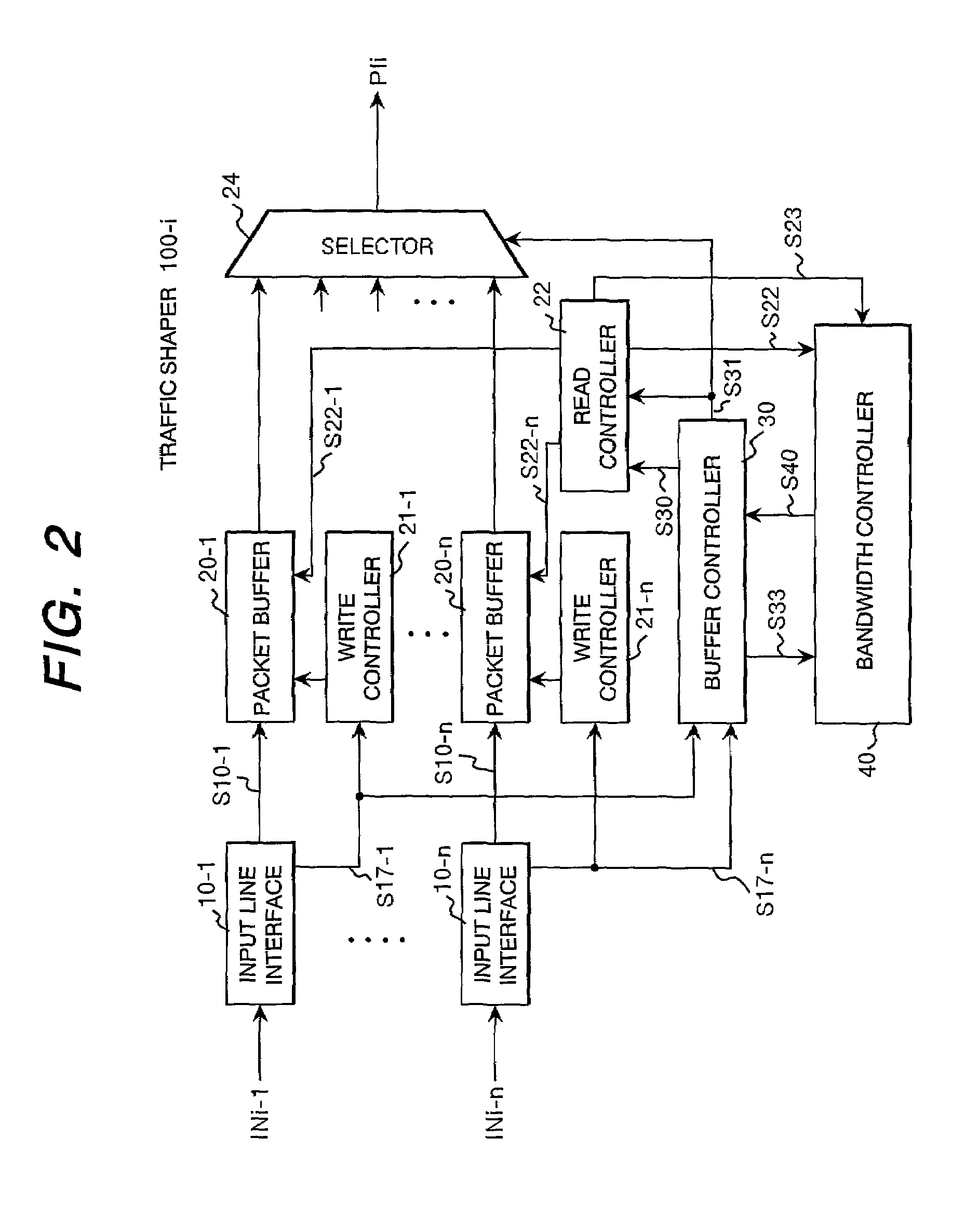

Leaky bucket type traffic shaper and bandwidth controller

InactiveUS7023799B2Easy to useGuaranteed bandwidthError preventionFrequency-division multiplex detailsTraffic shapingBand counts

A traffic shaper comprises a bandwidth controller 40 having a plurality of leaky bucket units 41-1 to 41-n prepared in correspondence with buffer memories 20-1 to 20-n, and an output queue designation unit 43 for specifying a buffer memory from which a packet is to be read out. Each of the leaky bucket units 41 has a level counter 416 for decrementing the count value at a predetermined rate, and a level increaser 411 to 417 for increasing the count value of the level counter by a value proportional to the product of the length of a transmitted packet and a unitary increment value which is variable depend on the current count value of the level counter.

Owner:HITACHI LTD

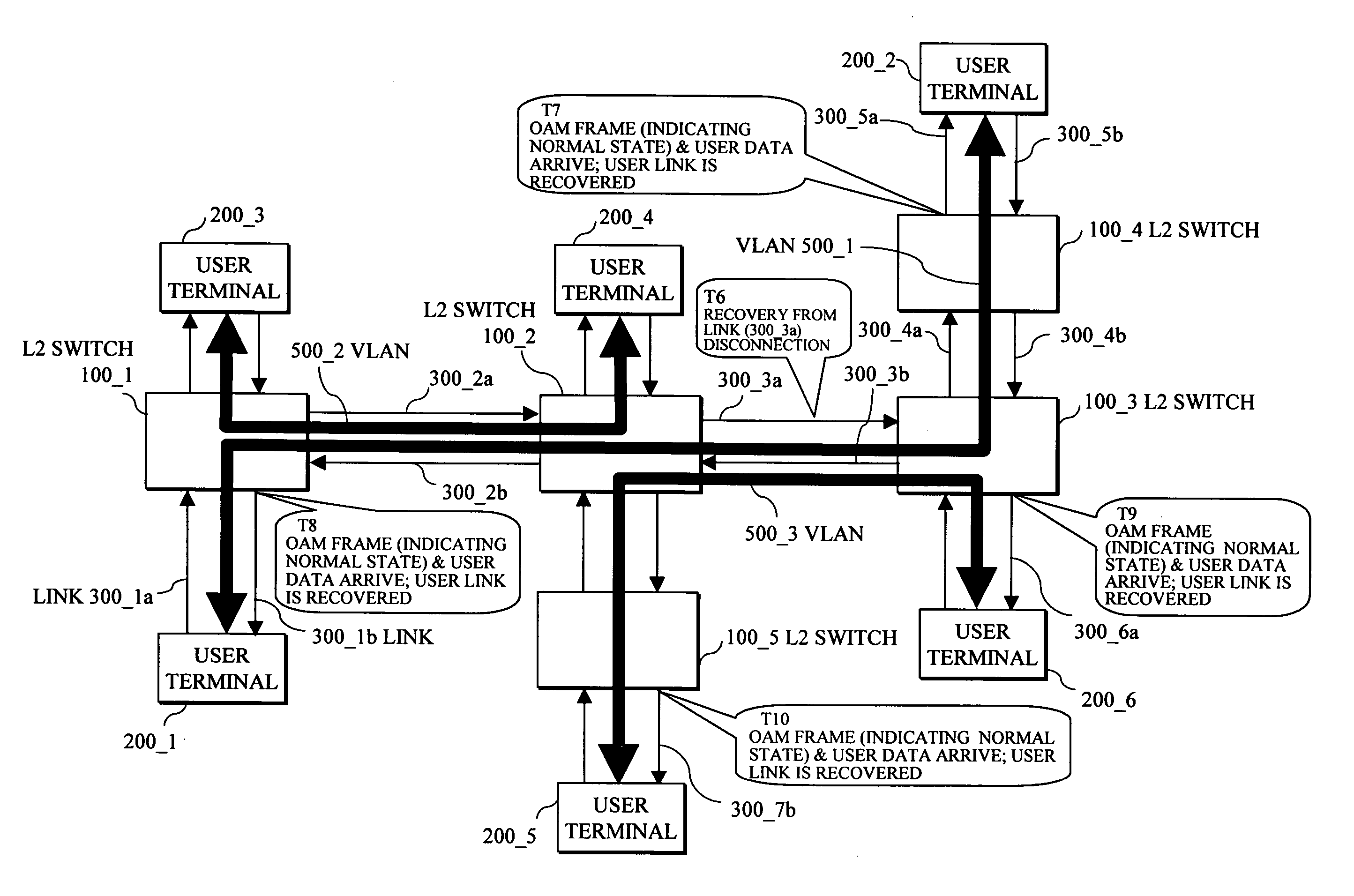

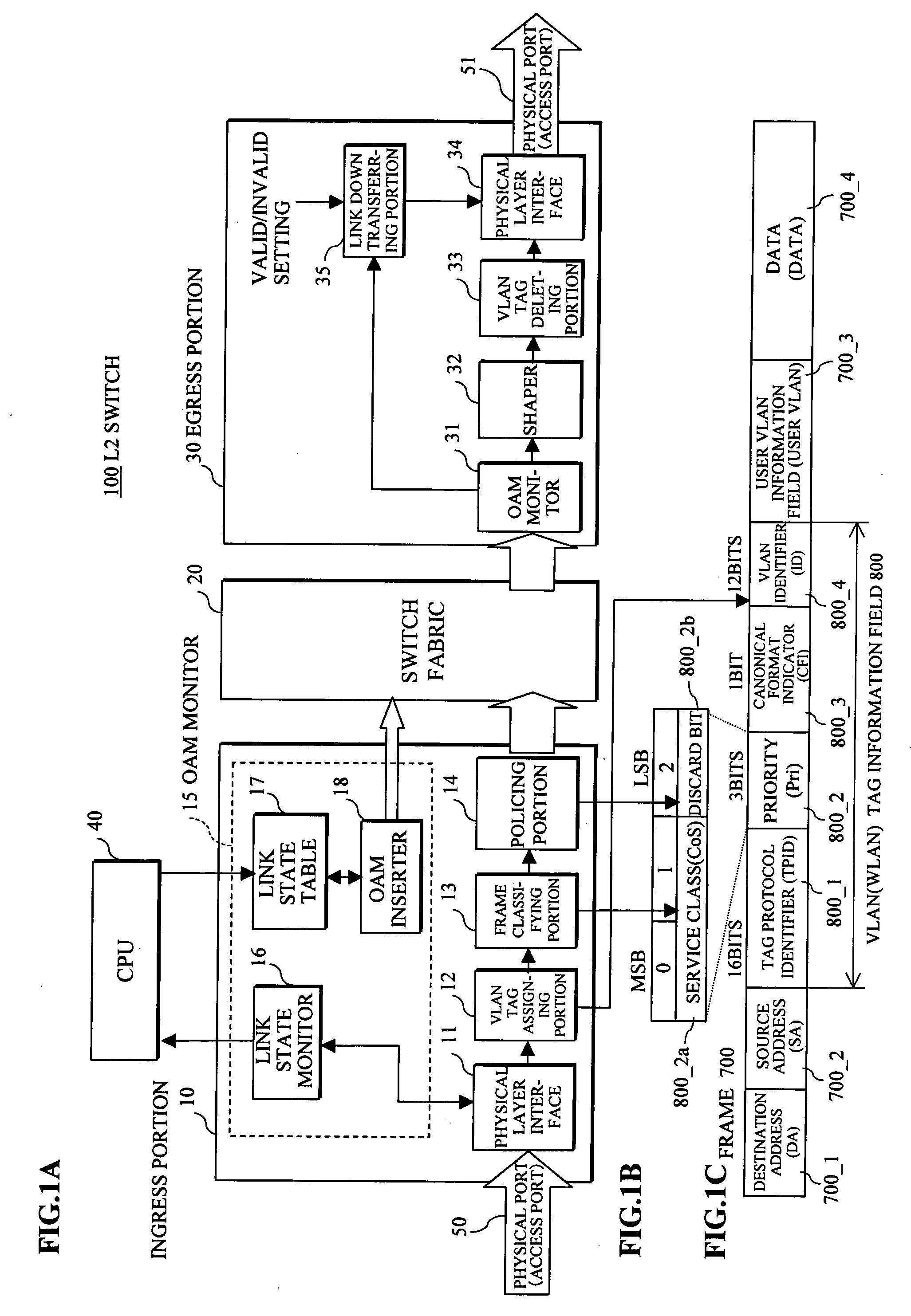

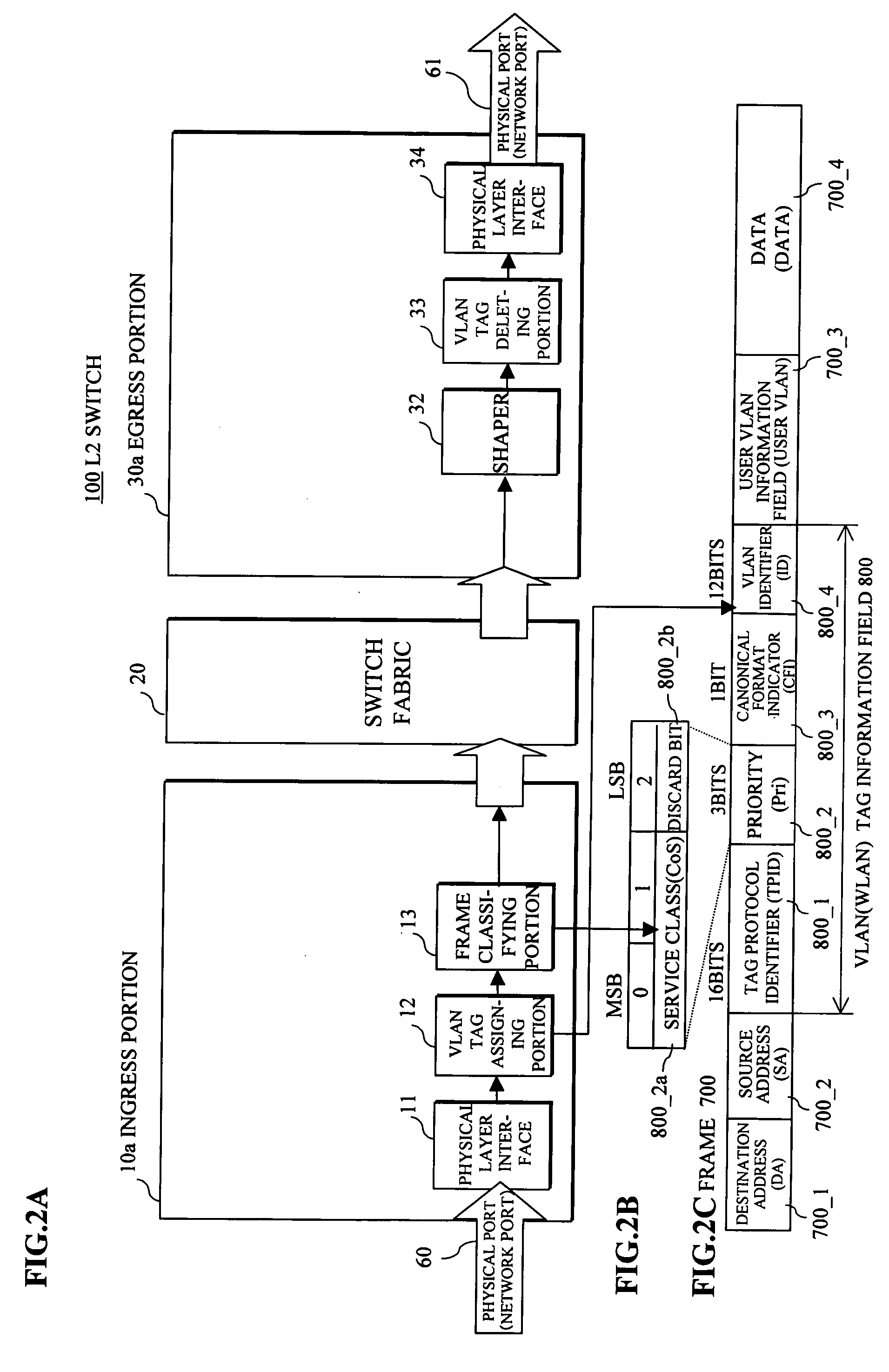

L2 switch

InactiveUS20050163132A1Bandwidth-guaranteed and inexpensive and highly reliableGuaranteed bandwidthError preventionFrequency-division multiplex detailsComputer hardware

Owner:FUJITSU LTD

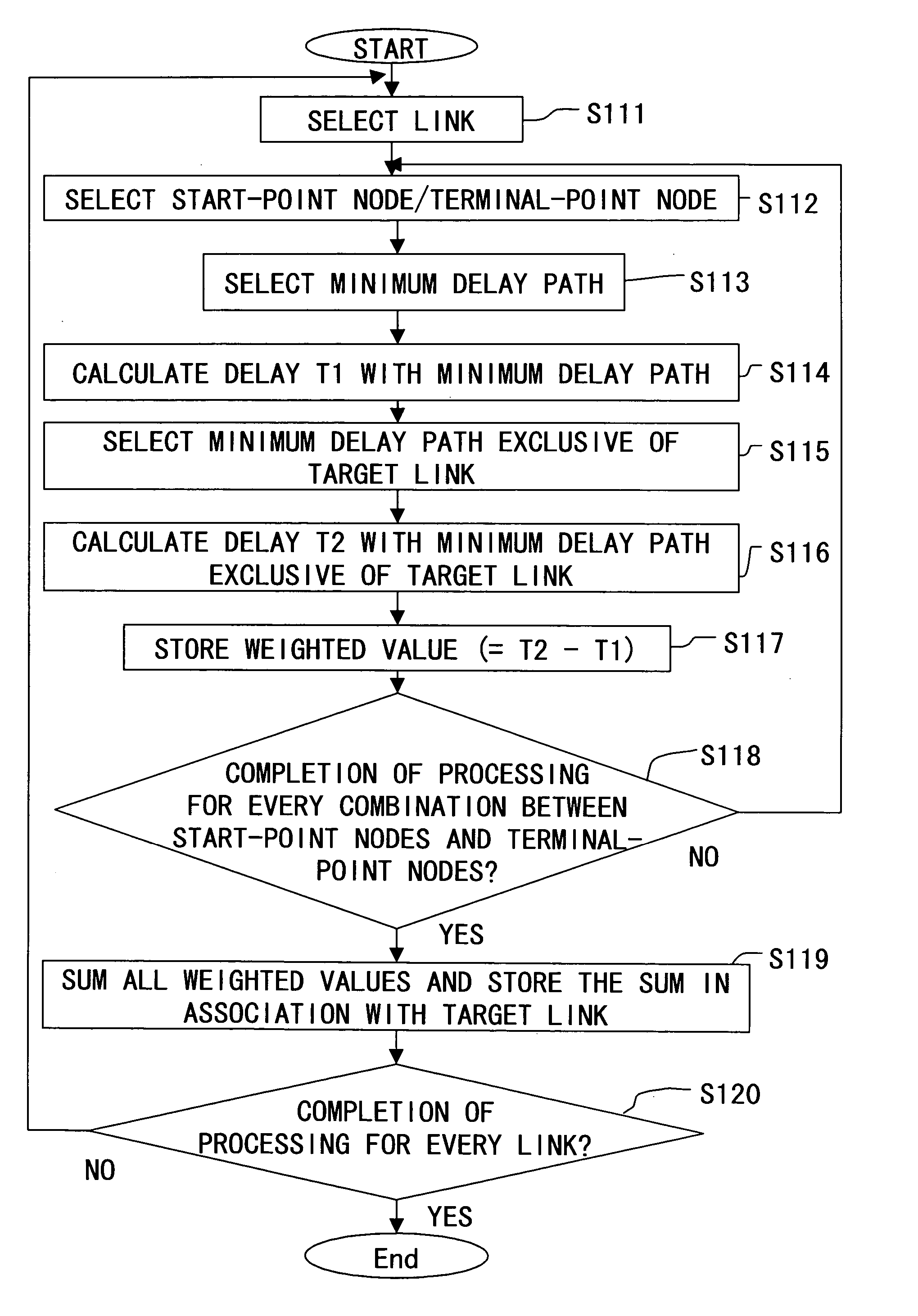

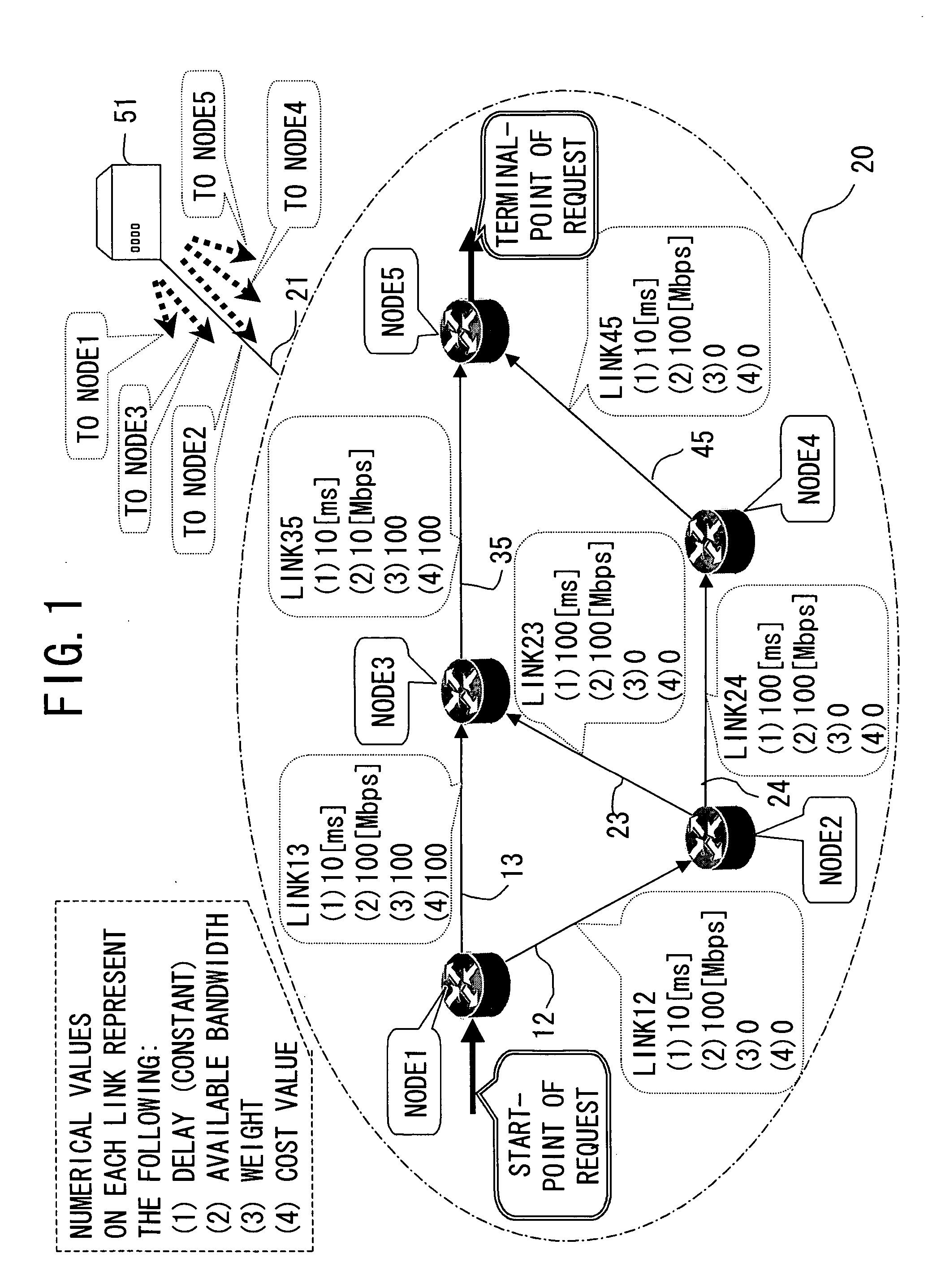

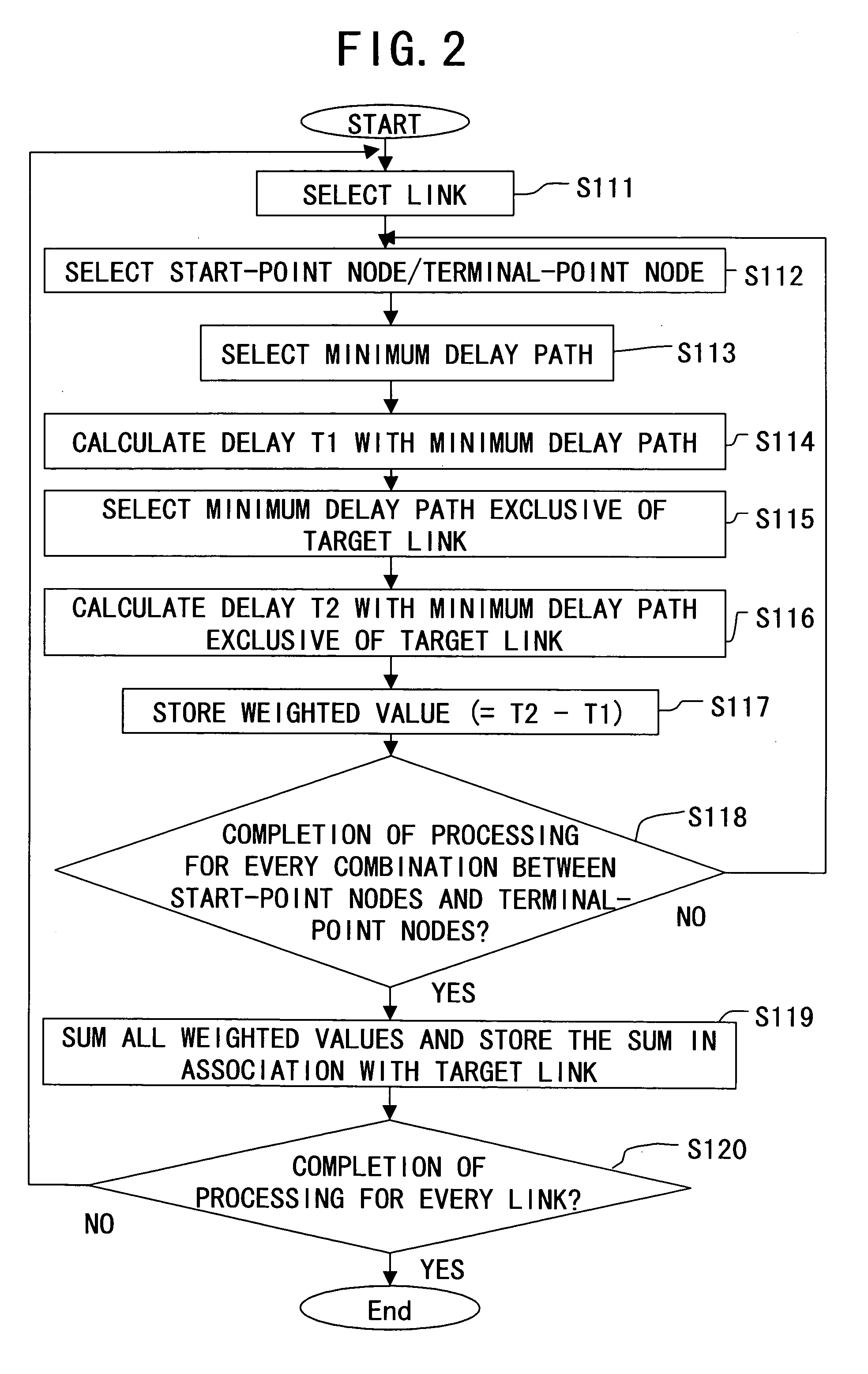

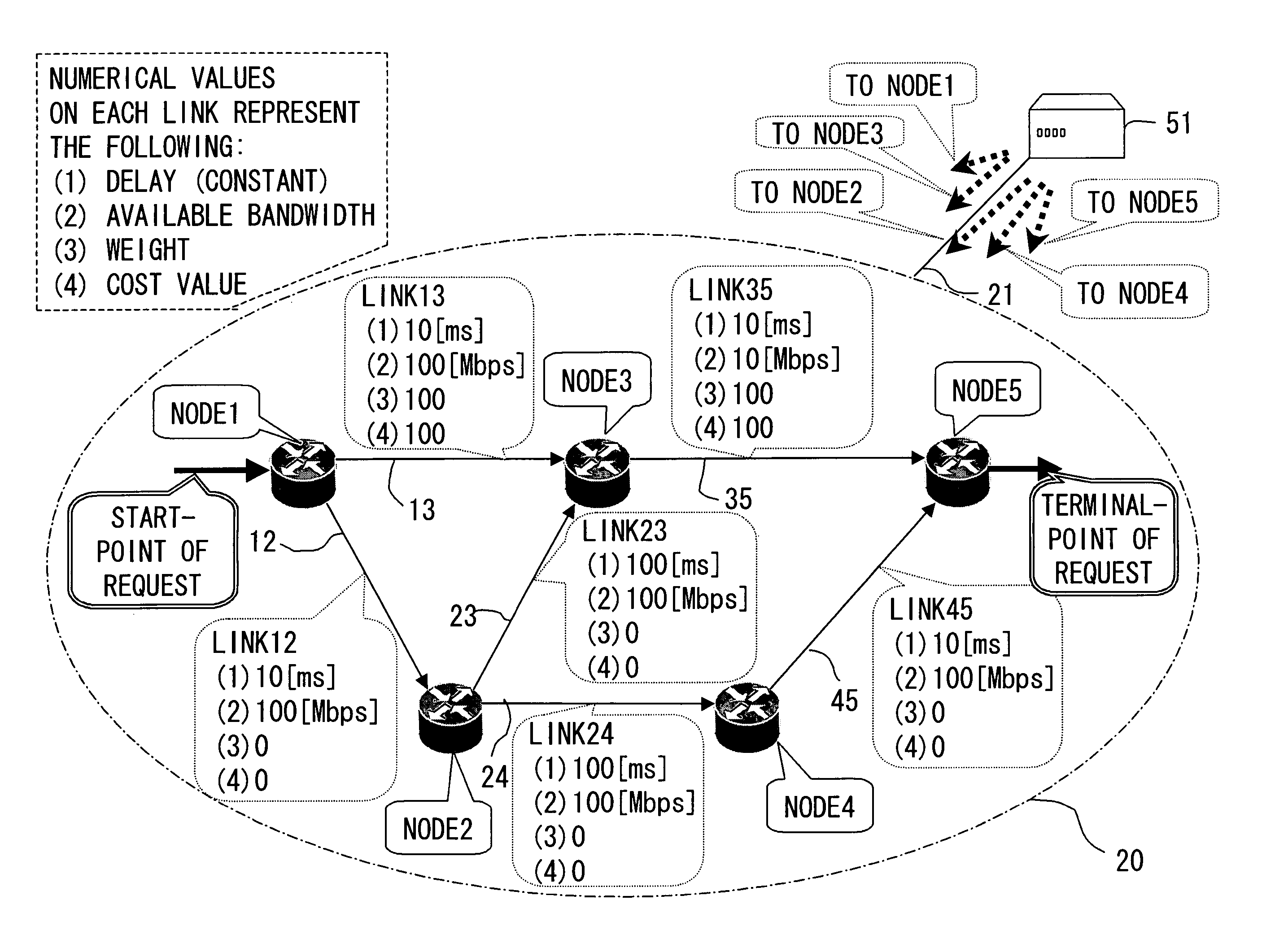

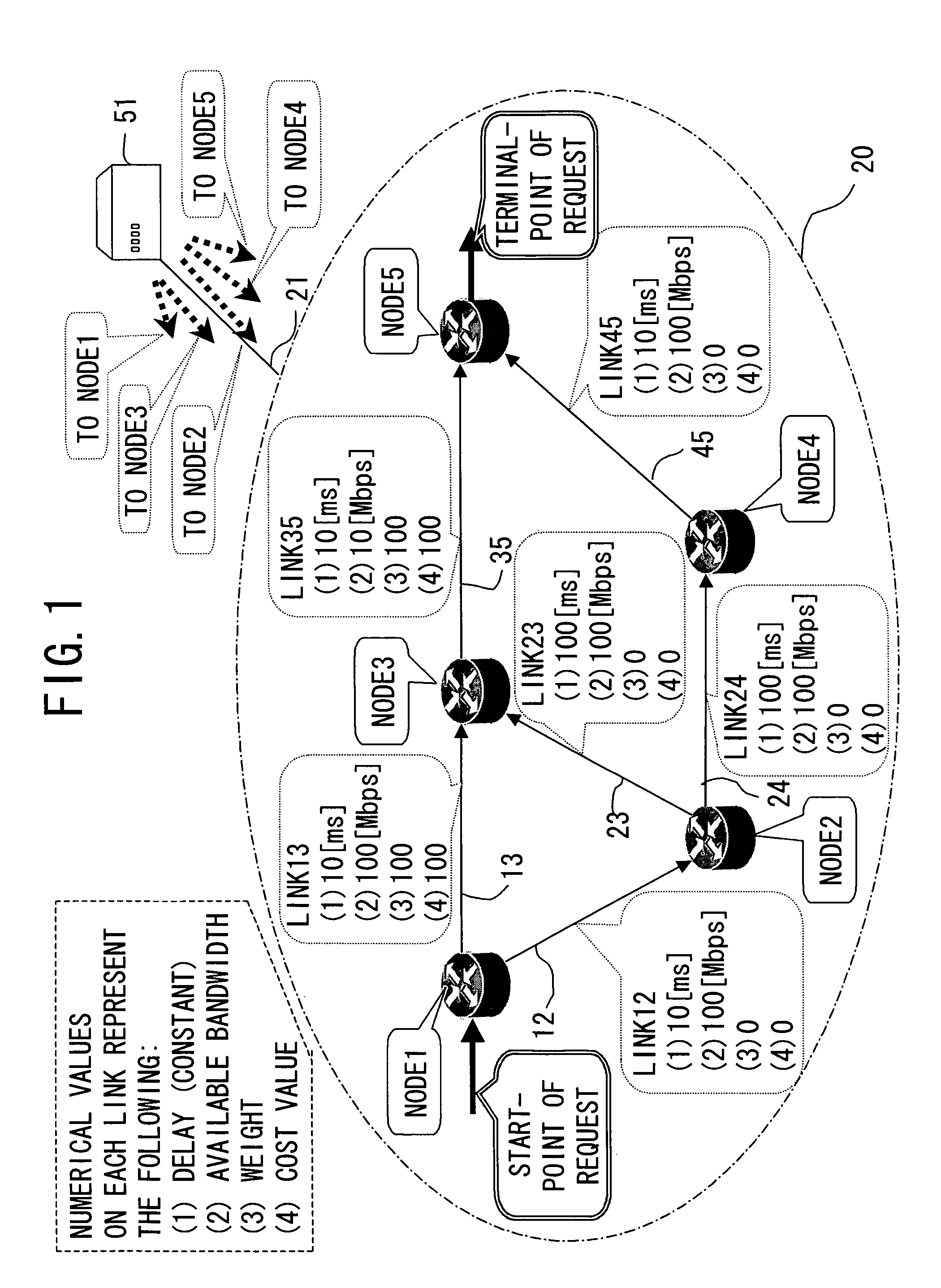

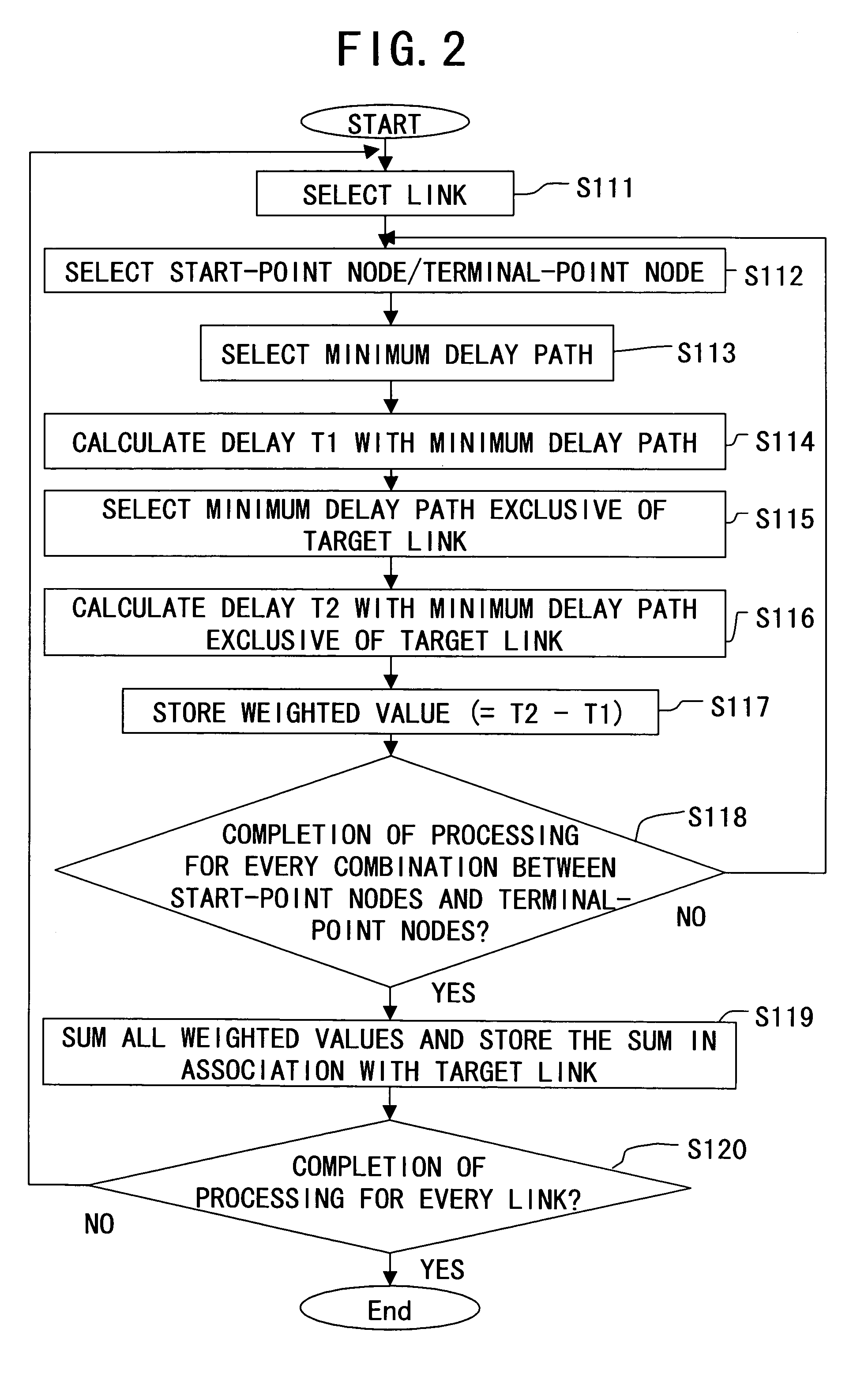

Delay guarantee path setting system

InactiveUS20060050635A1Reduce call loss rateSet with easeError preventionFrequency-division multiplex detailsNetwork managementDistributed computing

A delay guarantee path setting system, which sets a traffic transfer path in a network, based on a delay guarantee message that requests path setting that guarantees a bandwidth and a delay, and in the system, the network management node defines a weighted value for each link connecting between the nodes according to an ability to comply with a requested bandwidth and a requested delay in the delay guarantee message, and selects, upon receiving the delay guarantee message, a path that complies with requests in the received delay guarantee message, and has the weighted value of a link in the path which meets a predetermined condition.

Owner:FUJITSU LTD

Delay guarantee path setting system

InactiveUS7496039B2Improve usabilityGuaranteed bandwidthError preventionTransmission systemsNetwork managementDistributed computing

A delay guarantee path setting system, which sets a traffic transfer path in a network, based on a delay guarantee message that requests path setting that guarantees a bandwidth and a delay, and in the system, the network management node defines a weighted value for each link connecting between the nodes according to an ability to comply with a requested bandwidth and a requested delay in the delay guarantee message, and selects, upon receiving the delay guarantee message, a path that complies with requests in the received delay guarantee message, and has the weighted value of a link in the path which meets a predetermined condition.

Owner:FUJITSU LTD

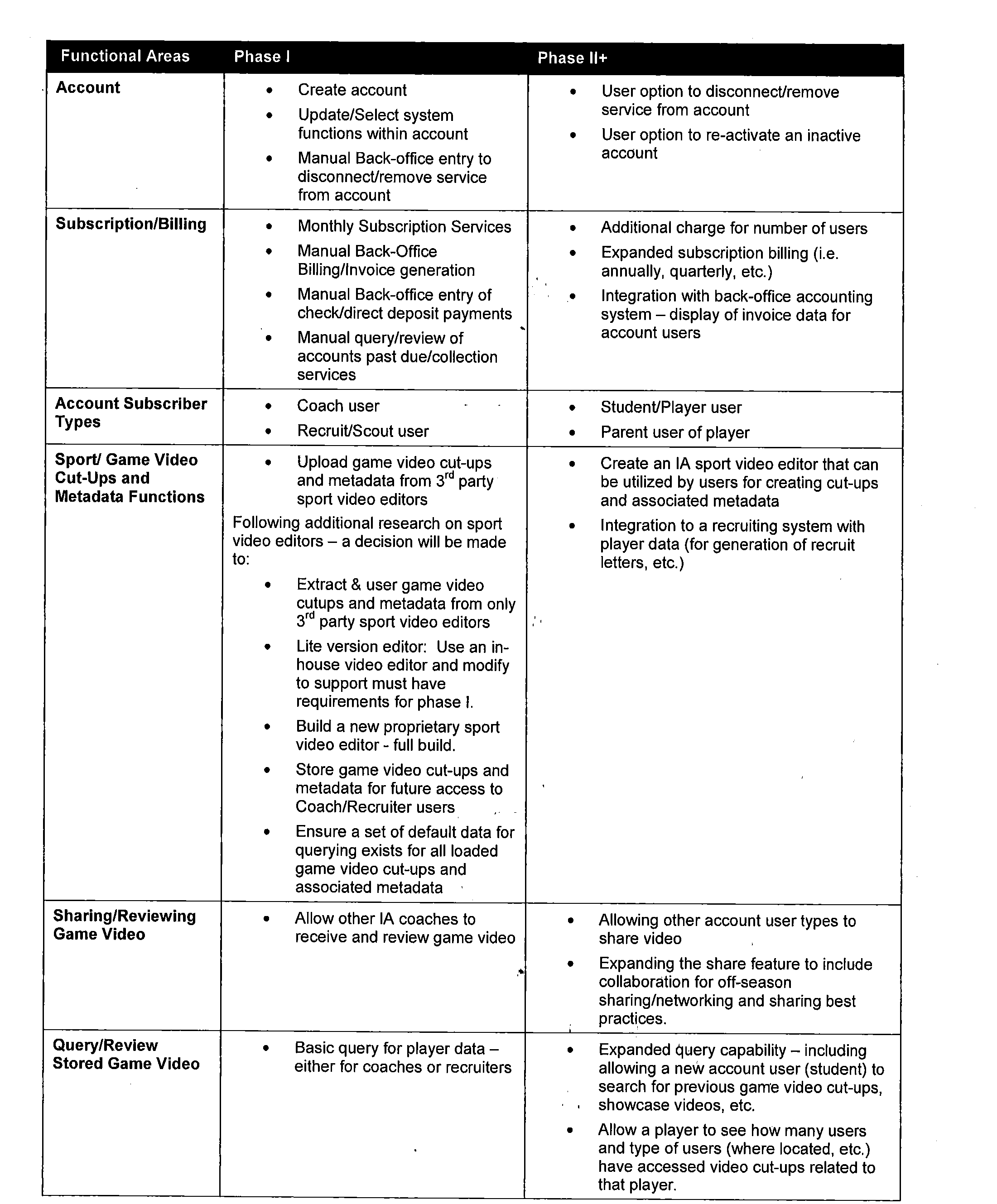

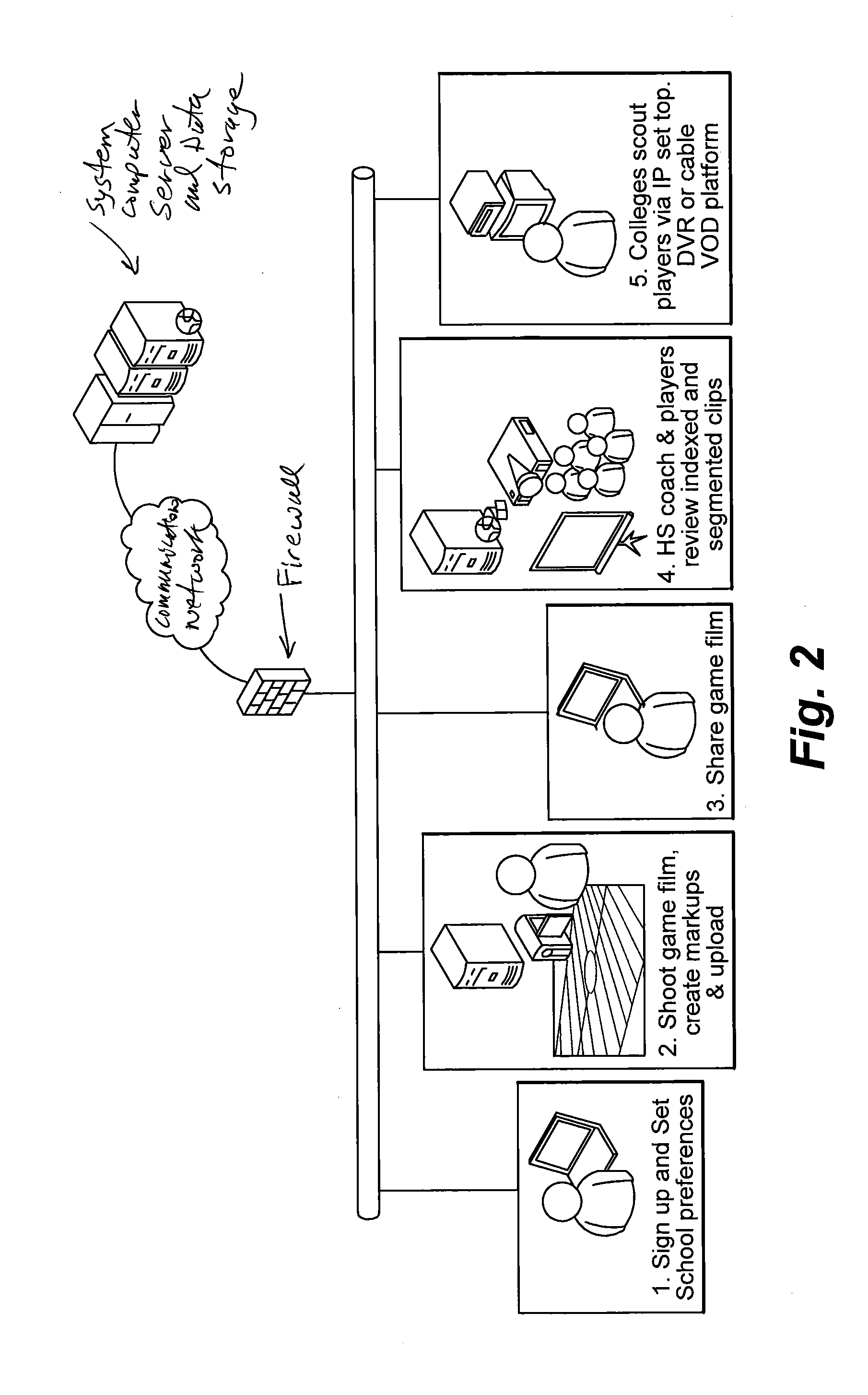

Sport video hosting system and method

InactiveUS20090044237A1Improve broadcast qualityProviding serviceData processing applicationsTwo-way working systemsThe InternetWeb site

A hosting system and method are provided for sharing, analysis, and review of videos, to include sport videos and data concerning athletes and teams. The system can be used for many purposes to include athlete education, recruiting of players, scouting of teams, and coaching analysis. The system is web based where a central server or computer links to a website that is accessible to authorized users over a communications network such as the internet. Data is uploaded to the central server from the various remote users, and the data is selectively edited and shared among the authorized users.

Owner:KEITER ZACHARY RYAN

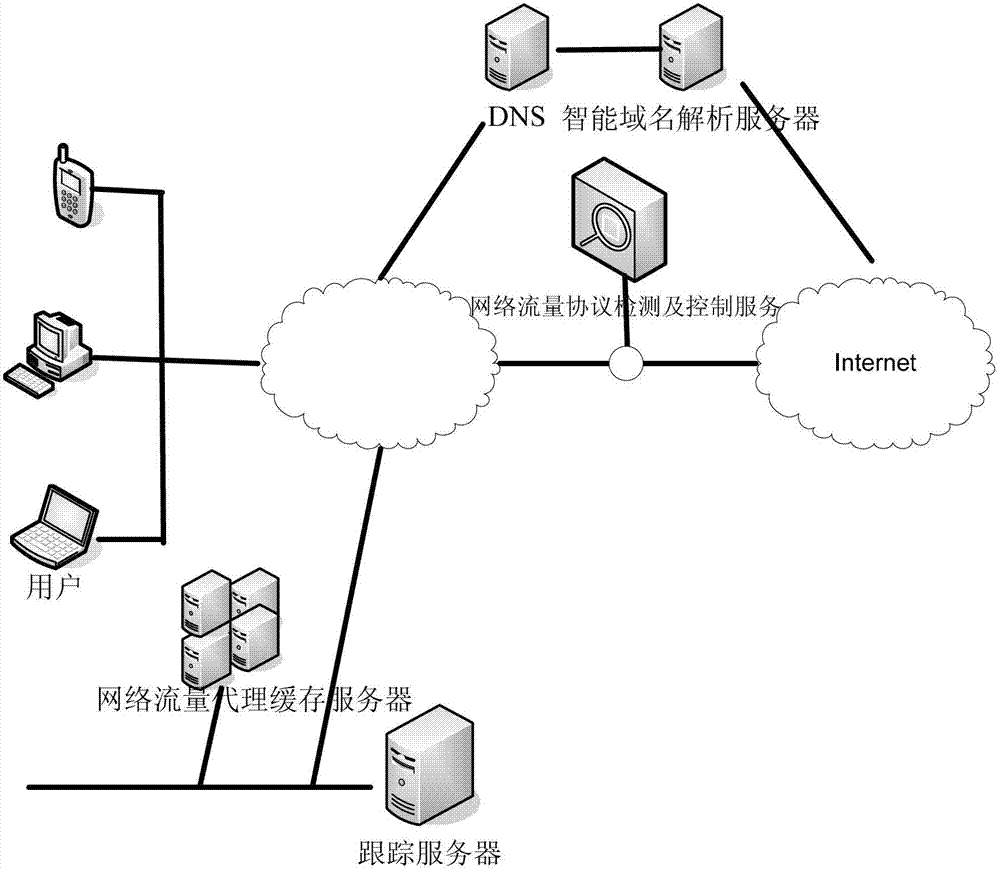

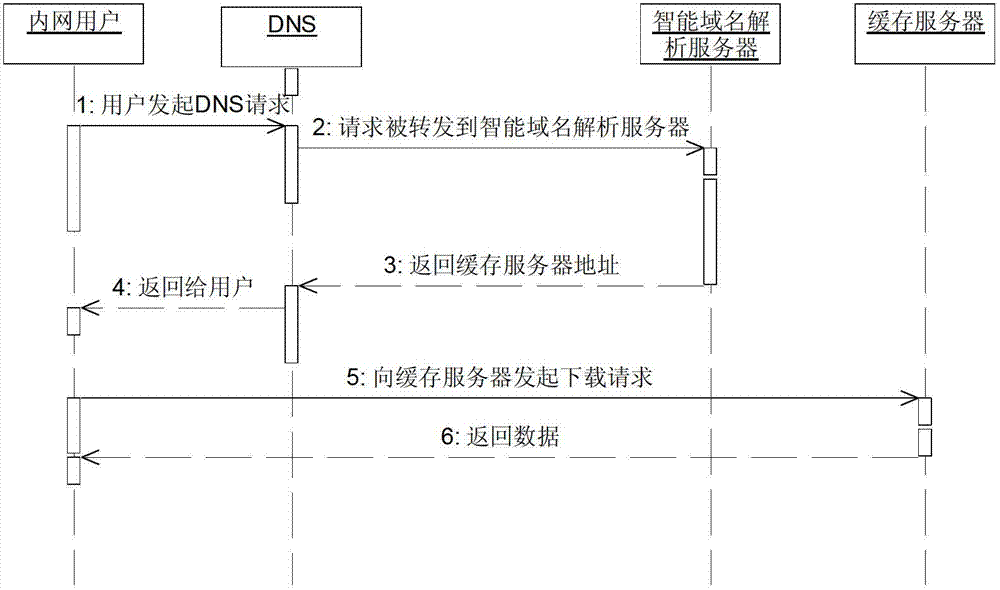

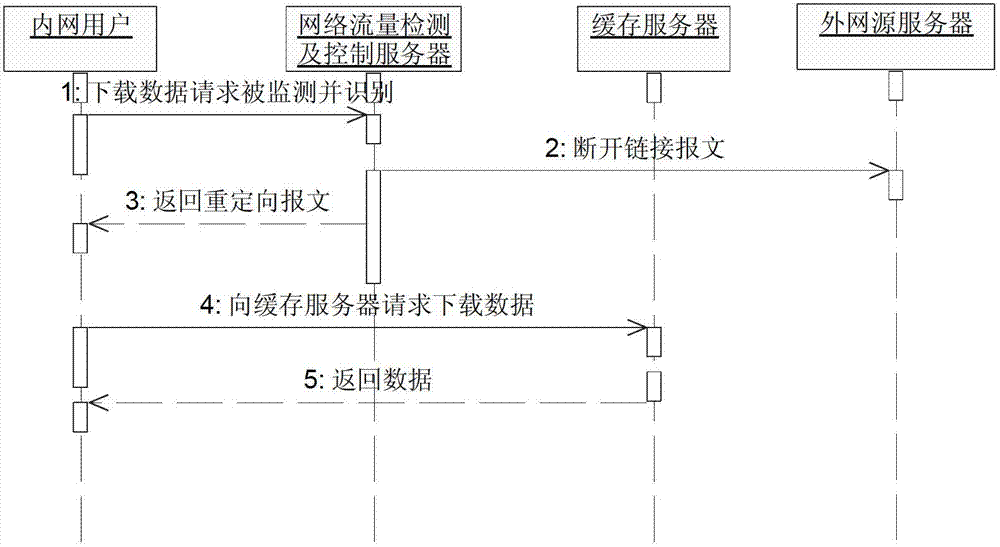

Network flow cache method and system based on active guidance

ActiveCN102891807AReduce flow pressureReduce operating costsData switching networksTraffic capacityDomain name

The invention relates to a network flow cache method and a network flow cache system based on active guidance. The method comprises the following steps of: 101) analyzing a protocol in network flow, and caching the network flow meeting a requirement to a local cache server; and 102) actively guiding a user to the local cache server by one of the following active guidance modes according to a network protocol used by a user request service, and cutting off linkage between an internal network user and an external network server, wherein by the first mode, the user is guided to the local cache server by adopting a redirection guide mechanism; by the second mode, the user is guided to the local cache server by an intelligent domain name server (DNS) guide method; by the third mode, the user is guided to the local cache server by a false message guide method; and by the fourth mode, the user is guided to the local cache server by a distorted message guide method. The system supports multiple active guidance modes; and after the modes are combined, the network flow can be guided to the cache system for service to the maximum extent.

Owner:BEIJING NETEAST TECH

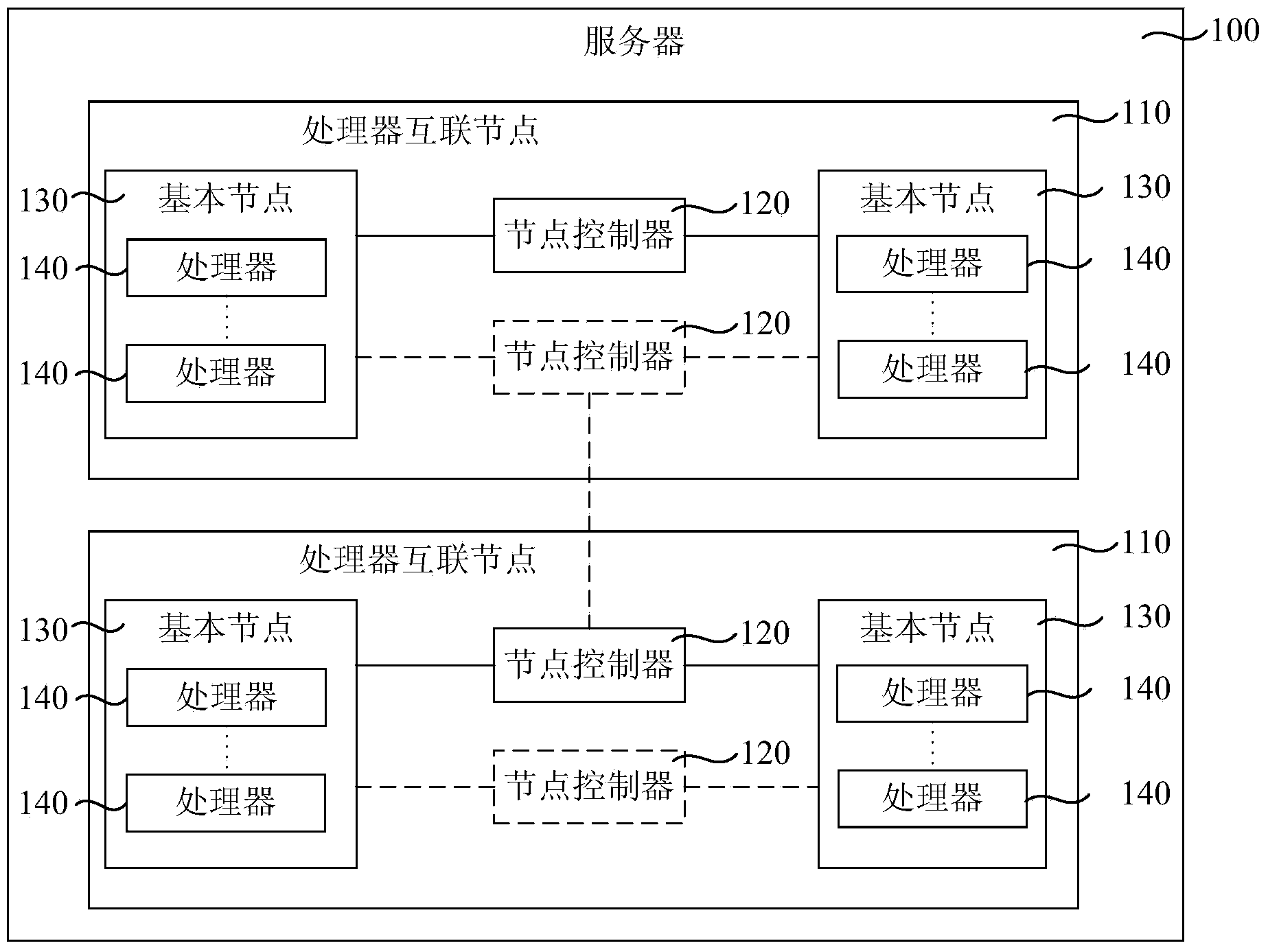

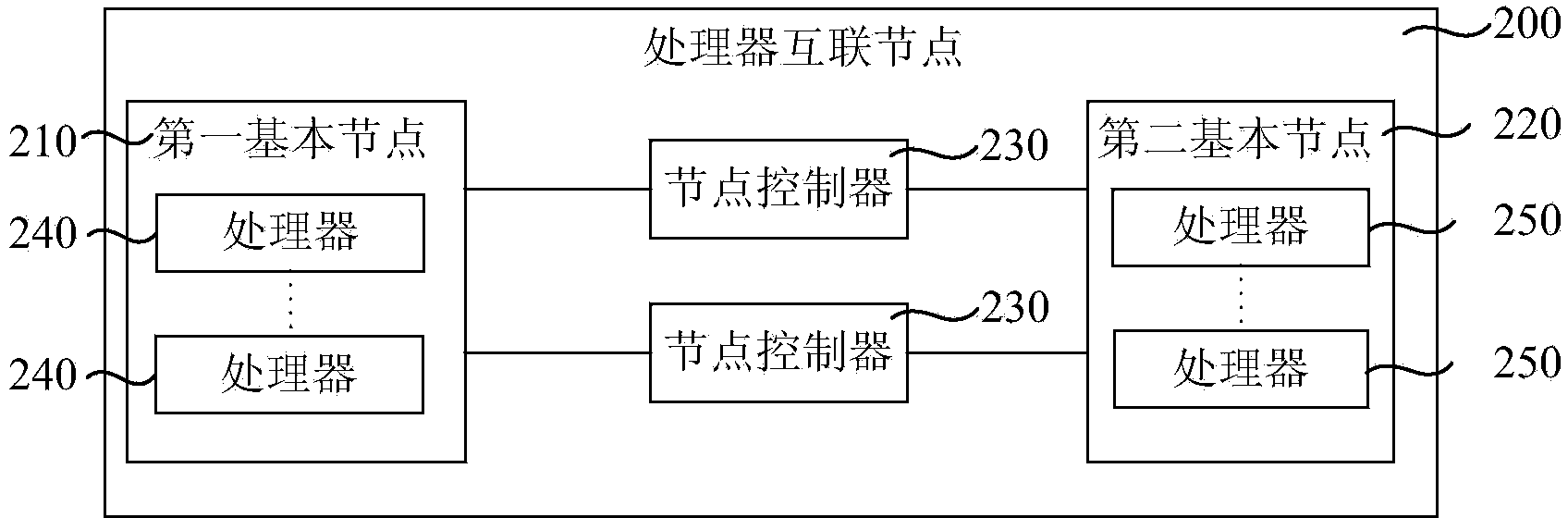

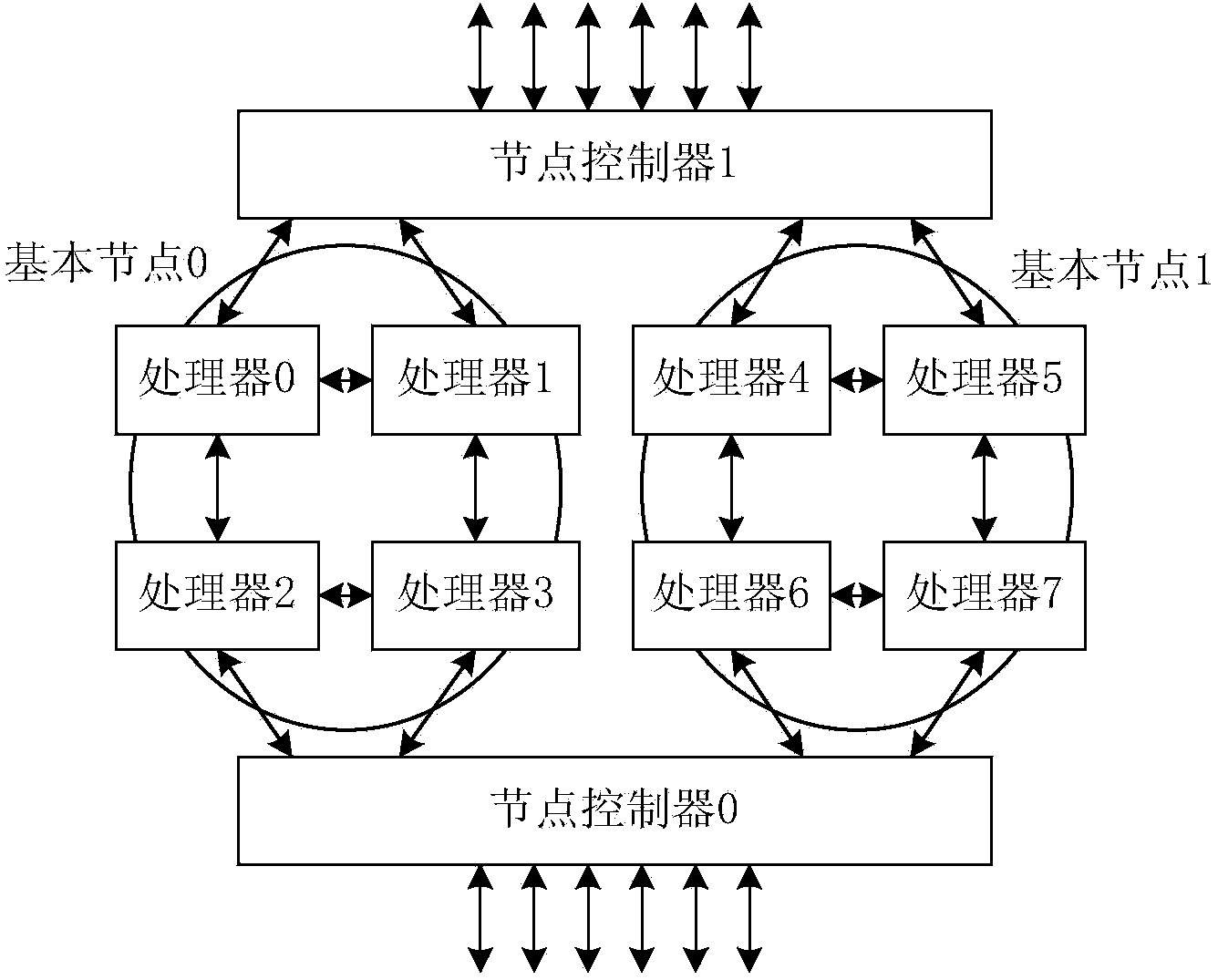

Server and data access method

ActiveCN103870435AReduce server latencyGuaranteed bandwidthDigital computer detailsElectric digital data processingAddress spaceAccess method

The invention relates to a server and a data access method. The server comprises processor interconnection nodes and node controllers; each processor interconnection node comprises at least one node controller and at least two base nodes; each base node comprises at least four processors; the node controllers are connected with the base nodes, used for managing the processor transactions according to address space of the processors, and also used for receiving access requests and identifications of source processors and sending the access requests and the node controller identifications to target processors according to the target addresses carried in the access requests; at least one NC (Node Controller) ensures the band width of the server; processors in the same base nodes can be directly interconnected and mutually access to one another; when processors in different base nodes in the same processor interconnection nodes carry out the data access, the processors do not need to span the chains of the NCs, so that the server lag is reduced.

Owner:HUAWEI TECH CO LTD

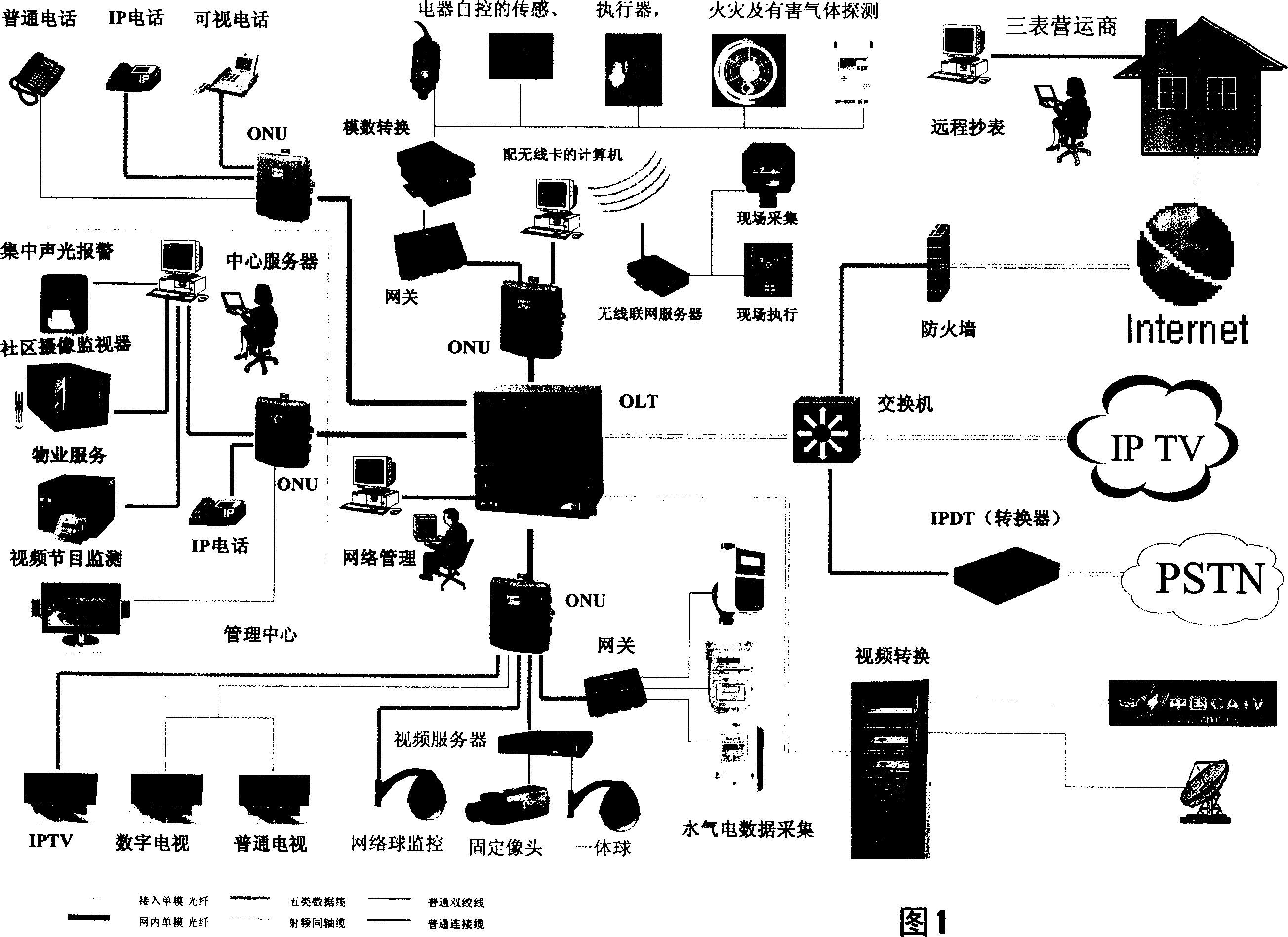

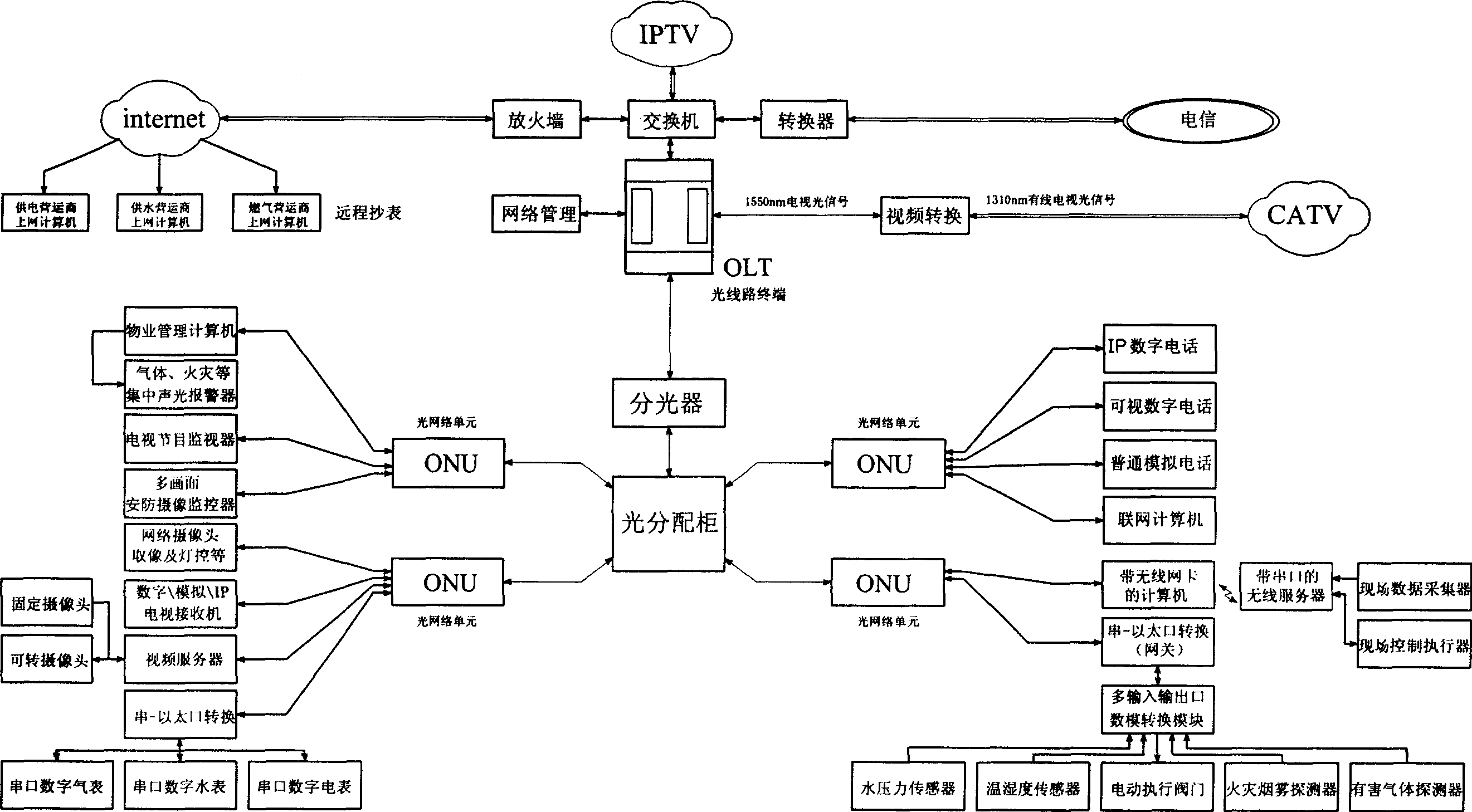

FTTX information transmission network system merged by multi-network

ActiveCN1731750AGuaranteed bandwidthGuaranteed priorityData switching by path configurationInformation transmissionAutomatic control

This invention relates to an information transporting net system for actualizing FTTX multiple nets merging one including multiple information, multiple systems, multiple services, which comprises a data net, a voice communication network, A video network, a protection control system network, a center automatic control network. It is characterized in that the said data net, the voice communication network, the video network links with optical network device OLT through optical fibre by exchanging and converting devices, the OLT via net management, through optical fibre links with user net unit ONU, ONU through its interface links with users' voice, video, data devices and control device, the invention constructs a high-efficiency net circuit with uniformed switch-in and application interface, and can avoid overlapping construction.

Owner:WUHAN YANGTZE OPTICAL TECH

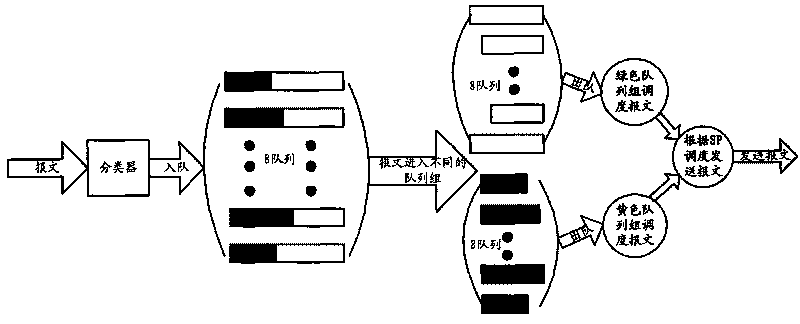

Information switching realizing system and method and scheduling algorithm

InactiveCN1866927AImprove the isolation effectGuaranteed bandwidthData switching by path configurationSelection arrangementsData interchangeEmbedded system

The invention relates to a system for realizing information communication and relative distribution method. Wherein, said system comprises an input port and a communicate unit; the input port is used to input data pack; said communicate unit is used to communicate date between the input port and the output port; the input port has delay queue; the input port divides each delay queue and selects data pack to be transferred to the communicate unit; the communicate unit stores the data pack and selects data pack to be transferred to the output port. The invention can divide the service data, and increase the insulation level according to the service demand.

Owner:CHINA NAT DIGITAL SWITCHING SYST ENG & TECH R&D CENT

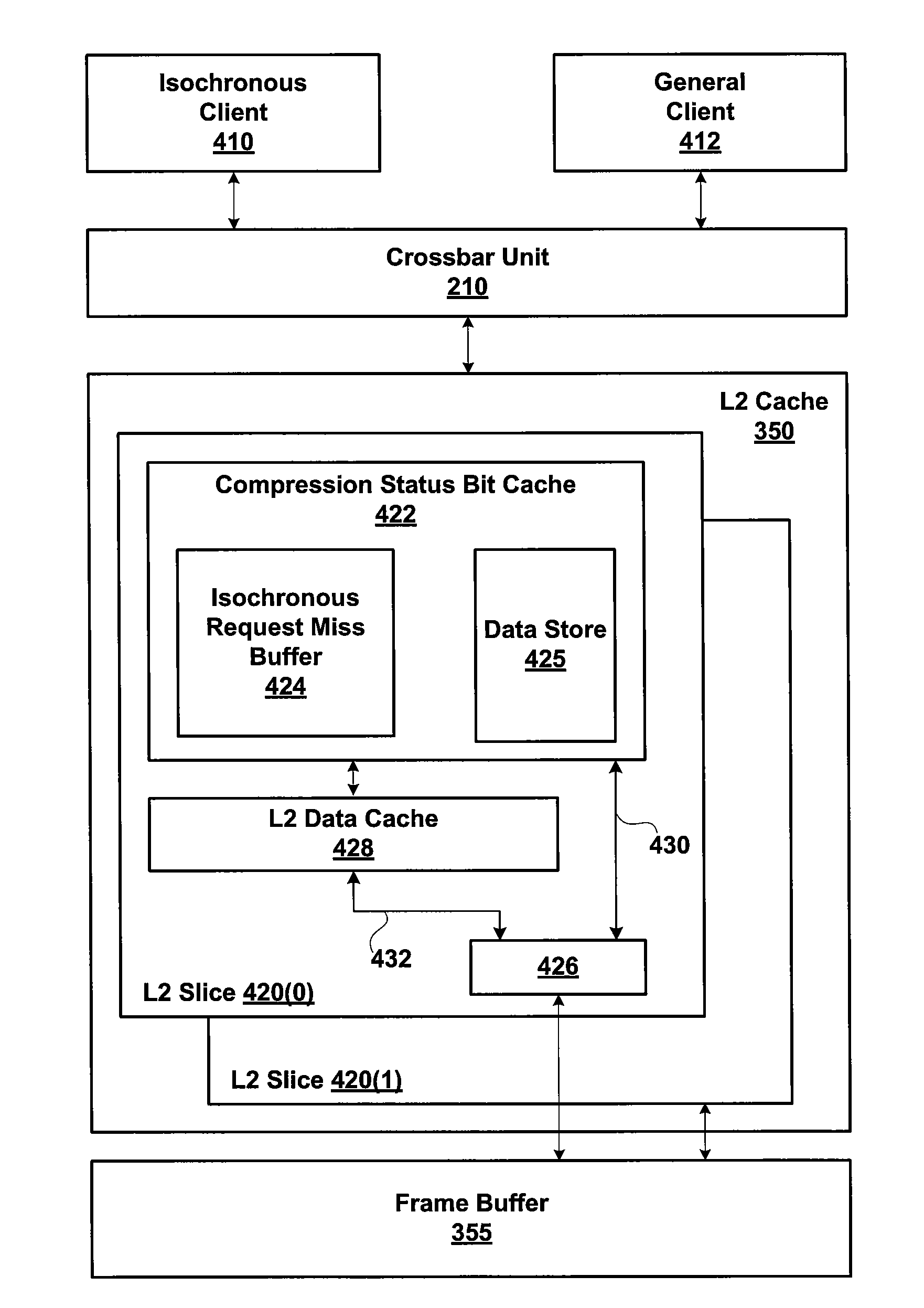

Compression status bit cache with deterministic isochronous latency

ActiveUS8595437B1Inexpensively optimizedGuaranteed bandwidthMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingClient-side

One embodiment of the present invention sets forth a compression status bit cache with deterministic latency for isochronous memory clients of compressed memory. The compression status bit cache improves overall memory system performance by providing on-chip availability of compression status bits that are used to size and interpret a memory access request to compressed memory. To avoid non-deterministic latency when an isochronous memory client accesses the compression status bit cache, two design features are employed. The first design feature involves bypassing any intermediate cache when the compression status bit cache reads a new cache line in response to a cache read miss, thereby eliminating additional, potentially non-deterministic latencies outside the scope of the compression status bit cache. The second design feature involves maintaining a minimum pool of clean cache lines by opportunistically writing back dirty cache lines and, optionally, temporarily blocking non-critical requests that would dirty already clean cache lines. With clean cache lines available to be overwritten quickly, the compression status bit cache avoids incurring additional miss write back latencies.

Owner:NVIDIA CORP

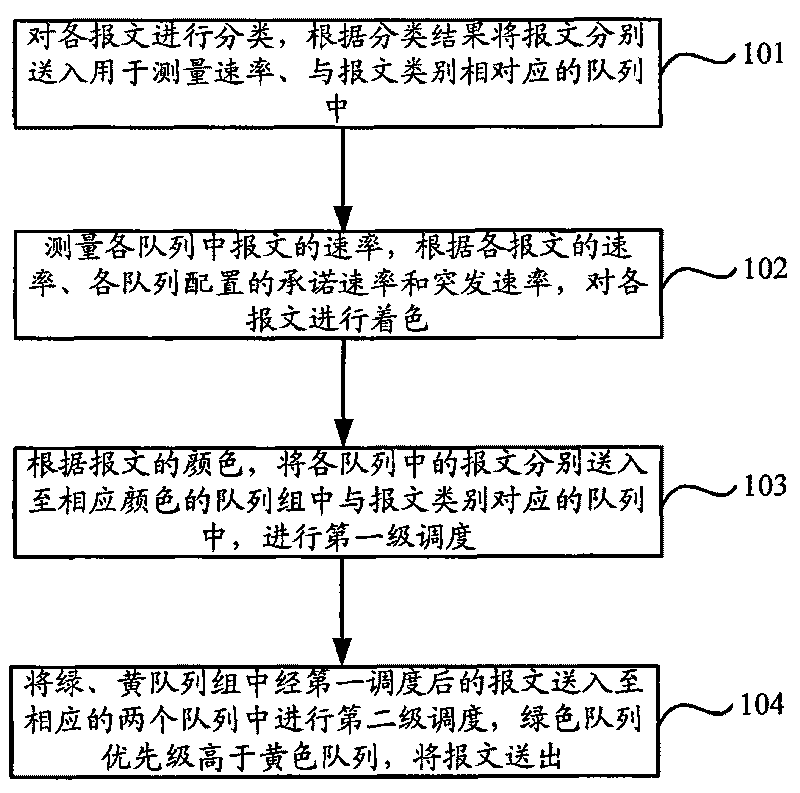

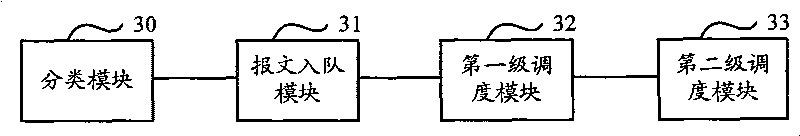

Method and system for queue scheduling

ActiveCN101692648AEasy to controlGuaranteed bandwidthData switching by path configurationQuality of serviceDistributed computing

The invention discloses a method and a system for queue scheduling for solving the problem of low business service quality caused by the incapability of guaranteeing a promised rate in advance in the prior queue scheduling process. The method comprises: sending messages of which the message rates are lower than a first threshold set for the messages of the same kind to queues corresponding to the types of the messages in a first queue group; sending messages of which the rates are higher than the first threshold set for the messages of the same kind but lower than a second threshold to queues corresponding to the types of the messages in a second queue group; according to a scheduling policy which is determined according to the operation state of a network, scheduling the messages in the queues in the first queue group to a first scheduling queue and scheduling the messages in queues in the second queue group to a second scheduling queue of which the priority is lower than that of the first scheduling queue; and scheduling the messages in the first scheduling queue first. According to the technical scheme of the invention, the bandwidth of the first threshold of the queues is guaranteed first and the business service quality is improved.

Owner:ZTE CORP

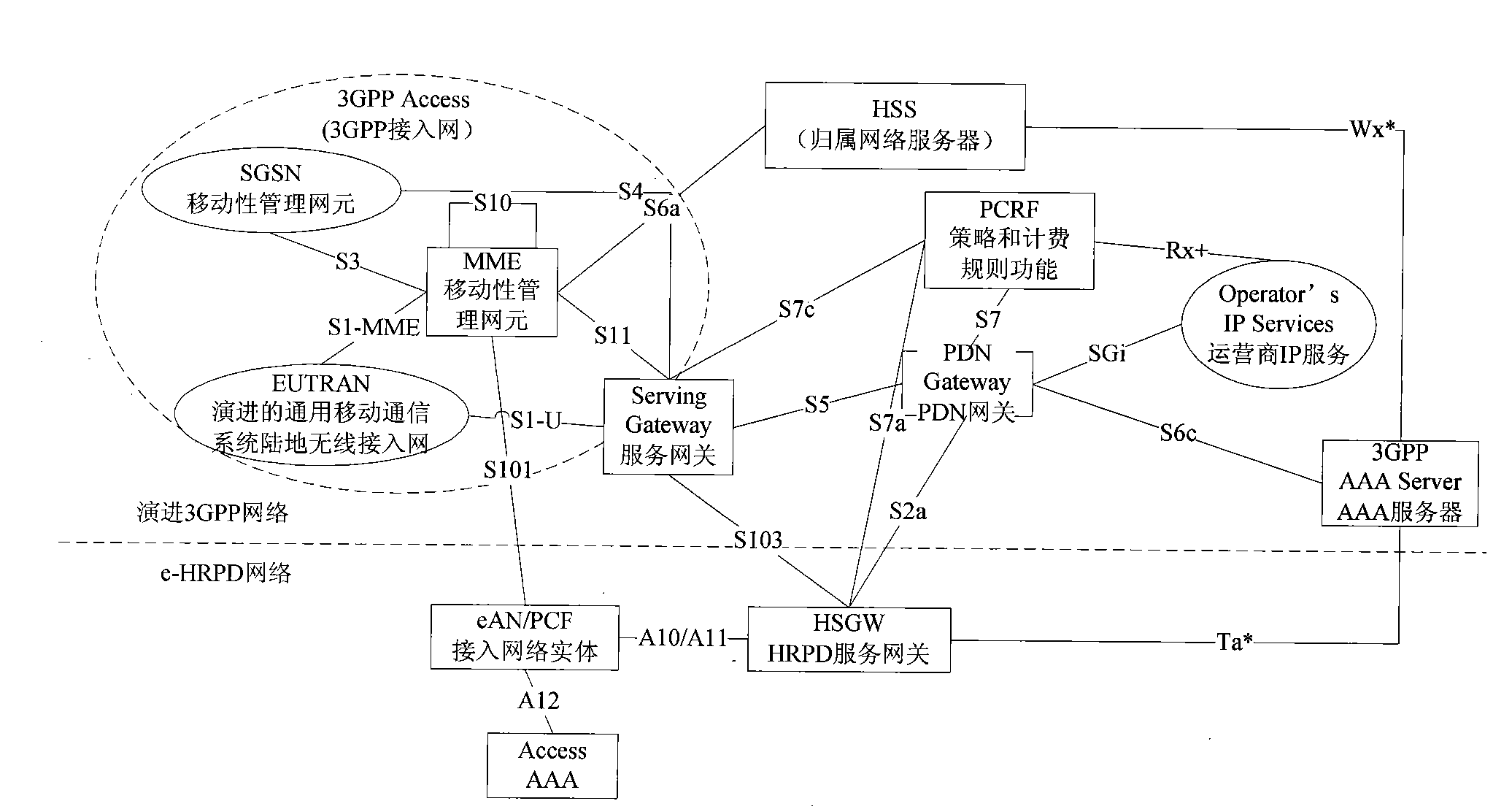

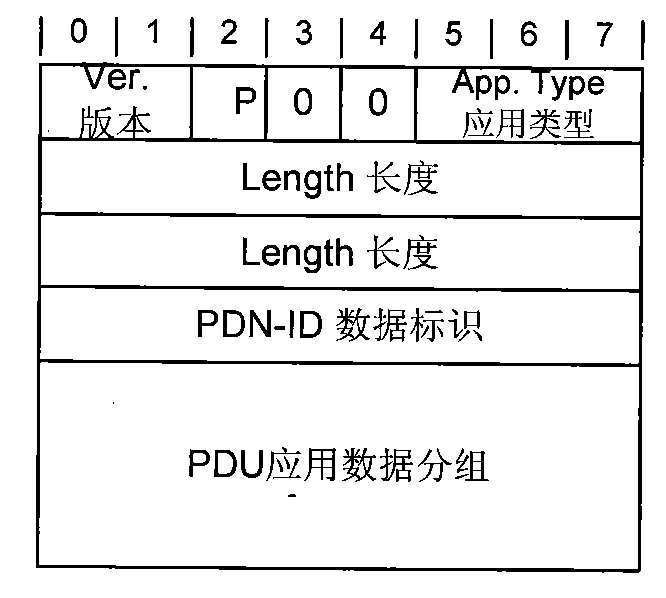

Method, system and equipment for transporting signaling

InactiveCN101599896AAchieve separationEasy to handleMessaging/mailboxes/announcementsData switching networksNetwork packetThird generation

The invention discloses a method, a system and equipment for transporting signaling, belonging to the communication field. The method for transporting singling comprises the following steps: signaling information from a terminal is received by an extensible authentication protocol (eAN) / PCF through a first default route, and the signaling information is sent to an HRDP serving gateway (HSGW) through a second default route; or signaling information from the HSGW is received by the eAN / PCF through the second default route, and the signaling information is sent to the terminal through the first default route; and the bearing separation of the signaling information and data is achieved through the bearing signaling information of the first default route and the second default route. The system for transporting singling comprises user equipment (UE), the eAN / PCF and the HSGW. The sorting of a user plane data packet and signaling in a band can be avoided by using signaling outside the band, the performance of the system for transporting signaling is improved, the processing capacity of the HSGW and the capacity of a serviceable terminal are enhanced, the band width of the surface of the signaling is ensured, the signaling can not lose under the high load of the system for transporting signaling, and the stability and the reliability of the system for transporting signaling are improved. Because the surface of the signaling and the user plane are logically separated, the system for transporting signaling more structurally meets the requirement of the third generation partnership project (3GPP).

Owner:HUAWEI TECH CO LTD

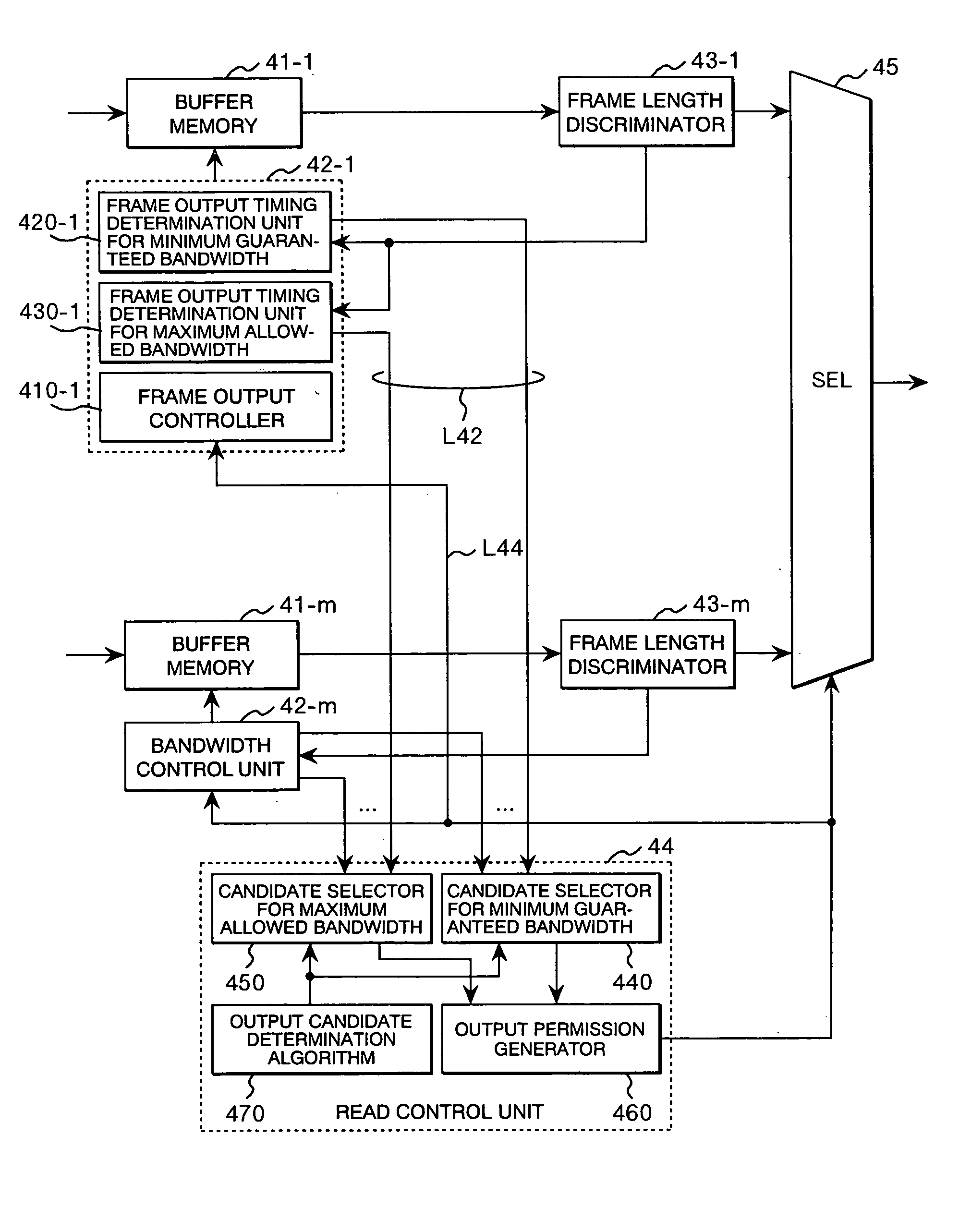

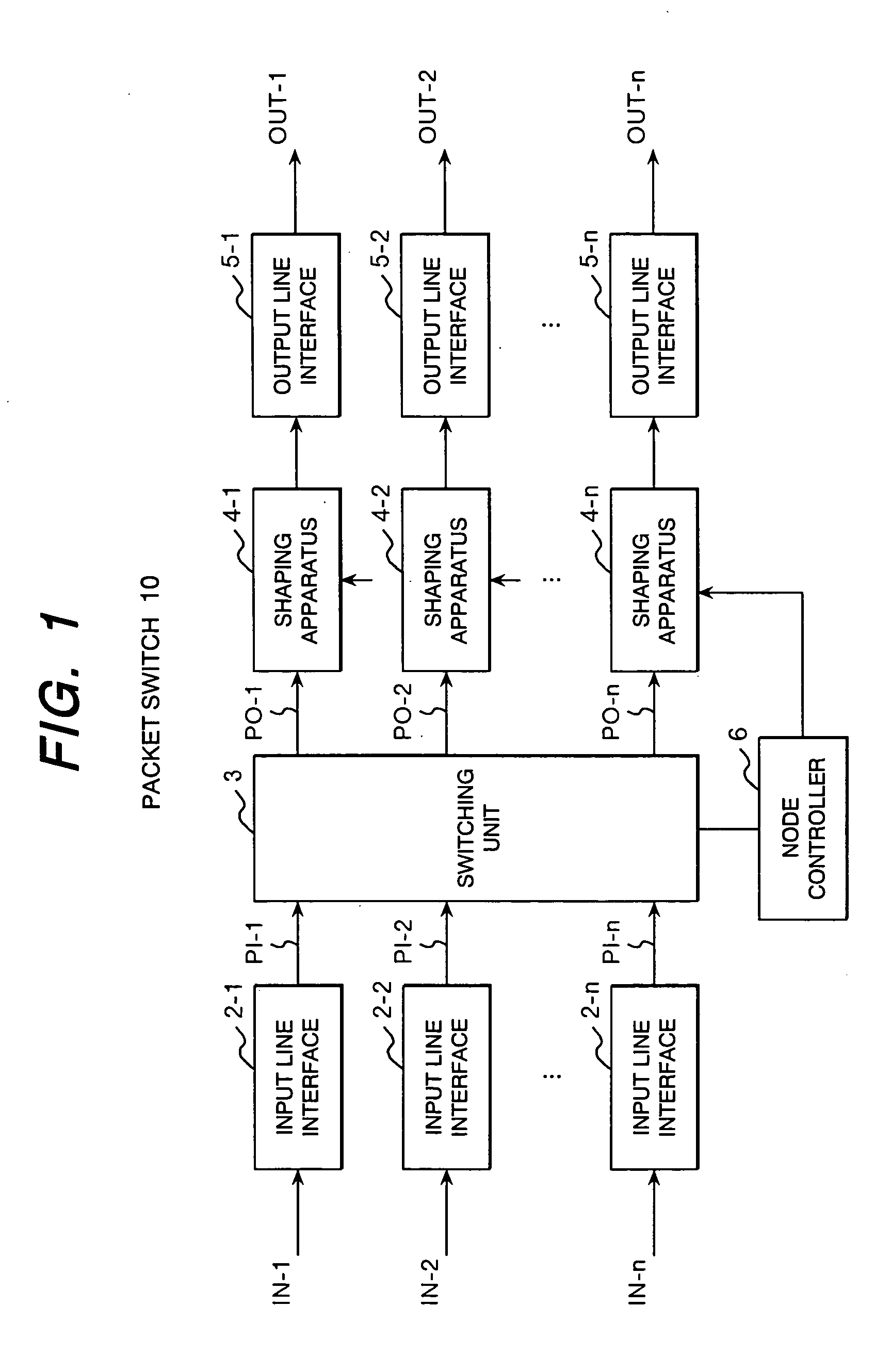

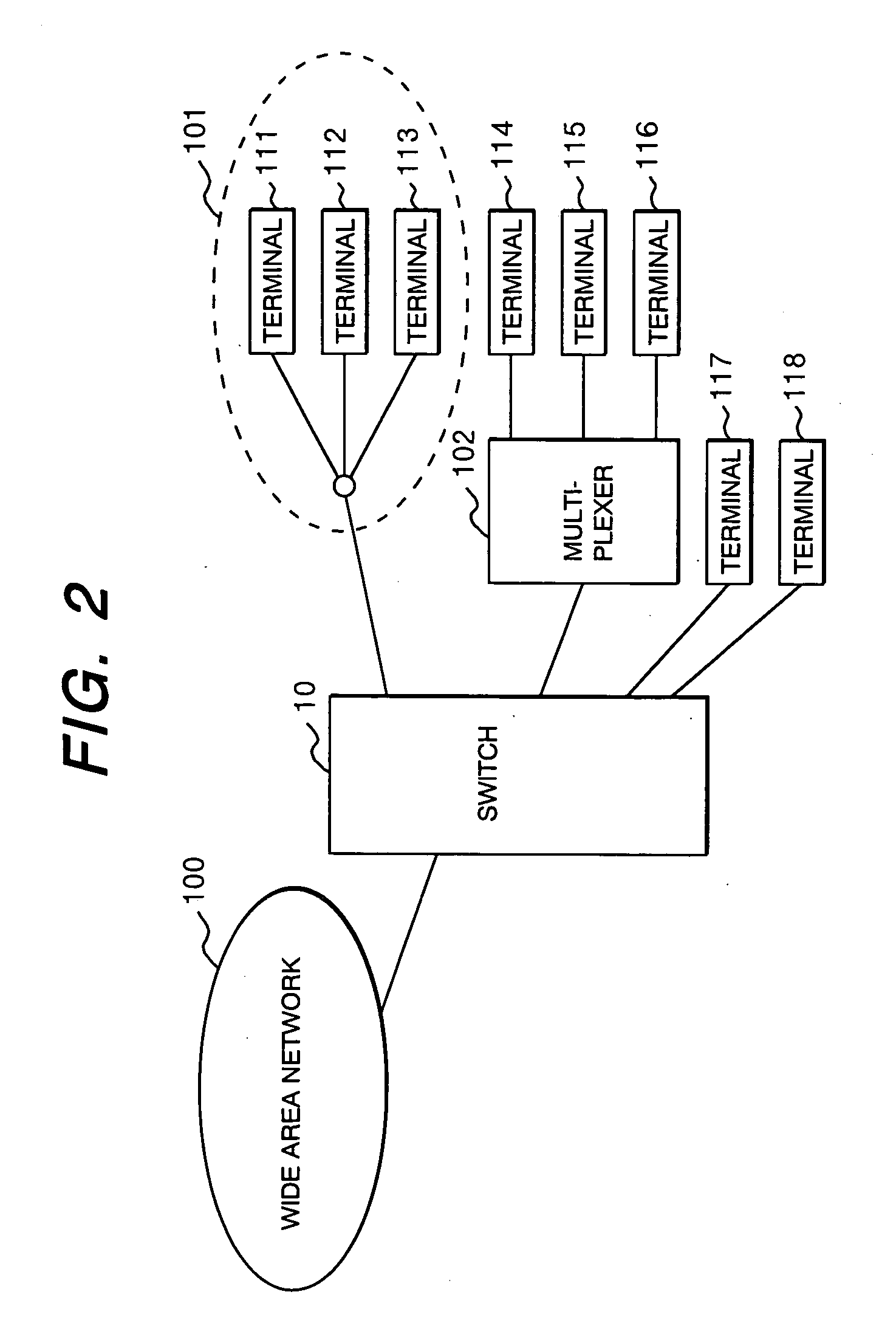

Shaping apparatus, communication node and flow control method for controlling bandwidth of variable length frames

InactiveUS20050259578A1Prevent excess of maximum allowed bandwidthEasy to useError preventionTransmission systemsVariable-length codeVariable length

A shaping apparatus comprising a plurality of buffer memories allocated for different flows, bandwidth control units associated with the buffer memories, and a read control unit, wherein each of the bandwidth control units issues a first frame output request based on a maximum allowed bandwidth and a second frame output request based on a minimum guaranteed bandwidth, and the read control unit selects a bandwidth control unit to be permitted for frame output execution out of bandwidth control units that are issuing the first or second frame output request, giving priority to the second frame output request.

Owner:HITACHI LTD

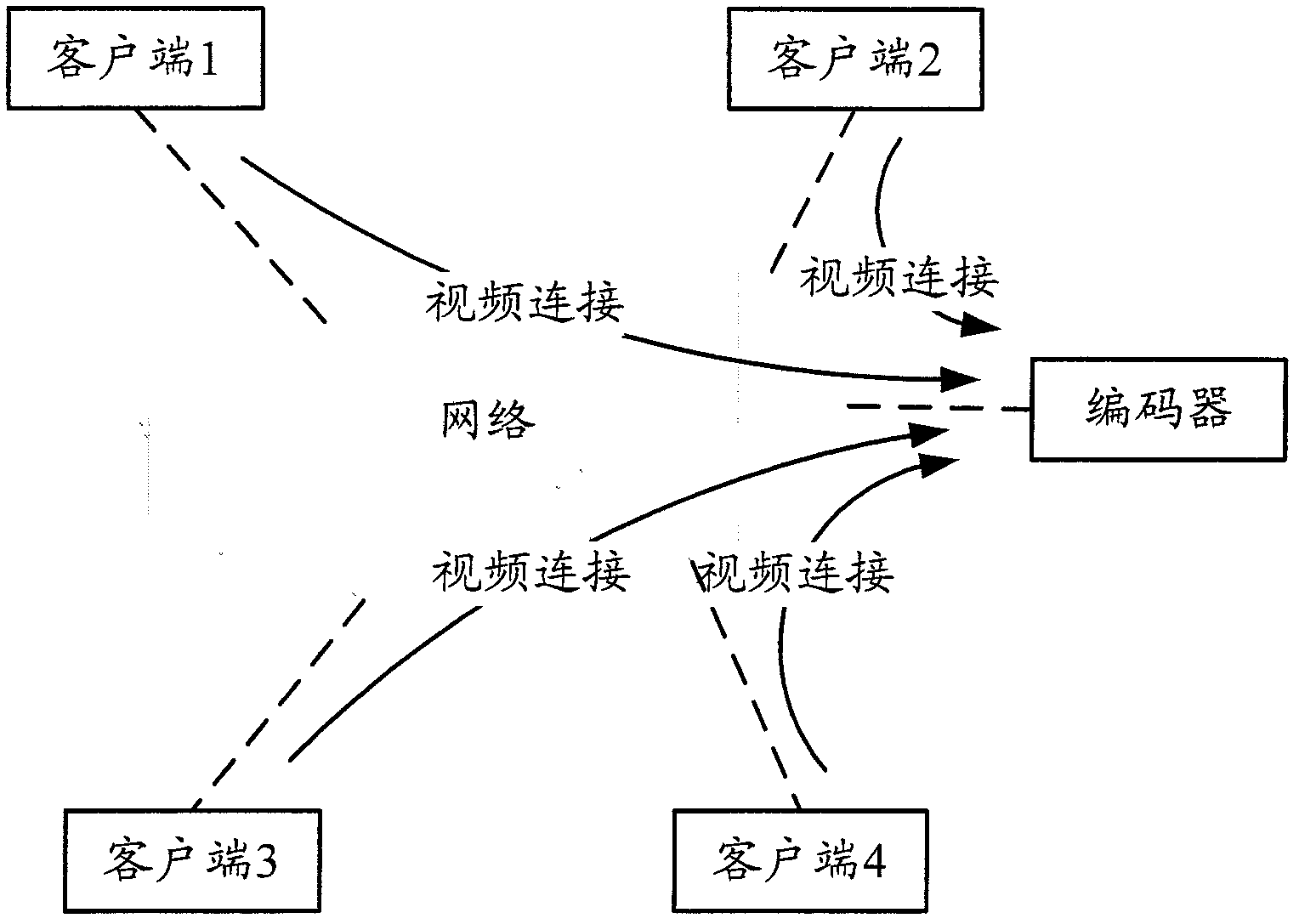

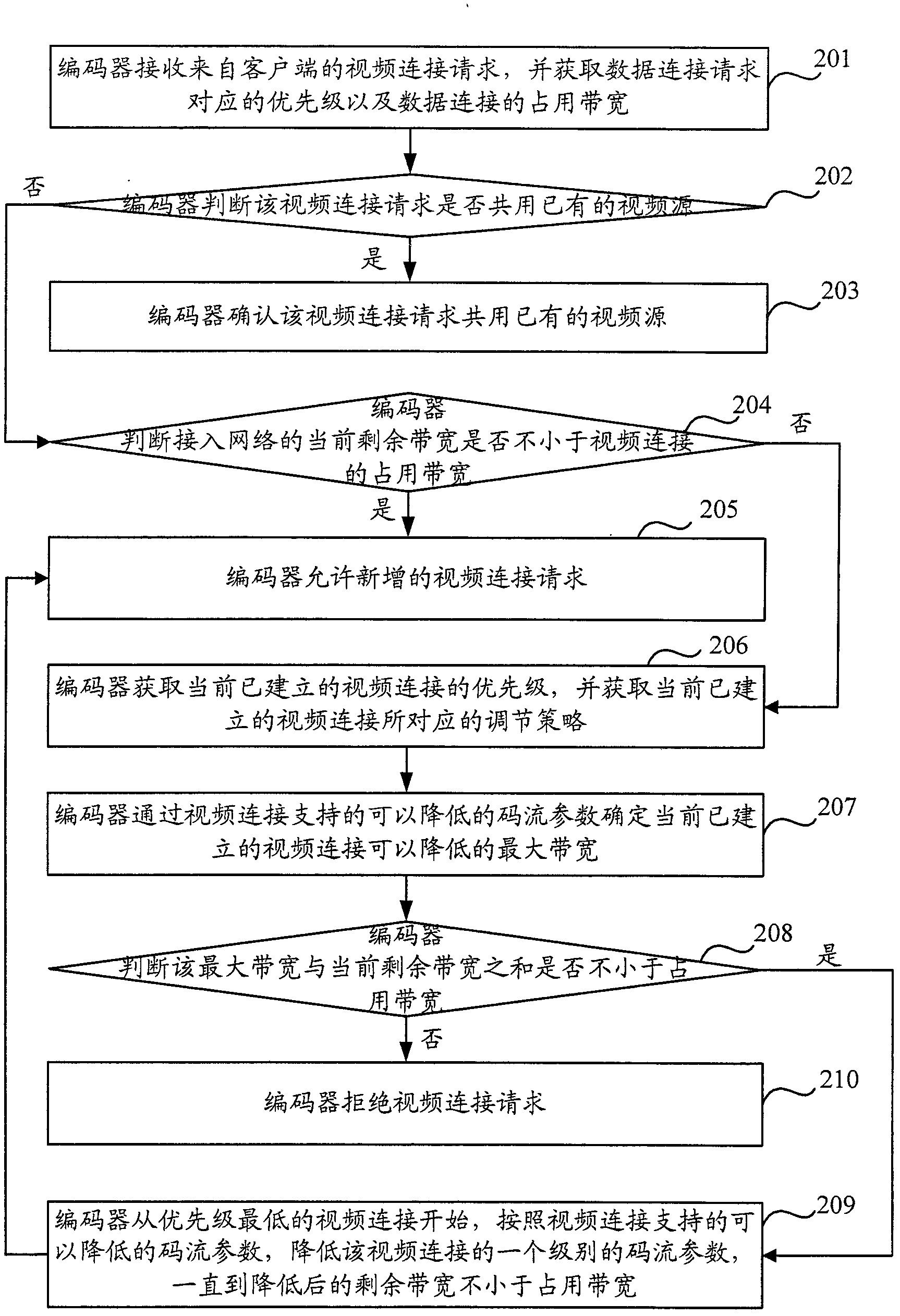

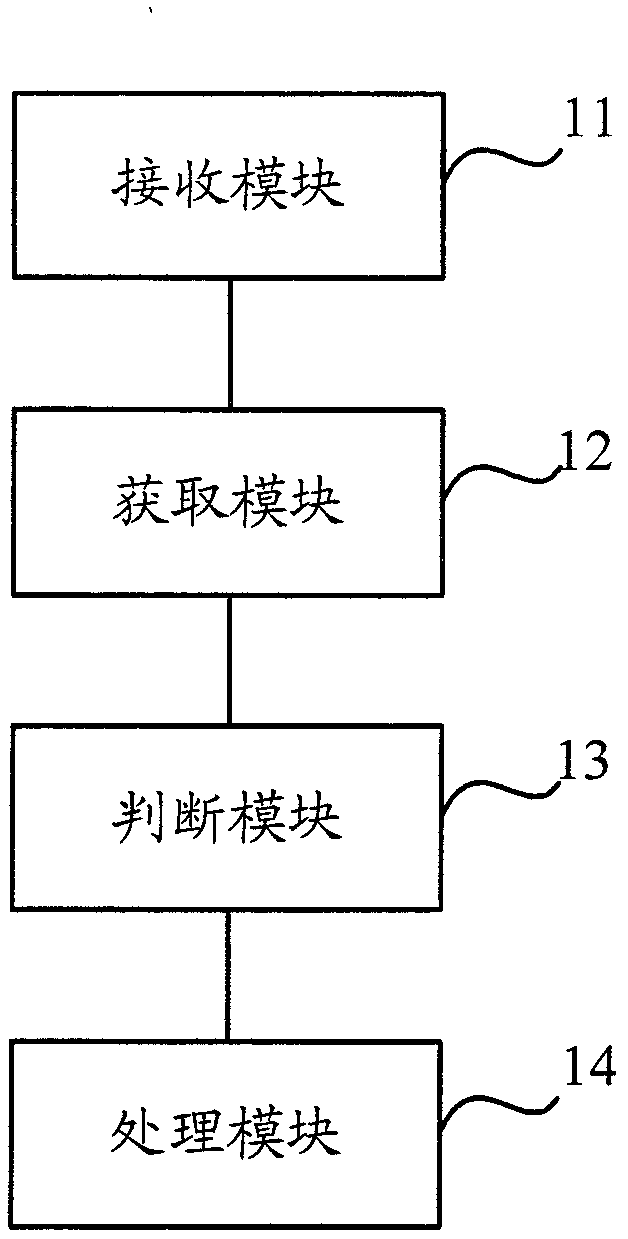

Video connection control method and equipment

ActiveCN102307300AImprove experienceGuaranteed bandwidthPulse modulation television signal transmissionConnection controlLower priority

The invention discloses a video connection control method and video connection control equipment. The method comprises the following steps of: receiving a video connection request, and acquiring a priority and an occupied bandwidth corresponding to the video connection request; if a current residual bandwidth for encoding equipment to access a network is lower than the occupied bandwidth, determining a maximum bandwidth, which can be decreased, of currently established video connection, and judging whether the sum of the maximum bandwidth and the current residual bandwidth is not lower than the occupied bandwidth or not; if the sum of the maximum bandwidth and the current residual bandwidth is not lower than the occupied bandwidth, decreasing code stream parameters of the video connectionaccording to the code stream parameters which can be decreased and are supported by the video connection from the video connection with the lowest priority until the residual bandwidth after the decreasing is not lower than the occupied bandwidth; otherwise denying to establish the video connection. By the method and the equipment, users with high priorities can be ensured to obtain better experiences.

Owner:XINHUASAN INFORMATION TECH CO LTD

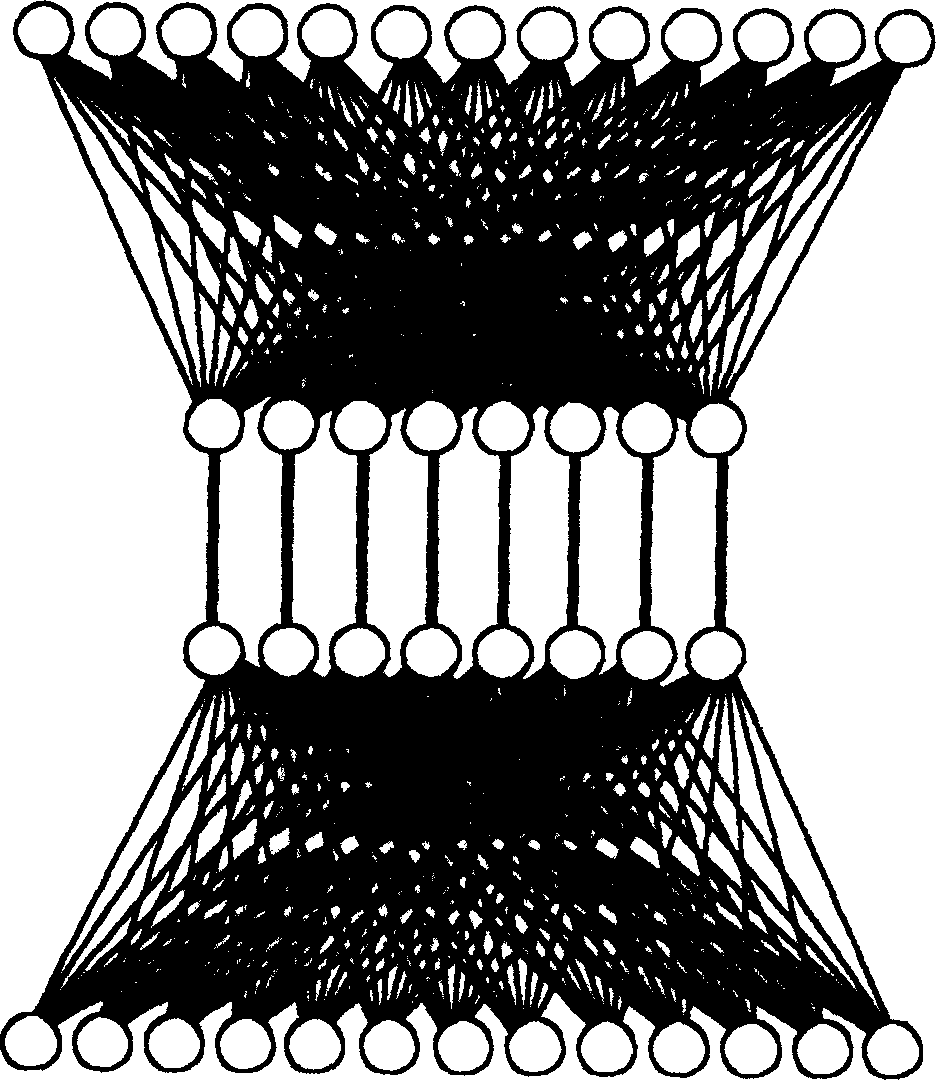

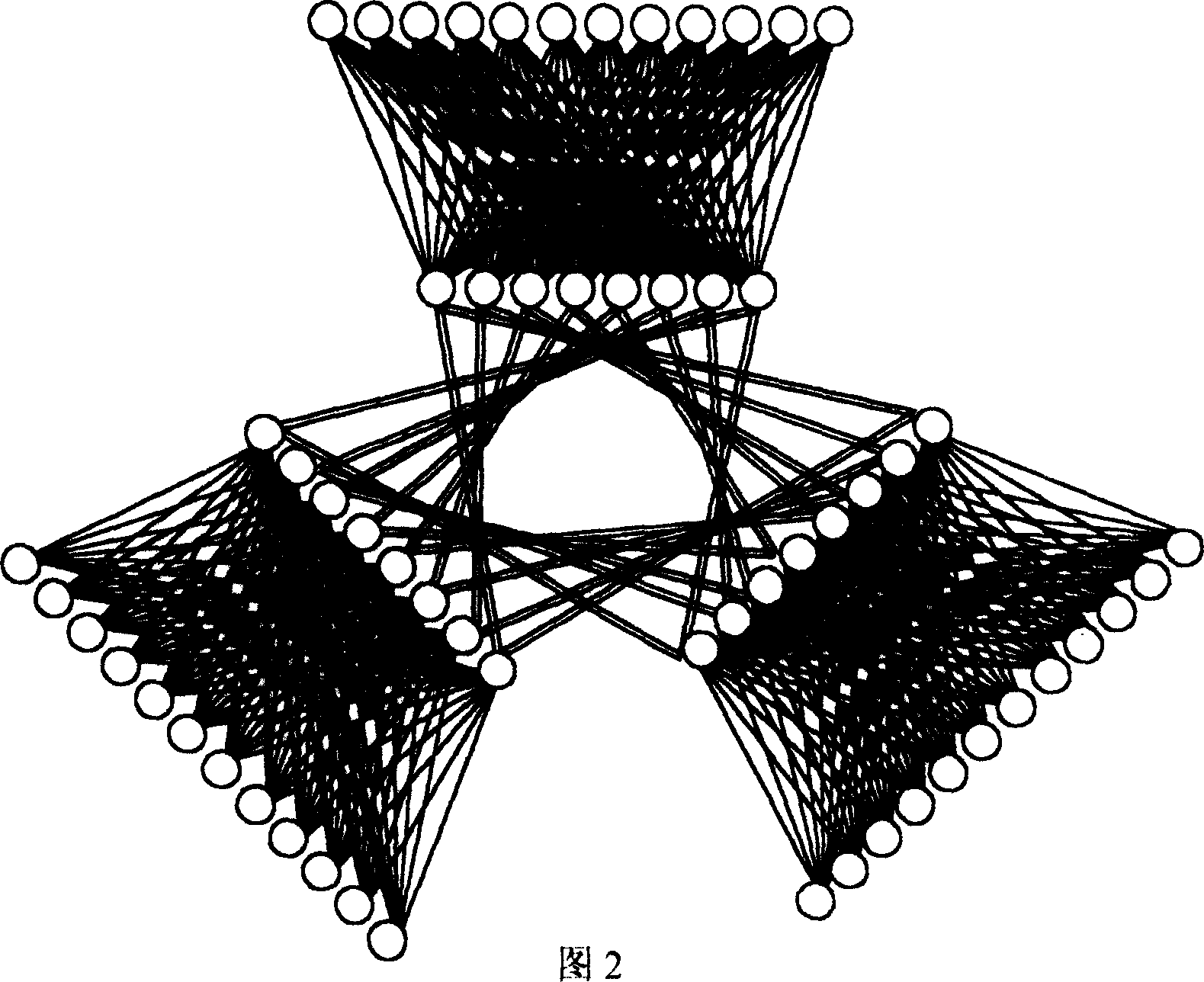

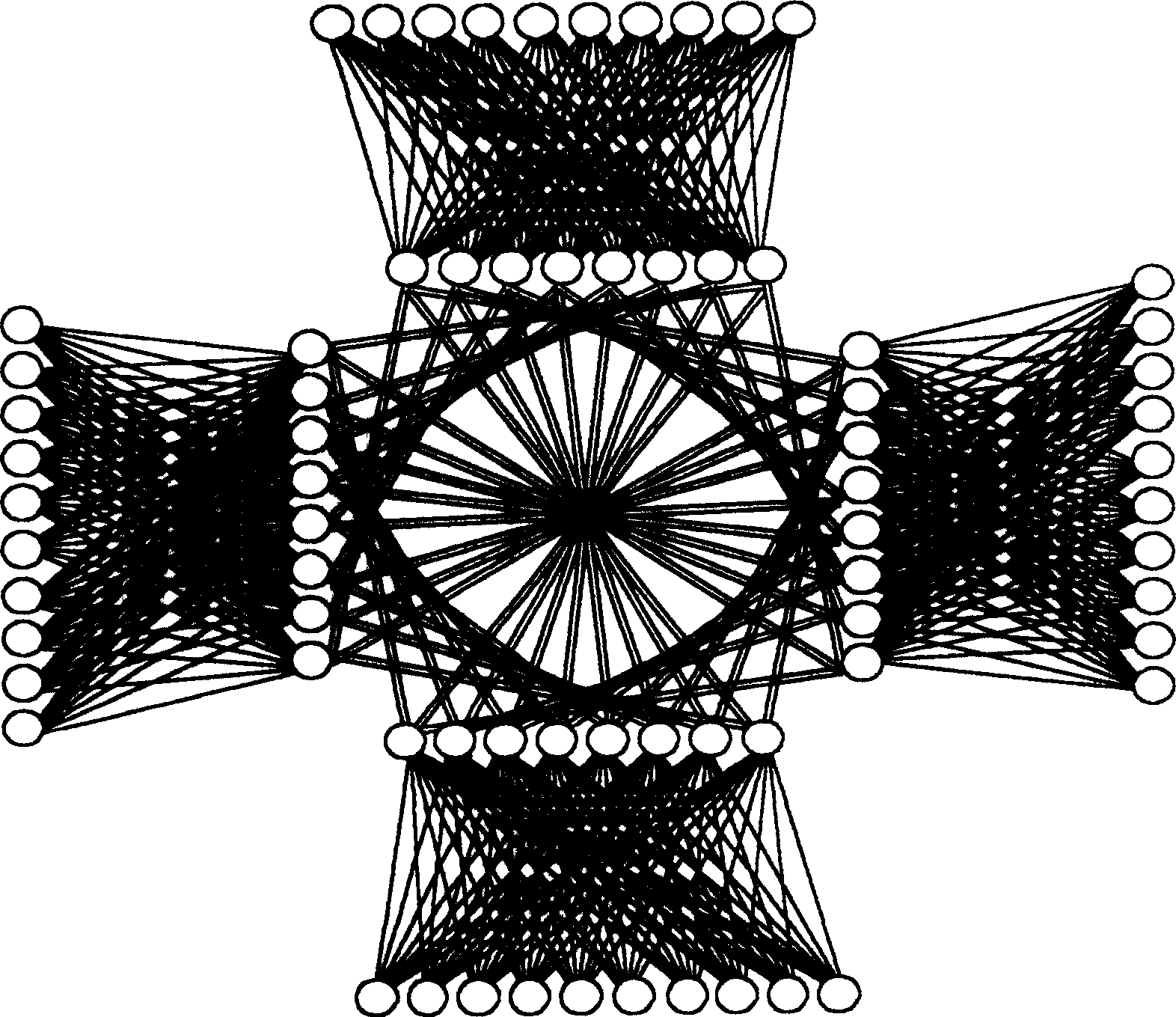

High speed, high character price ratio multi branch fat tree network topological structure

InactiveCN1514591AGuaranteed bandwidthCommunication without blockingData switching by path configurationSelection arrangementsHigh ratePrice ratio

When numbers of node in network increases to a certain scale, network topology interconnection techniques between exchangers are produced. Synthesized interconnection mode of fat tree topology and full net topology is utilized in the invention, that is to say fat tree interconnection is utilized for each branch and full net interconnection mode is applied among branches. Based on number of branch, two branches fat trees, three branches fat trees and four branches fat trees are named. Comparing with network topology structure of nonblocking fat tree under condition of the same number of exchnger, the invented structure possesses advantages of available large scale of networked scale, lowered communication delay, and higher rate of performances / cost.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

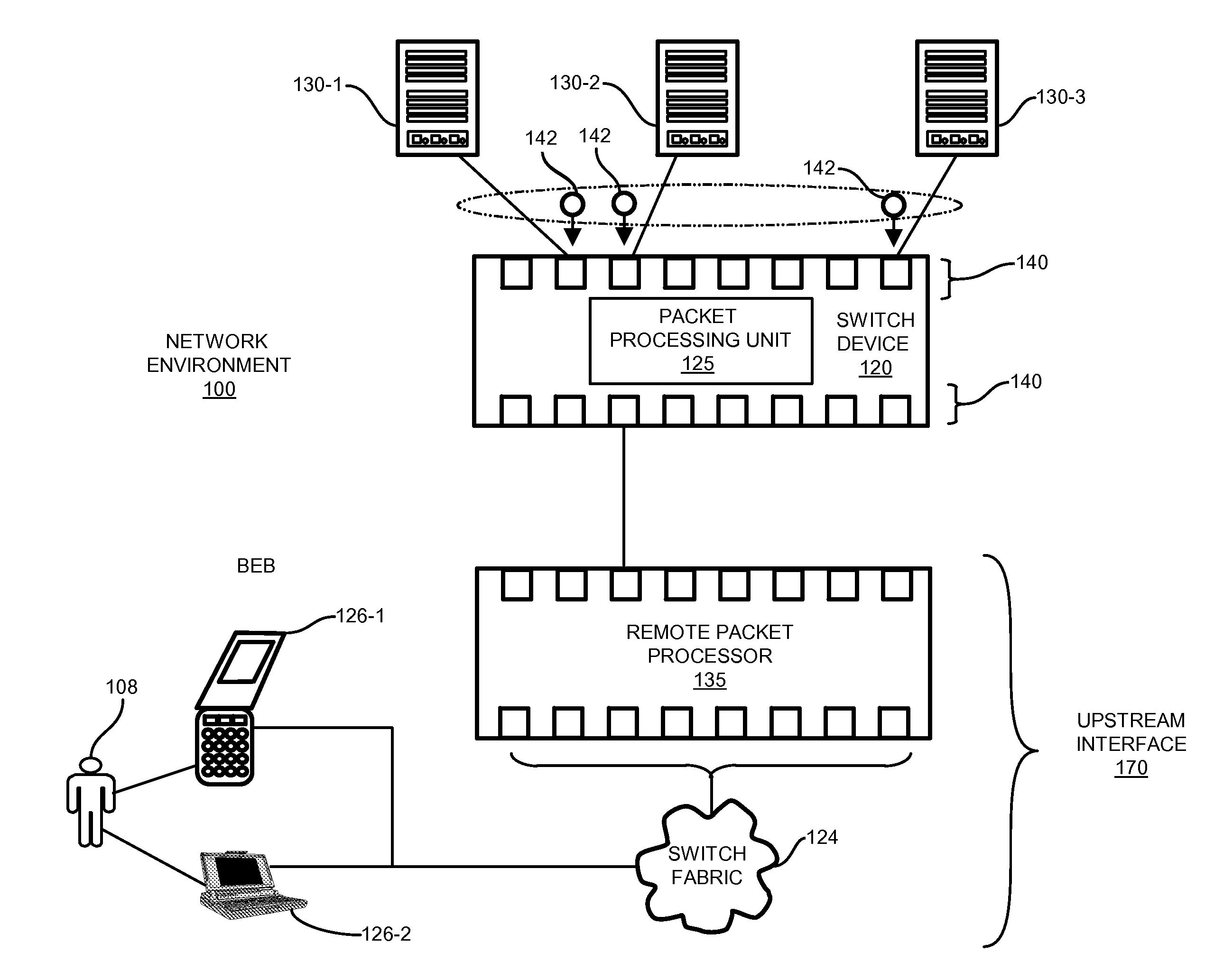

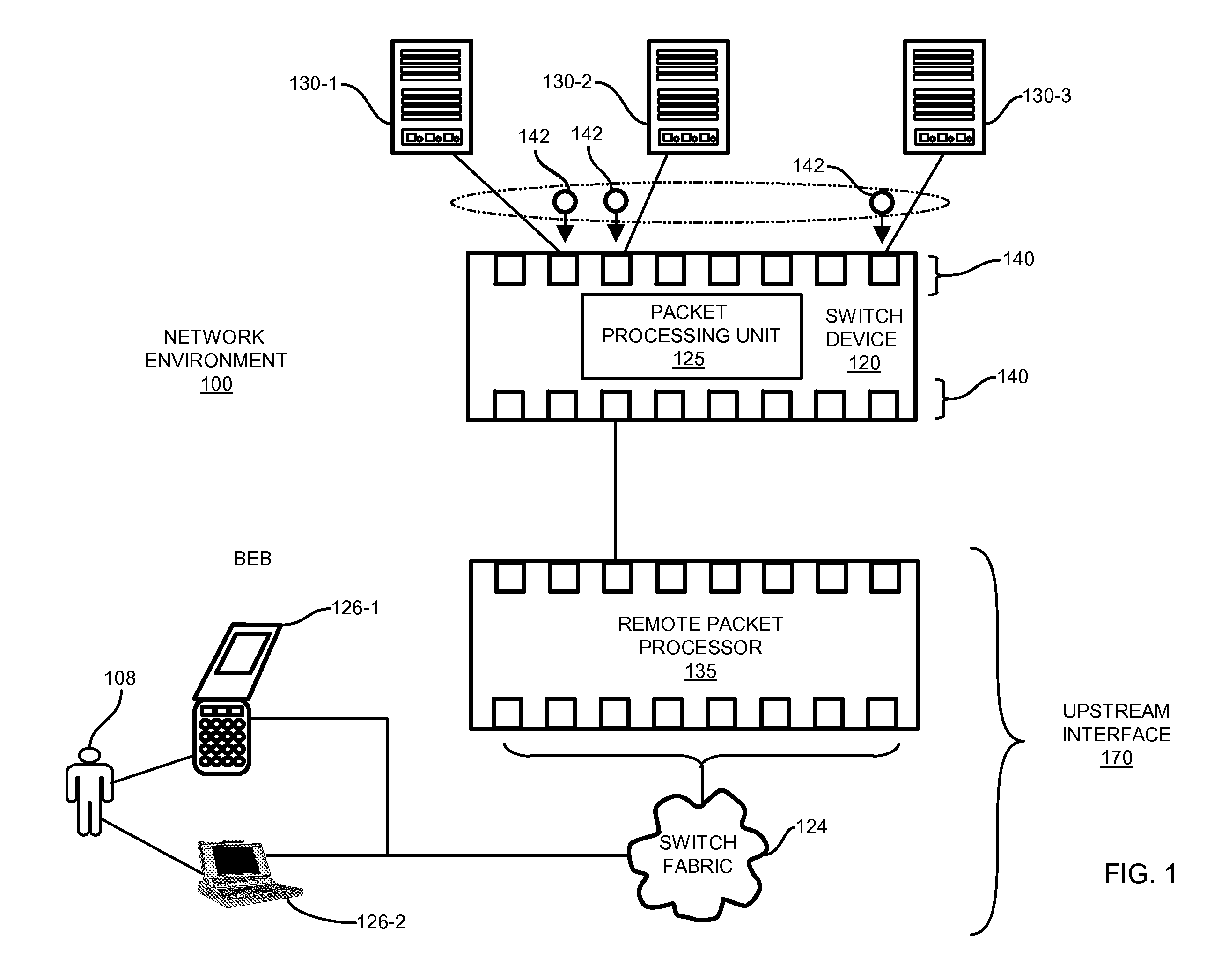

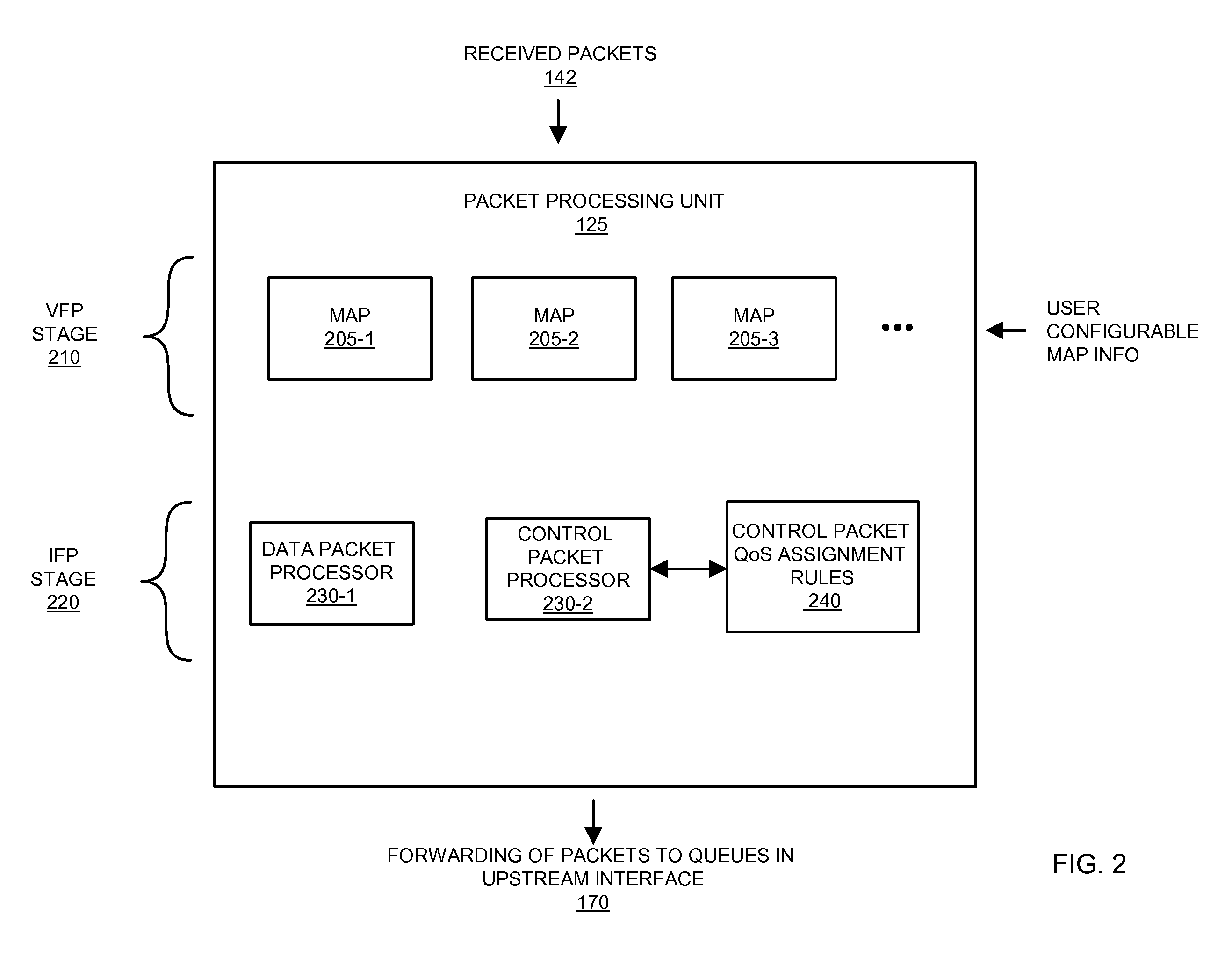

IMPLEMENTATION OF A QoS PROCESSING FILTER TO MANAGE UPSTREAM OVER-SUBSCRIPTION

ActiveUS20120218894A1Guaranteed bandwidthError preventionTransmission systemsEmbedded systemPacket processing

A switch device can be configured to operate in a manner that was not originally intended. For example, a switch device can be a Broadcom XGS type of device that is configured with a packet-processing unit to perform line speed lookups in accordance with a default configuration. The default configuration can include classifying and forwarding received packets to an upstream interface based on VLAN information. The default configuration can be overwritten such that the switch device operates in a different manner than originally intended. For example, the switch device can be reconfigured to include mapping rules that specify different QoS data to be assigned to different type of received packets. Subsequent to utilizing the maps to identify QoS information for received packets, the reconfigured switch device uses the QoS information to forward the packets to queues in an upstream interface.

Owner:EXTREME NETWORKS INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com