Patents

Literature

366results about How to "Reduce access latency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

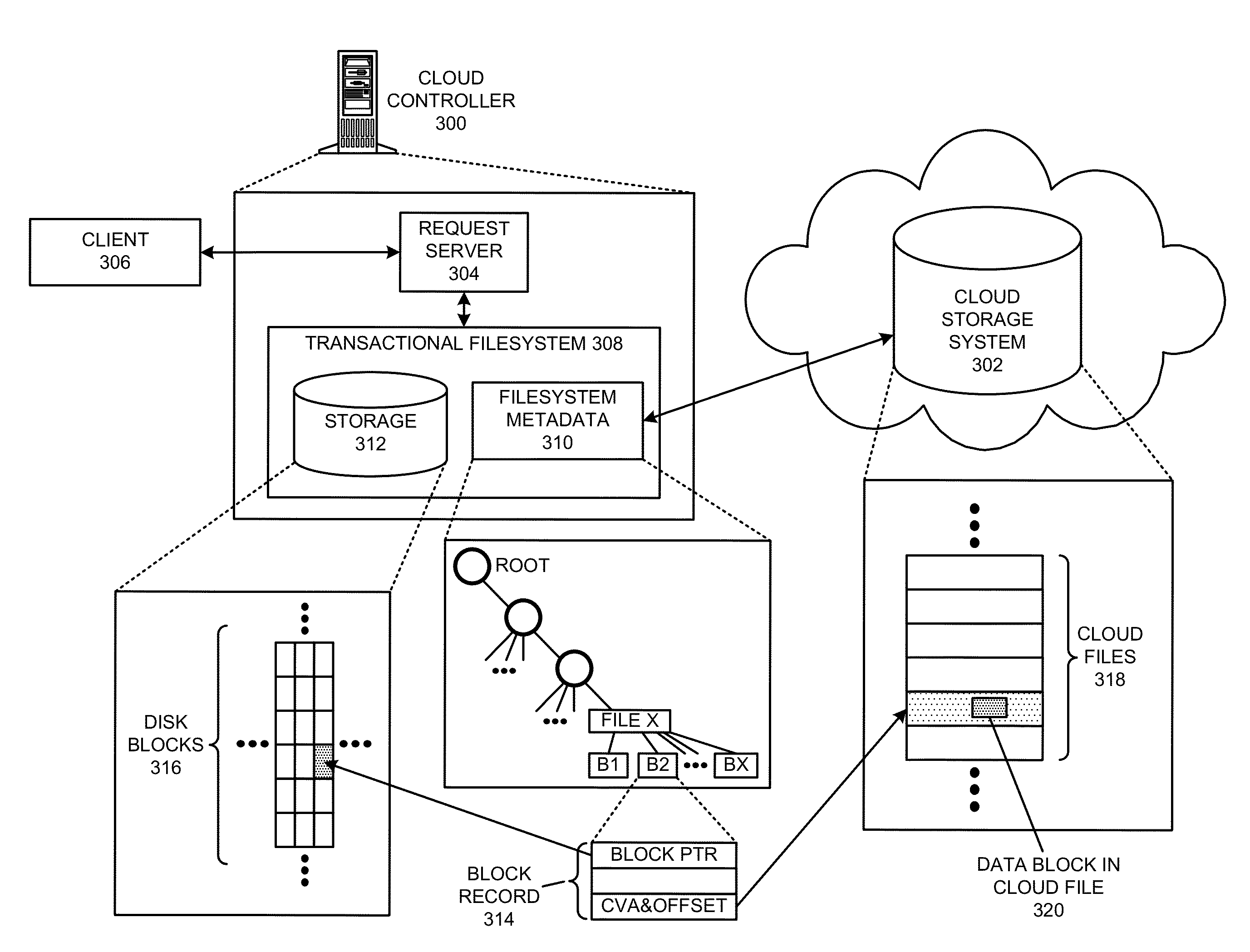

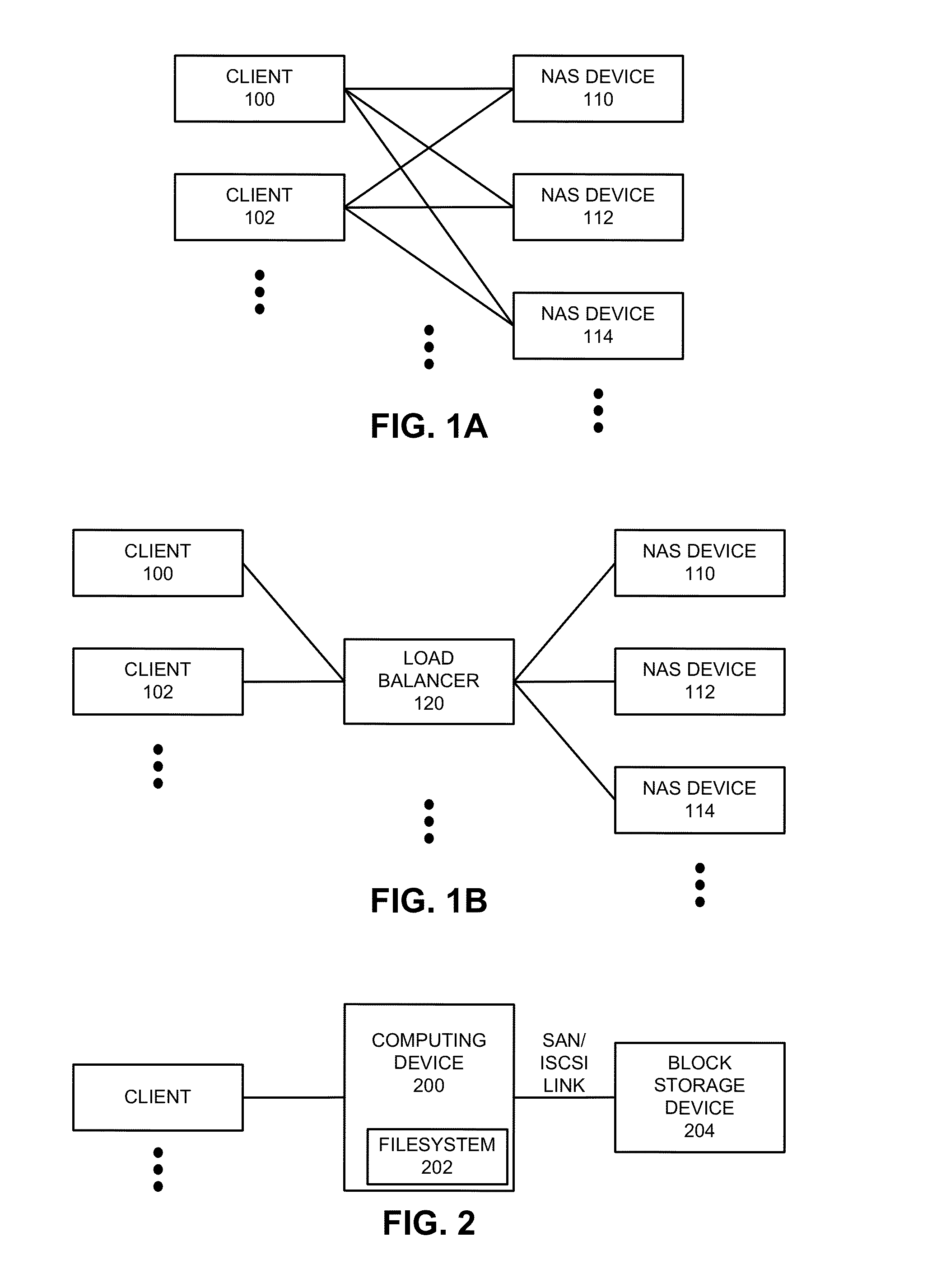

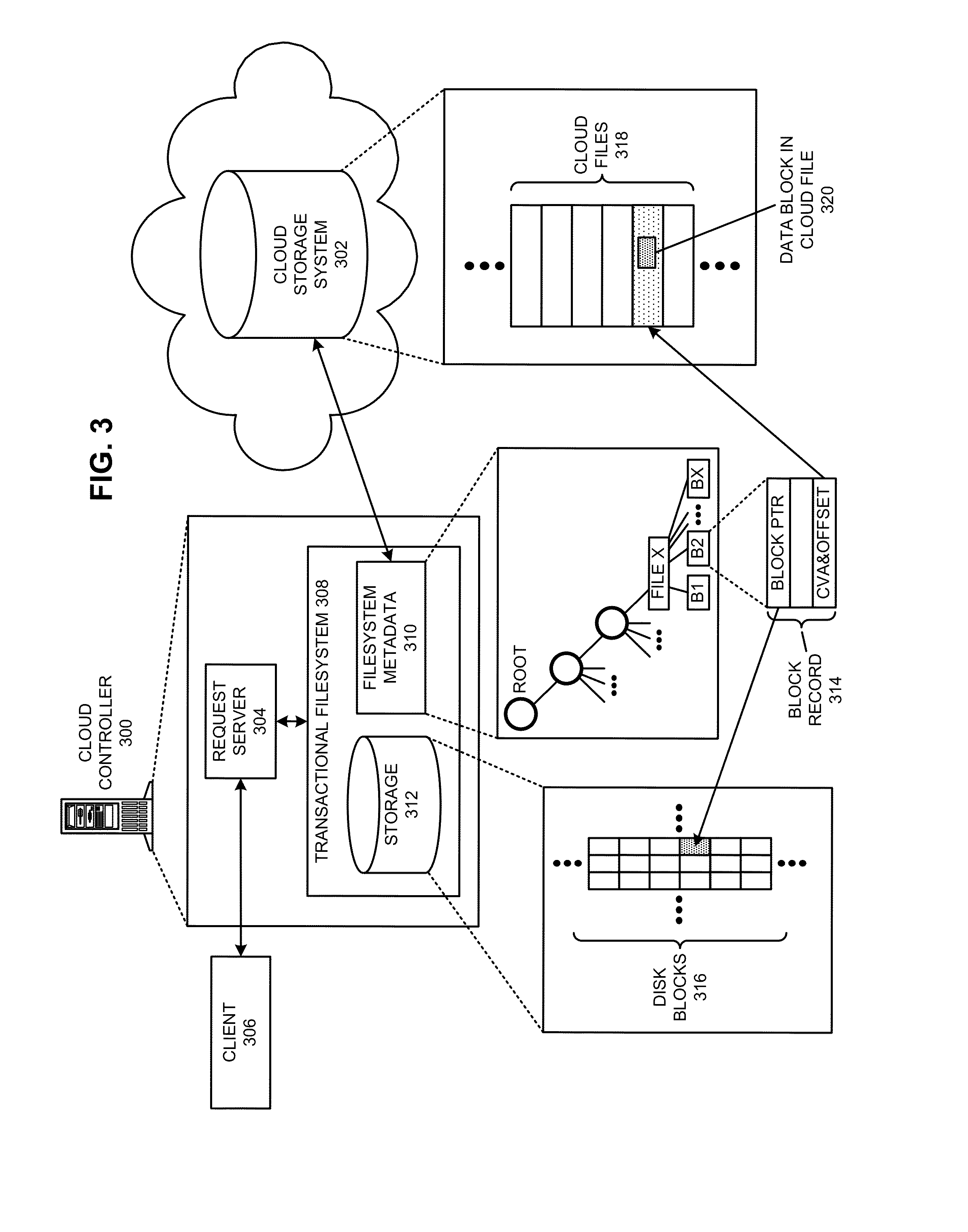

Avoiding client timeouts in a distributed filesystem

ActiveUS9852150B2Reduce access latencyMemory architecture accessing/allocationInput/output to record carriersFile systemCloud storage system

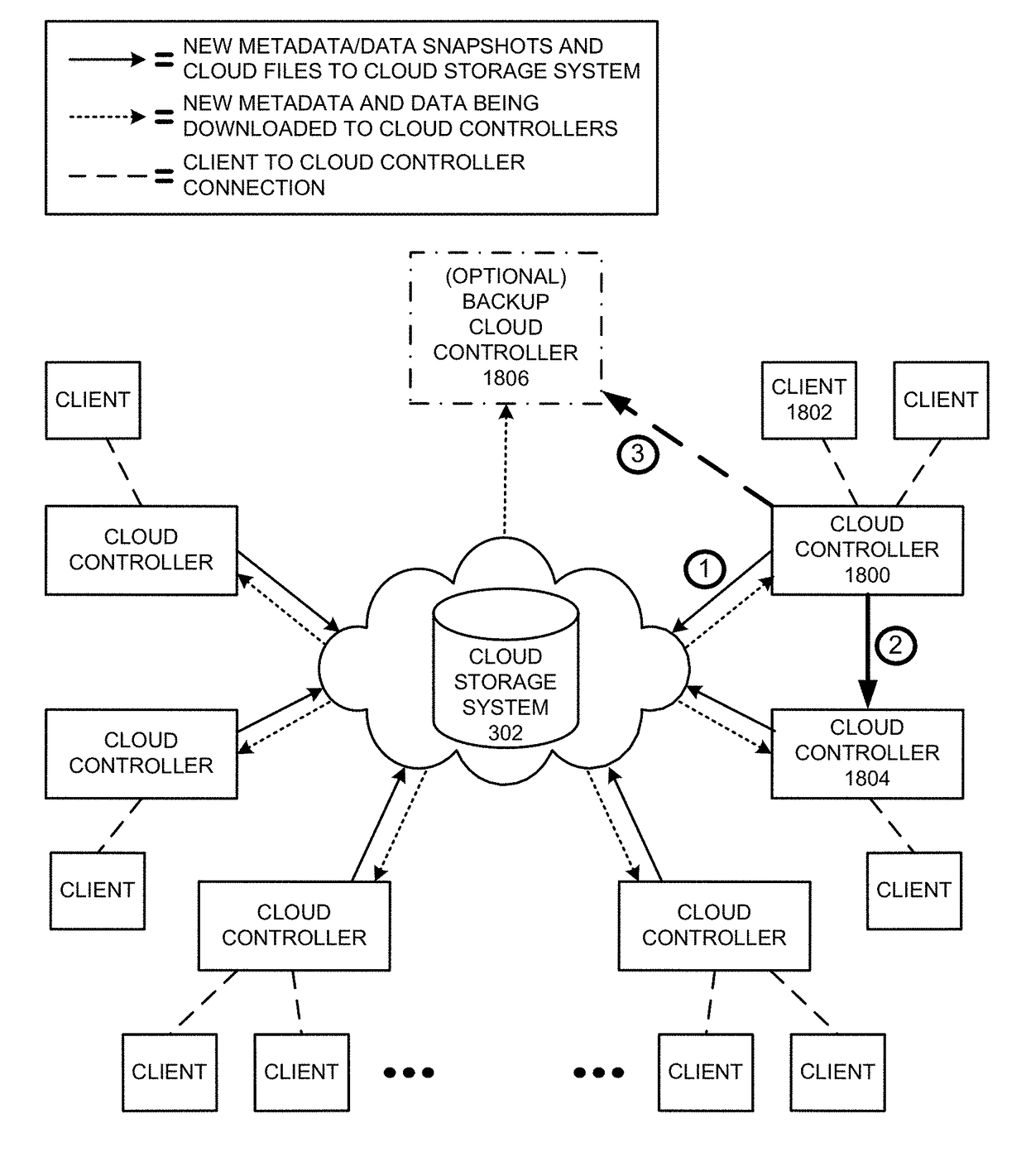

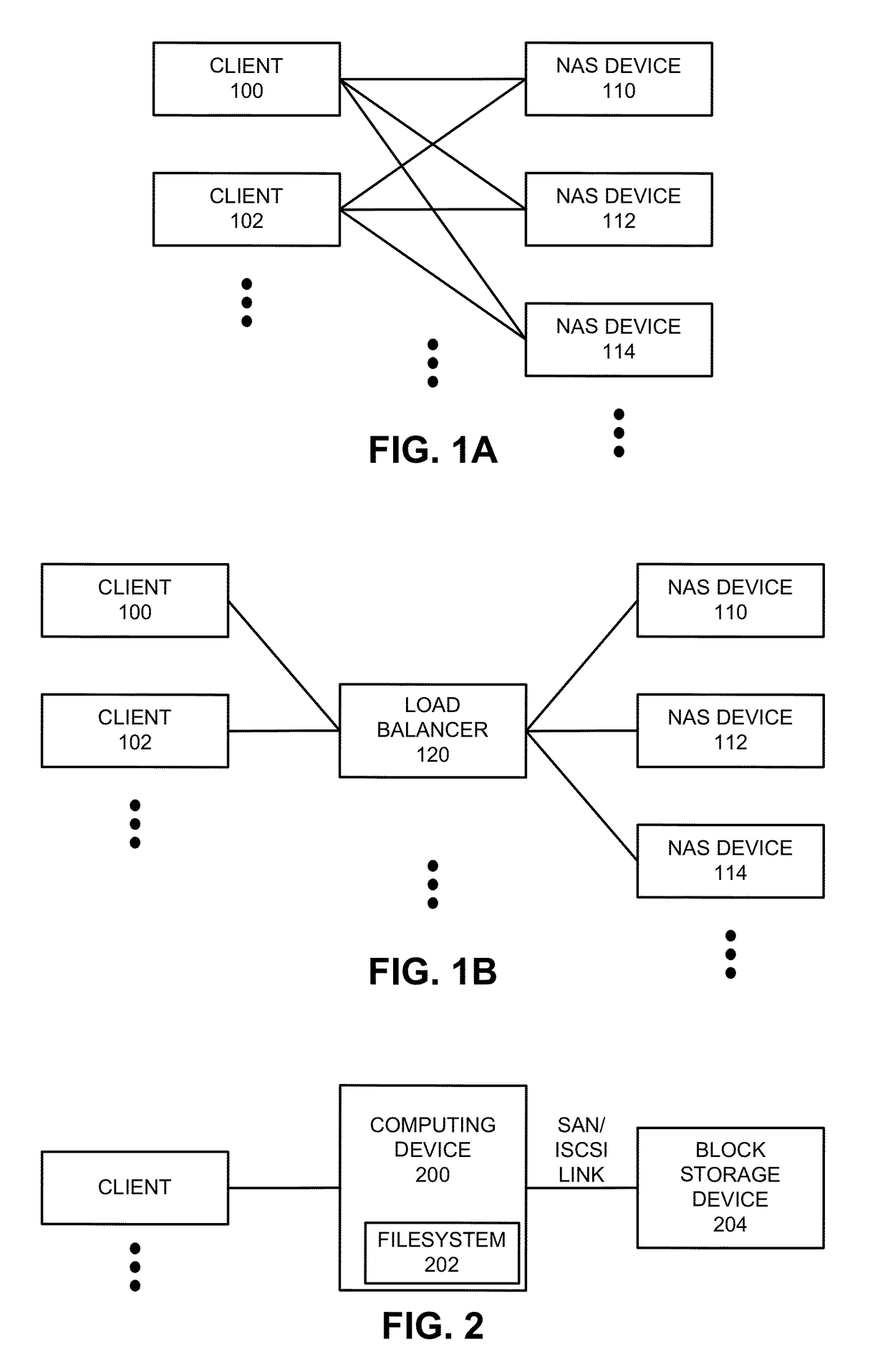

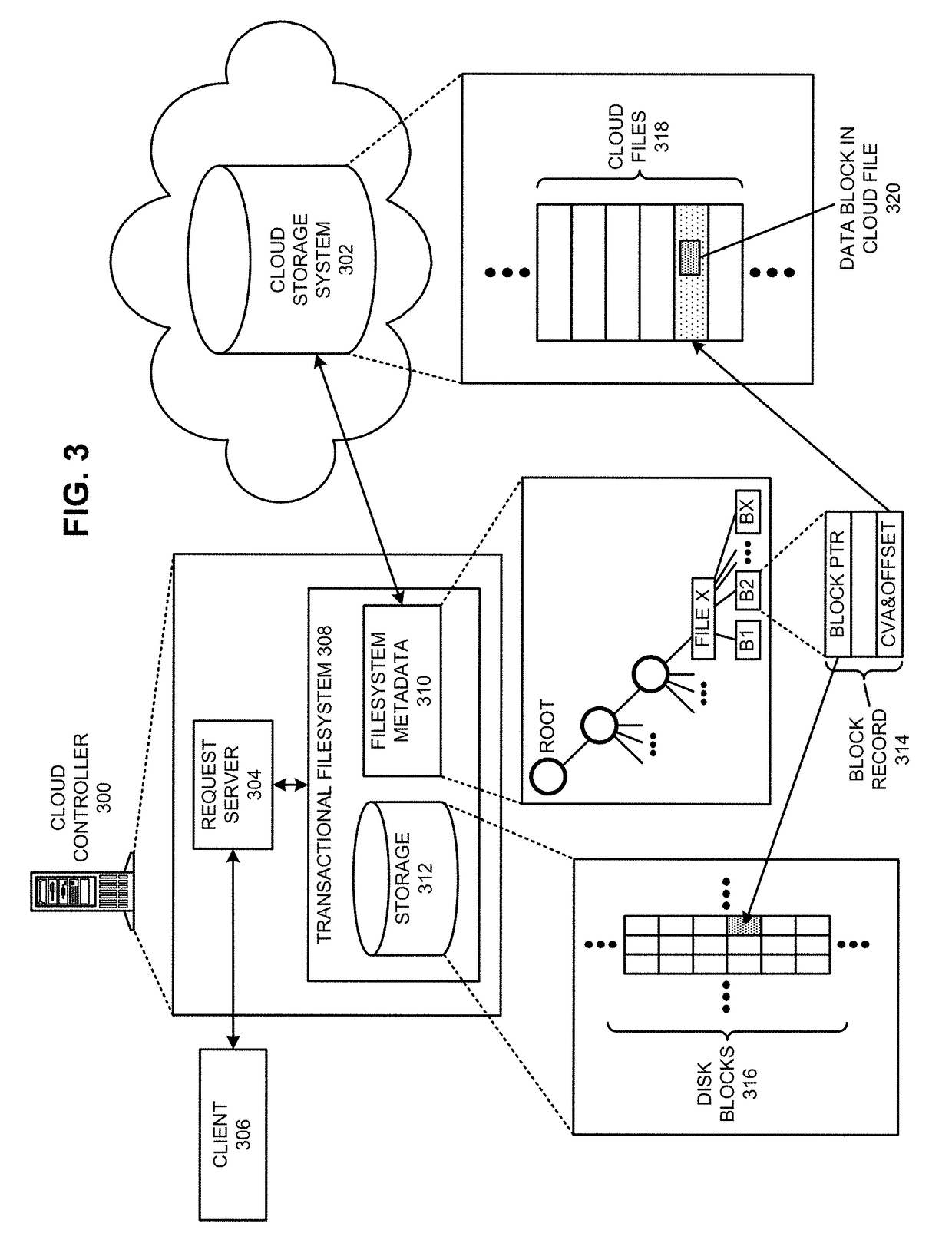

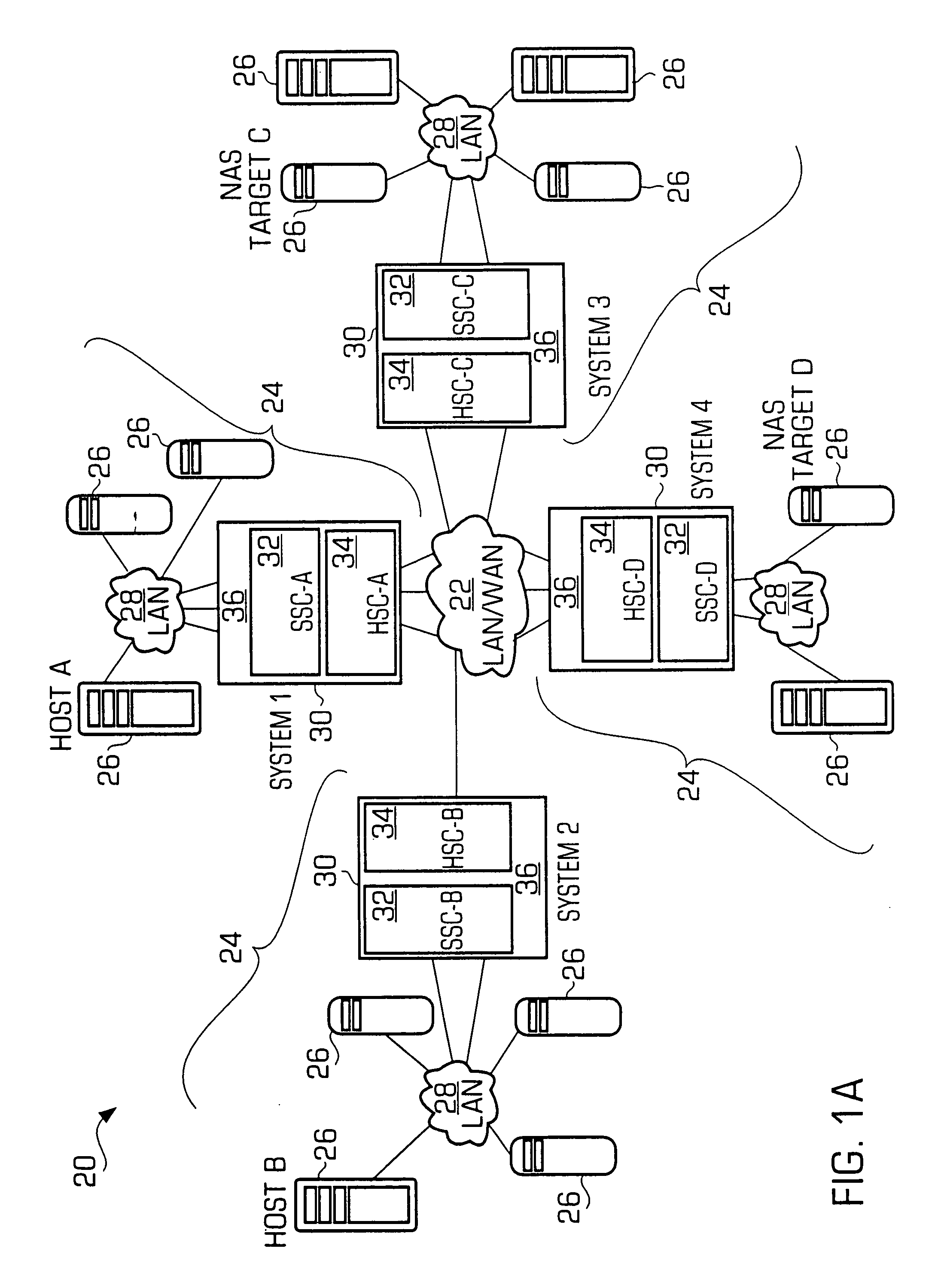

The disclosed embodiments disclose techniques that facilitate of avoiding client timeouts in a distributed filesystem. Multiple cloud controllers collectively manage distributed filesystem data that is stored in one or more cloud storage systems; the cloud controllers ensure data consistency for the stored data, and each cloud controller caches portions of the distributed filesystem in a local storage pool. During operation, a cloud controller receives from a client system a request for a data block in a target file that is stored in the distributed filesystem. Although the cloud controller is already caching the requested data block, the cloud controller delays transmission of the cached data block; this additional delay gives the cloud controller more time to access uncached data blocks for the target file from a cloud storage system, thereby ensuring that subsequent requests of such data blocks do not exceed a timeout interval on the client system.

Owner:PANZURA LLC

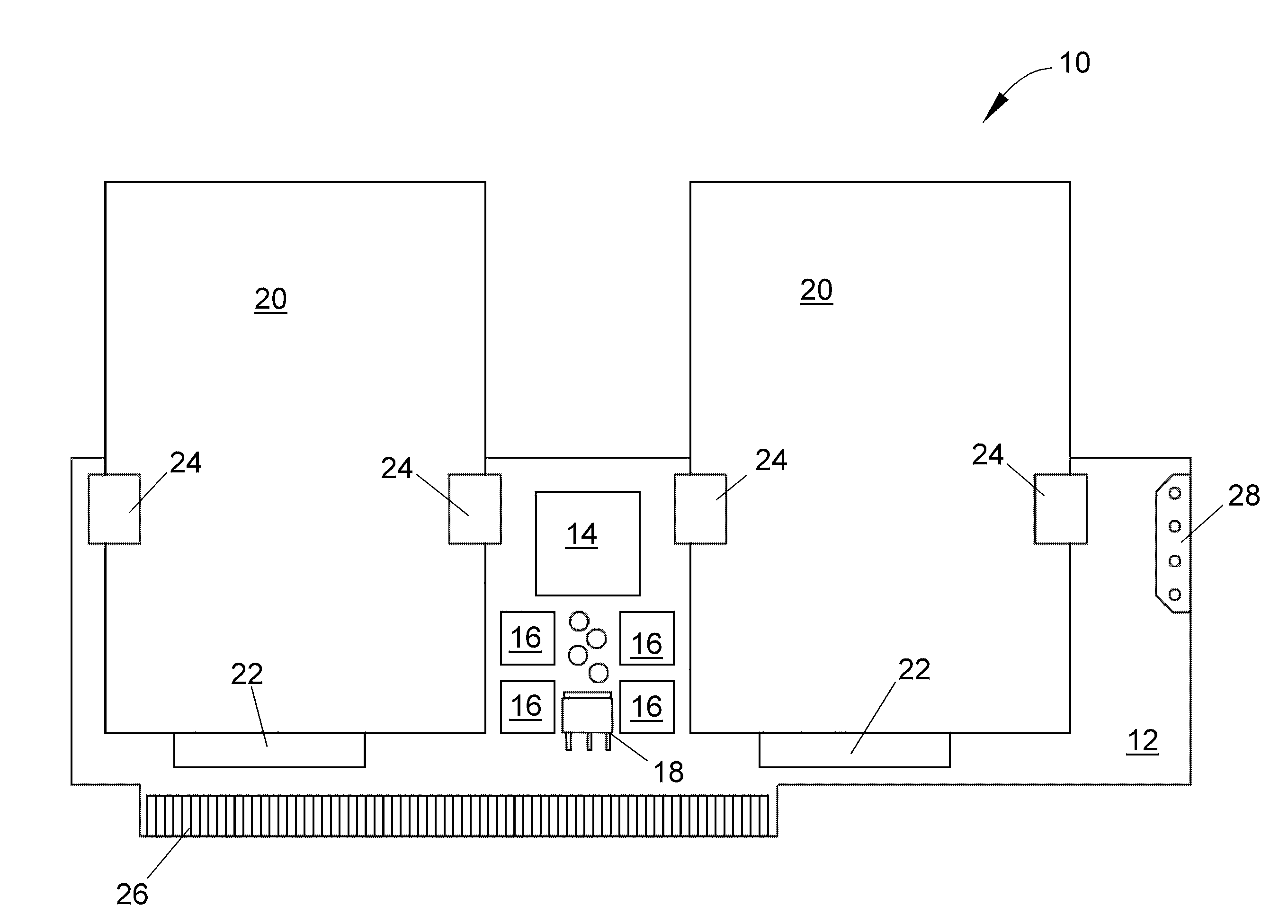

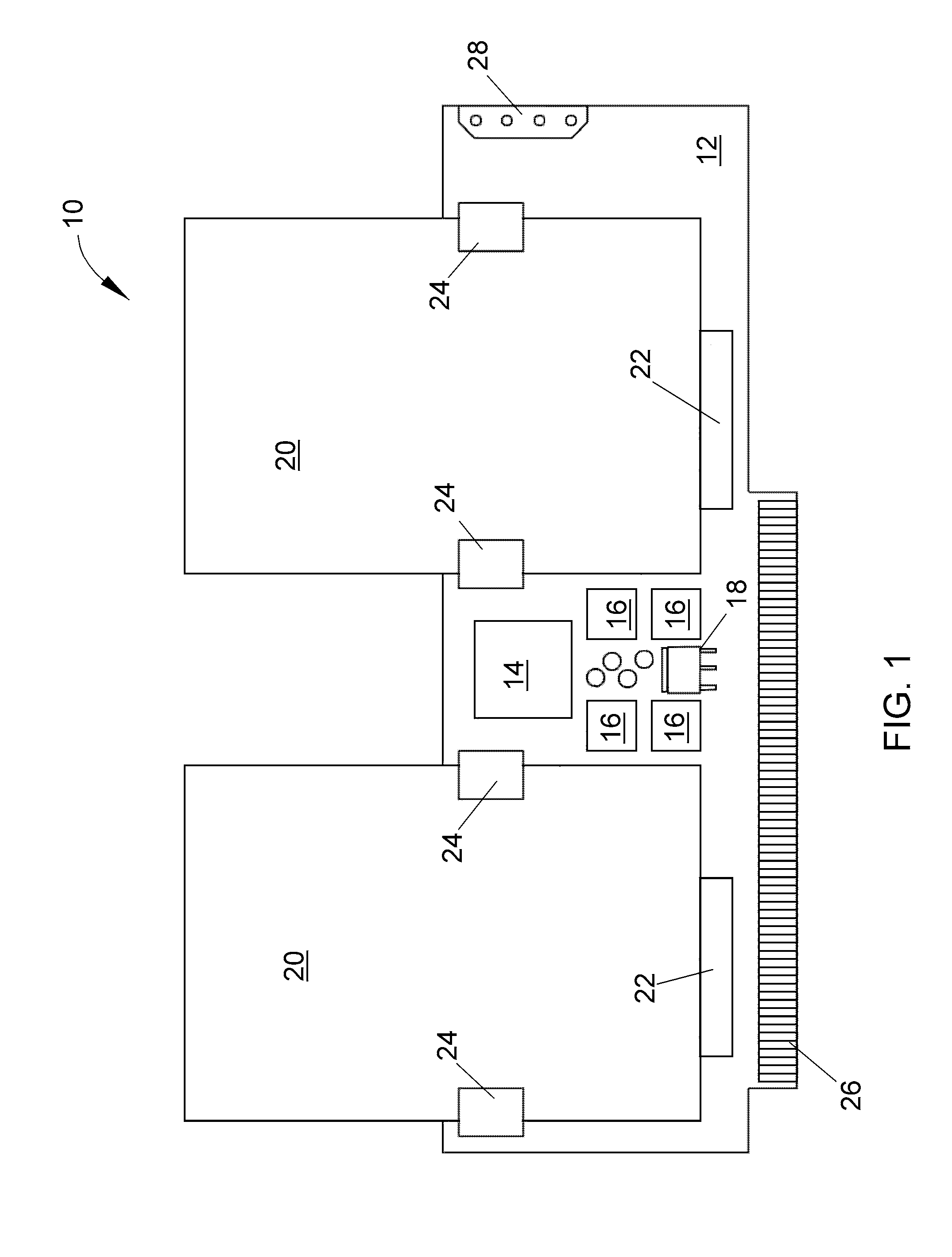

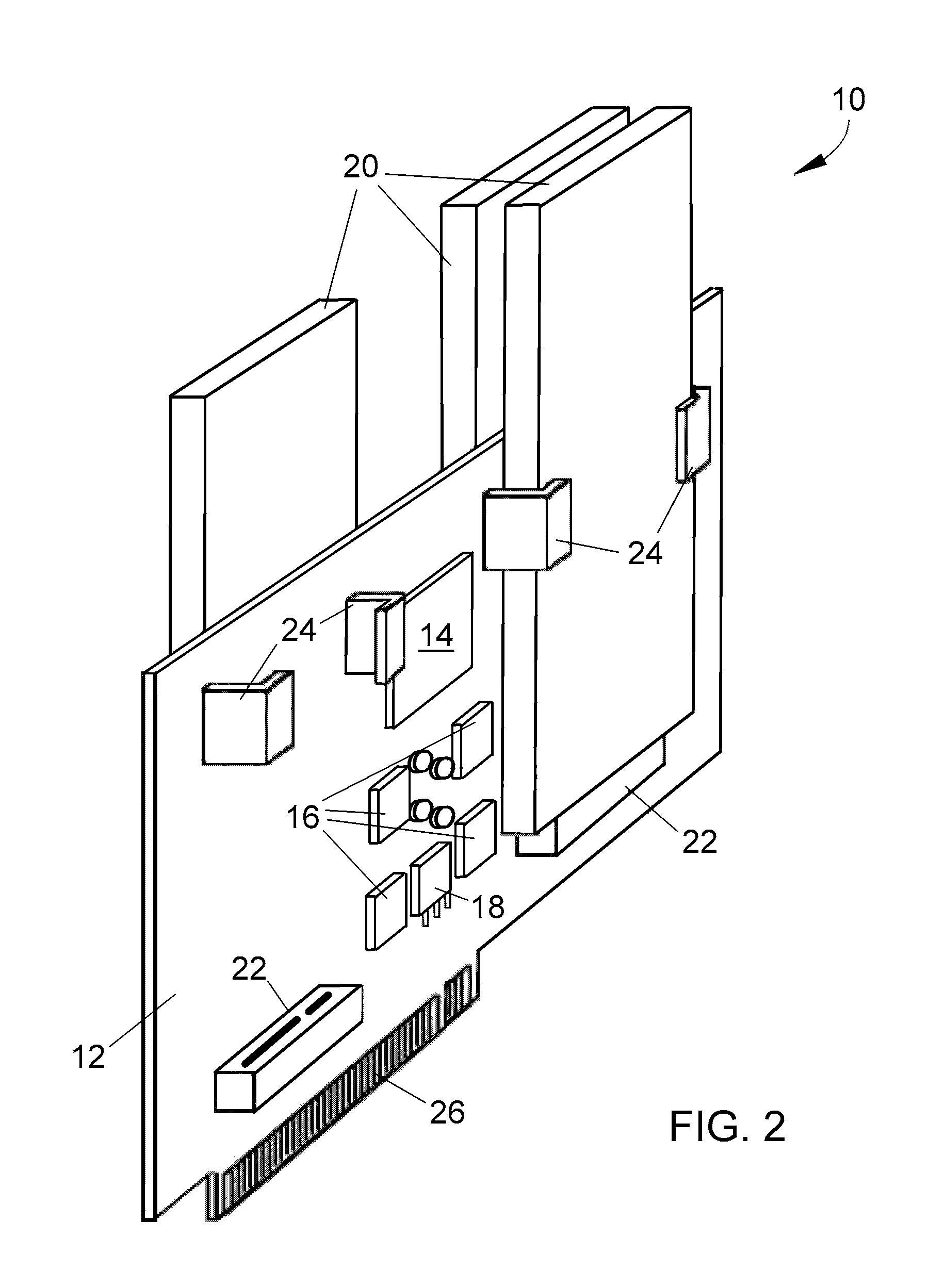

Mass storage system and method using hard disk and solid-state media

ActiveUS20110320690A1Minimal degradationReduce access latencyMemory architecture accessing/allocationError detection/correctionPrinted circuit boardSolid state memory

Methods and systems for mass storage of data over two or more tiers of mass storage media that include nonvolatile solid-state memory devices, hard disk devices, and optionally volatile memory devices or nonvolatile MRAM in an SDRAM configuration. The mass storage media interface with a host through one or more PCIe lanes on a single printed circuit board.

Owner:KIOXIA CORP

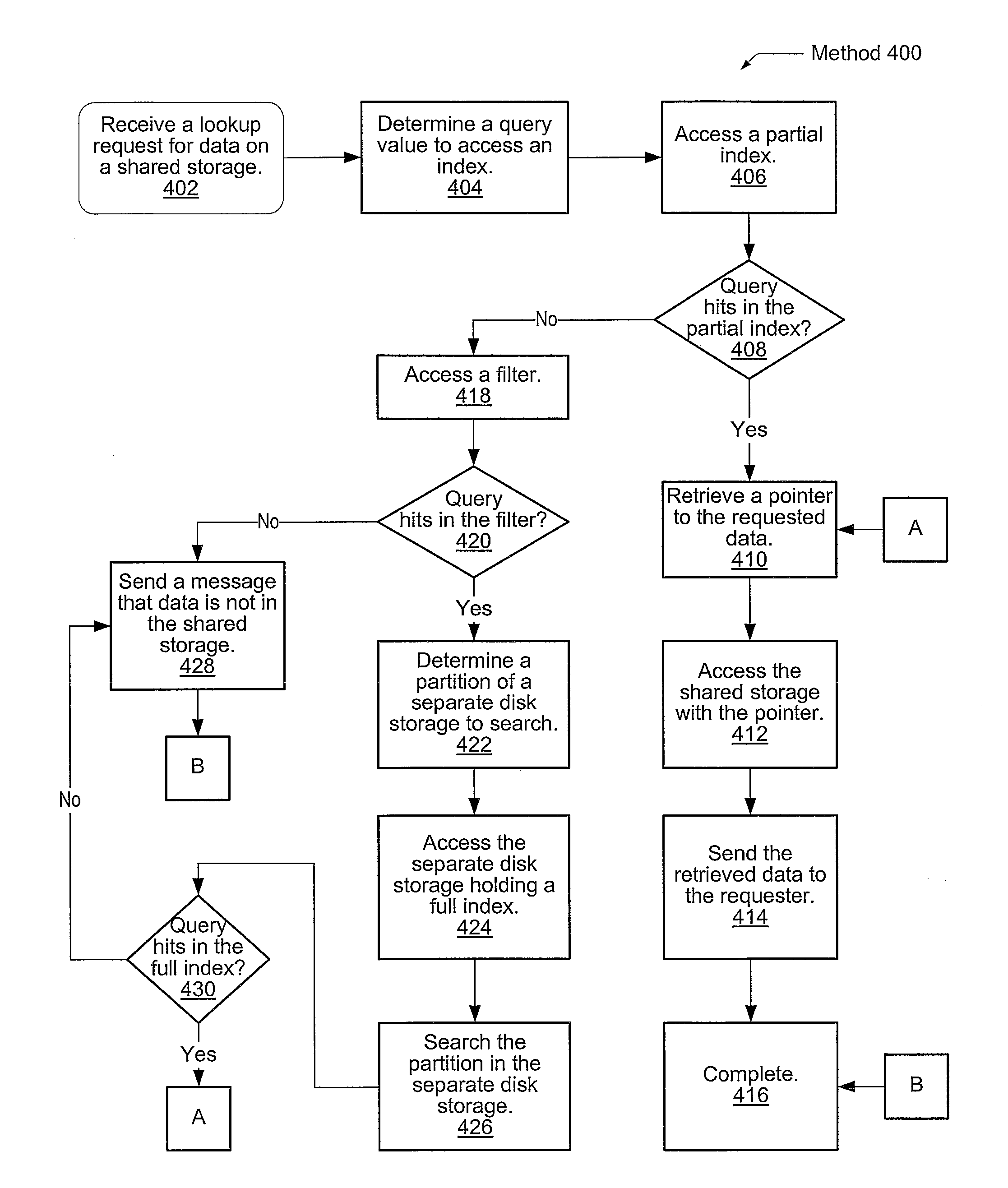

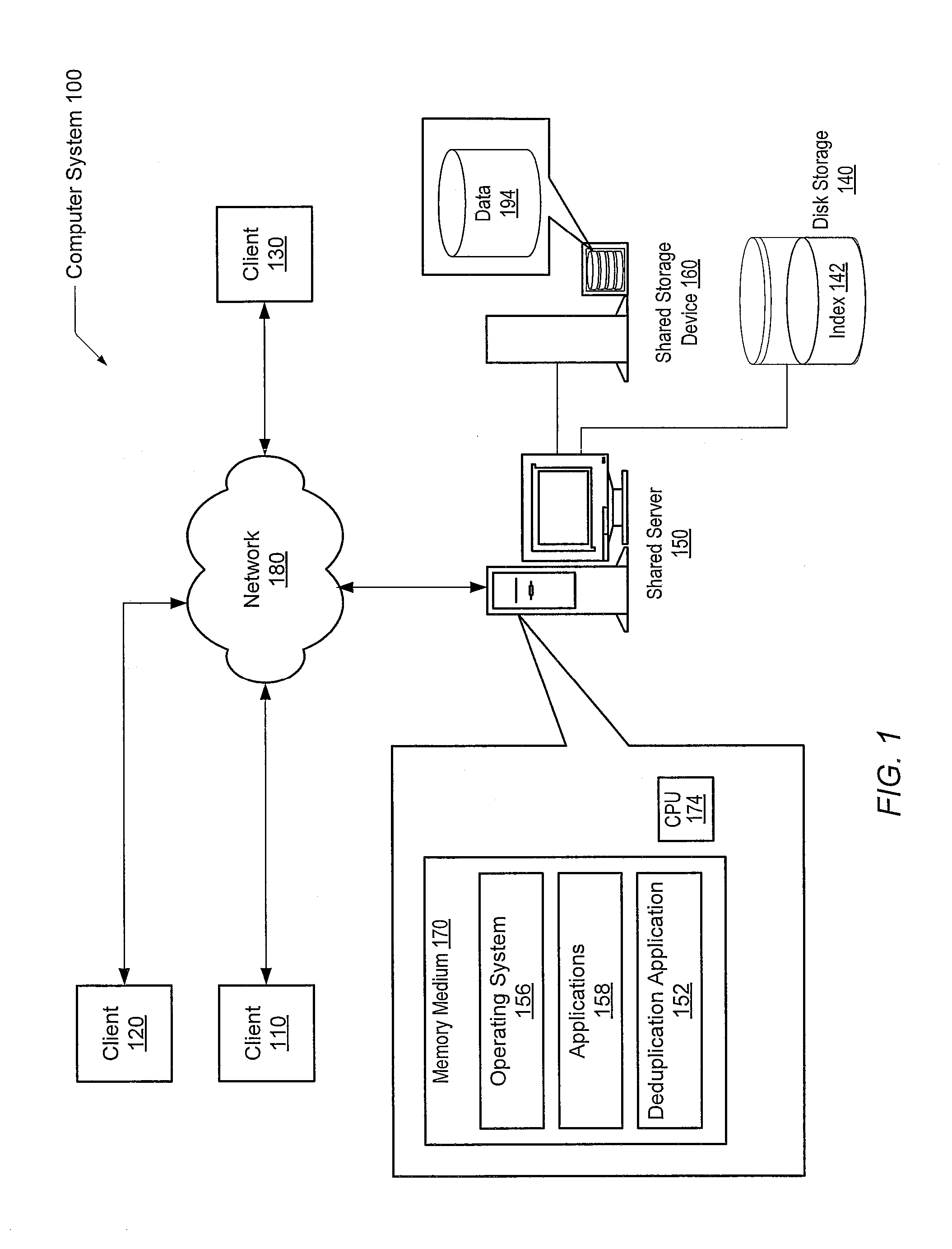

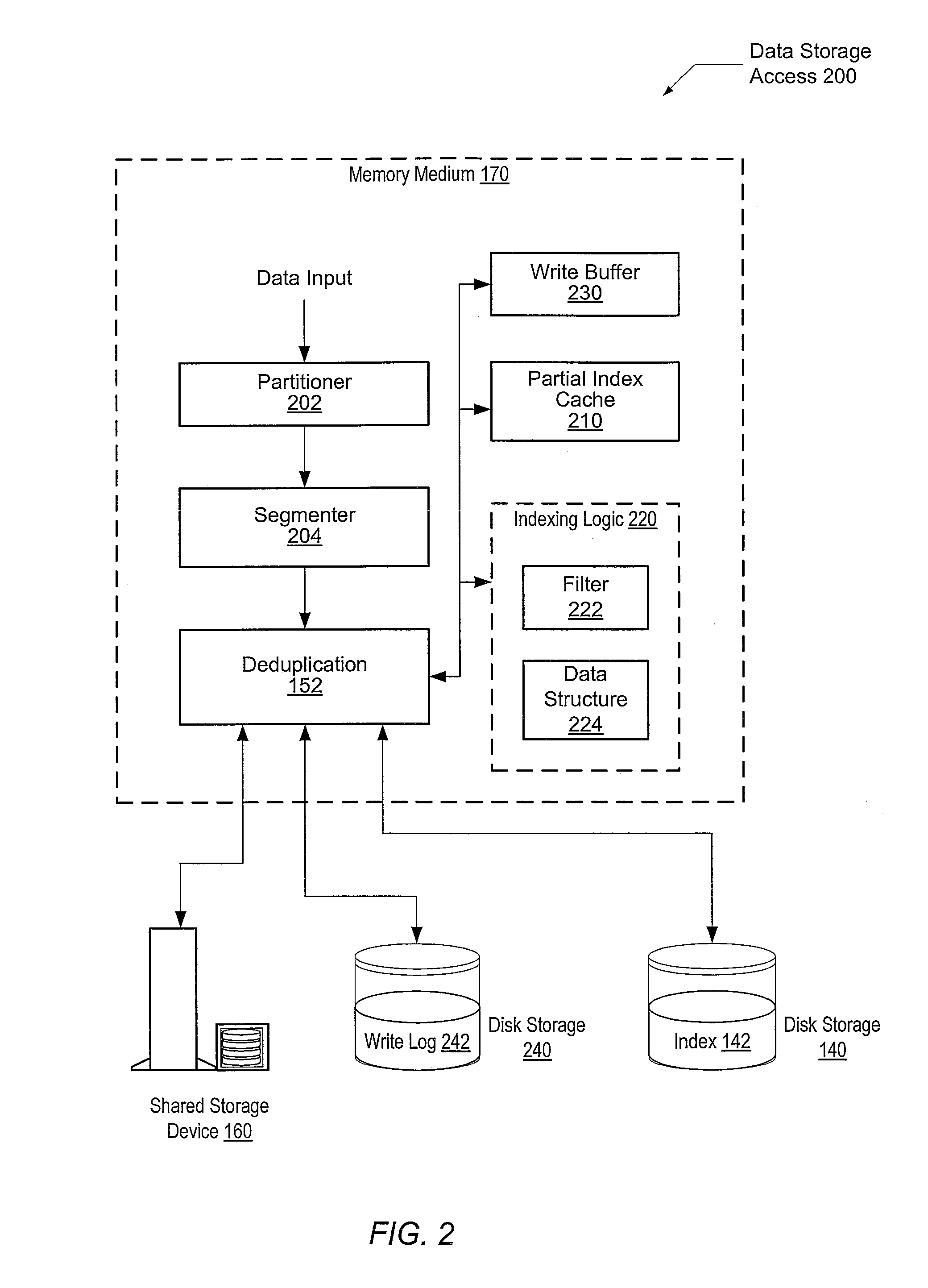

System and method for high performance deduplication indexing

ActiveUS8370315B1Reduce access latencyPrevent duplicate dataDigital data information retrievalDigital data processing detailsFingerprintSolid-state

A system and method for efficiently reducing latency of accessing an index for a data segment stored on a server. A server both removes duplicate data and prevents duplicate data from being stored in a shared data storage. The file server is coupled to an index storage subsystem holding fingerprint and pointer value pairs corresponding to a data segment stored in the shared data storage. The pairs are stored in a predetermined order. The file server utilizes an ordered binary search tree to identify a particular block of multiple blocks within the index storage subsystem corresponding to a received memory access request. The index storage subsystem determines whether an entry corresponding to the memory access request is located within the identified block. Based on at least this determination, the file server processes the memory access request accordingly. In one embodiment, the index storage subsystem is a solid-state disk (SSD).

Owner:VERITAS TECH

Avoiding client timeouts in a distributed filesystem

ActiveUS20130339407A1Reduce access latencyMemory architecture accessing/allocationDigital data information retrievalDistributed File SystemFile system

The disclosed embodiments disclose techniques that facilitate of avoiding client timeouts in a distributed filesystem. Multiple cloud controllers collectively manage distributed filesystem data that is stored in one or more cloud storage systems; the cloud controllers ensure data consistency for the stored data, and each cloud controller caches portions of the distributed filesystem in a local storage pool. During operation, a cloud controller receives from a client system a request for a data block in a target file that is stored in the distributed filesystem. Although the cloud controller is already caching the requested data block, the cloud controller delays transmission of the cached data block; this additional delay gives the cloud controller more time to access uncached data blocks for the target file from a cloud storage system, thereby ensuring that subsequent requests of such data blocks do not exceed a timeout interval on the client system.

Owner:PANZURA LLC

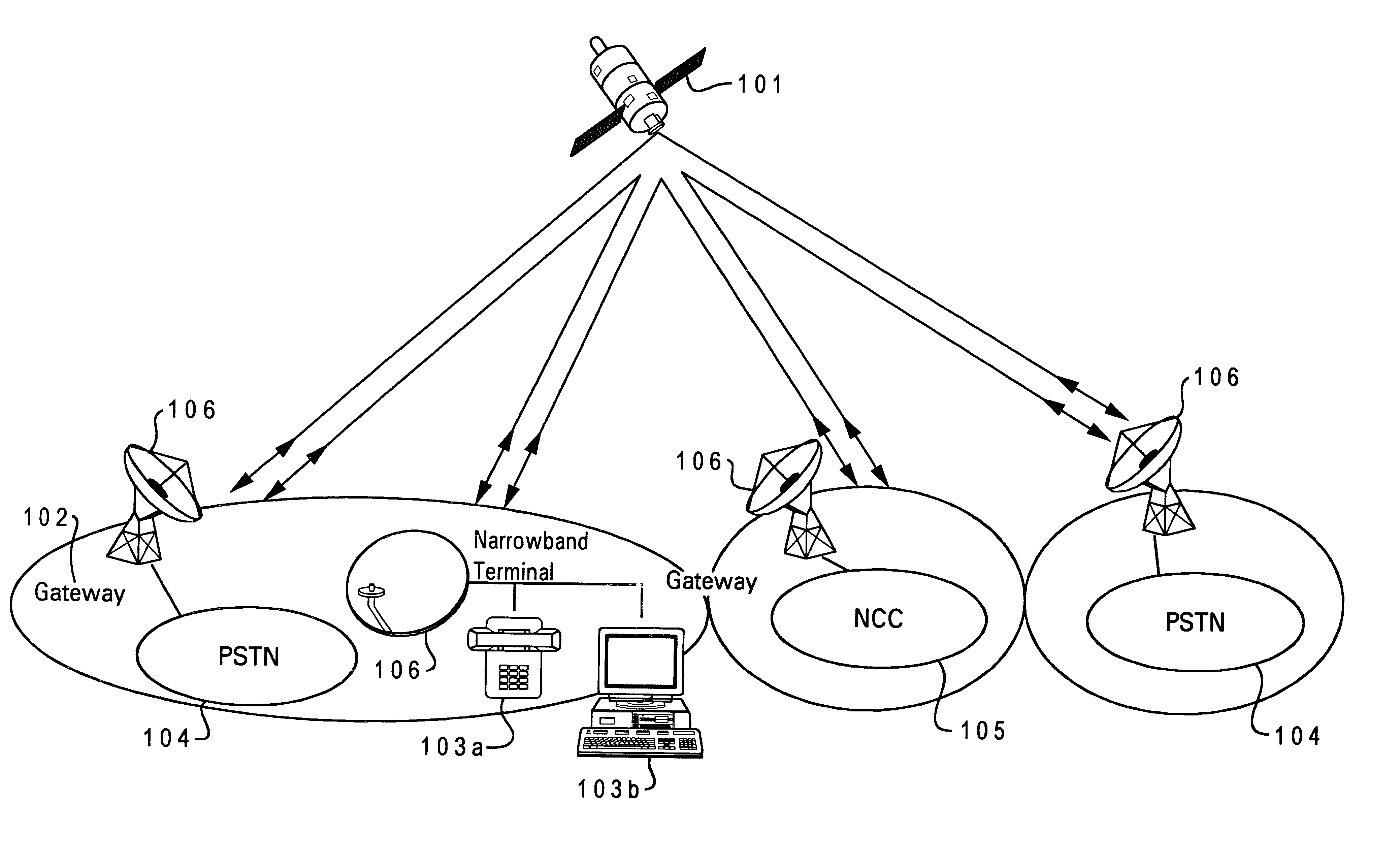

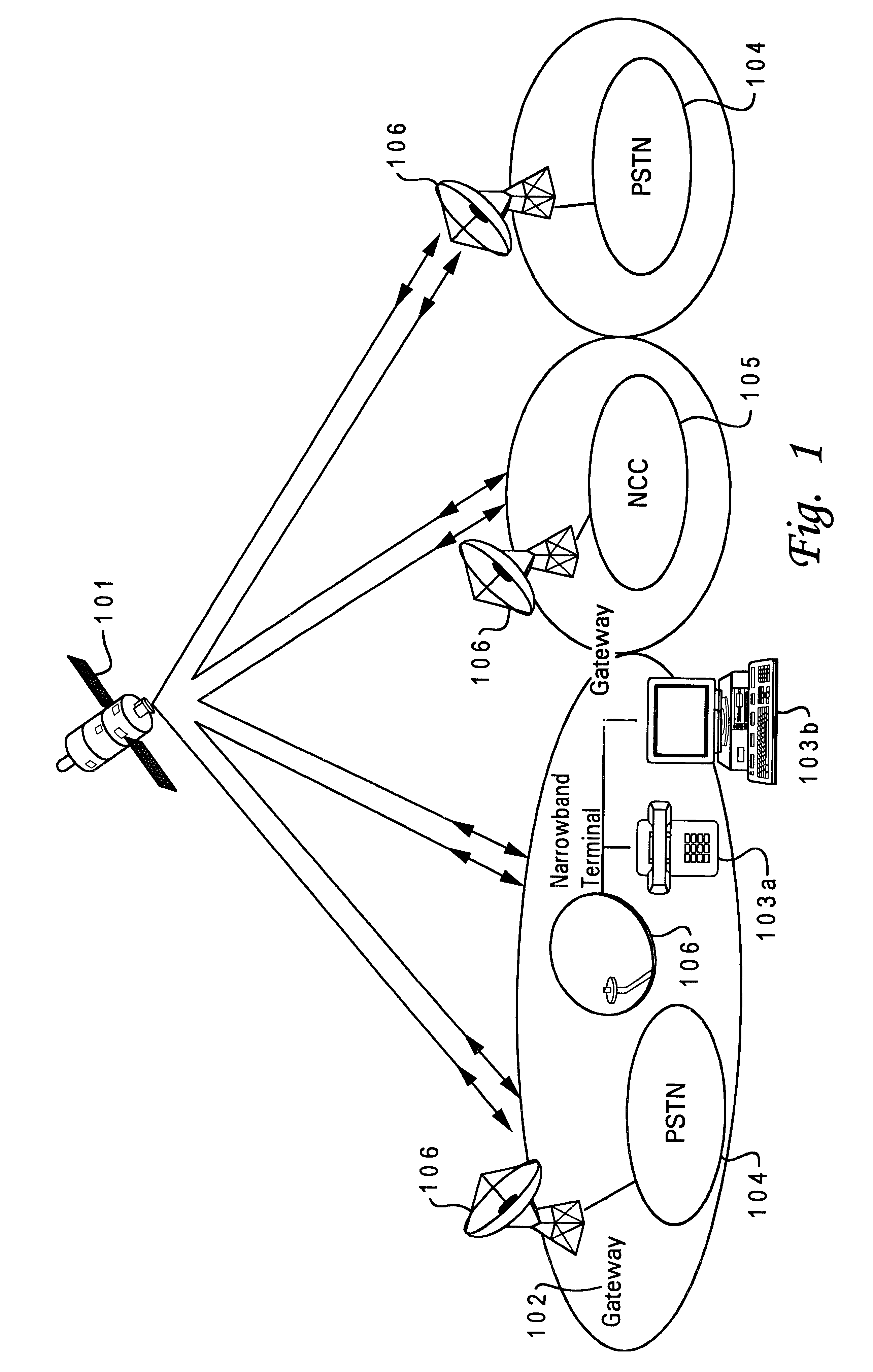

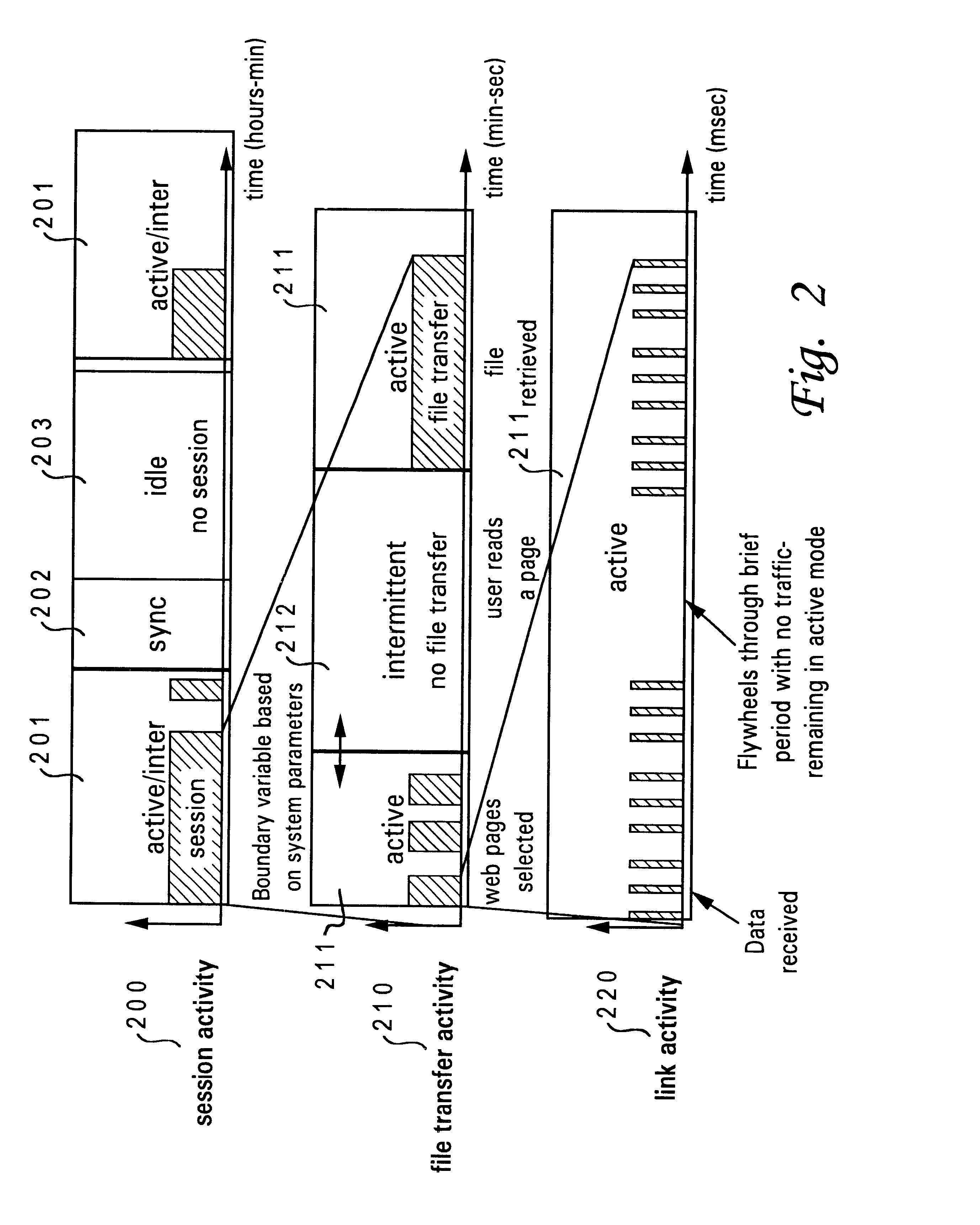

Activity based resource assignment medium access control protocol

InactiveUS6487183B1Improve throughput efficiencyReduce access latencyTime-division multiplexRadio/inductive link selection arrangementsCommunications systemResource utilization

A method for implementing an activity based resource assignment medium access control (MAC) protocol to allocate resources to subscribers in an interactive, multimedia, broadband satellite communications system. A plurality of channels is provided with at least one of said plurality of channels being assigned to each of a plurality of terminals. Utilization of the channel resources by the plurality of terminals is monitored and an evaluation is conducted of system needs based on the levels of activity and utilization of channel resources by the terminals. Requests for system resources by the terminals are communicated to a resource controller which calculates the system needs. The resource controller is utilized to adjust the channel resources based on the levels of activity and utilization of resources by the terminals. The adjustment provides active terminals as much resources as required during duration of their activity, subject to resource availability.

Owner:MICROSOFT TECH LICENSING LLC

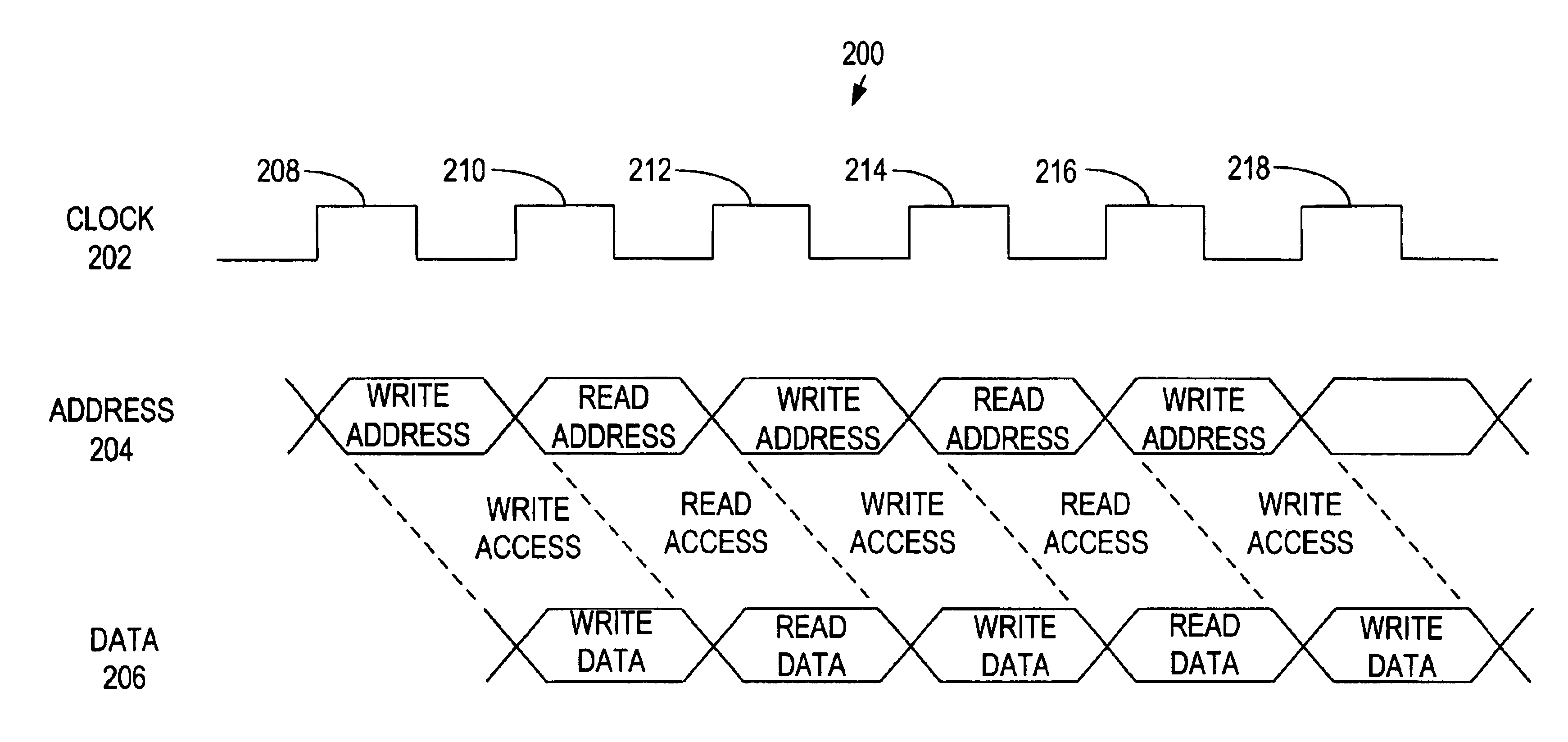

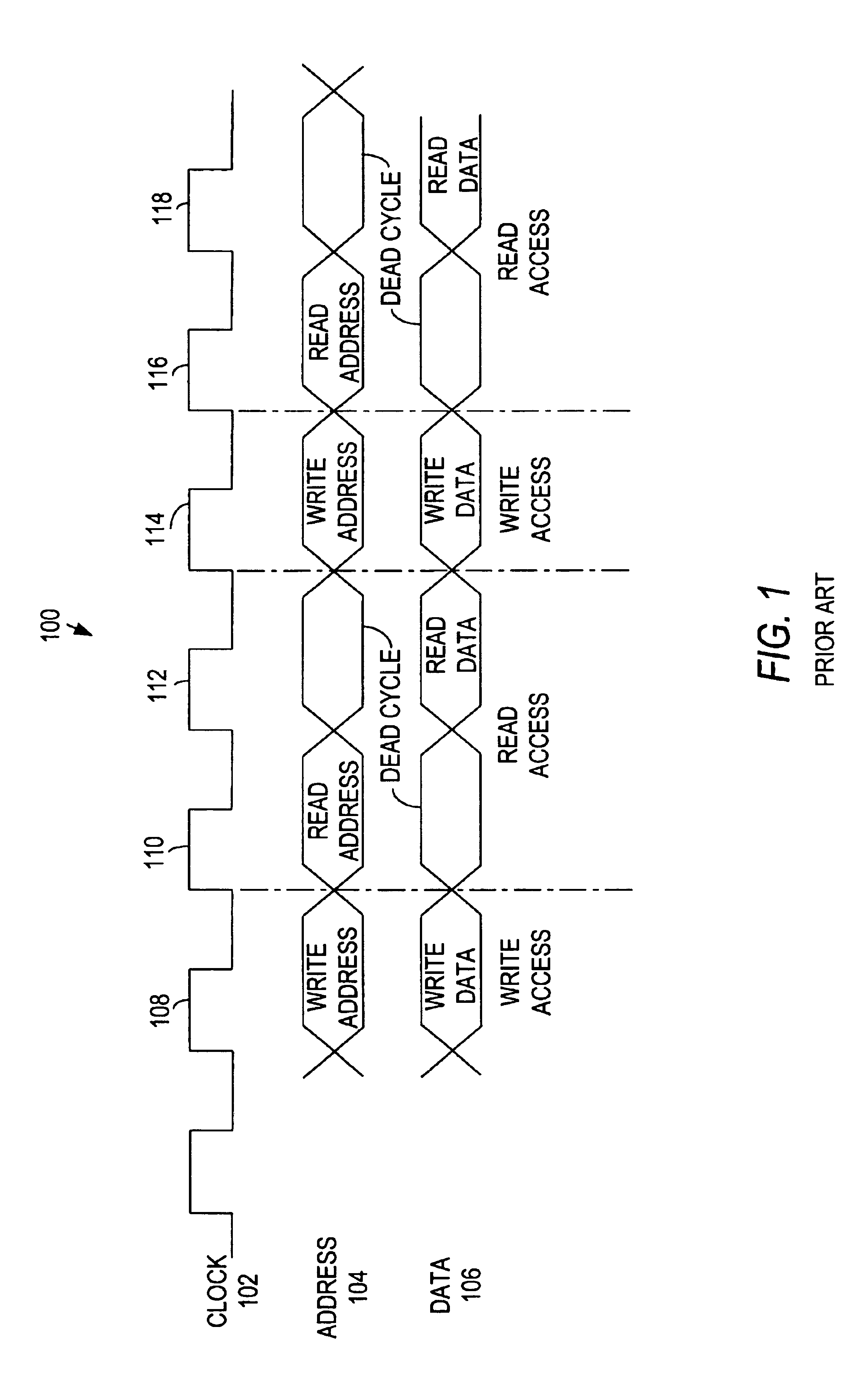

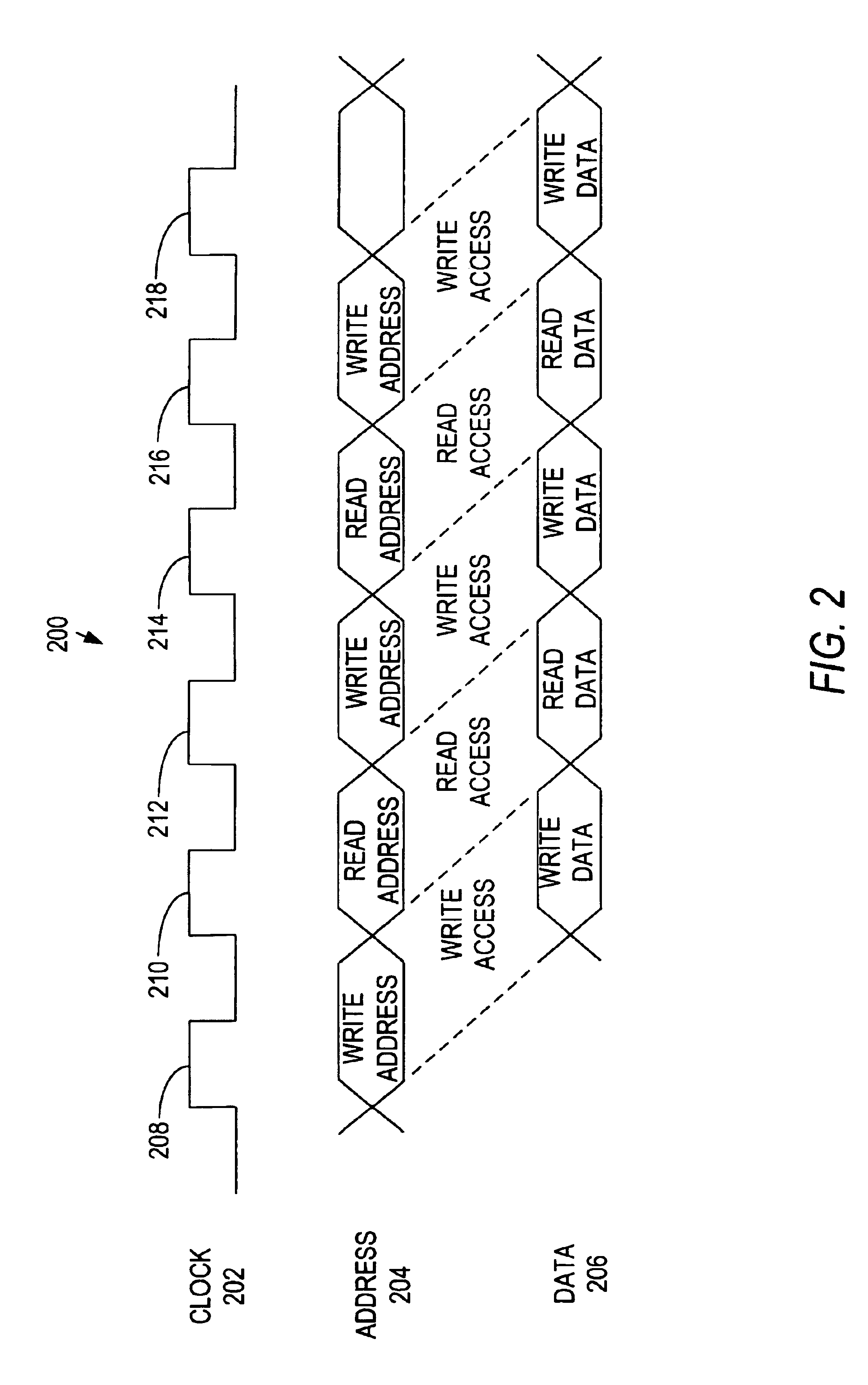

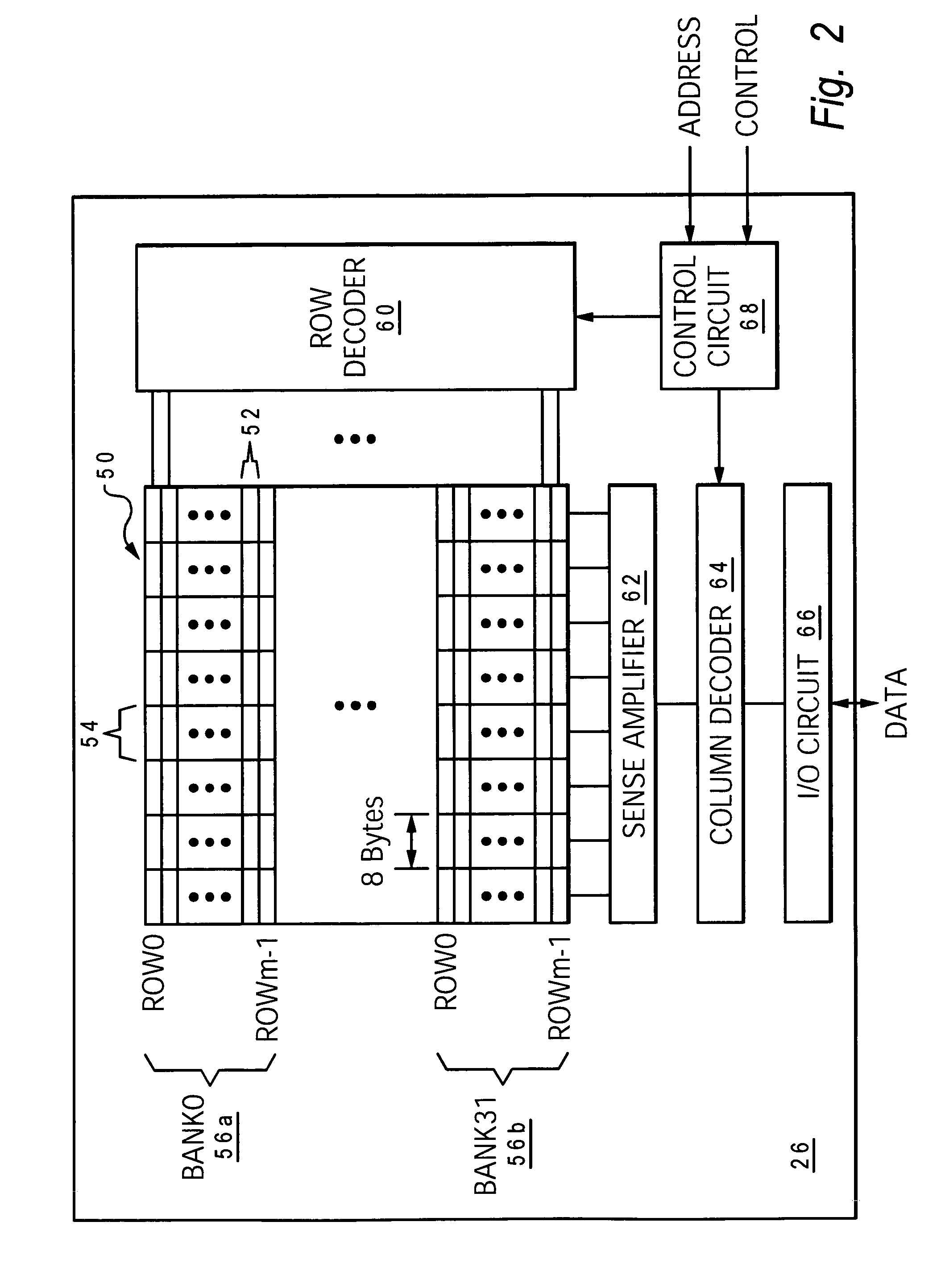

Multi-bank memory accesses using posted writes

InactiveUS6938142B2Reduce read-write access delayReduce read-write access delay and write-read access delayMemory adressing/allocation/relocationDigital storageOperating systemPosted write

Systems and methods for reducing delays between successive write and read accesses in multi-bank memory devices are provided. Computer circuits modify the relative timing between addresses and data of write accesses, reducing delays between successive write and read accesses. Memory devices that interface with these computer circuits use posted write accesses to effectively return the modified relative timing to its original timing before processing the write access.

Owner:ROUND ROCK RES LLC

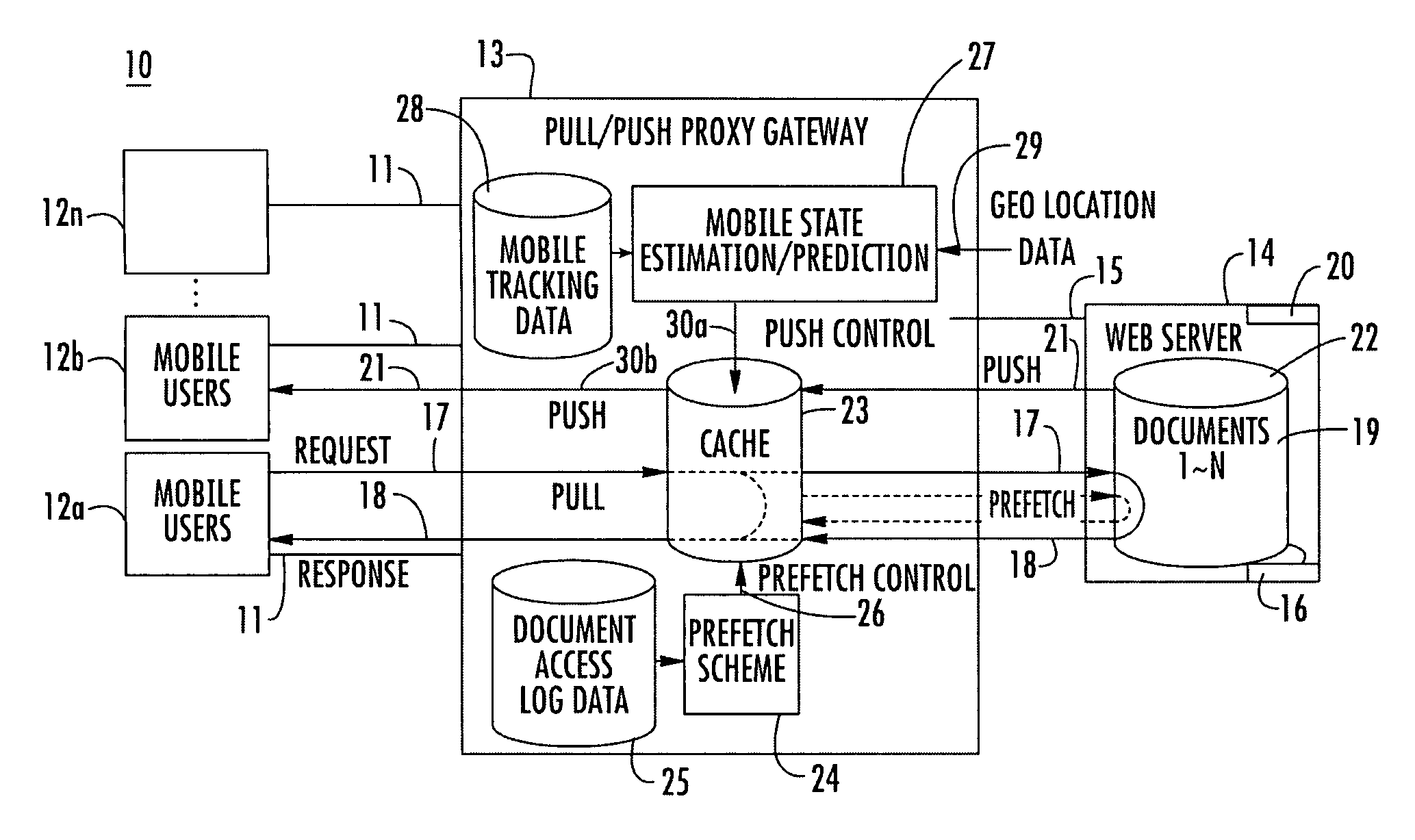

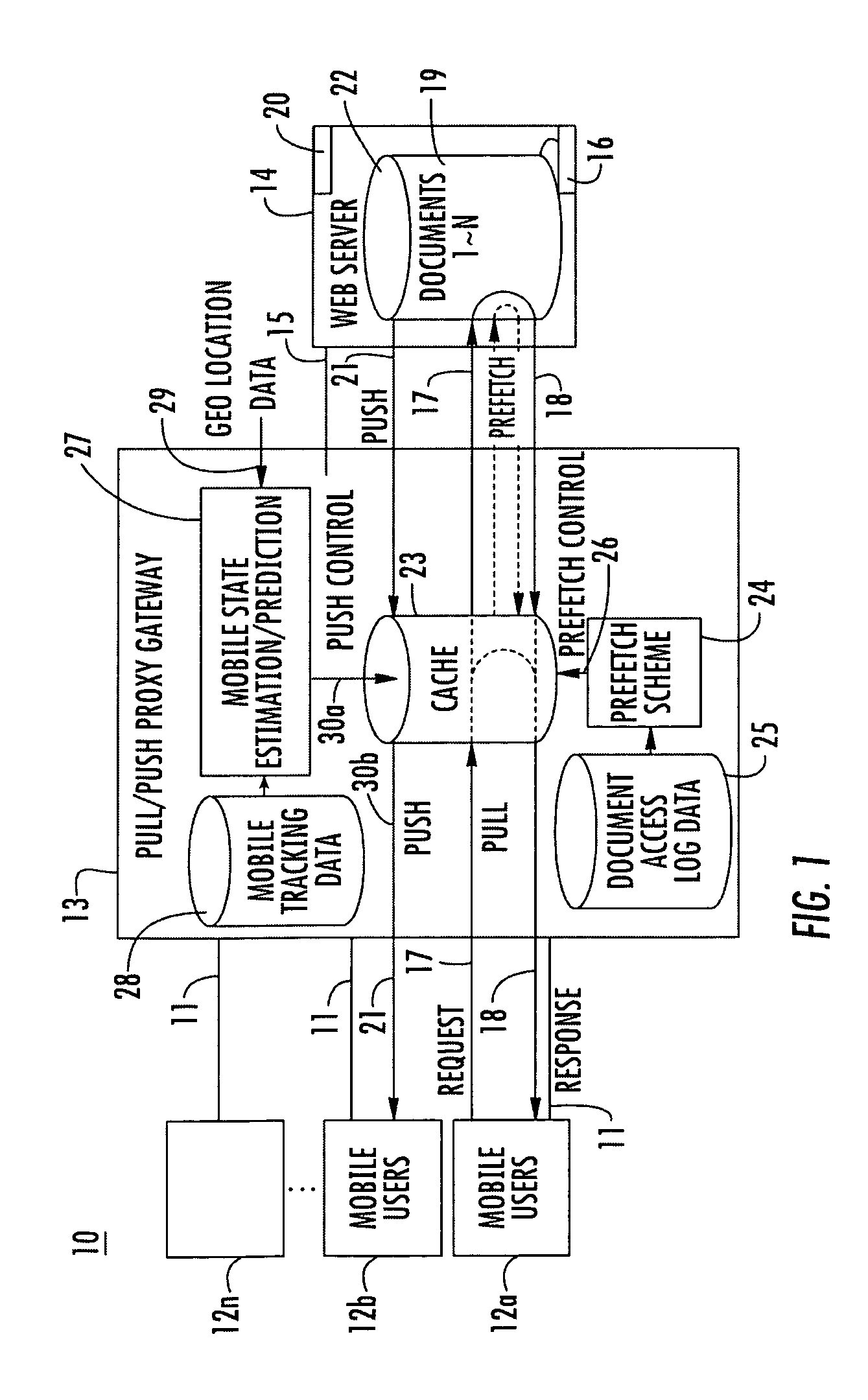

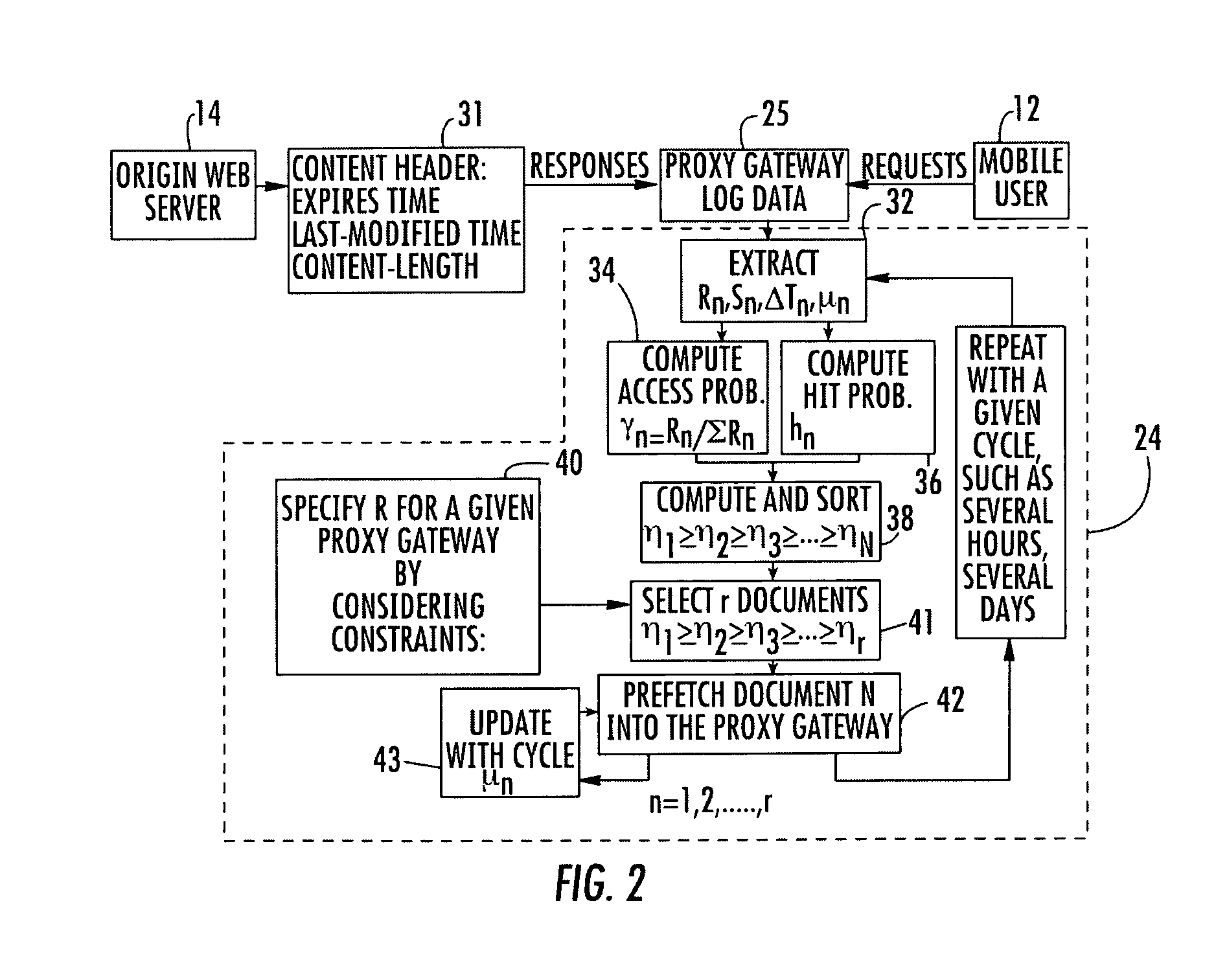

System for wireless push and pull based services

InactiveUS7058691B1Improve performanceReduce access latencyMultiple digital computer combinationsProgram controlPush and pullResponse delay

The present invention relates to a method and system for providing Web content from pull and push based services running on Web content providers to mobile users. A proxy gateway connects the mobile users to the Web content providers. A prefetching module is used at the proxy gateway to optimize performance of the pull services by reducing average access latency. The average access latency can be reduced by using at least three factors: one related to the frequency of access to the pull content; second, the update cycle of the pull content determined by the Web content providers; and third, the response delay for fetching pull content from the content provider to the proxy gateway. Pull content, such as documents, having the greatest average access latency are sorted and a predetermined number of the documents are prefetched into the cache. Push services are optimized by iteratively estimating a state of each of the mobile users to determine relevant push content to be forward to the mobile user.

Owner:THE TRUSTEES FOR PRINCETON UNIV

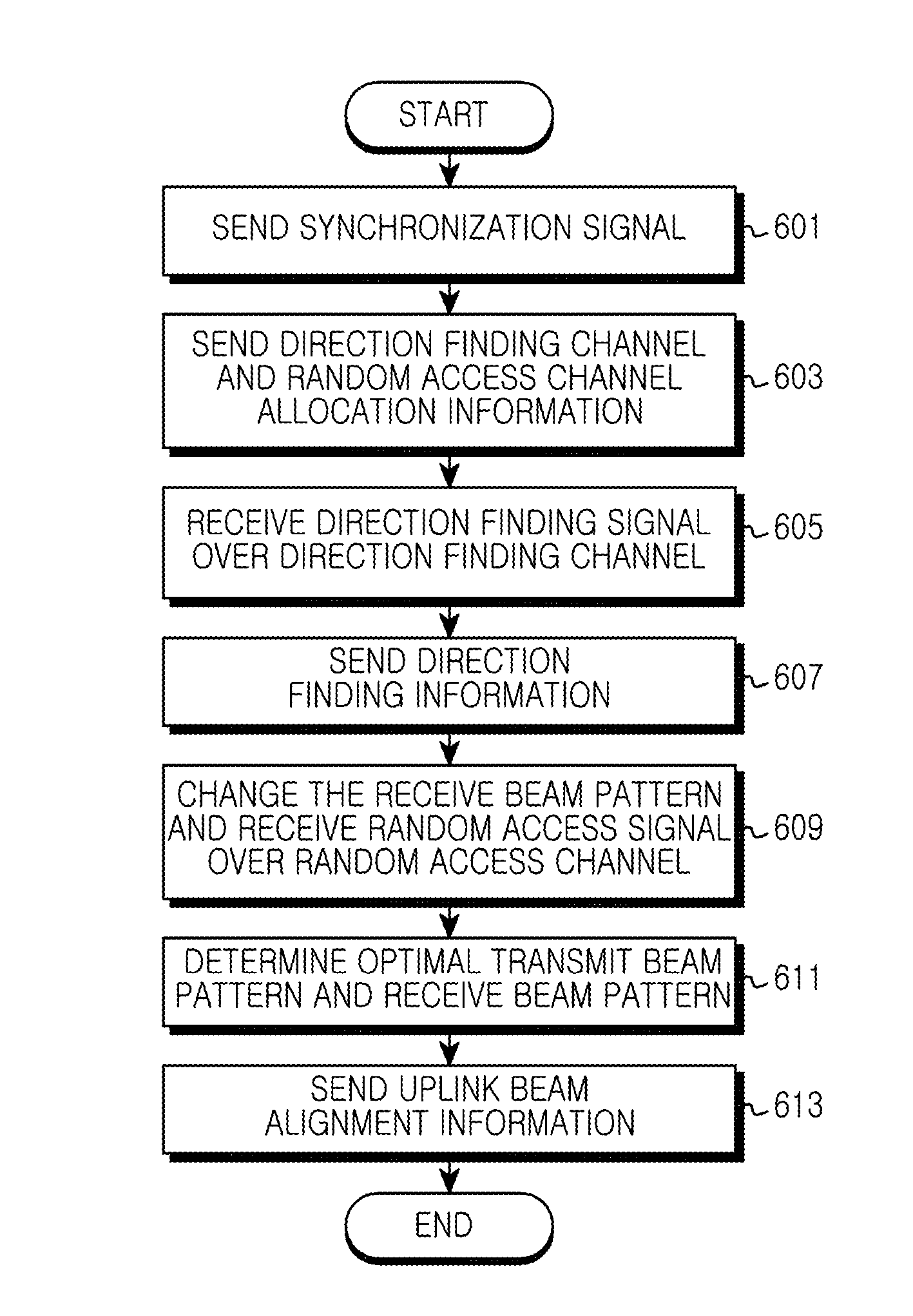

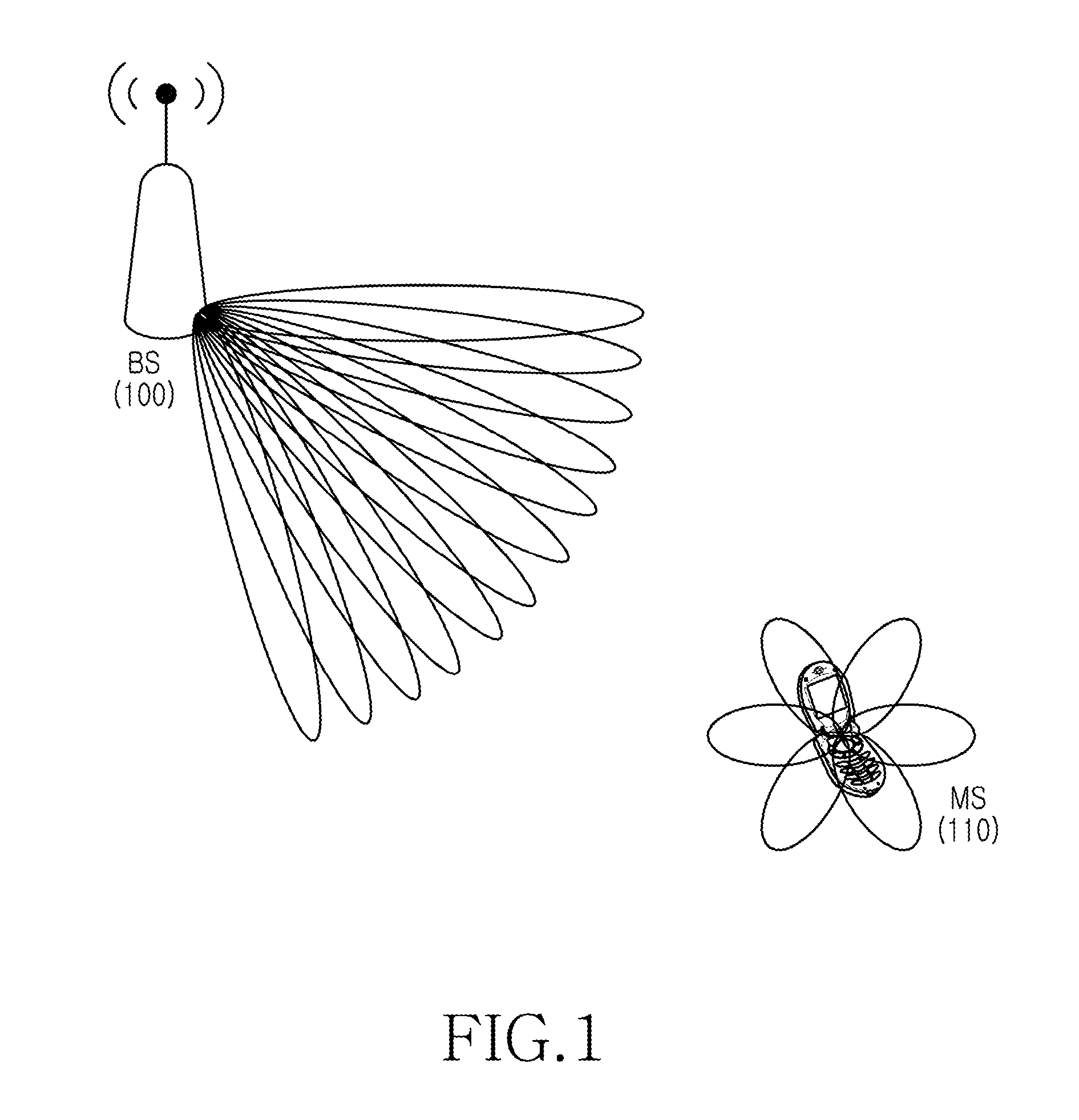

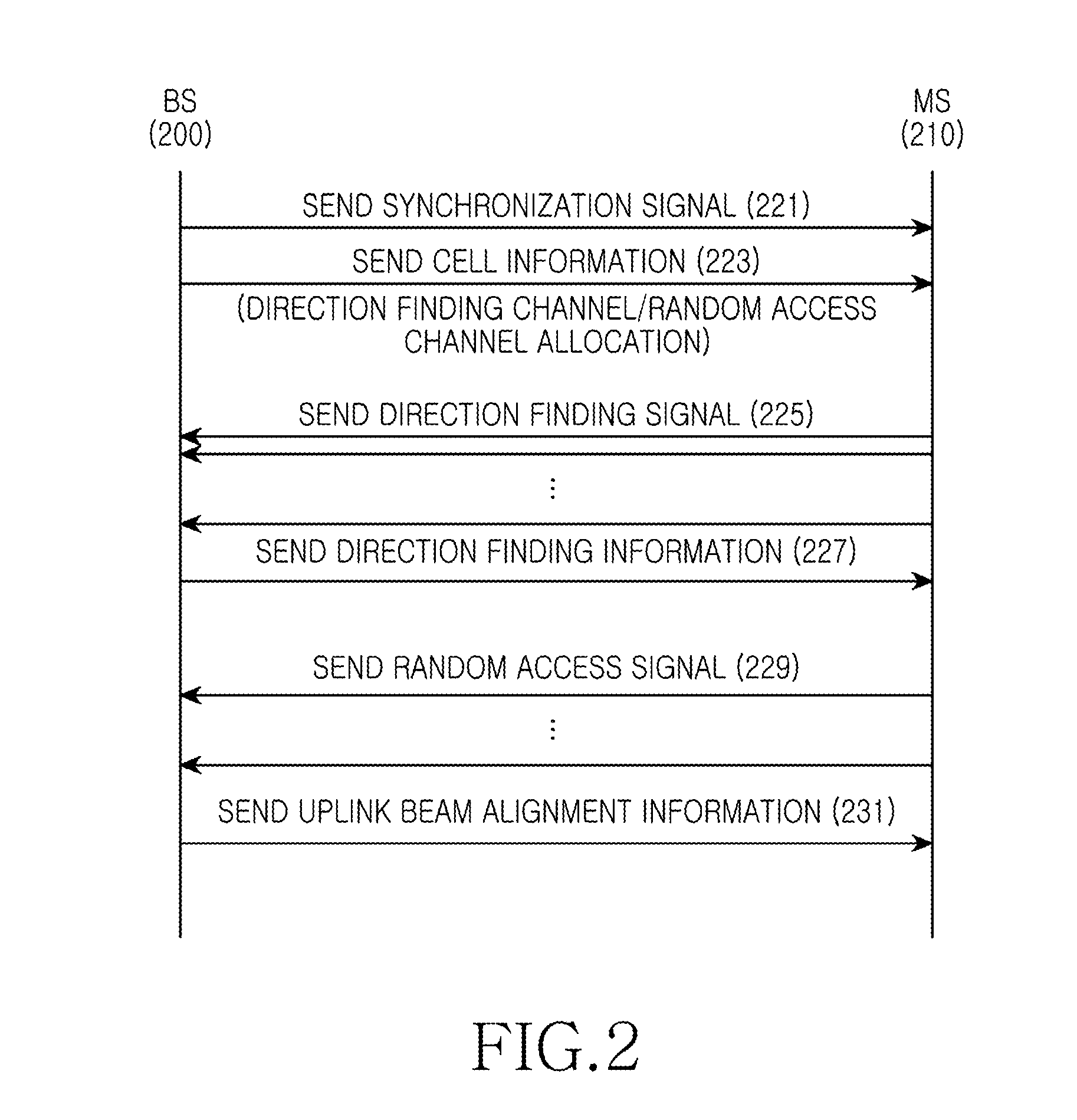

Apparatus and method for beam selecting in beamformed wireless communication system

ActiveUS20130072244A1Reduce delaysReduce access latencyRadio transmissionWireless communicationCommunications systemBeam pattern

An apparatus and a method for reducing delay during beam alignment in a beamformed wireless communication system are provided. A method for selecting a beam pattern in a Base Station (BS) includes determining whether a direction finding signal is received through each time interval of a direction finding channel, transmitting information of whether the direction finding signal is received with respect to each time interval of the direction finding channel, changing a receive beam pattern and receiving a random access signal from a Mobile Station (MS) with at least one transmit beam pattern over a random access channel, and selecting one of transmit beam patterns of the MS and one of receive beam patterns of the BS according to the random access signal.

Owner:SAMSUNG ELECTRONICS CO LTD

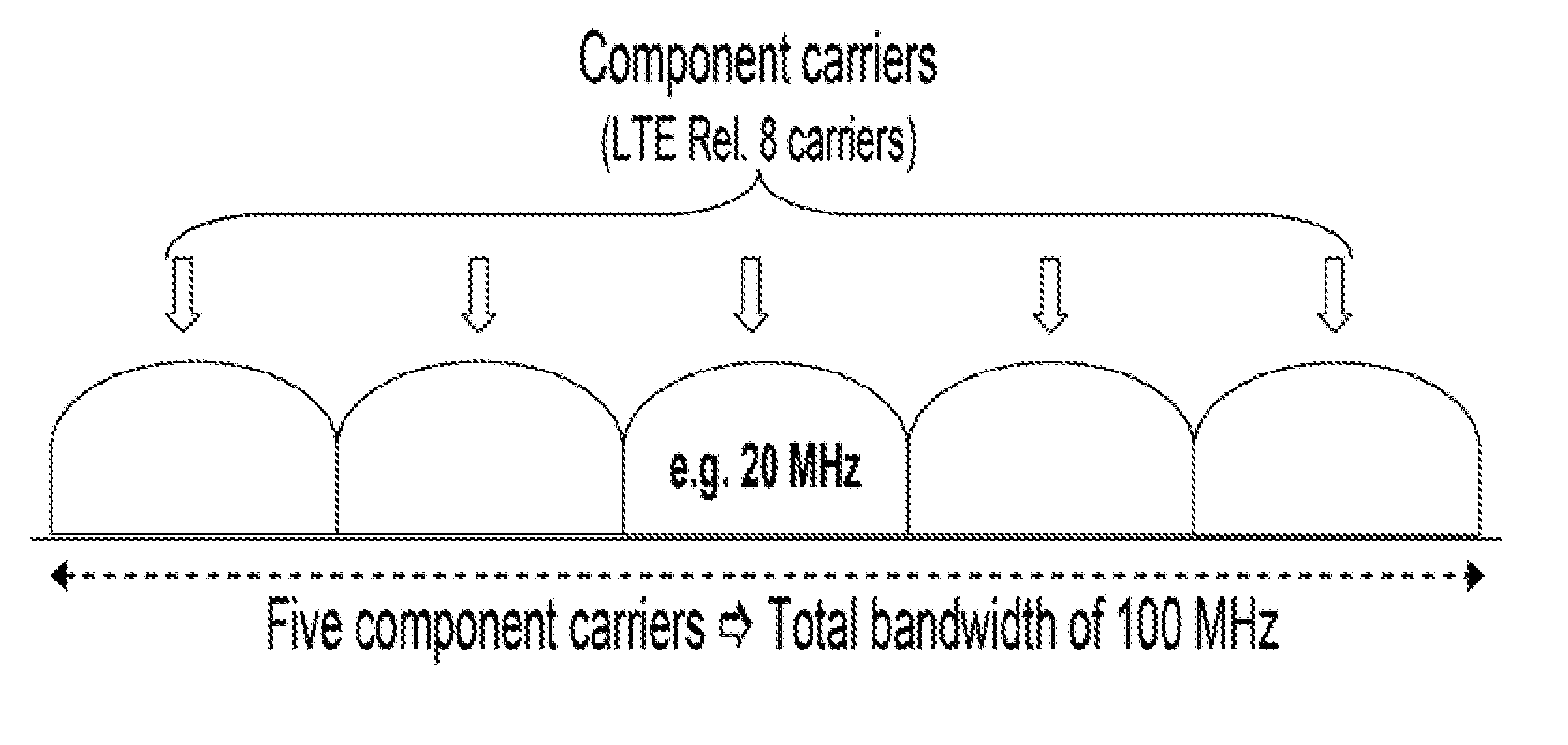

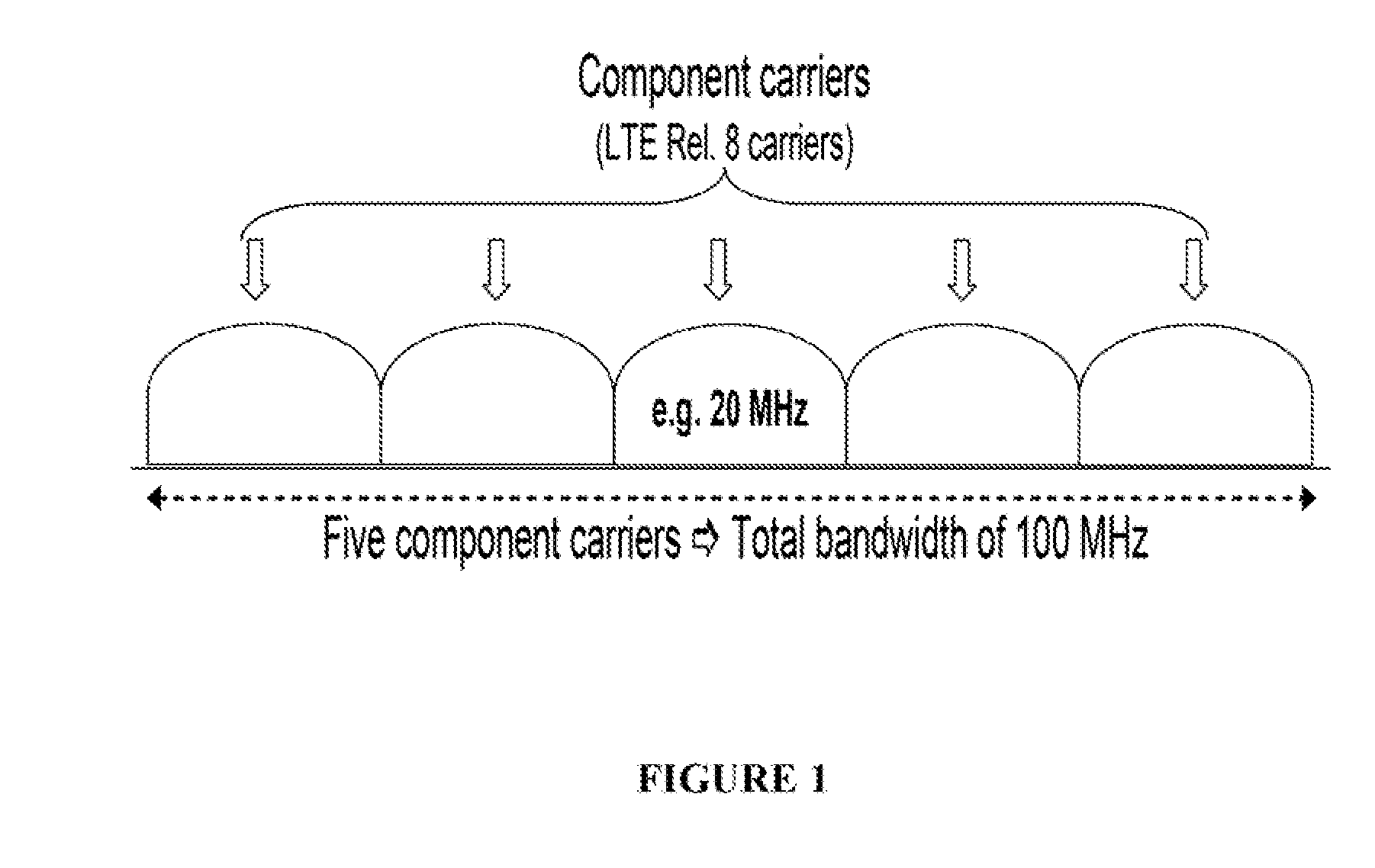

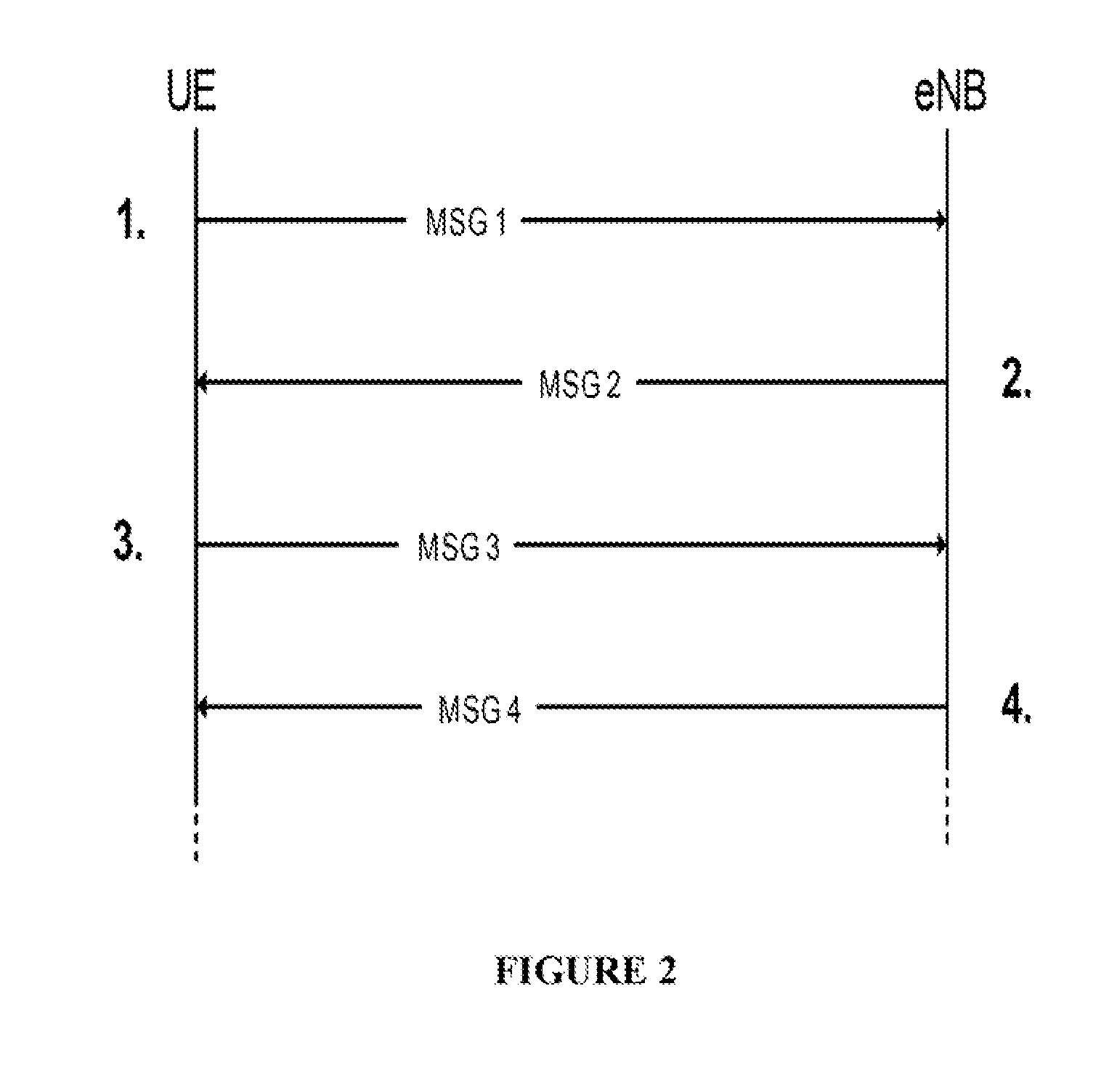

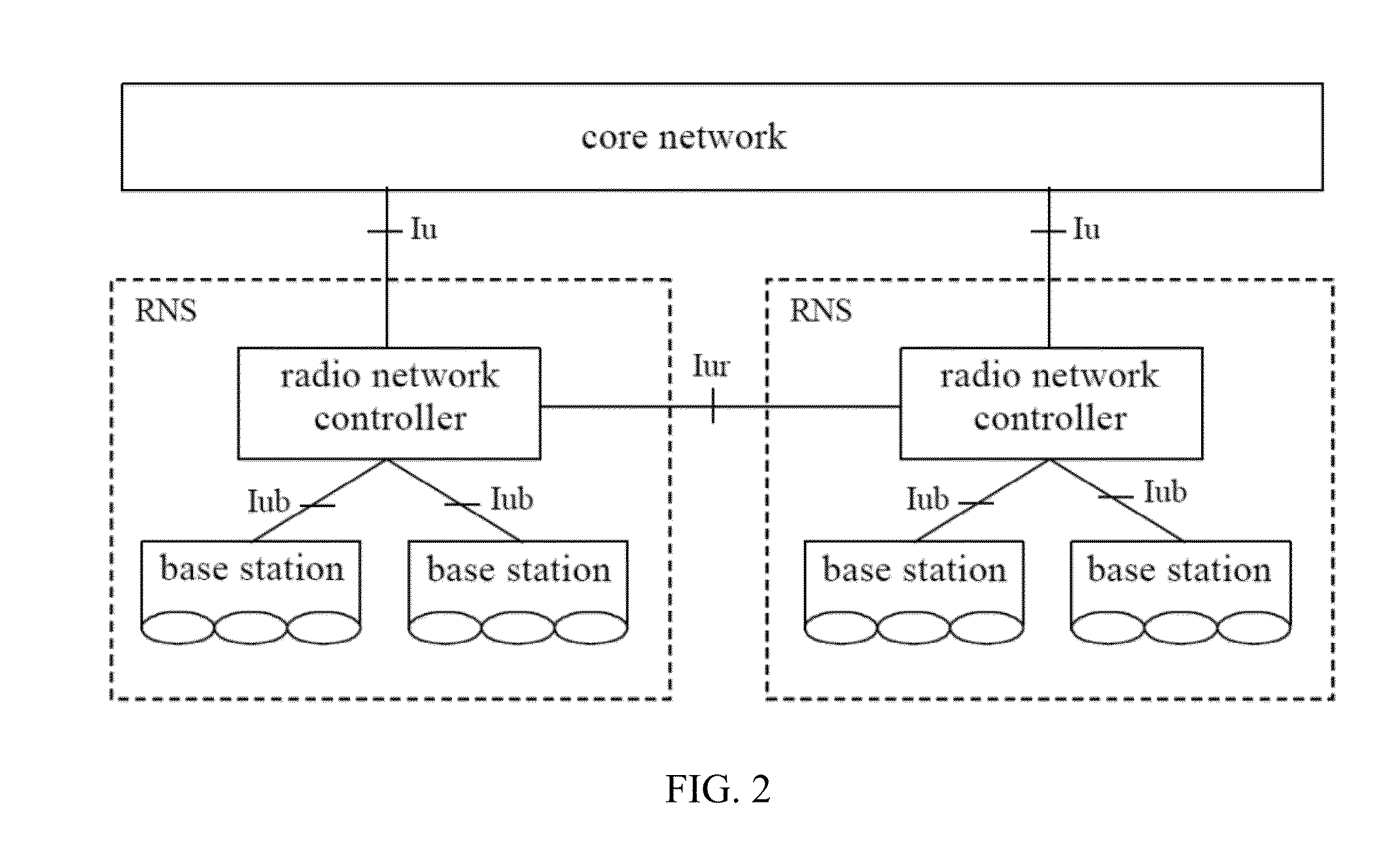

Methods and apparatus for resource management in a multi-carrier telecommunications system

ActiveUS20100285809A1Reduce handover latencyReduce delayTransmission path divisionAssess restrictionCarrier signalResource management

The embodiments of the present invention relate to apparatuses and methods for resource management in a multi-carrier system wherein a plurality of component carriers (CCs) is defined per cell. According to a method in an apparatus corresponding to a radio base station, a message is assembled comprising information on the structure of the cell served by the radio base station; the information including one or more CCs used in the cell that is / are available for a user equipment for performing initial access in the cell. The method also comprises, transmitting the assembled message to the user equipment and indicating to the user equipment to what resources to use for random access in the cell. The exemplary embodiments of the present invention also relates to a method in the user equipment, to a radio base station and to a user equipment.

Owner:TELEFON AB LM ERICSSON (PUBL)

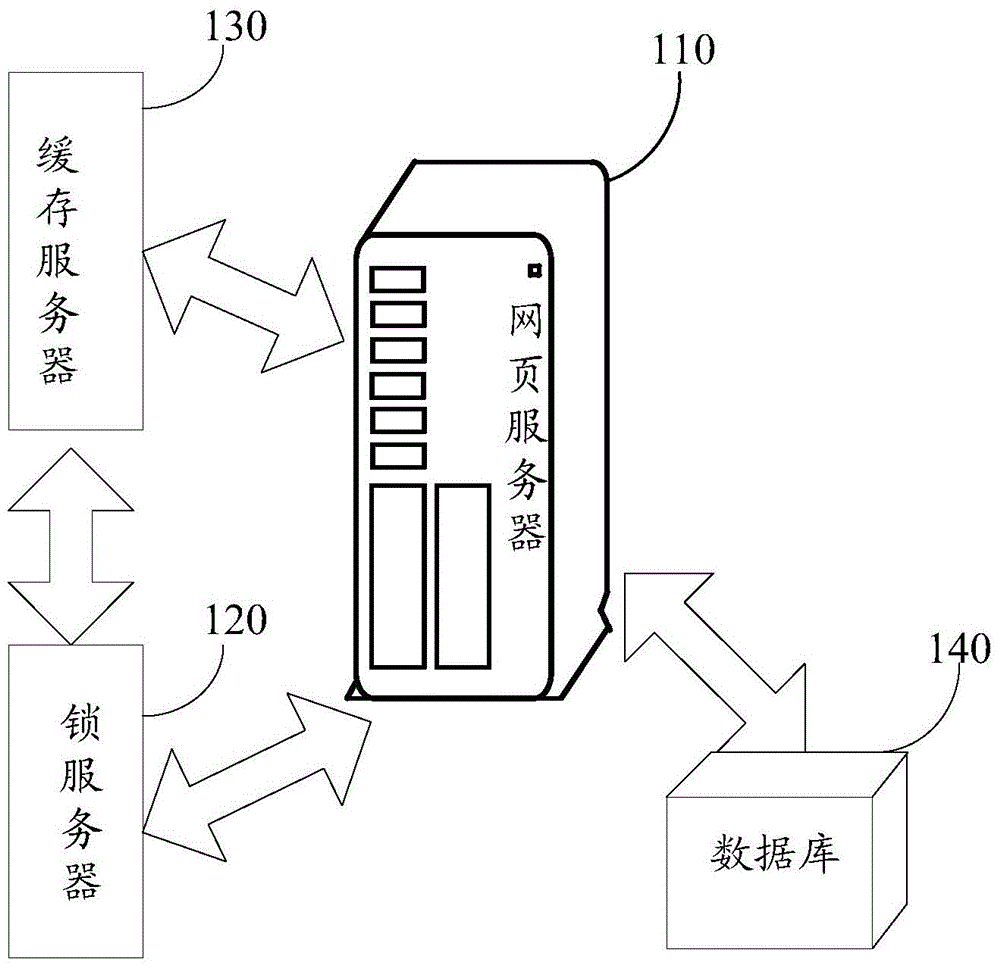

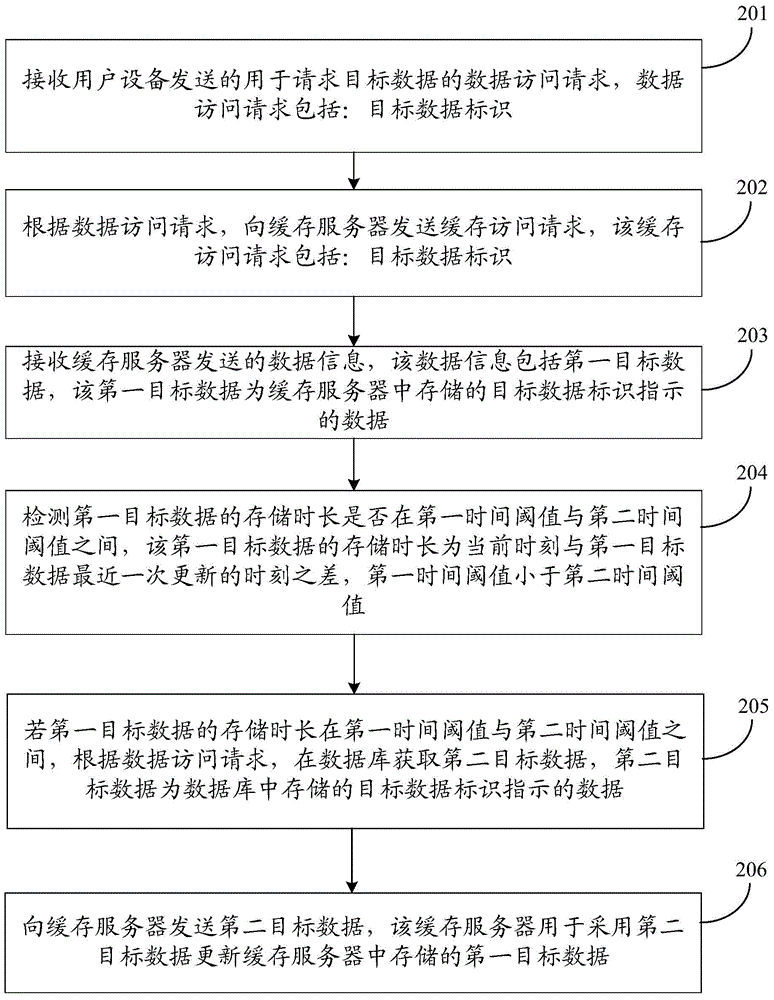

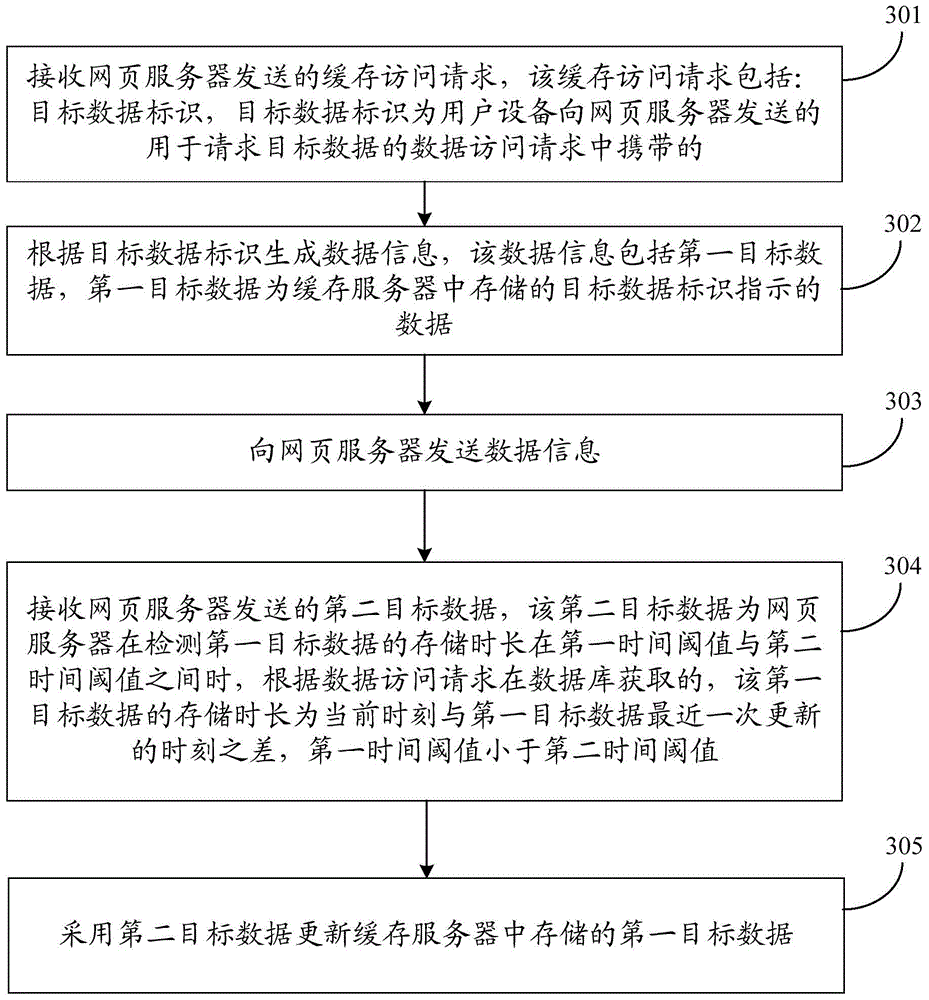

Data access method, apparatus and system

ActiveCN105138587AImprove data access efficiencyReduce access latencySpecial data processing applicationsData informationWeb page

The disclosure relates to a data access method and apparatus and system, and belongs to the field of communication. The data access method comprises: receiving a data access request sent by a user device; according to the data access request, sending a cache access request to a cache server; receiving data information sent by the cache server, wherein the data information comprises first target data; detecting whether a storage time of the first target data is between a first time threshold and a second time threshold or not; if the first target data is between the first time threshold and the second time threshold, according to the data access request, acquiring second target data in a database; and sending the second target data to the cache server, wherein the cache server is for adopting the first target data stored in the second target data update cache server. According to the data access method provided by the invention, problems of relatively low efficiency and relatively long delay of the data access are solved; effects of improving the data access efficiency and reducing the access delay are realized; and the data access method is used for for the access of page data.

Owner:XIAOMI INC

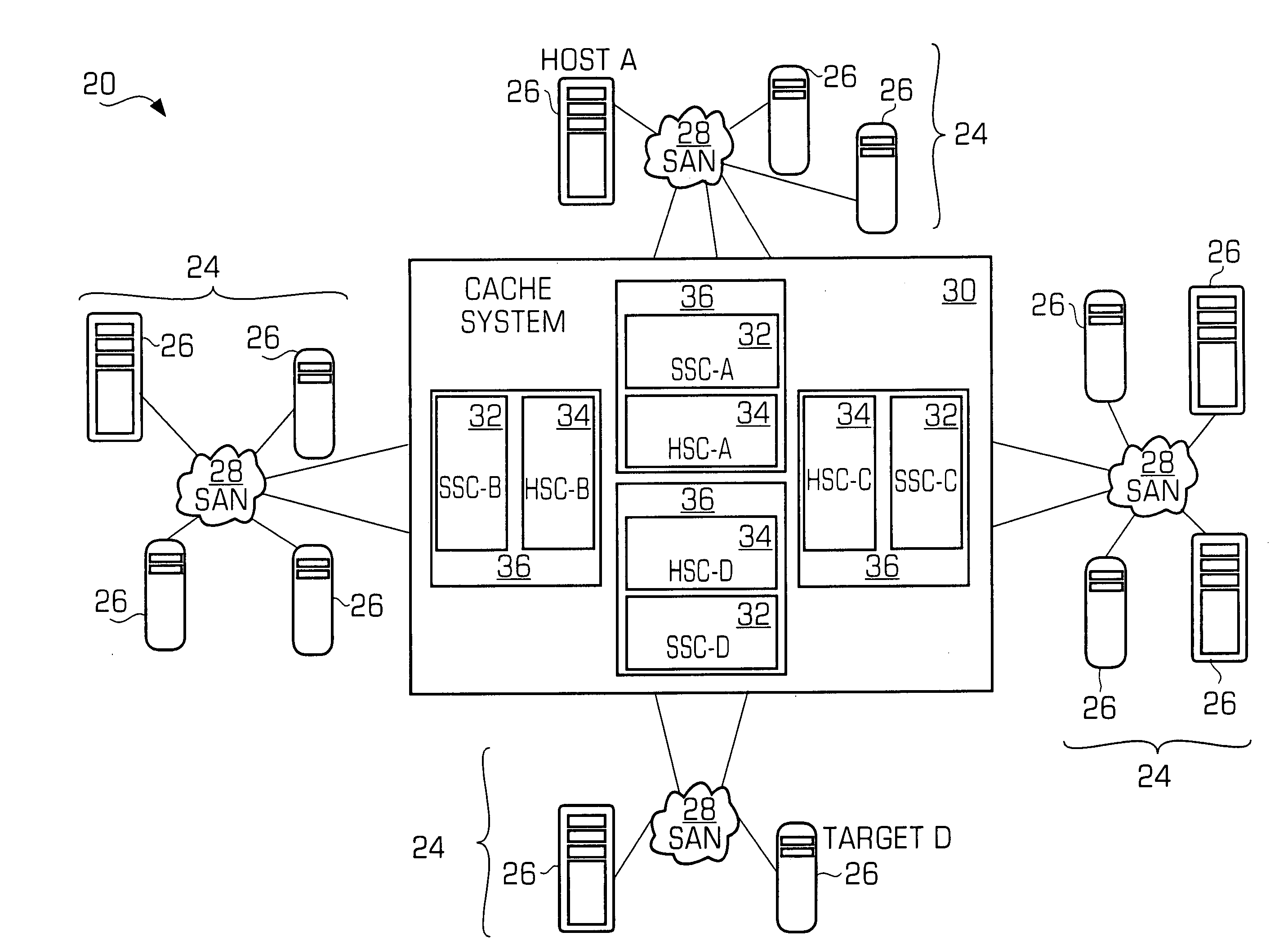

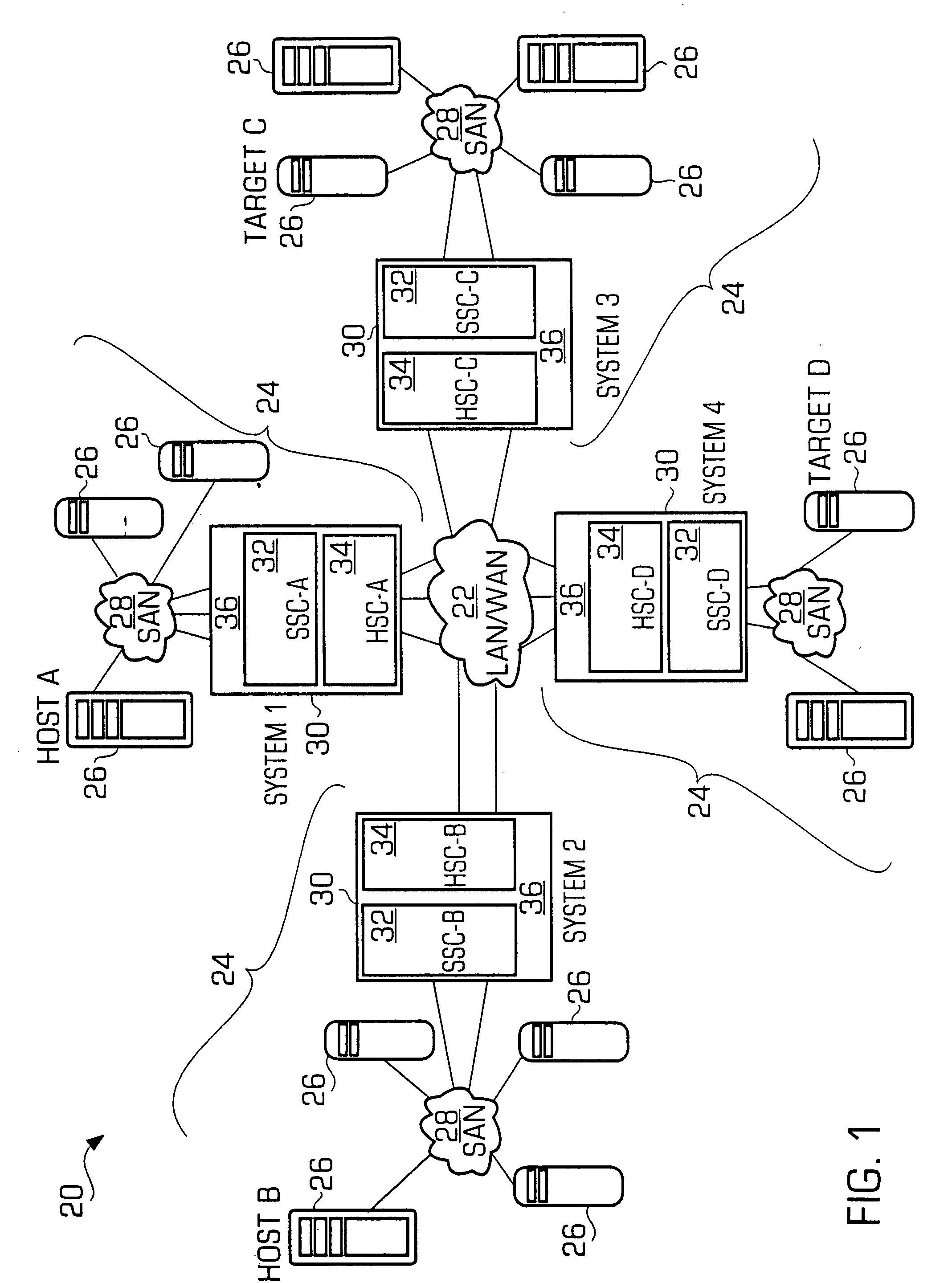

Caching system and method for a network storage system

InactiveUS20050027798A1Eliminating trafficEfficient updateMemory architecture accessing/allocationMemory adressing/allocation/relocationData trafficOperating system

A cache system and method in accordance with the invention includes a cache near the target devices and another cache at the requesting host side so that the data traffic across the computer network is reduced. A cache updating and invalidation method are described.

Owner:CHIOU LIH SHENG +4

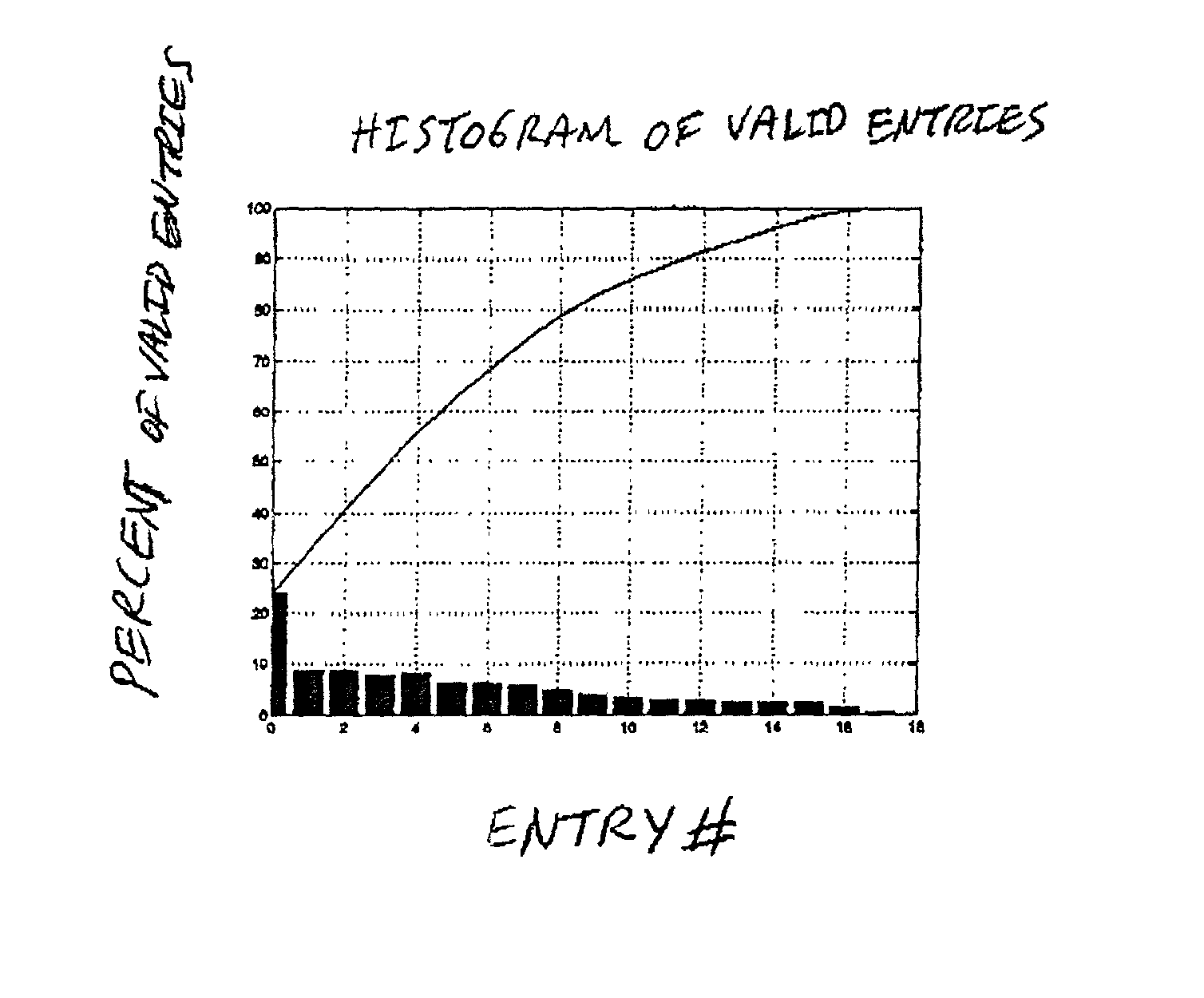

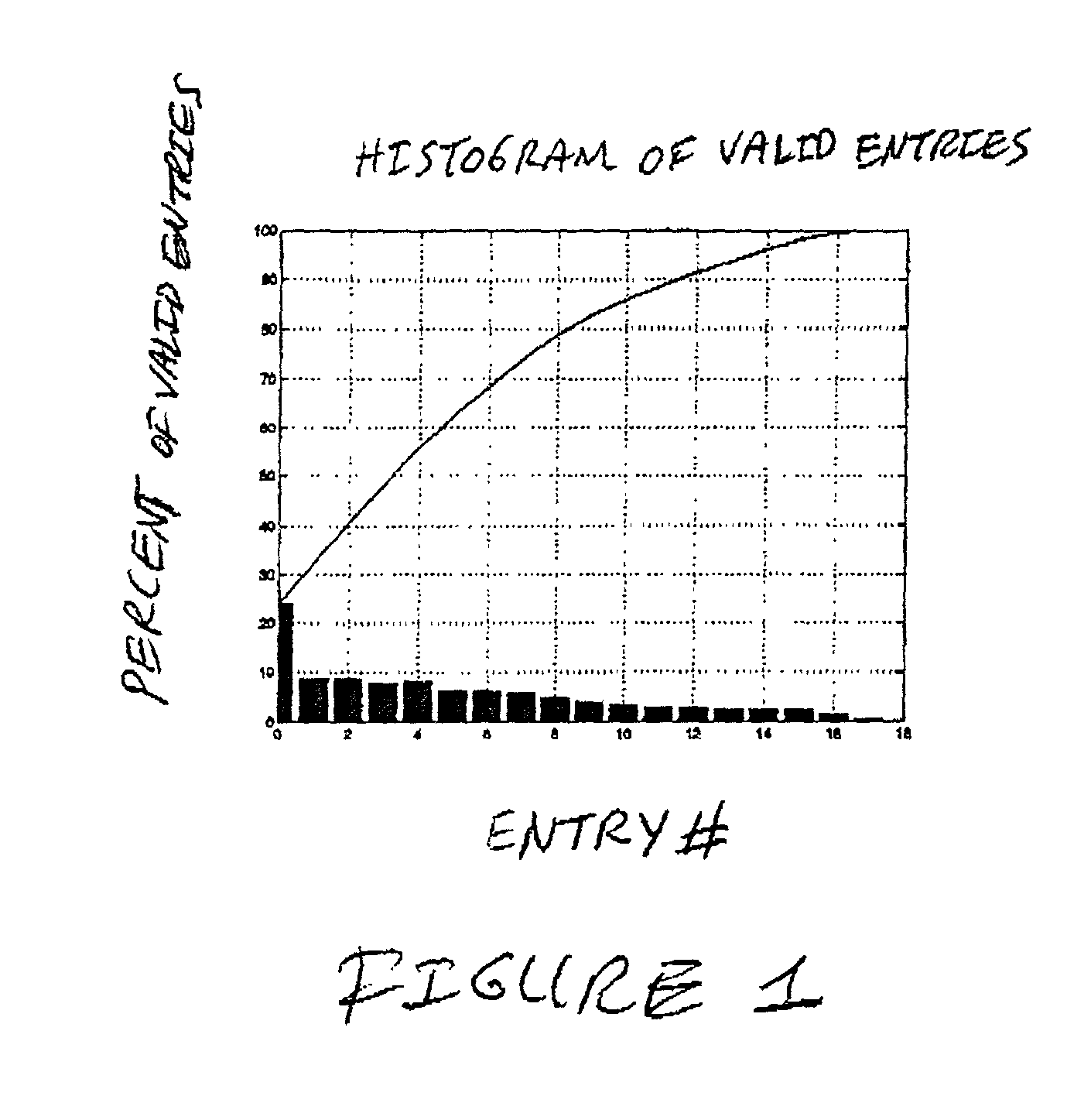

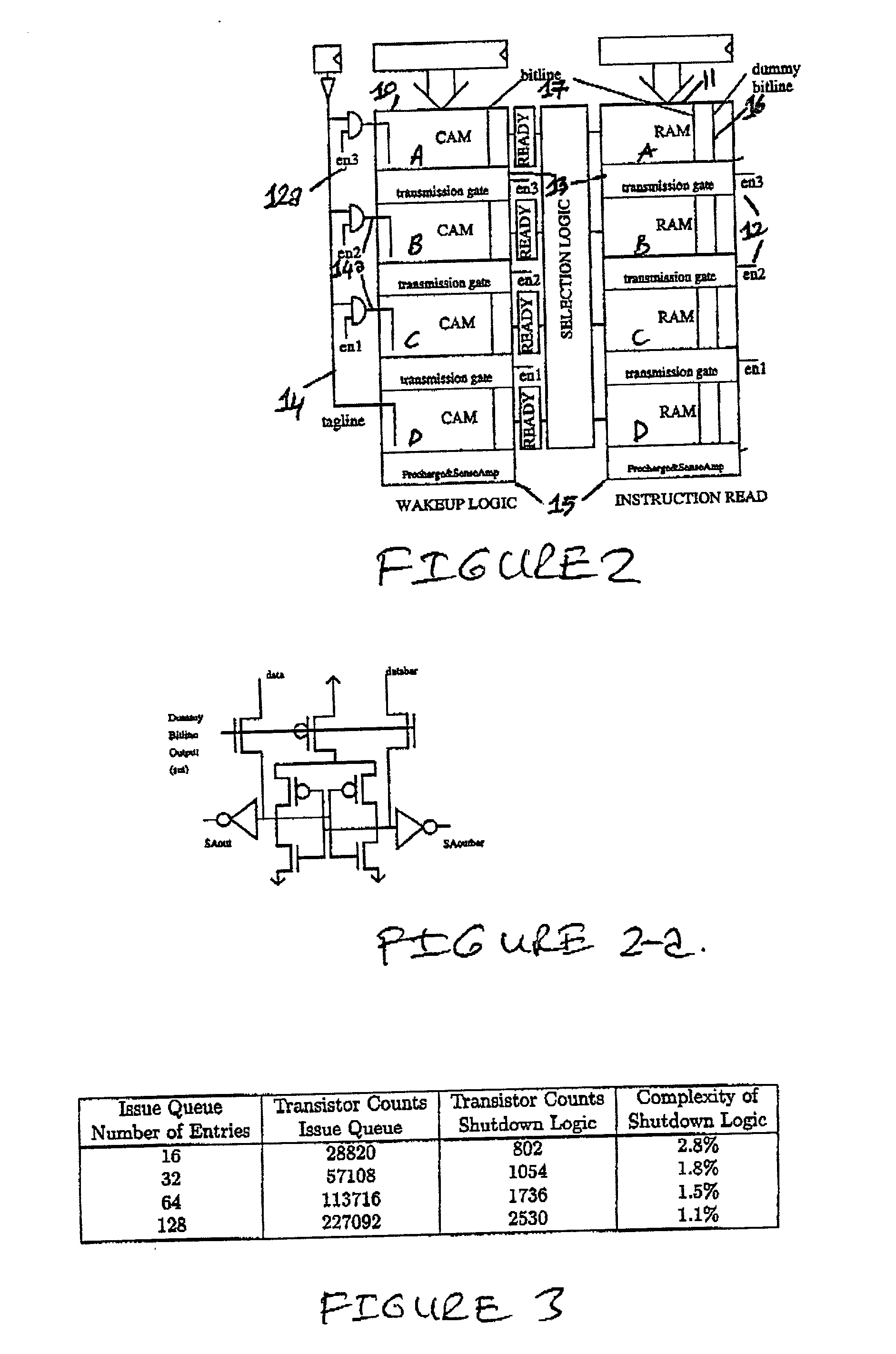

Adaptive issue queue for reduced power at high performance

ActiveUS20020053038A1Prevent degradationLimit IPC lossEnergy efficient ICTVolume/mass flow measurementParallel computingMicroprocessor

A method and structure of reducing power consumption in a microprocessor includes at least one storage structure in which the activity of the storage structure is dynamically measured and the size of the structure is controlled based on the activity. The storage structure includes a plurality of blocks, and the size of the structure is controlled in units of block size, based on activity measured in the blocks. An exemplary embodiment is an adaptive out-of-order queue.

Owner:IBM CORP +1

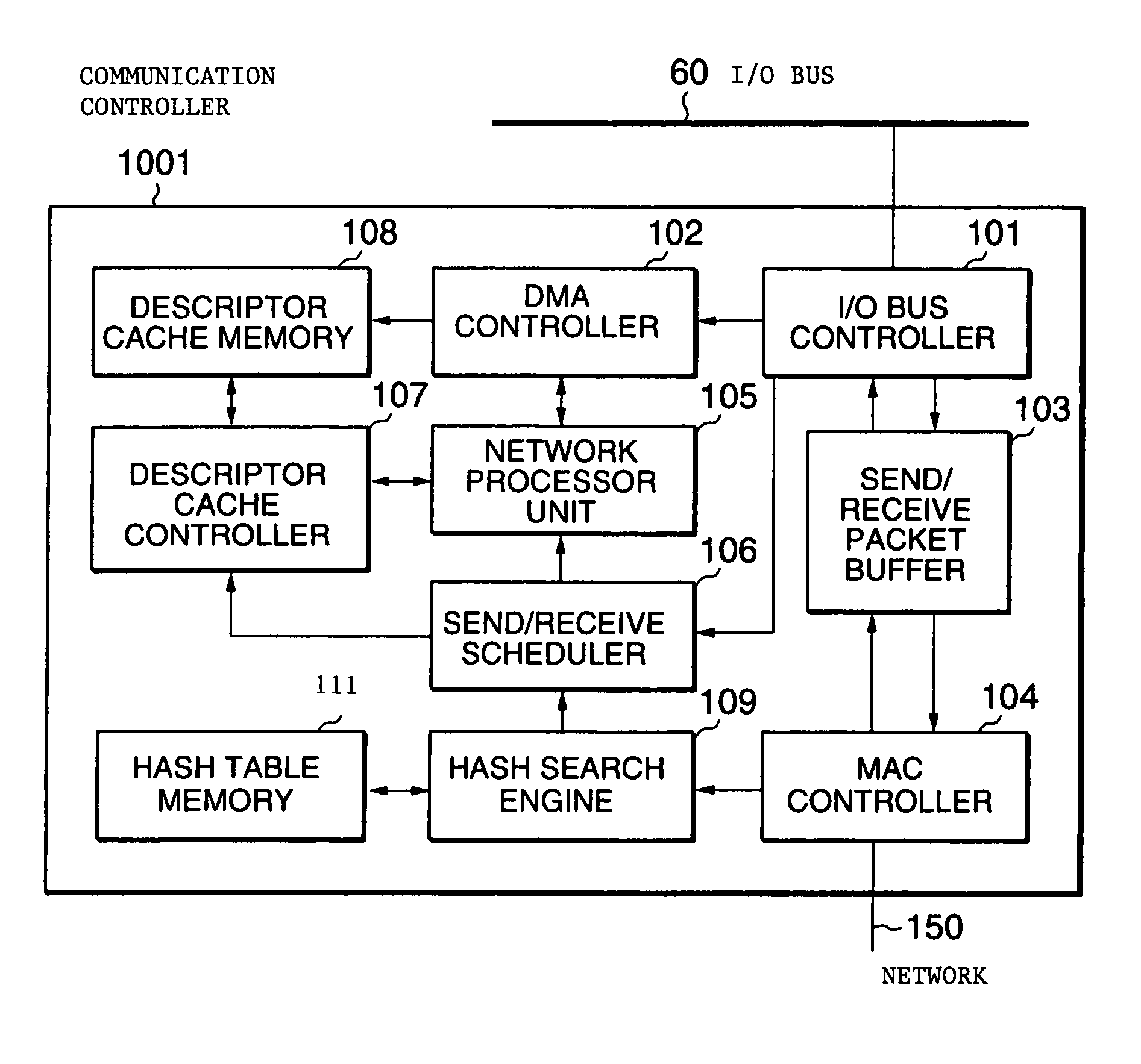

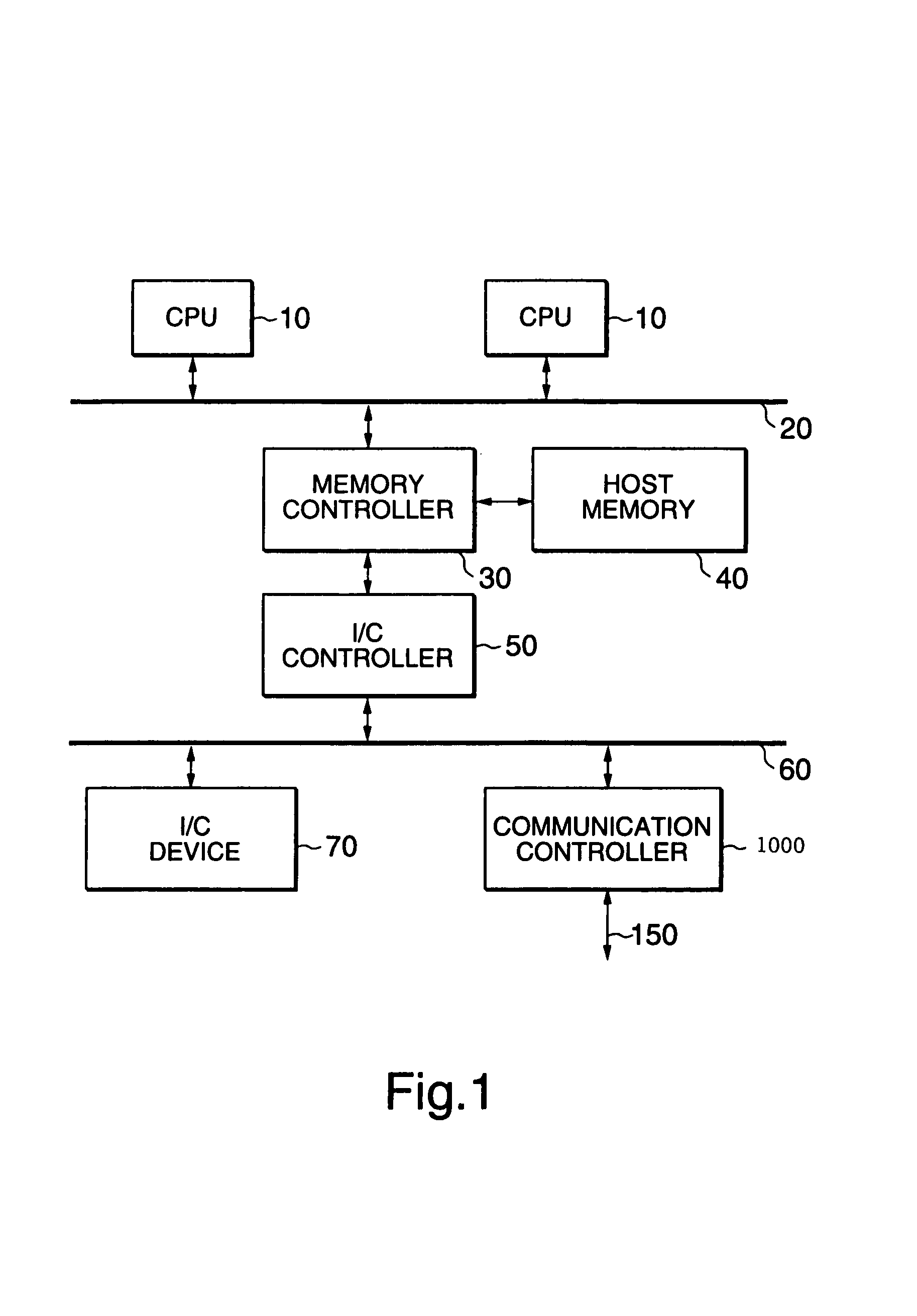

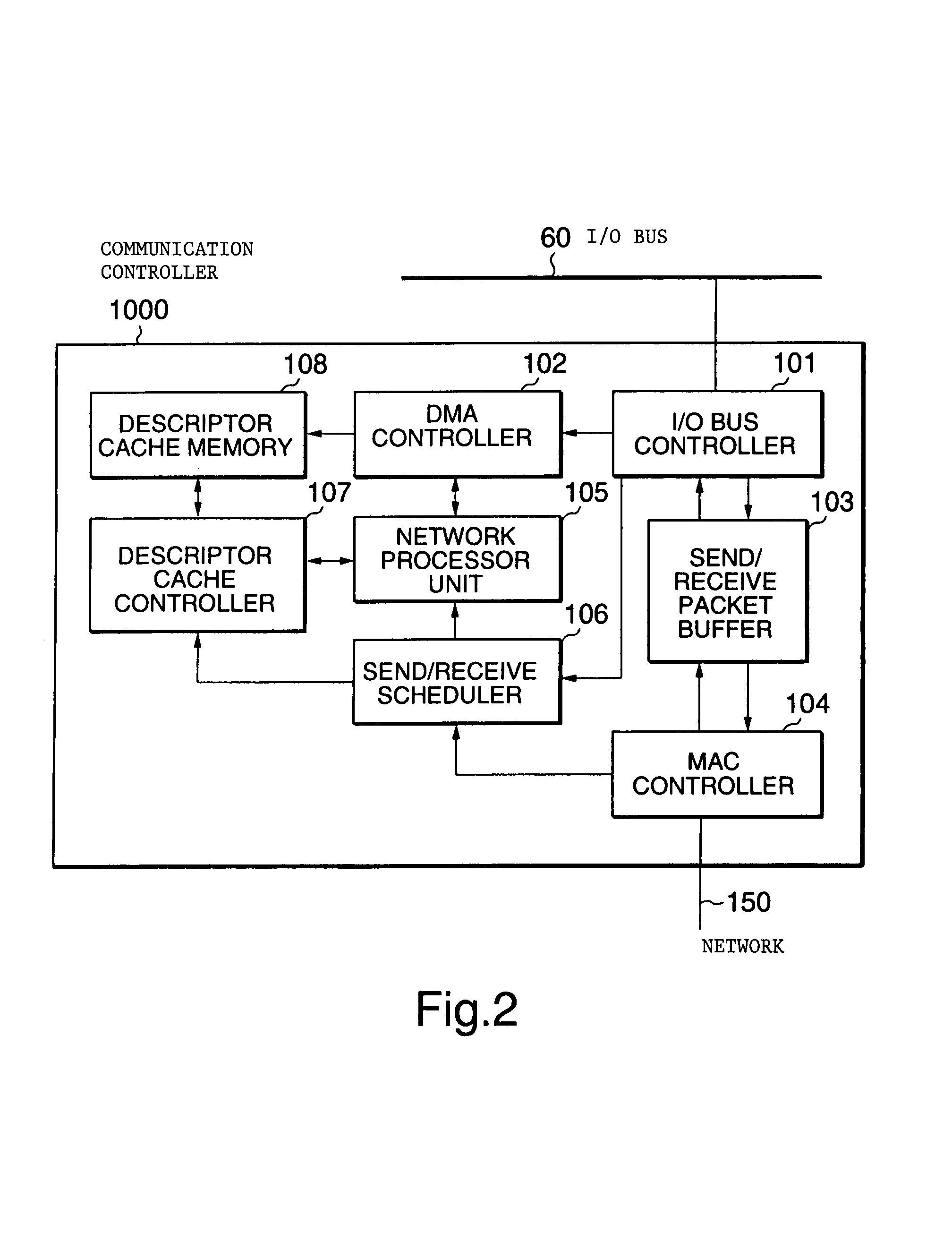

Communication control apparatus which has descriptor cache controller that builds list of descriptors

InactiveUS7472205B2Reduce descriptor control overheadReduce access latencyCharacter and pattern recognitionMultiple digital computer combinationsCommunication unitParallel computing

A communication controller of the present invention includes a descriptor cache mechanism which makes a virtual descriptor gather list from the descriptor indicted from a host, and which allows a processor to refer to a portion of the virtual descriptor gather list in a descriptor cache window. Another communication controller of the present invention includes a second processor which allocates any communication process related with a first communication unit of the communication processes to the first one of a first processors and any communication process related with a second communication unit of the communication processes to the second one of the first processors. Another communication controller includes a first memory which stores control information. The first memory includes a first area accessed by the associated one of processors to refer to the control information and a second area which stores the control information during the access.

Owner:NEC CORP

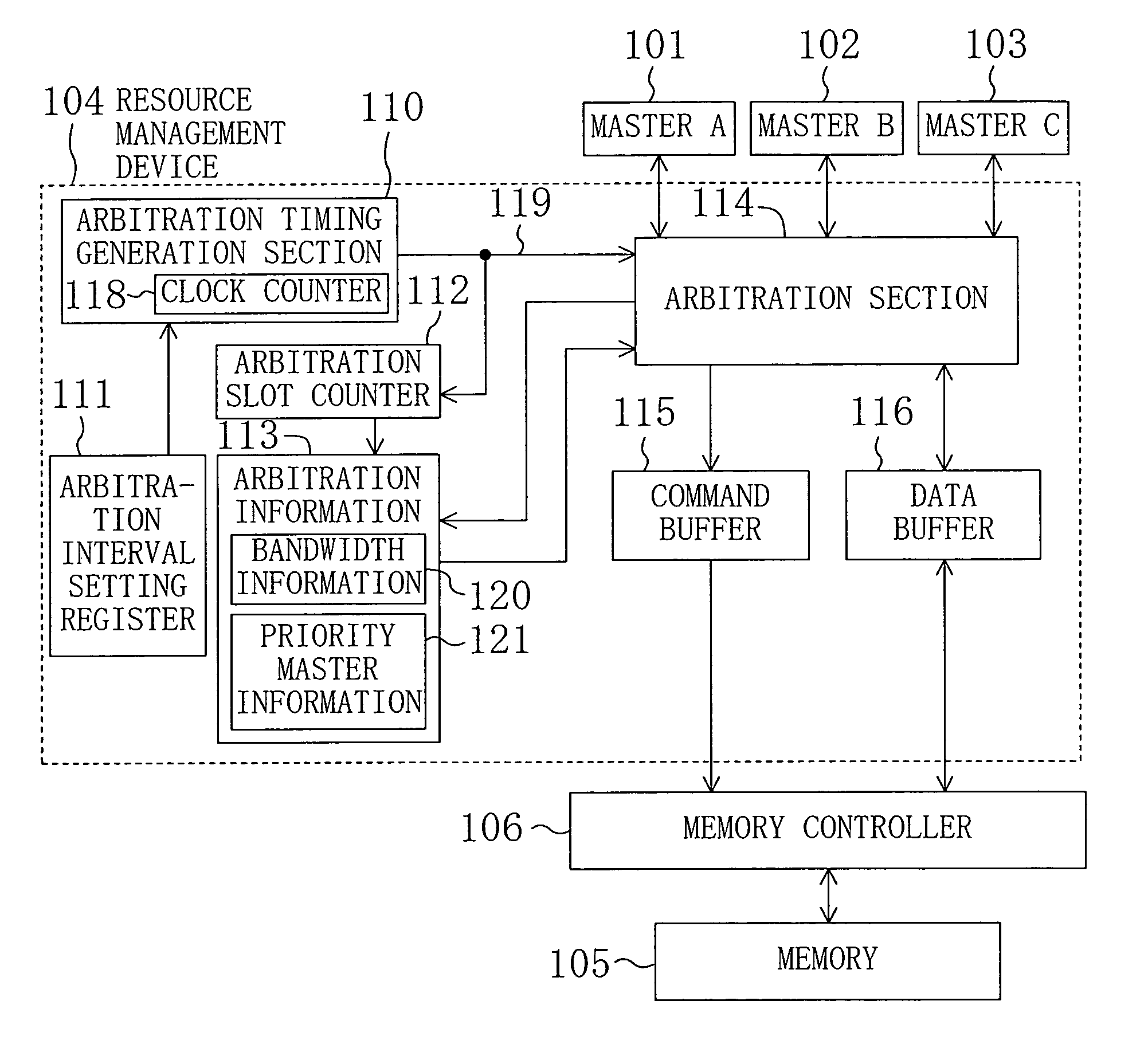

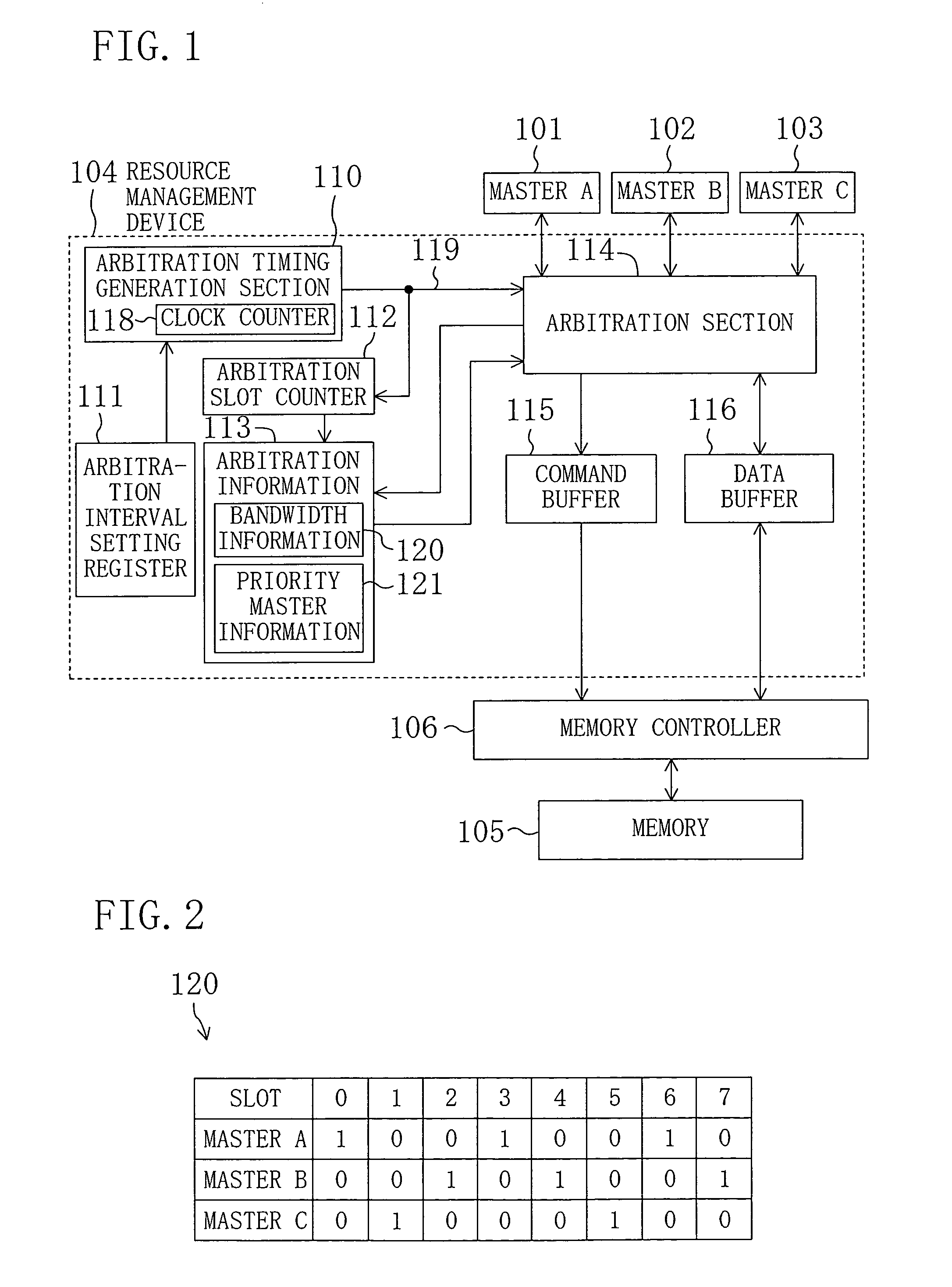

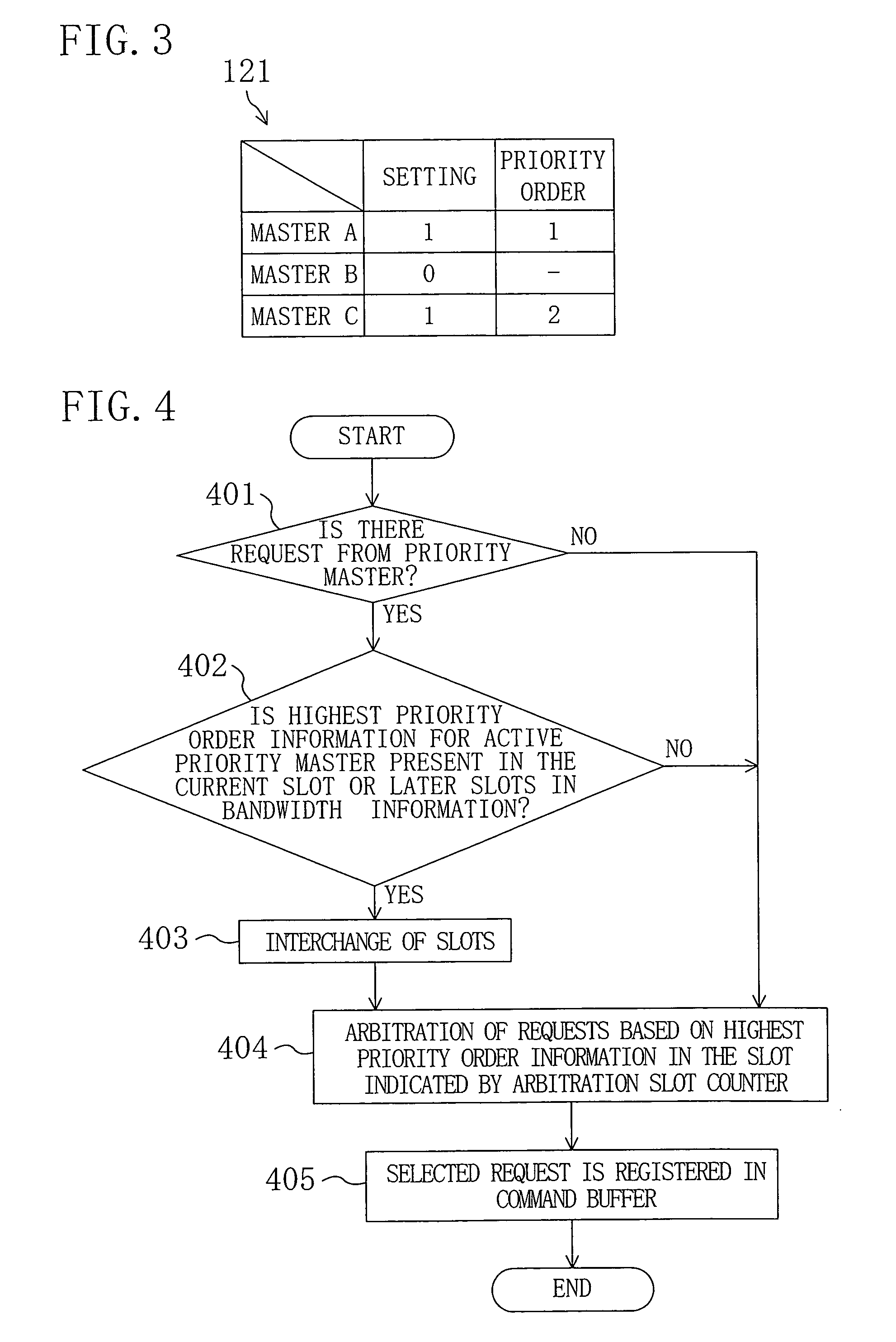

Resource management device

ActiveUS20050204085A1Reduce access latencyProgram synchronisationDigital computer detailsResource managementShared resource

Bandwidth information including a plurality of slots each having highest priority order information for arbitrating access conflict, and priority master information for specifying, as a priority master, one or more of a plurality of masters whose latency in accessing a memory serving as a shared resource is desired to be reduced are included as arbitration information. When an arbitration section arbitrates access conflict while switching the slots in the bandwidth information at each of predetermined arbitration timings, if there is an access request from the priority master specified in the priority master information, the arbitration section changes the sequence of the slots in the bandwidth information so as to allow the priority master to access the memory with priority.

Owner:BEIJING ESWIN COMPUTING TECH CO LTD

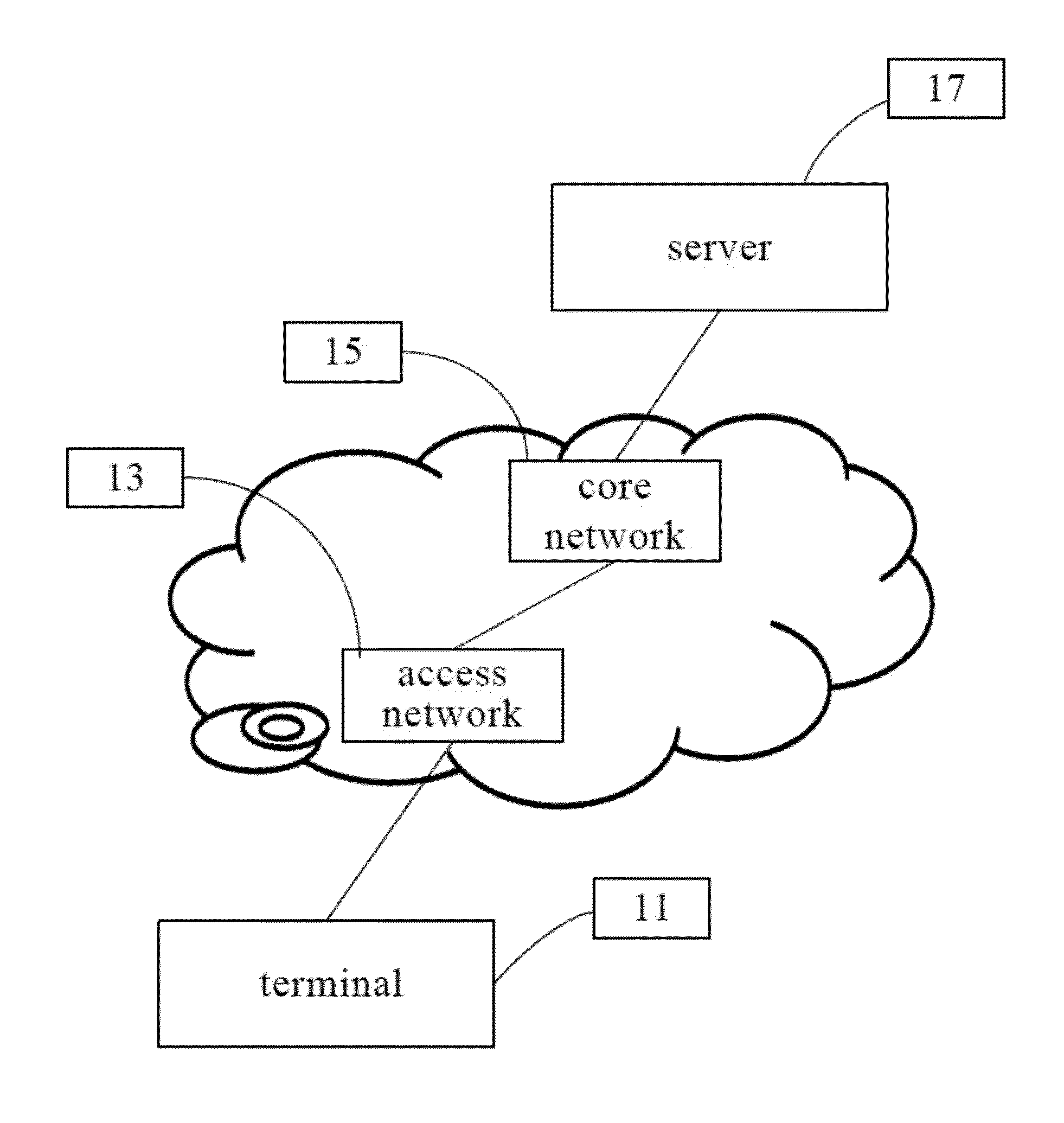

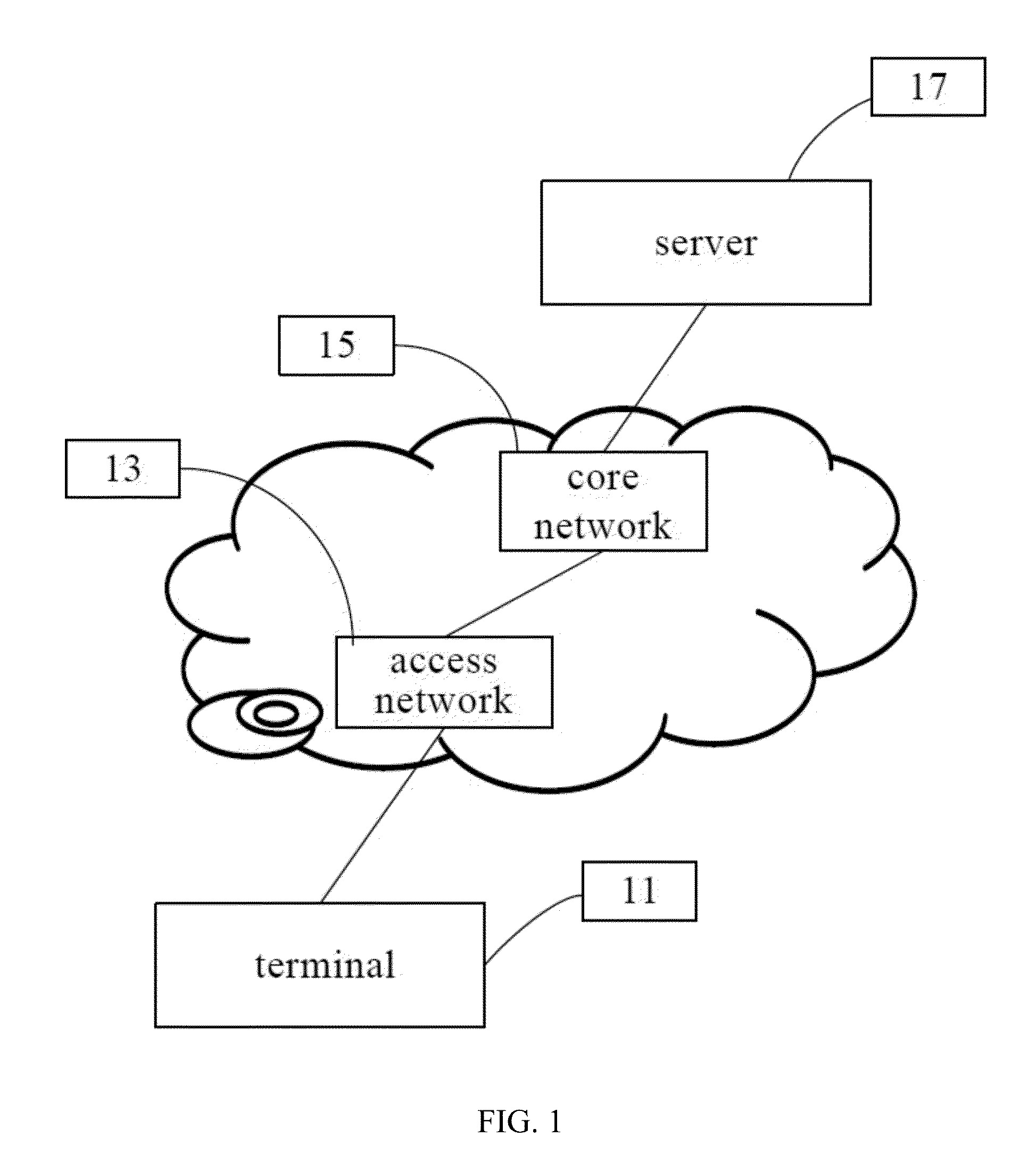

Method and system for accessing network

ActiveUS20130155894A1Reduce signaling overheadReduce access latencyError preventionTransmission systemsAccess networkComputer terminal

A method and system for accessing a network are disclosed in the present invention. The method includes that: terminals in a same group execute random number synchronization; when an arbitrary first terminal in the group initiates network access, terminals in the group except the first terminal monitor the access of the first terminal according to the synchronized random number; after monitoring that the first terminal accesses network successfully, the terminals in the group except the first terminal initiate network access by using Radio Resource Control (RRC) connection uplink resources which are allocated to the group by the network. The technical solution of the present invention can reduce the signaling overhead for establishing signaling connection and data bearing.

Owner:HUAWEI TECH CO LTD

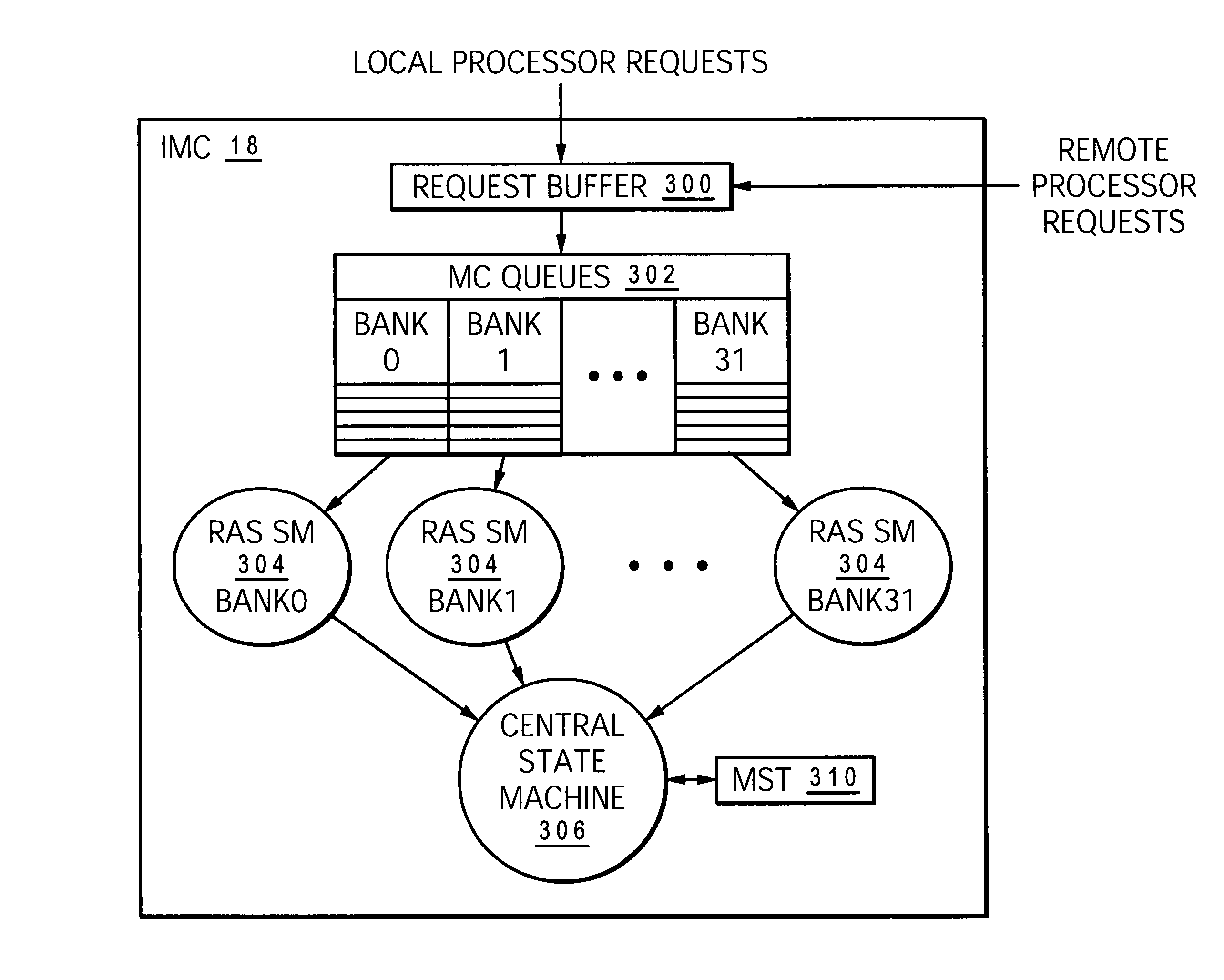

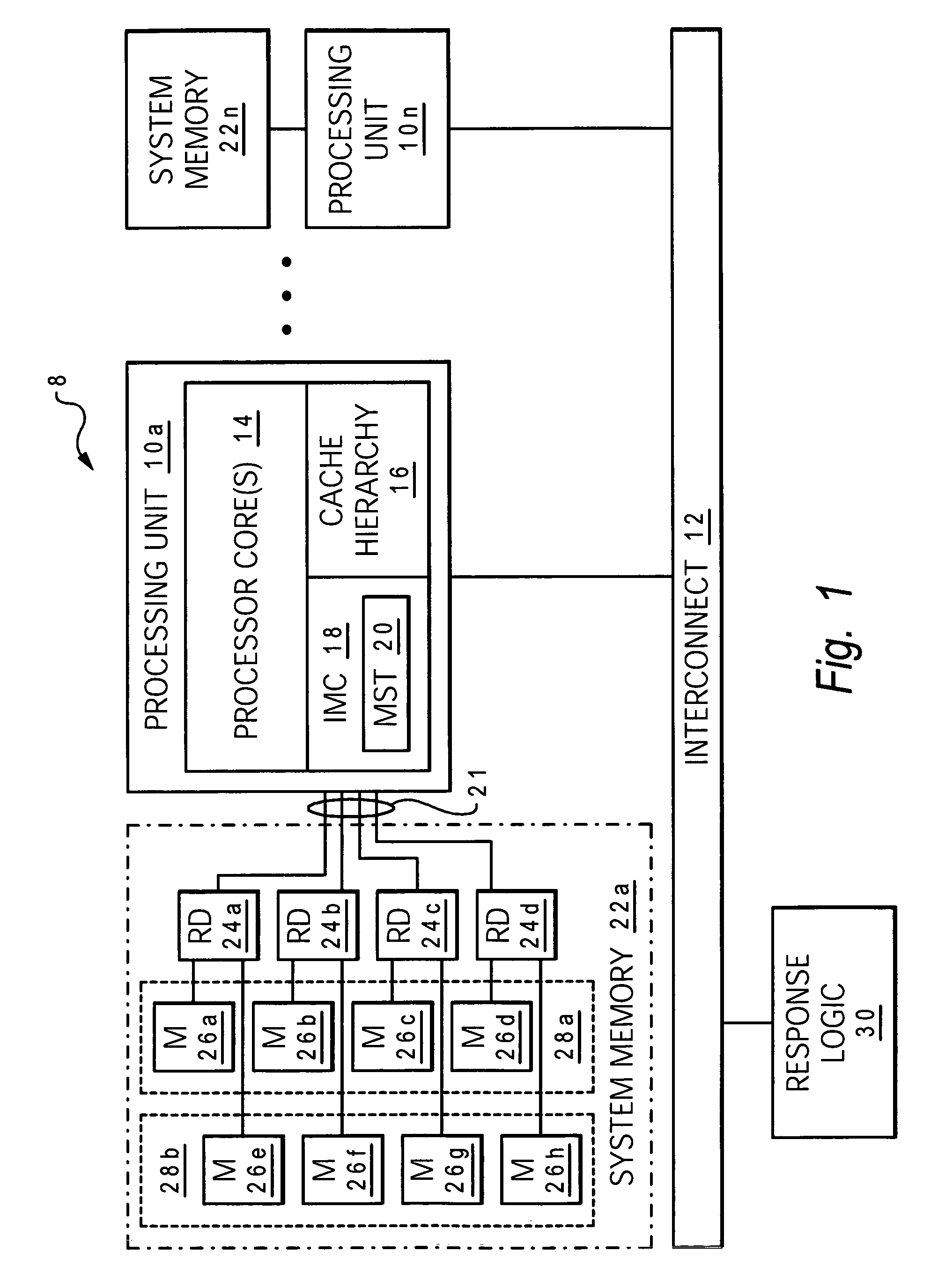

Method and system for supplier-based memory speculation in a memory subsystem of a data processing system

InactiveUS7130967B2Improvement in average memory access latencySignificant comprehensive benefitsMemory adressing/allocation/relocationConcurrent instruction executionData processing systemProcessing core

A data processing system includes one or more processing cores, a system memory having multiple rows of data storage, and a memory controller that controls access to the system memory and performs supplier-based memory speculation. The memory controller includes a memory speculation table that stores historical information regarding prior memory accesses. In response to a memory access request, the memory controller directs an access to a selected row in the system memory to service the memory access request. The memory controller speculatively directs that the selected row will continue to be energized following the access based upon the historical information in the memory speculation table, so that access latency of an immediately subsequent memory access is reduced.

Owner:INT BUSINESS MASCH CORP

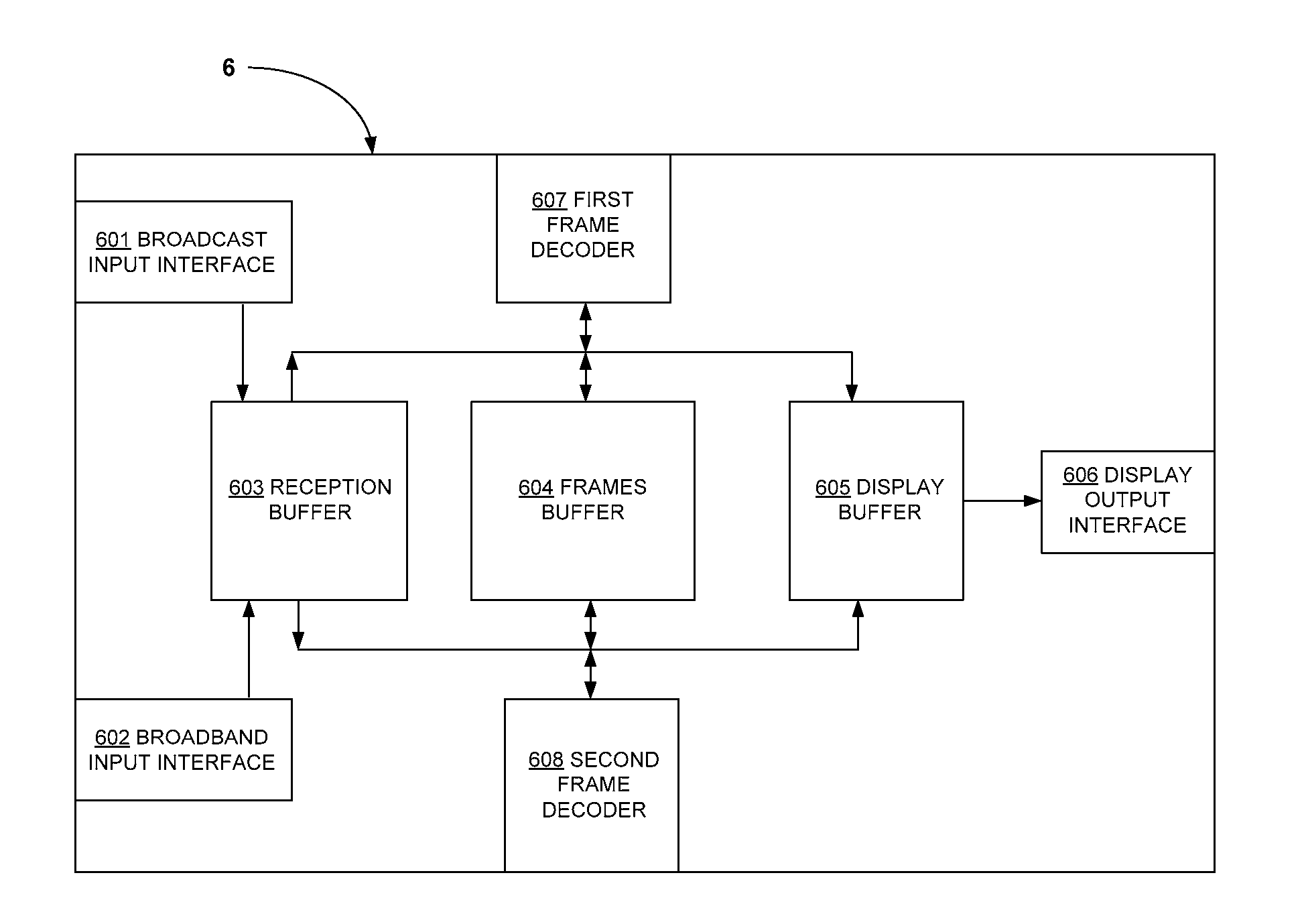

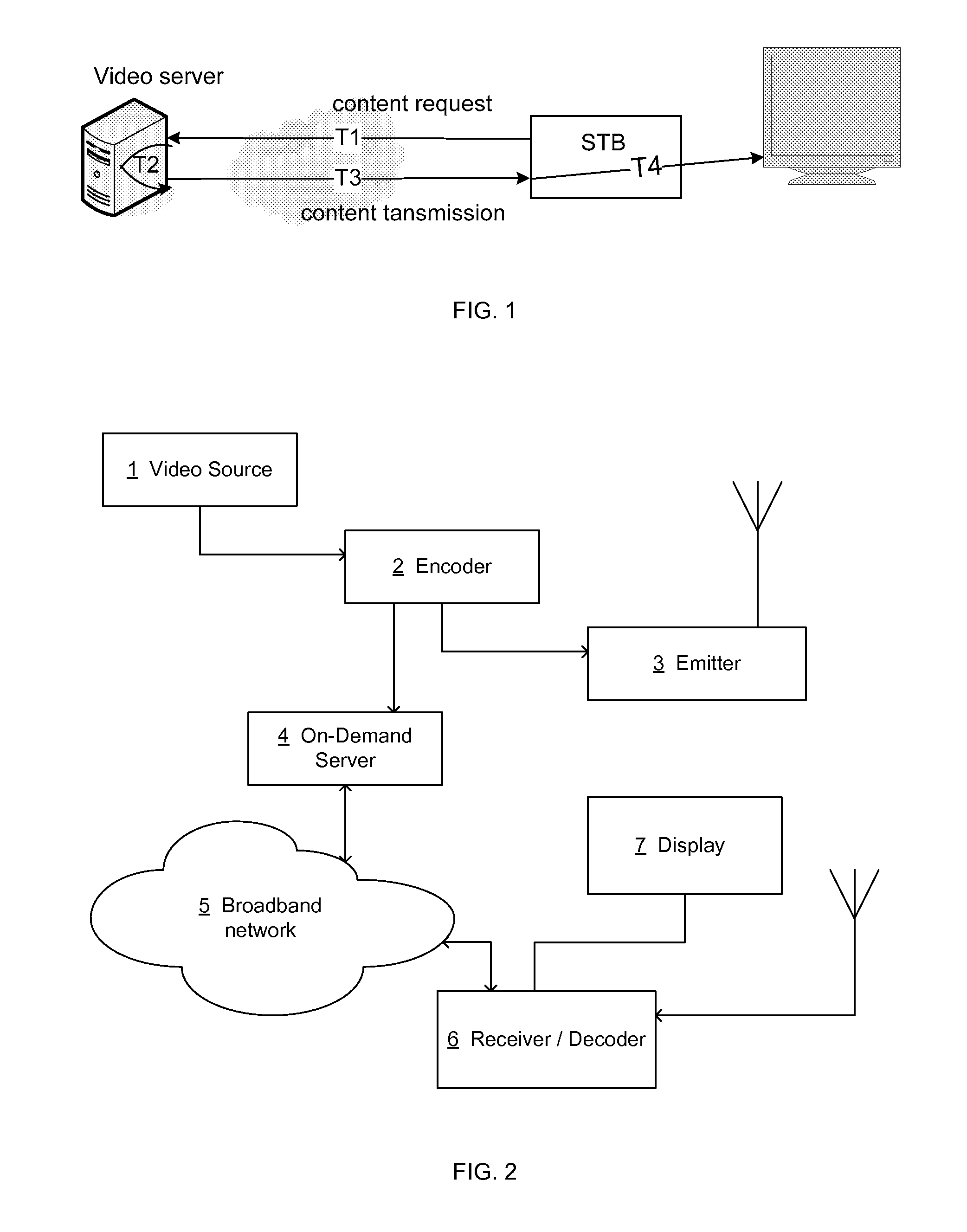

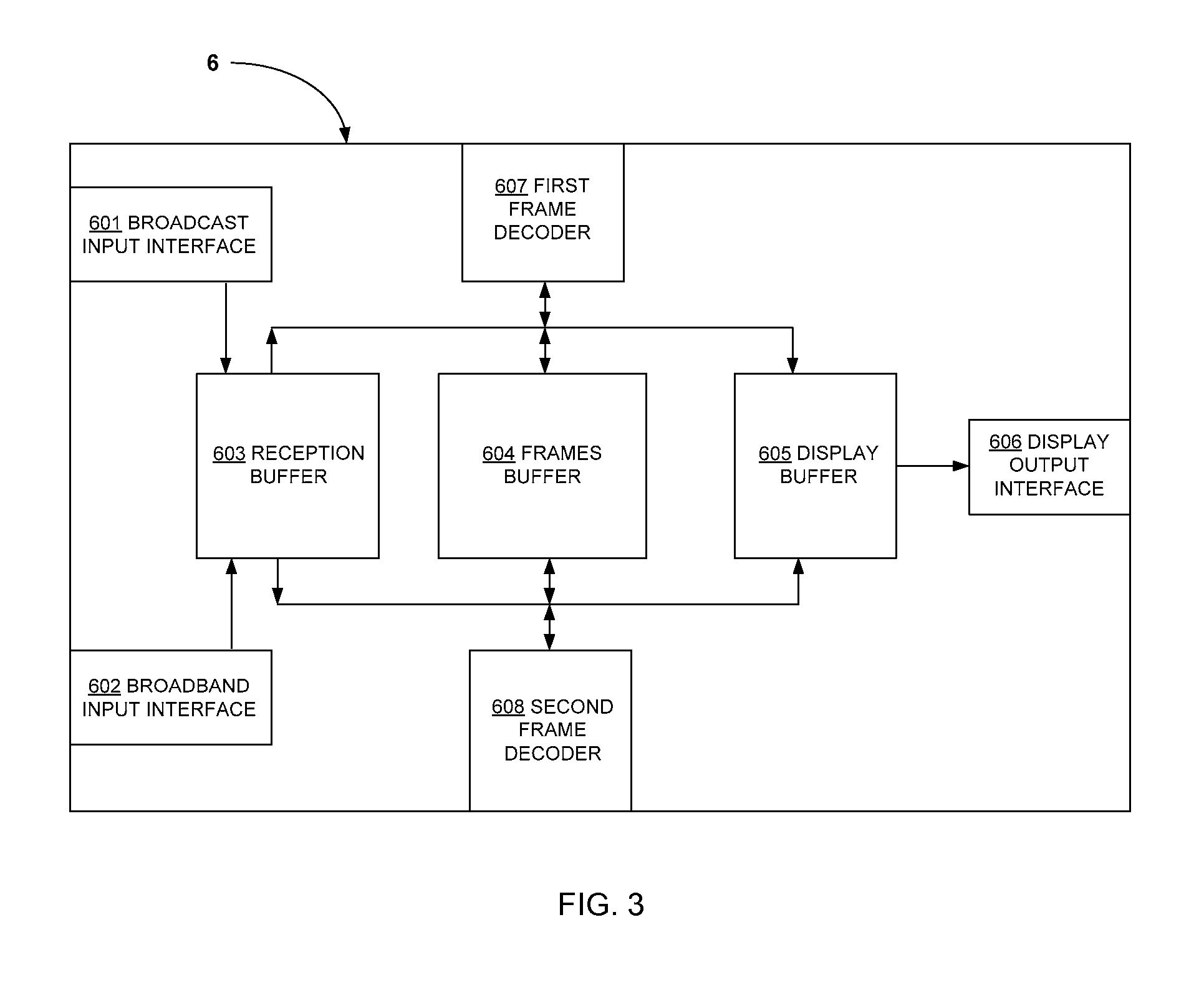

Decoder and method at the decoder for synchronizing the rendering of contents received through different networks

ActiveUS20120230389A1Optimization of delayOvercome disadvantagesColor television with pulse code modulationColor television with bandwidth reductionComputer networkGroup of pictures

A method of decoding an audio / video content transmitted over a broadband network. The method being based on the quick decoding of the first frames of a group of pictures without rendering them if the group of pictures arrives too late to be rendered synchronously with another audio / video content received through a broadcast network. The method allowing the synchronized rendering of contents respectively received over broadcast and broadband networks as soon as possible for the viewer.

Owner:INTERDIGITAL MADISON PATENT HLDG

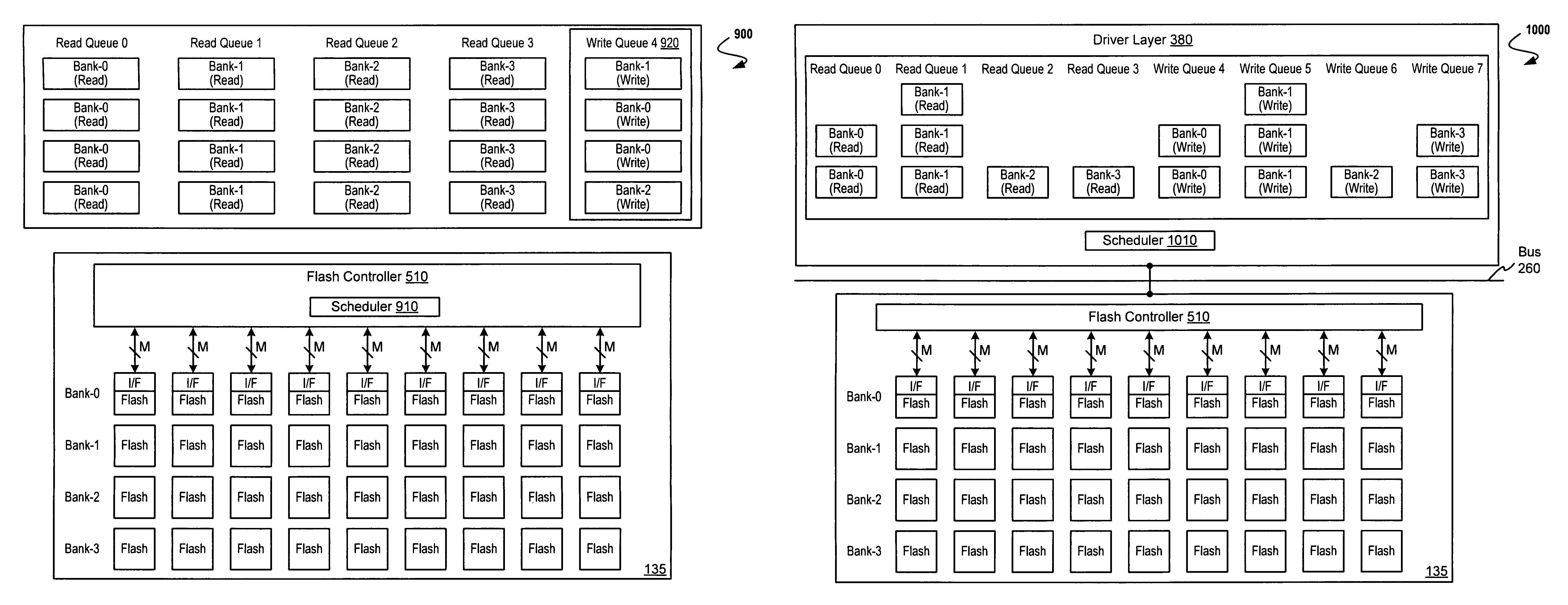

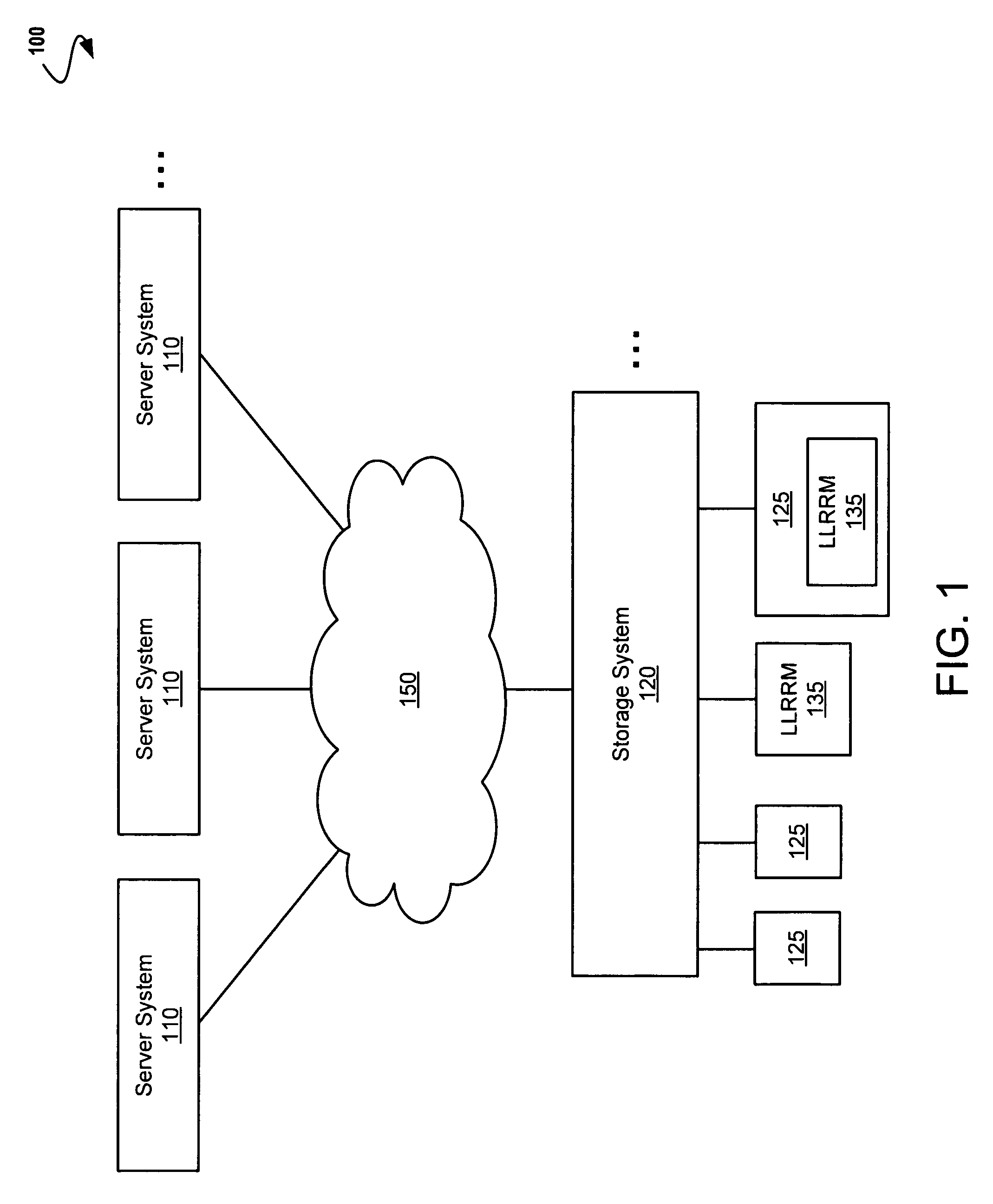

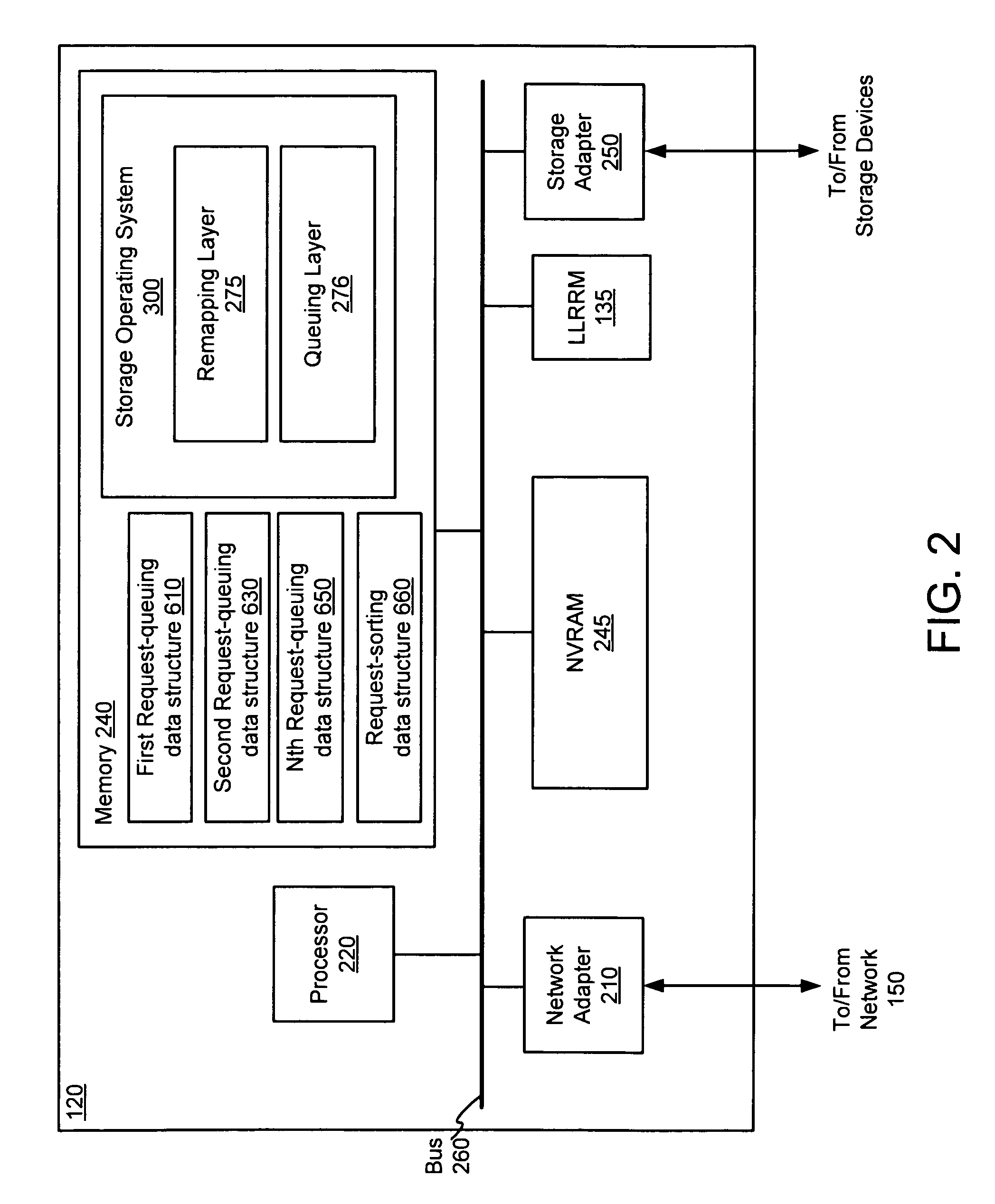

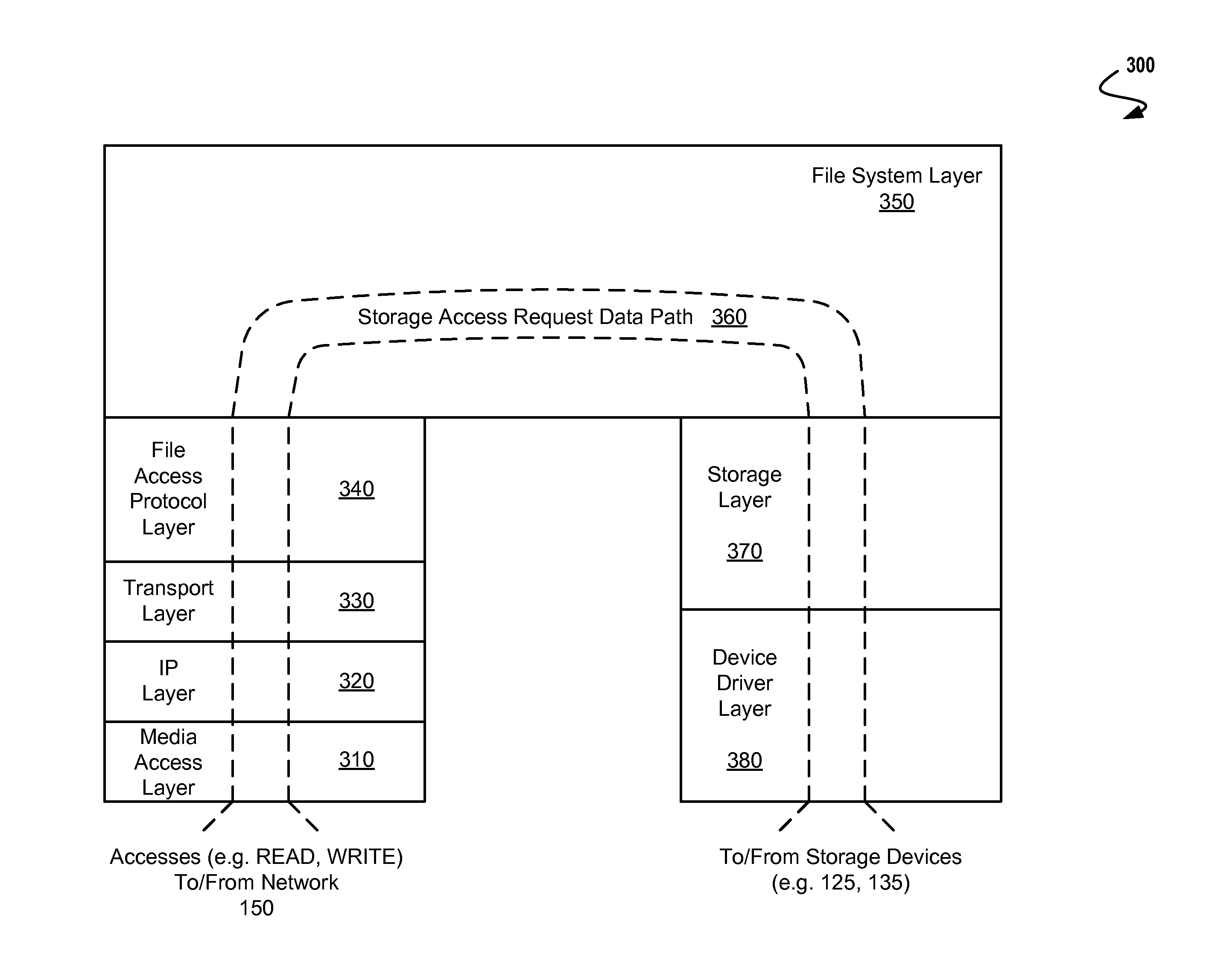

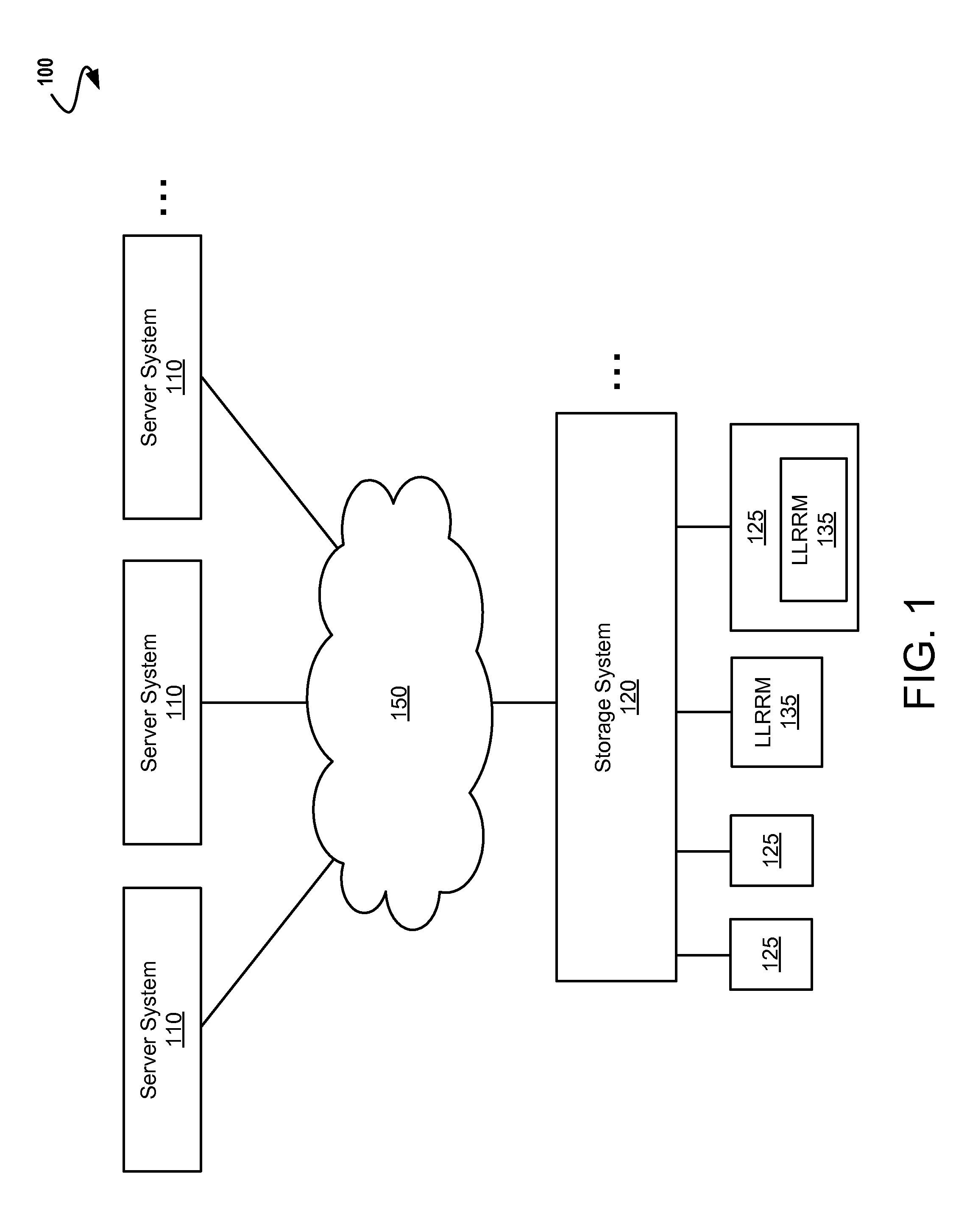

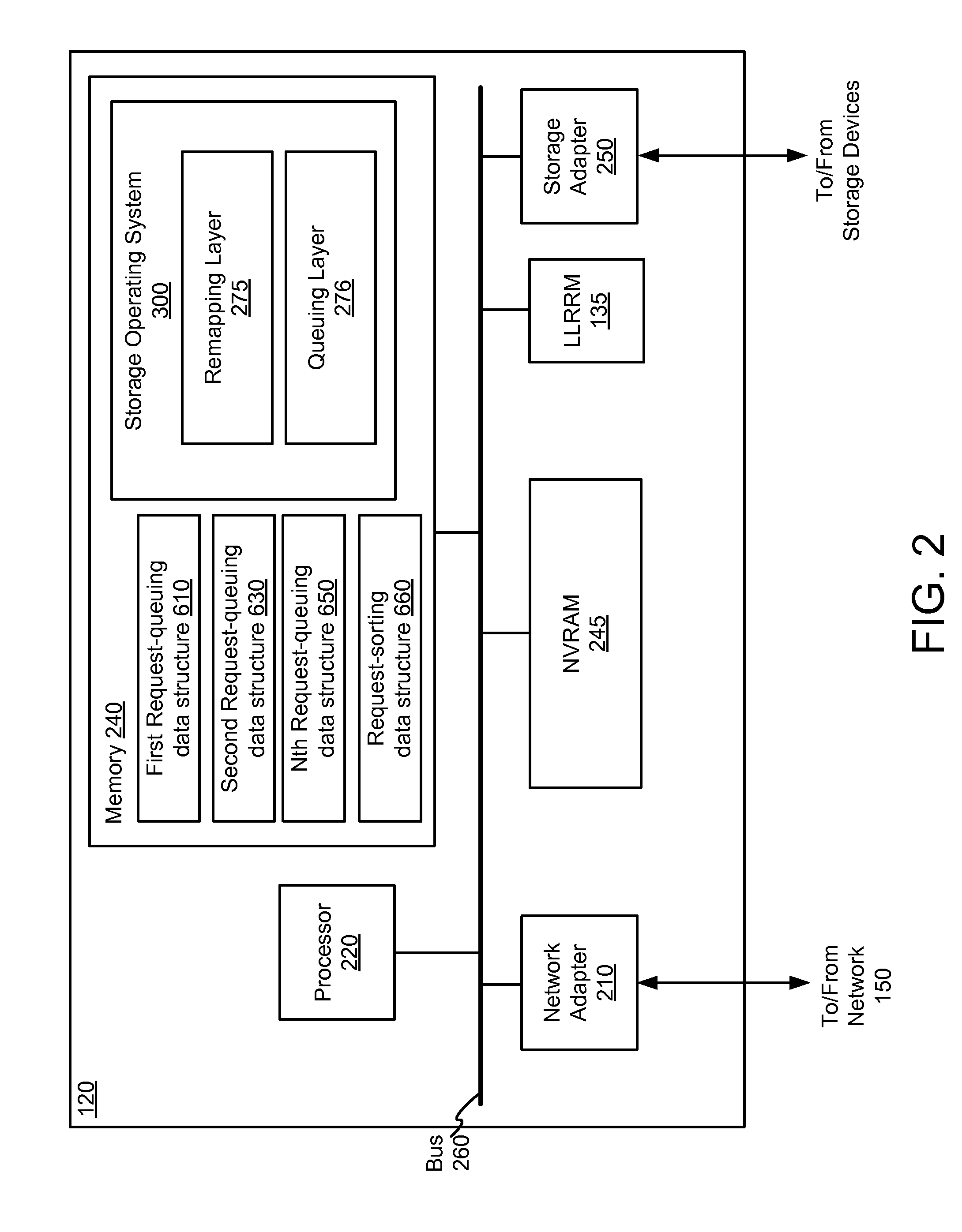

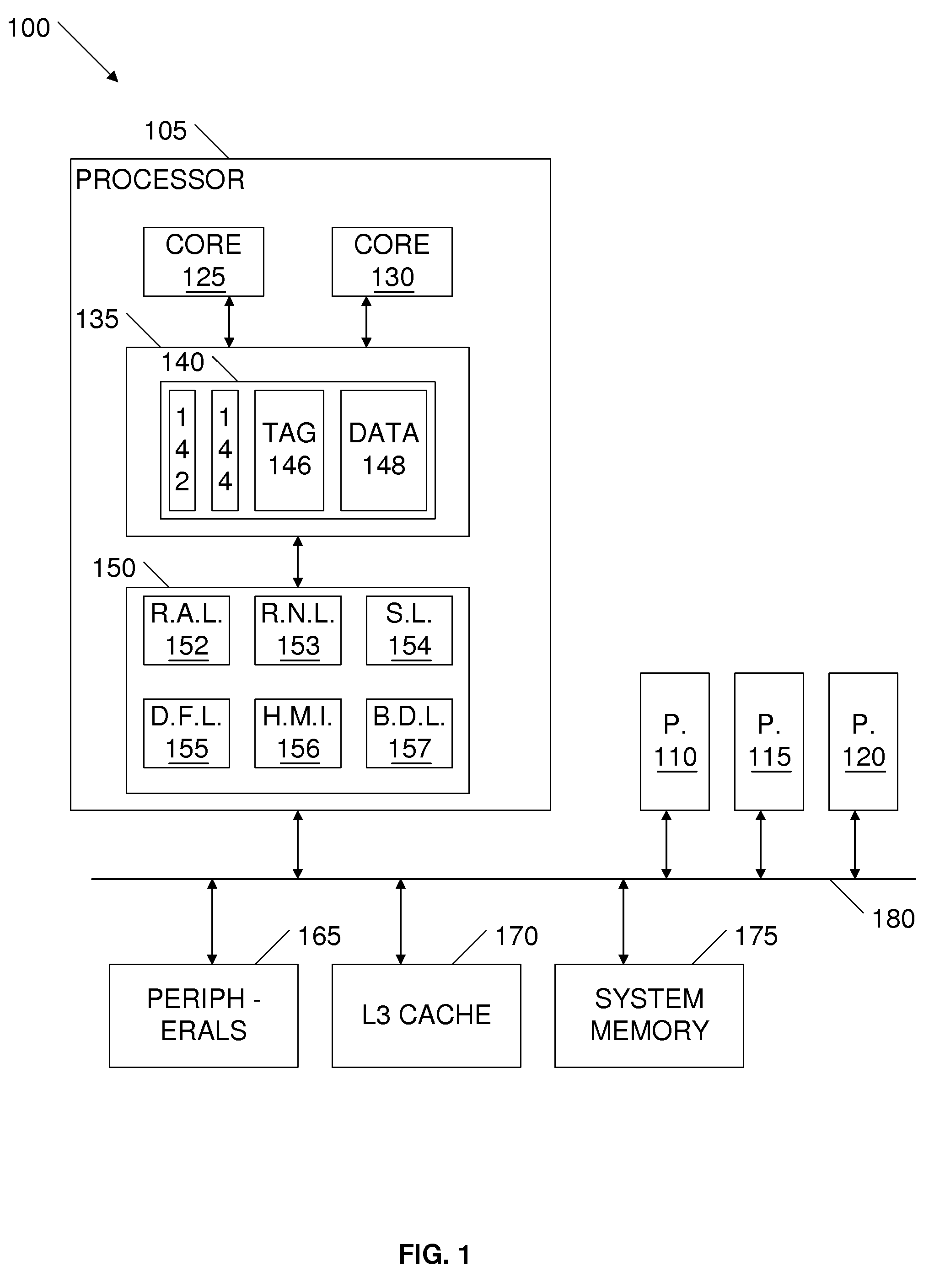

Scheduling access requests for a multi-bank low-latency random read memory device

ActiveUS8510496B1Reduce idle timeReduce access latencyMemory adressing/allocation/relocationInput/output processes for data processingMemory bankLatency (engineering)

Method and apparatus for scheduling access requests for a multi-bank low-latency random read memory (LLRRM) device within a storage system. The LLRRM device comprising a plurality of memory banks, each bank being simultaneously and independently accessible. A queuing layer residing in storage system may allocate a plurality of request-queuing data structures (“queues”), each queue being assigned to a memory bank. The queuing layer may receive access requests for memory banks in the LLRRM device and store each received access request in the queue assigned to the requested memory bank. The queuing layer may then send, to the LLRRM device for processing, an access request from each request-queuing data structure in successive order. As such, requests sent to the LLRRM device will comprise requests that will be applied to each memory bank in successive order as well, thereby reducing access latencies of the LLRRM device.

Owner:NETWORK APPLIANCE INC

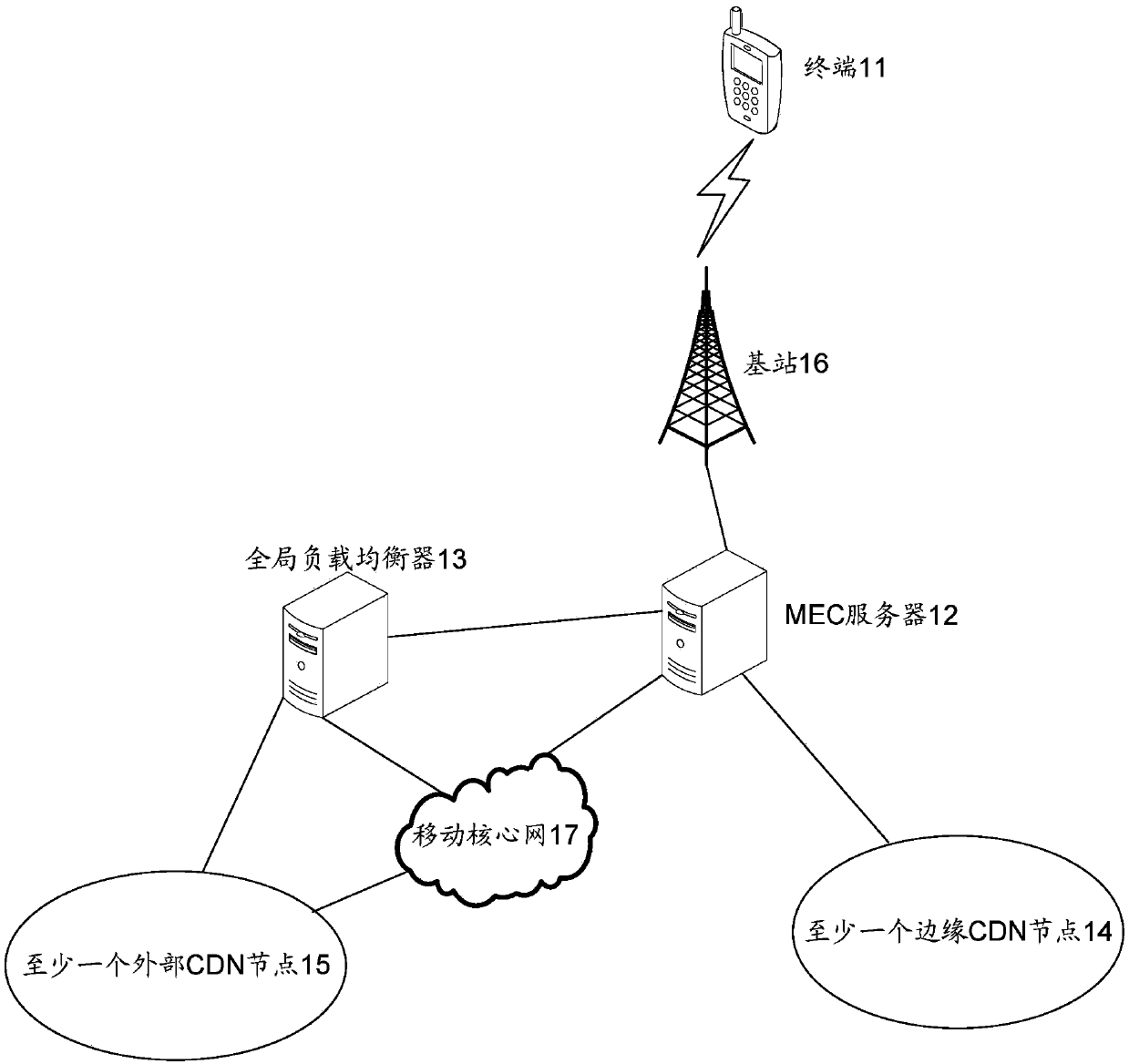

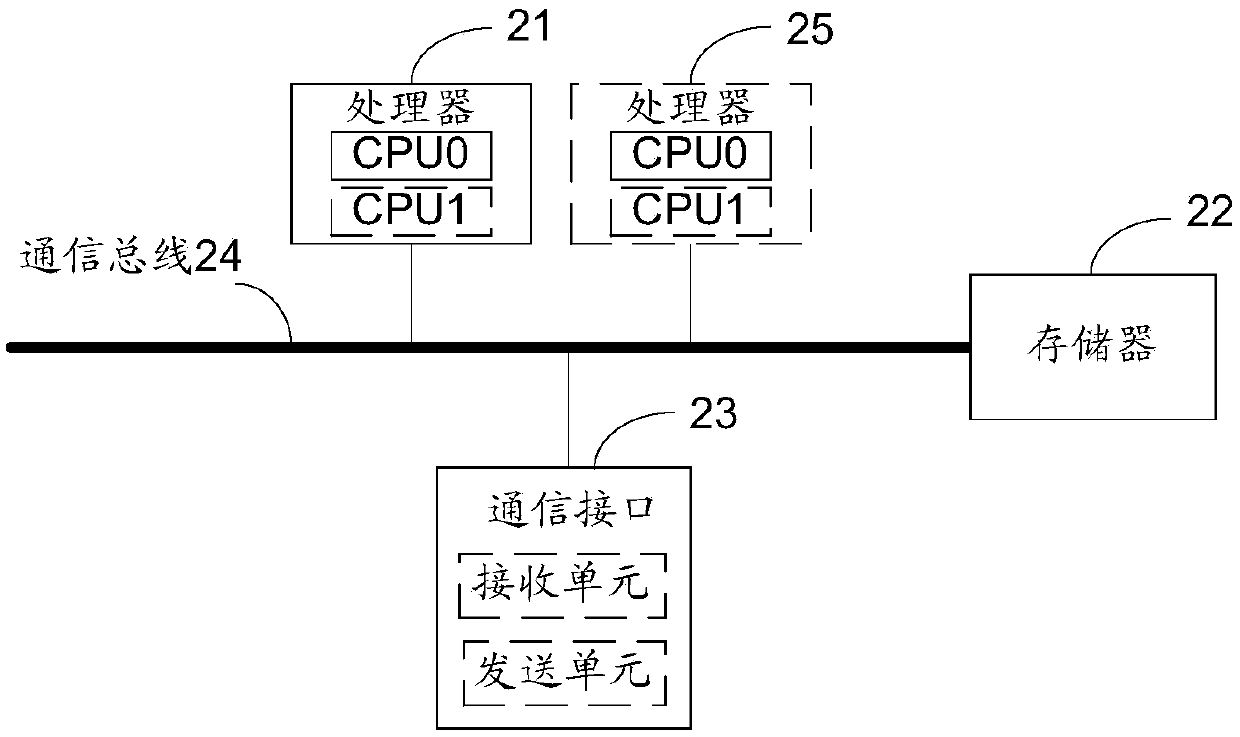

Method and equipment for selecting CDN node

An embodiment of the invention discloses a method and equipment for selecting a CDN node, which relate to the field of communication, and implement the selection of an edge CDN node for a high priority user, thereby reducing the service access delay. The method comprises the specific steps that a global load balancer receives a DNS request and a identifier of a terminal sent by an MEC server, determines a target external CDN node according to a preset rule and the DNS request, sends an IP address of the target external CDN node to the MEC server, sends an application message for applying for the use of the edge CDN node to the MEC server when determining that a pre-stored high priority identifier set comprises the identifier of the terminal, and receives a response message for using the edge CDN node sent by the MEC server. The method and the equipment are used in the process of selecting the CDN node for the terminal.

Owner:CHINA UNITED NETWORK COMM GRP CO LTD

MEC-based task cache allocation strategy applied in mobile edge computing network

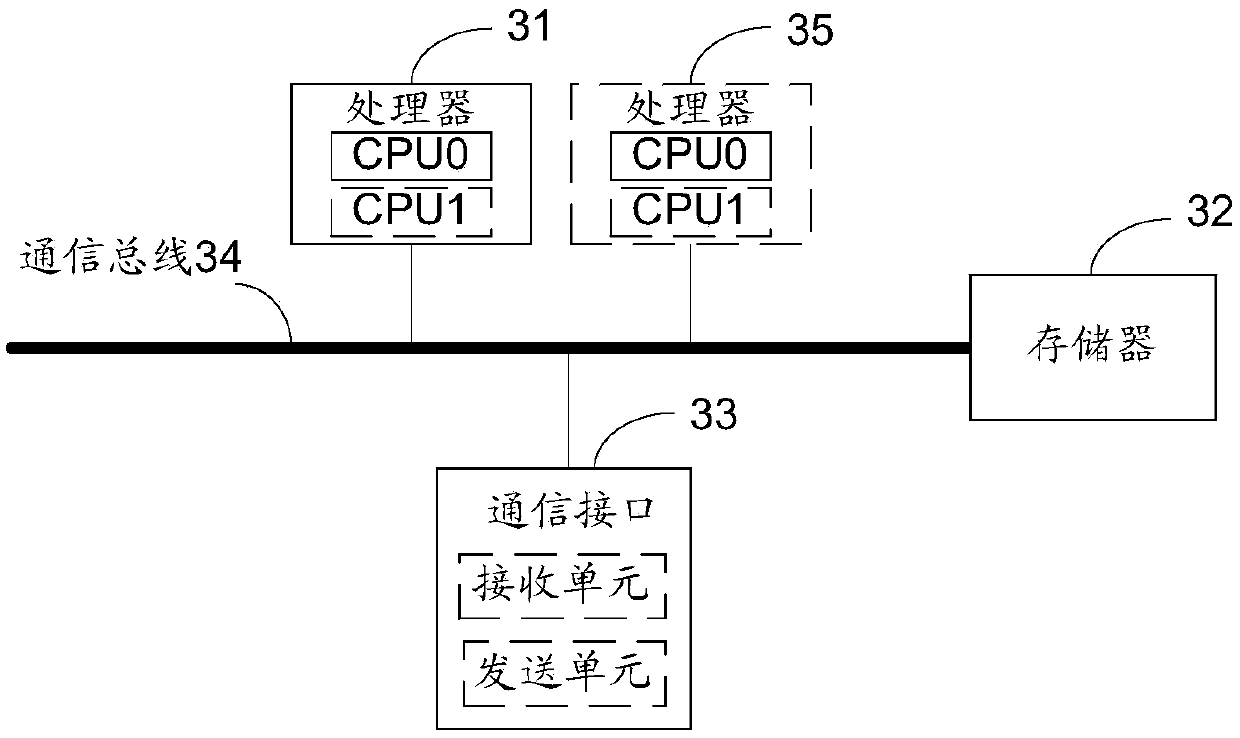

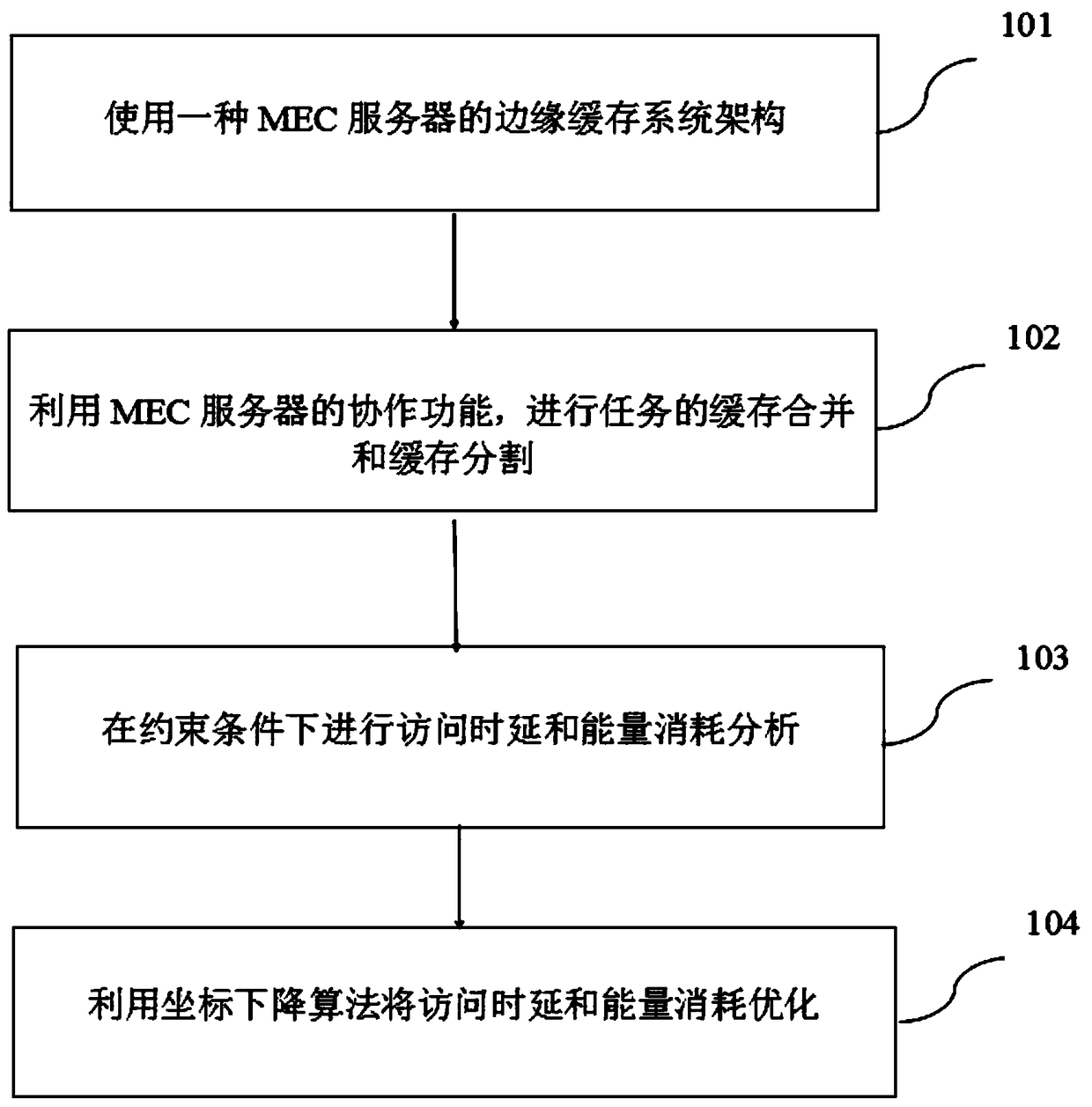

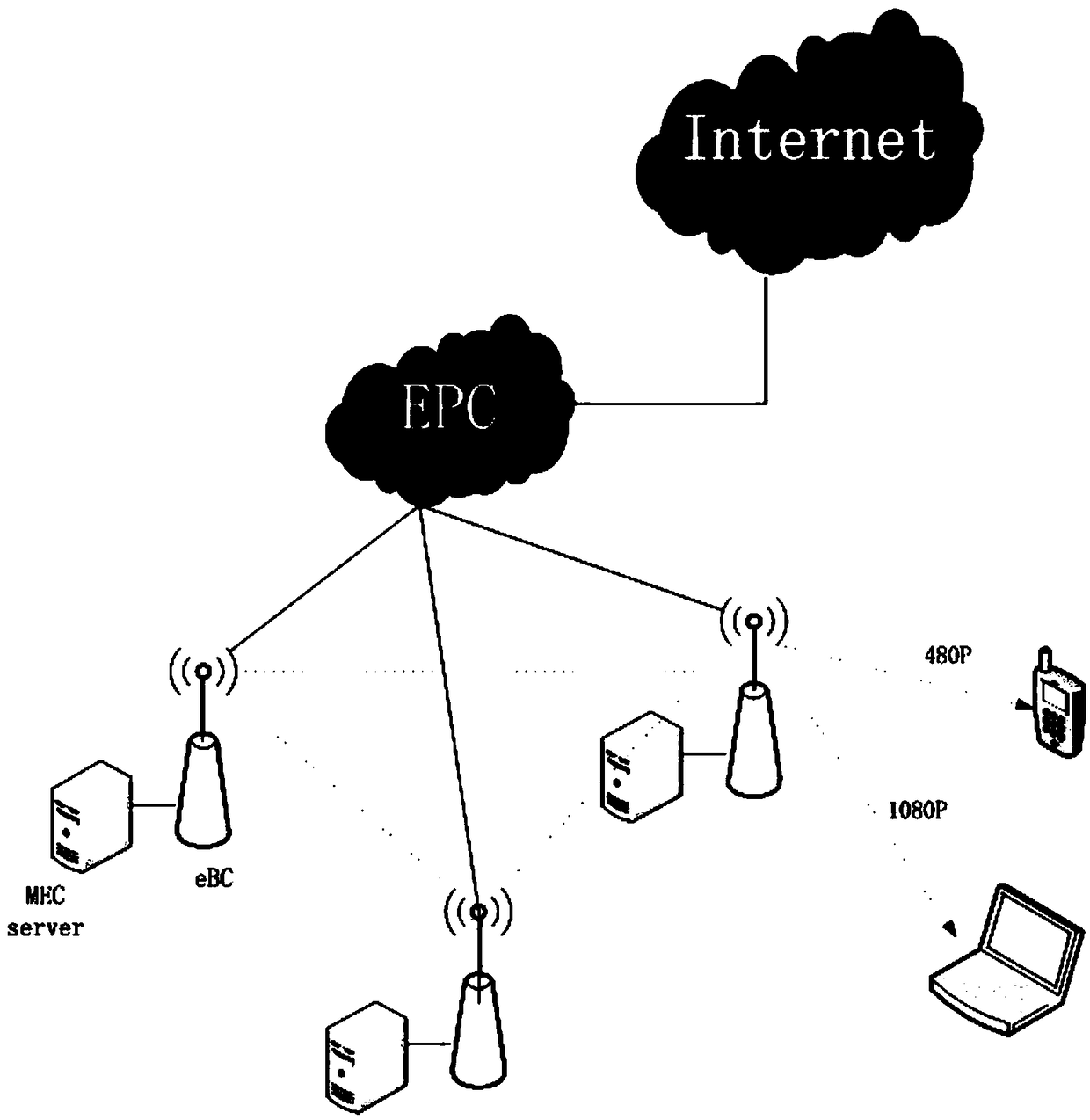

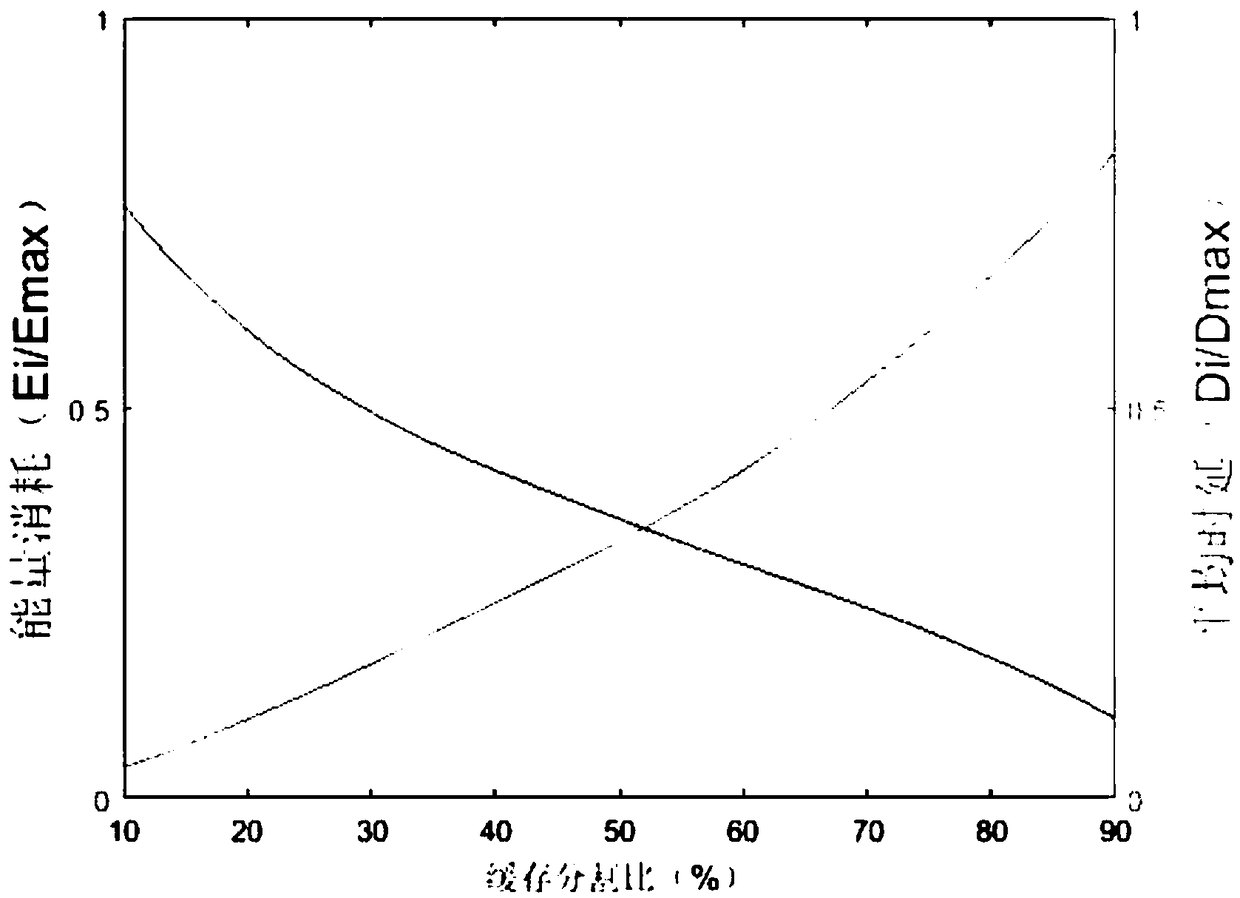

InactiveCN109362064AReduce in quantityReduce access latencyService provisioningNetwork traffic/resource managementDescent algorithmParallel computing

The invention discloses an MEC-based task cache allocation strategy applied in a mobile edge computing network. The MEC-based task cache allocation strategy comprises the steps: an edge cache system architecture of MEC servers is used; cache merging and cache segmenting of a task are conducted by means of a collaboration function of the MEC servers; access delay and energy consumption analysis areconducted under constraint conditions; and access delay and energy consumption are optimized by means of a coordination descent algorithm. Accordingly, more videos can be effectively cached on the MEC servers, the access delay is greatly shortened, and the energy consumption is greatly reduced.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

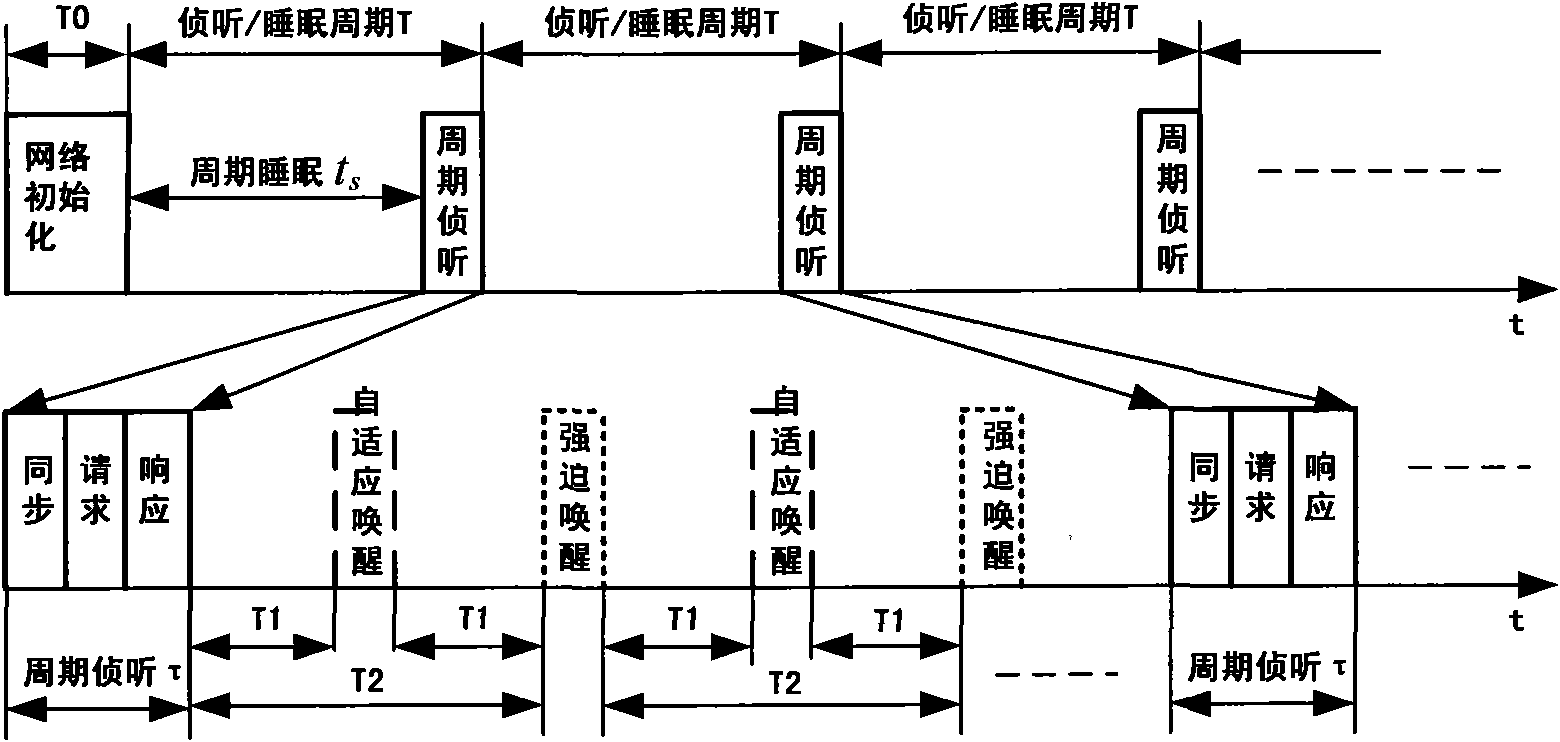

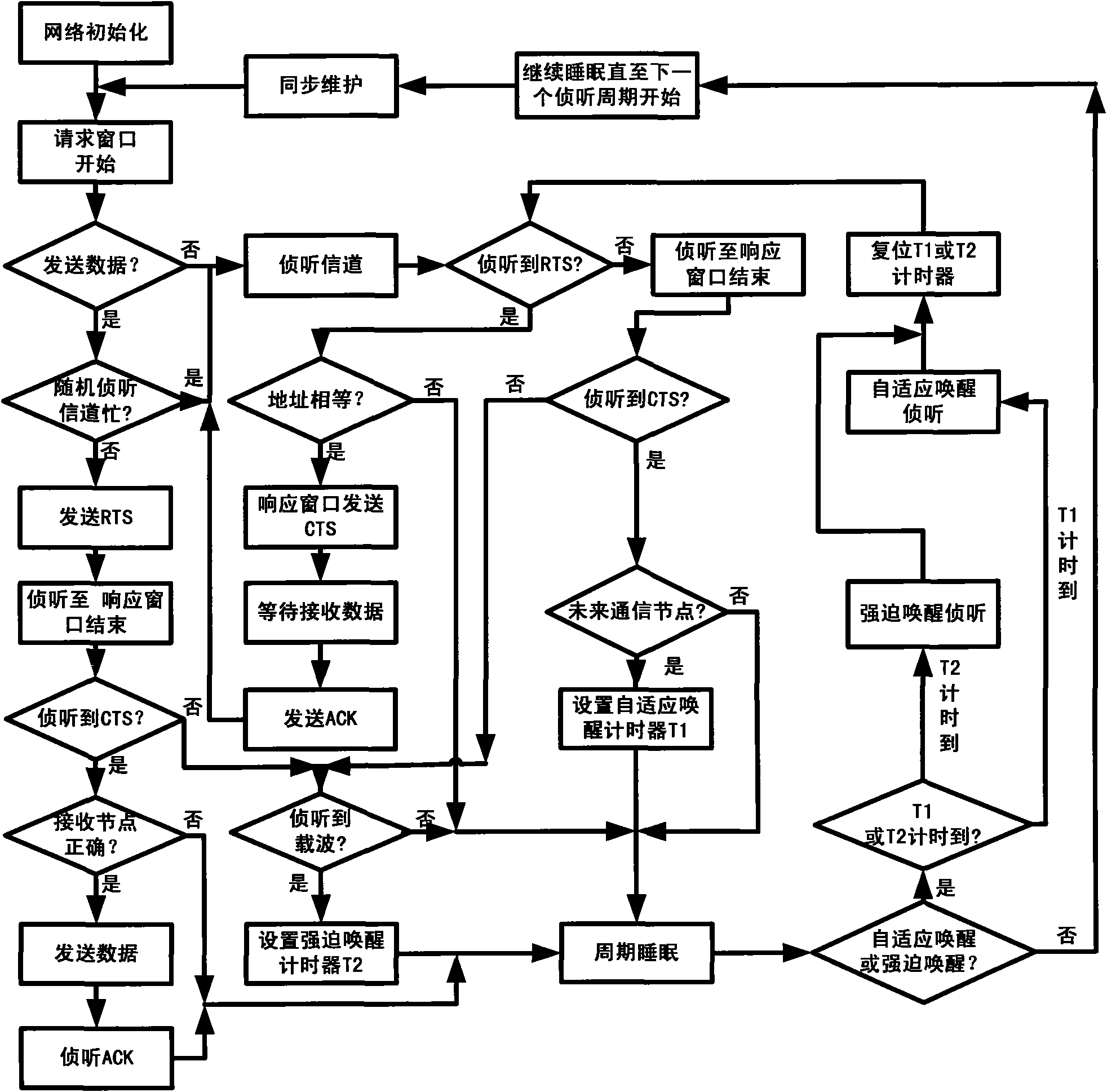

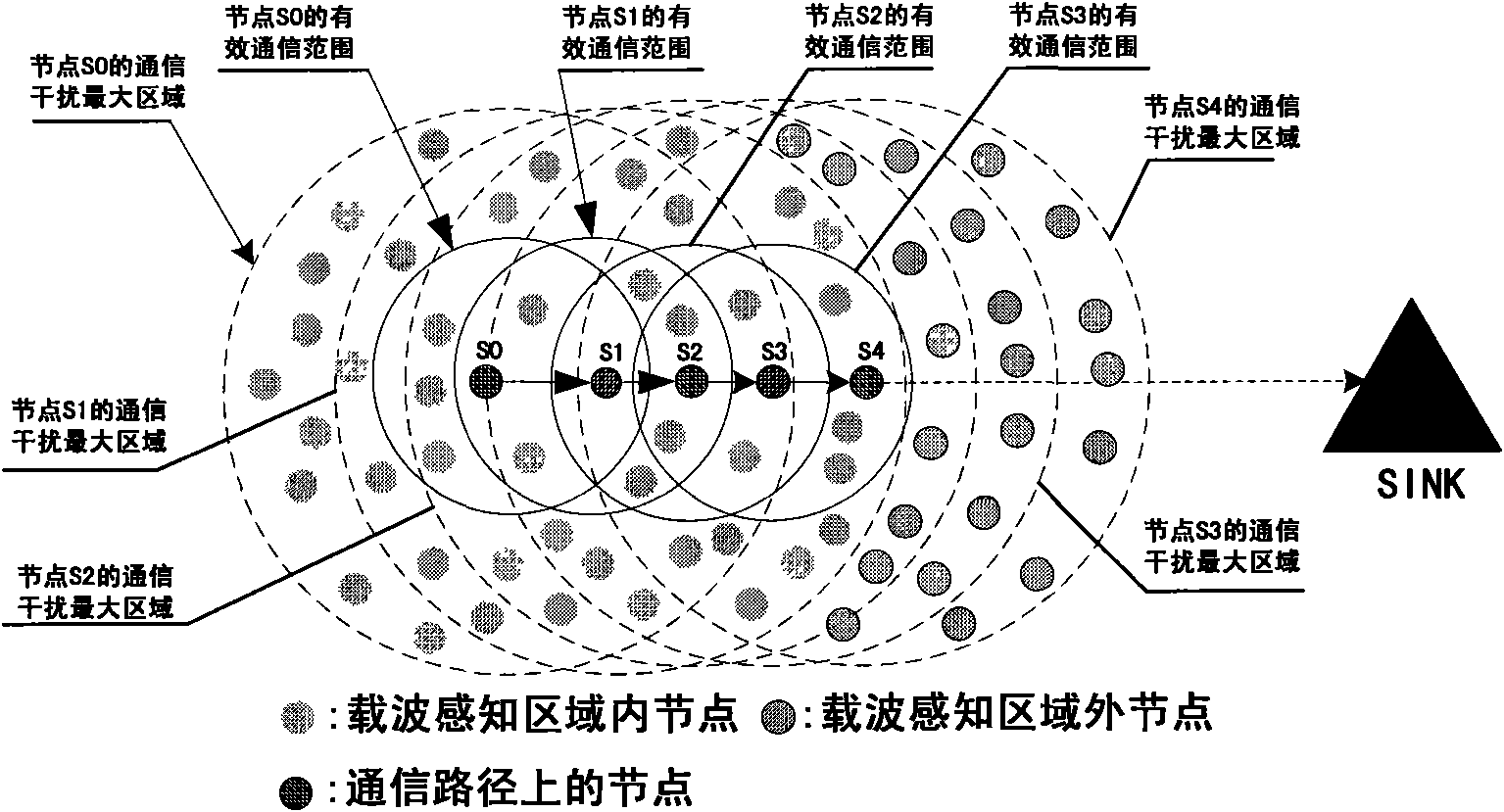

Method for realizing cross-layer wireless sensor network medium access control protocol

InactiveCN101557637AReduce collisionRealize multi-hop transmissionPower managementNetwork topologiesTime delaysMedia access control

The invention relates to a method for realizing cross-layer wireless sensor network medium access control protocol. The method mainly arranges a plurality of nodes as forcible arousing nodes after the wireless sensor network nodes enter a periodic sleeping time so as to find a node in a future communication path from the nodes, thus realizing multi-hop transmission of one interception / sleeping periodic data. The method is mainly used for solving the problems that the wireless sensor network which is sensitive to time delay and has little service quantity can not meet the channel to access a waiting time delay.

Owner:HENAN UNIV OF SCI & TECH

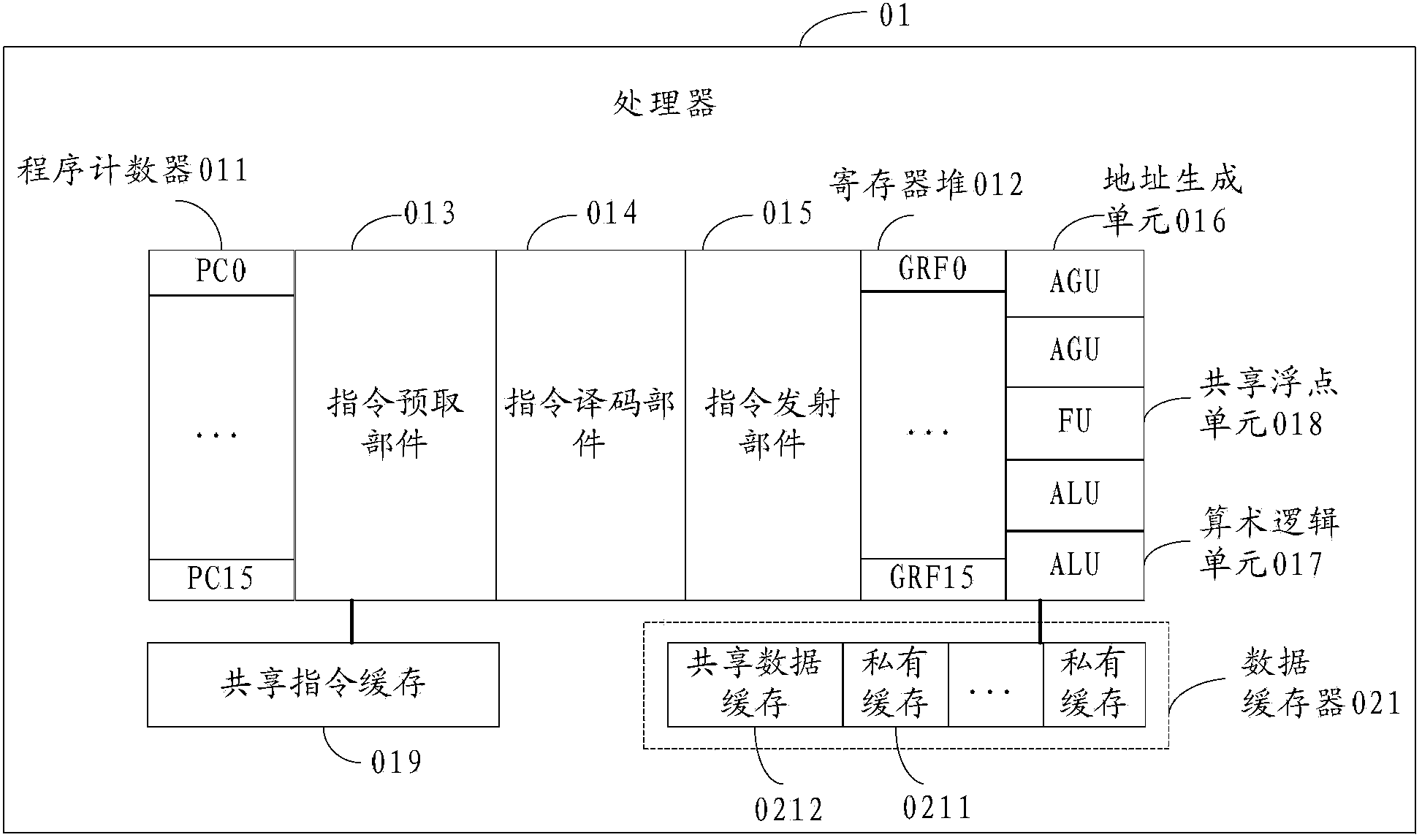

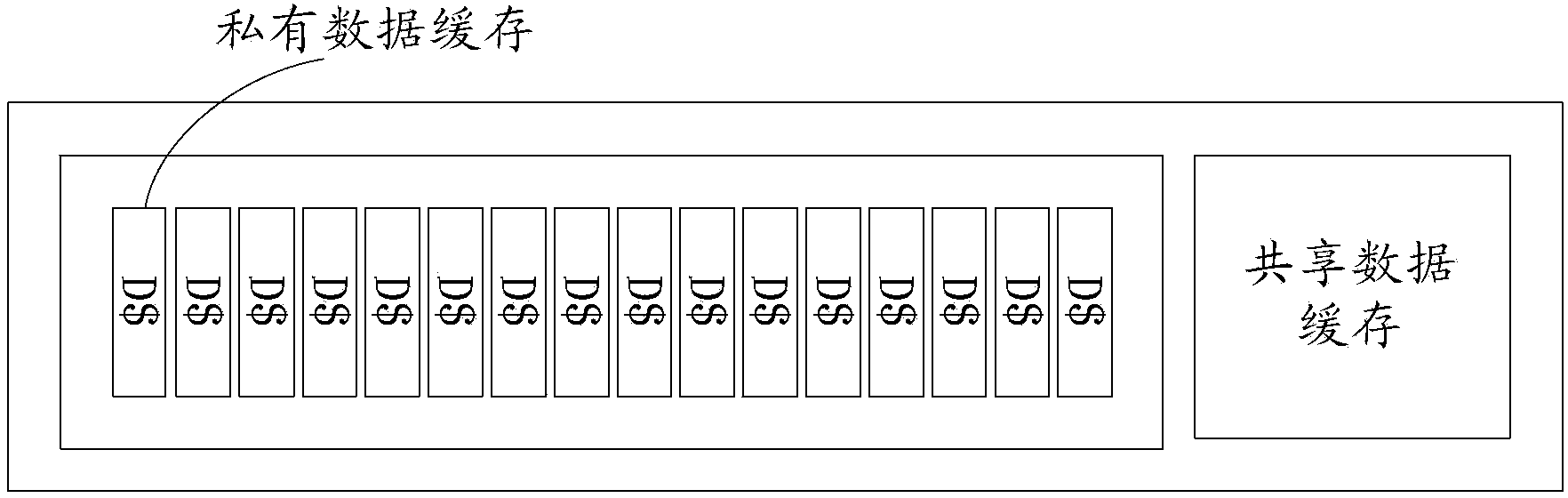

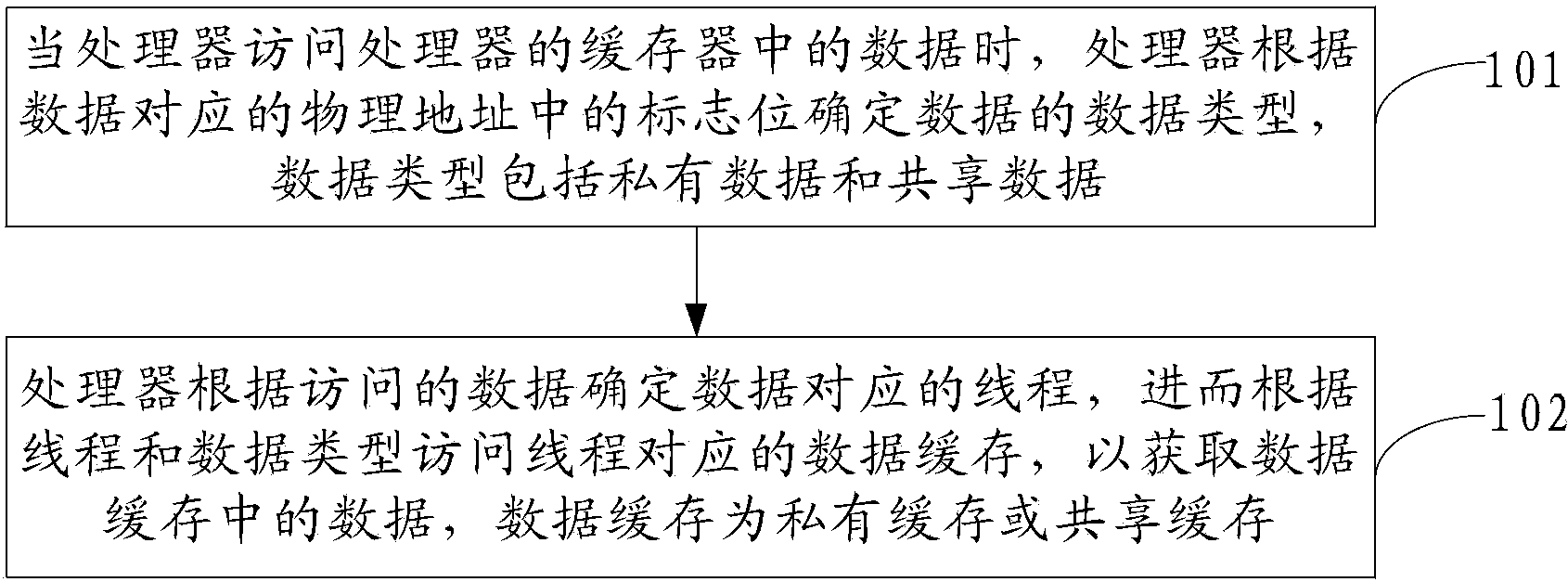

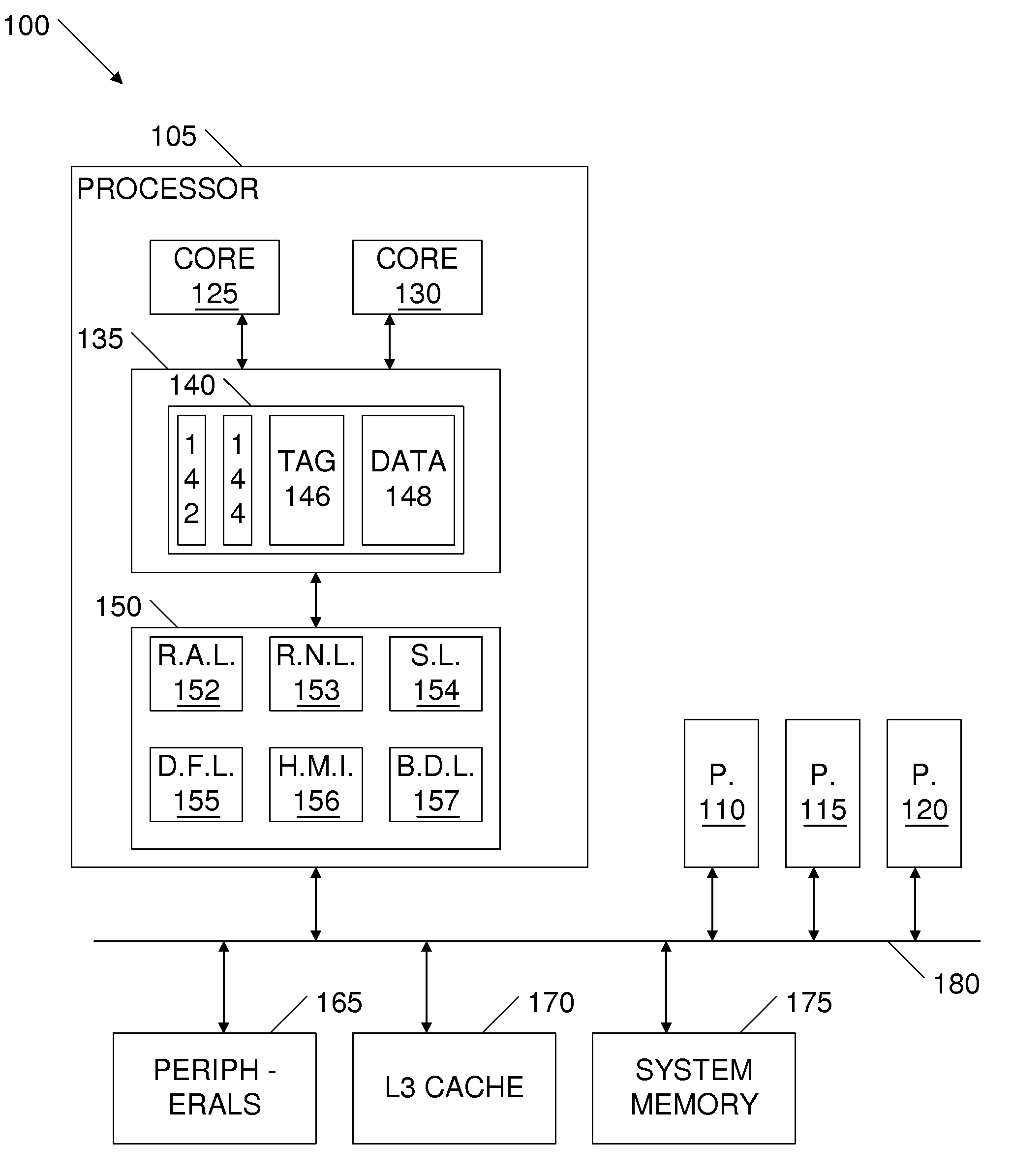

Method for accessing data cache and processor

ActiveCN104252392AReduce search rangeReduce access latencyResource allocationMemory adressing/allocation/relocationParallel computingPhysical address

The embodiment of the invention provides a method for accessing a data cache and a processor, and relates to the field of computers. According to the method and the processor, the range of data search can be narrowed, the access delay is reduced and the performances of the system are improved. A data register of the processor is a first-level cache, wherein the first-level cache comprises a private data cache and a shared data cache; the private data cache comprises a plurality of private caches and is used for storing private data of threads; the shared data cache is used for storing shared data among the threads; when data in the data register of the processor are accessed, data types of the data are determined according to additional flags, corresponding to the data, in physical addresses; the data types comprise the private data and the shared data; the threads corresponding to the data are determined according to the accessed data, and further data caches corresponding to the threads are accessed according to the threads and the data types, so that the data in the data caches are acquired. The embodiment of the invention is used for distinguishing the data caches and accessing the data caches.

Owner:HUAWEI TECH CO LTD +1

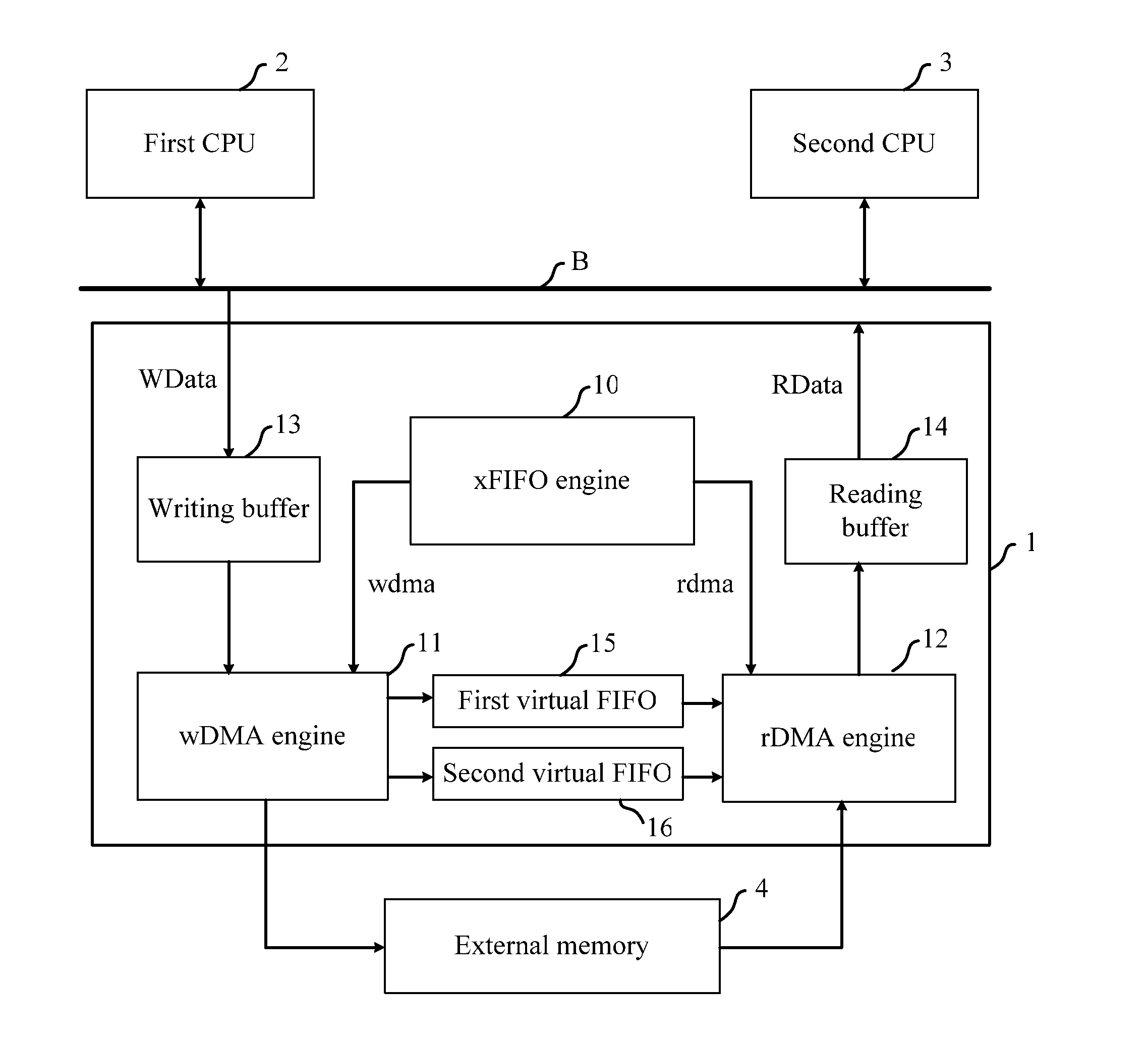

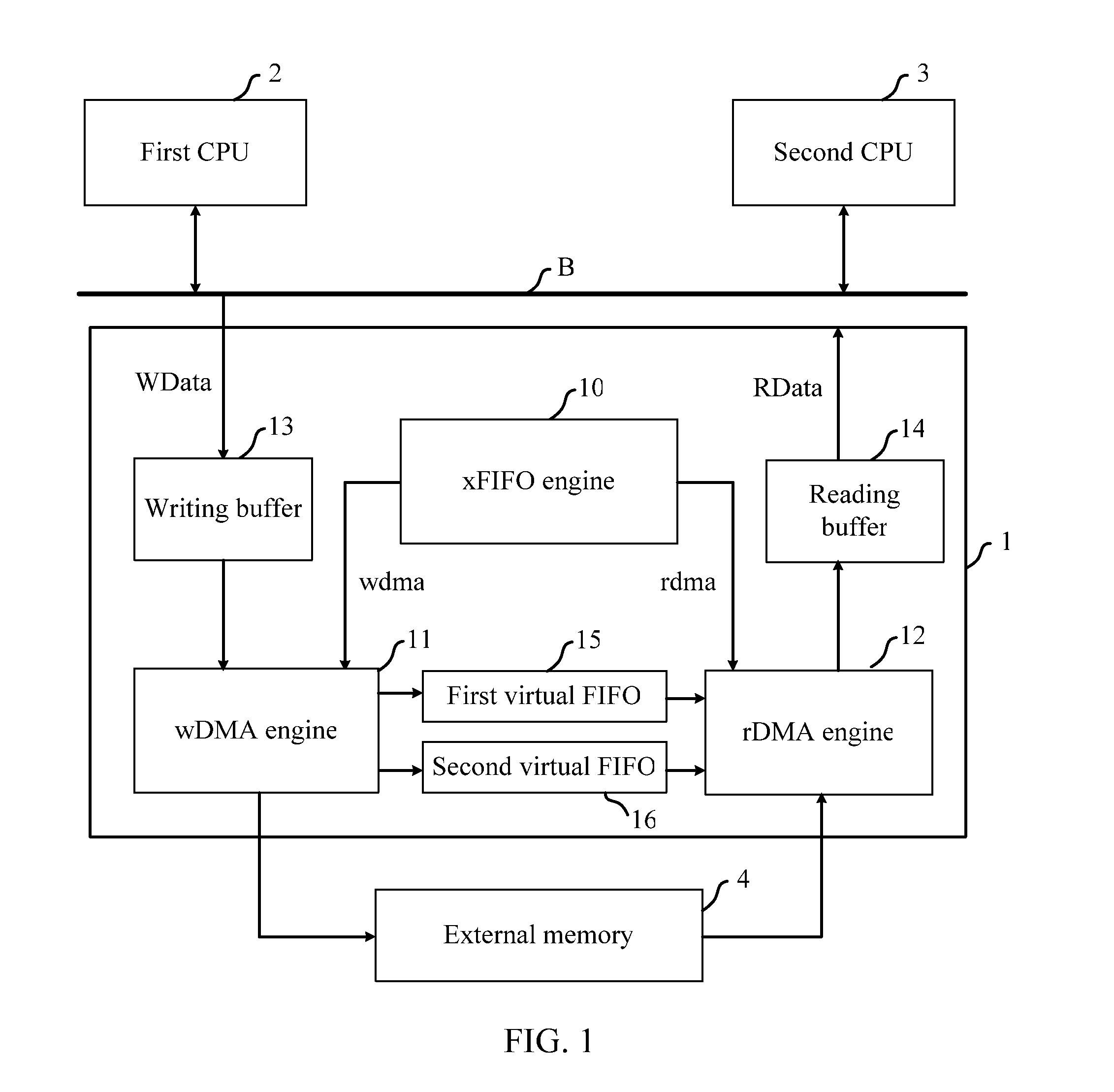

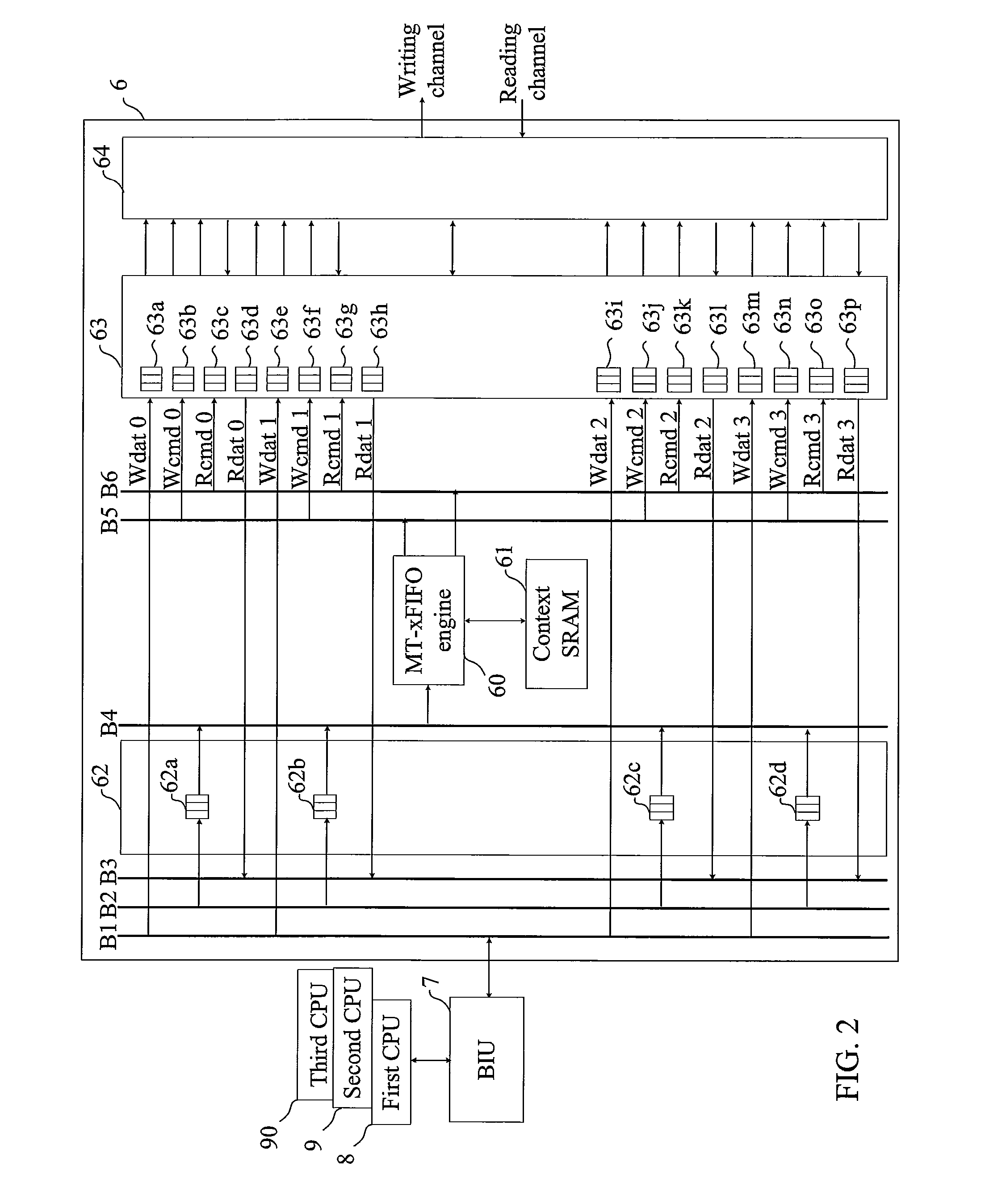

Hardware assisted inter-processor communication

InactiveUS20100325334A1Reduce access latencyCheap off-chip memoryMultiple digital computer combinationsElectric digital data processingProcessor registerInterprocessor communication

An external memory based FIFO (xFIFO) apparatus coupled to an external memory and a register bus is disclosed. The xFIFO apparatus includes an xFIFO engine, a wDMA engine, an rDMA engine, a first virtual FIFO, and a second virtual FIFO. The xFIFO engine receives a FIFO command from the register bus and generates a writing DMA command and a reading DMA command. The wDMA engine receives the writing DMA command from the xFIFO engine and forwards an incoming data to the external memory. The rDMA engine receives the reading DMA command from the xFIFO engine and pre-fetches a FIFO data from the external memory. The wDMA engine and the rDMA engine synchronize with each other via the first virtual FIFO and the second virtual FIFO.

Owner:ABLAZE WIRELESS

Scheduling access requests for a multi-bank low-latency random read memory device

ActiveUS20130304988A1Reduce idle timeReduce access latencyInput/output to record carriersMemory adressing/allocation/relocationMemory bankLatency (engineering)

Described herein are method and apparatus for scheduling access requests for a multi-bank low-latency random read memory (LLRRM) device within a storage system. The LLRRM device comprising a plurality of memory banks, each bank being simultaneously and independently accessible. A queuing layer residing in storage system may allocate a plurality of request-queuing data structures (“queues”), each queue being assigned to a memory bank. The queuing layer may receive access requests for memory banks in the LLRRM device and store each received access request in the queue assigned to the requested memory bank. The queuing layer may then send, to the LLRRM device for processing, an access request from each request-queuing data structure in successive order. As such, requests sent to the LLRRM device will comprise requests that will be applied to each memory bank in successive order as well, thereby reducing access latencies of the LLRRM device.

Owner:NETWORK APPLIANCE INC

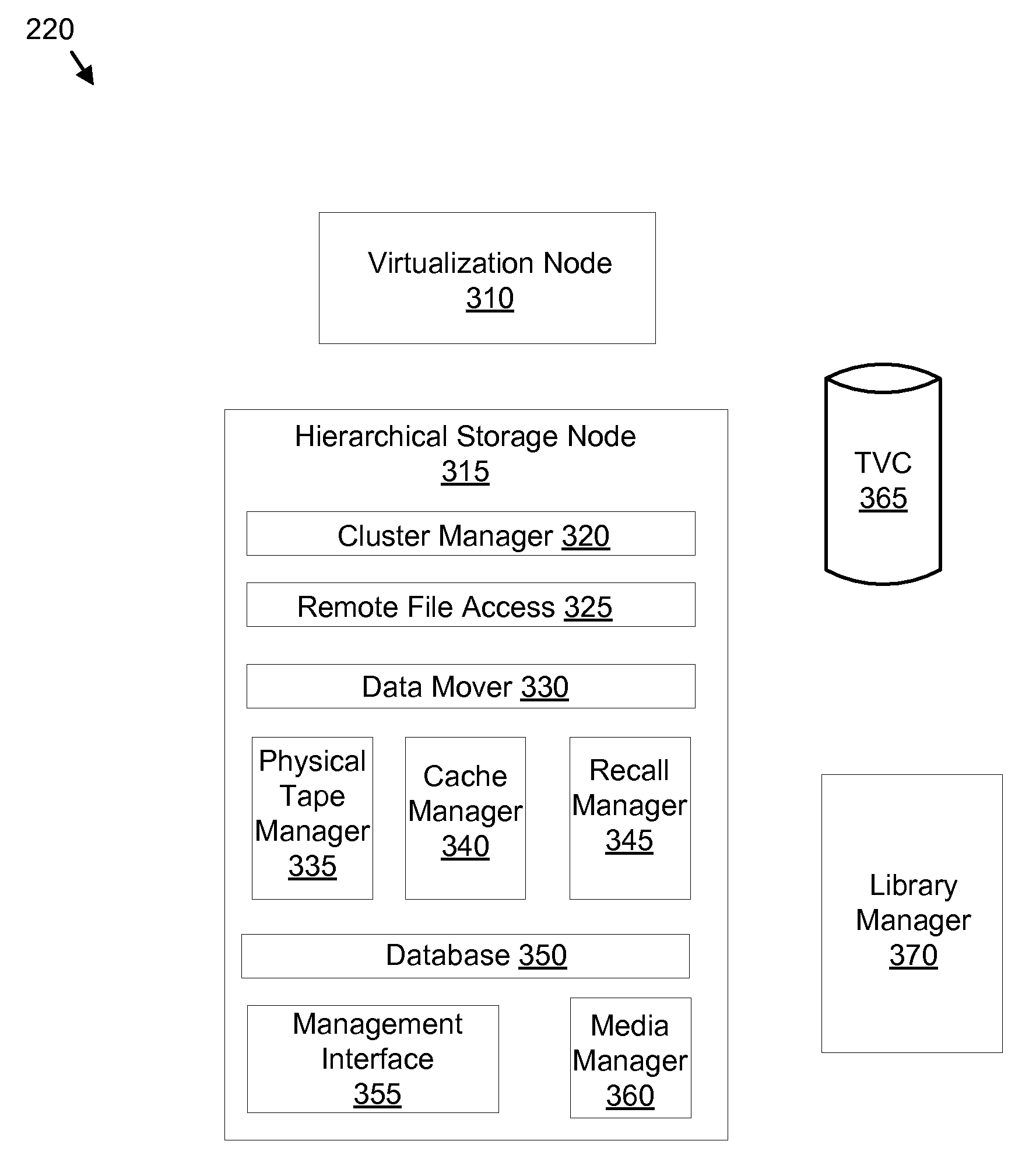

Apparatus, system, and method for selecting a cluster

InactiveUS20090006734A1Lower latencyIncrease weightMemory architecture accessing/allocationInput/output to record carriersMagnetic tapeData mining

An apparatus, system, and method are disclosed for selecting a source cluster in a distributed storage configuration. A measurement module measures system factors for a plurality of clusters over a plurality of instances. The clusters are in communication over a network and each cluster comprises at least one tape volume cache. A smoothing module applies a smoothing function to the system factors, wherein recent instances have higher weights. A lifespan module calculates a mount-to-dismount lifespan for each cluster from the smoothed system factors. A selection module selects a source cluster for accessing an instance of a specified volume in response to the mount-to-dismount lifespans and a user policy.

Owner:IBM CORP

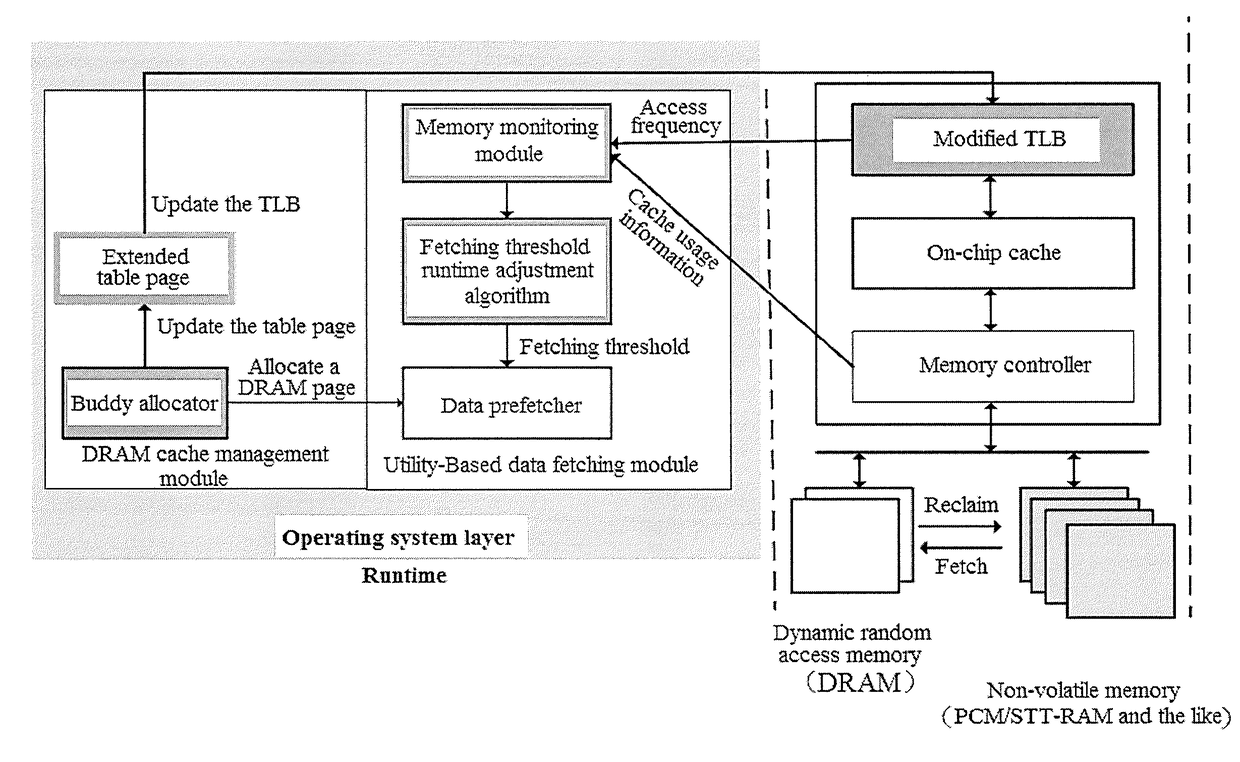

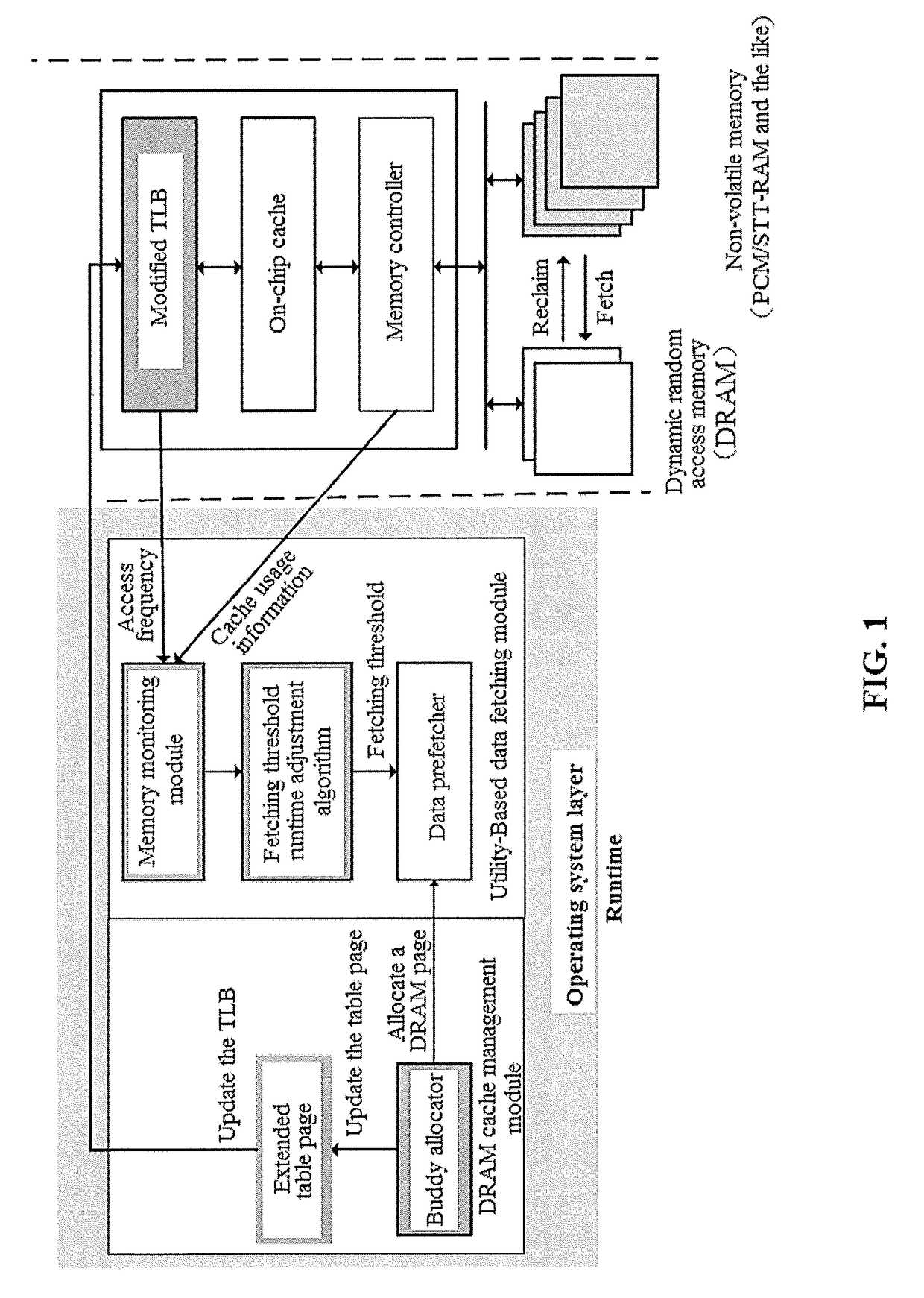

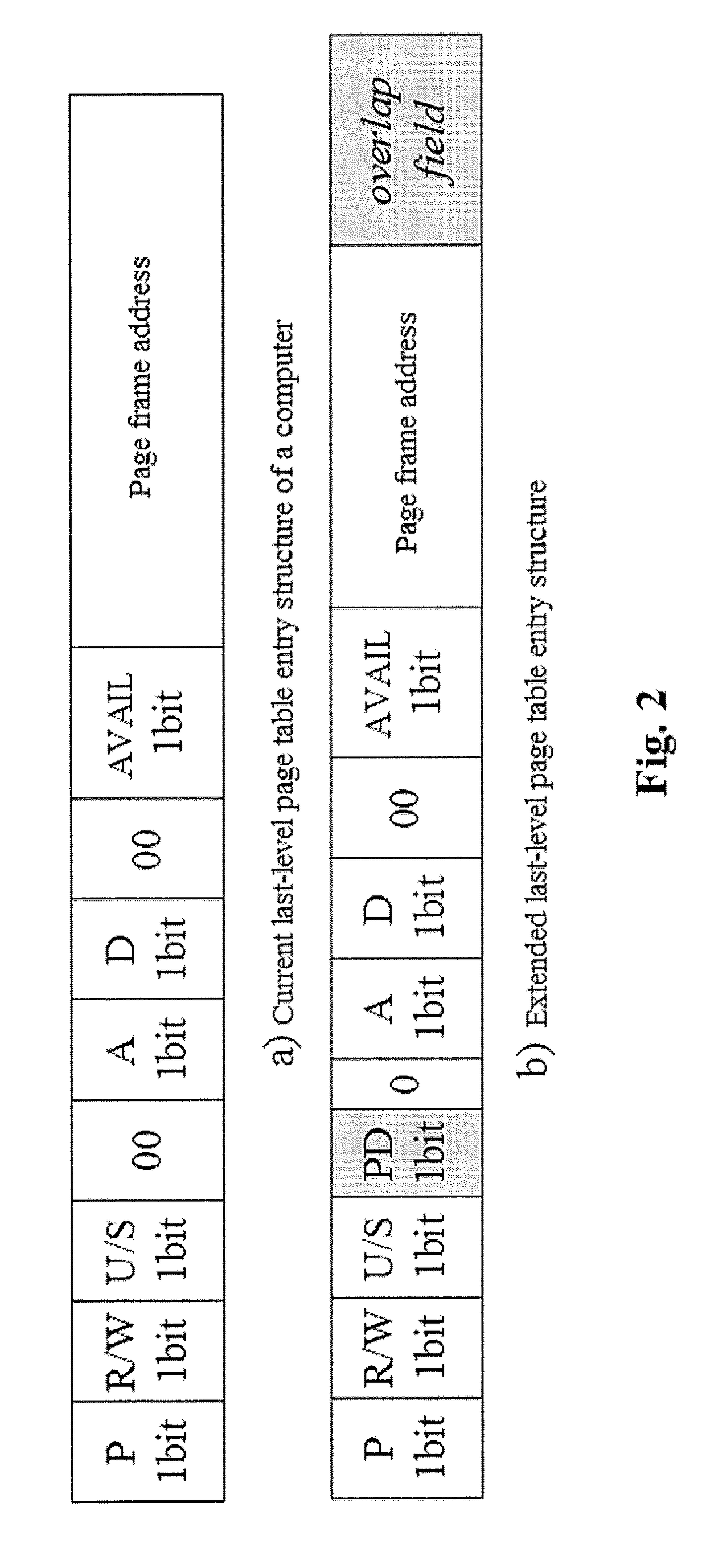

Dram/nvm hierarchical heterogeneous memory access method and system with software-hardware cooperative management

ActiveUS20170277640A1Eliminates hardwareReducing memory access delayMemory architecture accessing/allocationMemory systemsTerm memoryPage table

The present invention provides a DRAM / NVM hierarchical heterogeneous memory system with software-hardware cooperative management schemes. In the system, NVM is used as large-capacity main memory, and DRAM is used as a cache to the NVM. Some reserved bits in the data structure of TLB and last-level page table are employed effectively to eliminate hardware costs in the conventional hardware-managed hierarchical memory architecture. The cache management in such a heterogeneous memory system is pushed to the software level. Moreover, the invention is able to reduce memory access latency in case of last-level cache misses. Considering that many applications have relatively poor data locality in big data application environments, the conventional demand-based data fetching policy for DRAM cache can aggravates cache pollution. In the present invention, an utility-based data fetching mechanism is adopted in the DRAM / NVM hierarchical memory system, and it determines whether data in the NVM should be cached in the DRAM according to current DRAM memory utilization and application memory access patterns. It improves the efficiency of the DRAM cache and bandwidth usage between the NVM main memory and the DRAM cache.

Owner:HUAZHONG UNIV OF SCI & TECH

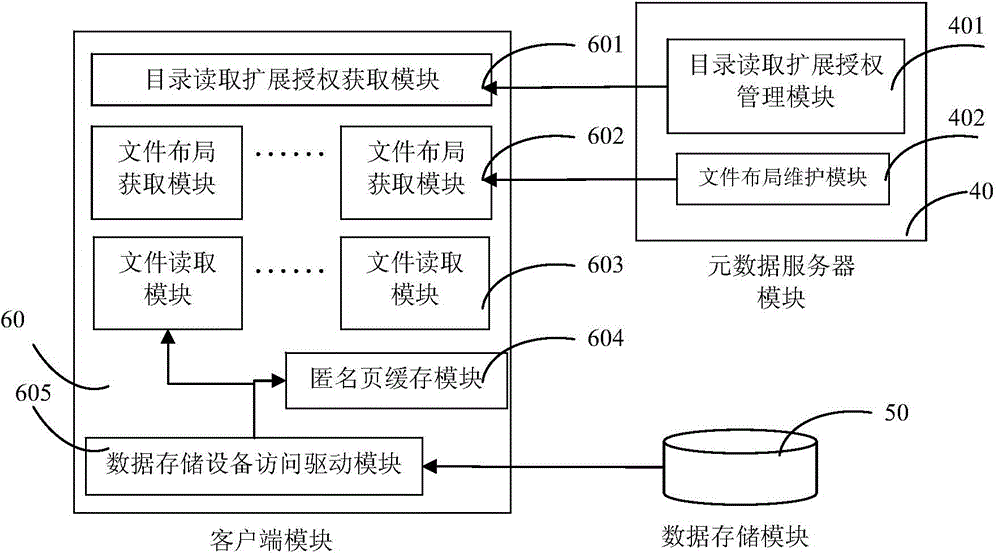

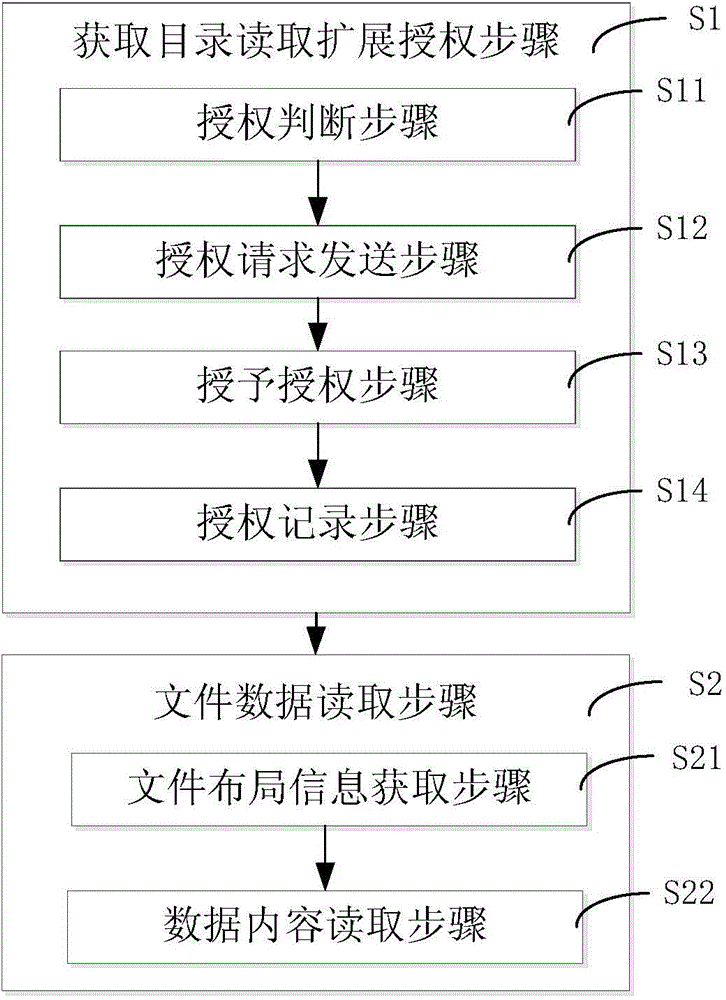

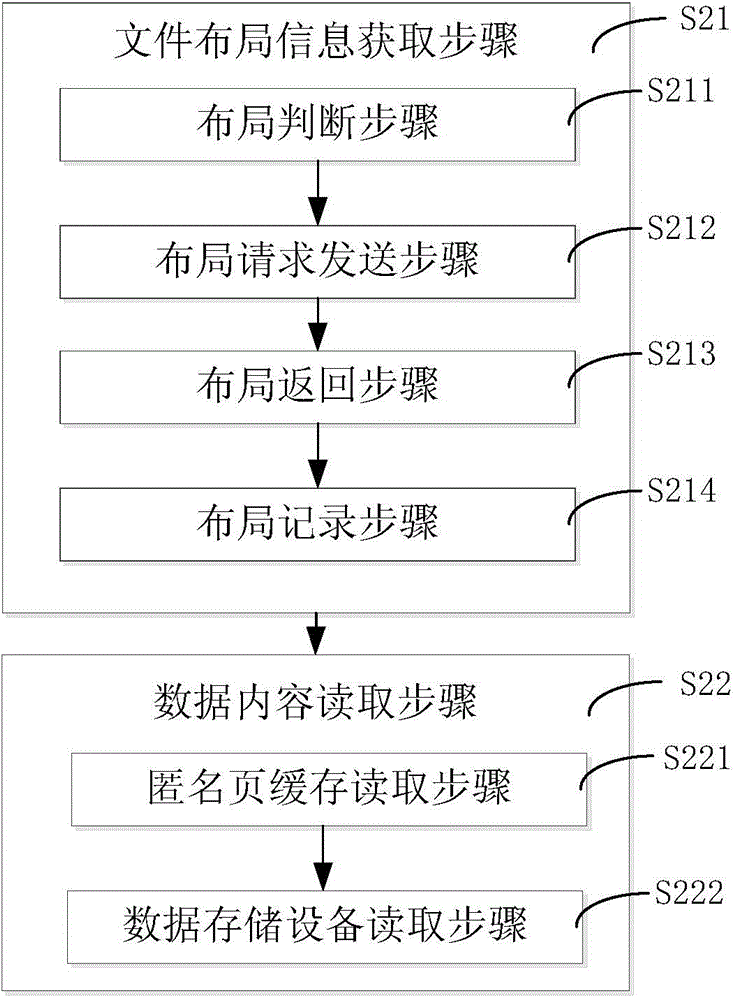

Data pre-reading device based on distributed file system and method thereof

InactiveCN103916465AReduce data access latencyReduce access latencyTransmissionSpecial data processing applicationsClient-sideDistributed File System

The invention discloses a data pre-reading device based on a distributed file system. The data pre-reading device comprises a client-side module, a metadata server module and a data memory module. The client-side module obtains the catalogue read extension authorization and a small file layout by accessing the metadata server module. Small file data and large granularity data of space continuity of the small file data are pre-read to a cache of the client-side module at the same time from the data memory module according to the small file layout. The invention further discloses a data pre-reading method based on the distributed file system.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI +1

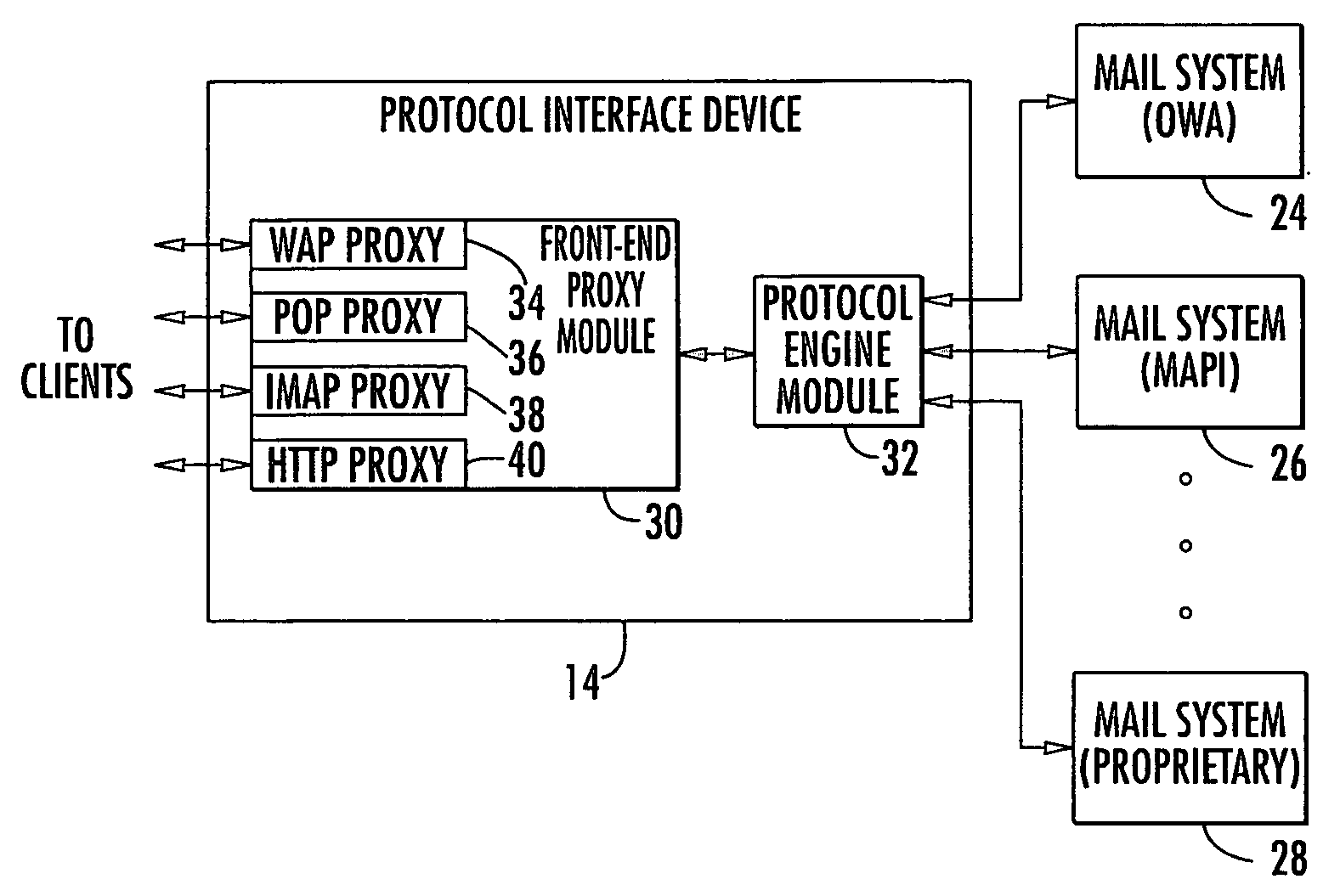

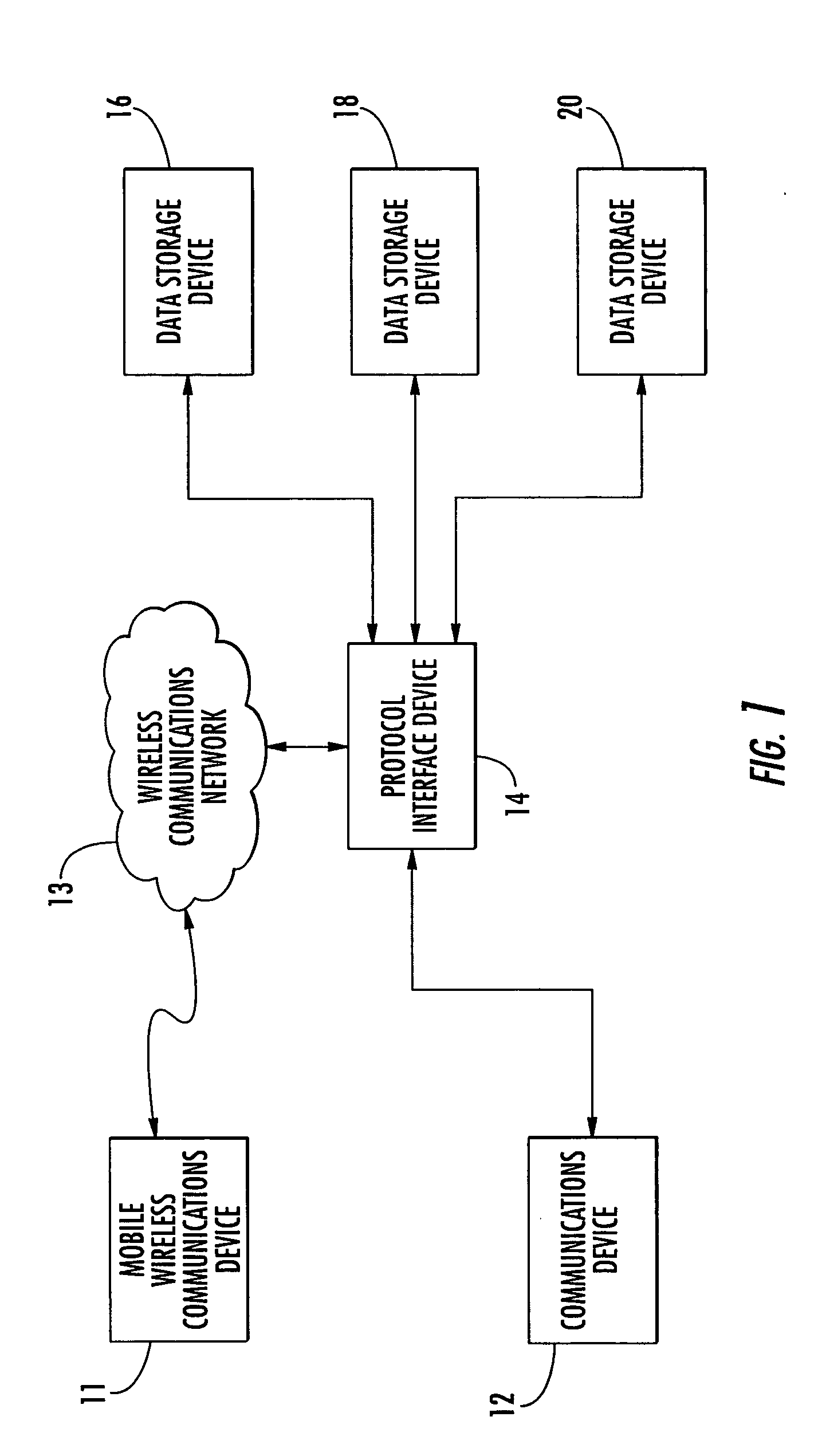

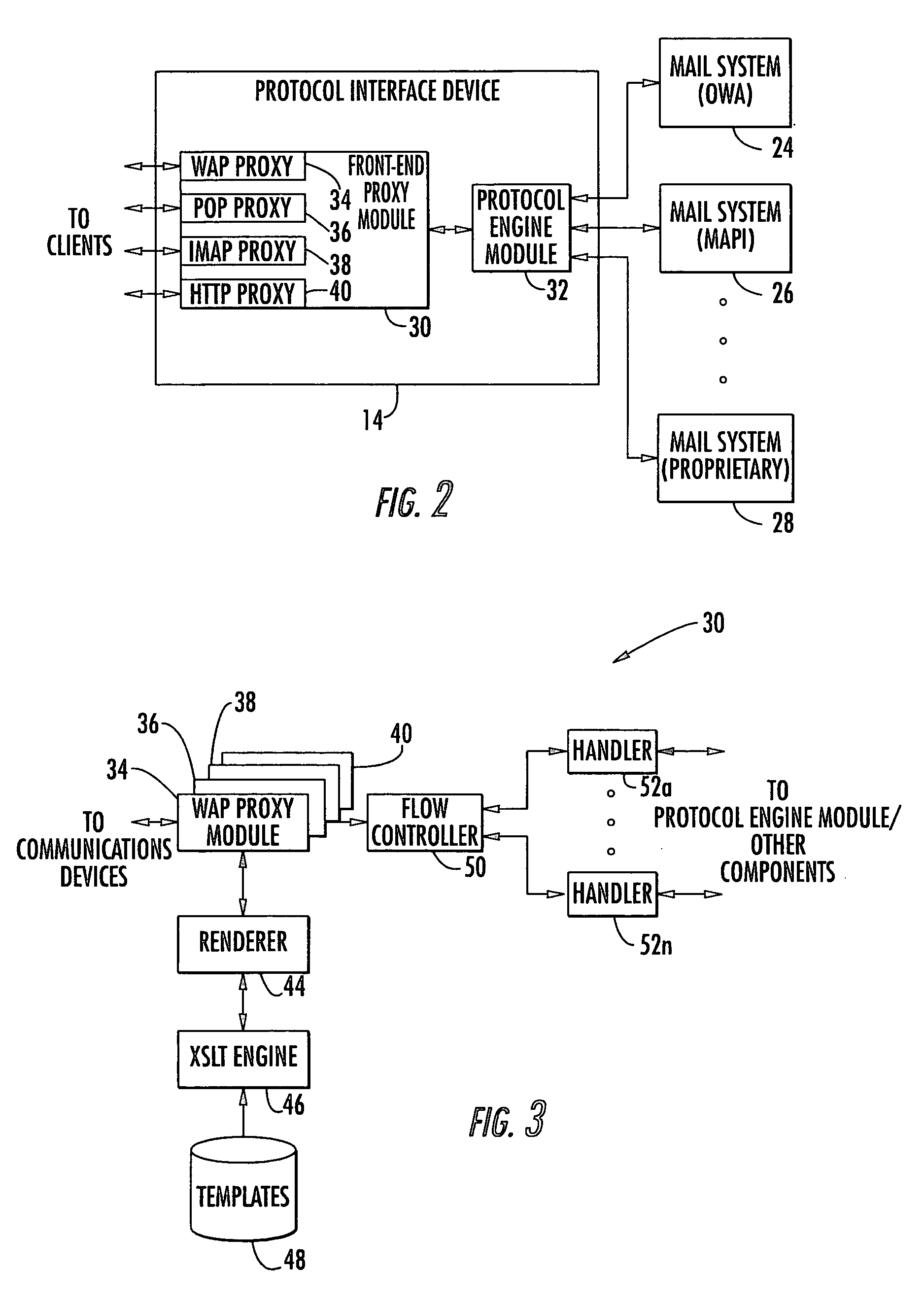

Communications system providing reduced access latency and related methods

ActiveUS20050033847A1Enhanced operating protocol conversion featureReduced access latency and related methodMultiple digital computer combinationsSubstation equipmentMobile wirelessData file

A communications system may include data storage devices for storing data files, and mobile wireless communications devices (MWCDs) generating access requests for the data files. The data storage devices and MWCDs may each use one or more different operating protocols. Each data file may be associated with a respective MWCD and have a unique identification (UID) associated therewith. The system may also include a protocol interface device including a protocol converter module for communicating with the MWCDs using respective operating protocols thereof, and a protocol engine module for communicating with the data storage devices using respective operating protocols thereof. The protocol engine module may also poll the data storage devices for UIDs of data files stored thereon, and cooperate with the protocol converter module to provide UIDs for respective data files to the MWCDs upon receiving access requests therefrom.

Owner:BLACKBERRY LTD

Data reorganization in non-uniform cache access caches

InactiveUS20100274973A1Reduce access latencyMemory adressing/allocation/relocationData reorganizationWaiting time

Embodiments that dynamically reorganize data of cache lines in non-uniform cache access (NUCA) caches are contemplated. Various embodiments comprise a computing device, having one or more processors coupled with one or more NUCA cache elements. The NUCA cache elements may comprise one or more banks of cache memory, wherein ways of the cache are horizontally distributed across multiple banks. To improve access latency of the data by the processors, the computing devices may dynamically propagate cache lines into banks closer to the processors using the cache lines. To accomplish such dynamic reorganization, embodiments may maintain “direction” bits for cache lines. The direction bits may indicate to which processor the data should be moved. Further, embodiments may use the direction bits to make cache line movement decisions.

Owner:IBM CORP

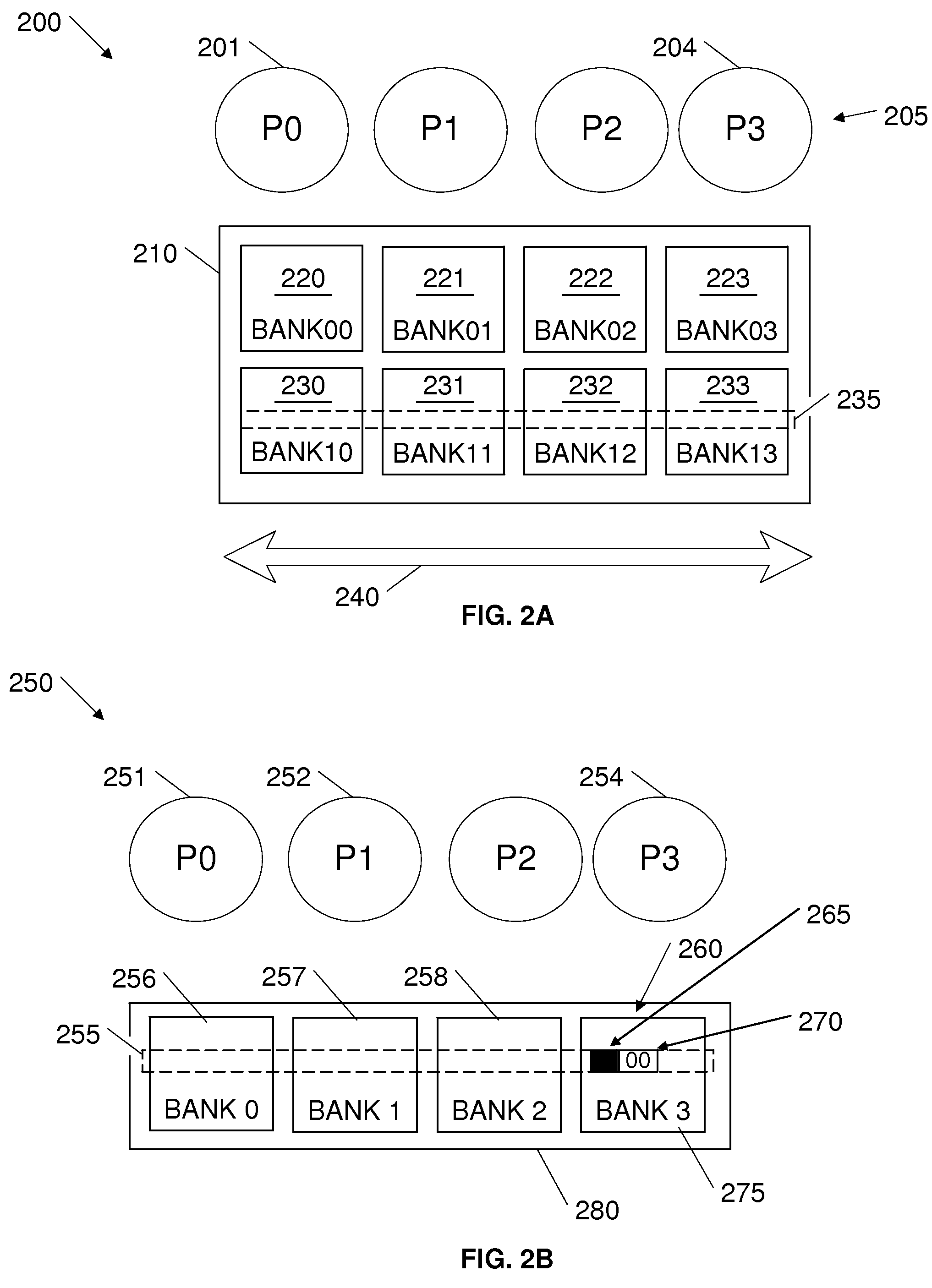

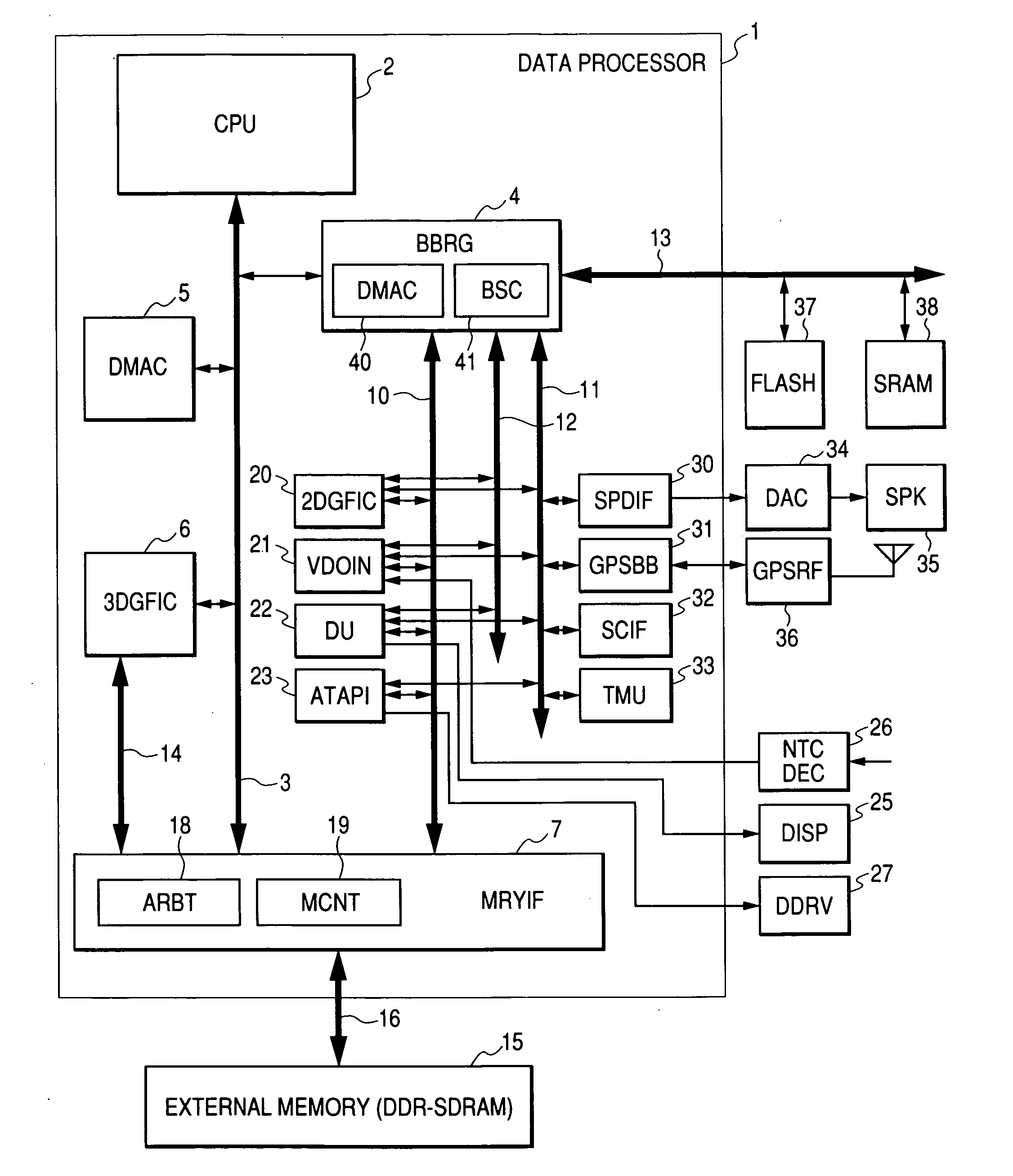

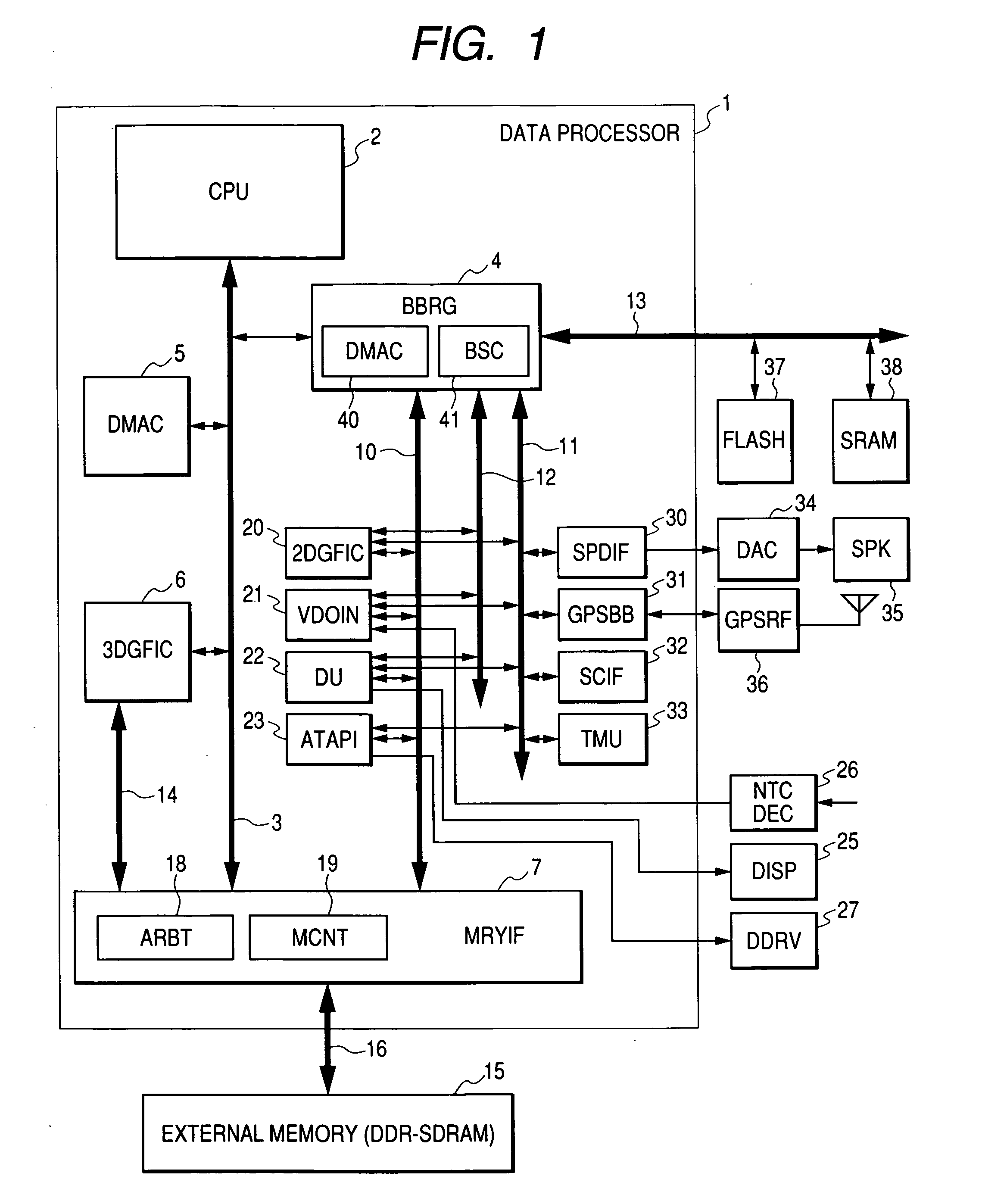

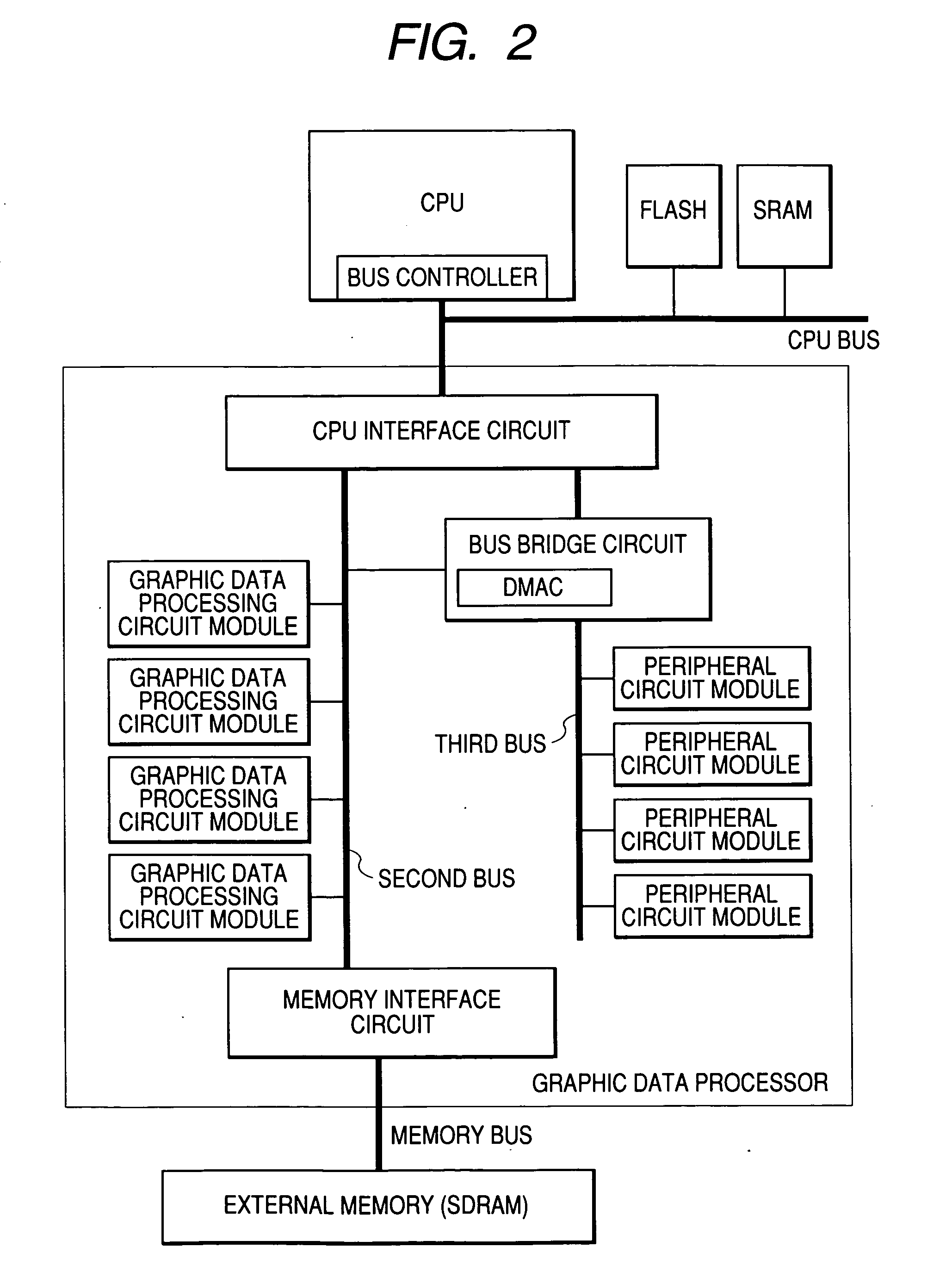

Data processor and graphic data processing device

ActiveUS20050030311A1Improve data processing speedData transfer speed is fastDrawing from basic elementsProcessor architectures/configurationDirect memory accessExternal storage

An object of the present invention is to improve efficiency of transfer of control information, graphic data, and the like for drawing and display control in a graphic data processor. A graphic data processor includes: a CPU; a first bus coupled to the CPU; a DMAC for controlling a data transfer using the first bus; a bus bridge circuit for transmitting / receiving data to / from the first bus; a three-dimensional graphics module for receiving a command from the CPU via the first bus and performing a three-dimensional graphic process; a second bus coupled to the bus bridge circuit and a plurality of first circuit modules; a third bus coupled to the bus bridge circuit and second circuit modules; and a memory interface circuit coupled to the first and second buses and the three-dimensional graphic module and connectable to an external memory, wherein the bus bridge circuit can control a direct memory access transfer between an external circuit and the second bus.

Owner:RENESAS ELECTRONICS CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com