Dram/nvm hierarchical heterogeneous memory access method and system with software-hardware cooperative management

a hierarchical heterogeneous memory and access method technology, applied in the field of cache performance optimization, can solve the problems of large hardware cost, large hardware cost, and relatively high read/write delay, so as to reduce memory access delay, eliminate hardware cost, and eliminate hardware cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029]To illustrate the objectives, technical solutions and advantages of the presented invention more clearly, the following further describes the details of this invention with figures and case studies. It should be noted that, the specific cases described in this invention are only used to illustrate the present invention rather than limiting the application scenarios of this invention.

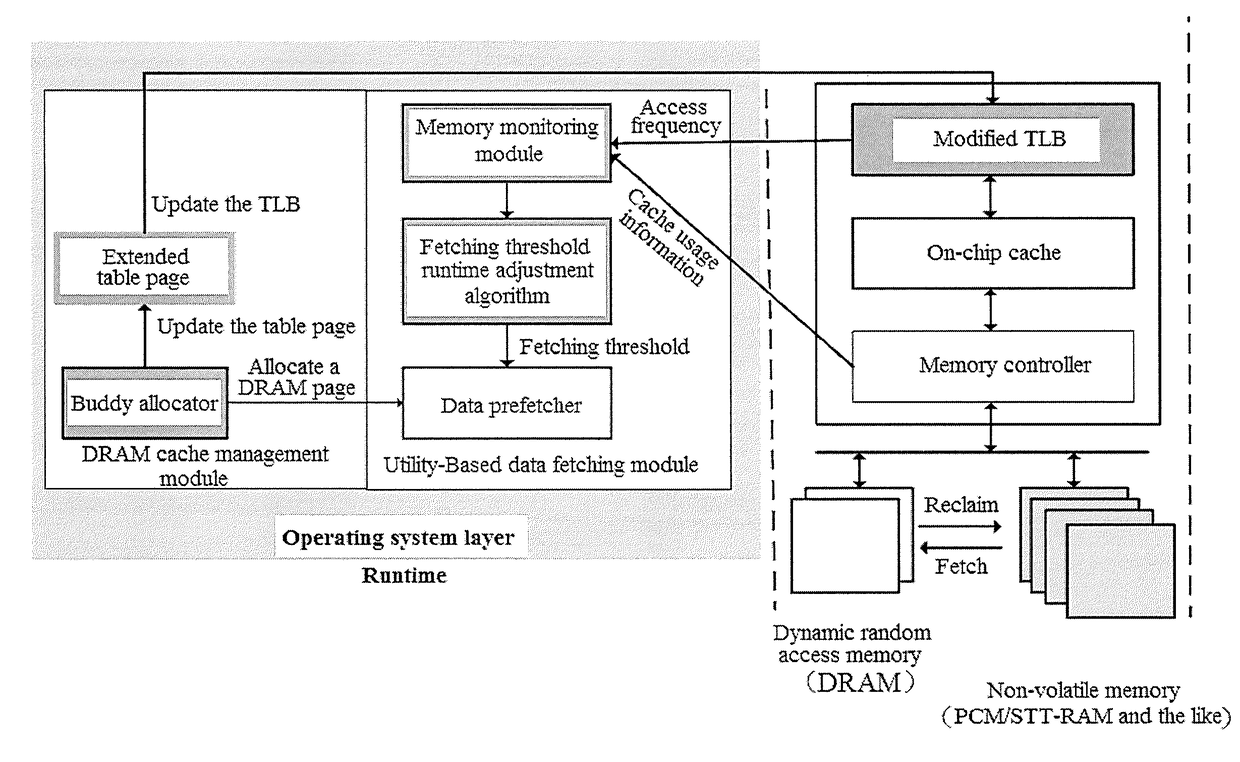

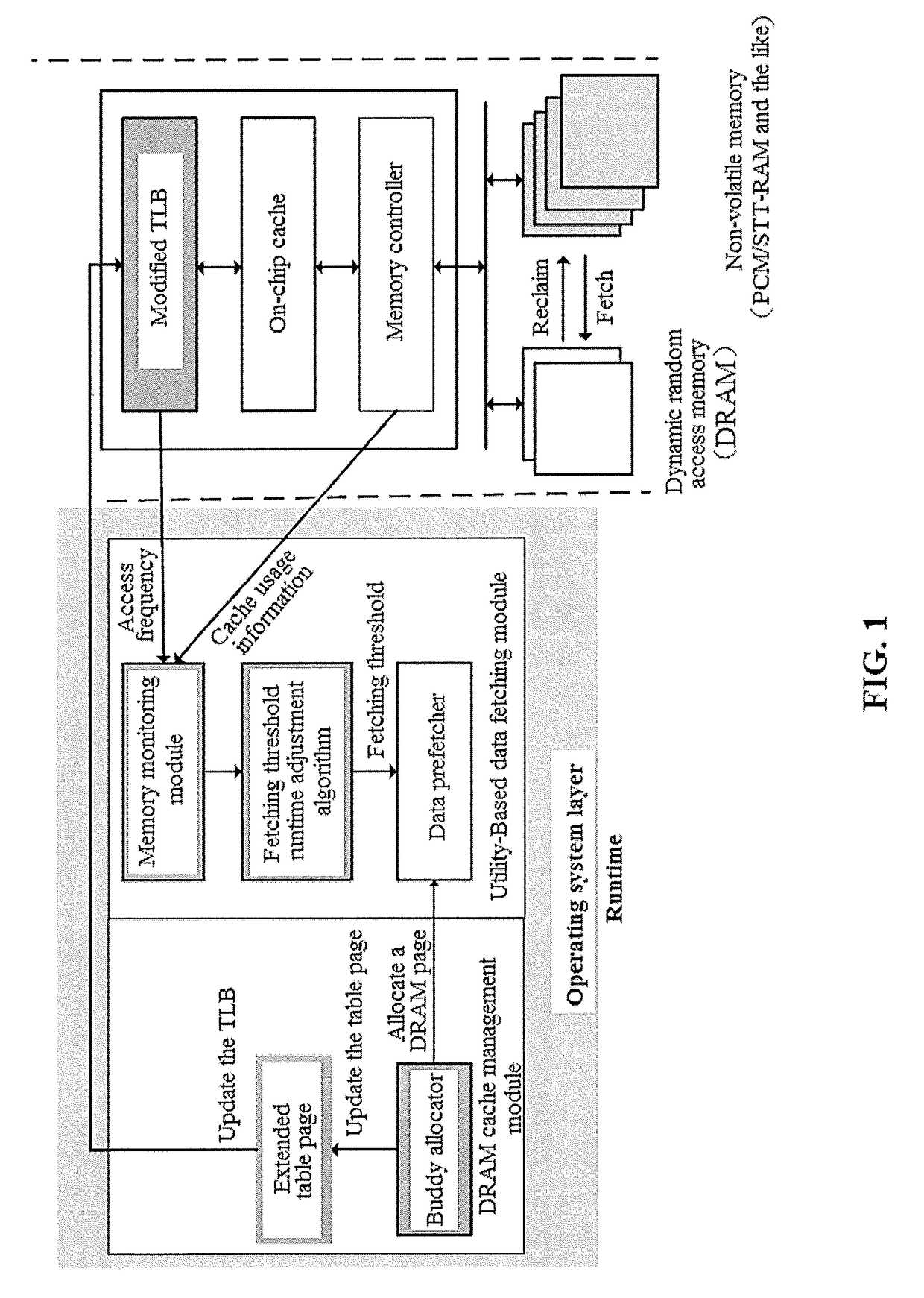

[0030]FIG. 1 shows system architecture of a DRAM / NVM hierarchical heterogeneous memory system with software-hardware cooperative management. The hardware layer includes a modified TLB, and the software layer includes extended page table, a utility-based data fetching module, and a DRAM cache management module. The extended page table is mainly used to manage the mapping from virtual pages to physical pages and the mapping from NVM memory pages to DRAM cache pages. The modified TLB caches page table entries that are frequently accessed in the extended page table, thereby improve the efficiency of ad...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com