Patents

Literature

78results about How to "Improve cache utilization" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

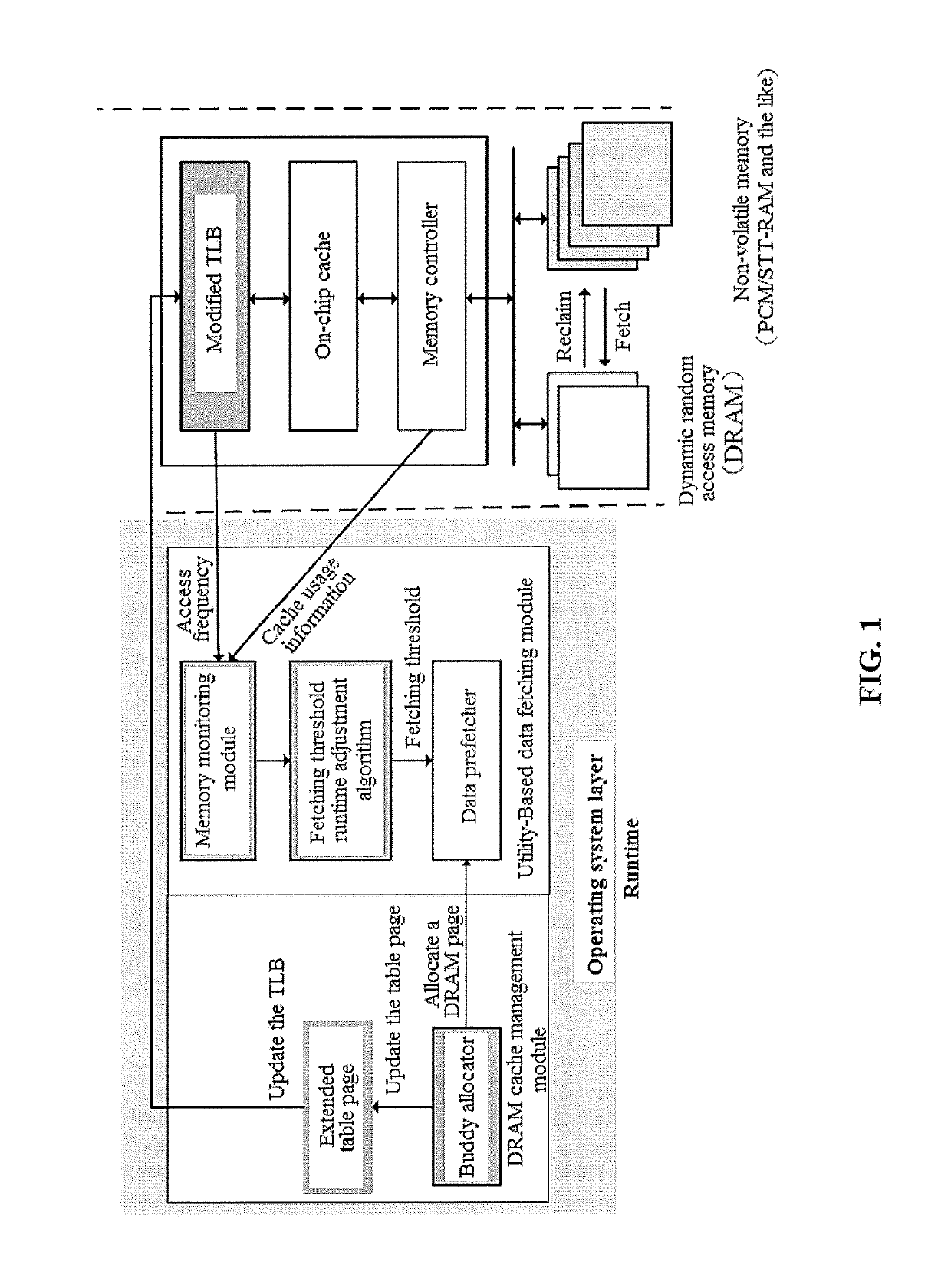

DRAM (dynamic random access memory)-NVM (non-volatile memory) hierarchical heterogeneous memory access method and system adopting software and hardware collaborative management

ActiveCN105786717ARealize unified managementEliminate overheadMemory architecture accessing/allocationMemory adressing/allocation/relocationPage tableCache management

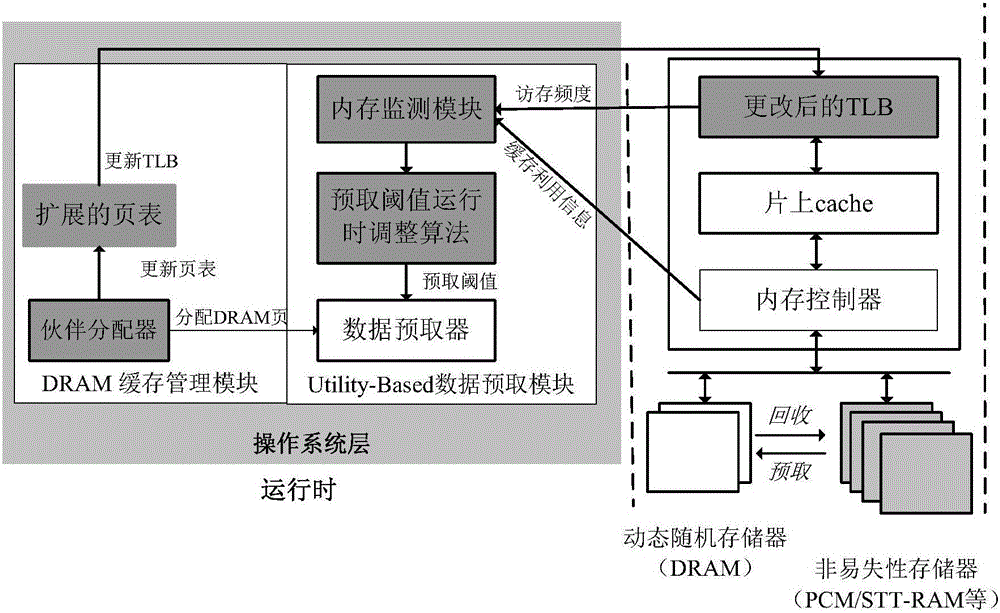

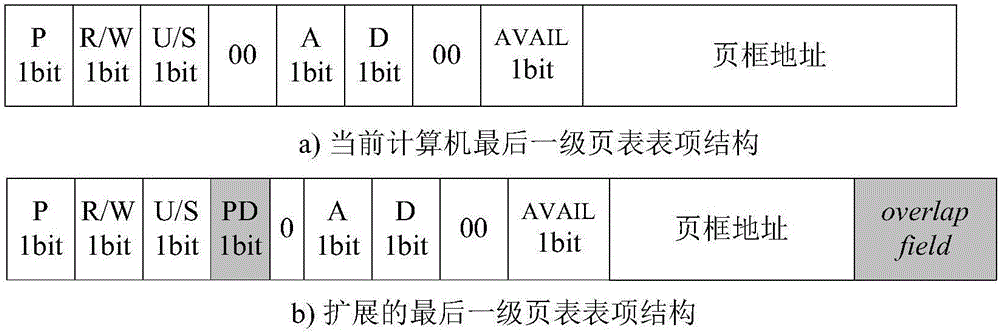

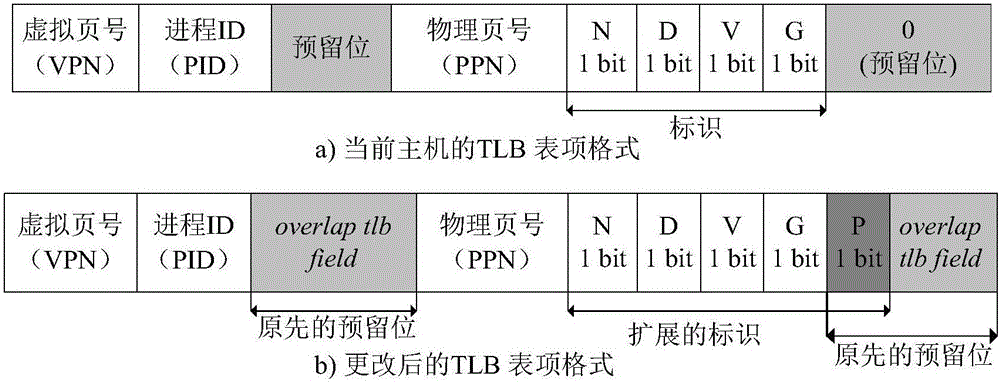

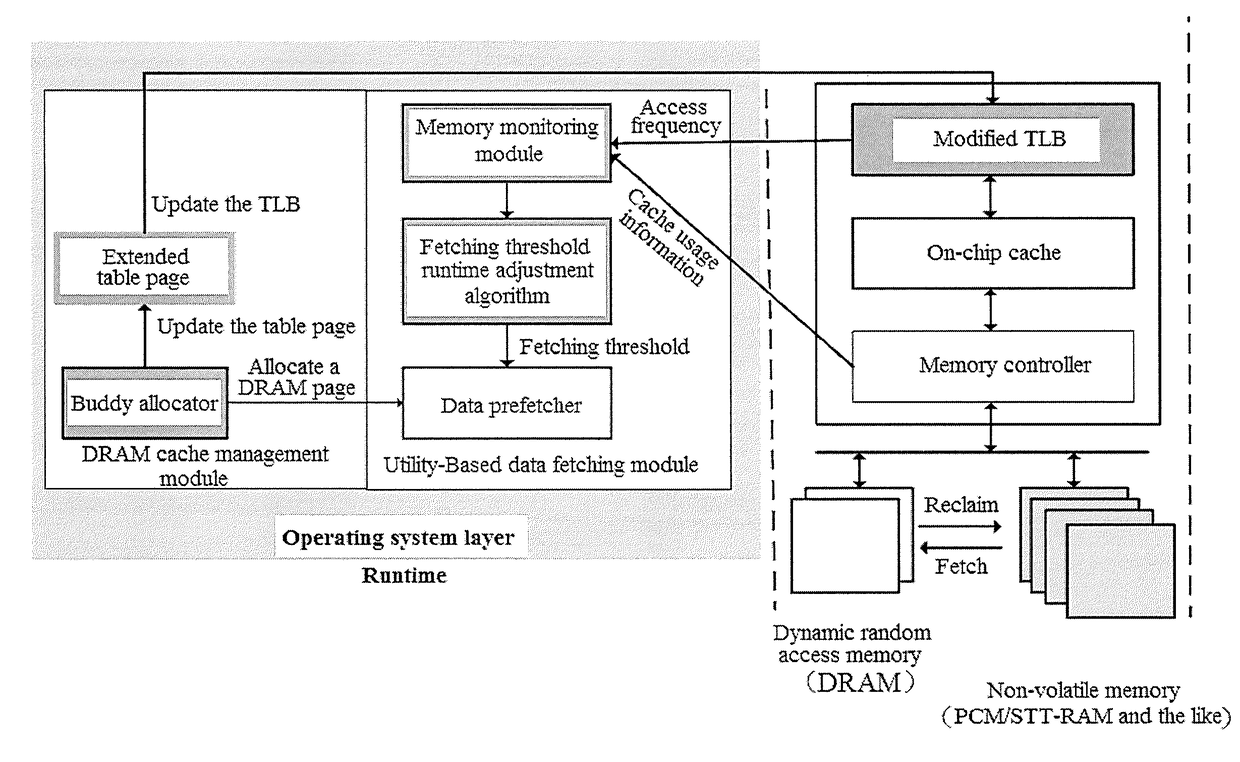

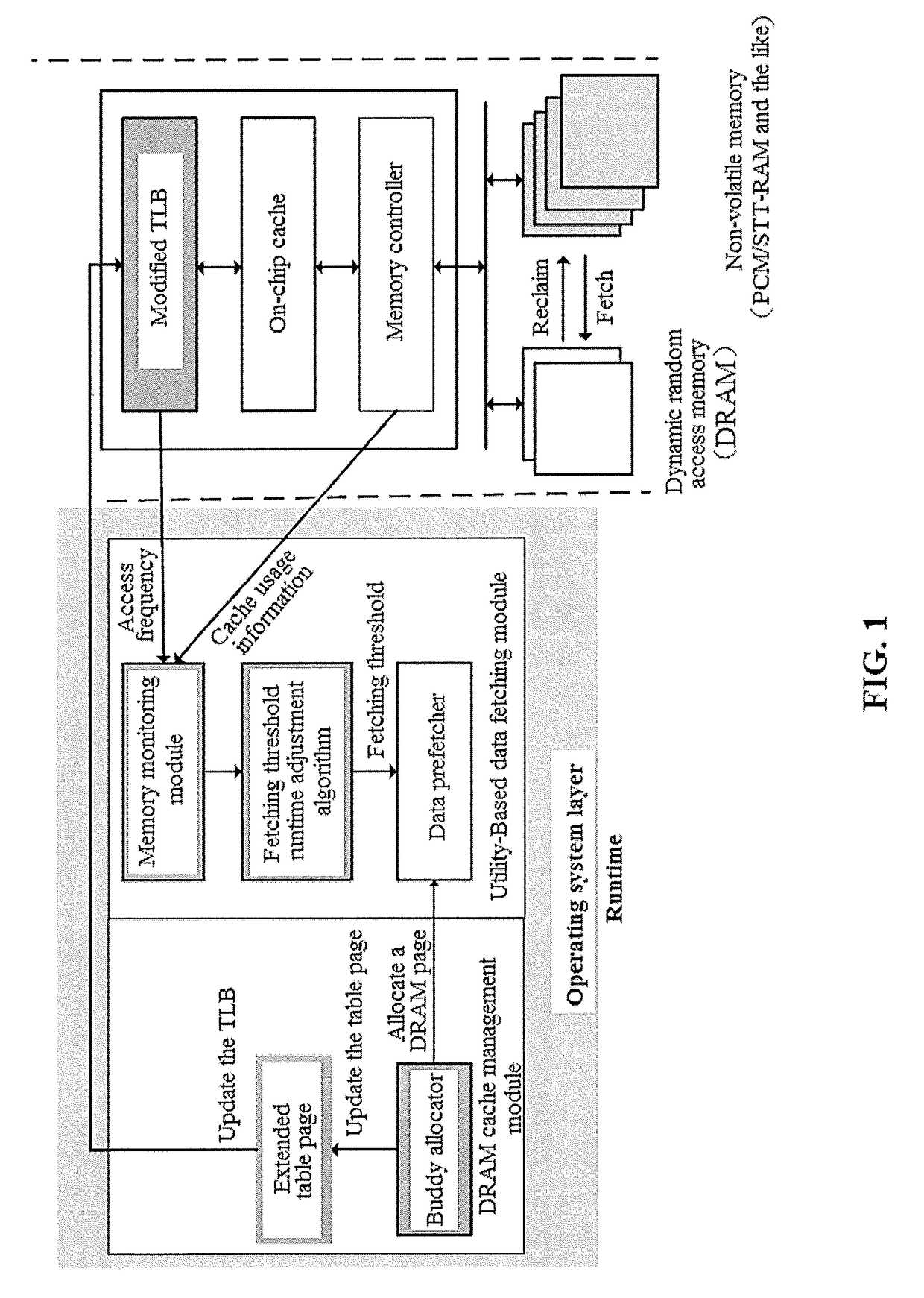

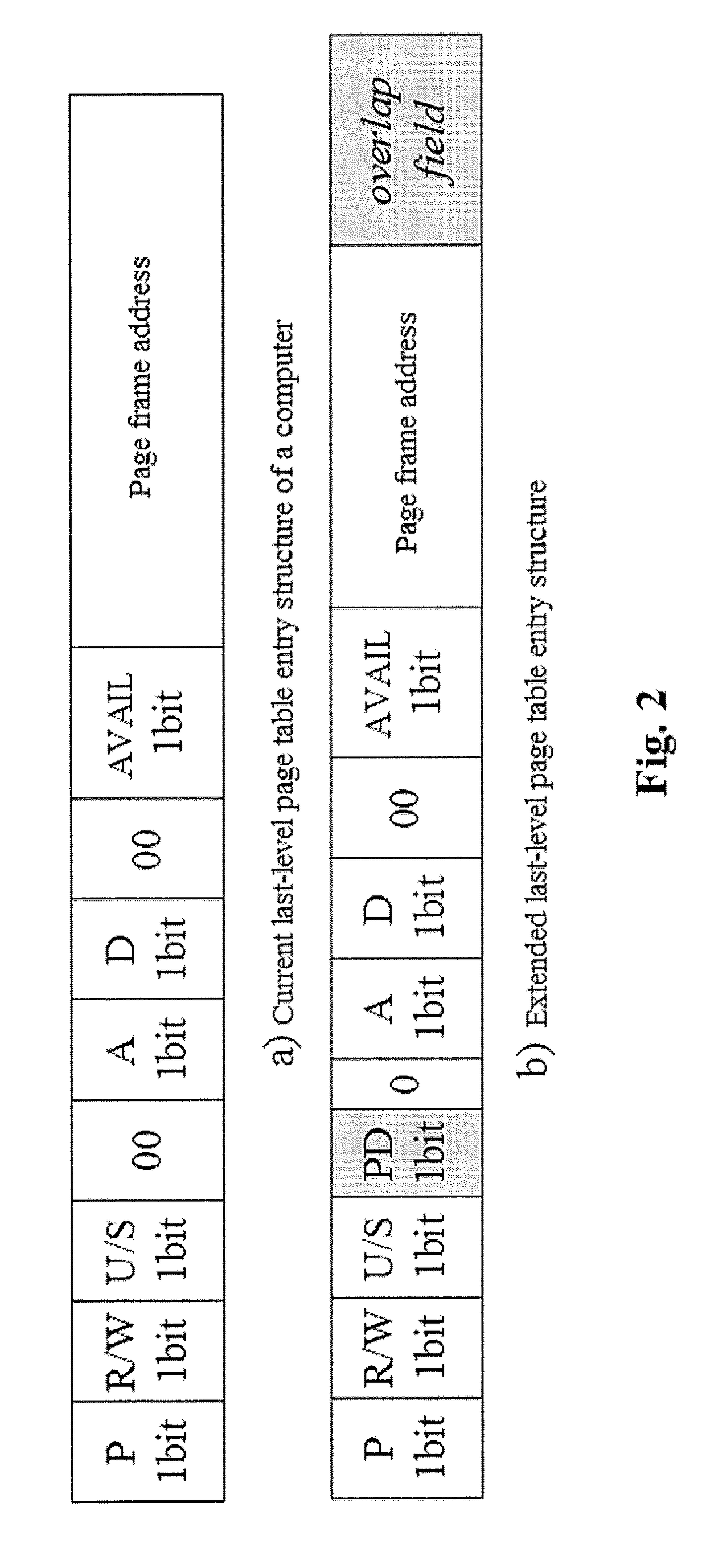

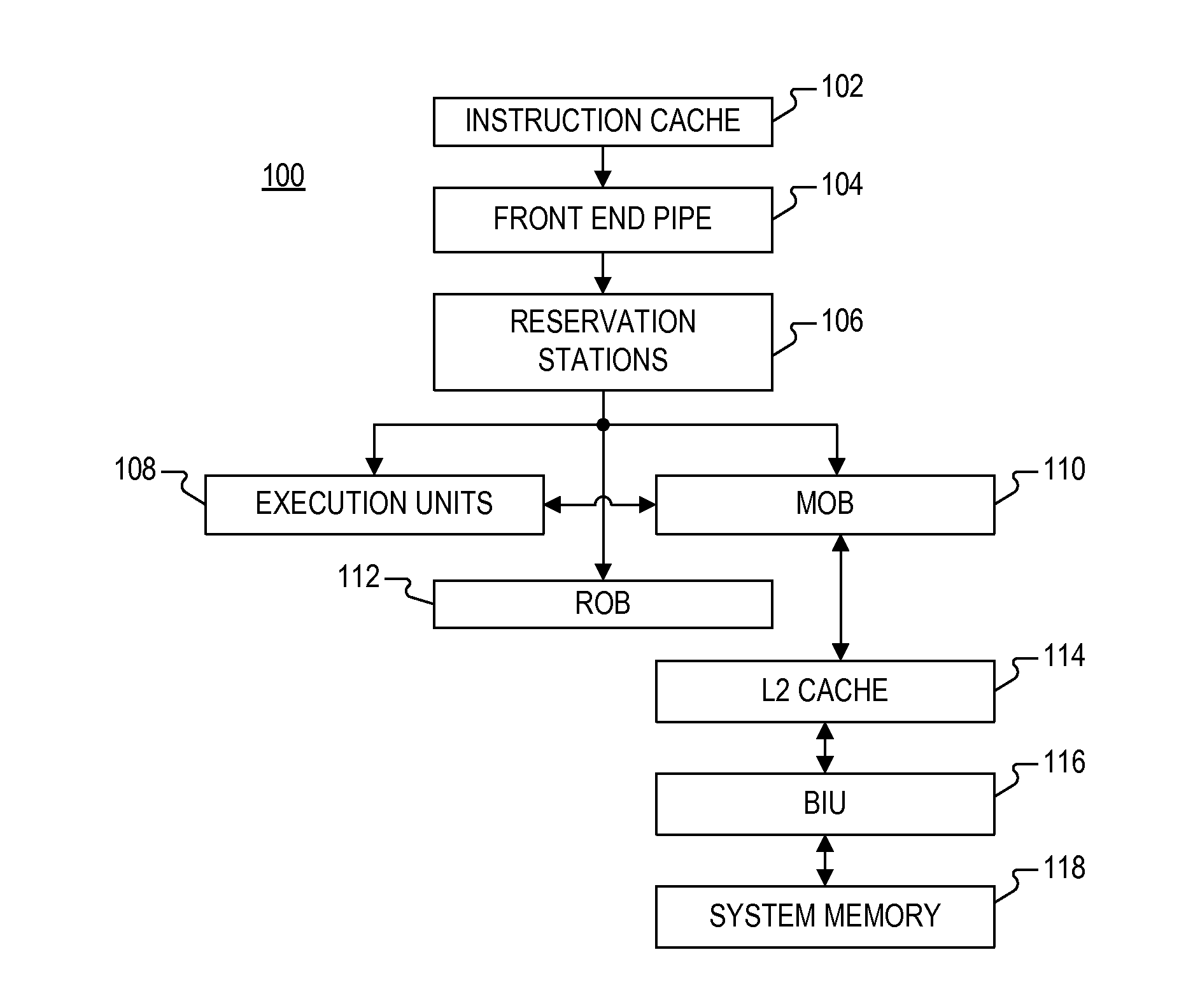

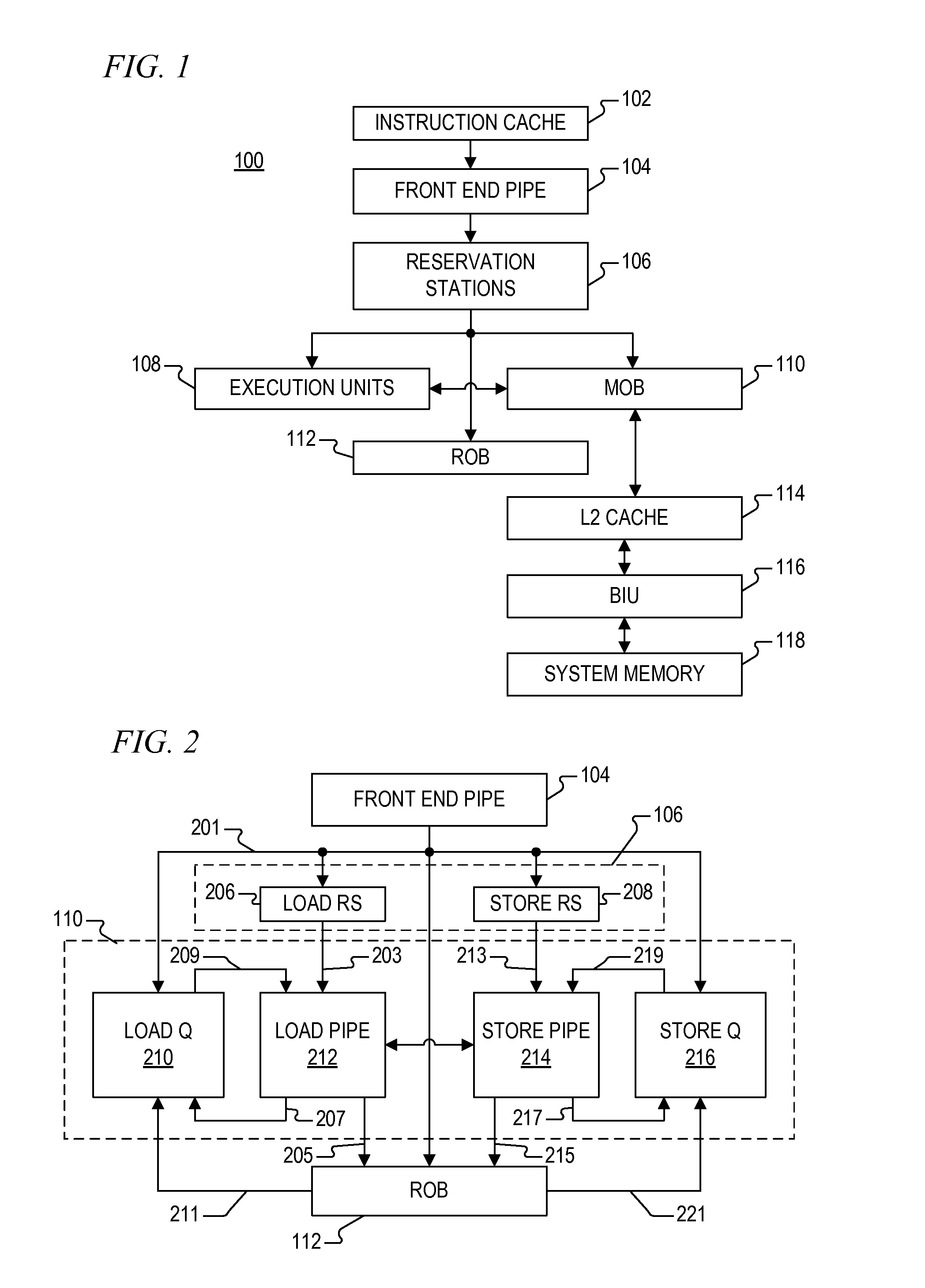

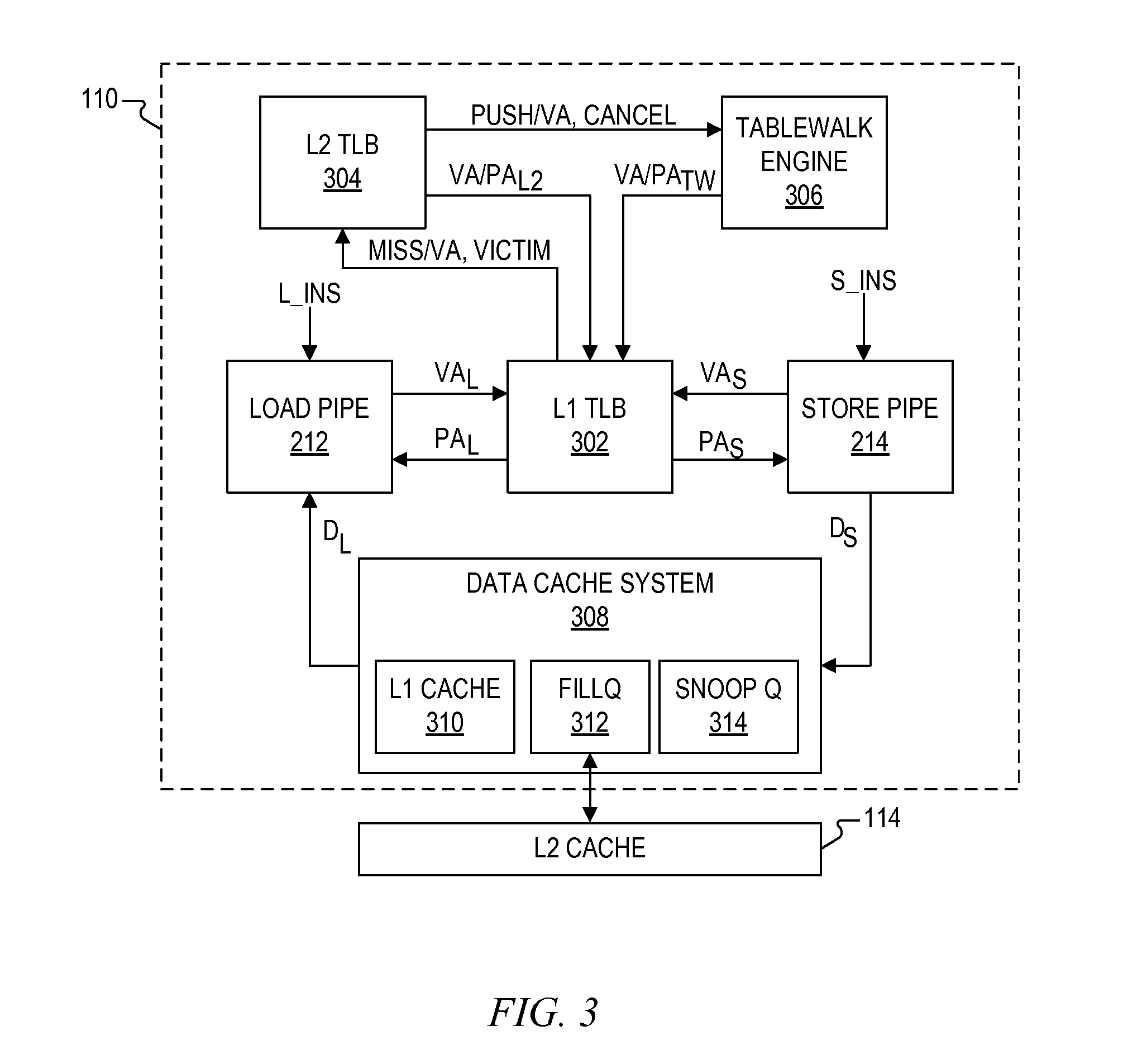

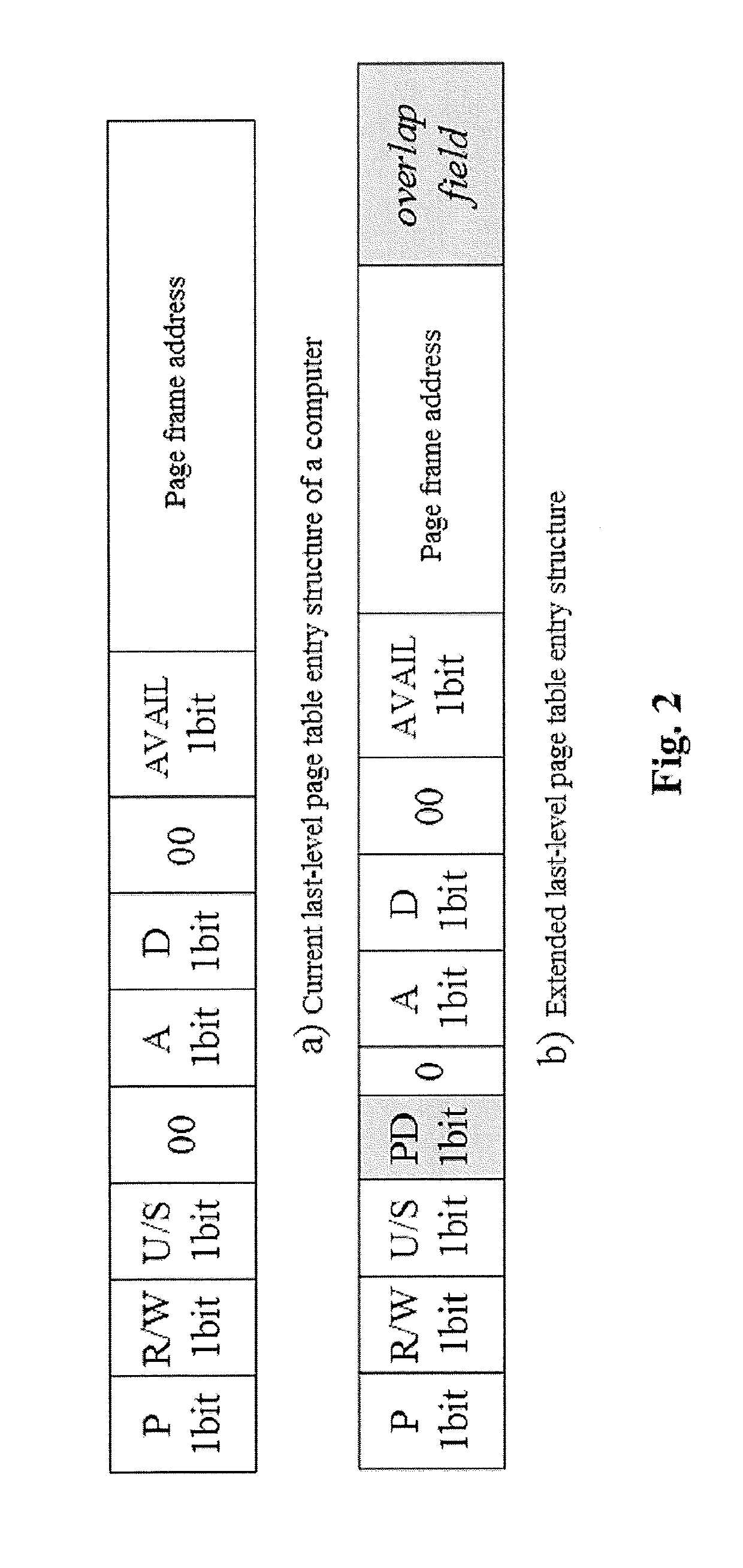

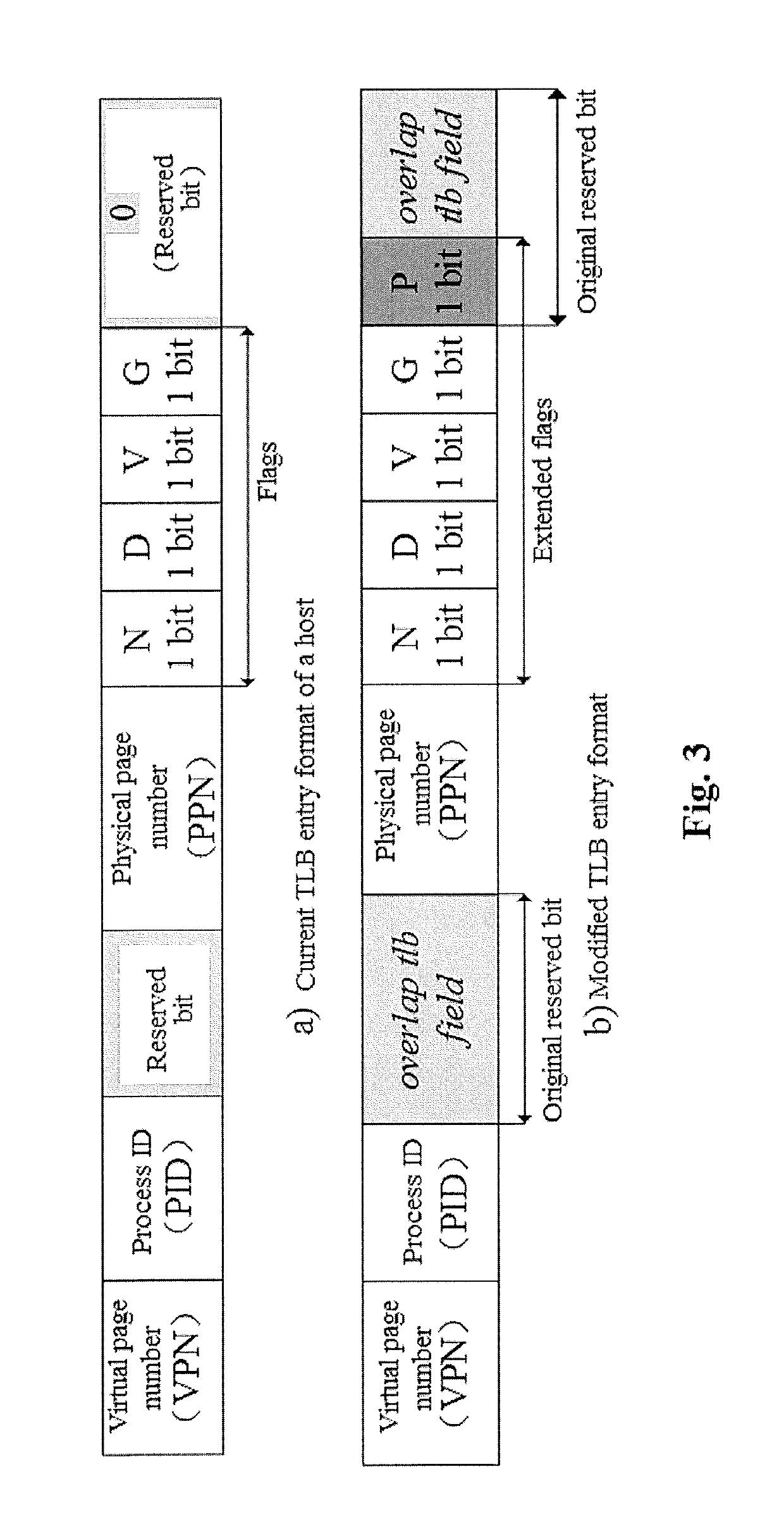

The invention provides a DRAM (dynamic random access memory)-NVM (non-volatile memory) hierarchical heterogeneous memory system adopting software and hardware collaborative management. According to the system, an NVM is taken as a large-capacity NVM for use while DRAM is taken as a cache of the NVM. Hardware overhead of a hierarchical heterogeneous memory architecture in traditional hardware management is eliminated through effective use of certain reserved bits in a TLB (translation lookaside buffer) and a page table structure, a cache management problem of the heterogeneous memory system is transferred to the software hierarchy, and meanwhile, the memory access delay after missing of final-stage cache is reduced. In view of the problems that many applications in a big data application environment have poorer data locality and cache pollution can be aggravated with adoption of a traditional demand-based data prefetching strategy in the DRAM cache, a Utility-Based data prefetching mechanism is adopted in the DRAM-NVM hierarchical heterogeneous memory system, whether data in the NVM are cached into the DRAM is determined according to the current memory pressure and memory access characteristics of the applications, so that the use efficiency of the DRAM cache and the use efficiency of the bandwidth from the NVM main memory to the DRAM cache are increased.

Owner:HUAZHONG UNIV OF SCI & TECH

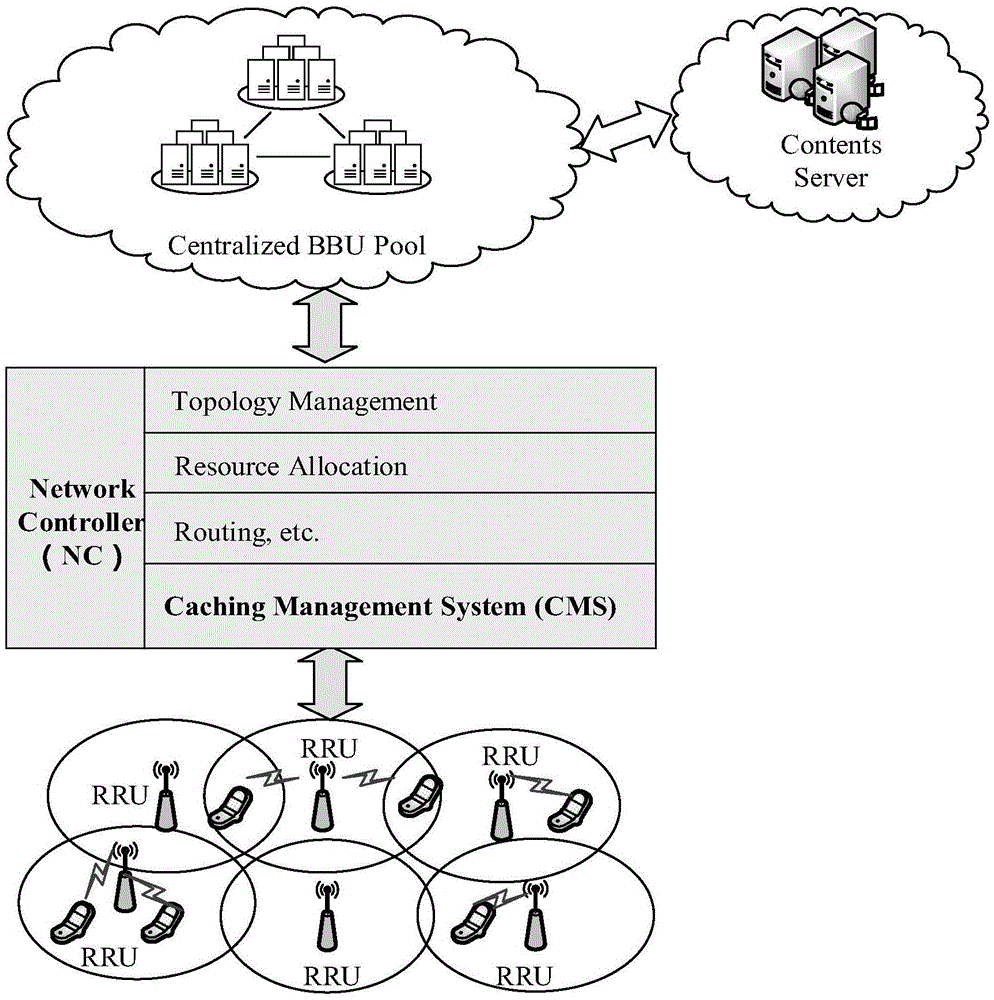

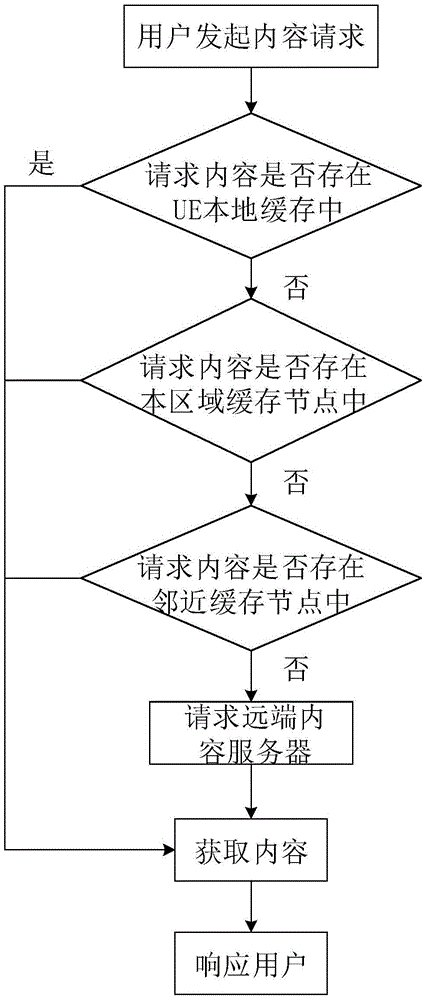

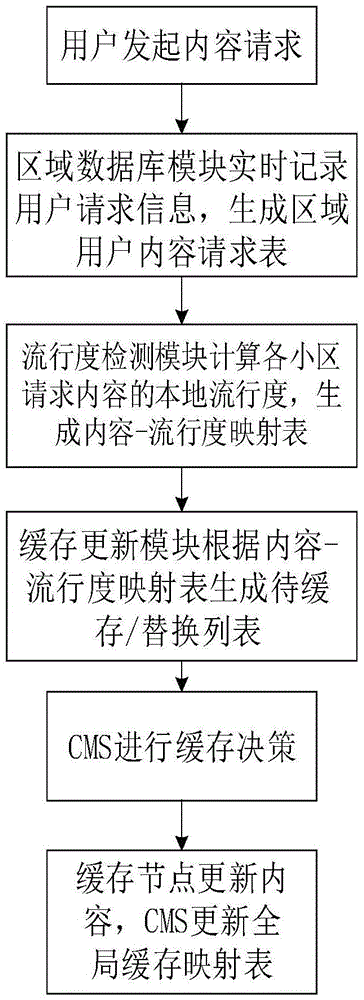

SD-RAN-based whole network collaborative content caching management system and method

The invention discloses an SD-RAN (software defined radio access network)-based whole network cooperative caching management system and method. According to the SD-RAN (software defined radio access network)-based whole network cooperative caching management system and method, a content-popularity mapping table and a wait caching / replacement list are generated through detection and content popularity statistics, and a caching decision is made according to the wait caching / replacement list, and is issued to a caching node, so that caching and update can be carried out; a real-time global caching mapping table is obtained through monitoring the caching decision; and the caching node responds to a content request and delivers corresponding content according to the content request of a user of a current cell; characteristics of limitation of content acquisition delay and the change of the popularity of different content with time and places are fully considered, and a global network view in a control layer of a software defined network is fully utilized, and therefore, the detection range of the popularity of content can be expanded; and based on whole network cooperative caching optimized content arrangement, a situation that content is repeatedly called from a remote-end content server can be avoided, and network overhead can be reduced, and user experience can be improved.

Owner:HUAZHONG UNIV OF SCI & TECH

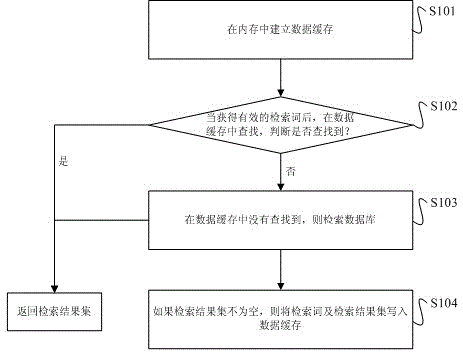

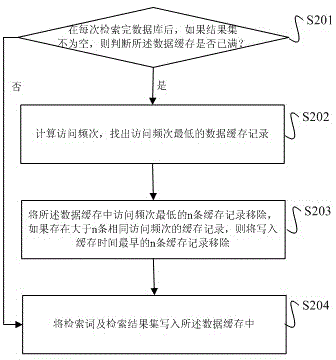

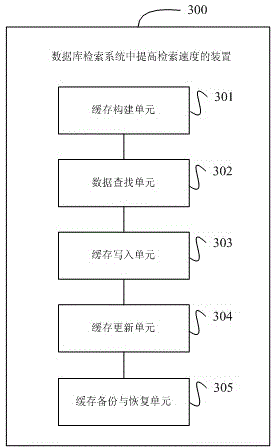

Method and device for increasing retrieval speed in database retrieval system

InactiveCN103336849AImprove retrieval speedImprove cache utilizationSpecial data processing applicationsResult setRetrieval result

The invention relates to a method and a device for increasing a retrieval speed in a database retrieval system and belongs to the technical field of database retrieval. The method comprises the following steps: establishing a data cache in an internal storage; after obtaining an index word, searching in the data cache; if a cache record of a corresponding index word is found in the data cache, generating and returning a retrieval result set according to the cache record and ending the retrieval at this time; if the cache record is not found in the data cache, retrieving the database and returning the retrieval result set; and if the retrieval result set is not empty, writing the index word and the retrieval result set into the data cache. The invention also discloses the device for increasing the retrieval speed in the database retrieval system. In the mode of caching after using, the device reduces the system resource consumed by establishing the cache and increases the use ratio of the cache. In the mode of caching the result set, the caching mechanism is more convenient and quick.

Owner:KUNMING UNIV OF SCI & TECH

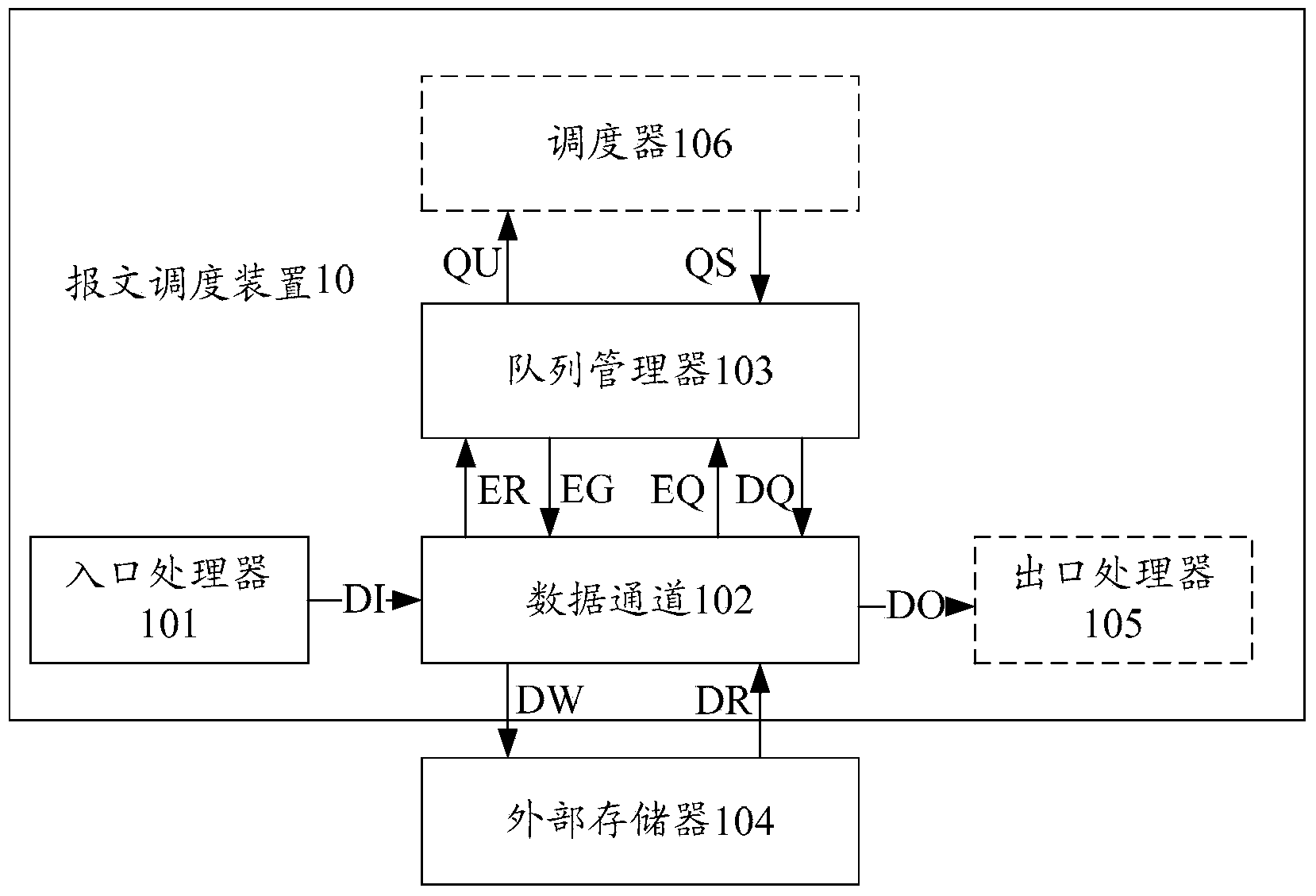

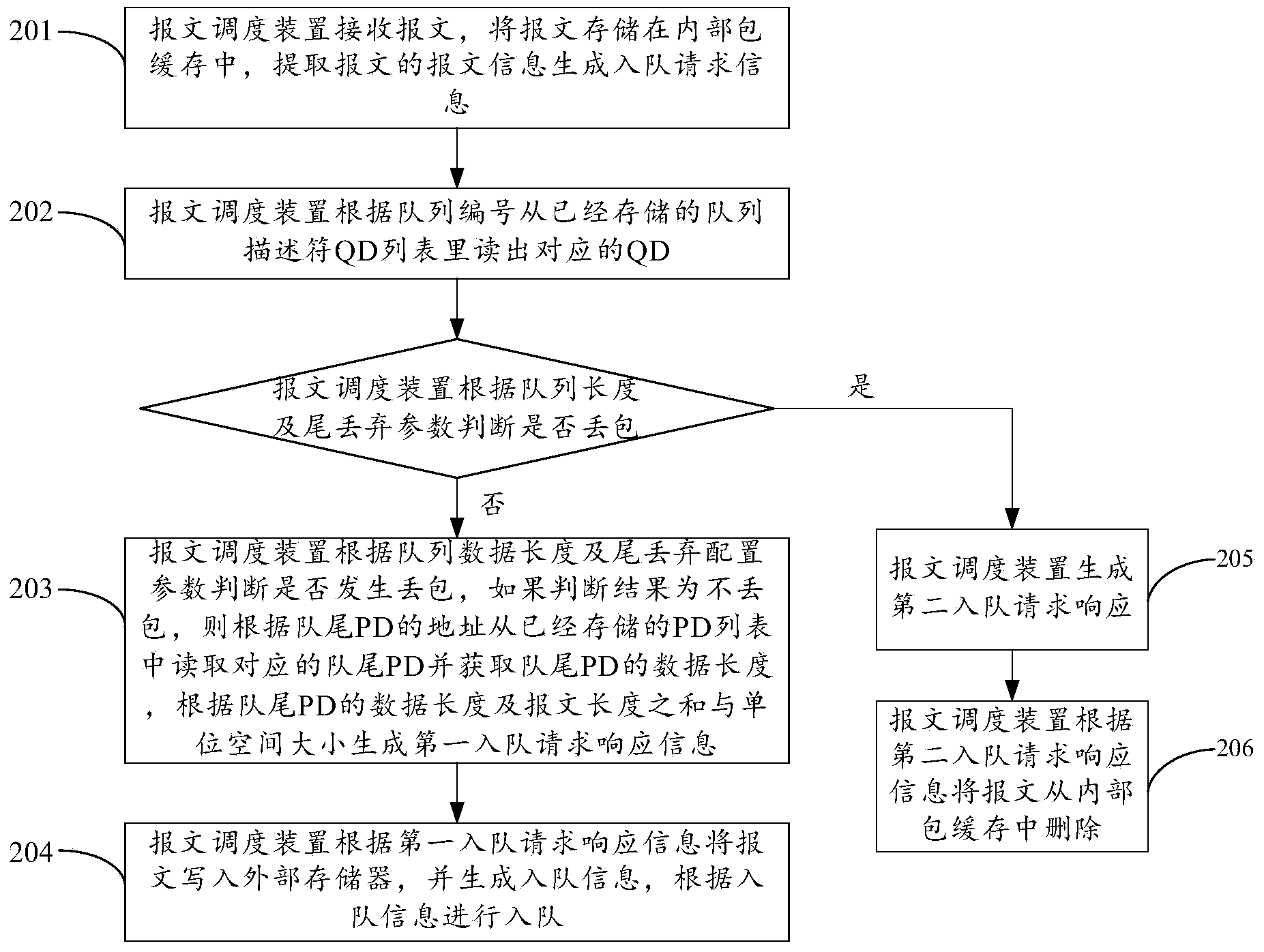

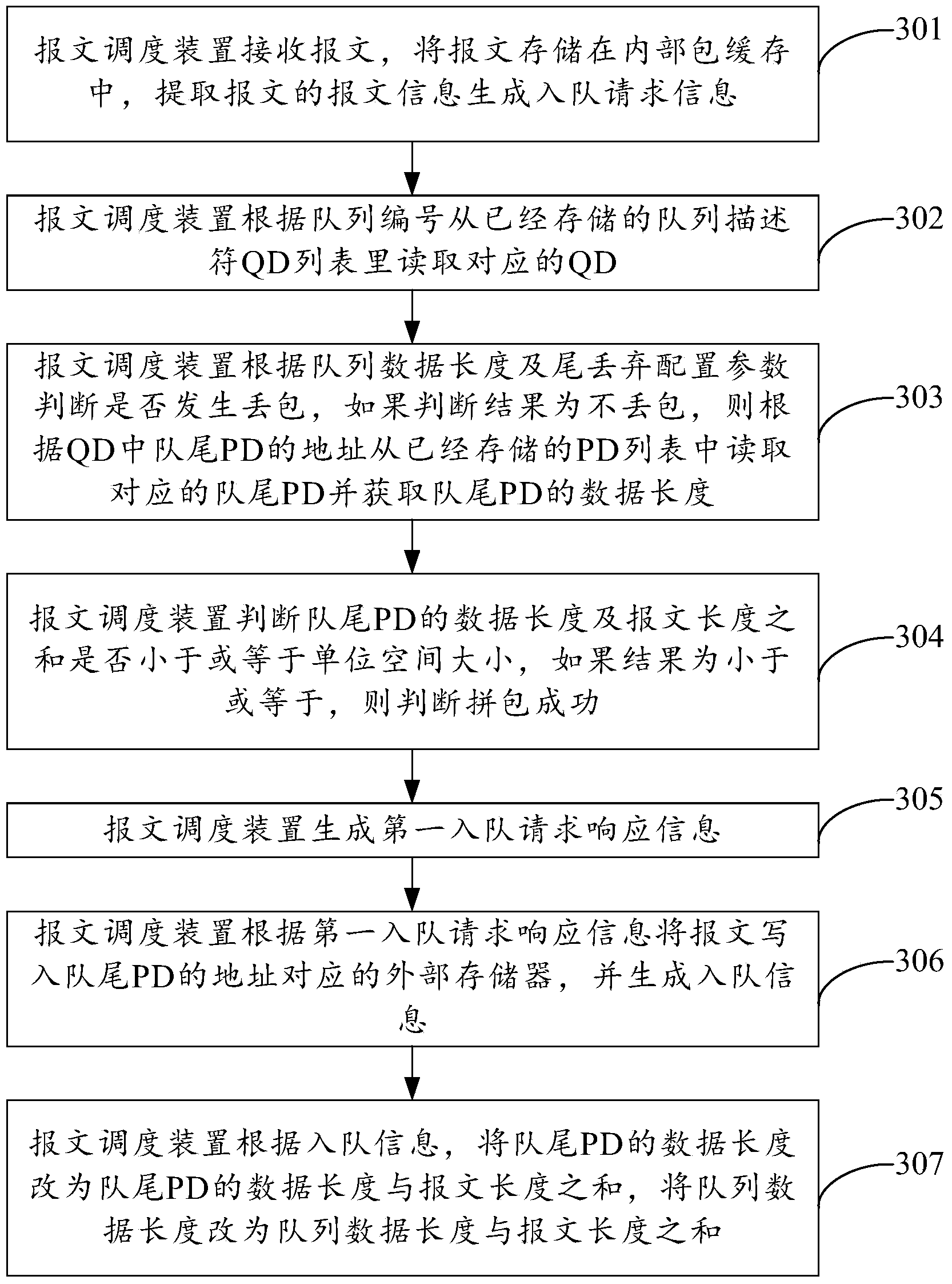

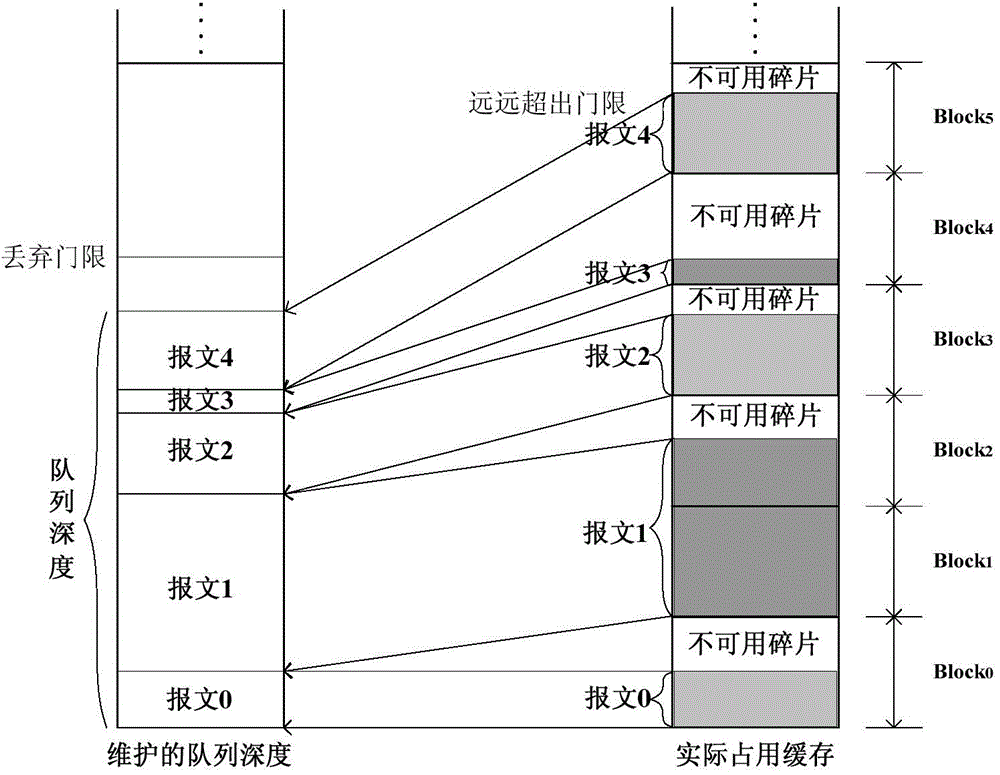

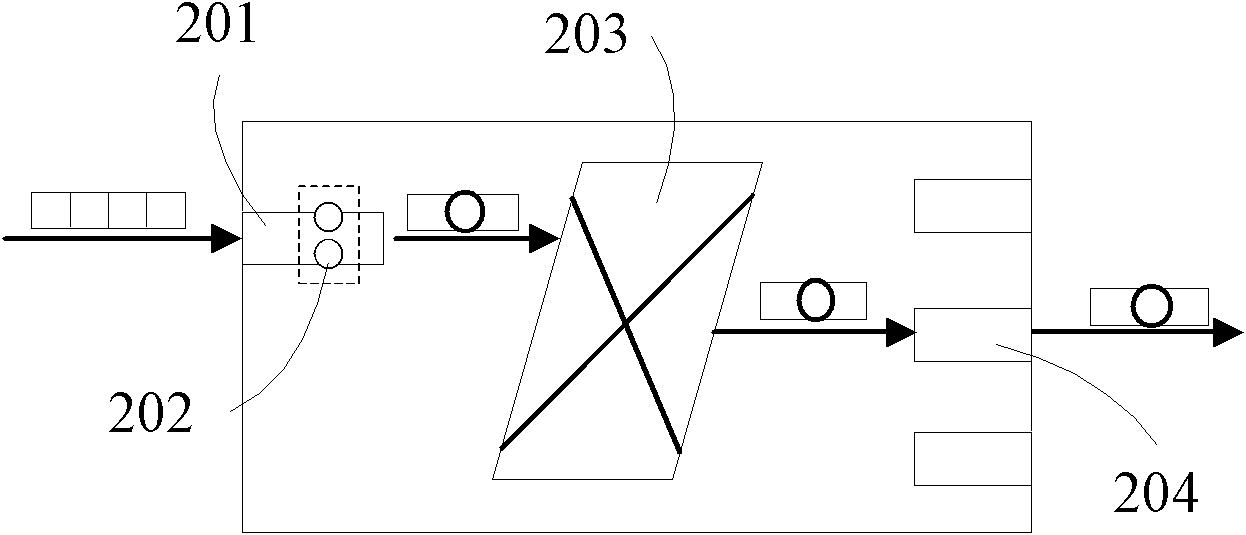

Message dispatching method and device thereof

ActiveCN103647726AImprove cache utilizationSave resourcesData switching networksMessage lengthQueue number

The embodiment of the invention discloses a message dispatching method and a device thereof and relates to the field of communication, the utilization rate of data cache can be raised, and resources are saved. The method comprises the following step: (1) the message dispatching device receives a message and stores the message into internal package cache, the message information is extracted and queue entering request information is generated, and the queue entering request information comprises a queue number and a message length; (2) the corresponding queue descriptor is read from a stored queue descriptor list according to the queue number; (3) whether a packet is lost or not is judged according to the queue descriptor, and if a judgment result is that the packet is lost, first queue entering request response information is generated according to the data length, the message length and the unit space size of a queue tail message descriptor in the queue message descriptor; (4) the message is written into an external memory according to the first queue entering response information, queue entering information is generated, and the message enters the queue according to the queue entering information. The message dispatching method and the device are used for the message dispatching.

Owner:HUAWEI TECH CO LTD

Dram/nvm hierarchical heterogeneous memory access method and system with software-hardware cooperative management

ActiveUS20170277640A1Eliminates hardwareReducing memory access delayMemory architecture accessing/allocationMemory systemsTerm memoryPage table

The present invention provides a DRAM / NVM hierarchical heterogeneous memory system with software-hardware cooperative management schemes. In the system, NVM is used as large-capacity main memory, and DRAM is used as a cache to the NVM. Some reserved bits in the data structure of TLB and last-level page table are employed effectively to eliminate hardware costs in the conventional hardware-managed hierarchical memory architecture. The cache management in such a heterogeneous memory system is pushed to the software level. Moreover, the invention is able to reduce memory access latency in case of last-level cache misses. Considering that many applications have relatively poor data locality in big data application environments, the conventional demand-based data fetching policy for DRAM cache can aggravates cache pollution. In the present invention, an utility-based data fetching mechanism is adopted in the DRAM / NVM hierarchical memory system, and it determines whether data in the NVM should be cached in the DRAM according to current DRAM memory utilization and application memory access patterns. It improves the efficiency of the DRAM cache and bandwidth usage between the NVM main memory and the DRAM cache.

Owner:HUAZHONG UNIV OF SCI & TECH

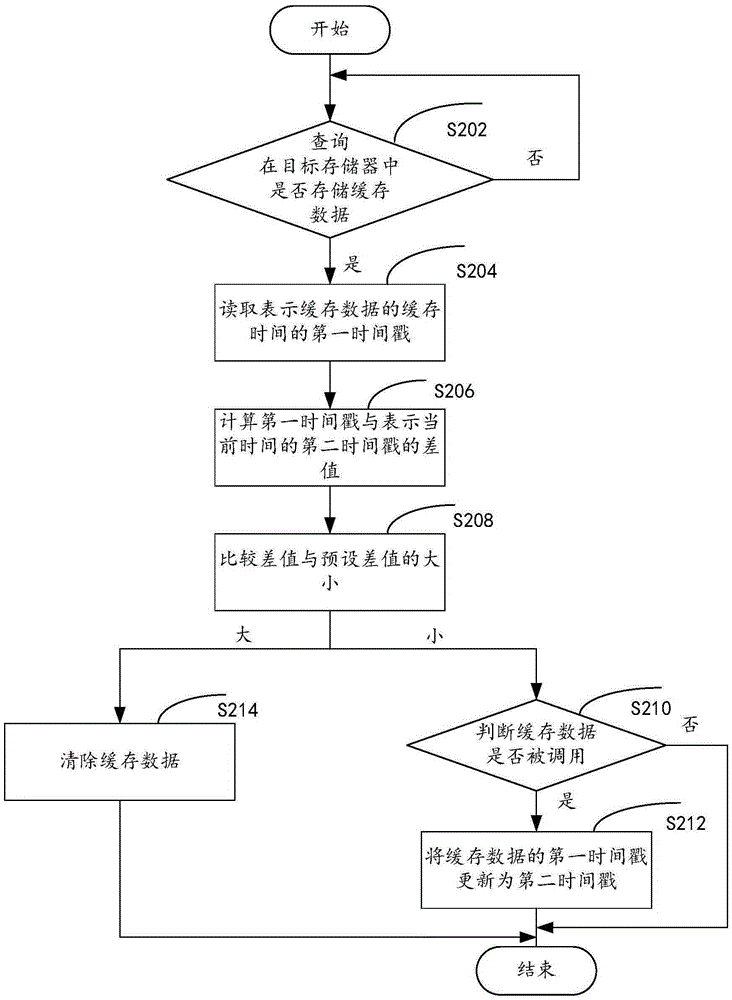

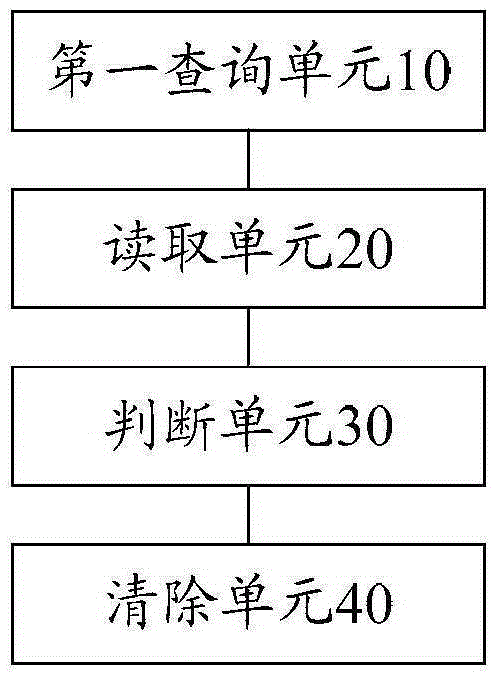

Processing method and processing device for buffered data

InactiveCN106569733ARealize automatic queryImprove cache utilizationInput/output to record carriersData miningTime stamping

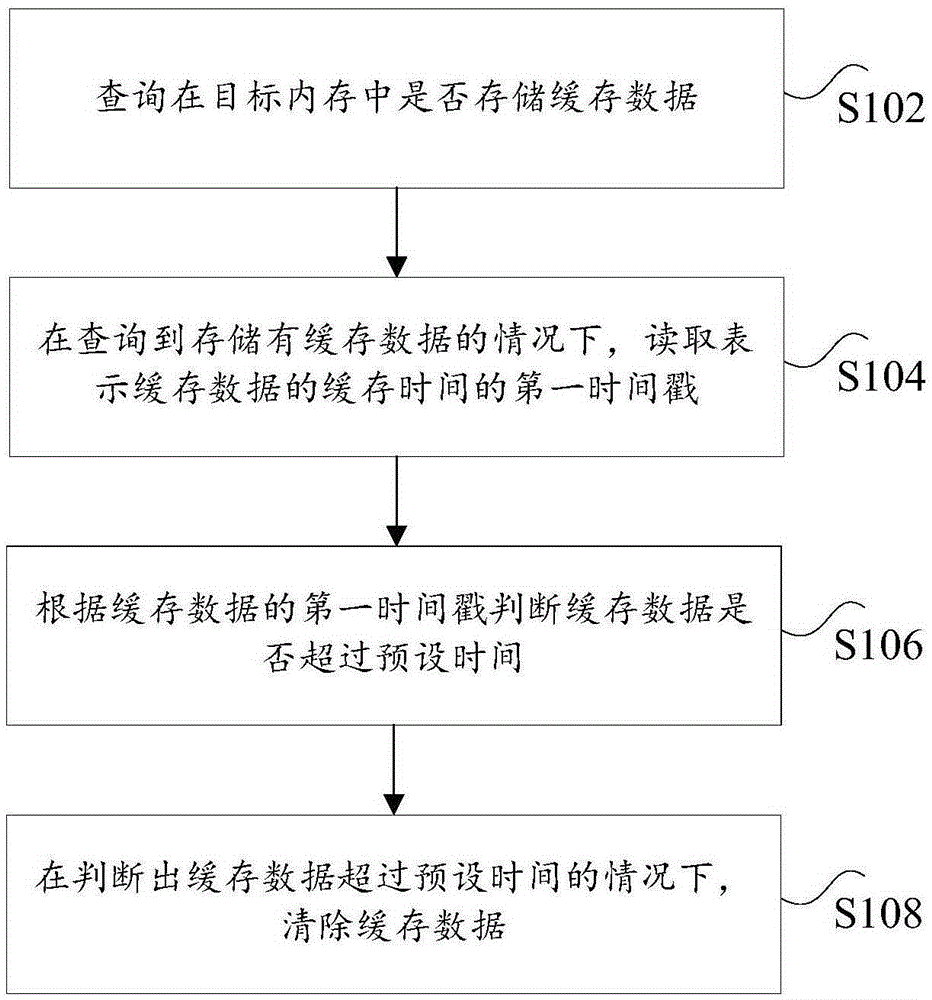

The invention discloses a processing method and a processing device for buffered data, wherein the processing method comprises the steps of inquiring whether the buffered data are stored in an objective memory; on the condition that a fact that buffered data are stored is inquired, reading a first time stamp which represents buffering time of the buffered data, wherein one piece of data corresponds with a first time stamp; determining whether the buffered data exceed preset time according to the first time stamp of the buffered data; and on the condition that a fact that the buffered data exceeds the preset time is determined, eliminating the buffered data. The processing method and the processing device settle a technical problem of incapability of timely eliminating the stale buffered data in prior art.

Owner:BEIJING GRIDSUM TECH CO LTD

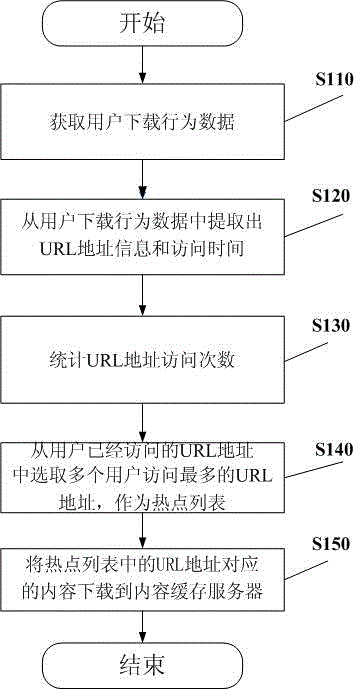

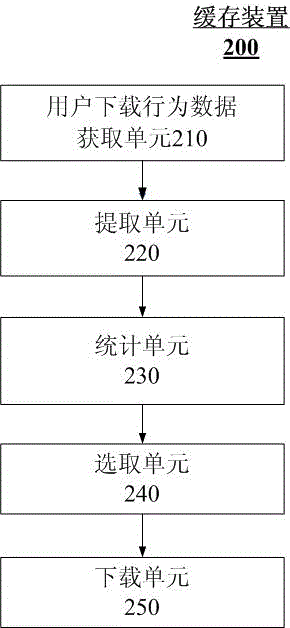

Cache method and device

The invention provides a cache method, which includes the following steps: acquiring user downloading behavior data, and extracting URL address information and access time in the user downloading behavior data; performing statistics of URL address access times of the URL addresses accessed by users within a preset time based on the extracted URL address information and access time; selecting the URL addresses accessed by users the most frequently in the URL addresses accessed by users according to the statistical-based URL address access times as a hot issue list; downloading the content corresponding to the URL addresses in the hot issue list to a content cache server. Through the adoption of the method, the hot issue content can be confirmed by the great amount of downloading behaviors of users, and are cached only, so that unnecessary content storage is reduced, and the cache use ratio is improved.

Owner:ALIBABA (CHINA) CO LTD

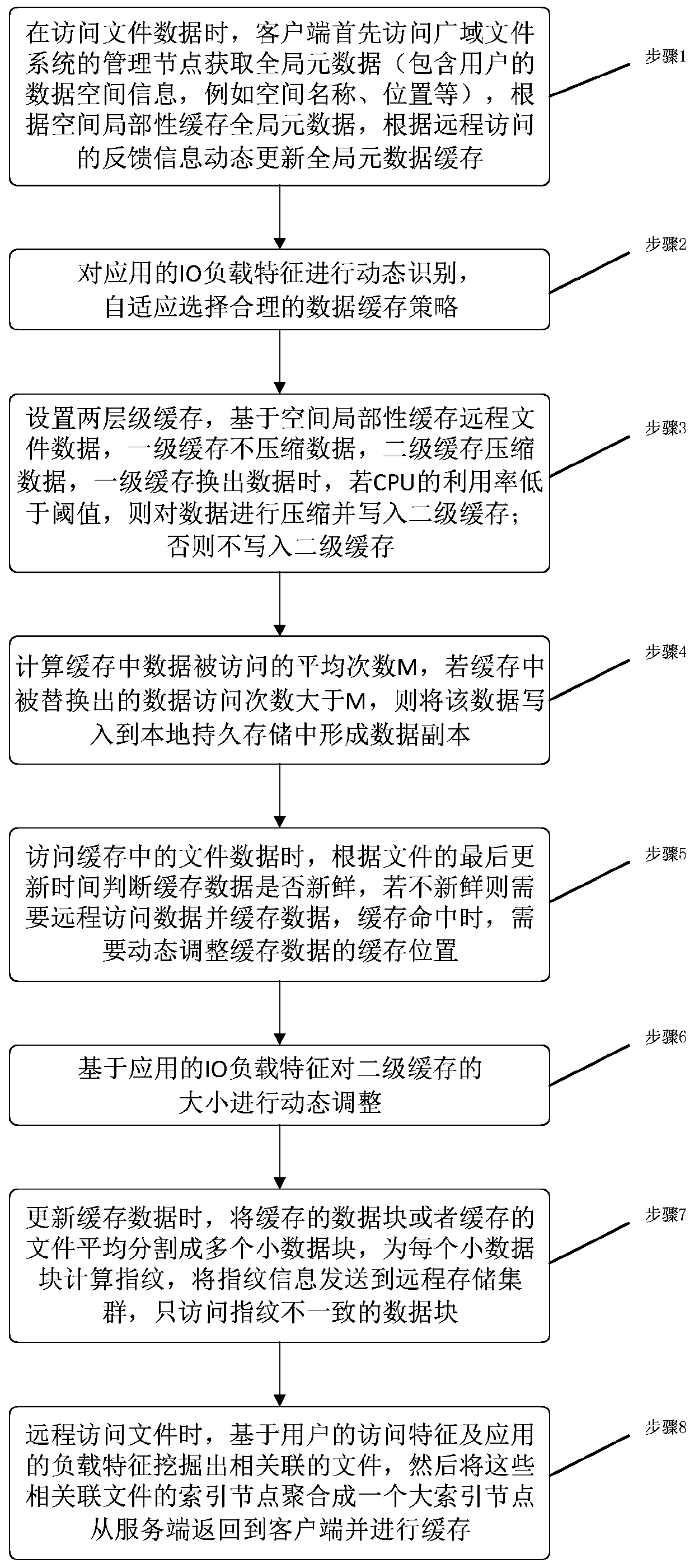

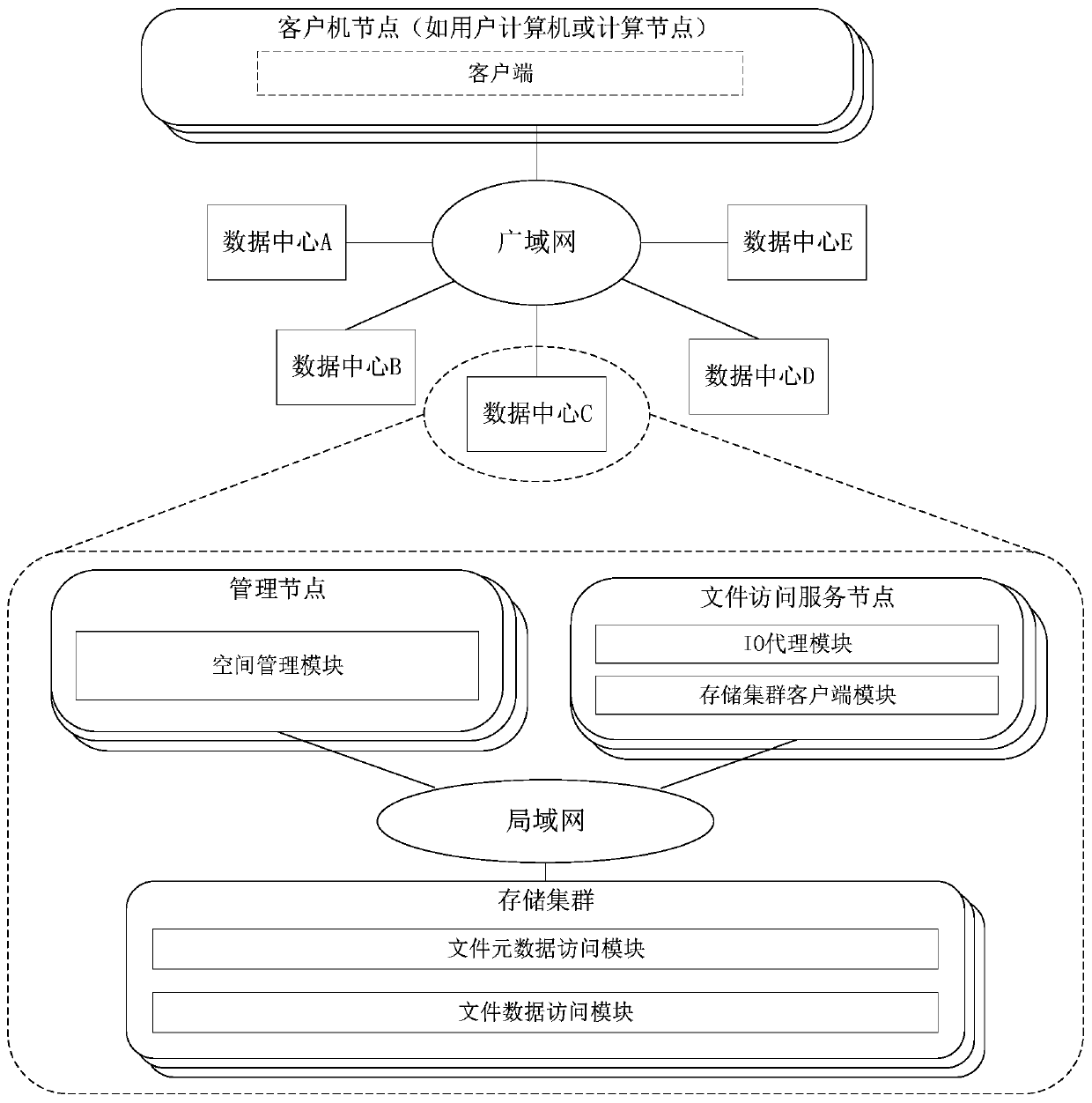

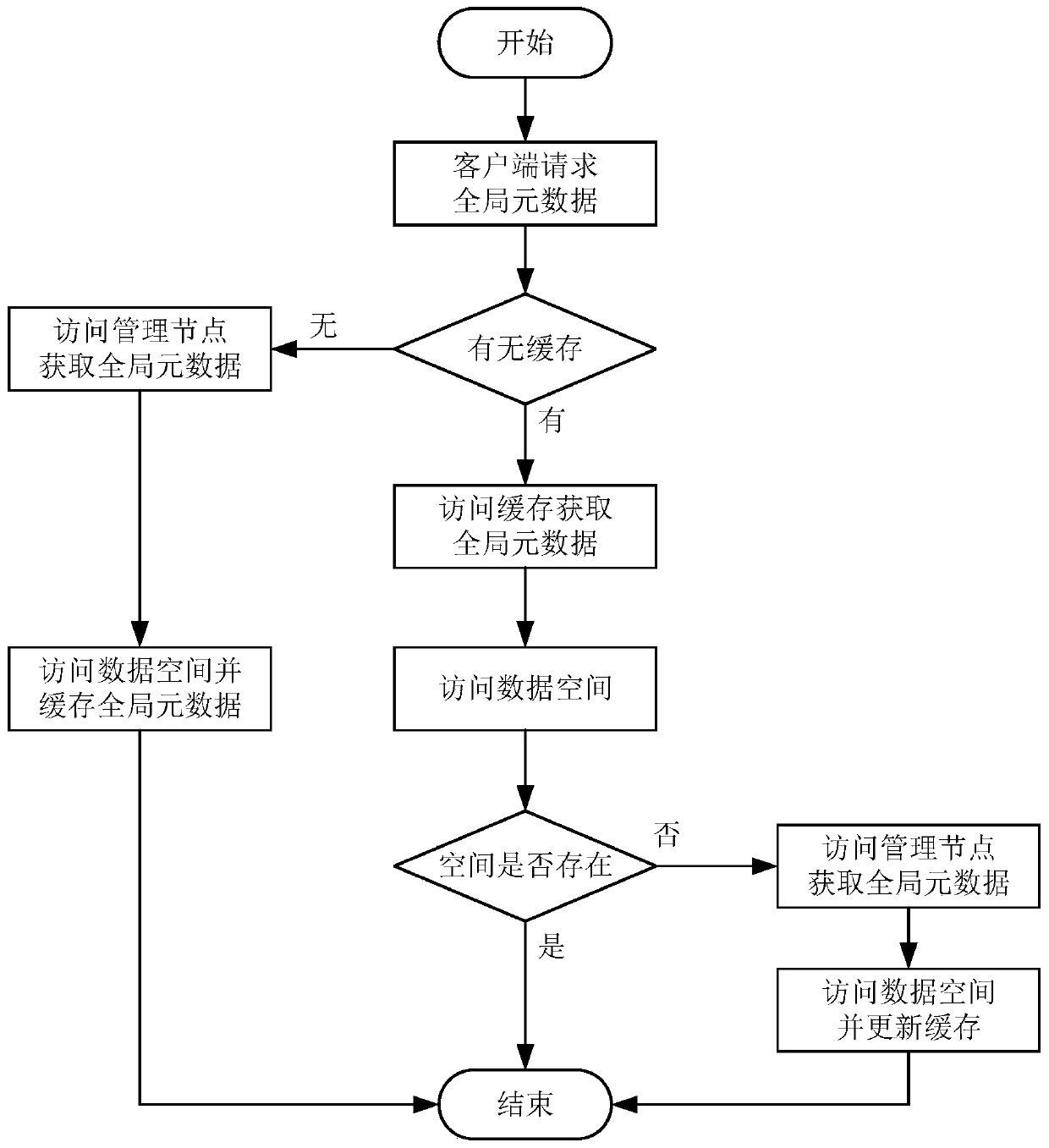

Remote file data access performance optimization method based on efficient caching of client

ActiveCN110188080AReduce the amount of remote actual transmissionPrevent beingFile access structuresSpecial data processing applicationsAdaptive compressionGranularity

The invention provides a remote file data access performance optimization method based on the efficient caching of a client. The method comprises the steps of caching the global metadata and the filedata on the client based on a locality principle; caching the file data by adopting a mixed caching strategy based on the data block and the file as granularity; performing adaptive compression on thecache according to the use state of the computing resource; locally generating a copy file for the frequently accessed data; dynamically adjusting the size of the cache based on the IO load characteristics of the application; carrying out the fine-grained updating on the overdue cache data; and mining an associated file based on the access characteristics of the user and the load characteristicsof the application, and aggregating the index nodes of the associated file into a large index node and caching the large index node at the client. According to the method, the remote file data accessperformance can be remarkably improved.

Owner:BEIHANG UNIV

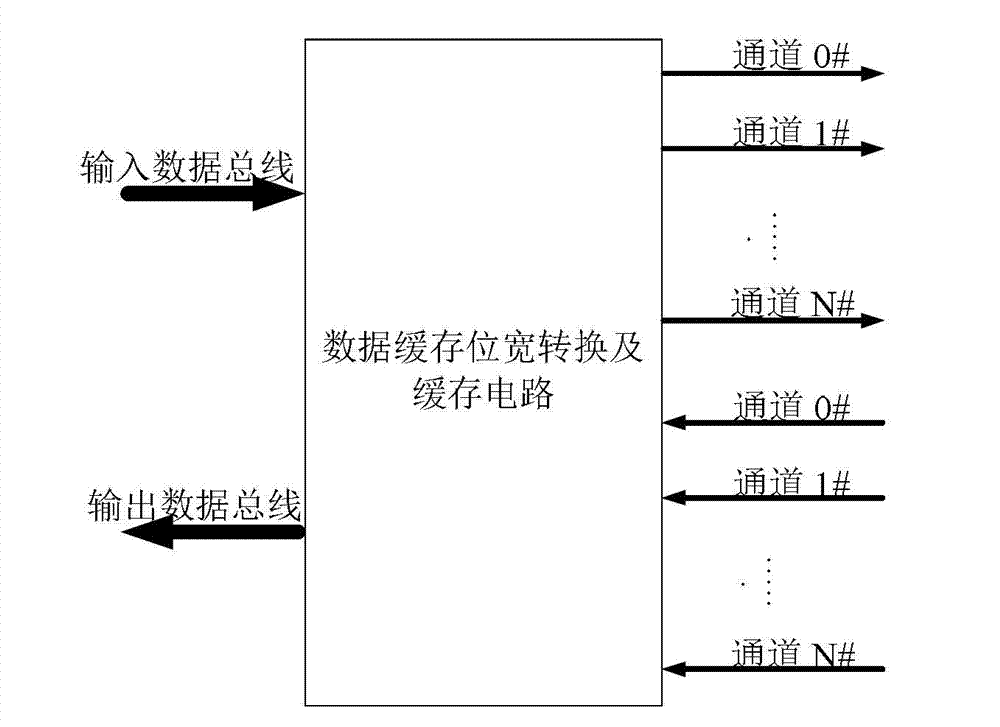

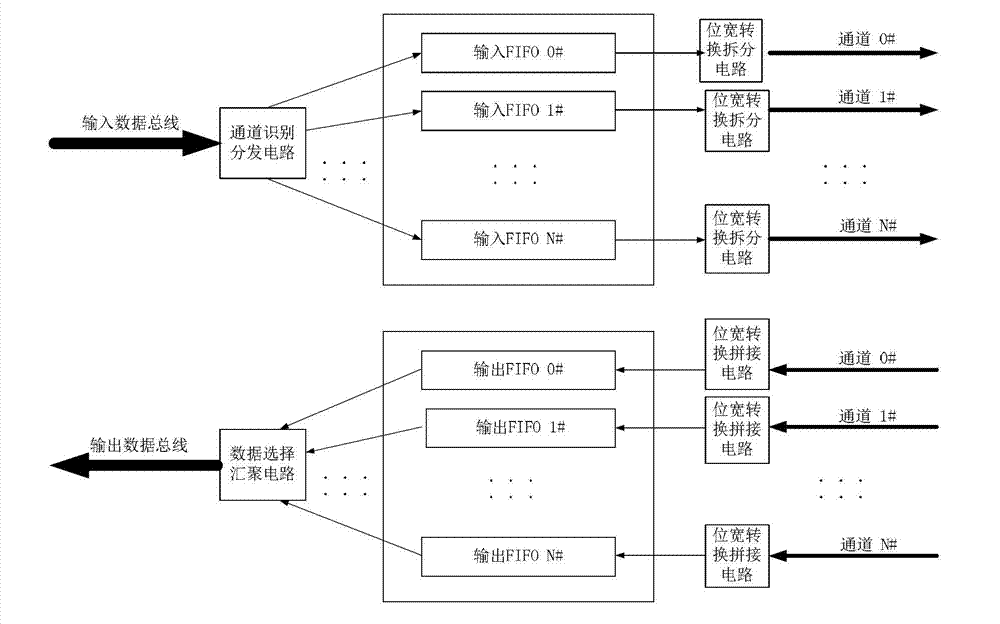

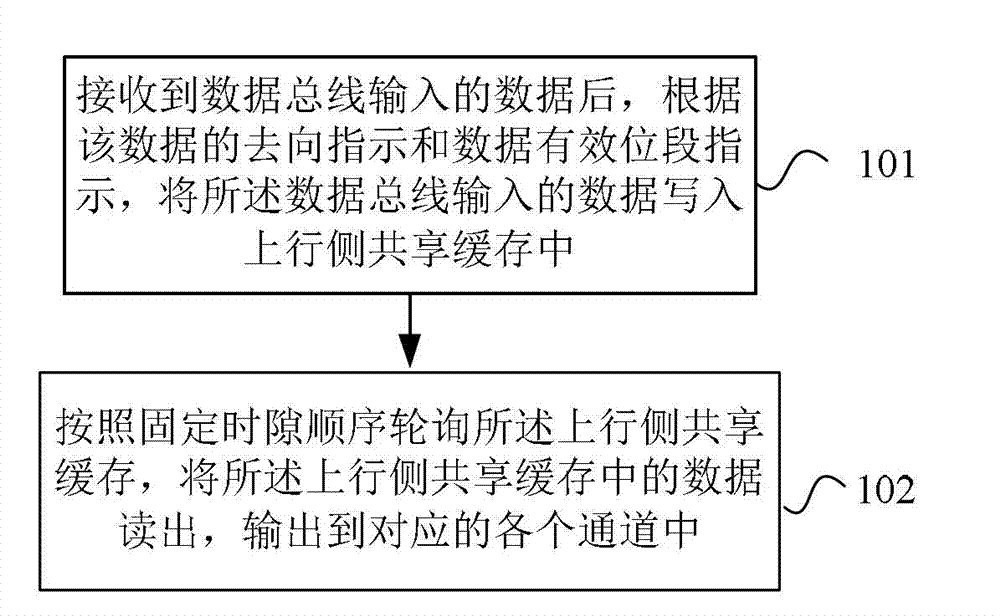

Data processing method and device

ActiveCN103714038AImplement cachingSave cache resourcesMemory architecture accessing/allocationMemory adressing/allocation/relocationComputer networkBit field

The invention provides a data processing method and device. The method includes: writing the received data input by a data bus an uplink side sharing cache according to the heading direction indication and data significance bit field indication of the data; polling the uplink side sharing cache according to fixed time slot sequence to read the data in the uplink side sharing cache, and outputting the data to each corresponding channel. The method has the advantages that cache resources can be saved effectively while reliable data caching and bit width conversion are achieved, area and time sequence pressure can be lowered, and cache utilization rate can be increased.

Owner:SANECHIPS TECH CO LTD

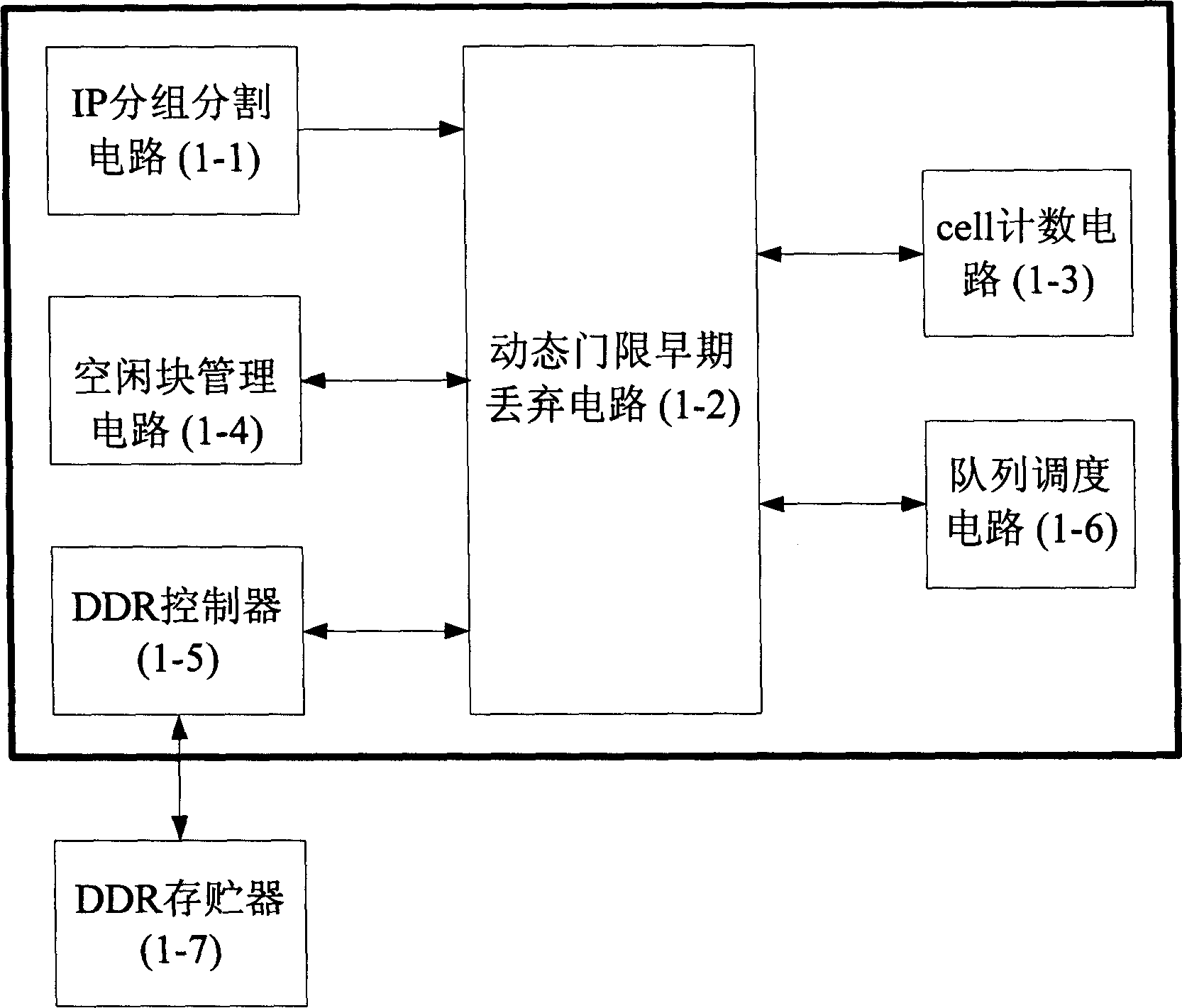

Sharing cache dynamic threshold early drop device for supporting multi queue

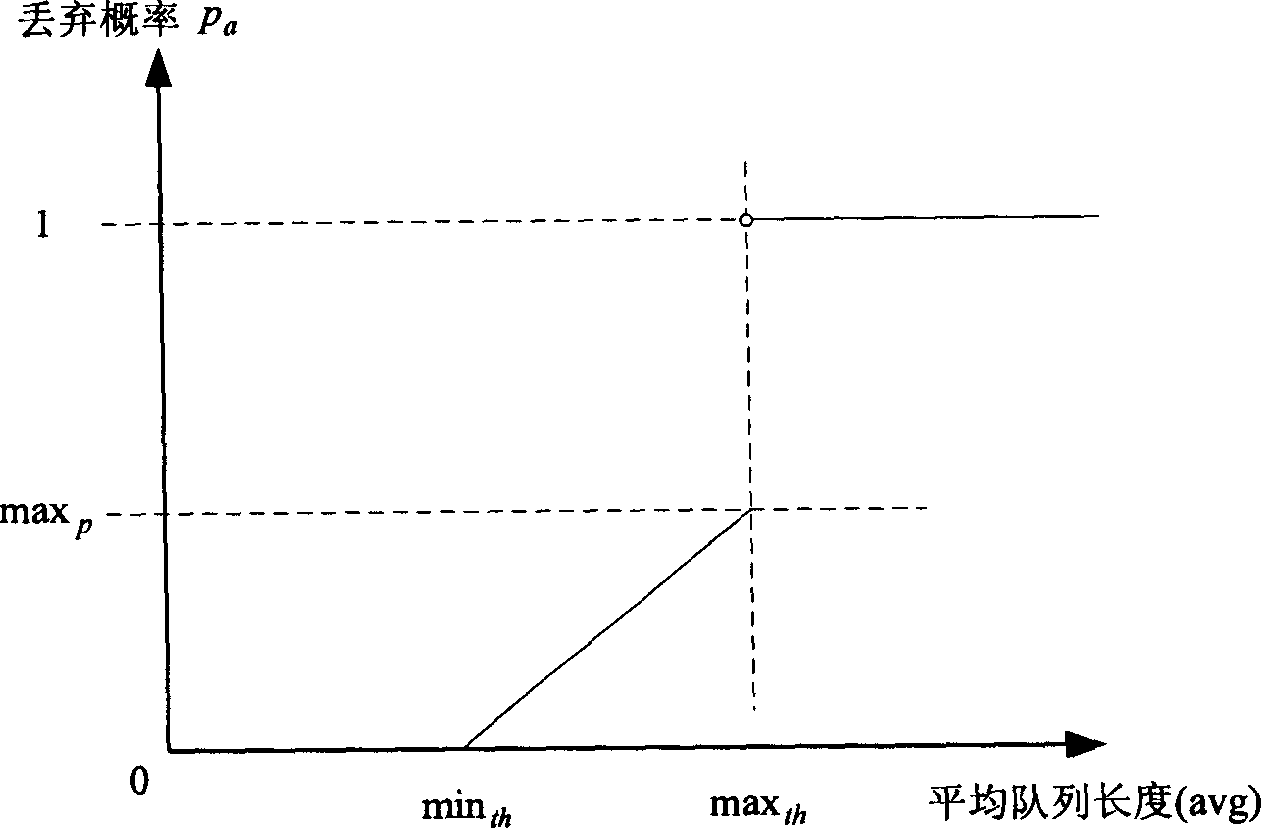

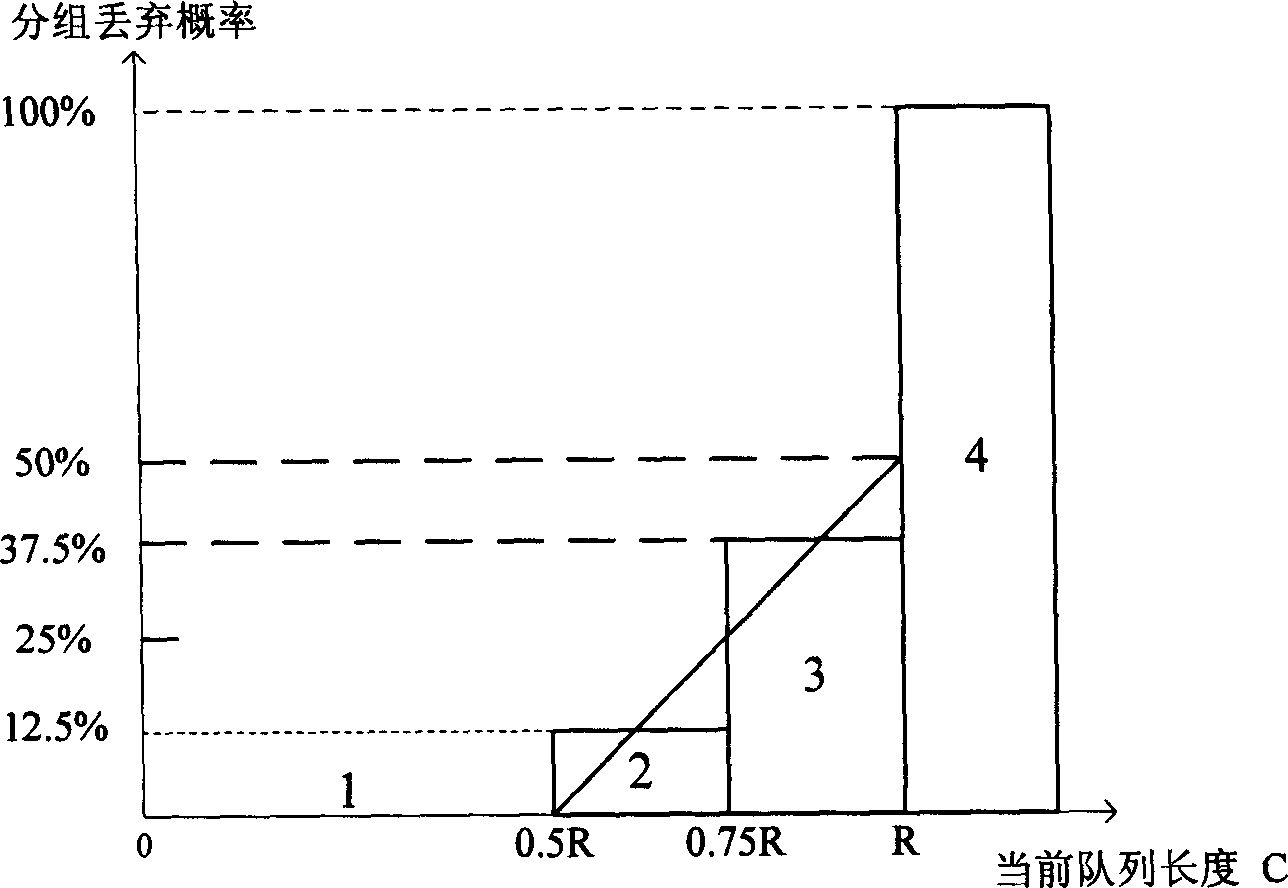

InactiveCN1777147AAdaptableImprove cache utilizationData switching networksRandom early detectionPacket loss

Belonging to IP technical area, the invention is realized on a piece of field programmable gate array (FPGA). Characters of the invention are that the device includes IP grouping circuit, early discarding circuit with dynamic threshold, cell counting circuit, idle block management circuit, DDR controller, queue dispatch circuit, storage outside DDR. Based on average queue size of each current active queue and average queue size in whole shared buffer area, the invention adjusts parameters of random early detection (RED) algorithm to put forward dynamic threshold early discarding method of supporting multiple queues and sharing buffer. The method keeps both advantages of mechanism of RED and dynamic threshold. It is good for realization in FPGA to use cascaded discarding curve approximation. Features are: smaller rate of packet loss, higher use ratio of buffer, and a compromise of fairness.

Owner:TSINGHUA UNIV

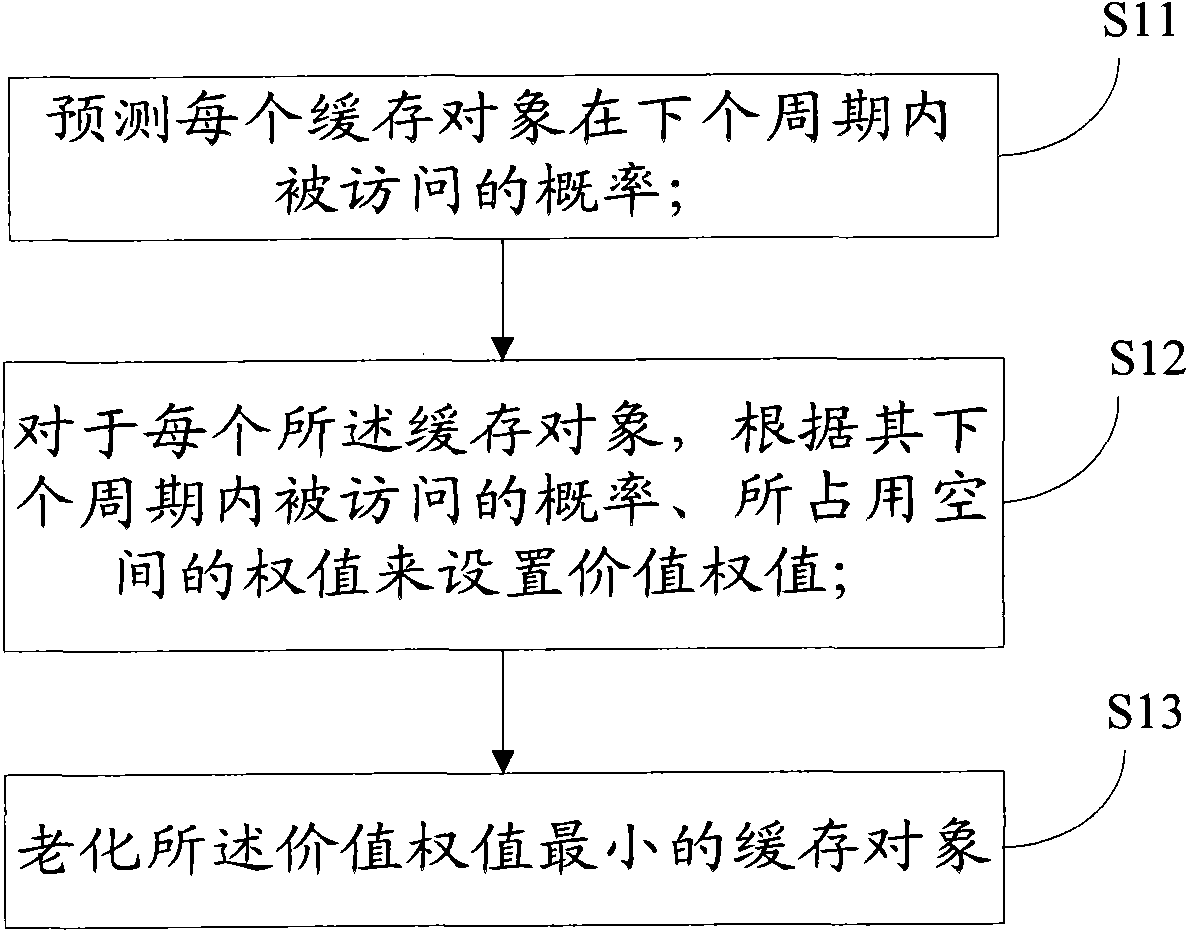

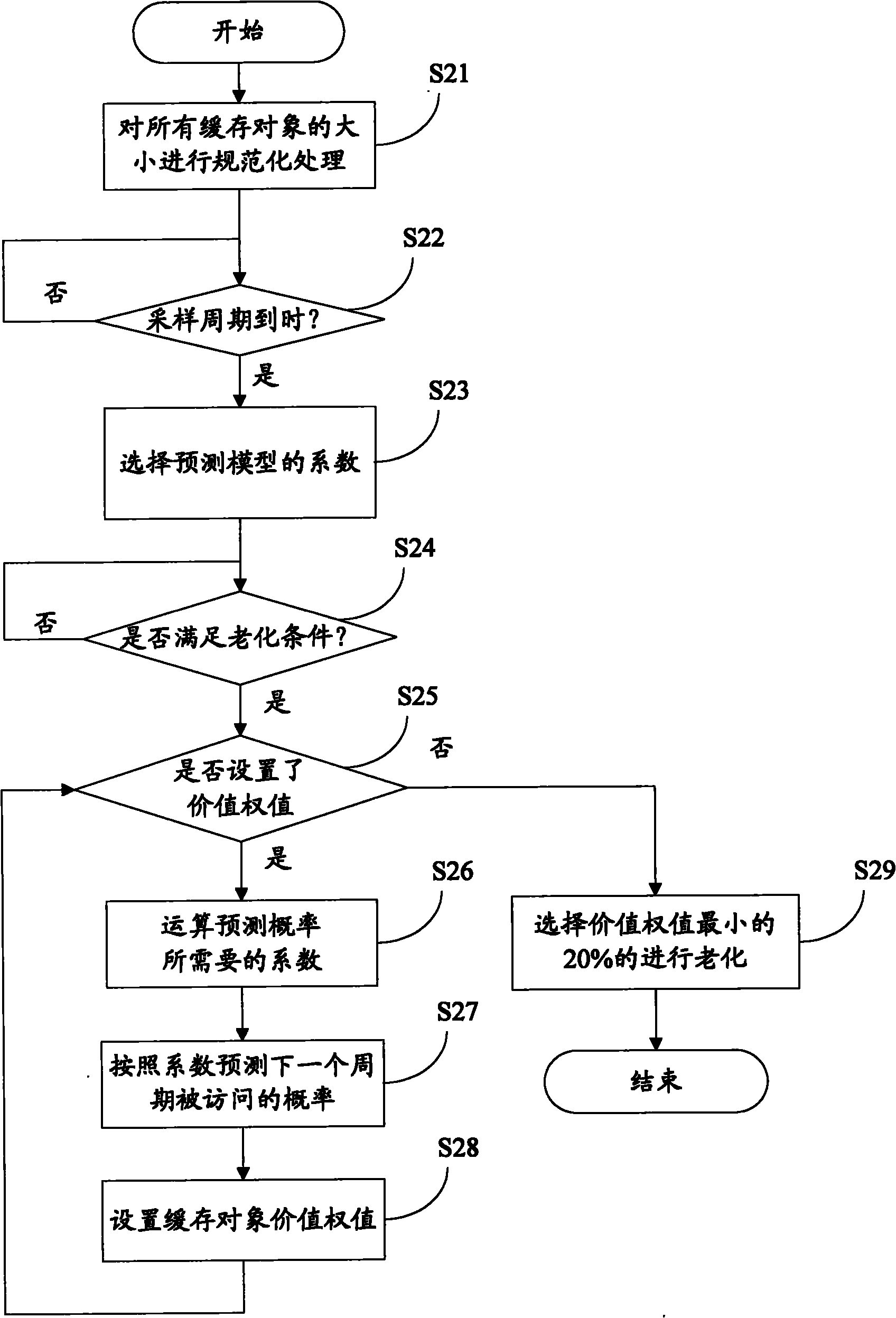

Method and device for aging caching objects

ActiveCN101887400AImprove cache utilizationMemory adressing/allocation/relocationComputer scienceUtilization rate

The invention discloses method and device for aging caching objects. The method comprises the following steps of: forecasting the accessed probabilities of all caching objects in a next period; setting value weight numbers for all the caching objects according to the accessed probabilities in the next period and the weight numbers of occupied spaces; and aging the caching objects with the minimum value weight number. The invention also discloses the device for aging the caching objects. In the invention, the accessed probabilities of a next time are forecasted by adopting previous accessed probabilities in the aging operation process, and the spaces occupied by the caching objects are balanced so that the caching objects which are not used for a long time in computer equipment are aged, thereby the caching utilization ratio is improved.

Owner:ZTE CORP +1

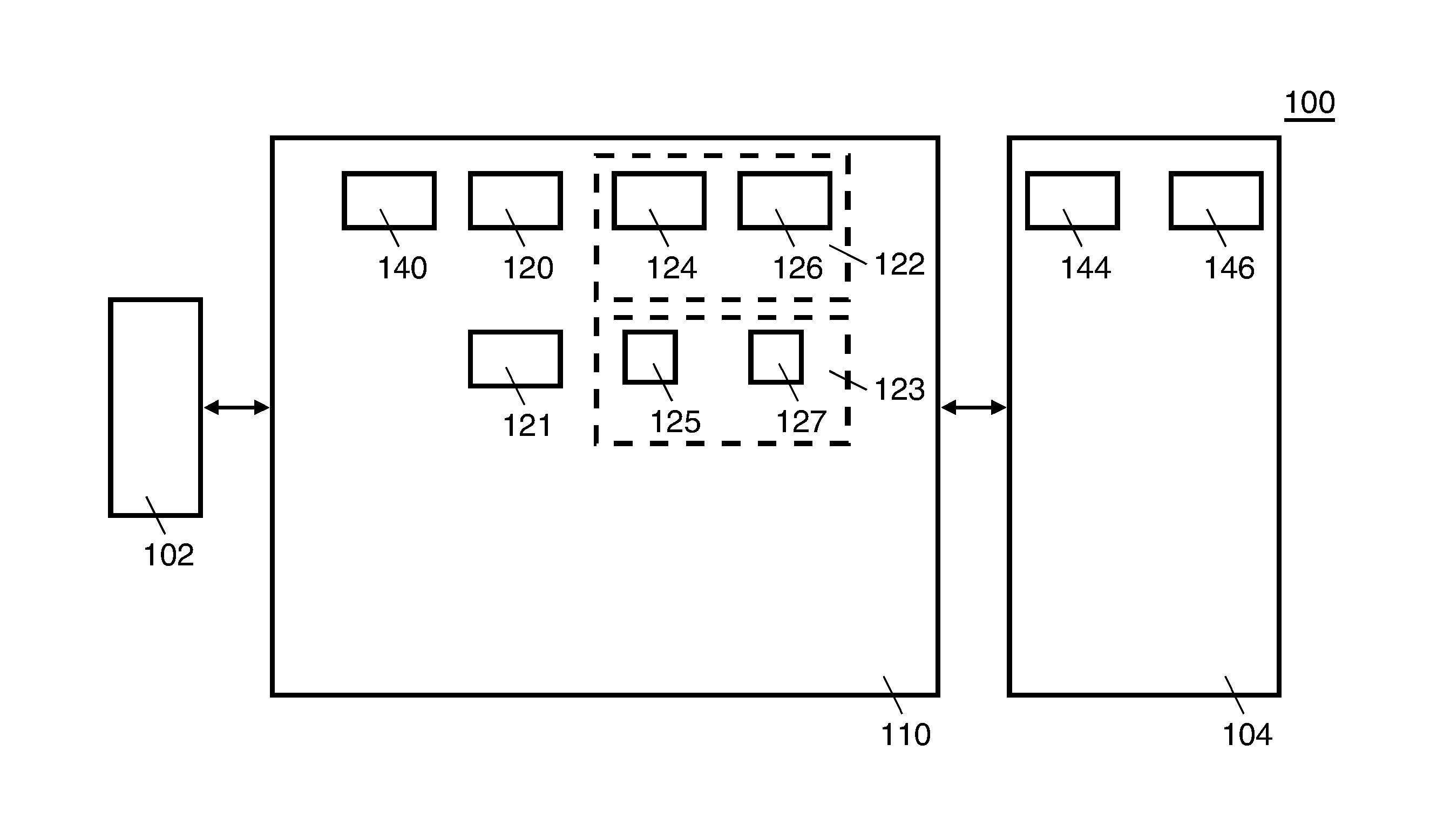

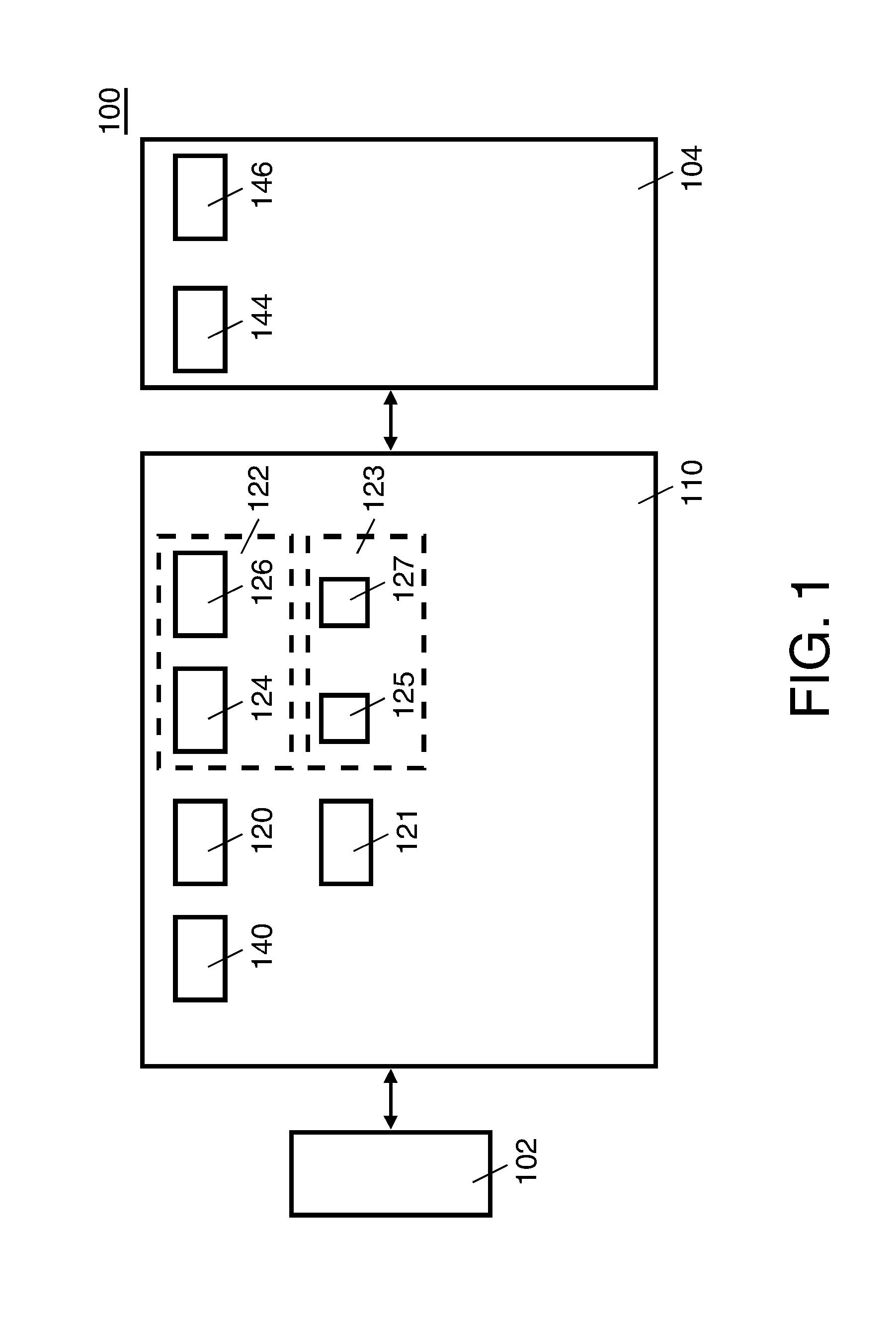

Cache system with a primary cache and an overflow cache that use different indexing schemes

PendingUS20160170884A1Improve utilityImprove cache utilizationMemory architecture accessing/allocationMemory adressing/allocation/relocationHash tableMemory systems

A cache memory system including a primary cache and an overflow cache that are searched together using a search address. The overflow cache operates as an eviction array for the primary cache. The primary cache is addressed using bits of the search address, and the overflow cache is addressed by a hash index generated by a hash function applied to bits of the search address. The hash function operates to distribute victims evicted from the primary cache to different sets of the overflow cache to improve overall cache utilization. A hash generator may be included to perform the hash function. A hash table may be included to store hash indexes of valid entries in the primary cache. The cache memory system may be used to implement a translation lookaside buffer for a microprocessor.

Owner:VIA ALLIANCE SEMICON CO LTD

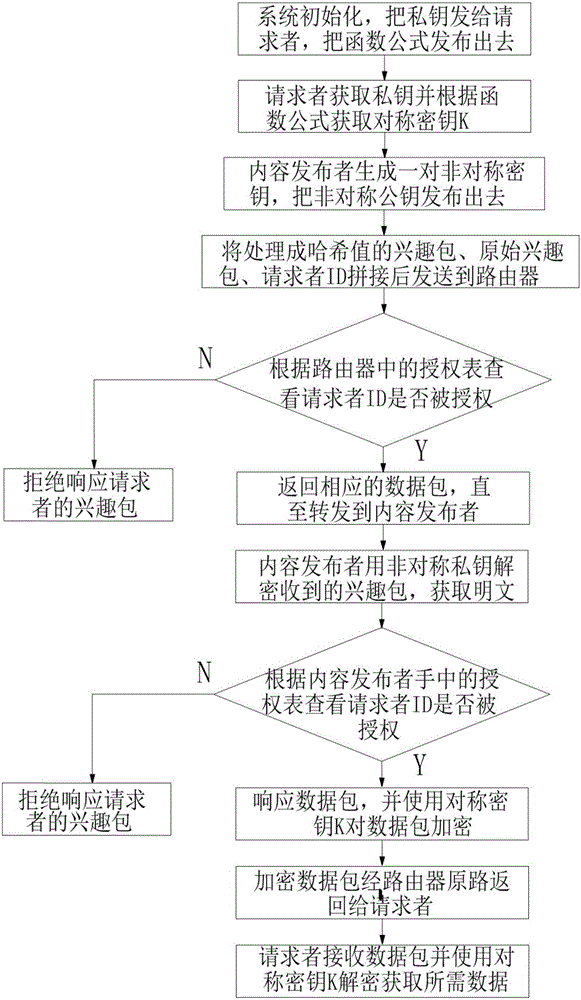

Privacy protection method based on content center

InactiveCN106657079AReduce the burden onPrivacy protectionTransmissionNetwork packetPrivacy protection

The invention relates to a privacy protection method based on a content center. The method comprises a series of programs as follows: a requester uses an asymmetric public key puk_p of a content publisher to encrypt an original interest packet, the interest packet is processed as a Hash value H(I), a router returns a data packet after querying ID information of a related requester in a requester authorization table, the content publisher receives the interest packet encrypted by use of the asymmetric public key puk_p of himself, and uses a symmetric secret key K to encrypt the data packet, the privacy of each of the requester and the publisher is furthest protect; and furthermore, the decryption is unnecessary in the router when content publisher returns the data packet corresponding to the interest packet through the same route, the burdens of saving the secret key and computing by the router are relieved in the premise of protecting the privacy, the different authorization requesters with the same interest request can sufficiently use the cache data, the cache utilization rate is improved, and the requester access resource can be dynamically controlled.

Owner:GUANGDONG UNIV OF TECH

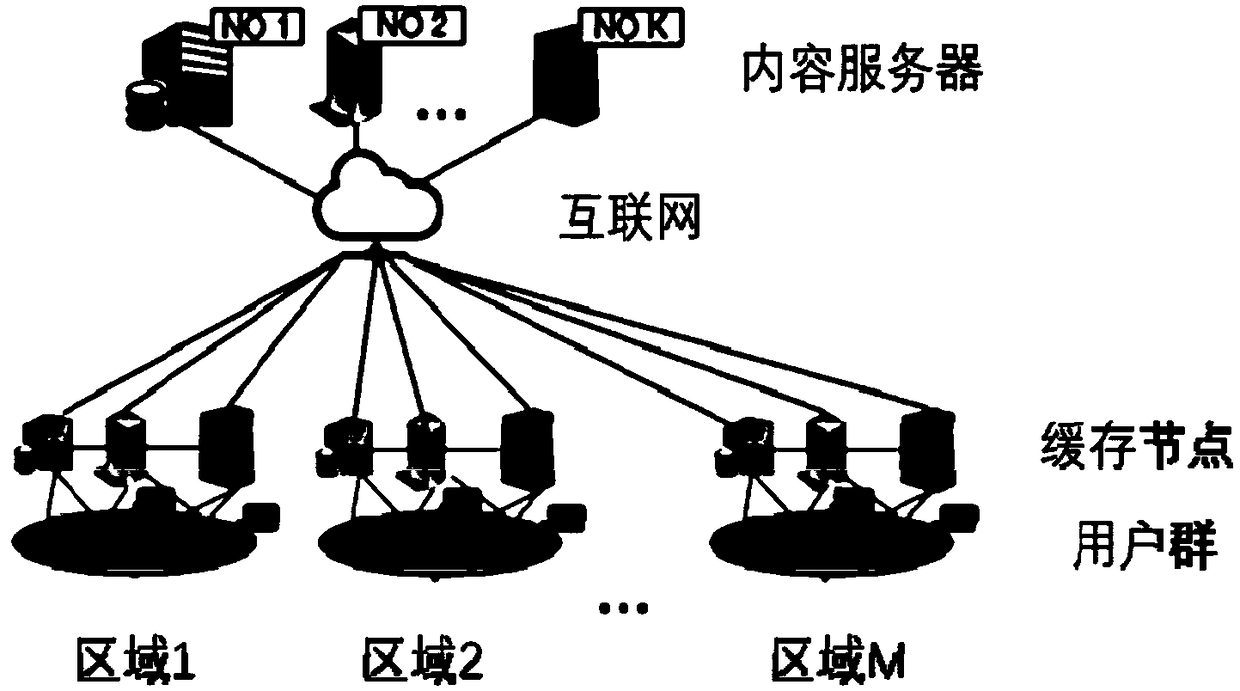

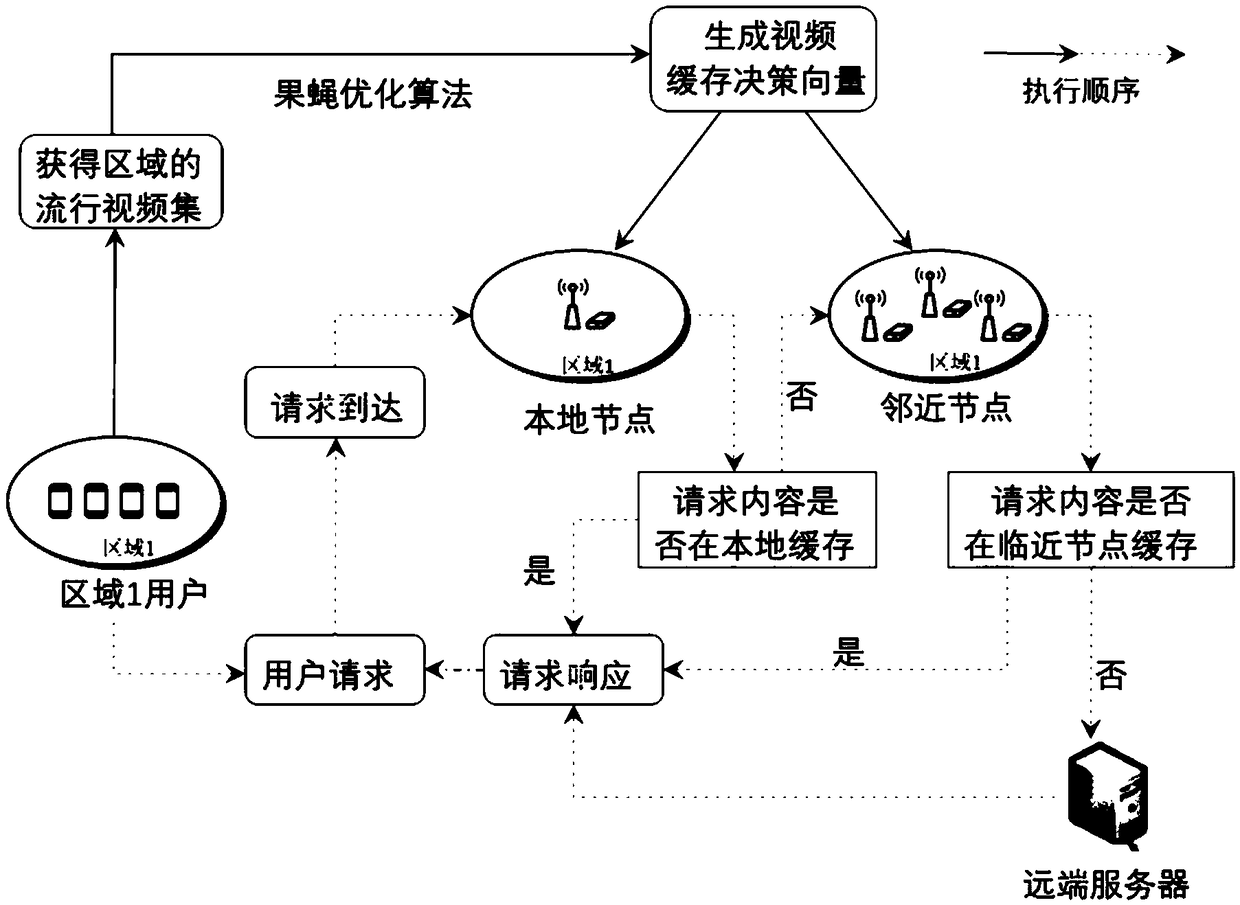

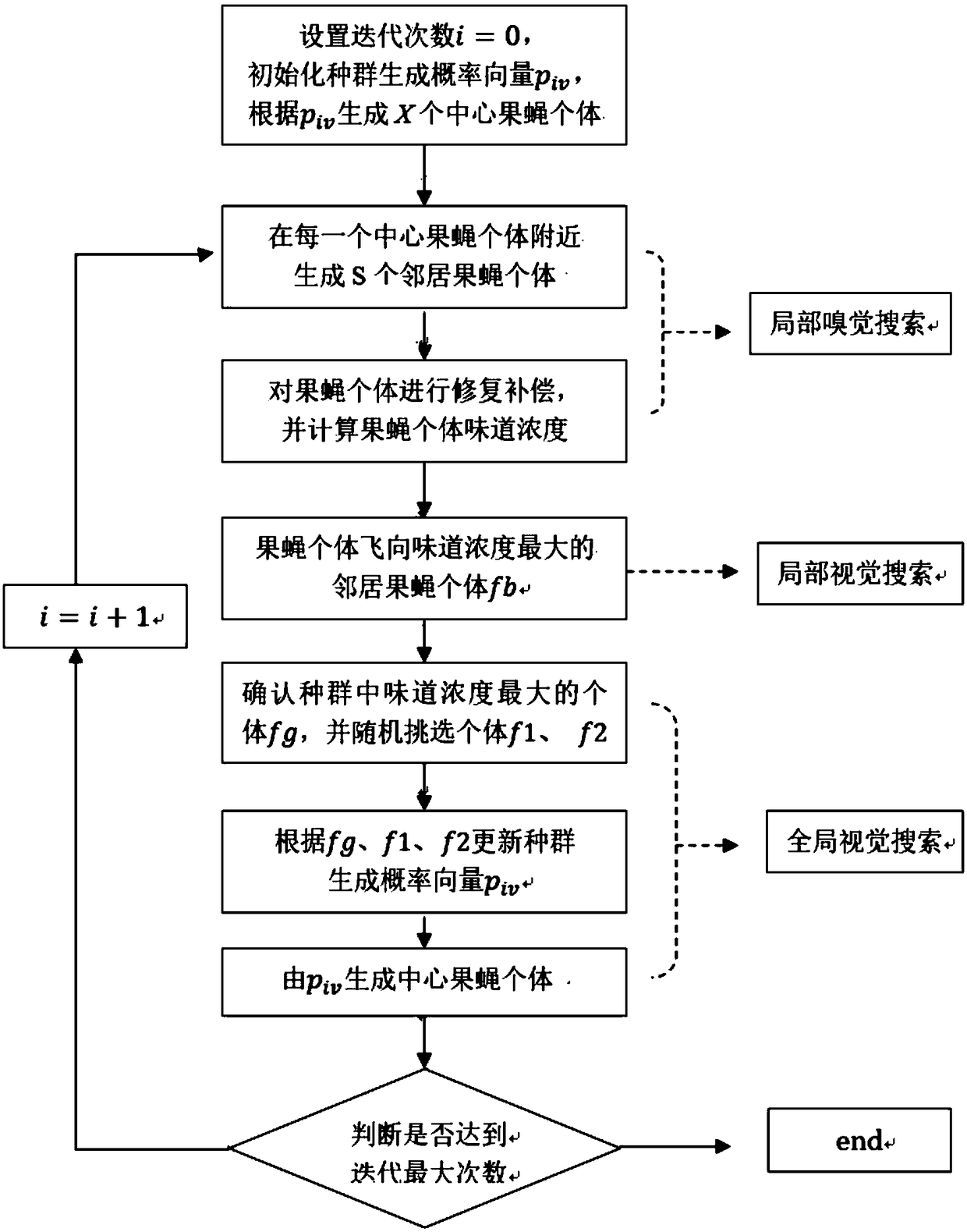

Edge collaboration cache arrangement method based on drosophila optimization algorithm

ActiveCN108848395AEasy to implementOptimal caching strategyArtificial lifeSelective content distributionVideo transmissionParallel computing

The invention discloses an edge collaboration cache arrangement method based on a drosophila optimization algorithm. The method comprises the following steps: (1) obtaining a popular video set and a user demand vector of an area according to the historical request information of users in the area; (2) according to the popular video set and the user demand vector, establishing a target optimizationproblem with the maximization of a total video transmission delay reduction amount in the area as the target, and solving the target optimization problem based on the drosophila optimization algorithm to generate a cache arrangement decision; (3) allocating a video cache task for each cache node according to the cache arrangement decision; and (4) when a user request arrives on the cache node, ifthe cache node does not cache corresponding contents, downloading the contents from a neighbor cache node that caches the contents and has the minimal delay, and if all cache nodes in the area have no cache response contents, downloading the cache response contents from a remote server. By adoption of the edge collaboration cache arrangement method disclosed by the invention, the cache hit rate can be improved, the average video transmission delay is reduced, and the user experience quality is improved.

Owner:SOUTHEAST UNIV

Read-write solution for tens of millions of small file data

InactiveCN104375782AImprove cache utilizationImprove access performanceInput/output to record carriersMemory adressing/allocation/relocationDisk arrayMetadata server

The invention provides a read-write solution for tens of millions of small file data. According to the solution, the mode of creating large-block continuous disk space is used for storing a large number of small files when the small files are stored, namely, logically continuous data are stored on the continuous space of a disk array as far as possible; the disk space is divided into a plurality of blocks, and the size of each block is 64 KB. According to the basic thought, each small file can only be stored in the single block and cannot be stored by crossing two blocks, each folder can be provided with one or more blocks, the blocks are only used for storing the data of the corresponding folder, and each piece of file data is stored on the continuous disk space. Compared with the prior art, the logically continuous data are stored on the continuous space of each physical disk as far as possible, the cache technology is used for playing the role of a metadata server, the cache utilization rate is improved through simplified file information nodes, and thus the access performance of the small files is improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

Cache management method and device

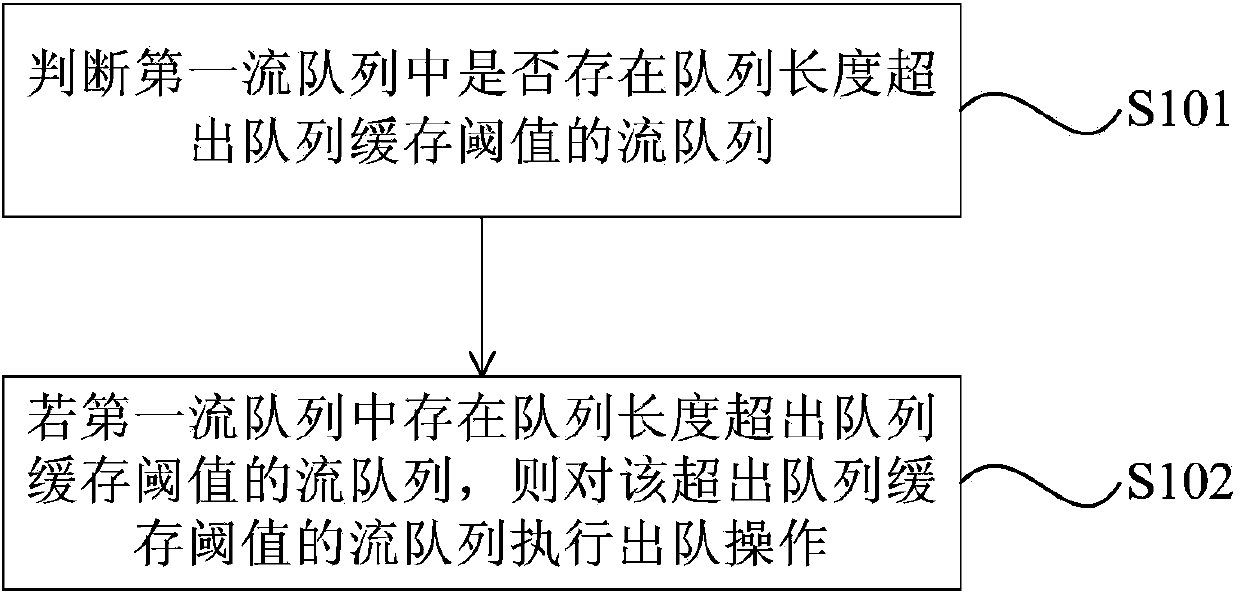

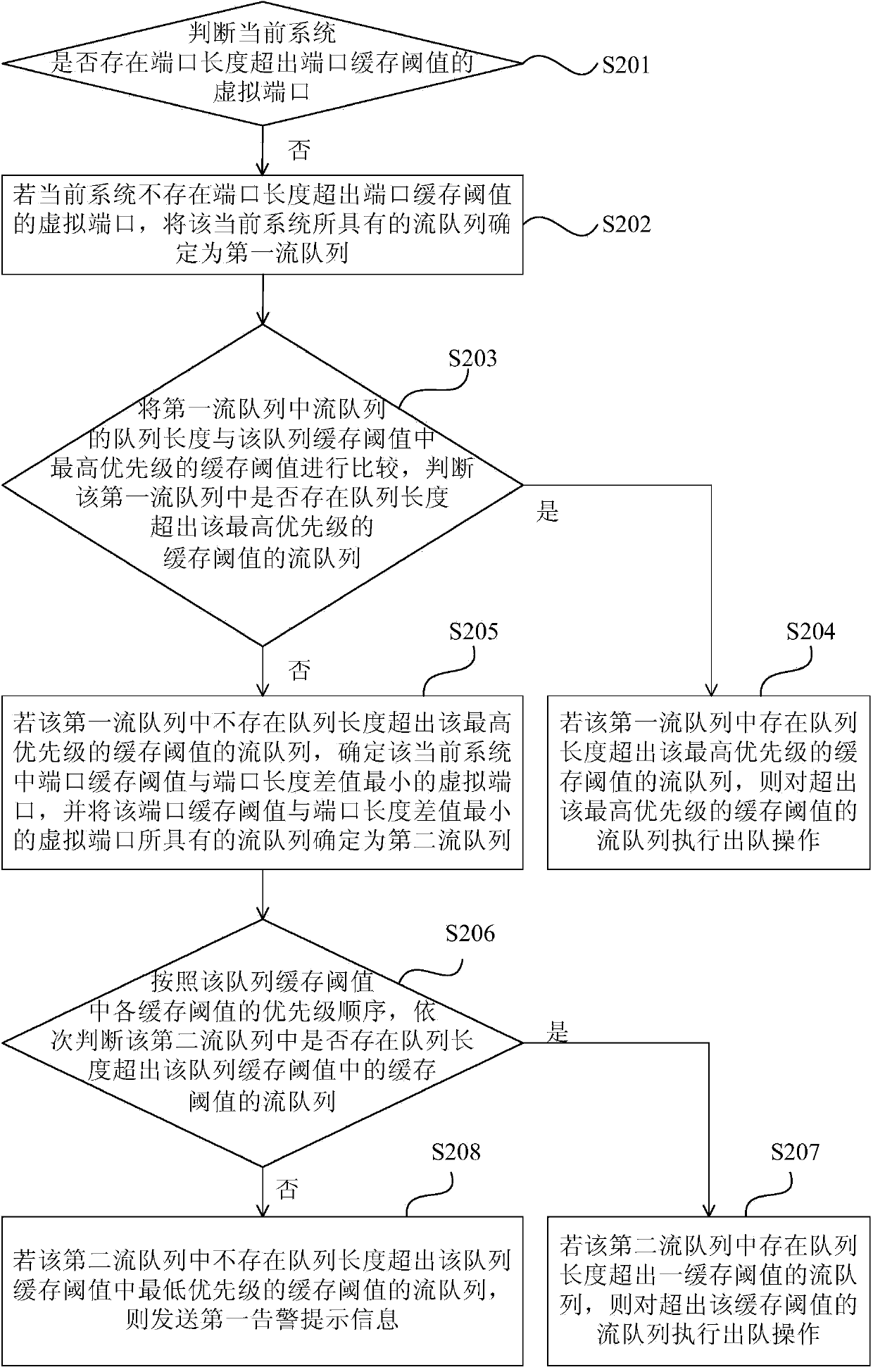

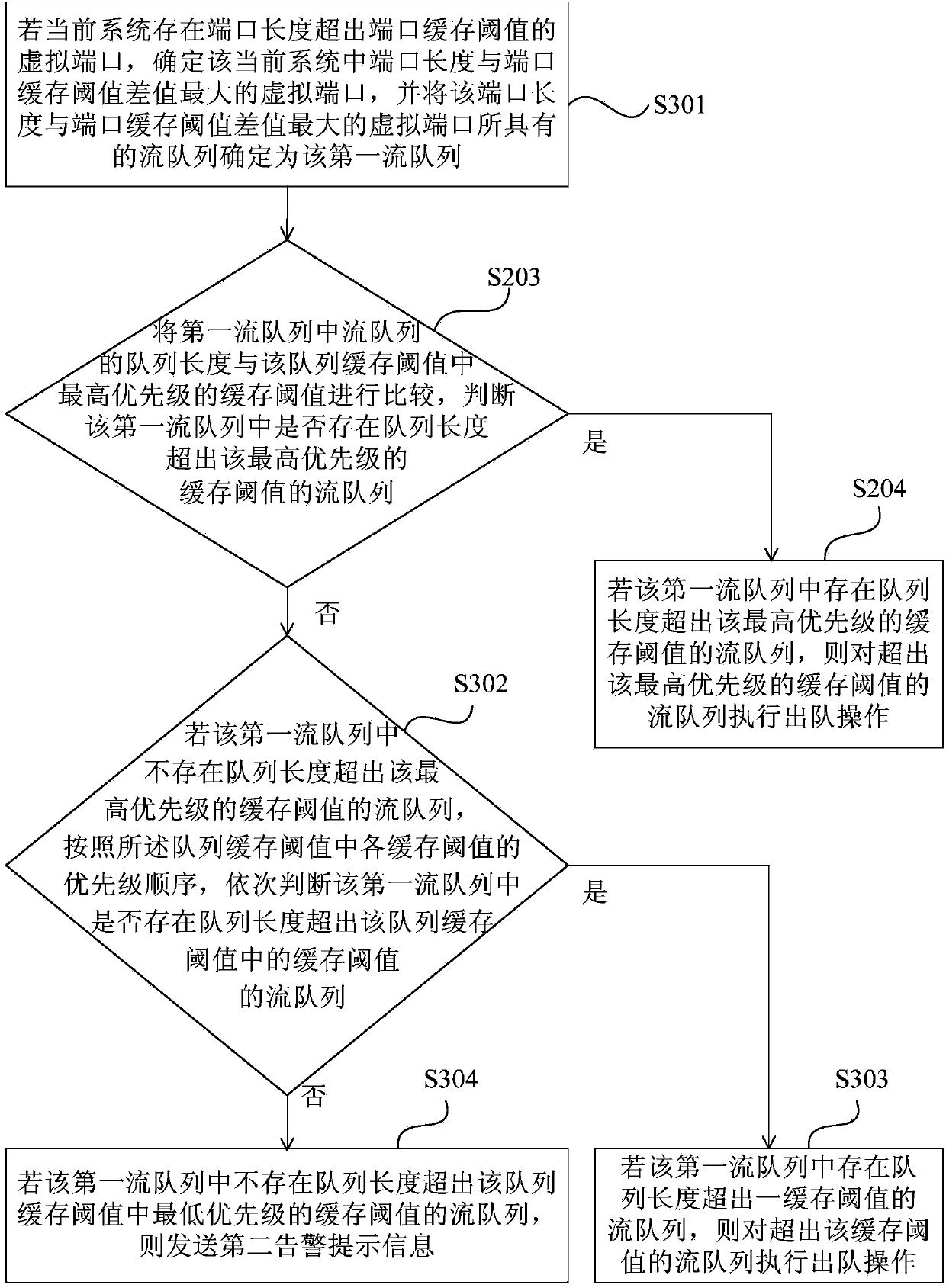

InactiveCN103685062AImprove cache utilizationData switching networksCache managementDistributed computing

An embodiment of the invention provides a cache management method and device. The cache management method includes: judging whether a stream queue with queue length exceeding a queue cache threshold exists in first stream queues or not; if so, executing dequeuing operation to the stream queue exceeding the queue cache threshold. By the method and device, utilization rate of caches can be increased.

Owner:HUAWEI TECH CO LTD

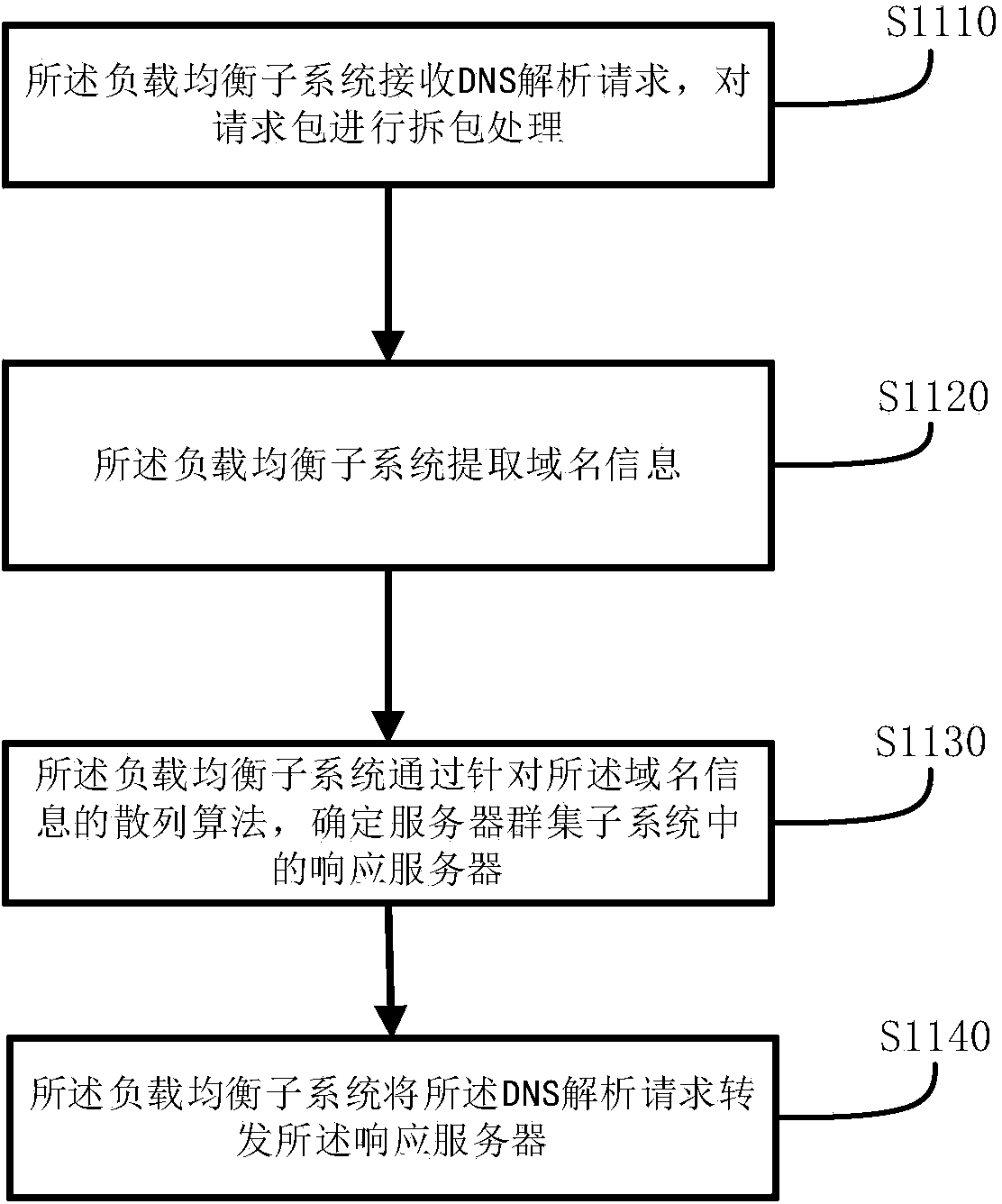

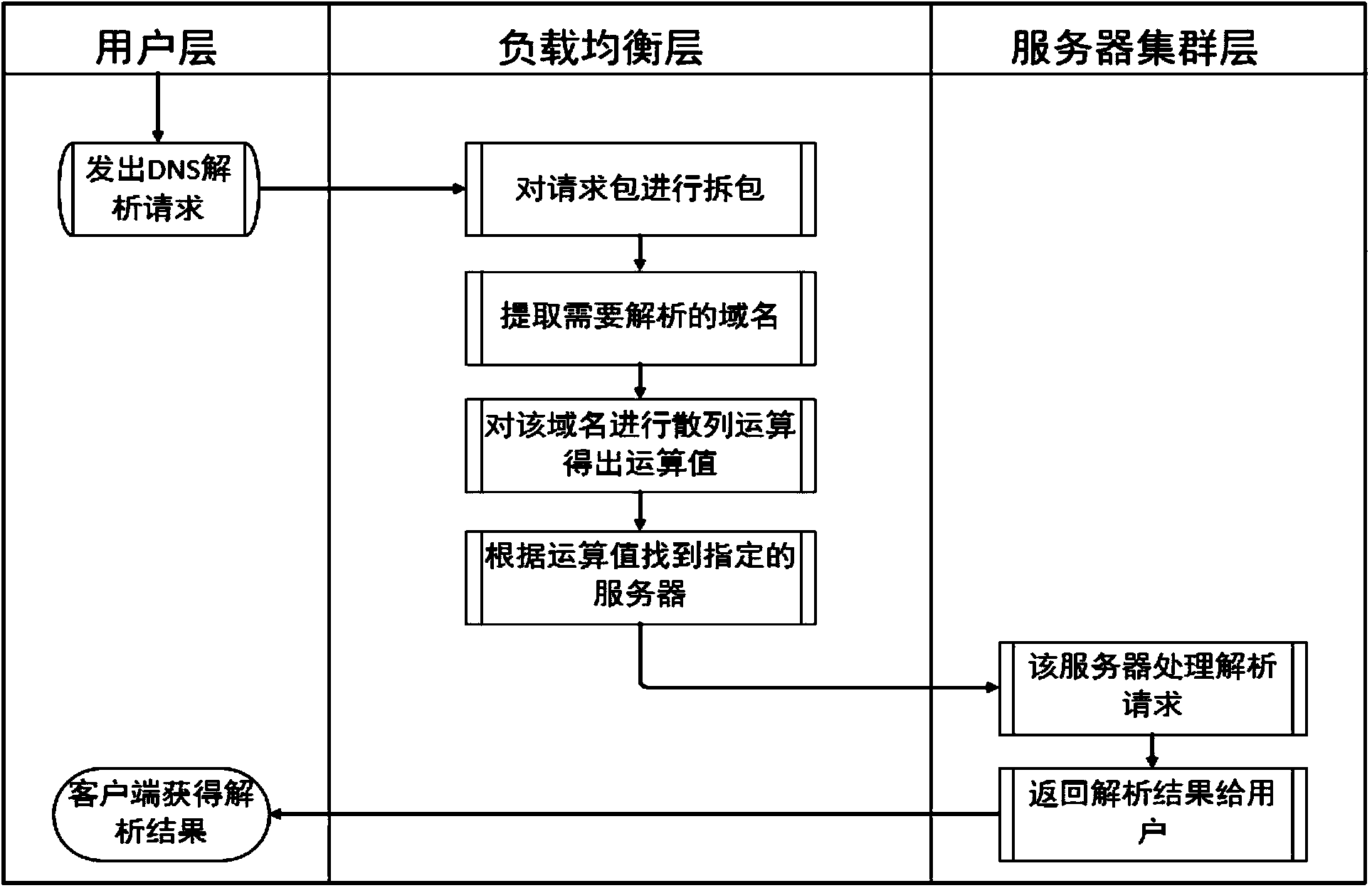

DNS (Domain Name System) load balancing adjusting method and system

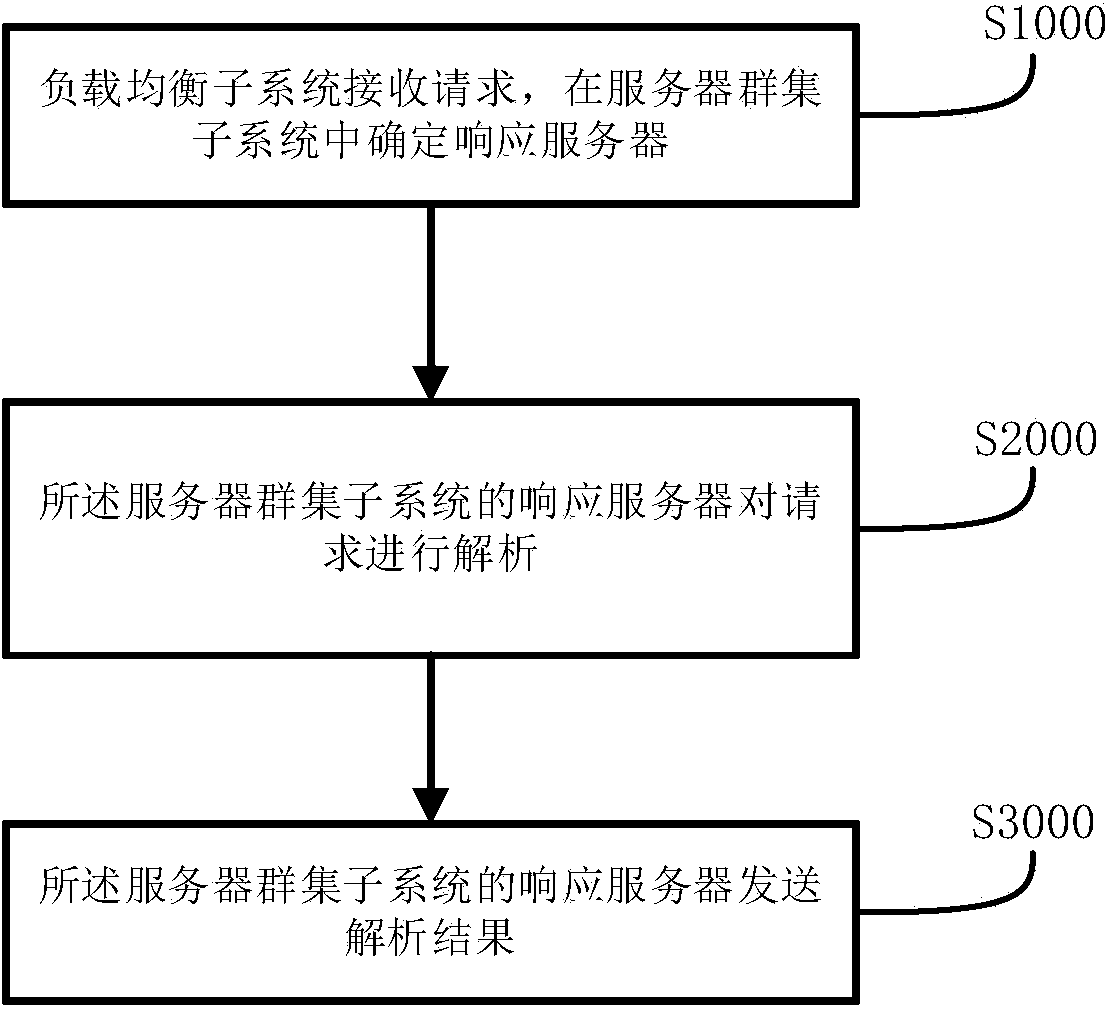

ActiveCN104079668AReduce the number of recursionsImprove compression performanceTransmissionDistributed computingDomain Name System

A DNS (Domain Name System) load balancing adjusting method includes the following steps: step S1000, a load balancing subsystem receives a request, and a server is confirmed to be responded in a server cluster subsystem; step S2000, a response server of the server cluster subsystem analyzes the request; step S3000, the analysis result is sent by the response server of the server cluster subsystem. The method and the system can improve the scheduling balancing capability of a DNS system.

Owner:QINGYUAN CHUQU INTELLIGENT TECH CO LTD

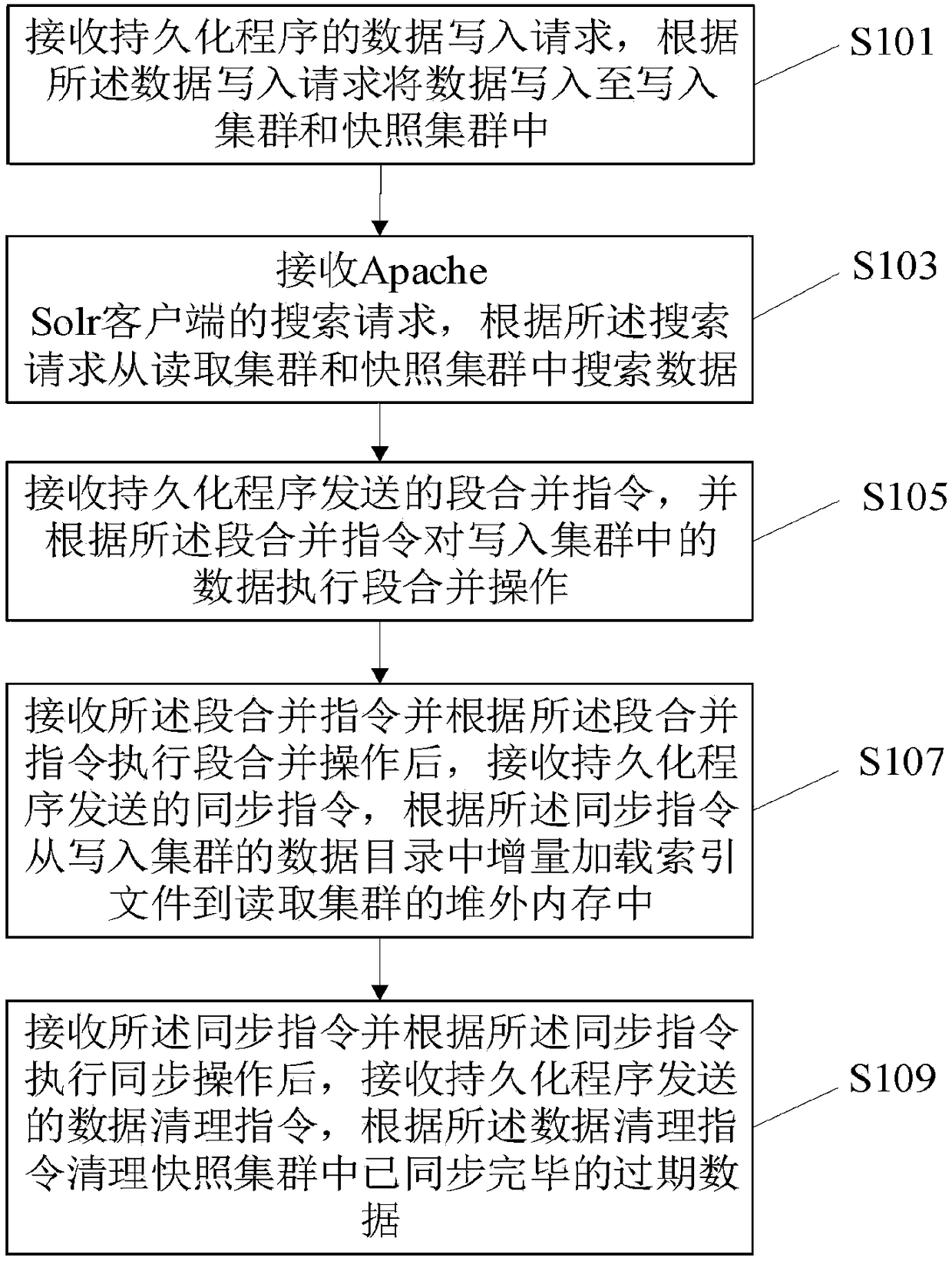

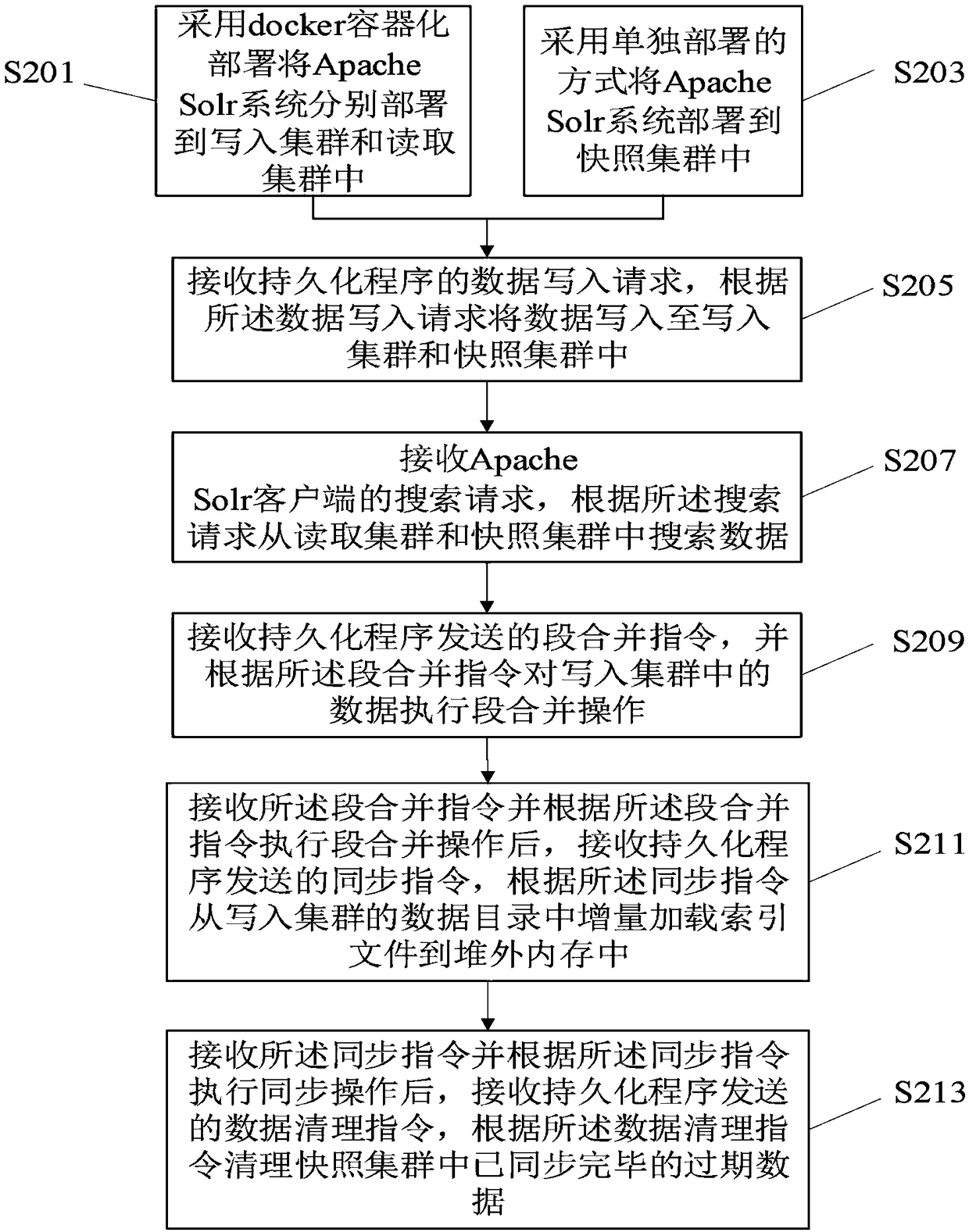

Apache Solr read-write separation method and apparatus

ActiveCN108763572AImprove cache utilizationGuaranteed uptimeResource allocationSoftware simulation/interpretation/emulationData synchronizationDatabase

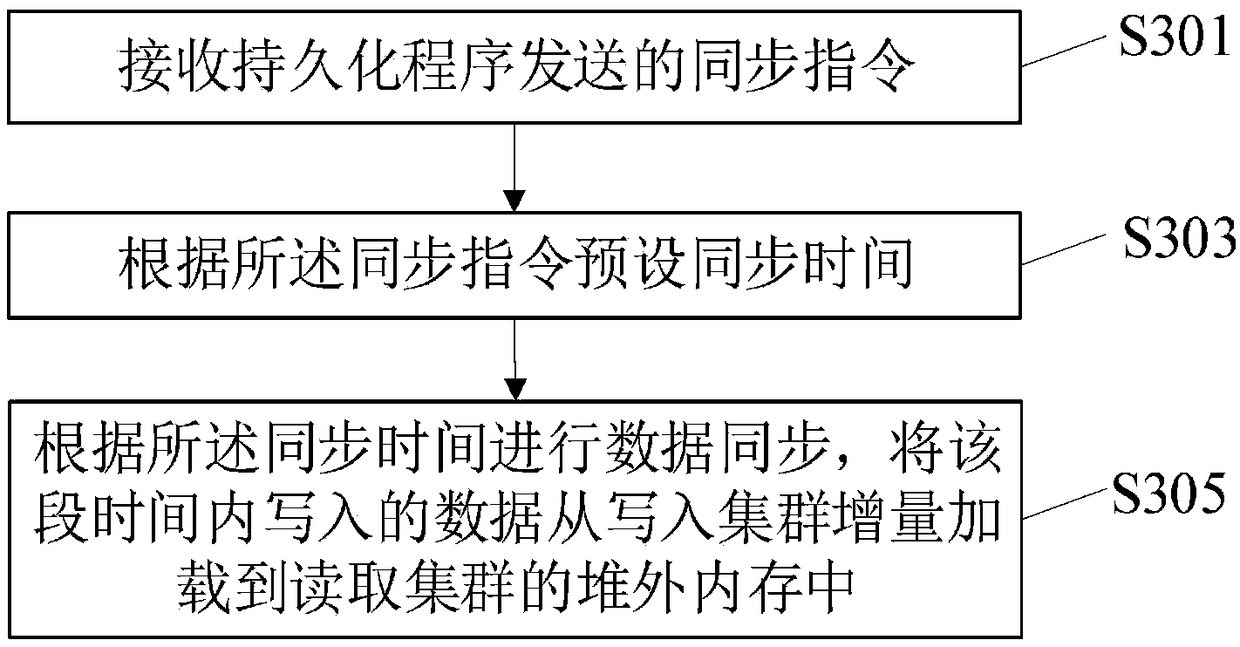

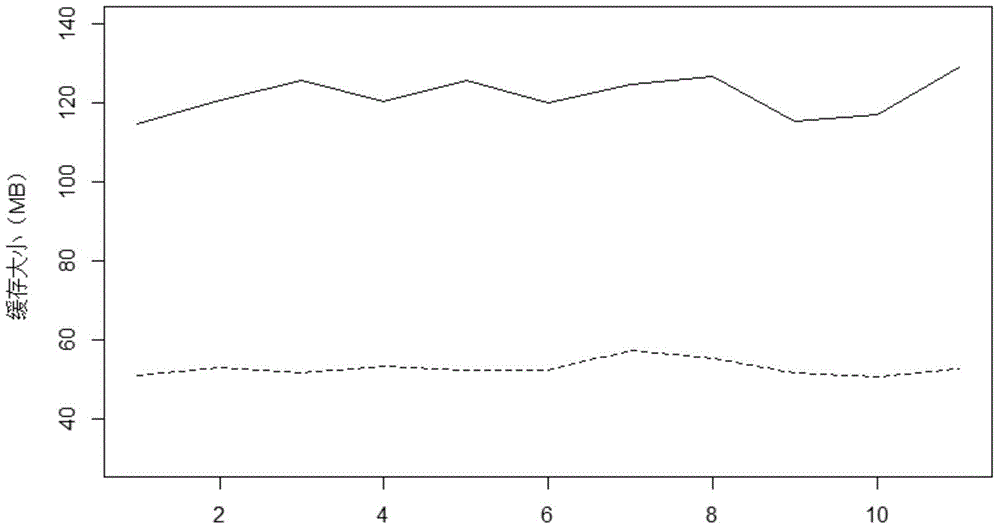

The invention relates to an Apache Solr read-write separation method and apparatus, a computer device and a storage medium. The method comprises the steps of receiving a data writing request of a persistence program, and writing data into a writing cluster and a snapshot cluster; receiving a searching request of an Apache Solr client, and search for the data from a reading cluster and the snapshotcluster; receiving a segment merging instruction sent by the persistence program, and executing segment merging operation on the data in the writing cluster; receiving a synchronization instruction sent by the persistence program, and incrementally loading an index file from a data directory of the writing cluster to an out-of-heap memory of the reading cluster; and receiving a data cleaning instruction sent by the persistence program, and cleaning out the synchronized expired data in the snapshot cluster. By the adoption of the method, the read operation and the write operation can be separated, so that the situation that the system resources are competed is avoided, and the normal operation of a server is guaranteed; and the segment merging operation is completed before data synchronization, so that system crash caused by segment merging during synchronization is avoided.

Owner:HUNAN ANTVISION SOFTWARE

Caching clearing method and device

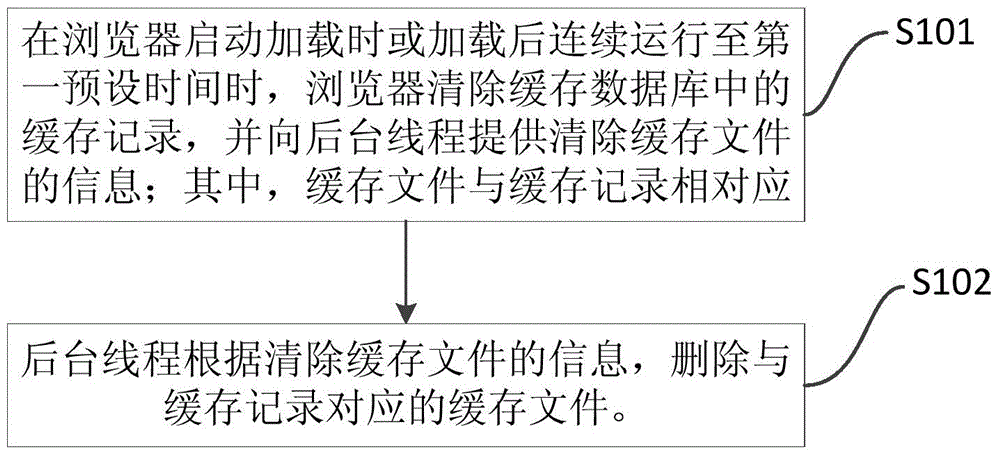

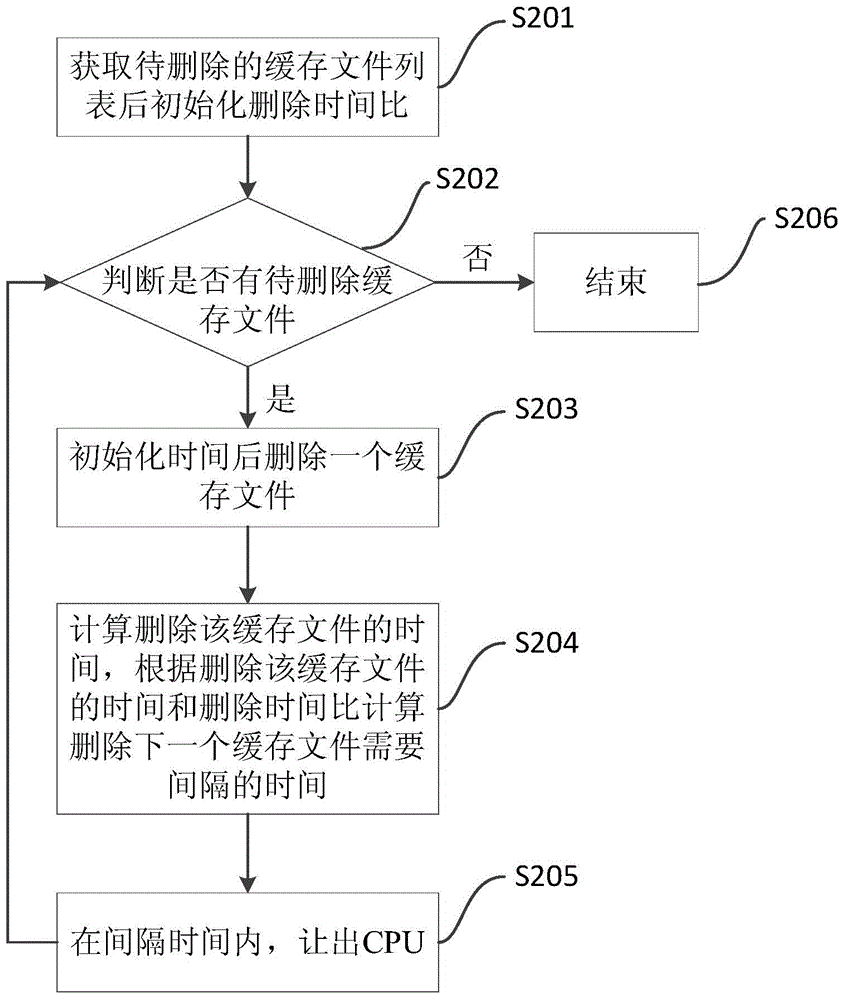

InactiveCN105447037ASave storage spaceImprove cache utilizationSpecial data processing applicationsWeb pageData library

The invention provides a caching clearing method and device. The method comprises the following steps: when a browser starts loading or loading continuously runs for a first preset time, the browser clears caching records in a caching database, and provides information of caching files corresponding to the cleared caching records for a background thread; and the background thread deletes the caching files corresponding to the caching records according to the information of the cleared caching files. Through adoption of the caching clearing method and device provided by the invention, the caching utilization ratio can be increased, and a user's storage space can be saved; and the cache is cleared through an improved caching file deletion algorithm, so that the CPU occupancy time can be shortened, the standstill phenomenon when a user browses a webpage can be avoided, and the user's web experience is improved.

Owner:UCWEB

Cache management policy and corresponding device

ActiveUS8732409B2Reduce in quantityShorten the timeMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingData element

Owner:ENTROPIC COMM INC

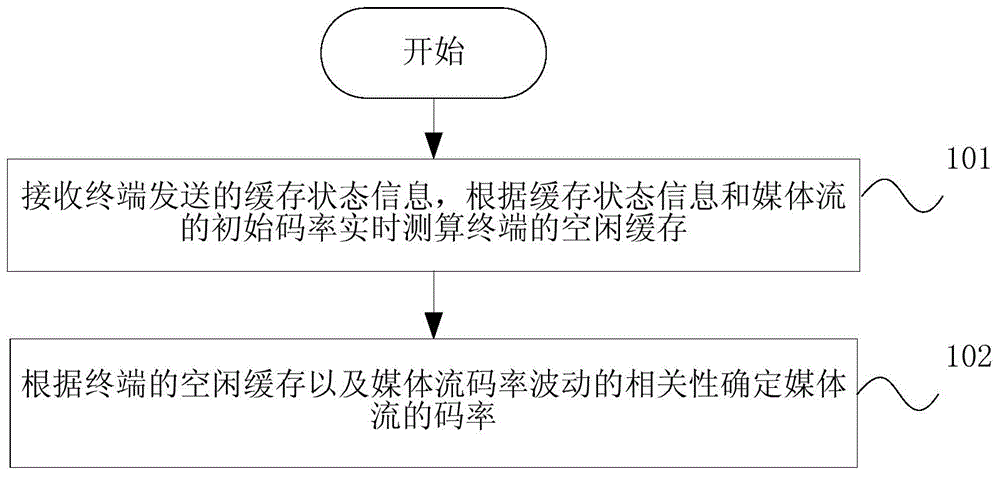

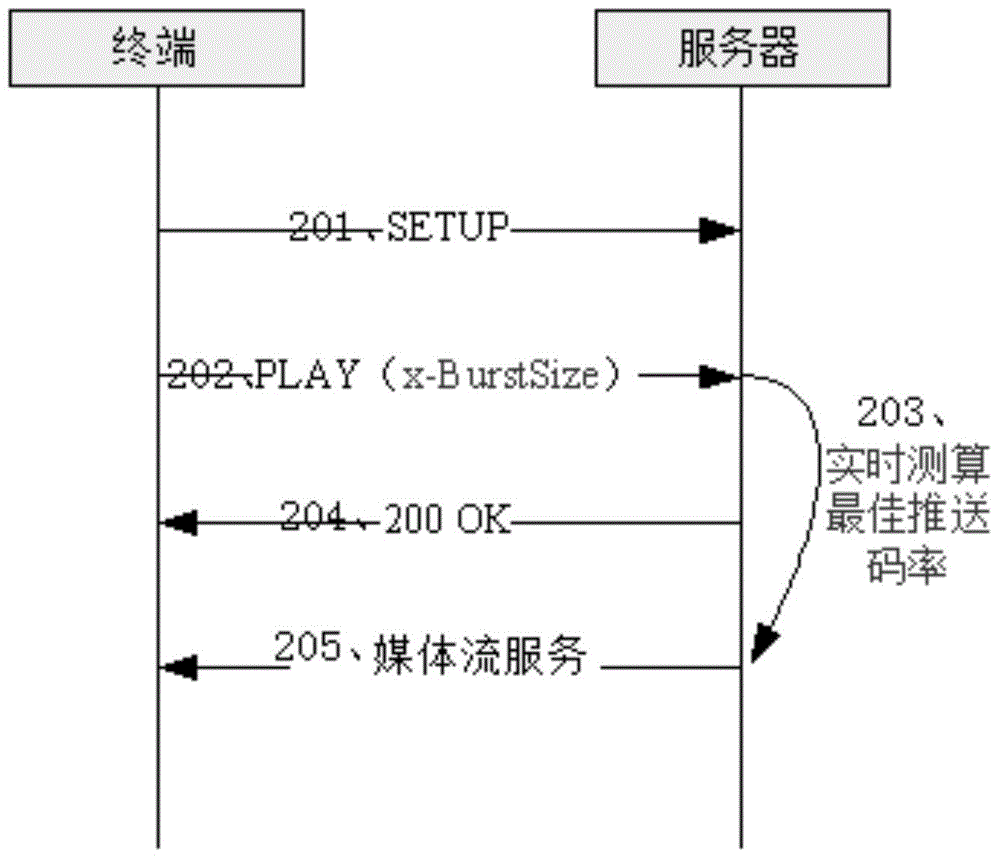

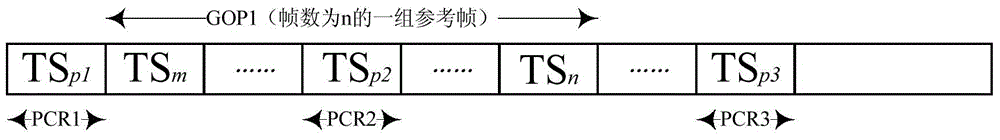

Streaming media code rate control method, streaming media code rate control system and streaming media server

InactiveCN105338376AImprove experienceRelieve pressureSelective content distributionControl systemVideo transmission

The invention discloses a streaming media code rate control method, a streaming media code rate control system and a streaming media server. Cache state information sent by a terminal is received, and an idle cache of the terminal can be calculated in real time according to the cache state information and an initial code rate of a media stream; the code rate of the media stream can be determined according to the idle cache of the terminal and media stream code rate fluctuation correlation. According to the streaming media code rate control method, the streaming media code rate control system and the streaming media server, through calculating the idle cache of the terminal and the code stream fluctuation correlation in real time, the code rate is determined, code stream cache can be prevented from overflowing, moreover, the cache utilization rate can be further effectively improved, smooth processing on the code stream is further carried out, pressure of an instant peak value code rate exerted on a transmission network and a server bandwidth can be effectively reduced, video transmission quality is guaranteed, and user experience is improved.

Owner:CHINA TELECOM CORP LTD

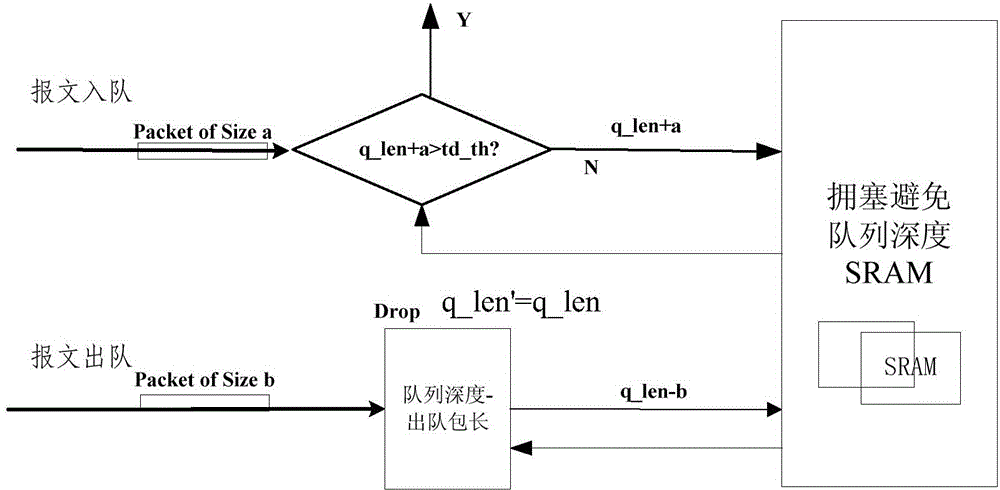

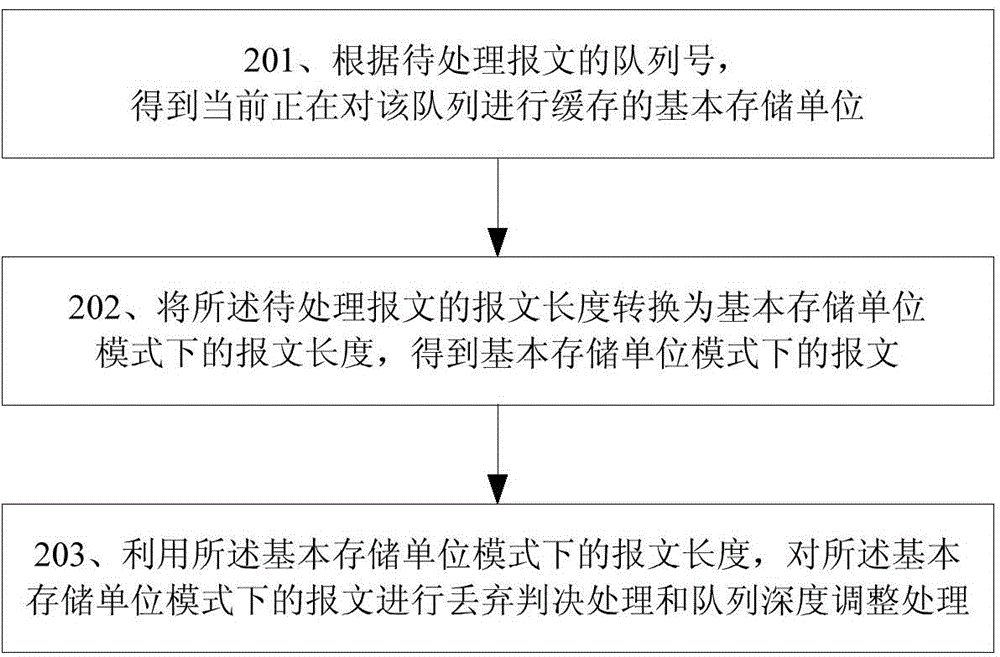

Congestion avoiding method and apparatus of router

InactiveCN104426796ASolve the problem of mutual preemption of cache spaceCongestion effectiveData switching networksDistributed computingWhole systems

Disclosed are a router congestion avoidance method and apparatus, relating to the field of network transmission control. The method comprises: according to a number of a queue of a to-be-processed packet, obtaining a basic storage unit that buffers the queue currently; converting a packet length of the to-be-processed packet into a packet length in a basic storage unit mode, to obtain a packet in the basic storage unit mode; and by using the packet length in the basic storage unit mode, performing discard decision processing and queue depth adjustment processing on the packet which is in the basic storage unit mode. The present invention can make a maintained queue depth the same as an actual queue depth of a queue stored in the buffer, thereby effectively improving the performance of congestion avoidance and improving the utilization of the buffer of the whole system.

Owner:ZTE CORP

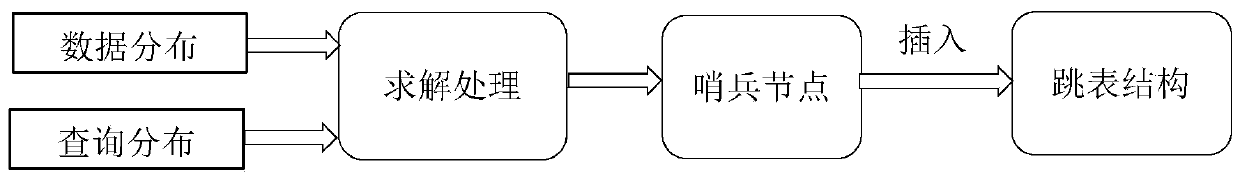

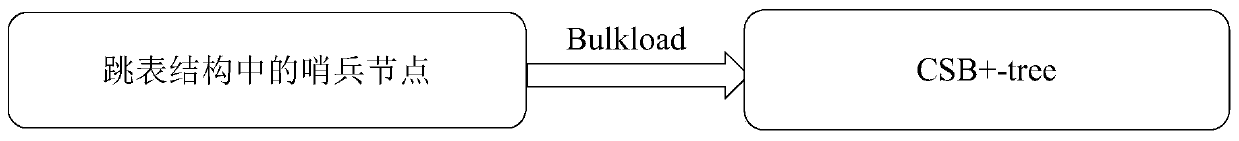

Efficient novel memory index structure processing method

ActiveCN110597805AIncrease heightIncrease profitSpecial data processing applicationsDatabase indexingSkip listSentinel node

The invention discloses an efficient novel memory index structure processing method. The method comprises the steps of before the skip list processing, calculating the query distribution and the datadistribution conditions through the statistical information; selecting the sentinel nodes inserted into a skip list structure; obtaining an optimal sentinel node configuration result by solving the minimum average operation cost of a skip list after the nodes are inserted; inserting the sentinel nodes into the bottom-layer skip list structure, then establishing an upper-layer CSB + tree structurefrom bottom to top through a Bulkload method after the sentinel nodes in the bottom-layer skip list structure are completely inserted, and quickly positioning the sentinel nodes; and for each piece ofdata needing to be queried or inserted, finding the nearest sentry node through the upper-layer CSB + tree structure, and starting to operate the skip list from the nearest sentry node. According tothe method, on the basis of reserving the advantages of simple implementation, good concurrency, suitability for range query and the like of a traditional skip list structure, the cache utilization rate of the whole operation process is improved, so that the memory index performance is obviously improved.

Owner:ZHEJIANG UNIV

DRAM/NVM hierarchical heterogeneous memory access method and system with software-hardware cooperative management

ActiveUS10248576B2Reduce access latencyImprove utilizationMemory architecture accessing/allocationMemory adressing/allocation/relocationComputer architectureTerm memory

Owner:HUAZHONG UNIV OF SCI & TECH

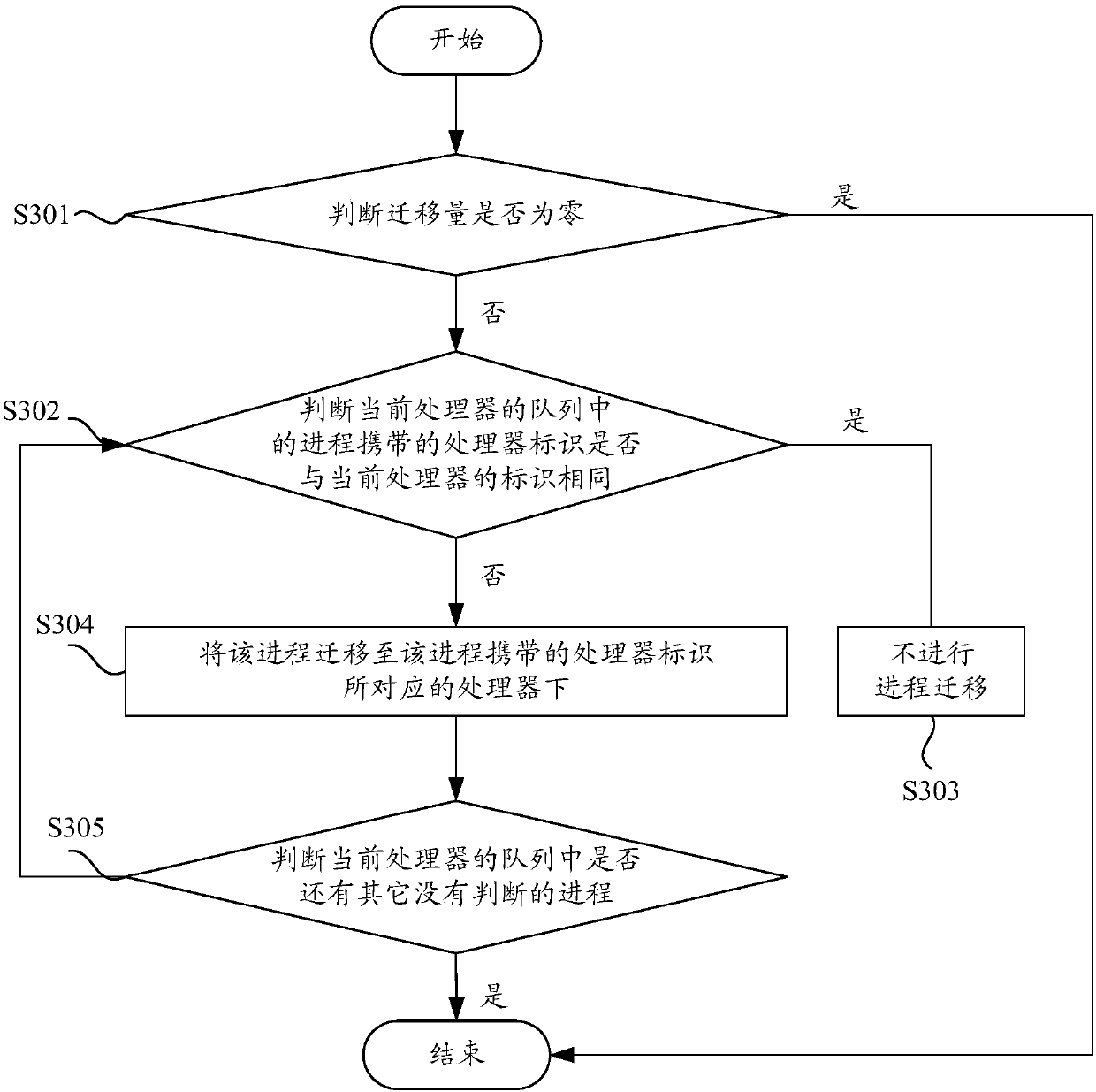

A load balancing method and device for a multi-core processor

ActiveCN109840151AImprove execution efficiencyImprove cache utilizationProgram initiation/switchingResource allocationOperational systemData placement

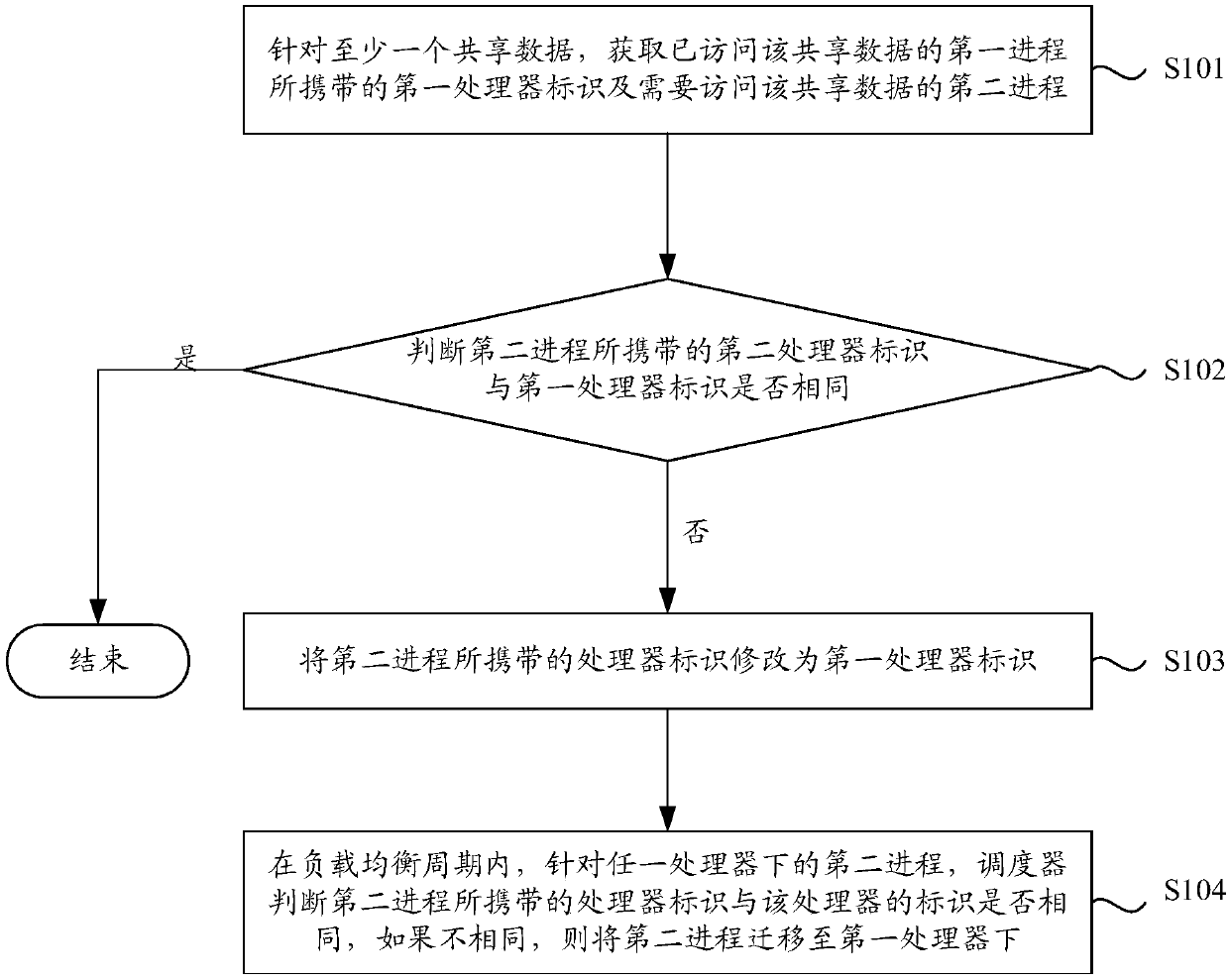

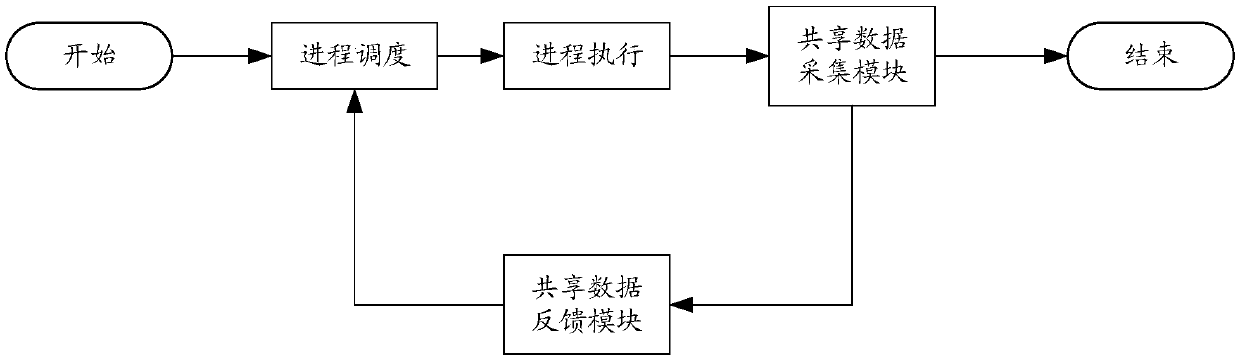

The embodiment of the invention relates to the technical field of computers, in particular to a load balancing method and device for a multi-core processor, and the method comprises the steps: for atleast one piece of shared data, obtaining a first processor identifier carried by a first process which accesses the shared data and a second process which needs to access the shared data; Judging whether a second processor identifier carried by the second process is the same as the first processor identifier or not, and if not, modifying the processor identifier carried by the second process intothe first processor identifier; And in a load balancing period, migrating the second process to the first processor. In this way, it can be seen that the multiple processes of the shared data can bemigrated to the same processor, the shared data does not need to be placed in respective caches, but the shared data is placed in the caches of the same processor, and therefore the cache utilizationrate of an operating system can be increased, and the execution efficiency of the processes can be improved.

Owner:DATANG MOBILE COMM EQUIP CO LTD

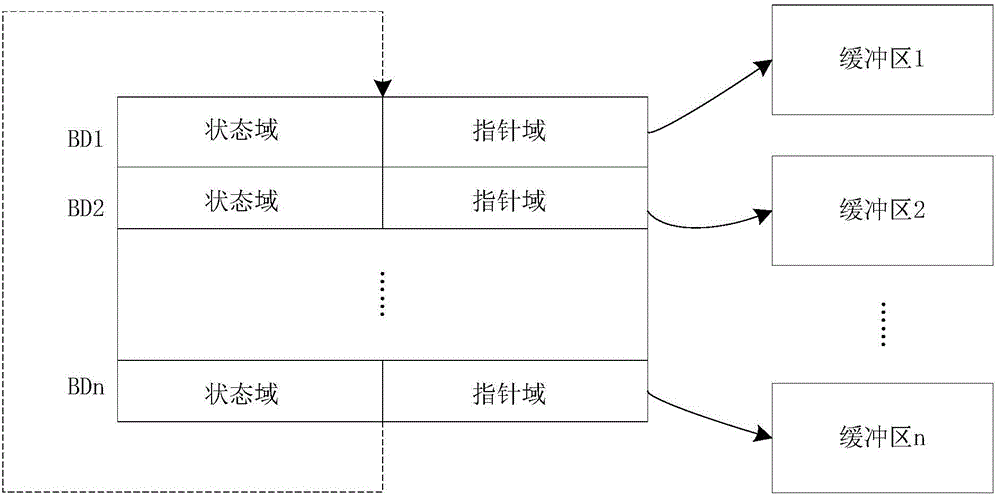

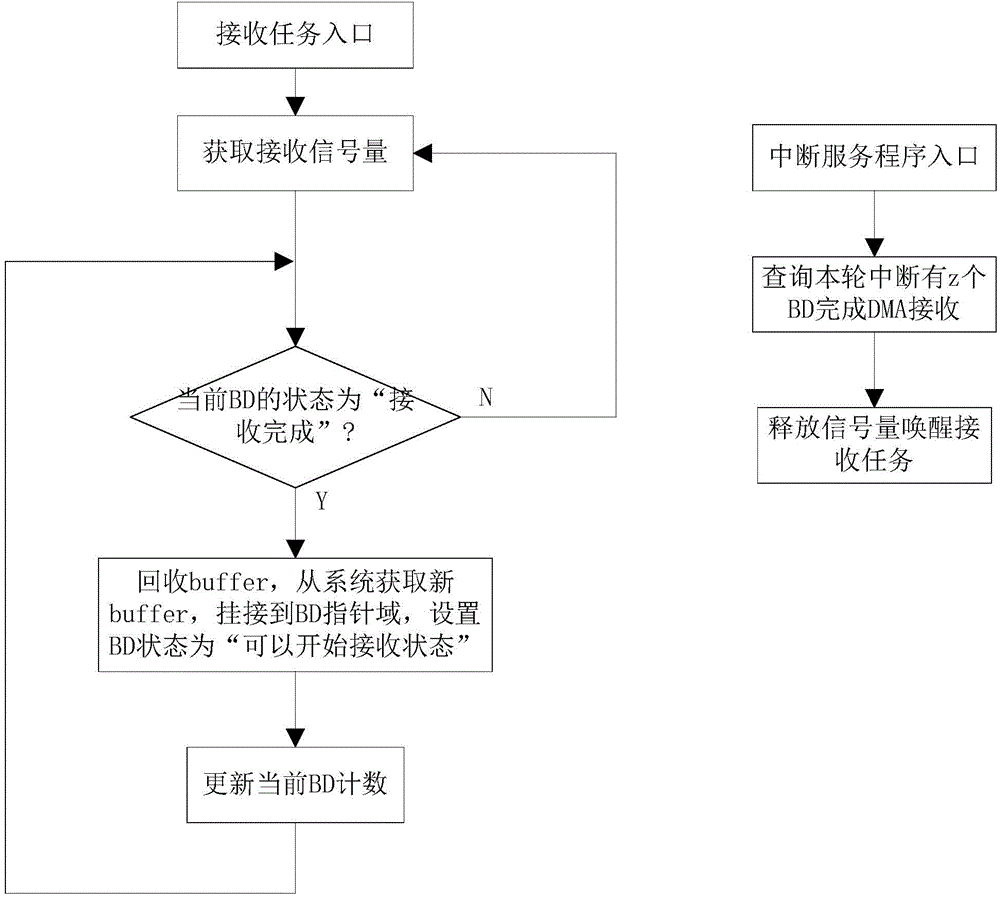

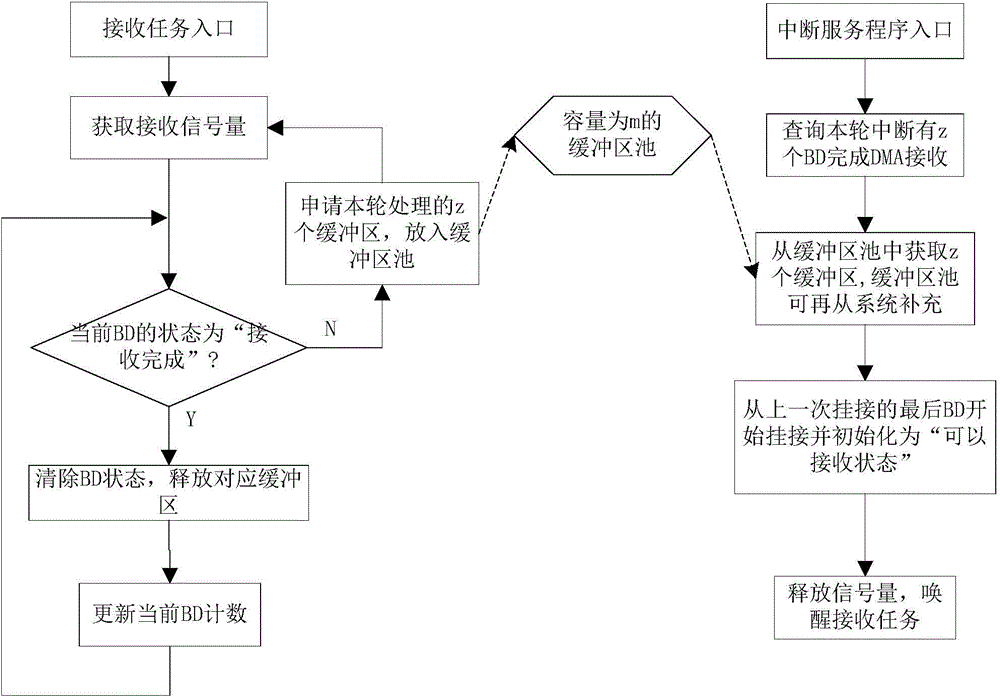

Buffer configuration method and device

ActiveCN104468404APerformance is not affectedReduce cache usageData switching networksTransport systemComputer science

The invention relates to a data transmission technology, and discloses a buffer configuration method for solving the problem that in the prior art, a DMA data transmission system is large in buffer memory occupation. The method comprises the steps that A, a buffer descriptor (BD) ring with the length of n is established and initialized to be in a non-receivable state, wherein n is a positive integer and is determined by system parameters; B, m buffers are applied for and stored in a buffer pool, wherein m is a positive integer and is smaller than n; C, k buffers are applied for and attached to first k BDs of the BD ring, the corresponding BDs are set to be in a receivable state, and hardware is started for reception, wherein k is a positive integer and is smaller than or equal to m; D, after DMA data reception of the BDs is completed, the number z of the BDs of which reception is completed currently is queried, z buffers are taken from the buffer pool and attached behind the kth BD of the BD ring, the corresponding BD is set to be in a receivable state, the reception semaphore is released, and a reception task is awaken. The algorithm is simple, and buffer memory occupation can be reduced without influencing performance.

Owner:MAIPU COMM TECH CO LTD

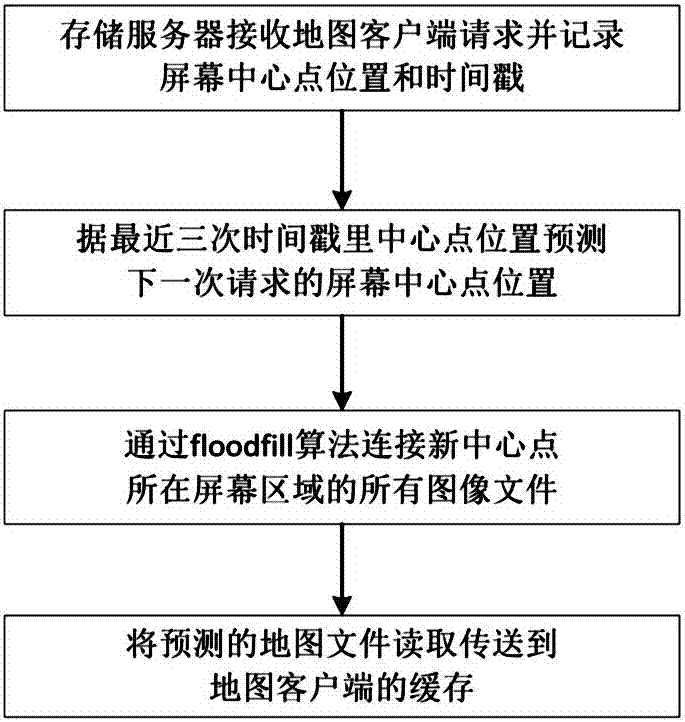

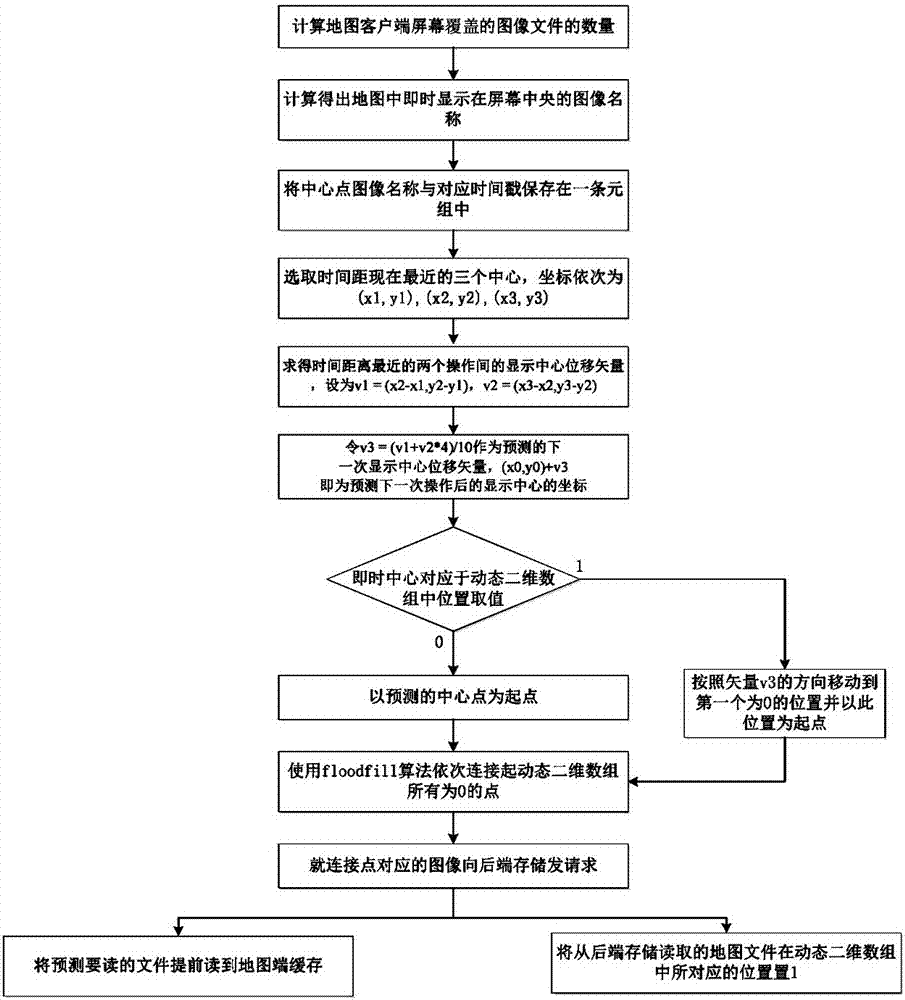

Map file prereading method based on distributed storage

ActiveCN107450860ALower latencyImprove cache utilizationInput/output to record carriersGeographical information databasesTimestampClient-side

The invention discloses a map file prereading method based on distributed storage. The method includes the steps of when a storage server responses to a request of a map client side, recording a screen center point location and a timestamp of a user; according to the screen center point locations in the latest N requests of the map client side, predicting the screen center point location of a next request; on the basis of a floodfill algorithm, linking all unread image files in all image files of a screen area corresponding to the screen center point location of the next request to serve as predicted map files, sending a reading request to a back-end memory, prereading and then transmitting the predicted map files into the cache of the map client side. According to the map file prereading method based on the distributed storage, since the predicted map files are read and transmitted into the cache of the map client side, when a current map page is browsed on the map client side, a part of to-be-read files corresponding to the page are preread and cached from the storage server, and therefore the cache utilization rate is increased, and delay during user operation is decreased.

Owner:HUNAN ANCUN TECH CO LTD

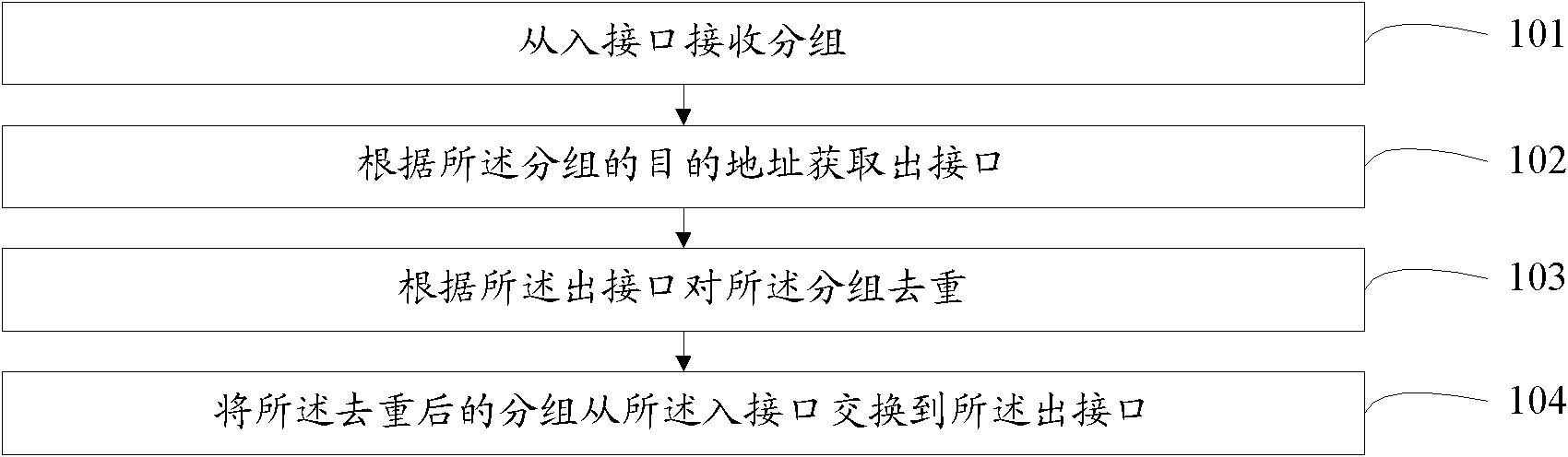

Method and equipment for network duplicate removal

InactiveCN102833146AImprove cache utilizationReduce trafficData switching networksTraffic volumeLine card

The embodiment of the invention discloses a method and equipment for network duplicate removal. The method comprises the following steps: receiving grouping from an incoming interface; acquiring an outcoming interface according to a destination address of the grouping; carrying out duplicate removal on the grouping according to the outcoming interface; and switching the grouping after duplicate removal from the incoming interface to the outcoming interface. By adopting the technical scheme provided by the embodiment of the invention, the problems that flow duplicate removal of interior of network equipment and an interface line card can not be realized in the prior art, and the cache utilization rate of the network equipment is low are solved.

Owner:HUAWEI TECH CO LTD

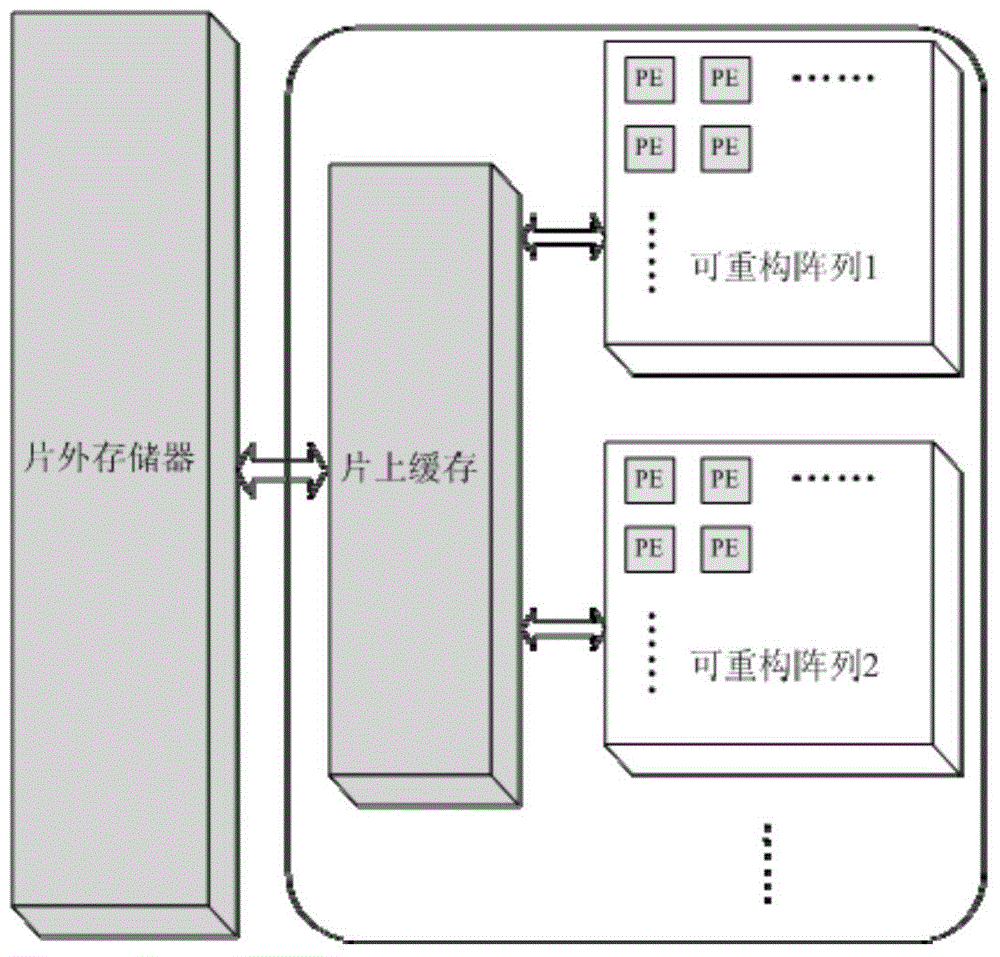

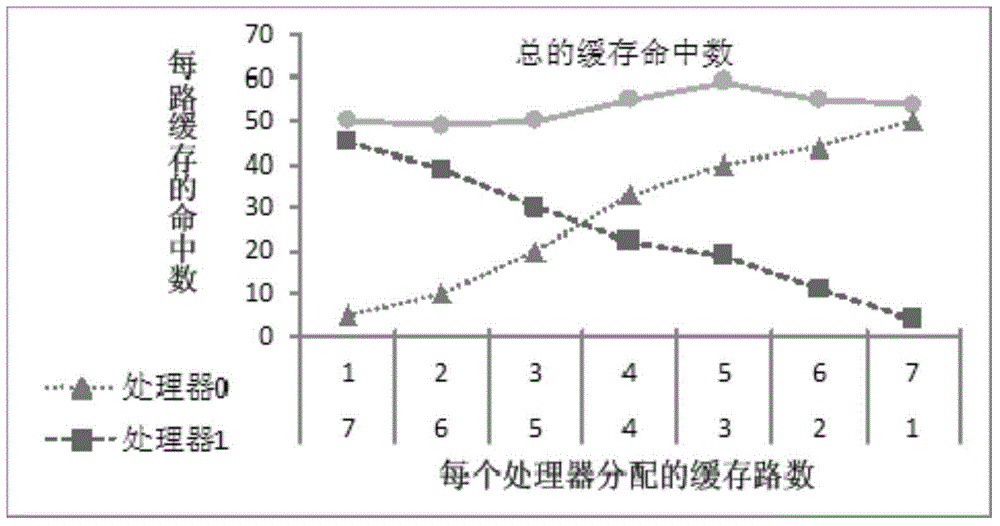

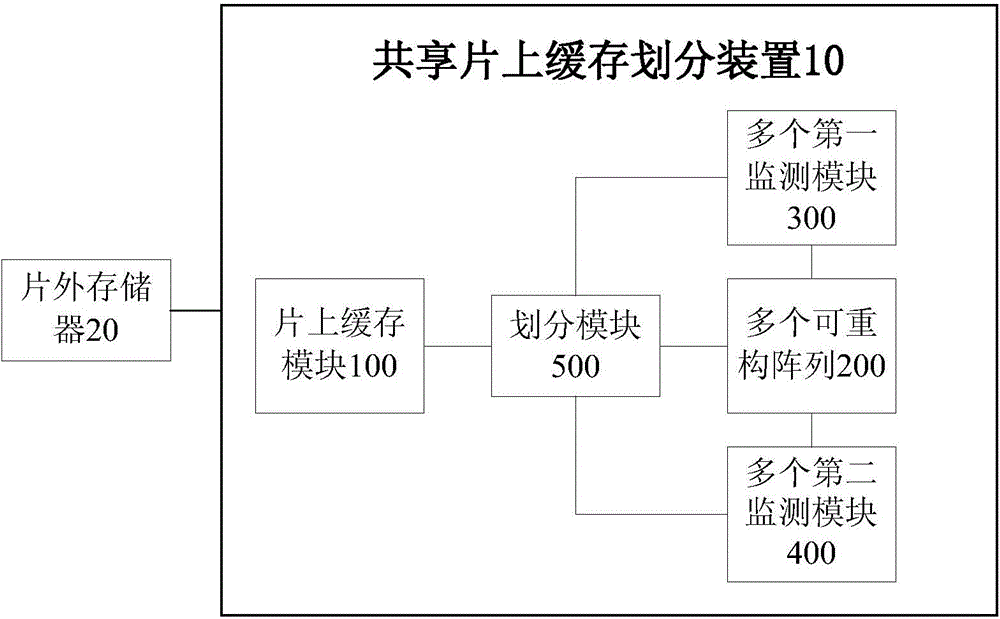

Sharing on-chip cache dividing device

ActiveCN104699630ASolve technical problemsSimple structureMemory adressing/allocation/relocationComputer moduleReconfigurable array

The invention discloses a sharing on-chip cache dividing device which comprises an on-chip cache module, a plurality of reconfigurable arrays, a plurality of first monitoring modules, a plurality of second monitoring modules and a dividing module. The plurality of first monitoring modules are used for tracking cache use ratio information of execution application programs on the plurality of reconfigurable arrays. The plurality of second monitoring modules are used for monitoring overlapping data amount among the plurality of reconfigurable arrays. The dividing module is used for determining corresponding shared cache channel number of each reconfigurable array according to the cache use ratio information and the overlapping data amount among the plurality of reconfigurable arrays. The dividing device solves the problem that the cache use ratio is reduced due to the overlapping data among the plurality of reconfigurable arrays, is favorable for reducing the total cache loss, improves cache use rate, and is simple in structure and convenient to operate.

Owner:TSINGHUA UNIV

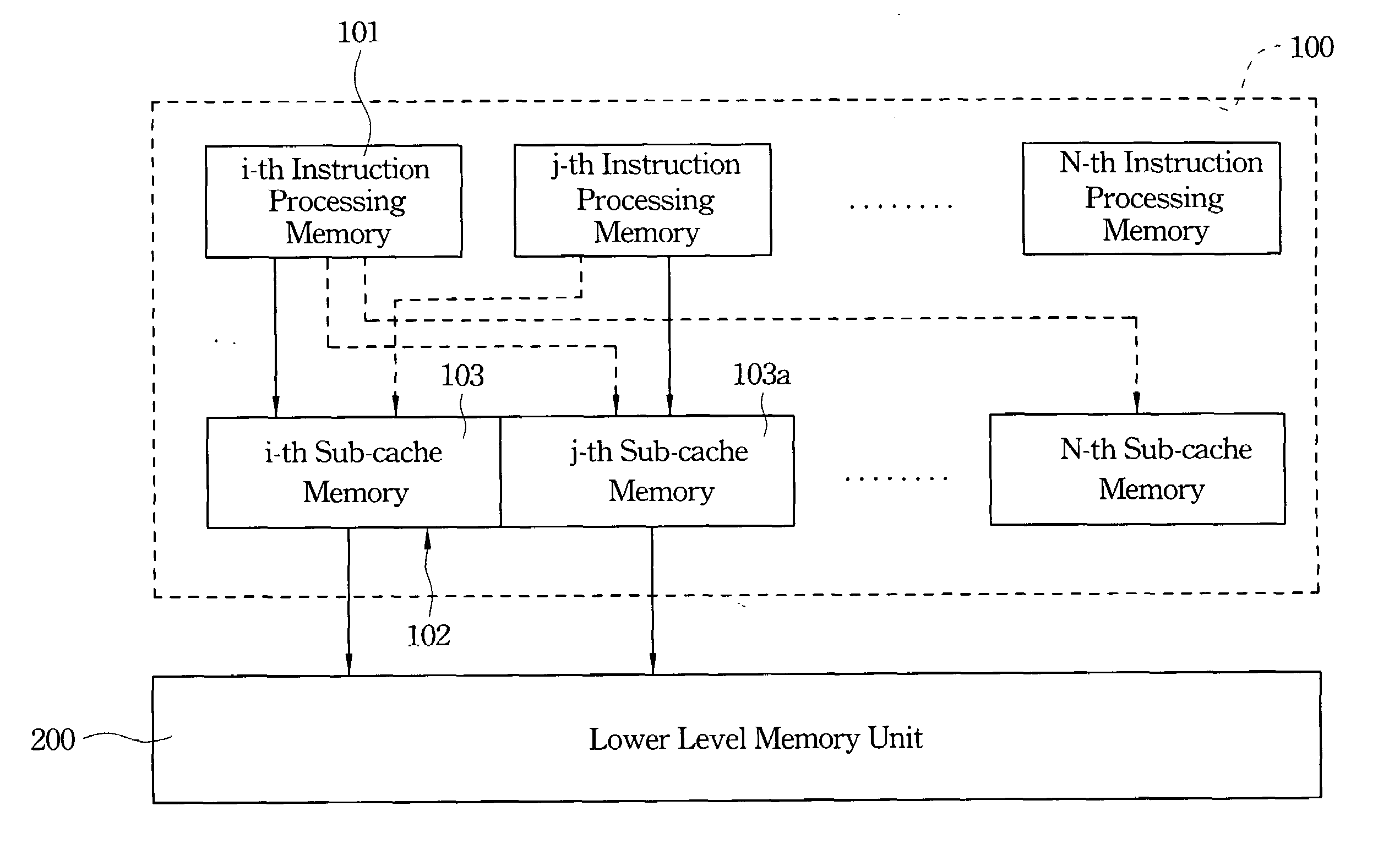

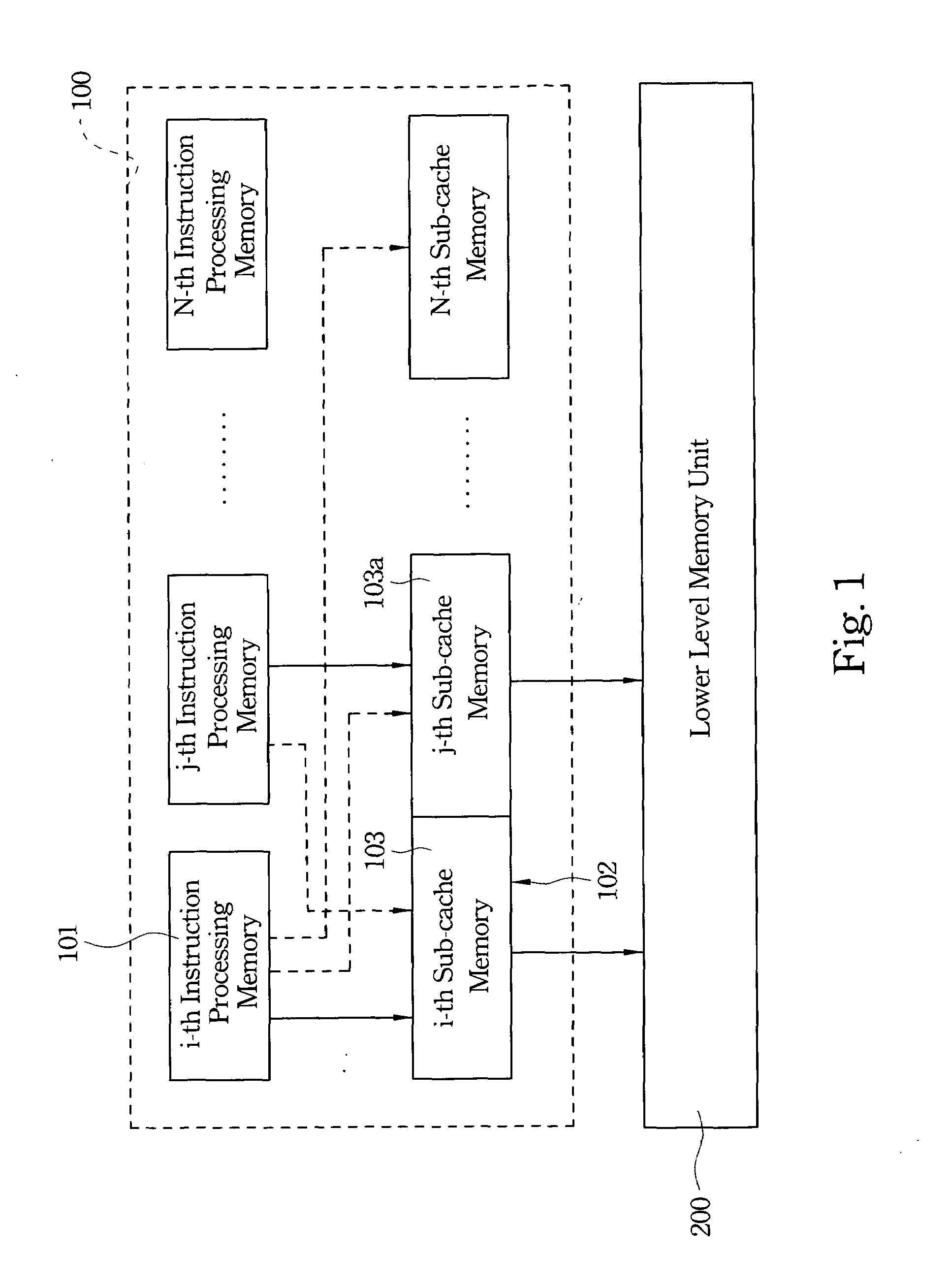

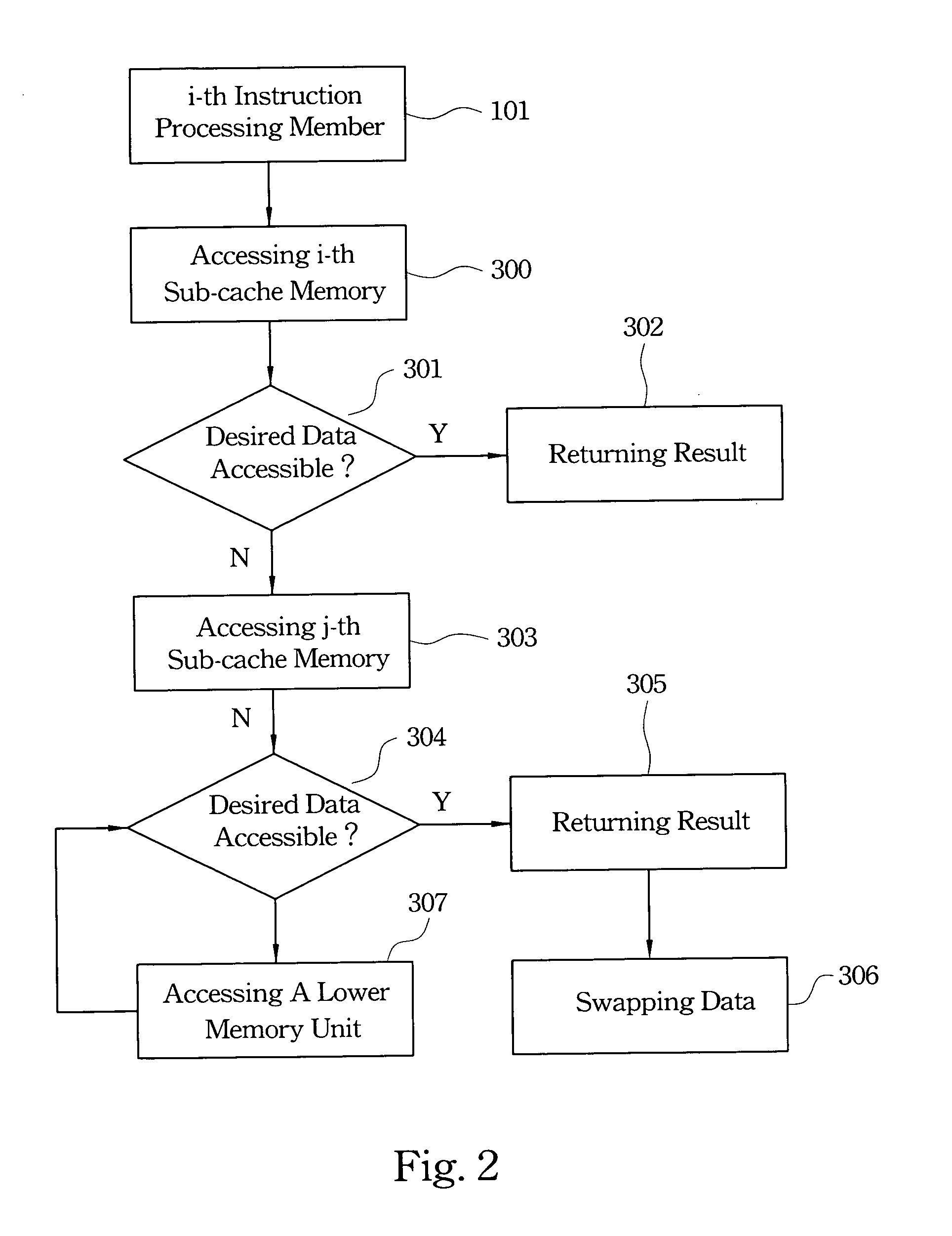

Method of accessing cache memory for parallel processing processors

A method of accessing cache memory for parallel processing processors includes providing a processor and a lower level memory unit. The processor utilizes multiple instruction processing members and multiple sub-cache memories corresponding to the instruction processing members. Next step is using a first instruction processing member to access a first sub-cache memory. The first instruction processing member will access the rest sub-cache memories when the first instruction processing member does not access the desired data successfully in the first instruction processing member. The first instruction processing member will access the lower level memory unit until the desired data have been accessed, when the first instruction processing member does not access the desired data successfully in the sub-memories. Then, the instruction processing member returns a result.

Owner:NATIONAL CHUNG CHENG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com