Patents

Literature

55results about How to "Save cache resources" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Pop-up advertisement blocking method and system based on browser and related browser

ActiveCN104036030AEasy to useImprove recognition efficiencyComputer security arrangementsSpecial data processing applicationsThird partyApplication software

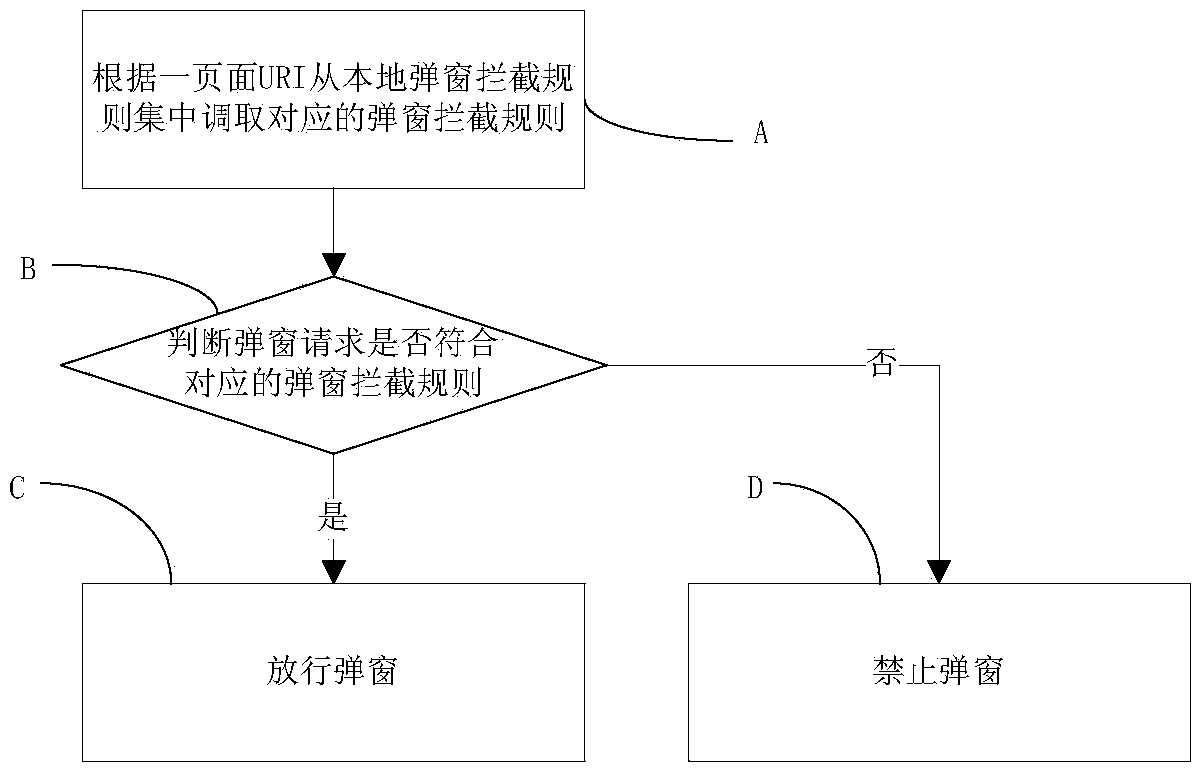

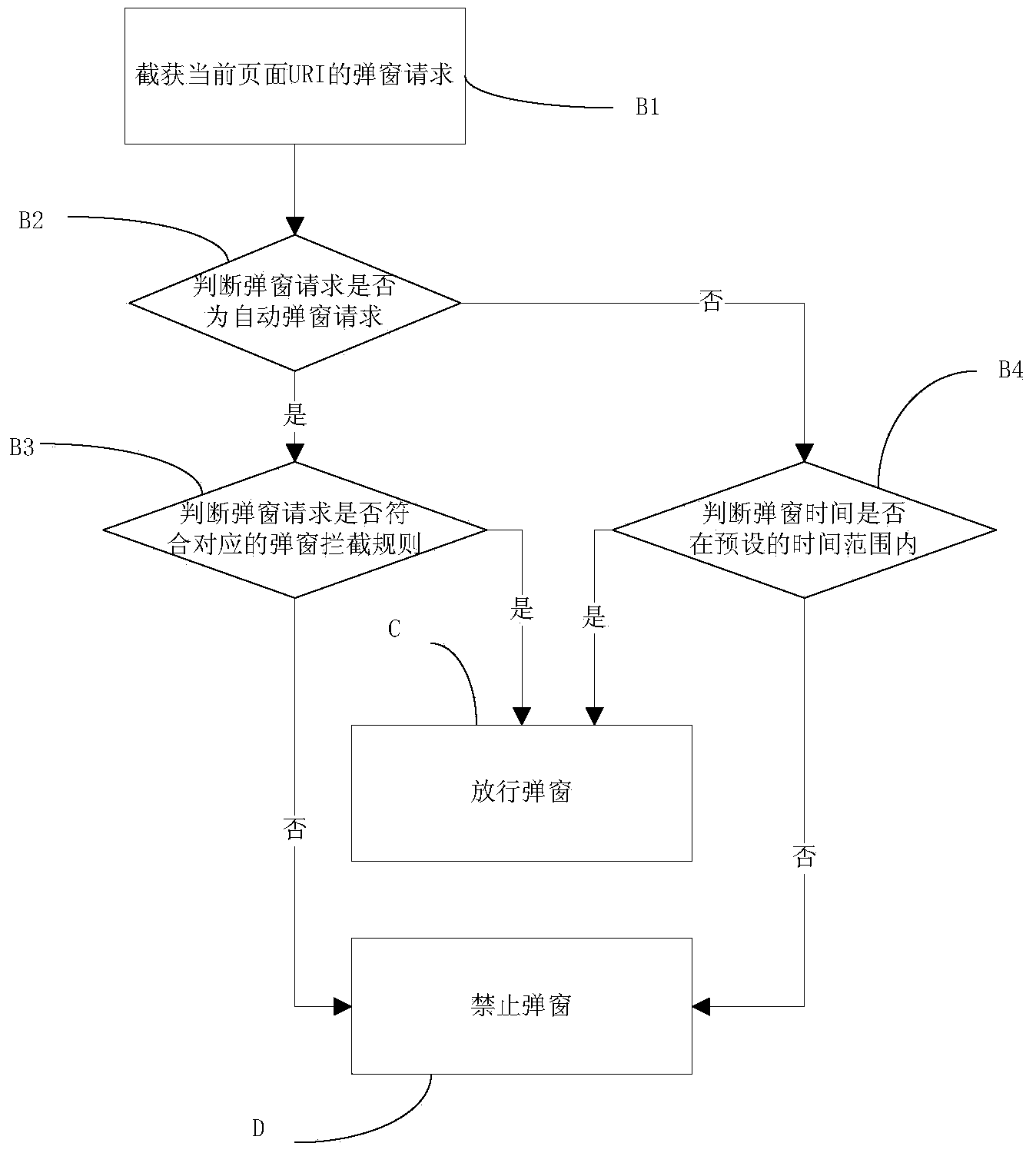

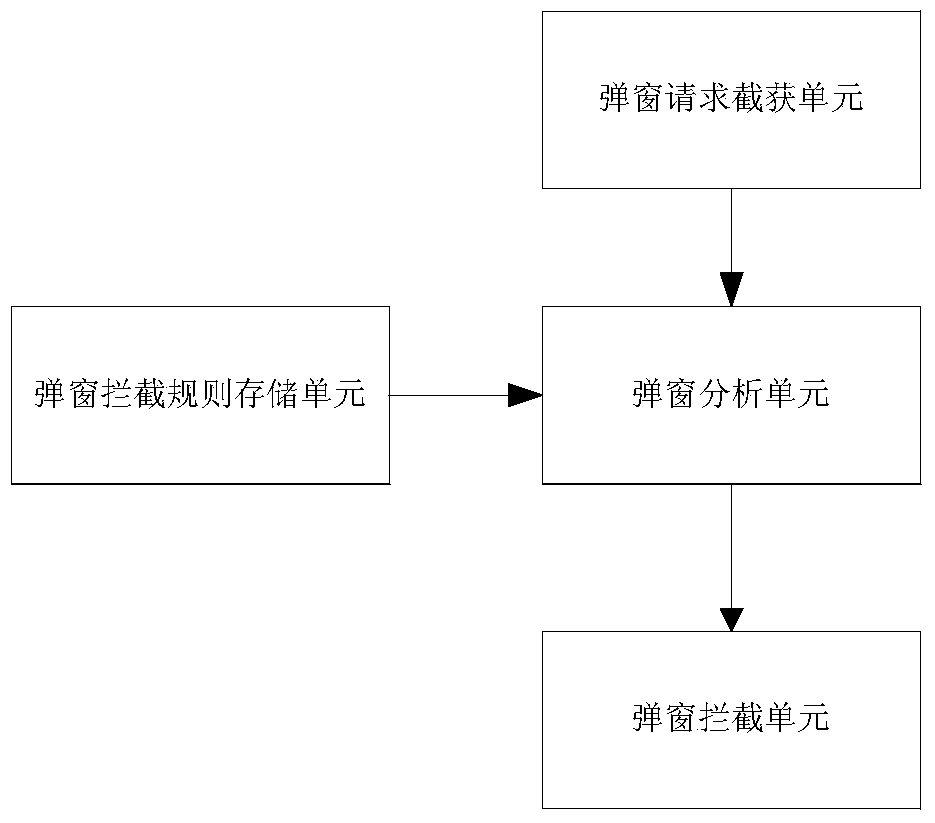

A pop-up advertisement blocking method and system based on a browser comprises the following steps that A, a corresponding pop-up blocking rule is called from a local pop-up blocking rule in a concentrated mode according to a page URI; B, a pop-up request of a current page URI is intercepted and captured, and whether the pop-up request accords with the corresponding pop-up blocking rule or not is judged, if yes, the step C is carried out, and if not, the step D is carried out; C, the pop-up is released; D, the pop-up is forbidden. According to the pop-up advertisement blocking method and system, the pop-up is automatically identified directly based on the browser, pop-ups which do not accord with the pop-up blocking rule are blocked, a third party plug-in does not need to be mounted, third party application software is of no need, and a user can use the method more conveniently. The invention further relates to a related system and a browser.

Owner:SHANGHAI 2345 NETWORK TECH

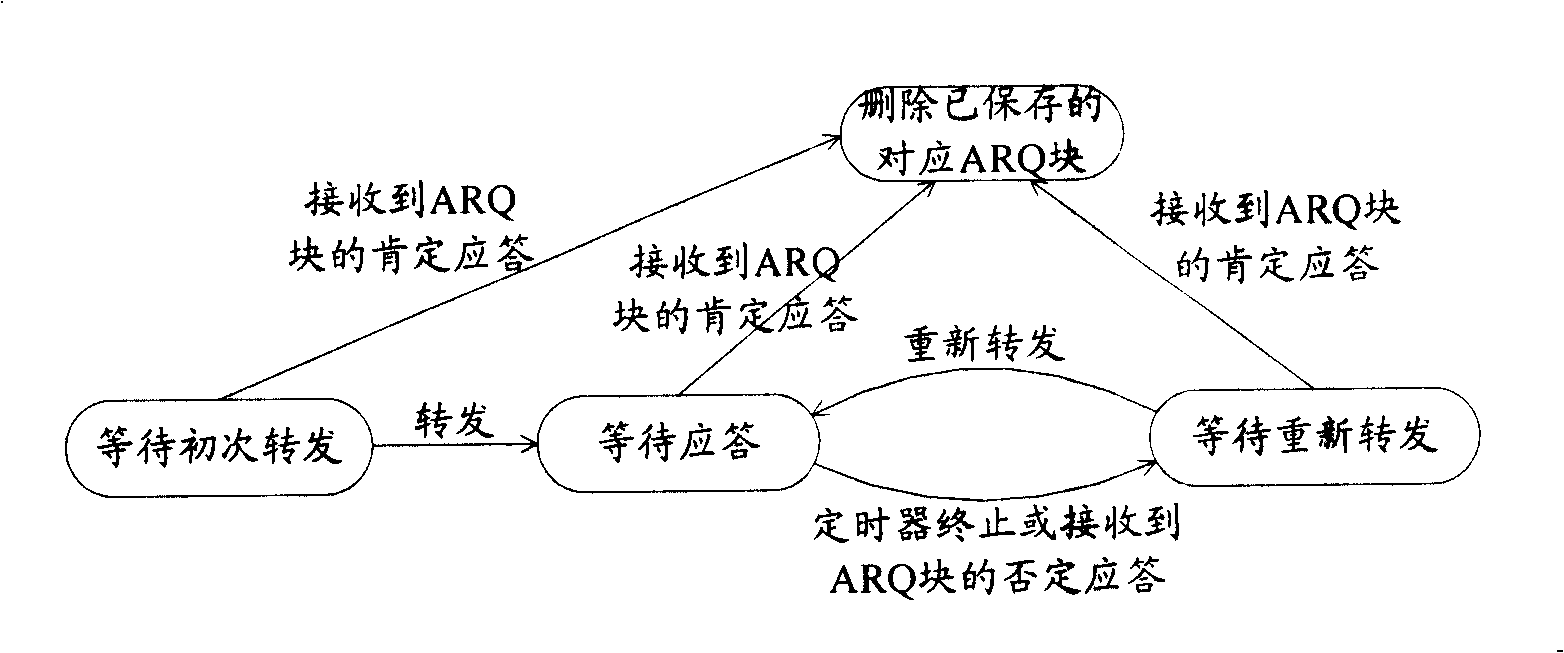

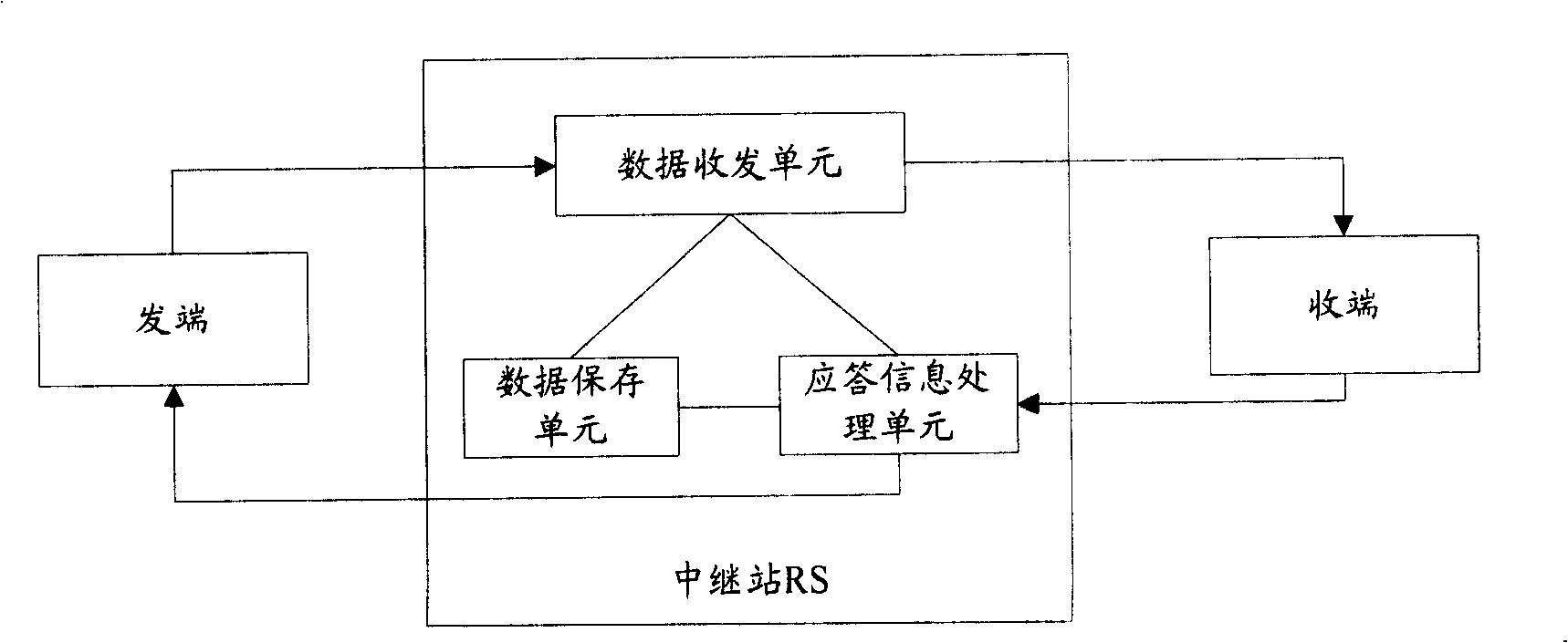

An automatic retransfer request method, system and relay station in relay network

InactiveCN101267241AAvoid data transfer delaysSave cache resourcesError prevention/detection by using return channelActive radio relay systemsTime delaysAutomatic repeat request

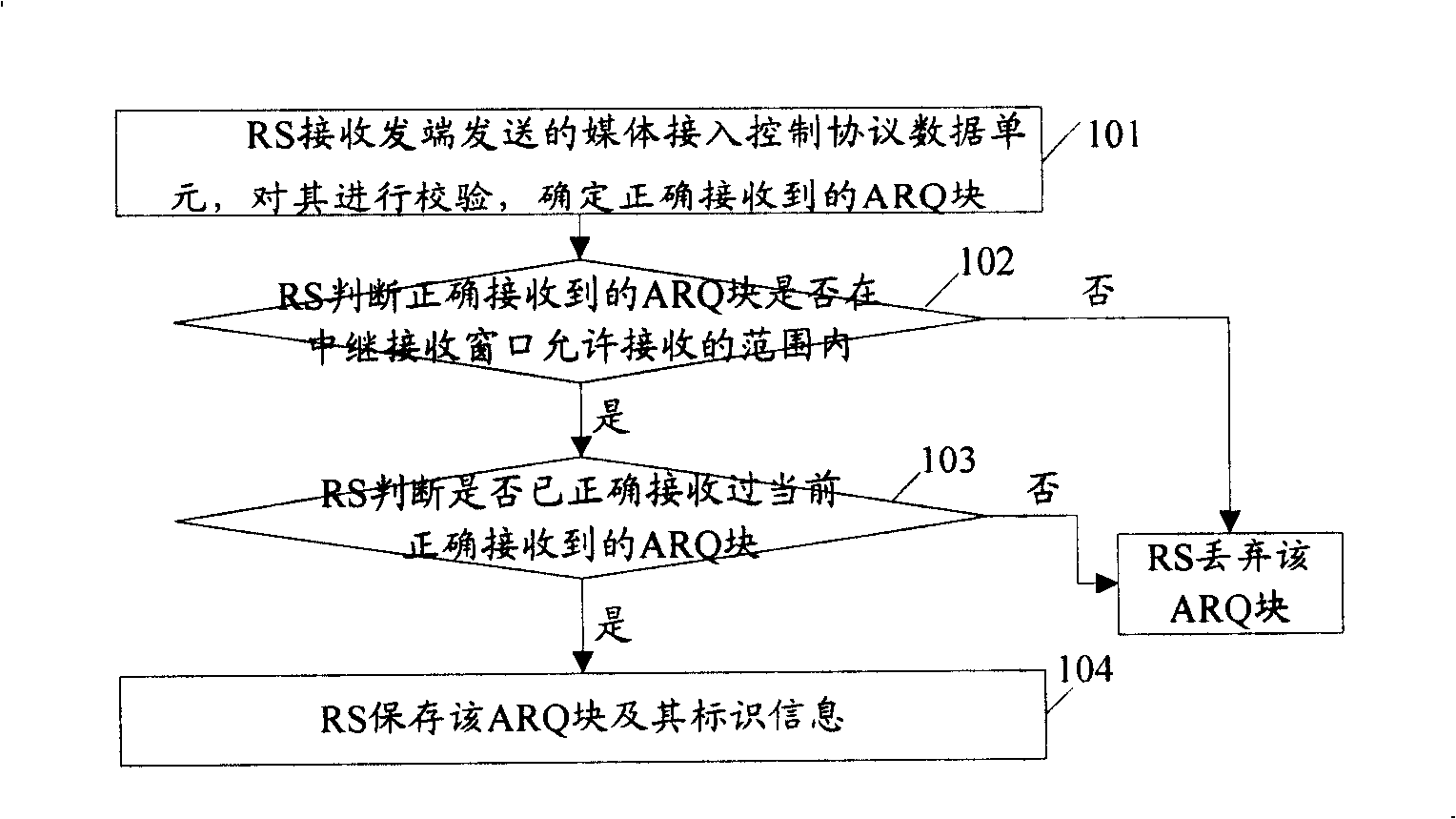

An embodiment of the invention discloses an automatic retransmission requesting method in a relay network. The method comprises the following procedures: storing the data and transmitting the data to the receiving end when a relay station RS confirms that the data from the transmitting side is correctly received; returning an affirmative response of the corresponding data to the transmitting side by the RS when the RS confirms that the data corresponding to the response information is correctly received by the receiving end according to the response information from the receiving end; and retransmitting the corresponding data when the RS confirms that the data stored by itself is not correctly received by the receiving end according to the response information. The embodiment of the invention simultaneously discloses an automatic retransmission requesting system and a relay station in the relay network. The method, system and relay station provided by the embodiment of the invention can avoid the problems of larger time delay of the data transmission in the first RS processing mechanism of the prior art and more RS caching resource engaging in the second RS processing mechanism of the prior art.

Owner:HUAWEI TECH CO LTD

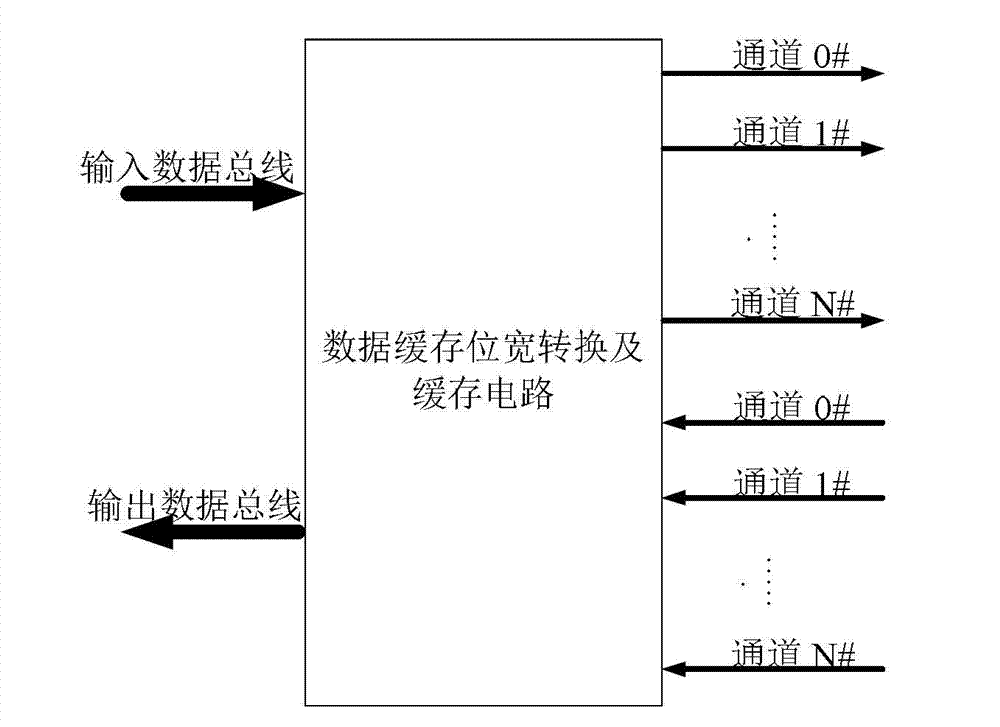

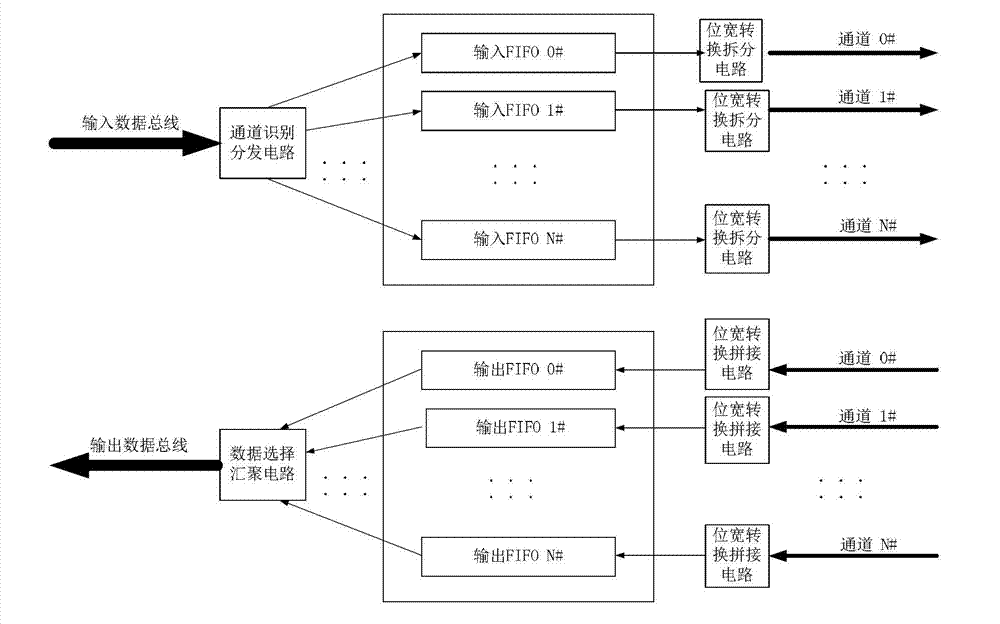

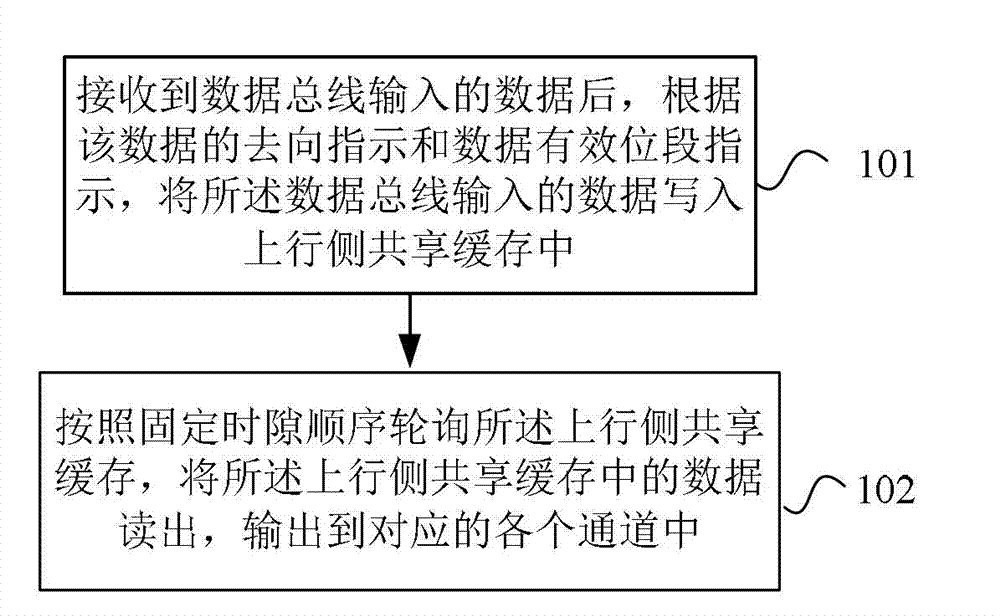

Data processing method and device

ActiveCN103714038AImplement cachingSave cache resourcesMemory architecture accessing/allocationMemory adressing/allocation/relocationComputer networkBit field

The invention provides a data processing method and device. The method includes: writing the received data input by a data bus an uplink side sharing cache according to the heading direction indication and data significance bit field indication of the data; polling the uplink side sharing cache according to fixed time slot sequence to read the data in the uplink side sharing cache, and outputting the data to each corresponding channel. The method has the advantages that cache resources can be saved effectively while reliable data caching and bit width conversion are achieved, area and time sequence pressure can be lowered, and cache utilization rate can be increased.

Owner:SANECHIPS TECH CO LTD

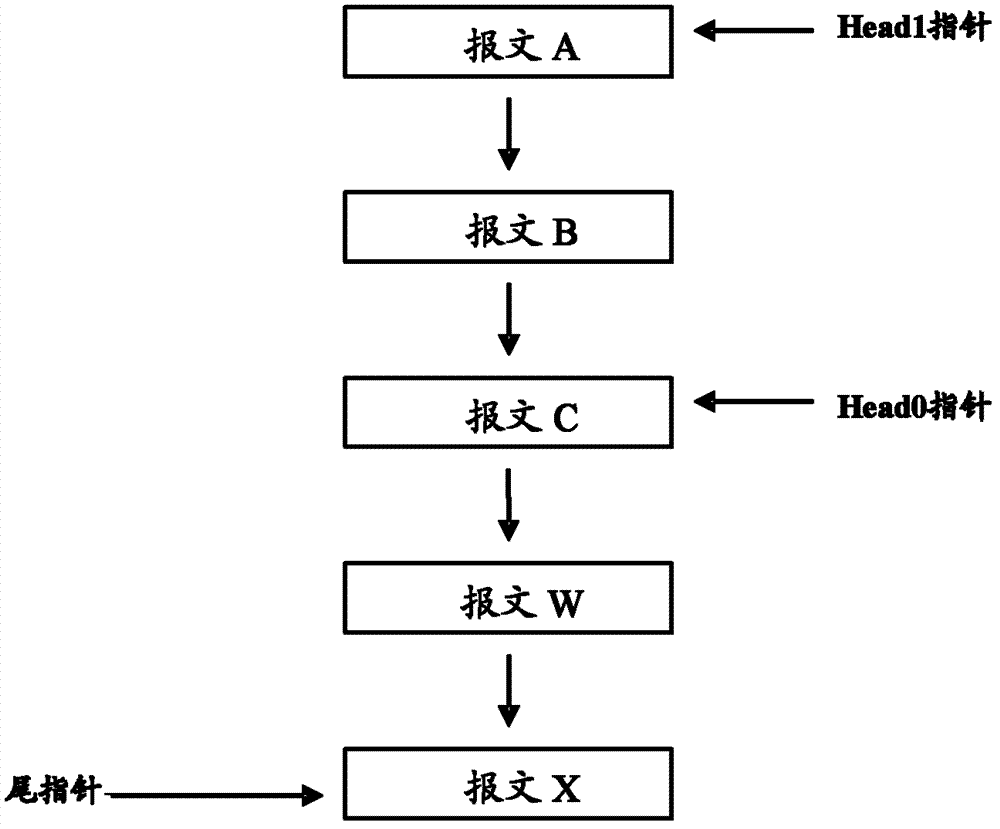

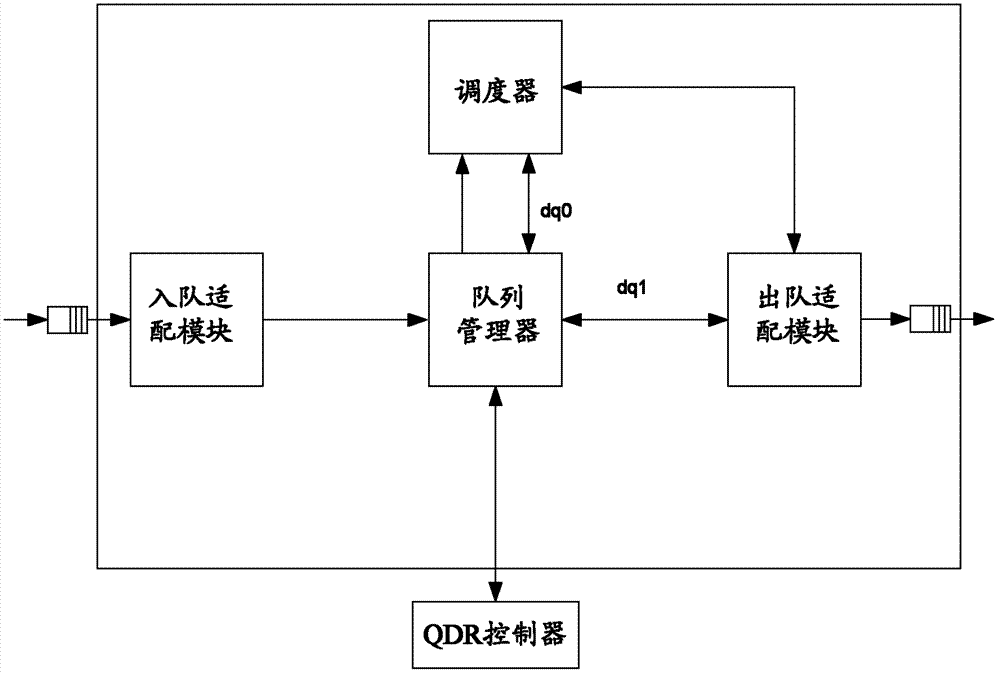

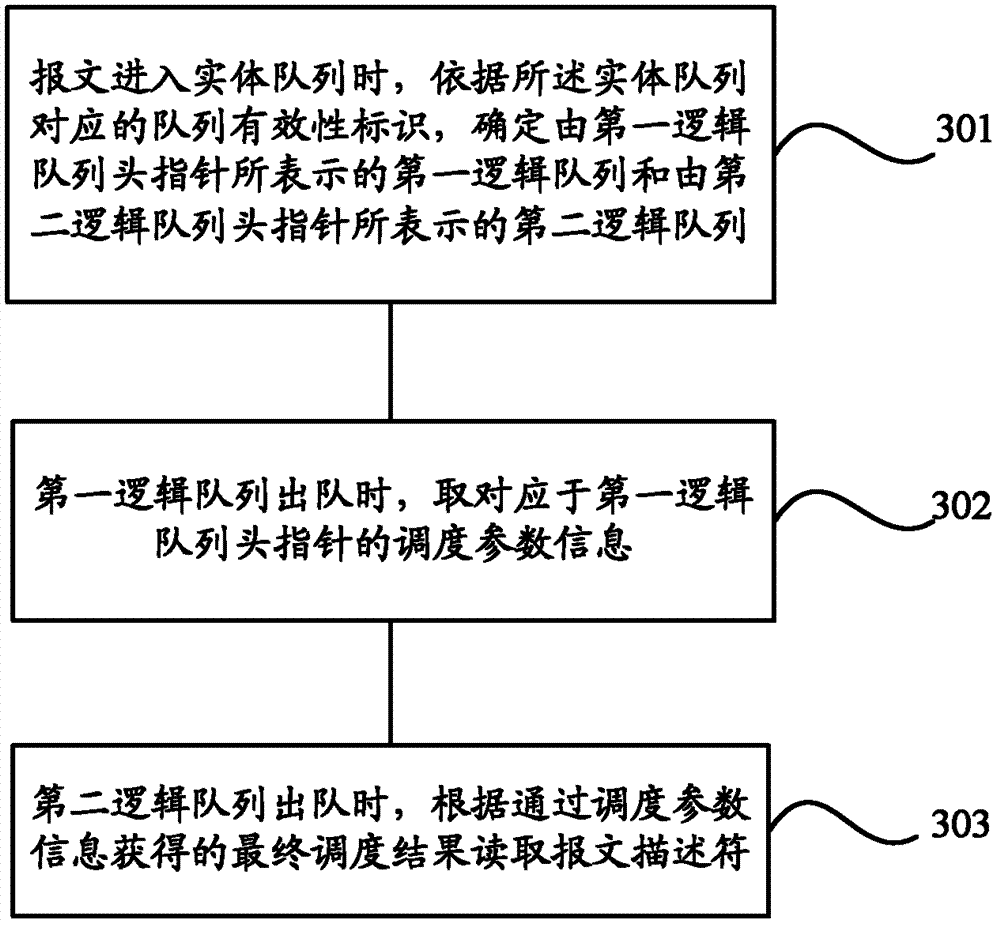

Method and device for queue management

ActiveCN102957629ASave cache resourcesData switching networksQueue management systemDistributed computing

The invention provides a method and a device for queue management. The method for queue management comprises the steps of: determining a first logical queue indicated by a first logic queue head pointer and a second logic queue indicated by a second logic queue head pointer according to a queue validity identifier corresponding to an entity queue when a message enters the entity queue, wherein the first logic queue and the second logic queue comprise the same tail pointers; reading a scheduling parameter information corresponding to the first logic queue head pointer when the first logic queue dequeues, and reading a message descriptor according to a final scheduling result obtained through the scheduling parameter information when the second logic queue dequeues. Thus, the special function requirement that a push-type multi-level scheduler can schedule and select the queue after obtaining the scheduling parameter information firstly before scheduling is met, and the message descriptor only can be read from a message descriptor table when the scheduler outputs the final scheduling result. Therefore, cache resources are greatly saved.

Owner:XFUSION DIGITAL TECH CO LTD

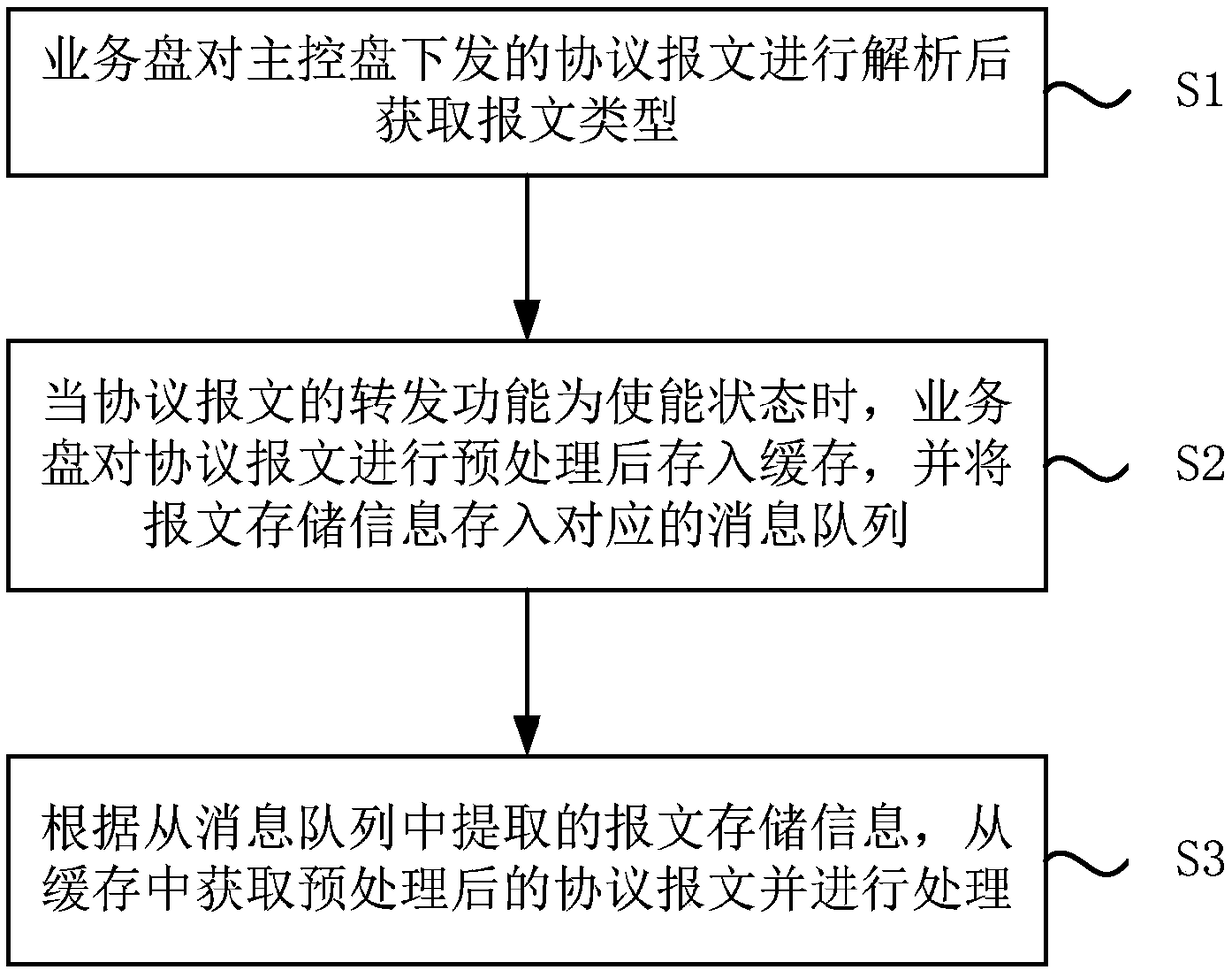

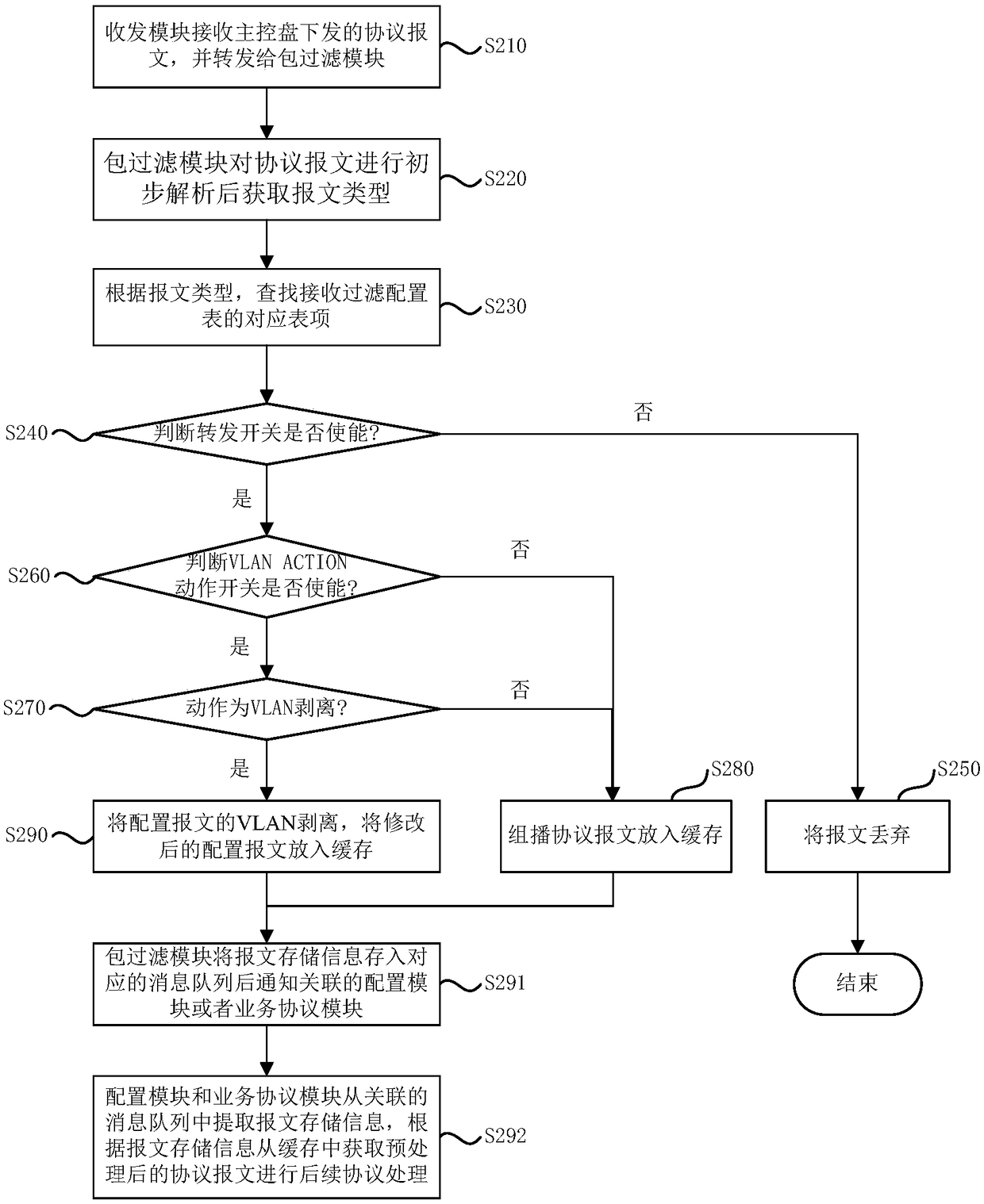

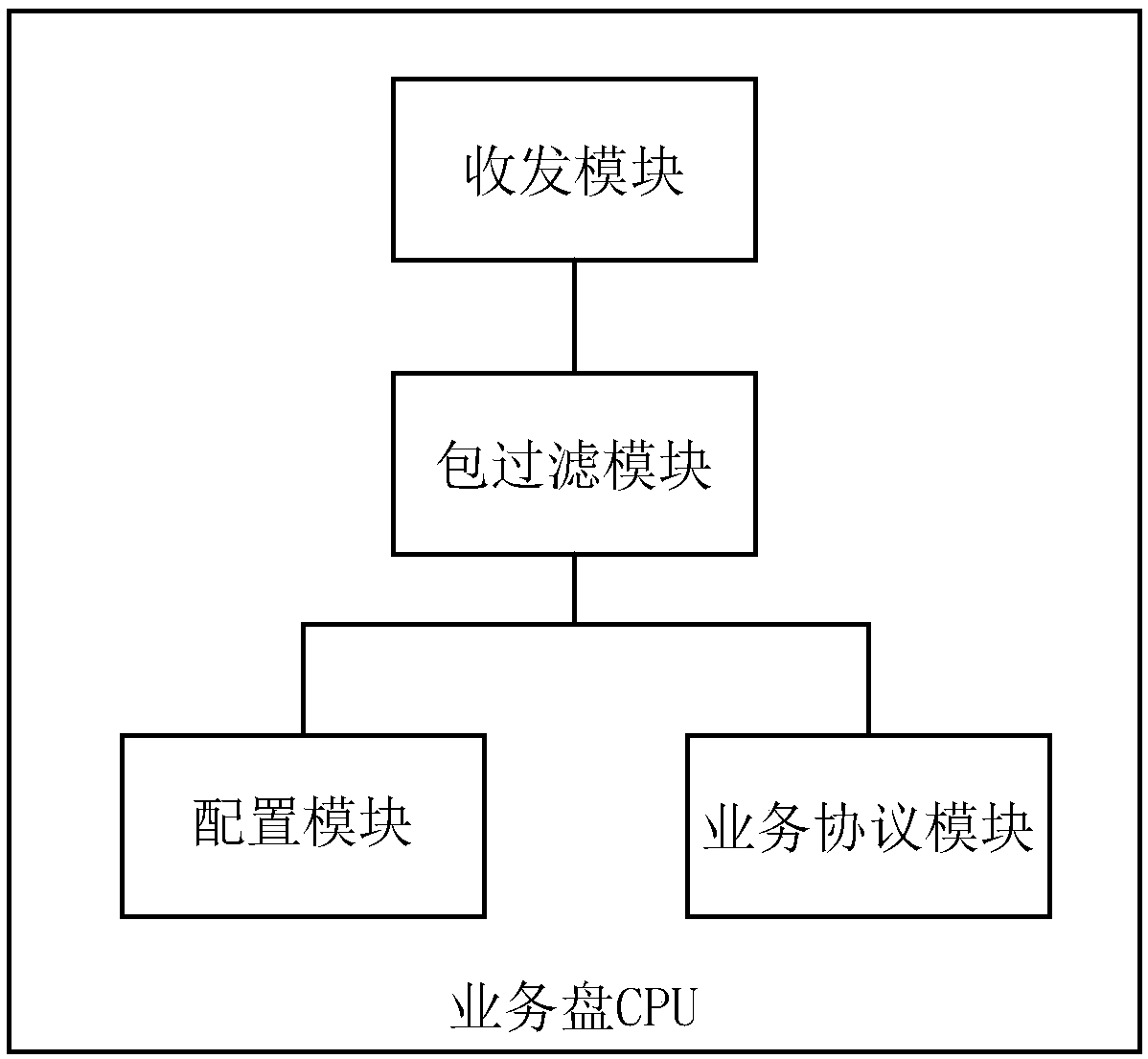

Method and system for processing protocol packet

InactiveCN108768882AAvoid lossImprove communication efficiencyNetworks interconnectionMessage queueDependability

The invention discloses a method and a system for processing a protocol packet, and relates to the technical field of communications. The method comprises the following steps: a service disc obtains the packet type after parsing the protocol packet sent by a main control disc; when the forwarding function of the sent protocol packet is at an enabled state, the service disc stores the protocol packet in the cache after preprocessing, and stores the packet storage information in the corresponding message queues, wherein each packet type corresponds to a message queue; at the same time or later,the preprocessed protocol packet is obtained from the cache and processed according to the packet storage information extracted from the message queues. The invention reduces the processing links, avoids the loss of the management protocol packet, and ensures that the configuration message and the event message are preferentially processed, which improves the communication efficiency and reliability of the service disc, and is beneficial to the maintenance and management of the operator.

Owner:FENGHUO COMM SCI & TECH CO LTD

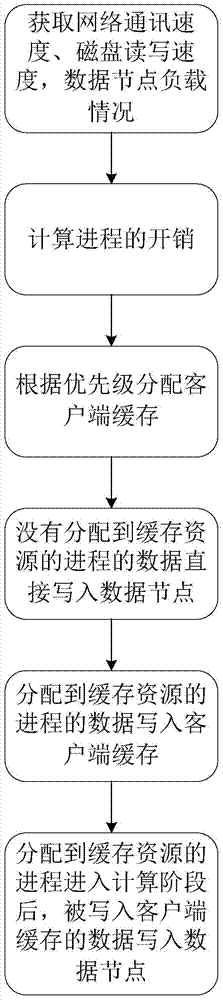

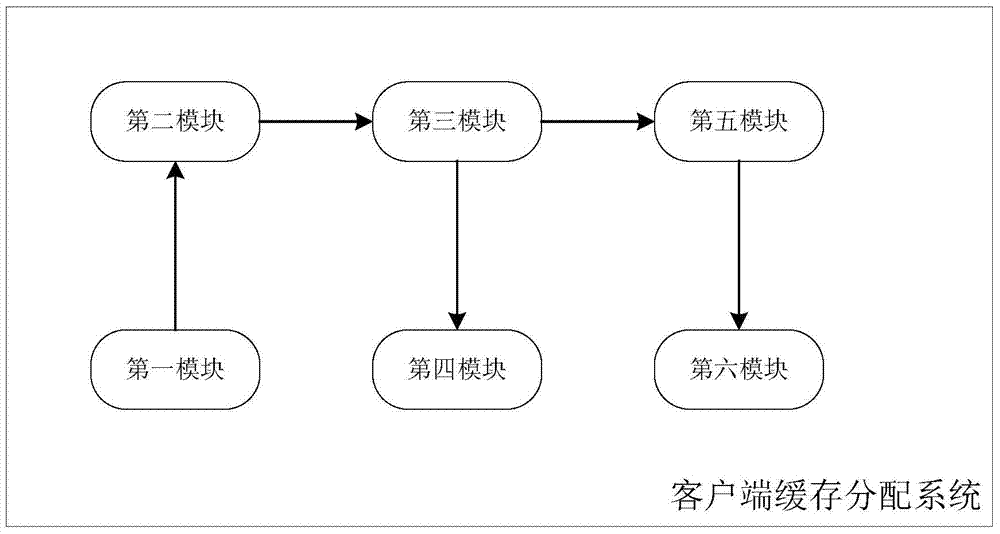

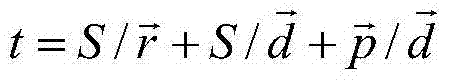

Performance pre-evaluation based client cache distributing method and system

InactiveCN103685544AReduce occupancyImprove caching efficiencyTransmissionFile systemParallel computing

The invention discloses a performance pre-evaluation based client cache distributing method. The performance pre-evaluation based client cache distributing method comprises the following procedures of: firstly, counting loads of different data nodes in a parallel file system and collecting information such as a network speed and a magnetic disk write-read speed in the parallel file system at the same time; performing performance pre-evaluation on different system client cache distribution strategies by using the counted and collected information; selecting a client cache distribution strategy capable of bringing maximum performances by the system based on a performance pre-evaluation result; giving different priorities to different write requests based on the selected client cache distribution strategy; distributing the client cache to the write requests with relatively high priorities; directly writing the write requests with relatively low priorities into a magnetic disk. The performance pre-evaluation based client cache distributing method can solve the problems of high priority and low efficiency existing in the client distribution strategy of the existing parallel file system and maximizes performance improvement which can be brought by the limited client cache.

Owner:HUAZHONG UNIV OF SCI & TECH

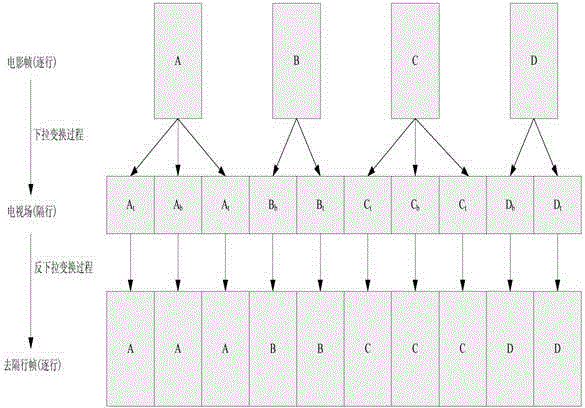

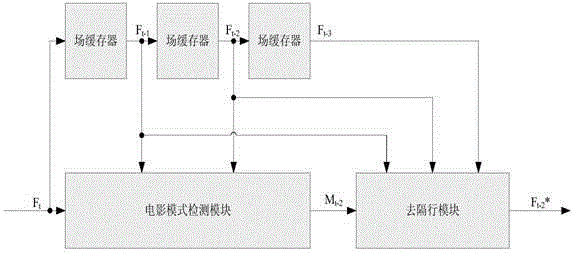

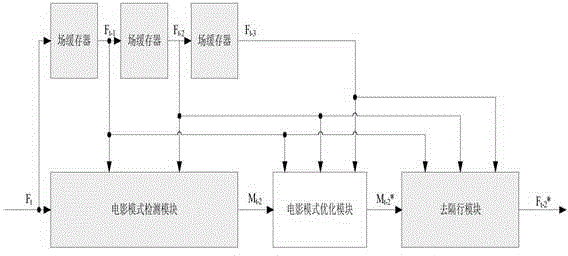

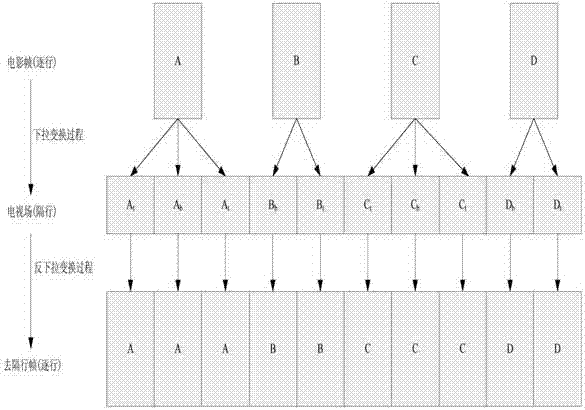

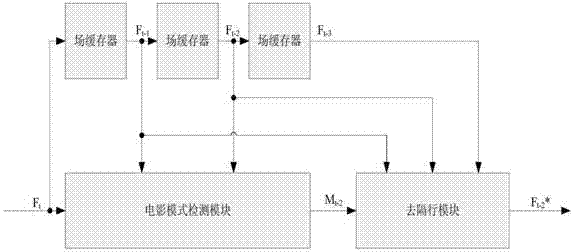

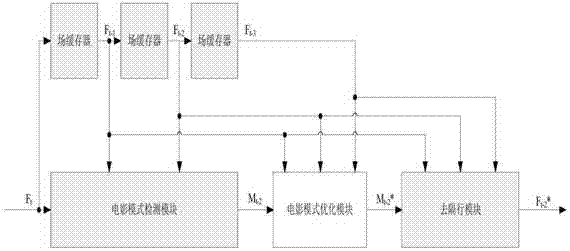

Video detecting and processing method and video detecting and processing device

ActiveCN104580978ASave cache resourcesSave bandwidth resourcesTelevision system detailsBrightness and chrominance signal processing circuitsPattern recognitionPattern detection

The invention relates to a video detecting and processing method. The video detecting and processing method comprises the following steps: calculating the whole dependency of images of adjacent fields to predicate whether a current field is a film field or not; if so, combining the current field and the adjacent field to form a frame; detecting comb tooth artifacts in the synthesized frame pixel by pixel; if no comb tooth artifact is detected, determining that a current local region is a film pattern and taking the synthesized frame as a restored video frame; if the comb tooth artifacts are detected, judging that the current local region is a non-film pattern; and calculating an interpolation frame by adopting a movement self-adaptive method or a spatial interpolation method and taking the interpolation frame as a restored video frame. The video detecting and processing method can be used for correctly detecting a film region and an interlacing region in a mixed video and different interlacing removing technologies are used for processing respectively, so that the details of the film region are recovered and a comb tooth phenomenon is avoided. Meanwhile, a film pattern detection module and an interlacing removing module can use the same input so that field caching and DDR bandwidth are reduced, and the hardware cost is saved.

Owner:HAIER BEIJING IC DESIGN

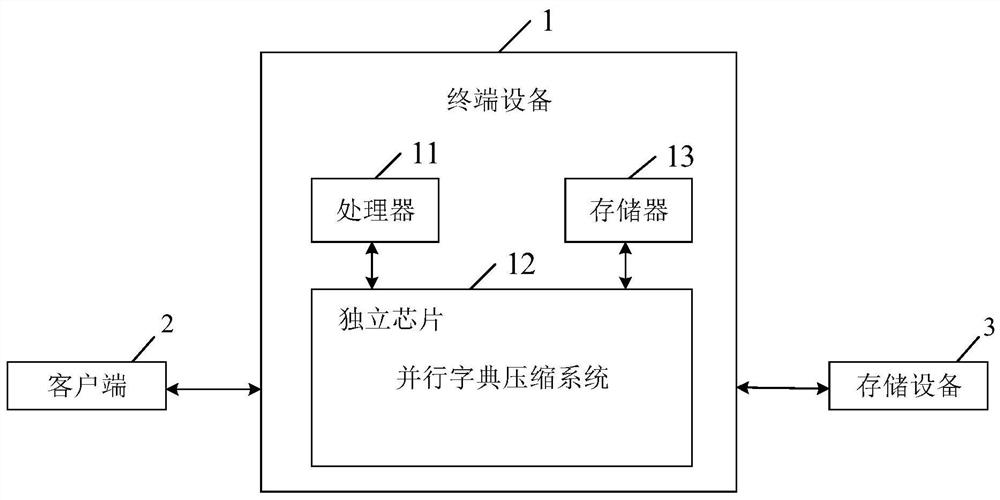

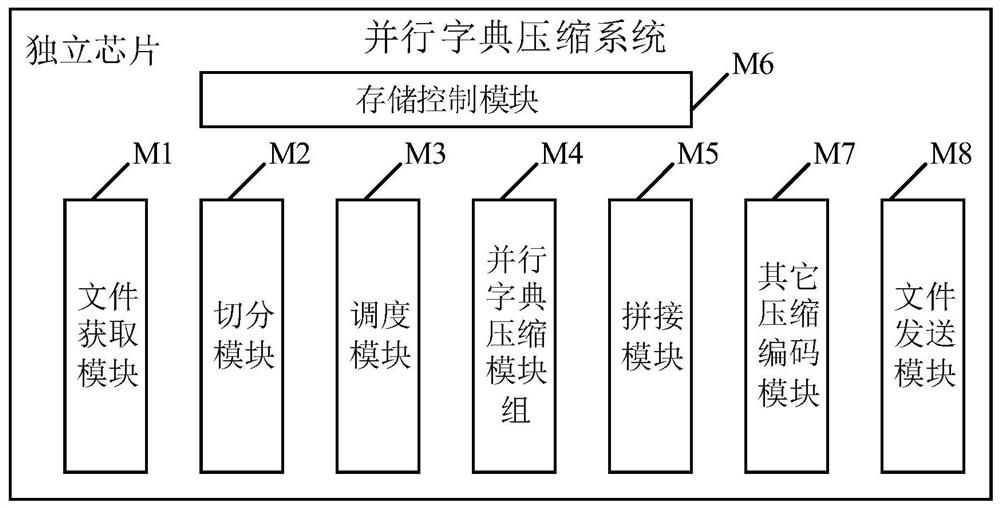

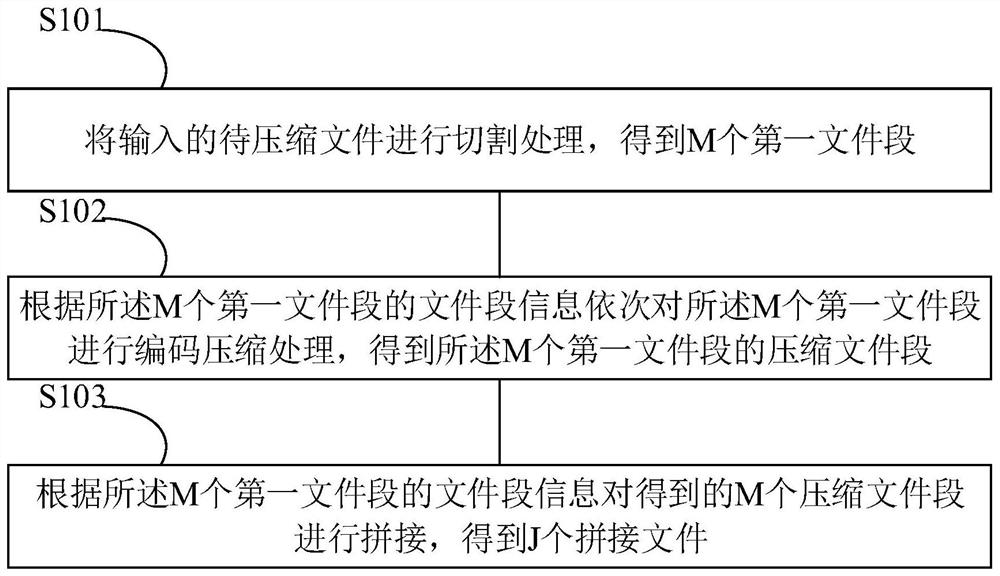

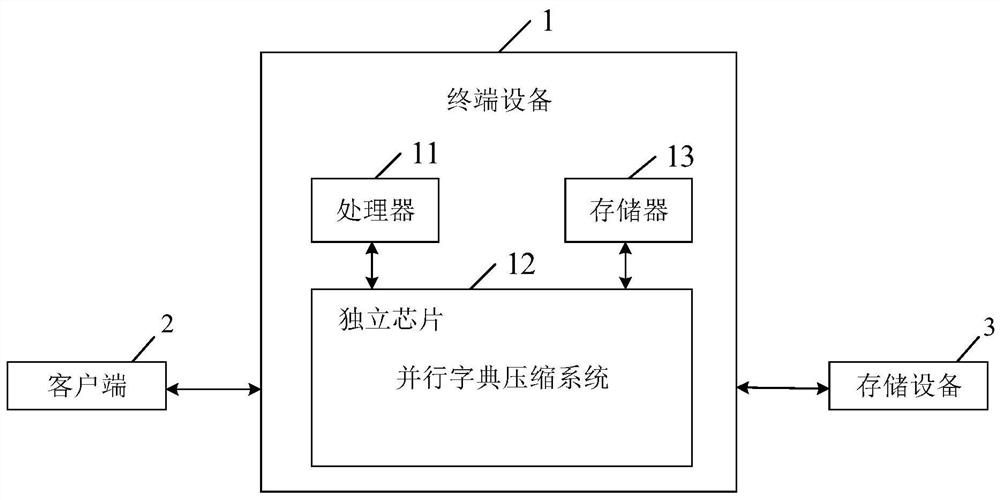

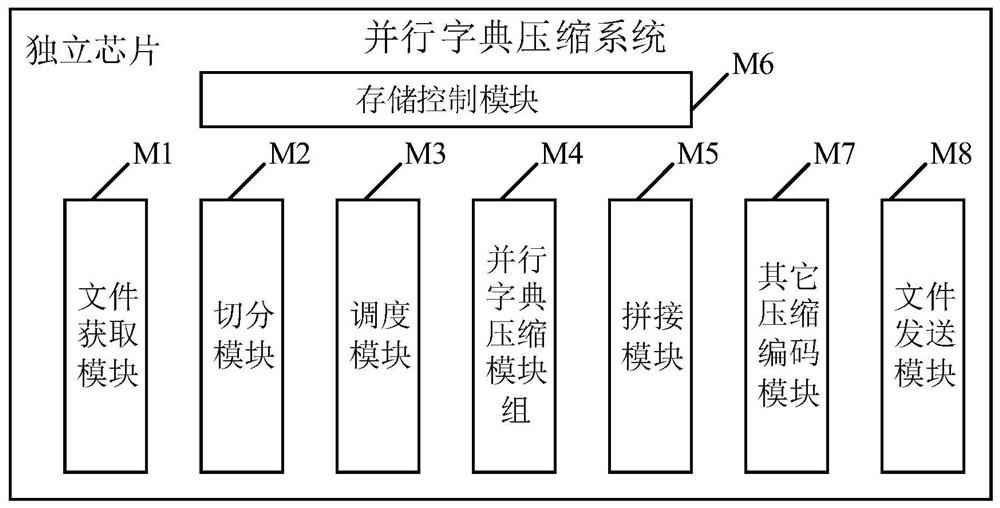

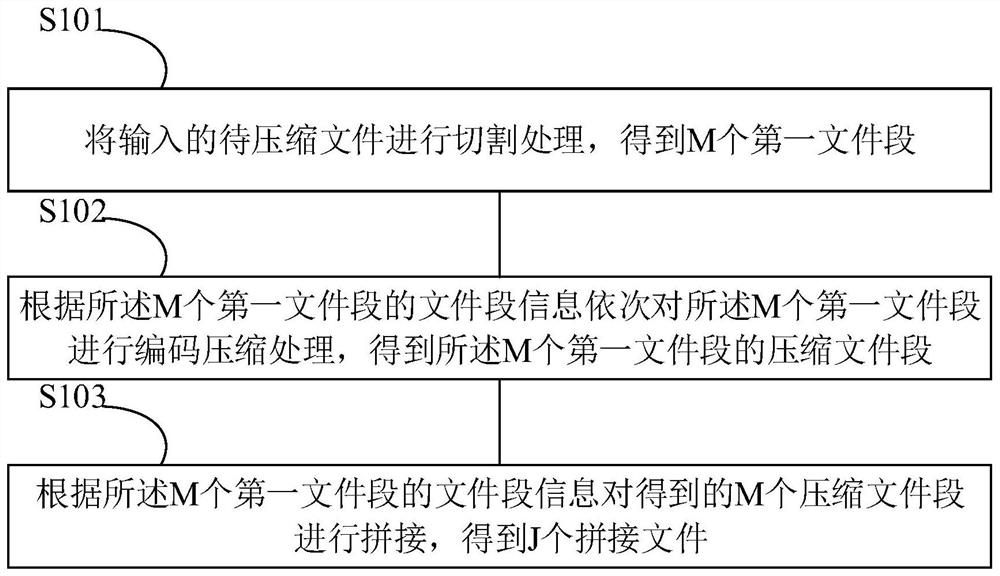

Data compression method and device, terminal equipment and storage medium

ActiveCN111723059AShorten Compression LatencyEasy to compress separatelyFile/folder operationsSpecial data processing applicationsComputer hardwareParallel computing

The invention particularly relates to a data processing method and device, terminal equipment and a storage medium, is suitable for the technical field of data compression, and can effectively improvethe efficiency of a compression algorithm, thereby solving the problems of low compression speed and large compression delay of an existing compression technology. The method comprises the steps: cutting an input to-be-compressed file to obtain M first file segments, wherein each first file segment comprises file segment information and file segment data; encoding and compressing the M first filesegments according to the file segment information of the M first file segments to obtain compressed file segments of the M first file segments; and splicing the obtained M compressed file segments according to the file segment information of the M first file segments to obtain J spliced files.

Owner:SHENZHEN KENAN TECH DEV CO LTD

Cache data sharing method and equipment

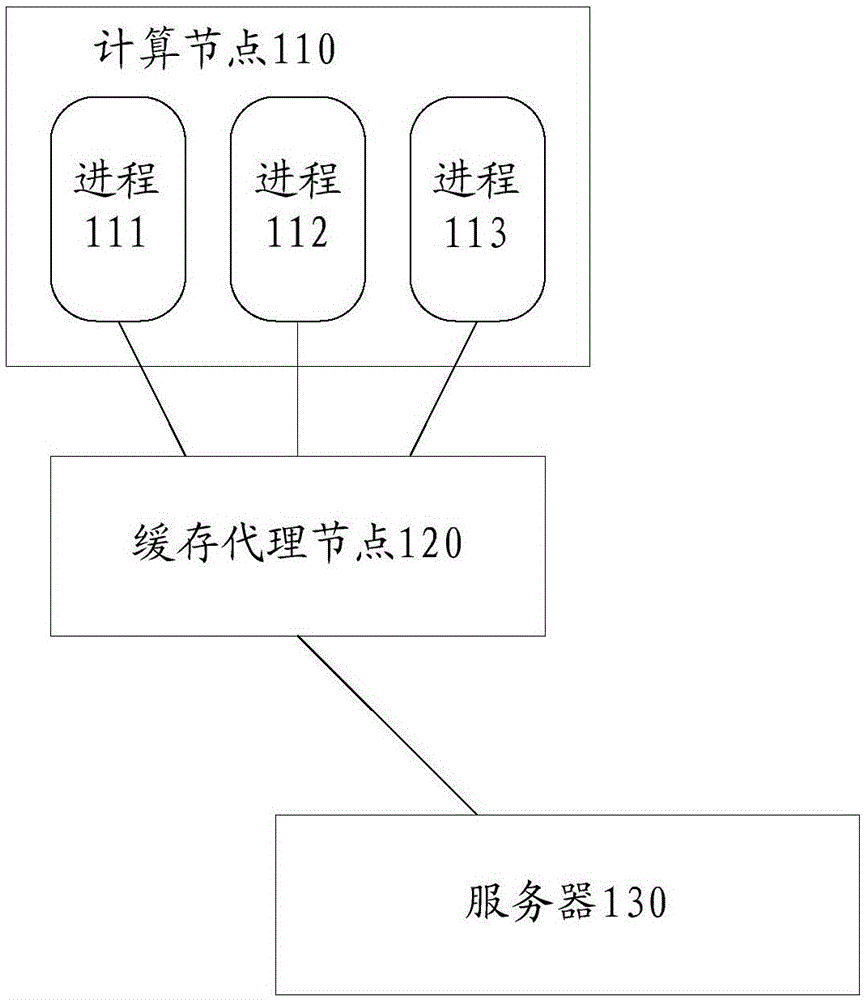

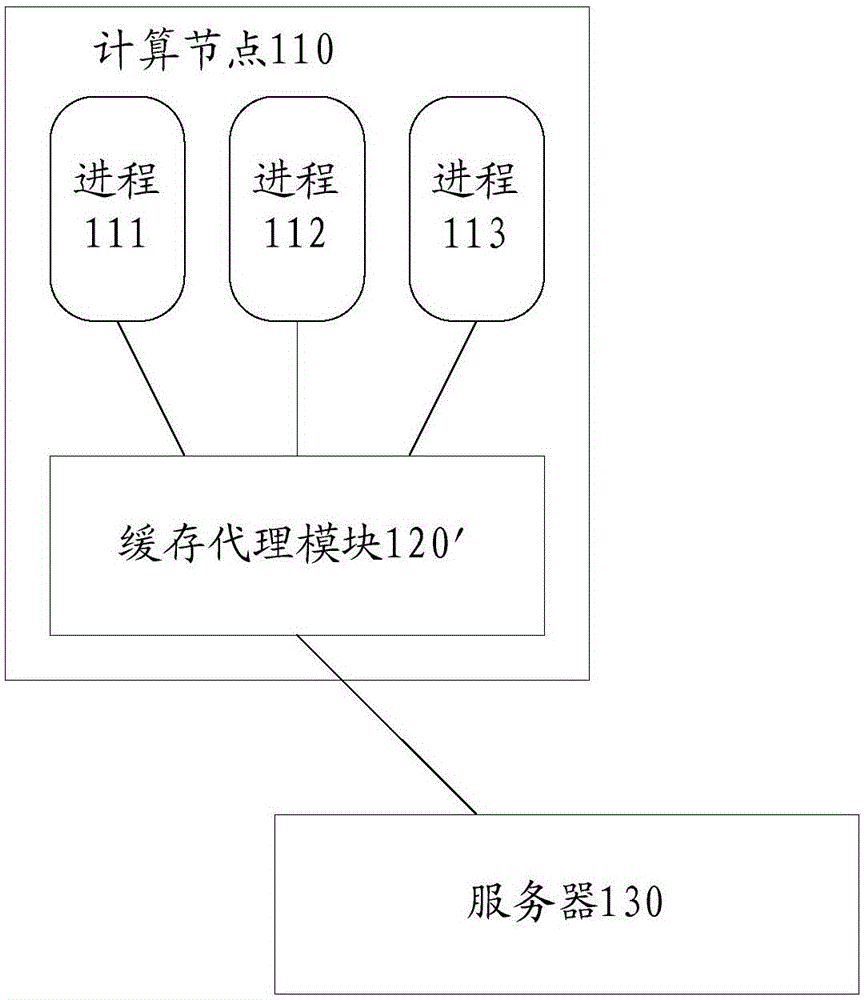

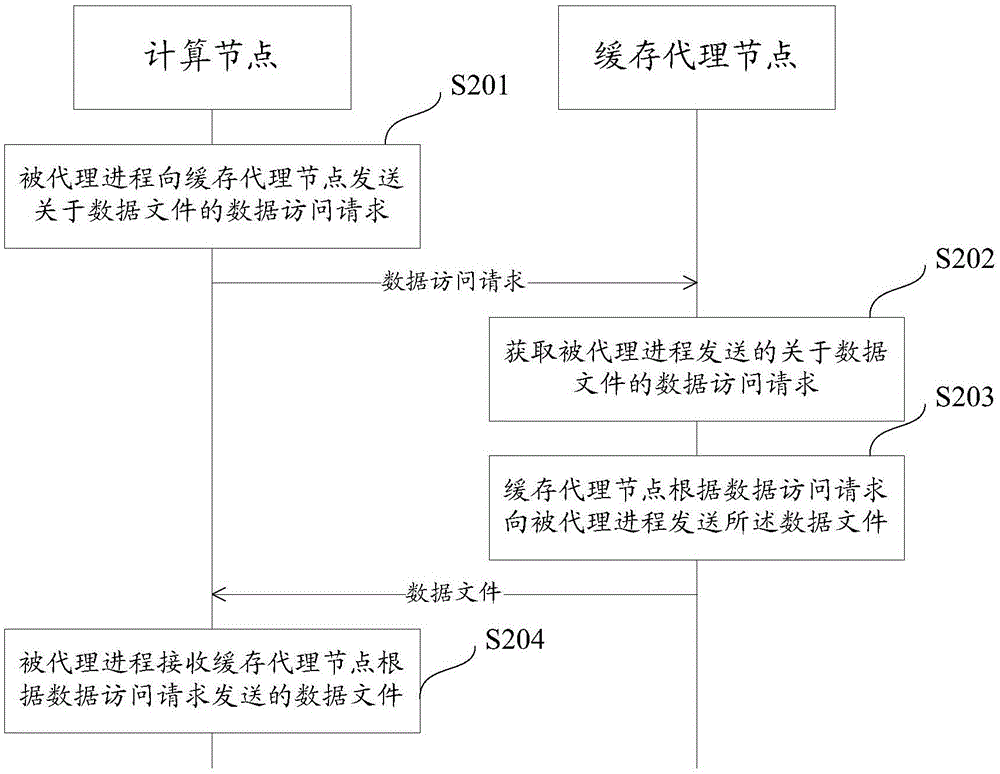

The objective of the invention is to provide a cache data sharing method and equipment. Concretely, a data access request transmitted by the agented process and related to a data file is acquired at a cache agent node side, and the data file is transmitted to the agented process according to the data access request; the process on one or multiple computational nodes is managed through the cache agent node, and the data file required to be used by the agented process is saved in the cache of the cache agent node so that the process on the computational nodes does not need to maintain its independent cache space, the specific data file in the cache of the cache agent node can be shared for multiple processes and cache and computational resources can be saved; meanwhile, the agented process does not need to directly establish connection with a server, and only one connection is established with the server for multiple processes under management of the same cache agent node so that large volume of connection to the server caused by the subscription behavior can be reduced and the load of the server can be reduced.

Owner:ALIBABA GRP HLDG LTD

Dynamic cache processing method and device, storage medium and electronic equipment

PendingCN110688401AImprove experienceImprove call cache data efficiencyDigital data information retrievalResourcesInformation processingEngineering

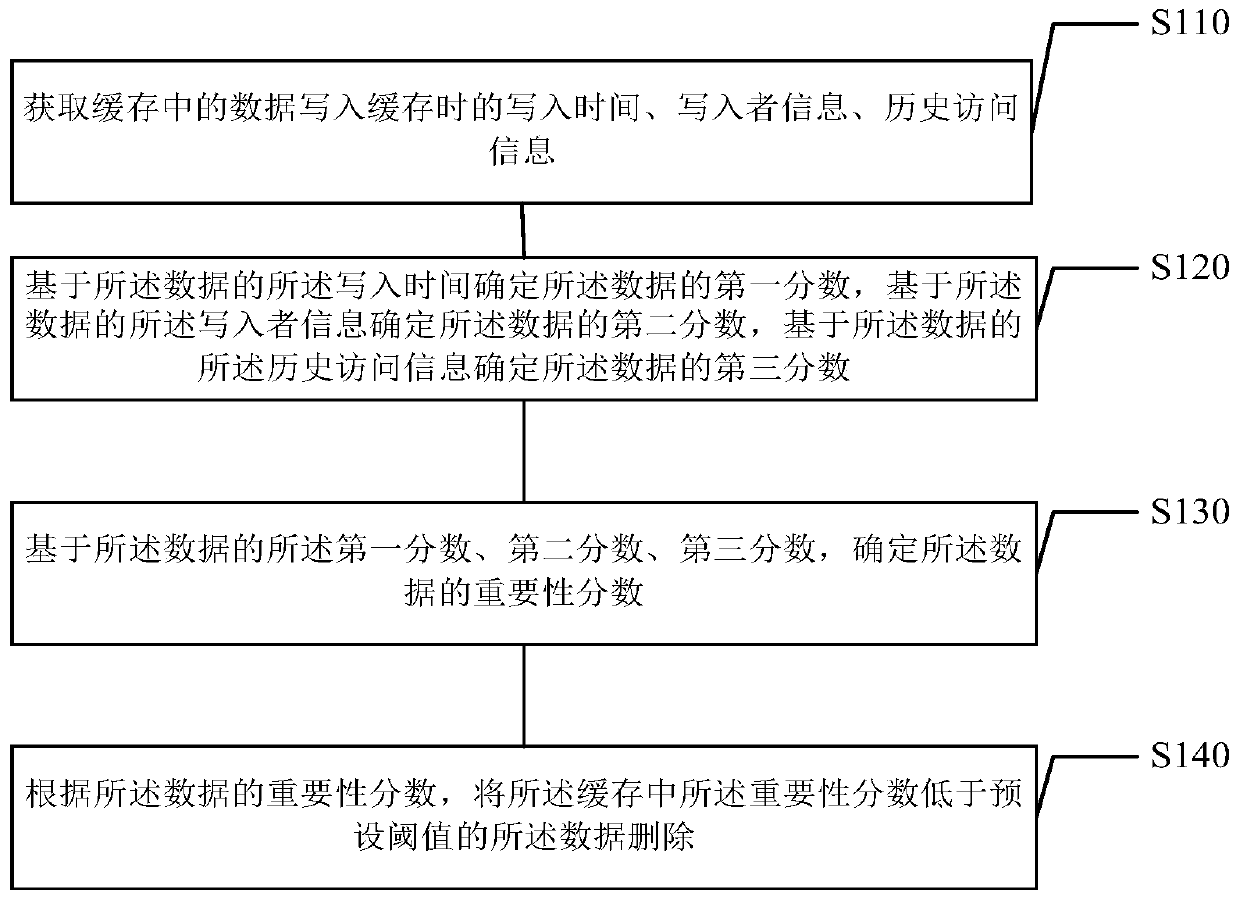

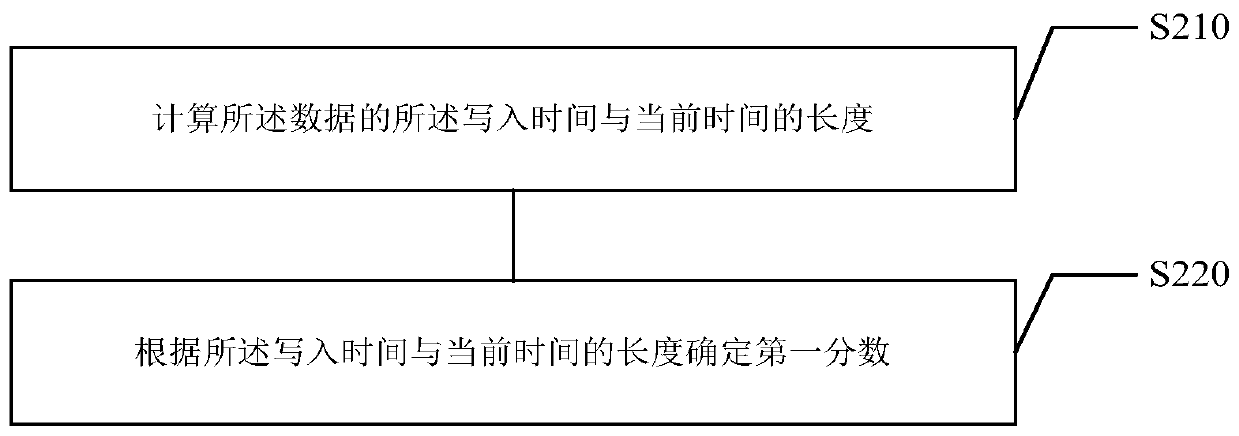

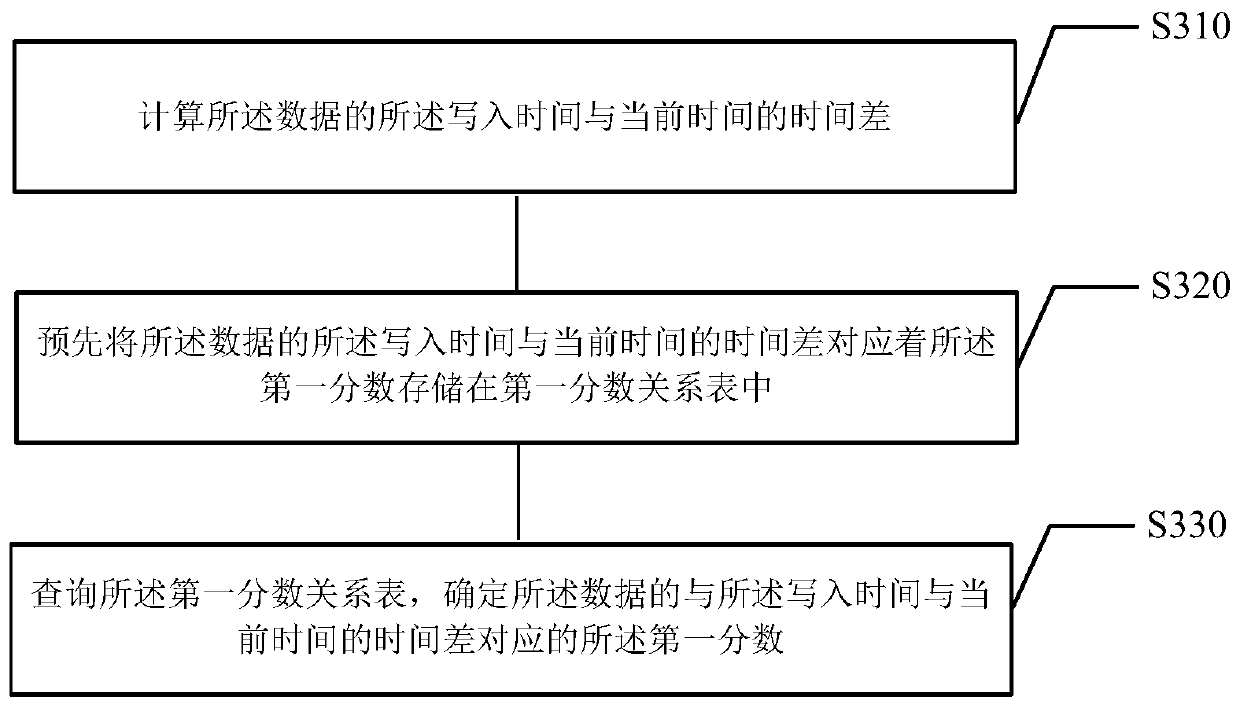

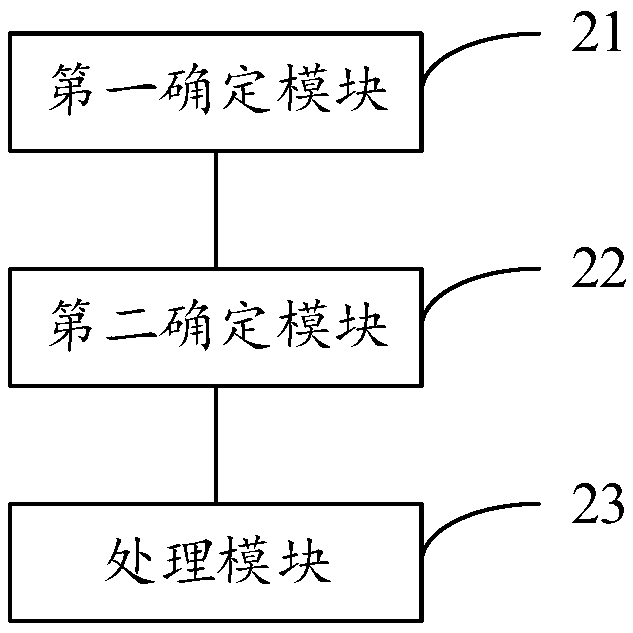

The invention discloses a dynamic cache processing method and device, a storage medium and electronic equipment, and belongs to the technical field of information processing. The method comprises thesteps of obtaining writing time, writer information and historical access information when data in a cache is written into the cache; determining a first score of the data based on the write time of the data, determining a second score of the data based on the writer information of the data, and determining a third score of the data based on the historical access information of the data; determining an importance score of the data based on the first score, the second score and the third score of the data; and deleting the data of which the importance score is lower than a preset threshold in the cache according to the importance score of the data. According to the method, the data with the low importance degree in the cache is deleted in a targeted mode, the accuracy of clearing the data in the cache is improved, the data processing efficiency is improved, and the user experience is improved.

Owner:CHINA PING AN PROPERTY INSURANCE CO LTD

Shard message forwarding method and device

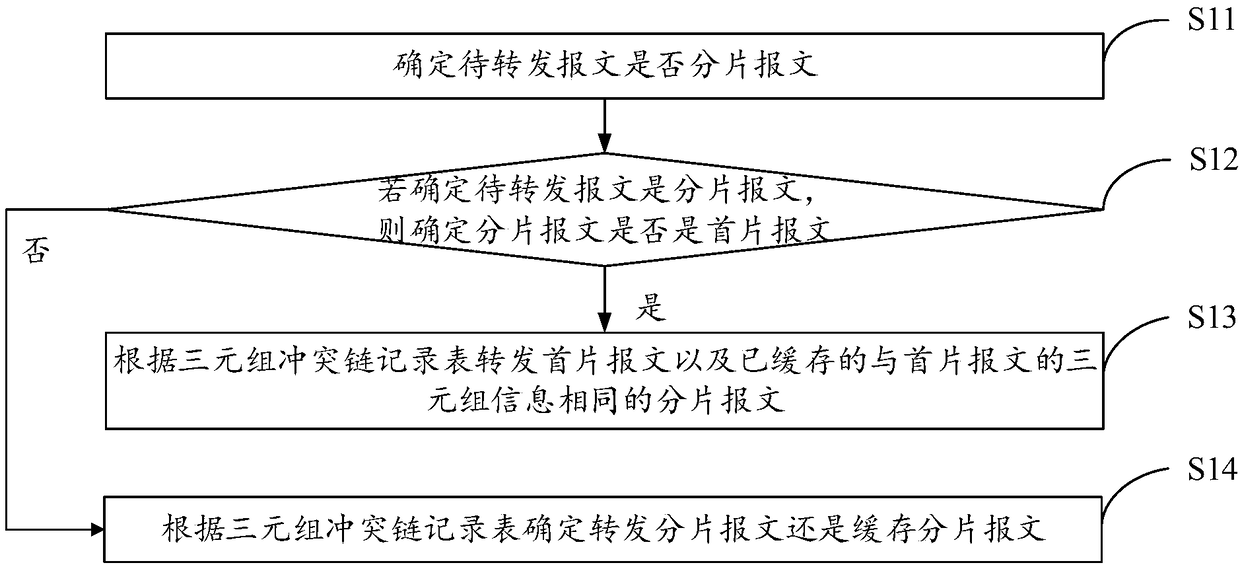

PendingCN109450814AReduce cacheSave cache resourcesData switching networksPacket lossReal-time computing

The invention discloses a shard message forwarding method and device. The method comprises the steps of determining whether a to-be-forwarded message is a shard message or not; determining whether theshard message is a first shard message or not if it is determined that the to-be-forwarded message is the shard message; forwarding the first shard message and the cached shard messages of which triple information is the same as that of the first shard message according to a triple conflict chain record table if it is determined that the shard message is the first shard message; and determining whether to forward the shard message or cache the shard message according to the triple conflict chain record table if it is determined that the shard message is not the first shard message. Accordingto the scheme, the forwarding efficiency is greatly improved, moreover, cache of the shard message can be reduced, cache resources of network equipment are saved, and packet loss of other messages isavoided.

Owner:RUIJIE NETWORKS CO LTD

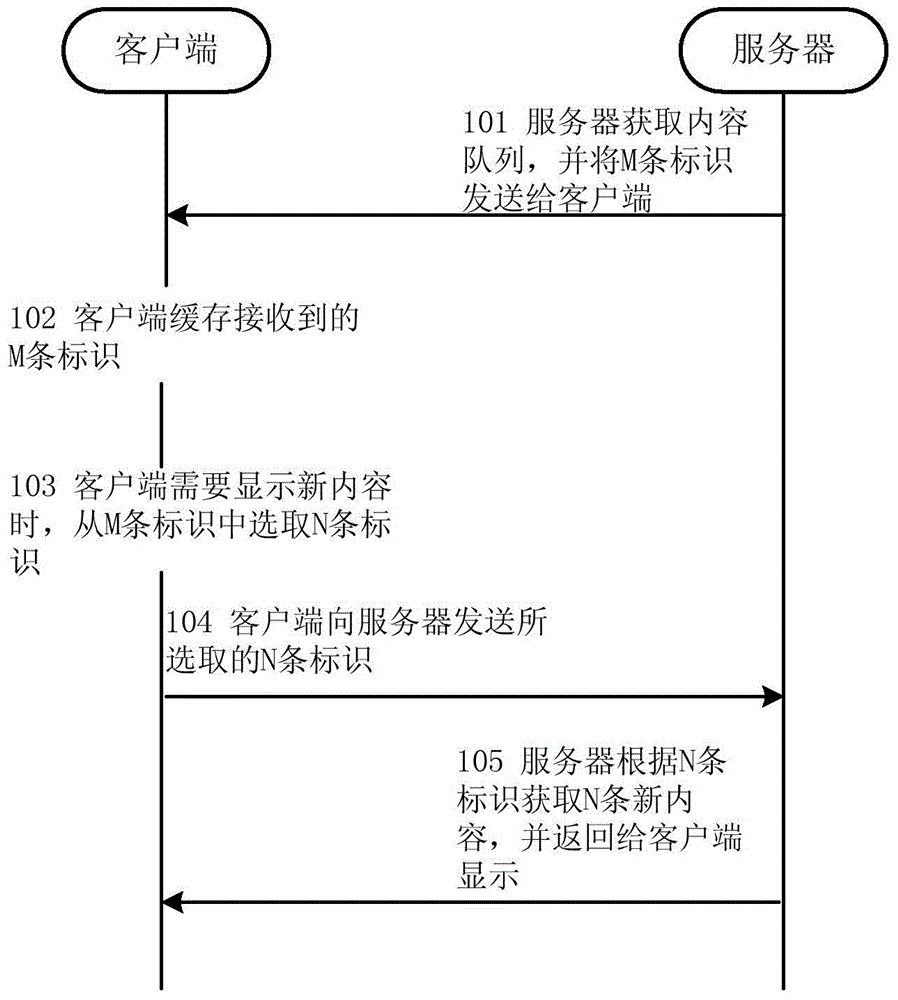

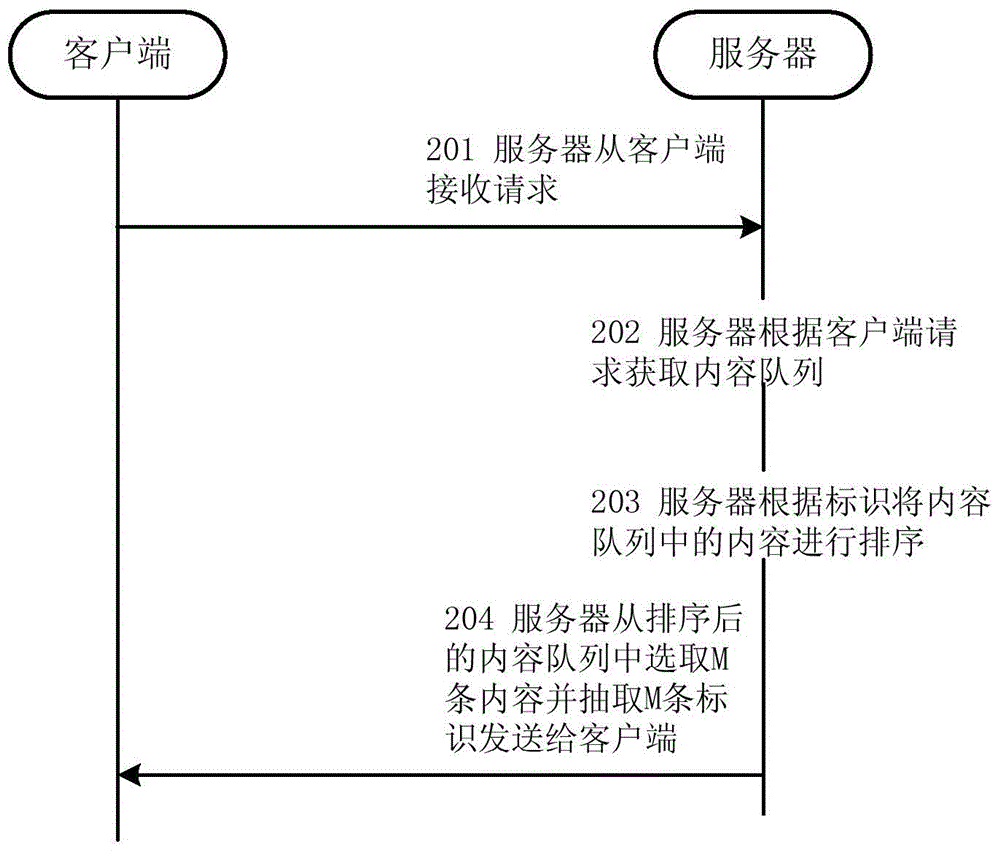

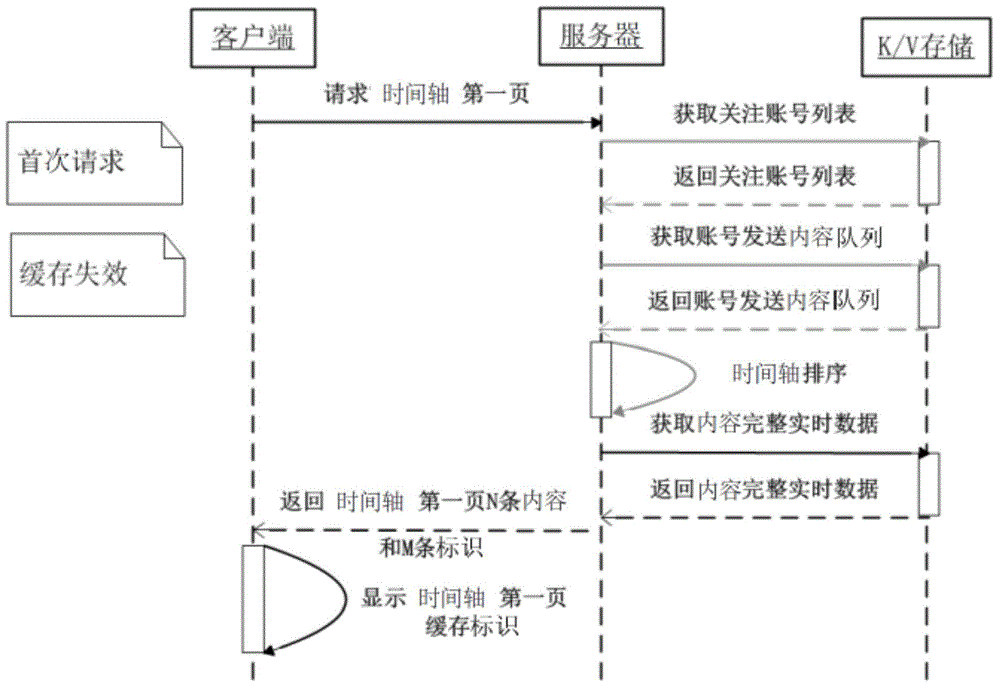

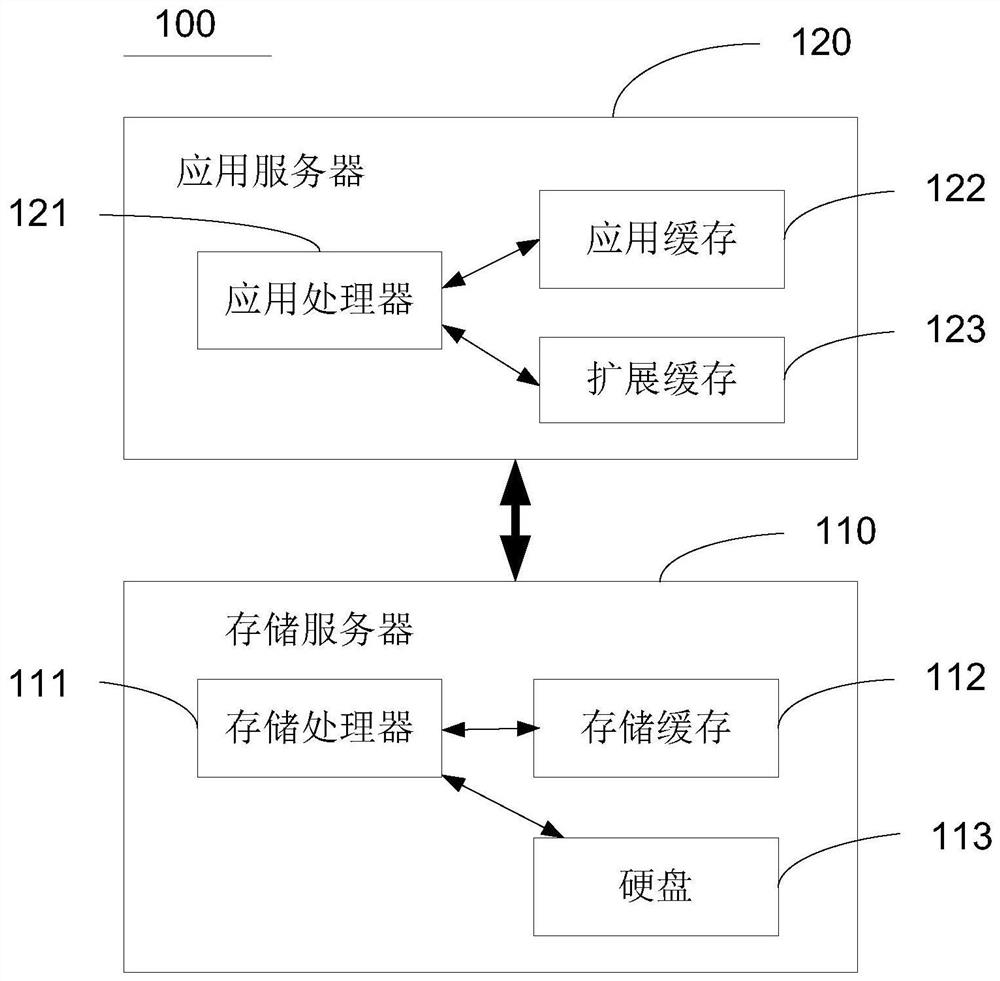

Content caching and transmitting method and system

ActiveCN105634981AReduce data transferImprove browsing speed experienceServices signallingData switching networksData transmissionTraffic volume

The invention relates to the technology of data caching and transmitting, and discloses a content caching and transmitting method and system. According to the invention, when a client needs to display a new page, only a cached corresponding identification needs to be returned to the server, and the server can directly obtain the content of a content queue according to the identification, so that the client displays the content. Moreover, there is no need of repeated ordering and calculation, thereby saving the flow and buffer resources, reducing the data transmission between the client and the server, improving the browsing speed and experience of a user, and facilitating the expansion for handling the increasing trend of users.

Owner:ALIBABA GRP HLDG LTD

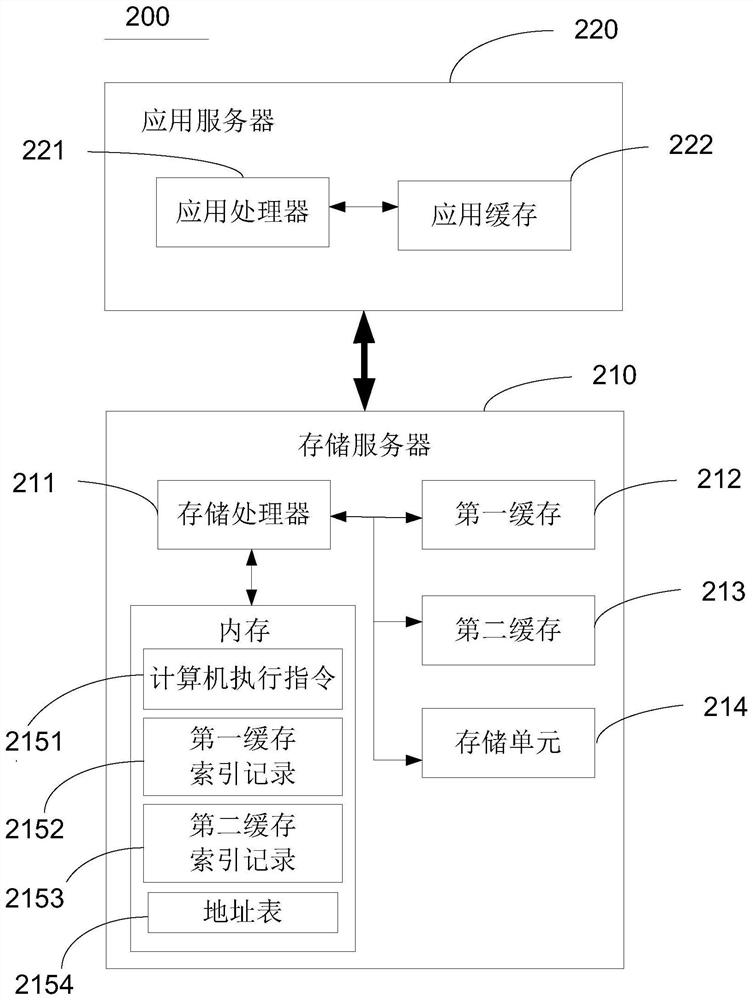

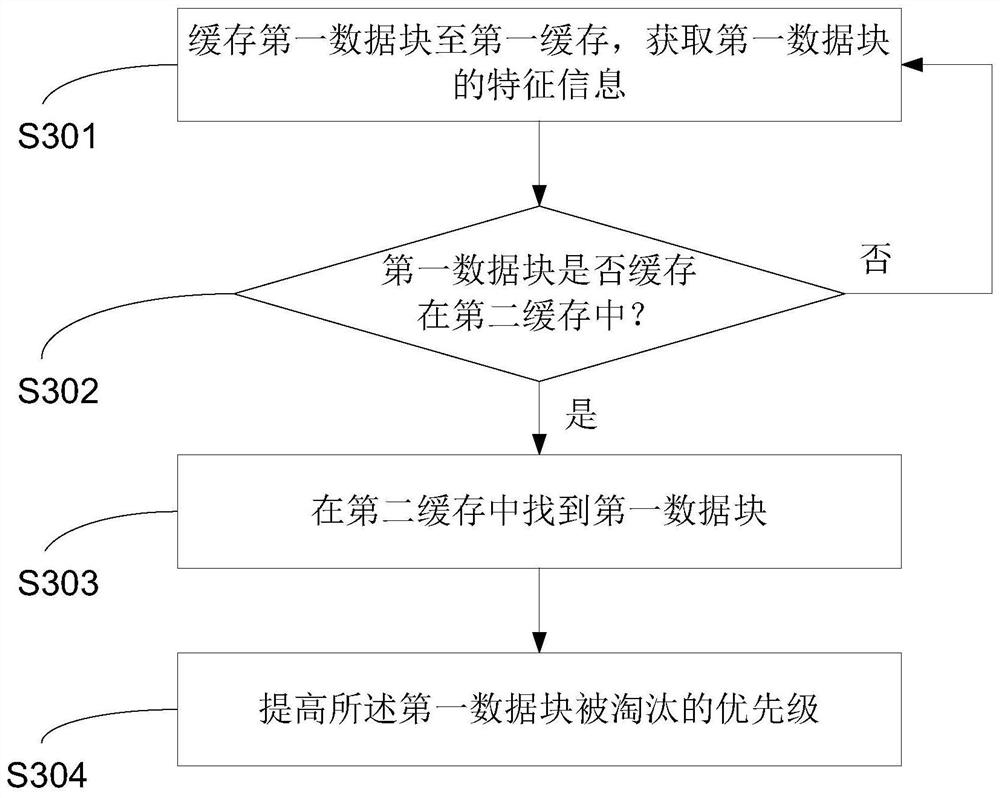

Data caching method, storage control device and storage equipment

PendingCN112214420AReduce duplicate dataQuick responseMemory adressing/allocation/relocationDevice MonitorEngineering

The invention discloses a data caching method applied to storage equipment, a control device of the storage equipment and the storage equipment. The storage equipment comprises a first cache (212) anda second cache (213). When the storage device monitors that a first data block is cached in the first cache (212) (S301), a first data block to be deleted is cached in the first cache (212) (S601), or the caching time of the first data block in the first cache (212) exceeds a threshold value (S801), obtaining feature information of the first data block, and then determining whether the first datablock is stored in the second cache (213) according to the feature information of the first data block; and if it is determined that the first data block is stored in the second cache (213) (S303, S603, S803), adjusting the level of elimination priority of the first data block in the second cache (213) (S304, S604, S804). By coordinating the data in the first cache (212) and the second cache (213) in the storage device, the response speed of the IO request is improved.

Owner:HUAWEI TECH CO LTD

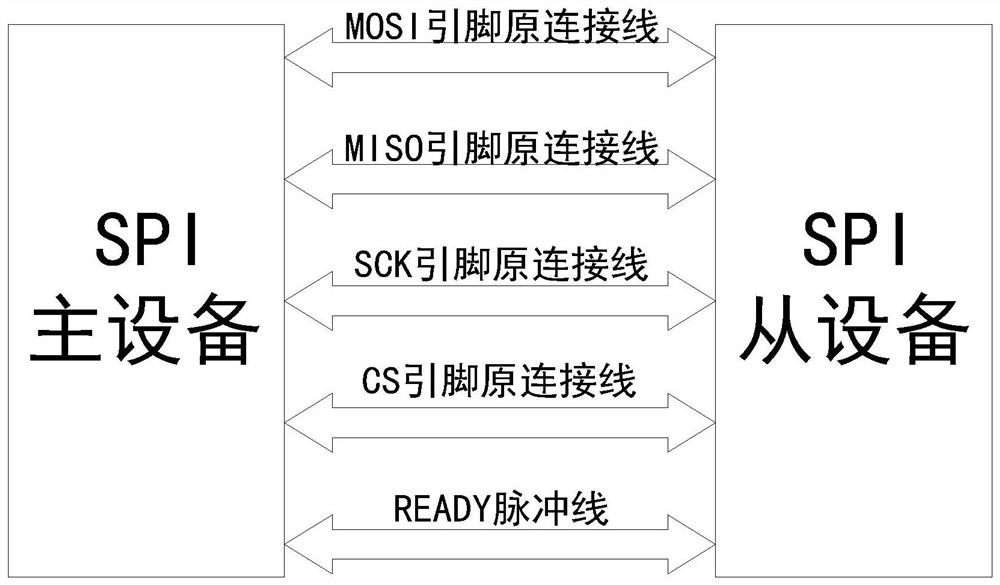

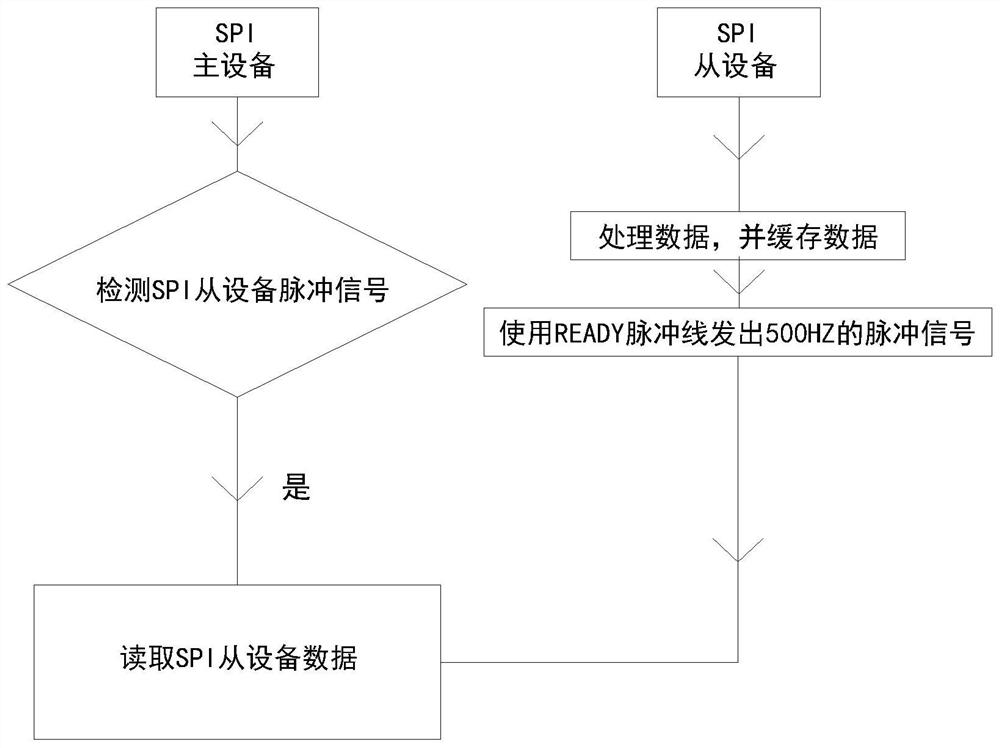

Communication method based on SPI communication

PendingCN112214440AReduce the number of queriesSave cache resourcesElectric digital data processingElectrical connectionDevice Monitor

The invention relates to the technical field of communication, in particular to a communication method based on SPI (Serial Peripheral Interface) communication, which solves the problem of frequent interaction between master equipment and slave equipment when the slave equipment needs to process data and the master equipment passively acquires the data, so that the system performance is improved.The communication method comprises an SPI master device, an SPI slave device and four groups of original connecting wires, wherein two ends of each of the four groups of original connecting wires areelectrically connected with an MISO pin, an MOSI pin, a CS pin and an SCK pin of the SPI master device and an MISO pin, an MOSI pin, a CS pin and an SCK pin of the SPI slave device respectively; an READY pulse line is also included, wherein the SPI master device and the SPI slave device are electrically connected through the READY pulse line. The communication method comprises the steps that the SPI slave device processes data, then caches the data and sends a 500HZ pulse signal through the READY pulse line, and the SPI master device monitors the signal and reads the data.

Owner:北京寓科未来智能科技有限公司

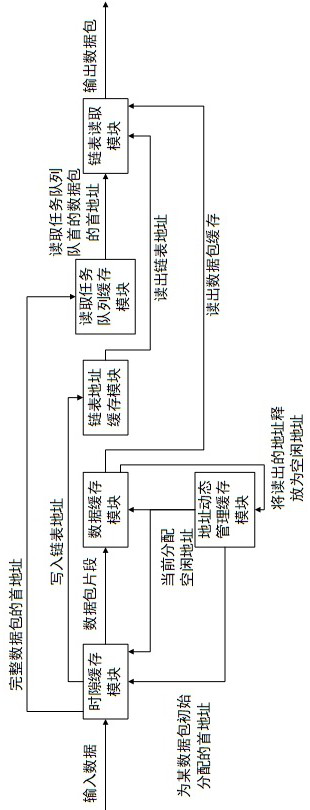

Method for realizing time slot data packet recombination by time division multiplexing cache

ActiveCN112269747AAchieve reorganizationIncrease profitMemory adressing/allocation/relocationTransmissionComputer networkEngineering

The invention discloses a method for realizing time slot data packet recombination by time division multiplexing cache. According to the method, time division multiplexing is carried out on cache resources, namely for the same data cache, a plurality of data packets with different time slots are stored at different time; data packets in different time slots of input original time slot multiplexingcommunication protocol data are stored in the same data cache, and when the data packets are recombined and output, the data cache is read according to the linked list addresses, and the continuouslyoutput of the data packets in a certain time slot is realized; after the addresses of the data cache are manageddynamically to be written into a certain address of the data cache, the address is marked to occupied, and after the certain address of the data cache is read out, the address is marked to be released and to be an idle address, and the idle address of the data cache needs to be distributed before the address is written into the data cache. By the adoption of the scheme, recombination of the data packets in a time slot multiplexing communication protocol is achieved, the cache utilization rate is increased, cache resources are saved, and the recombination of data packets in more time slots is realized based on the limited cache resources.

Owner:TOEC TECH

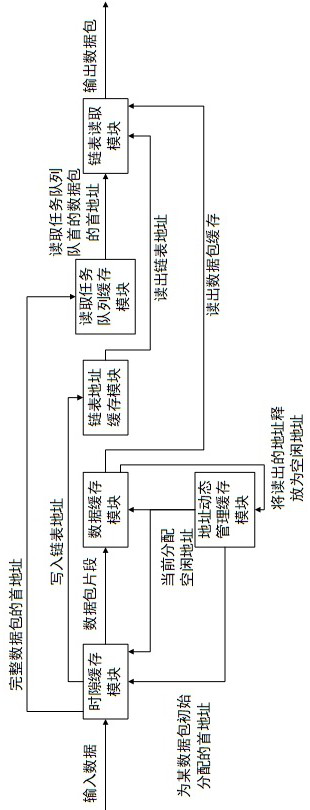

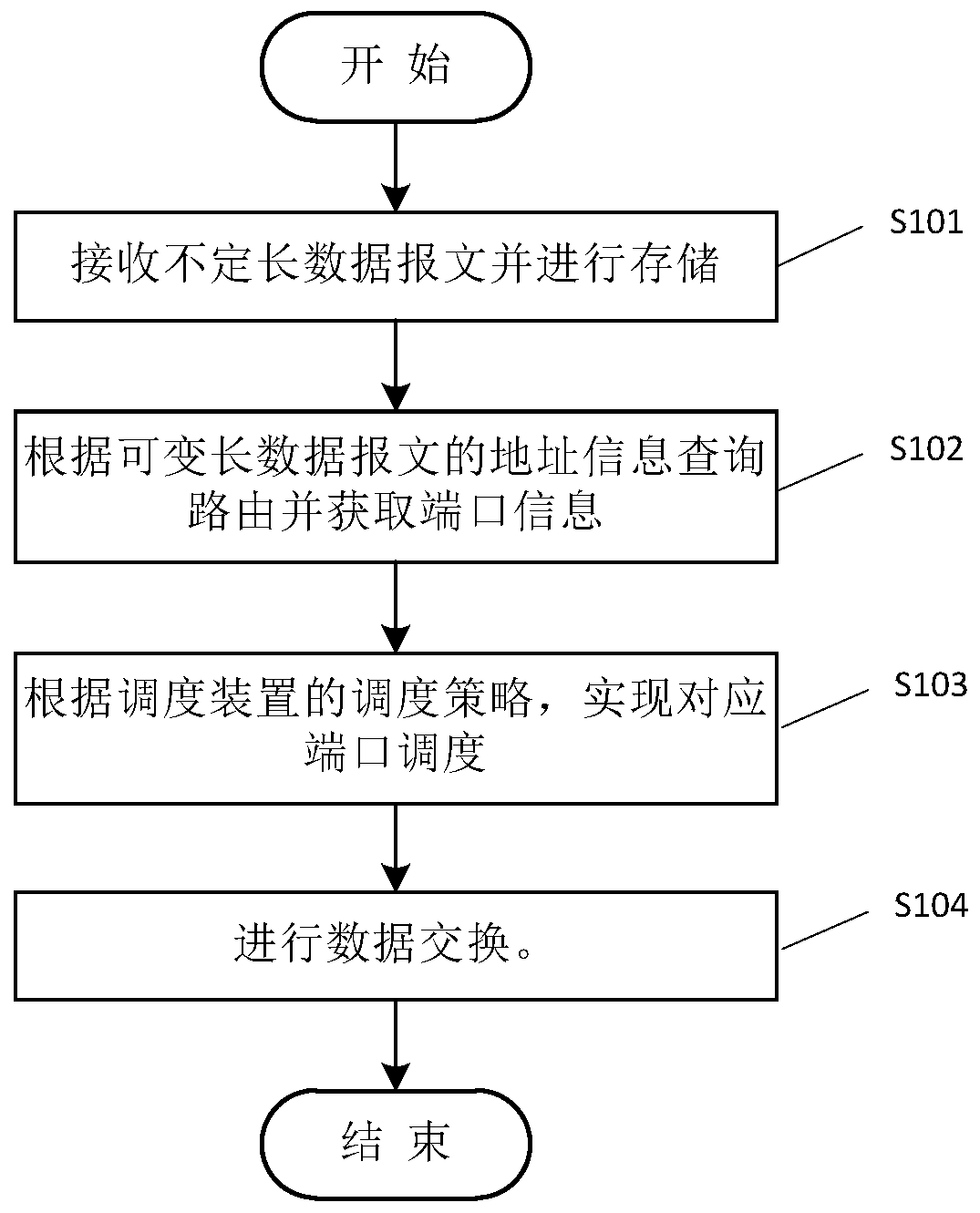

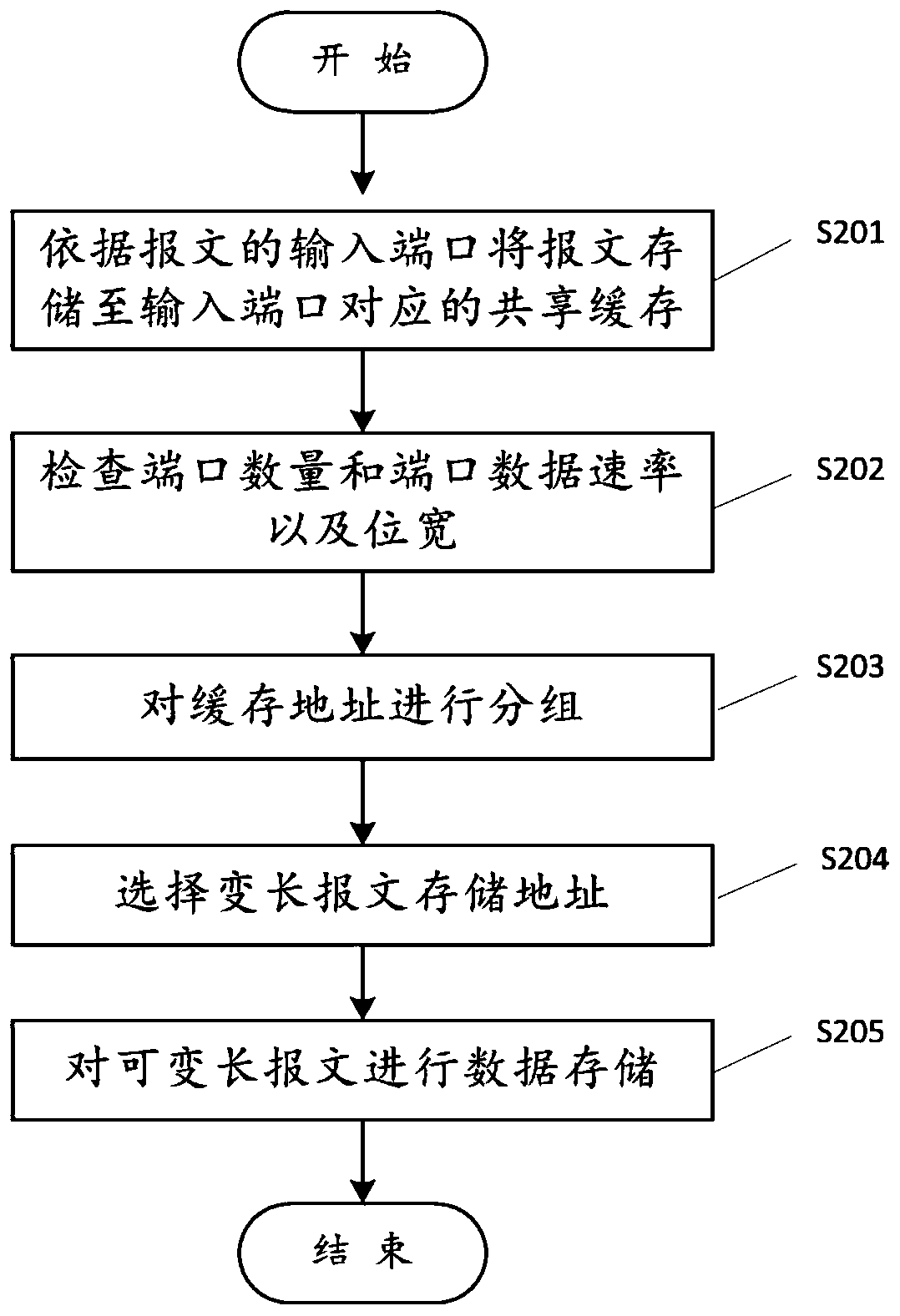

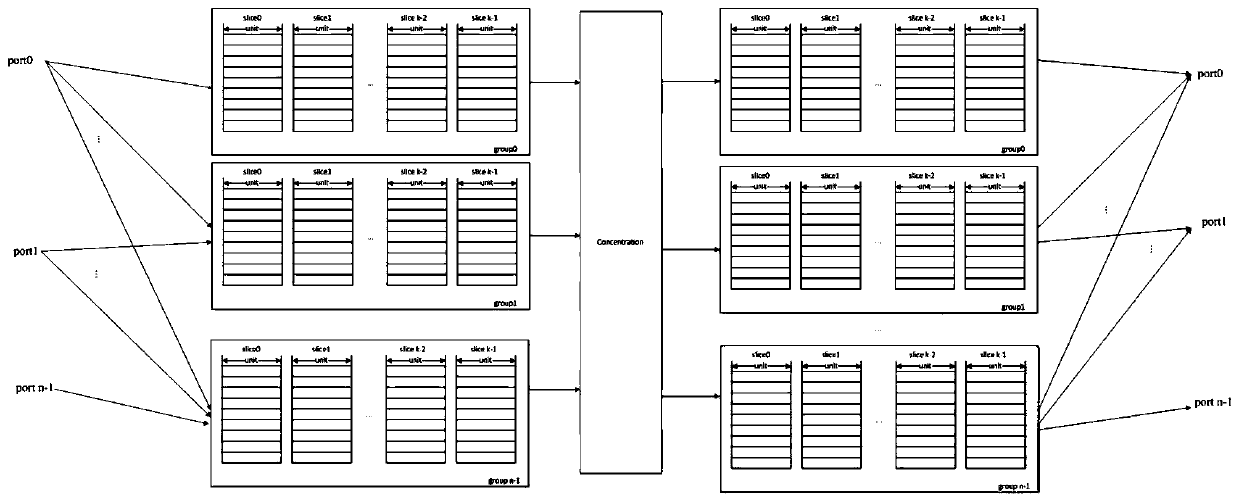

Variable-length message data processing method and scheduling device

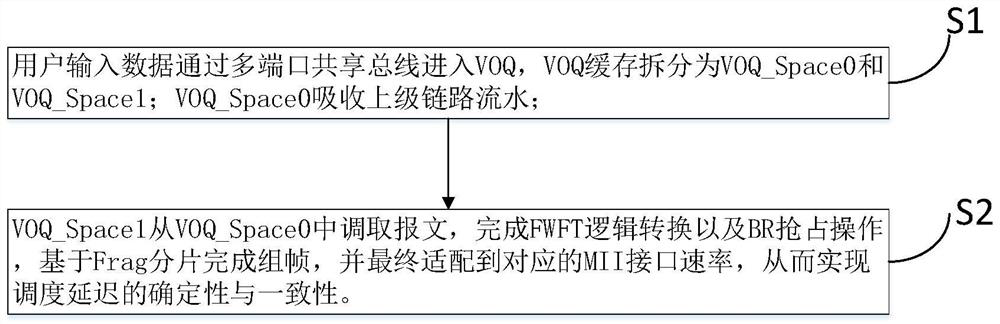

ActiveCN110995598ASave cache resourcesGuaranteed Cache UtilizationData switching networksVirtual Output QueuesUnicast

The invention provides a variable-length message data processing method and a scheduling device, and belongs to the field of high-speed transmission of data communication. The data processing method provided by the invention comprises the following steps: receiving and storing a variable-length message; querying a route according to the address type information carried by the variable-length message and obtaining output port information; realizing corresponding port scheduling according to the scheduling strategy of the scheduling device; and carrying out data exchange. The switching device executes message caching, sorting, switching and copying operations according to the input port, the type and the destination port of the variable-length message, wherein the variable-length message adopts a shared cache storage and virtual output queue mode in a plane, and the cache space is adjusted according to the number of variable ports. According to the invention, ordered non-blocking exchange of unicast and multicast variable-length messages can be realized according to the port setting of the exchange device.

Owner:ETOWNIP MICROELECTRONICS BEIJING CO LTD

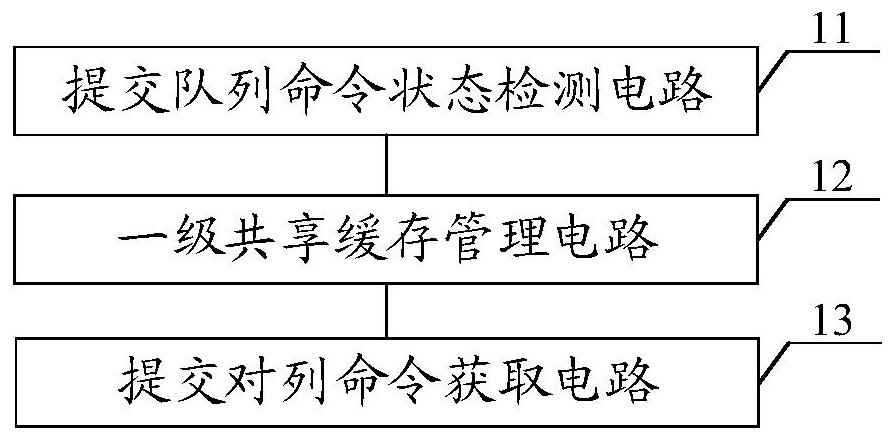

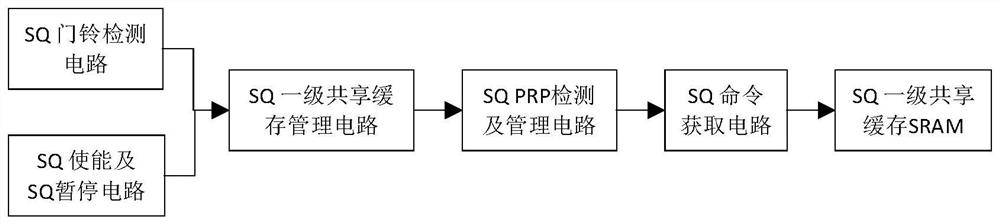

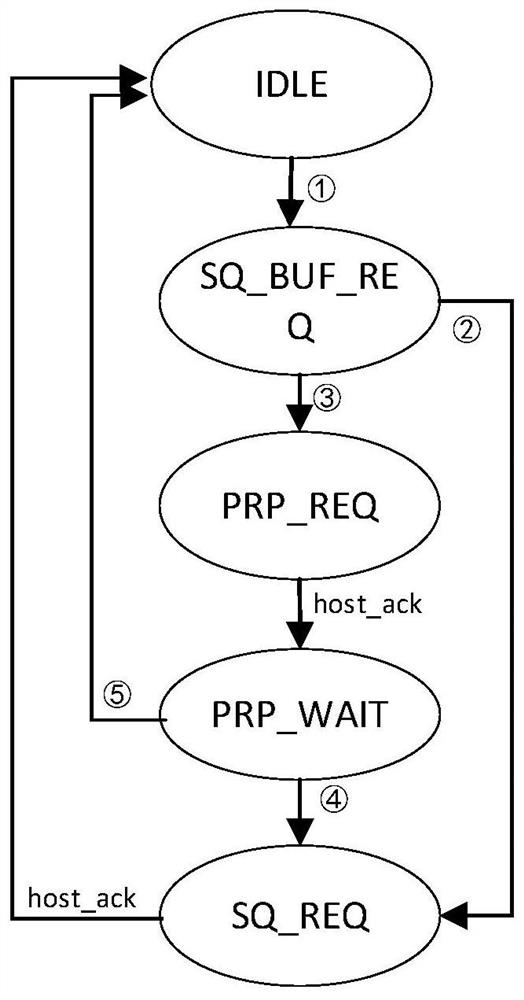

NVMe submission queue control device and method

PendingCN112612424AReduce difficultyIncrease profitInput/output to record carriersCache managementOperating system

The invention discloses an NVMe submission queue control device and method, and the device comprises: a submission queue command state detection circuit which is used for detecting whether the current state of each submission queue is non-empty or not; the first-level shared cache management circuit that is used for distributing corresponding first-level cache units in the same first-level shared cache for different submission arrays of which the current states are non-empty; and the submission alignment command acquisition circuit that is used for sending a submission alignment command acquisition request to the host end, so that the NVMe controller writes a submission queue command returned by the host end into the corresponding first-level cache unit. In this way, the utilization rate of the submission queue cache can be increased, cache resources can be saved, and the difficulty of hardware circuit layout and wiring of the submission queue cache is reduced.

Owner:江苏国科微电子有限公司

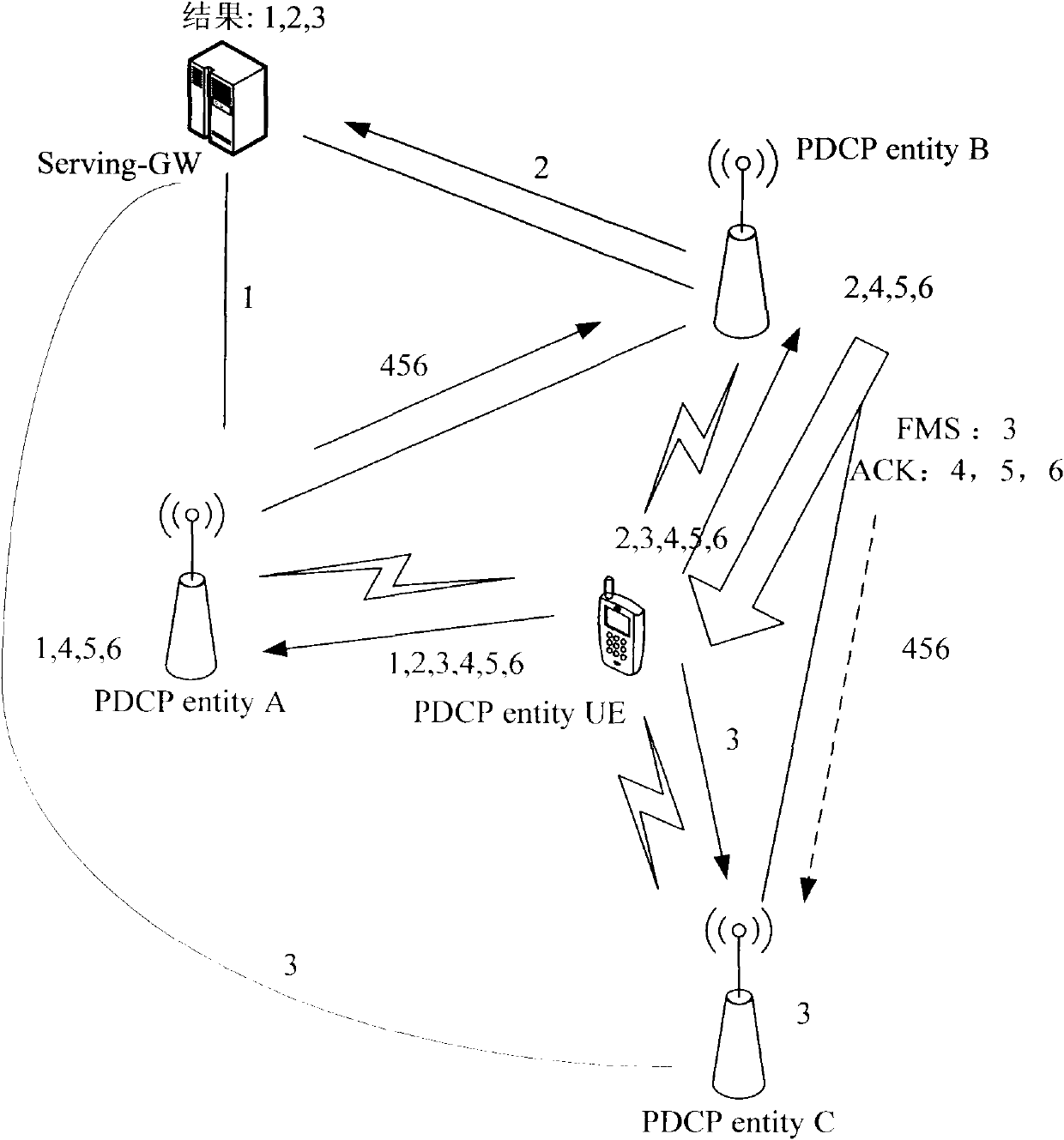

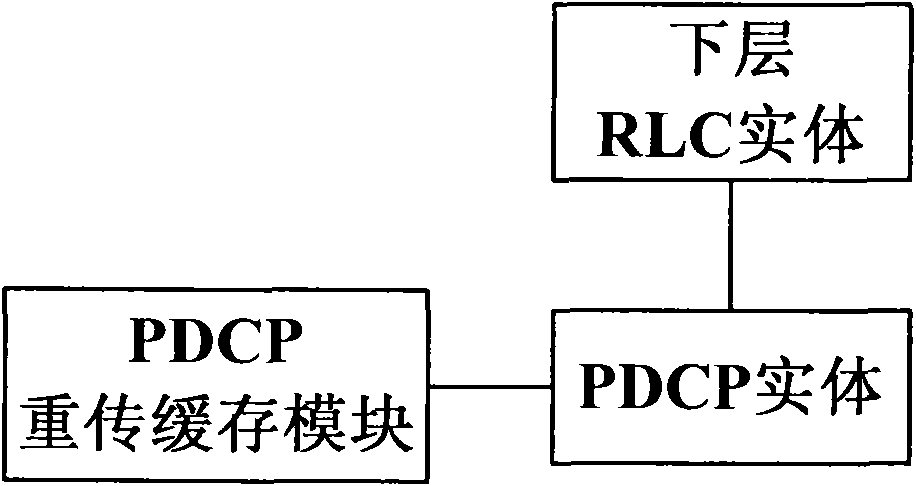

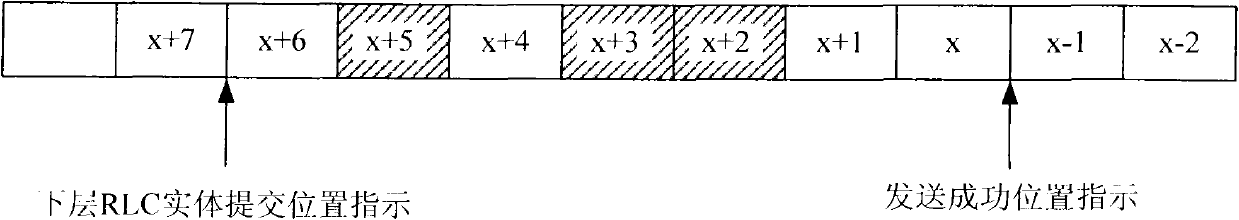

Method and device for avoiding losing uplink data

InactiveCN101997660BEasy to sendPrevent lossError prevention/detection by using return channelNetwork traffic/resource managementData lossUser equipment

The invention discloses a method and a device for avoiding losing uplink data and relates to a long term evolution system. The method comprises the step of: only deleting packet data convergence protocol (PDCP) service data units (SDU) which are determined to be received continuously in PDCP feedback information in a PDCP retransmission cache when a PDCP entity of user equipment (UE) receives the PDCP feedback information in the process of reconstructing a PDCP. The technical scheme of the invention optimizes transmission of the uplink data, particularly avoids losing the data under the condition of continuously reconstructing the PDCP and saves the PDCP cache resources.

Owner:ZTE CORP

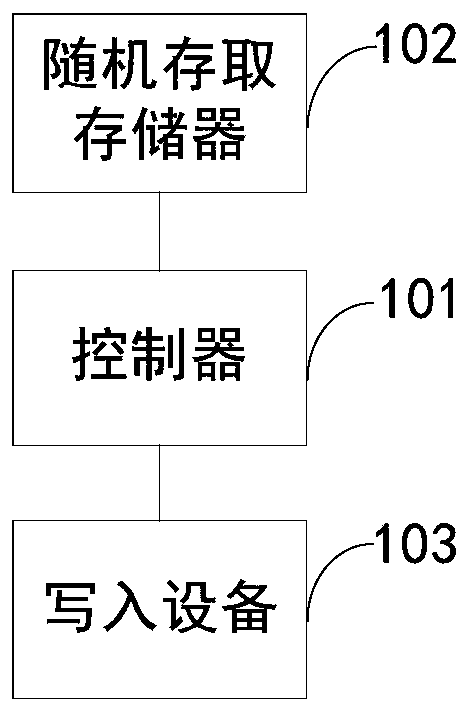

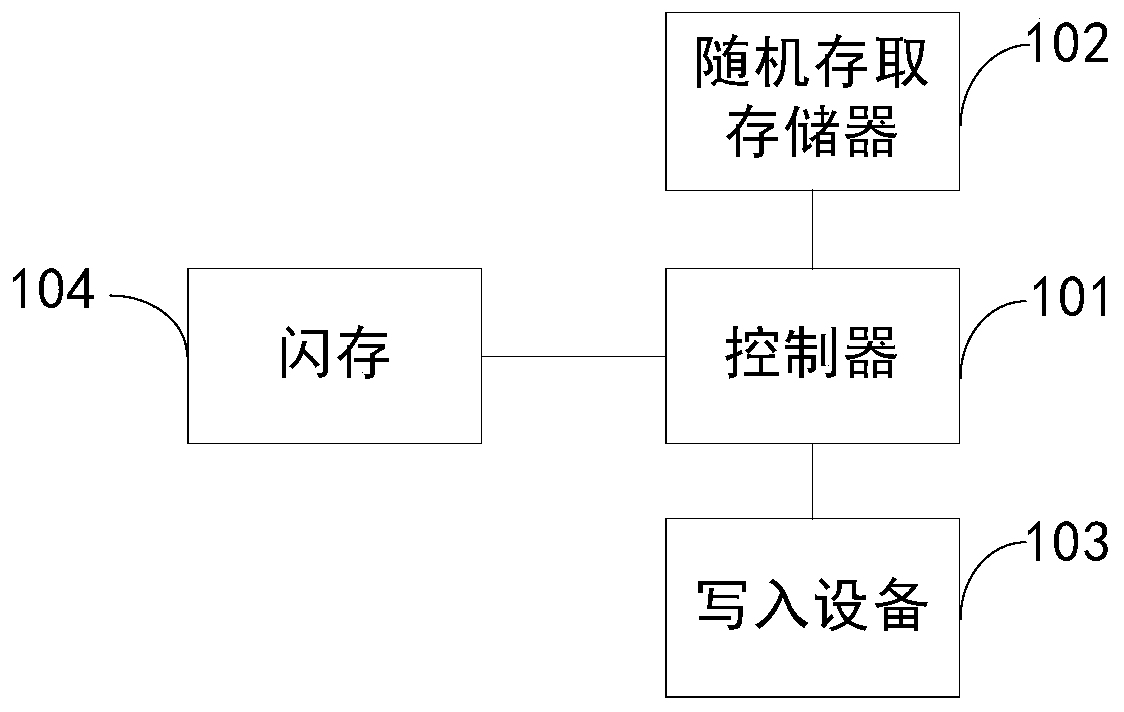

Flash system and engine

PendingCN110888606ASave cache resourcesInput/output to record carriersRandom access memoryEngineering

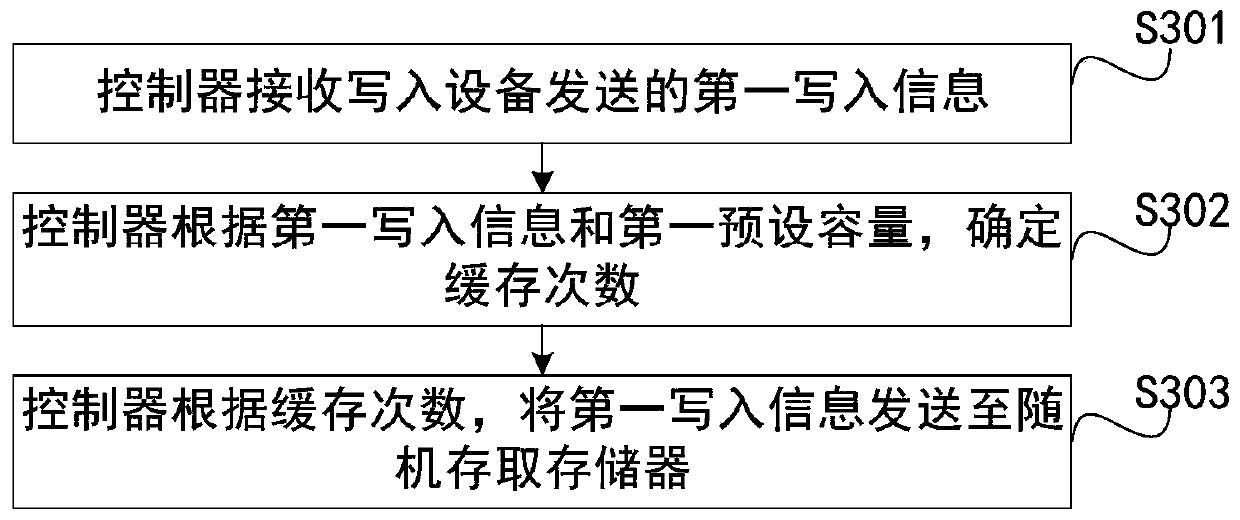

The invention provides a flash system and an engine. The flash system comprises a controller, a random access memory and a writing device, wherein the controller is respectively connected with the writing device and the random access memory, the controller is used for receiving the first write-in information sent by a write-in device, determining the caching times according to the first write-in information and the first preset capacity, and sending the first write-in information to the random access memory according to the caching times. The flash system and the engine are used for saving thecaching resources of a random access memory.

Owner:WEICHAI POWER CO LTD

Data caching method and device

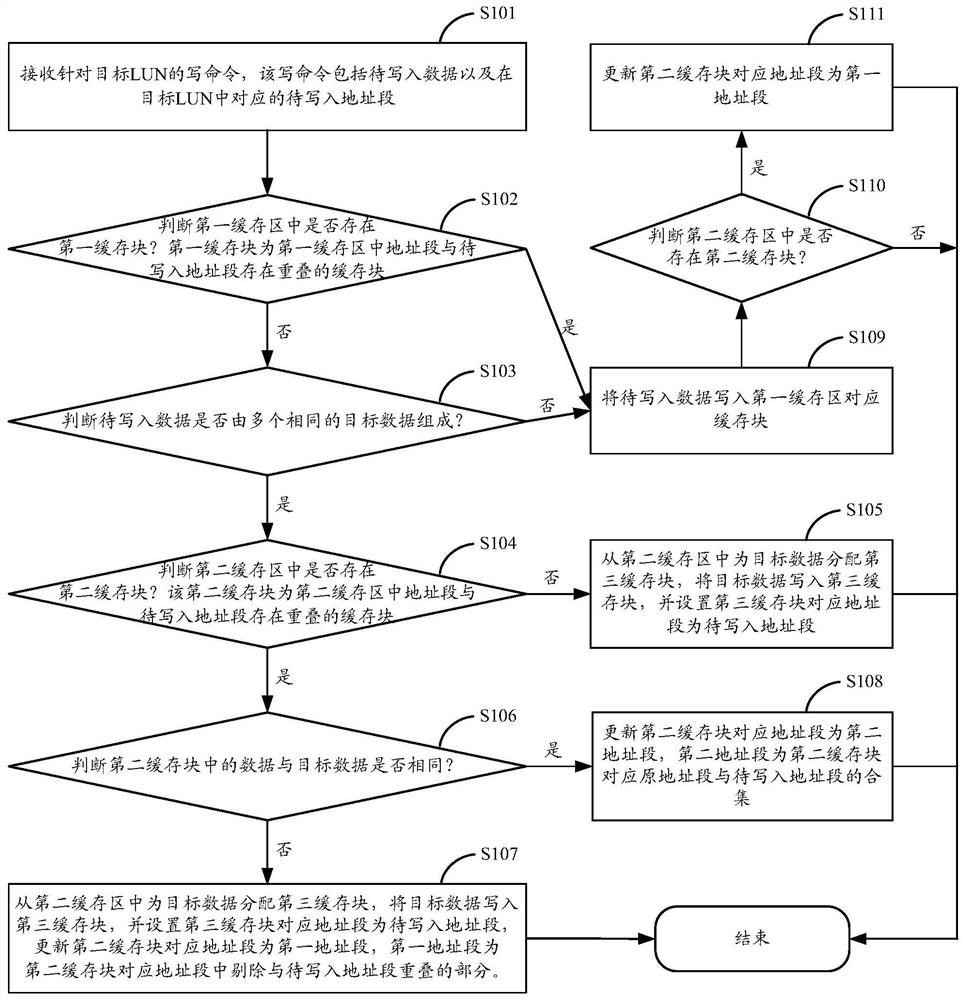

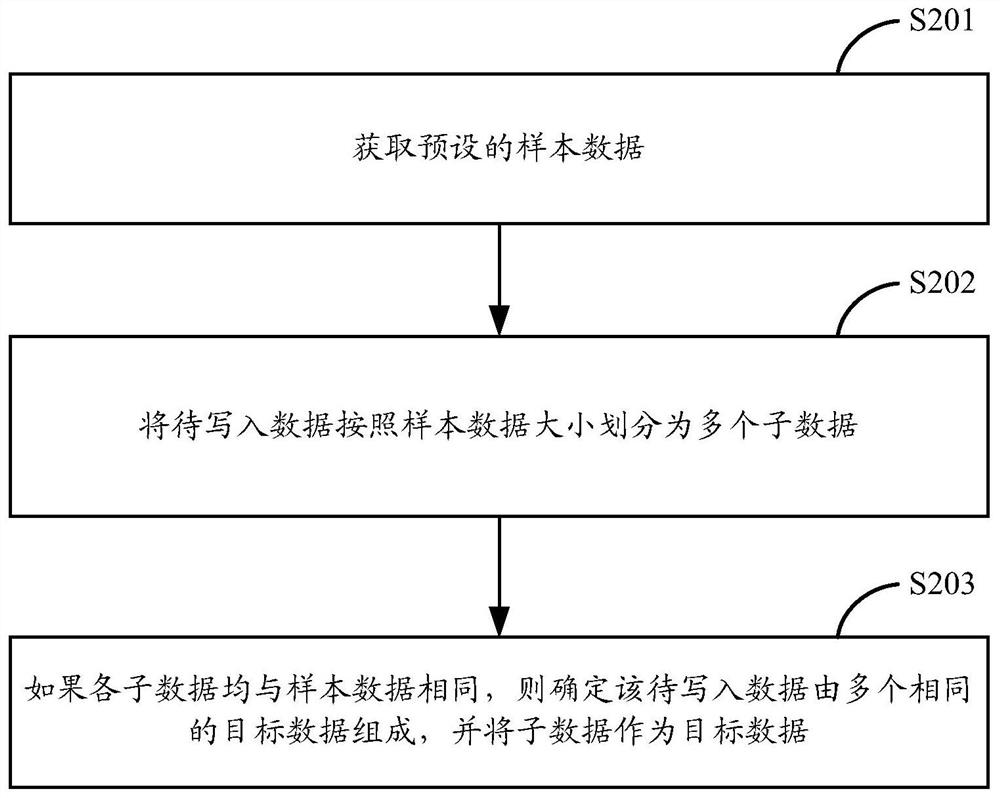

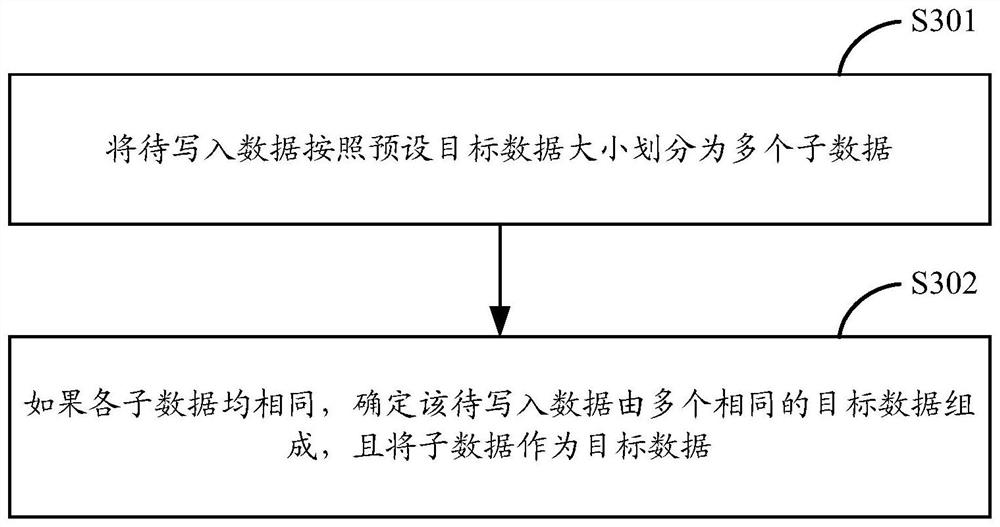

ActiveCN112559388AImprove performanceSave cache resourcesMemory adressing/allocation/relocationEngineeringDatabase

The invention provides a data caching method and device, which are applied to a caching module, and the caching module is used for managing a cache included in storage equipment. When the caching module receives a write command, to-be-written data included in the write command is identified, if the to-be-written data is composed of multiple pieces of repeated data, a cache space of a preset size is allocated to only one piece of repeated data, and the size of the cache space is far smaller than the size of a logic space corresponding to the write command. Caching resources can be effectively saved, the cache utilization rate is improved, and the overall performance of storage equipment is improved.

Owner:MACROSAN TECH

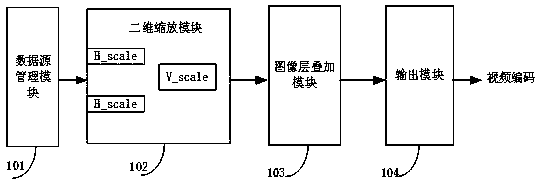

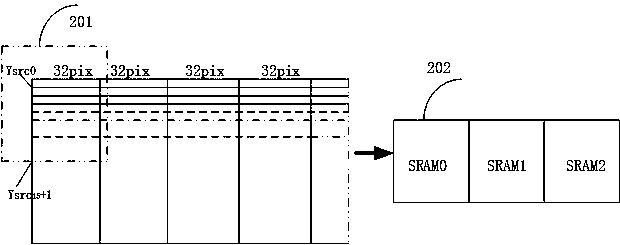

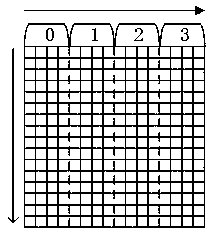

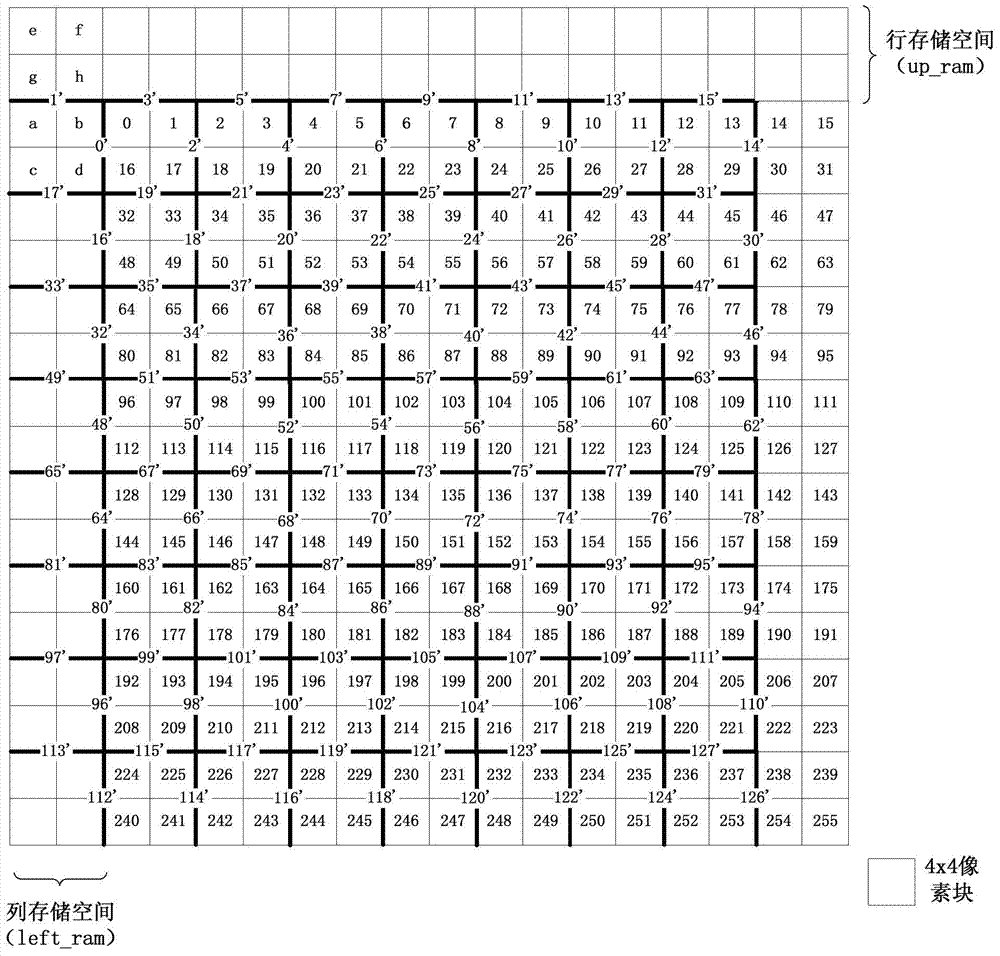

An image preprocessing device suitable for video coding

PendingCN109040755AReduce bandwidth consumptionSave cache resourcesDigital video signal modificationImaging processingComputer graphics (images)

The invention relates to the technical field of image processing, and provides an image preprocessing device suitable for video coding. The device realizes the functions of macroblock zooming, image layer superimposing and thumbnail output of the source image in an online manner, and comprises a data source management module, a two-dimensional zooming module, an image layer superimposing module, an output module and the like. The data source management module pre-generates a source data read instruction required for the output of the current target macroblock row and buffers the source data; The image layer superimposing module reads out and pre-reads the macroblock data of the corresponding superimposed layer according to the coordinate position of each image layer. The output module obtains image data of corresponding size according to a fixed reduction multiple, and then outputs the image data to the video encoding module and writes the image data into the off-chip memory. The device cooperates with the pipeline processing of each module through the block partition and ping-pong storage of the data source, which not only reduces the bandwidth consumption, but also satisfies thereal-time requirement of the high-definition video coding.

Owner:珠海亿智电子科技有限公司

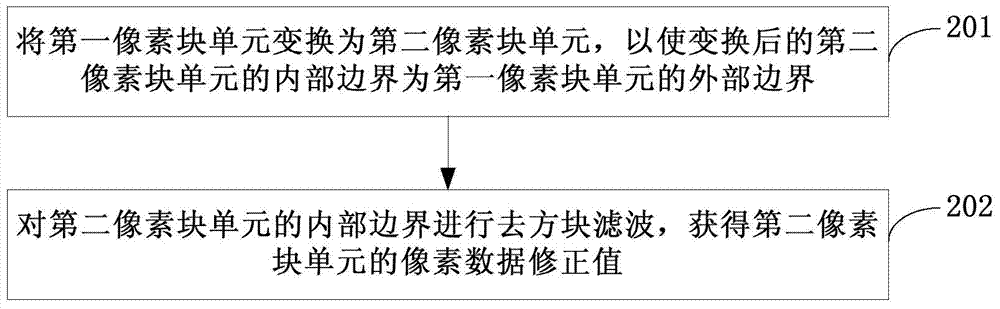

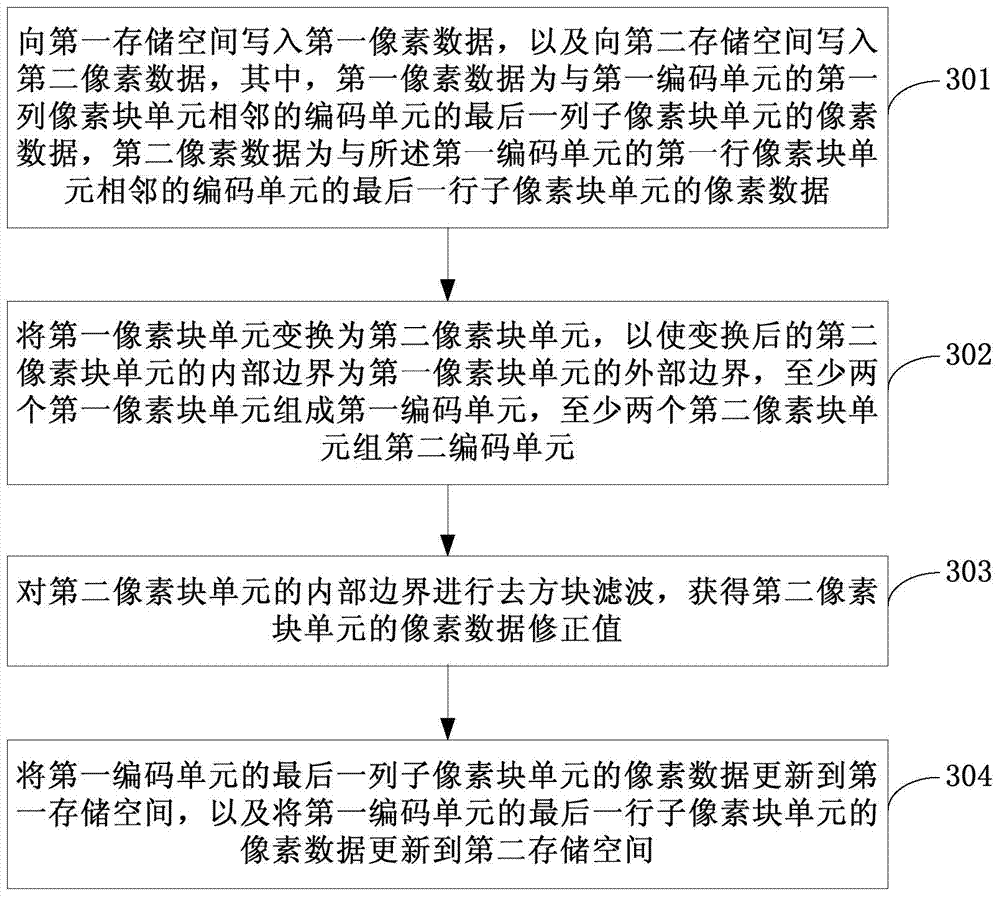

Filtering method, device and equipment

ActiveCN103702132ASimplify the filtering processSave cache resourcesDigital video signal modificationFilter methodsParallel computing

Owner:HUAWEI CLOUD COMPUTING TECH CO LTD

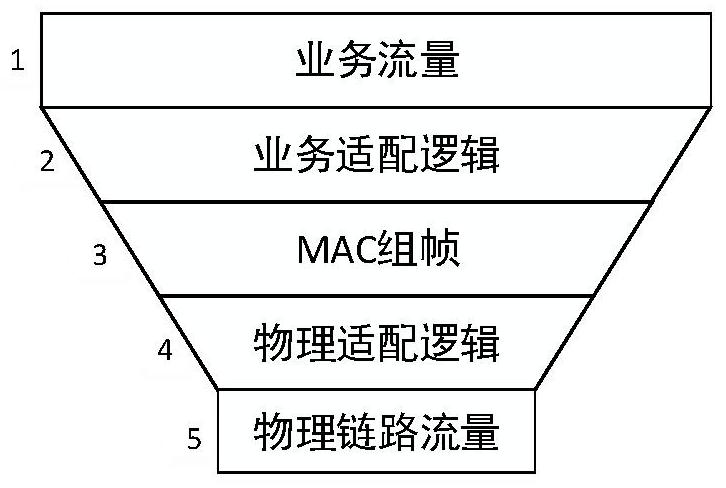

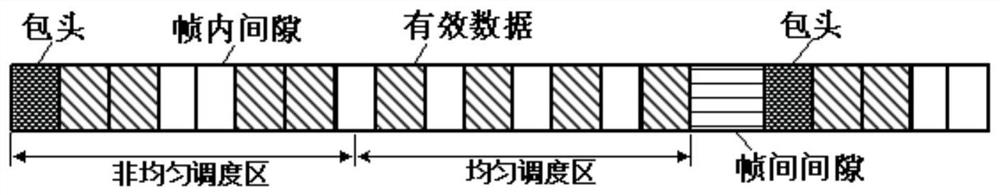

Equal-delay distributed cache Ethernet MAC architecture

PendingCN114500581AImplement the access methodAchieve certaintyTransmissionComputer architectureDistributed cache

The invention relates to the technical field of communication, in particular to an equal-delay distributed cache Ethernet MAC (Media Access Control) architecture, which comprises the following steps of: based on a distributed cache thought, disassembling a cache part which is used for ensuring that a link is not cut off when back pressure is revoked in a traditional adaptive VOQ (Voice Over Quality) cache, and integrating the cache part into each existing downstream function sub-module; according to the method, point-by-point back pressure scheduling is combined, stepping scheduling of each register is achieved, and low delay and low jitter required by application scenarios such as a TSN network are achieved in cooperation with a modularized BR preemptive sub-module capable of being added and deleted, so that the certainty and consistency of scheduling delay are achieved.

Owner:芯河半导体科技(无锡)有限公司

Data recovery method, device and system

ActiveCN109697136AImprove recovery efficiencyReduce data volumeRedundant operation error correctionRecovery methodData recovery

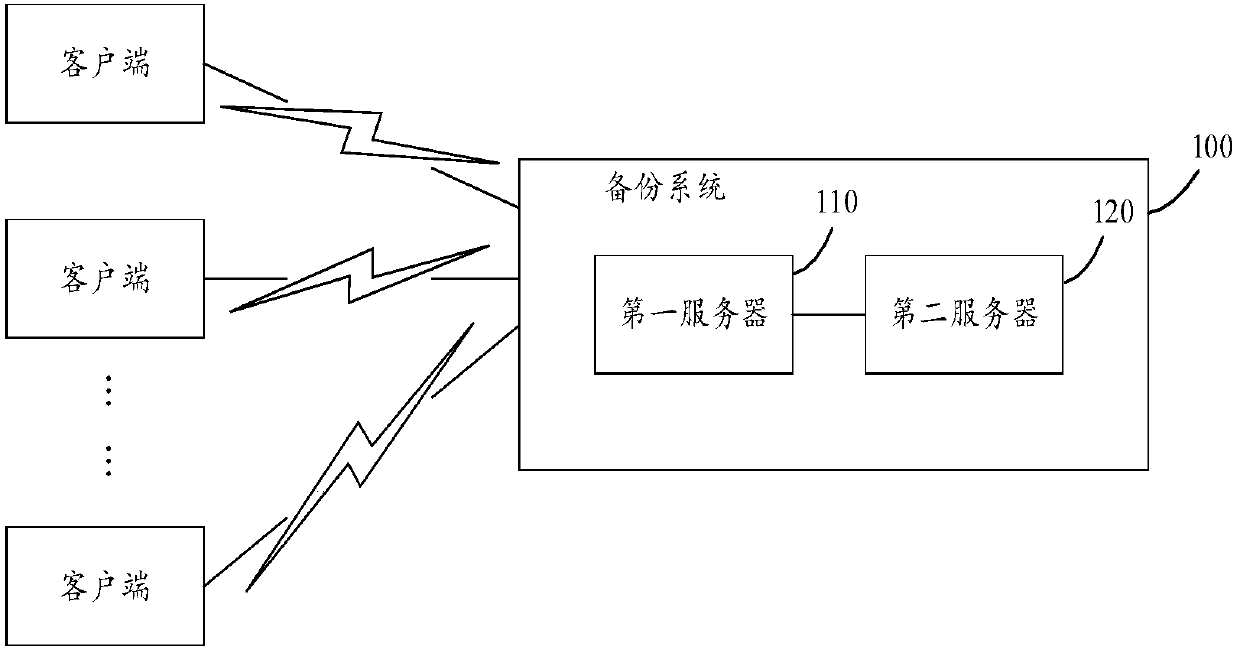

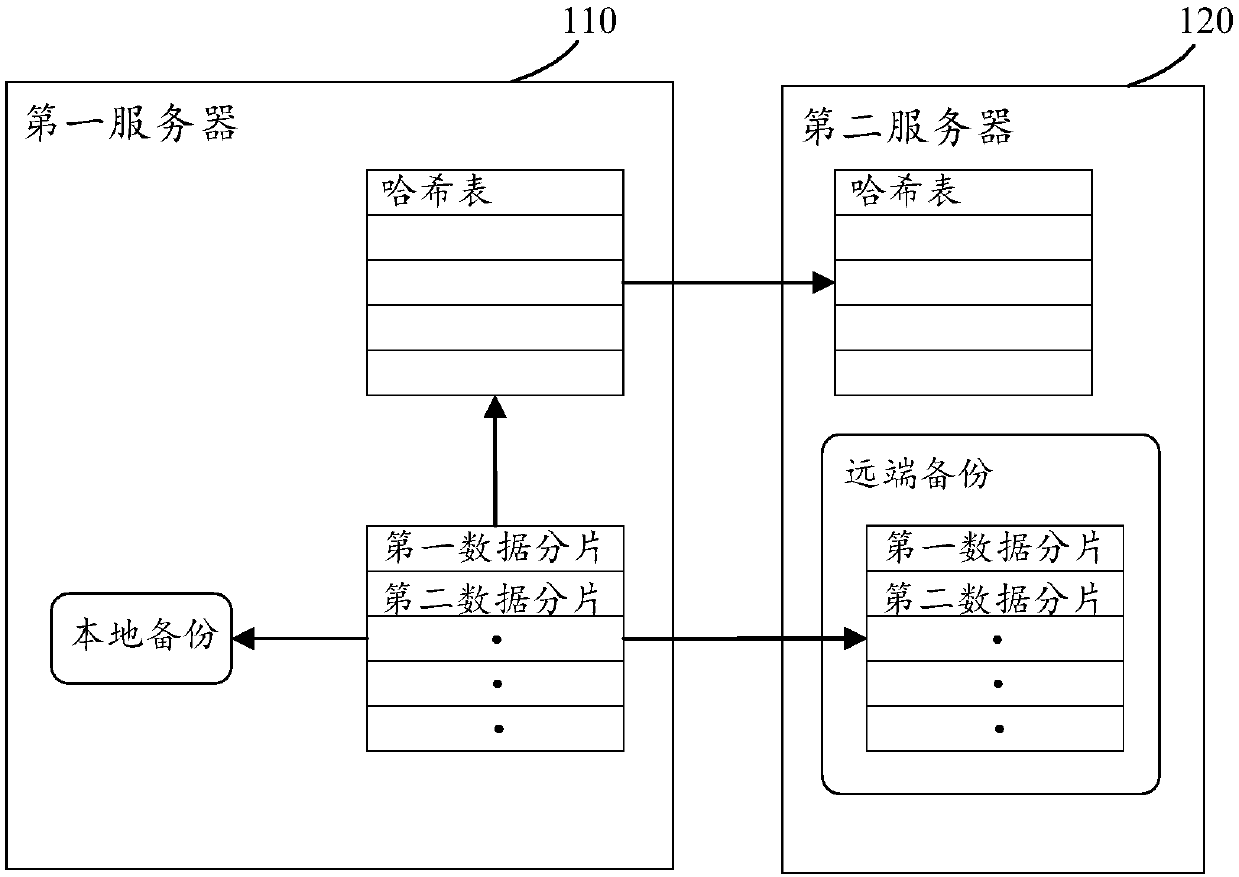

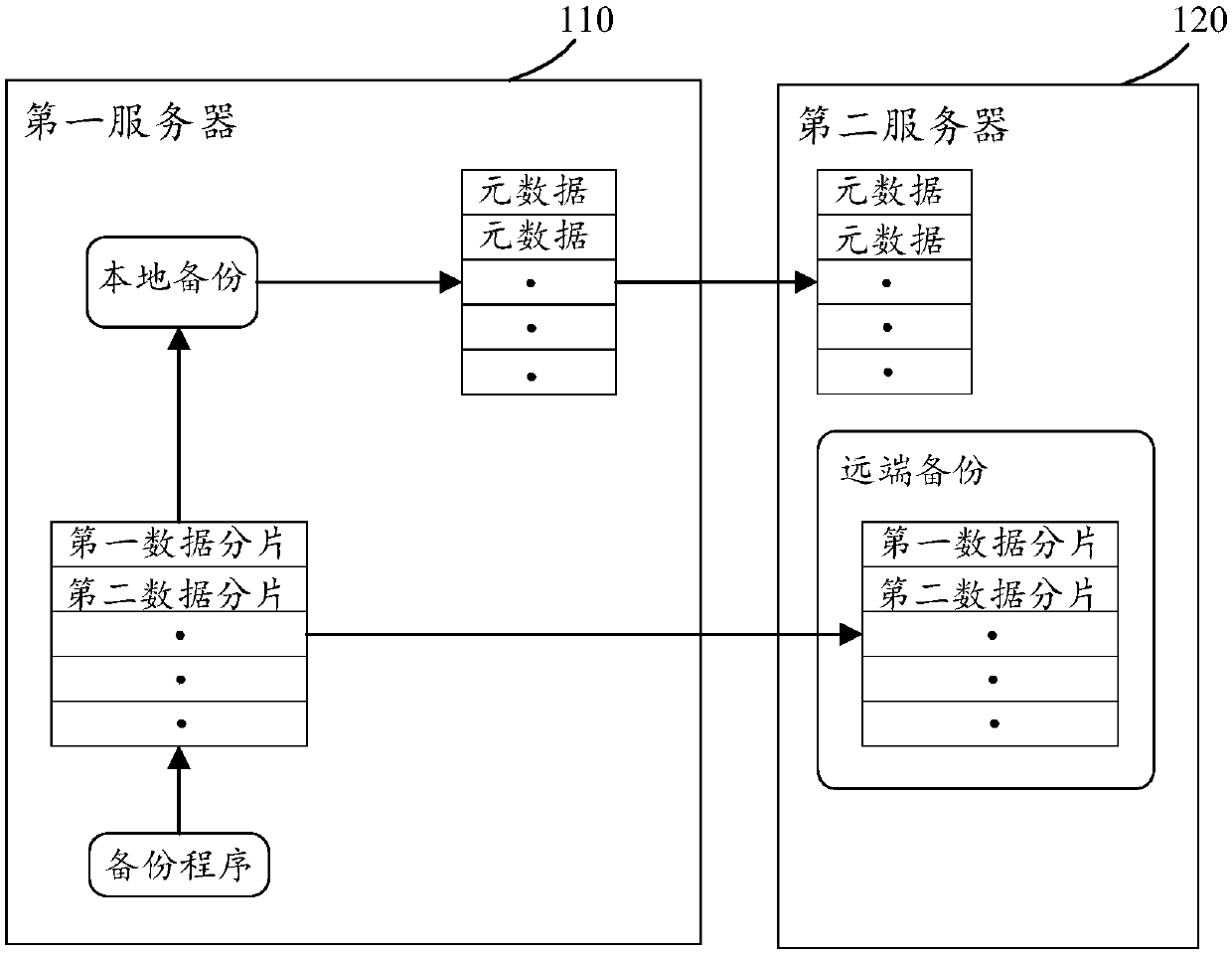

The embodiment of the invention provides a data recovery method, device and system. And the first server receives the metadata of the first data fragment sent by the second server, wherein the metadata of the first data fragment comprises first indication information which is an identifier or a storage position of a backup data fragment of the first data fragment in the first server. And when thefirst server confirms that the first server does not store the backup data fragment of the first data fragment in the first server according to the metadata of the first data fragment, the first server requests the second server for the backup data fragment of the first data fragment in the second server, and stores the backup data fragment of the first data fragment. The data size of the identification of the backup data fragment of the first server or the storage position of the backup data fragment of the first server stored in the metadata is smaller than the data size of the hash value. Therefore, few network resources are used for transmitting a large amount of metadata from the second server, and the data recovery efficiency is improved.

Owner:HUAWEI TECH CO LTD

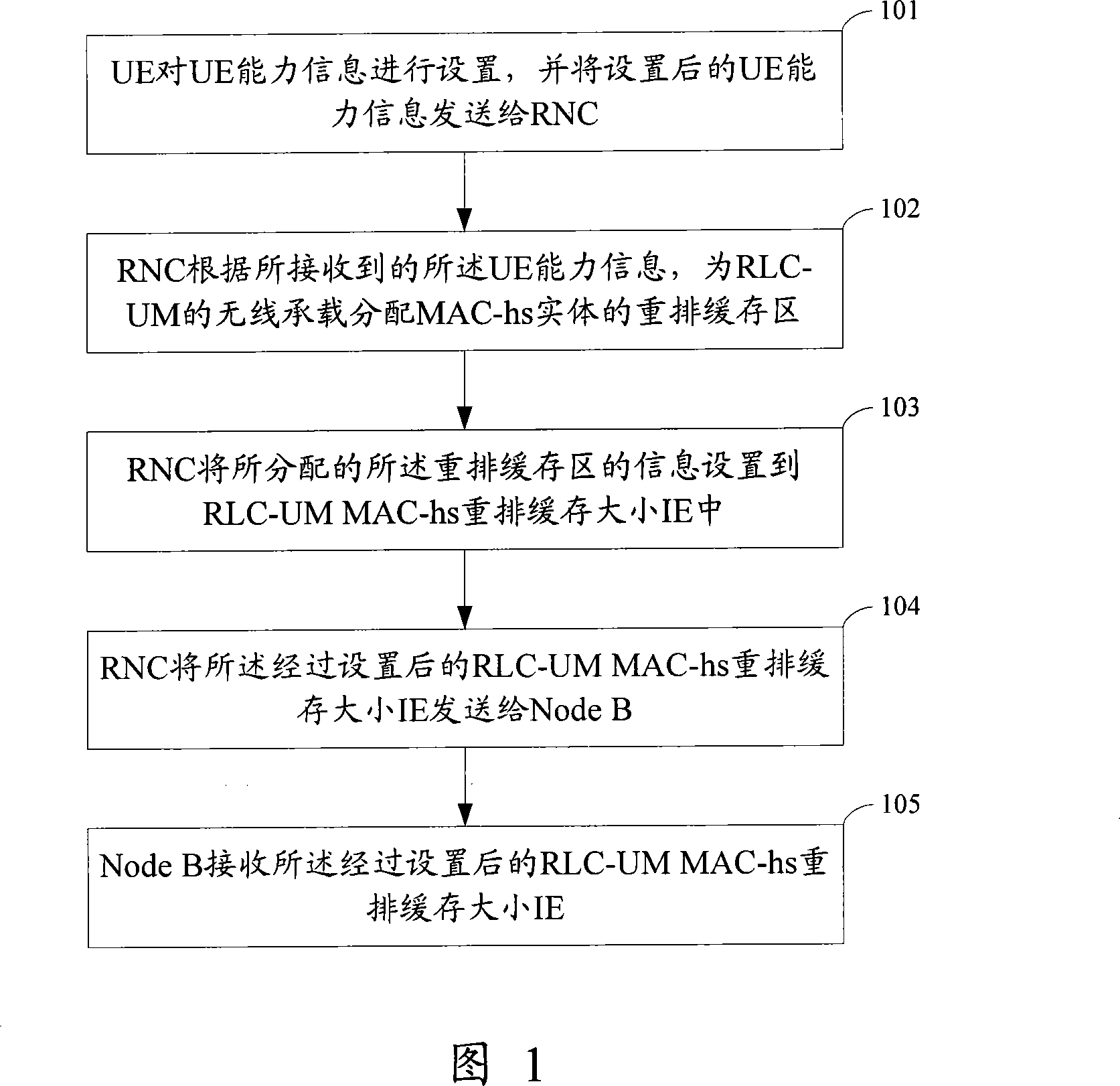

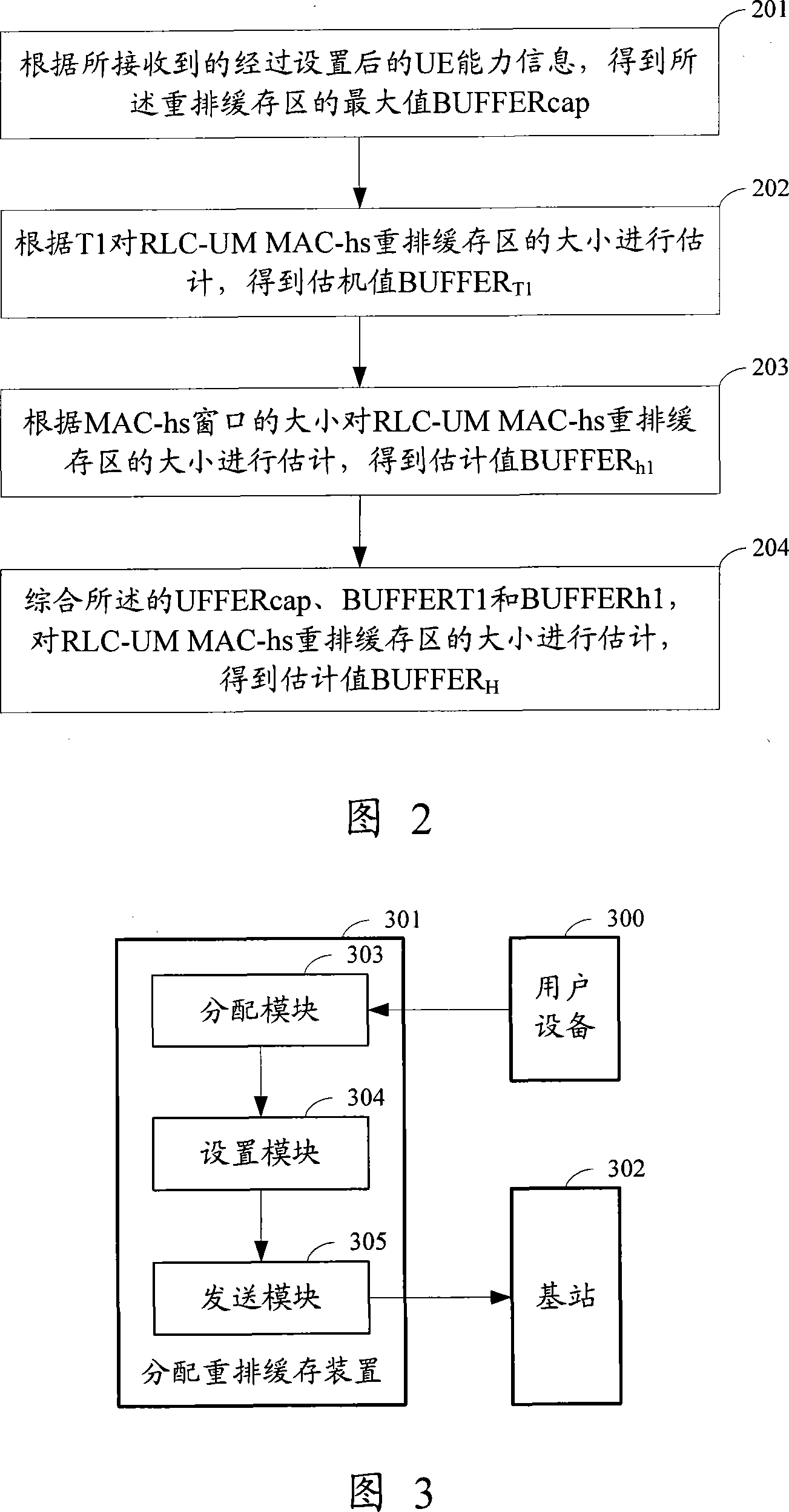

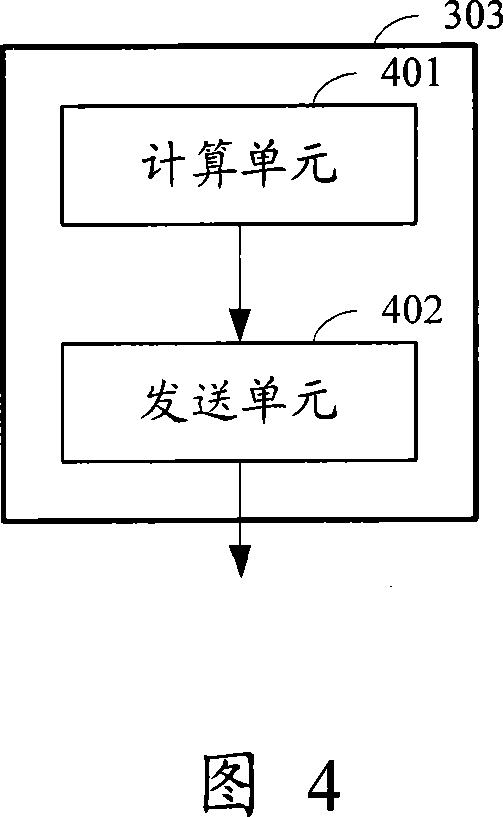

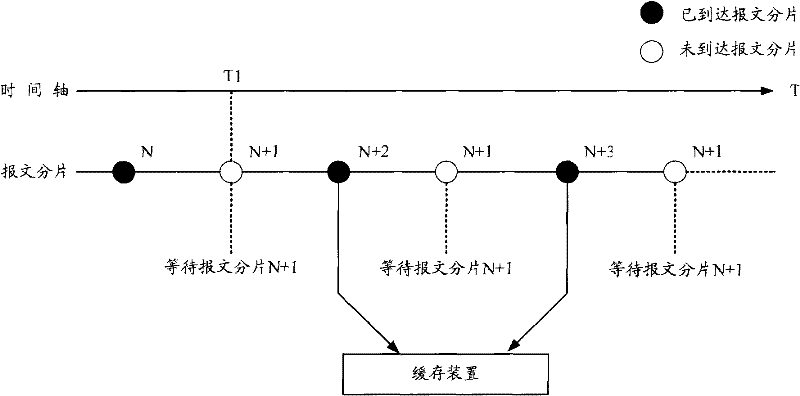

Method and apparatus for sending rearranged cache zone information

InactiveCN101388826BSave cache resourcesError prevention/detection by using return channelData switching networksOperating systemComputer network

The implement of the invention discloses a method for sending information of an MAC-hs rearrangement cache area controlled by a wireless link under an unacknowledged mode, which comprises the following steps: receiving UE ability information which is sent by the UE and comprises the total RLC-UM MAC-hs rearrangement cache size IE by a RNC, setting the maximum BUFFERcap of an MAC-hs rearrangement cache area under the RLC-UM in the IE, allocating the rearrangement cache area of an MAC-hs entity for the wireless load of the RLC-UM by the RNC according to the received UE ability information, setting the allocated information of the rearrangement cache area into the RLC-UM MAC-hs rearrangement cache size IE, and sending the RLC-UM MAC-hs rearrangement cache size IE. The implement of the invention further discloses a device for sending information of the MAC-hs rearrangement cache area under the RLC-UM. The method and the system provided by the invention enable a Node B to know the size of the rearrangement cache area of the MAC-hs entity which is allocated for the wireless load of the RLC-UM by the RNC from information units of the rearrangement cache area, and then overcome the defects in the protocol.

Owner:TD TECH COMM TECH LTD

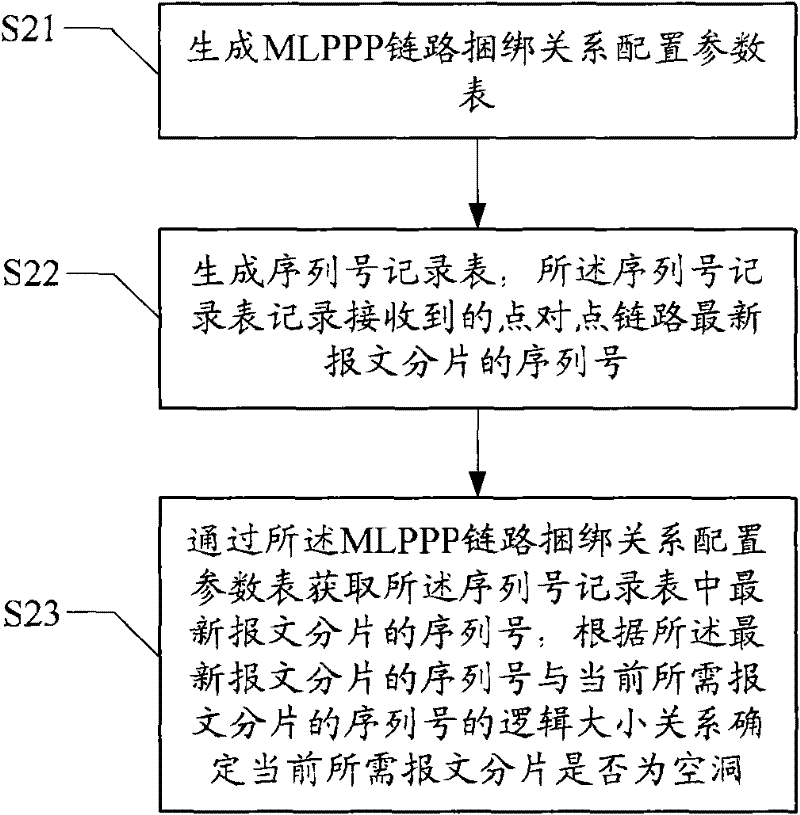

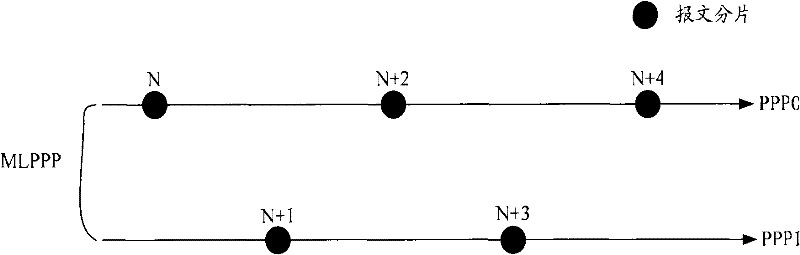

Empty identifying method, device and receiving equipment for MLPPP link

InactiveCN101656639BAvoid unnecessary waitingSave cache resourcesData switching networksSerial codeTime delayed

Embodiment of the invention discloses an empty identifying method, a device and a receiving equipment capable of identifying empty of a MLPPP link, which is used for quickly identifying empty in the MLPPP link and performing packet fragment reassembling. The method comprises generating MLPPP link binding relation configuration parameter list; generating a serial number recording list which records serial numbers of received point-to-point link latest packet fragment; obtaining the serial numbers of latest packet fragment in the serial number recording list through the MLPPP link binding relation configuration parameter list, determining whether the current packet fragment is empty according to logical size relationship of serial number of the latest packet fragment and the current required packet fragment. The invention can quickly determine whether a packet fragment is empty, prevent pointless waiting when meeting an empty, therefore the time delay of the MLPPP link is small, cache resource is saved, and fault-tolerant capability is improved.

Owner:吴中区横泾博尔机械厂

A method for time-division multiplexing cache to realize time-slot data packet reassembly

ActiveCN112269747BAchieve reorganizationIncrease profitMemory adressing/allocation/relocationTransmissionData packEngineering

The invention discloses a method for recombining time slot data packets by time division multiplexing cache. The method performs time division multiplexing on buffer resources, that is, for the same data buffer, multiple data packets of different time slots are stored at different times. The data packets in different time slots of the input original time slot multiplexing communication protocol data are stored in the same data buffer. When the data packet is reassembled and output, the data cache is read according to the address of the linked list, and the data packet of a certain time slot is continuously output. The address dynamic management of the data cache. After writing an address in the data cache, the address is marked as occupied. After reading an address in the data cache, the address is marked as released and marked as a free address. It needs to be allocated before writing into the data cache. The free address of the data cache. This scheme realizes the recombination of data packets in the time slot multiplexing communication protocol, improves the utilization rate of the cache, and saves cache resources. Reassembly of data packets in more time slots is realized based on limited buffer resources.

Owner:TOEC TECH

A data compression method, device, terminal equipment and storage medium

ActiveCN111723059BShorten Compression LatencyEasy to compress separatelyFile/folder operationsSpecial data processing applicationsComputer hardwareParallel computing

Owner:SHENZHEN KENAN TECH DEV CO LTD

A video detection and processing method and device

ActiveCN104580978BSave cache resourcesSave bandwidth resourcesTelevision system detailsBrightness and chrominance signal processing circuitsInterlaced videoComputer module

Owner:HAIER BEIJING IC DESIGN

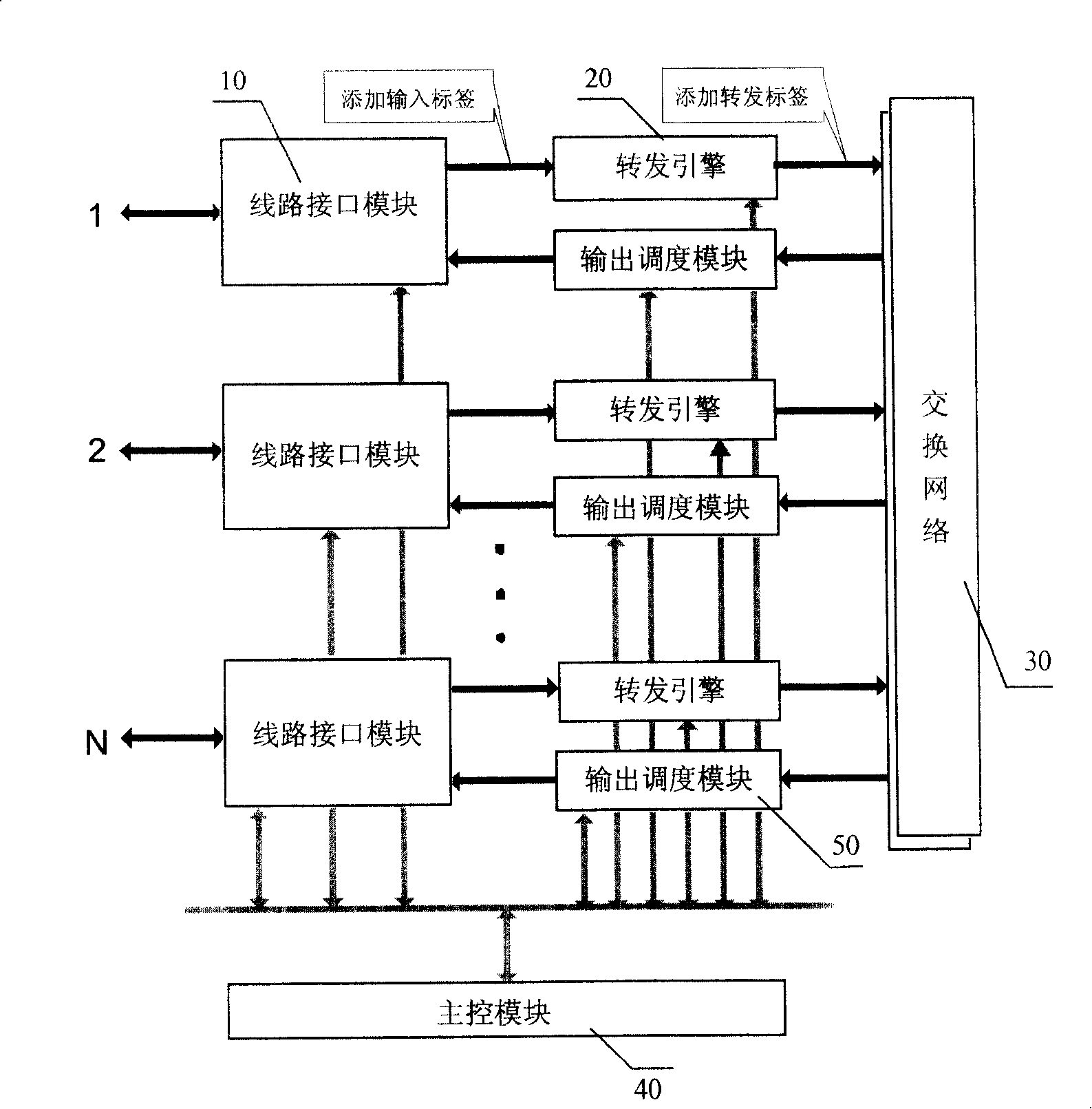

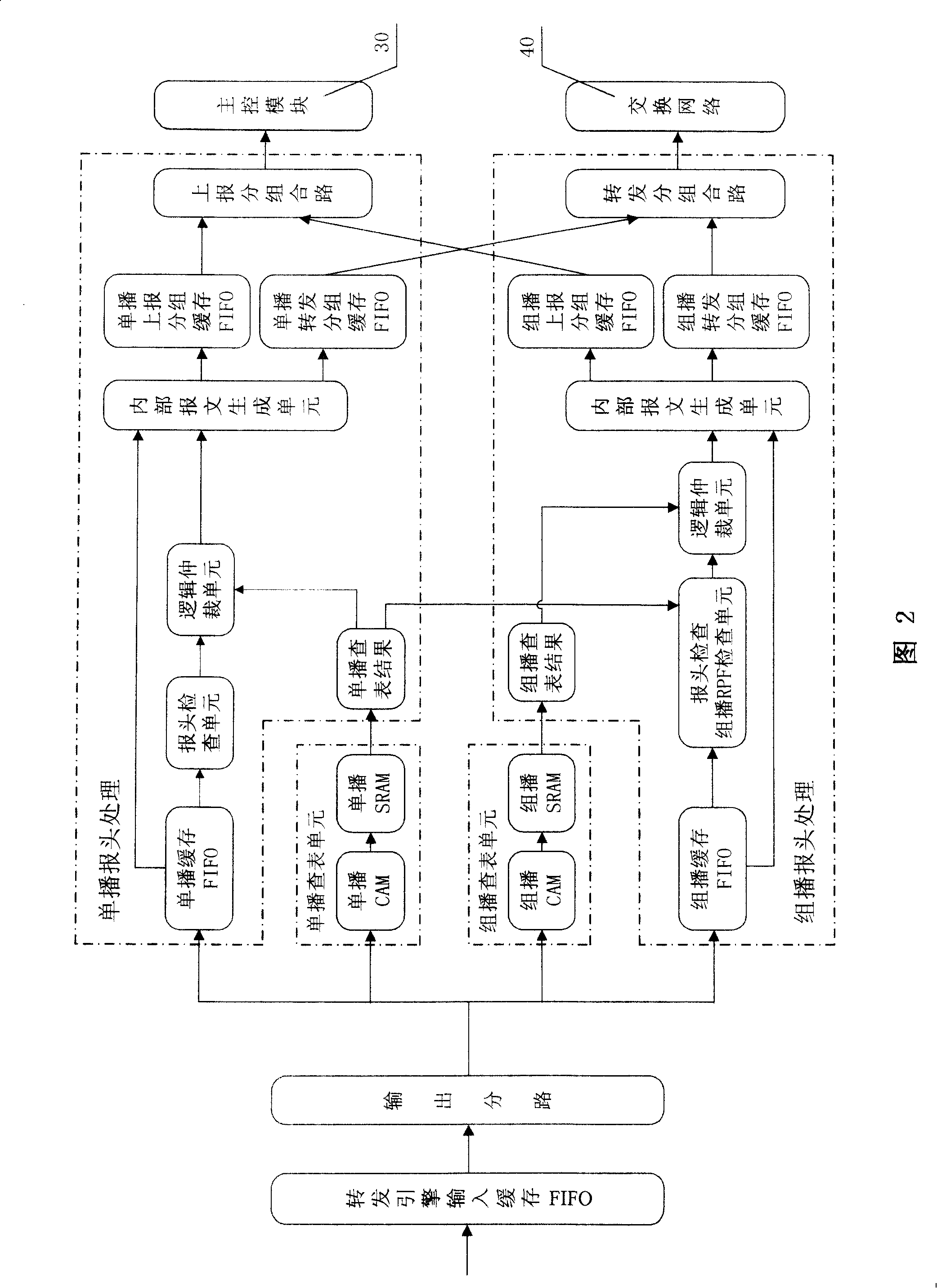

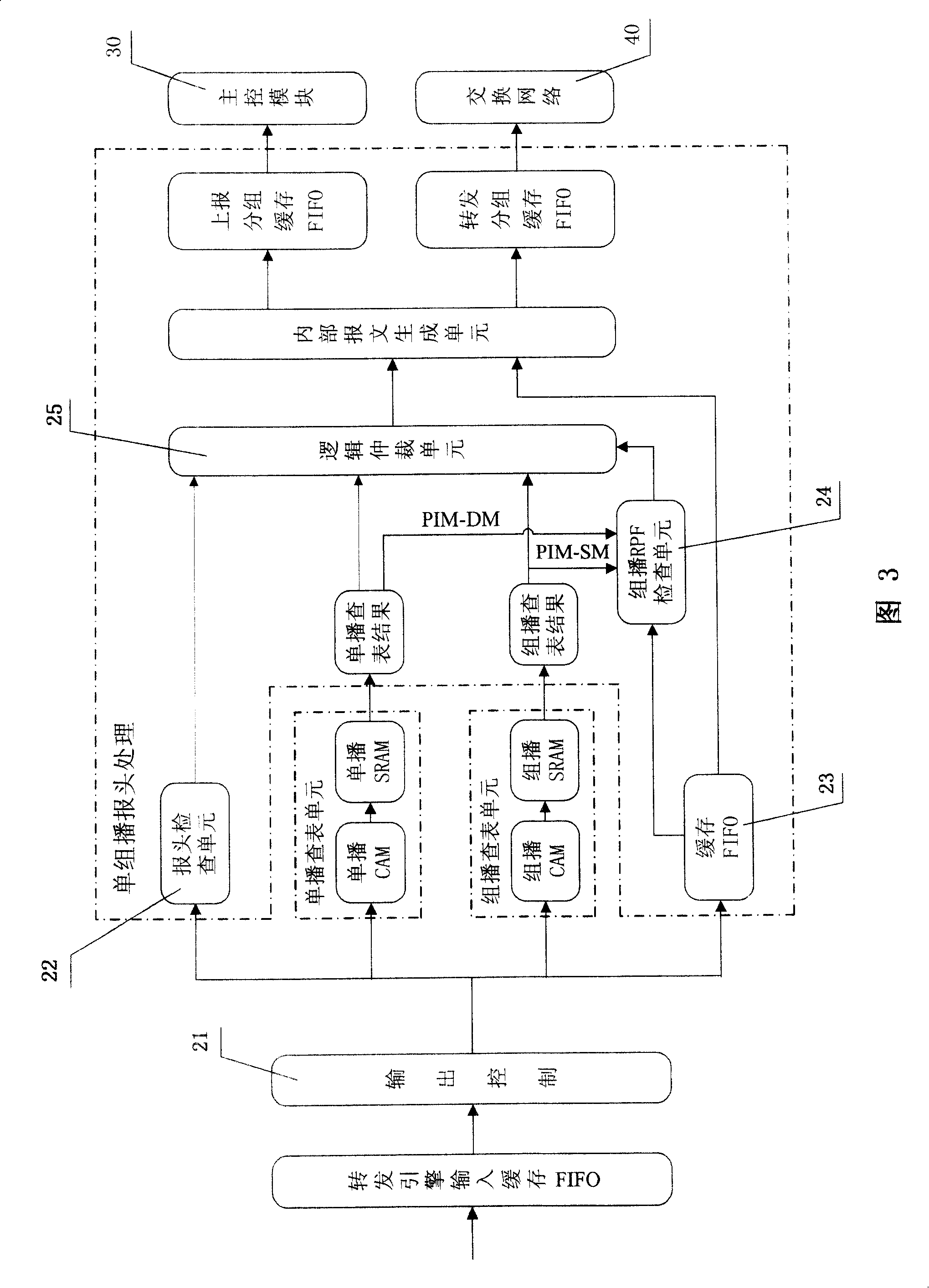

Forwarding method and router for supporting linear speed of IPv6 single multicast operation

InactiveCN100389579CSave cache resourcesSave logic resourcesData switching networksWire speedExchange network

Message output from line interface module is buffered. Then, output control unit reads out buffered message. Header of message written to a header inspection unit generates unicast and multicast table look up key words to carry out unicast and multicast table look up. Header of message is written into buffered first-in, first-out register of processing header of message. Using result of unicast table look up, result of multicast table look up and the message, the multicast RPF inspection unit obtains RPF inspection result. Synthesizing inspection result of message header, result of unicast, result of multicast RPF, and logic arbitration unit generates inner reporting message header or inner forwarding message header. Repackaging the message by using the inner message header, the inner message generation unit sends generated message to be reported to main control module, and sends generated forwarding message to exchange network. The invention also discloses IPv6 router for the method.

Owner:CHINA NAT DIGITAL SWITCHING SYST ENG & TECH R&D CENT

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com