Patents

Literature

611 results about "Interlaced video" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

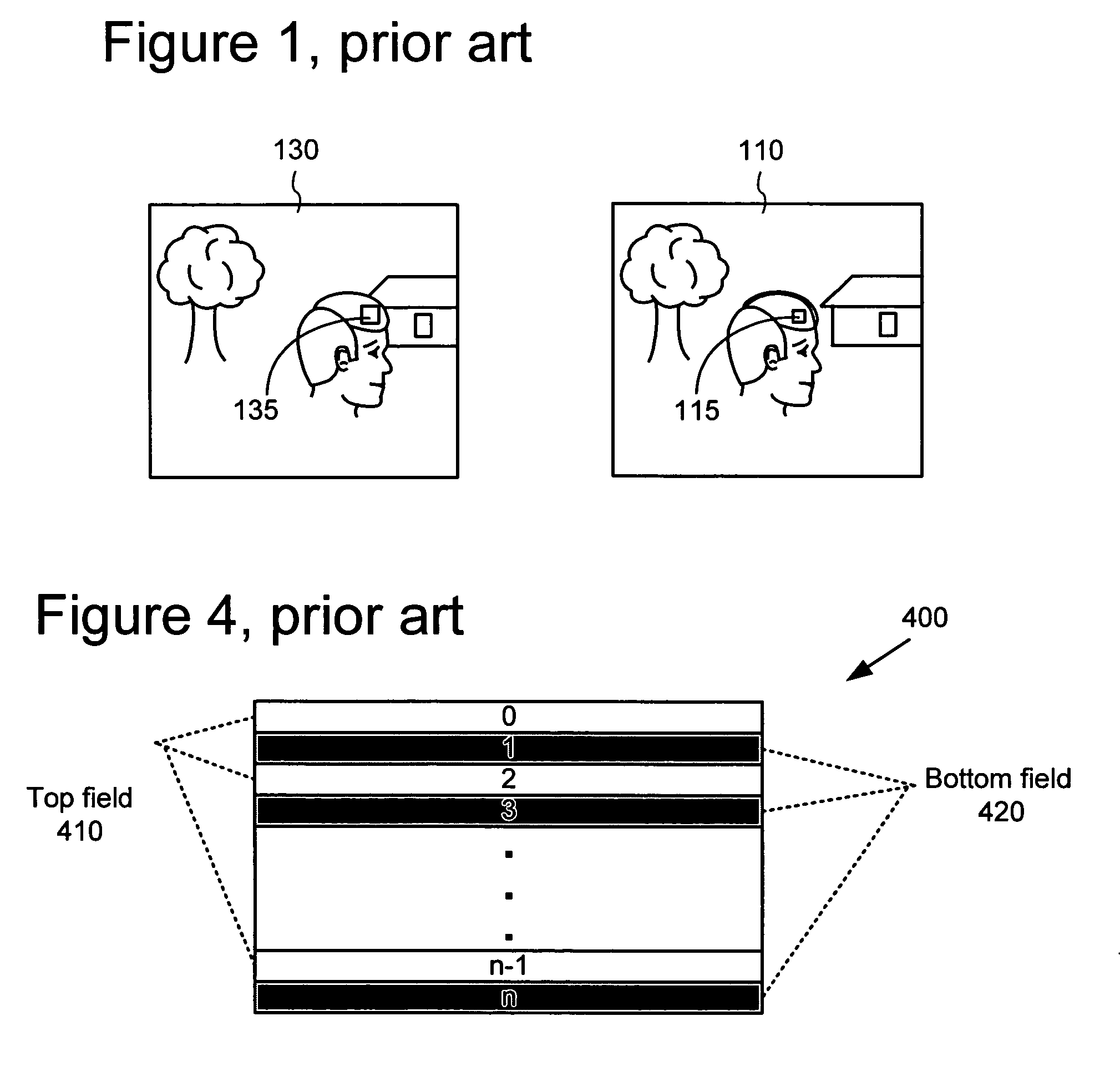

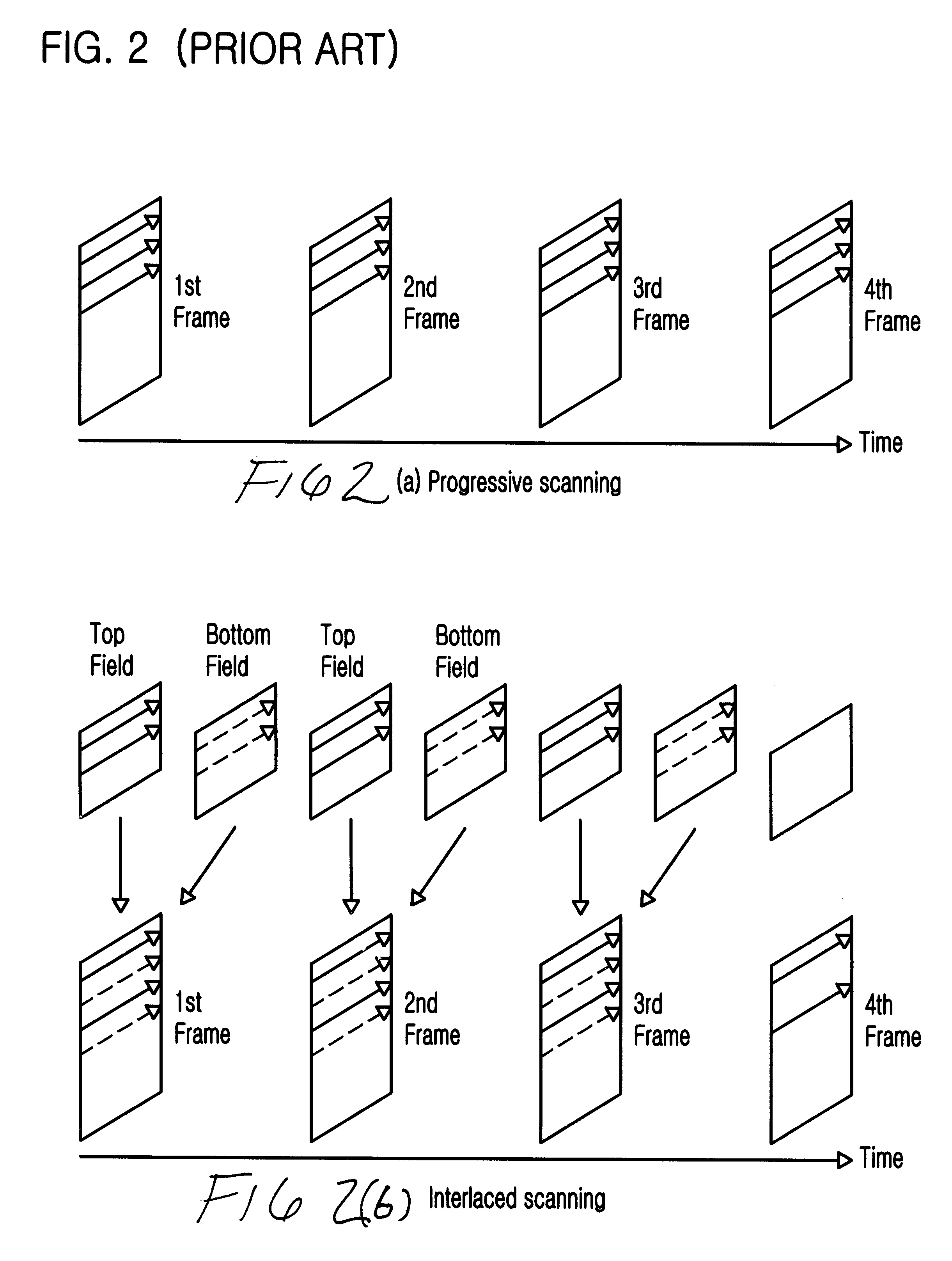

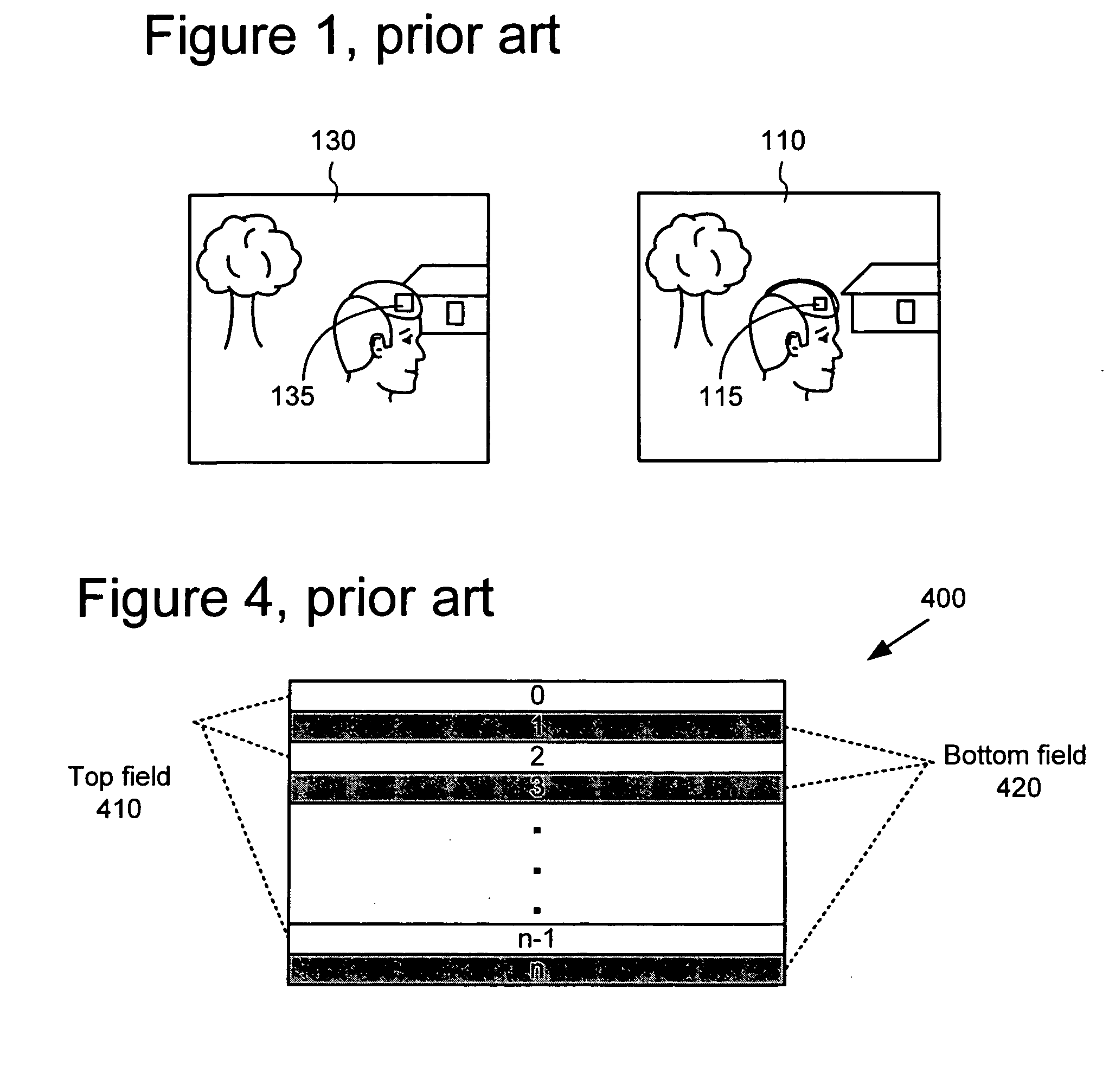

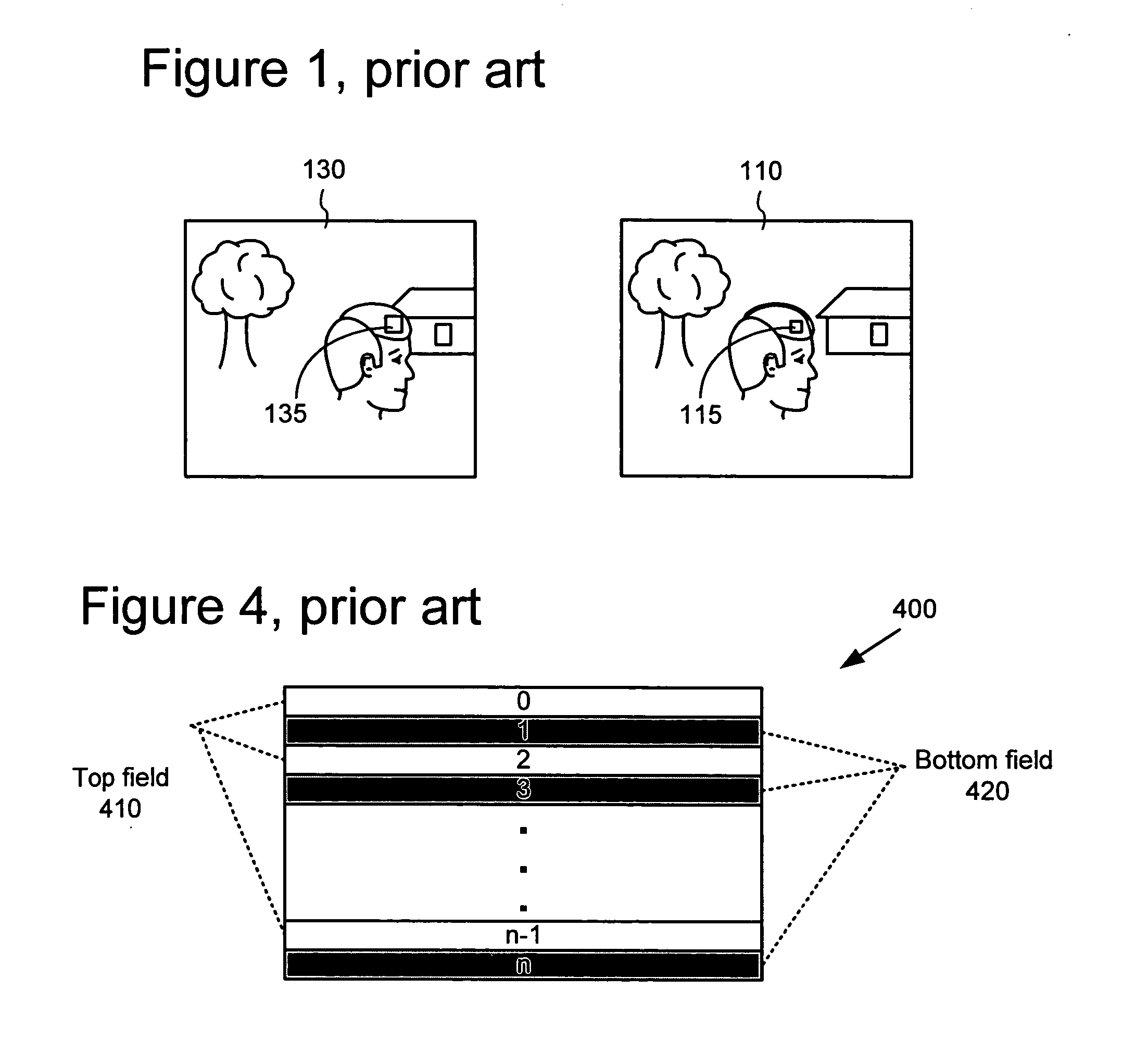

Interlaced video (also known as Interlaced scan) is a technique for doubling the perceived frame rate of a video display without consuming extra bandwidth. The interlaced signal contains two fields of a video frame captured consecutively. This enhances motion perception to the viewer, and reduces flicker by taking advantage of the phi phenomenon.

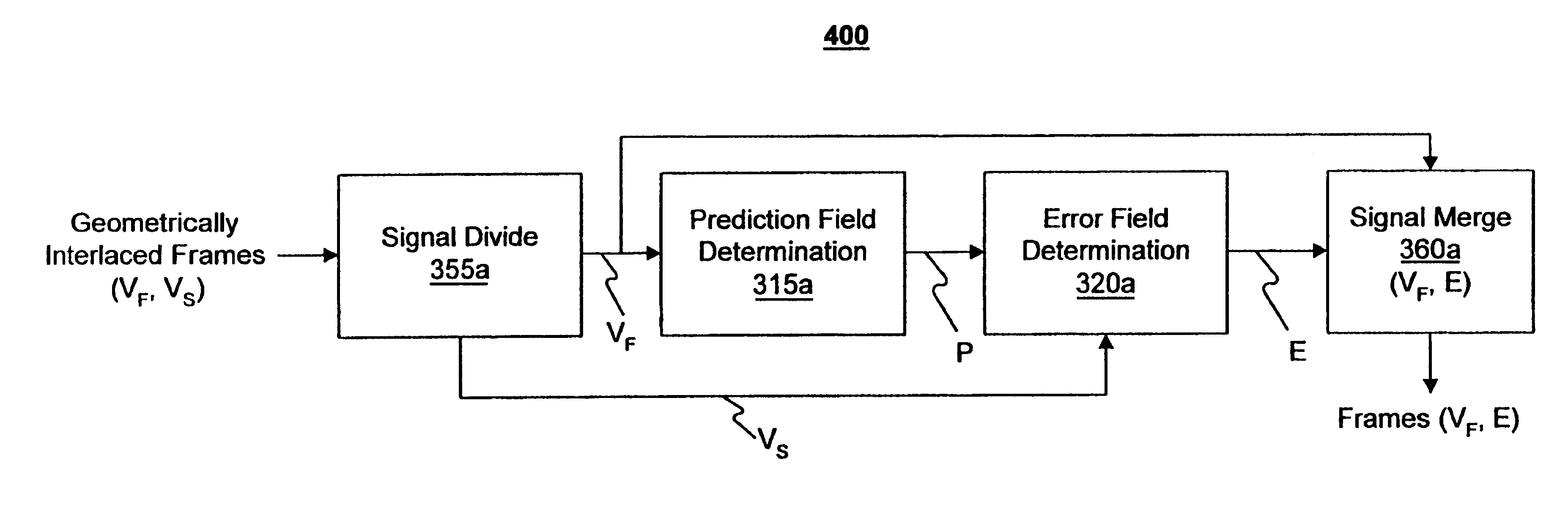

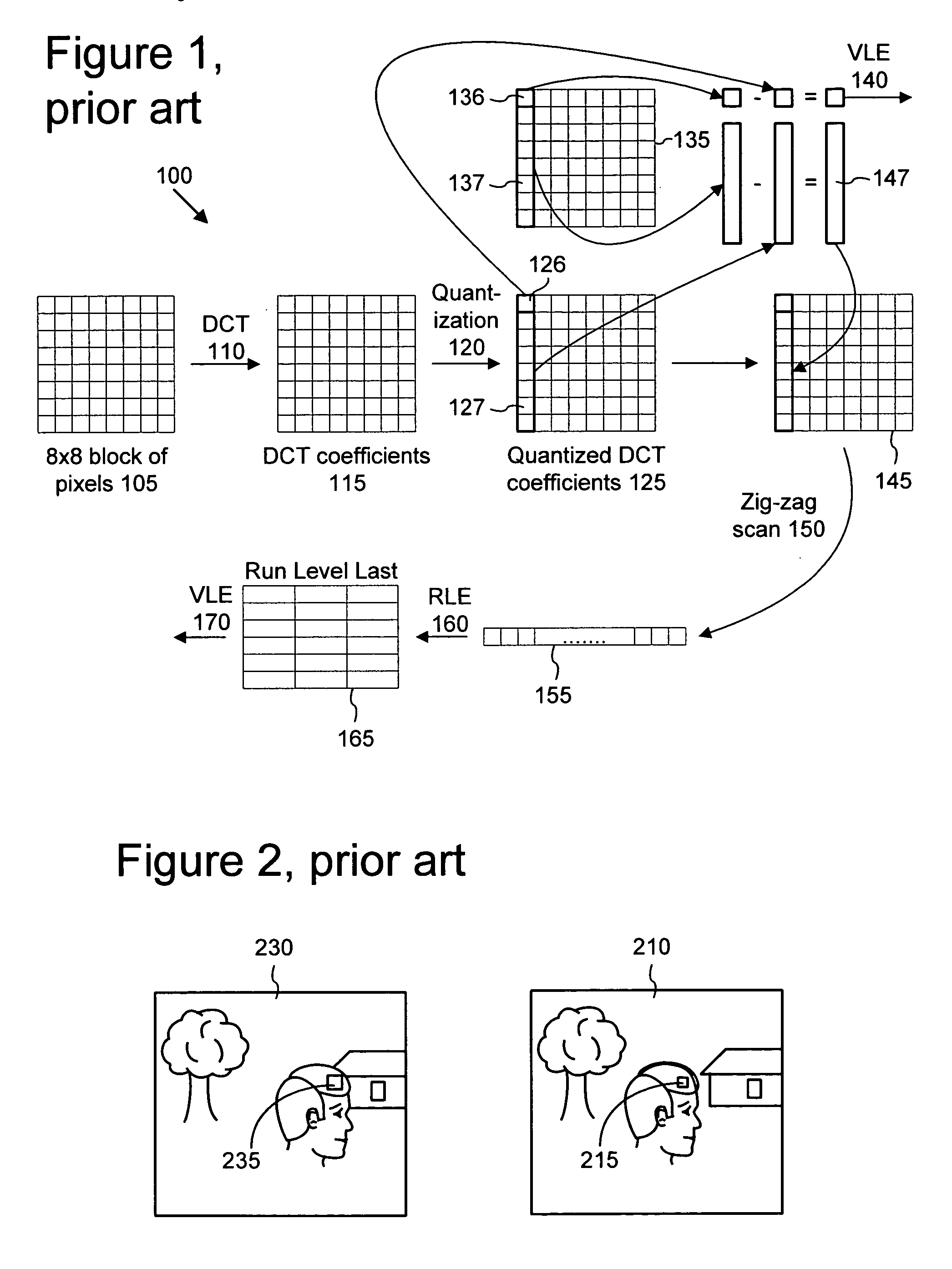

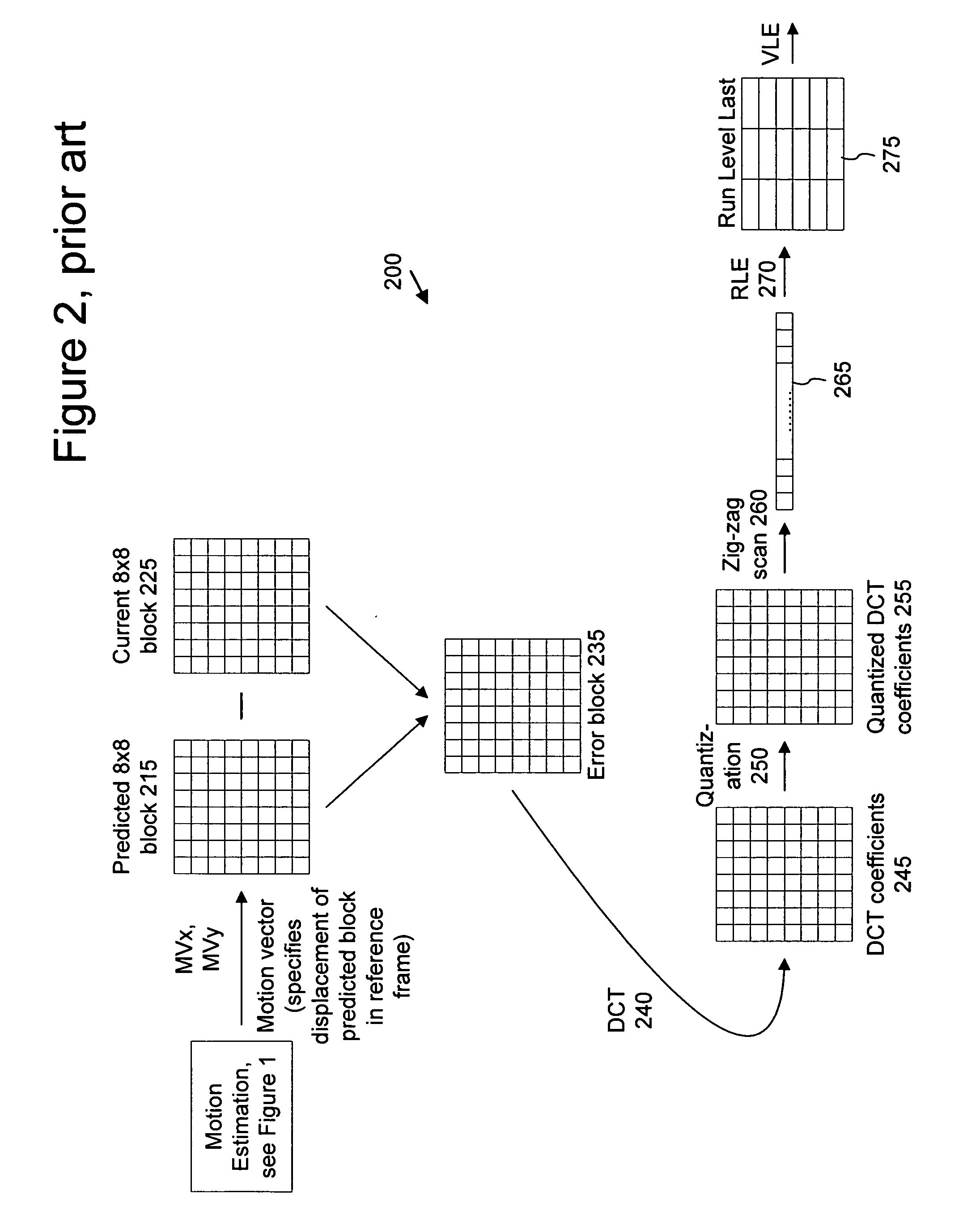

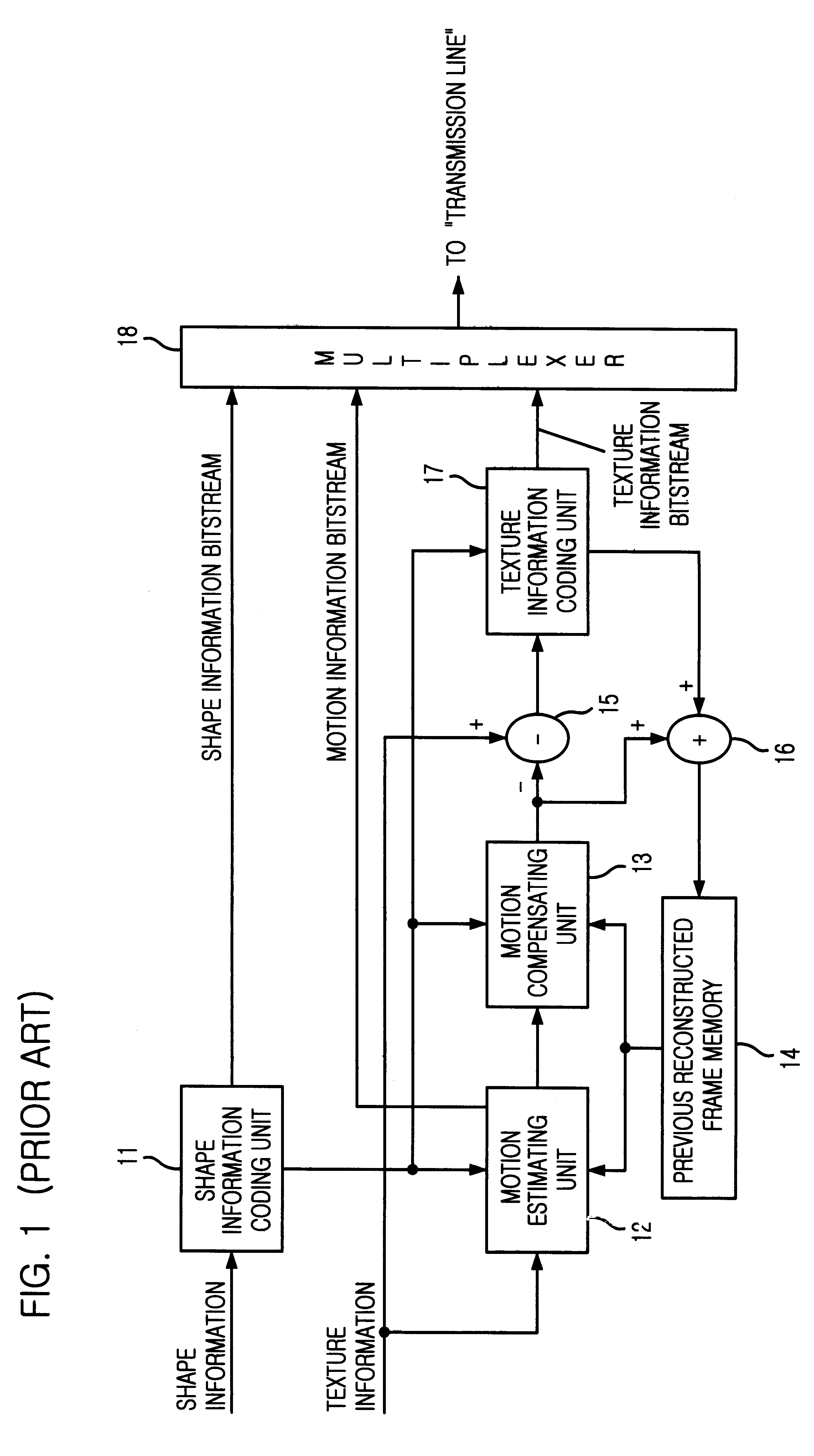

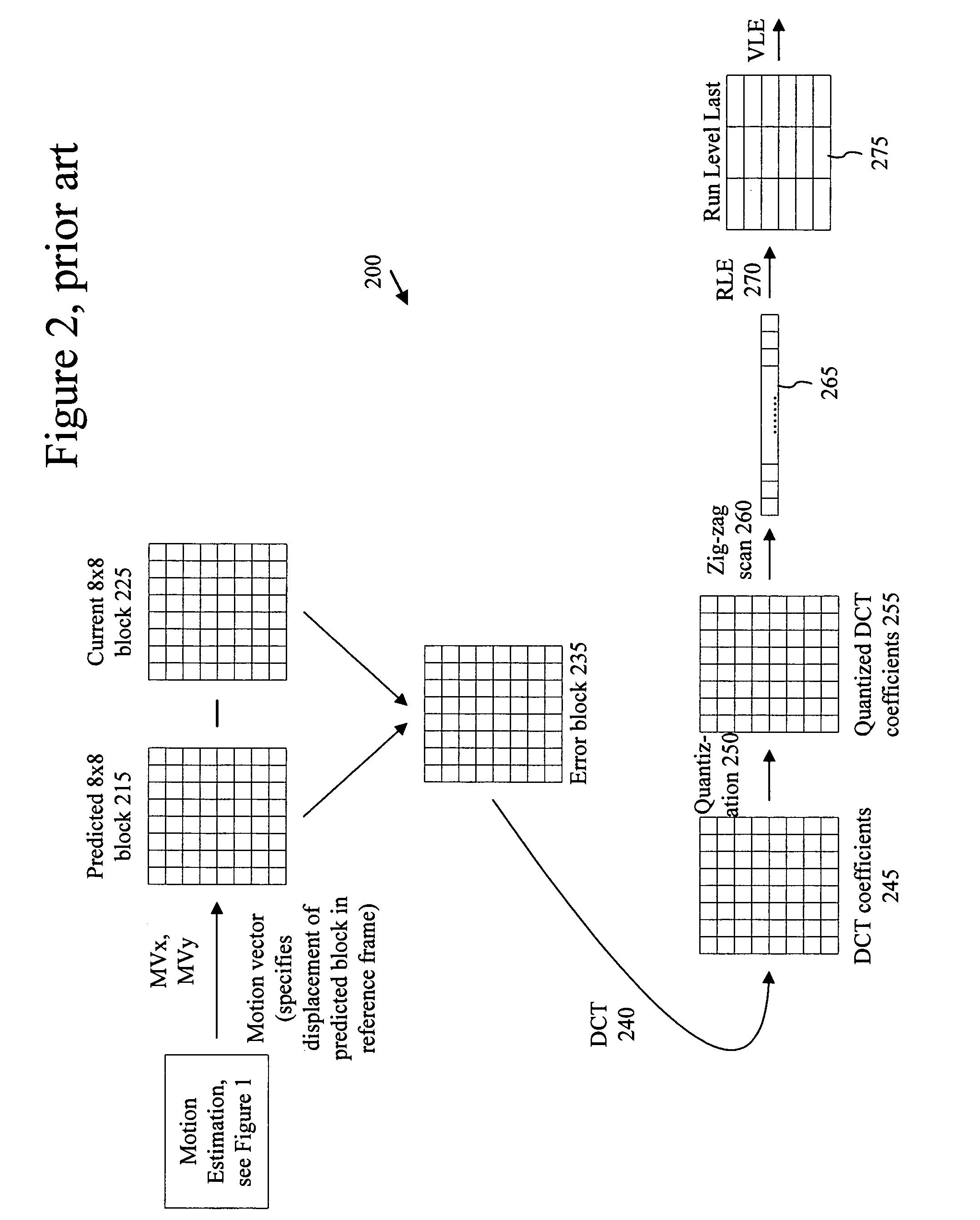

Apparatus and method for optimized compression of interlaced motion images

InactiveUS6289132B1Easy to compressAvoid inefficiencyPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningImaging processingInterlaced video

Owner:QUVIS +1

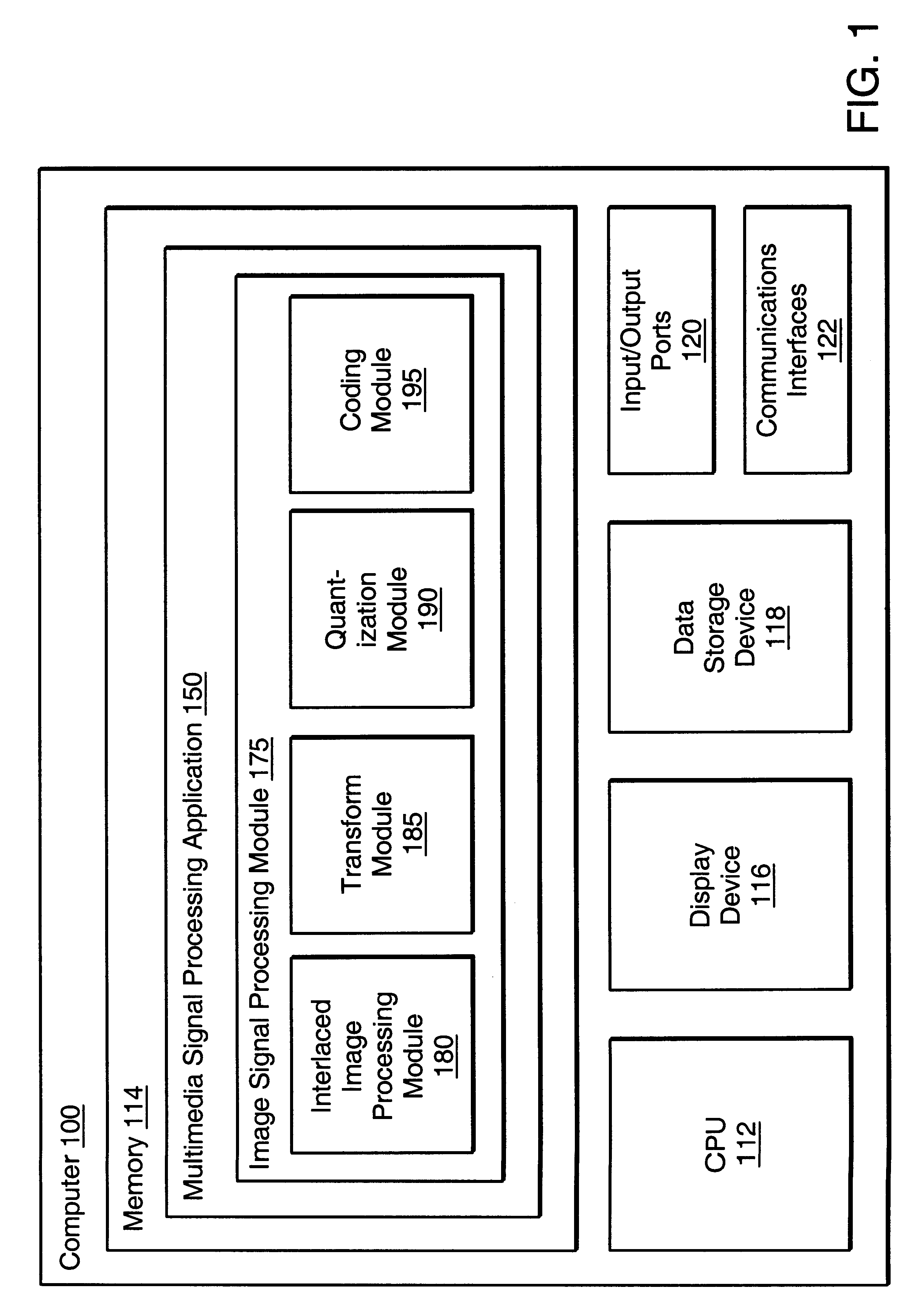

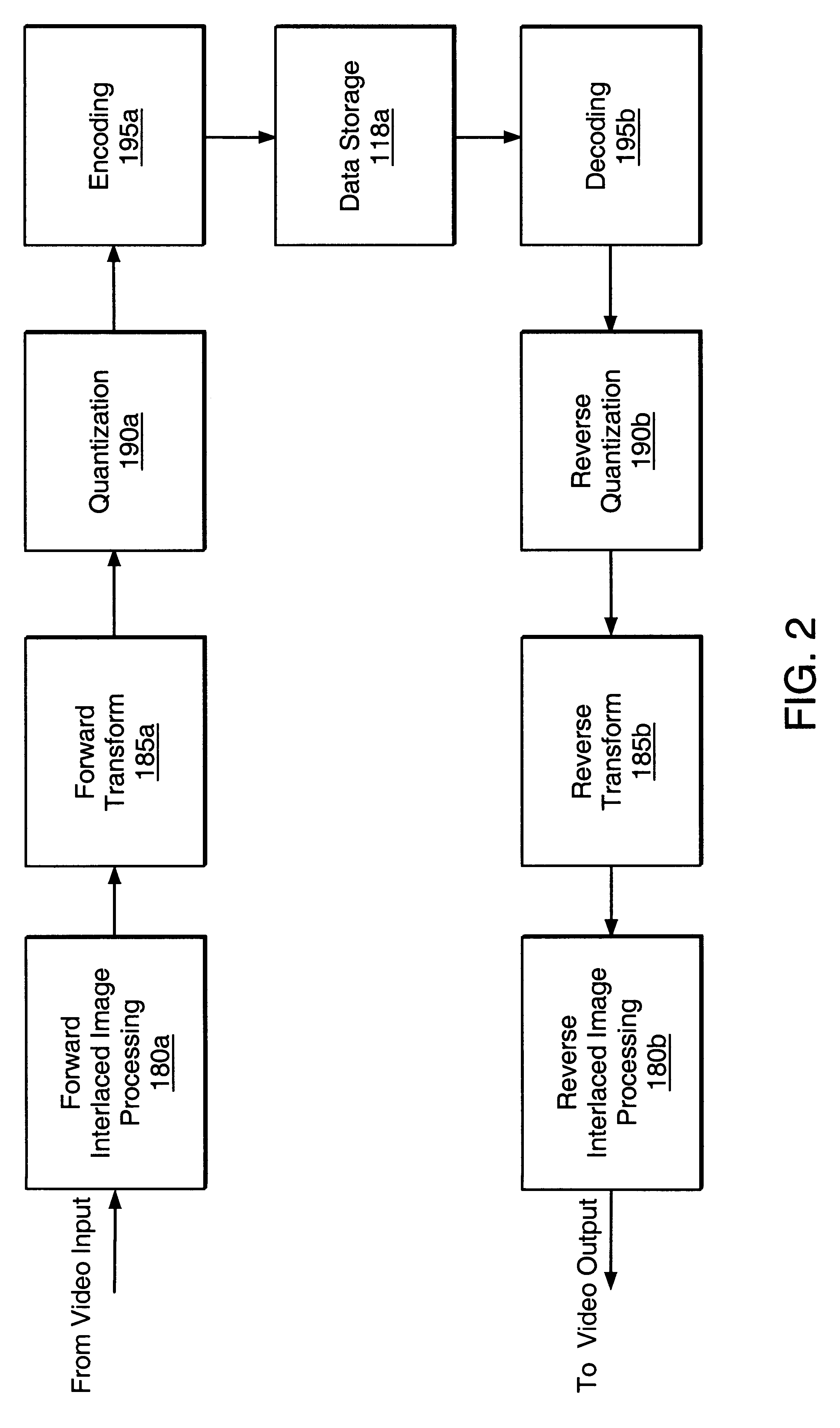

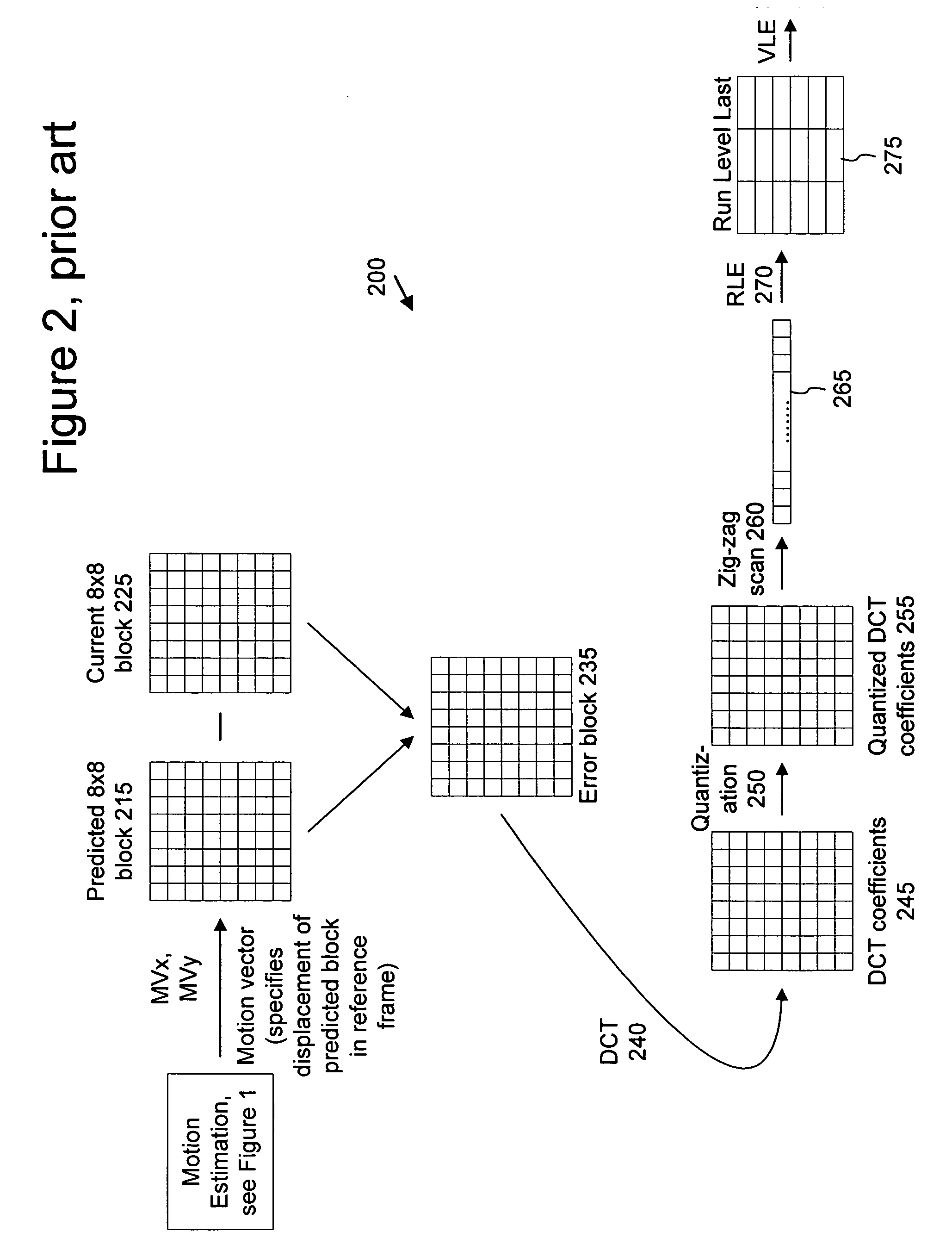

Intraframe and interframe interlace coding and decoding

ActiveUS20050013497A1Facilitate decodingCharacter and pattern recognitionTelevision systemsInterlaced videoMotion vector

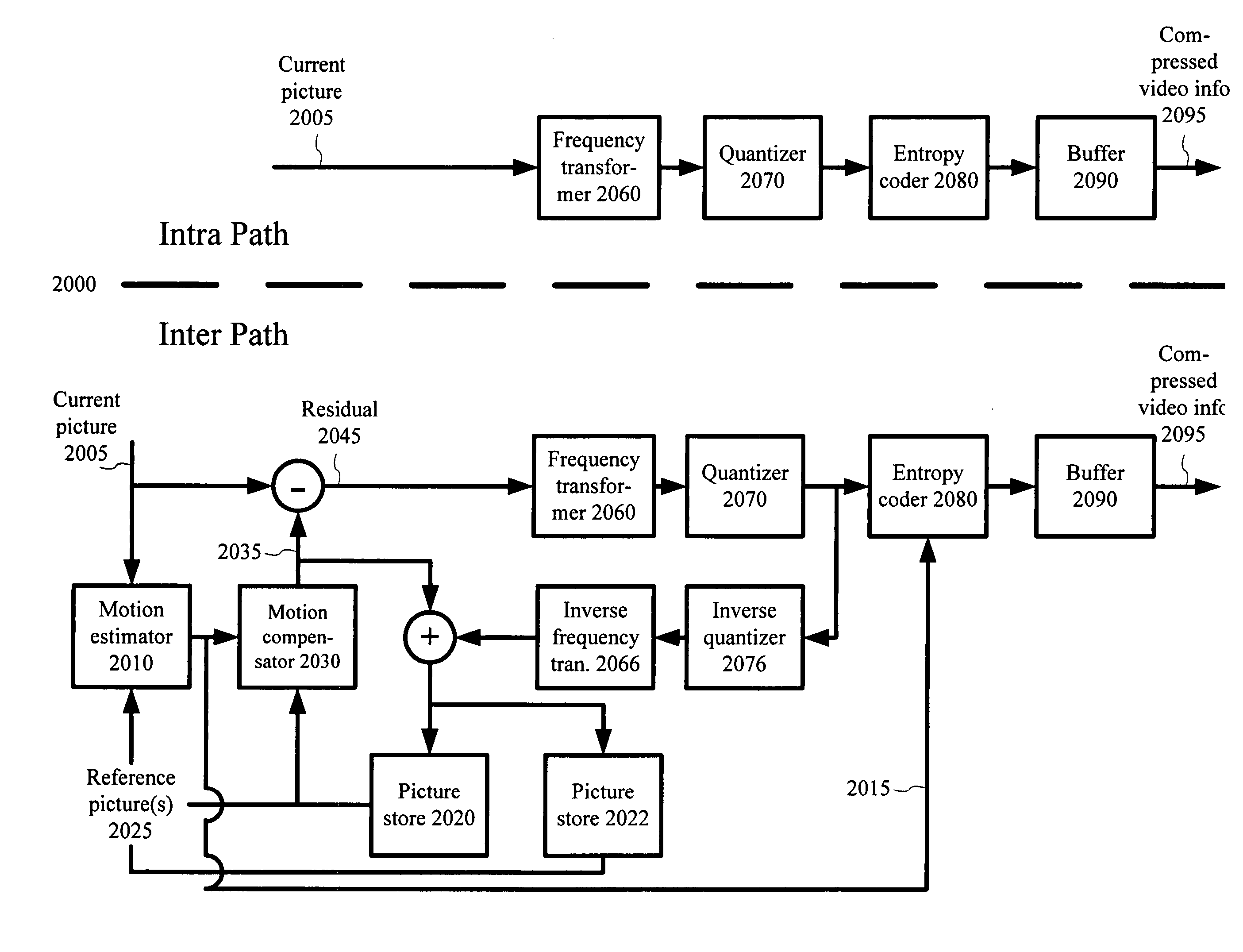

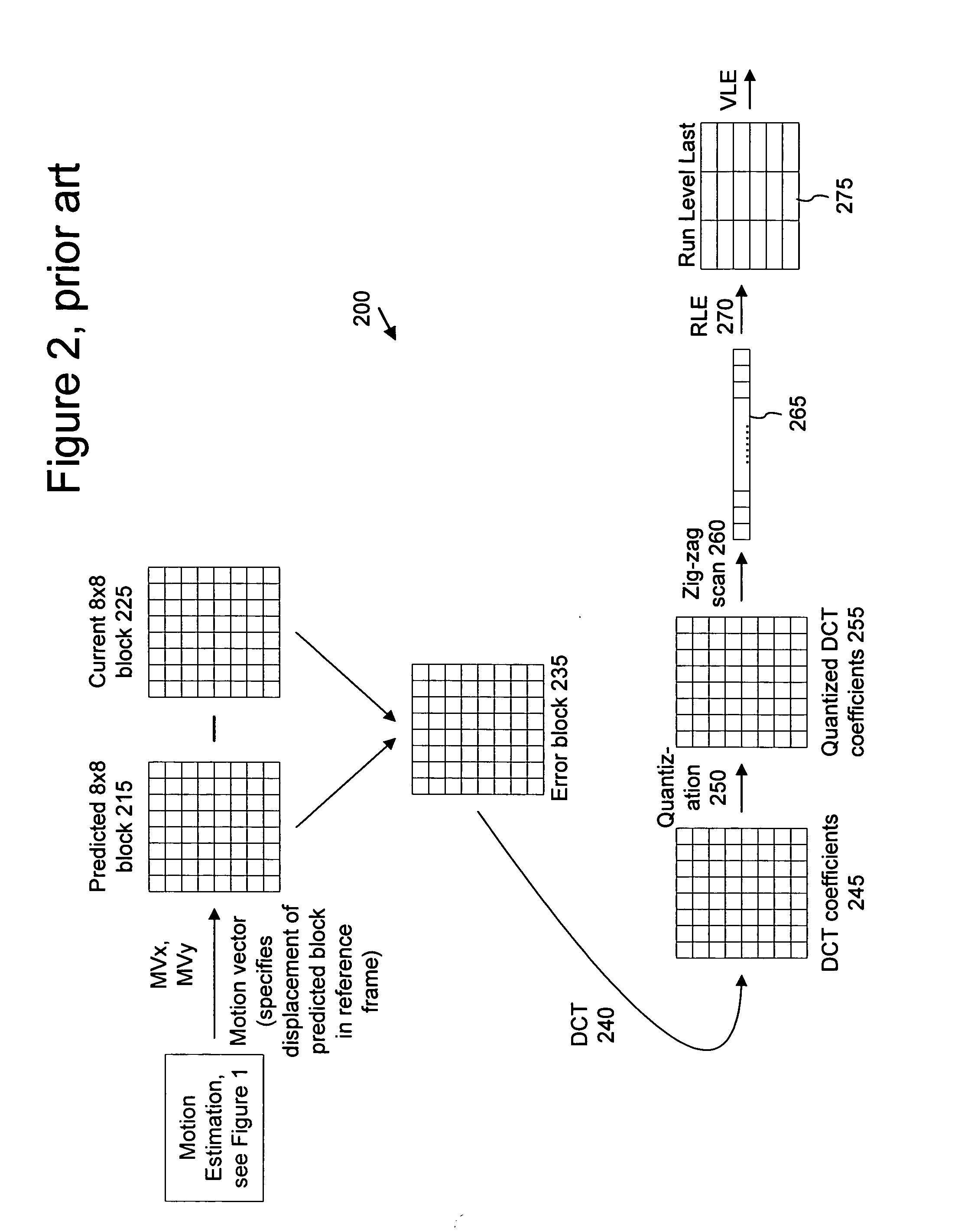

Techniques and tools for encoding and decoding video images (e.g., interlaced frames) are described. For example, a video encoder or decoder processes 4:1:1 format macroblocks comprising four 8×8 luminance blocks and four 4×8 chrominance blocks. In another aspect, fields in field-coded macroblocks are coded independently of one another (e.g., by sending encoded blocks in field order). Other aspects include DC / AC prediction techniques and motion vector prediction techniques for interlaced frames.

Owner:MICROSOFT TECH LICENSING LLC

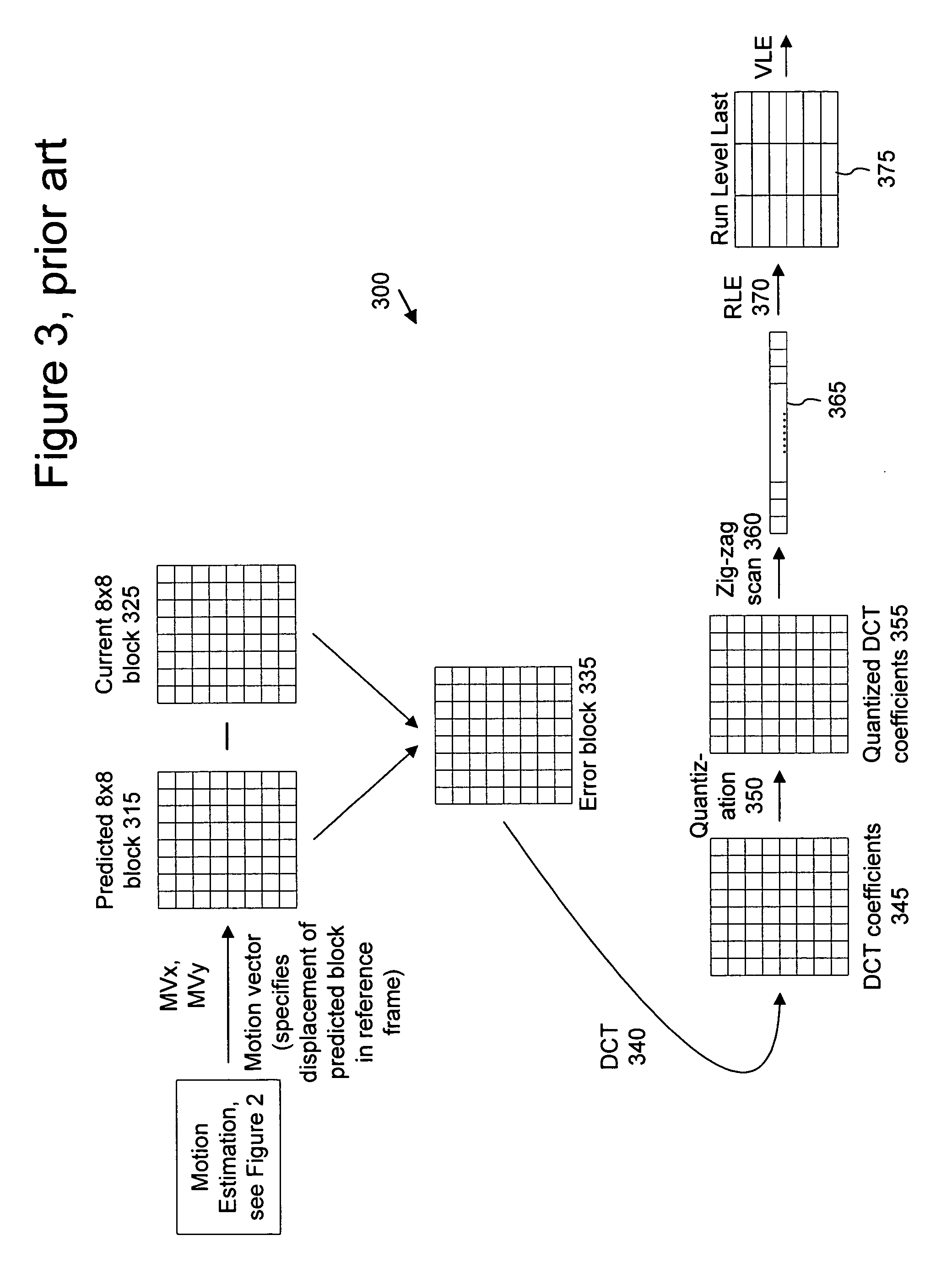

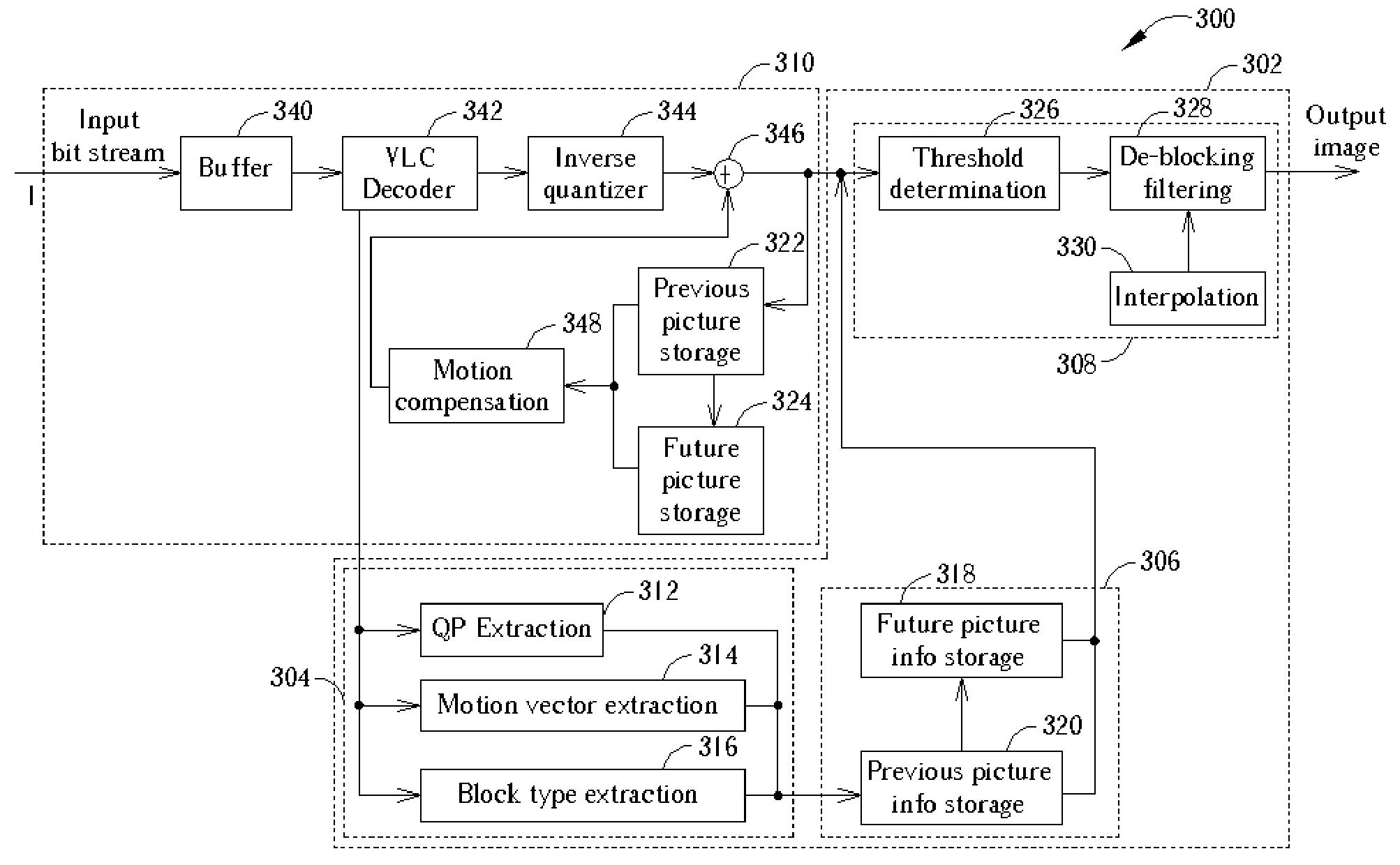

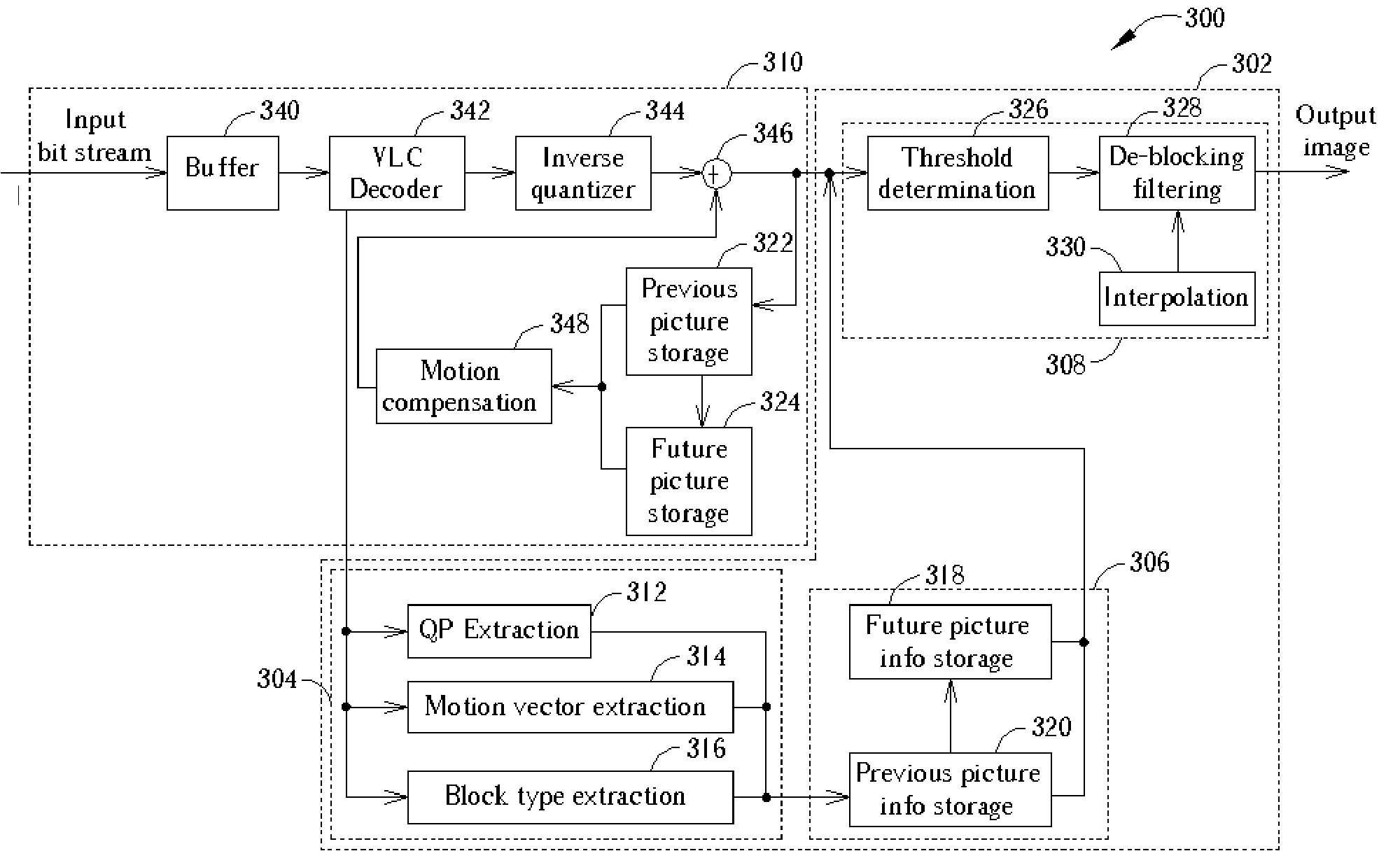

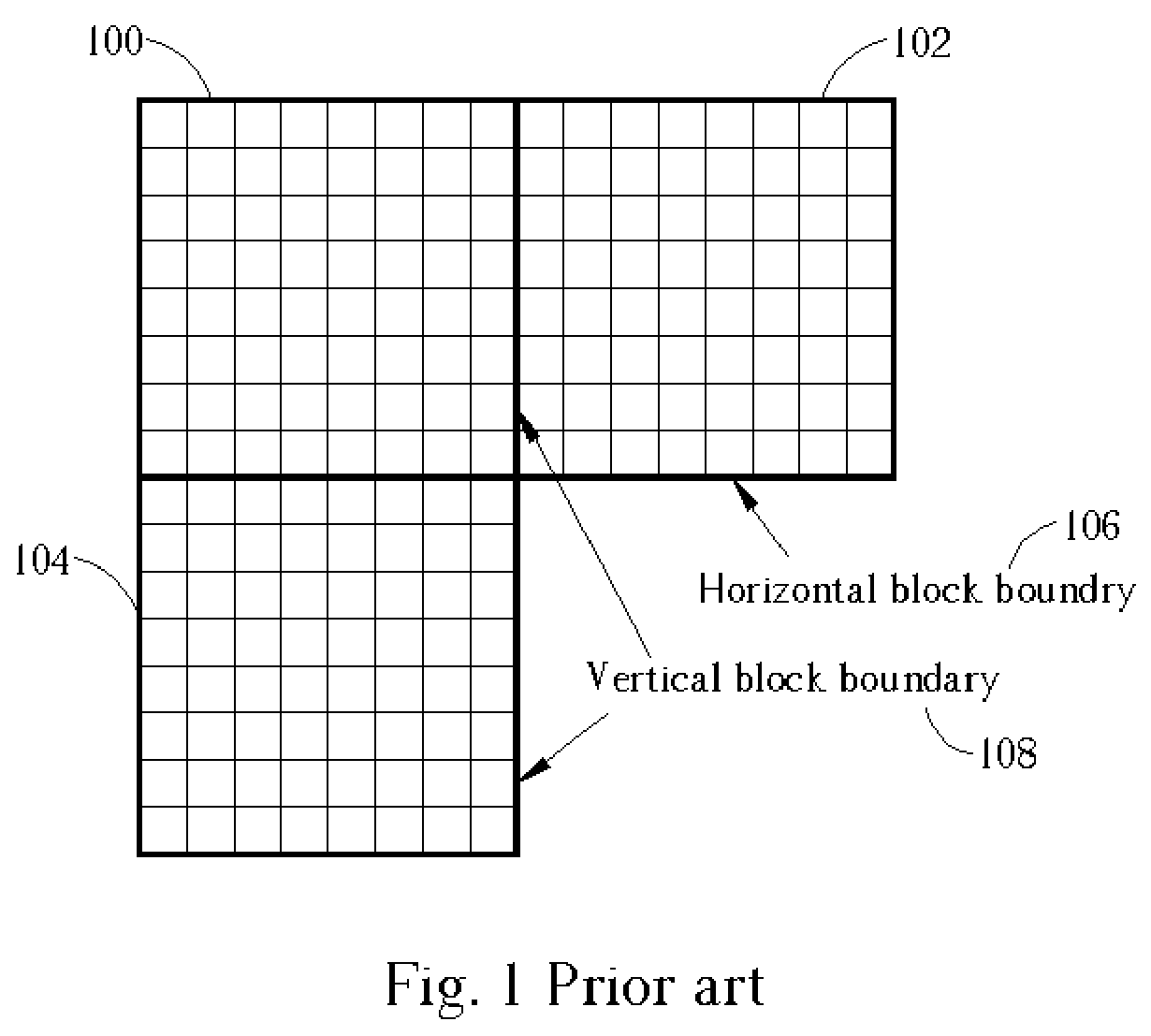

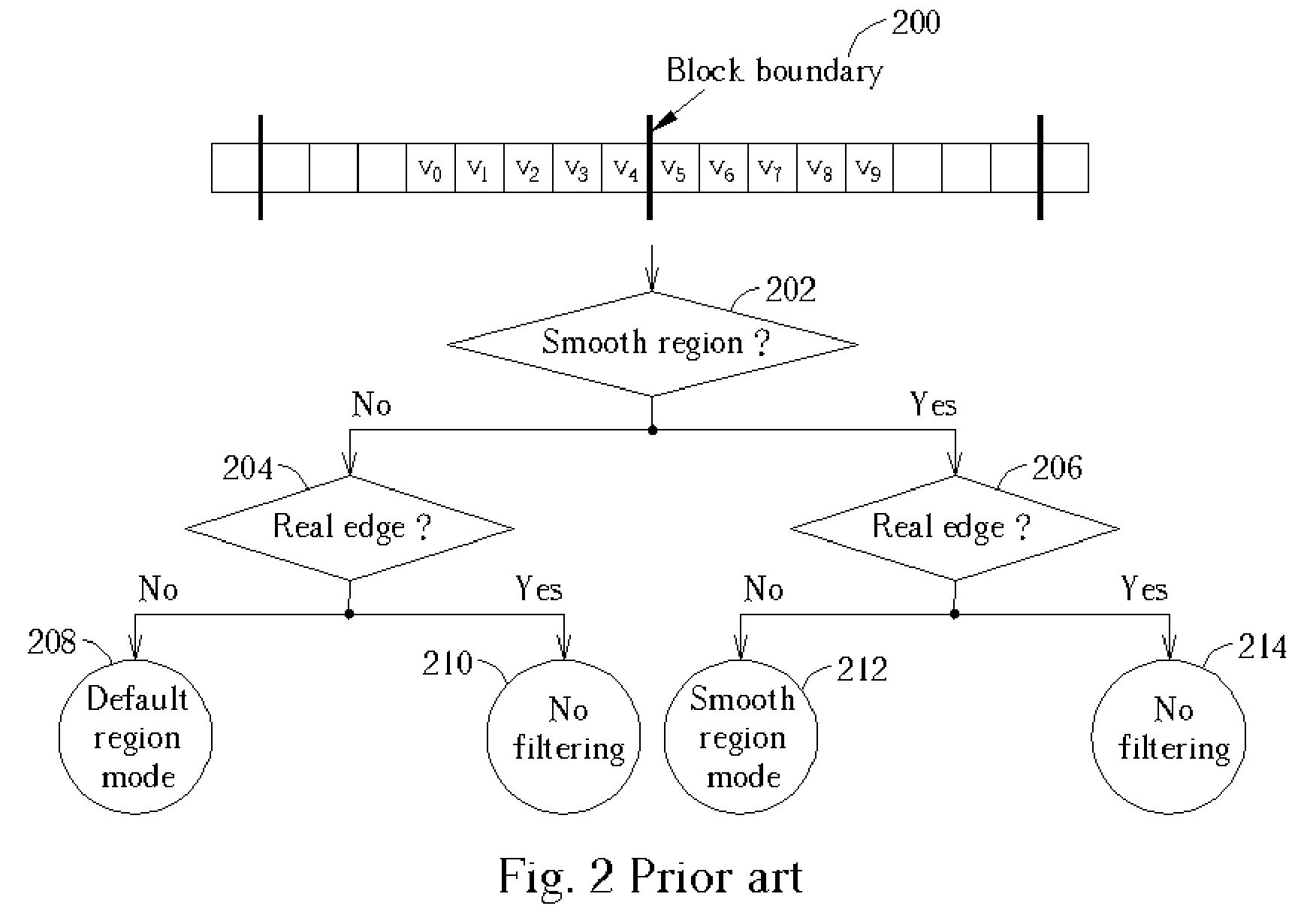

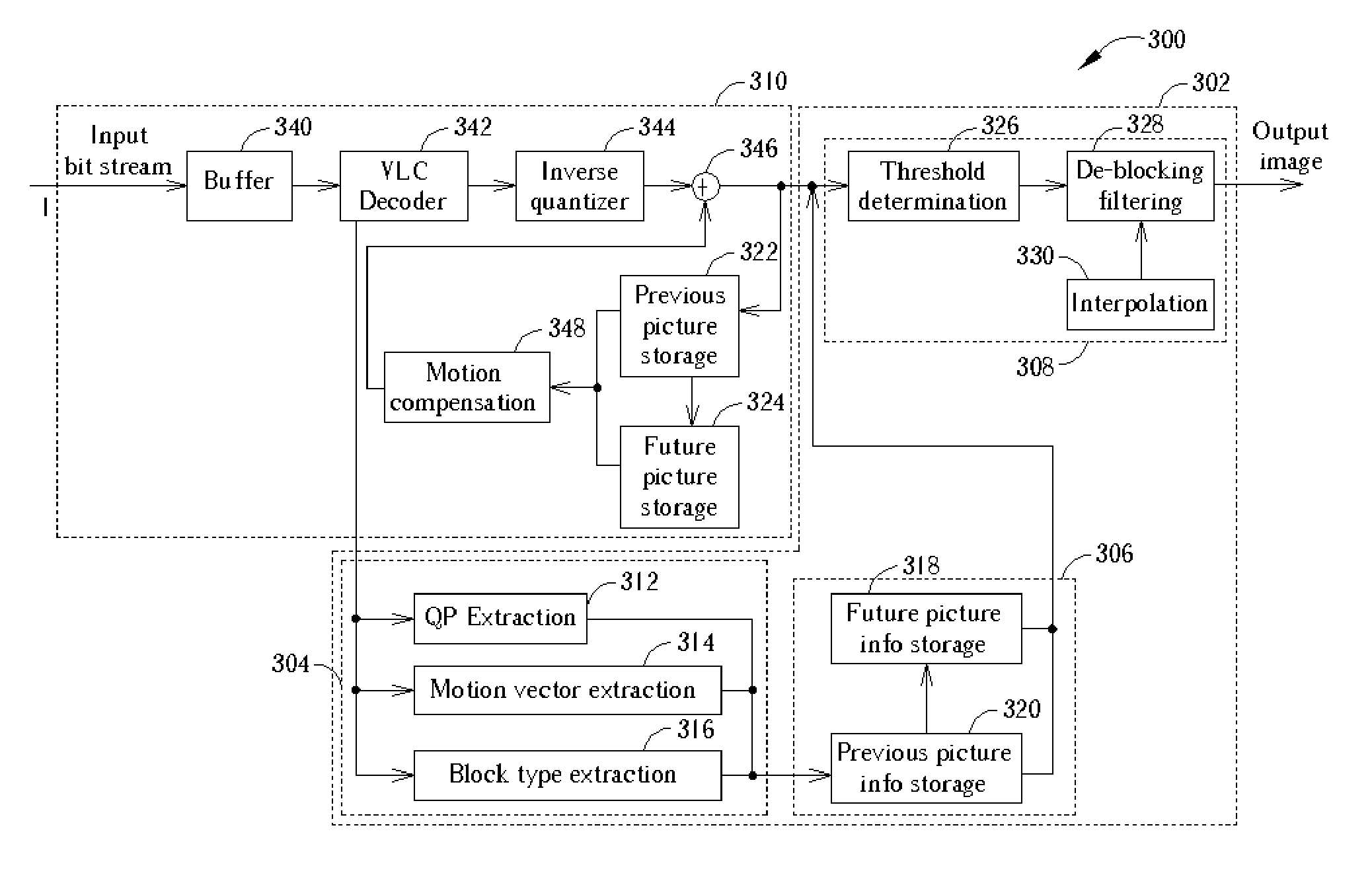

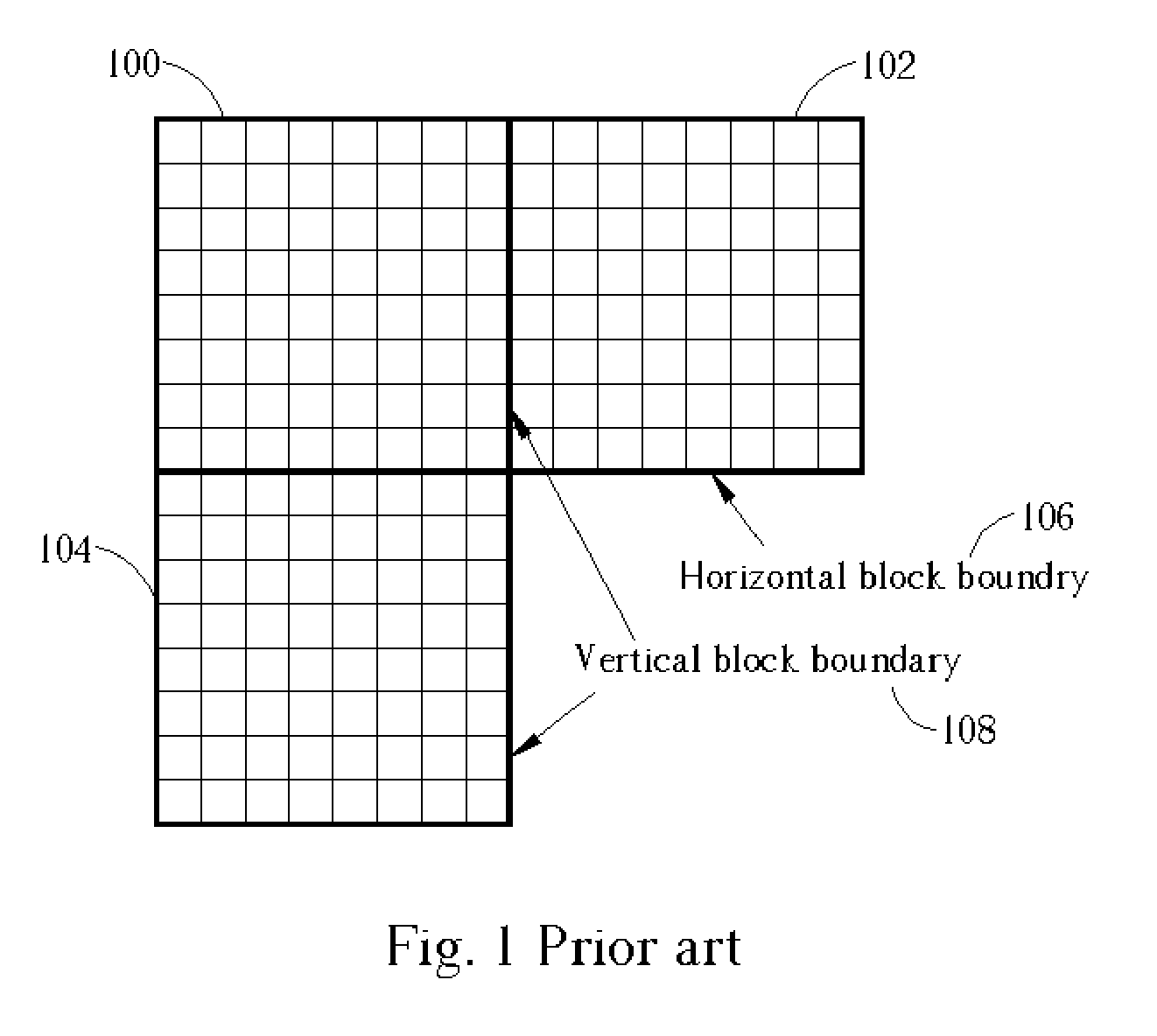

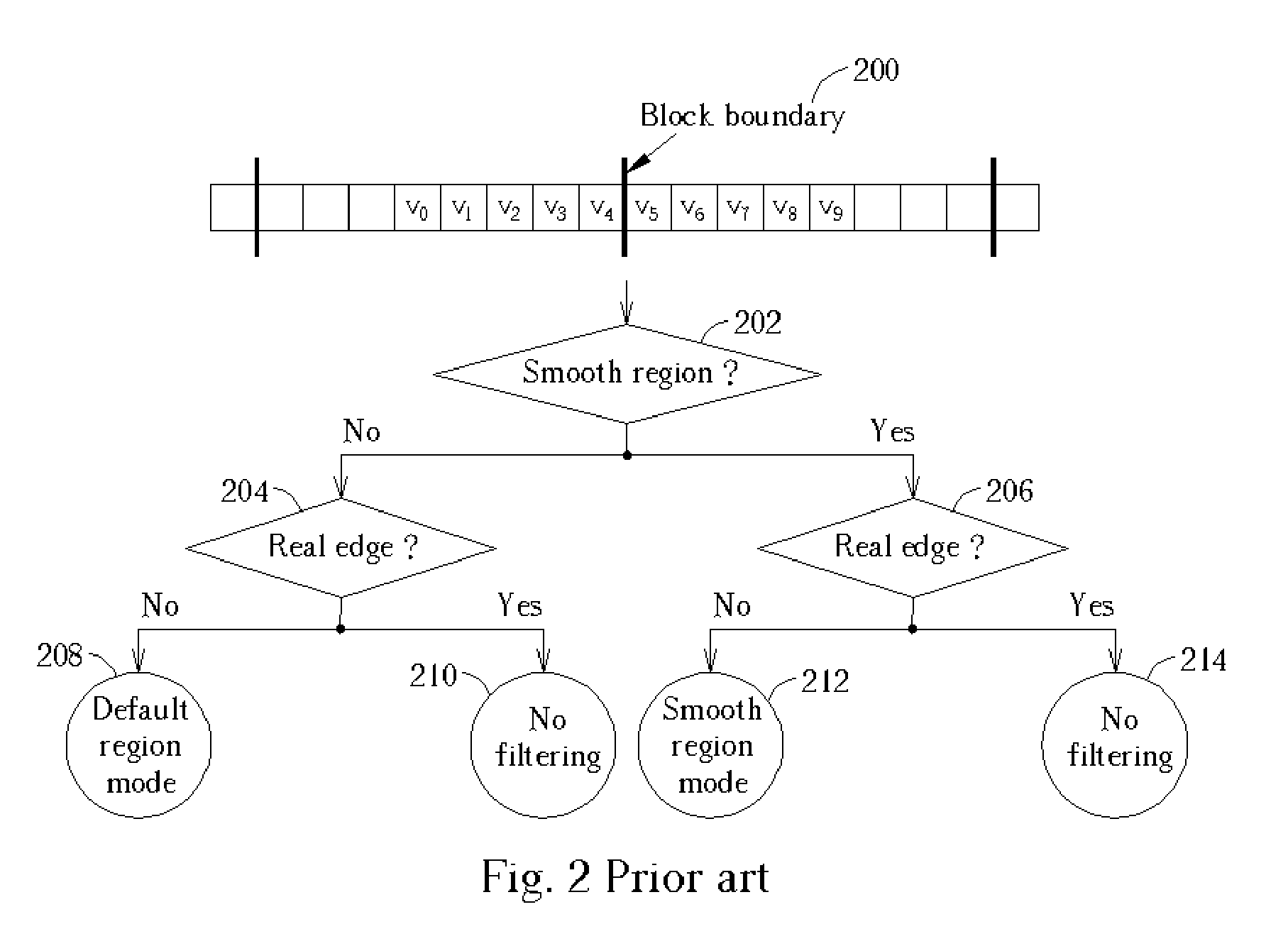

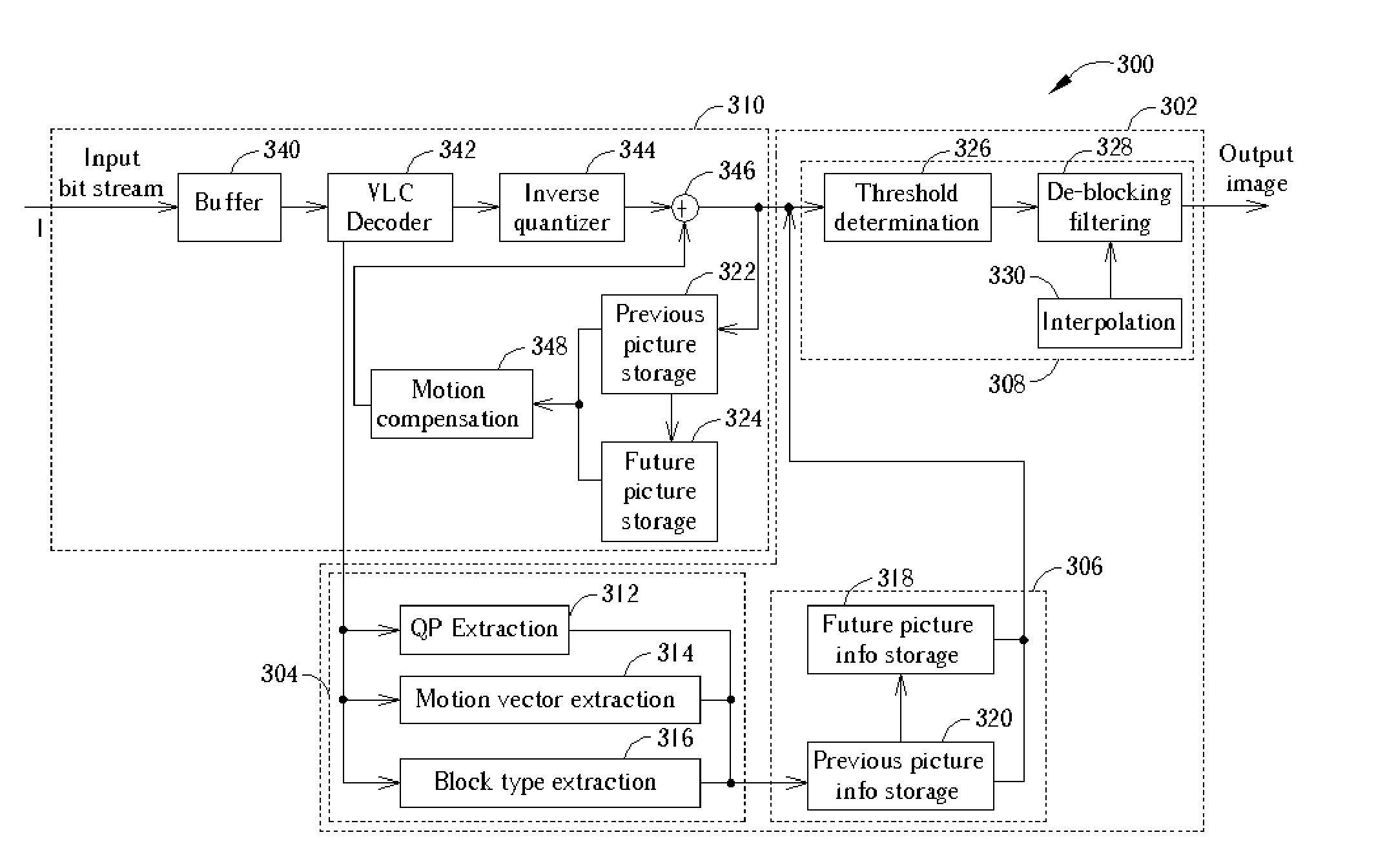

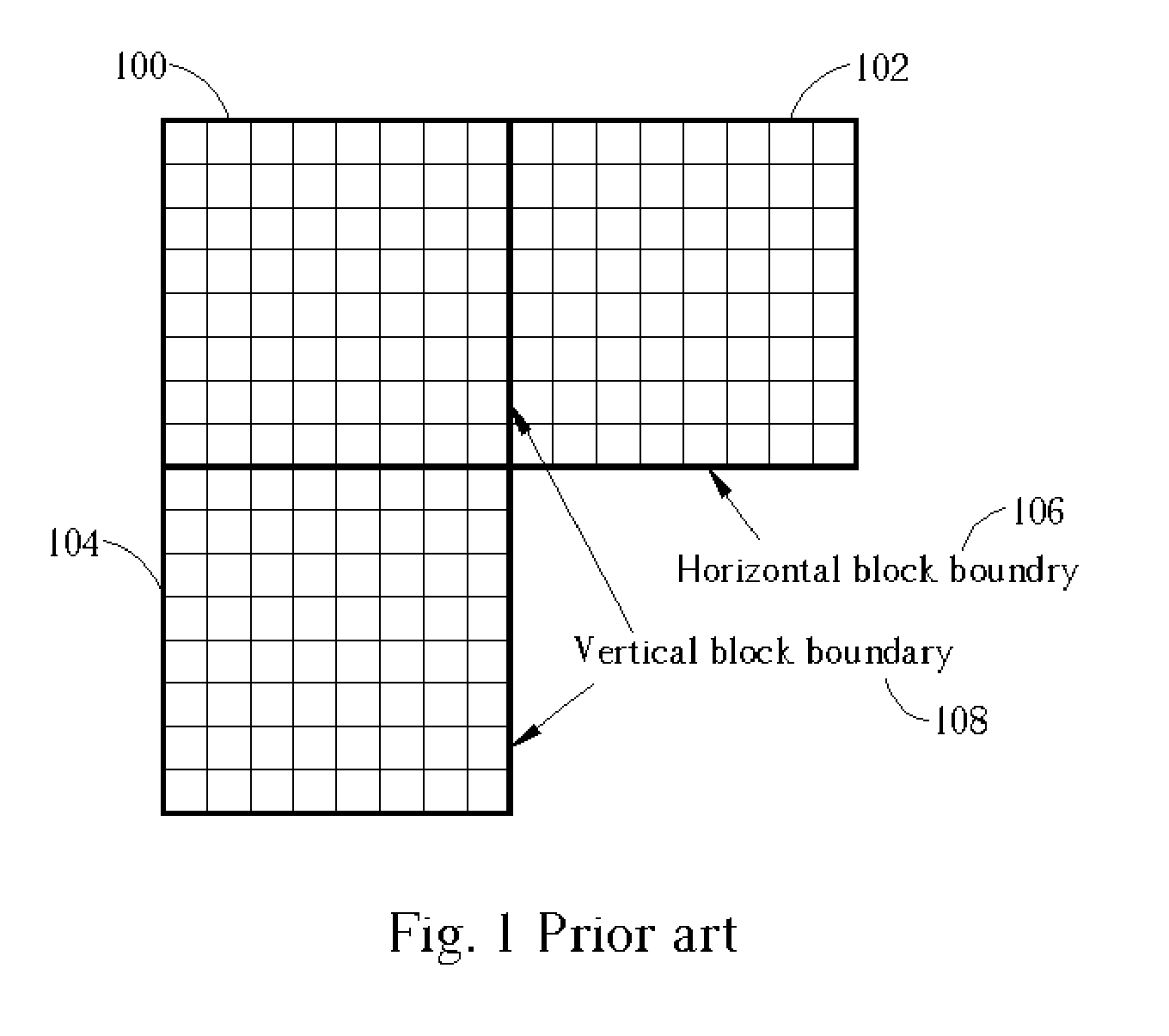

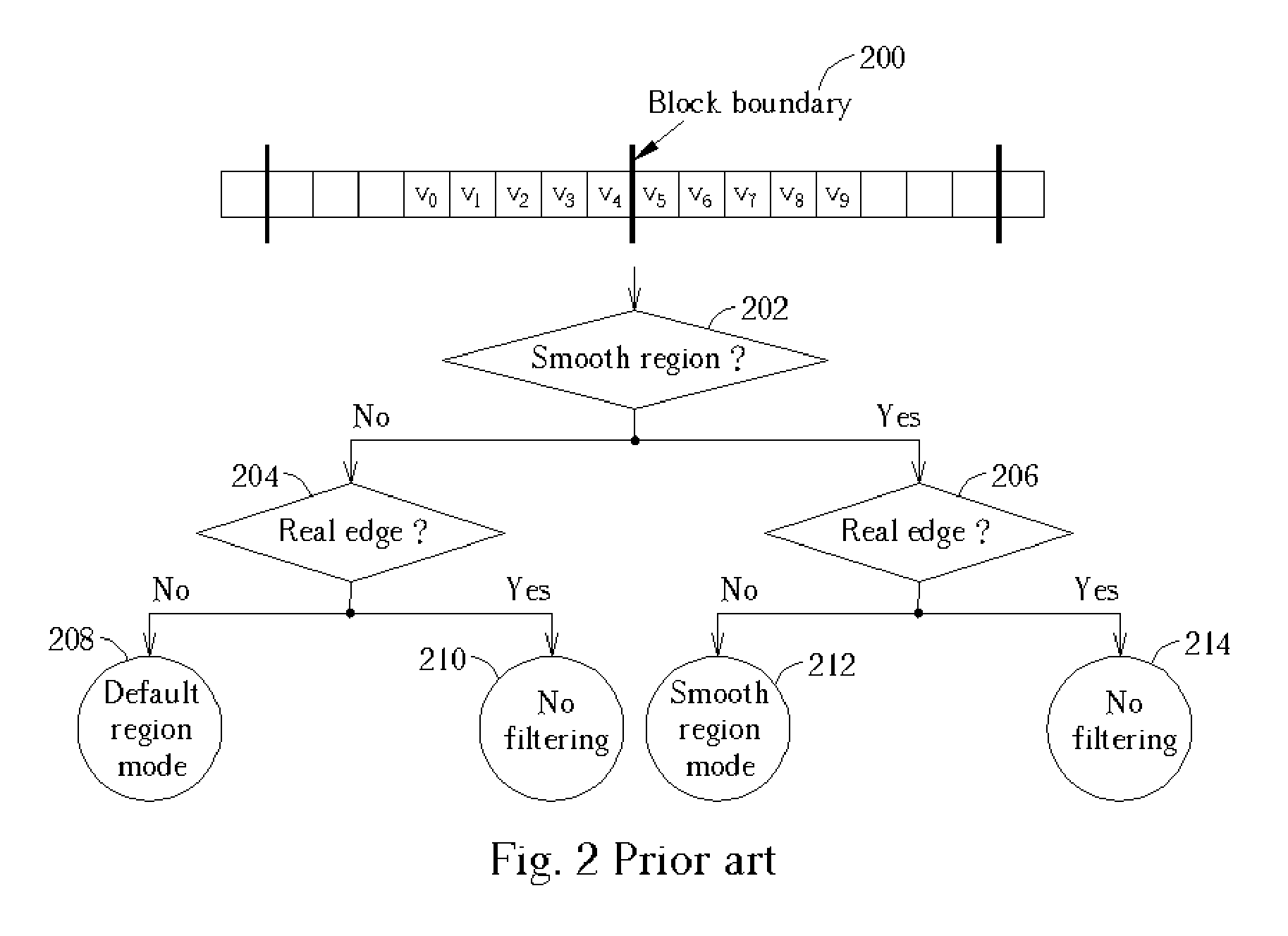

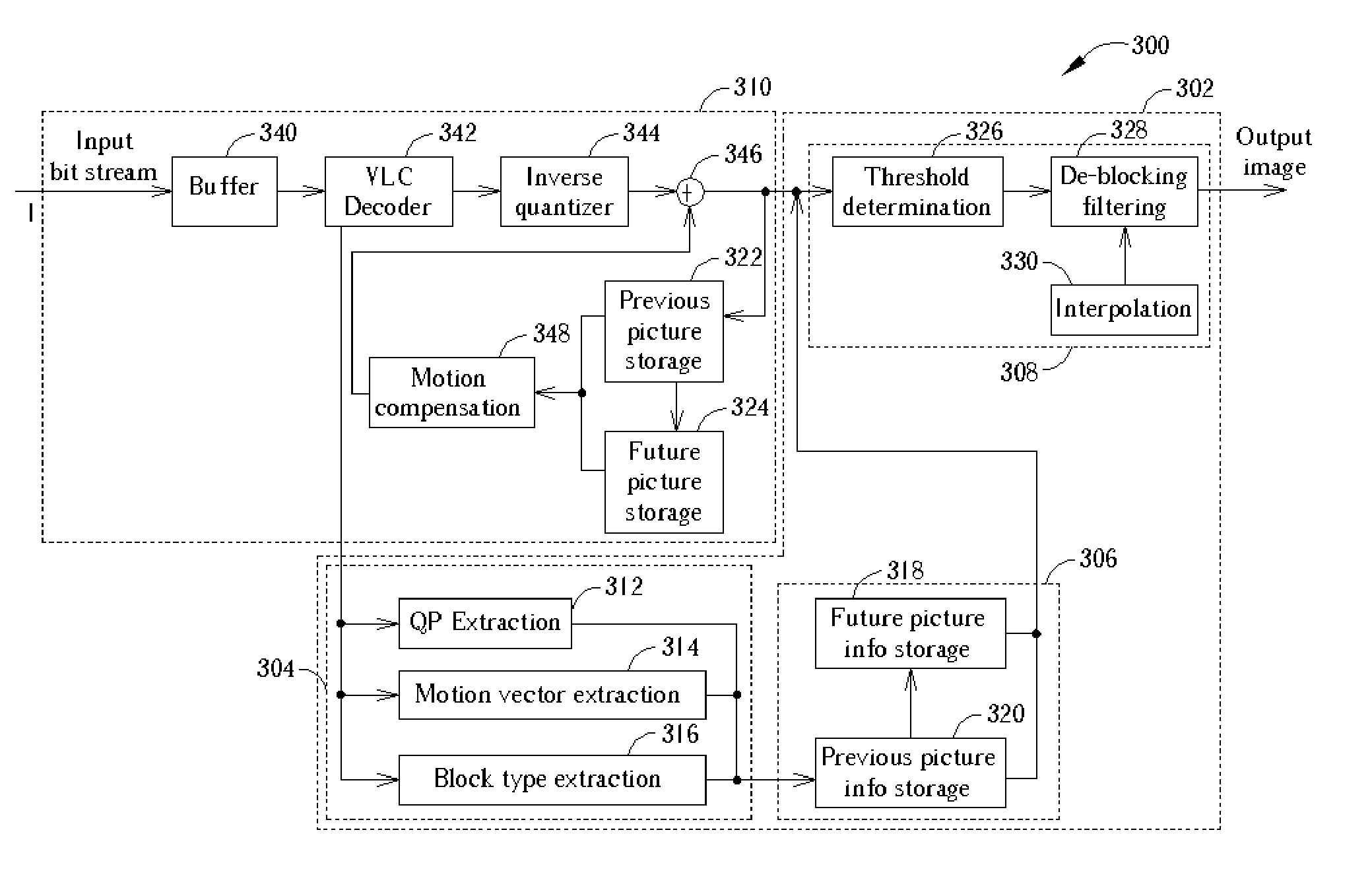

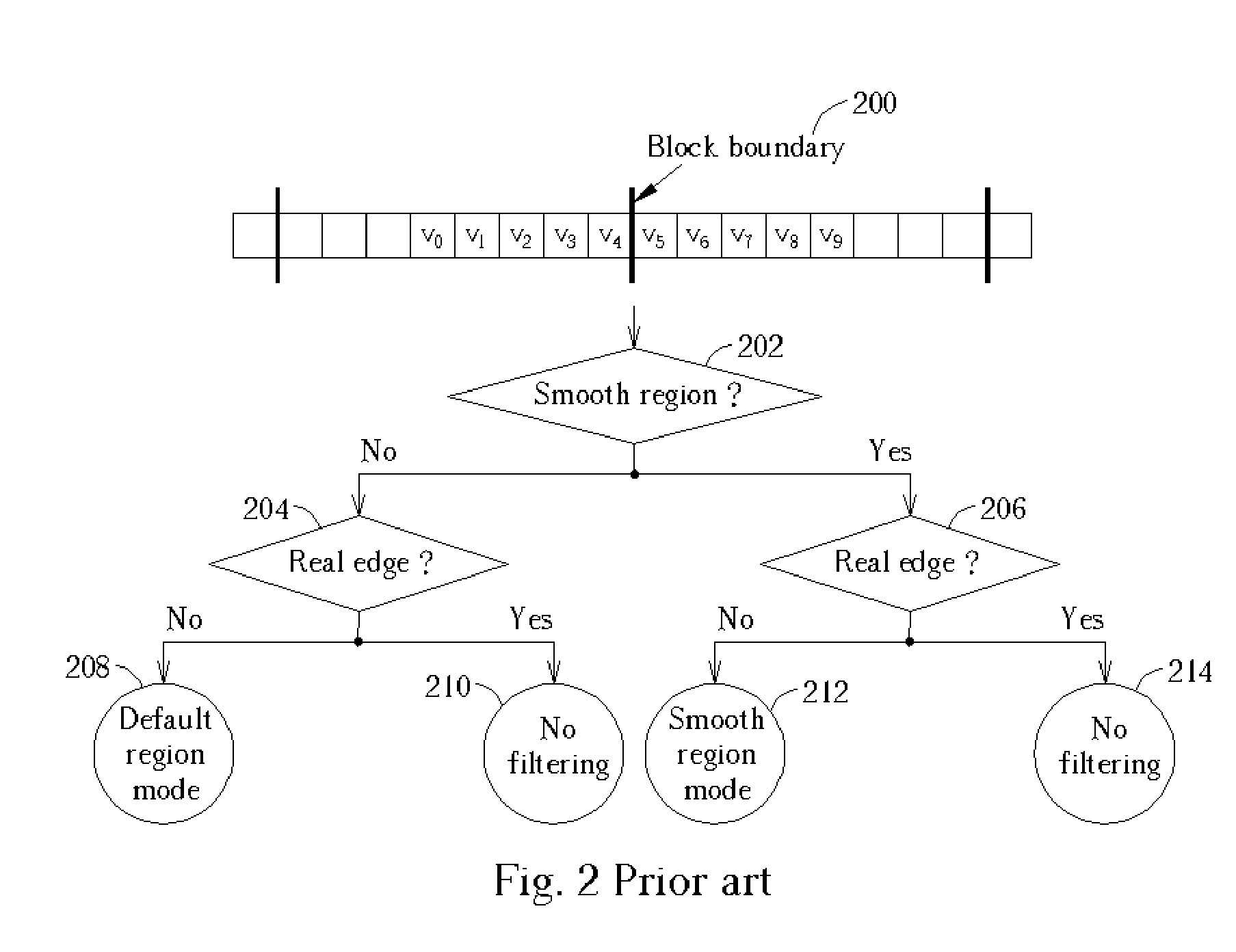

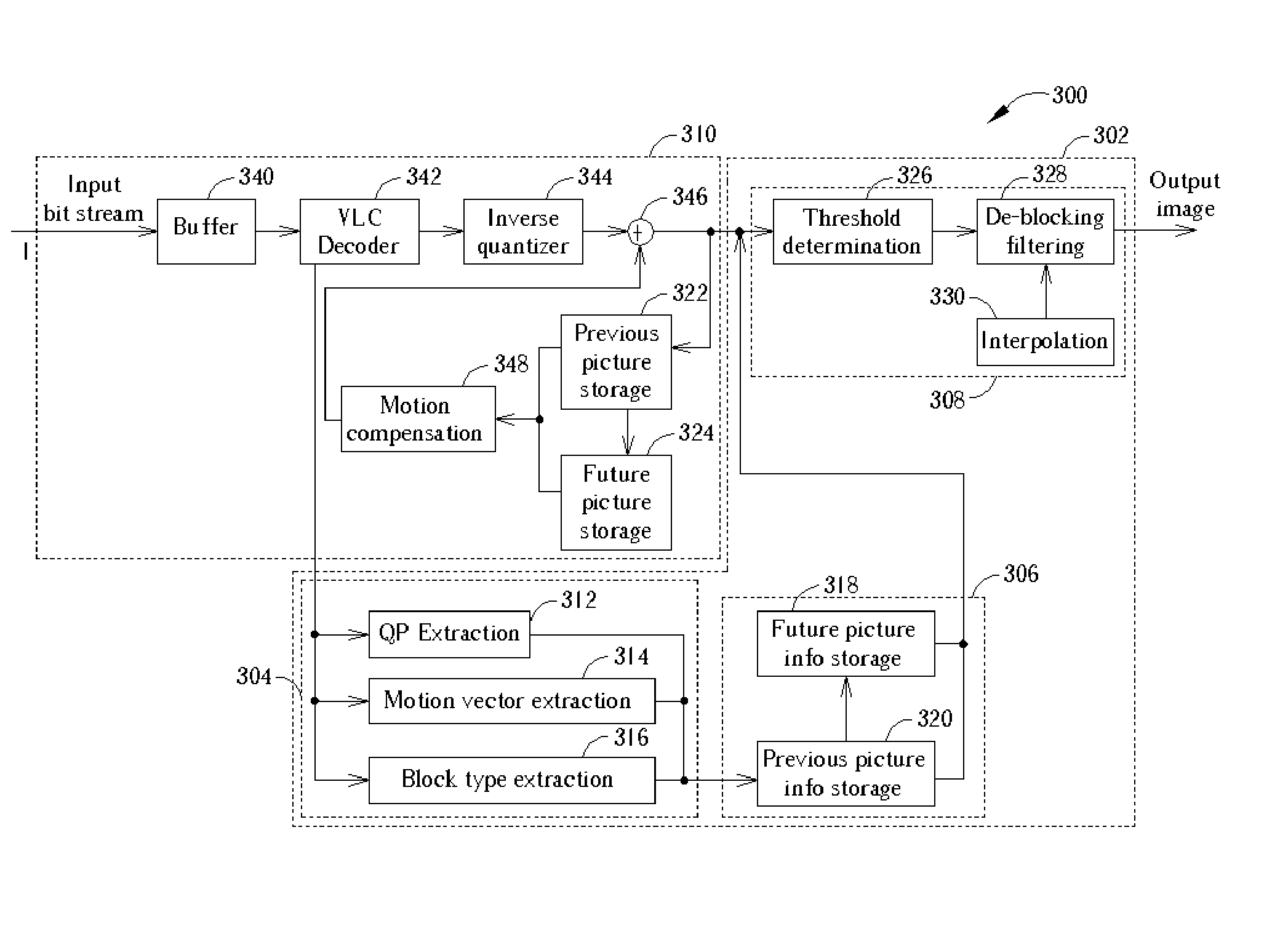

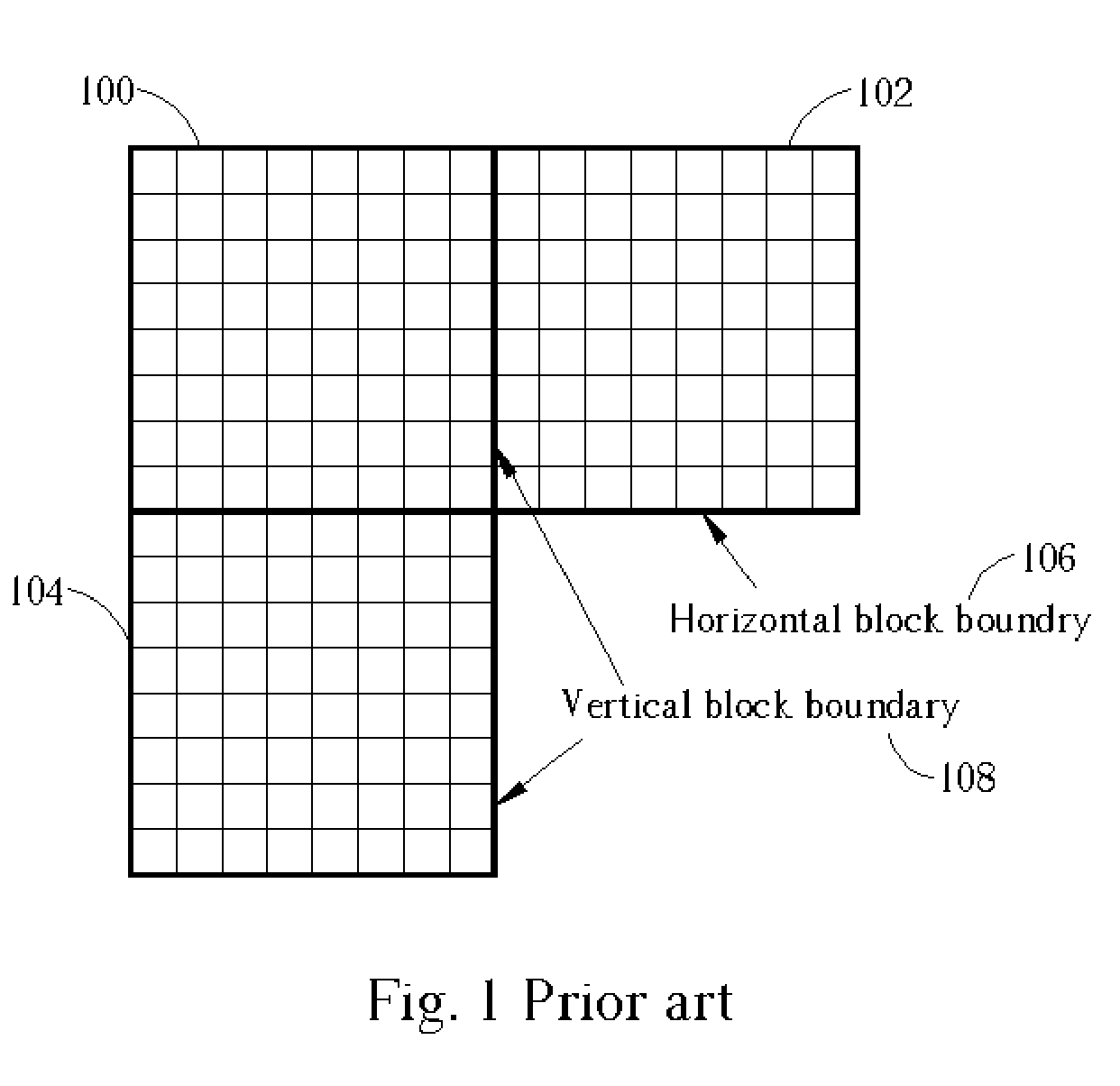

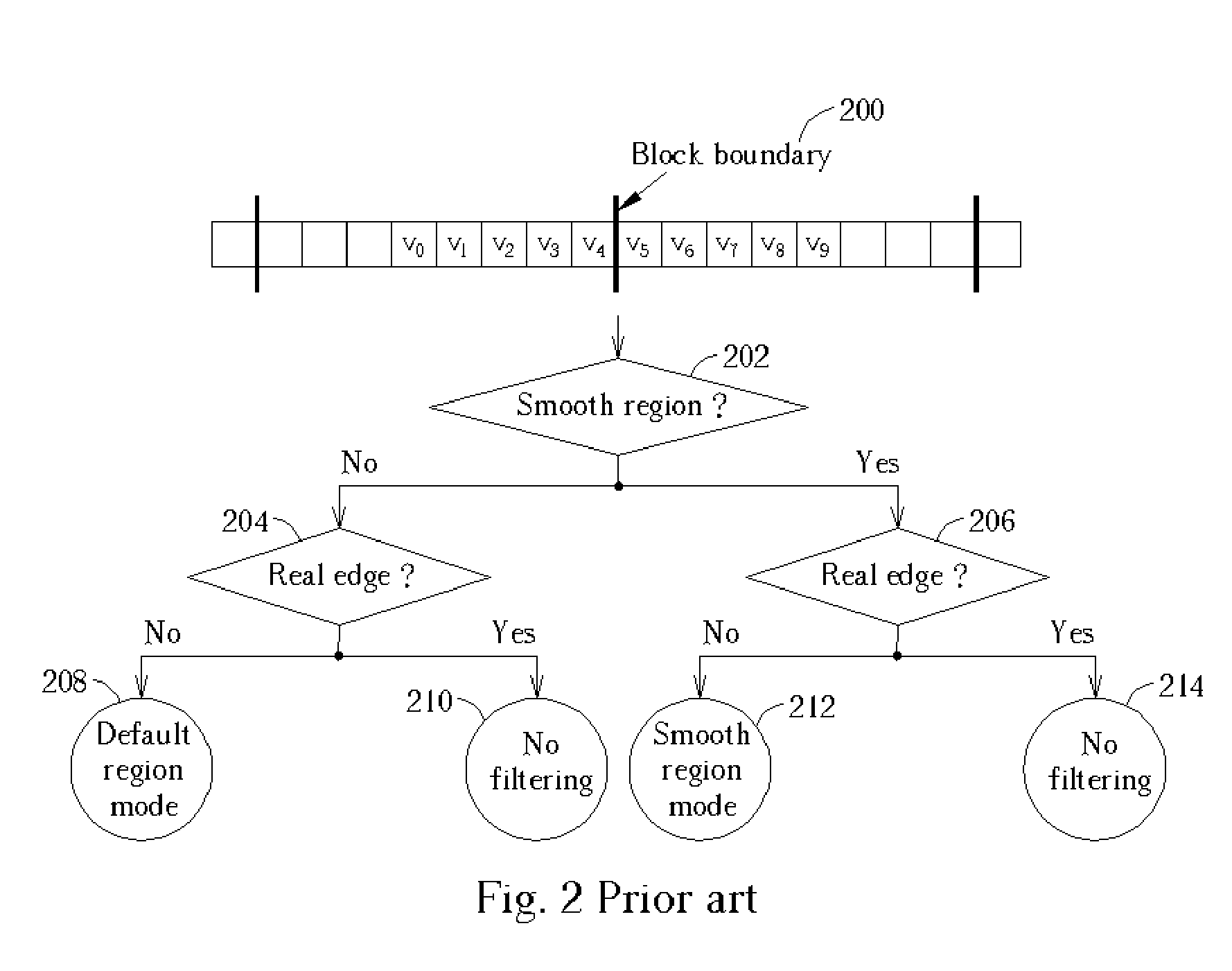

Adaptive de-blocking filtering apparatus and method for MPEG video decoder

ActiveUS7397853B2Reduce complexityColor television with pulse code modulationColor television with bandwidth reductionInterlaced videoParallel computing

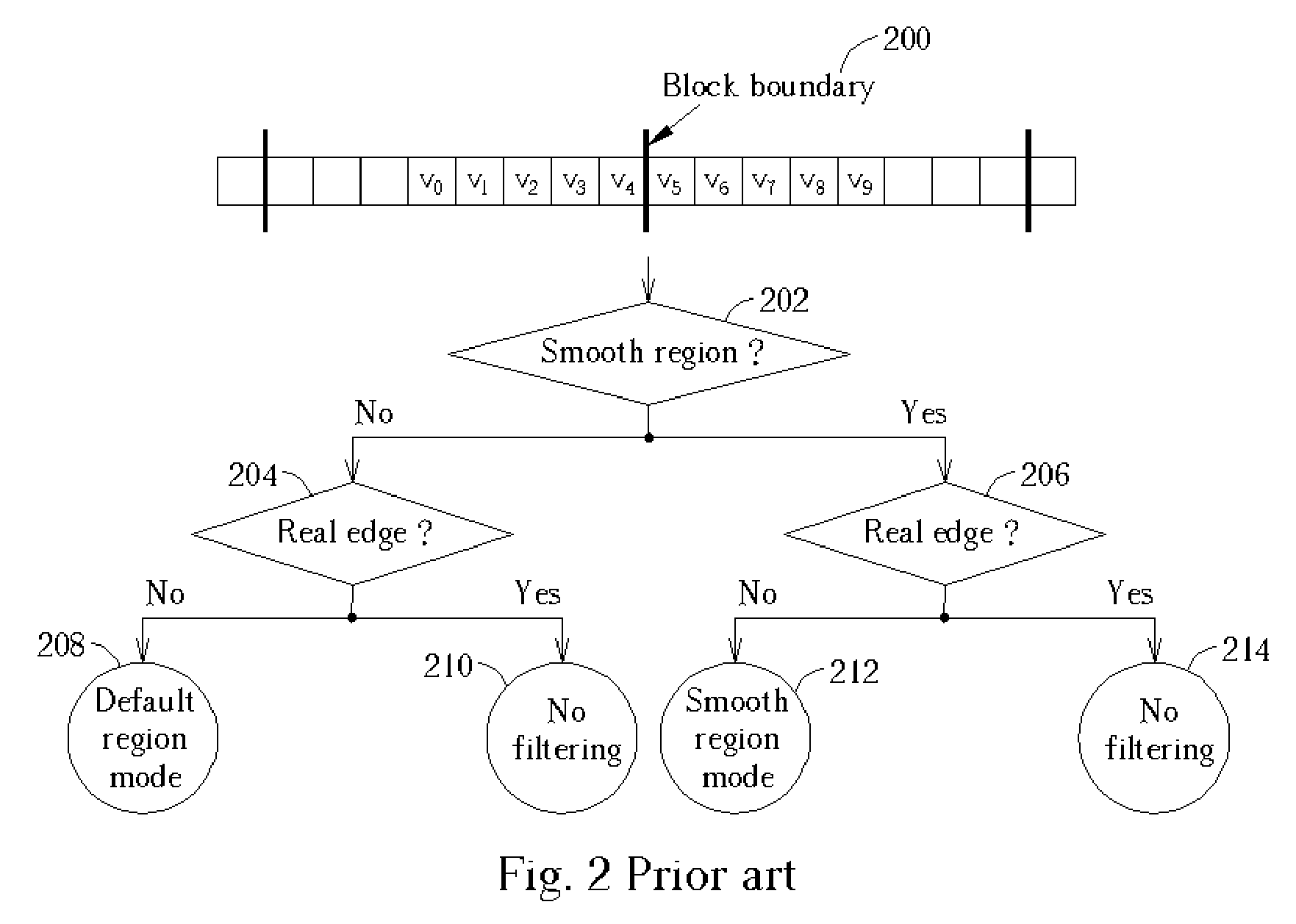

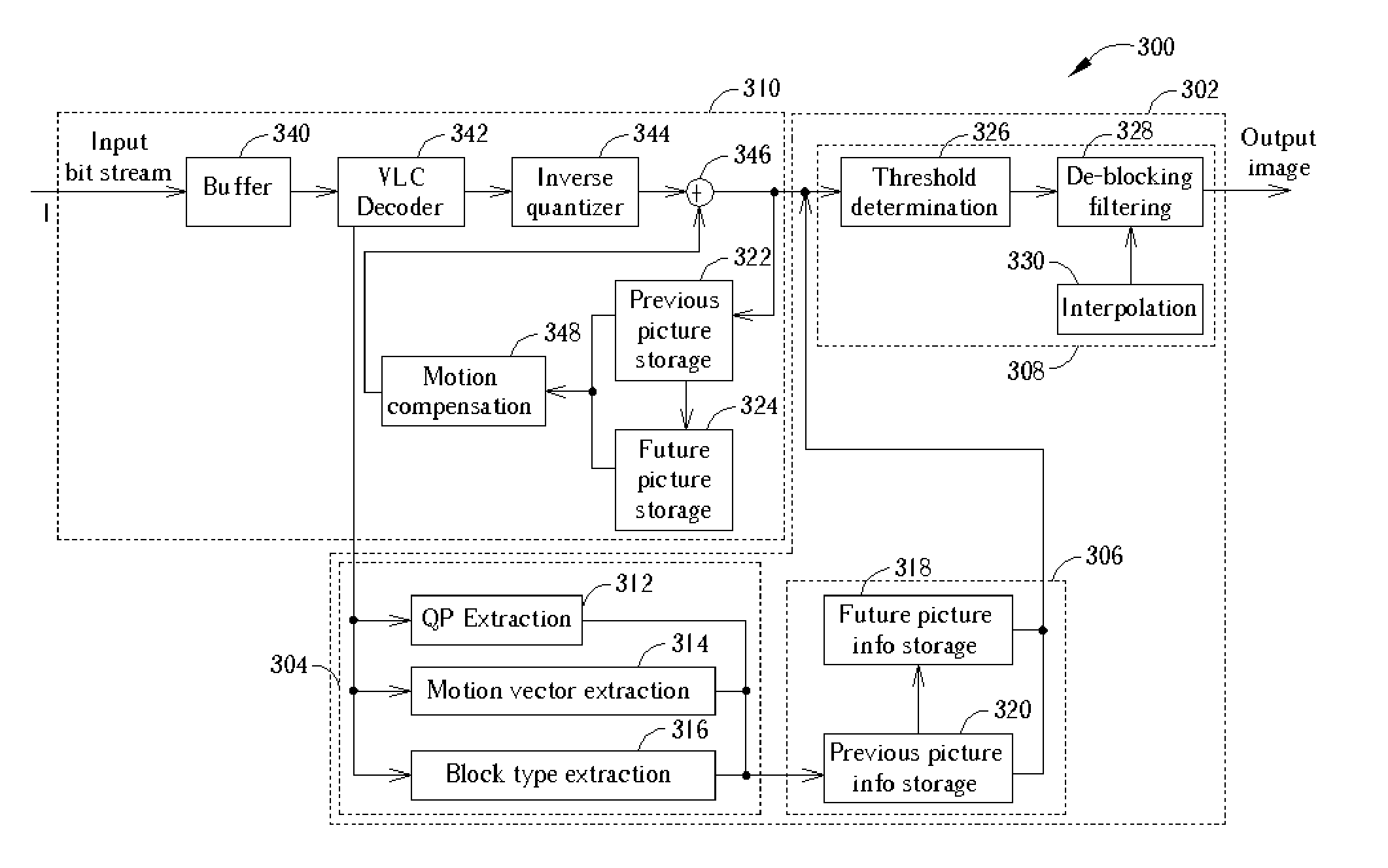

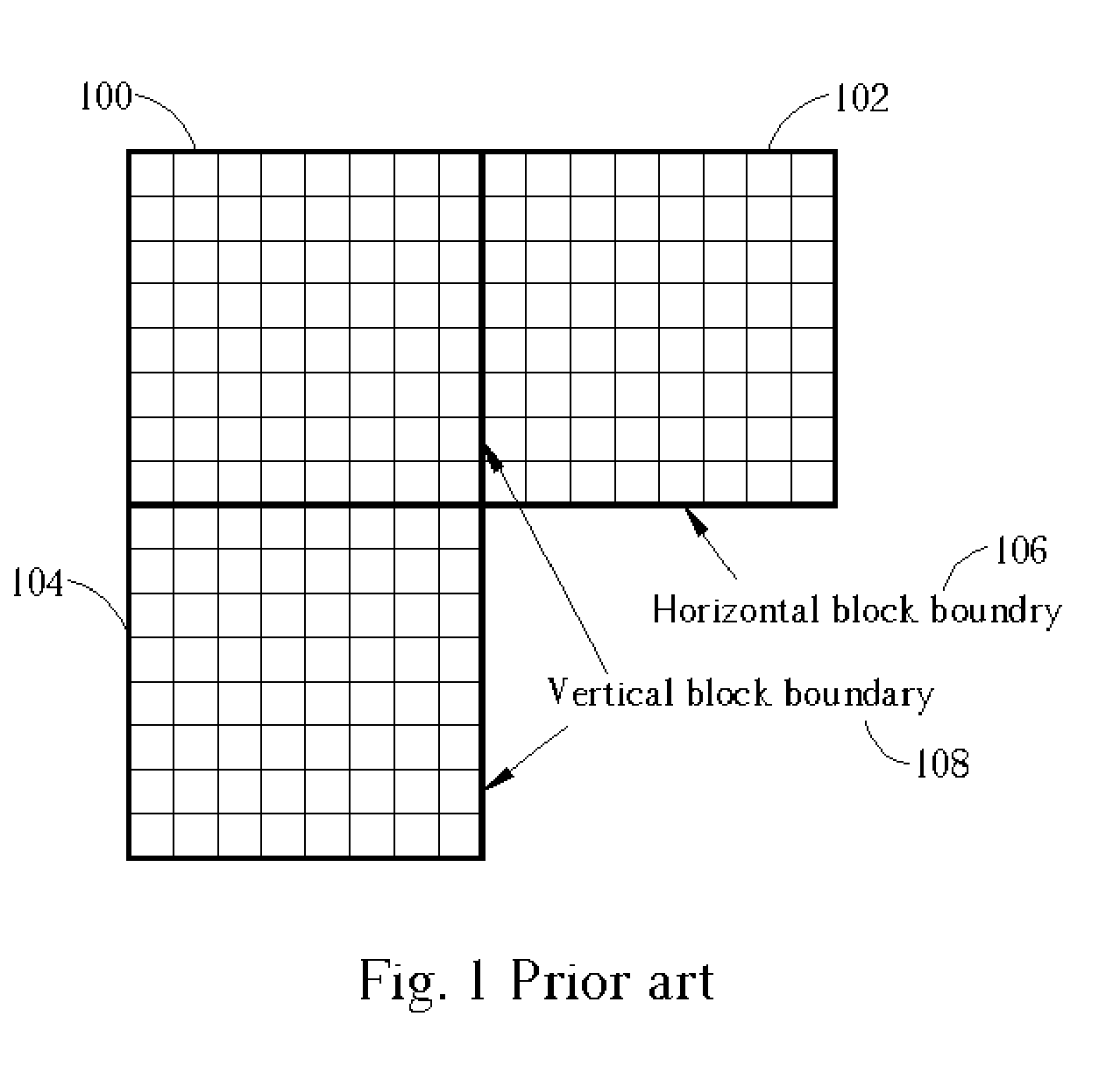

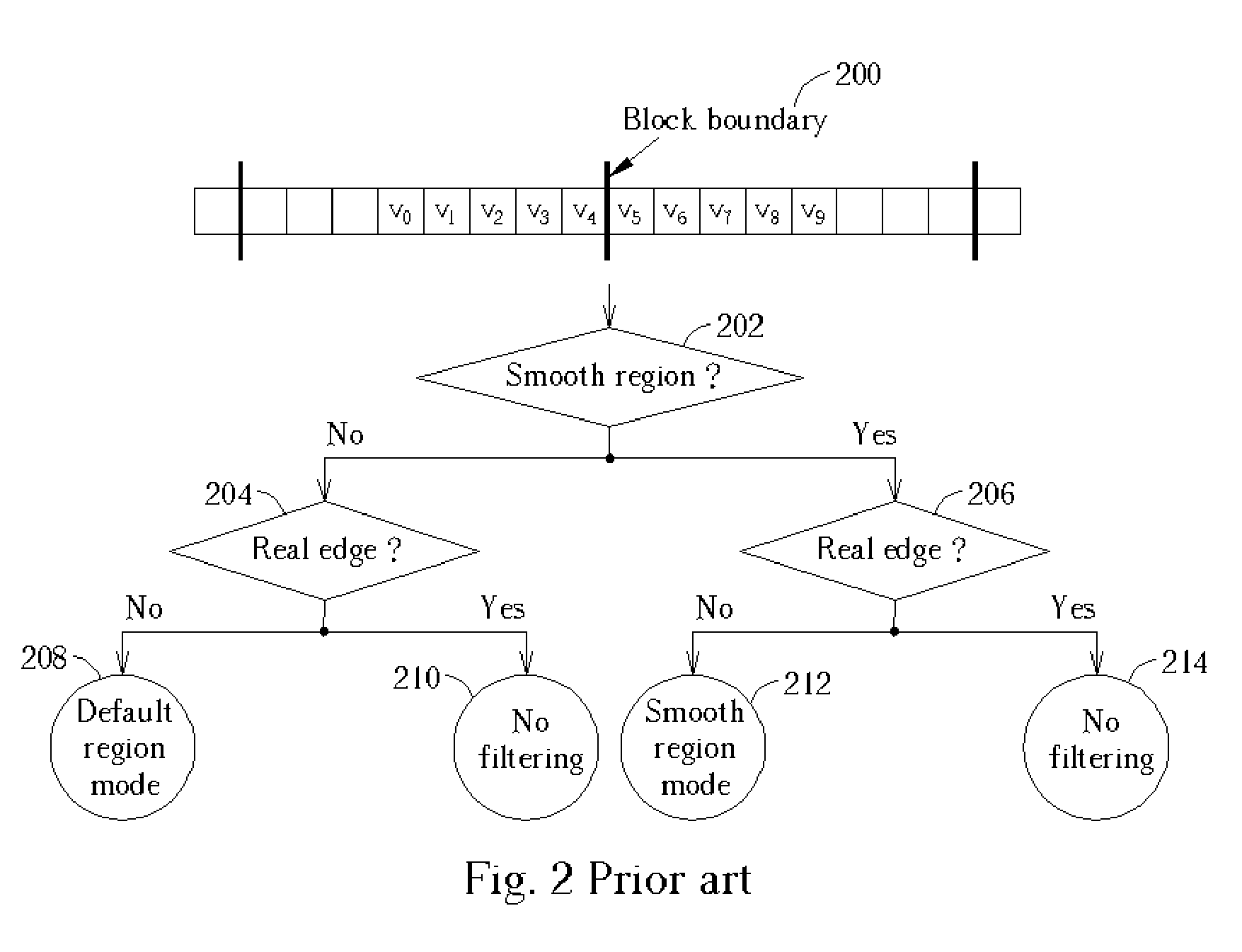

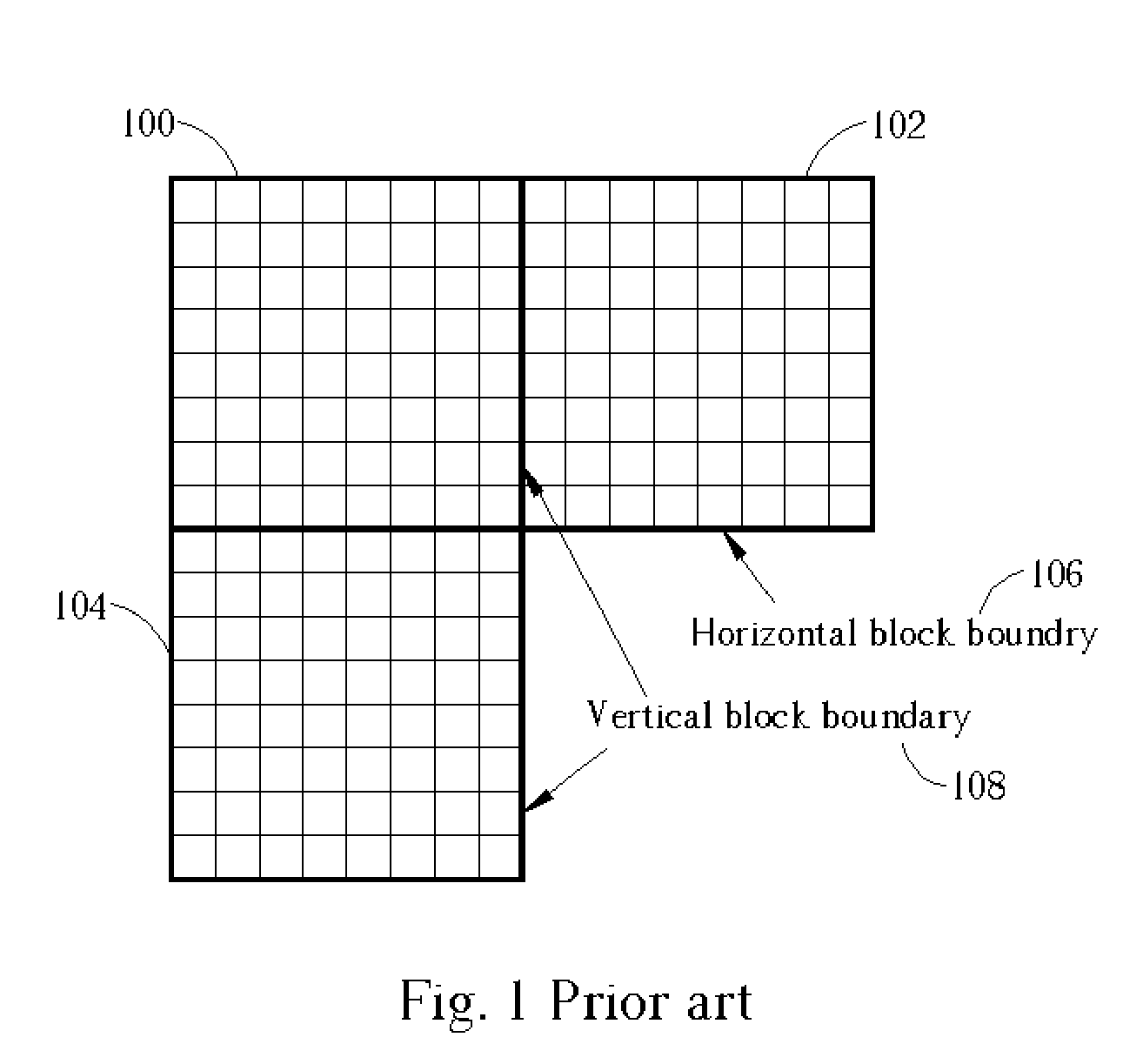

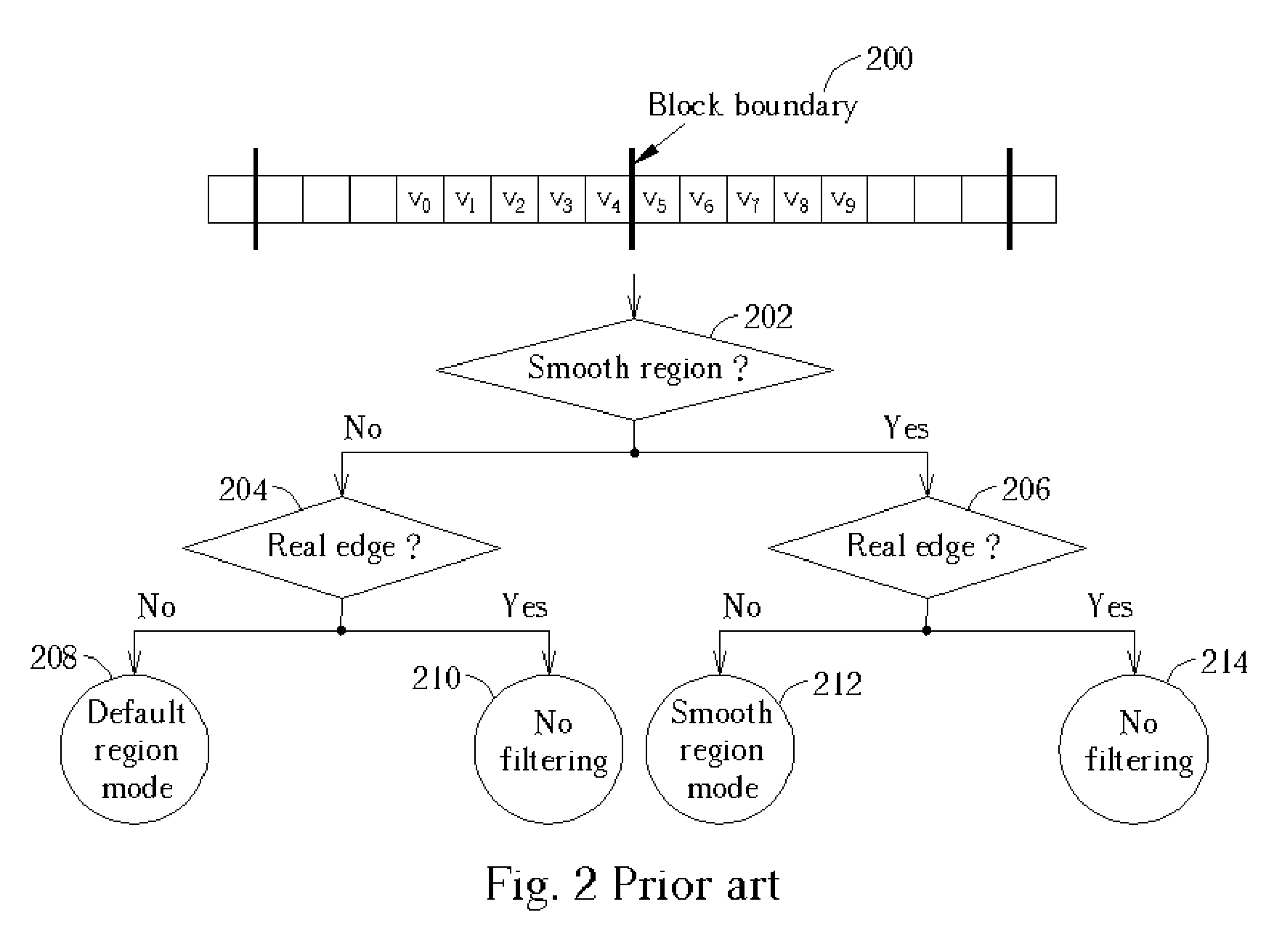

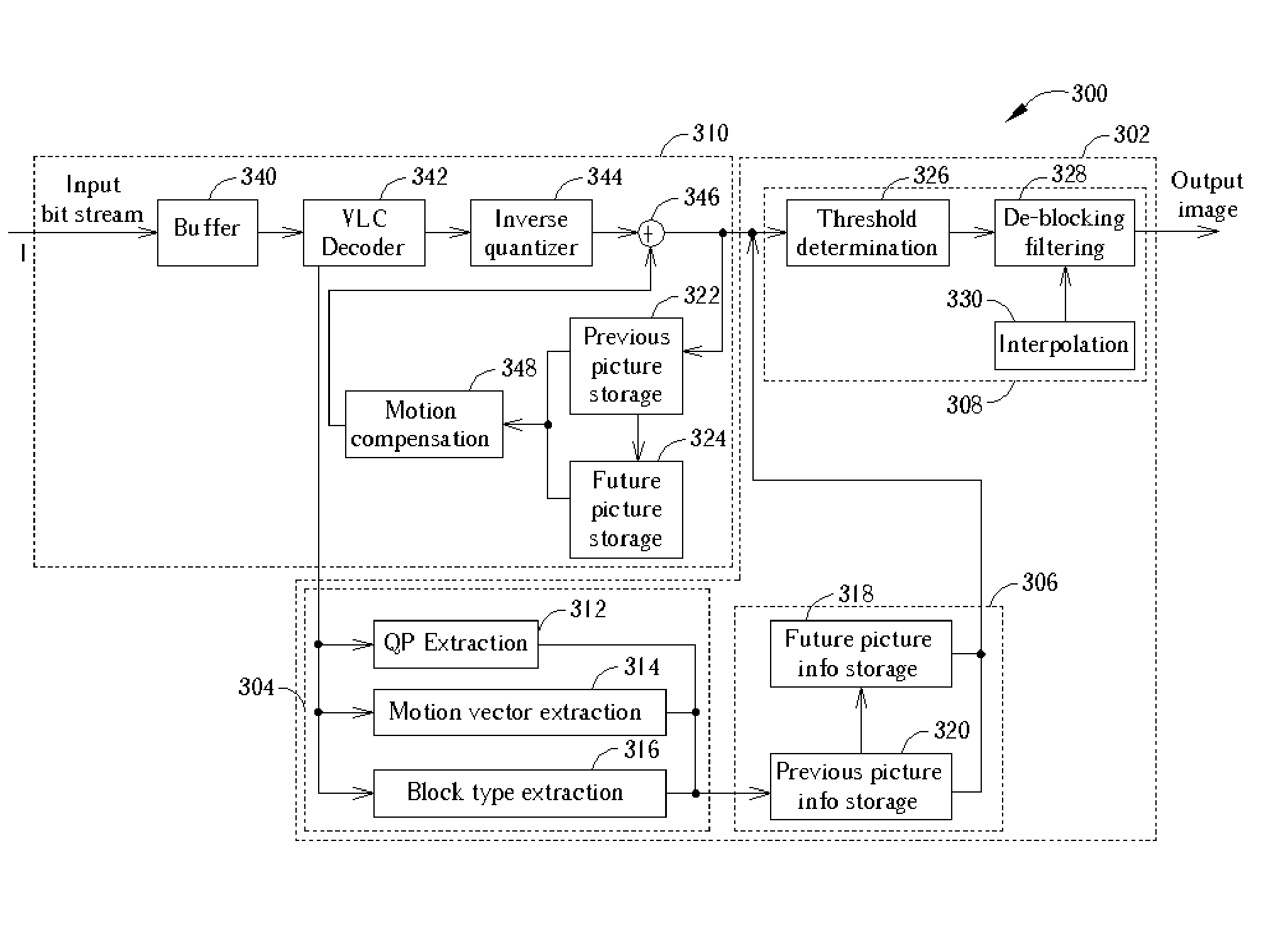

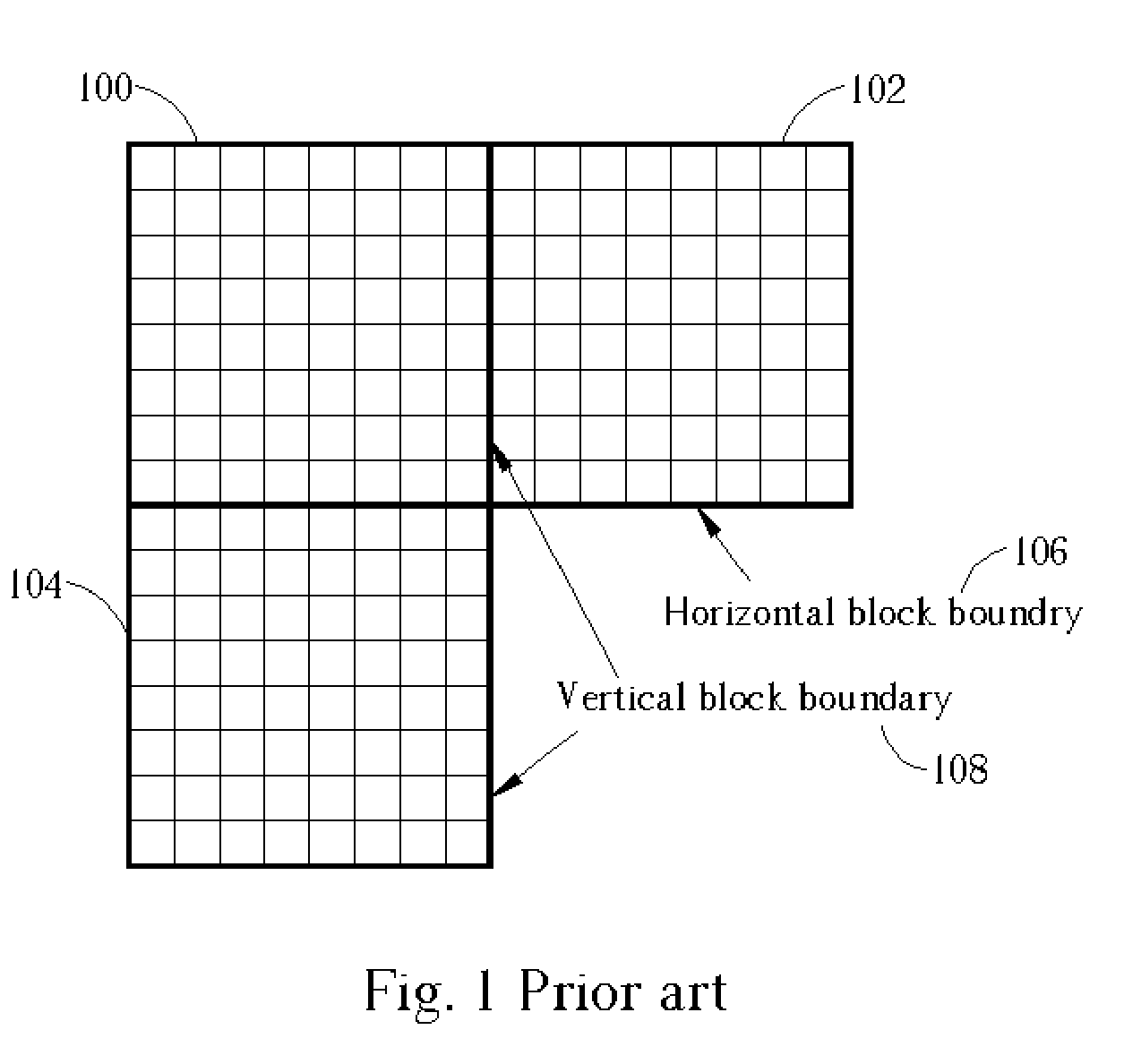

A post processing de-blocking filter includes a threshold determination unit for adaptively determining a plurality of threshold values according to at least differences in quantization parameters QPs of a plurality of adjacent blocks in a received video stream and to a user defined offset (UDO) allowing the threshold levels to be adjusted according to the UDO value; an interpolation unit for performing an interpolation operation to estimate pixel values in an interlaced field if the video stream comprises interlaced video; and a de-blocking filtering unit for determining a filtering range specifying a maximum number of pixels to filter around a block boundary between the adjacent blocks, determining a region mode according to local activity around the block boundary, selecting one of a plurality of at least three filters, and filtering a plurality of pixels around the block boundary according to the filtering range, the region mode, and the selected filter.

Owner:MEDIATEK INC

Adaptive de-blocking filtering apparatus and method for MPEG video decoder

ActiveUS20050244063A1Reduce computational complexityReduce complexityCharacter and pattern recognitionTelevision systemsInterlaced videoParallel computing

A post processing de-blocking filter includes a threshold determination unit for adaptively determining a plurality of threshold values according to at least differences in quantization parameters QPs of a plurality of adjacent blocks in a received video stream and to a user defined offset (UDO) allowing the threshold levels to be adjusted according to the UDO value; an interpolation unit for performing an interpolation operation to estimate pixel values in an interlaced field if the video stream comprises interlaced video; and a de-blocking filtering unit for determining a filtering range specifying a maximum number of pixels to filter around a block boundary between the adjacent blocks, determining a region mode according to local activity around the block boundary, selecting one of a plurality of at least three filters, and filtering a plurality of pixels around the block boundary according to the filtering range, the region mode, and the selected filter.

Owner:MEDIATEK INC

Adaptive de-blocking filtering apparatus and method for MPEG video decoder

ActiveUS20050243912A1Reduce complexityColor television with pulse code modulationColor television with bandwidth reductionInterlaced videoParallel computing

A post processing de-blocking filter includes a threshold determination unit for adaptively determining a plurality of threshold values according to at least differences in quantization parameters QPs of a plurality of adjacent blocks in a received video stream and to a user defined offset (UDO) allowing the threshold levels to be adjusted according to the UDO value; an interpolation unit for performing an interpolation operation to estimate pixel values in an interlaced field if the video stream comprises interlaced video; and a de-blocking filtering unit for determining a filtering range specifying a maximum number of pixels to filter around a block boundary between the adjacent blocks, determining a region mode according to local activity around the block boundary, selecting one of a plurality of at least three filters, and filtering a plurality of pixels around the block boundary according to the filtering range, the region mode, and the selected filter.

Owner:MEDIATEK INC

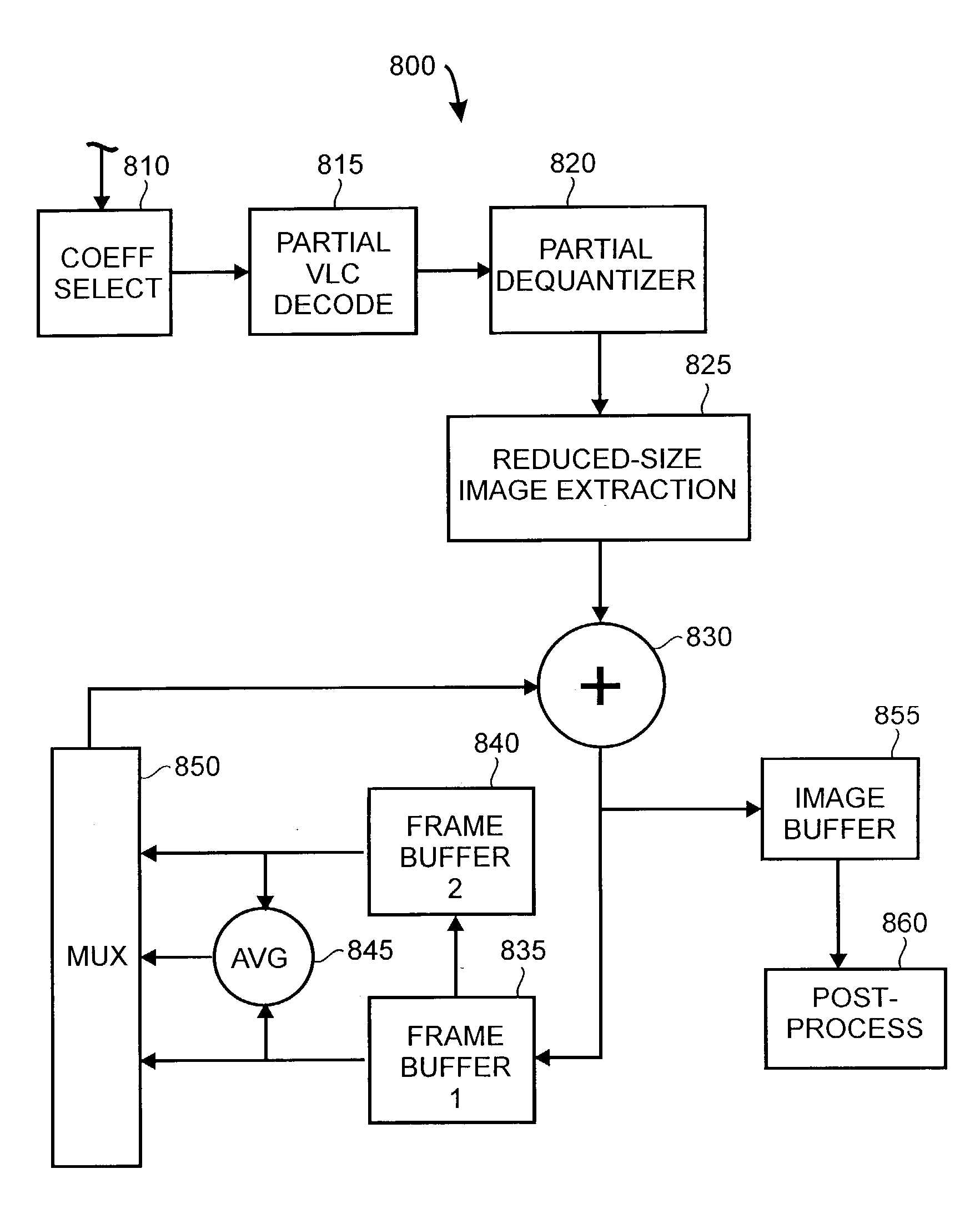

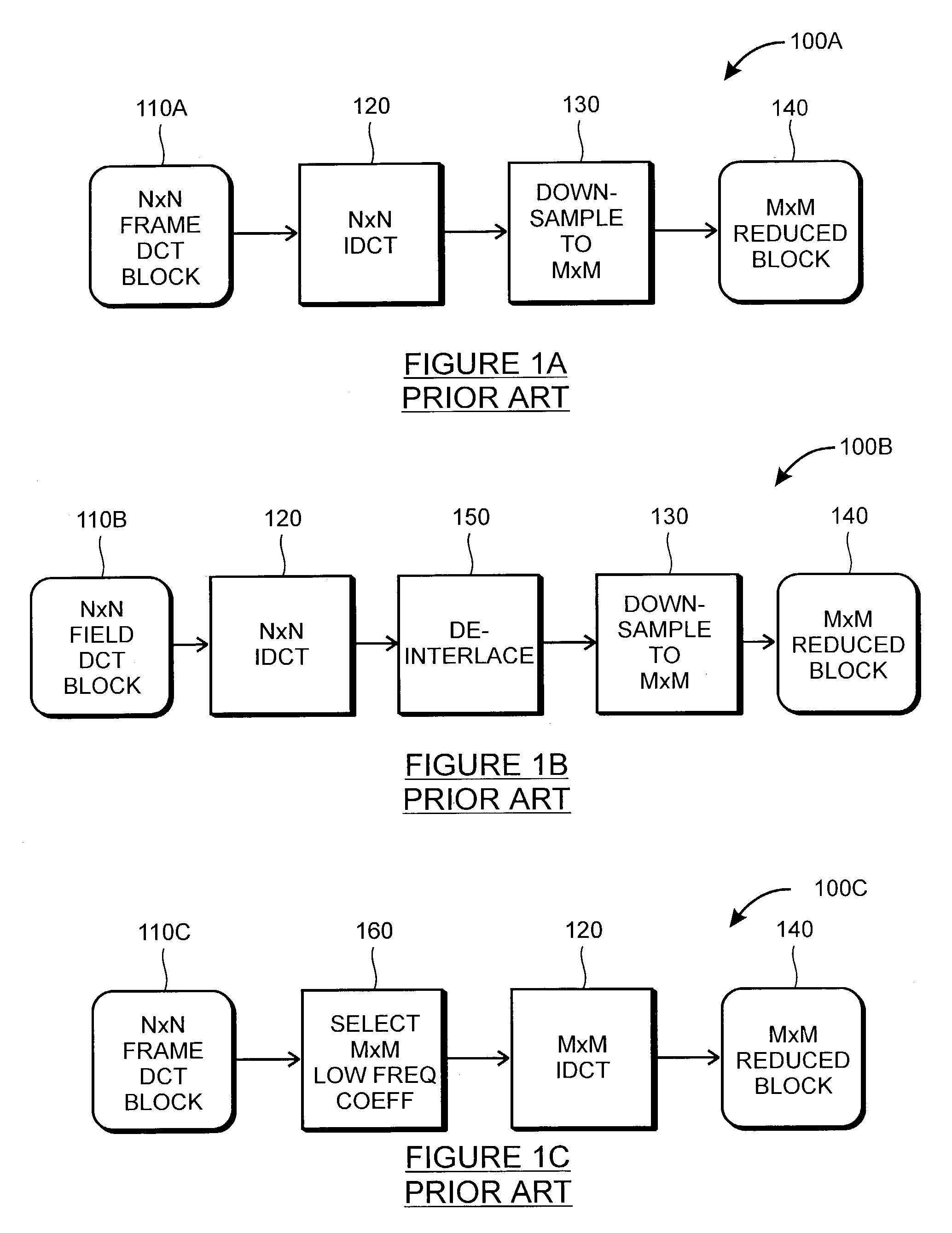

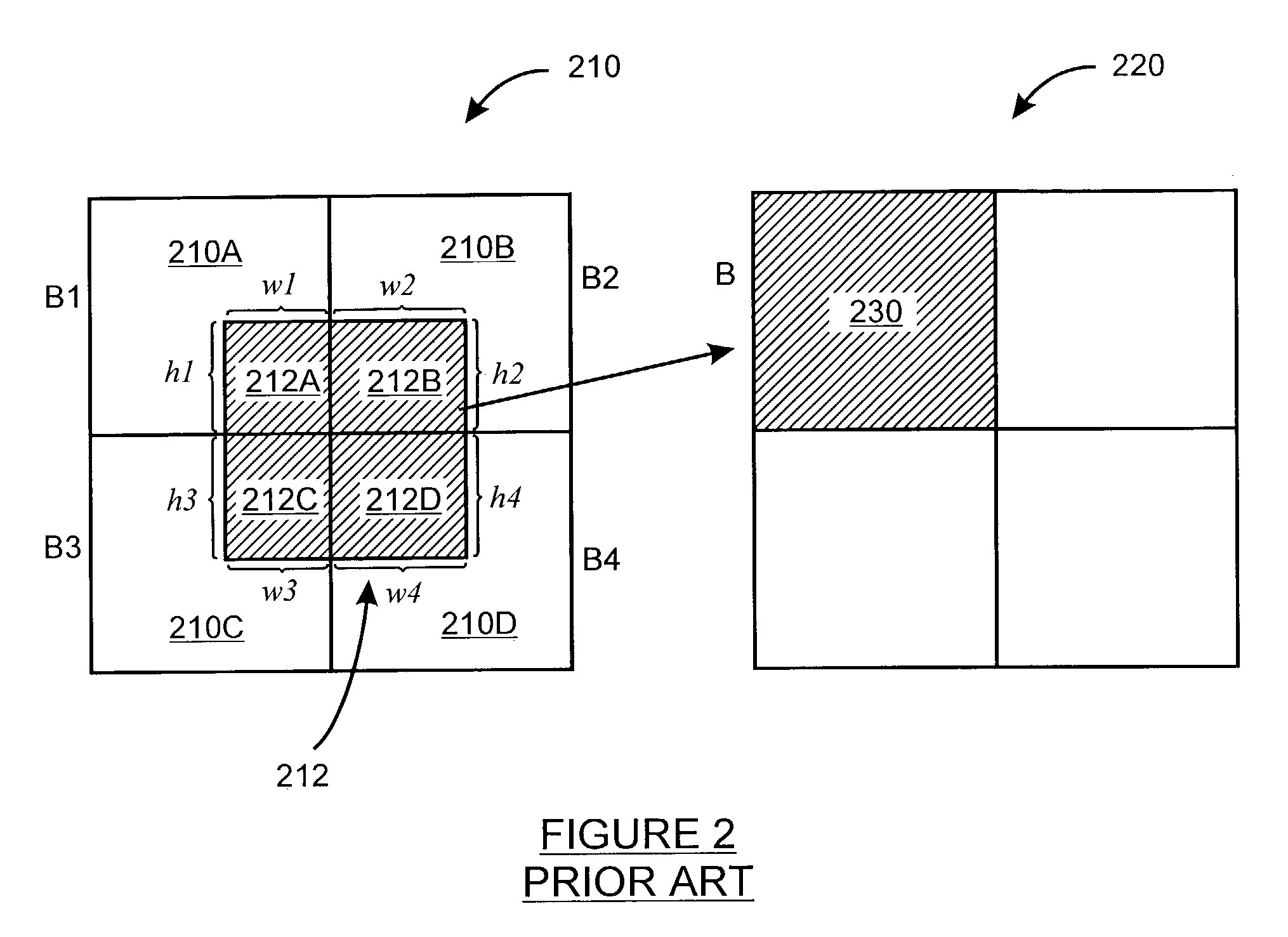

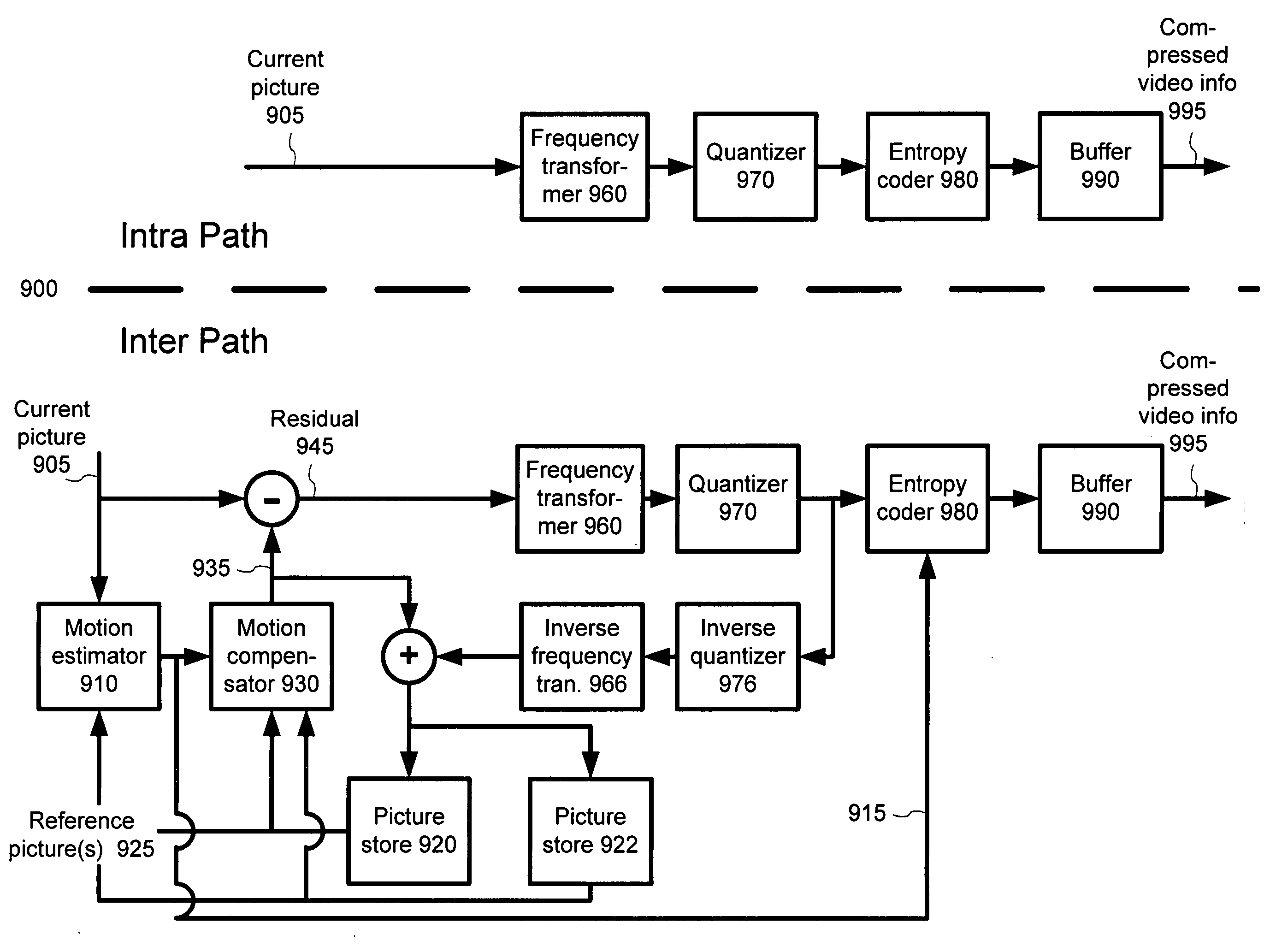

Rapid production of reduced-size images from compressed video streams

ActiveUS7471834B2Simple technologyRapid productionDisc-shaped record carriersFlat record carrier combinationsInterlaced videoReduced size

Methods for fast generating spatially reduced-size images directly from compressed video streams supporting the coding of interlaced frames through transform coding and motion compensation. A sequence of reduced-size images are rapidly generated from compressed video streams by efficiently combining the steps of inverse transform, down-sampling and construction of each field image. The construction of reduced-size field images also allows the efficient motion compensation. The fast generation of reduced-size images is applicable to a variety of low-cost applications such as video browsing, video summary, fast thumbnail playback and video indexing.

Owner:SCENERA INC

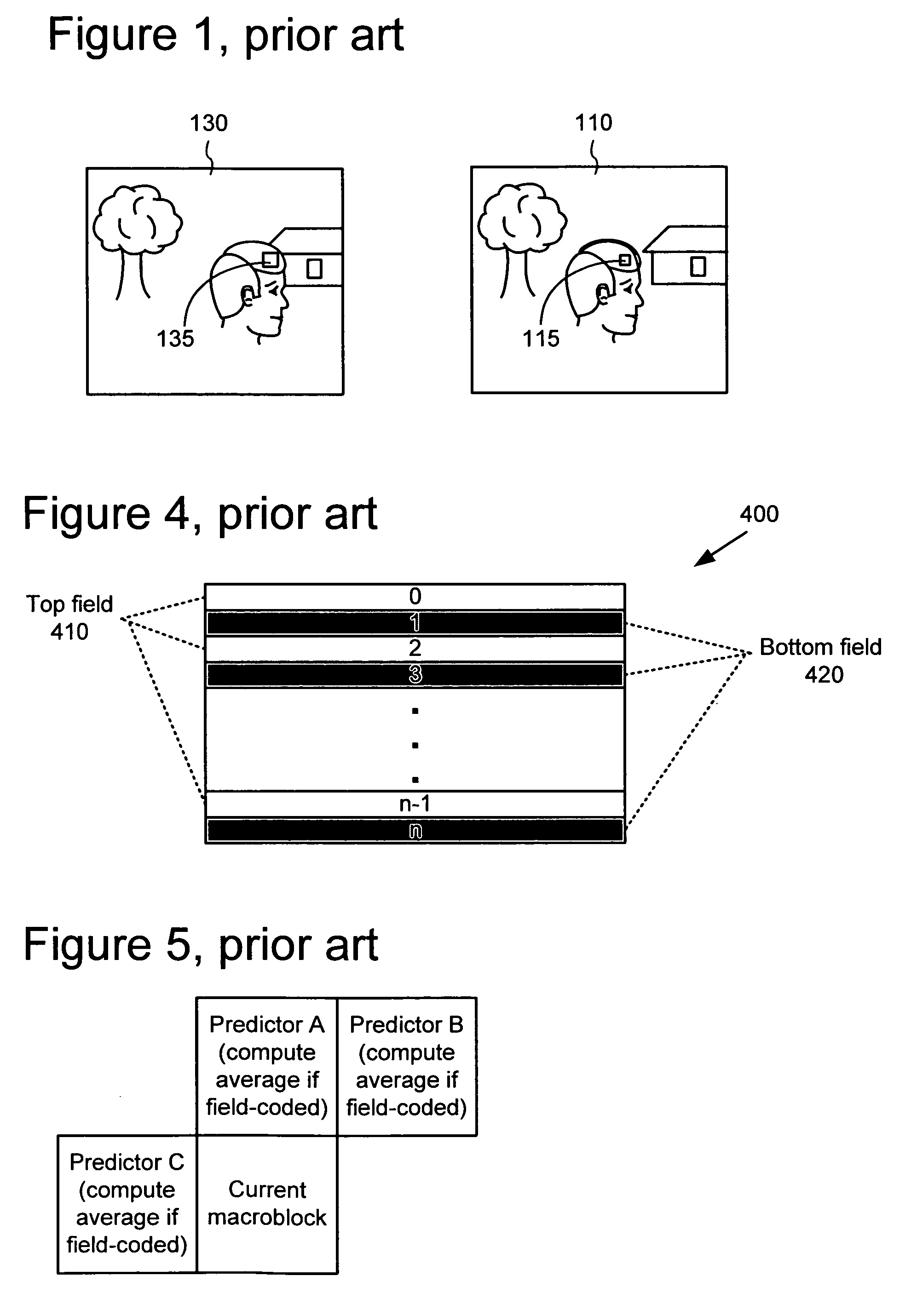

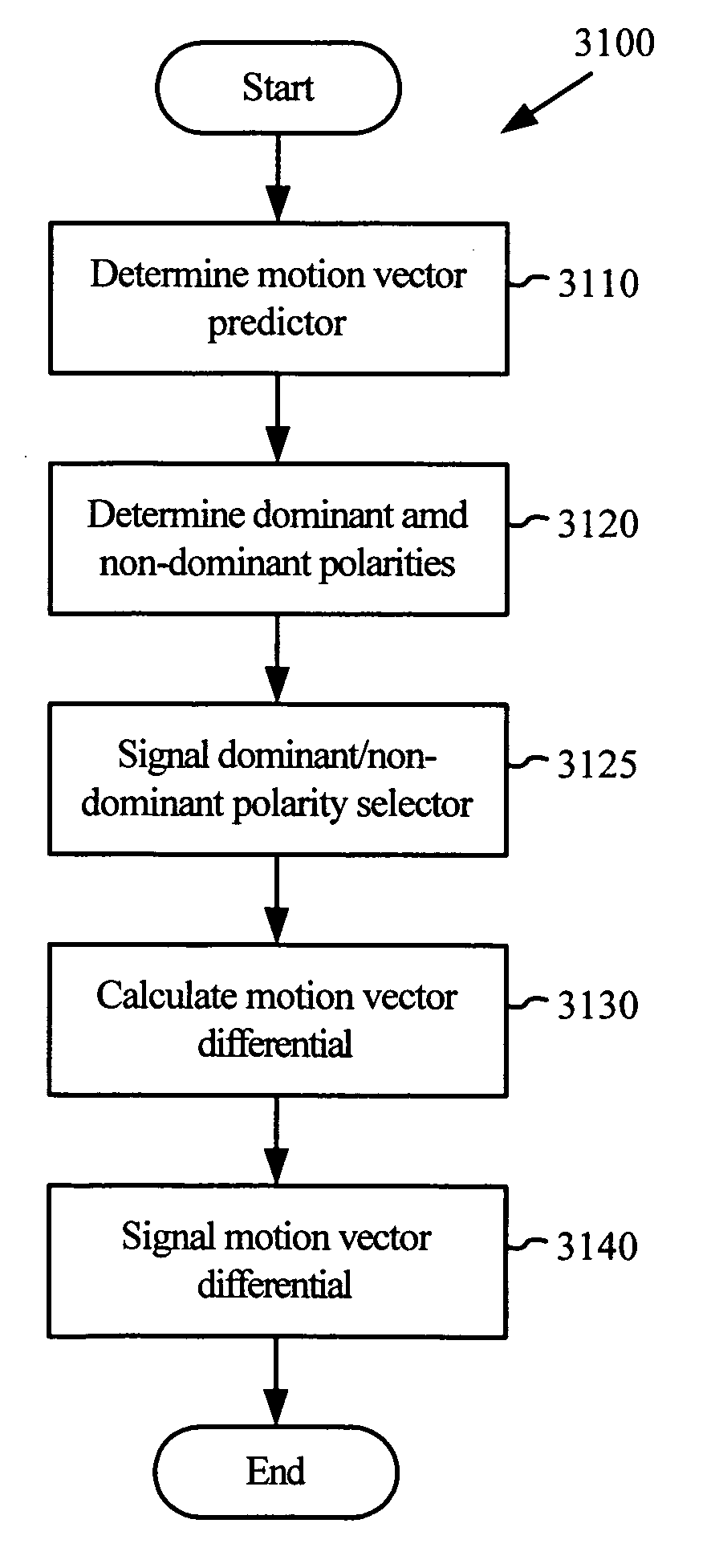

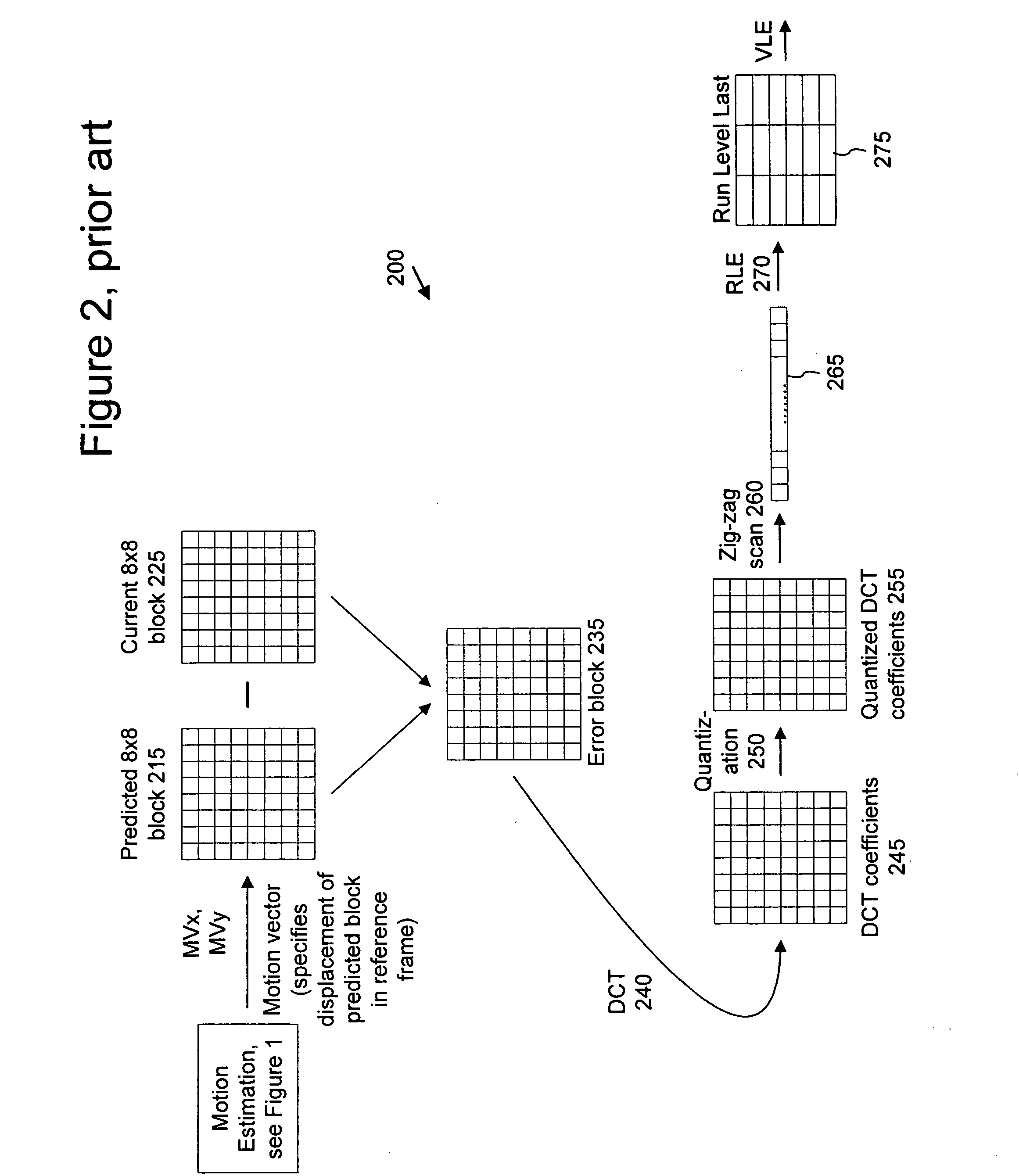

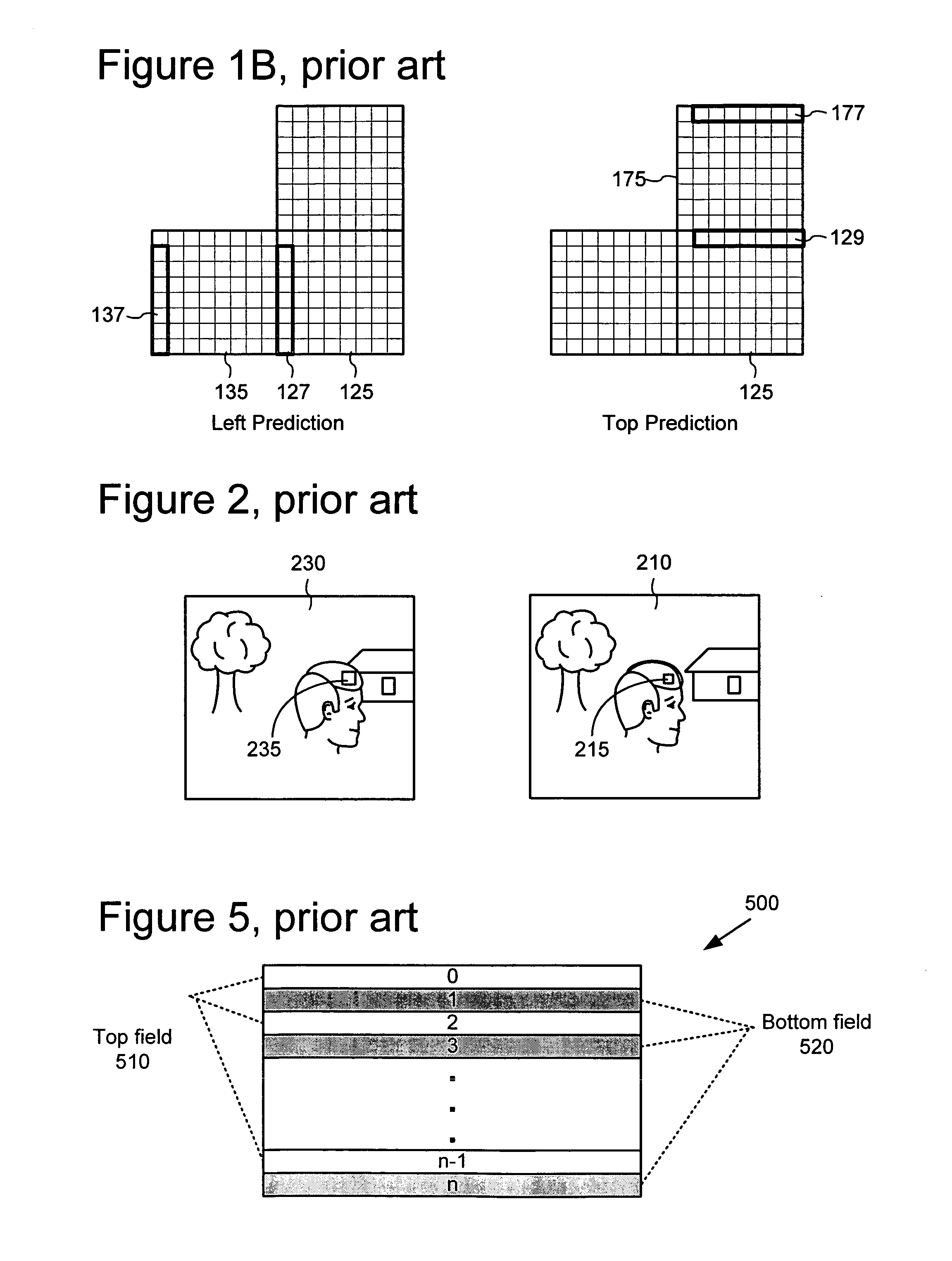

Predicting motion vectors for fields of forward-predicted interlaced video frames

ActiveUS20050053137A1Improve accuracyReduce bitratePicture reproducers using cathode ray tubesCode conversionInterlaced videoEncoder decoder

Techniques and tools for encoding and decoding predicted images in interlaced video are described. For example, a video encoder or decoder computes a motion vector predictor for a motion vector for a portion (e.g., a block or macroblock) of an interlaced P-field, including selecting between using a same polarity or opposite polarity motion vector predictor for the portion. The encoder / decoder processes the motion vector based at least in part on the motion vector predictor computed for the motion vector. The processing can comprise computing a motion vector differential between the motion vector and the motion vector predictor during encoding and reconstructing the motion vector from a motion vector differential and the motion vector predictor during decoding. The selecting can be based at least in part on a count of opposite polarity motion vectors for a neighborhood around the portion and / or a count of same polarity motion vectors.

Owner:MICROSOFT TECH LICENSING LLC

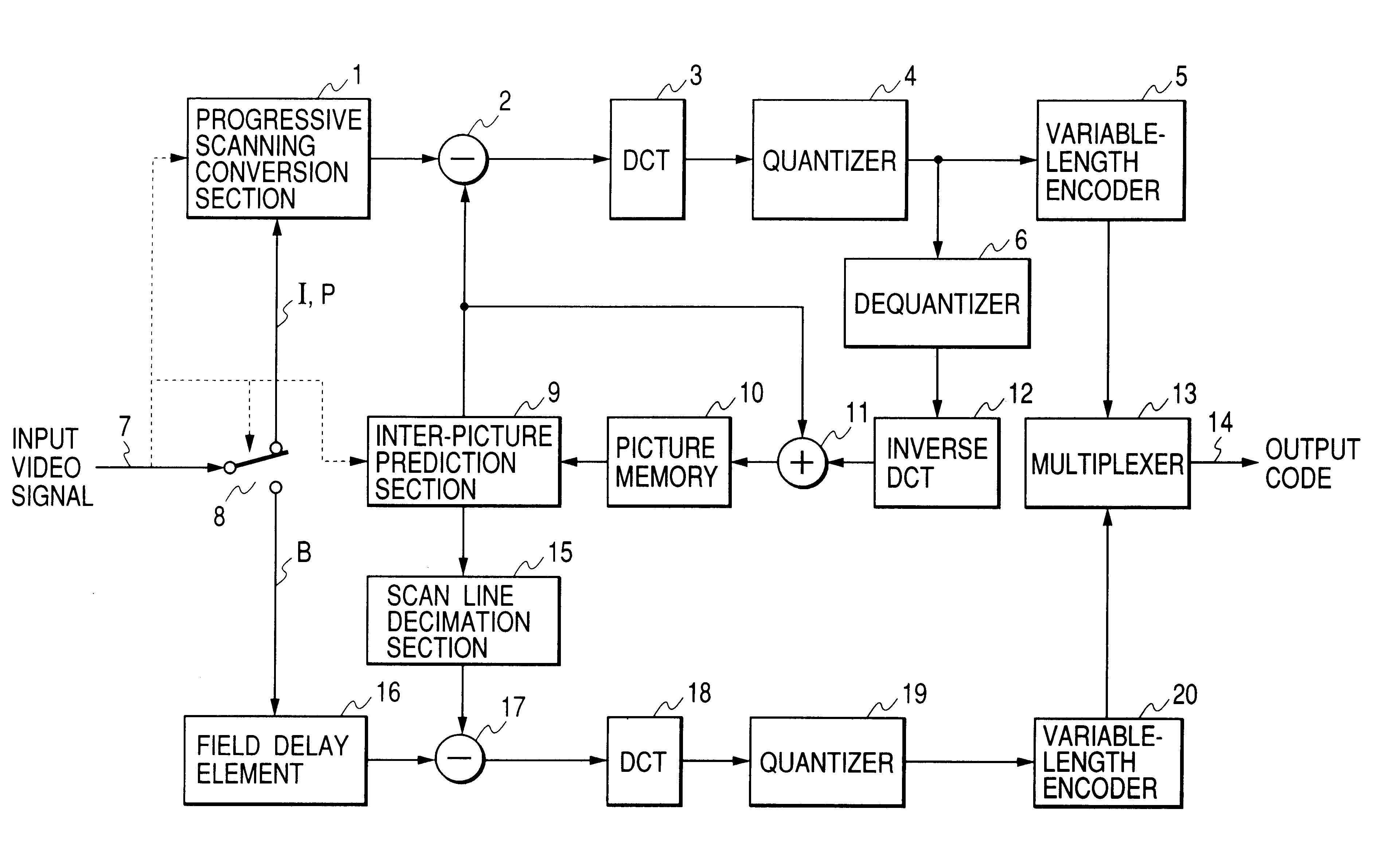

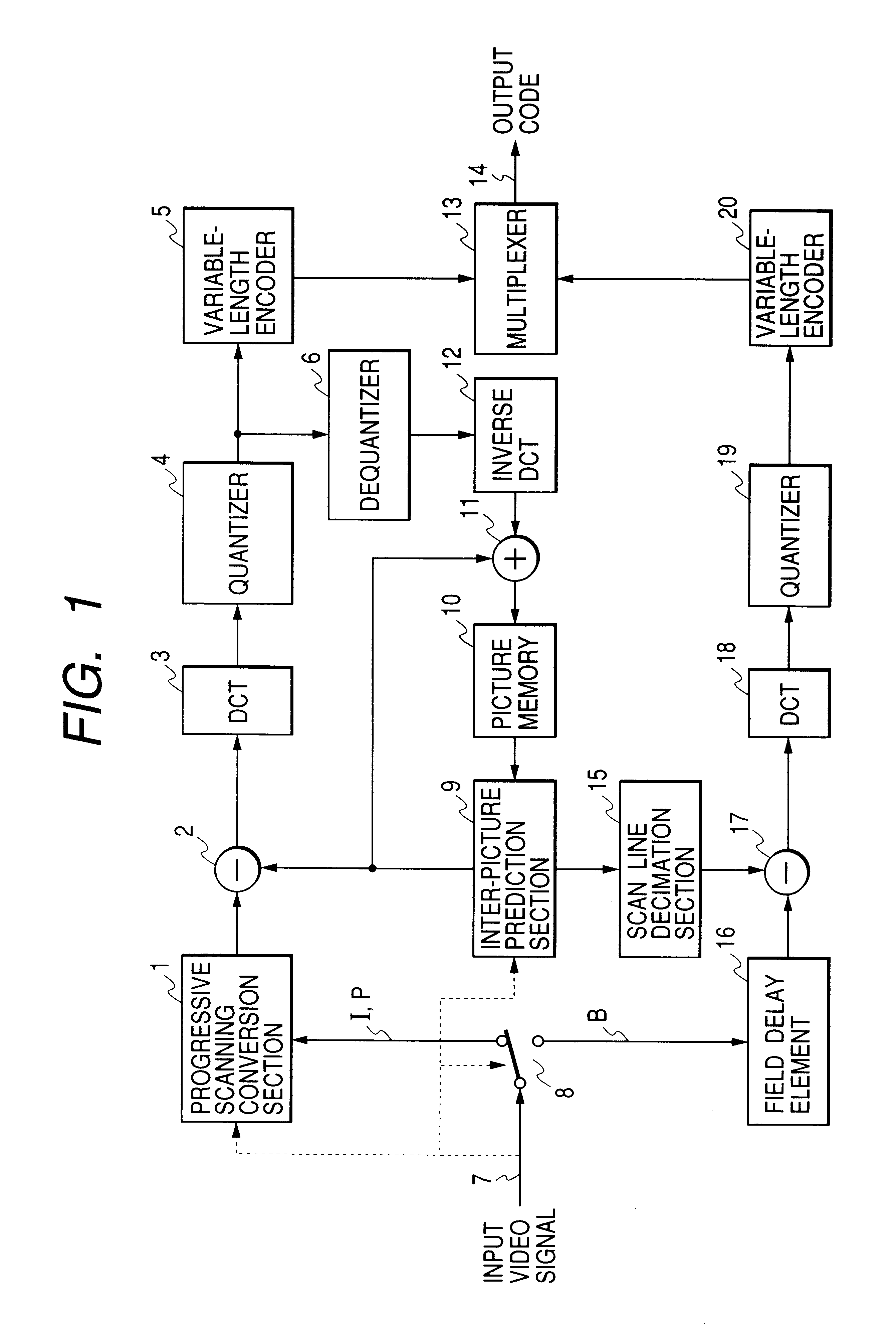

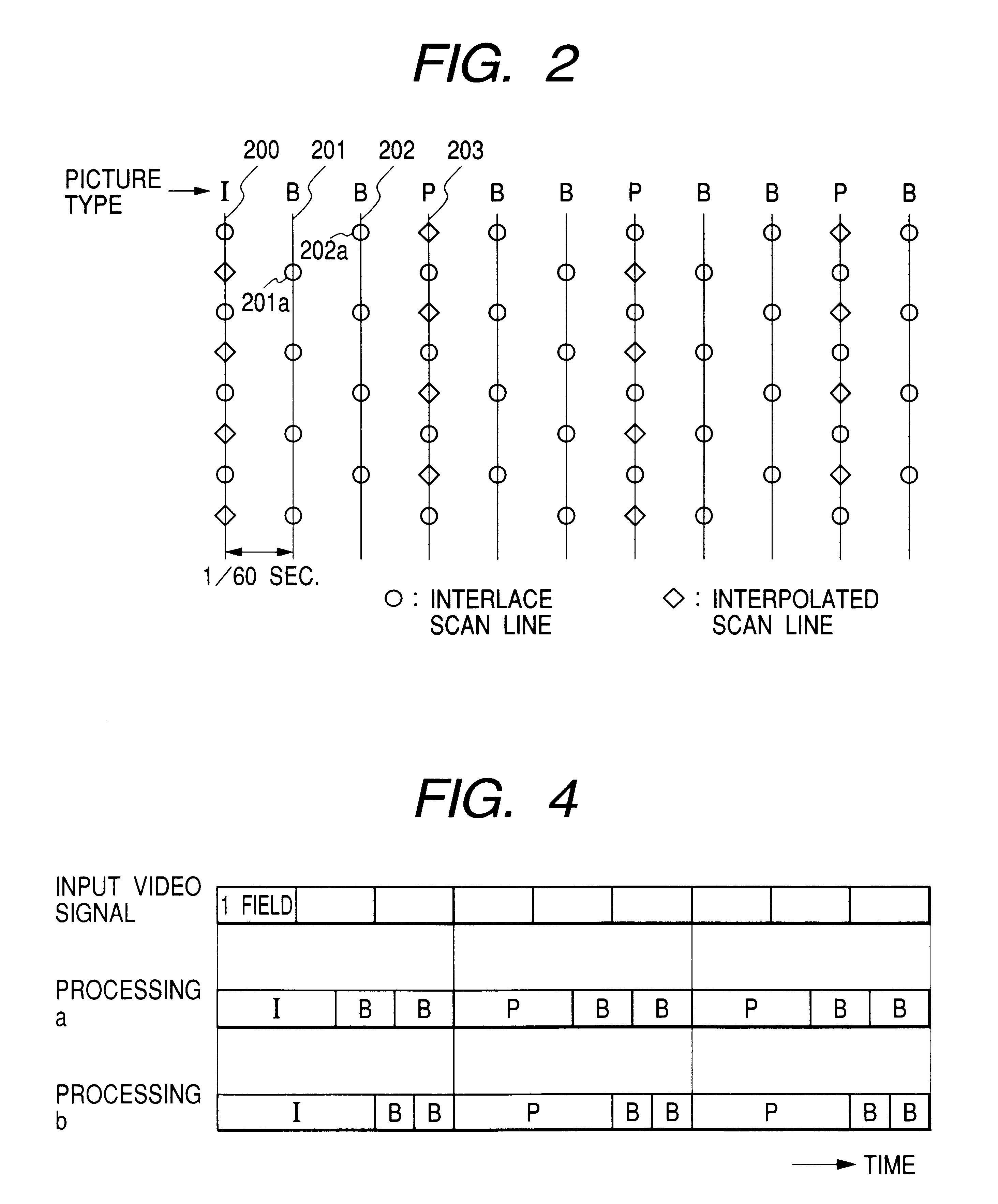

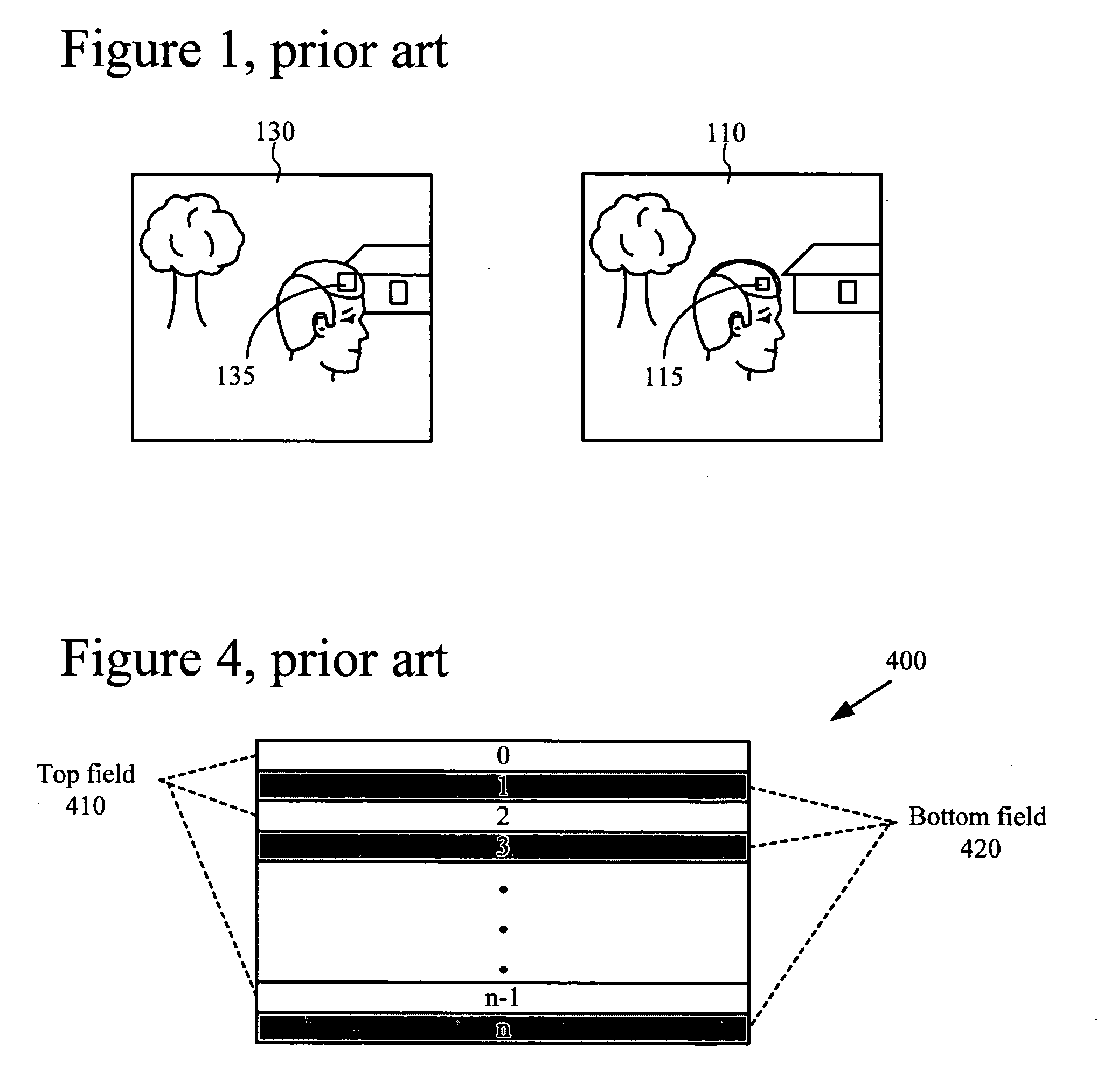

Interplaced video signal encoding and decoding method, and encoding apparatus and decoding apparatus utilizing the method, providing high efficiency of encoding by conversion of periodically selected fields to progressive scan frames which function as reference frames for predictive encoding

InactiveUS6188725B1Television system detailsPicture reproducers using cathode ray tubesScan conversionInterlaced video

An encoding apparatus includes a selector for periodically selecting fields of an interlaced video signal to be converted to respective progressive scanning frames, by a scanning converter which doubles the number of scanning lines per field. The apparatus encodes these frames by intra-frame encoding or unidirectional predictive encoding using preceding ones of the frames, and encodes the remaining fields of the video signal by bidirectional prediction using preceding and succeeding ones of the progressive scanning frames for reference. The resultant code can be decoded by an inverse process to recover the interlaced video signal, or each decoded field can be converted to a progressive scanning frame to thereby enable output of a progressive scanning video signal. Enhanced accuracy of motion prediction for inter-frame encoding can thereby be achieved, and generation of encoded aliasing components suppressed, since all reference pictures are progressive scanning frames rather than pairs of fields constituting interlaced scanning frames.

Owner:RAKUTEN INC

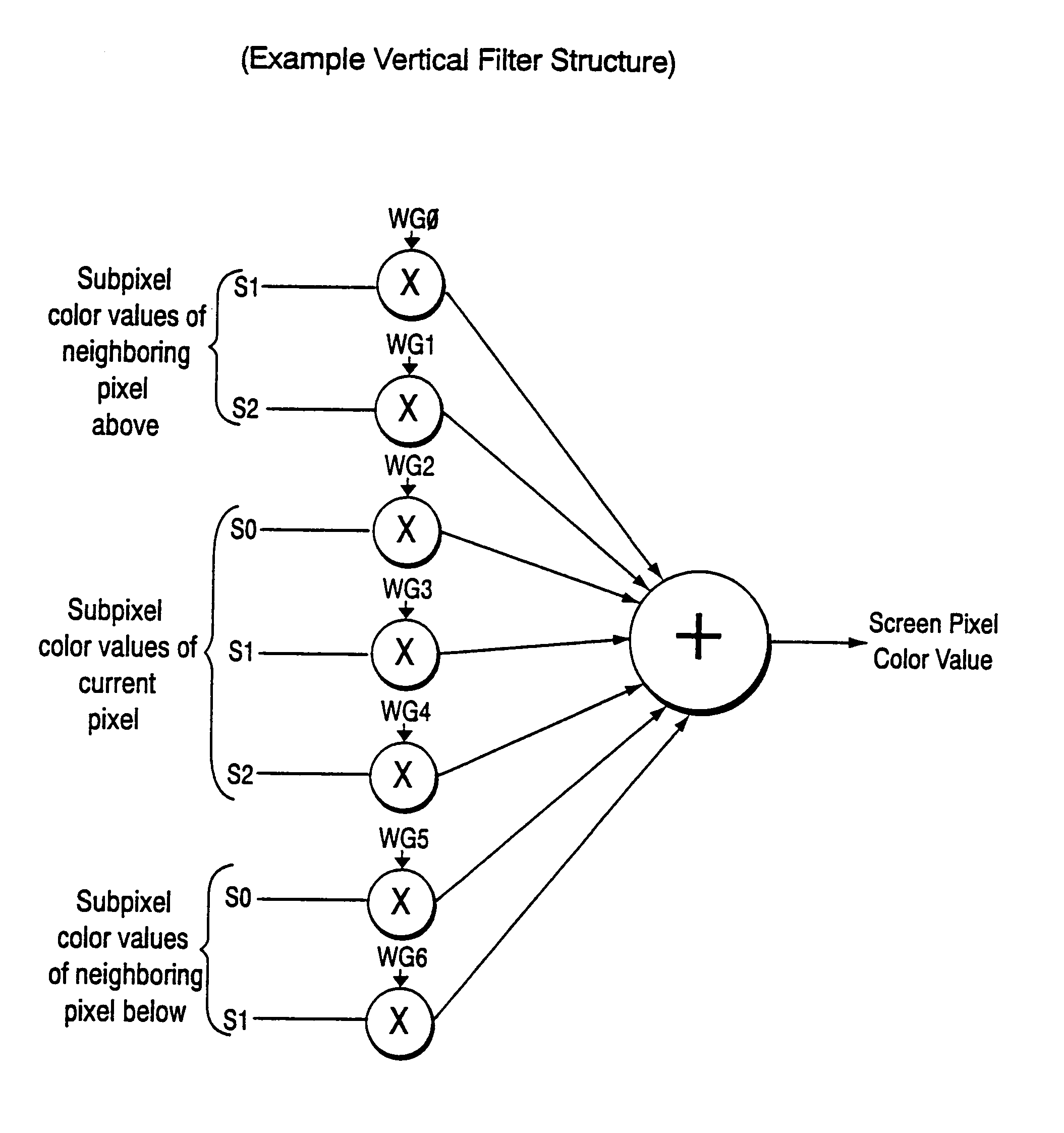

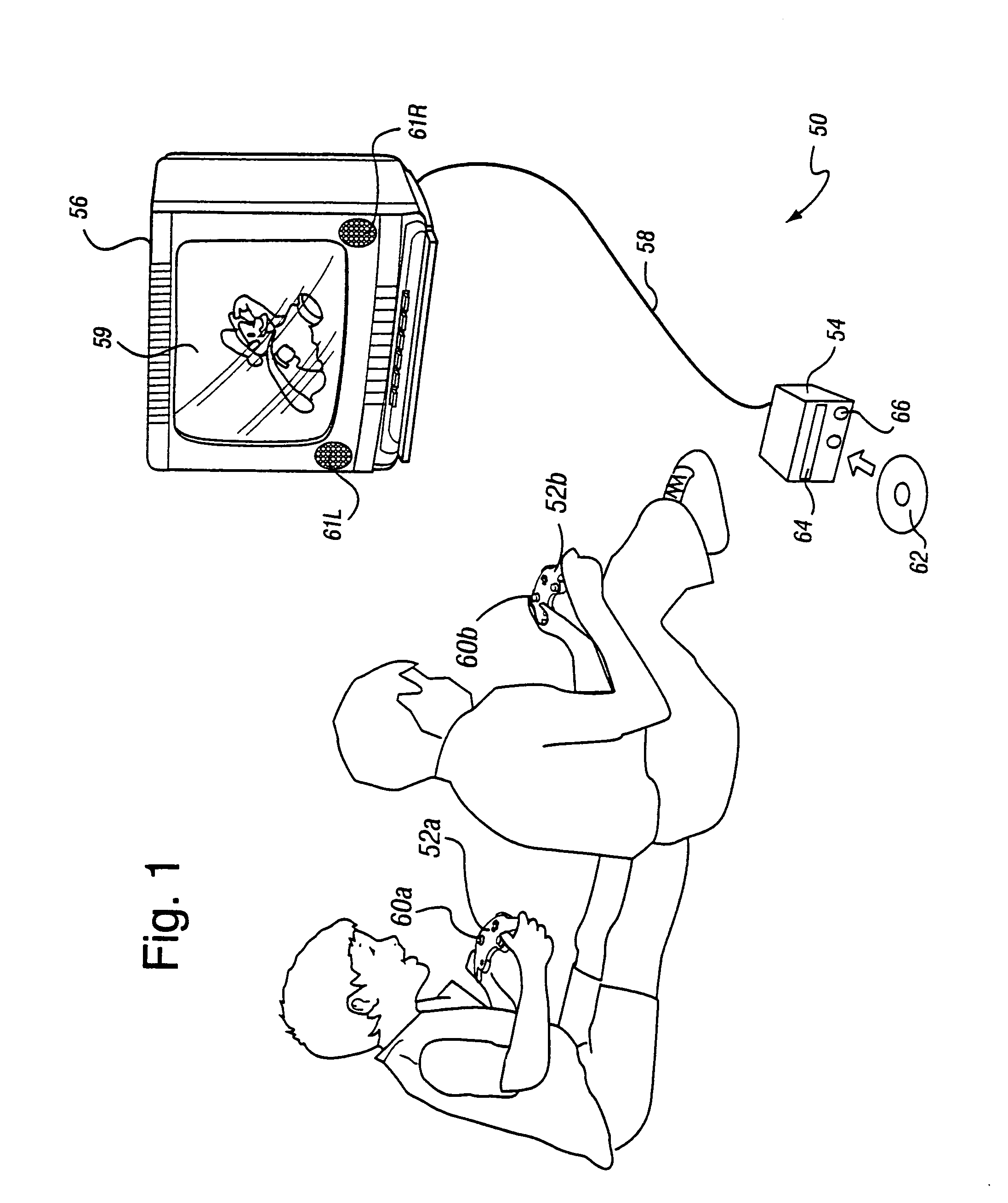

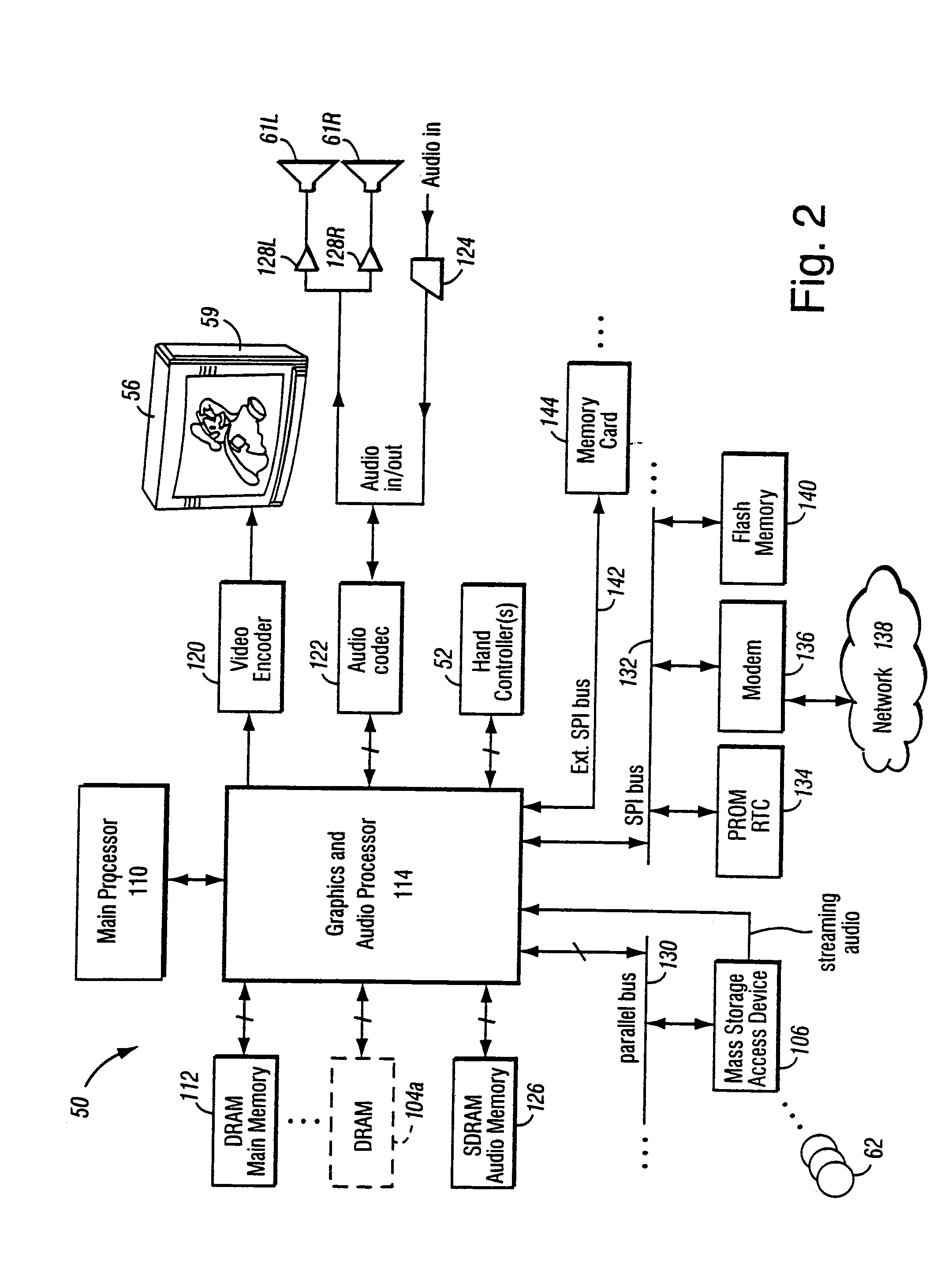

Method and apparatus for anti-aliasing in a graphics system

InactiveUS6999100B1Low costHigh cost-effectiveImage enhancementCathode-ray tube indicatorsInterlaced videoAnti-aliasing

A graphics system including a custom graphics and audio processor produces exciting 2D and 3D graphics and surround sound. The system includes a graphics and audio processor including a 3D graphics pipeline and an audio digital signal processor. The system achieves highly efficient full-scene anti-aliasing by implementing a programmable-location super-sampling arrangement and using a selectable-weight vertical-pixel support area blending filter. For a 2×2 pixel group (quad), the locations of three samples within each super-sampled pixel are individually selectable. A twelve-bit multi-sample coverage mask is used to determine which of twelve samples within a pixel quad are enabled based on the portions of each pixel occupied by a primitive fragment and any pre-computed z-buffering. Each super-sampled pixel is filtered during a copy-out operation from a local memory to an external frame buffer using a pixel blending filter arrangement that combines seven samples from three vertically arranged pixels. Three samples are taken from the current pixel, two samples are taken from a pixel immediately above the current pixel and two samples are taken from a pixel immediately below the current pixel. A weighted average is then computed based on the enabled samples to determine the final color for the pixel. The weight coefficients used in the blending filter are also individually programmable. De-flickering of thin one-pixel tall horizontal lines for interlaced video displays is also accomplished by using the pixel blending filter to blend color samples from pixels in alternate scan lines.

Owner:NINTENDO CO LTD

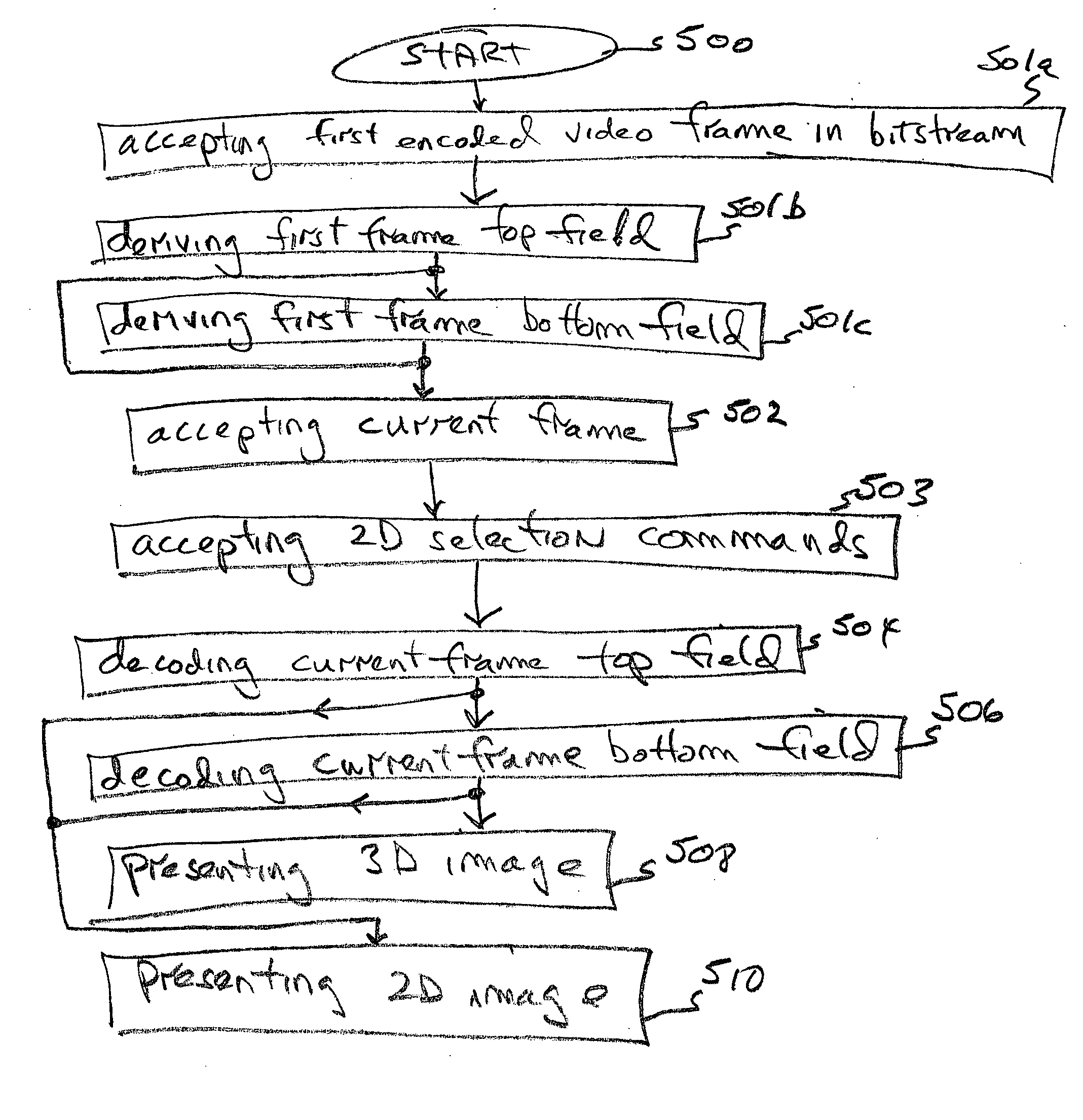

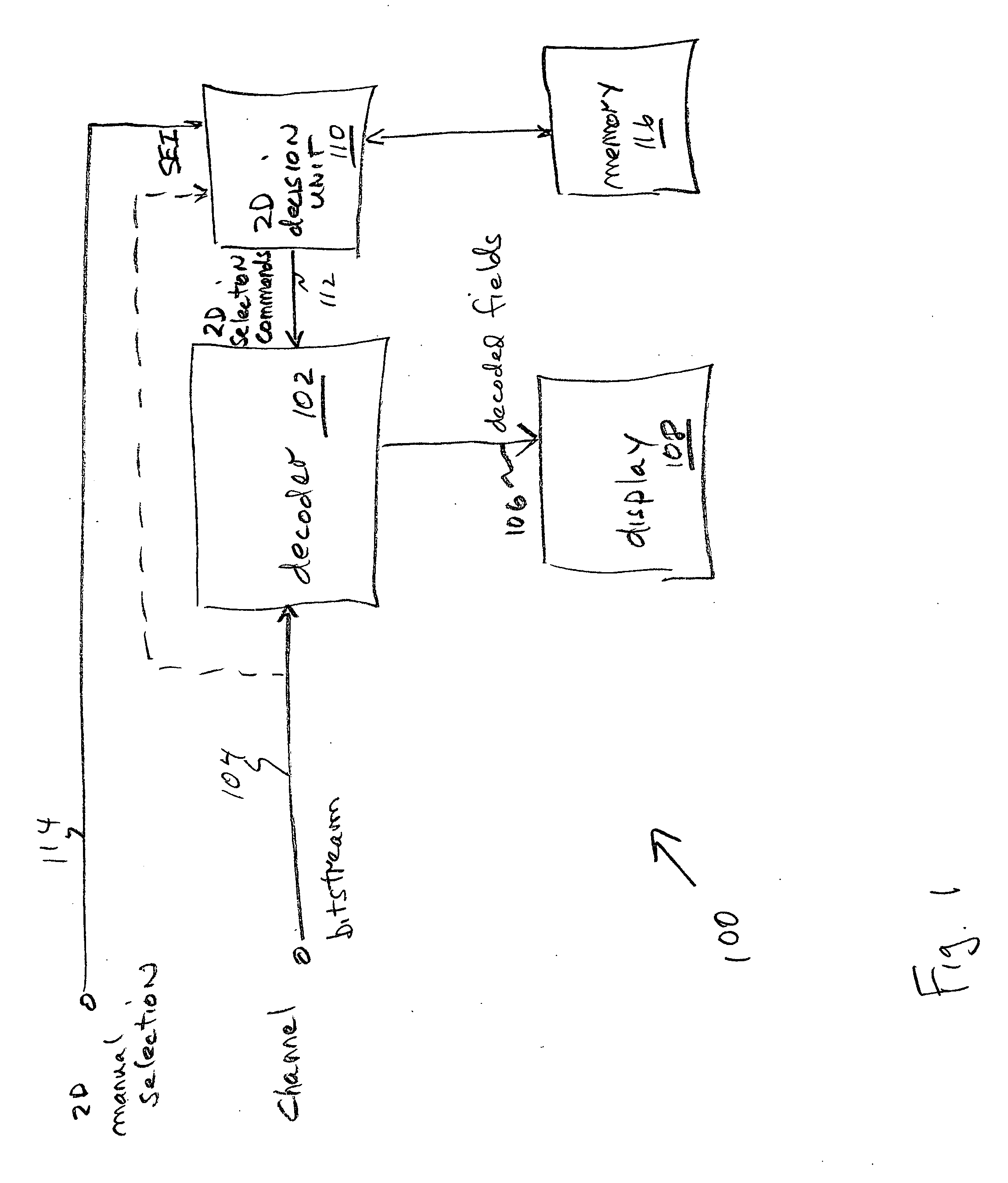

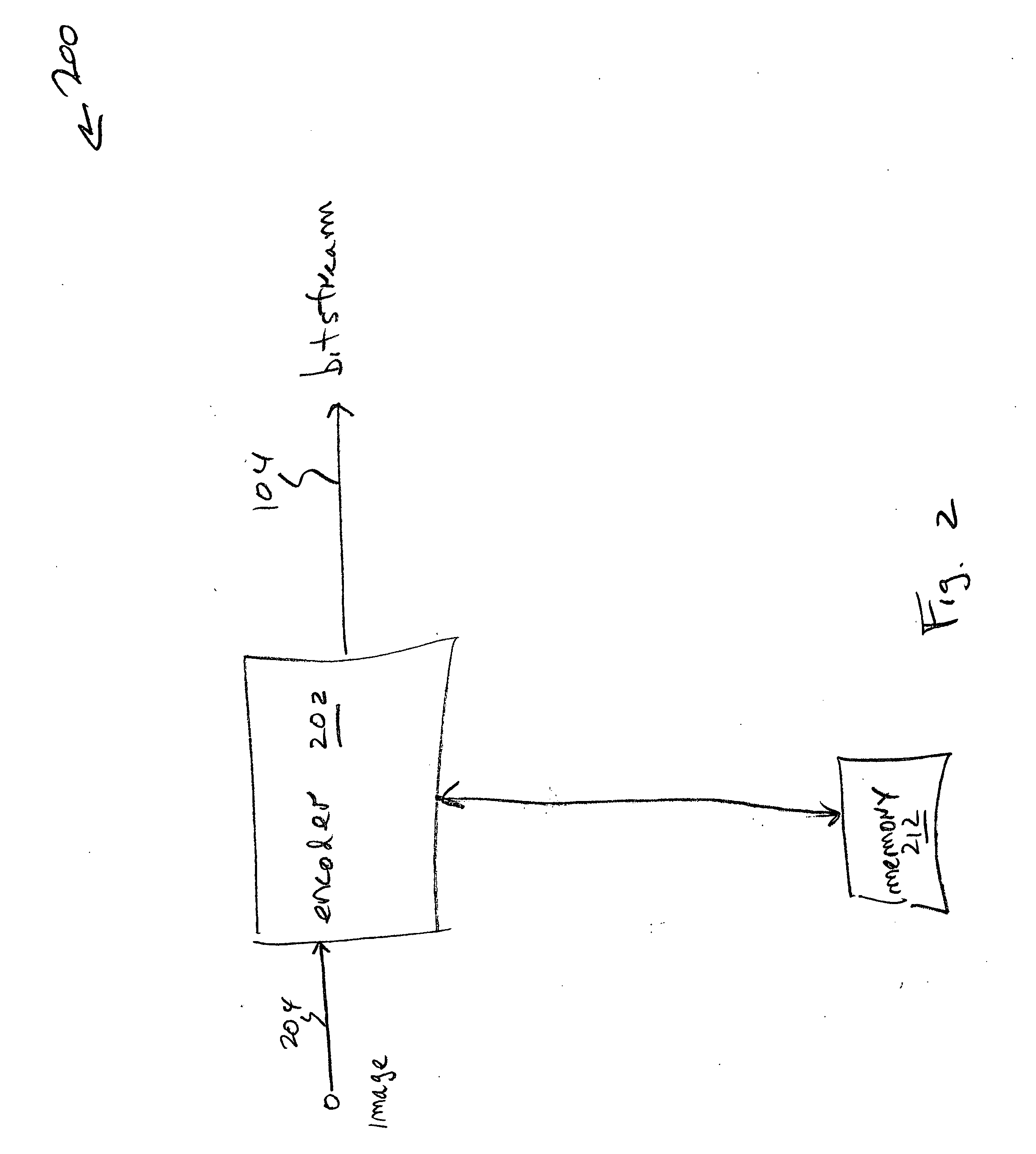

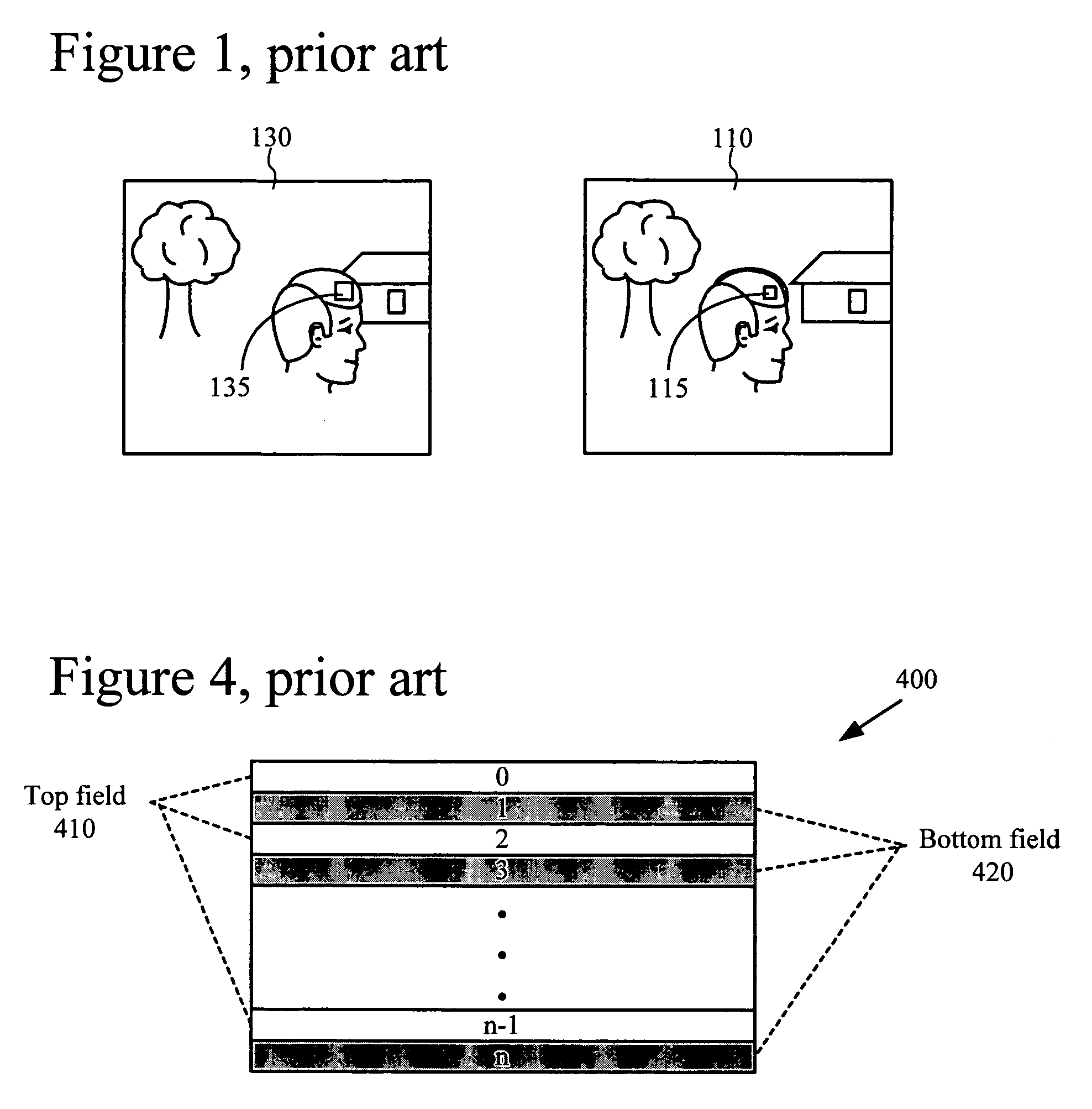

System and method for three-dimensional video coding

InactiveUS20050084006A1Easy to compressMinimal restrictionPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningInterlaced videoComputer graphics (images)

Systems and methods are provided for receiving and encoding 3D video. The receiving method comprises: accepting a bitstream with a current video frame encoded with two interlaced fields, in a MPEG2, MPEG4, or H.264 standard; decoding a current frame top field; decoding a current frame bottom field; and, presenting the decoded top and bottom fields as a 3D frame image. In some aspects, the method presents the decoded top and bottom fields as a stereo-view image. In other aspects, the method accepts 2D selection commands in response to a trigger such as receiving a supplemental enhancement information (SEI) message, an analysis of display capabilities, manual selection, or receiver system configuration. Then, only one of the current frame interlaced fields is decoded, and a 2D frame image is presented.

Owner:SHARP KK

Adaptive de-blocking filtering apparatus and method for MPEG video decoder

ActiveUS20050243911A1Reduce computational complexityReduce complexityColor television with pulse code modulationColor television with bandwidth reductionPattern recognitionInterlaced video

A post processing de-blocking filter includes a threshold determination unit for adaptively determining a plurality of threshold values according to at least differences in quantization parameters QPs of a plurality of adjacent blocks in a received video stream and to a user defined offset (UDO) allowing the threshold levels to be adjusted according to the UDO value; an interpolation unit for performing an interpolation operation to estimate pixel values in an interlaced field if the video stream comprises interlaced video; and a de-blocking filtering unit for determining a filtering range specifying a maximum number of pixels to filter around a block boundary between the adjacent blocks, determining a region mode according to local activity around the block boundary, selecting one of a plurality of at least three filters, and filtering a plurality of pixels around the block boundary according to the filtering range, the region mode, and the selected filter.

Owner:MEDIATEK INC

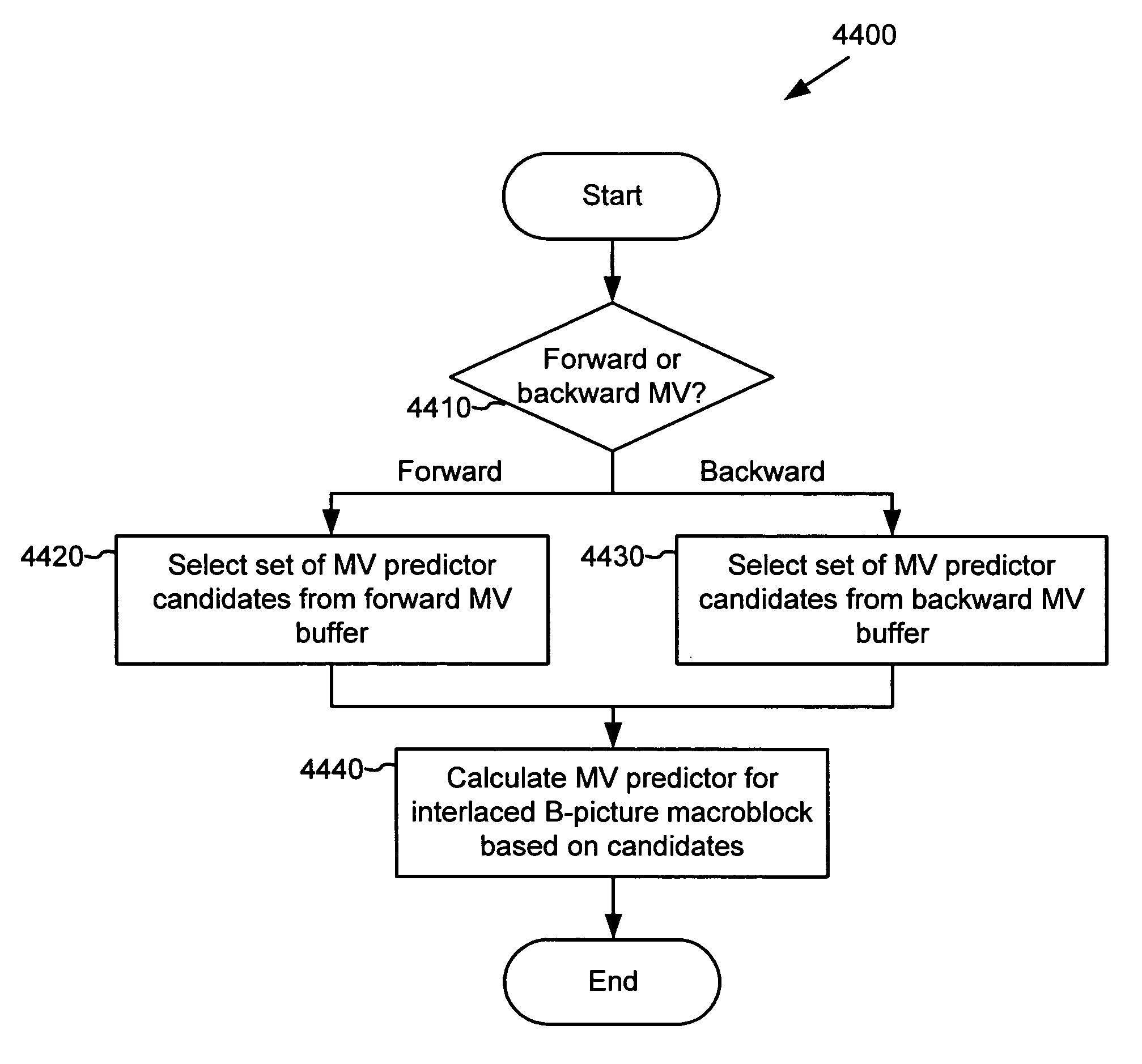

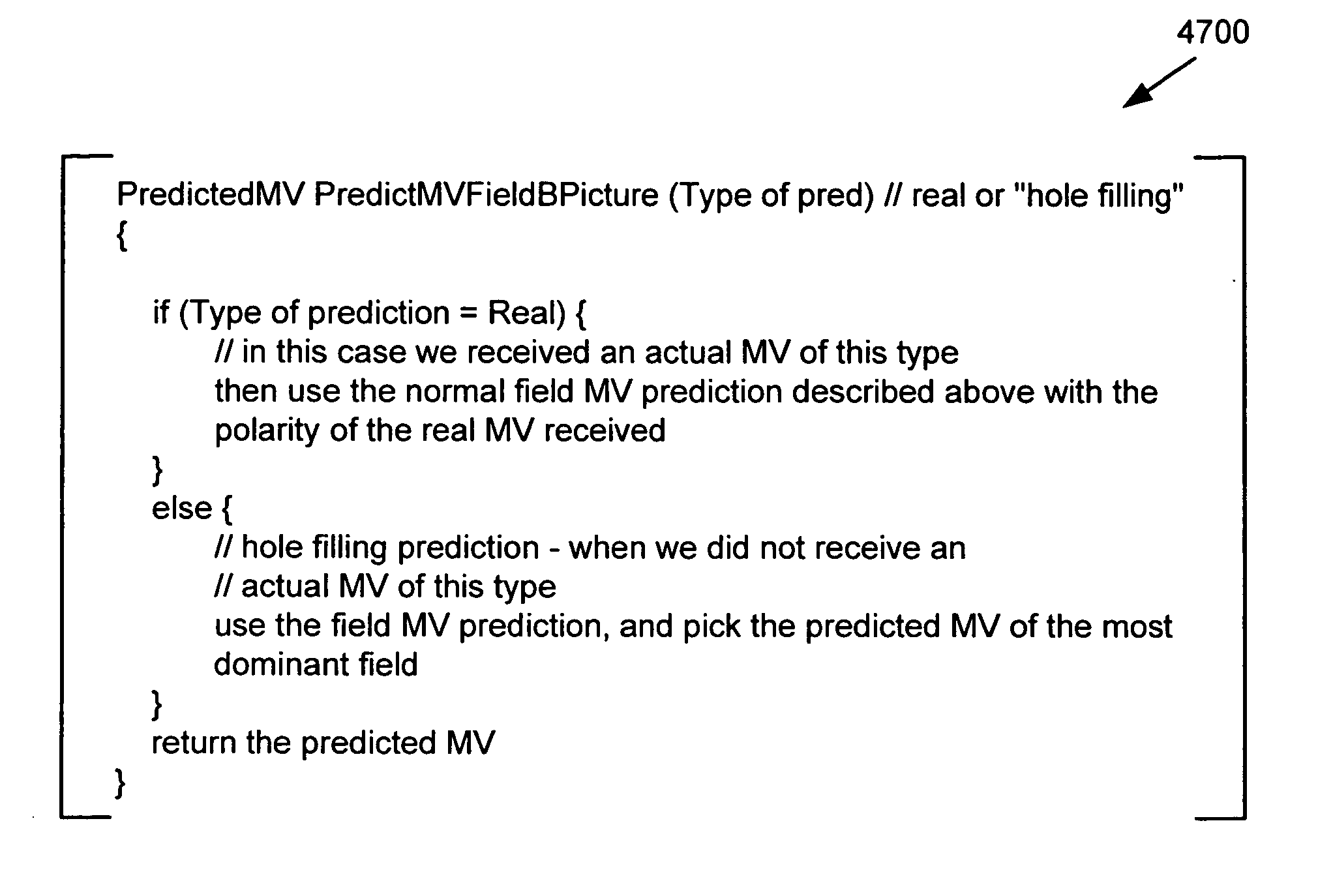

Advanced bi-directional predictive coding of interlaced video

ActiveUS20050053292A1Accurate compensationReduce encoding overheadPicture reproducers using cathode ray tubesCode conversionInterlaced videoMotion vector

For interlaced B-fields or interlaced B-frames, forward motion vectors are predicted by an encoder / decoder using forward motion vectors from a forward motion vector buffer, and backward motion vectors are predicted using backward motion vectors from a backward motion vector buffer. The resulting motion vectors are added to the corresponding buffer. Holes in motion vector buffers can be filled in with estimated motion vector values. An encoder / decoder switches prediction modes between fields in a field-coded macroblock of an interlaced B-frame. For interlaced B-frames and interlaced B-fields, an encoder / decoder computes direct mode motion vectors. For interlaced B-fields or interlaced B-frames, an encoder / decoder uses 4 MV coding. An encoder / decoder uses “self-referencing” B-frames. An encoder sends binary information indicating whether a prediction mode is forward or not-forward for one or more macroblocks in an interlaced B-field. An encoder / decoder uses intra-coded B-fields [“BI-fields”].

Owner:MICROSOFT TECH LICENSING LLC

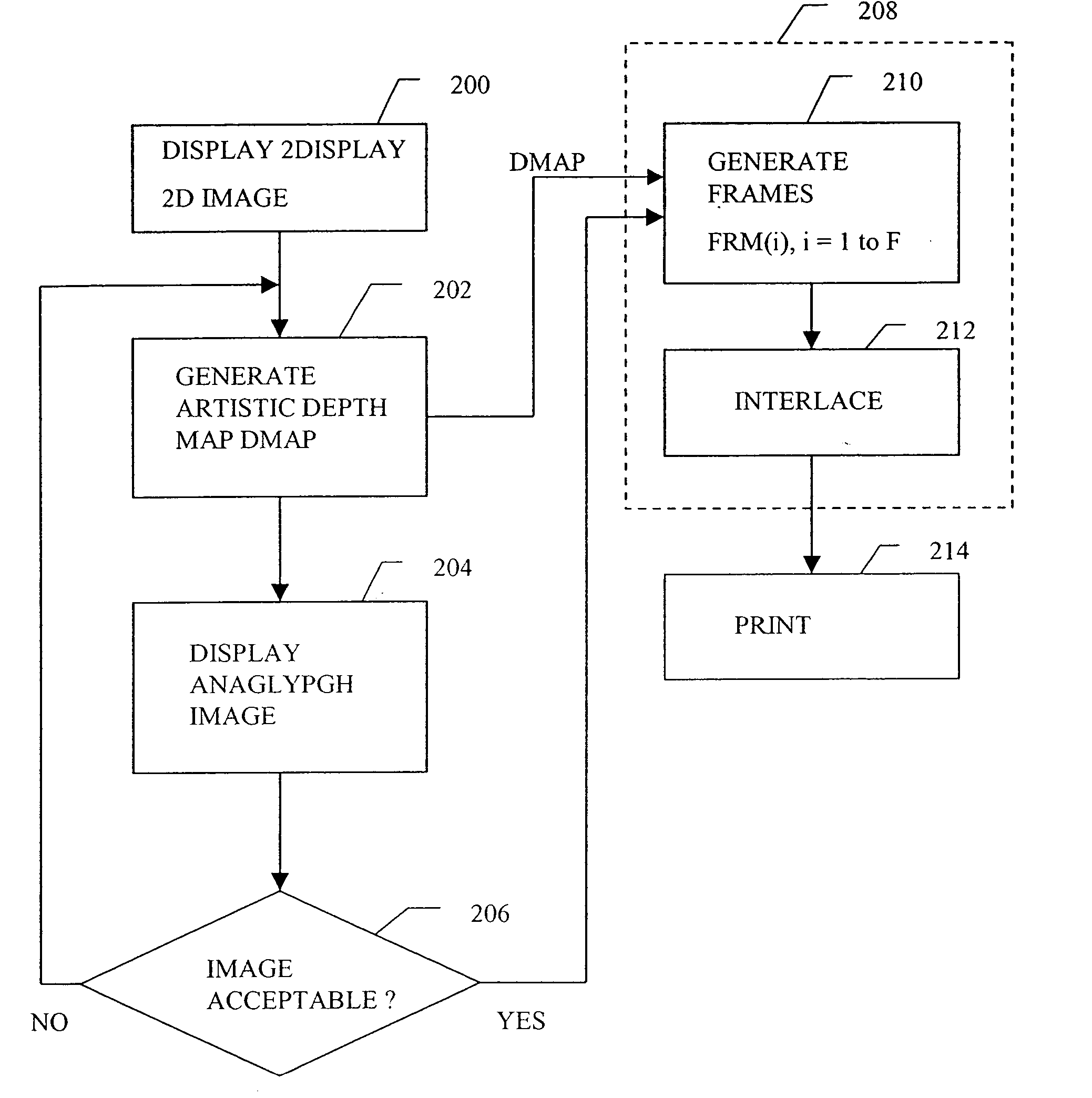

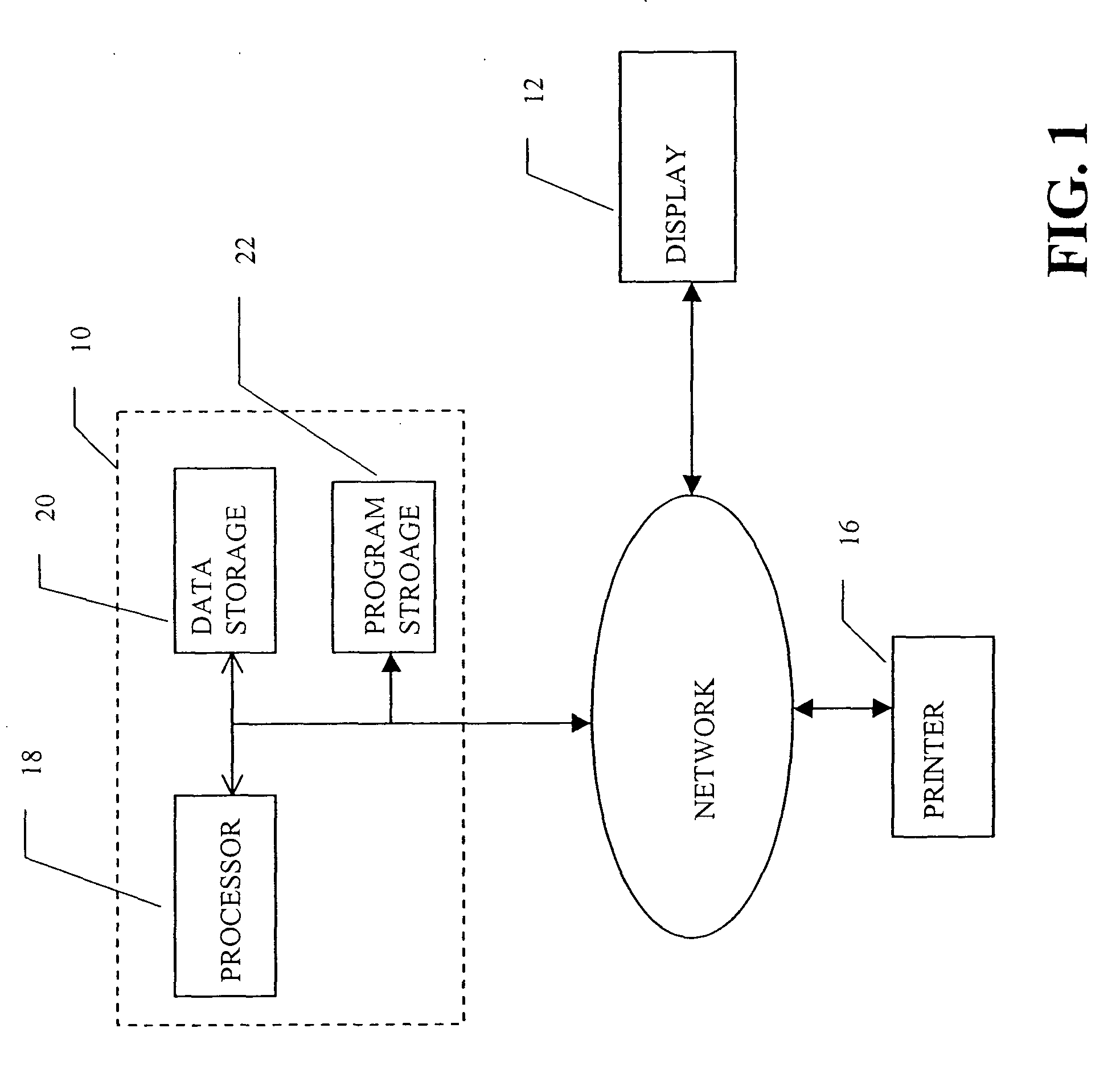

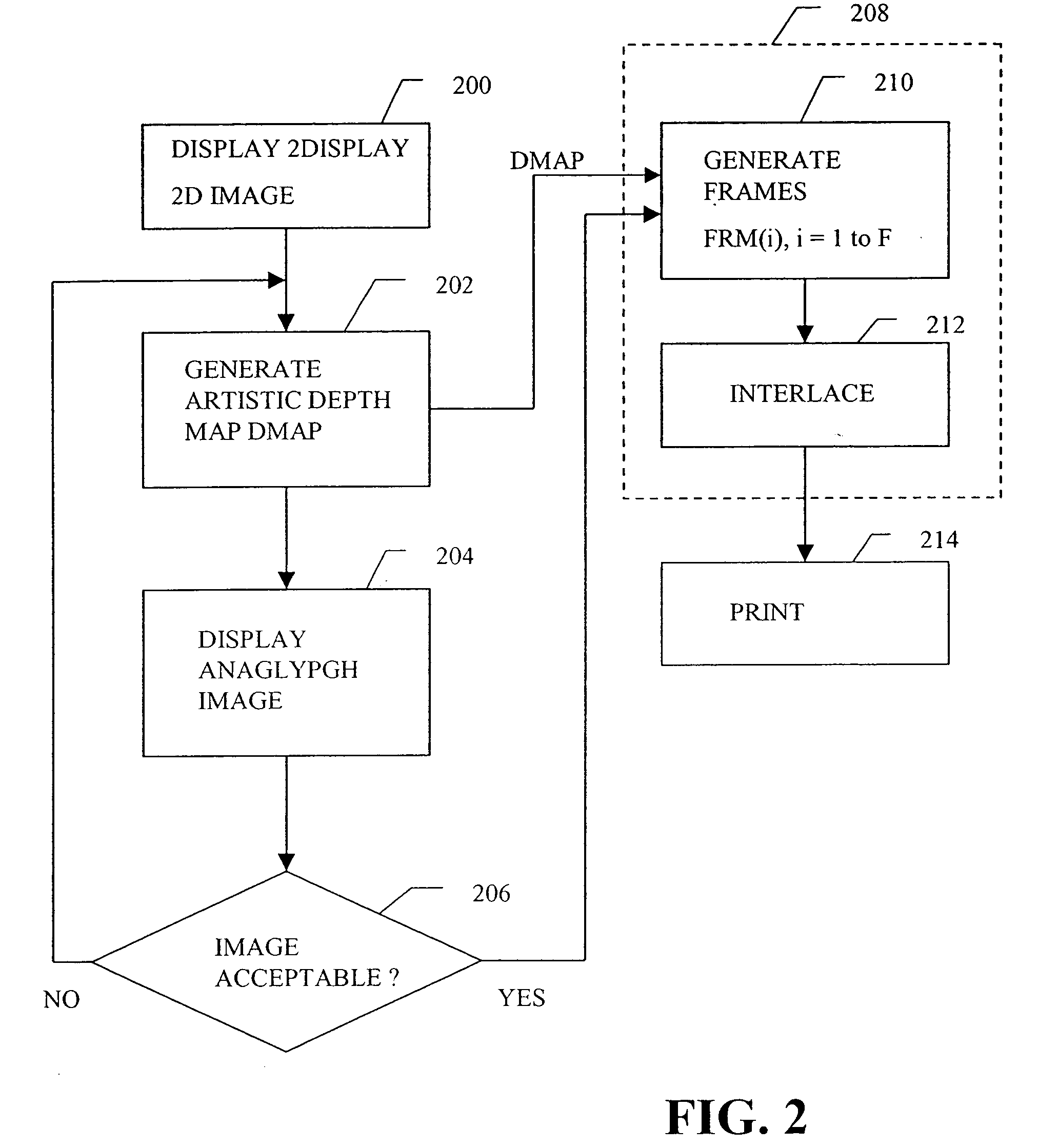

Multi-dimensional images system for digital image input and output

ActiveUS20040135780A1Character and pattern recognitionSteroscopic systemsInterlaced videoStereo pair

A two-dimensional image is displayed and a grey level image is drawn on said two-dimensional image defining a depth map. A stereo image pair is generated based on the depth map and an anaglyph image of the stereo image pair is displayed. Edits of the depth map are displayed by anaglyph image. A plurality of projection frames are generated based on the depth map, and interlaced and printed for viewing through a micro optical media. Optionally, a layered image is extracted from the two-dimensional image and the plurality of projection frames have an alignment correction shift to center the apparent position of the image. A measured depth map is optionally input, and a stereo pair is optionally input, with a depth map calculated based on the pair. The user selectively modifies the calculated and measured depth map, and the extracted layers, using painted grey levels. A ray trace optionally modifies the interlacing for optimization to a particular media.

Owner:NIMS JERRY C

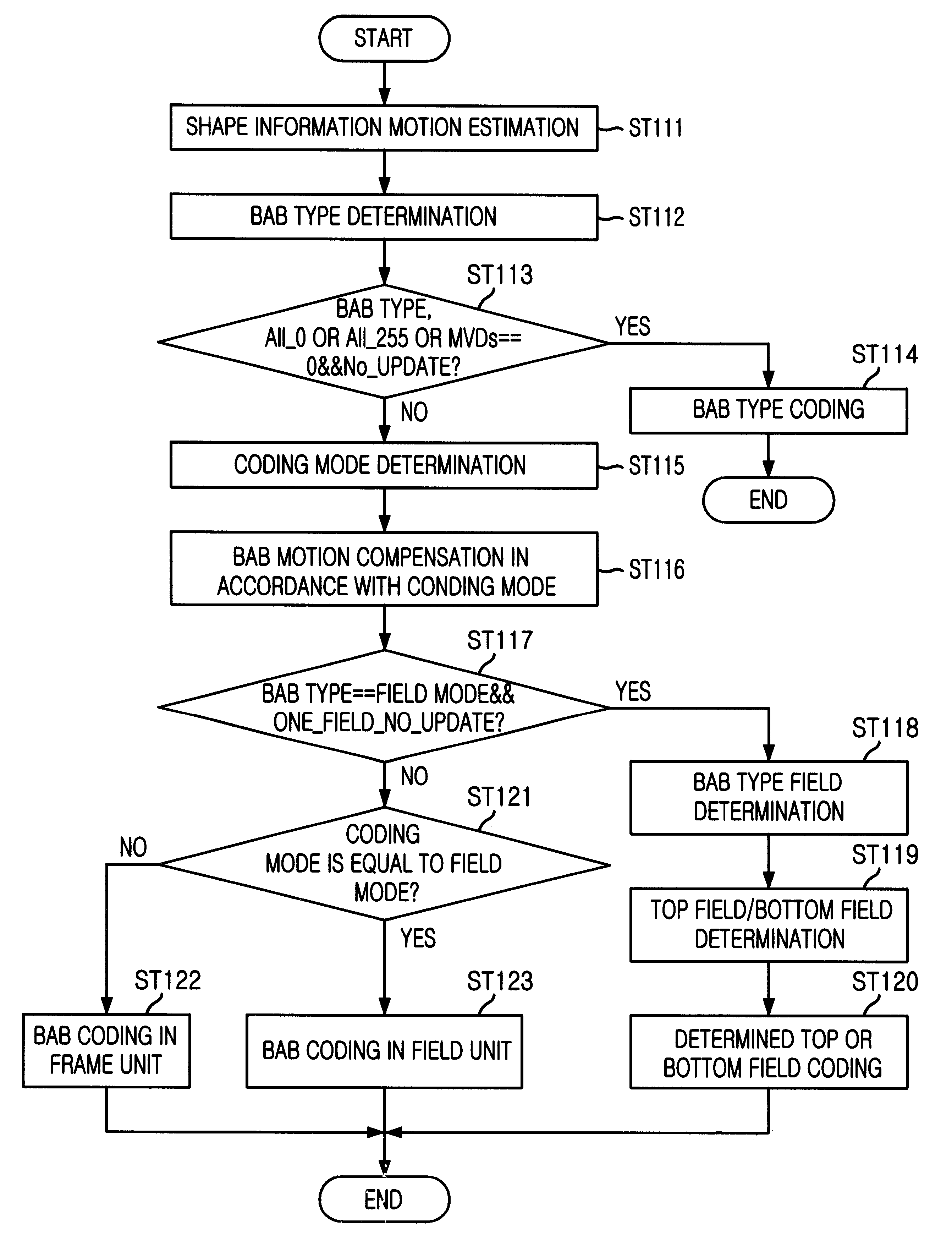

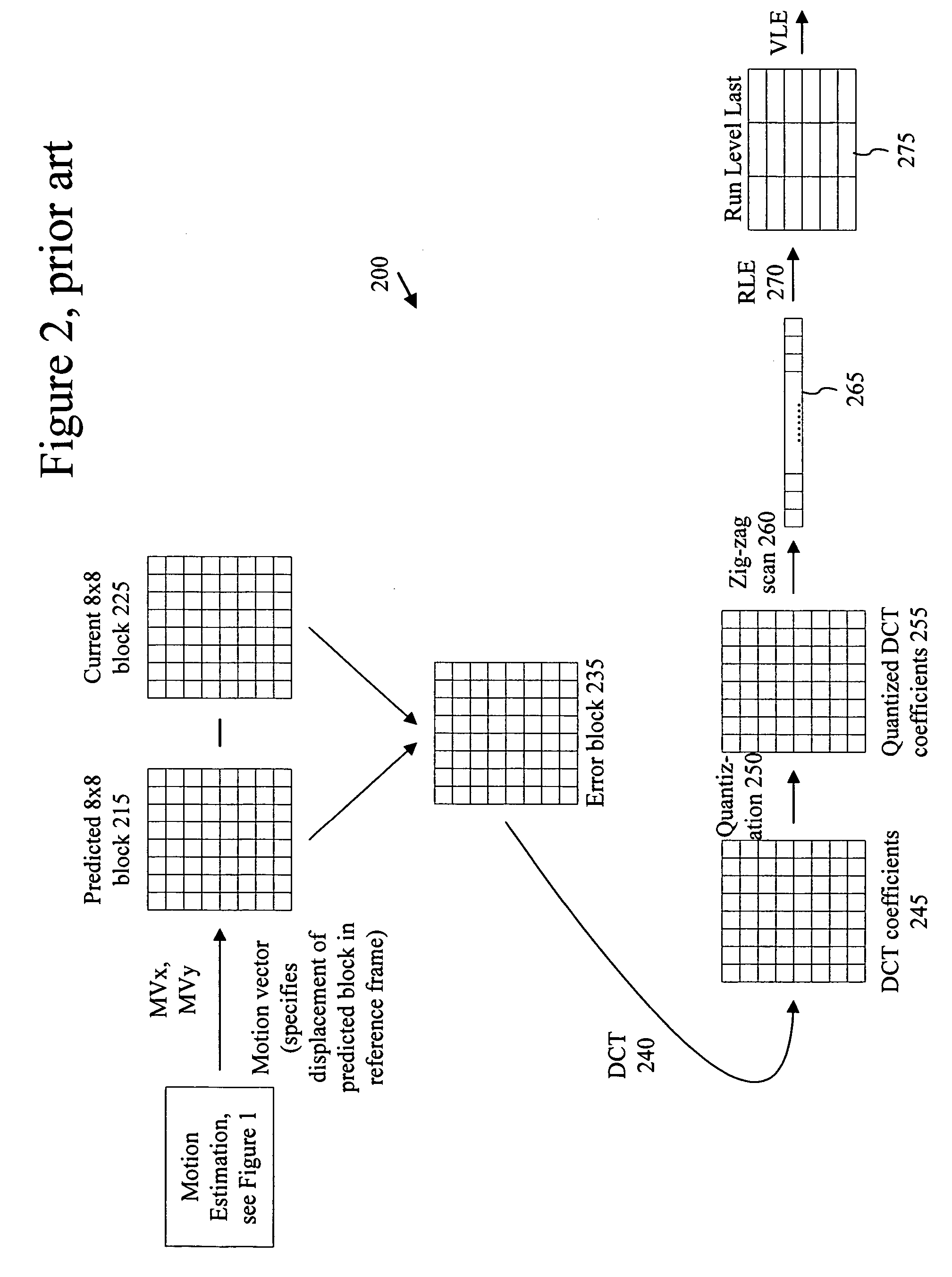

Shaped information coding device for interlaced scanning video and method therefor

InactiveUS6381277B1Color television with pulse code modulationColor television with bandwidth reductionInterlaced videoMotion vector

Shape information coding device for interlaced scanning video and method in which the shape information coding device and method can detect an amount of motion of object video, on coding of interlaced scanning video, select field or frame coding mode in accordance with the detected result, perform motion compensation in a field unit if the selected coding mode is the field mode, and perform motion compensation in a frame unit if the selected coding mode is the frame mode. In addition, the present invention can construct one frame with two fields, upon coding of shape information for the interlaced scanning video, and then determine a motion vector predictor for shape by using motion information of adjacent block so as to perform an effective coding of the shape information motion information. At this time, the coding efficiency can be improved by a method contemplating preceding a motion vector having the same motion prediction mode as the current motion vector for the motion vector predictor for shape having a high similarity and determining the motion vector predictor for shape and by a method contemplating performing coding of the field block type information and the filed discrimination information for one field of the two fields.

Owner:PANTECH CO LTD

Chroma motion vector derivation for interlaced forward-predicted fields

Techniques and tools for deriving chroma motion vectors for macroblocks of interlaced forward-predicted fields are described. For example, a video encoder or decoder determines a prevailing polarity among luma motion vectors for a macroblock. The encoder or decoder then determines a chroma motion vector for the macroblock based at least in part upon one or more of the luma motion vectors having the prevailing polarity.

Owner:MICROSOFT TECH LICENSING LLC

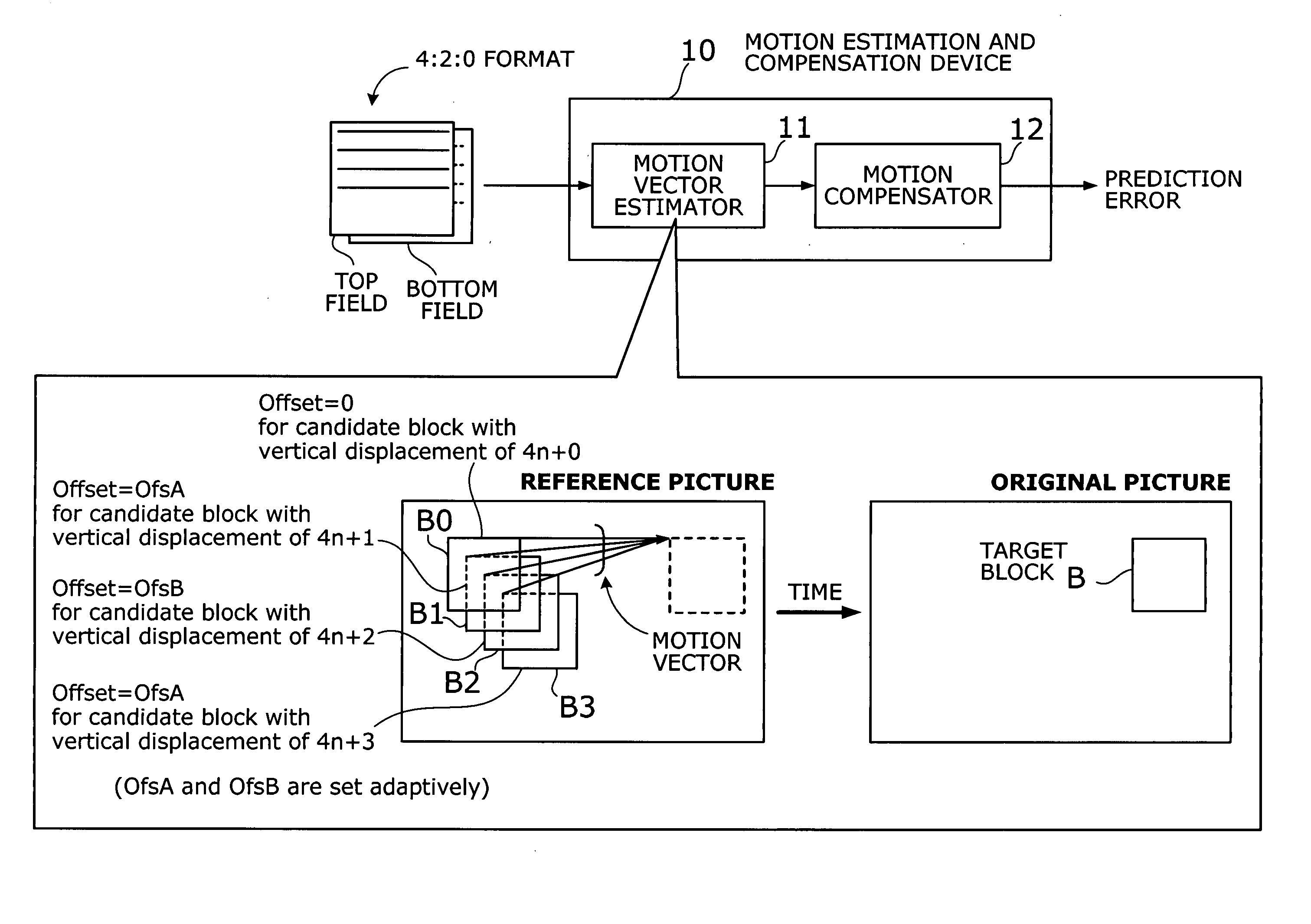

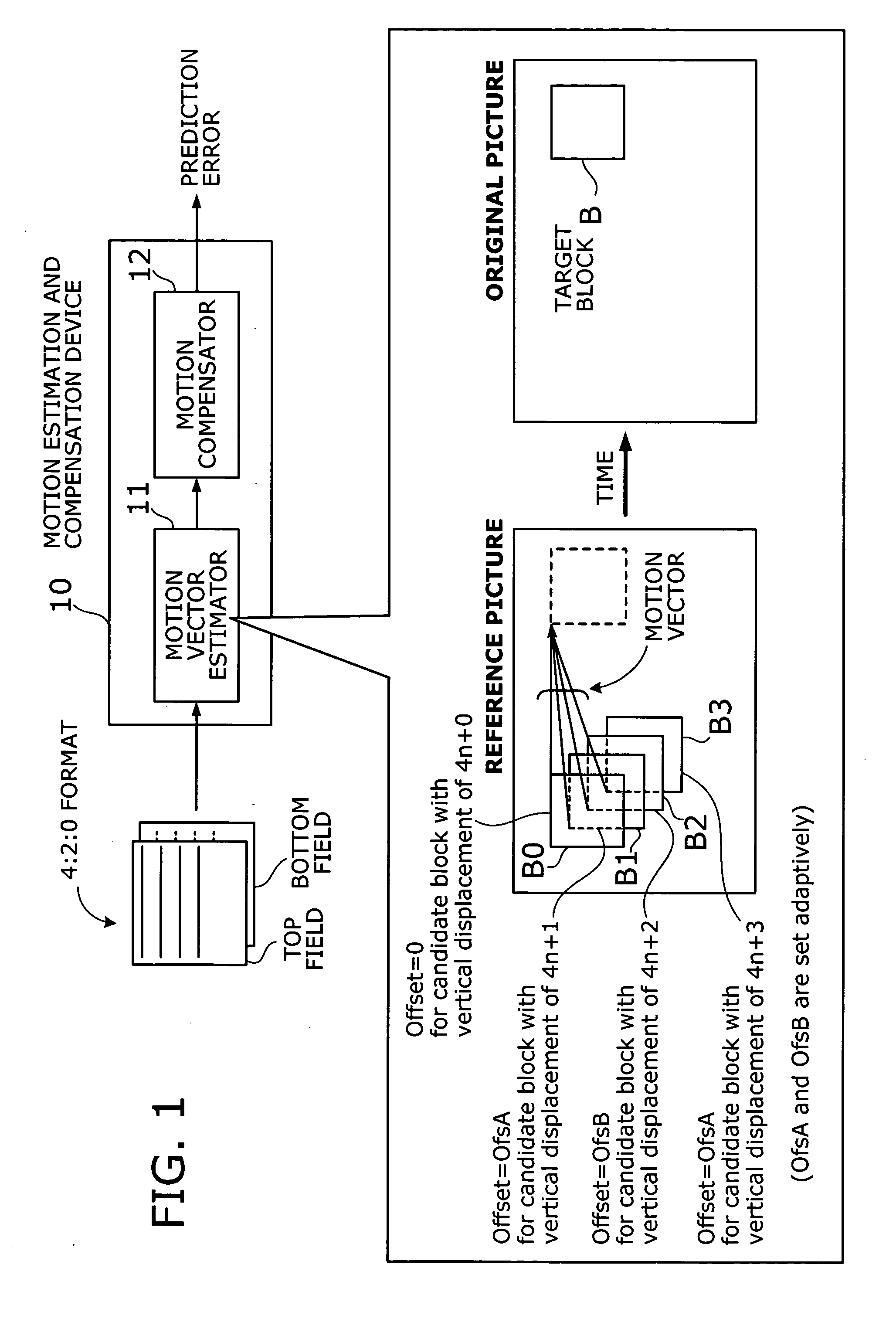

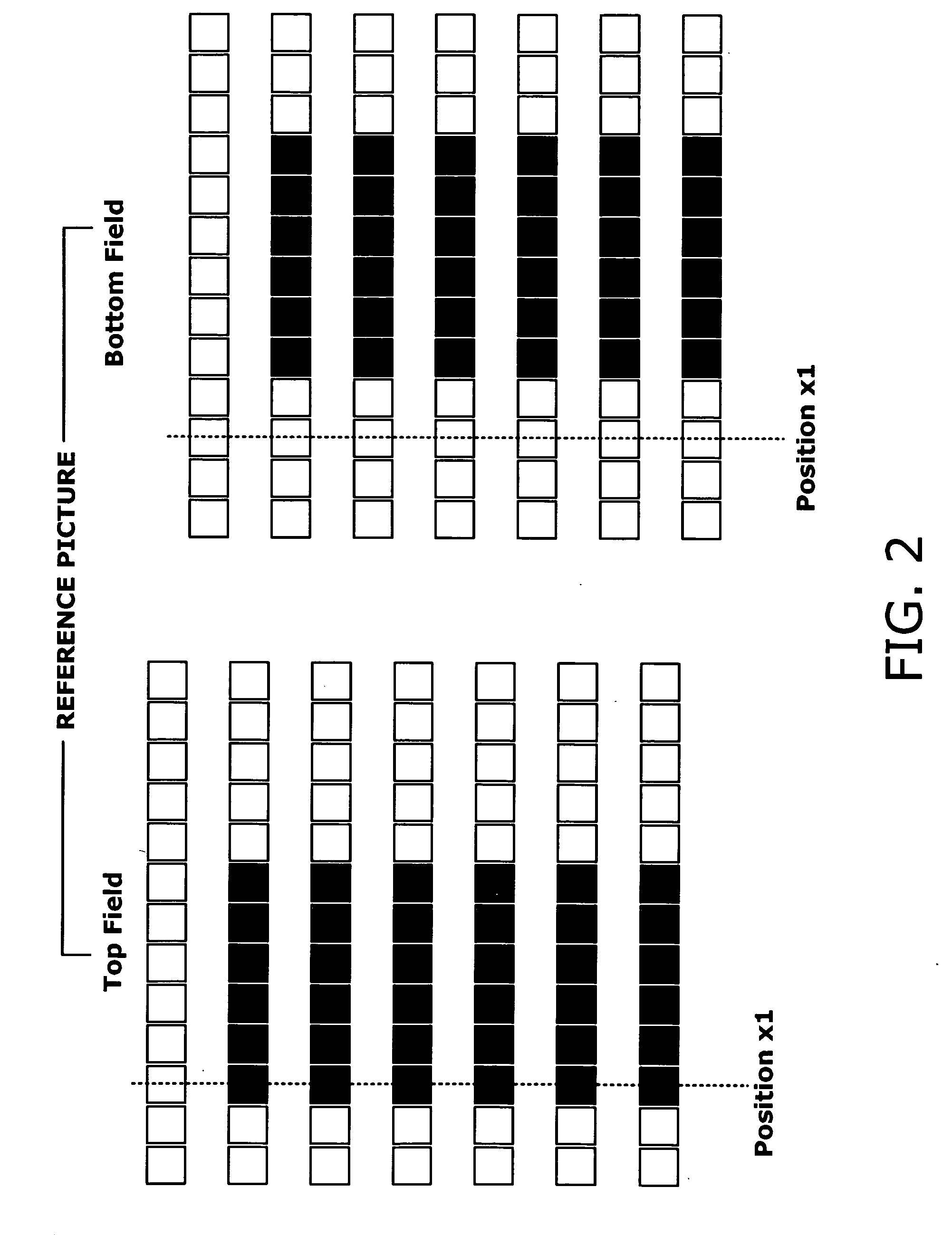

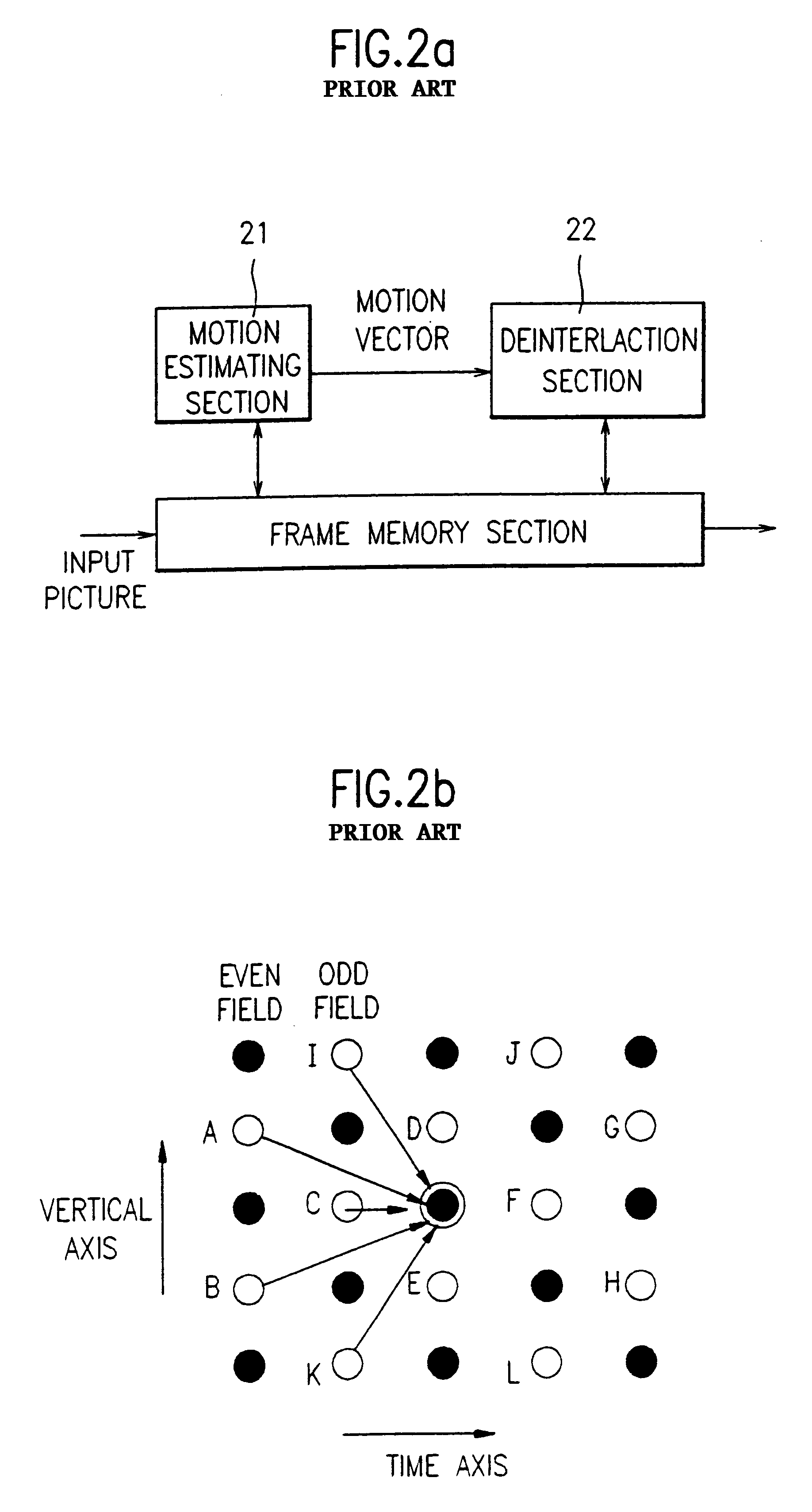

Motion estimation and compensation device with motion vector correction based on vertical component values

InactiveUS20060023788A1Color television with pulse code modulationImage analysisMotion vectorErrors and residuals

A motion estimation and compensation device that avoids discrepancies in chrominance components which could be introduced in the process of motion vector estimation. The device has a motion vector estimator for finding motion vectors in given interlace-scanning chrominance-subsampled video signals. The estimator compares each candidate block in a reference picture with a target block in an original picture by using a sum of absolute differences (SAD) in luminance as similarity metric, chooses a best matching candidate block that minimizes the SAD, and determines its displacement relative to the target block. In this process, the estimator gives the SAD of each candidate block an offset determined from the vertical component of a corresponding motion vector, so as to avoid chrominance discrepancies. A motion compensator then produces a predicted picture using such motion vectors and calculates prediction error by subtracting the predicted picture from the original picture.

Owner:FUJITSU MICROELECTRONICS LTD

Adaptive de-blocking filtering apparatus and method for MPEG video decoder

ActiveUS20050243913A1Reduce computational complexityReduce complexityColor television with pulse code modulationColor television with bandwidth reductionPattern recognitionInterlaced video

A post processing de-blocking filter includes a threshold determination unit for adaptively determining a plurality of threshold values according to at least differences in quantization parameters QPs of a plurality of adjacent blocks in a received video stream and to a user defined offset (UDO) allowing the threshold levels to be adjusted according to the UDO value; an interpolation unit for performing an interpolation operation to estimate pixel values in an interlaced field if the video stream comprises interlaced video; and a de-blocking filtering unit for determining a filtering range specifying a maximum number of pixels to filter around a block boundary between the adjacent blocks, determining a region mode according to local activity around the block boundary, selecting one of a plurality of at least three filters, and filtering a plurality of pixels around the block boundary according to the filtering range, the region mode, and the selected filter.

Owner:MEDIATEK INC

Adaptive de-blocking filtering apparatus and method for MPEG video decoder

ActiveUS20050243916A1Reduce computational complexityReduce complexityColor television with pulse code modulationColor television with bandwidth reductionPattern recognitionInterlaced video

A post processing de-blocking filter includes a threshold determination unit for adaptively determining a plurality of threshold values according to at least differences in quantization parameters QPs of a plurality of adjacent blocks in a received video stream and to a user defined offset (UDO) allowing the threshold levels to be adjusted according to the UDO value; an interpolation unit for performing an interpolation operation to estimate pixel values in an interlaced field if the video stream comprises interlaced video; and a de-blocking filtering unit for determining a filtering range specifying a maximum number of pixels to filter around a block boundary between the adjacent blocks, determining a region mode according to local activity around the block boundary, selecting one of a plurality of at least three filters, and filtering a plurality of pixels around the block boundary according to the filtering range, the region mode, and the selected filter.

Owner:MEDIATEK INC

Adaptive de-blocking filtering apparatus and method for MPEG video decoder

InactiveUS20050243914A1Reduce computational complexityAdaptableColor television with pulse code modulationColor television with bandwidth reductionInterlaced videoParallel computing

A post processing de-blocking filter includes a threshold determination unit for adaptively determining a plurality of threshold values according to at least differences in quantization parameters QPs of a plurality of adjacent blocks in a received video stream and to a user defined offset (UDO) allowing the threshold levels to be adjusted according to the UDO value; an interpolation unit for performing an interpolation operation to estimate pixel values in an interlaced field if the video stream comprises interlaced video; and a de-blocking filtering unit for determining a filtering range specifying a maximum number of pixels to filter around a block boundary between the adjacent blocks, determining a region mode according to local activity around the block boundary, selecting one of a plurality of at least three filters, and filtering a plurality of pixels around the block boundary according to the filtering range, the region mode, and the selected filter.

Owner:MEDIATEK INC

Motion vector prediction in bi-directionally predicted interlaced field-coded pictures

ActiveUS20050053147A1Stable supportImprove performancePicture reproducers using cathode ray tubesCode conversionInterlaced videoReference field

Forward motion vectors are predicted by an encoder / decoder using previously reconstructed (or estimated) forward motion vectors from a forward motion vector buffer, and backward motion vectors are predicted using previously reconstructed (or estimated) backward motion vectors from a backward motion vector buffer. The resulting motion vectors are added to the corresponding buffer. Holes in motion vector buffers can be filled in with estimated motion vector values. For example, for interlaced B-fields, to choose between different polarity motion vectors (e.g., “same polarity” or “opposite polarity”) for hole-filling, an encoder / decoder selects a dominant polarity field motion vector. The distance between anchors and current frames is computed using various syntax elements, and the computed distance is used for scaling reference field motion vectors.

Owner:MICROSOFT TECH LICENSING LLC

Adaptive de-blocking filtering apparatus and method for MPEG video decoder

ActiveUS20050243915A1Reduce computational complexityAdaptableColor television with pulse code modulationColor television with bandwidth reductionPattern recognitionInterlaced video

A post processing de-blocking filter includes a threshold determination unit for adaptively determining a plurality of threshold values according to at least differences in quantization parameters QPs of a plurality of adjacent blocks in a received video stream and to a user defined offset (UDO) allowing the threshold levels to be adjusted according to the UDO value; an interpolation unit for performing an interpolation operation to estimate pixel values in an interlaced field if the video stream comprises interlaced video; and a de-blocking filtering unit for determining a filtering range specifying a maximum number of pixels to filter around a block boundary between the adjacent blocks, determining a region mode according to local activity around the block boundary, selecting one of a plurality of at least three filters, and filtering a plurality of pixels around the block boundary according to the filtering range, the region mode, and the selected filter.

Owner:MEDIATEK INC

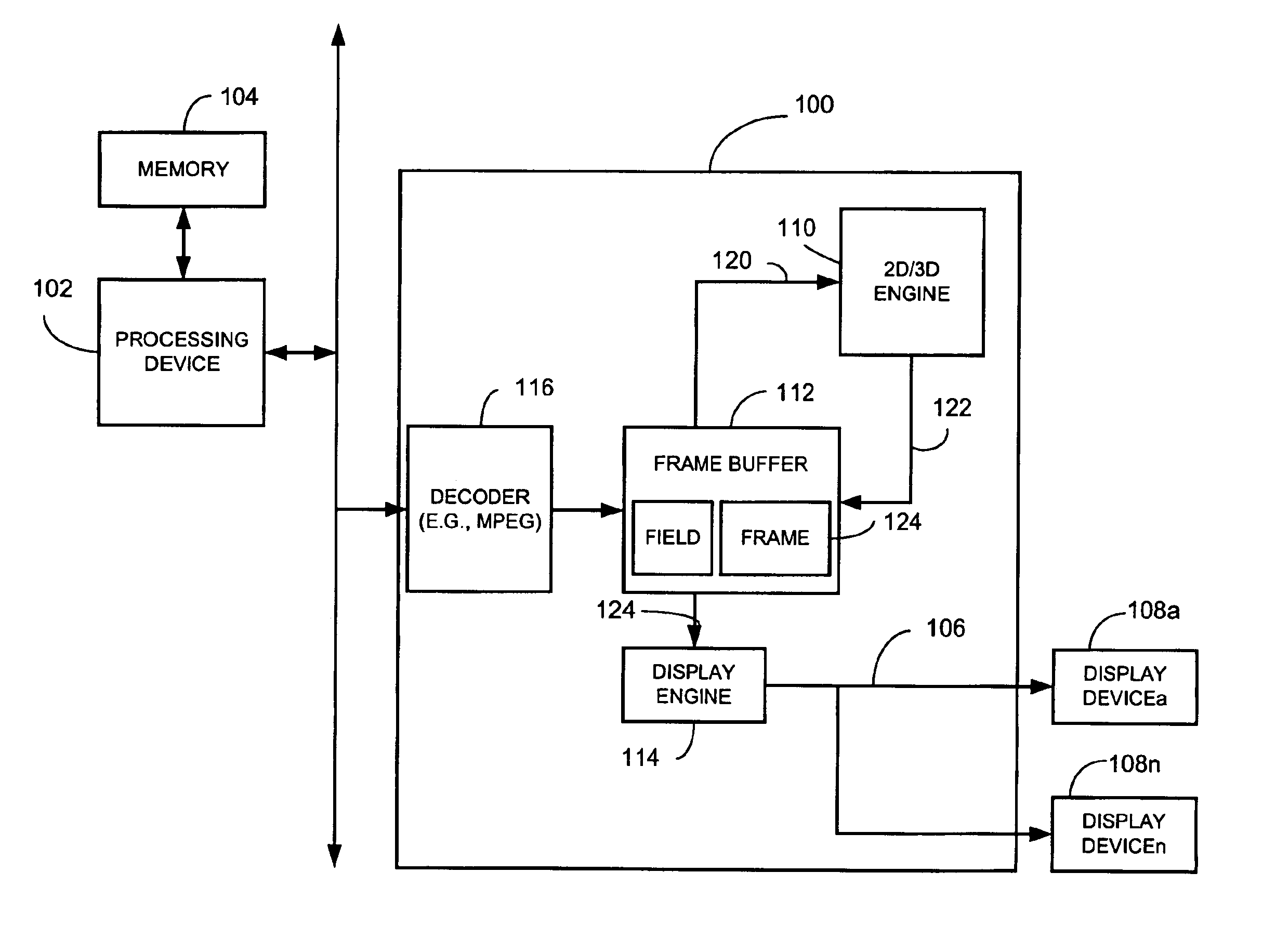

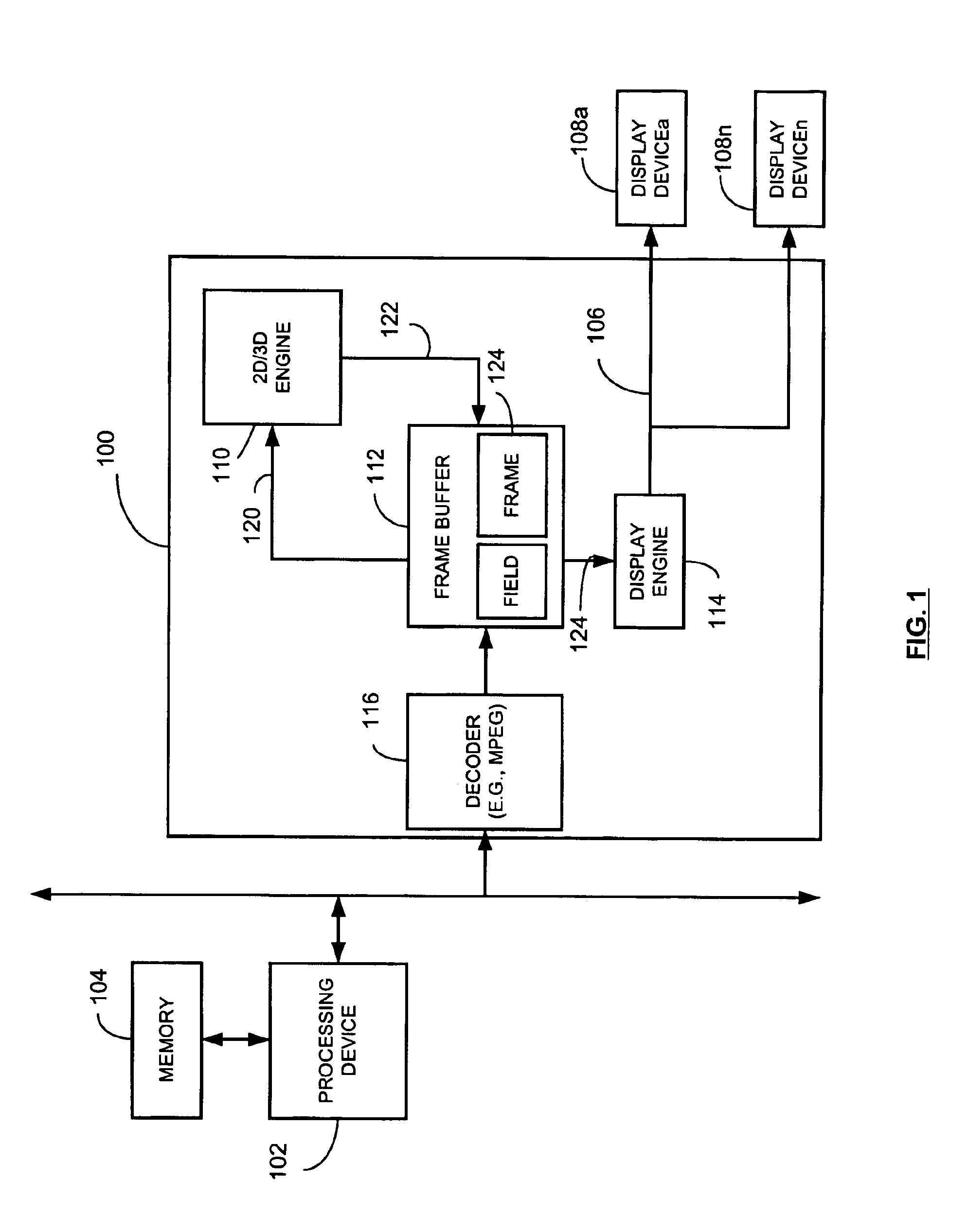

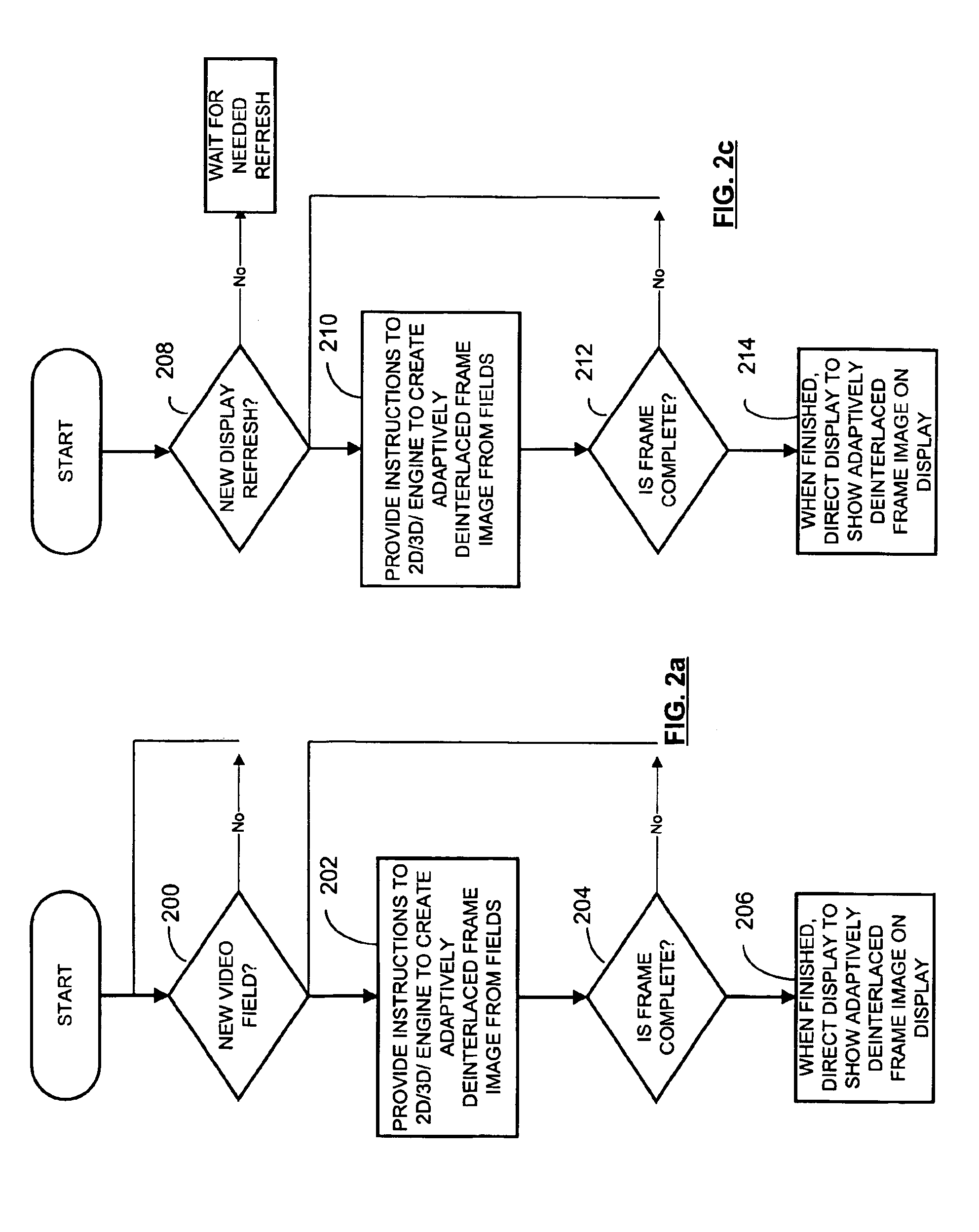

Method for deinterlacing interlaced video by a graphics processor

InactiveUS6970206B1Television system detailsPicture reproducers using cathode ray tubesGraphicsInterlaced video

A method for deinterlacing interlaced video using a graphics processor includes receiving at least one instruction for a 2D / 3D engine to facilitate creation of an adaptively deinterlaced frame image from at least a first interlaced field. The method also includes performing, by the 2D / 3D engine, at least a portion of adaptive deinterlacing based on at least the first interlaced field, in response to the at least one instruction to produce at least a portion of the adaptively deinterlaced frame image. Once the information is deinterlaced, the method includes retrieving, by a graphics processor display engine, the stored adaptively deinterlaced frame image generated by the 2D / 3D engine, for display on one or more display devices. The method also includes issuing 2D / 3D instructions to the 2D / 3D engine to carry out deinterlacing of lines of video data from interlaced fields. This may be done, for example, by another processing device, such as a host CPU, or any other suitable processing device.

Owner:AVAGO TECH INT SALES PTE LTD

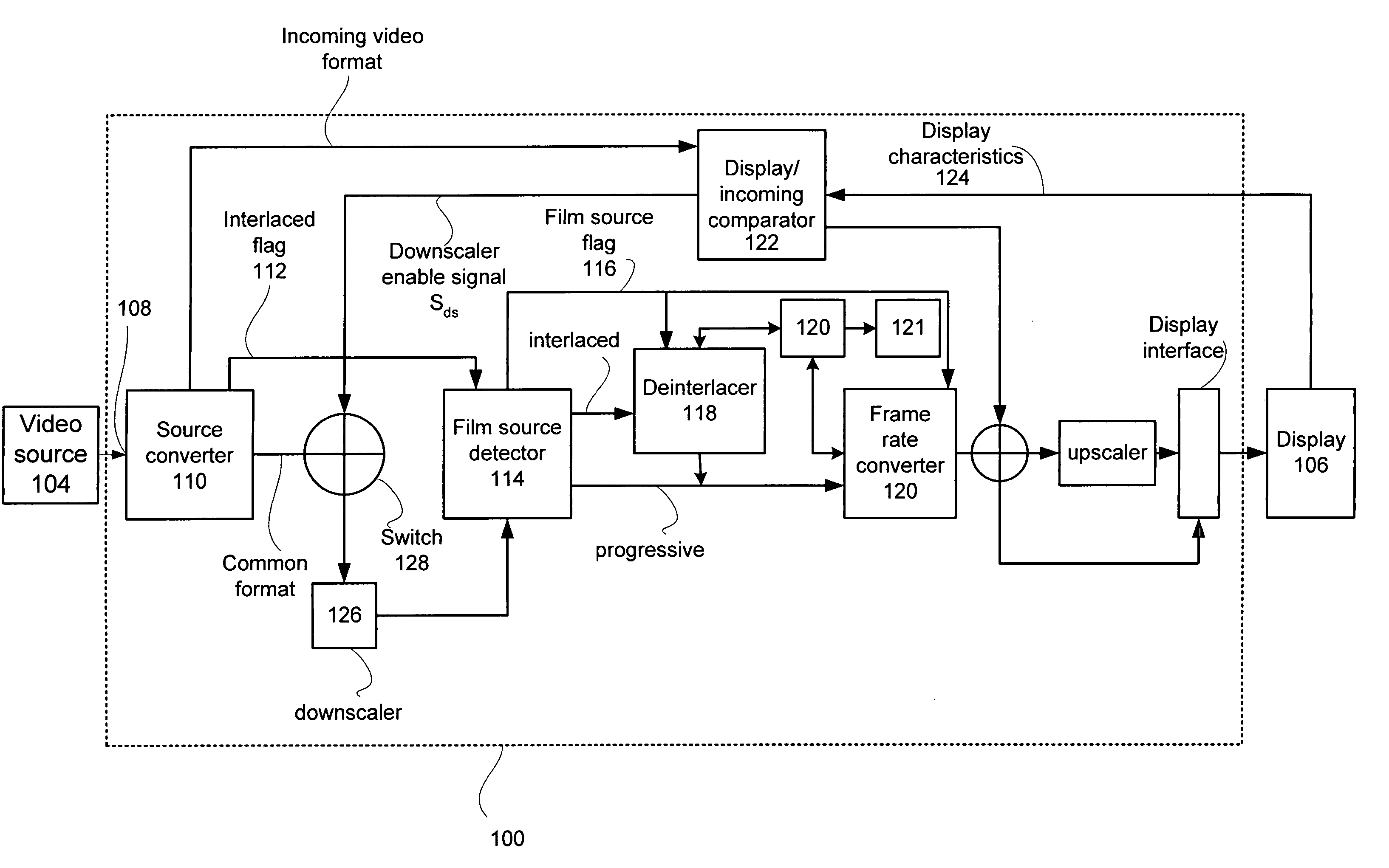

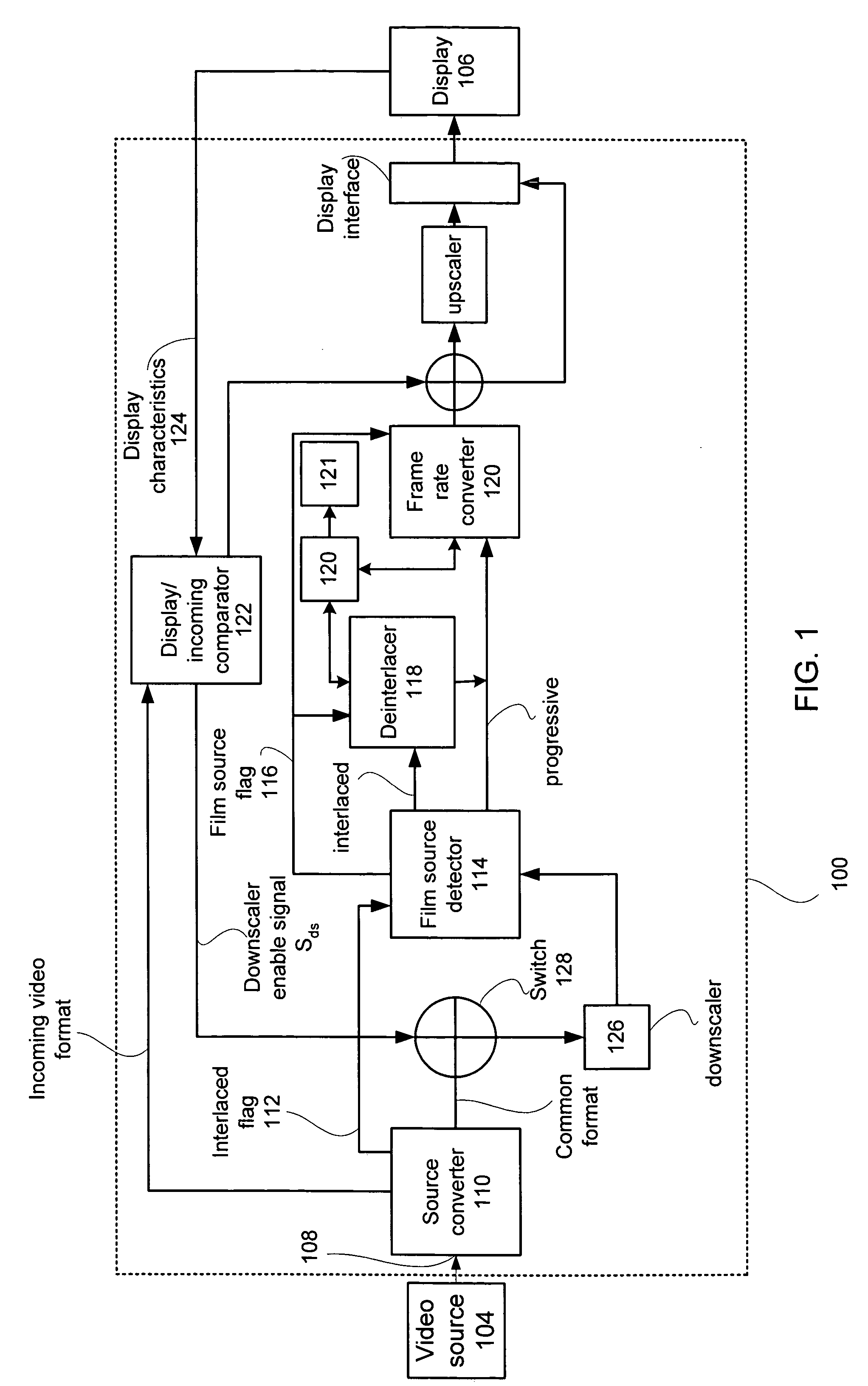

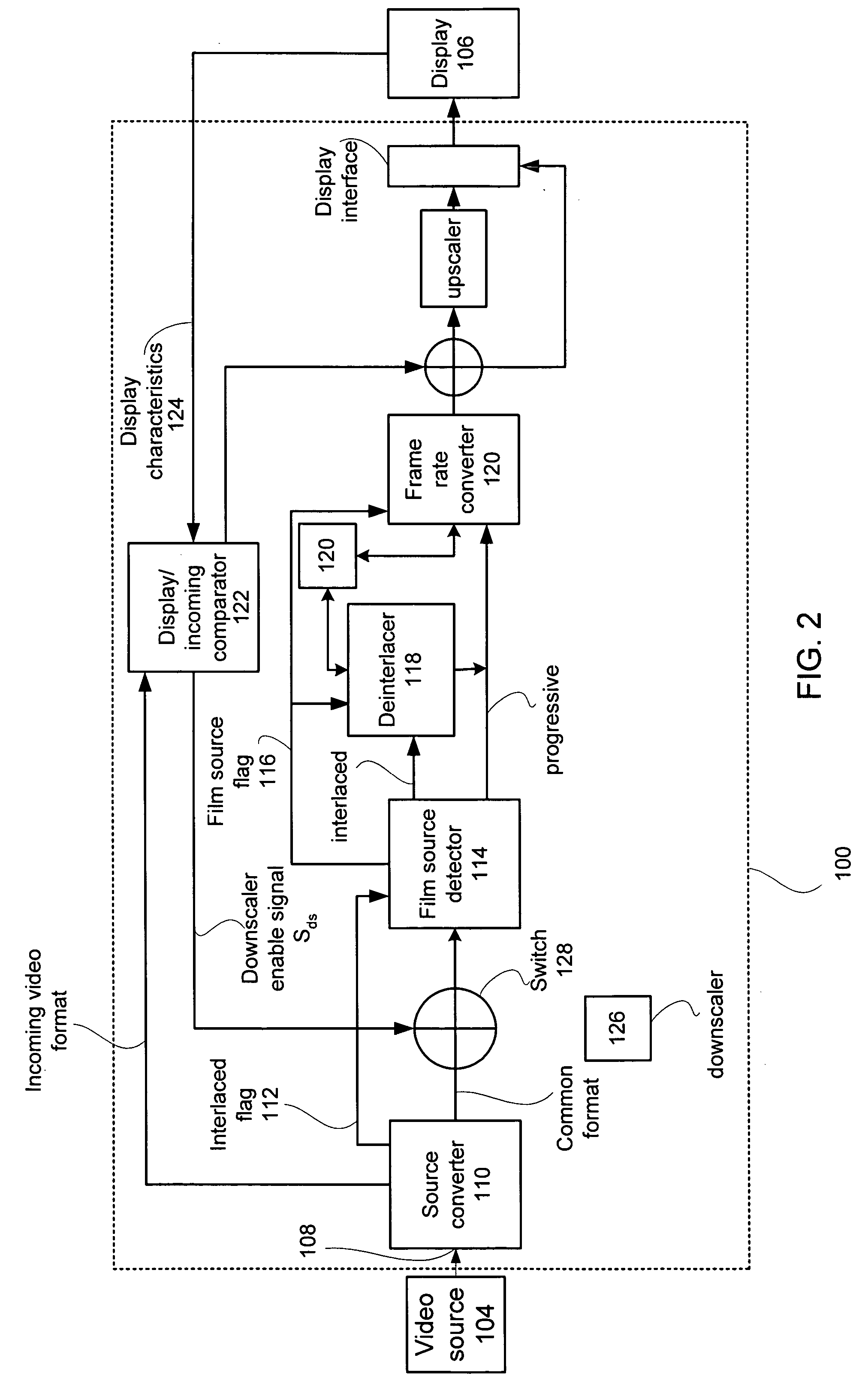

Adaptive display controller

ActiveUS20050134735A1Cancel noiseBandwidthTelevision system detailsTelevision system scanning detailsMulti-function displayProgressive scan

A multi-function display controller that includes a source detector unit for determining if the source of an input stream is either film originated source originated or video source originated. A source converter unit for converting the input image stream to a common signal processing format is coupled to the source detector unit. Once converted to the common signal processing format, a determination is made if the input image stream is interlaced or non-interlaced (progressive scan). If the input image stream is interlaced, a de-interlace unit converts the interlaced signal to progressive scan using either motion adaptive or motion compensated de-interlacing techniques. It should be noted that in the described embodiment, motion vectors generated for use by the motion compensated de-interlace can be optionally stored in a memory unit for use in subsequent operations, such as motion compensated frame rate conversion or noise reduction (if any).

Owner:GENESIS MICROCHIP

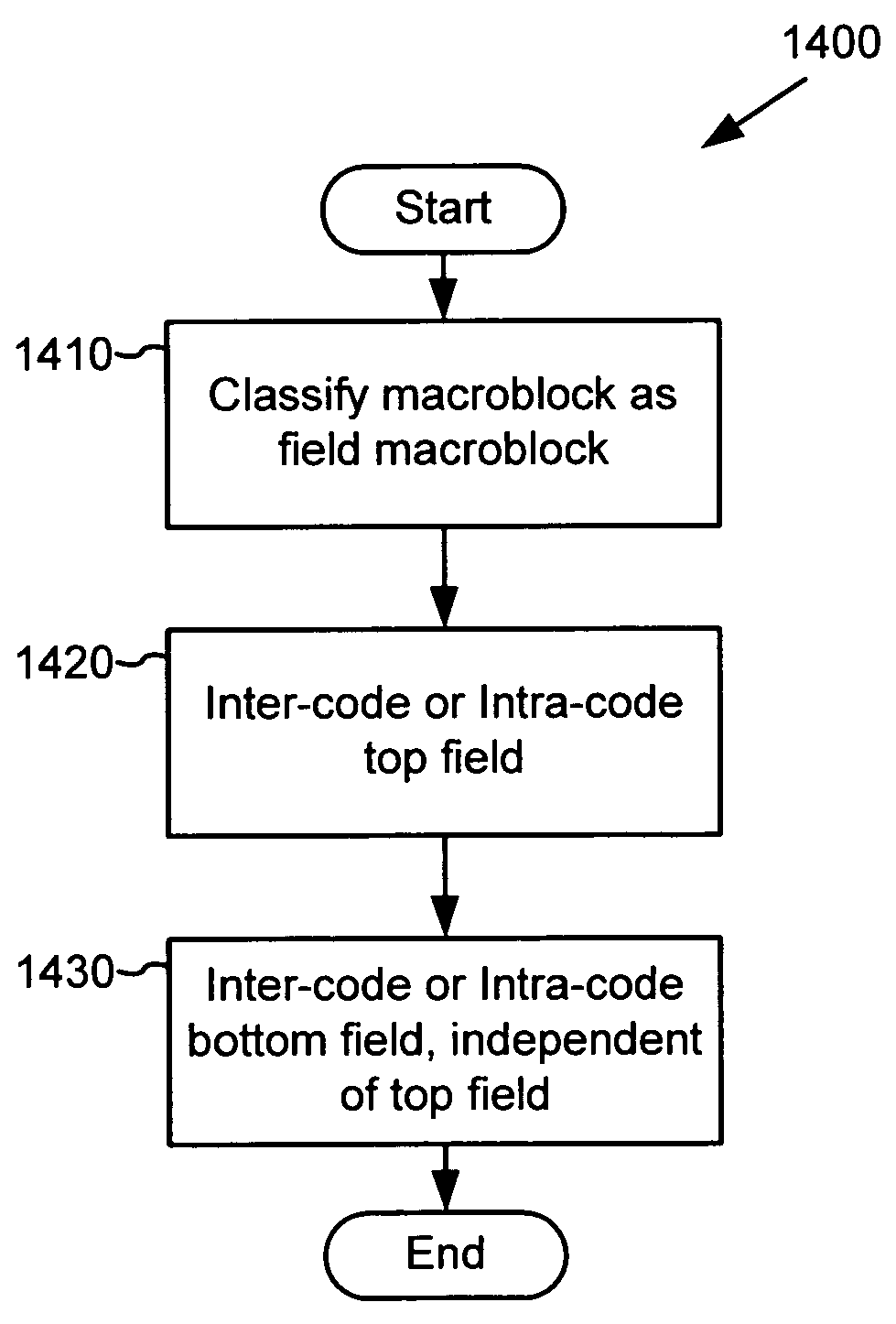

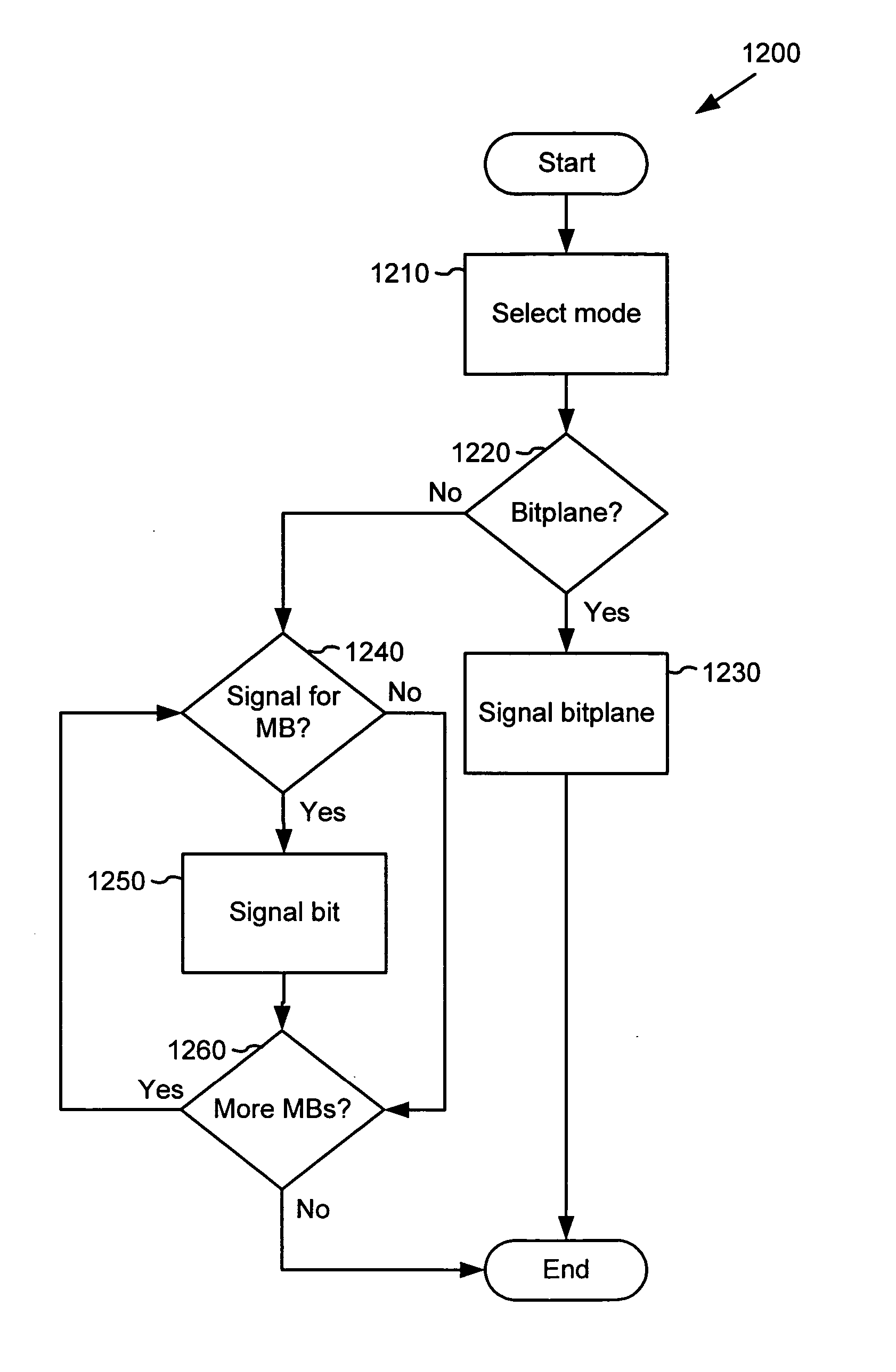

Bitplane coding for macroblock field/frame coding type information

ActiveUS20050053296A1Reduced flexibilityColor television with pulse code modulationColor television with bandwidth reductionInterlaced videoComputer graphics (images)

In one aspect, for a first interlaced video frame in a video sequence, a decoder decodes a bitplane signaled at frame layer for the first interlaced video frame. The bitplane represents field / frame transform types for plural macroblocks of the first interlaced video frame. For a second interlaced video frame in the video sequence, for each of at least one but not all of plural macroblocks of the second interlaced video frame, the decoder processes a per macroblock field / frame transform type bit signaled at macroblock layer. An encoder performs corresponding encoding.

Owner:MICROSOFT TECH LICENSING LLC

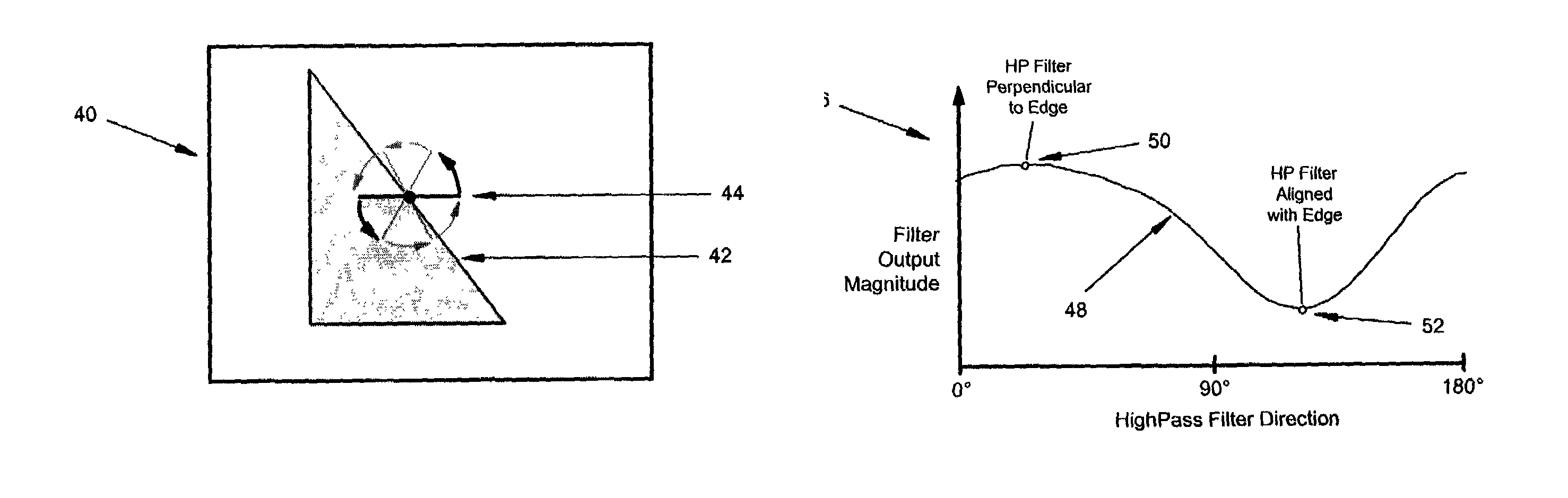

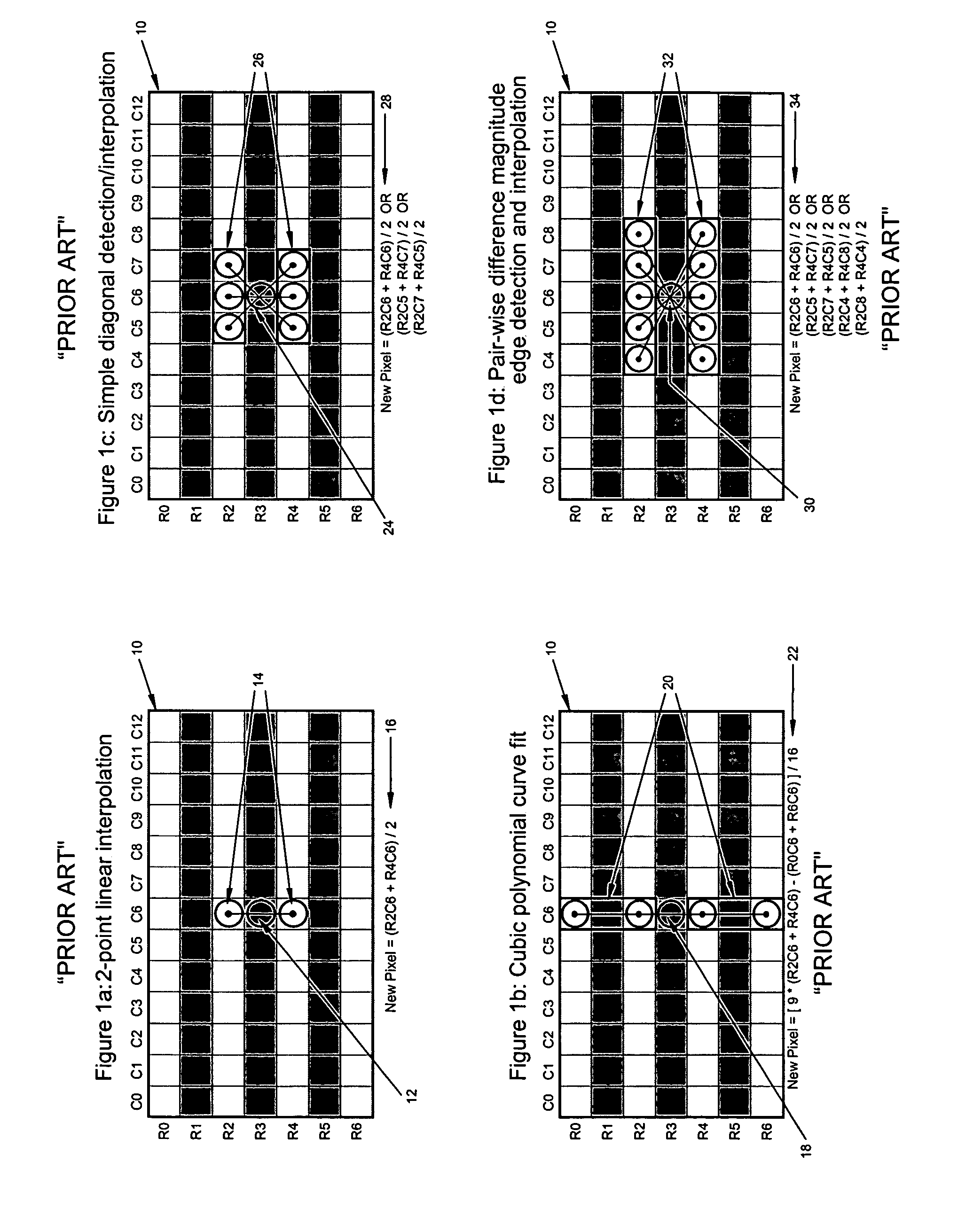

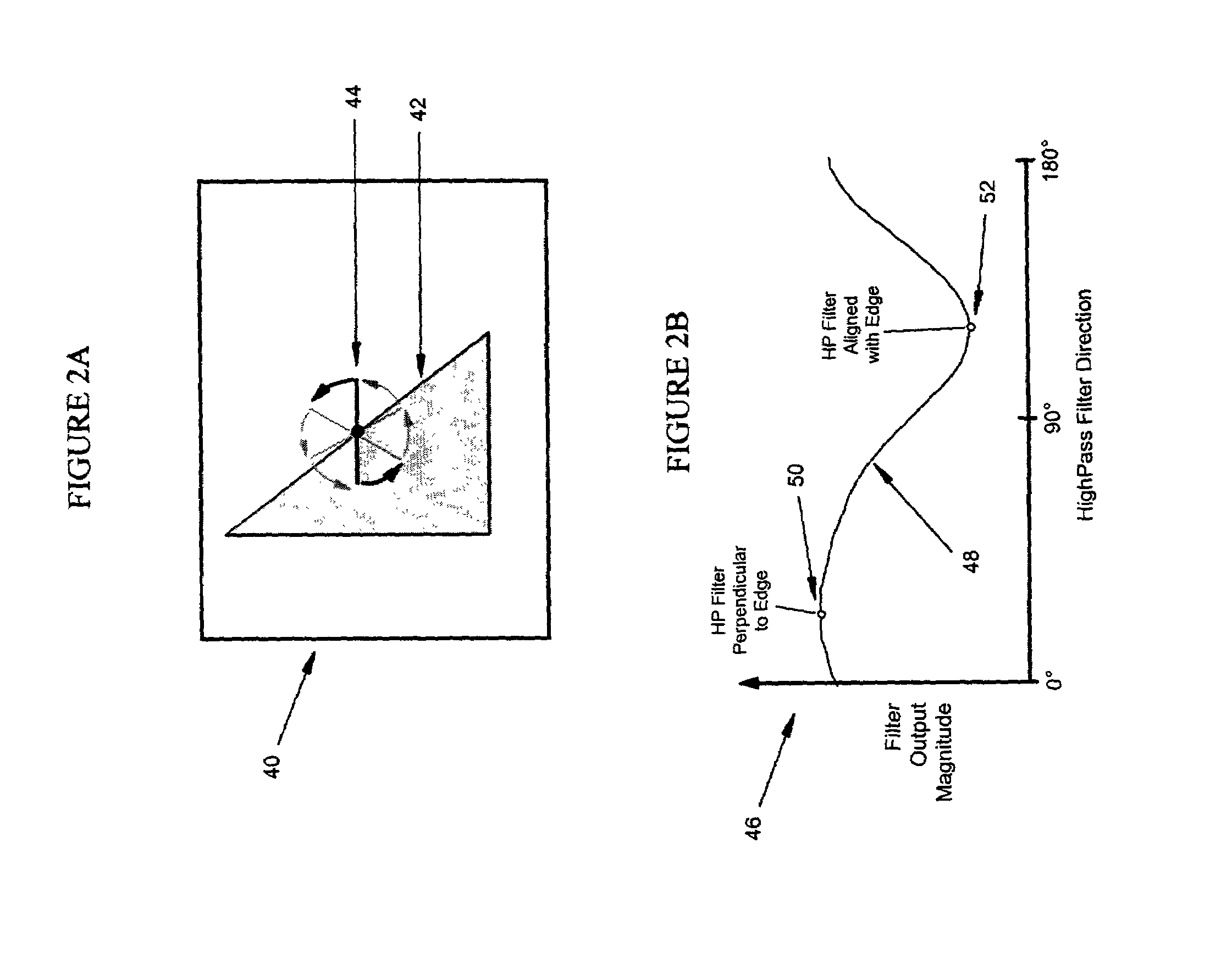

Deinterlacing of video sources via image feature edge detection

ActiveUS7023487B1Reduce artifactsPreserves maximum amount of vertical detailImage enhancementTelevision system detailsInterlaced videoProgressive scan

An interlaced to progressive scan video converter which identifies object edges and directions, and calculates new pixel values based on the edge information. Source image data from a single video field is analyzed to detect object edges and the orientation of those edges. A 2-dimensional array of image elements surrounding each pixel location in the field is high-pass filtered along a number of different rotational vectors, and a null or minimum in the set of filtered data indicates a candidate object edge as well as the direction of that edge. A 2-dimensional array of edge candidates surrounding each pixel location is characterized to invalidate false edges by determining the number of similar and dissimilar edge orientations in the array, and then disqualifying locations which have too many dissimilar or too few similar surrounding edge candidates. The surviving edge candidates are then passed through multiple low-pass and smoothing filters to remove edge detection irregularities and spurious detections, yielding a final edge detection value for each source image pixel location. For pixel locations with a valid edge detection, new pixel data for the progressive output image is calculated by interpolating from source image pixels which are located along the detected edge orientation.

Owner:LATTICE SEMICON CORP

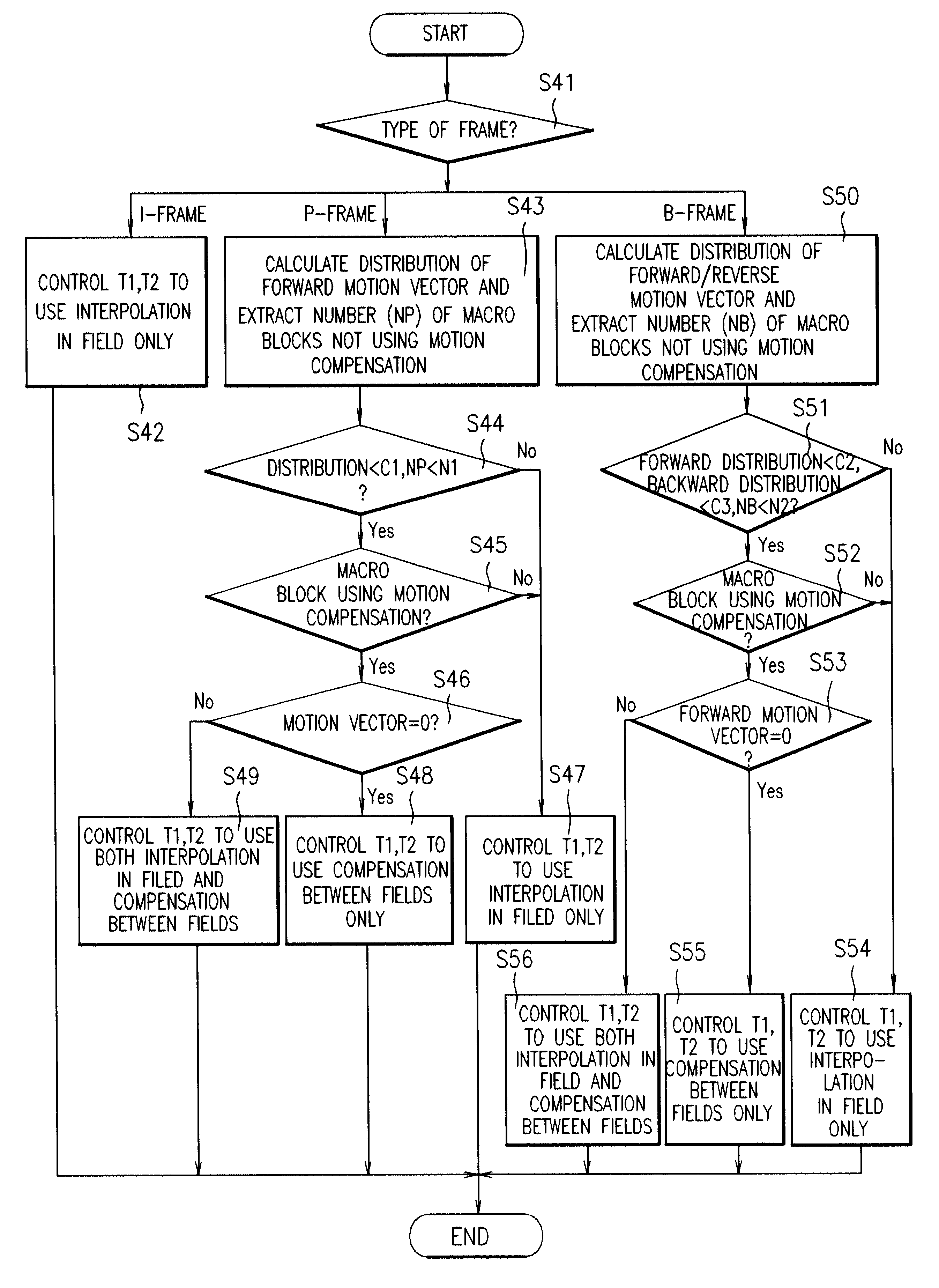

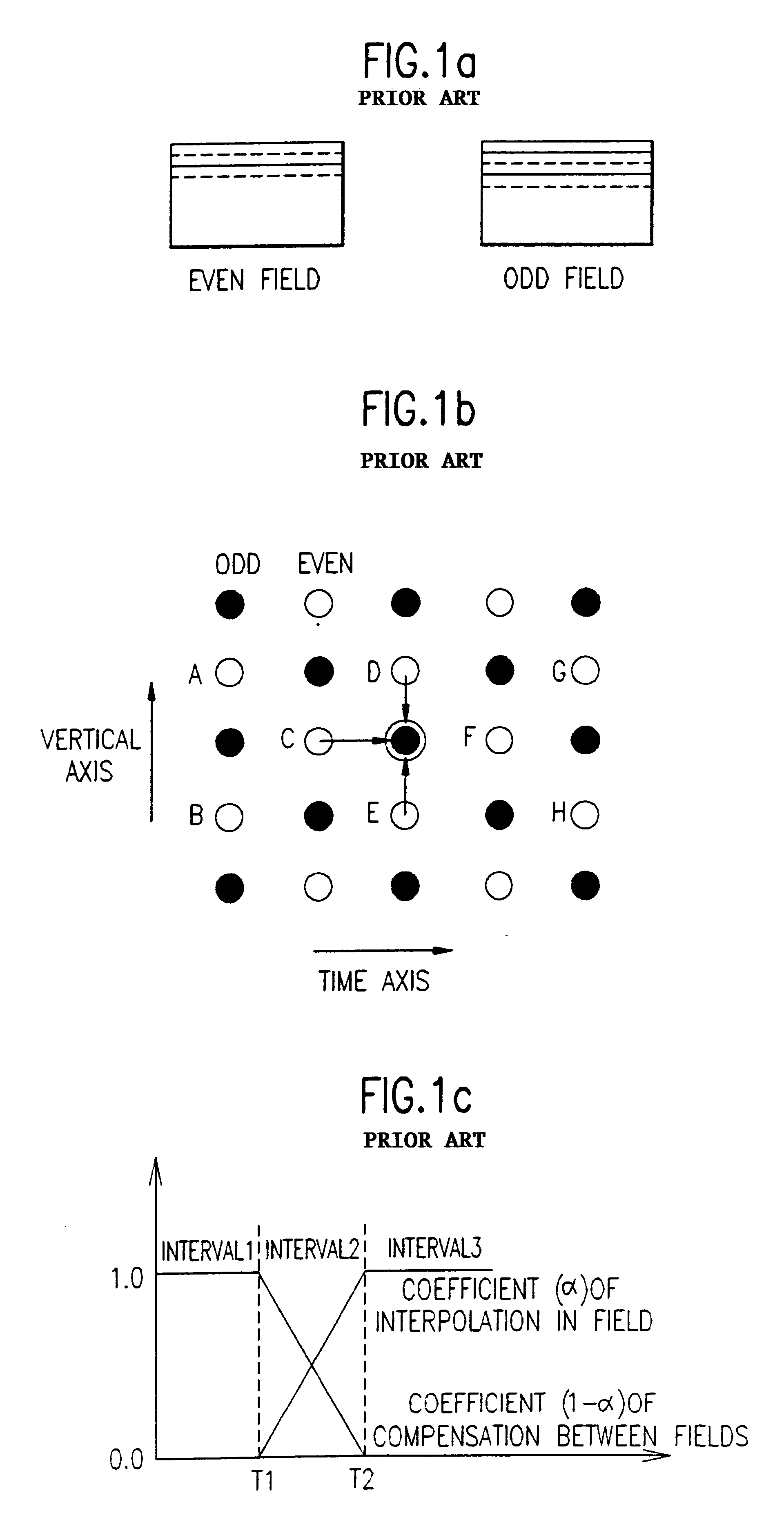

Apparatus for converting picture format in digital TV and method of the same

InactiveUS6268886B1Television system detailsPicture reproducers using cathode ray tubesFeature extractionInterlaced video

An apparatus and method for converting a picture format in a digital TV is disclosed including a picture characteristic extracting section analyzing a compressed bit stream of every input picture scanned in an interlaced scanning pattern to analyze the characteristic of each picture; an interpolation determining section determining an interpolation operation processing in an adaptable manner using information each extracted from every given pictures at the picture characteristic extracting section; and an operation performing section performing the related operation according to the interpolation operation processing determined by the interpolation determining section to perform an interpolation.

Owner:LG ELECTRONICS INC

Number of reference fields for an interlaced forward-predicted field

ActiveUS20050053134A1Picture reproducers using cathode ray tubesCode conversionInterlaced videoMotion vector

Techniques and tools for signaling the number of reference fields for an interlaced forward-predicted field are described. For example, a video decoder processes a first signal indicating whether an interlaced forward-predicted field has one or two reference fields for motion compensation. If the first signal indicates the interlaced forward-predicted field has one reference field, the decoder processes a second signal identifying the one reference field from among the two reference fields. On the other hand, if the first signal indicates the interlaced forward-predicted field has two reference fields, for each of multiple motion vectors of the interlaced forward-predicted field, the decoder processes a third signal for selecting between the two reference fields. A video encoder performs corresponding signaling.

Owner:MICROSOFT TECH LICENSING LLC

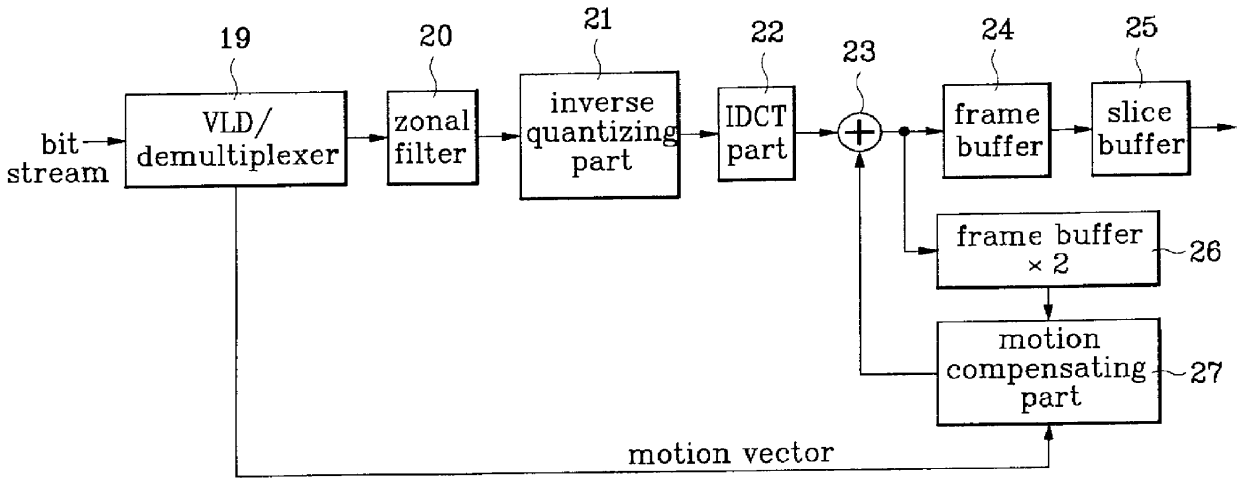

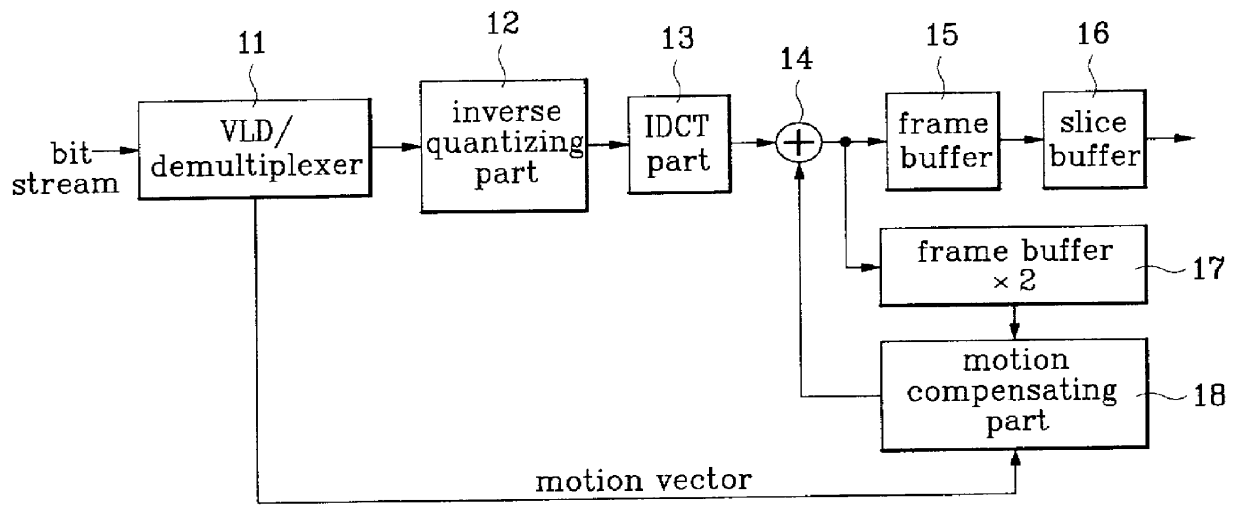

Device and method for decoding HDTV video

InactiveUS6104753AImprove picture qualityQuality improvementPulse modulation television signal transmissionPicture reproducers using cathode ray tubesProgressive scanComputer graphics (images)

HDTV video decoder circuit is disclosed, which has an +E,fra 1 / 4+EE size frame memory for a progressive scanned or interlace scanned video and yet can conduct IDCT and motion compensation to fit to the reduced frame memory size, which, in comparison to a conventional MPEG-2 video decoder which uses a 4x4 IDCT that requires +E,fra 1 / 4+EE frame memory in encoding an interlace scanned image into frame picture only to lose field information of the image resulting in a significant damage to the picture quality, facilitates to maintain the field information as it was resulting in an improvement in the picture quality.

Owner:LG ELECTRONICS INC

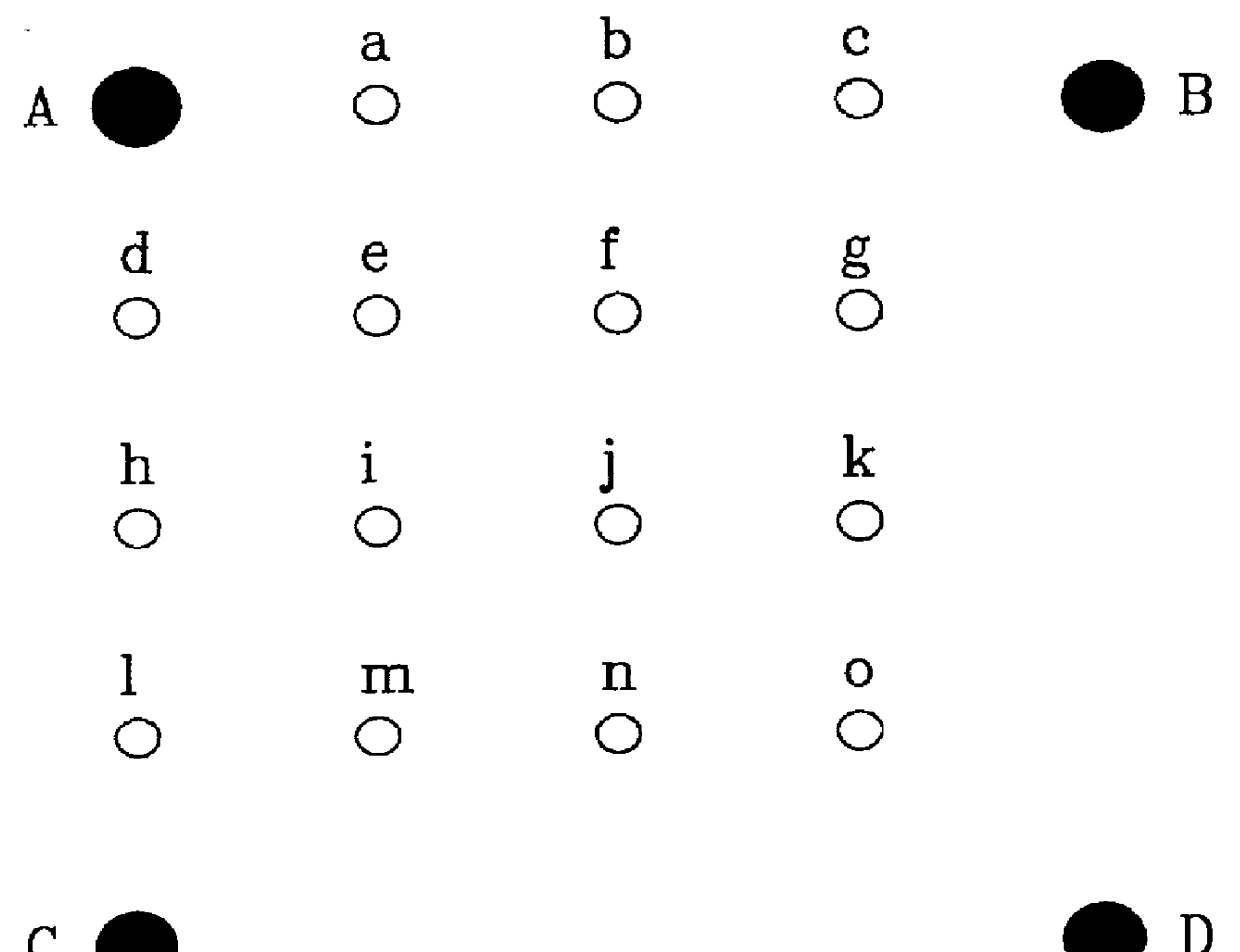

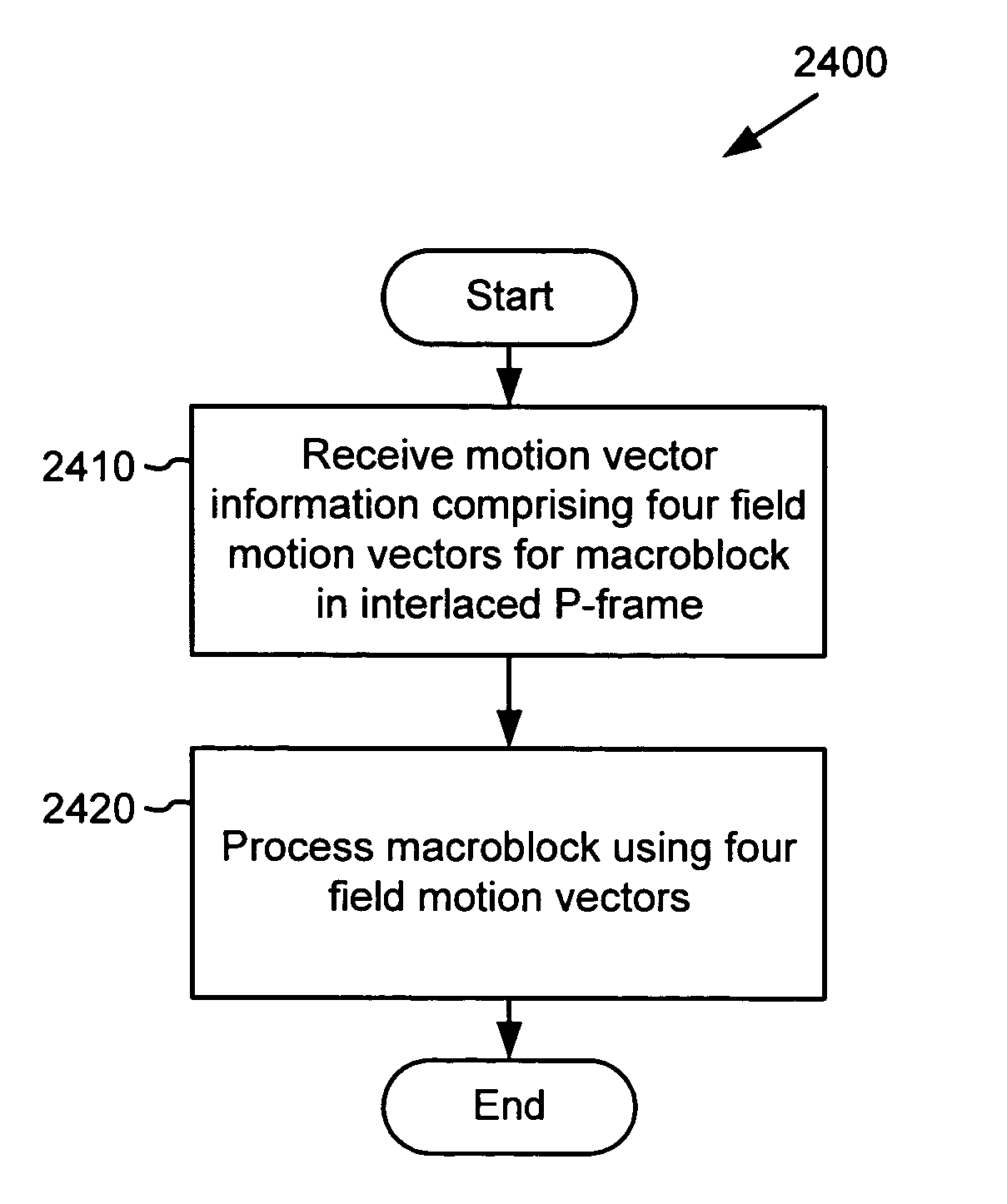

Motion vector coding and decoding in interlaced frame coded pictures

ActiveUS20050053293A1Reduce time redundancyReduce entropyPicture reproducers using cathode ray tubesCode conversionMotion vectorComputer science

In one aspect, an encoder / decoder receives information for four field motion vectors for a macroblock in an interlaced frame-coded, forward-predicted picture and processes the macroblock using the four field motion vectors. In another aspect, an encoder / decoder determines a number of valid candidate motion vectors and calculates a field motion vector predictor. The encoder / decoder does not perform a median operation on the valid candidates if there are less than three of them. In another aspect, an encoder / decoder determines valid candidates, determines field polarities for the valid candidates, and calculates a motion vector predictor based on the field polarities. In another aspect, an encoder / decoder determines one or more valid candidates, determines a field polarity for each individual valid candidate, allocates each individual valid candidate to one of two sets (e.g., opposite polarity and same polarity sets) depending on its field polarity, and calculates a motion vector predictor based on the two sets.

Owner:MICROSOFT TECH LICENSING LLC

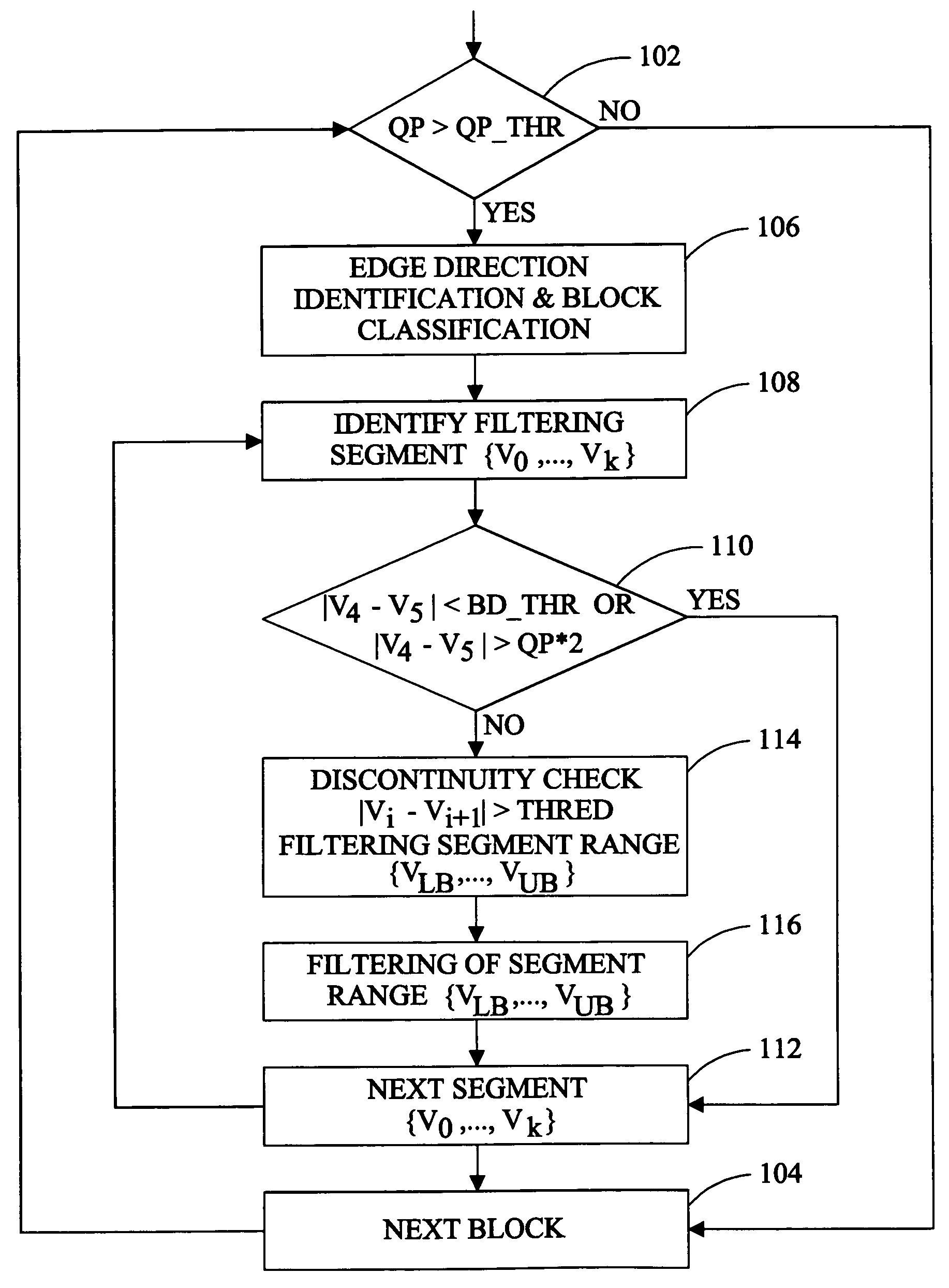

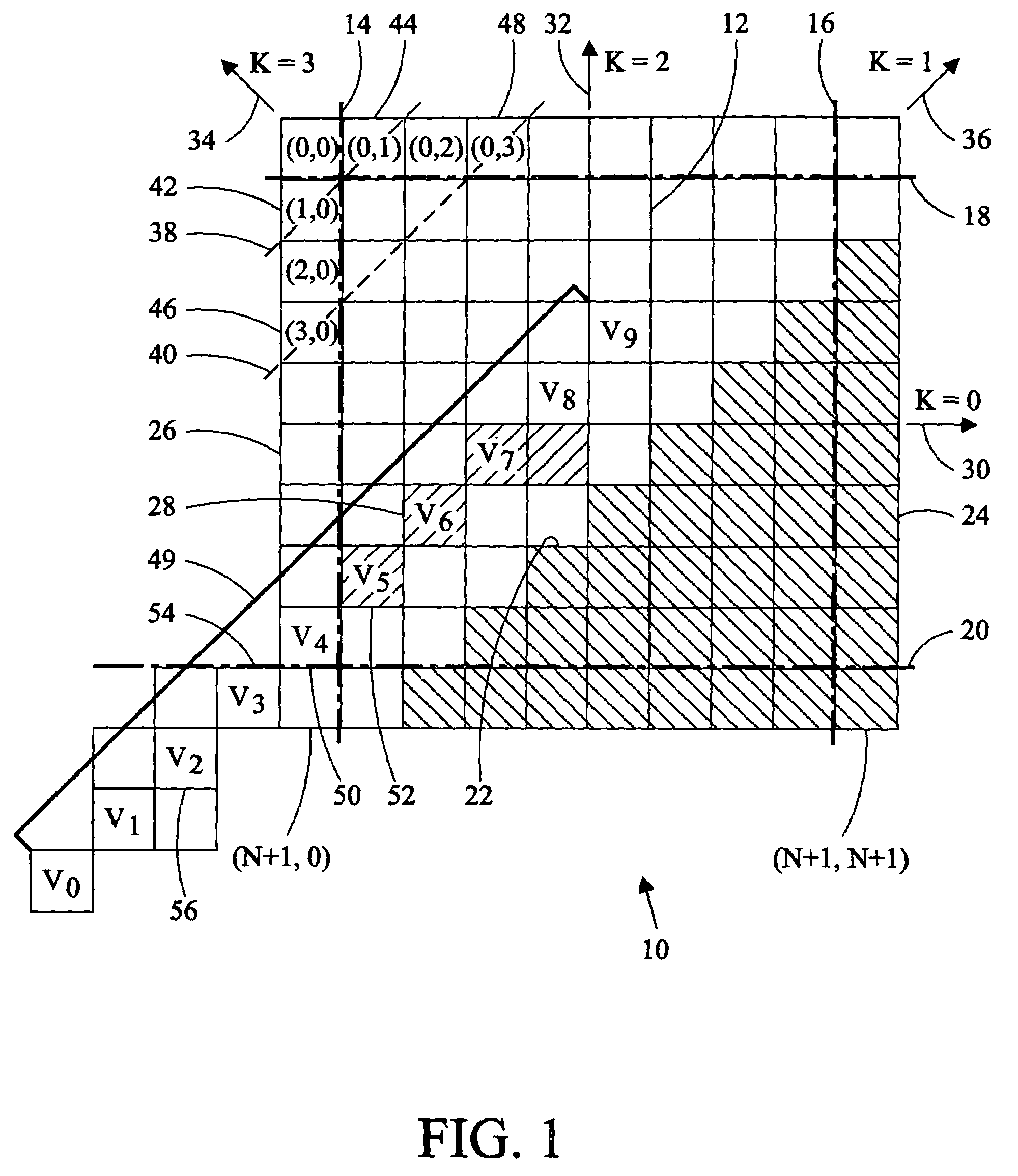

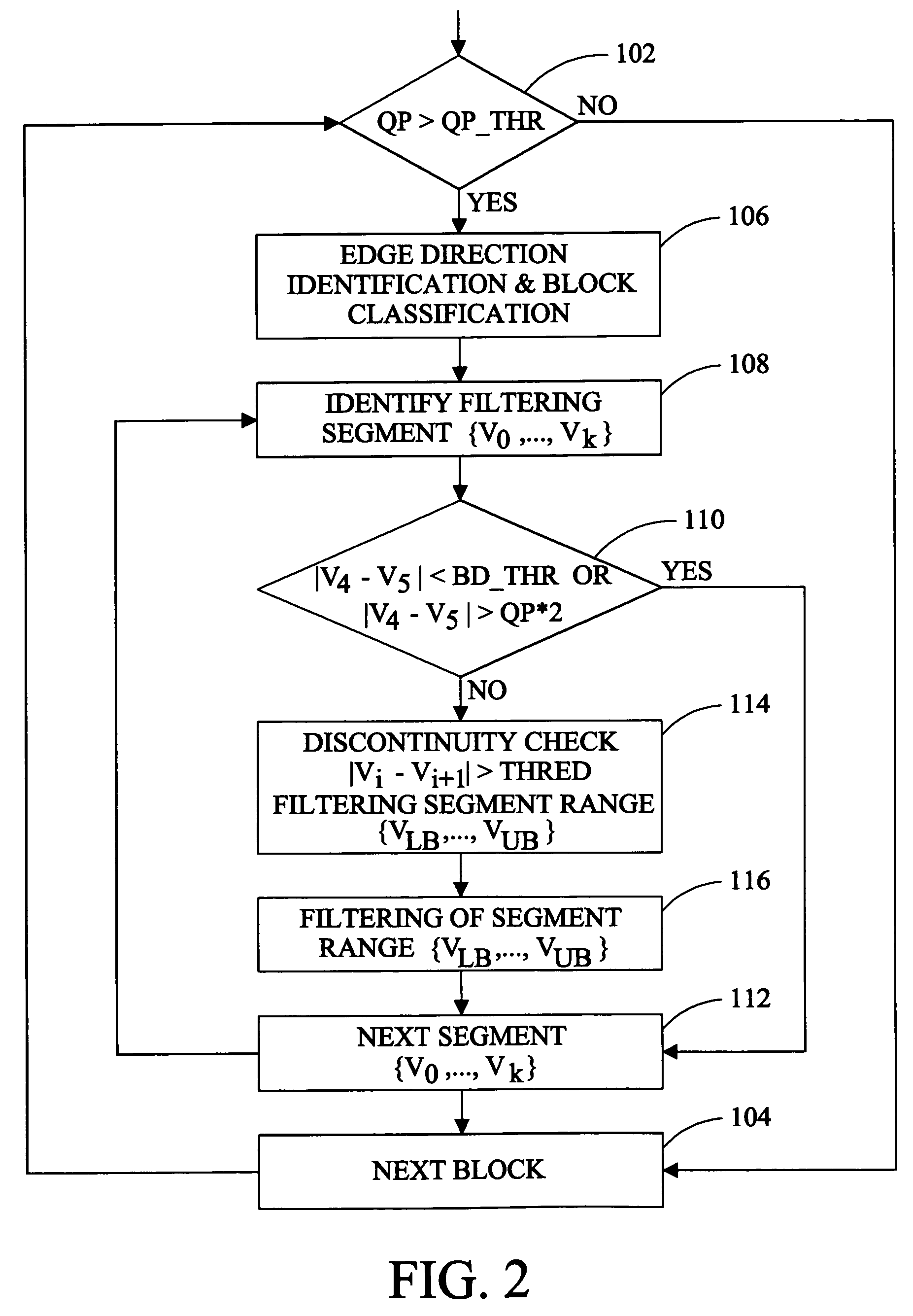

Method of directional filtering for post-processing compressed video

InactiveUS7203234B1Minimize impactSave computing resourcesImage enhancementTelevision system detailsInterlaced videoRinging artifacts

A method of post-processing decompressed images includes identification of the direction of an image edge in a pixel block of the image and filtering applied along the boundary of the block in a direction substantially parallel to the detected image edge. Pixels are selected for filtering on the basis of the quantization parameter of the block of which they are members, the relative difference between pixels adjacent to the block boundary, and significant changes value of pixels in a filtering segment. Filtering is applied parallel to the detected edge to protect the sharpness of the edge while reducing or eliminating blocking and ringing artifacts. A method of separately post-processing fields of interlaced video eliminating complications arising from separate compression of the fields is also disclosed.

Owner:SHARP KK

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com