Patents

Literature

183 results about "Video browsing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

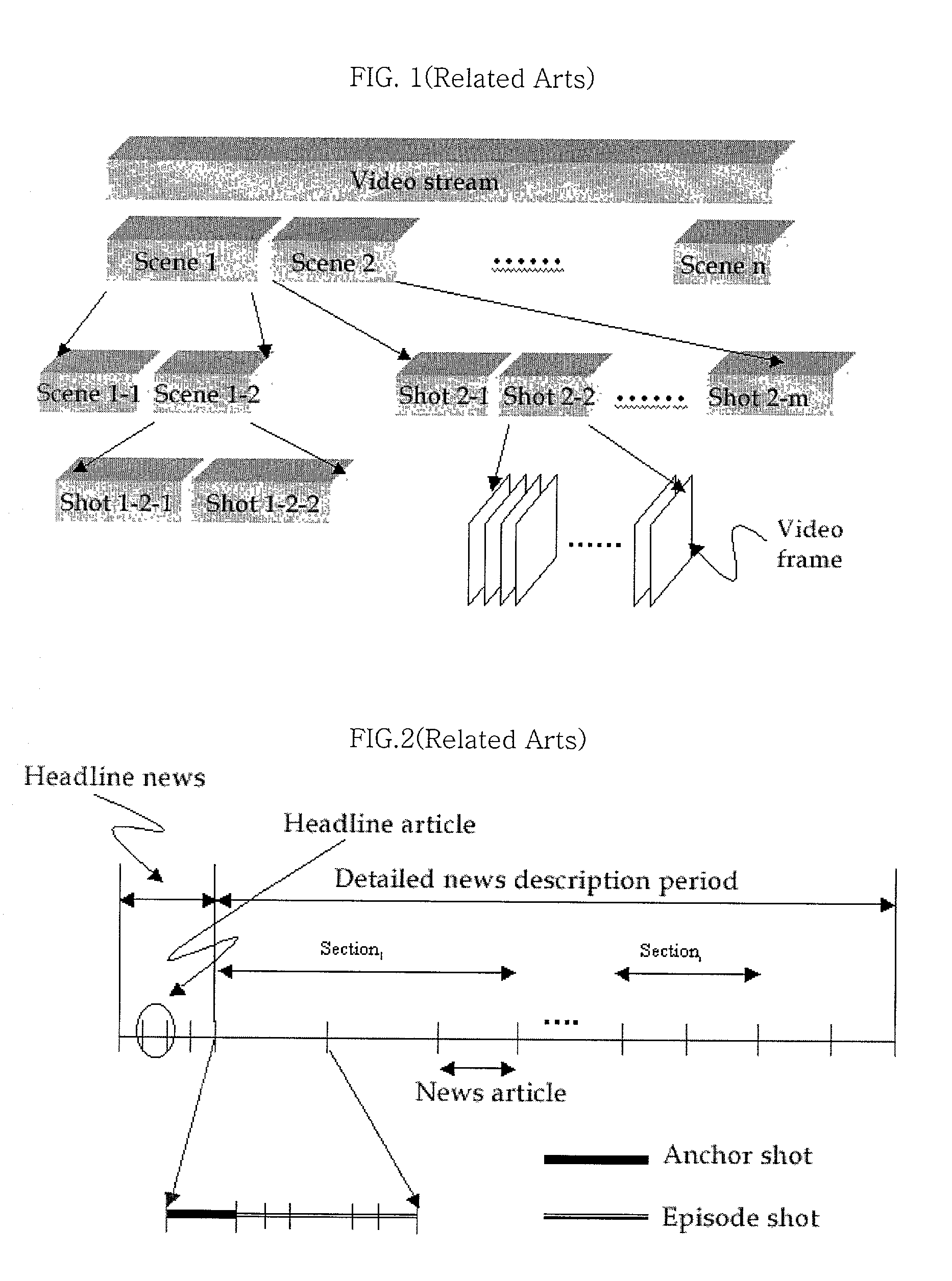

Video browsing, also known as exploratory video search, is the interactive process of skimming through video content in order to satisfy some information need or to interactively check if the video content is relevant. While originally proposed to help users inspecting a single video through visual thumbnails, modern video browsing tools enable users to quickly find desired information in a video archive by iterative human–computer interaction through an exploratory search approach. Many of these tools presume a smart user that wants features to interactively inspect video content as well as automatic content filtering features. For that purpose, several video interaction features are usually provided, such as sophisticated navigation in video or search by a content-based query. Video browsing tools often build on lower-level video content analysis, such as shot transition detection, keyframe extraction, semantic concept detection, and create a structured content overview of the video file or video archive. Furthermore, they usually provide sophisticated navigation features, such as advanced timelines, visual seeker bars or a list of selected thumbnails, as well as means for content querying. Examples of content queries are shot filtering through visual concepts (e.g., only shots showing cars), through some specific characteristics (e.g., color or motion filtering), through user-provided sketches (e.g., a visually drawn sketch), or through content-based similarity search.

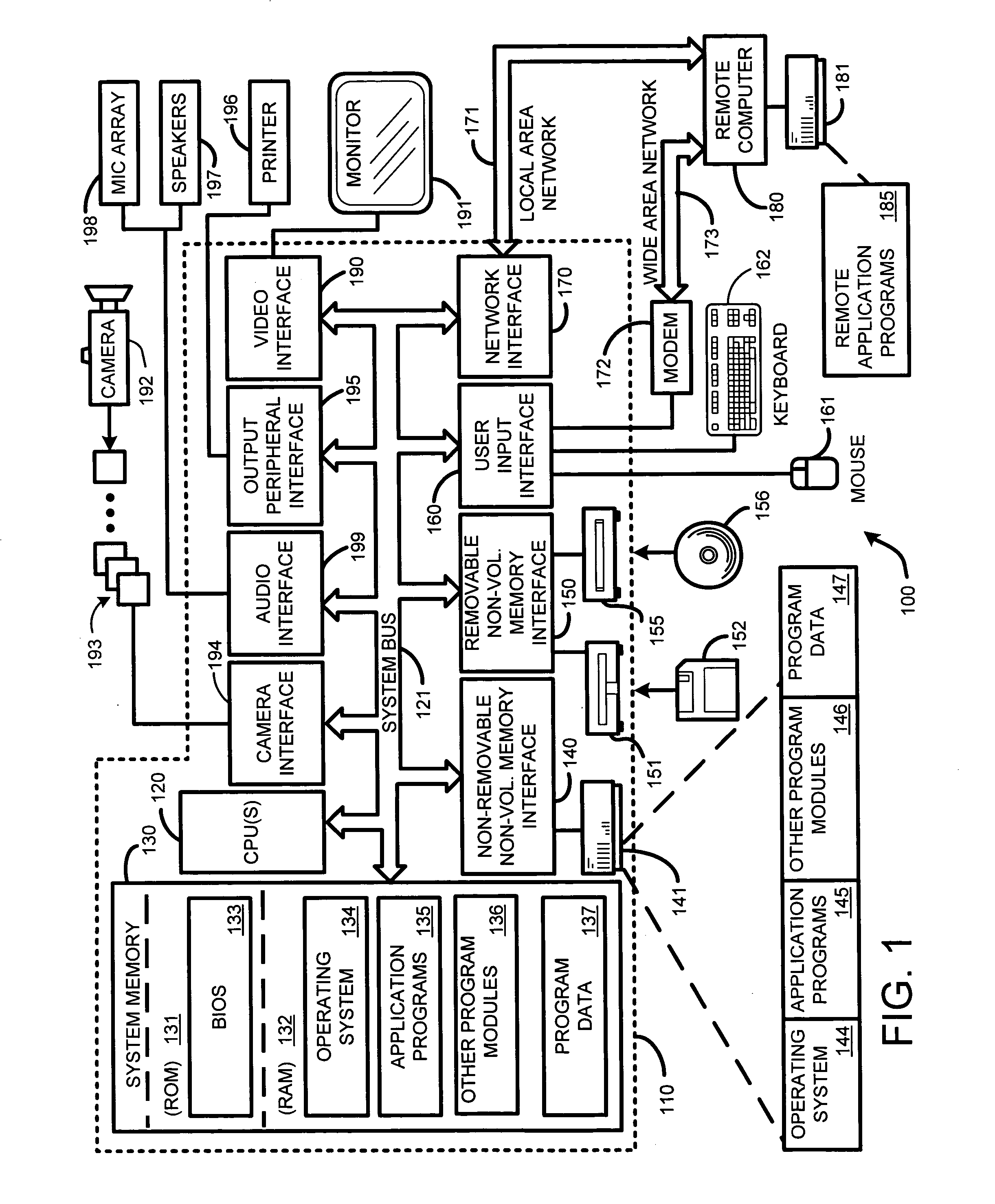

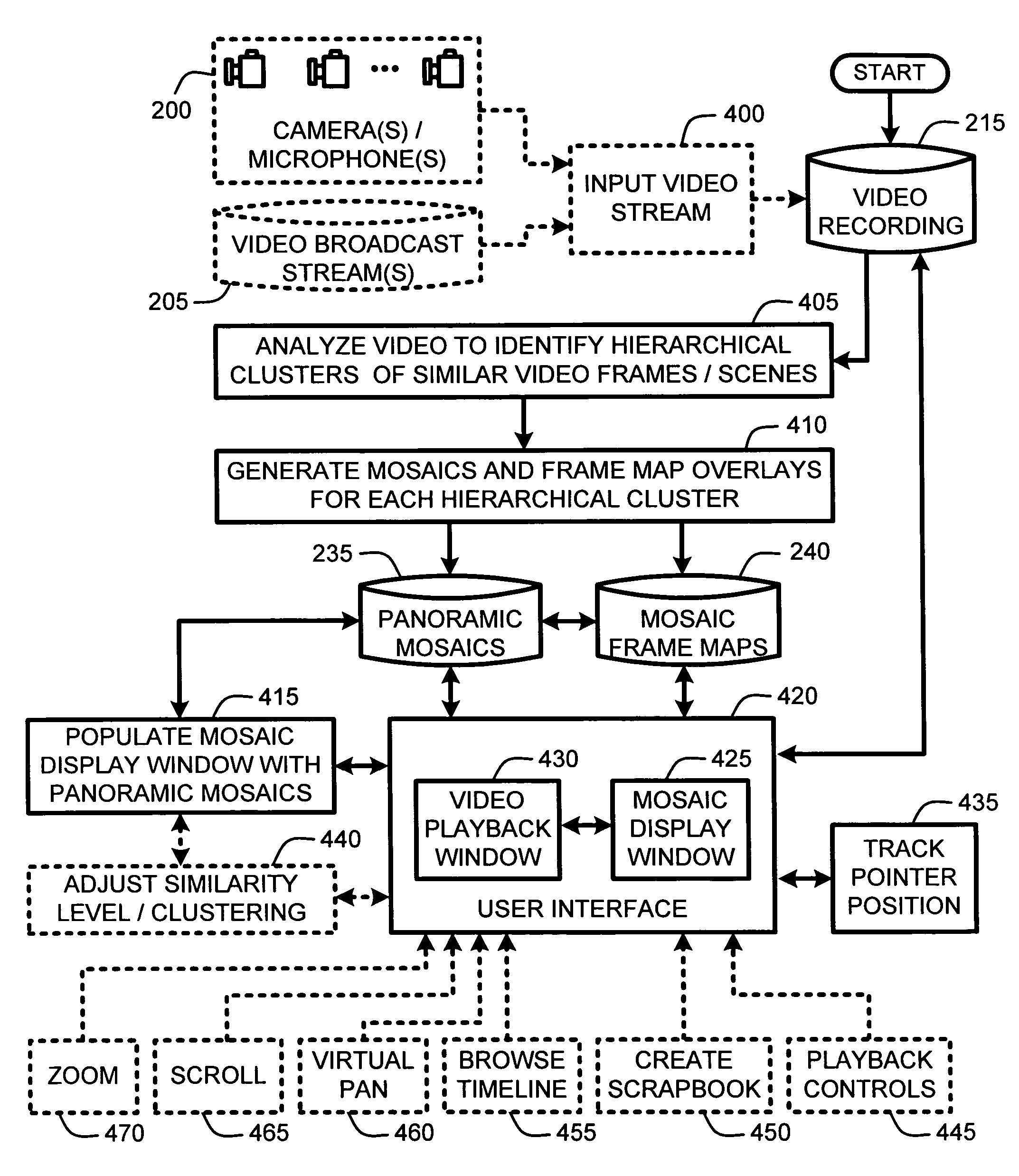

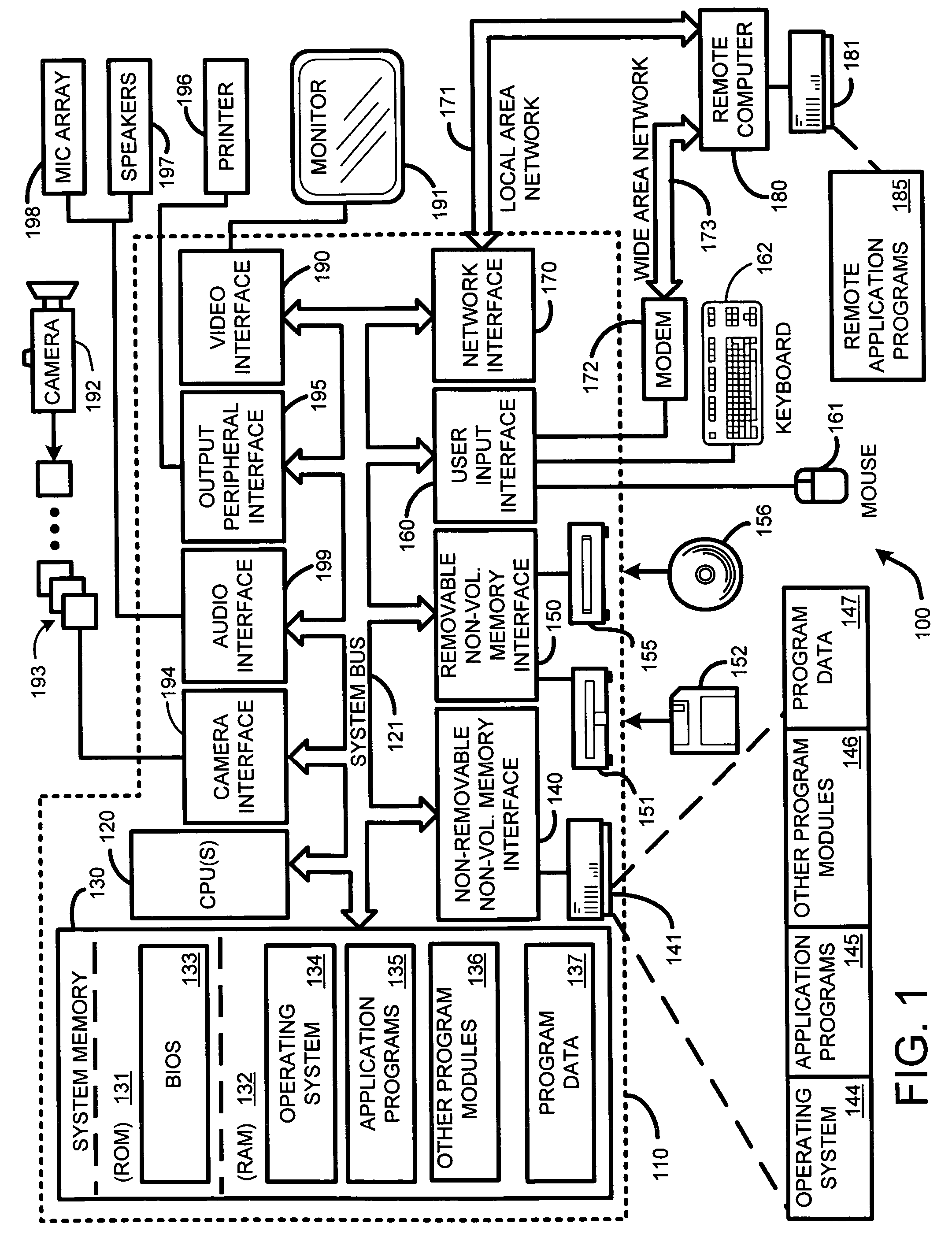

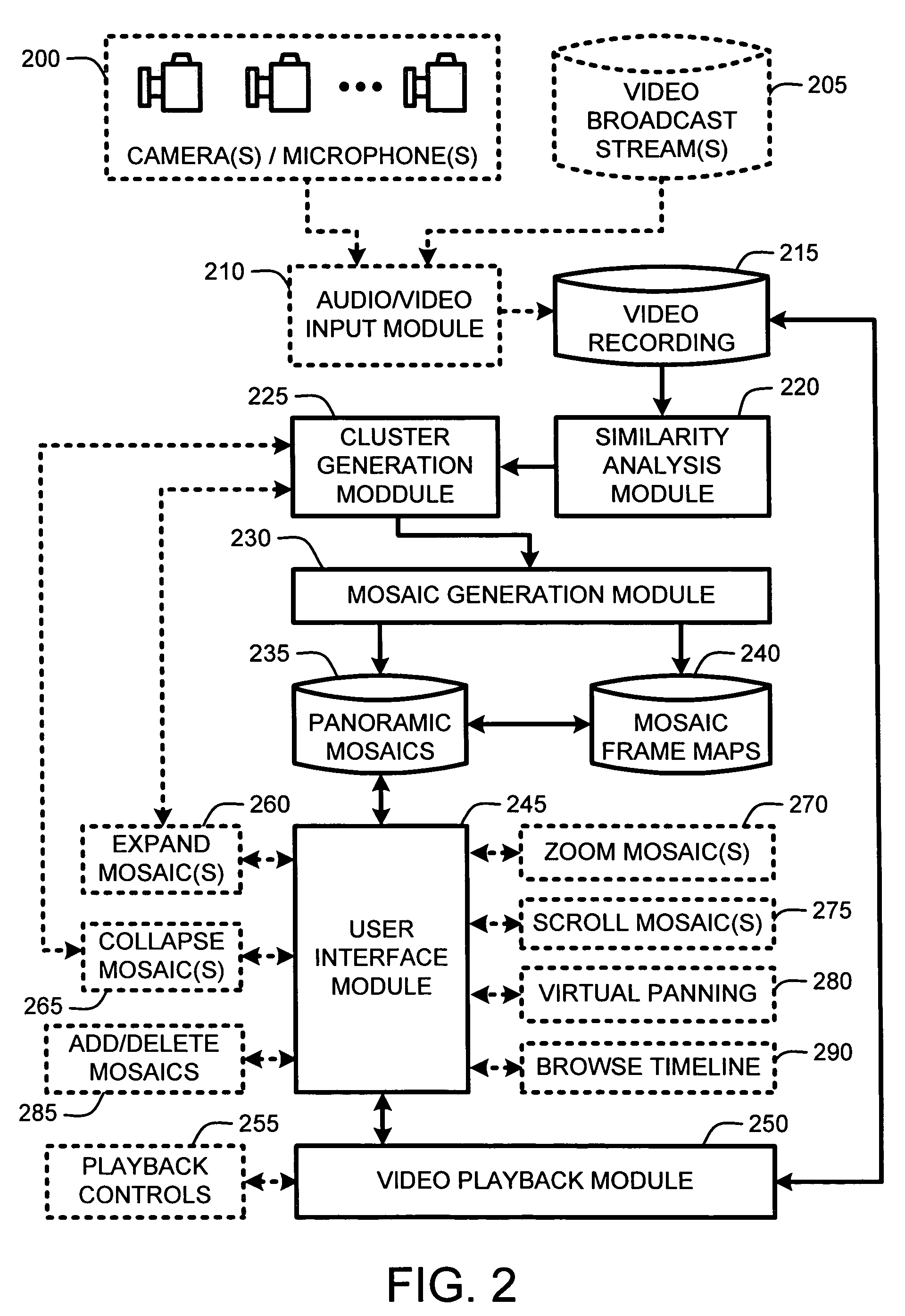

System and method for video browsing using a cluster index

ActiveUS20060120624A1Reduce in quantityIncrease the number ofDigital data information retrievalCharacter and pattern recognitionComputer graphics (images)Video recording

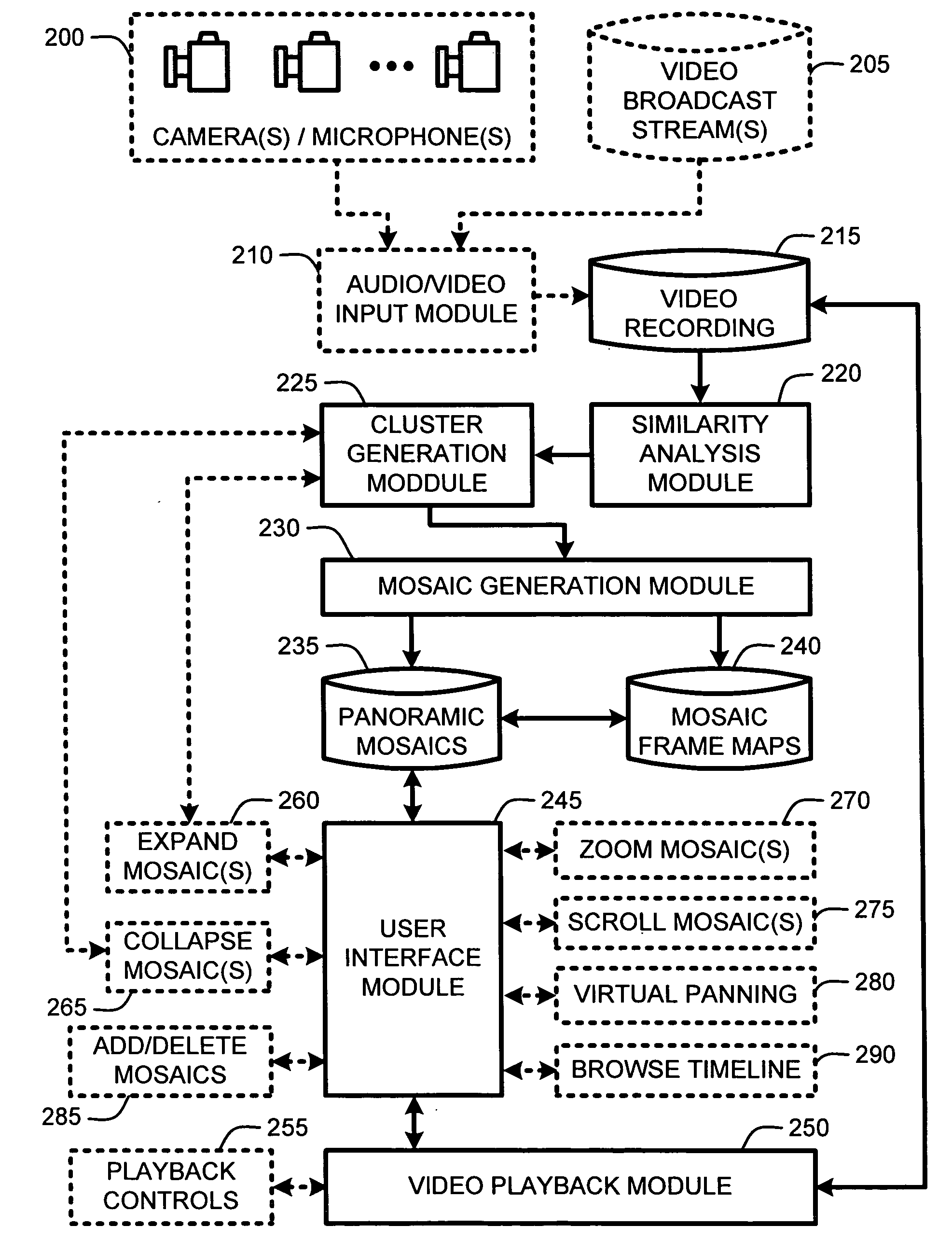

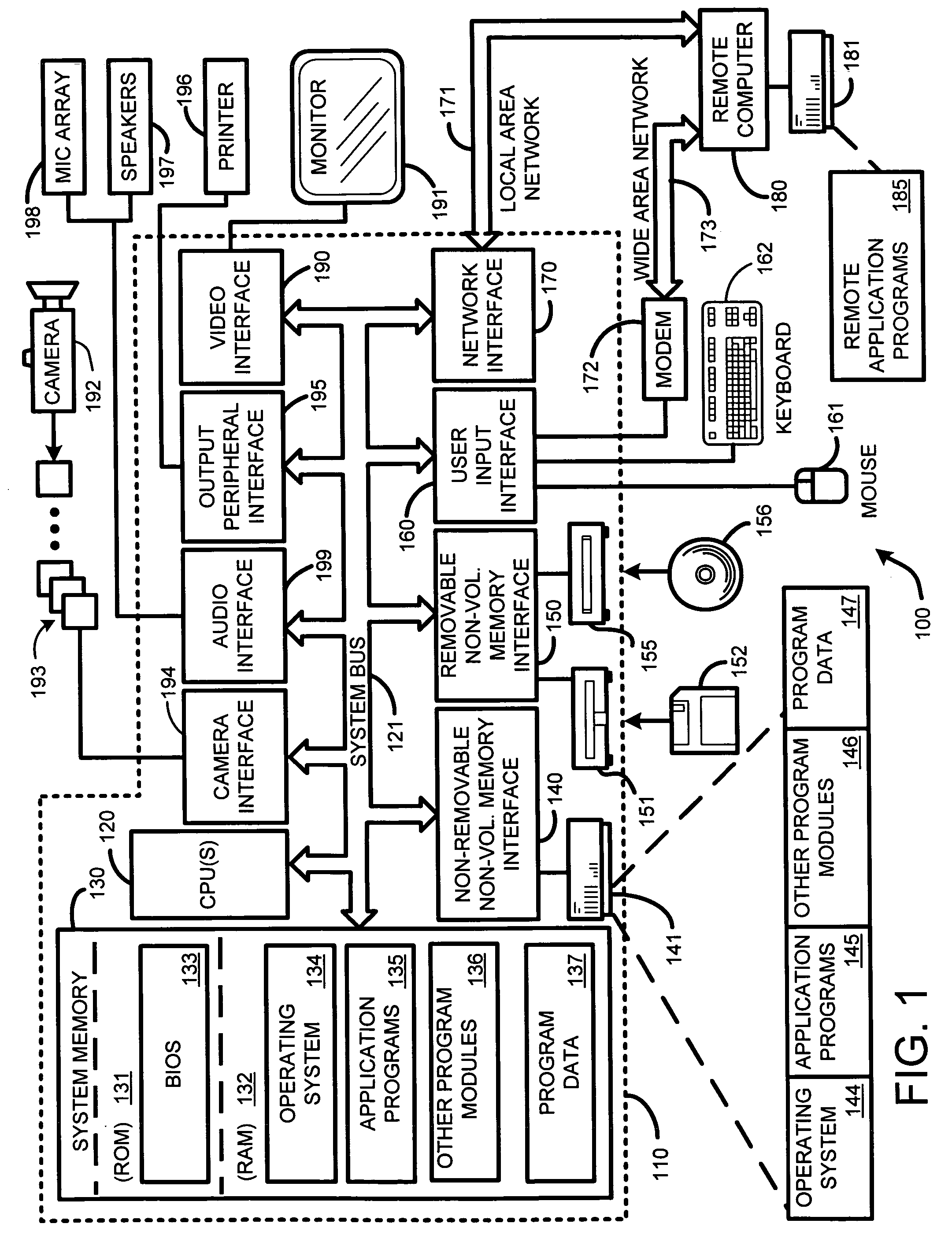

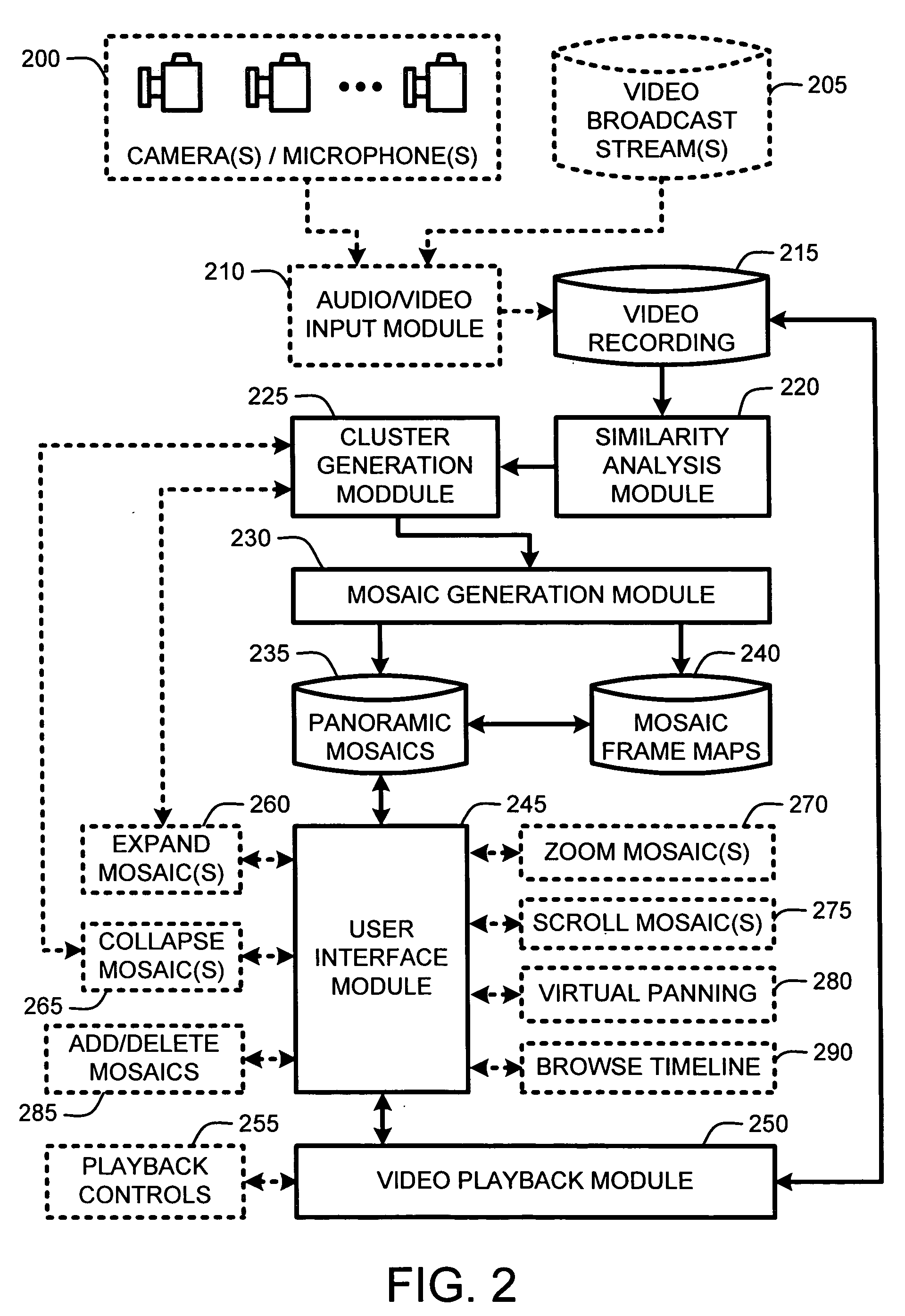

A “Video Browser” provides an intuitive user interface for indexing, and interactive visual browsing, of particular elements within a video recording. In general, the Video Browser operates by first generating a set of one or more mosaic images from the video recording. In one embodiment, these mosaics are further clustered using an adjustable similarity threshold. User selection of a particular video mosaic then initiates a playback of corresponding video frames. However, in contrast to conventional mosaicing schemes which simply play back the set of frames used to construct the mosaic, the Video Browser provides a playback of only those individual frames within which a particular point selected within the image mosaic was observed. Consequently, user selection of a point in one of the image mosaics serves to provide a targeted playback of only those frames of interest, rather than playing back the entire image sequence used to generate the mosaic.

Owner:MICROSOFT TECH LICENSING LLC

Rapid production of reduced-size images from compressed video streams

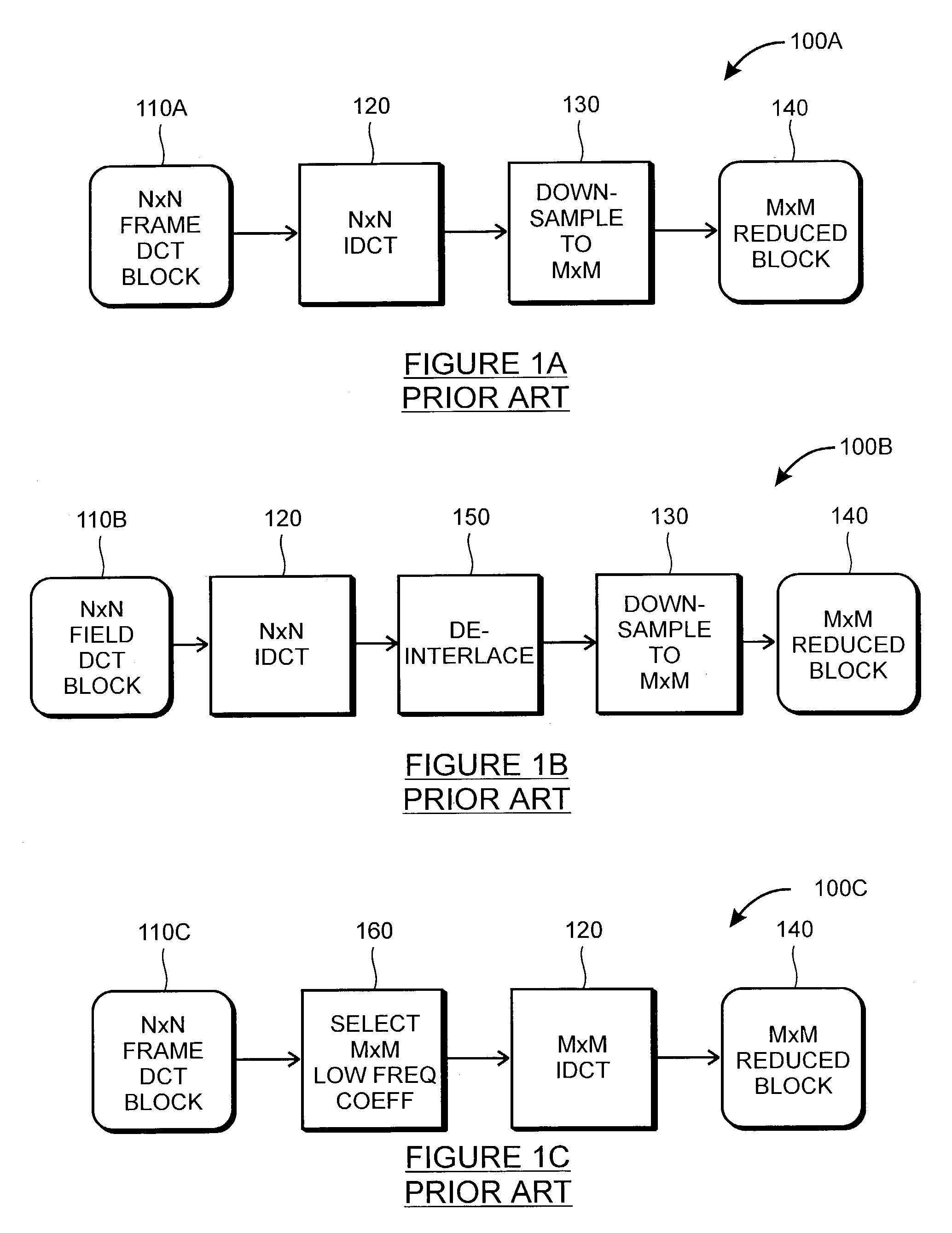

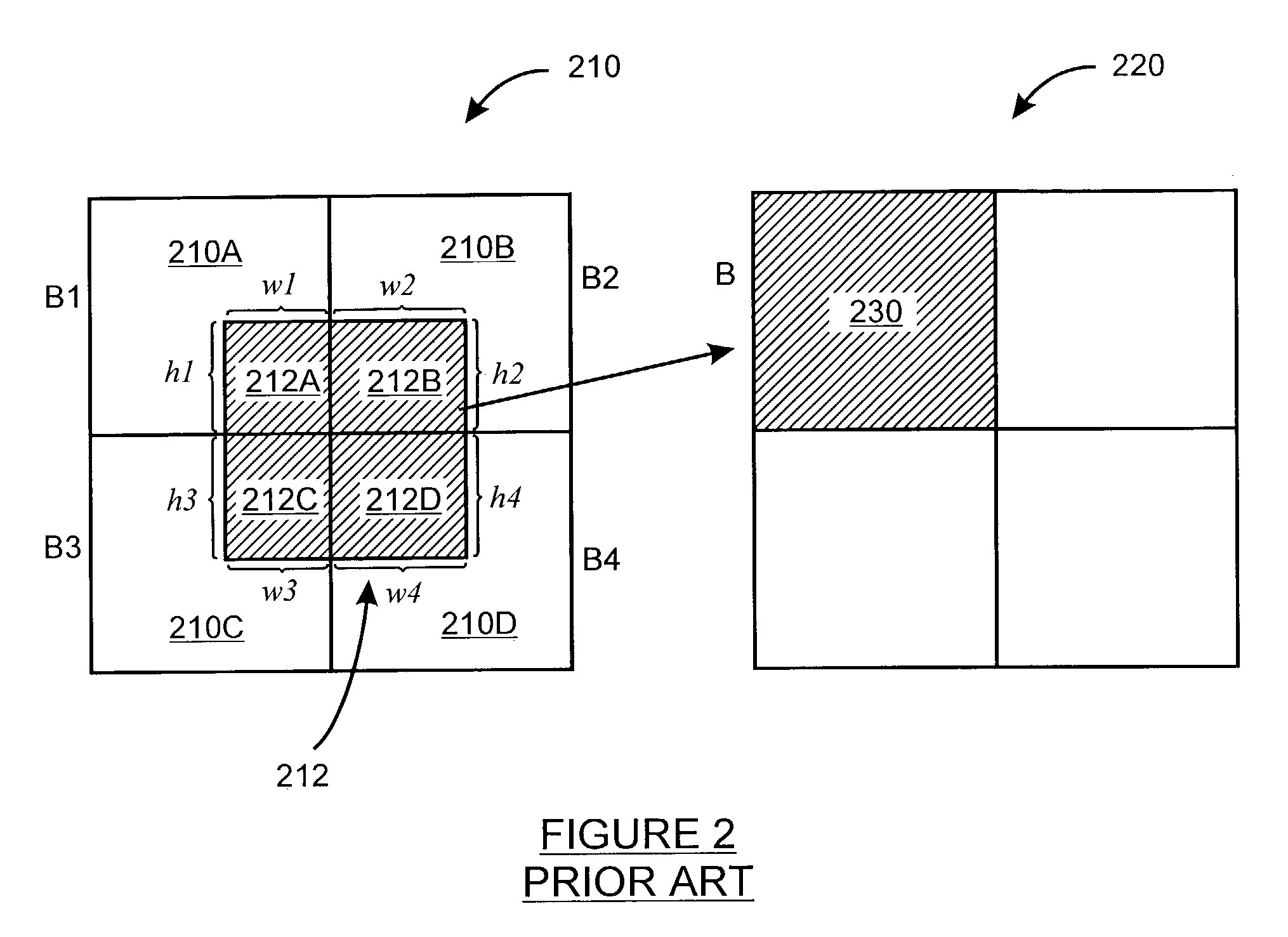

ActiveUS7471834B2Simple technologyRapid productionDisc-shaped record carriersFlat record carrier combinationsInterlaced videoReduced size

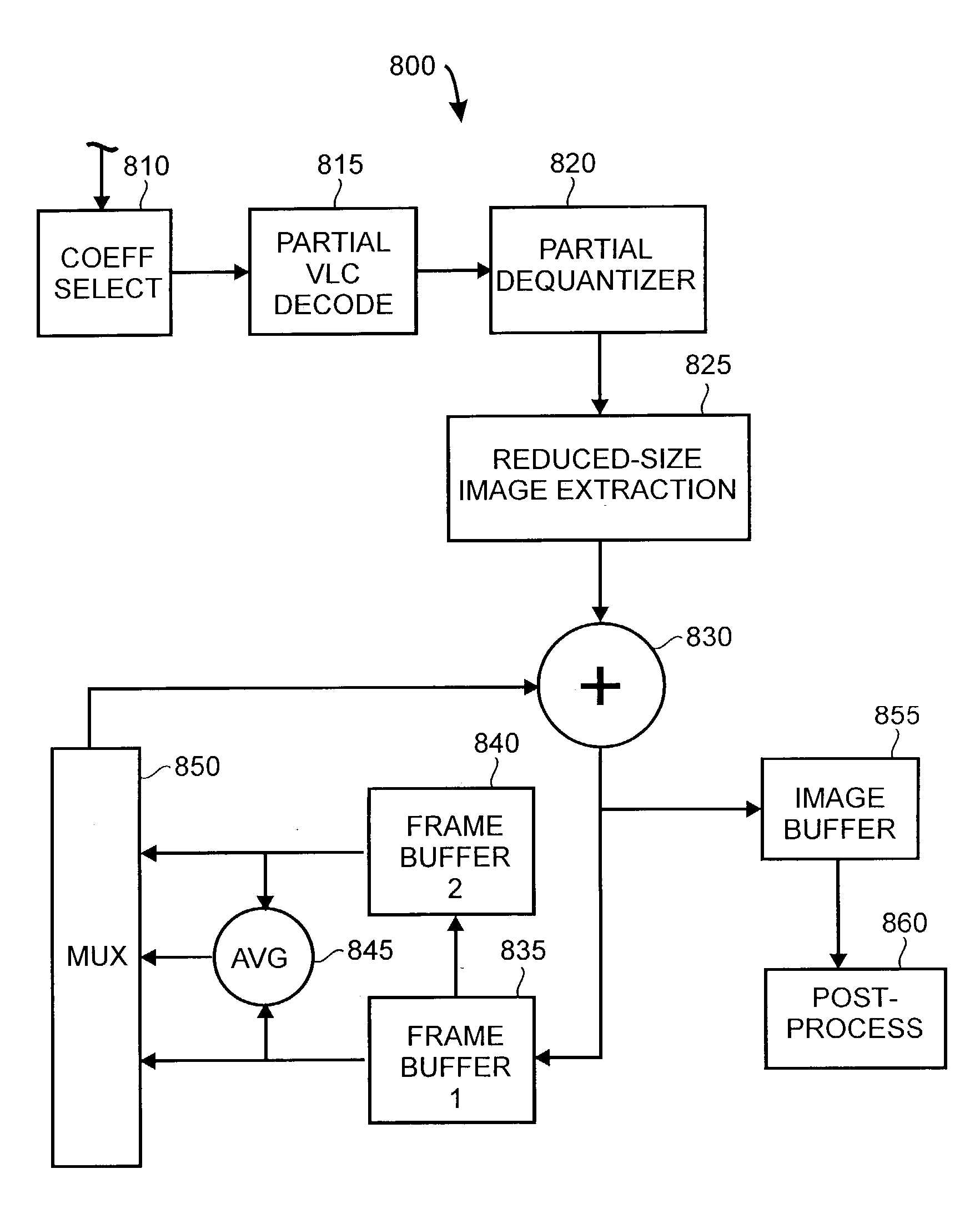

Methods for fast generating spatially reduced-size images directly from compressed video streams supporting the coding of interlaced frames through transform coding and motion compensation. A sequence of reduced-size images are rapidly generated from compressed video streams by efficiently combining the steps of inverse transform, down-sampling and construction of each field image. The construction of reduced-size field images also allows the efficient motion compensation. The fast generation of reduced-size images is applicable to a variety of low-cost applications such as video browsing, video summary, fast thumbnail playback and video indexing.

Owner:SCENERA INC

Temporal-context-based video browsing interface for PVR-enabled television systems

InactiveUS20060109283A1Improve presentationImprove browsingTelevision system detailsDigital data information retrievalTelevision systemGraphics

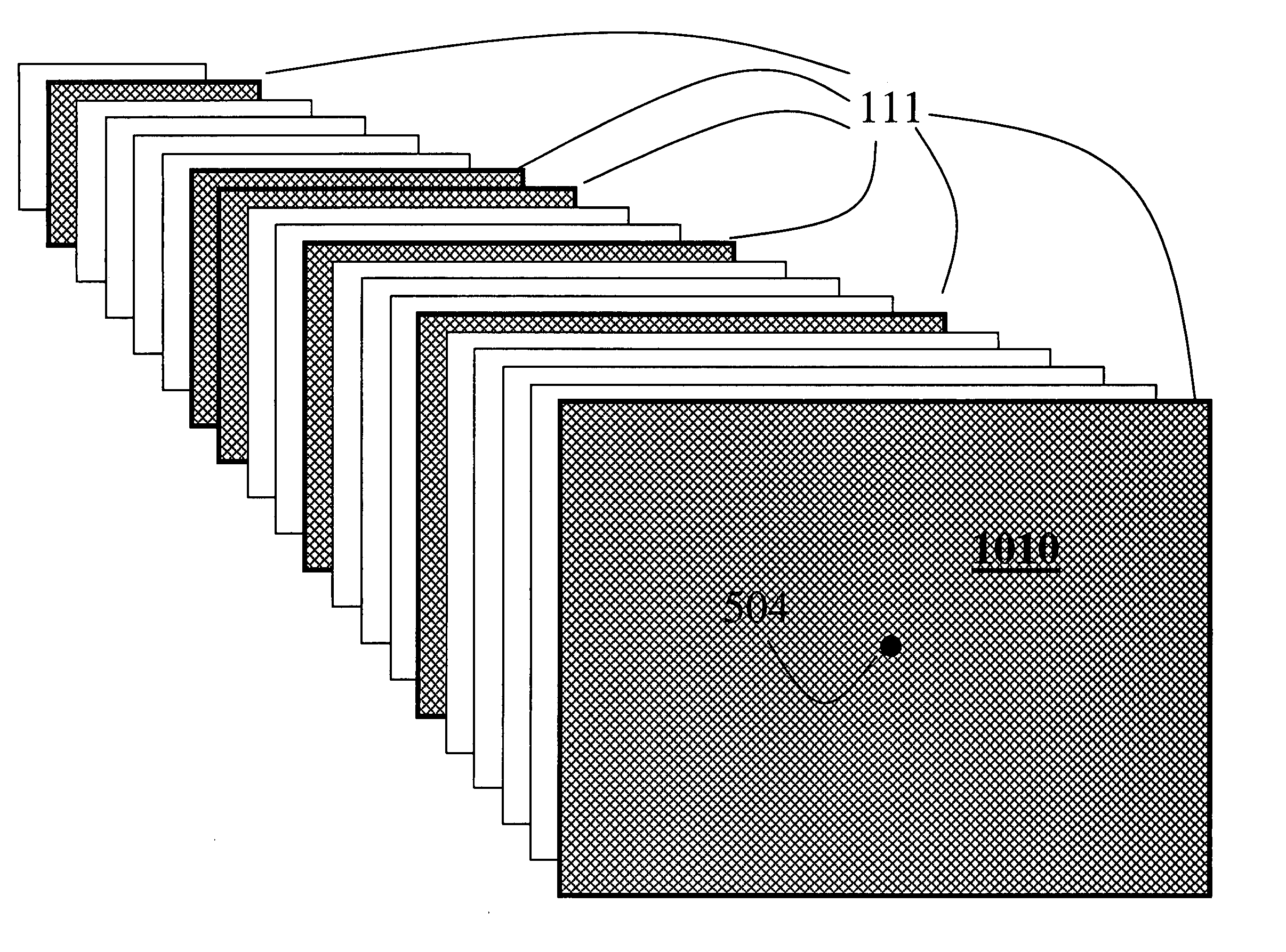

A method and system for presenting a set of graphic images on a television system is presented. A sequence of frames of a video is received. The frames are decoded and scaled to reduced size frames, which are sampled temporally and periodically to provide selected frames. The selected frames are stored in a circular buffer and converted to graphic images. The graphic images are periodically composited and rendered as an output graphic image using a graphic interface.

Owner:MITSUBISHI ELECTRIC RES LAB INC

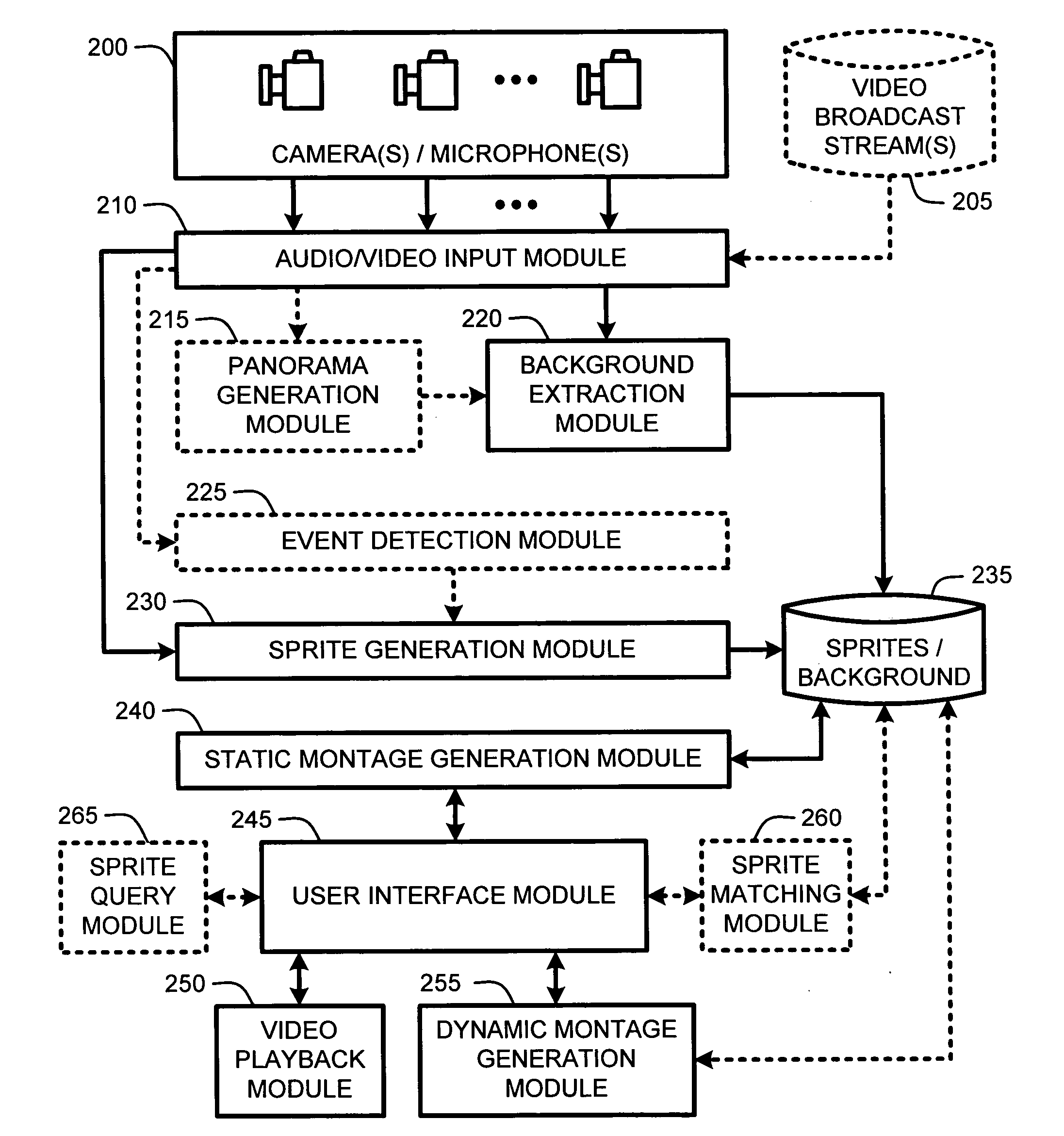

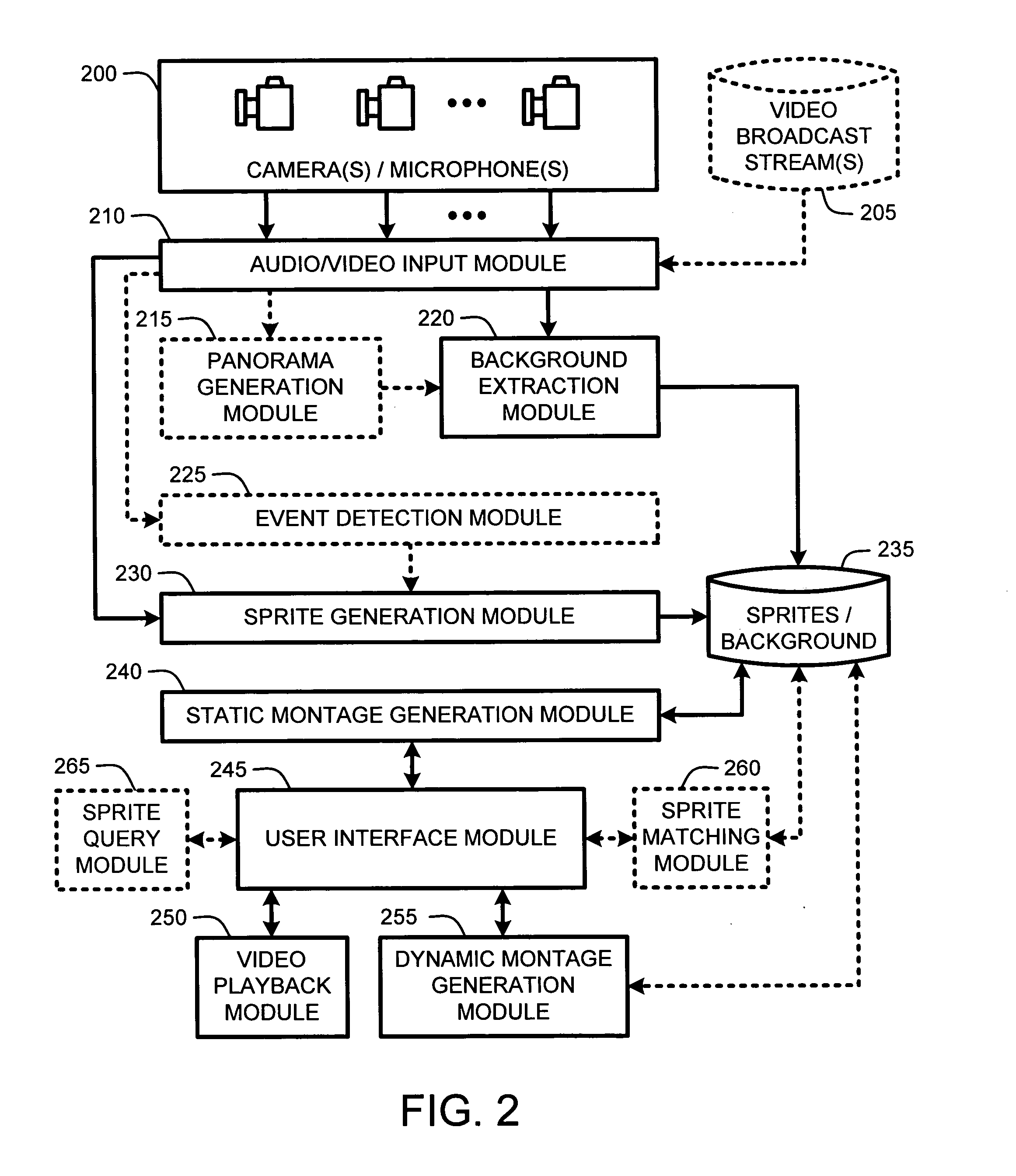

Interactive montages of sprites for indexing and summarizing video

ActiveUS20060117356A1Quick reviewAvoid normal displayDigital data information retrievalElectronic editing digitised analogue information signalsEvent typeInteractive video

A “Video Browser” provides interactive browsing of unique events occurring within an overall video recording. In particular, the Video Browser processes the video to generate a set of video sprites representing unique events occurring within the overall period of the video. These unique events include, for example, motion events, security events, or other predefined event types, occurring within all or part of the total period covered by the video. Once the video has been processed to identify the sprites, the sprites are then arranged over a background image extracted from the video to create an interactive static video montage. The interactive video montage illustrates all events occurring within the video in a single static frame. User selection of sprites within the montage causes either playback of a portion of the video in which the selected sprites were identified, or concurrent playback of the selected sprites within a dynamic video montage.

Owner:MICROSOFT TECH LICENSING LLC

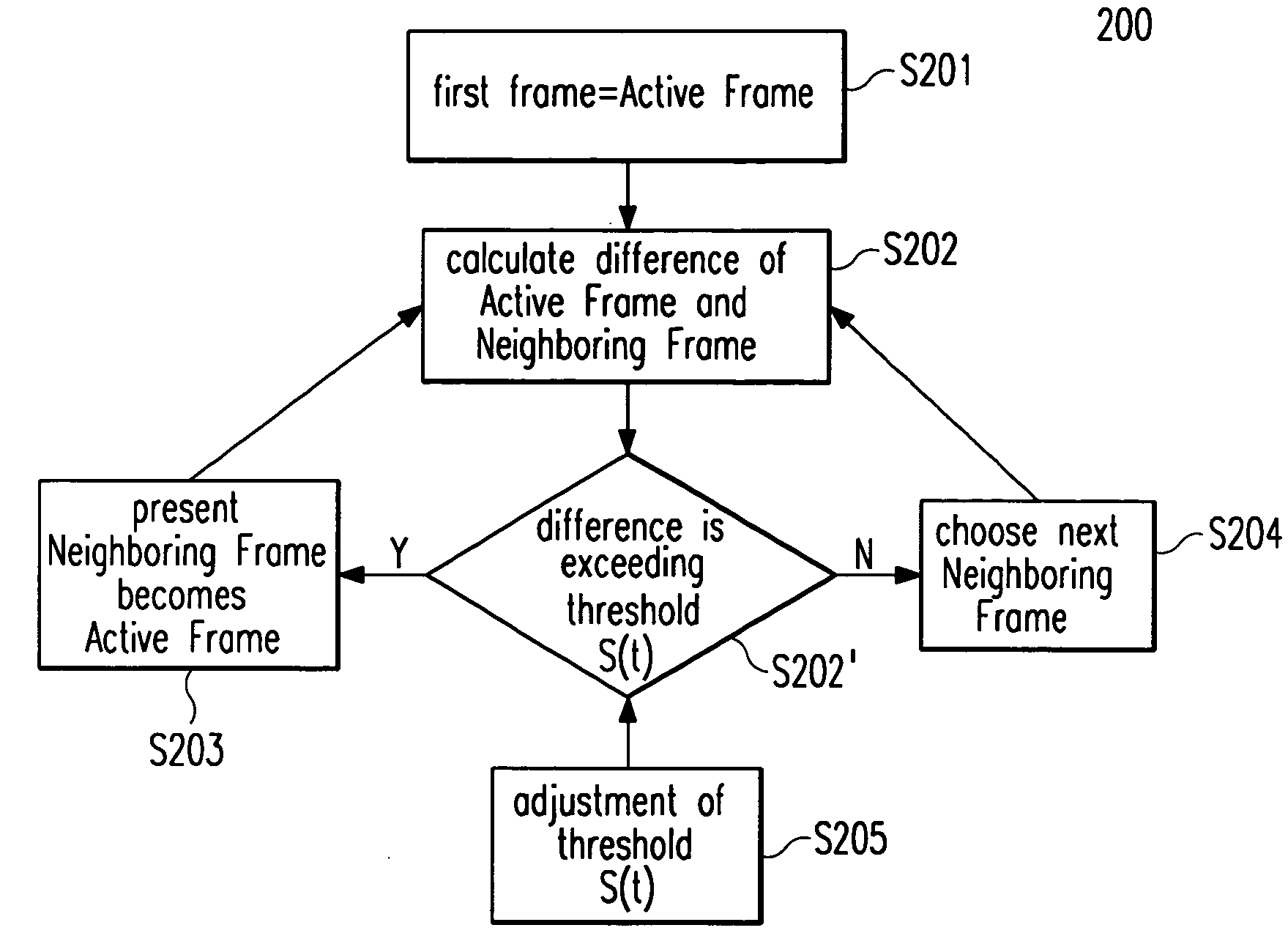

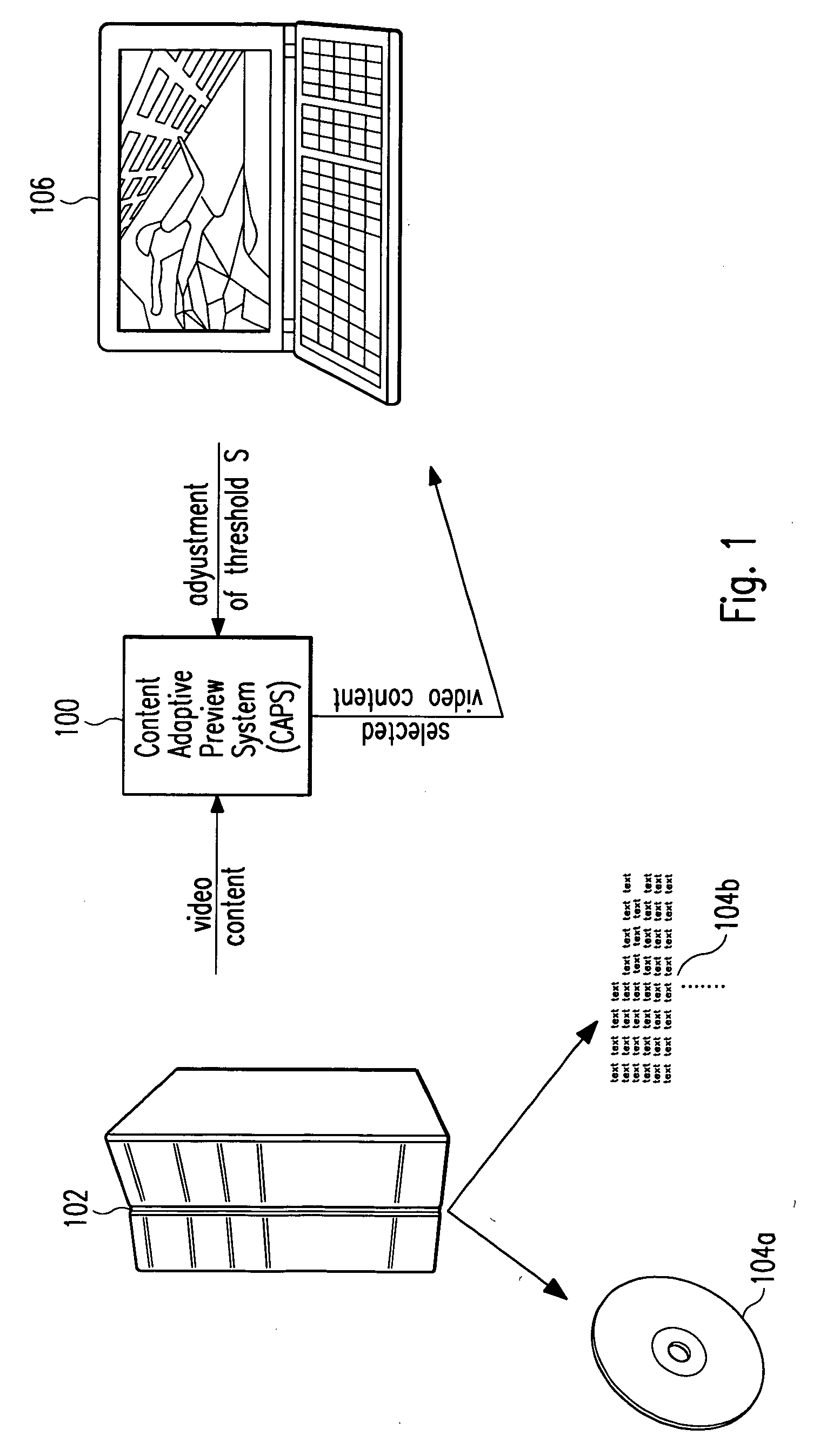

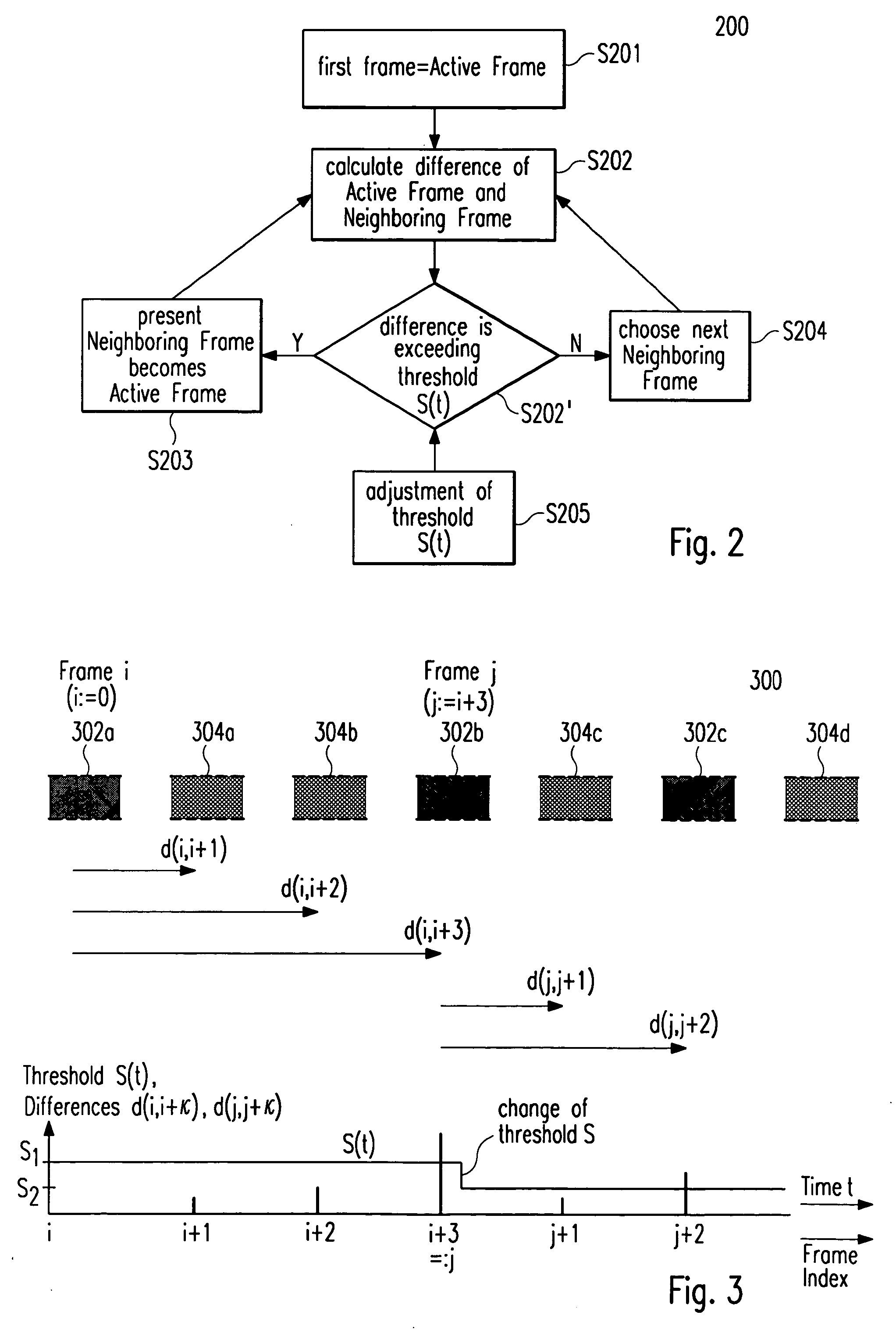

Redundancy elimination in a content-adaptive video preview system

InactiveUS20050200762A1Reducing visual redundancyEfficiently contentTelevision system detailsDrawing from basic elementsAdaptive videoSelf adaptive

A content-adaptive video preview system (100) allows to go faster through a video than existing video skimming techniques. Thereby, a user can interactively adapt (S1) the speed of browsing and / or the abstraction level of presentation. According to one embodiment of the invention, this adaptation procedure (S1) is realized by the following steps: First, differences between precalculated spatial color histograms associated with chronologically subsequent pairs of video frames said video file is composed of are calculated (S1a). Then, these differences and / or a cumulative difference value representing the sum of these differences are compared (S1b) to a predefined redundancy threshold (S(t)). In case differences in the color histograms of particular video frames (302a-c) and / or said cumulative difference value exceed this redundancy threshold (S(t)), these video frames are selected (S1c) for the preview. Intermediate video frames (304a-d) are removed and / or inserted (S1d) between each pair of selected chronologically subsequent video frames depending on the selected abstraction level of presentation. Thereby, said redundancy threshold value (S(t)) can be adapted (S1b′) for changing the speed of browsing and / or the abstraction level of presentation.

Owner:SONY DEUT GMBH

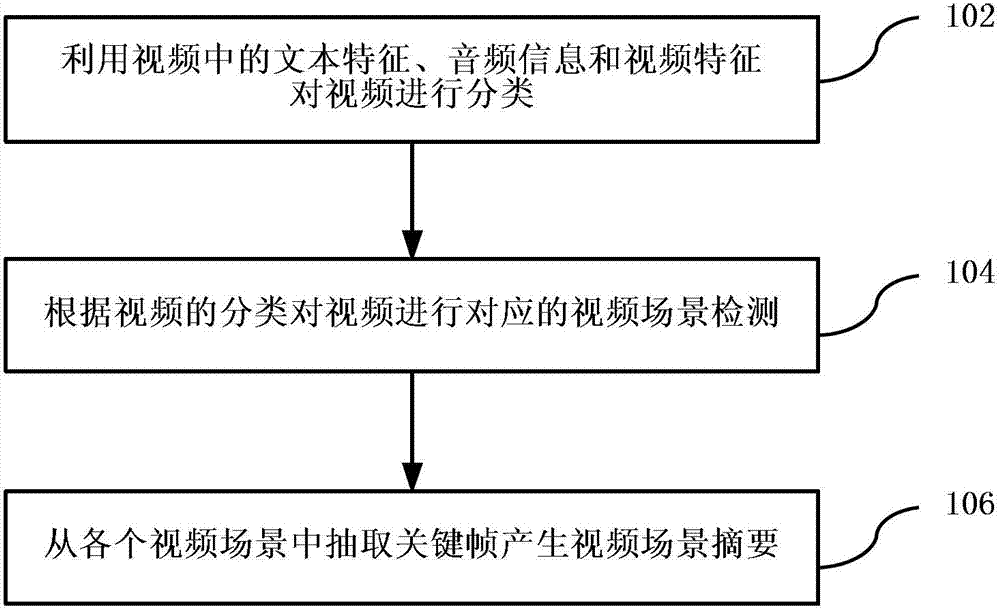

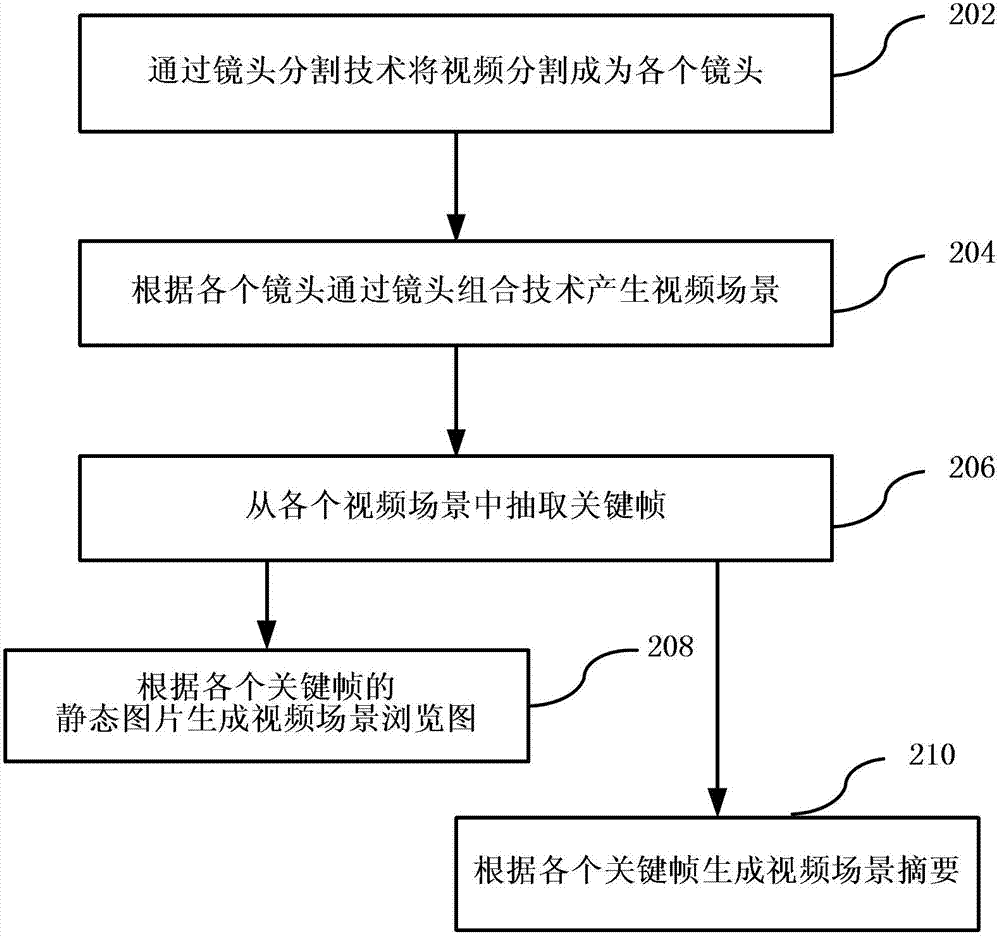

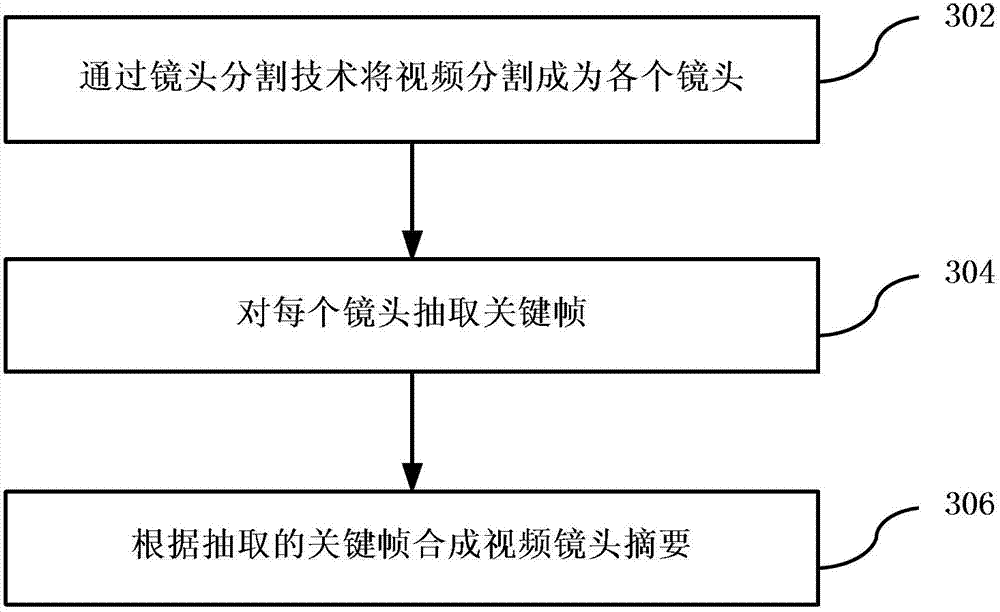

Method and device for generating video summary

InactiveCN103200463AImprove accuracyAccurate summarySelective content distributionSpecial data processing applicationsFace detectionComputer graphics (images)

The invention discloses a method and a device for generating a video summary, and relates to the technical field of video processing. The method comprises the steps of confirming the classification of a video through textual characteristics, audio information and video characteristics in the video, carrying out corresponding video scene detection for the video according to the classification of the video, and extracting key frames in a video scene to generate the video scene summary. The scheme with multi-media content analyses comprises key frame detection, lens boundary detection, image similarity analyses, face detection and identification, text search, news story segmentation, sports key scene analyses and the like. Automatic generation of the functions, such as a video browsing function and a video summarizing and rapid previewing function, of interactive television content is achieved. The functions generated by the interactive television content are automatically achieved based on multi-media content analysis techniques. Time-wasting and expensive labor editing processes can be avoided.

Owner:TVMINING BEIJING MEDIA TECH

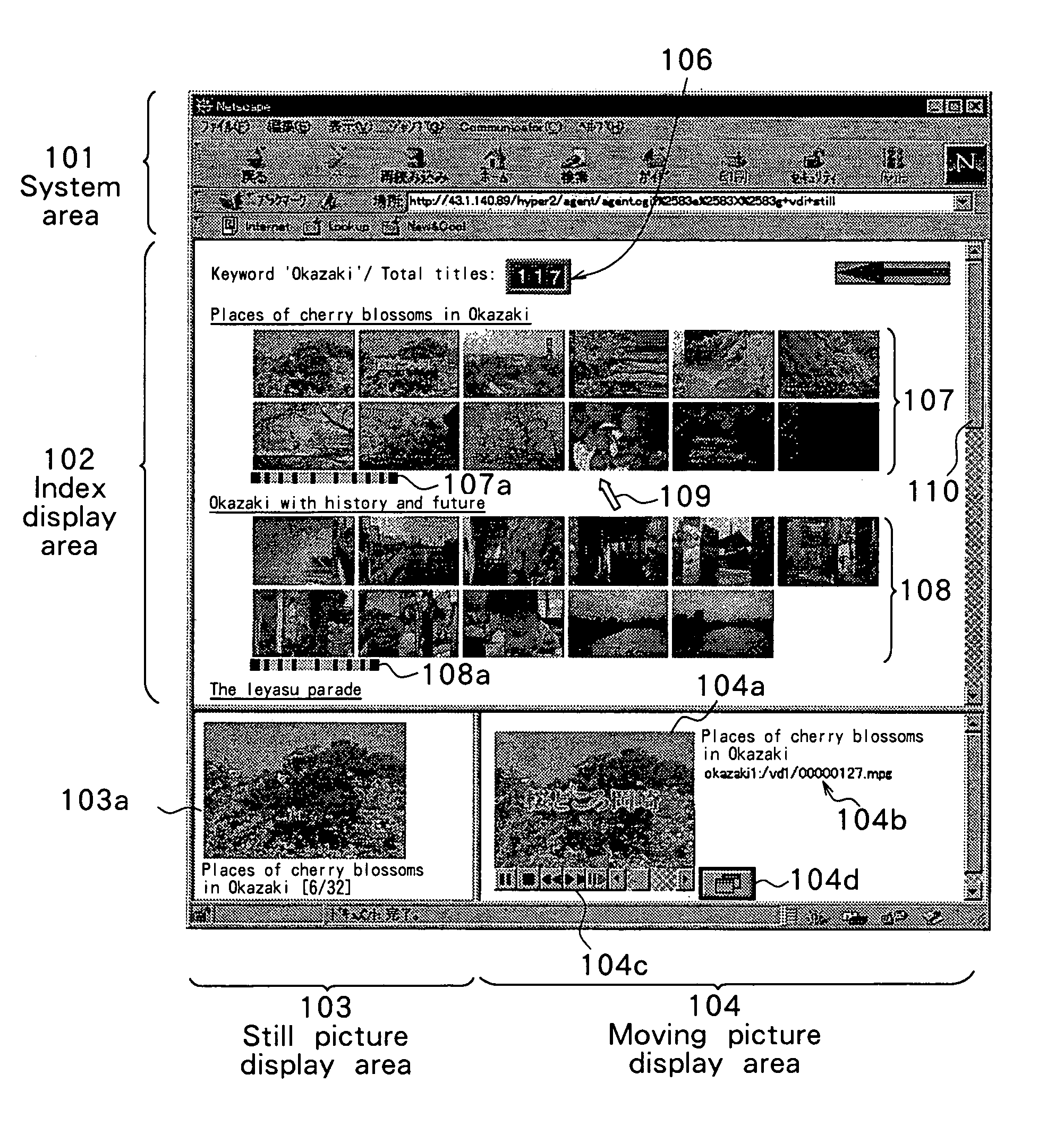

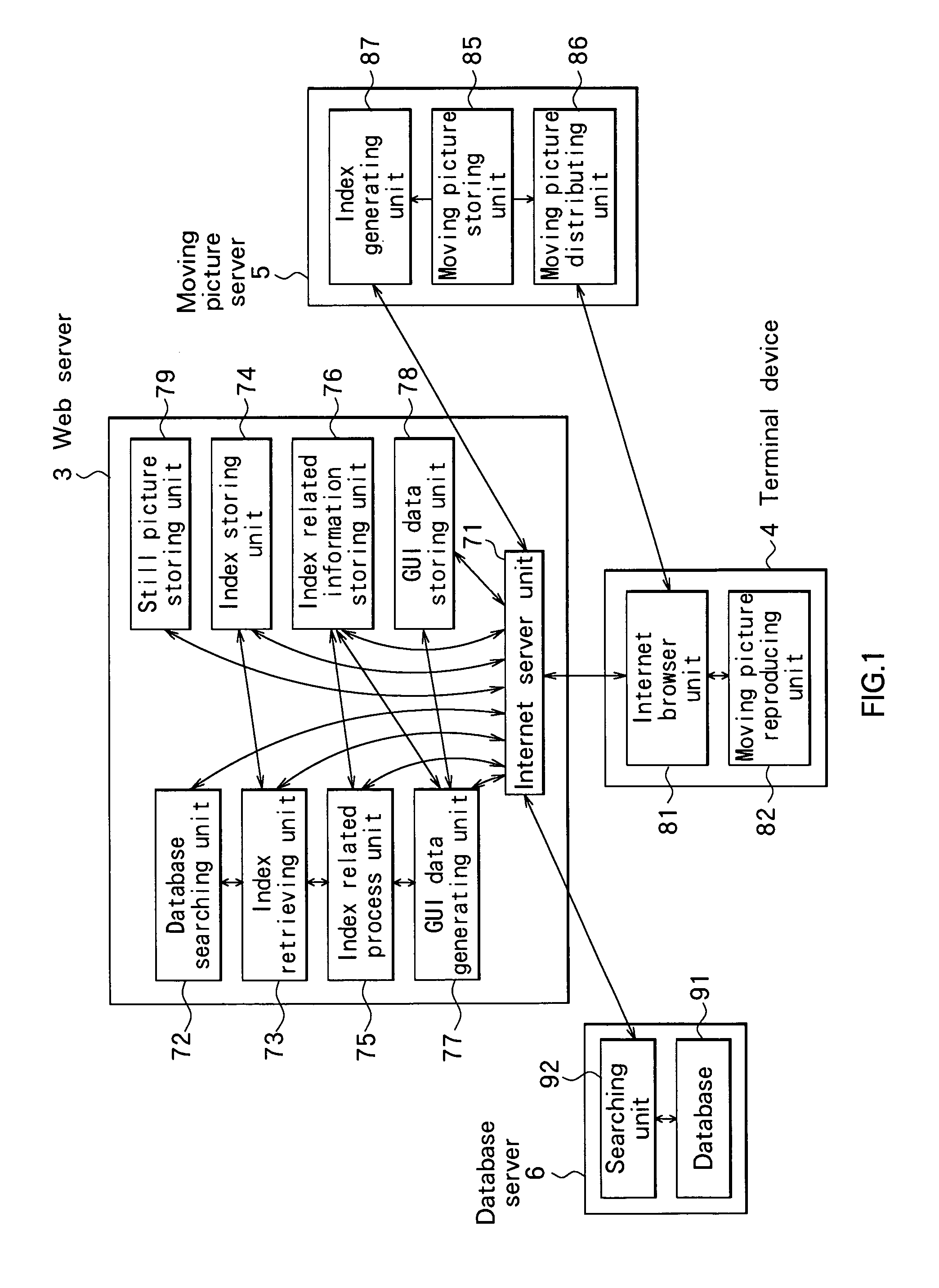

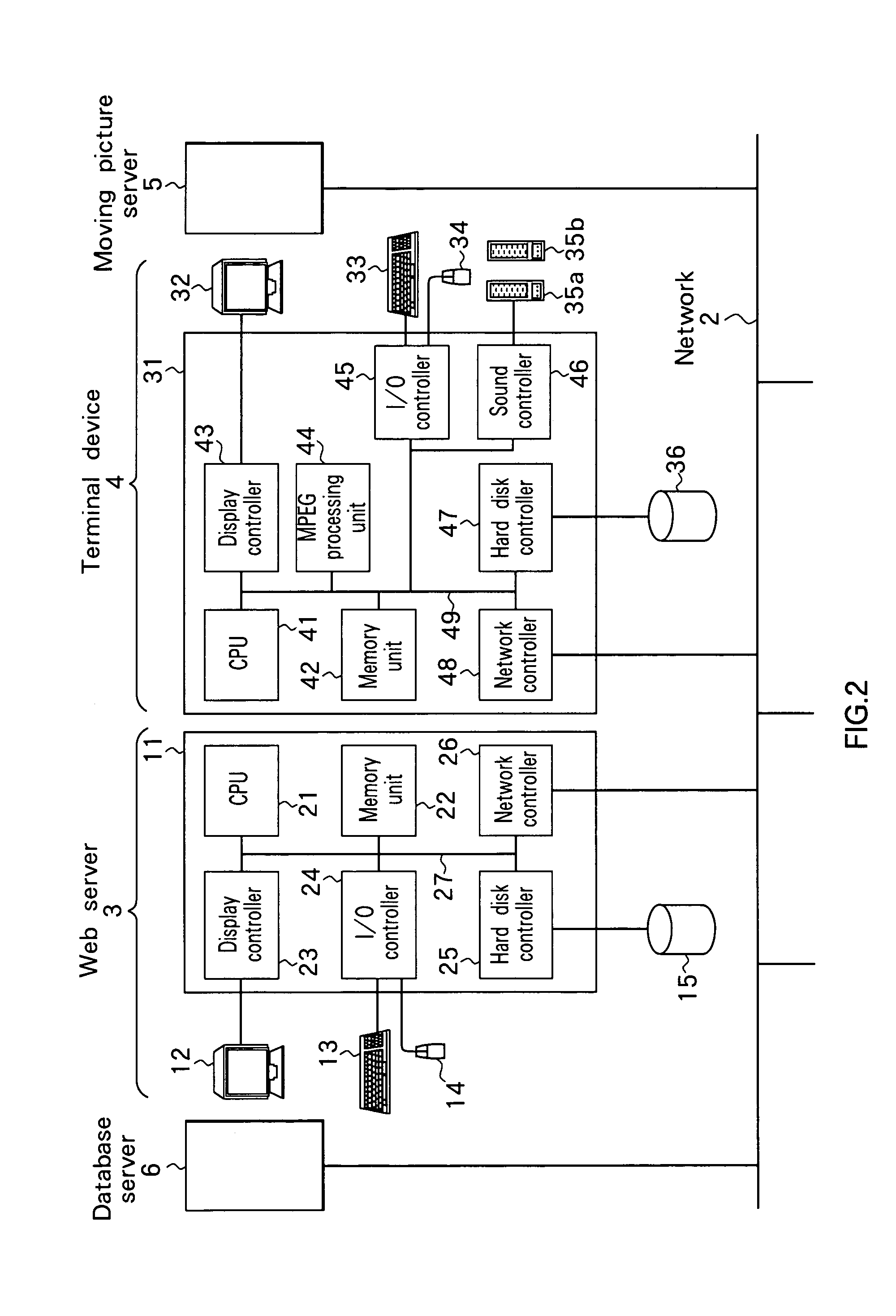

Image generating apparatus and method

InactiveUS6988244B1Television system detailsDigital data information retrievalComputer graphics (images)Video browsing

An index picture as a still picture obtained by summarizing the contents of a moving picture, which is generated by using a video browser technique can be formed in various modes. A plurality of kinds of generating modes for generating an index picture by summarizing the contents of a moving picture are prepared, any of the modes is selected, and a generating process is performed in the selected mode. It enables an index picture to be generated in a proper form according to the property, use, and the like of a moving picture material.

Owner:SONY CORP

System and method for video browsing using a cluster index

ActiveUS7594177B2Reduce in quantityIncrease the number ofDigital data information retrievalCharacter and pattern recognitionComputer graphics (images)Video recording

A “Video Browser” provides an intuitive user interface for indexing, and interactive visual browsing, of particular elements within a video recording. In general, the Video Browser operates by first generating a set of one or more mosaic images from the video recording. In one embodiment, these mosaics are further clustered using an adjustable similarity threshold. User selection of a particular video mosaic then initiates a playback of corresponding video frames. However, in contrast to conventional mosaicking schemes which simply play back the set of frames used to construct the mosaic, the Video Browser provides a playback of only those individual frames within which a particular point selected within the image mosaic was observed. Consequently, user selection of a point in one of the image mosaics serves to provide a targeted playback of only those frames of interest, rather than playing back the entire image sequence used to generate the mosaic.

Owner:MICROSOFT TECH LICENSING LLC

Article-based news video content summarizing method and browsing system

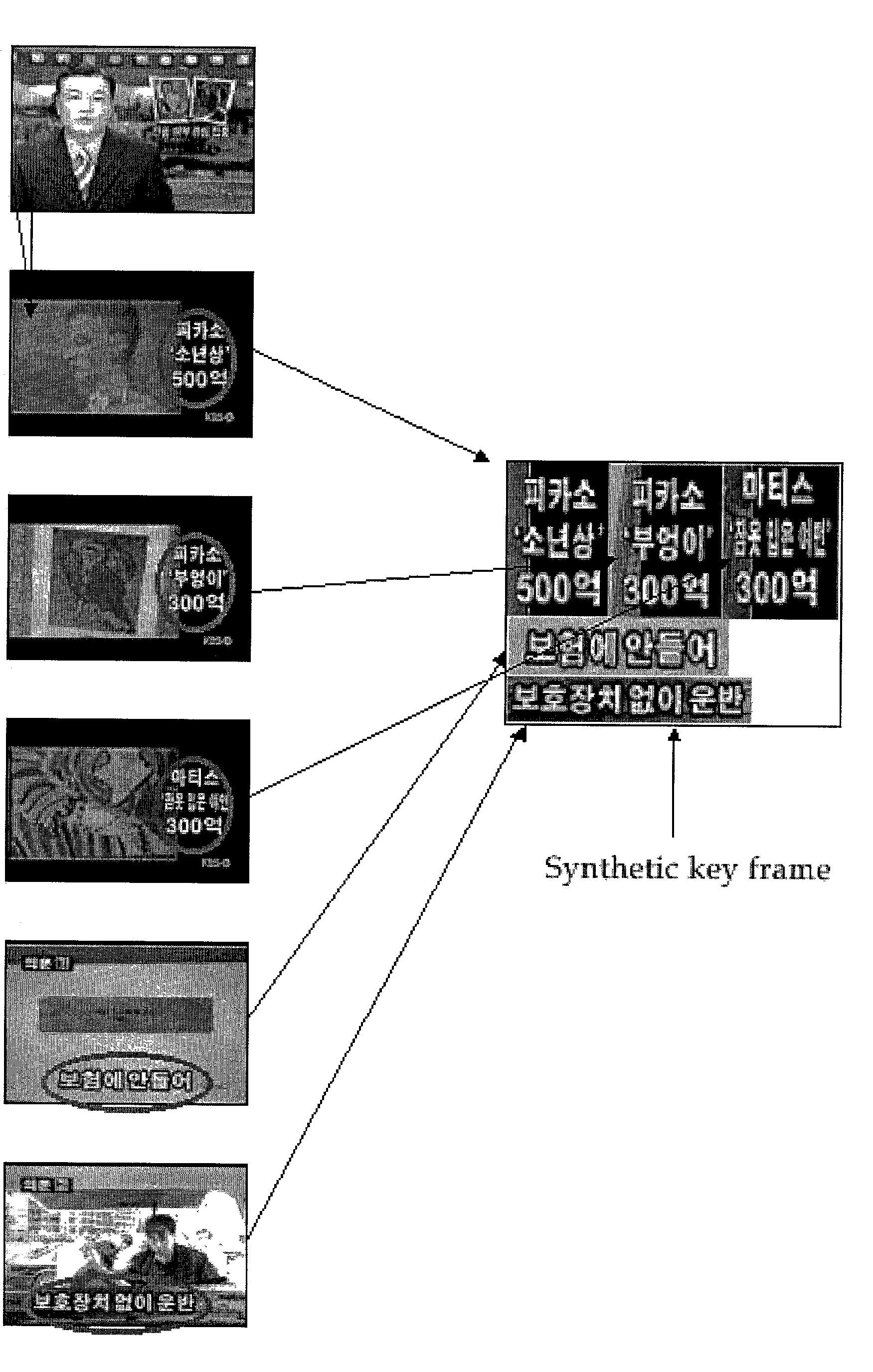

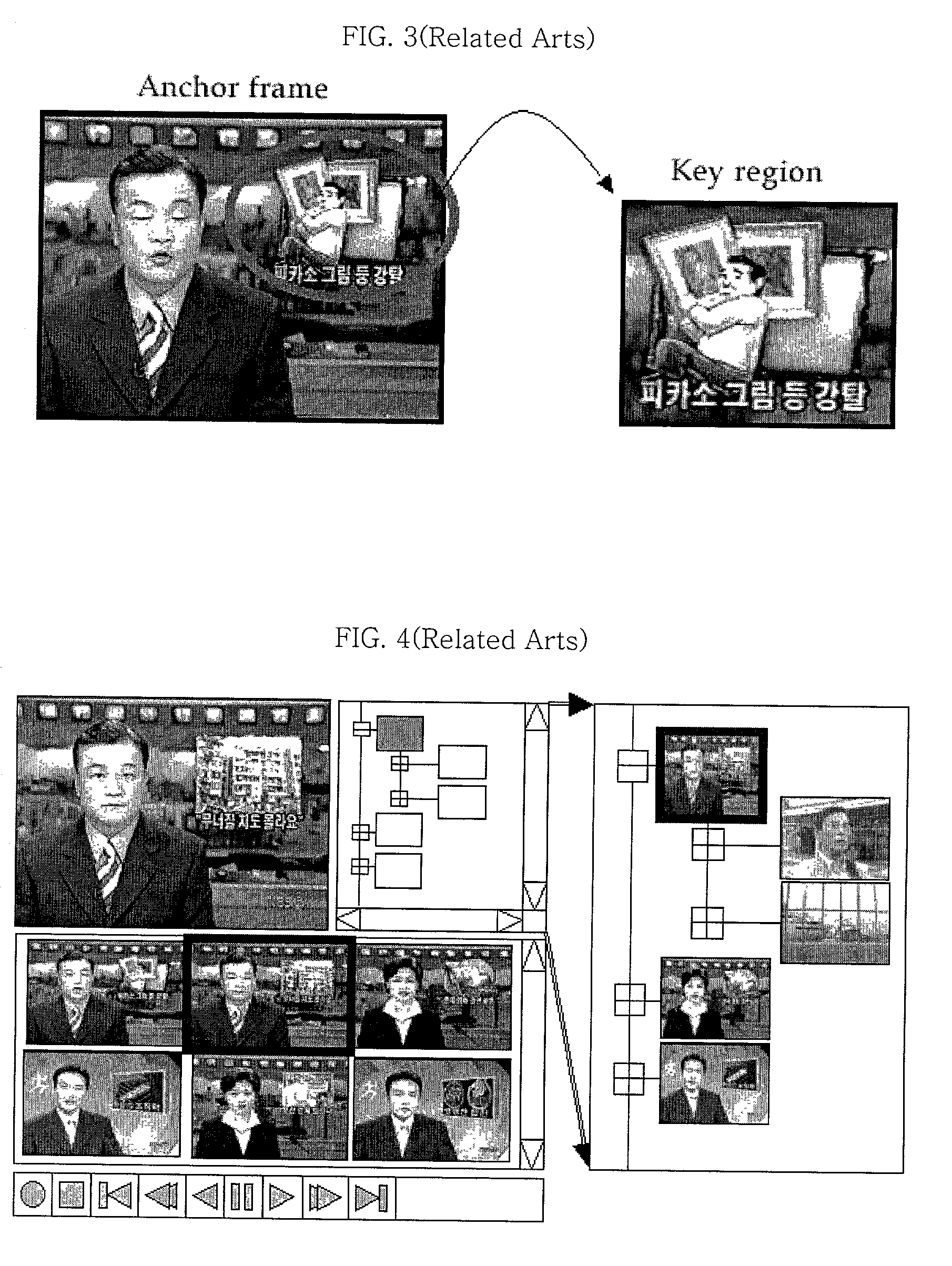

InactiveUS20020126143A1Effective summarizationTelevision system detailsCathode-ray tube indicatorsGraphicsKey frame

The present invention relates to a method that can effectively summarize news video and an article-based video-browsing system that enables effective searching and filtering using the method. The present invention provides an article-based news video content summary method, browsing interface and browsing system that summarize and browse at least one news article by using an anchor key frame, an episode key frame, a synthetic text key frame and a news icon of the news video stream. The anchor key frame is extracted from an anchor shot. The episode key frame is extracted from an episode scene. The synthetic text key frame is generated by summarizing important texts in video frames n the news article to be an image. The news icon includes an image, graphical element and so forth, which appear in an anchor shot to summarize contents of the news article.

Owner:LG ELECTRONICS INC

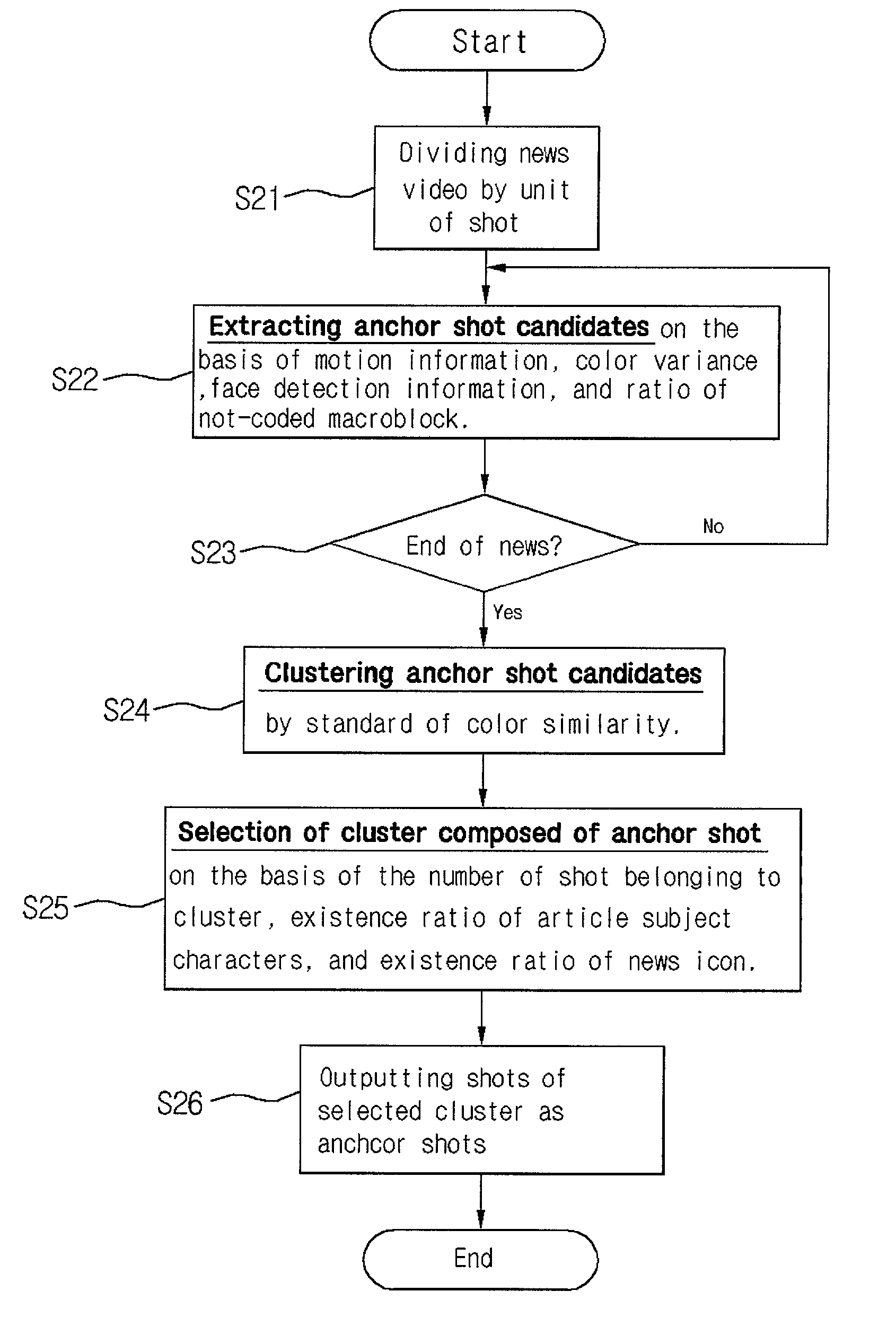

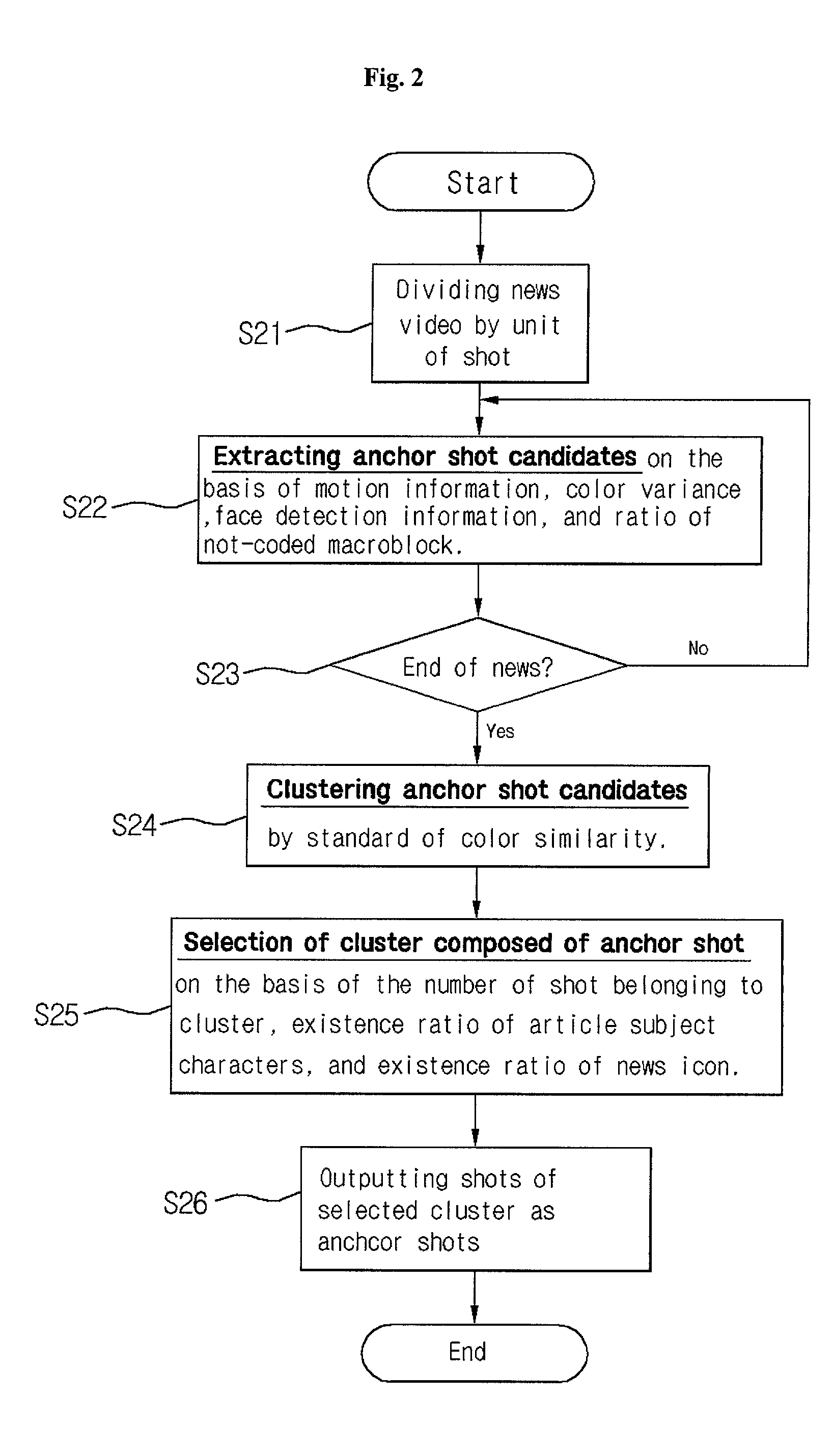

Anchor shot detection method for a news video browsing system

InactiveUS20020146168A1Reliable methodEffective article-based browsing of news videoTelevision system detailsCharacter and pattern recognitionPattern recognitionFace detection

The present invention relates to a method for automatically detecting anchor shot in news video contents. The present invention comprises steps of extracting the anchor shot candidates in news video divided by unit of shot, clustering similar shots among the extracted anchor shot candidates, and deciding an anchor shot by selecting a cluster of the anchor shot among the clusters which the similar shots are grouped. Also, in accordance with the present invention, anchor shot candidates is extracted on the basis of motion information, color variance, length of shot, face detection information, and ratio of not-coded macroblock, similar shots are clustered based on the color similarity of the extracted anchor shot candidates, and the genuine anchor shot are decided by selecting the cluster of anchor shot among the clusters on the basis of the number of shots belonging to the cluster, the existence ratio of article subject character, and the existence ratio of news icon.

Owner:LG ELECTRONICS INC

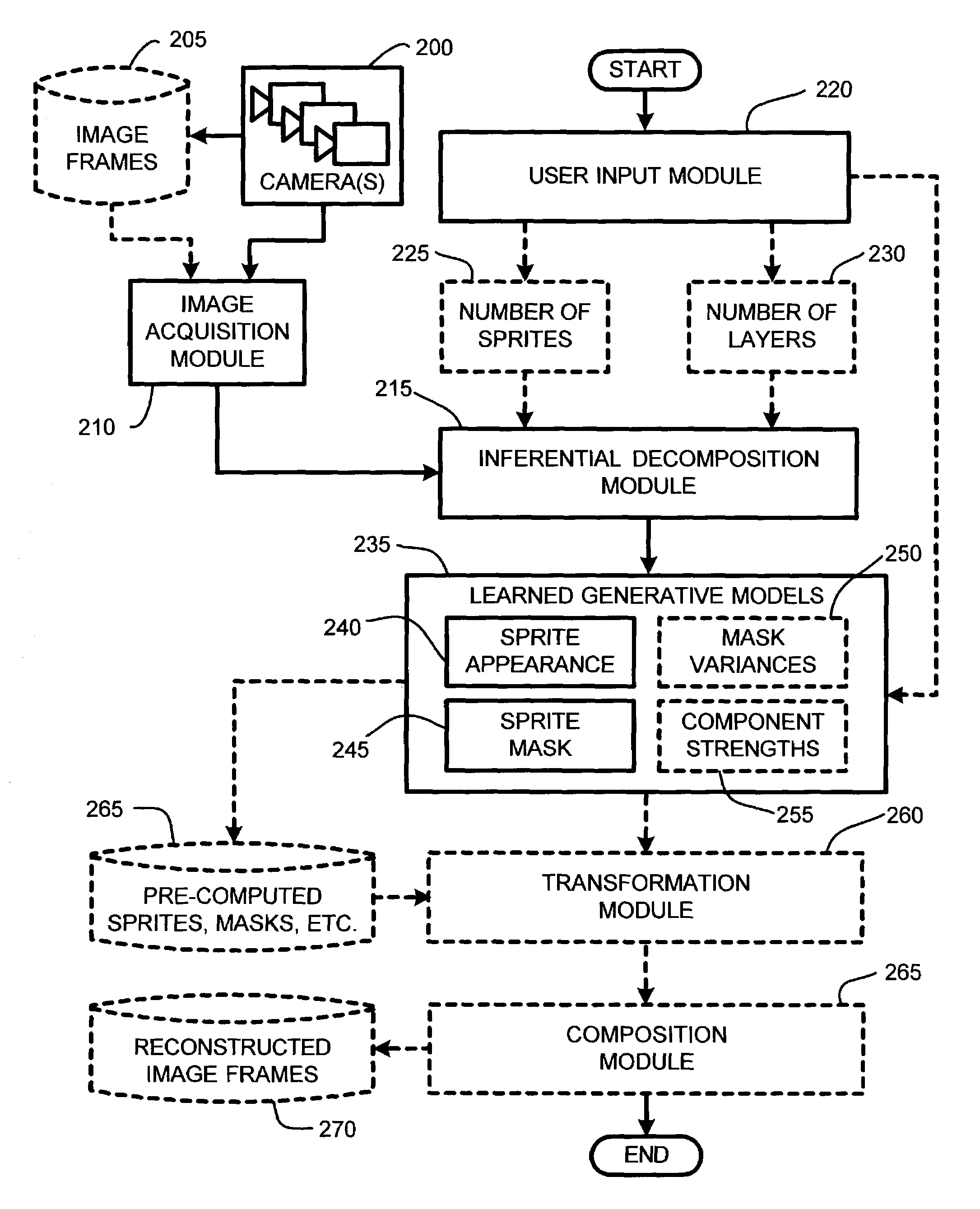

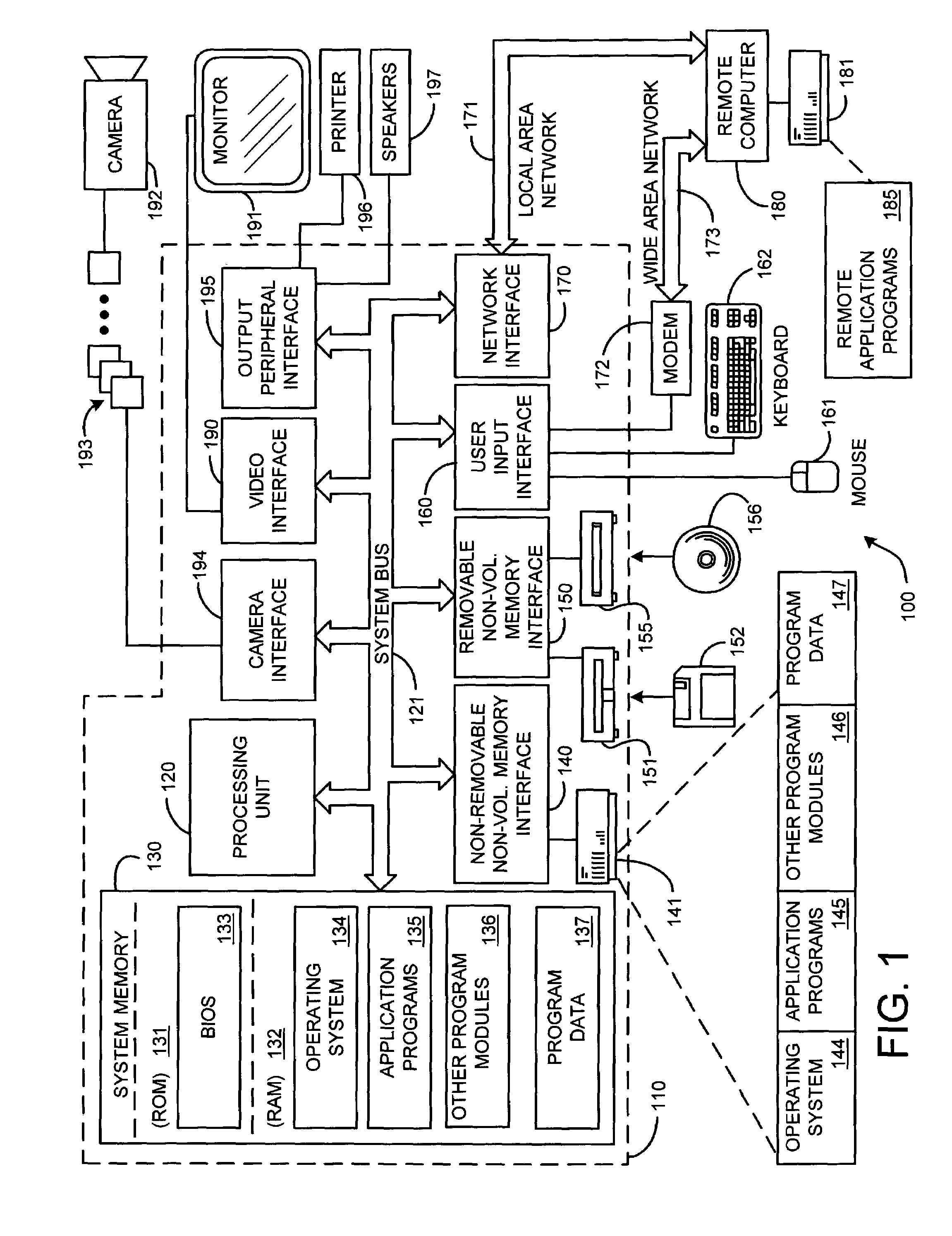

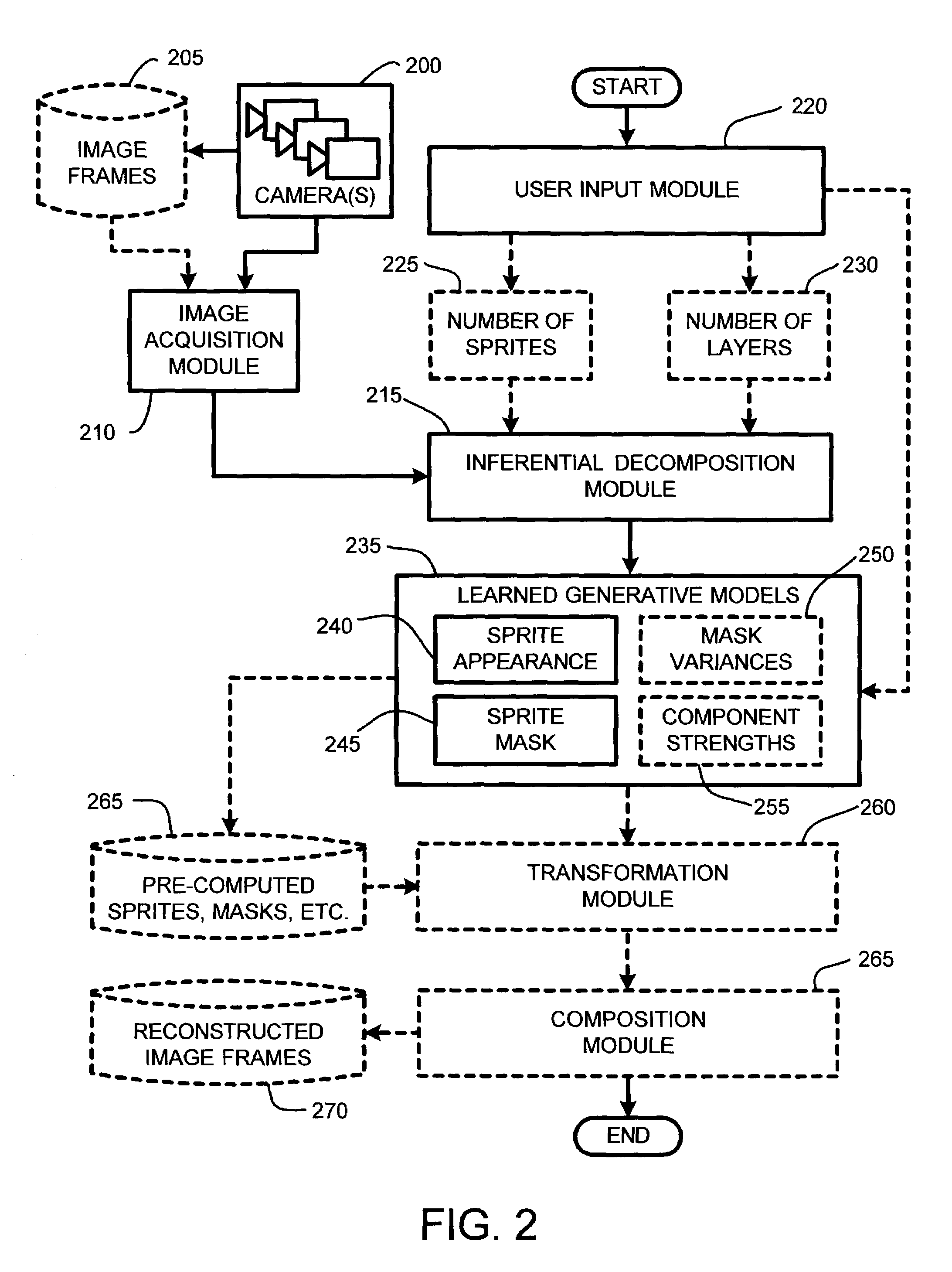

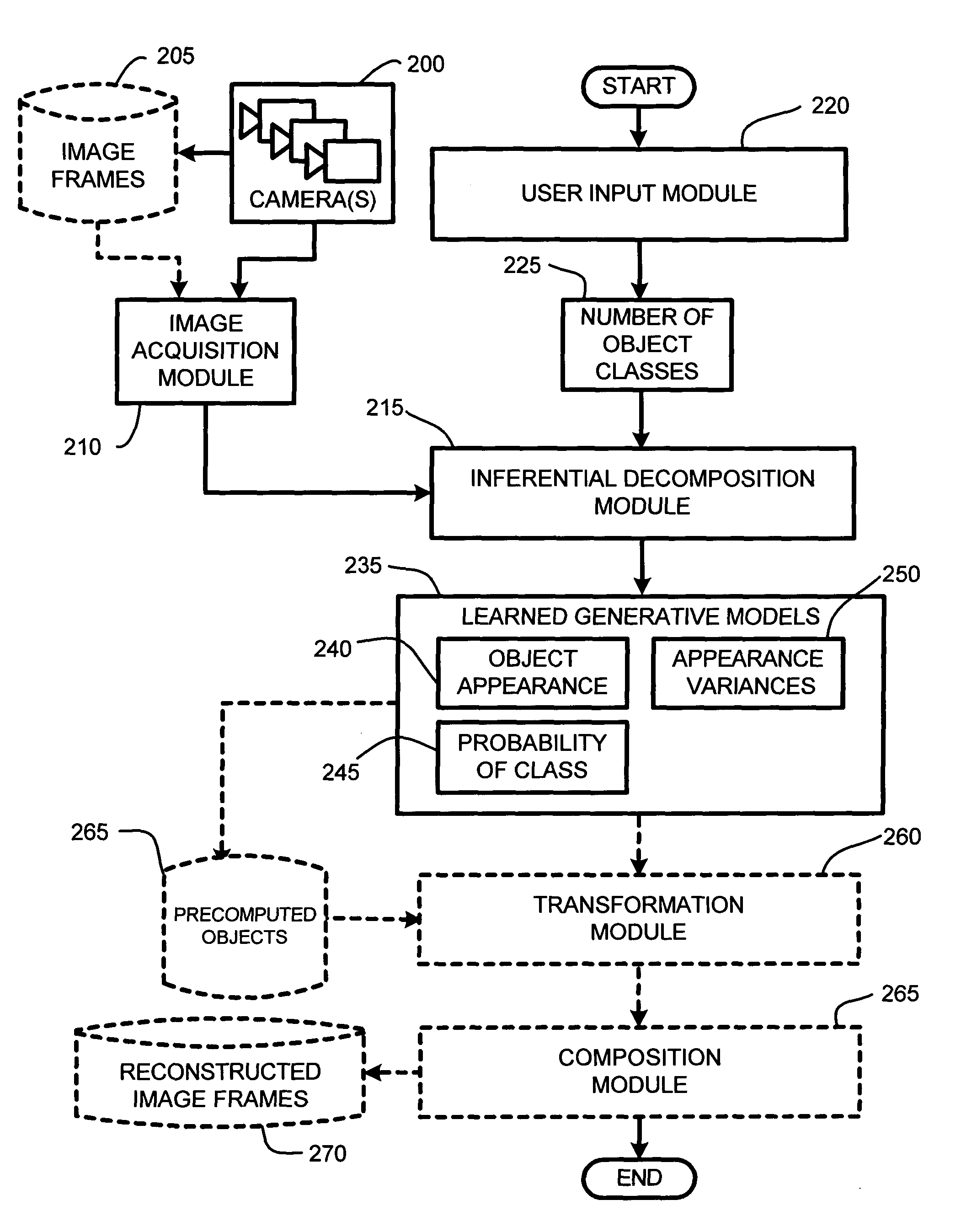

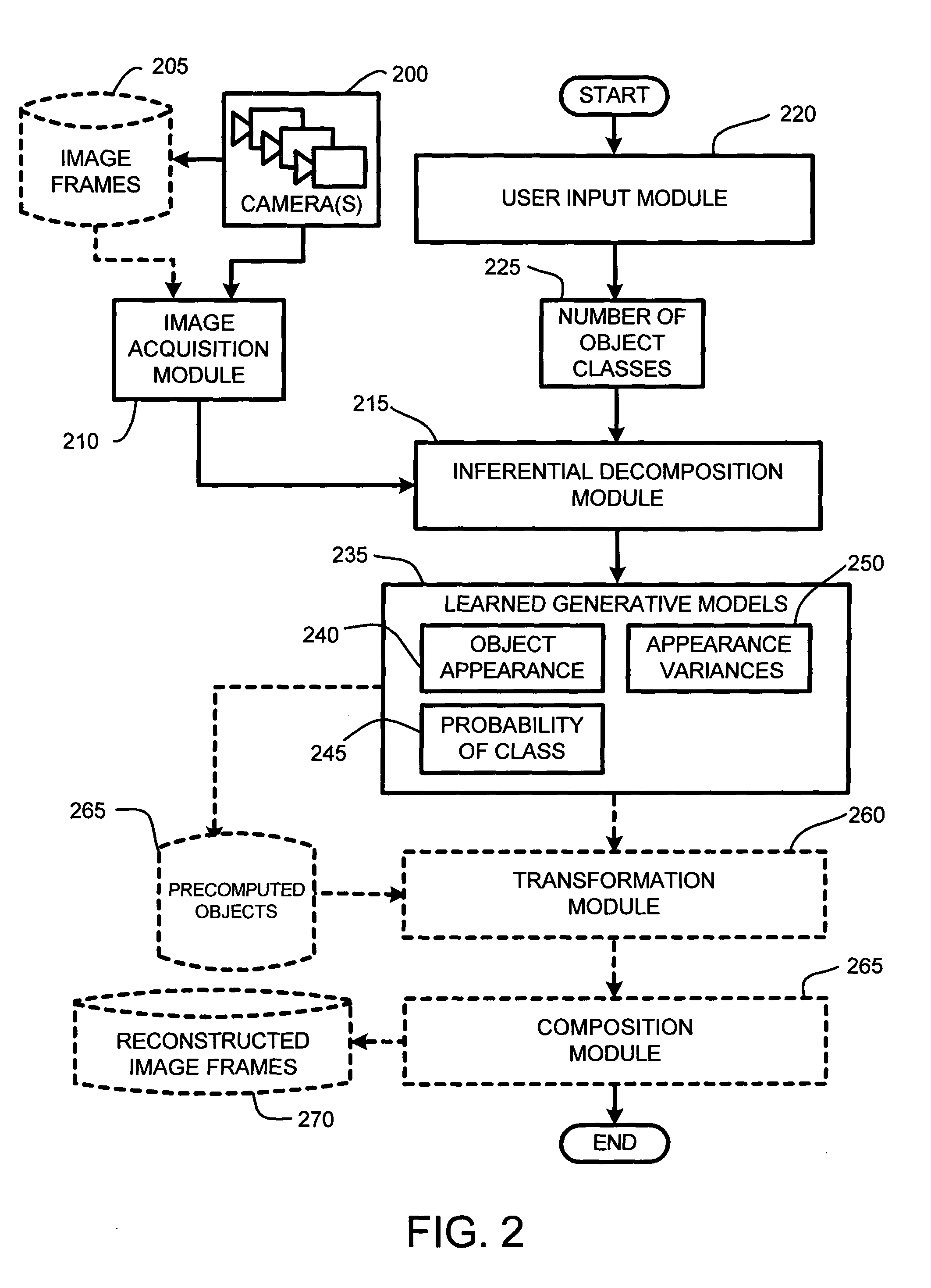

System and method for automatically learning flexible sprites in video layers

ActiveUS7113185B2Simple geometrySimple and efficient to and analyzeImage enhancementImage analysisVideo sequenceVisual perception

A simplified general model and an associated estimation algorithm is provided for modeling visual data such as a video sequence. Specifically, images or frames in a video sequence are represented as collections of flat moving objects that change their appearance and shape over time, and can occlude each other over time. A statistical generative model is defined for generating such visual data where parameters such as appearance bit maps and noise, shape bit-maps and variability in shape, etc., are known. Further, when unknown, these parameters are estimated from visual data without prior pre-processing by using a maximization algorithm. By parameter estimation and inference in the model, visual data is segmented into components which facilitates sophisticated applications in video or image editing, such as, for example, object removal or insertion, tracking and visual surveillance, video browsing, photo organization, video compositing, etc.

Owner:MICROSOFT TECH LICENSING LLC

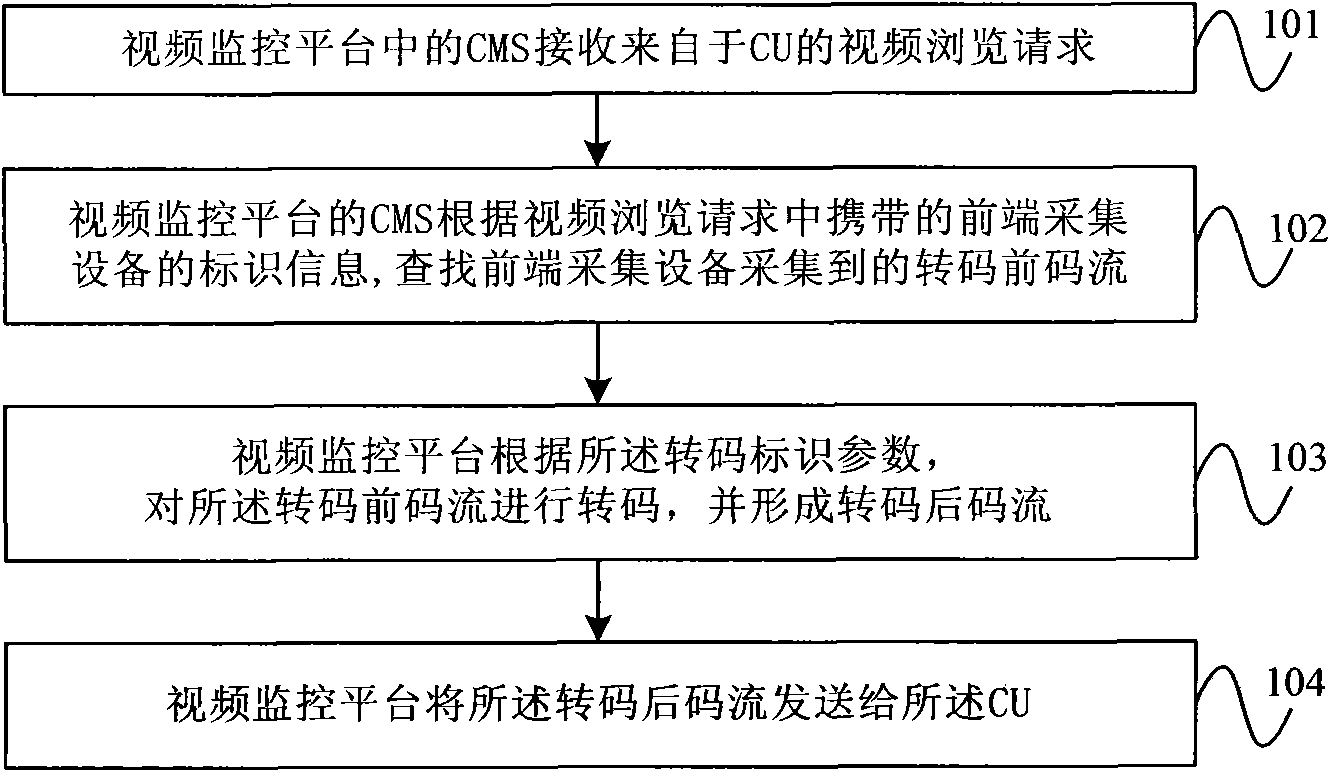

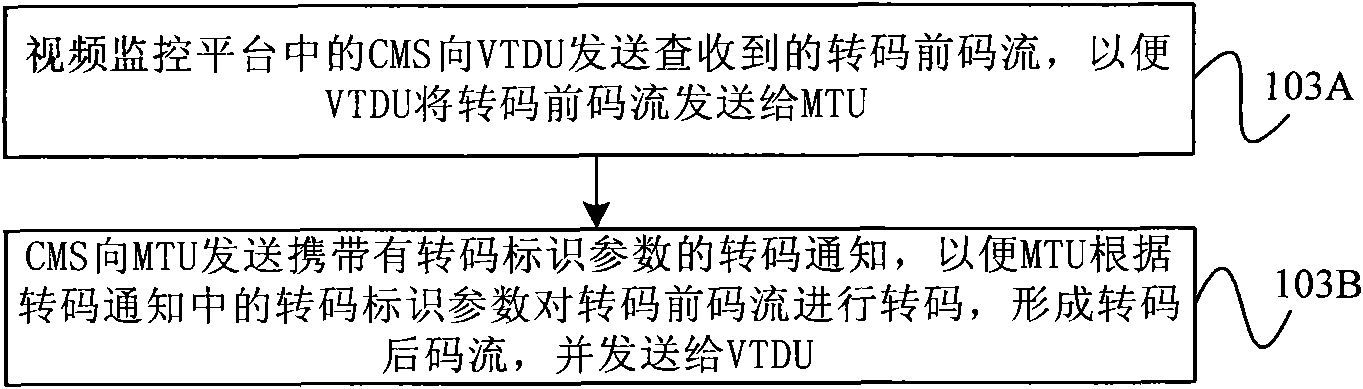

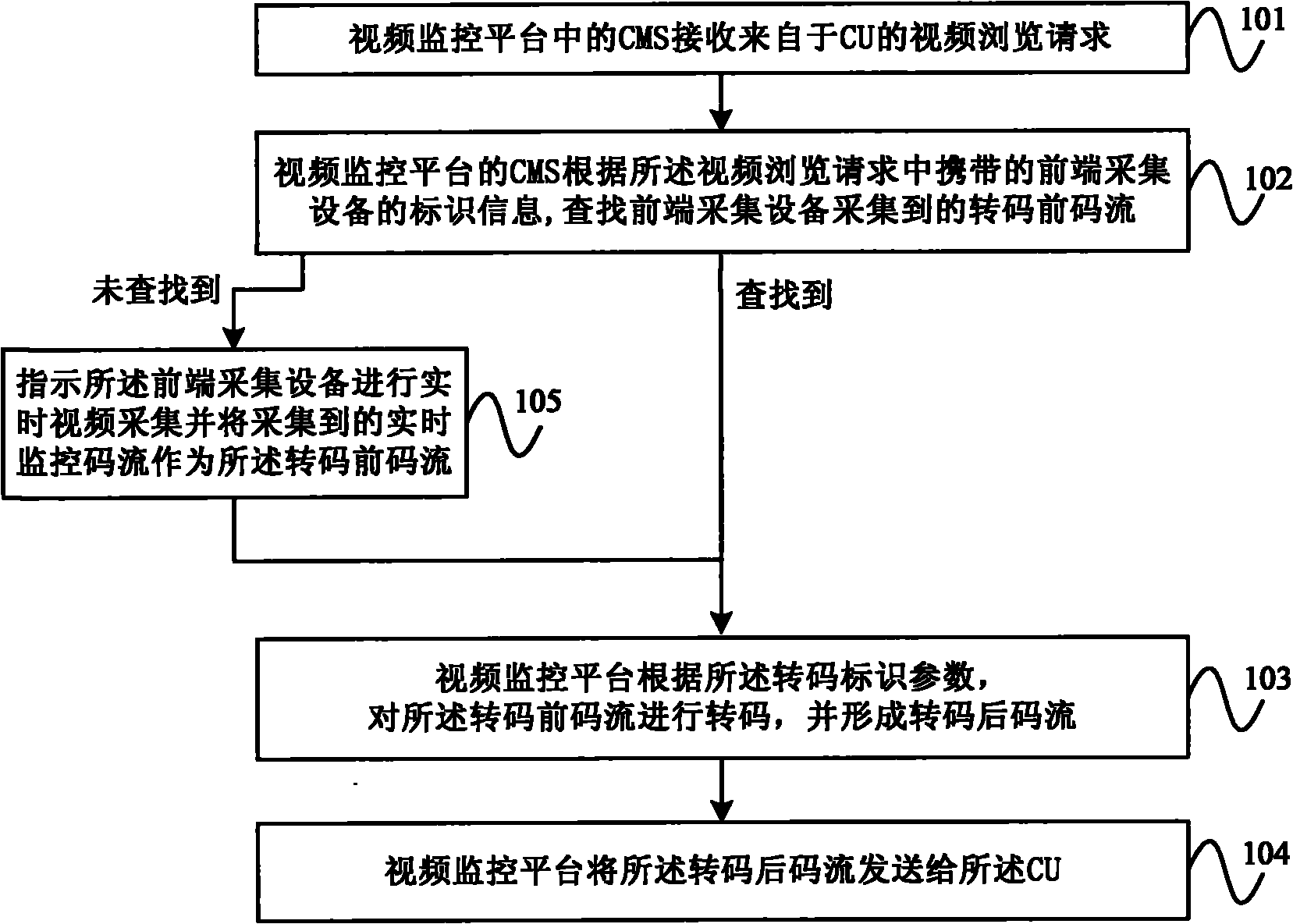

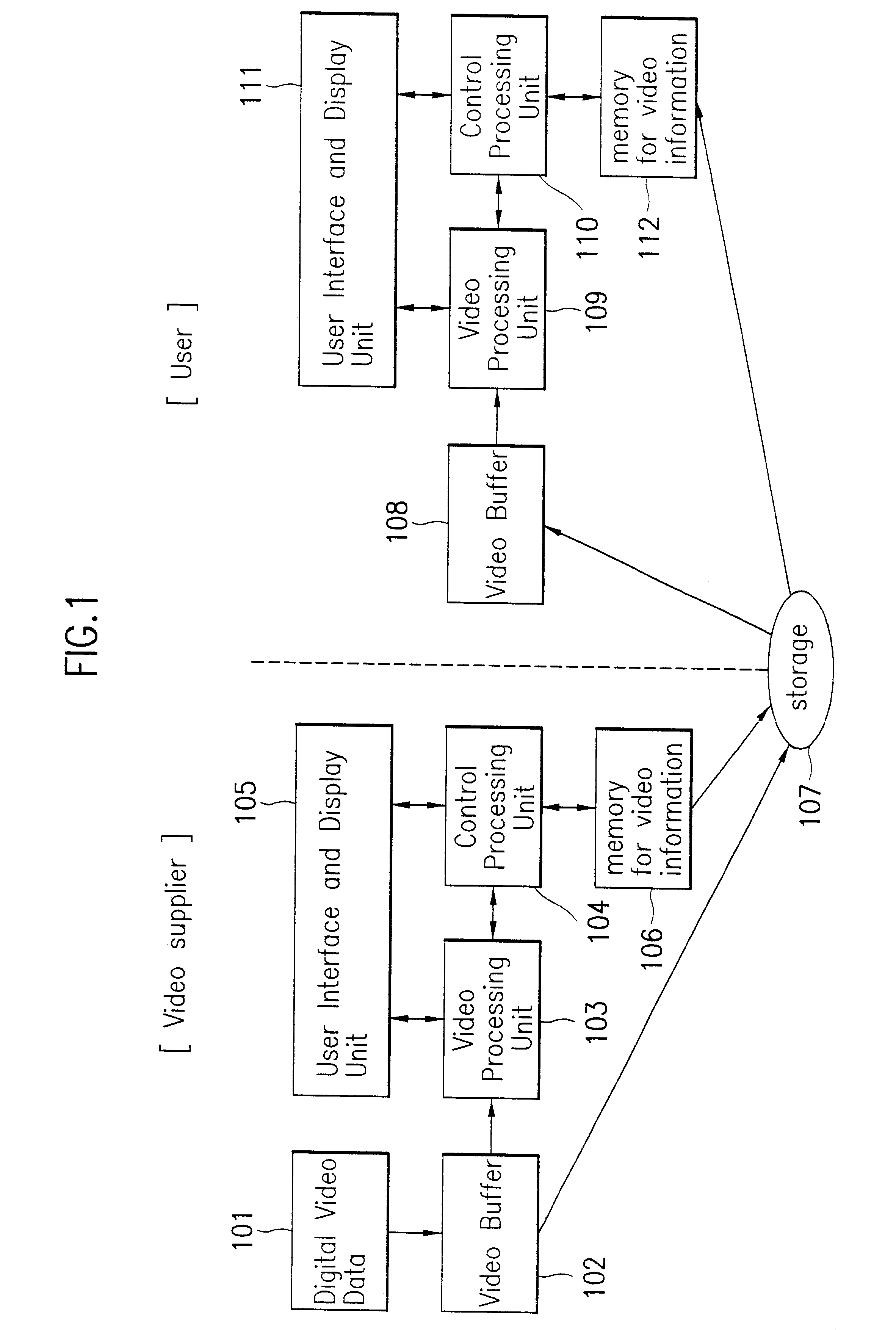

Video monitoring method, system and equipment

InactiveCN102045540AAchieve uniformitySimple structurePulse modulation television signal transmissionClosed circuit television systemsComputer hardwareVideo monitoring

The embodiment of the invention provides a video monitoring method, a video monitoring system and video monitoring equipment. The method comprises the following steps that: a video monitoring platform receives a video browsing request from a client unit, wherein the video browsing request carries a transcoding identification parameter; according to identification information of front-end acquisition equipment carried in the video browsing request, a code stream before transcoding acquired by the front-end acquisition equipment is searched for; the video monitoring platform transcodes the code stream before transcoding to form the code stream after transcoding according to the transcoding identification parameter; and the video monitoring platform transmits the code stream after transcoding to the client unit, so that the client unit can receive and play the code stream after transcoding corresponding to the transcoding identification parameter. In the embodiment of the invention, the video monitoring platform is used for performing uniform transcoding processing on each client unit, so that the normality of transcoding processing is realized.

Owner:HUAWEI SOFTWARE TECH

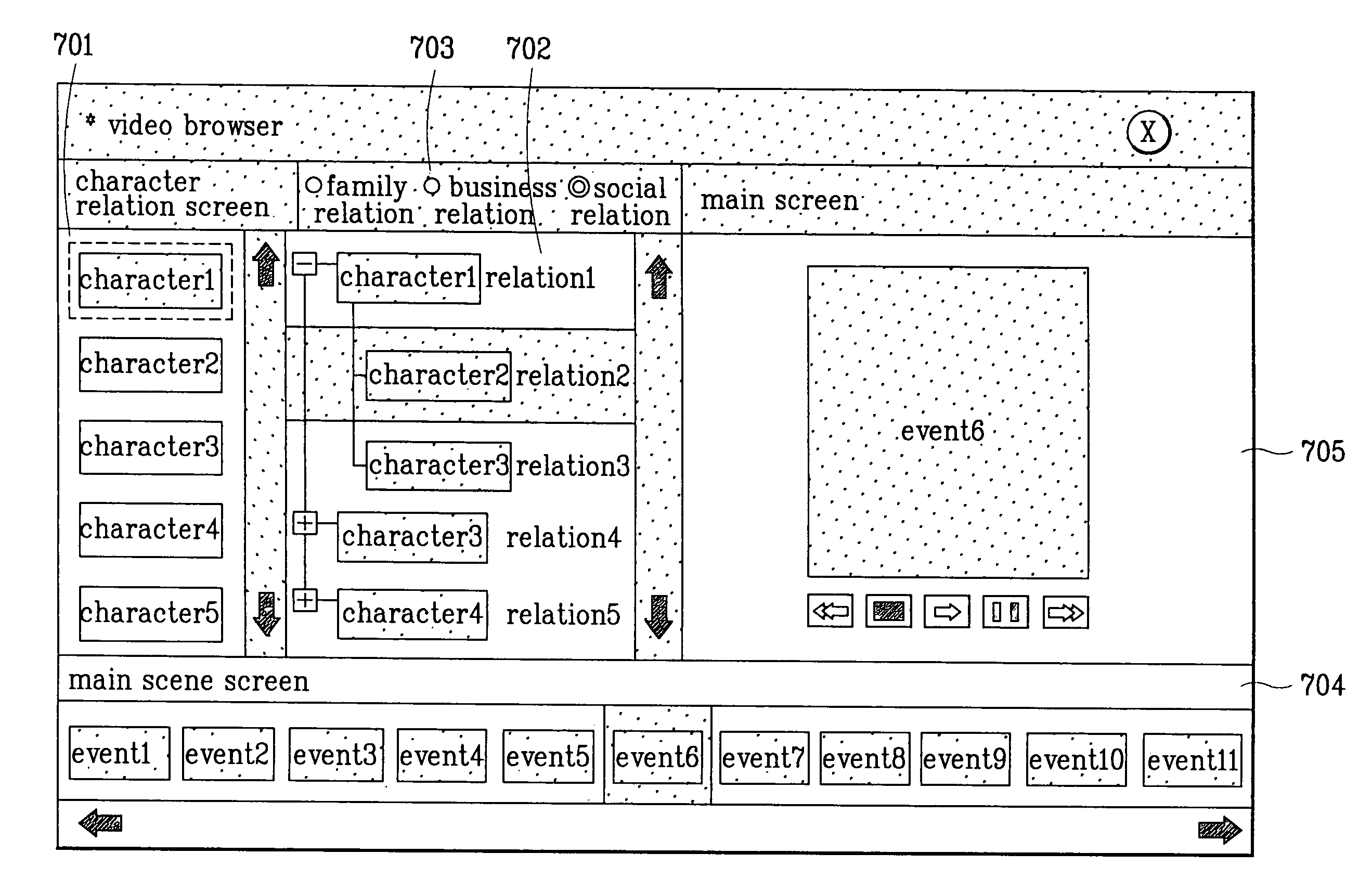

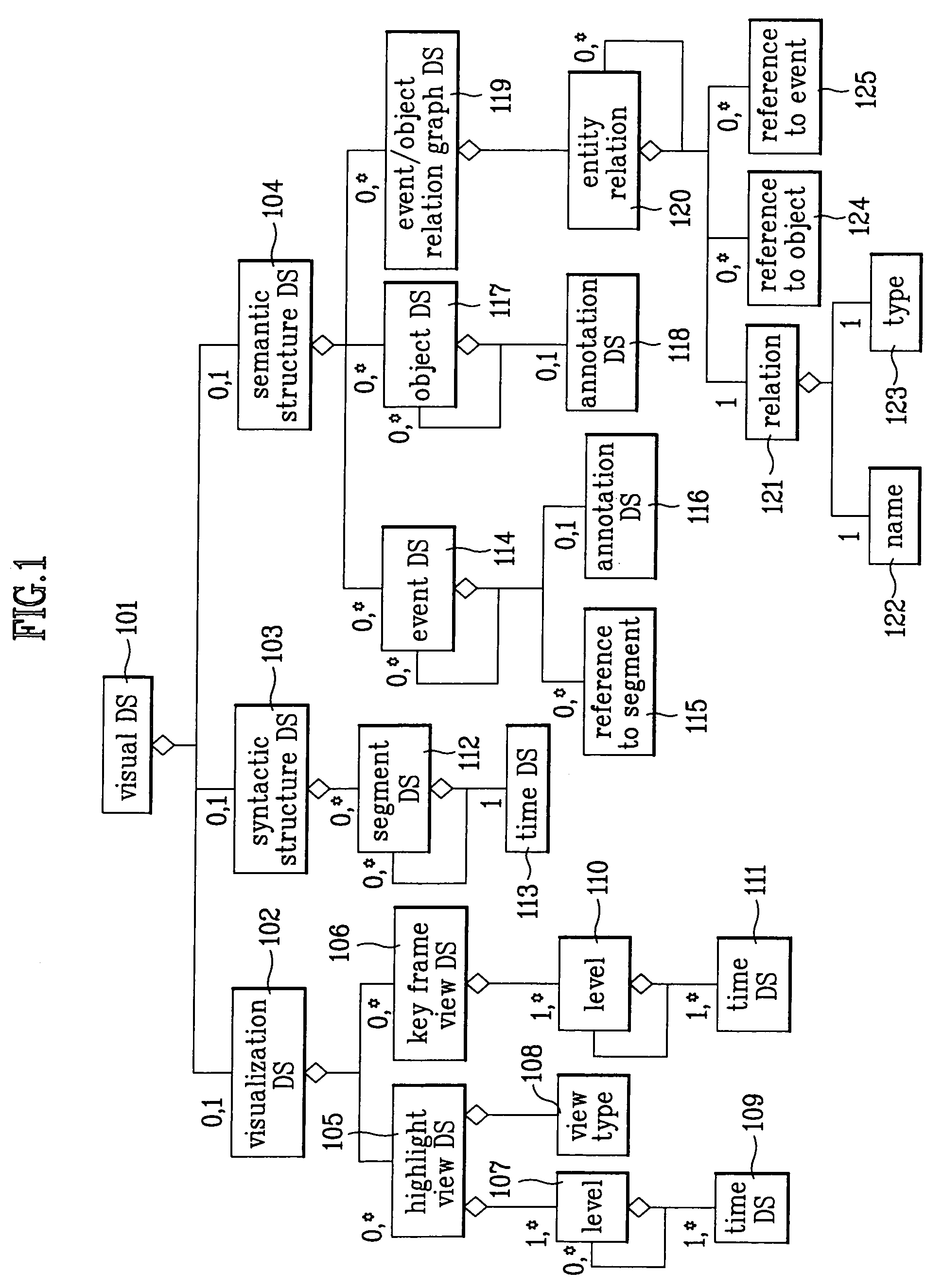

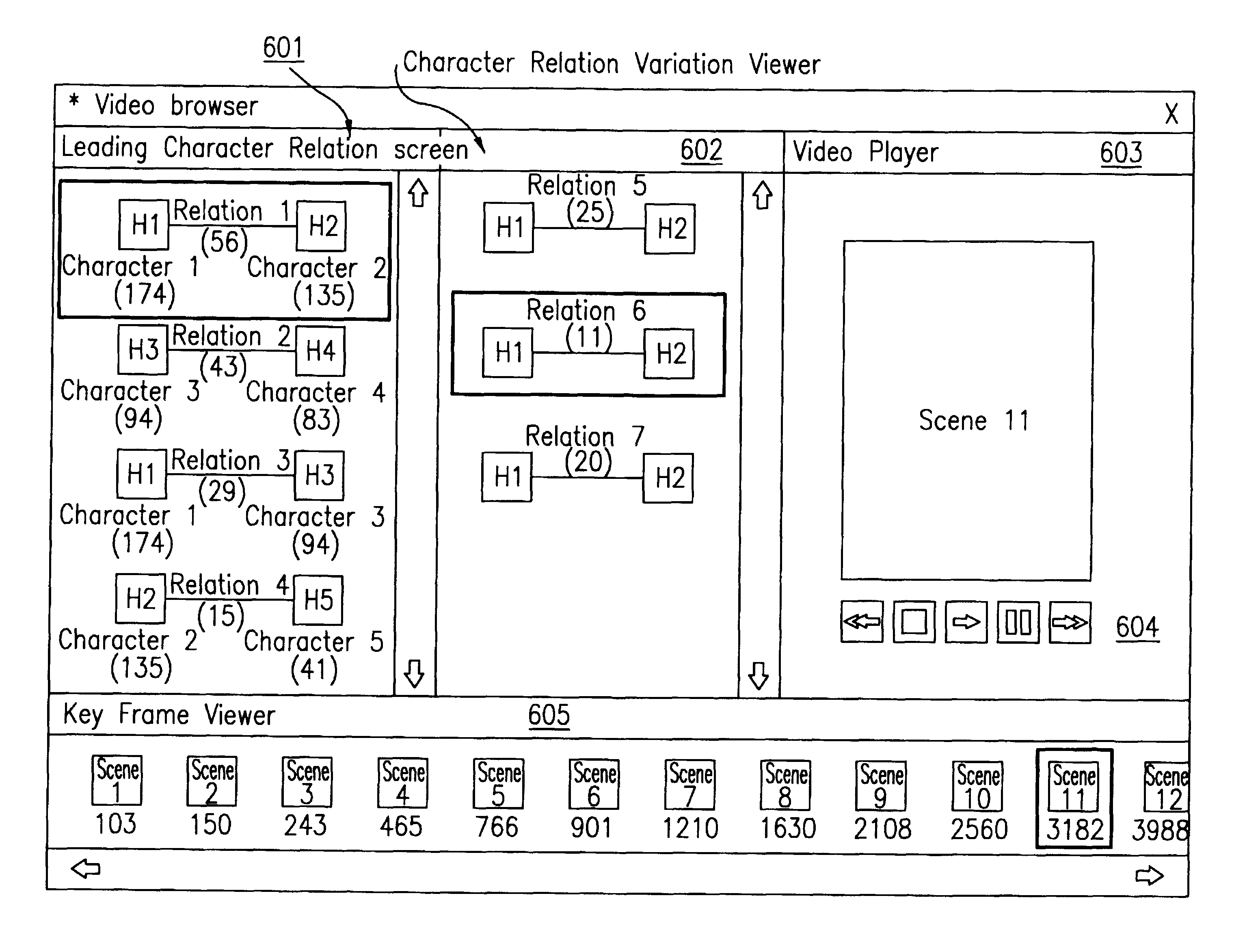

Video browser based on character relation

InactiveUS7509581B1Pulse modulation television signal transmissionMetadata video data retrievalComputer graphics (images)Data structure

Owner:LG ELECTRONICS INC

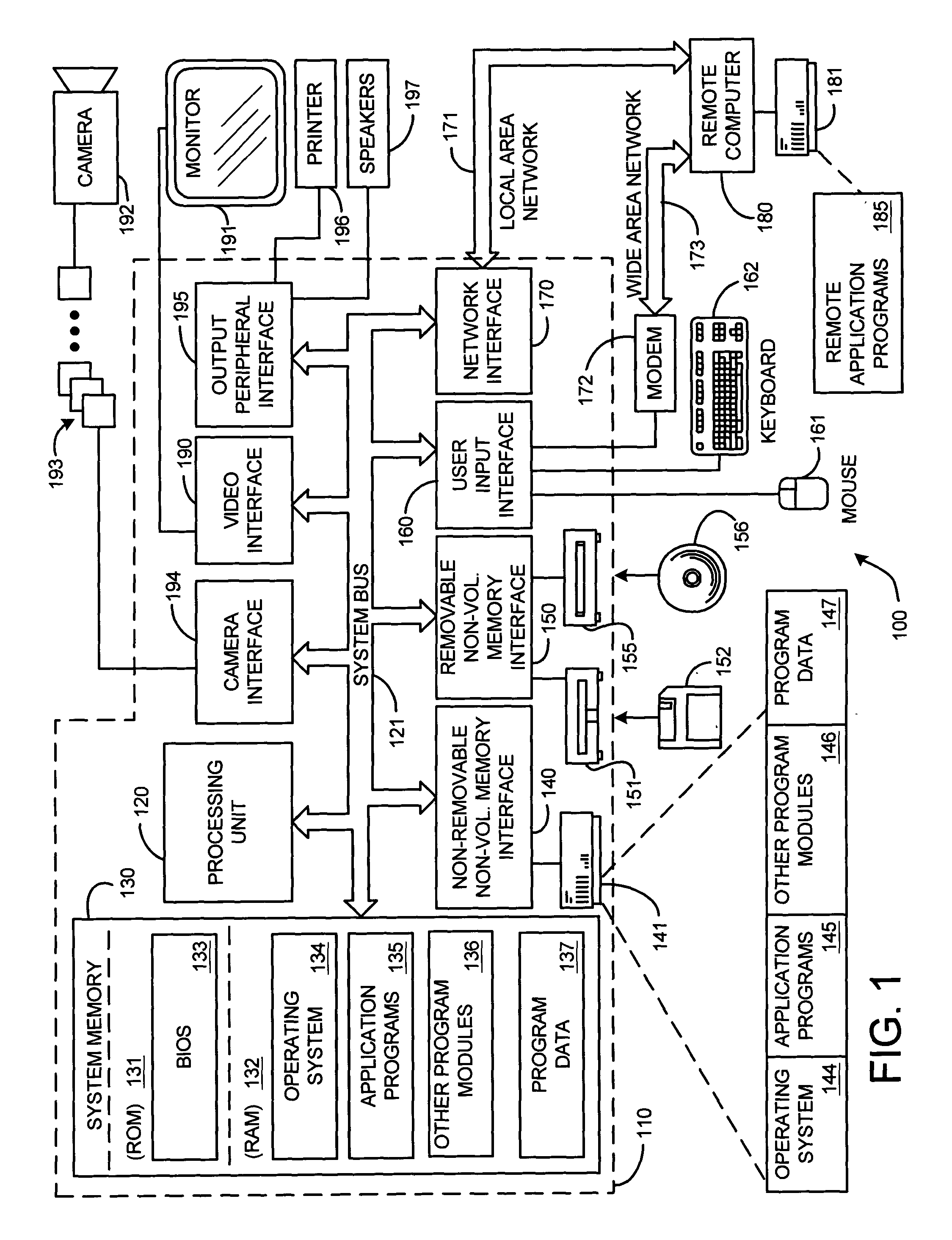

System and method for fast on-line learning of transformed hidden Markov models

InactiveUS20050047646A1High marginal probabilityReduce decreaseRecord information storageUsing detectable carrier informationBatch processingLatent image

A fast variational on-line learning technique for training a transformed hidden Markov model. A simplified general model and an associated estimation algorithm is provided for modeling visual data such as a video sequence. Specifically, once the model has been initialized, an expectation-maximization (“EM”) algorithm is used to learn the one or more object class models, so that the video sequence has high marginal probability under the model. In the expectation step (the “E-Step”), the model parameters are assumed to be correct, and for an input image, probabilistic inference is used to fill in the values of the unobserved or hidden variables, e.g., the object class and appearance. In one embodiment of the invention, a Viterbi algorithm and a latent image is employed for this purpose. In the maximization step (the “M-Step”), the model parameters are adjusted using the values of the unobserved variables calculated in the previous E-step. Instead of using batch processing typically used in EM processing, the system and method according to the invention employs an on-line algorithm that passes through the data only once and which introduces new classes as the new data is observed is proposed. By parameter estimation and inference in the model, visual data is segmented into components which facilitates sophisticated applications in video or image editing, such as, for example, object removal or insertion, tracking and visual surveillance, video browsing, photo organization, video compositing, and meta data creation.

Owner:MICROSOFT TECH LICENSING LLC

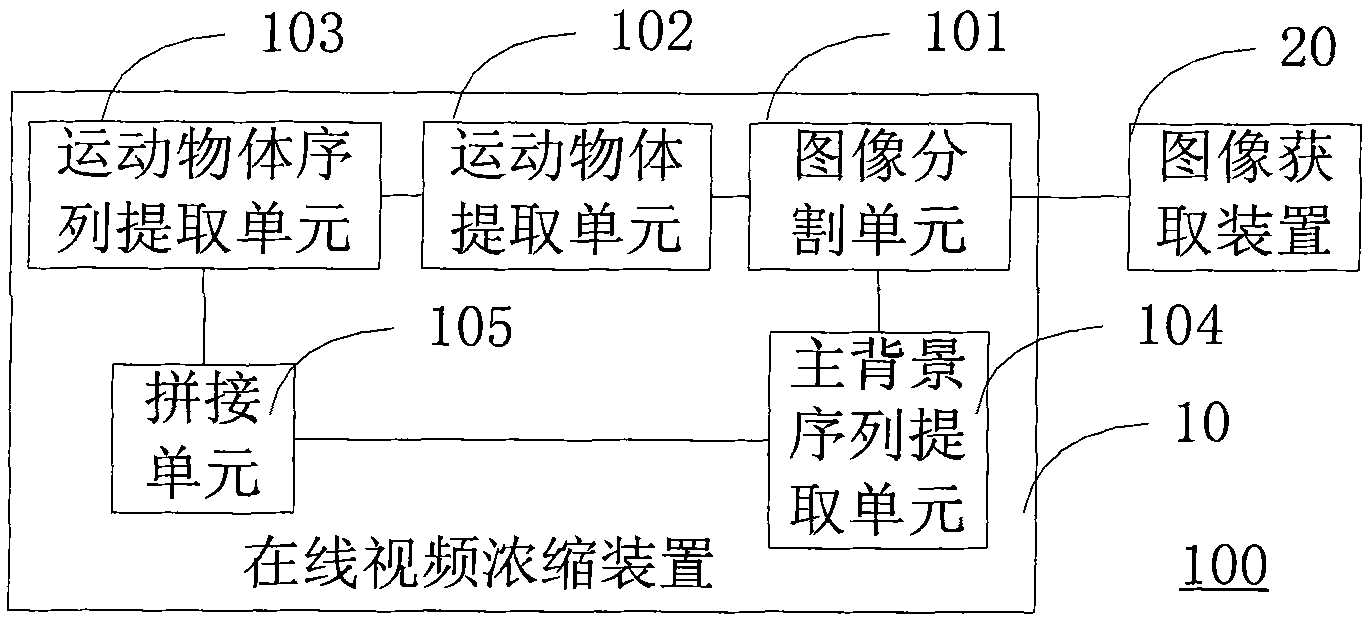

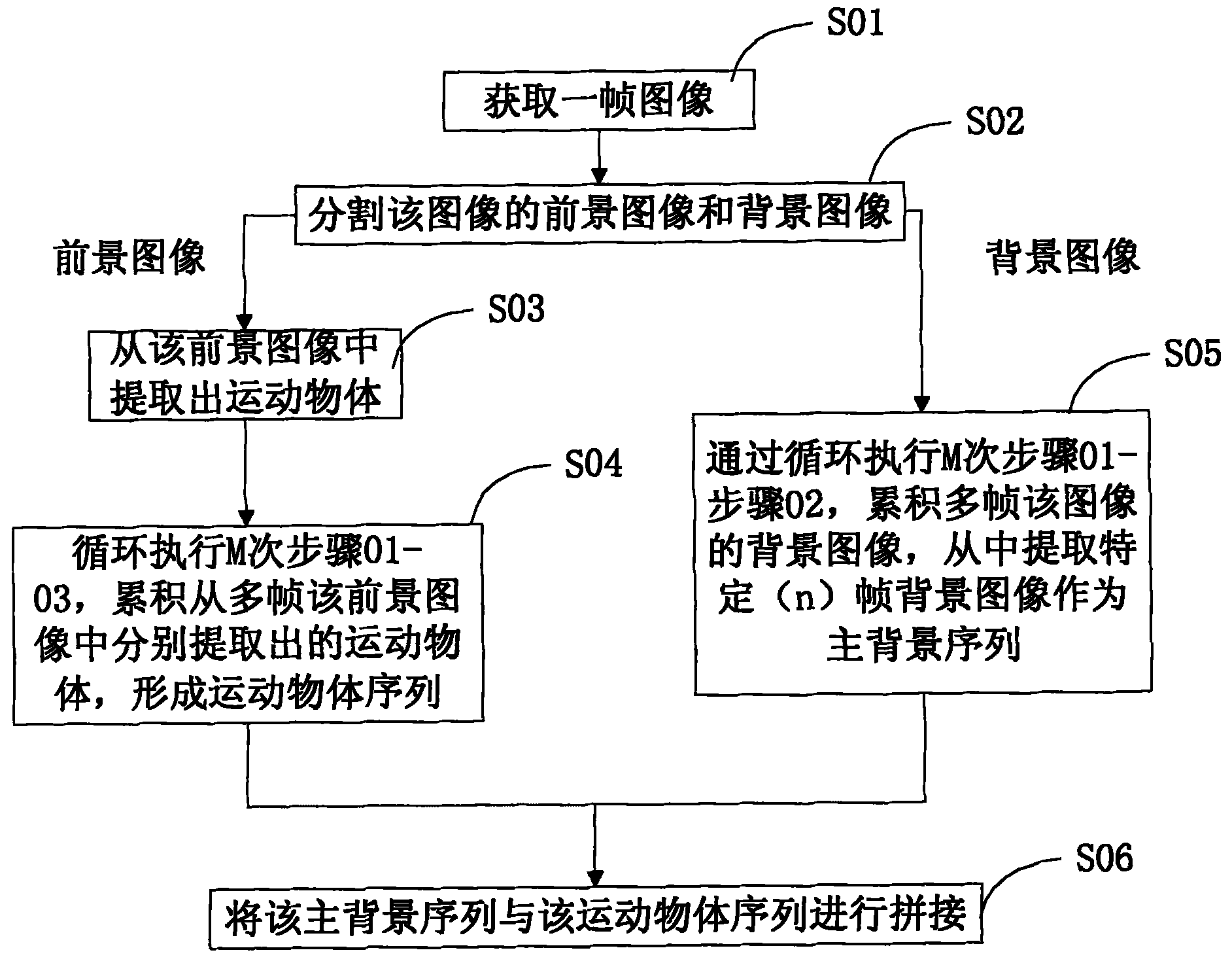

Online video concentration device, system and method

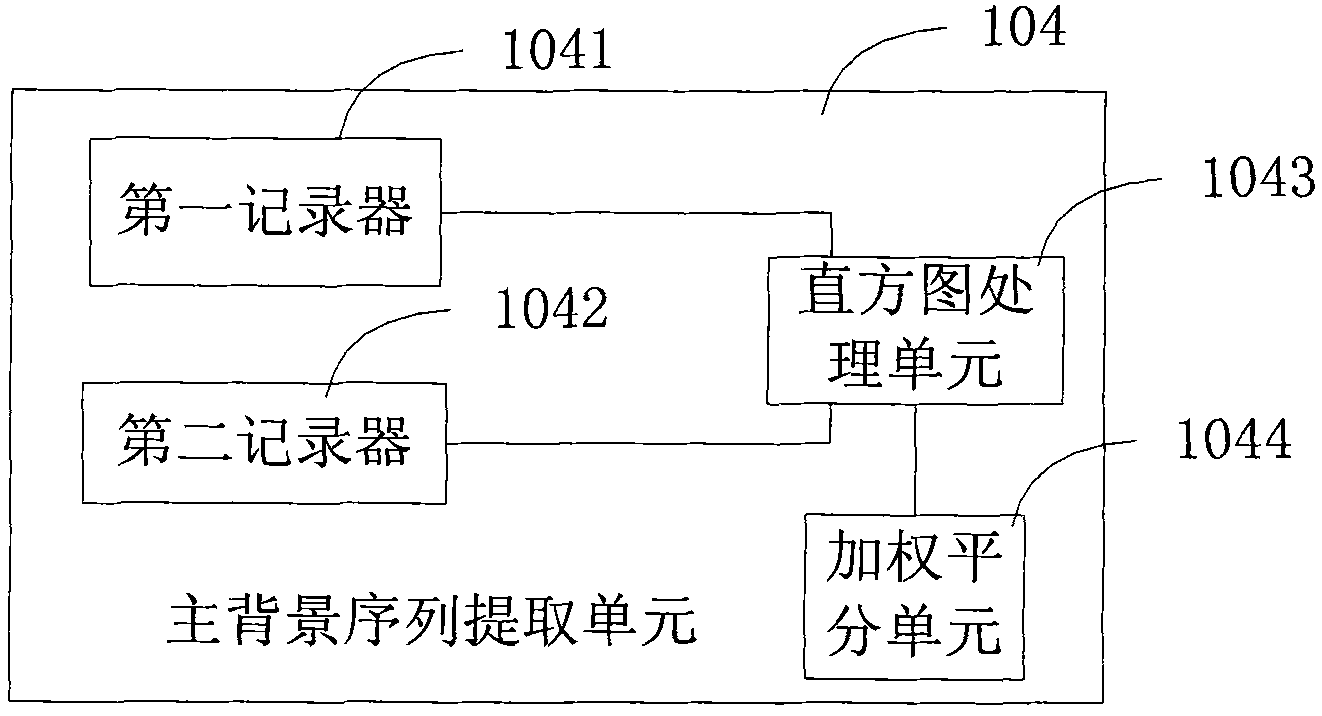

ActiveCN102375816AReduce demandSave storage spaceImage analysisCharacter and pattern recognitionMultiple frameBackground image

The invention discloses an online video concentration device, system and method. The method can sequentially perform real-time execution on an image which is currently acquired by each frame, and the method comprises the following steps of: the segmentation step: segmenting a background image and a foreground image of each image; the extraction step: extracting a motion object from each foreground image; the step of extracting the sequence of the motion objects: accumulating the motion objects which are respectively extracted from the foreground images of the multiple frames and forming the sequence of the motion objects; the step of extracting the sequence of main backgrounds: extracting specific (n) frames of the background images from the background images of the images of the multiple frames as the sequence of the main backgrounds; and the splicing step: splicing the sequence of the main backgrounds with the sequence of the motion objects. By utilizing the online video concentration way, the length of a concentrated video is shortened and information of the motion objects in the video can be retained as far as possible. Fast and convenient video browsing and previewing can be realized, and the visual effect is better. The hardware requirements and the complexity in an algorithm can be reduced.

Owner:北京中科奥森数据科技有限公司

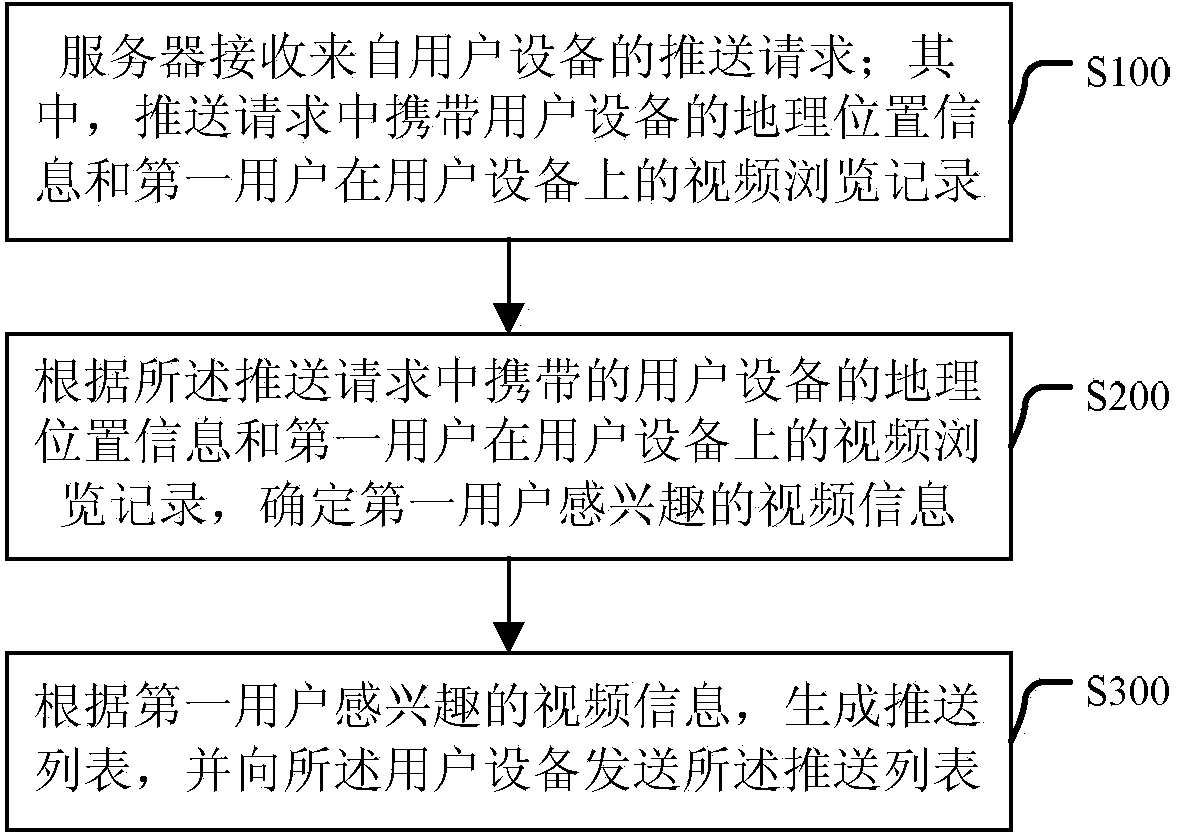

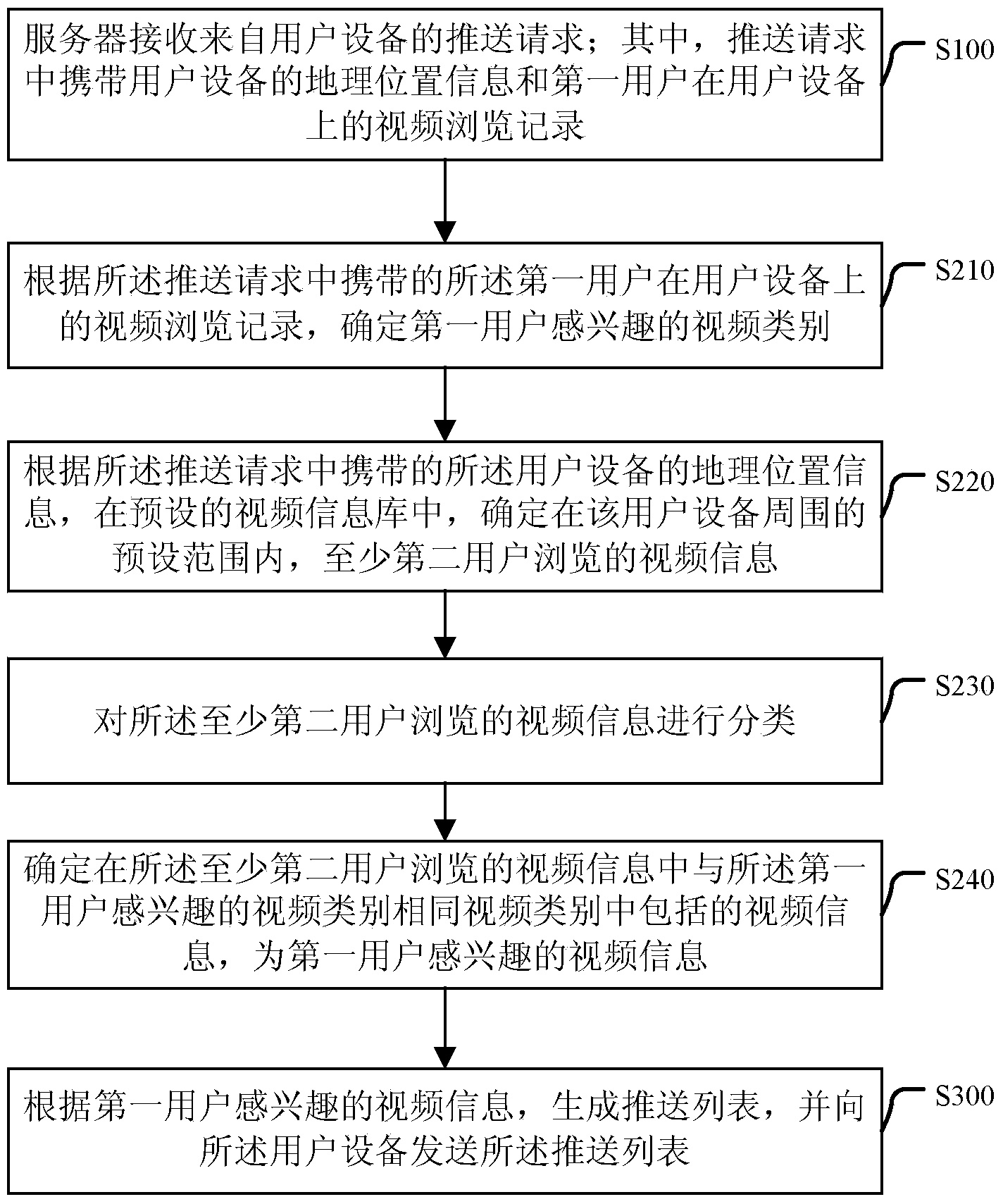

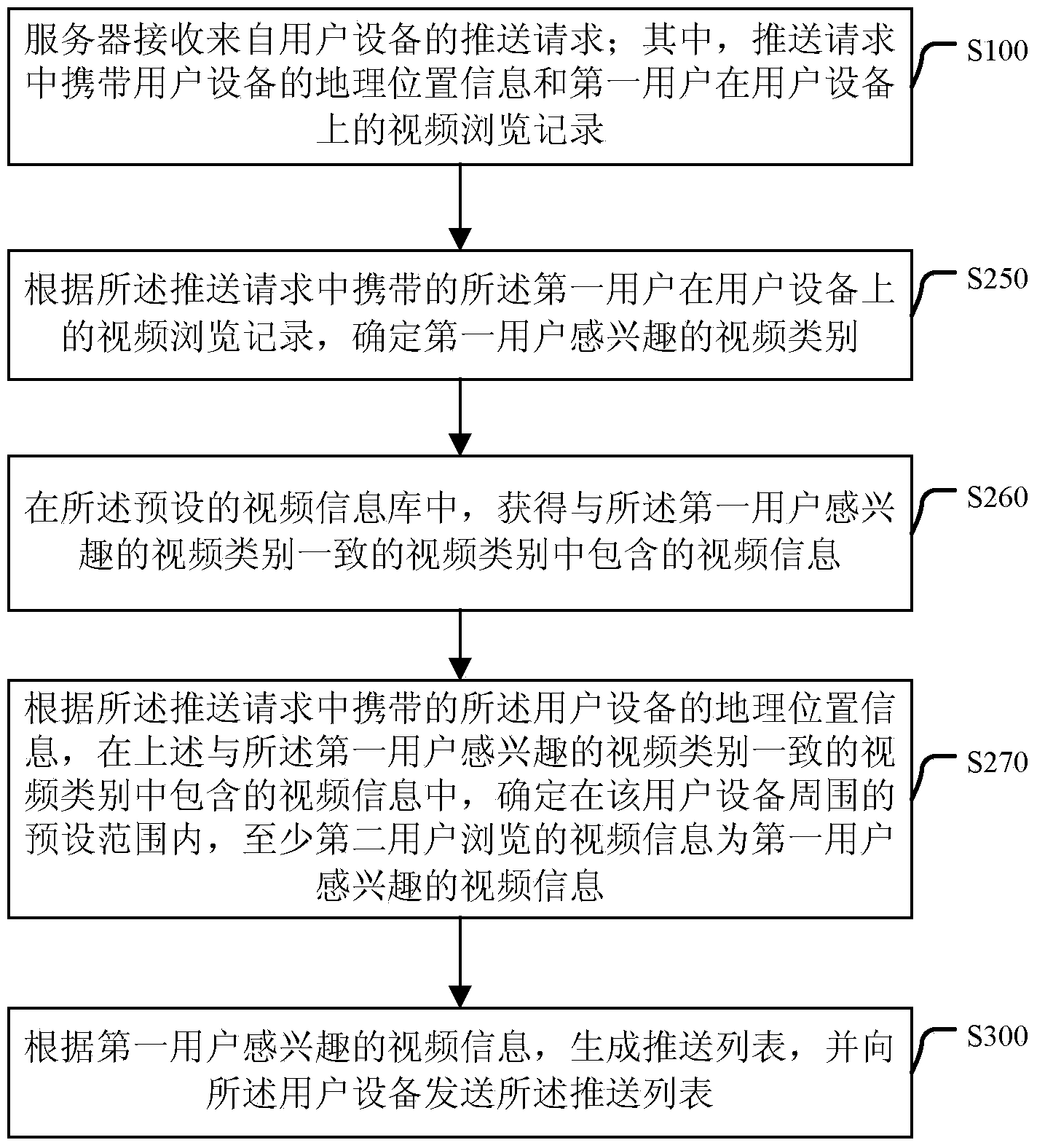

Video information pushing method and device

ActiveCN103888837AEasy to browseEasy to understandSelective content distributionUser deviceGeolocation

The embodiment of the invention discloses a video information pushing method and device and relates to the technical field of network communication. The method includes the first step of receiving a pushing request from a user device, wherein the pushing request carries geographical location information of the user device and video browsing records of a first user on the user device, the second step of determining video information that the first user is interested in according to the geographical location information of the user device and the video browsing records of the first user on the user device in the pushing request, and the third step of generating a push list according to the video information that the first user is interested in and pushing the push list to the user device. Through the application of the video information pushing method, the user can conveniently know the video information that the users around are interested in and can conveniently browse videos.

Owner:BEIJING KINGSOFT NETWORK TECH

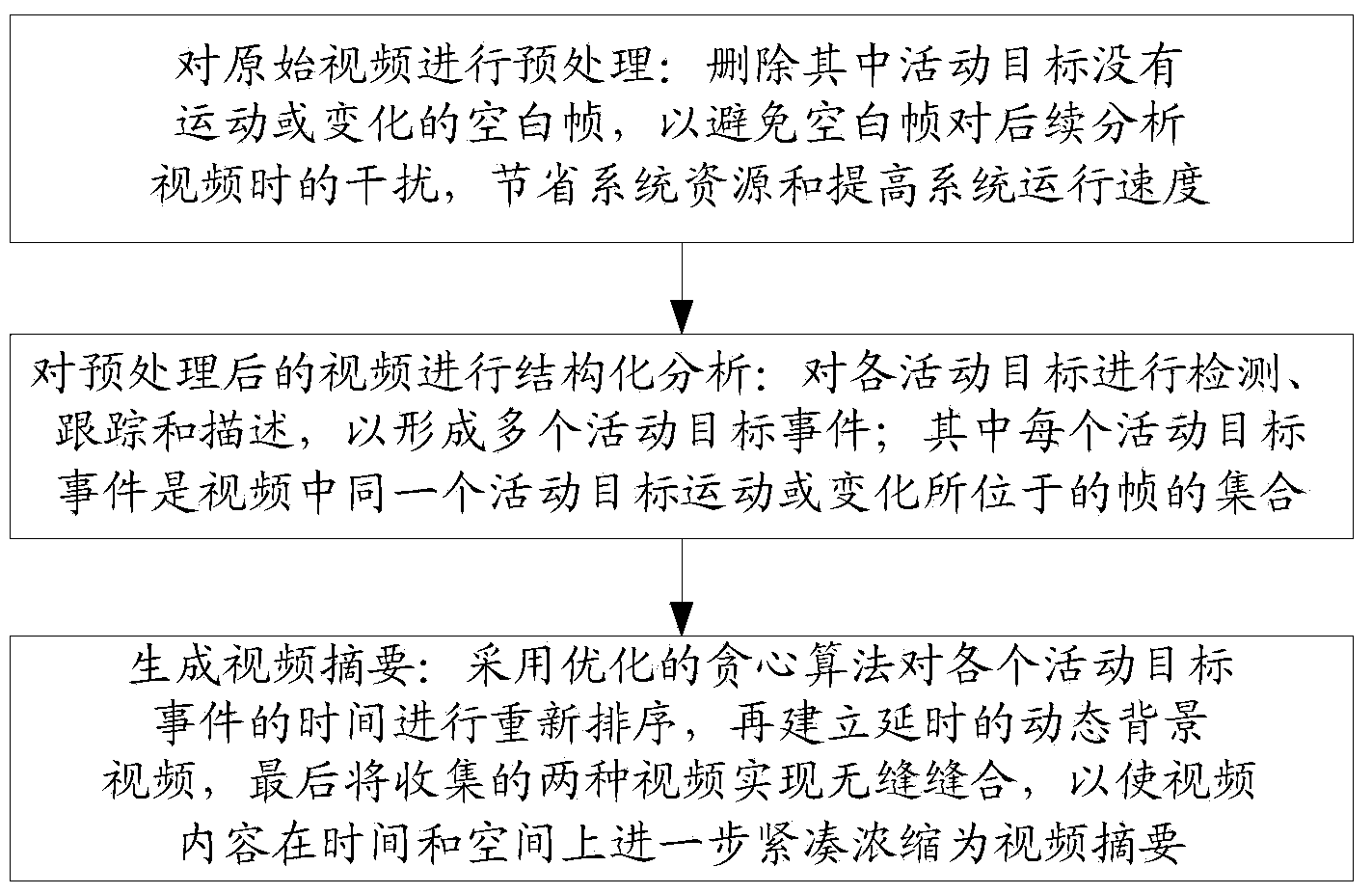

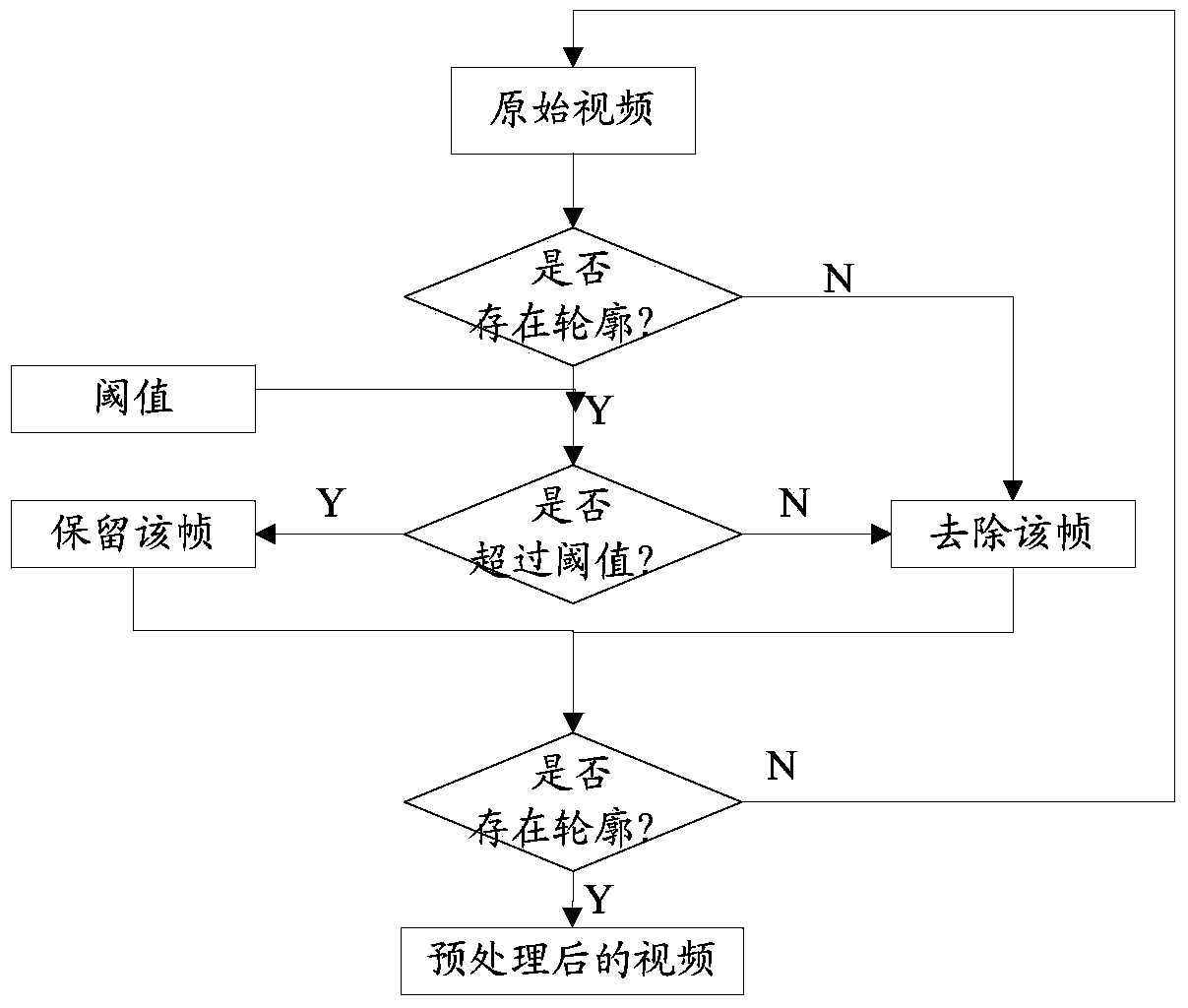

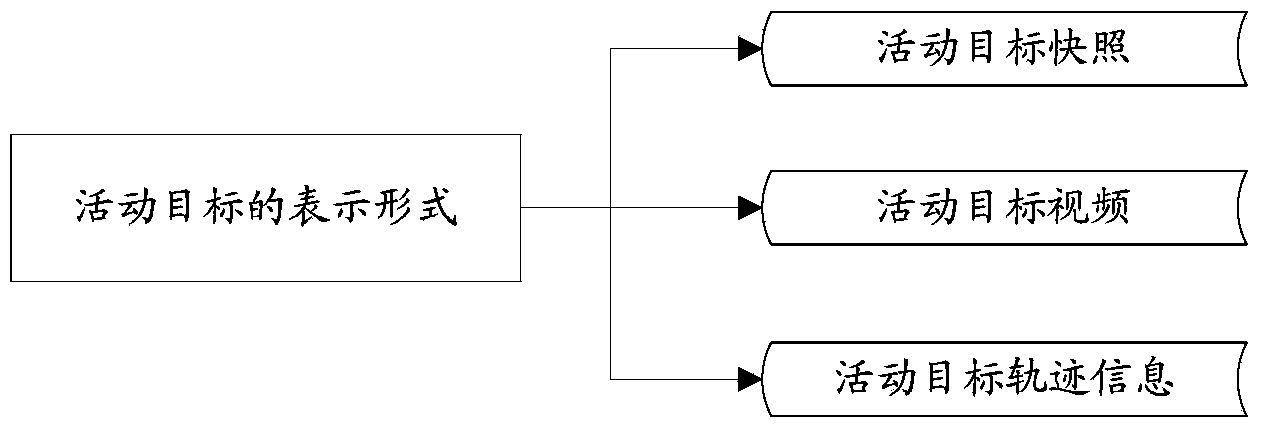

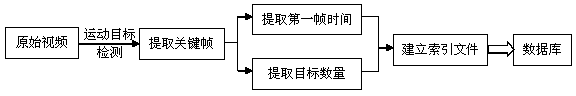

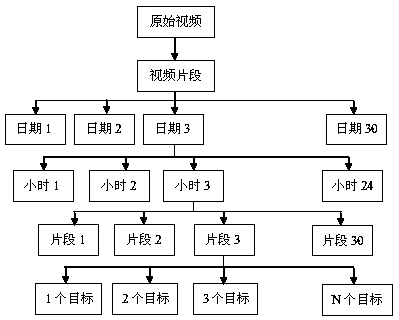

Video abstraction generation method based on space-time recombination of active events

ActiveCN103778237AAvoid interferenceSave resourcesSpecial data processing applicationsTime correlationStructured analysis

The invention provides a video abstraction generation method based on space-time recombination of active events. According to the method, an original video is pre-treated, blank frames are removed, and the video after pretreatment is subjected to structured analysis as follows: moving targets in the original video are taken as an object, videos of all key moving target events are extracted, time correlation between the moving target events is weakened, and time sequence recombination is performed on the moving target events based on the principle that activity ranges are not conflicted; meanwhile, background images are extracted reasonably based on the reference of the visual perception of a user, and a delayed dynamic background video is generated; and finally, the moving target events and the delayed dynamic background video are sutured seamlessly, a video abstraction with short time, concise content and comprehensive information is formed, and a plurality of moving targets can occur simultaneously in the finally generated video abstraction. The video abstraction generation method can generate the video abstraction used for video browsing or searching efficiently and rapidly, and the video abstraction can express semantic information of the video more reasonably and better meets the visual perception of the user.

Owner:BEIJING UNIV OF POSTS & TELECOMM

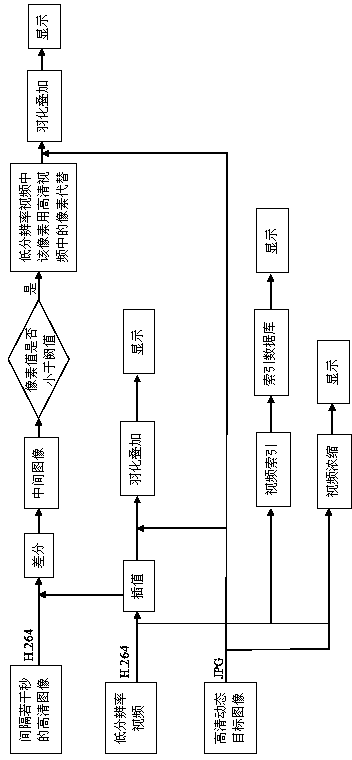

Event-oriented intelligent camera monitoring method

ActiveCN104284158ASave browsing timeImprove the efficiency of investigation and evidence collectionImage analysisClosed circuit television systemsComputer graphics (images)Engineering

The invention provides an event-oriented intelligent camera monitoring method. The method includes the steps that after video capture is conducted, processing is carried out, three code streams are obtained, the three code streams include a low-resolution video, high-definition images which are spaced by a plurality of seconds and a high-definition moving object image, and the three code streams are displayed on a display terminal independently or are displayed on the display terminal after being overlaid and fused. By means of the event-oriented intelligent camera monitoring method, one video which is much shorter than an original video can be provided, and the browsing time is greatly shortened; event clues can be searched for quickly according to the information such as time, and thus the investigation and evidence collection efficiency is improved; space information in the scene is fully utilized, space-time redundancy in the video is reduced, events which happen at different periods of time are displayed at the same time, and activities in the video can be understood and grasped easily; activities and events in the original video cannot be lost, and the effects that fast playing can be achieved and video information cannot be lost are achieved; the code stream bandwidth and the definition of special areas are both considered, hence, powerful support is provided for evidence collection after events, and a wide application requirement for the method can be met in the video security and protection monitoring field.

Owner:NANJING XINBIDA INTELLIGENT TECH

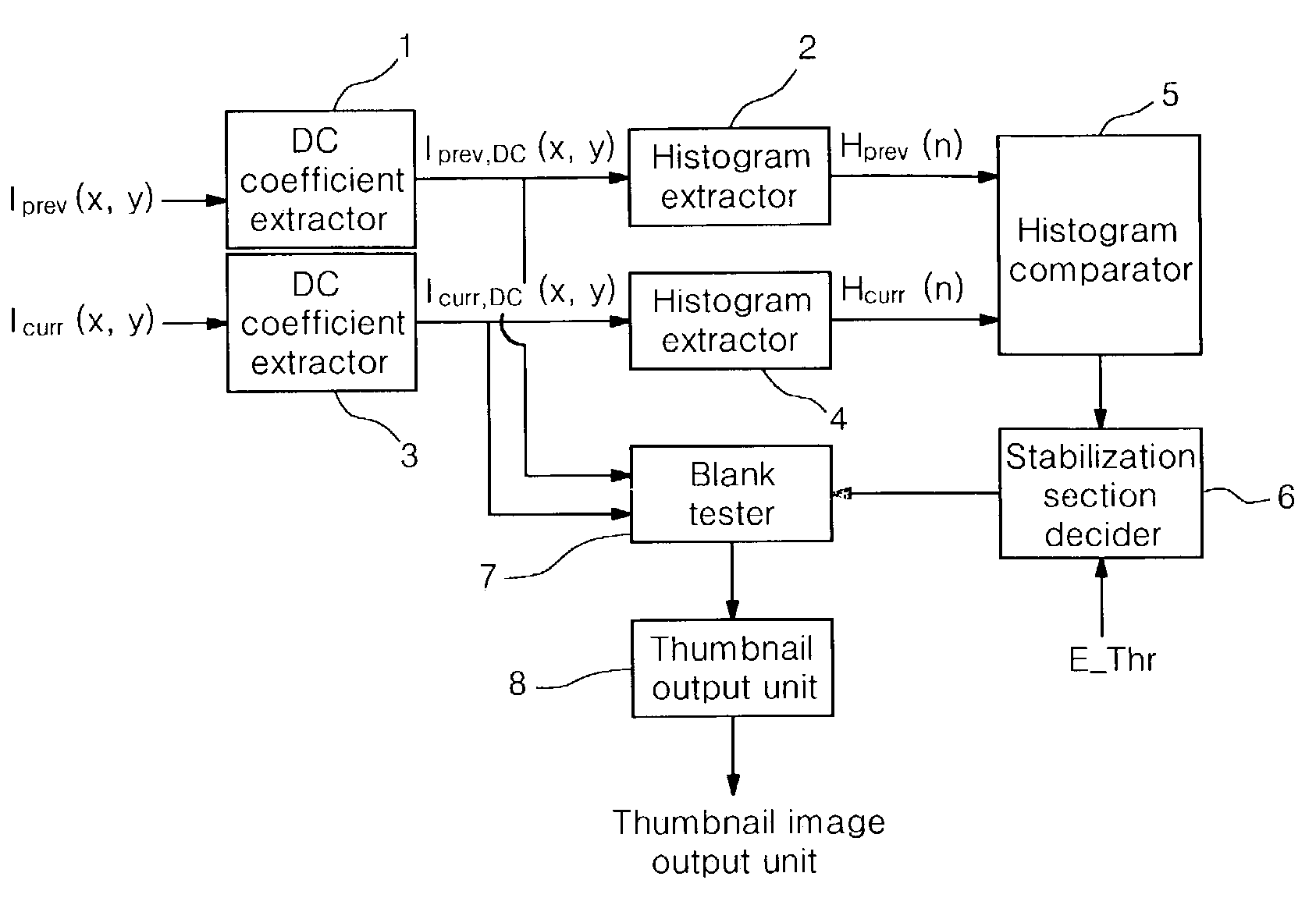

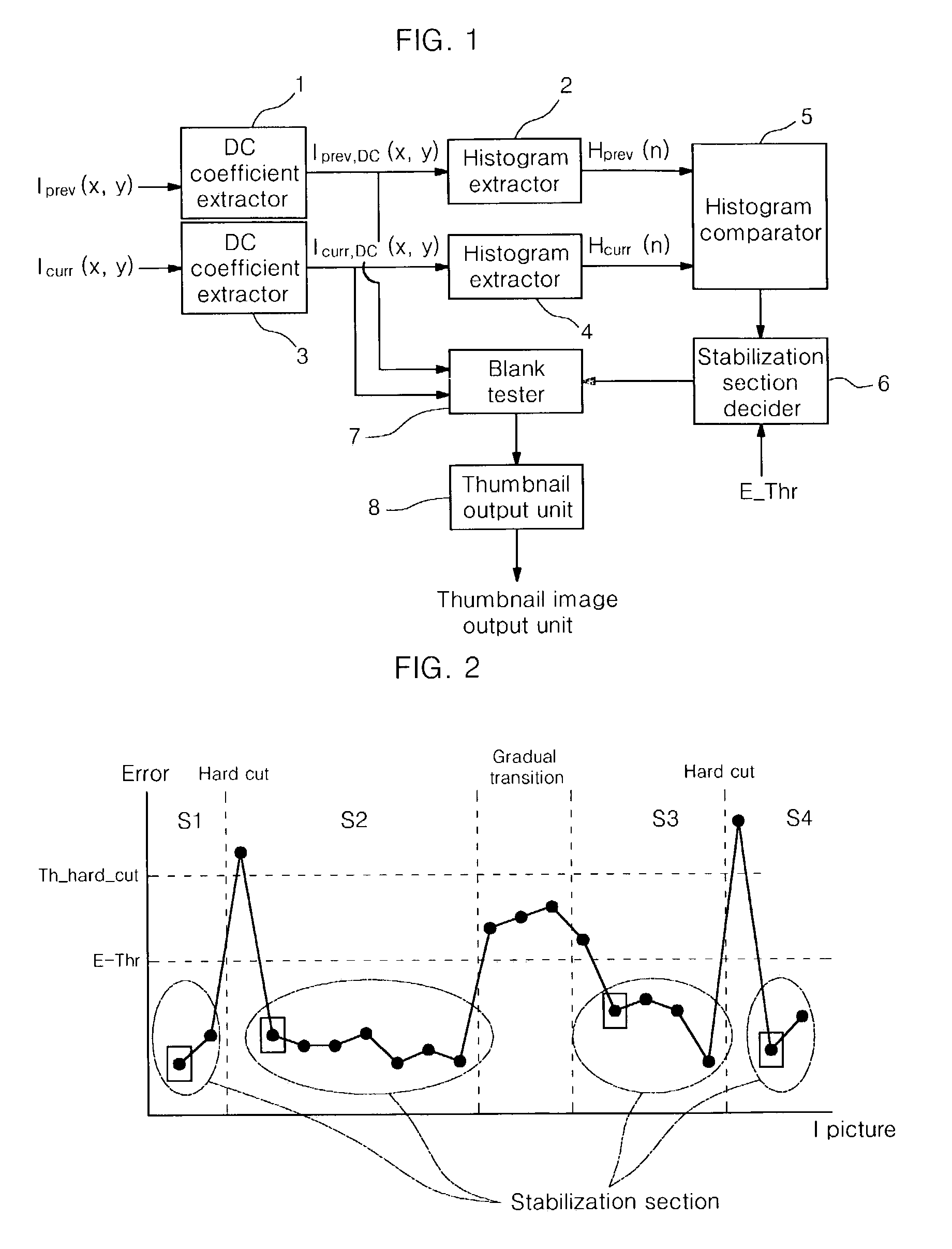

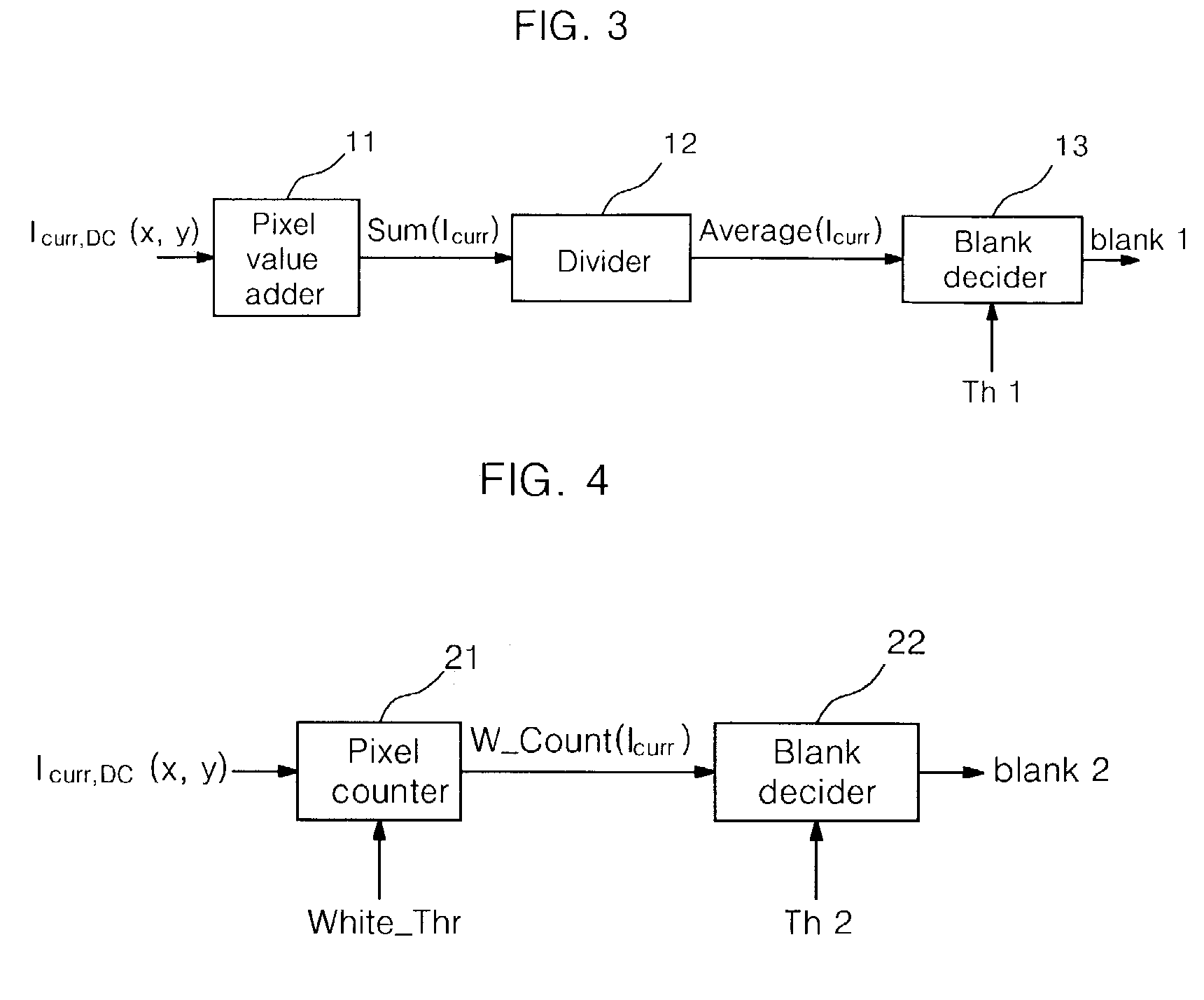

Apparatus and method for generating thumbnail images

InactiveUS7257261B2Improve information transmission abilityAvoid it happening againTelevision system detailsImage analysisVideo recordingThumbnail Image

The present invention discloses an apparatus for automatically generating thumbnail images for a video browser and a video recording and reproducing device, and a method therefor. A difference between histograms of two DC images of a current frame I picture and a previous frame I picture is calculated and compared with a predetermined reference value for deciding a stabilization section. When the difference between the histograms of the current I picture DC image and the previous I picture DC image does not exceed the reference value, the corresponding I picture DC image is outputted as the thumbnail image. Here, a blank test is executed to exclude a dark original image whose contents are indistinguishable. Only the DC image of the I picture passing through the blank test is outputted as the thumbnail image.

Owner:LG ELECTRONICS INC

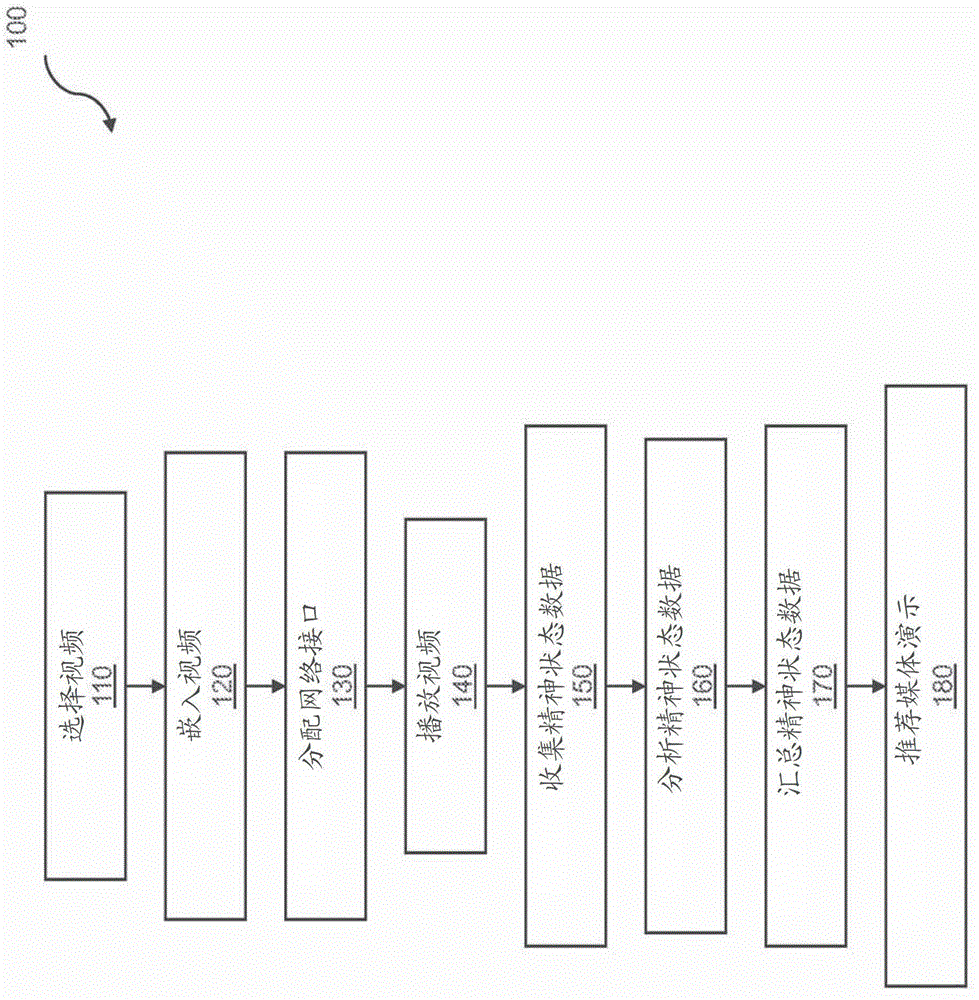

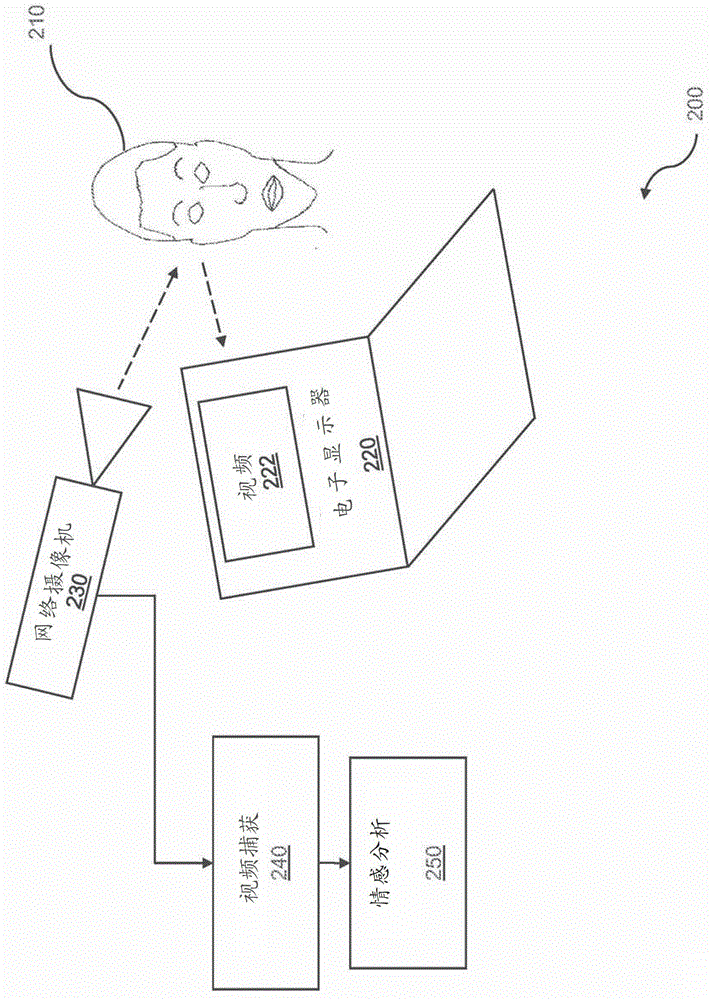

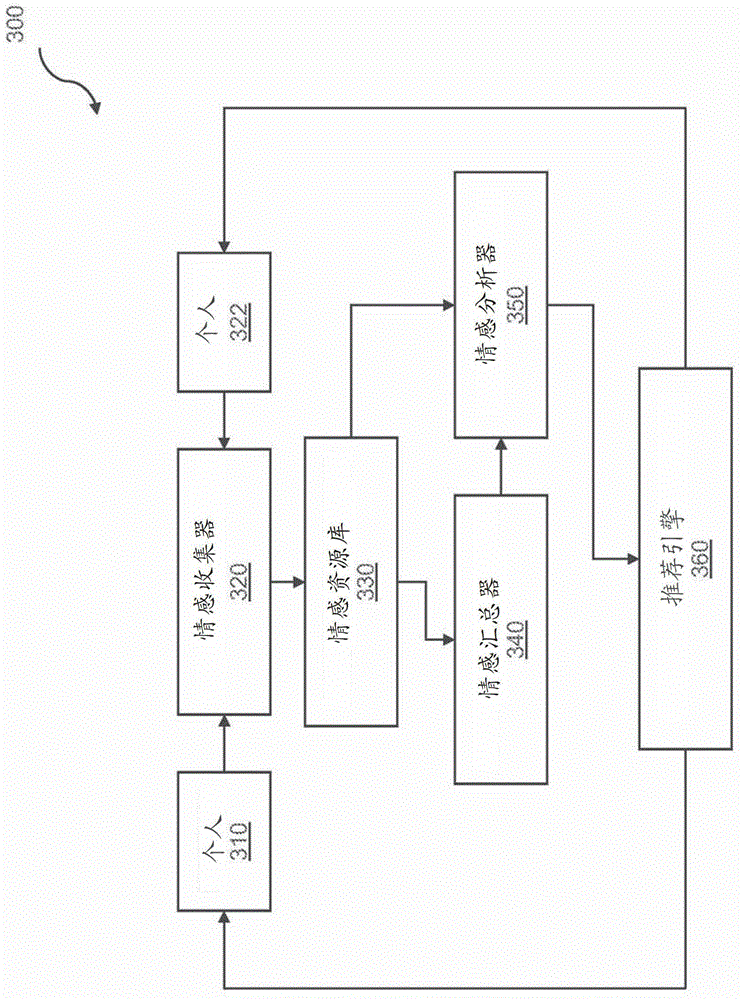

Video recommendation based on affect

Analysis of mental states is provided to enable data analysis pertaining to video recommendation based on affect. Video response may be evaluated based on viewing and sampling various videos. Data is captured for viewers of a video where the data includes facial information and / or physiological data. Facial and physiological information may be gathered for a group of viewers. In some embodiments, demographics information is collected and used as a criterion for visualization of affect responses to videos. In some embodiments, data captured from an individual viewer or group of viewers is used to rank videos.

Owner:AFFECTIVA

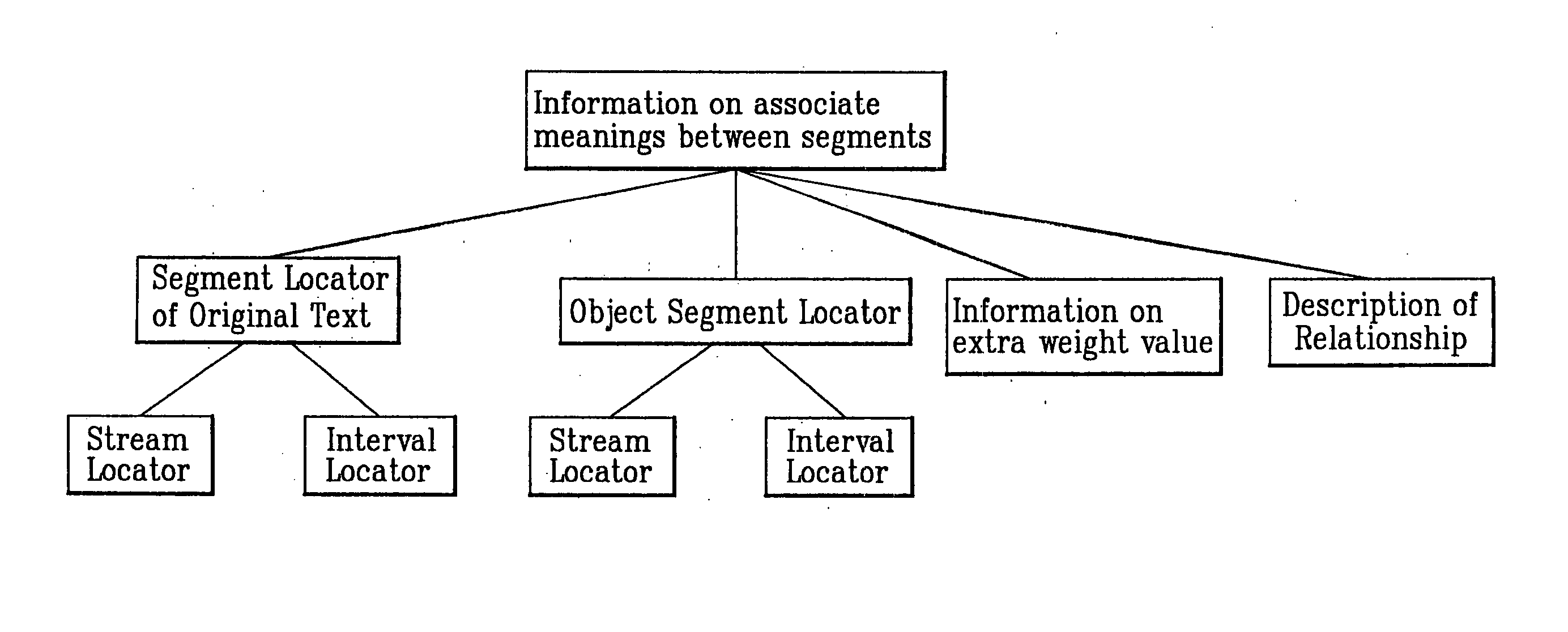

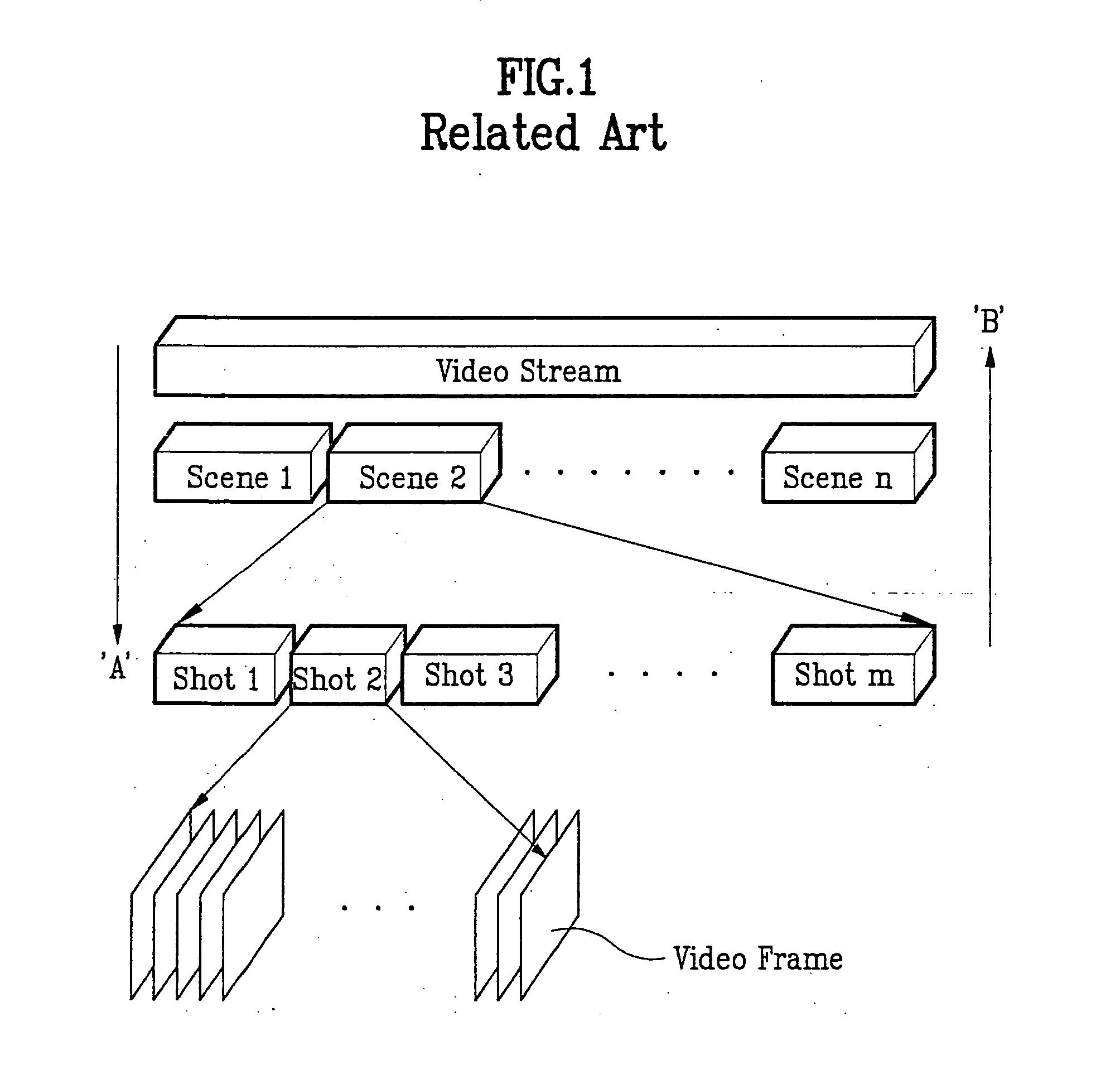

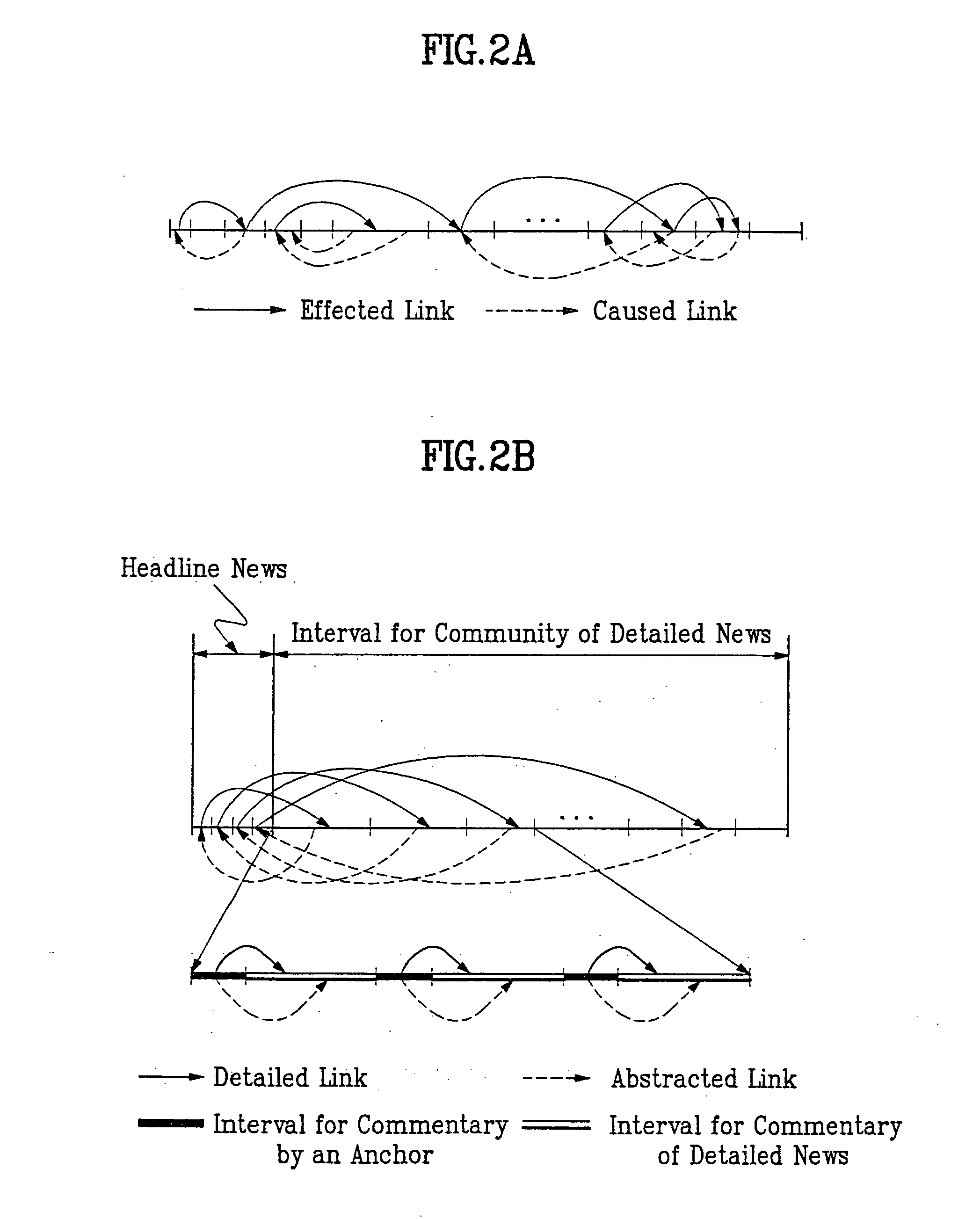

Method of constructing information on associate meanings between segments of multimedia stream and method of browing video using the same

InactiveUS20050240980A1Easily search/browseShort timeTelevision system detailsDigital data information retrievalComputer graphics (images)Video browsing

Disclosed are a method of constructing information on associate meanings between segments of a multimedia stream, which can describe the cause / effect or abstract / detail relationship between segments of the video streams to efficiently browse the video stream and a method of browsing a video using the same. The present invention defines the cause / effect or abstract / detail relationship between the segments, event intervals, scenes, shots, etc. existing within one video stream or between the video streams, and provides a method of describing the relationship in a data region based on the content of a video stream as well as a method of browsing a video by using the information on the cause / effect or abstract / detail relationship obtained by the aforementioned method. Accordingly, a video browsing on associate meanings is available with easy manipulation and easy access to a desired part, thereby providing an effective video browsing interface for easy browsing of desired segments in a short period of time.

Owner:LG ELECTRONICS INC

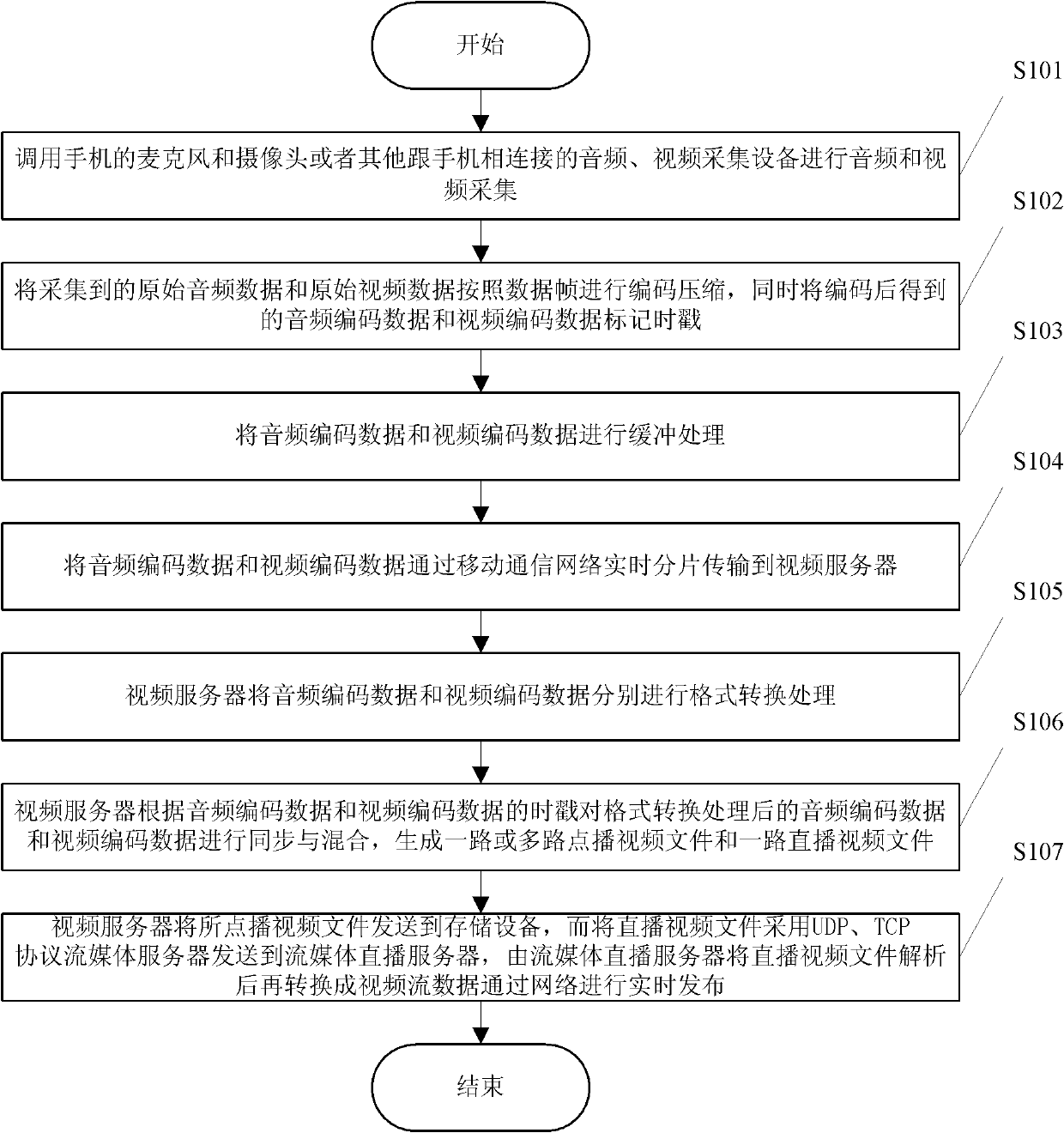

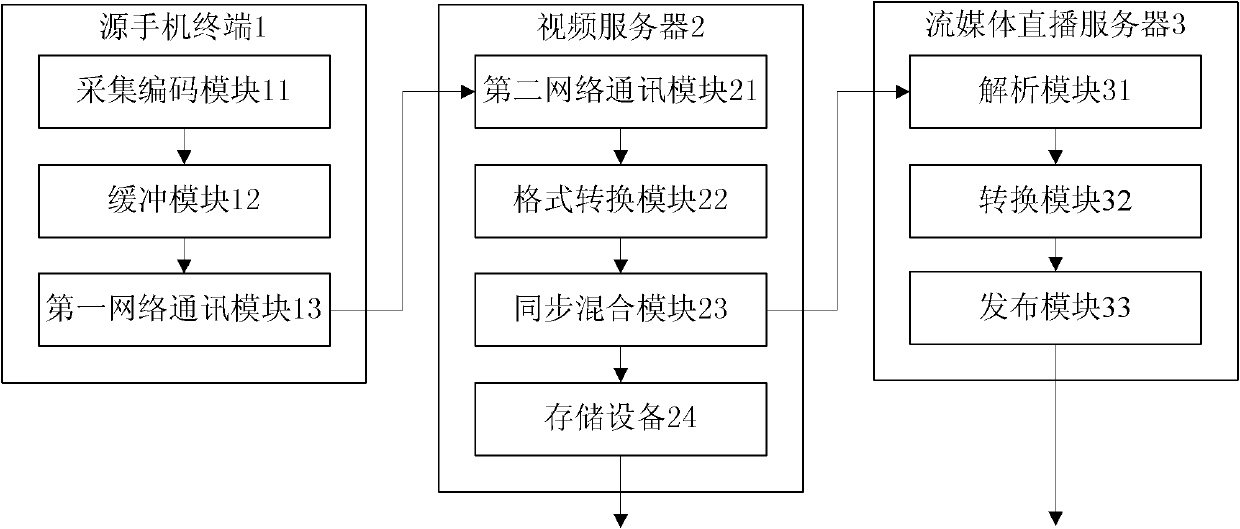

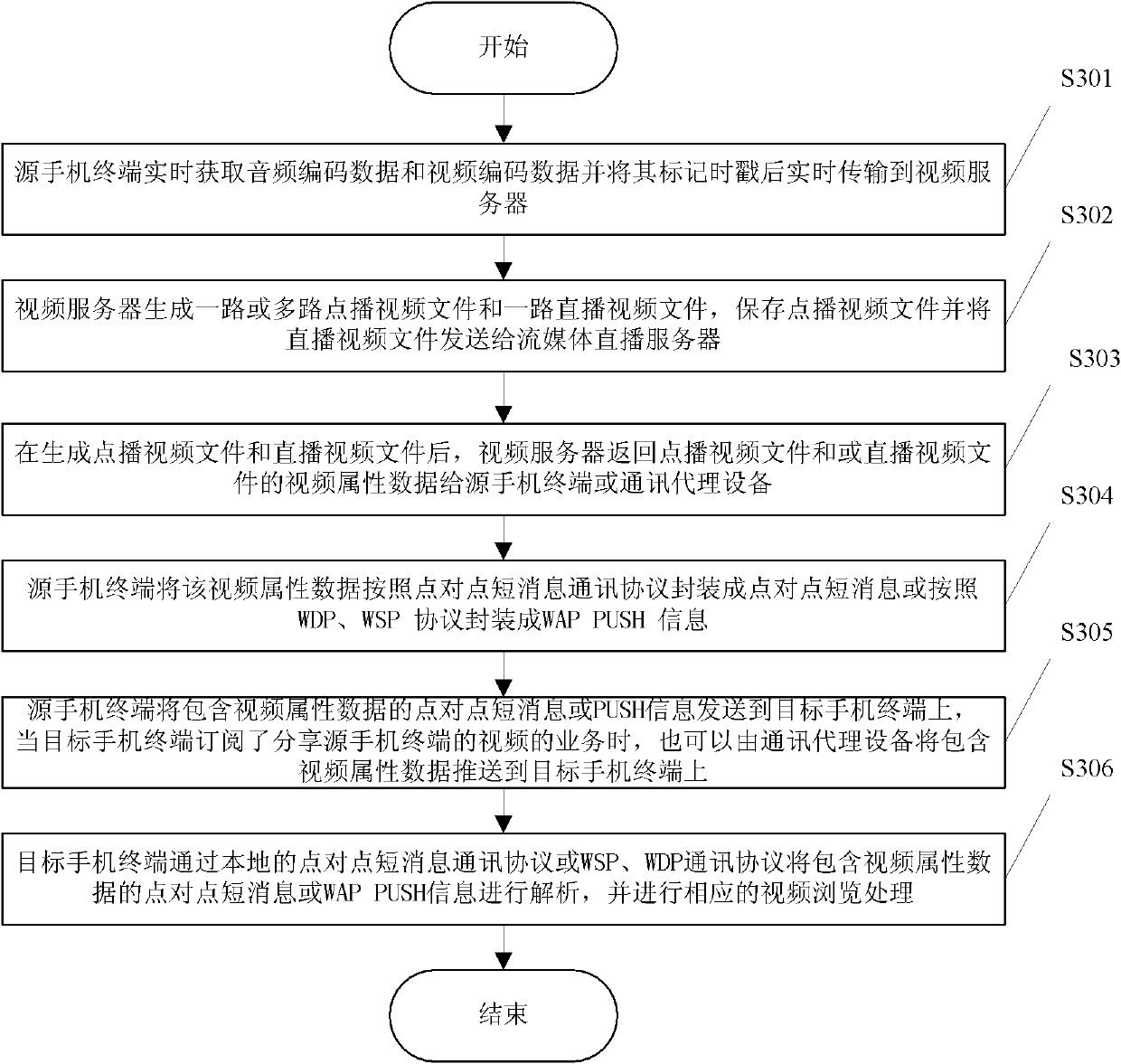

Method for sharing video of mobile phone and system

InactiveCN102447956AShare in real timeSubstation equipmentSelective content distributionTimestampVideo encoding

The invention discloses a method for sharing a video of a mobile phone and a system. The method comprises the following steps that: a source mobile phone terminal acquires audio coding data and video coding data in real time and transmits to a video server in real time after the being marked with timestamp; the audio coding data and the video coding data are generated into a path or multi paths of VOD (Video on Demand) video file(s) and one path of live video file and transmits the video attribute data of the VOD file(s) / or the live video file to the source mobile phone terminal or communication agency equipment; the source mobile phone terminal transmits the video attribute data to a target mobile phone terminal, or when the target mobile phone subscribes the business of sharing the video of the source mobile phone terminal, the communication agency equipment pushes the information containing the video attribute data into the target mobile phone terminal; and the target mobile phone terminal analyzes the information containing the video attribute data, and calls a video browser for browsing the video. Thus, by the invention, by a mobile communication network and through connectionless conversion interaction, a data channel is established between two mobile phones for sharing the video in real time.

Owner:北京沃安科技有限公司

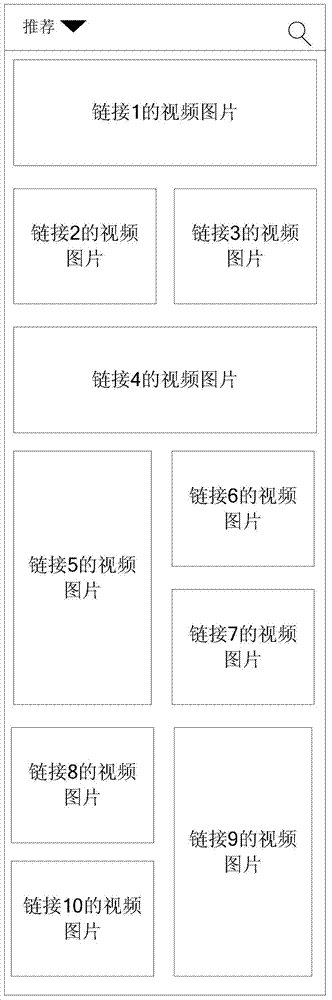

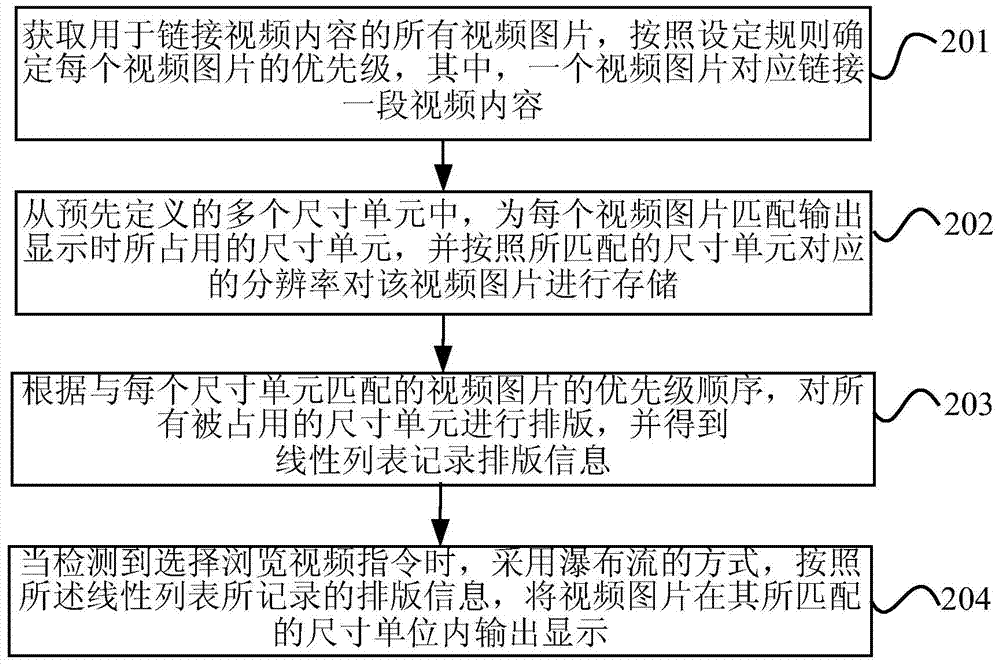

Intelligent terminal and method for displaying video images by same

InactiveCN103702216AIncrease your chances of finding videos of interestReduce complexitySelective content distributionComputer graphics (images)Image resolution

The invention discloses an intelligent terminal and a method for displaying video images by the same. The method comprises the following steps of acquiring all video images used for linking video content, and determining the priority of each video image according to a set rule; matching the occupied size unit for each video image during outputting and display from a plurality of predefined size units, and storing each video image according to the resolution ratio corresponding to the matched size unit; composing all occupied size units according to the priority order of the video images matched with the size units, and recording composing information by using a linear list; when that a video browsing instruction is selected is detected, outputting and displaying the video images in the matched size units in a pinterest mode according to the composing information recorded by the linear list. According to the intelligent terminal and the method, the complexity of the interface of the intelligent terminal can be effectively reduced, an interface space is saved, and the probability that a user finds interested video can be increased.

Owner:LETV INFORMATION TECH BEIJING

Video abstract generating method and device

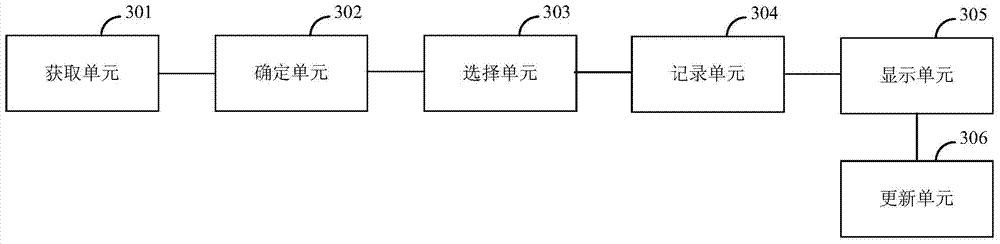

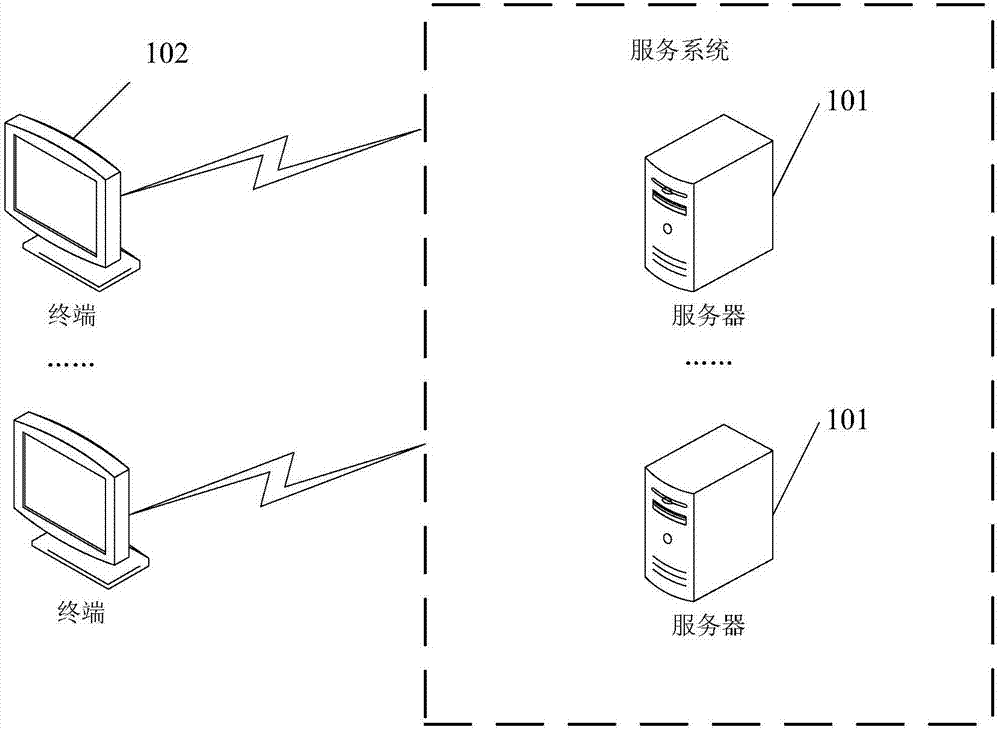

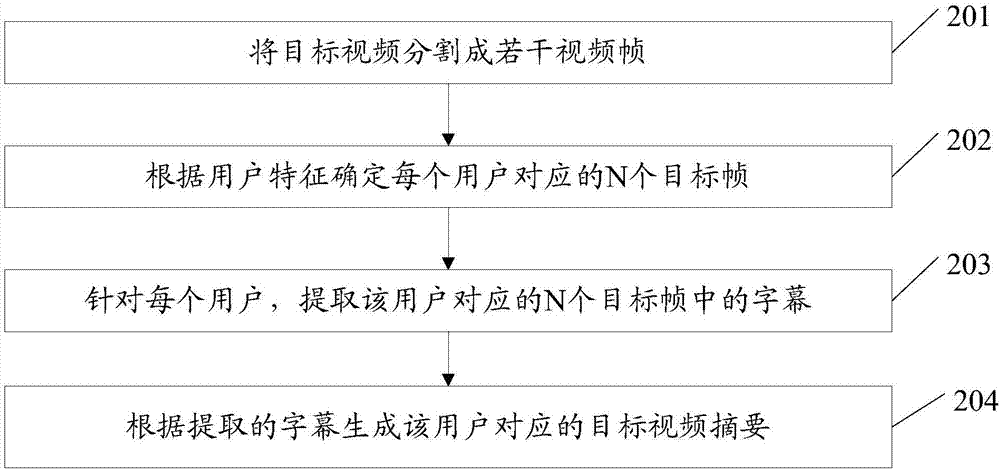

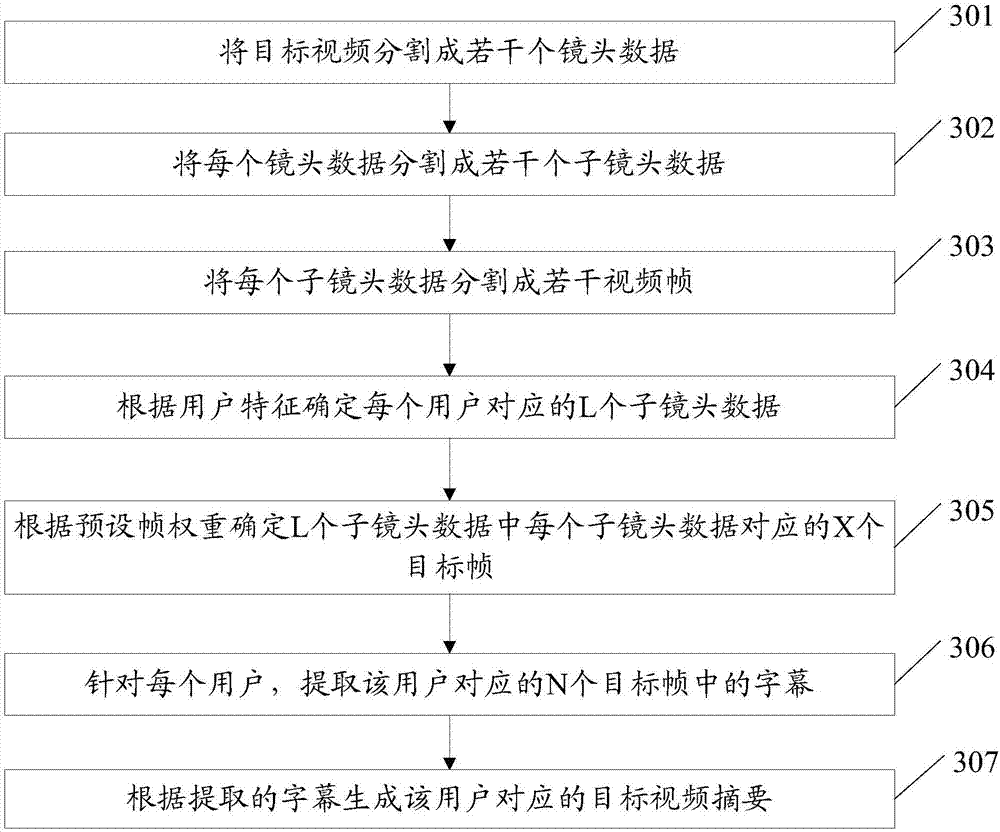

ActiveCN106888407AIncrease pageviewsImprove production efficiencySelective content distributionComputer graphics (images)Video browsing

The embodiment of the invention discloses a video abstract generating method. According to the technical scheme of the invention, different video abstracts are generated automatically for different users, and the video browsing amount is improved. The effective information is provided for more users, and the generation efficiency of video abstracts is improved. According to the embodiments of the method, the method comprises the steps of dividing a target video into a plurality of video frames; according to the features of users; determining N target frames corresponding to each user, wherein the target frames belong to video frames and N is an integer greater than 1; for each user, extracting the subtitles of N target frames corresponding to the user; according to the subtitles, generating a target video abstract corresponding to the user. The embodiment of the invention also provides a video abstract generating device. Based on the device, different video abstracts are generated automatically for different users, and the video browsing amount is improved. The effective information is provided for more users, and the generation efficiency of video abstracts is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

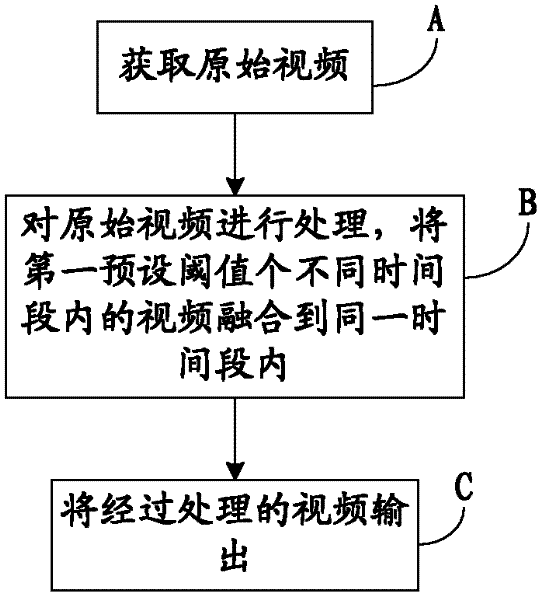

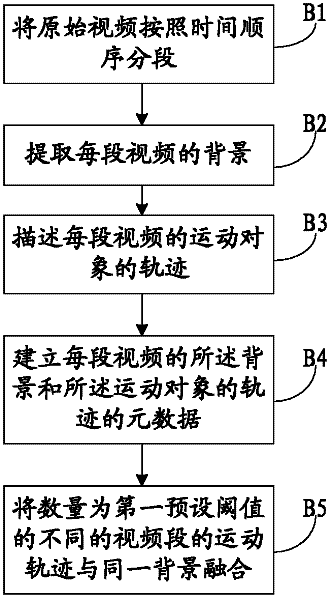

Monitoring image processing method, device and system

InactiveCN102231820AQuick ViewTelevision system detailsImage analysisImaging processingVideo browsing

The invention discloses a monitoring image processing method, which comprises the following steps of: acquiring an original video; processing the original video, and fusing videos in different time periods in a number which is a preset threshold value into the same time period; and outputting the processed video. The invention also discloses a monitoring image processing device, which comprises a segmentation module, a background extraction module, a description module, a data storage module and a fusion module. The invention also provides a monitoring image processing system, which comprises an input unit, an image processing device and an output unit. By the monitoring image processing method, the monitoring image processing device and the monitoring image processing system provided by the invention, video browsing efficiency can be effectively improved.

Owner:GUANGZHOU JIAQI INTELLIGENT TECHNOLOGY CO LTD

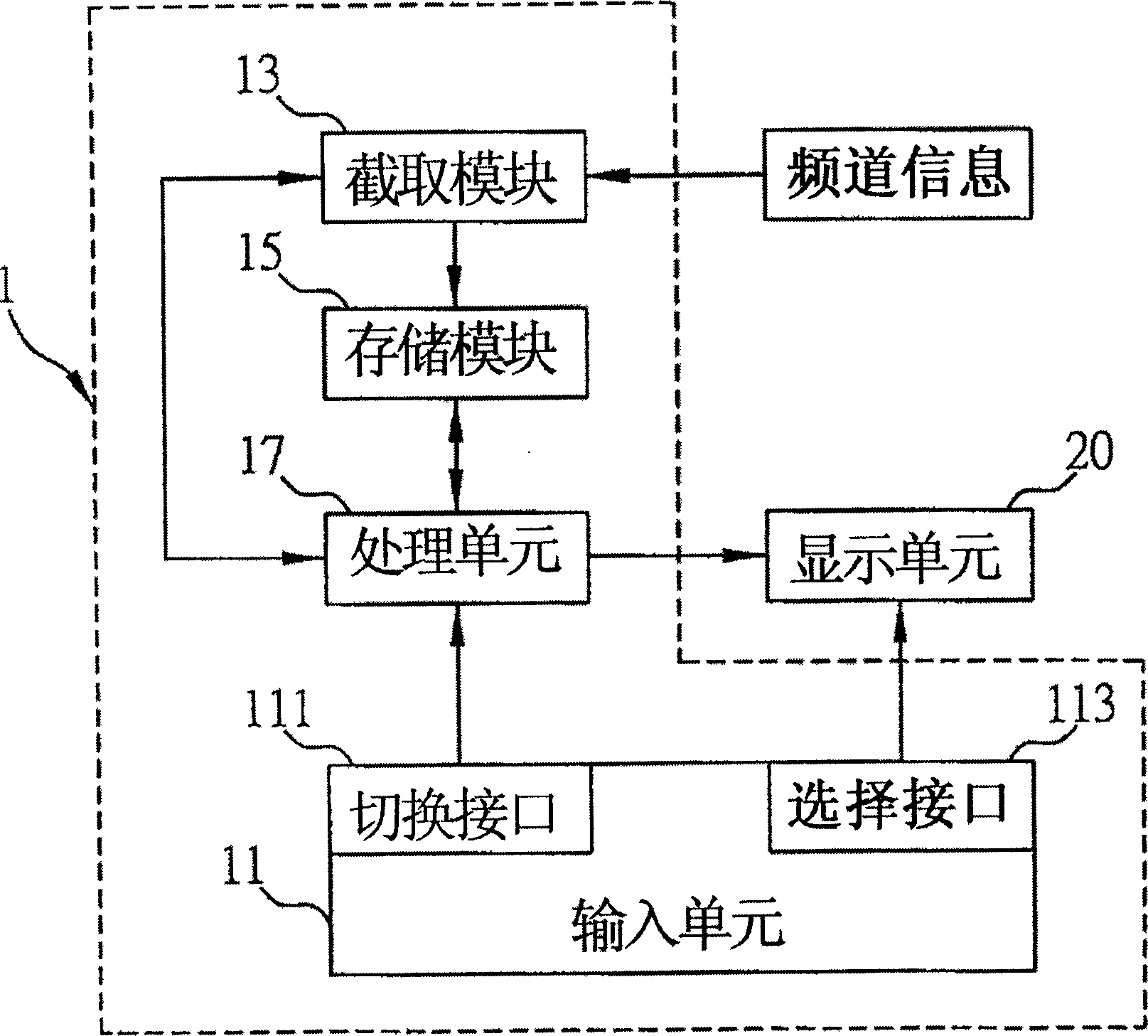

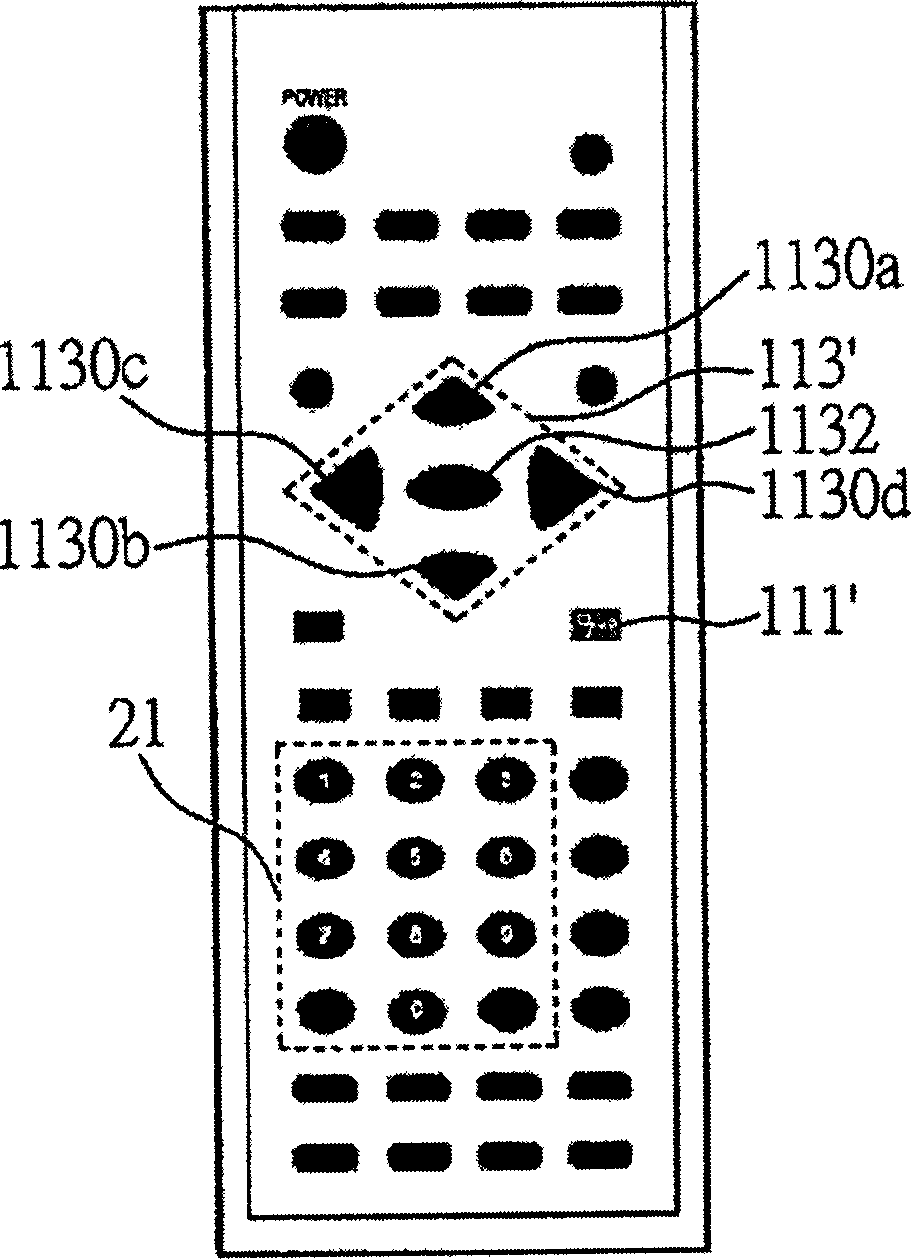

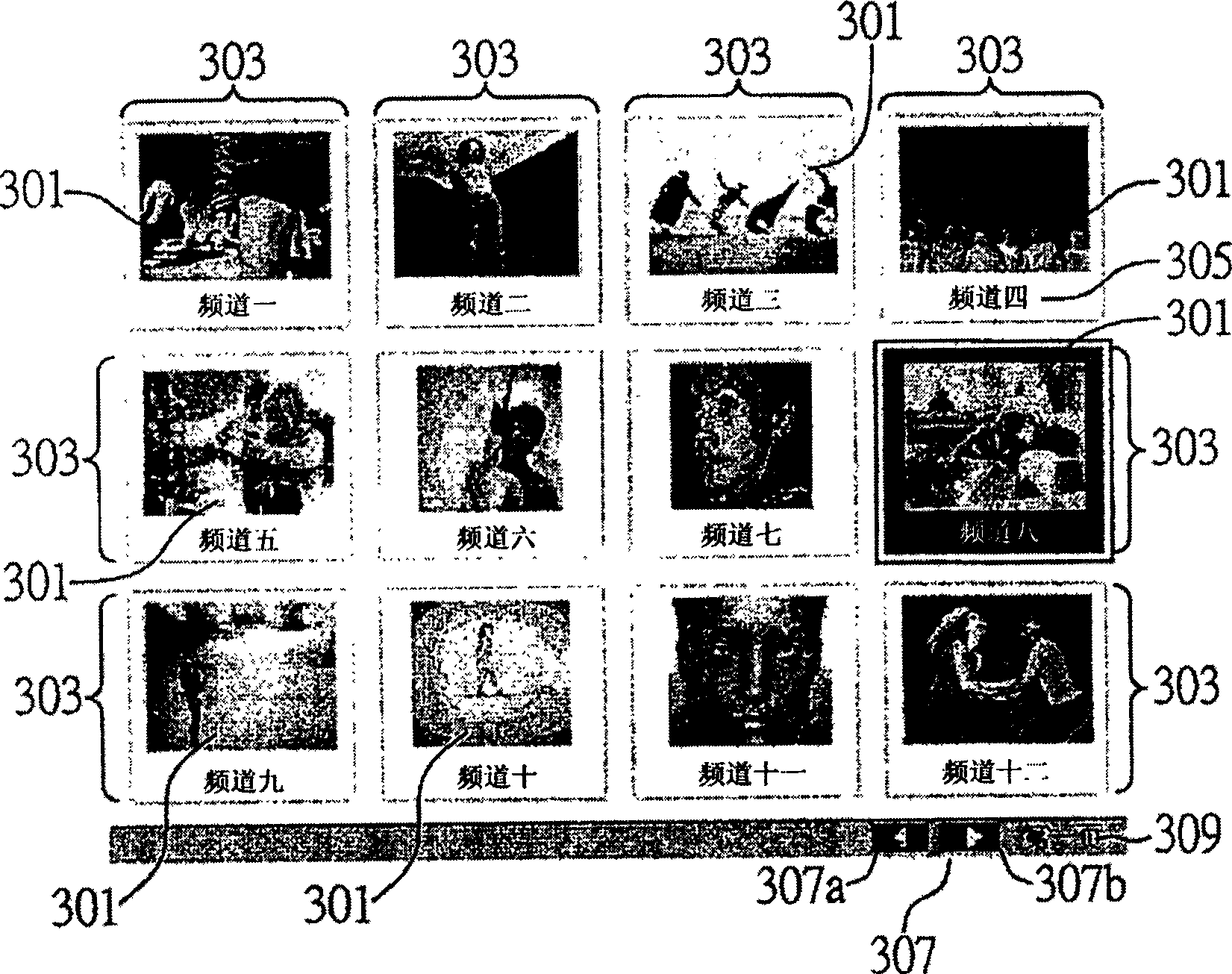

Video frequency browsing system and method

InactiveCN1901642ARealize switchingQuick ViewTelevision system detailsColor television detailsVideo browsingMultimedia

This invention relates to a system and a method for video browsing, in which, the system includes: a process unit, an input unit, an interception module and a storage unit, in which, users can play contents of said channel dynamically by applying said video browsing system and the method to select the multi-picture display mode and the channel expressed by the pictures to realize quick browsing and effective selection to programs.

Owner:INVENTEC CORP

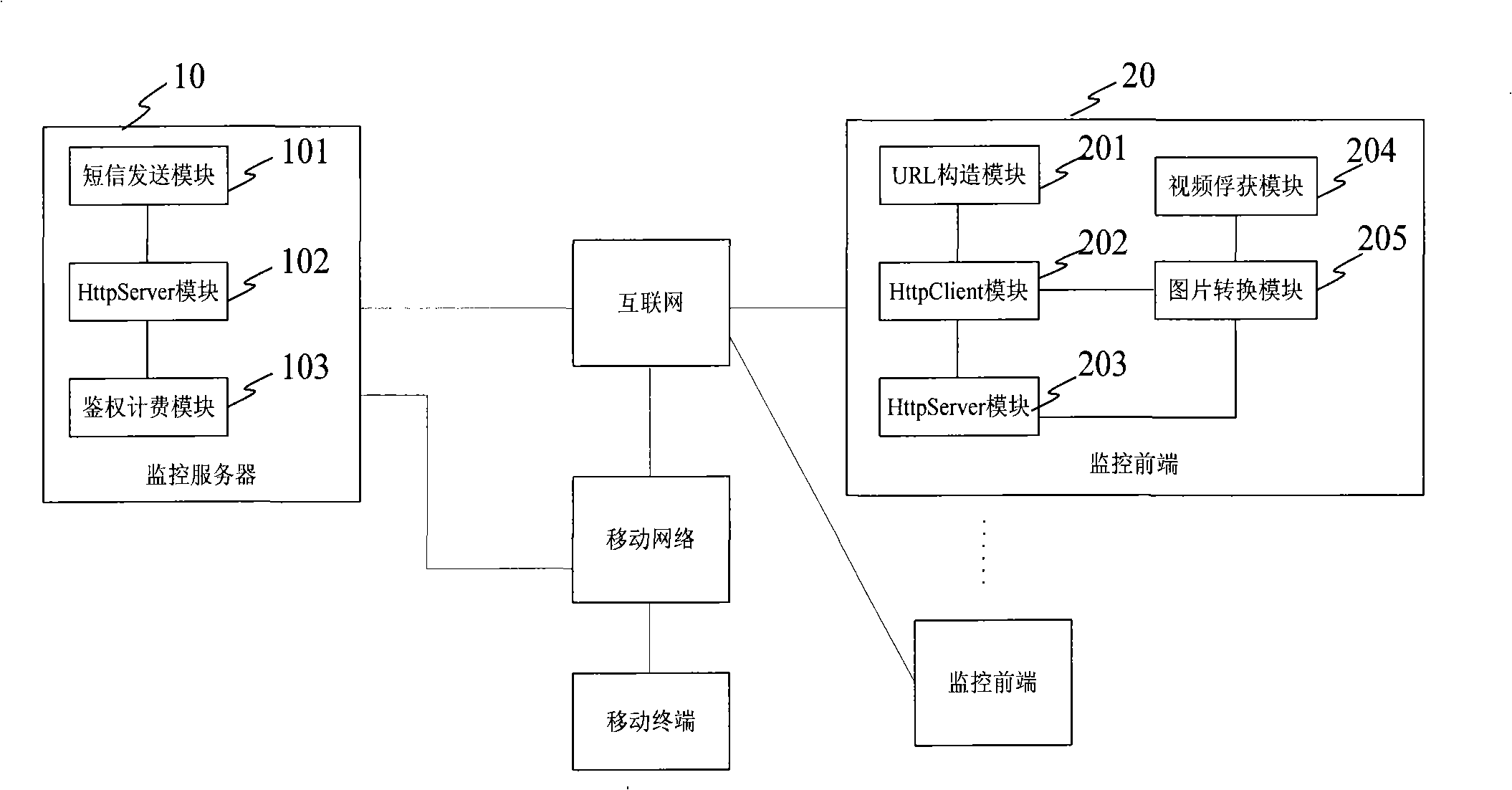

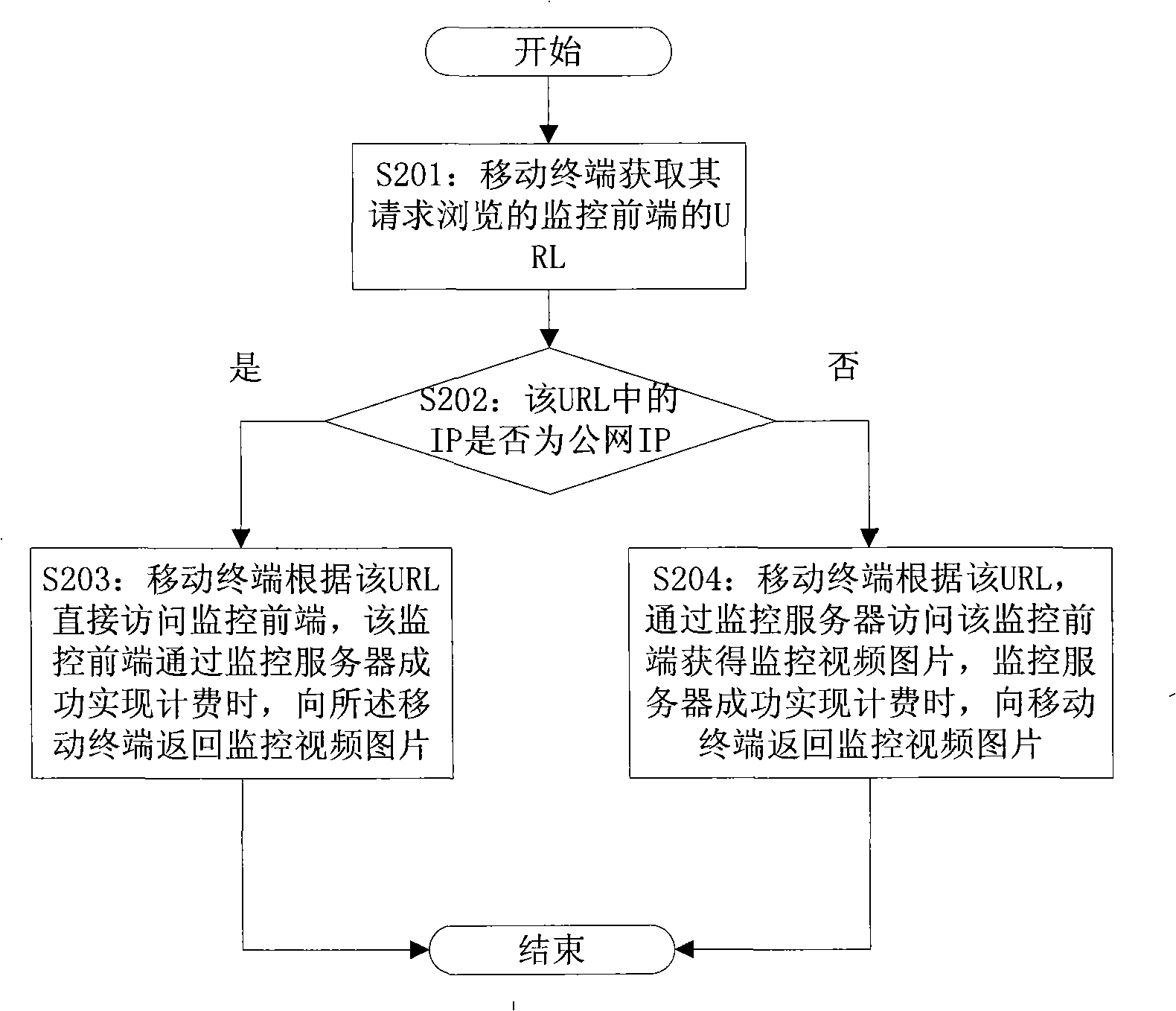

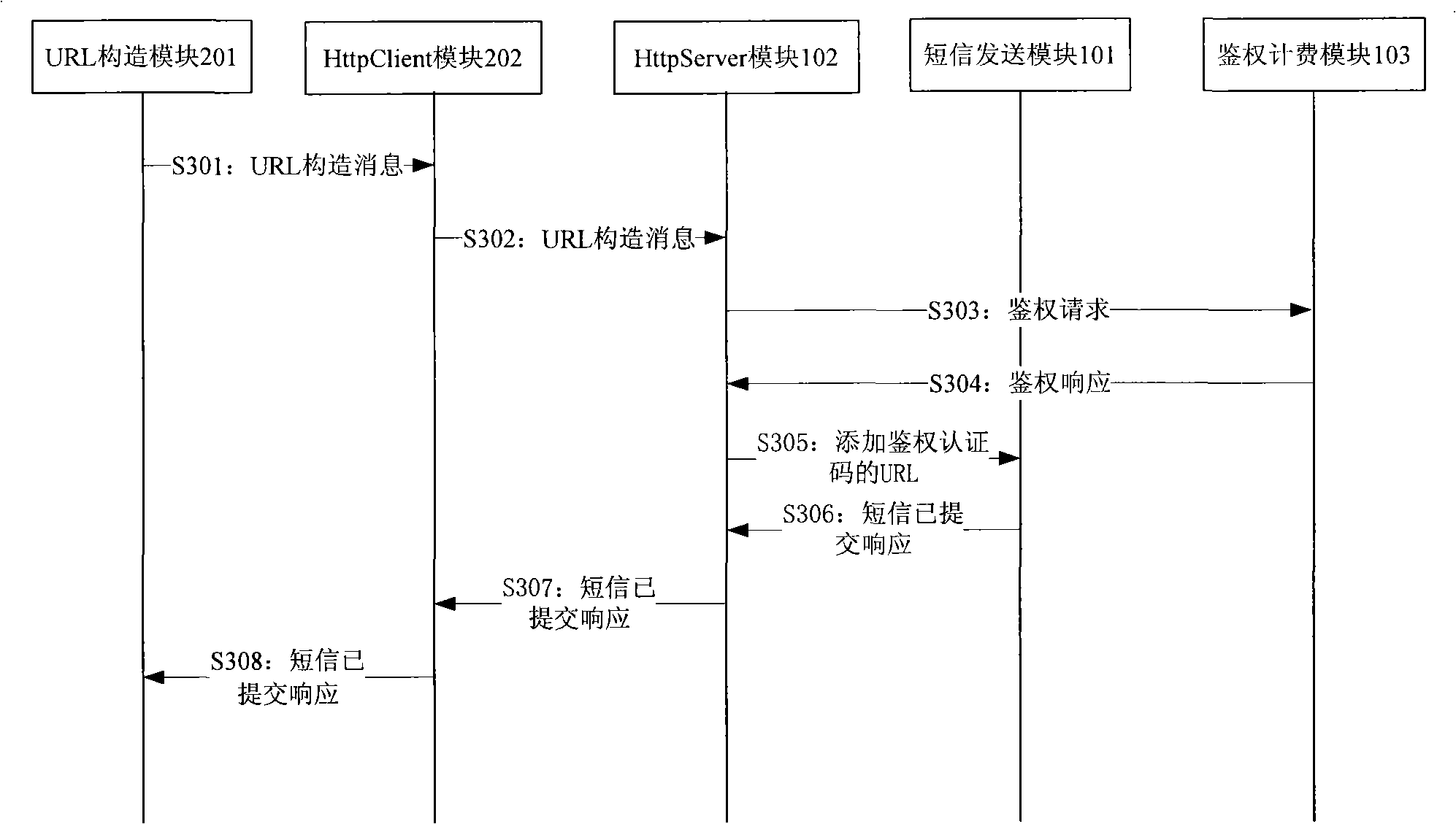

System and method for implementing mobile terminal monitored video browse

InactiveCN101287003ALower requirementRealize browsingClosed circuit television systemsRadio/inductive link selection arrangementsVideo monitoringMobile end

The invention relates to a system for realizing video browse monitoring by a mobile terminal, which comprises a monitoring server and at least one monitoring front end; the invention also provides a method for realizing video browse monitoring by the mobile terminal; in the method, the mobile terminal obtains a uniform resource localizer (URL) at the monitoring front end; when an IP in the URL is the IP of a public network, the mobile end directly visits the monitoring front end according to the URL; the monitoring front end returns video monitoring pictures to the mobile terminal and finishes the procedure of monitoring video browse when successfully accounting; when the IP of the URL is the IP of an internal network, the mobile terminal visits the monitoring front end and obtains the video monitoring pictures; the monitoring server returns video monitoring pictures to the mobile terminal and finishes the procedure of monitoring video browse when successfully accounting. The system and the method lower the requirement for the mobile terminal when browsing monitored videos, relax the requirement for the access manner of the monitoring front end and are greatly convenient for users to use.

Owner:ZTE CORP

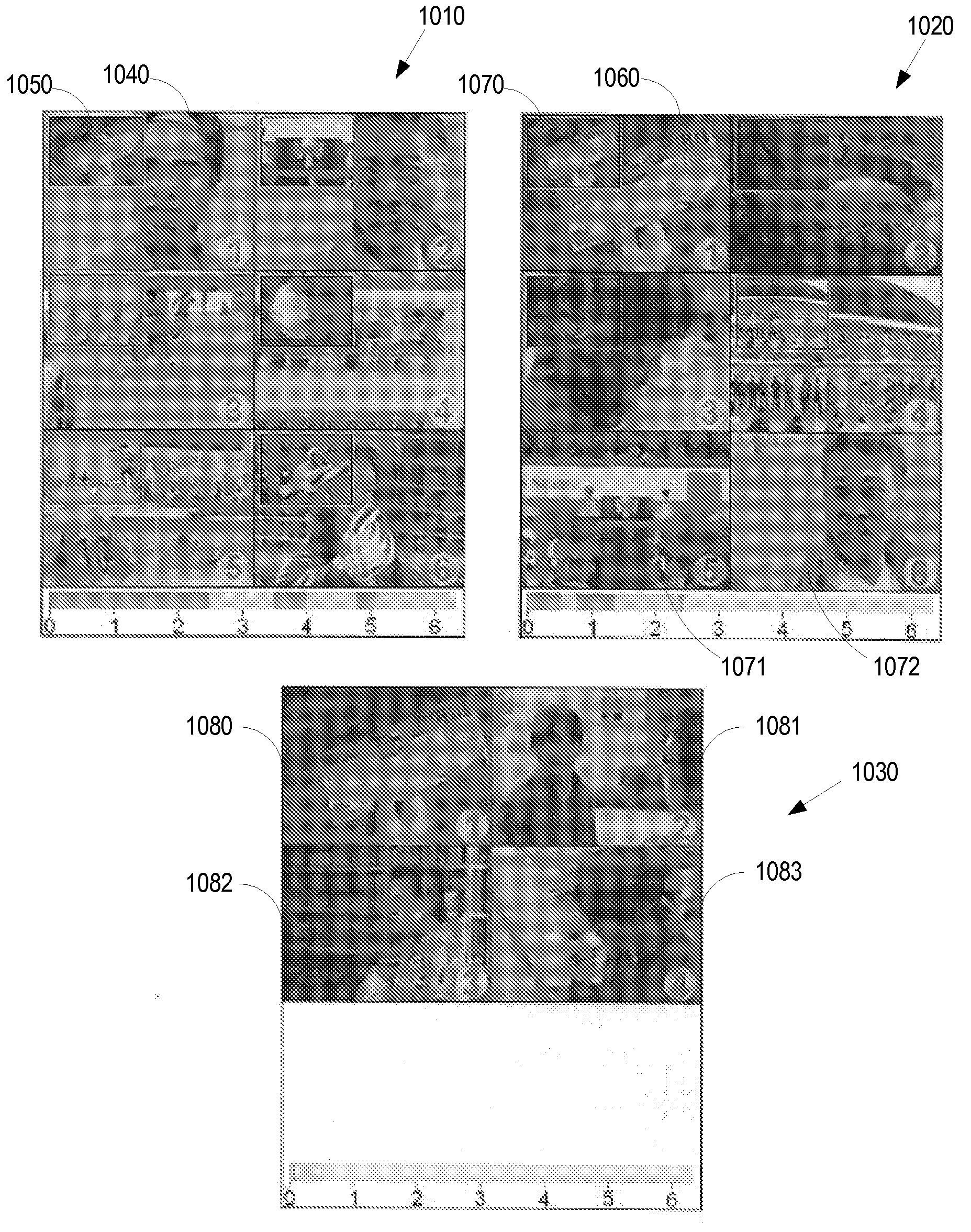

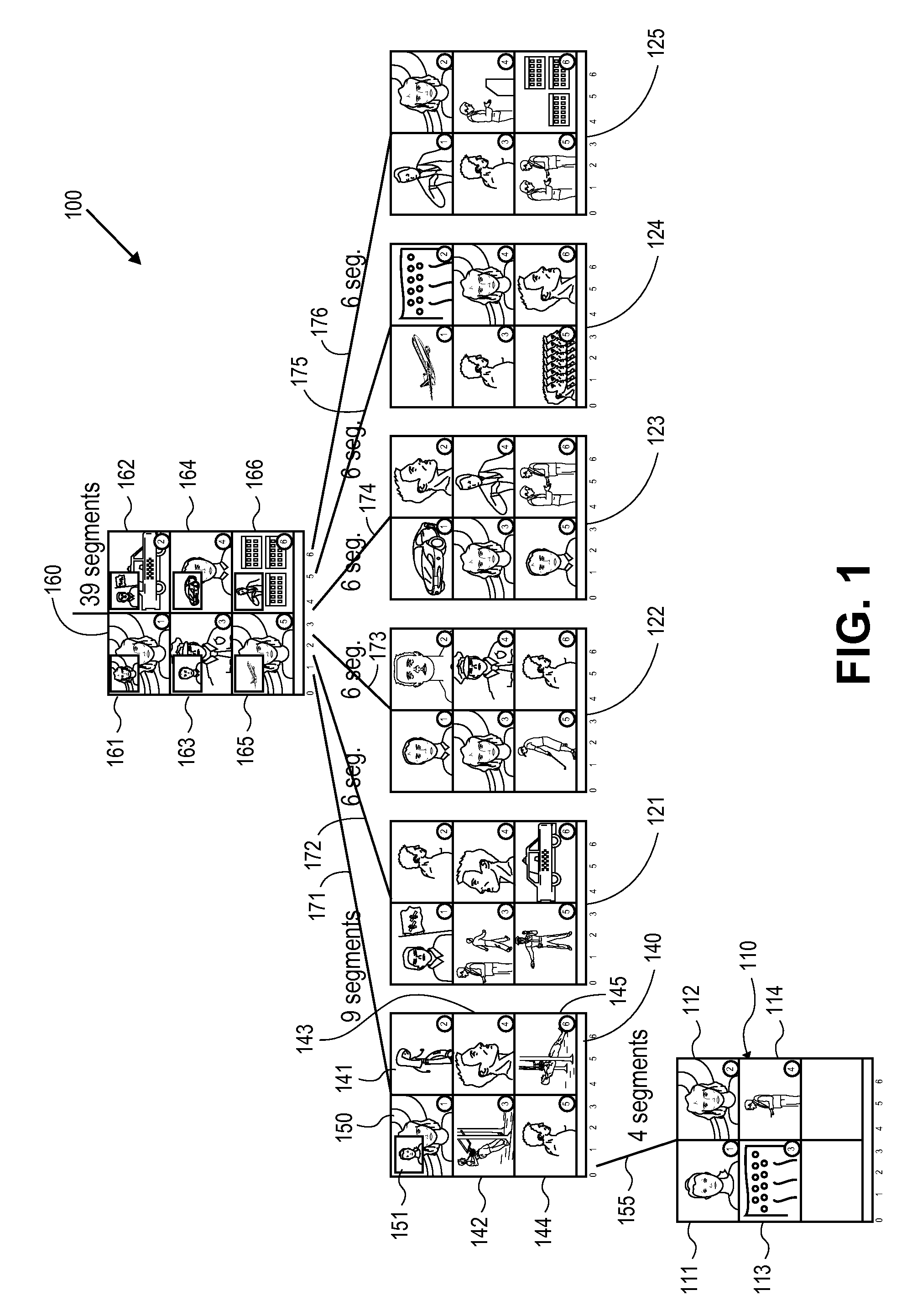

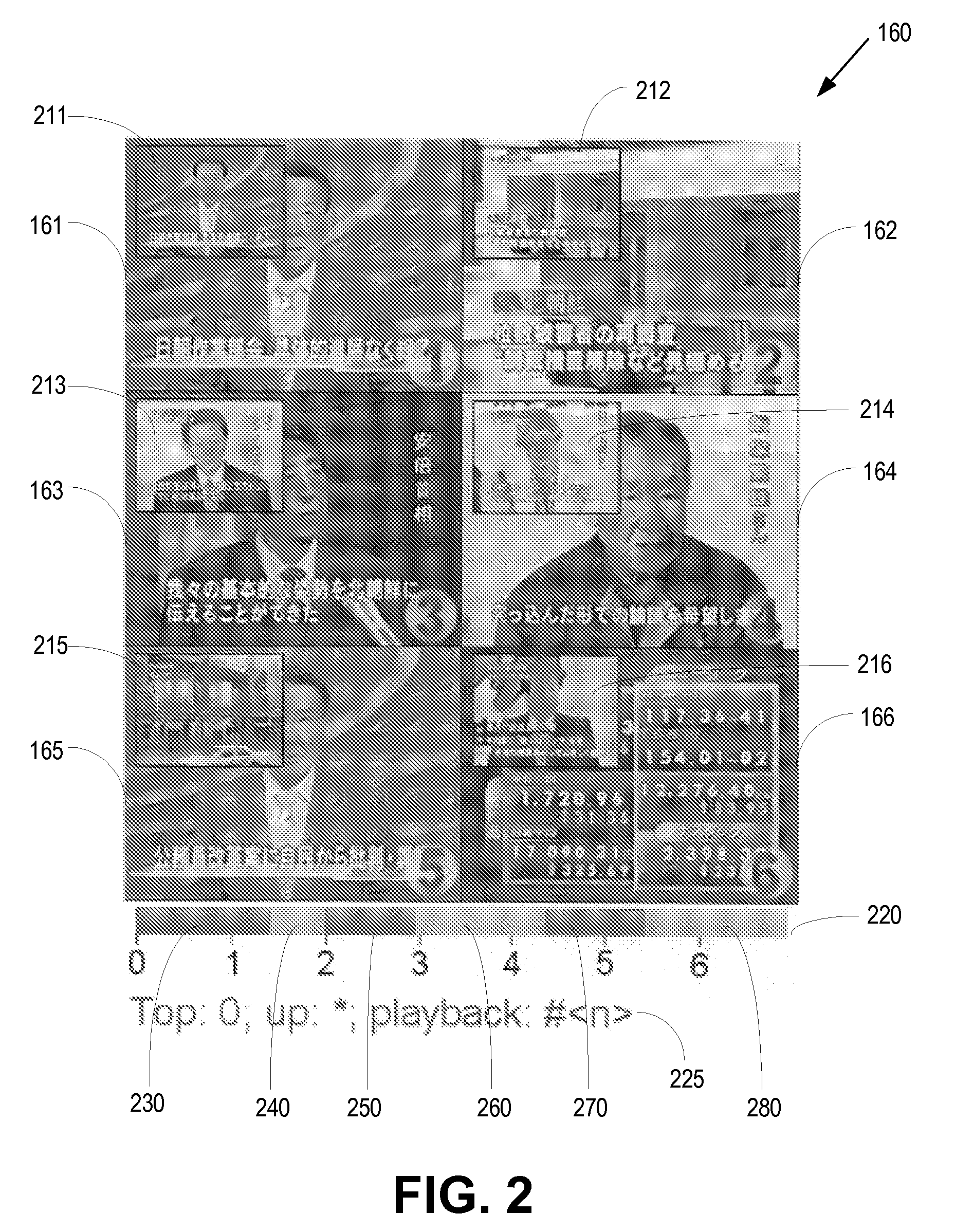

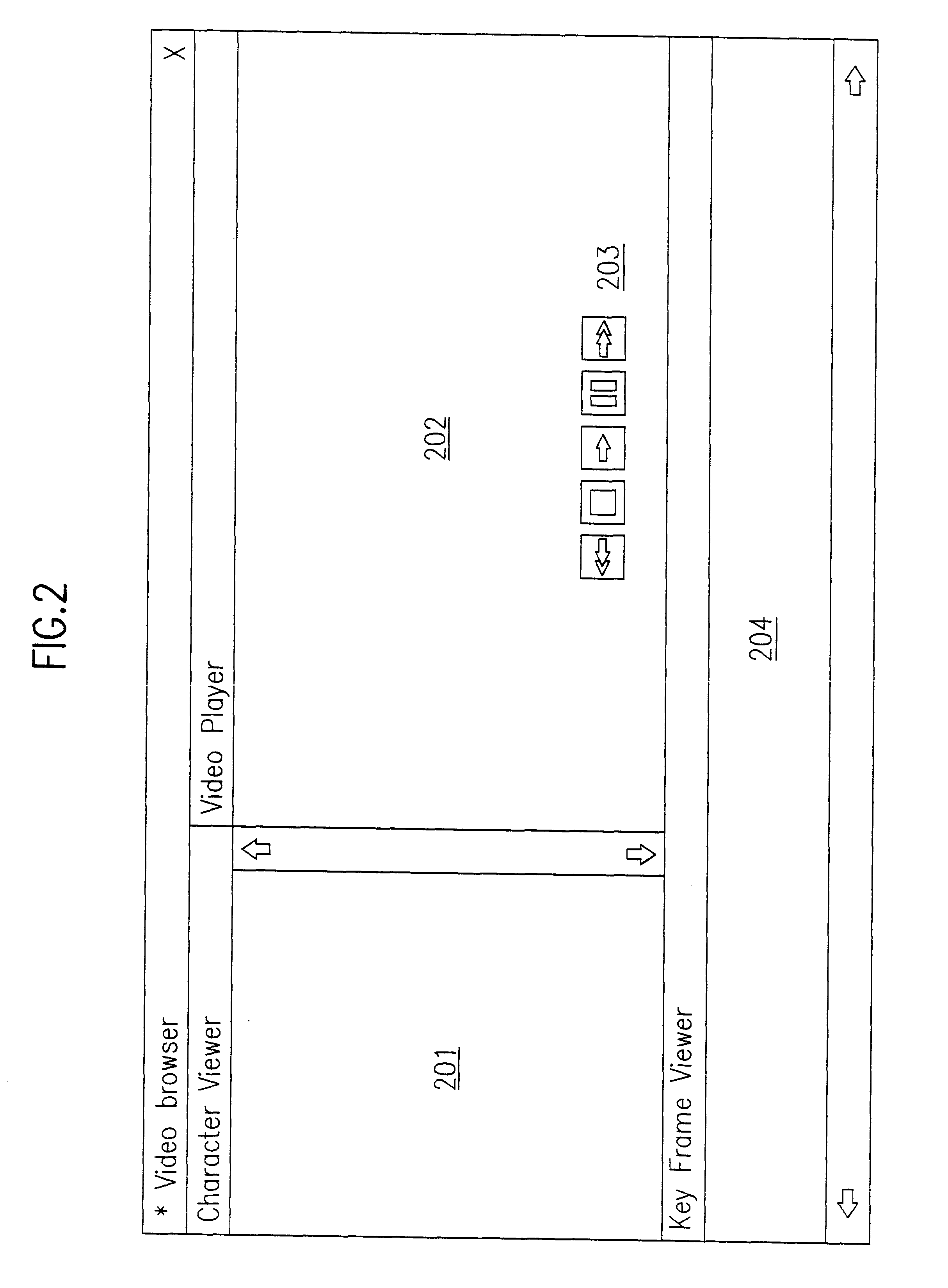

Video browser for navigating linear video on small display devices using a similarity-based navigation hierarchy of temporally ordered video keyframes with short navigation paths

ActiveUS20090199099A1Digital data processing detailsAnalogue secracy/subscription systemsPath lengthComputer graphics (images)

A computer-based method is provided for enabling navigation of video using a keyframe-based video browser on a display device with a limited screen size, for a video segmented into video shots. The video shots are clustered by similarity, while temporal order of the video shots is maintained. A hierarchically organized navigation tree is produced for the clusters of video shots, while the path lengths of the tree are minimized.

Owner:FUJIFILM BUSINESS INNOVATION CORP

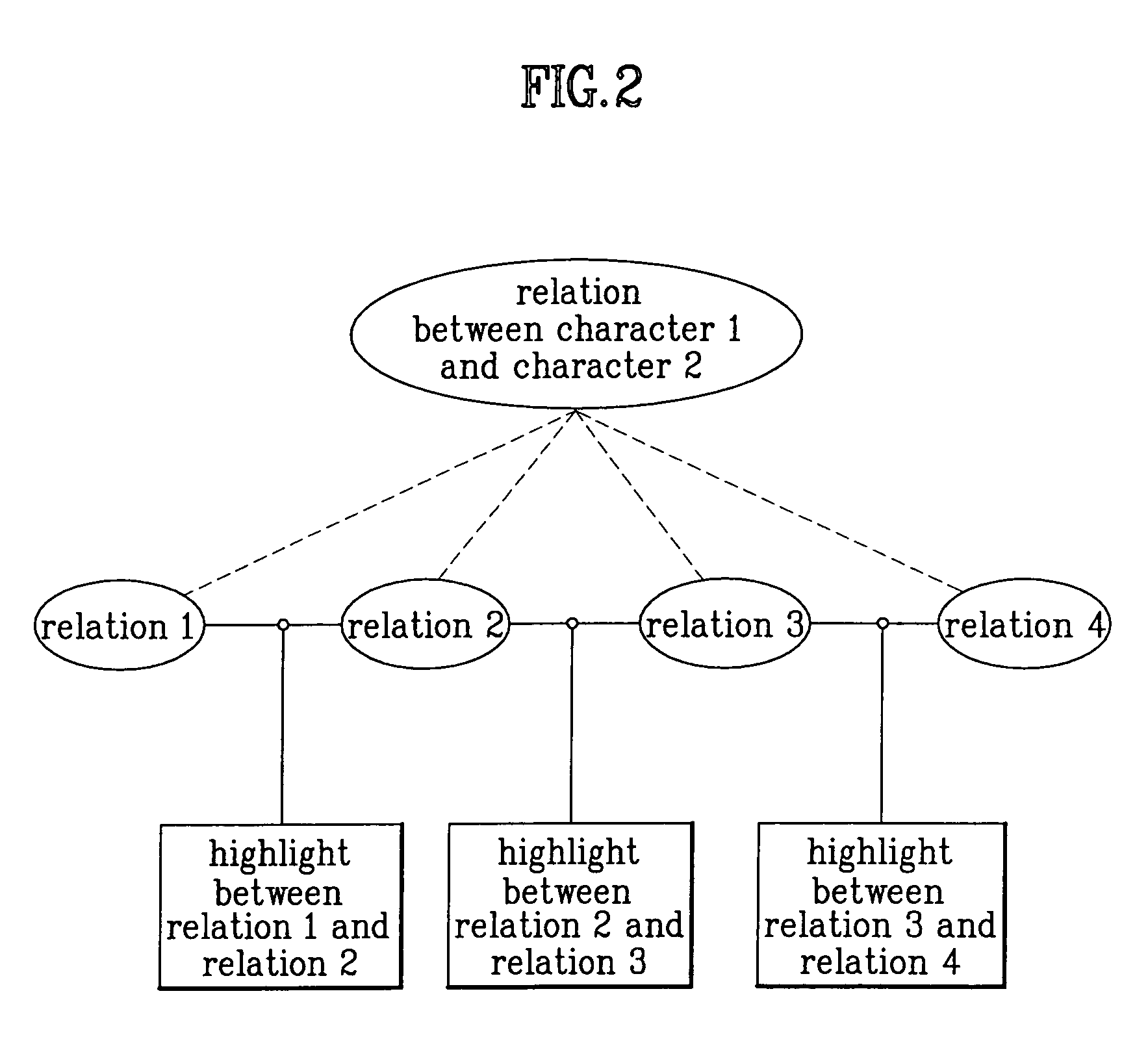

Motional video browsing data structure and browsing method therefor

A motional video browsing data structure based upon character relations is disclosed for a system and method of video browsing. In the present invention, the character relation information by which relations between a character and other characters constituting the contents of a motional video are formed and displayed, and information on the variation of relations between characters in the motional video are also displayed.

Owner:LG ELECTRONICS INC

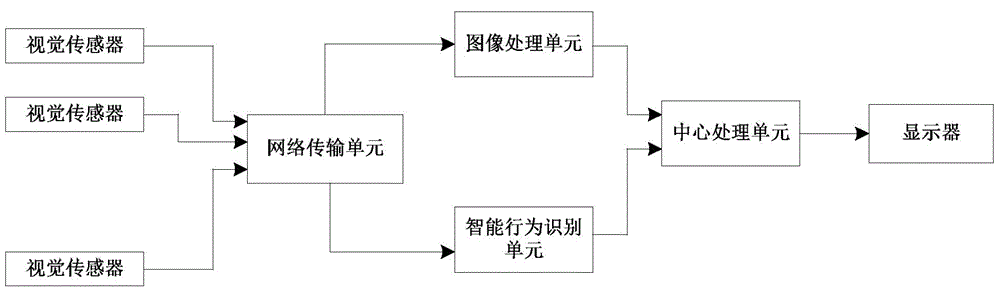

Intelligent monitoring system and method based on combination of 3DGIS with real scene video

InactiveCN104159067ALive video browsingReal time monitoringClosed circuit television systemsVideo monitoringBroadband transmission

The invention discloses an intelligent monitoring system and method based on a combination of a 3DGIS with a real scene video. The system comprises a plurality of visual sensors, a network transmission unit, an image processing unit, an intelligent behavior recognition unit, a central processing unit and a display; and the method comprises an S1 step of using the plurality of visual sensors for collecting real-time video signals; an S2 step of analyzing the real-time video signals, and extracting shape, behavioral and coordinate information of a moving target; and an S3 step of building a moving target model based on the shape, behavioral and coordinate information of the moving target, and dynamically displaying the moving target model on a corresponding coordinate of an electronic map. According to the invention, by use of the technologies such as the visual sensors, broadband transmission, image processing, intelligent behavioral recognition, photogrammetry, 3D virtual environment and the like, real-time panoramic video browsing and monitoring are achieved with good social and economic benefits. The invention can be applied to a variety of video monitoring systems.

Owner:SHENZHEN BELLSENT INTELLIGENT SYST

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com