Performance pre-evaluation based client cache distributing method and system

A cache allocation, client-side technology, applied in transmission systems, electrical components, etc., can solve the problems of occupying cache resources, low client cache efficiency, slow write operation speed, etc., to achieve low cache resources, improve execution efficiency, and write operation speed. quick effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example

[0057] In order to verify the feasibility and effectiveness of the system of the present invention, the system of the present invention is configured in a real environment, and the experiment is carried out by using the authoritative Benchmark in the field of supercomputing.

[0058] The cluster basic hardware and software configuration of the present invention are shown in Table 1 below:

[0059]

[0060] Table 1

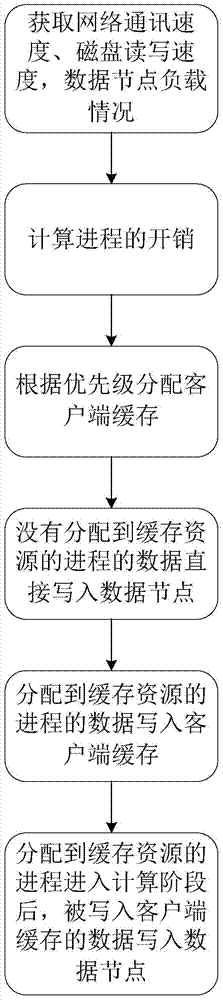

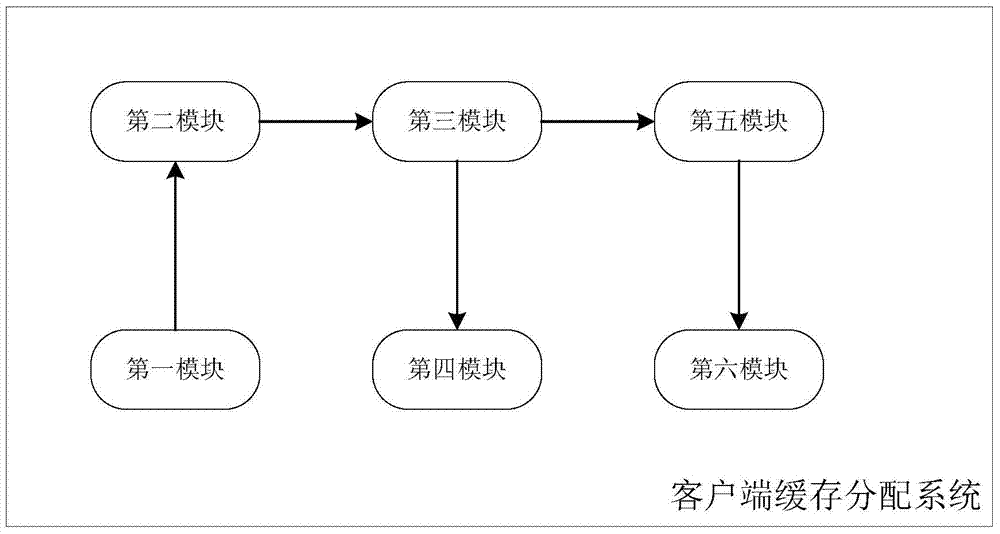

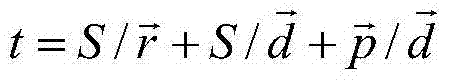

[0061] The invention first analyzes the file writing request of the scientific application program to the parallel file system, collects and counts the running information of the program on the cluster; Various parameters customize the most reasonable client cache allocation strategy, so that the limited client cache can maximize the performance of the program's file write request. The system quickly, automatically and effectively provides client-side cache configuration strategies for parallel file systems, reduces the priority of complex client-side cache ope...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com