Caching system and method for a network storage system

a network storage and cache technology, applied in the field of network storage system cache system and cache system, can solve the problems of slow access-time target storage device, caching system does not support separate write cache, etc., and achieve the effect of eliminating traffic over the intermediate network and avoiding local access to local storage devices

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

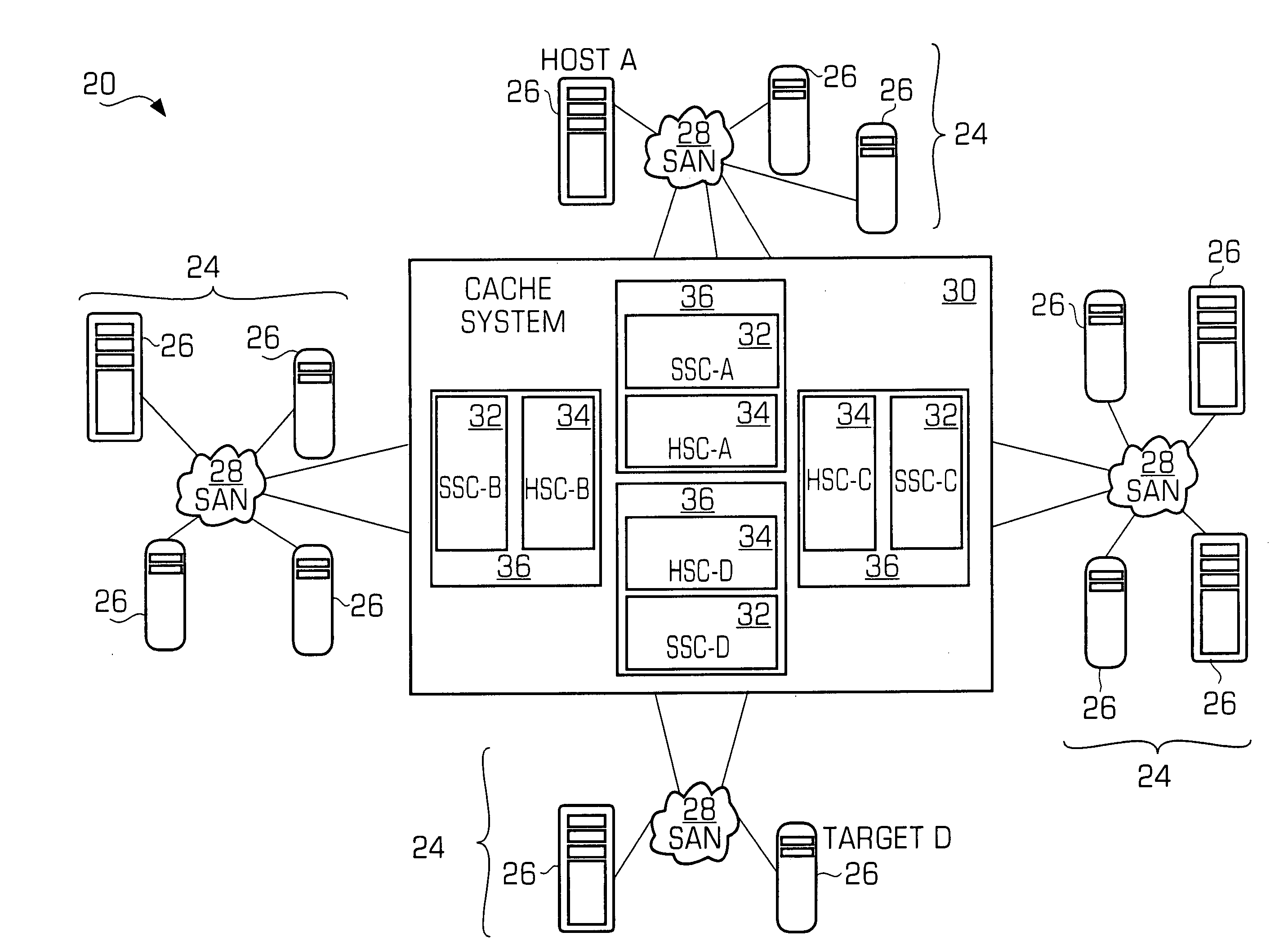

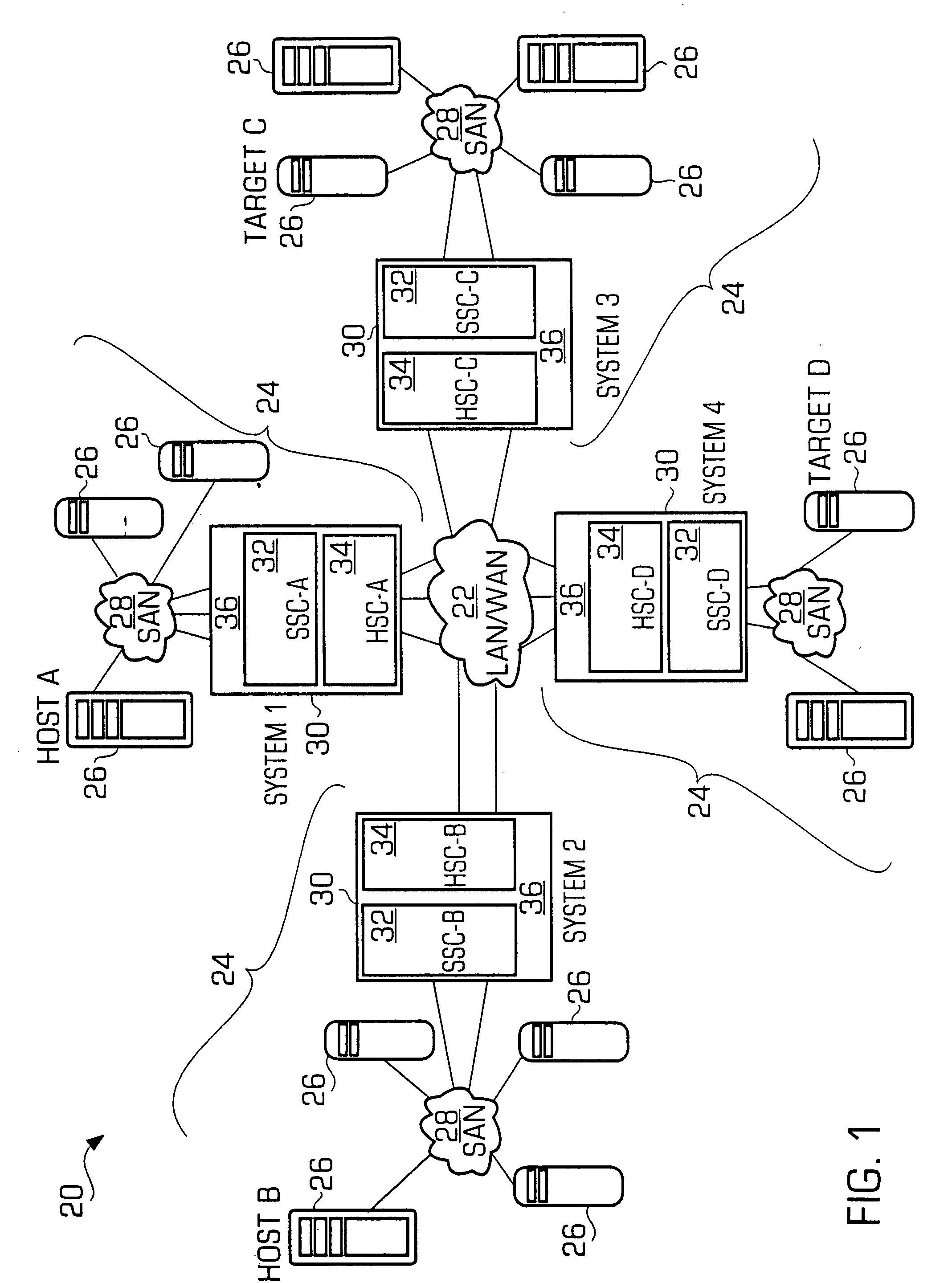

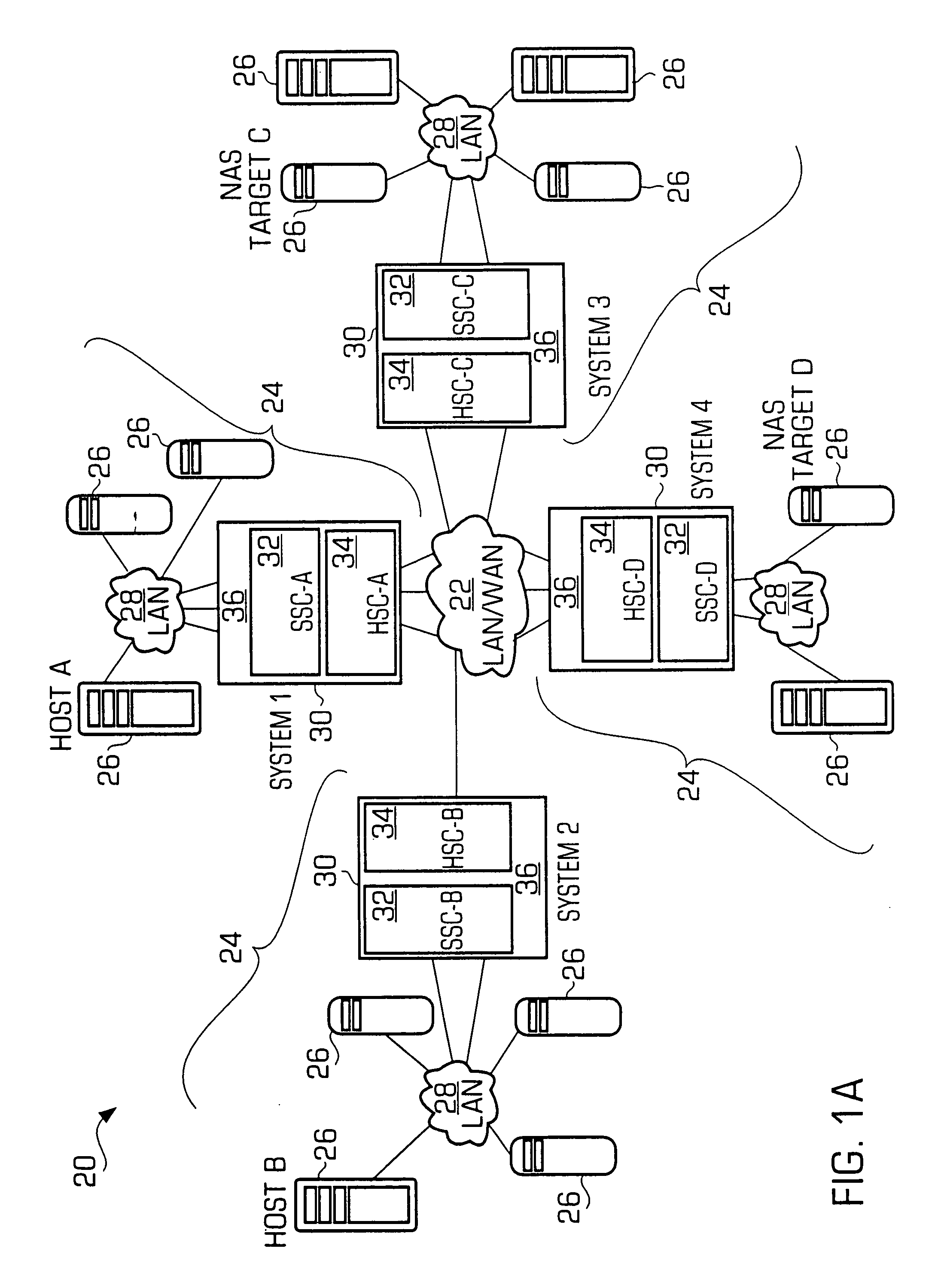

[0031] The invention is particularly applicable to a cache system for a network and it is in this context that the invention will be described. It will be appreciated, however, that the system and method in accordance with the invention has greater utility.

[0032] The cache storage network system is a networking device that enables the sharing of functional and operational resources among all assets in a storage network system in a high performance manner.

[0033] The cache system, in accordance with the invention, creates an intelligent storage network without incurring a large investment for new infrastructure. It utilizes reliable protocols to guarantee delivery in traditionally unreliable LAN / WAN environments. The cache system interconnects local storage-centric networks based on Fibre Channel or SCSI to existing or new LAN / WAN IP-based networking infrastructures. The interconnection of these diverse network technologies allows storage networks to be deployed across geographicall...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com