Patents

Literature

58 results about "Cache invalidation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Cache invalidation is a process in a computer system whereby entries in a cache are replaced or removed. It can be done explicitly, as part of a cache coherence protocol. In such a case, a processor changes a memory location and then invalidates the cached values of that memory location across the rest of the computer system.

Method and system for limiting the use of user-specific software features

InactiveUS20050060266A1Preventing unchecked proliferationDigital data processing detailsUser identity/authority verificationSchema for Object-Oriented XMLUniform resource locator

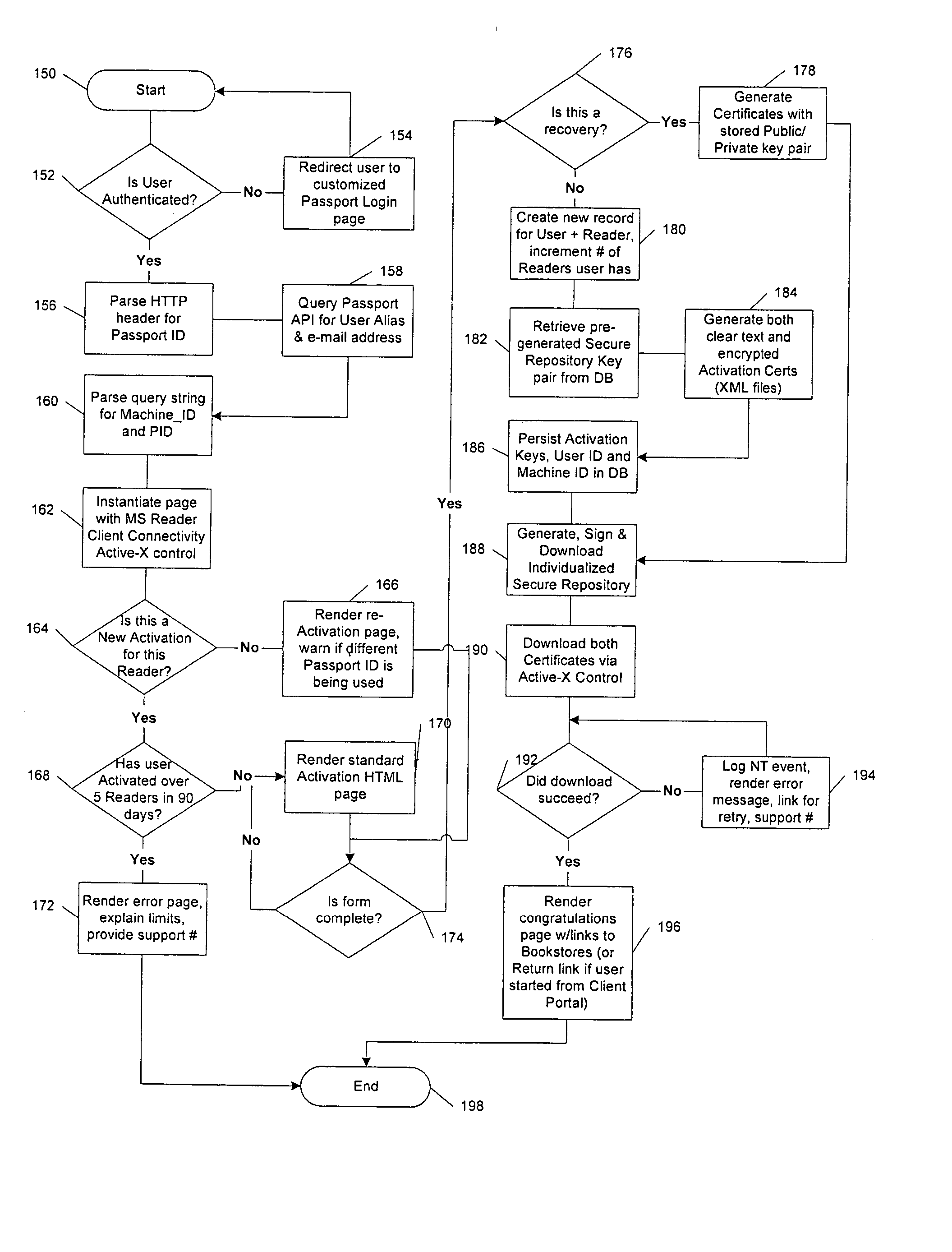

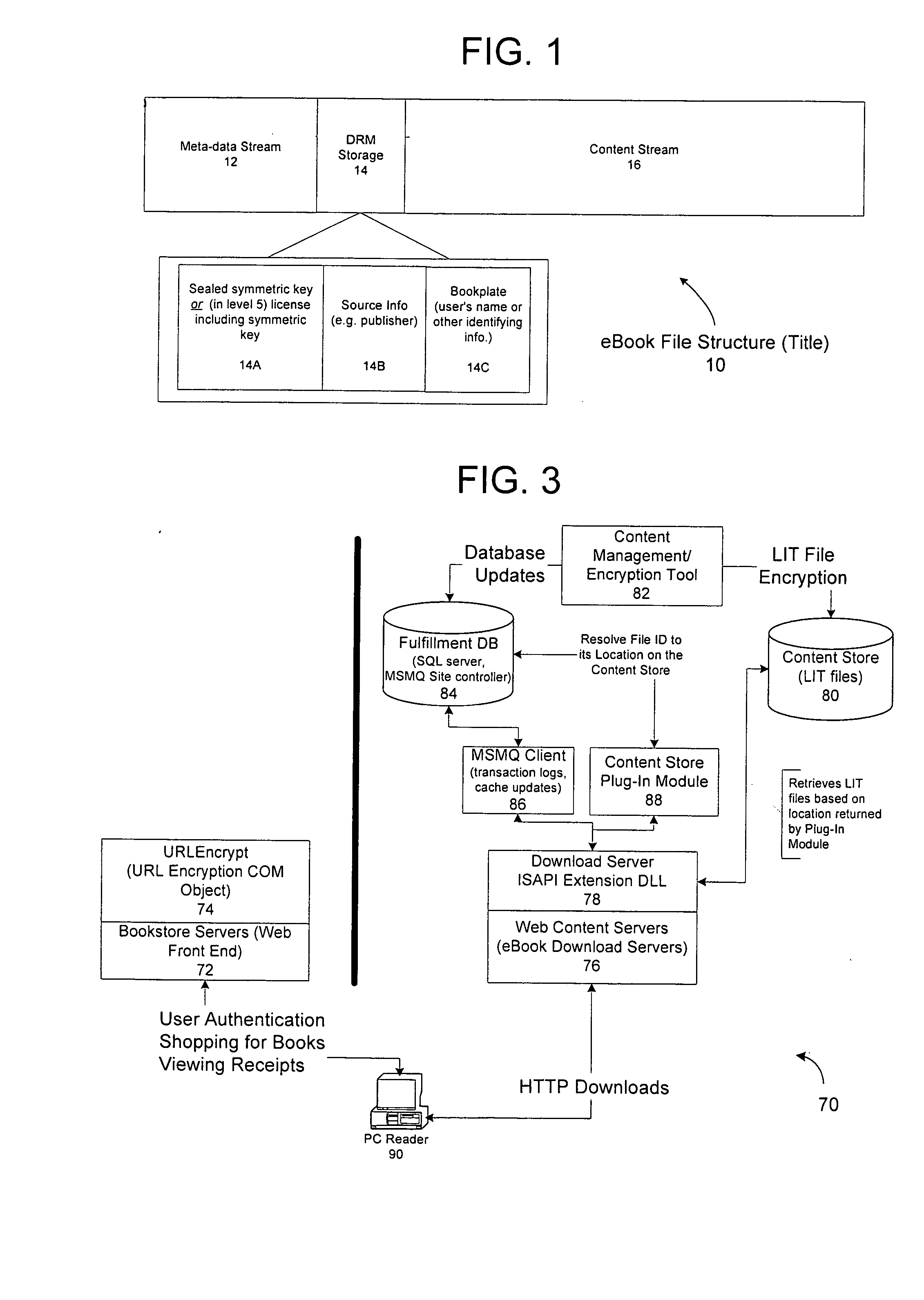

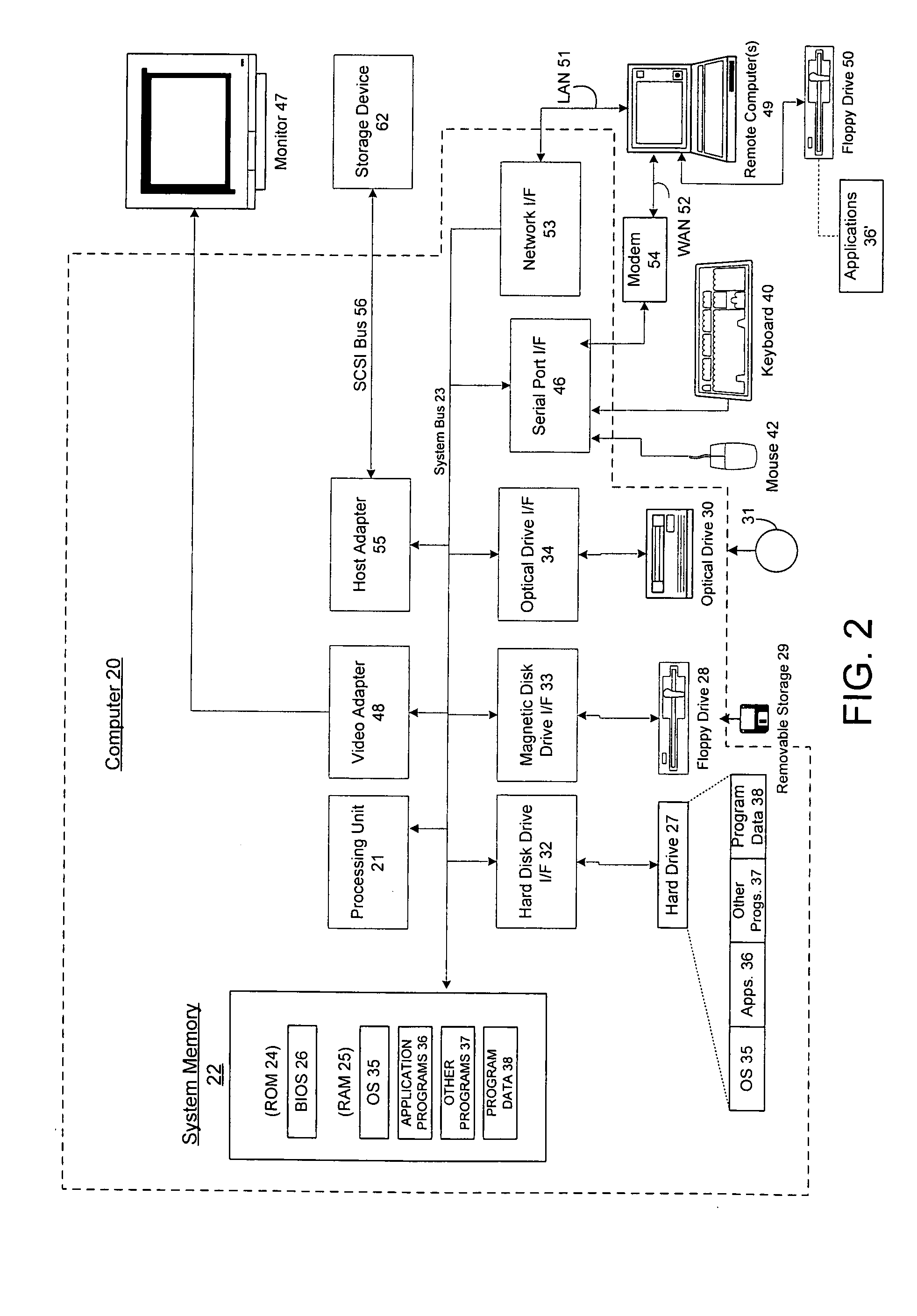

A server architecture for a digital rights management system that distributes and protects rights in content. The server architecture includes a retail site which sells content items to consumers, a fulfillment site which provides to consumers the content items sold by the retail site, and an activation site which enables consumer reading devices to use content items having an enhanced level of copy protection. Each retail site is equipped with a URL encryption object, which encrypts, according to a secret symmetric key shared between the retail site and the fulfillment site, information that is needed by the fulfillment site to process an order for content sold by the retail site. Upon selling a content item, the retail site transmits to the purchaser a web page having a link to a URL comprising the address of the fulfillment site and a parameter having the encrypted information. Upon following the link, the fulfillment site downloads the ordered content to the consumer, preparing the content if necessary in accordance with the type of security to be carried with the content. The fulfillment site includes an asynchronous fulfillment pipeline which logs information about processed transactions using a store-and-forward messaging service. The fulfillment site may be implemented as several server devices, each having a cache which stores frequently downloaded content items, in which case the asynchronous fulfillment pipeline may also be used to invalidate the cache if a change is made at one server that affects the cached content items. An activation site provides an activation certificate and a secure repository executable to consumer content-rendering devices which enables those content rendering devices to render content having an enhanced level of copy-resistance. The activation site “activates” client-reading devices in a way that binds them to a persona, and limits the number of devices that may be activated for a particular persona, or the rate at which such devices may be activated for a particular persona.

Owner:MICROSOFT TECH LICENSING LLC

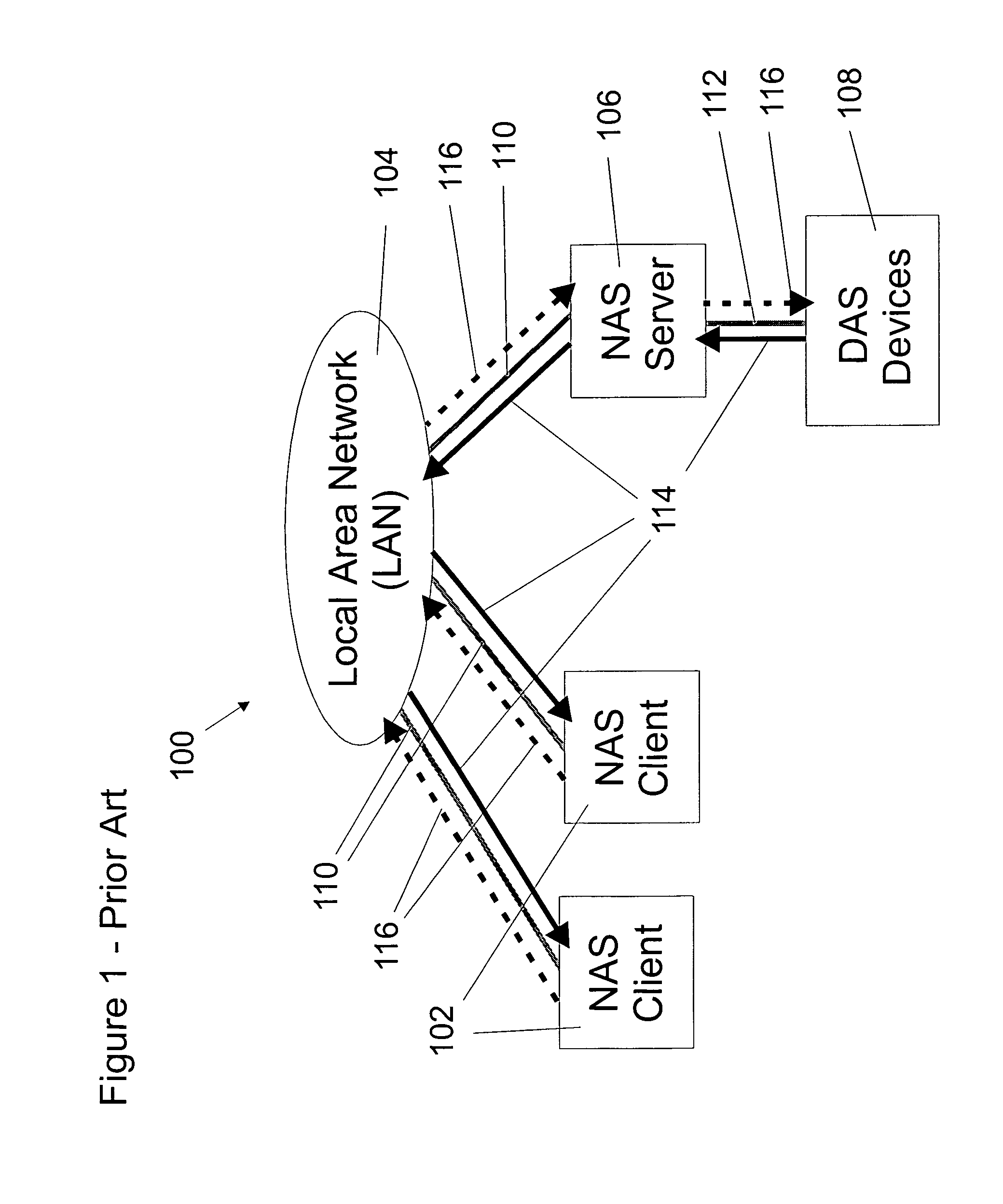

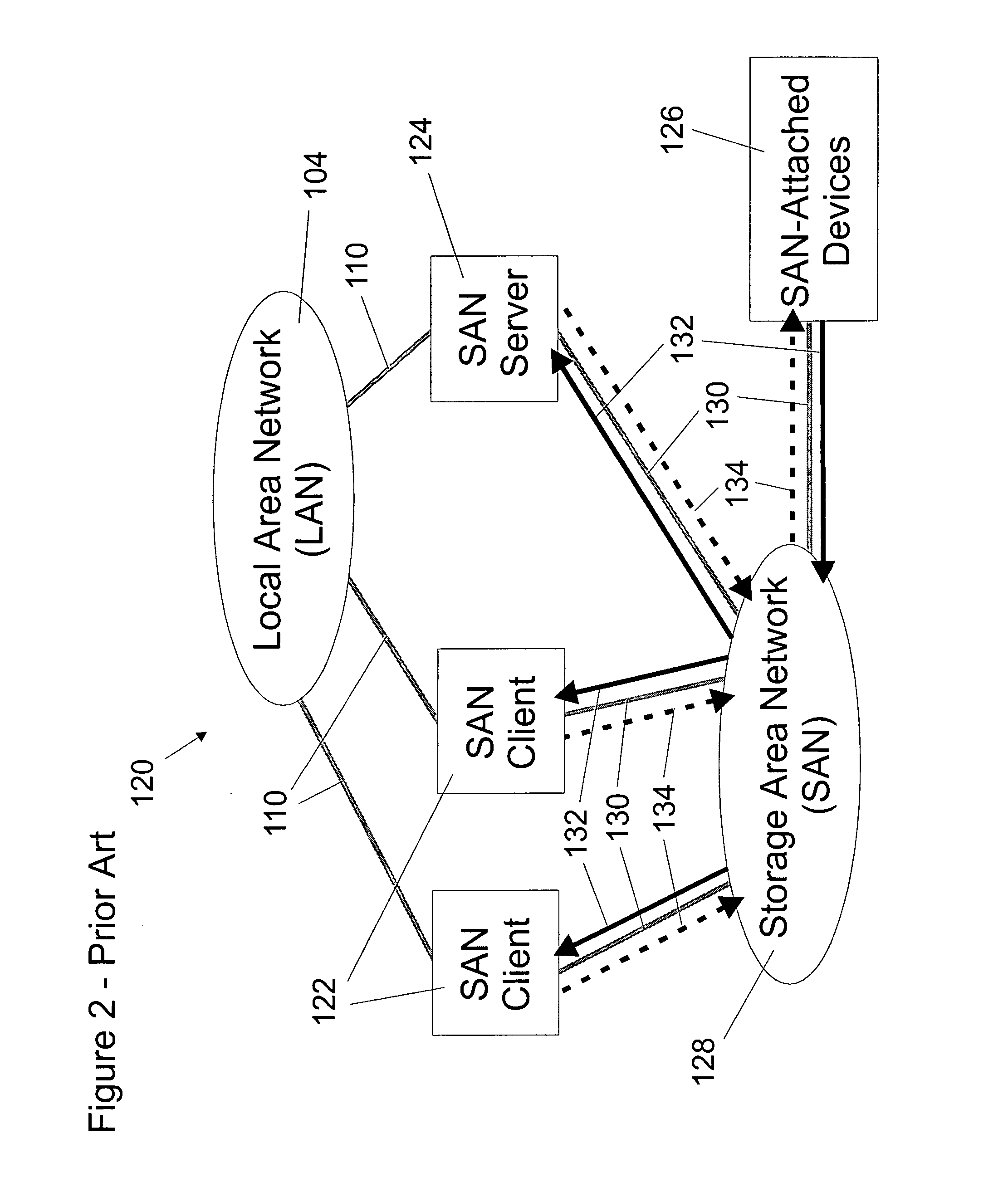

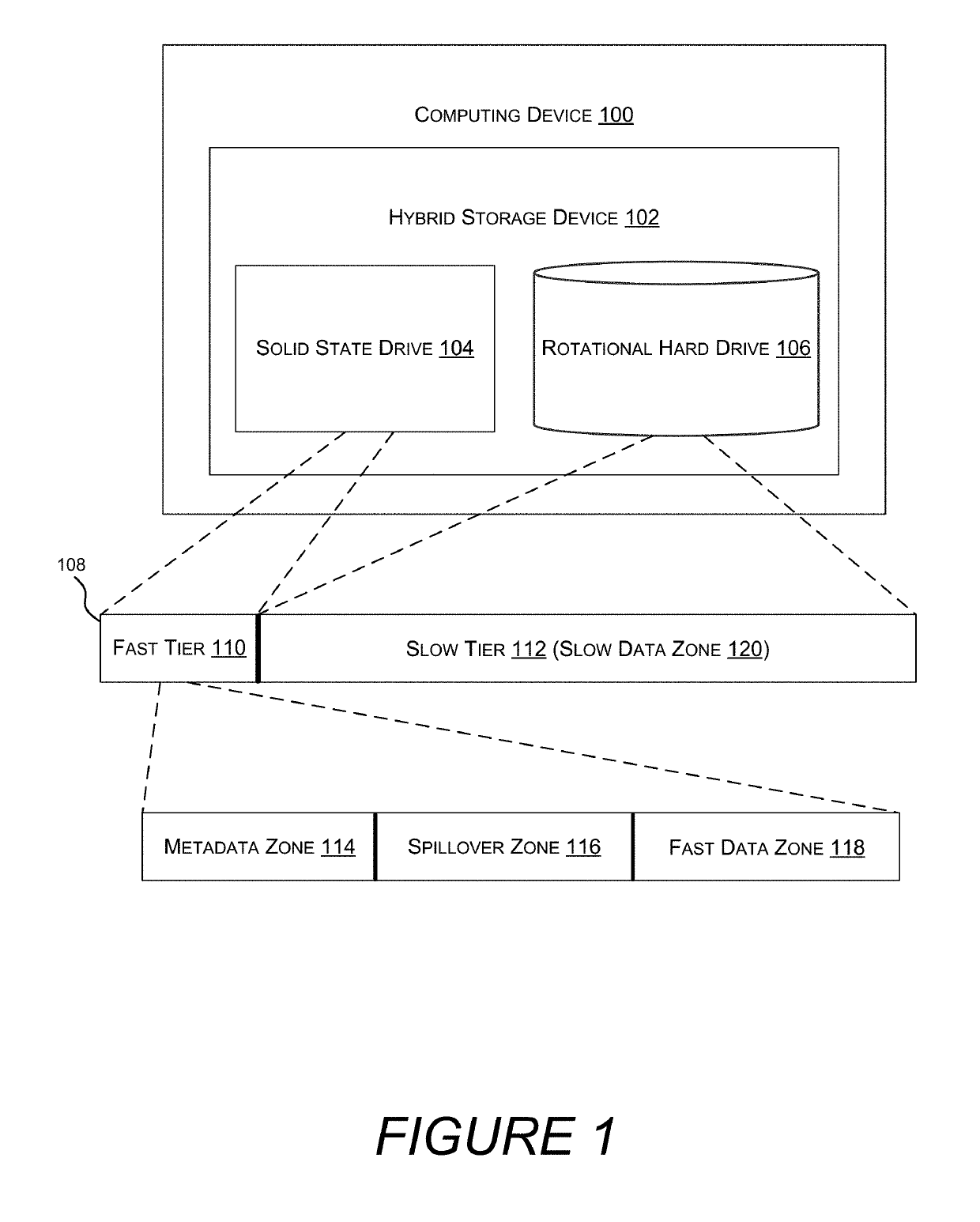

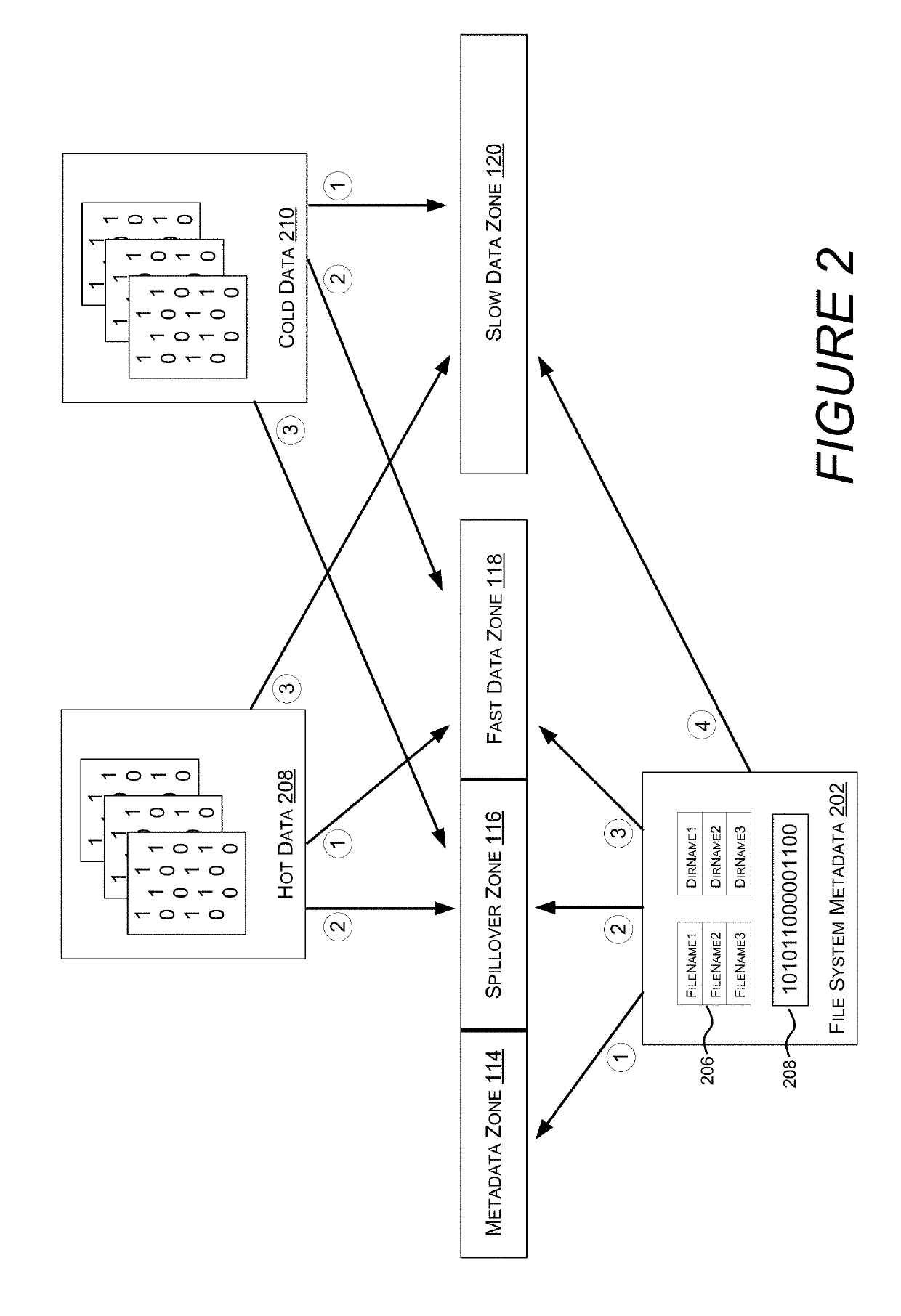

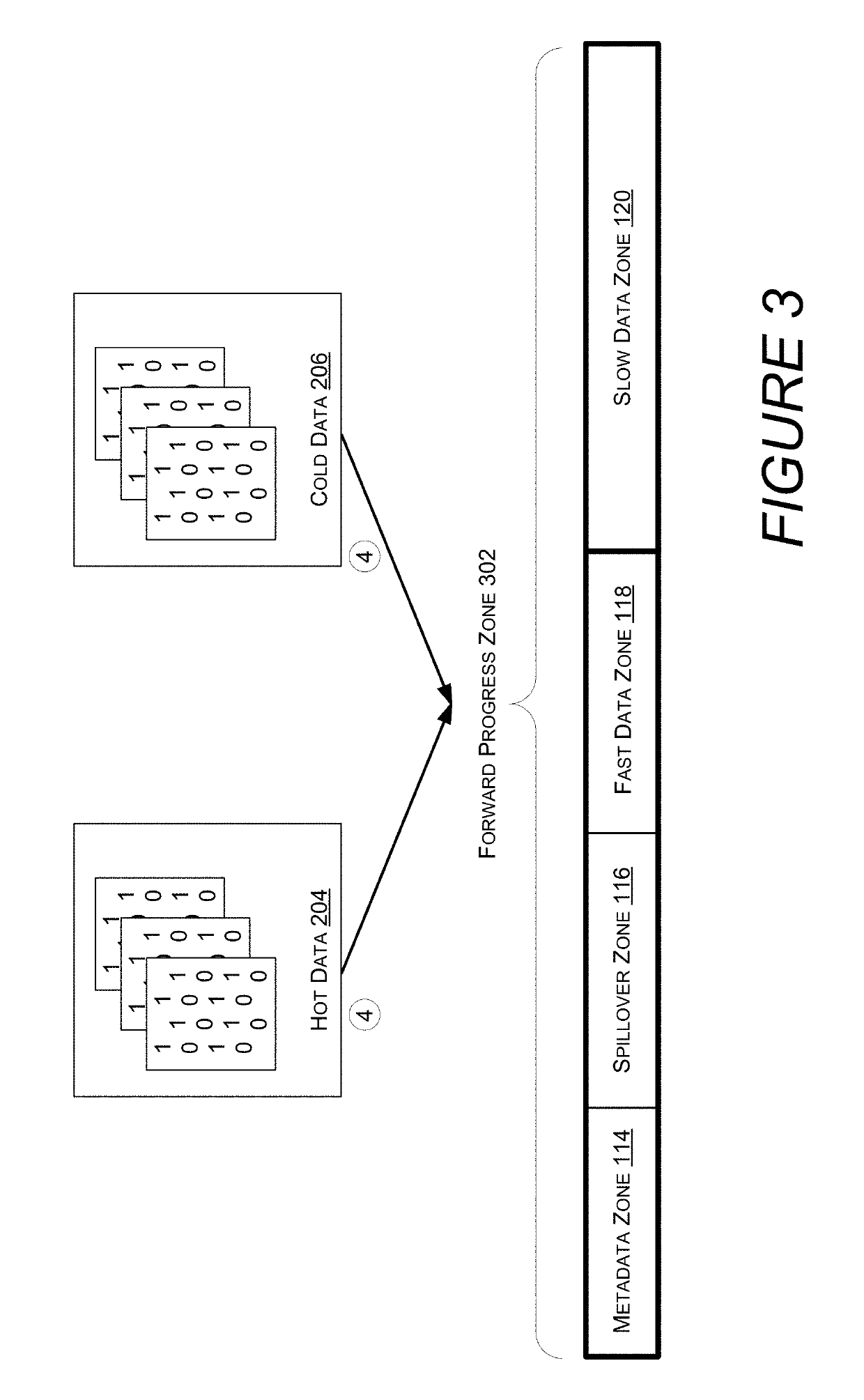

Storage area network file system

InactiveUS20070094354A1Read data quicklyData processing applicationsInput/output to record carriersPathPingCache invalidation

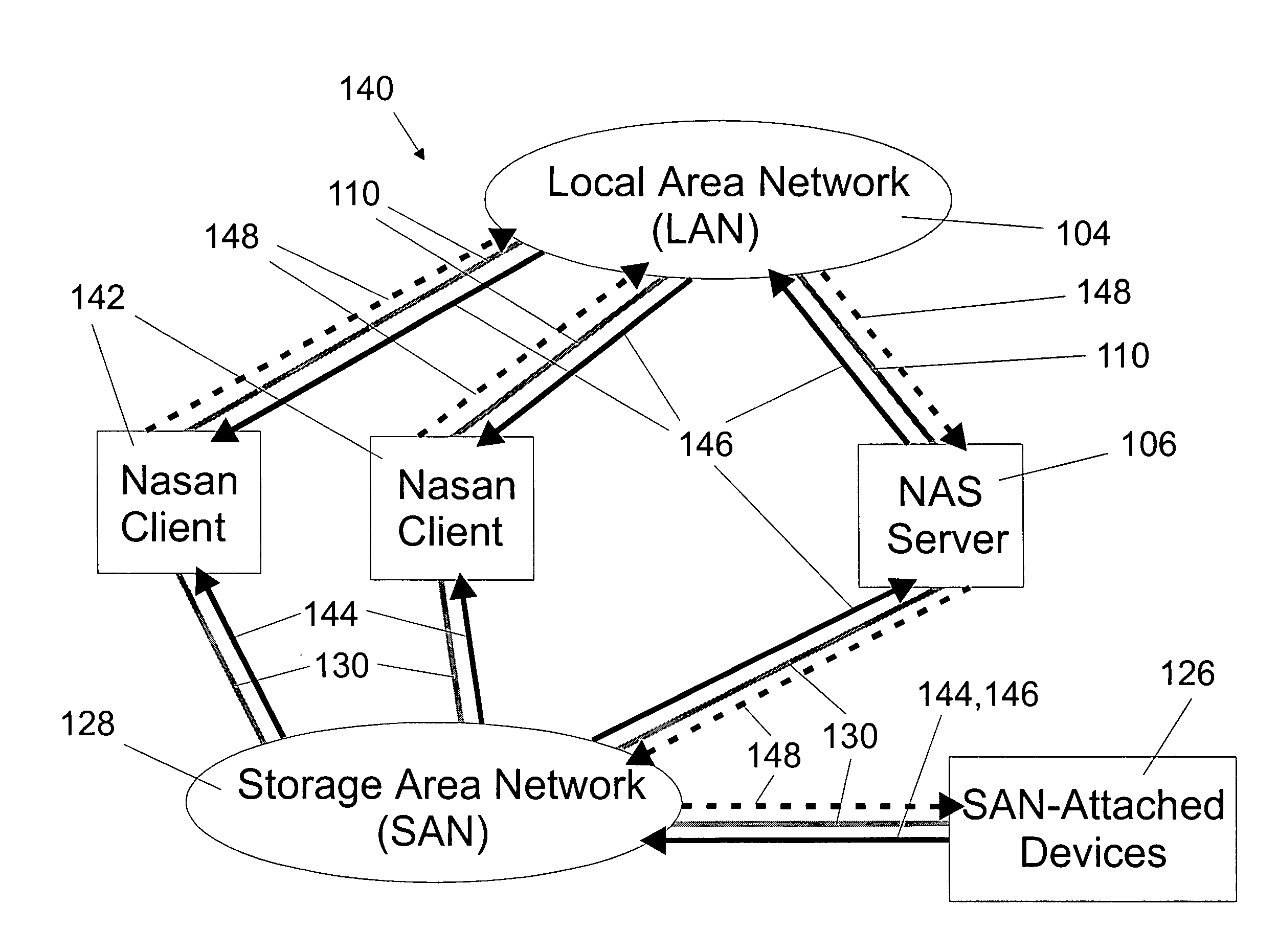

A shared storage distributed file system is presented that provides applications with transparent access to a storage area network (SAN) attached storage device. This is accomplished by providing clients read access to the devices over the SAN and by requiring most write activity to be serialized through a network attached storage (NAS) server. Both the clients and the NAS server are connected to the SAN-attached device over the SAN. Direct read access to the SAN attached device is provided through a local file system on the client. Write access is provided through a remote file system on the client that utilizes the NAS server. A supplemental read path is provided through the NAS server for those circumstances where the local file system is unable to provide valid data reads. Consistency is maintained by comparing modification times in the local and remote file systems. Since writes occur over the remote file systems, the consistency mechanism is capable of flushing data caches in the remote file system, and invalidating metadata and real-data caches in the local file system. It is possible to utilize unmodified local and remote file systems in the present invention, by layering over the local and remote file systems a new file system. This new file system need only be installed at each client, allowing the NAS server file systems to operate unmodified. Alternatively, the new file system can be combined with the local file system.

Owner:DATAPLOW

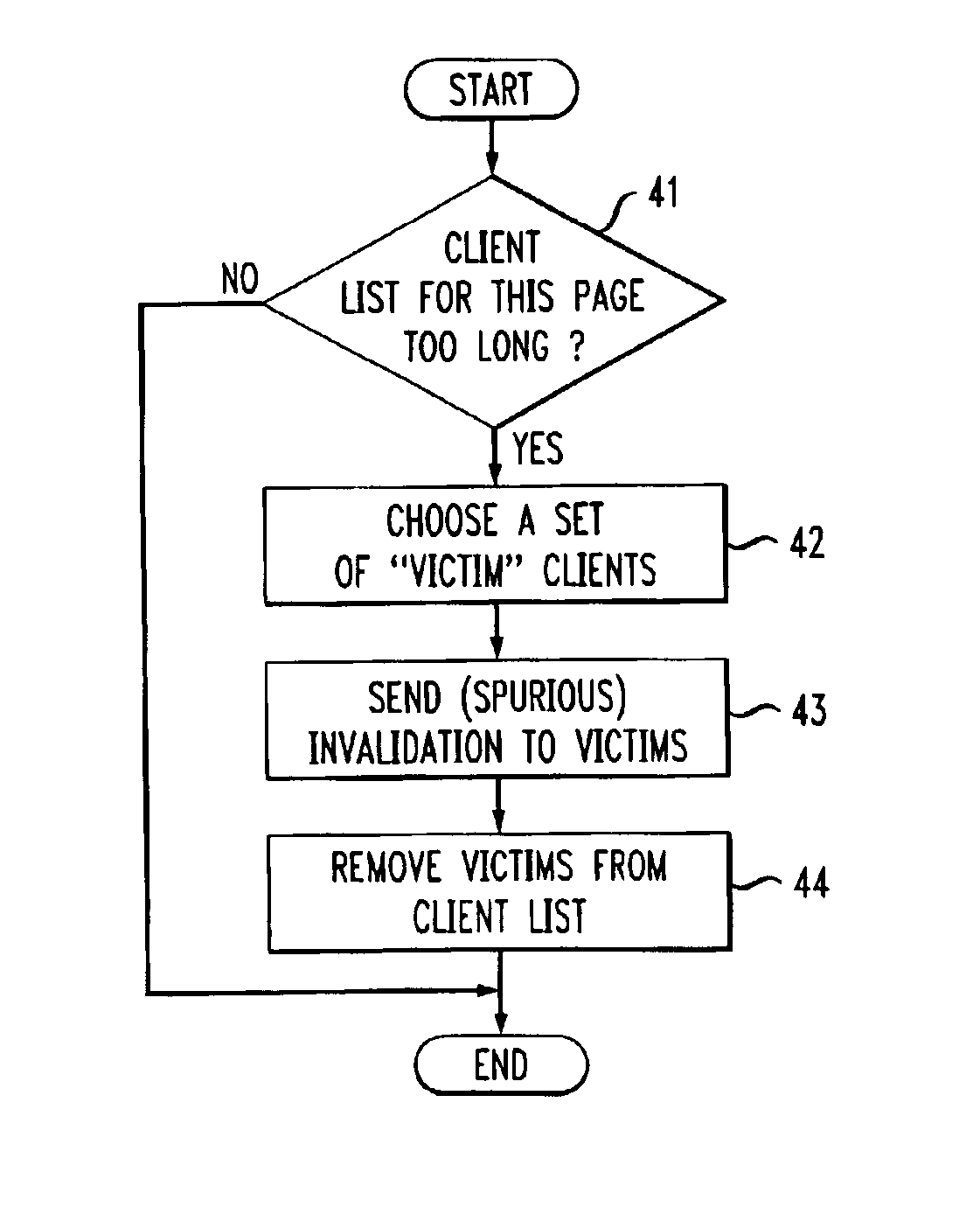

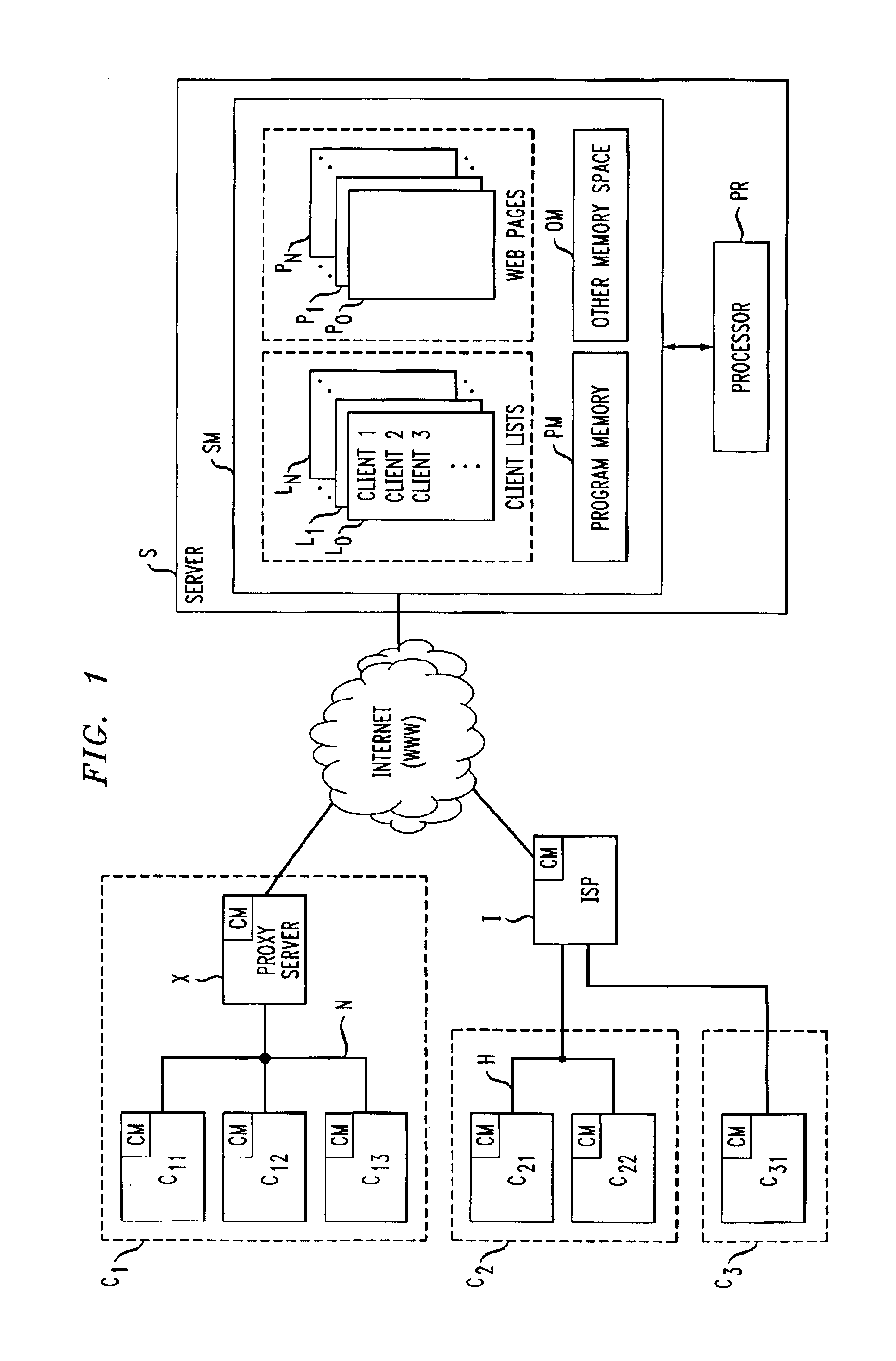

Cache invalidation technique with spurious resource change indications

ActiveUS6912562B1Safely deletedManageable sizeMemory adressing/allocation/relocationMultiple digital computer combinationsCache invalidationProgram planning

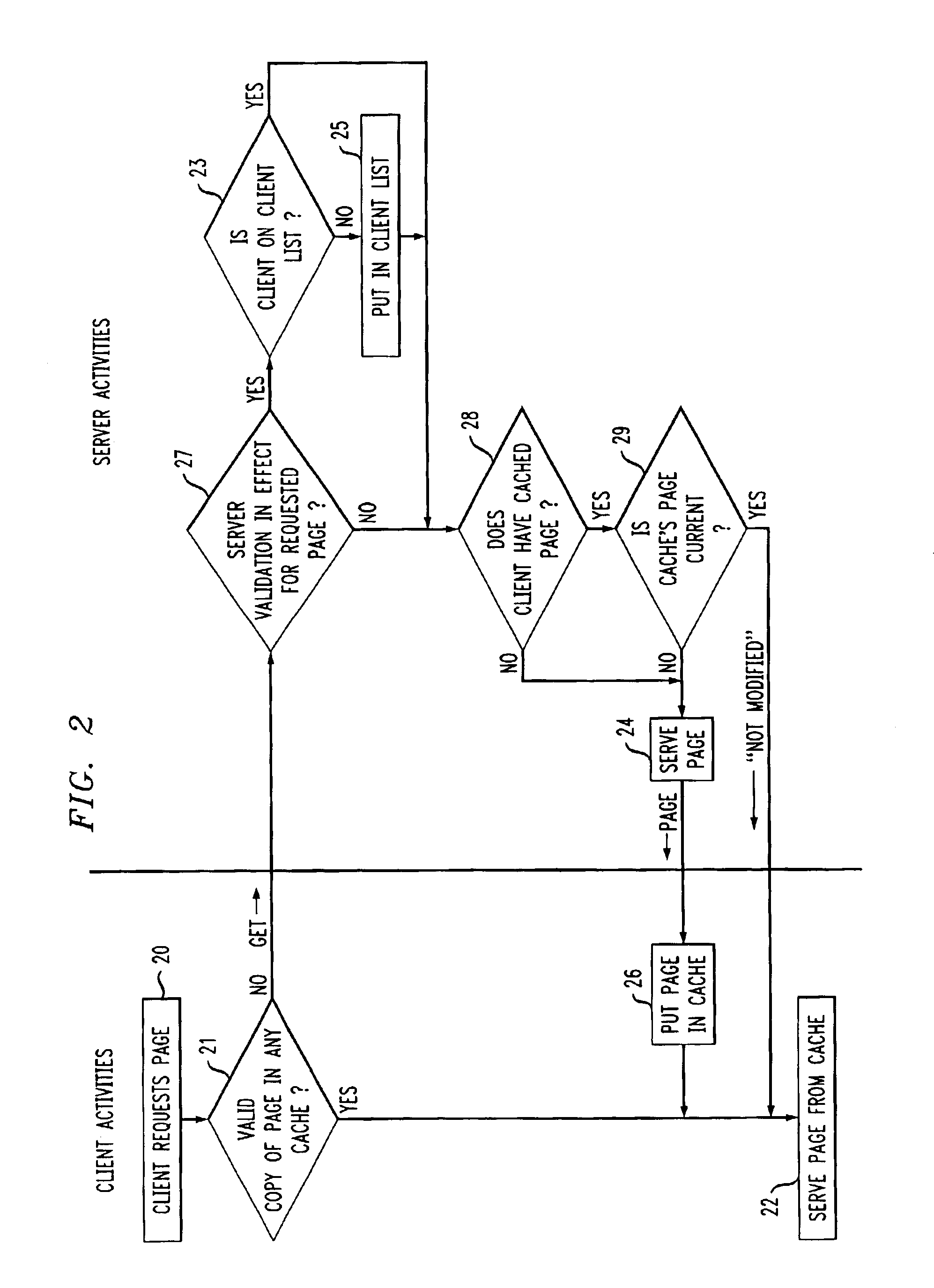

A Web server maintains, for one or more resources, a respective list of clients who requested that resource. The server takes on the responsibility of notifying all of those clients on when the resource in question changes, thereby letting them know that if the resource is again asked for by a user, an updated copy will have to be requested from the origin server. The server thereupon purges the client list, and then begins rebuilding it as subsequent requests come in for the resource in question. Invalidation messages are sent to selected “victim” clients on the client list, independent of whether the resource in question has changed, when the list meets a predetermined criterion, such as becoming too large. The victim clients may include clients who access the server less frequently than others, clients who have accessed the server in the more distant past than other clients, i.e., using a first-in-first methodology, or clients who have not subscribed to a service that keeps them from being victim clients. Review of a client list to determine whether it meets the selected criterion can be invoked every time a client gets added to a client list or on a scheduled basis.

Owner:AMERICAN TELEPHONE & TELEGRAPH CO

Accurate invalidation profiling for cost effective data speculation

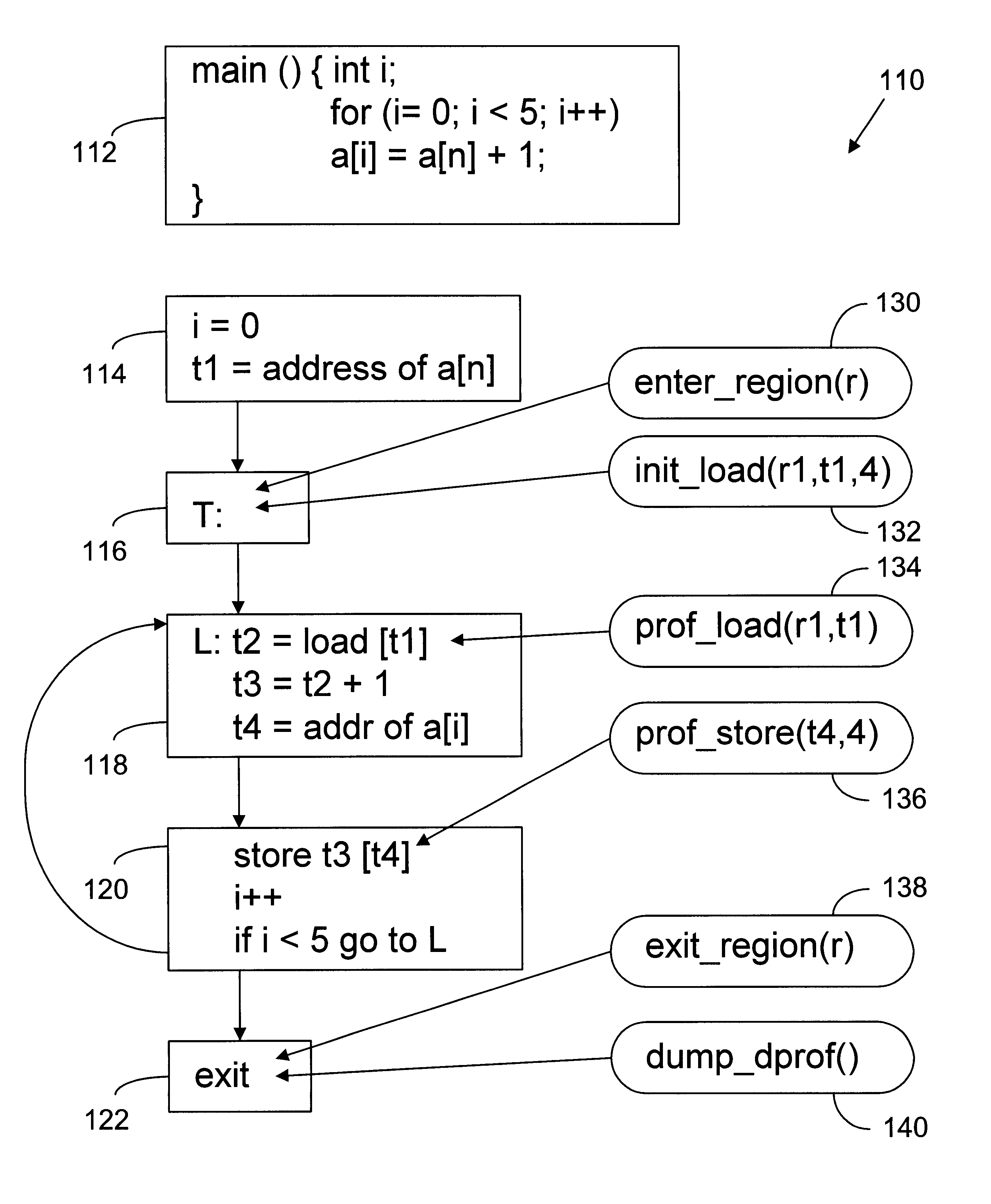

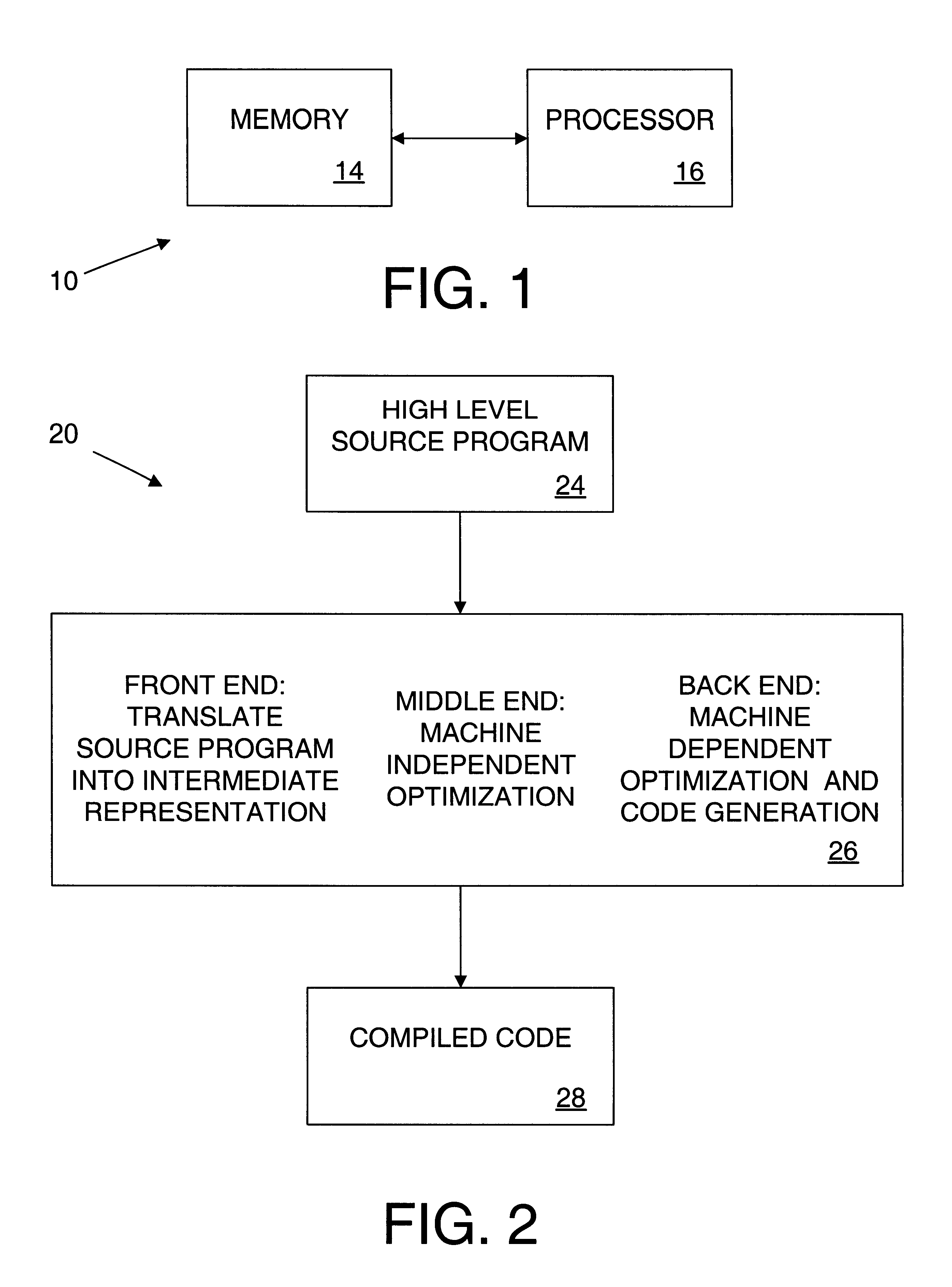

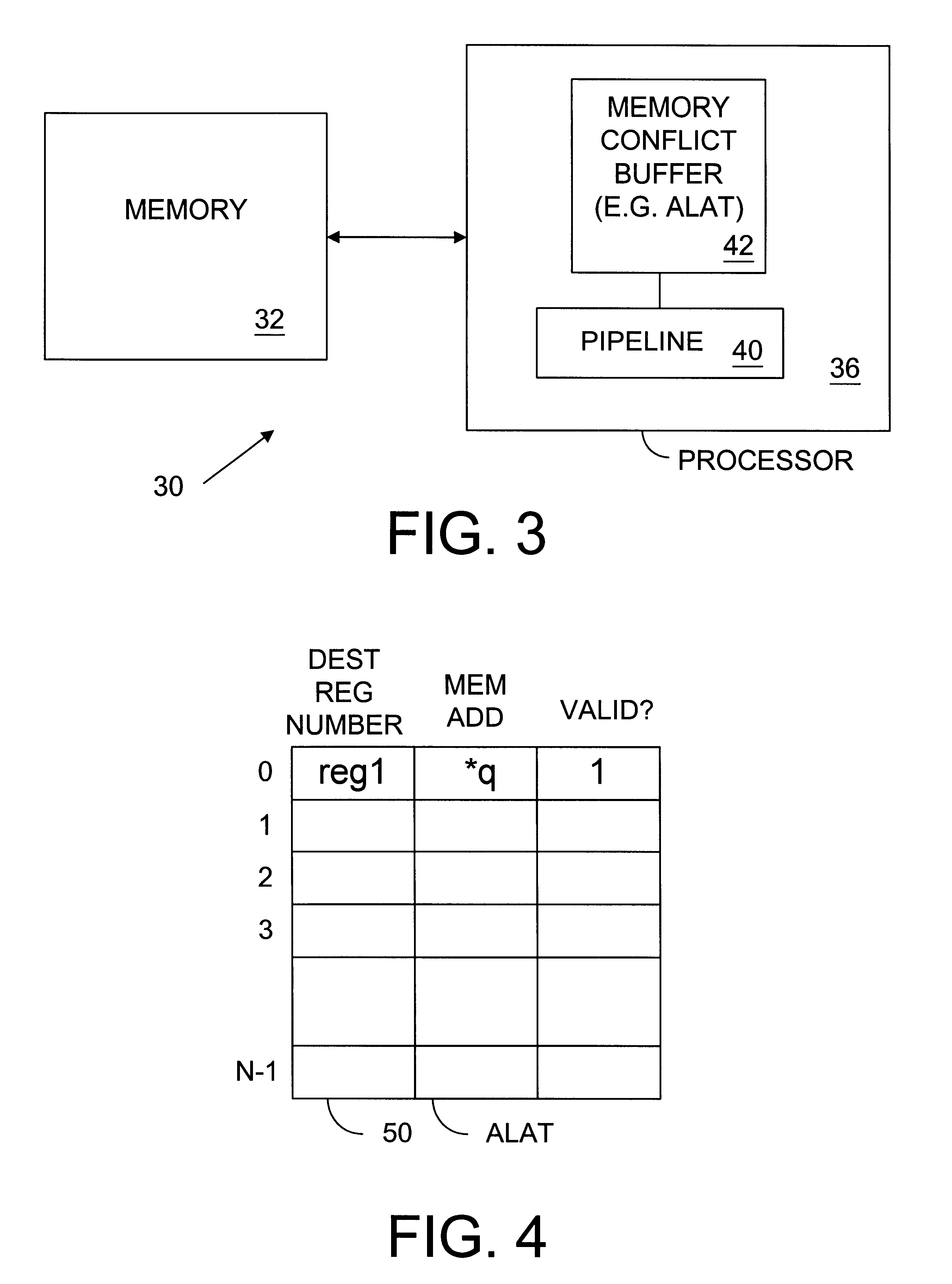

In one implementation, the invention involves a computer implemented method used in compiling a program. The method includes selecting conflict regions of the program. The method further includes performing invalidation profiling of load instructions with respect to certain ones of the conflict regions to determine invalidation rates of the load instructions. The method may further include a feedback step in which the invalidation rates are used by a scheduler of the compiler to determine whether to move the load instructions to target locations.

Owner:INTEL CORP

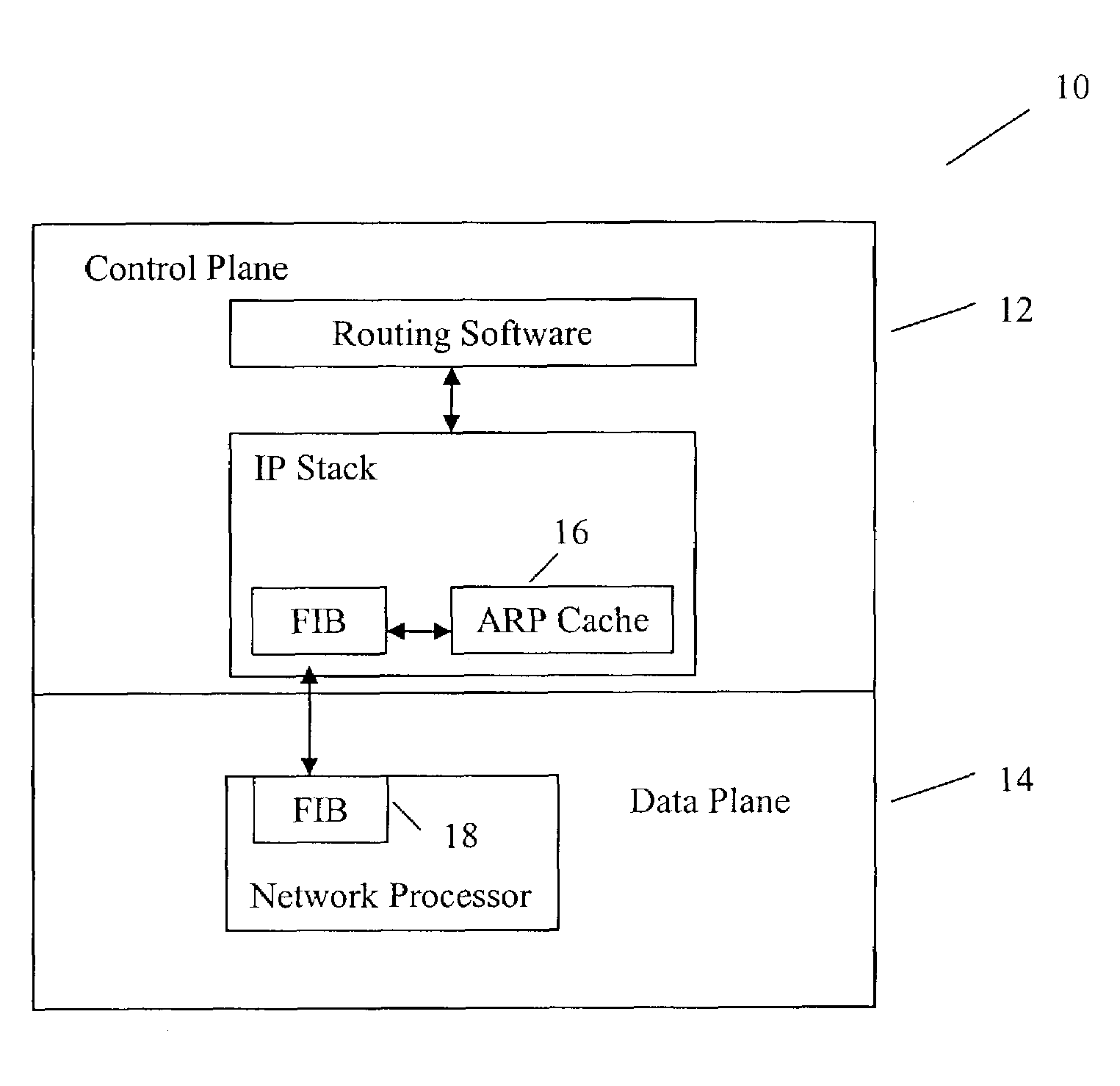

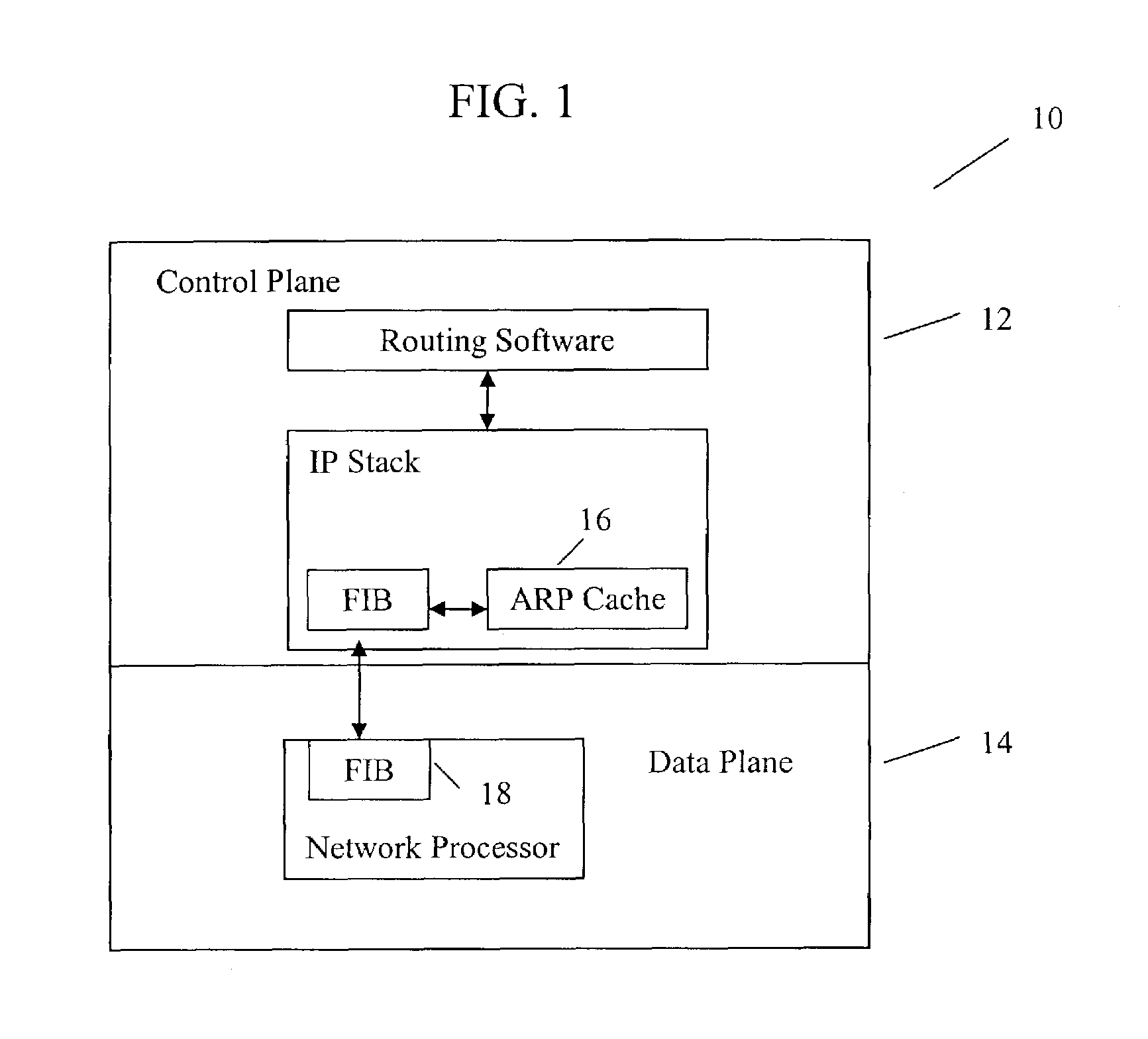

Method and system for optimizing routing table changes due to ARP cache invalidation in routers with split plane architecture

ActiveUS7415028B1Data switching by path configurationAddress Resolution ProtocolInformation repository

A method for optimizing routing functions in a router is provided. The router has a split plane architecture including a control plane and a data plane. The control plane includes an Address Resolution Protocol cache and the data plane includes a programmable forwarding information base. According to one aspect, when the control plane obtains information about a route, it evaluates the obtained information about the route to determine if address resolution is needed. If it is determined that address resolution is needed for the route, the control plane performs the needed address resolution to derive additional information about the route. The control plane programs the forwarding information base to incorporate the obtained and additional information. The determination as to whether address resolution is needed for the route is performed regardless of whether packets are to be delivered on the route.

Owner:RIBBON COMM OPERATING CO INC

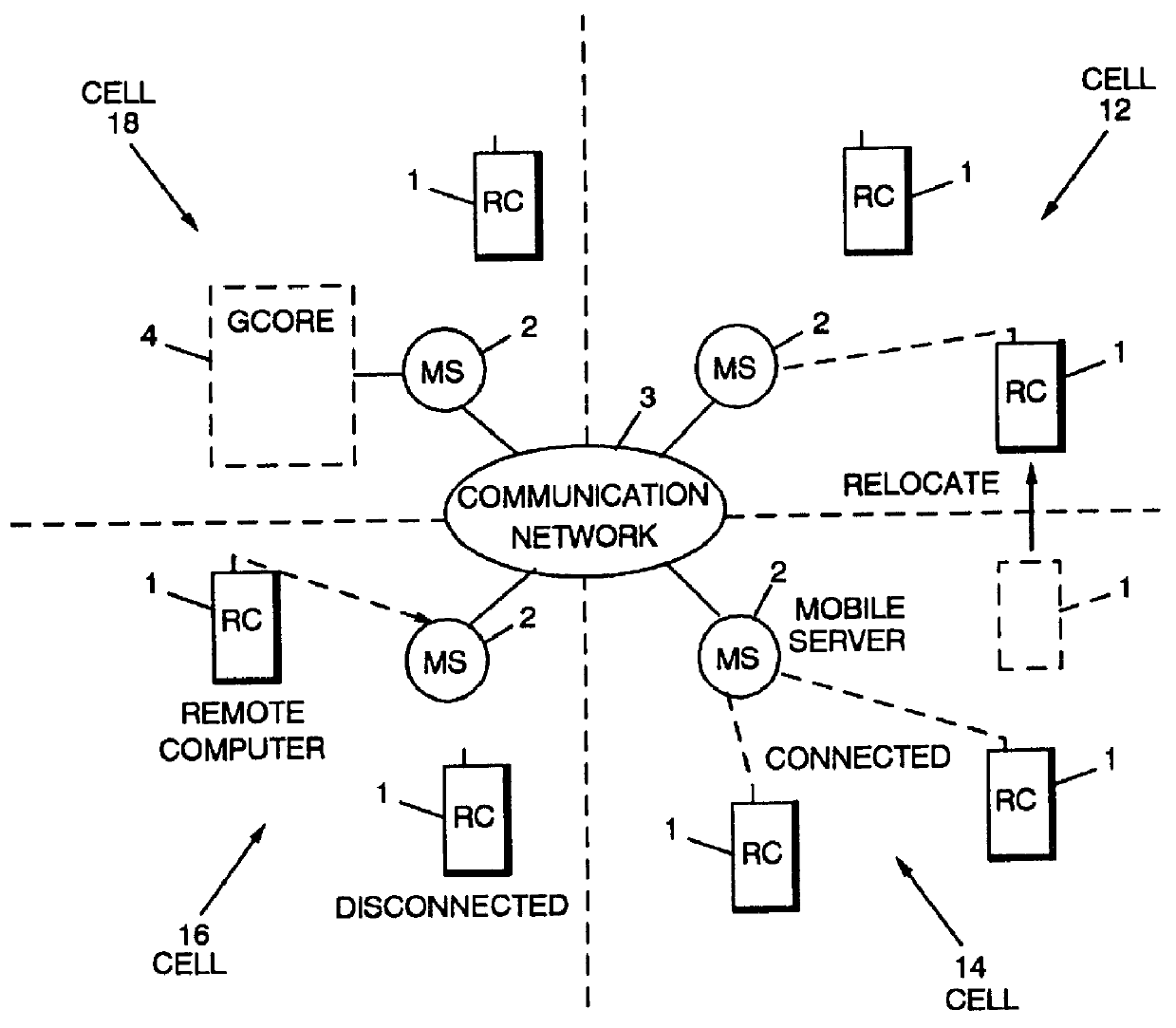

Information handling system and method for maintaining coherency between network servers and mobile terminals

InactiveUS6128648AEnergy efficient ICTDigital data information retrievalCache invalidationCommunications system

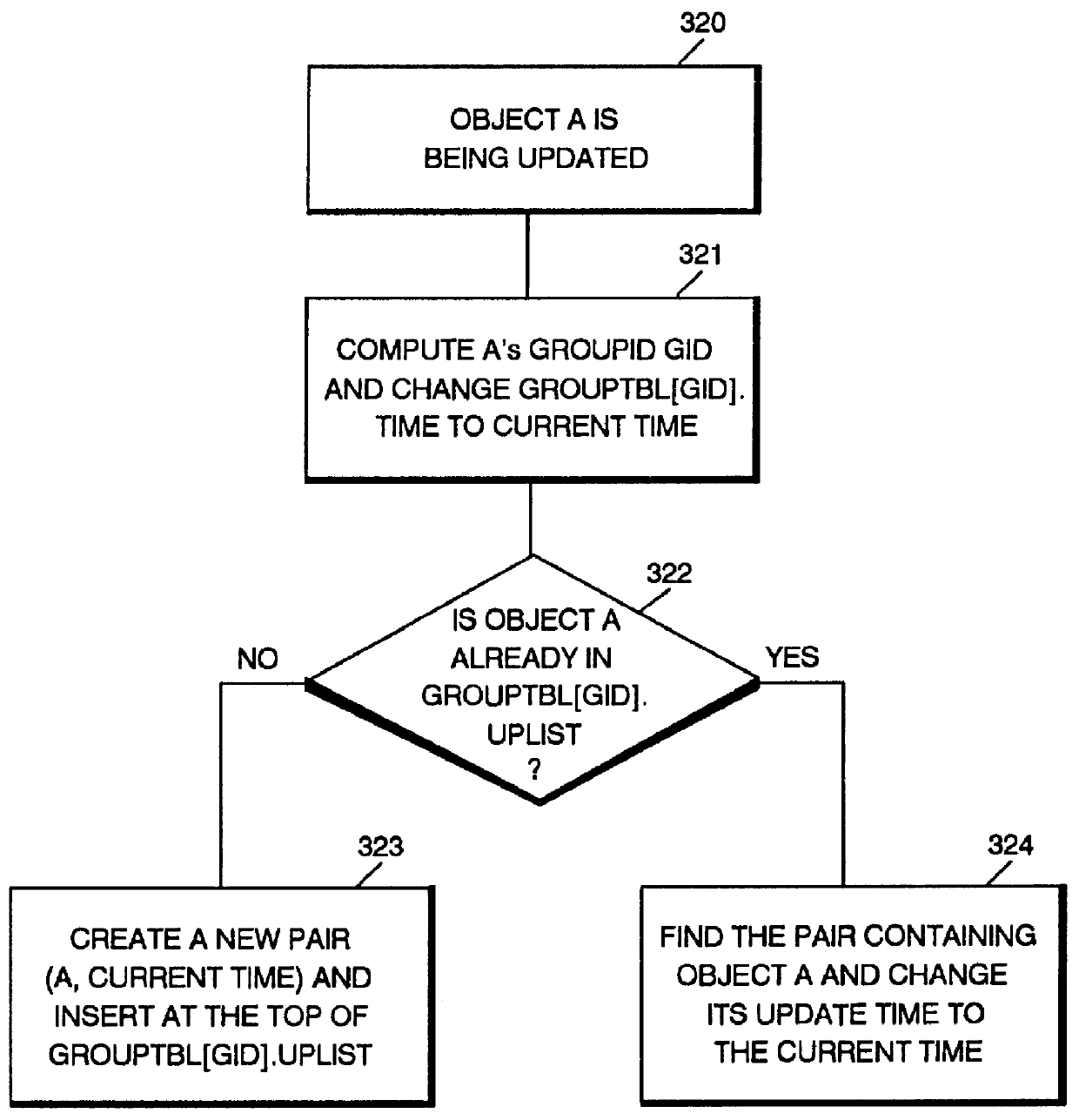

A communications system and method include an efficient cache invalidation technique which allows a computer to relocate and to disconnect without informing the server. The server partitions the entire database into a number of groups. The server also dynamically identifies recently updated objects in a group and excludes them from the group when checking the validity of the group. If these objects have already been included in the most recent invalidation broadcast, the remote computer can invalidate them in its cache before checking the group validity with the server. With the recently updated objects excluded from a group, the server can conclude that the cold objects in the group can be retained in the cache, and validate the rest of the group.

Owner:IBM CORP

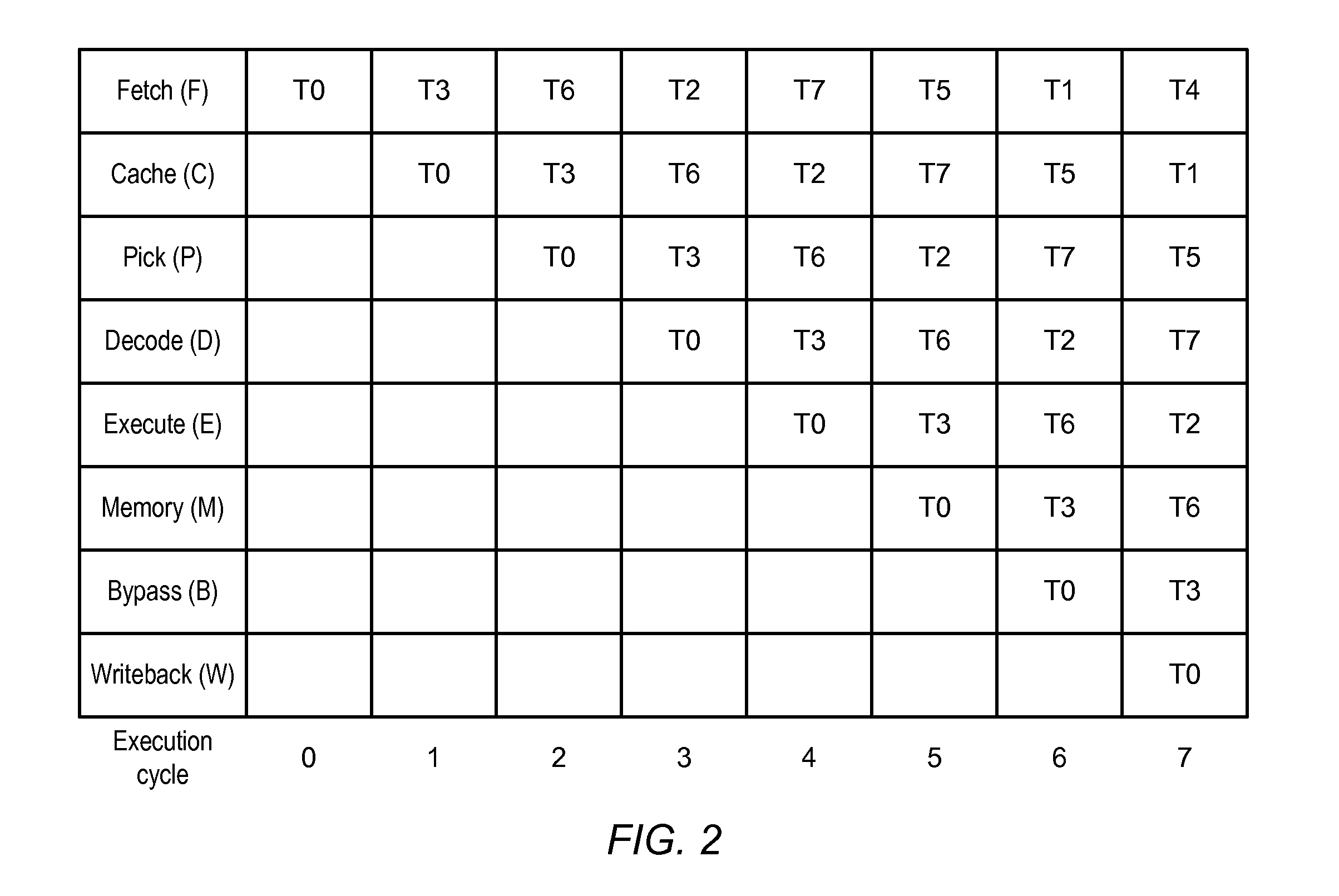

Micro-architecture sensitive thread scheduling (MSTS) method

InactiveCN102081551AImprove hit rateRun fastMultiprogramming arrangementsOperational systemRelevant information

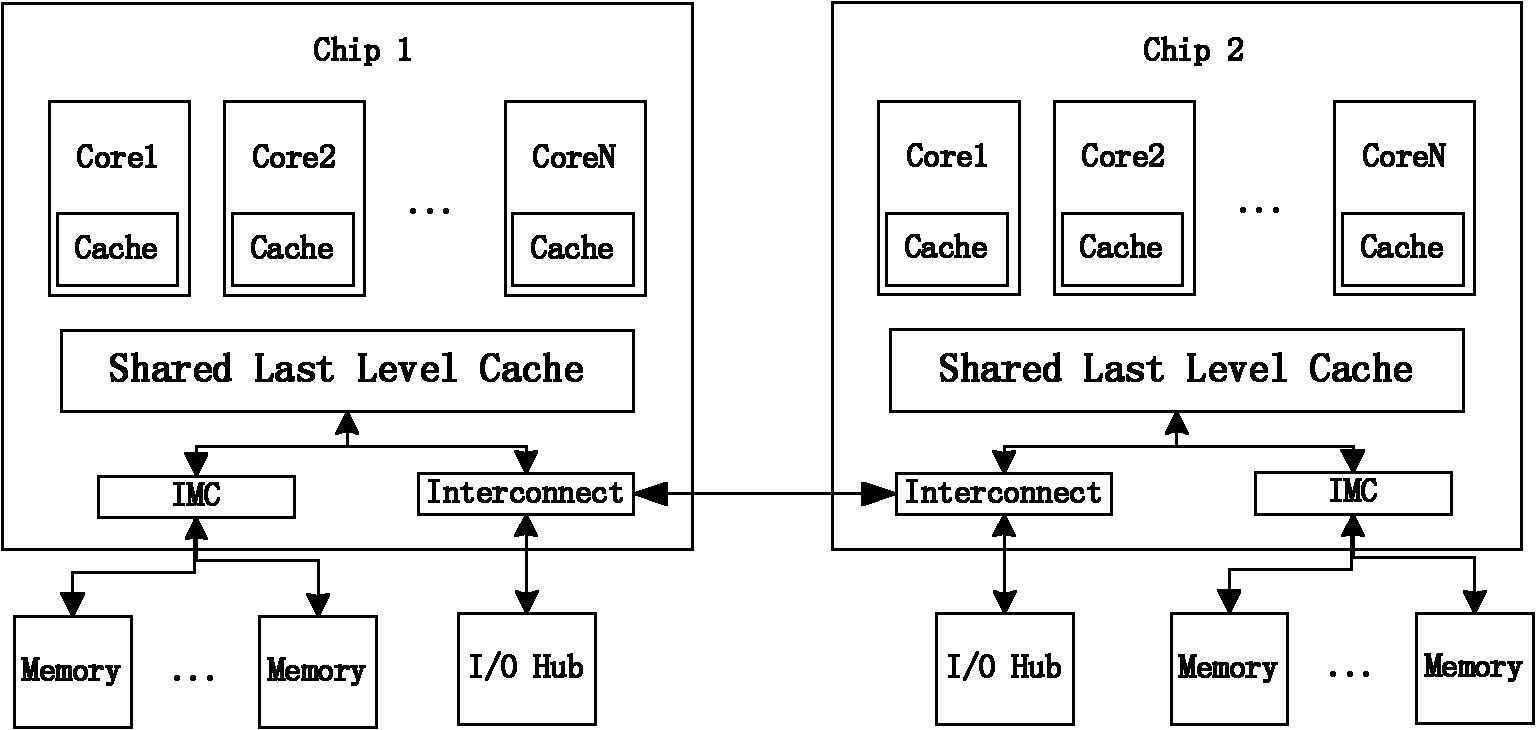

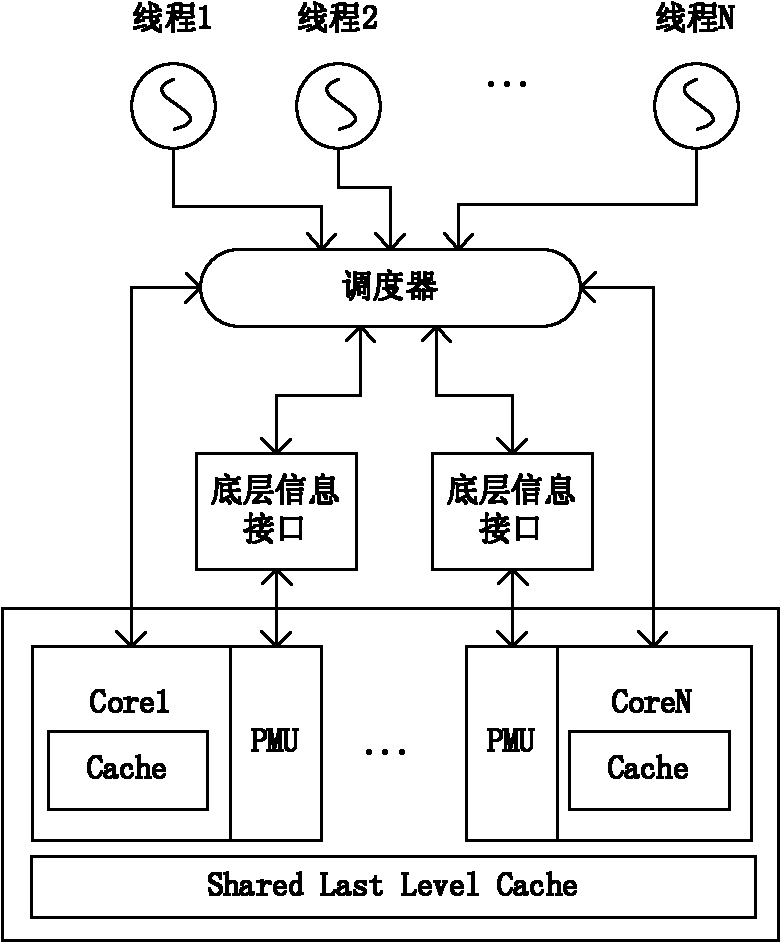

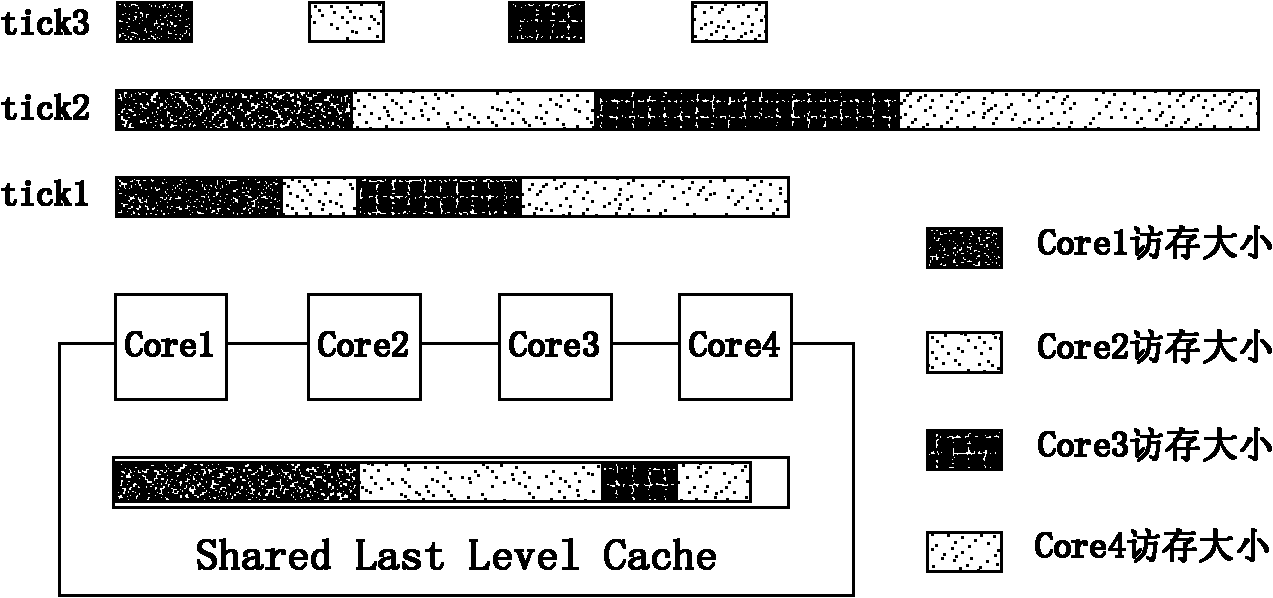

The invention relates to a micro-architecture sensitive thread scheduling (MSTS) method. The method comprises two-level scheduling strategies: in the inner portions of nodes, according to the structural characteristics of CMP, through acquiring relevant information of cache invalidation in real time, the threads which excessively compete for the shared cache resources operate in a staggered mode in time and spatial dimension, and the threads which can carry out effective mutual data sharing operate simultaneously or successively; and in a layer between nodes, through sampling memory data areas frequently accessed by the threads, the threads are bound to the nodes in which data are arranged as far as possible so as to reduce the access to a remote memory and reduce the amount of communication between chips. The method provided by the invention has the advantages of reducing the access delay caused by simultaneously operating a plurality of threads with large shared cache contention, and improving the operation speeds and system throughput rates of the threads.

Owner:NAT UNIV OF DEFENSE TECH

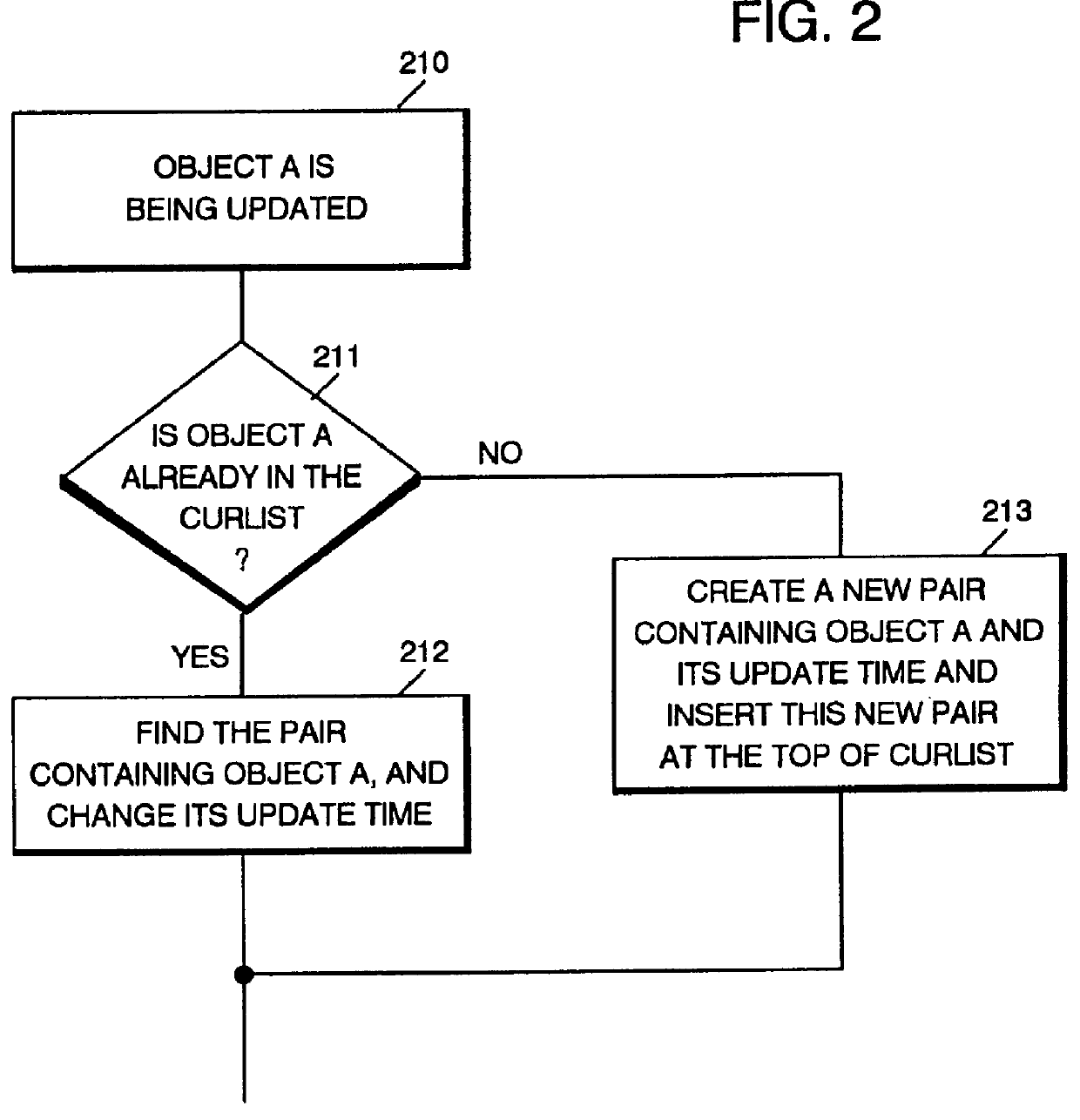

Asynchronous database updates

InactiveUS7043478B2Simple methodDatabase updatingData processing applicationsCache invalidationCentral database

An improved apparatus and method are provided for updating a database object. A database thread is implemented in a database-dependent application stored in the main memory of a computer. An object cache manager allows the database-dependent application to modify a cached version of a transient object and to queue corresponding database processing commands. The database thread updates the persistent data stored in the central database corresponding to the transient object. A Permit Manager provides for carrying out concurrency using a cache invalidation mechanism.

Owner:AUTODESK INC

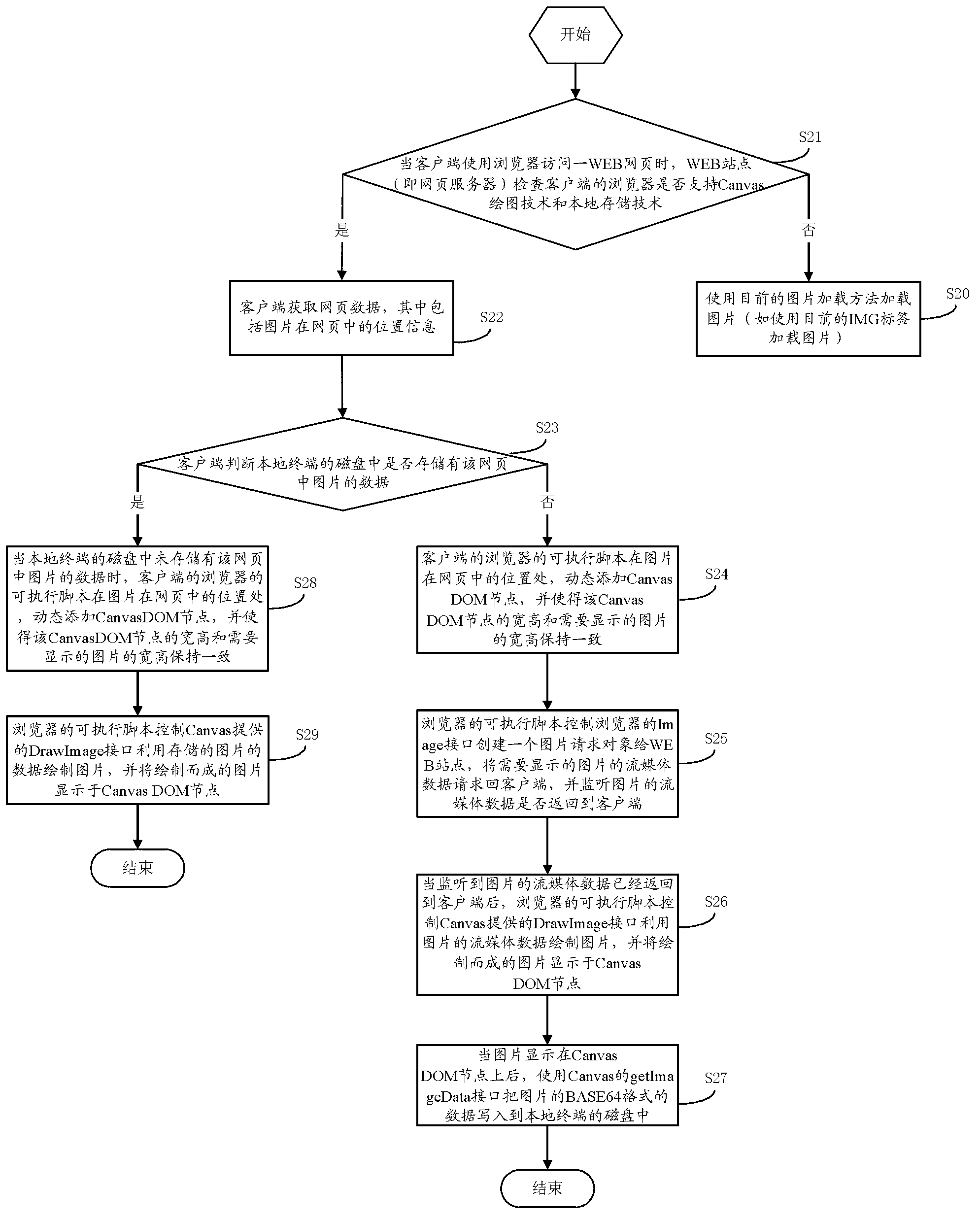

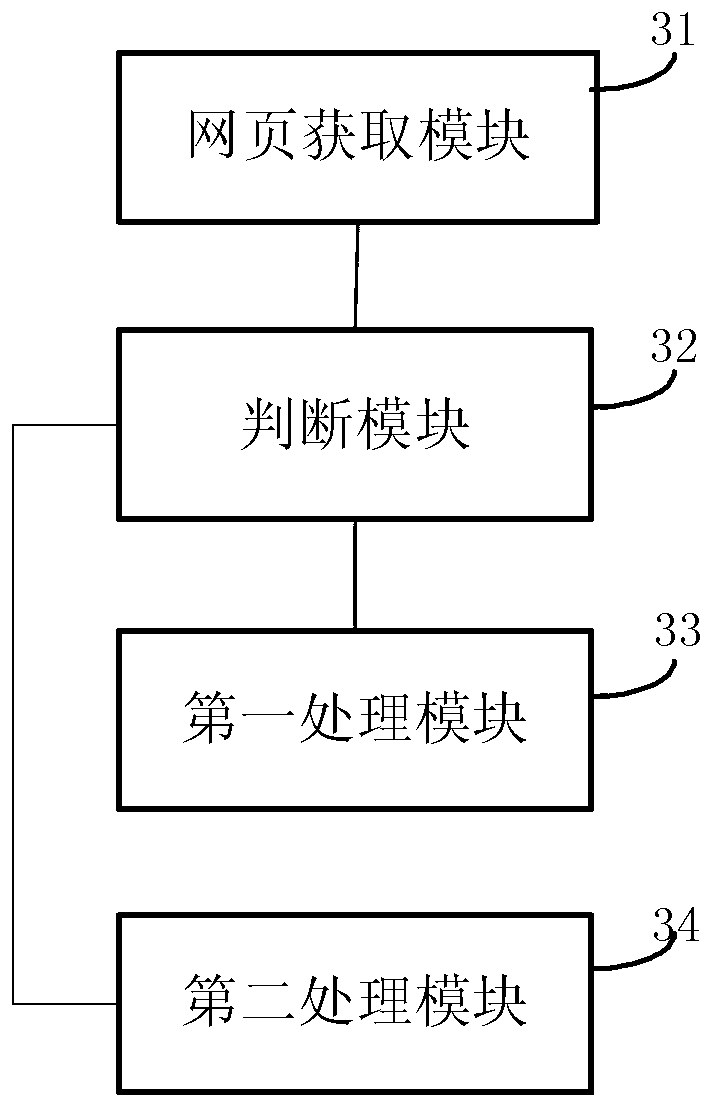

Method, device and terminal device for loading explorer pictures

InactiveCN103279574AImprove loading speedImprove experienceSpecial data processing applicationsCache invalidationComputer graphics (images)

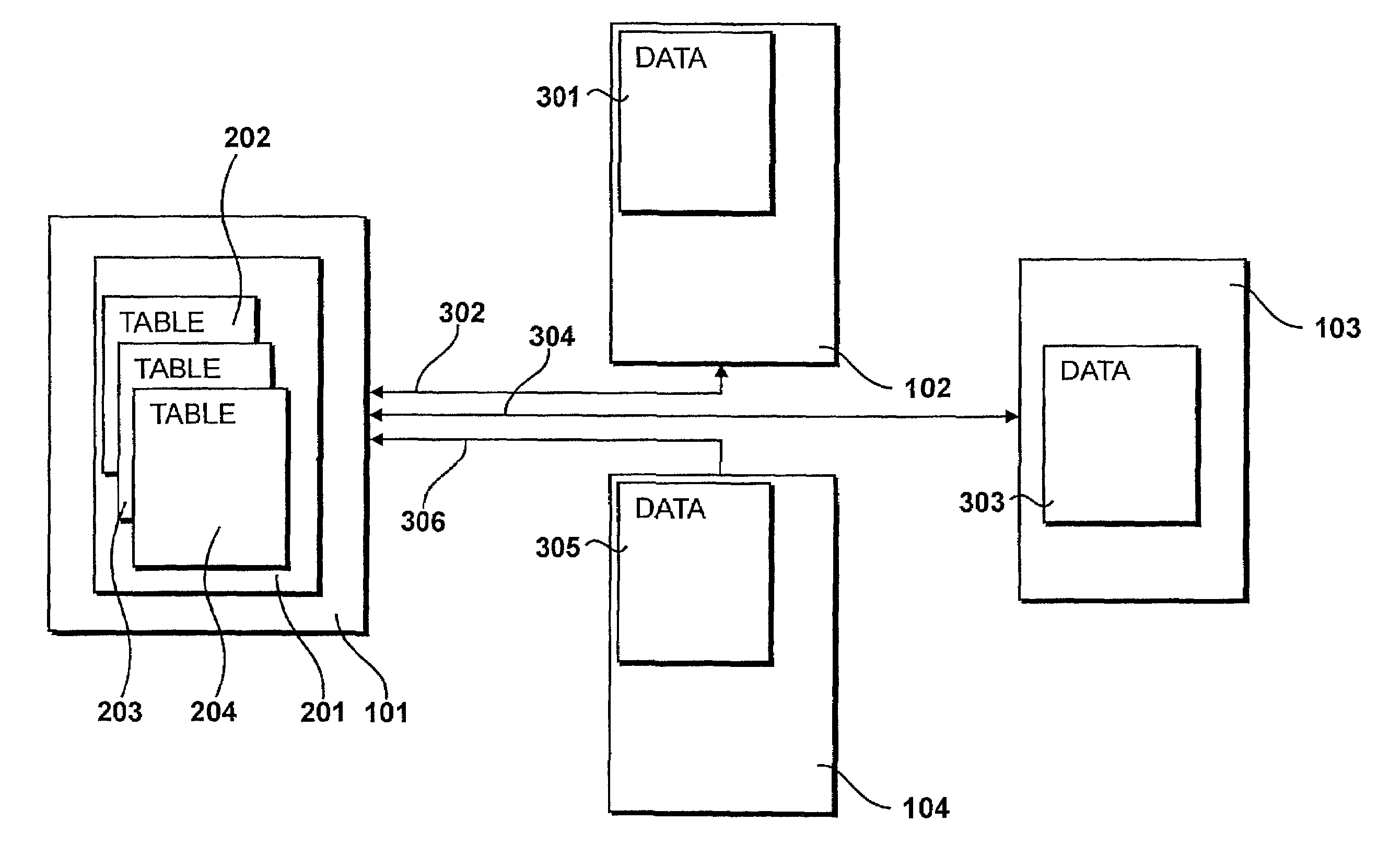

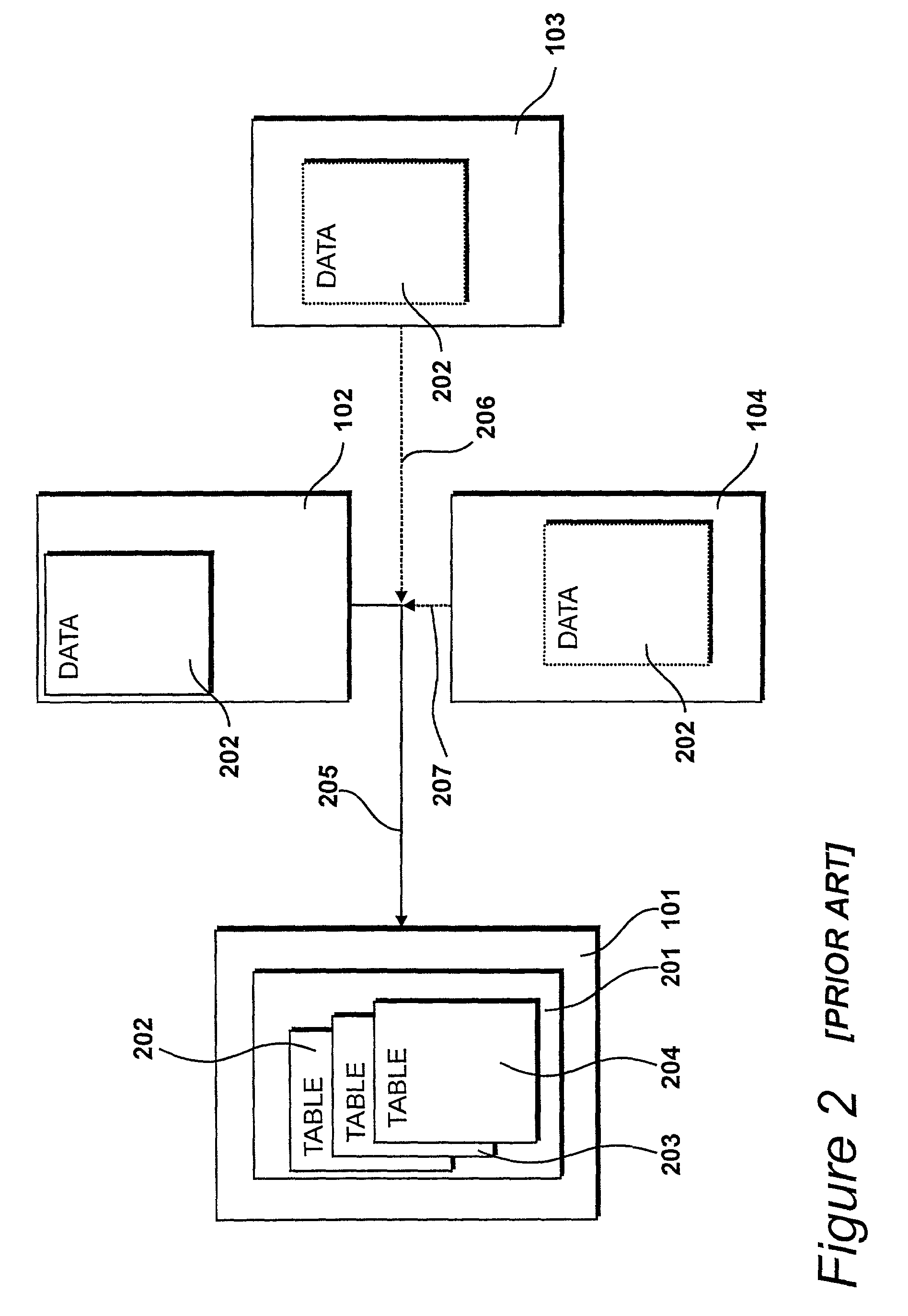

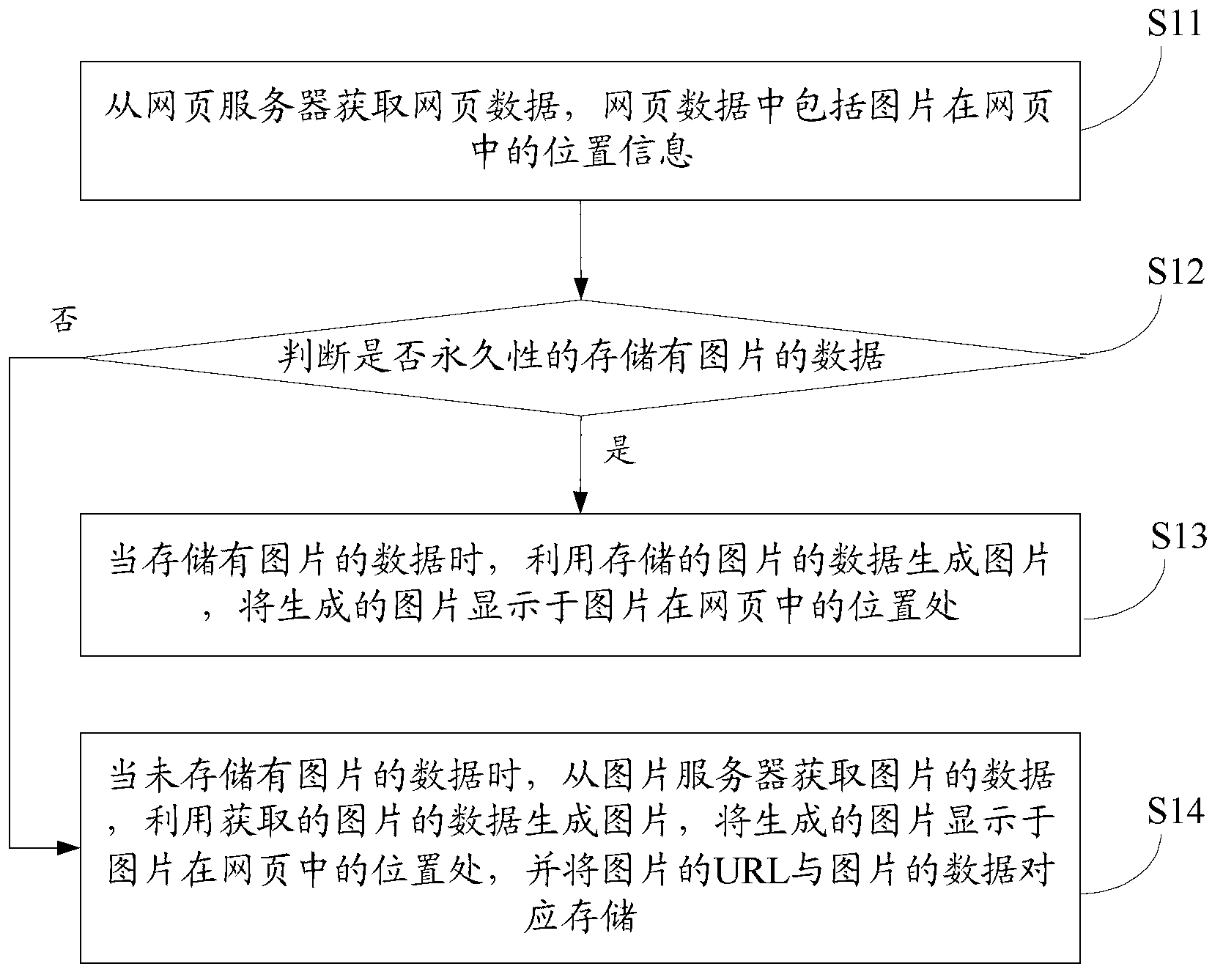

An embodiment of the invention discloses a method, a device and a terminal device for loading explorer pictures. The method includes the steps: acquiring webpage data including positional information of the pictures in a webpage; generating the pictures by the aid of stored picture data when the picture data are stored, and displaying the generated pictures at picture positions of the webpage; and acquiring the picture data when the picture data are not stored, generating the pictures by the aid of the acquired picture data, displaying the generated pictures at the picture positions of the webpage and correspondingly storing uniform resource locators of the pictures and the picture data. By the aid of the scheme, network transmission with a network side is decreased, the number of concurrent requests is not limited, and picture loading speed is increased. Cache invalidation is avoided, the loading process is more stable, user experience is better, and the bandwidth pressure of a server for storing the pictures is relieved.

Owner:XIAOMI INC

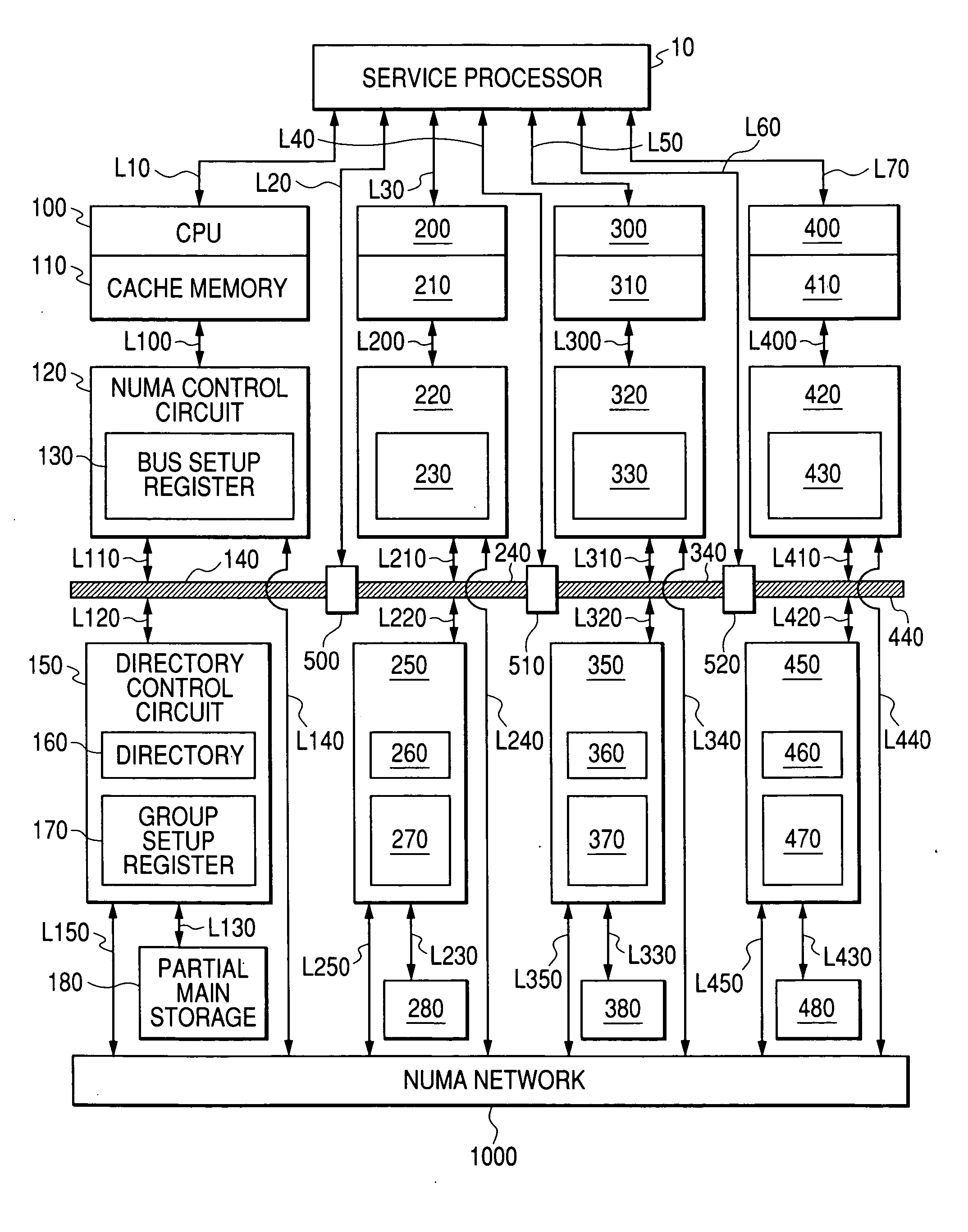

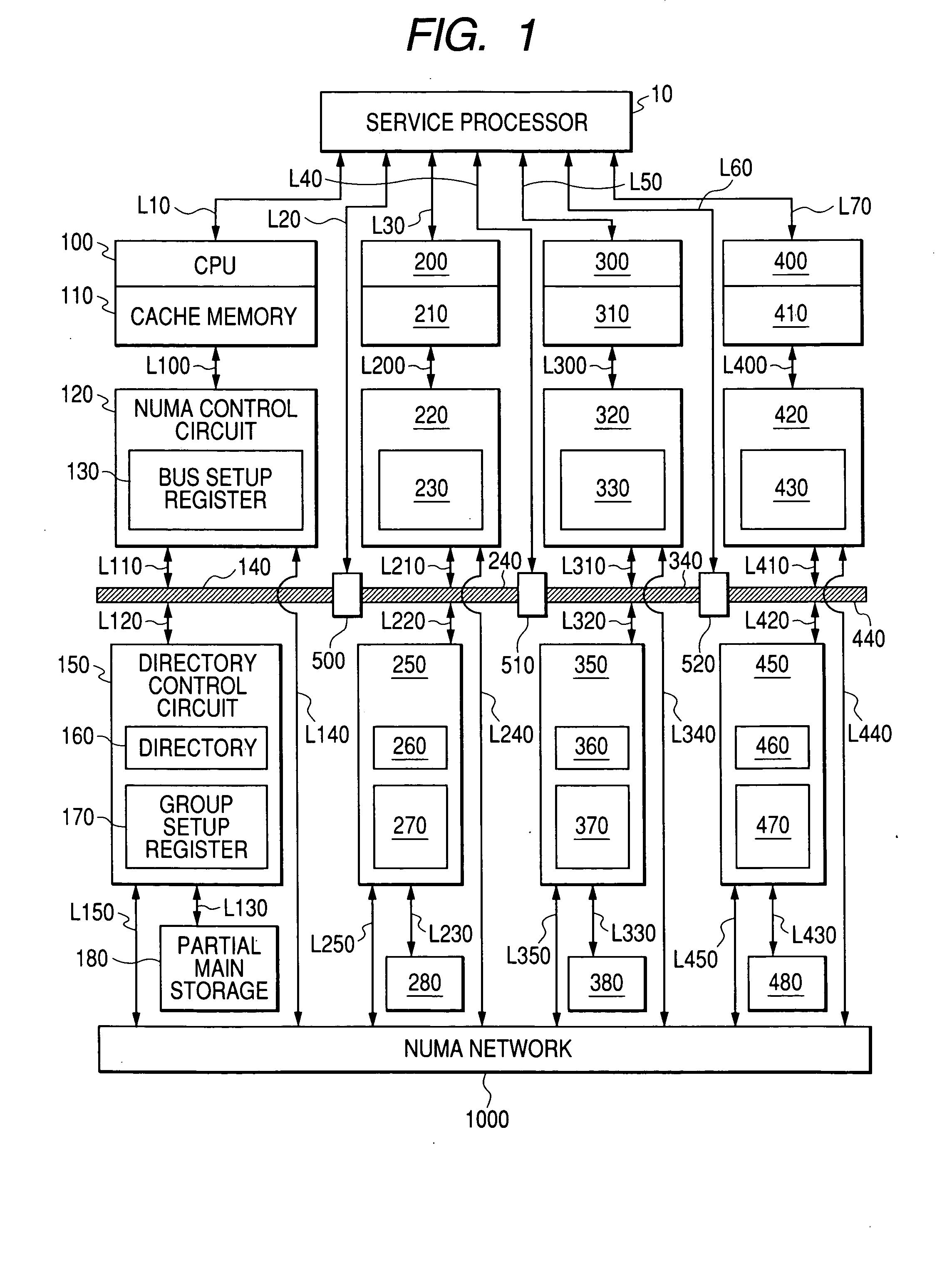

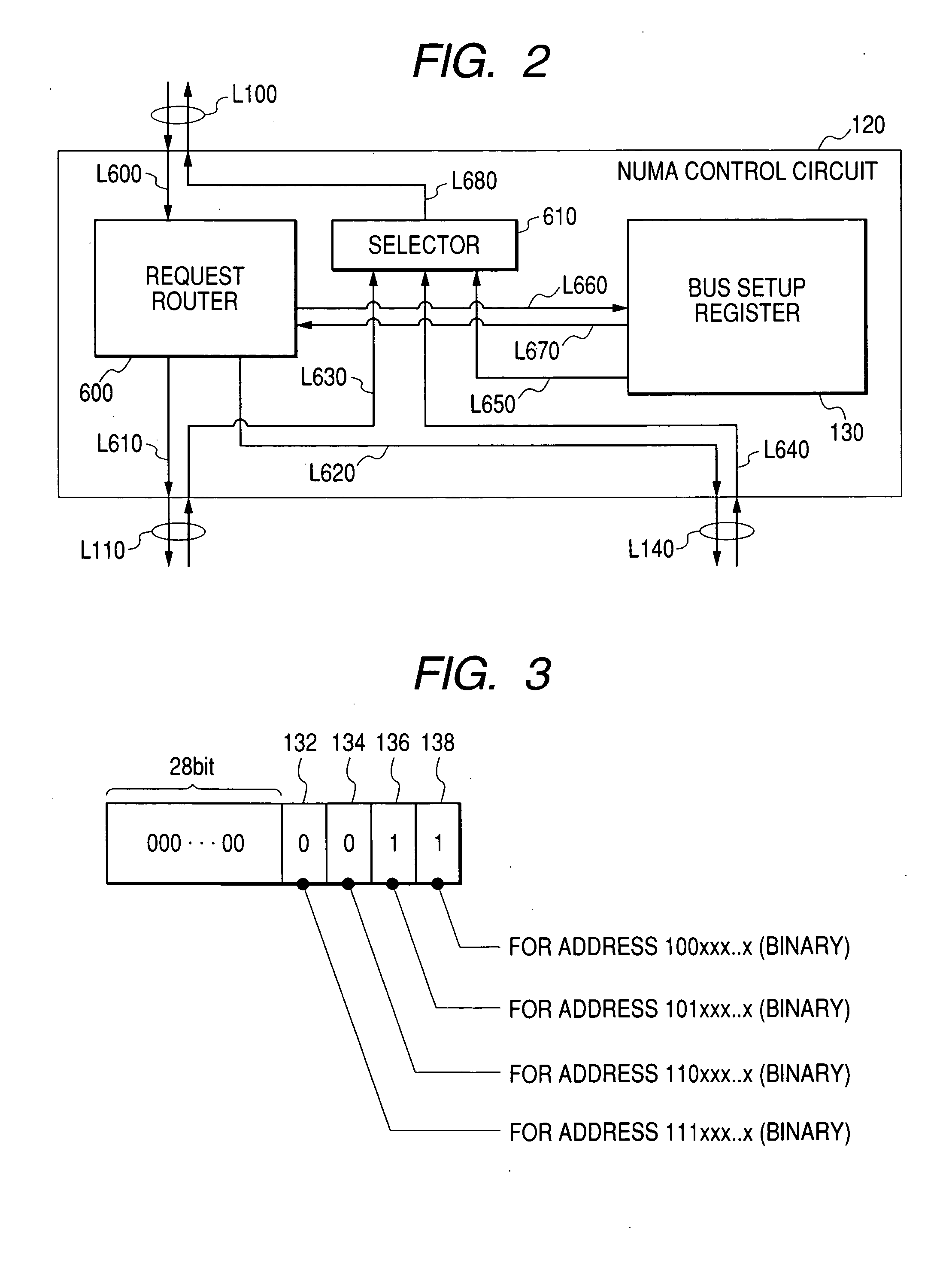

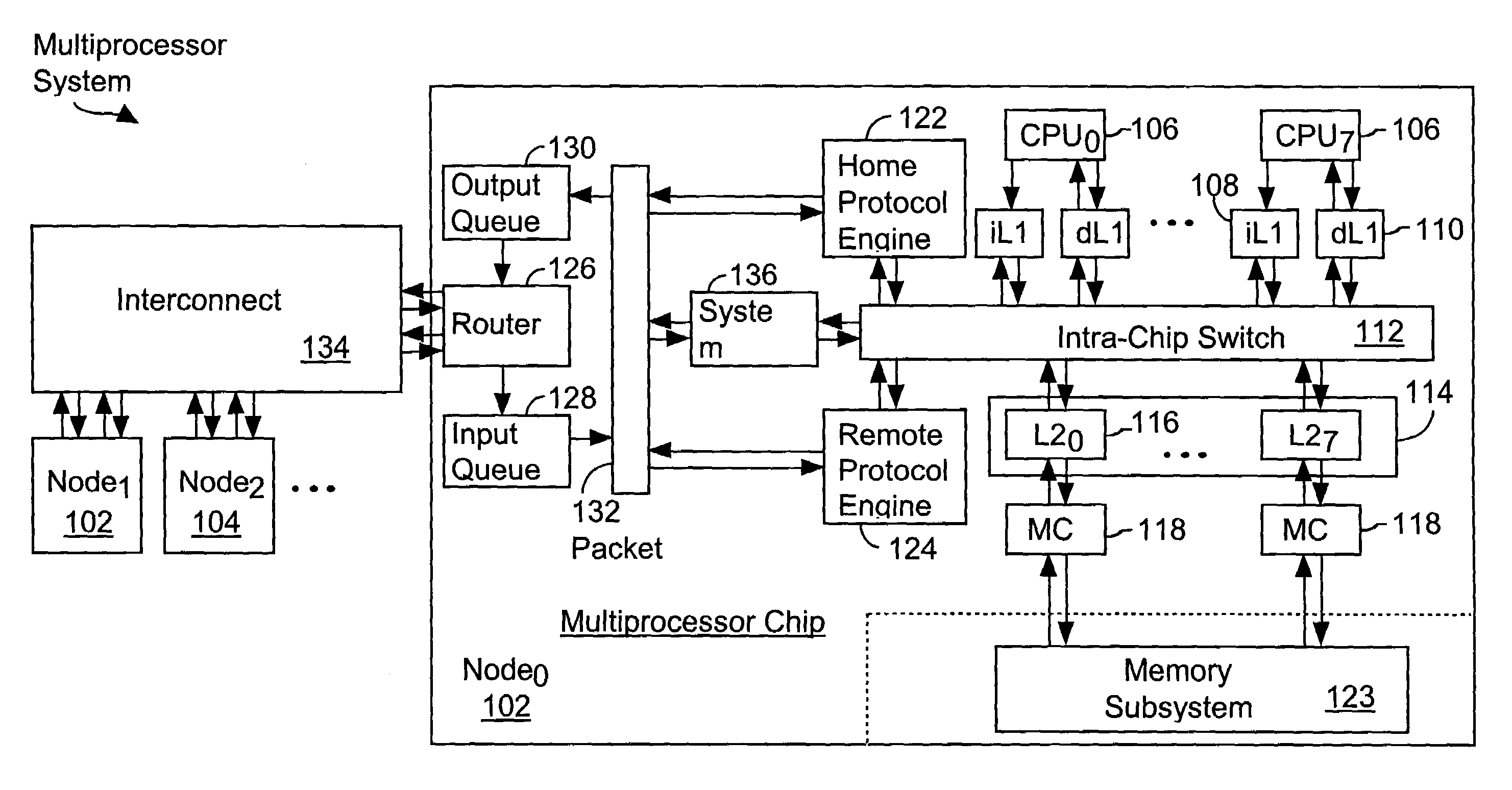

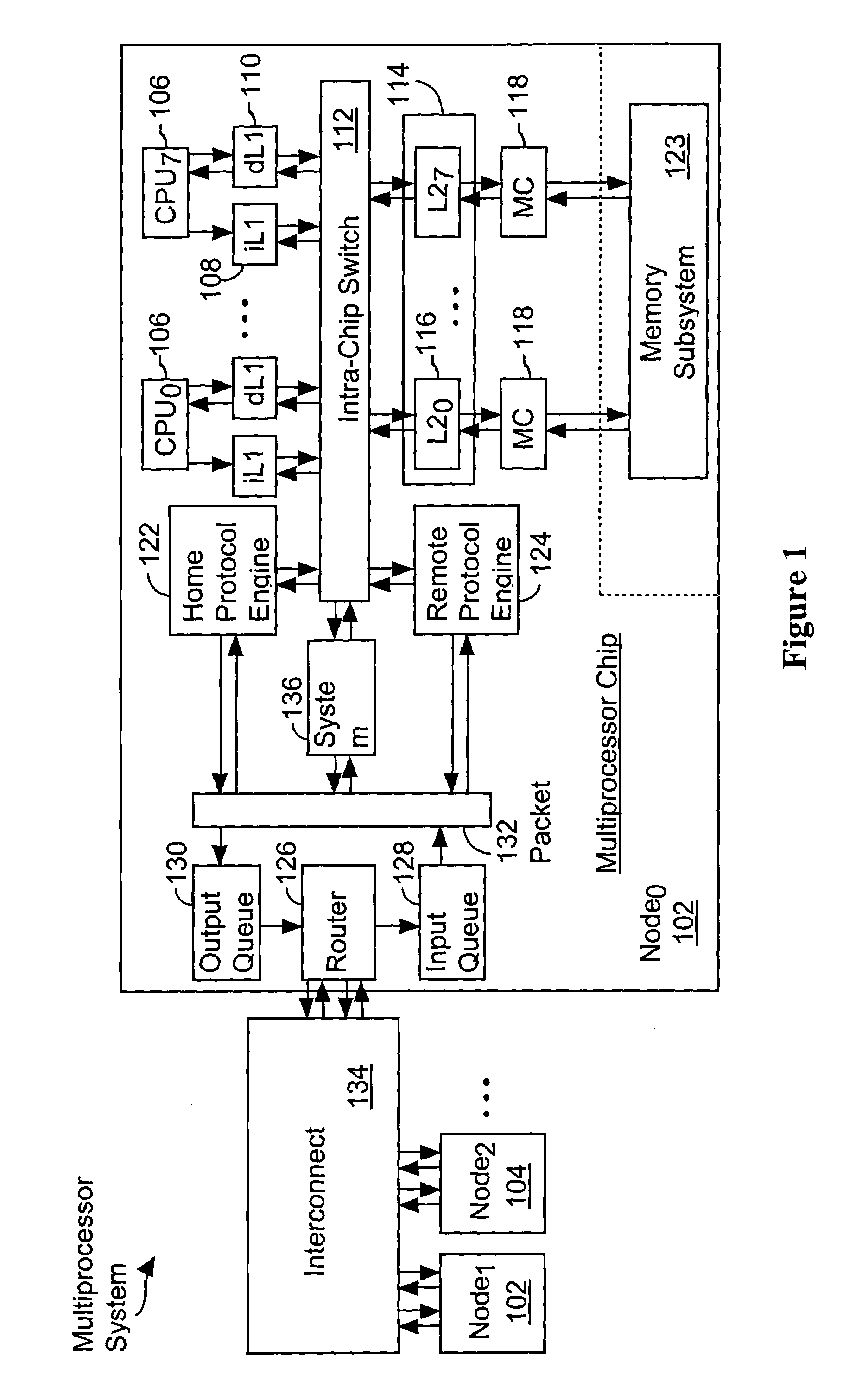

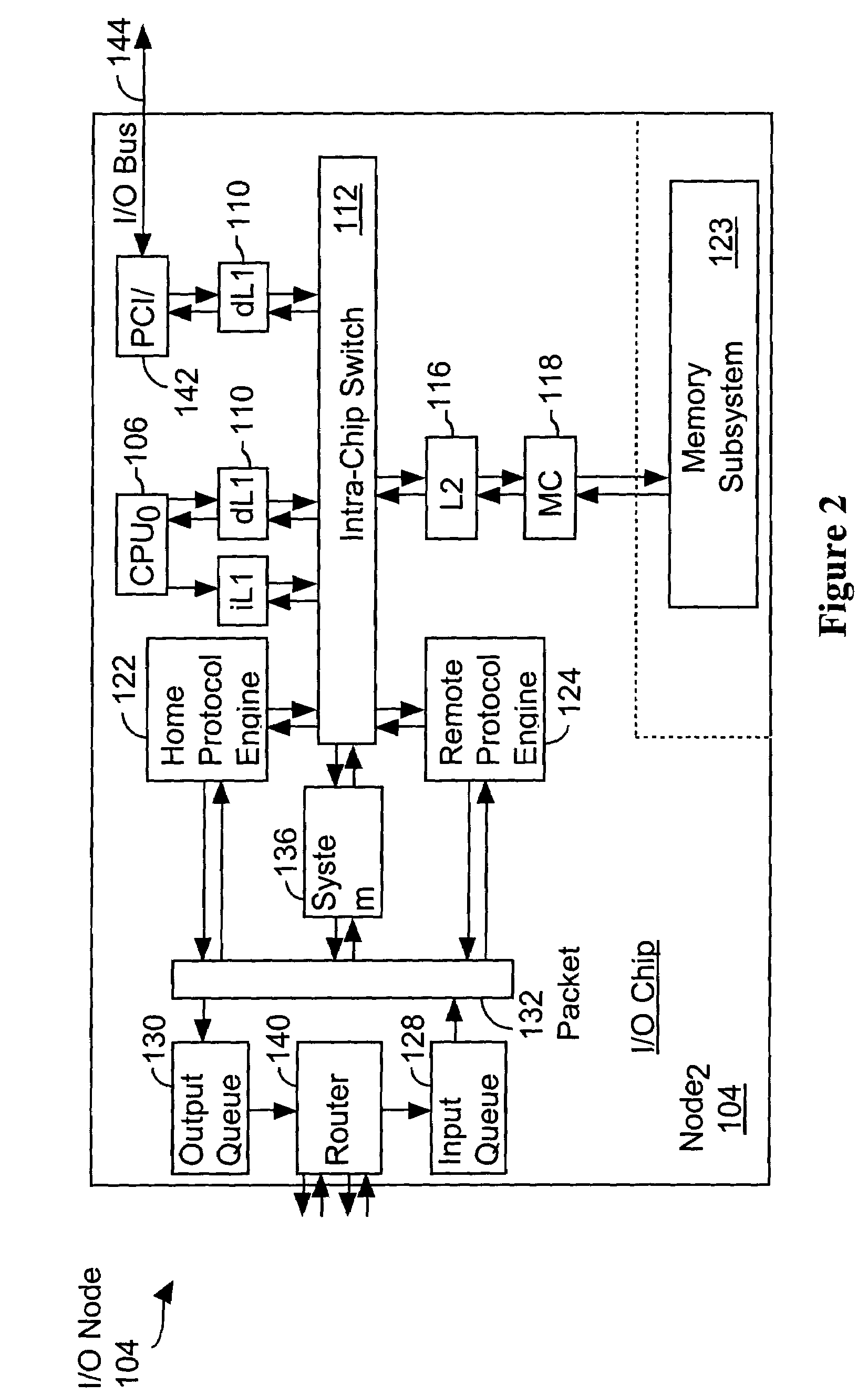

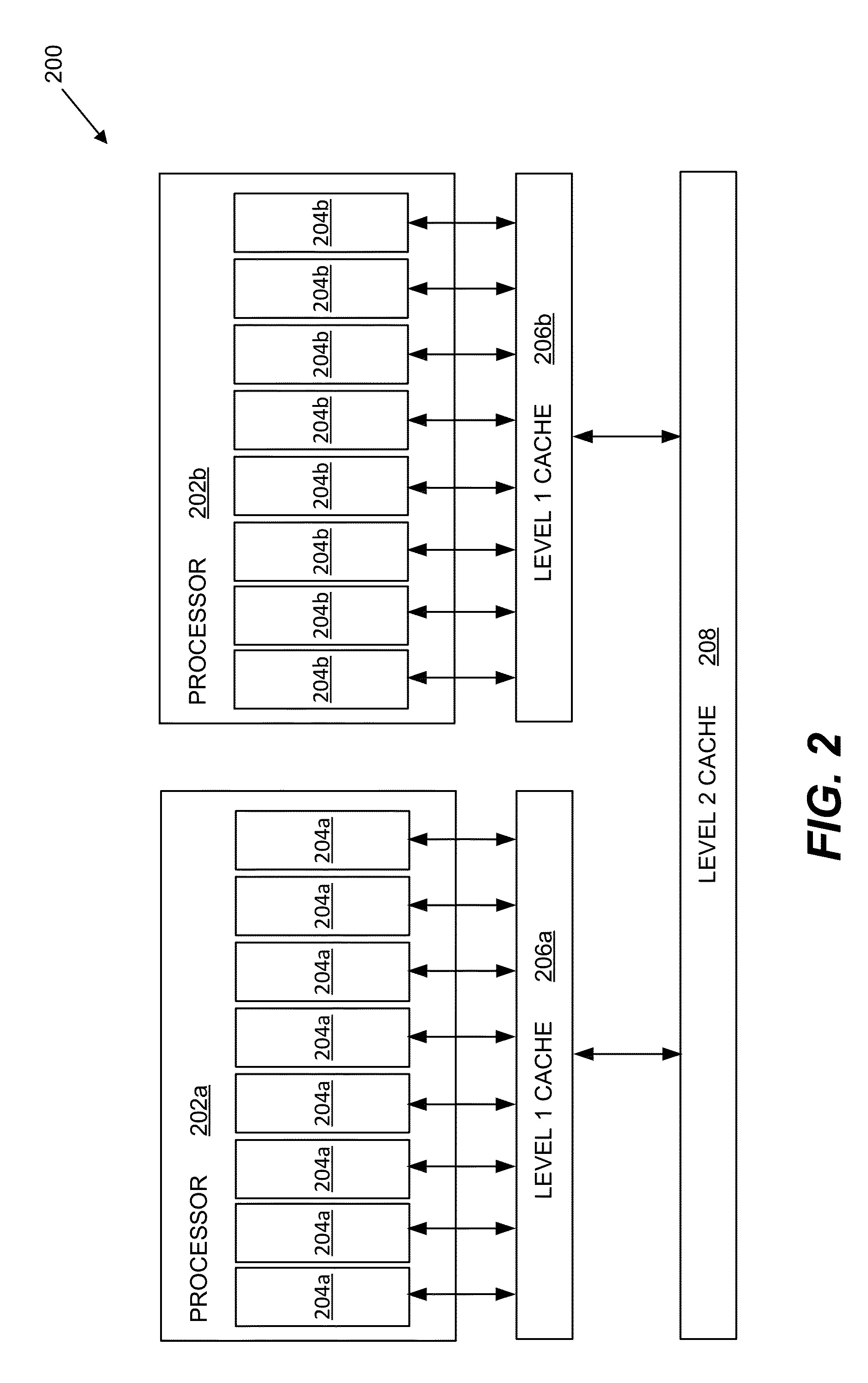

Multiprocessor system

InactiveUS20050102477A1High speed machiningMemory adressing/allocation/relocationDigital computer detailsCache invalidationMulti processor

A splittable / connectible bus 140 and a network 1000 for transmitting coherence transactions between CPUs are provided between the CPUs, and a directory 160 and a group setup register 170 for storing bus-splitting information are provided in a directory control circuit 150 that controls cache invalidation. The bus is dynamically set to a split or connected state to fit a particular execution form of a job, and the directory control circuit uses the directory in order to manage all inter-CPU coherence control sequences in response to the above setting, while at the same time, in accordance with information of the group setup register, omitting dynamically bus-connected CPU-to-CPU cache coherence control, and conducting only bus-split CPU-to-CPU cache coherence control through the network. Thus, decreases in performance scalability due to an inter-CPU coherence-processing overhead are relieved in a system having multiple CPUs and guaranteeing inter-CPU cache coherence by use of hardware.

Owner:HITACHI LTD

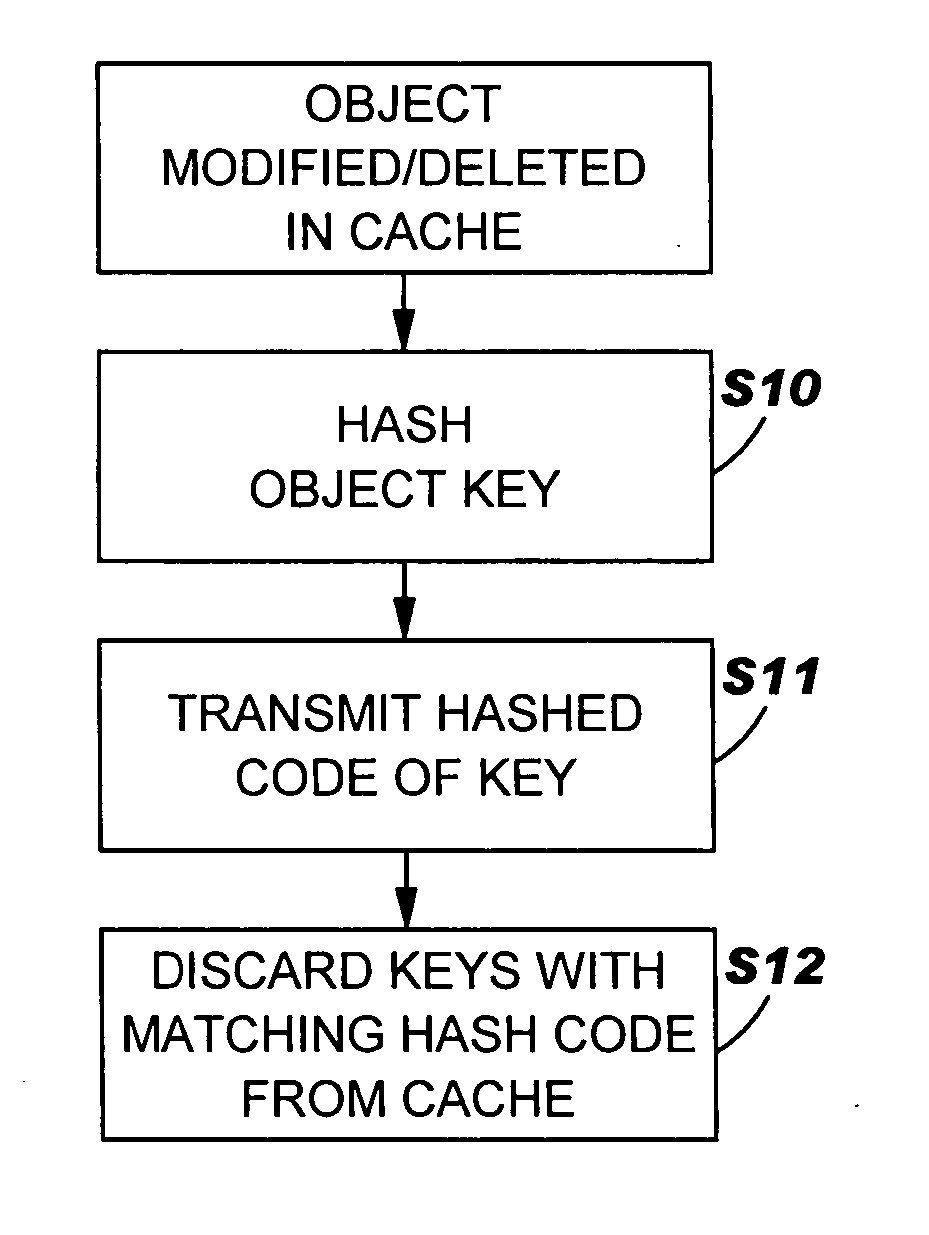

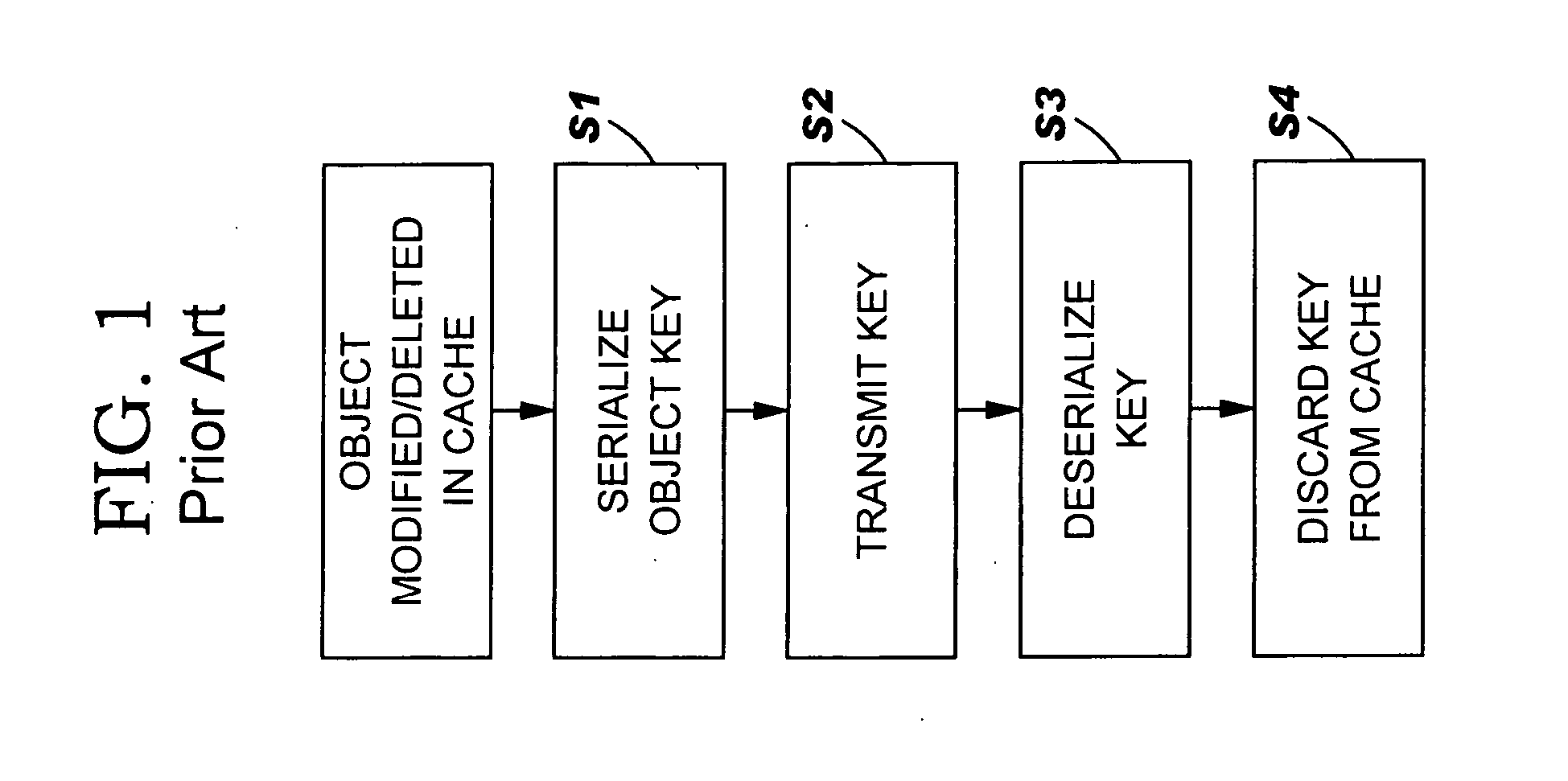

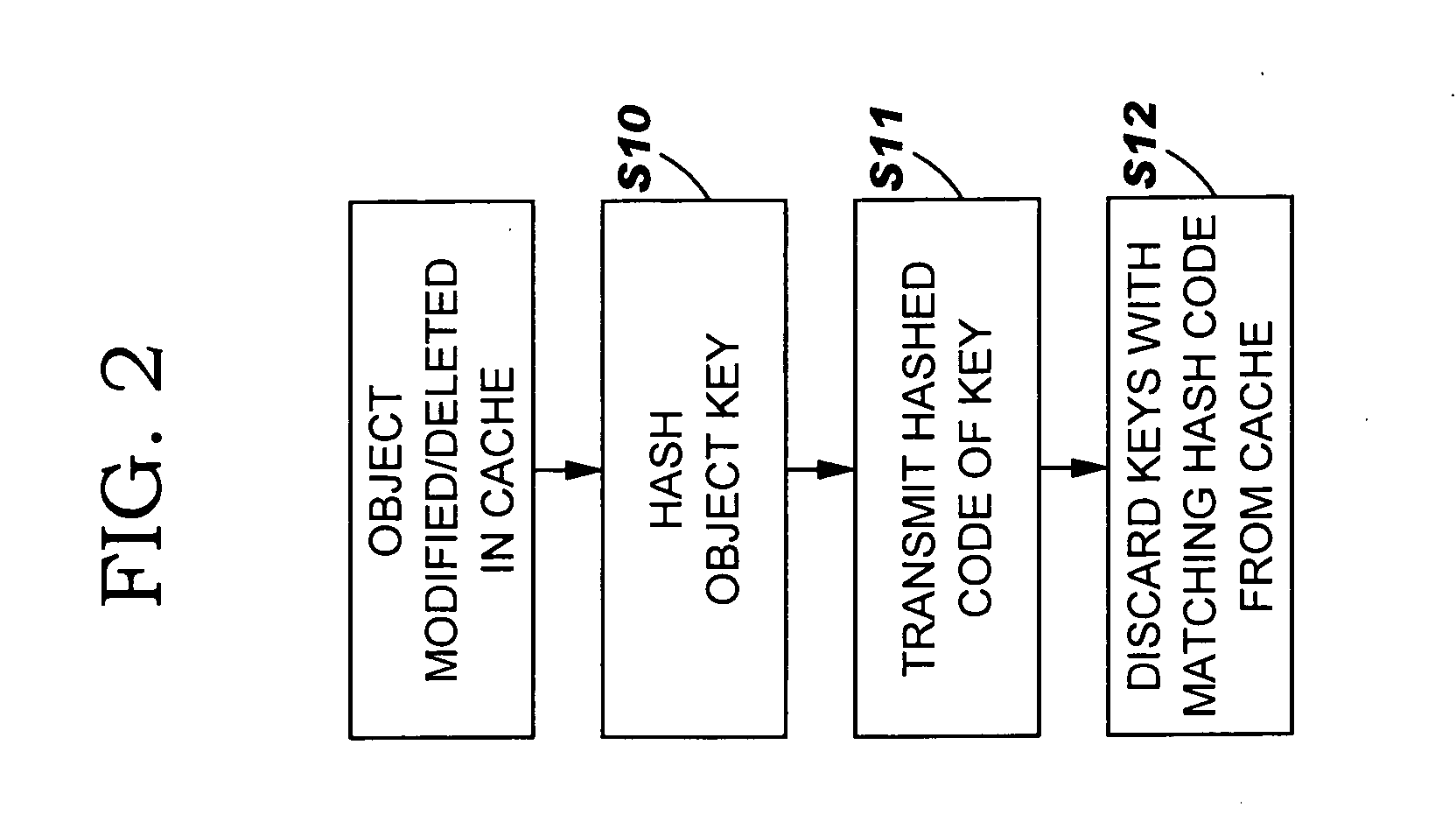

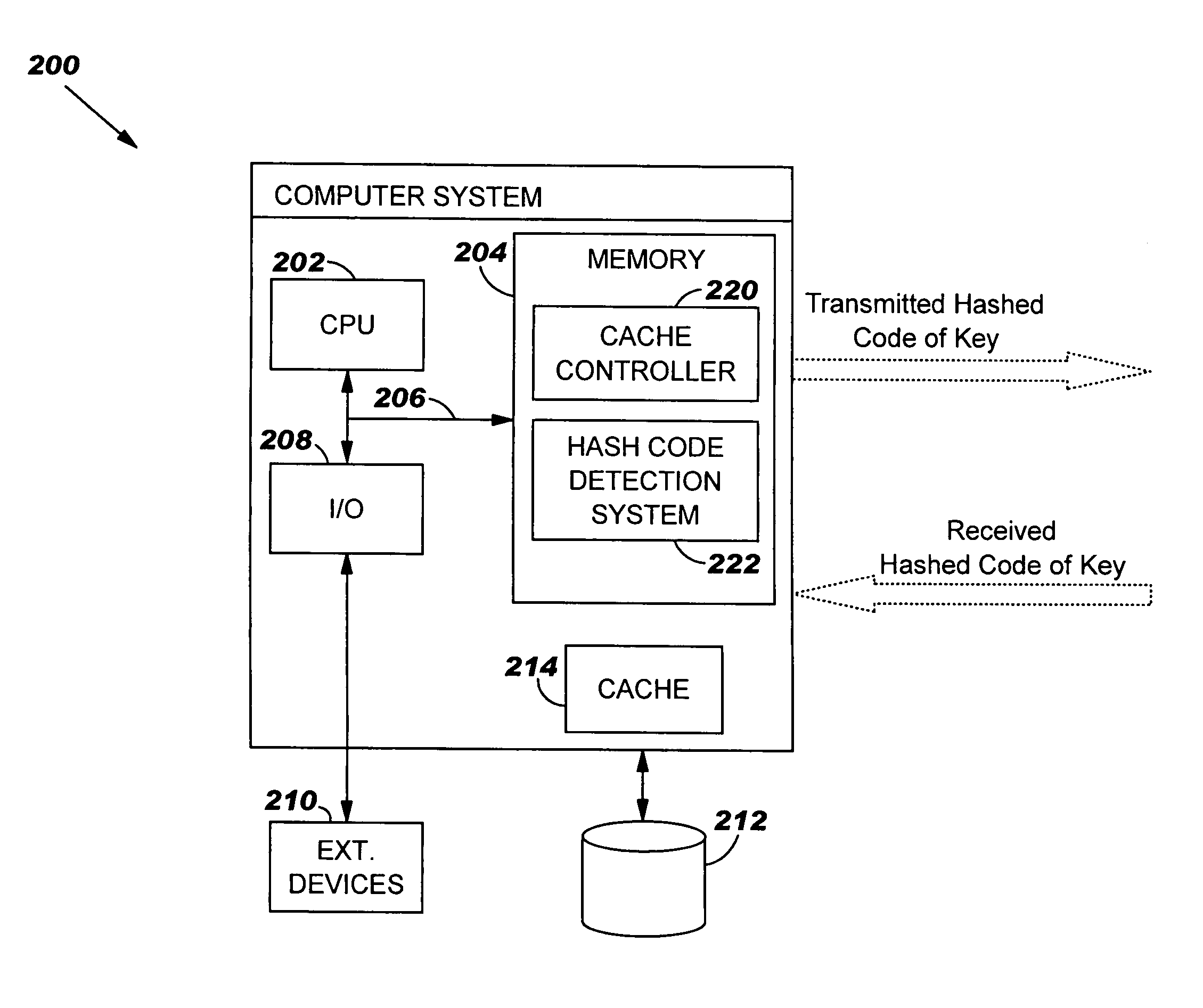

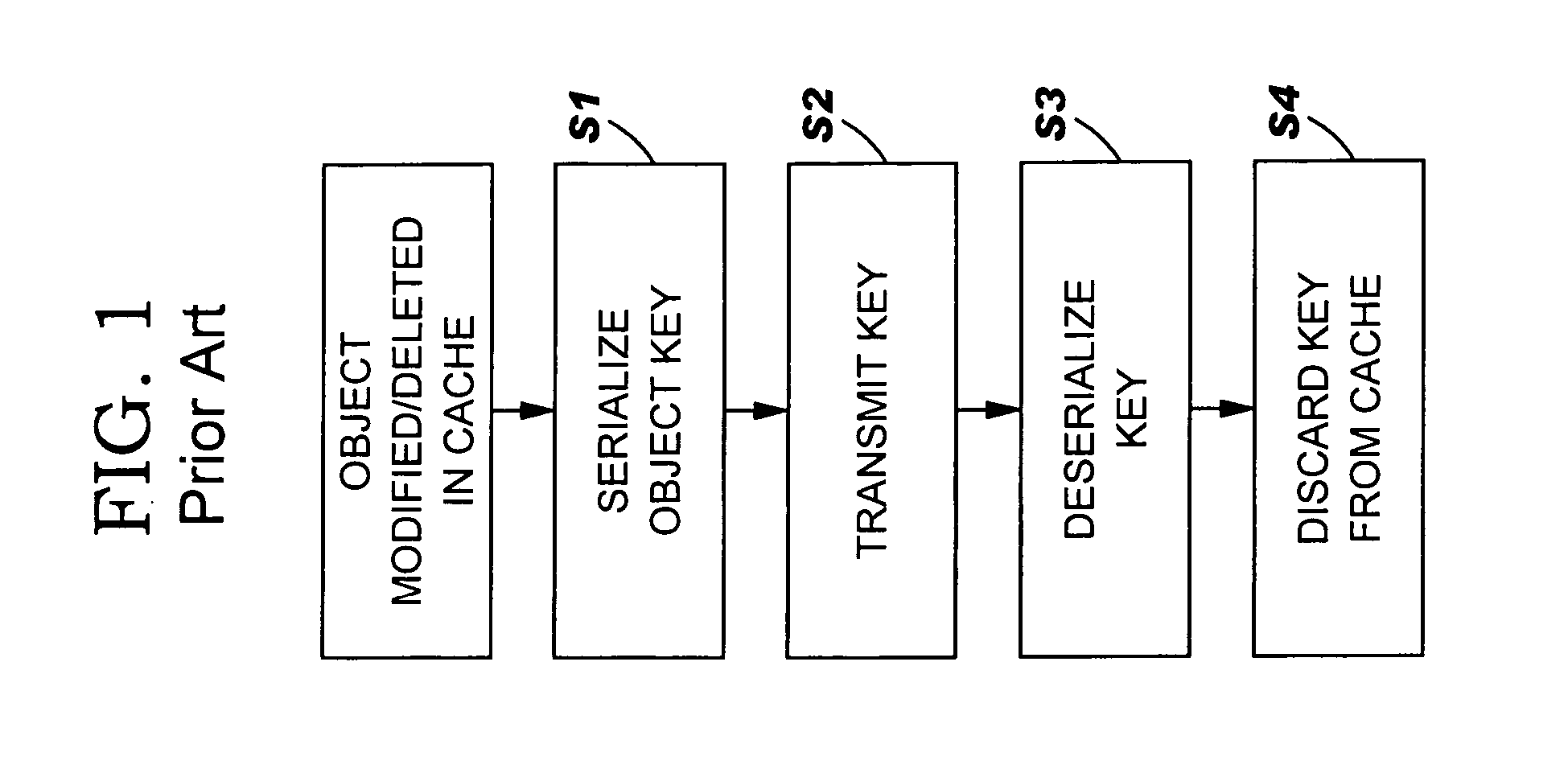

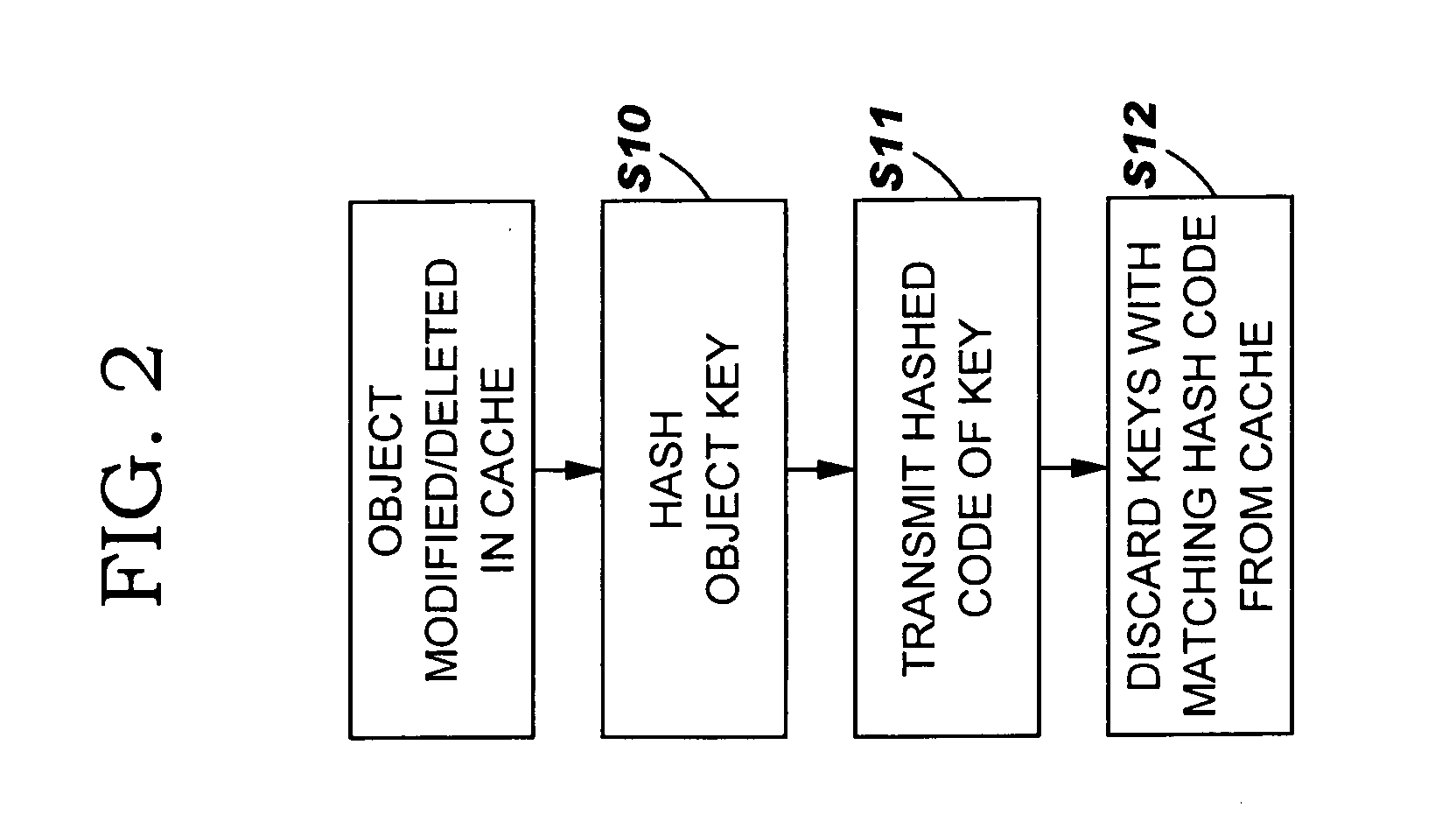

Lower overhead shared cache invalidations

InactiveUS20050204098A1Reduce overheadMemory adressing/allocation/relocationMicro-instruction address formationCache invalidationParallel computing

Under the present invention, a system, method, and program product are provided for reducing the overhead of cache invalidations in a shared cache by transmitting a hashed code of a key to be invalidated. The method for shared cache invalidation comprises: hashing a key corresponding to an object in a first cache that has been modified or deleted to provide a hashed code of the key, wherein the first cache forms part of a shared cache; transmitting the hashed code of the key to other caches in the shared cache; comparing the hashed code of the key with entries in the other caches; and dropping any keys in the other caches having a hash code the same as the hashed code of the key.

Owner:IBM CORP

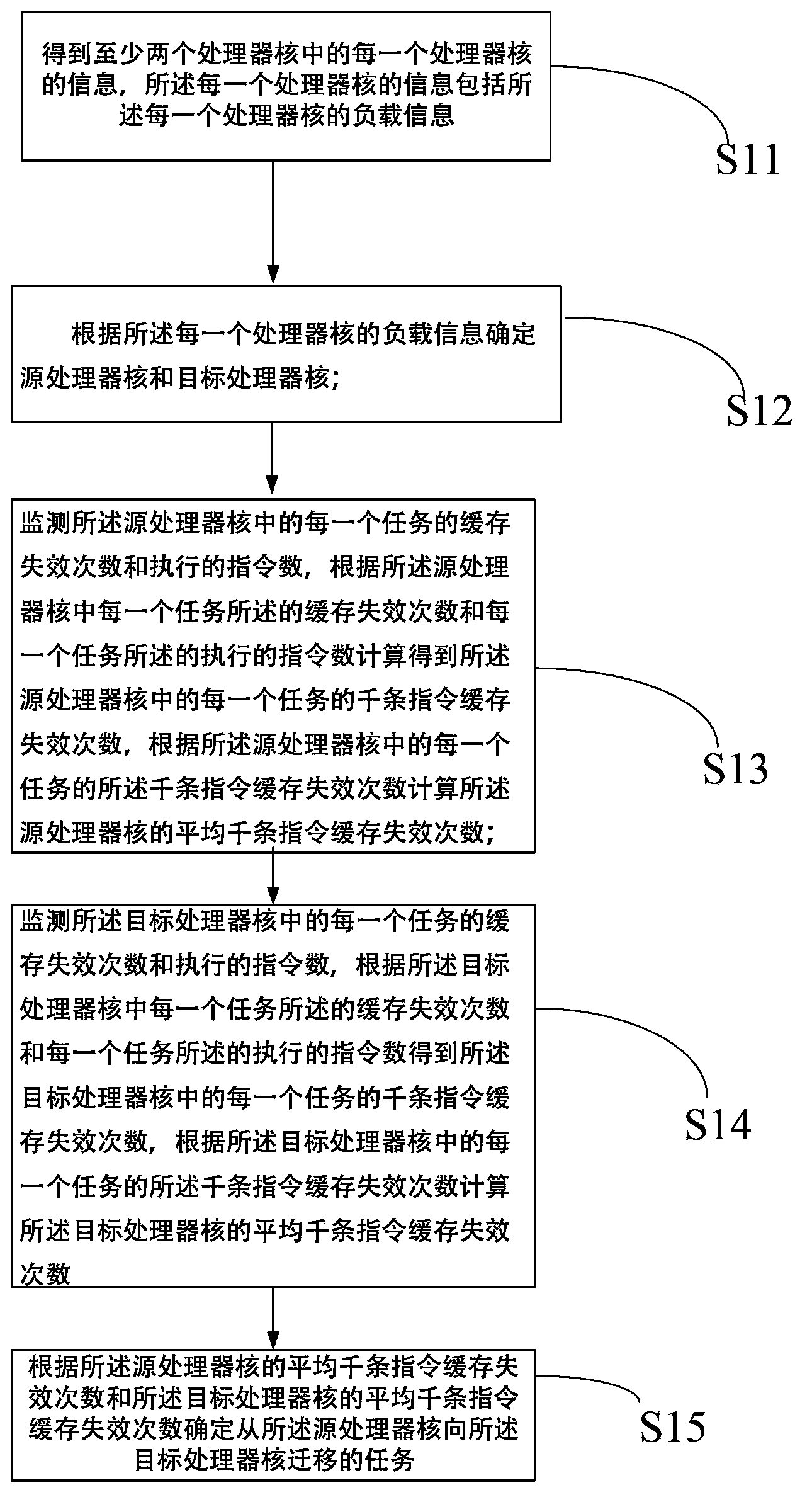

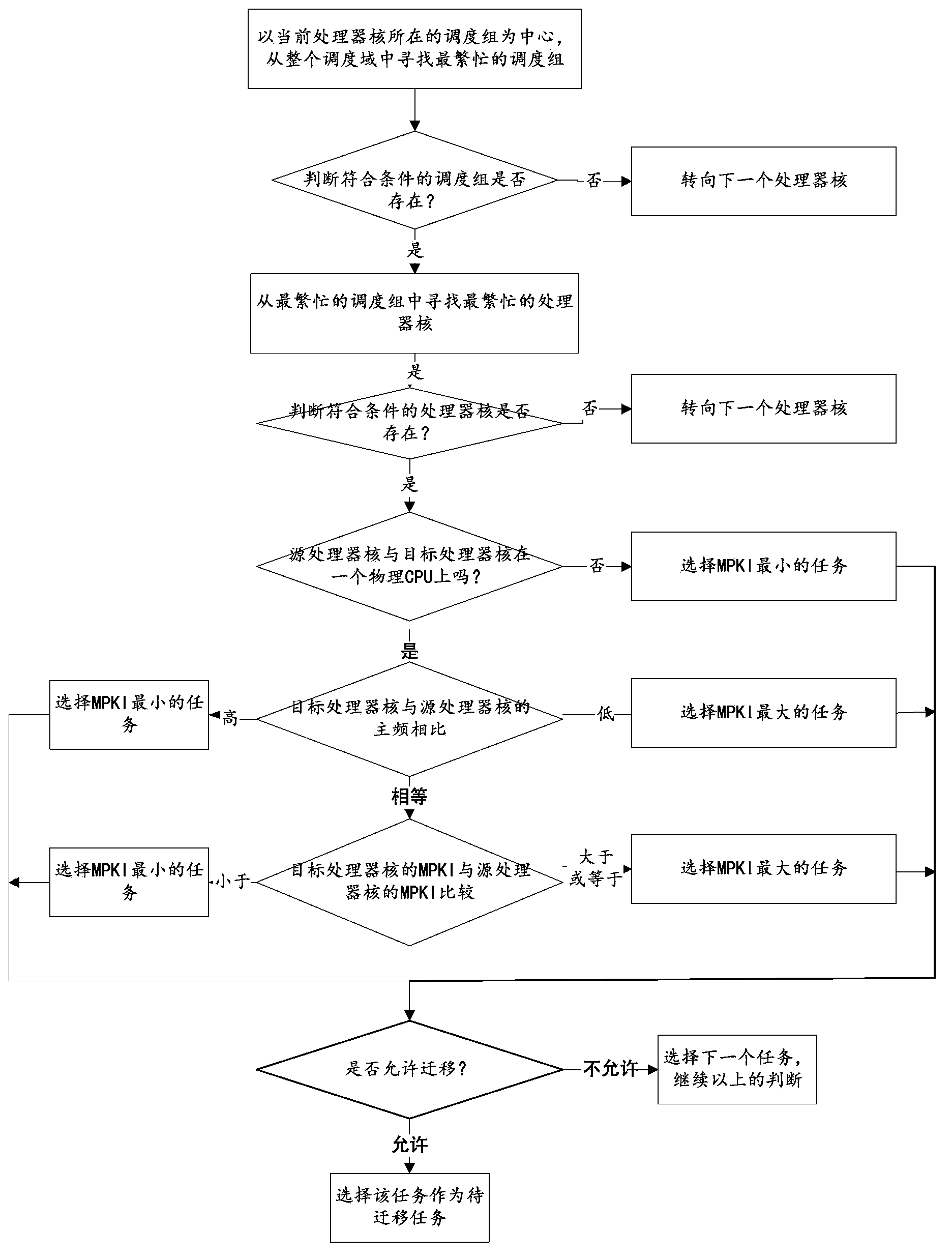

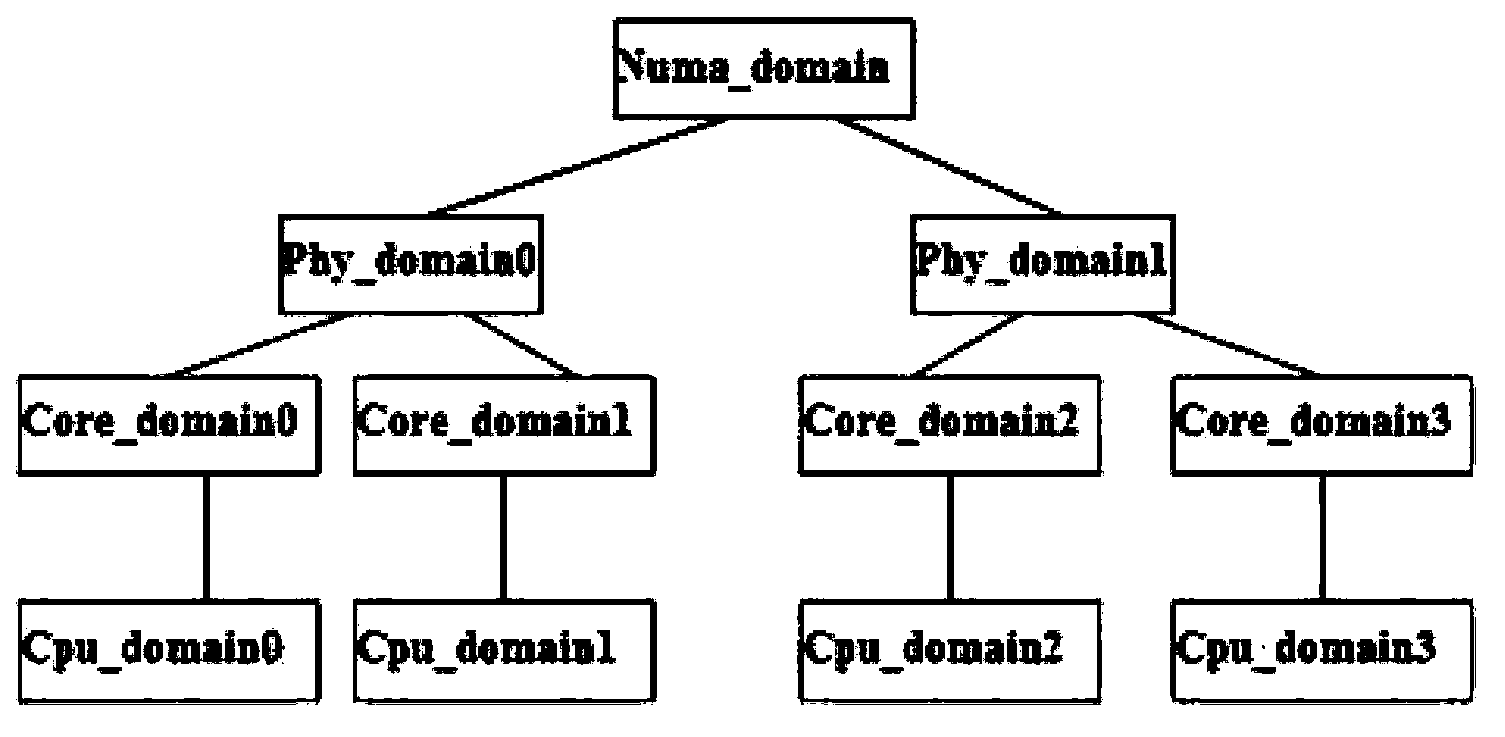

Method and device for determining tasks to be migrated based on cache perception

ActiveCN103729248AReduce the probability of resource contentionImprove performanceResource allocationOperational systemCache invalidation

The invention discloses a method for determining tasks to be migrated based on cache perception. The method comprises the steps that a source processor core and a target processor core are determined according to loads of each processor core; the number of times of cache invalidation and the number of executed orders of each task in the source processor core and the target processor core are monitored to obtain the number of times of cache invalidation of thousands of orders of each task in the source processor core and the target processor core; the average number of times of cache invalidation of thousands of orders of the source processor core and the average number of times of cache invalidation of thousands of orders of the target processor core are obtained; the tasks needing to be migrated from the source processor core to the target processor core are determined according to the average number of times of cache invalidation of thousands of orders of the source processor core and the average number of times of cache invalidation of thousands of orders of the target processor core. According to the method for determining the tasks to be migrated, an operating system can perceive the behavior of programs, and more reasonable tasks can be selected when the tasks are migrated. The invention further discloses a device for determining the tasks to be migrated based on cache perception.

Owner:HUAWEI TECH CO LTD +1

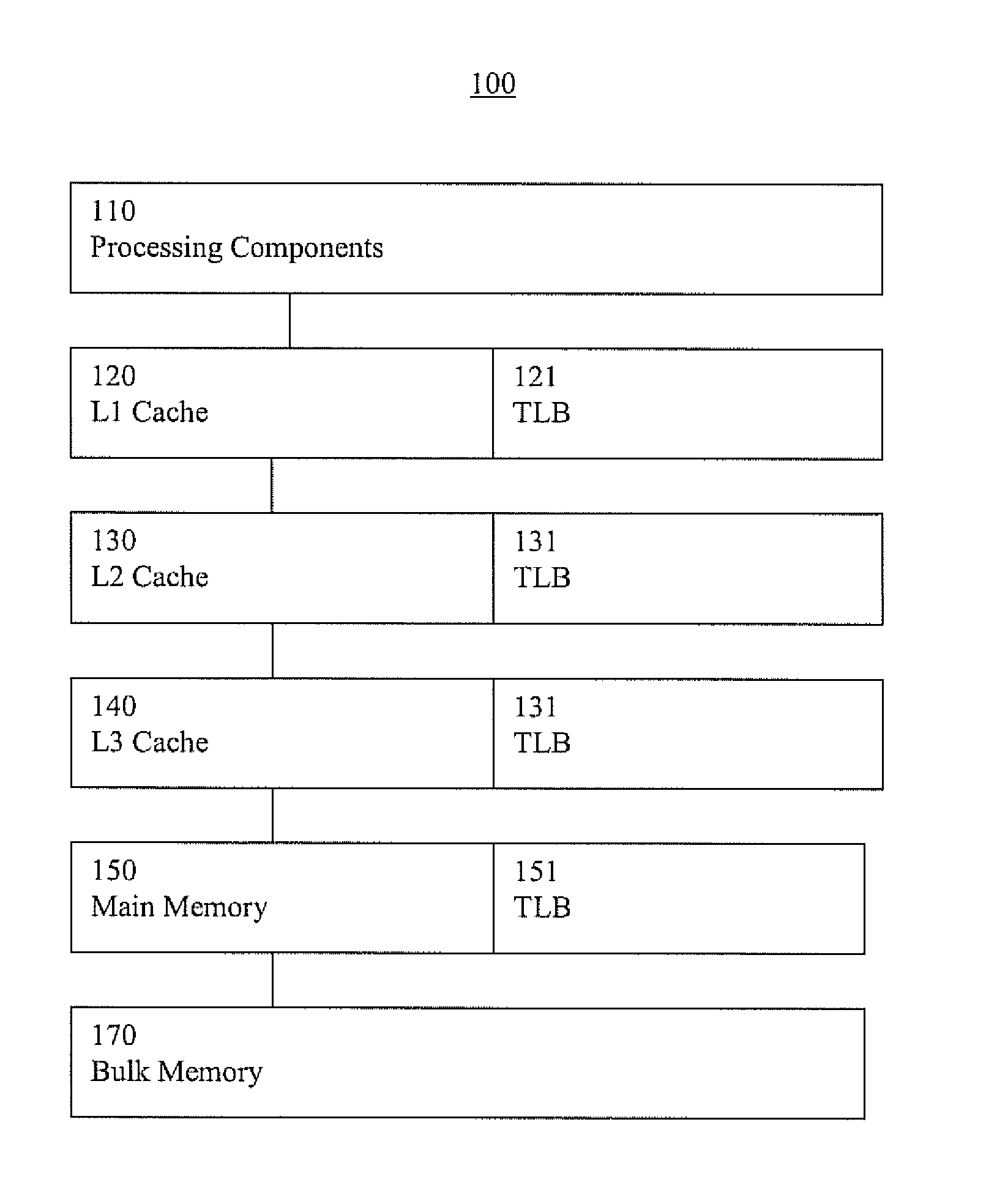

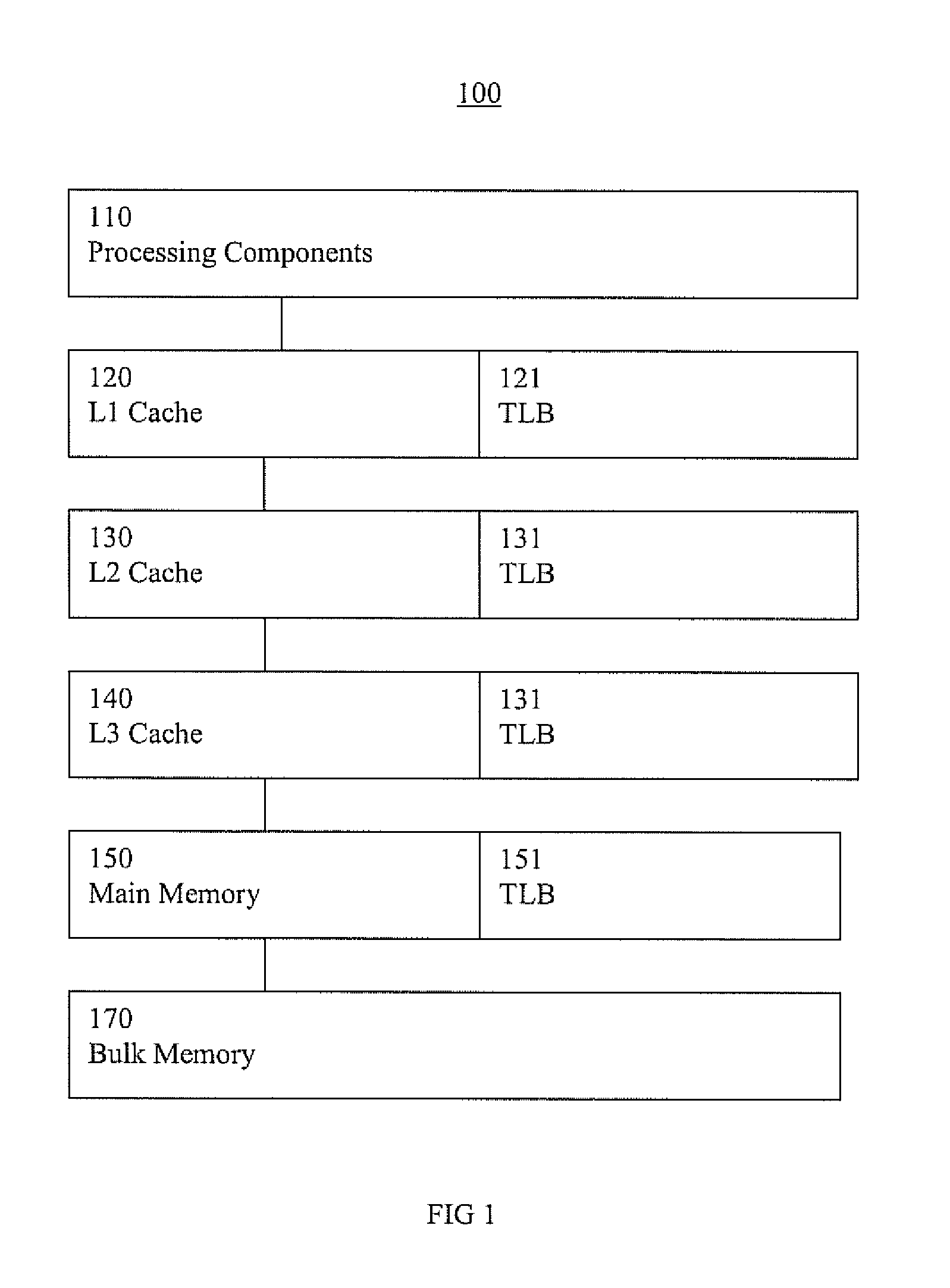

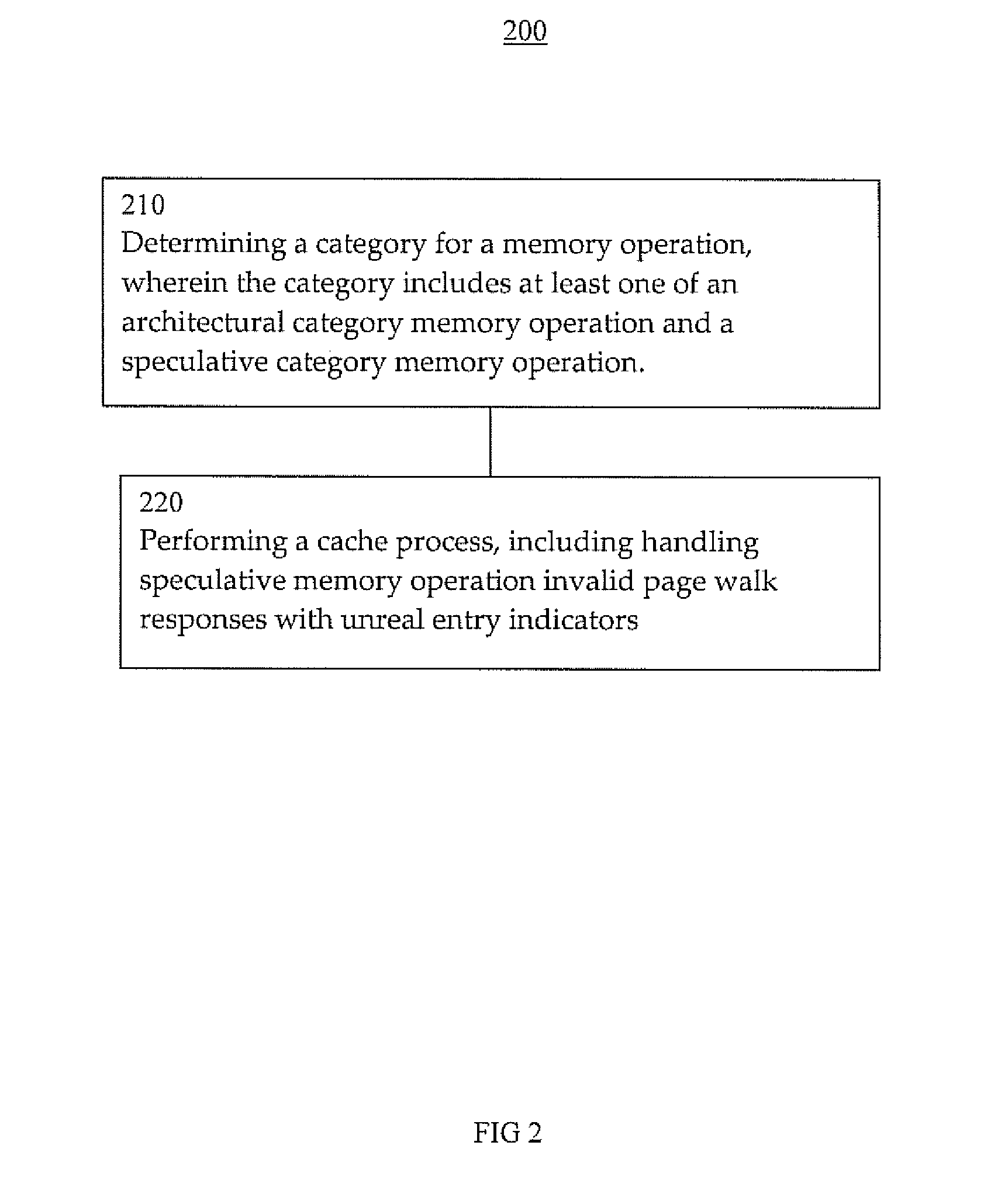

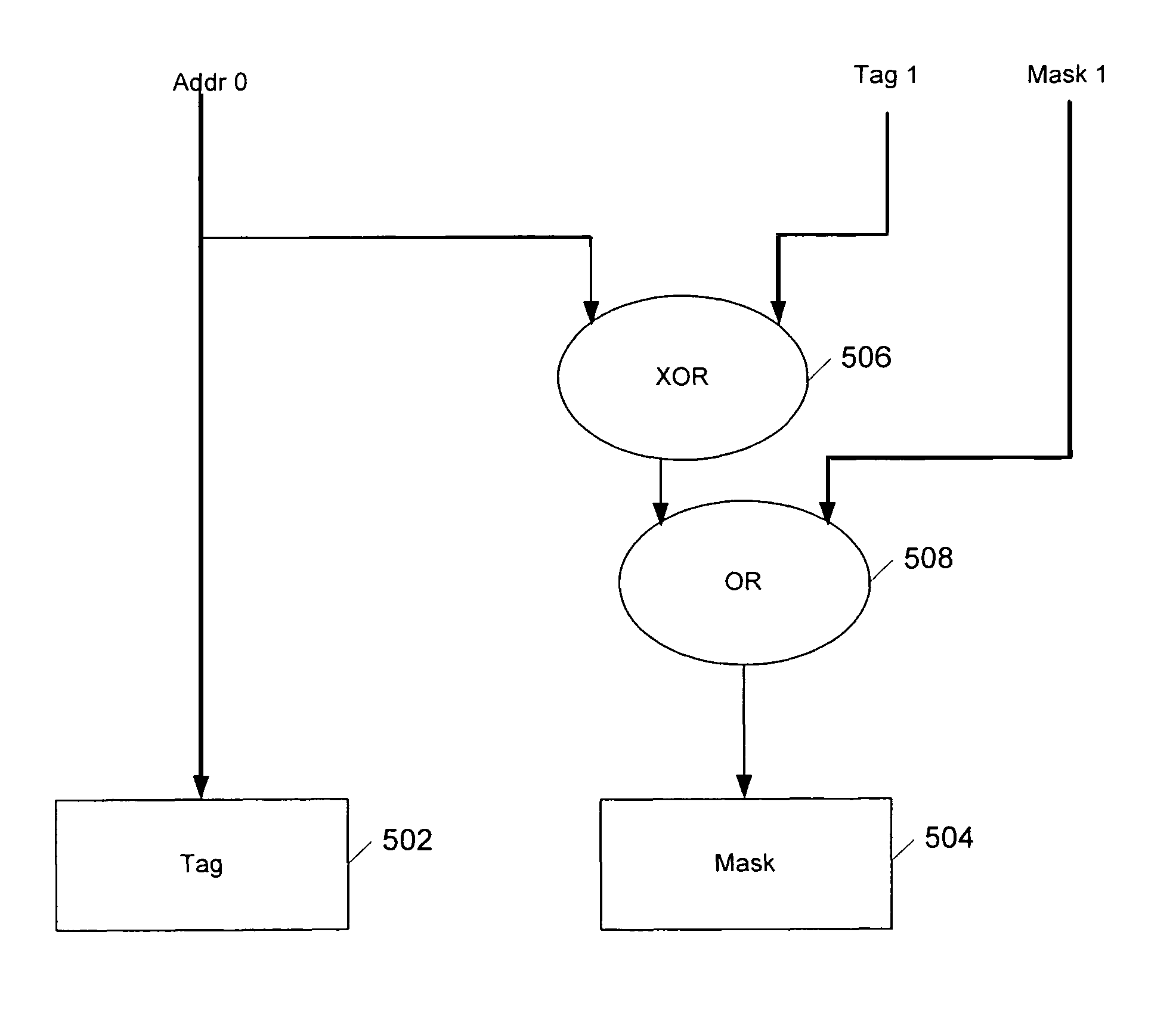

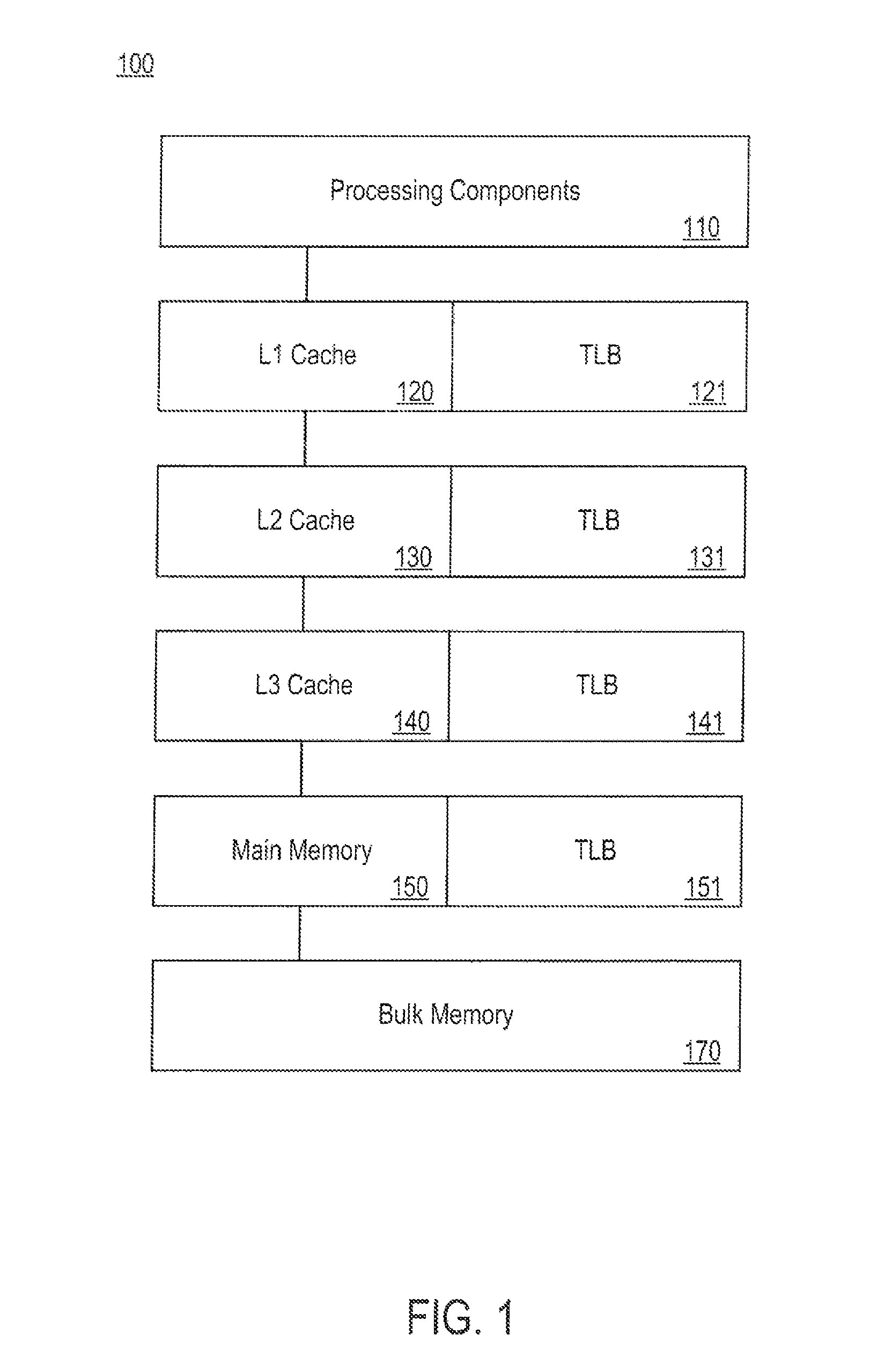

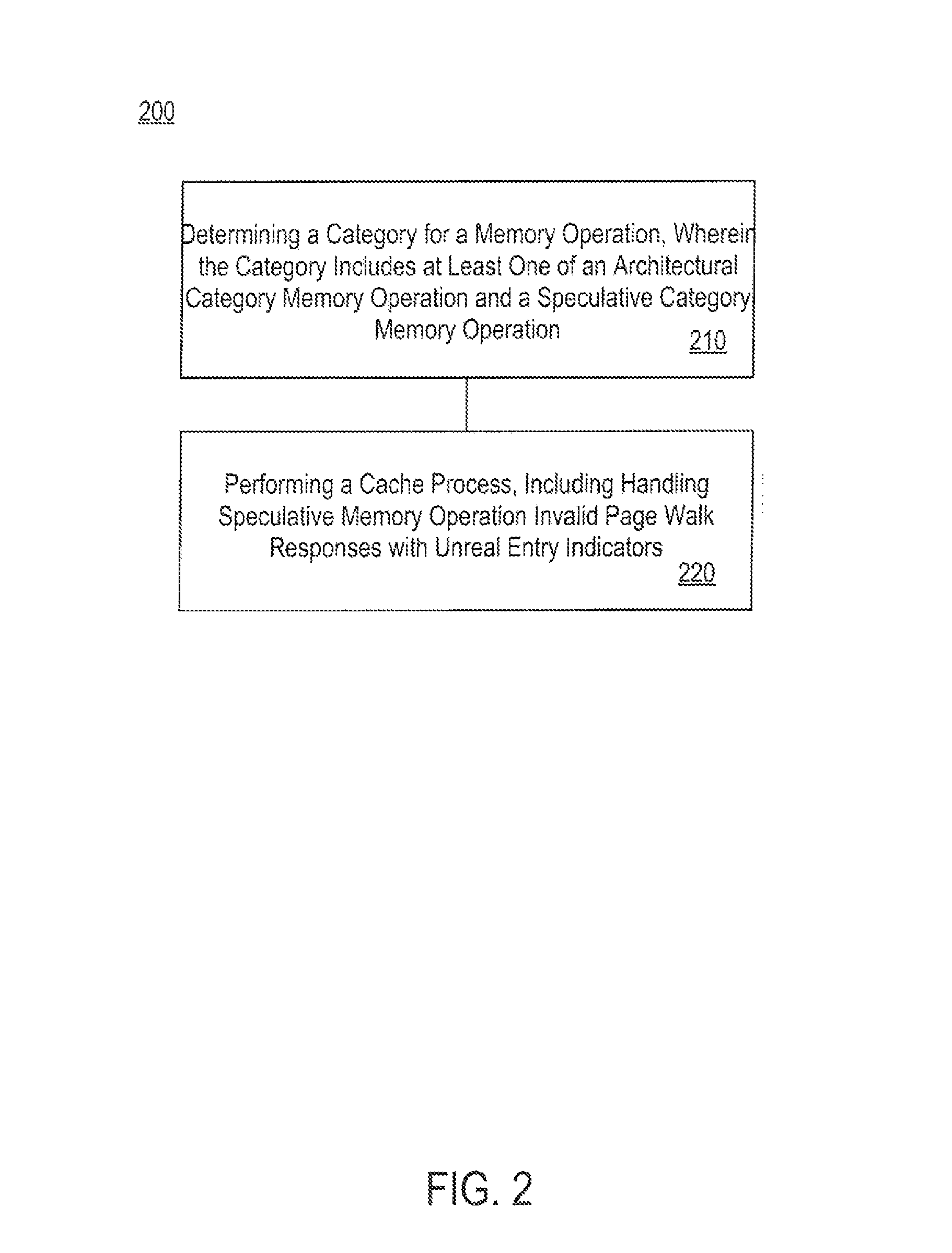

Translation lookaside buffer entry systems and methods

ActiveUS20140281259A1Improve efficiencyEffective cachingMemory adressing/allocation/relocationCache invalidationComputer science

Presented systems and methods can facilitate efficient information storage and tracking operations, including translation look aside buffer operations. In one embodiment, the systems and methods effectively allow the caching of invalid entries (with the attendant benefits e.g., regarding power, resource usage, stalls, etc), while maintaining the illusion that the TLBs do not in fact cache invalid entries (e.g., act in compliance with architectural rules). In one exemplary implementation, an “unreal” TLB entry effectively serves as a hint that the linear address in question currently has no valid mapping. In one exemplary implementation, speculative operations that hit an unreal entry are discarded; architectural operations that hit an unreal entry discard the entry and perform a normal page walk, either obtaining a valid entry, or raising an architectural fault.

Owner:NVIDIA CORP

Tiled cache invalidation

ActiveUS20140122812A1Reduce the amount of memoryMemory architecture accessing/allocationMemory adressing/allocation/relocationGraphicsCache invalidation

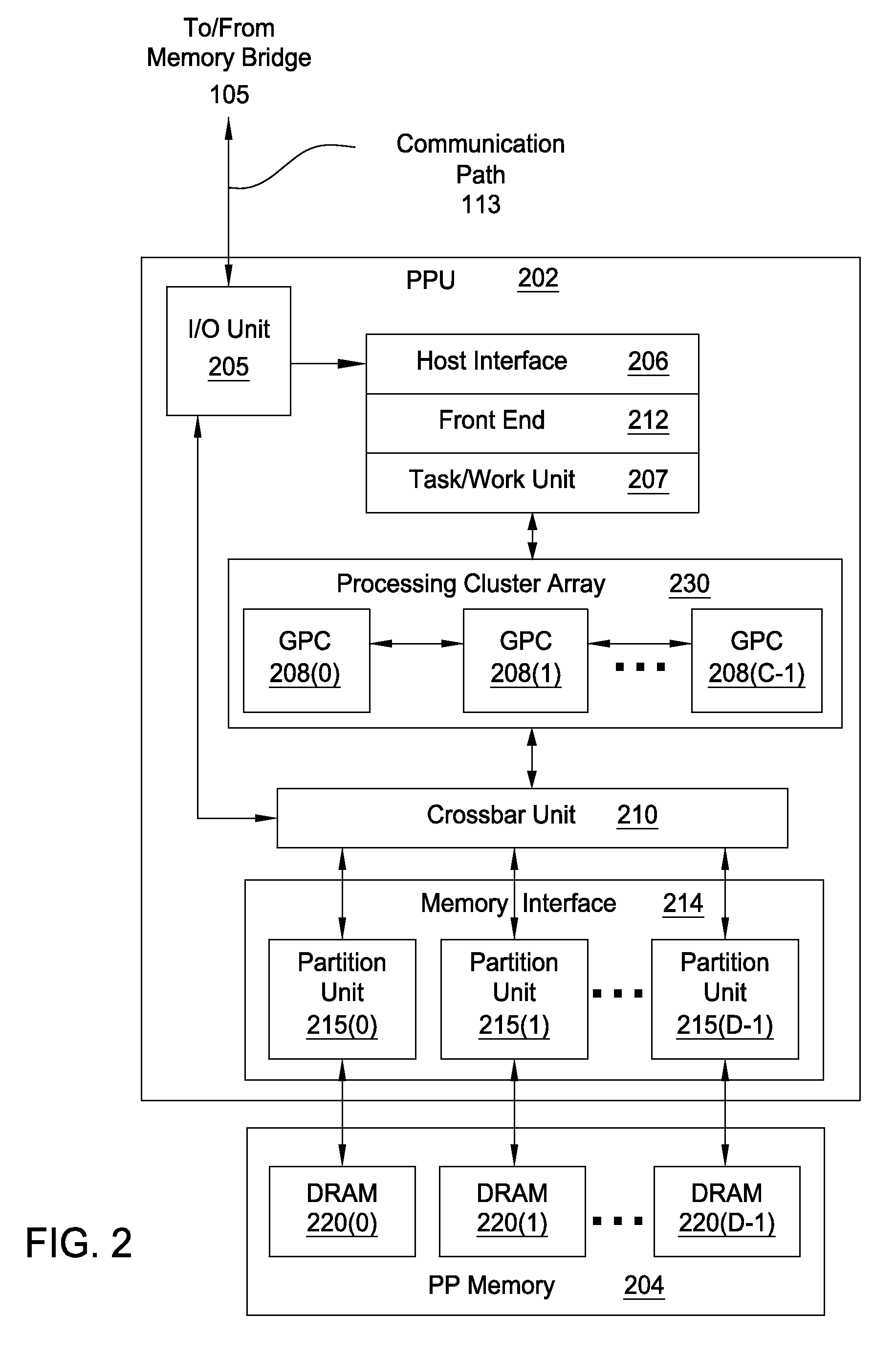

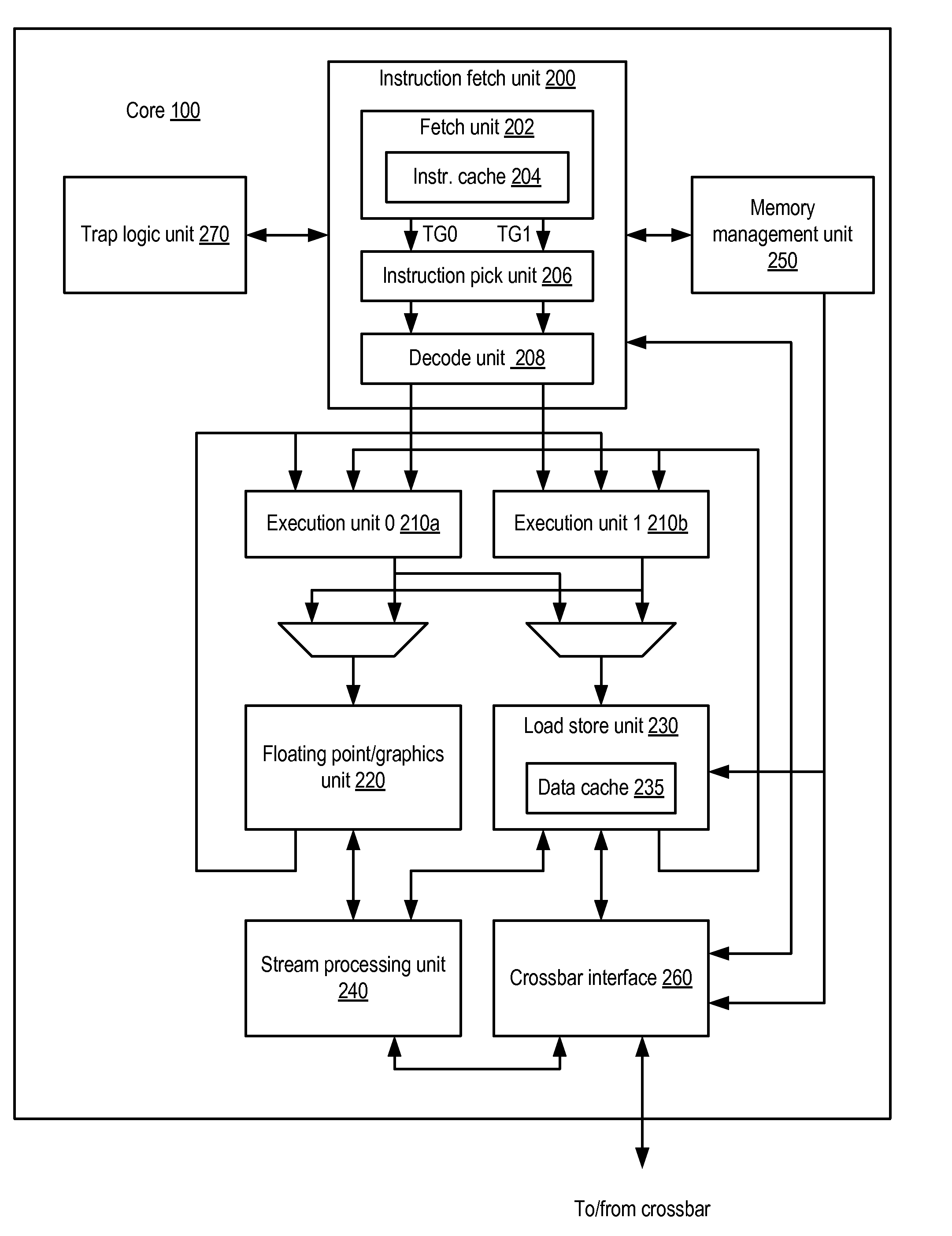

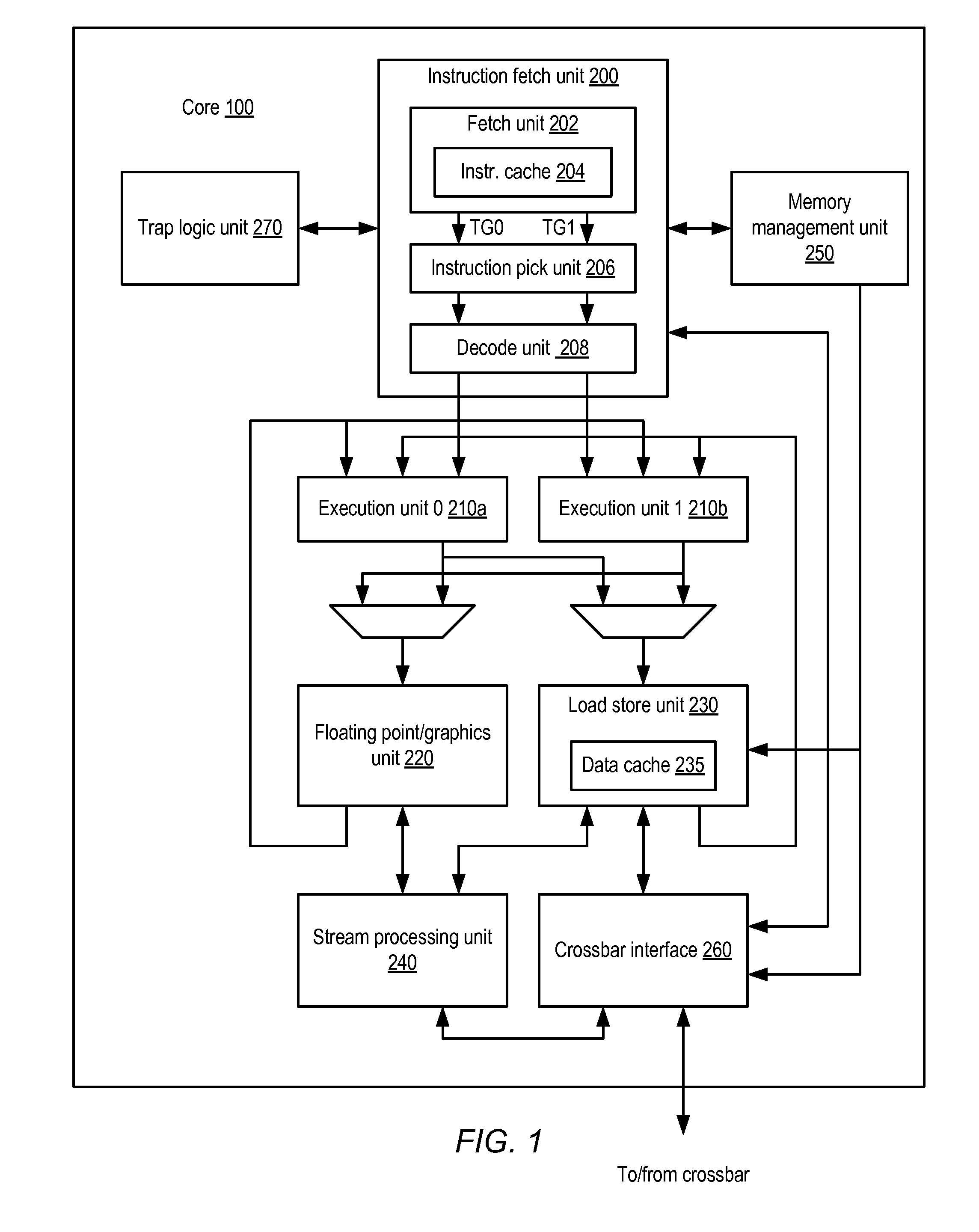

One embodiment of the present invention sets forth a graphics subsystem. The graphics subsystem includes a first tiling unit associated with a first set of raster tiles and a crossbar unit. The crossbar unit is configured to transmit a first set of primitives to the first tiling unit and to transmit a first cache invalidate command to the first tiling unit. The first tiling unit is configured to determine that a second bounding box associated with primitives included in the first set of primitives overlaps a first cache tile and that the first bounding box overlaps the first cache tile. The first tiling unit is further configured to transmit the primitives and the first cache invalidate command to a first screen-space pipeline associated with the first tiling unit for processing. The screen-space pipeline processes the cache invalidate command to invalidate cache lines specified by the cache invalidate command.

Owner:NVIDIA CORP

Reducing implementation costs of communicating cache invalidation information in a multicore processor

ActiveUS20110153942A1Low implementation costEnergy efficient ICTMemory adressing/allocation/relocationCache invalidationMulti-core processor

Owner:ORACLE INT CORP

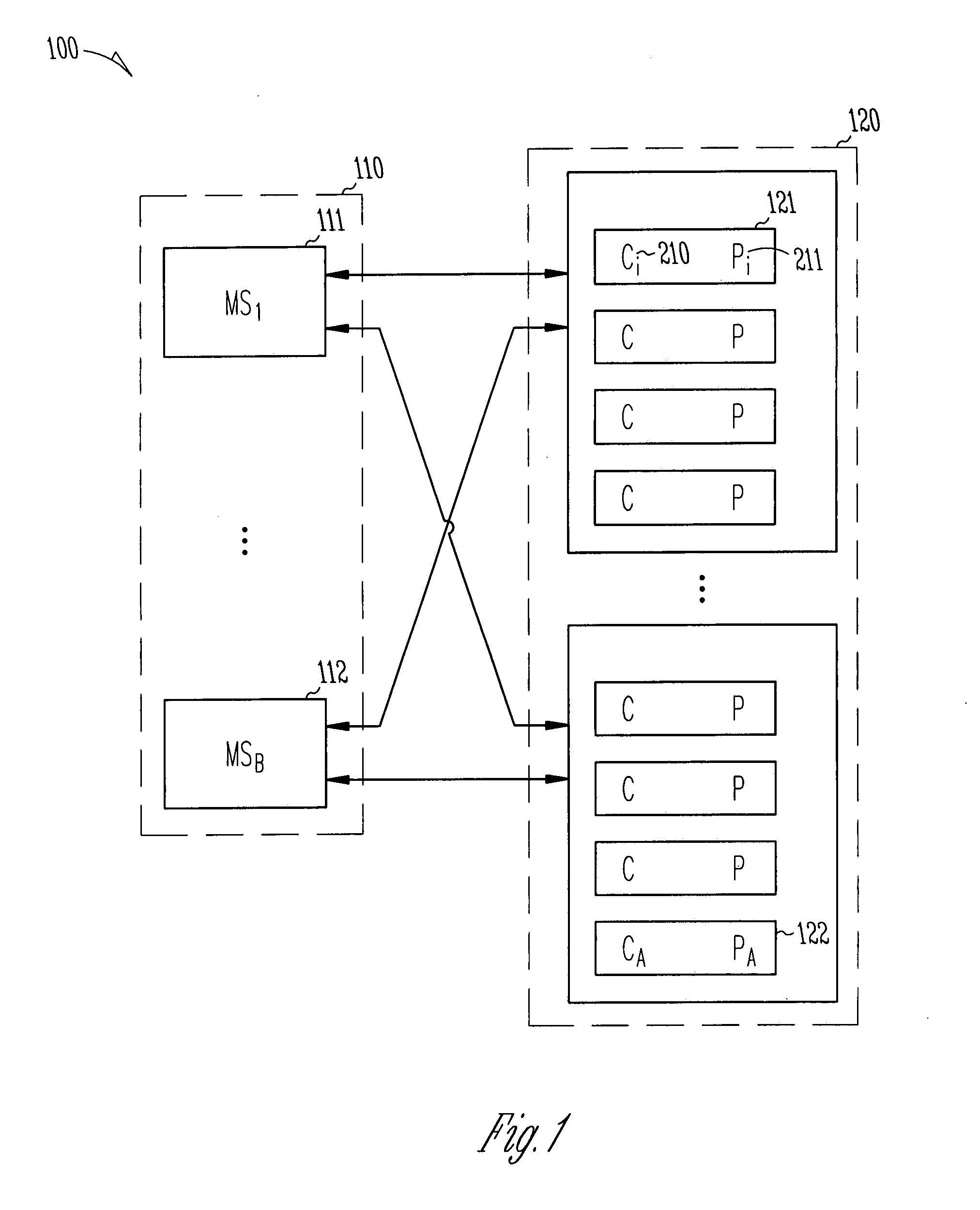

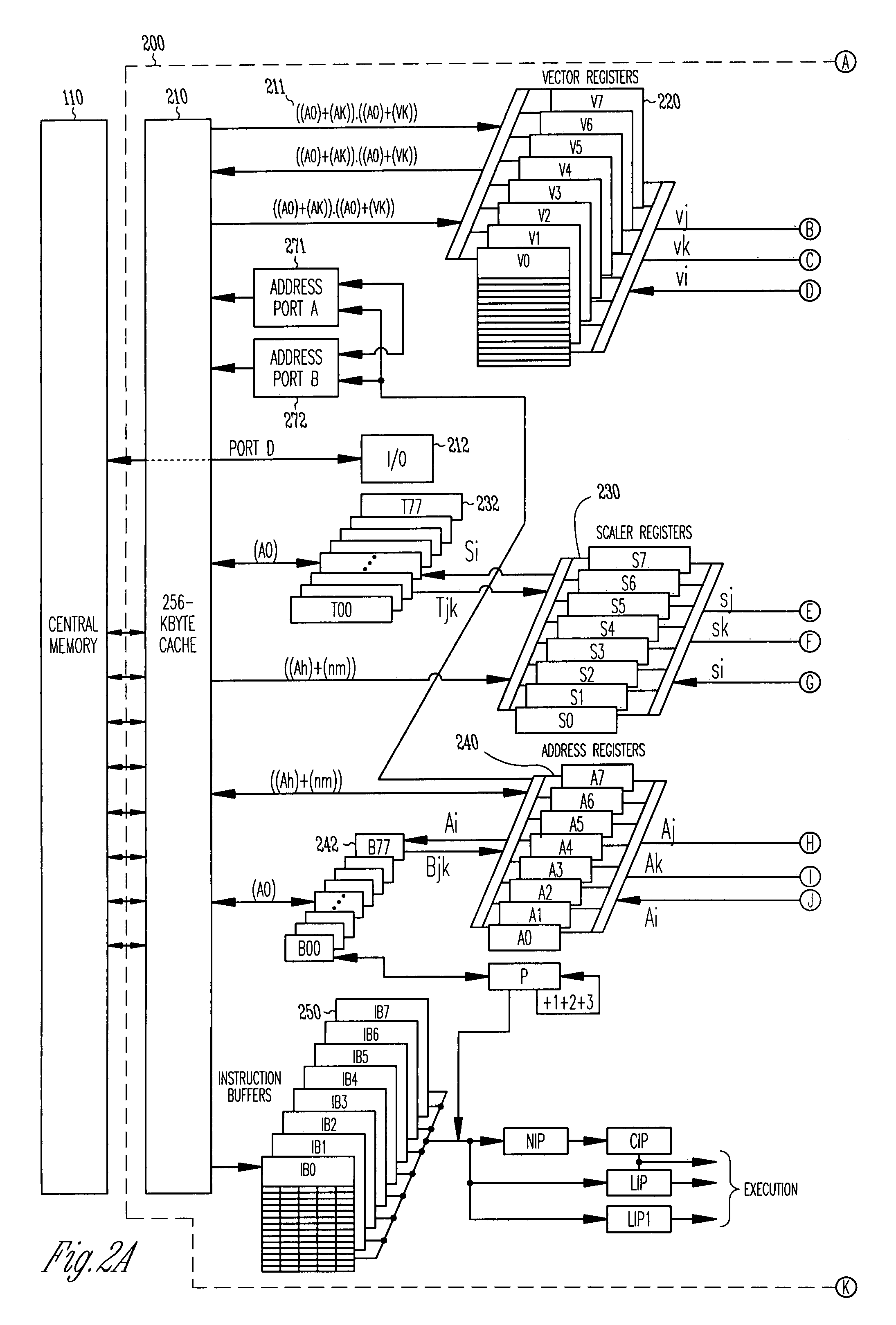

System and method for limited fanout daisy chaining of cache invalidation requests in a shared-memory multiprocessor system

InactiveUS7389389B2Memory architecture accessing/allocationDigital data processing detailsCache invalidationMulti processor

A protocol engine is for use in each node of a computer system having a plurality of nodes. Each node includes an interface to a local memory subsystem that stores memory lines of information, a directory, and a memory cache. The directory includes an entry associated with a memory line of information stored in the local memory subsystem. The directory entry includes an identification field for identifying sharer nodes that potentially cache the memory line of information. The identification field has a plurality of bits at associated positions within the identification field. Each respective bit of the identification field is associated with one or more nodes. The protocol engine furthermore sets each bit in the identification field for which the memory line is cached in at least one of the associated nodes. In response to a request for exclusive ownership of a memory line, the protocol engine sends an initial invalidation request to no more than a first predefined number of the nodes associated with set bits in the identification field of the directory entry associated with the memory line.

Owner:SK HYNIX INC

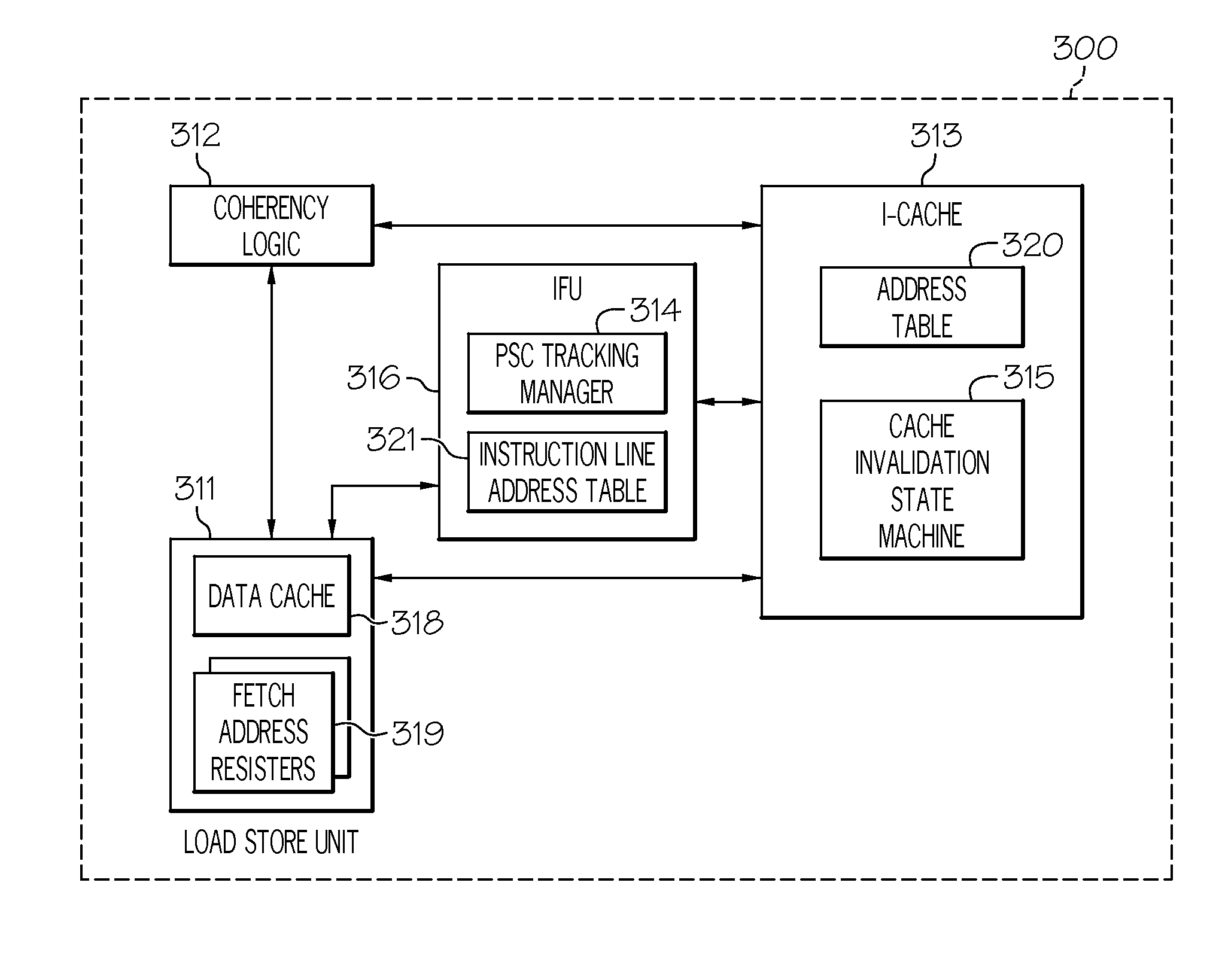

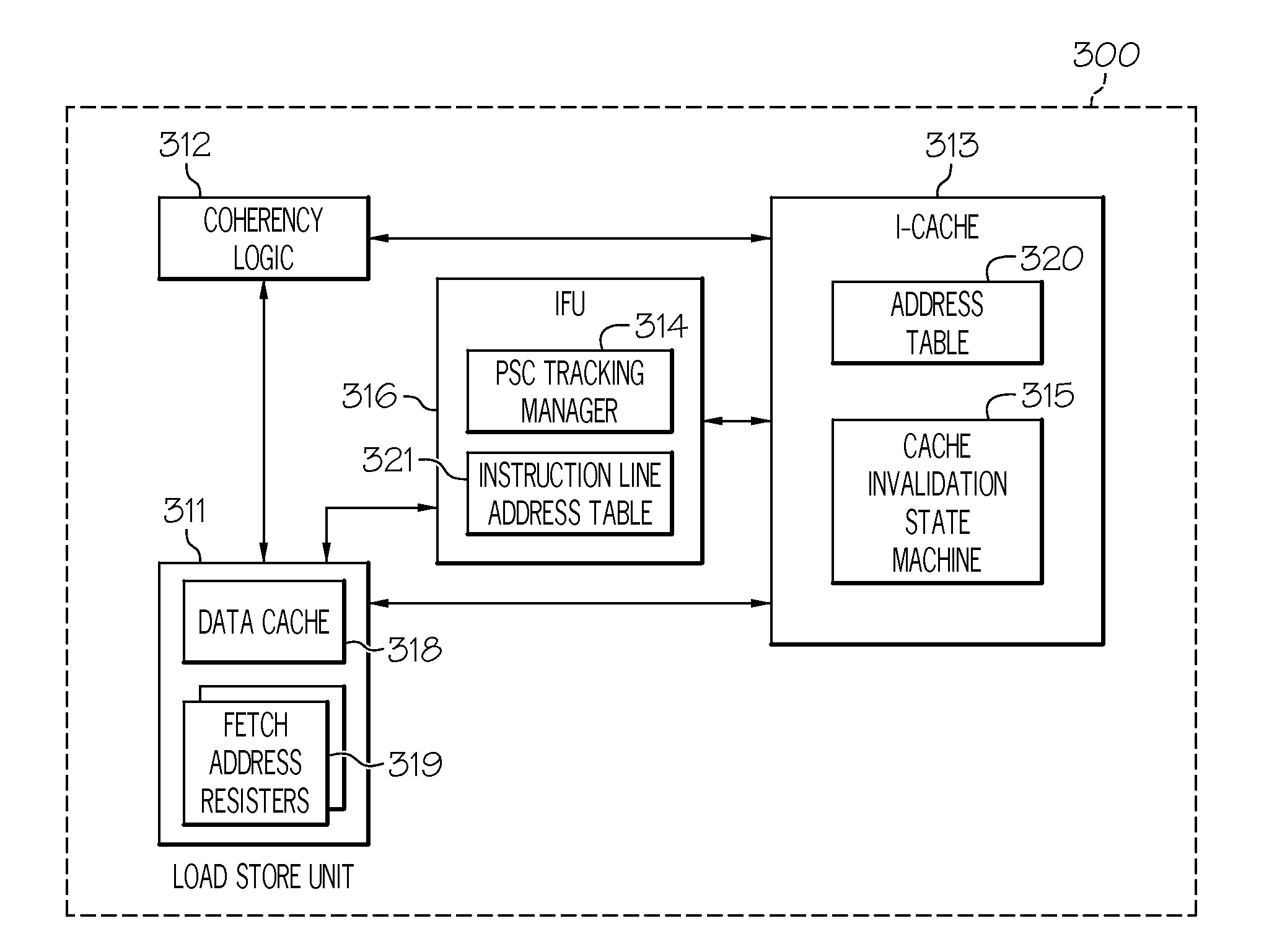

Managing cache coherency for self-modifying code in an out-of-order execution system

InactiveUS8417890B2Memory architecture accessing/allocationDigital computer detailsSelf-modifying codeCache invalidation

A method, system, and computer program product for managing cache coherency for self-modifying code in an out-of-order execution system are disclosed. A program-store-compare (PSC) tracking manager identifies a set of addresses of pending instructions in an address table that match an address requested to be invalidated by a cache invalidation request. The PSC tracking manager receives a fetch address register identifier associated with a fetch address register for the cache invalidation request. The fetch address register is associated with the set of addresses and is a PSC tracking resource reserved by a load store unit (LSU) to monitor an exclusive fetch for a cache line in a high level cache. The PSC tracking manager determines that the set of entries in an instruction line address table associated with the set of addresses is invalid and instructs the LSU to free the fetch address register.

Owner:INT BUSINESS MASCH CORP

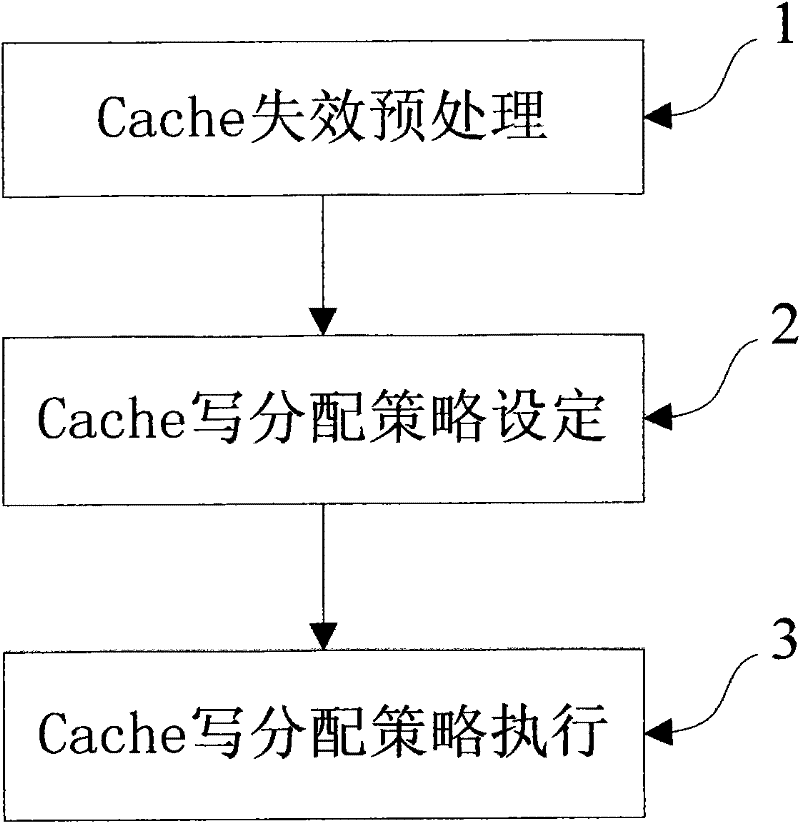

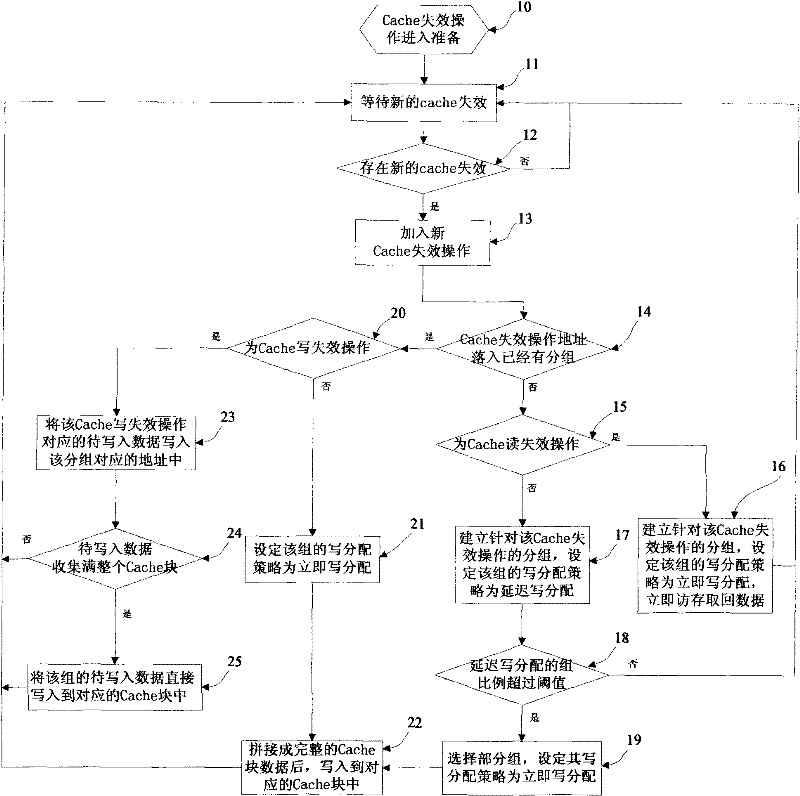

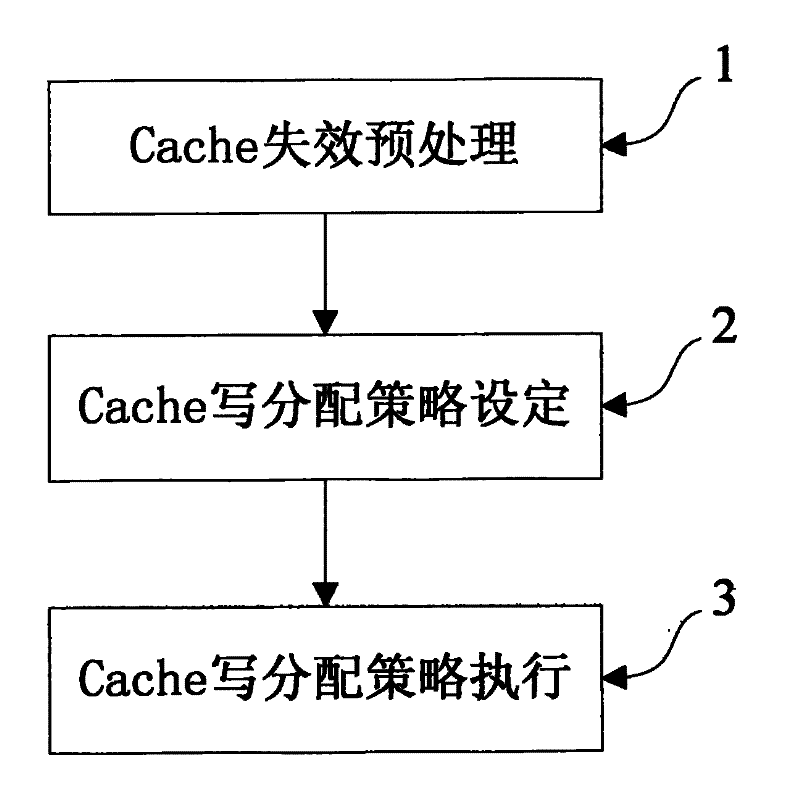

Processor Cache write-in invalidation processing method based on memory access history learning

ActiveCN101751245AReduce bandwidth wasteImprove performanceMemory systemsMachine execution arrangementsMissing dataCache invalidation

A processor Cache write-in invalidation processing method based on memory access history learning includes the following procedures: (1) Cache invalidation preprocessing procedure; (2) Cache write-allocation strategy setting procedure includes that: the immediate write-allocation or delay write-allocation strategy of each group is set; (3) the group belonging to the immediate write-allocation immediately accesses the Cache block corresponding to the memory and reads the missing data of the group back, and integrates the missing data with the write-in data to form complete Cache block data, and writes the complete Cache block data into the corresponding Cache block; the group belonging to the delay write-allocation collects the write-in data of Cache write-in invalidation operation allocated in the group, and writes the write-in data directly into the corresponding Cache block when the write-in data of a certain group is fully collected in the whole Cache block. The invention can reduce great unnecessary operations of reading Cache block from the memory during the processing process of the Cache write-in invalidation, accordingly reduces the bandwidth waste of the process and further improves the performance of the application program.

Owner:LOONGSON TECH CORP

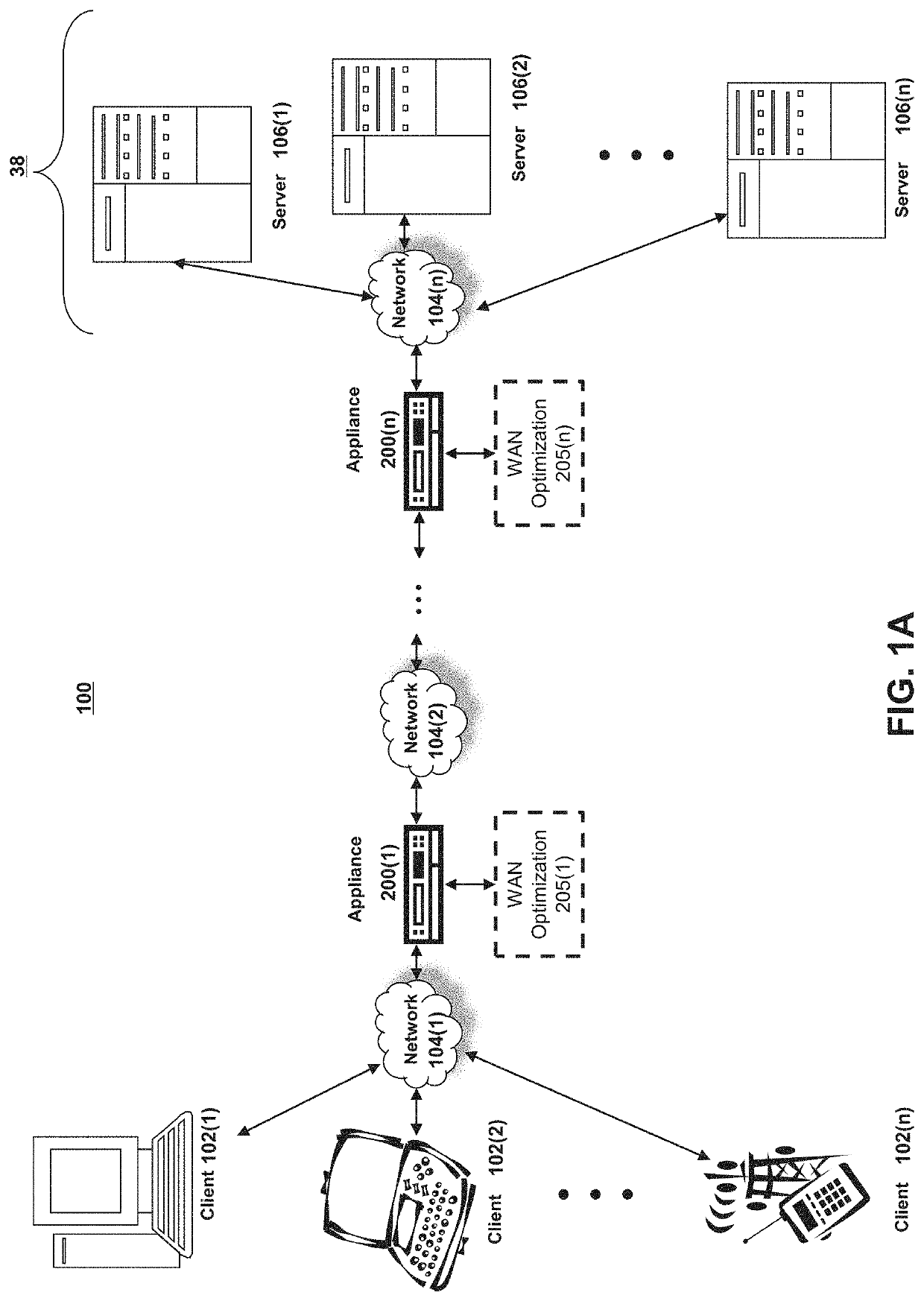

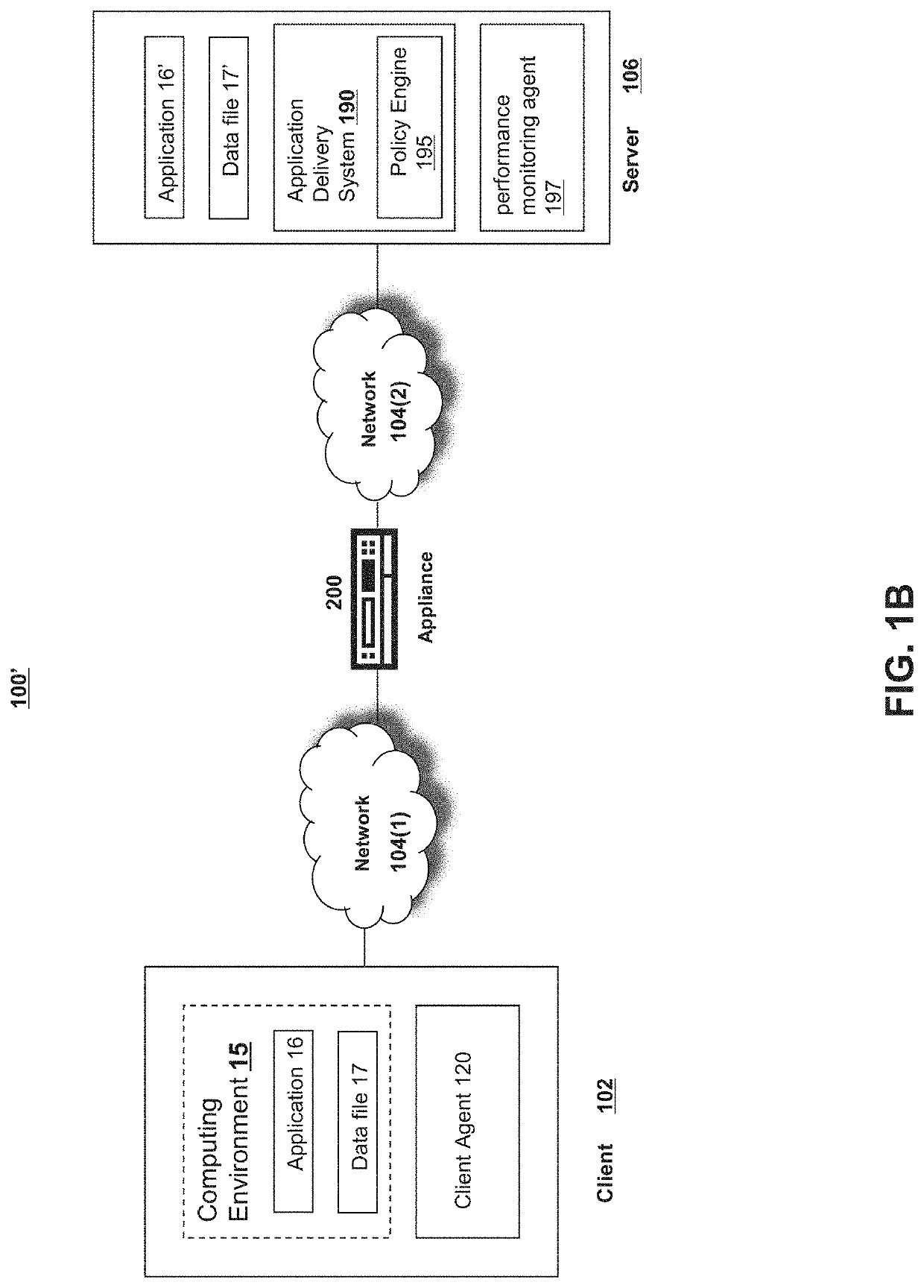

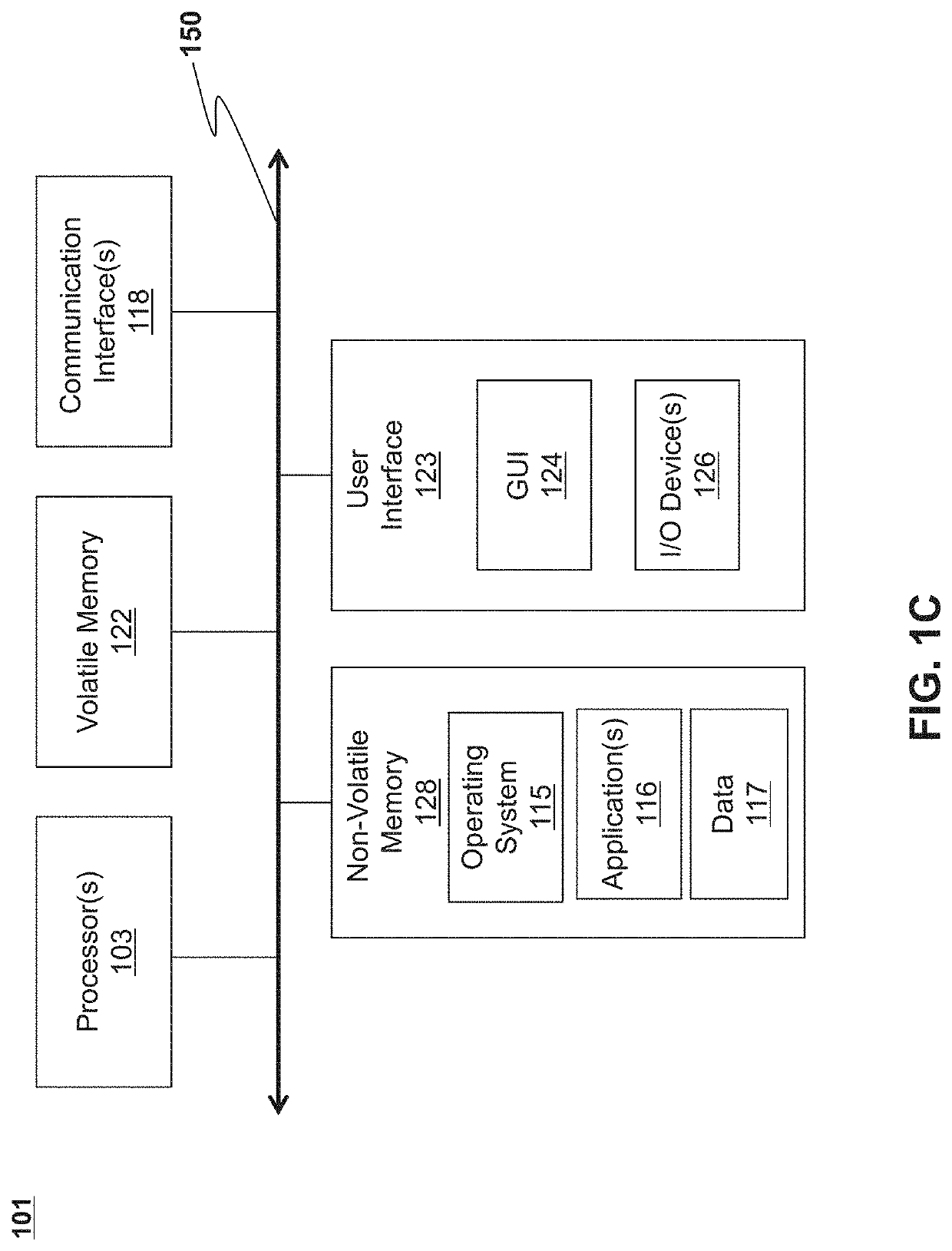

Systems and methods to operate devices with domain name system (DNS) caches

Described embodiments provide systems and methods for invalidating a cache of a domain name system (DNS) information based on changes in internet protocol (IP) families. A mobile device having one or more network interfaces configured to communicate over a plurality of networks using a plurality of internet protocol (IP) families is configured to maintain a cache storing DNS information of one or more IP addresses of a first IP family of the plurality of IP families used by the mobile device for a connection to a first network of the plurality of networks. The device can detect a change in the connection of the mobile device from the first network using the first IP family to a second network using a second IP family different from the first IP family and flush at least the DNS information of one or more IP addresses of the first IP family from the cache to prevent use by the mobile device of an IP address that corresponds to an invalid cache entry.

Owner:CITRIX SYST INC

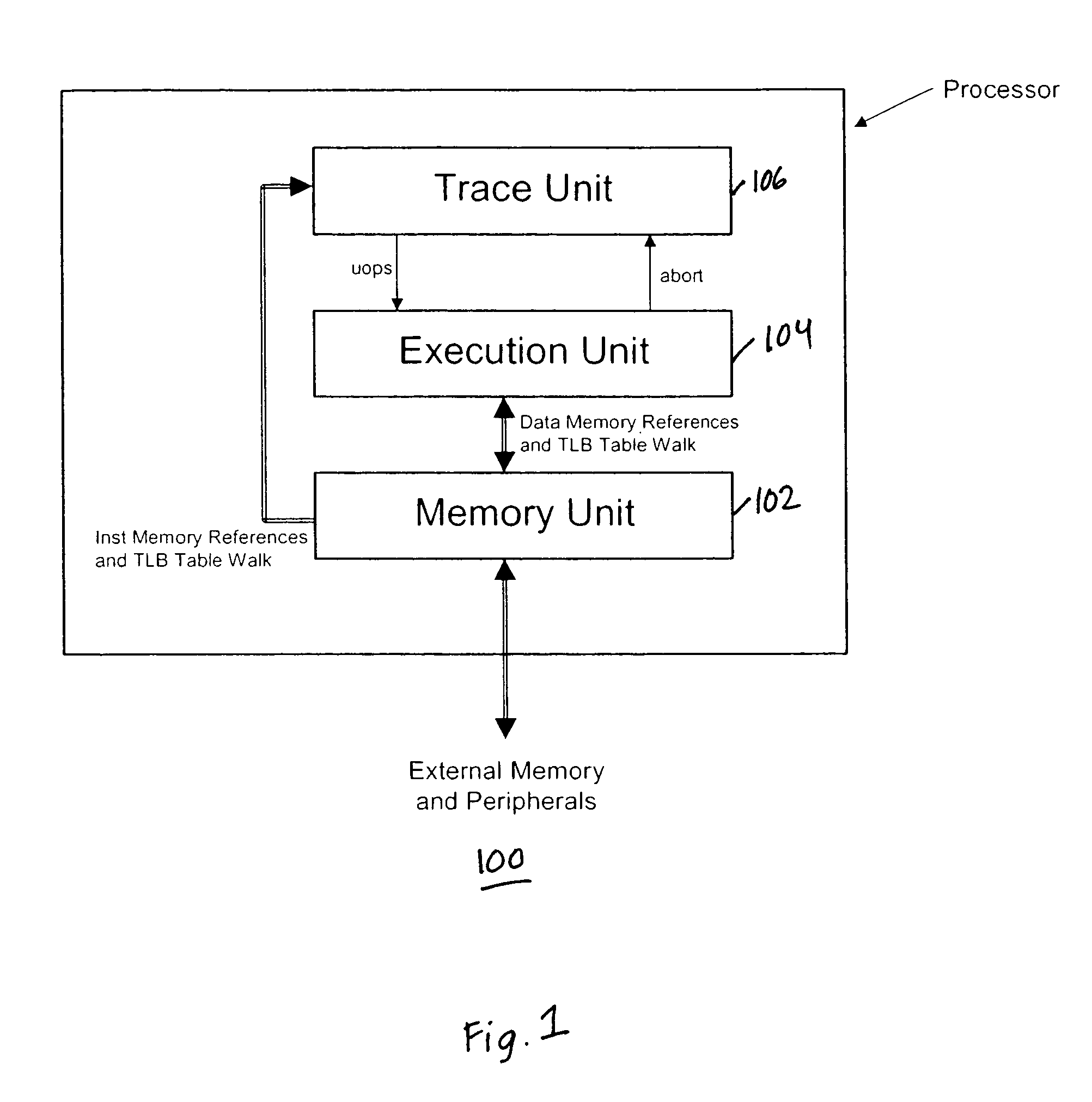

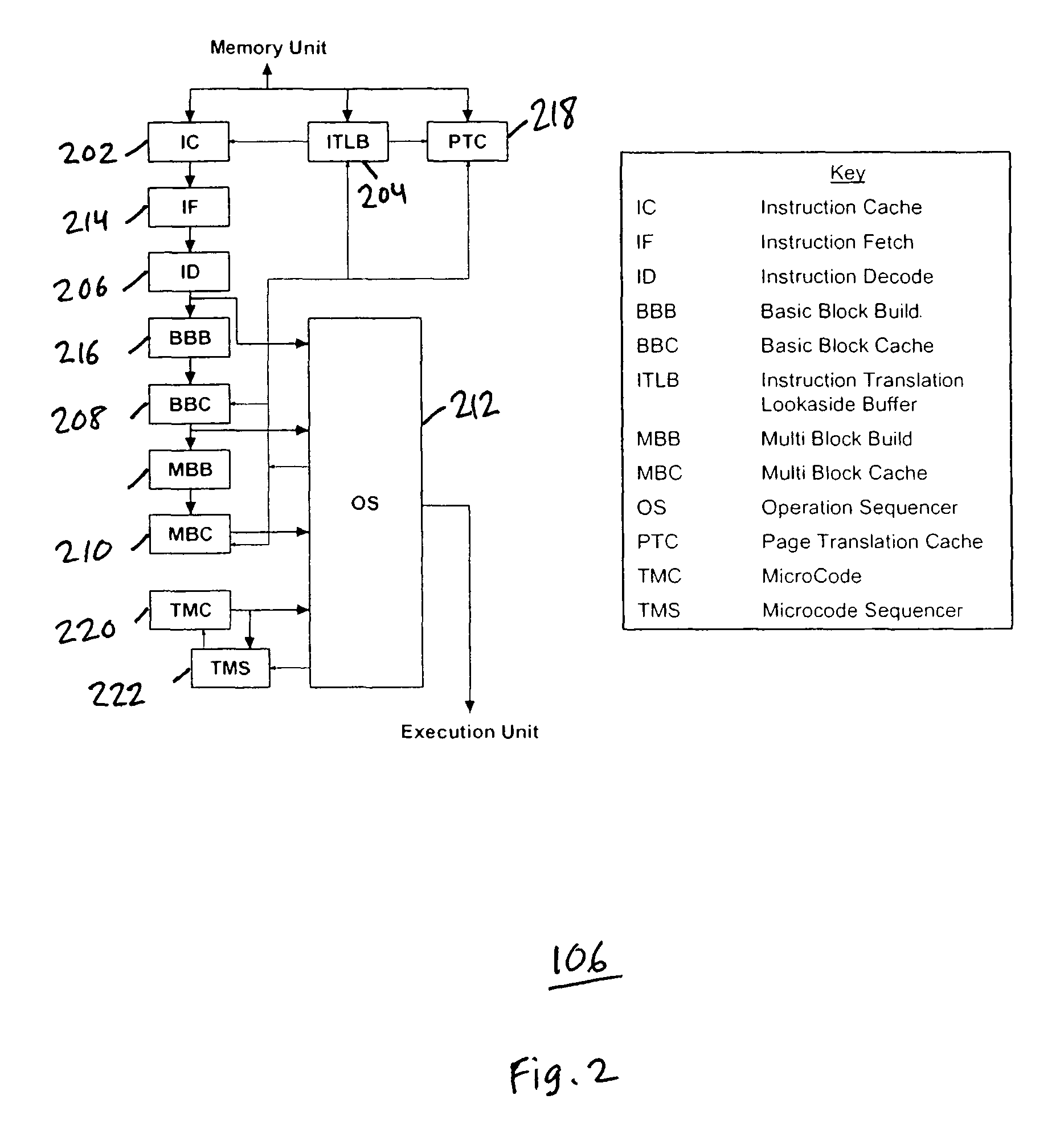

Maintaining memory coherency with a trace cache

ActiveUS7747822B1Energy efficient ICTMemory adressing/allocation/relocationPower efficientCache invalidation

A method and system for maintaining memory coherence in a trace cache is disclosed. The method and system comprises monitoring a plurality of entries in a trace cache. The method and system includes selectively invalidating at least one trace cache entry based upon detection of a modification of the at least one trace cache entry.If modifications are detected, then corresponding trace cache entries are selectively invalidated (rather than invalidating the entire trace cache). Thus trace cache coherency is maintained with respect to memory in a performance and power-efficient manner. The monitoring further accounts for situations where more than one trace cache entry is dependent on a single cache line, such that modifications to the single cache line result in invalidations of a plurality of trace cache entries.

Owner:SUN MICROSYSTEMS INC

Translation lookaside buffer entry systems and methods

ActiveUS9547602B2Improve efficiencyEffective cachingMemory adressing/allocation/relocationCache invalidationTranslation lookaside buffer

Presented systems and methods can facilitate efficient information storage and tracking operations, including translation look aside buffer operations. In one embodiment, the systems and methods effectively allow the caching of invalid entries (with the attendant benefits e.g., regarding power, resource usage, stalls, etc), while maintaining the illusion that the TLBs do not in fact cache invalid entries (e.g., act in compliance with architectural rules). In one exemplary implementation, an “unreal” TLB entry effectively serves as a hint that the linear address in question currently has no valid mapping. In one exemplary implementation, speculative operations that hit an unreal entry are discarded; architectural operations that hit an unreal entry discard the entry and perform a normal page walk, either obtaining a valid entry, or raising an architectural fault.

Owner:NVIDIA CORP

Managing cache coherency for self-modifying code in an out-of-order execution system

InactiveUS20110307662A1Memory architecture accessing/allocationMemory adressing/allocation/relocationSelf-modifying codeCache invalidation

A method, system, and computer program product for managing cache coherency for self-modifying code in an out-of-order execution system are disclosed. A program-store-compare (PSC) tracking manager identifies a set of addresses of pending instructions in an address table that match an address requested to be invalidated by a cache invalidation request. The PSC tracking manager receives a fetch address register identifier associated with a fetch address register for the cache invalidation request. The fetch address register is associated with the set of addresses and is a PSC tracking resource reserved by a load store unit (LSU) to monitor an exclusive fetch for a cache line in a high level cache. The PSC tracking manager determines that the set of entries in an instruction line address table associated with the set of addresses is invalid and instructs the LSU to free the fetch address register.

Owner:IBM CORP

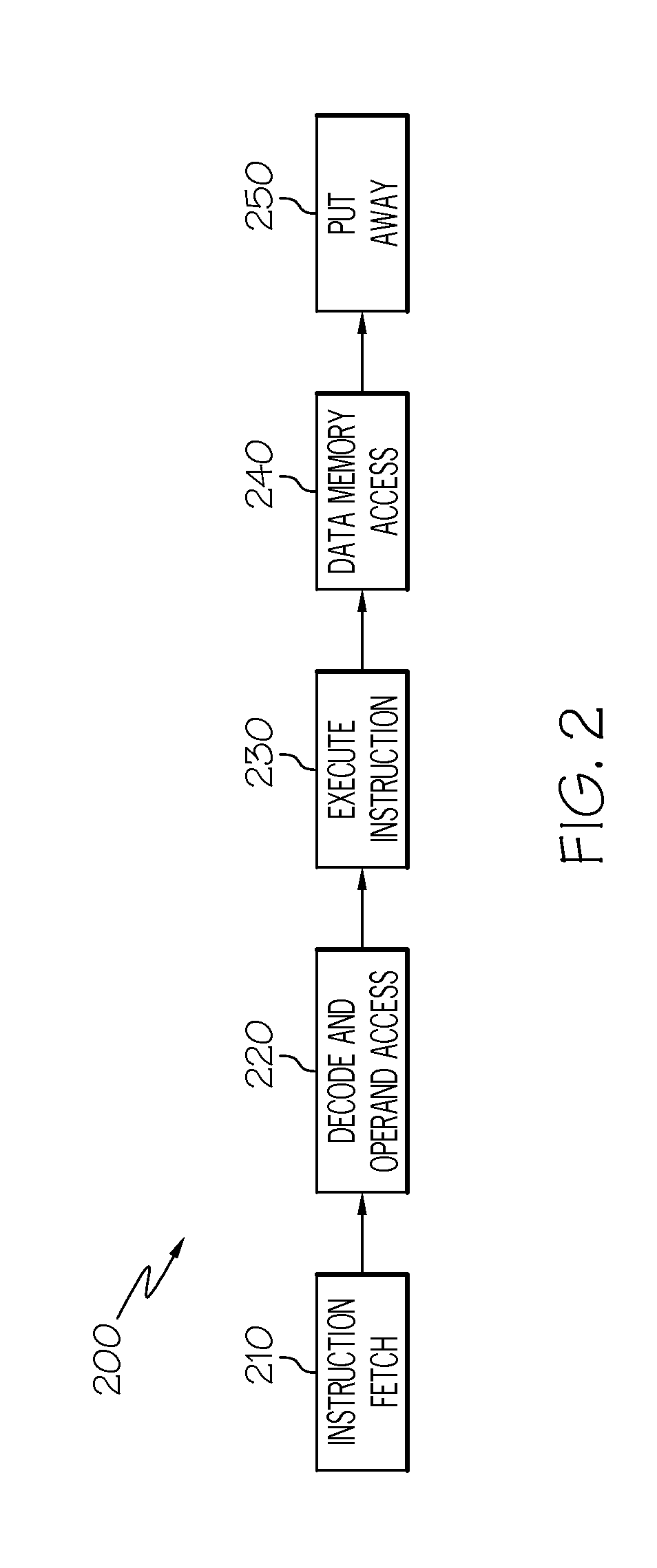

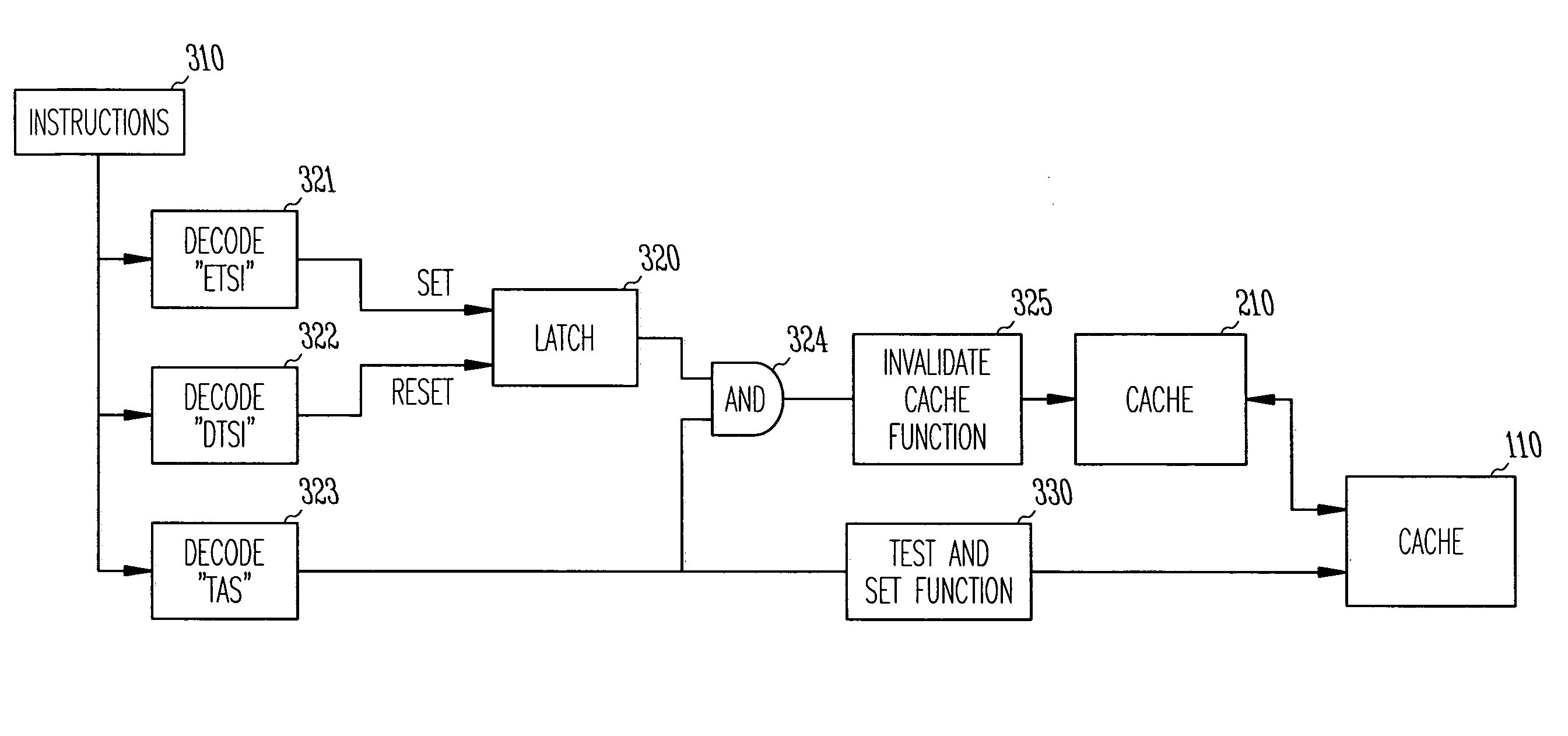

Instructions for test & set with selectively enabled cache invalidate

InactiveUS7234027B2Program synchronisationMemory adressing/allocation/relocationCache invalidationTest-and-set

A method and system for selectively enabling a cache-invalidate function supplement to a resource-synchronization instruction such as test-and-set. Some embodiments include a first processor, a first memory, at least a first cache between the first processor and the first memory, wherein the first cache caches data accessed by the first processor from the first memory, wherein the first processor executes: a resource-synchronization instruction, an instruction that enables a cache-invalidate function to be performed upon execution of the resource-synchronization instruction, and an instruction that disables the cache-invalidate function from being performed upon execution of the resource-synchronization instruction.

Owner:CRAY

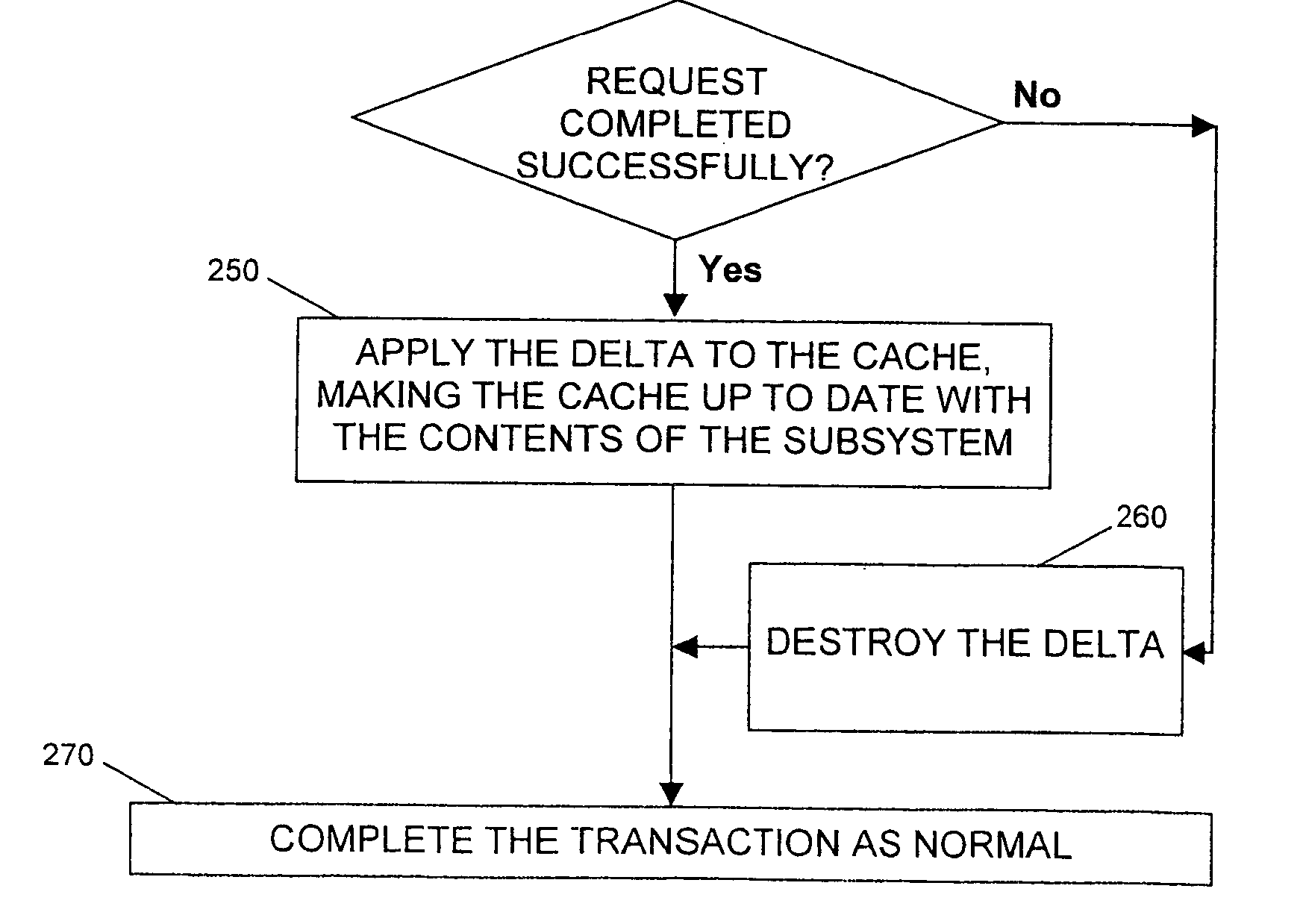

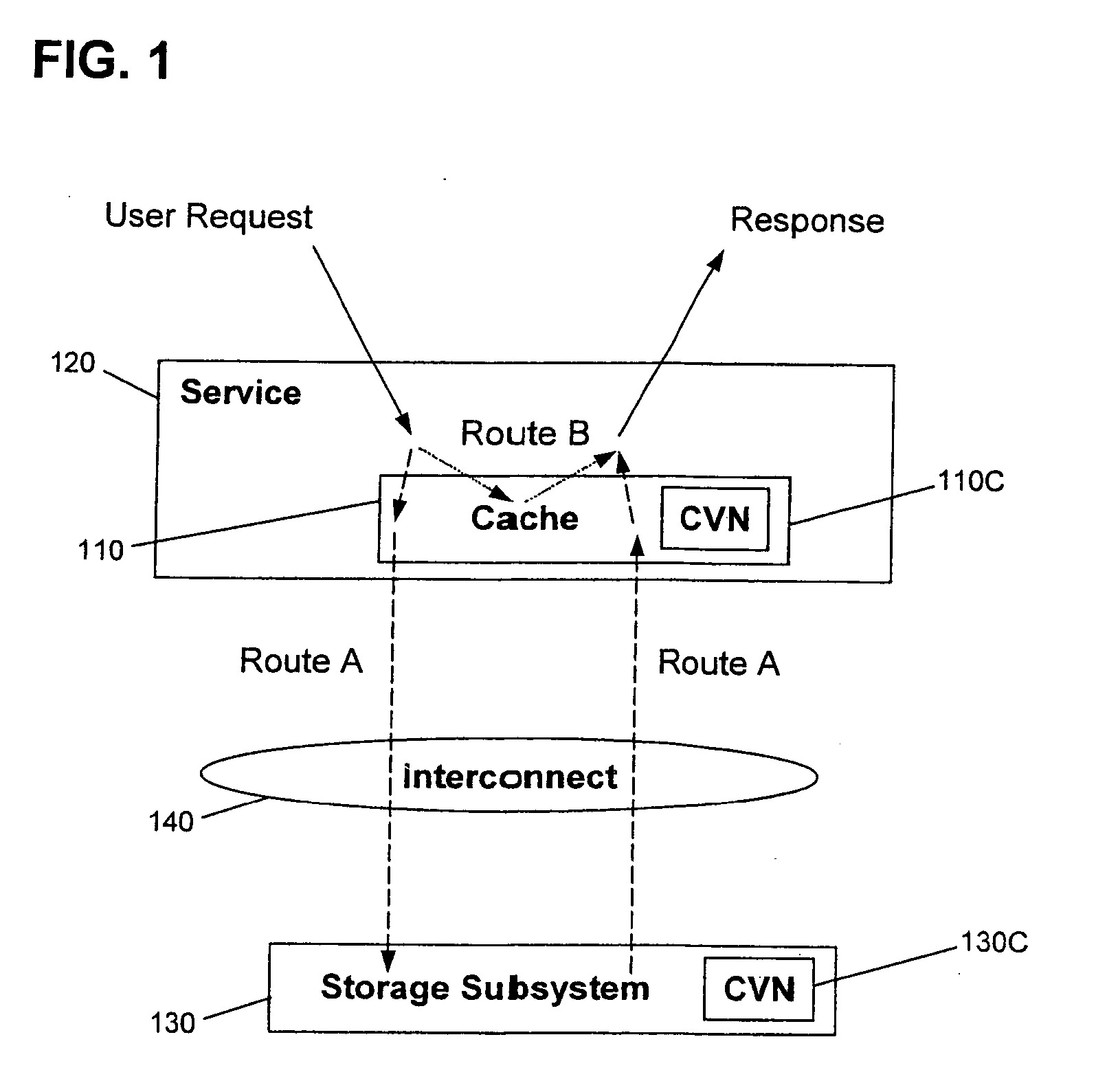

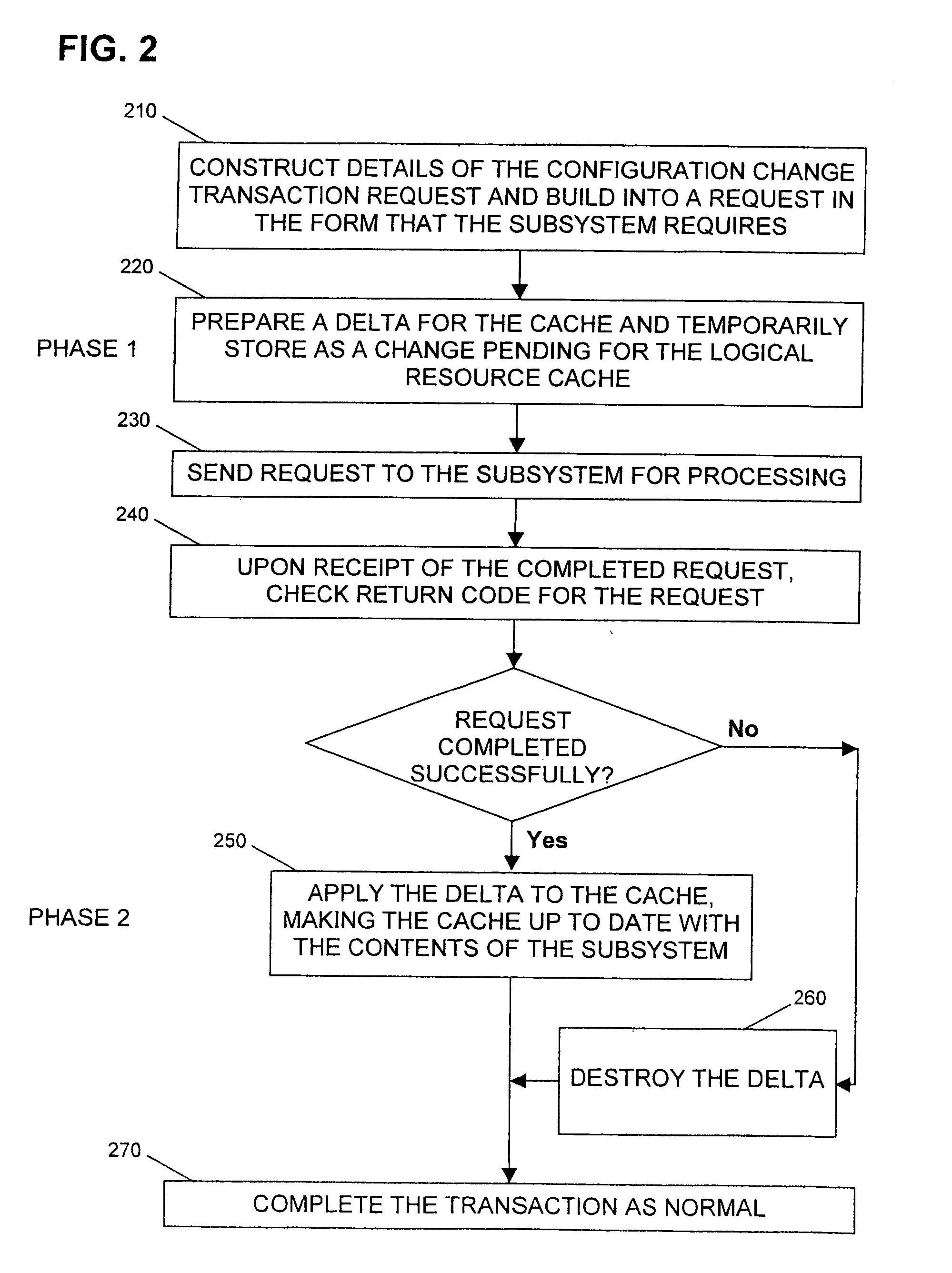

Arrangement and method for update of configuration cache data

An arrangement and method for update of configuration cache data in a disk storage subsystem in which a cache memory (110) is updated using two-phase (220, 250) commit technique. This provides the advantage that known changes to the subsystem do not require an invalidate / rebuild style operation on the cache. This is especially important where a change will invalidate the entire cache.

Owner:IBM CORP

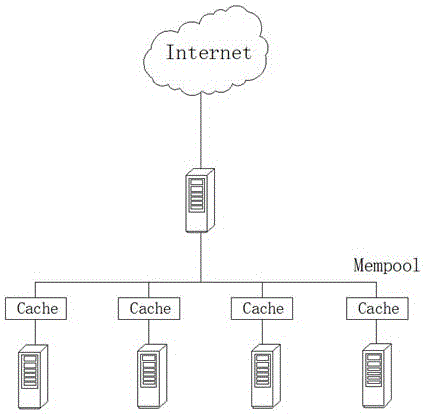

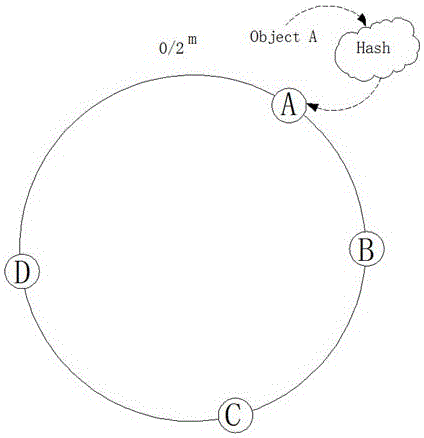

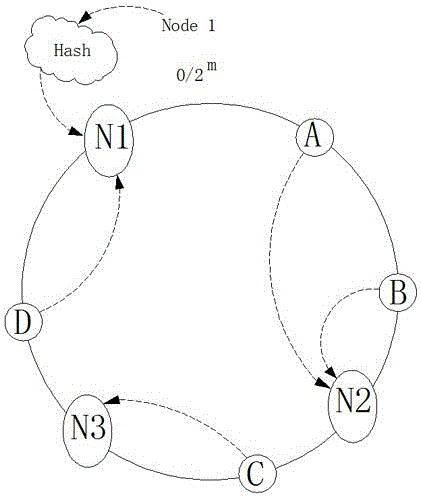

Network cache design method based on consistent hash

InactiveCN105007328AImprove I/O performanceEliminate the effects of failureTransmissionResource poolCache invalidation

The invention discloses a network cache design method based on consistent hash. The method comprises the following steps that the caches of a server cluster are centrally put in a unified resource pool, all resources in the resource pool can be scheduled by any one resource, and an NUMA (Non Uniform Memory Access Architecture) is adopted to manage the caches; a hash value is put in an annular space of 2 m, and the single server is regarded as an object to carry out hash calculation to be put in a hash ring; and a corresponding key value is computed by a data block through a hash algorithm and is put into a hash space. The technology of the invention is used for buffer sharing and buffer balancing of the server cluster, the large-scale data access speed can be accelerated, the influence of single-node cache invalidation can be eliminated, the IO (Input Output) performance of the server cluster can be effectively improved, a unified storage space is not only provided, but the caches can be relatively uniformly distributed in each server node.

Owner:SHANDONG CHAOYUE DATA CONTROL ELECTRONICS CO LTD

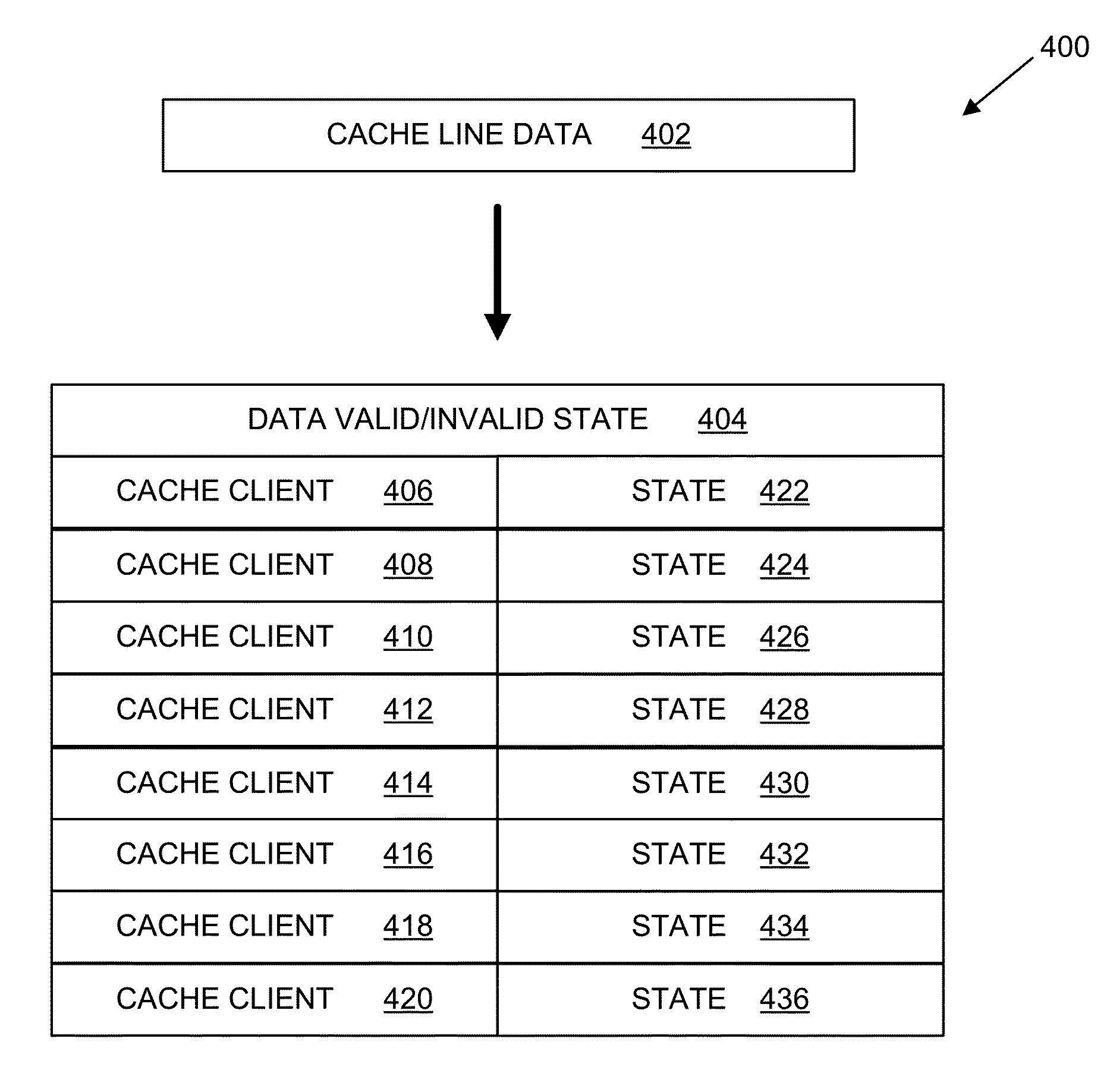

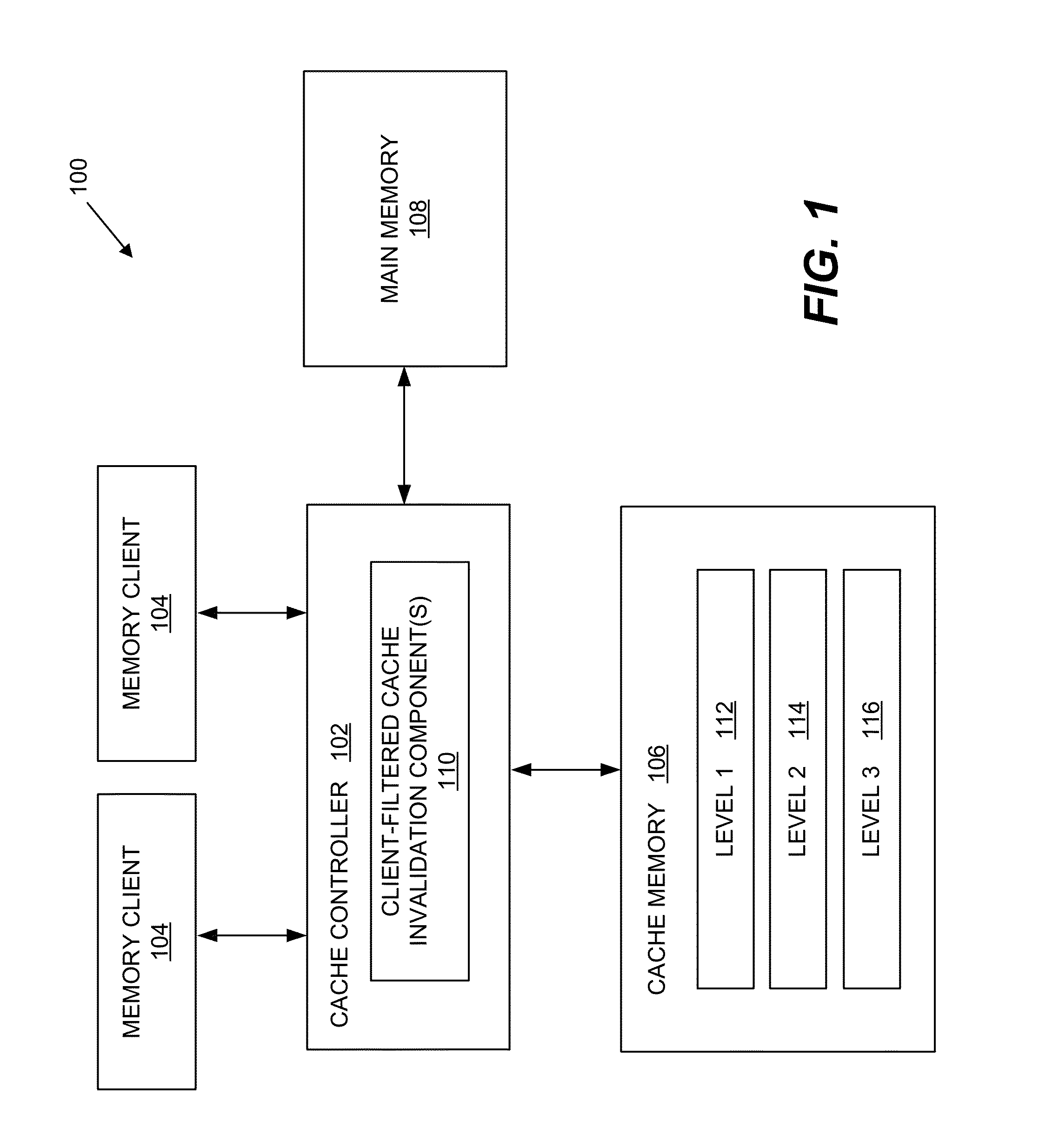

Systems, methods, and computer programs for providing client-filtered cache invalidation

InactiveUS20150363322A1Memory architecture accessing/allocationMemory adressing/allocation/relocationCache invalidationFilter cache

A method and system includes generating a cache entry comprising cache line data for a plurality of cache clients and receiving a cache invalidate instruction from a first of the plurality of cache clients. In response to the cache invalidate instruction, the data valid / invalid state is changed for the first cache client to an invalid state without modifying the data valid / invalid state for the other of the plurality of cache clients from the valid state. A read instruction may be received from a second of the plurality of cache clients and in response to the read instruction, a value stored in the cache line data is returned to the second cache client while the data valid / invalid state for the first cache client is in the invalid state and the data valid / invalid state for the second cache client is in the valid state.

Owner:QUALCOMM INC

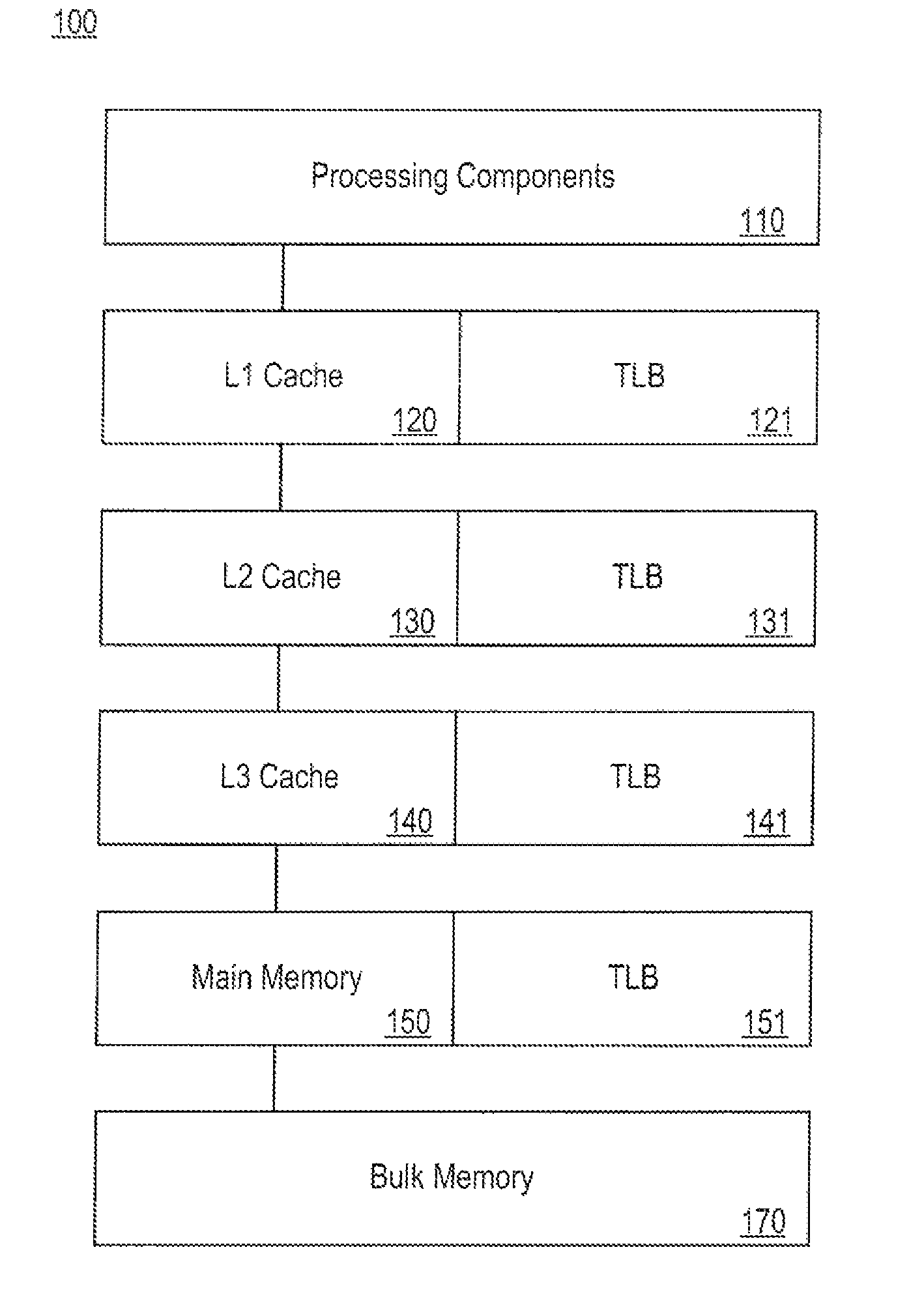

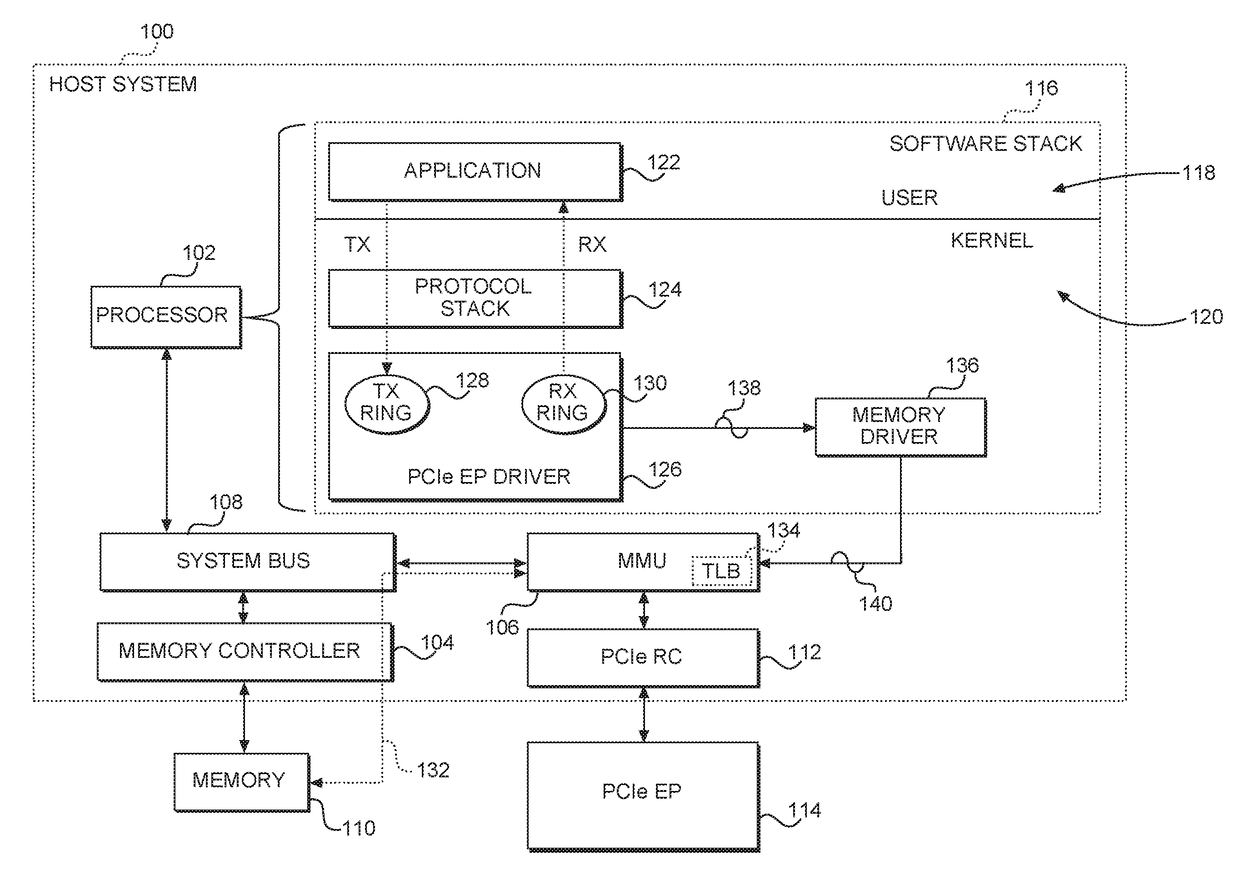

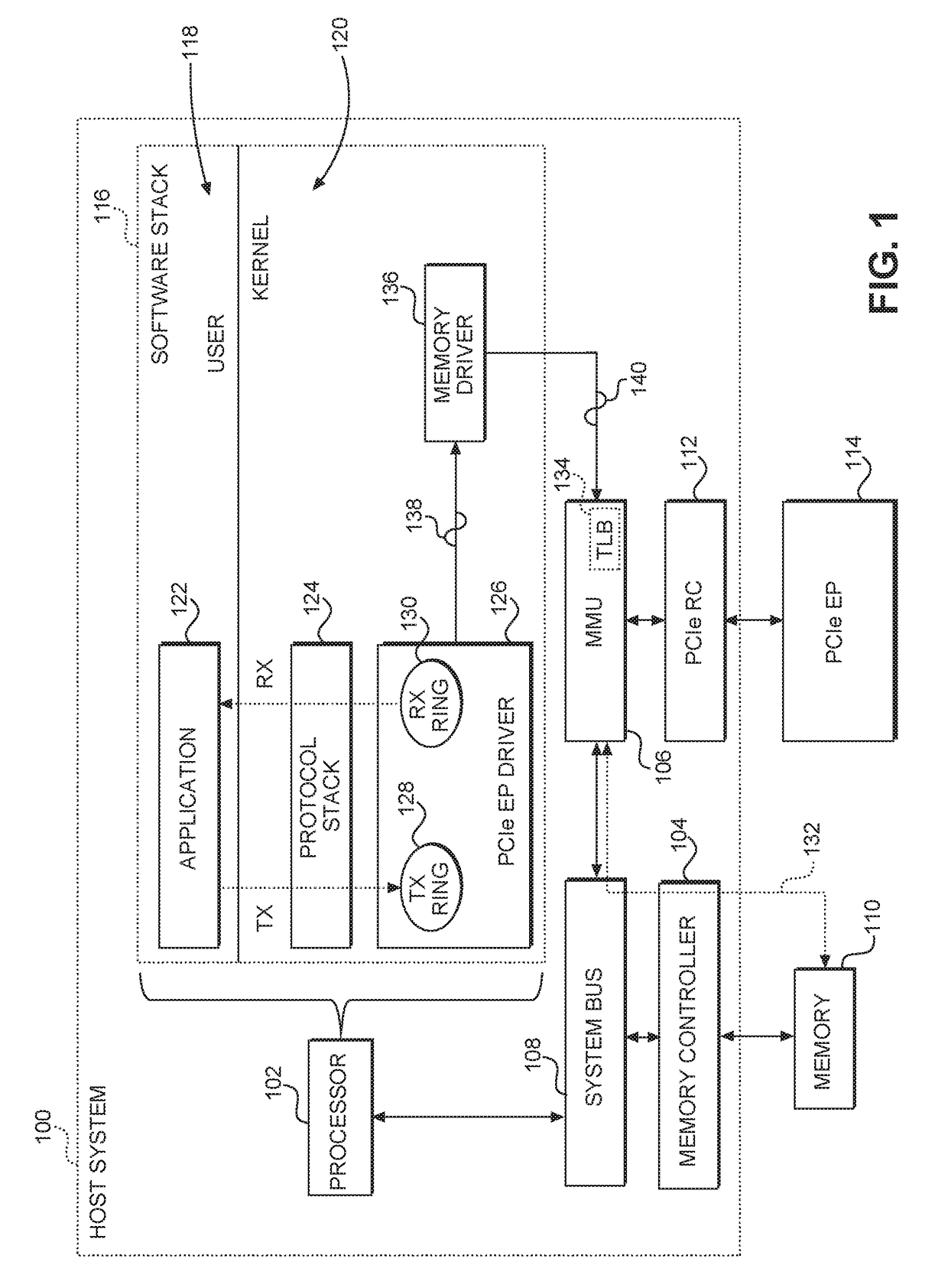

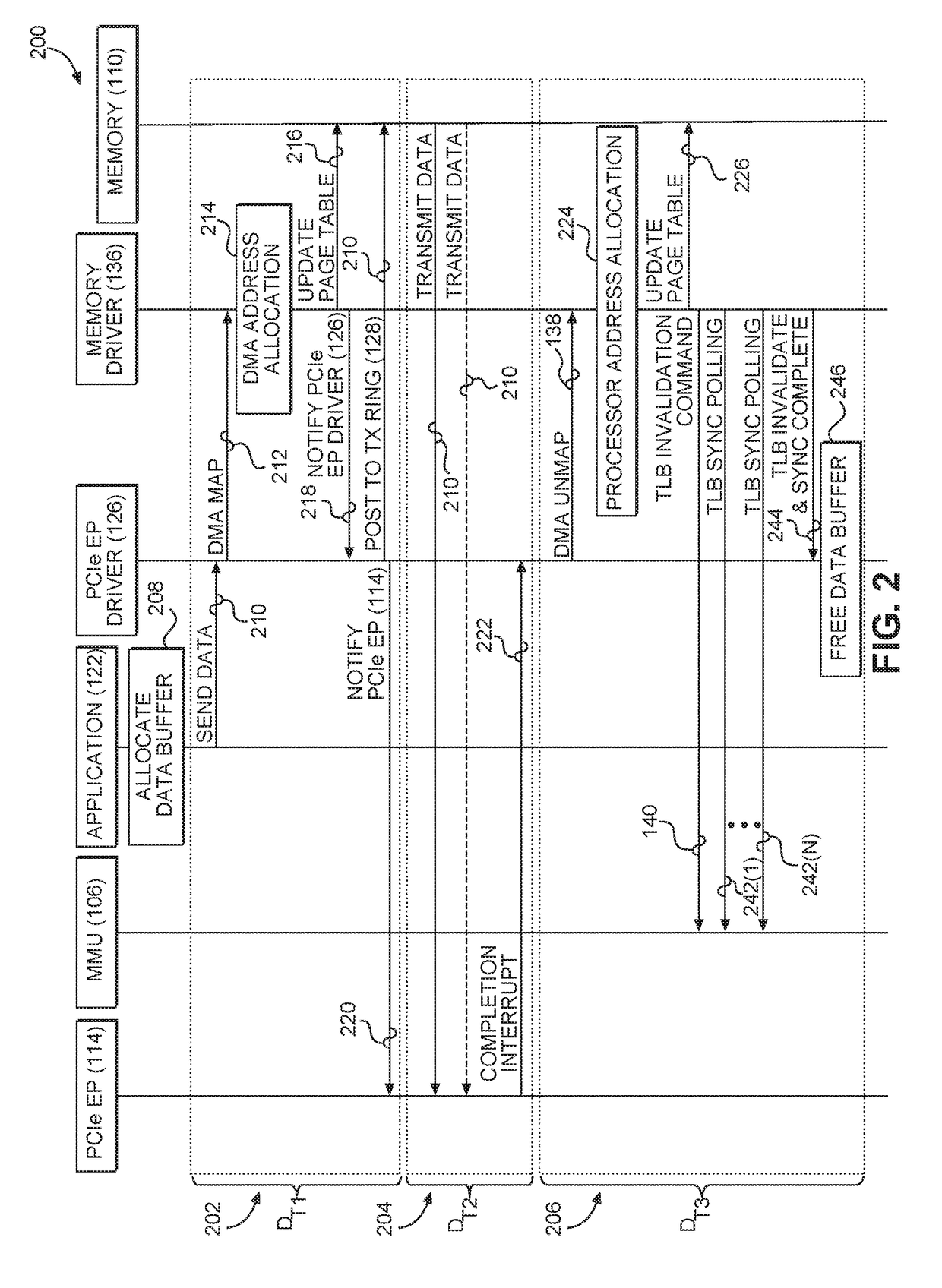

Hardware-based translation lookaside buffer (TLB) invalidation

ActiveUS20170286314A1Reduce TLB invalidation delayImprove data throughputMemory architecture accessing/allocationInput/output to record carriersCache invalidationMemory management unit

Hardware-based translation lookaside buffer (TLB) invalidation techniques are disclosed. A host system is configured to exchange data with a peripheral component interconnect express (PCIe) endpoint (EP). A memory management unit (MMU), which is a hardware element, is included in the host system to provide address translation according to at least one TLB. In one aspect, the MMU is configured to invalidate the at least one TLB in response to receiving at least one TLB invalidation command from the PCIe EP. In another aspect, the PCIe EP is configured to determine that the at least one TLB needs to be invalidated and provide the TLB invalidation command to invalidate the at least one TLB. By implementing hardware-based TLB invalidation in the host system, it is possible to reduce TLB invalidation delay, thus leading to increased data throughput, reduced power consumption, and improved user experience.

Owner:QUALCOMM INC

Lower overhead shared cache invalidations

InactiveUS7120747B2Reduce overheadMemory adressing/allocation/relocationMicro-instruction address formationCache invalidationParallel computing

Under the present invention, a system, method, and program product are provided for reducing the overhead of cache invalidations in a shared cache by transmitting a hashed code of a key to be invalidated. The method for shared cache invalidation comprises: hashing a key corresponding to an object in a first cache that has been modified or deleted to provide a hashed code of the key, wherein the first cache forms part of a shared cache; transmitting the hashed code of the key to other caches in the shared cache; comparing the hashed code of the key with entries in the other caches; and dropping any keys in the other caches having a hash code the same as the hashed code of the key.

Owner:INT BUSINESS MASCH CORP

Central processing unit cache friendly multithreaded allocation

ActiveUS20190258579A1Improve performanceImpact performanceMemory architecture accessing/allocationConcurrent instruction executionCache invalidationParallel computing

A cluster allocation bitmap determines which clusters in a band of storage remain unallocated. However, concurrent access to a cluster allocation bitmap can cause CPU stalls as copies of the cluster allocation bitmap in a CPU's level 1 (L1) cache are invalidated by another CPU allocating from the same bitmap. In one embodiment, cluster allocation bitmaps are divided into L1 cache line sized and aligned chunks. Each core of a multicore CPU is directed at random to allocate space out of a chunk. Because the chunks are L1 cache line aligned, the odds of the same portion of the cluster allocation bitmap being loaded into multiple L1 caches by multiple CPU cores is reduced, reducing the odds of an L1 cache invalidation. The number of CPU cores performing allocations on a given cluster allocation bitmap is limited based on the number of chunks with unallocated space that remain.

Owner:MICROSOFT TECH LICENSING LLC

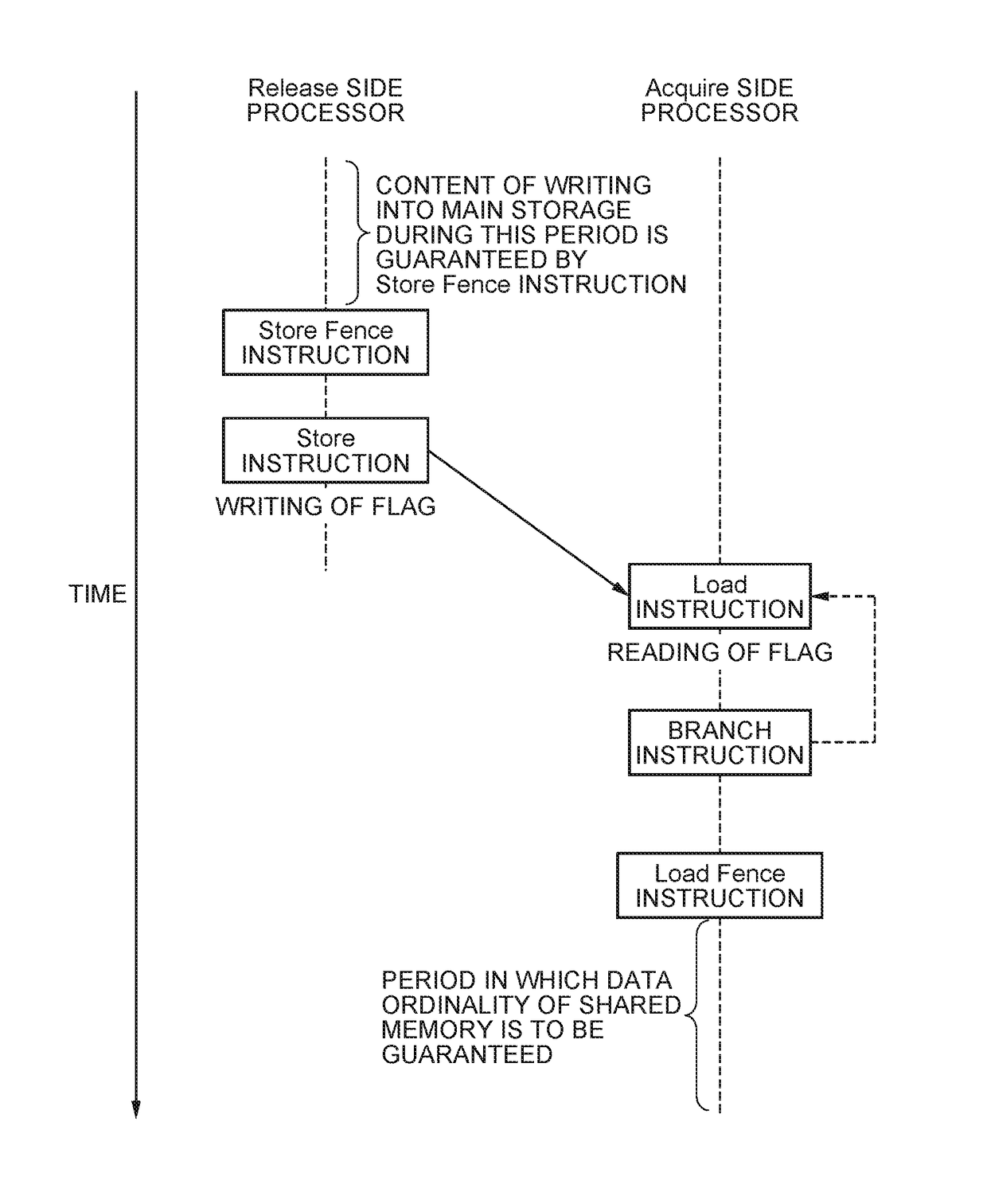

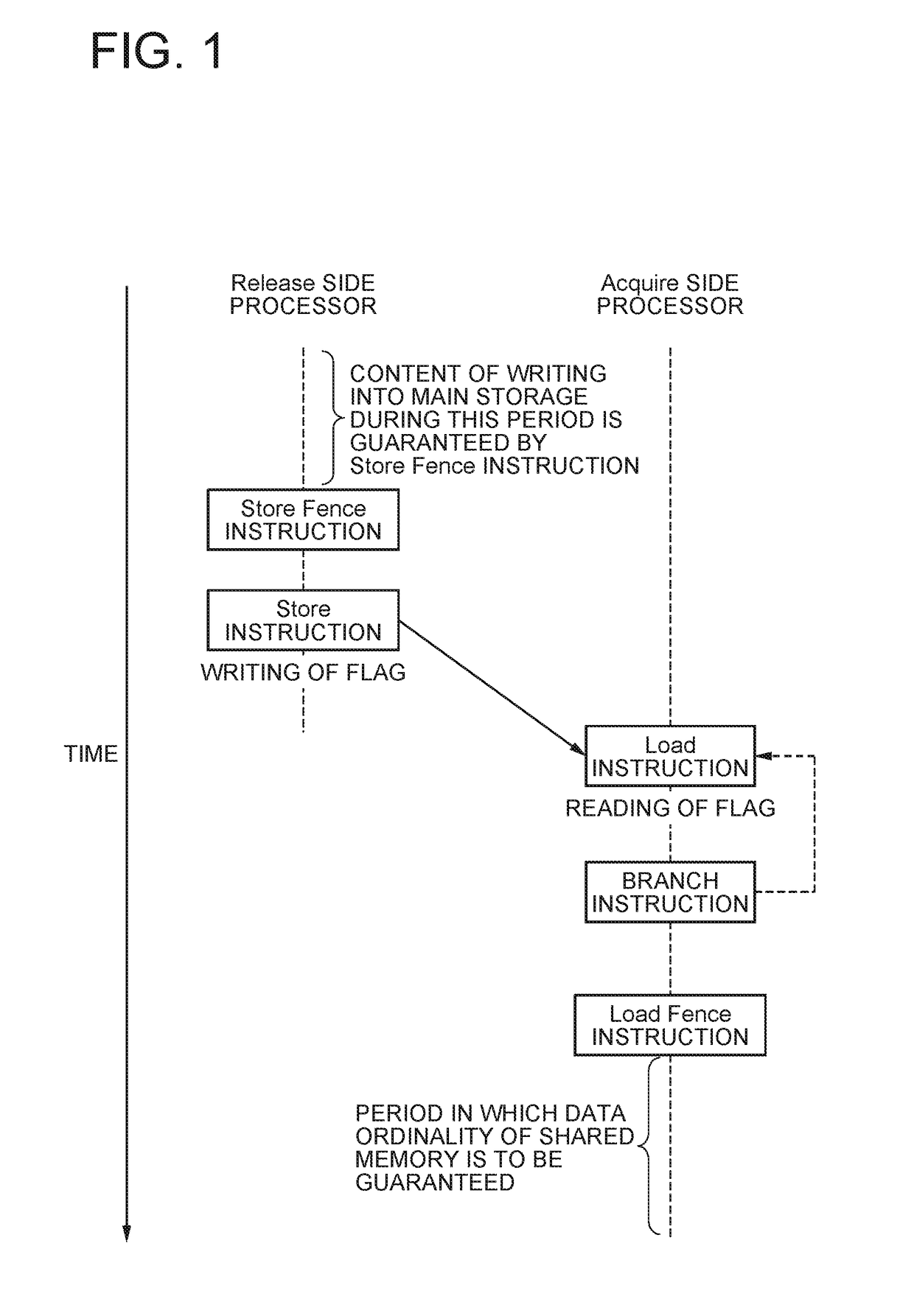

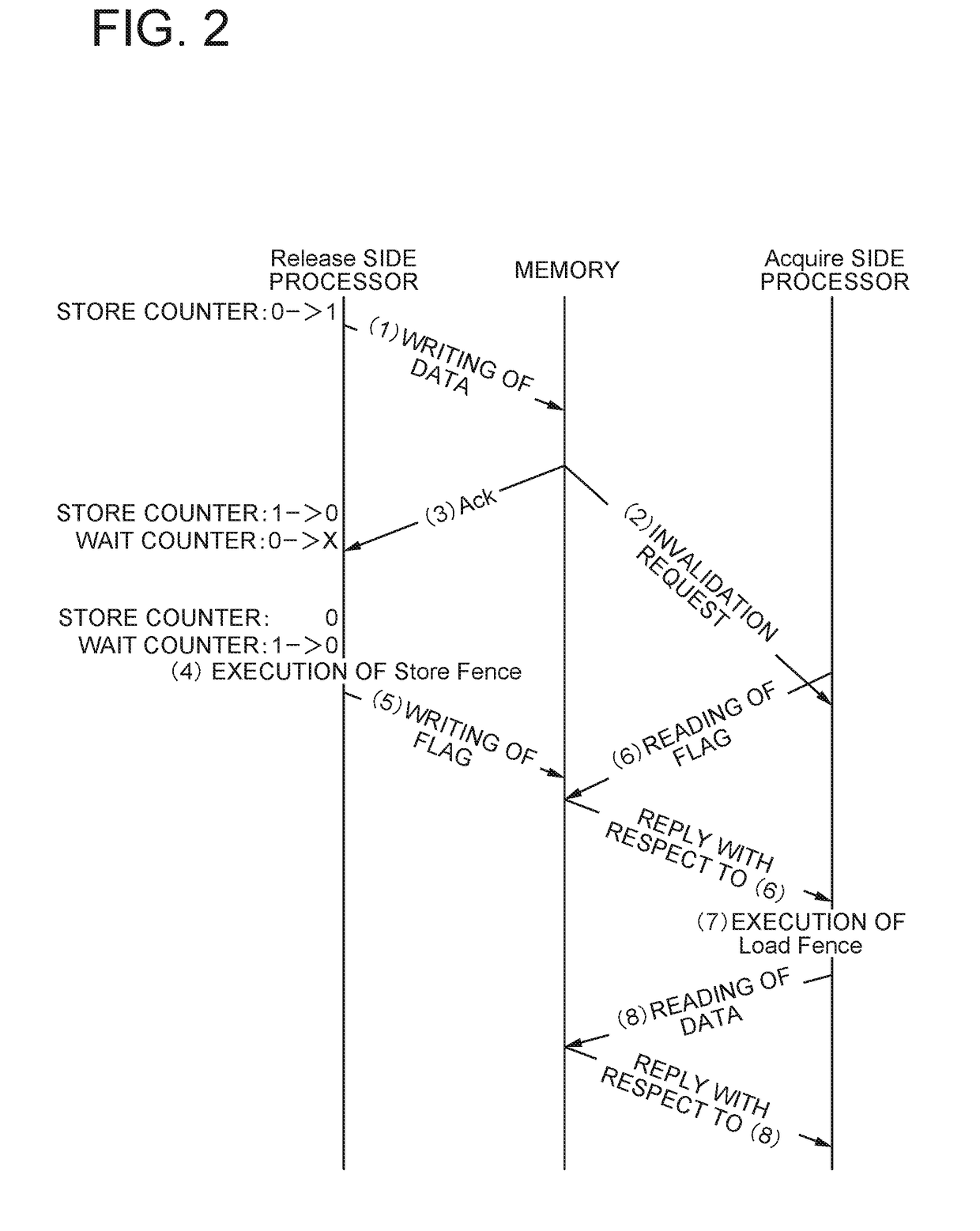

Information processing device

ActiveUS20180225208A1Reduce loadMemory architecture accessing/allocationGeneral purpose stored program computerInformation processingCache invalidation

On receiving a Store instruction from a Release side processor, a shared memory transmits a cache invalidation request to an Acquire side processor, increases the value of an execution counter, and transmits the count value to the Release side processor asynchronously with the receiving of the Store instruction. The Release side processor has: a store counter which increases its value when the Store instruction is issued and, when the count value of the execution counter is received, decreases its value by the count value; and a wait counter which, when the store counter has come to indicate 0, sets a value indicating a predetermined time and decreases its value every unit time. The Release side processor issues a Store Fence instruction to request for a guarantee of completion of invalidation of the cache of the Acquire side processor when both the counters have come to indicate 0.

Owner:NEC CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com