Network cache design method based on consistent hash

A design method and consistent technology, applied in the field of computer networks, can solve problems such as large system load, drastic changes in cached data resources, mismatch between cached data and servers, and improve IO performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

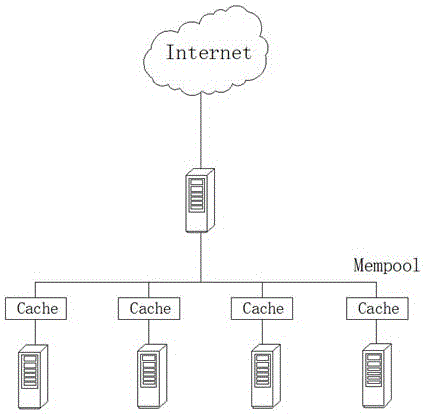

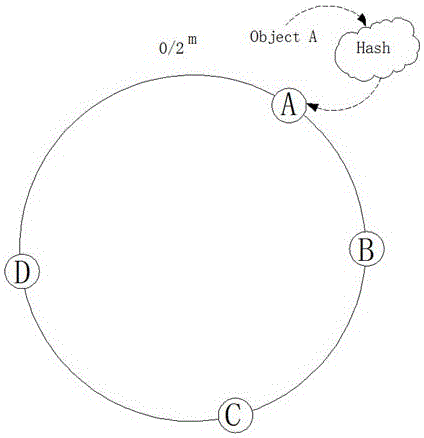

[0025] A network cache design method based on consistent hash. First, the cache of the server cluster is centralized and put into a unified resource pool. All resources in the resource pool can be used by any resource scheduling, and NUMA is used for cache management; the hash value put in a 2 m In the ring space, a single server is also regarded as an object for hash calculation and put into the hash ring; then the data blocks Object A, B, C and D are calculated by the hash algorithm to correspond to the key value, and the value is put into the In the hash space; through the above two operations, the server node and the data block are mapped in the same hash space, and then the data block is put into the corresponding server node in a clockwise direction, such as figure 2 , image 3 shown.

[0026] A server cluster is a collection of multiple servers to provide services, but it appears to the client as one server. Clusters can be operated in parallel, and clustered operat...

Embodiment 2

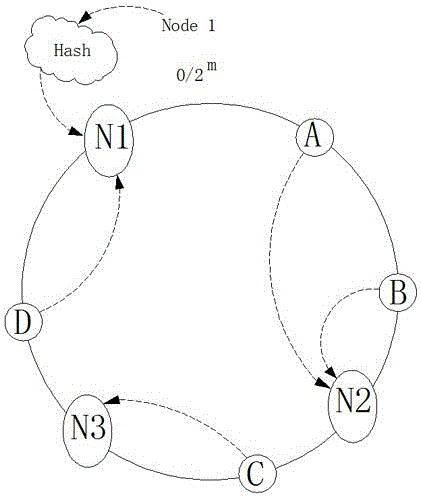

[0029] On the basis of Embodiment 1, in this embodiment, for the operation of any cluster, there is a case where a single server is down, but server downtime cannot affect the performance and services of the cluster, so it is necessary to delete the server If a server in the server cluster goes down and its cache content becomes invalid, which affects the work of all servers, then cache migration will occur; similarly, when the number of server clusters does not meet the requirements, it cannot provide To provide sufficient services from the outside world, it is necessary to increase the number of nodes for expansion. The process is as follows:

[0030] If the N2 node goes down, the node fails, such as Figure 4 As shown, the original data blocks A and B pointing to N2 need to be re-cached to N3, and cache migration will occur, but data blocks C and D are still cached in the original node, so all data will not be migrated, reducing system resources consumption;

[0031] If t...

Embodiment 3

[0033] On the basis of Embodiment 1 or 2, the method described in this embodiment obtains the virtual node through the algorithm of the physical node, and maps the virtual node to the hash space. The addition of the virtual node can avoid a large amount of data cache in a single server node, A balanced load of data is achieved.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com