Patents

Literature

72 results about "CPU cache" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

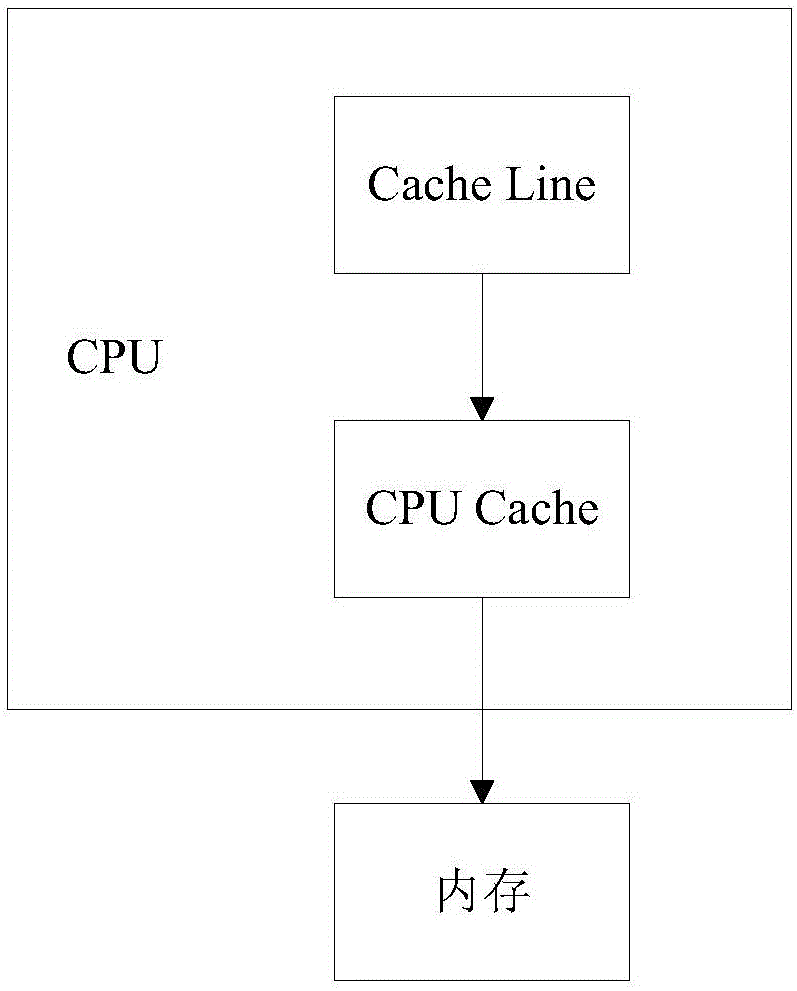

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have different independent caches, including instruction and data caches, where the data cache is usually organized as a hierarchy of more cache levels (L1, L2, L3, L4, etc.).

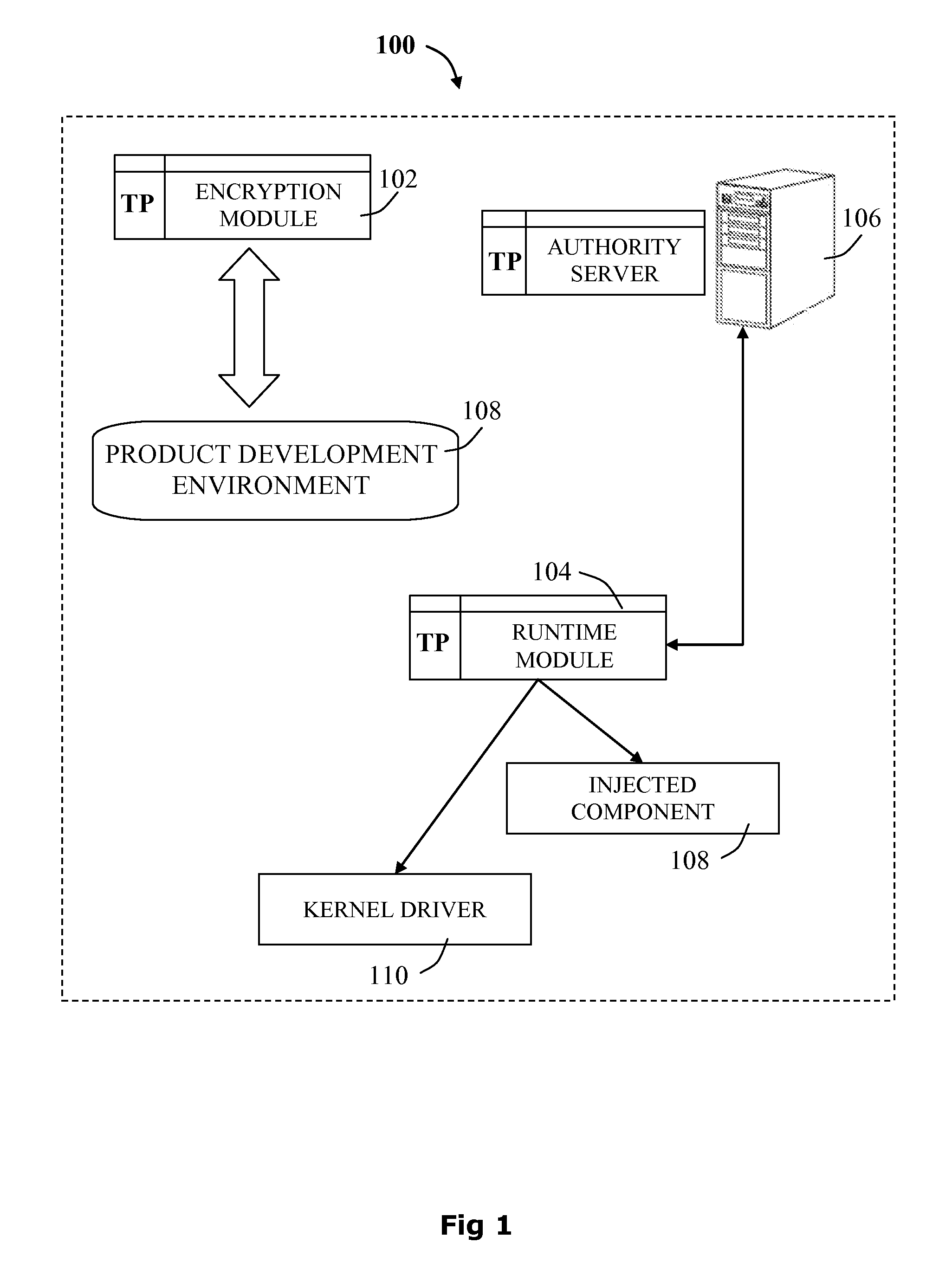

System and methods for CPU copy protection of a computing device

ActiveUS20150149732A1Avoiding evictionMemory architecture accessing/allocationMemory adressing/allocation/relocationOperabilityCopy protection

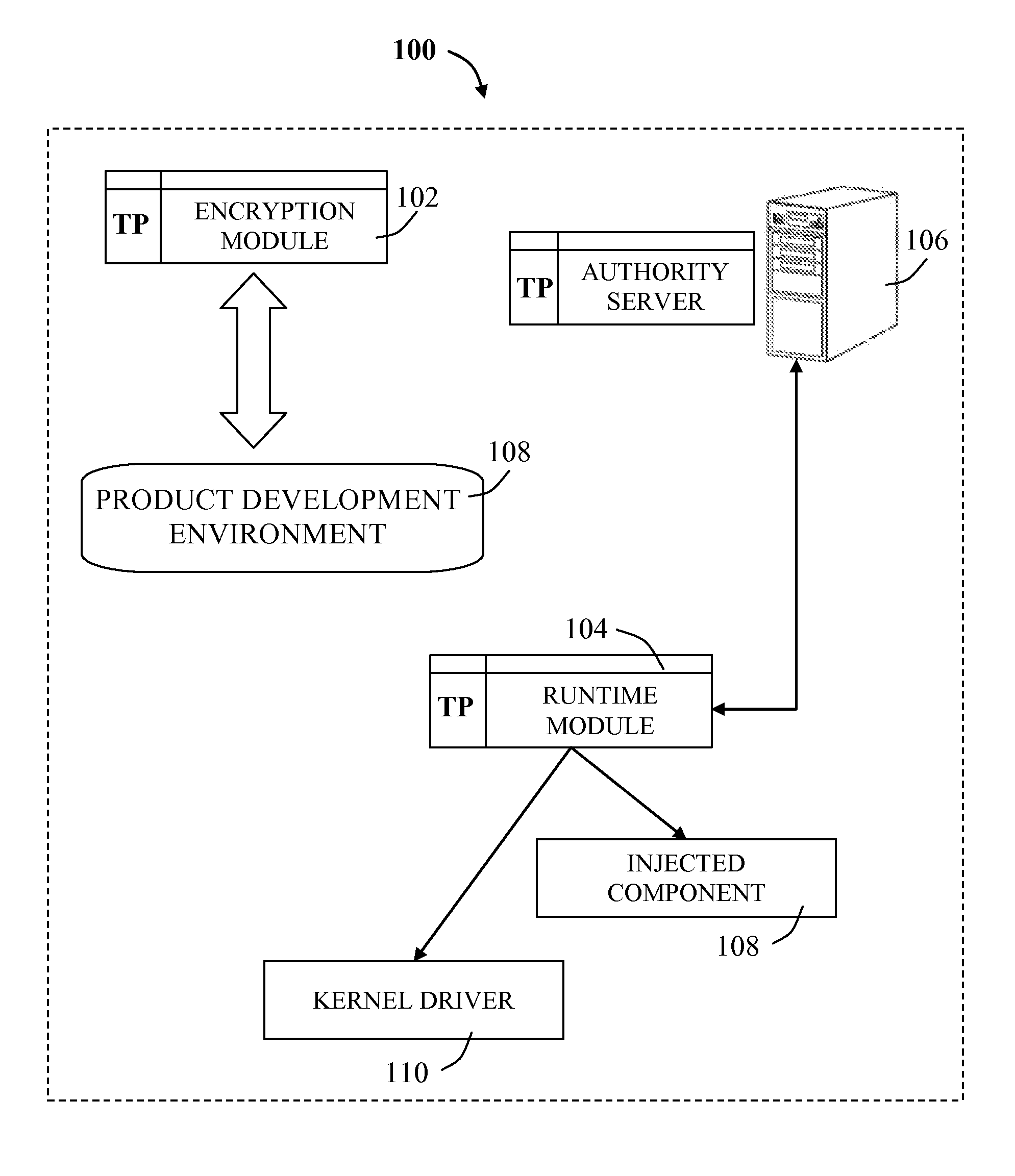

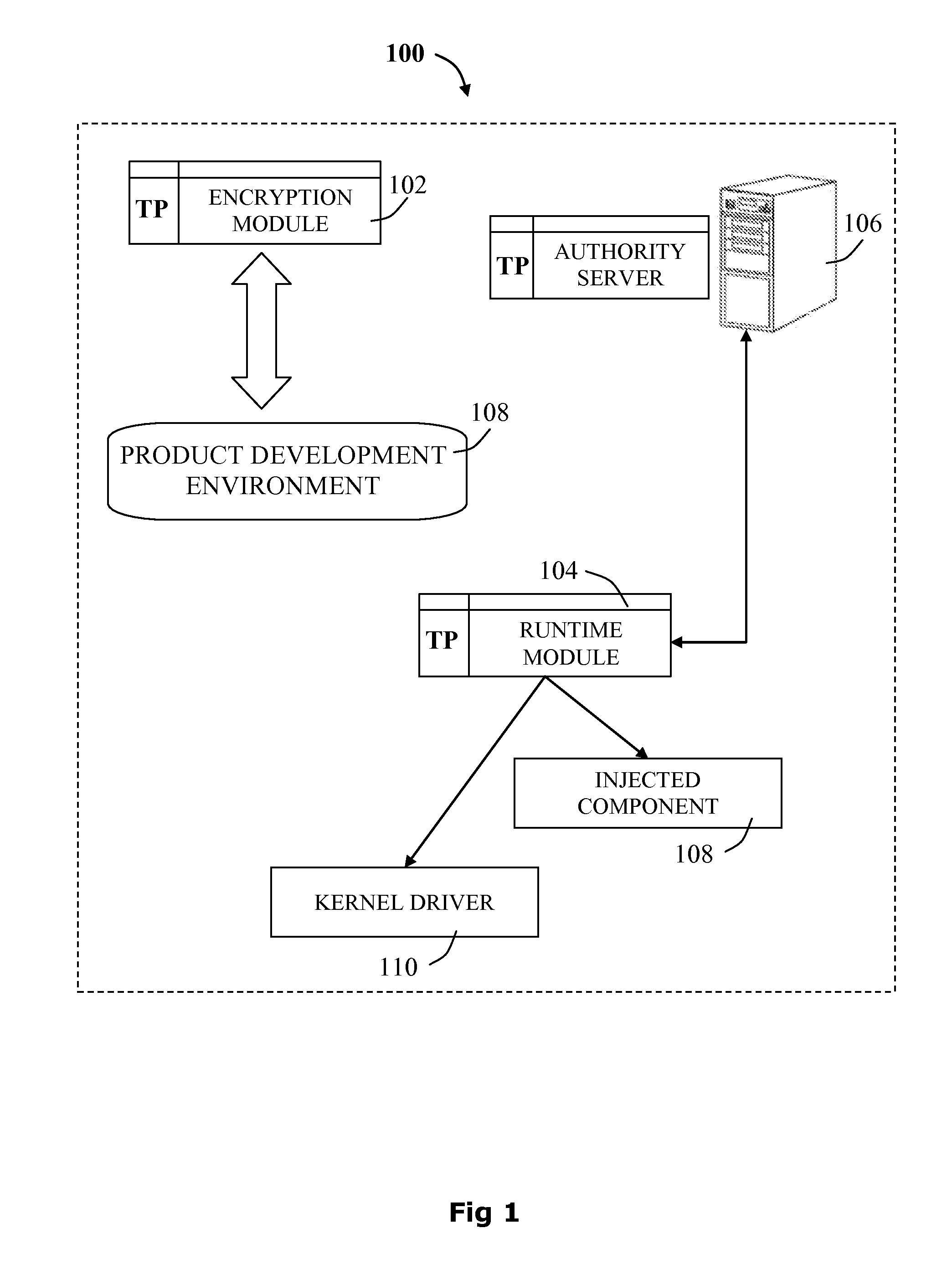

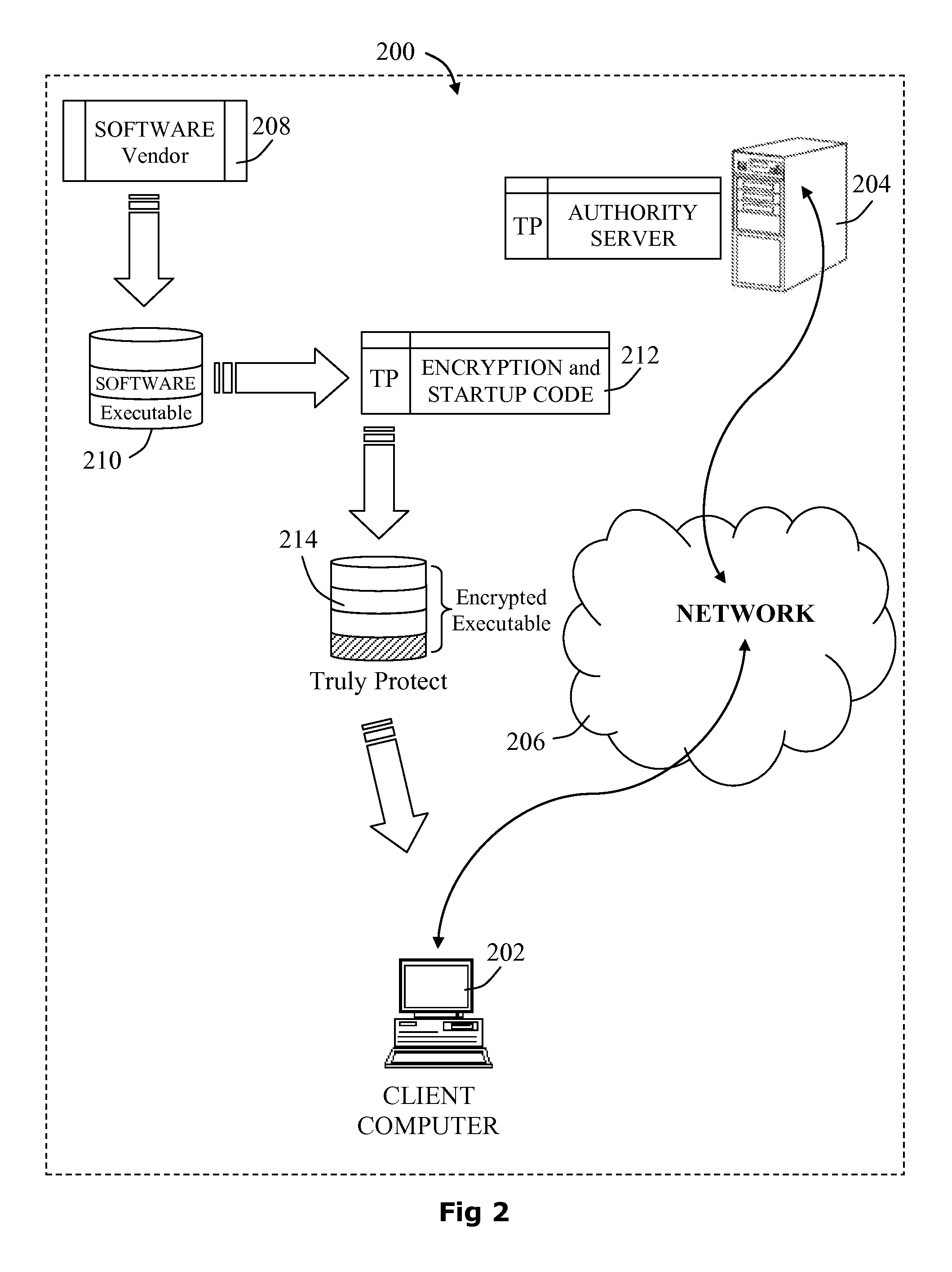

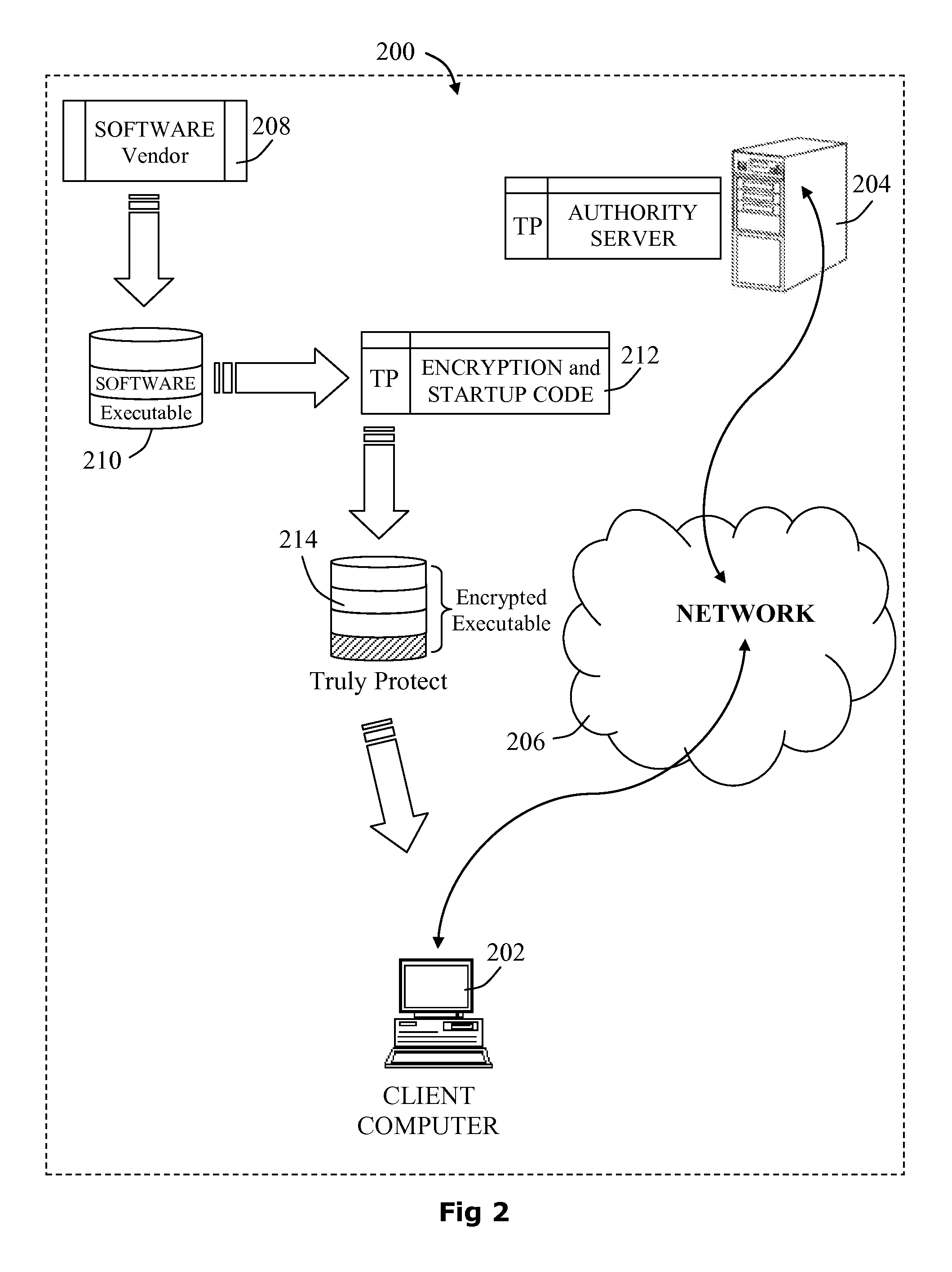

The present disclosure relates to techniques for system and methods for software-based management of protected data-blocks insertion into the memory cache mechanism of a computerized device. In particular the disclosure relates to preventing protected data blocks from being altered and evicted from the CPU cache coupled with buffered software execution. The technique is based upon identifying at least one conflicting data-block having a memory mapping indication to a designated memory cache-line and preventing the conflicting data-block from being cached. Functional characteristics of the software product of a vendor, such as gaming or video, may be partially encrypted to allow for protected and functional operability and avoid hacking and malicious usage of non-licensed user.

Owner:TRULY PROTECT

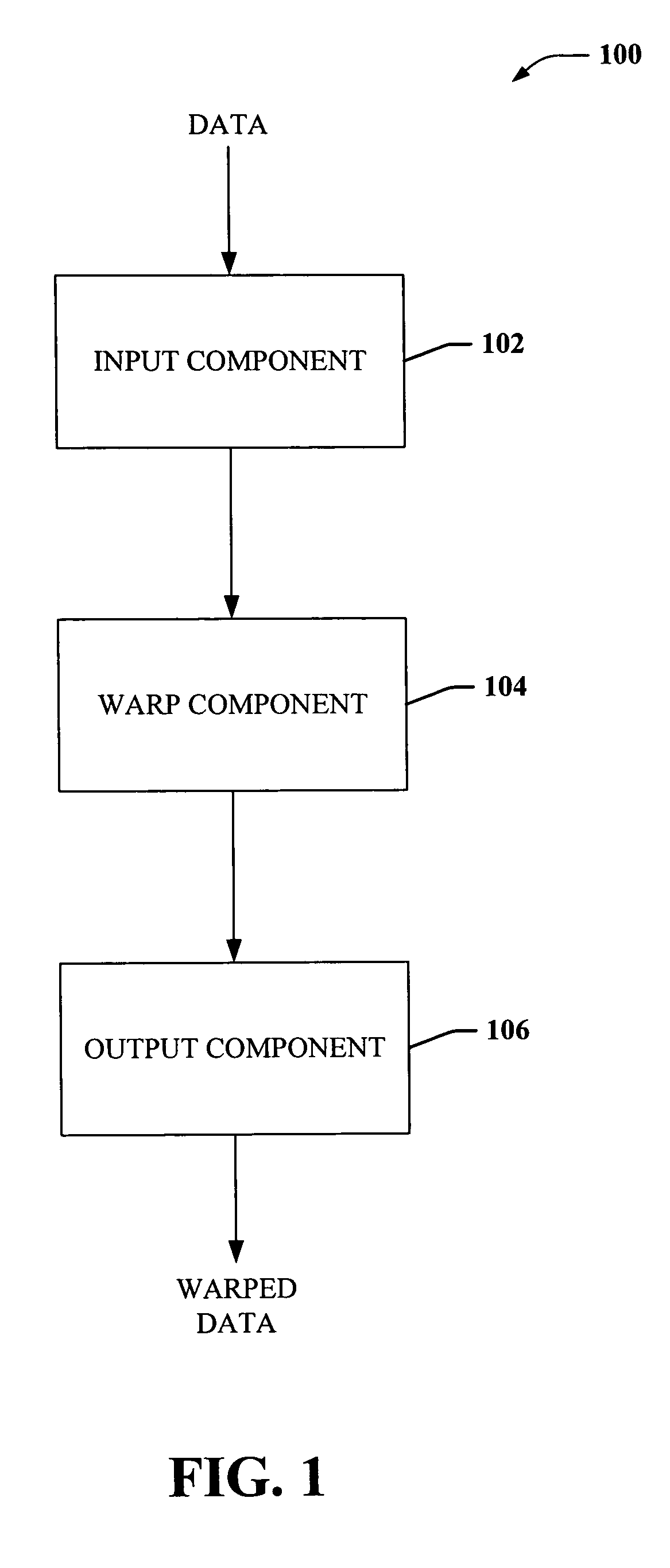

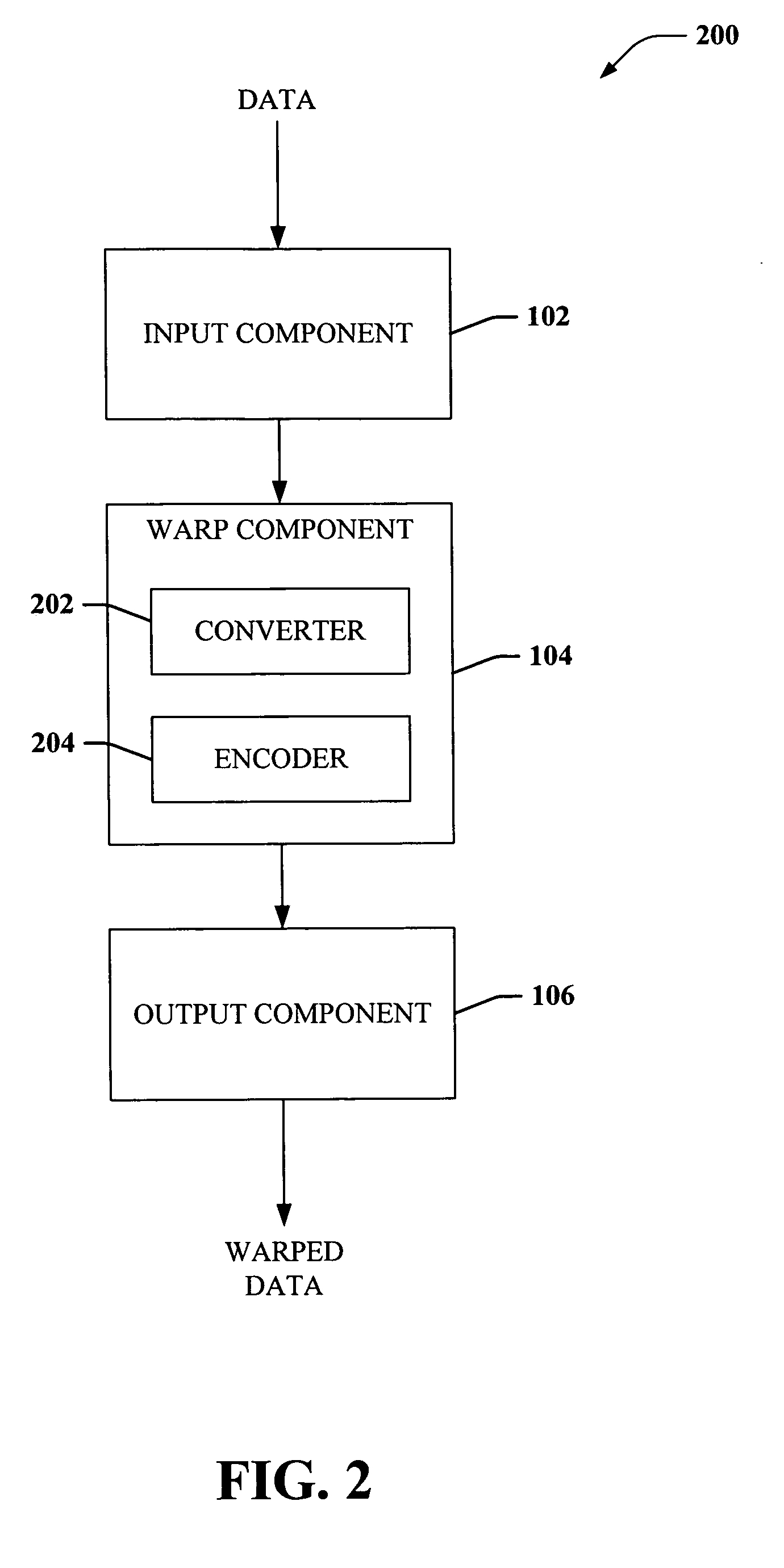

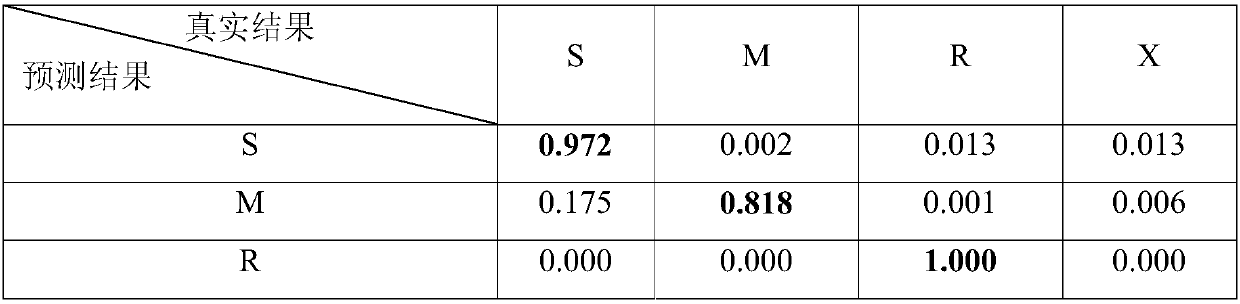

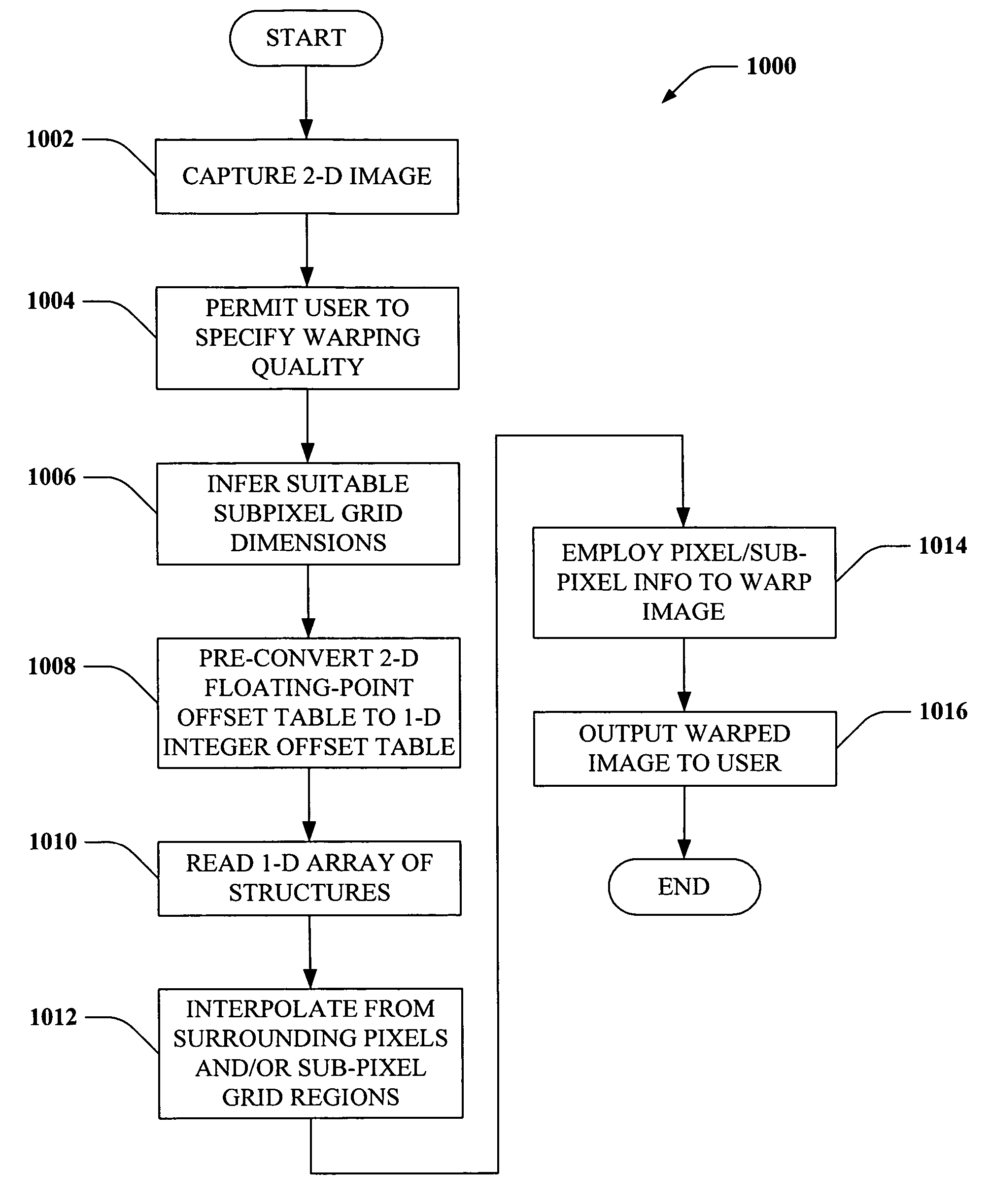

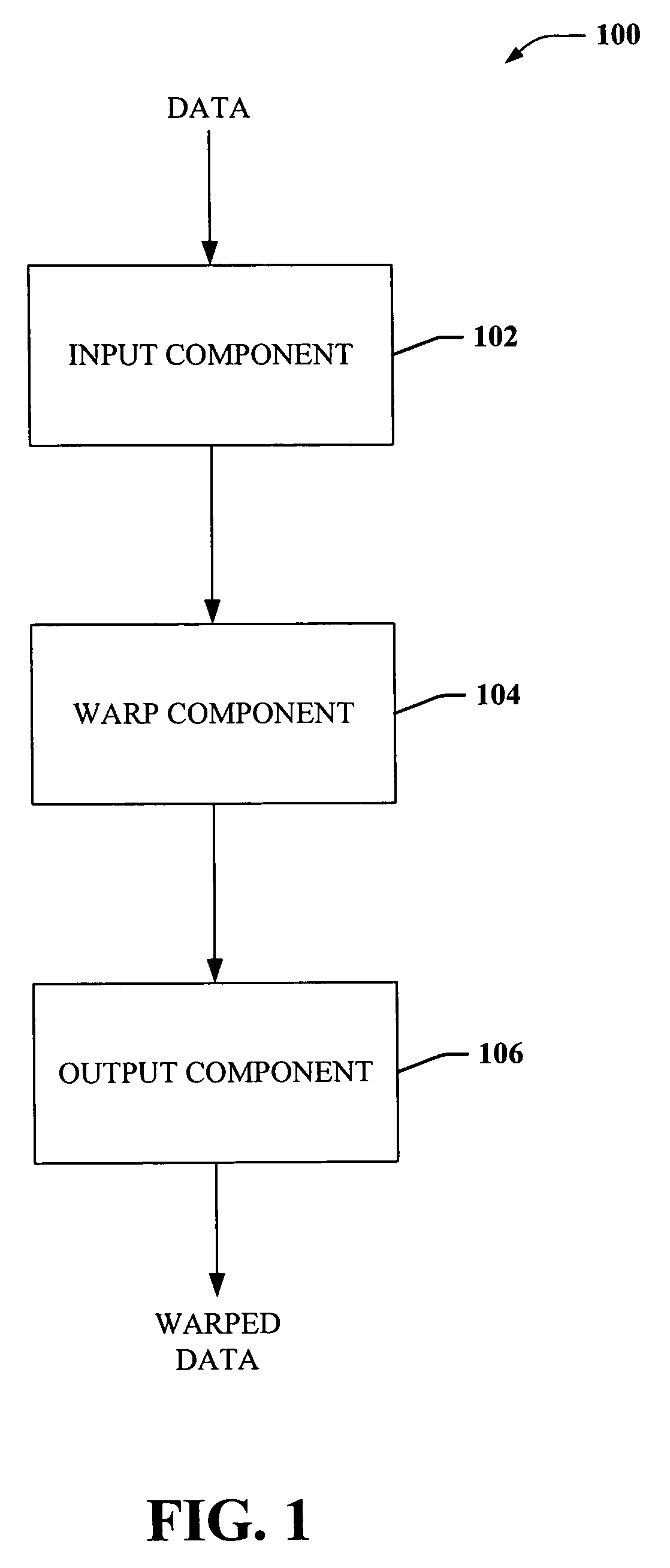

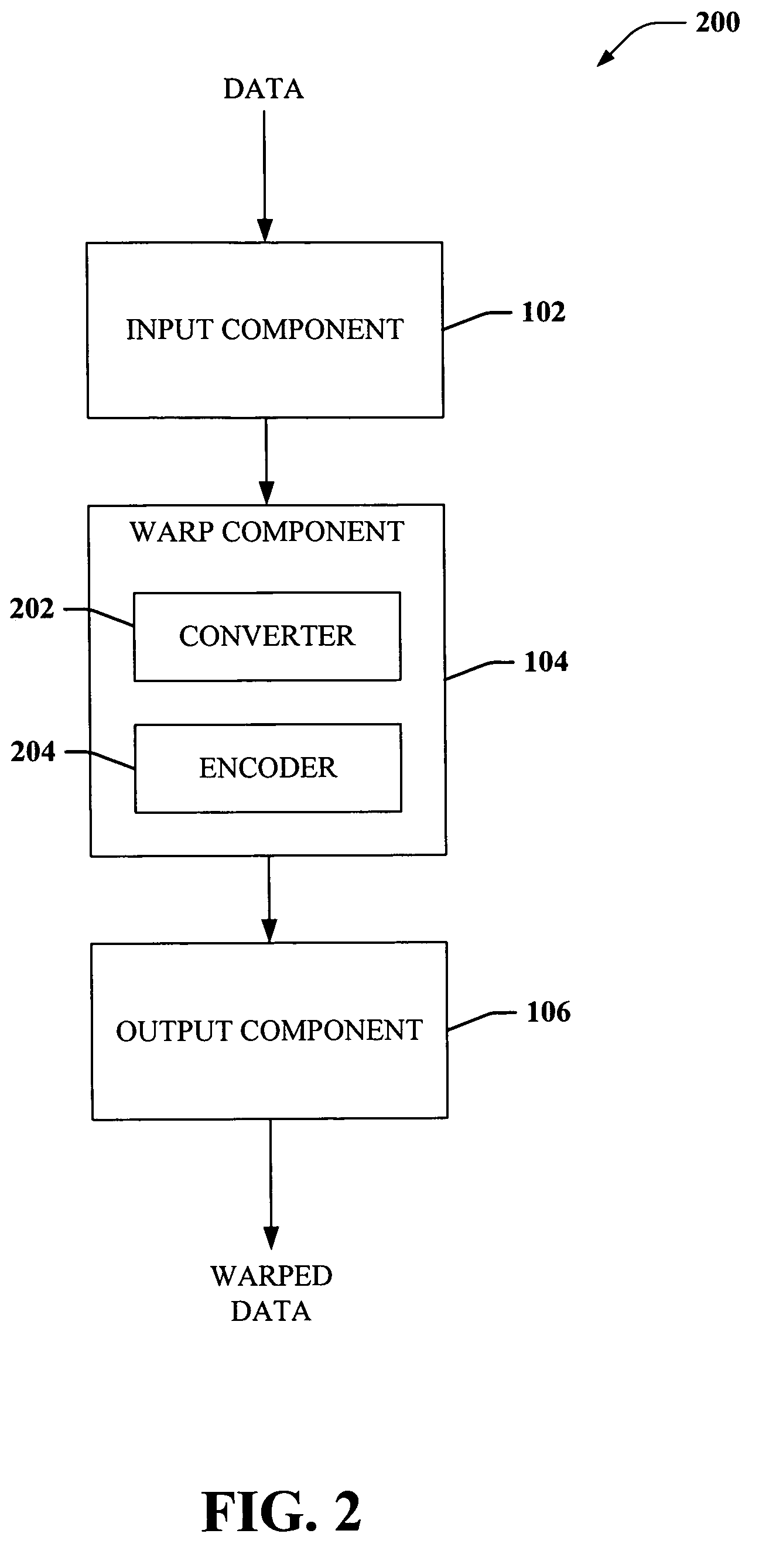

Novel method to quickly warp a 2-D image using only integer math

InactiveUS20050243103A1Good reliefEnhance the imageColor signal processing circuitsGeometric image transformationComputer graphics (images)Floating point

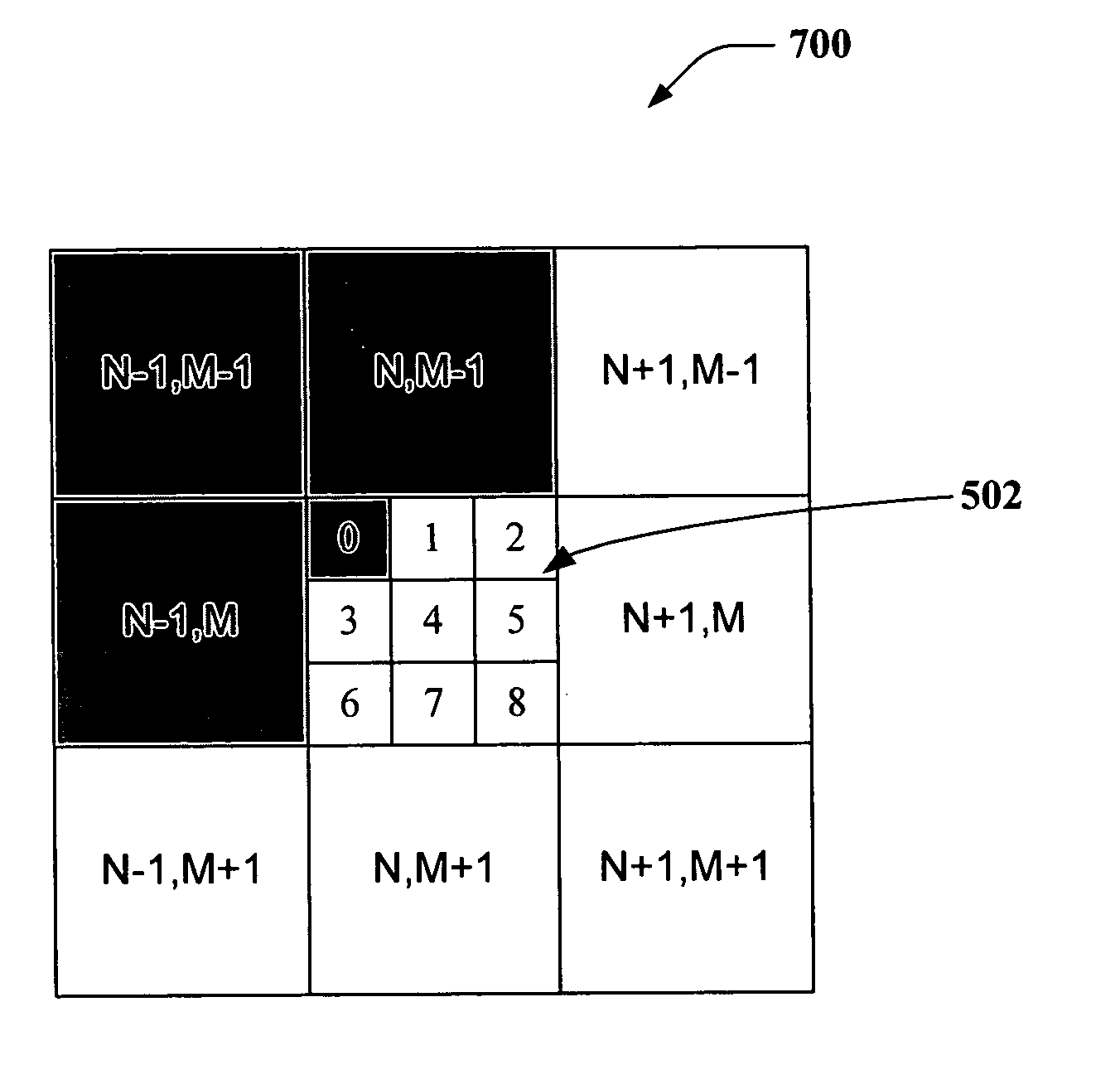

Systems and methods are disclosed that facilitate rapidly warping a two-dimensional image using integer math. A warping table can contain two-dimensional floating point output pixel offset values that are mapped to respective input pixel locations in a captured image. The warping table values can be pre-converted to integer offset values and integer grid values mapped to a sub-pixel grid. During warping, each output pixel can be looked up via its integer offset value, and a one-dimensional table lookup for each pixel can be performed to interpolate pixel data based at least in part on the integer grid value of the pixel. Due to the small size of the lookup tables, lookups can potentially be stored in and retrieved from a CPU cache, which stores most recent instructions to facilitate extremely rapid warping and fast table lookups.

Owner:MICROSOFT TECH LICENSING LLC

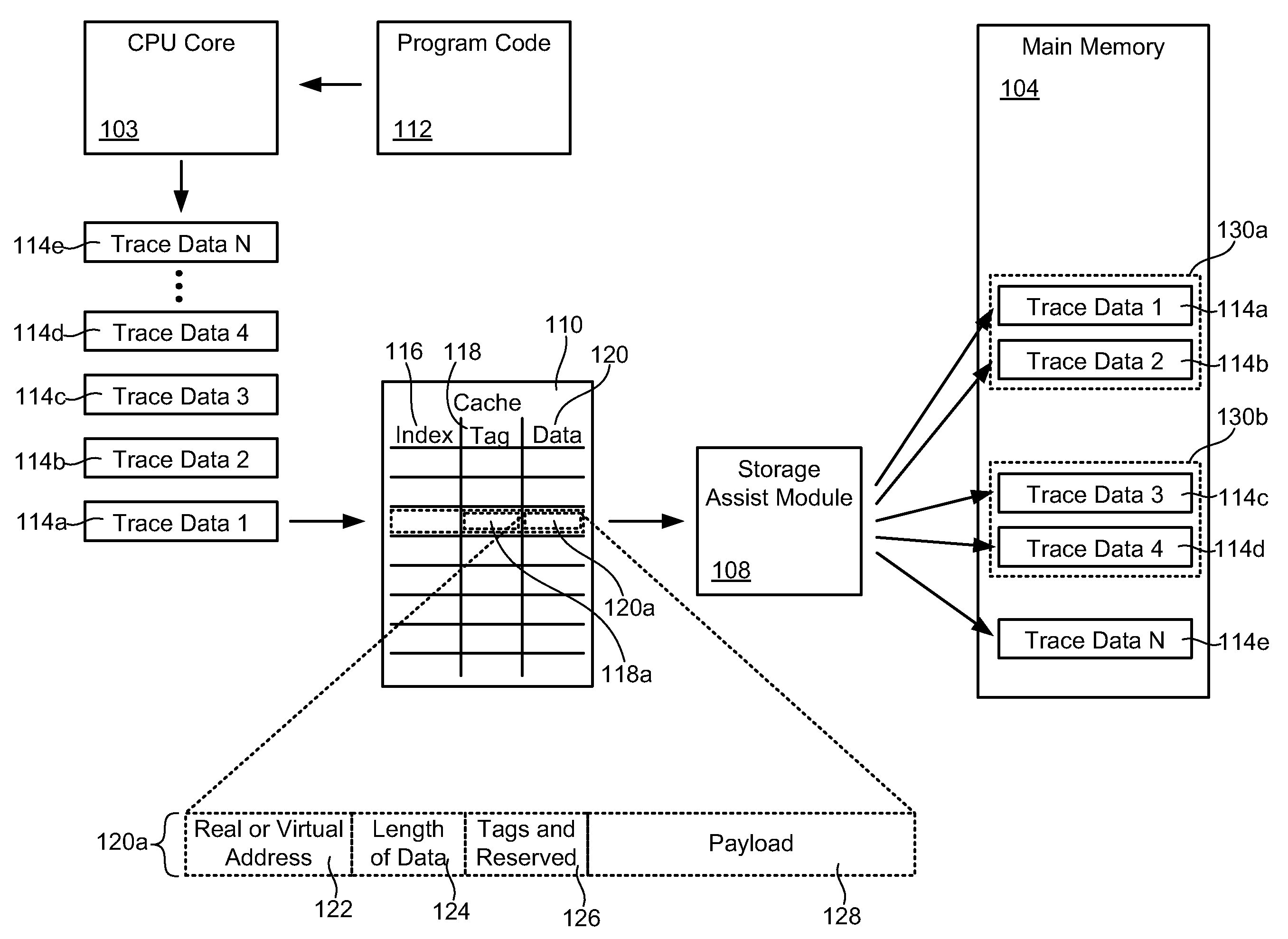

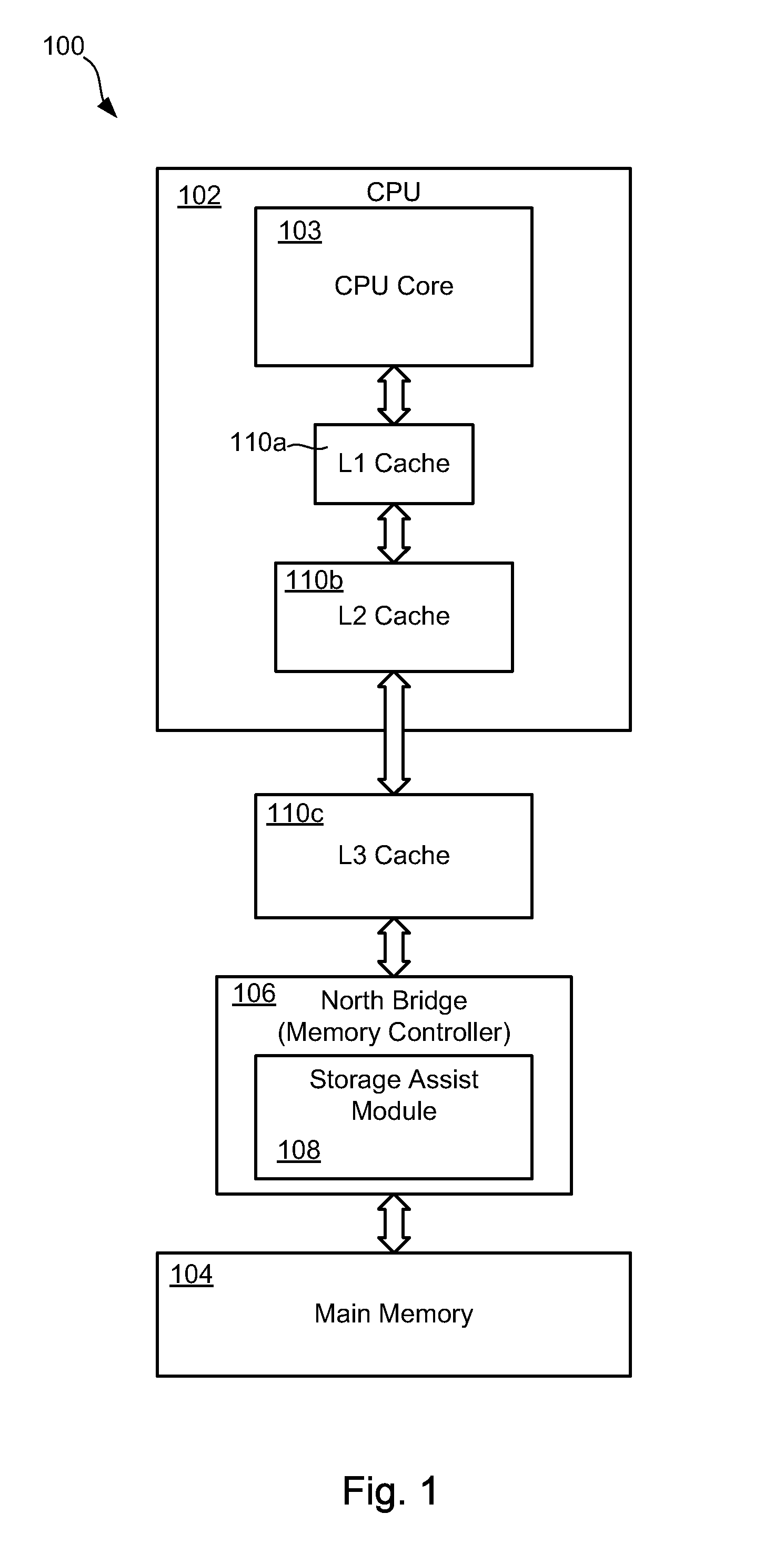

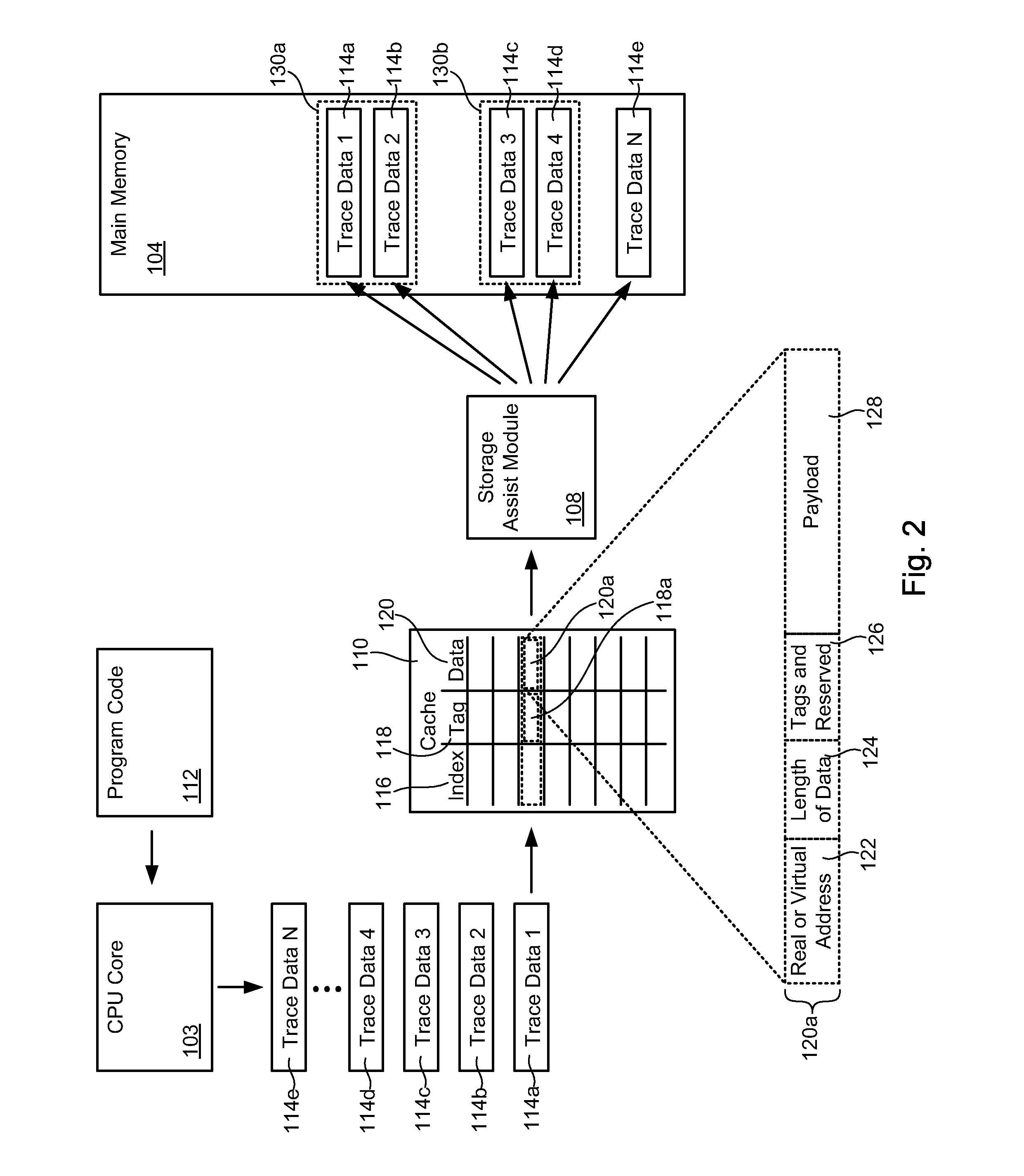

Assisted trace facility to improve CPU cache performance

InactiveUS20080065810A1Error detection/correctionMemory adressing/allocation/relocationParallel computingCPU cache

A system and method for recording trace data while conserving cache resources includes generating trace data and creating a cache line containing the trace data. The cache line is assigned a tag which corresponds to an intermediate address designated for processing the trace data. The cache line also contains embedded therein an actual address in memory for storing the trace data, which may include either a real address or a virtual address. The cache line may be received at the intermediate address and parsed to read the actual address. The trace data may then be written to a location in memory corresponding to the actual address. By routing trace data through a designated intermediate address, CPU cache may be conserved for other more important or more frequently accessed data.

Owner:IBM CORP

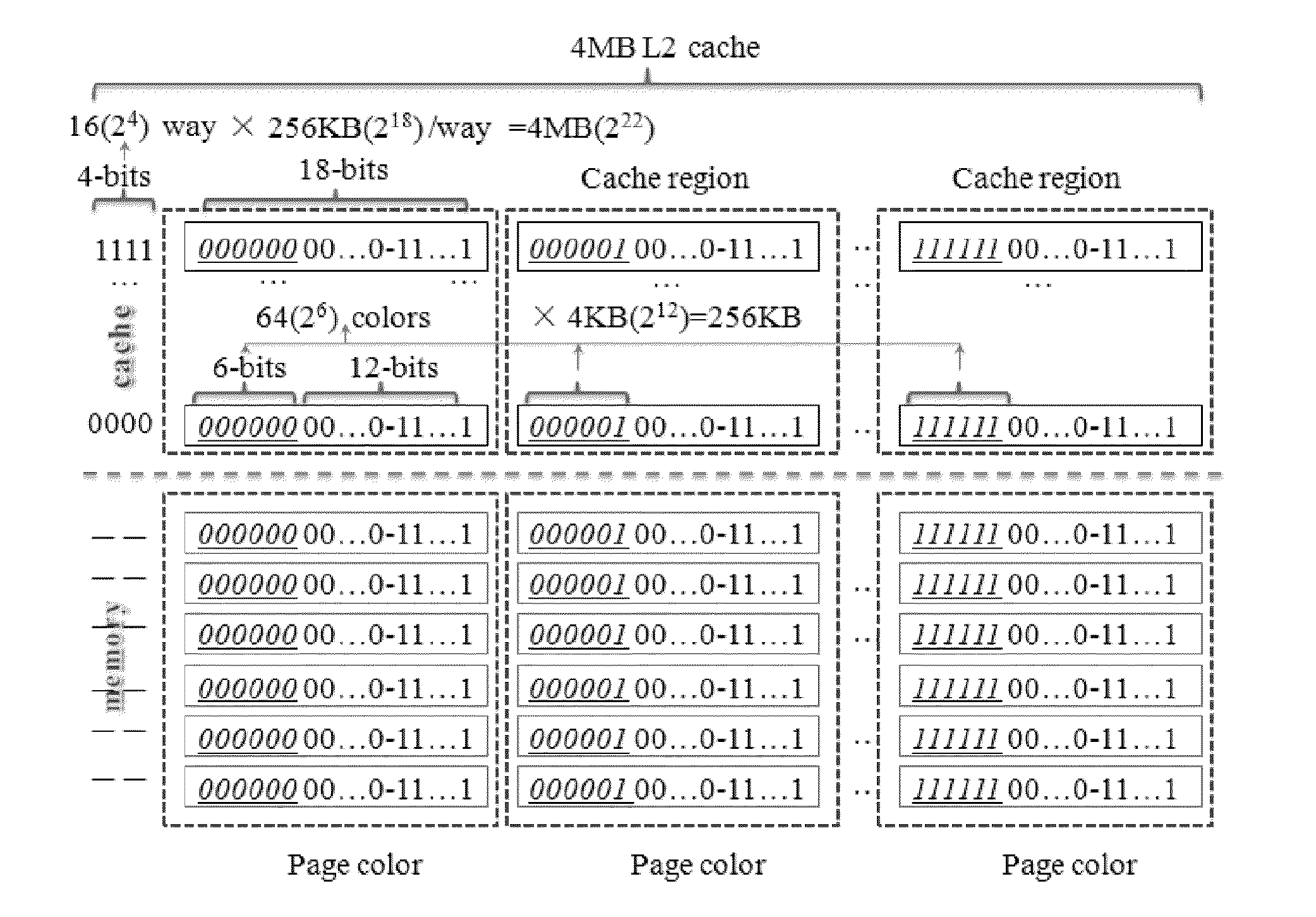

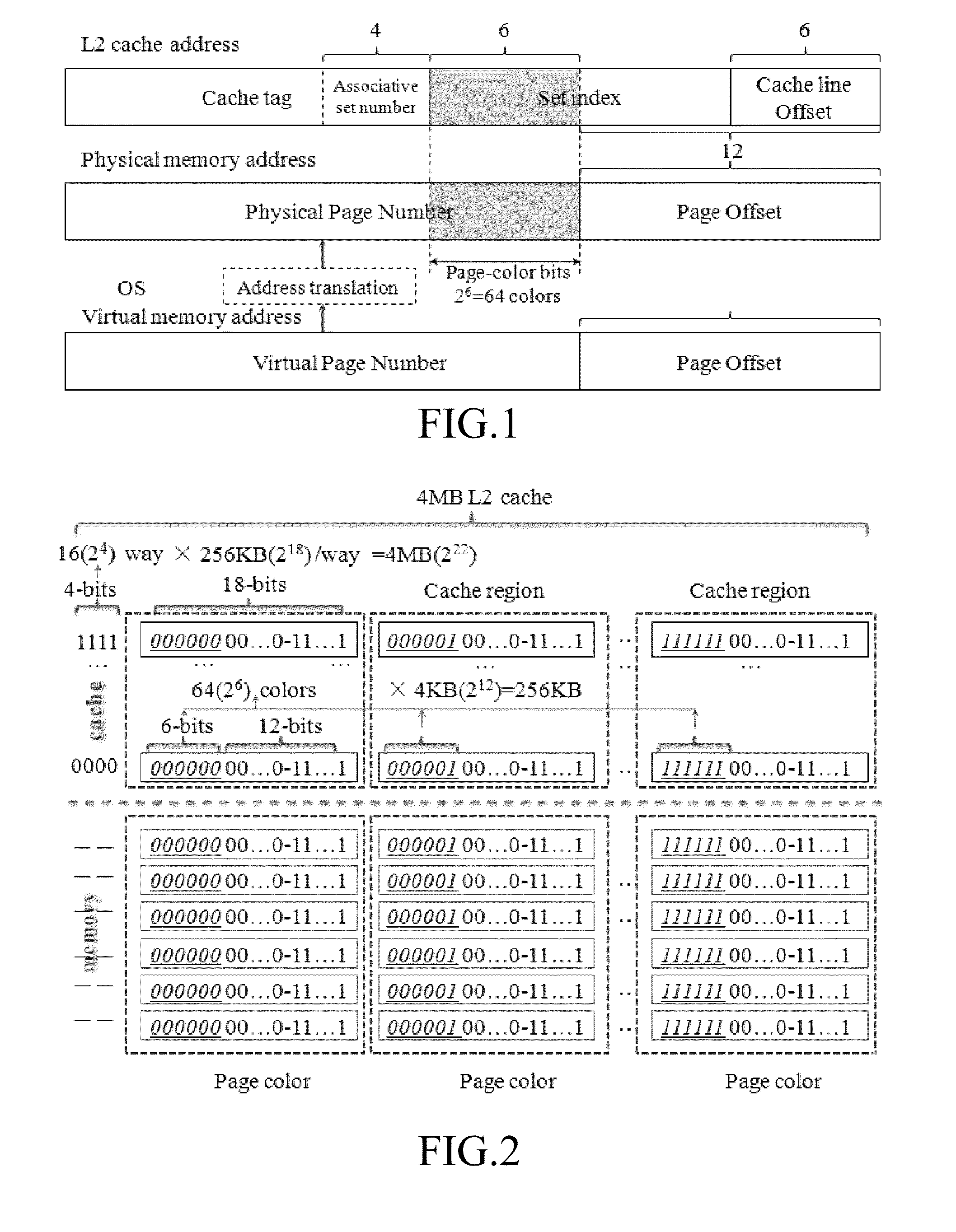

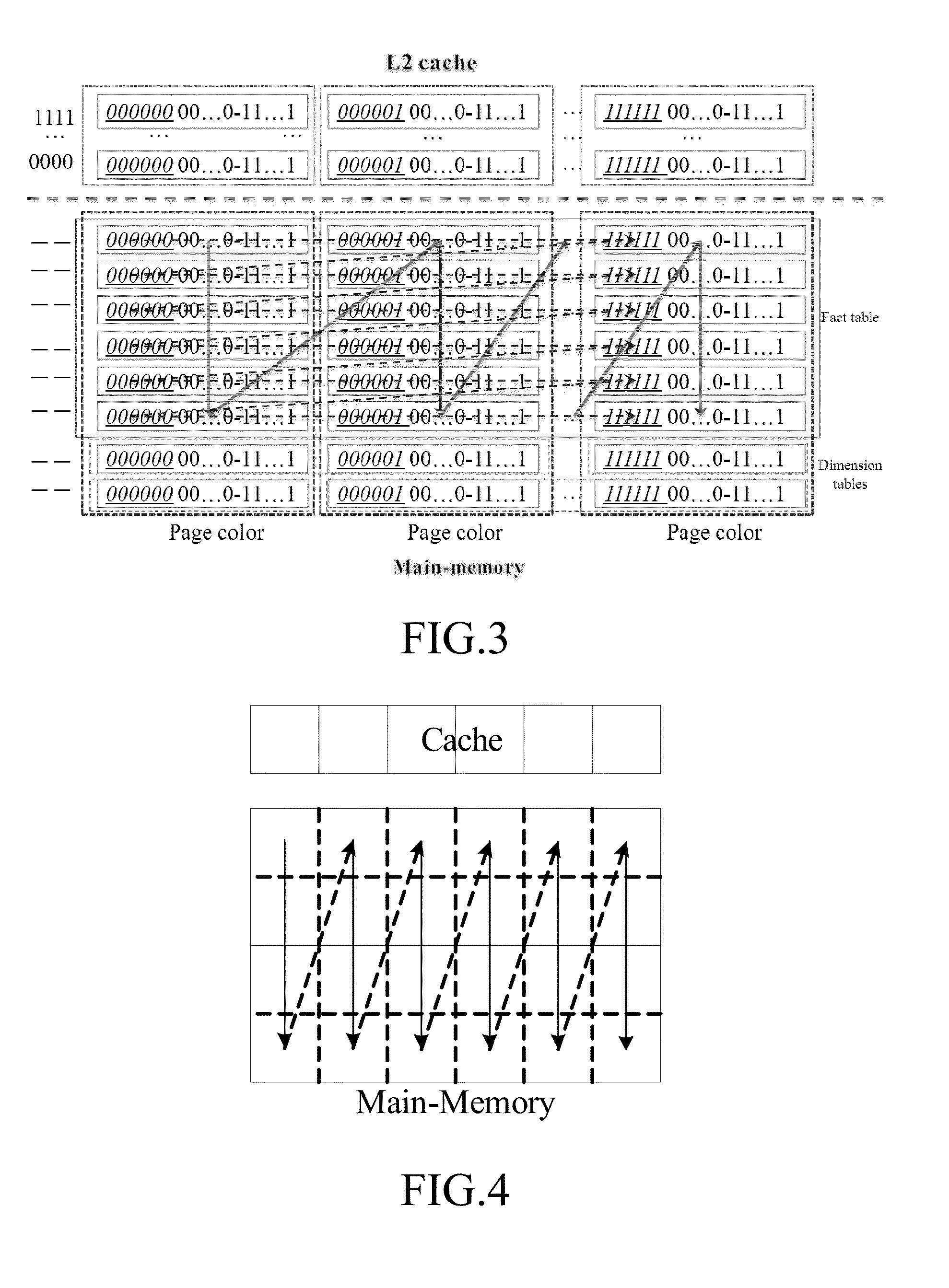

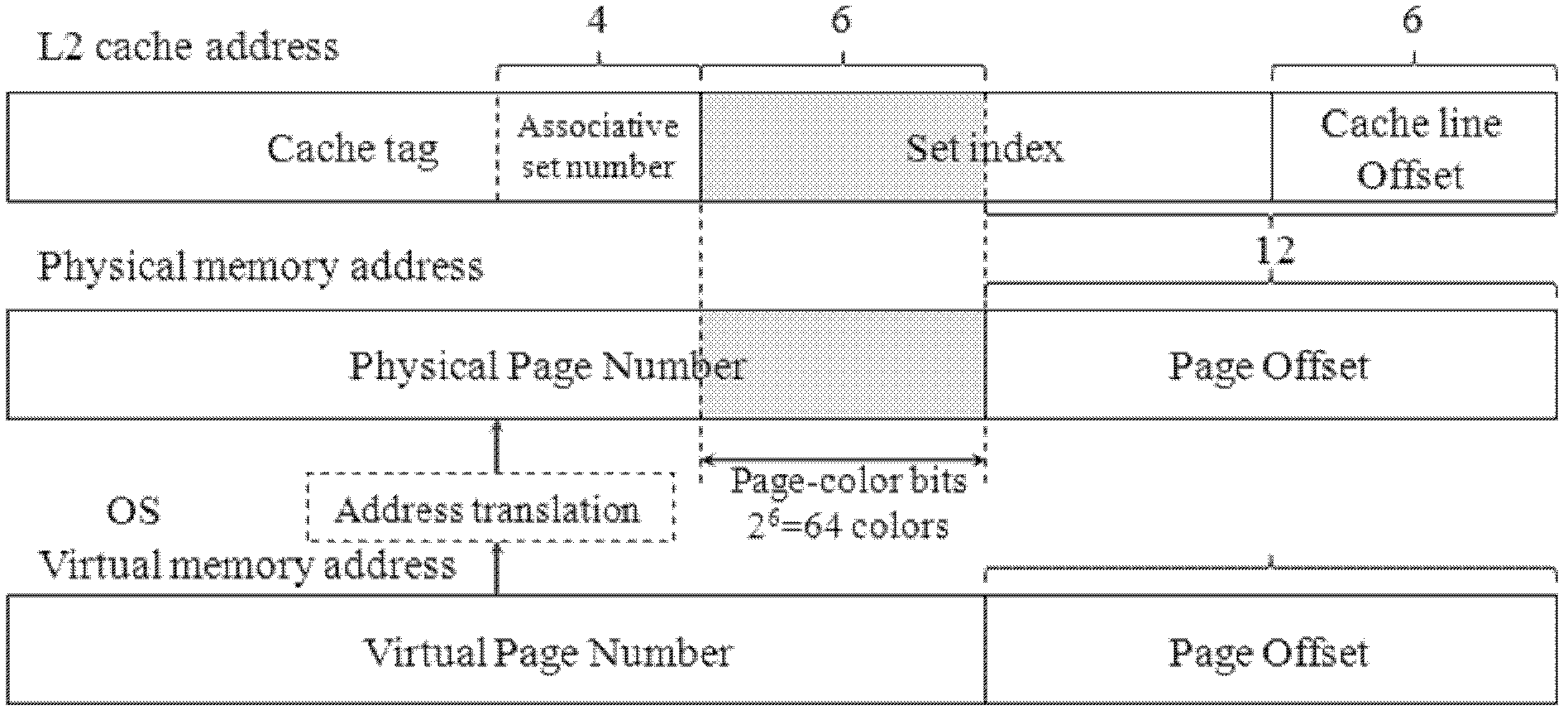

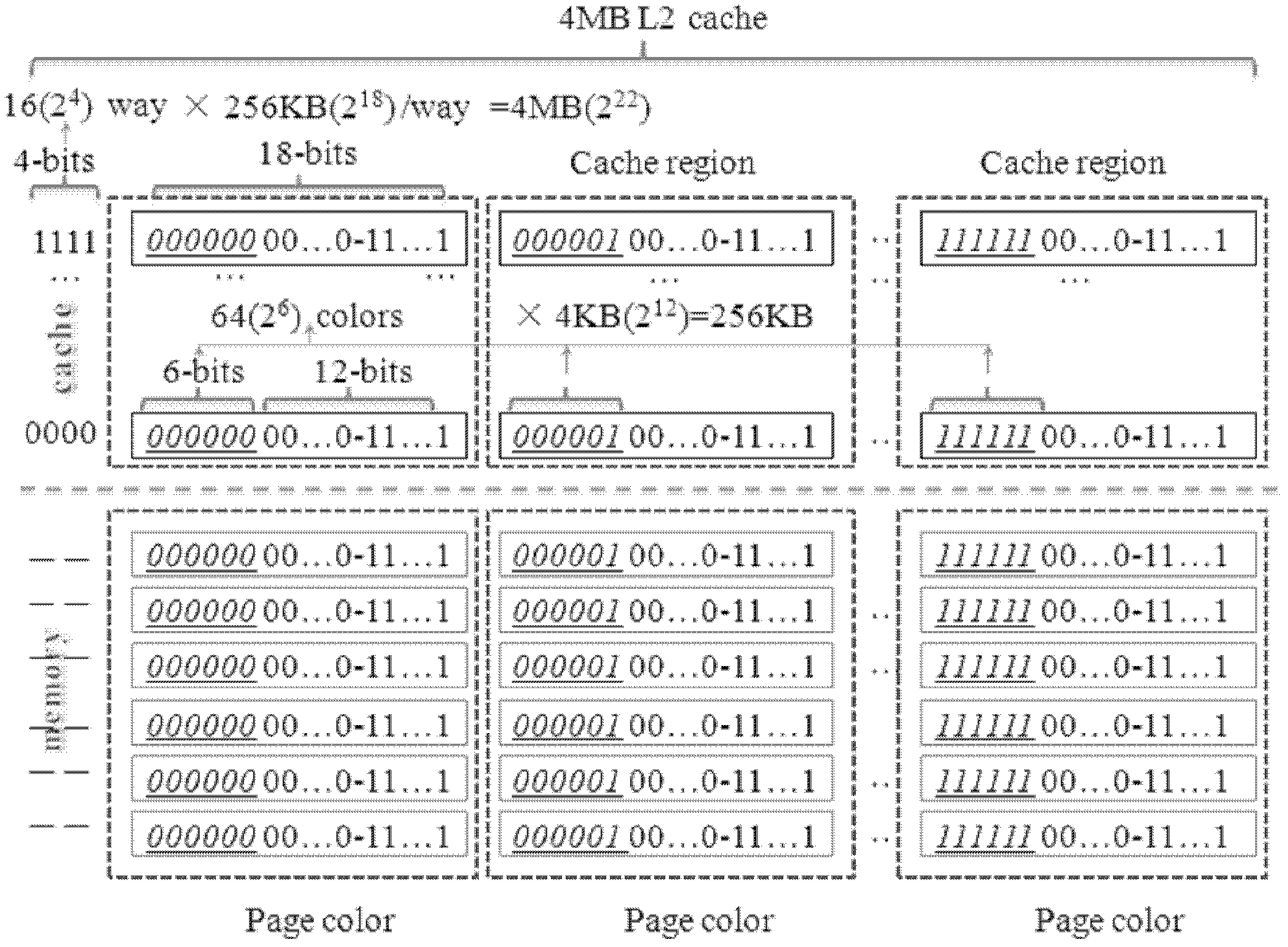

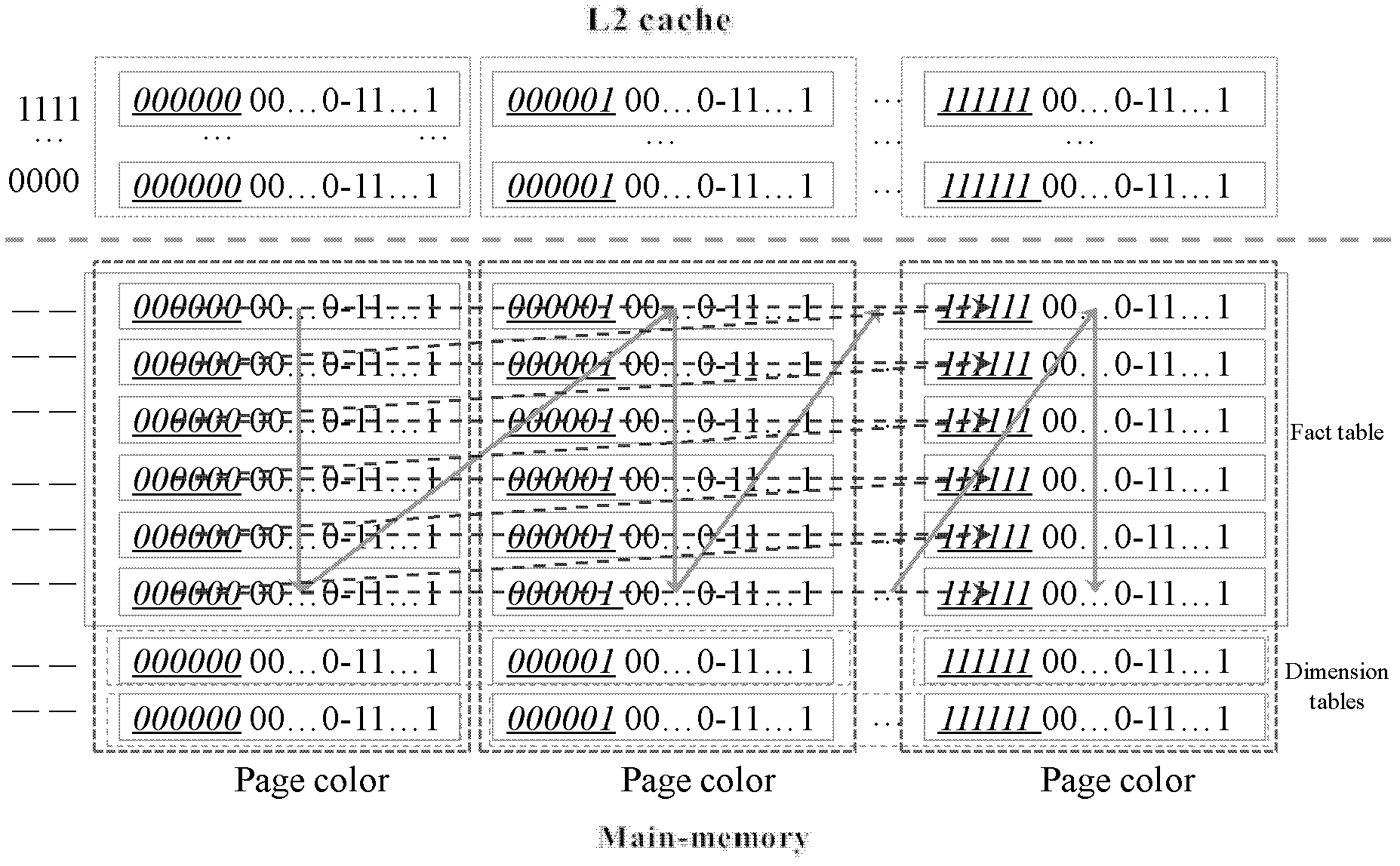

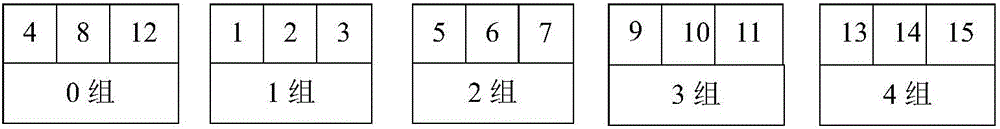

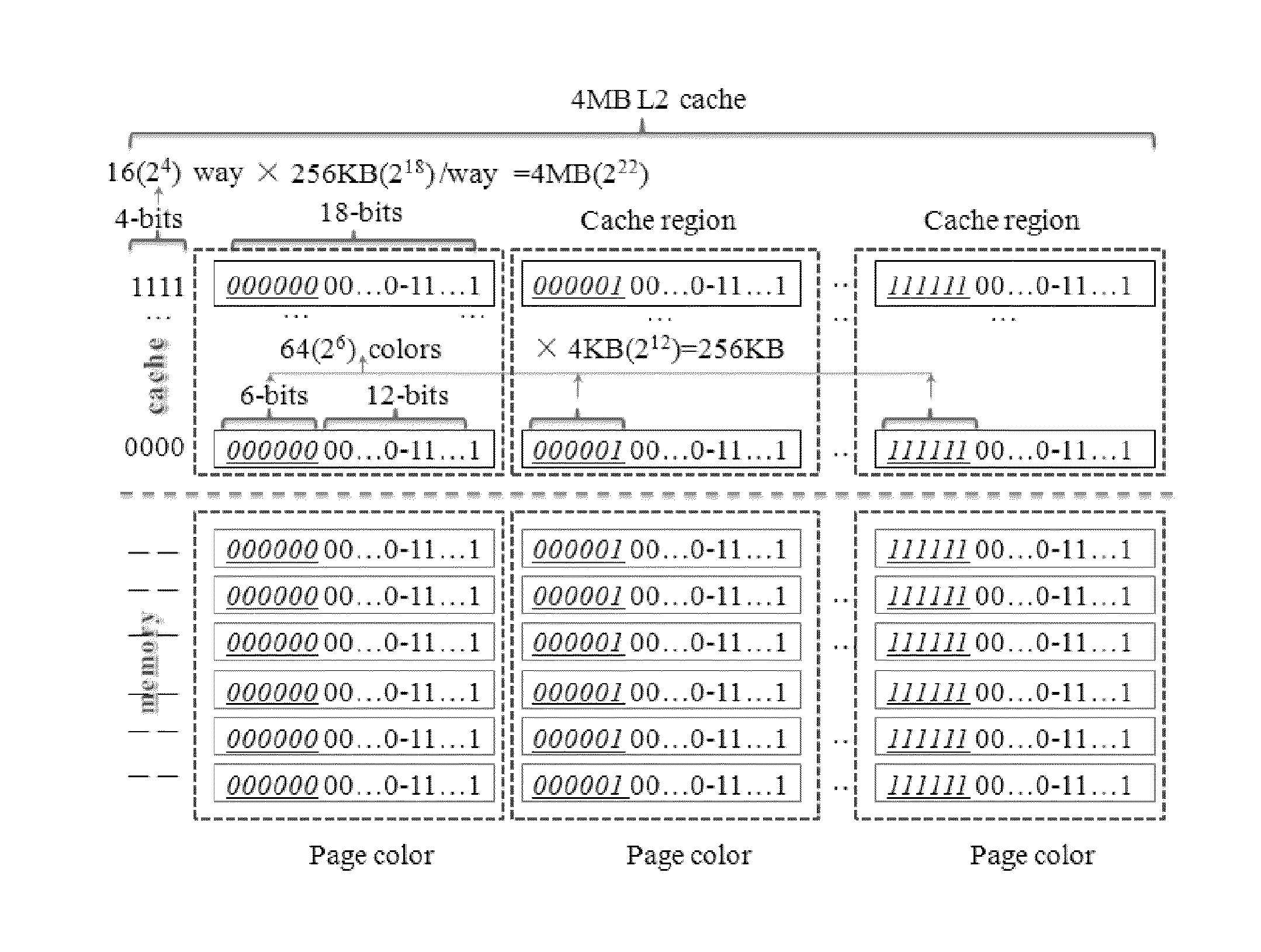

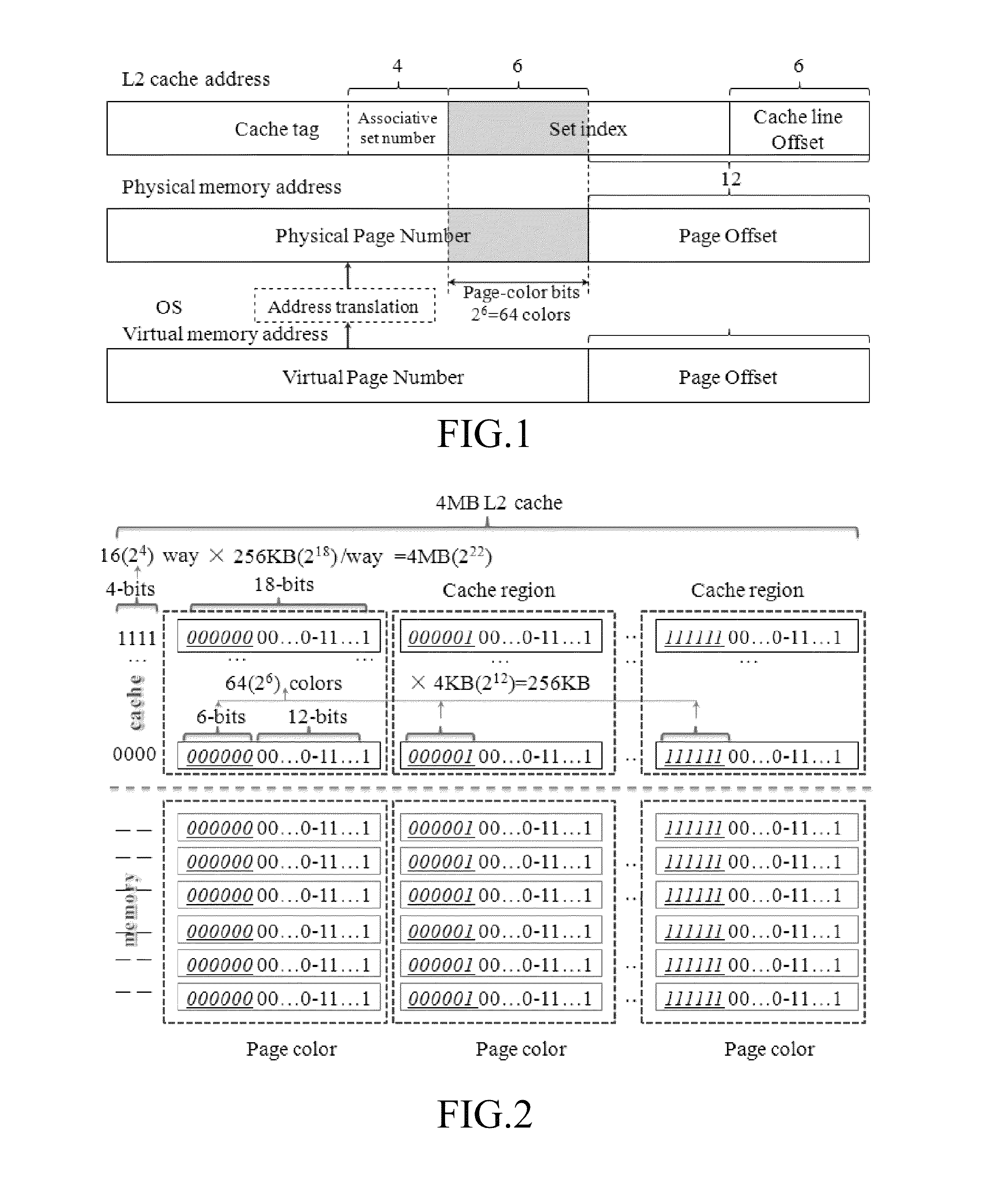

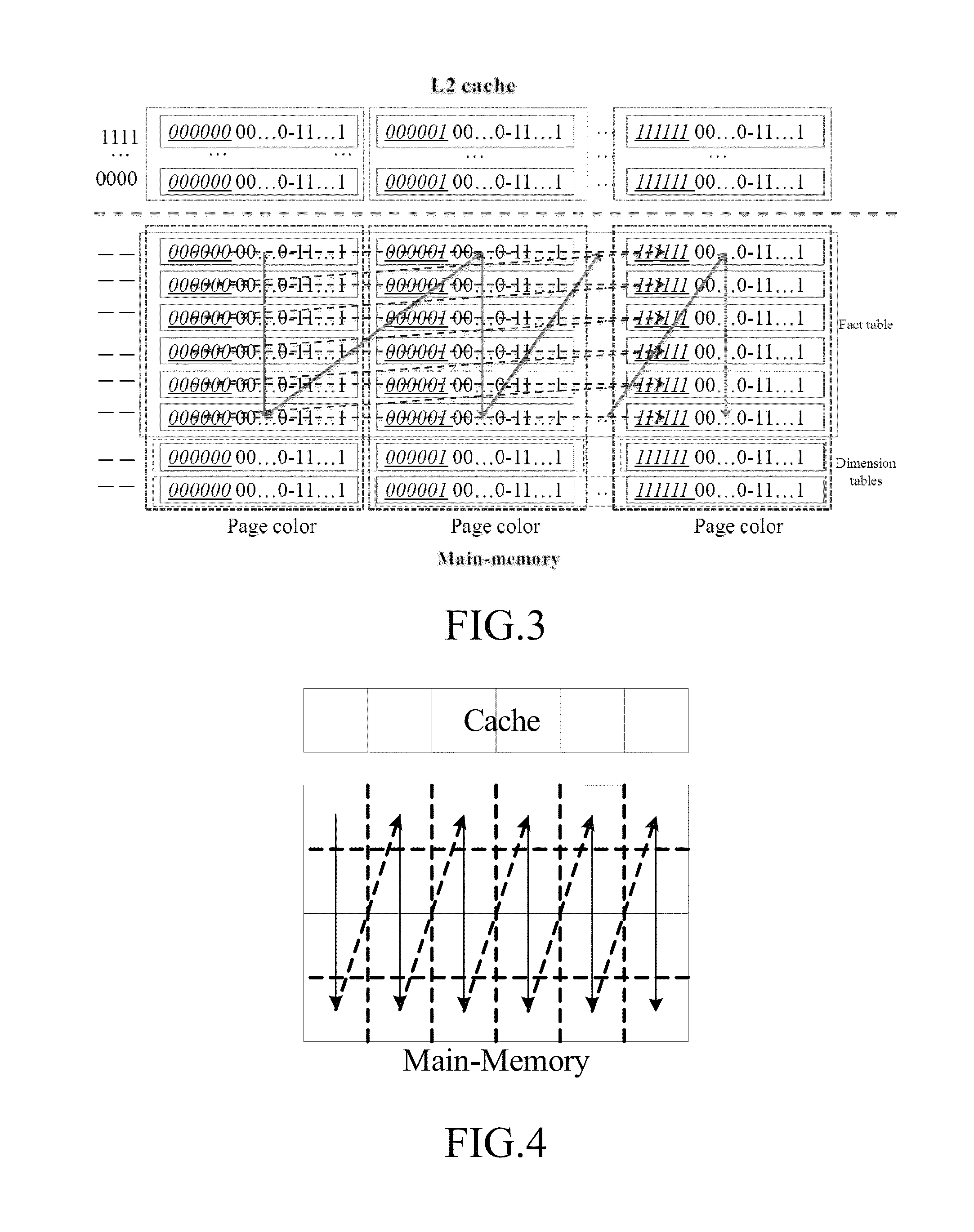

Access Optimization Method for Main Memory Database Based on Page-Coloring

InactiveUS20130275649A1Reduce conflictReduce cache miss rateMemory architecture accessing/allocationMemory adressing/allocation/relocationComplete dataData set

An access optimization method for a main memory database based on page-coloring is described. An access sequence of all data pages of a weak locality dataset is ordered by page-color, and all the data pages are grouped by page-color, and then all the data pages of the weak locality dataset are scanned in a sequence of page-color grouping. Further, a number of memory pages having the same page-color are preset as a page-color queue, in which the page-color queue serves as a memory cache before a memory page is loaded into a CPU cache; the data page of the weak locality dataset first enters the page-color queue in an asynchronous mode, and is then loaded into the CPU cache to complete data processing. Accordingly, cache conflicts between datasets with different data locality strengths can be effectively reduced.

Owner:RENMIN UNIVERSITY OF CHINA

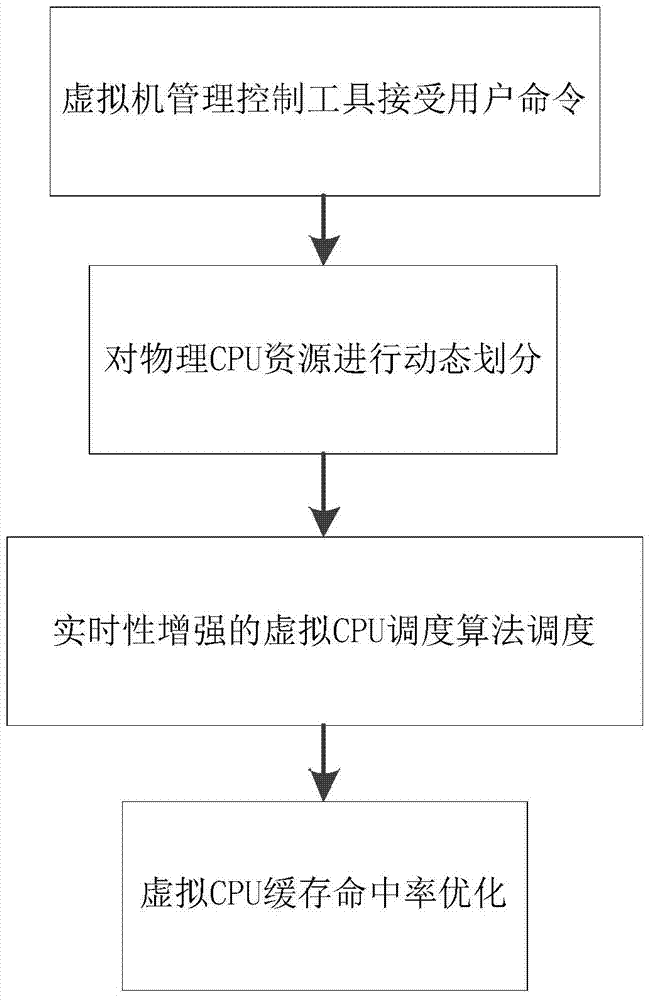

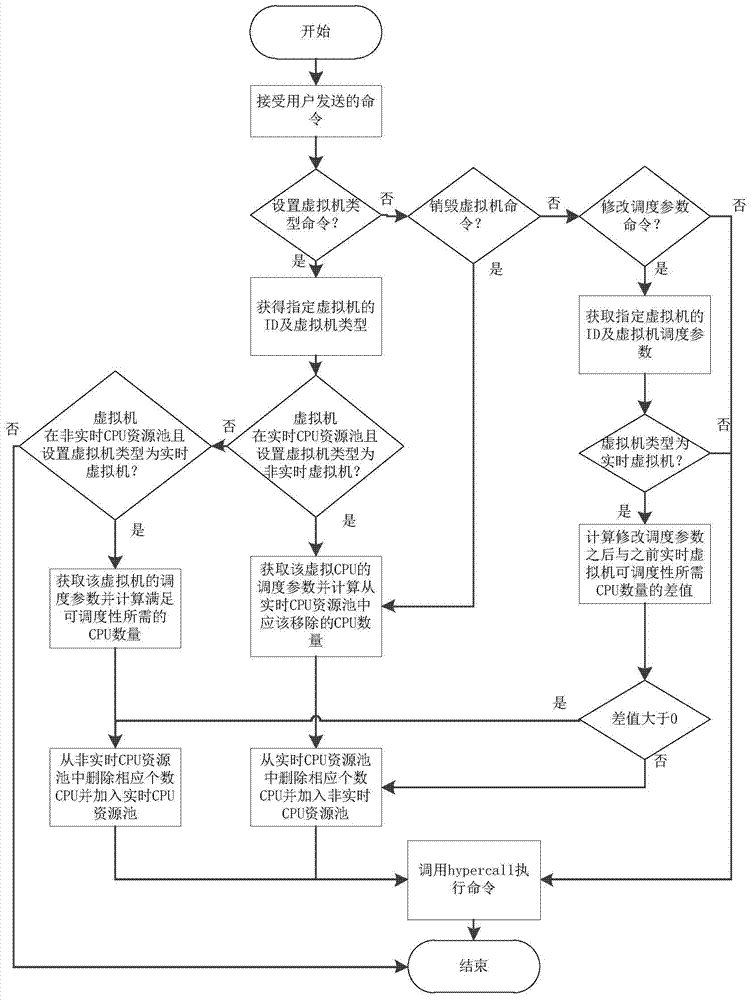

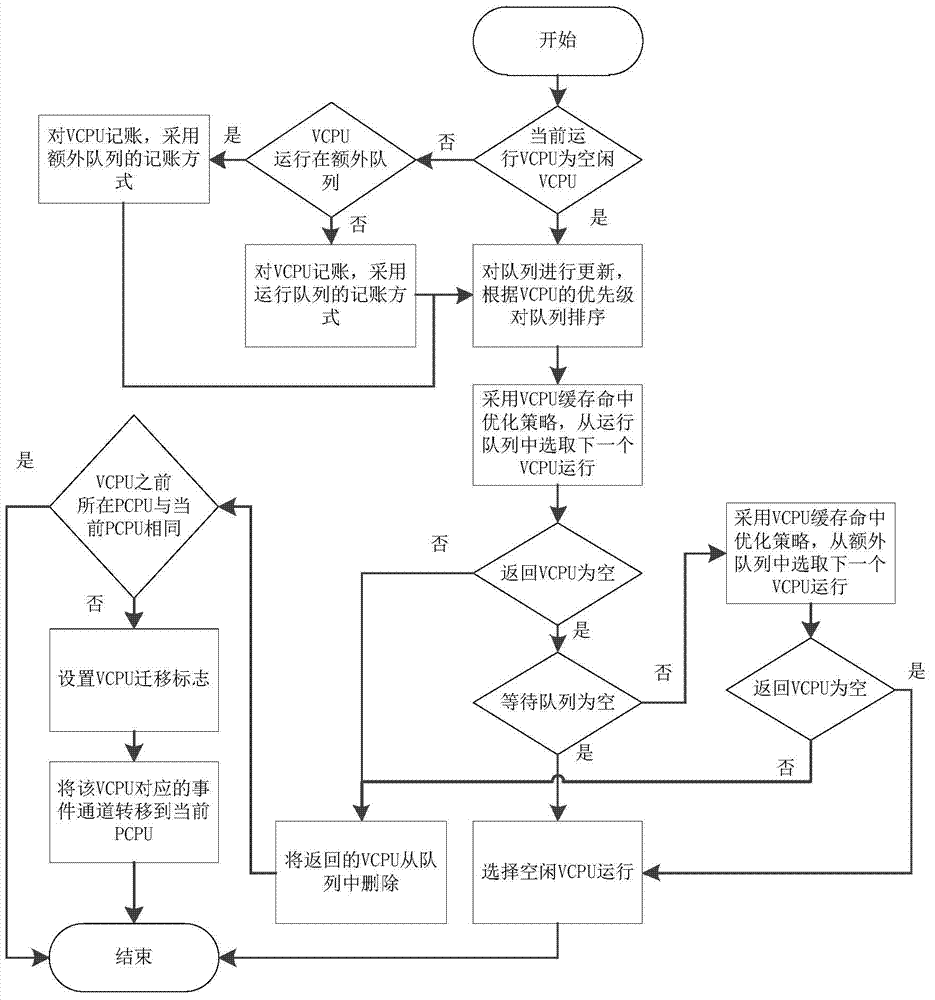

Virtual CPU scheduling method capable of enhancing real-time performance

ActiveCN103678003AFlexible settingsConducive to differential treatmentResource allocationSoftware simulation/interpretation/emulationNon real timeResource pool

The invention discloses a virtual CPU scheduling method capable of enhancing real-time performance. The virtual CPU scheduling method comprises the following steps: a virtual machine management control tool accepts a command that a user operates a virtual machine and schedule parameters; the management control tool judges whether a user command is related with a real-time virtual machine, conditions for meeting schedulability of the real-time virtual machine are calculated if the user command is related with the real-time virtual machine, and physical CPU resources are dynamically partitioned according to the calculation result; scheduling is carried out by adopting the virtual CPU scheduling method capable of enhancing real-time performance, a global earliest deadline priority scheduling algorithm is applied to a CPU resource pool operating the real-time virtual machine, and a limit scheduling algorithm is applied to a CPU resource pool operating a non-real time virtual machine; optimization of virtual CPU caching and hitting is conducted on the global earliest deadline priority scheduling algorithm. According to the virtual CPU scheduling method capable of enhancing real-time performance, real-time performance of the real-time virtual machine is guaranteed, a good isolation property is provided for partitioning the CPU recourses, and influence on performance of the non-real time virtual machine is reduced.

Owner:HUAZHONG UNIV OF SCI & TECH

Main memory database access optimization method on basis of page coloring technology

ActiveCN102663115AResolve dependenciesSolve space problemsSpecial data processing applicationsMemory systemsIn-memory databaseData set

The invention discloses a main memory database access optimization method on the basis of a page coloring technology. The method comprises the following steps of: firstly, carrying out sorting on an access sequence of all data pages of a weak locality dataset according to page colors and carrying out grouping on all the data pages according to the page colors; then scanning all the data pages of the weak locality dataset according to a sequence of grouping the page colors; furthermore, presetting a plurality of memory pages with the same page color into a page color queue, wherein the page color queue is used as a memory cache before the memory pages are uploaded into a CPU (Central Processing Unit) cache; and ensuring the data pages of the weak locality dataset to firstly enter the page color queue by an asynchronous mode and then be uploaded into the CPU cache to complete the data processing. The invention can solve the problem that in the main memory database application, a cache address space cannot be optimized and distributed for processes, threads or the datasets by the page colors, and effectively reduces cache conflicts between the datasets with different data locality intensities.

Owner:RENMIN UNIVERSITY OF CHINA

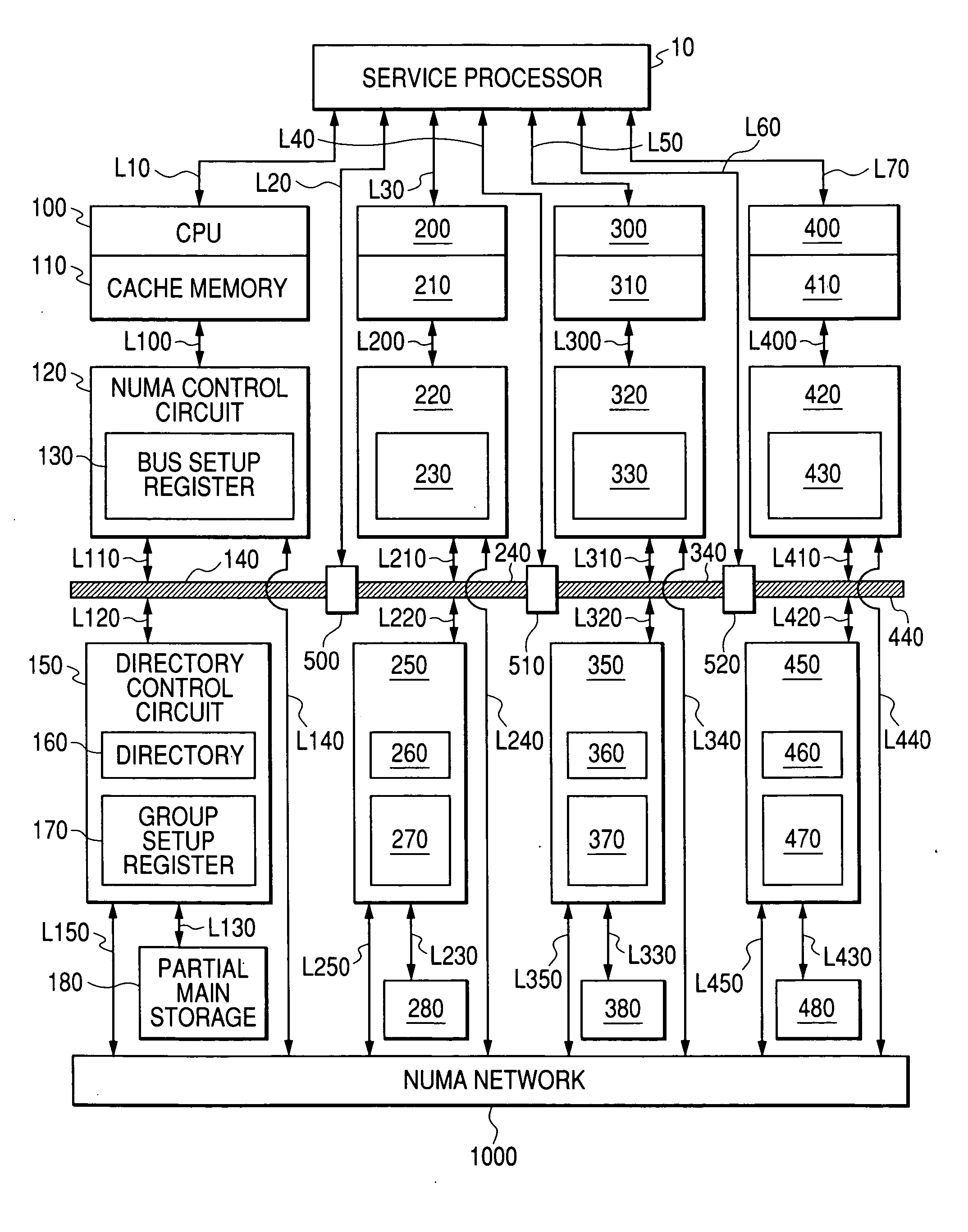

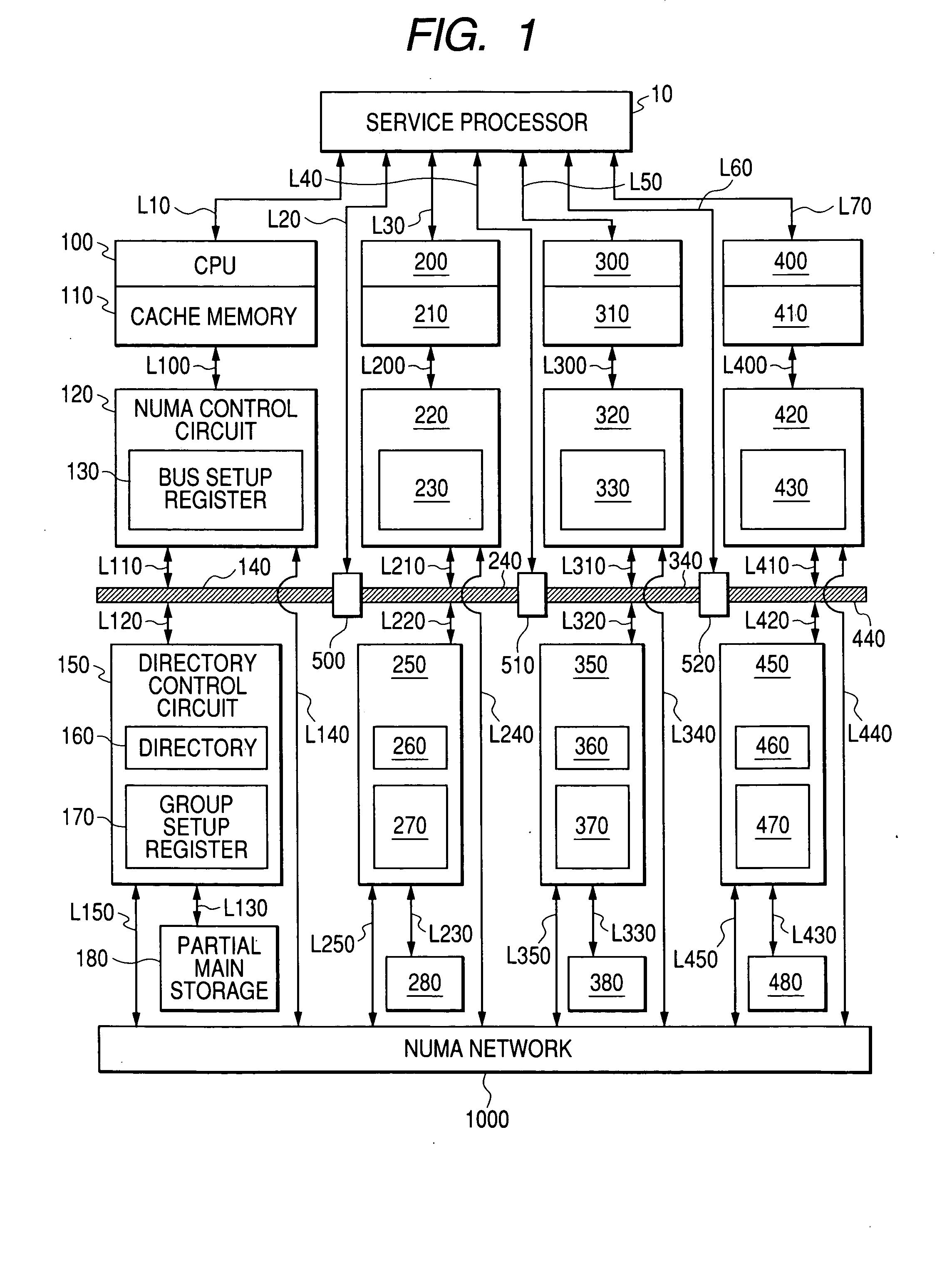

Multiprocessor system

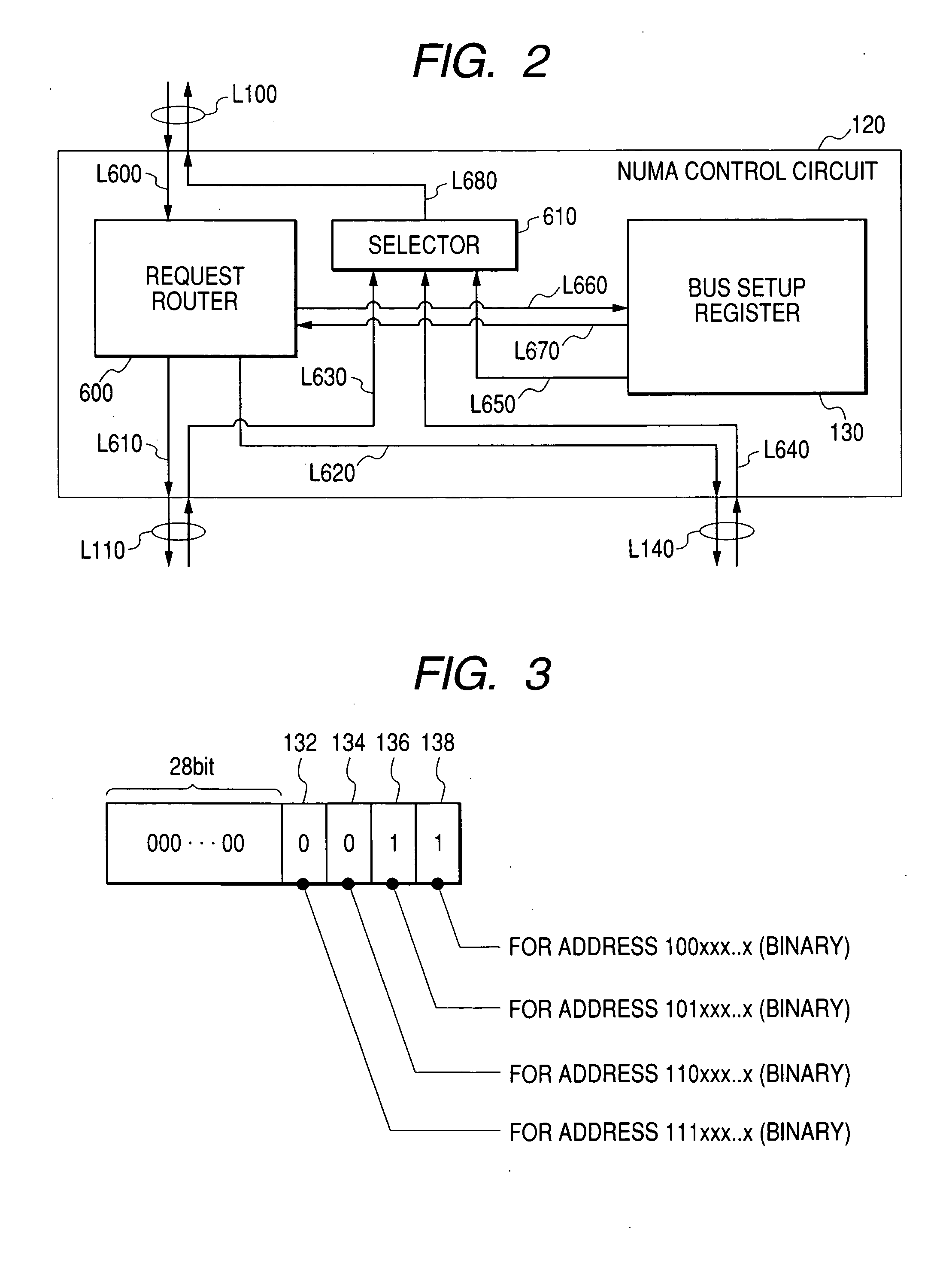

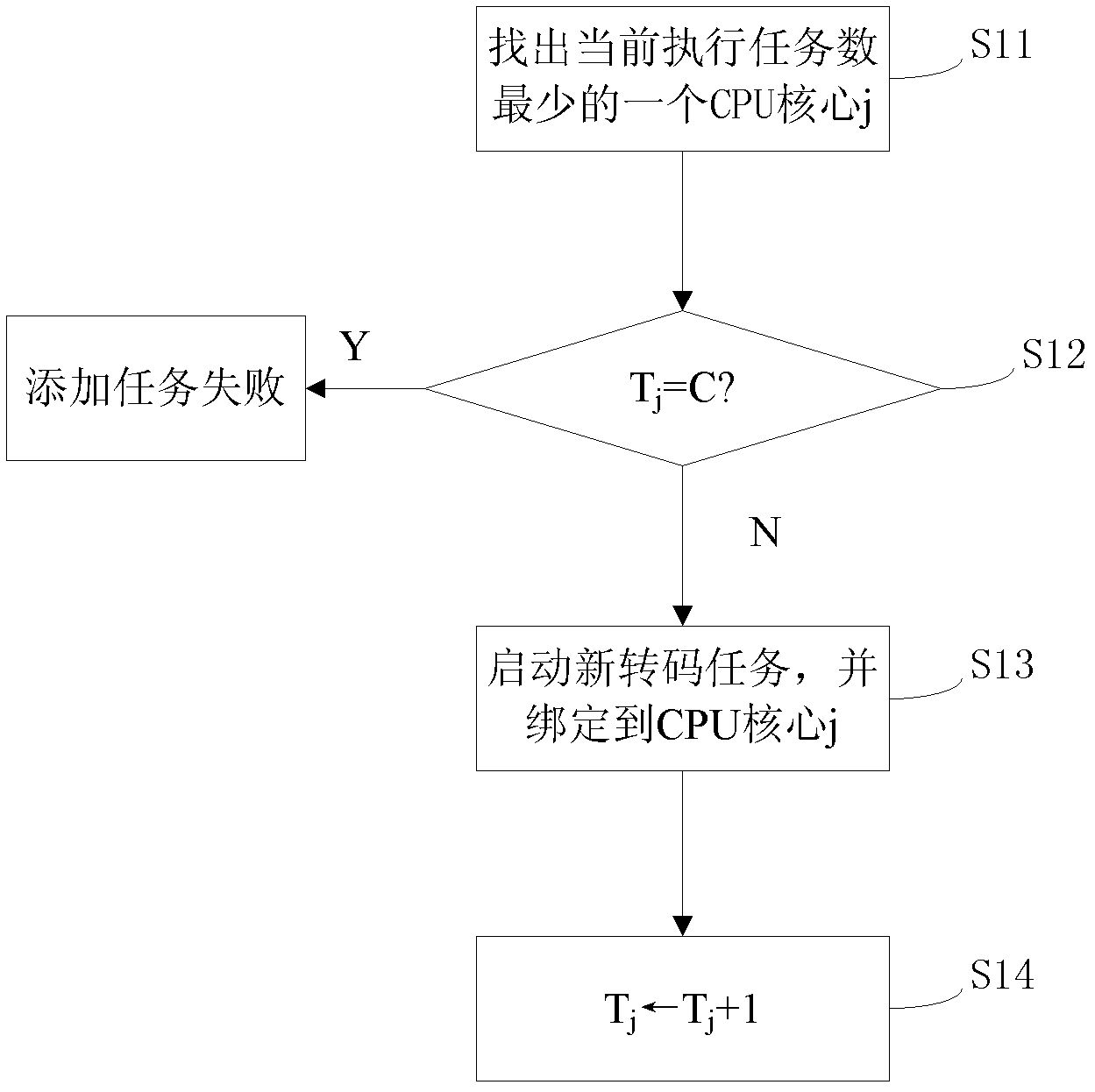

InactiveUS20050102477A1High speed machiningMemory adressing/allocation/relocationDigital computer detailsCache invalidationMulti processor

A splittable / connectible bus 140 and a network 1000 for transmitting coherence transactions between CPUs are provided between the CPUs, and a directory 160 and a group setup register 170 for storing bus-splitting information are provided in a directory control circuit 150 that controls cache invalidation. The bus is dynamically set to a split or connected state to fit a particular execution form of a job, and the directory control circuit uses the directory in order to manage all inter-CPU coherence control sequences in response to the above setting, while at the same time, in accordance with information of the group setup register, omitting dynamically bus-connected CPU-to-CPU cache coherence control, and conducting only bus-split CPU-to-CPU cache coherence control through the network. Thus, decreases in performance scalability due to an inter-CPU coherence-processing overhead are relieved in a system having multiple CPUs and guaranteeing inter-CPU cache coherence by use of hardware.

Owner:HITACHI LTD

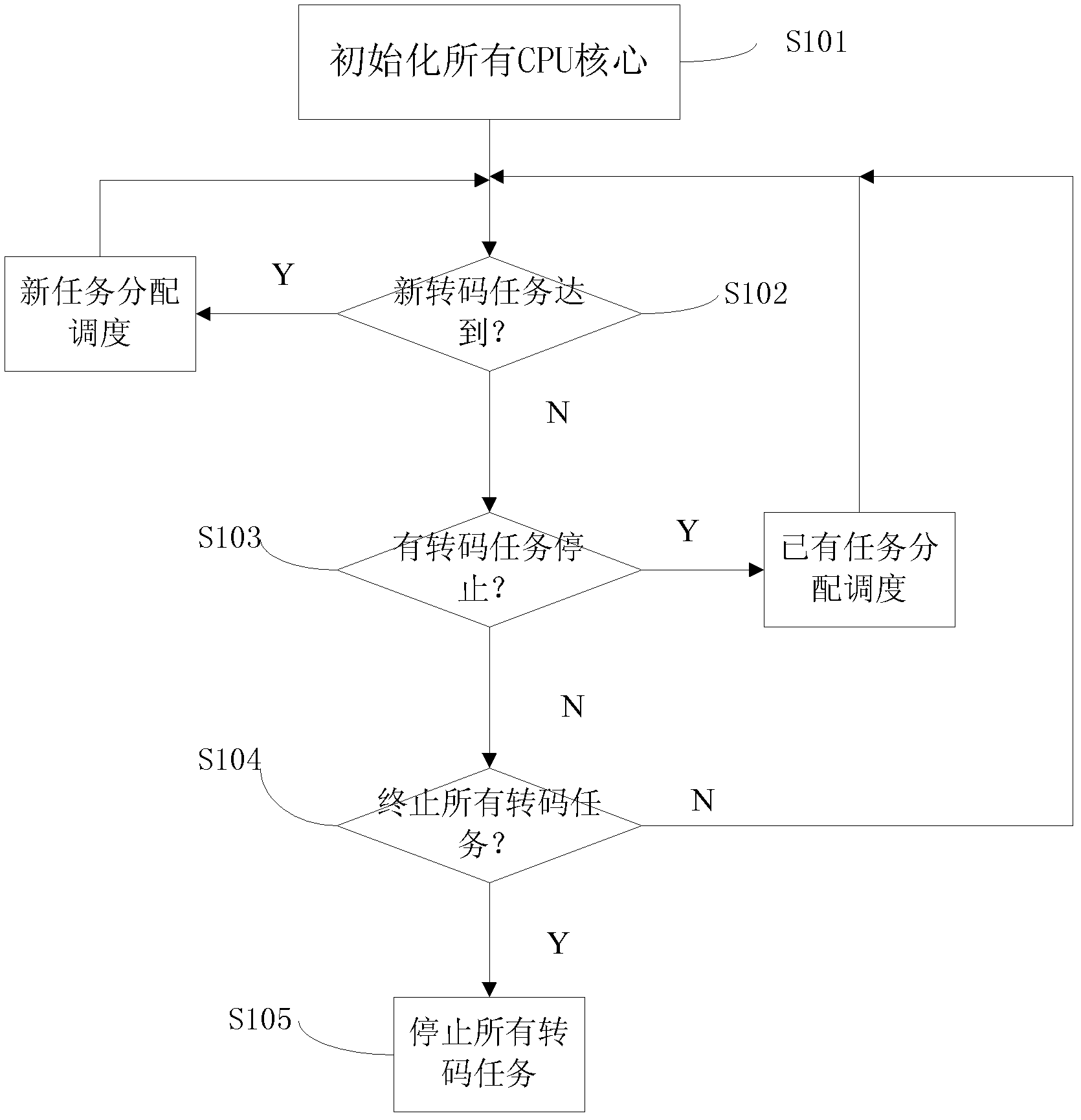

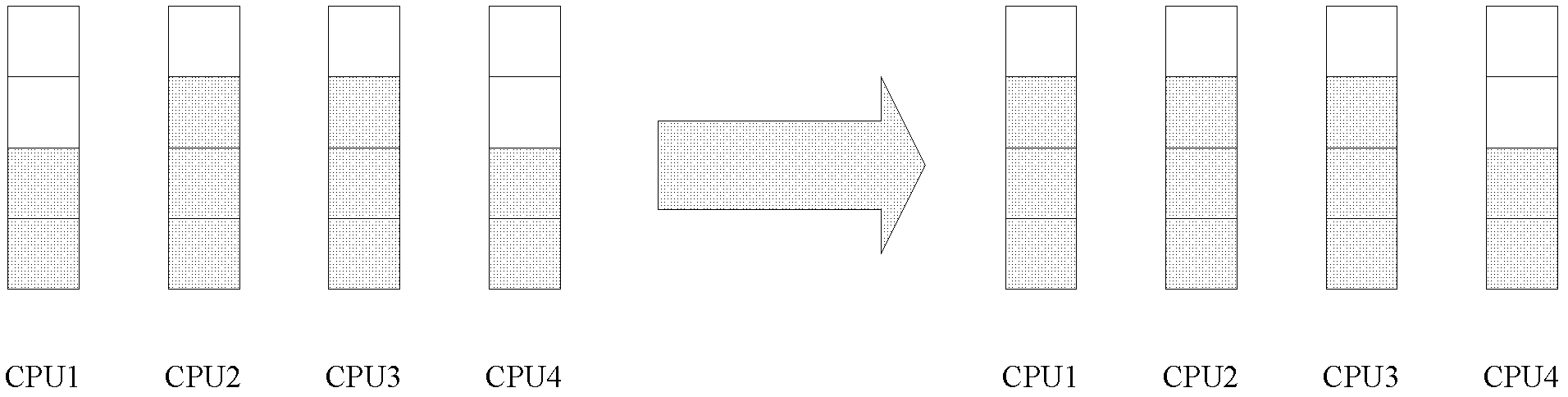

Multi-core CPU (central processing unit) video transcoding scheduling method and multi-core CPU video transcoding scheduling system

InactiveCN102325255ALoad balancingImprove hit rateResource allocationTelevision systemsTranscodingCPU cache

The invention discloses a multi-core CPU video transcoding scheduling method, which belongs to the field of video transcoding. Before a scheduling scheme is implemented, a static pressure test is carried out for a transcoding server in order to measure the maximum concurrent transcoding task number of a single CPU core; the scheduling scheme is started; the number of currently executed tasks of all the CPU cores is initialized as 0; if a new transcoding task arrives, then scheduling is carried out according to an allocation and scheduling method for new tasks; if a transcoding task is stopped, then scheduling is carried out according to an allocation and scheduling method for existing tasks; if a message for stopping all transcoding tasks is received, then a program is closed, and otherwise, the method rejudges whether a new transcoding task arrives, and carries out circular processing. The invention ensures that the transcoding tasks can be operated in the same CPU core to the greatest extent, thus effectively increasing the hit rate of the CPU cache and the operation efficiency of the system, and moreover, the loads of all the CPU cores can be balanced to the greatest extent.

Owner:深圳烘酷达科技技术有限公司

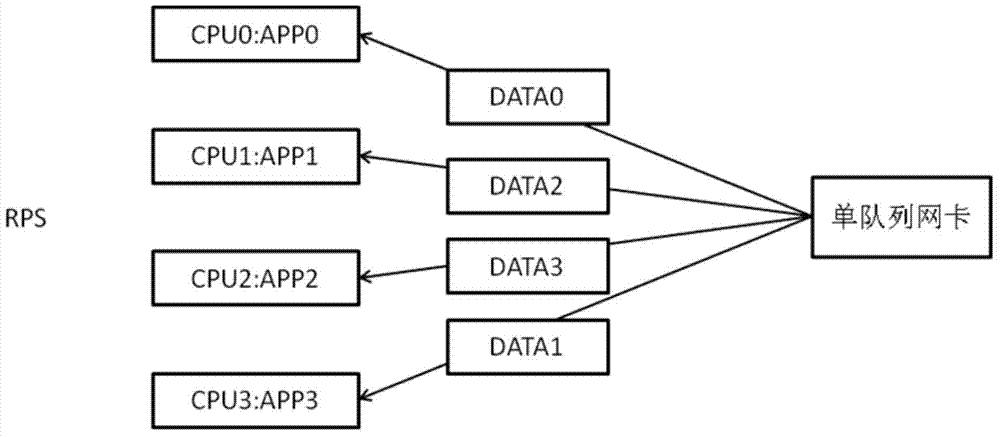

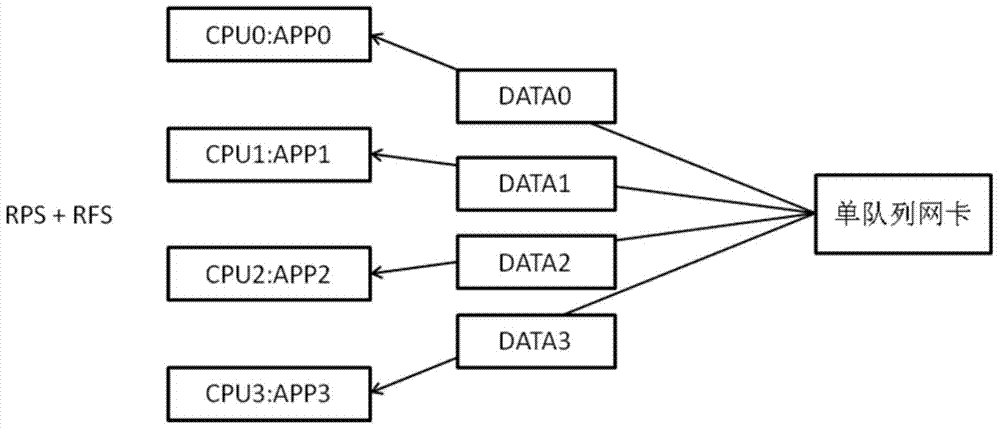

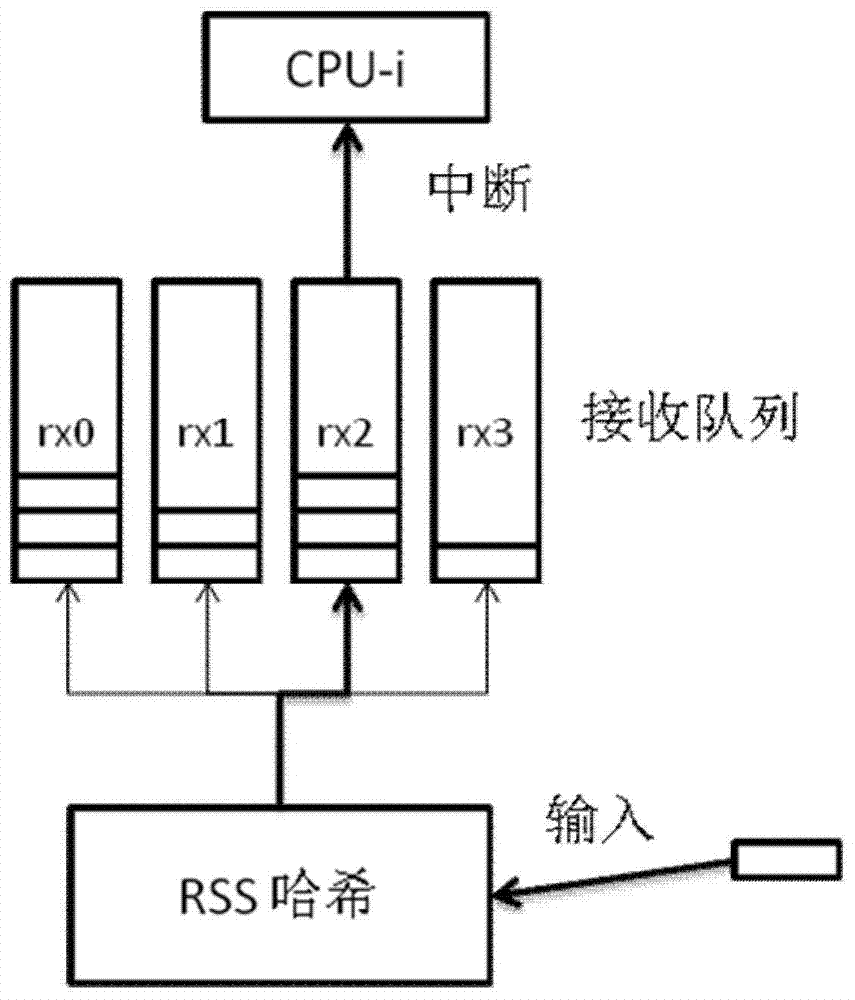

Method for improving performance of multiprocess programs of application delivery communication platforms

InactiveCN104281493AImprove cache hit ratioProgram initiation/switchingResource allocationNetwork packetCache hit rate

The invention discloses a method for improving performance of multiprocess programs of application delivery communication platforms. The method includes hashing data packets to network card queues according to source IPs (internet protocols); binding the data packets in the network card queues to corresponding CPU (central processing unit) cores; binding the data packets received by the CPU cores with corresponding processes to process; respectively creating service programs for the processes, setting the service processes as REUSEPORT options, and binding IPs (internet protocols) and ports; running the corrected service programs, and adjusting the number of queues enabled by multi-queue network cards according to the number of the service processes; enabling each service process to be bond with one CUP core. By the method, hard interruption and soft interruption of the network cards can be balanced, CPU cores used for receiving and sending the data packets are the same, and thus, CPU cache hit ratio is increased.

Owner:般固(北京)网络科技有限公司

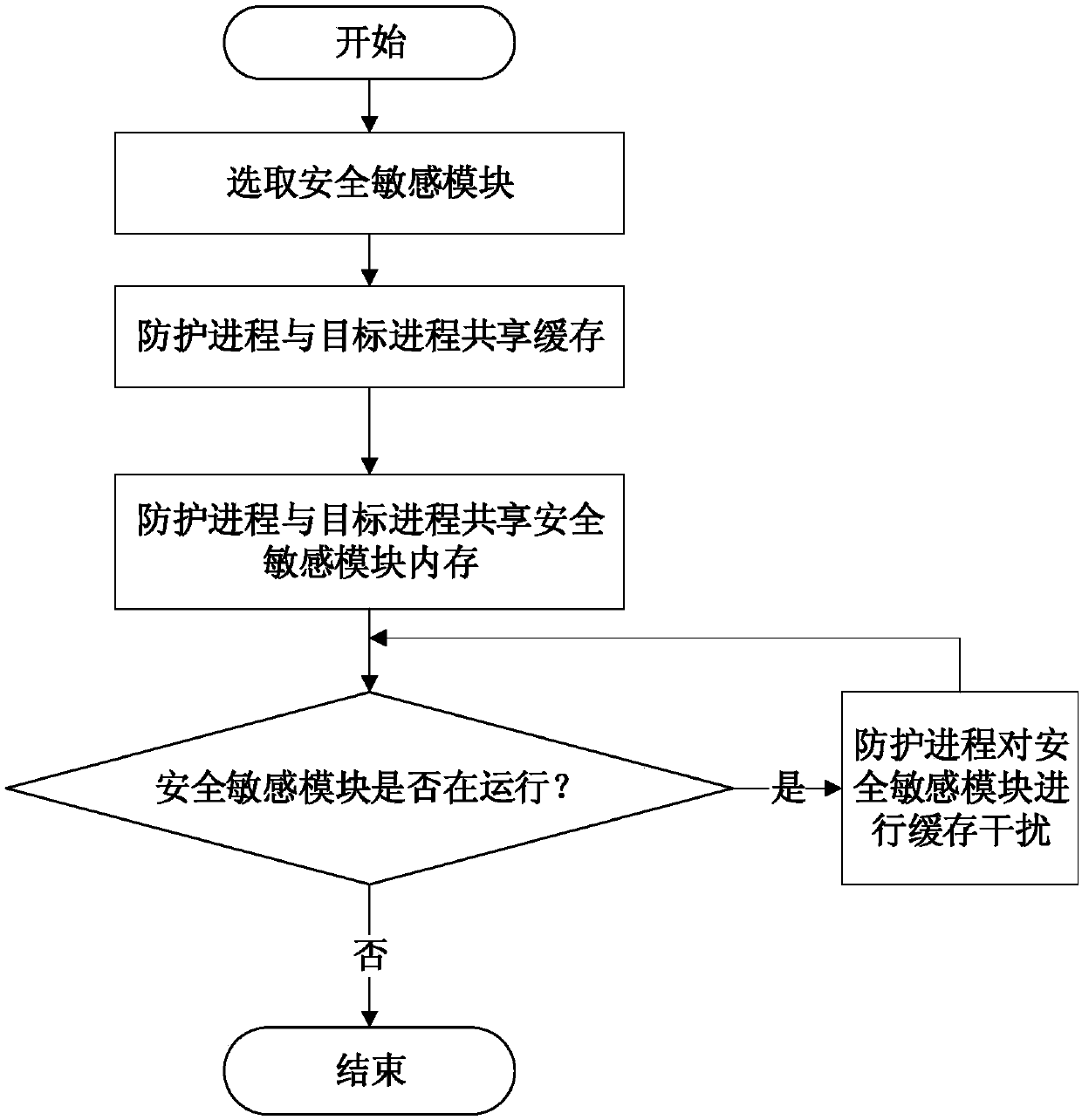

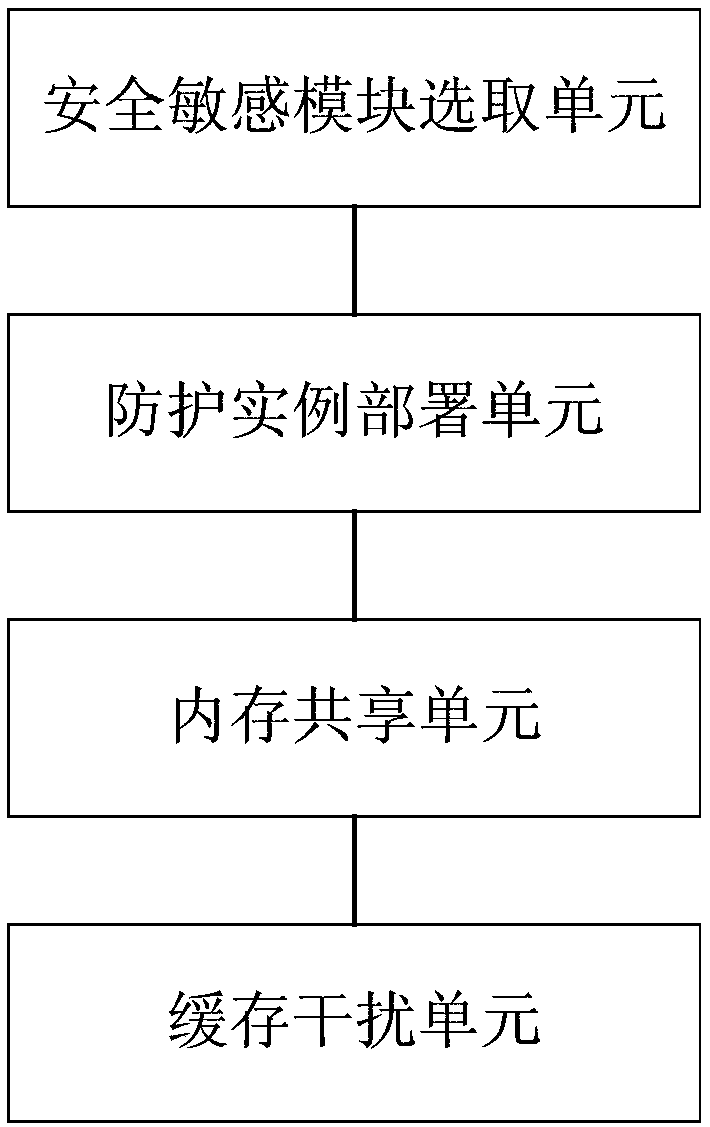

Flush-Reload cache side channel attack defense method and device in cloud environment

ActiveCN107622199AProtect private informationReduce aggressionInterprogram communicationPlatform integrity maintainanceUser privacyComputer module

The invention relates to a Flush-Reload cache side channel attack defense method and device in a cloud environment. The method comprises the following steps: 1) selecting a safety-sensitive module tobe protected; 2) sharing CPU (Central Processing Unit) caches between a protection process and a target process; 3) sharing the memory of the safety-sensitive module between the protection process andthe target process; and 4) when the target process runs the safety-sensitive module, obfuscating the shared memory of the safety-sensitive module according to a certain strategy by the protection process to interfere a caching state in order to defend Flush-Reload cache side channel attacks. Through adoption of the Flush-Reload cache side channel attack defense method and device, noise is continuously introduced into a high-speed cache channel utilized by Flush-Reload attacks in order to interfere an attack instance, so that user privacy information can be protected effectively.

Owner:INST OF INFORMATION ENG CHINESE ACAD OF SCI

Method to quickly warp a 2-D image using only integer math

InactiveUS7379623B2Good reliefEnhance the imageColor signal processing circuitsGeometric image transformationComputer graphics (images)Floating point

Systems and methods are disclosed that facilitate rapidly warping a two-dimensional image using integer math. A warping table can contain two-dimensional floating point output pixel offset values that are mapped to respective input pixel locations in a captured image. The warping table values can be pre-converted to integer offset values and integer grid values mapped to a sub-pixel grid. During warping, each output pixel can be looked up via its integer offset value, and a one-dimensional table lookup for each pixel can be performed to interpolate pixel data based at least in part on the integer grid value of the pixel. Due to the small size of the lookup tables, lookups can potentially be stored in and retrieved from a CPU cache, which stores most recent instructions to facilitate extremely rapid warping and fast table lookups.

Owner:MICROSOFT TECH LICENSING LLC

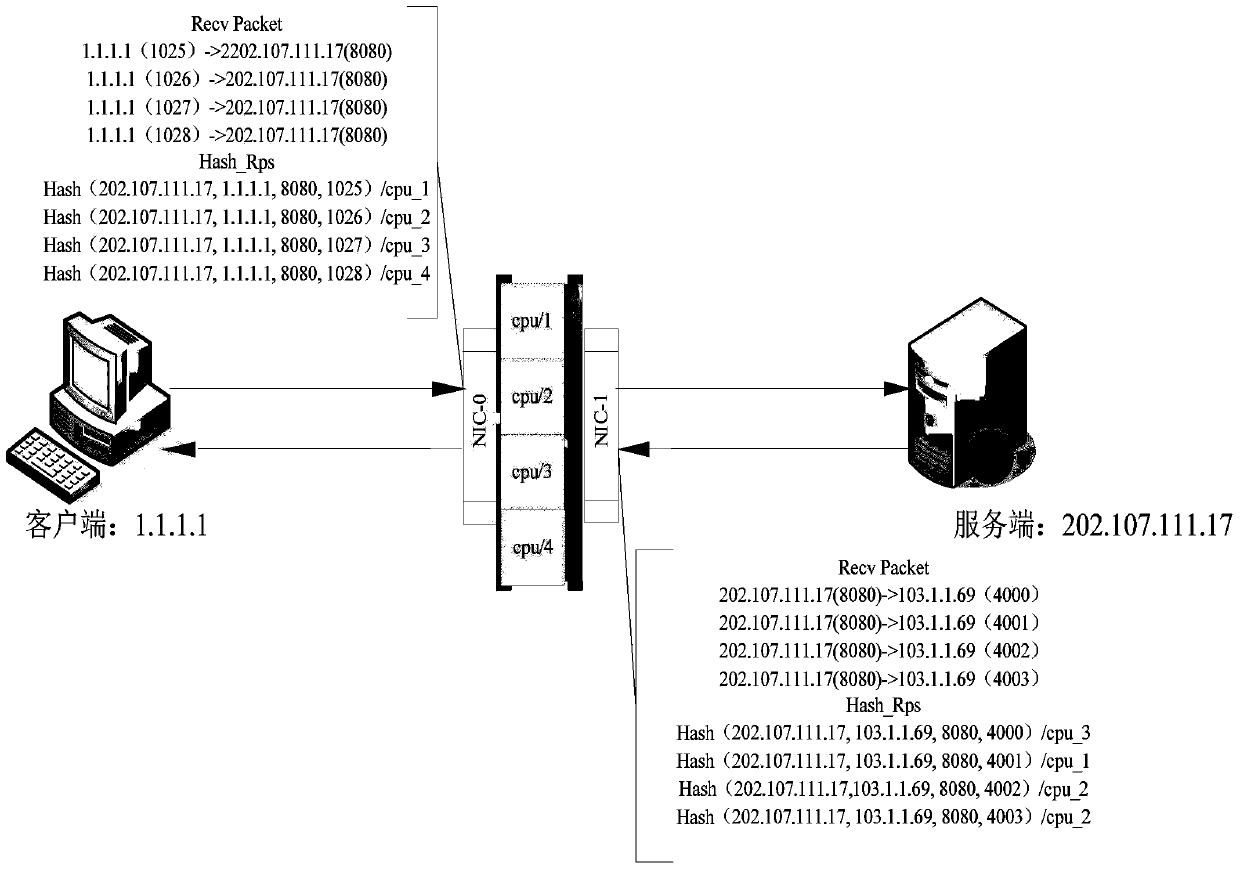

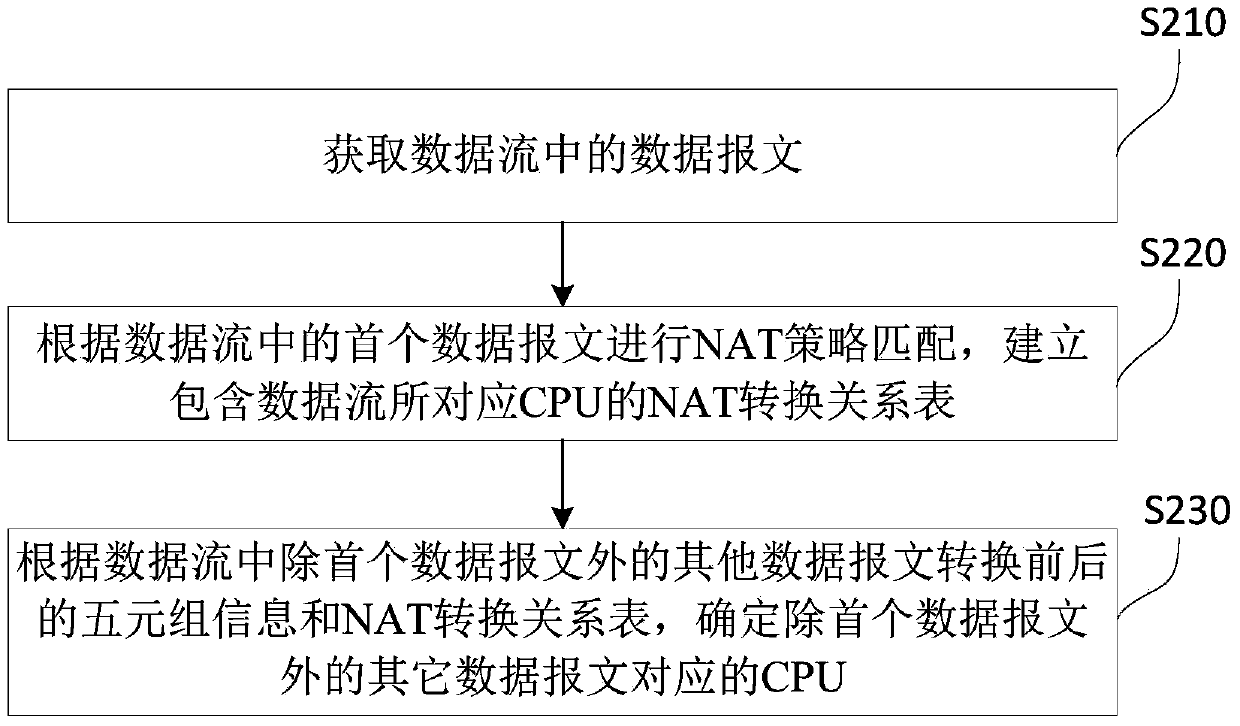

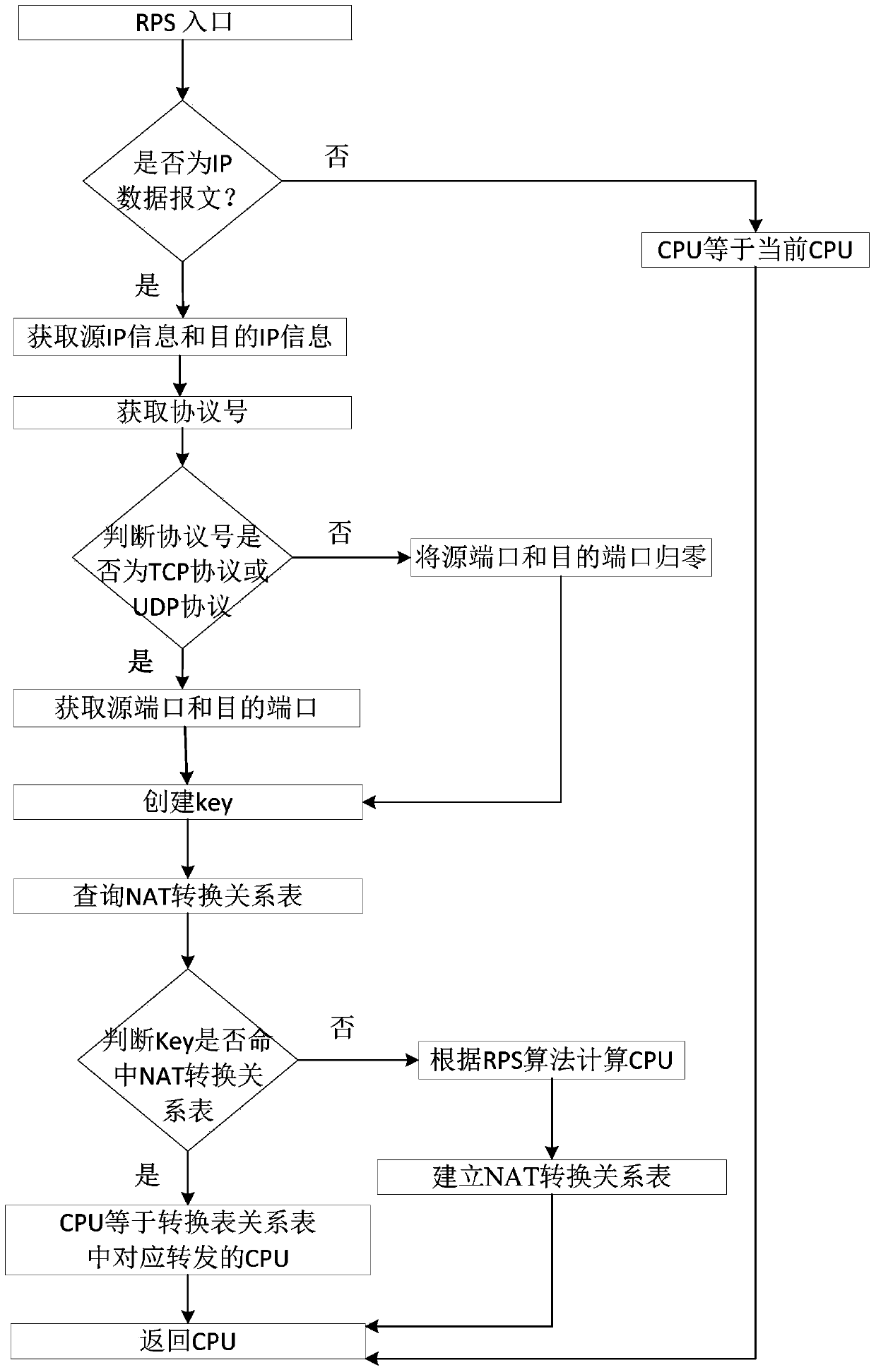

Method, device and system for forwarding network data messages

ActiveCN103475586AReduce usageReduce jitterData switching networksNetwork address port translationCPU cache

The invention provides a method, a device and a system for forwarding network data messages. The method comprises the following steps: acquiring data messages in a data stream according to the received data stream; performing network address translation (NAT) strategy matching according to the first data message in the data stream, and establishing an NAT relation table; and determining a central processing unit (CPU) for correspondingly forwarding other data messages except the first data message according to quintuple information before and after the other data messages except the first data message in the data stream are translated and the NAT relation table. By adopting the method, the device and the system, the data messages in the same data stream can be distributed to the same CPU for processing in the presence or absence of a network address port translation (NAPT) / NAT scene, and use of a lock and jitter of a CPU cache are reduced, so that the network forwarding performance is promoted.

Owner:NEUSOFT CORP

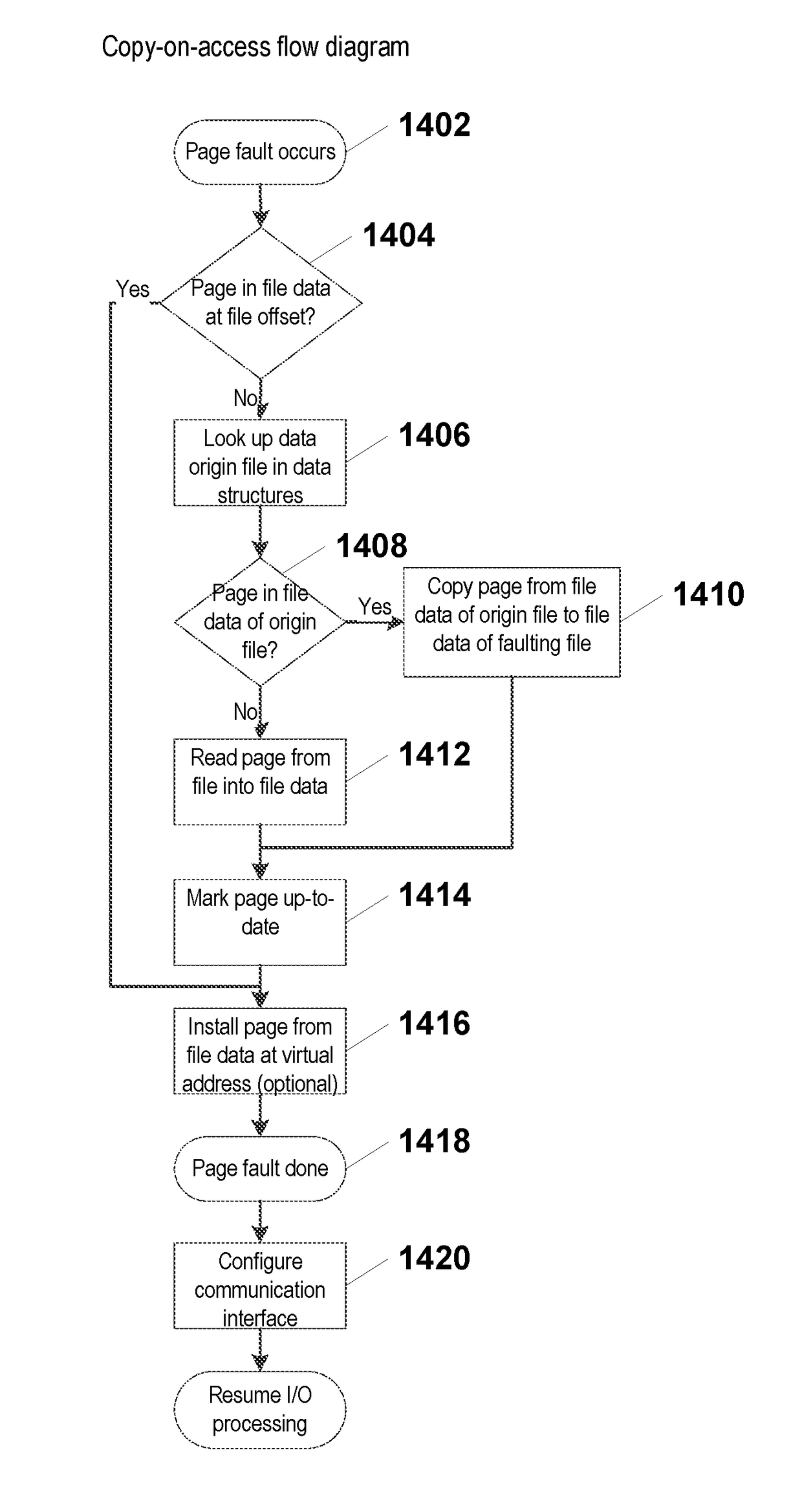

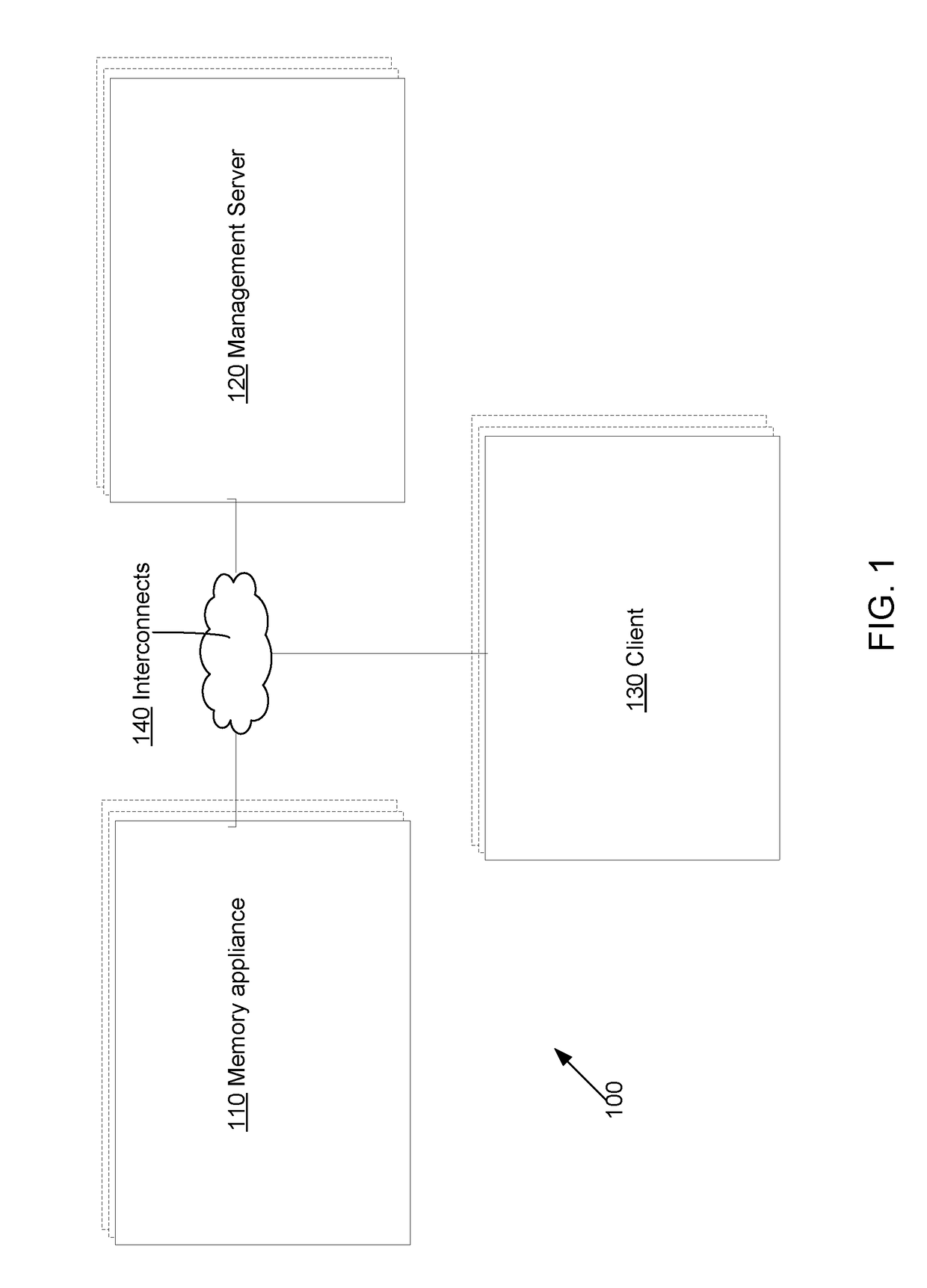

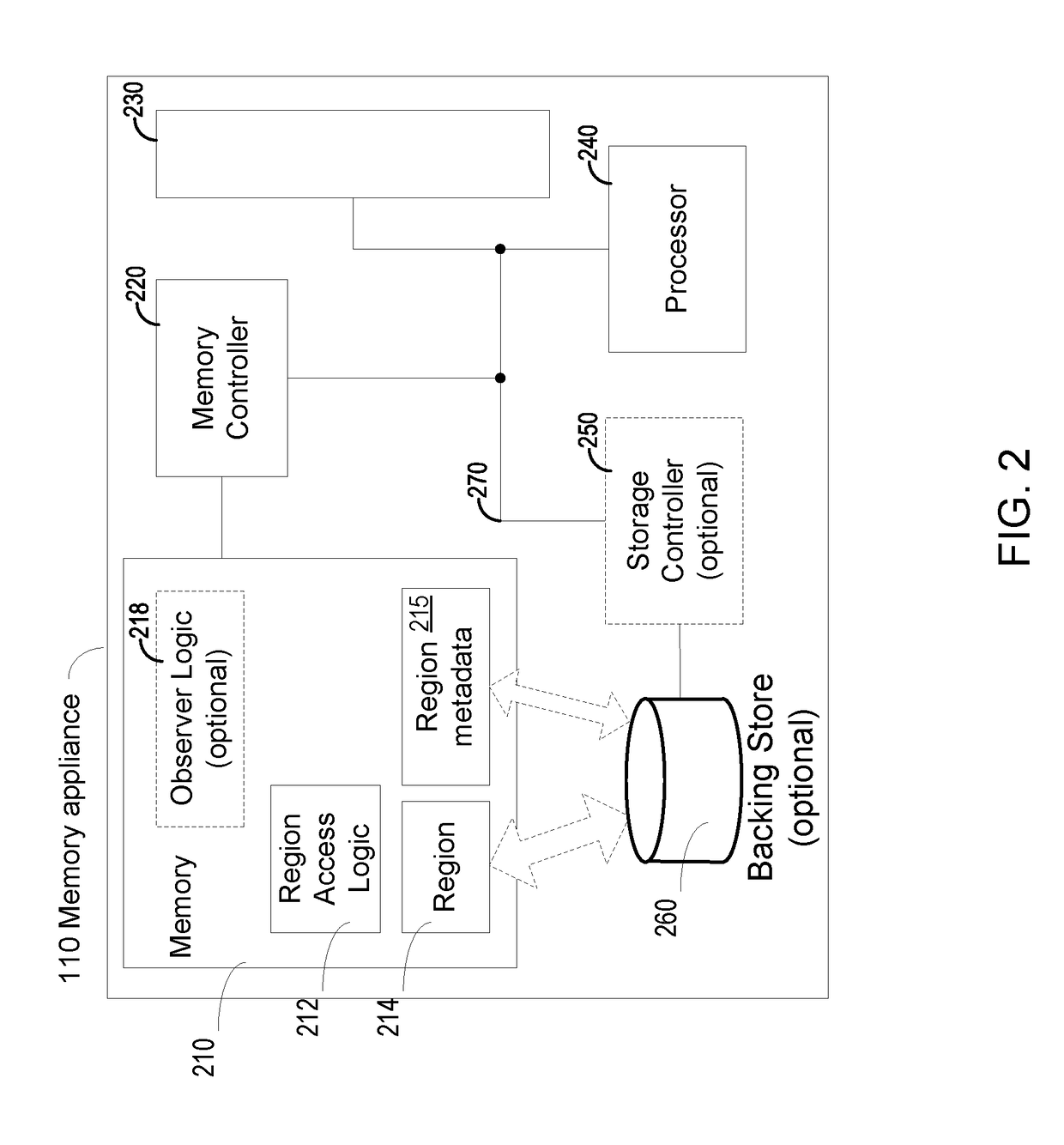

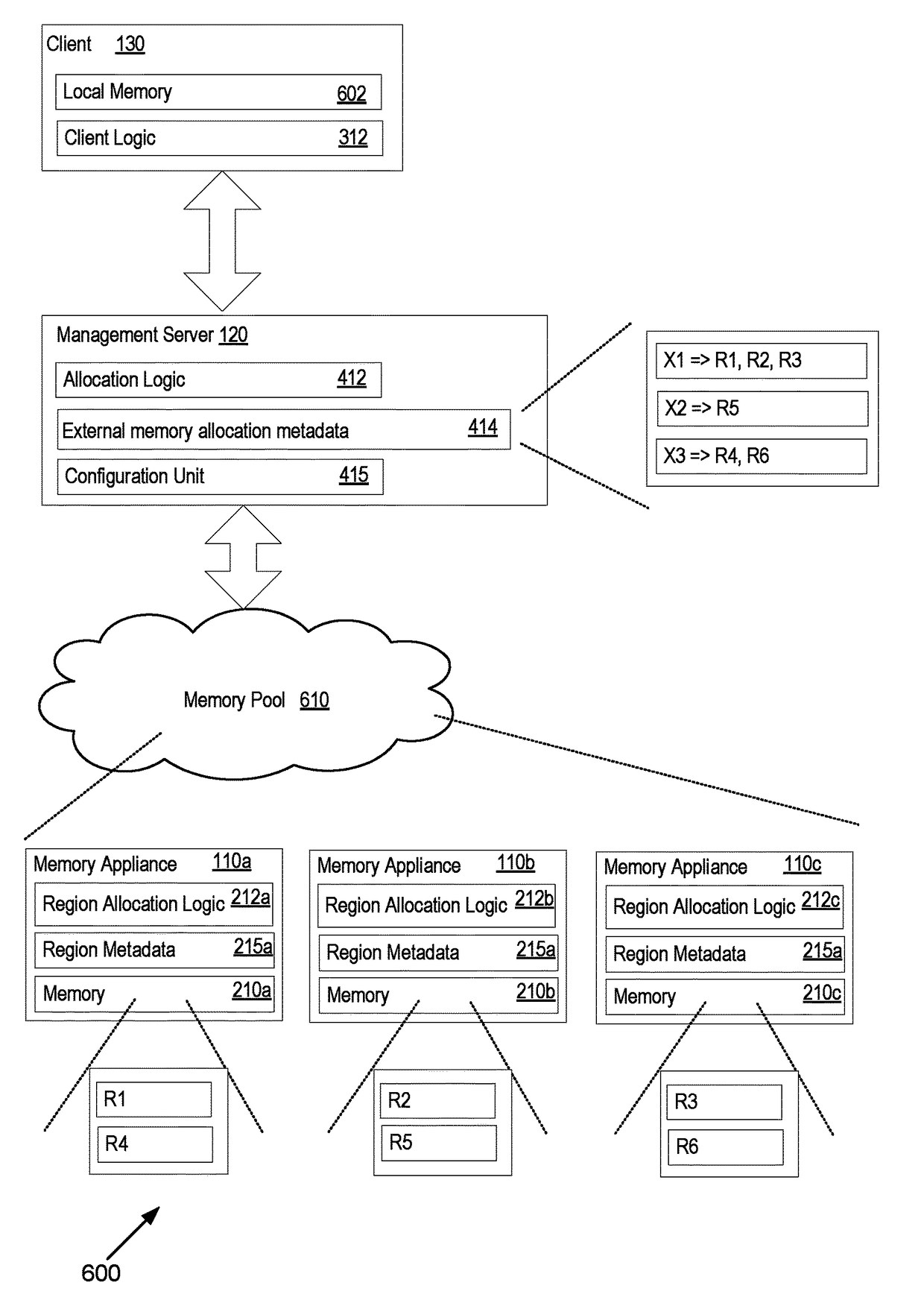

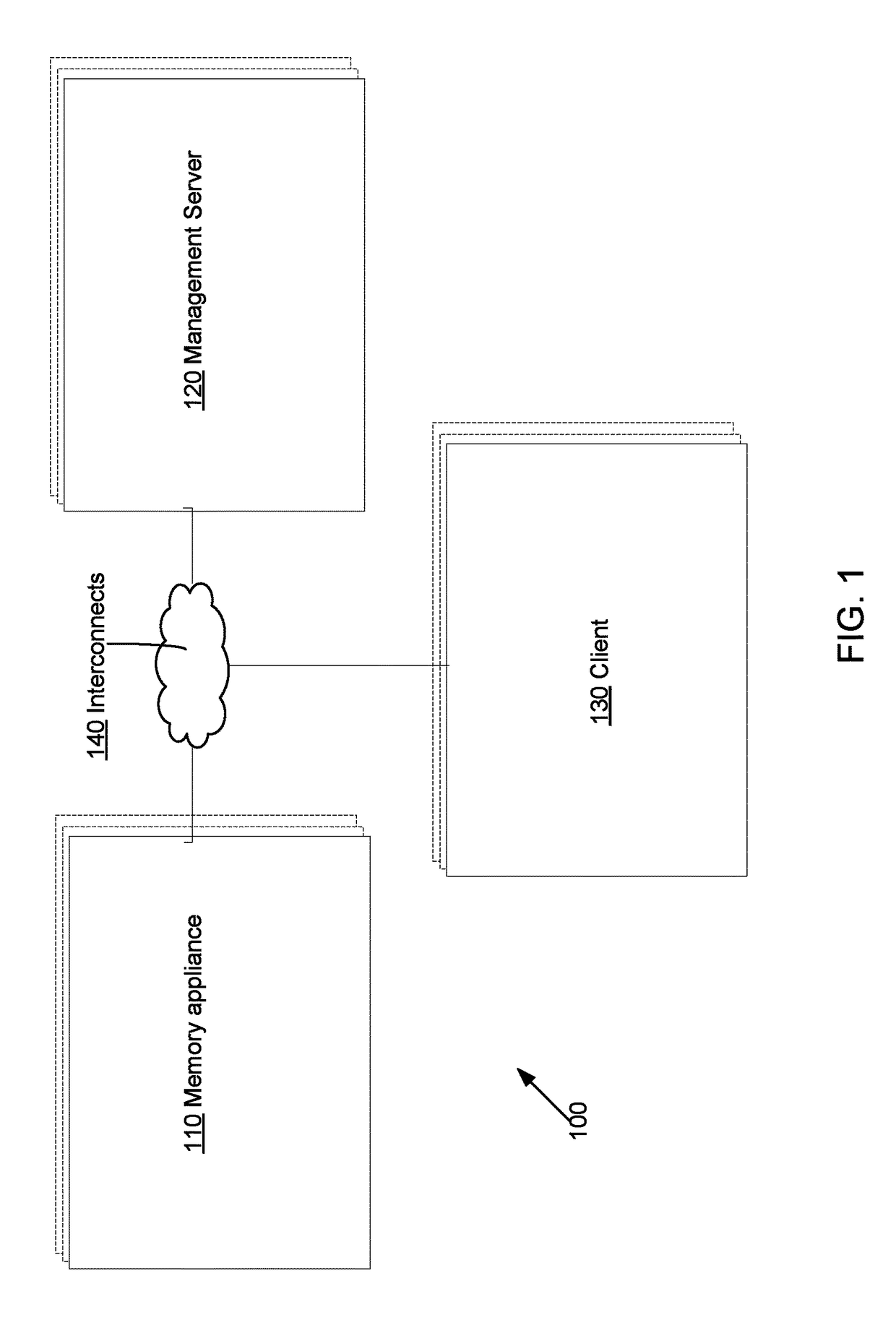

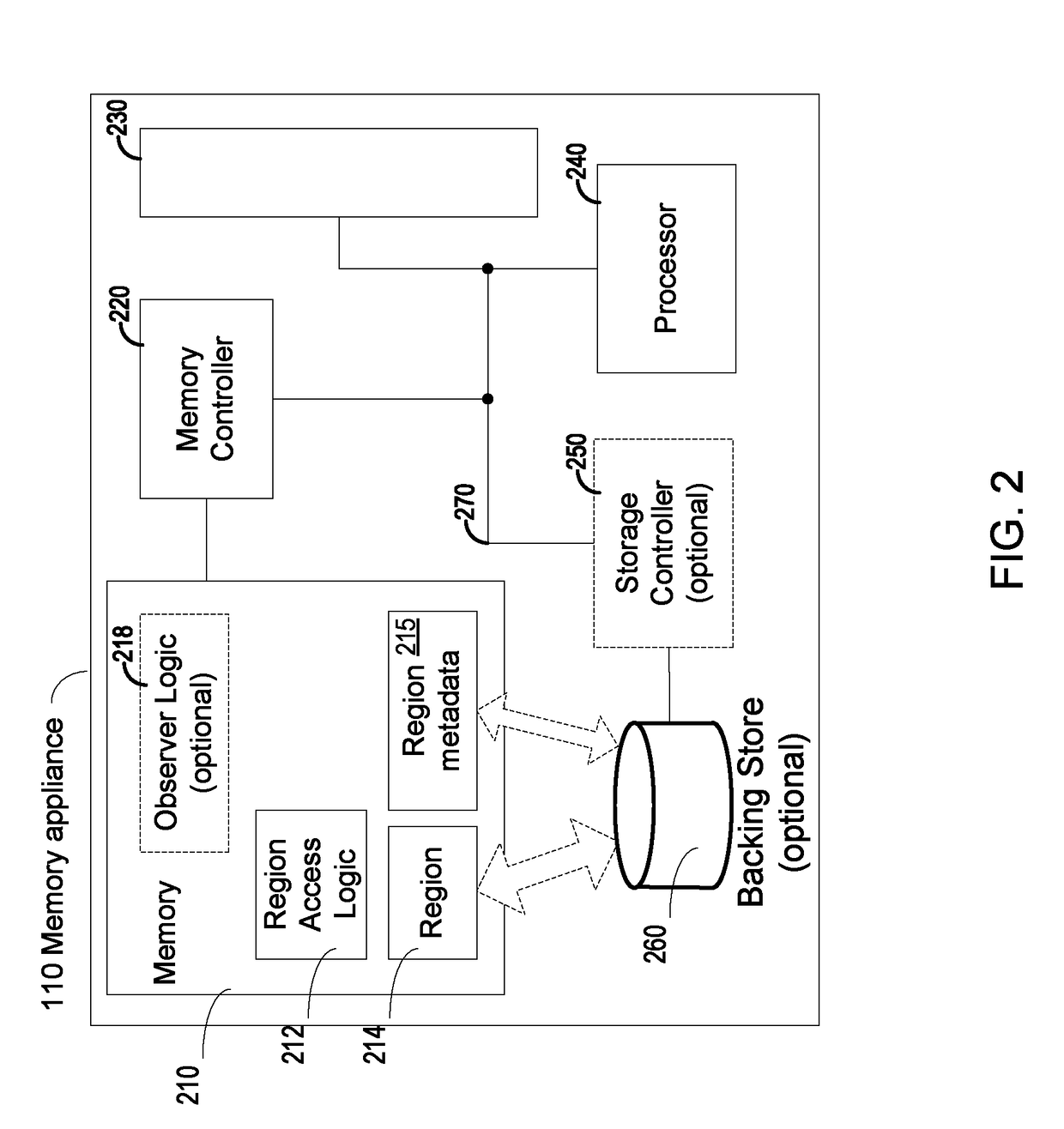

Local primary memory as CPU cache extension

ActiveUS20170285997A1Memory architecture accessing/allocationInput/output to record carriersParallel computingClient-side

Owner:KOVE IP

A method and apparatus for using a CPU cache memory for non-CPU related tasks

InactiveUS20150324287A1Memory architecture accessing/allocationMemory adressing/allocation/relocationCPU cacheControl unit

There is provided a processor for use in a computing system, said processor including at least one Central Processing Unit (CPU), a cache memory coupled to the at least one CPU, and a control unit coupled to the cache memory and arranged to obscure the existing data in the CPU cache memory, and assign control of the CPU cache memory to at least one other entity within the computing system. There is also provided a method of using a CPU cache memory for non-CPU related tasks in a computing system.

Owner:NXP USA INC

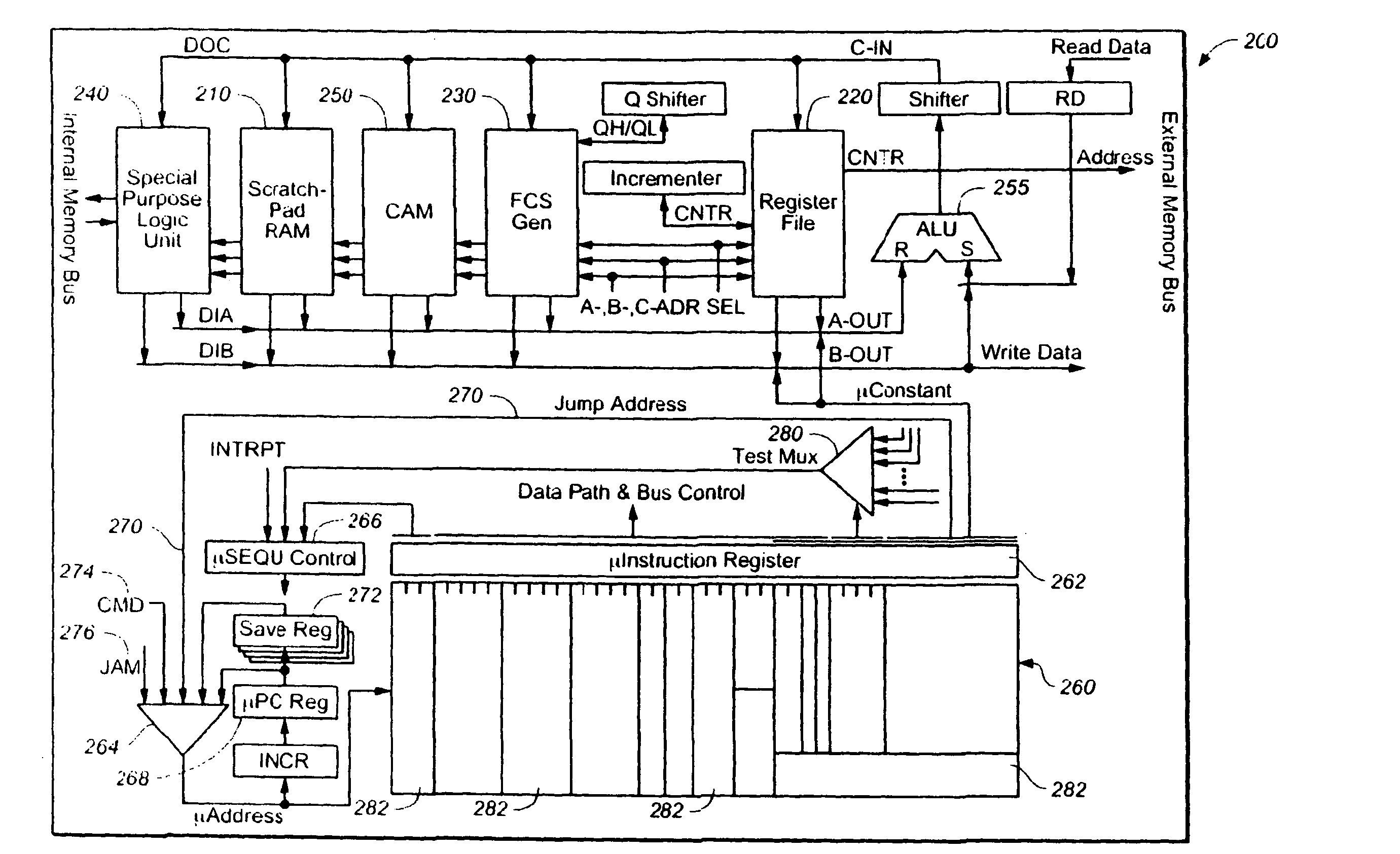

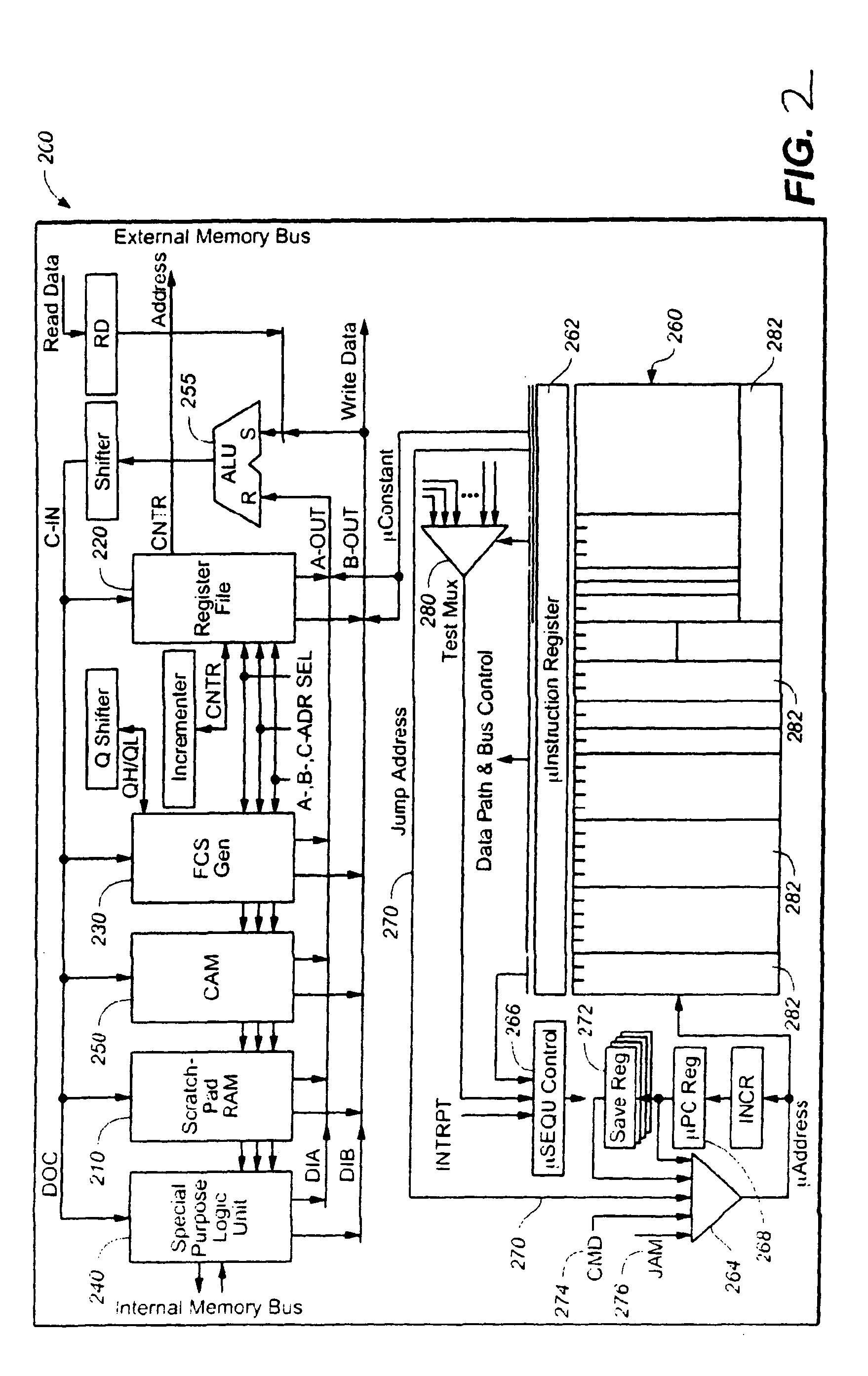

System and method for large microcoded programs

PendingUS20090228693A1Consumes powerApproach is slowInstruction analysisDigital computer detailsVirtual memorySoftware define radio

An improved architectural approach for implementation of a microarchitecture for a low power, small footprint microcoded processor for use in packet switched networks in software defined radio MANeTs. A plurality of on-board CPU caches and a system of virtual memory allows the microprocessor to employ a much larger program size, up to 64k words or more, given the size and power footprint of the microprocessor.

Owner:ROCKWELL COLLINS INC

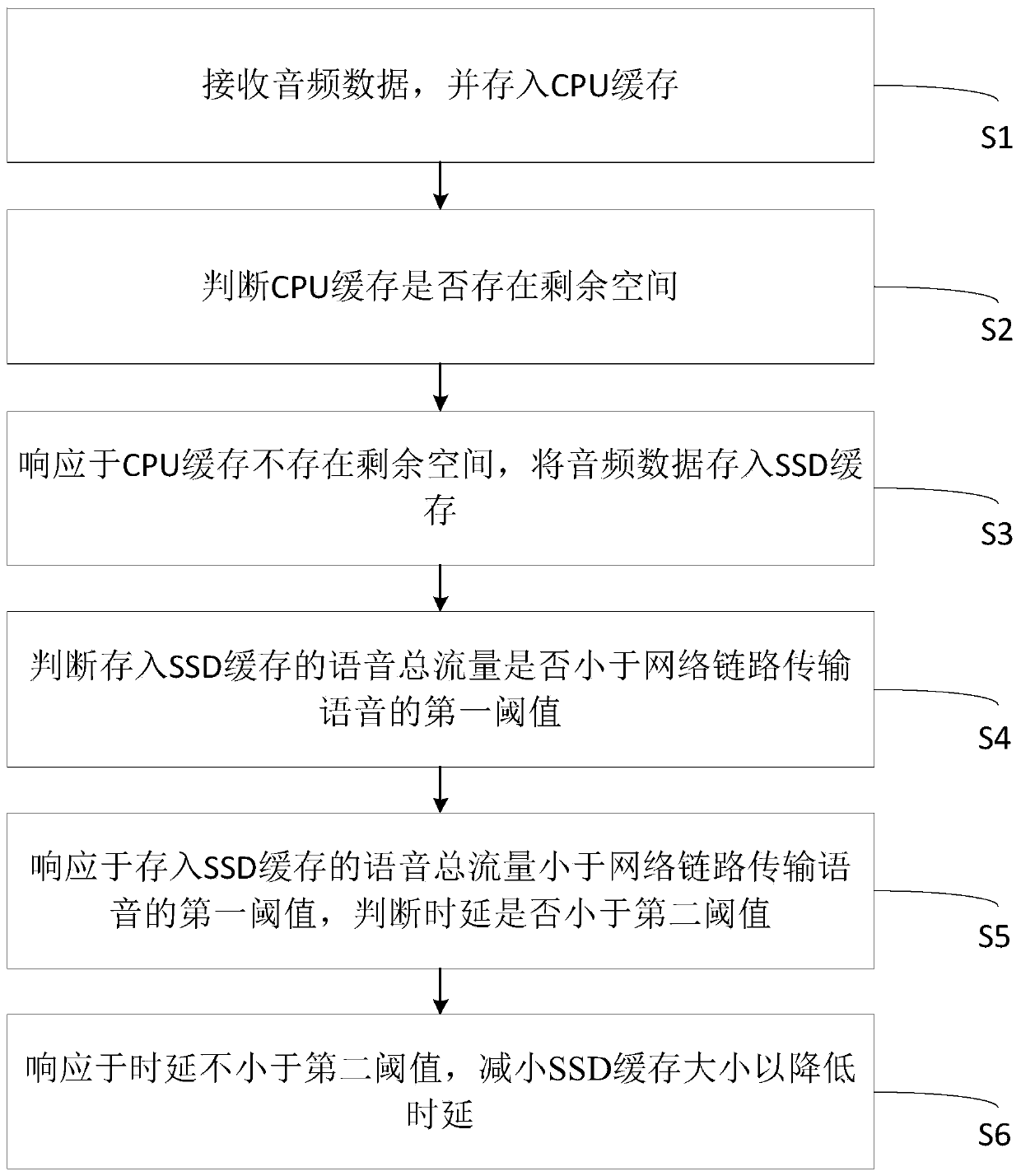

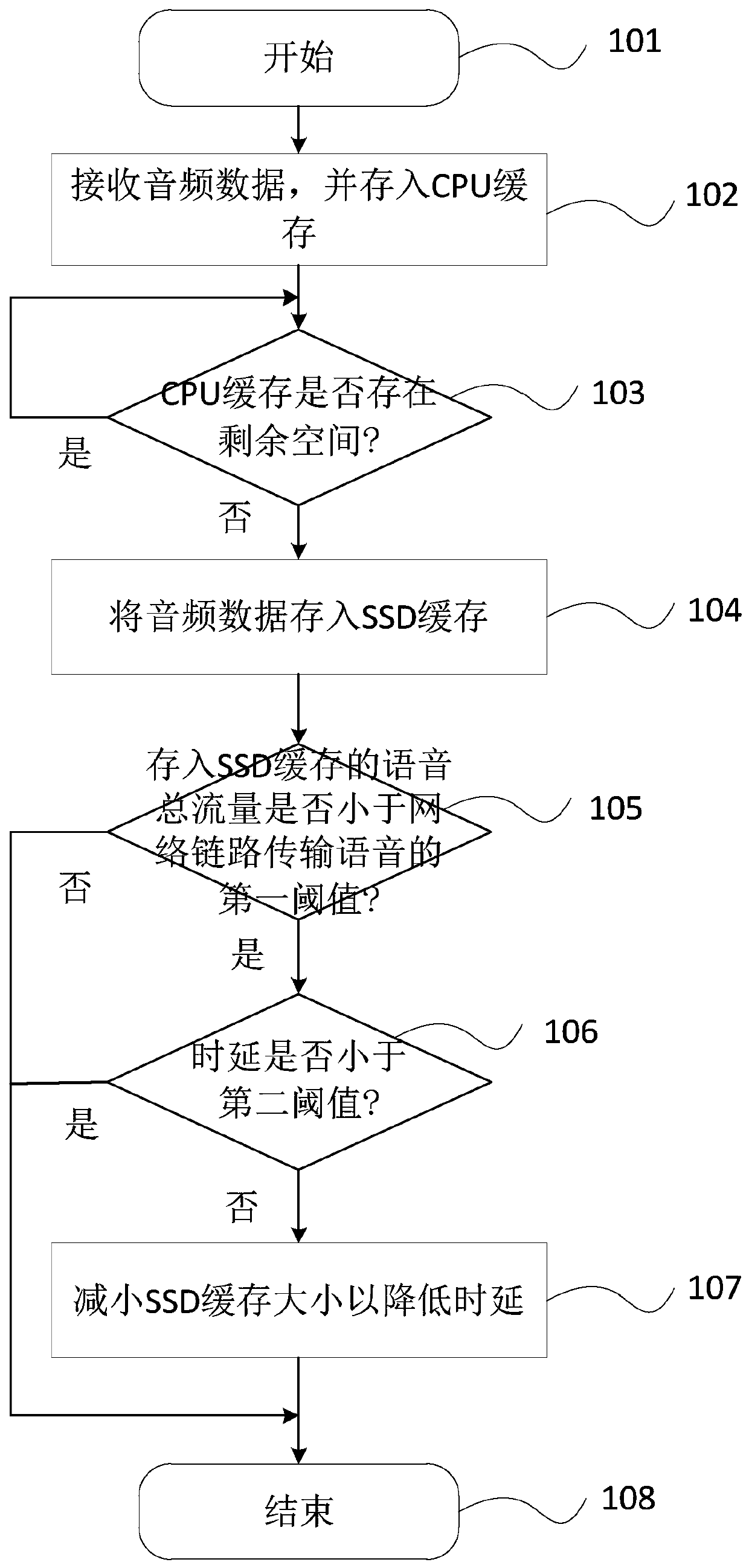

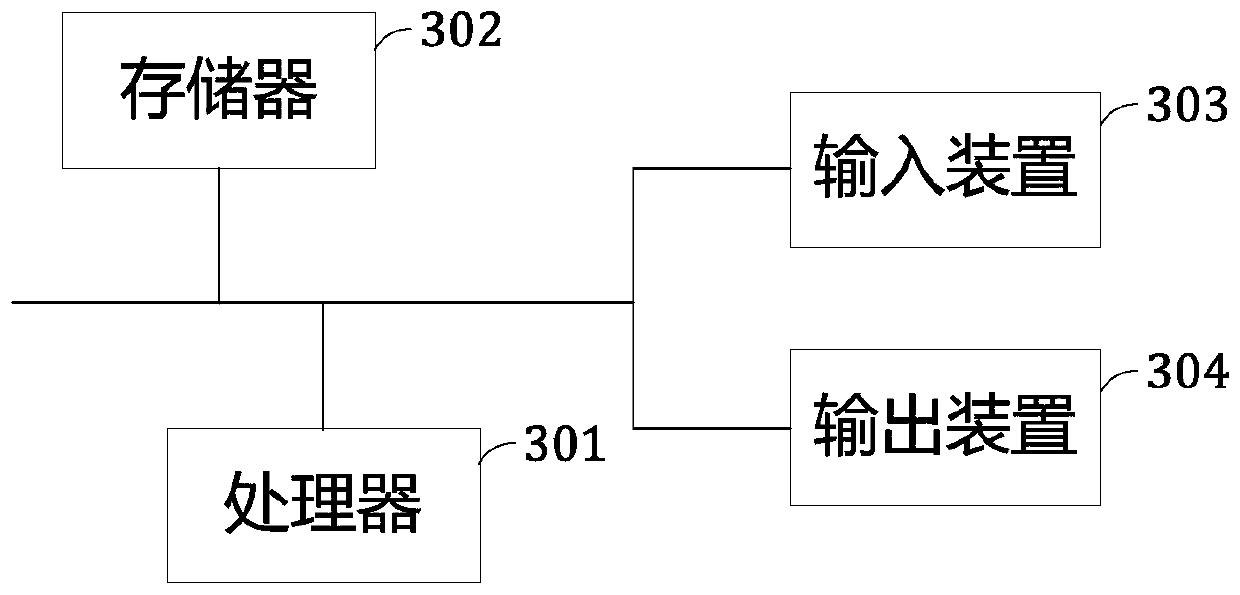

Method and device for improving audio quality, and medium

ActiveCN110620793AVoice jitter quality is goodImprove performanceTelevision conference systemsTwo-way working systemsComputer hardwareNetwork link

The invention discloses a method and device for improving audio quality and a readable storage medium. The method comprises the following steps: receiving audio data, and storing the audio data in a CPU cache; judging whether the CPU cache has a residual space or not; in response to the fact that the CPU cache does not have the residual space, storing the audio data into an SSD cache; judging whether the total voice flow stored in the SSD cache is smaller than a first threshold value of network link transmission voice or not; in response to the fact that the total voice flow stored in the SSDcache is smaller than a first threshold value of network link transmission voice, judging whether the time delay is smaller than a second threshold value or not; and in response to the fact that the time delay is not smaller than the second threshold, reducing the SSD cache size to reduce the time delay. According to the method and device for improving the audio quality and the medium provided bythe invention, the multi-level cache is designed, and the size of the secondary cache is dynamically adjusted according to the voice time delay, the uplink and downlink voice flow and the voice compression condition, so that the best overall performance of the voice jitter quality and the voice time delay is ensured, and the audio quality is improved to the maximum extent.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

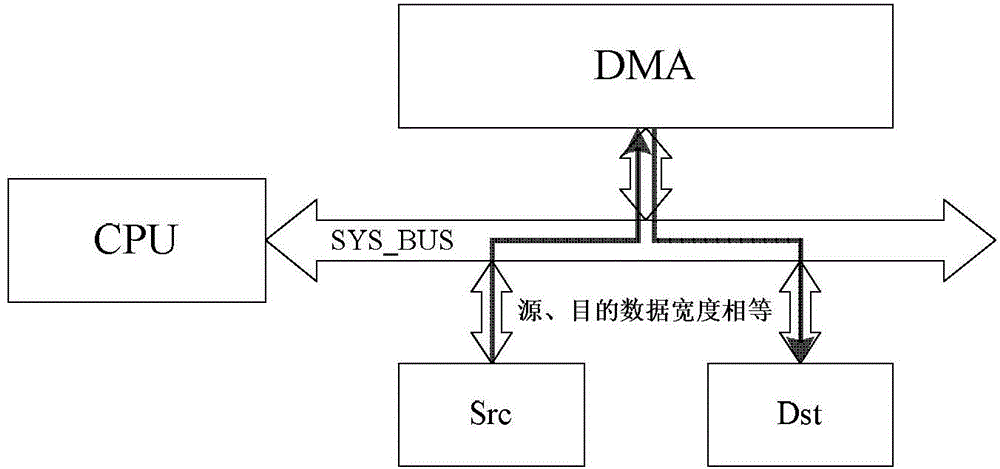

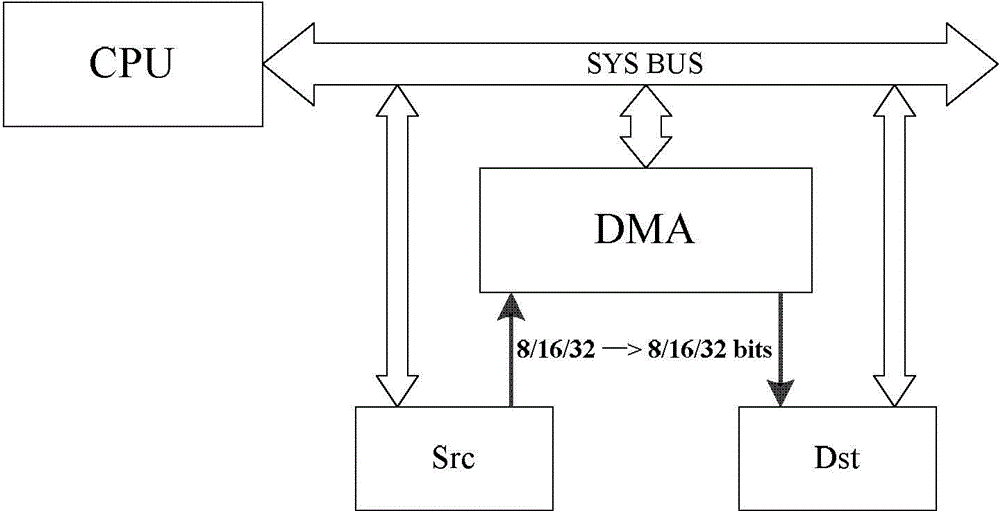

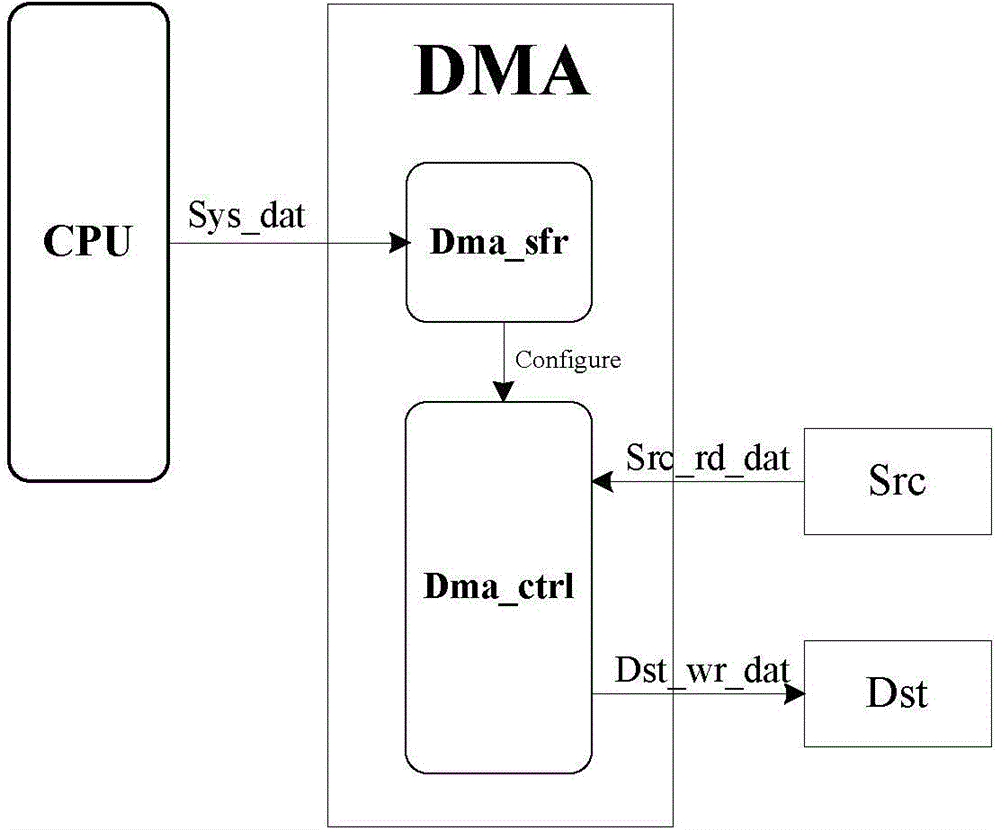

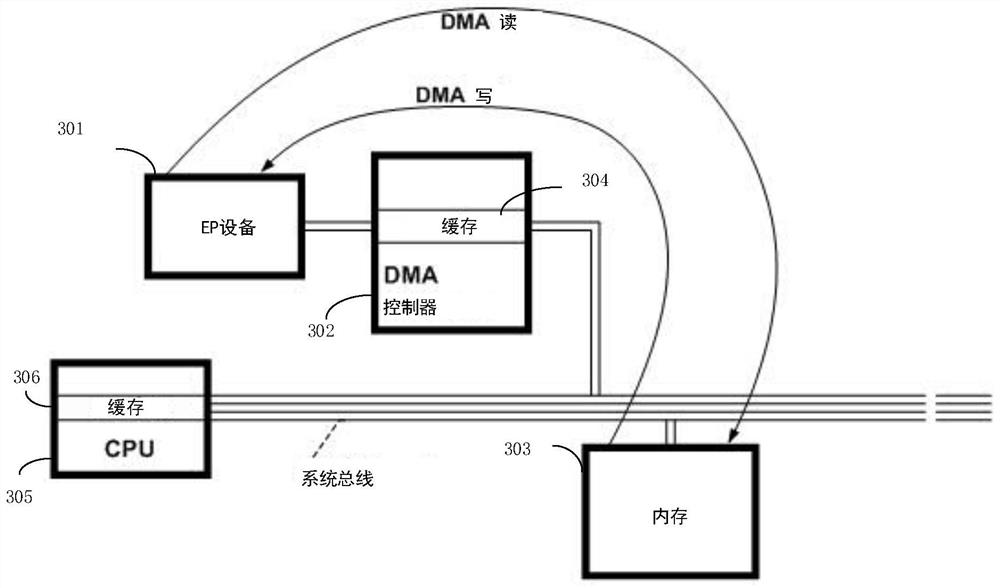

Method for controlling data transmission and DMA controller

ActiveCN104021099AIncrease speedReduce intermediate linksElectric digital data processingControl dataData transmission

The invention provides a method for controlling data transmission and a DMA controller. The method comprises the steps that configuration information of a CPU is received and analyzed, wherein the configuration information includes transmission width conversion parameters; data are read from a source peripheral and converted into the corresponding data width to be written in a target peripheral according to the transmission width conversion parameters. The transmission mode adopted for the DMA controller acts on direct exchange data between memories, between the memories and the peripherals and between the peripherals, CPU read-write commands are not executed, a CPU cache does not need to be used, and therefore intermediate links are reduced; only the DMA controller needs to be configured, all read-write time sequences are executed by hardware, and therefore the rate of data transmission is greatly increased.

Owner:DATANG MICROELECTRONICS TECH CO LTD

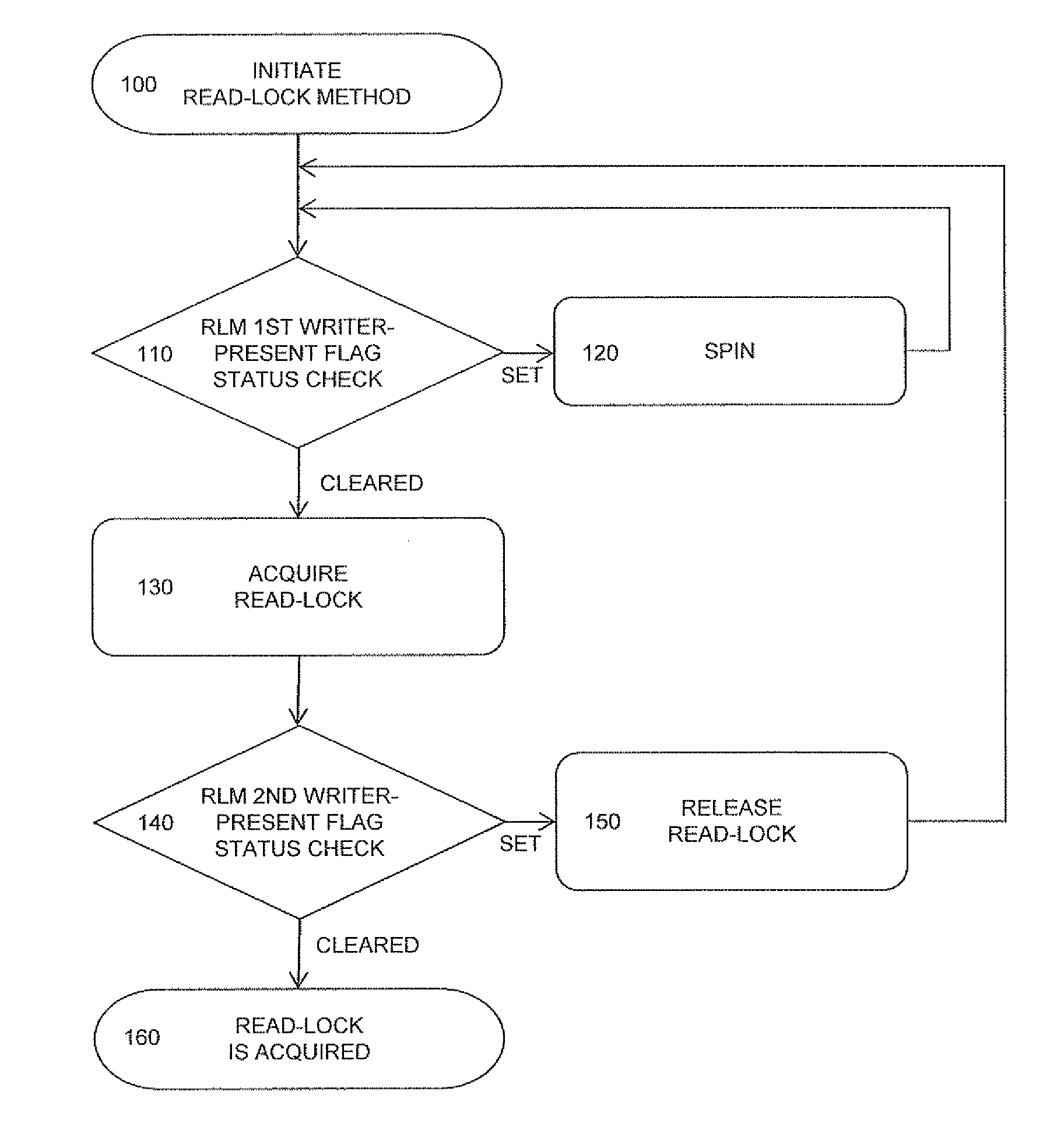

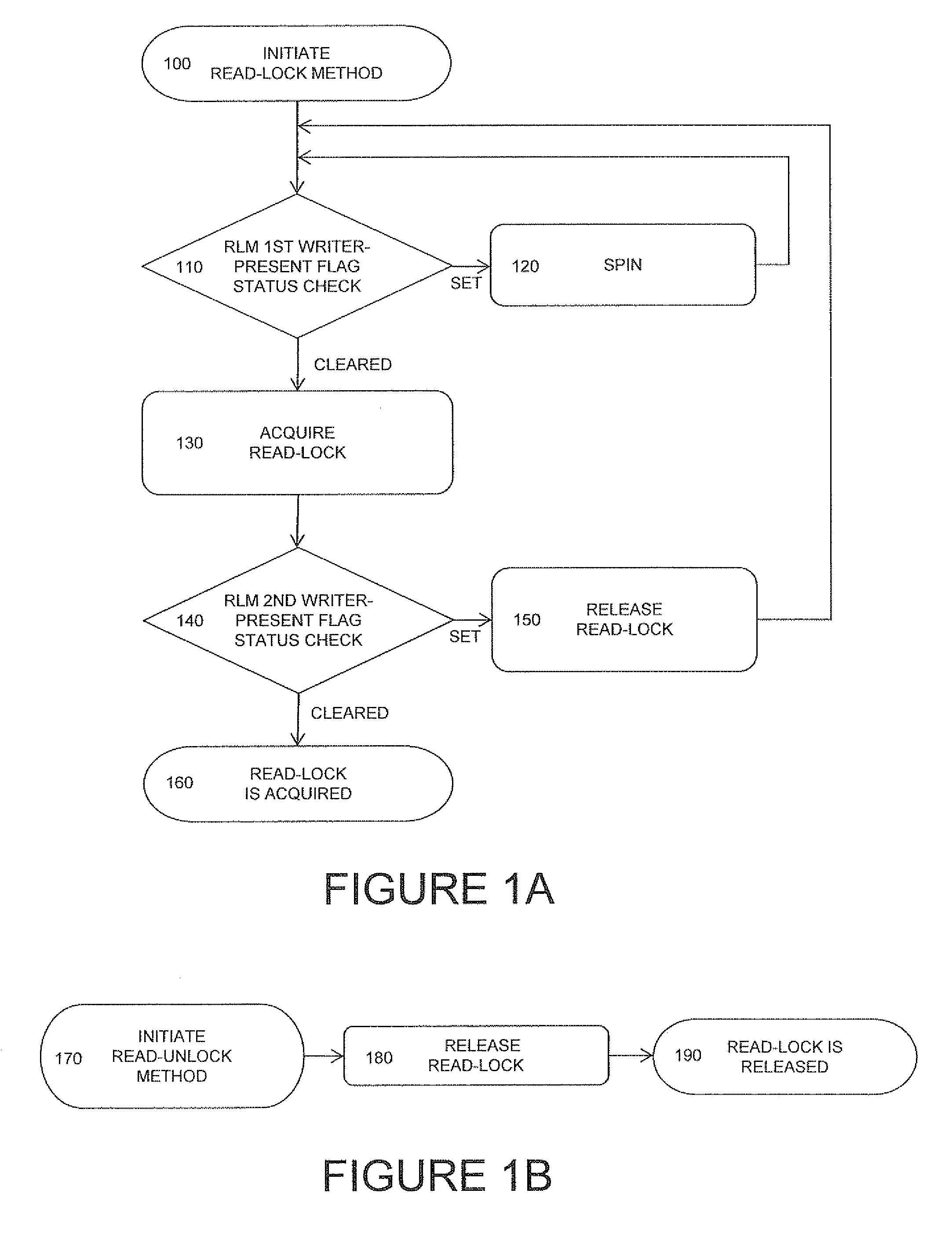

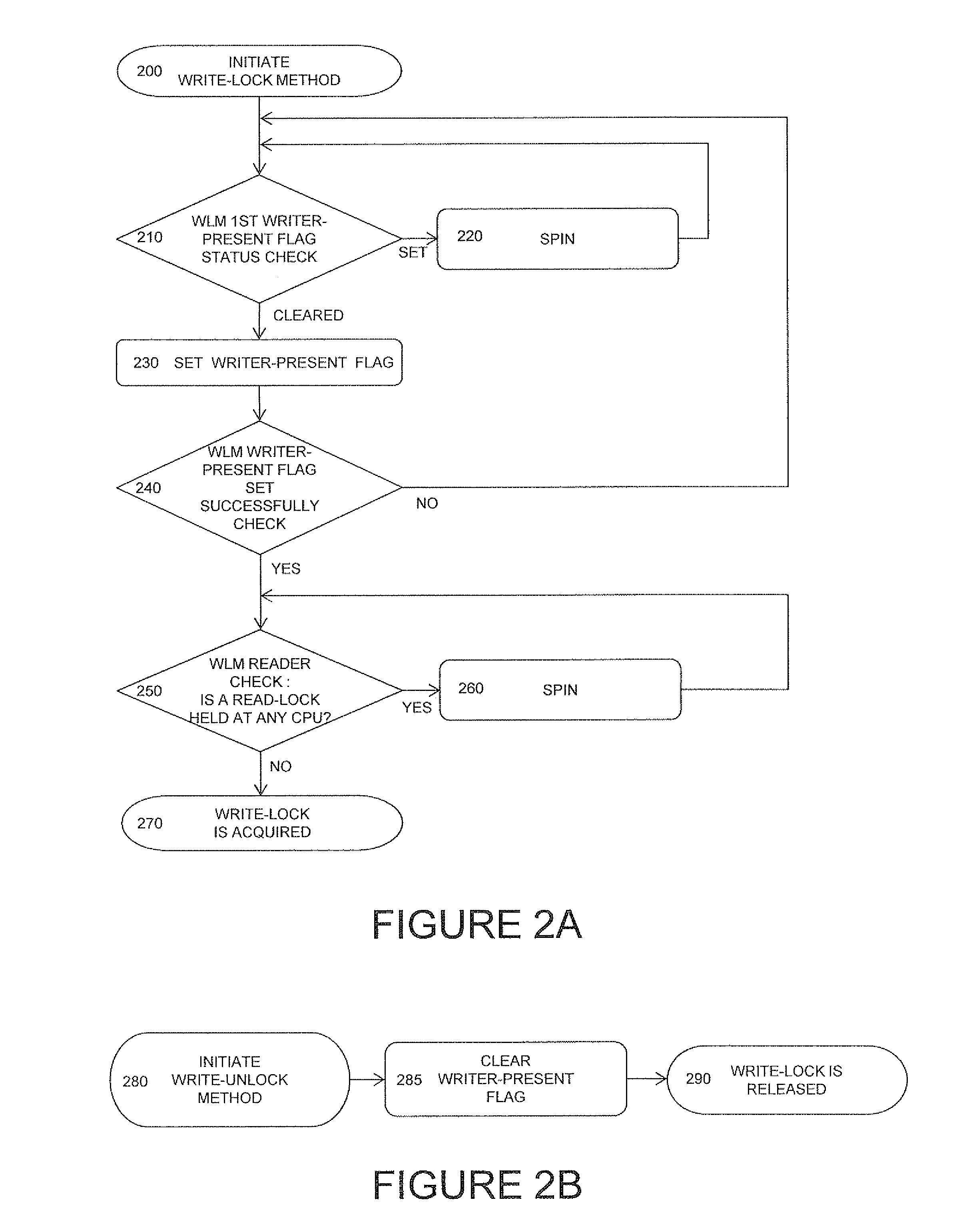

Optimization of Data Locks for Improved Write Lock Performance and CPU Cache usage in Mulit Core Architectures

ActiveUS20160098361A1Memory architecture accessing/allocationResource allocationMulticore architectureData access

Data access optimization features the innovative use of a writer-present flag when acquiring read-locks and write-locks. Setting a writer-present flag indicates that a writer desires to modify a particular data. This serves as an indicator to readers and writers waiting to acquire read-locks or write-locks not to acquire a lock, but rather to continue waiting (i.e., spinning) until the write-present flag is cleared. As opposed to conventional techniques in which readers and writers are not locked out until the writer acquires the write-lock, the writer-present flag locks out other readers and writers once a writer begins waiting for a write-lock (that is, sets a writer-present flag). This feature allows a write-lock method to acquire a write-lock without having to contend with waiting readers and writers trying to obtain read-locks and write-locks, such as when using conventional spinlock implementations.

Owner:CHECK POINT SOFTWARE TECH LTD

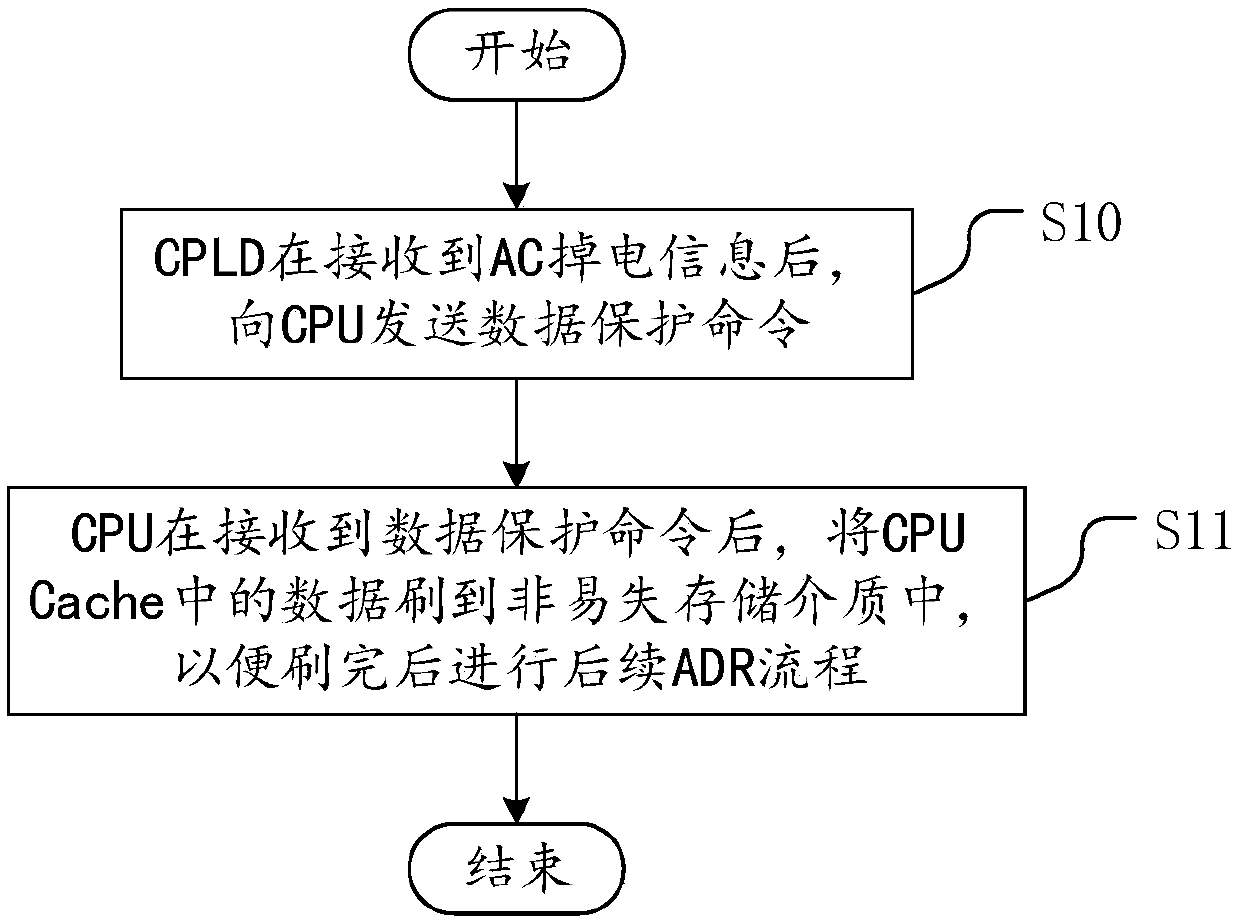

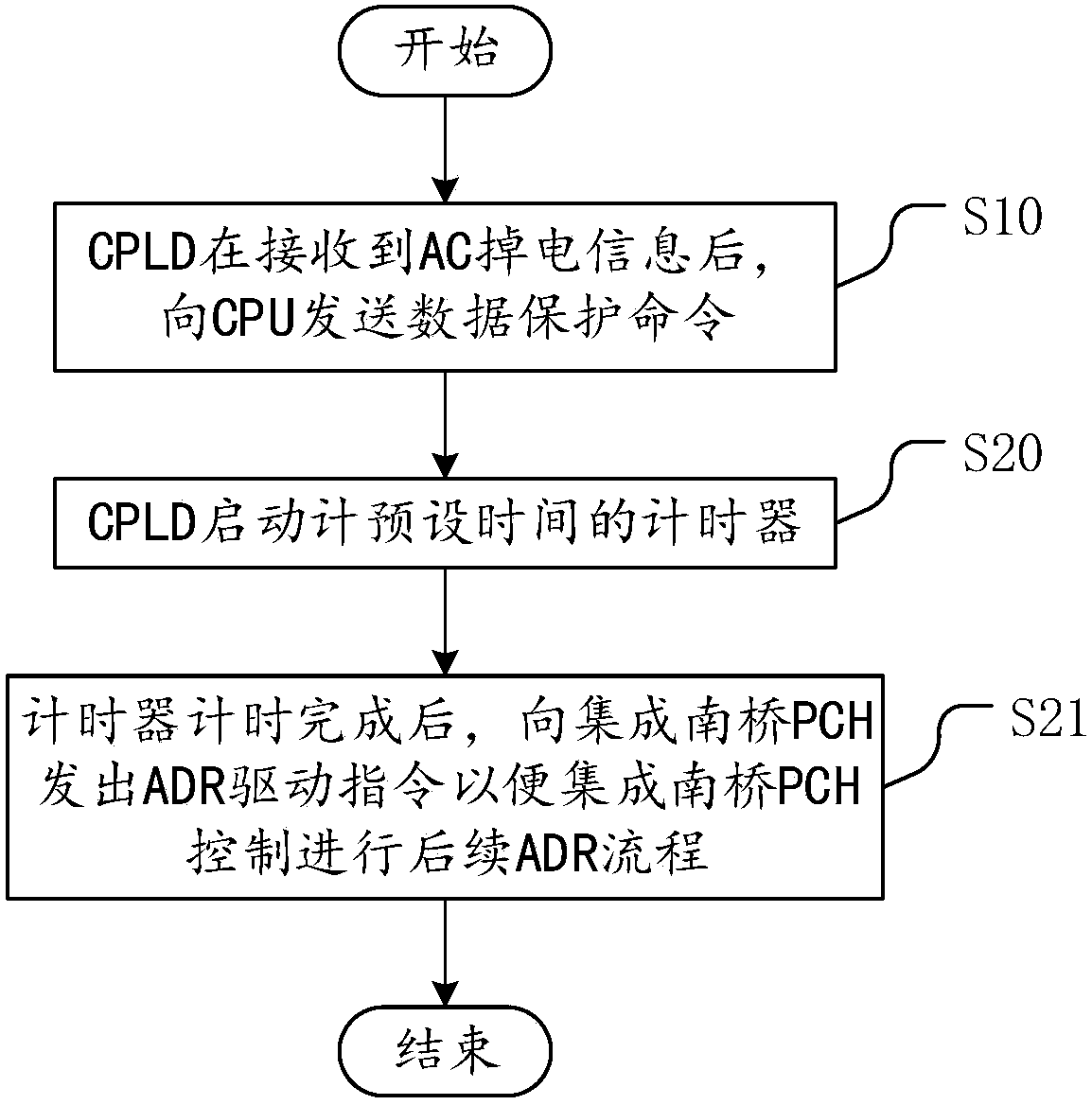

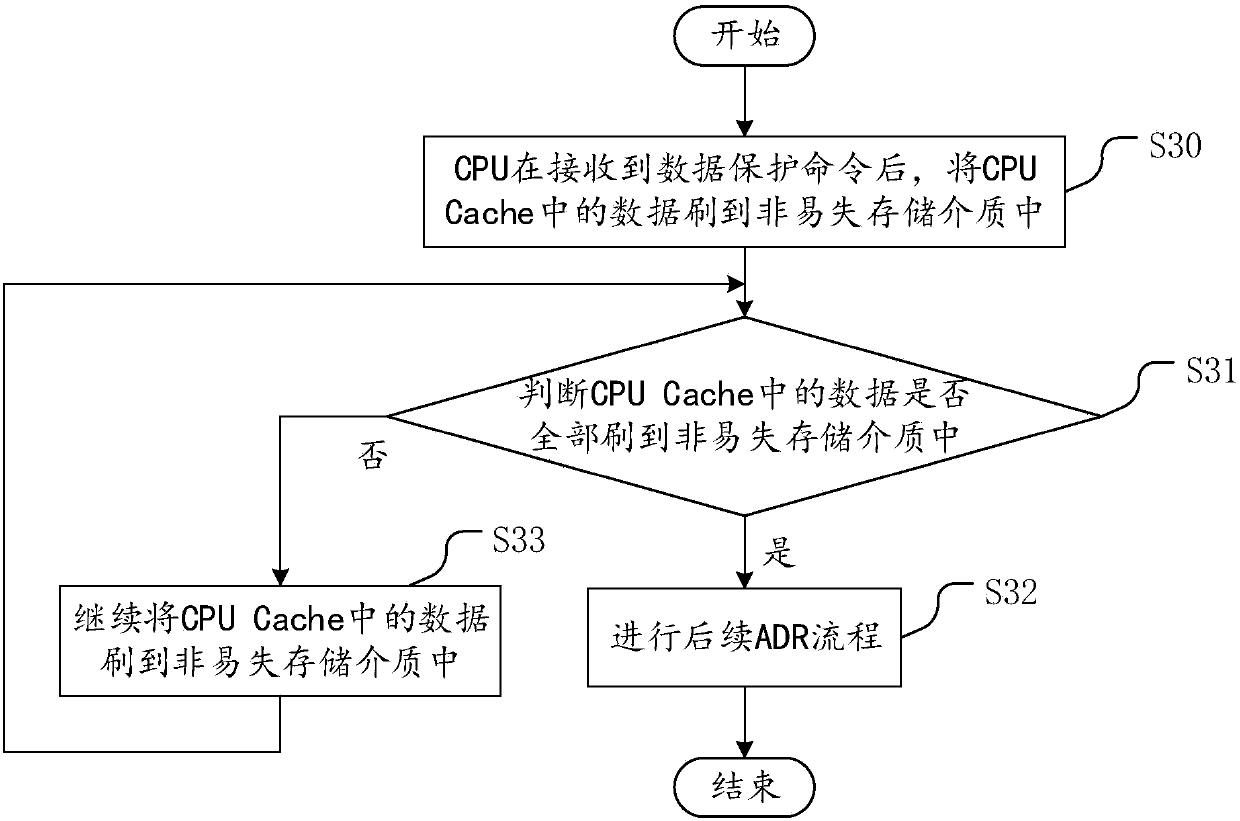

Method and system for protecting CPU Cache data after AC power failure

InactiveCN107807863AEffective protectionRead and write performance does not affectMemory loss protectionRedundant data error correctionElectricityComputer hardware

The invention discloses a method for protecting CPU Cache data after AC power failure. The method comprises the steps that a CPLD sends a data protection command to a CPU after receiving AC power failure information; and the CPU flashes data in a CPU Cache to a non-volatile storage medium after receiving the data protection instruction, thereby performing a subsequent ADR process after finishing the flashing. According to the method provided by the invention, the data in the CPU Cache can be effectively protected after the AC power failure only by adding the process of flashing the data in theCPU Cache to the non-volatile storage medium after the AC power failure on the premise of not changing the read-write performance of the non-volatile storage medium, so that the data in the CPU Cacheis protected from being not lost, the read-write performance of NVDIMM-N or other non-volatile storage mediums is not influenced, and the delay is shortened. The invention furthermore discloses a system for protecting the CPU Cache data after the AC power failure. The system also has the abovementioned beneficial effects, which are no longer repeated here.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

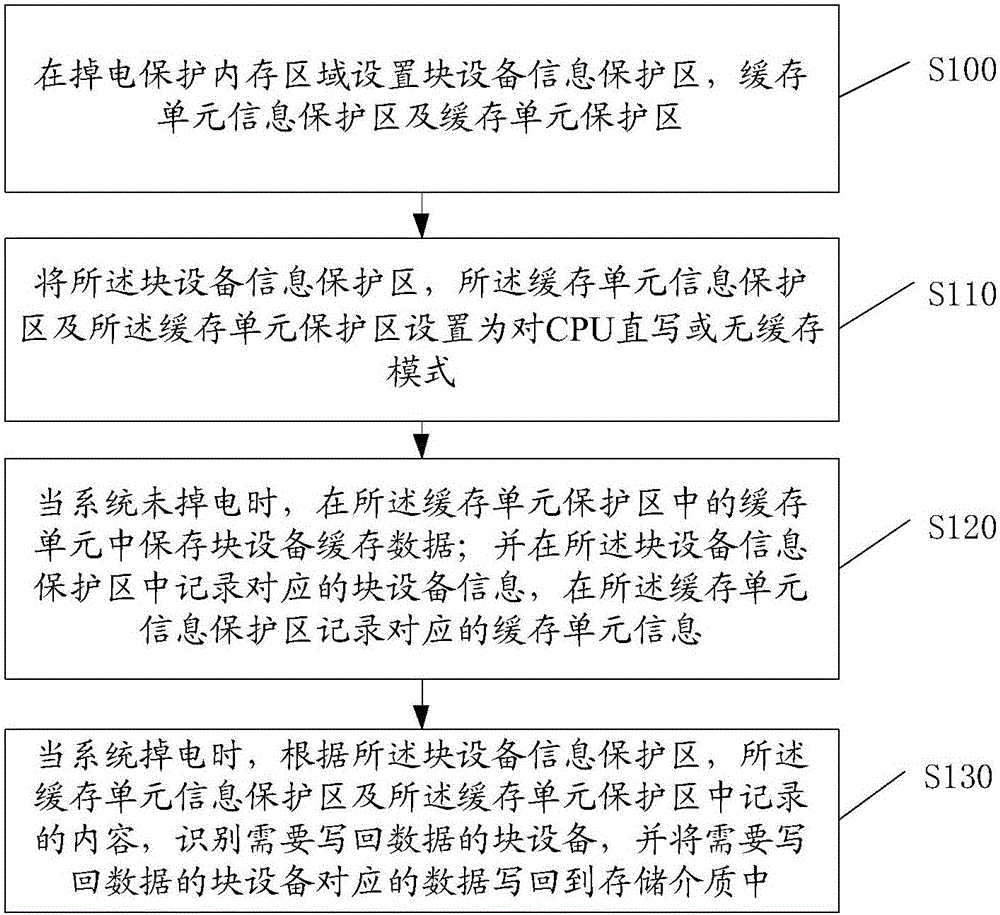

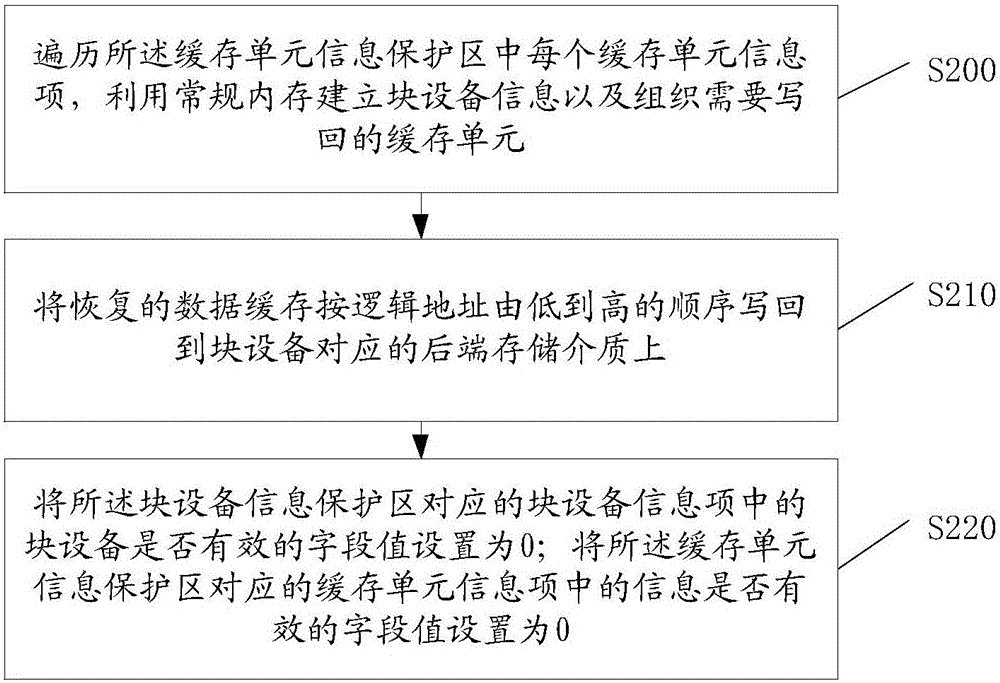

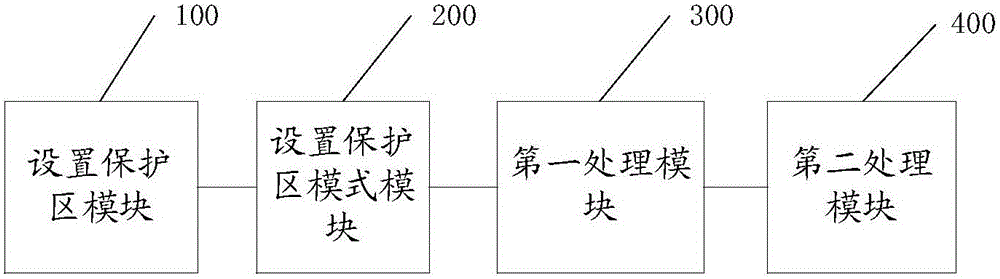

Block device data cache power-down protection method and system

InactiveCN105740172AIntegrity guaranteedAvoid security issuesMemory loss protectionElectricityComplete data

The invention discloses a block device data cache power-down protection method and system. The method comprises the steps of setting a block device information protection region, a cache unit information protection region and a cache unit protection region in a power-down protection memory region; setting the block device information protection region, the cache unit information protection region and the cache unit protection region to be in a CPU direct-writing or no-cache mode; when a system does not lose power, storing block device cache data in a cache unit of the cache unit protection region; recording corresponding block device information in the block device information protection region and recording corresponding cache unit information in the cache unit information protection region; and when the system loses the power, according to the content recorded in the block device information protection region, the cache unit information protection region and the cache unit protection region, identifying a block device required to be subjected to data back-writing, and writing back the corresponding data of the block device into a storage medium. Therefore, the problems on asynchronism and protection of CPU caches can be solved and the complete data power-down protection is ensured; and the method and system have relatively good performance.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

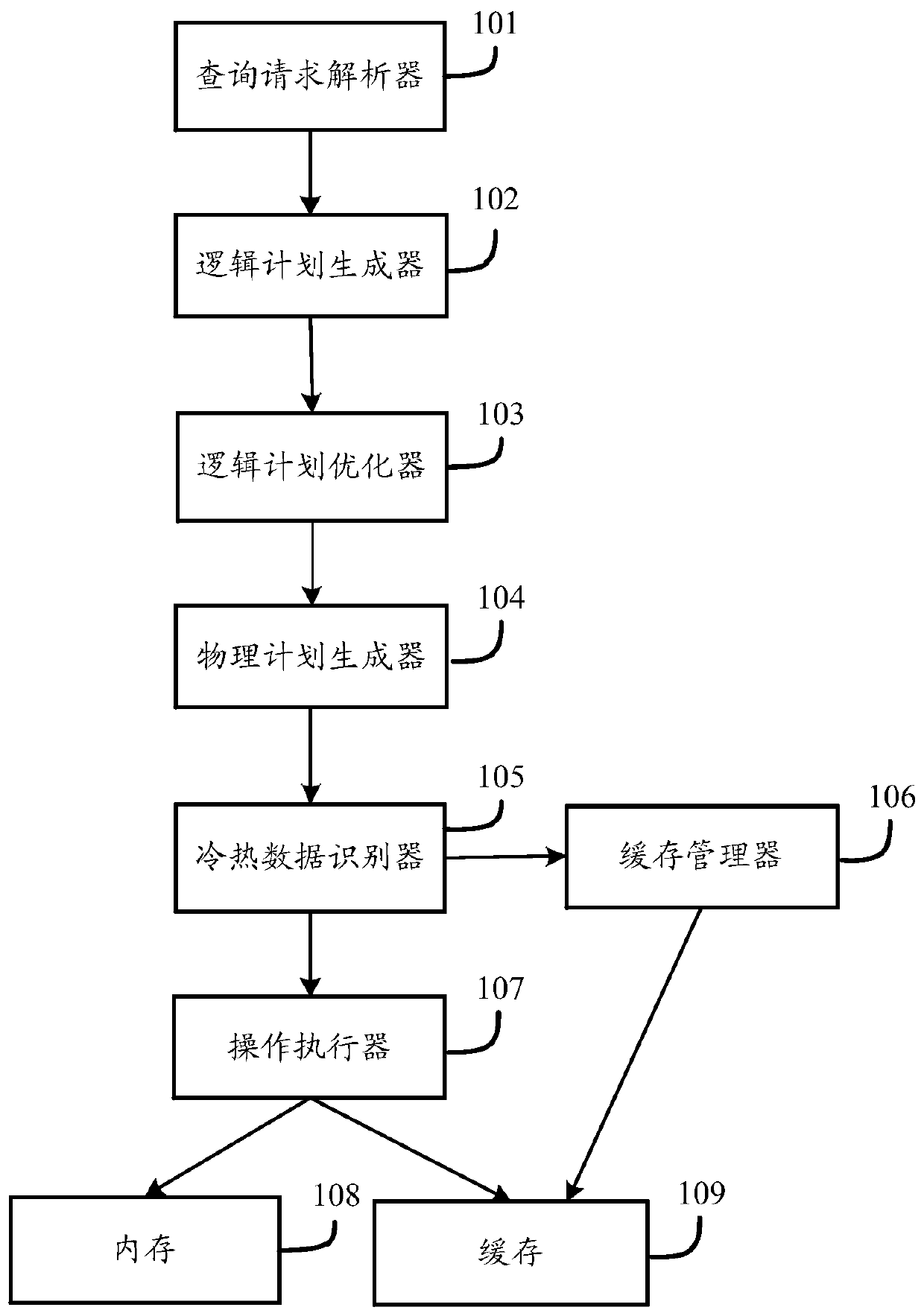

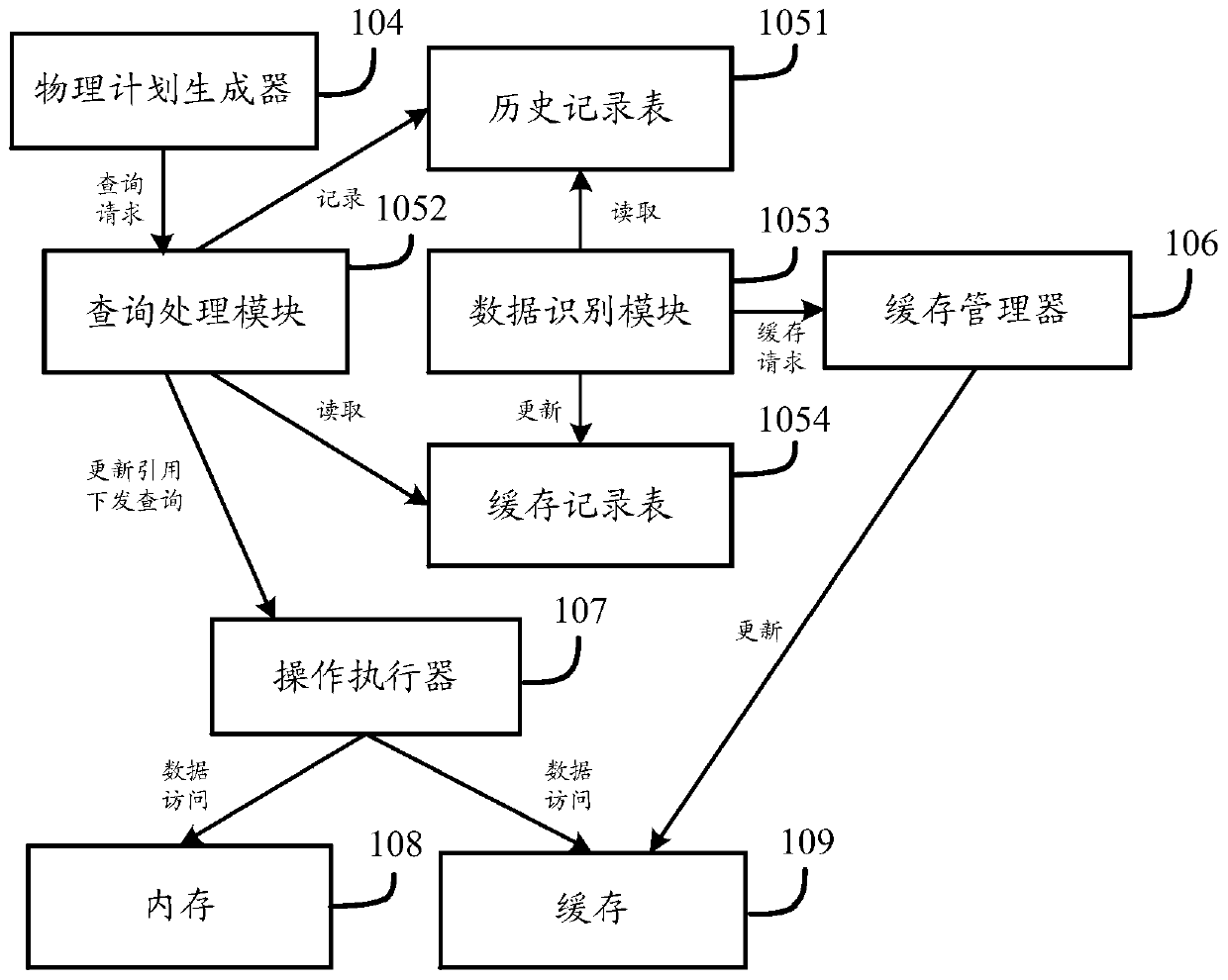

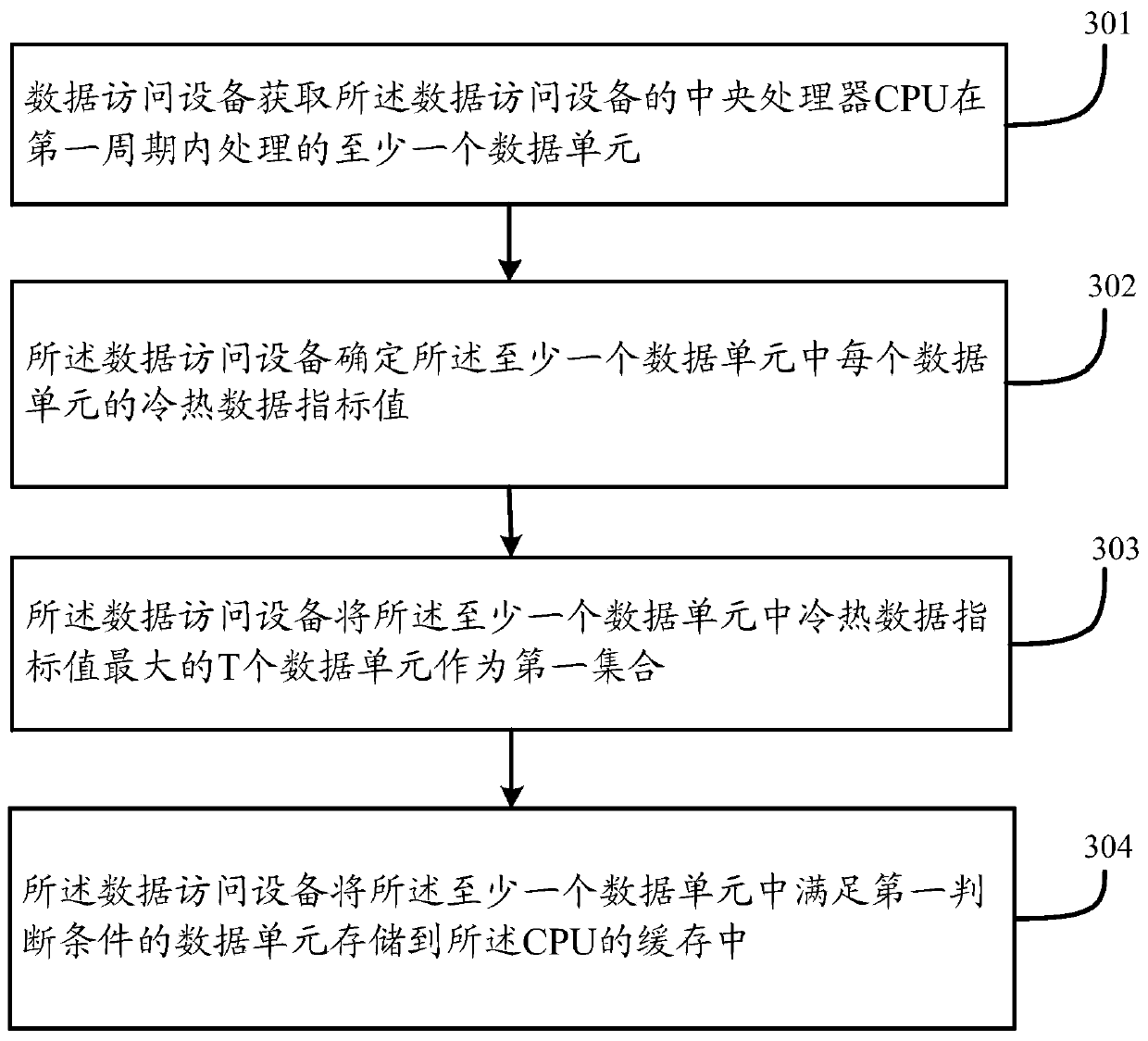

A data processing method and device

InactiveCN109739646AImprove access efficiencyGuaranteed accuracyResource allocationData accessCPU cache

The invention discloses a data processing method and device. The method comprises the steps that data access equipment acquires at least one data unit processed by a central processing unit (CPU) of the data access equipment in a first period; the data access device determines a cold and hot data index value of each data unit in the at least one data unit; the data access equipment takes T data units with maximum cold and hot data index values in the at least one data unit as a first set; aiming at a first data unit in the at least one data unit; if the data access device determines that the first data unit is located in the first set in N continuous periods before the first period and is not stored in the CPU cache, the first data unit is stored in the CPU cache; wherein the first data unit is any one of the at least one data unit.

Owner:NSFOCUS INFORMATION TECHNOLOGY CO LTD +1

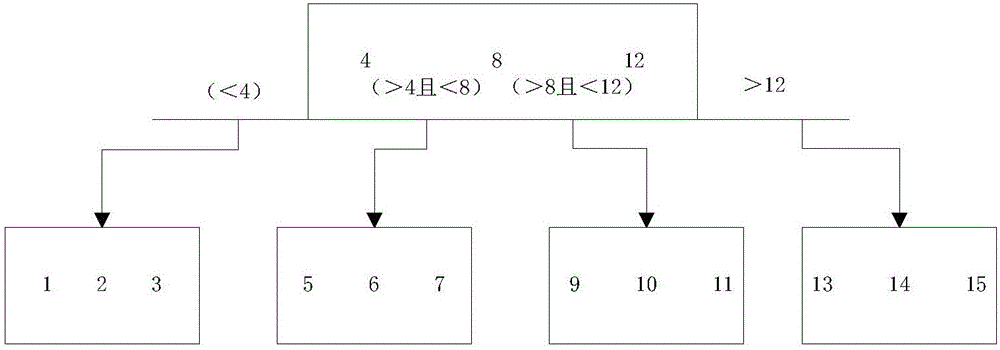

Data search method

InactiveCN106708749AReduce the number of interactionsAccurate captureMemory adressing/allocation/relocationInternal memoryParallel computing

The invention relates to a data search method. Data is organized and stored according to the fractal tree principle. The data search method includes: by a CPU, receiving a data reading request; searching the requested data in Cache Line; if the requested data is found in the Cache Line, reading the data and terminating the searching; if the requested is not found in the Cache Line, determining the possible storage position of the data in CPU Cache through numeric comparison; searching for the data in the corresponding interval in the CPU Cache according to the possible storage position of the data in the CPU Cache; if the requested data is found in the CPU Cache, reading the data and terminating the searching; if the requested data is not found in the CPU Cache, determining the possible storage position of the data in an internal memory; searching for the data in the corresponding interval in the internal memory according to the possible storage position of the data in the internal memory; if the requested data is found in the internal memory, reading the data and terminating the searching; if the requested data is not found in the internal memory, searching the data in a hard disk. By the data search method, the times of data exchanging between the Cache and the internal memory can be reduced, and CPU speed can be increased.

Owner:量子云未来(北京)信息科技有限公司 +1

Local primary memory as CPU cache extension

ActiveUS9921771B2Memory architecture accessing/allocationInput/output to record carriersParallel computingClient-side

A system may be provided comprising: a local primary memory; an interconnect; and a processor, the processor configured to cause, in response to a memory allocation request from an application, allocation of a region of an external primary memory included in a memory appliance, the external primary memory in the memory appliance accessible by the system over the interconnect with client-side memory access, wherein the client-side memory access is independent of a central processing unit of the memory appliance, wherein the external primary memory is memory that is external to the system and primary memory to the system, and wherein the processor is further configured to operate the local primary memory as a cache for data accessed in the external primary memory included in the memory appliance.

Owner:KOVE IP

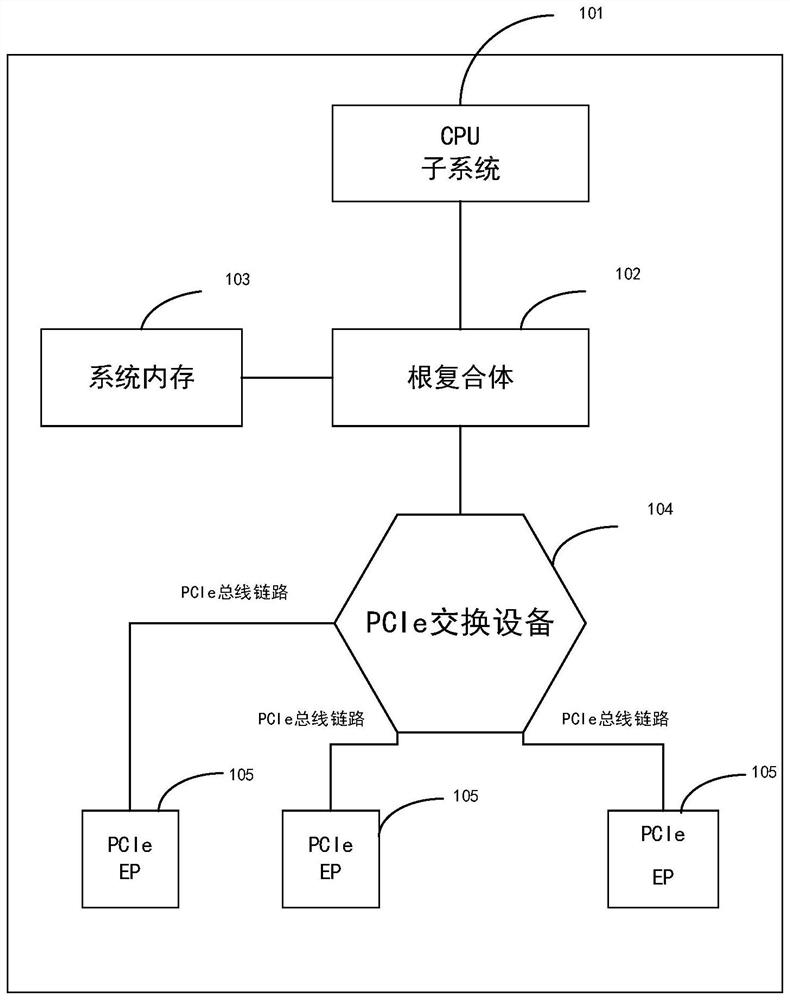

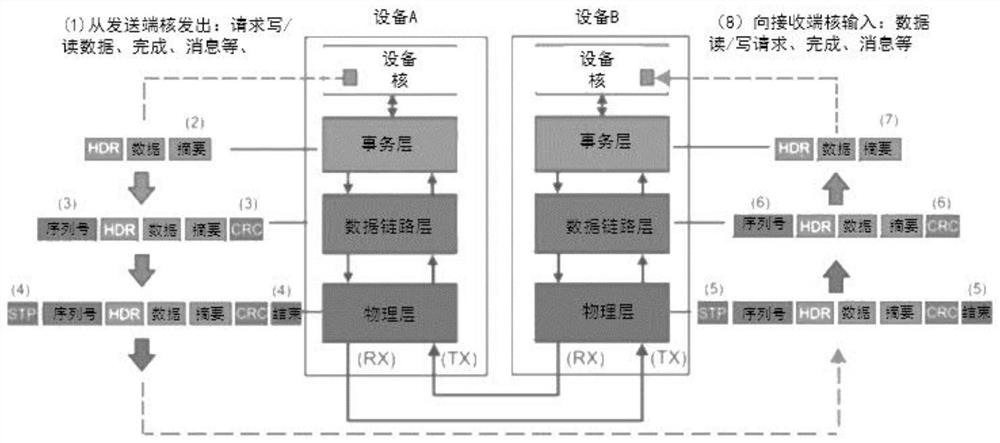

System, method and medium for realizing cache consistency of PCIe equipment

Provided are a system, method and medium for implementing cache consistency of a PCIe device, the system comprising: a CPU cache master controller configured to send a first read-write request of a CPU for a first address to a memory cache slave controller through a cache command of an internal bus; the memory cache slave controller is configured to update the state of the memory cache line of the first address according to the state of the memory cache line of the first address of the first read-write request, and send the first address and a command for updating the state of the cache line of the PCIe equipment to be the first state to the input / output bridge controller through a cache command of an internal bus; the input / output bridge controller is configured to send the first address and a command for updating the state of the cache line of the PCIe equipment to be in a first state to the PCIe equipment through a first PCIe bus message, receive first data from the first address of the PCIe equipment through a second PCIe bus message and receive a response for updating the state of the cache line of the PCIe equipment to be in the first state, and the cache command of the internal bus is sent to the memory cache slave controller.

Owner:HYGON INFORMATION TECH CO LTD

Access optimization method for main memory database based on page-coloring

InactiveUS8966171B2Reduce conflictImprove performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationComplete dataData set

Owner:RENMIN UNIVERSITY OF CHINA

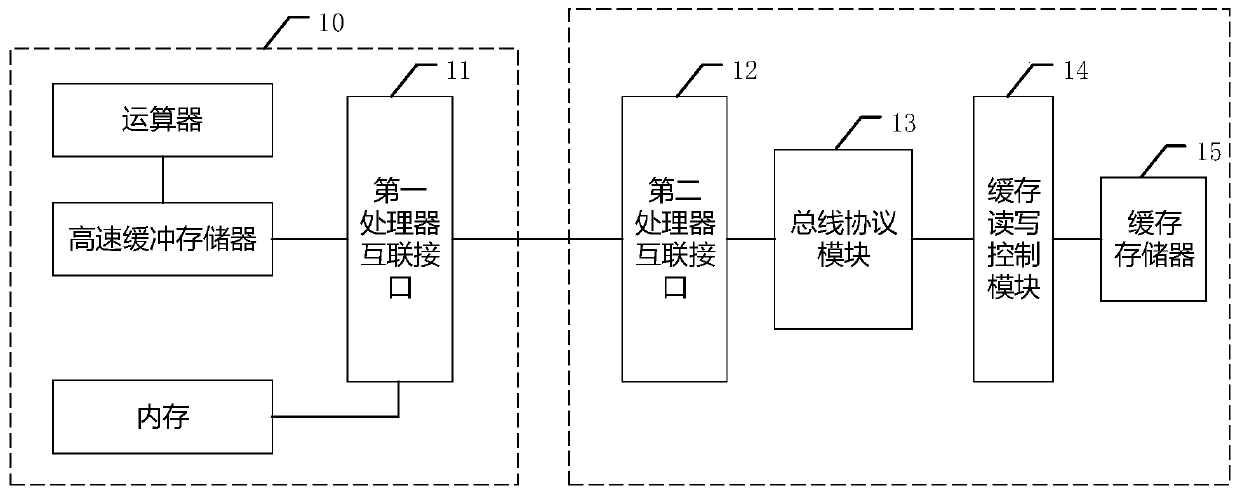

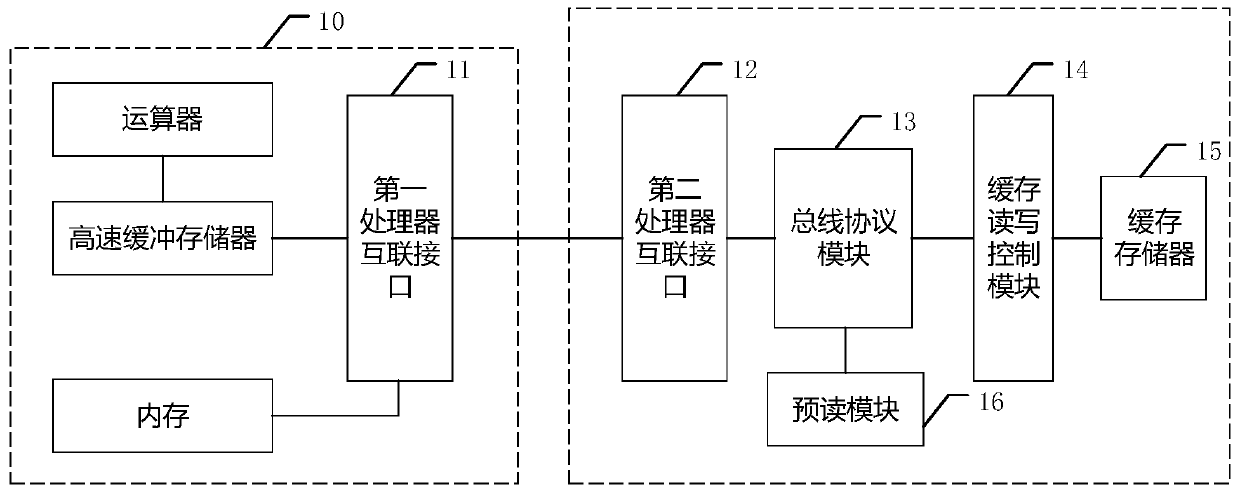

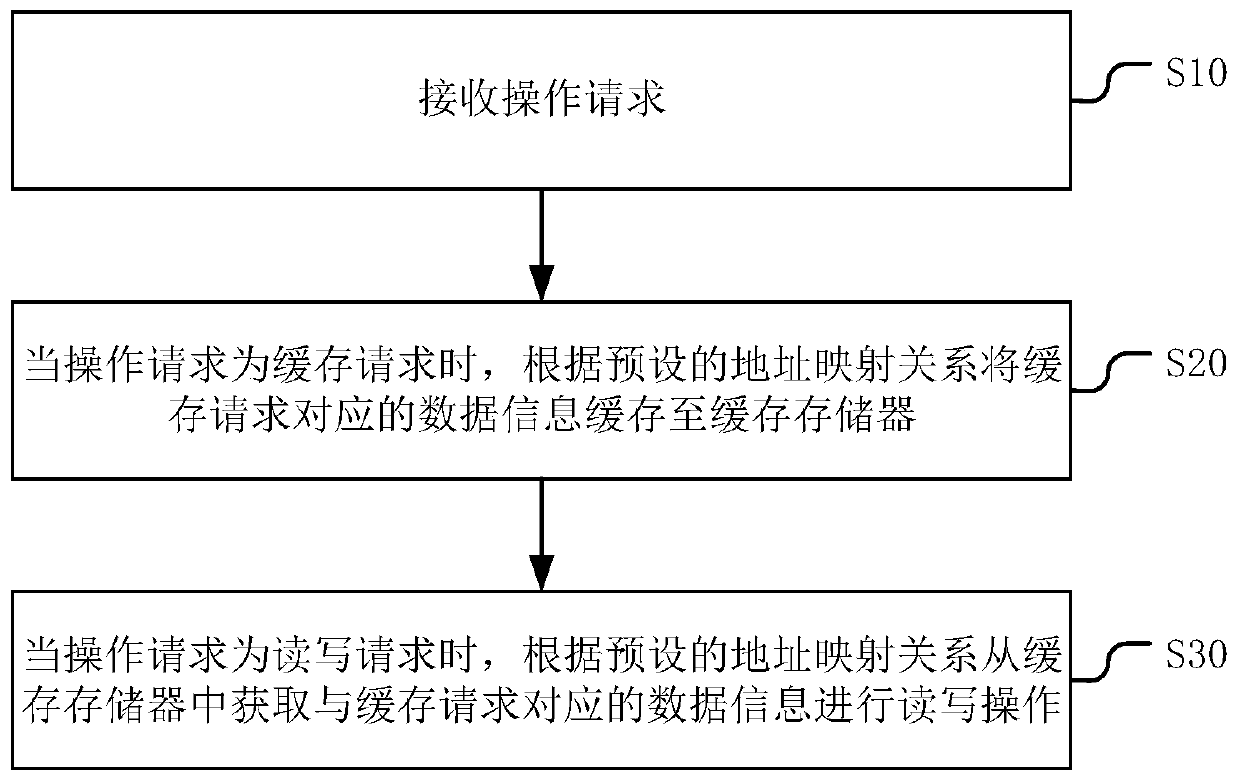

CPU, electronic equipment and CPU cache control method

ActiveCN110399314AIncrease cache spaceImprove hit rateMemory architecture accessing/allocationMemory systemsData informationComputer module

The invention discloses a CPU which comprises a CPU body and further comprises: a second processor interconnection interface detachably connected with a first processor interconnection interface of the CPU body, a bus protocol module which is connected with the second processor interconnection interface and used for providing a bus protocol, a cache read-write control module which is connected with the bus protocol module and used for controlling read-write operation; and a cache memory which is connected with the cache read-write control module and is used for caching data information. According to the CPU provided by the invention, the cache space can be increased or reduced according to actual requirements, and the cache space of the CPU can be conveniently and flexibly adjusted, so that on one hand, the hit rate of the cache space of the CPU can be improved, the working efficiency of the CPU is further improved, and the system performance is improved; and on the other hand, waste of the cache space can be avoided. The invention also discloses an electronic device and a CPU cache control method, which have the above beneficial effects.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

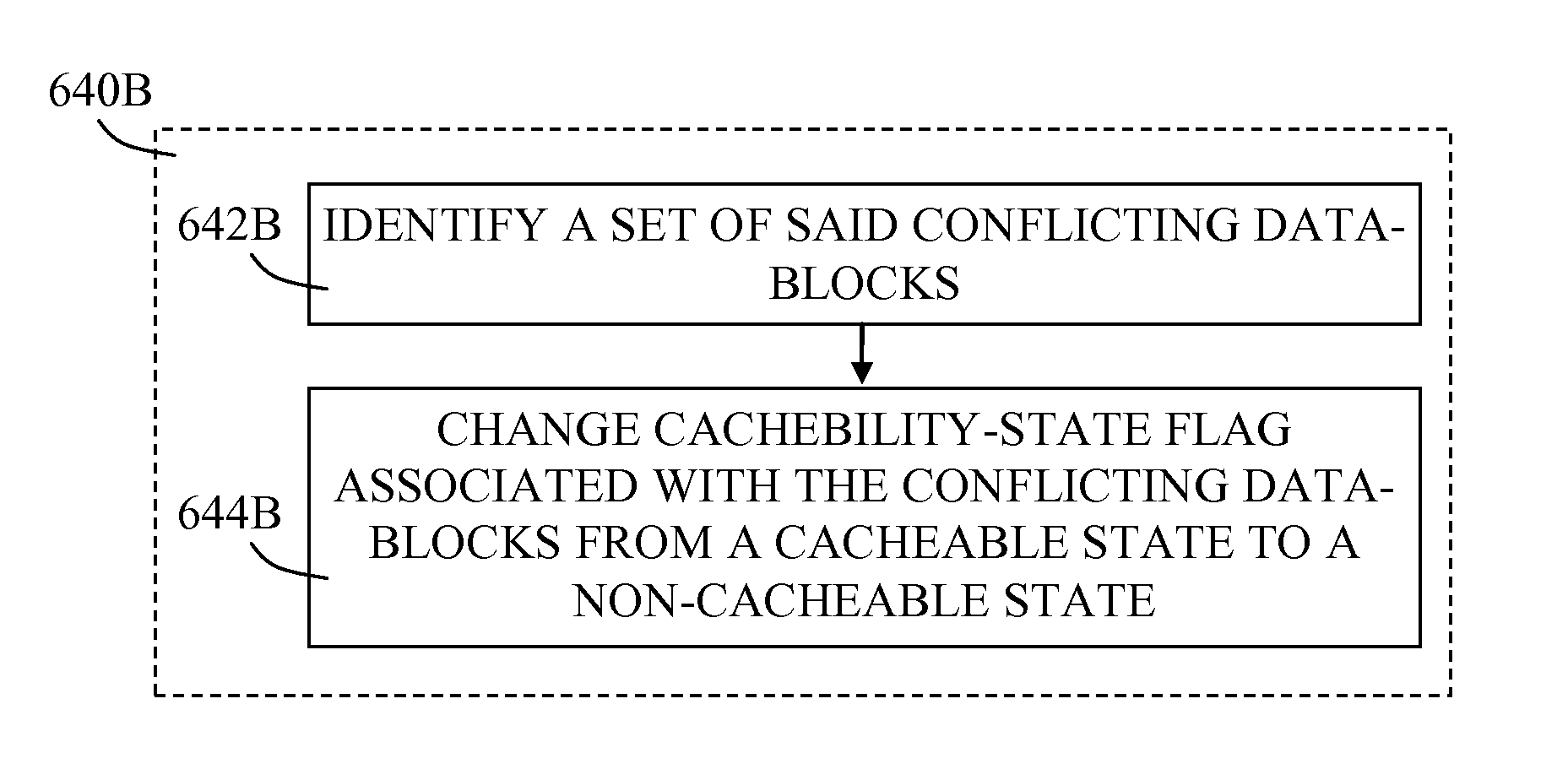

System and methods for CPU copy protection of a computing device

ActiveUS9471511B2Avoiding evictionMemory architecture accessing/allocationMemory adressing/allocation/relocationOperabilityCopy protection

The present disclosure relates to techniques for system and methods for software-based management of protected data-blocks insertion into the memory cache mechanism of a computerized device. In particular the disclosure relates to preventing protected data blocks from being altered and evicted from the CPU cache coupled with buffered software execution. The technique is based upon identifying at least one conflicting data-block having a memory mapping indication to a designated memory cache-line and preventing the conflicting data-block from being cached. Functional characteristics of the software product of a vendor, such as gaming or video, may be partially encrypted to allow for protected and functional operability and avoid hacking and malicious usage of non-licensed user.

Owner:TRULY PROTECT

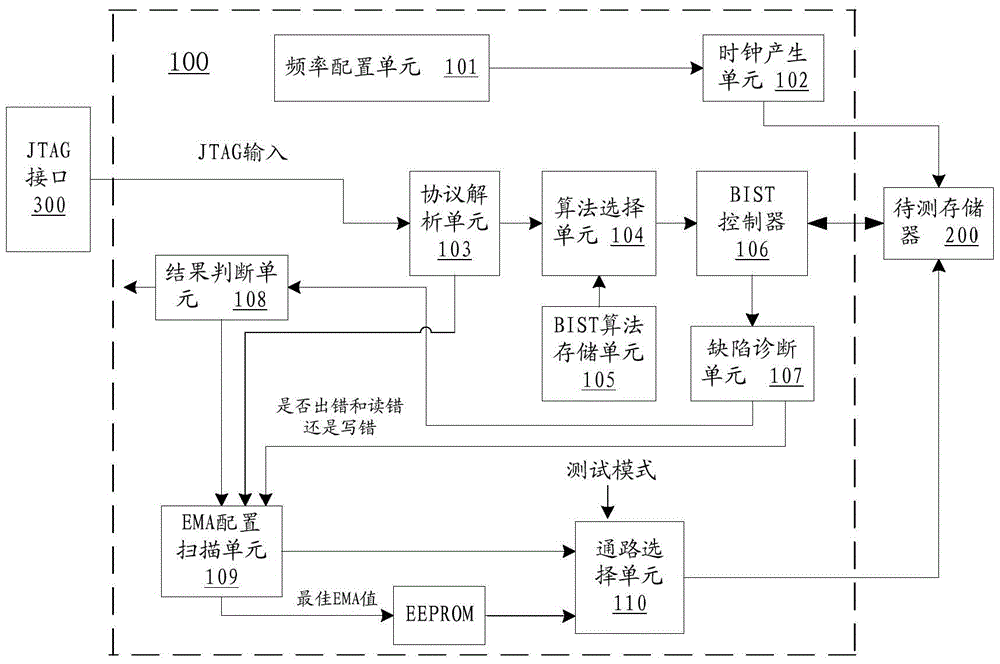

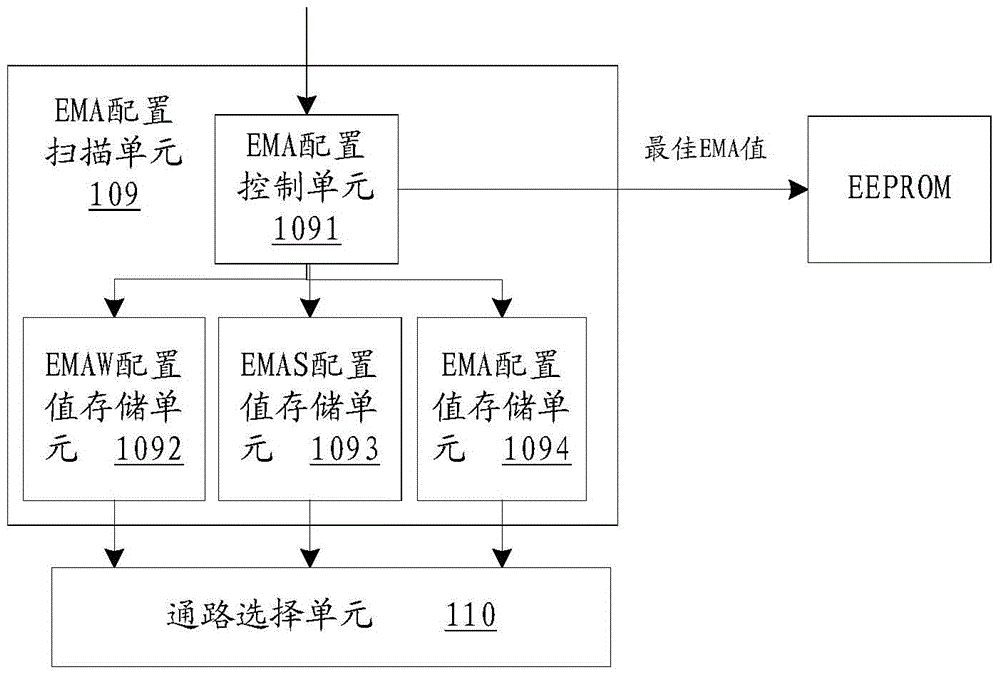

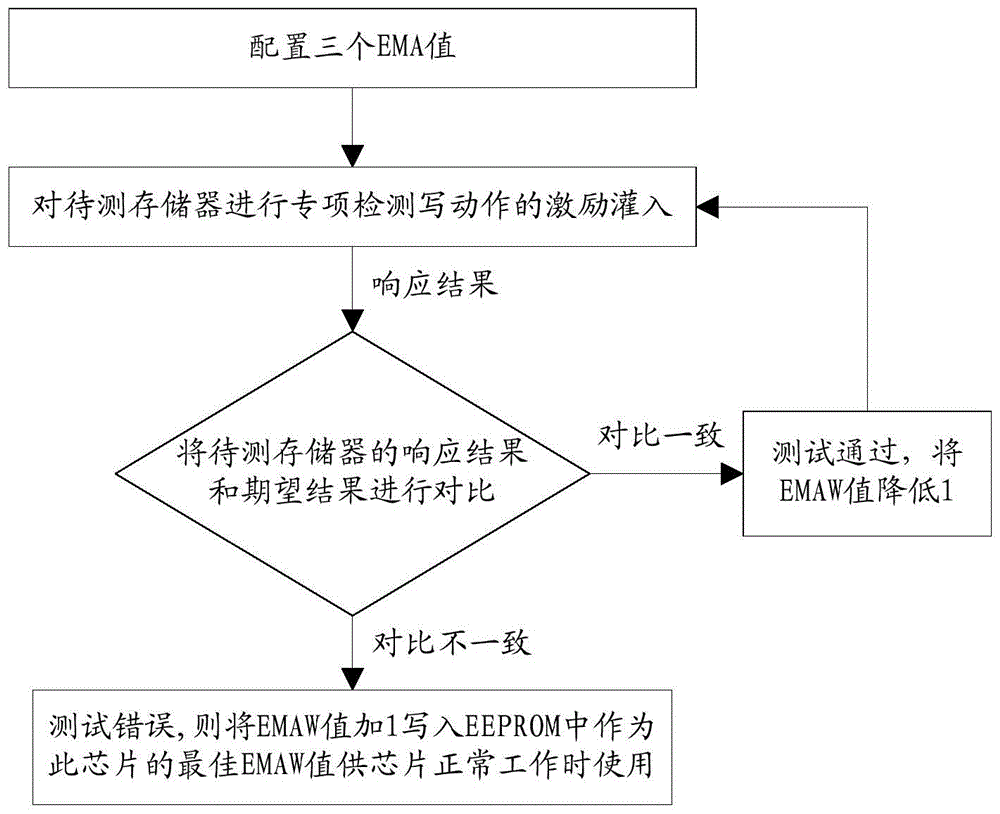

Self-adaptive testing method and device of CPU cache memory

The invention provides a self-adaptive testing method and device of CPU cache memory. The method includes: setting the frequency allocation corresponding to a test target frequency before testing; when testing starts, injecting a bist start command through a jtag interface; conducting protocol parsing on a jtag command into a direct control signal; performing an EMA (extra margin adjustment) scanning equipped bist testing process through the direct control signal, if optimal EMA is found in a bist testing process, judging the test passes, at the same time internal EEPROM can store an optimal EMA configuration value under the frequency for a chip to use while entering a normal work mode; otherwise judging the test fails, and classifying the chip into an unsatisfactory chip. The optimal EMA value is obtained through testing to serve the configuration use of the chip during normal work, so that the chip can work under the optimal EMA value specific to itself and acquire an equilibrium point of the best memory performance and stability.

Owner:FUZHOU ROCKCHIP SEMICON

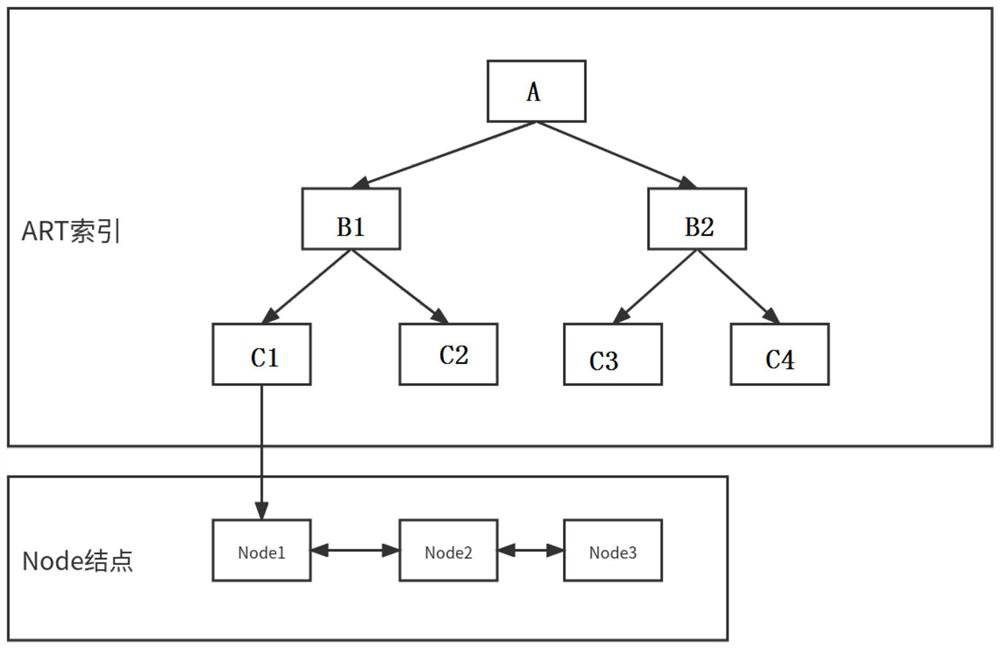

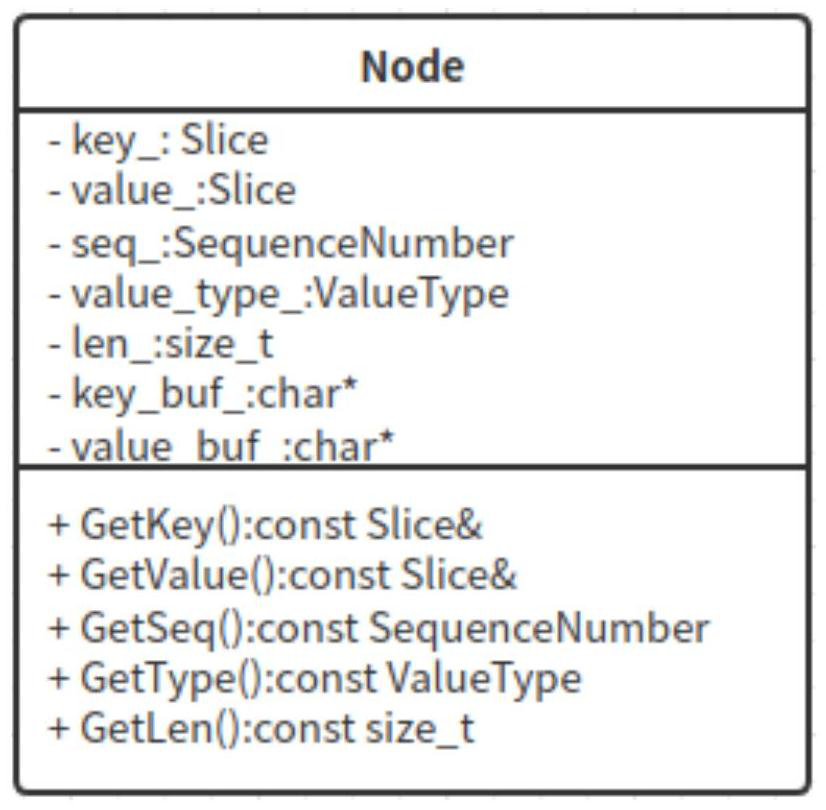

Key-value separated storage method and system

PendingCN114138792AReduce consumptionImprove hit rateSpecial data processing applicationsDatabase indexingParallel computingTree (data structure)

The invention particularly relates to a Key-value separated storage method and a Key-value separated storage system. According to the Key-value separation storage method and system, an ART prefix tree is used as a basic data structure of a data index, spaces are distributed for a key value and a value value in a node Node of each piece of data for storage, corresponding Sequence Number and Value Type are stored in the node Node, separation of Key-value is achieved, coding is not carried out any more in the data storage process, the key, the Sequence Number, the Value Type and the value are directly stored and read, and the data storage efficiency is improved. And therefore, the IO throughput is reduced, the CPU cache hit rate is increased, and the read-write performance of the database is improved. According to the Key-value separated storage method and system, corresponding information does not need to be decoded during data read-write operation, and the information can be obtained by directly using the node Node, so that the I / O consumption of a disk is reduced, the IO throughput is reduced, the CPU cache hit rate is increased, and the read-write performance of a database is improved.

Owner:上海沄熹科技有限公司

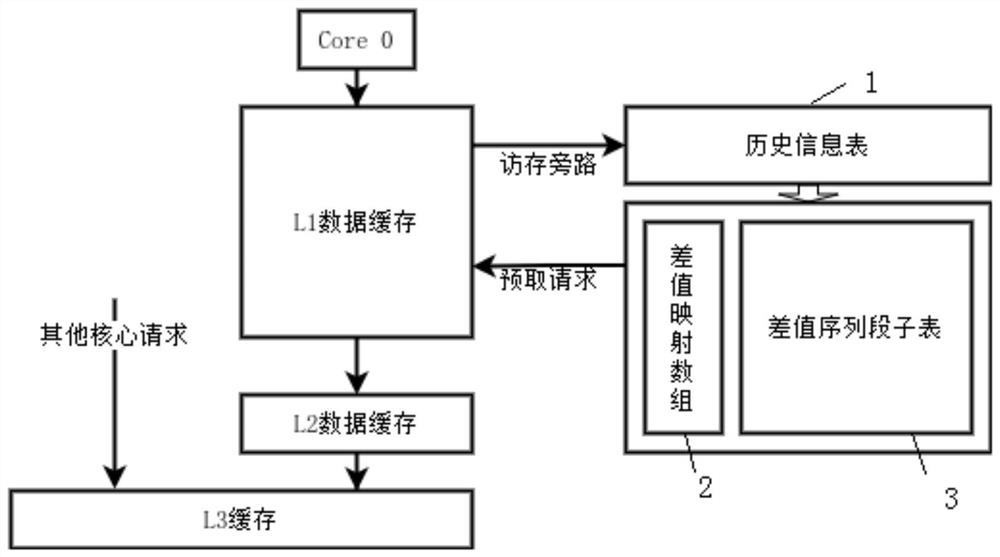

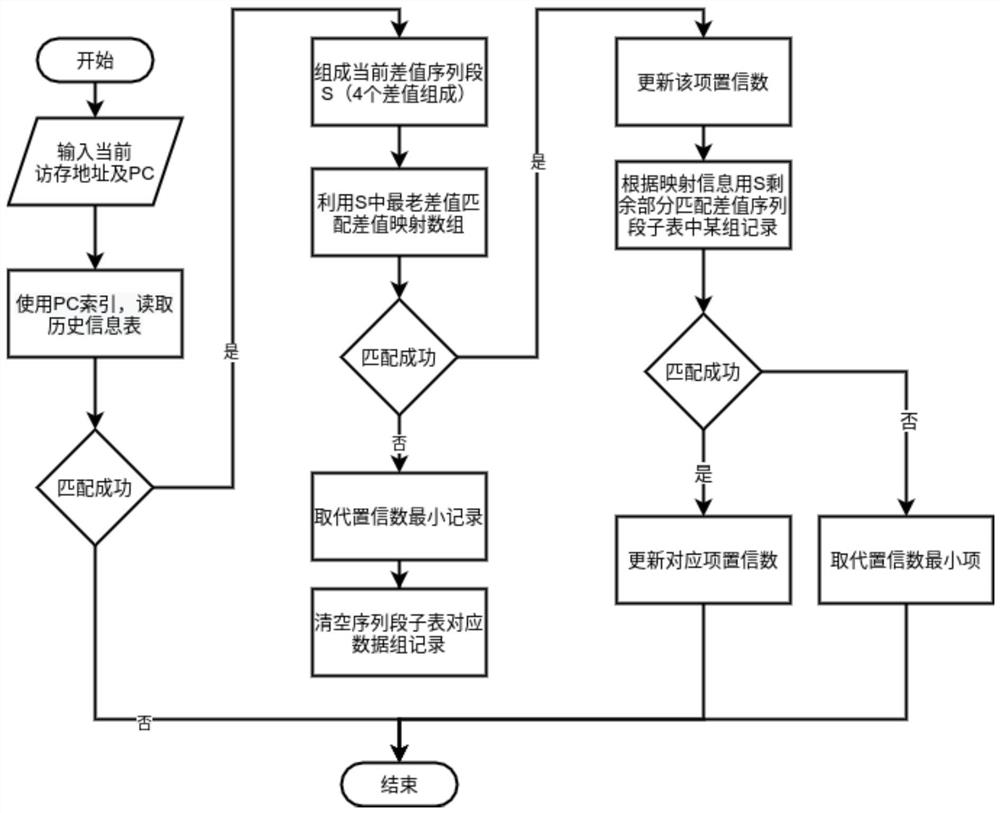

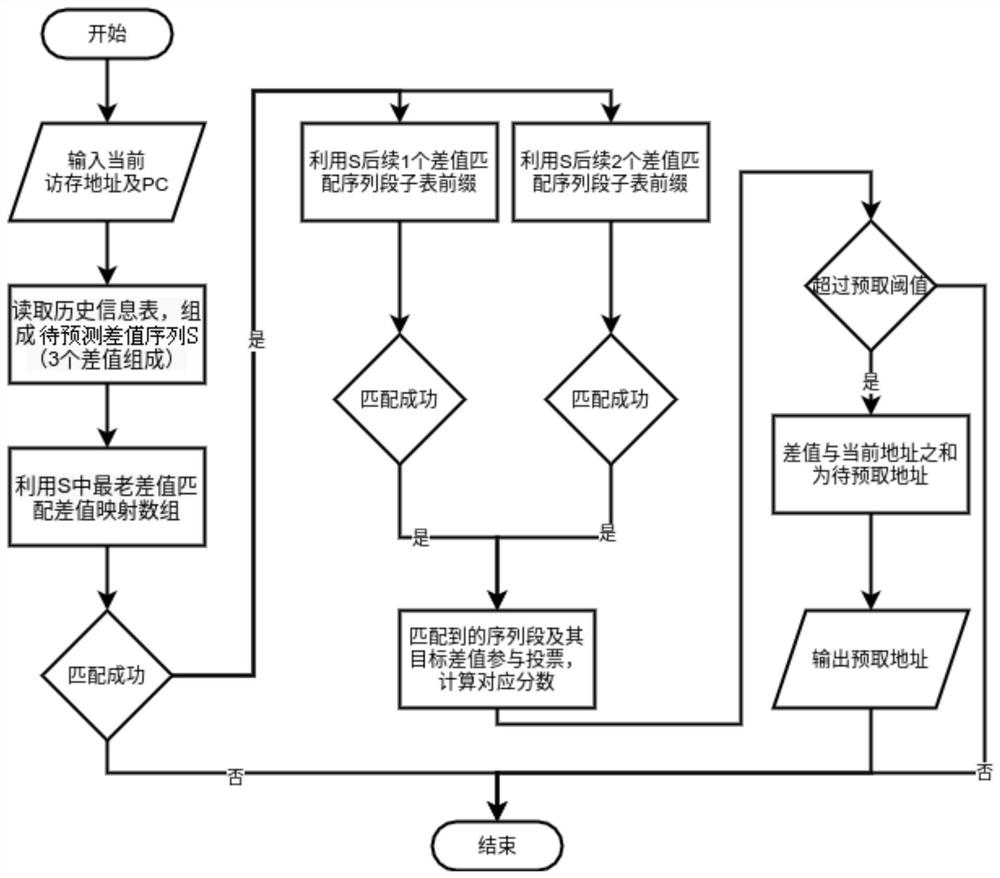

Method for prefetching CPU cache data based on merged address difference value sequence

ActiveCN113656332AIncrease match oddsIncrease coverageMemory architecture accessing/allocationEnergy efficient computingTheoretical computer scienceData mining

The invention provides a CPU cache data prefetching method based on a merged address difference value sequence, which comprises the following steps of: collecting current memory access information of a to-be-prefetched data cache, and trying to obtain information from a historical information table to update and obtain a current difference value sequence section when the current memory access information is collected; according to the current difference value sequence segment, updating the historical information table, the difference value mapping array and the difference value sequence segment sub-table, and removing a first difference value to obtain a to-be-predicted difference value sequence; performing multiple matching on prefix subsequences of complete sequence segments stored in the dynamic mapping mode table by using the to-be-predicted difference value sequence to obtain an optimally matched complete sequence segment and a corresponding prediction target difference value; and adding the predicted target difference value to a memory access address in the current memory access information to obtain a predicted target address. The problem that in the prior art, multi-table cascading is needed to store the multi-length difference value sequence is solved, only one table is used for storage, and the storage and query logic of a memory access mode is simplified.

Owner:SHANGHAI ADVANCED RES INST CHINESE ACADEMY OF SCI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com