Method for improving performance of multiprocess programs of application delivery communication platforms

A communication platform and application delivery technology, applied in the field of computer networks, can solve the problems of low CPU cache efficiency and system performance degradation, and achieve the effect of improving the cache hit rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the embodiments and accompanying drawings.

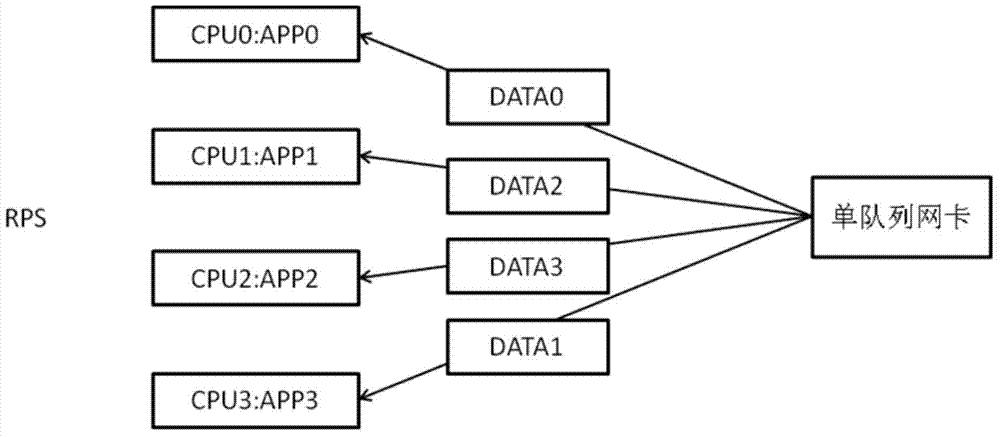

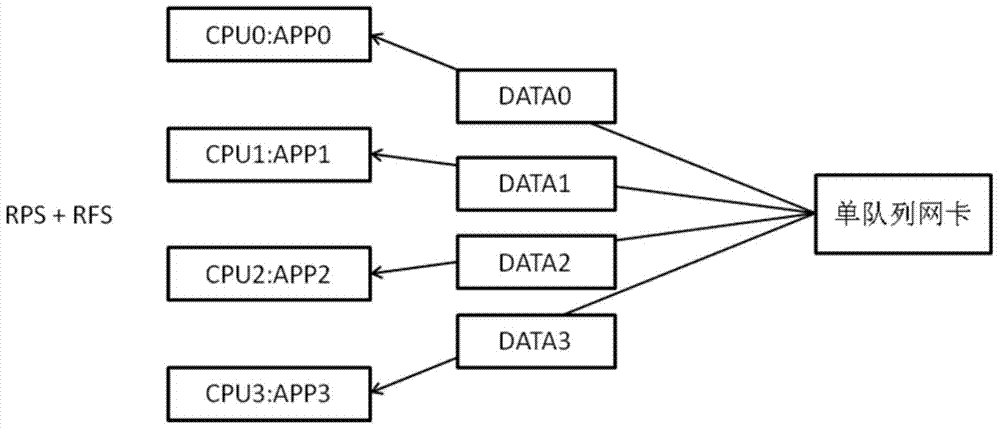

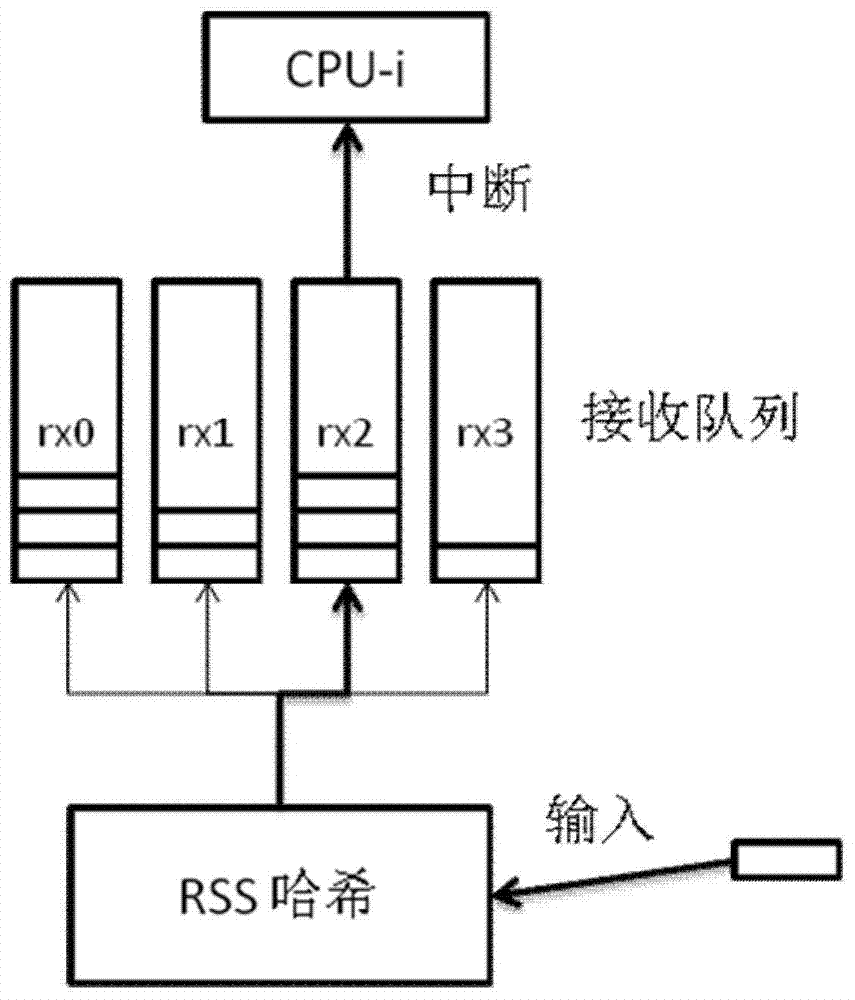

[0035] Such as Figure 5 As shown, in order to improve the performance of the multi-process program of the application delivery communication platform, the method includes:

[0036] Step 10 hashes the data packet to the network card queue according to the source IP;

[0037] In order to ensure that the hard interrupts and soft interrupts of the data packets of the same source IP are processed by the same processor core, the default RSS hash algorithm based on the source IP, source port, destination IP, and destination port in the Linux kernel network card driver module is replaced by Source IP address hash algorithm.

[0038] Step 20 binds the data packet in the network card queue to the corresponding CPU core;

[0039] The hard interrupts and s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com