Main memory database access optimization method on basis of page coloring technology

A page coloring and optimization method technology, applied in the field of database management, can solve problems such as quota conflicts, few page colors, and allocation of large data sets that cannot be weakly localized, and achieve the effect of reducing cache conflicts

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

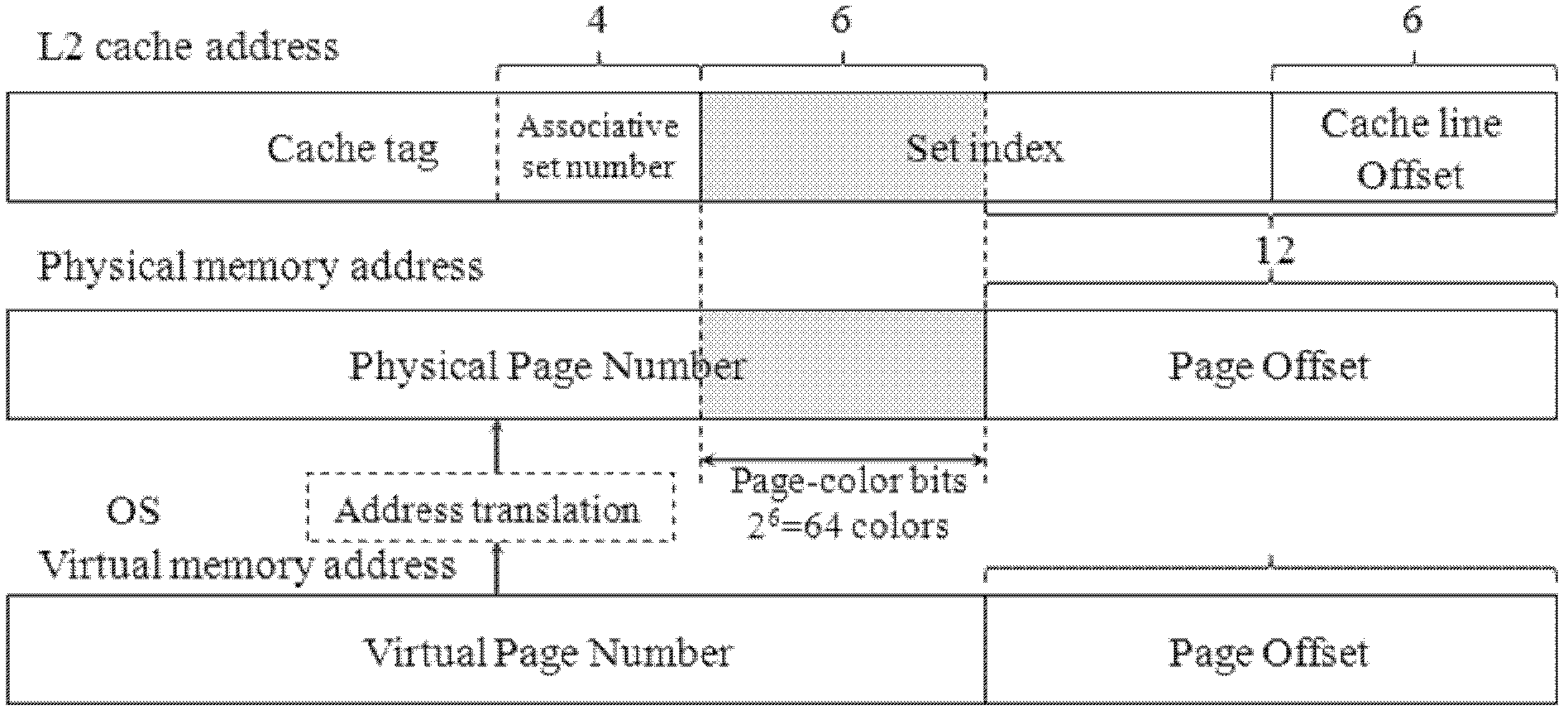

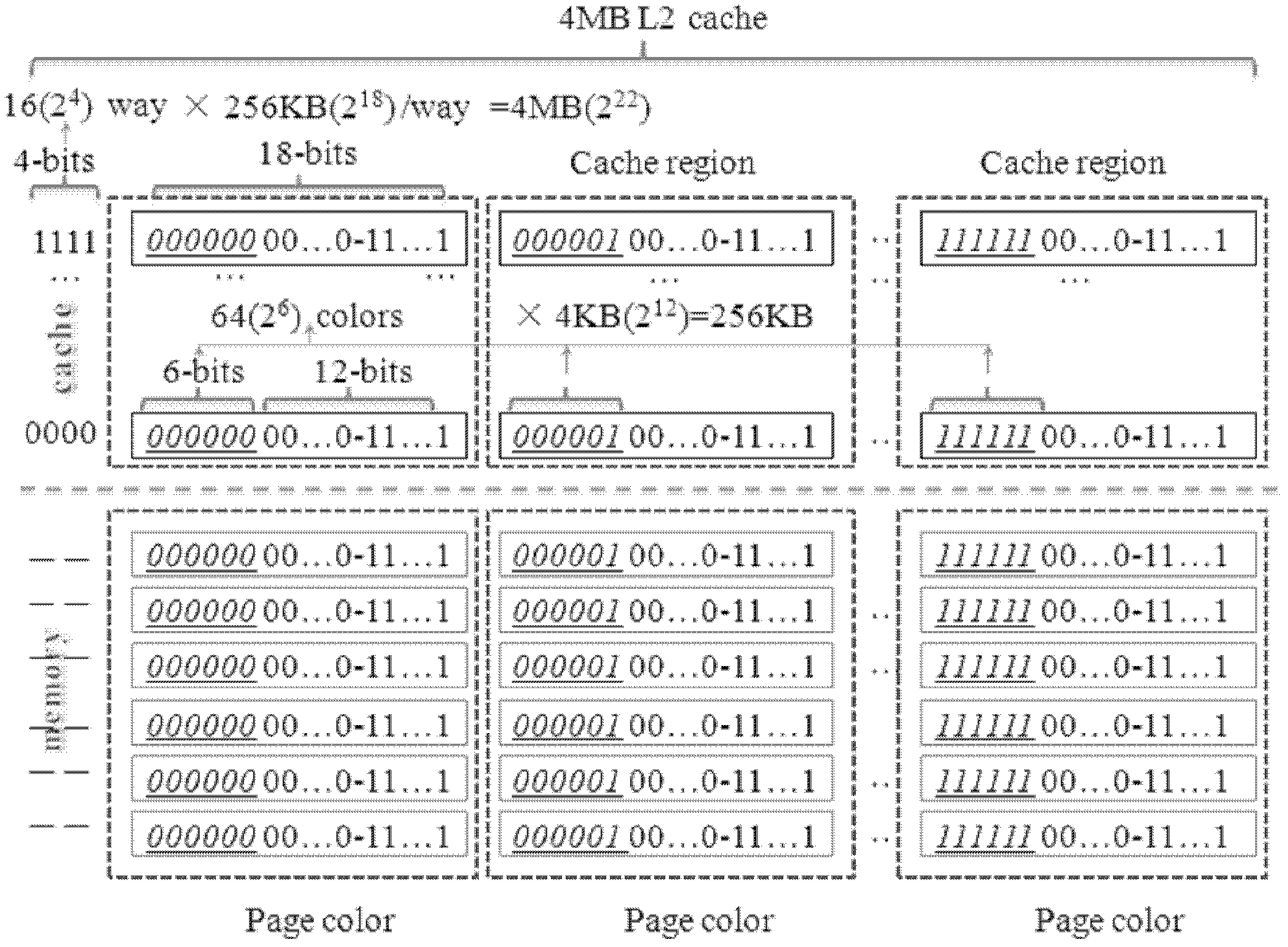

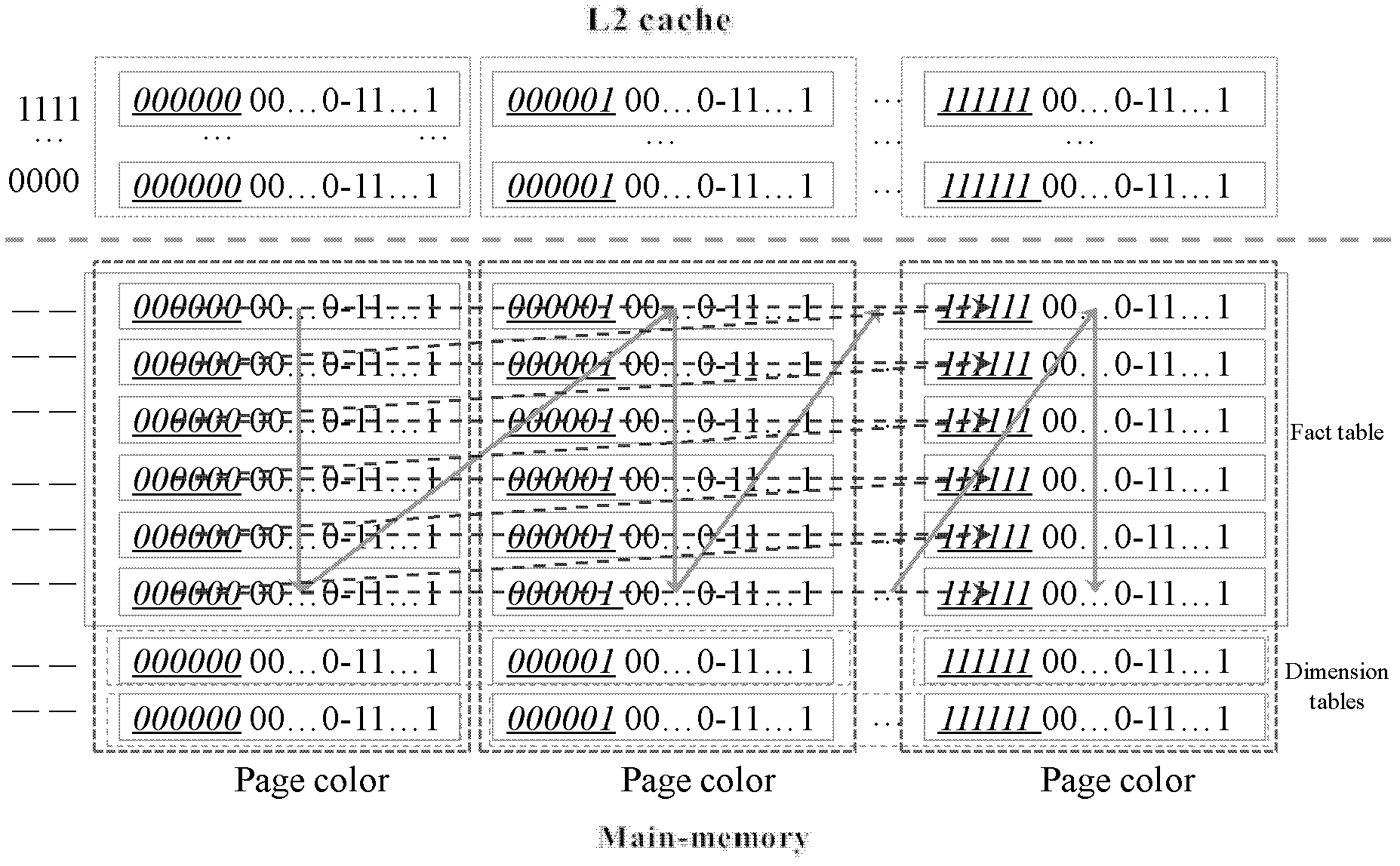

[0033] In the present invention, the cache (cache) mainly refers to the CPU cache at the processor hardware level. It is different from the database software-level buffer (buffer), whose access control is determined by hardware instructions, and the database software cannot actively manage it like a buffer. The bottom-level goal of cache access is to optimize the access mode of data sets with different access characteristics, so process-level cache optimization technology still has a large room for optimization. For this reason, the present invention provides a cache access optimization method applied to memory databases. This method is based on the current mainstream cache replacement algorithm for multi-core processor shared CPU cache - n-way set associative replacement (n-way set associative) algorithm, optimizes the data page access sequence according to the page color of memory data, thereby reducing OLAP query processing When the cache access conflicts, improve the over...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com