Method for prefetching CPU cache data based on merged address difference value sequence

A CPU cache and data prefetching technology, applied in electrical digital data processing, memory systems, instruments, etc., to improve prediction accuracy, reduce cache miss rate, and improve coverage.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The present invention will be described in further detail below through specific examples and accompanying drawings.

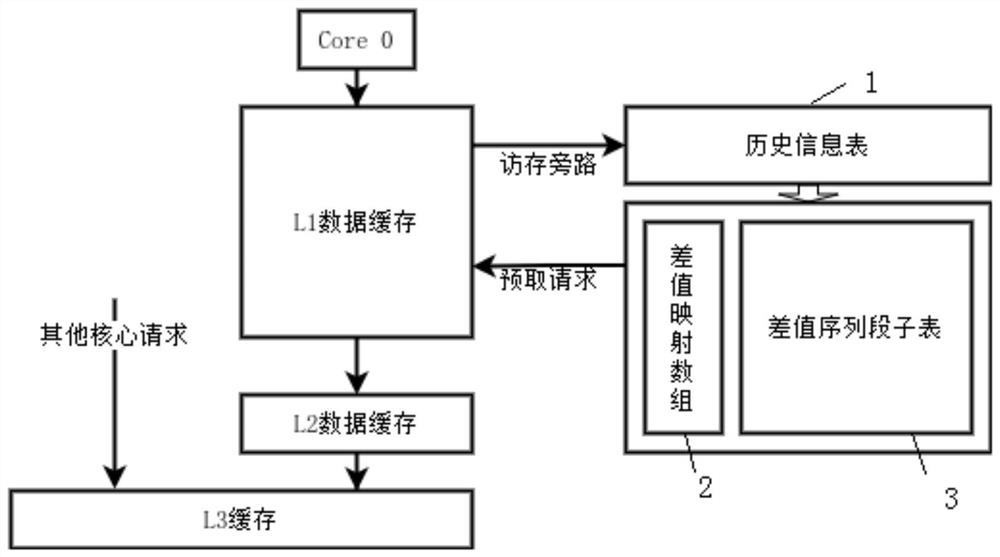

[0039] figure 1 The applicable environment and design architecture diagram of the CPU cache data prefetching method based on the merged address difference sequence of the present invention are shown. Wherein, the CPU cache data prefetching method based on the merged address difference sequence of the present invention is applicable to L1 of a core in a traditional CPU (in this embodiment, the core is the No. 0 core Core0 in the CPU) Level data cache can also be applied to L2 and L3 level data cache, and the corresponding performance will be reduced. And each core in the traditional CPU can adopt a set of independent CPU cache data prefetching method based on the combined address difference sequence of the present invention to realize data prefetching, thereby realizing each core in the traditional CPU data prefetch.

[0040] The CPU cache data prefet...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com