Central processing unit cache friendly multithreaded allocation

a multi-threaded allocation and central processing unit technology, applied in the field of central processing unit cache friendly multi-threaded allocation, can solve the problems of increasing filesystem latency and achieve the effect of improving the performance of a hybrid storage device and affecting the overall performan

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

example clauses

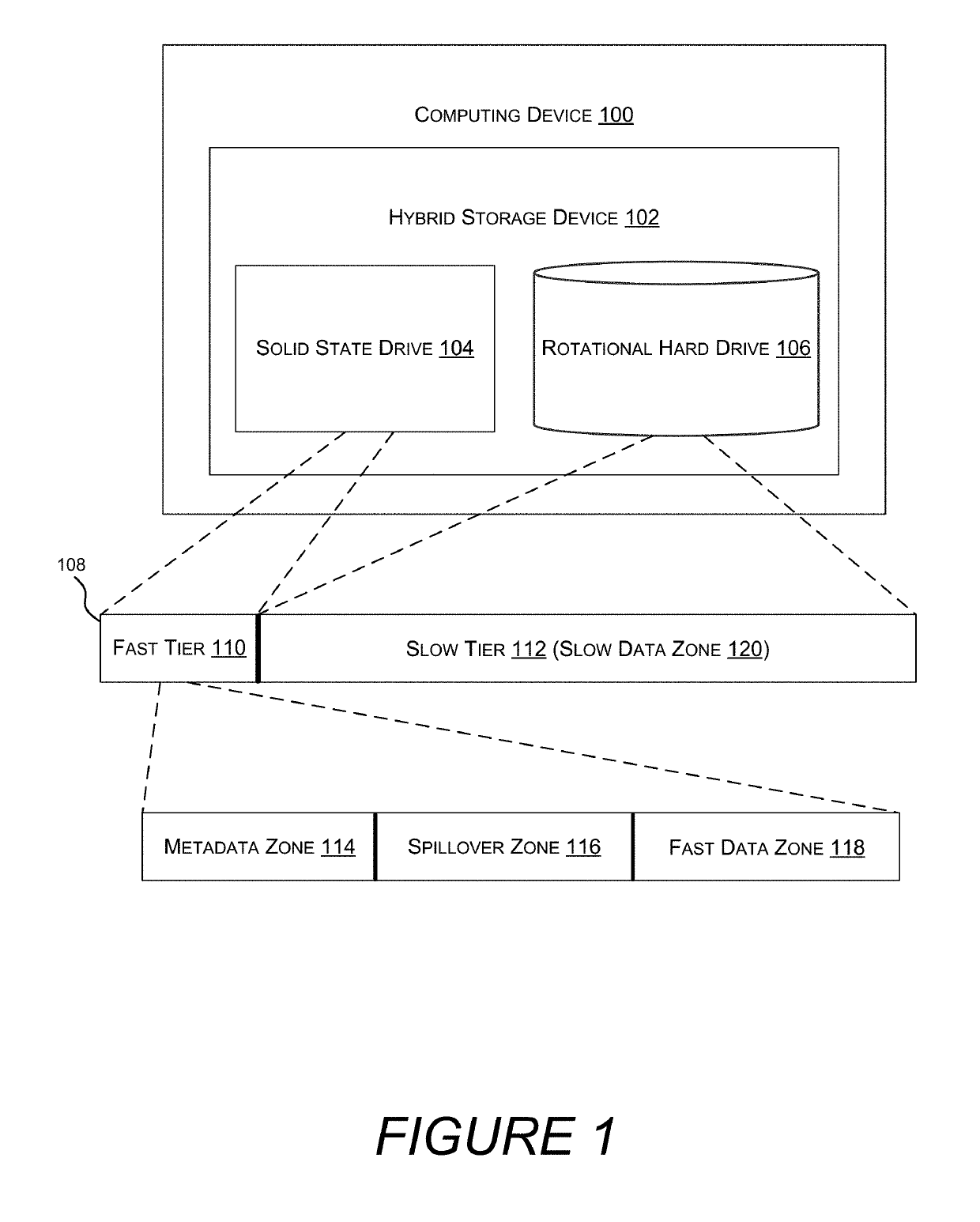

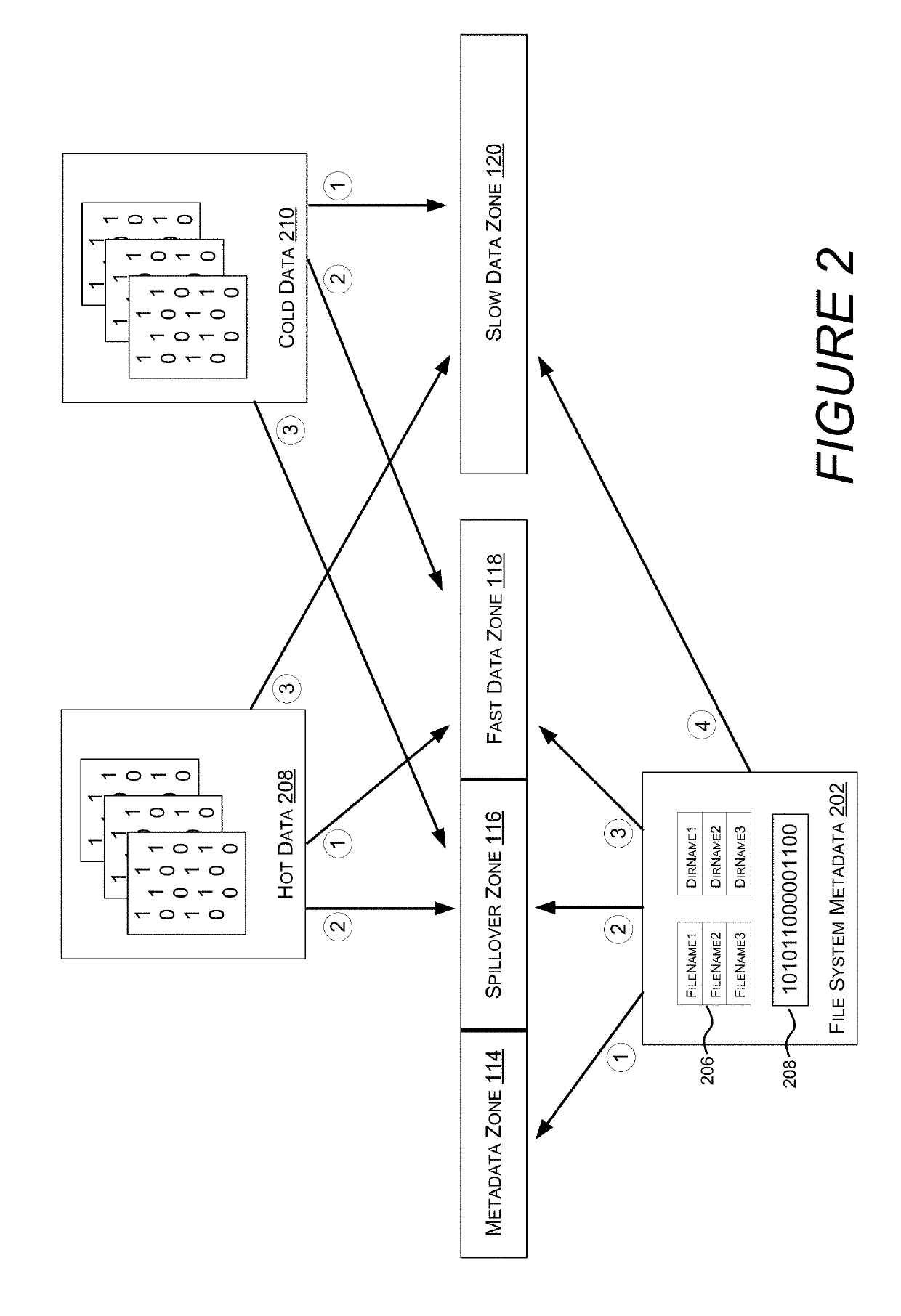

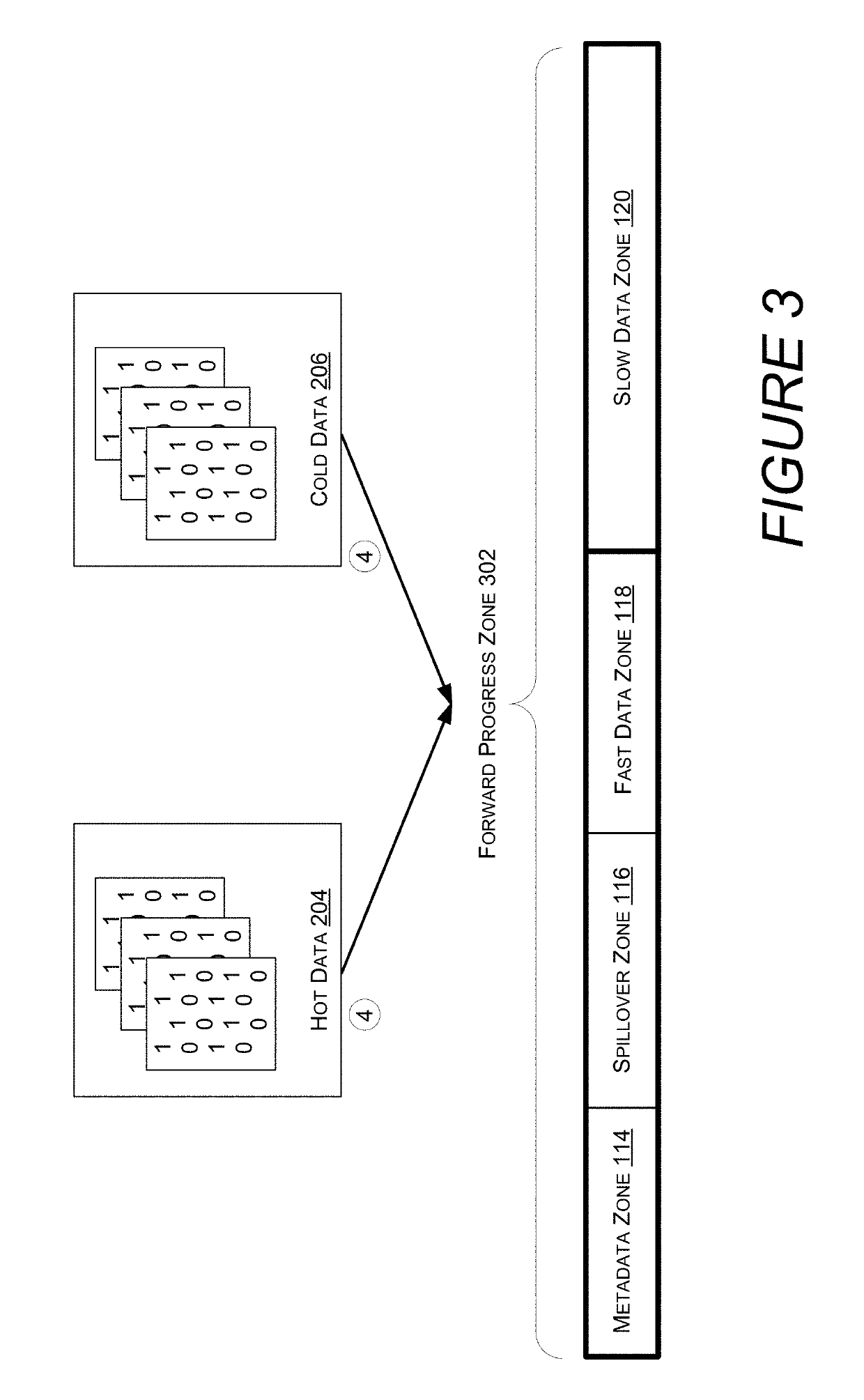

[0122]Example Clause A, a method for storage allocation on a computing device comprising a multi-core central processing unit (CPU), each CPU core having a non-shared cache, the method comprising: receiving, at a filesystem allocator, a plurality of storage allocation requests, each executing on a different core of the multi-core CPU, wherein the storage allocation requests are for a file system volume that is divided into bands composed of a plurality of storage clusters, and wherein, for each band, storage clusters are marked as allocated or unallocated by a corresponding cluster allocation bitmap; dividing a cluster allocation bitmap into a plurality of chunks, wherein each chunk is the size of a cache line of the non-shared cache, wherein each chunk is aligned in system memory with the non-shared cache lines of the non-shared cache, and wherein a chunk status bitmap indicates which of the plurality of chunks has at least one unallocated cluster; determining a maximum number of s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com