Patents

Literature

25839results about "Digital output to display device" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

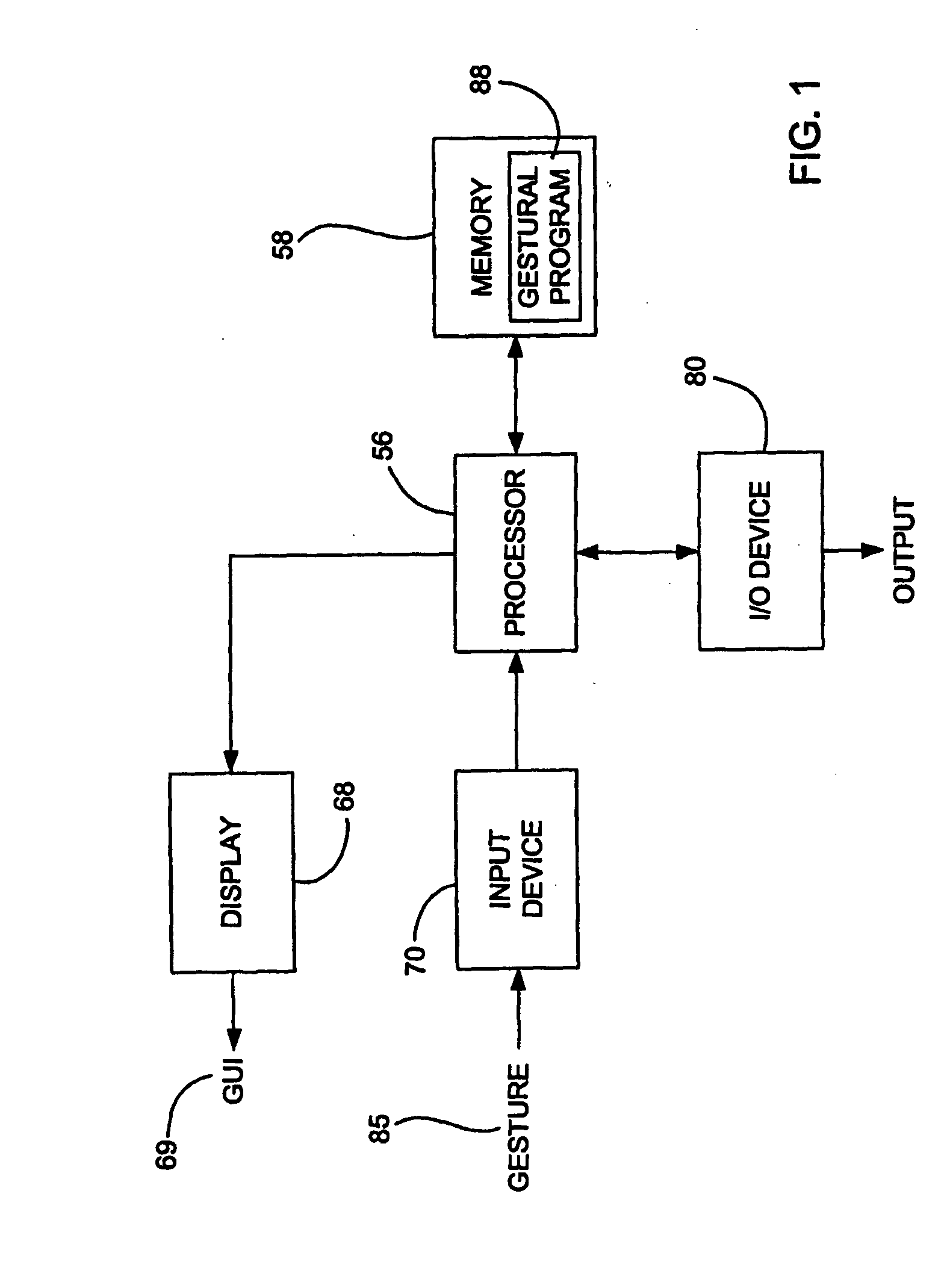

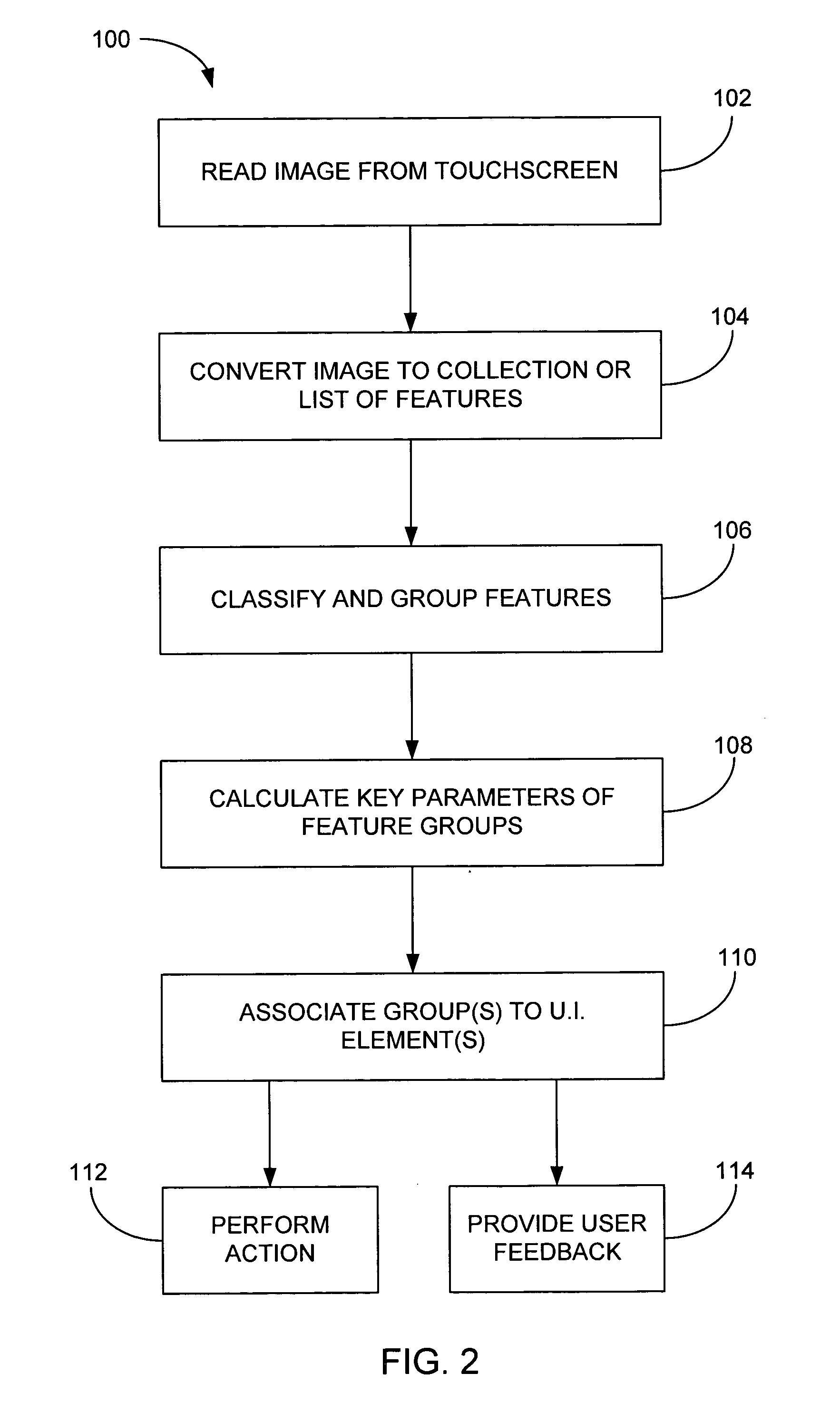

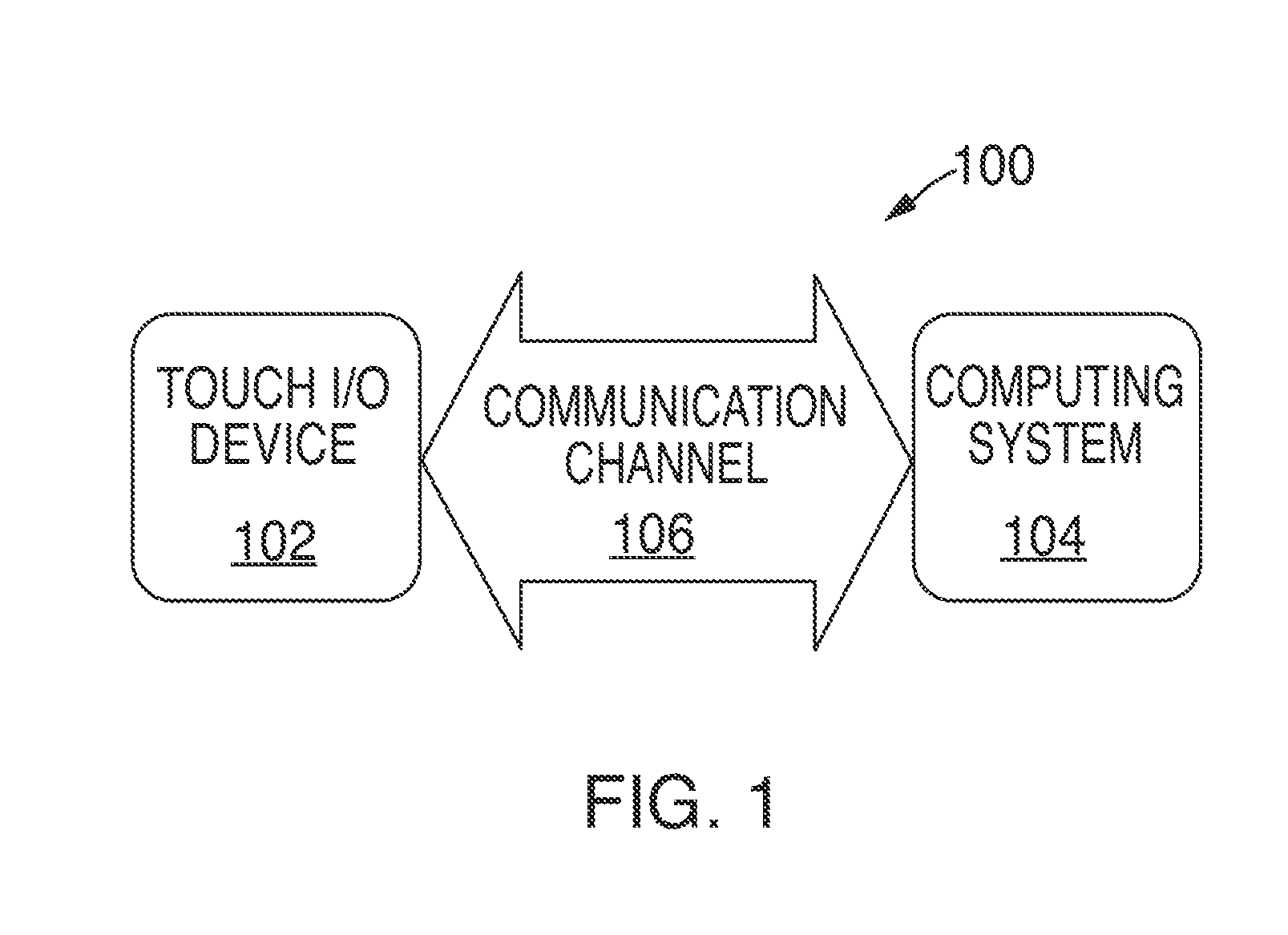

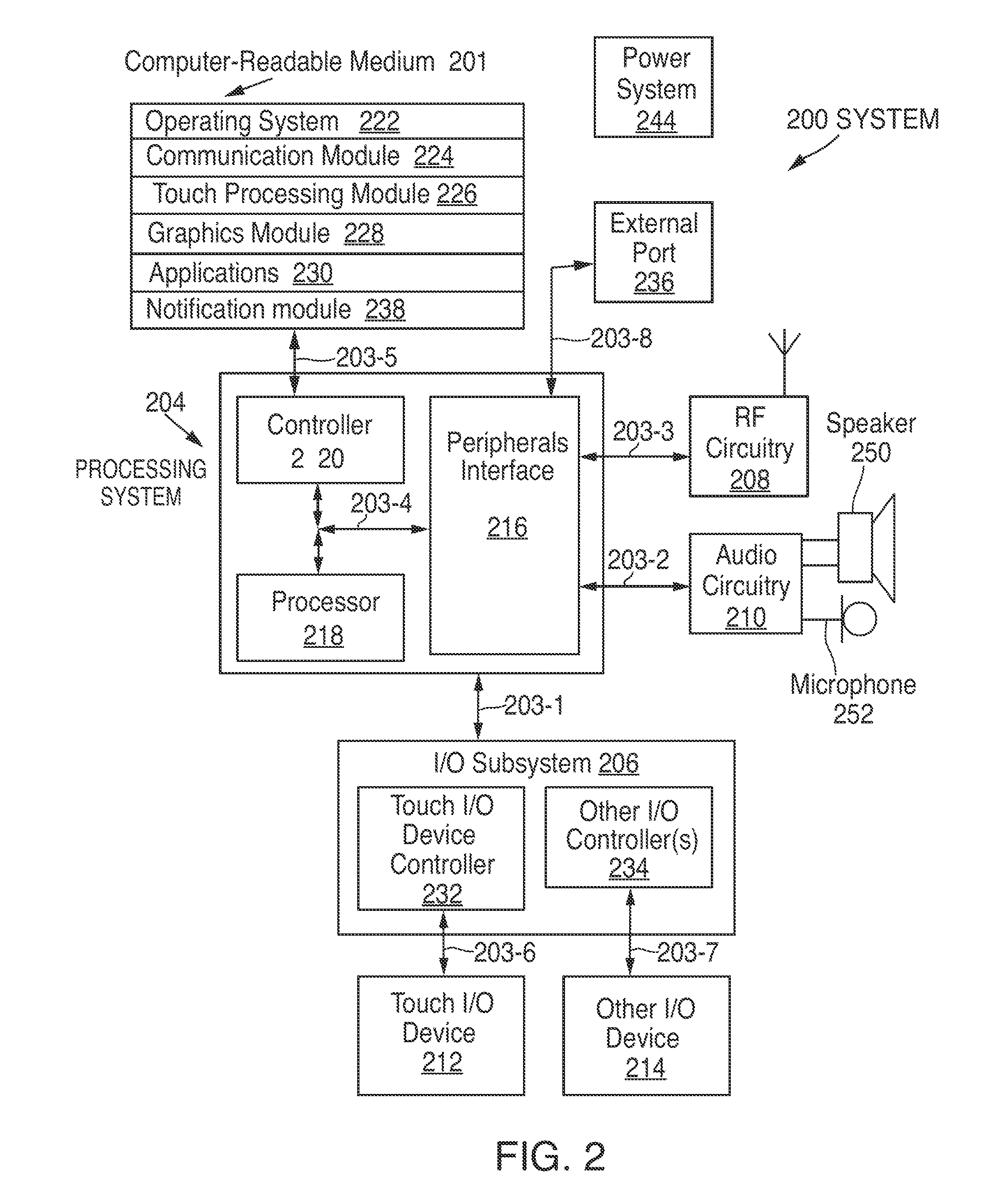

Mode-based graphical user interfaces for touch sensitive input devices

ActiveUS20060026535A1Input/output for user-computer interactionGraph readingGraphicsGraphical user interface

A user interface method is disclosed. The method includes detecting a touch and then determining a user interface mode when a touch is detected. The method further includes activating one or more GUI elements based on the user interface mode and in response to the detected touch.

Owner:APPLE INC

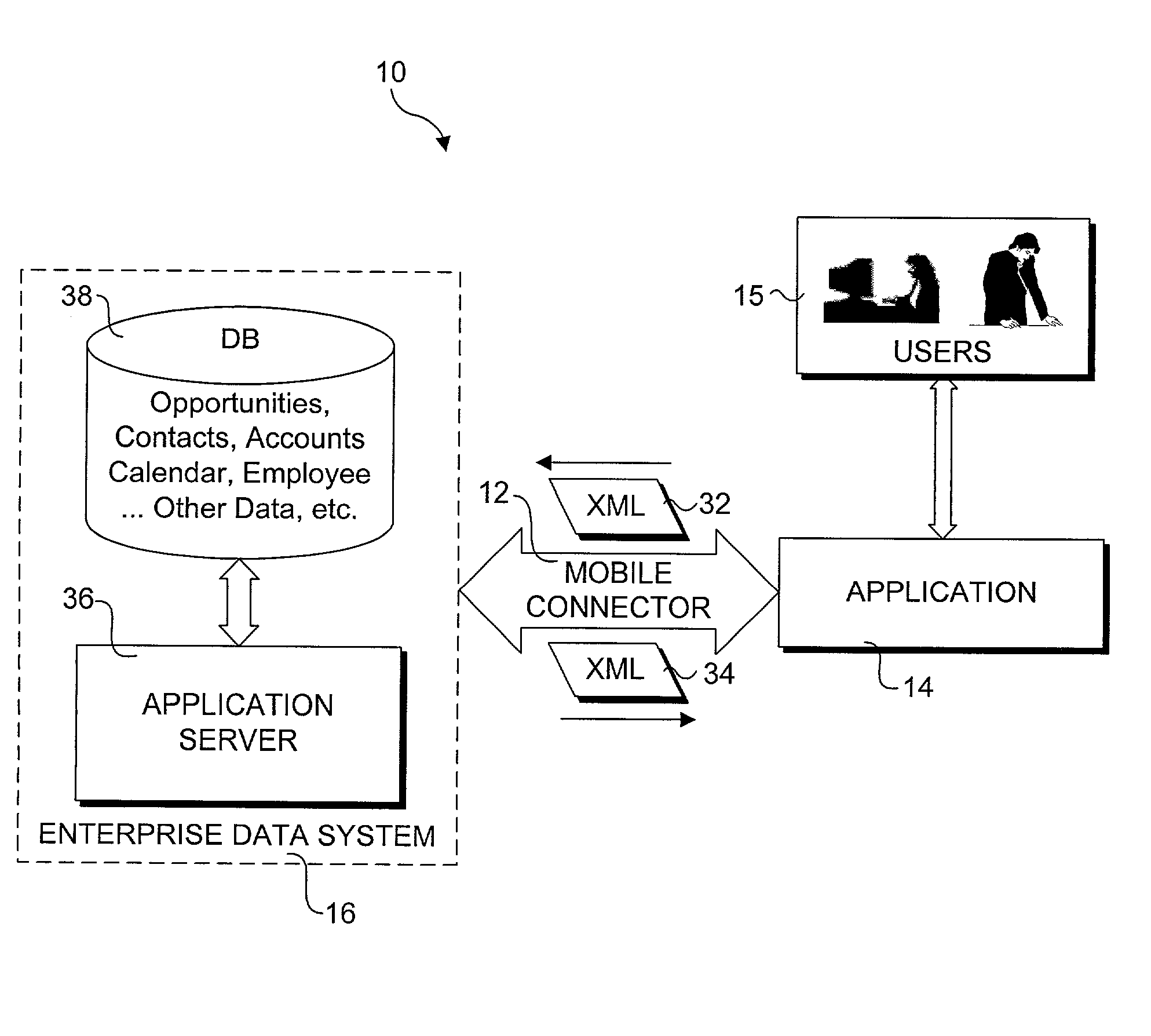

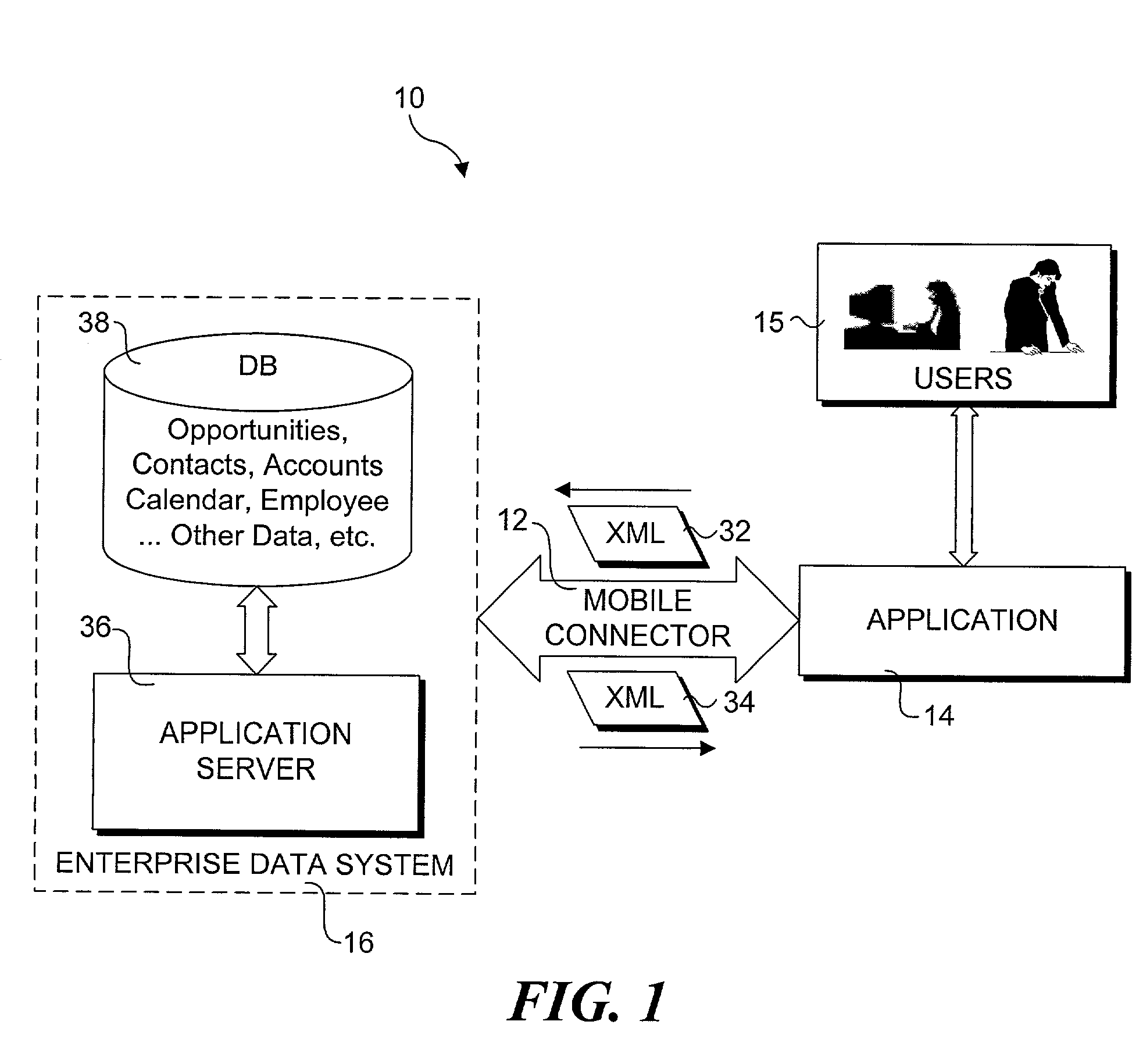

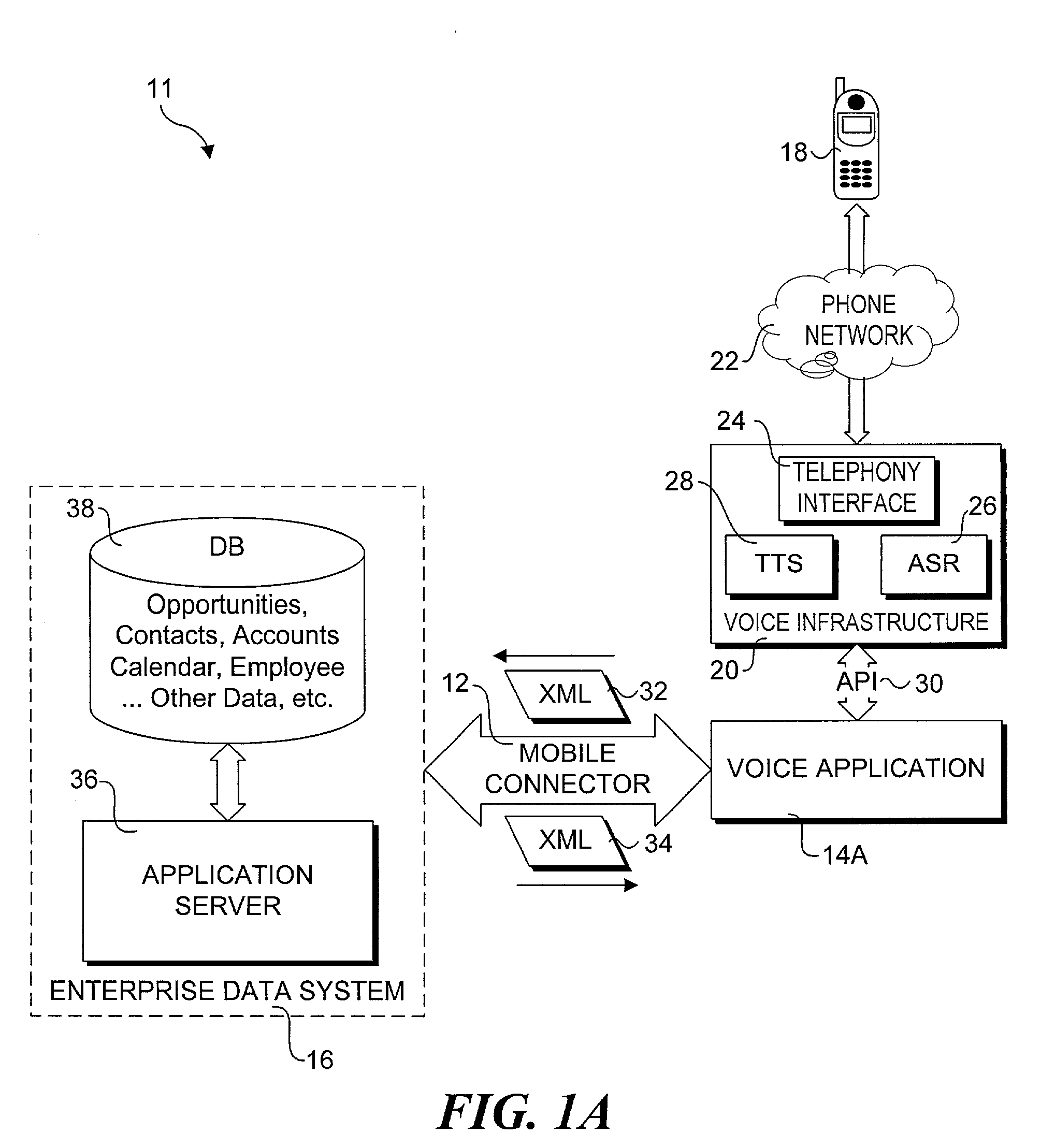

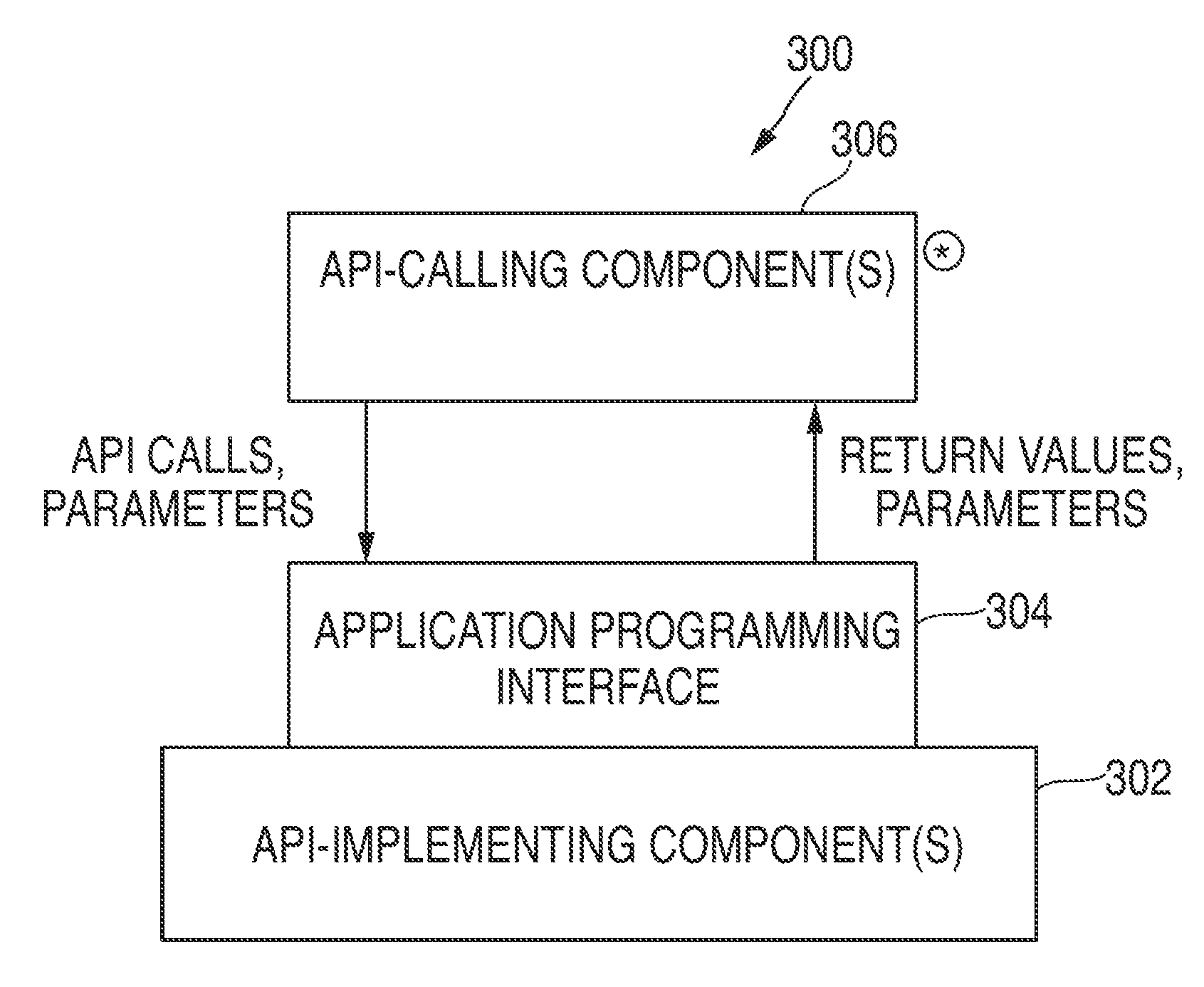

Method and system for enabling connectivity to a data system

A method and system that provides filtered data from a data system. In one embodiment the system includes an API (application programming interface) and associated software modules to enable third party applications to access an enterprise data system. Administrators are enabled to select specific user interface (UI) objects, such as screens, views, applets, columns and fields to voice or pass-through enable via a GUI that presents a tree depicting a hierarchy of the UI objects within a user interface of an application. An XSLT style sheet is then automatically generated to filter out data pertaining to UI objects that were not voice or pass-through enabled. In response to a request for data, unfiltered data are retrieved from the data system and a specified style sheet is applied to the unfiltered data to return filtered data pertaining to only those fields and columns that are voice or pass-through enabled.

Owner:ORACLE INT CORP

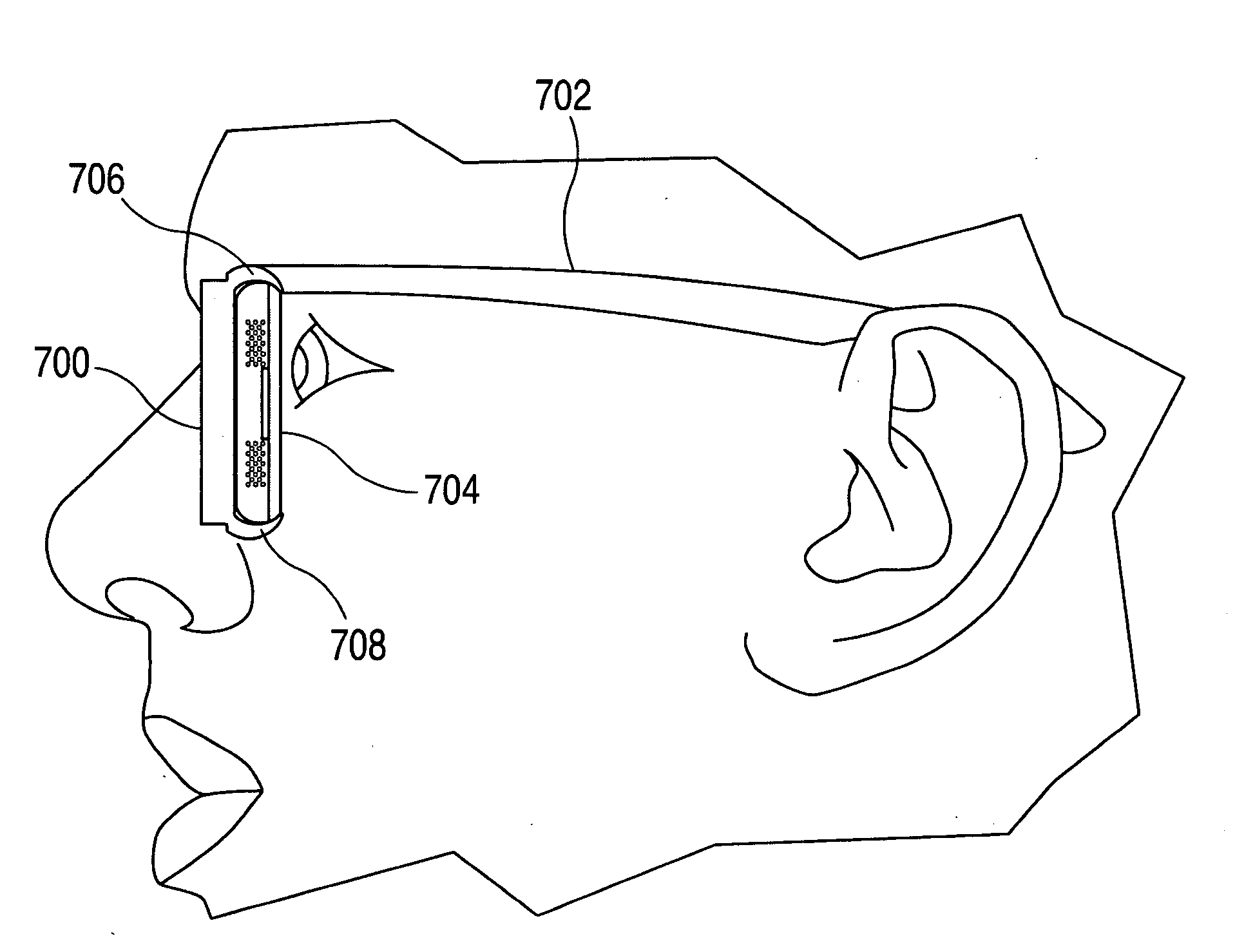

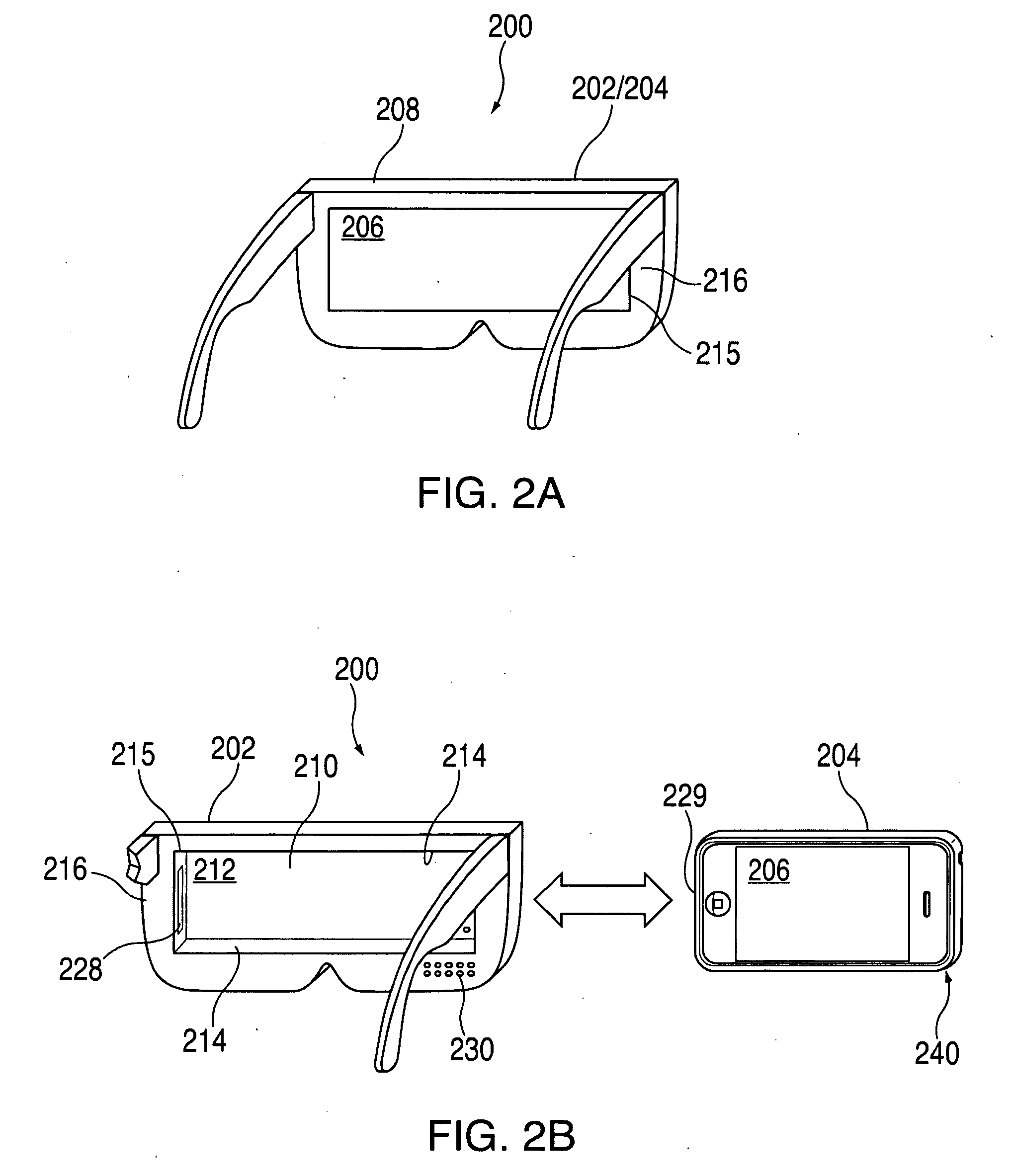

Head-mounted display apparatus for retaining a portable electronic device with display

Owner:APPLE INC

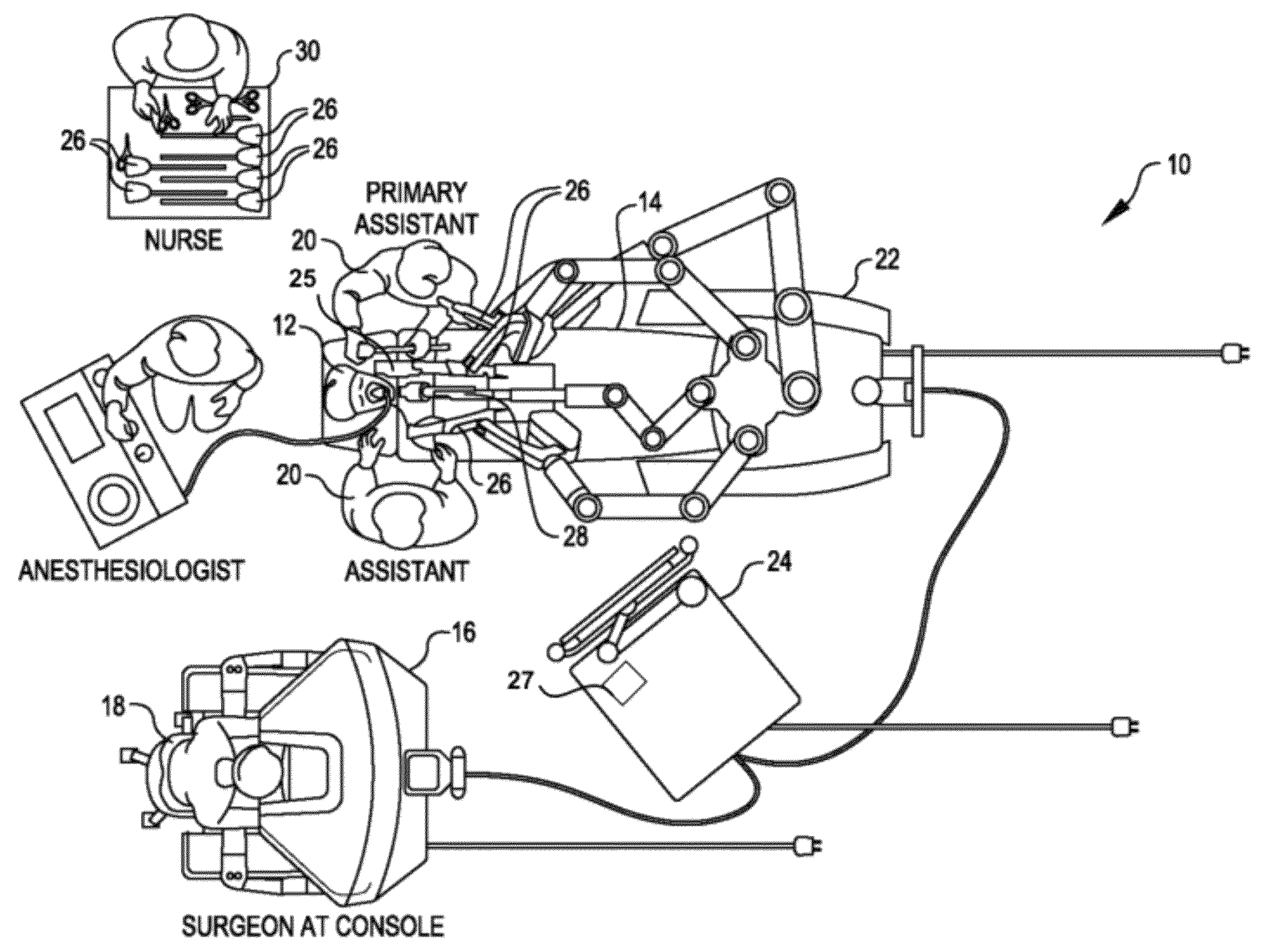

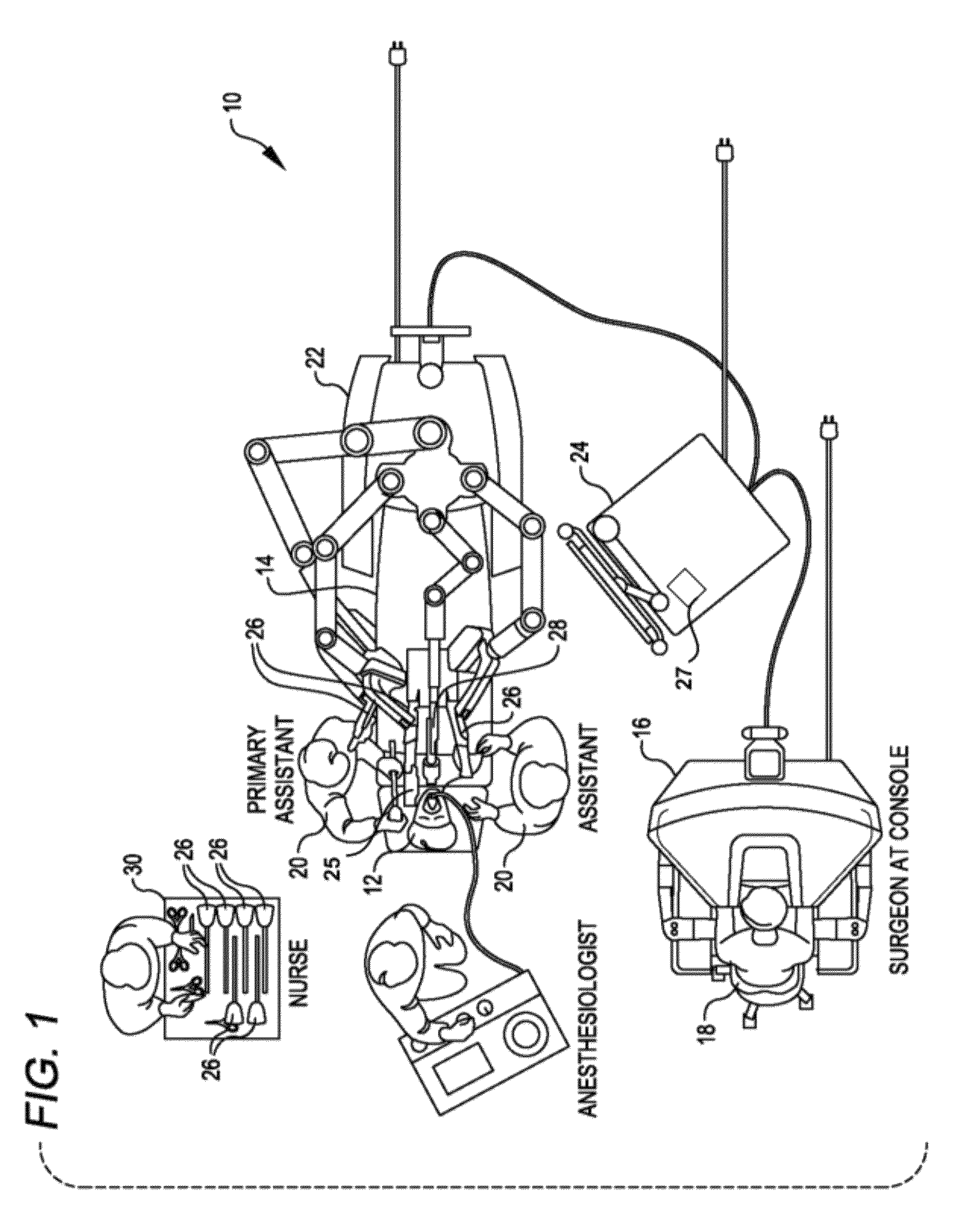

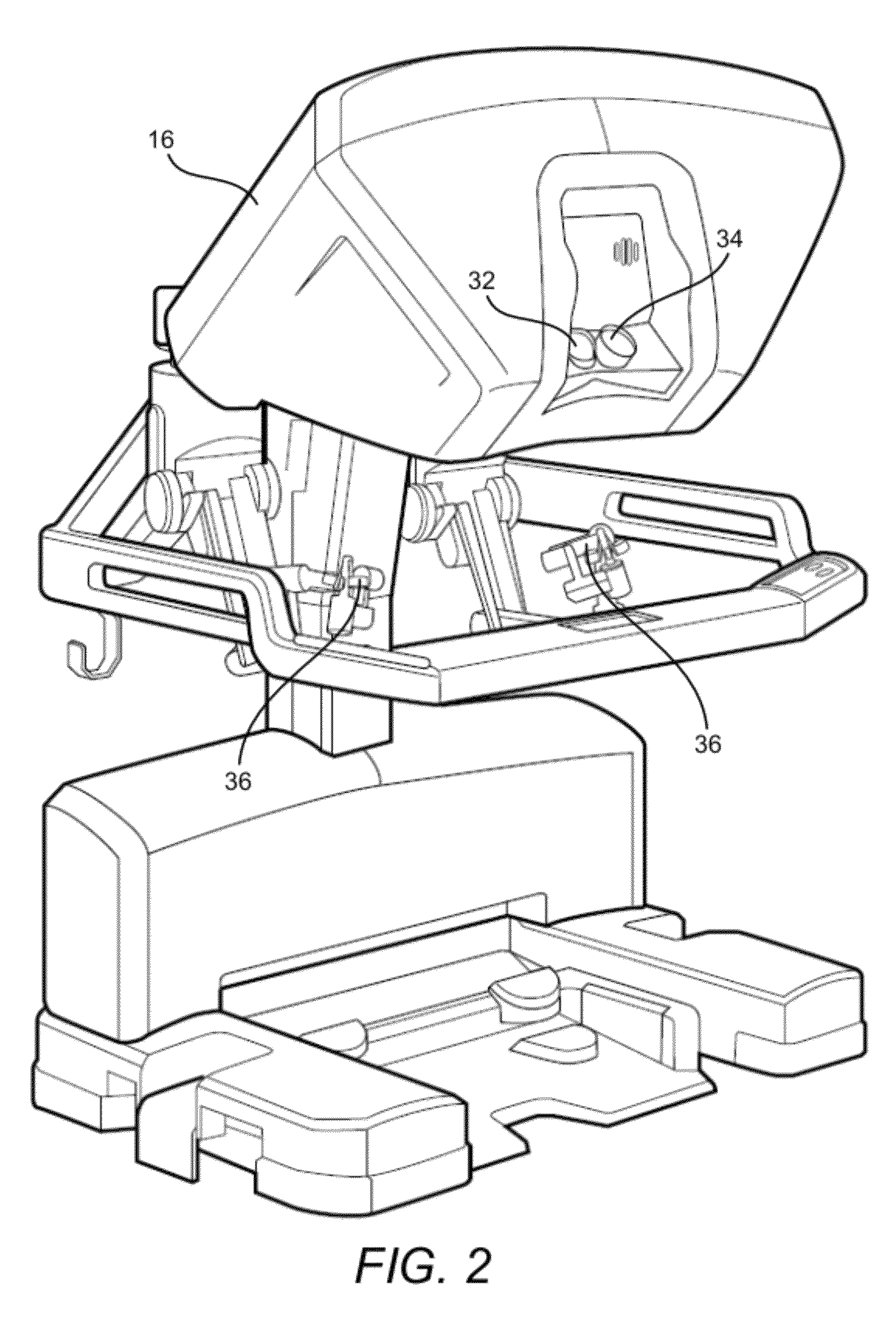

Methods and systems for indicating a clamping prediction

ActiveUS8989903B2Reduce the amount requiredReduce bleeding during staplingProgramme-controlled manipulatorDiagnosticsUser interfaceBody tissue

Owner:INTUITIVE SURGICAL OPERATIONS INC

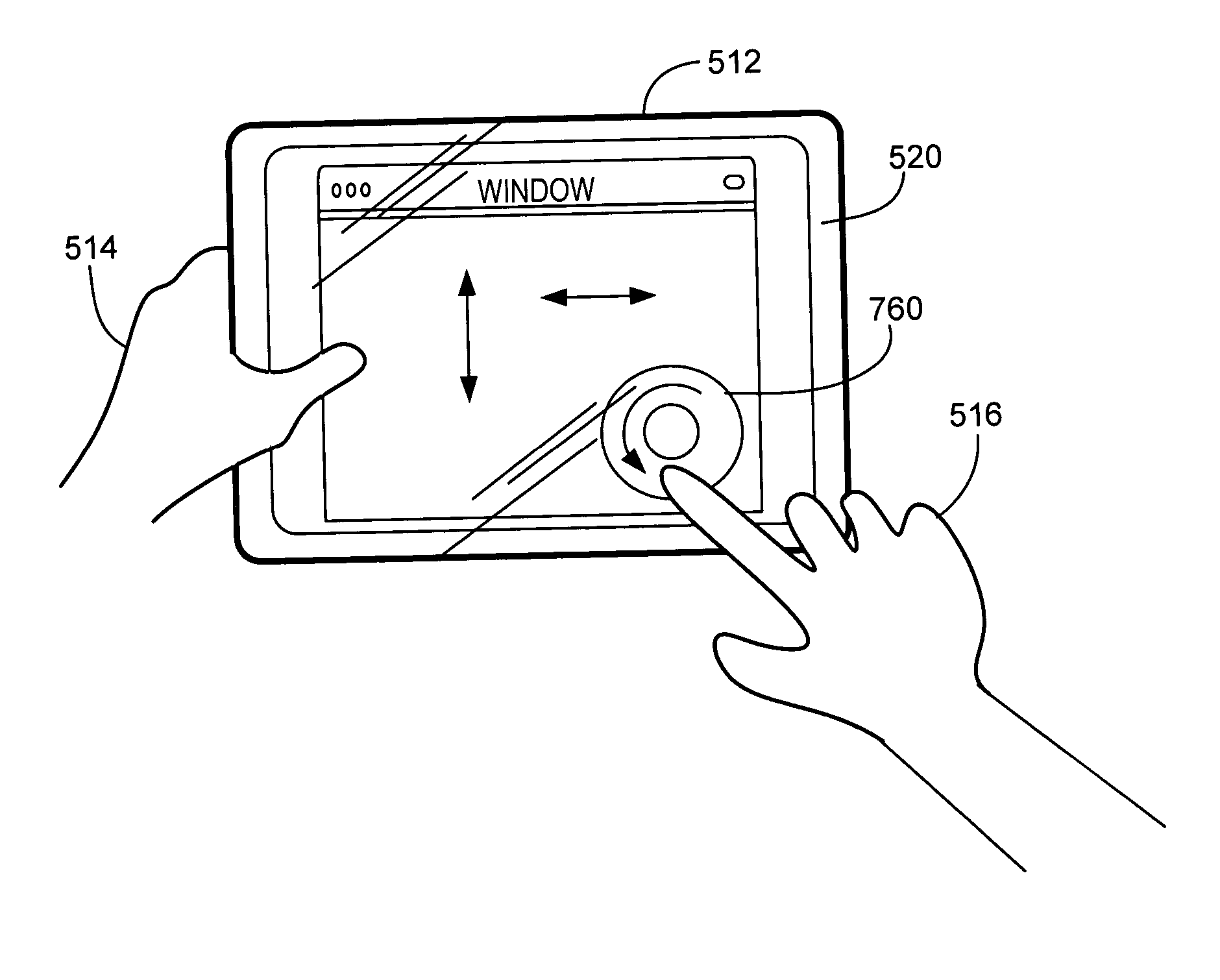

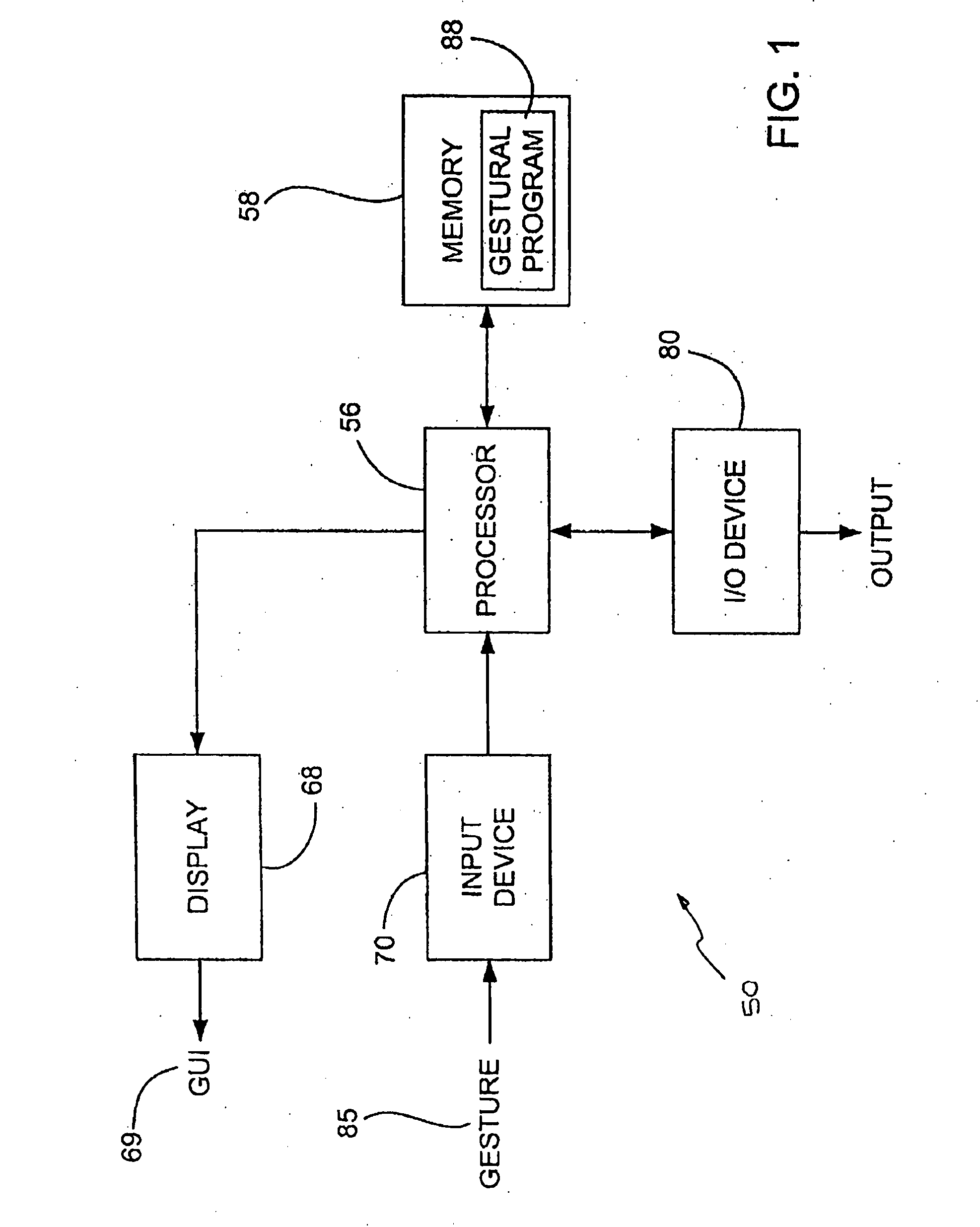

Detecting and interpreting real-world and security gestures on touch and hover sensitive devices

InactiveUS20080168403A1Digital output to display deviceComputer graphics (images)Application software

“Real-world” gestures such as hand or finger movements / orientations that are generally recognized to mean certain things (e.g., an “OK” hand signal generally indicates an affirmative response) can be interpreted by a touch or hover sensitive device to more efficiently and accurately effect intended operations. These gestures can include, but are not limited to, “OK gestures,”“grasp everything gestures,”“stamp of approval gestures,”“circle select gestures,”“X to delete gestures,”“knock to inquire gestures,”“hitchhiker directional gestures,” and “shape gestures.” In addition, gestures can be used to provide identification and allow or deny access to applications, files, and the like.

Owner:APPLE INC

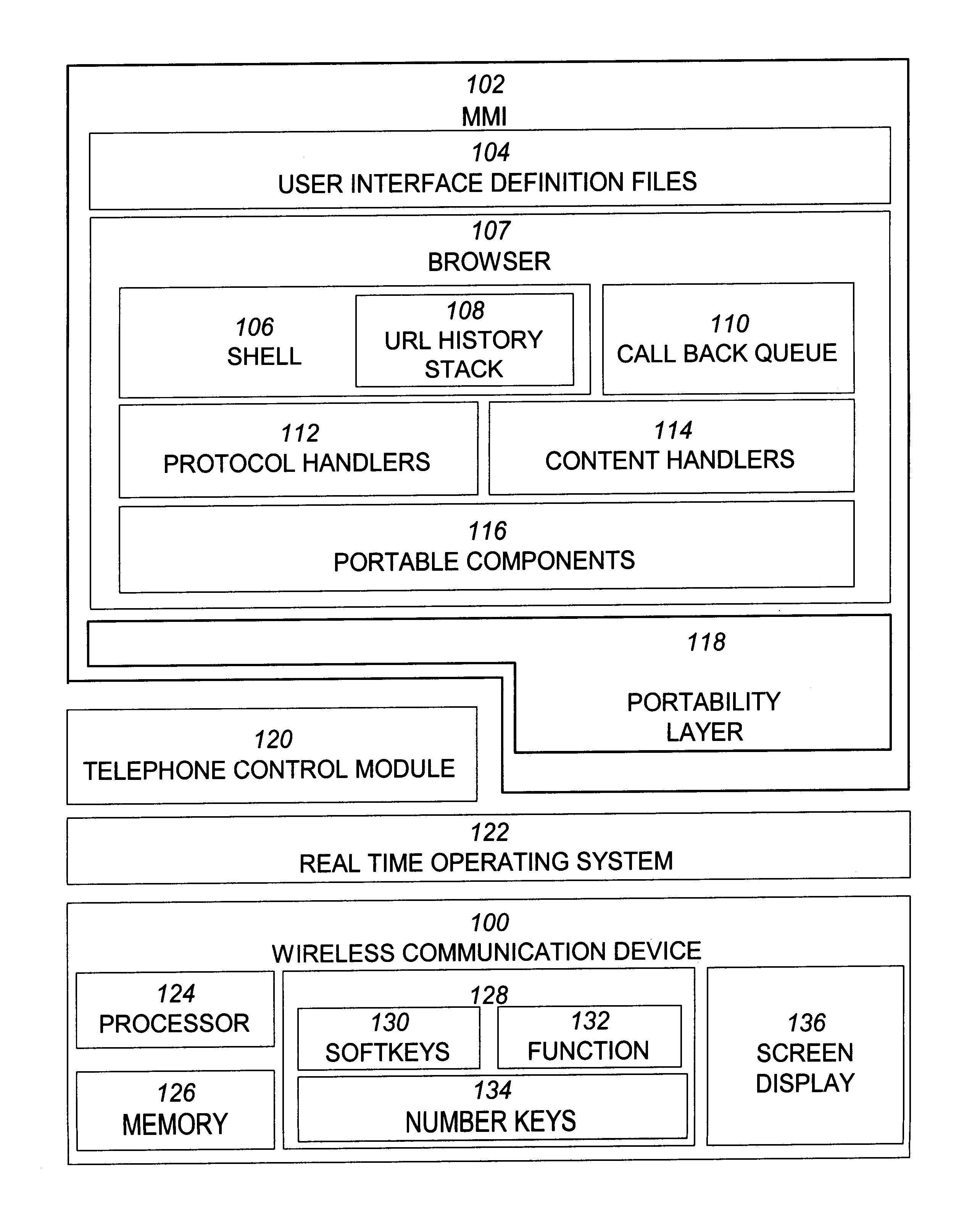

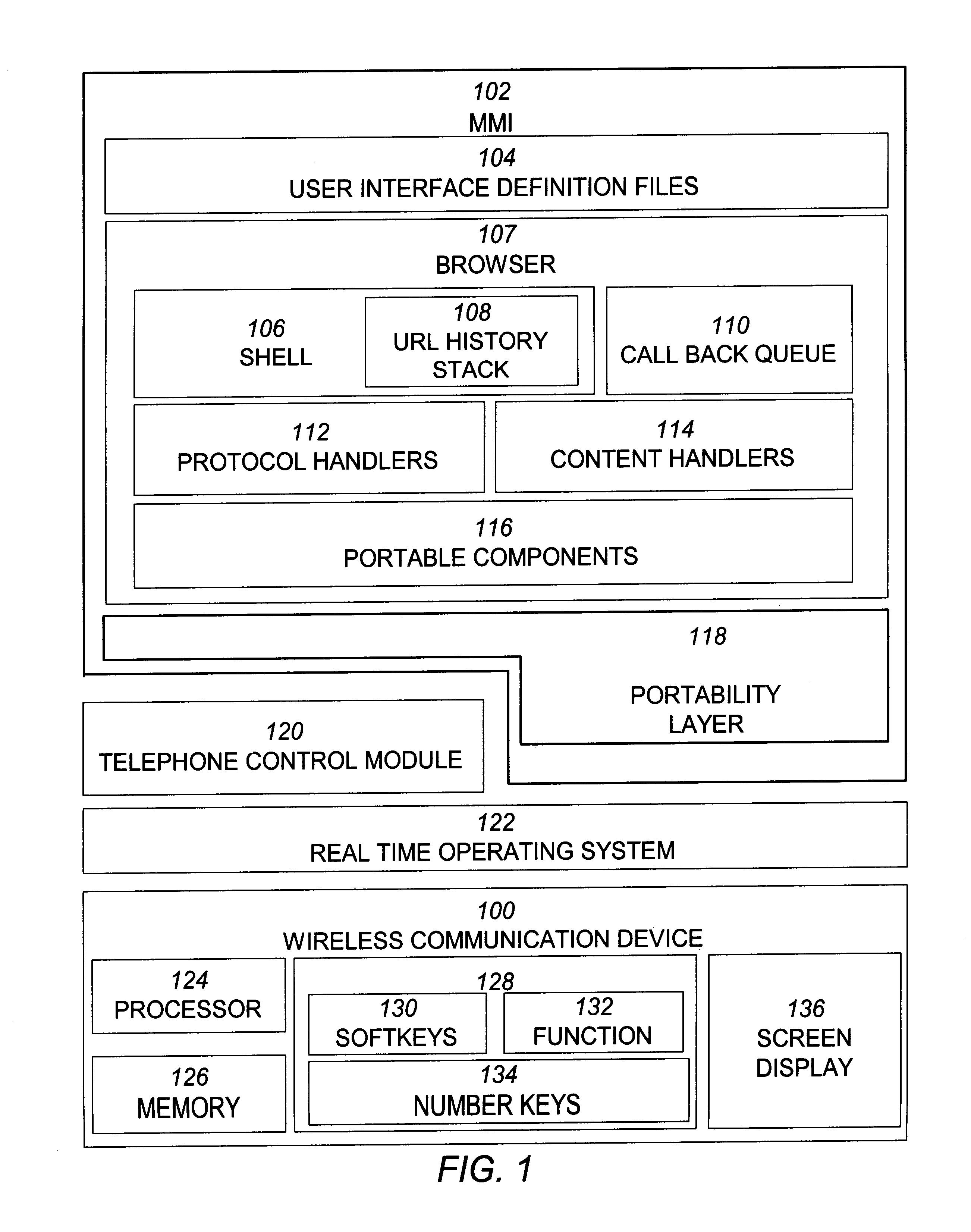

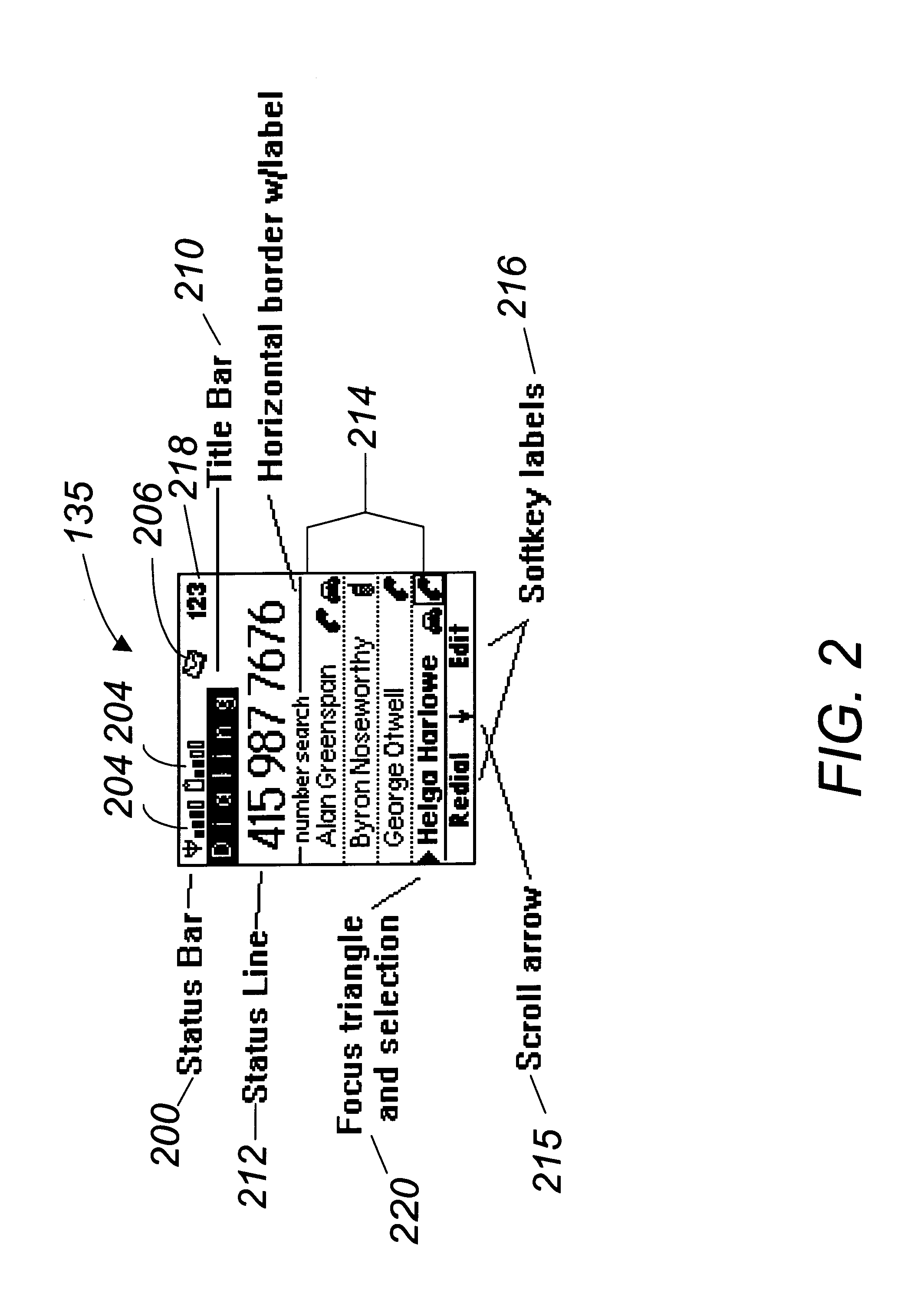

Wireless communication device with markup language based man-machine interface

A system, method, and software product provide a wireless communications device with a markup language based man-machine interface. The man-machine interface provides a user interface for the various telecommunications functionality of the wireless communication device, including dialing telephone numbers, answering telephone calls, creating messages, sending messages, receiving messages, establishing configuration settings, which is defined in markup language, such as HTML, and accessed through a browser program executed by the wireless communication device. This feature enables direct access to Internet and World Wide Web content, such as Web pages, to be directly integrated with telecommunication functions of the device, and allows Web content to be seamlessly integrated with other types of data, since all data presented to the user via the user interface is presented via markup language-based pages. The browser processes an extended form of HTML that provides new tags and attributes that enhance the navigational, logical, and display capabilities of conventional HTML, and particularly adapt HTML to be displayed and used on wireless communication devices with small screen displays. The wireless communication device includes the browser, a set of portable components, and portability layer. The browser includes protocol handlers, which implement different protocols for accessing various functions of the wireless communication device, and content handlers, which implement various content display mechanisms for fetching and outputting content on a screen display.

Owner:ACCESS

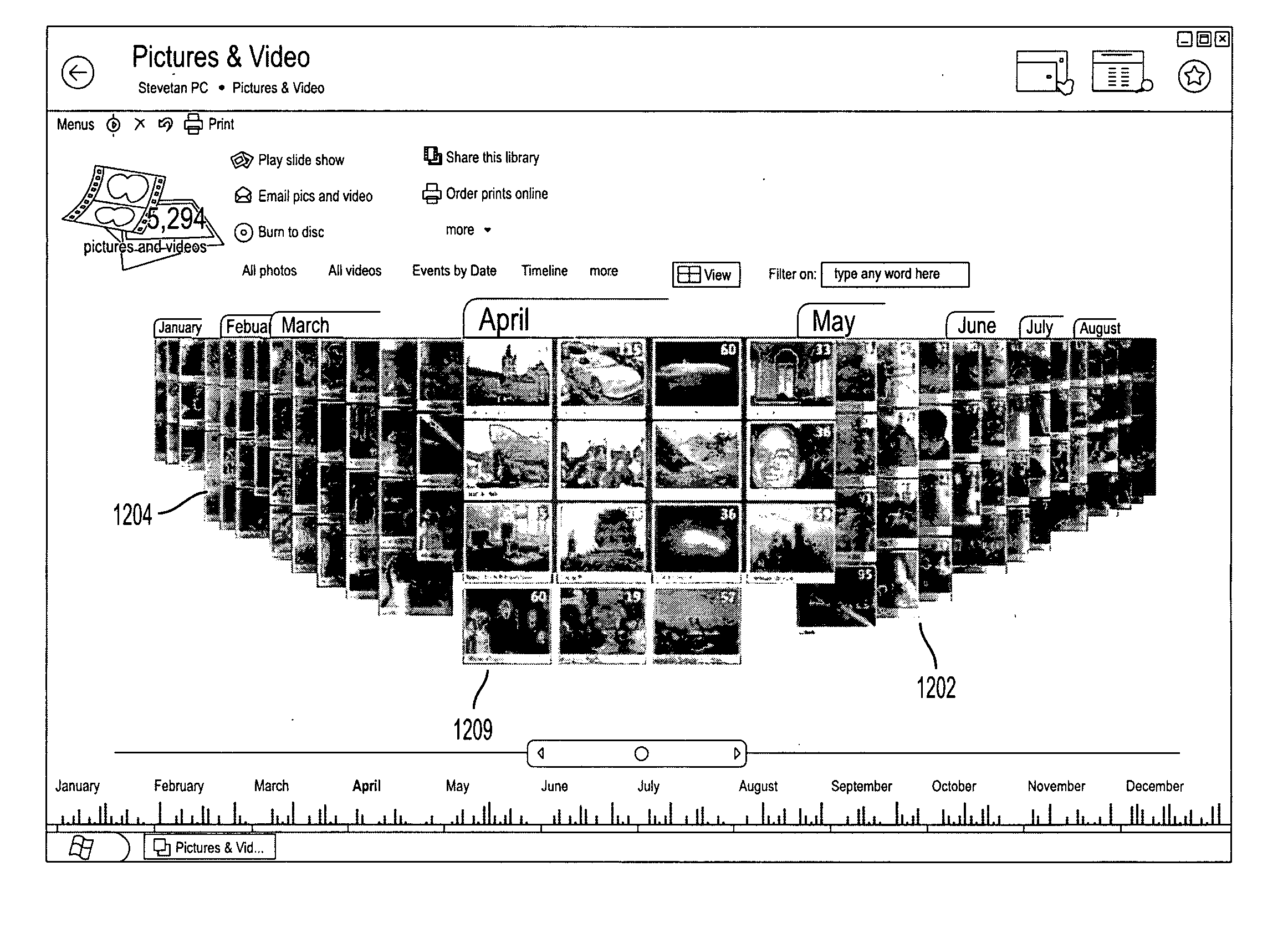

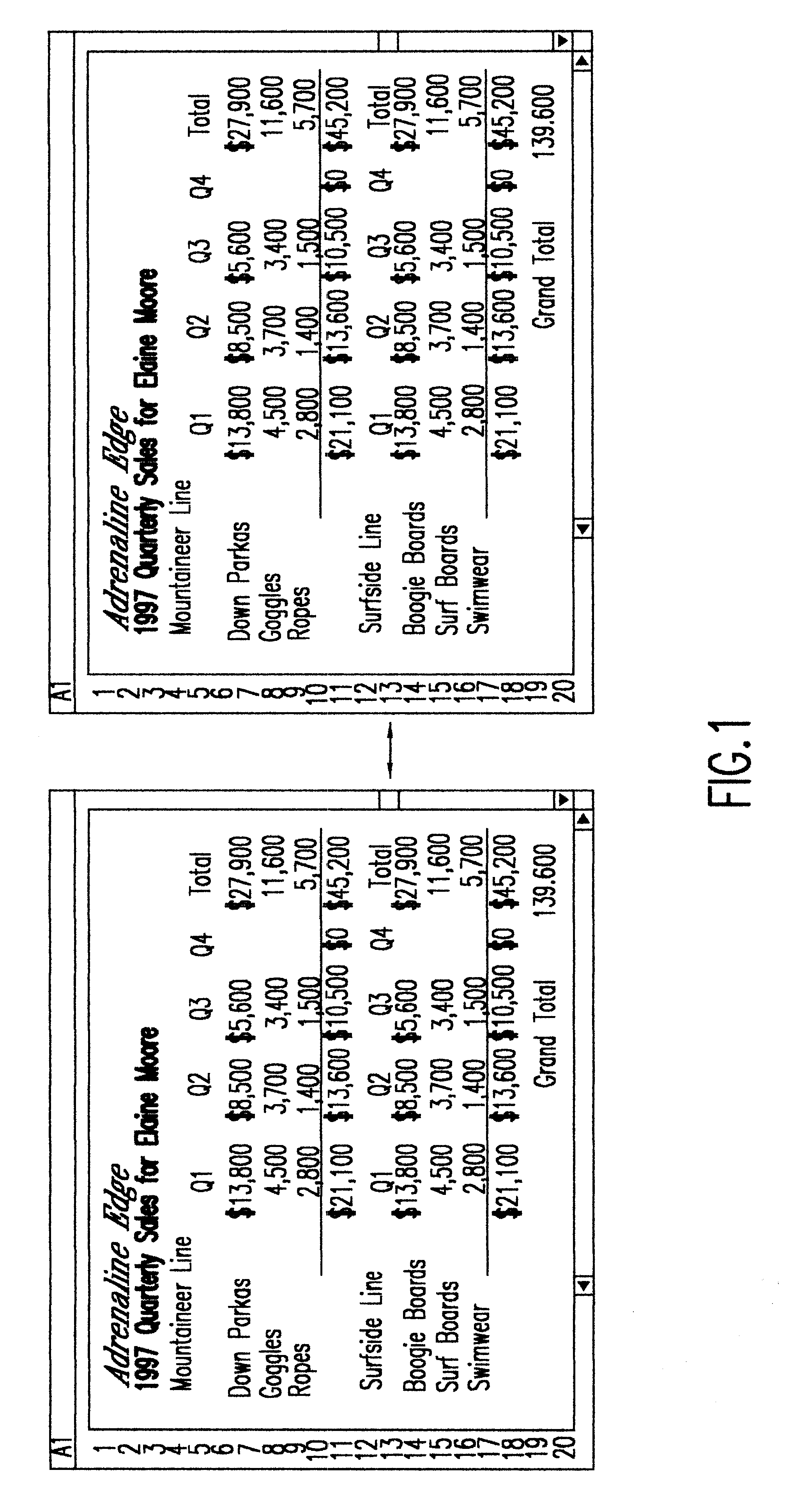

Graphical user interface for 3-dimensional view of a data collection based on an attribute of the data

A three-dimensional (3D) view of a data collection based on an attribute is disclosed. A timeline is provided for displaying files and folders. The timeline may include a focal group that displays detailed information about its contents to the user. Remaining items on the timeline are displayed in less detail and may be positioned to appear further away from the user. A histogram may be provided as part of the view to allow the user to more easily navigate the timeline to find a desired file or folder.

Owner:MICROSOFT TECH LICENSING LLC

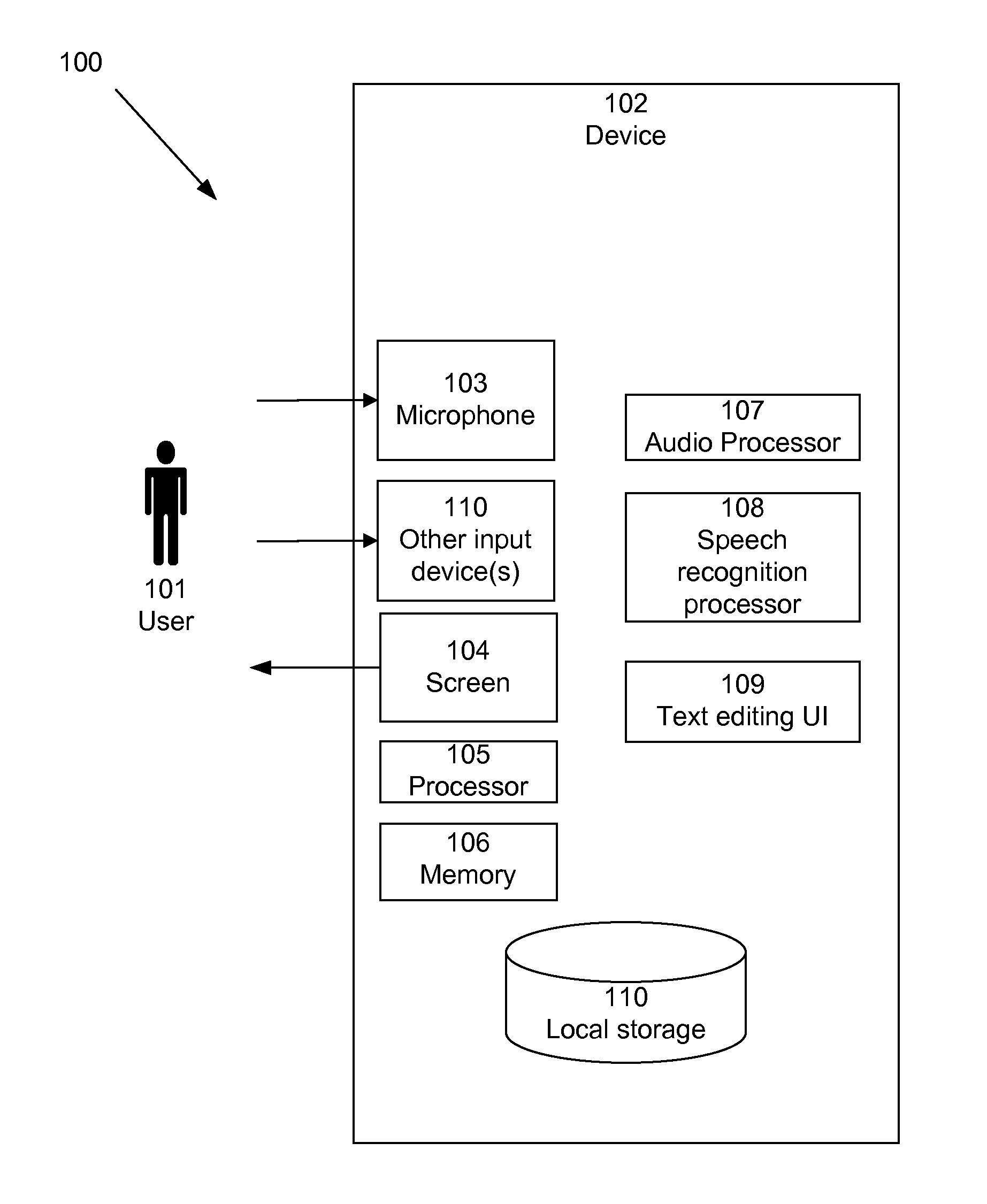

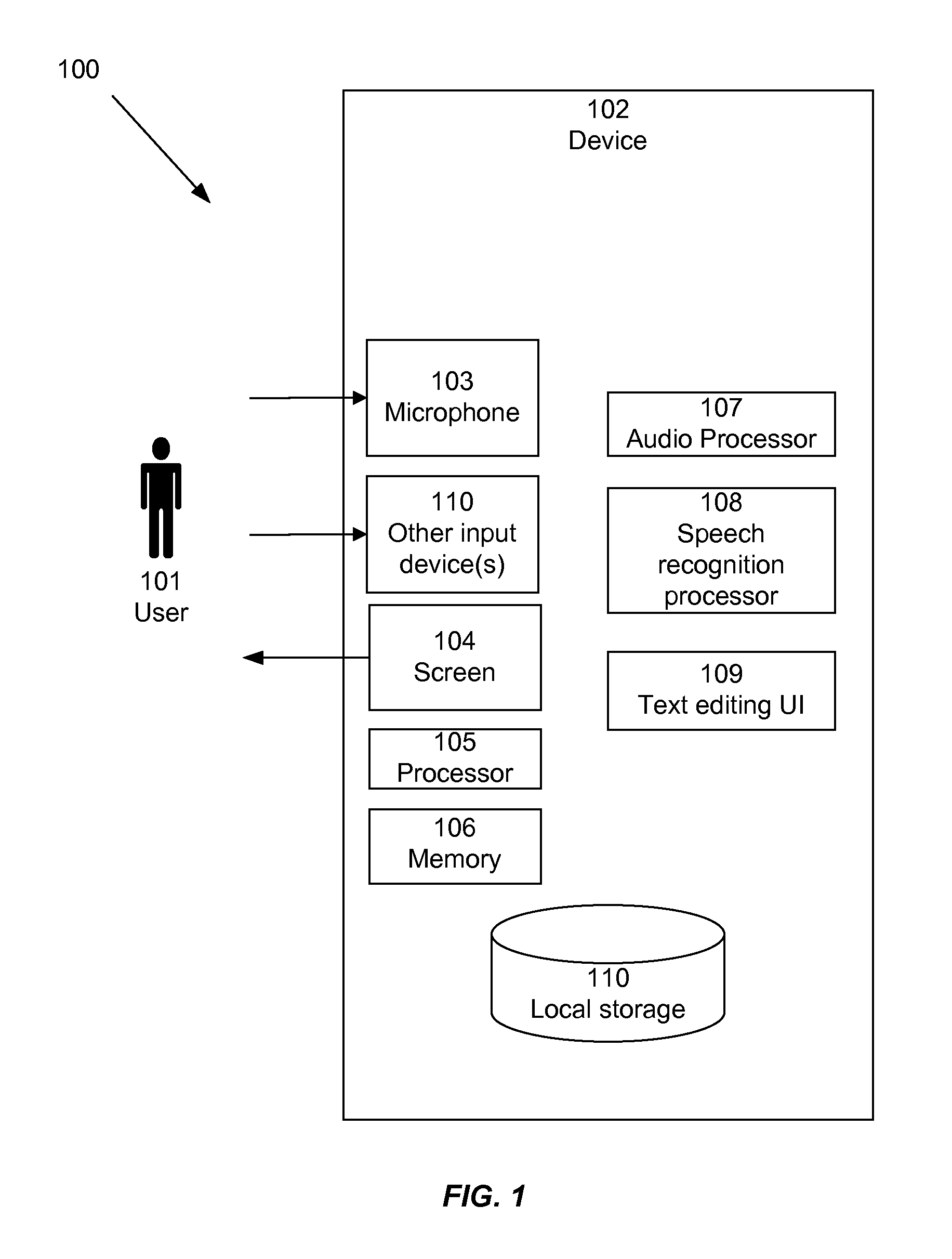

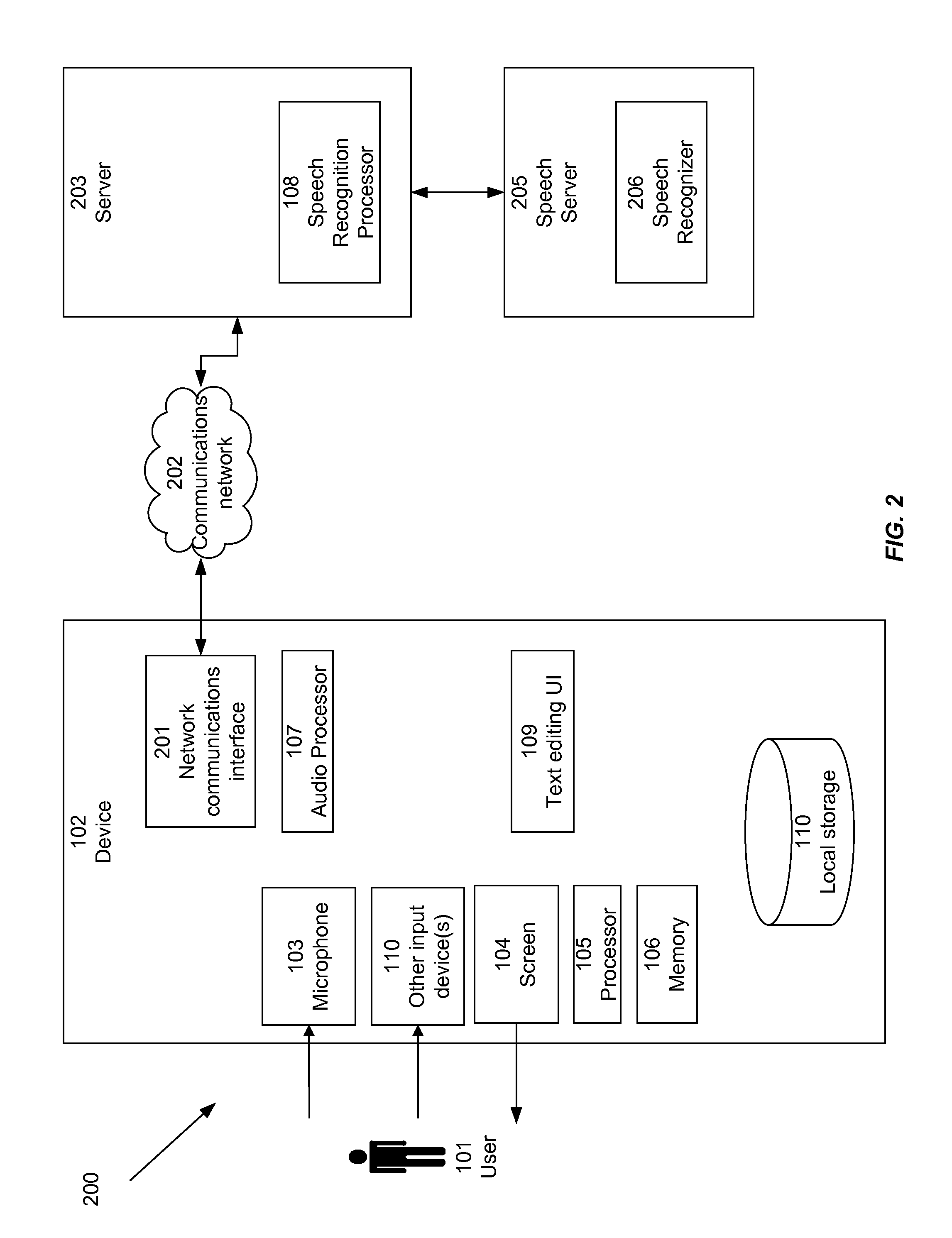

Consolidating Speech Recognition Results

InactiveUS20130073286A1Redundant elements are minimized or eliminatedChoose simpleSpeech recognitionSound input/outputRecognition algorithmSpeech identification

Candidate interpretations resulting from application of speech recognition algorithms to spoken input are presented in a consolidated manner that reduces redundancy. A list of candidate interpretations is generated, and each candidate interpretation is subdivided into time-based portions, forming a grid. Those time-based portions that duplicate portions from other candidate interpretations are removed from the grid. A user interface is provided that presents the user with an opportunity to select among the candidate interpretations; the user interface is configured to present these alternatives without duplicate elements.

Owner:APPLE INC

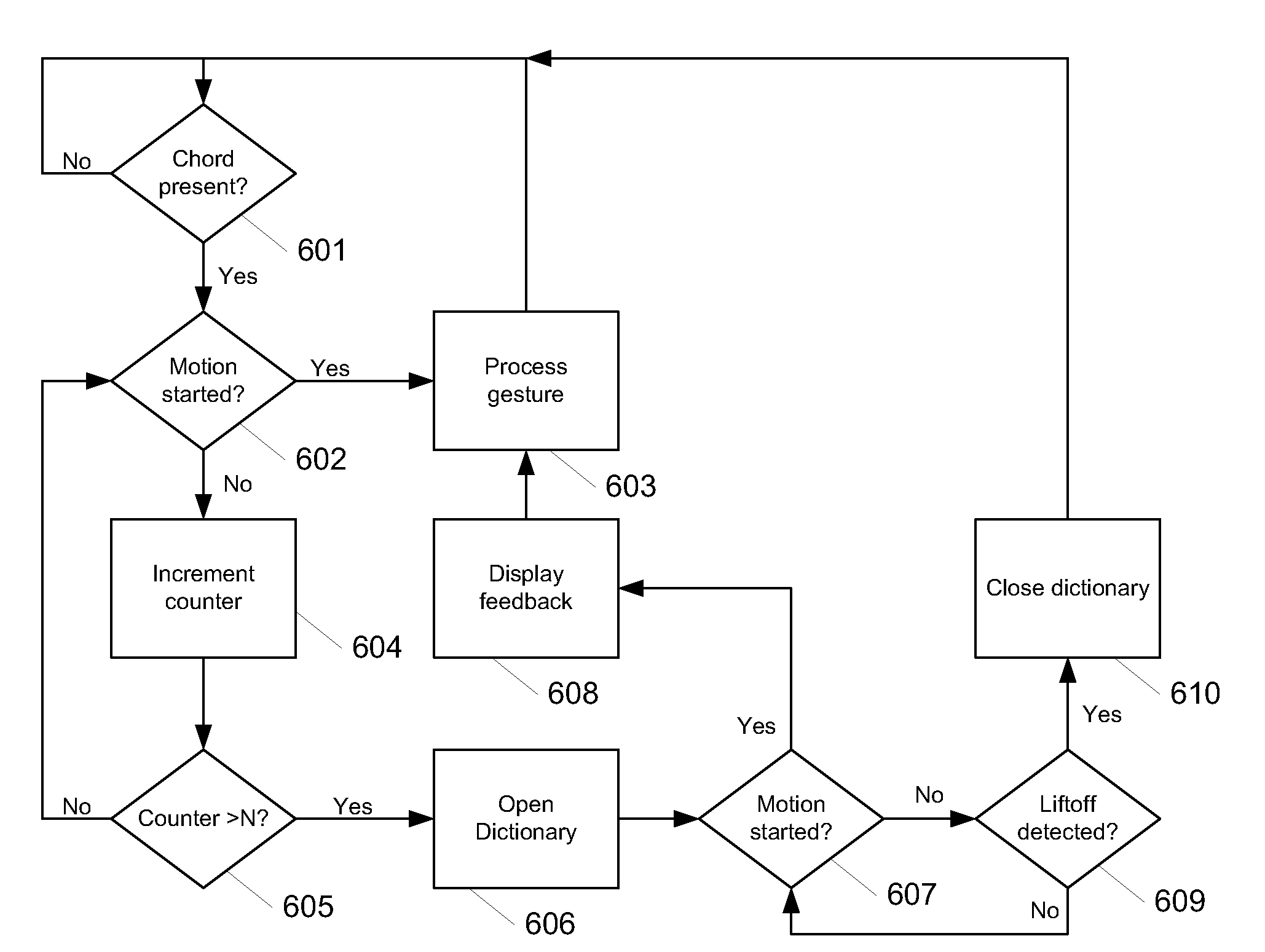

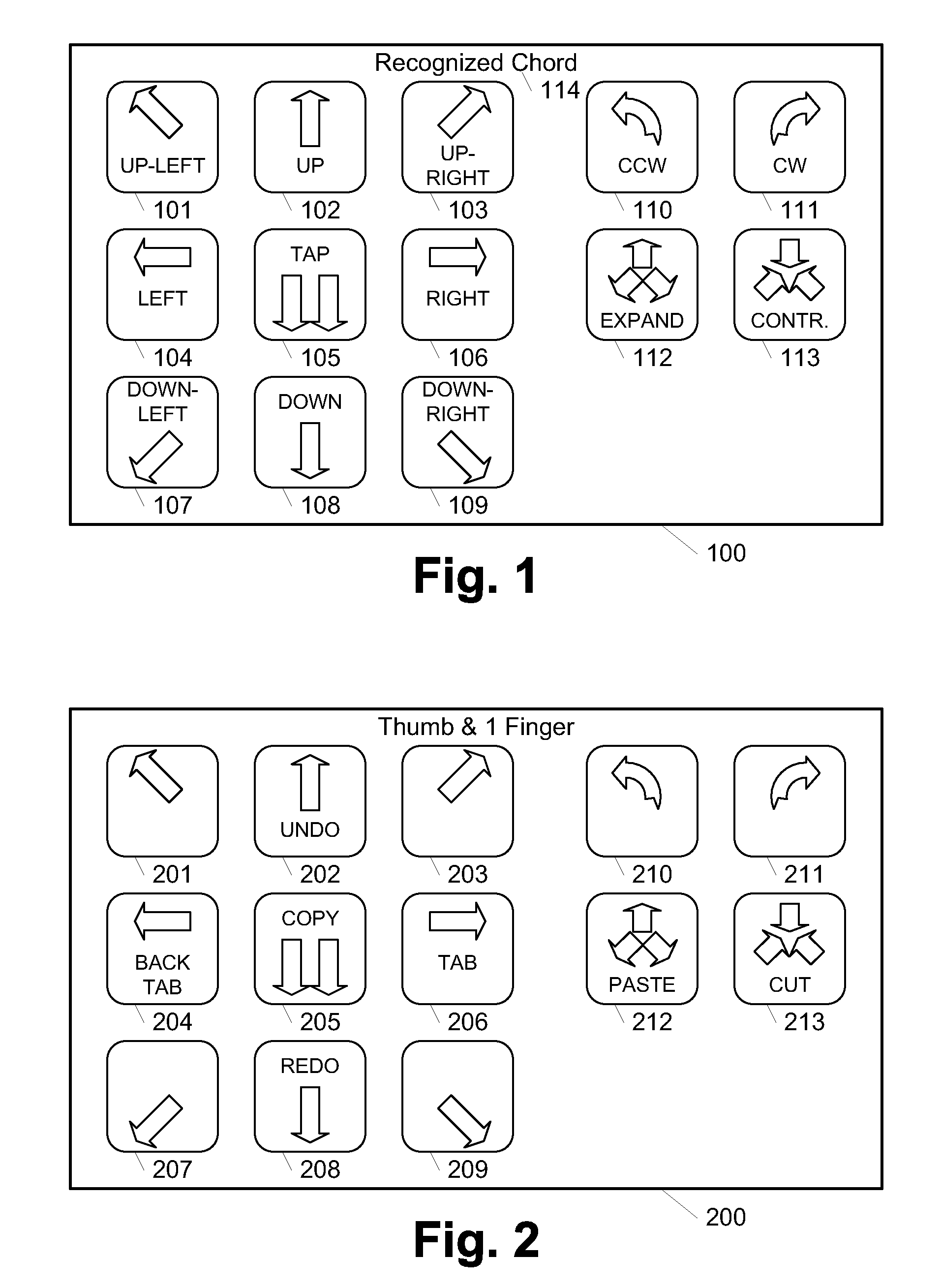

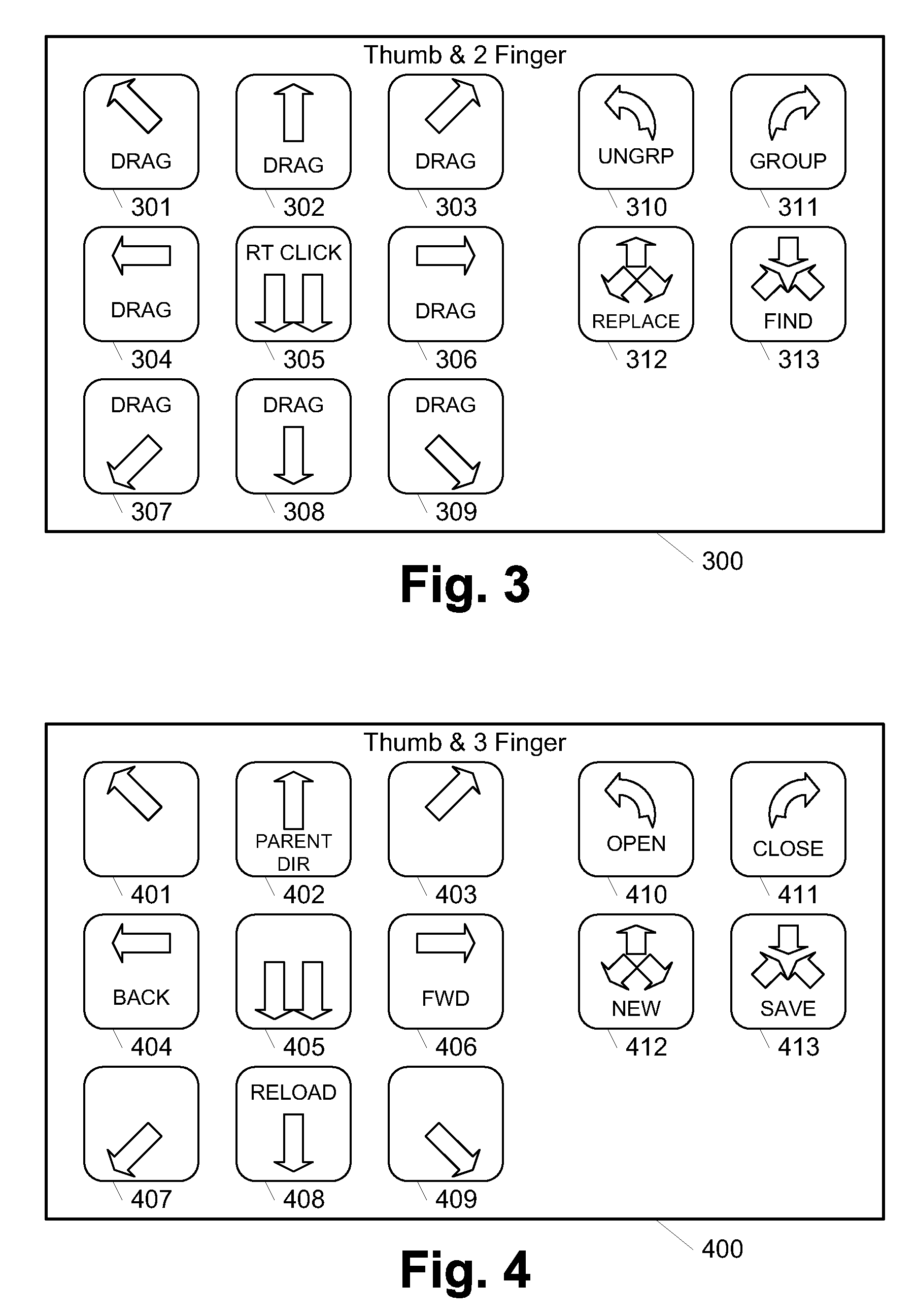

Multi-touch gesture dictionary

ActiveUS20070177803A1Easy to implementCharacter and pattern recognitionDigital output to display deviceTablet computerComputerized system

Owner:APPLE INC

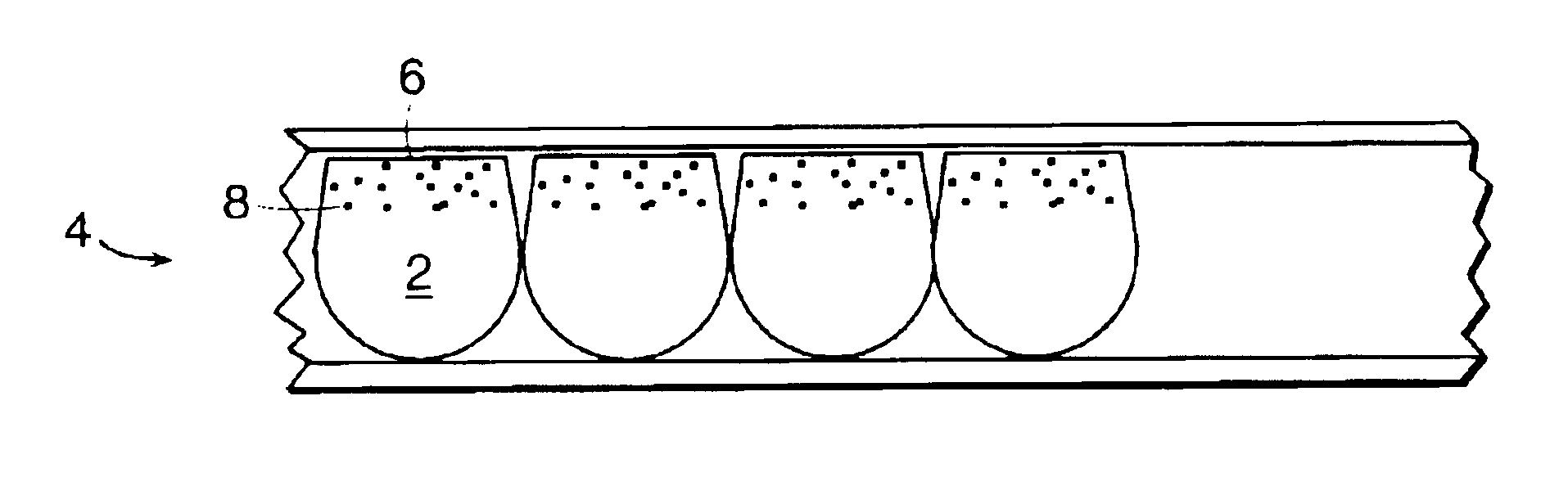

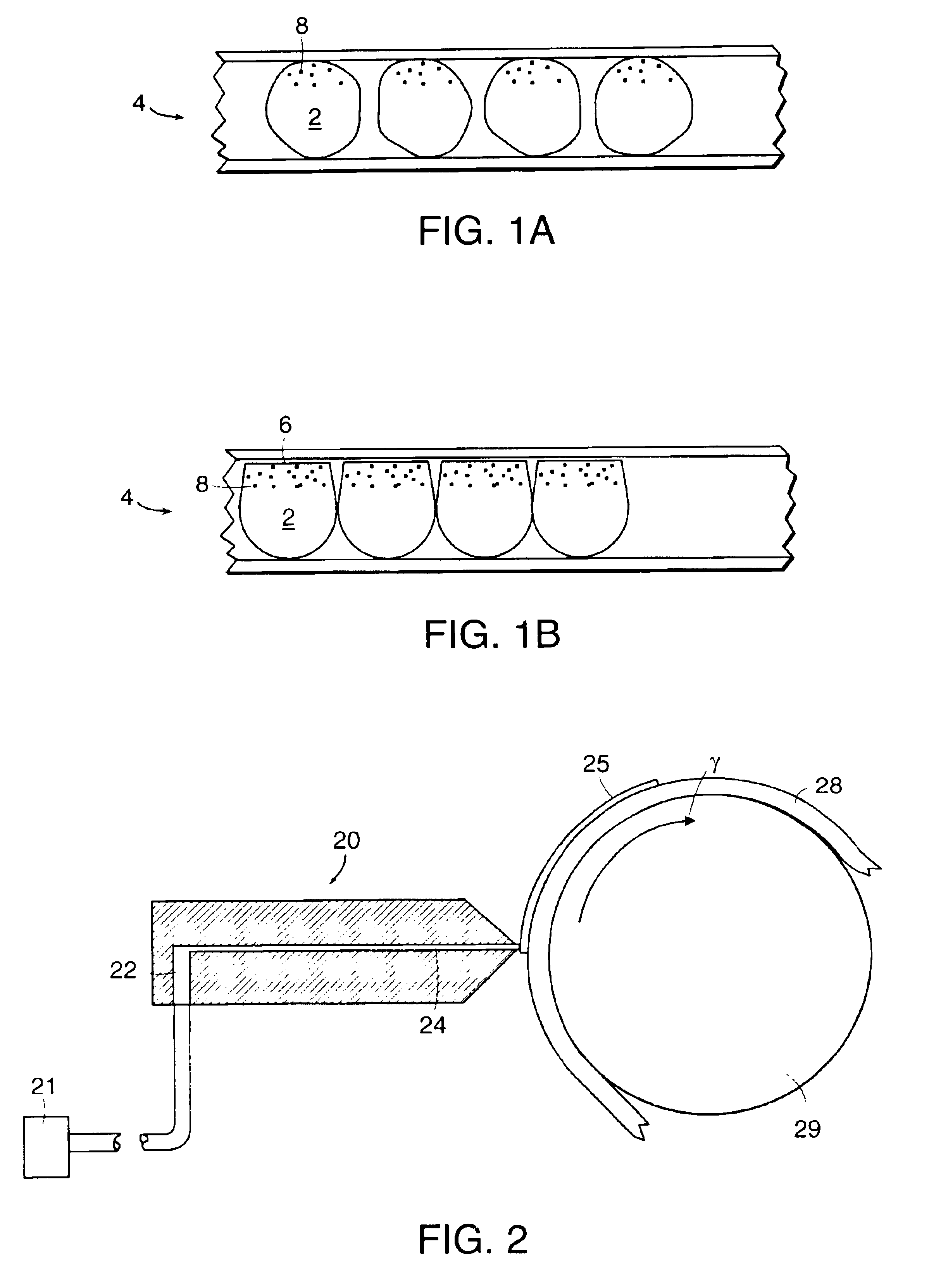

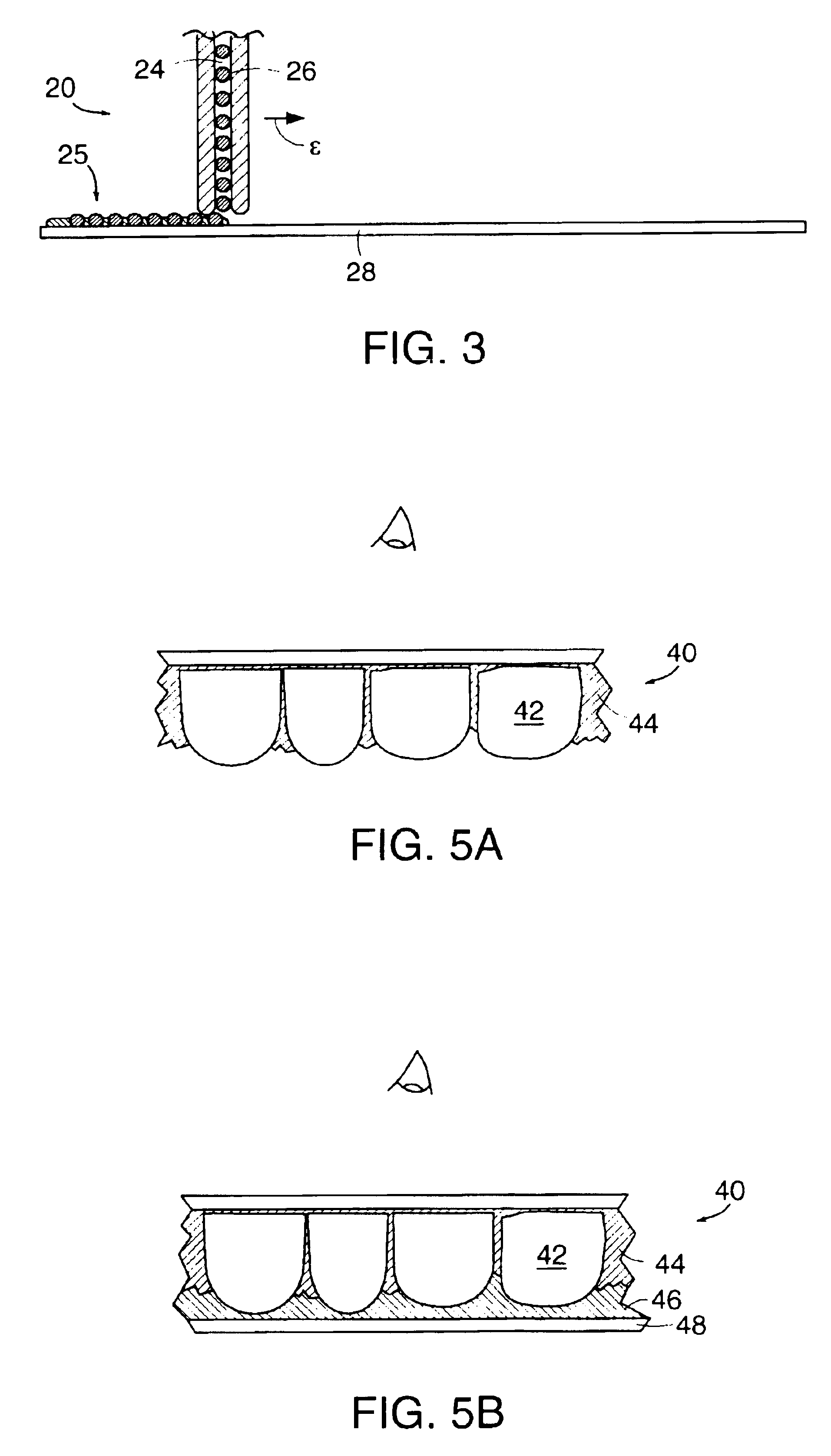

Encapsulated electrophoretic displays having a monolayer of capsules and materials and methods for making the same

InactiveUS6839158B2Static indicating devicesTime-pieces with integrated devicesElectrophoresisDisplay device

Owner:E INK CORPORATION

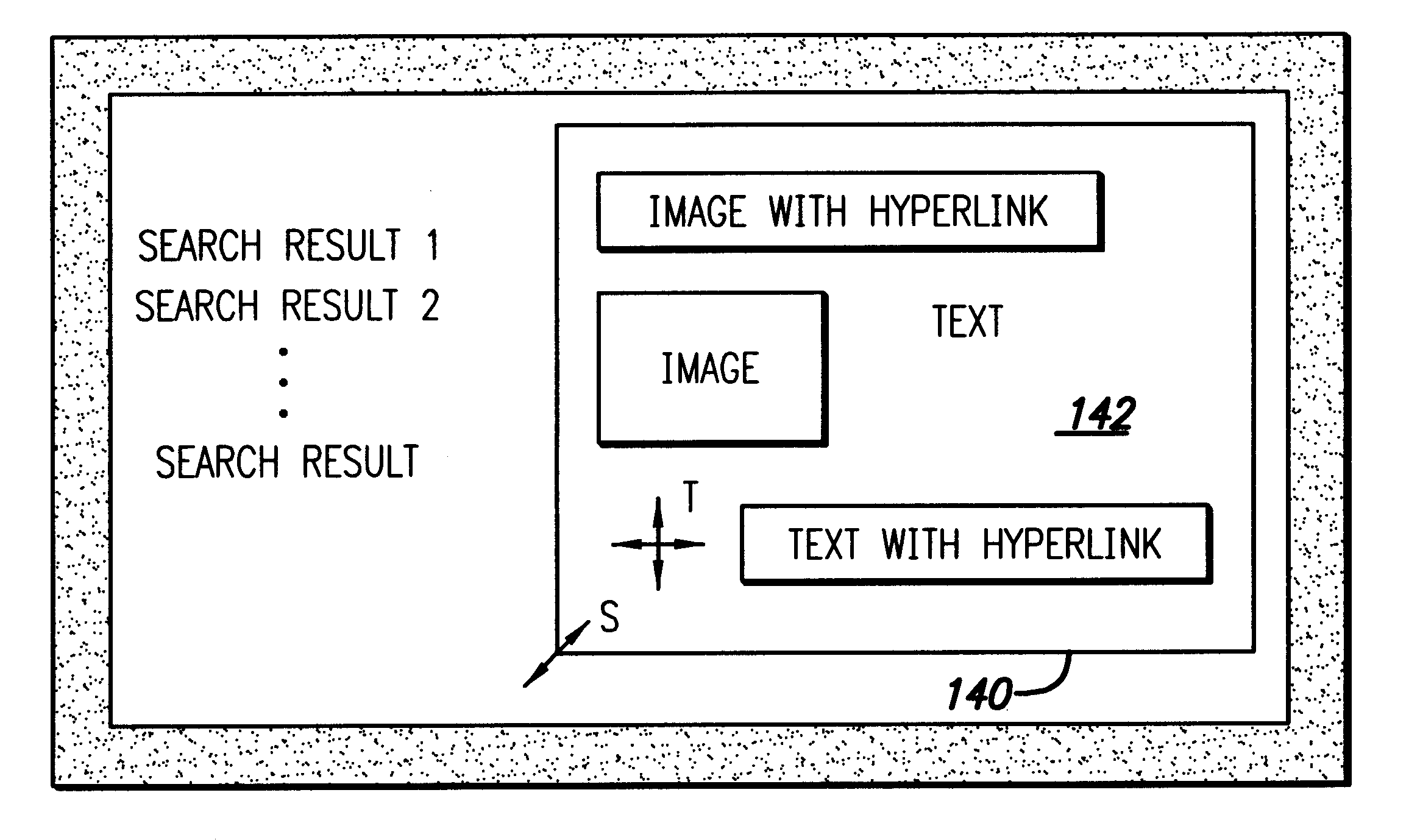

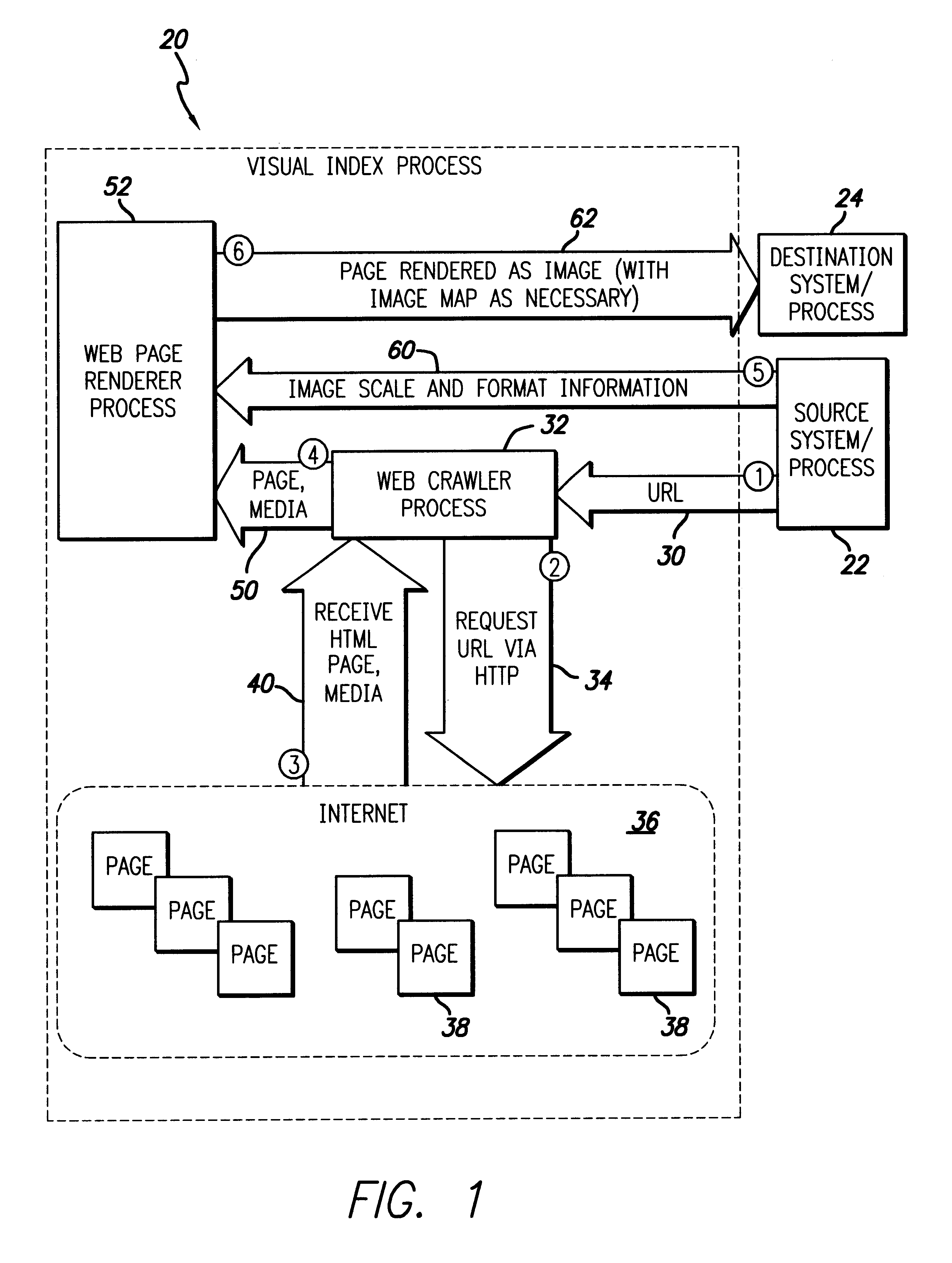

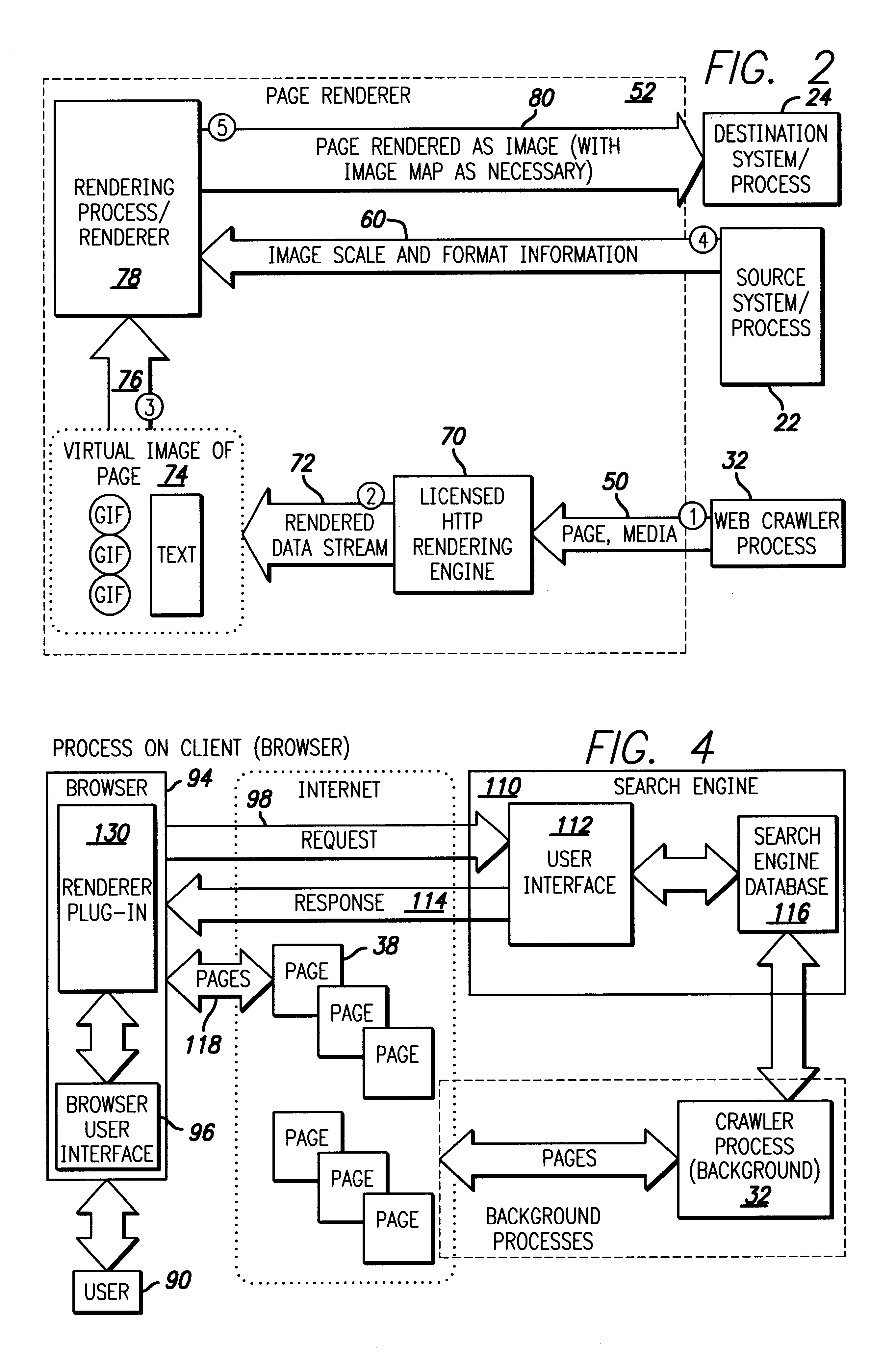

Graphical search engine visual index

InactiveUS6271840B1Faster perusalQuick reviewWeb data indexingSpecial data processing applicationsHyperlinkGraphics

A visual index method provides graphical output from search engine results or other URL lists. Search engine results or a list of URLs are passed to a web crawler that retrieves the web page and other media information present at the associated URL. The web crawler then passes this information to a page renderer which also receives image scale and format information regarding the web pages present at the URLs. The graphical information as well as other media information is then rendered into a reduced graphical form so that the page may be summarily reviewed by the user. Media, visual, or other information may also be downwardly scaled as appropriate or rendered in its original as appropriate (such as with audio data streams). A variety of convenient formats allows the user to quickly and readily scan the presentation at the URL web pages or other data present. Image maps associated with the reduced images may also provide hyperlink access to the linked web page and / or multimedia allowing the links present on the web page in its original to be accessed through the reduced image provided by the web page renderer.

Owner:HYPER SEARCH LLC

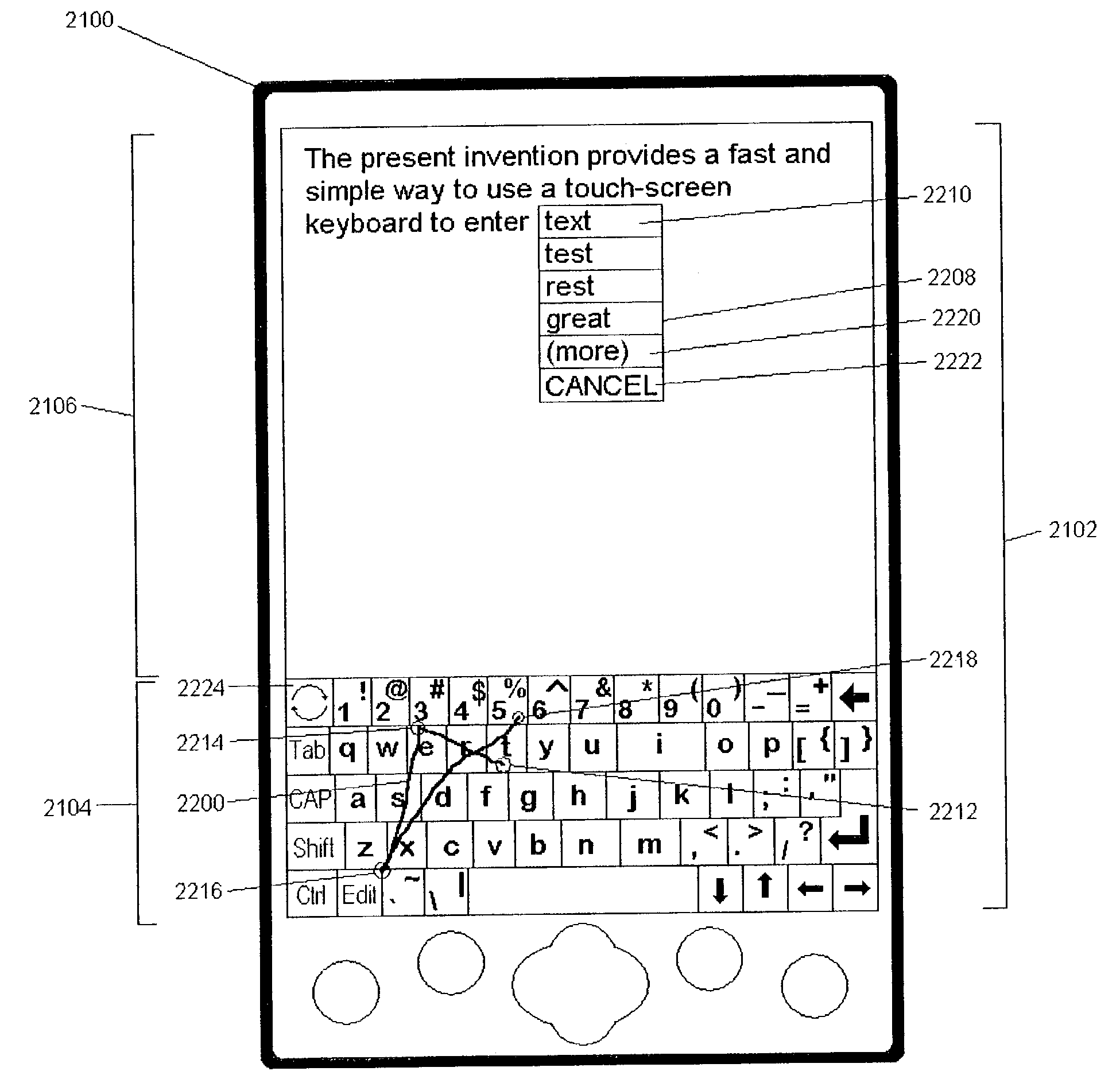

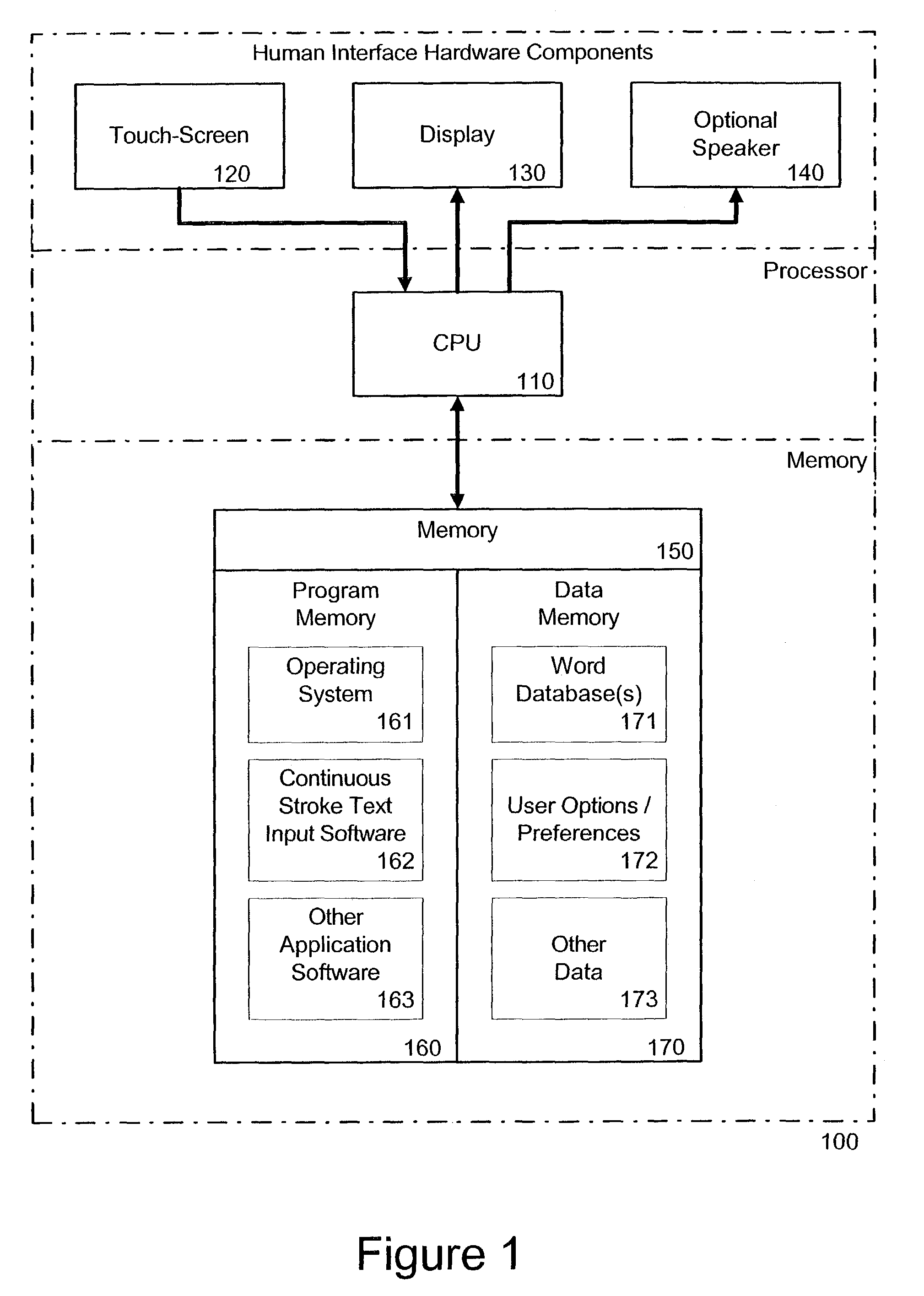

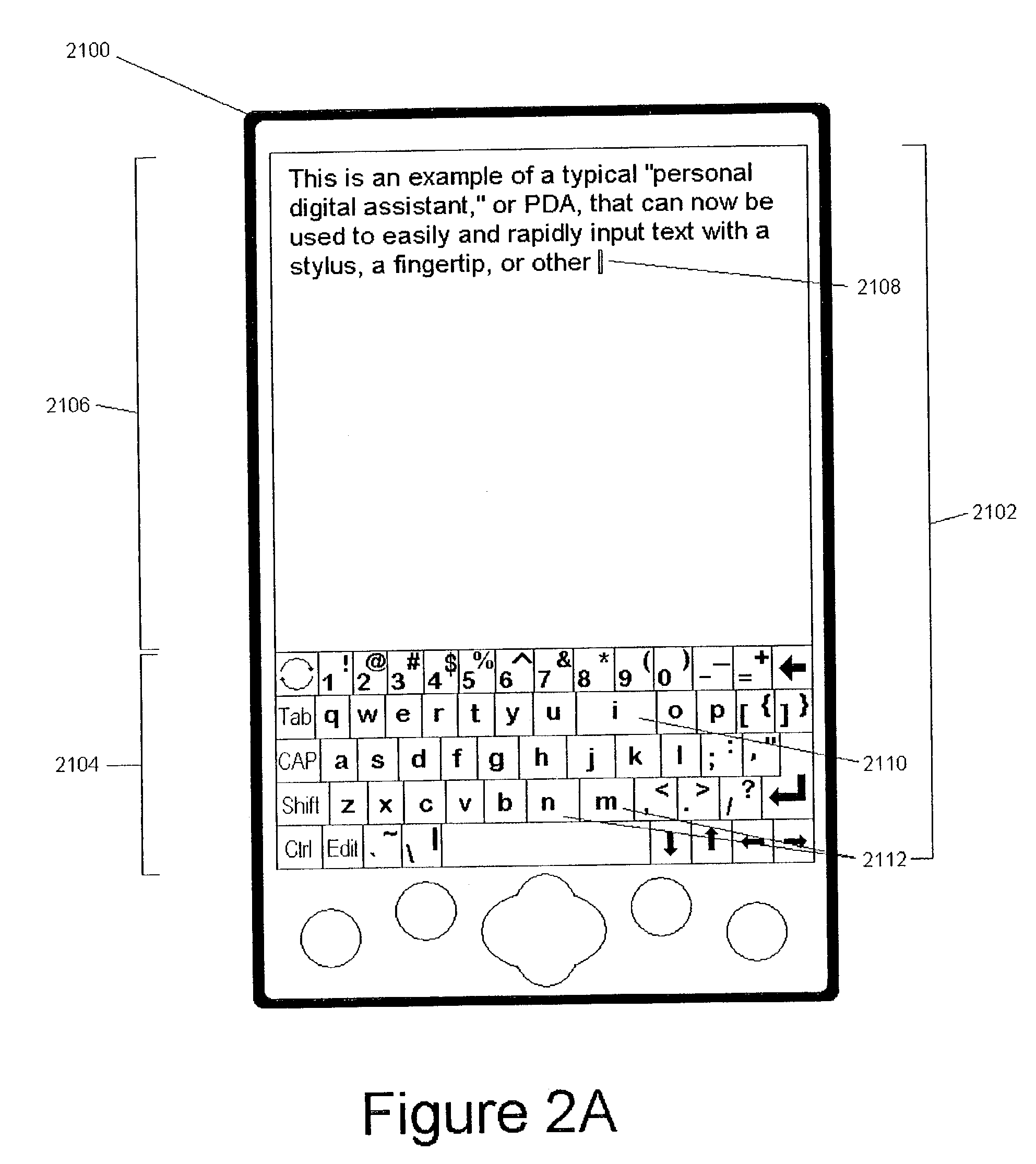

System and method for continuous stroke word-based text input

ActiveUS7098896B2Reduce in quantityIncrease text entry speedInput/output for user-computer interactionCathode-ray tube indicatorsText entryLettering

A method and system of inputting alphabetic text having a virtual keyboard on a touch sensitive screen. The virtual keyboard includes a set of keys where an each letter of alphabet is associated with at least one key. The present invention allows someone to use the virtual keyboard with continuous contact of the touch sensitive screen. The user traces an input pattern for word by starting at or near the first letter in a decided word and then tracing through or near each letter in sequence. The present invention then generates a list of possible words associated with the entered part and presents it to user for selection.

Owner:CERENCE OPERATING CO

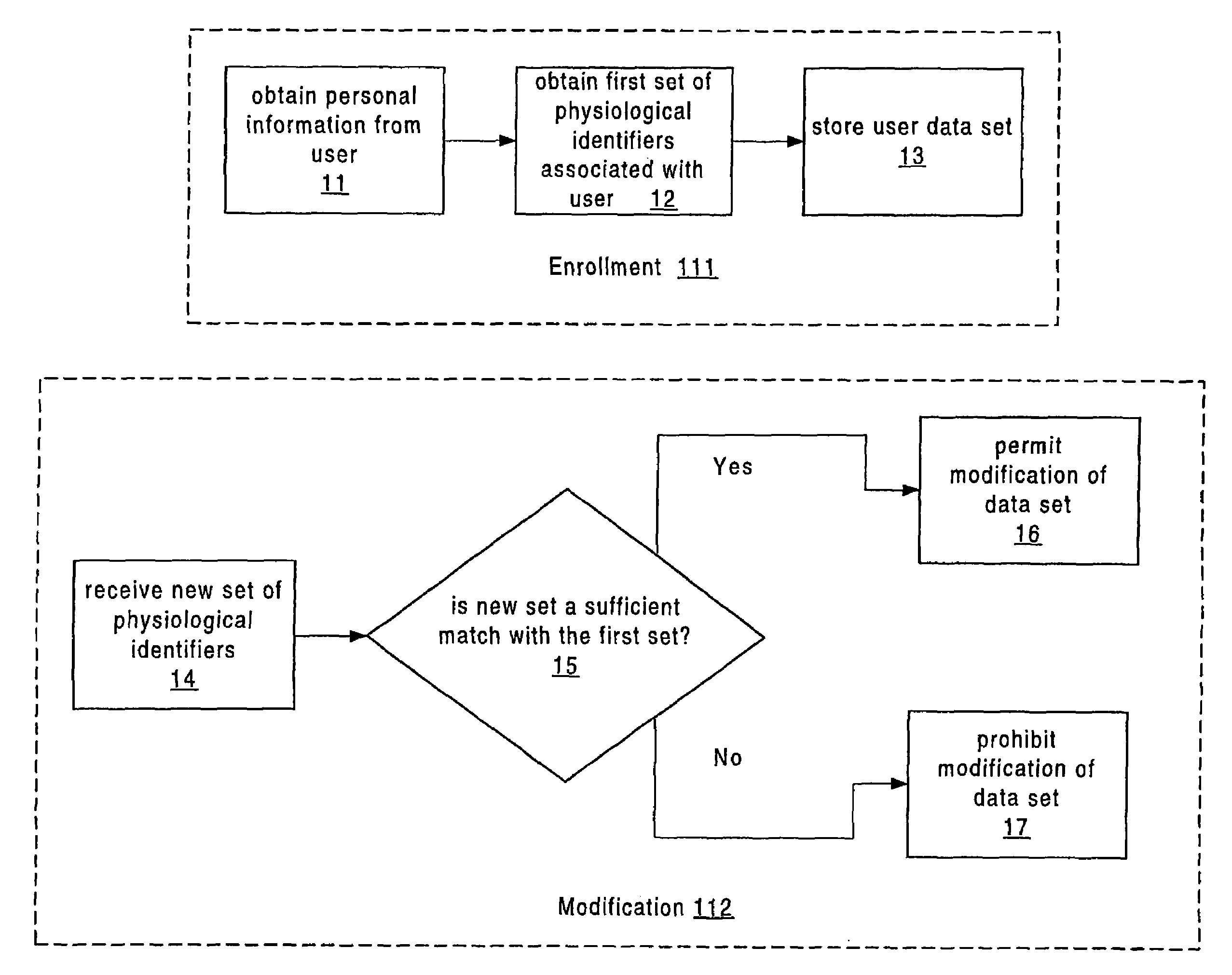

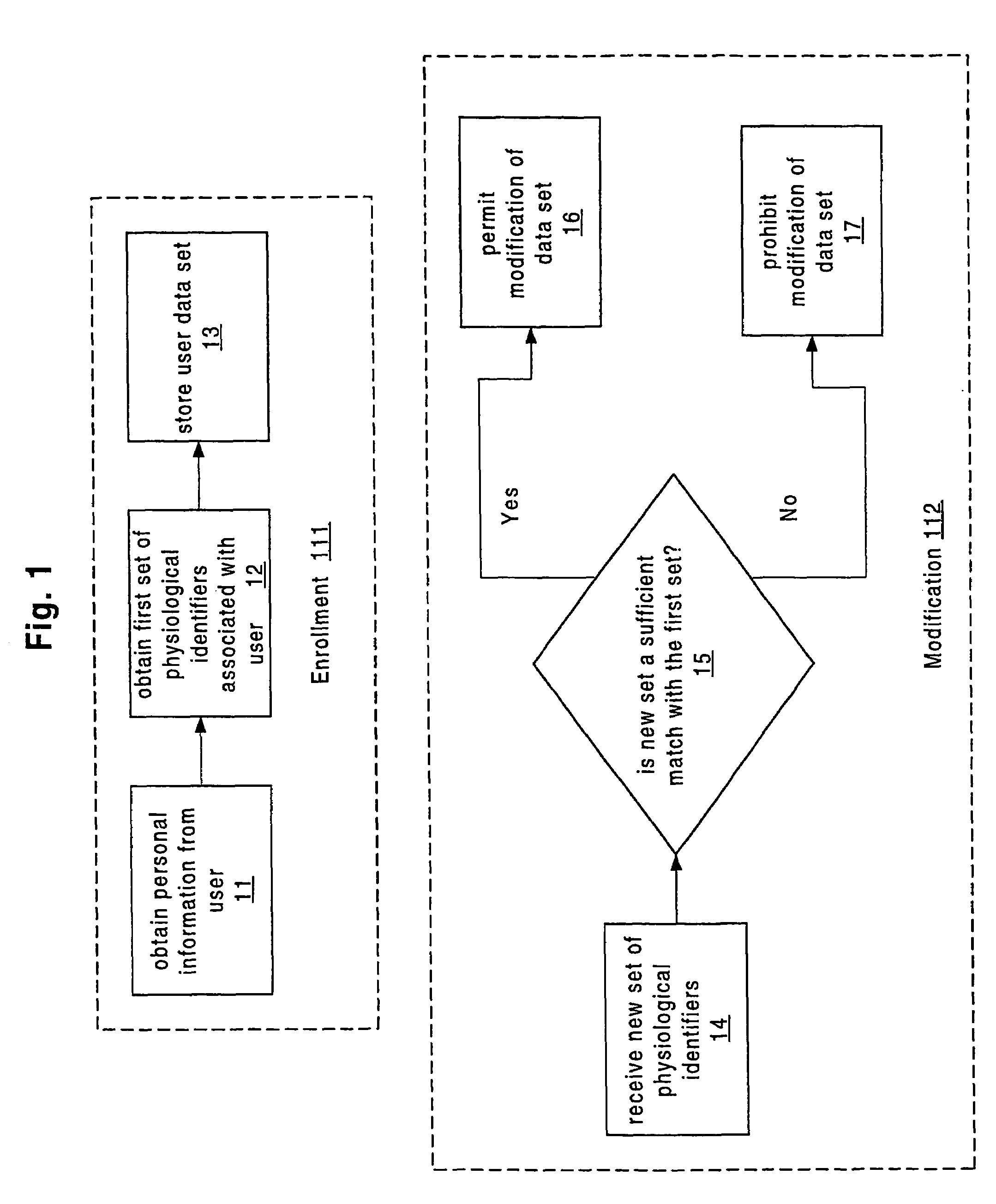

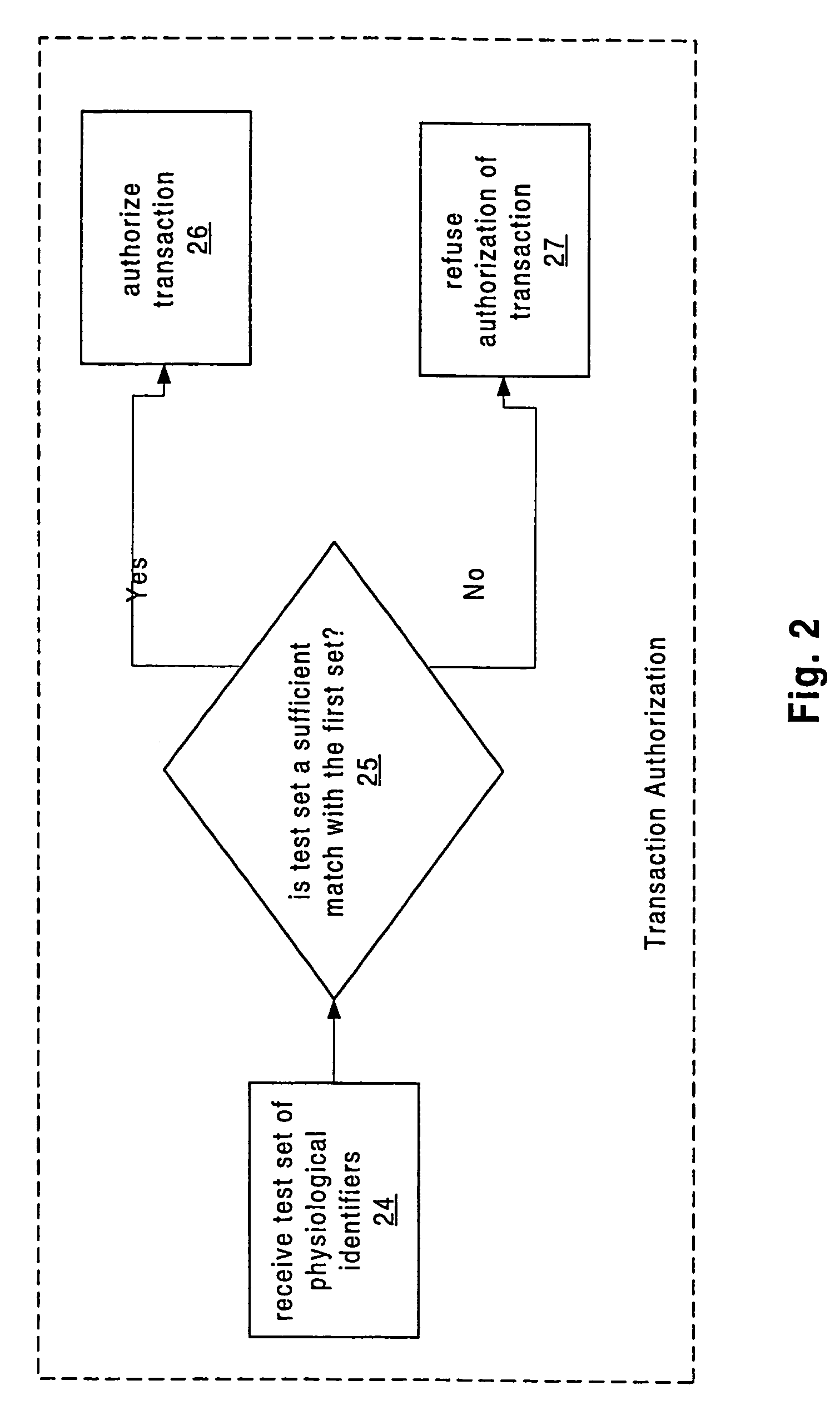

Apparatus and method for authenticated multi-user personal information database

InactiveUS6985887B1Improve reliabilityLower level of reliabilityStampsError preventionInternet privacyMulti-user

Owner:GOLD STANDARD TECH

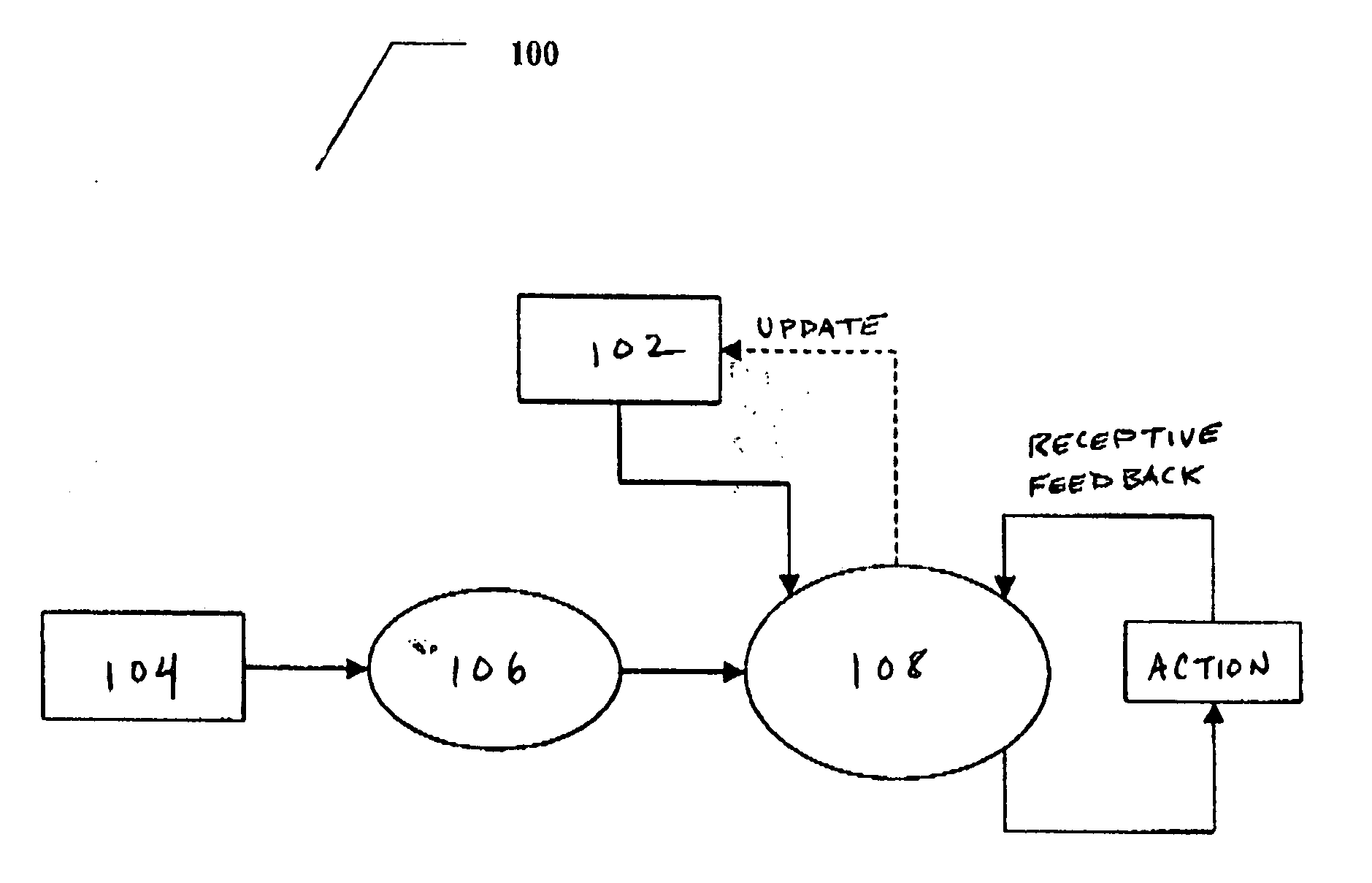

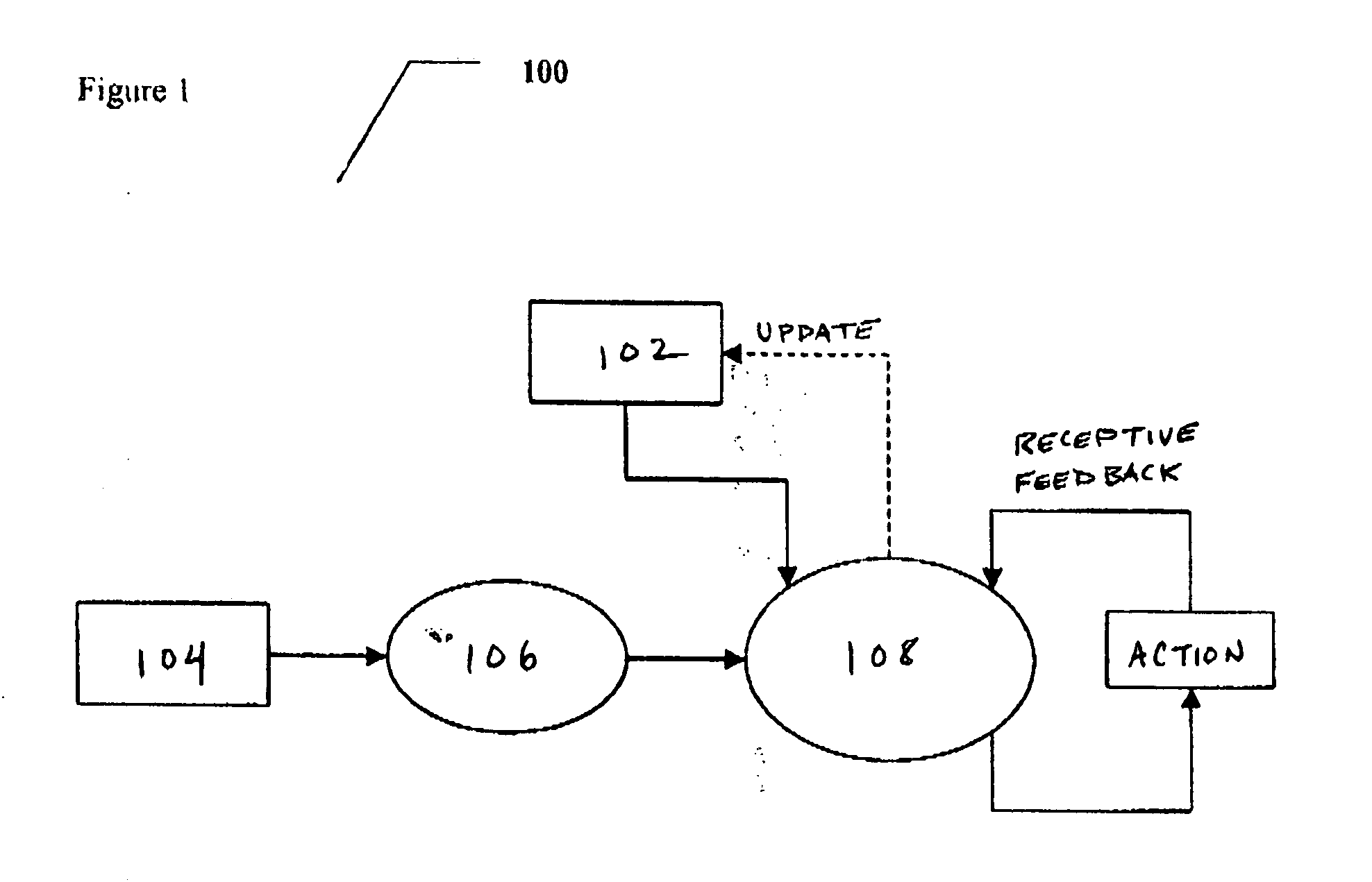

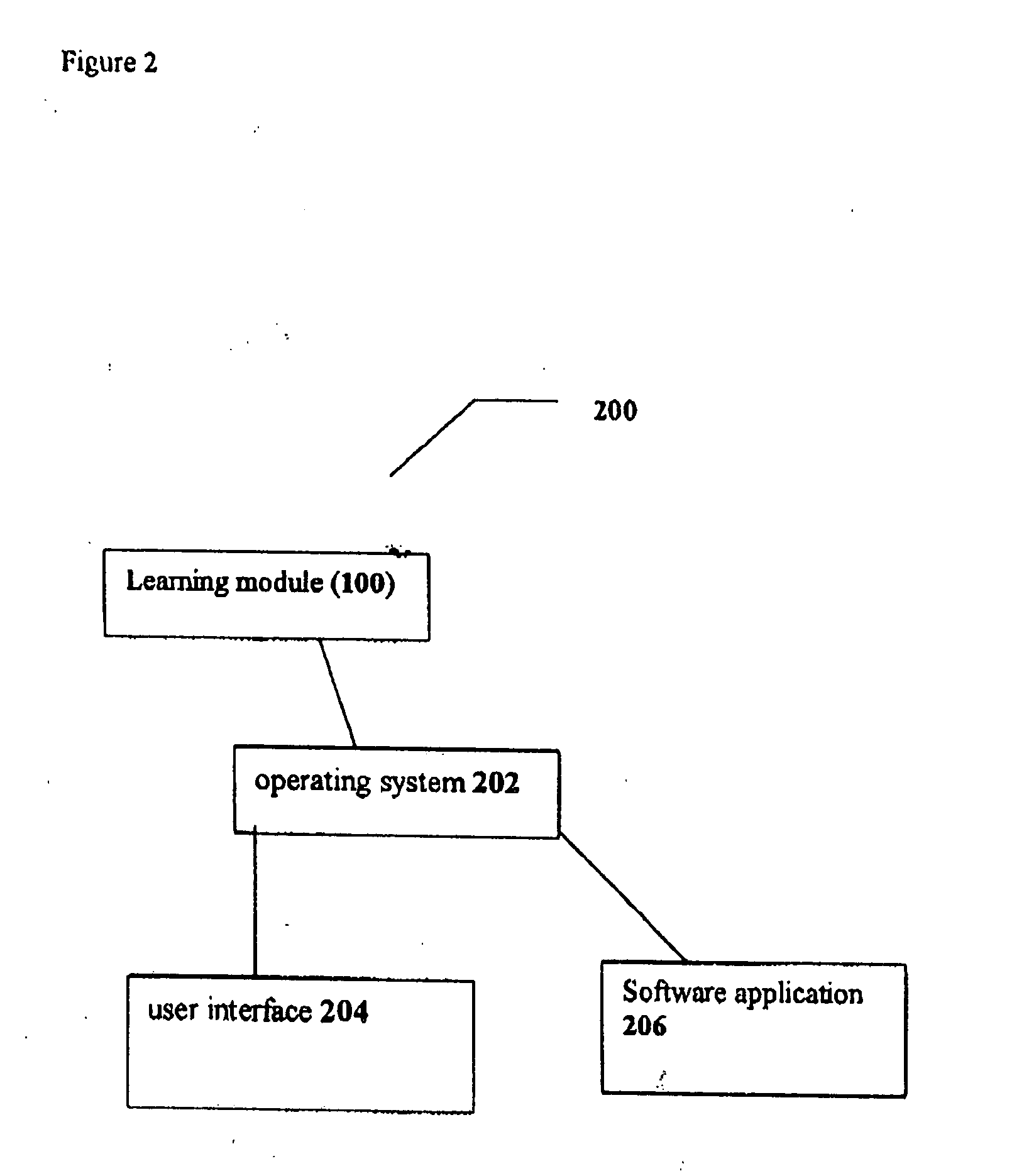

Proactive user interface

InactiveUS20050054381A1Increase sense of intelligenceImprove experienceInput/output for user-computer interactionDevices with sensorDisplay deviceTouchscreen

A proactive user interface, which could optionally be installed in (or otherwise control and / or be associated with) any type of computational device. The proactive user interface actively makes suggestions to the user, based upon prior experience with a particular user and / or various preprogrammed patterns from which the computational device could select, depending upon user behavior. These suggestions could optionally be made by altering the appearance of at least a portion of the display, for example by changing a menu or a portion thereof; providing different menus for display; and / or altering touch screen functionality. The suggestions could also optionally be made audibly.

Owner:SAMSUNG ELECTRONICS CO LTD

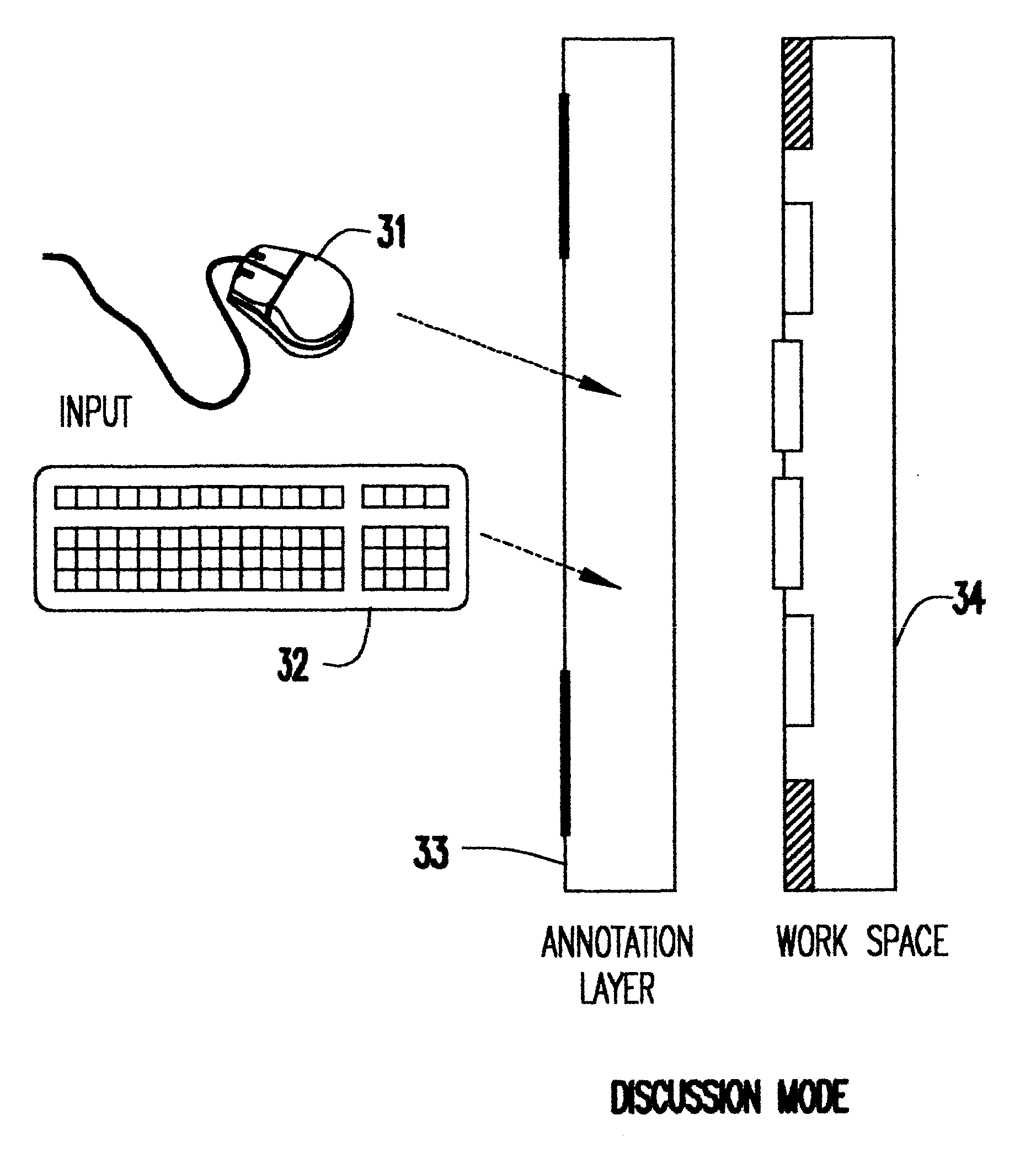

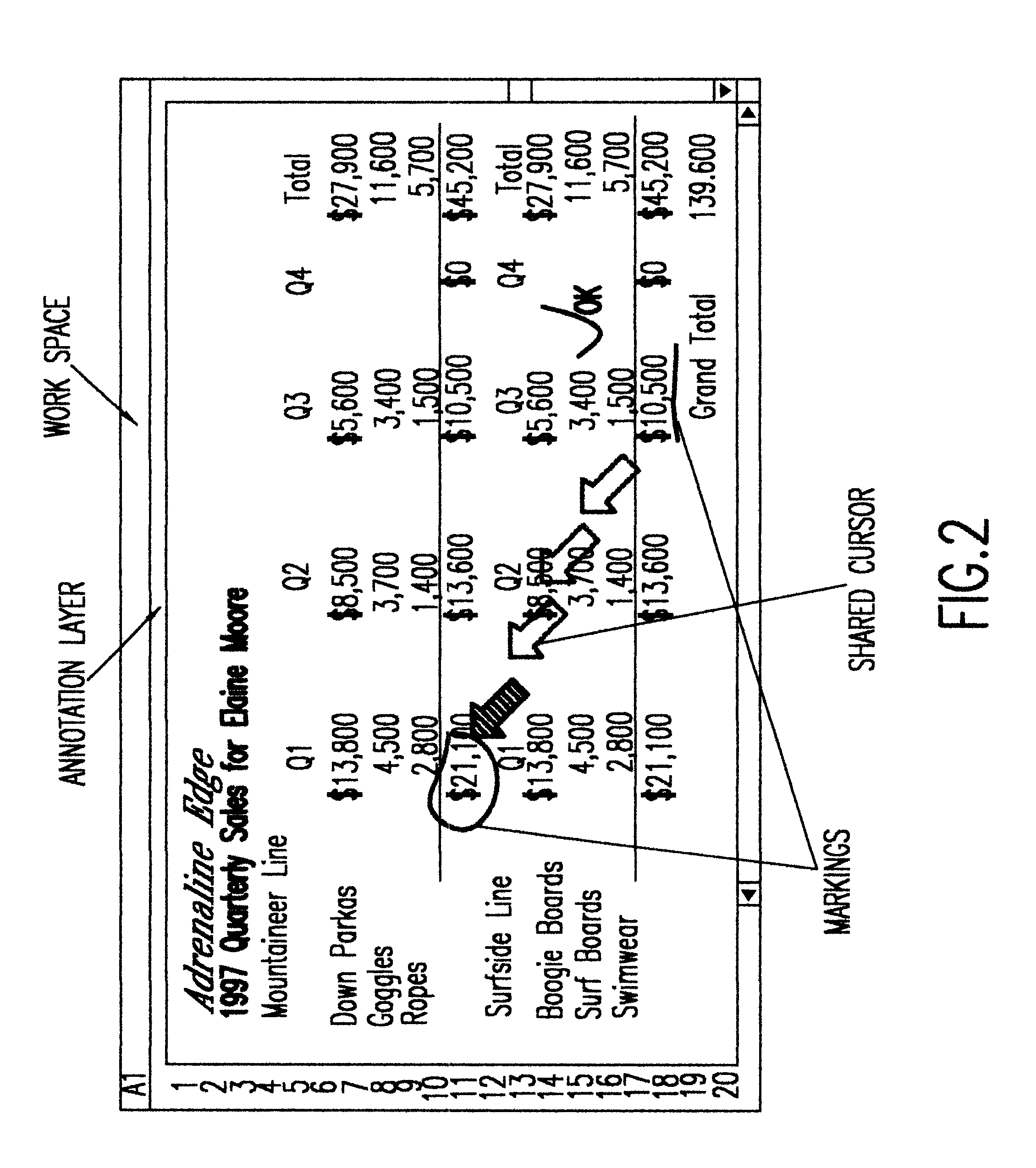

Annotation layer for synchronous collaboration

InactiveUS6342906B1Special data processing applicationsDigital output to display deviceUser inputOperation mode

Effective real-time collaboration across remote sites in which any type of data can be shared in a common work space in a consistent manner is made possible by an annotation layer having multiple distinct modes of operation during a collaborative session with two or more people sharing the same work space. One mode is a discussion mode in which one or more users simply share a common view of the shared data and manipulate the view independent of the shared data. During the discussion mode, all user input is handled by the annotation layer which interprets user inputs to move common cursors, create, move or delete markings and text which, since the annotation layer is transparent, appear over the application. Another mode is an edit mode in which one or more users actually edit the shared data. The applications and the data are synchronized among all clients to display the same view. Manipulating the view includes moving a common cursor and placing markings on the common view using text and / or drawing tools.

Owner:IBM CORP

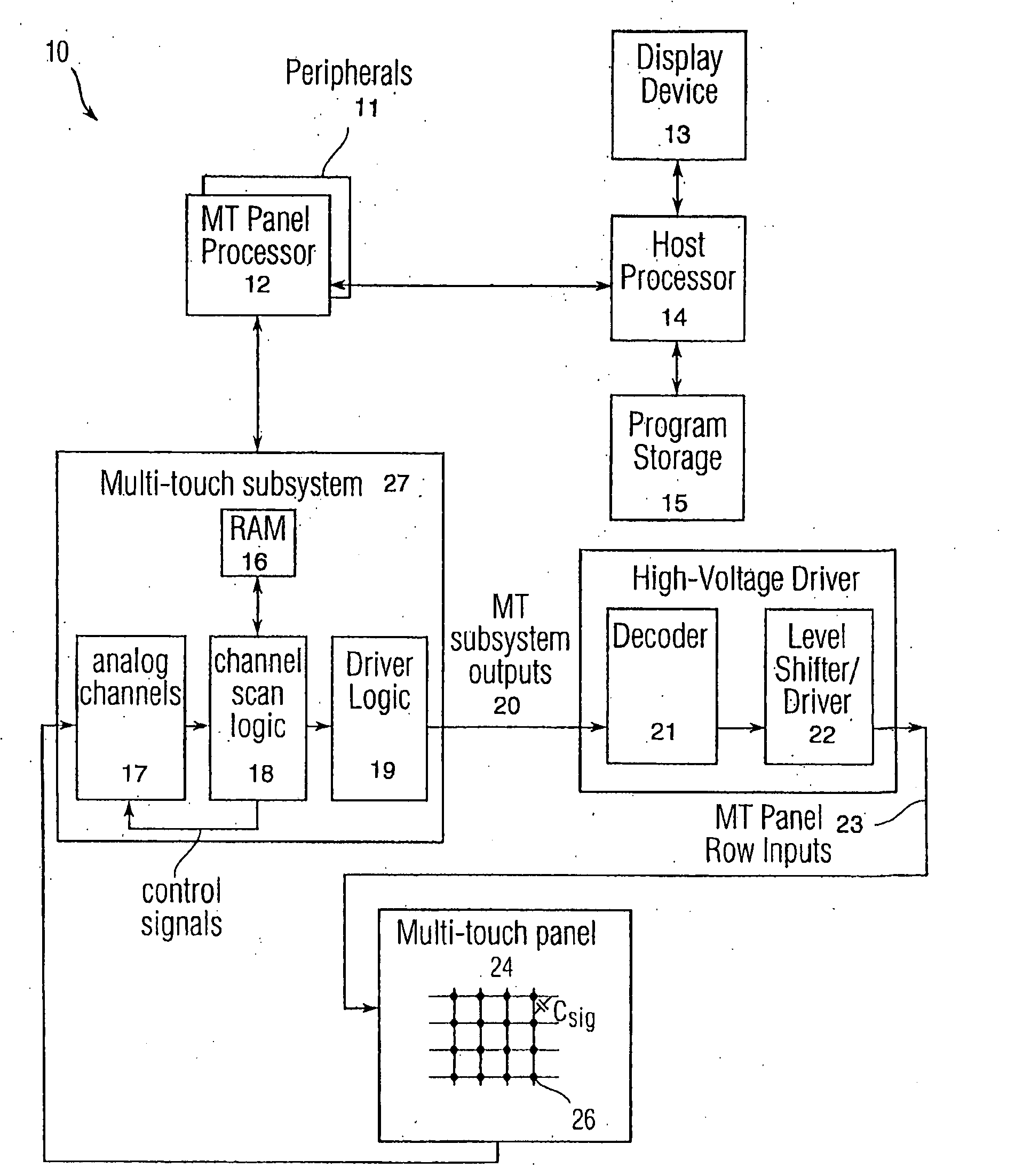

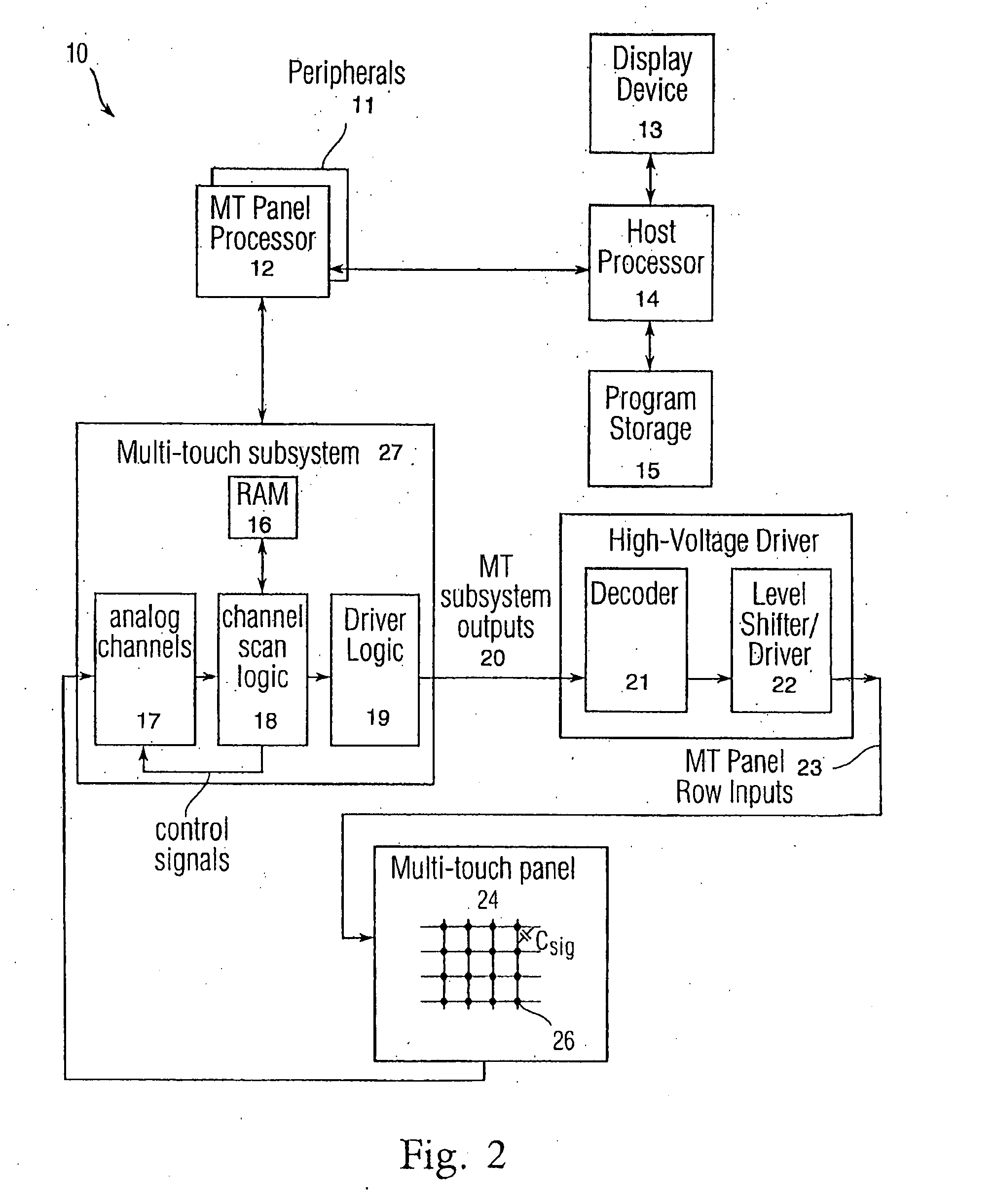

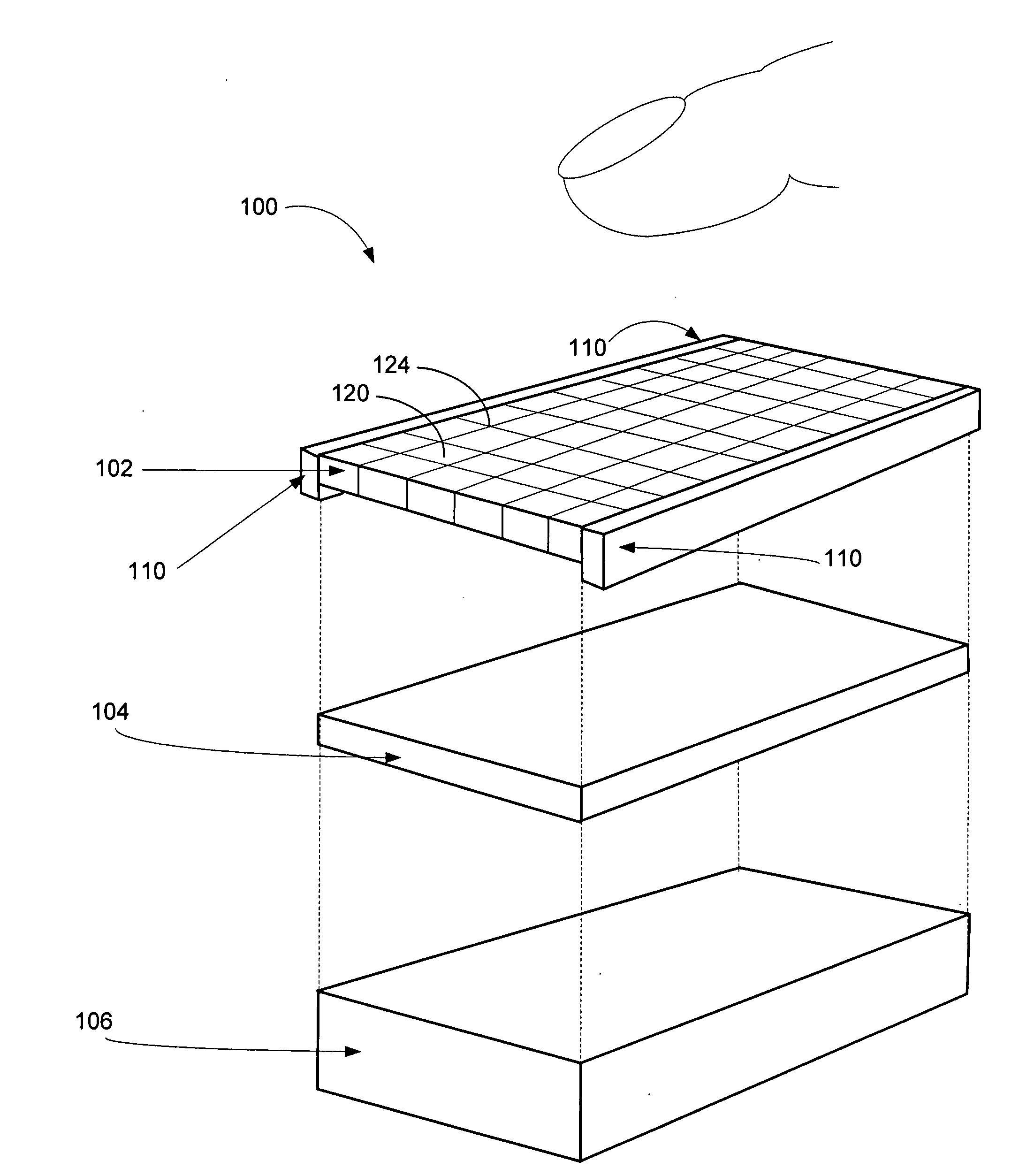

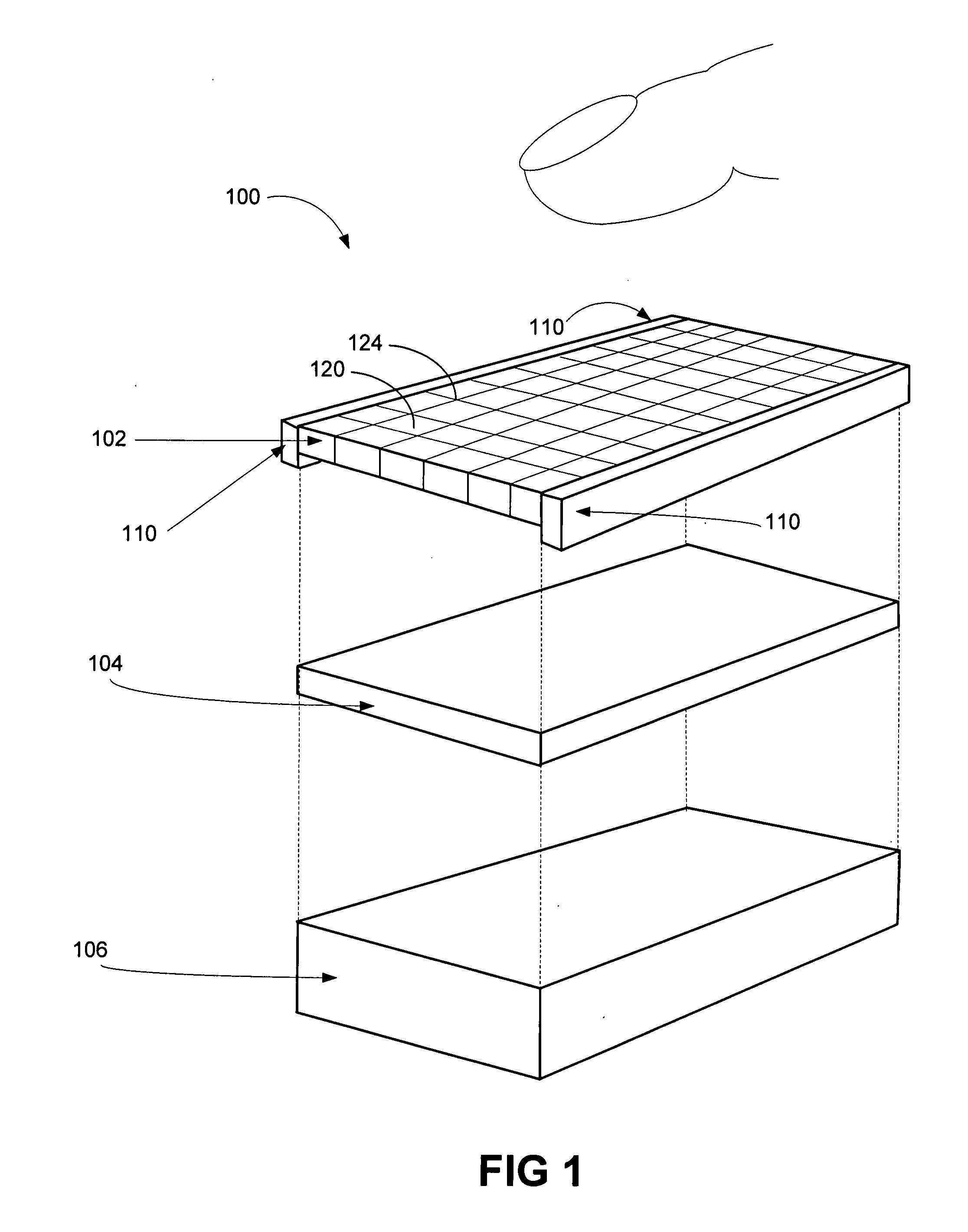

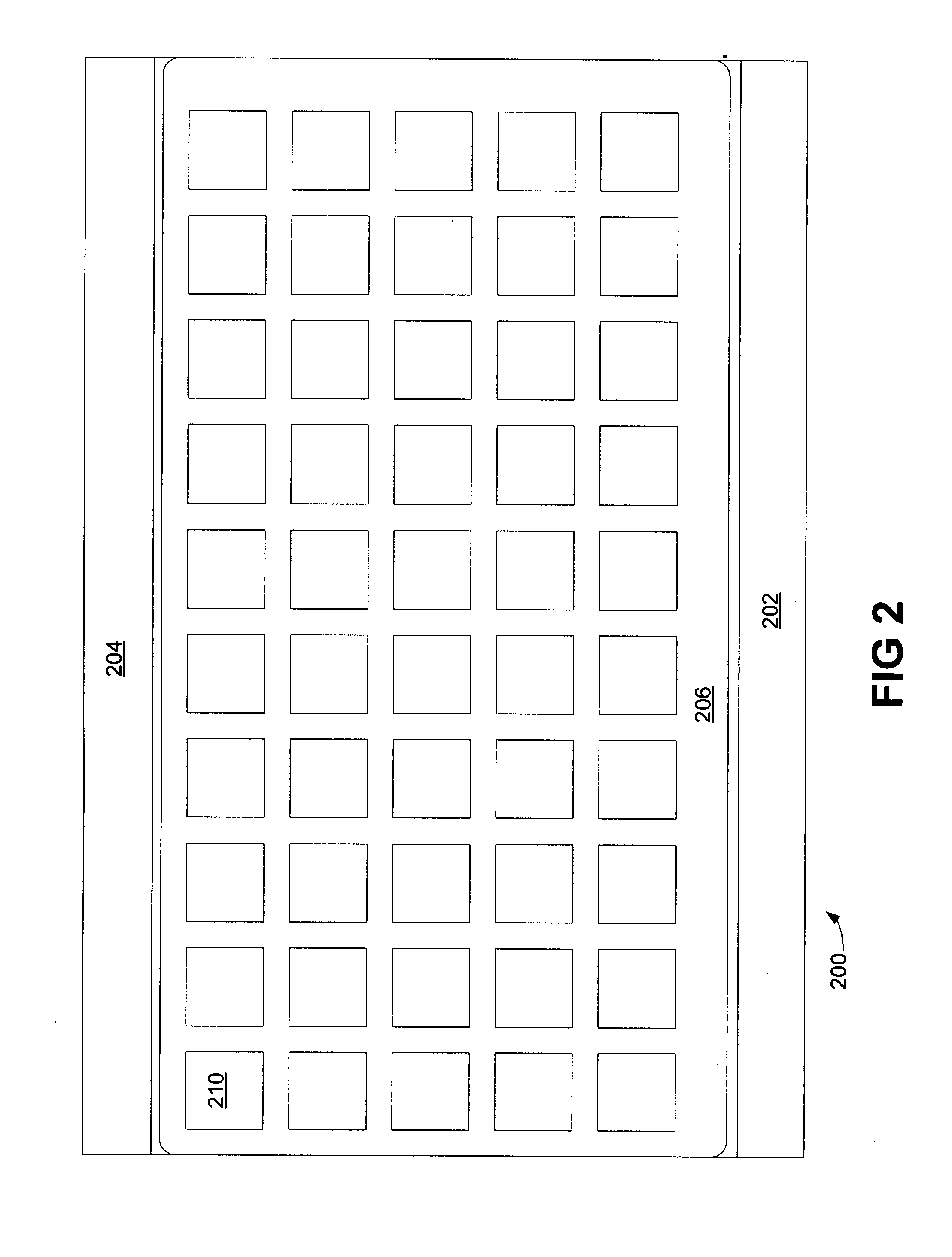

Method and apparatus for multi-touch tactile touch panel actuator mechanisms

A method and apparatus of actuator mechanisms for a multi-touch tactile touch panel are disclosed. The tactile touch panel includes an electrical insulated layer and a tactile layer. The top surface of the electrical insulated layer is capable of receiving an input from a user. The tactile layer includes a grid or an array of haptic cells. The top surface of the haptic layer is situated adjacent to the bottom surface of the electrical insulated layer, while the bottom surface of the haptic layer is situated adjacent to a display. Each haptic cell further includes at least one piezoelectric material, Micro-Electro-Mechanical Systems (“MEMS”) element, thermal fluid pocket, MEMS pump, resonant device, variable porosity membrane, laminar flow modulation, or the like. Each haptic cell is configured to provide a haptic effect independent of other haptic cells in the tactile layer.

Owner:IMMERSION CORPORATION

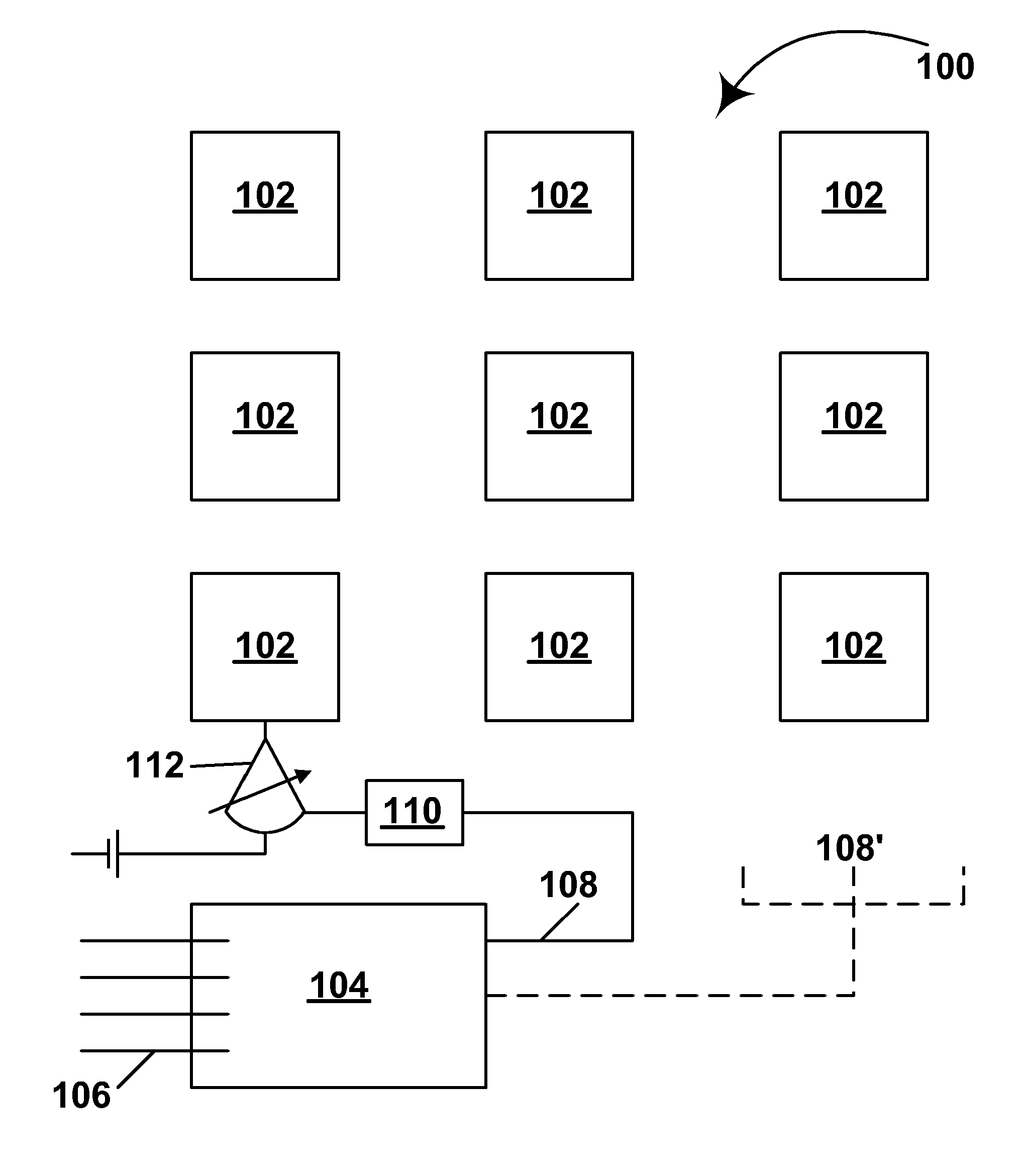

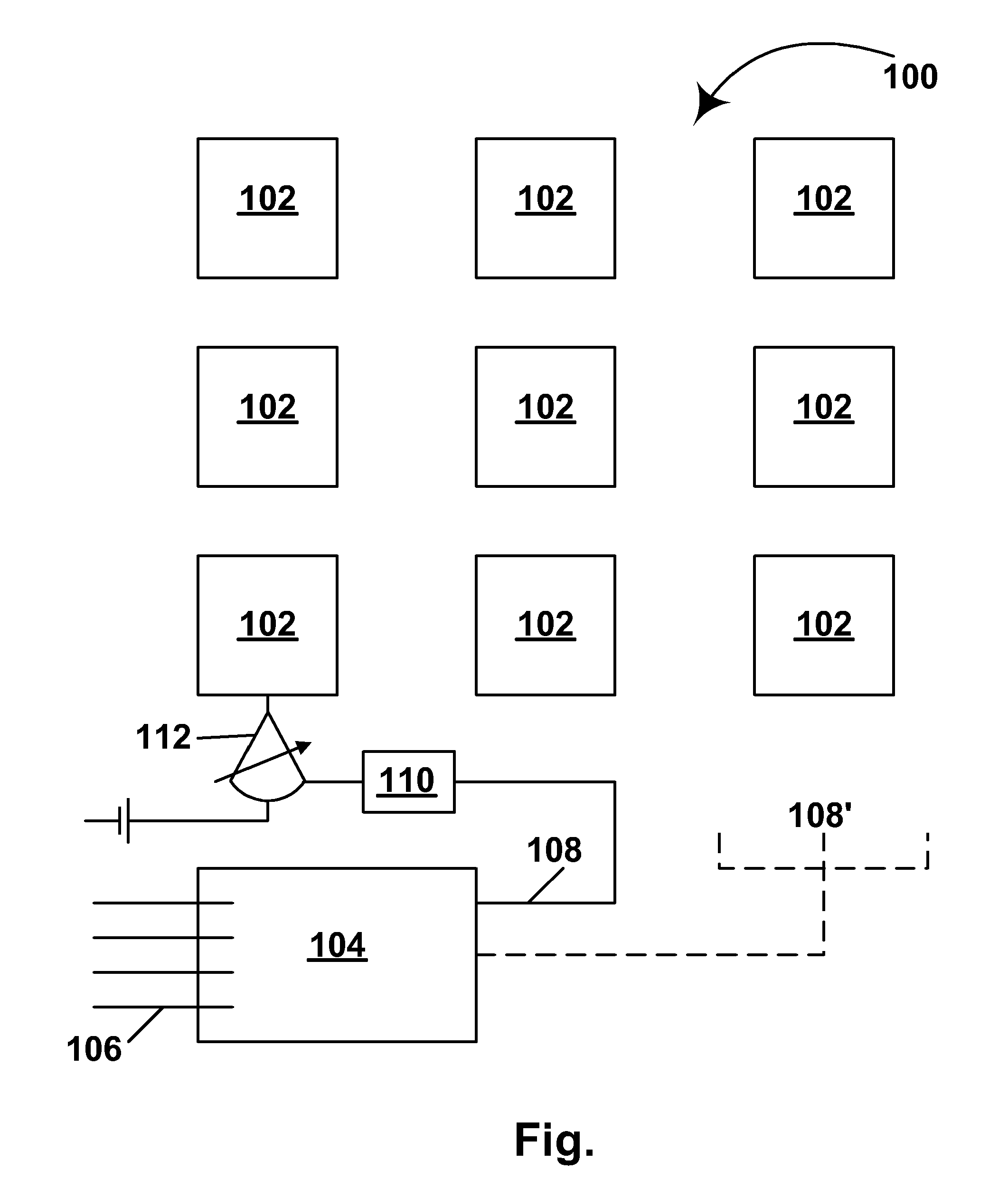

Tiled displays and methods for driving same

InactiveUS20050253777A1Reduce variationCathode-ray tube indicatorsDigital output to display deviceDisplay deviceEngineering

Owner:E INK CORPORATION +1

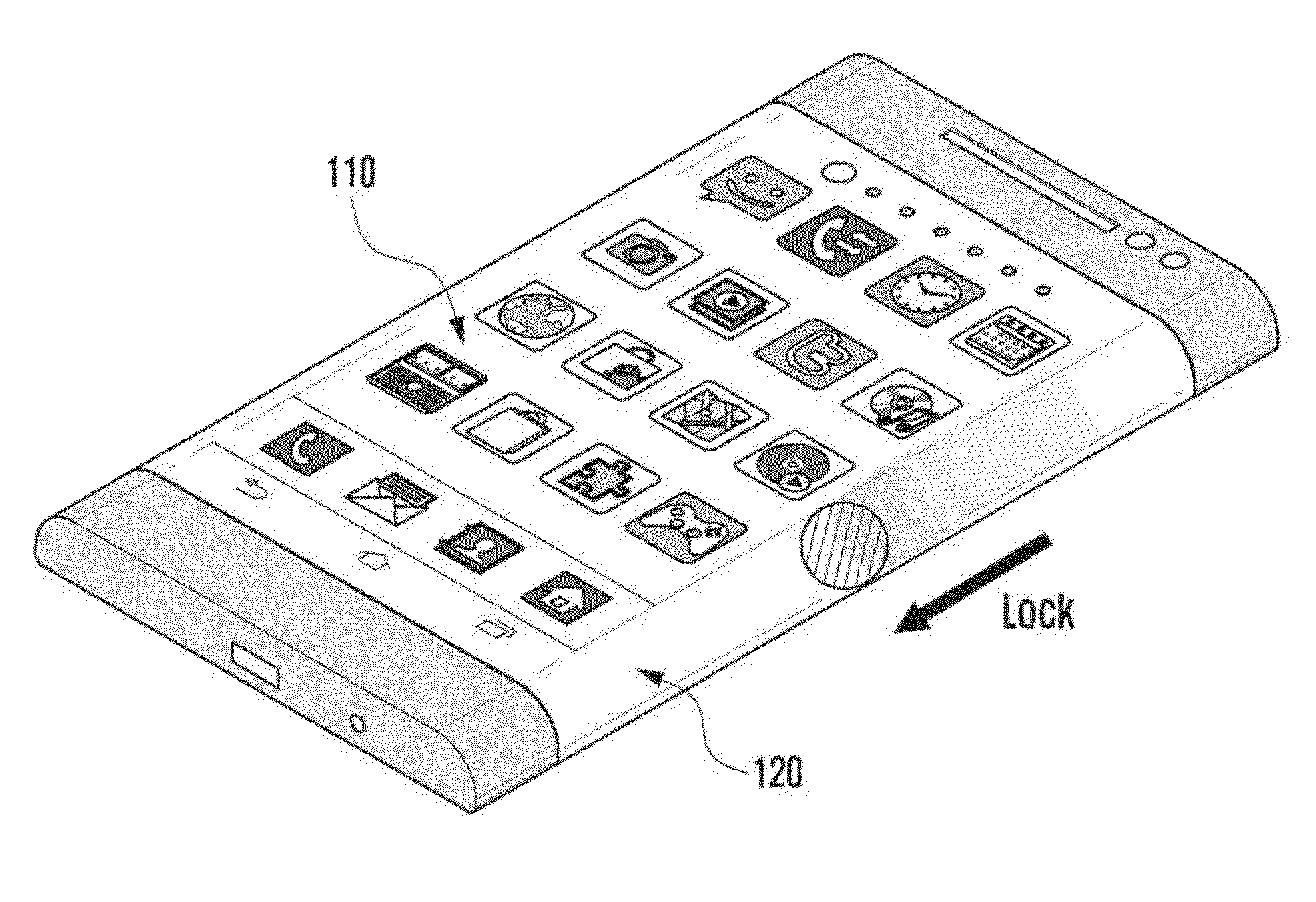

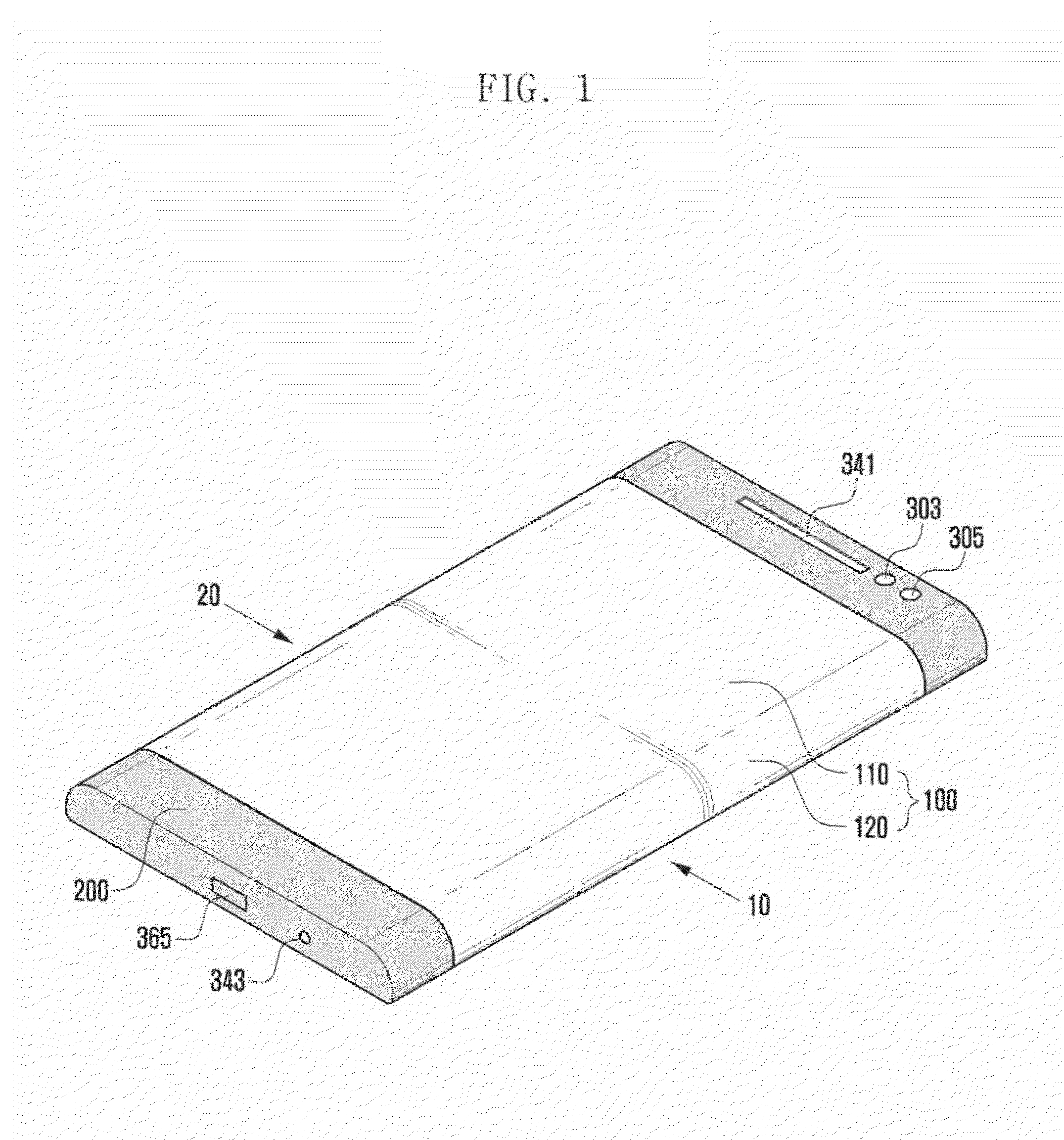

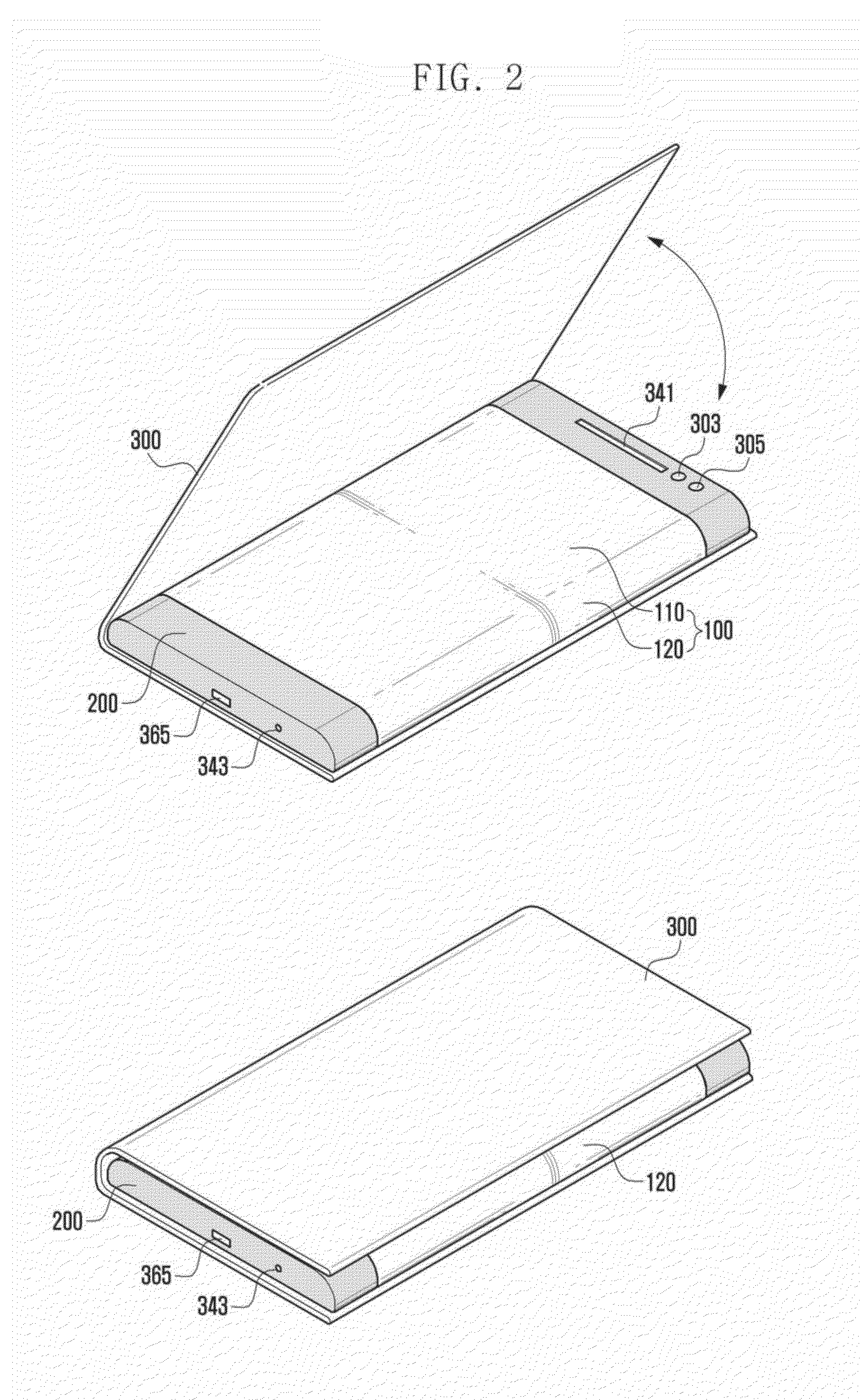

Method and apparatus for operating functions of portable terminal having bended display

InactiveUS20130300697A1Improve usabilityImprove operationDigital data processing detailsTransmissionDisplay deviceComputer science

Owner:SAMSUNG ELECTRONICS CO LTD

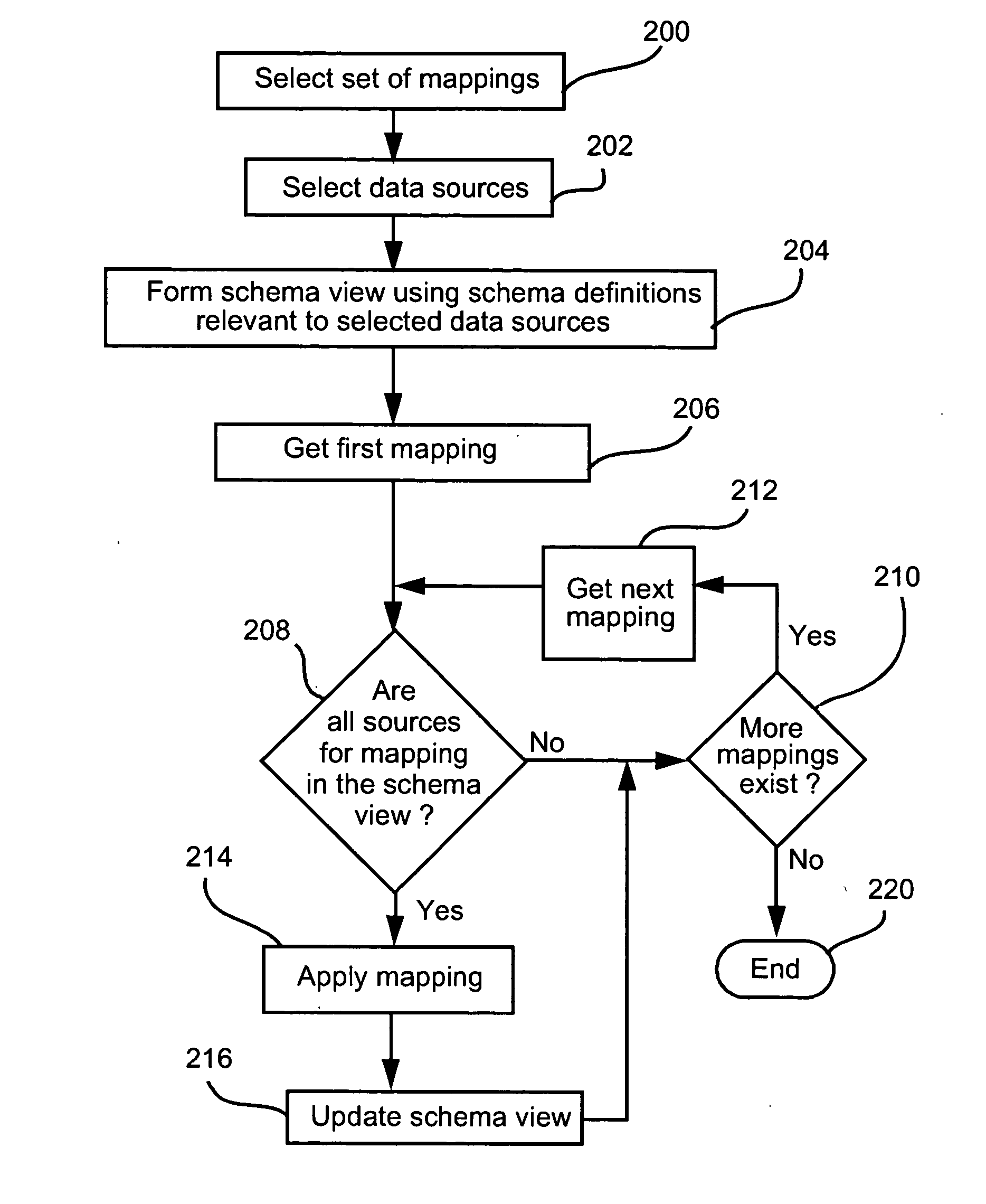

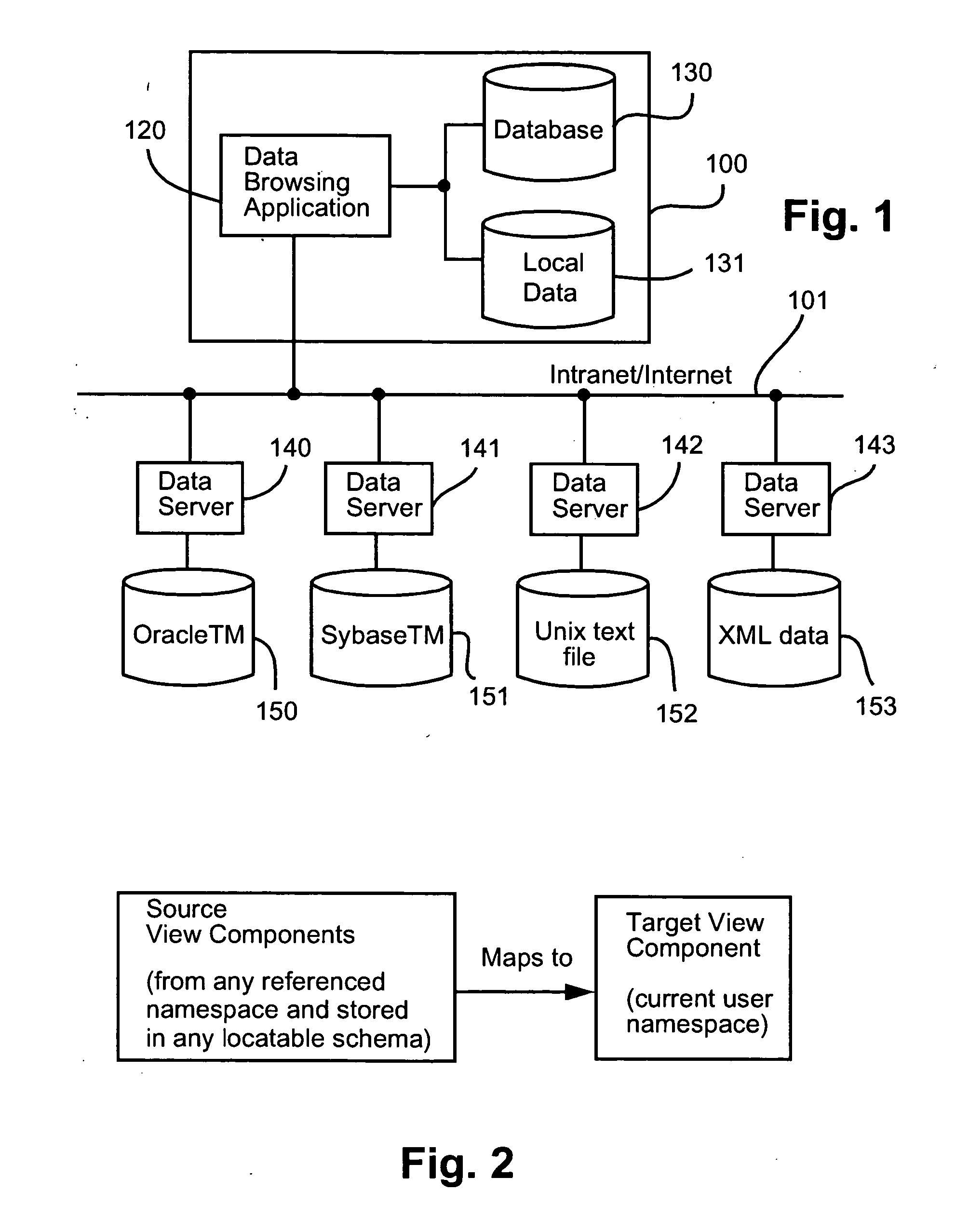

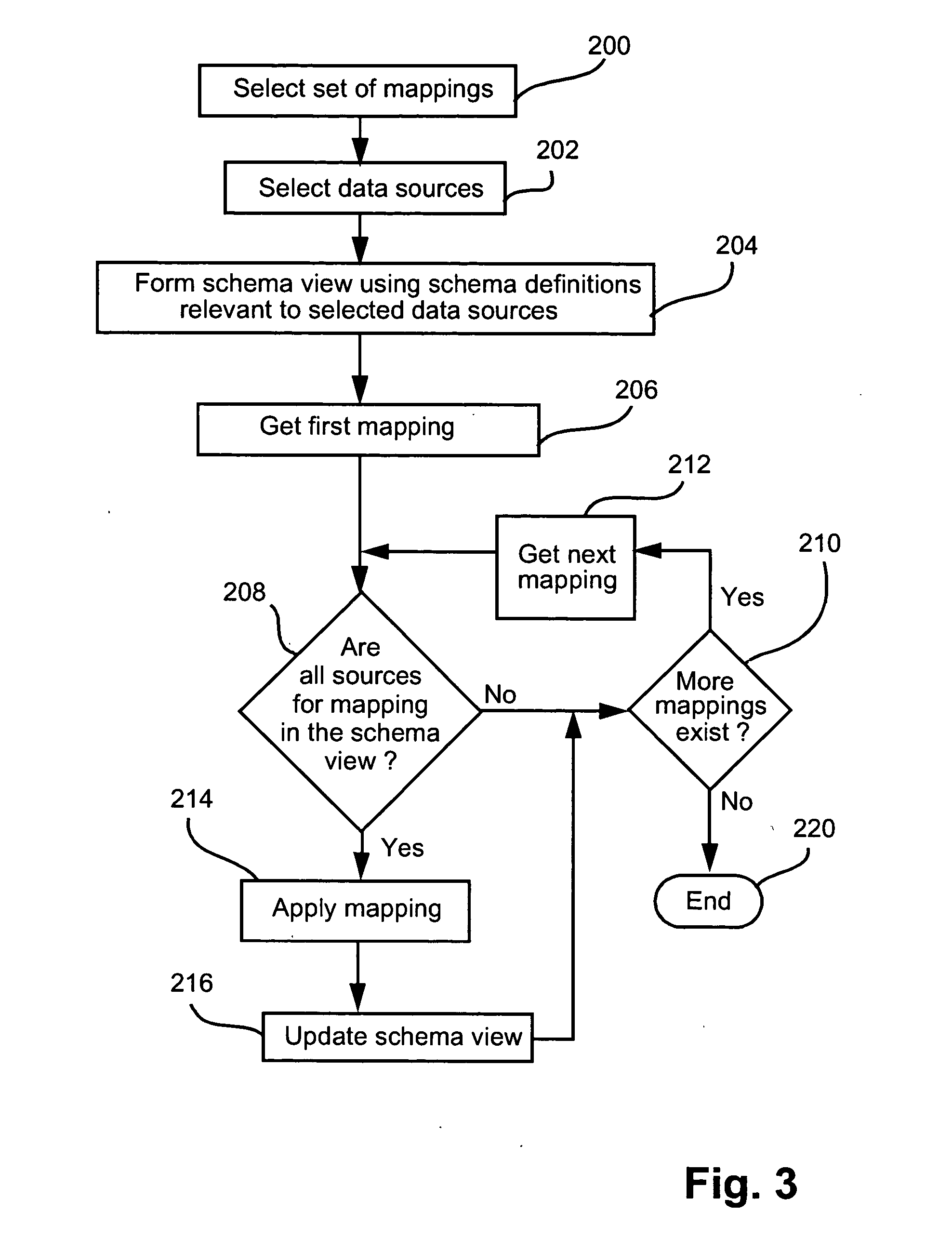

Method for presenting hierarchical data

InactiveUS20050060647A1Easy to operateDatabase management systemsDigital computer detailsHyperlinkDisplay device

Methods, programs (120) and apparatus (100) are disclosed for accessing and heterogenous data sources (150-153) and presenting information obtained therefrom. Specifically, the data sources may have hierarchical data, which may be presented by identifying a context data node from the data, the context data node having one or more descendent data nodes. At least one data pattern is determined in the descendent data nodes. At least one display type is assigned to the current context data node on the basis of the at least one data pattern. Thereafter, the method presents at least a subset of the descendent data nodes according to one of the assigned display types. Also disclosed is a method of browsing an hierarchically-represented data source. A user operation is interpreted to identify a context data node from the data source, the context data node having one or more descendent data nodes. At least one data pattern in the descendent data nodes is then determined and at least one display type is assigned to the current context data node on the basis of the at least one data pattern. A subset of the descendent data nodes is then presented according to one of the assigned display types, the subset including at least one hyperlink (3401,3402) having as its target a descendant data node of the current context data node. A further user operation is then interpreted to select the at least one hyperlink, the selection resulting in the current context data node being replaced with the data node corresponding to the target of the selected hyperlink. These steps may be repeated until no further hyperlinks to descendent data nodes are included in the subset. Other methods associated with access and presentation are also disclosed.

Owner:CANON KK

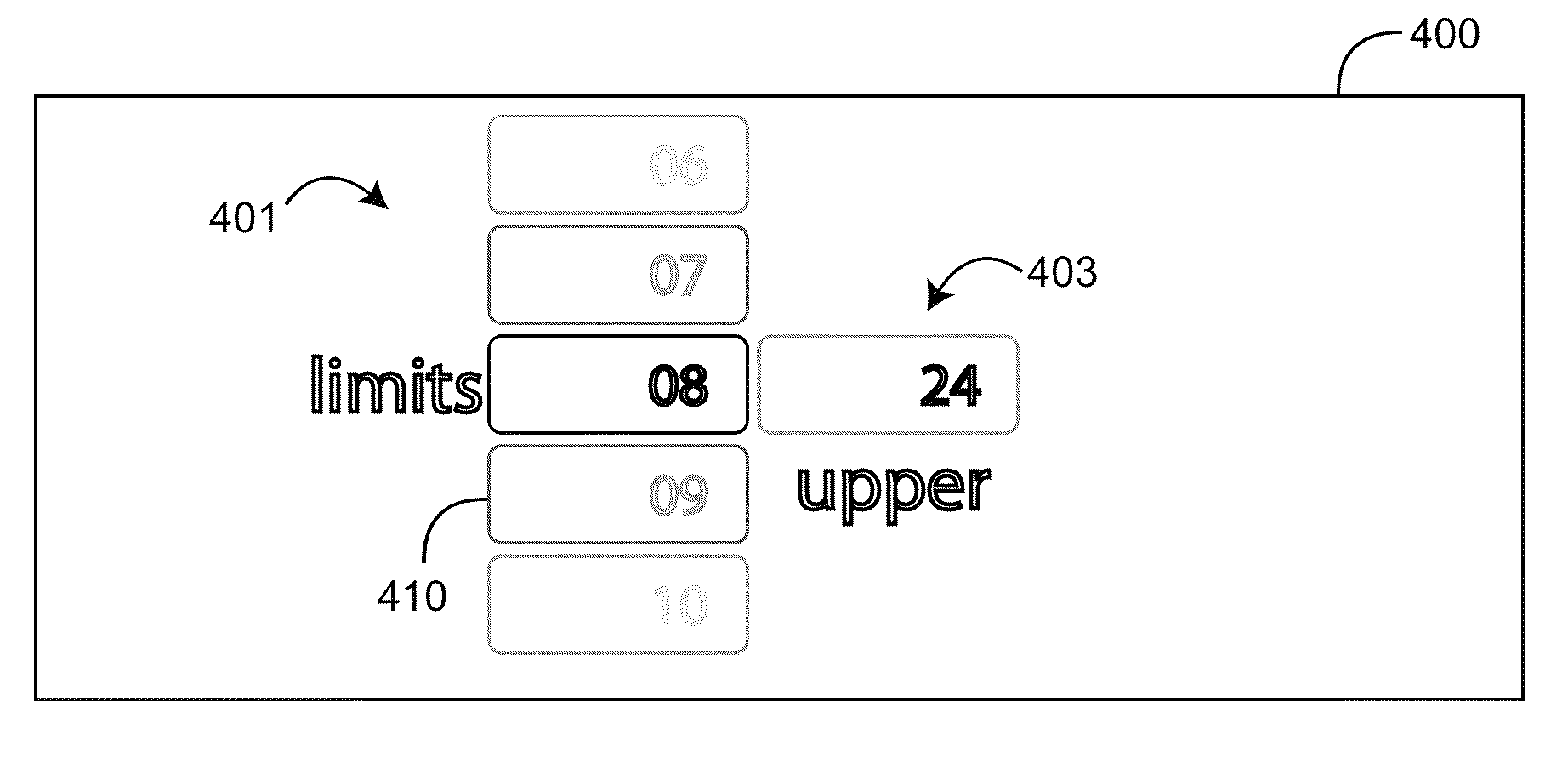

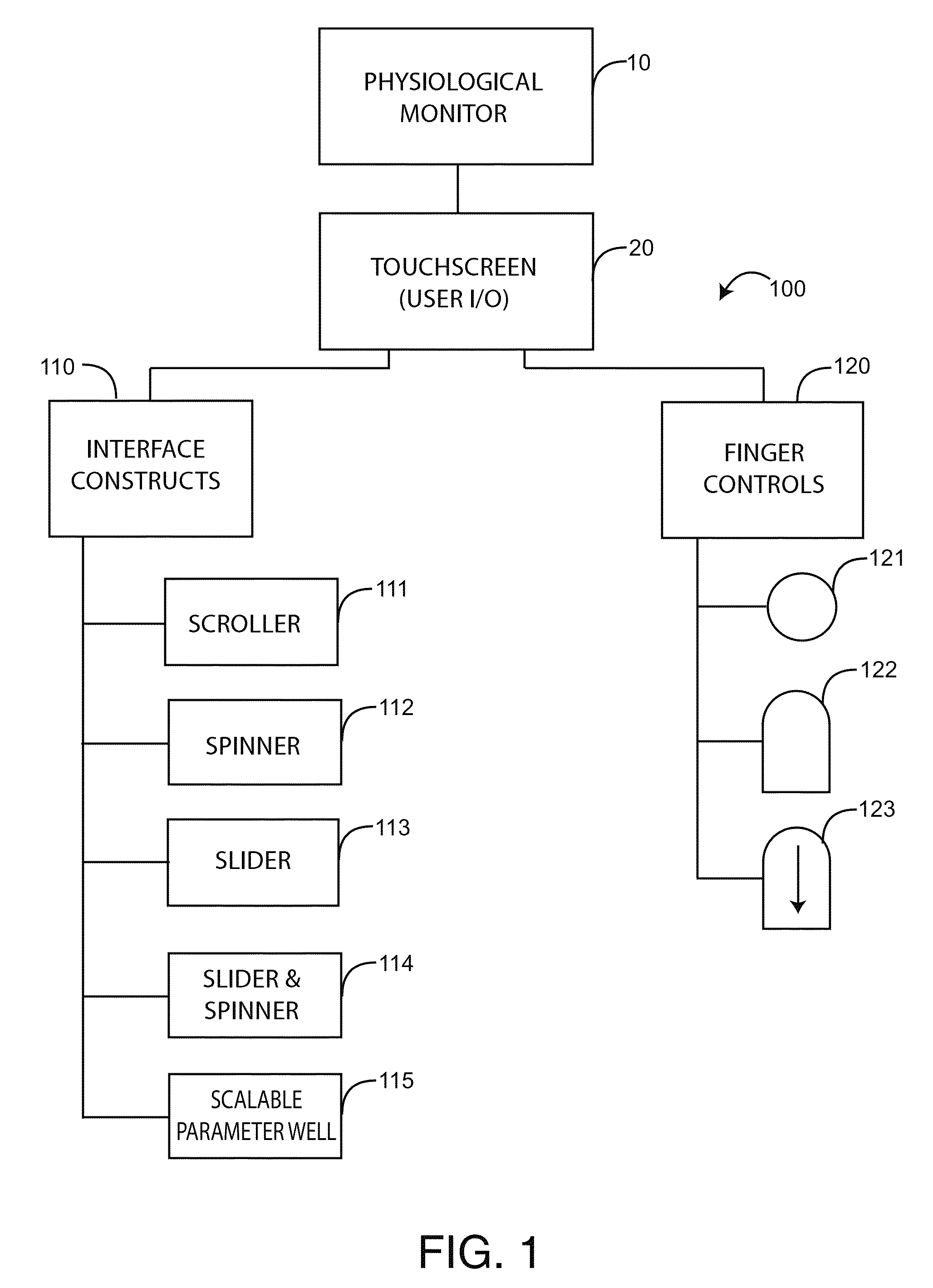

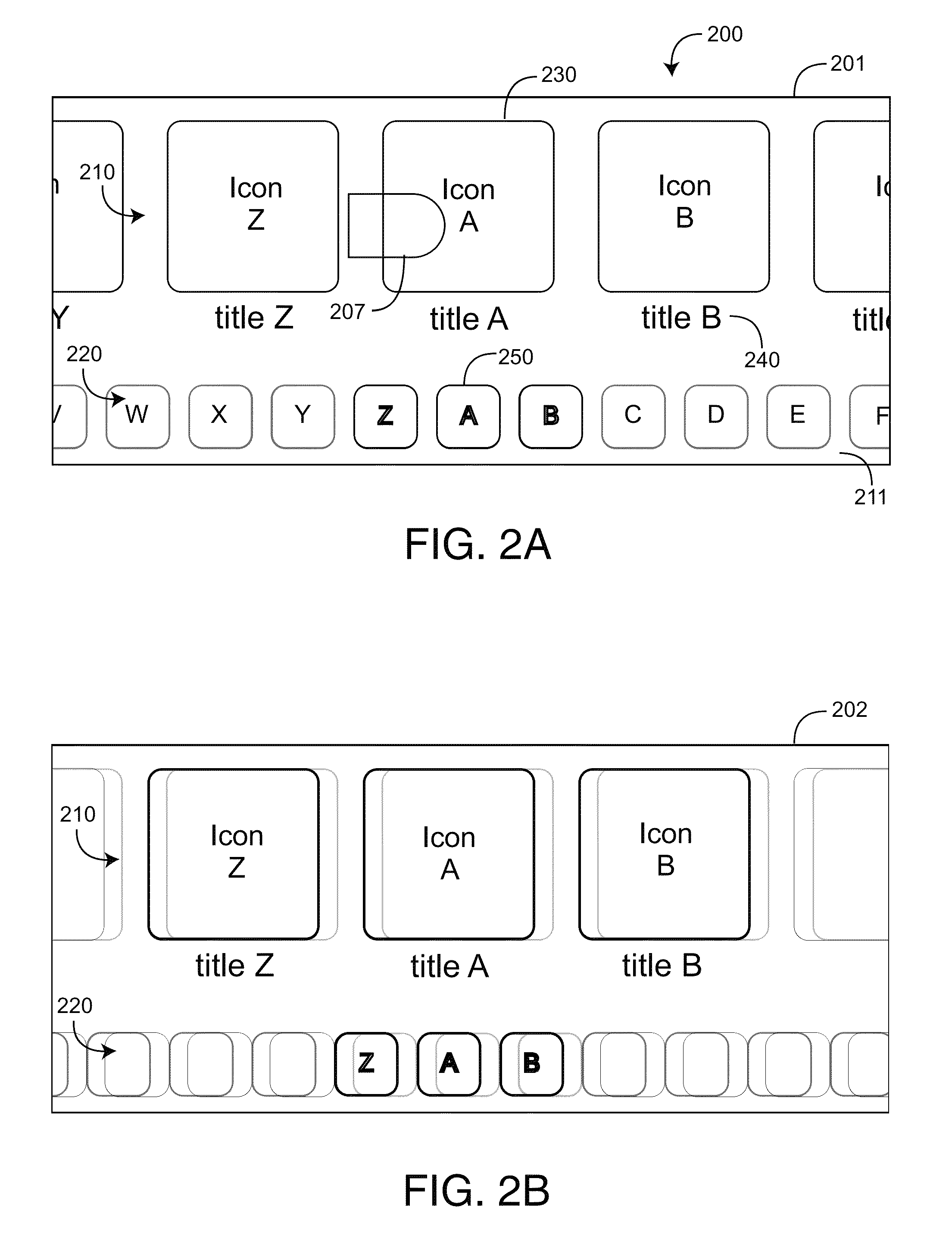

Physiological monitor touchscreen interface

A physiological monitor touchscreen interface which presents interface constructs on a touchscreen display that are particularly adapted to finger gestures. The finger gestures operate to change at least one of a physiological monitor operating characteristic and a physiological touchscreen display characteristic. The physiological monitor touchscreen interface includes a first interface construct operable to select a menu item from a touchscreen display and a second interface construct operable to define values for the selected menu item. The first interface construct can include a first scroller that presents a rotating set of menu items in a touchscreen display area and a second scroller that presents a rotating set of thumbnails in a display well. The second interface construct can operate to define values for a selected menu item.

Owner:JPMORGAN CHASE BANK NA

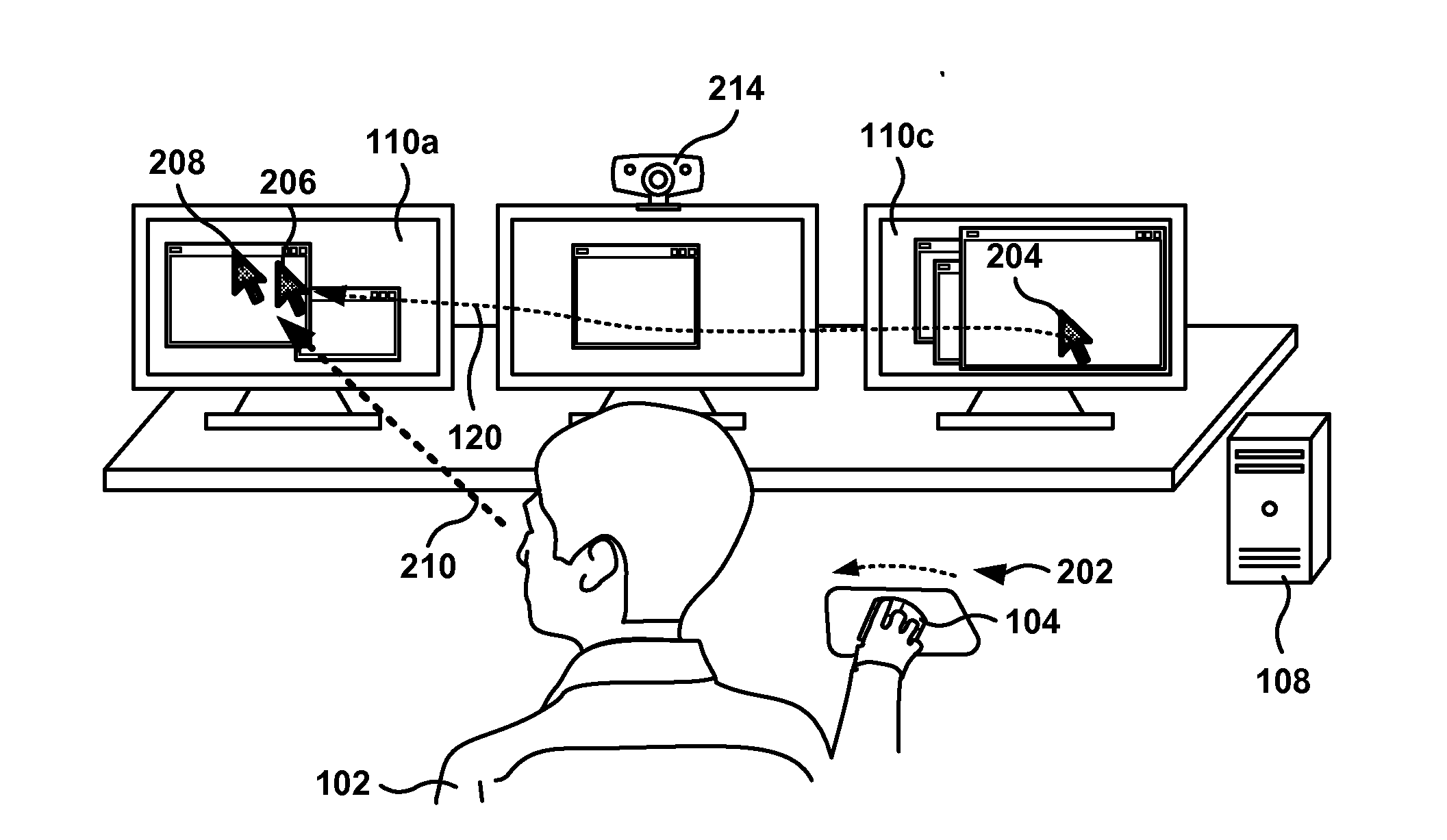

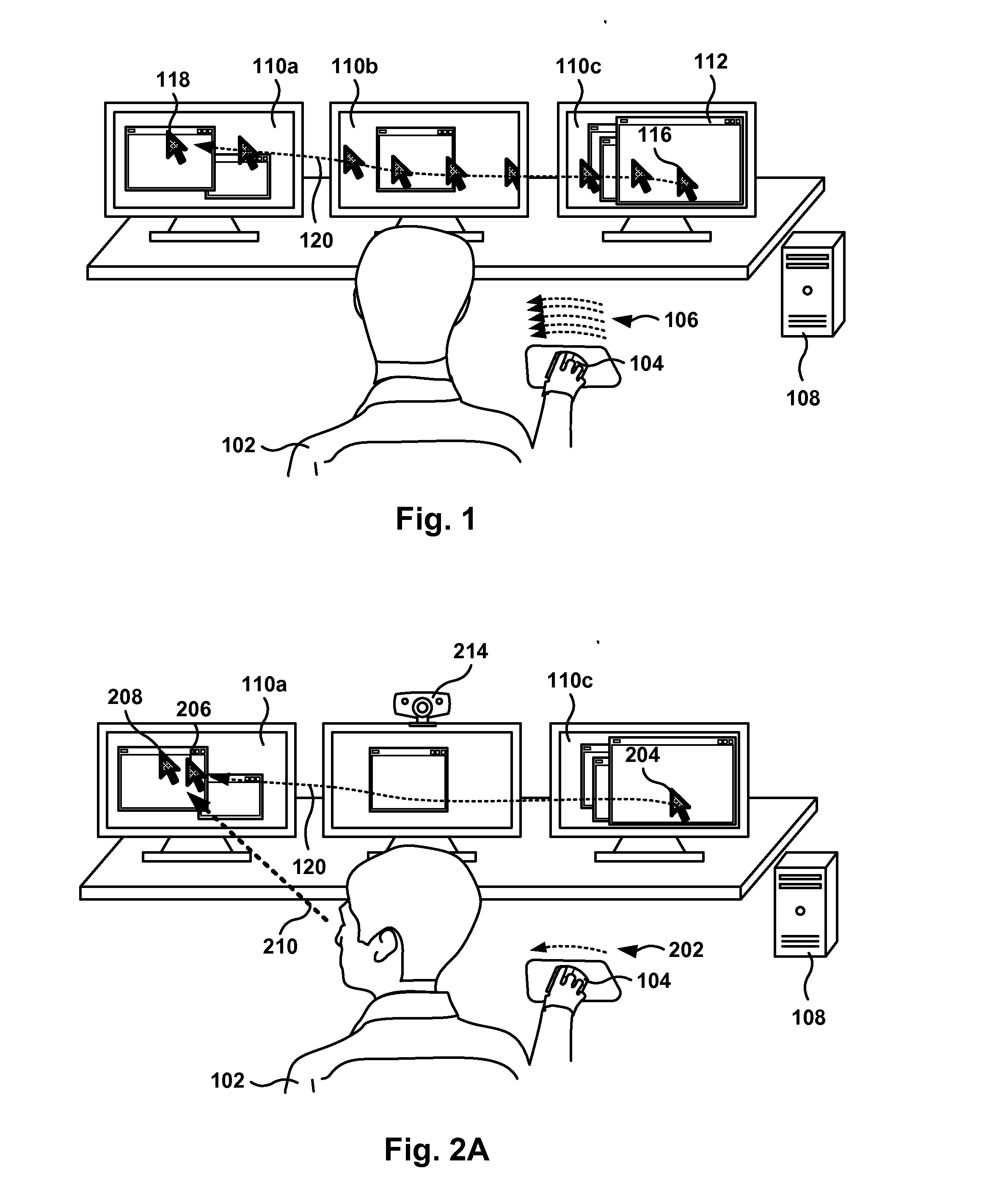

Gaze-Assisted Computer Interface

ActiveUS20120272179A1Input/output for user-computer interactionCathode-ray tube indicatorsGraphicsGraphical user interface

Methods, systems, and computer programs for interfacing a user with a Graphical User Interface (GUI) are provided. One method includes an operation for identifying the point of gaze (POG) of the user. The initiation of a physical action by the user, to move a position of a cursor on a display, is detected, where the cursor defines a focus area associated with a computer program executing the GUI. Further, the method includes an operation for determining if the distance between the current position of the cursor and the POG is greater than a threshold distance. The cursor is moved from the current position to a region proximate to the POG in response to the determination of the POG and to the detection of the initiation of the physical action.

Owner:SONY COMPUTER ENTERTAINMENT INC

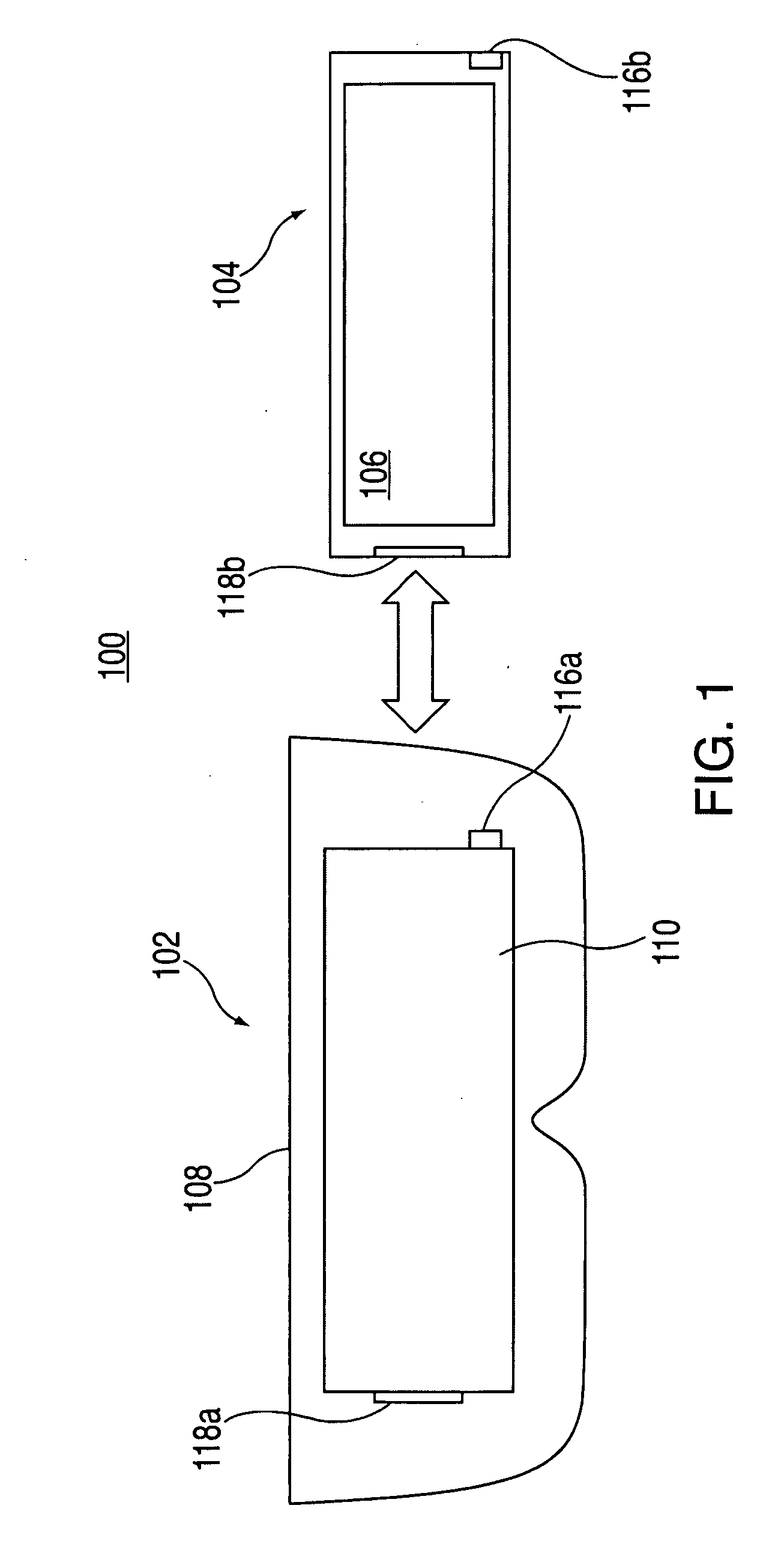

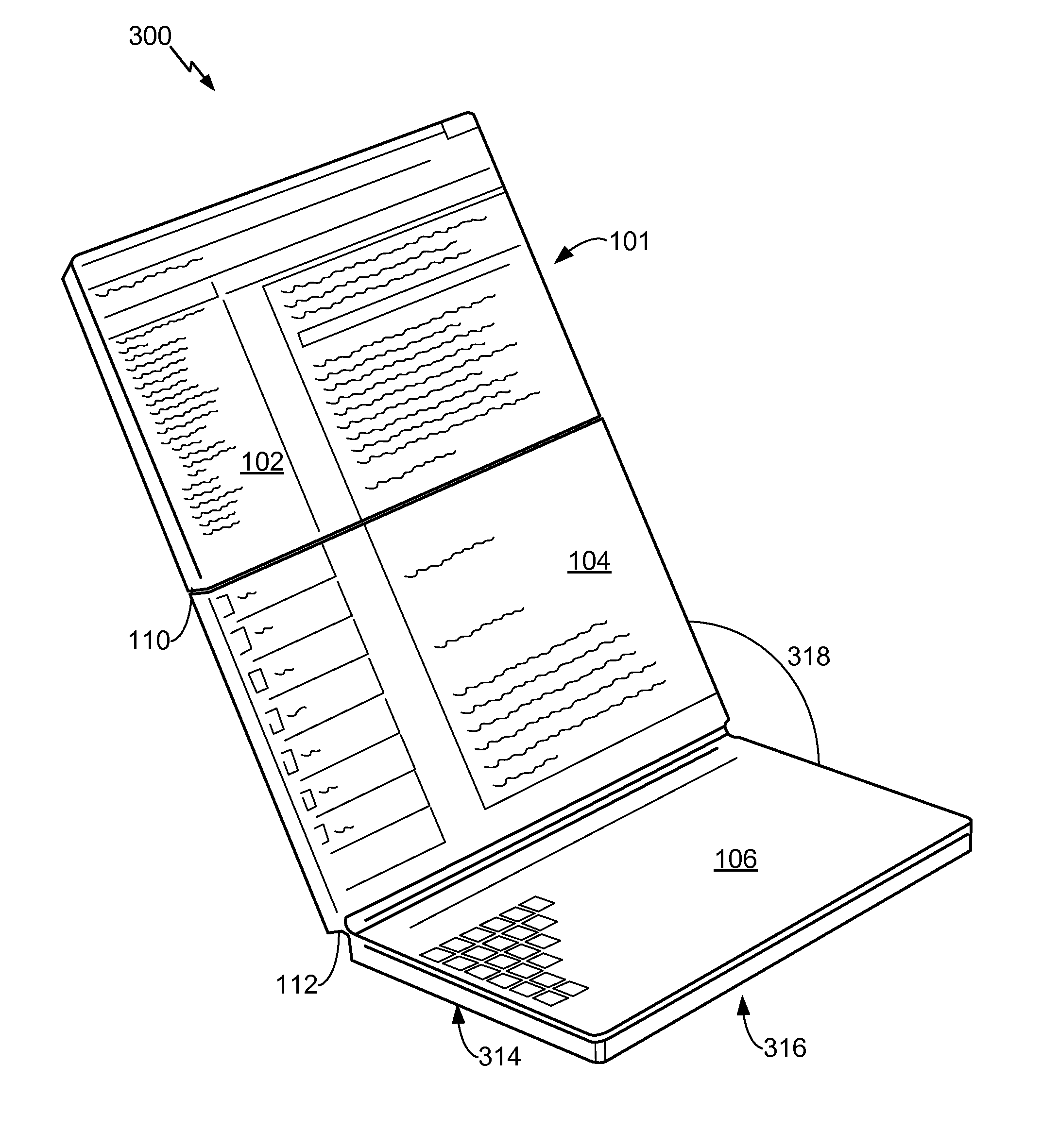

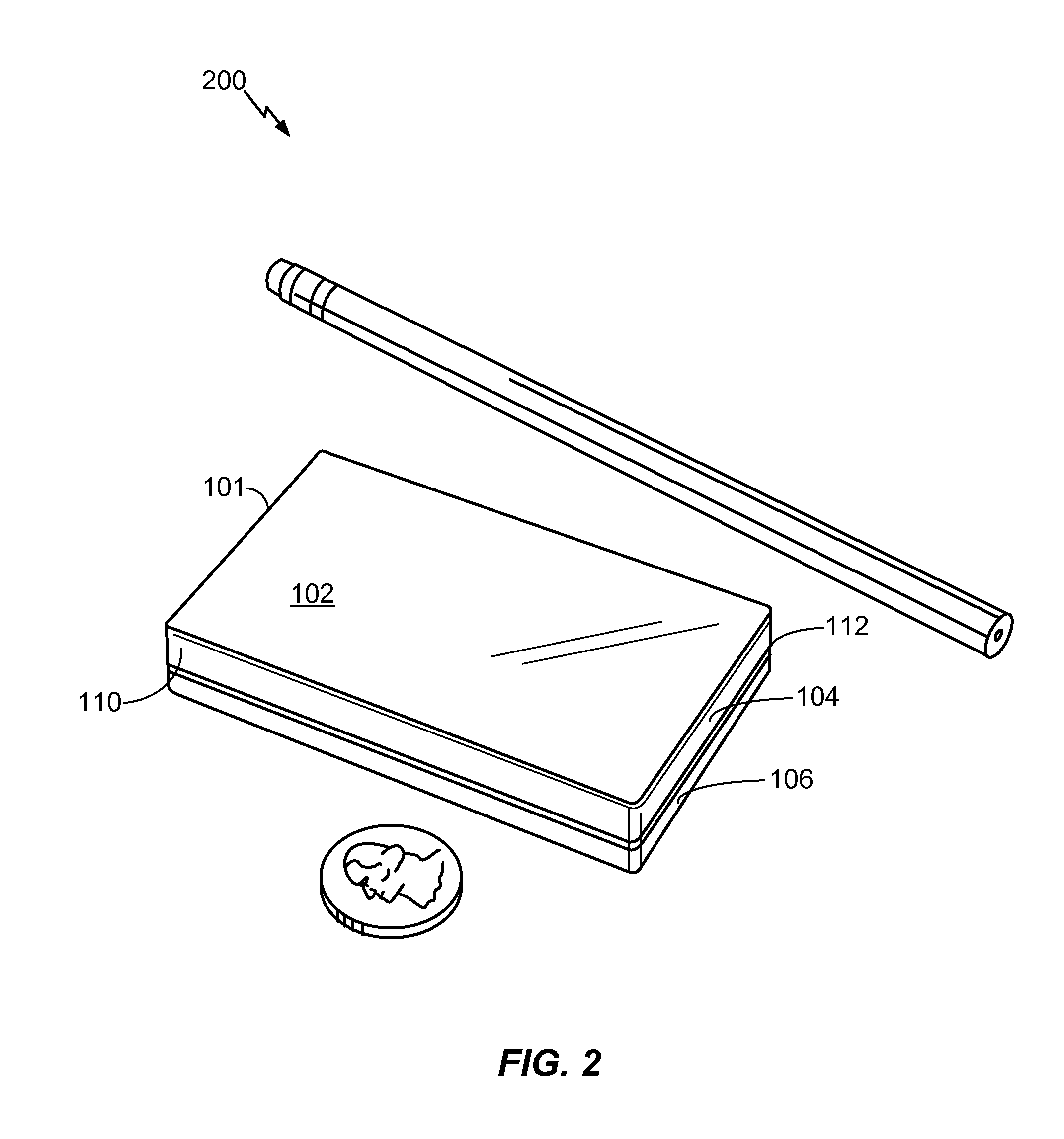

Sending a parameter based on screen size or screen resolution of a multi-panel electronic device to a server

ActiveUS20110216064A1Easy to operateDevices with multiple display unitsStatic indicating devicesComputer hardwareImage resolution

In a particular embodiment, a method includes detecting a hardware configuration change at an electronic device. The electronic device includes at least a first panel having a first display surface and a second panel having a second display surface. An effective screen size or a screen resolution corresponding to a viewing area that includes the first display surface and the second display surface is modified in response to the hardware configuration change. The method also includes sending at least one parameter associated with or based on the modified effective screen size or the modified screen resolution to a server.

Owner:QUALCOMM INC

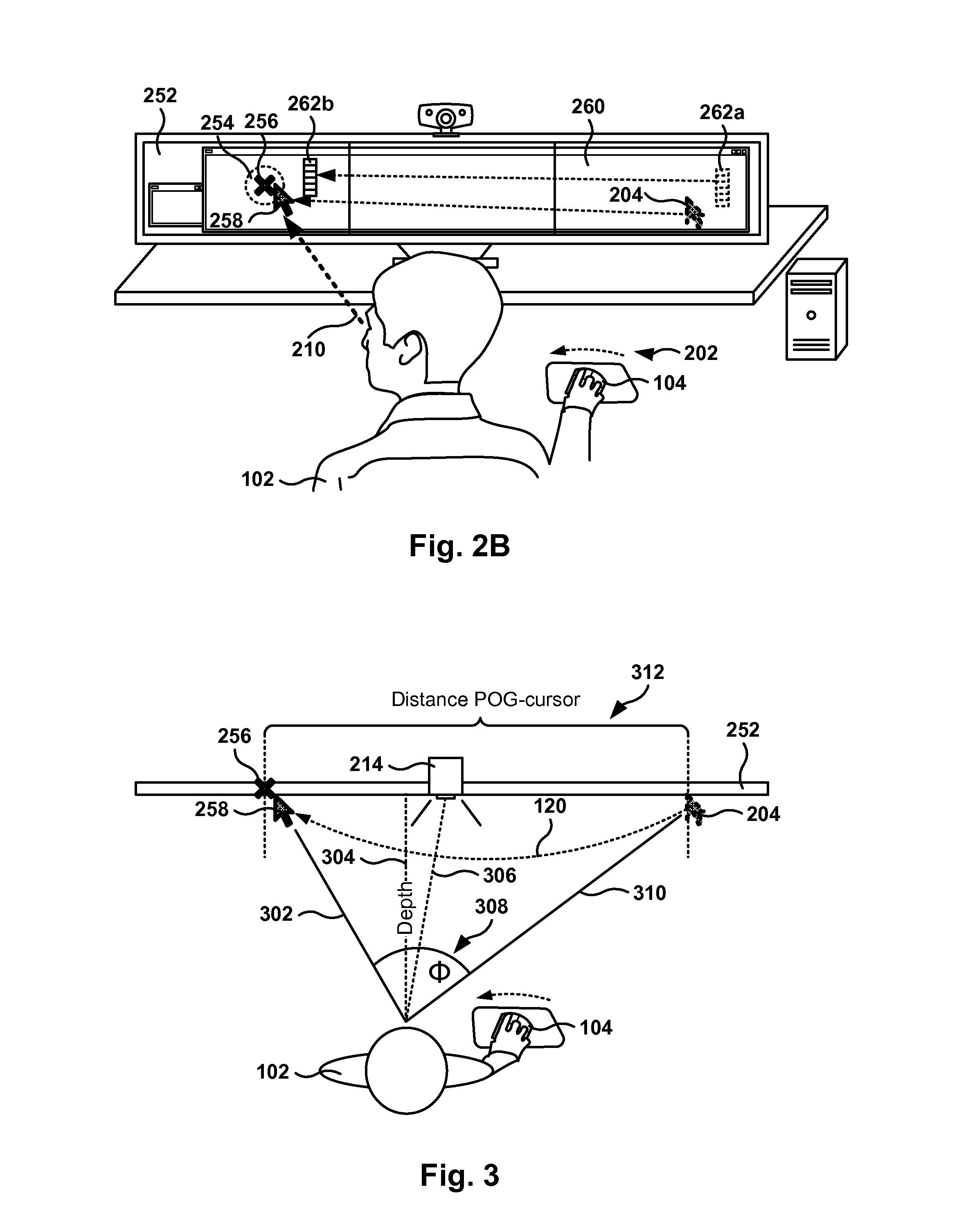

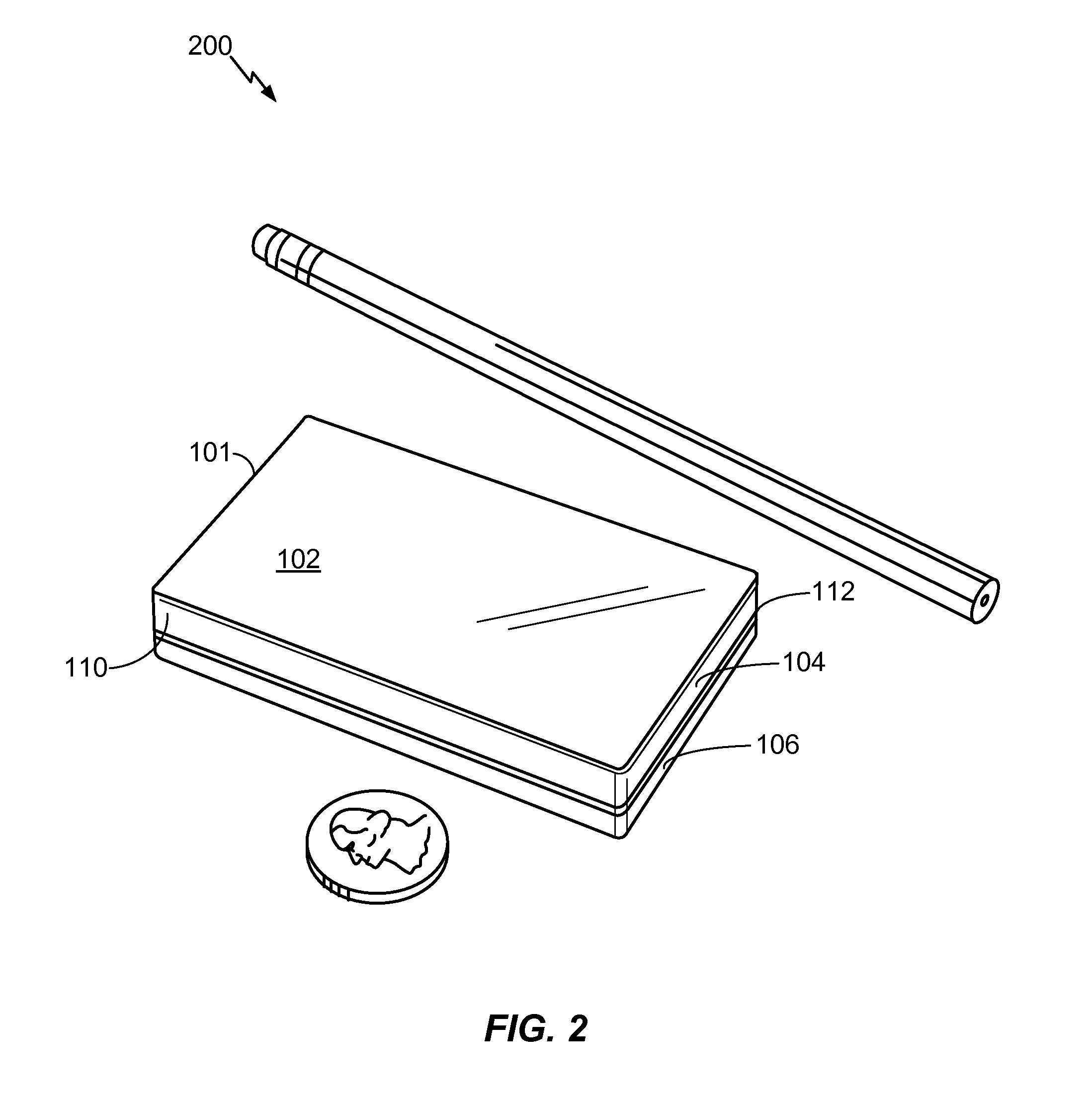

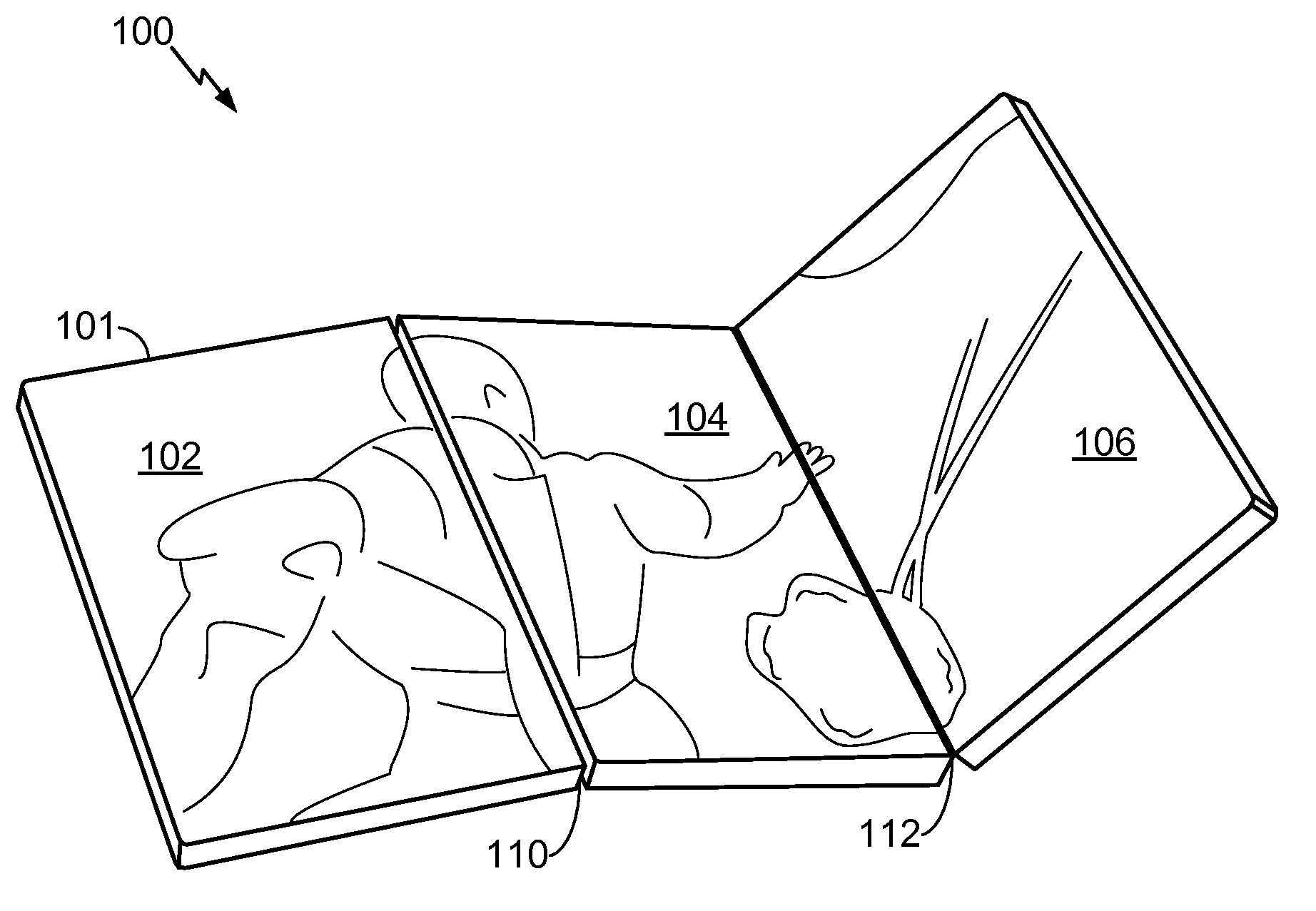

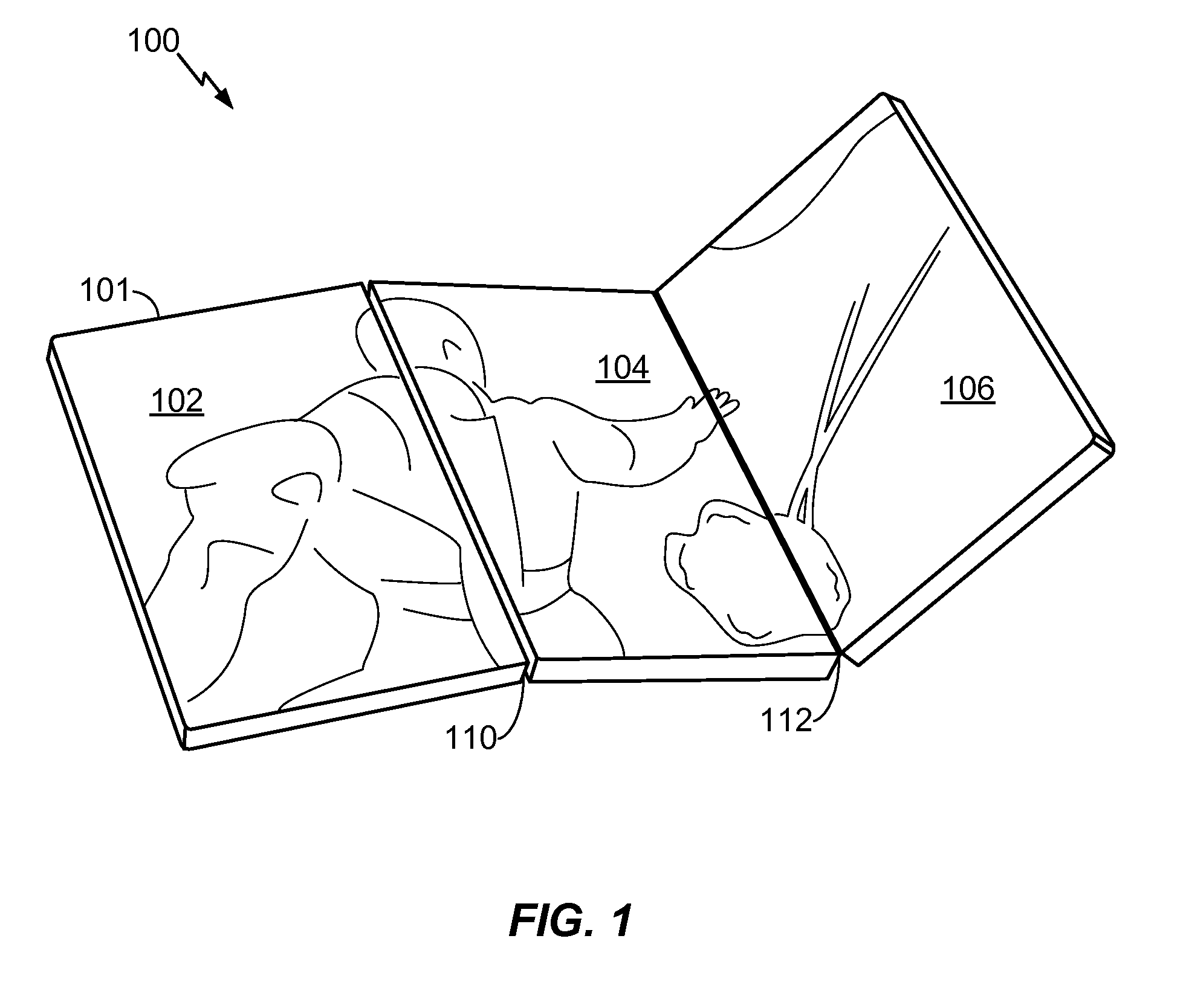

Multi-panel electronic device

ActiveUS20110126141A1Devices with multiple display unitsDevices with sensorGraphicsGraphical user interface

Methods, apparatuses, and computer-readable storage media for displaying an image at an electronic device are disclosed. In a particular embodiment, an electronic device is disclosed that includes a first panel having a first display surface to display a graphical user interface element associated with an application. The electronic device also includes a second panel having a second display surface. The first display surface is separated from the second display surface by a gap. A processor is configured to execute program code including a graphical user interface. The processor is configured to launch or close the application in response to user input causing a movement of the graphical user interface element in relation to the gap.

Owner:QUALCOMM INC

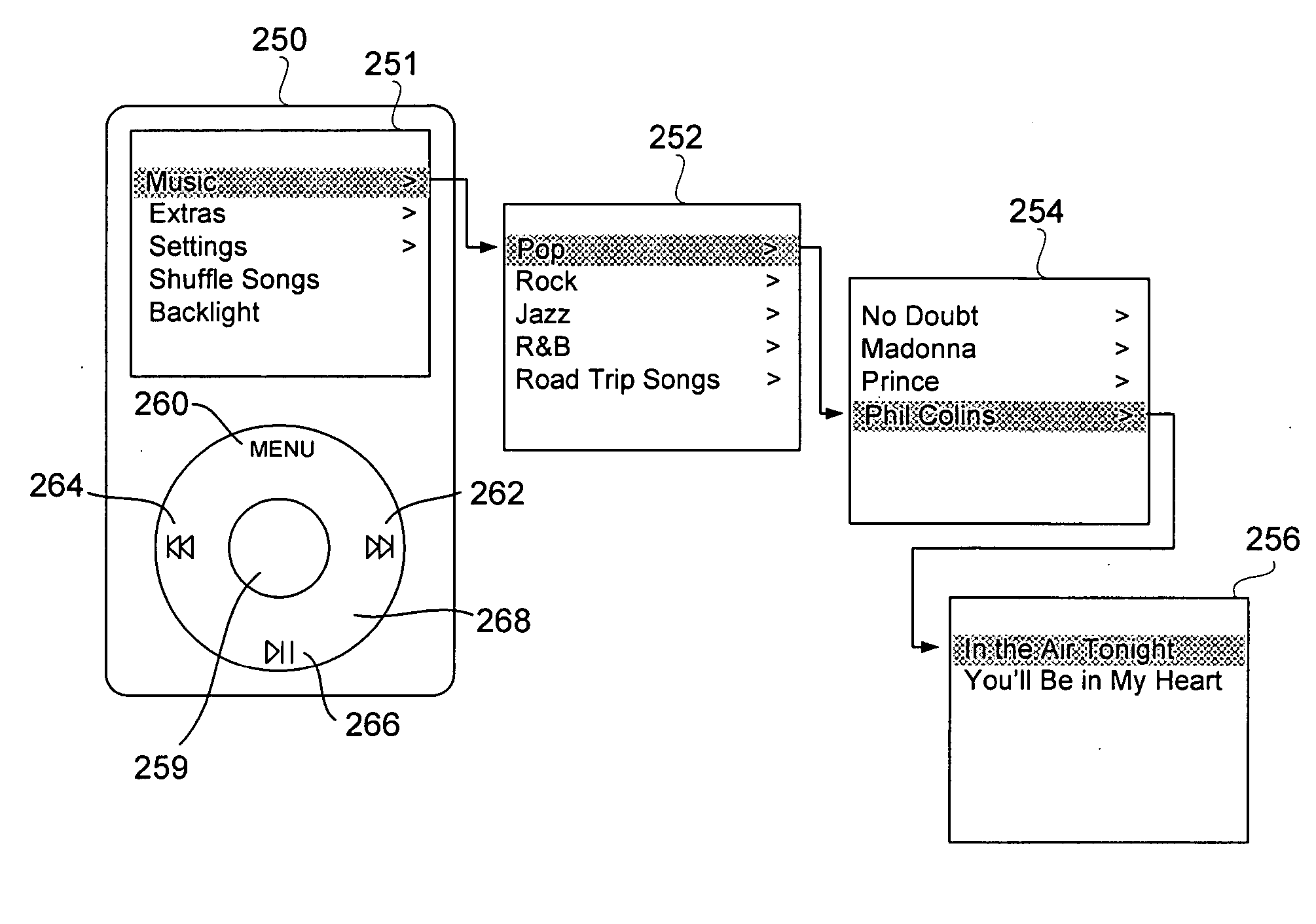

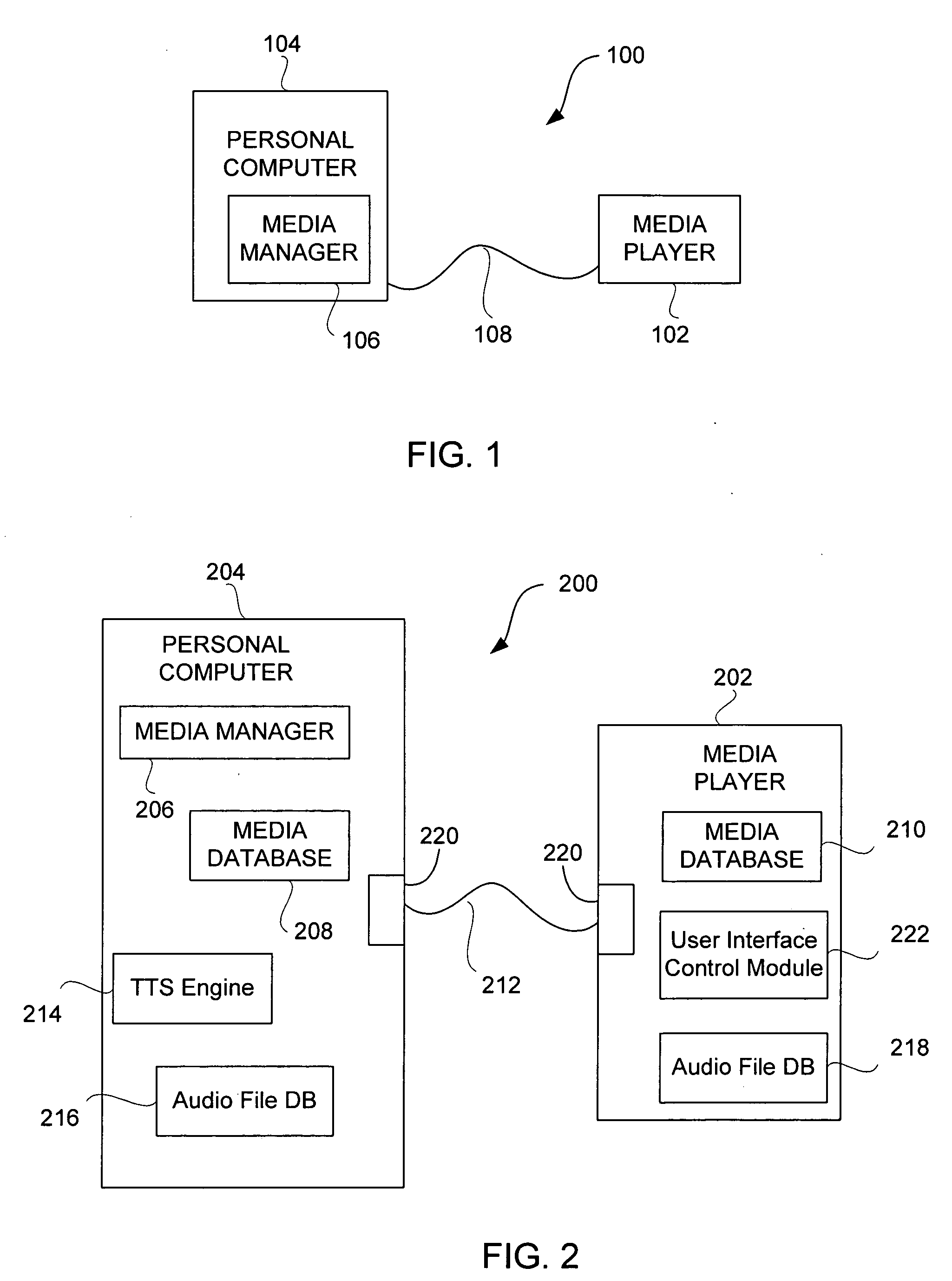

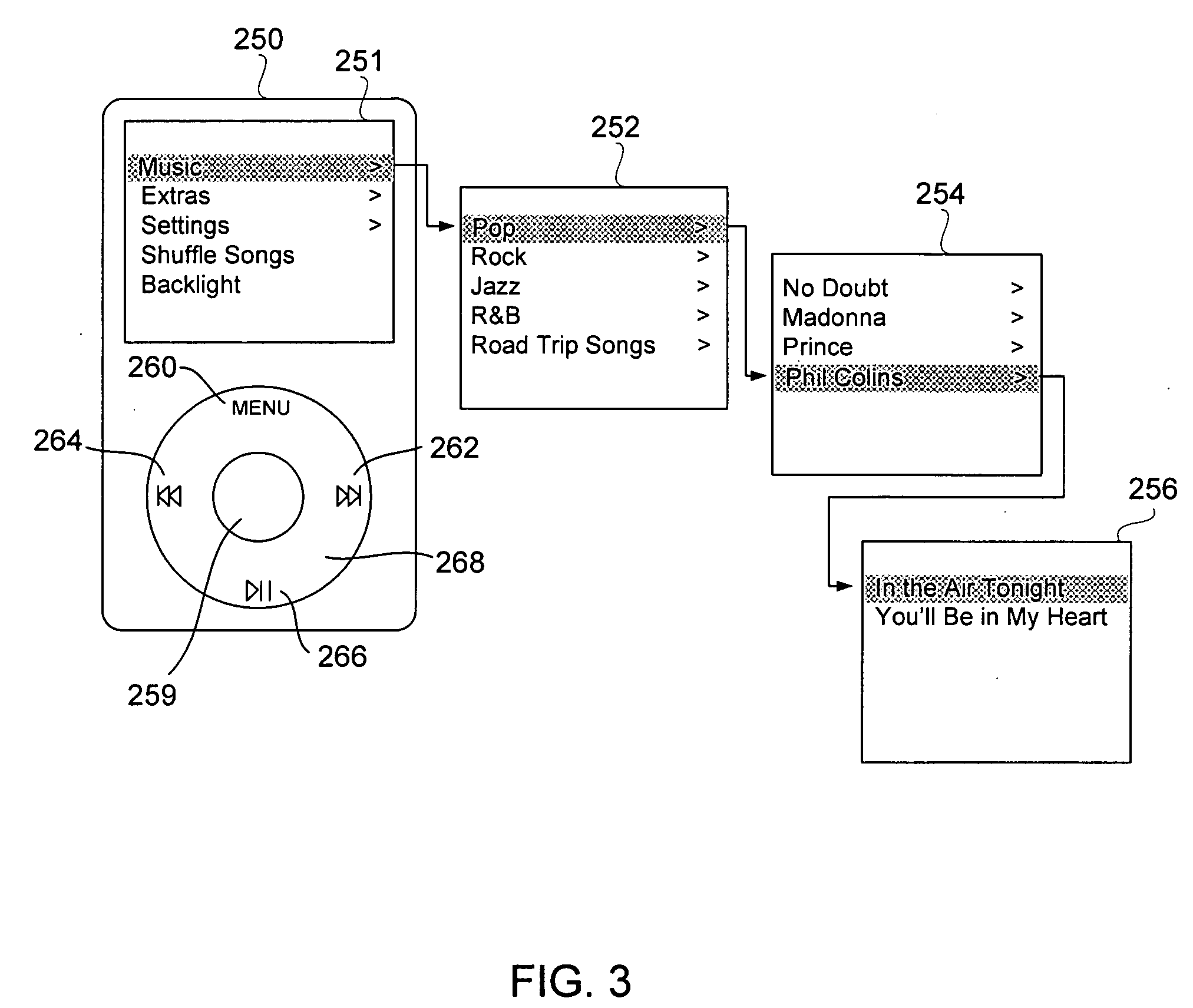

Audio user interface for computing devices

ActiveUS20060095848A1Easy to carryEfficient leveragingRecord information storageCarrier indicating arrangementsHand heldHand held devices

An audio user interface that generates audio prompts that help a user interact with a user interface of a device is disclosed. One aspect of the present invention pertains to techniques for providing the audio user interface by efficiently leveraging the computing resources of a host computer system. The relatively powerful computing resources of the host computer can convert text strings into audio files that are then transferred to the computing device. The host system performs the process intensive text-to-speech conversion so that a computing device, such as a hand-held device, only needs to perform the less intensive task of playing the audio file. The computing device can be, for example, a media player such as an MP3 player, a mobile phone, or a personal digital assistant.

Owner:APPLE INC

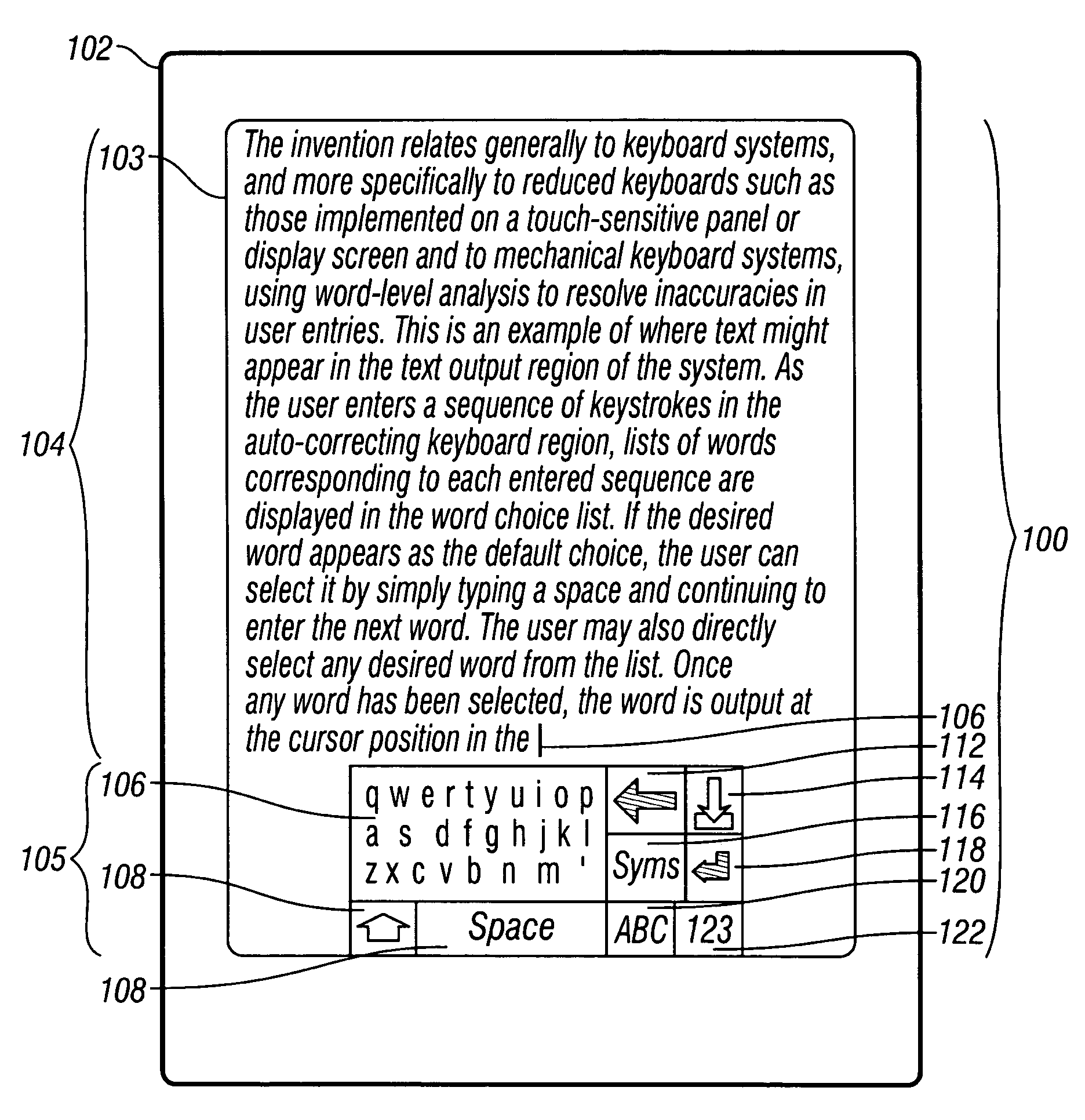

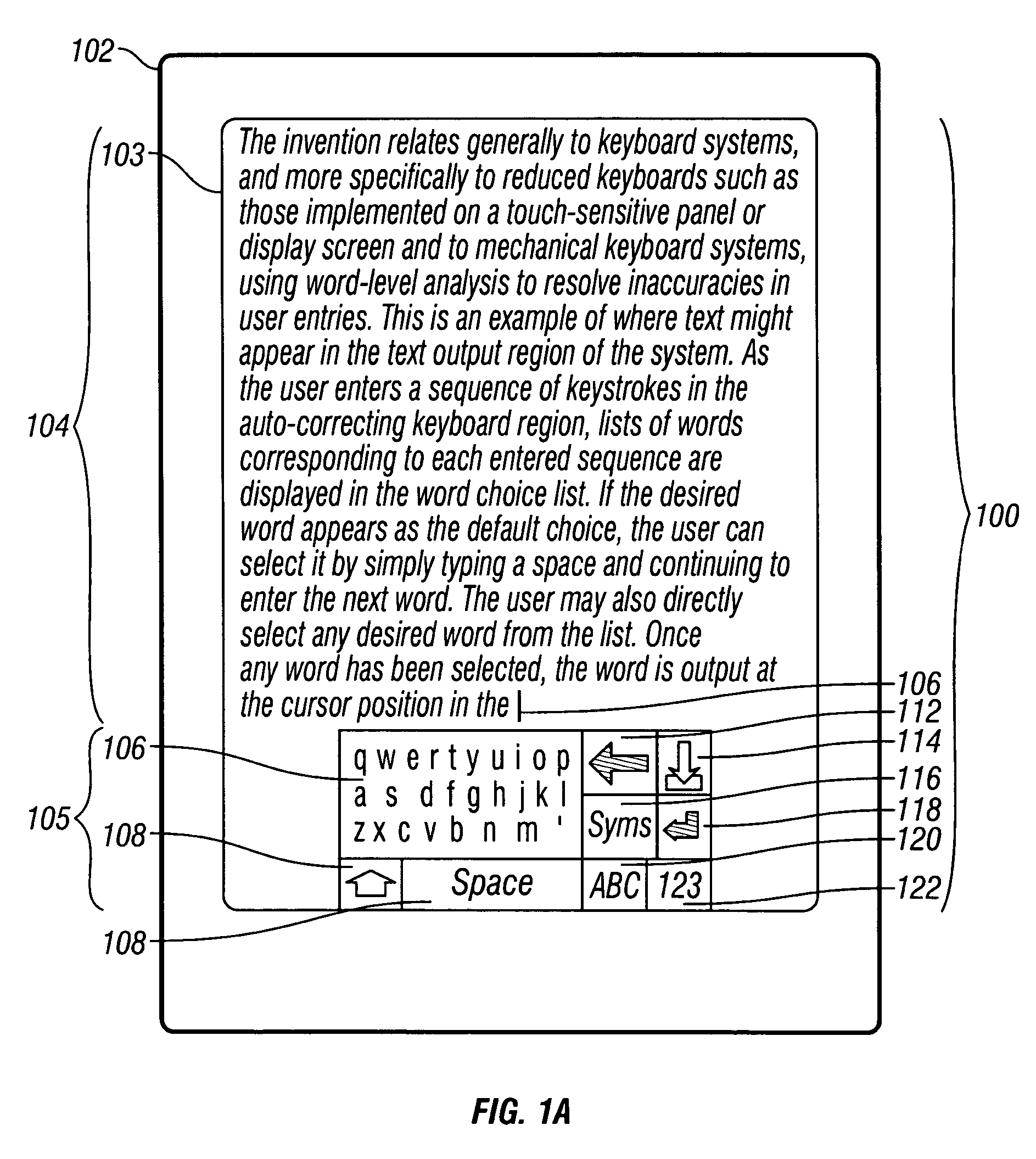

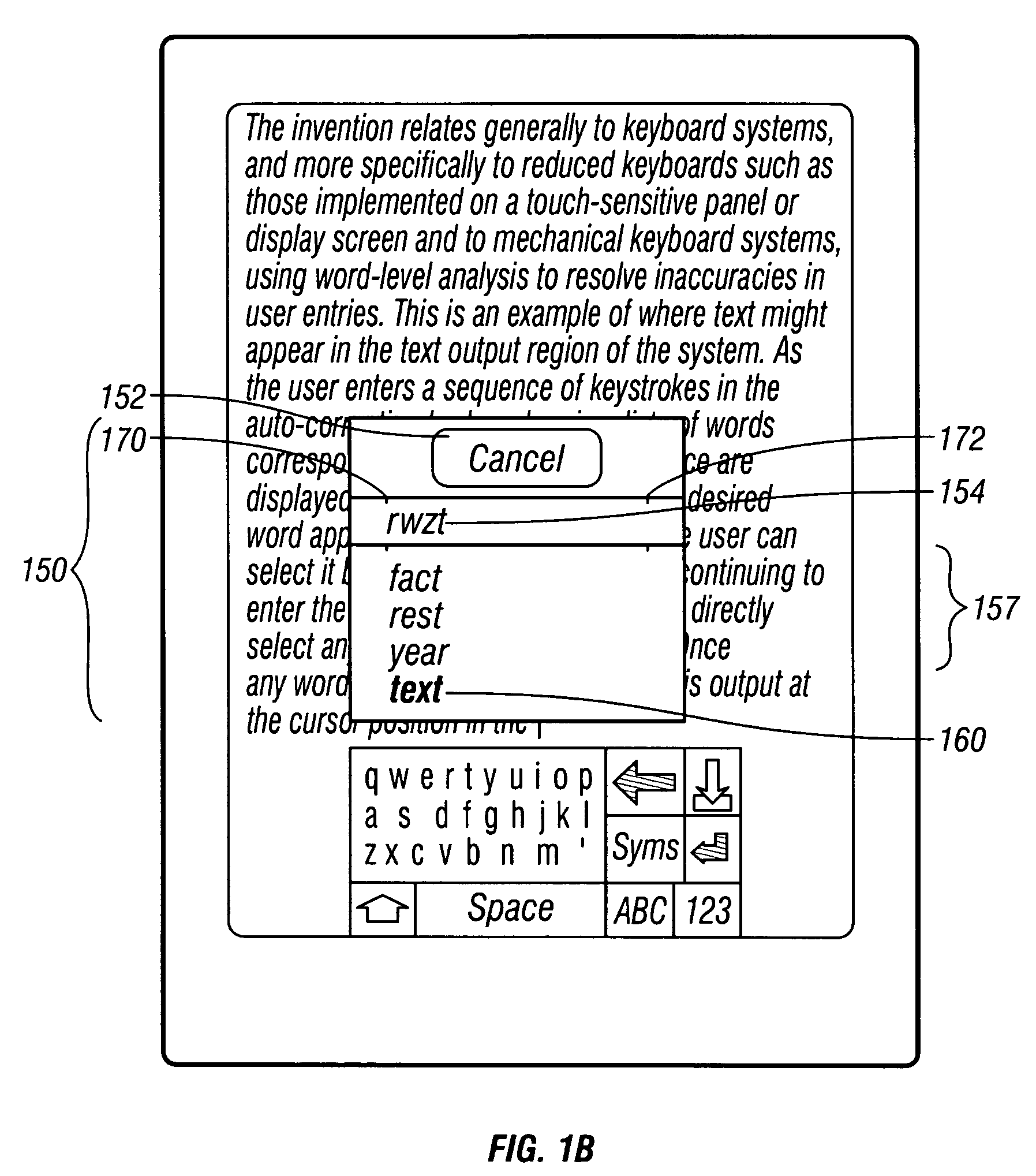

Keyboard system with automatic correction

InactiveUS7277088B2Reduce in quantityCharacter and pattern recognitionElectronic switchingAutocorrectionComputer science

A method and system are defined which determine one or more alternate textual interpretations of each sequence of inputs detected within a designated auto-correcting keyboard region. The actual contact locations for the keystrokes may occur outside the boundaries of the specific keyboard key regions associated with the actual characters of the word interpretations proposed or offered for selection, where the distance from each contact location to each corresponding intended character may in general increase with the expected frequency of the intended word in the language or in a particular context. Likewise, in a mechanical keyboard system, the keys actuated may differ from the keys actually associated with the letters of the word interpretations. Each such sequence corresponds to a complete word, and the user can easily select the intended word from among the generated interpretations.

Owner:NUANCE COMM INC +1

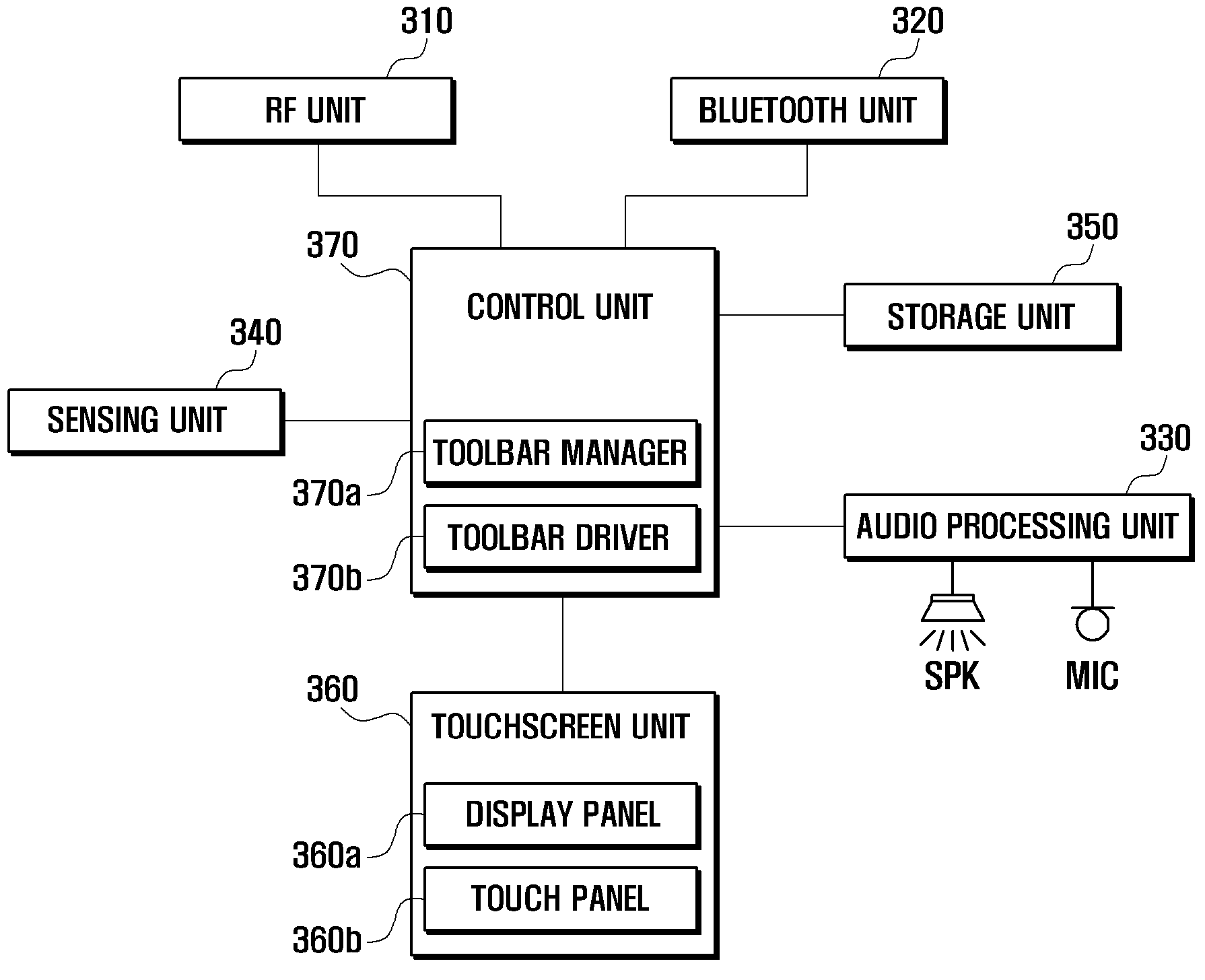

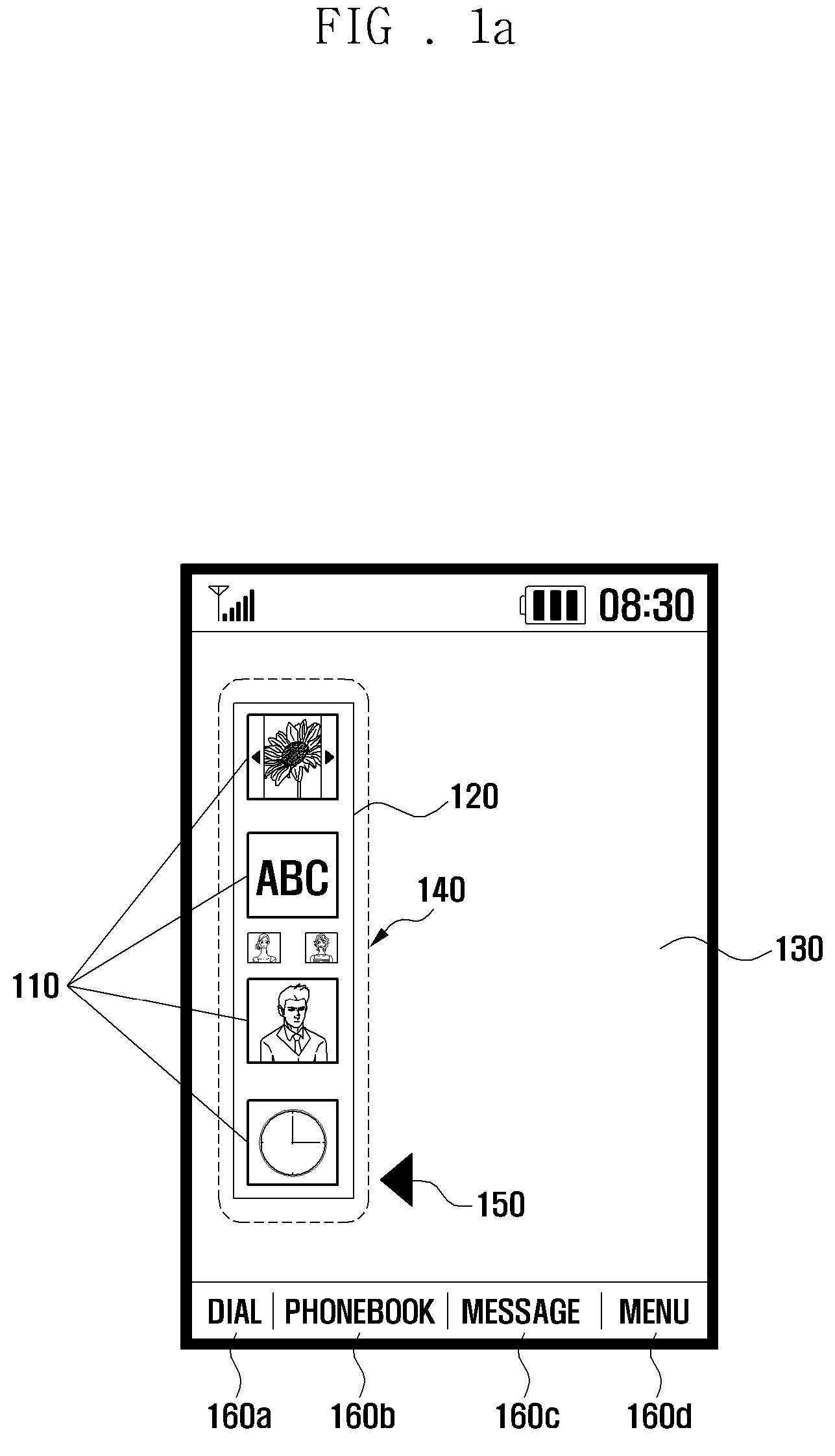

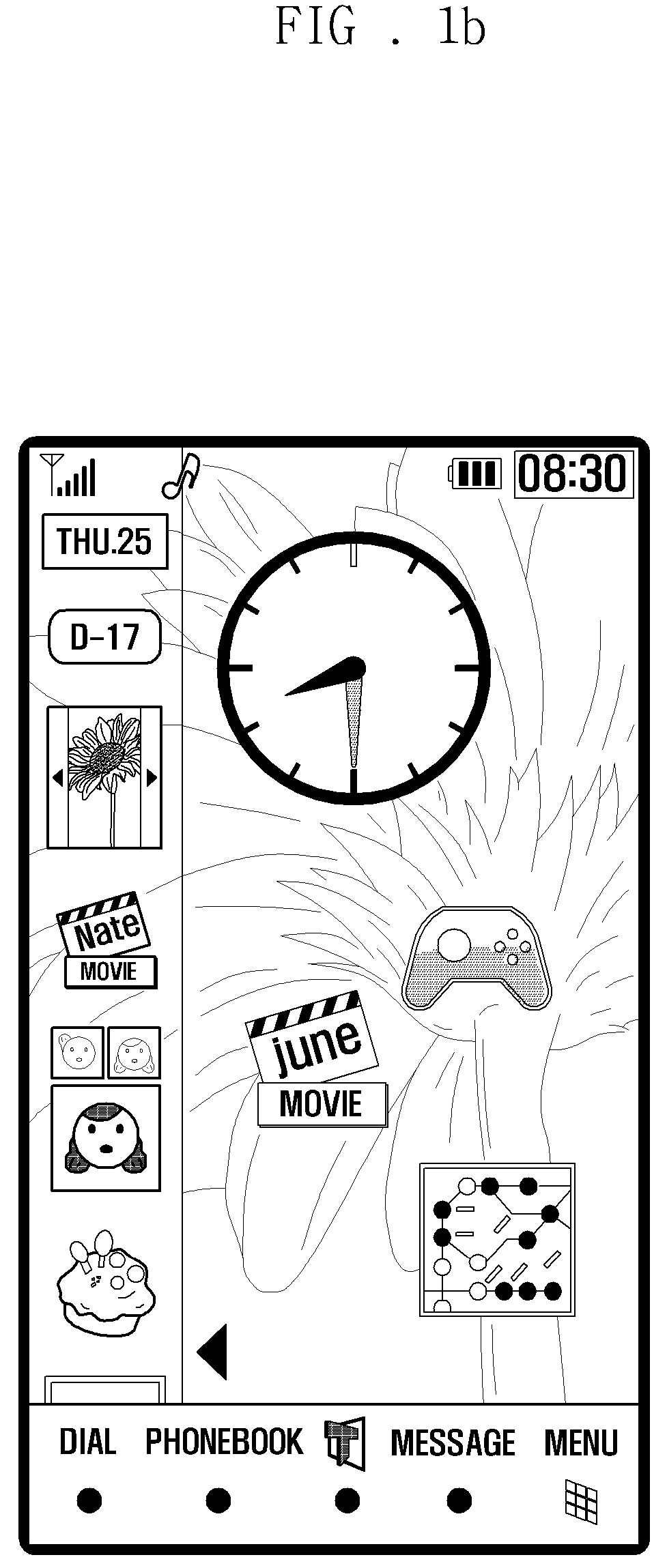

User interface method and apparatus for mobile terminal having touchscreen

ActiveUS20090228820A1Improve interactivityGeometric image transformationSubstation equipmentGraphicsDrag and drop

A user interface method and apparatus for a mobile terminal having a touchscreen are provided. The apparatus and method improve interactivity using a toolbar menu mode screen which allows a user to execute functions and commands with drag and drop behaviors on the touchscreen to graphical objects such as toolbar, icons, and other active objects. An interface apparatus includes a touchscreen unit that displays a screen including a second region for presenting a toolbar having at least one User Interface (UI) element representing a specific function and a first region for activating, when the UI element is dragged from the toolbar and dropped in the first region on the touchscreen, the function represented by the UI element. The interface apparatus also includes a control unit which detects a drag and drop action of the UI element and activates, when the drag and drop action is detected, the function associated with the UI element in the form of an active function object. The interface apparatus of the present invention registers the frequently used functions with the toolbar in the form of icons such that, when an icon is dragged from the toolbar to the main window, the function represented by the icon is activated.

Owner:SAMSUNG ELECTRONICS CO LTD

Systems and methods for displaying notifications received from multiple applications

Systems and methods are disclosed for displaying notifications received from multiple applications. In some embodiments, an electronic device can monitor notifications that are received from the multiple applications. Responsive to receiving the notifications, the electronic device can control the manner in which the notifications are displayed while the device is operating in a locked or an unlocked state. In some embodiments, the electronic device can allow users to customize how notifications are to be displayed while the device is in the locked and / or unlocked states.

Owner:APPLE INC

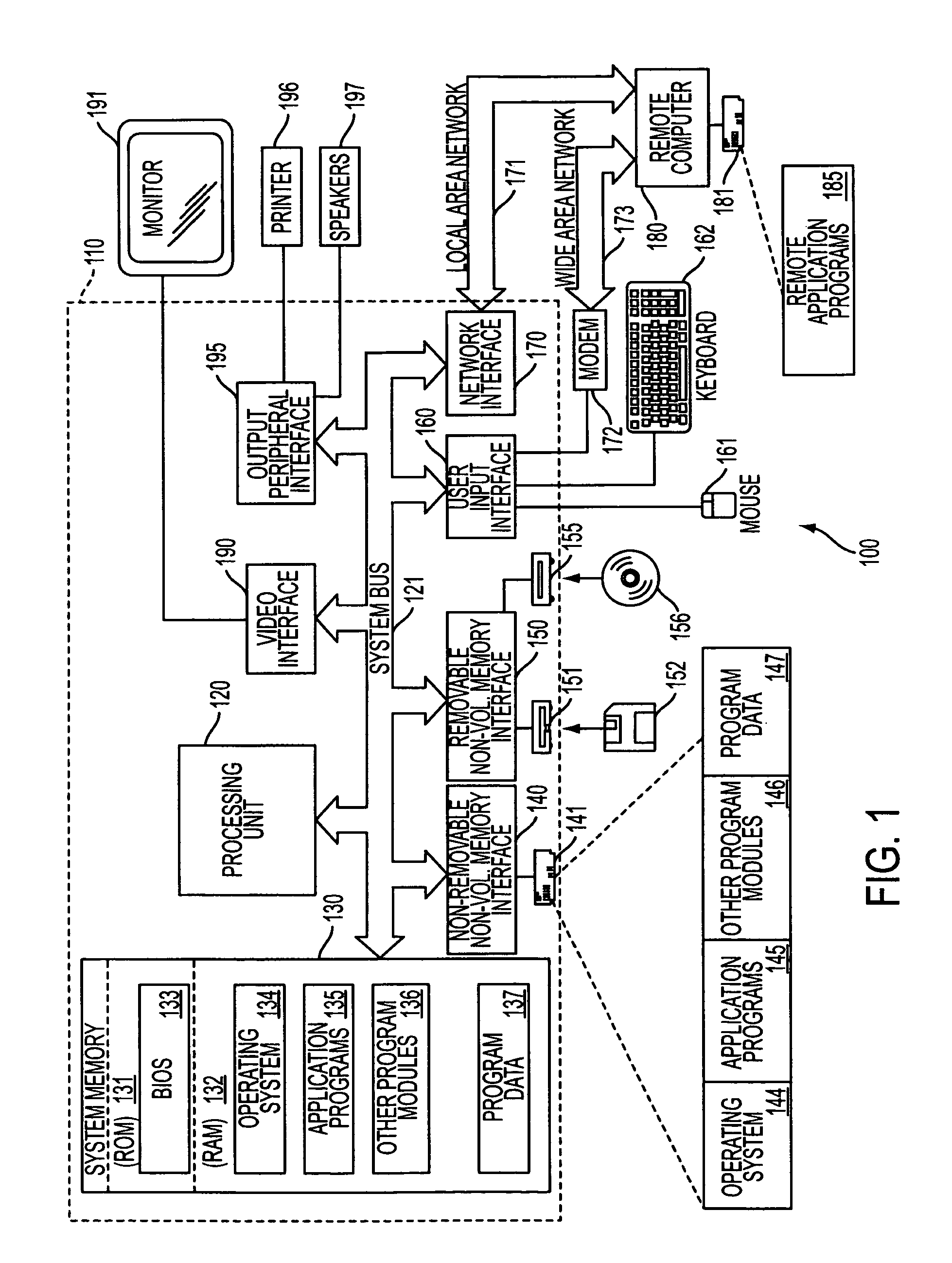

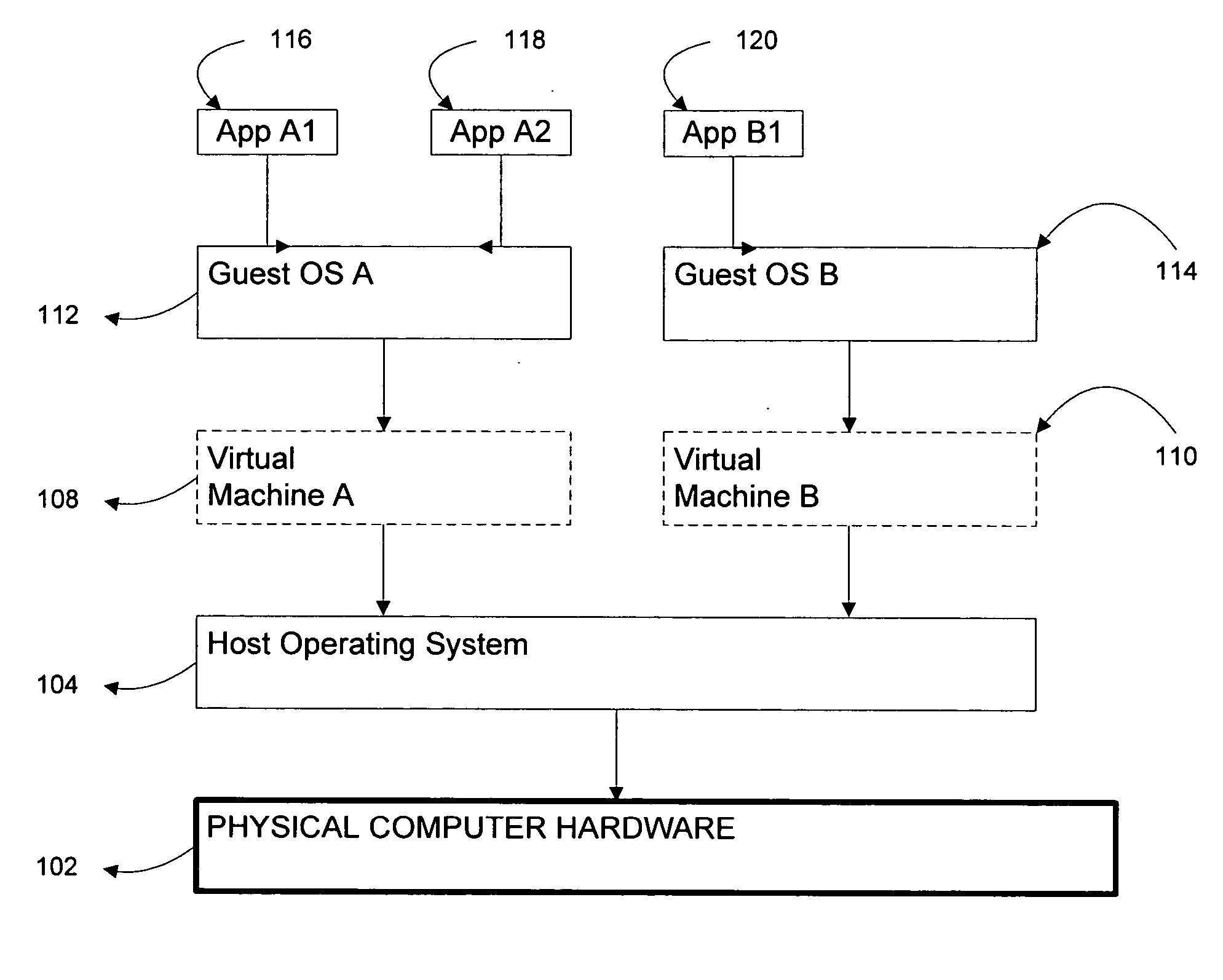

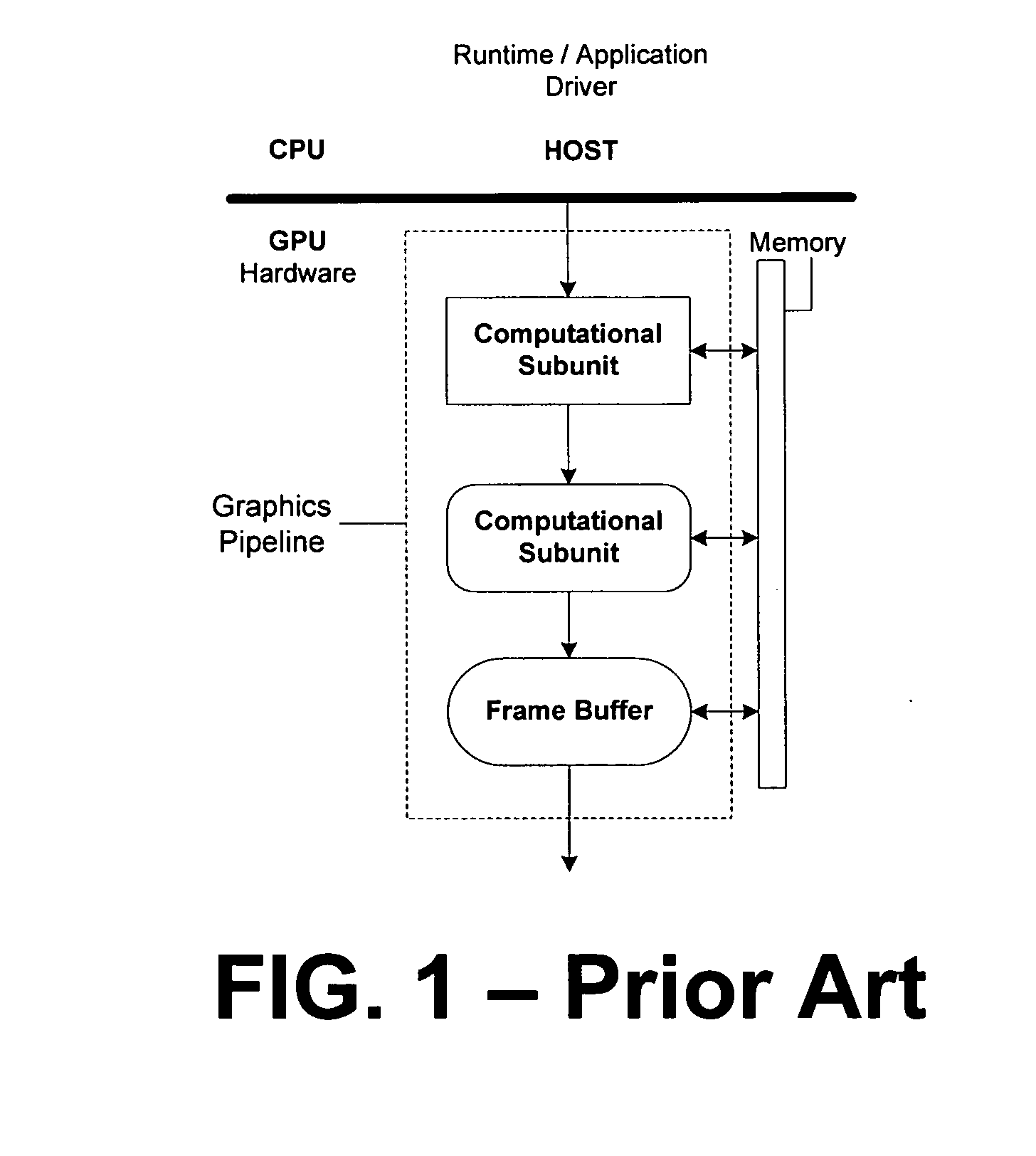

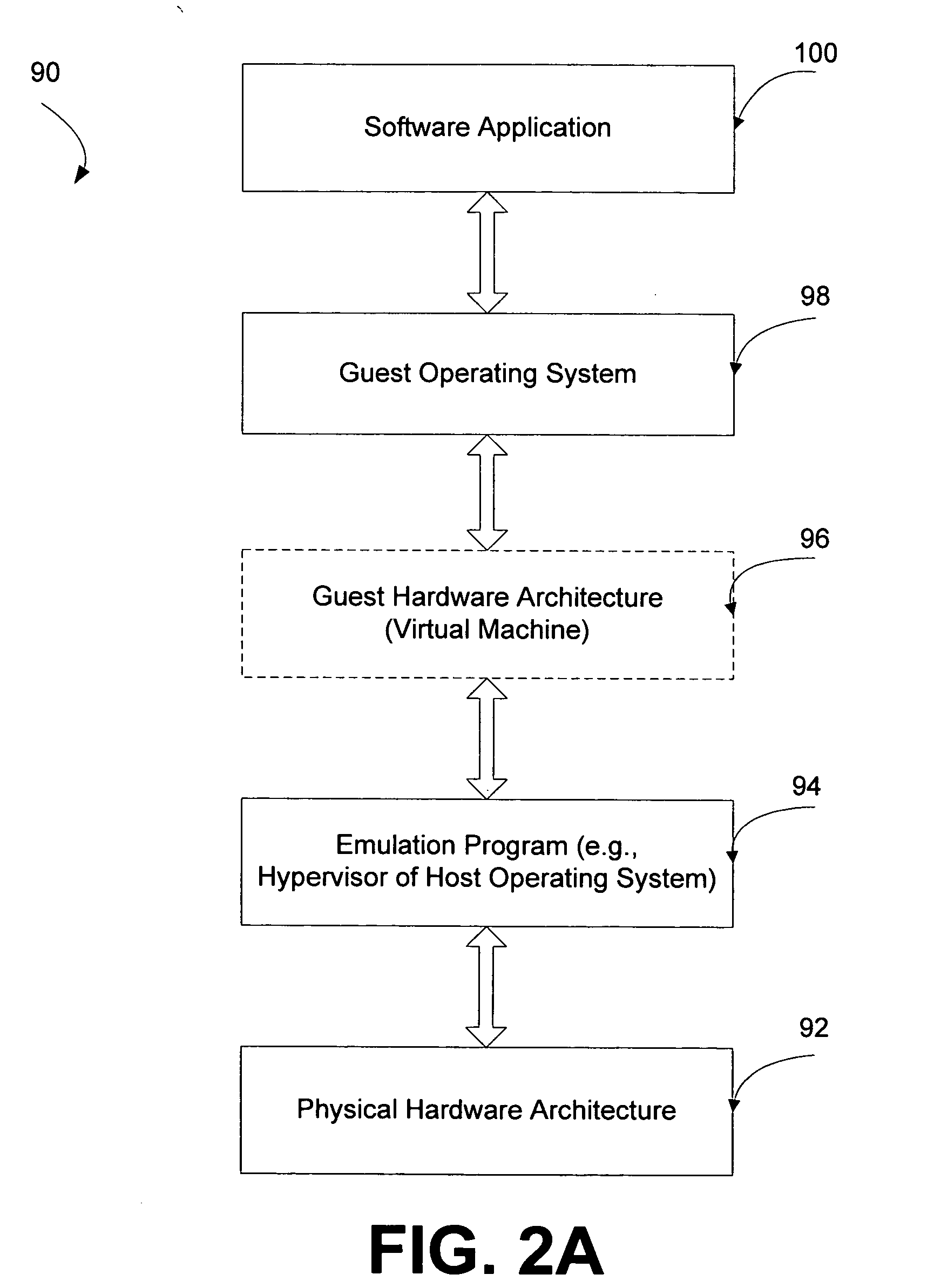

Systems and methods for virtualizing graphics subsystems

ActiveUS20060146057A1Program control using stored programsProcessor architectures/configurationVirtualizationOperational system

Systems and methods for applying virtual machines to graphics hardware are provided. In various embodiments of the invention, while supervisory code runs on the CPU, the actual graphics work items are run directly on the graphics hardware and the supervisory code is structured as a graphics virtual machine monitor. Application compatibility is retained using virtual machine monitor (VMM) technology to run a first operating system (OS), such as an original OS version, simultaneously with a second OS, such as a new version OS, in separate virtual machines (VMs). VMM technology applied to host processors is extended to graphics processing units (GPUs) to allow hardware access to graphics accelerators, ensuring that legacy applications operate at full performance. The invention also provides methods to make the user experience cosmetically seamless while running multiple applications in different VMs. In other aspects of the invention, by employing VMM technology, the virtualized graphics architecture of the invention is extended to provide trusted services and content protection.

Owner:MICROSOFT TECH LICENSING LLC

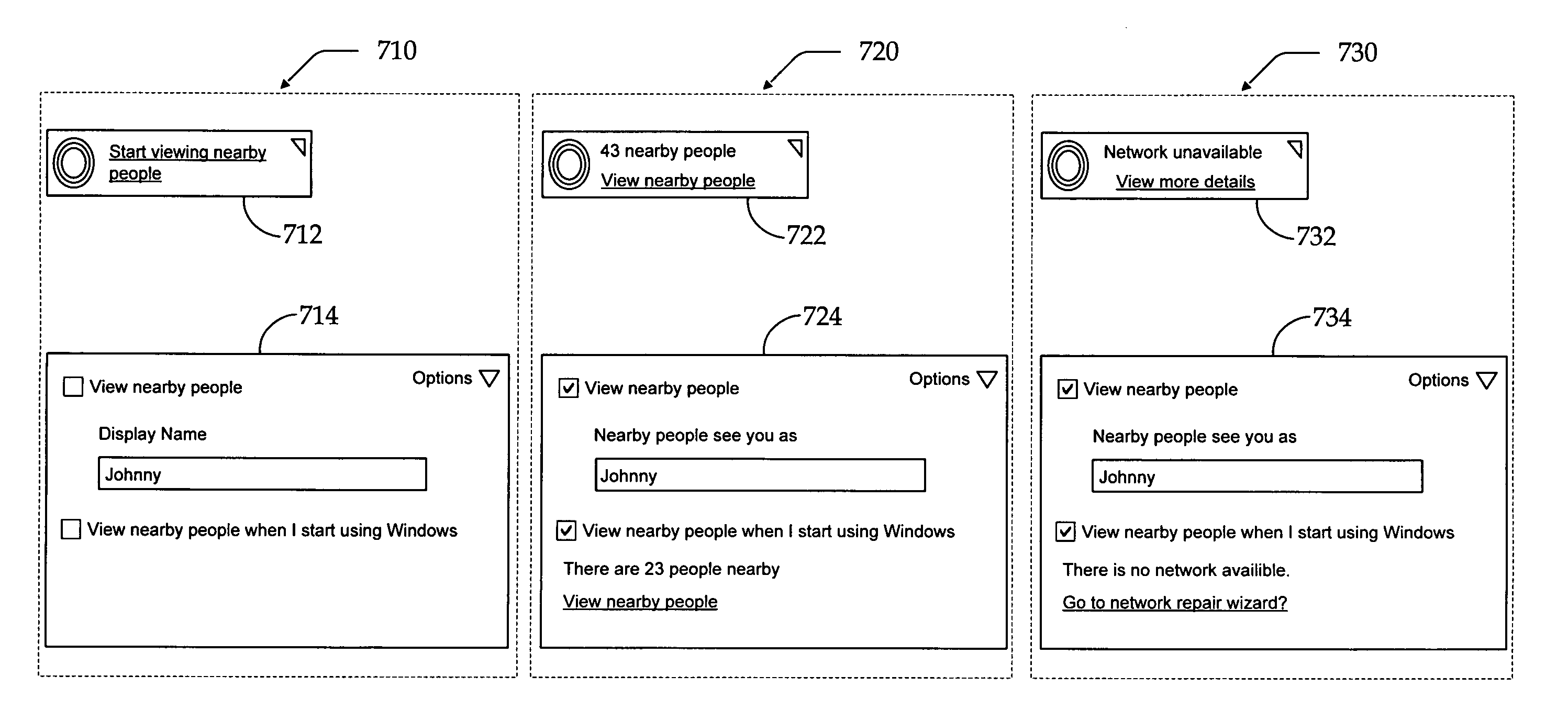

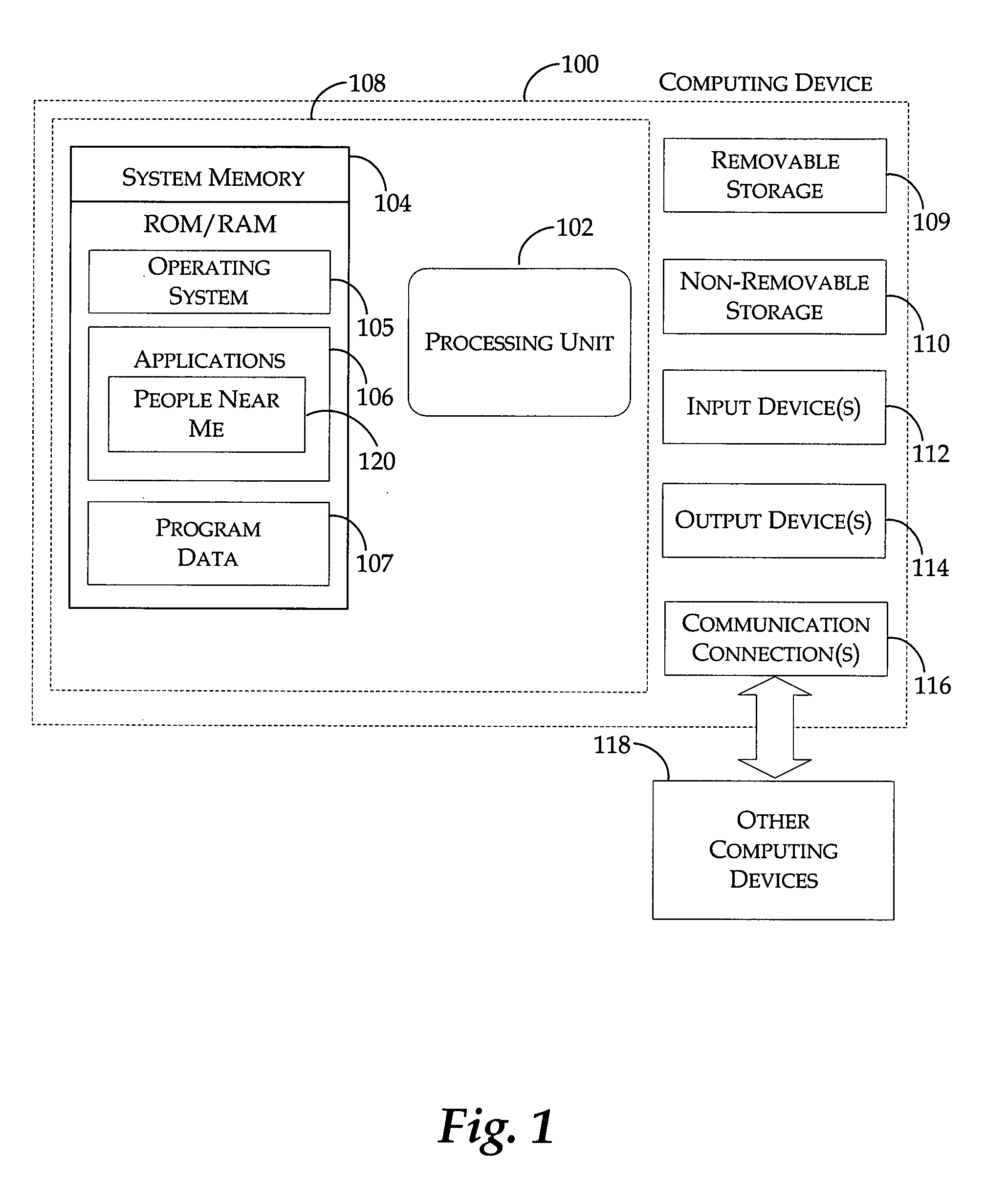

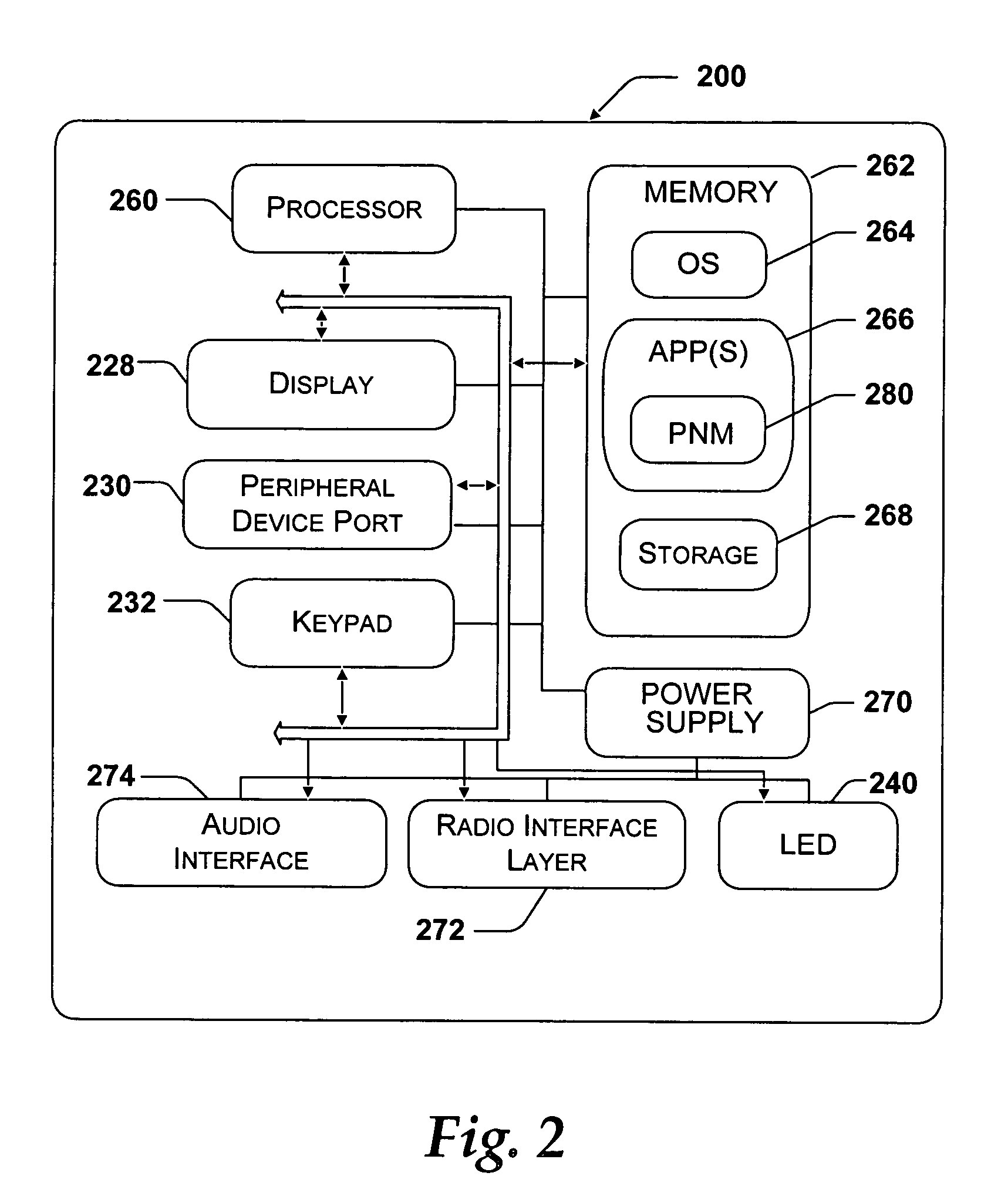

System and method for a user interface directed to discovering and publishing presence information on a network

A system and method is provided for a user interface directed to publication and discovery of the presence of users on a network. A sidebar tile is provided that peripherally and unobtrusively displays the presence information of nearby users on the network. The sidebar tile is also used to notify a local user that their information is also being published on the network. The sidebar tile provides options for selecting to change, enable, or disable the presence discovery service.

Owner:MICROSOFT TECH LICENSING LLC

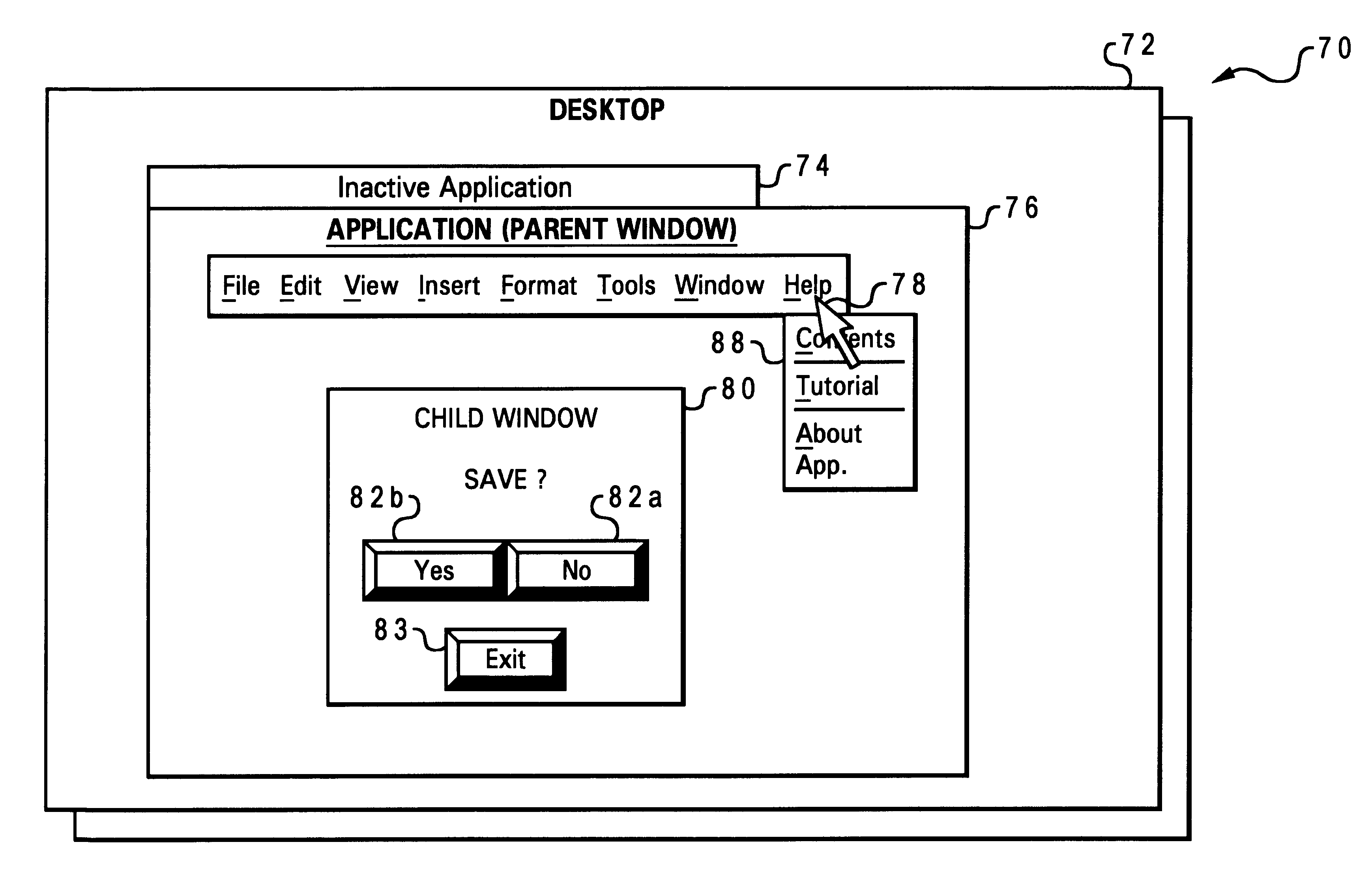

Variable modality child windows

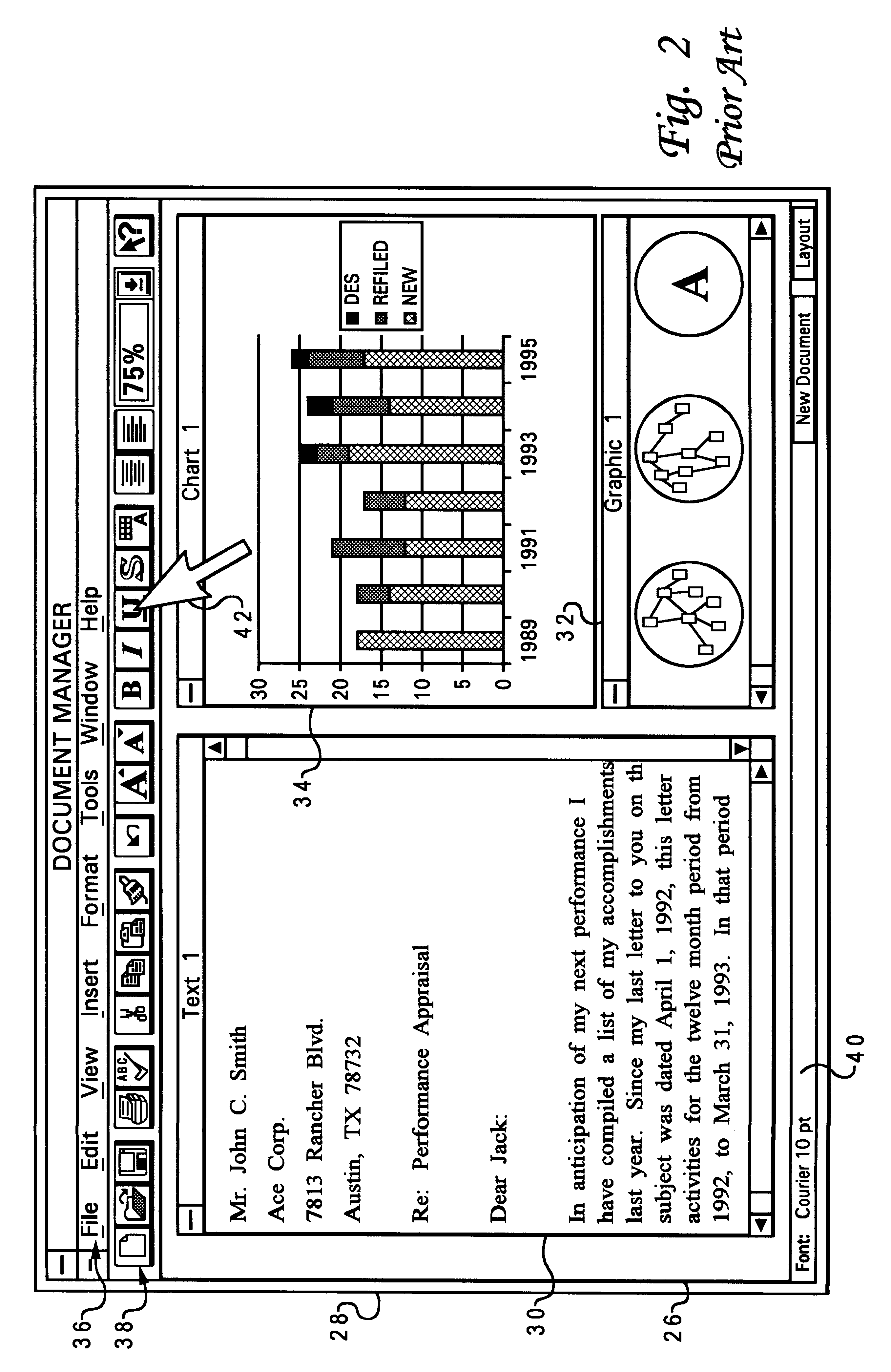

InactiveUS6232971B1Execution for user interfacesDigital output to display deviceGraphicsGraphical user interface

A method and system for generating variable modality child windows. An application is executed utilizing a graphical user interface (GUI) which enables a parent window. One or more child windows may be available to the user during execution. The level of modality of the child window is determined during programming by the application developer. The operating system is modified to permit a variable level of modality during application execution. The developer selects variable modality for one or more of the child windows. When the child window is opened, the user is allowed to interact with the other windows based on the developers determination of level of modality. The variability may be either application or system-wide. The user is thus permitted to interface with only some of the functions on the other windows on the desktop while the child window is open.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com