Local data cache management method and device

A cache management, local data technology, applied in electrical digital data processing, memory system, memory address/allocation/relocation, etc., can solve the problem of inflexible storage of one-to-one correspondence between keywords and their values, and achieve fast data access The effect of high efficiency, high cache performance, and fast data access

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

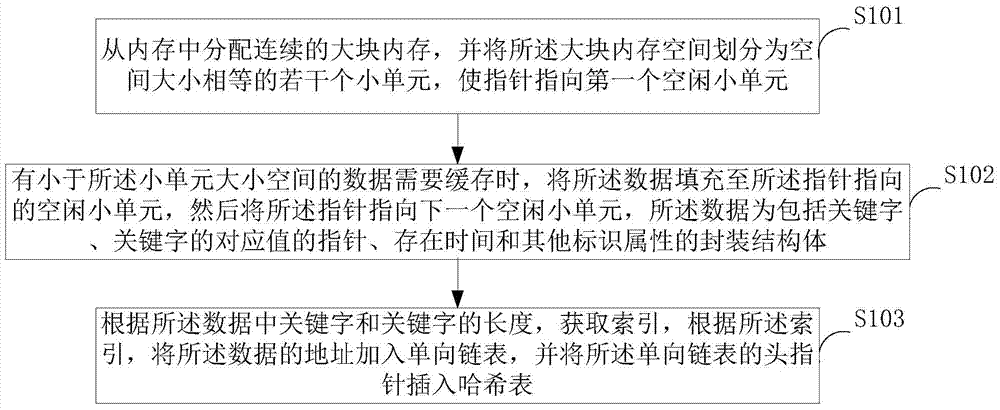

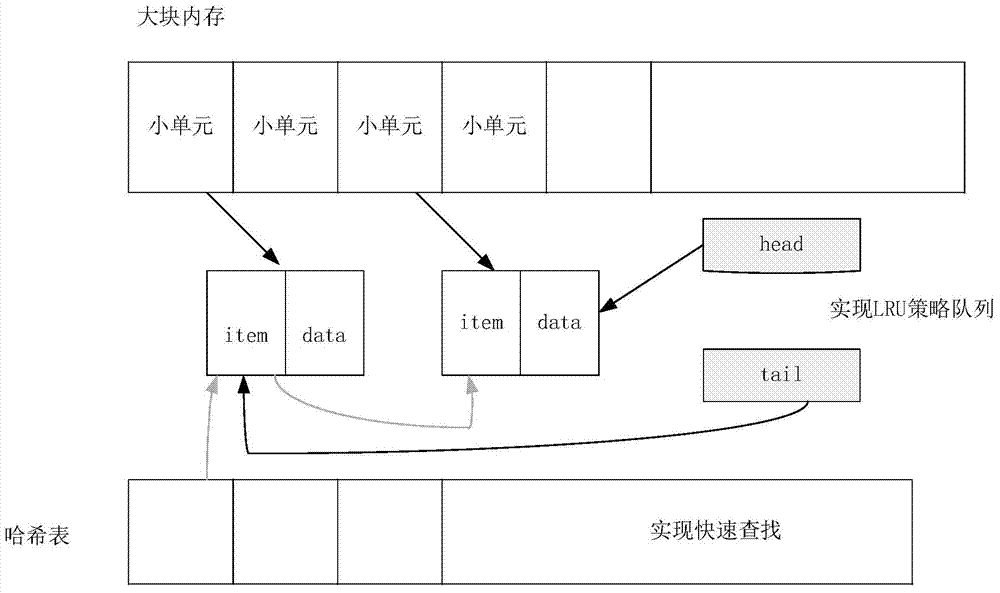

[0022] figure 1 The implementation process of the local data cache management method provided by the first embodiment of the present invention is shown, and the details are as follows:

[0023] It should be noted that the present invention is especially suitable for running on a single server and the memory space is extremely limited.

[0024] In step S101, a continuous large block of memory is allocated from the memory, and the large block of memory space is divided into several small units of equal space size, so that the pointer points to the first free small unit.

[0025] In this embodiment, the large block of memory is a continuous memory space allocated in the memory, and its size is set according to the server environment. The larger the server memory, the larger the large block of memory. Preferably, the size of the large block of memory is in the range of 1-10Mb. Large blocks of memory are equivalent to slabs in Memcached. The small unit is several equal-sized sub...

Embodiment 2

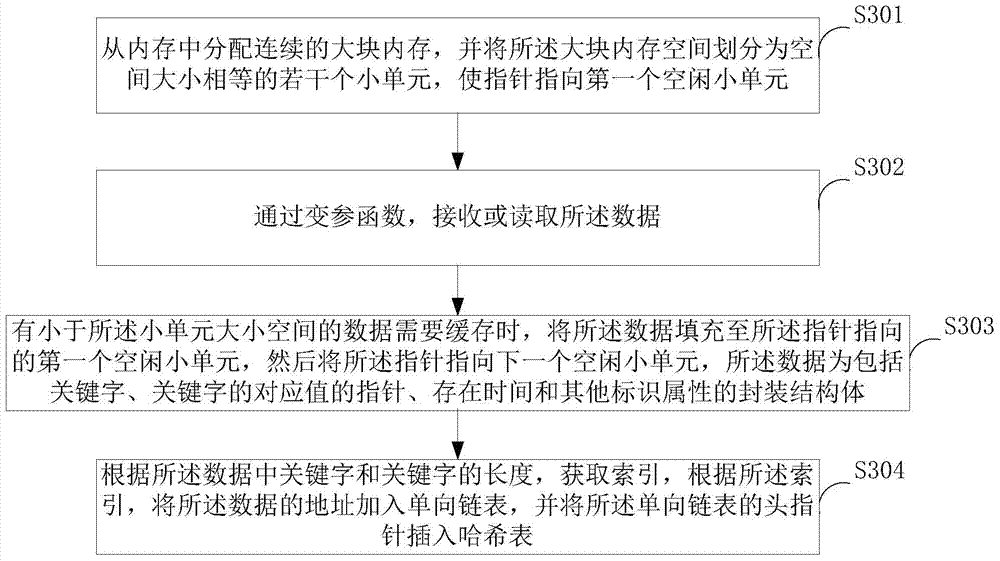

[0041] image 3 The implementation process of the local data cache management method provided by the second embodiment of the present invention is shown, and the details are as follows:

[0042] In step S301, a continuous large block of memory is allocated from the memory, and the large block of memory space is divided into several small units of equal space size, so that the pointer points to the first free small unit.

[0043] In this embodiment, the execution of step S201 is similar to the execution process of step S101 in the above-mentioned first embodiment. For details, please refer to the description of the above-mentioned first embodiment.

[0044] In step S302, the data is received or read through a variable parameter function.

[0045] Specifically, since a large block of memory is controllable to apply for, and the boundaries of each small unit are also clear, when you know the data type, you also know the location and size occupied in the memory space, according t...

Embodiment 3

[0054] Figure 4 A specific structural block diagram of the local data cache management apparatus provided in Embodiment 3 of the present invention is shown. For convenience of description, only the parts related to the embodiment of the present invention are shown. In this embodiment, the local data cache management apparatus includes: an allocation unit 41 , a storage unit 42 , an index unit 43 , an elimination unit 44 , a space calculation unit 45 , a judgment unit 46 , a reading unit 47 and an interface unit 48 .

[0055] Wherein, the allocation unit 41 is used to allocate a continuous large block of memory from the memory, and divide the large block of memory space into several small units of equal space size, so that the pointer points to the first free small unit;

[0056] The storage unit 42 is used to fill the data into the free small cell pointed to by the pointer when there is data smaller than the size of the small cell that needs to be cached, and then point the p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com