Patents

Literature

81 results about "Multilevel queue" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Multi-level queueing, used at least since the late 1950s/early 1960s, is a queue with a predefined number of levels. Unlike the multilevel feedback queue, items get assigned to a particular level at insert (using some predefined algorithm), and thus cannot be moved to another level. Items get removed from the queue by removing all items from a level, and then moving to the next. If an item is added to a level above, the "fetching" restarts from there. Each level of the queue is free to use its own scheduling, thus adding greater flexibility than merely having multiple levels in a queue.

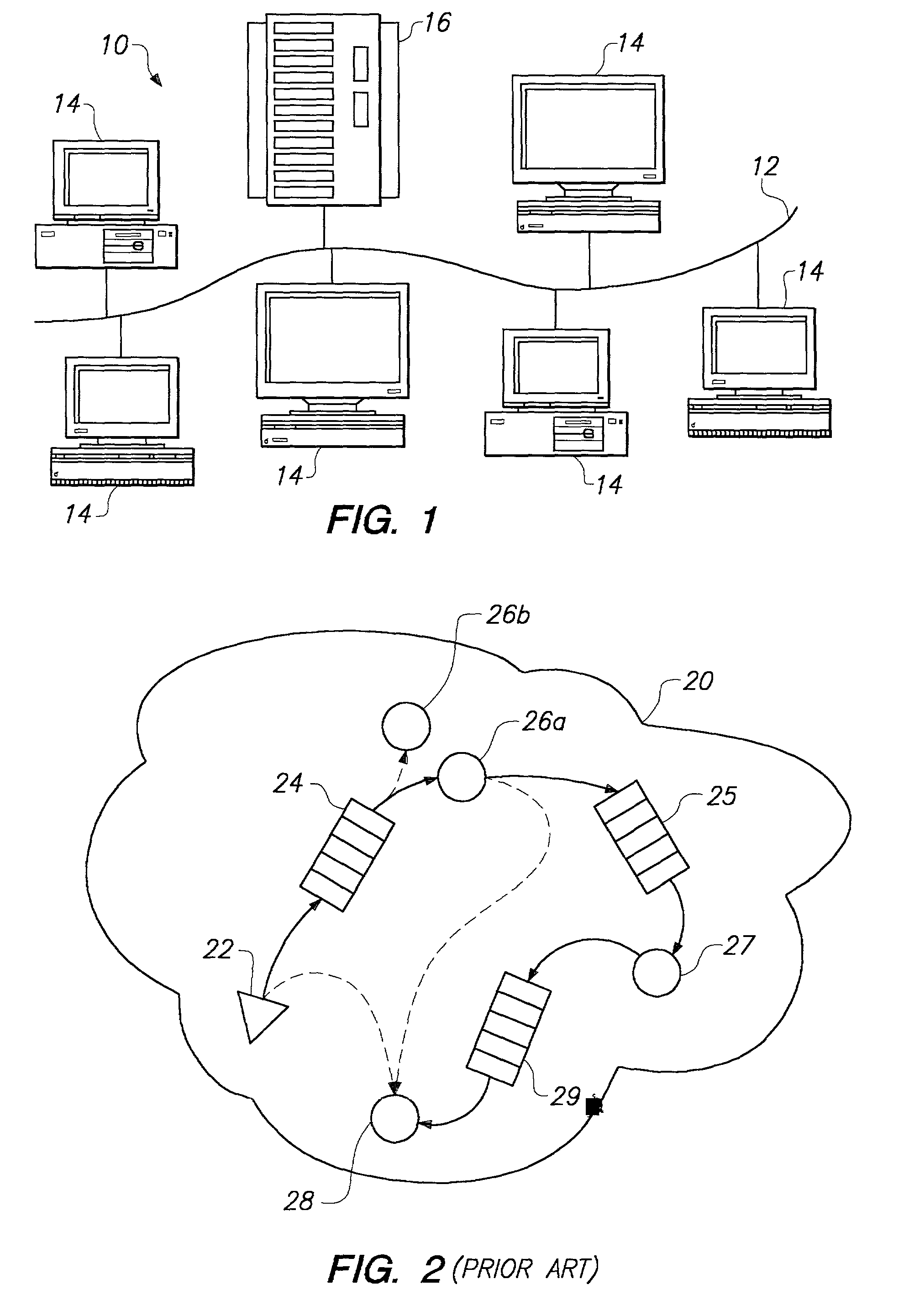

System and method for managing multiple queues of non-persistent messages in a networked environment

ActiveUS20120198004A1Data switching by path configurationMultiple digital computer combinationsMessage queueMultilevel queue

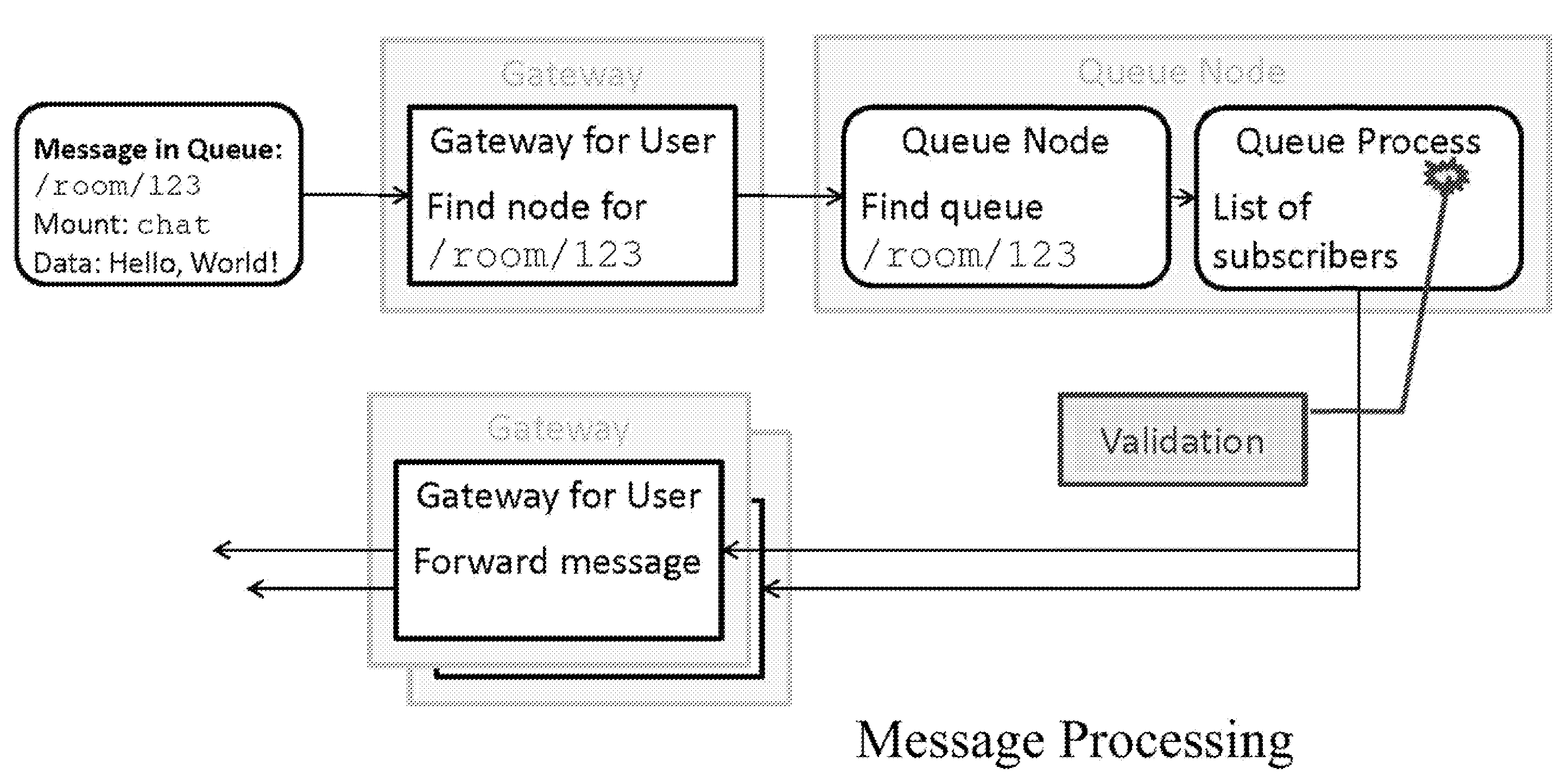

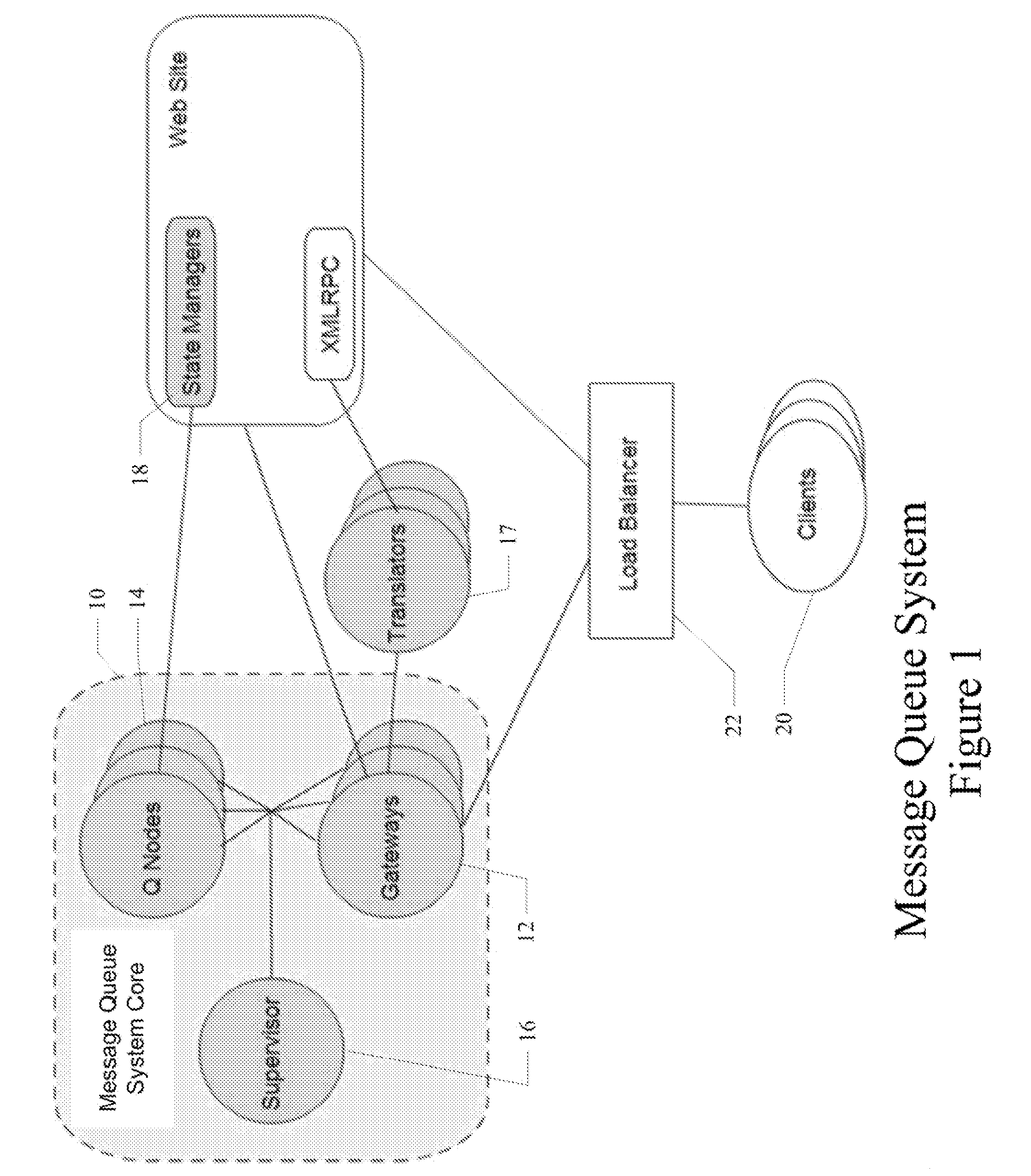

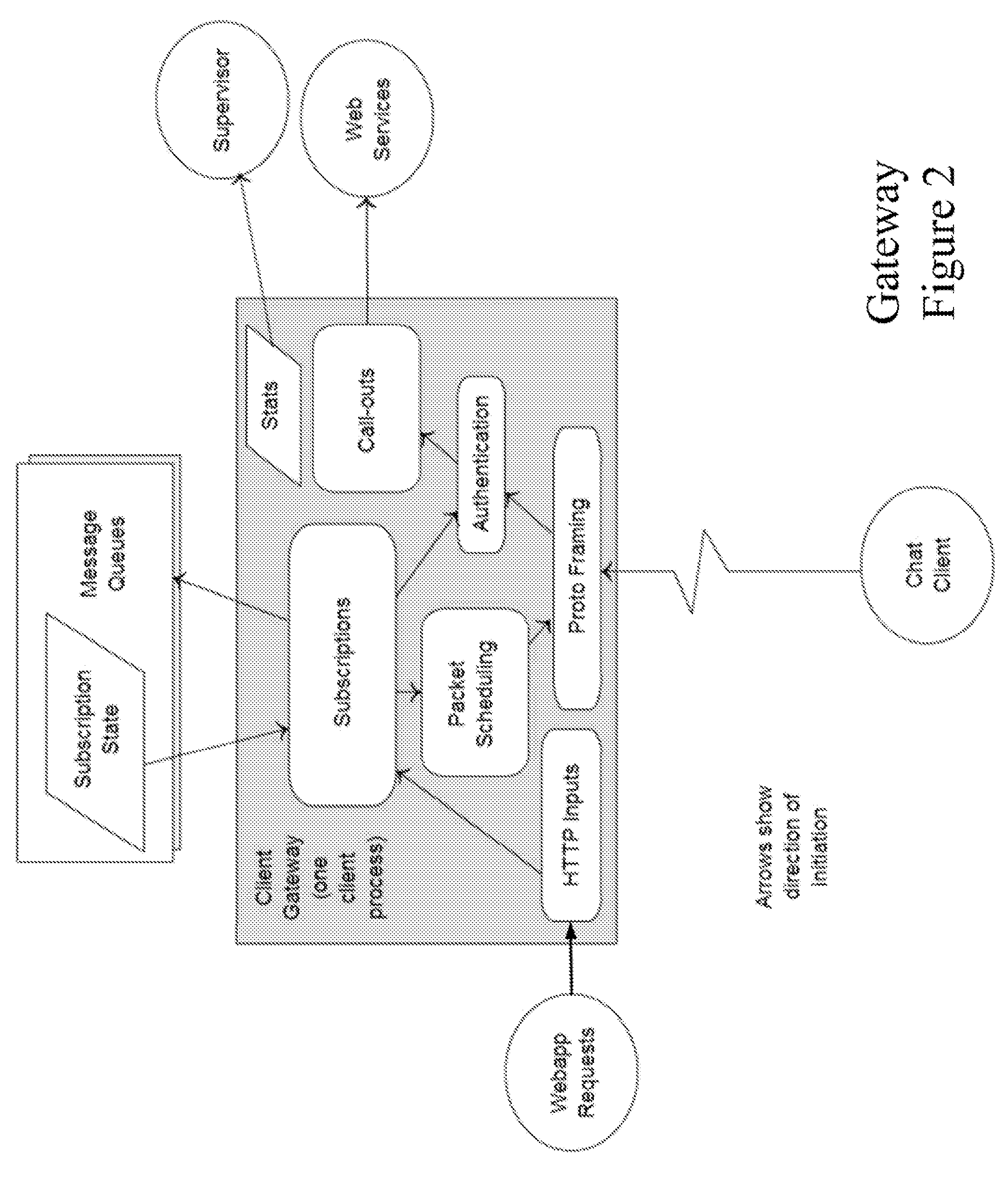

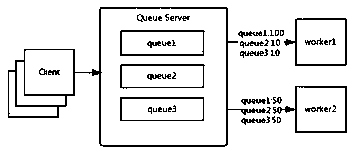

A system and method for managing multiple queues of non-persistent messages in a networked environment are disclosed. A particular embodiment includes: receiving a non-persistent message at a gateway process, the message including information indicative of a named queue; mapping, by use of a data processor, the named queue to a queue node by use of a consistent hash of the named queue; mapping the message to a queue process at the queue node; accessing, by use of the queue process, a list of subscriber gateways; and routing the message to each of the subscriber gateways in the list.

Owner:IMVU

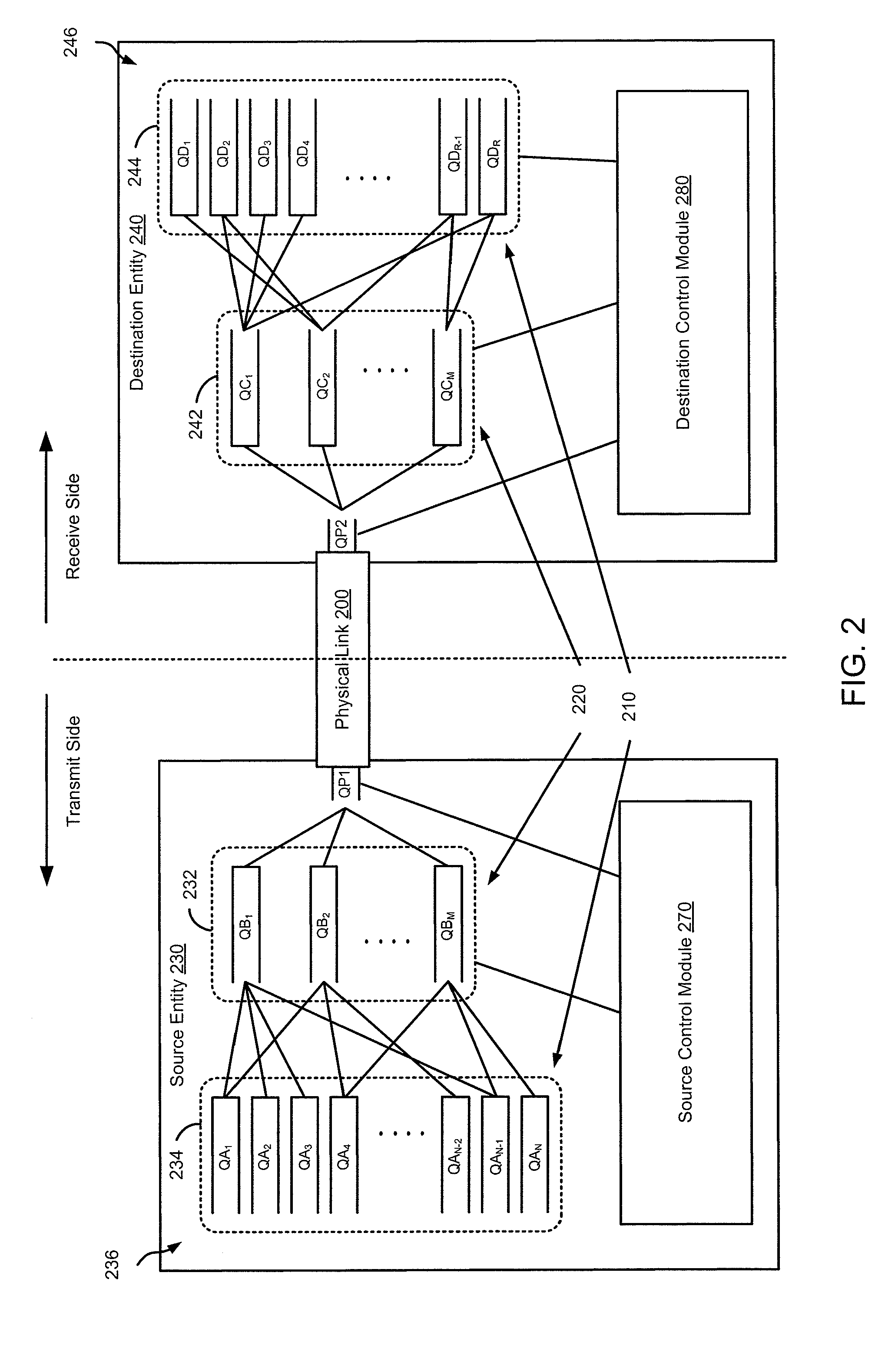

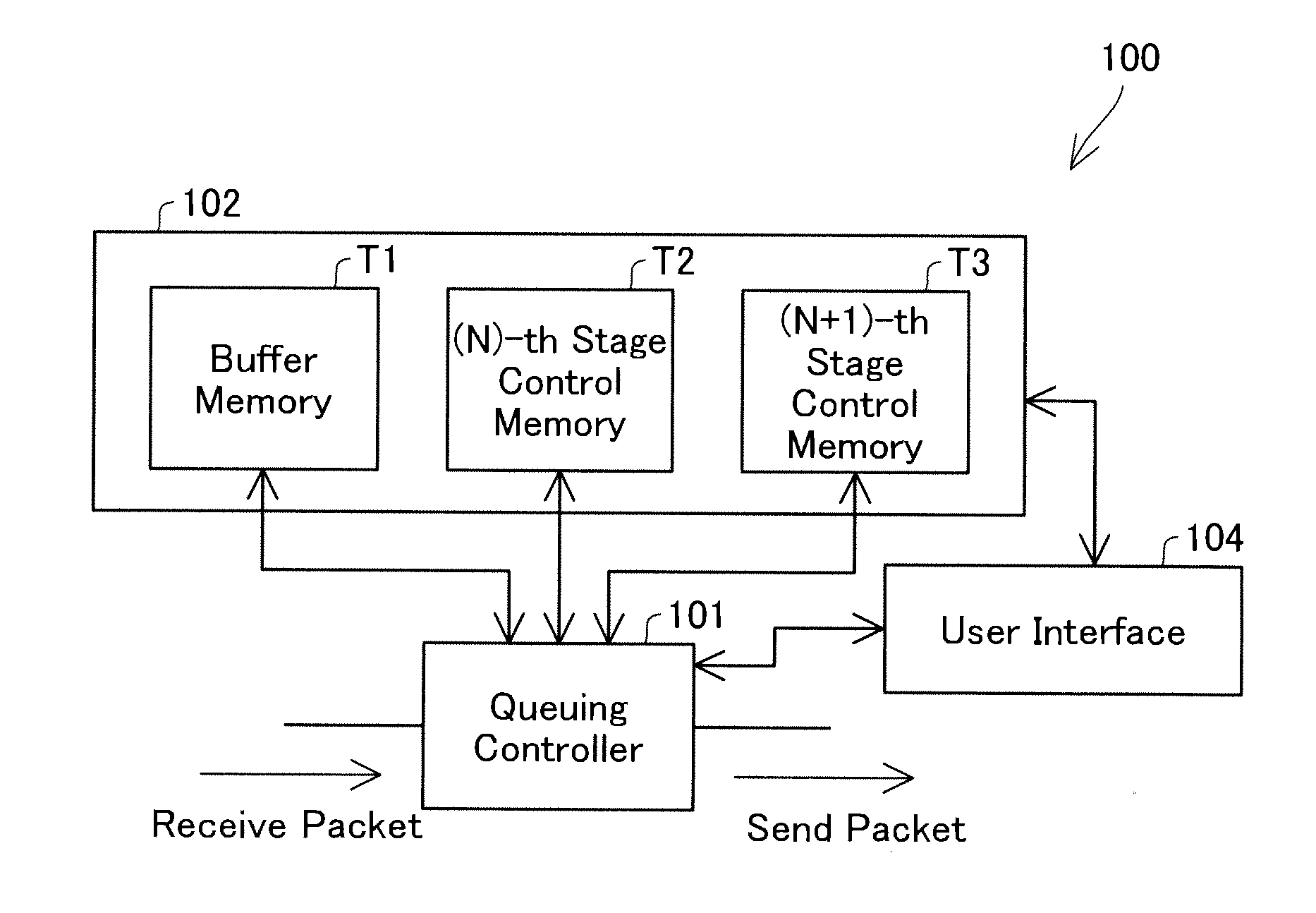

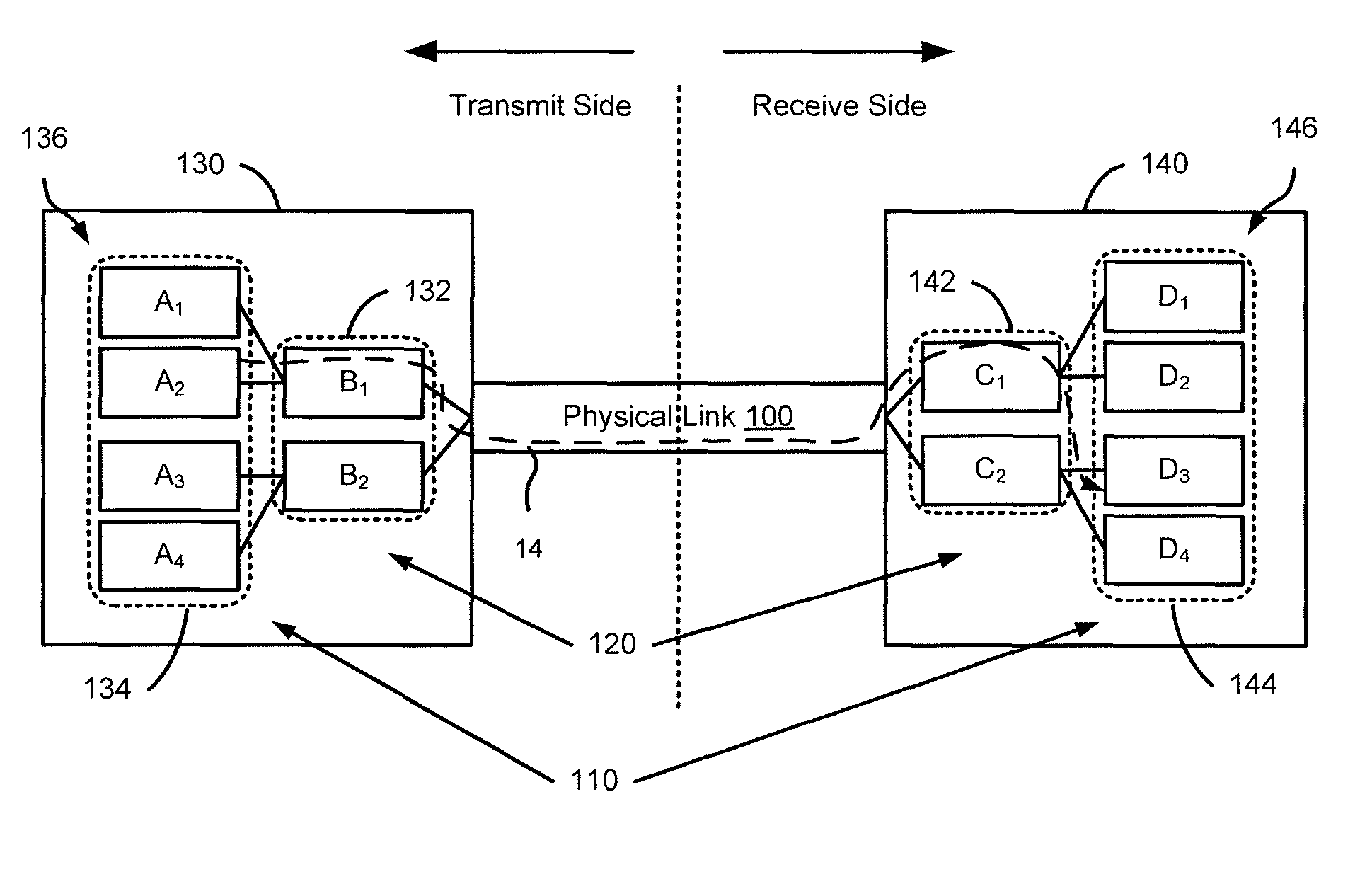

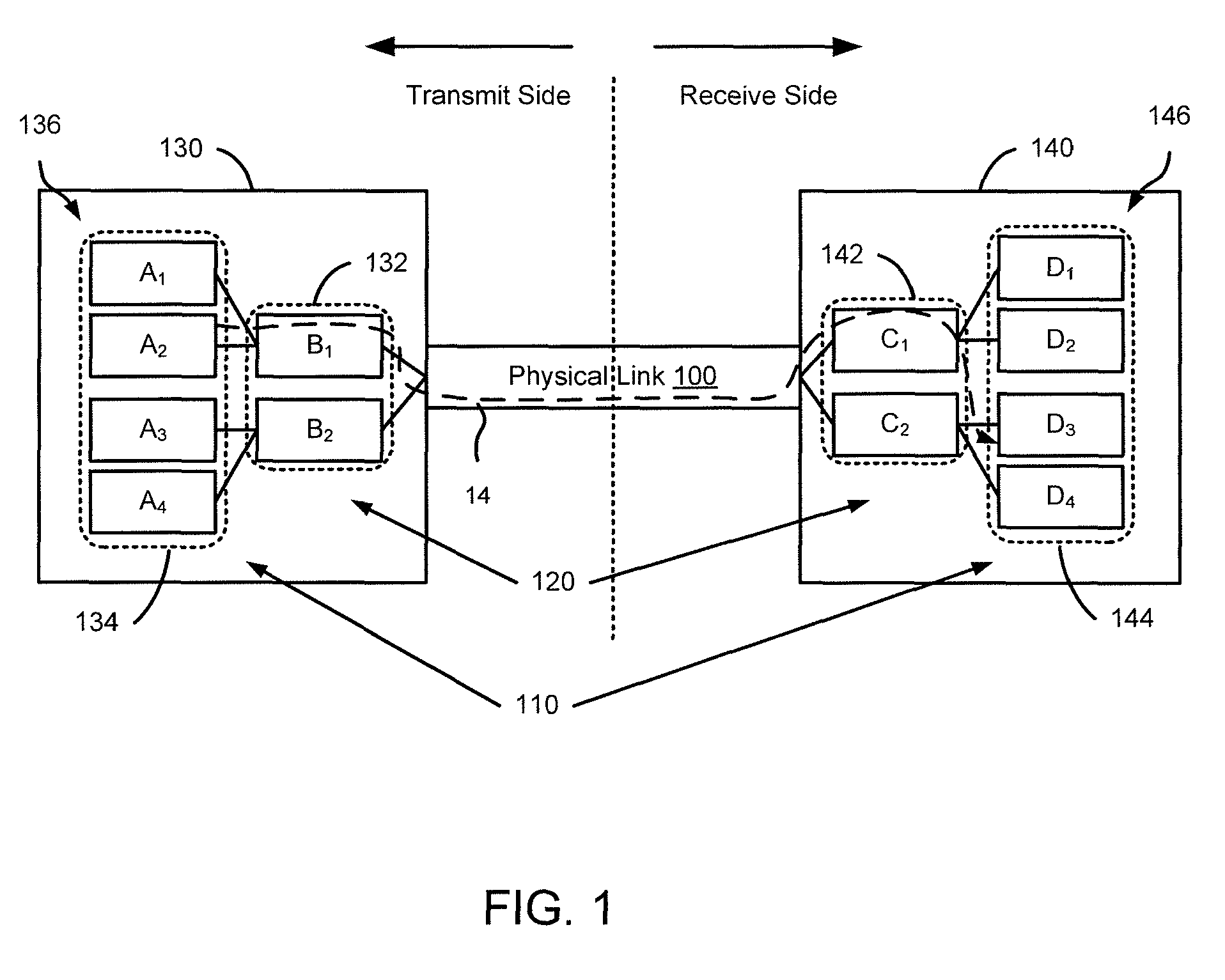

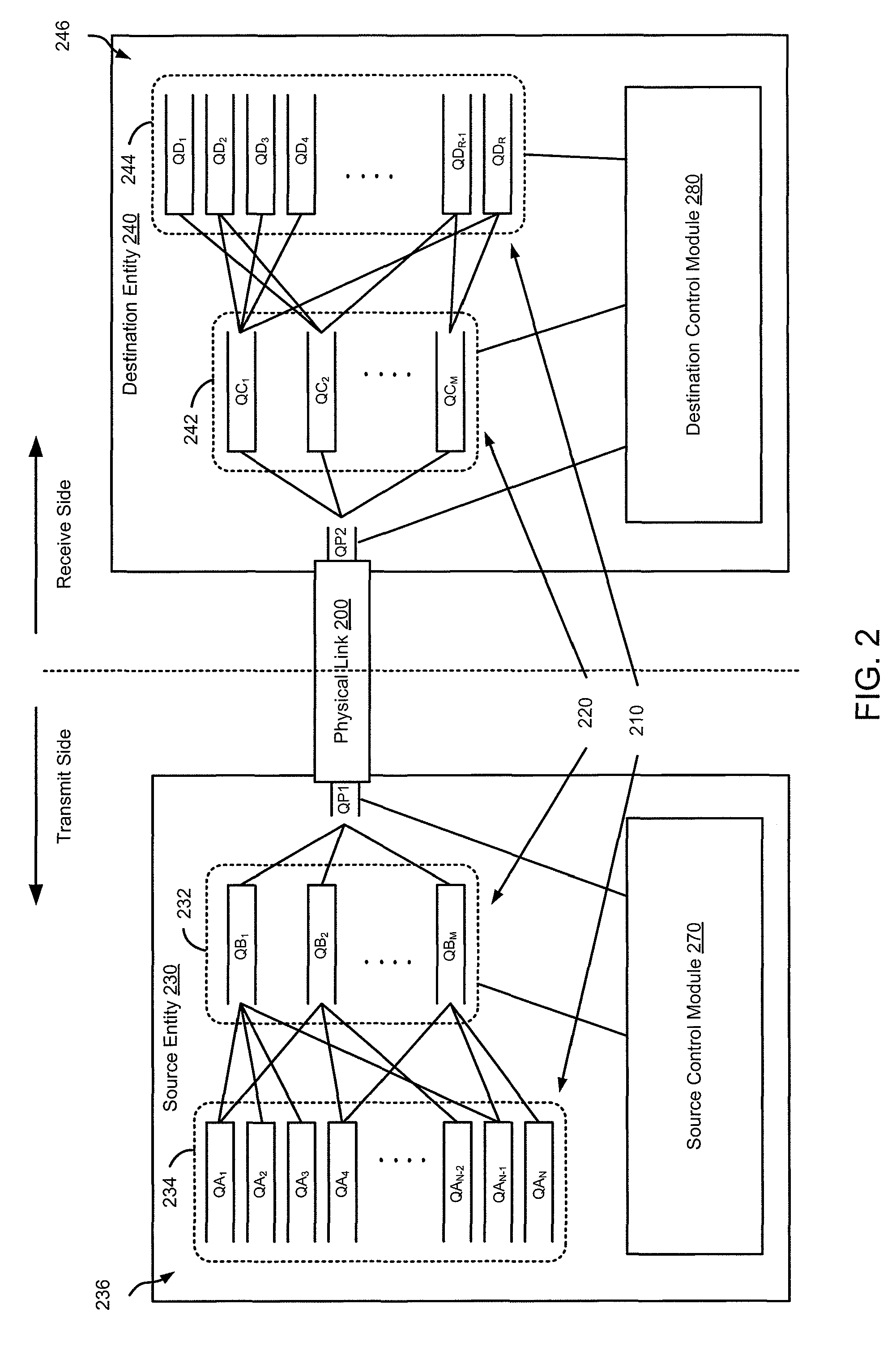

Methods and apparatus for flow control associated with multi-staged queues

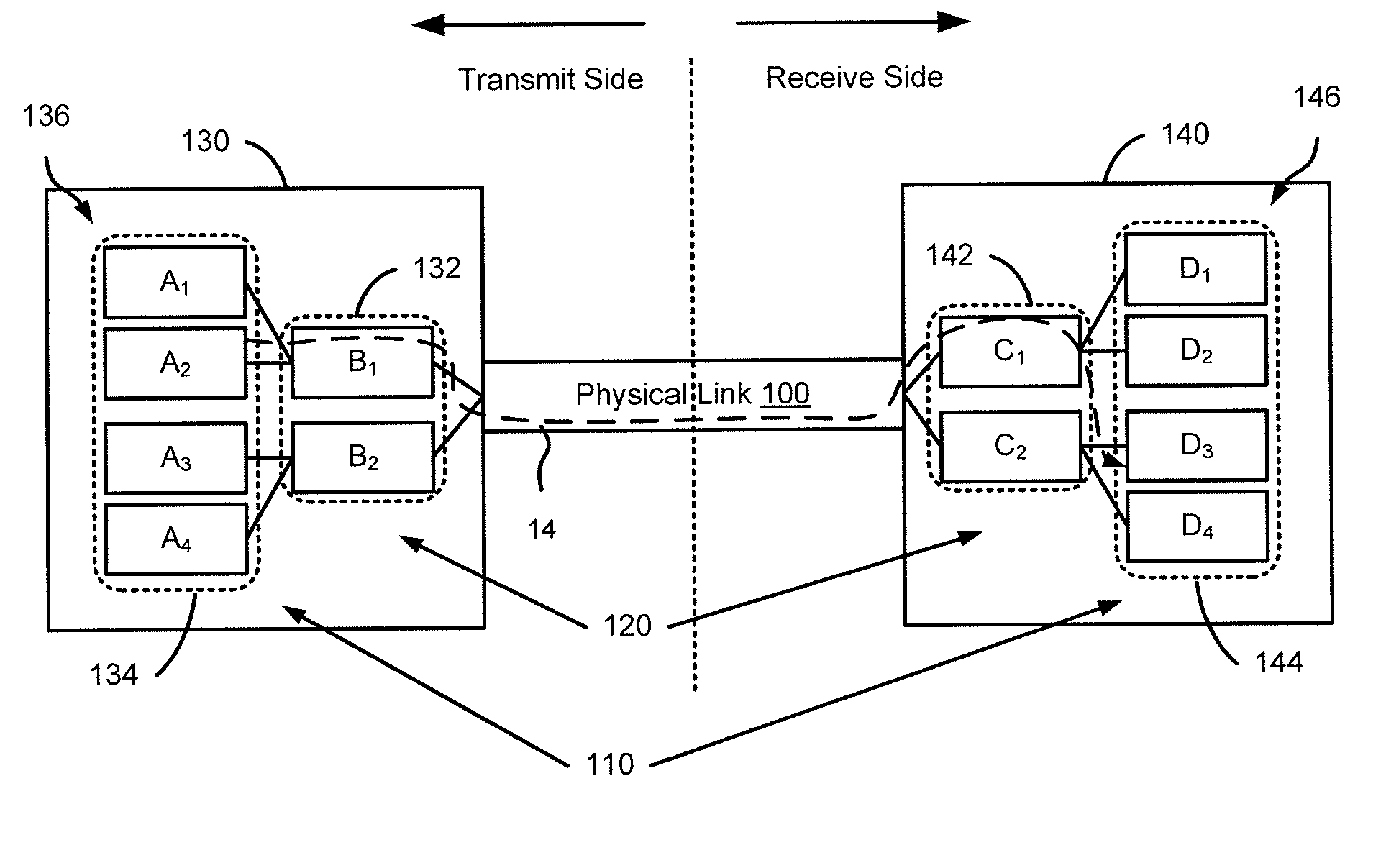

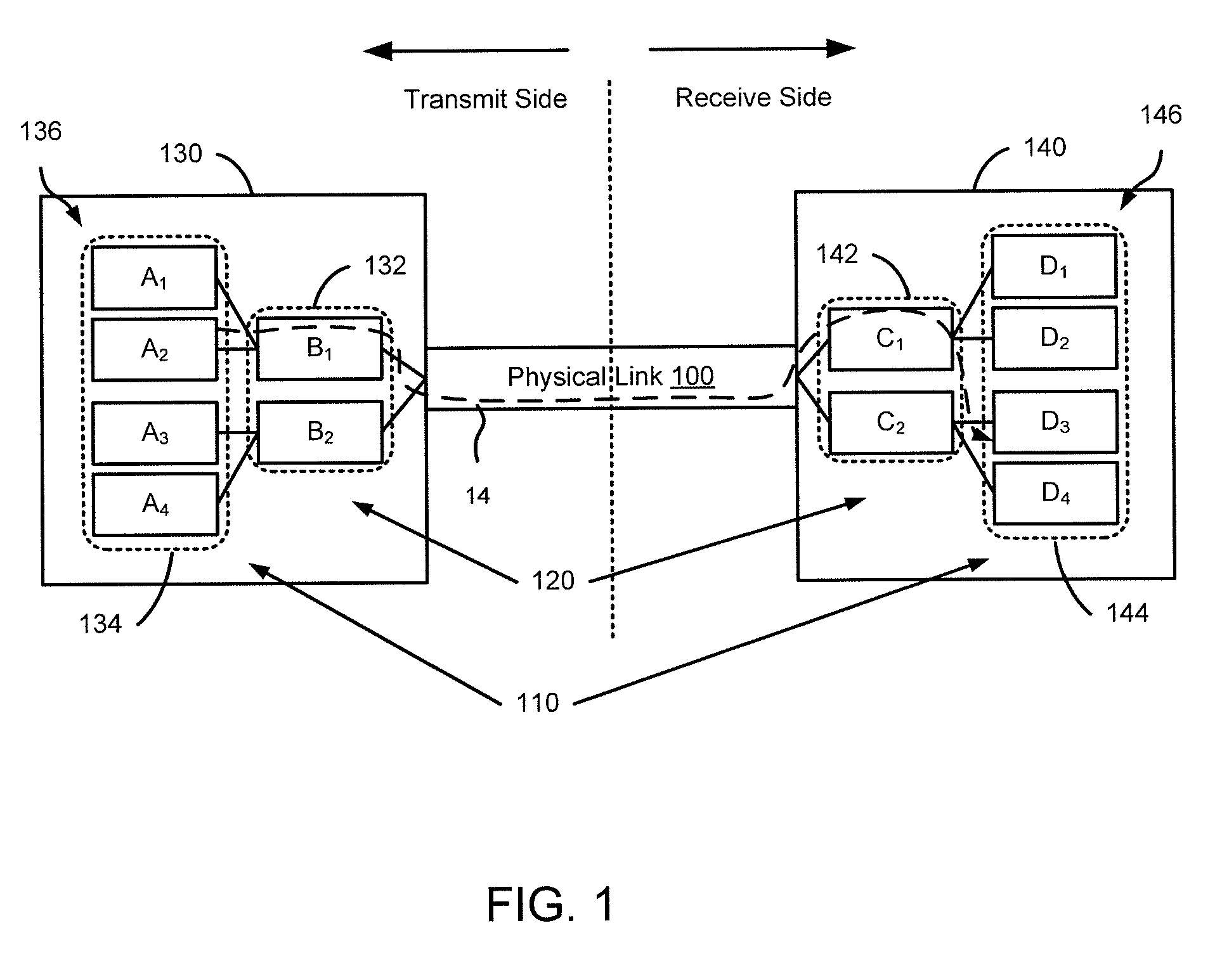

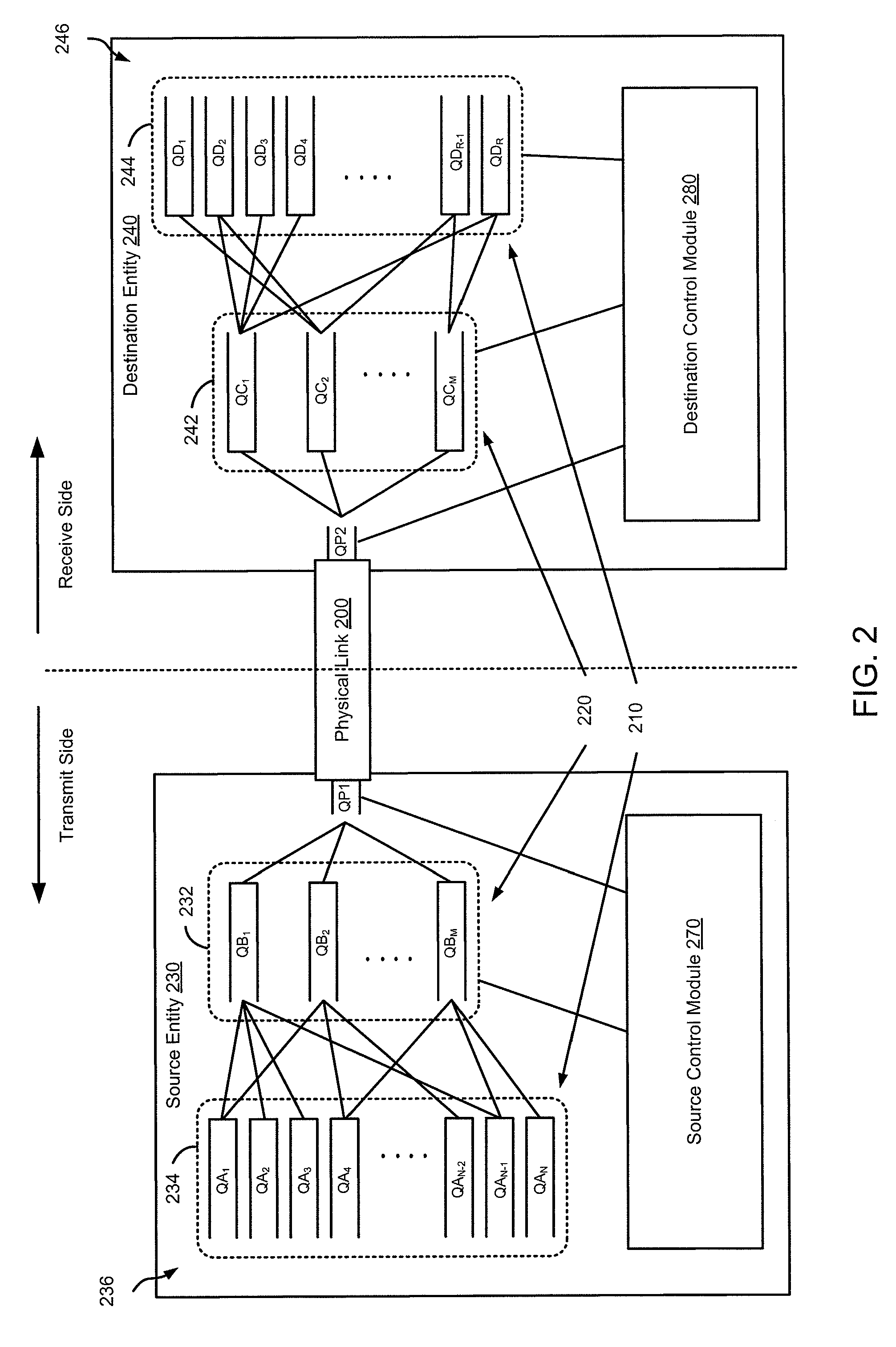

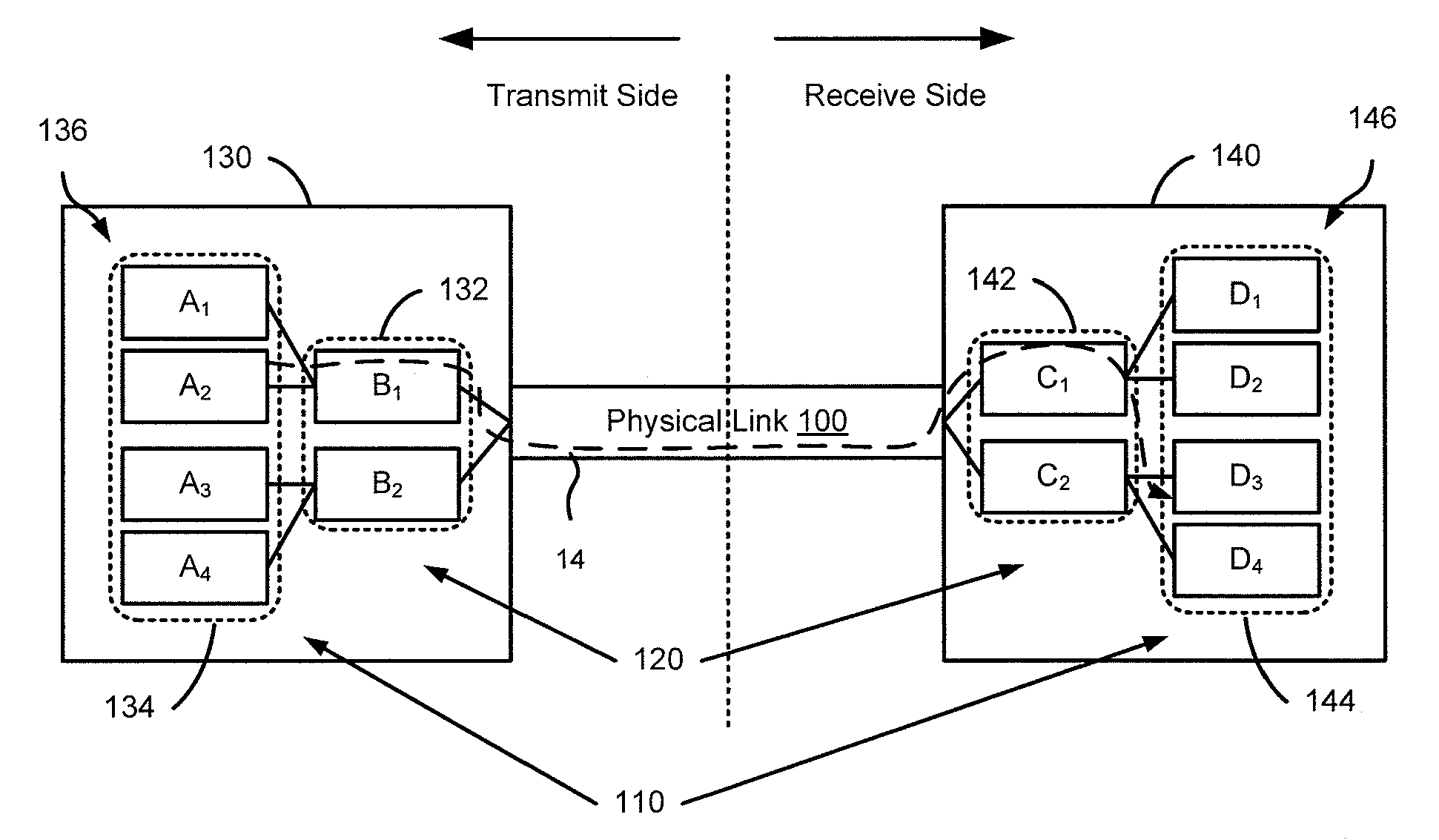

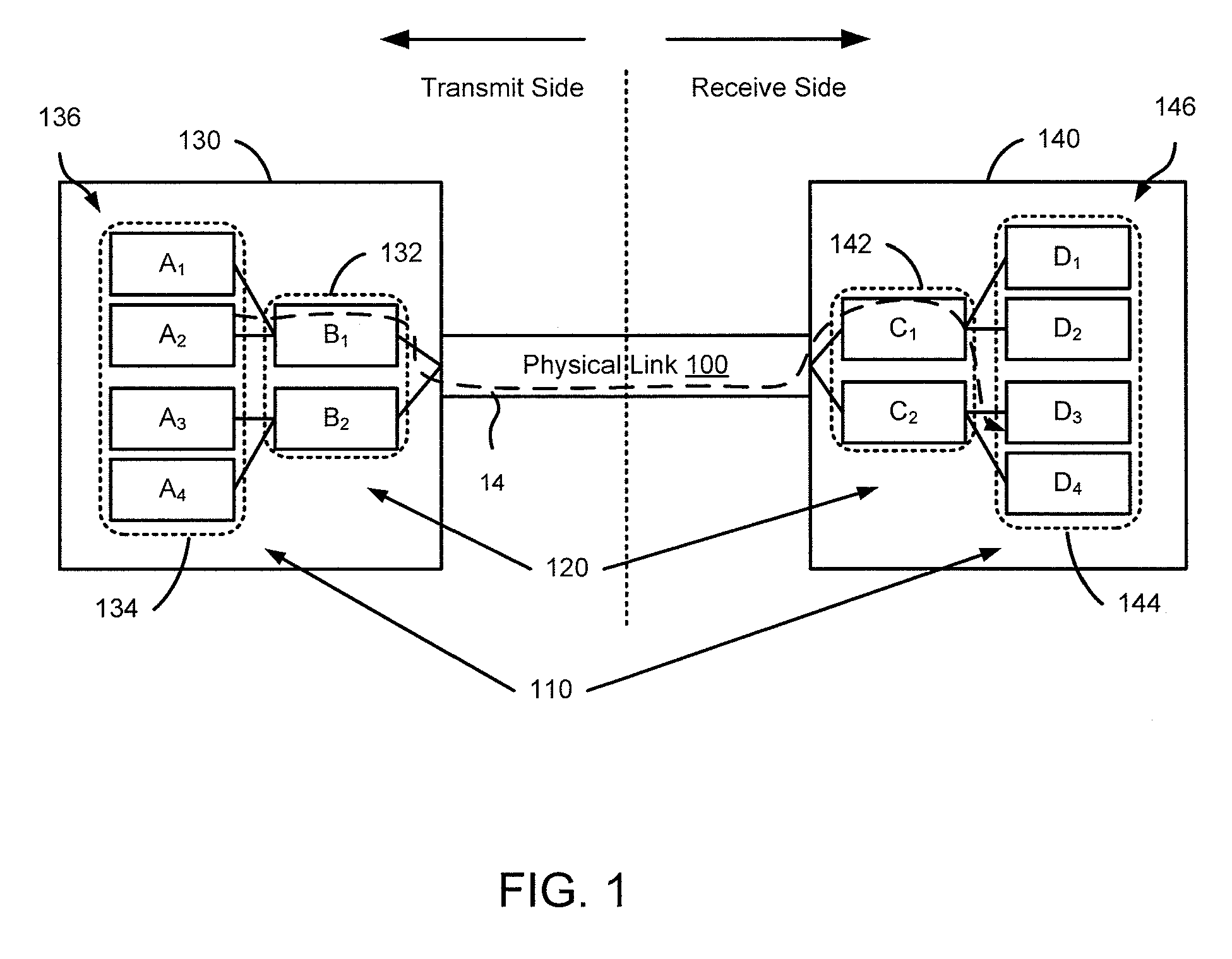

In one embodiment, a method, comprising receiving at a receive side of a physical link a request to suspend transmission of data from a queue within a transmit side of a first stage of queues and to suspend transmission via a path including the physical link, a portion of the first stage of queues, and a portion of a second stage of queues. The method includes sending, in response to the request, a flow control signal to a flow control module configured to schedule transmission of the data from the queue within the transmit side of the first stage of queues. The flow control signal is associated with a first control loop including the path and differing from a second control loop that excludes the first stage of queues.

Owner:JUMIPER NETWORKS INC

Methods and apparatus for flow-controllable multi-staged queues

In one embodiment, a method includes sending a first flow control signal to a first stage of transmit queues when a receive queue is in a congestion state. The method also includes sending a second flow control signal to a second stage of transmit queues different from the first stage of transmit queues when the receive queue is in the congestion state.

Owner:JUMIPER NETWORKS INC

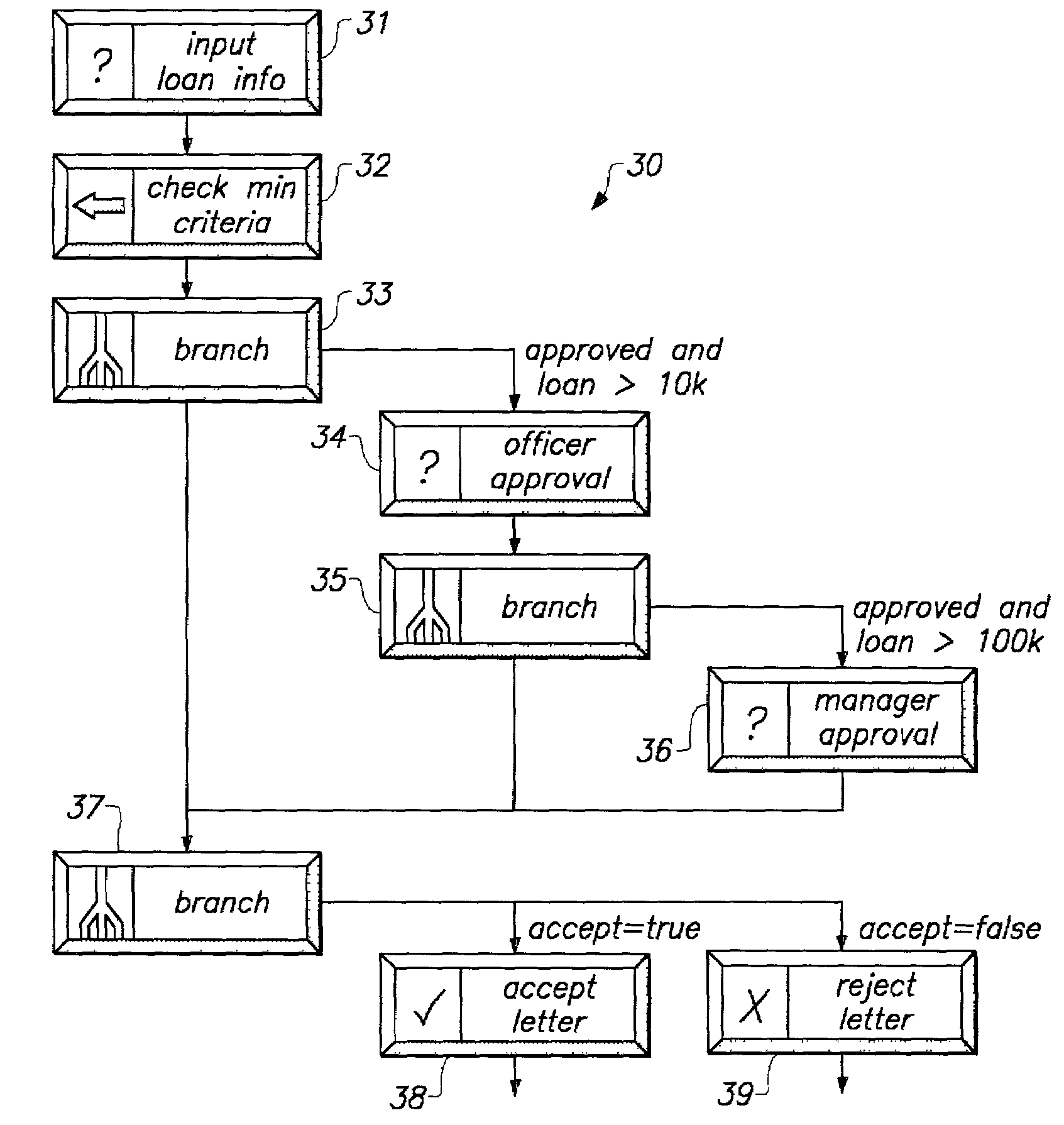

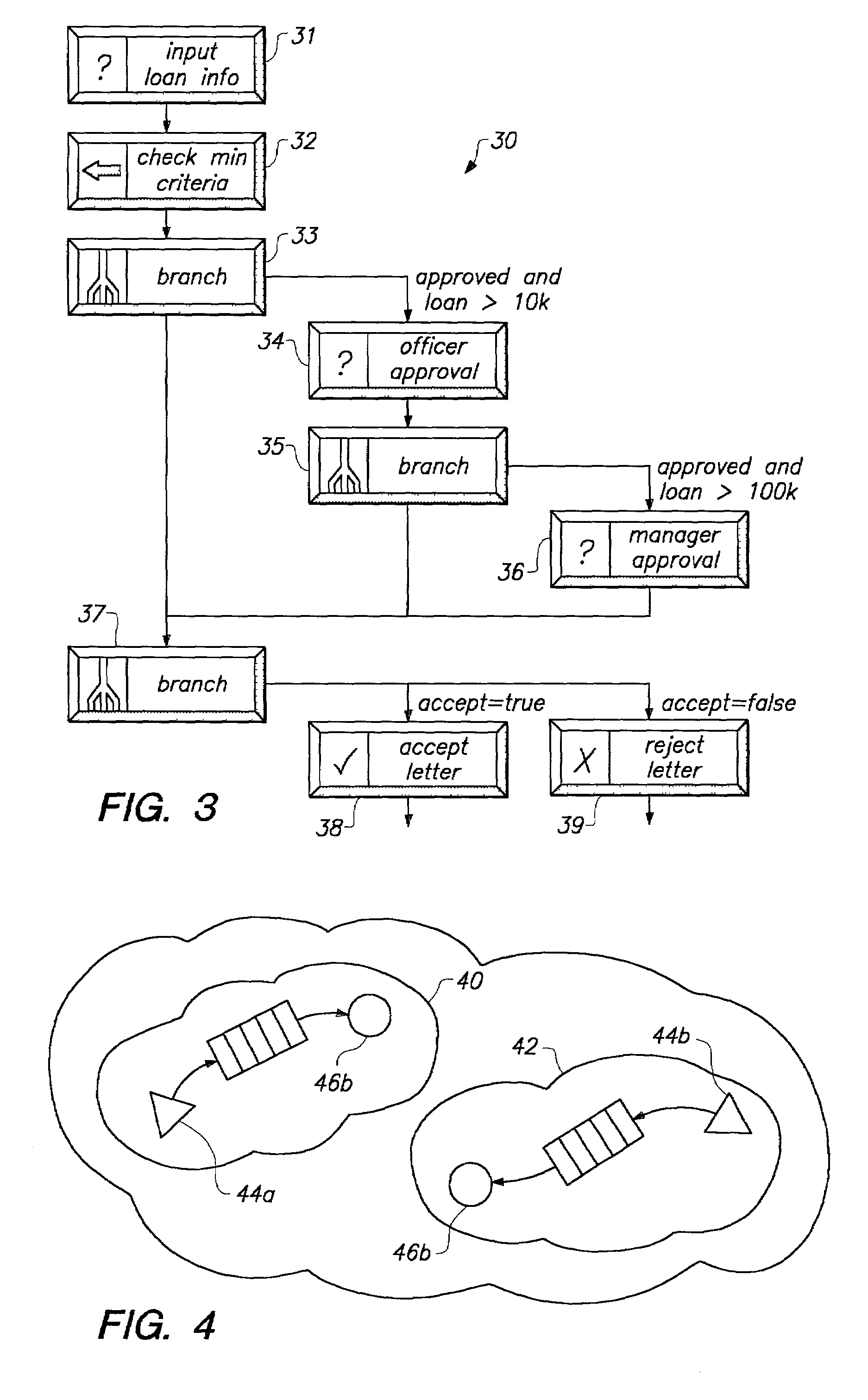

Multilevel queuing system for distributing tasks in an enterprise-wide work flow automation

InactiveUS7010602B2Improve scalabilityEfficient communicationMultiprogramming arrangementsMultiple digital computer combinationsWeb siteMultilevel queue

Methods and apparatus are provided for a enterprise-wide work flow system that may encompass multiple geographically separate sites. The sites may be either permanently or transiently linked. A single computer network may accommodate multiple work flow systems and a single work flow system may be distributed over multiple local area networks. The system maintains the paradigm of one global queue per service and provides for individual work flow systems to export services to one another in an enterprise.

Owner:SERVICENOW INC

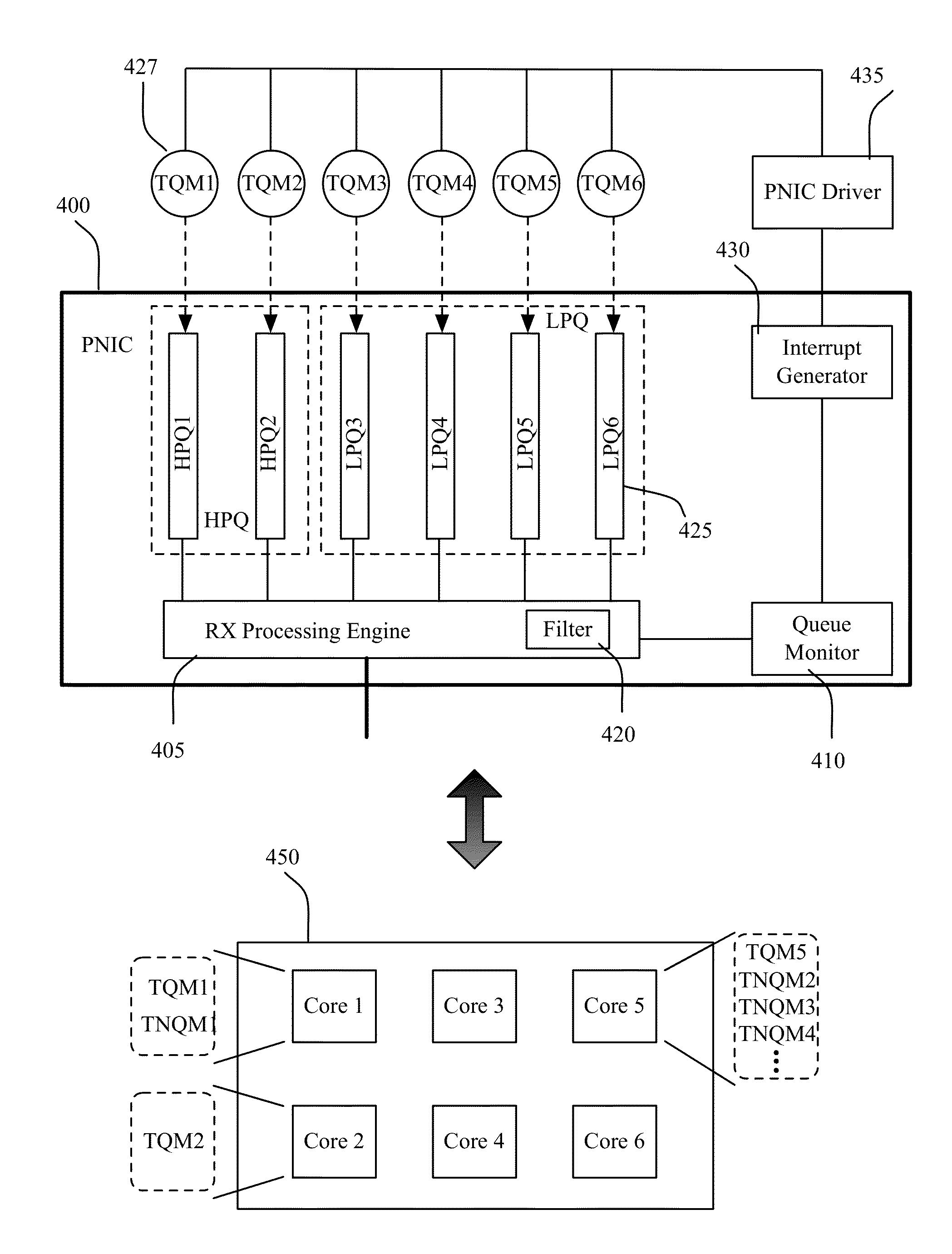

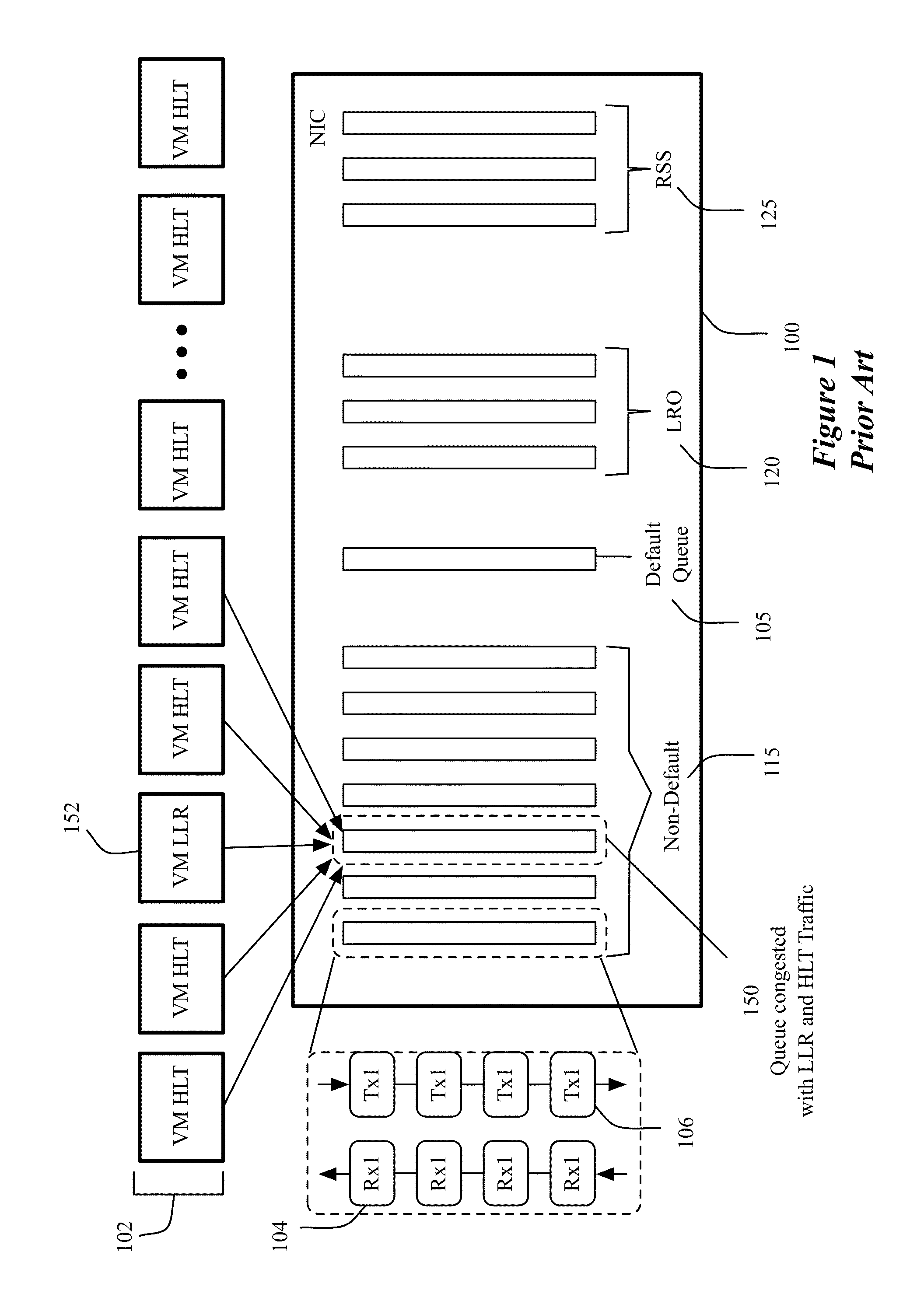

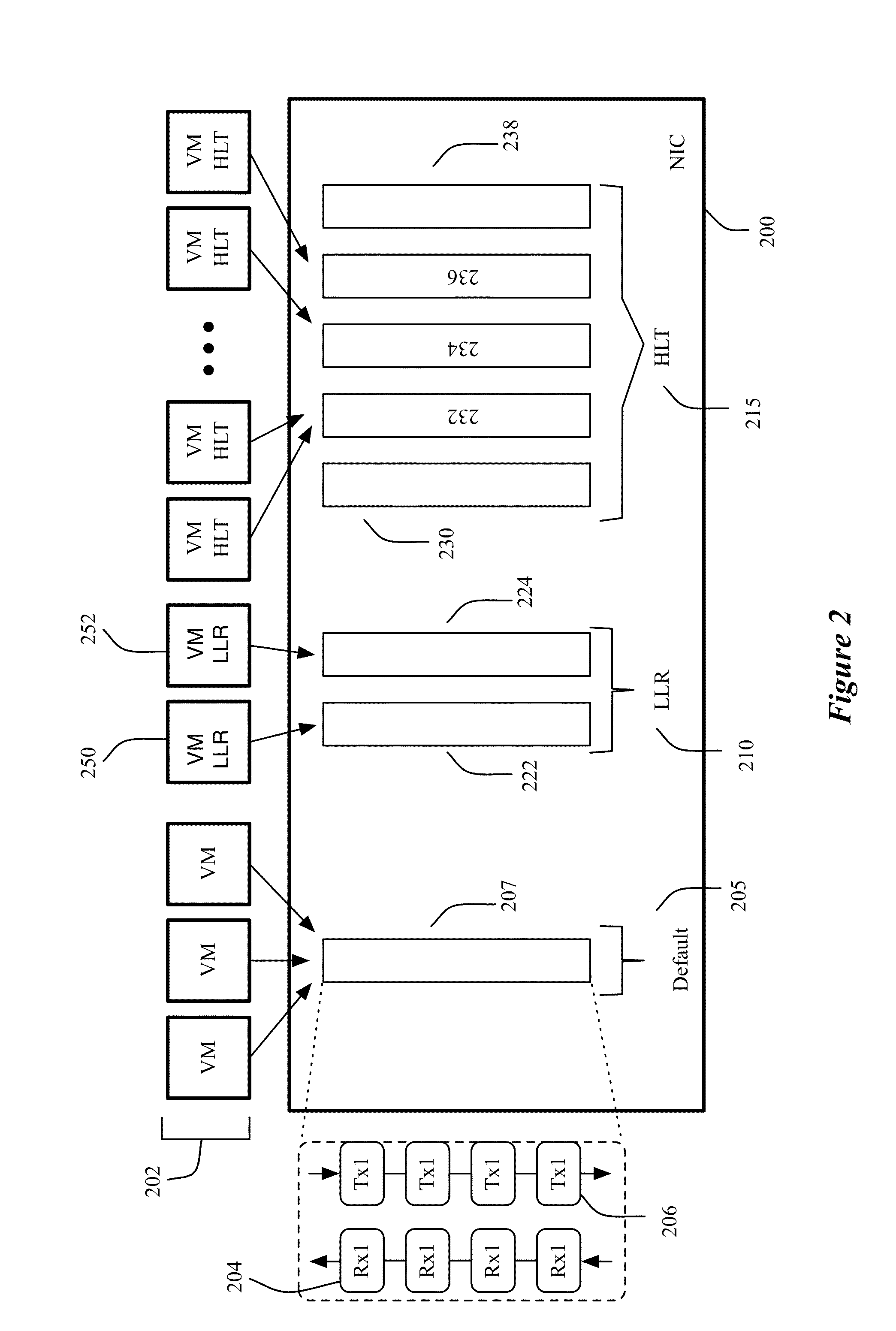

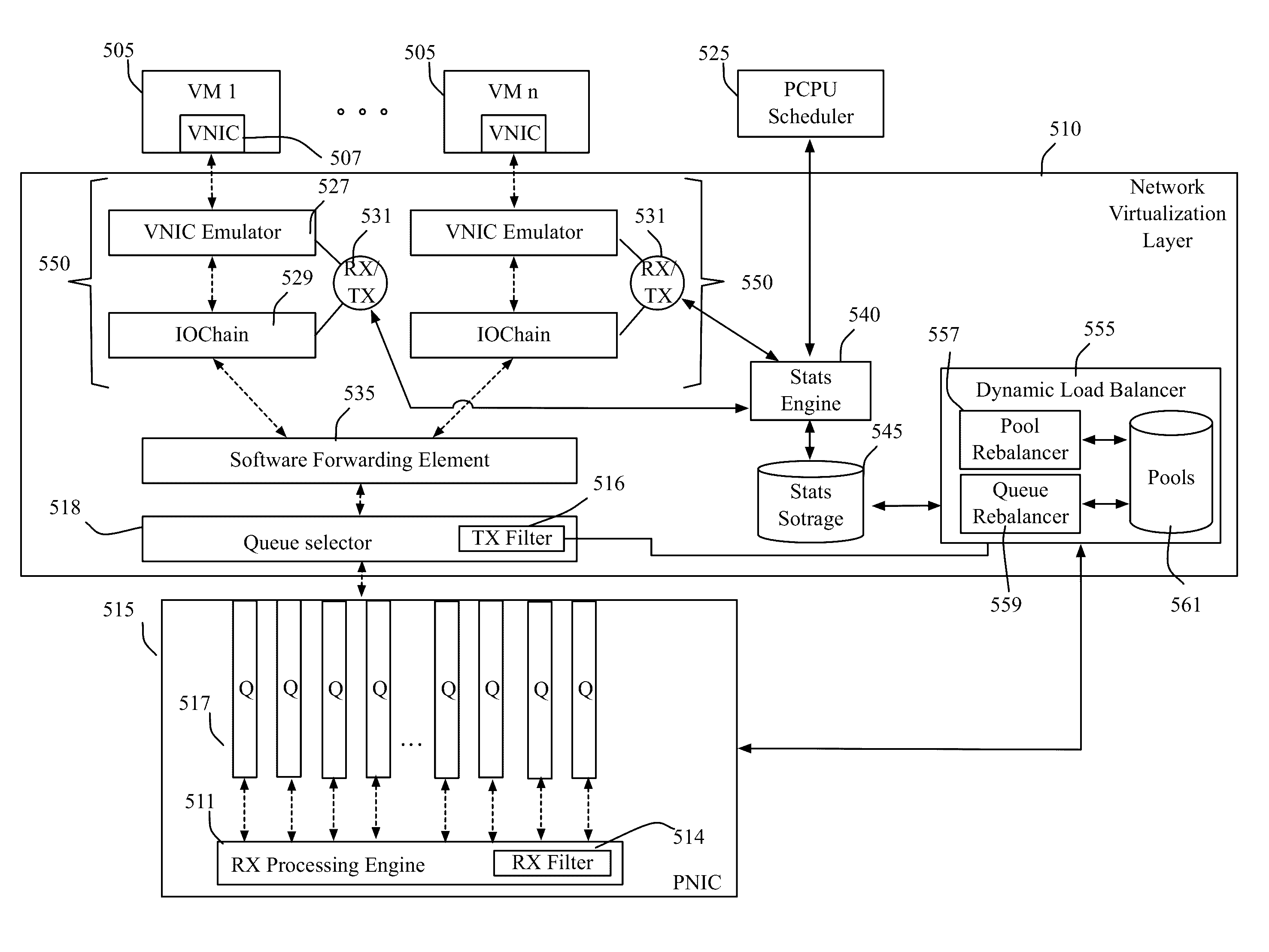

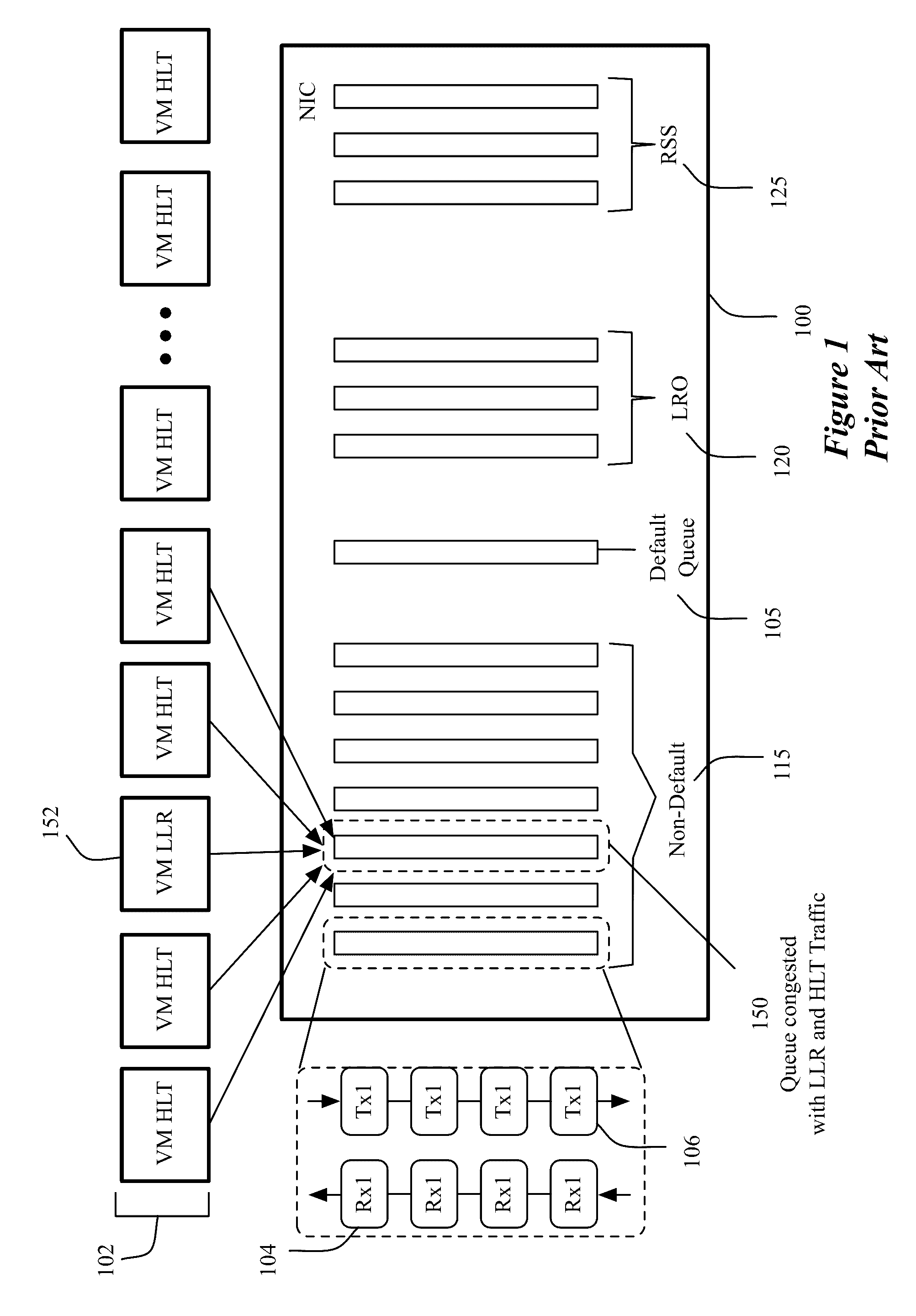

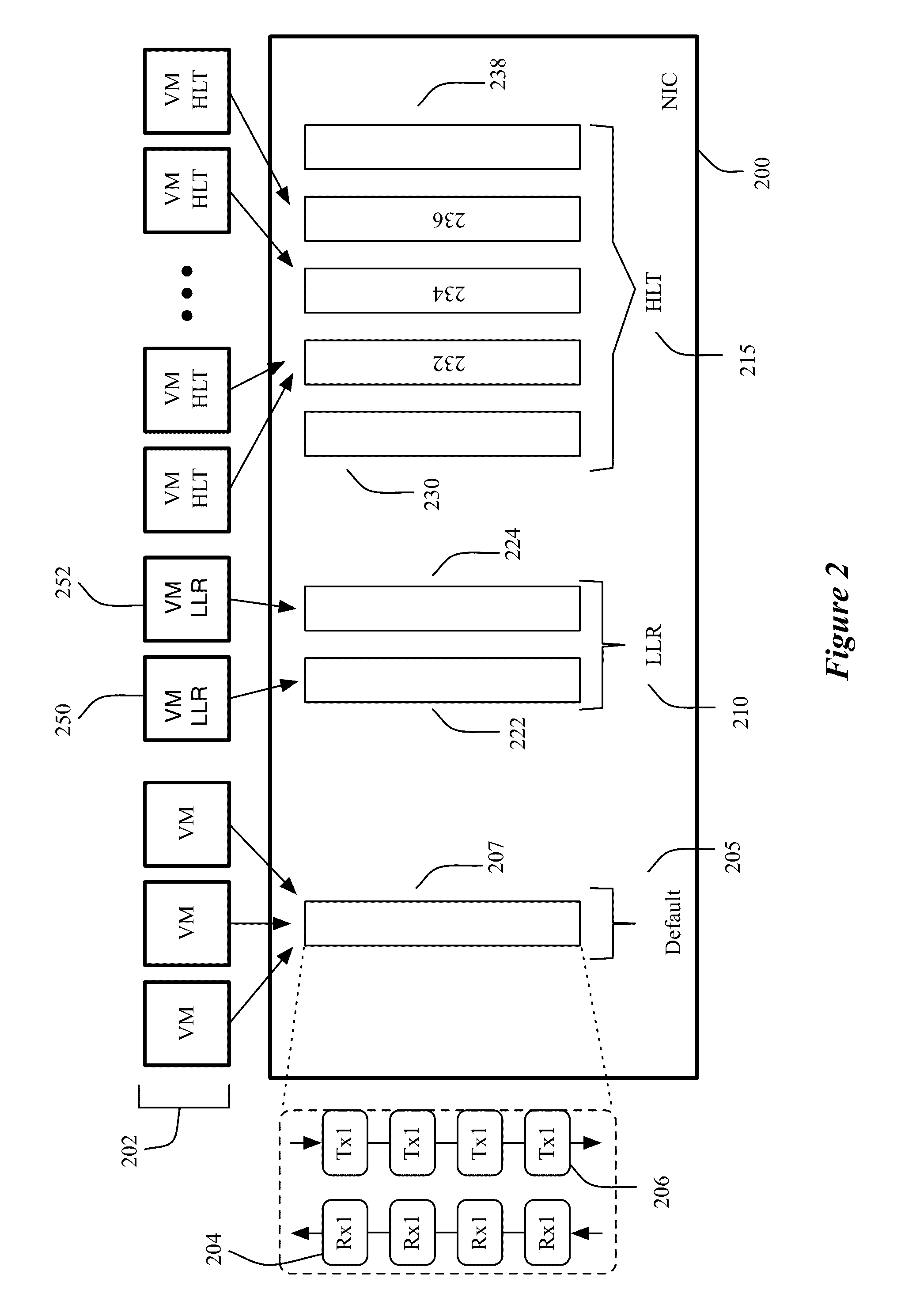

Traffic and load aware dynamic queue management

Some embodiments provide a queue management system that efficiently and dynamically manages multiple queues that process traffic to and from multiple virtual machines (VMs) executing on a host. This system manages the queues by (1) breaking up the queues into different priority pools with the higher priority pools reserved for particular types of traffic or VM (e.g., traffic for VMs that need low latency), (2) dynamically adjusting the number of queues in each pool (i.e., dynamically adjusting the size of the pools), (3) dynamically reassigning a VM to a new queue based on one or more optimization criteria (e.g., criteria relating to the underutilization or overutilization of the queue).

Owner:VMWARE INC

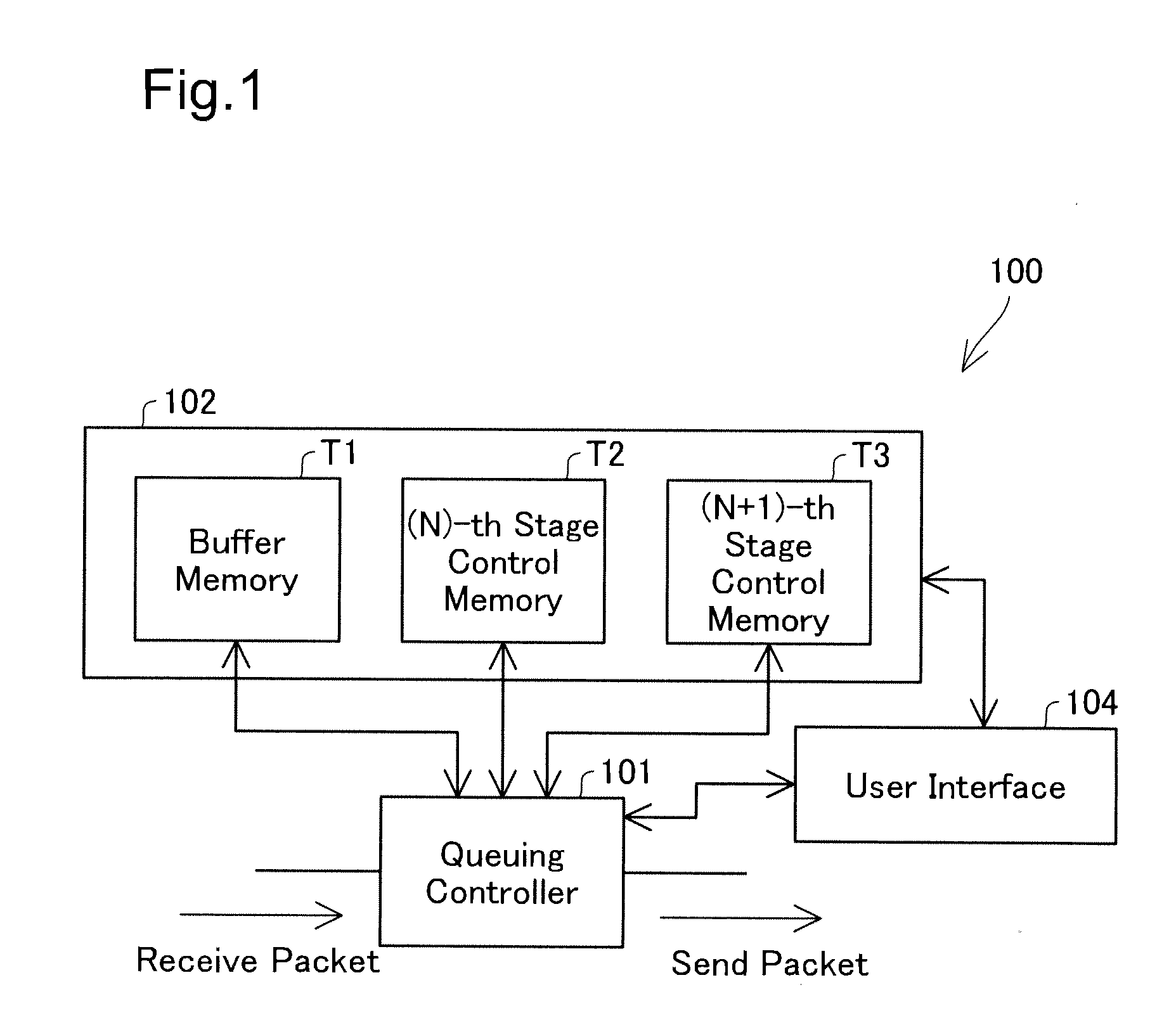

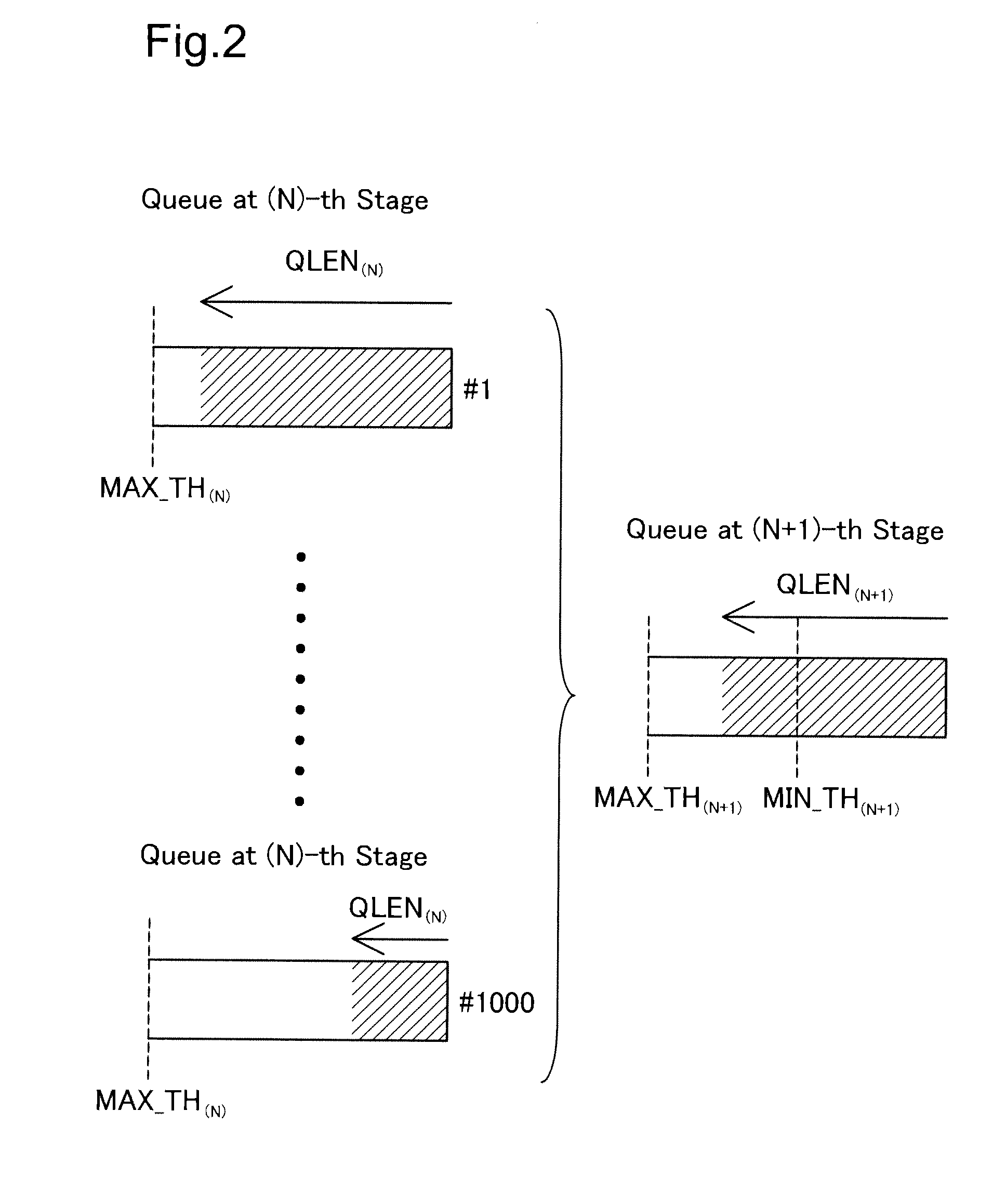

Packet relay apparatus and method of relaying packet

InactiveUS20110176554A1Control deteriorationData switching by path configurationNetwork packetMultilevel queue

The packet relay apparatus is provided. The packet relay apparatus includes a receiver that receives a packet; and a determiner that determines to drop the received packet without storing the received packet into a queue among the multi-stage queue. The determiner determines to drop the received packet at a latter stage, based on former-stage queue information representing a state of a queue at any former stage which the received packet belongs to and latter-stage queue information representing a state of a queue at the latter stage which the received packet belongs to.

Owner:ALAXALA NETWORKS

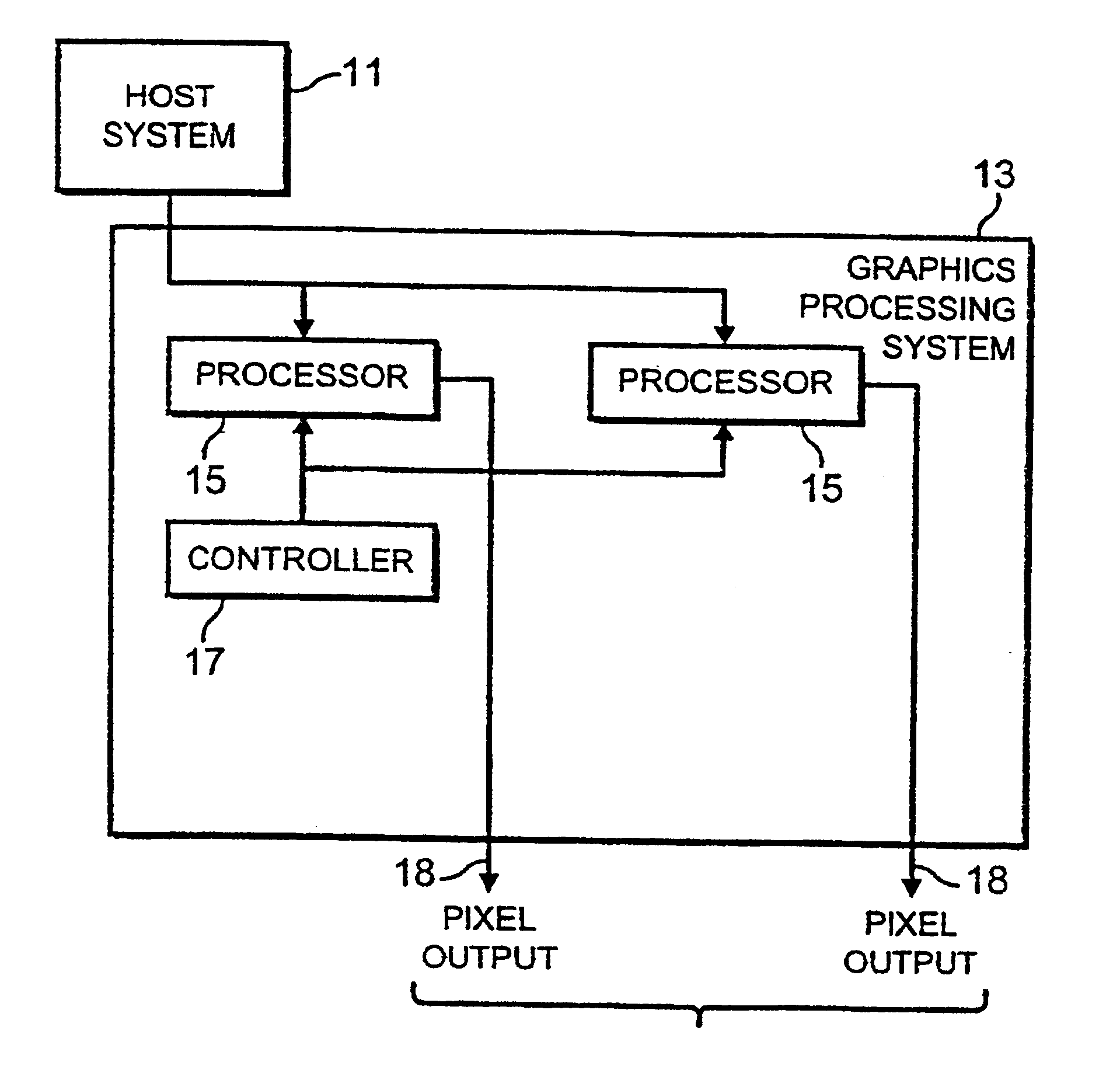

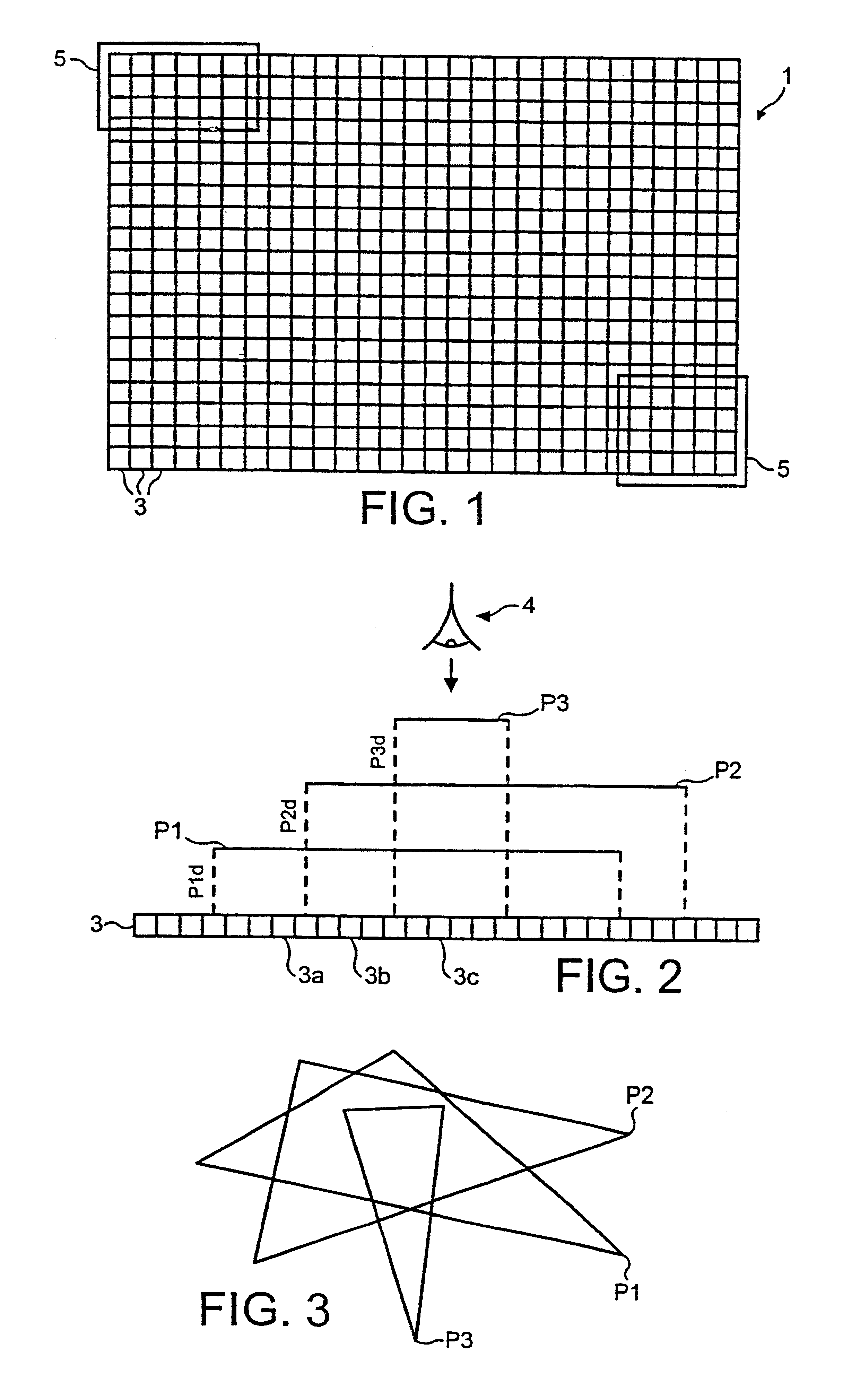

Method and apparatus for SIMD processing using multiple queues

InactiveUS6898692B1Operational speed enhancementGeneral purpose stored program computerGraphicsMultilevel queue

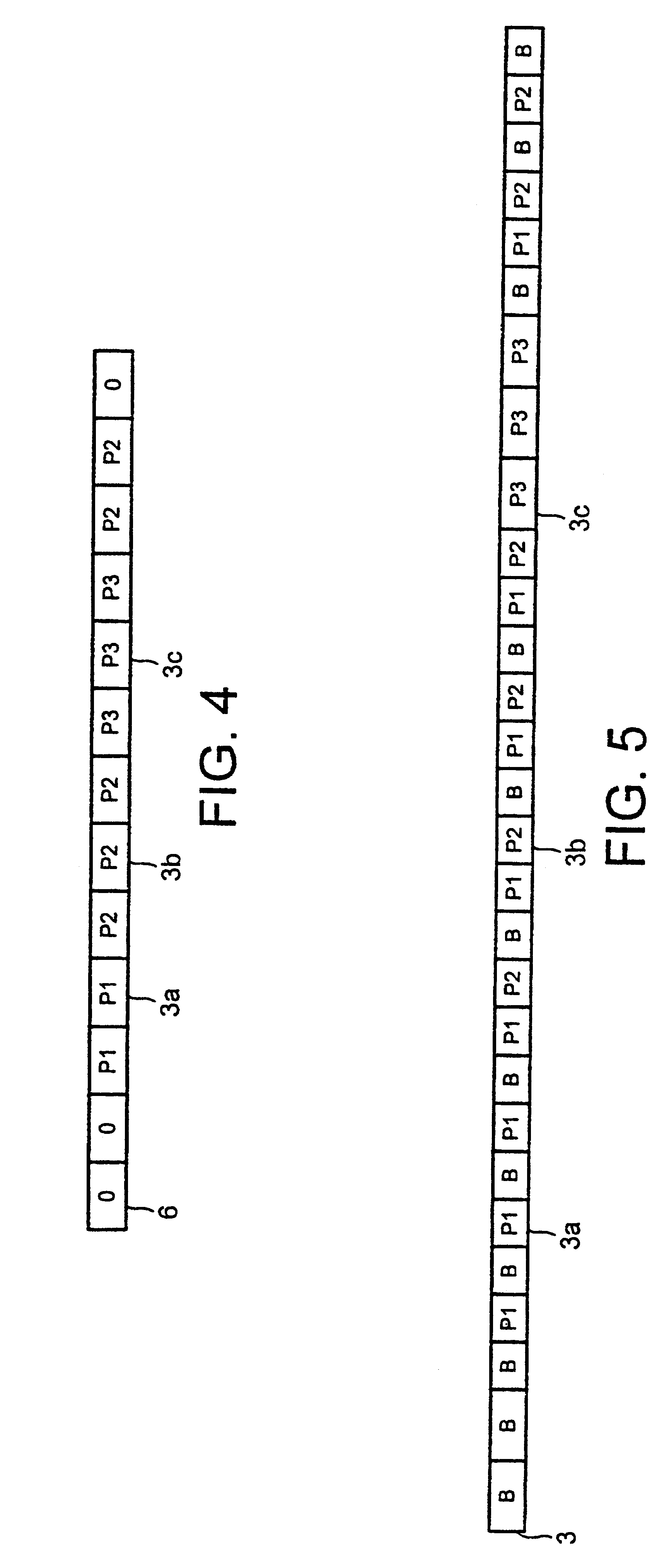

A method of processing data relating to graphical primitives to be displayed on a display device using region-based SIMD multiprocessor architecture, has the shading and blending operations deferred until rasterization of the available graphical primitive data is completed. For example, the method may comprise the steps of: a) defining a data queue having a predetermined number of locations therein; b) receiving fragment information belonging to an image to be displayed by the pixel; c) determining whether the fragment information belongs to an opaque image or to a blended image; d) if the fragment information relates to a blended image, storing the fragment information on the next available location in the queue; e) if the fragment information relates to an opaque image, clearing the locations of the queue and storing the fragment information in the first location in the queue; f) repeating steps b) to e) for new fragment information until fragment information is stored in all the locations in the data queue or until no further fragment information is available; and g) processing in turn fragment information stored in the locations of the queue to produce respective pixel display values.

Owner:RAMBUS INC

Methods and systems for managing network traffic by multiple constraints

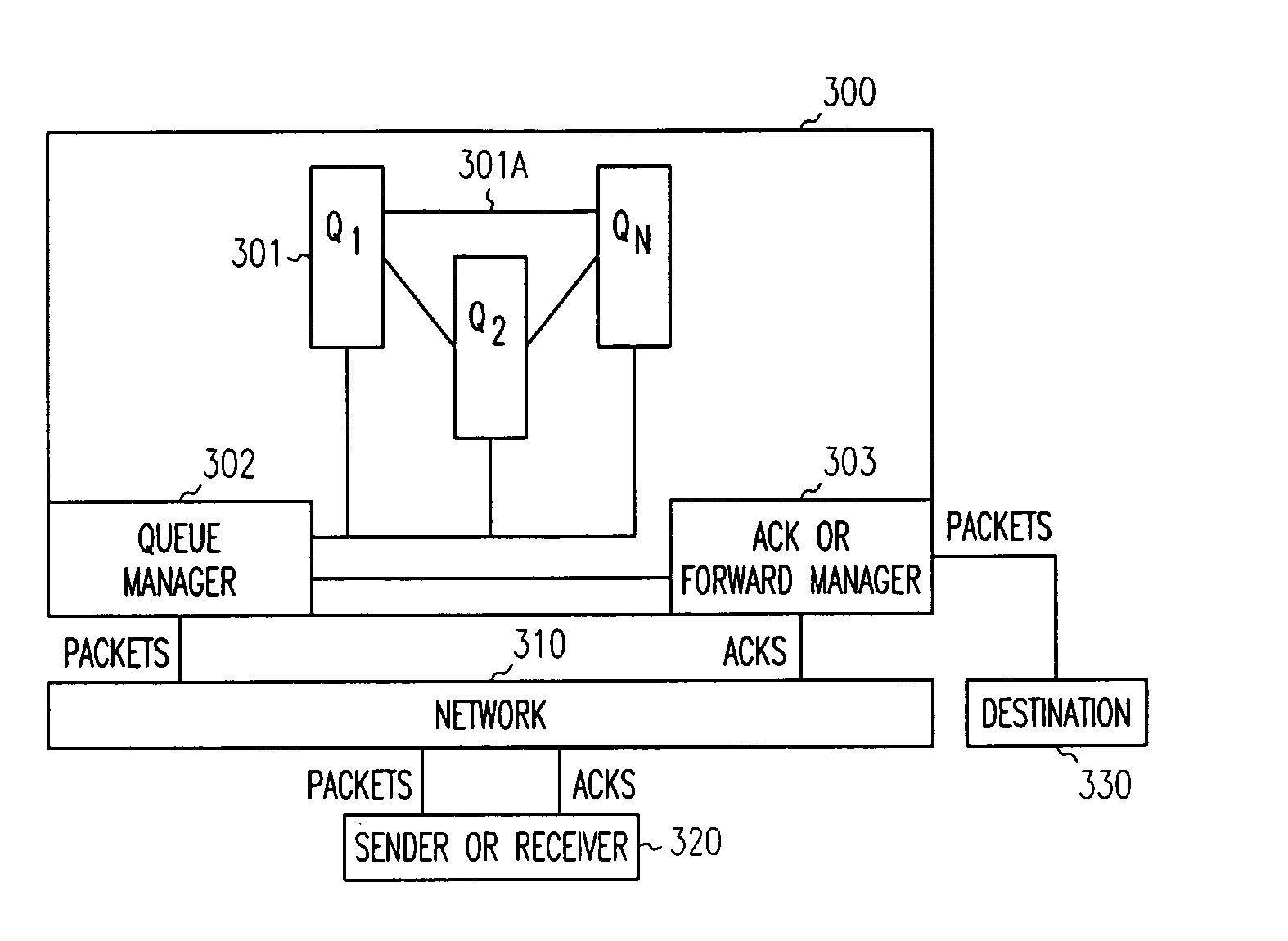

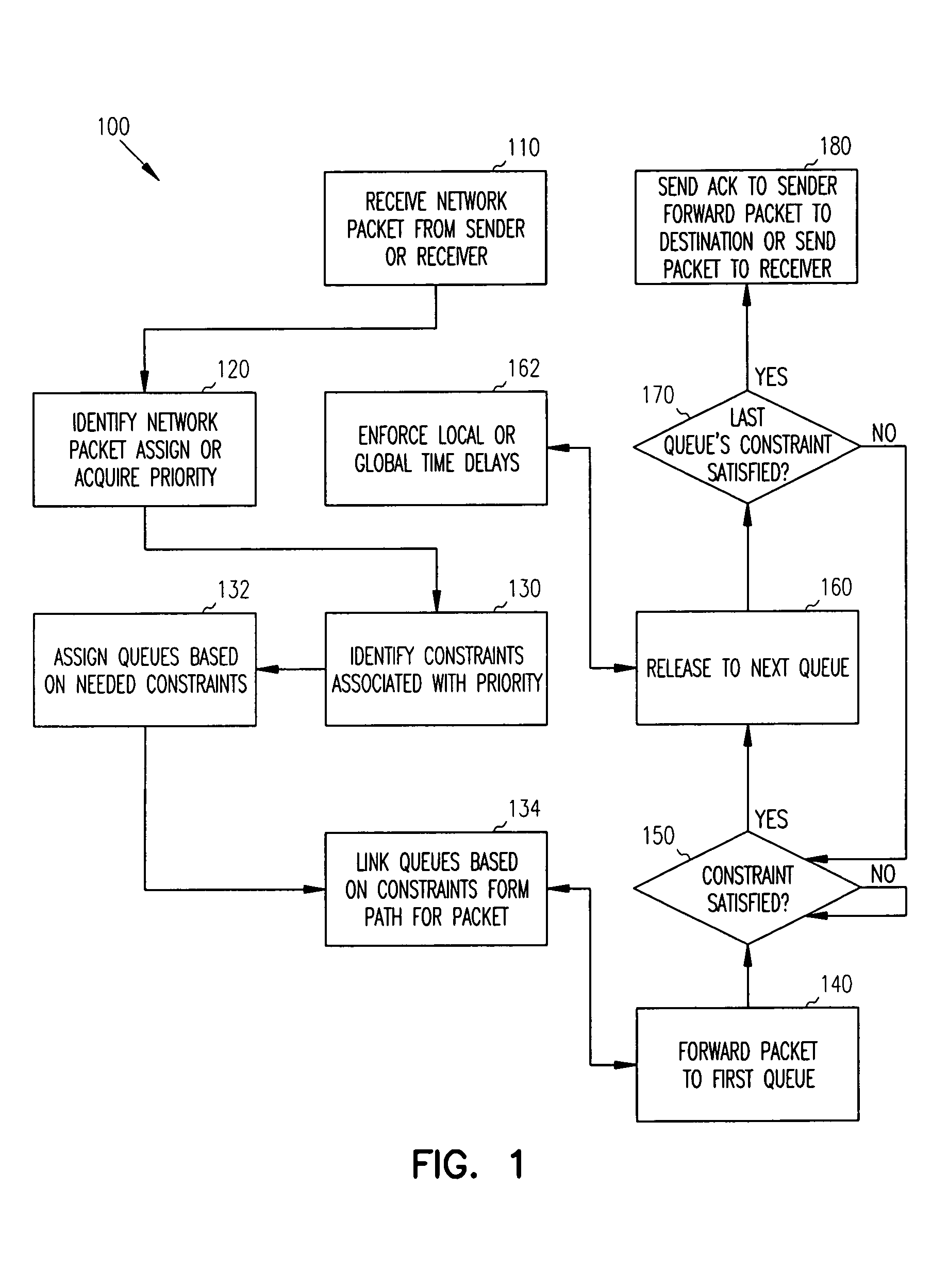

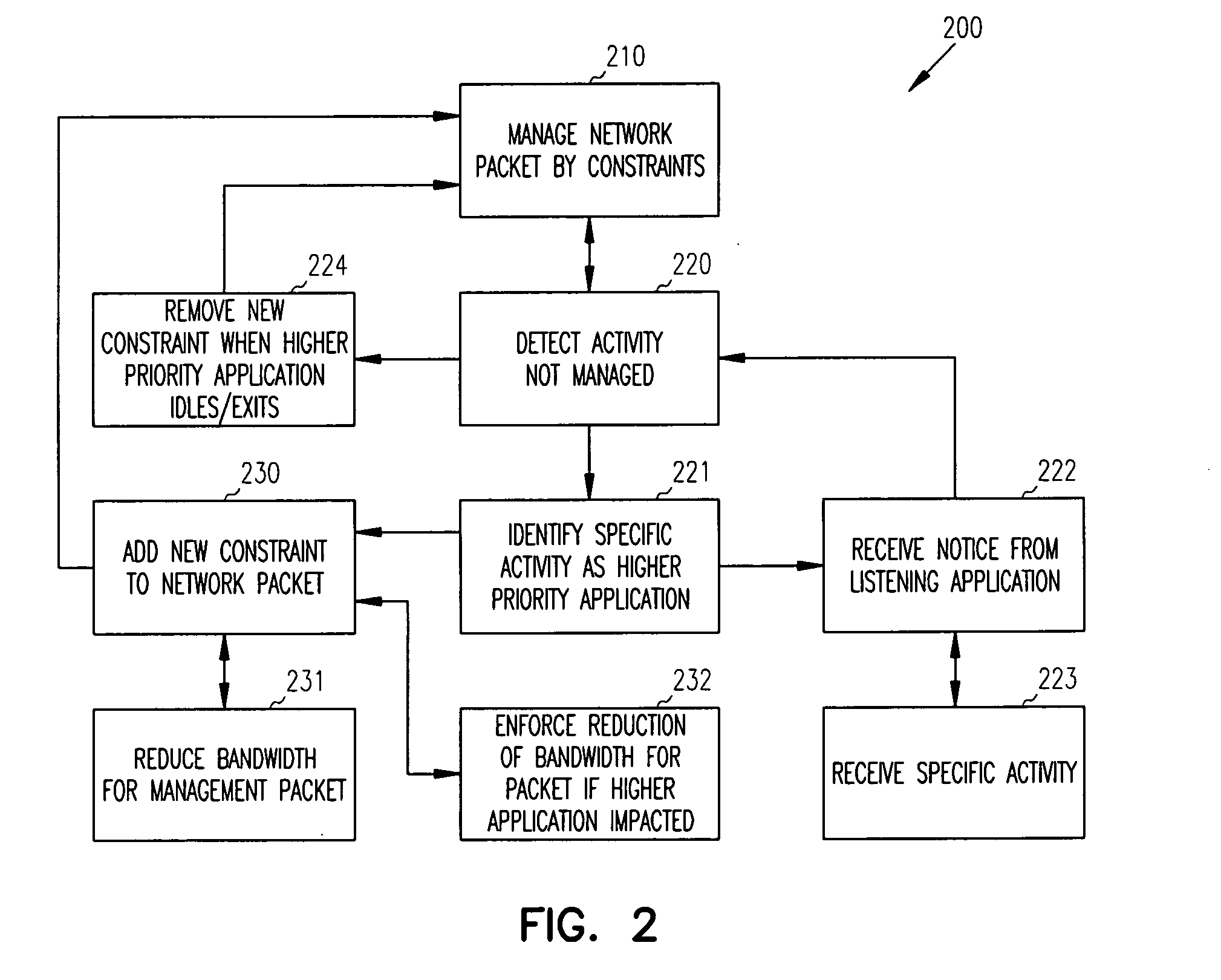

Methods and systems for managing network traffic by multiple constraints are provided. A received network packet is assigned to multiple queues where each queue is associated with a different constraint. A network packet traverses the queues once it satisfies the constraint associated with that queue. Once the network packet has traversed all its assigned queues the network packet is forwarded to its destination. Also, network activity, associated with higher priority applications which are not managed, is detected. When such activity is detected, additional constraints are added to the network packet as it traverses its assigned queues.

Owner:EMC IP HLDG CO LLC

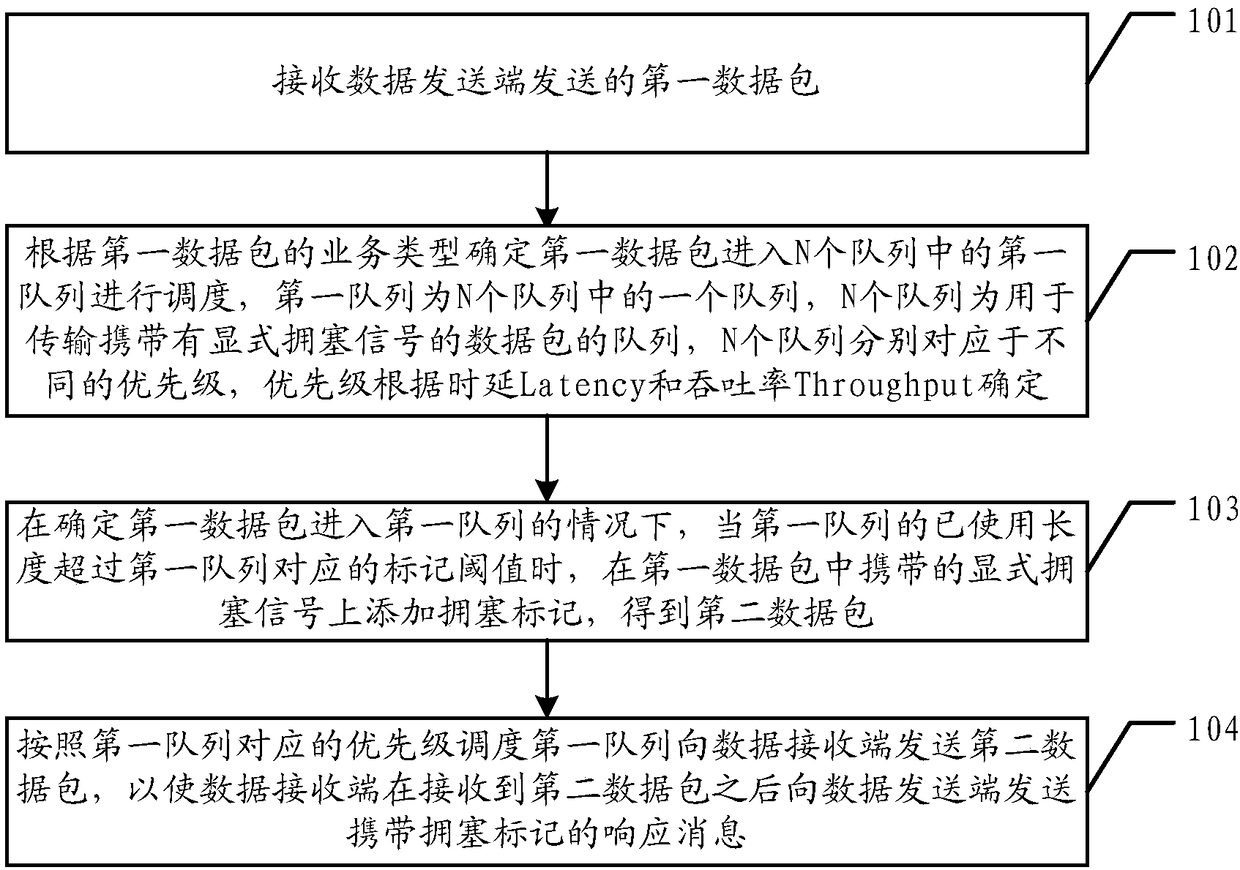

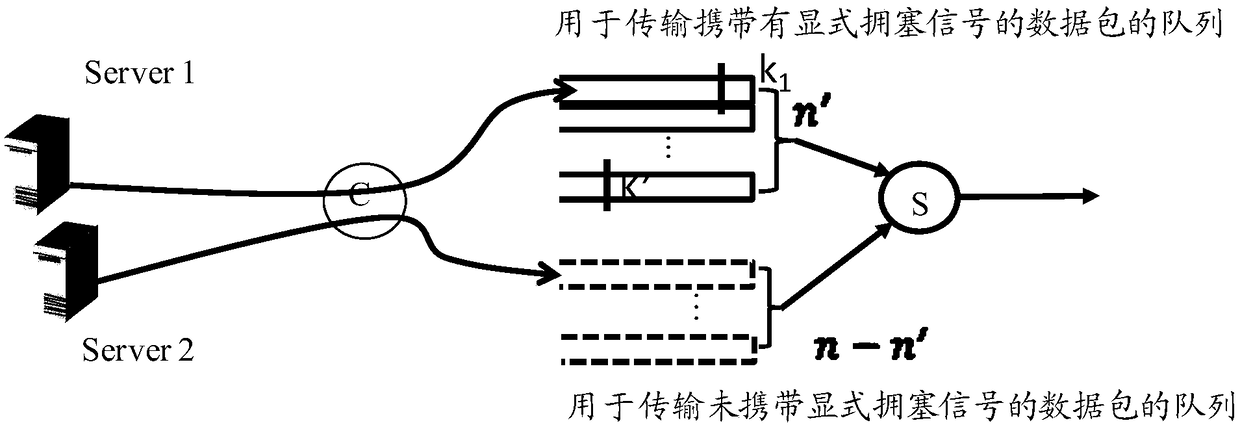

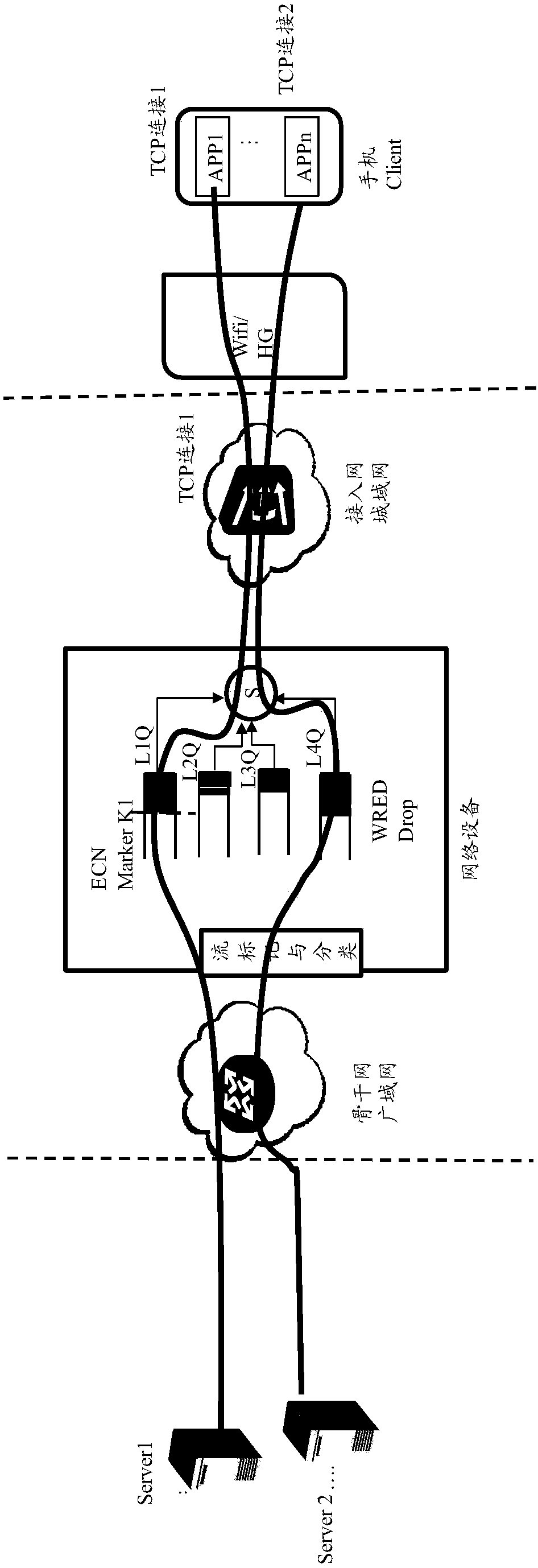

Data transmission method and network equipment

InactiveCN108259383AEasy to deployReduce latencyData switching networksMultilevel queueData transmission

The invention discloses a data transmission method and network equipment; the requirements of a data packet on latency and throughput rate are met under the condition of multiple queues. Firstly, a first data packet sent by a data sending end is received; according to the service type of the first data packet, the first data packet is determined to enter a first queue of the N queues for scheduling; the N queues are used for transmitting a data packet carrying an explicit congestion signal; the N queues correspond to different priorities respectively; the priorities are determined according tothe Latency and the Throughput; when the first data packet enters the first queue, and when the used length of the first queue exceeds a marking threshold value corresponding to the first queue, a congestion mark is added on the explicit congestion signal carried in the first data packet to obtain a second data packet; the first queue is scheduled to send the second data packet to a data receiving end according to the priority corresponding to the first queue; and the data receiving end sends a response message carrying the congestion mark to the data sending end after receiving the second data packet.

Owner:BEIJING HUAWEI DIGITAL TECH

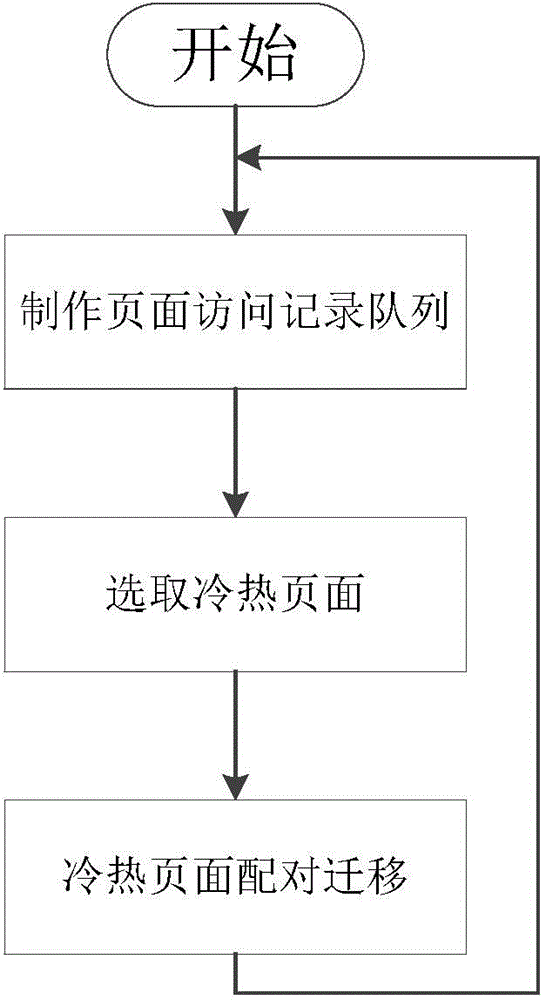

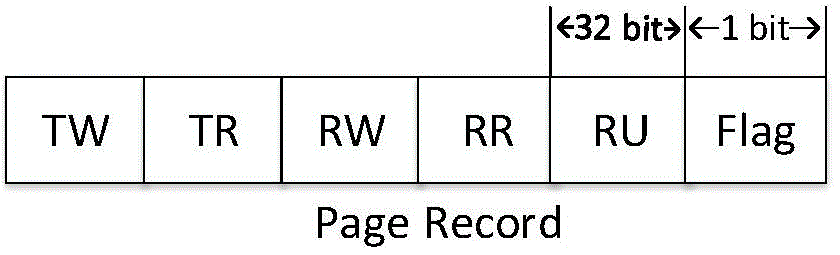

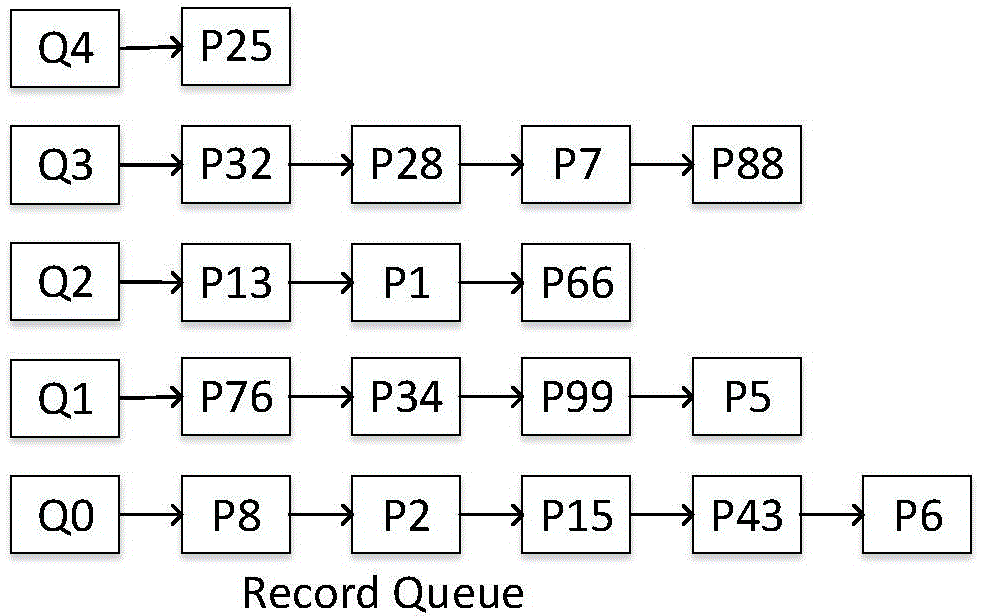

Page hot degree based heterogeneous memory management method

ActiveCN104699424AIntuitive selectionImprove performanceInput/output to record carriersMultilevel queueEnergy consumption

The invention discloses a page hot degree based heterogeneous memory management method. The page hot degree based heterogeneous memory management method is characterized by including the steps of (1), as for memory pages of a heterogeneous memory, collecting total accessed read-write data, partial accessed read-write data and recent accessed read-write data in the memory pages, and storing the read-write data in a multi-level queue to form a page access record queue; (2), determining page hot degree values and hot degree values according to the partial read-write data and the recent read-write data, and sequentially reserving N pages with page hot degree values, exceeding a preset hot degree threshold value, as hot pages and N pages with page cold degree values, exceeding a preset cold degree threshold value, as cold pages; (3), selecting one optional cold page to be combined with each hot page, estimating energy-saving values, sequencing the energy-saving values in a descending sequence, selecting the cold and hot pages to be matched, and migrating the pages according to match results so as to reduce energy consumption. By the aid of the page hot degree based heterogeneous memory management method, high performance of a dynamic random access memory can be used fully, and overall performance of a heterogeneous memory system can be improved.

Owner:HUAZHONG UNIV OF SCI & TECH

Methods and apparatus for flow control associated with multi-staged queues

Owner:JUMIPER NETWORKS INC

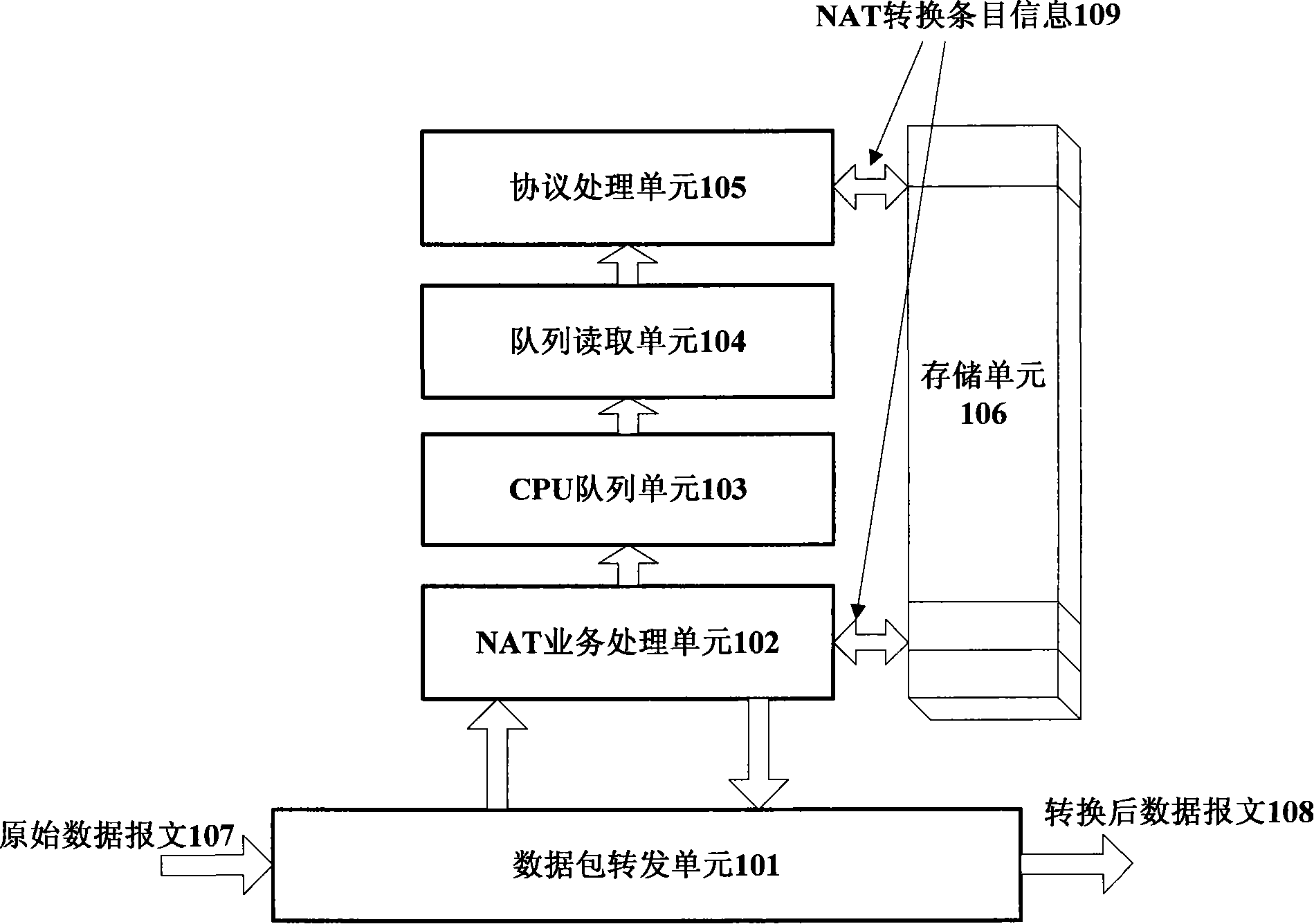

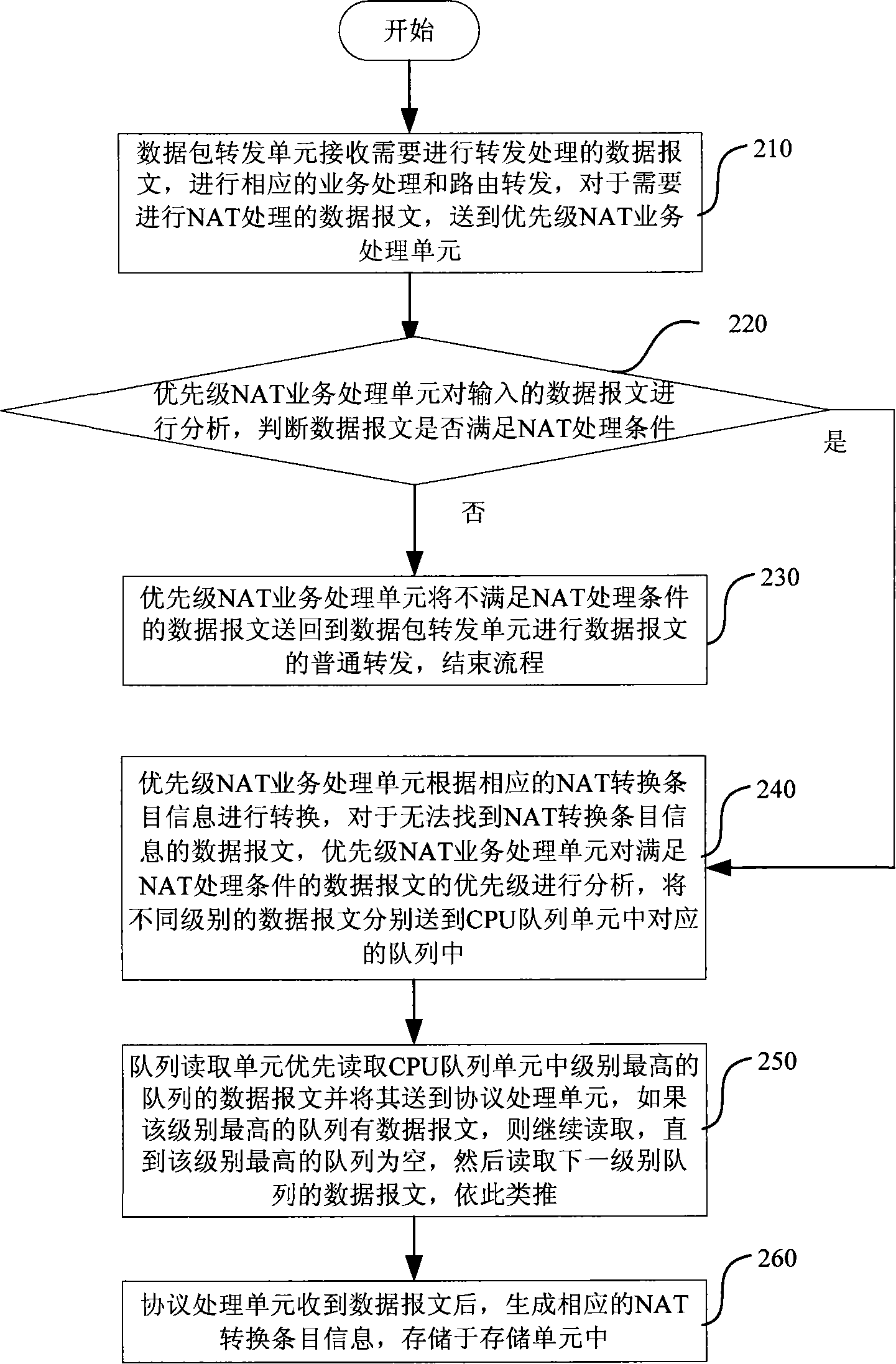

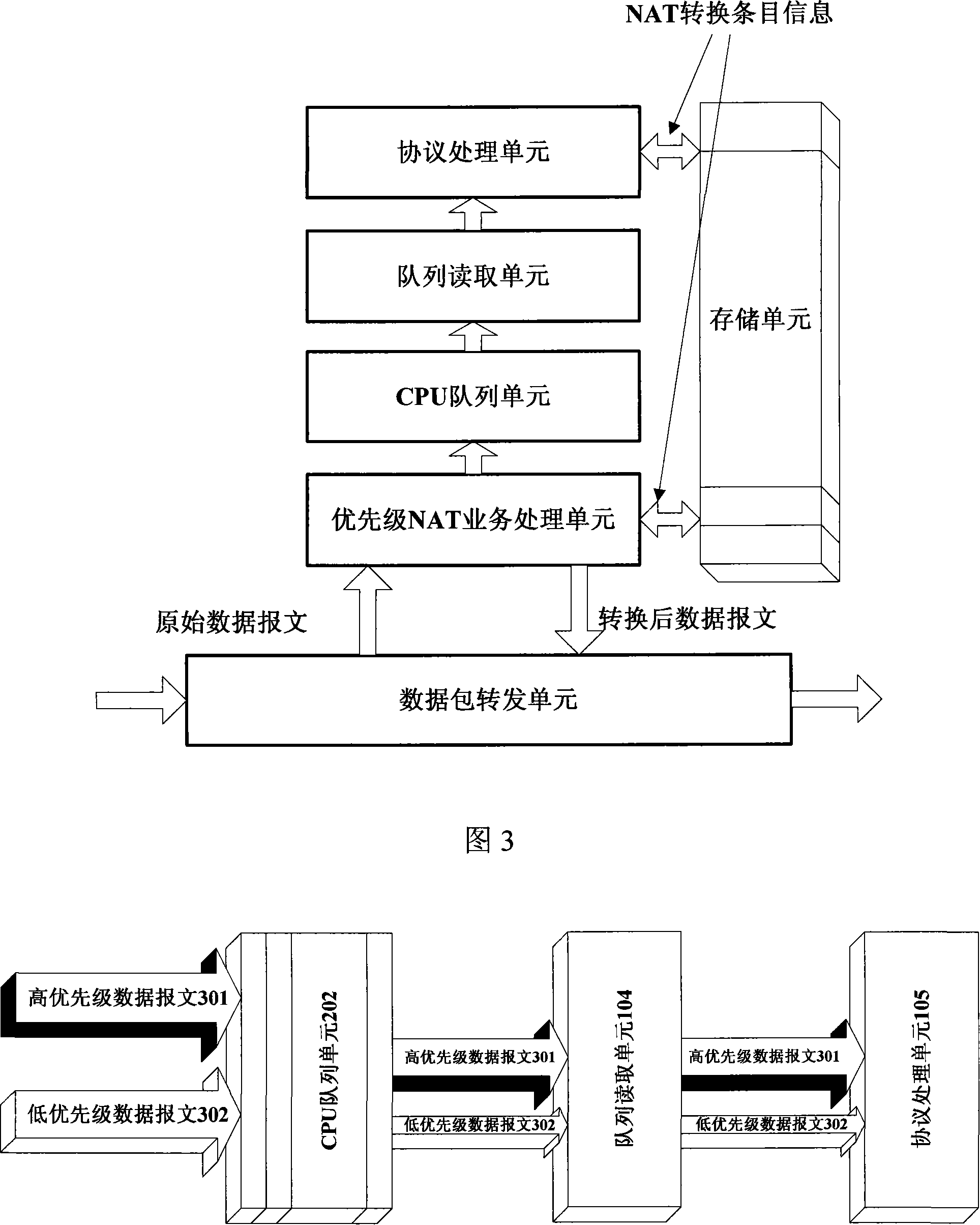

A network address conversion method and system based on priority queue

InactiveCN101242360AGuaranteed normal generationGuaranteed supportData switching networksMultilevel queueNetwork addressing

The invention discloses a method and a system for network address translation based on priority queue. The method comprises the steps that: firstly, a NAT service processing unit divides the received data massages needing to be NAT processed into multiple queues having different priorities, and sends the data massages having different priorities to corresponding queues of a CPU queue unit, wherein the data massages meet the NAP processing condition; secondly, a queue reading unit reads the data massages of the highest-priority queue in the CPU queue unit, the data massages is upward sent and network address translated, until the data massages of the highest-priority queue is empty, then the data massages of the next priority queue is read, upward sent and network address translated, the process is the same for other data massages. The invention improves the way of sending data massages upward, better guarantees the upward sending of high-priority data massages and generation of corresponding NAT converting item information, as well as application of high-priority users.

Owner:付丽敏

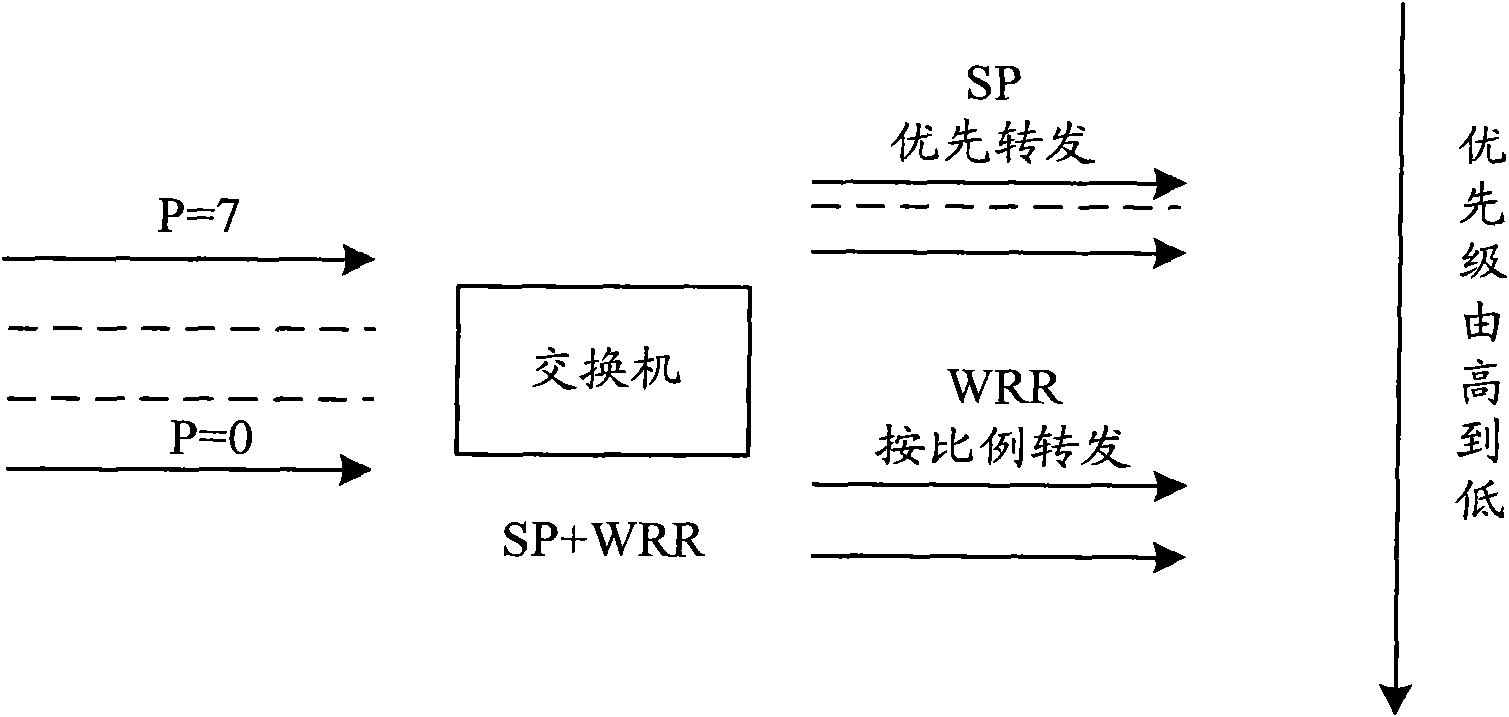

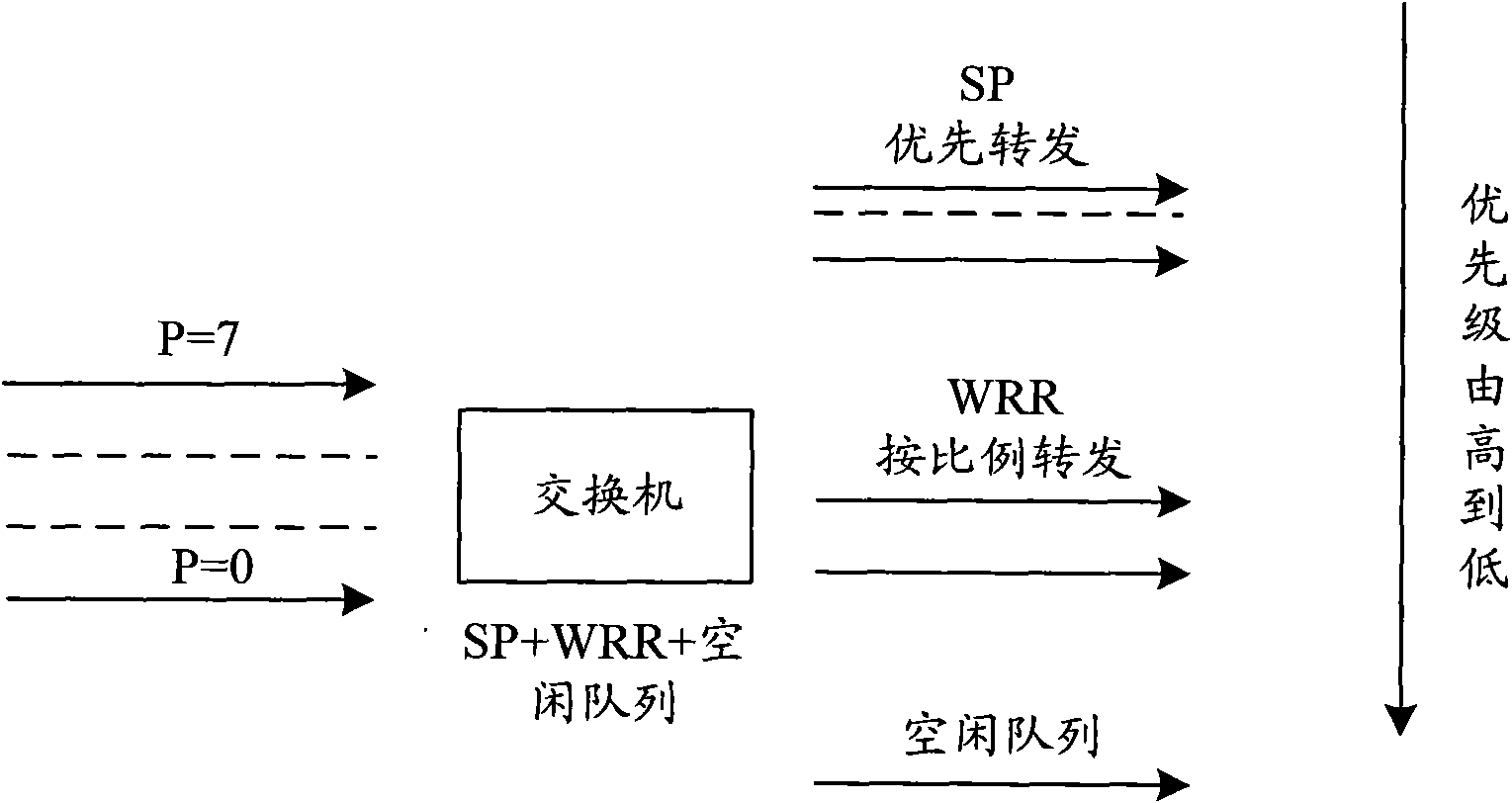

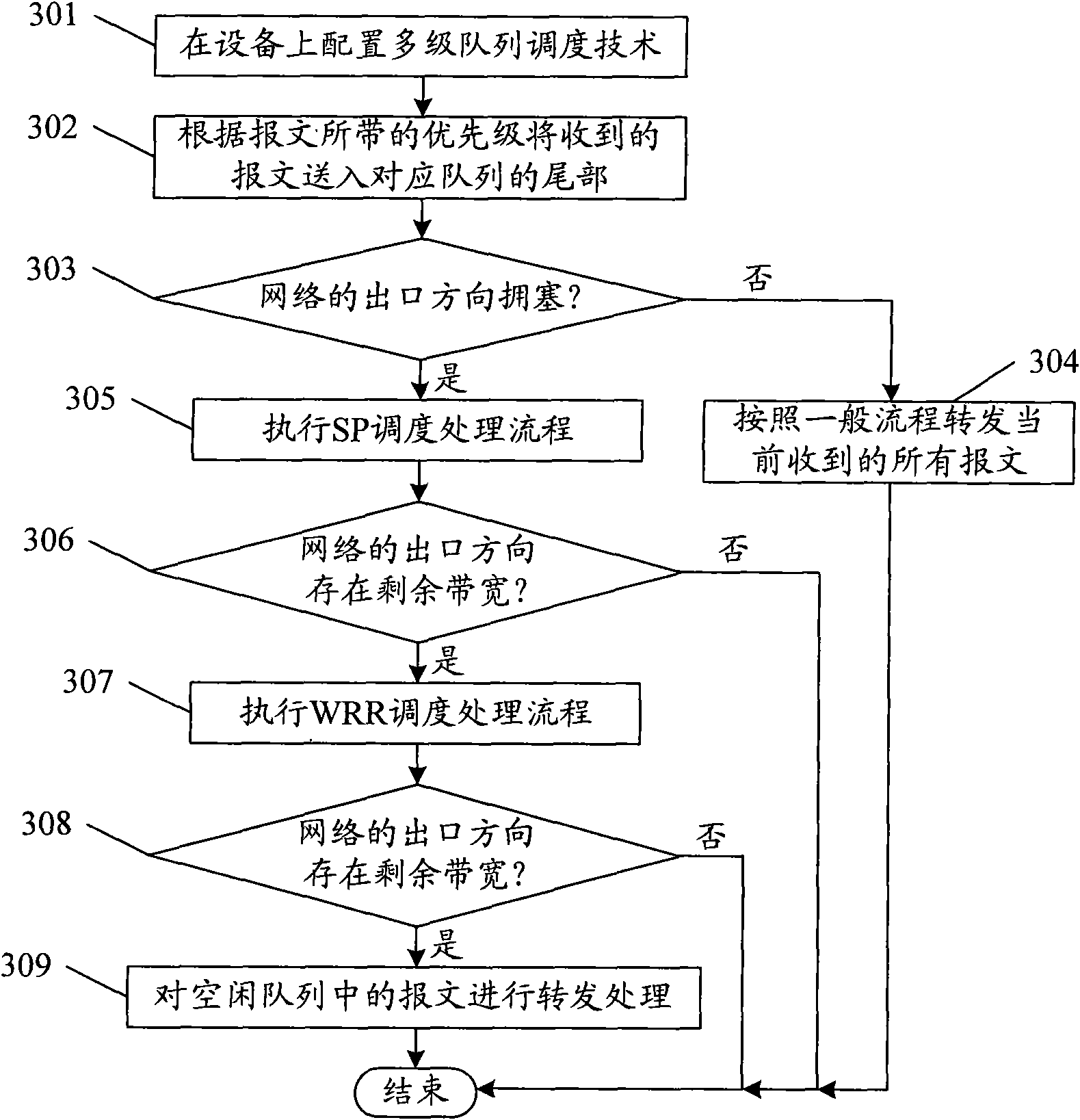

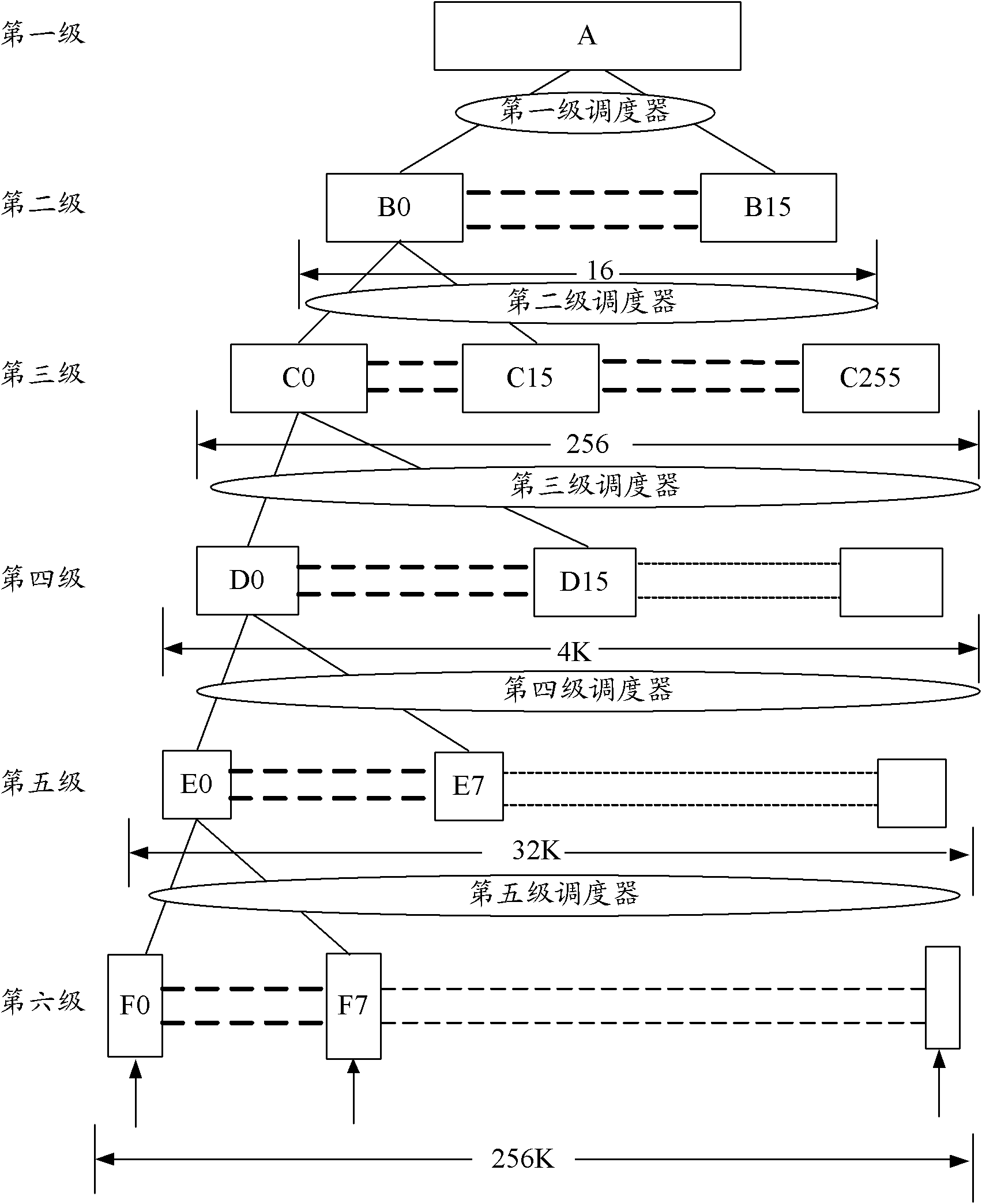

Method for realizing multilevel queue scheduling in data network and device

InactiveCN101557340AIncrease flexibilityMeet needsData switching networksMultilevel queueDistributed computing

The invention discloses a method for realizing multilevel queue scheduling in a data network. The method comprises the following steps of: sending the message into the tail part of a corresponding queue according to the priority of the received message; if the exit direction of the current network is not congested, forwarding the message received currently; otherwise, according to the queue scheduling mode of strict priority queues, weighted fair queues and idle queues which are preset, firstly executing scheduling processing flow of the strict priority queues; then, if the exit direction of the current network also has residual bandwidth, executing scheduling processing flow of the weighted fair queues; and then, if the exit direction of the current network also has residual bandwidth, carrying out forward processing to the messages in the idle queues. The invention also provides a device used for realizing multilevel queue scheduling in the data network. The method can lead the messages without need of guarantee not to preempt the bandwidth, thus improving the flexible application capability of queue scheduling.

Owner:ZTE CORP

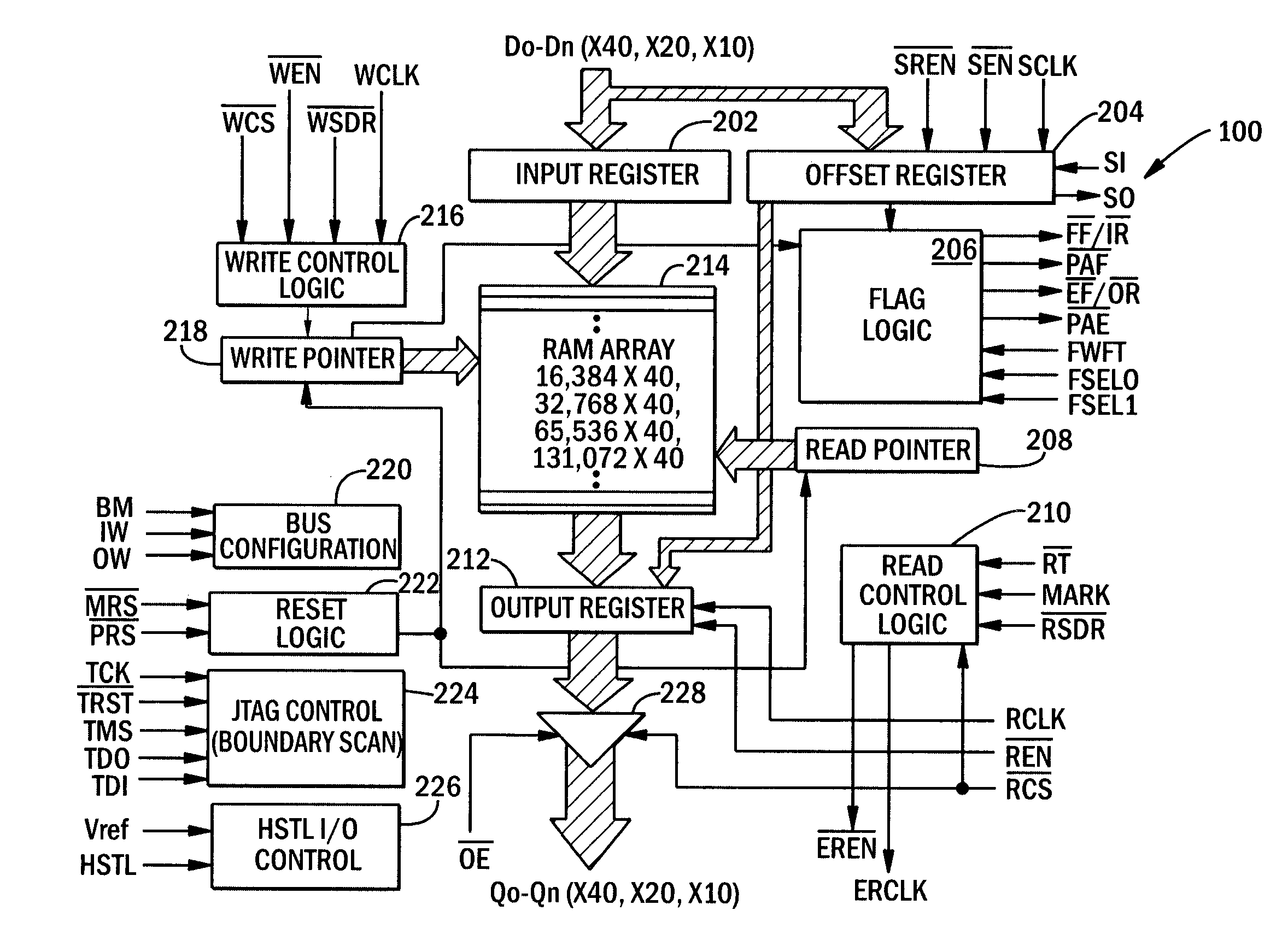

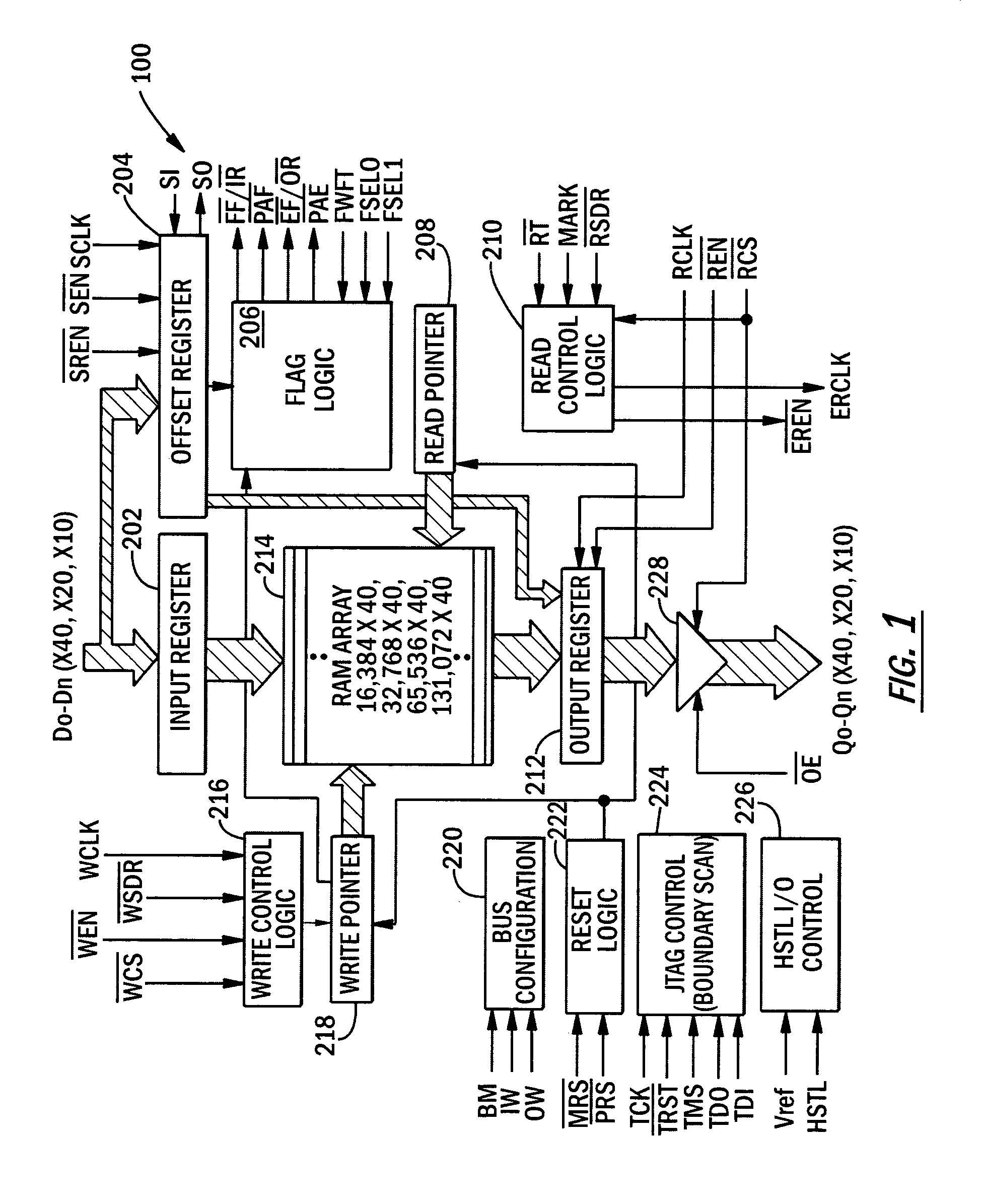

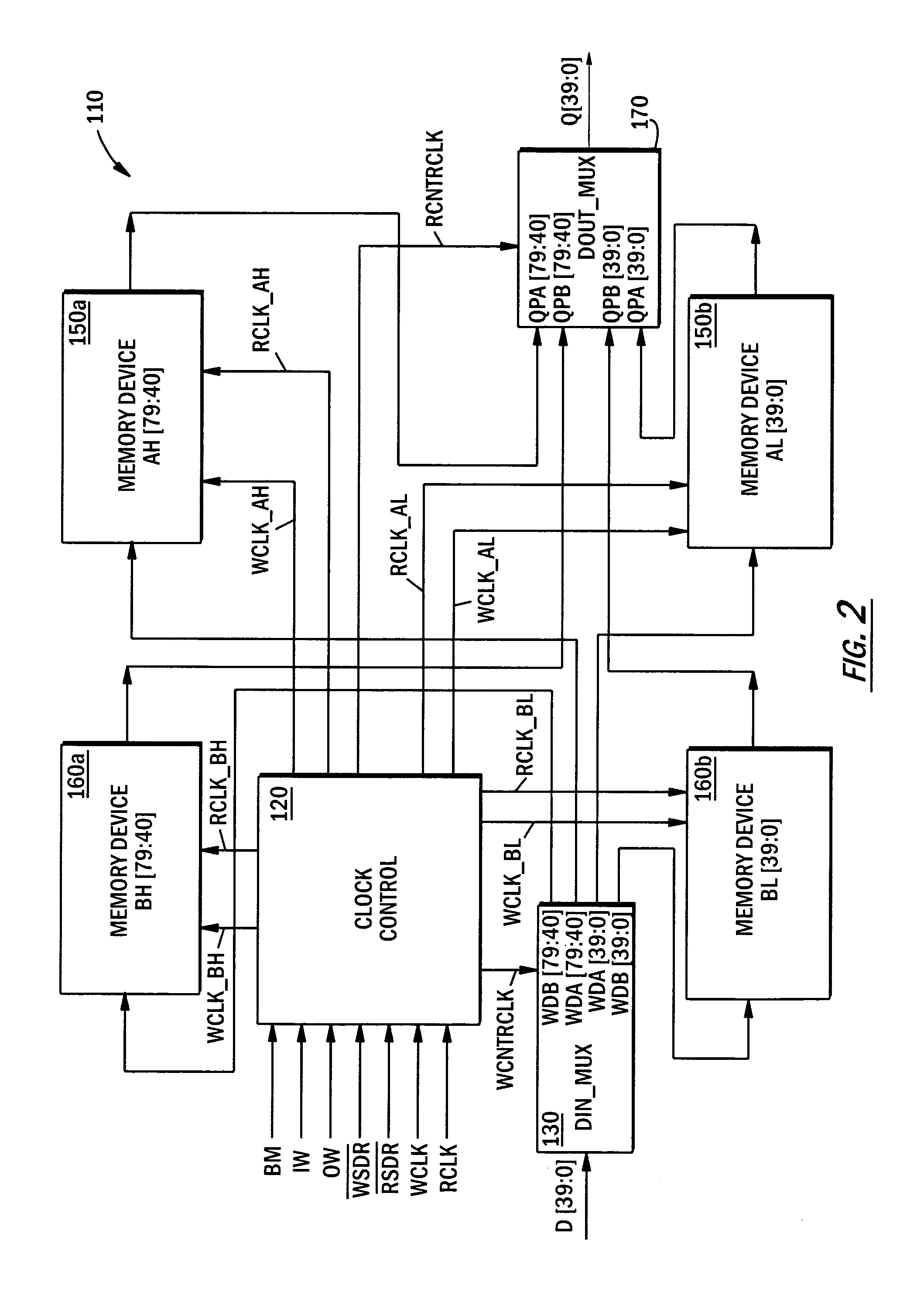

Integrated DDR/SDR flow control managers that support multiple queues and MUX, DEMUX and broadcast operating modes

InactiveUS7082071B2High data rateExtending of outputDigital storageData conversionMultilevel queueMultiplexer

An integrated circuit chip includes a plurality of independent FIFO memory devices that are each configured to support all four combinations of DDR and SDR write modes and DDR and SDR read modes and collectively configured to support all four multiplexer, demultiplexer, broadcast and multi-Q operating modes.

Owner:INTEGRATED DEVICE TECH INC

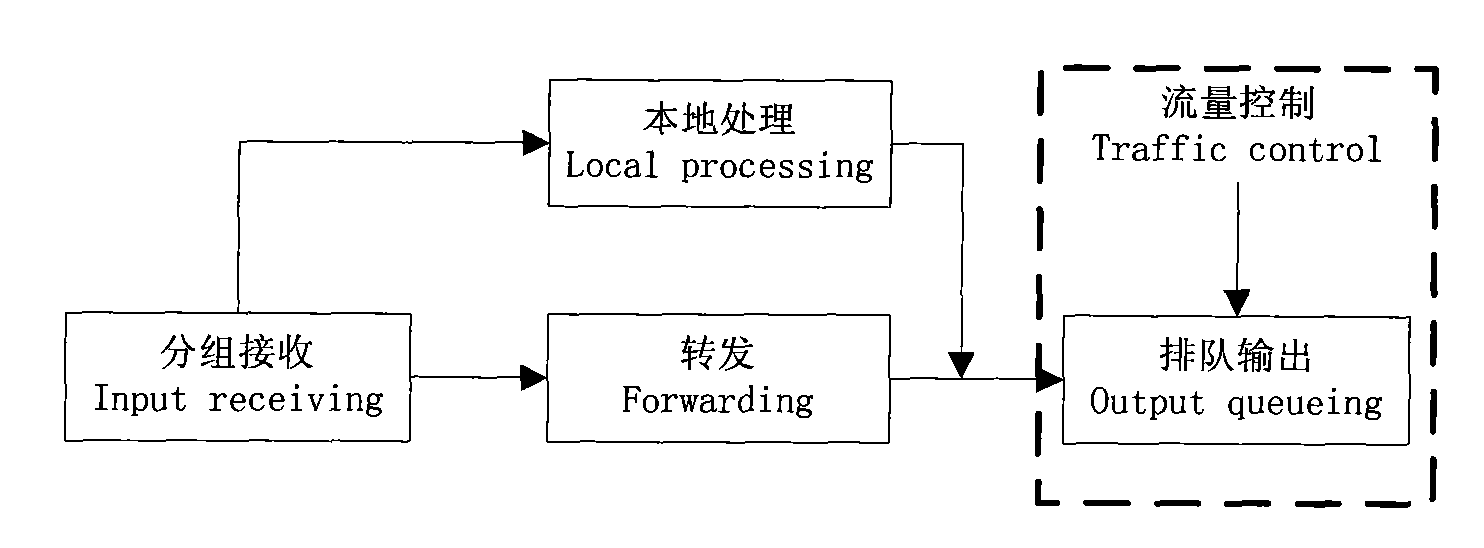

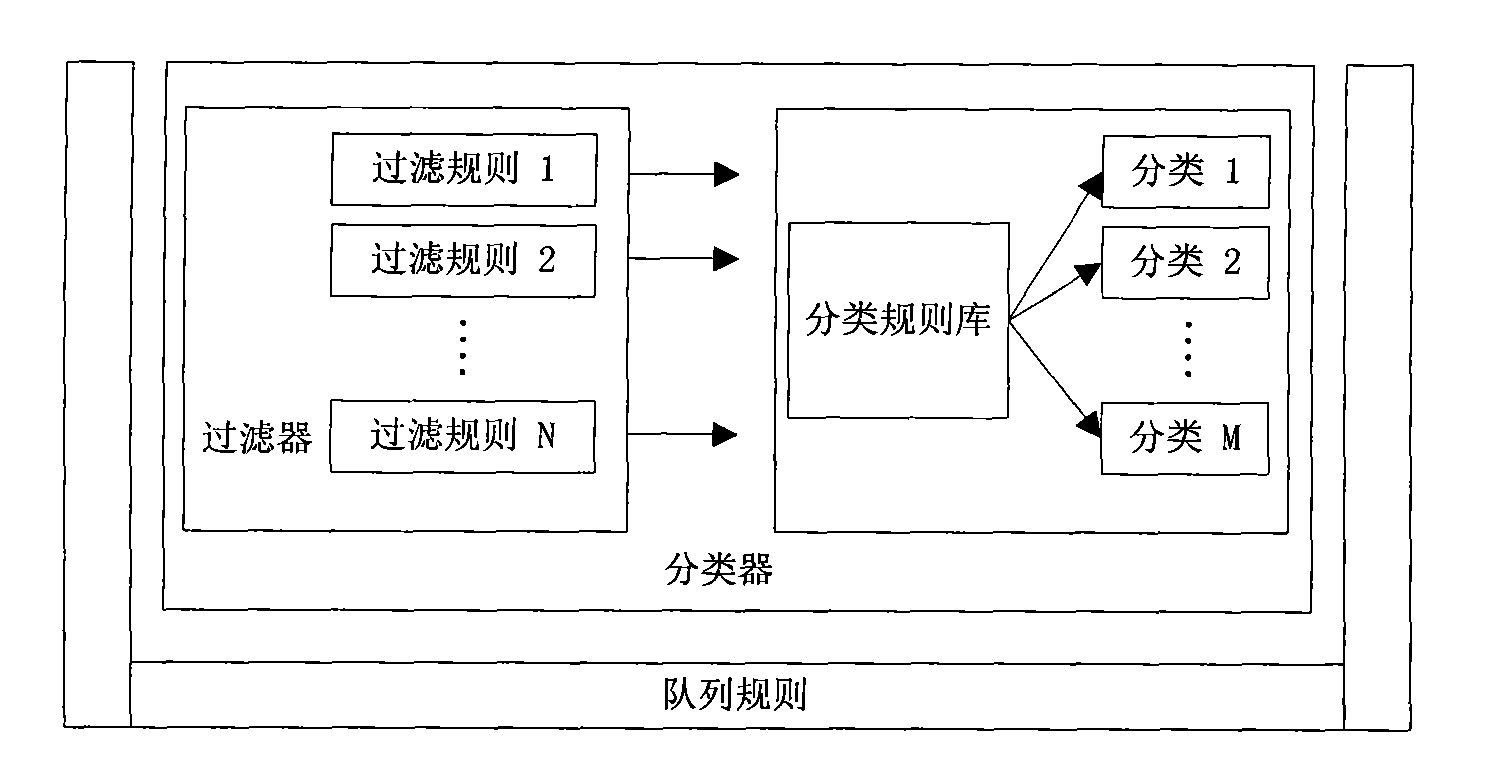

User and service based QoS (quality of service) system in Linux environment

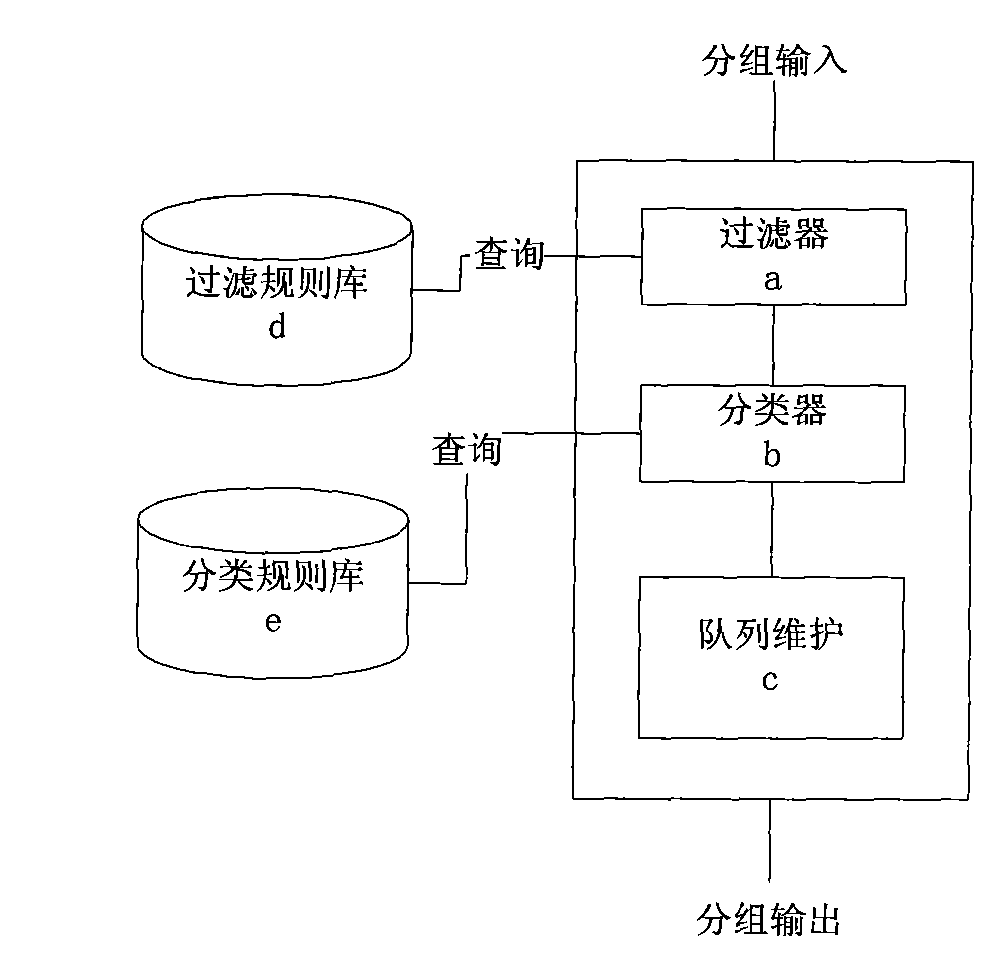

InactiveCN102082765AGuaranteed Latency RequirementsReduce complexityTransmissionQuality of serviceGNU/Linux

The invention relates to a user and service based QoS (quality of service) system in a Linux environment, which comprises a filter rule library, a filter, a classification rule library, a classifier and a queue maintaining module, wherein the filter rule library is connected with the filter; the classification rule library is connected with the classifier; the filter, the classifier and the queue maintaining module are successively connected; and the queue maintaining module adopts a structure of multistage queue plus LLQ (low latency queuing) and adopts a DWRR (display window right register) algorithm for dispatching and sending messages. Compared with the prior art, the system provided by the invention has the advantages of reducing sources required for system realization to the minimum, ensuring the system stability and the like.

Owner:SHANGHAI UNIV

Method for client terminal applying server for serve and system thereof

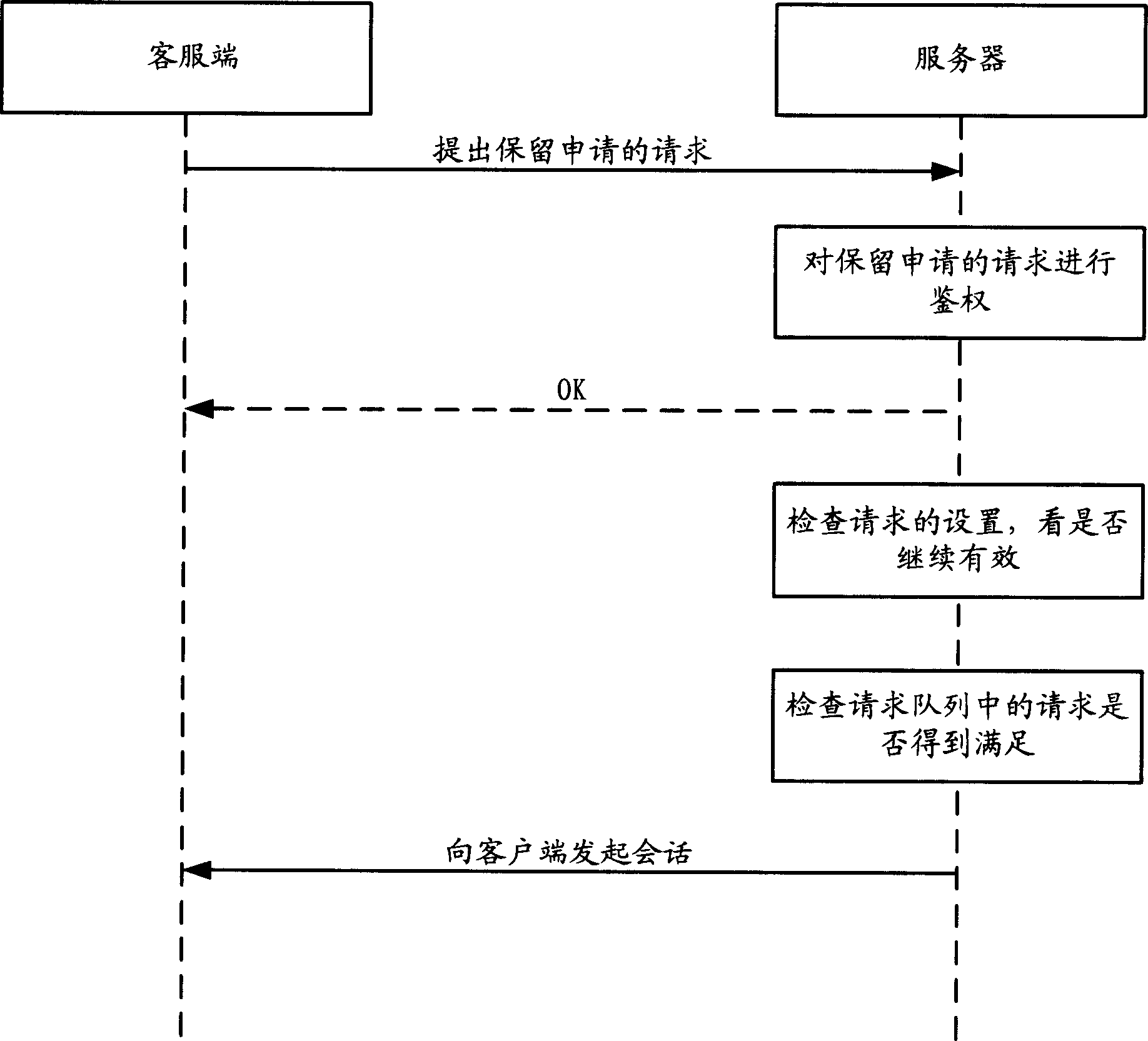

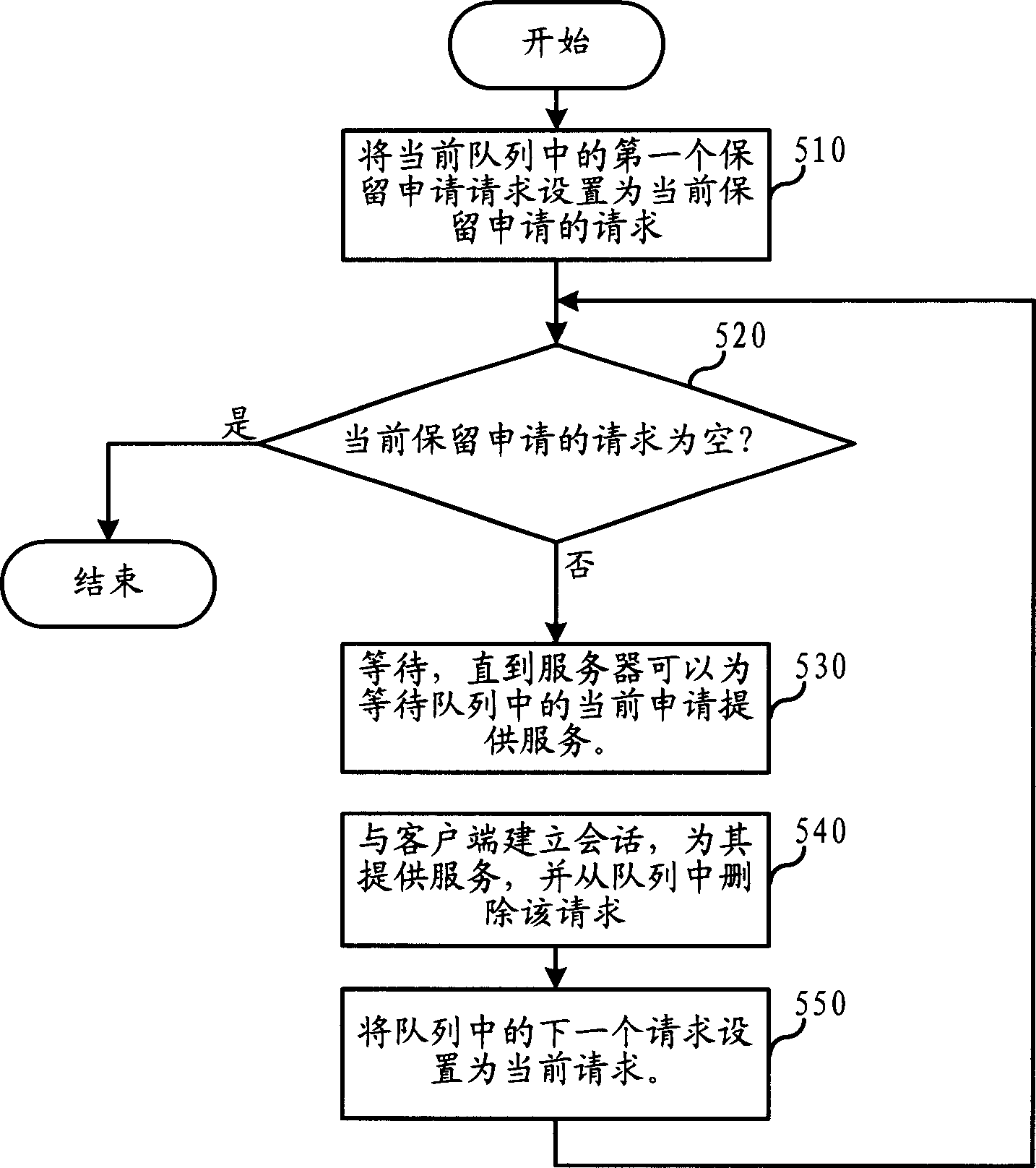

ActiveCN1863072AReduce waiting timeImprove efficiencyData switching networksSingle stageMultilevel queue

The invention discloses a method and system for applying service to server from client-side. The keeping applying would be sent to server, when the applying from client-side is rejected by server, and the server would add the applying into queue until the condition being ready. The keeping condition would be set by user and periodically checking the validity of the applying, deleting the invalid applying. The waiting queue could be single stage or multi-stage, and the first applying of the highest priority would get the service first.

Owner:HUAWEI TECH CO LTD

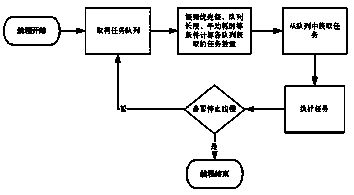

Dispatching management method for task queue priority of large data set

InactiveCN105511950AAvoid wastingEfficient use ofProgram initiation/switchingResource allocationData setMultilevel queue

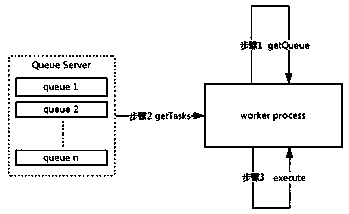

The invention discloses a dispatching management method for task queue priority of a large data set. According to the dispatching management method, a circulating thread for task acquisition is operated at each node of a task acquisition end, tasks are acquired from different queues according to priority of the queues, the tasks can be preferentially acquired from the high-priority queues, and meanwhile, the condition that the low-priority tasks cannot be blocked by the high-priority tasks is also taken into consideration. The queue service can support multiple queues of multiple types, the priority of each queue can be set, and average operating time of one queue can be acquired according to operating records; the number of the tasks acquired from each queue is dynamically adjusted according to multiple conditions including the task priority, queue length, the average operating time of each queue, the maximum number of the tasks acquired every time and the like, the tasks are dispatched reasonably, and resource waste is prevented.

Owner:HYLANDA INFORMATION TECH

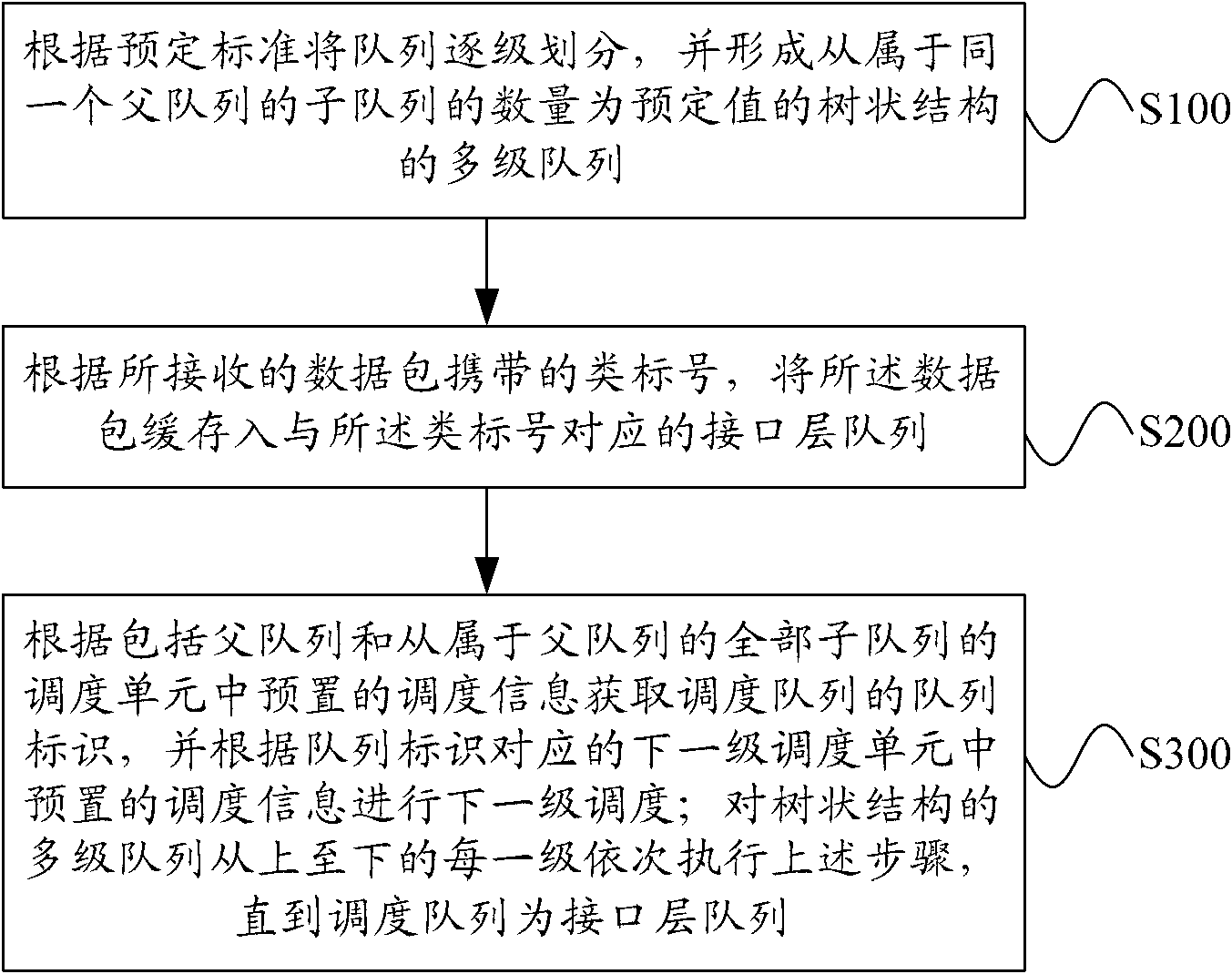

Queue scheduling method and system

InactiveCN102025639ARelieve schedule pressureImprove QOSData switching networksQuality of serviceMessage queue

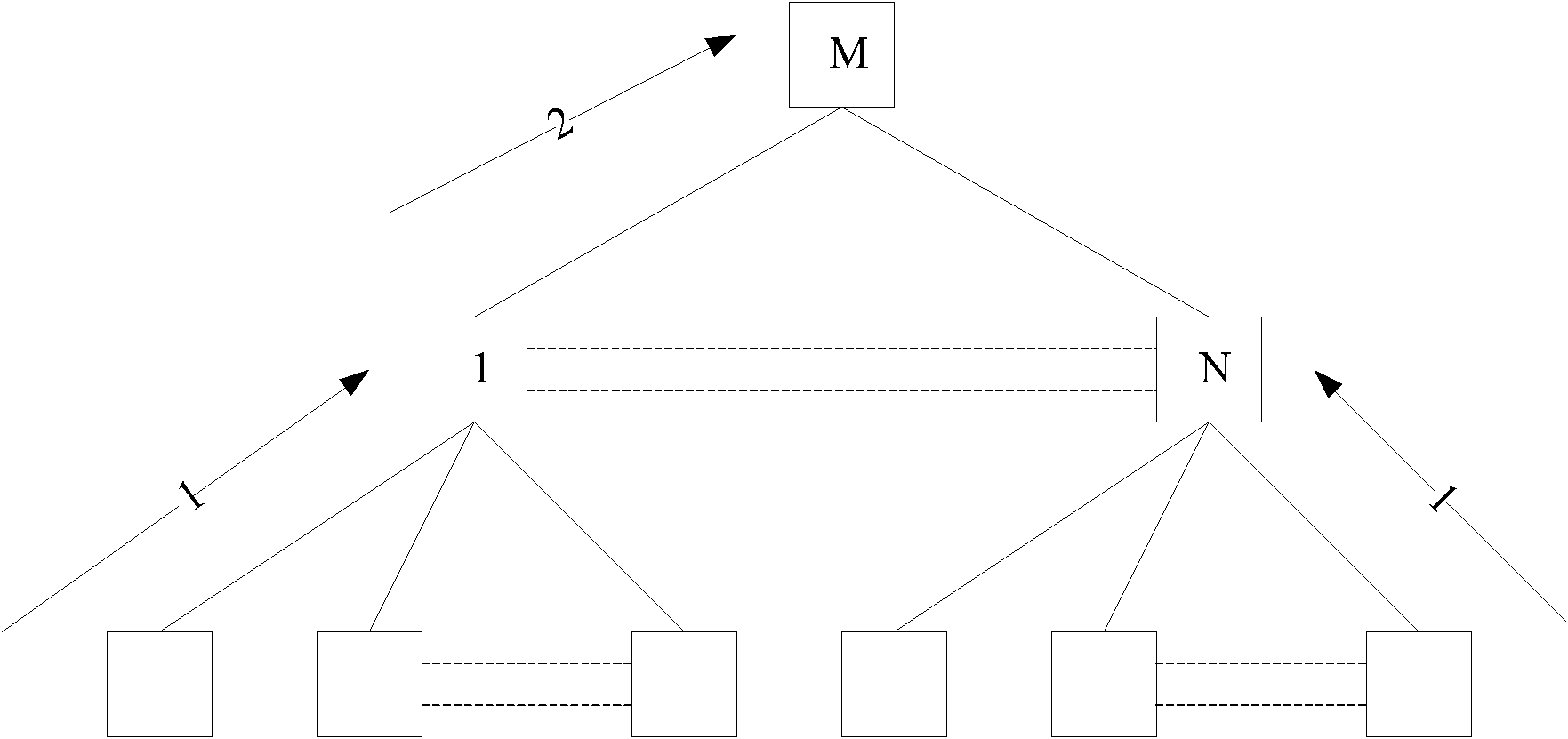

The invention provides a queue scheduling method and system. The queue scheduling method comprises the following steps: gradually dividing a queue according to a preset standard and forming a tree-like multi-stage queue in which the number of sub-queues subordinate to the same parent queue is a preset value; caching data packets into interface layer queues corresponding to class mark numbers according to the class mark numbers carried in the received data packet; acquiring a queue marker of a scheduling queue according to scheduling information preset in a scheduling unit comprising the parent queue and all the sub-queues subordinate to the same parent queue, and carrying out the next-stage scheduling according to the scheduling information preset in the next-stage scheduling unit corresponding to the queue marker; and carrying out the above steps on each of the tree-like multi-stage queue from top to bottom until the scheduling queue is the interface layer queue. By the queue scheduling method and system in the invention, effective scheduling is carried out in a complicated multi-stage system, and quality of service (QOS) of a network is improved.

Owner:BEIJING XINWANG RUIJIE NETWORK TECH CO LTD

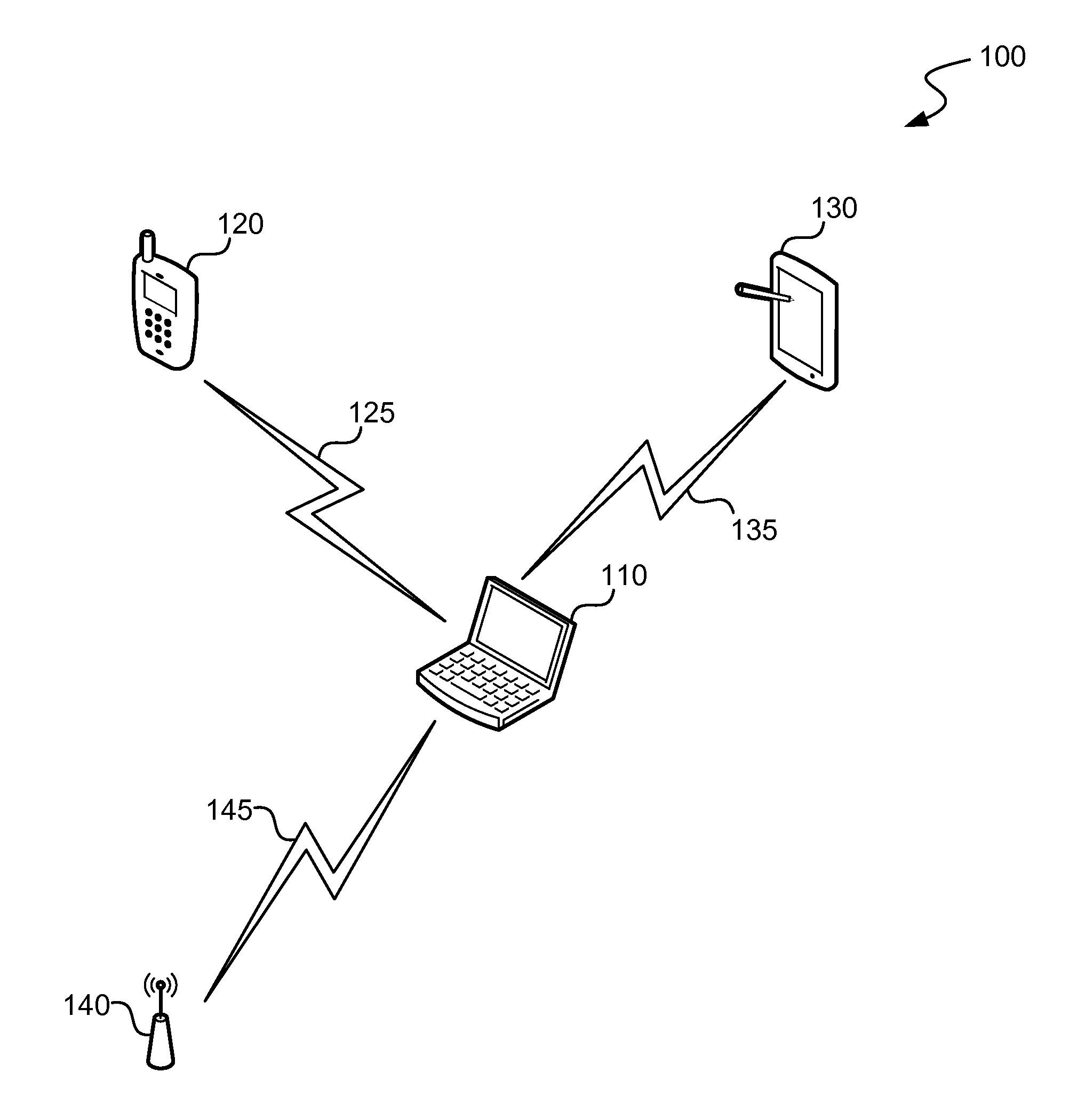

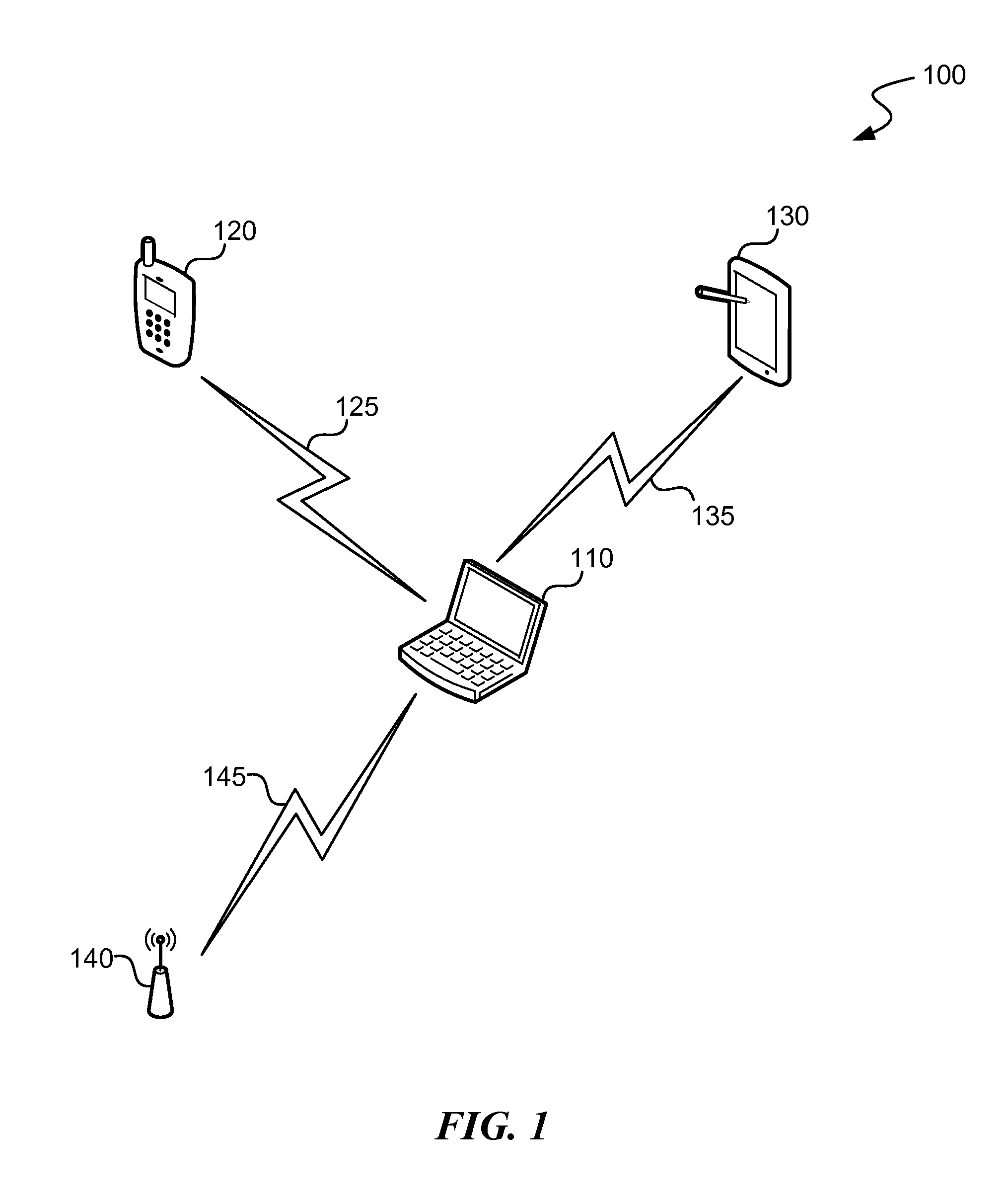

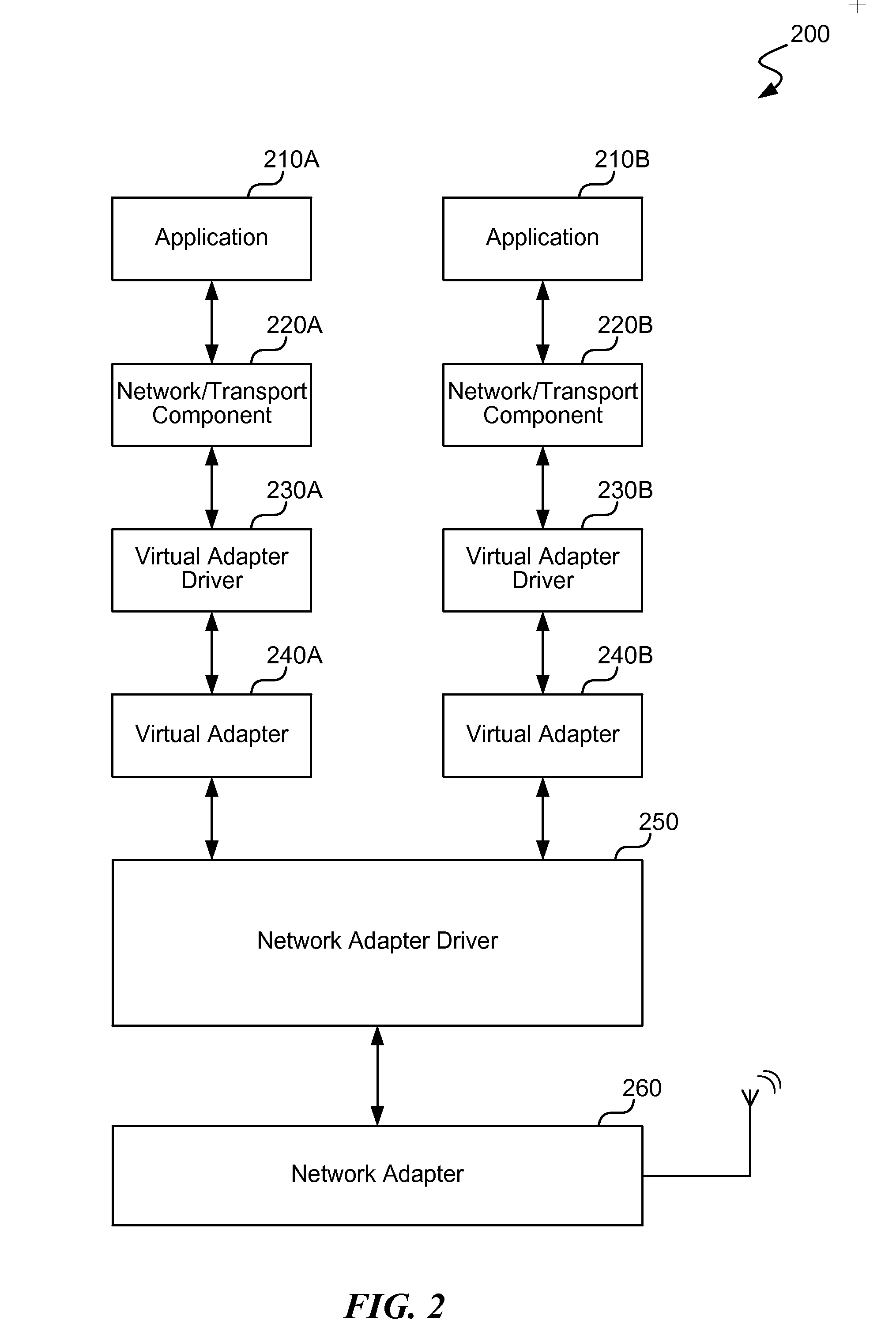

Management of multilevel queues for shared network adapters

ActiveUS20140359160A1Guaranteed normal transmissionDigital computer detailsData switching networksMultilevel queueData transmission

Technology for managing queuing resources of a shared network adapter is disclosed. The technology includes selectively transferring data from data transmission sources to a queue of the shared network adapter based on status indications from the shared network adapter regarding availability of queuing resources at the shared network adapter. In addition, the technology also includes features for selectively controlling transfer rates of data to the shared network adapter from applications, virtual network stations, other virtual adapters, or other data transmission sources. As one example, this selective control is based on how efficiently data from these data transmission sources are transmitted from the shared network adapter.

Owner:MICROSOFT TECH LICENSING LLC

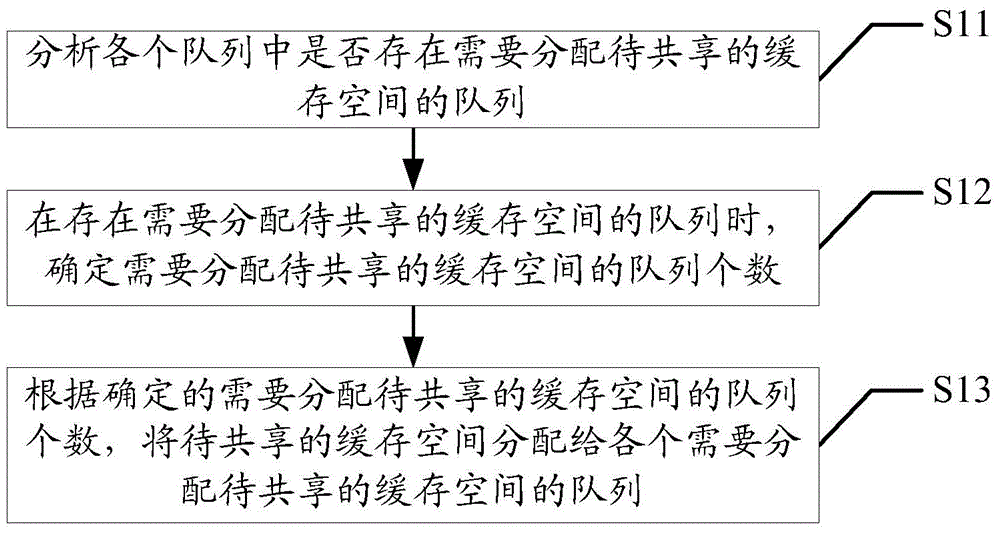

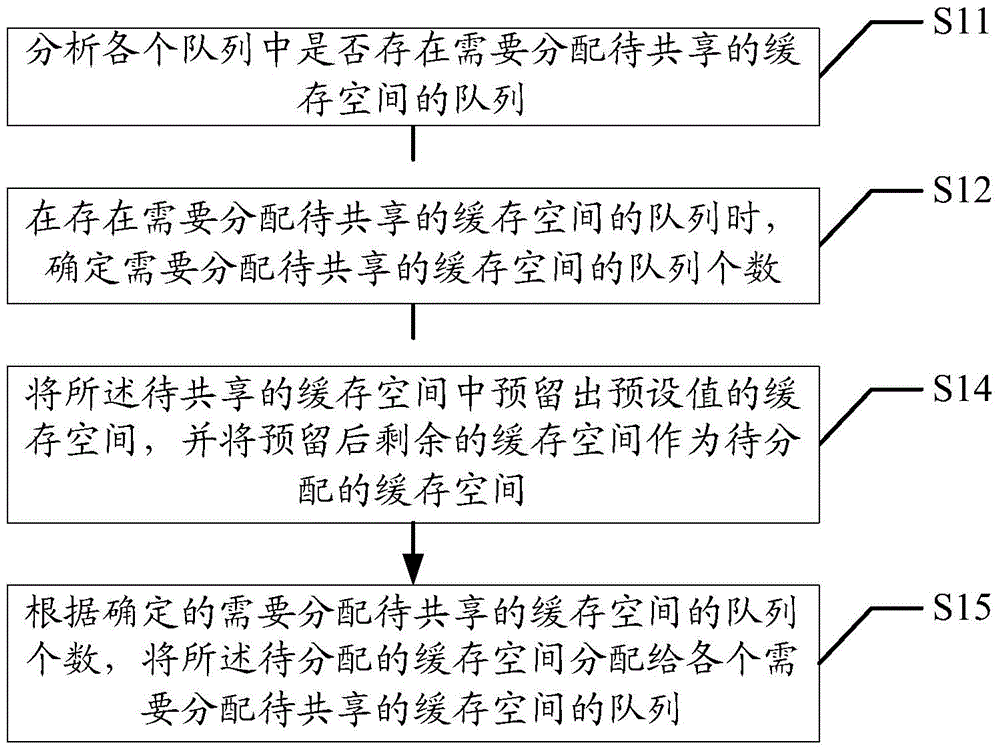

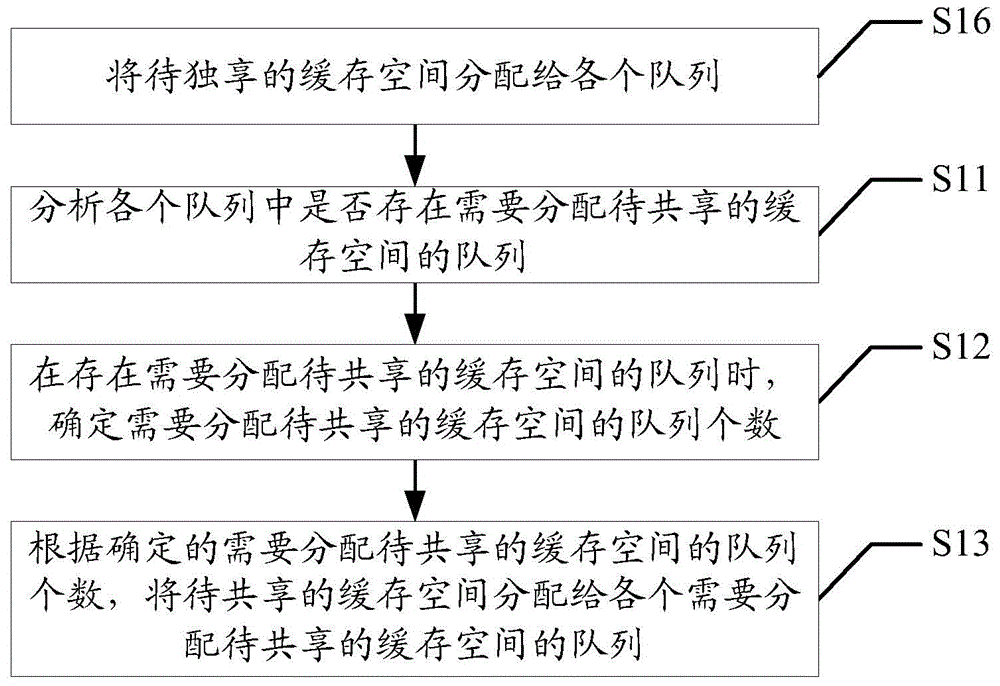

Method and device for carrying out distribution control on cache space with multiple queues

ActiveCN104426790ARealize automatic adjustment allocationIncrease profitData switching networksDistribution controlMultilevel queue

The embodiments of the present invention provide a method and device for controlling the allocation of a caching space and a computer storage medium. The method comprises: analyzing whether a queue to which a caching space to be shared is required to be allocated exists in each queue or not; when it is determined that the queue to which the caching space to be shared is required to be allocated exists, determining the number of queues to which the caching space to be shared is required to be allocated; and according to the determined number of the queues to which the caching space to be shared is required to be allocated, allocating the caching space to be shared to the queue to which the caching space to be shared is required to be allocated.

Owner:SANECHIPS TECH CO LTD

Traffic and load aware dynamic queue management

Some embodiments provide a queue management system that efficiently and dynamically manages multiple queues that process traffic to and from multiple virtual machines (VMs) executing on a host. This system manages the queues by (1) breaking up the queues into different priority pools with the higher priority pools reserved for particular types of traffic or VM (e.g., traffic for VMs that need low latency), (2) dynamically adjusting the number of queues in each pool (i.e., dynamically adjusting the size of the pools), (3) dynamically reassigning a VM to a new queue based on one or more optimization criteria (e.g., criteria relating to the underutilization or overutilization of the queue).

Owner:VMWARE INC

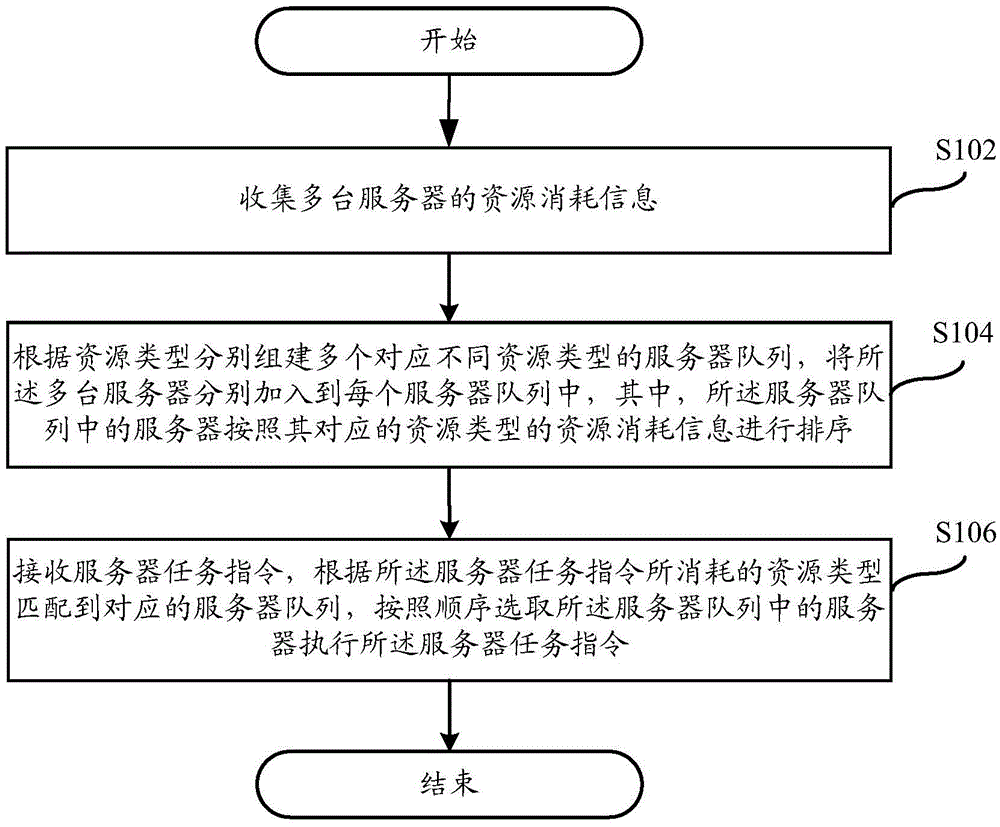

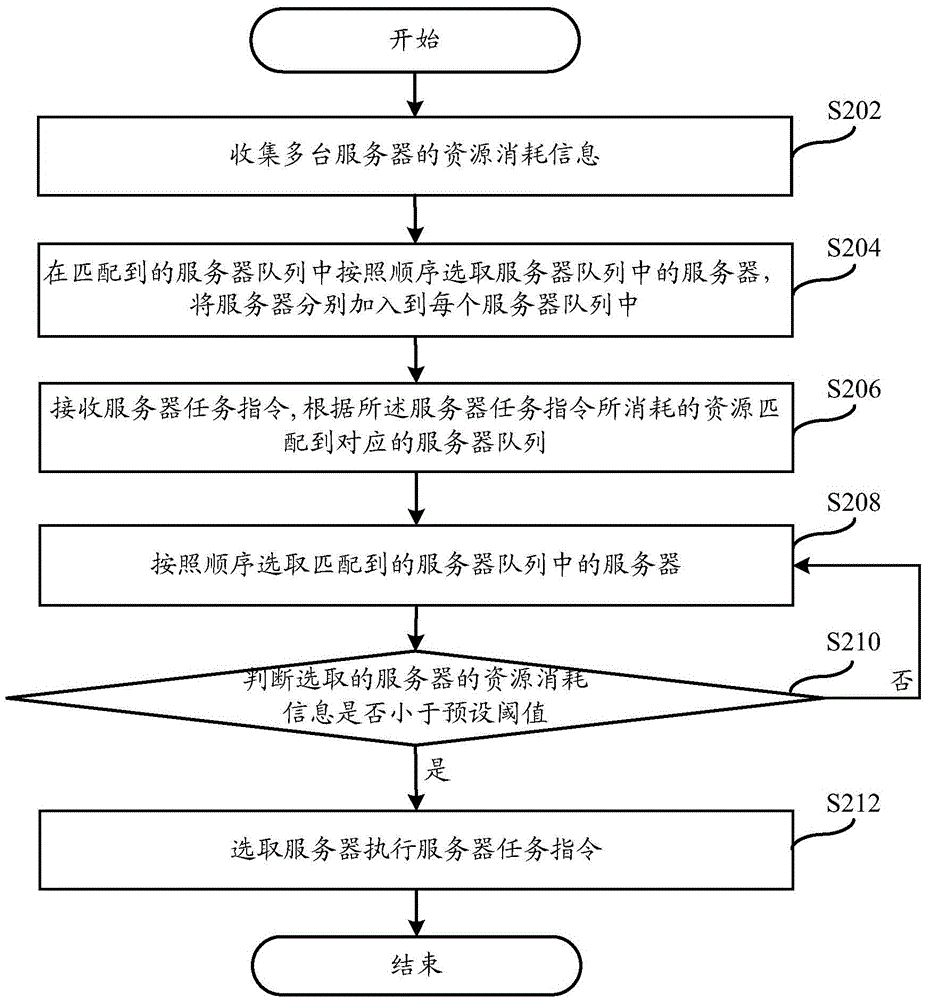

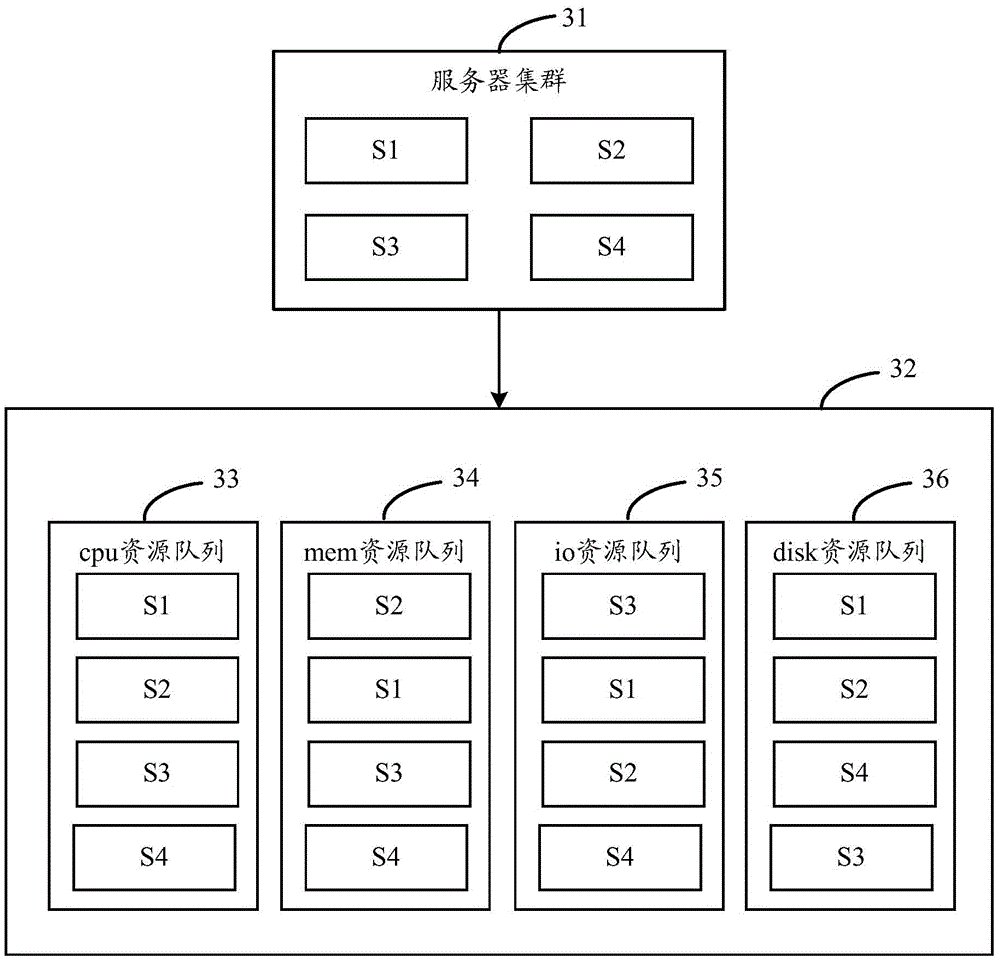

Server scheduling method and system

The invention discloses a server scheduling method and system. The method comprises the following steps: collecting resource consumption information of multiple servers; according to resource types, respectively establishing multiple server queues corresponding to different resource types, and adding the multiple servers into each server queue, wherein the servers in the server queues are ordered according to the resource consumption information of corresponding resource types; and receiving a server task instruction, according to the resource type consumed by the server task instruction, matching to the corresponding server queues, and according to a sequence, selecting servers in the server queues to execute the server task instruction. According to the invention, the servers with the best performance in a server cluster can be selected for executing the sever task instruction.

Owner:ALIBABA GRP HLDG LTD

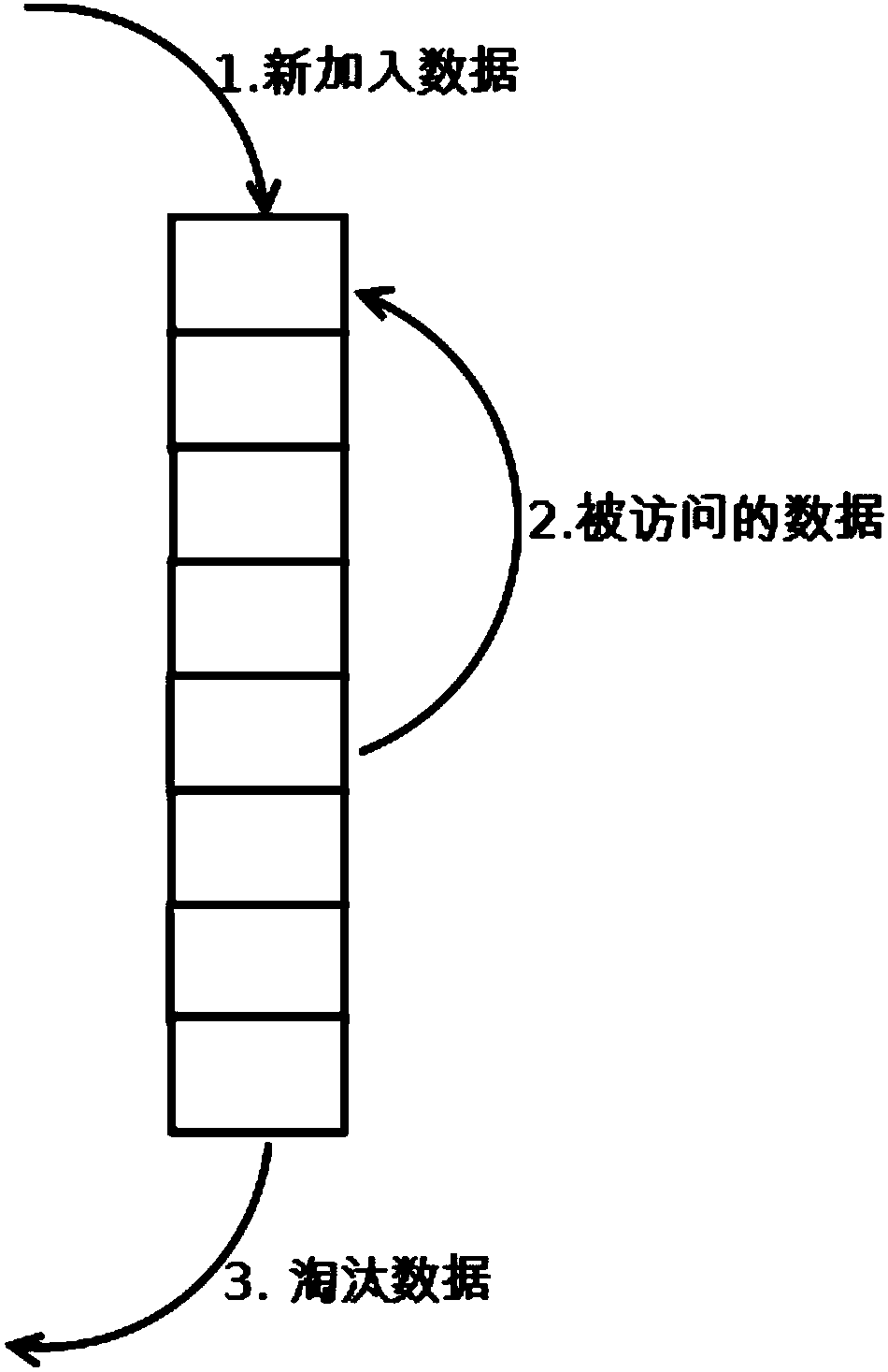

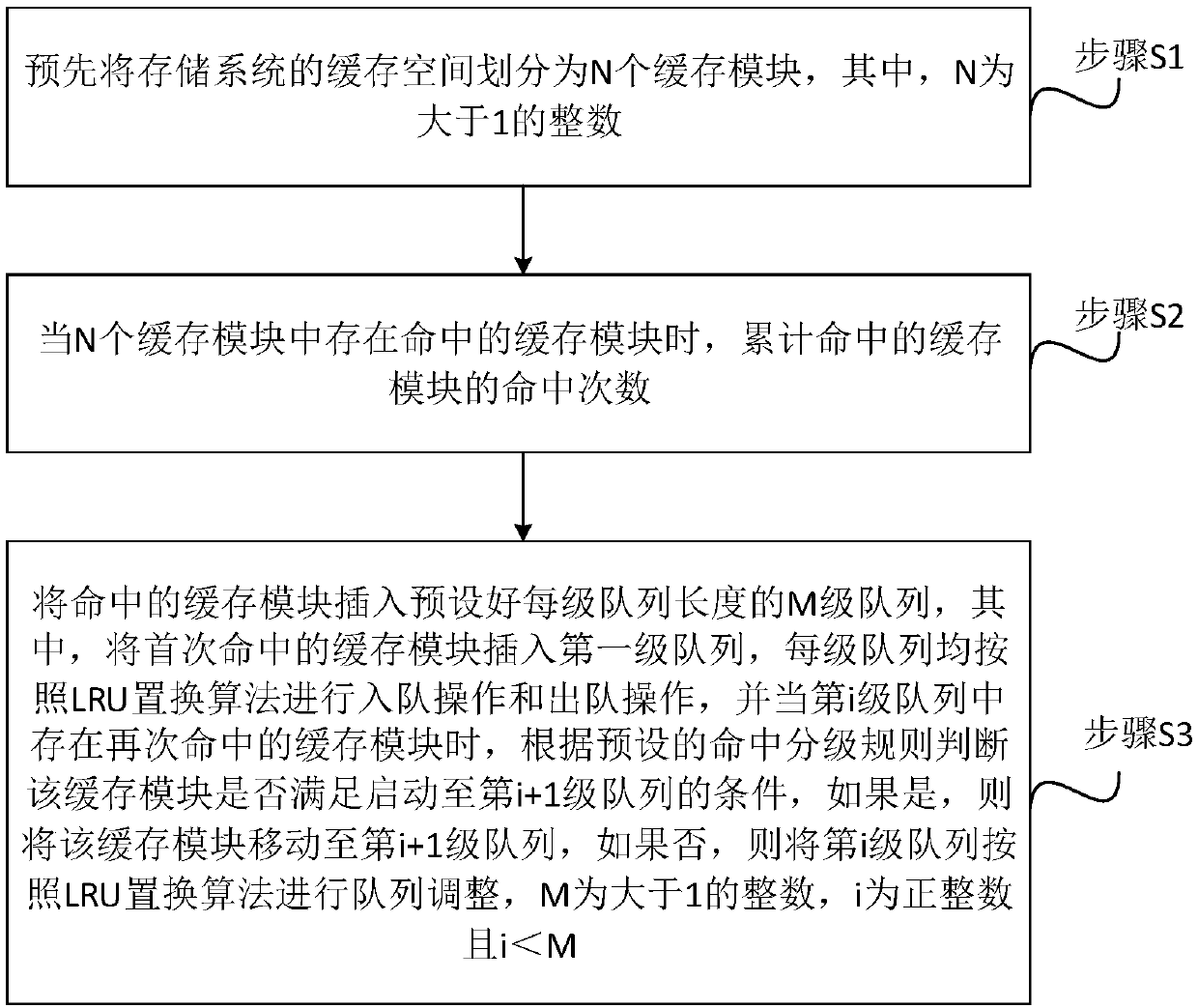

Replacement method and system for cached data in storage system, and storage system

InactiveCN107704401AAvoid the jitter problem of access performanceThe frequency of replacement is reducedMemory architecture accessing/allocationMemory systemsMultilevel queueParallel computing

The invention discloses a replacement method and system for cached data in a storage system, and the storage system. The method comprises the steps of dividing a cache space of the storage system intoN cache modules in advance, and when the hit cache module exists in the N cache modules, accumulating a hit count of the hit cache module; inserting the hit cache module into an M-level queue with preset queue lengths of all levels, wherein the cache module hit for the first time is inserted in the first-level queue, and the queue of each level performs enqueuing operation and dequeuing operationaccording to an LRU replacement algorithm; and when the re-hit cache module exists in the ith-level queue, judging whether the cache module meets a condition of starting the (i+1)th-level queue or not according to a preset hit grading rule, and if yes, moving the cache module to the (i+1)th-level queue, or otherwise, performing queue adjustment on the ith-level queue according to the LRU replacement algorithm. The jitter problem of IO access performance is avoided; and the system access efficiency is improved.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

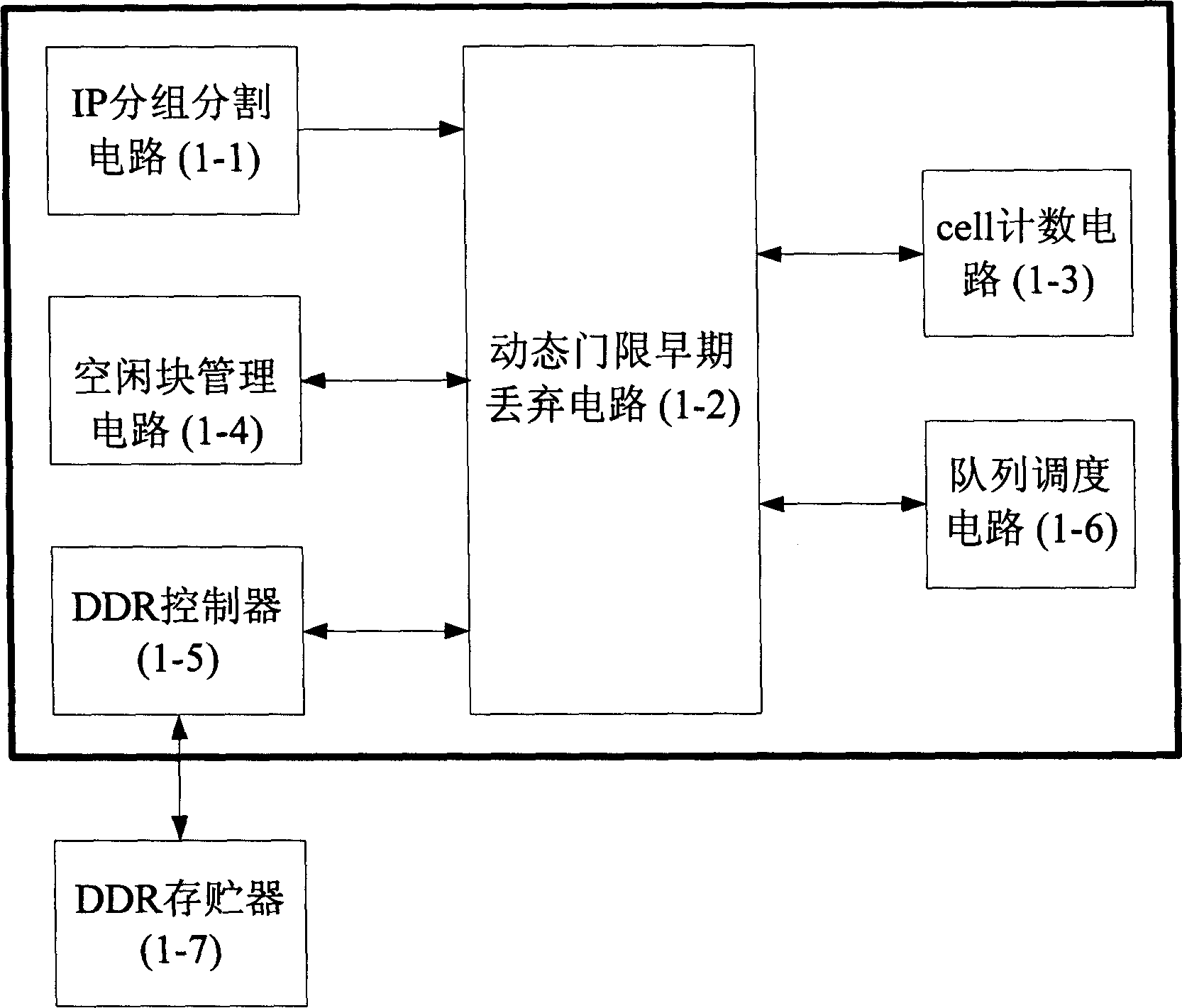

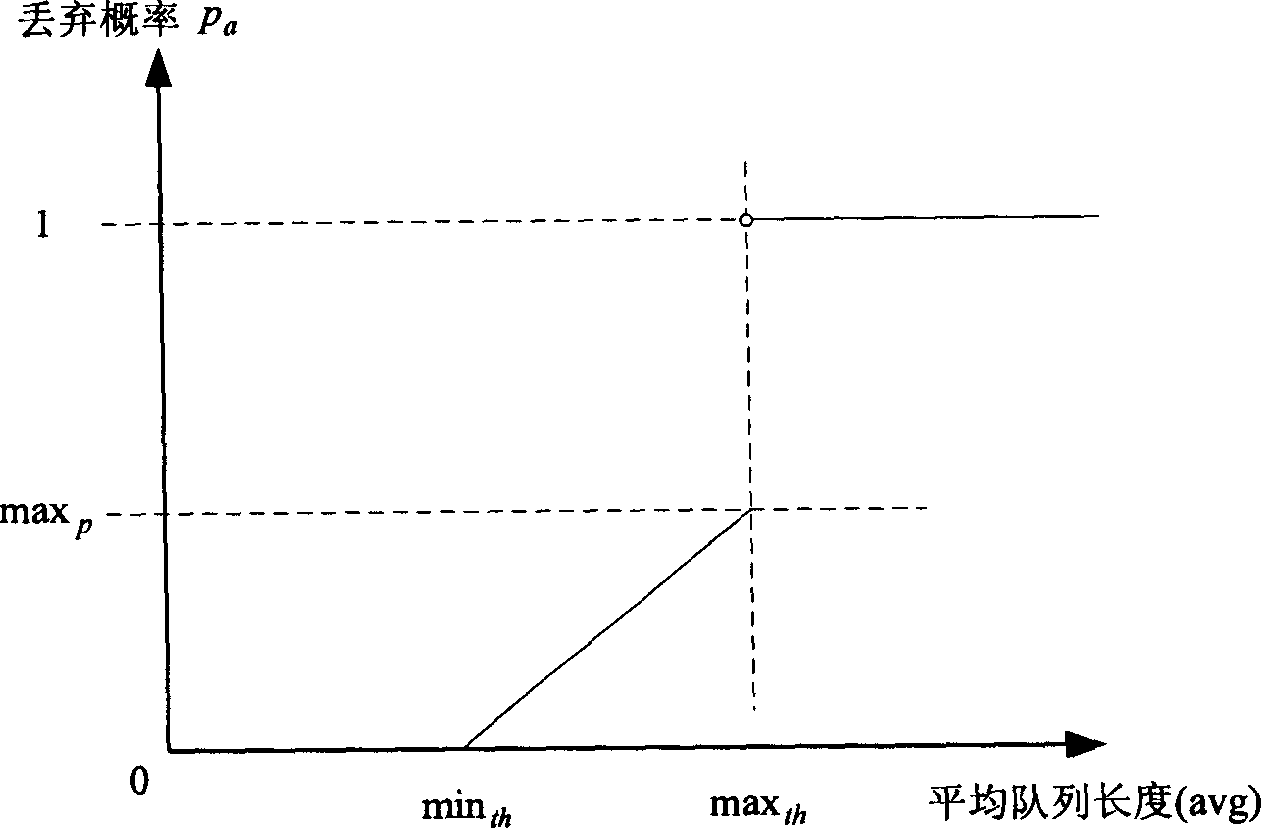

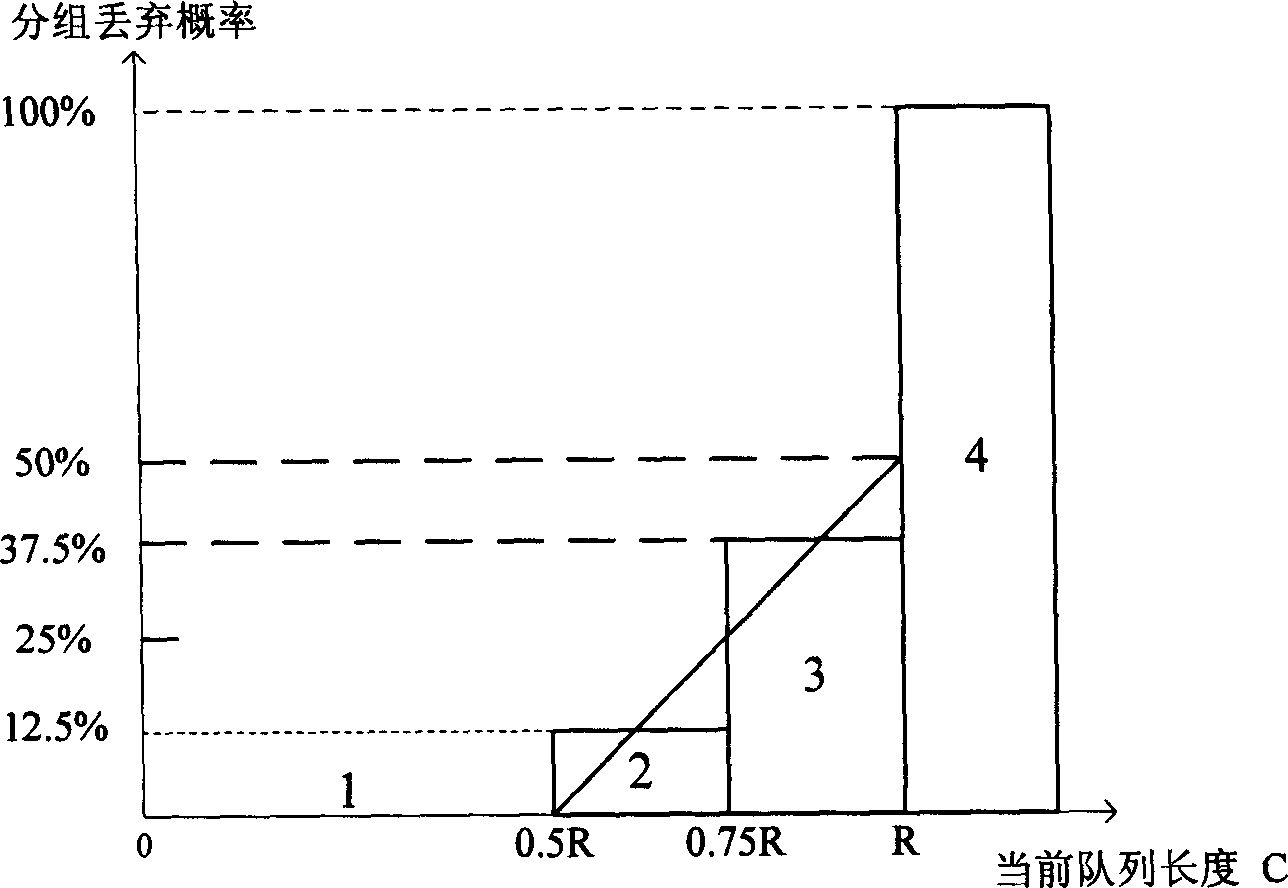

Sharing cache dynamic threshold early drop device for supporting multi queue

InactiveCN1777147AAdaptableImprove cache utilizationData switching networksRandom early detectionPacket loss

Belonging to IP technical area, the invention is realized on a piece of field programmable gate array (FPGA). Characters of the invention are that the device includes IP grouping circuit, early discarding circuit with dynamic threshold, cell counting circuit, idle block management circuit, DDR controller, queue dispatch circuit, storage outside DDR. Based on average queue size of each current active queue and average queue size in whole shared buffer area, the invention adjusts parameters of random early detection (RED) algorithm to put forward dynamic threshold early discarding method of supporting multiple queues and sharing buffer. The method keeps both advantages of mechanism of RED and dynamic threshold. It is good for realization in FPGA to use cascaded discarding curve approximation. Features are: smaller rate of packet loss, higher use ratio of buffer, and a compromise of fairness.

Owner:TSINGHUA UNIV

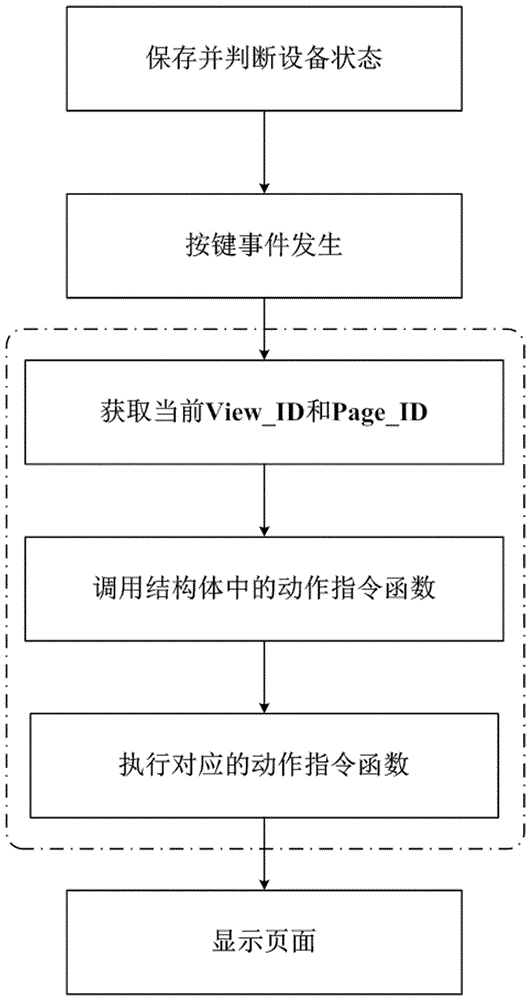

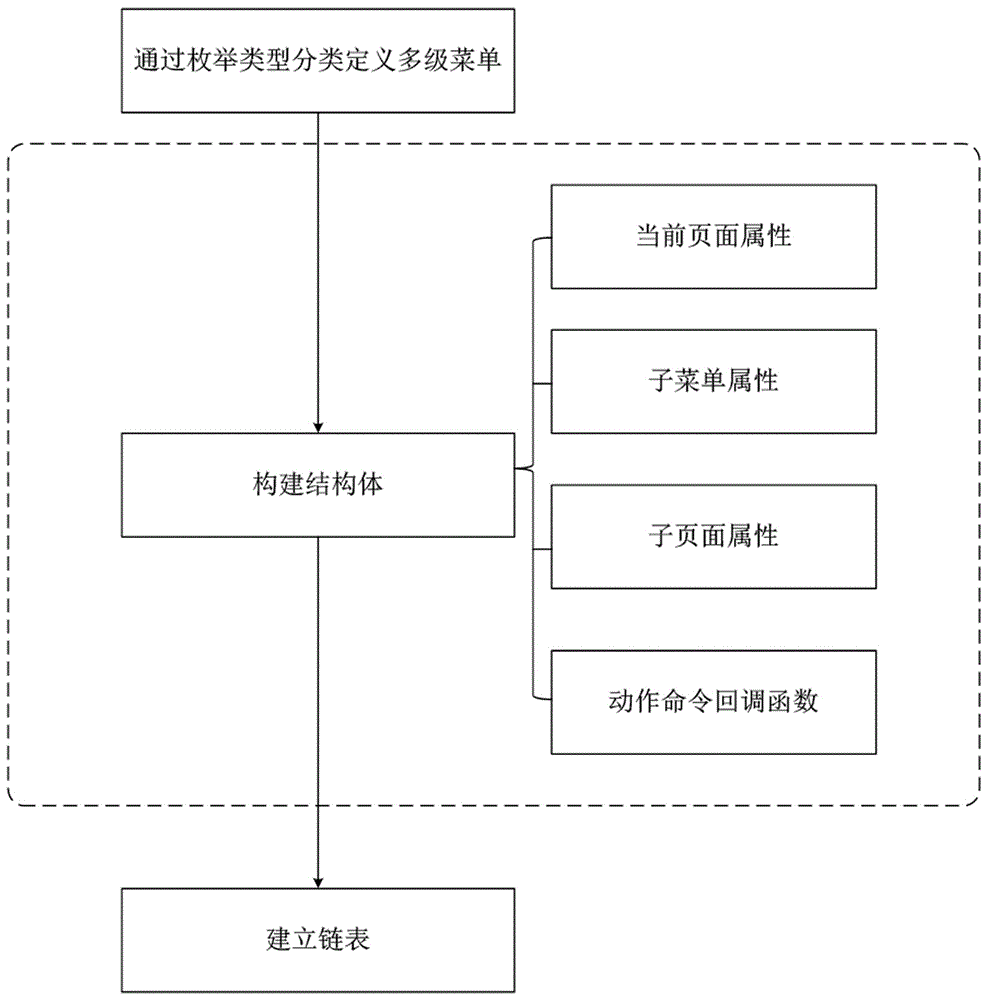

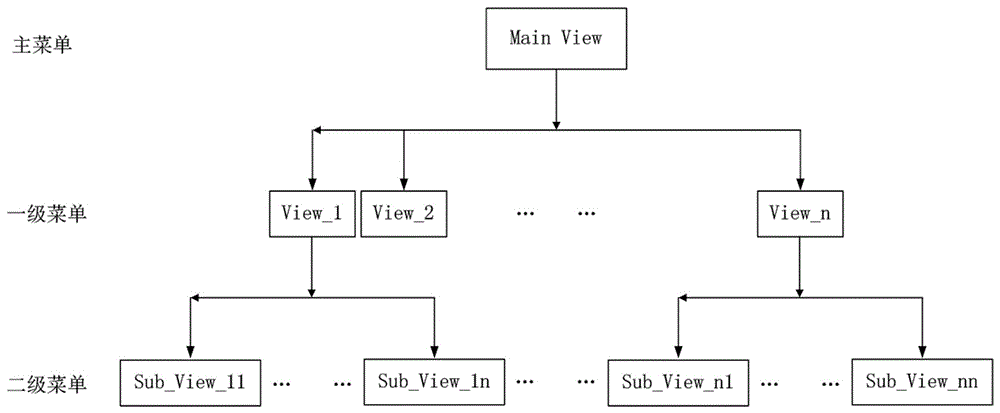

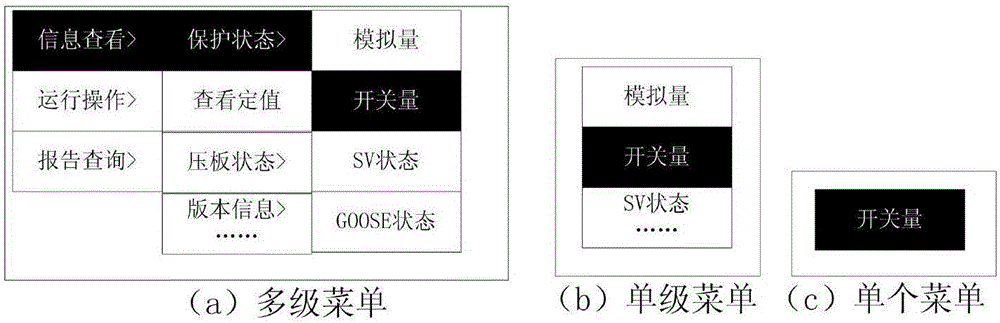

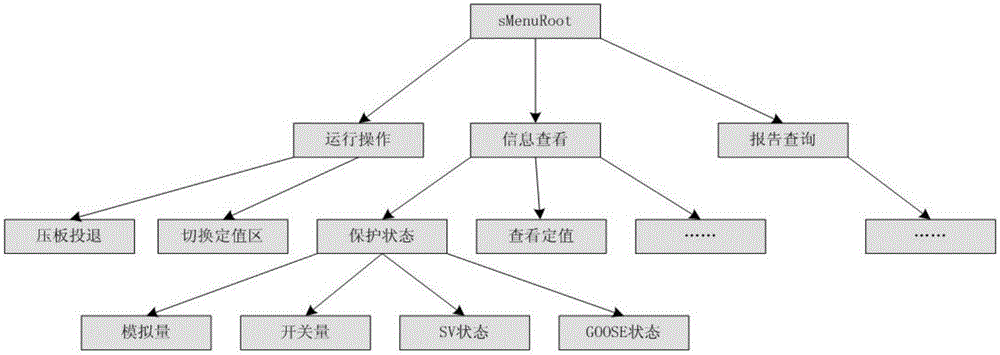

Multilevel menu page display method for intelligent wearable device, and intelligent wearable device

ActiveCN106484422AClear data structureAchieve divisionSoftware engineeringSpecific program execution arrangementsMultilevel modelMultilevel queue

The invention provides a multilevel menu page display method for an intelligent wearable device. A menu comprises multiple levels of pages which are displayed according to the levels and can execute device action instructions. The display method comprises the steps of storing and judging a current device state; and calling an action instruction callback function in a structure body, formed according to the multilevel menu, the corresponding pages of the multilevel menu and the action instructions, according to the current device state and a device action event, thereby enabling a device display module to perform recovery, maintenance or skip display of a page corresponding to a first-level menu in the multi-level menu. The invention furthermore discloses the intelligent wearable device. According to the method and the intelligent wearable device, the menu is divided through enumerated classification, so that the process is simplified and the multilevel menu is clearer; the skip or switching among different levels of pages is realized through a matching relationship between a structure body variable and the callback function; and the multilevel menu page display method for the intelligent wearable device, provided by the invention, has the advantages that the data structure is clearer, the control is easier, and the later addition and maintenance are facilitated.

Owner:GEER TECH CO LTD

Construction and display method of multilevel menu in embedded system

InactiveCN106598570AEasy maintenanceFor quick maintenanceSoftware engineeringSpecific program execution arrangementsMultilevel modelArray data structure

The invention relates to a convenient method for realizing multi-level menu construction and display based on a hybrid data structure of a linked list, a tree and an array in an embedded system. By use of the method disclosed by the invention, a sub-menu structural body, a single-level menu array for constructing a sub-menu, a tree structure for constructing the multi-level menu, a structural body for recording a selected menu, a linked list for recording an array mode of a menu selection path, and a group of expression functions for maintaining above mentioned data structures are constructed; the group of expression functions provide the running software environment of the mentioned multi-level menu of the invention. The construction and display of the multilevel menu can be rapidly and conveniently realized by use of the method disclosed by the invention, the addition, deletion, and shifting and other operations can be conveniently and rapidly performed on the individual menu and the single-level menu. The software and hardware development environments in different types are isolated from each other through an abstract data structure, so that the convenience, the fastness, the individuation and humanization of the menu maintenance are realized.

Owner:INTEGRATED ELECTRONICS SYST LAB

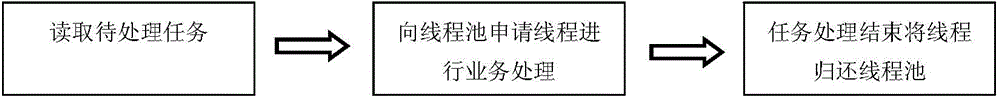

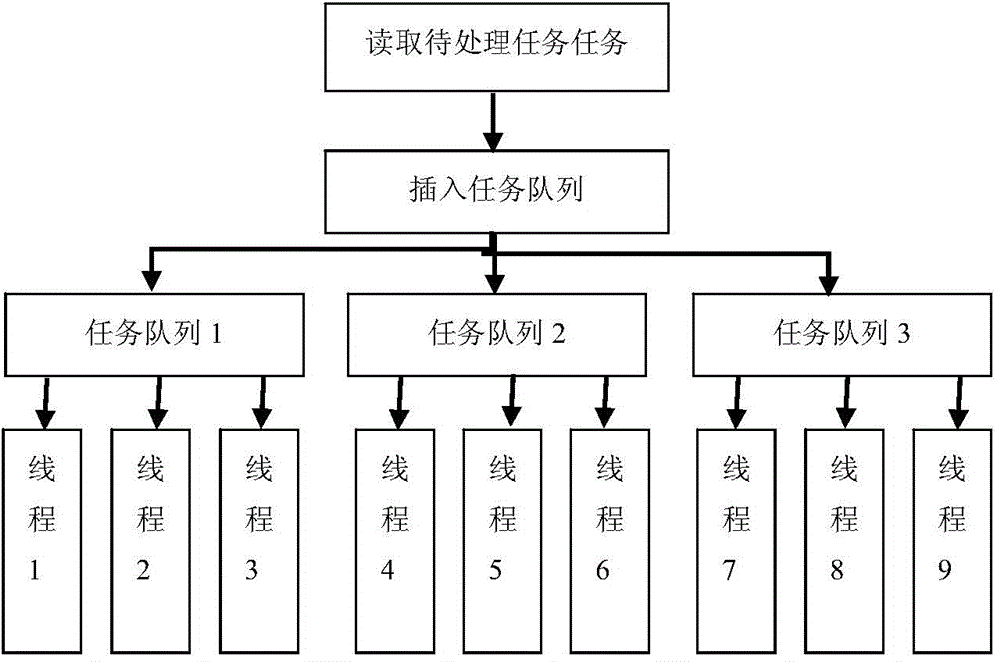

Parallel task processing method based on task decomposition

The invention discloses a parallel task processing method based on task decomposition. According to the method, event trigger objects do not need to be sequentially transmitted by a plurality of queues, the queues into which the objects are inserted are decided by state parameters of the objects, threads do not need to know the task queues from which the objects operated by the threads are transmitted or the task queues from which the objects operated by the threads are about to be inserted, and the threads only need to complete self task processing. The event trigger objects, the task queues and the threads do not influence one another, and class packing is facilitated. When multiple subtasks of services have no order dependencies, the subtasks can be inserted into multiple queues at the same time, and parallelization processing of the subtasks is carried out. Processing thread sets of a fixed number are distributed to the tasks, and a dedicated thread does not need to be created for each object.

Owner:ZHEJIANG UNIV

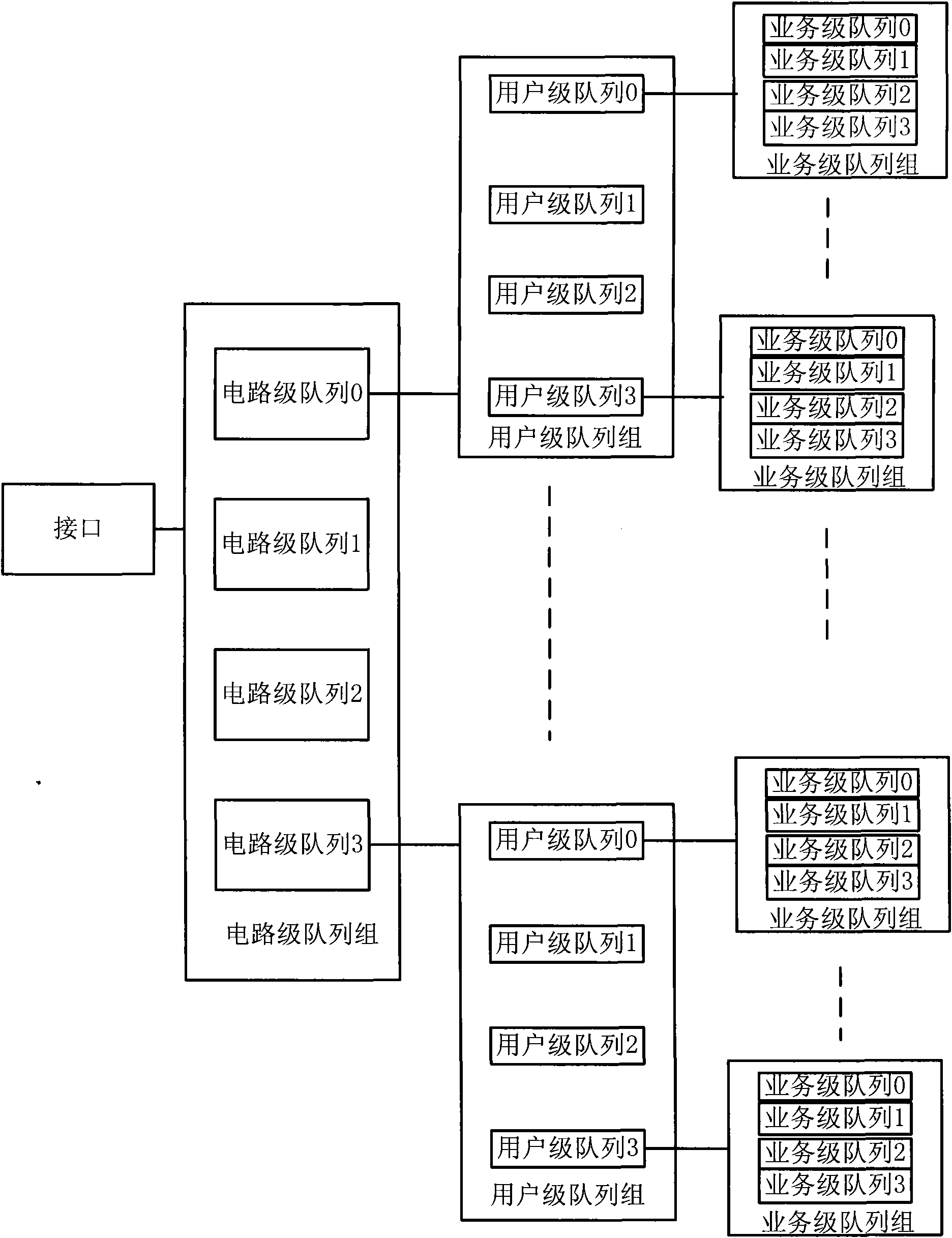

Multi-queue based scheduling method and system

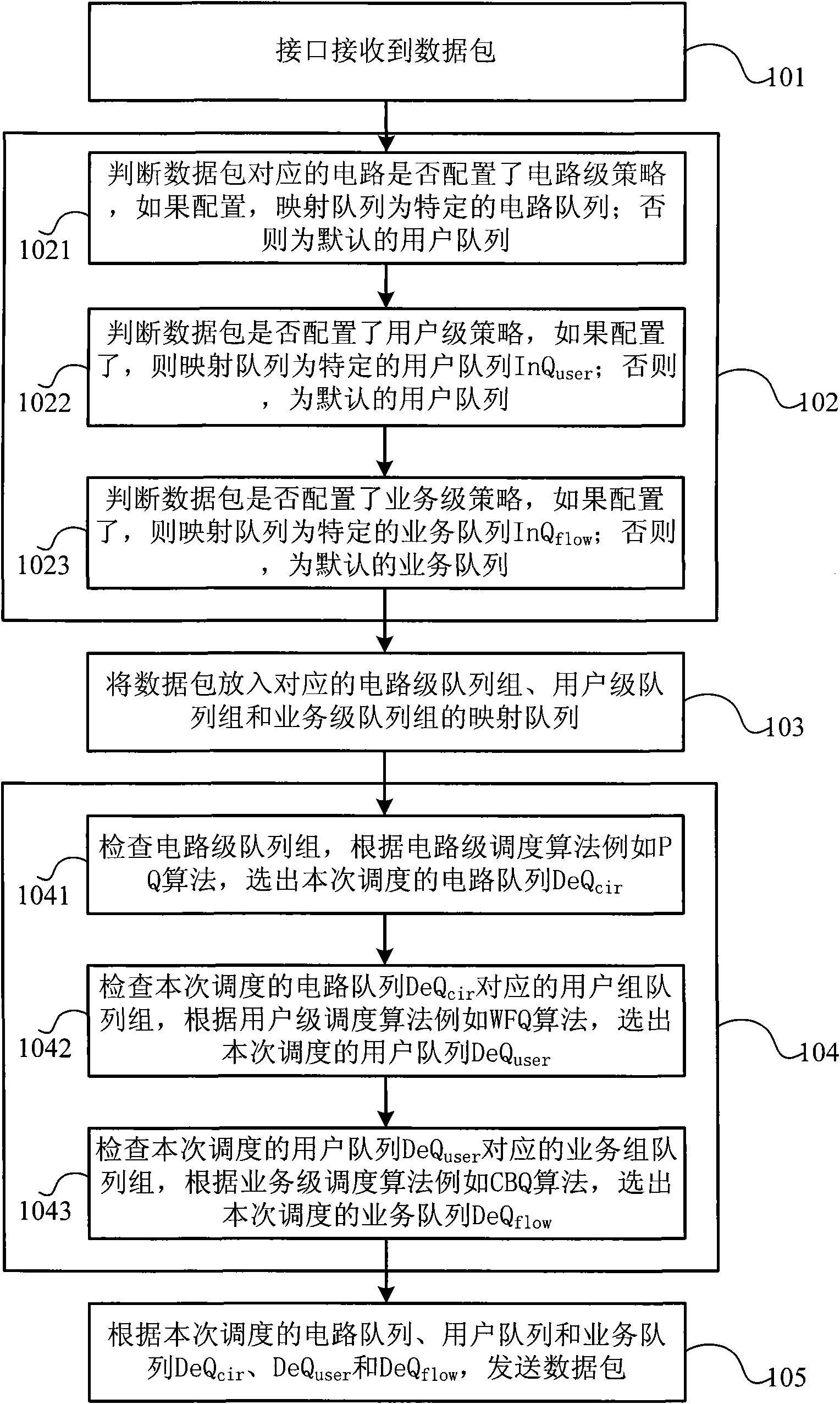

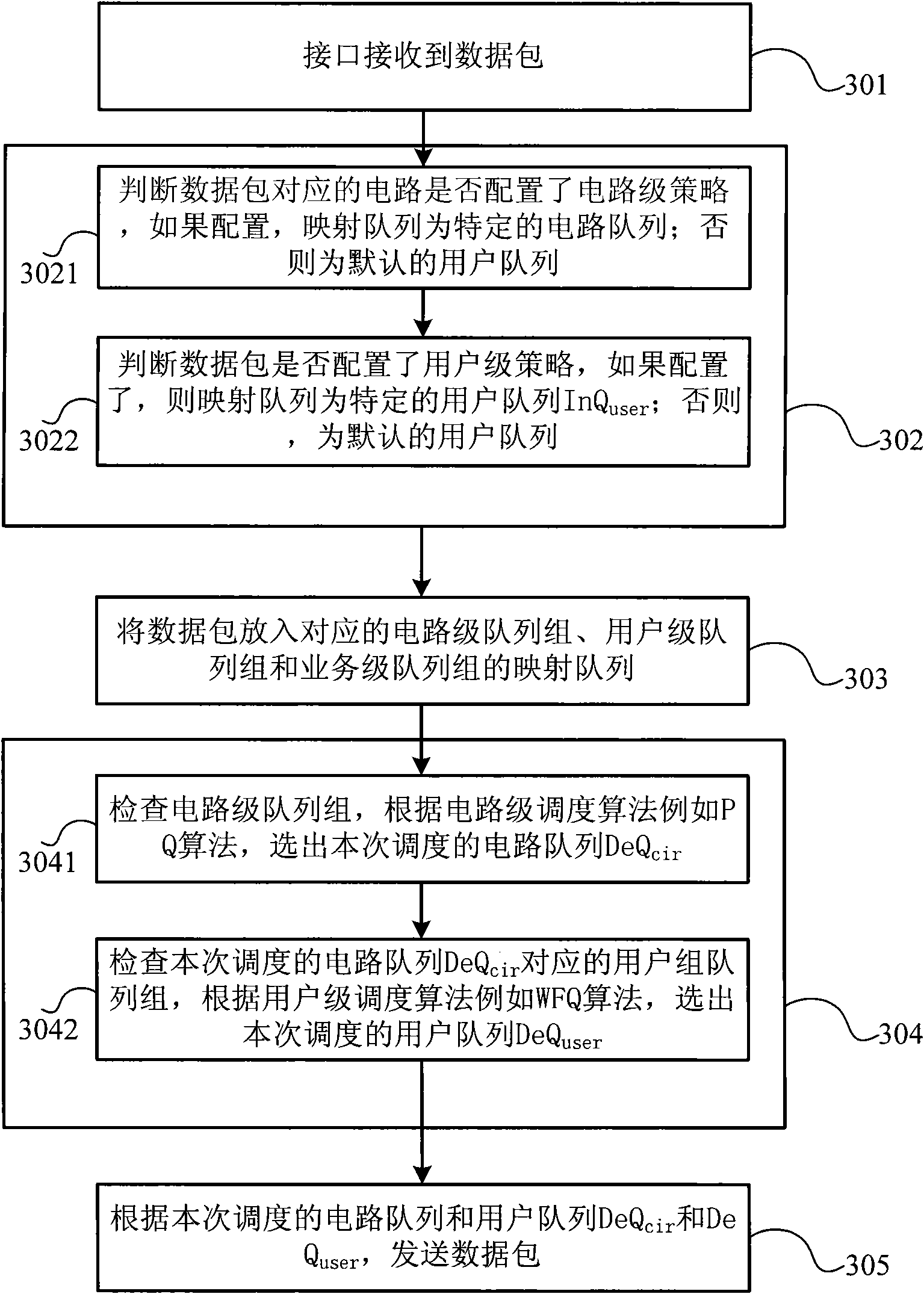

The invention discloses a multi-queue based scheduling method, which comprises the following steps: according to a multi-level stream classification strategy, performing circuit level mapping, user level mapping and / or service level mapping on a data packet, and putting the data packet in a mapping queue; and performing the scheduling in the circuit level, the user level and / or the service level according to a multi-queue scheduling algorithm. The invention also discloses a multi-queue based scheduling system, which comprises a multi-level strategy mapping module, a queue management module and a multi-level scheduling module, wherein the multi-level strategy mapping module is used for performing the circuit level mapping, the user level mapping and / or the service level mapping according to the multi-level stream classification strategy; the queue management module is connected with the multi-level strategy mapping module, and is used for putting the data packet in the mapping queue; and the multi-level scheduling module is connected with the queue management module, and is used for performing the scheduling in the circuit level, the user level and / or the service level according tothe multi-queue scheduling algorithm. Therefore, the method and the system can schedule data in the circuit level, the user level and the service level, realize complex traffic scheduling, and meet the increasingly complex QoS requirement.

Owner:ZTE CORP

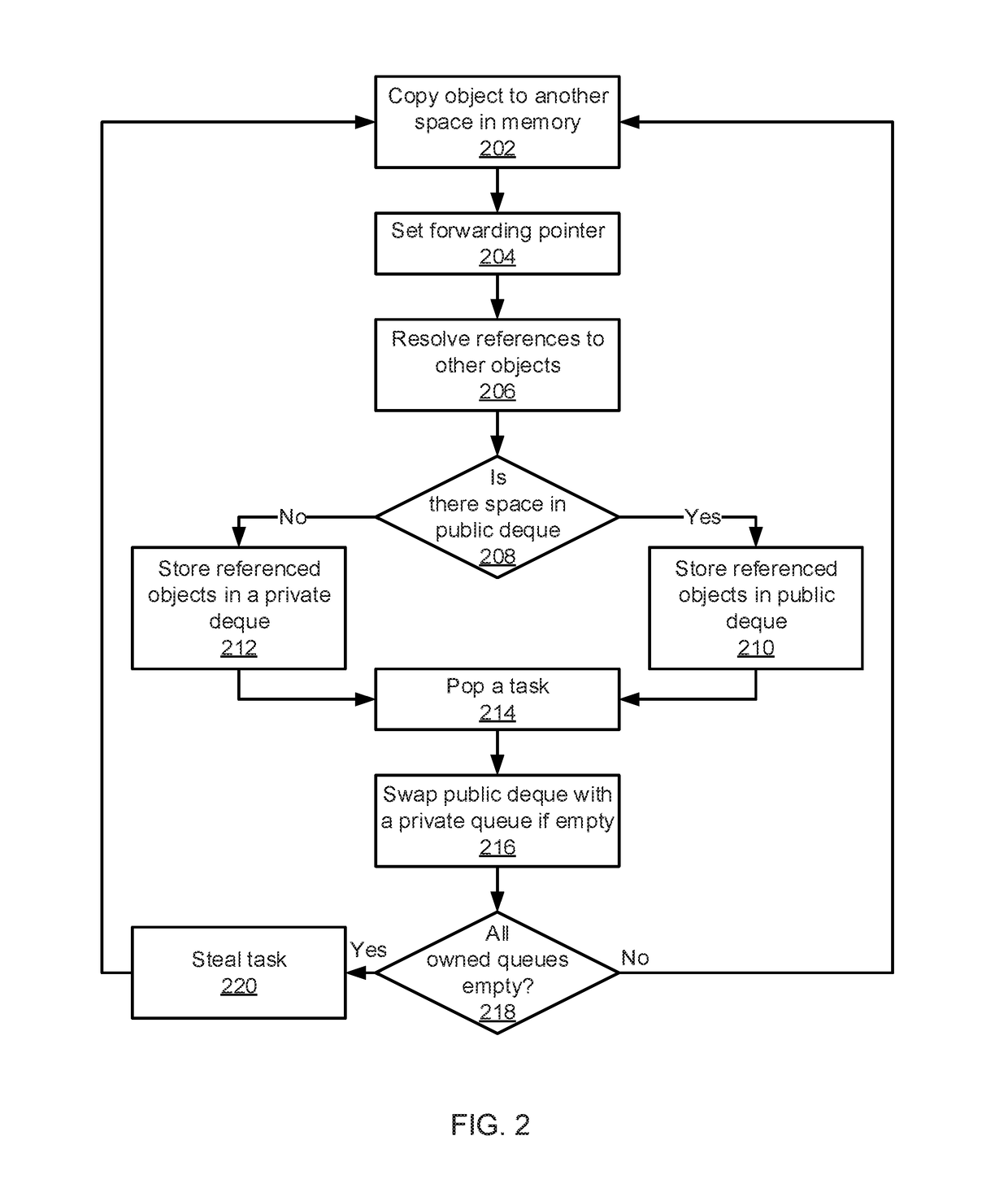

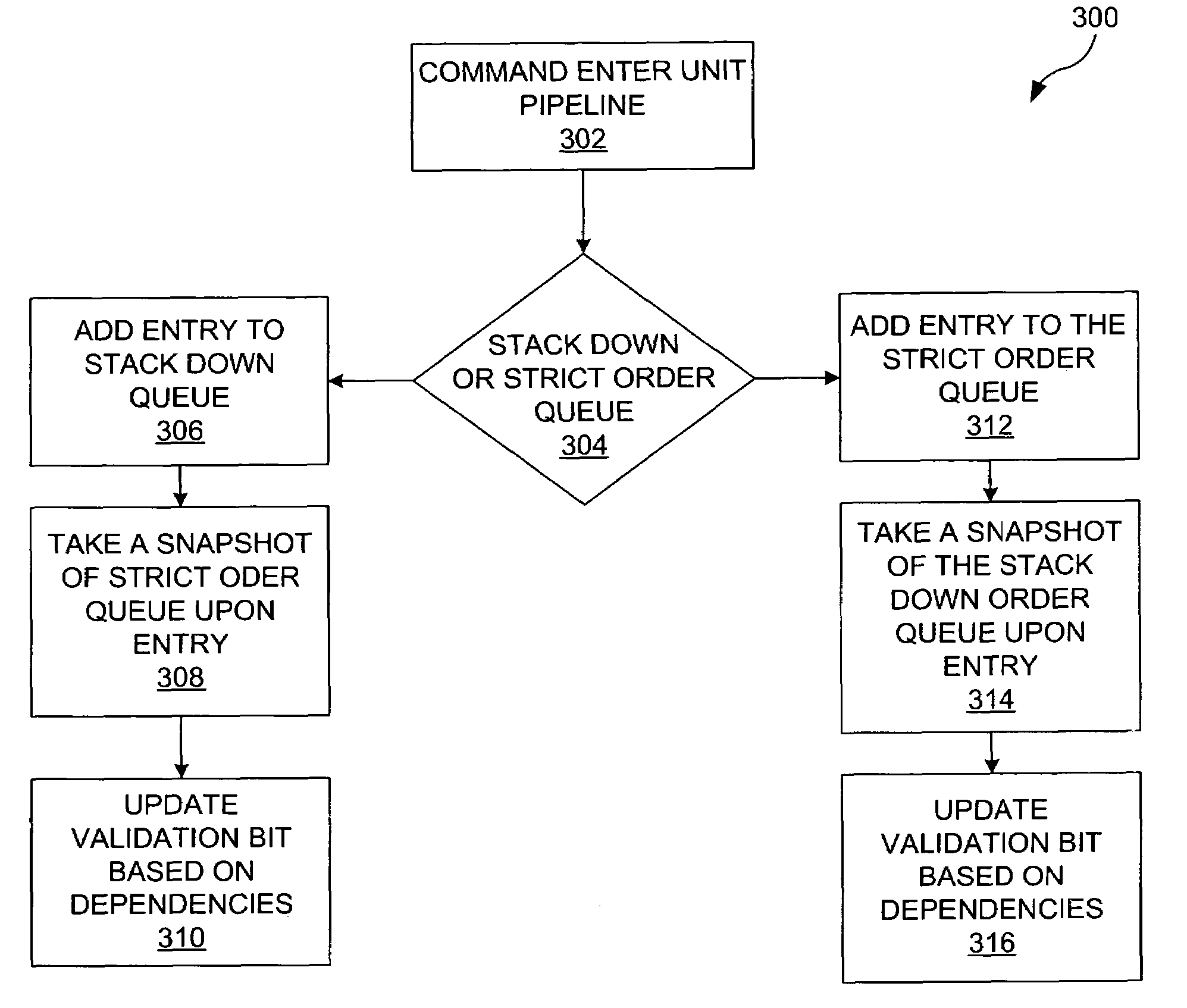

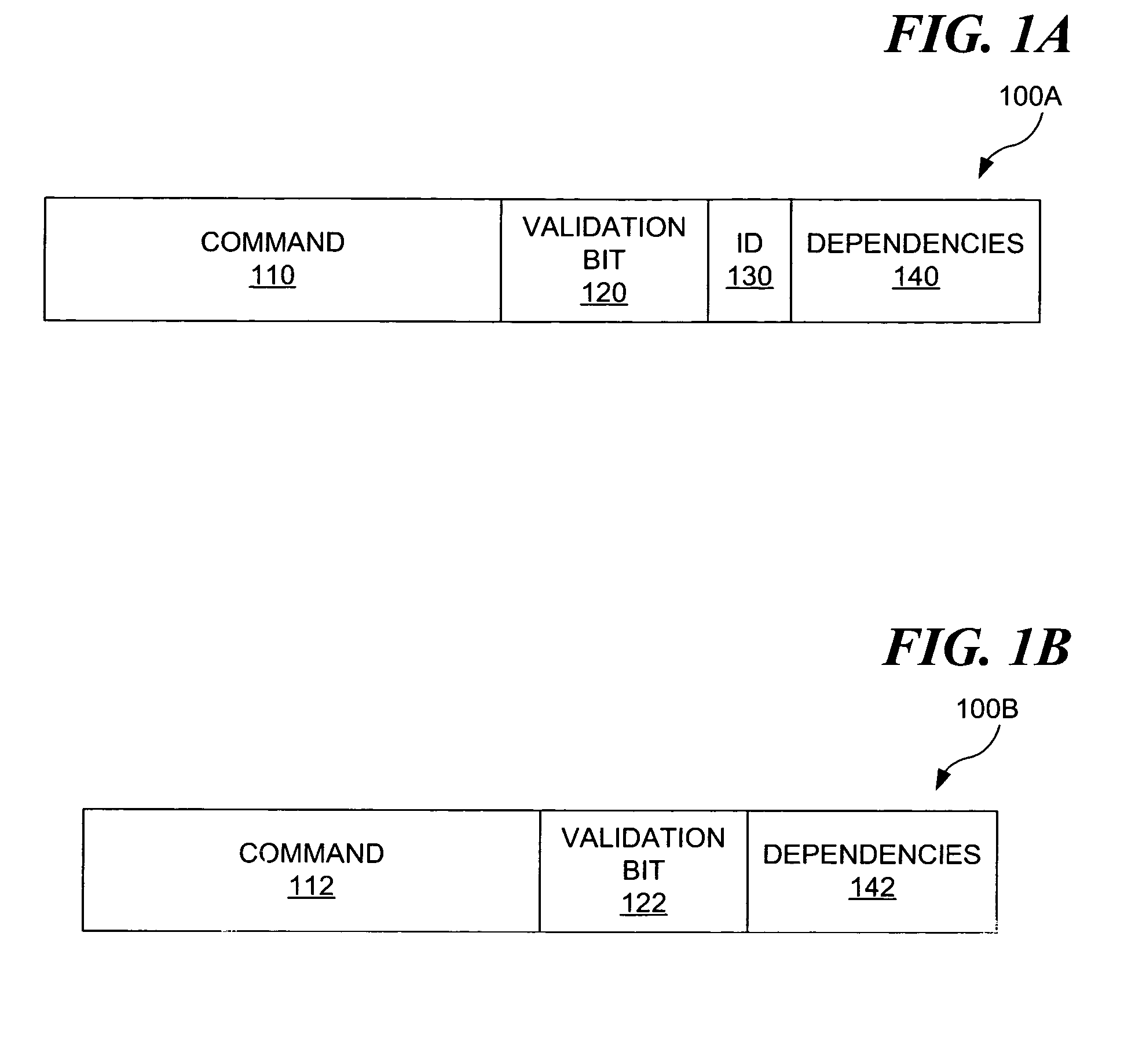

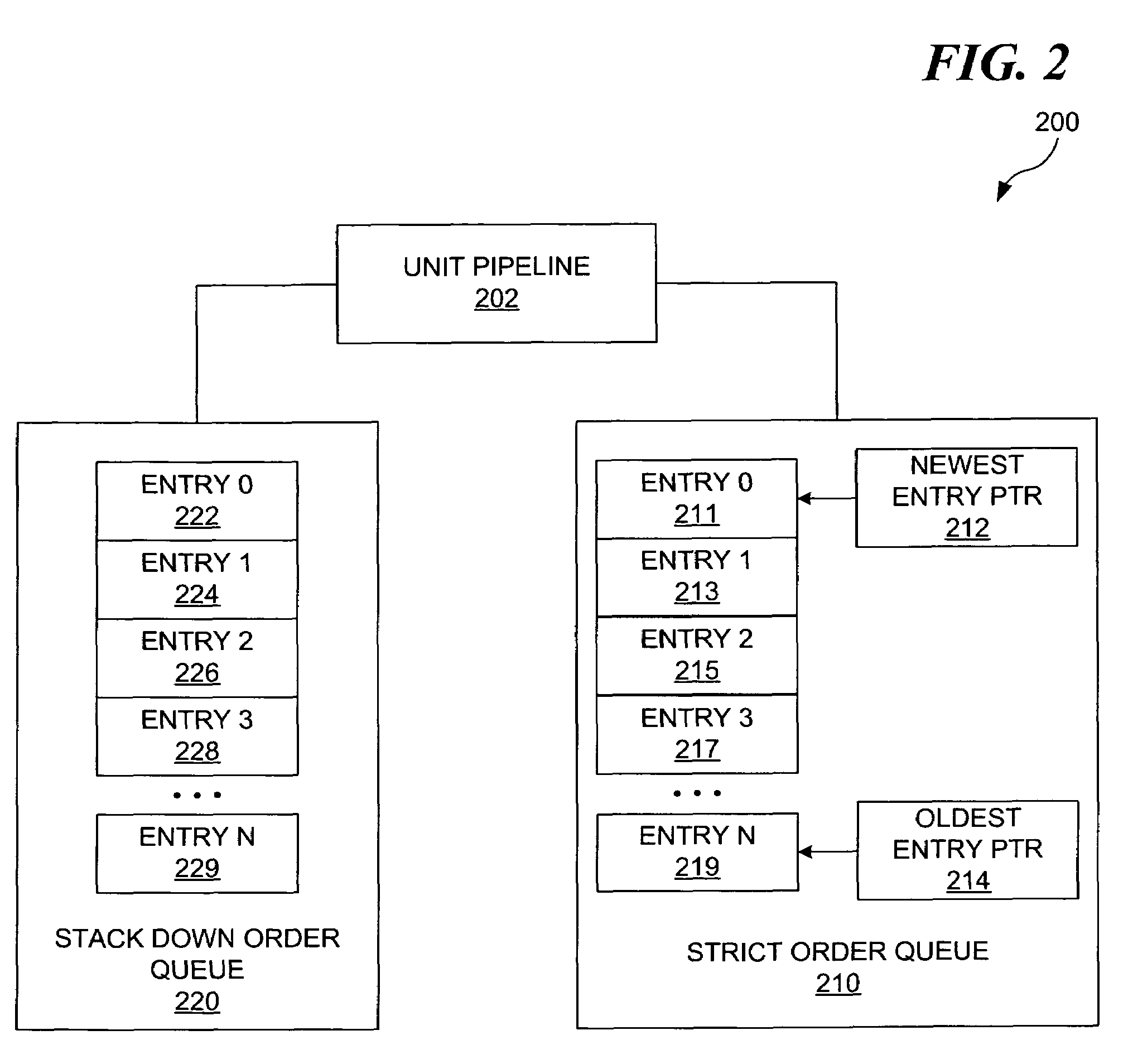

Balanced double deques for eliminating memory fences in garbage collection

ActiveUS20180314633A1Memory architecture accessing/allocationProgram initiation/switchingMultilevel queueWaste collection

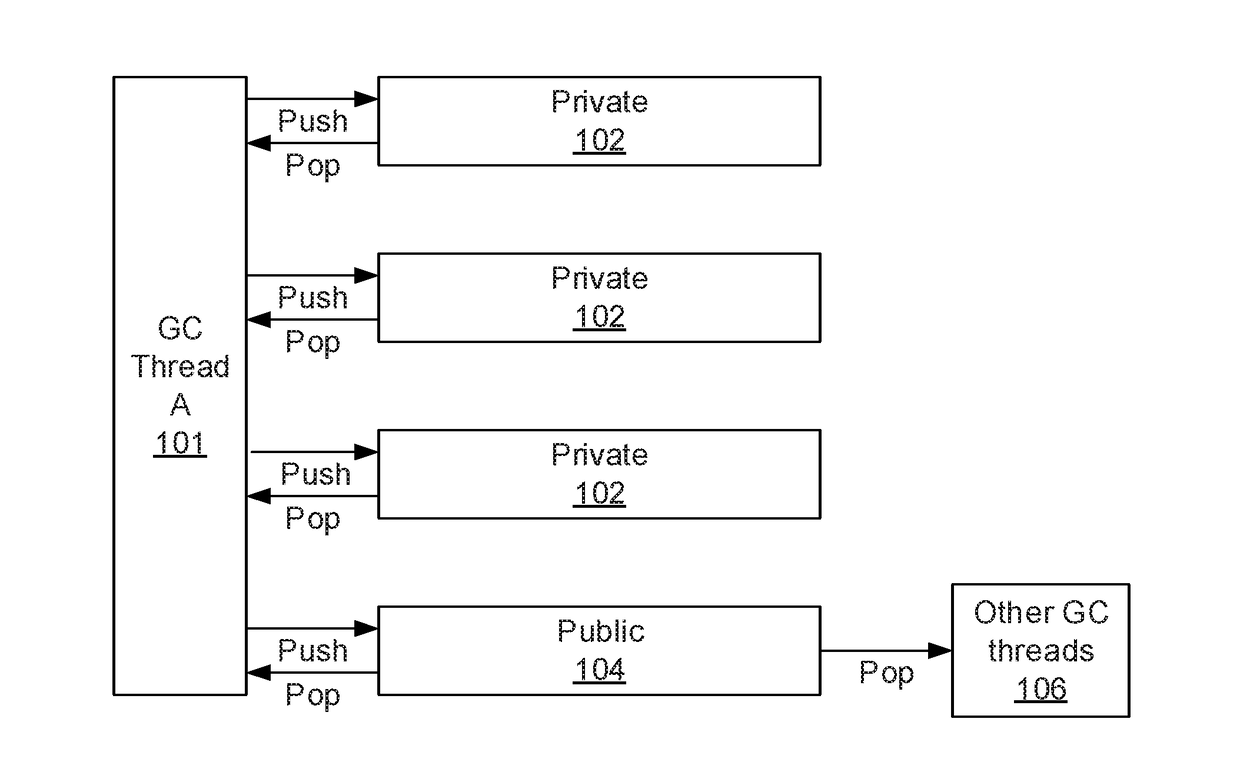

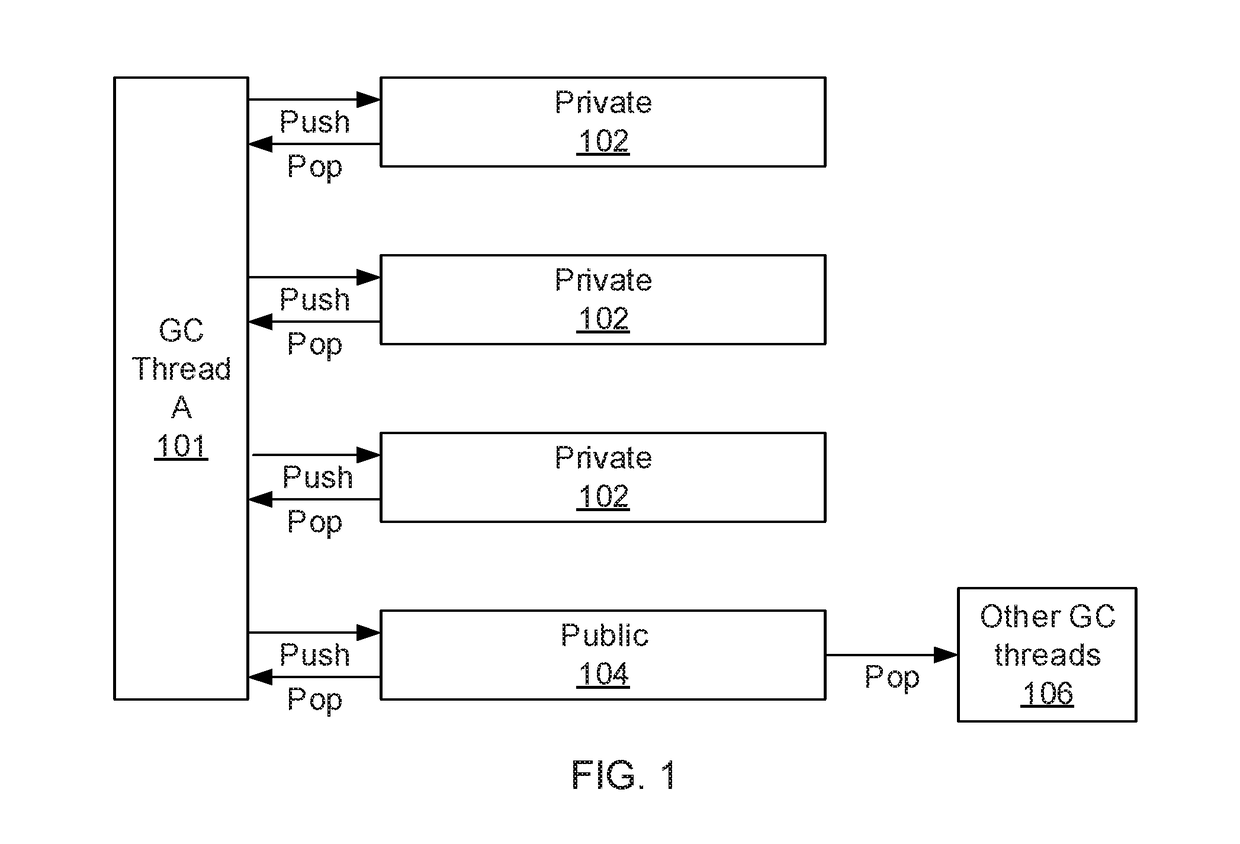

Garbage collection methods include adding a data object to one of multiple queues owned by a first garbage collection thread. The queues include a public queue and multiple private queues. A task is popped from one of the plurality of queues to perform garbage collection. The public queue is swapped with one of the private plurality of private queues if there are no tasks in the public queue.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com