Dispatching management method for task queue priority of large data set

A task queue and scheduling management technology, which is applied in the field of task queue priority scheduling management, can solve the problems of underutilization of working nodes, lower queue performance, high load, etc., and achieve resource waste prevention, efficient use of computing resources, and simple architecture Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] Hereinafter, the present invention will be described in detail through the drawings and specific embodiments.

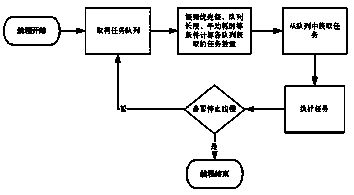

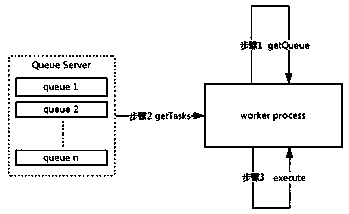

[0023] Such as Figure 1 to Figure 3 As shown, in the task queue priority scheduling management method of the large data set of the present invention, each node of the task acquisition end runs a cyclic thread worker process for acquiring tasks, and the cyclic thread includes the following steps:

[0024] A. Start the thread and get the task queue, namely figure 2 GetQueue in;

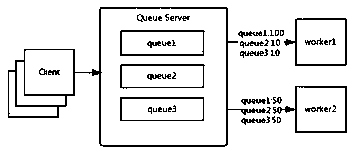

[0025] B. Run different algorithms through the execution engine to calculate the number of tasks acquired by each queue;

[0026] C. Get tasks from the queue server QueueServer corresponding to the task queue, namely getTask;

[0027] D, execute the task execute;

[0028] E. After the task is completed, it is judged whether the thread is stopped, and the loop goes to step A to obtain the task queue again.

[0029] The priority of tasks is preset in the task queue. The priority of the queue is c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com