Parallel task processing method based on task decomposition

A task processing and task decomposition technology, applied in parallel task processing and computer application fields, can solve problems such as unfavorable business update and maintenance, inconsistent with programming ideas, etc., to achieve the effect of easy encapsulation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] The present invention will be further described in detail below with reference to the accompanying drawings and specific embodiments.

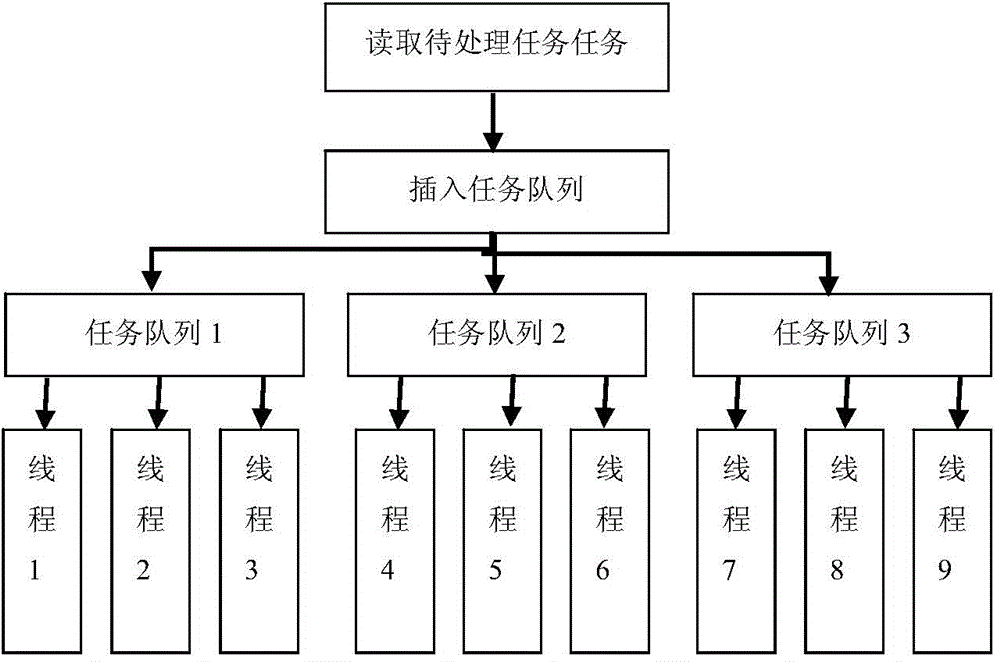

[0022] A kind of parallel task processing method based on task decomposition of the present invention comprises the following steps:

[0023] (1) Create task decomposition queues, task allocation queues, response filtering queues, and various task processing queues; the task decomposition queues, task allocation queues, response filtering queues, and various task processing queues are all implemented based on blocking queues;

[0024] (2) Create task decomposition threads, task allocation threads, response filtering thread groups and various task processing thread groups;

[0025] (3) The task decomposition thread obtains the task object from the task decomposition queue, decomposes the subtask according to the task code of the task object, and stores the subtask in the task hash table. After the decomposition is completed, the task obj...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com