FPGA-based binary neural network acceleration system

A binary neural and acceleration system technology, applied in the field of integrated circuit design, can solve the problems that the calculation speed is easily limited by serial calculation, the key calculation path is long, and the resource occupation is large, so as to reduce the calculation cost, reduce the number of calculations, The effect of increasing the calculation speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The present invention will be further described in detail below in conjunction with the accompanying drawings and specific implementation examples.

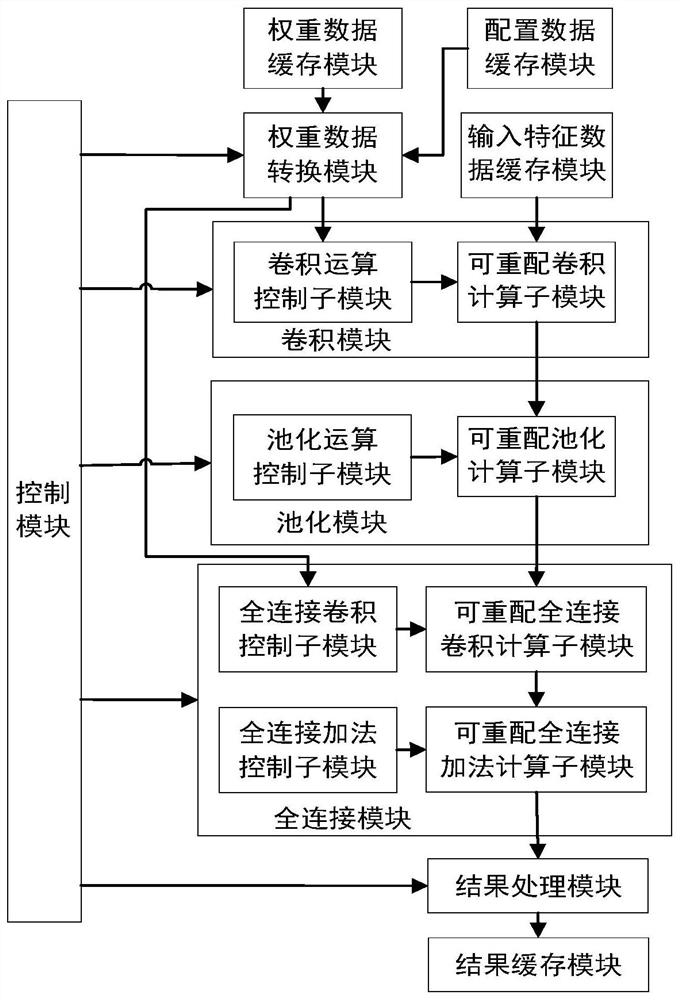

[0038] refer to figure 1 , the present invention includes a weight data cache module, an input feature data cache module, a configuration data cache module, a weight data conversion module, a convolution module, a pooling module, a full connection module, a result processing module, a result cache module and a control module realized by an FPGA. module, where:

[0039] The weight data cache module is used to cache the convolutional layer weight data and fully connected layer weight data of the binary neural network through the memory DDR on the FPGA;

[0040] The input feature data cache module is used to cache the input feature data of the binary neural network through the memory DDR on the FPGA;

[0041] In this embodiment, the convolutional layer weight data, fully connected layer weight data, and input feature data o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com