Binocular stereo vision matching method based on neural network and operation framework thereof

A technology of binocular stereo vision and neural network, applied in the field of binocular stereo vision matching method and its computing framework based on neural network

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

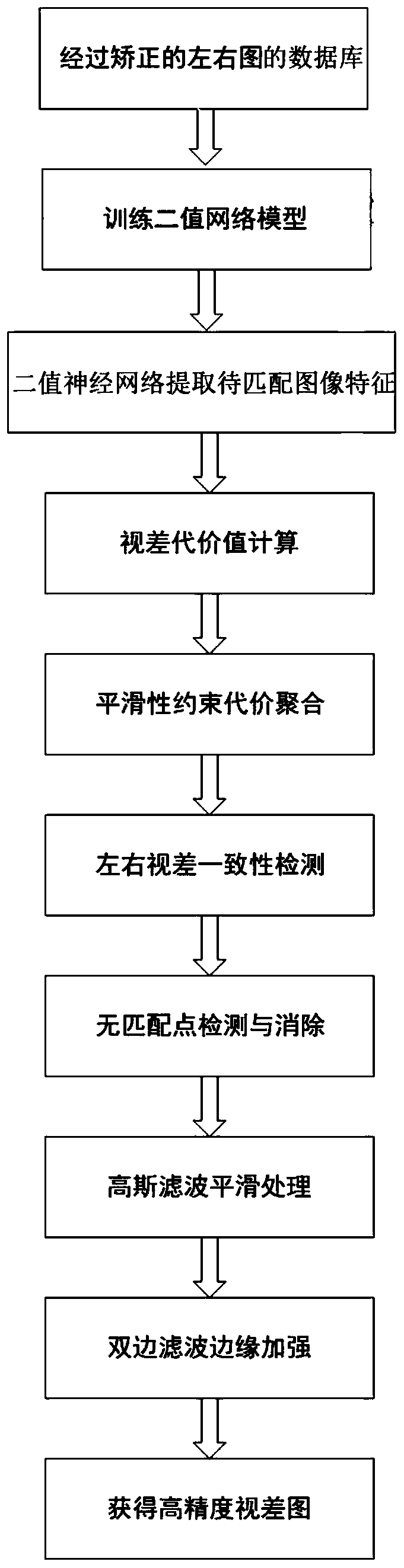

[0060] Such as figure 1 Shown is an embodiment of a neural network-based binocular stereo vision matching method, comprising the following steps:

[0061] Step 1: Construct a neural network computing framework, construct a binary neural network and conduct training; process 194 pairs of accurate depth images provided in the KITTI2012 database, and construct a total of 24,472,099 positive and negative sample pairs for training to obtain a binary value neural network model.

[0062] Step 2: Input the left image and the right image into the binary neural network for image feature extraction, and obtain a series of binary sequences as feature descriptions of image pixels;

[0063] Step 3: The binary neural network matches the left image and the right image through a matching algorithm, specifically:

[0064] S1: Parallax cost value calculation, the similarity calculation is performed on the binary sequence of two pixels, and the calculated similarity score represents the similar...

Embodiment 2

[0092] A computing framework used in Embodiment 1. In step 1 of Embodiment 1, the neural network computing framework is constructed as a modular neural network computing framework, and the data is compressed by way of channel packaging, and the computing time is reduced by laminar flow technology .

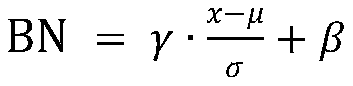

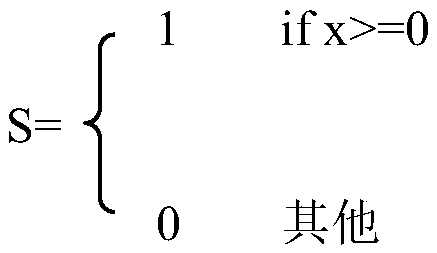

[0093] Specifically, the modular neural network computing framework is divided into networks, layers, tensors, and data blocks according to the granularity from coarse to fine; in the modular neural network computing framework, the network is divided into several layer structures, each Corresponding parameters are set in a layer, and the data in the neural network computing framework are stored in tensors, and are stored by data blocks. The network framework uses its own GPU memory management and recovery system. During the initialization process of the framework, it applies for a piece of memory resource from the GPU, and manages it using memory pointers in the framework. The dat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com