Binary neural network compression method based on weight sensitivity

A binary neural network and hardware compression technology, applied in instruments, adaptive control, control/regulation systems, etc., can solve problems such as high hardware resource overhead and power consumption, wrong weights, and reduced recognition accuracy, to ensure The effect of recognition accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

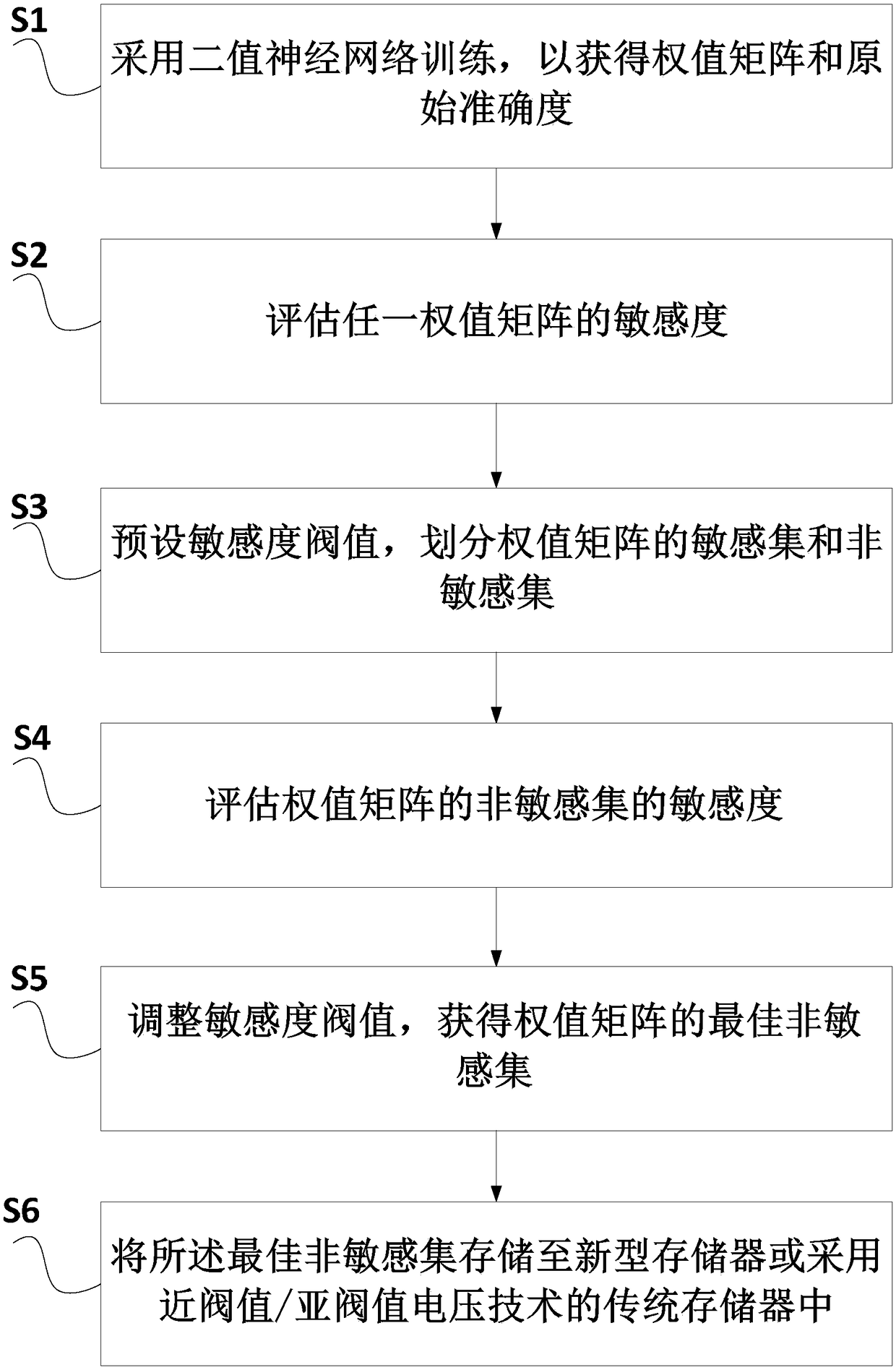

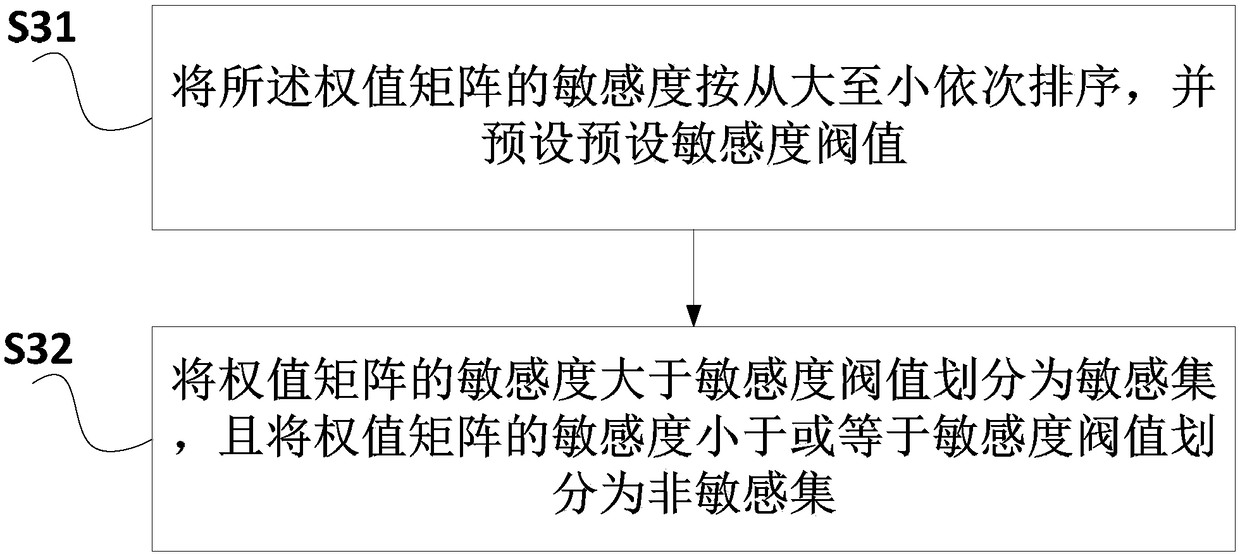

[0069] Such as Figure 1 to Figure 5 As shown, this embodiment provides a binary neural network hardware compression method based on weight sensitivity. It should be noted that the "first", "second", and "third" described in this embodiment The term of equal serial number is only used to distinguish the same kind of parts, including the following steps:

[0070] In the first step, binary neural network training is used to obtain the weight matrix and raw accuracy.

[0071] In the second step, the sensitivity of any weight matrix is evaluated as follows:

[0072] (21) preset error probability P to evaluate the unreliability of new memory devices and near-threshold / sub-threshold voltages, where P is a number greater than 0 and less than 1; that is, each weight in the weight matrix occurs The probability of being wrong (1→-1, -1→1) is P.

[0073] (22) Errors occur in any binary neural network weight of the weight matrix in sequence with the error probability P to obtain the ...

Embodiment 2

[0088] Such as Figure 6 As shown, this embodiment provides a binary neural network hardware compression method based on weight sensitivity, which combines sensitivity analysis and binary particle swarm optimization to search for binary neural networks that are less sensitive to recognition accuracy. Low weight matrix combination. Among them, the binary particle swarm optimization algorithm is composed of M particles to form a community, search for the optimal value in a D-dimensional target space, update the position of the particles according to the speed update formula, evaluate the pros and cons of each solution with the fitness function, and pass An iterative update method is used to search for the optimal value. It should be noted that the "fourth", "fifth", and other serial numbers described in this embodiment are only used to distinguish similar components. The binary neural network hardware compression method includes the following steps:

[0089] The binary neural ...

Embodiment 3

[0108] Such as Figure 7 As mentioned above, this embodiment provides a binary neural network hardware compression method based on weight sensitivity, and the serial numbers such as "first", "second", and "third" in this embodiment are only used To distinguish similar parts, specifically, the method includes the following steps:

[0109] In the first step, binary neural network training is used to obtain the weight matrix and raw accuracy.

[0110] In the second step, the sensitivity of any weight matrix is evaluated as follows:

[0111] (21) preset error probability P to evaluate the unreliability of new memory devices and near-threshold / sub-threshold voltages, where P is a number greater than 0 and less than 1; that is, each weight in the weight matrix occurs The probability of being wrong (1→-1, -1→1) is P.

[0112] (22) Errors occur in any binary neural network weight of the weight matrix in sequence with the error probability P to obtain the first accuracy of the bin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com