Patents

Literature

49 results about "Branch history table" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

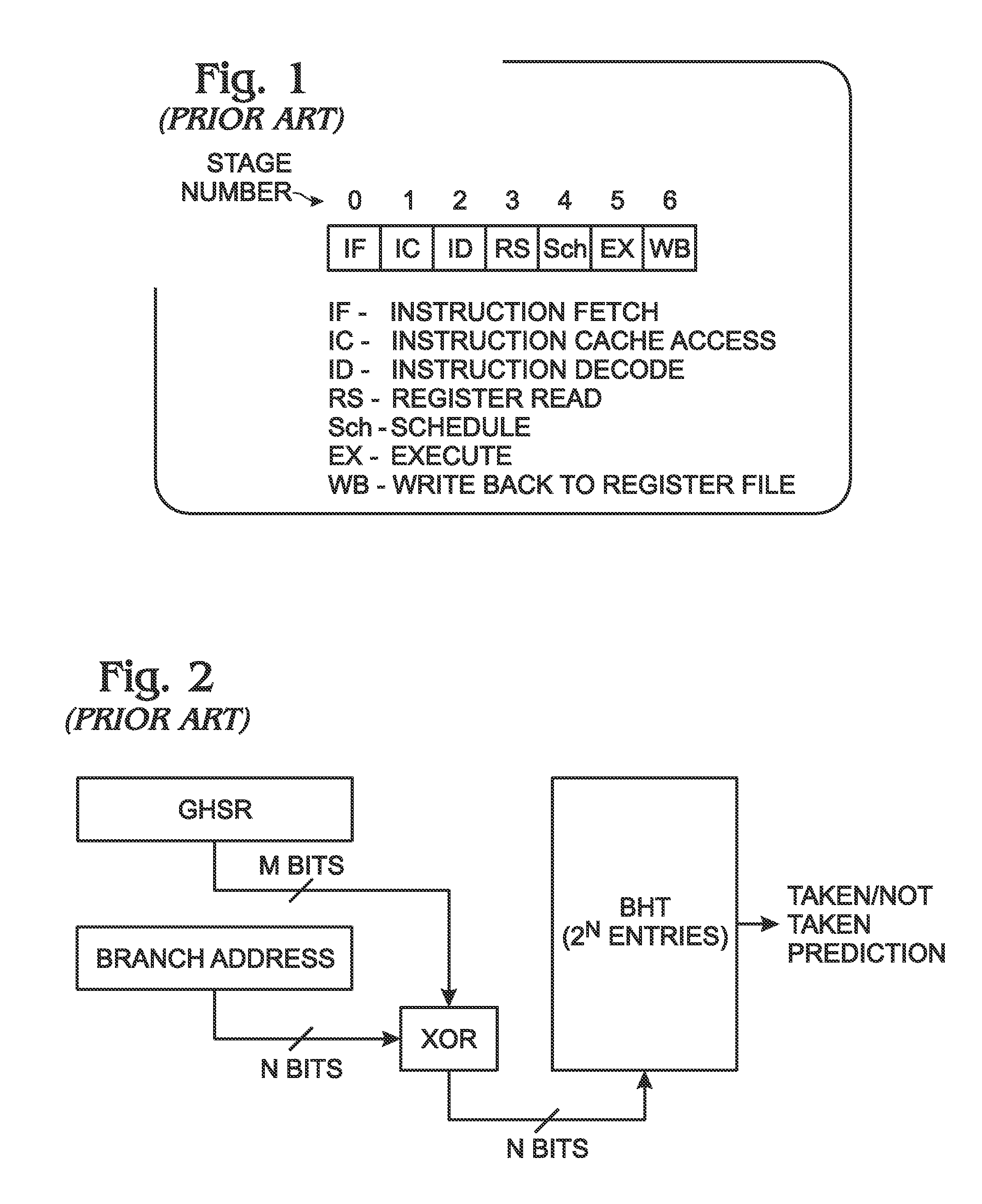

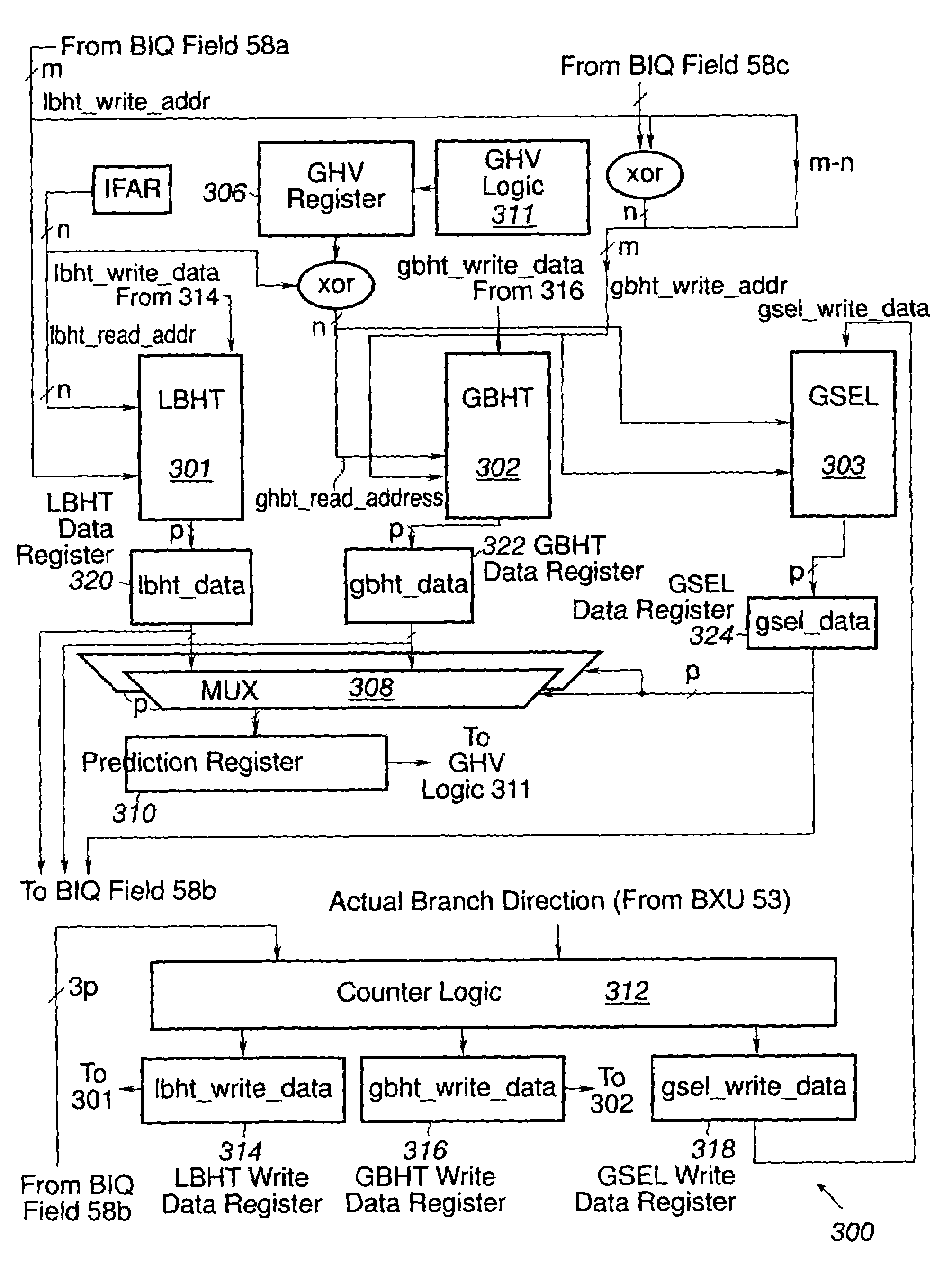

Speculative hybrid branch direction predictor

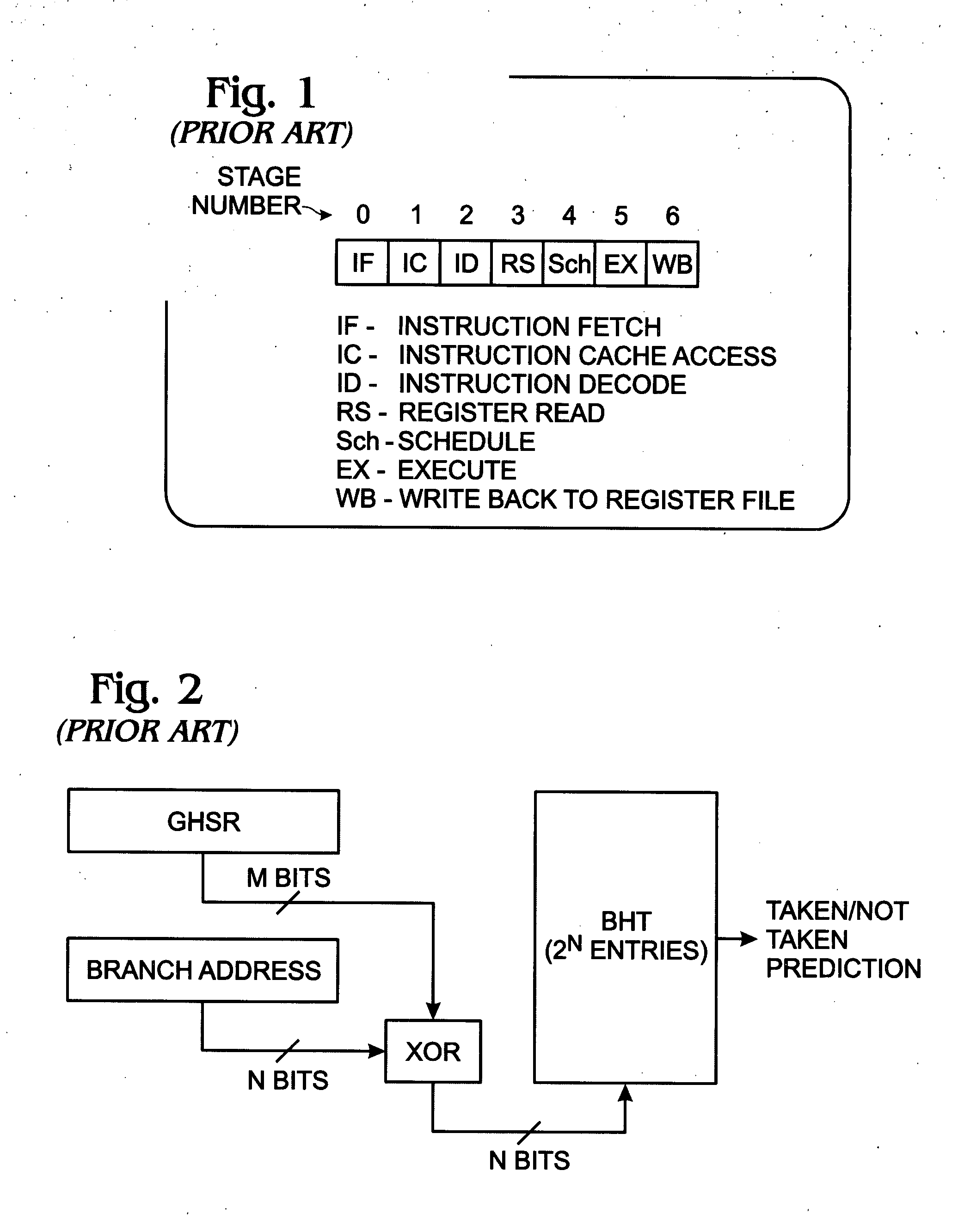

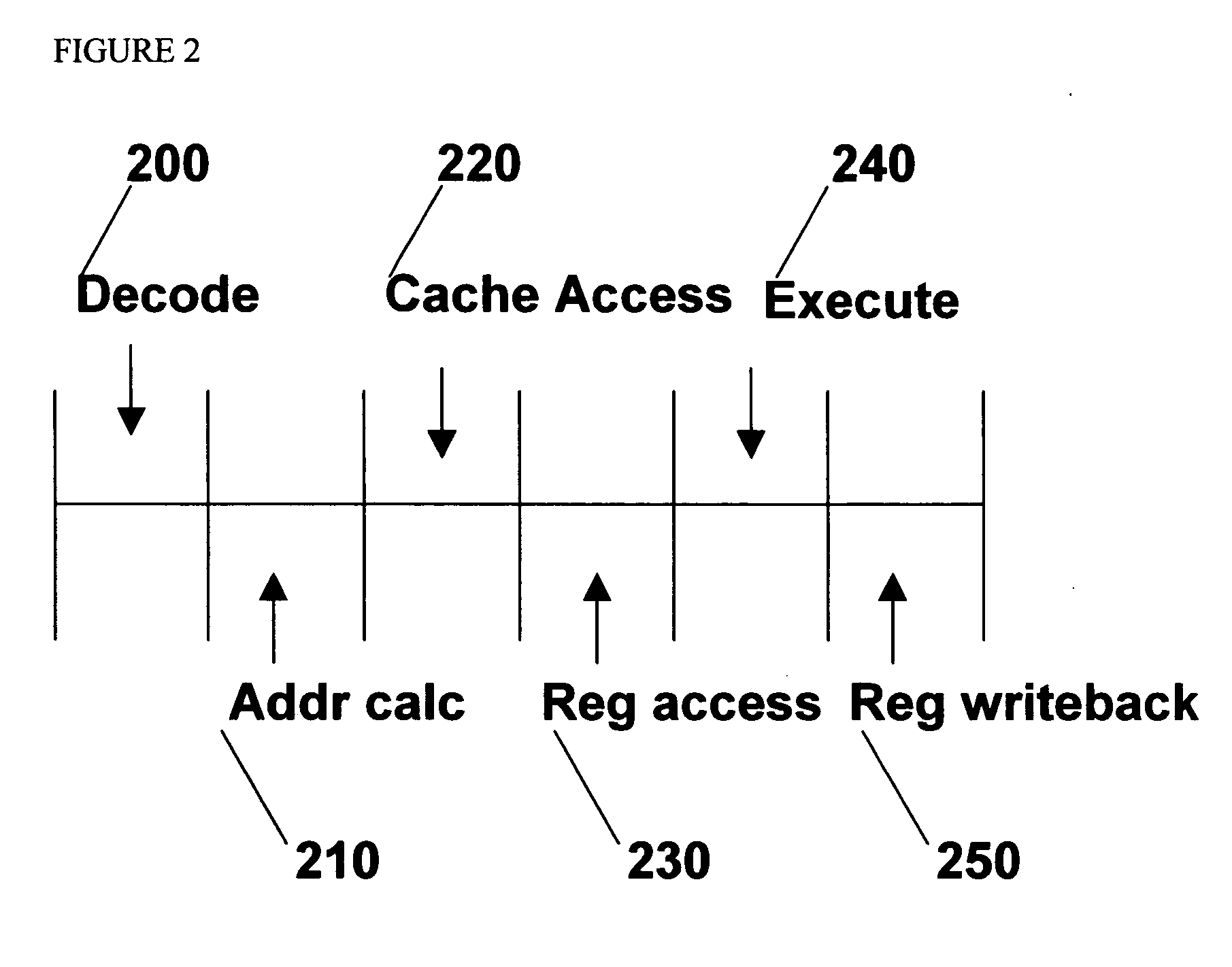

InactiveUS20050132175A1Improve accuracyReducing overall branch penaltyInstruction analysisDigital computer detailsCache accessParallel computing

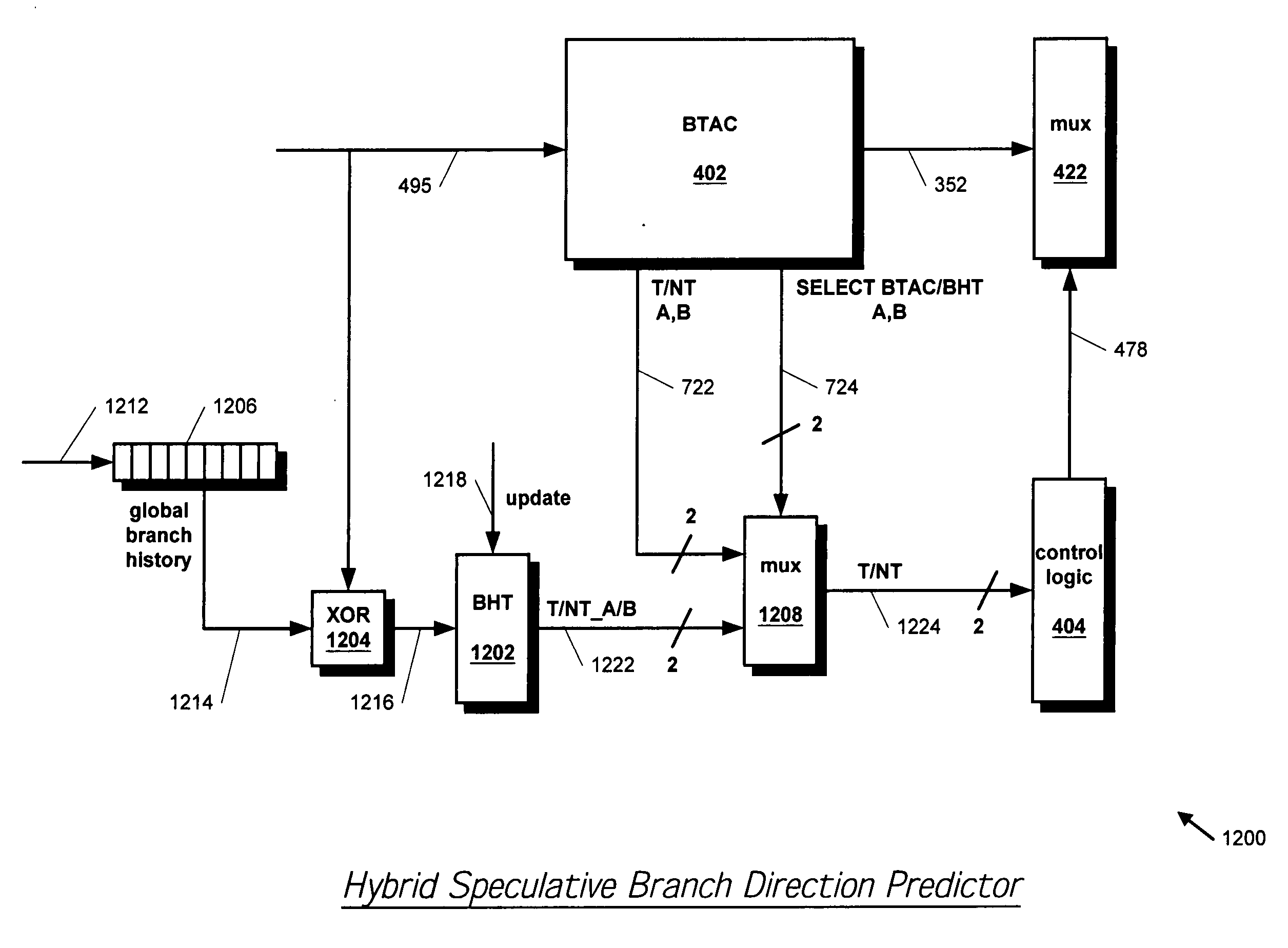

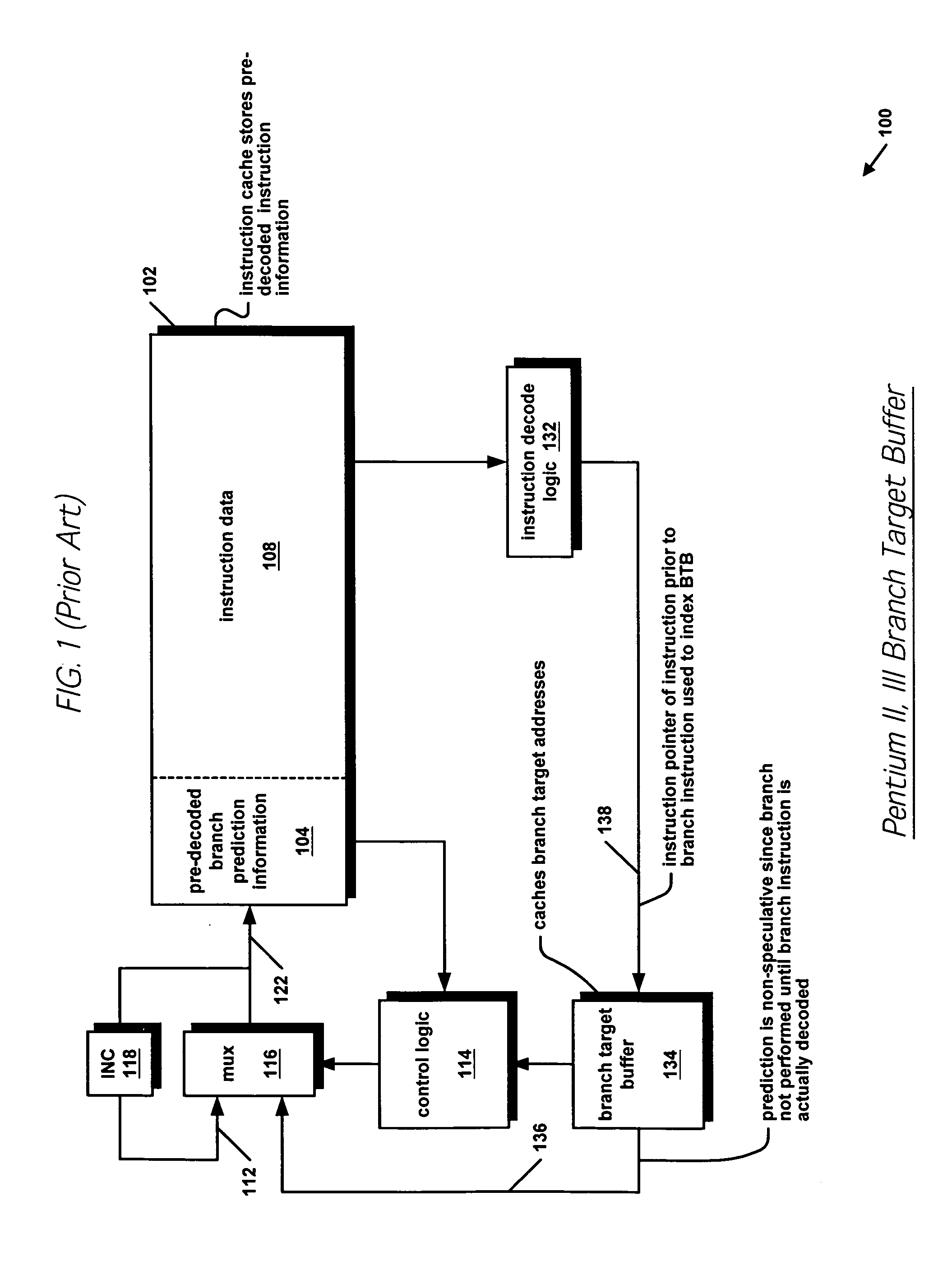

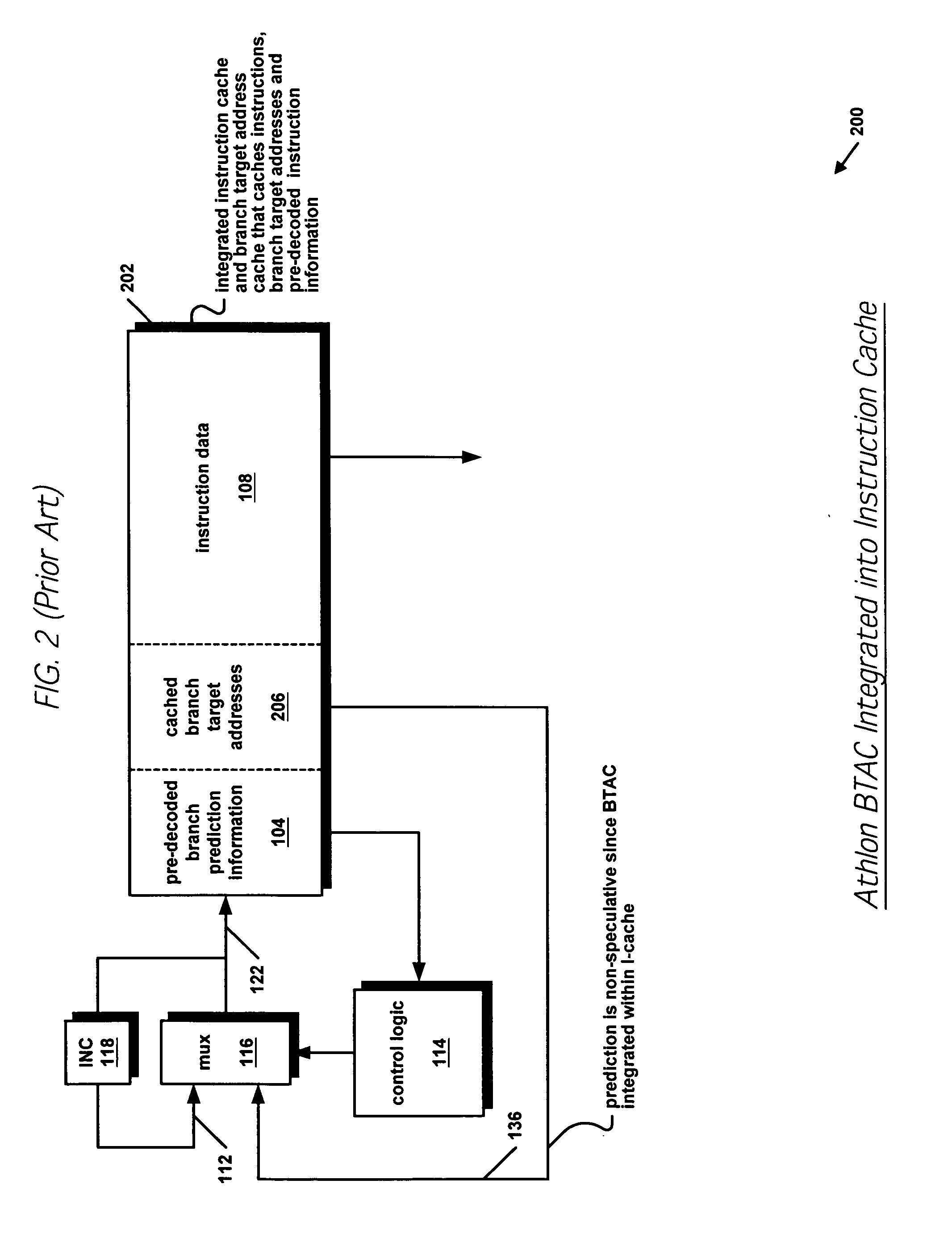

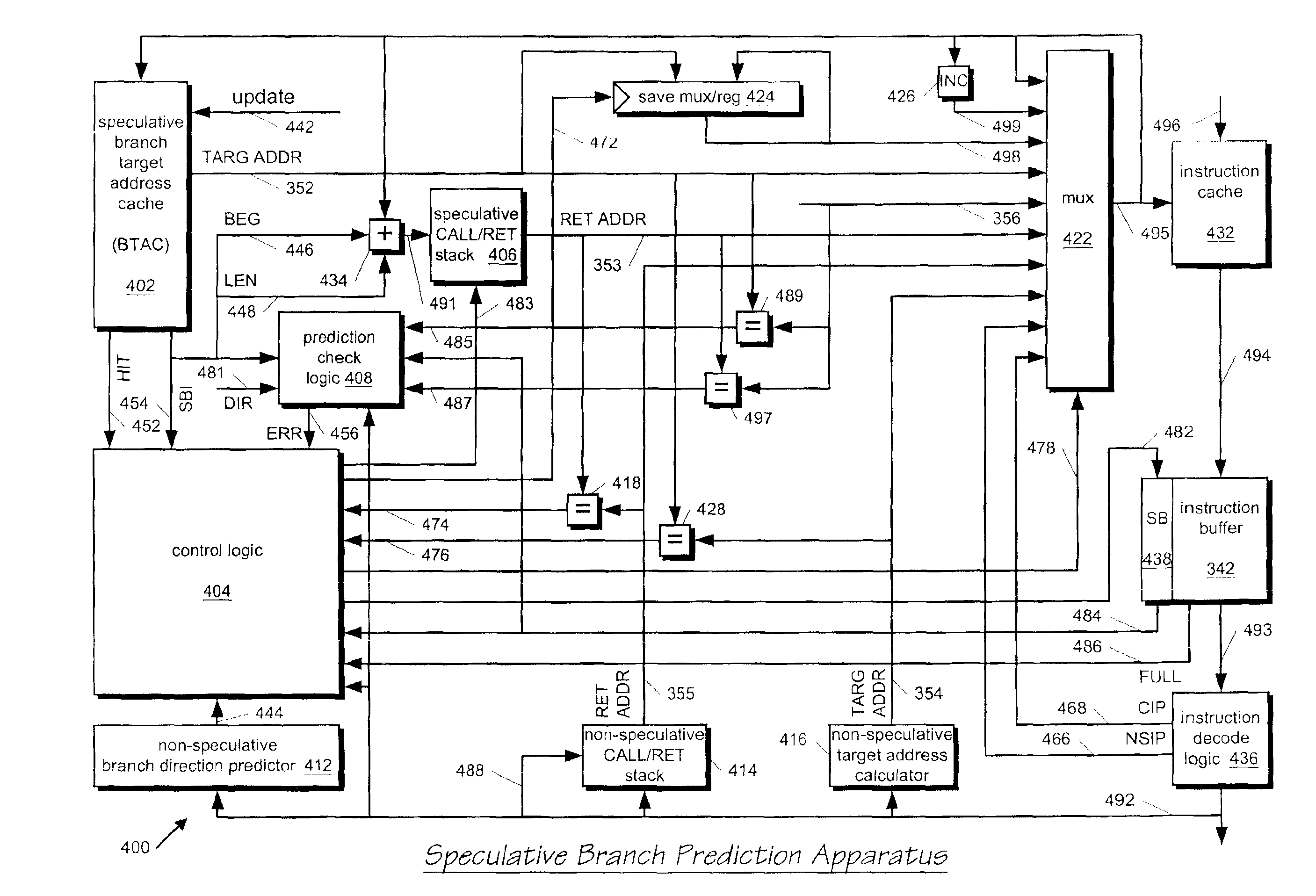

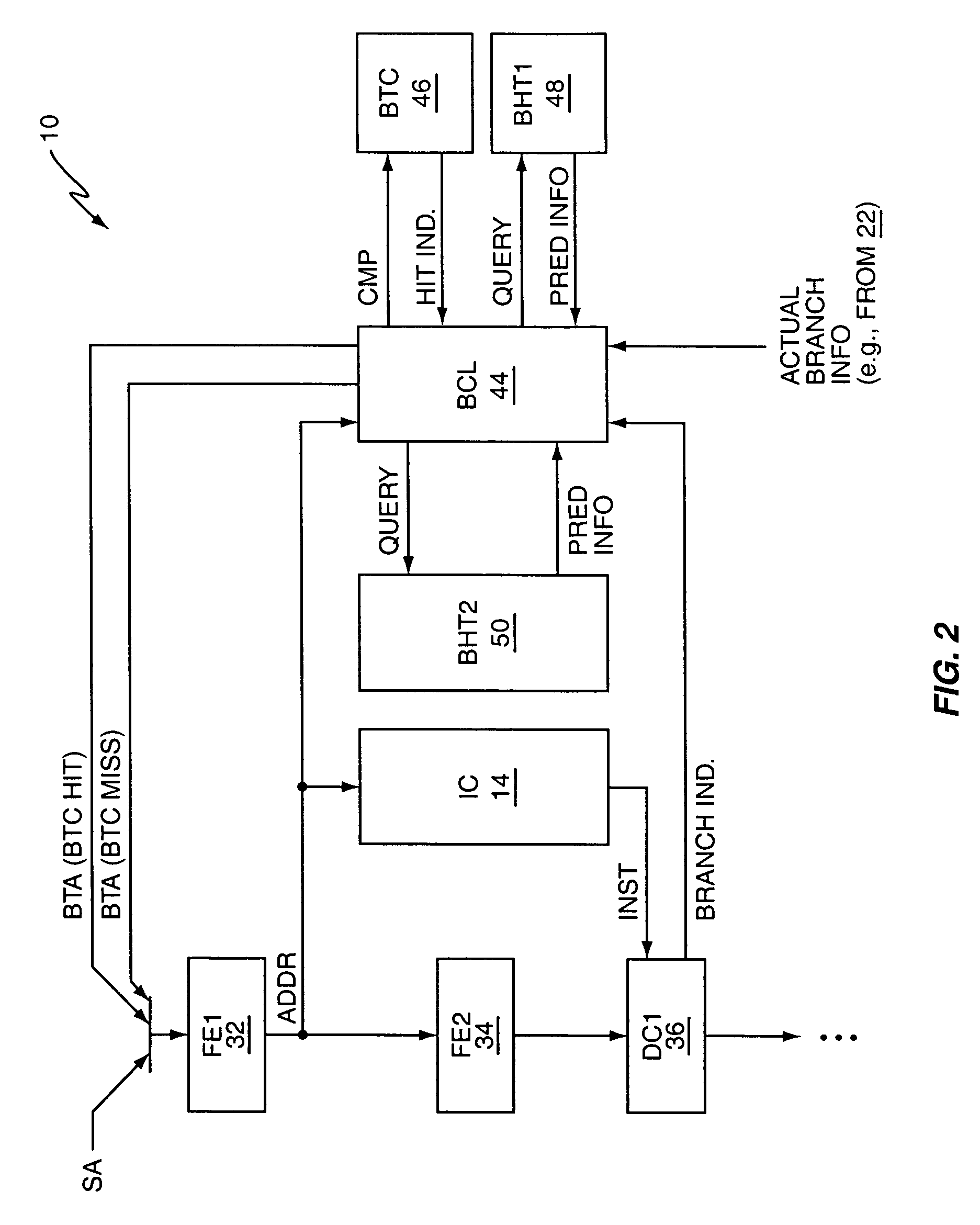

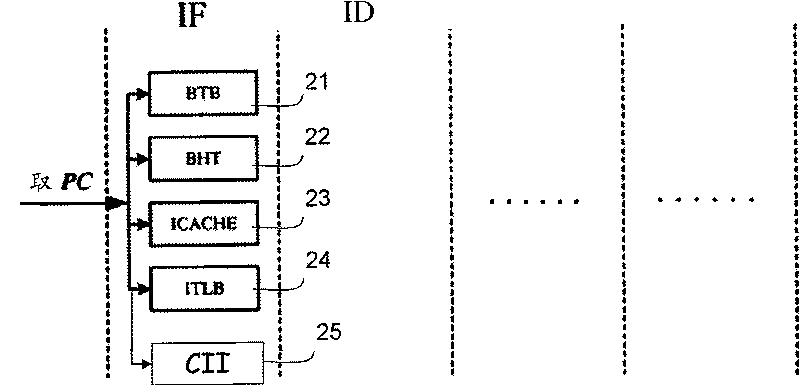

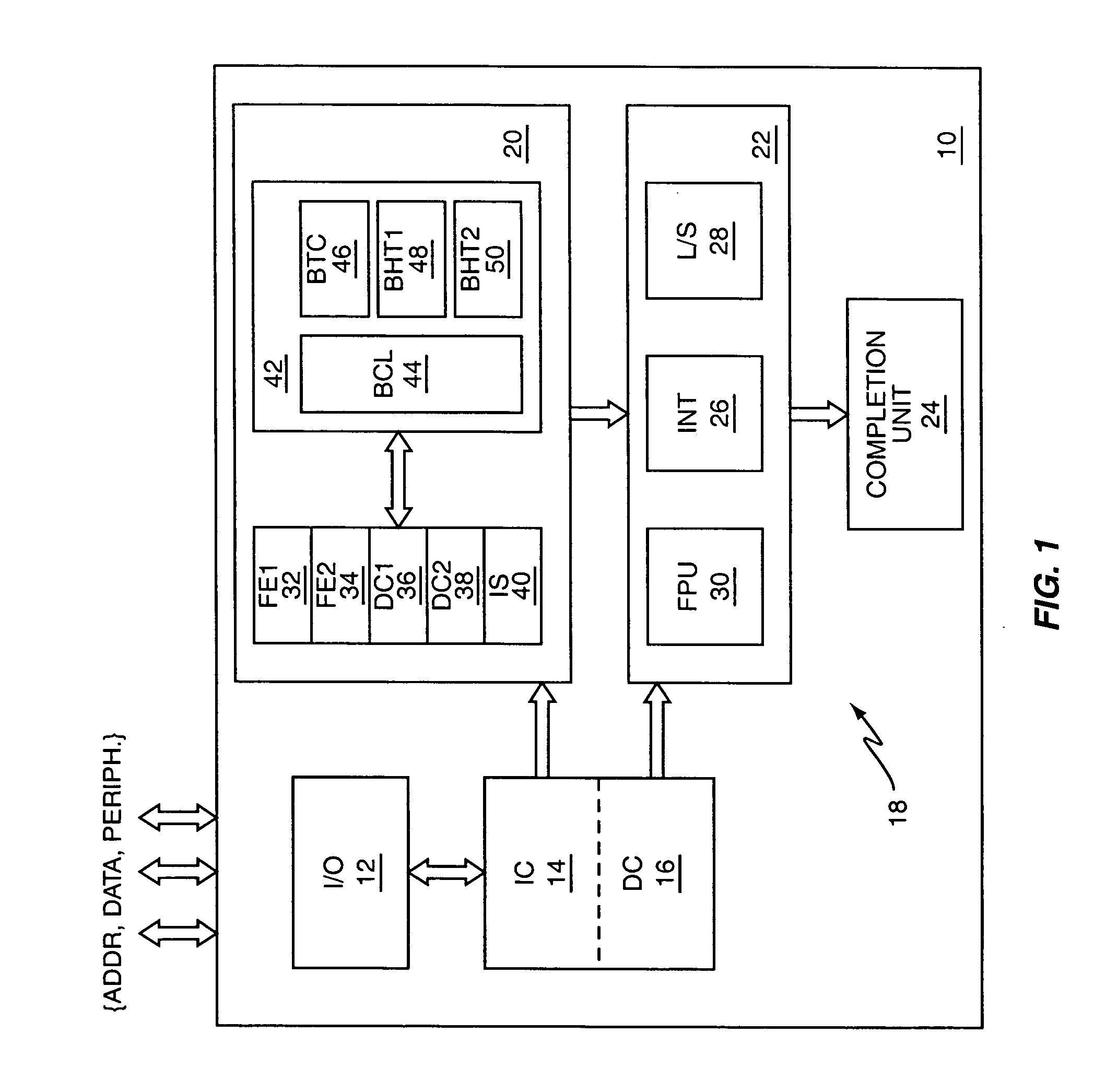

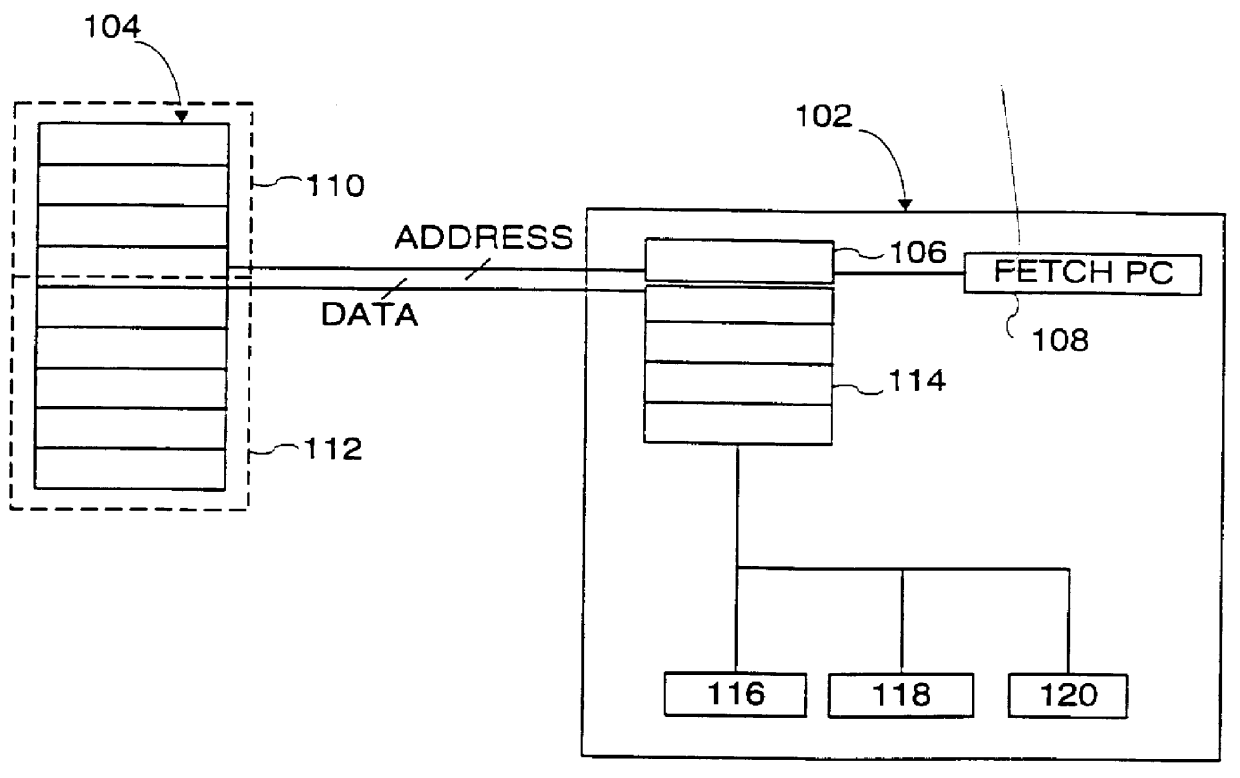

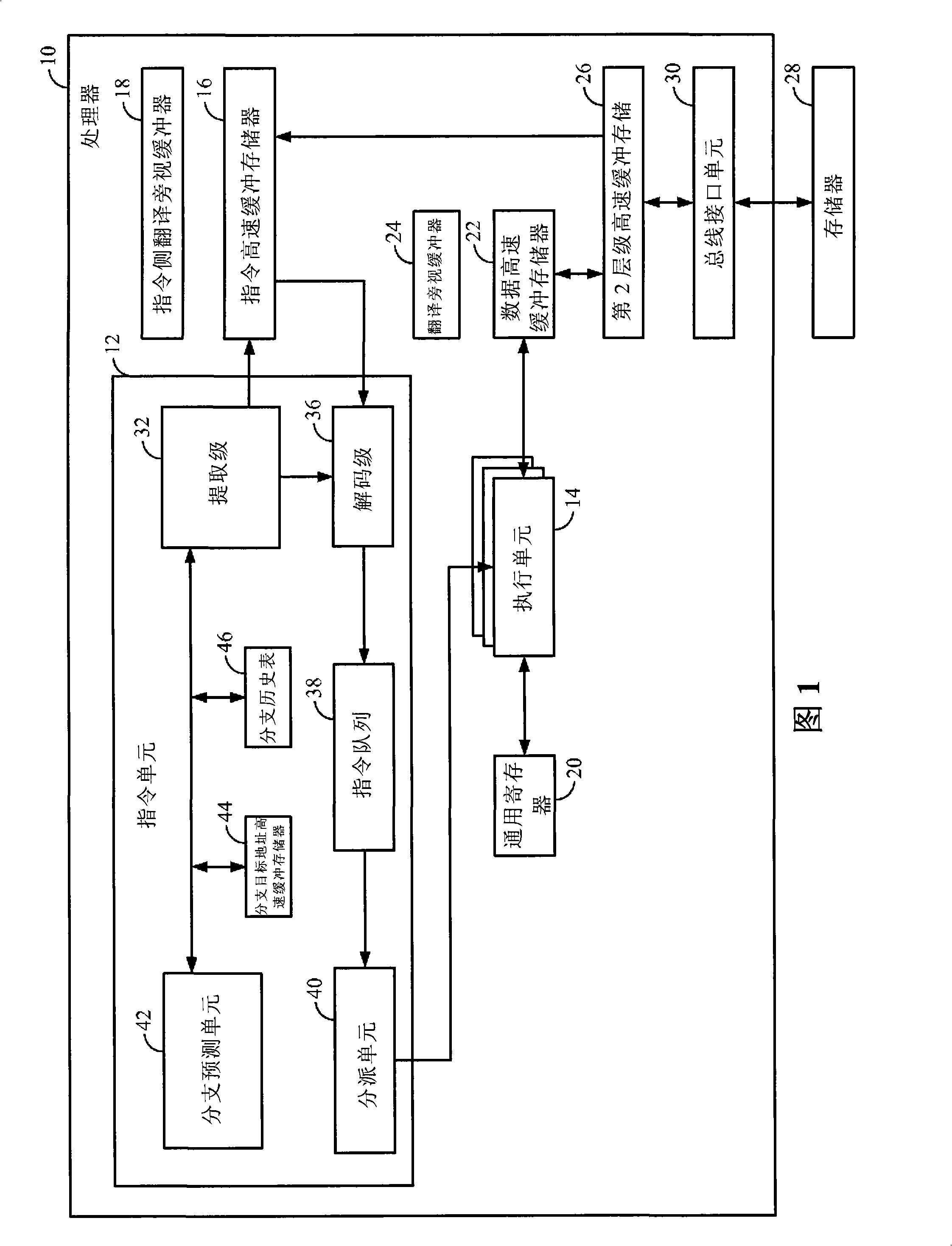

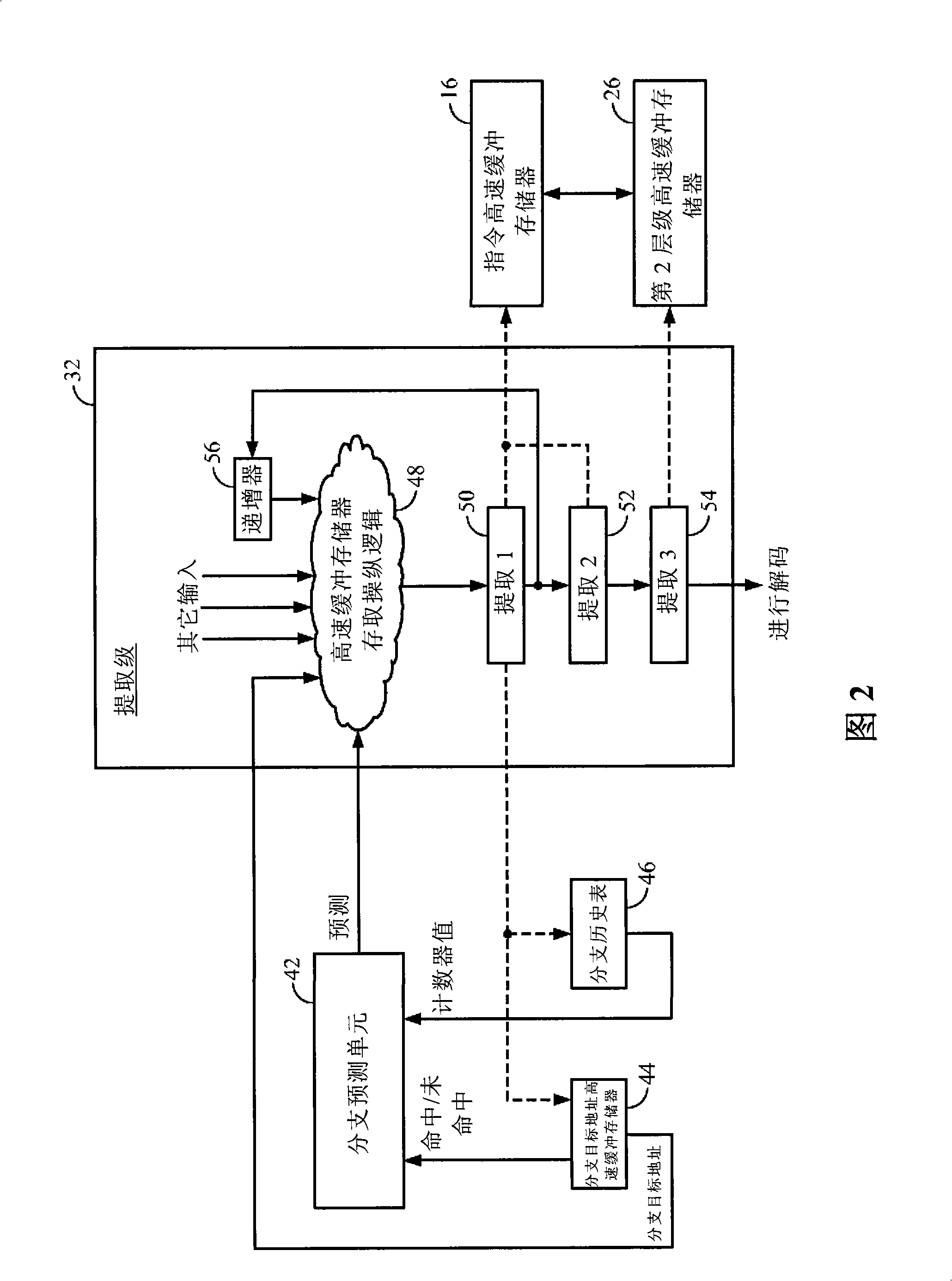

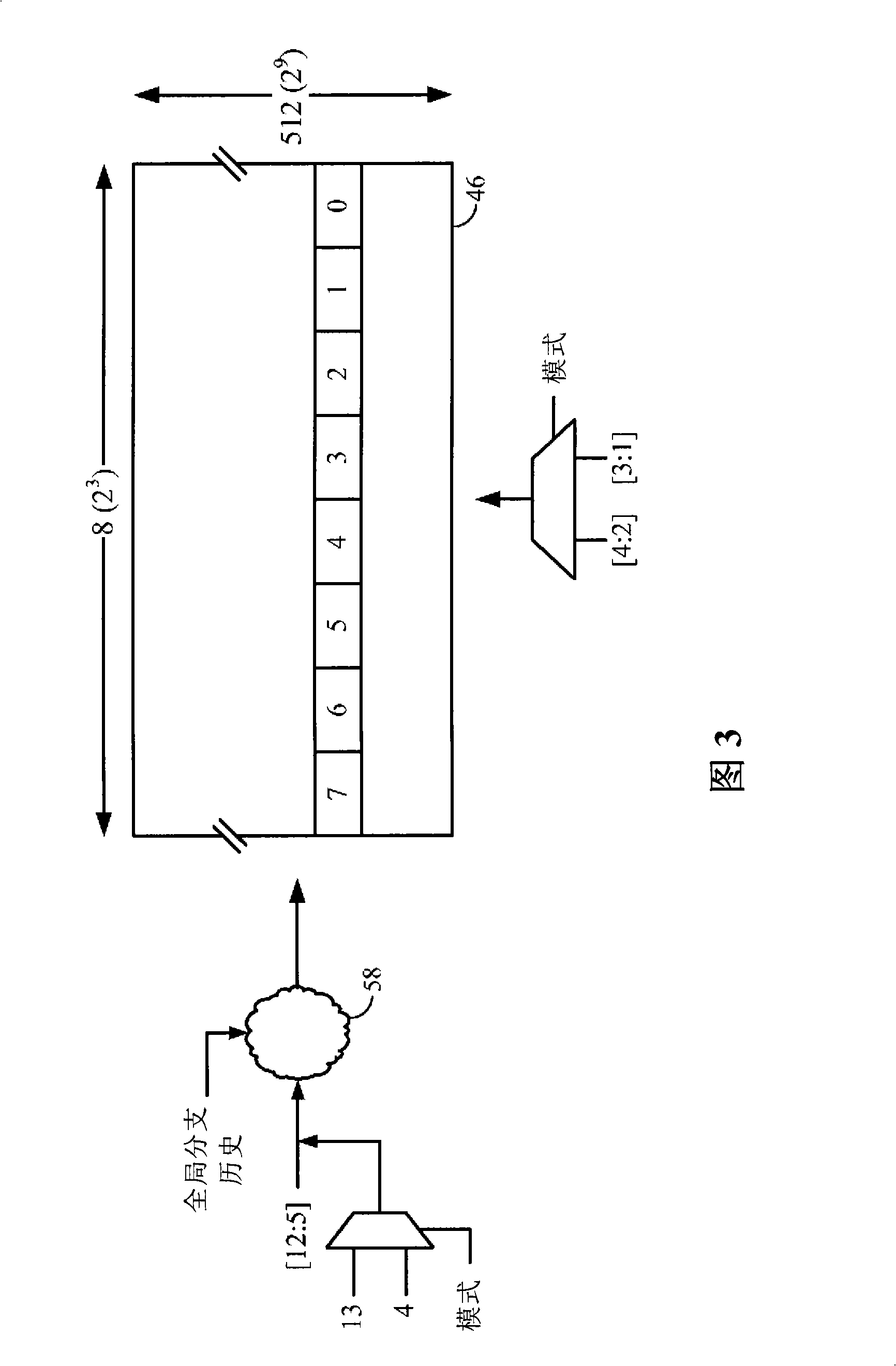

An apparatus for speculatively predicting the direction of a branch instruction in a pipelined microprocessor in a hybrid fashion. A branch target address cache (BTAC) stores a direction prediction about executed branch instructions. The BTAC is indexed by an instruction cache fetch address. The BTAC is accessed in parallel with the instruction cache access, such that the direction prediction is provided before the actual instruction is decoded which is presumed to be a branch instruction corresponding to the direction prediction stored in the BTAC. In parallel with the BTAC access, a branch history table (BHT) is accessed to provide a second speculative direction prediction. The BHT is indexed with a gshare function of the instruction cache fetch address and a global branch history stored in a global branch history register. The BTAC also provides a selector that selects between the two speculative direction predictions.

Owner:IP FIRST

Speculative hybrid branch direction predictor

InactiveUS6886093B2Improve accuracyReducing overall branch penaltyInstruction analysisDigital computer detailsCache accessProcessor register

An apparatus for speculatively predicting the direction of a branch instruction in a pipelined microprocessor in a hybrid fashion. A branch target address cache (BTAC) stores a direction prediction about executed branch instructions. The BTAC is indexed by an instruction cache fetch address. The BTAC is accessed in parallel with the instruction cache access, such that the direction prediction is provided before the actual instruction is decoded which is presumed to be a branch instruction corresponding to the direction prediction stored in the BTAC. In parallel with the BTAC access, a branch history table (BHT) is accessed to provide a second speculative direction prediction. The BHT is indexed with a gshare function of the instruction cache fetch address and a global branch history stored in a global branch history register. The BTAC also provides a selector that selects between the two speculative direction predictions.

Owner:IP FIRST

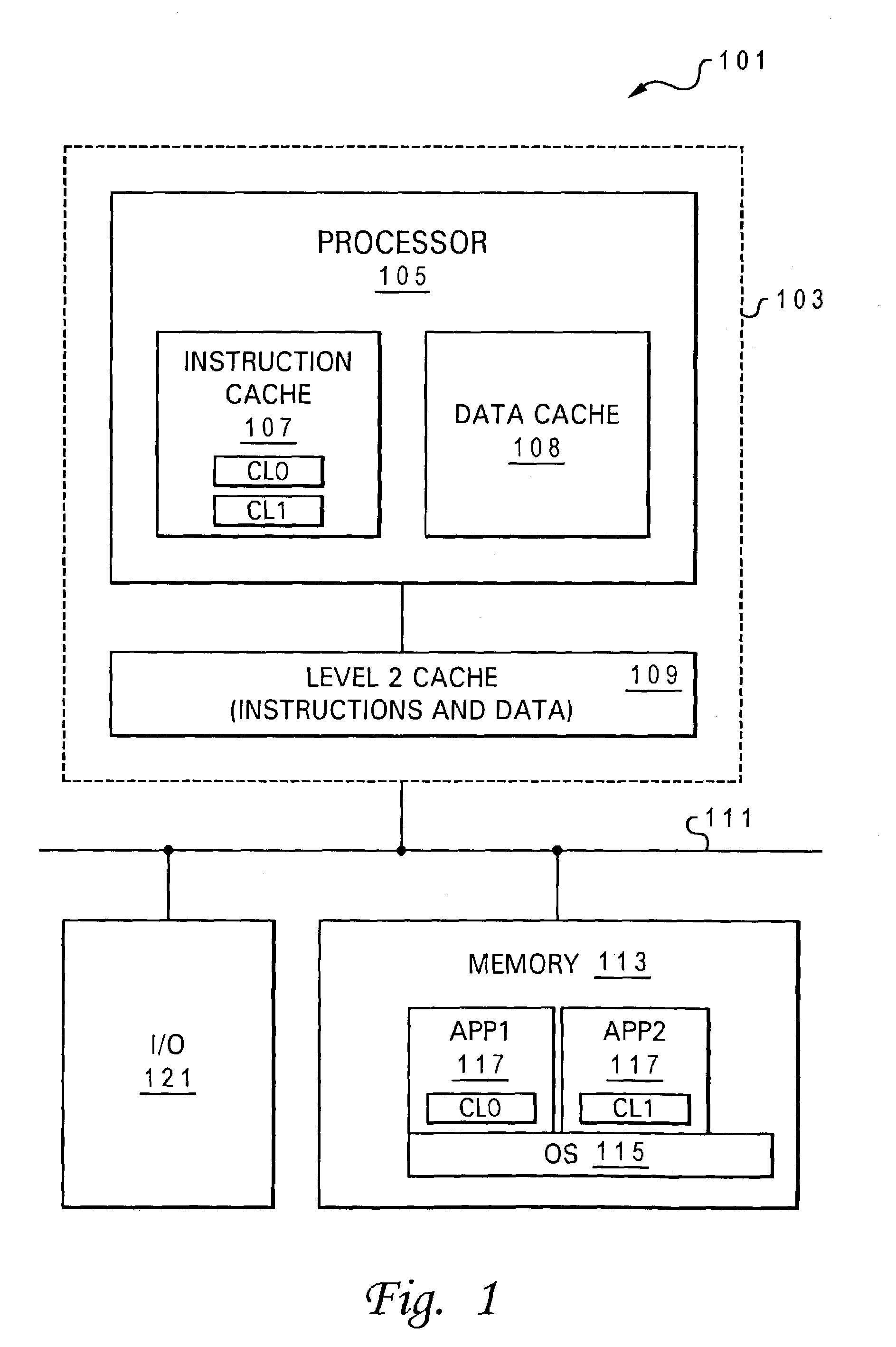

Branch prediction apparatus and process for restoring replaced branch history for use in future branch predictions for an executing program

InactiveUS6877089B2Saving and fast utilizationImprove programming speedDigital computer detailsConcurrent instruction executionParallel computingSemiconductor

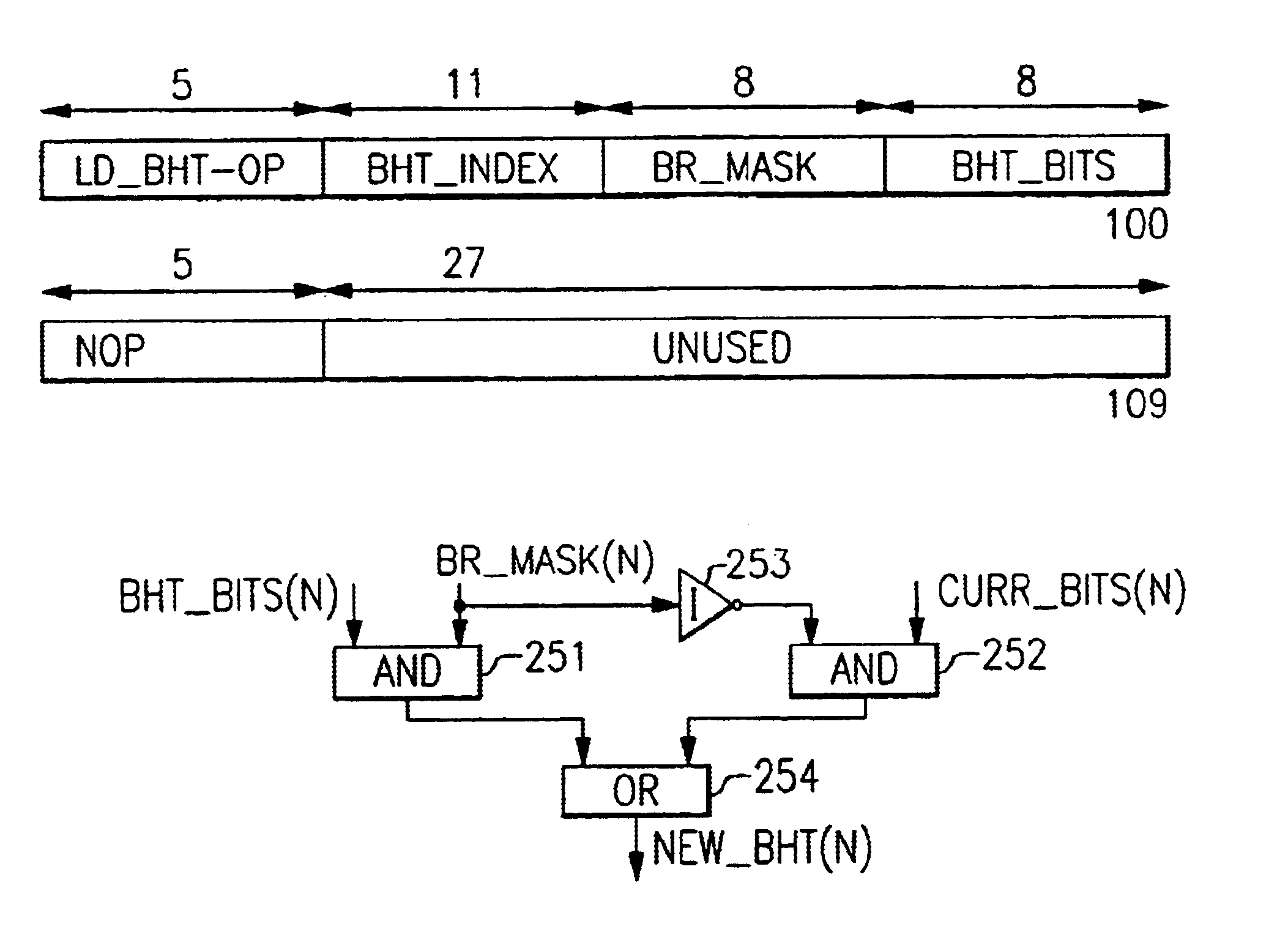

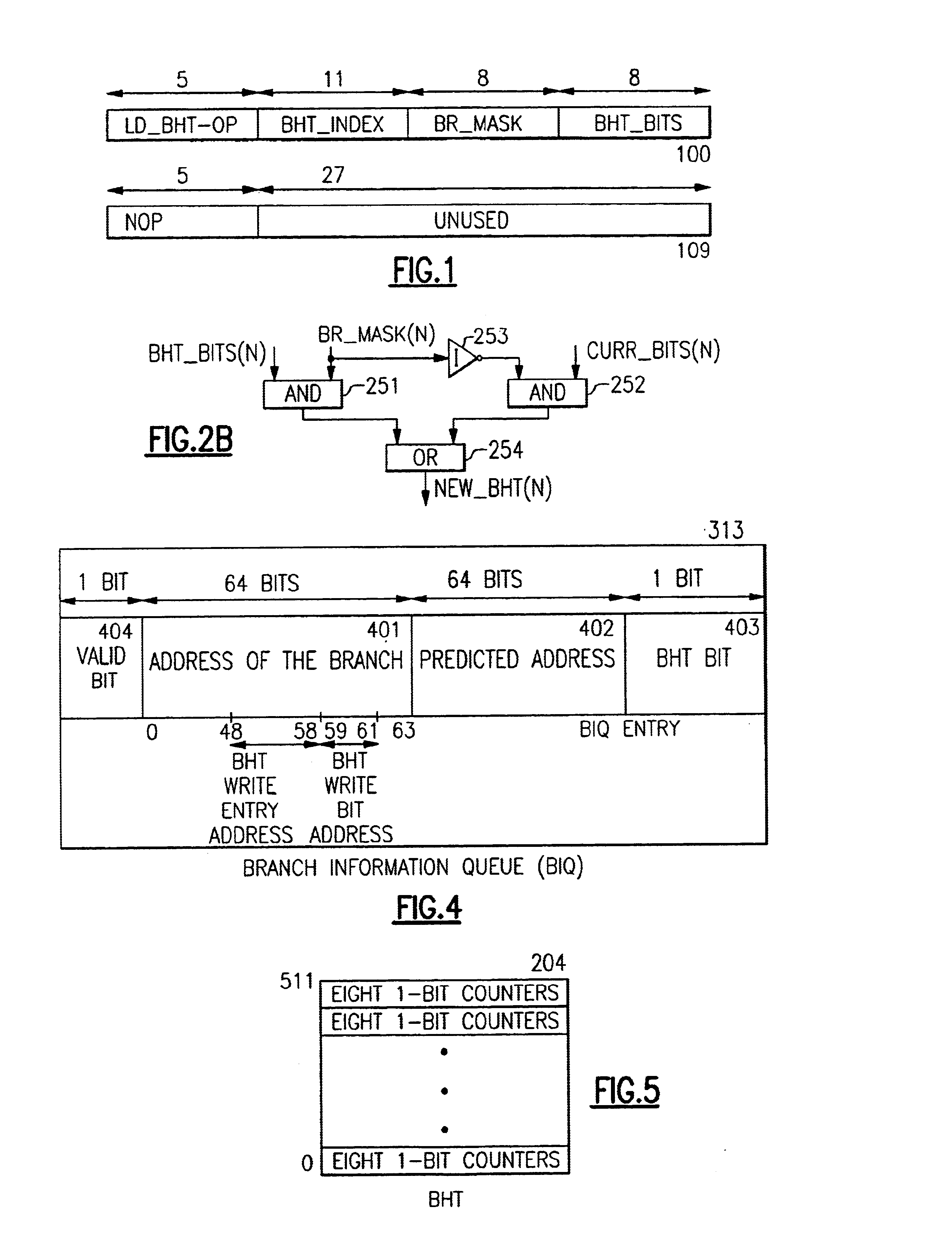

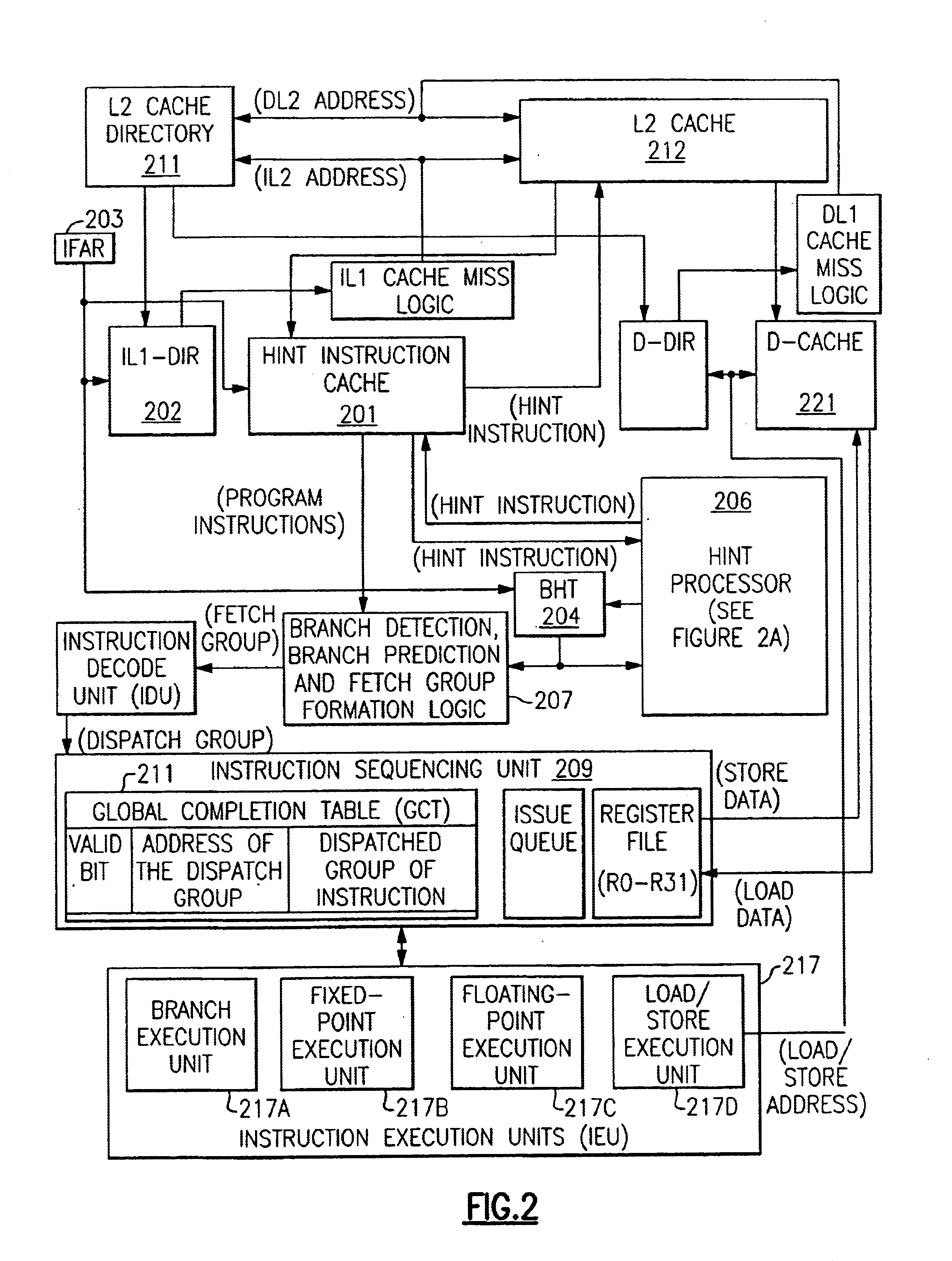

Apparatus and methods implemented in a processor semiconductor logic chip for providing novel “hint instructions” that uniquely preserve and reuse branch predictions replaced in a branch history table (BHT). A branch prediction is lost in the BHT after its associated instruction is replaced in an instruction cache. The unique “hint instructions” are generated and stored in a unique instruction cache which associates each hint instruction with a line of instructions. The hint instructions contains the latest branch history for all branch instructions executed in an associated line of instructions, and they are stored in the instruction cache during instruction cache hits in the associated line. During an instruction cache miss in an instruction line, the associated hint instruction is stored in a second level cache with a copy of the associated instruction line being replaced in the instruction cache. In the second level cache, the copy of the line is located through the instruction cache directory entry associated with the line being replaced in the instruction cache. Later, the hint instruction can be retrieved into the instruction cache when its associated instruction line is fetched from the second level cache, and then its associated hint instruction is also retrieved and used to restore the latest branch predictions for that instruction line. In the prior art this branch prediction would have been lost. It is estimated that this invention improves program performance for each replaced branch prediction by about 80%, due to increasing the probability of BHT bits correctly predicting the branch paths in the program from about 50% to over 90%. Each incorrect BHT branch prediction may result in the loss of many execution cycles, resulting in additional instruction re-execution overhead when incorrect branch paths are belatedly discovered.

Owner:IBM CORP

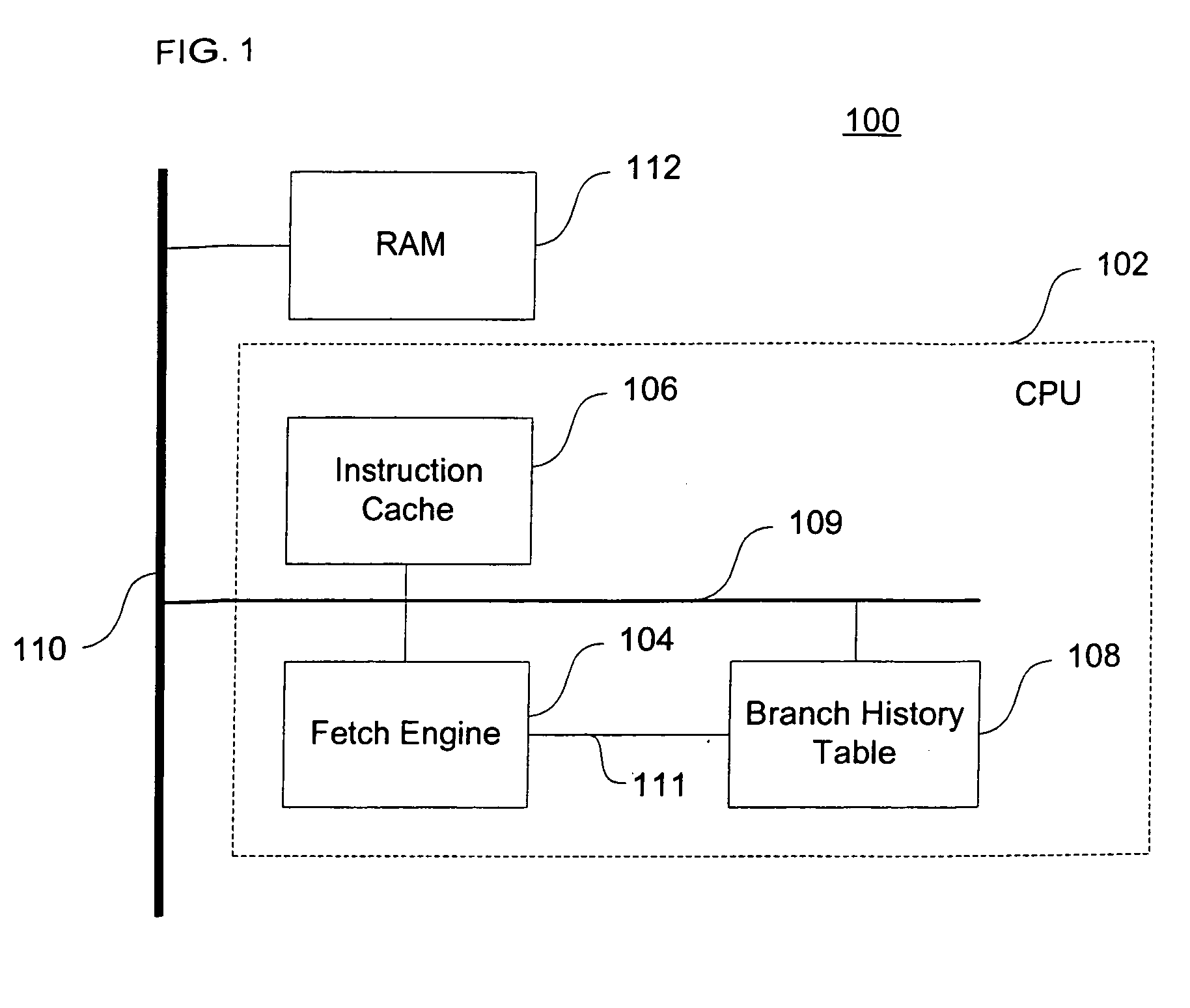

Method and apparatus for prefetching branch history information

InactiveUS7493480B2Digital computer detailsConcurrent instruction executionParallel computingCycle time

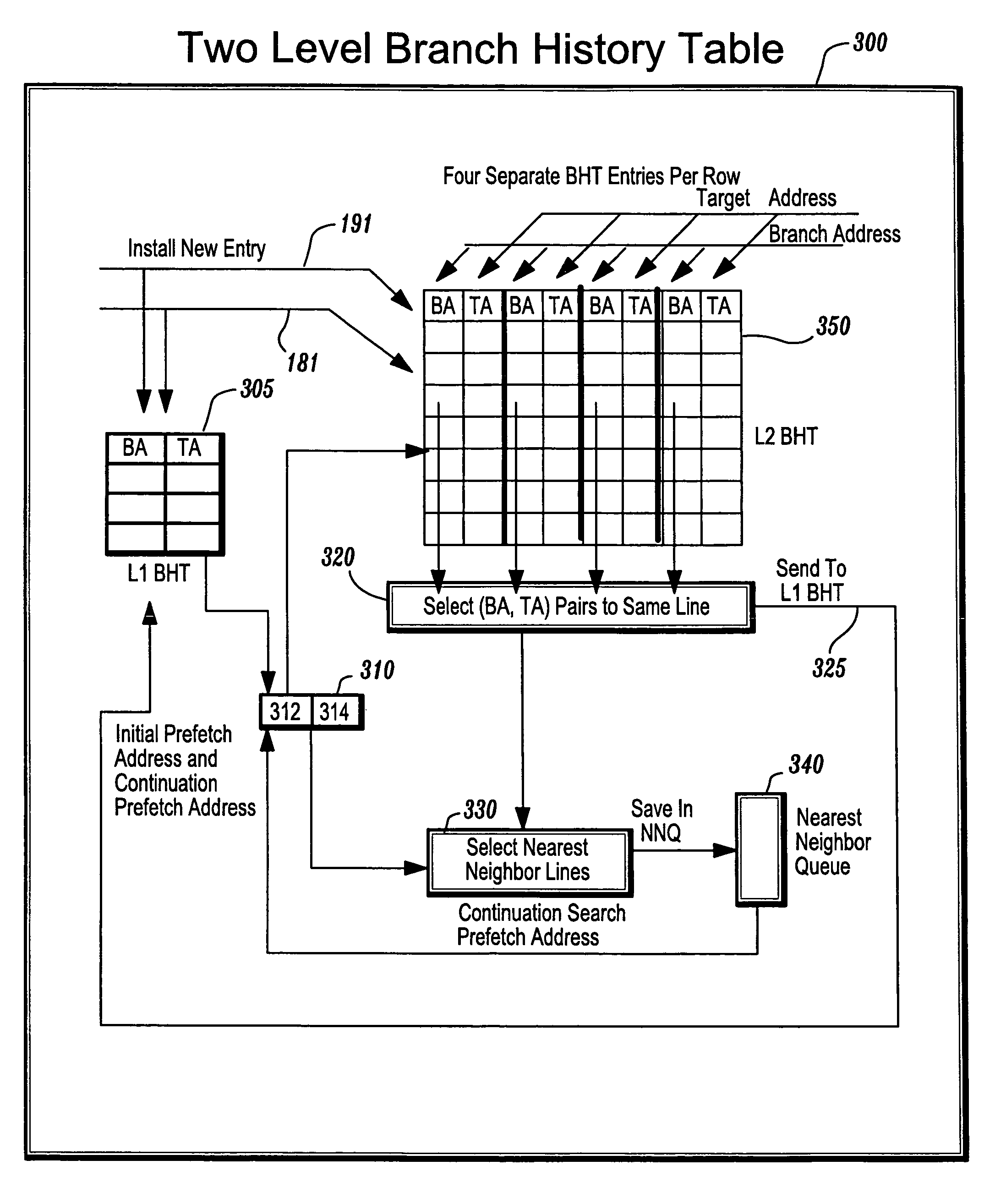

A two level branch history table (TLBHT) is substantially improved by providing a mechanism to prefetch entries from the very large second level branch history table (L2 BHT) into the active (very fast) first level branch history table (L1 BHT) before the processor uses them in the branch prediction process and at the same time prefetch cache misses into the instruction cache. The mechanism prefetches entries from the very large L2 BHT into the very fast L1 BHT before the processor uses them in the branch prediction process. A TLBHT is successful because it can prefetch branch entries into the L1 BHT sufficiently ahead of the time the entry is needed. This feature of the TLBHT is also used to prefetch instructions into the cache ahead of their use. In fact, the timeliness of the prefetches produced by the TLBHT can be used to remove most of the cycle time penalty incurred by cache misses.

Owner:IBM CORP

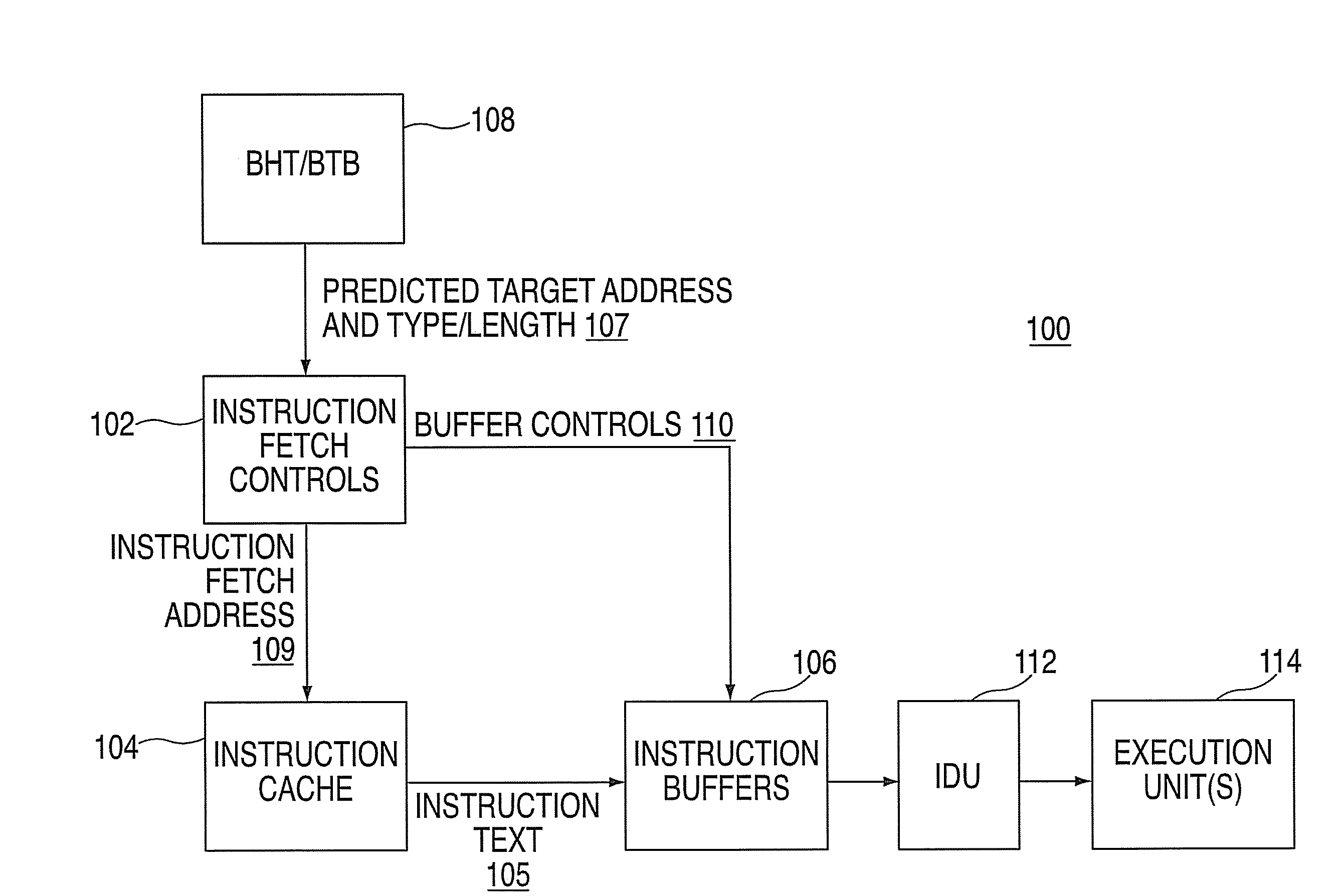

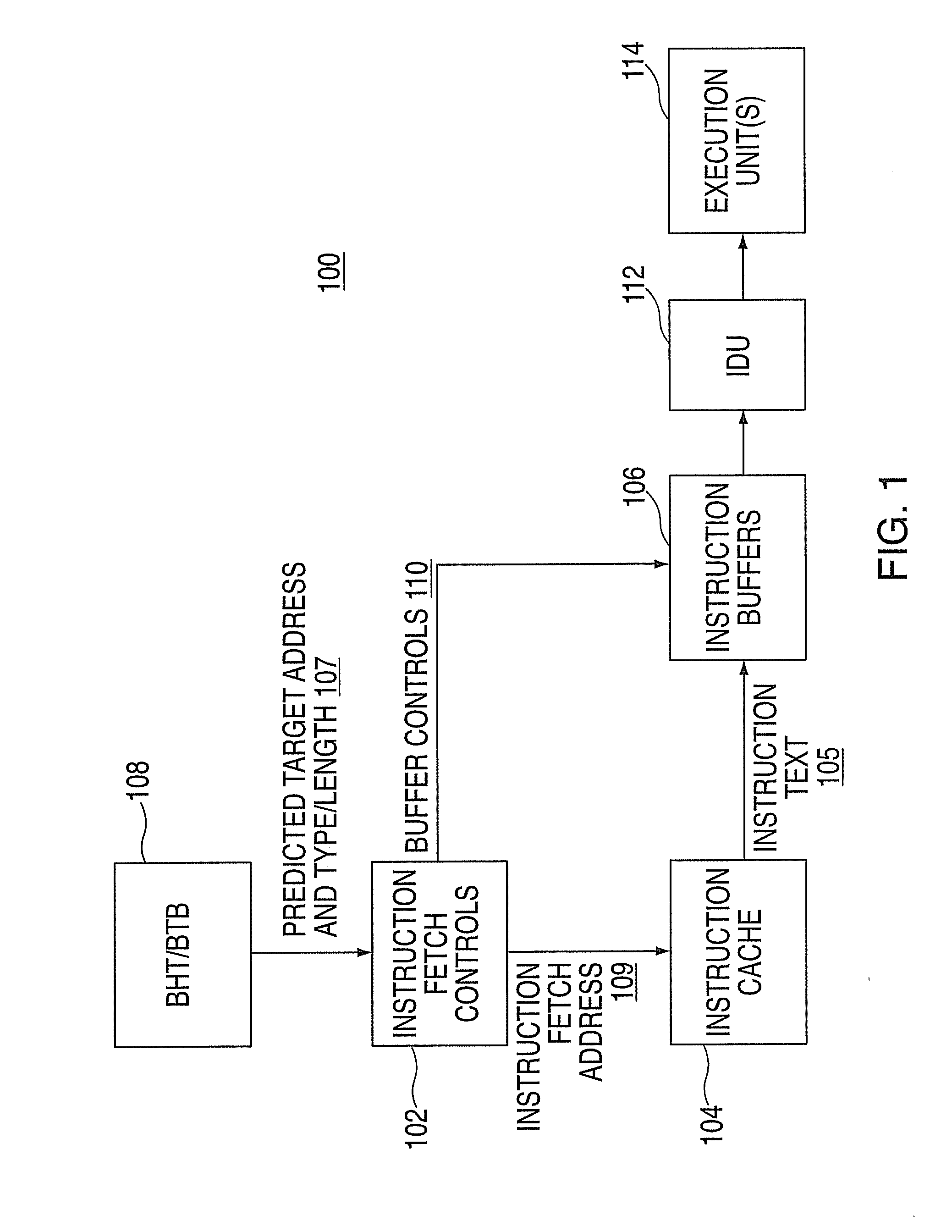

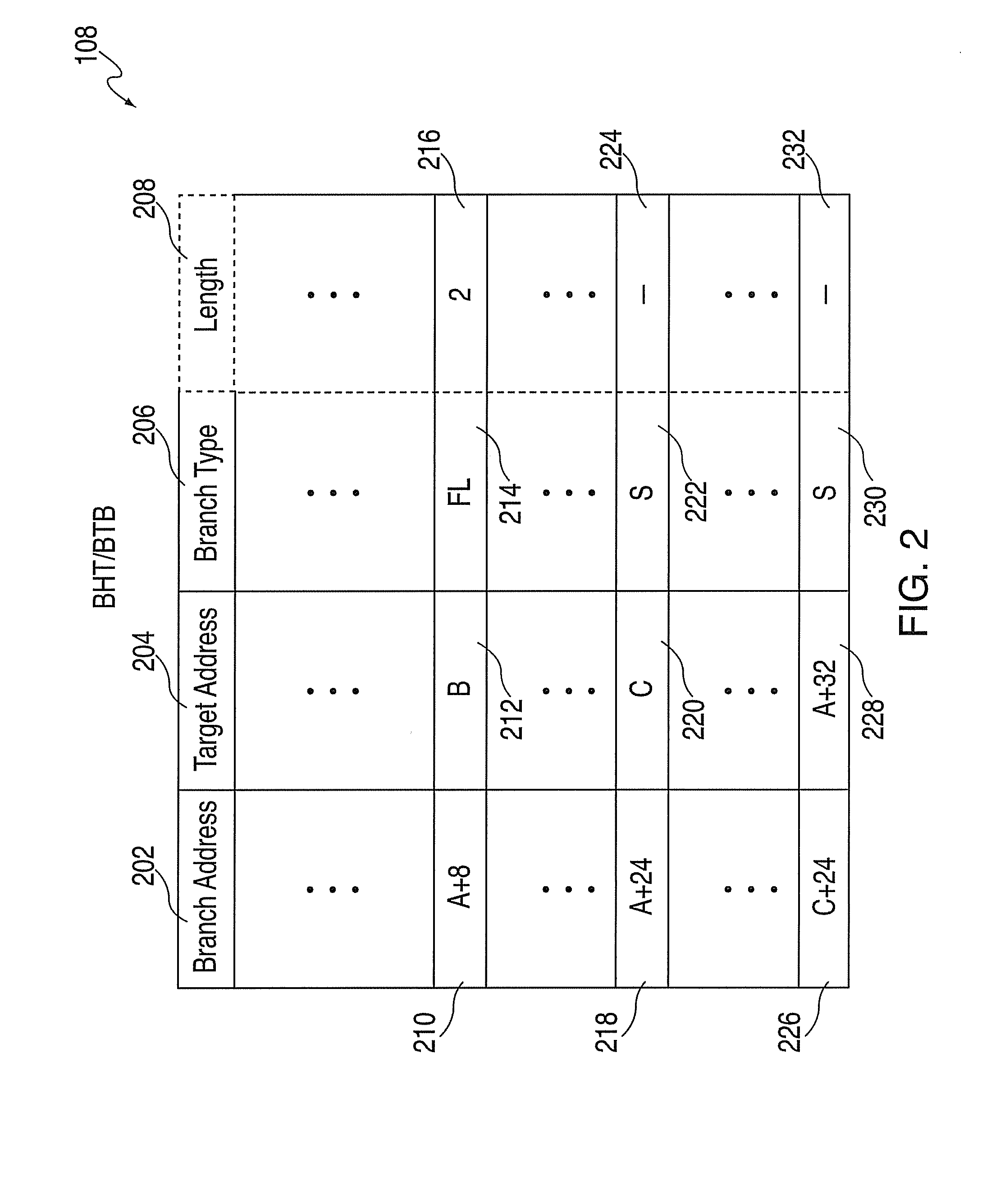

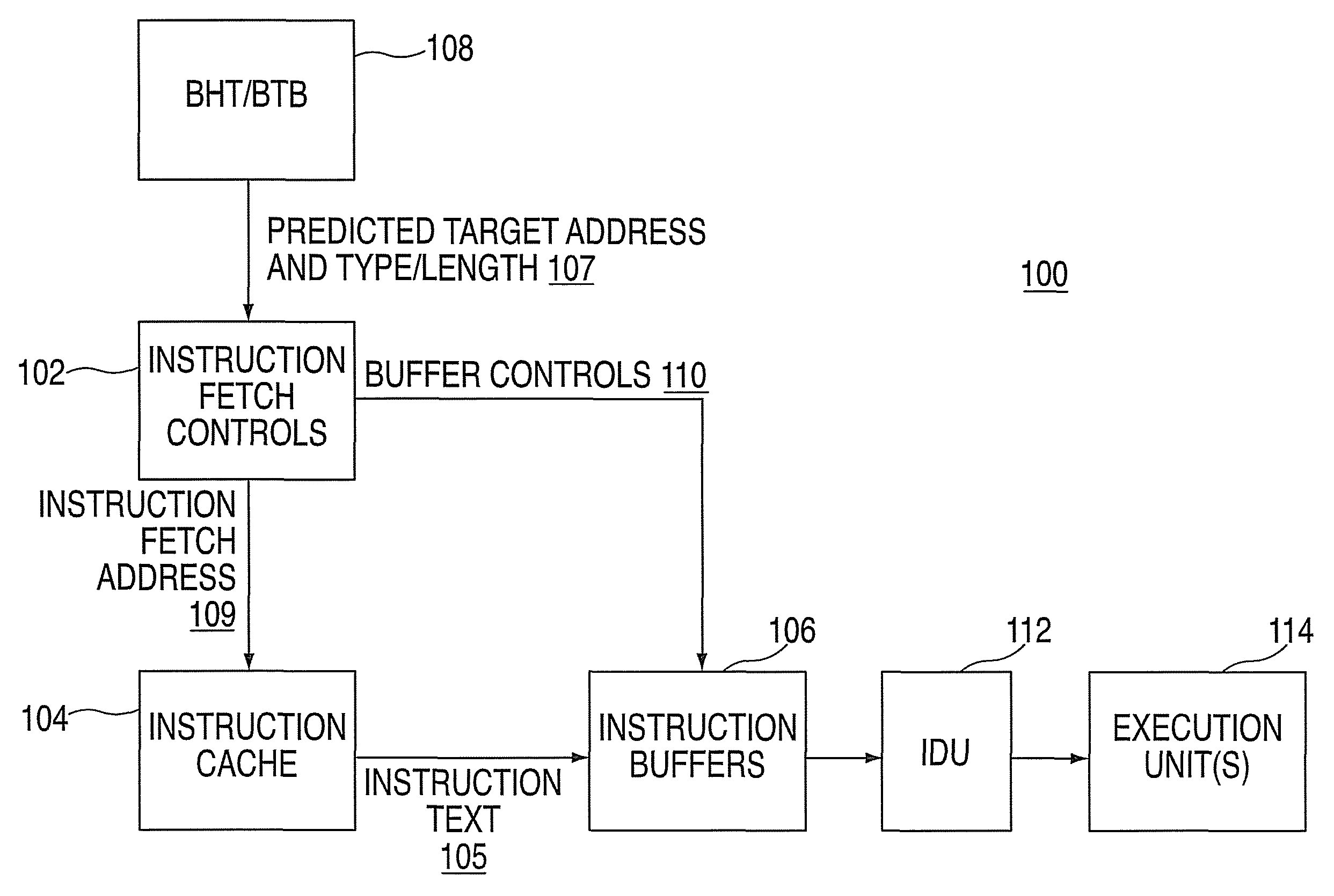

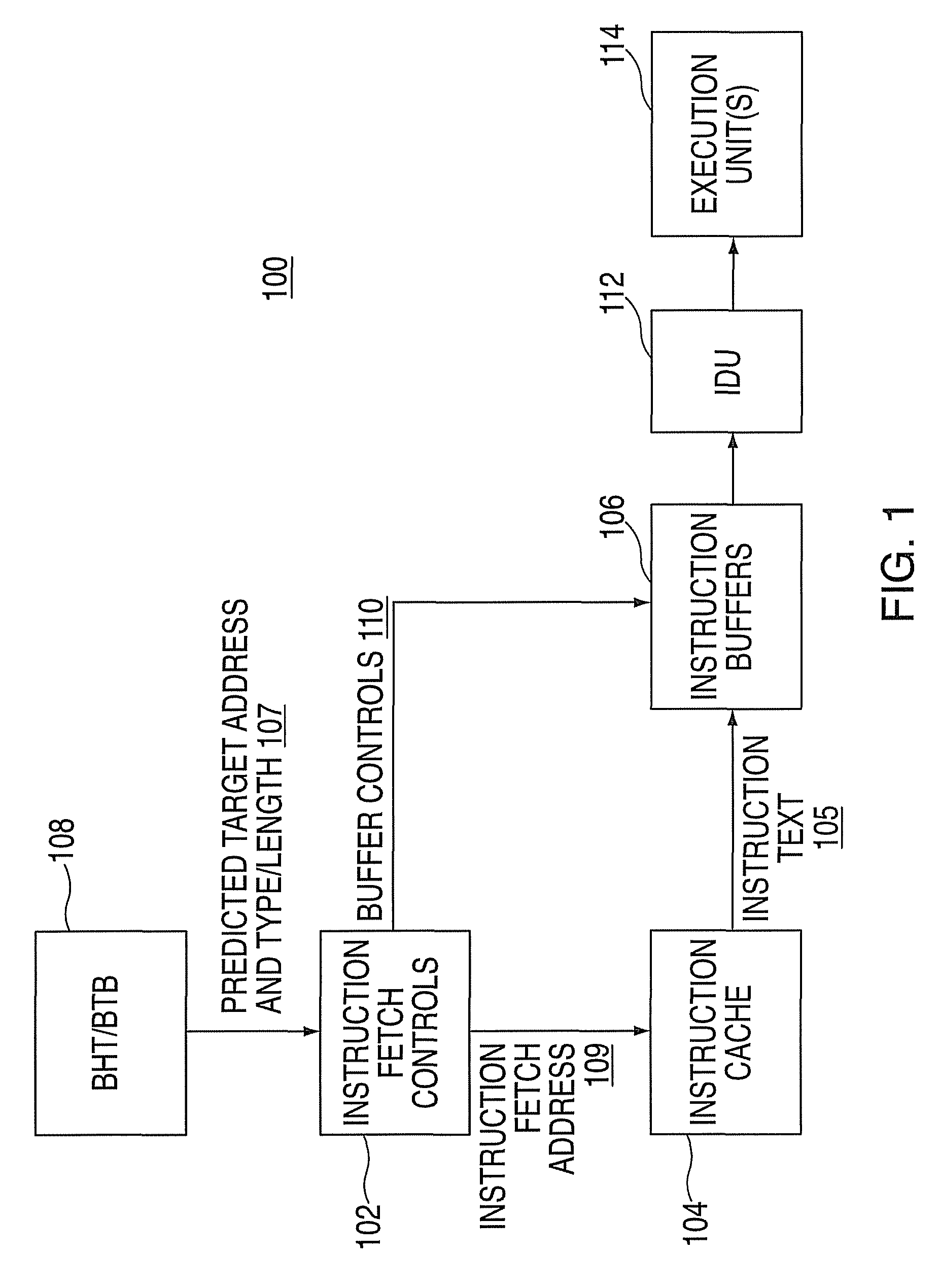

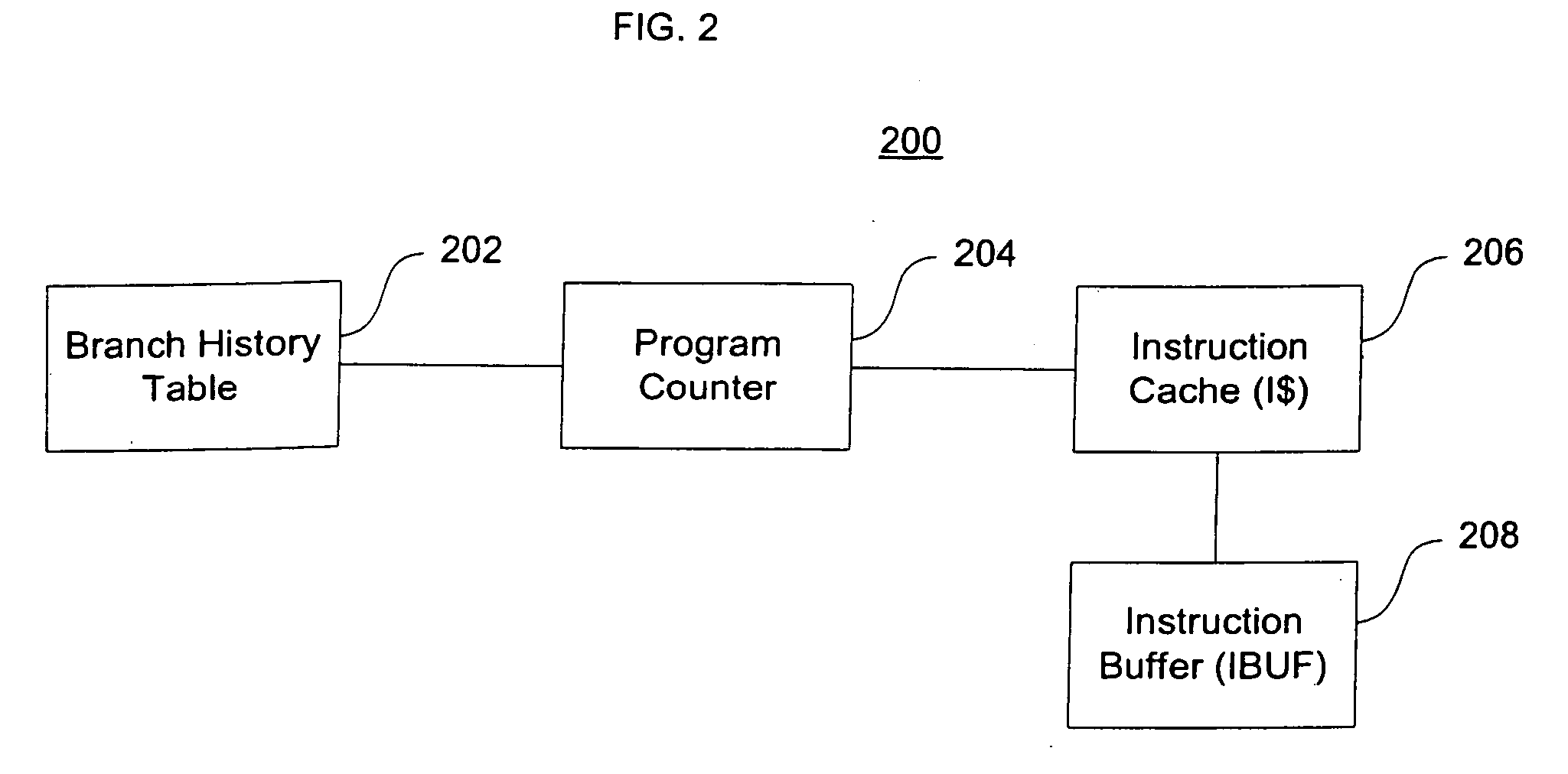

Method, system and computer program product for an implicit predicted return from a predicted subroutine

InactiveUS20090210661A1Digital computer detailsSpecific program execution arrangementsParallel computingInstruction buffer

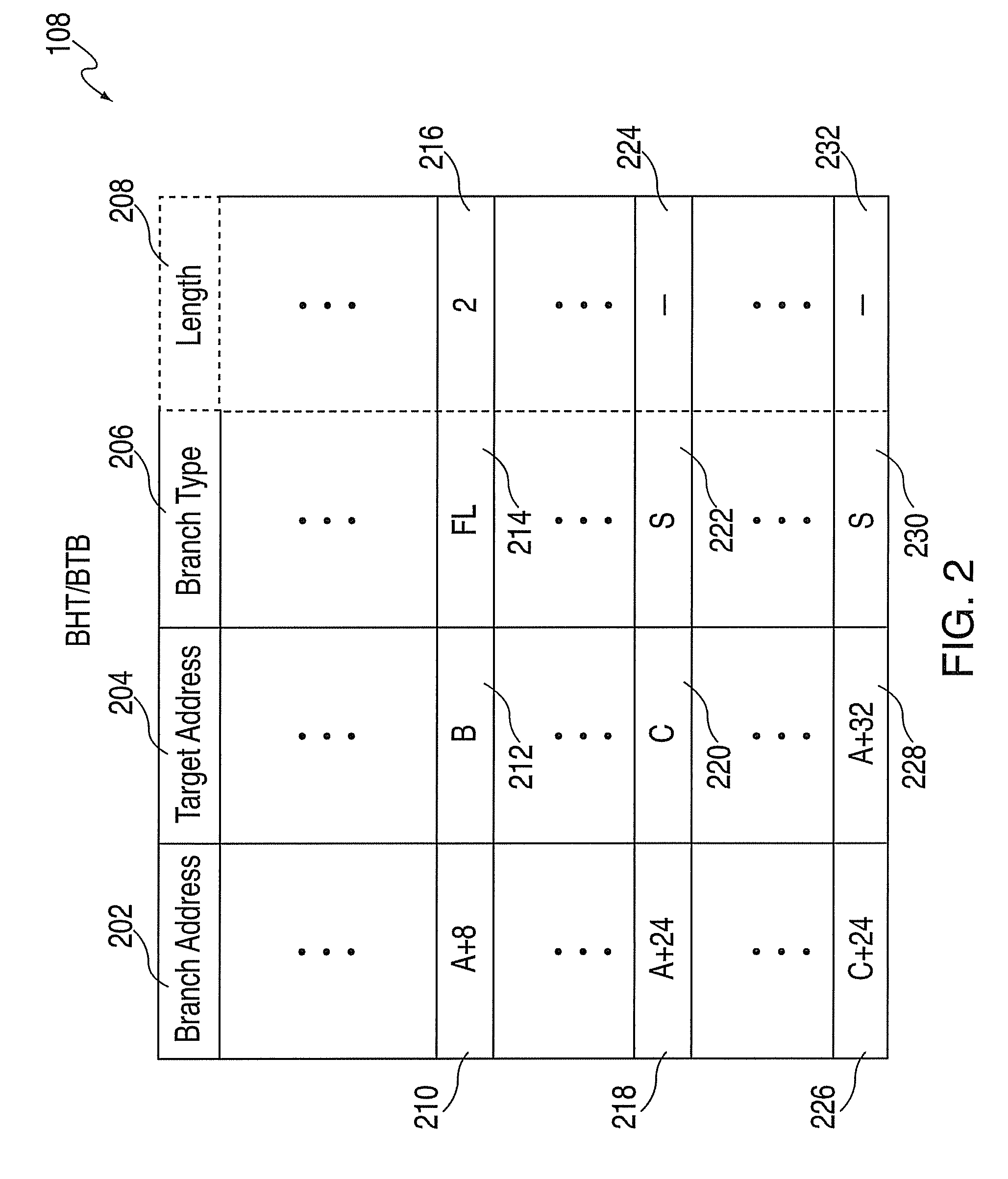

A method, system and computer program product for performing an implicit predicted return from a predicted subroutine are provided. The system includes a branch history table / branch target buffer (BHT / BTB) to hold branch information, including a target address of a predicted subroutine and a branch type. The system also includes instruction buffers, and instruction fetch controls to perform a method including fetching a branch instruction at a branch address and a return-point instruction. The method also includes receiving the target address and the branch type, and fetching a fixed number of instructions in response to the branch type. The method further includes referencing the return-point instruction within the instruction buffers such that the return-point instruction is available upon completing the fetching of the fixed number of instructions absent a re-fetch of the return-point instruction.

Owner:IBM CORP

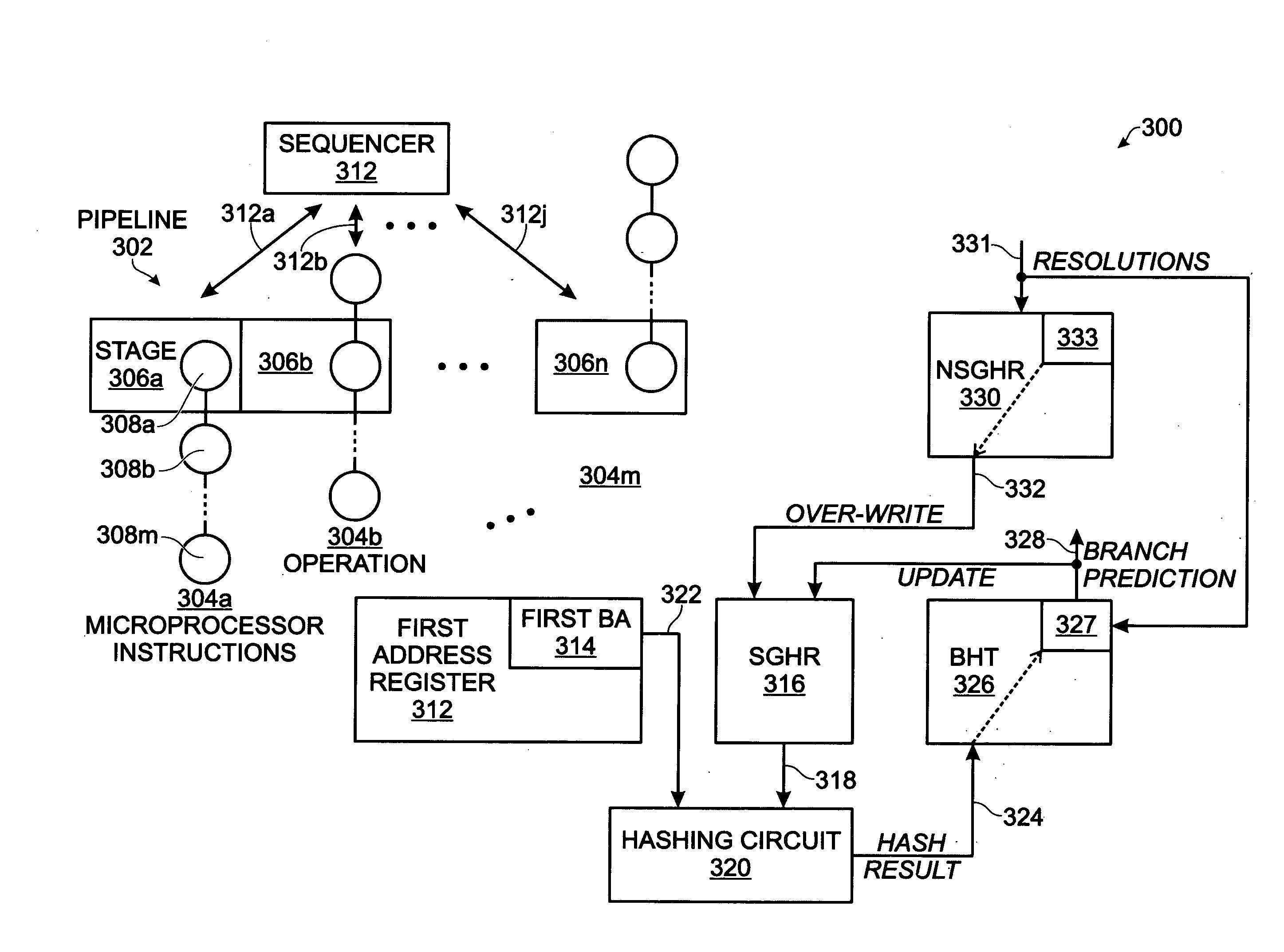

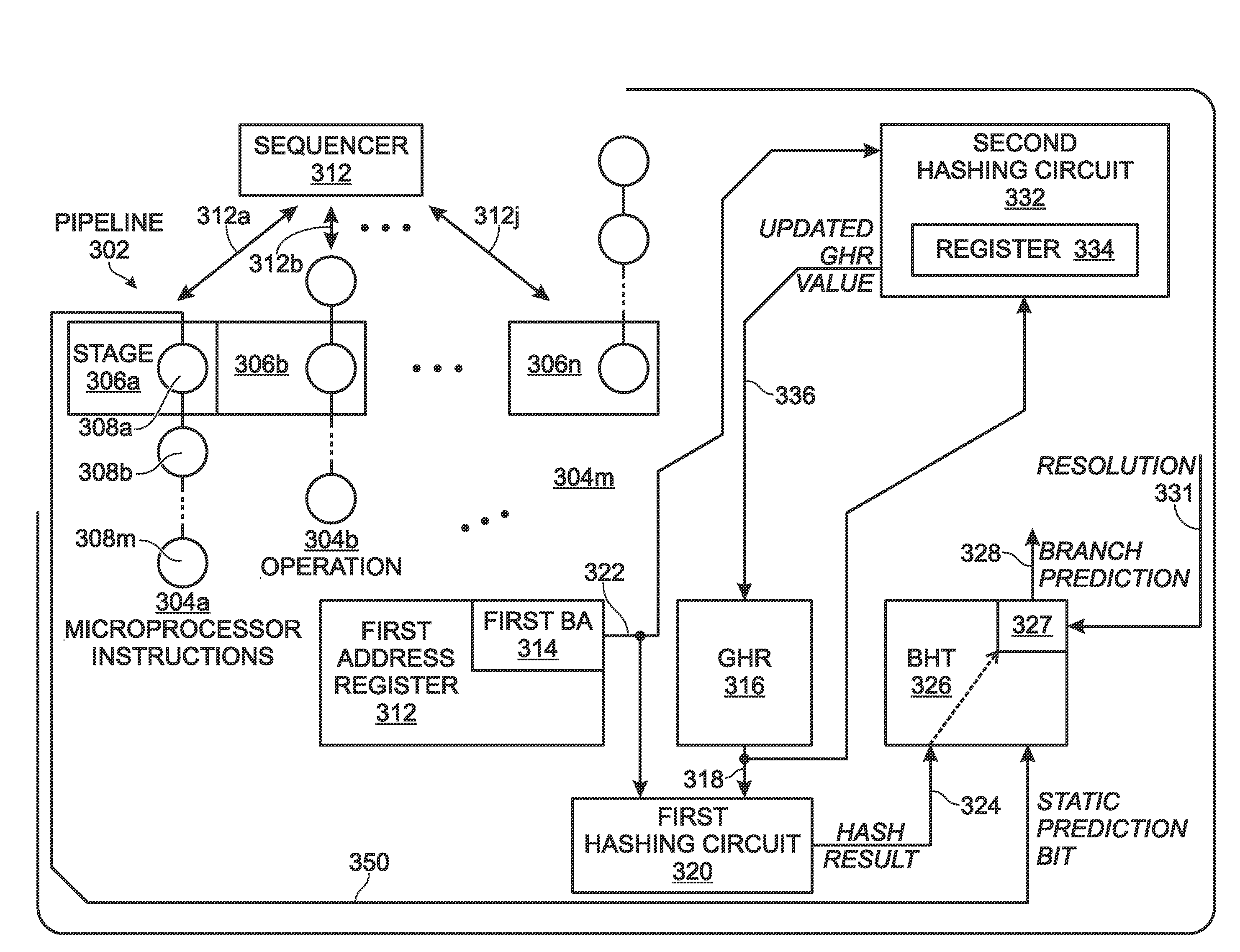

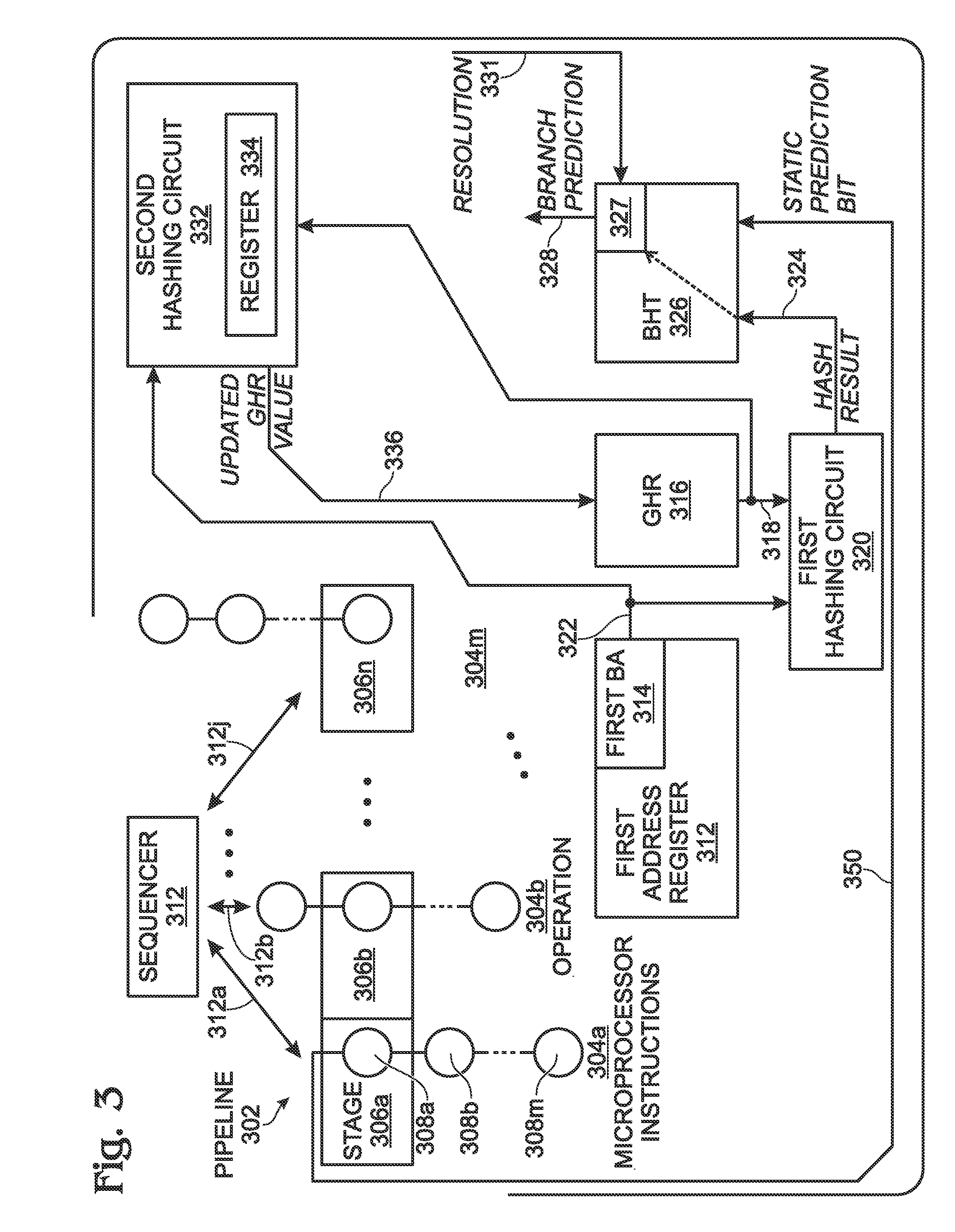

System and method for speculative global history prediction updating

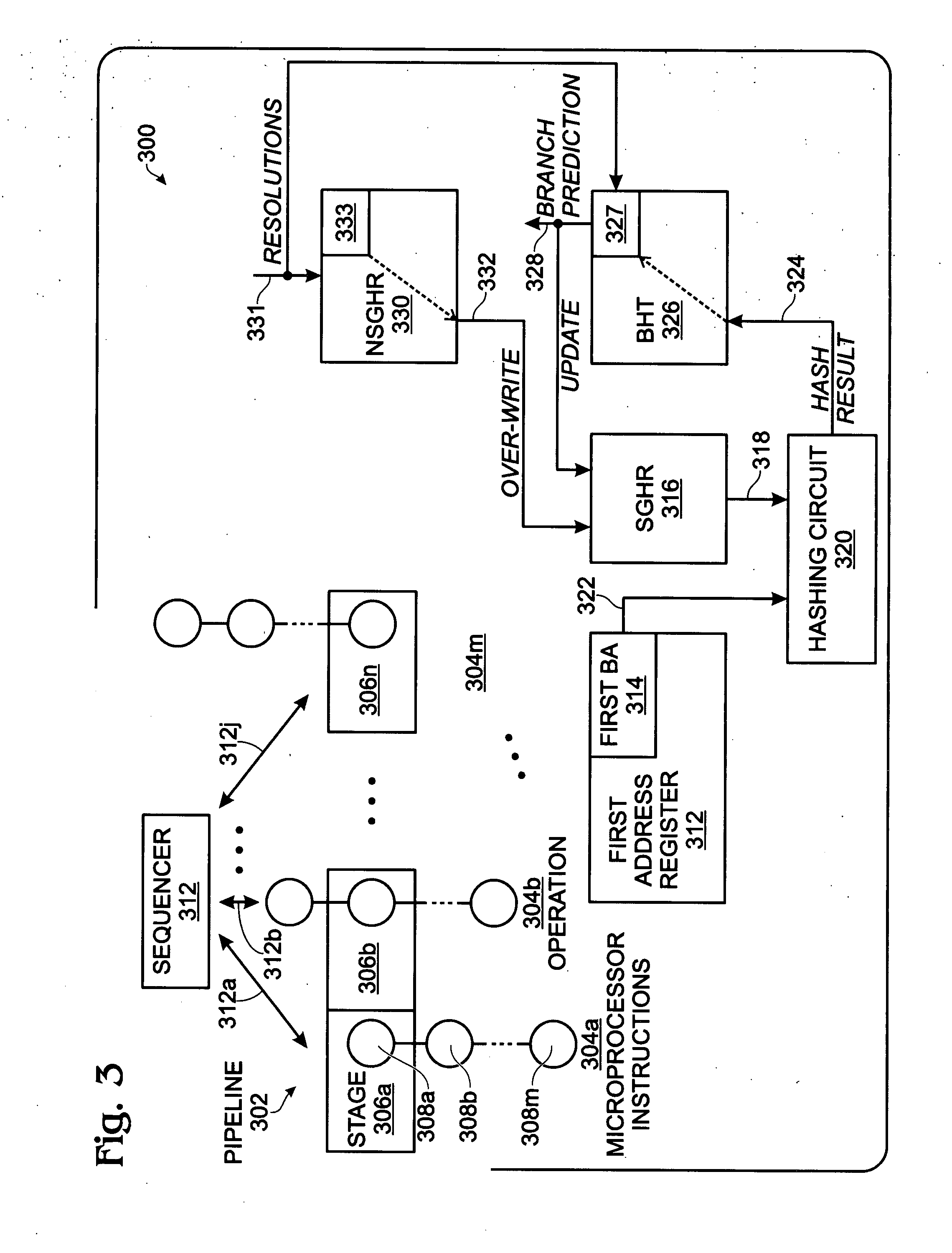

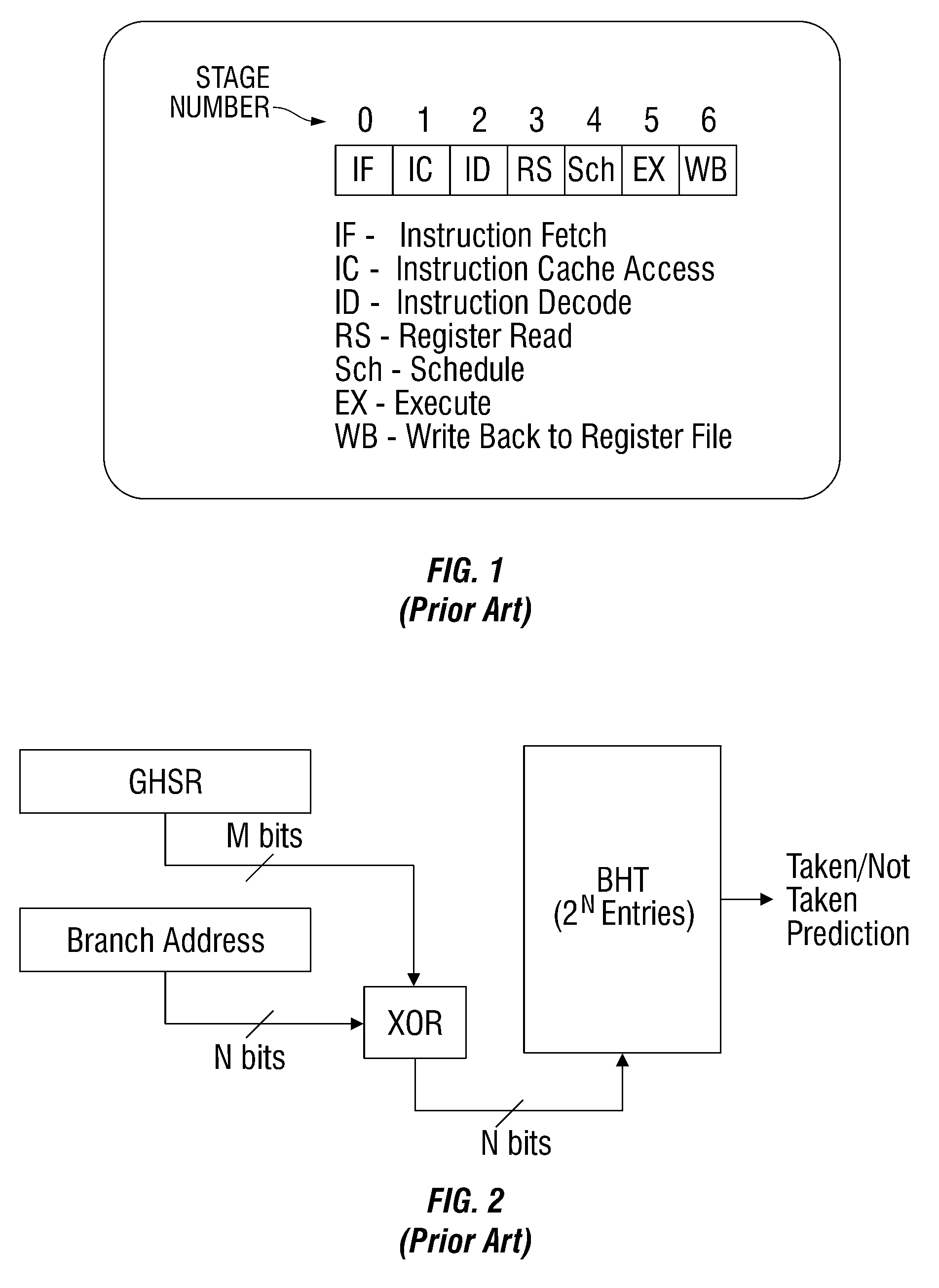

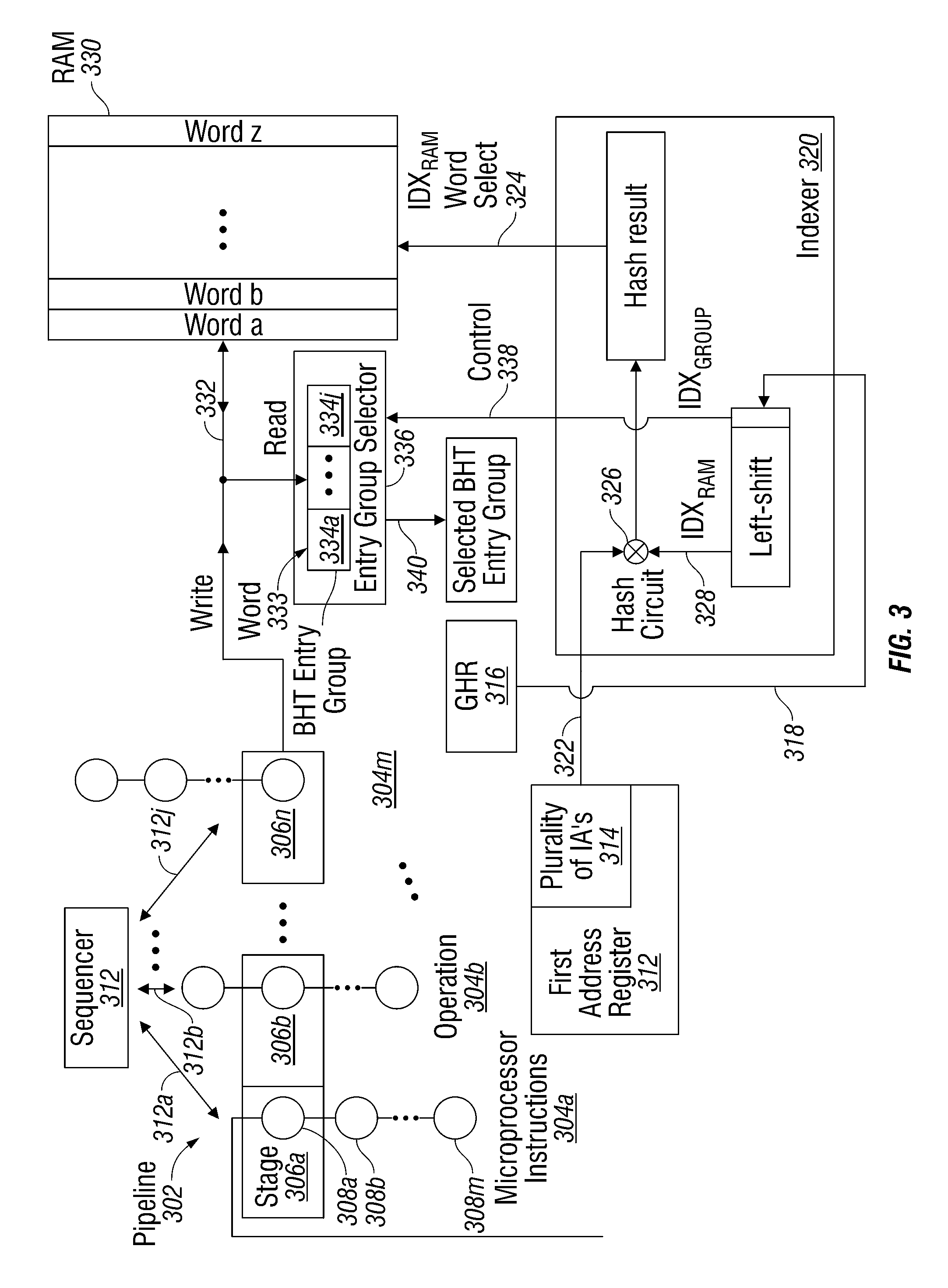

ActiveUS20090125707A1Digital computer detailsConcurrent instruction executionImage resolutionConditional branch

A system and method are provided for updating a speculative global history prediction record in a microprocessor system using pipelined instruction processing. The method accepts microprocessor instructions with consecutive operations, including a conditional branch operation with an associated first branch address. A speculative global history record (SGHR) of conditional branch resolutions and predictions is accessed and hashed with the first branch address, creating a first hash result. The first hash result is used to index a branch history table (BHT) of previous first branch resolutions. As a result, a first branch prediction is made, and the SGHR is updated with the first branch prediction. A non-speculative global history record (NSGHR) of branch resolutions is updated with the resolution of the first branch operation, and if the first branch prediction is incorrect, the SGHR is corrected using the NSGHR.

Owner:MACOM CONNECTIVITY SOLUTIONS LLC

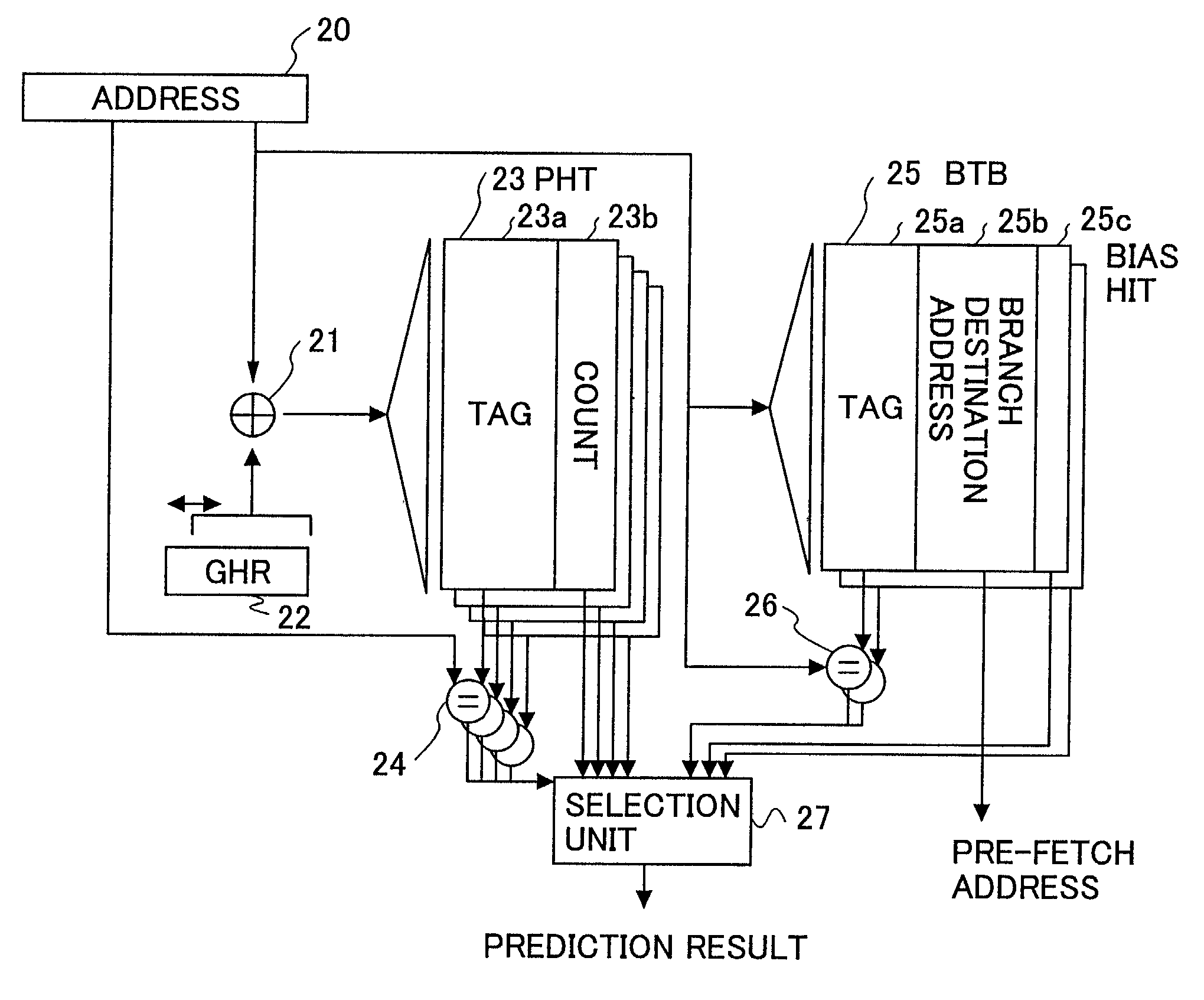

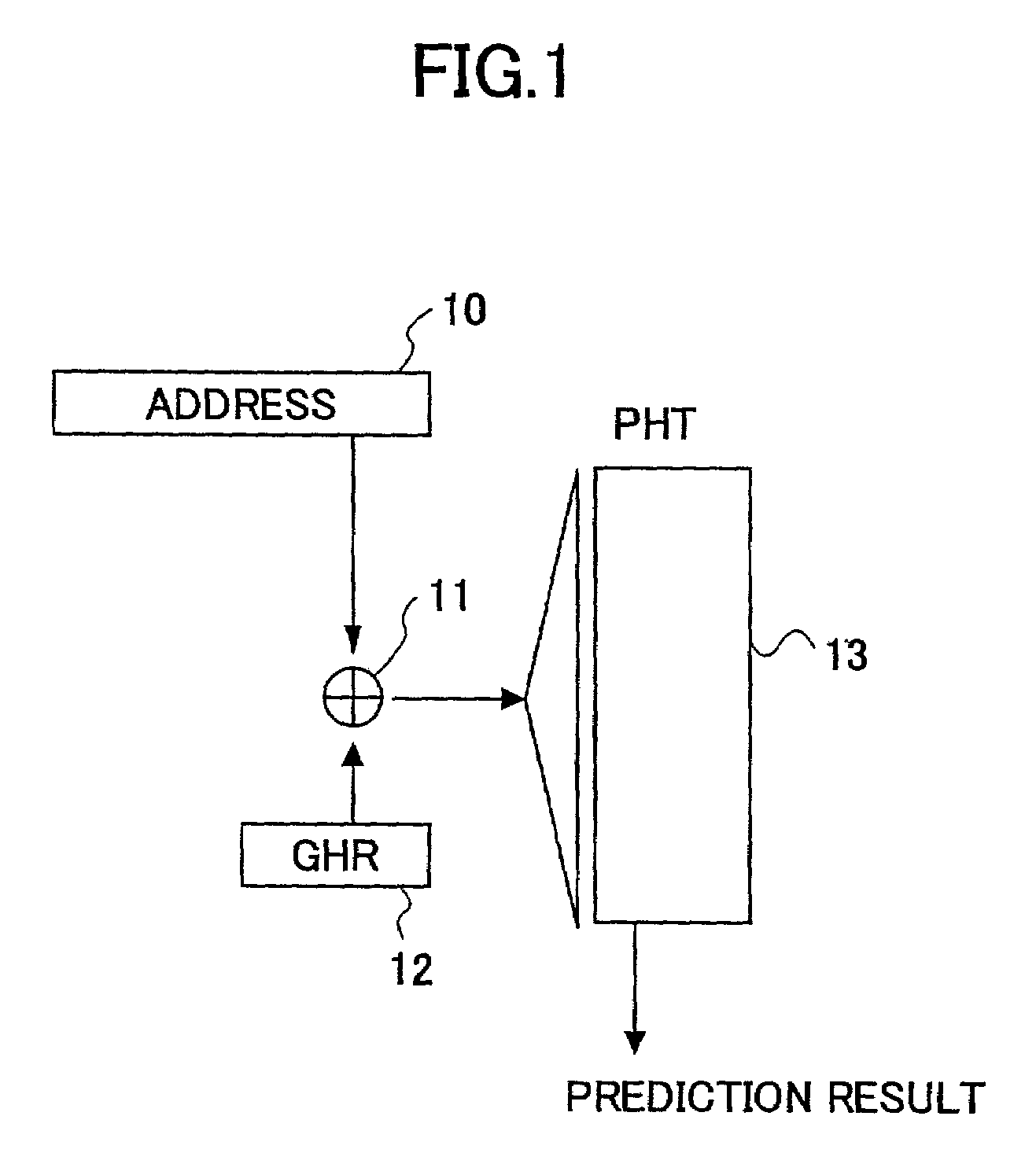

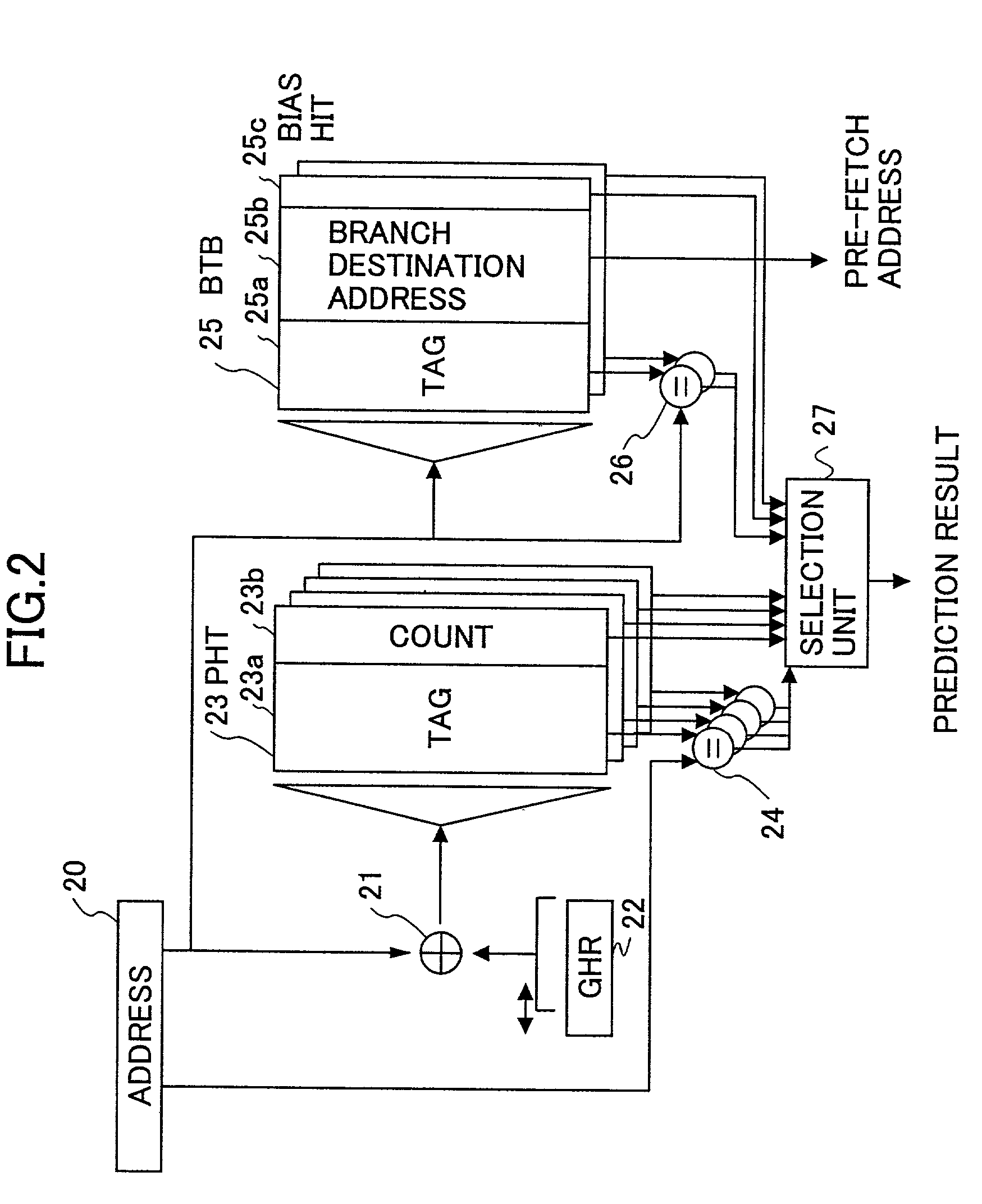

Apparatus and method for branch prediction where data for predictions is selected from a count in a branch history table or a bias in a branch target buffer

InactiveUS7055023B2Avoid interferenceHighly accurate branch predictionDigital computer detailsNext instruction address formationProcessor registerParallel computing

An apparatus for branch prediction includes a history register which stores therein history of previous branch instructions, an index generation circuit which generates a first index from an instruction address and the history stored in the history register, a history table which stores therein a portion of the instruction address as a tag and a first value indicative of likelihood of branching in association with the first index, a branch destination buffer which stores therein a branch destination address or predicted branch destination address of an instruction indicated by the instruction address and a second value indicative of likelihood of branching in association with a second index that is at least a portion of the instruction address, and a selection unit which makes a branch prediction by selecting one of the first value and the second value.

Owner:FUJITSU LTD

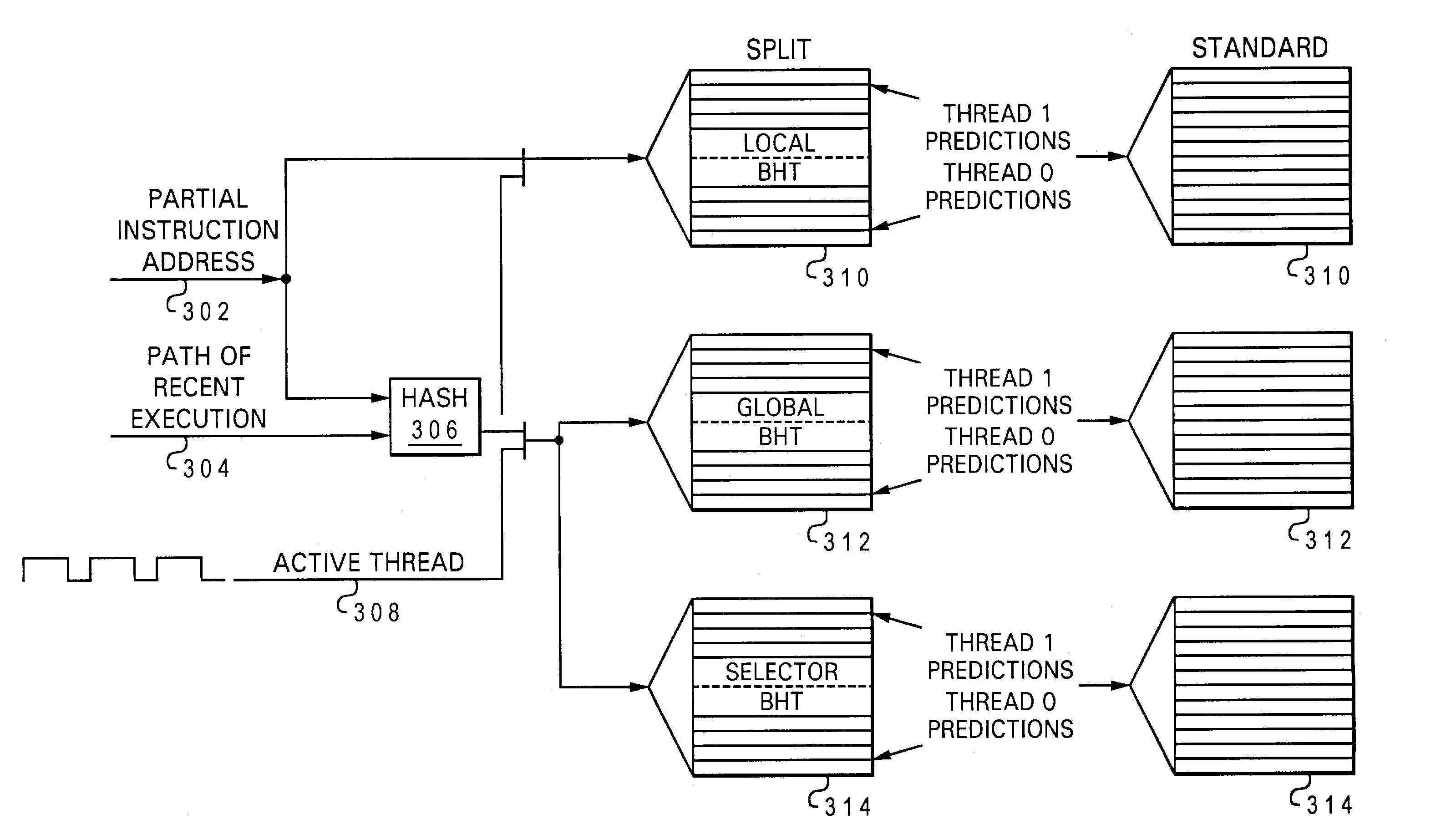

Thread-specific branch prediction by logically splitting branch history tables and predicted target address cache in a simultaneous multithreading processing environment

InactiveUS7120784B2Promote resultsAccelerates branch predictionMemory adressing/allocation/relocationMultiprogramming arrangementsArray data structureParallel computing

Owner:IBM CORP

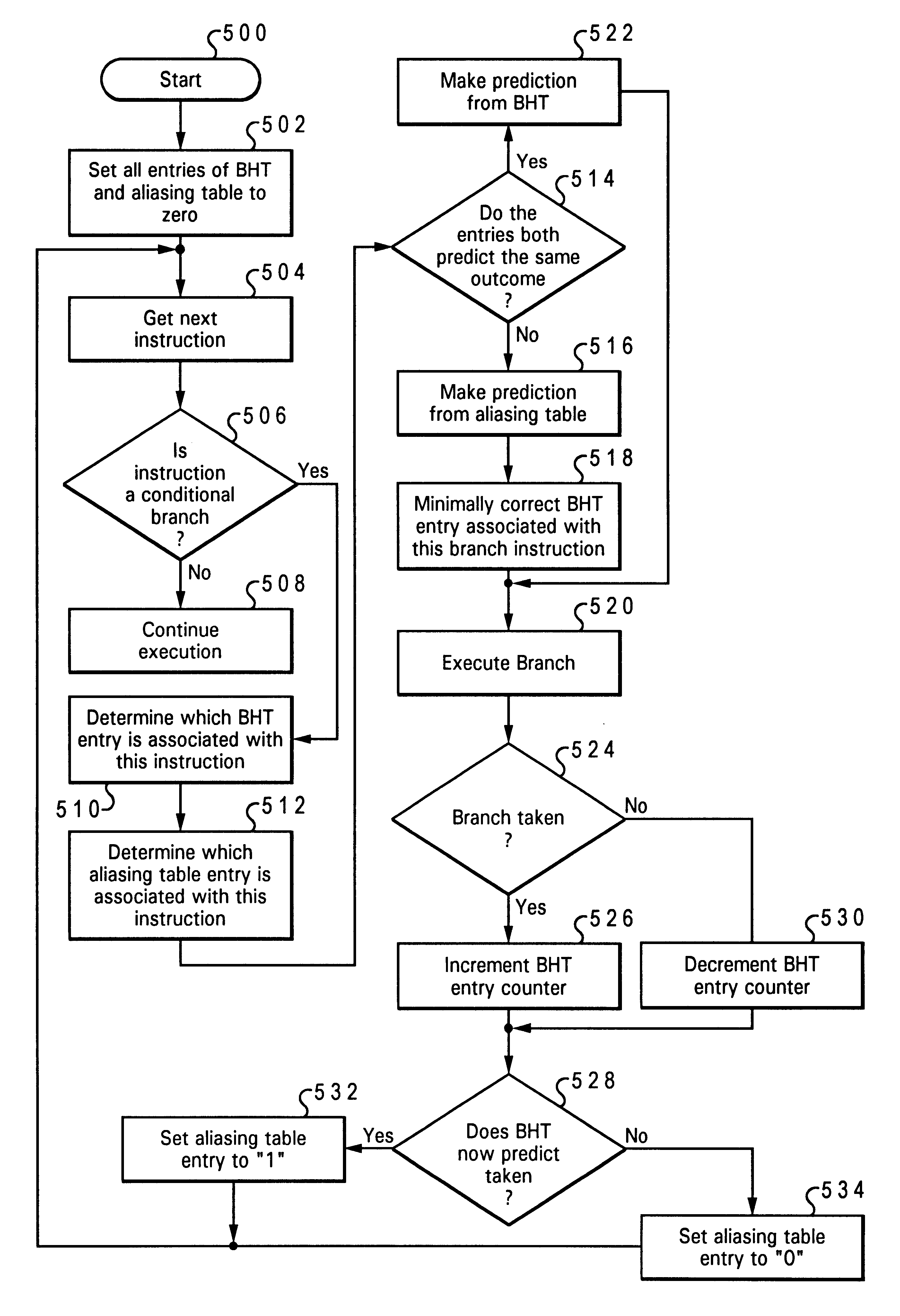

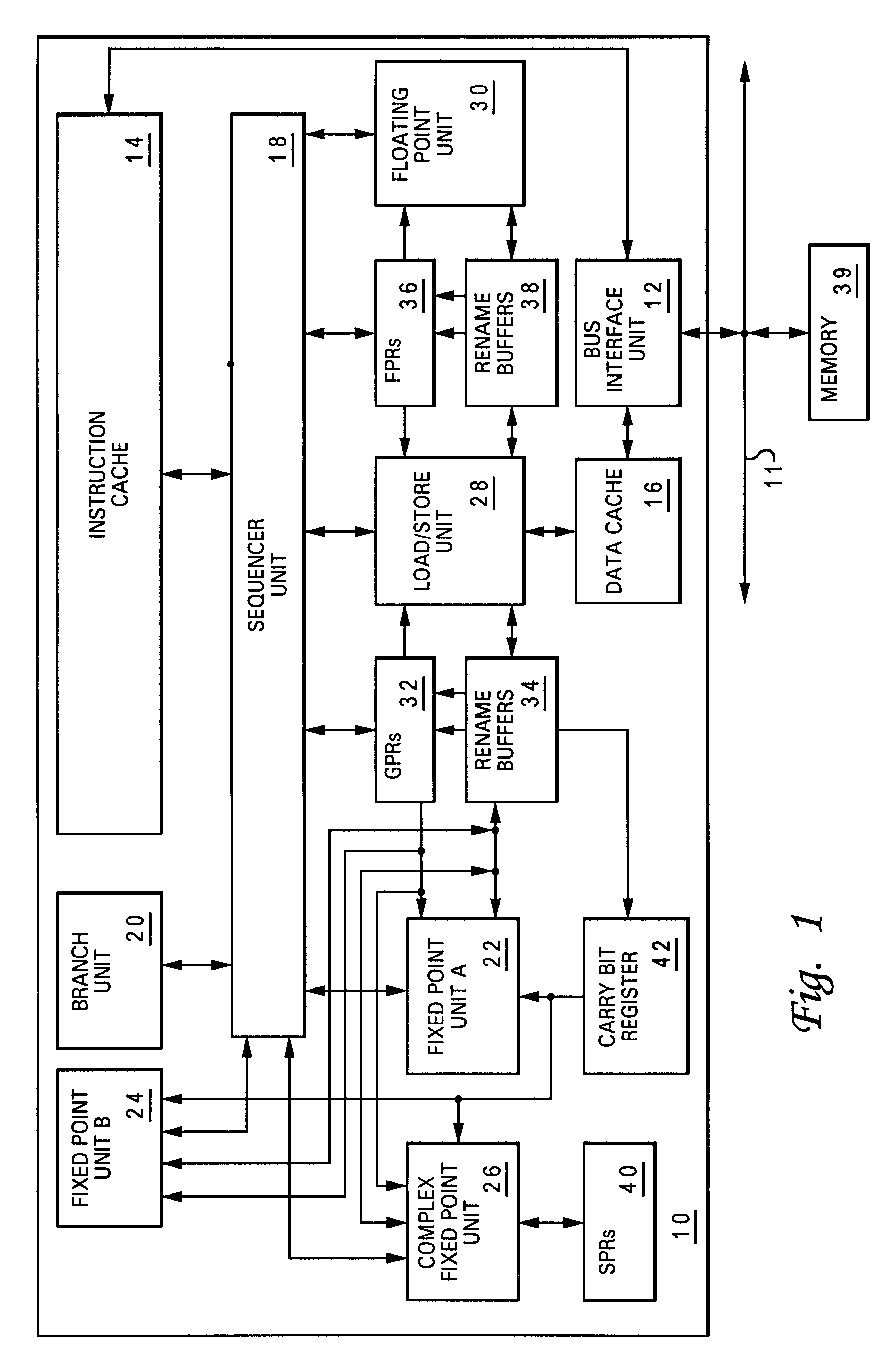

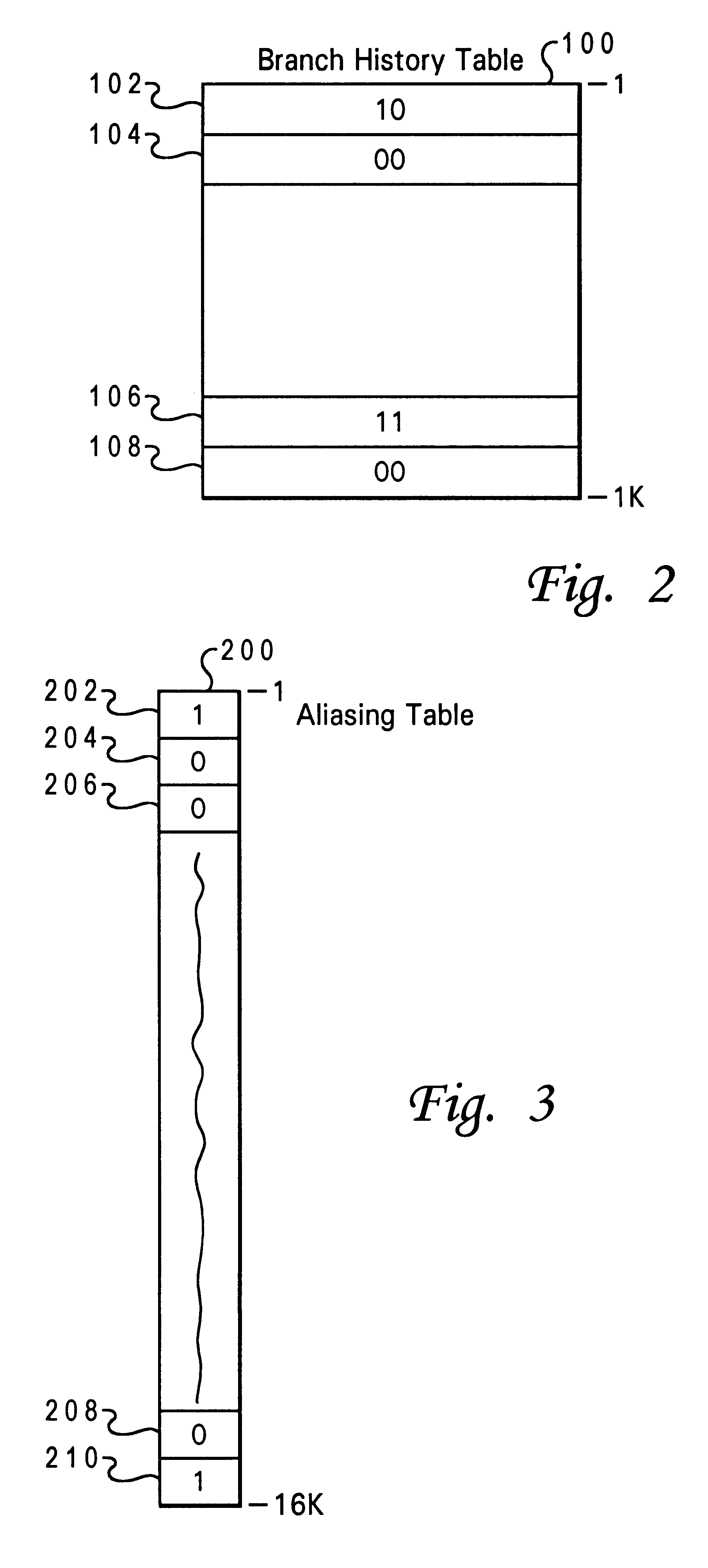

Apparatus and method of branch prediction utilizing a comparison of a branch history table to an aliasing table

InactiveUS6484256B1Digital computer detailsConcurrent instruction executionMultiple criteriaConditional branch

Improved conditional branch instruction prediction by detecting branch aliasing in a branch history table. Each entry in an aliasing table is associated with only one of a plurality of conditional branch instructions tracked by the branch history table. Prior to executing a conditional branch instruction, outcome of the execution of the conditional branch instruction is predicted utilizing the branch history table entry associated with the conditional branch instruction. Outcome of the execution of the conditional branch instruction is also predicted utilizing the aliasing table entry associated with the conditional branch instruction. Branch aliasing is detected by comparing the prediction made utilizing the branch history table with the prediction made utilizing the aliasing table. In response to the predictions being different, a determination is made that branch aliasing occurred, and the prediction made utilizing the aliasing table is utilized for predicting the outcome of the execution of the conditional branch instruction.

Owner:IBM CORP

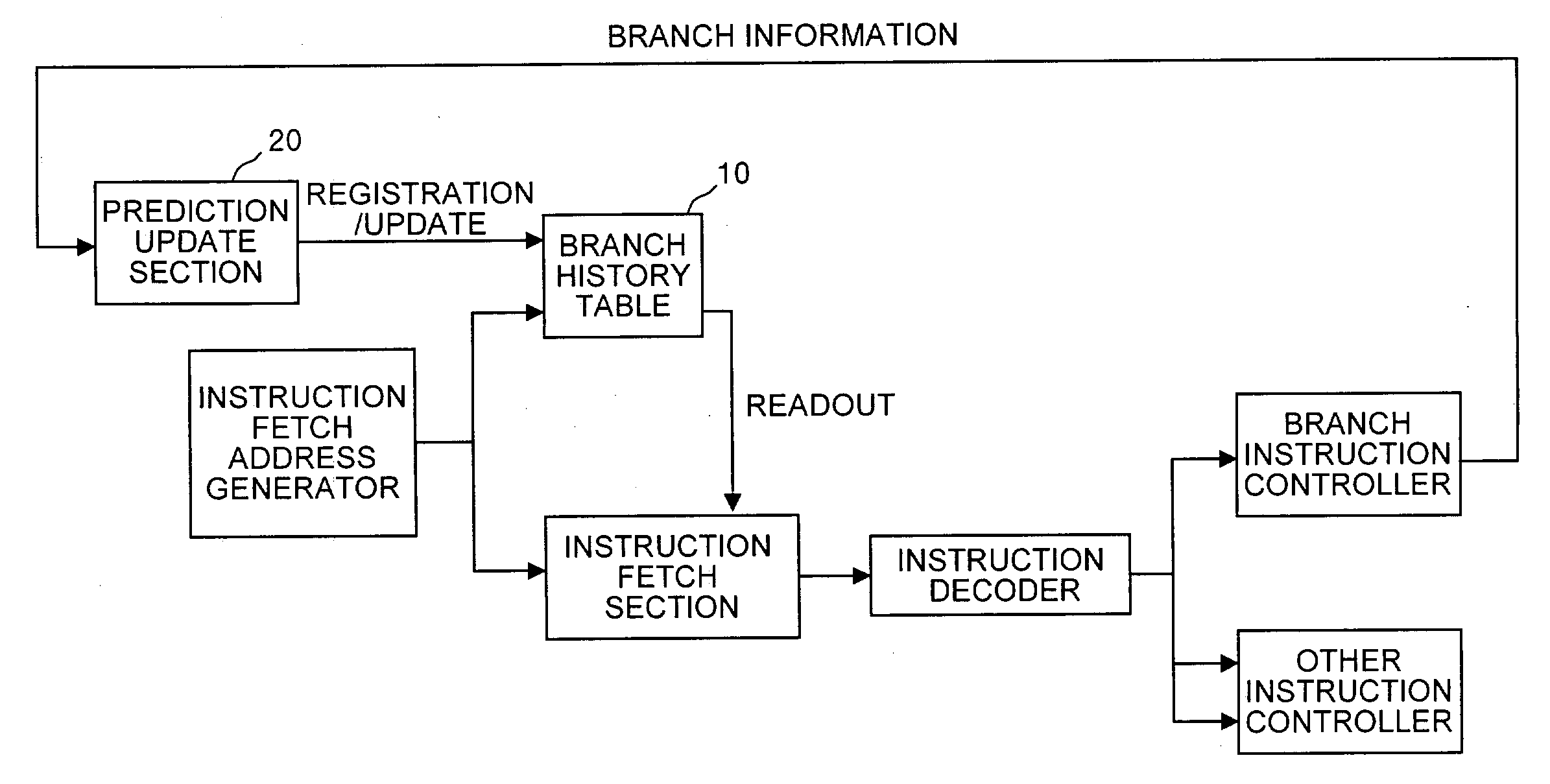

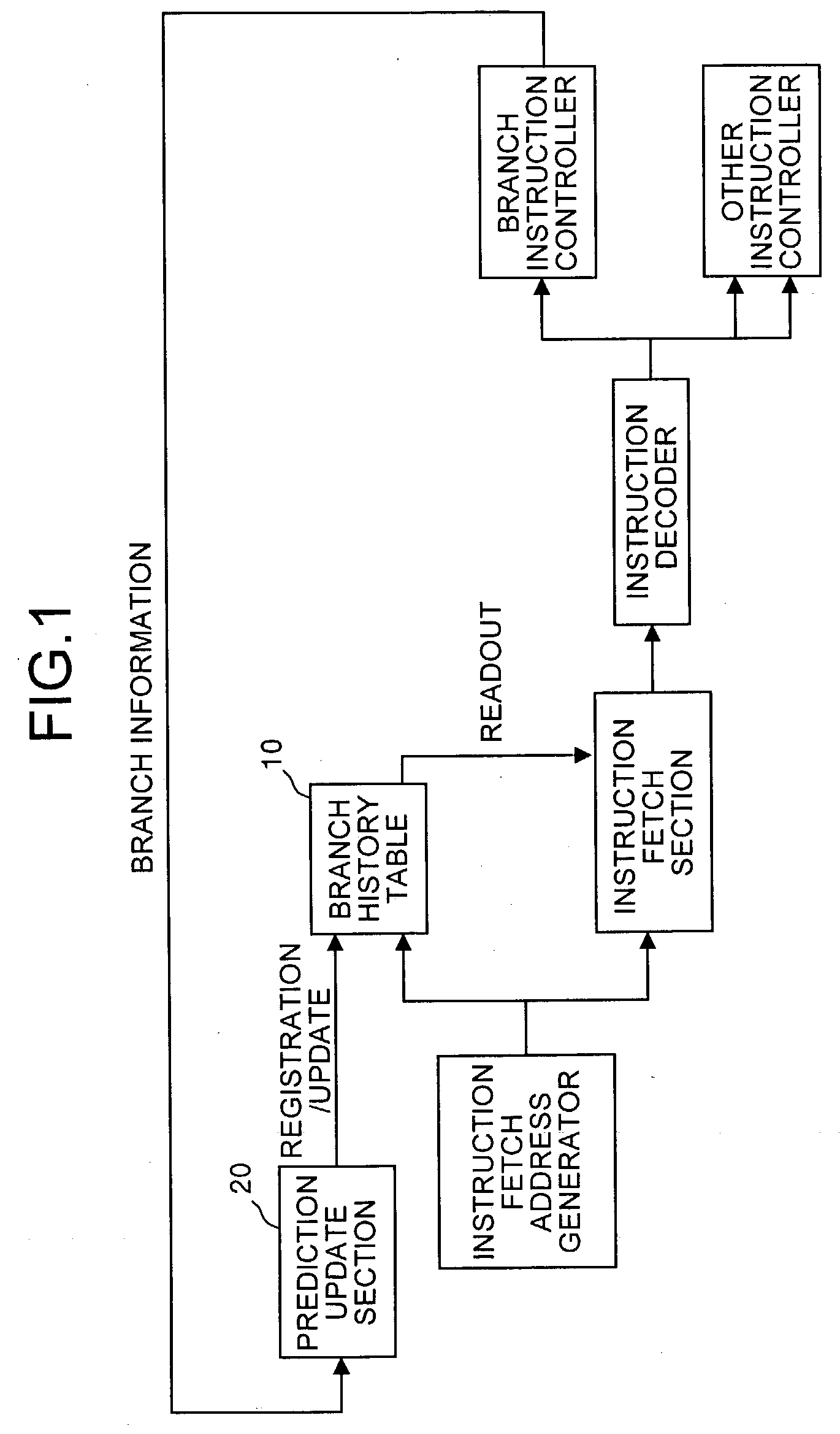

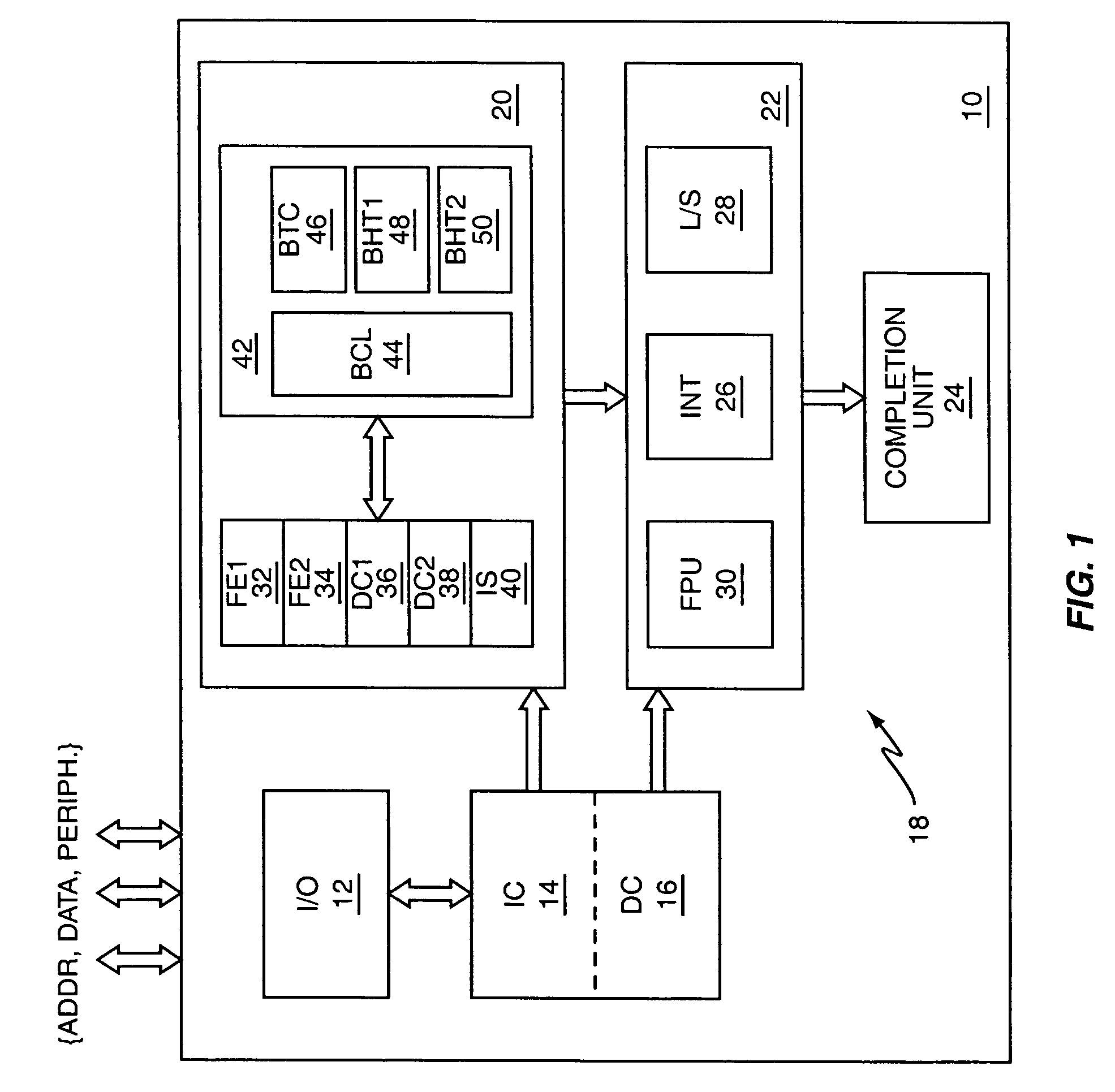

Branch prediction apparatus and branch prediction method

InactiveUS20040003218A1Digital computer detailsConcurrent instruction executionOperating systemBranch history table

A branch history memory stores the branch history. The branch history represents the results in the past. When processing of a branch instruction is finished, a branch history update section updates the branch history corresponding to the branch instruction, based on the processing result. A branch history table update section updates the branch history in a branch history table. The branch history stores the number of recent continuous branching successful and the number recent of continuous branching failures.

Owner:FUJITSU LTD

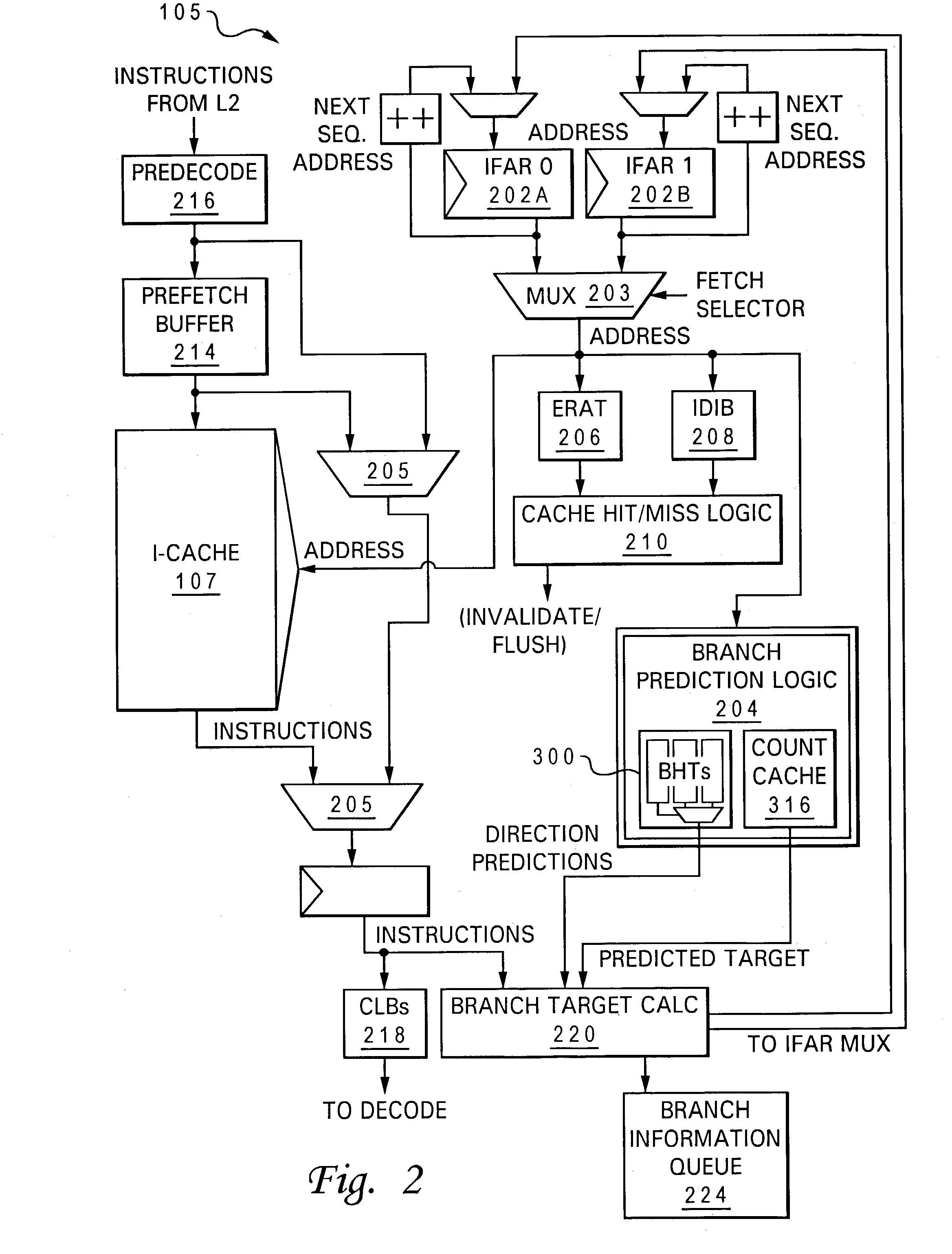

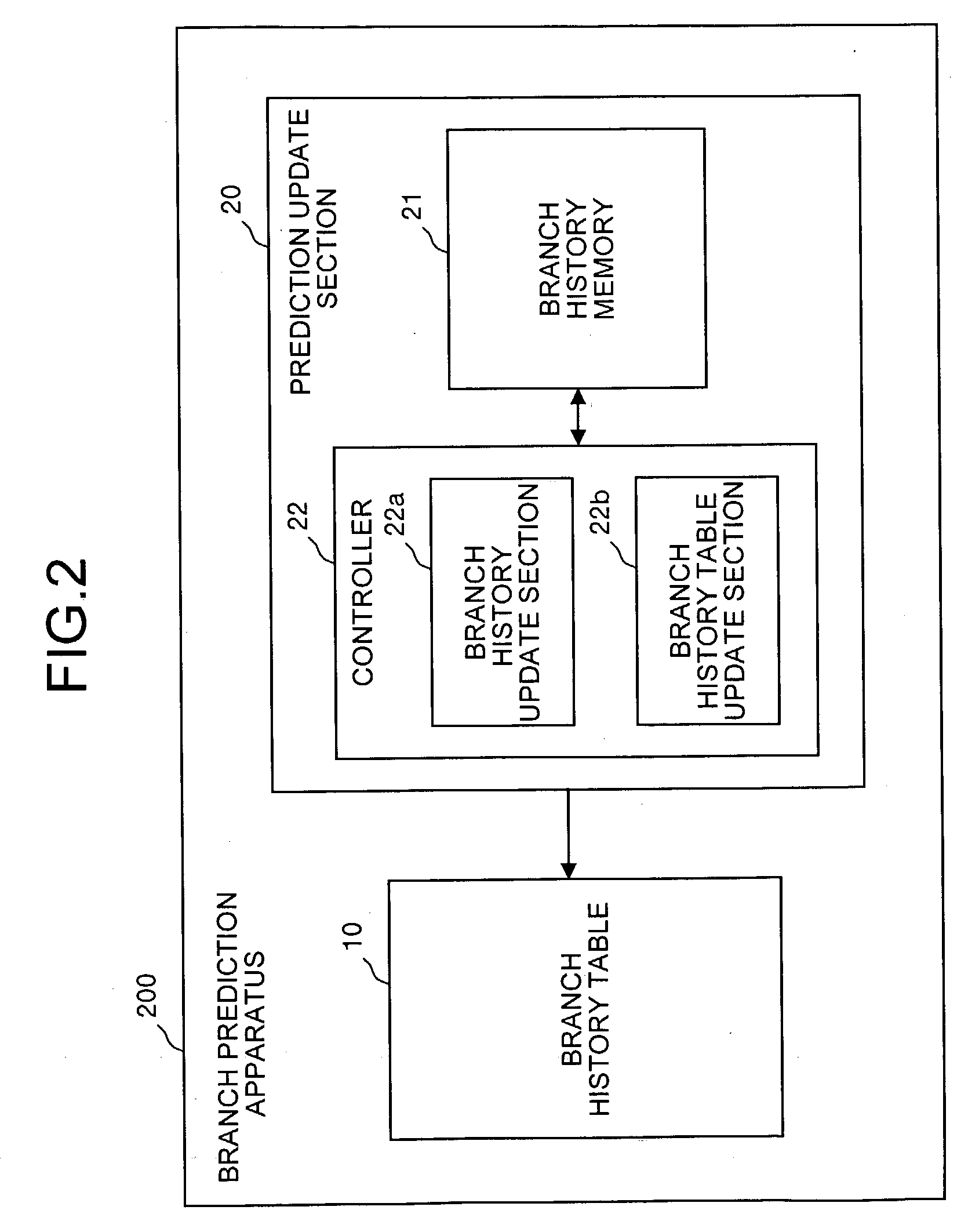

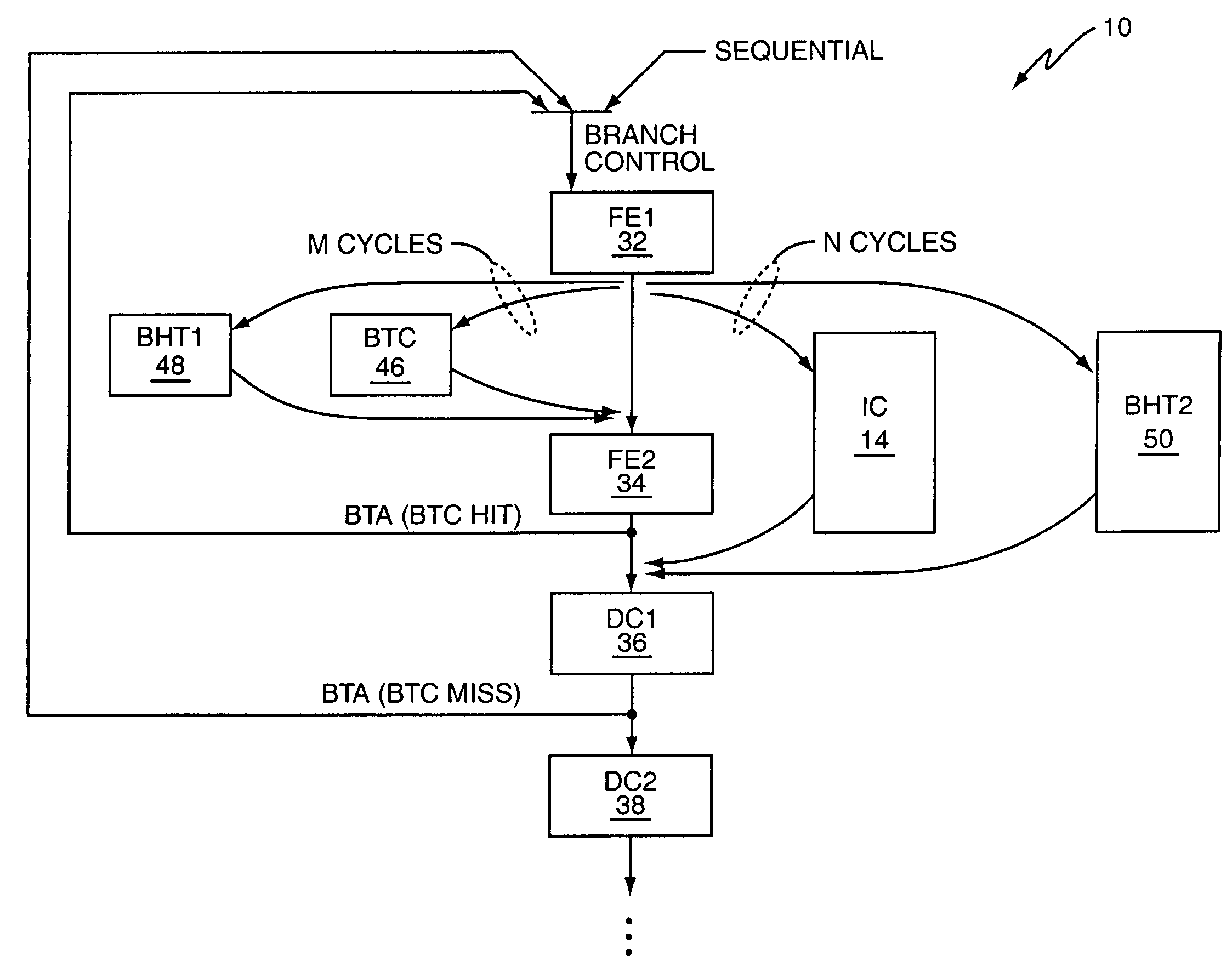

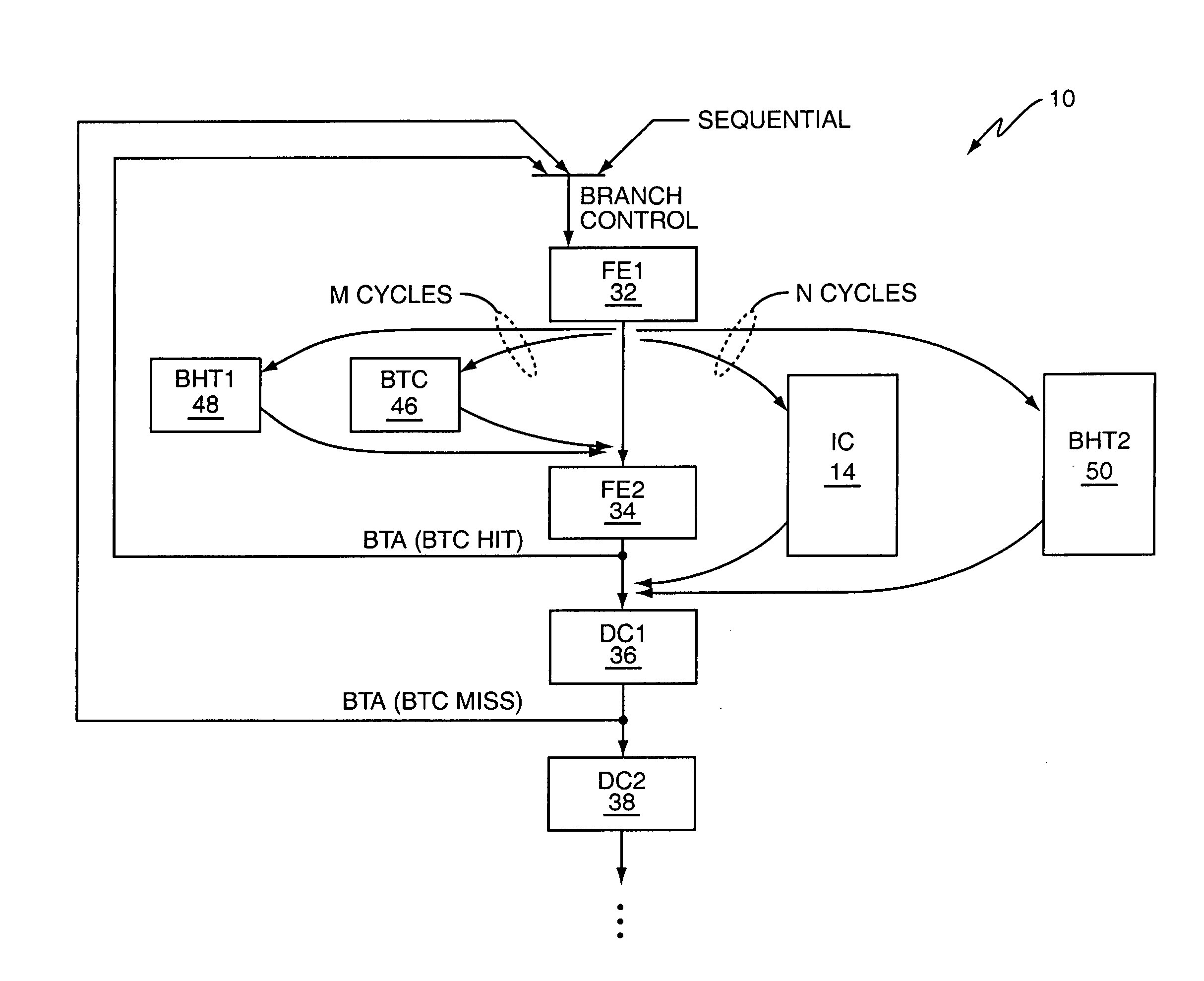

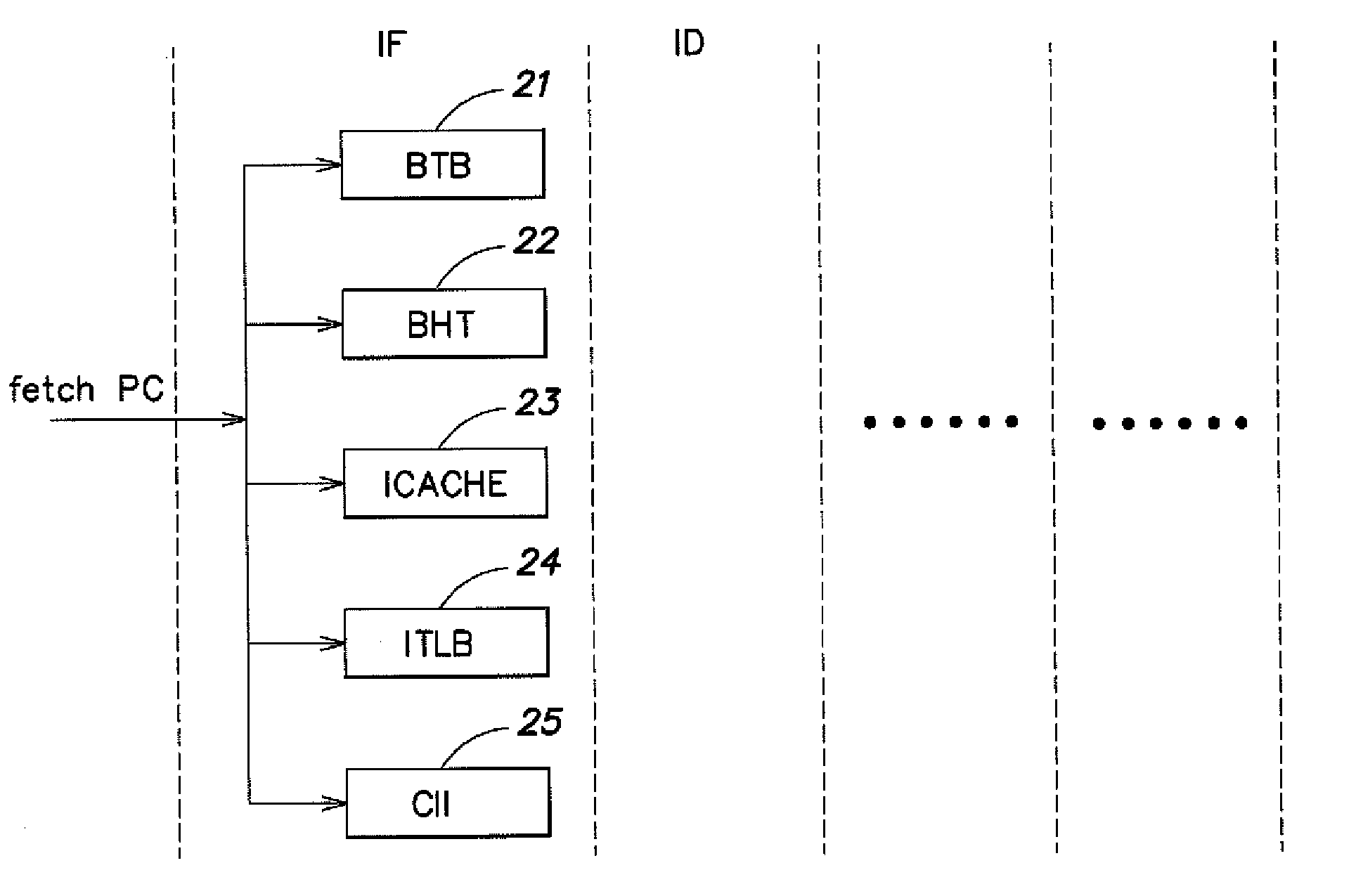

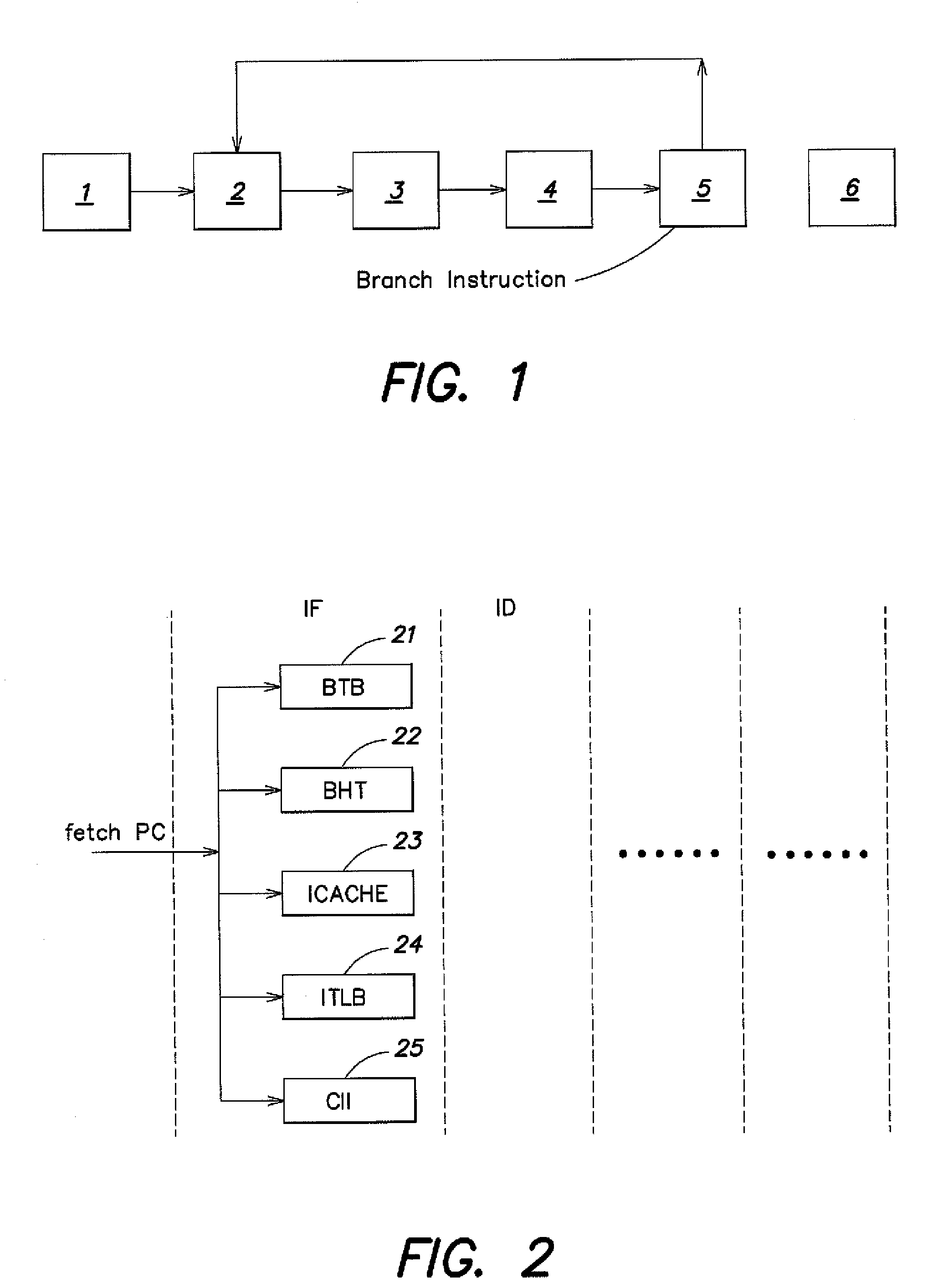

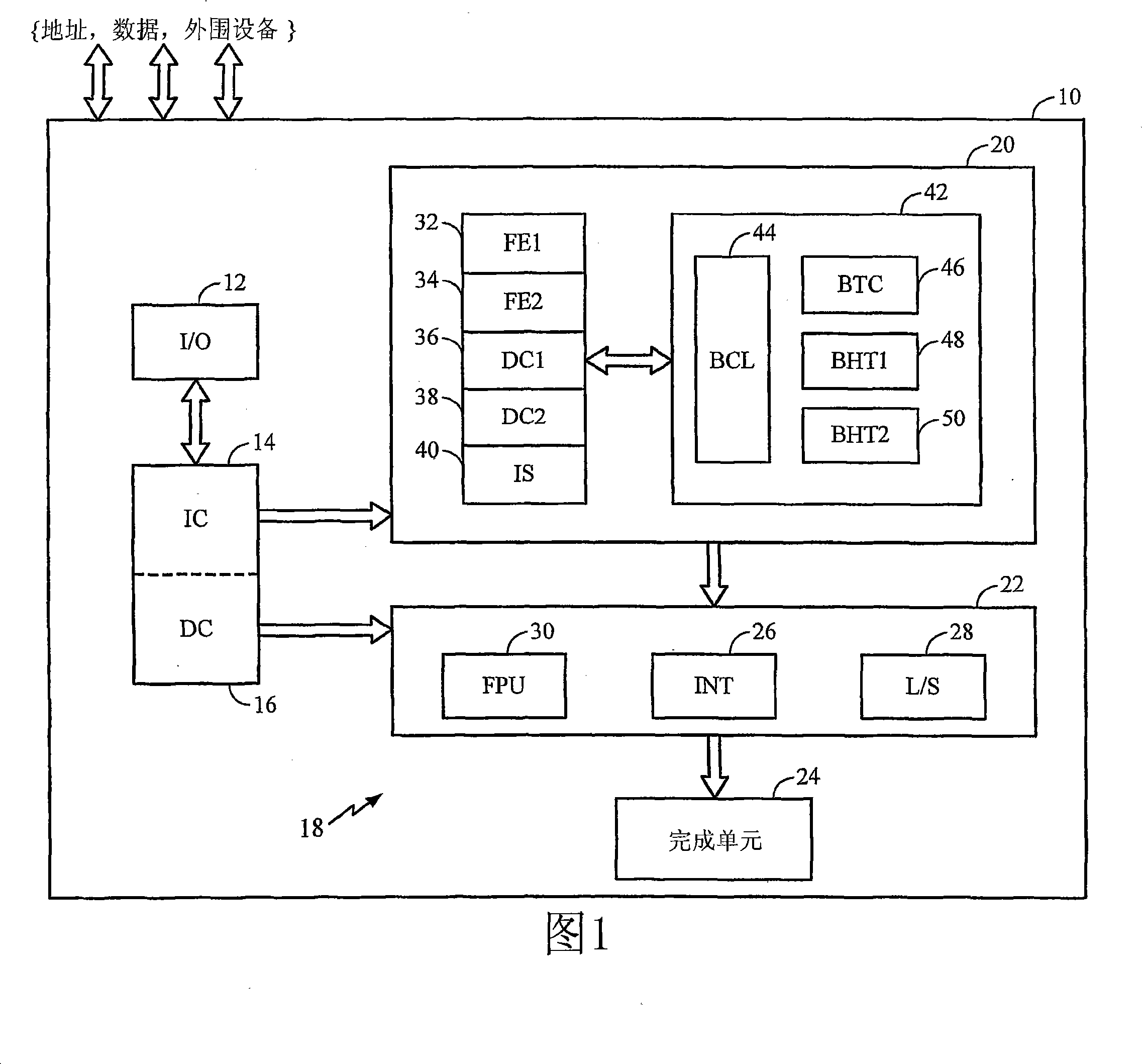

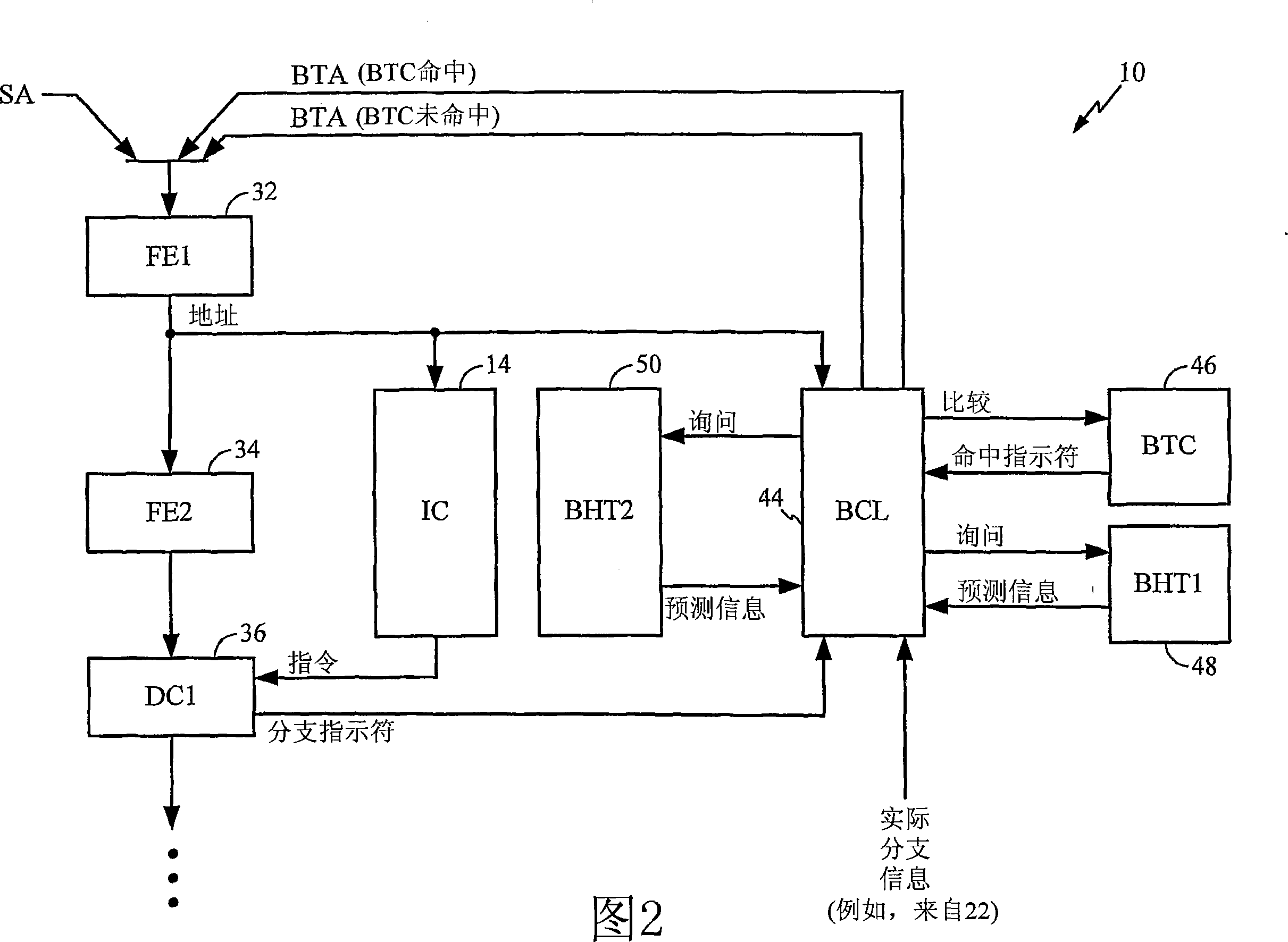

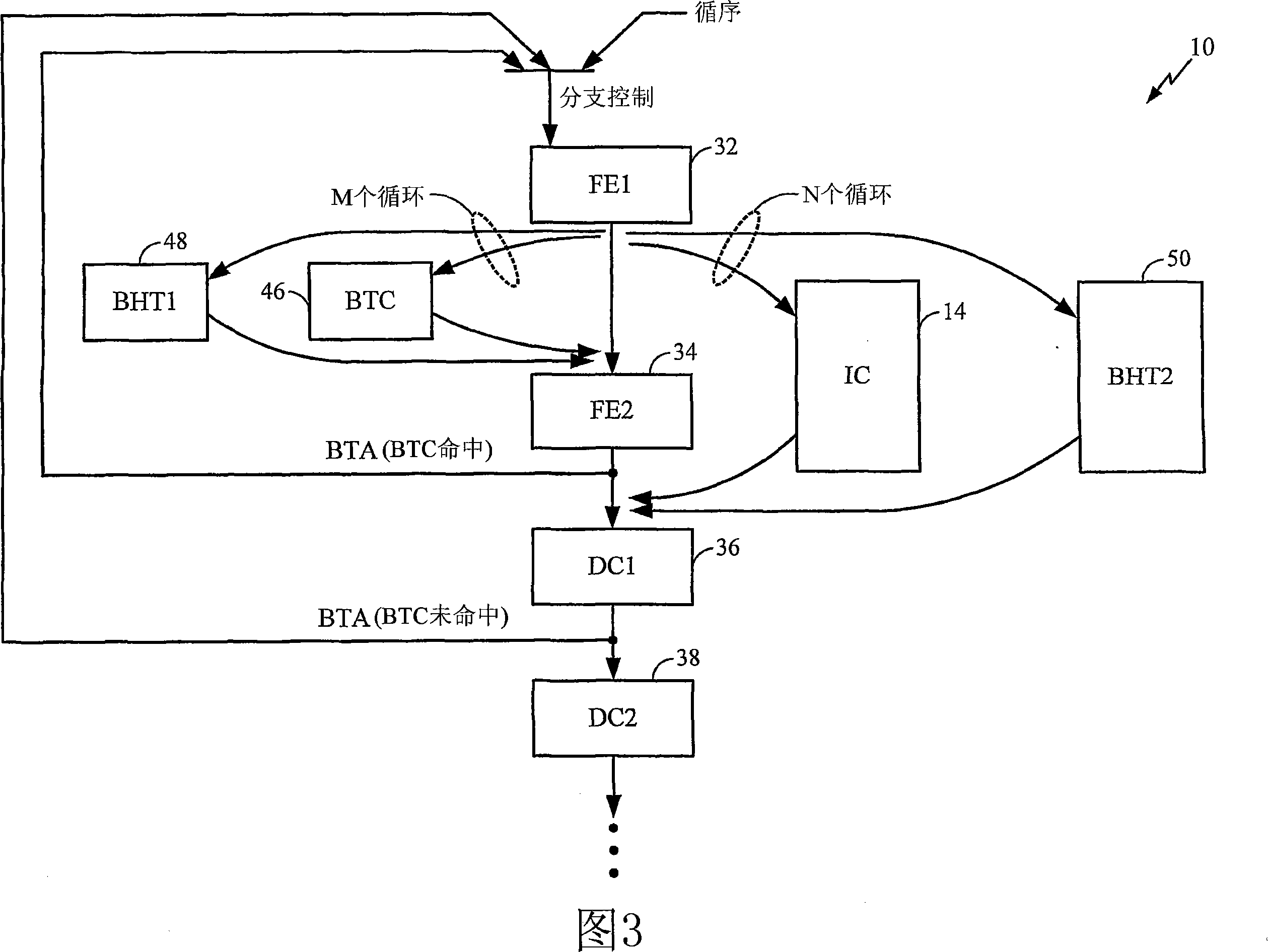

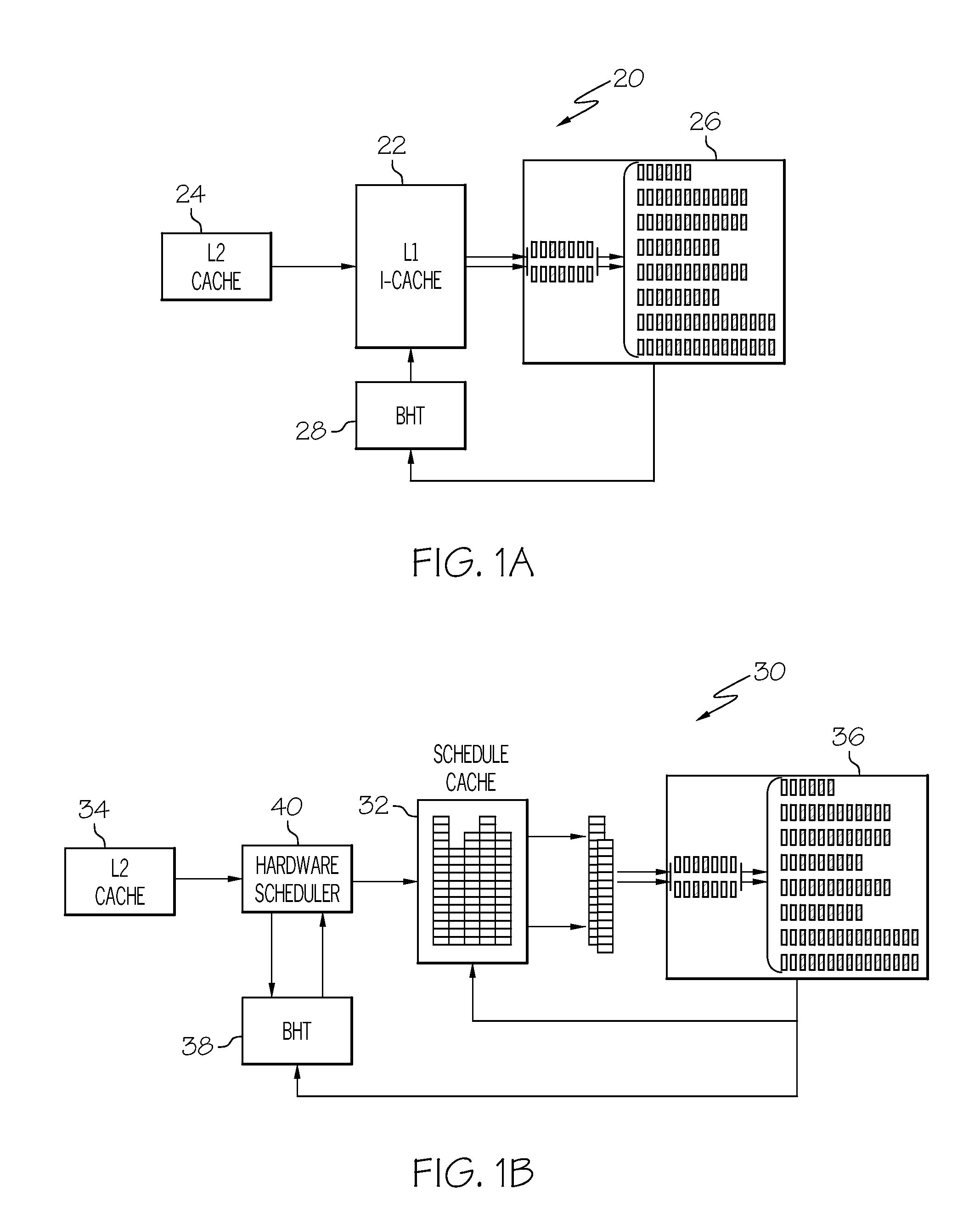

Method and apparatus for efficiently accessing first and second branch history tables to predict branch instructions

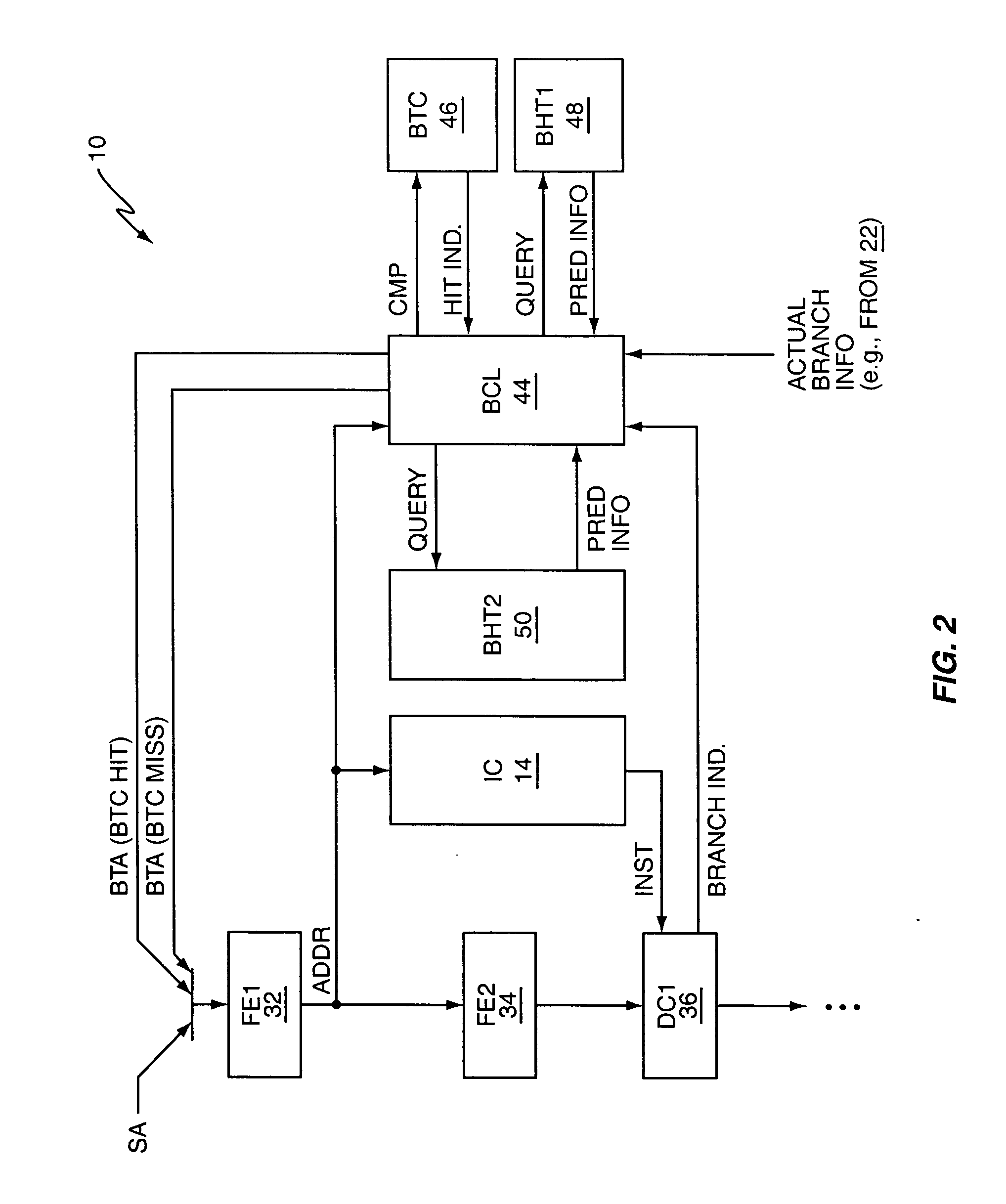

ActiveUS7278012B2Flexible configurationDigital computer detailsSpecific program execution arrangementsInstruction pipelineBranch target address

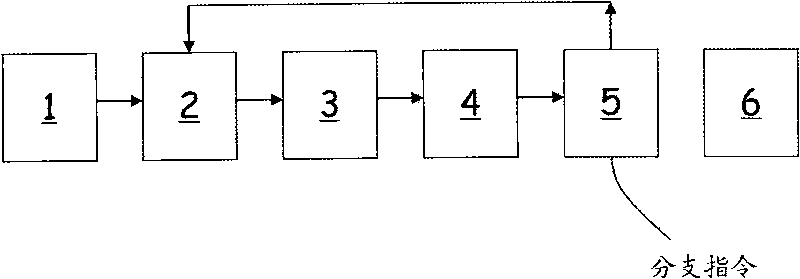

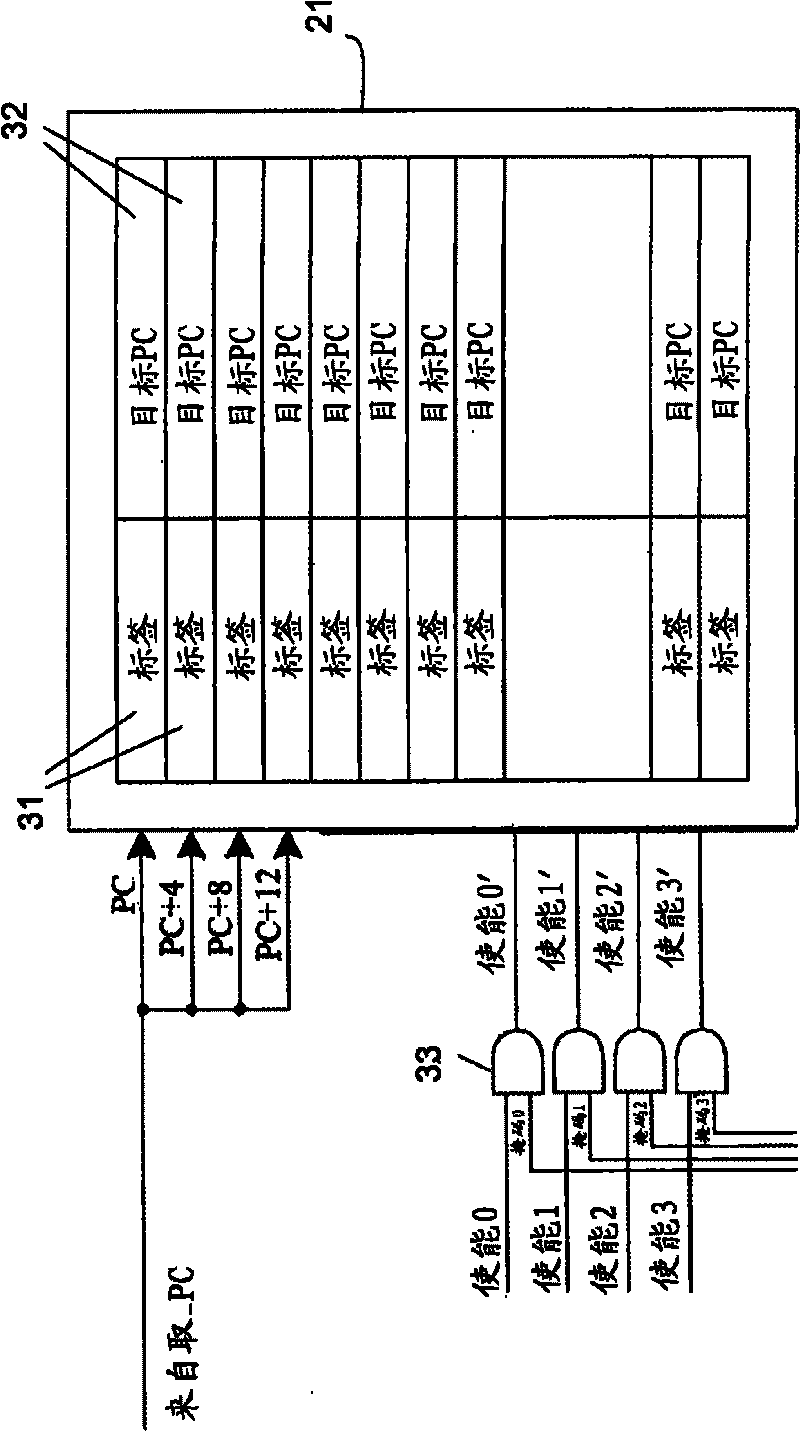

A microprocessor includes two branch history tables, and is configured to use a first one of the branch history tables for predicting branch instructions that are hits in a branch target cache, and to use a second one of the branch history tables for predicting branch instructions that are misses in the branch target cache. As such, the first branch history table is configured to have an access speed matched to that of the branch target cache, so that its prediction information is timely available relative to branch target cache hit detection, which may happen early in the microprocessor's instruction pipeline. The second branch history table thus need only be as fast as is required for providing timely prediction information in association with recognizing branch target cache misses as branch instructions, such as at the instruction decode stage(s) of the instruction pipeline.

Owner:QUALCOMM INC

Branch checkout for reduction of non-control flow commands

InactiveCN101763249ANext instruction address formationConcurrent instruction executionParallel computingIndustrial engineering

The invention relates to branch checkout for reduction of non-control flow commands. Some micro processors are used for checking branch forecast information in a branch history list and / or a branch target buffer. To check the branch forecast information, the micro processors can identify which commands are control flow commands and which are non-control flow commands. To reduce power consumption in the branch history list and / or the branch target buffer, the branch history list and / or the branch target buffer can be used for checking the branch forecast information corresponding to control flow commands, but not the branch forecast information corresponding to non-control flow commands.

Owner:STMICROELECTRONICS BEIJING R& D

Global History Branch Prediction Updating Responsive to Taken Branches

InactiveUS20090198984A1Digital computer detailsSpecific program execution arrangementsImage resolutionConditional branch

A system and method are provided for updating a global history prediction record in a microprocessor system using pipelined instruction processing. The method accepts a microprocessor instruction of consecutive operations, including a conditional branch operation with an associated branch address, at a first stage in a pipelined microprocessor execution process. A global history record (GHR) of conditional branch resolutions and predictions is accessed and hashed with the branch address, creating a first hash result. The first hash result is used to access an indexed branch history table (BHT) of branch direction counts and the BHT is used to make a branch prediction. If the branch prediction being “taken”, the current GHR value is left-shifted and hashed with the branch address, creating a second hash result which is used in creating an updated GHR.

Owner:MACOM CONNECTIVITY SOLUTIONS LLC

Method and apparatus for predicting branch instructions

ActiveUS20060277397A1Flexible configurationDigital computer detailsSpecific program execution arrangementsInstruction pipelineMicroprocessor

A microprocessor includes two branch history tables, and is configured to use a first one of the branch history tables for predicting branch instructions that are hits in a branch target cache, and to use a second one of the branch history tables for predicting branch instructions that are misses in the branch target cache. As such, the first branch history table is configured to have an access speed matched to that of the branch target cache, so that its prediction information is timely available relative to branch target cache hit detection, which may happen early in the microprocessor's instruction pipeline. The second branch history table thus need only be as fast as is required for providing timely prediction information in association with recognizing branch target cache misses as branch instructions, such as at the instruction decode stage(s) of the instruction pipeline.

Owner:QUALCOMM INC

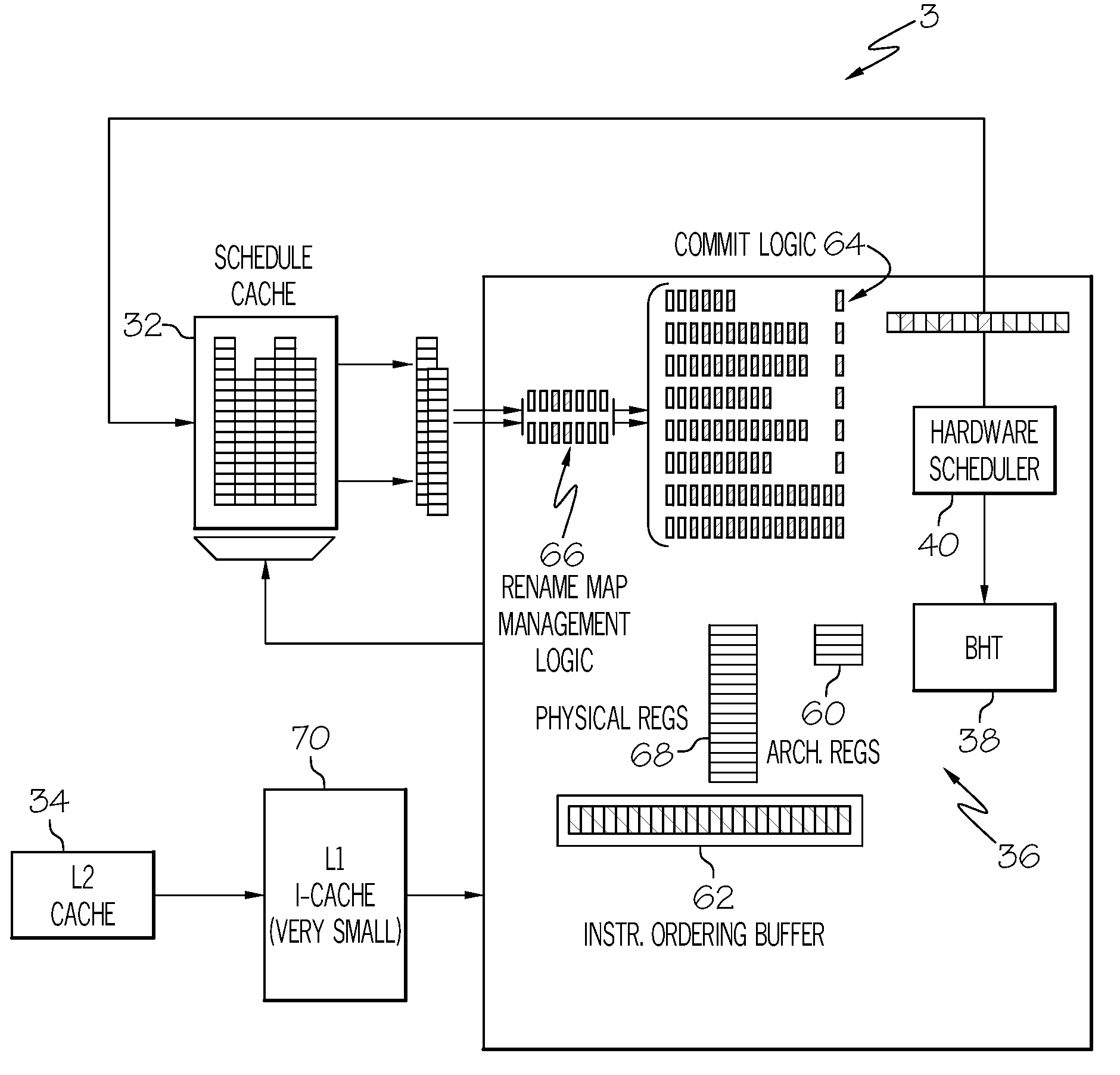

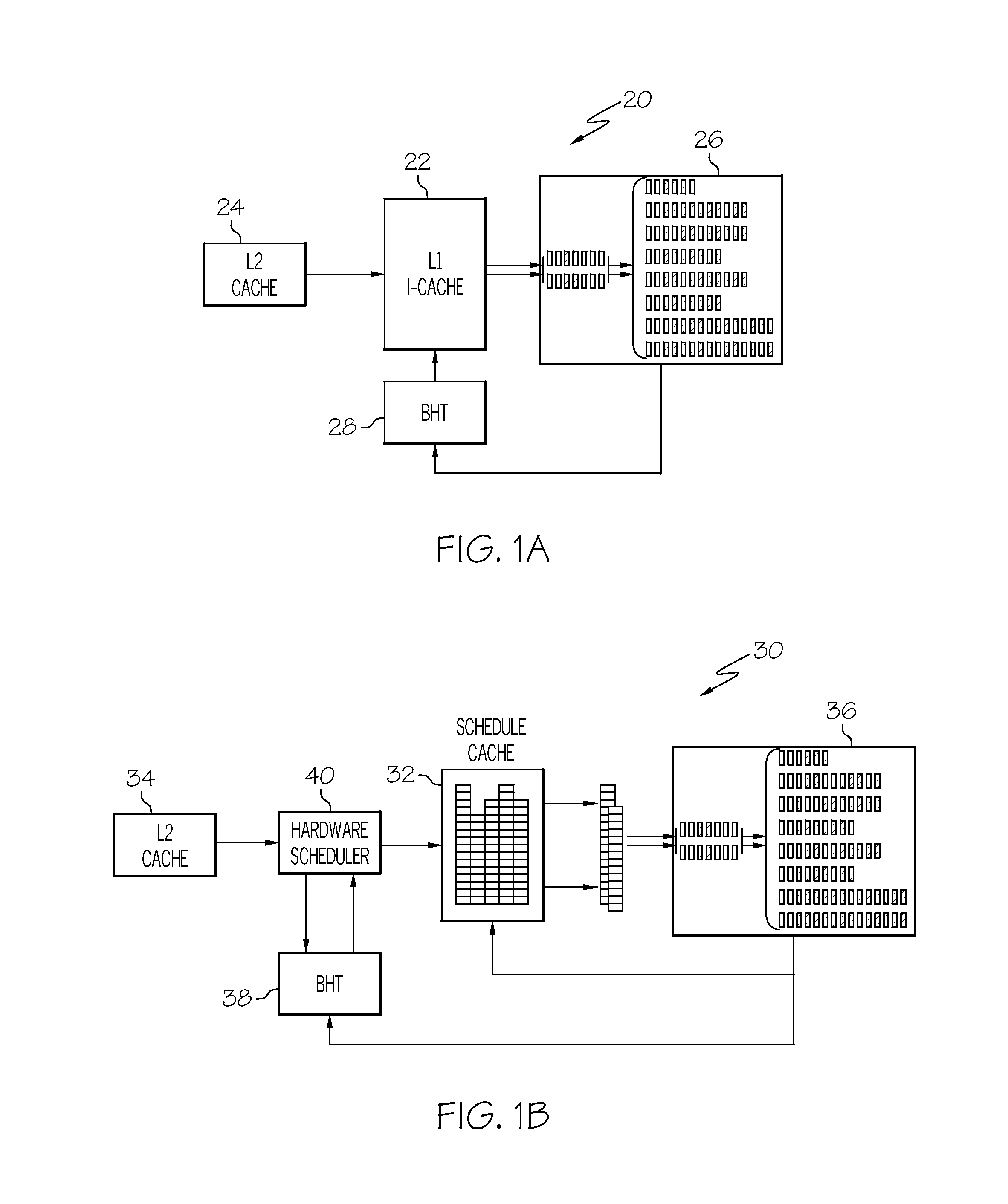

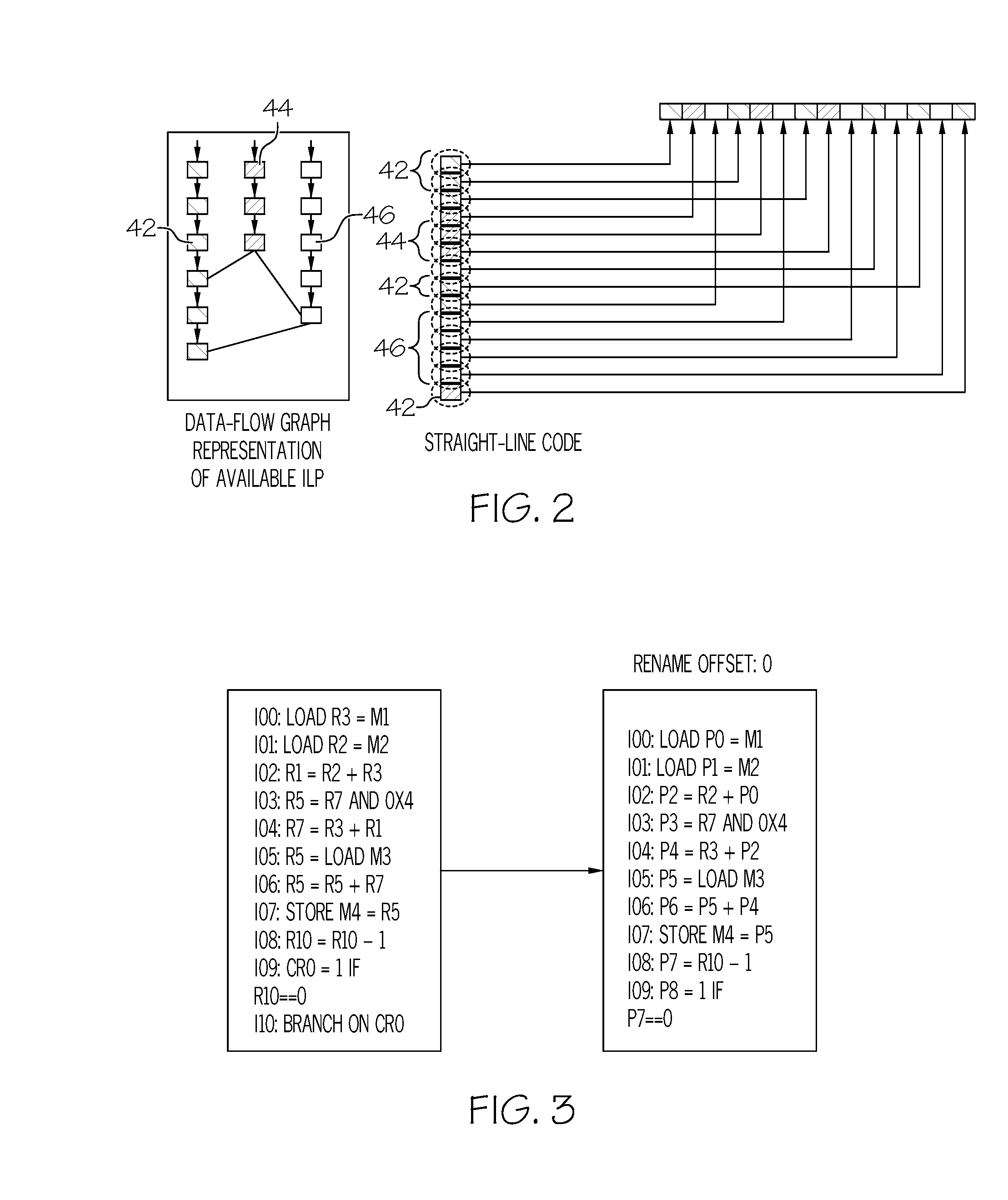

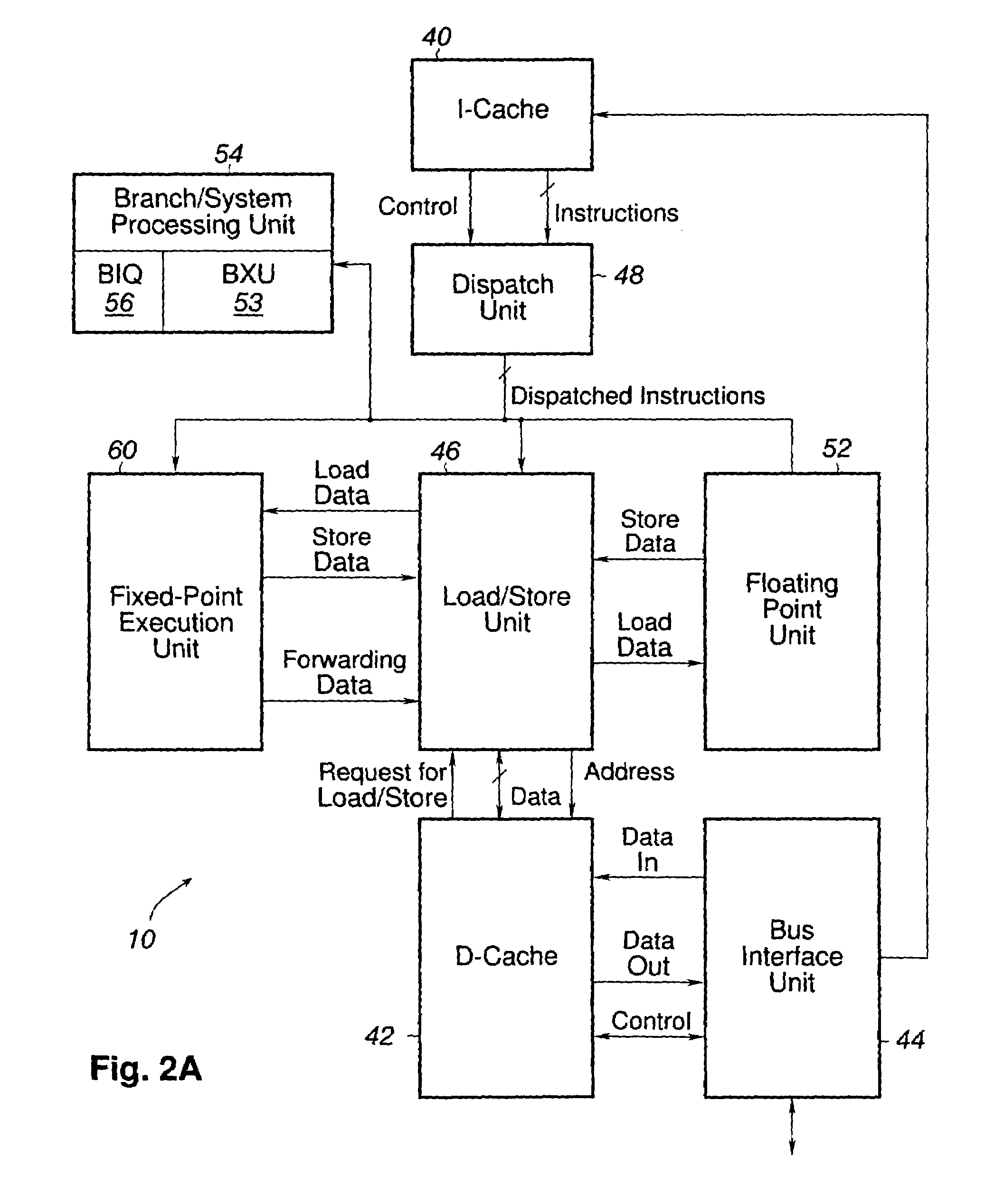

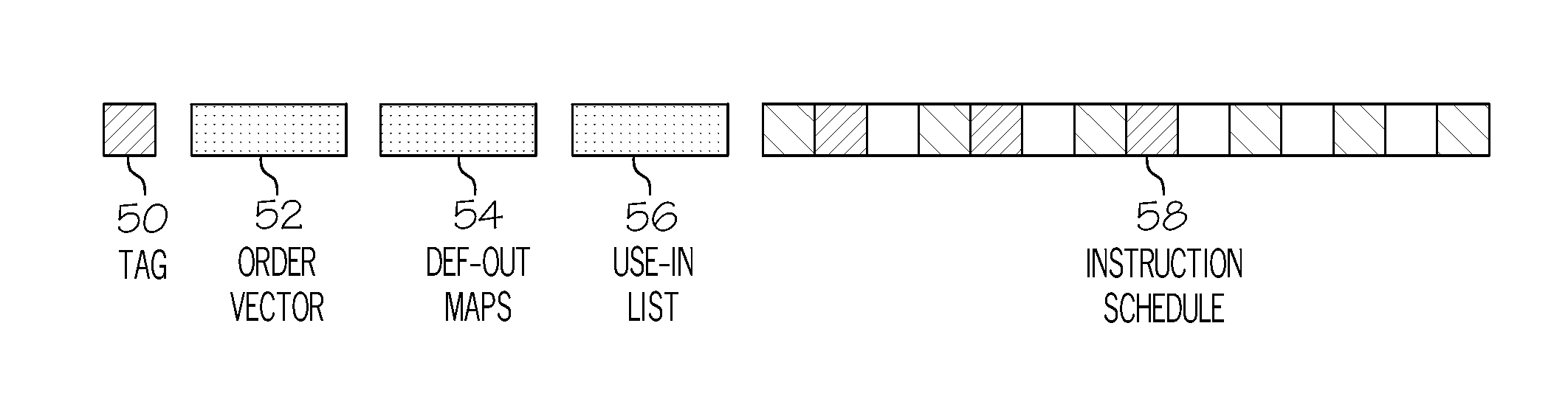

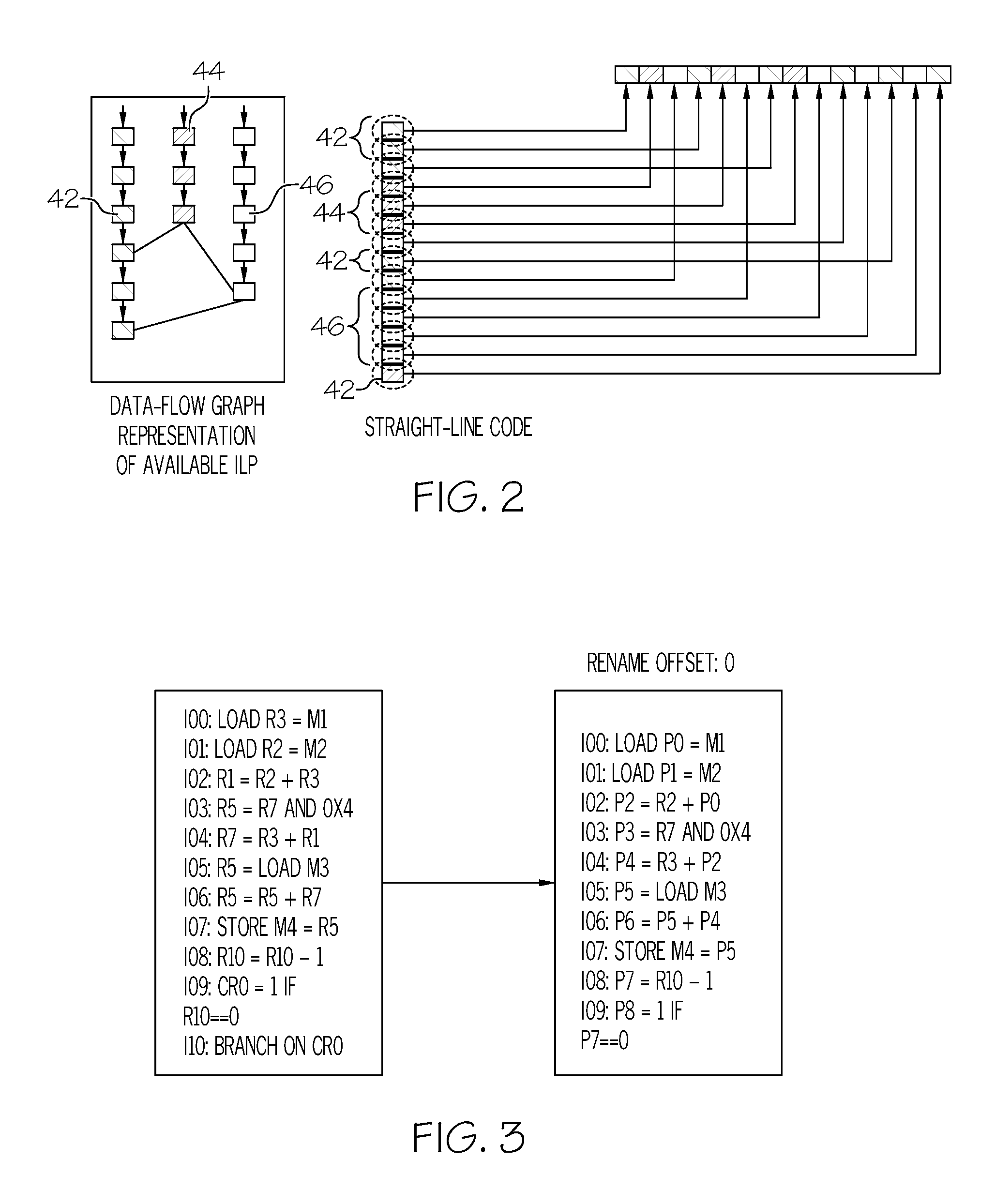

Computer processing system employing an instruction schedule cache

InactiveUS20080162884A1Efficient executionReduce complexityDigital computer detailsMemory systemsTime scheduleScheduling instructions

A processor core and method of executing instructions, both of which utilizes schedules, are presented. Each of the schedules includes a sequence of instructions, an address of a first of the instructions in the schedule, an order vector of an original order of the instructions in the schedule, a rename map of registers for each register in the schedule, and a list of register names used in the schedule. The schedule exploits instruction-level parallelism in executing out-of-order instructions. The processor core includes a schedule cache that is configured to store schedules, a shared cache configured to store both I-side and D-side cache data, and an execution resource for requesting a schedule to be executed from the schedule cache. The processor core further includes a scheduler disposed between the schedule cache and the cache. The scheduler creating the schedule using branch execution history from a branch history table to create the instructions when the schedule requested by the execution resource is not found in the schedule cache. The processor core executes the instructions according to the schedule being executed. The method includes requesting a schedule from a schedule cache. The method further includes fetching the schedule, when the schedule is found in the schedule cache; and creating the schedule, when the schedule is not found in the schedule cache. The method also includes renaming the registers in the schedule to avoid false dependencies in a processor core, mapping registers to renamed registers in the schedule, and stitching register values in and out of another schedule according to the list of register names and the rename map of registers.

Owner:IBM CORP

Circuits, systems and methods for performing branch predictions by selectively accessing bimodal and fetch-based history tables

InactiveUS6976157B1Minimized circuitDigital computer detailsSpecific program execution arrangementsProcessor registerTheoretical computer science

Branch prediction circuitry including a bimodal branch history table, a fetch-based branch history table and a selector table is provided. The local branch history table includes a plurality of entries each for storing a prediction value and accessed by selected bits of a branch address. The fetch-based branch history table included a plurality of entries for storing a prediction value and accessed by a pointer generated from selected bits of the branch address and bits from a history register. The selector table includes a plurality of entries each for storing a selection bit and accessed by a pointer generated from selected bits from the branch address and bits from the history register, each selector bit is used for selecting between a prediction value accessed from the local history table and a prediction value accessed from the fetch-based history table.

Owner:INTEL CORP

Predicting for all branch instructions in a bunch based on history register updated once for all of any taken branches in a bunch

InactiveUS6055629AReduce complexityShorten the timeDry-cleaning apparatusOther washing machinesProcessor registerParallel computing

A method for a prediction correlation between a first group of branch instructions in a bunch of instructions and a second group of branch instructions in a bunch of instructions is disclosed. The method includes indicating a direction of a plurality of branch instructions in a bunch of instructions. More particularly, the method includes building an address composed of an instruction fetch address and bits in a history register. The method accesses a bunch of instructions using the fetch address and accesses a prediction bits set from a branch history table using the composed address. The accessed bunch of instructions are processed. Further, the history register and the branch history table are updated to correlate a first group of a branch instructions in the accessed bunch of instructions to a second group of branch instructions in a next group of branch instructions in the bunch of instructions.

Owner:FUJITSU LTD

Reducing branch checking for non control flow instructions

ActiveUS20100169625A1Digital computer detailsSpecific program execution arrangementsControl flowParallel computing

Some microprocessors check branch prediction information in a branch history table and / or a branch target buffer. To check for branch prediction information, a microprocessor can identify which instructions are control flow instructions and which instructions are non control flow instructions. To reduce power consumption in the branch history table and / or branch target buffer, the branch history table and / or branch target buffer can check for branch prediction information corresponding to the control flow instructions and not the non control flow instructions.

Owner:STMICROELECTRONICS BEIJING R& D

Branch target buffer allocation

InactiveUS20100031010A1Digital computer detailsNext instruction address formationData processing systemParallel computing

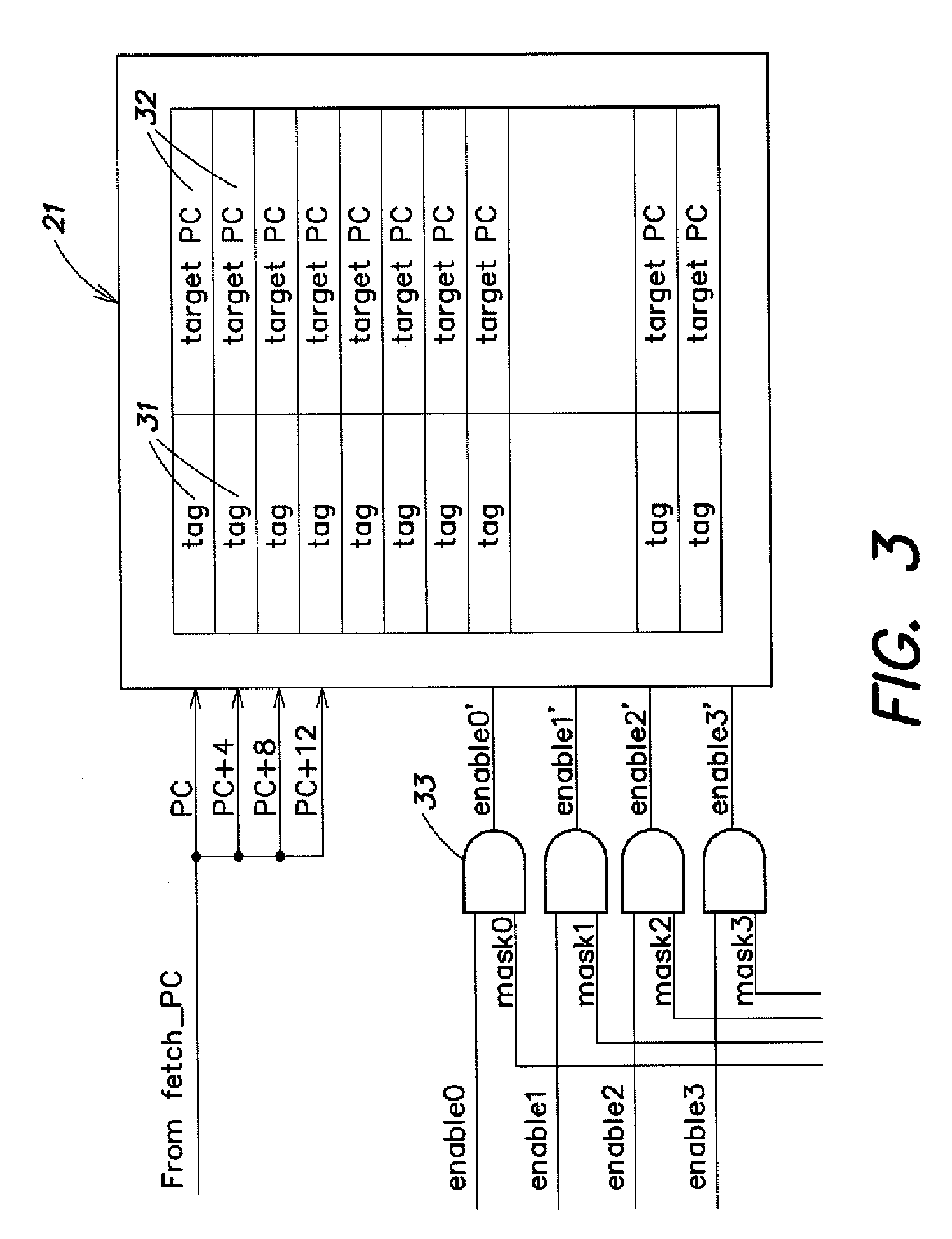

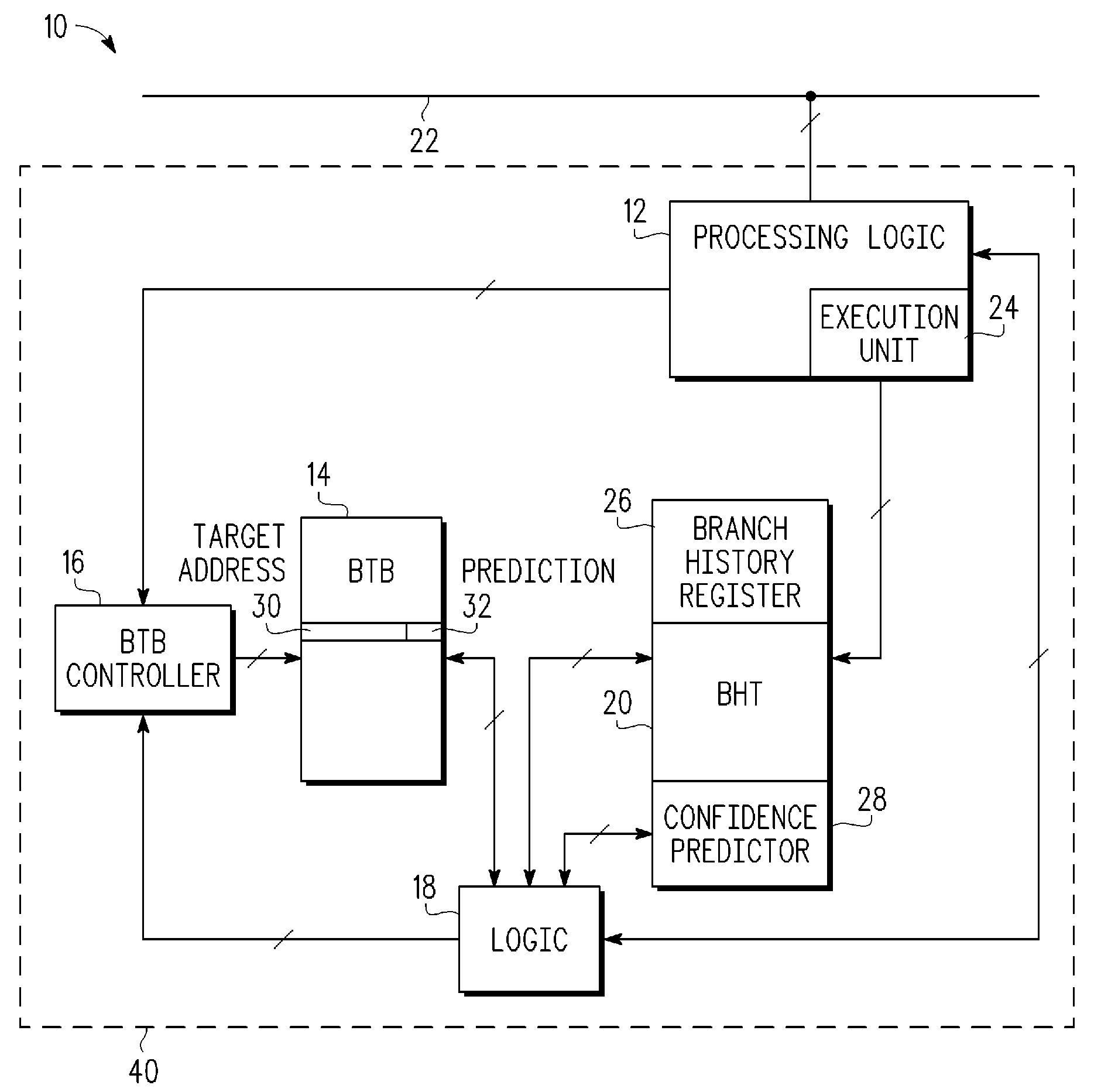

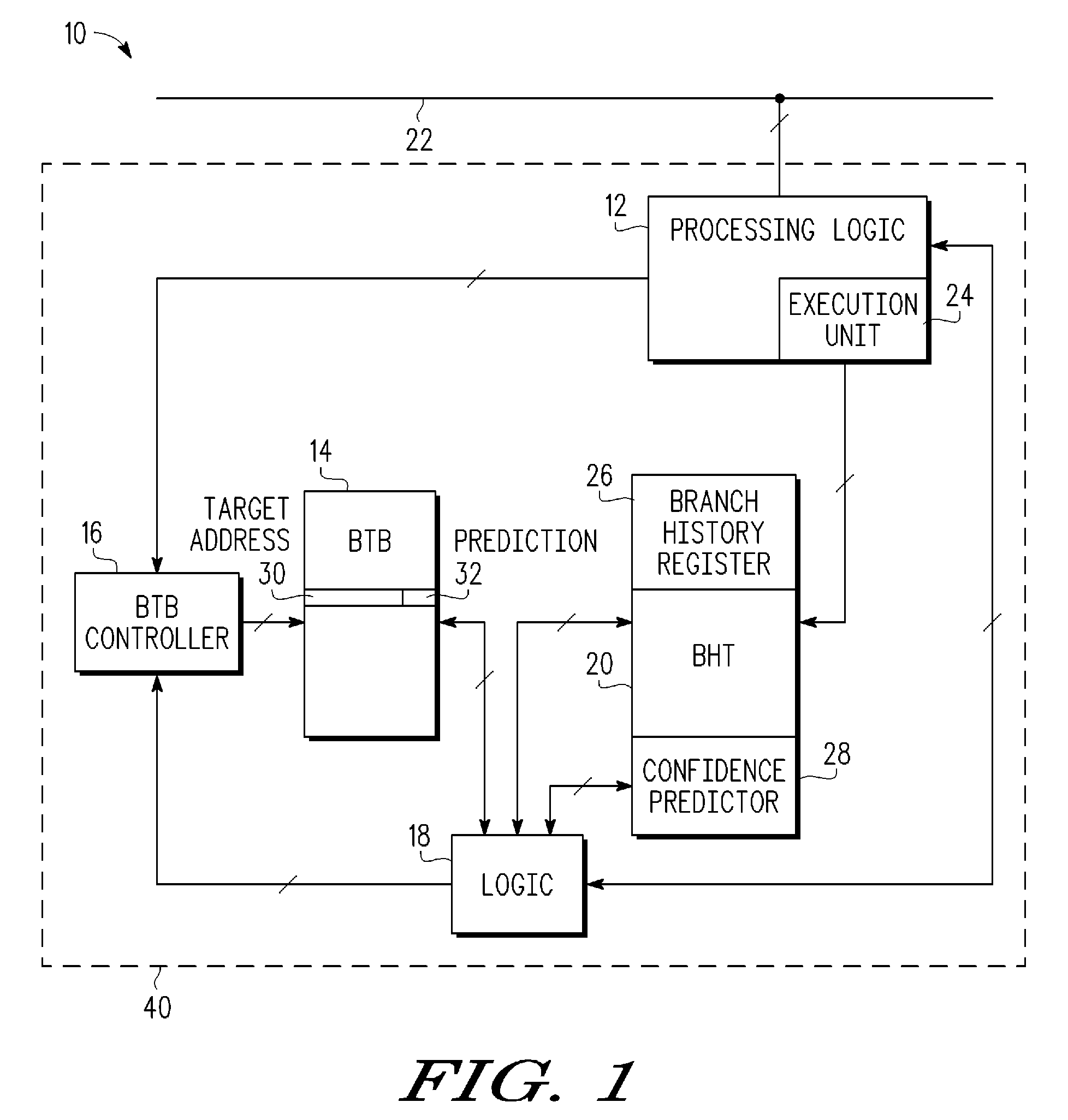

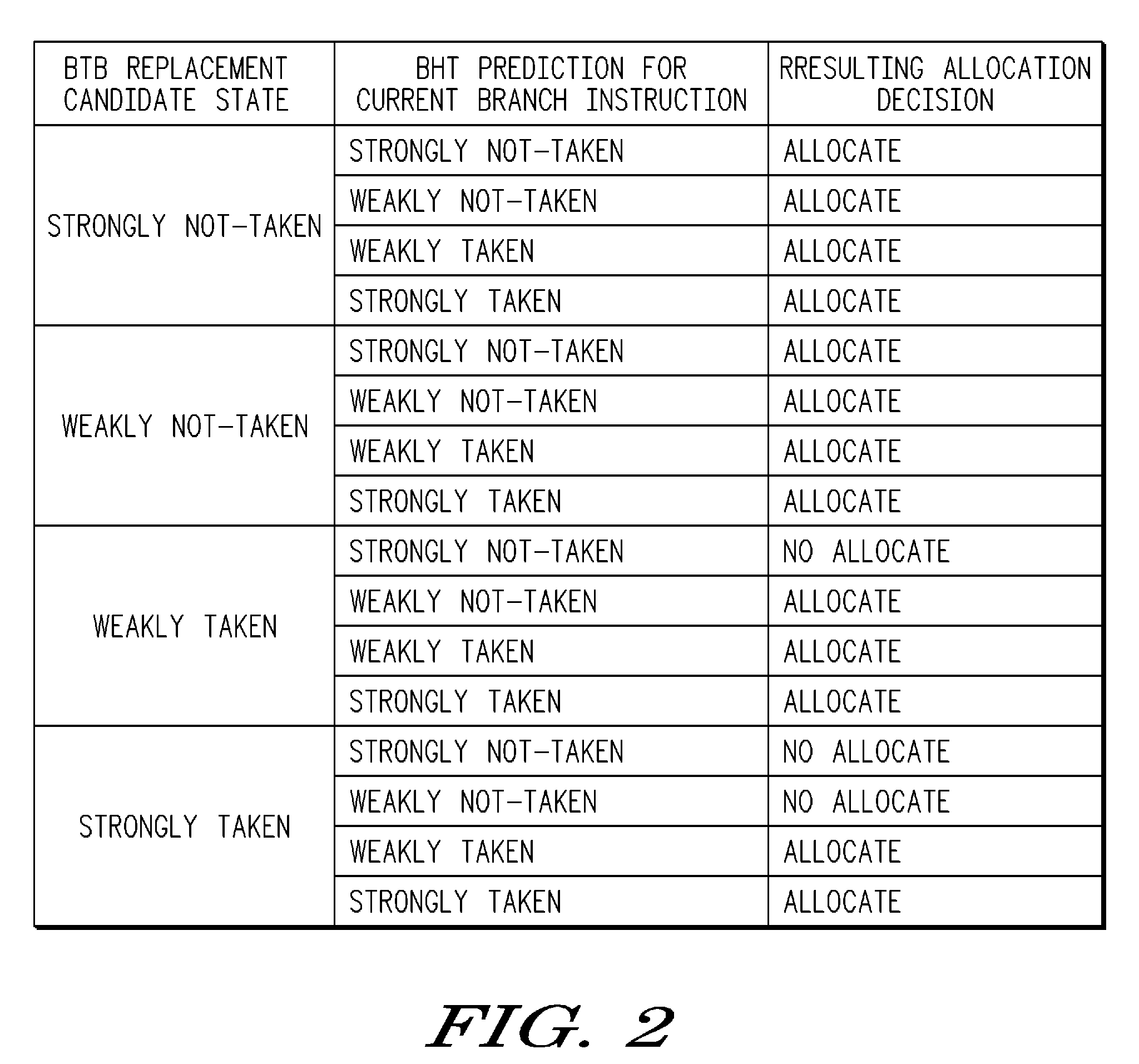

A data processing system and method are provided for allocating an entry in a branch target buffer (BTB). The method comprises: receiving a branch instruction to be executed in a data processor; determining that the BTB does not include an entry corresponding to the branch instruction; identifying an entry in the BTB for allocation, the identified entry in the BTB comprising a target identifier and a first prediction value for a previously received branch instruction; determining whether to allocate the branch instruction to the identified entry in the BTB based on a comparison of the first prediction value to a second prediction value, wherein the second prediction value is generated from a branch history table (BHT); and allocating the branch instruction to the identified entry if the second prediction value indicates a more strongly taken prediction than the first prediction value.

Owner:NORTH STAR INNOVATIONS

Dynamic branch hints using branches-to-nowhere conditional branch

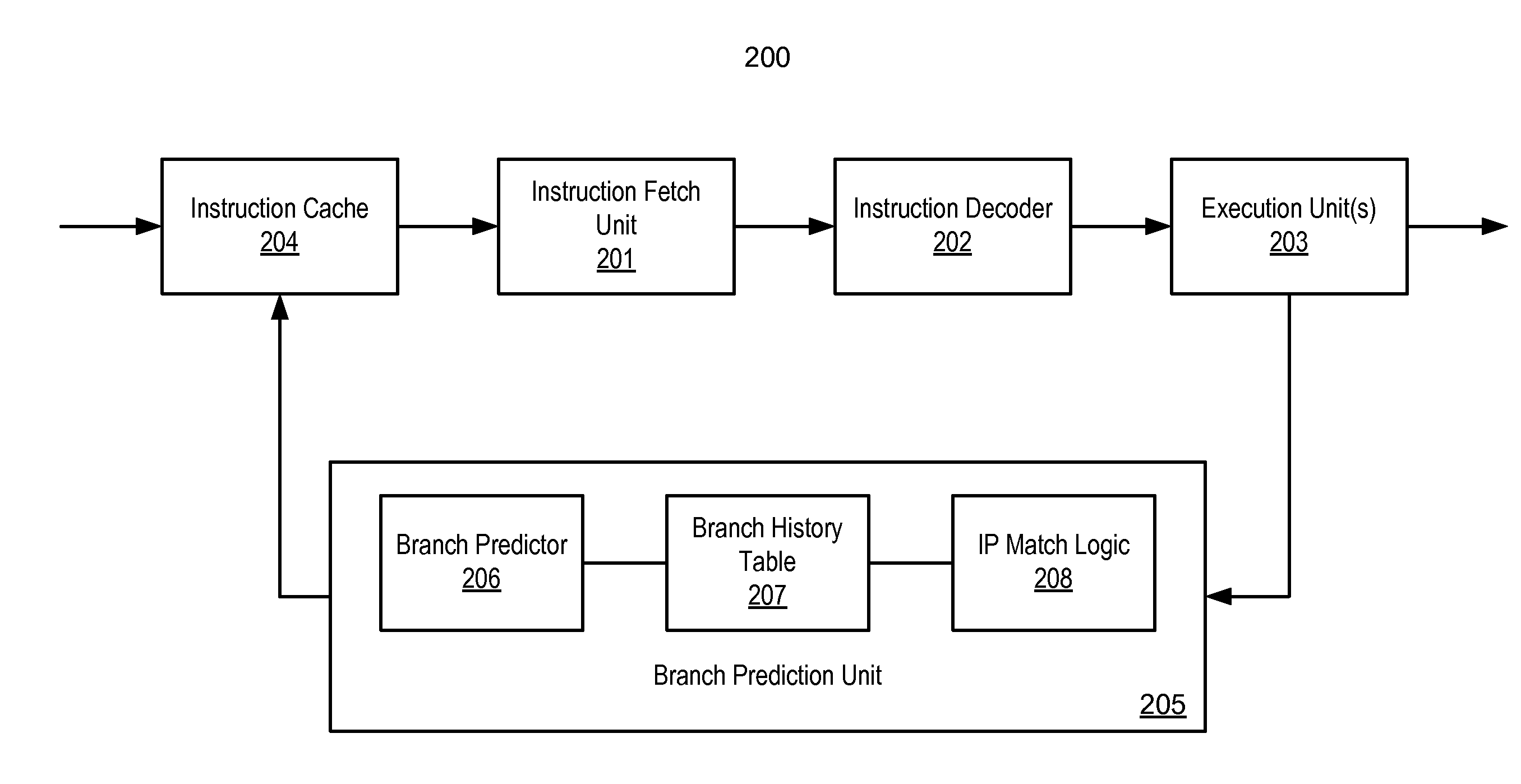

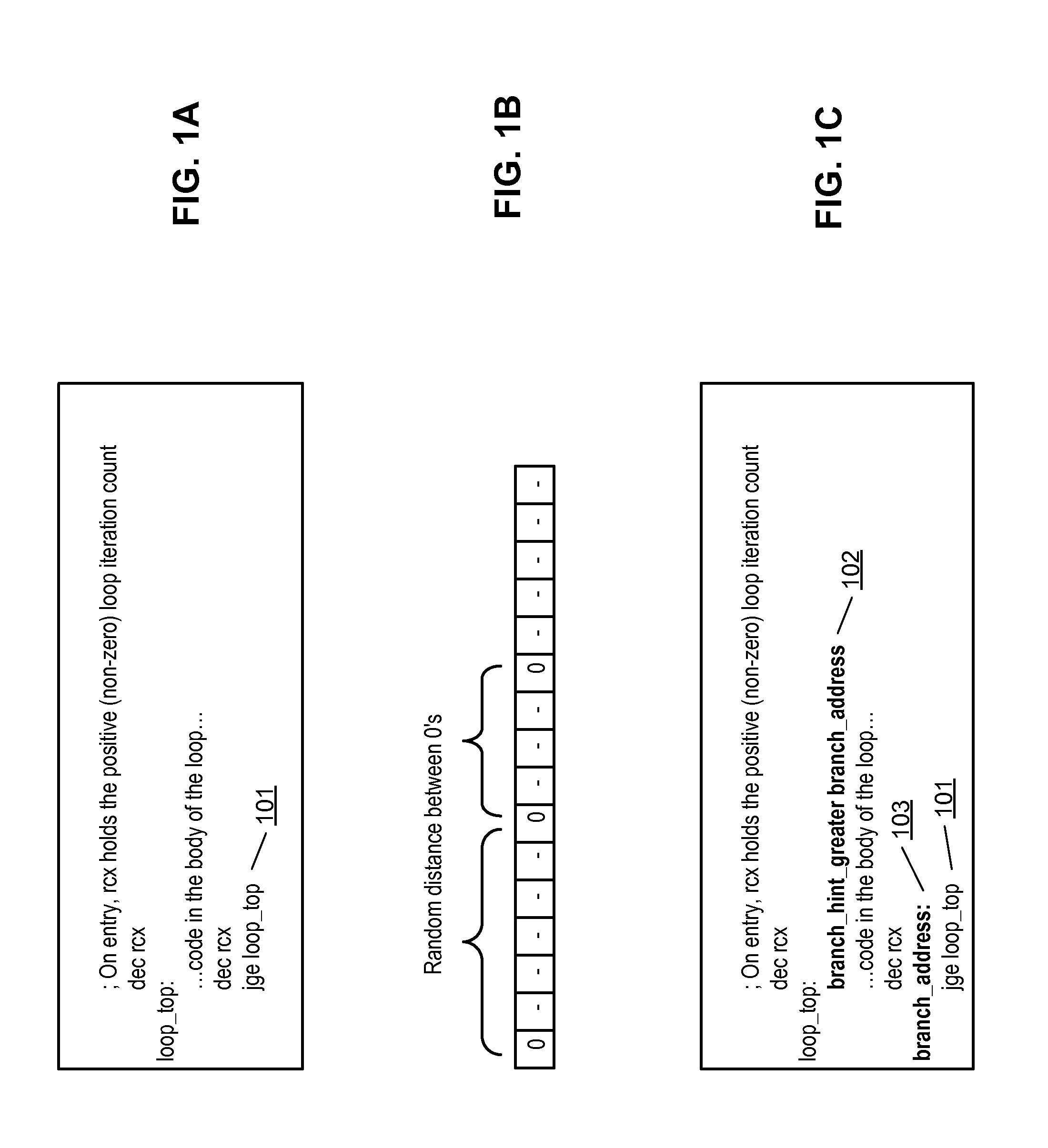

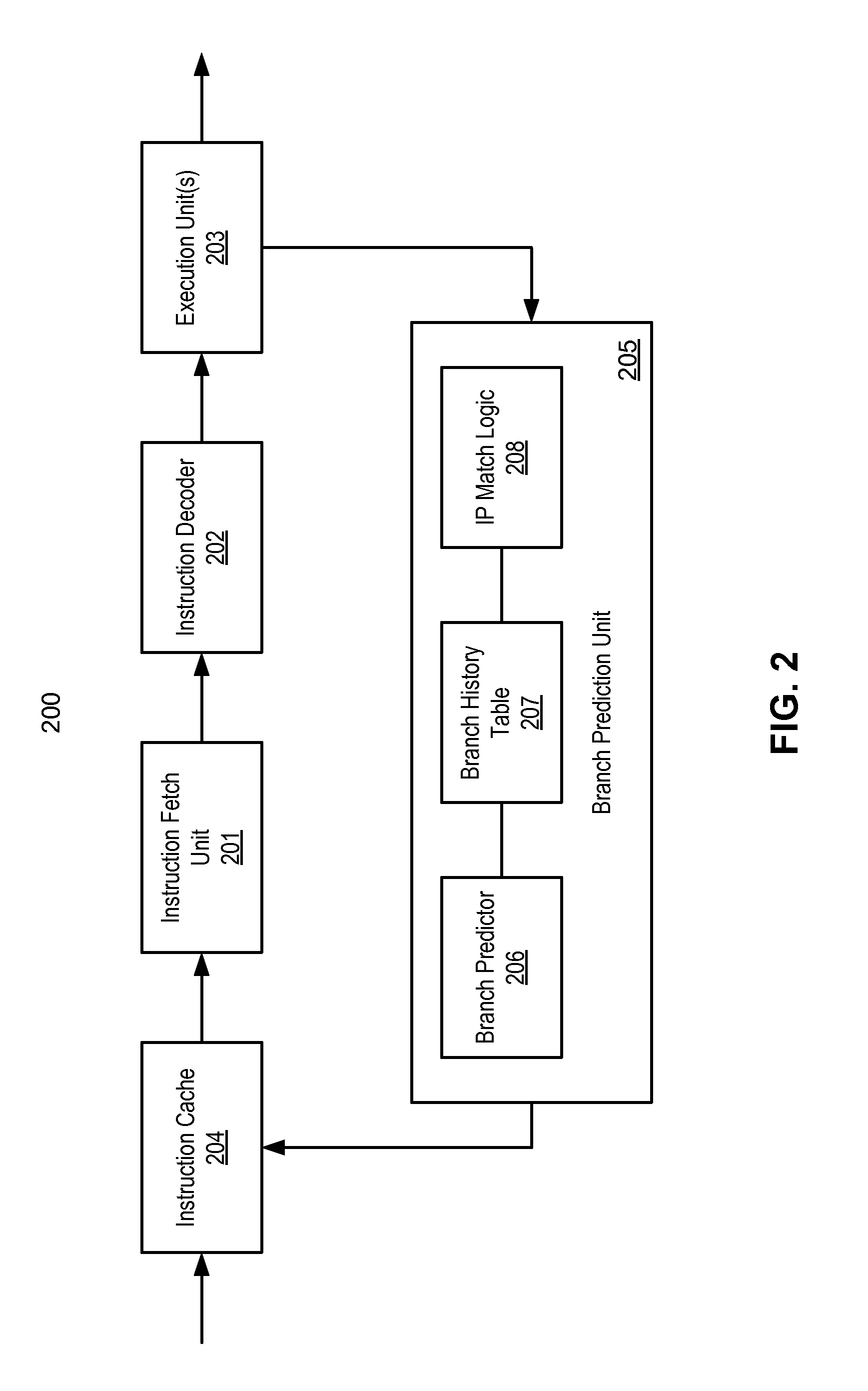

InactiveUS20140229721A1Digital computer detailsConcurrent instruction executionBranch predicationExecution unit

A processor includes an execution pipeline having one or more execution units to execution the instructions and a branch prediction unit coupled to the execution units. The branch prediction unit includes a branch history table to store prior branch predictions, a branch predictor, in response to a conditional branch instruction, to predict a branch target address of the conditional branch instruction based on the branch history table, and address match logic to compare the predicted branch target address with an address of a next instruction executed immediately following the conditional branch instruction. The address match logic is to cause the execution pipeline to be flushed if the predicted branch target address does not match the address of the next instruction to be executed.

Owner:INTEL CORP

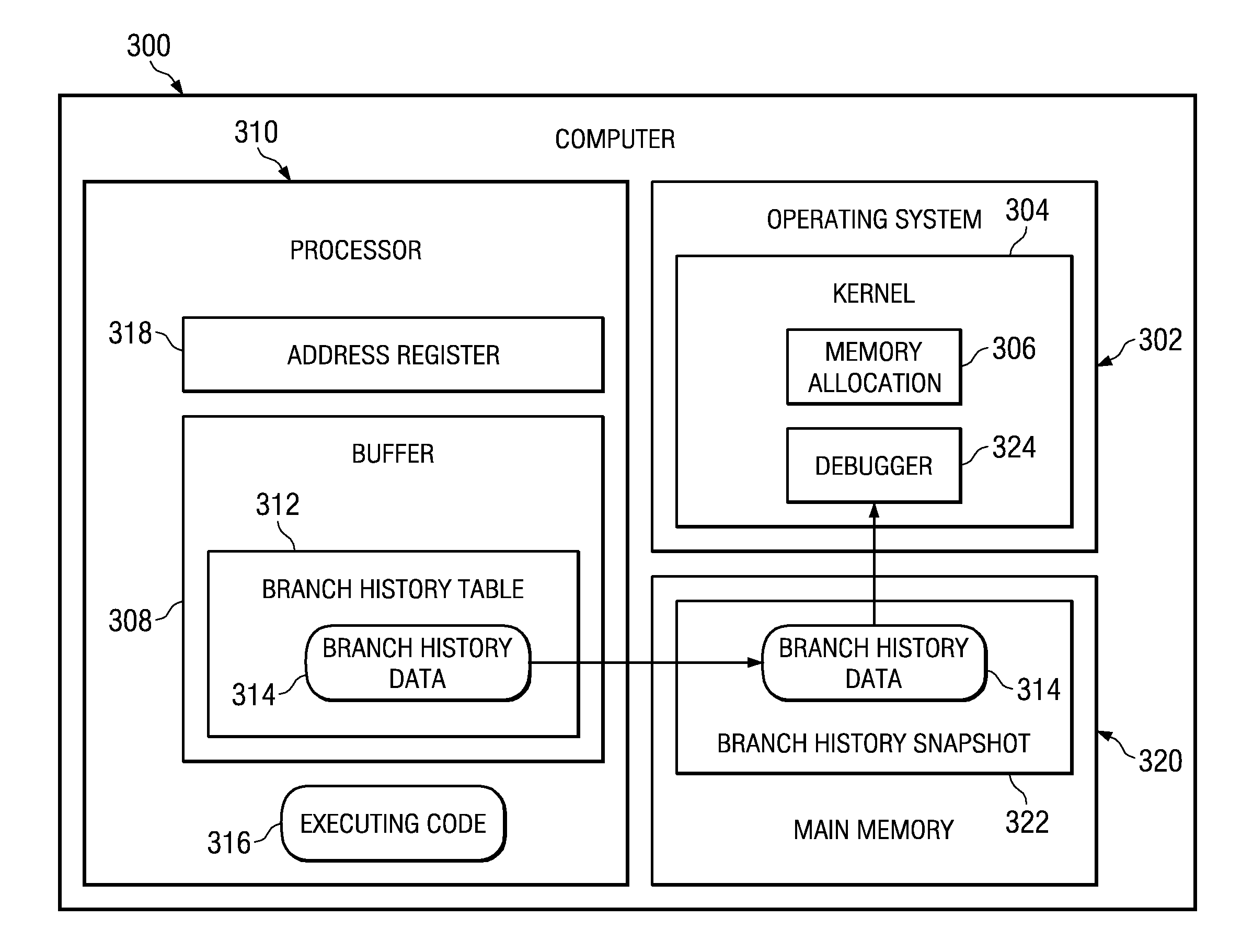

Branch history table for debug

InactiveUS20080114971A1Digital computer detailsConcurrent instruction executionComputer programData preservation

A computer implemented method, apparatus, and computer program product for preserving branch history data. The process creates a branch history table in a buffer. The process saves an address for each executed branch instruction that occurs during execution of code in the branch history table to form branch history data. In response to detecting an exception, the process saves the branch history data to an allocated memory space to form a branch history snapshot.

Owner:IBM CORP

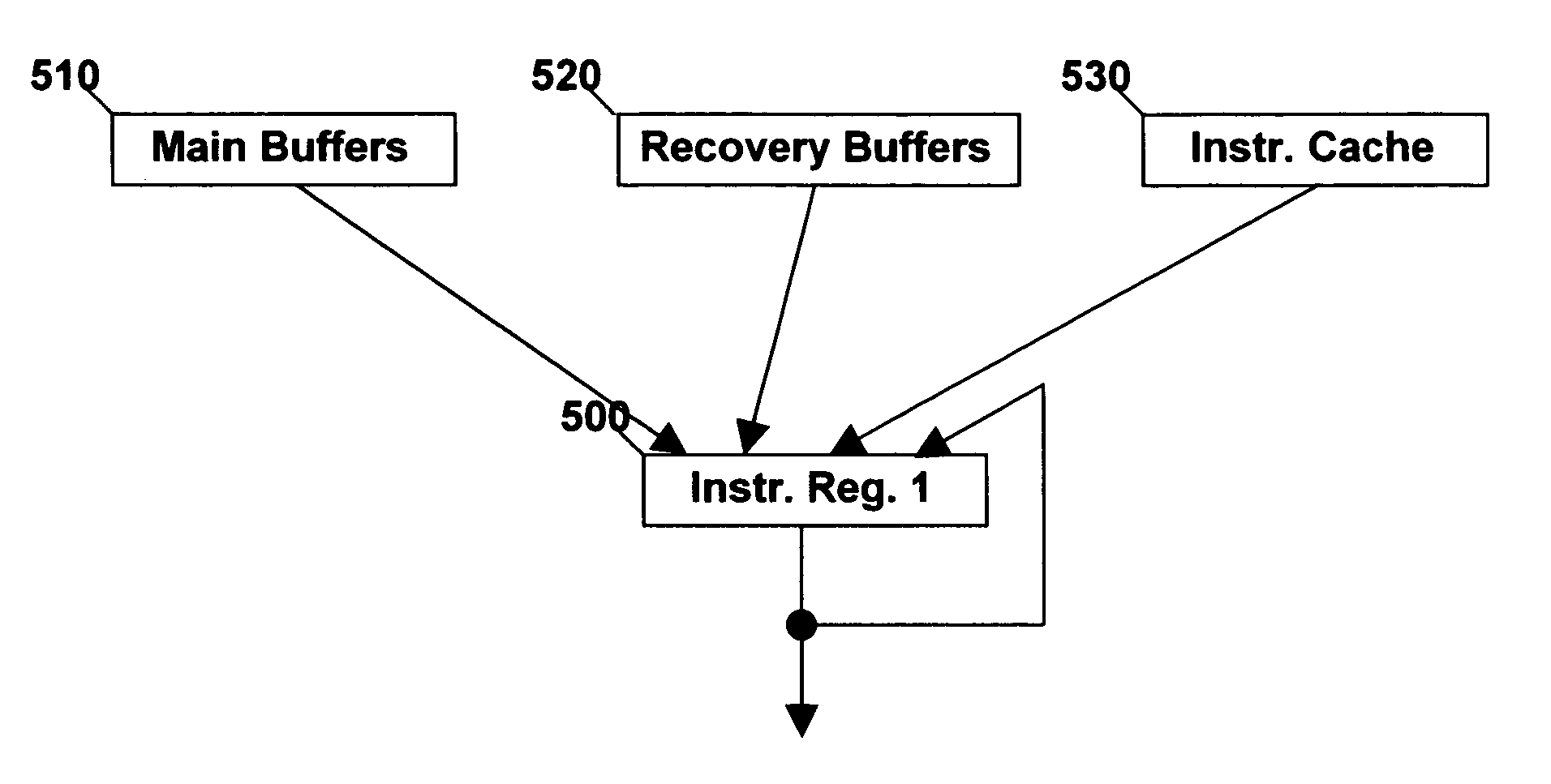

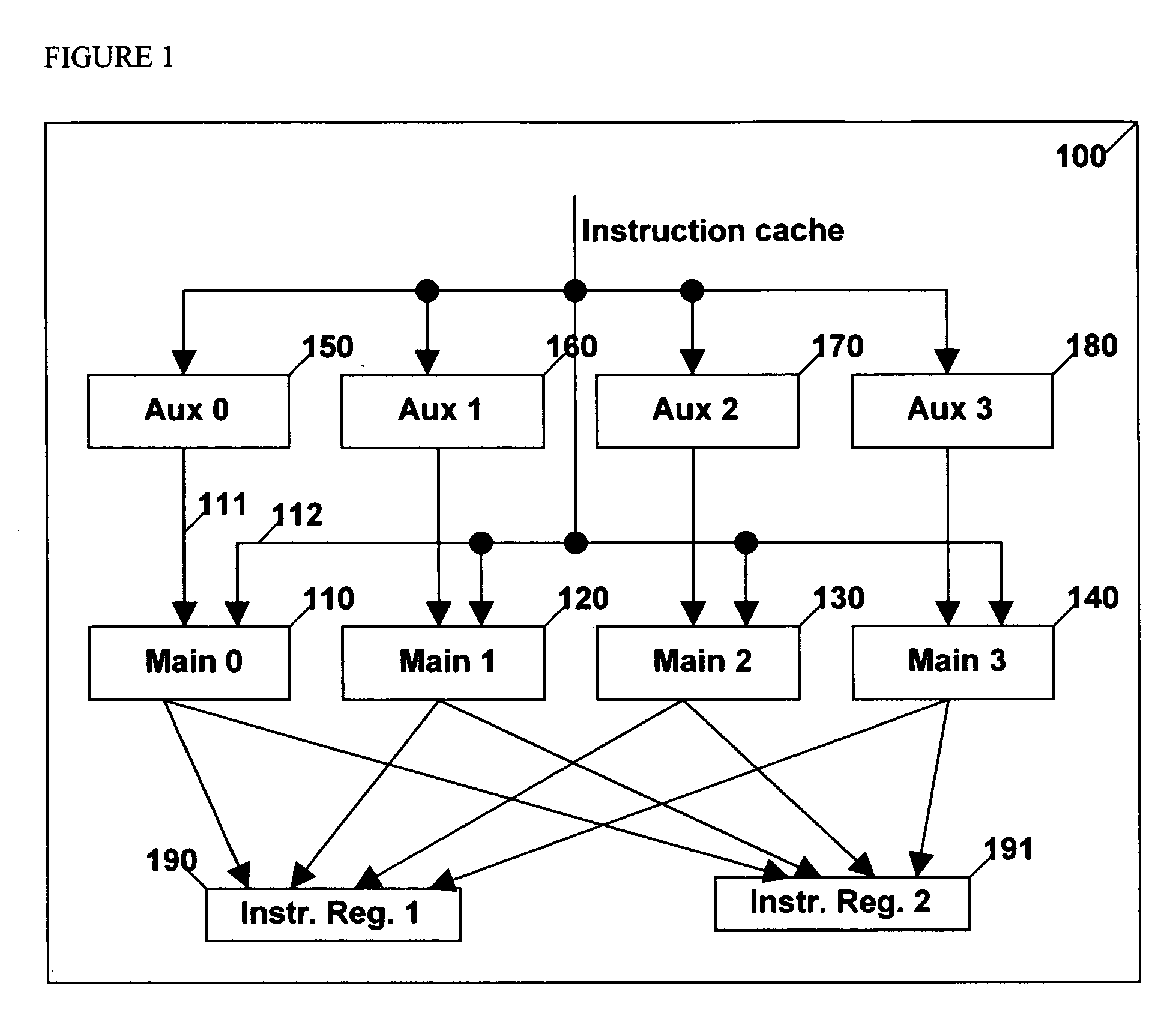

Linked instruction buffering of basic blocks for asynchronous predicted taken branches

InactiveUS20050257035A1Preventing unnecessary fetchingOvercomes shortcomingDigital computer detailsConcurrent instruction executionBasic blockTrace Cache

A method and apparatus for providing the capability to create a dynamic based buffer structure that takes an instruction addresses organized instruction cache and through the interaction of an asynchronous branch target buffer (BTB) and branch history table (BHT) forms a series of instructions that resembles a trace cache in the buffer structure. By allowing the dynamic creation of a predicted code sequence trace in the buffer structure, based on the past behavior of the instruction code, the usage of fetching is utilized and the instruction cache makes optimal use of area while reducing latency penalties associated with taken branches and branches which are predicted in the improper direction.

Owner:IBM CORP

Effective use of a bht in processor having variable length instruction set execution modes

InactiveCN101517534AProgram control using stored programsInstruction analysisMultiplexingVariable length

In a processor executing instructions in at least a first instruction set execution mode having a first minimum instruction length and a second instruction set execution mode having a smaller, second minimum instruction length, line and counter index addresses are formed that access every counter in a branch history table (BHT), and reduce the number of index address bits that are multiplexed based on the current instruction set execution mode. In one embodiment, counters within a BHT line are arranged and indexed in such a manner that half of the BHT can be powered down for each access in one instruction set execution mode.

Owner:QUALCOMM INC

Ram Block Branch History Table in a Global History Branch Prediction System

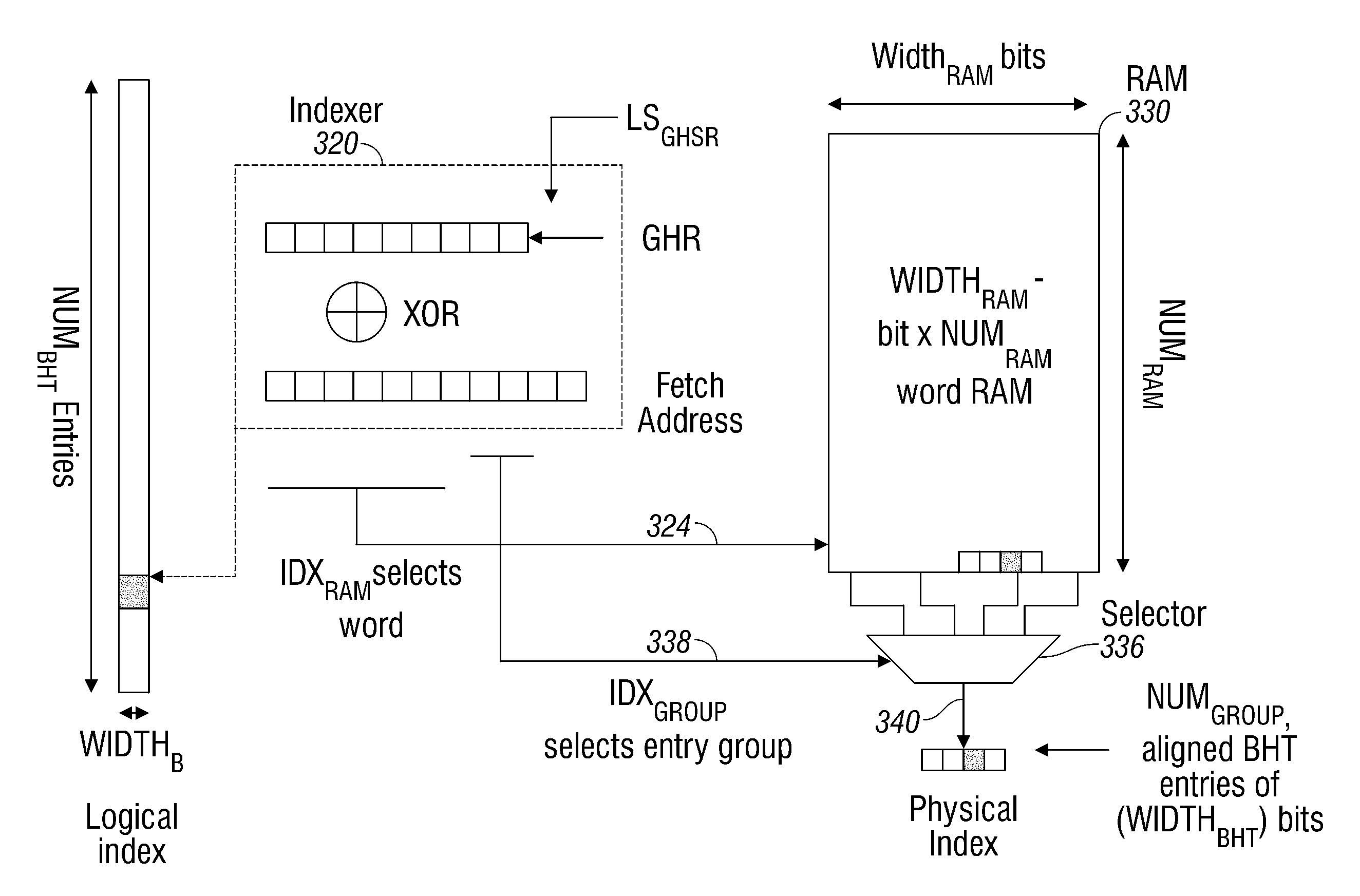

InactiveUS20090276611A1Simple designLow costDigital computer detailsSpecific program execution arrangementsImage resolutionRandom access memory

Owner:MACOM CONNECTIVITY SOLUTIONS LLC

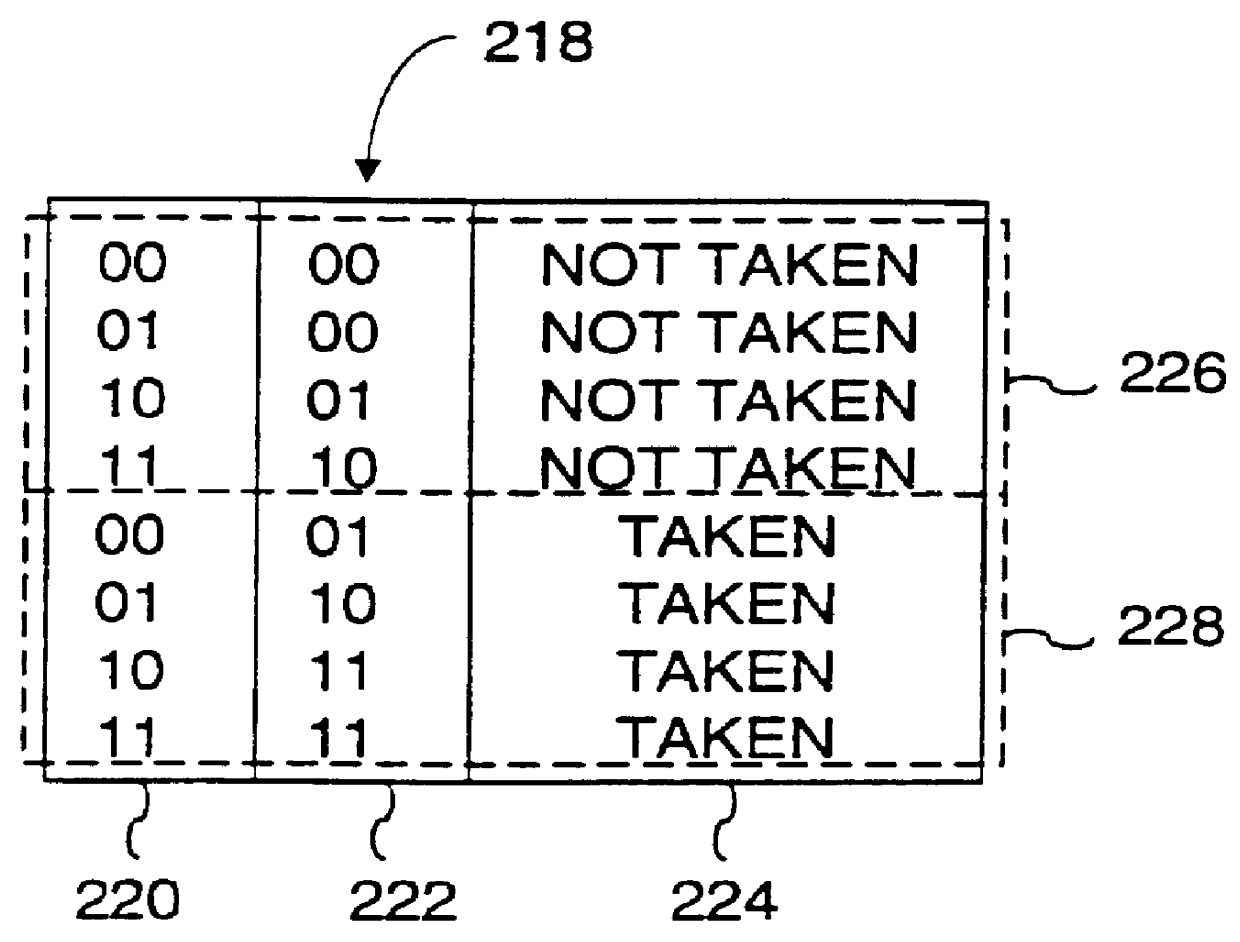

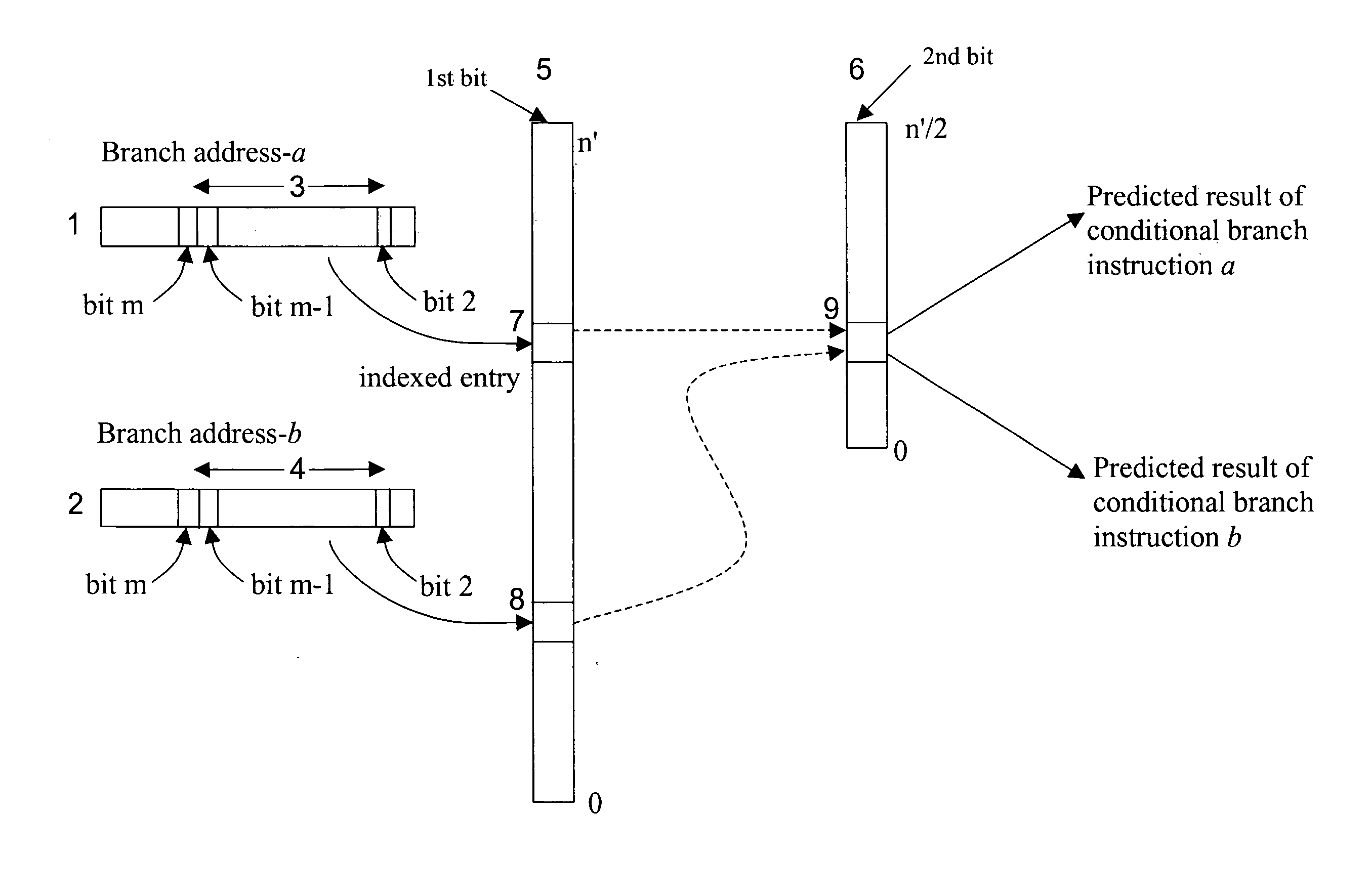

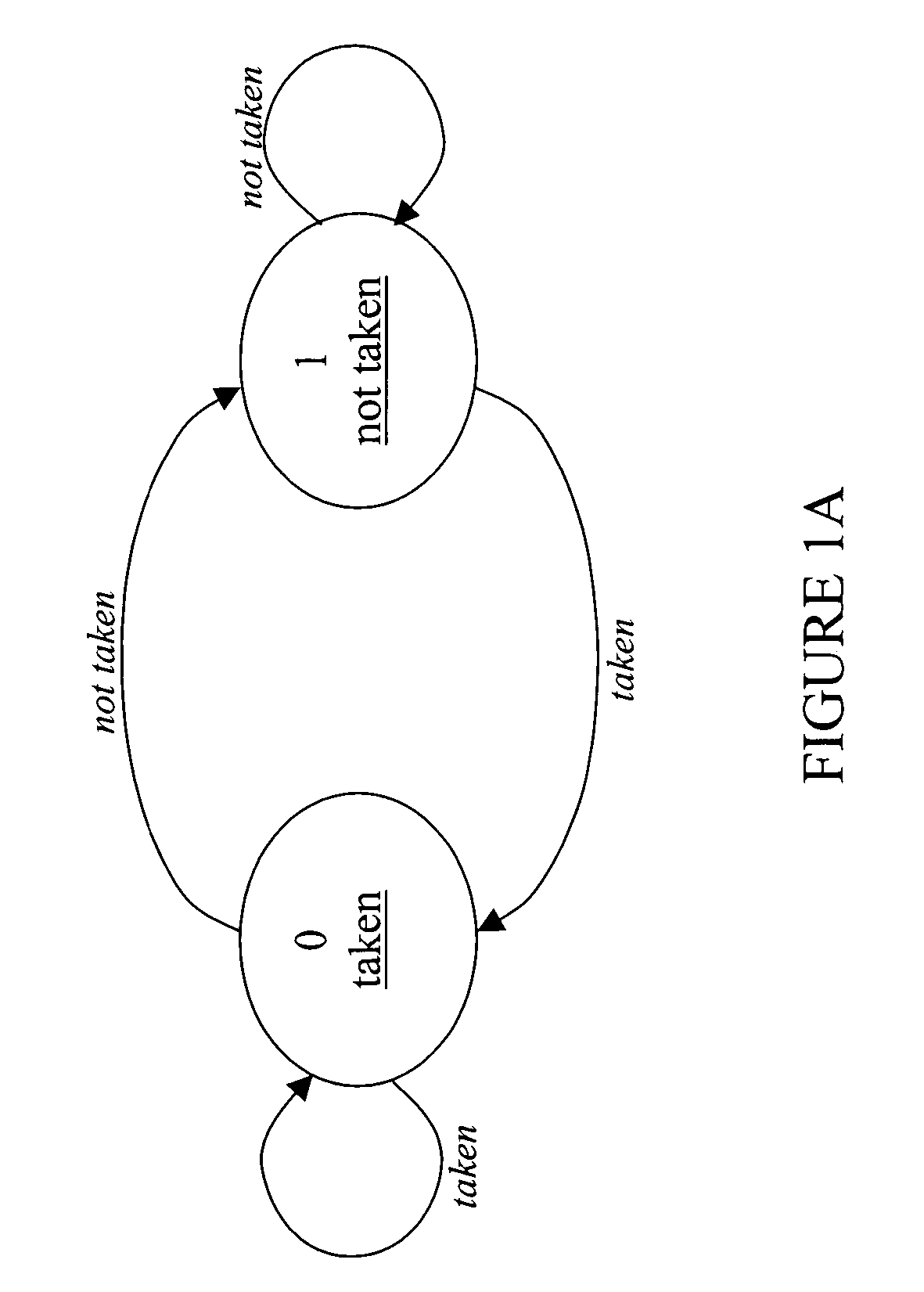

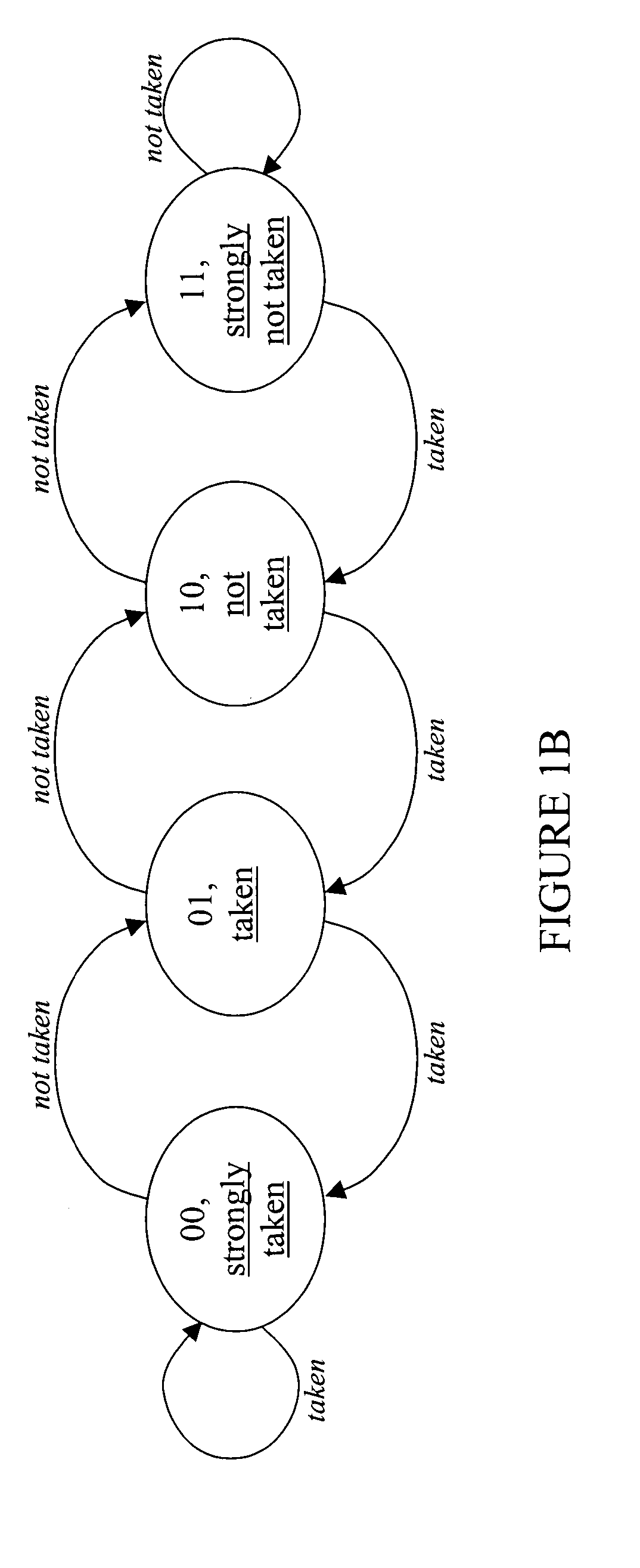

Two-bit branch prediction scheme using reduced memory size

InactiveUS20050015578A1Digital computer detailsConcurrent instruction executionBit arrayConditional branch

One or more methods and systems of reducing the size of memory used in implementing a predictive scheme for executing conditional branch instructions are presented. In one embodiment, a conditional branch instruction addresses a first bit array and a second bit array of a branch history table. The branch history table comprises a first bit array and a second bit array in which the second bit array contains a fraction of the number of entries of said first bit array. In one or more embodiments, the size of the branch history table is reduced by at least twenty five percent, resulting in a reduction of memory required for implementing the predictive scheme.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Method, system and computer program product for an implicit predicted return from a predicted subroutine

InactiveUS7882338B2Digital computer detailsNext instruction address formationParallel computingInstruction buffer

A method, system and computer program product for performing an implicit predicted return from a predicted subroutine are provided. The system includes a branch history table / branch target buffer (BHT / BTB) to hold branch information, including a target address of a predicted subroutine and a branch type. The system also includes instruction buffers, and instruction fetch controls to perform a method including fetching a branch instruction at a branch address and a return-point instruction. The method also includes receiving the target address and the branch type, and fetching a fixed number of instructions in response to the branch type. The method further includes referencing the return-point instruction within the instruction buffers such that the return-point instruction is available upon completing the fetching of the fixed number of instructions absent a re-fetch of the return-point instruction.

Owner:IBM CORP

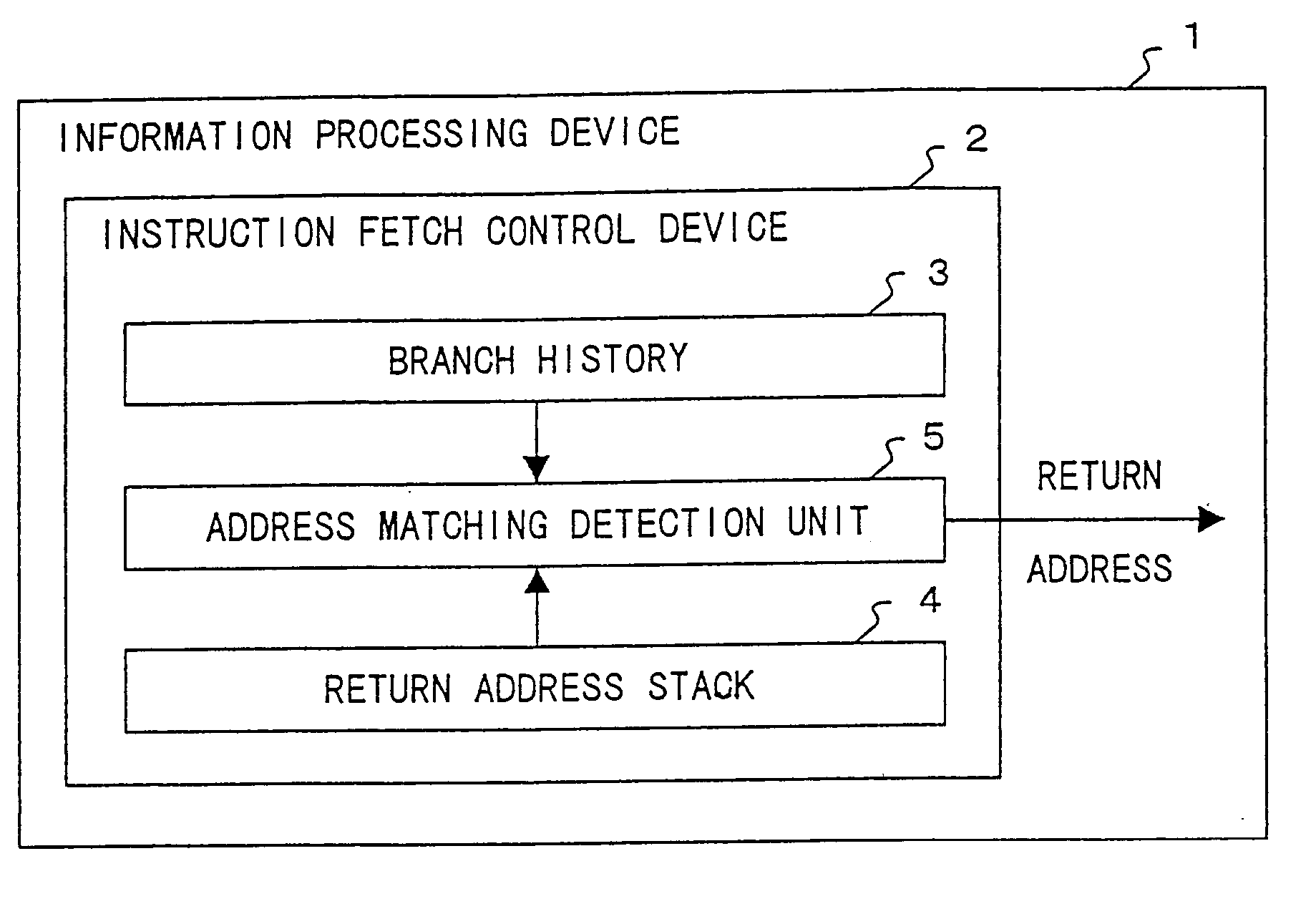

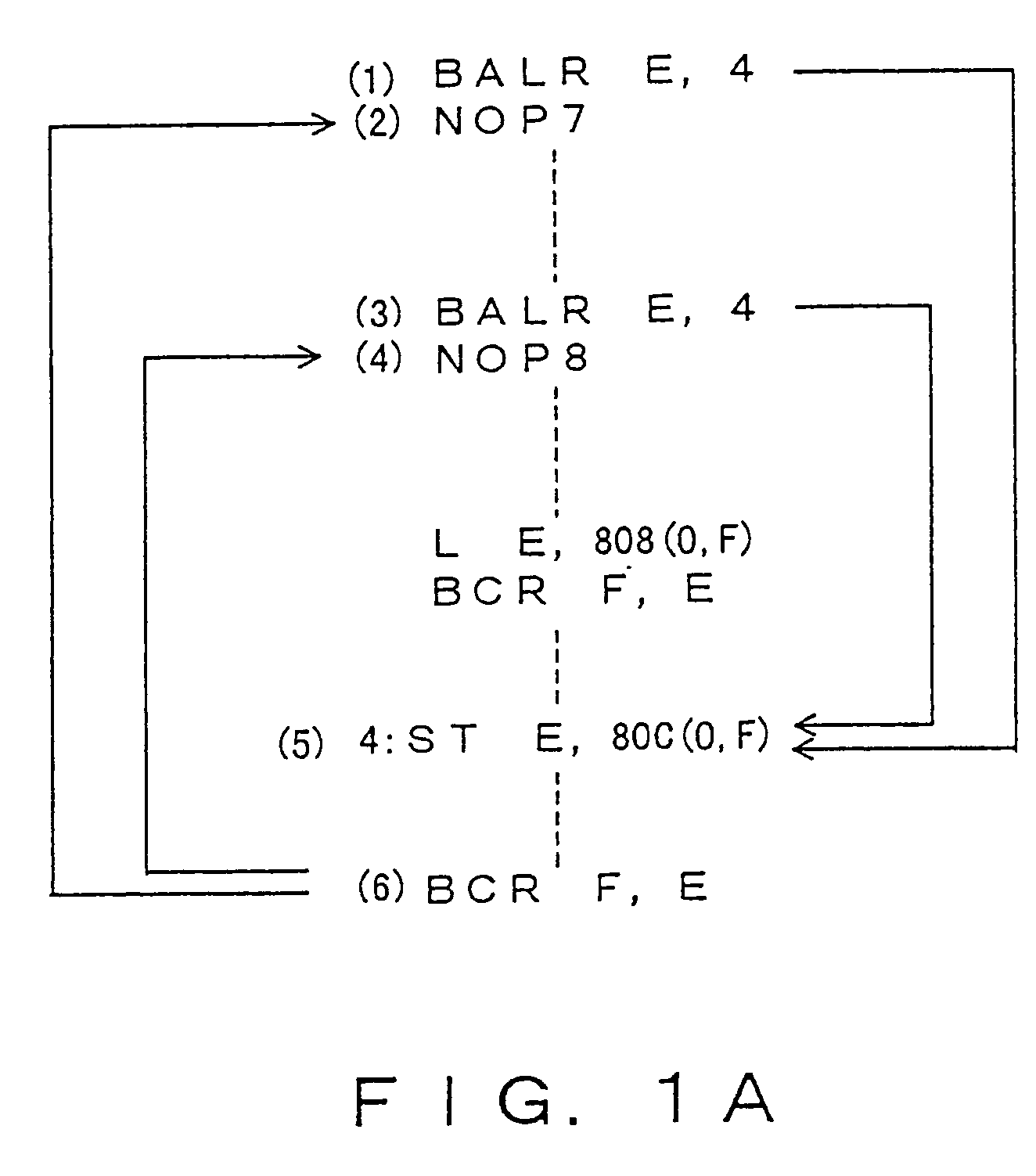

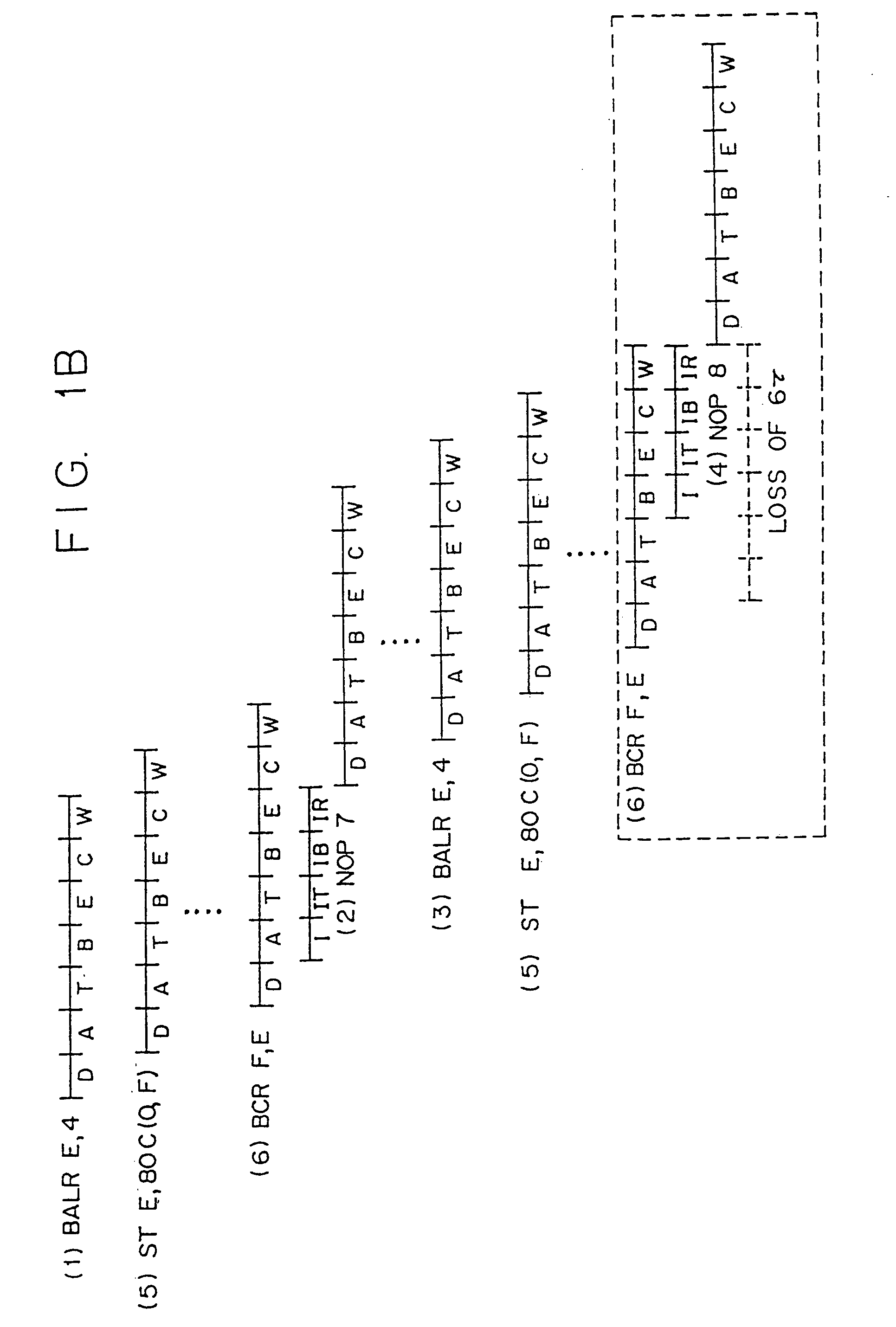

Predicted return address selection upon matching target in branch history table with entries in return address stack

InactiveUS20050278516A1High speed machiningDigital computer detailsNext instruction address formationInformation processingReturn address stack

An information processing apparatus is capable of speculatively performing an execution, such as a pipeline / superscalar / out-of-order execution and equipped with a branch prediction mechanism (a branch history). The information processing apparatus, in order to process an instruction sequence that includes a subroutine at a high speed, is further equipped with a return address stack, of which the stack operation is activated at a time of completing execution of an subroutine call / return correspondent instruction and an entry designating unit (pointer), in order to adjust a time difference resulting from an instruction fetch being executed prior to completing an instruction, pointing to a position relative to the stack front and adjusting a time difference between an instruction fetch performed speculatively in advance and completion of an instruction both at a time of completing execution of a branch instruction that is correspondent to a subroutine call / return and at a time of predicting a subroutine call / return in synchrony to the instruction fetch. An entry position correspondent to a stack position pointed to by the entry designation unit is adopted as a subroutine call / return prediction address and consequently the prediction of the subroutine return address becomes more accurate and the processing speed becomes higher.

Owner:FUJITSU LTD

A method and apparatus for predicting branch instructions

A microprocessor comprising two branch history tables and configured to use a first of the branch history tables to predict a branch instruction as a hit in a branch target cache, and to use a first of the branch history tables The second of the two to predict the branch instruction as a miss in the branch target cache. Likewise, the first branch history table is configured to have an access speed that matches that of the branch target cache such that its prediction information is available in time with respect to a branch target cache hit detection, wherein The branch target cache hit detection may occur early in the microprocessor's instruction pipeline. The second branch history table thus only needs to be as fast as is needed to provide timely predictive information associated with recognizing a branch target cache miss as a branch instruction, for example at an instruction decode stage of the instruction pipeline.

Owner:QUALCOMM INC

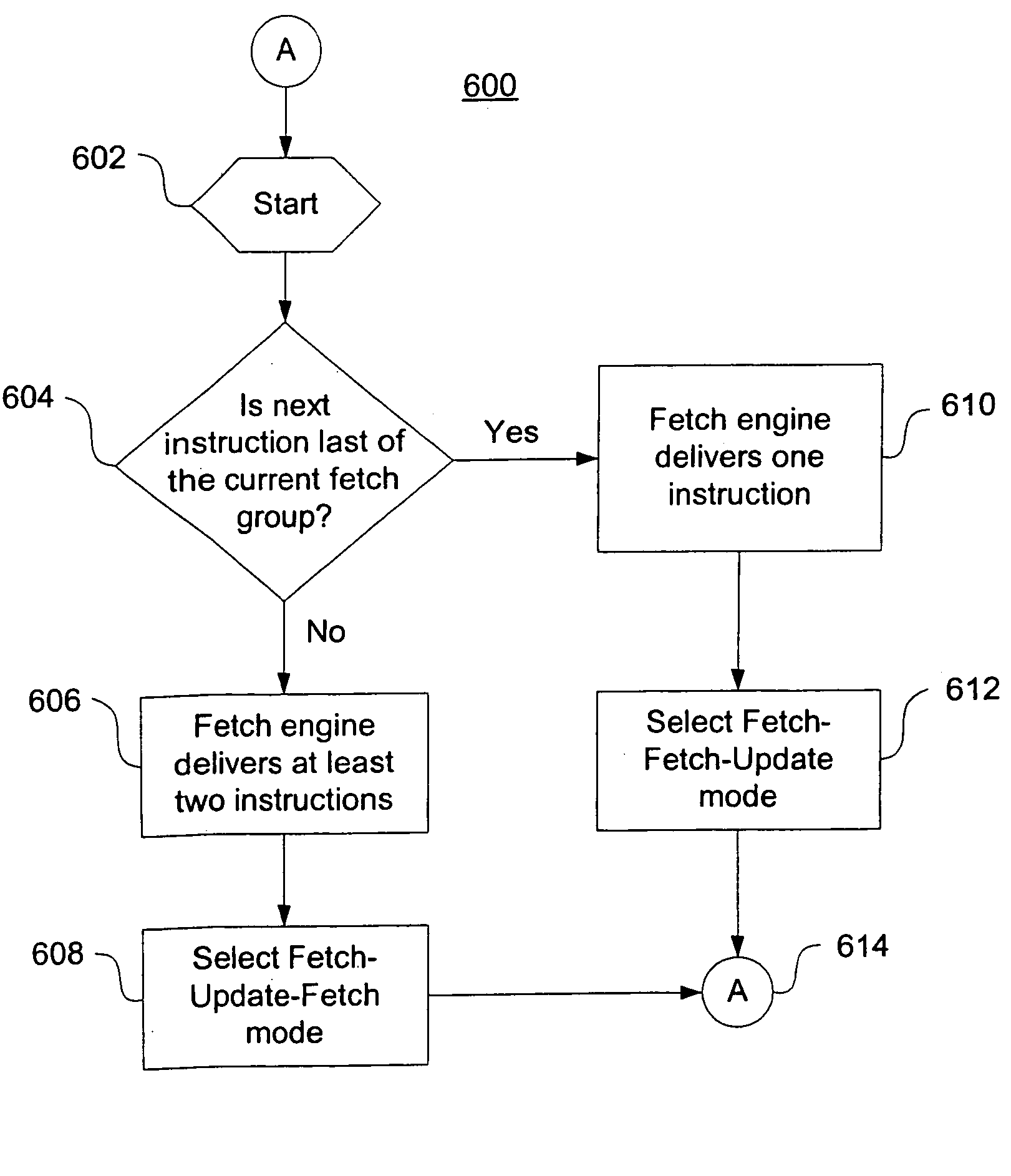

Methods and apparatus for updating of a branch history table

InactiveUS20060010311A1Digital computer detailsSpecific program execution arrangementsProcessing InstructionParallel computing

Methods and apparatus are provided for enhanced instruction handling in processing environments. If branch misprediction occurs during instruction processing, a branch history table may be updated based upon the number of instructions to be fetched. The branch history table may be updated in accordance with a first mode if at least two instructions are available, and may be updated in accordance with a second mode if less than two instructions are available. A compiler can assist the processing by aligning instructions for processing. The instructions can be aligned across multiple instruction fetch groups so that instructions are available for fetching and the branch history table is updated prior to performing a branching operation.

Owner:SONY COMPUTER ENTERTAINMENT INC

Computer processing system employing an instruction schedule cache

InactiveUS7454597B2Efficient executionReduce complexityDigital computer detailsConcurrent instruction executionTime scheduleScheduling instructions

A processor core and method of executing instructions, both of which utilizes schedules, are presented. Each of the schedules includes a sequence of instructions, an address of a first of the instructions in the schedule, an order vector of an original order of the instructions in the schedule, a rename map of registers for each register in the schedule, and a list of register names used in the schedule. The schedule exploits instruction-level parallelism in executing out-of-order instructions. The processor core includes a schedule cache that is configured to store schedules, a shared cache configured to store both I-side and D-side cache data, and an execution resource for requesting a schedule to be executed from the schedule cache. The processor core further includes a scheduler disposed between the schedule cache and the cache. The scheduler creating the schedule using branch execution history from a branch history table to create the instructions when the schedule requested by the execution resource is not found in the schedule cache. The processor core executes the instructions according to the schedule being executed. The method includes requesting a schedule from a schedule cache. The method further includes fetching the schedule, when the schedule is found in the schedule cache; and creating the schedule, when the schedule is not found in the schedule cache. The method also includes renaming the registers in the schedule to avoid false dependencies in a processor core, mapping registers to renamed registers in the schedule, and stitching register values in and out of another schedule according to the list of register names and the rename map of registers.

Owner:INT BUSINESS MASCH CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com