Patents

Literature

236 results about "Instruction prefetch" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

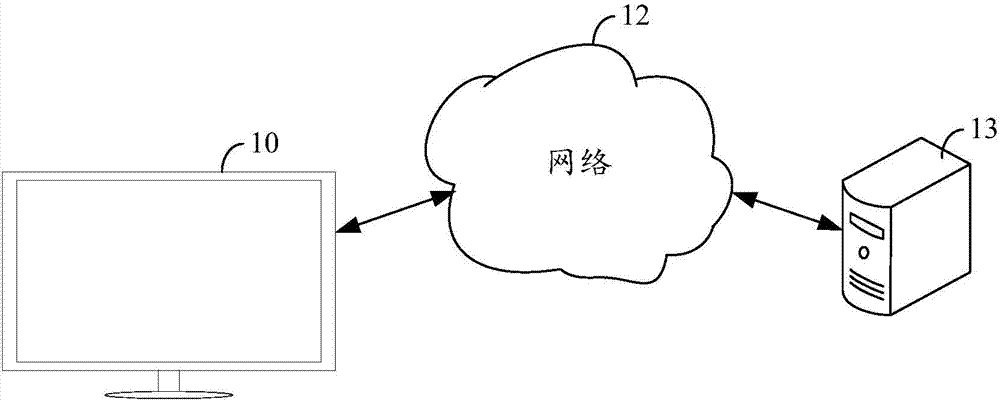

Cache prefetching is a technique used by computer processors to boost execution performance by fetching instructions or data from their original storage in slower memory to a faster local memory before it is actually needed (hence the term 'prefetch'). Most modern computer processors have fast and local cache memory in which prefetched data is held until it is required. The source for the prefetch operation is usually main memory. Because of their design, accessing cache memories is typically much faster than accessing main memory, so prefetching data and then accessing it from caches is usually many orders of magnitude faster than accessing it directly from main memory.

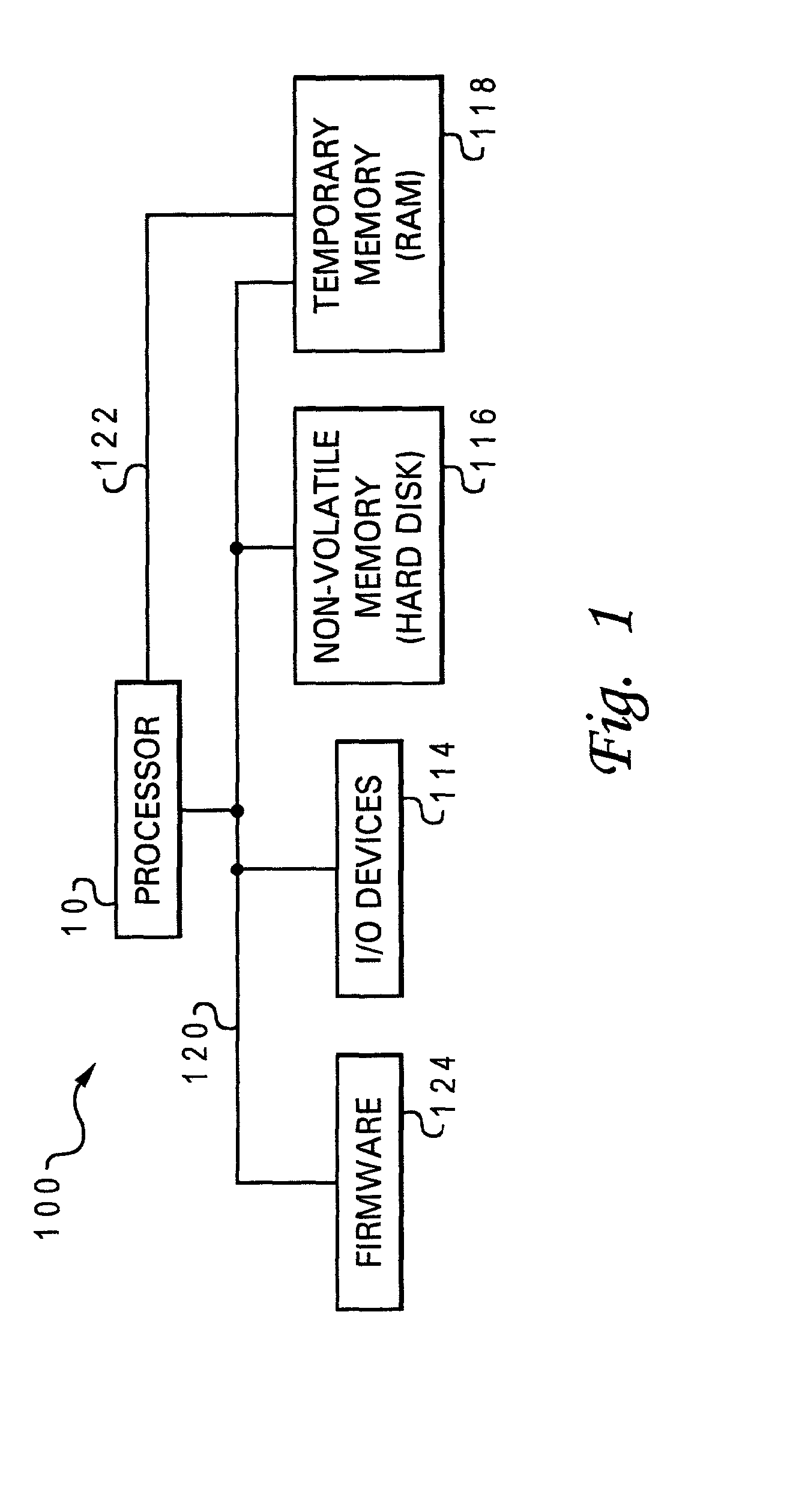

Instruction prefetch caching for remote memory

ActiveUS7240161B1Digital computer detailsConcurrent instruction executionMemory addressControl system

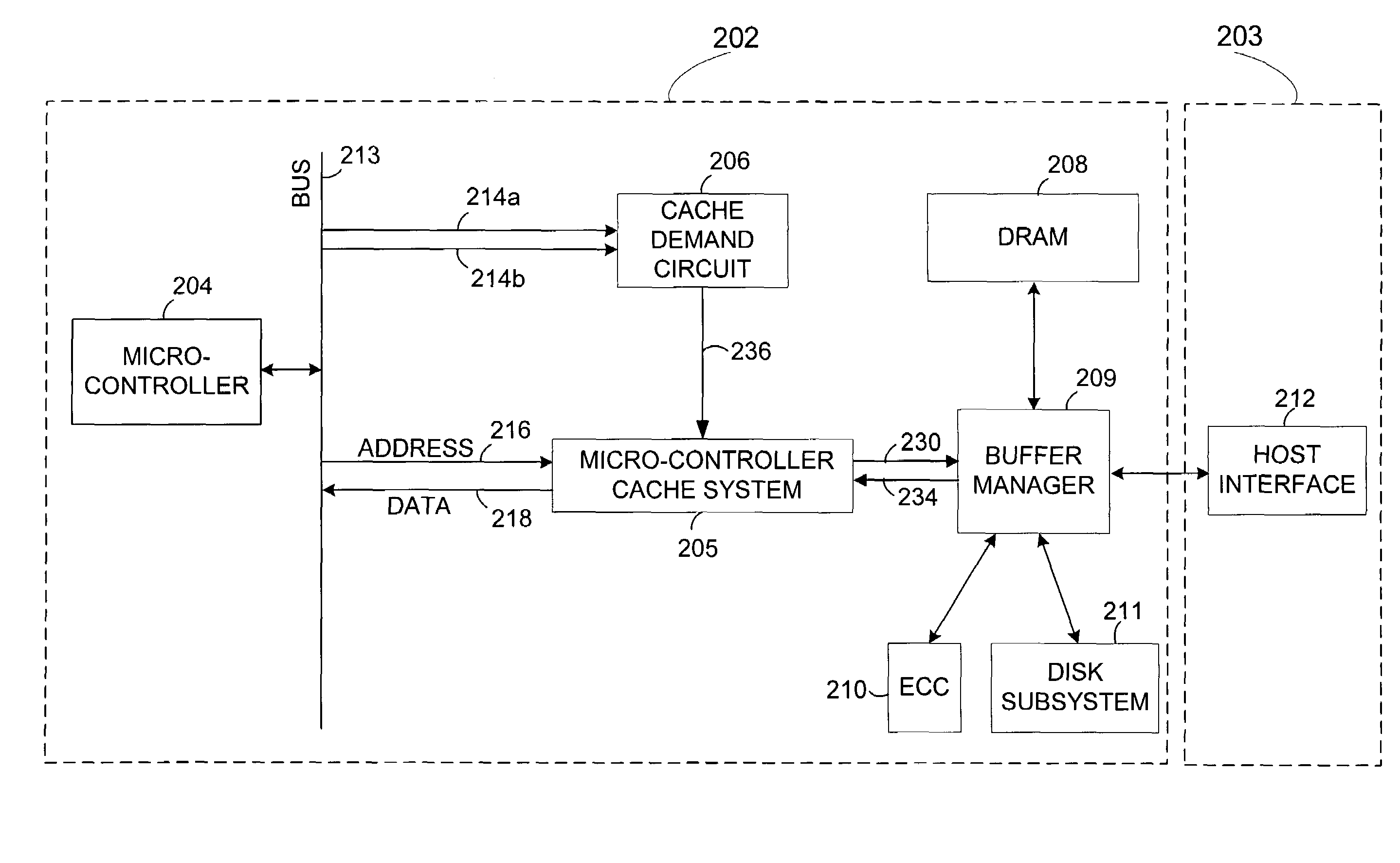

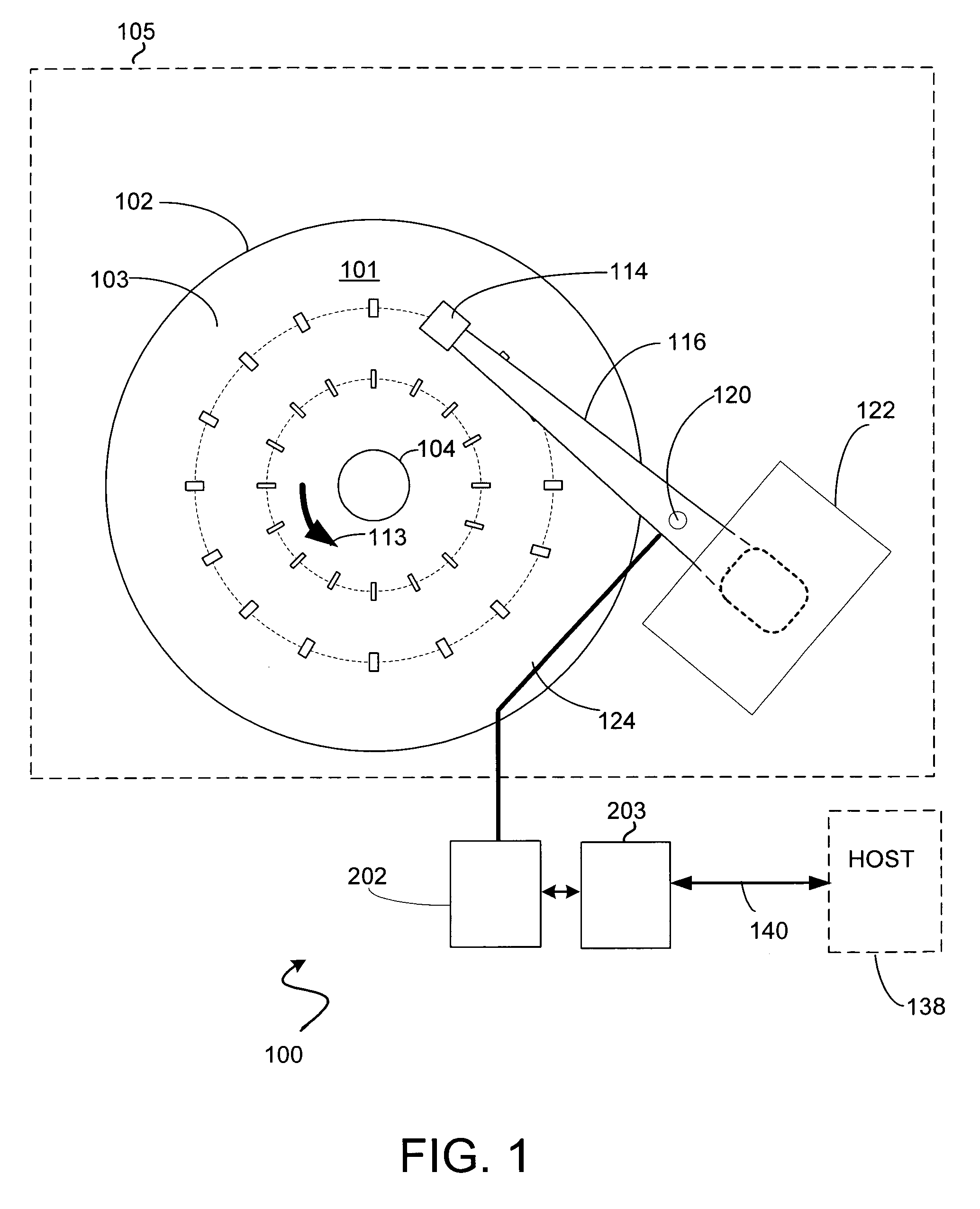

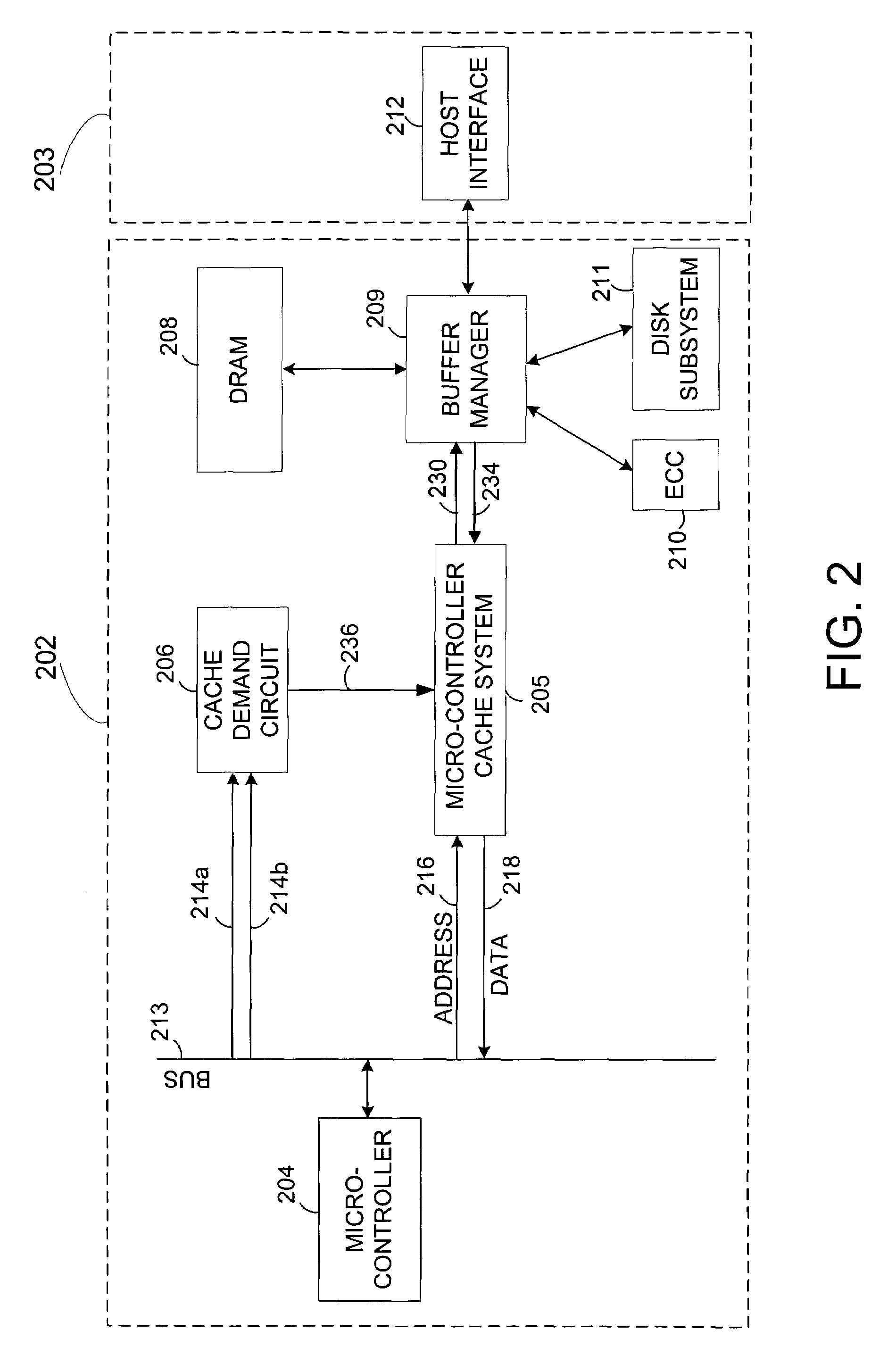

A disk drive control system comprising a micro-controller, a micro-controller cache system adapted to store micro-controller data for access by the micro-controller, a buffer manager adapted to provide the micro-controller cache system with micro-controller requested data stored in a remote memory, and a cache demand circuit adapted to: a) receive a memory address and a memory access signal, and b) cause the micro-controller cache system to fetch data from the remote memory via the buffer manager based on the received memory address and memory access signal prior to a micro-controller request.

Owner:WESTERN DIGITAL TECH INC

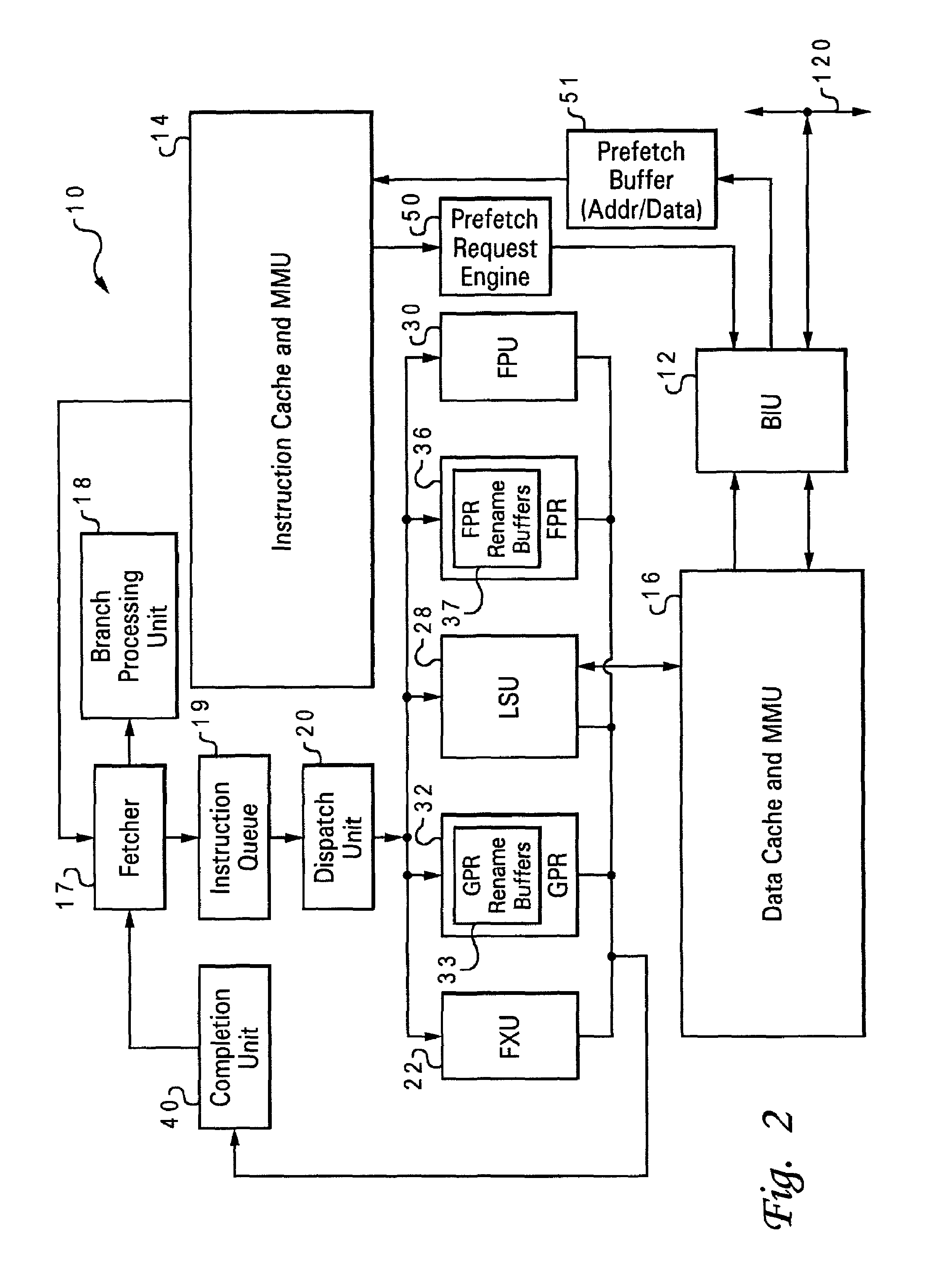

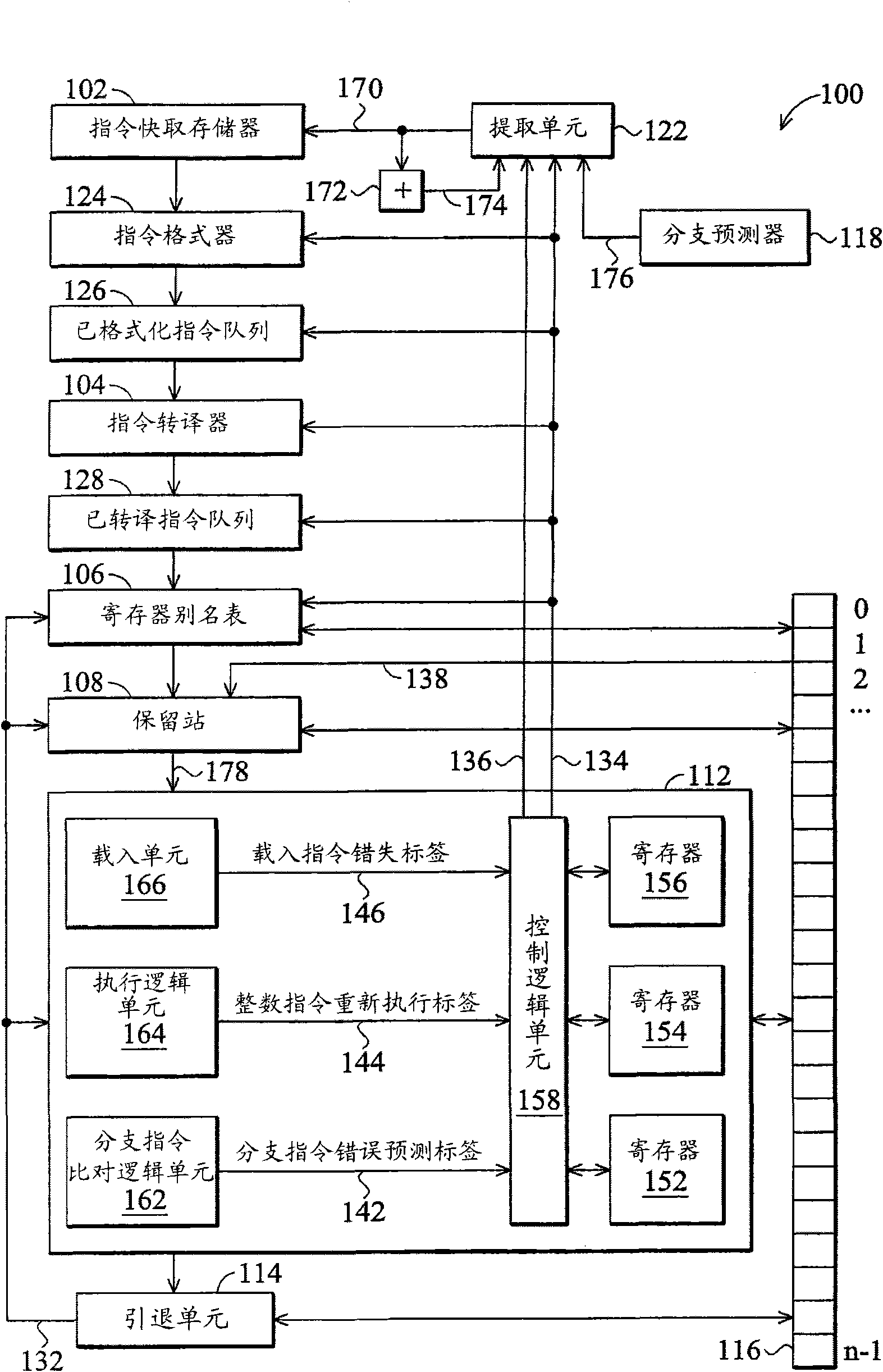

Method and logical apparatus for switching between single-threaded and multi-threaded execution states in a simultaneous multi-threaded (SMT) processor

InactiveUS7155600B2Program initiation/switchingGeneral purpose stored program computerPost processorControl logic

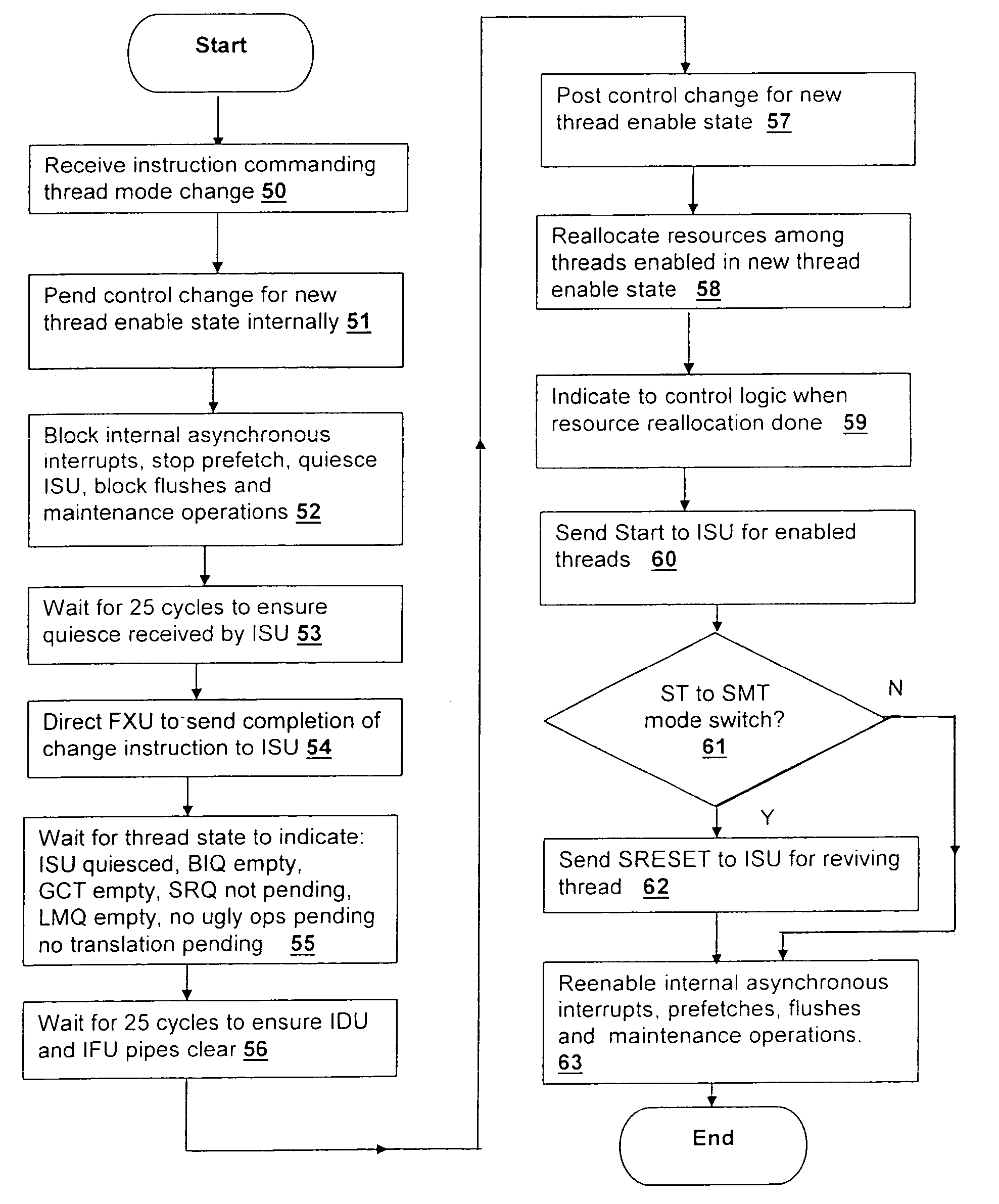

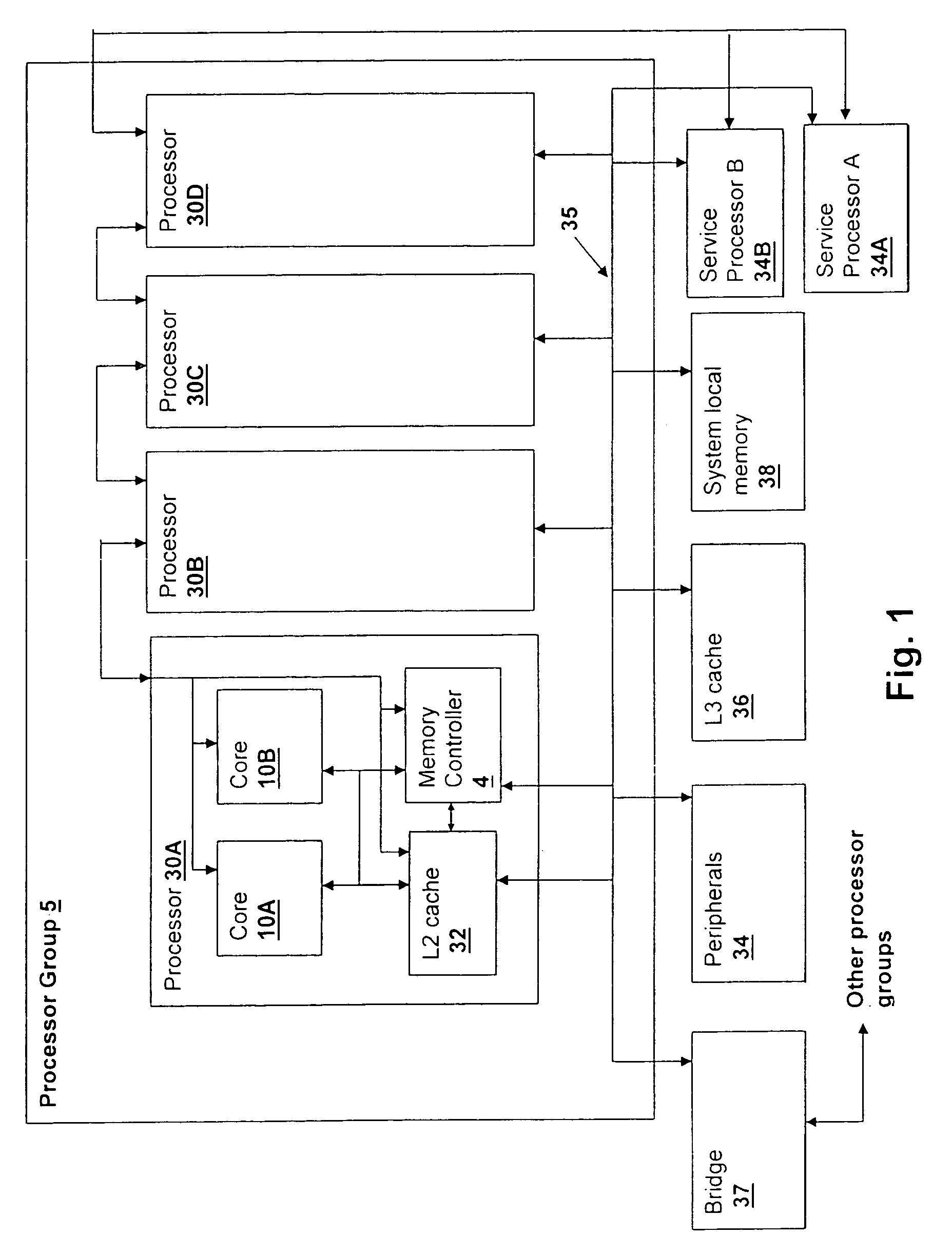

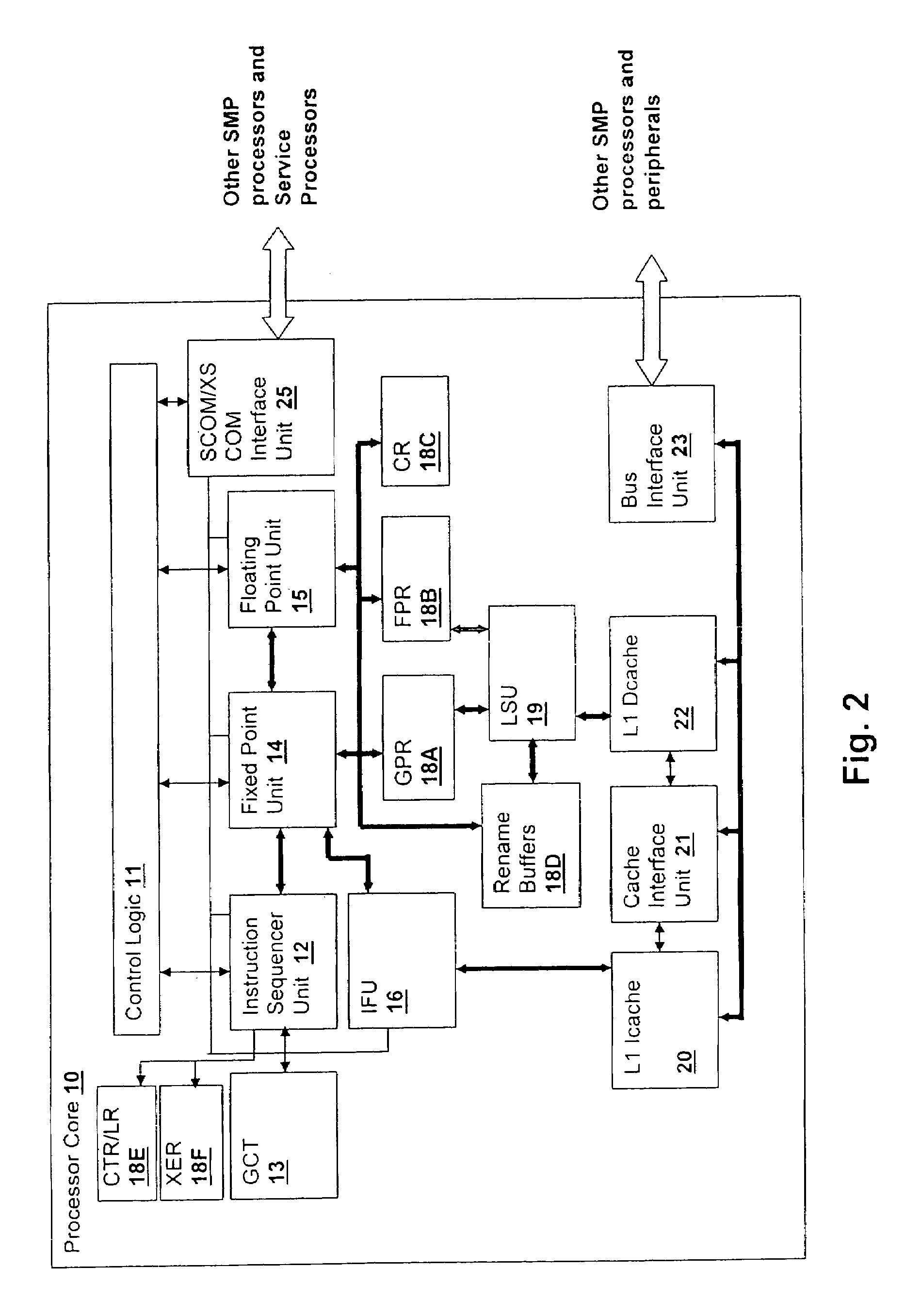

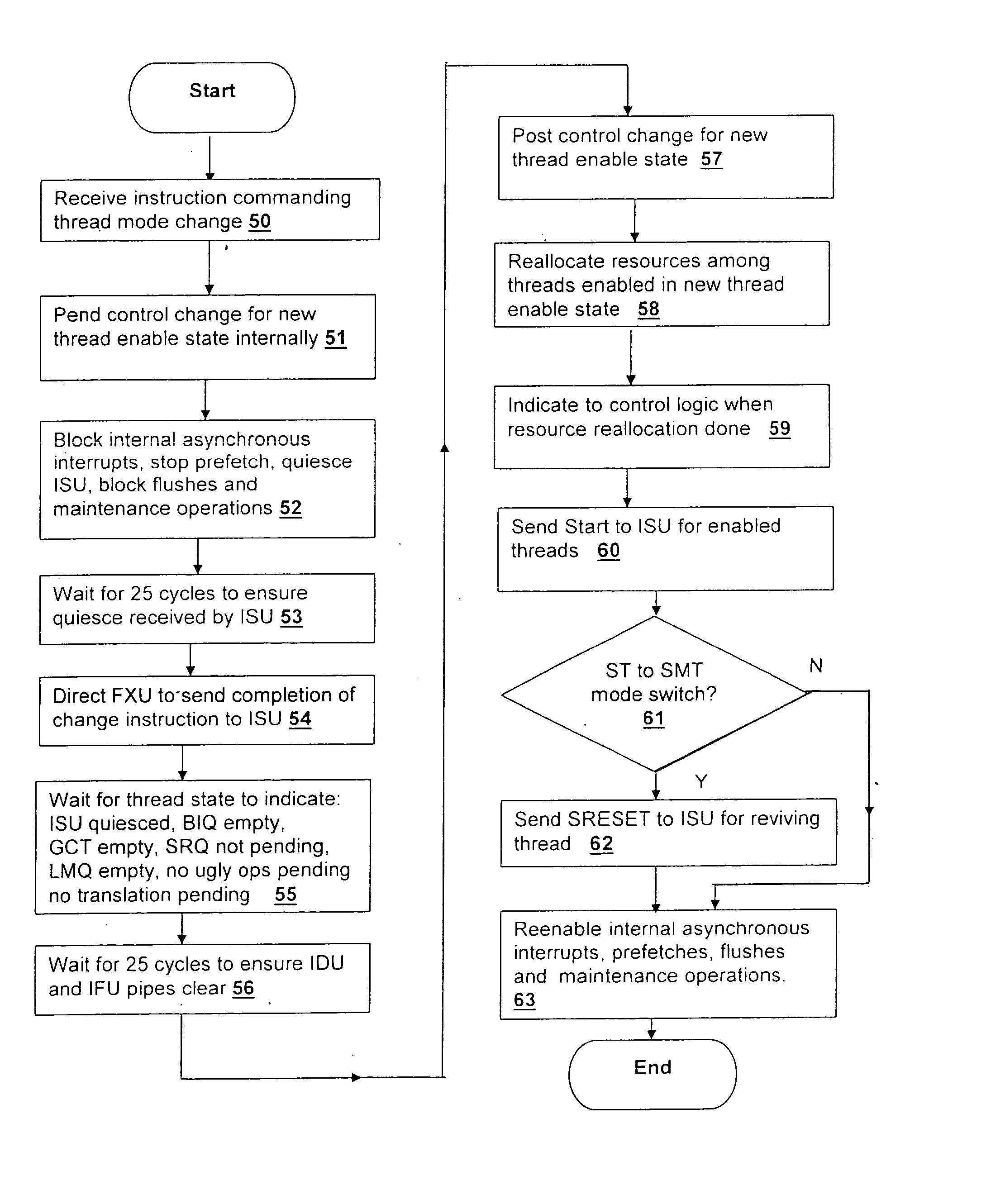

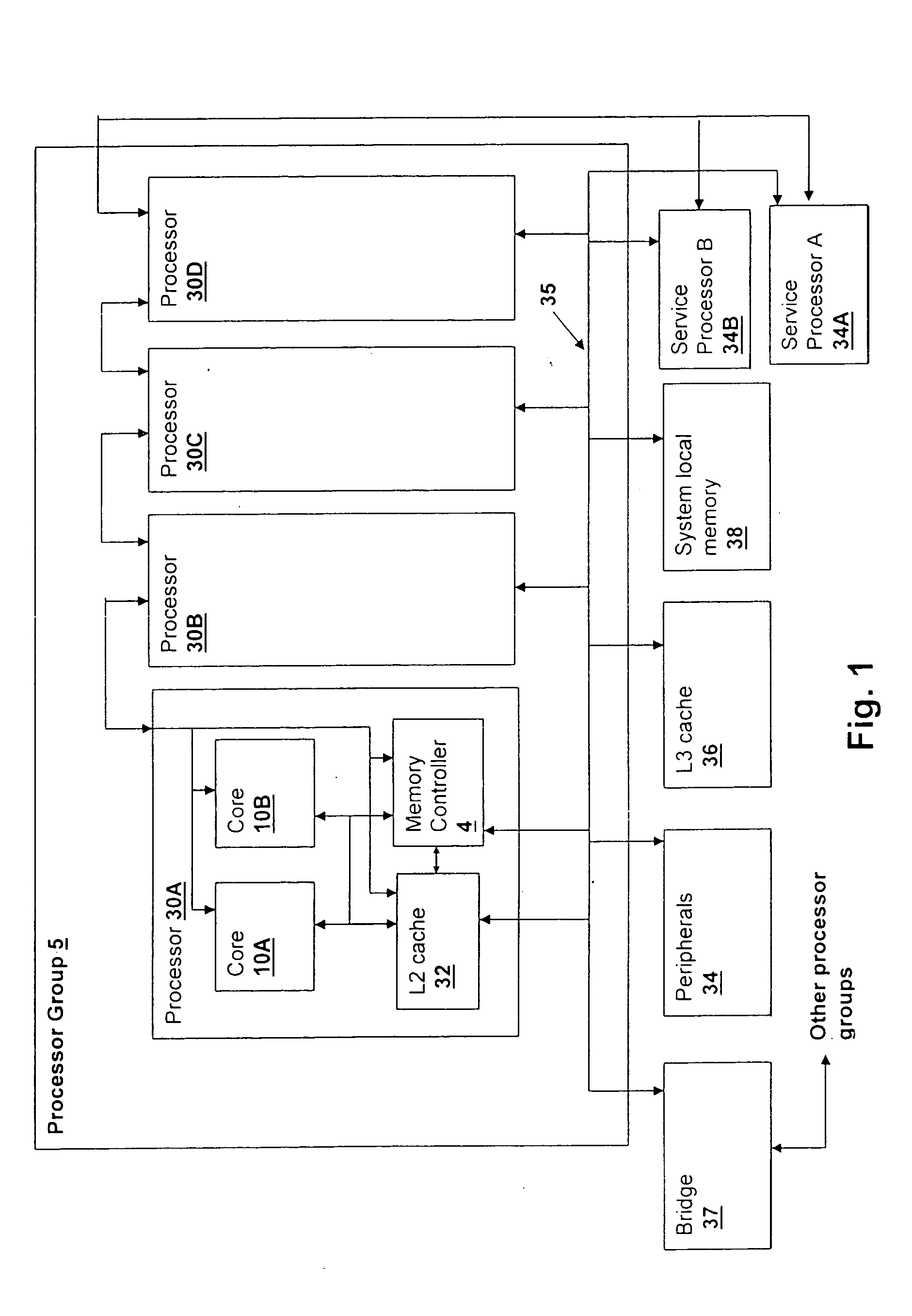

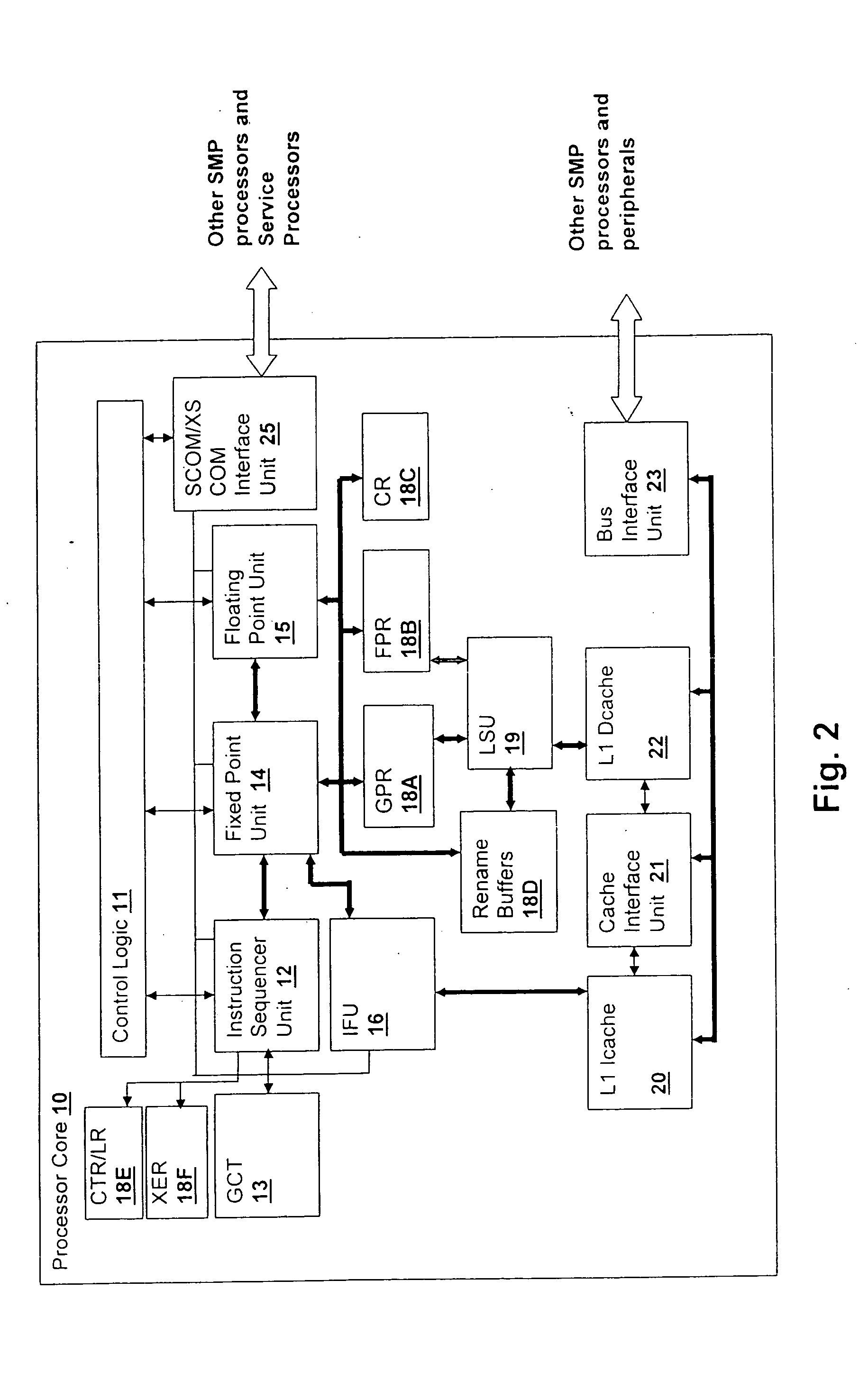

A method and logical apparatus for switching between single-threaded and multi-threaded execution states within a simultaneous multi-threaded (SMT) processor provides a mechanism for switching between single-threaded and multi-threaded execution. The processor receives an instruction specifying a transition from a single-threaded to a multi-threaded mode or vice-versa and halts execution of all threads executing on the processor. Internal control logic controls a sequence of events that ends instruction prefetching, dispatch of new instructions, interrupt processing and maintenance operations and waits for operation of the processor to complete for instructions that are in process. Then, the logic determines one or more threads to start in conformity with a thread enable state specifying the enable state of multiple threads and reallocates various resources, dividing them between threads if multiple threads are specified for further execution (multi-threaded mode) or allocating substantially all of the resources to a single thread if further execution is specified as single-threaded mode. The processor then starts execution of the remaining enabled threads.

Owner:INT BUSINESS MASCH CORP

Branch prediction mechanism for predicting indirect branch targets

InactiveUS20110078425A1Digital computer detailsConcurrent instruction executionExecution unitBranch target address

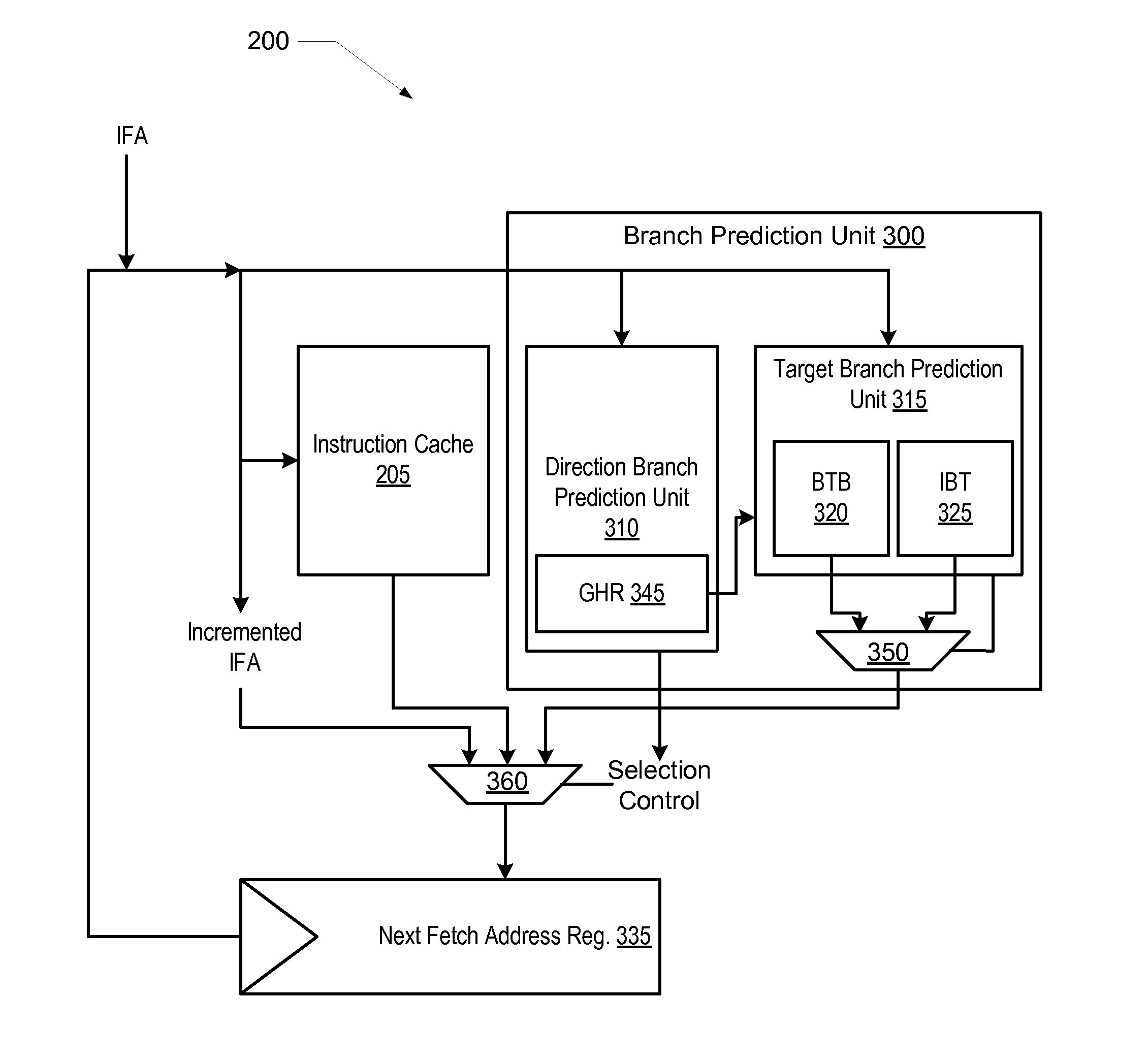

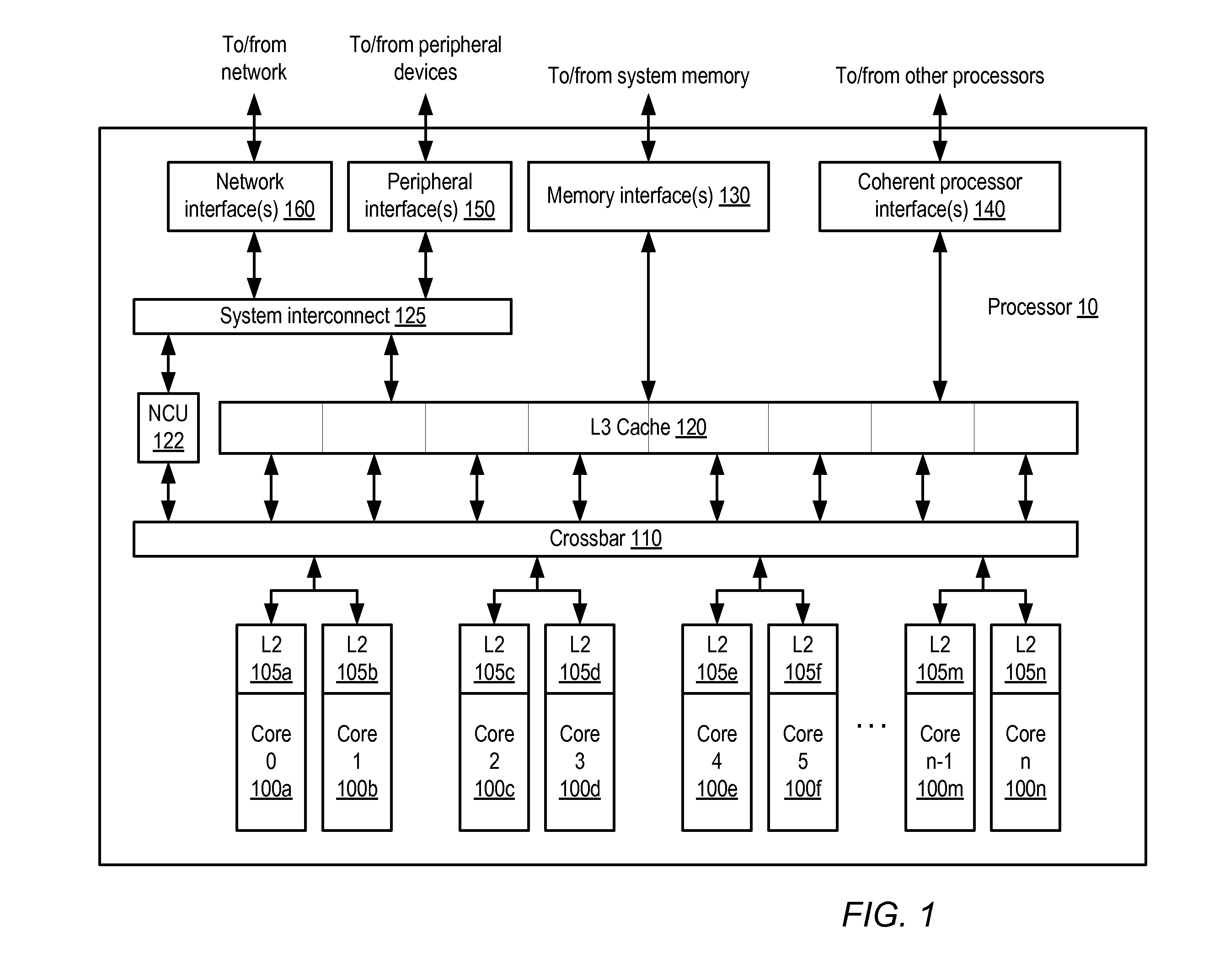

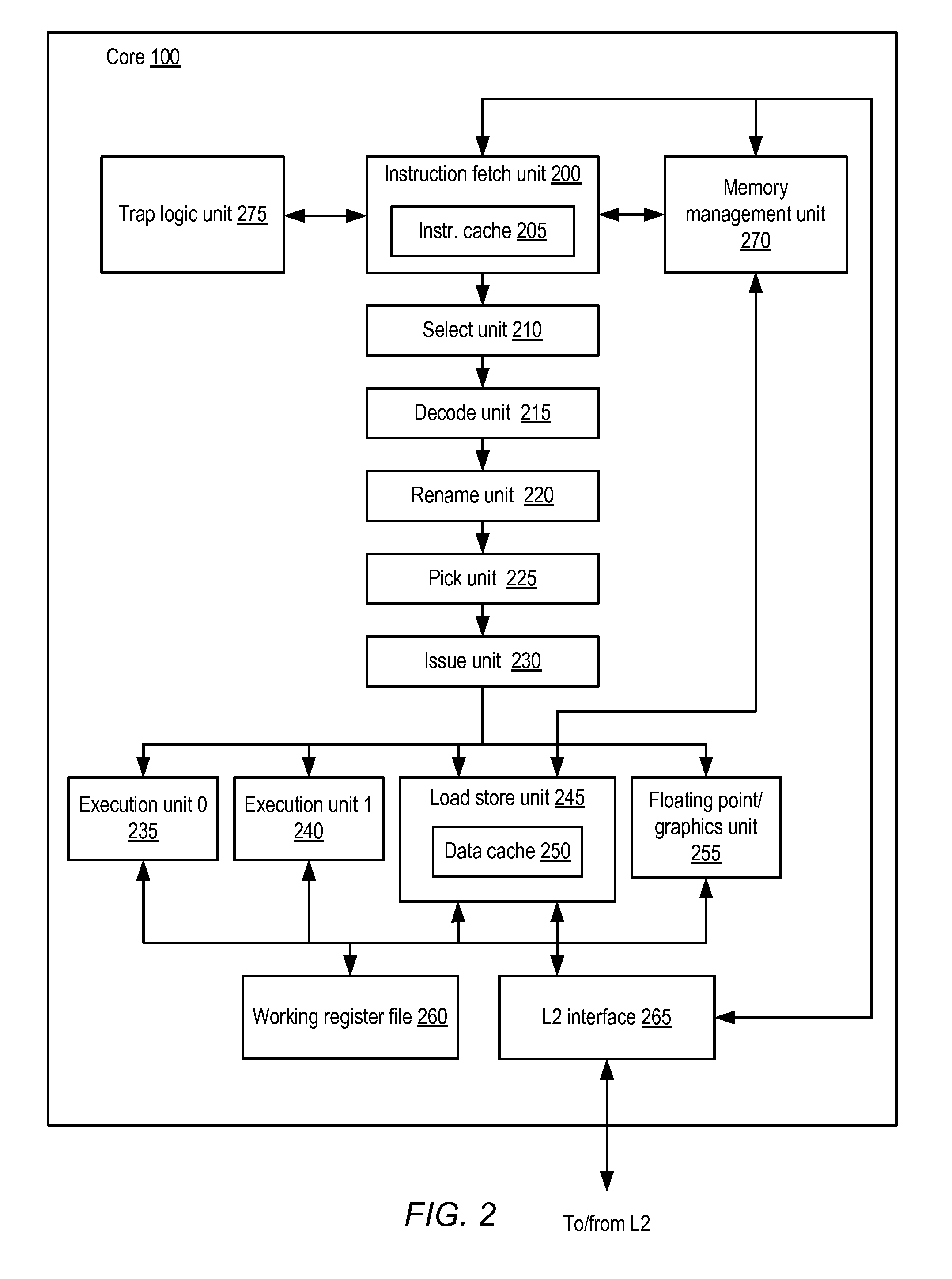

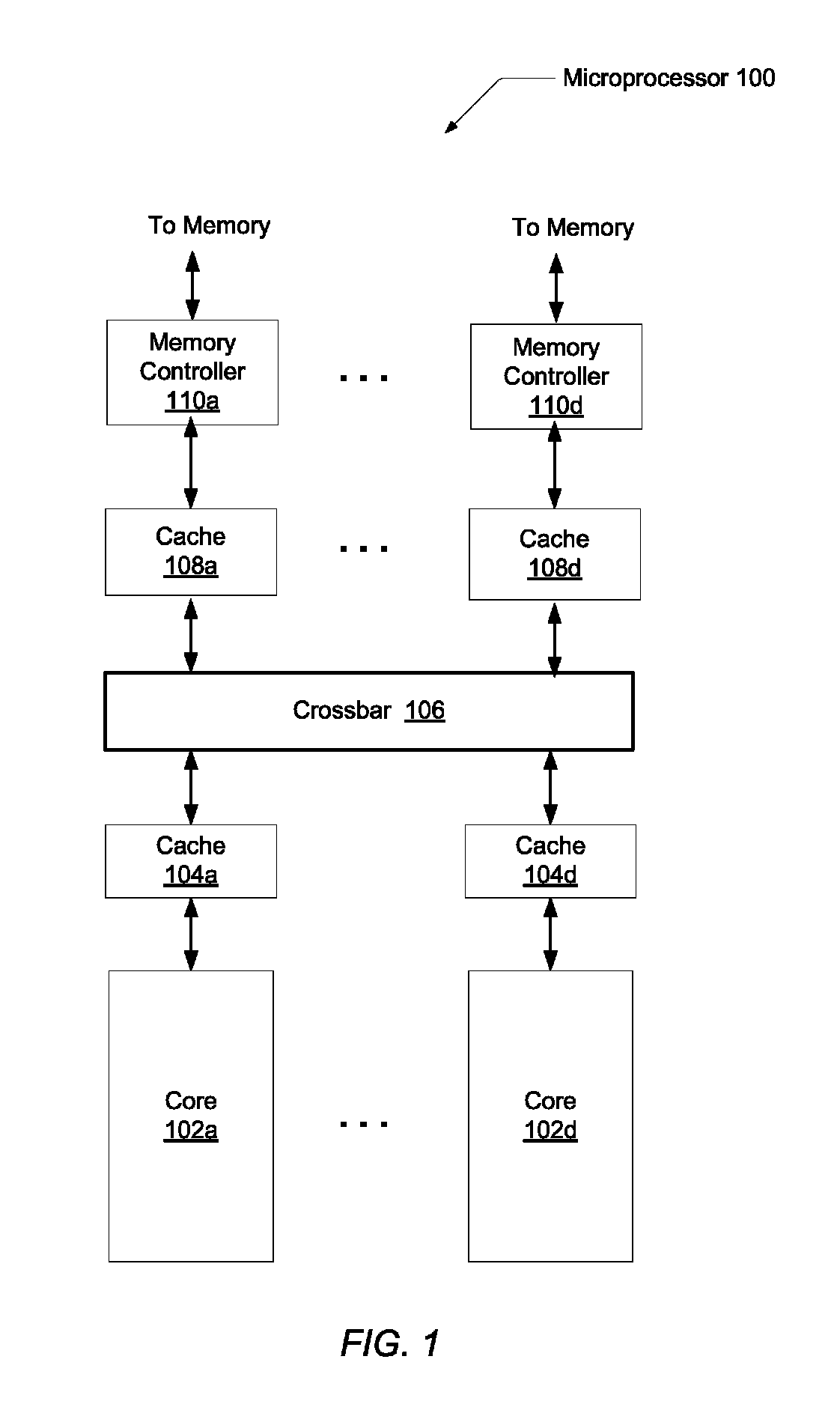

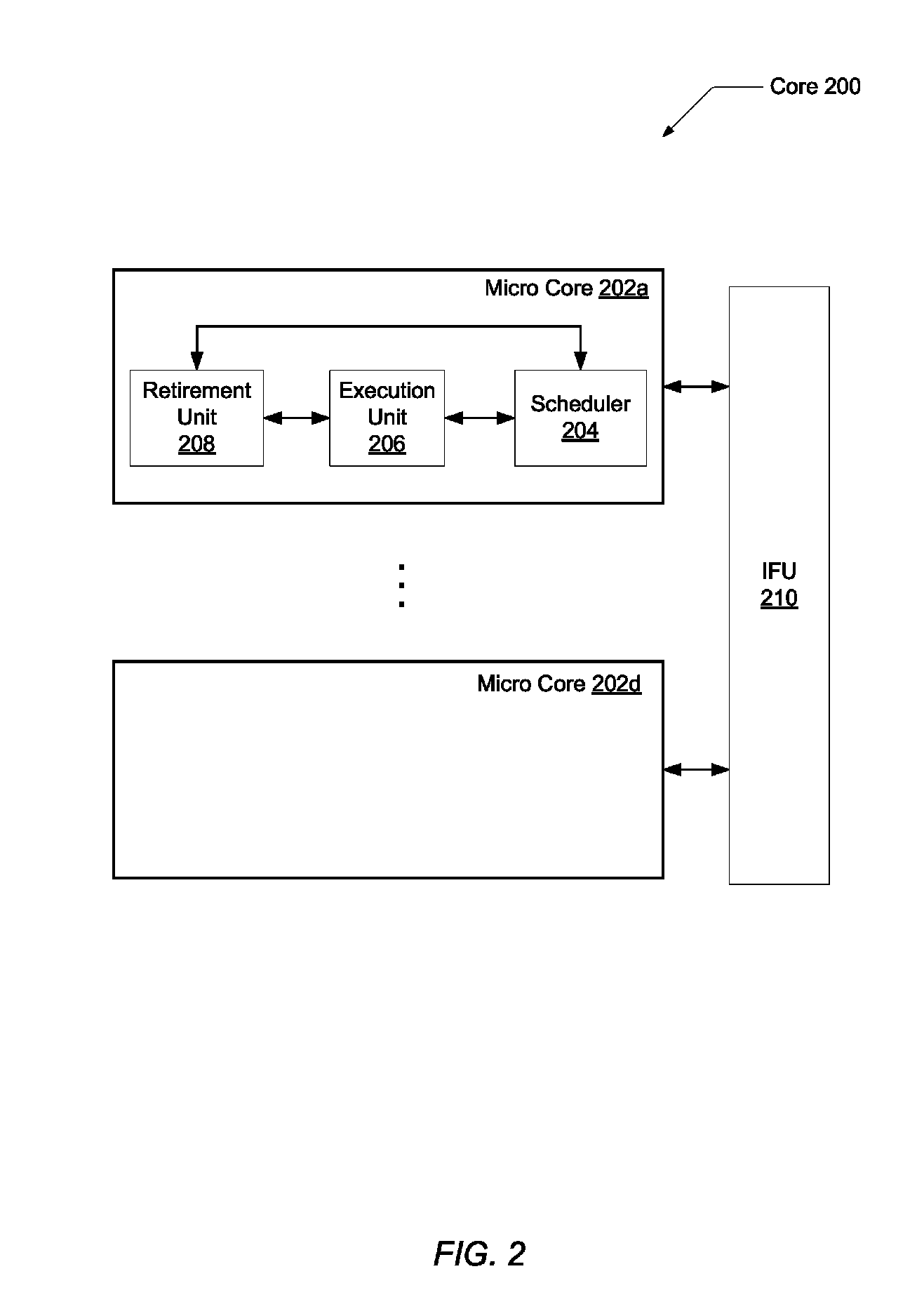

A multithreaded microprocessor includes an instruction fetch unit that may fetch and maintain a plurality of instructions belonging to one or more threads and one or more execution units that may concurrently execute the one or more threads. The instruction fetch unit includes a target branch prediction unit that may provide a predicted branch target address in response to receiving an instruction fetch address of a current indirect branch instruction. The branch prediction unit includes a primary storage and a control unit. The storage includes a plurality of entries, and each entry may store a predicted branch target address corresponding to a previous indirect branch instruction. The control unit may generate an index value for accessing the storage using a portion of the instruction fetch address of the current indirect branch instruction, and branch direction history information associated with a currently executing thread of the one or more threads.

Owner:SUN MICROSYSTEMS INC

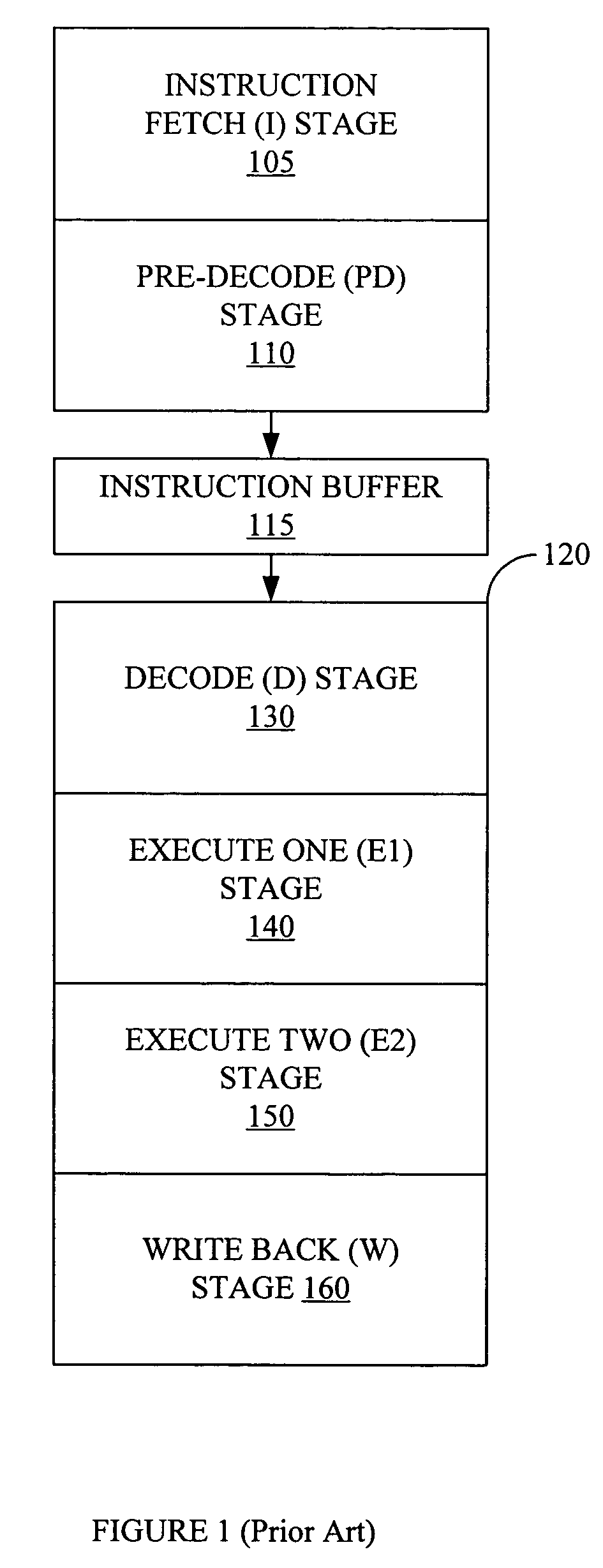

Method and logical apparatus for managing thread execution in a simultaneous multi-threaded (SMT) processor

InactiveUS20040215932A1Program initiation/switchingGeneral purpose stored program computerSimultaneous multithreadingInstruction prefetch

A method and logical apparatus for managing thread execution within a simultaneous multi-threaded (SMT) processor provides a mechanism for switching between single-threaded and multi-threaded execution. The processor receives an instruction specifying a transition from a single-threaded to a multi-threaded mode or vice-versa and halts execution of all threads executing on the processor. Internal control logic controls a sequence of events that ends instruction prefetching, dispatch of new instructions, interrupt processing and maintenance operations and waits for operation of the processor to complete for instructions that are in process. Then, the logic determines one or more threads to start in conformity with a thread enable state specifying the enable state of multiple threads and reallocates various resources, dividing them between threads if multiple threads are specified for further execution (multi-threaded mode) or allocating substantially all of the resources to a single thread if further execution is specified as single-threaded mode. The processor then starts execution of the remaining enabled threads.

Owner:IBM CORP

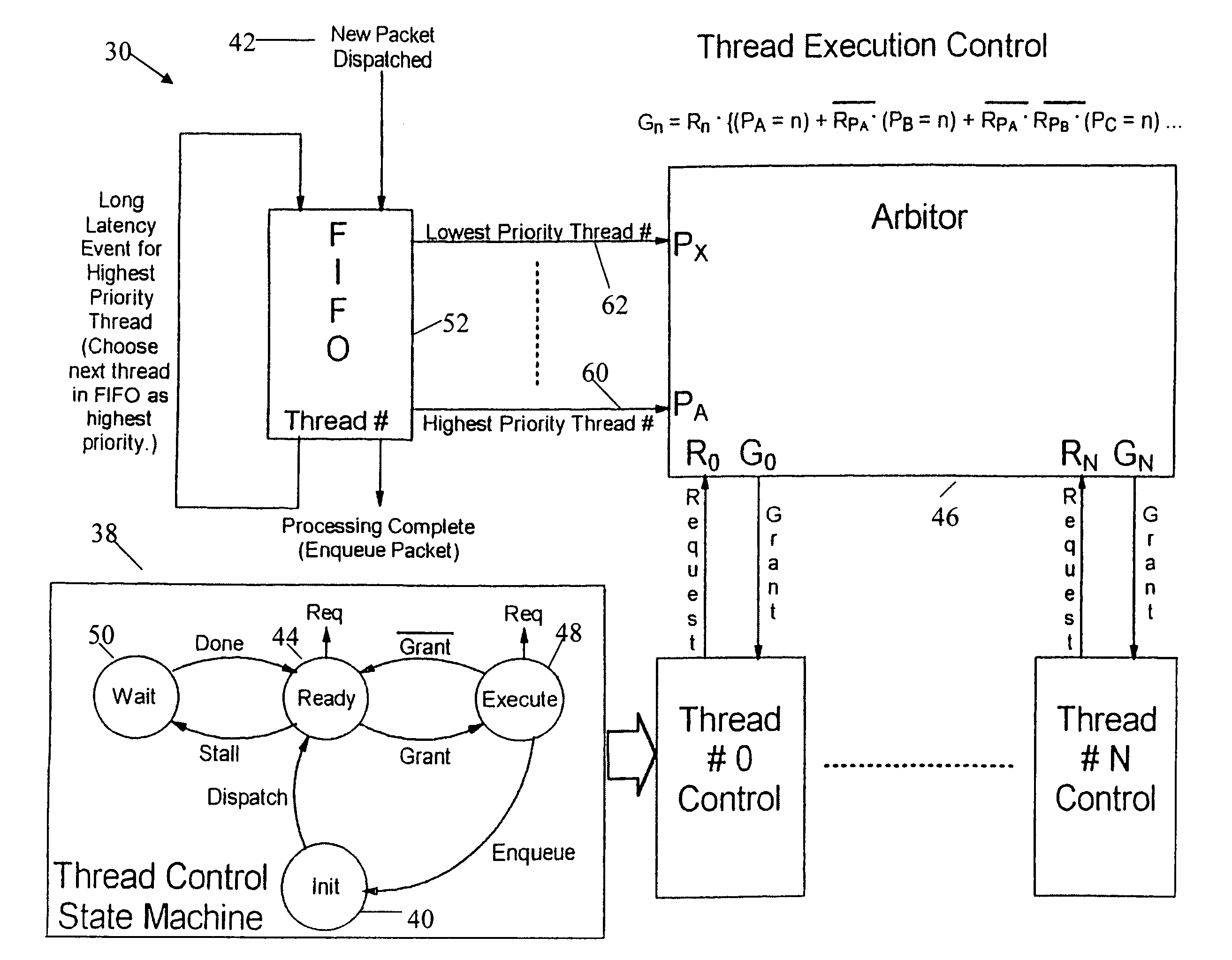

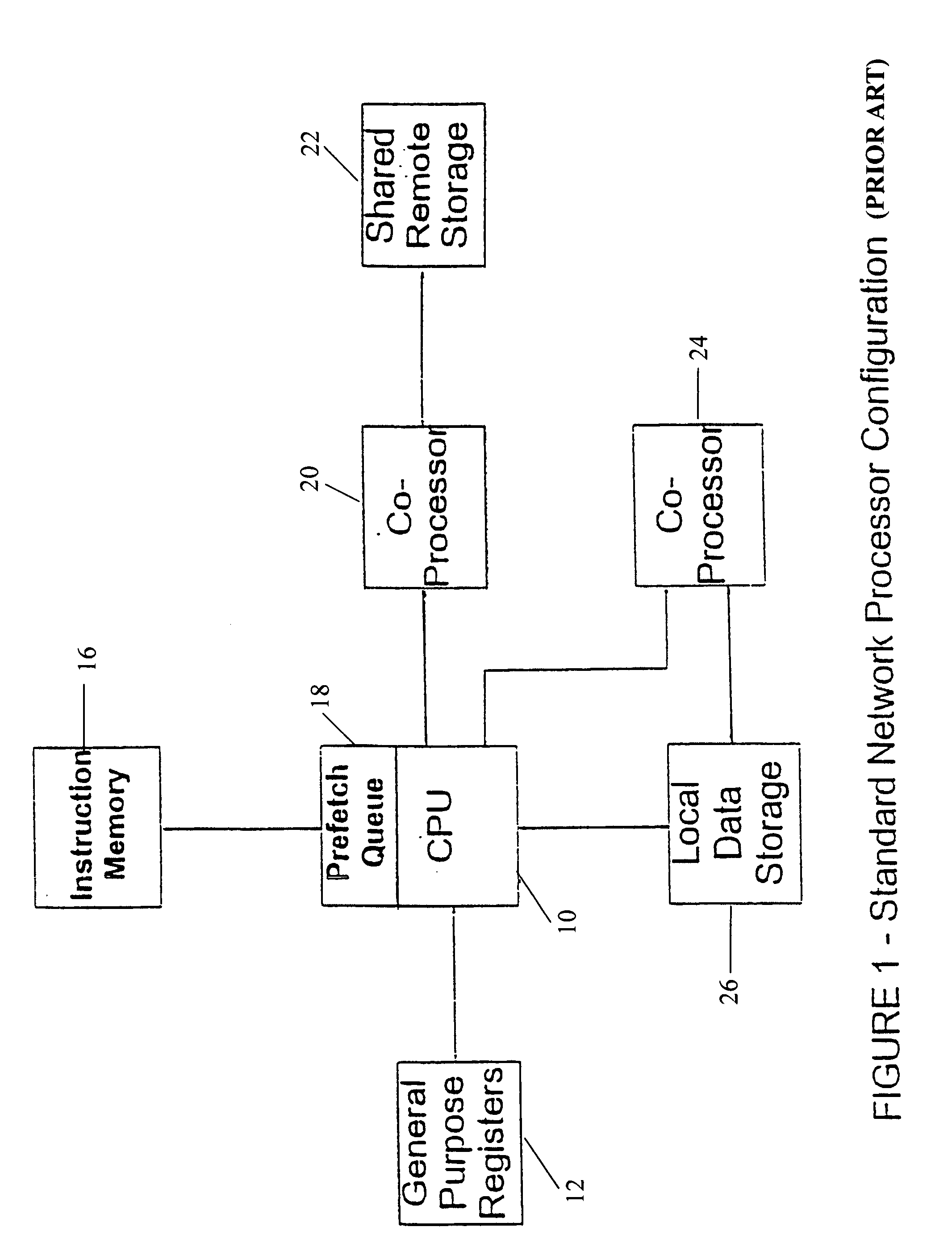

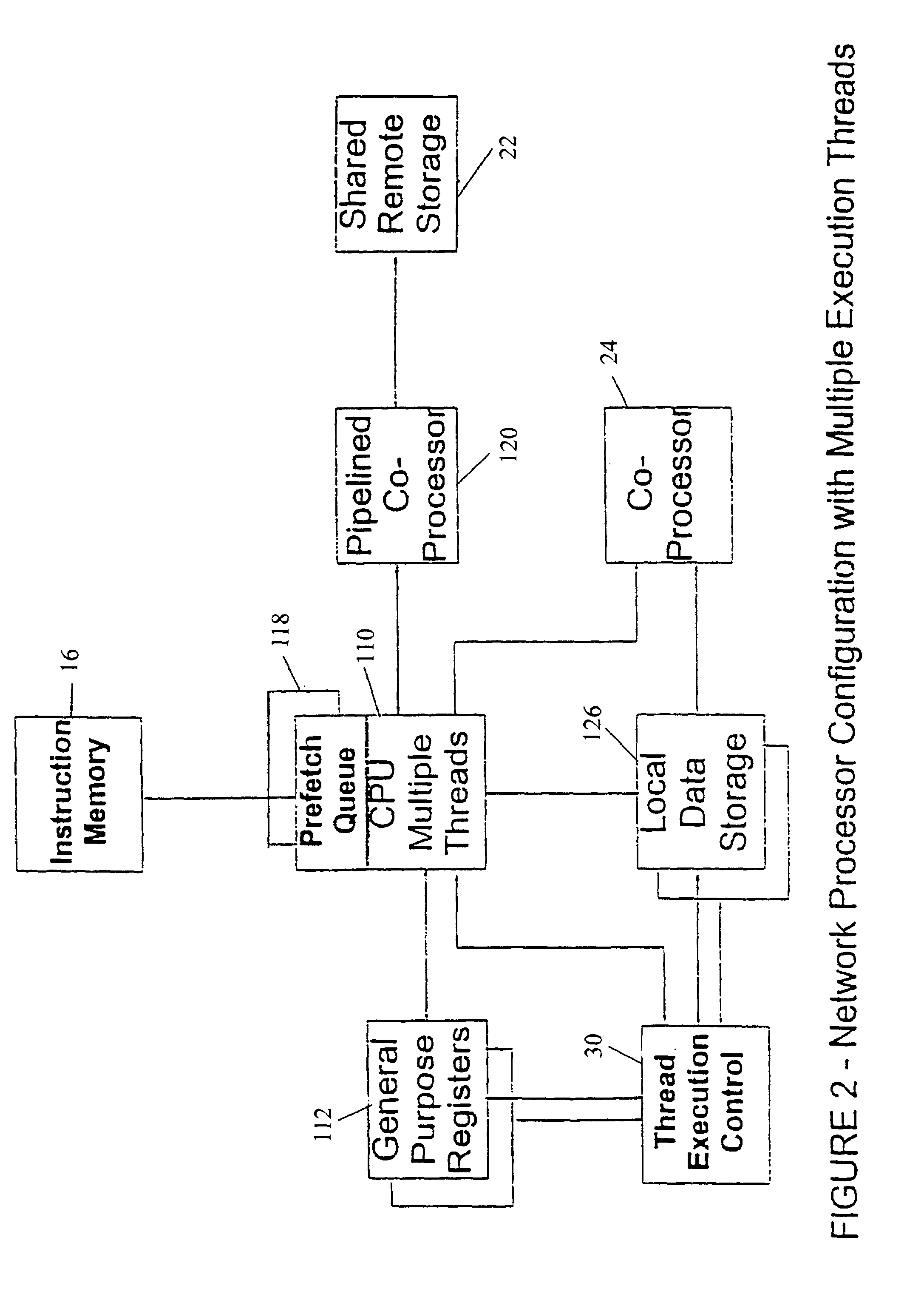

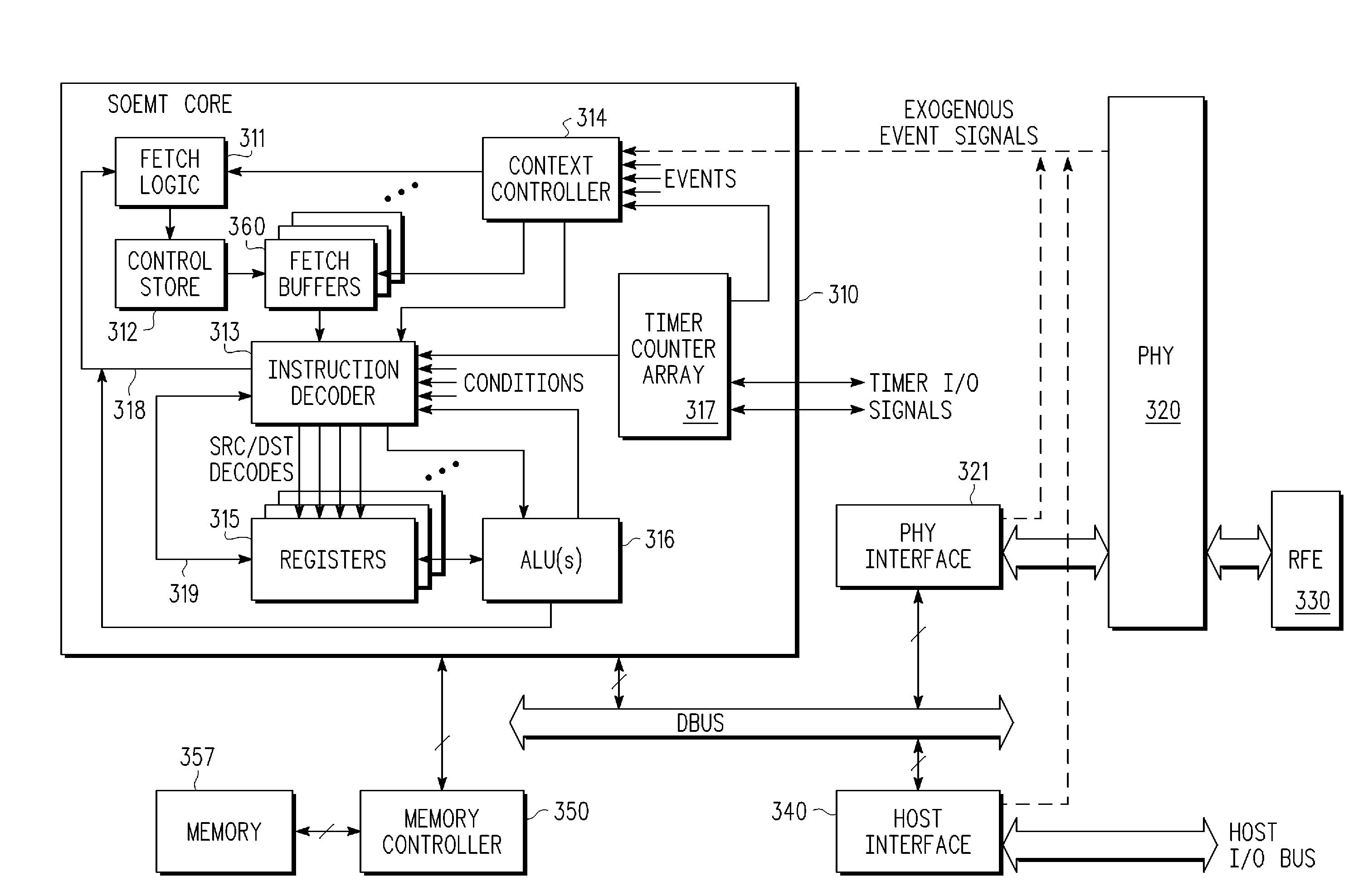

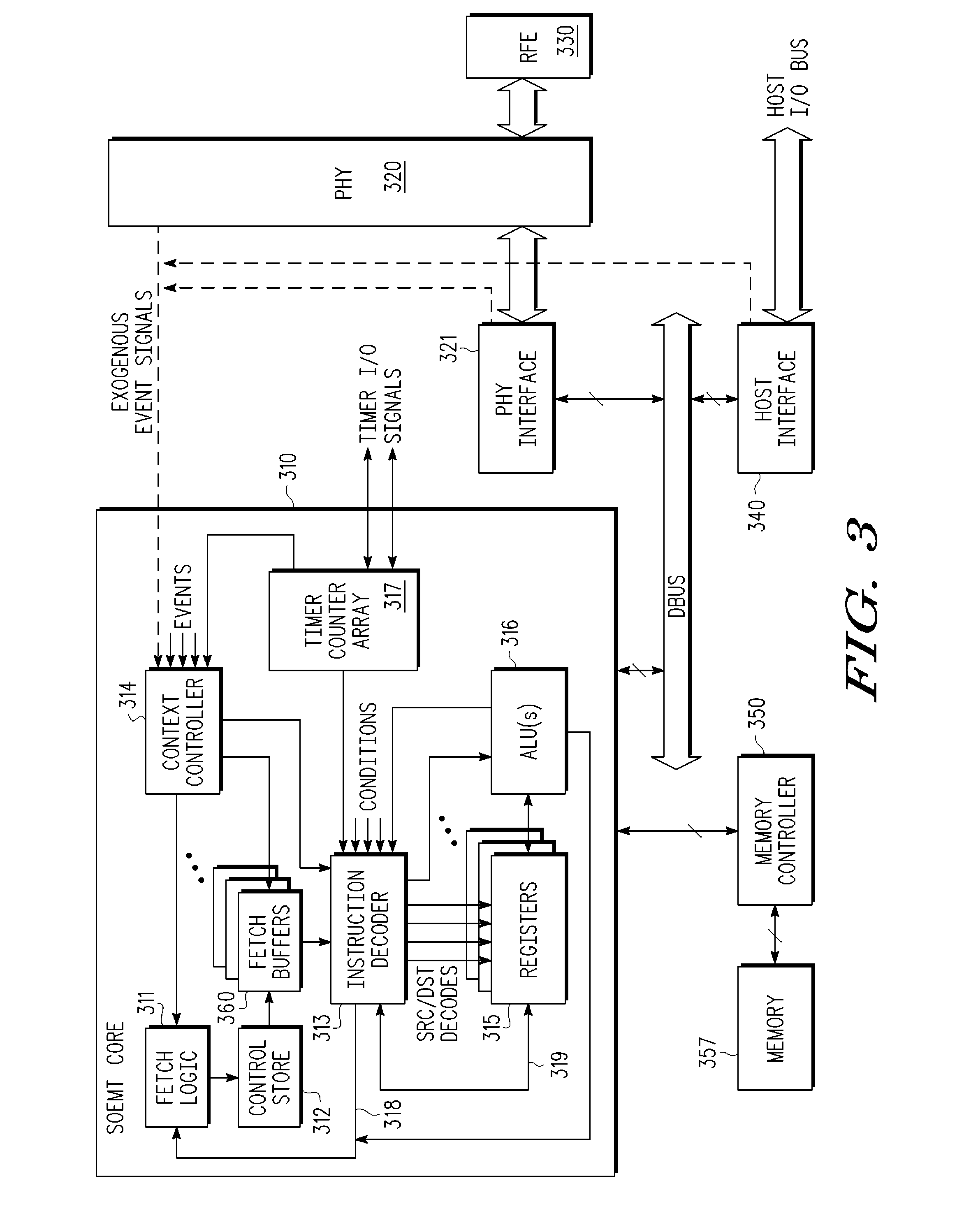

Network processor which makes thread execution control decisions based on latency event lengths

InactiveUS7093109B1Easy to useZero overheadDigital computer detailsMultiprogramming arrangementsLong latencyCoprocessor

A control mechanism is established between a network processor and a tree search coprocessor to deal with latencies in accessing the data such as information formatted in a tree structure. A plurality of independent instruction execution threads are queued to enable them to have rapid access to the shared memory. If execution of a thread becomes stalled due to a latency event, full control is granted to the next thread in the queue. The grant of control is temporary when a short latency event occurs or full when a long latency event occurs. Control is returned to the original thread when a short latency event is completed. Each execution thread utilizes an instruction prefetch buffer that collects instructions for idle execution threads when the instruction bandwidth is not fully utilized by an active execution thread. The thread execution control is governed by the collective functioning of a FIFO, an arbiter and a thread control state machine.

Owner:INTEL CORP

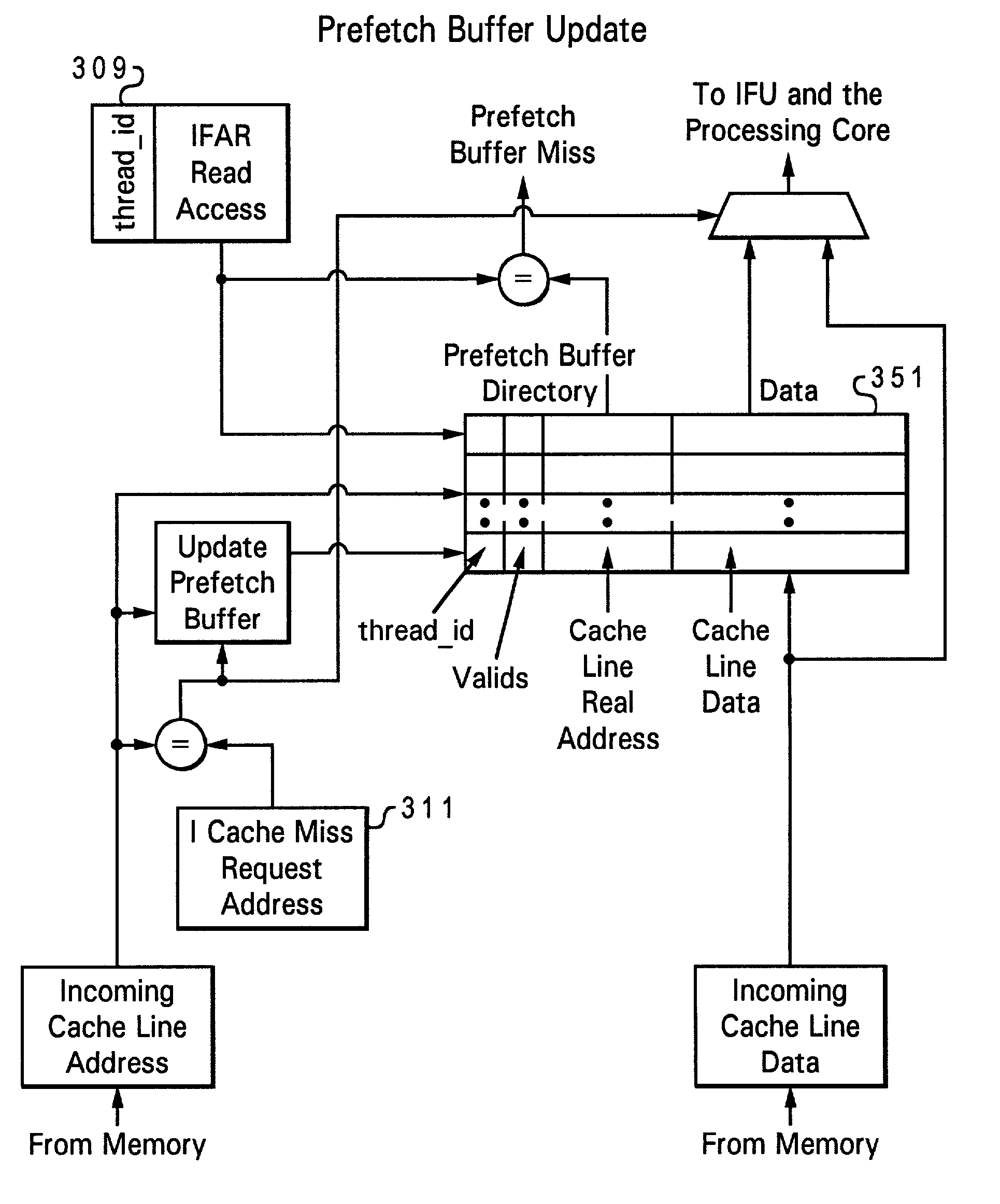

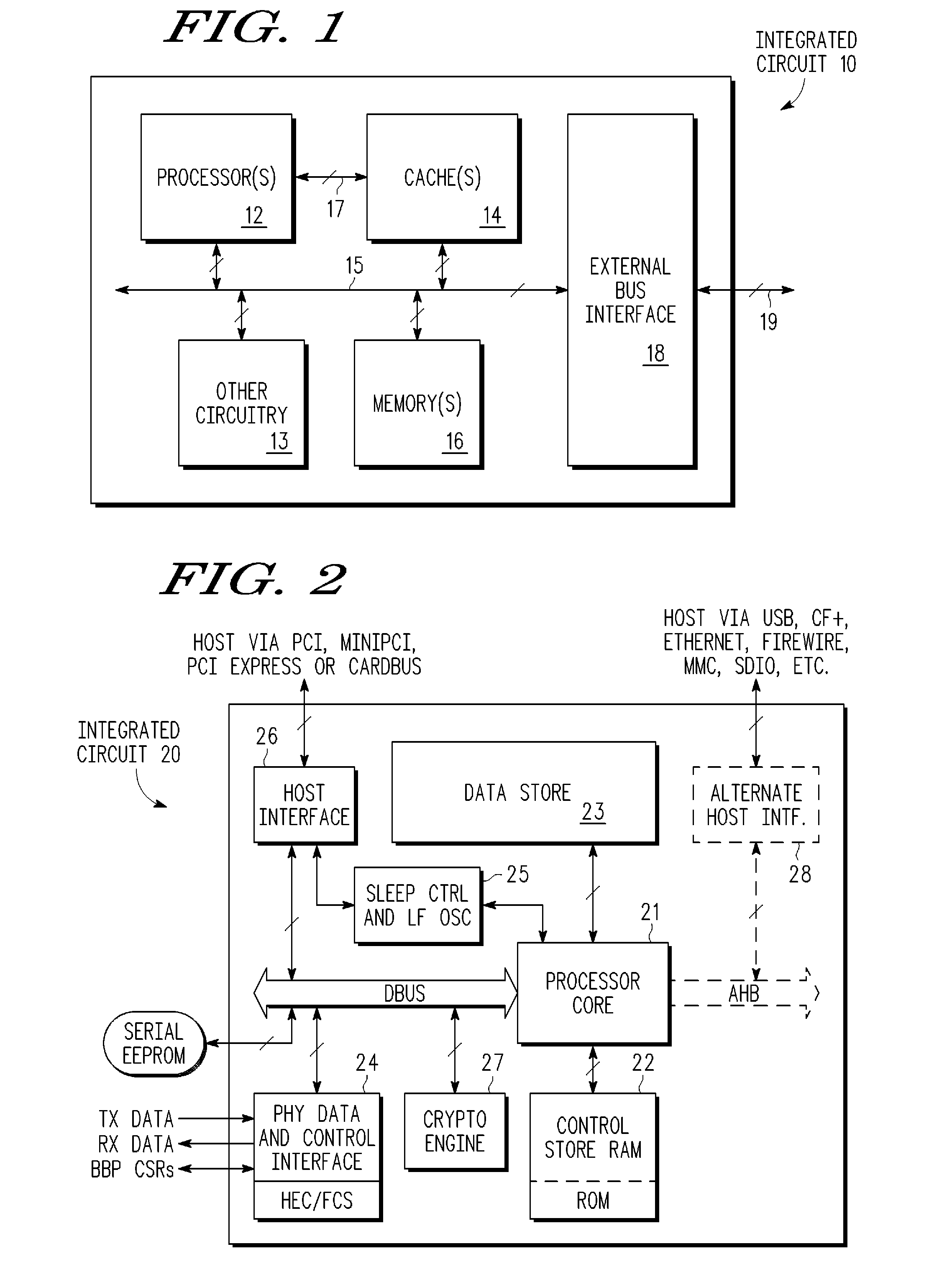

Multithreaded processor efficiency by pre-fetching instructions for a scheduled thread

InactiveUS6965982B2Ensure proper implementationMemory architecture accessing/allocationMemory adressing/allocation/relocationComputer architectureFlip-flop

Owner:INTEL CORP

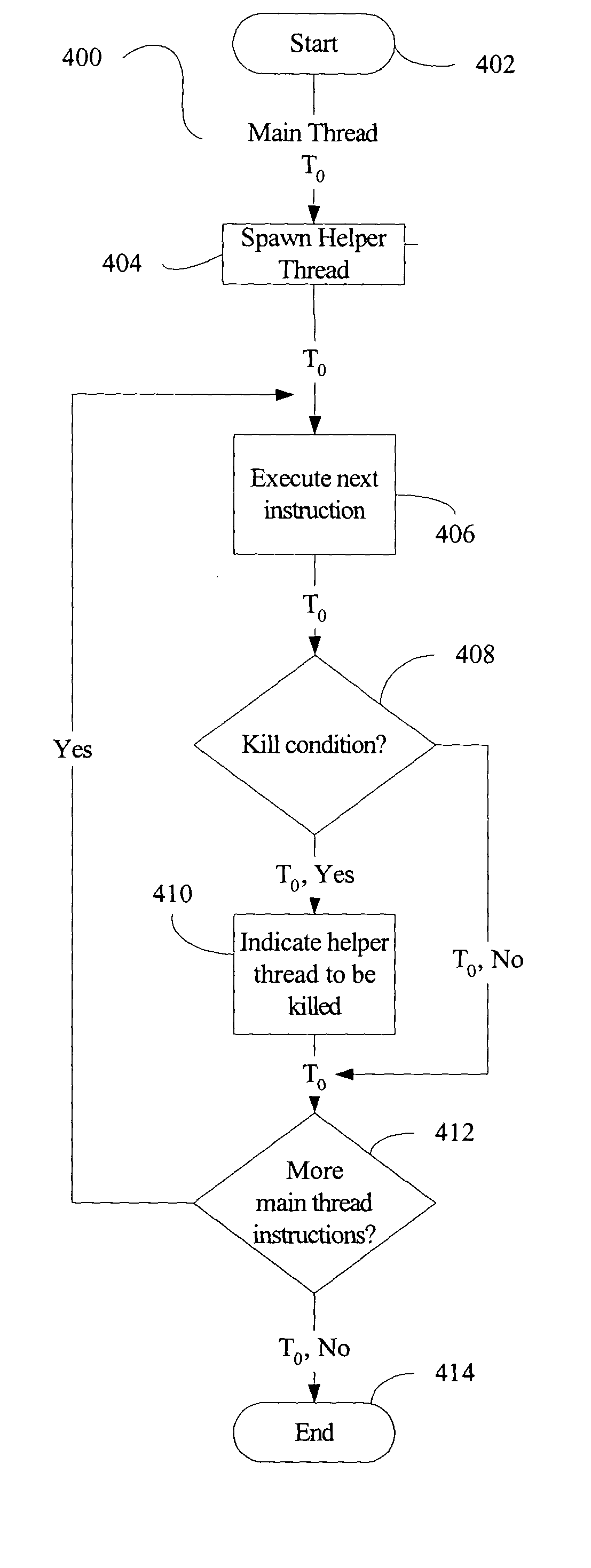

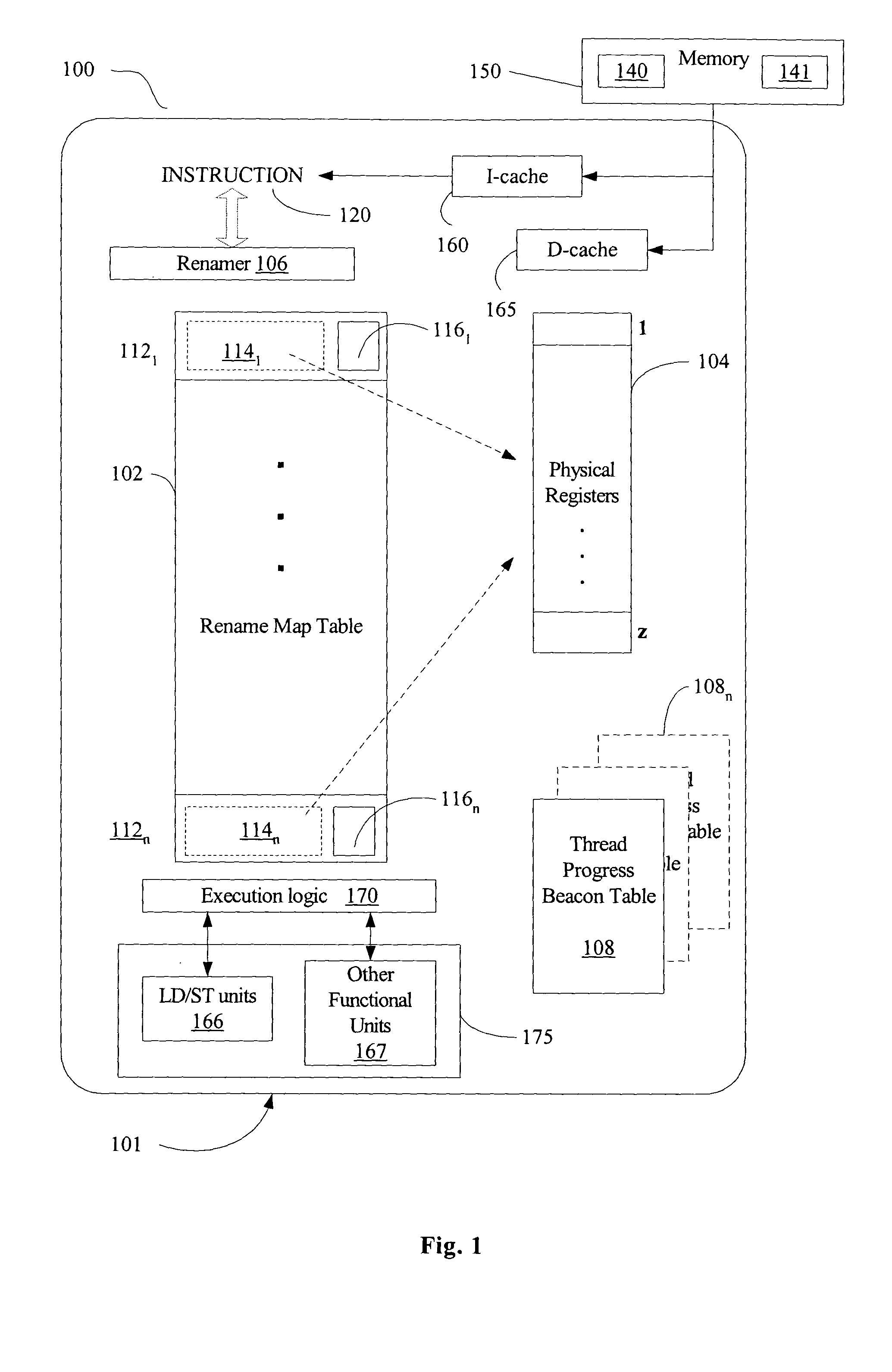

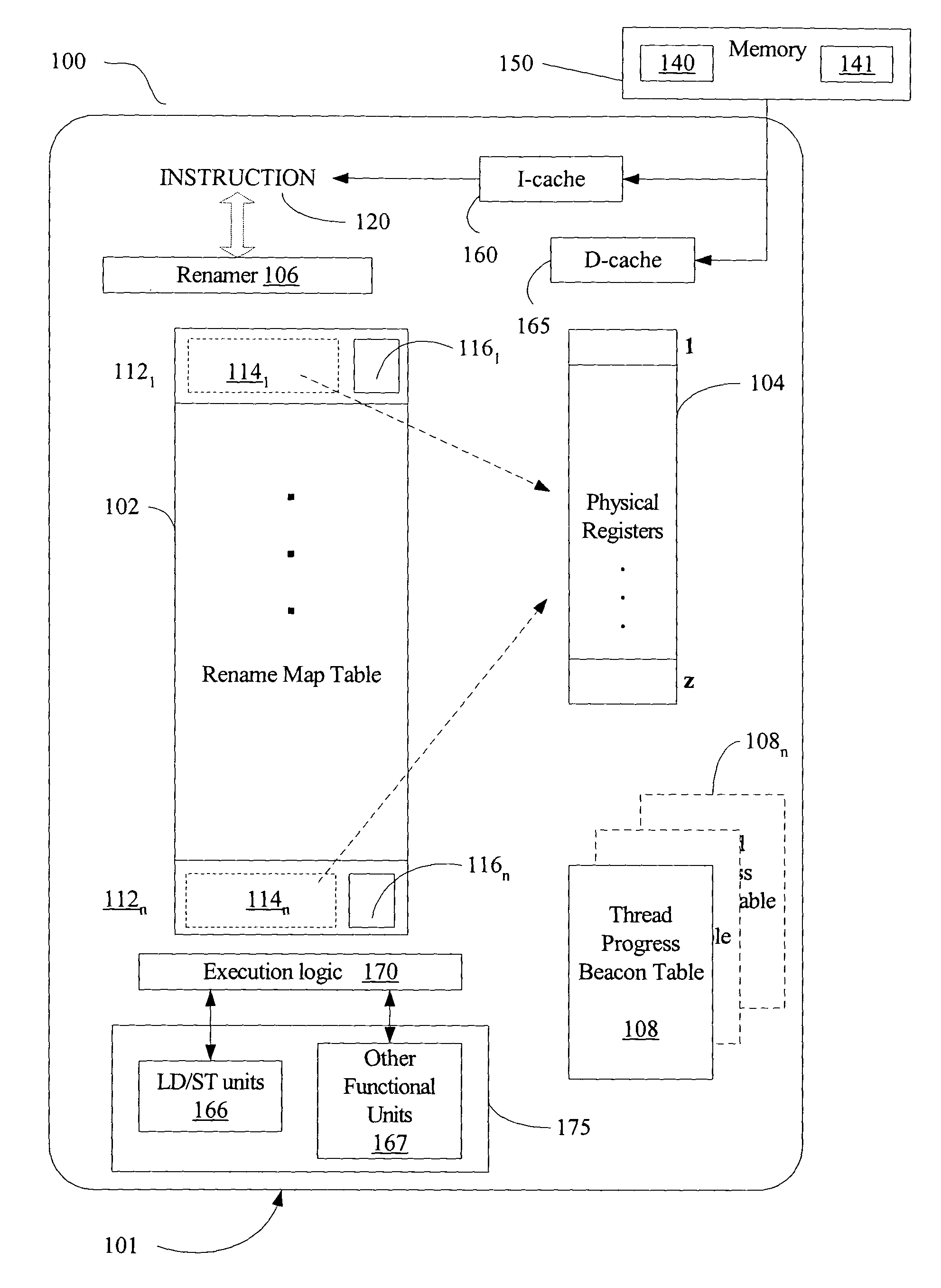

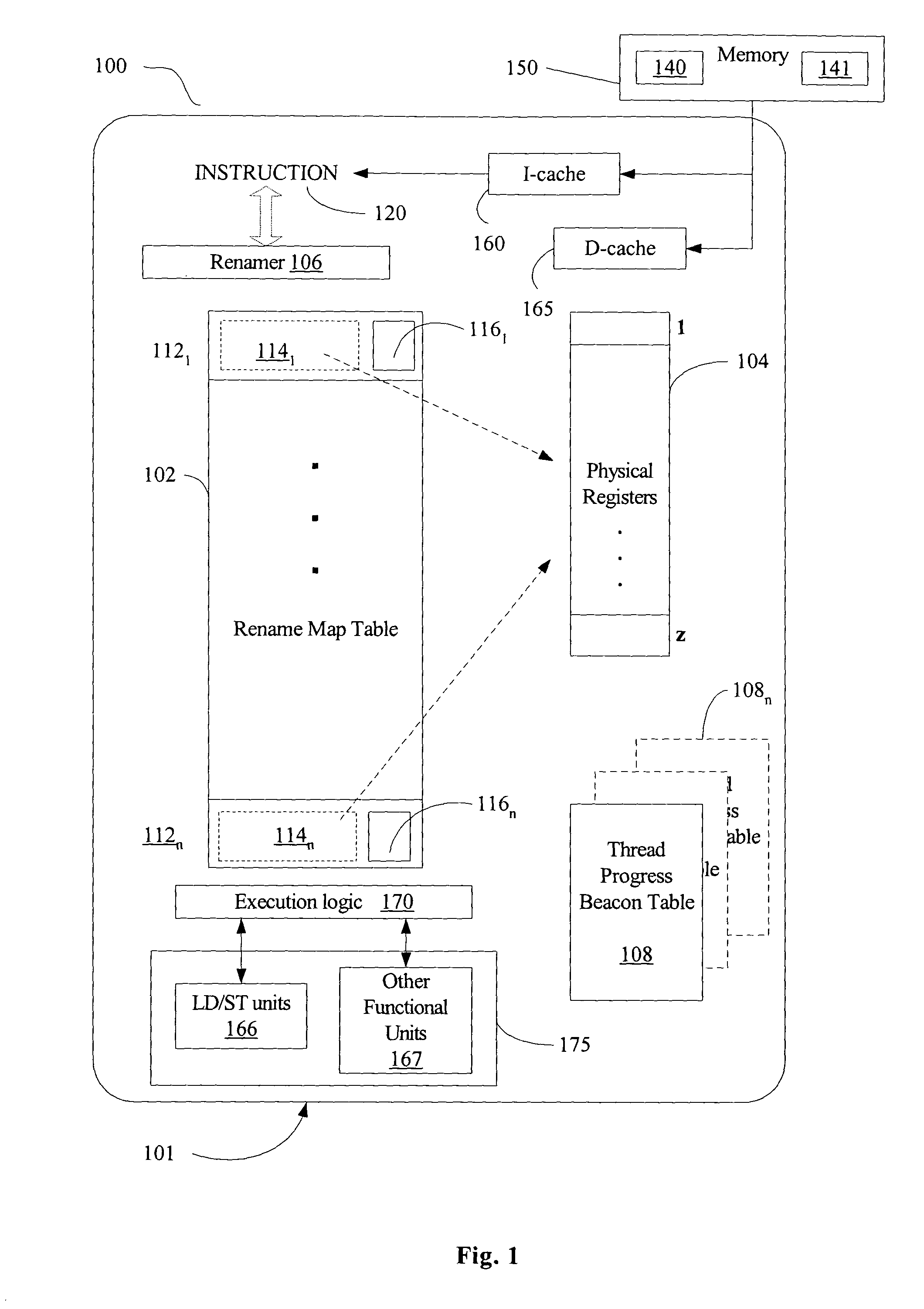

Method and apparatus for efficient utilization for prescient instruction prefetch

ActiveUS20050055541A1Digital computer detailsConcurrent instruction executionResource utilizationOperating system

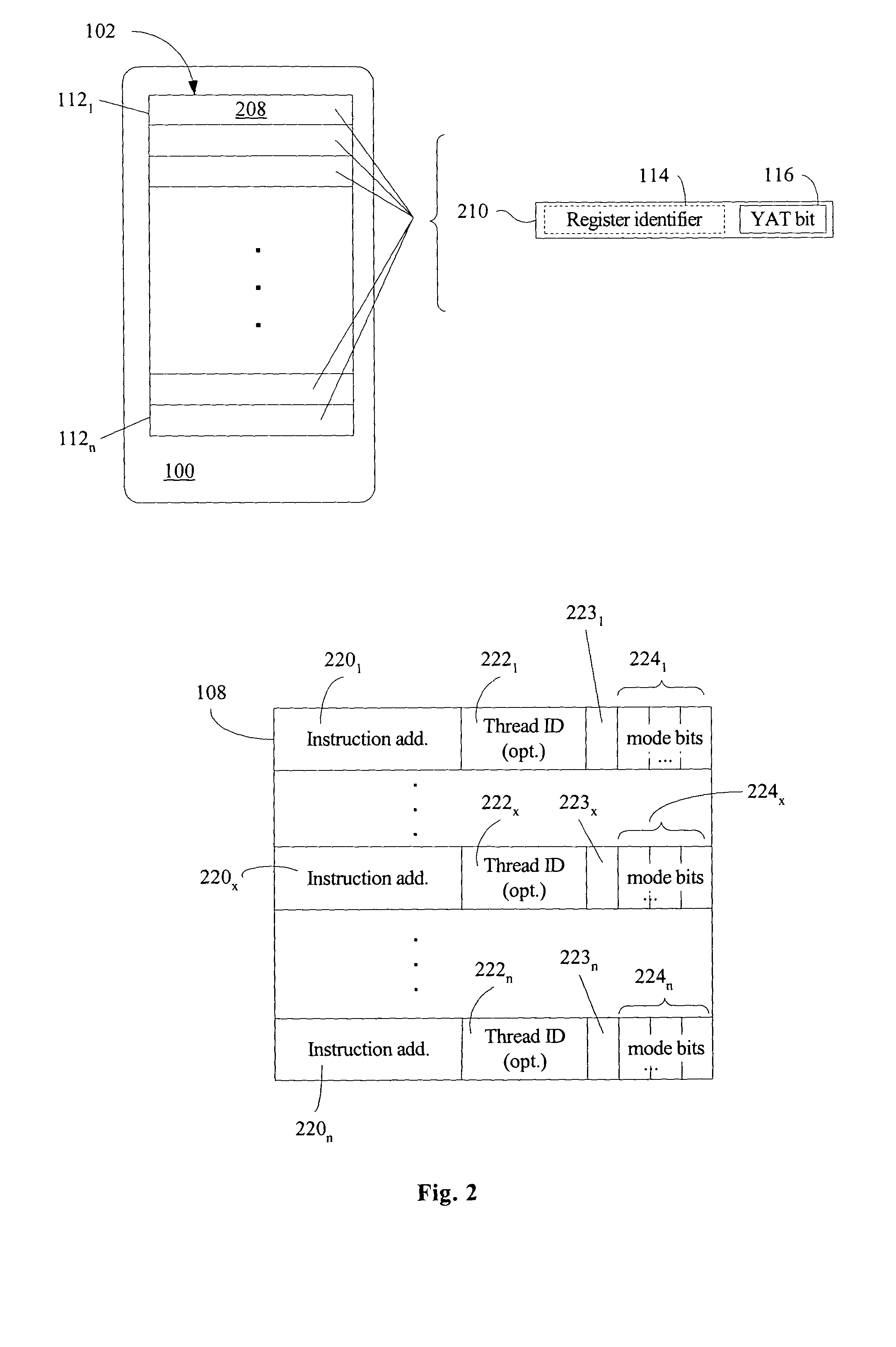

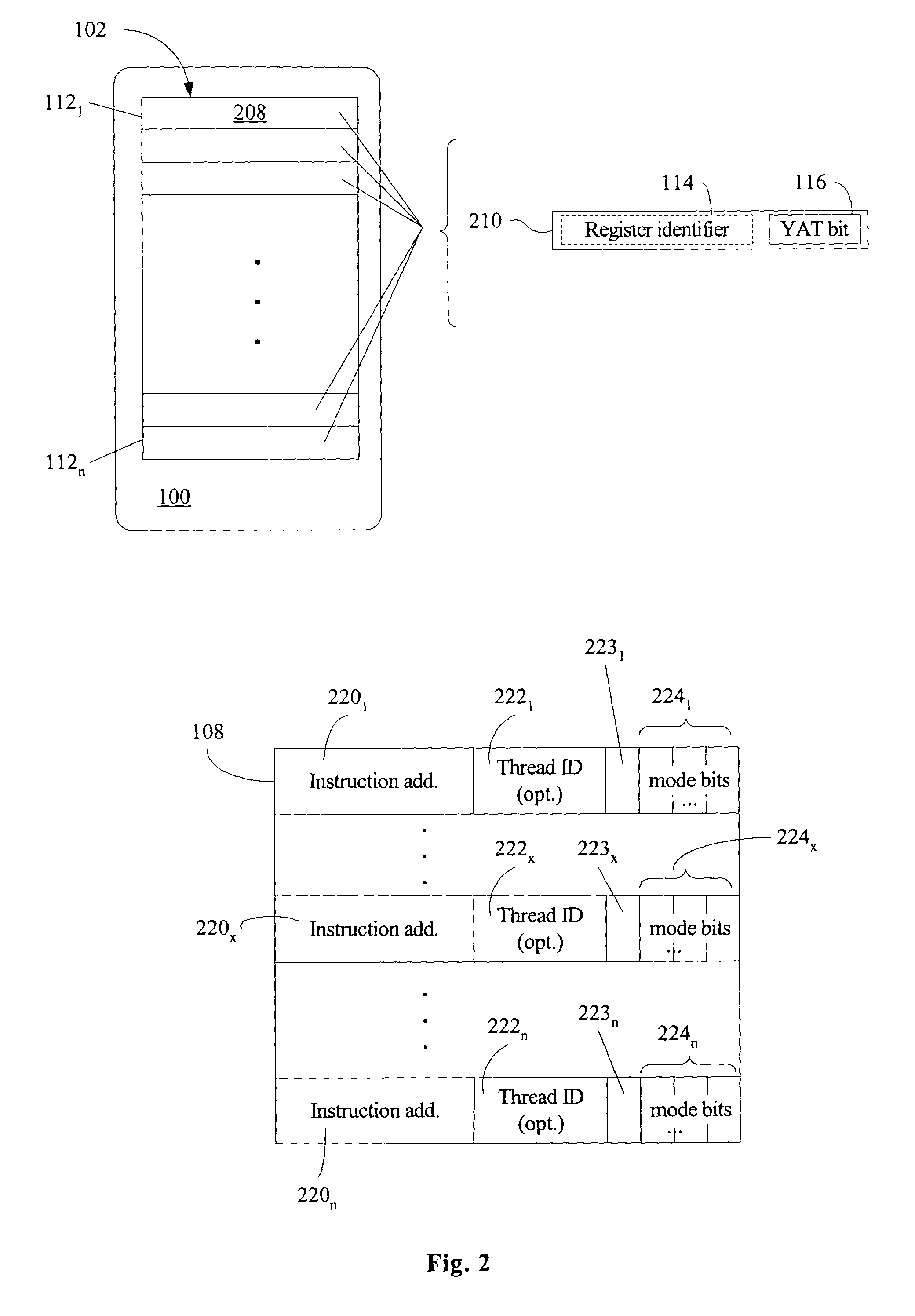

Embodiments of an apparatus, system and method enhance the efficiency of processor resource utilization during instruction prefetching via one or more speculative threads. Renamer logic and a map table are utilized to perform filtering of instructions in a speculative thread instruction stream. The map table includes a yes-a-thing bit to indicate whether the associated physical register's content reflects the value that would be computed by the main thread. A thread progress beacon table is utilized to track relative progress of a main thread and a speculative helper thread. Based upon information in the thread progress beacon table, the main thread may effect termination of a helper thread that is not likely to provide a performance benefit for the main thread.

Owner:TAHOE RES LTD

Dual thread processor

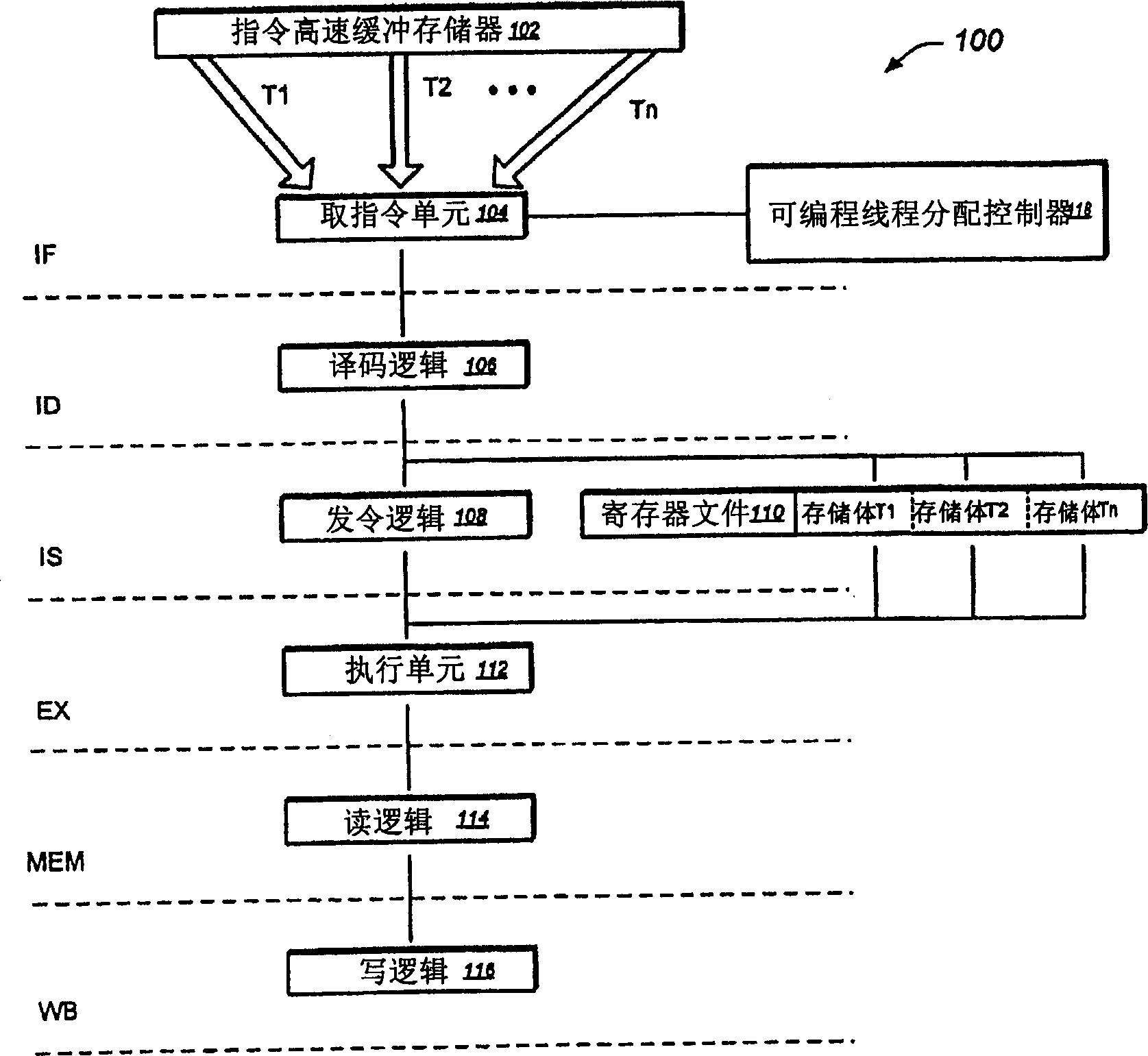

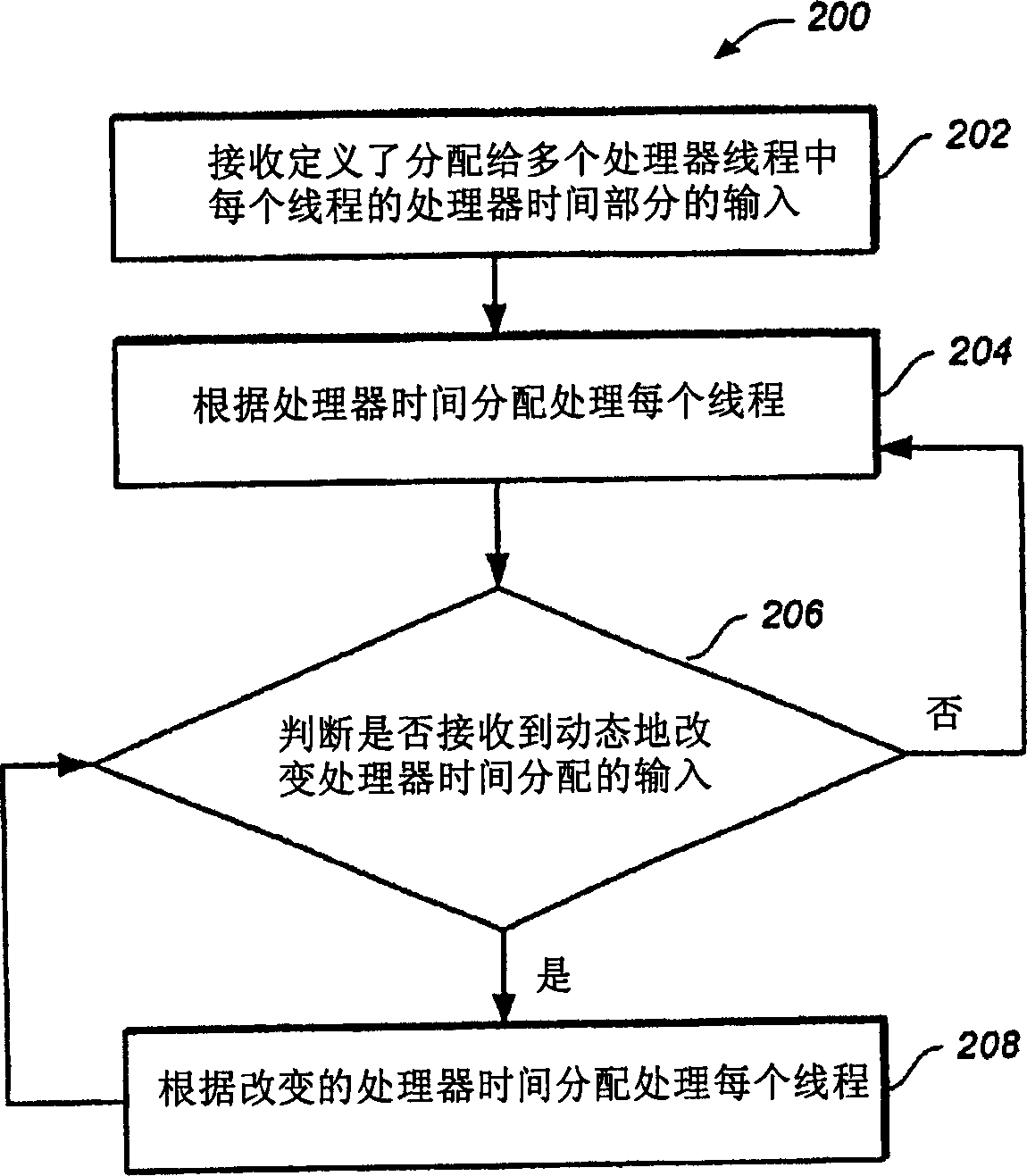

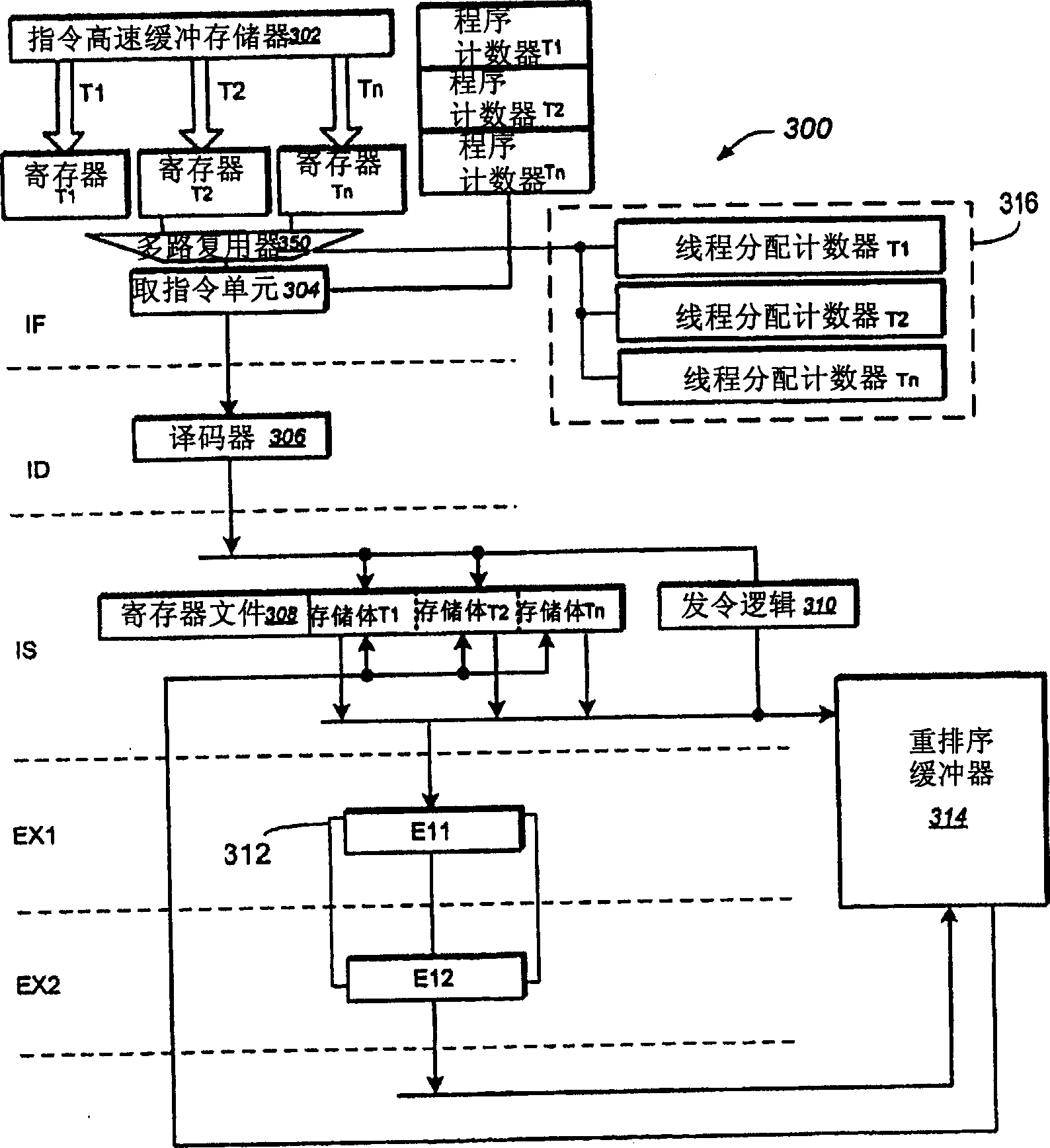

ActiveCN1841314ARegister arrangementsConcurrent instruction executionInstruction unitProcessor register

A pipeline processor architecture, processor, and methods are provided. In one implementation, a processor is provided that includes an instruction fetch unit operable to fetch instructions associated with a plurality of processor threads, a decoder responsive to the instruction fetch unit, issue logic responsive to the decoder, and a register file including a plurality of banks corresponding to the plurality of processor threads. Each bank is operable to store data associated with a corresponding processor thread. The processor can include a set of registers corresponding to each of a plurality of processor threads. Each register within a set is located either before or after a pipeline stage of the processor.

Owner:MARVELL ASIA PTE LTD

Instruction unit with instruction buffer pipeline bypass

InactiveUS20110320771A1Memory adressing/allocation/relocationDigital computer detailsInstruction unitMethod selection

A circuit arrangement and method selectively bypass an instruction buffer for selected instructions so that bypassed instructions can be dispatched without having to first pass through the instruction buffer. Thus, for example, in the case that an instruction buffer is partially or completely flushed as a result of an instruction redirect (e.g., due to a branch mispredict), instructions can be forwarded to subsequent stages in an instruction unit and / or to one or more execution units without the latency associated with passing through the instruction buffer.

Owner:IBM CORP

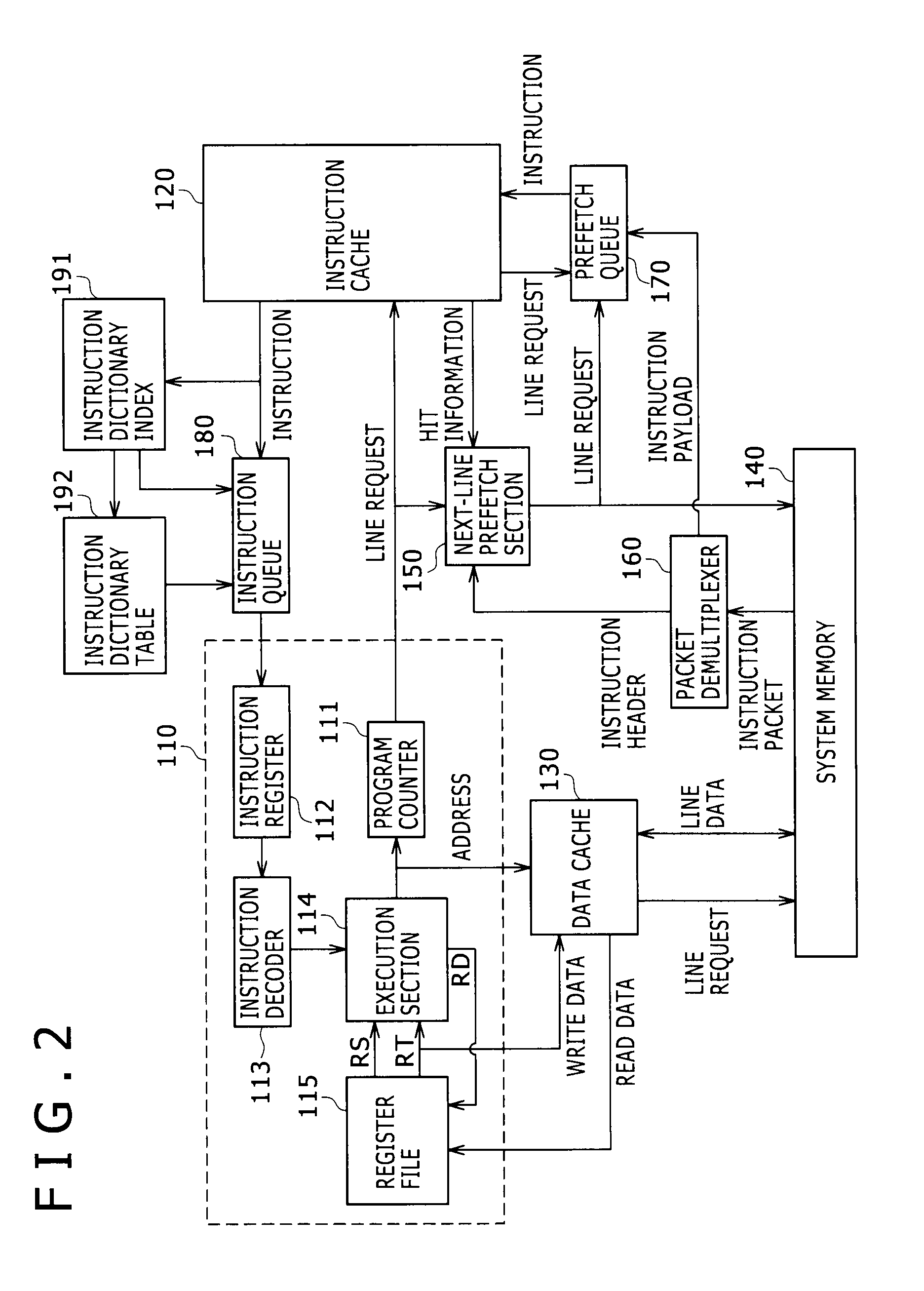

Apparatus for controlling instruction fetch reusing fetched instruction

InactiveUS7676650B2Reducing performance of information processingQuickly executing instructionDigital computer detailsConcurrent instruction executionShort loopParallel computing

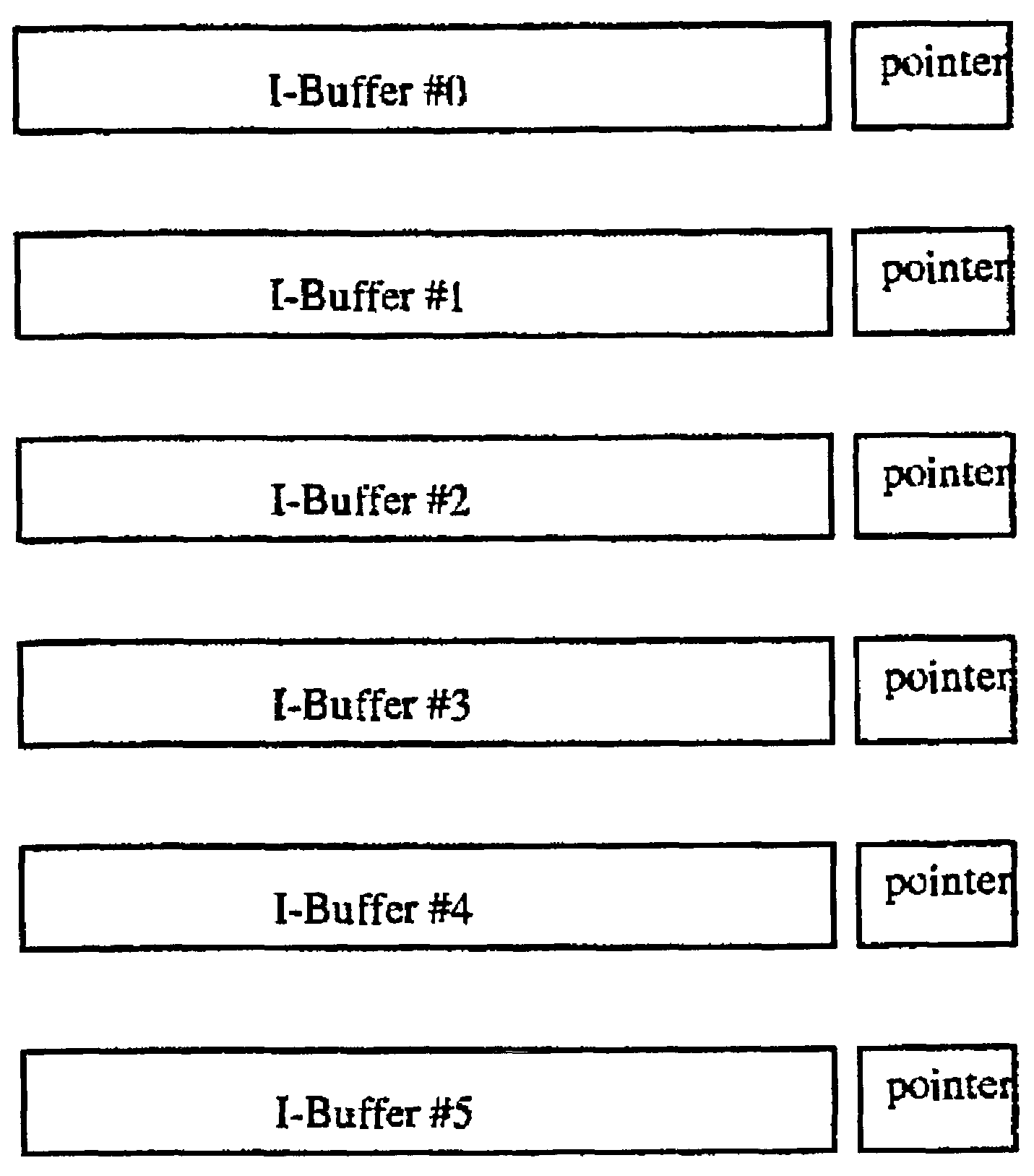

When an instruction stored in a specific instruction buffer is the same as another instruction stored in another instruction buffer and logically subsequent to the instruction in the specific instruction buffer, a connection is made from the instruction buffer storing a logically and immediately preceding instruction, not the instruction in the other instruction buffer, to the specific instruction buffer without the instruction in the other instruction buffer, and a loop is generated by instruction buffers, thereby performing a short loop in an instruction buffer system capable of arbitrarily connecting a plurality of instruction buffers.

Owner:FUJITSU LTD

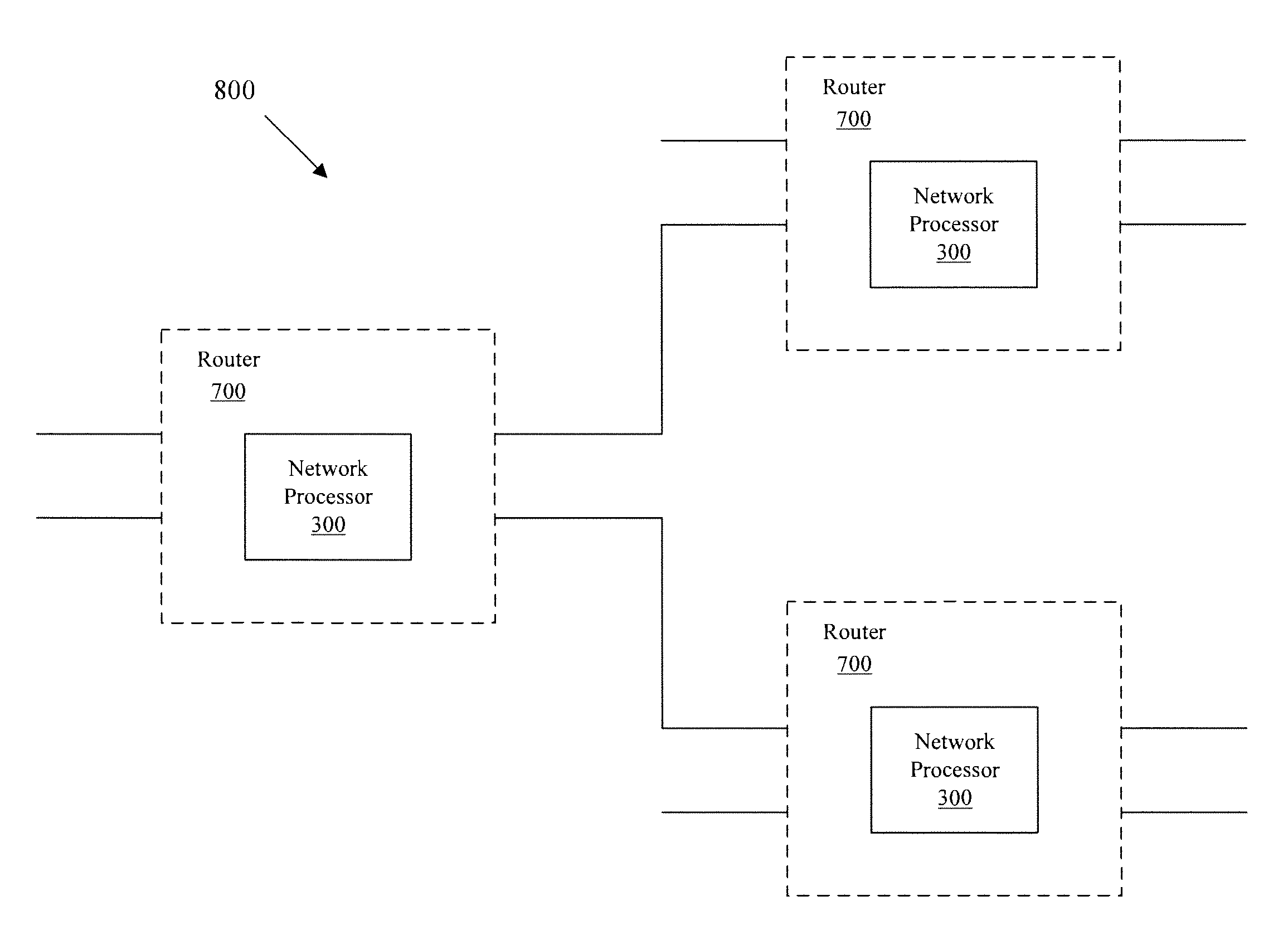

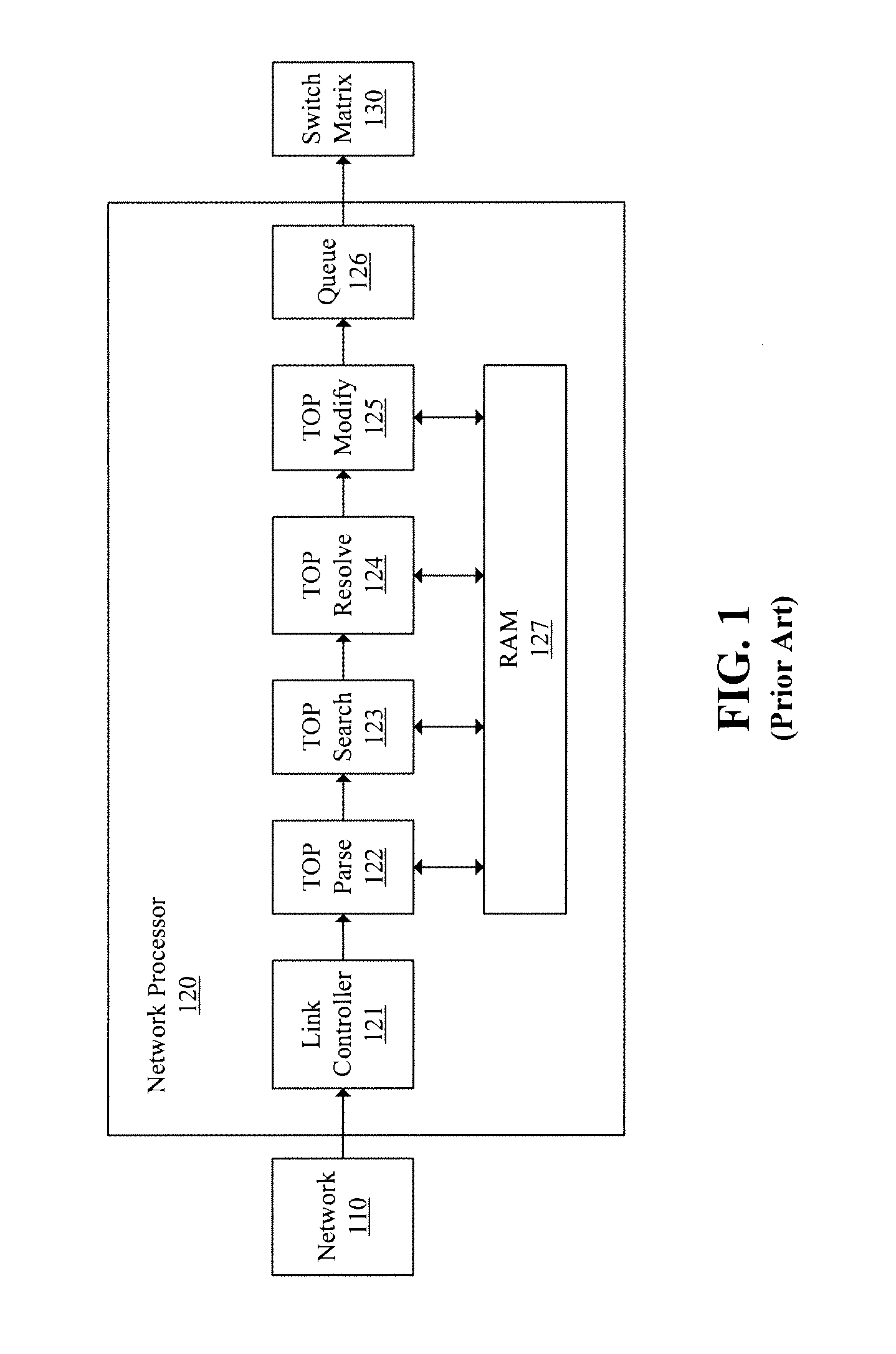

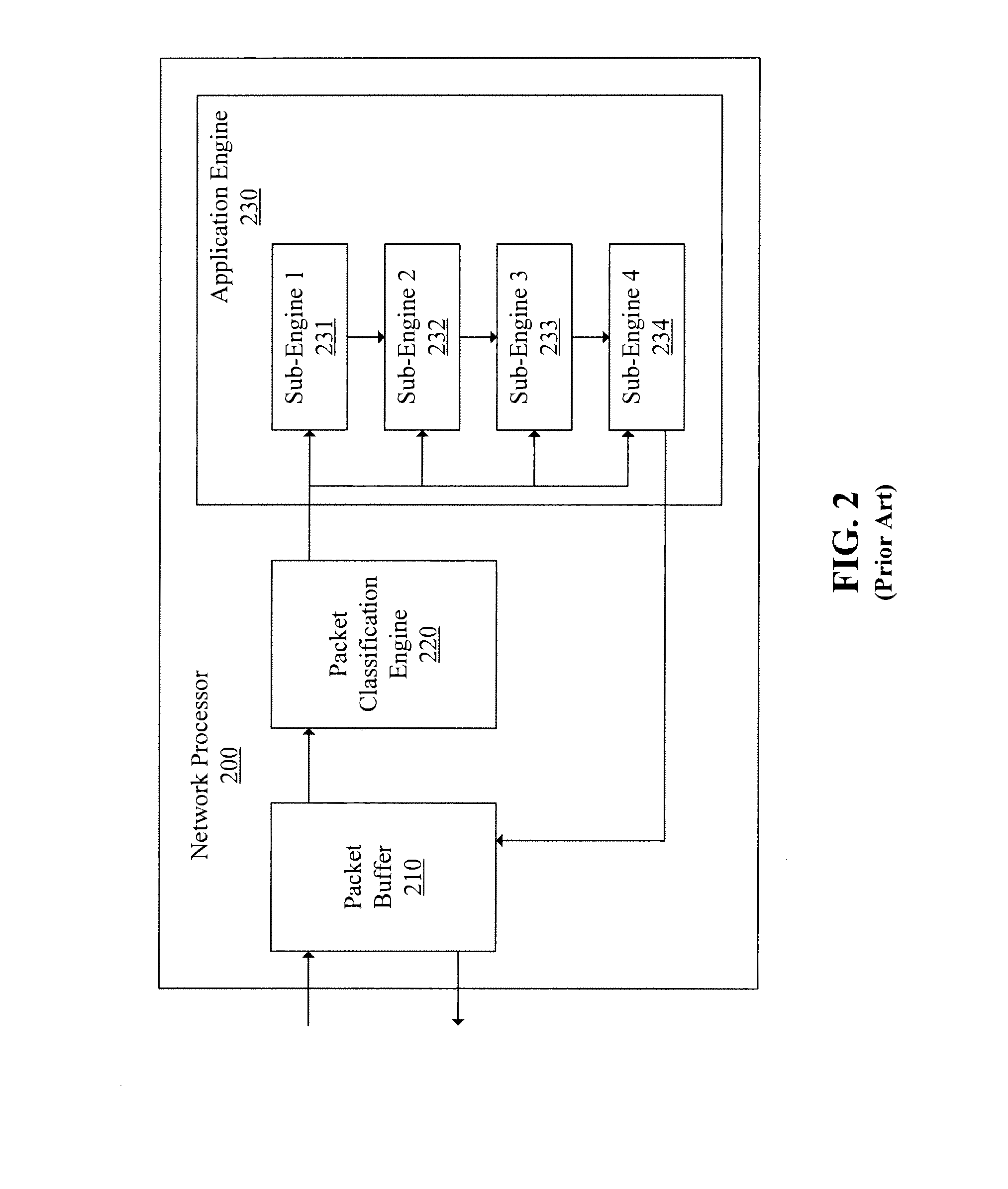

Network Processors and Pipeline Optimization Methods

A network processor of an embodiment includes a packet classification engine, a processing pipeline, and a controller. The packet classification engine allows for classifying each of a plurality of packets according to packet type. The processing pipeline has a plurality of stages for processing each of the plurality of packets in a pipelined manner, where each stage includes one or more processors. The controller allows for providing the plurality of packets to the processing pipeline in an order that is based at least partially on: (i) packet types of the plurality of packets as classified by the packet classification engine and (ii) estimates of processing times for processing packets of the packet types at each stage of the plurality of stages of the processing pipeline. A method in a network processor allows for prefetching instructions into a cache for processing a packet based on a packet type of the packet.

Owner:SOBAJE JUSTIN MARK

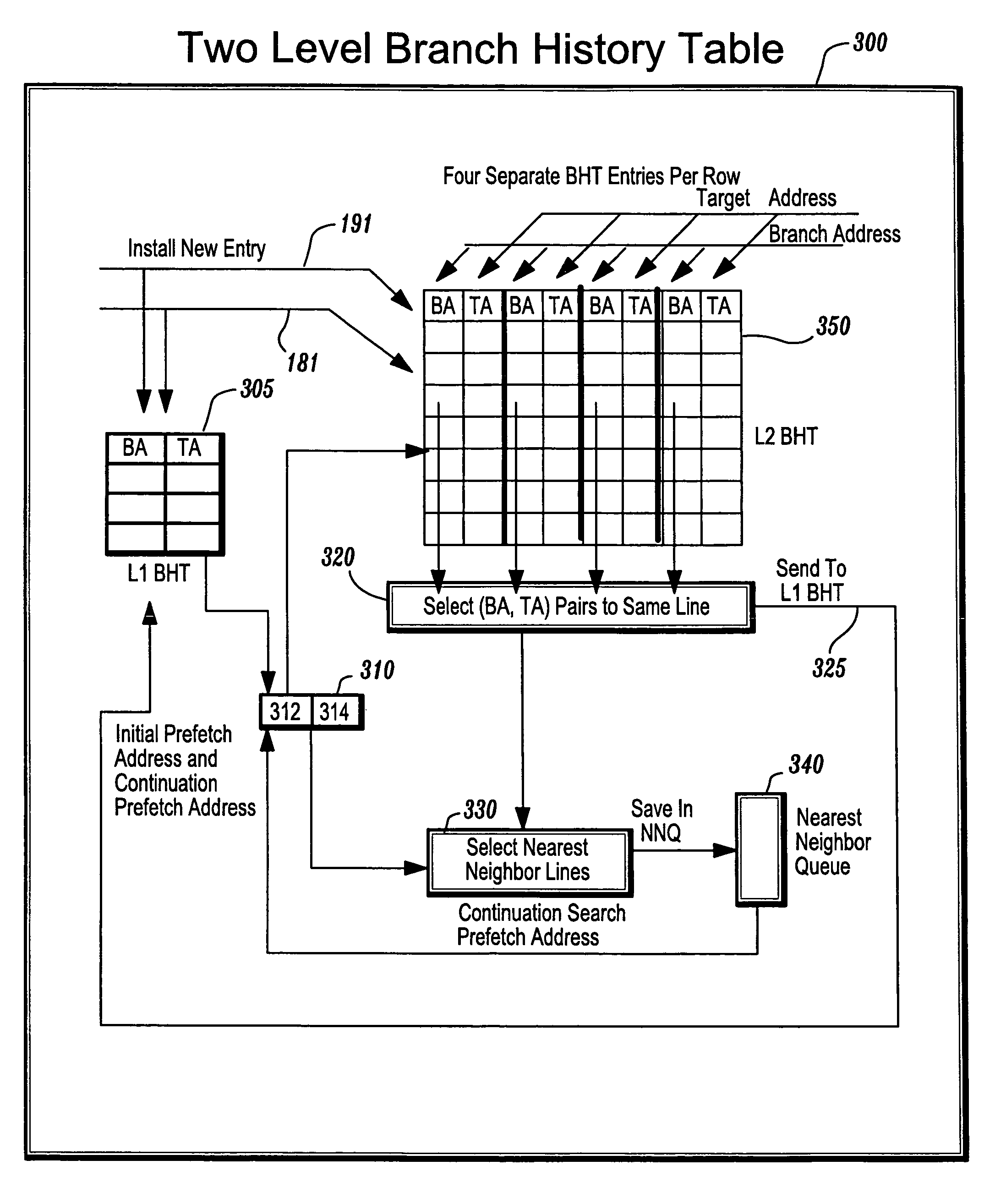

Method and apparatus for prefetching branch history information

InactiveUS7493480B2Digital computer detailsConcurrent instruction executionParallel computingCycle time

A two level branch history table (TLBHT) is substantially improved by providing a mechanism to prefetch entries from the very large second level branch history table (L2 BHT) into the active (very fast) first level branch history table (L1 BHT) before the processor uses them in the branch prediction process and at the same time prefetch cache misses into the instruction cache. The mechanism prefetches entries from the very large L2 BHT into the very fast L1 BHT before the processor uses them in the branch prediction process. A TLBHT is successful because it can prefetch branch entries into the L1 BHT sufficiently ahead of the time the entry is needed. This feature of the TLBHT is also used to prefetch instructions into the cache ahead of their use. In fact, the timeliness of the prefetches produced by the TLBHT can be used to remove most of the cycle time penalty incurred by cache misses.

Owner:IBM CORP

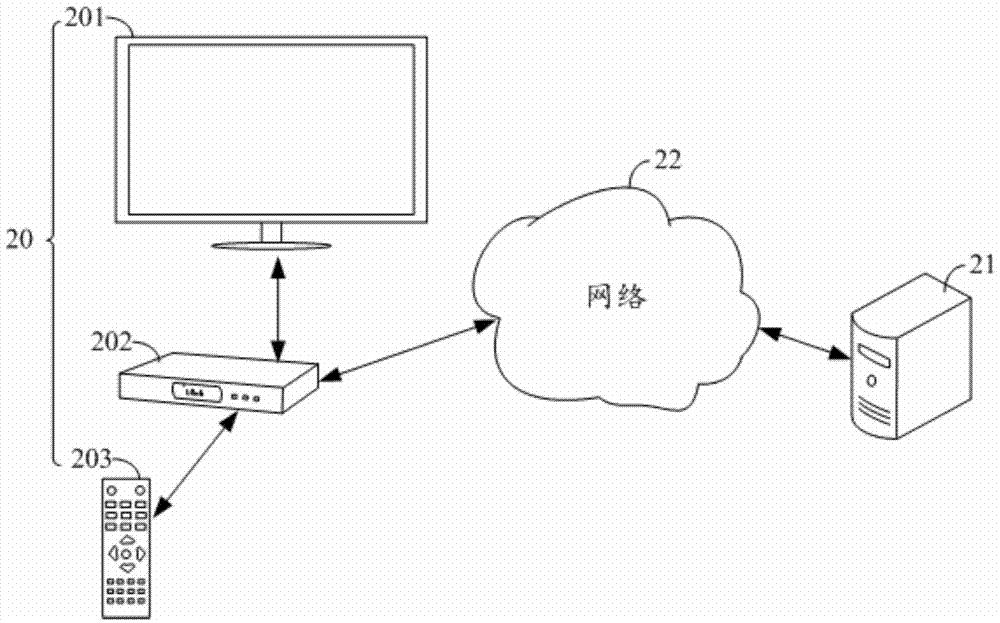

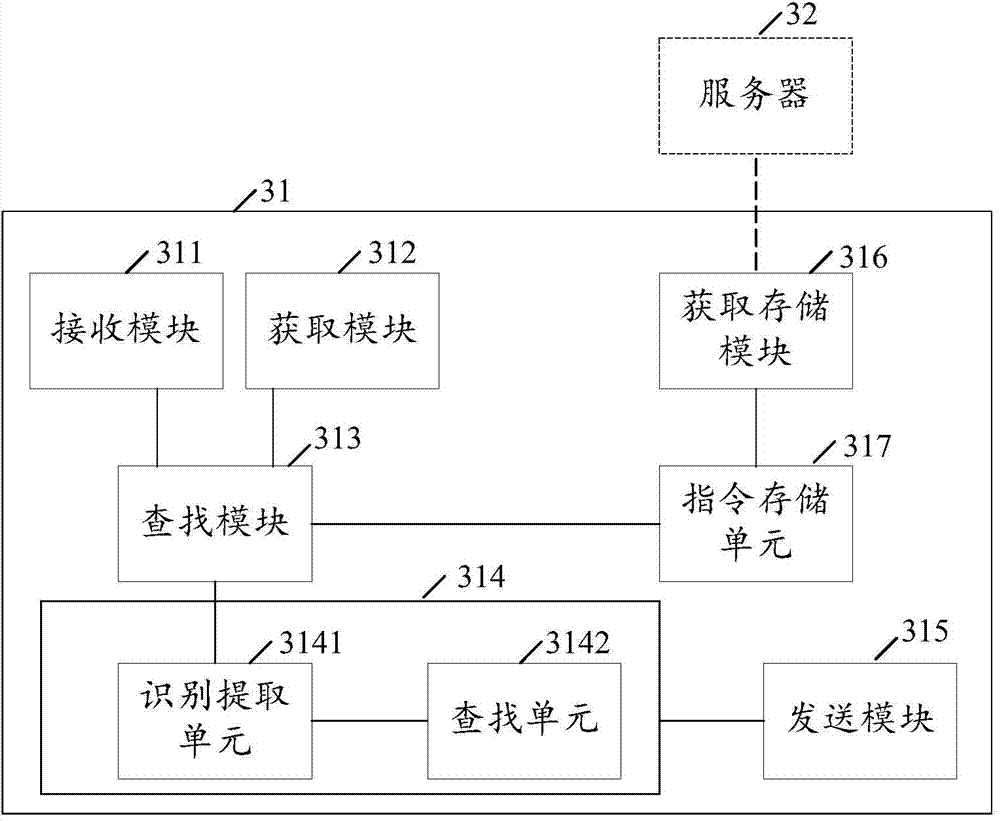

Voice control method and device of application program

InactiveCN103885783AEasy to controlRealize voice operationSpeech recognitionSelective content distributionApplication softwareSpeech sound

The invention discloses a voice control method and device of an application program. The method includes the steps that an input voice instruction is received; an identifier of a currently running application program is obtained; according to the identifier, an instruction set supported by the application program and an identifier of the application program are sought in an instruction storage unit, wherein the instruction storage unit is used for storing instruction sets supported by application programs; if the instruction set is found, an instruction matched with the voice instruction is searched for in the found instruction set; if the matched instruction is found, the matched instruction is sent to the application program so as to enable the application program to perform operations according to the matched instruction. Through the mode, the voice control method and device of the application program can achieve voice control over the application program and is very convenient to use.

Owner:SHENZHEN SITUATIONAL INTELLIGENCE CO LTD

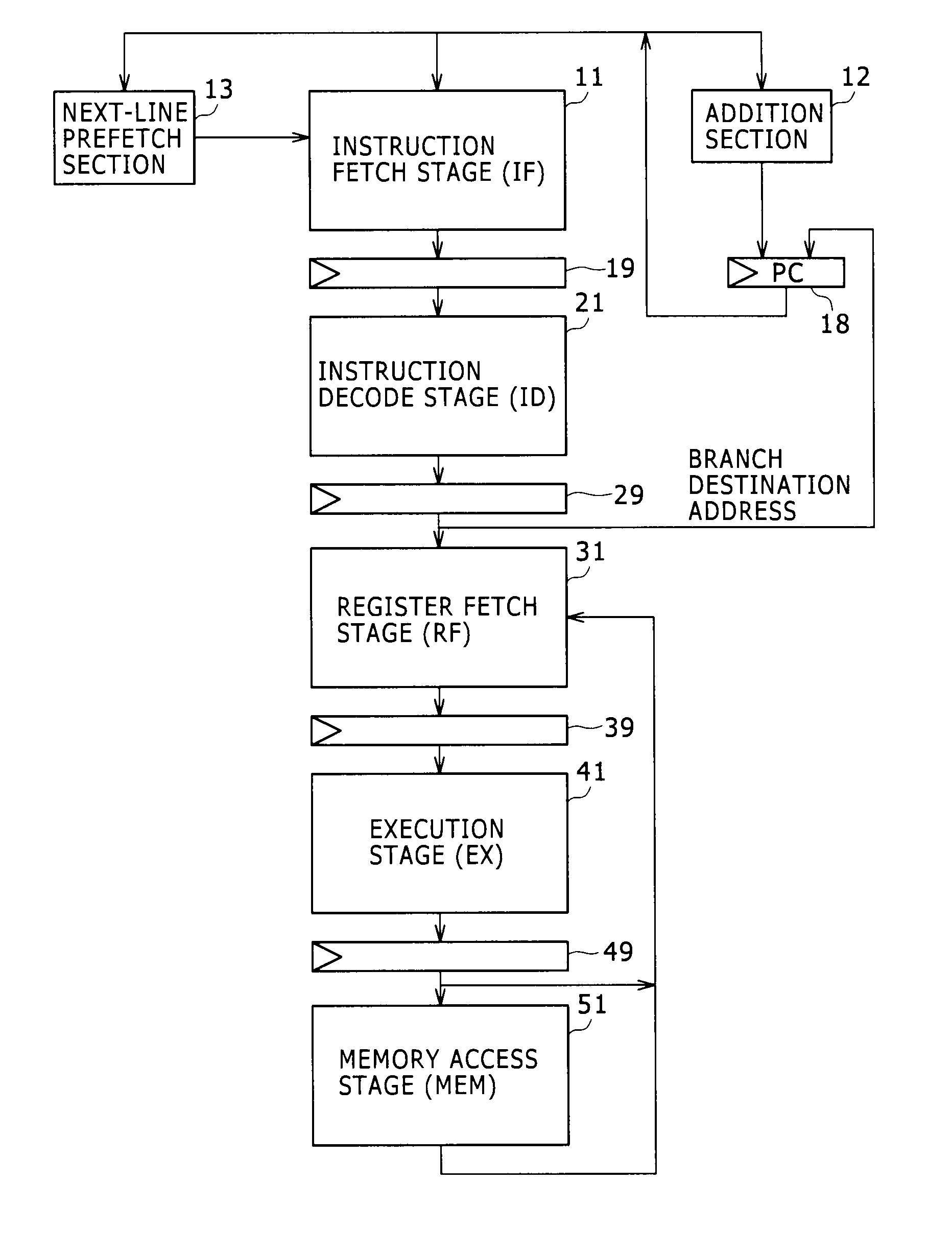

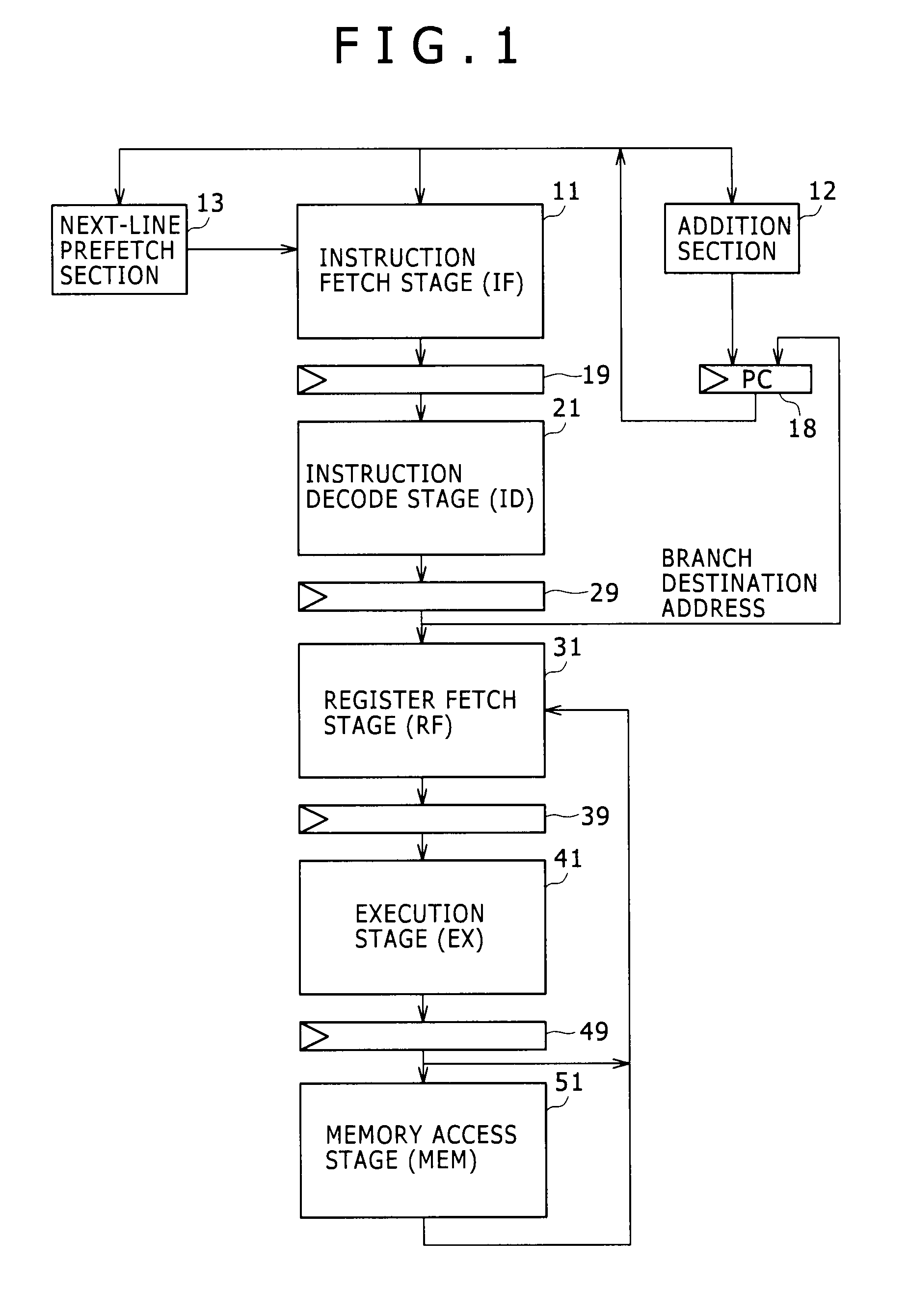

Instruction fetch apparatus and processor

InactiveUS20110238953A1Minimizing penaltyEasy accessDigital computer detailsMemory systemsParallel computingInstruction prefetch

An instruction fetch apparatus is disclosed which includes: a detection state setting section configured to set the execution state of a program of which an instruction prefetch timing is to be detected; a program execution state generation section configured to generate the current execution state of the program; an instruction prefetch timing detection section configured to detect the instruction prefetch timing in the case of a match between the current execution state of the program and the set execution state thereof upon comparison therebetween; and an instruction prefetch section configured to prefetch the next instruction upon detection of the instruction prefetch timing.

Owner:SONY CORP

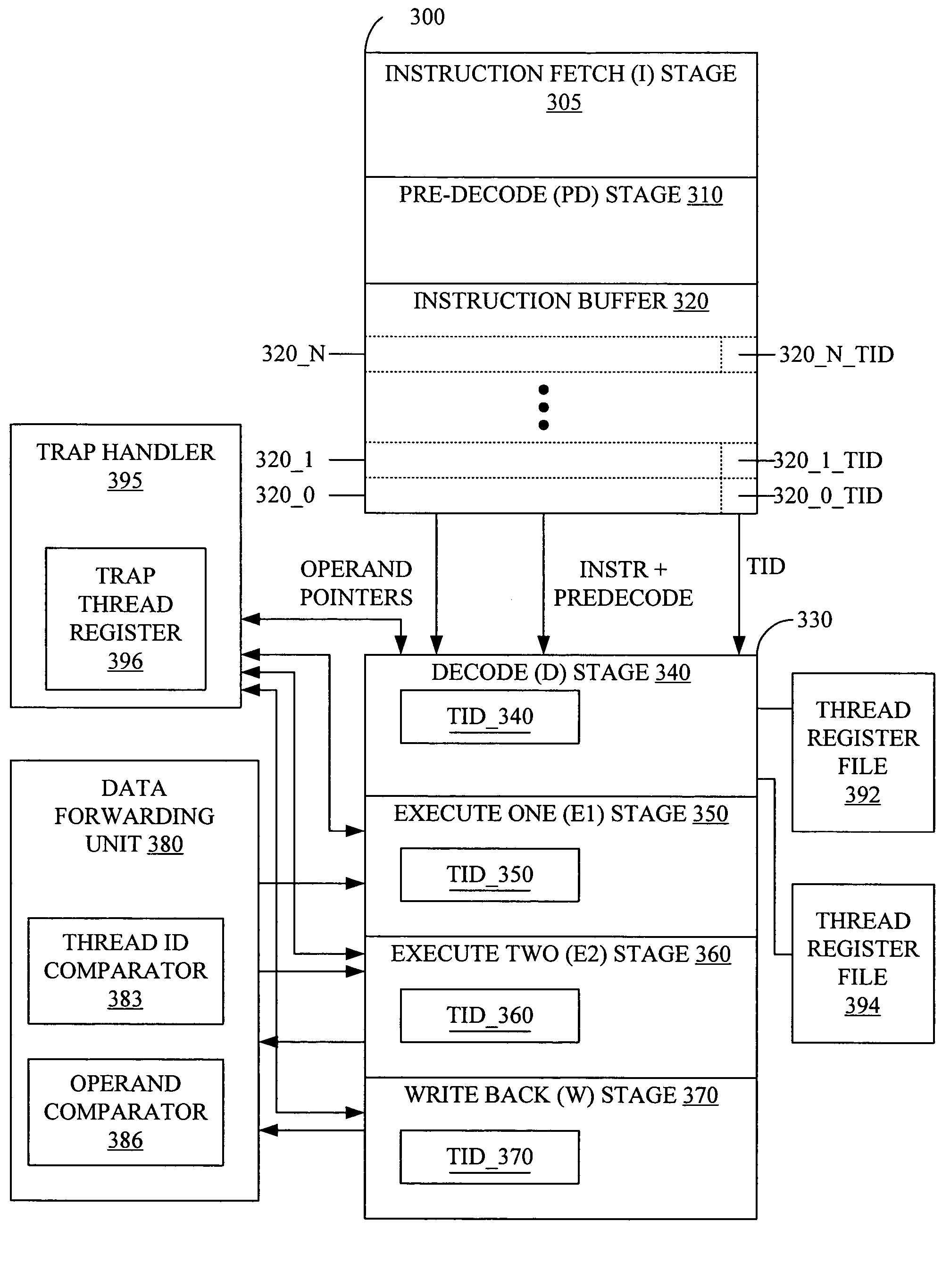

Thread ID in a multithreaded processor

ActiveUS7263599B2Easy maintenanceHinder resolutionProgram initiation/switchingDigital computer detailsOperandProgram trace

A multithreaded processor includes a thread ID for each set of fetched bits in an instruction fetch and issue unit. The thread ID attaches to the instructions and operands of the set of fetched bits. Pipeline stages in the multithreaded processor stores the thread ID associated with each operand or instruction in the pipeline stage. The thread ID are used to maintain data coherency and to generate program traces that include thread information for the instructions executed by the multithreaded processor.

Owner:INFINEON TECH AG

Arithmetic Branch Fusion

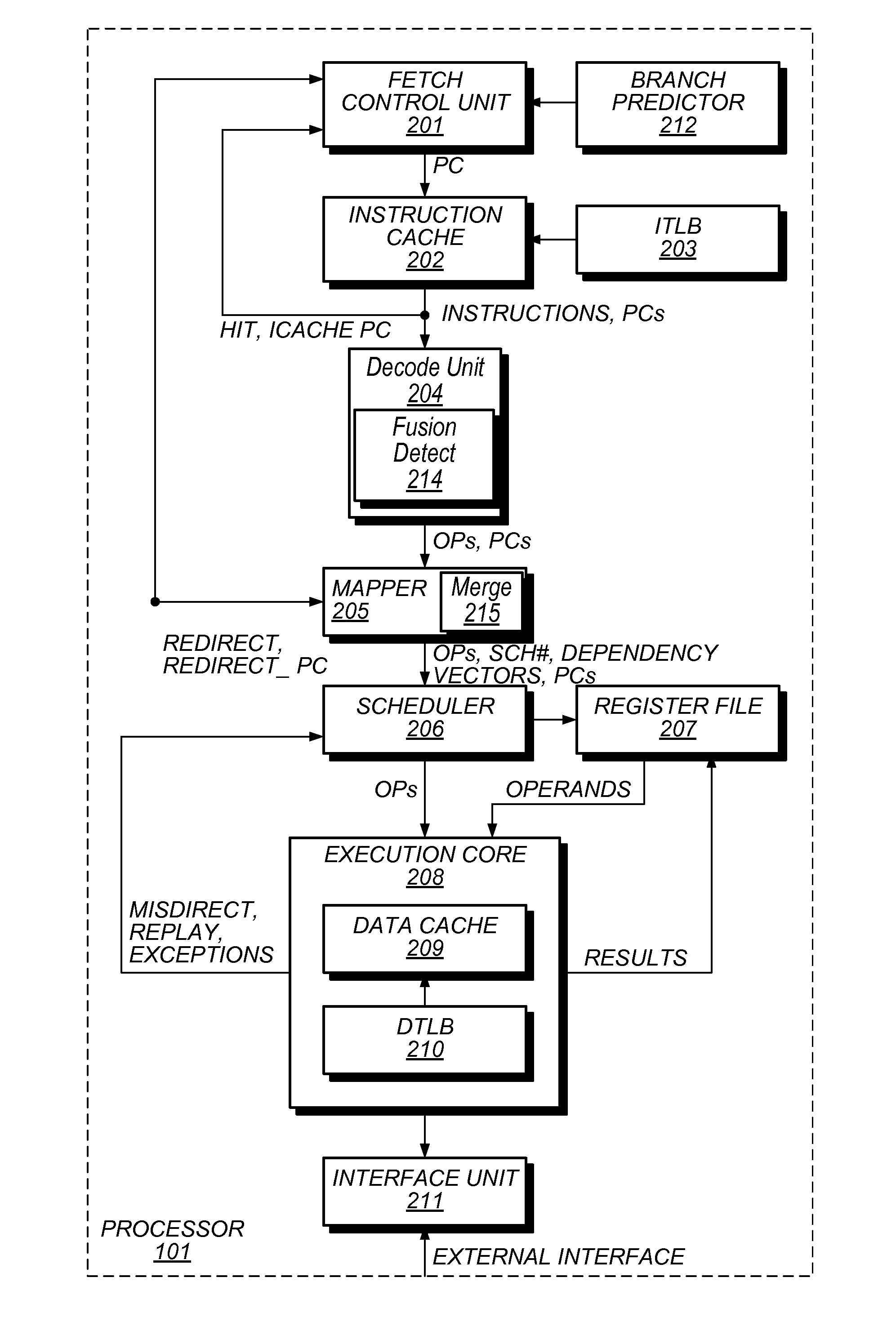

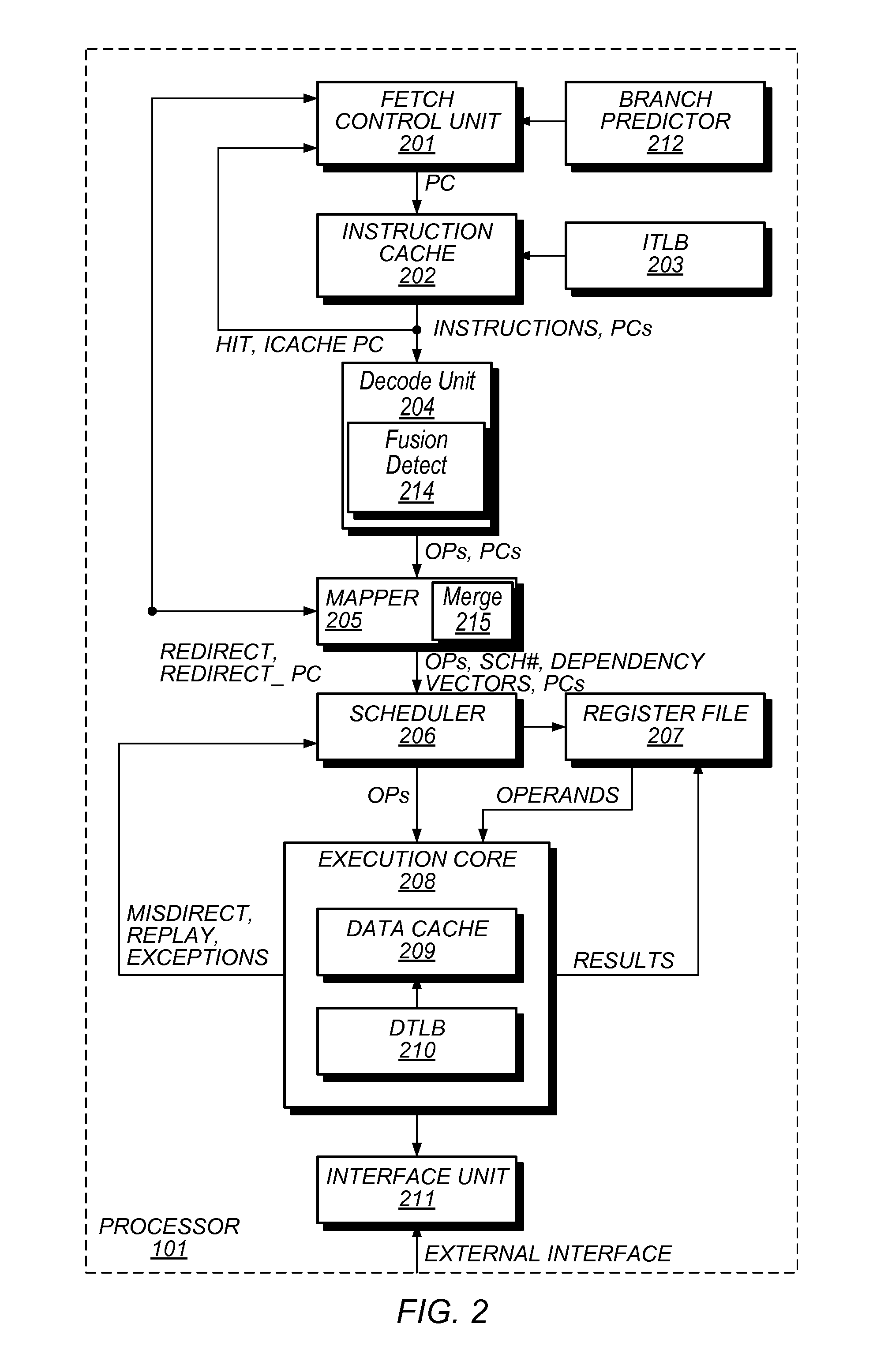

ActiveUS20140208073A1Conditional code generationInstruction analysisMicro-operationParallel computing

A processor and method for fusing together an arithmetic instruction and a branch instruction. The processor includes an instruction fetch unit configured to fetch instructions. The processor may also include an instruction decode unit that may be configured to decode the fetched instructions into micro-operations for execution by an execution unit. The decode unit may be configured to detect an occurrence of an arithmetic instruction followed by a branch instruction in program order, wherein the branch instruction, upon execution, changes a program flow of control dependent upon a result of execution of the arithmetic instruction. In addition, the processor may further be configured to fuse together the arithmetic instruction and the branch instruction such that a single micro-operation is formed. The single micro-operation includes execution information based upon both the arithmetic instruction and the branch instruction.

Owner:APPLE INC

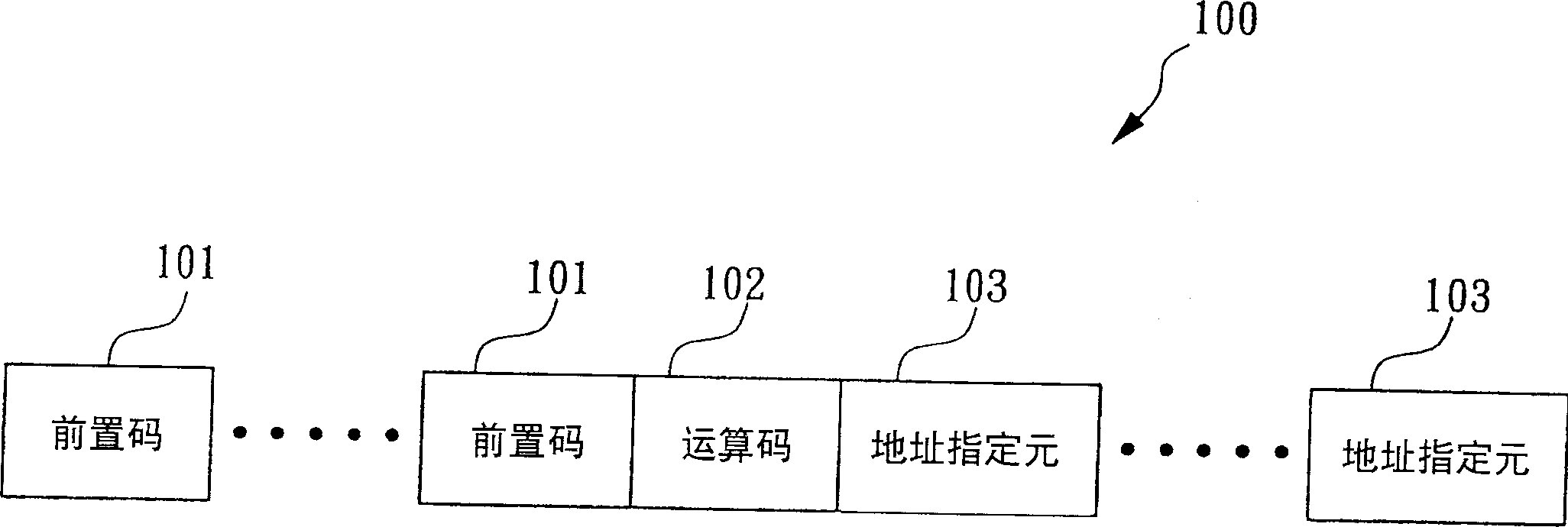

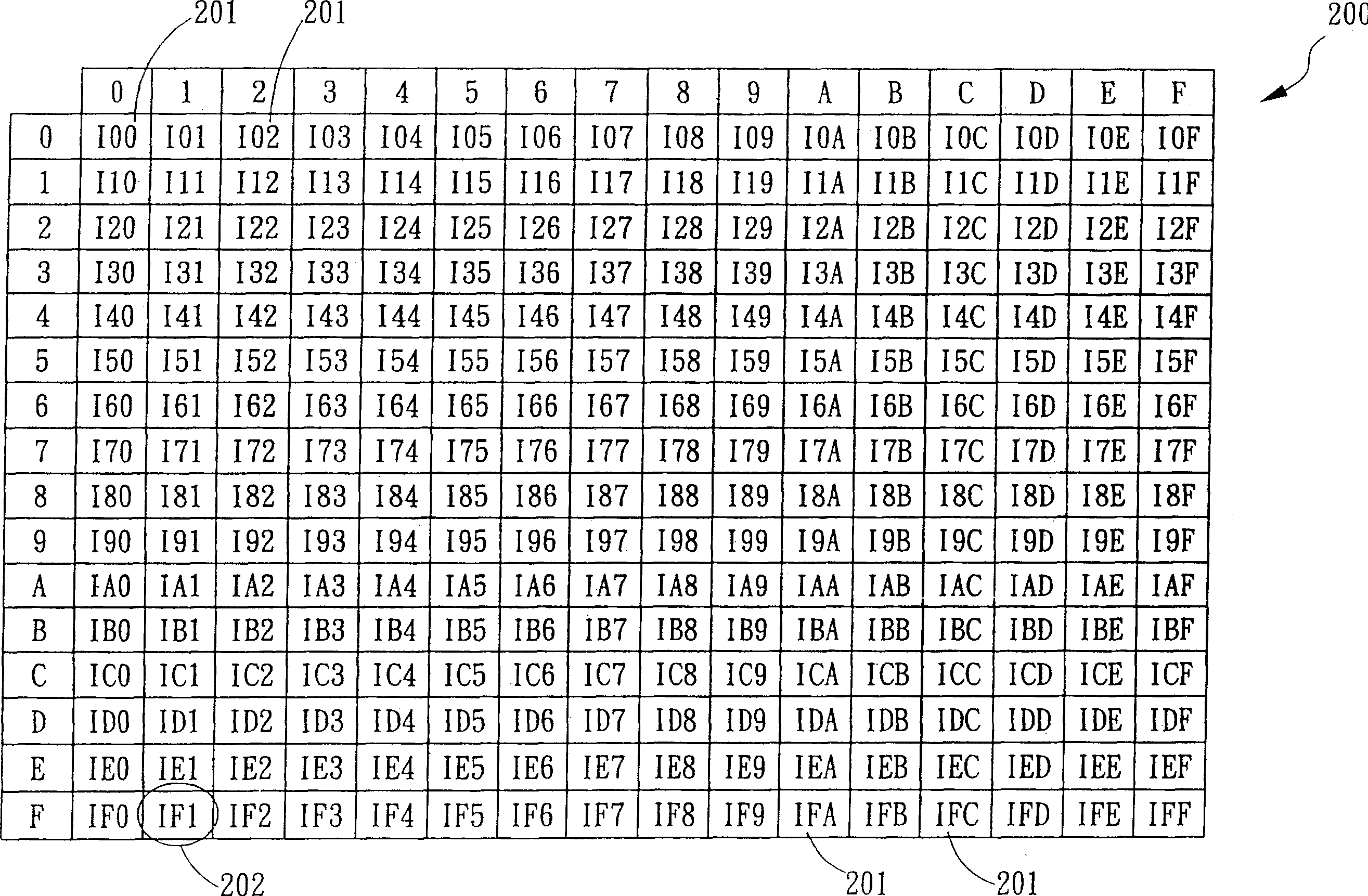

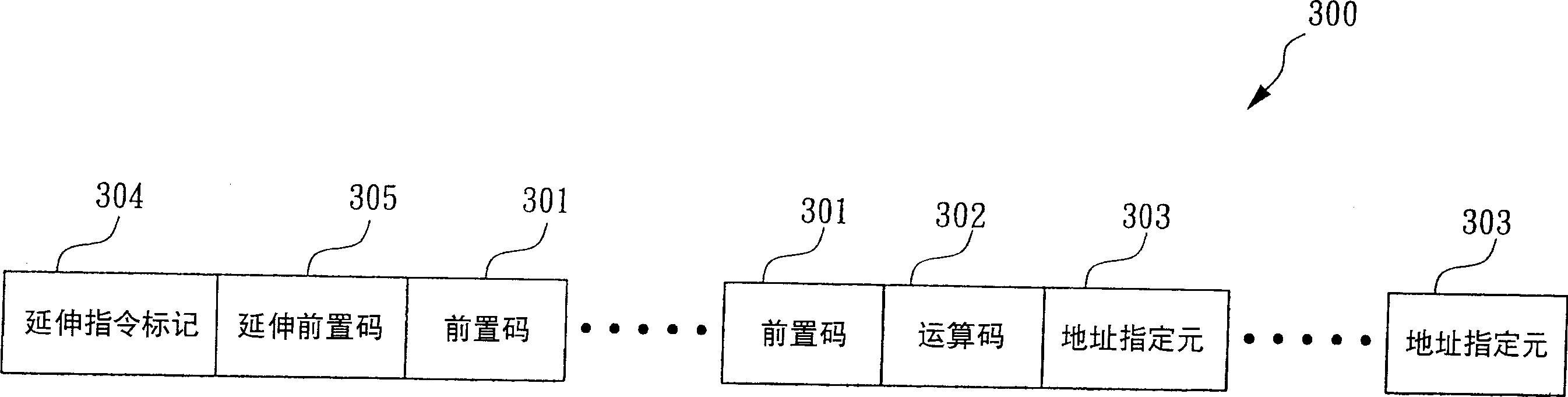

Appts. and method of extending microprocessor data mode

An apparatus and method are provided for extending a microprocessor instruction set beyond its current capabilities to allow for extended size operands specifiable by programmable instructions in the microprocessor instruction set. The apparatus includes translation logic and extended execution logic. The translation logic translates an extended instruction into corresponding micro instructions for execution by the microprocessor. The extended instruction has an extended prefix and an extended prefix tag. The extended prefix specifies an extended operand size for an operand corresponding to a prescribed operation, where the extended operand size cannot be specified by an existing instruction set. The extended prefix tag indicates the extended prefix, where the extended prefix tag is an otherwise architecturally specified opcode within the existing instruction set. The extended execution logic is coupled to the translation logic. The extended execution logic receives the corresponding micro instructions and performs the prescribed operation using the operand.

Owner:IP FIRST

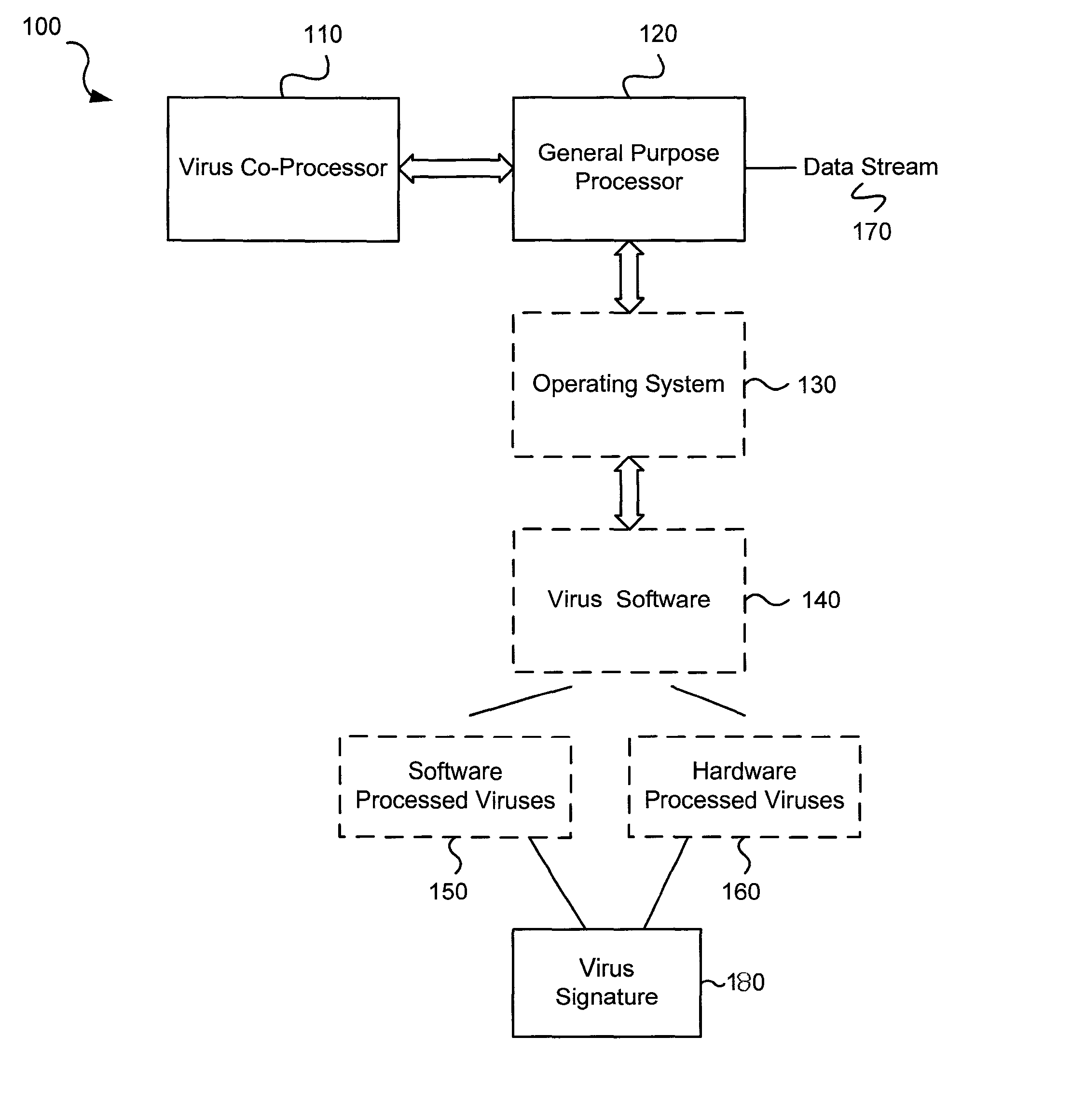

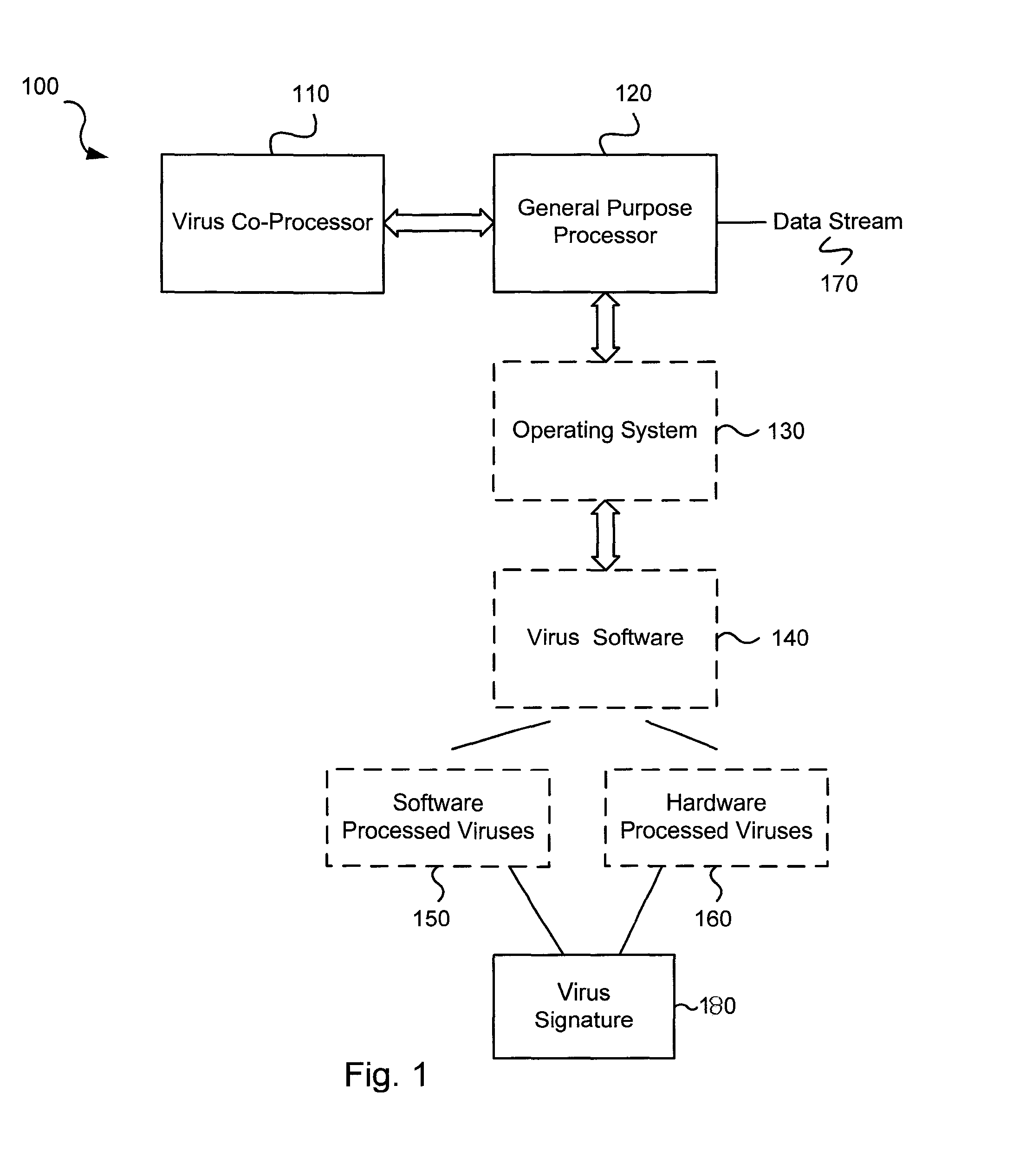

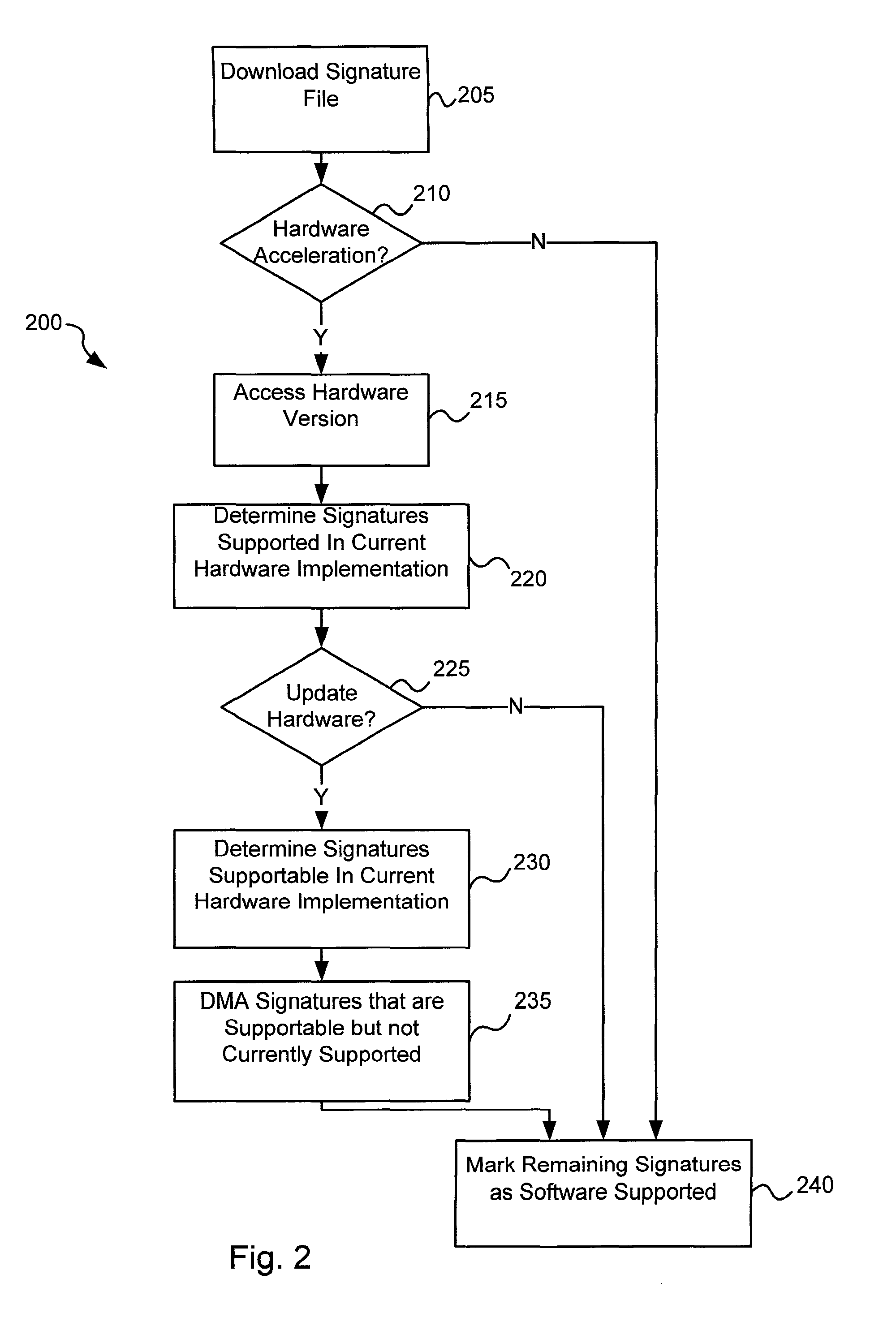

Virus co-processor instructions and methods for using such

ActiveUS8079084B1Memory loss protectionUnauthorized memory use protectionLoad instructionInstruction unit

Various embodiments of the present invention provide elements that may be utilized for improved virus processing. As one example, a computer readable medium is disclosed that includes a virus signature compiled for execution on a virus co-processor. The virus signature includes at least one primitive instruction and at least one CPR instruction stored at contiguous locations in the computer readable medium. The CPR instruction is one of an instruction set that includes, but is not limited to: a compare string instruction, compare buffer instruction; perform checksum instruction; a seek instruction; and a test instruction. The primitive instruction may be, but is not limited to, an add instruction, a branch instruction, a jump instruction, a load instruction, a move instruction, a logic AND instruction, a logic OR instruction, and / or a logic XOR instruction.

Owner:FORTINET

Instruction method for facilitating efficient coding and instruction fetch of loop construct

InactiveUS20100122066A1Digital computer detailsConcurrent instruction executionMachine instructionConditional loop

Instruction set techniques have been developed to identify explicitly the beginning of a loop body and to code a conditional loop-end in ways that allow a processor implementation to efficiently manage an instruction fetch buffer and / or entries in an instruction cache. In particular, for some computations and processor implementations, a machine instruction is defined that identifies a loop start, stores a corresponding loop start address on a return stack (or in other suitable storage) and directs fetch logic to take advantage of the identification by retaining in a fetch buffer or instruction cache the instruction(s) beginning at the loop start address, thereby avoiding usual branch delays on subsequent iterations of the loop. A conditional loop-end instruction can be used in conjunction with the loop start instruction to discard (or simply mark as no longer needed) the loop start address and the loop body instructions retained in the fetch buffer or instruction cache.

Owner:FREESCALE SEMICON INC

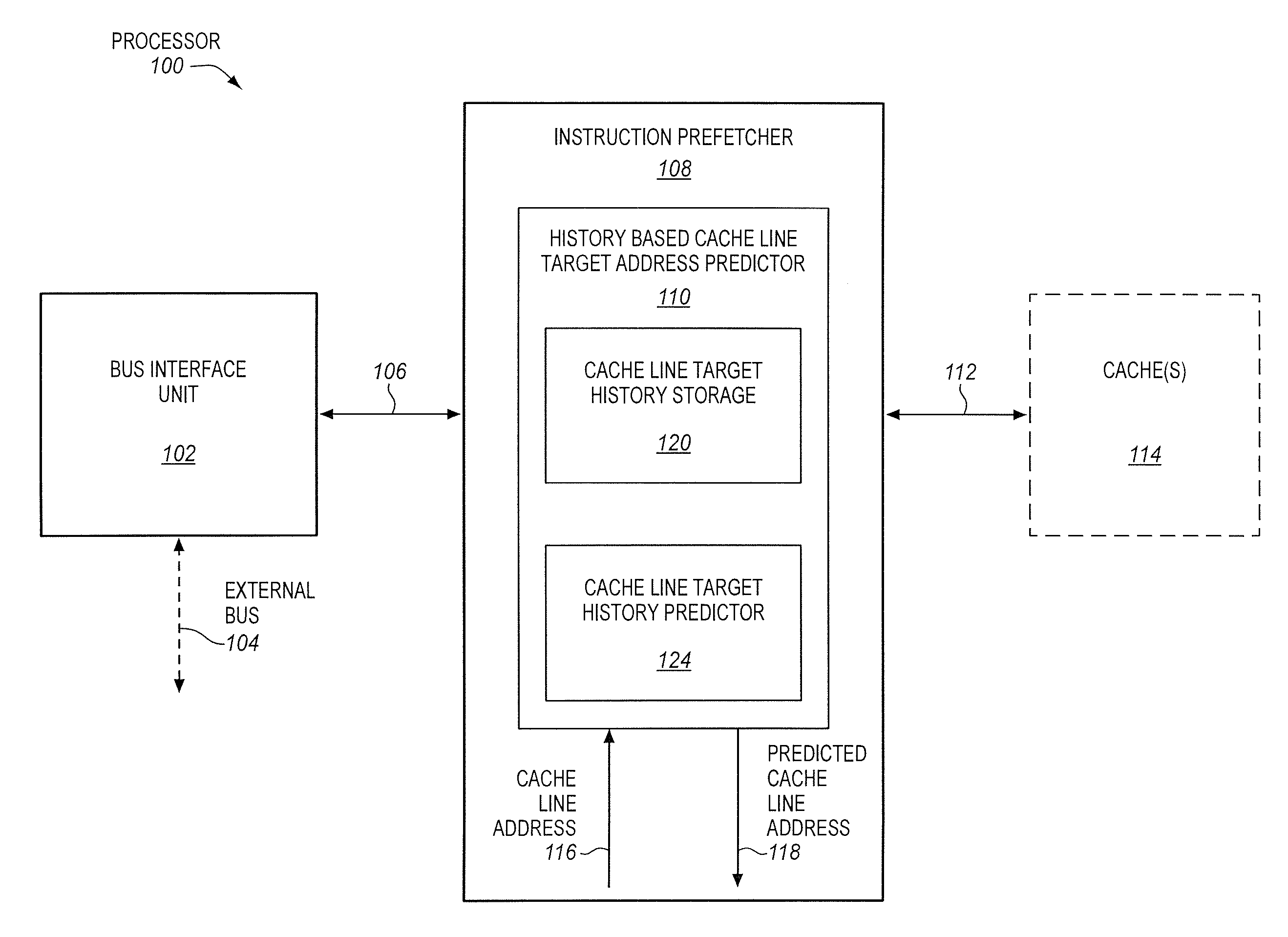

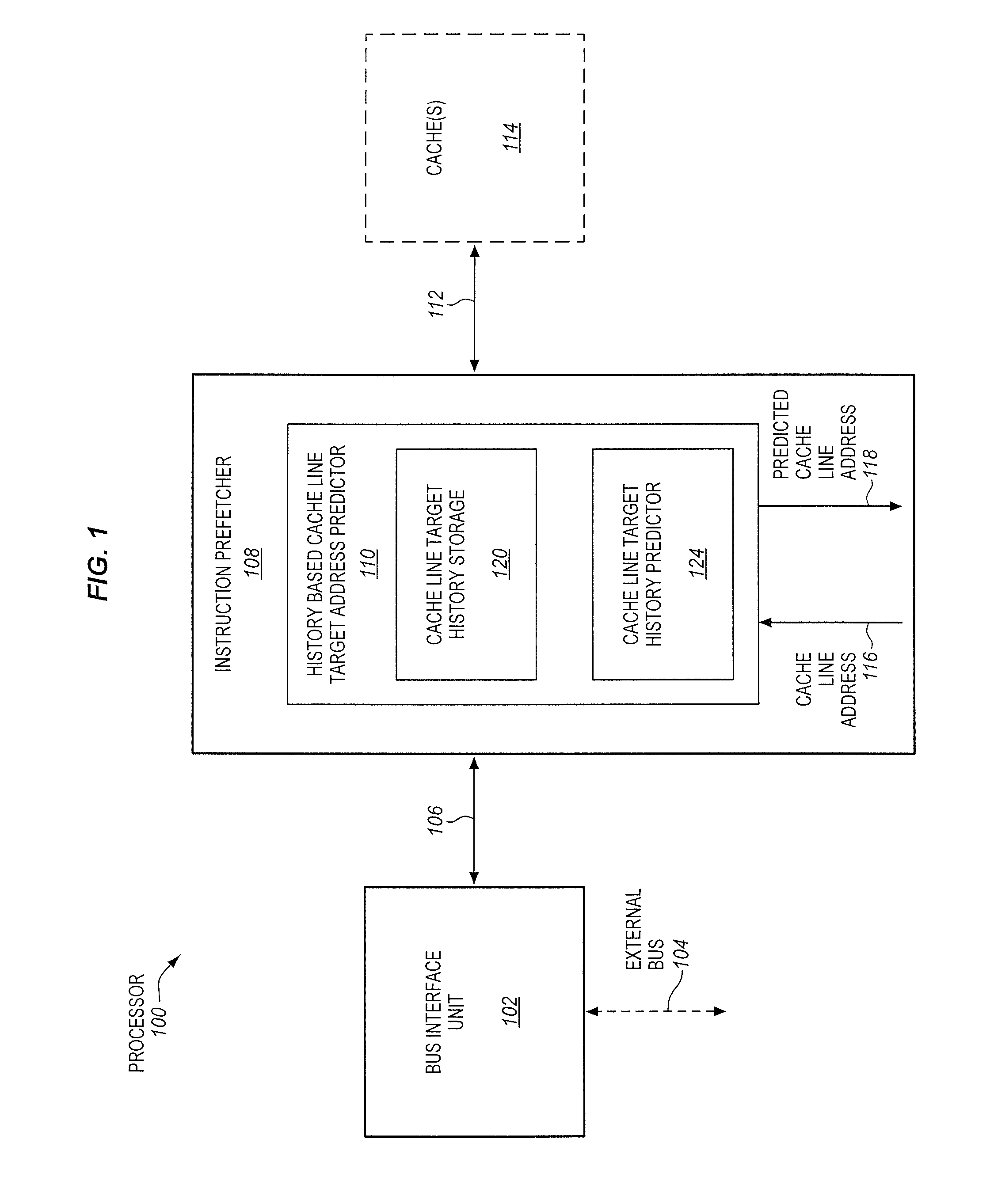

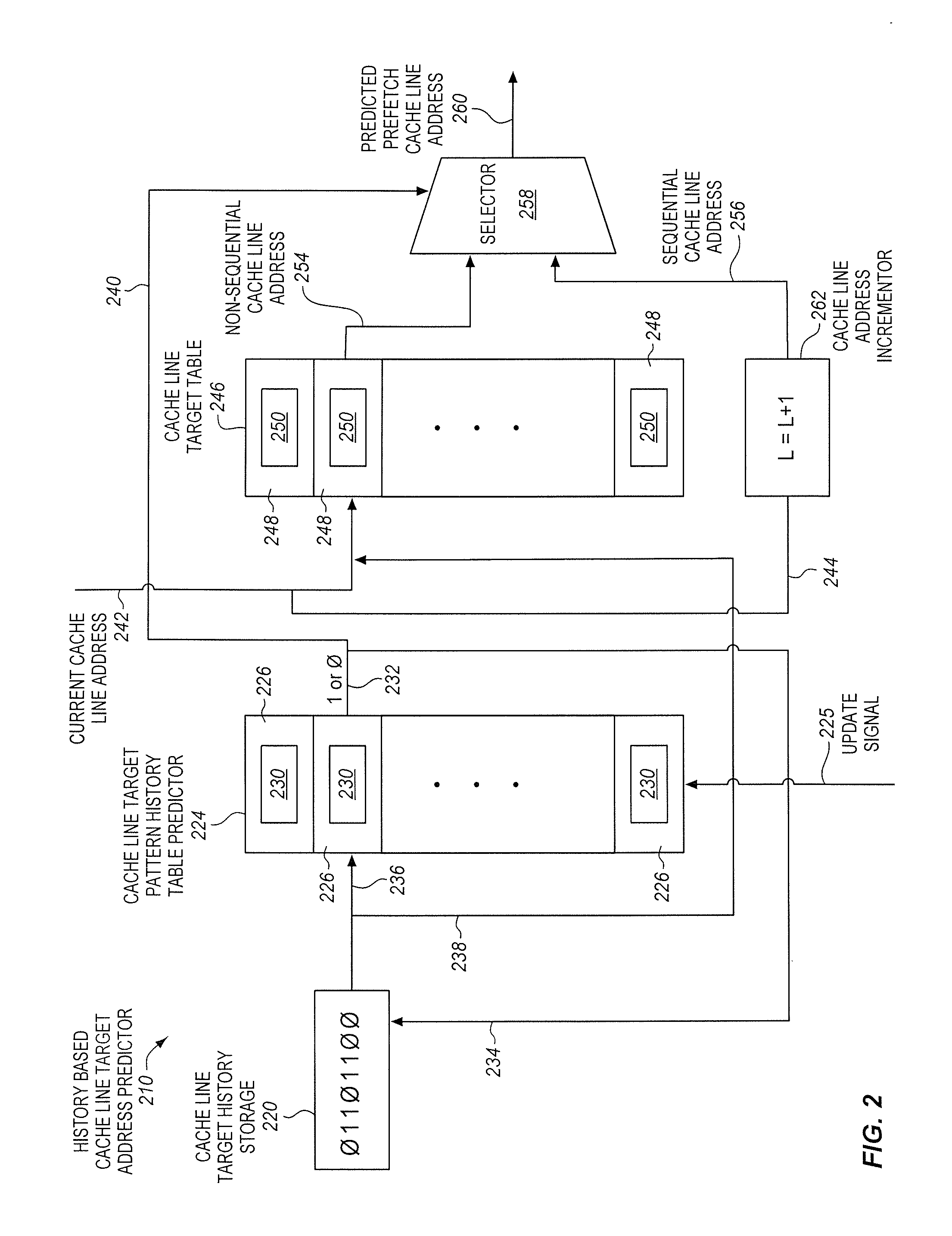

Instruction Prefetching Using Cache Line History

InactiveUS20120084497A1Memory architecture accessing/allocationEnergy efficient ICTParallel computingByte

Owner:INTEL CORP

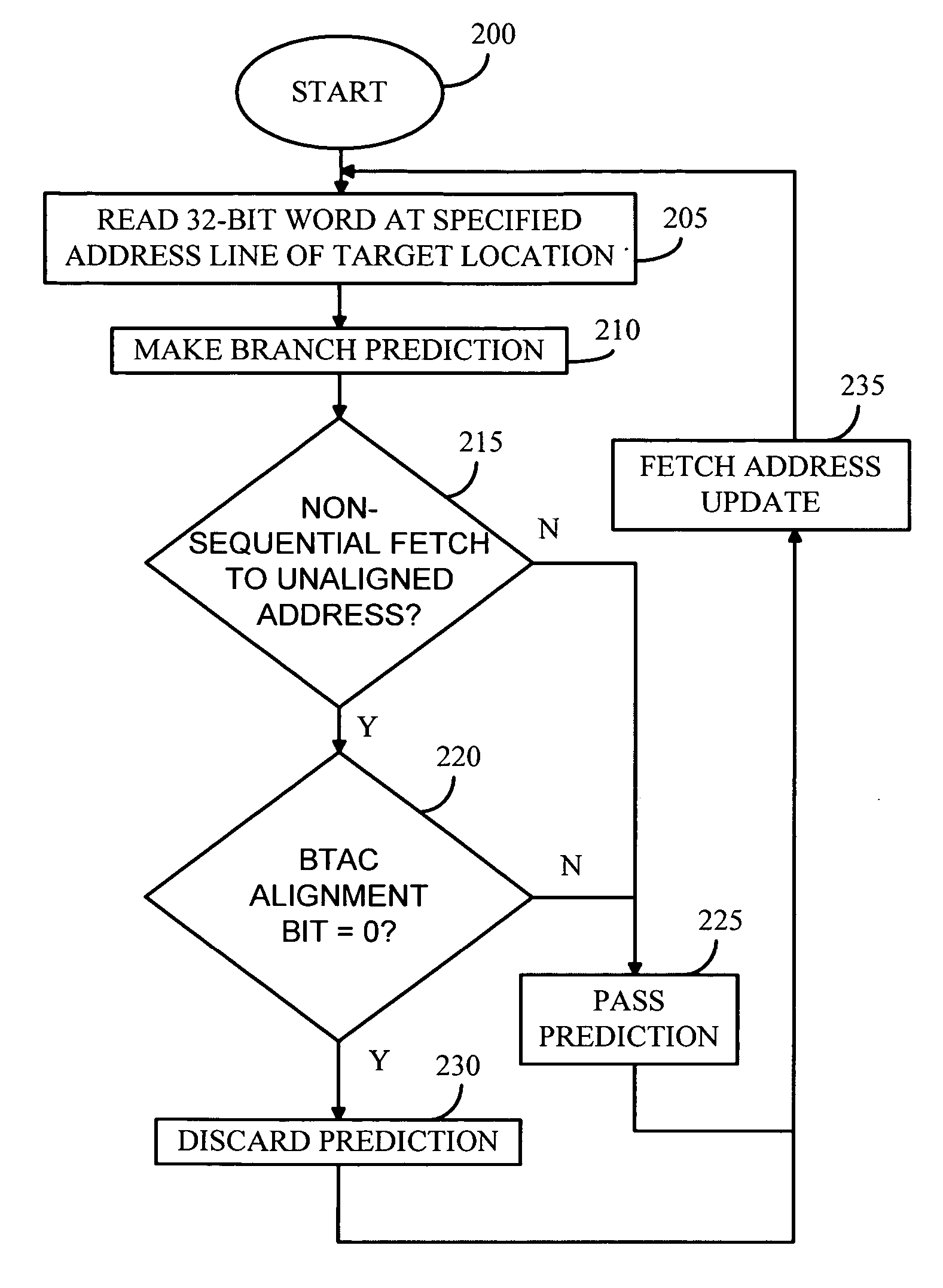

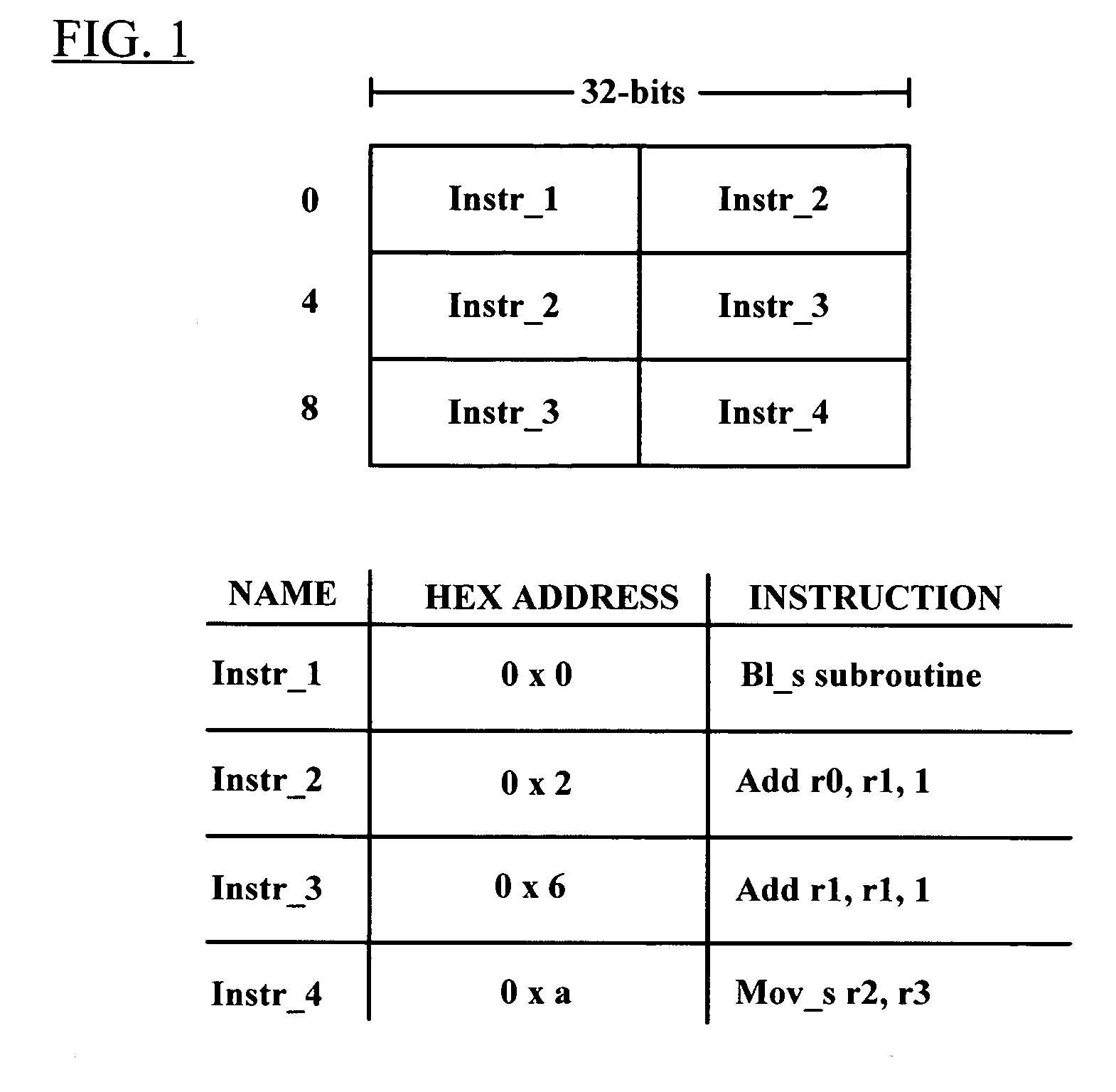

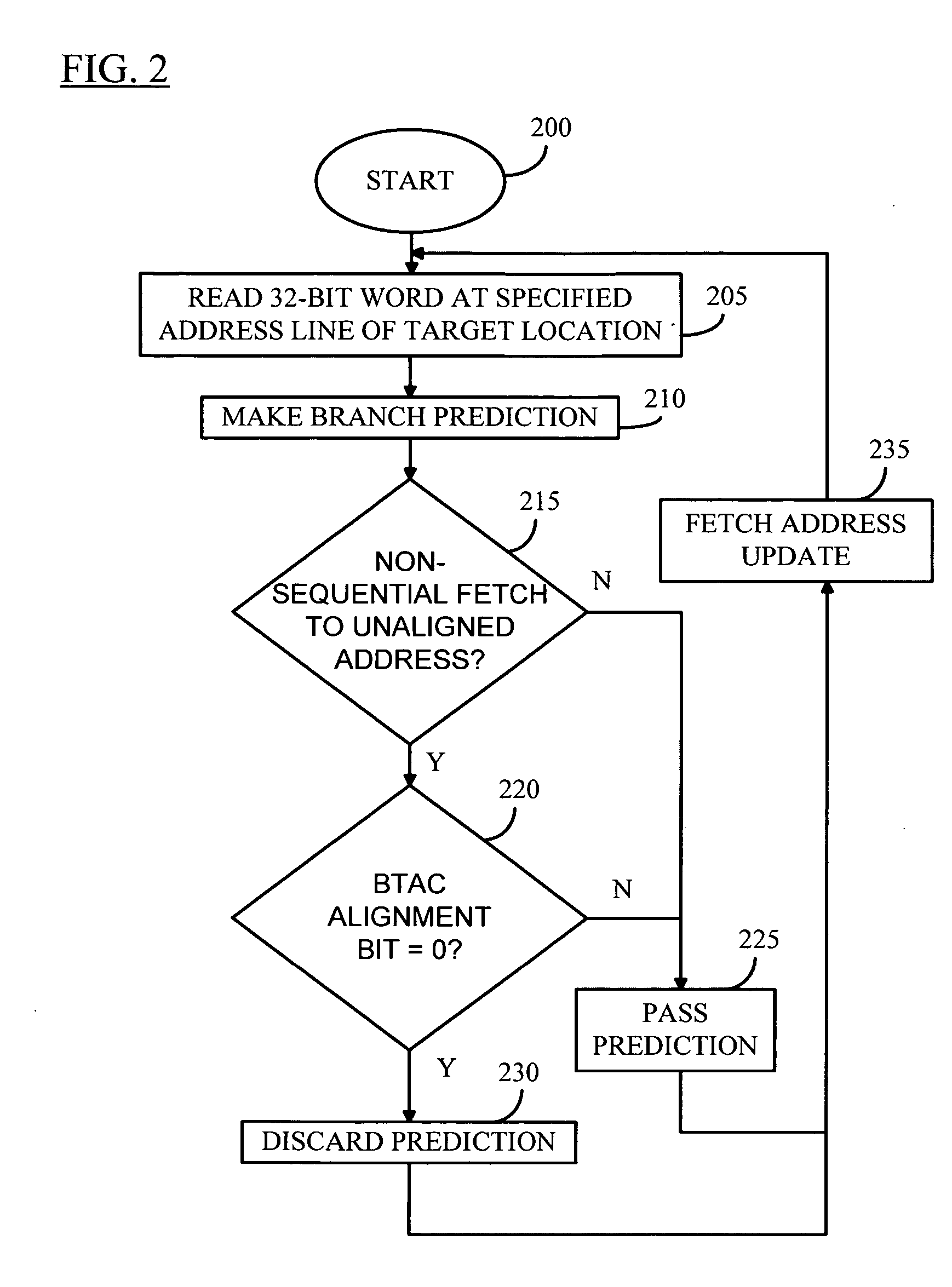

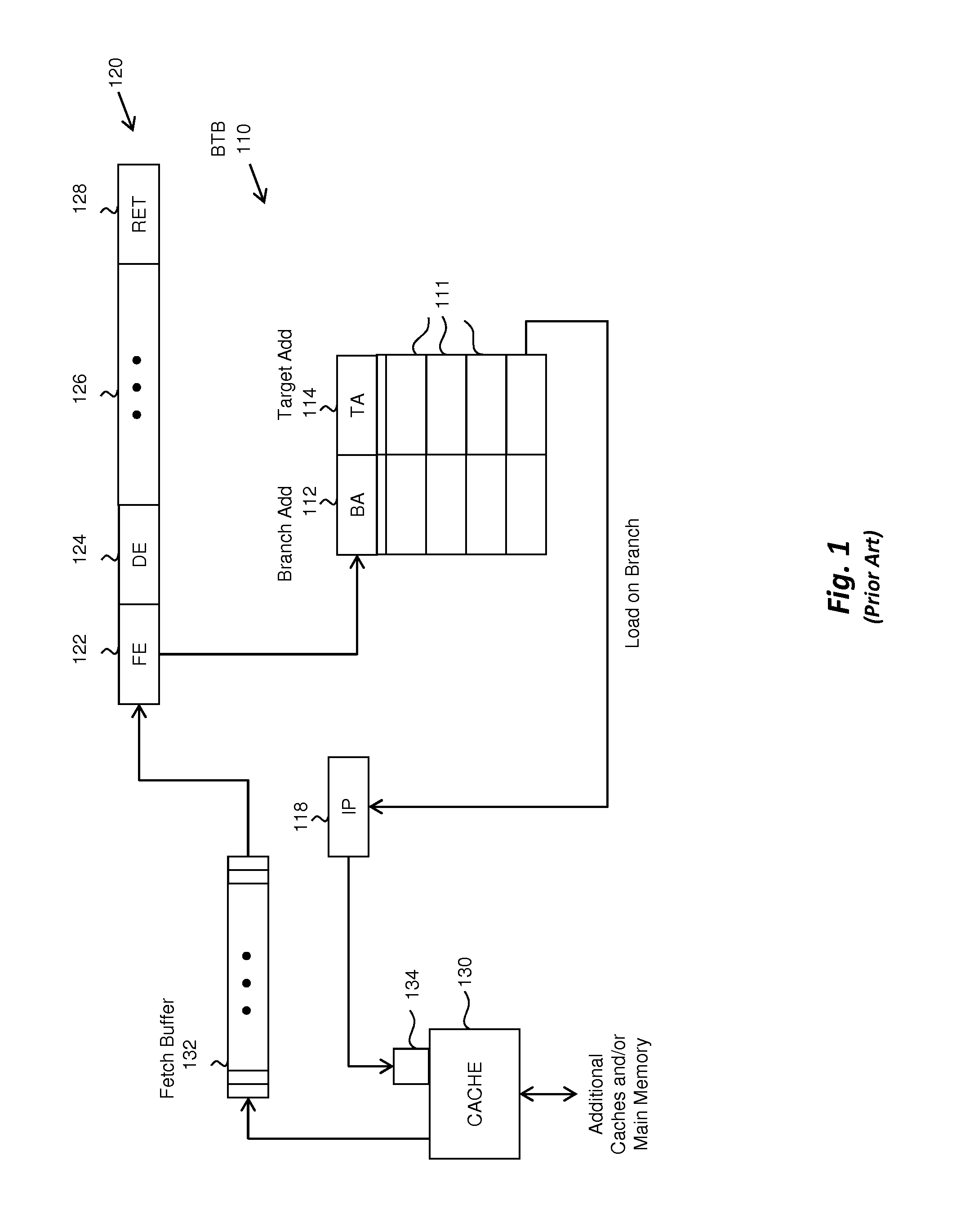

Systems and methods for performing branch prediction in a variable length instruction set microprocessor

InactiveUS20050278517A1Reduce power consumptionImprove performanceEnergy efficient ICTCode conversionVariable lengthInstruction set

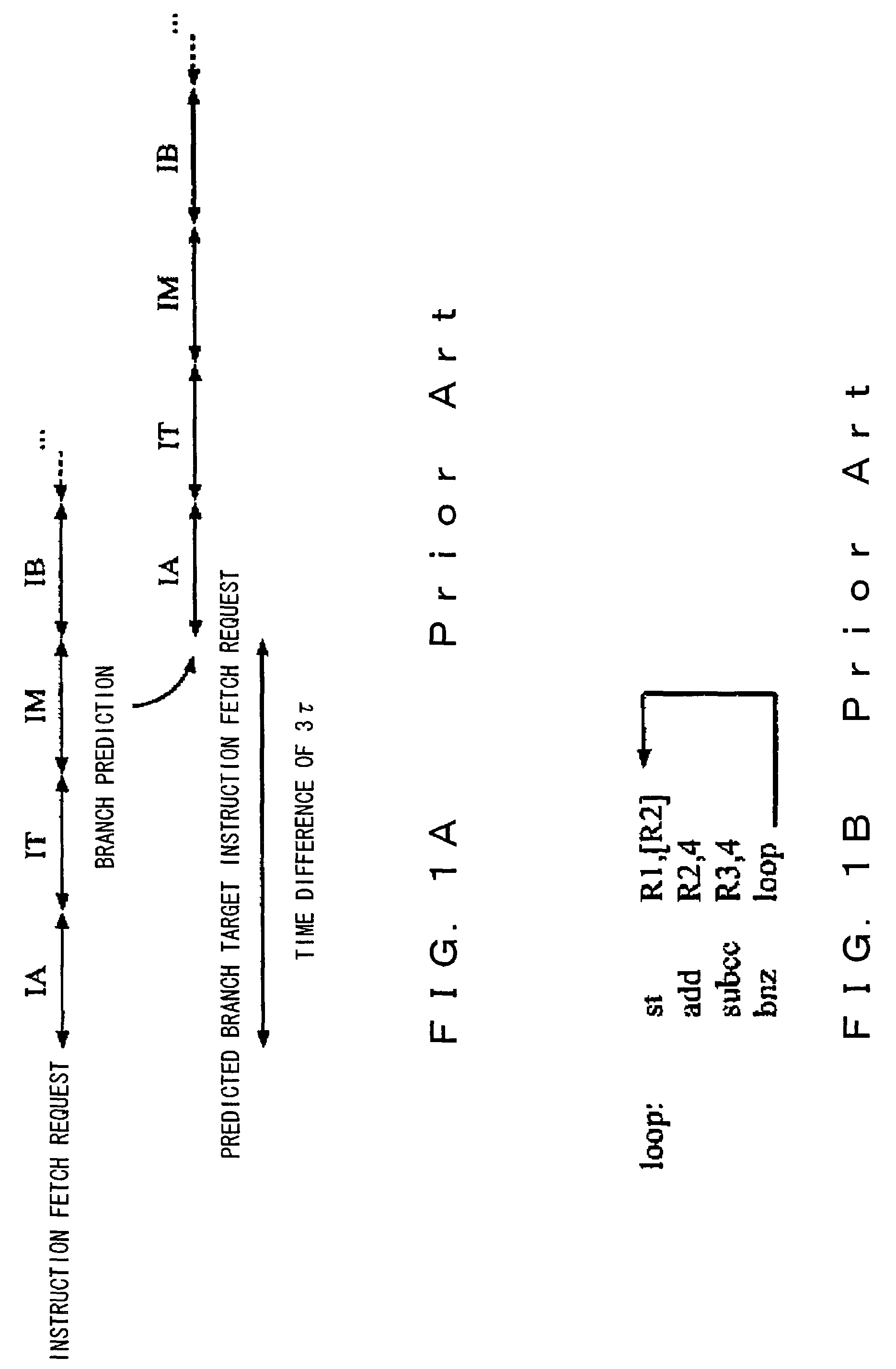

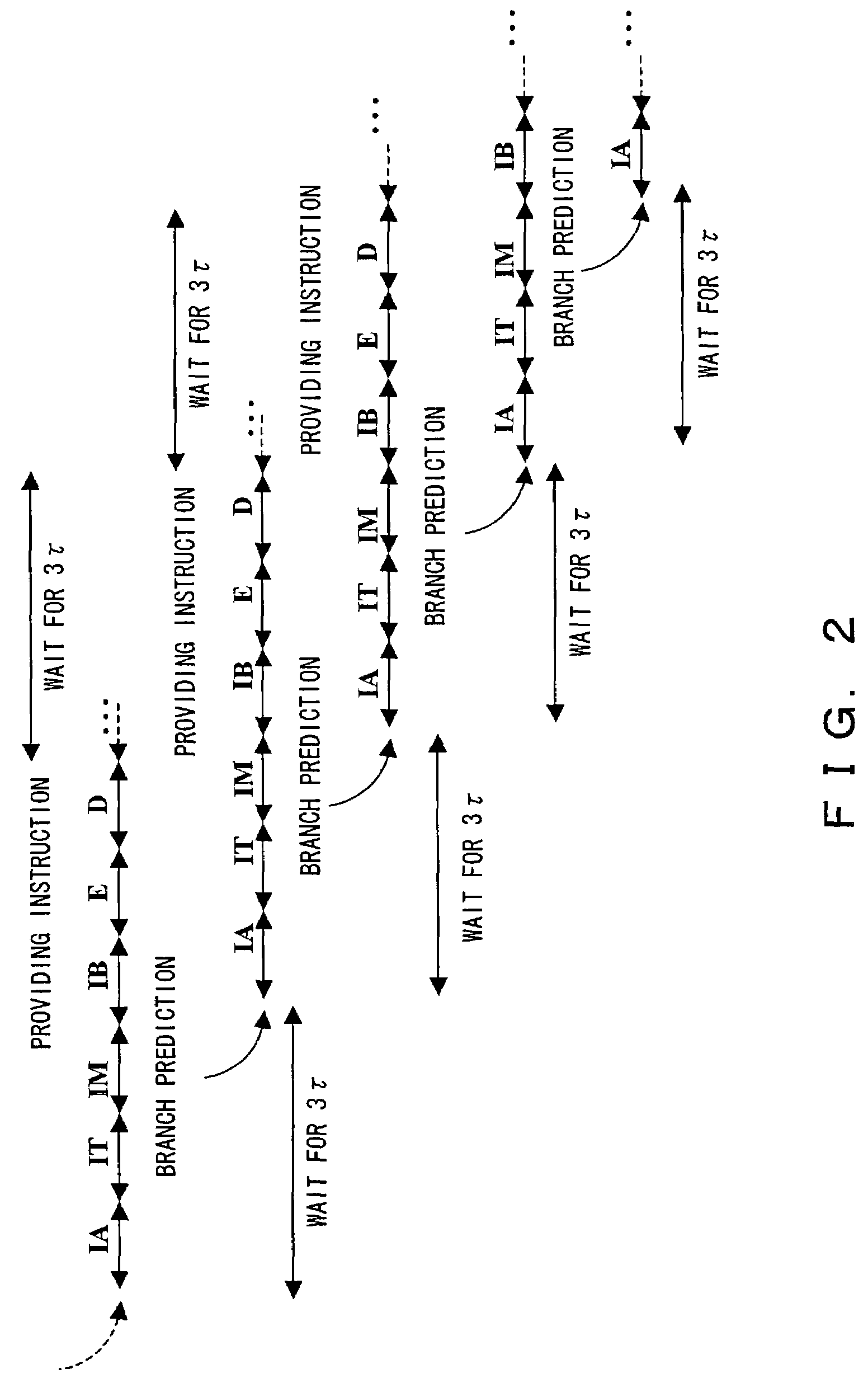

A method of performing branch prediction in a microprocessor using variable length instructions is provided. An instruction is fetched from memory based on a specified fetch address and a branch prediction is made based on the address. The prediction is selectively discarded if the look-up was based on a non-sequential fetch to an unaligned instruction address and a branch target alignment cache (BTAC) bit of the instruction is equal to zero. In order to remove the inherent latency of branch prediction, an instruction prior to a branch instruction may be fetched concurrently with a branch prediction unit look-up table entry containing prediction information for a next instruction word. Then, the branch instruction is fetched and a prediction is made on this branch instruction based on information fetched in the previous cycle. The predicted target instruction is fetched on the next clock cycle. If zero overhead loops are used, a look-up table of a branch prediction unit is updated whenever the zero-overhead loop mechanism is updated. A last fetch address of a last instruction of a loop body of a zero overhead loop in the branch prediction look-up table is stored. Then, whenever an instruction fetch hits the end of a loop body, predictively re-directing an instruction fetch to the start of the loop body. The last fetch address of the loop body is derived from the address of the first instruction after the end of the loop.

Owner:ARC INT LTD

Microprocessor and execution method thereof

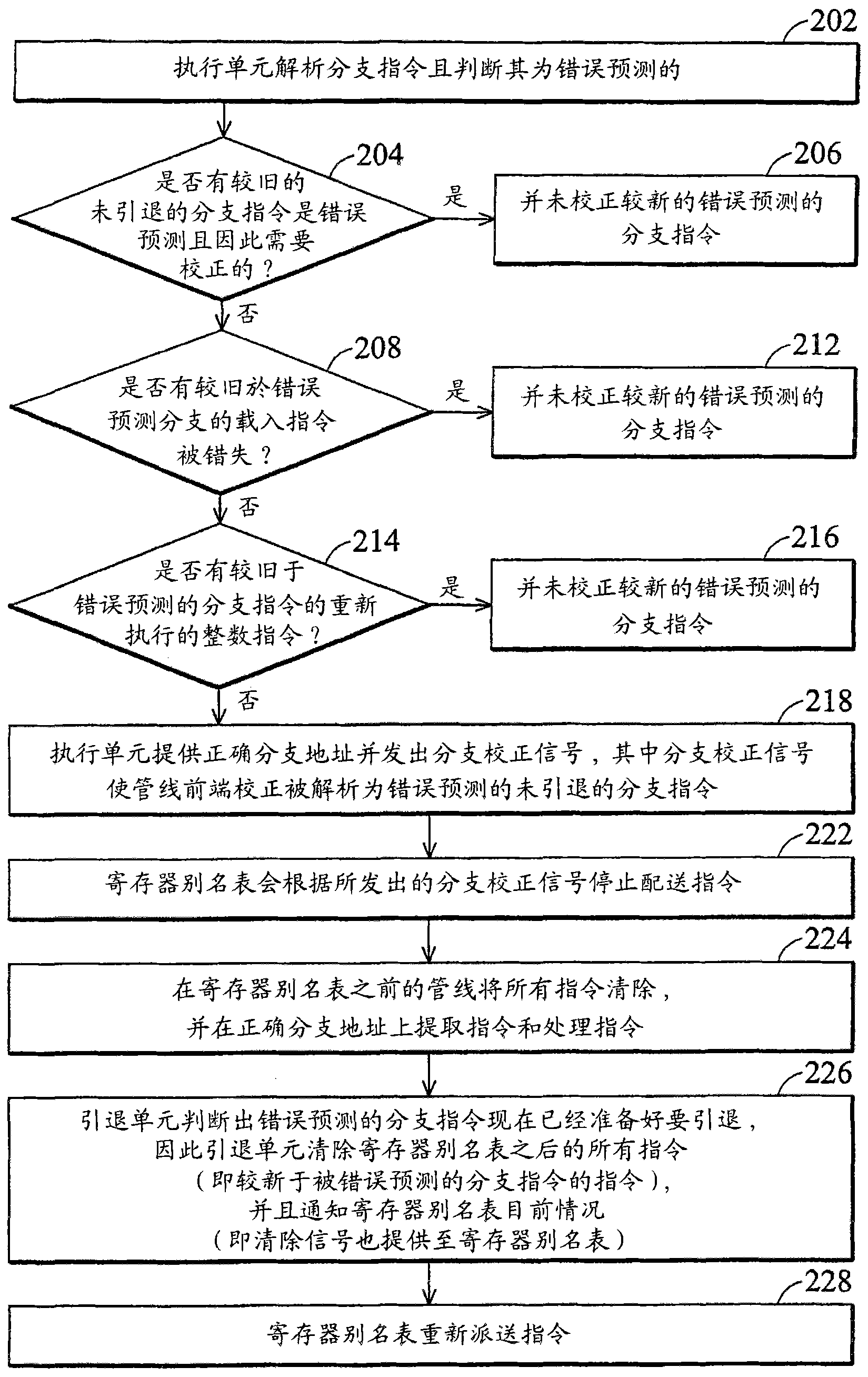

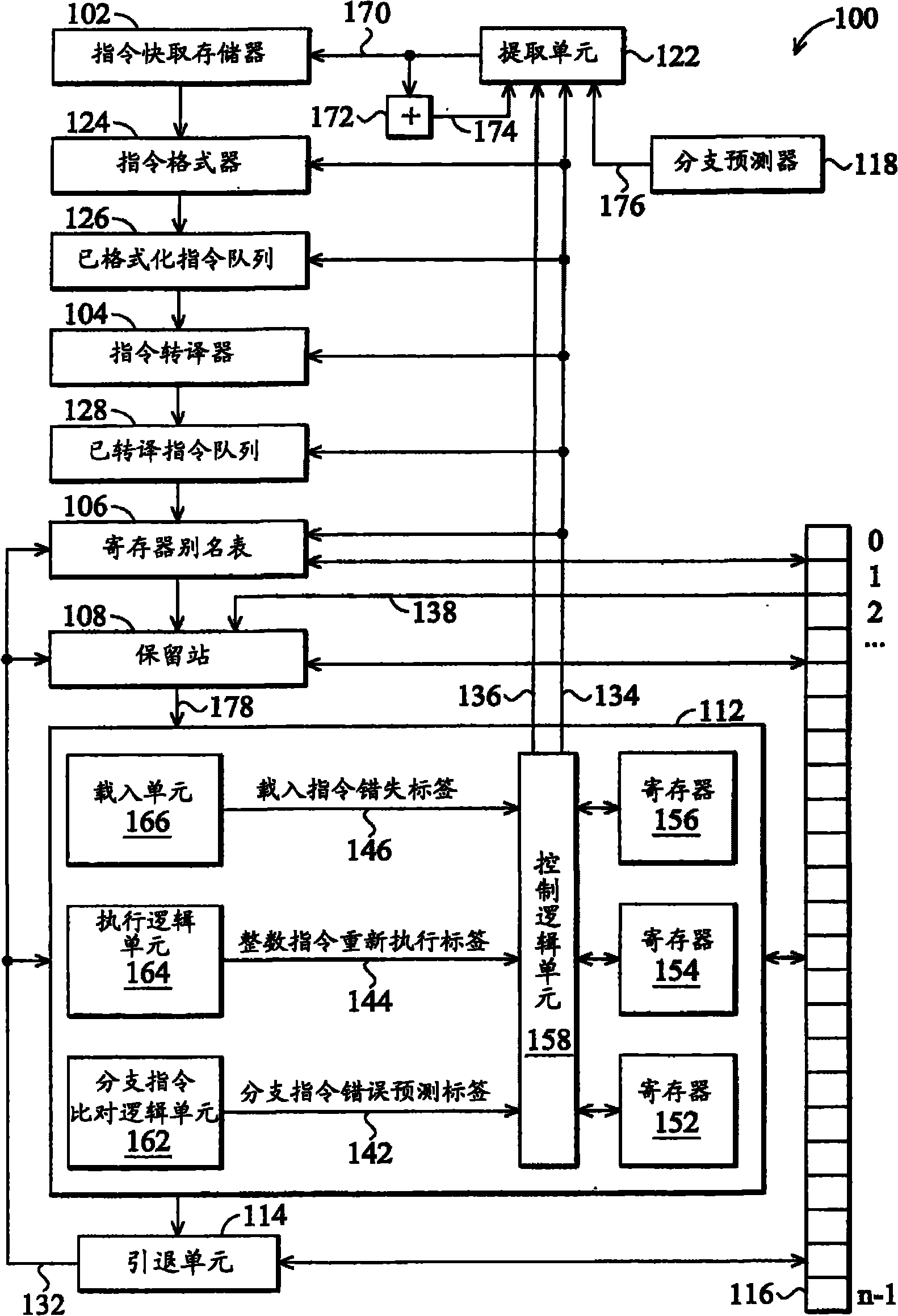

A microprocessor and an execution method thereof are used for pipelined out-of-order execution in-order retire. The microprocessor includes a branch predictor that predicts a target address of a branch instruction, a fetch unit that fetches instructions at the predicted target address, and an execution unit that: resolves a target address of the branch instruction and detects that the predicted and resolved target addresses are different; determines whether there is an unretired instruction that must be corrected and that is older in program order than the branch instruction, in response to detecting that the predicted and resolved target addresses are different; execute the branch instruction by flushing instructions fetched at the predicted target address and causing the fetch unit to fetch from the resolved target address, if there is not an unretired instruction that must be corrected and that is older in program order than the branch instruction; and otherwise, refrain from executing the branch instruction.

Owner:VIA TECH INC

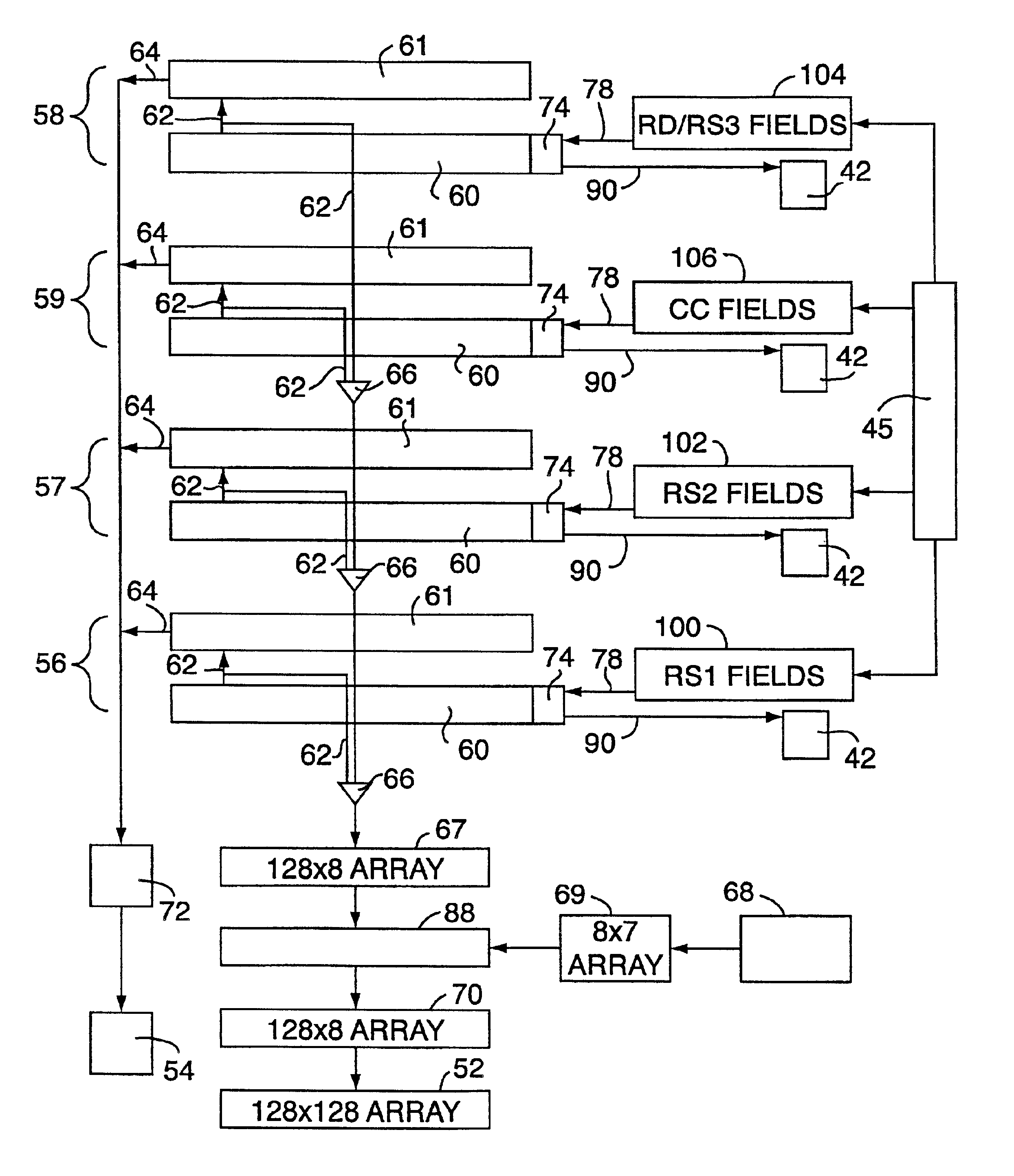

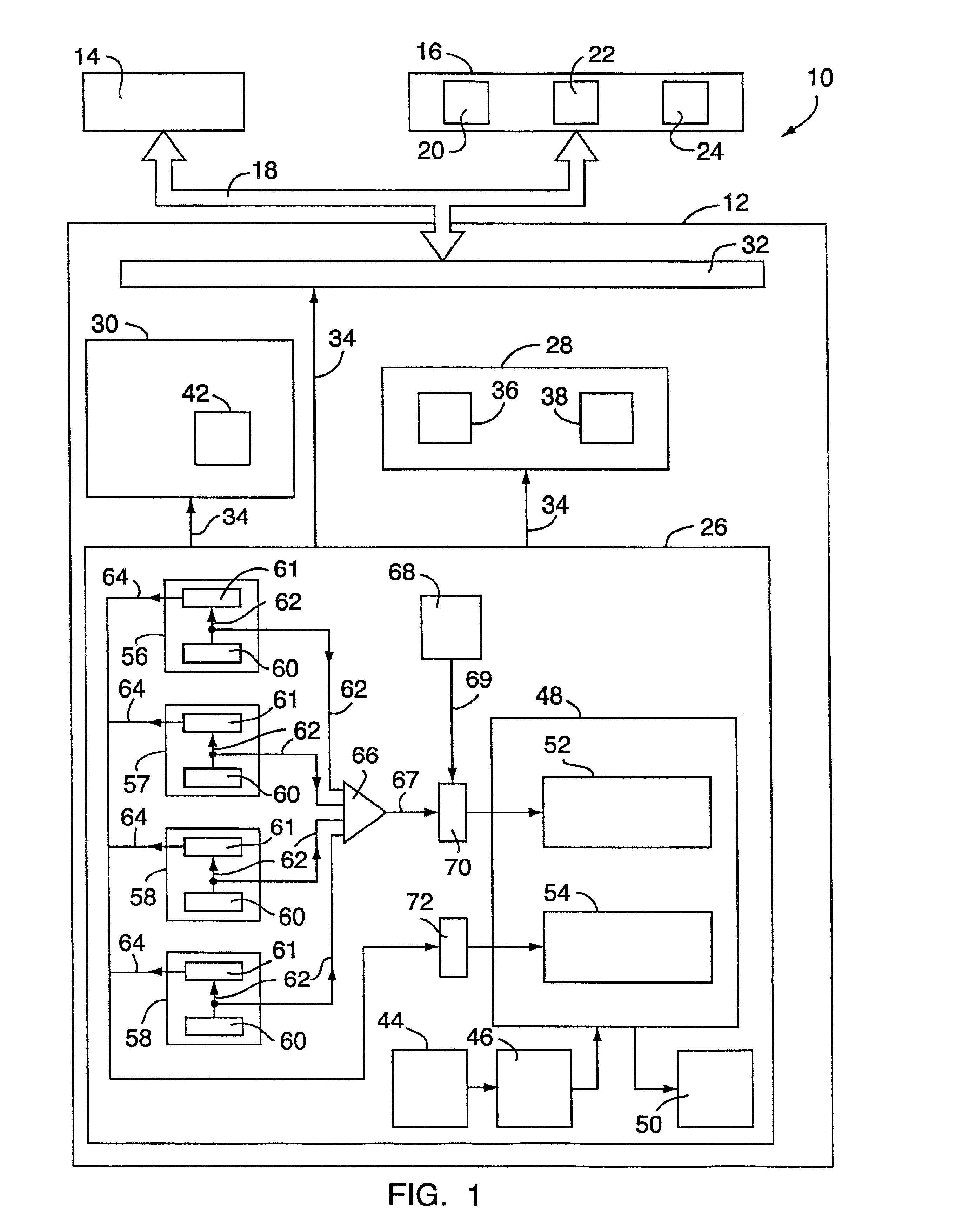

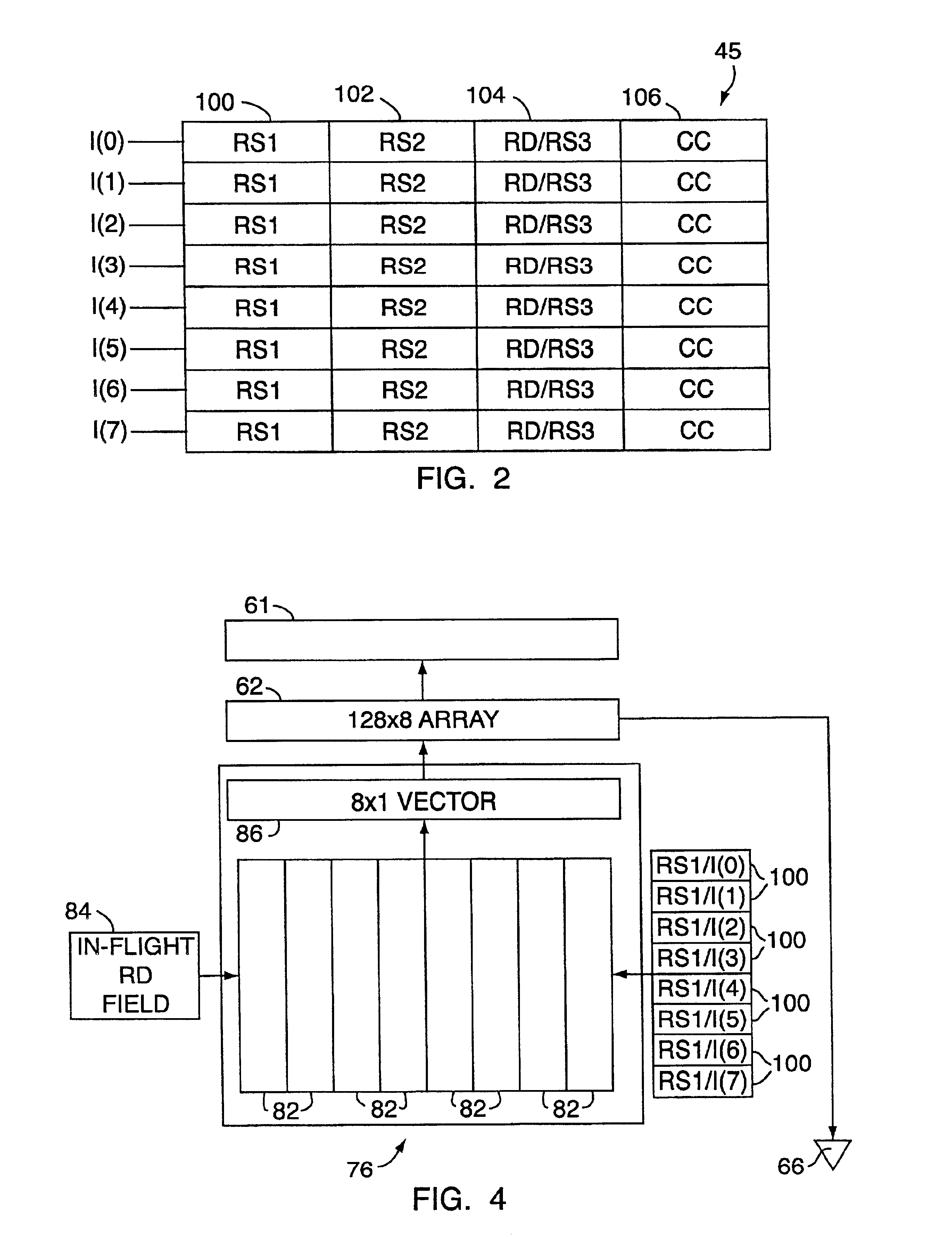

Superscalar processor having content addressable memory structures for determining dependencies

InactiveUS6862676B1Lower requirementRapid designMemory adressing/allocation/relocationGeneral purpose stored program computerScalar processorOrder processing

A superscalar processor having a content addressable memory structure that transmits a first and second output signal is presented. The superscalar processor performs out of order processing on an instruction set. From the first output signal, the dependencies between currently fetched instructions of the instruction set and previous in-flight instructions can be determined and used to generate a dependency matrix for all in-flight instructions. From the second output signal, the physical register addresses of the data required to execute an instruction, once the dependencies have been removed, may be determined.

Owner:ORACLE INT CORP

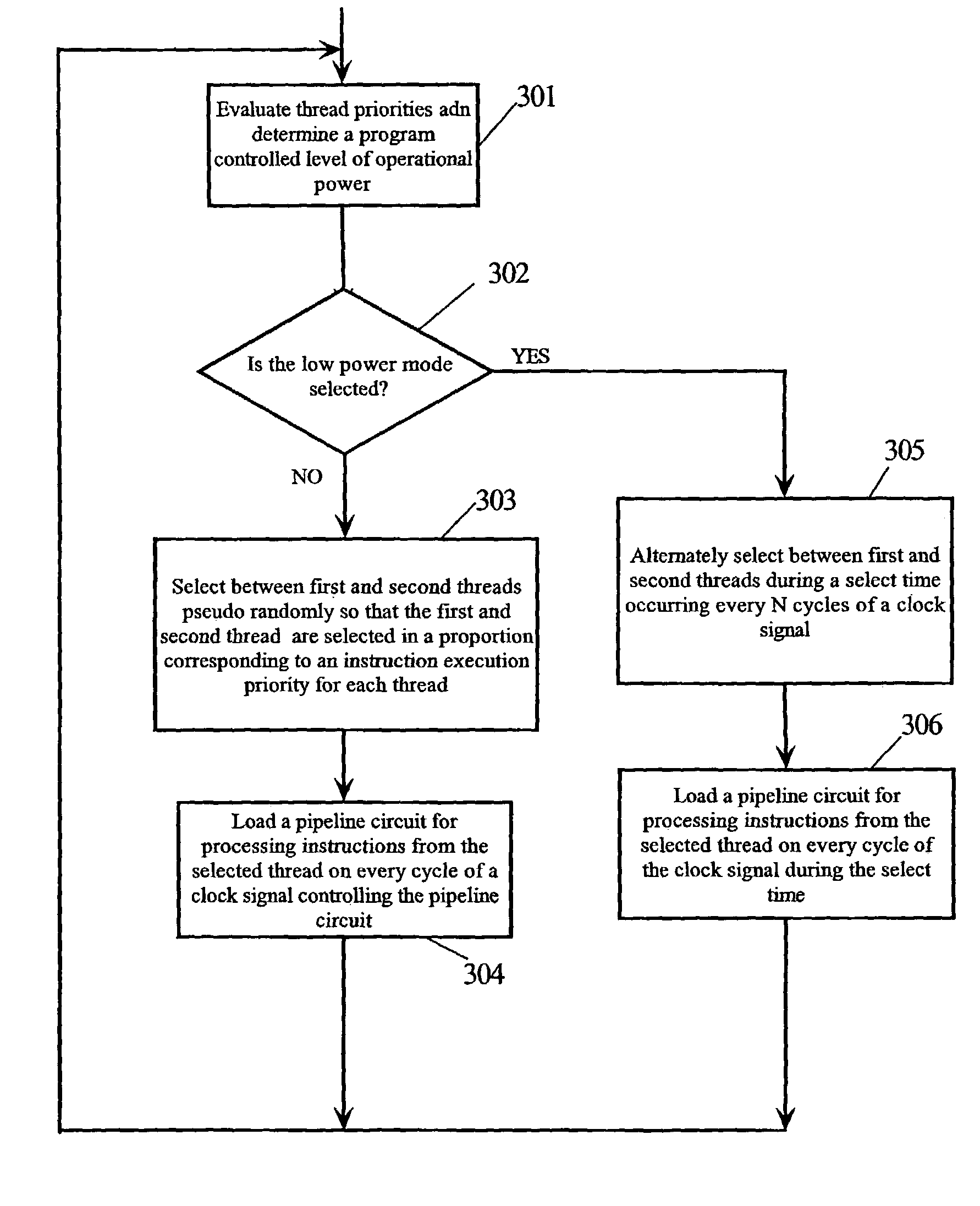

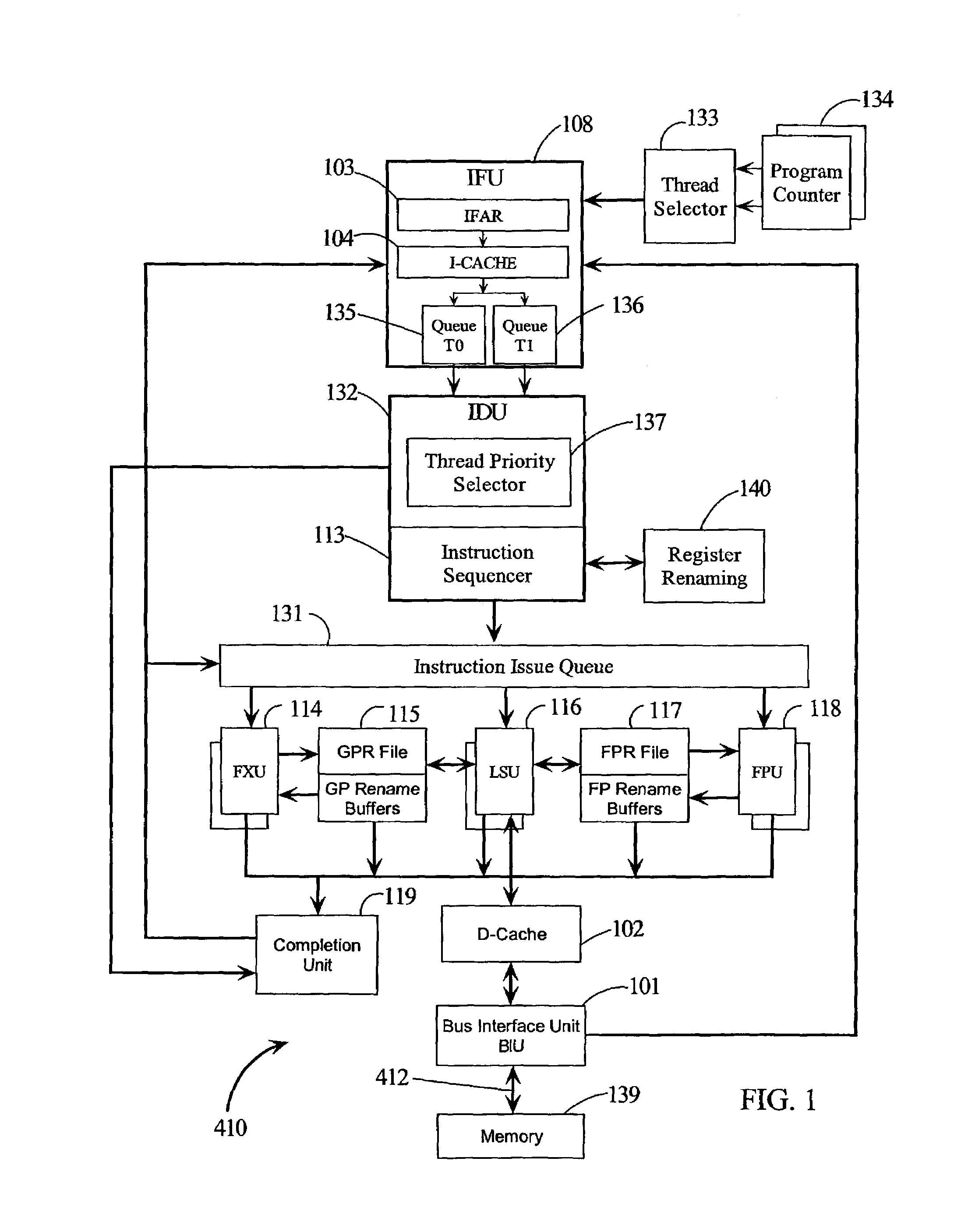

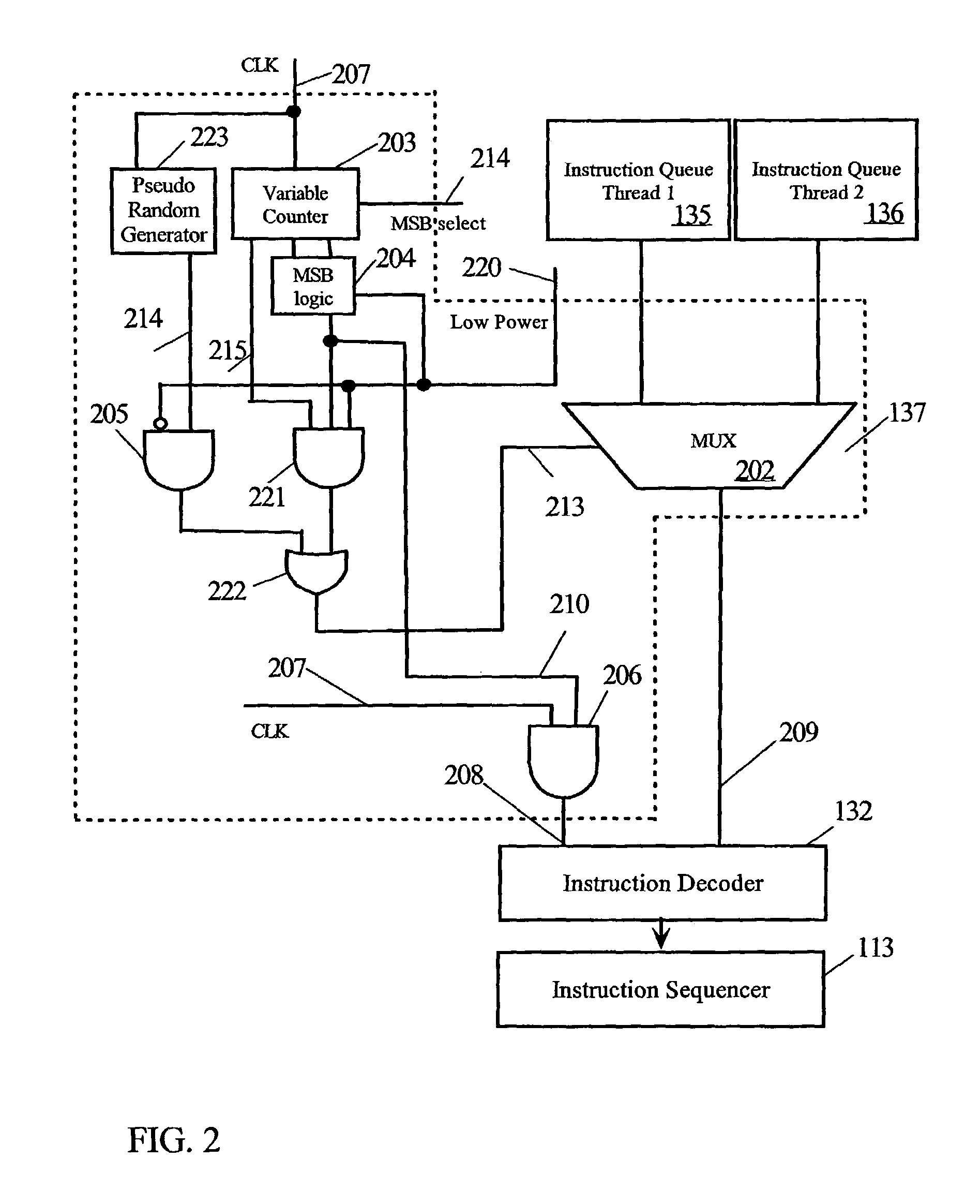

Method for managing power in a simultaneous multithread processor by loading instructions into pipeline circuit during select times based on clock signal frequency and selected power mode

ActiveUS7013400B2Save powerVolume/mass flow measurementDigital computer detailsPower modeLoad instruction

A register in the control unit of the CPU that is used to keep track of the address of the current or next instruction is called a program counter. In an SMT system having two threads, the CPU has program counters for both threads and means for alternately selecting between program counters to determine which thread supplies an instruction to the instruction fetch unit (IFU). The software for the SMT assigns a priority to threads entering the code stream. Instructions from the threads are read from the instruction queues pseudo-randomly and proportional to their execution priorities in the normal power mode. If both threads have a lowest priority, a low power mode is set generating a gated select time every N clock cycles of a clock when valid instructions are loaded. N may be adjusted to vary the amount of power savings and the gated select time.

Owner:IBM CORP

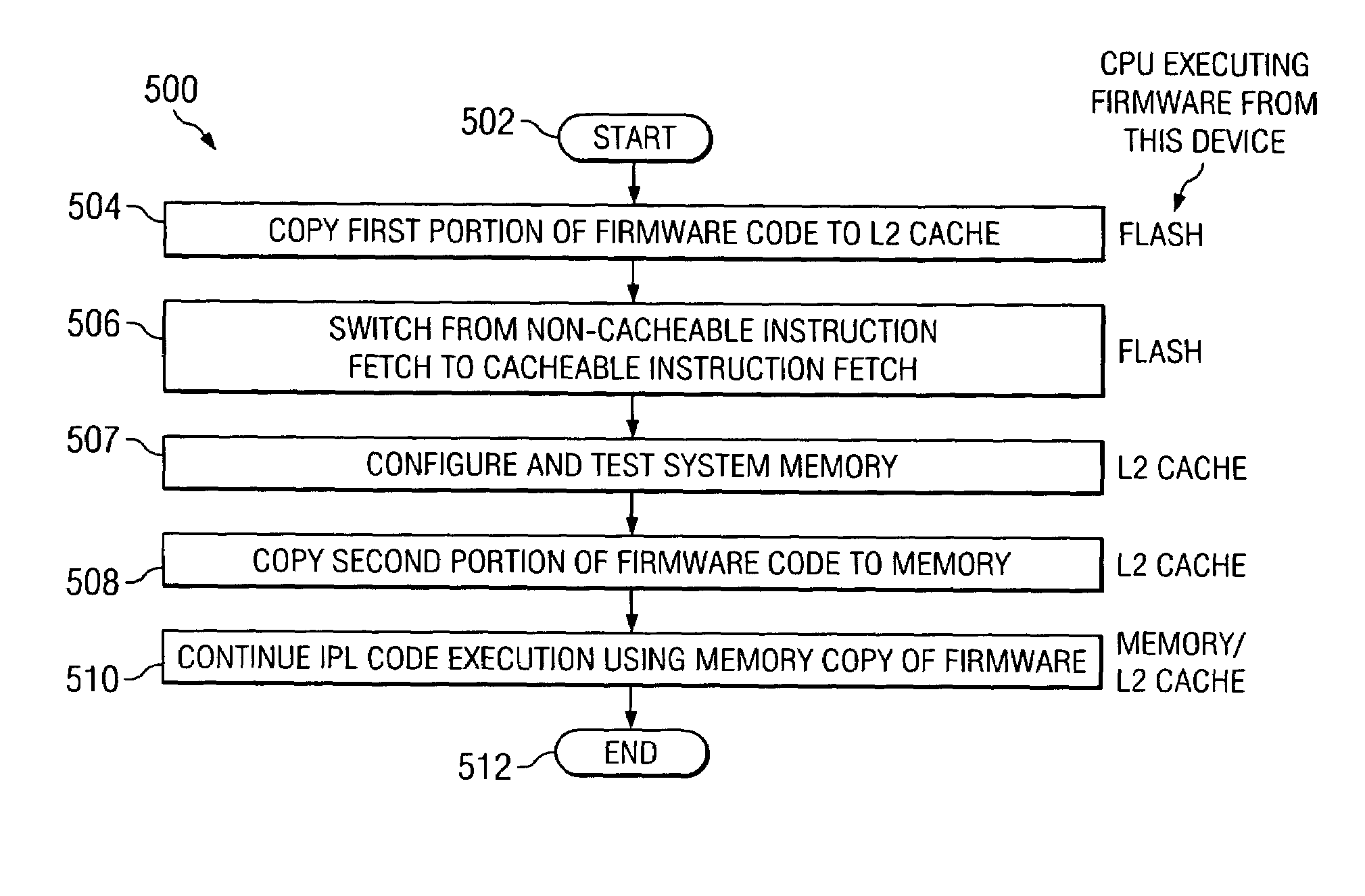

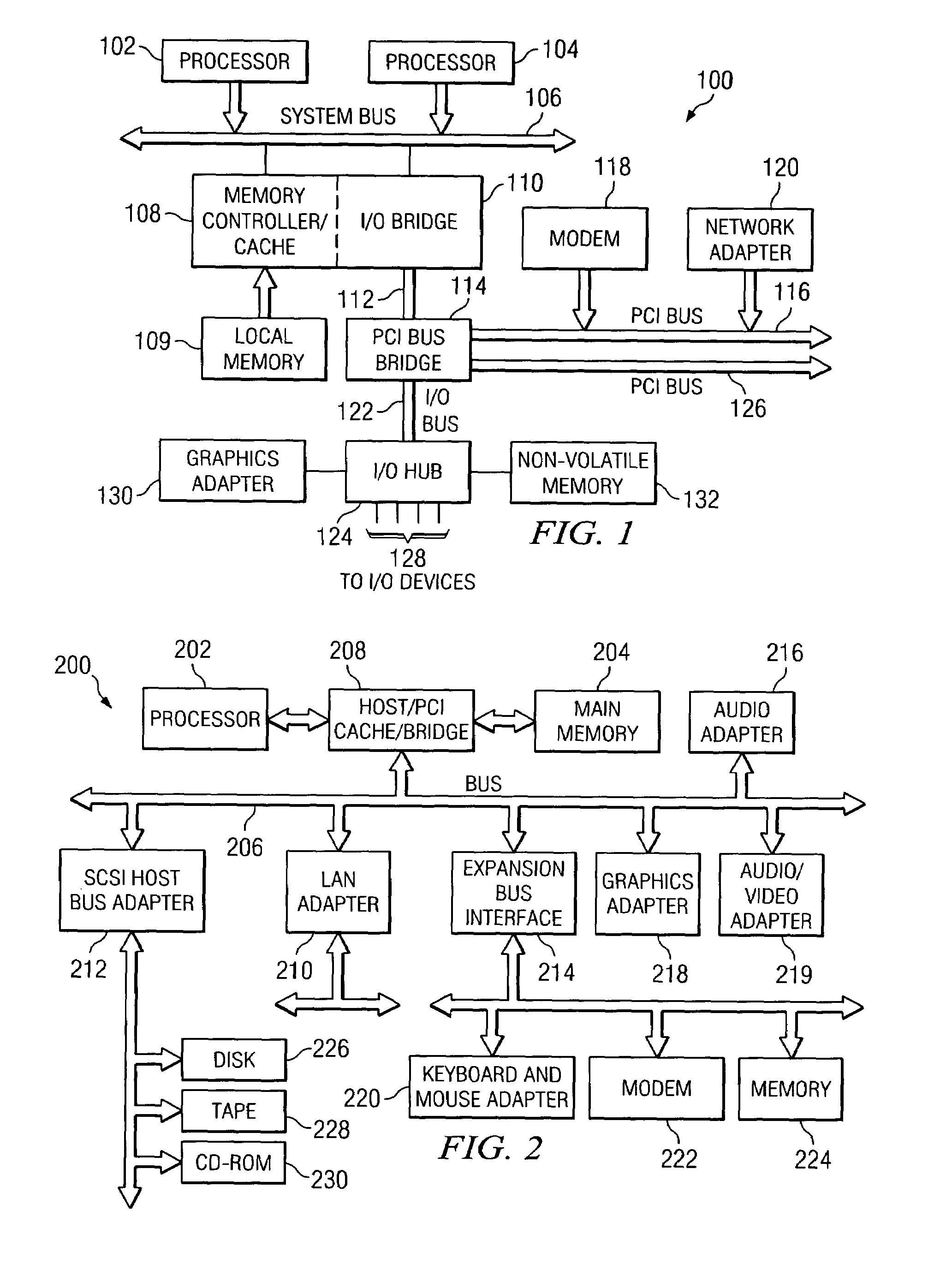

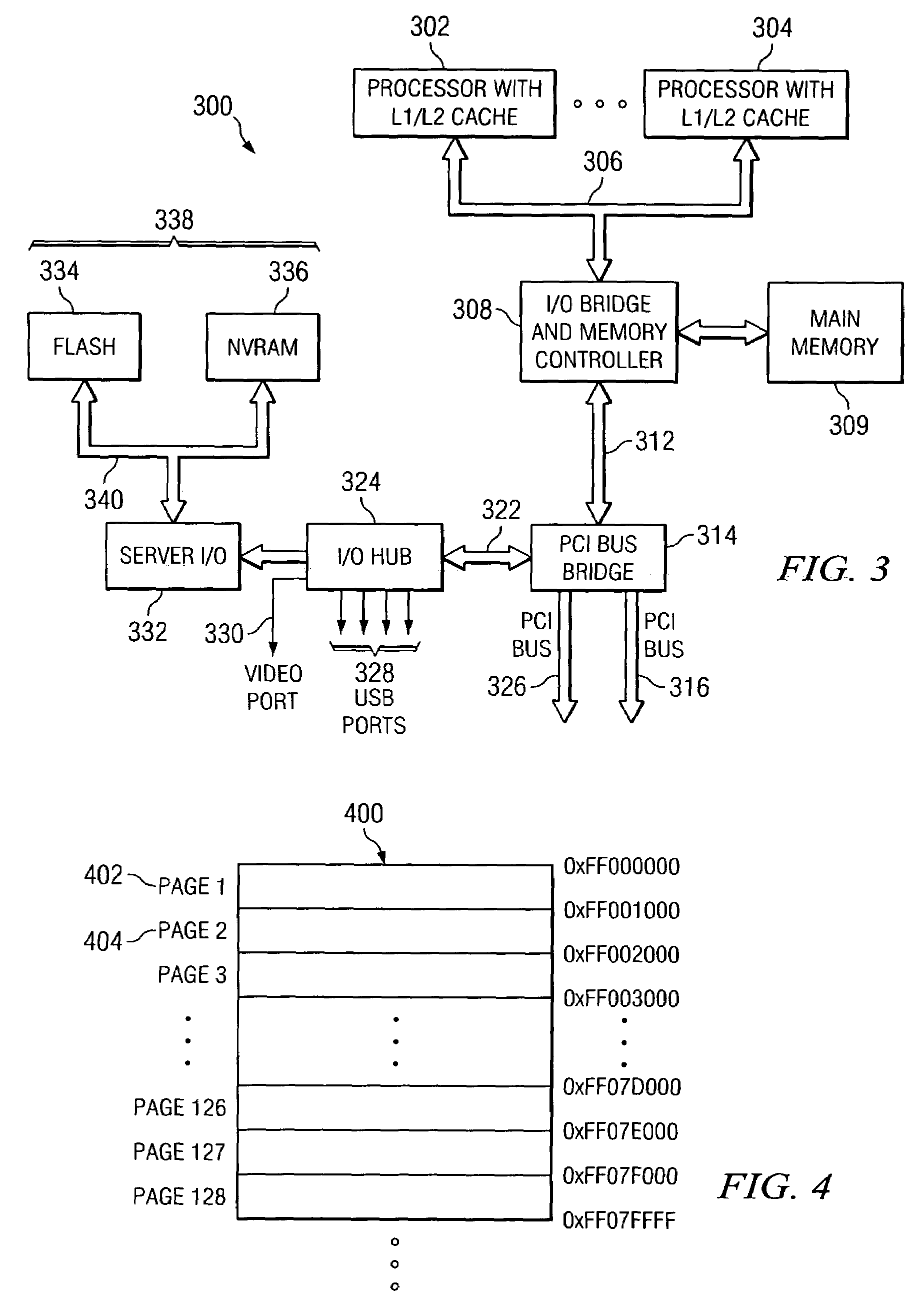

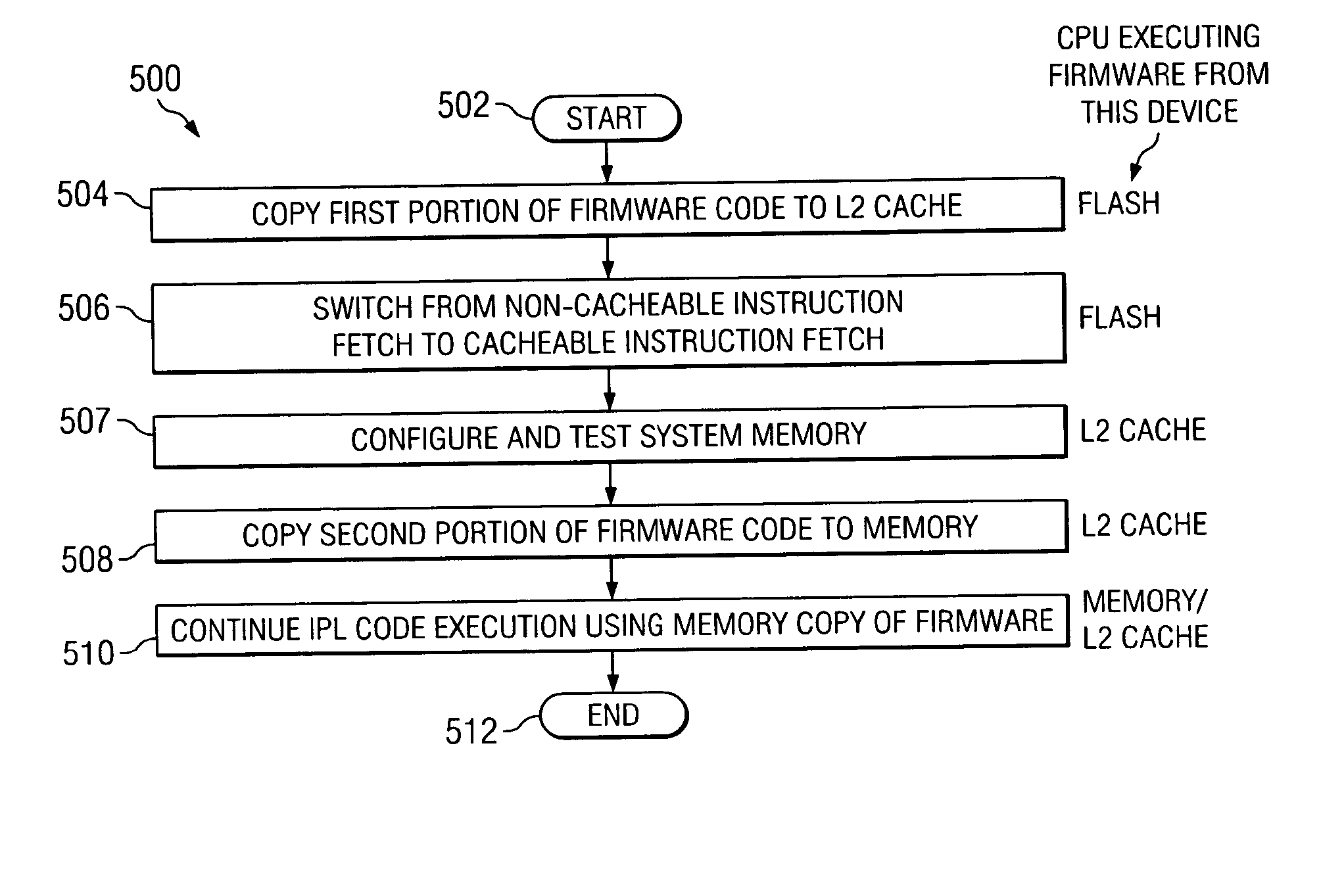

Technique for system initial program load or boot-up of electronic devices and systems

InactiveUS7139909B2Lower performance requirementsImprove system performanceData resettingBootstrappingParallel computingSystem hardware

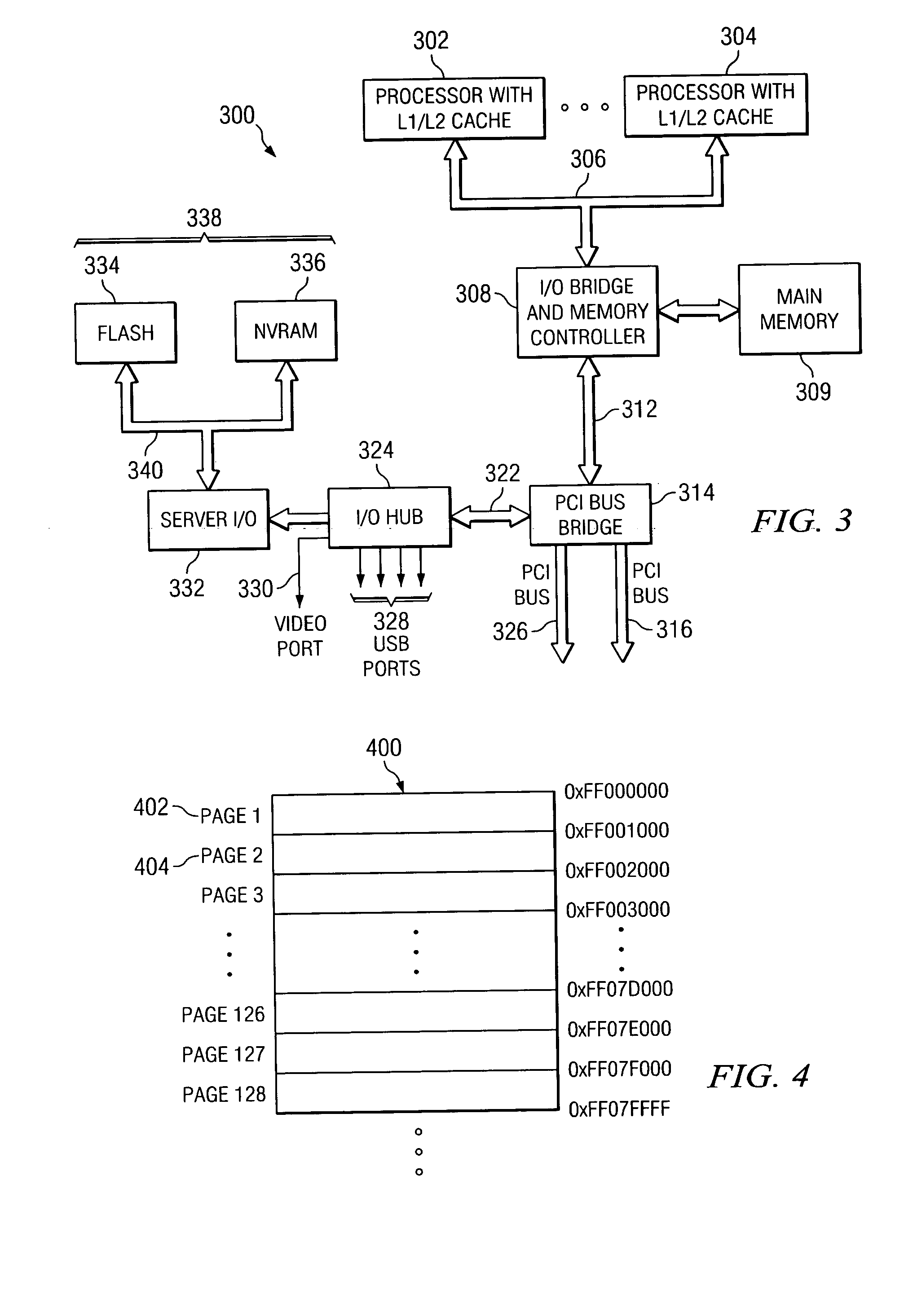

A means for minimizing time for a system / device initial program load (IPL) in a system that will not support instruction prefetching when executing IPL code out of non-volatile memory. The IPL code is organized into a first portion and second portion. The first portion is executed from the non-volatile memory device to configure system memory; the first portion also provides initial control of cache inhibit and cache enable by way of software control. The cache-enabling code is strategically located at a memory page boundary such that the system hardware will disable instruction prefetching in an adjoining page lust past this cache enabling software code. After the first portion of IPL code configures system memory, the second portion is copied into memory through the L2 cache and executed from memory with cache enabled to allow speculative instruction prefetching.

Owner:LINKEDIN

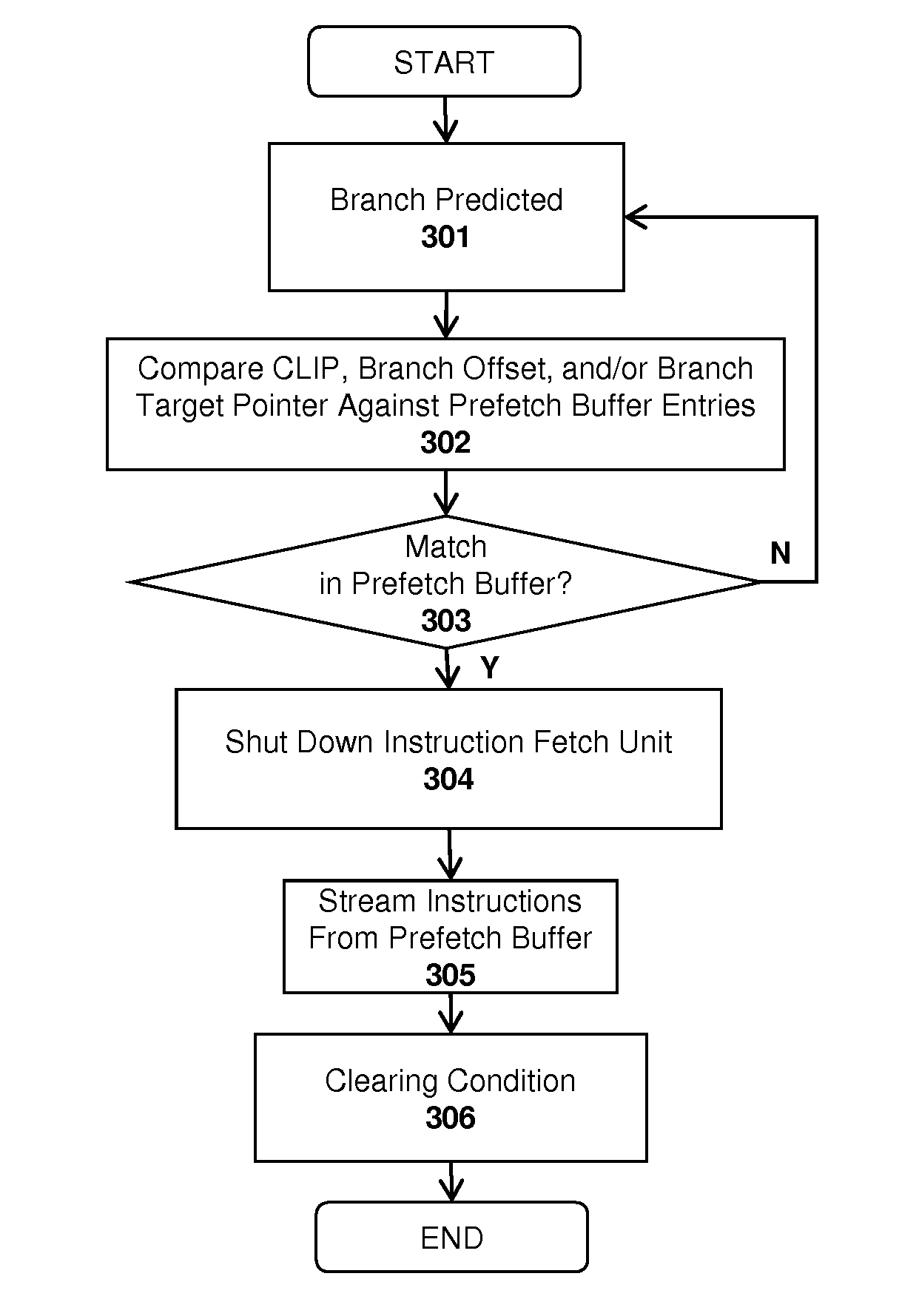

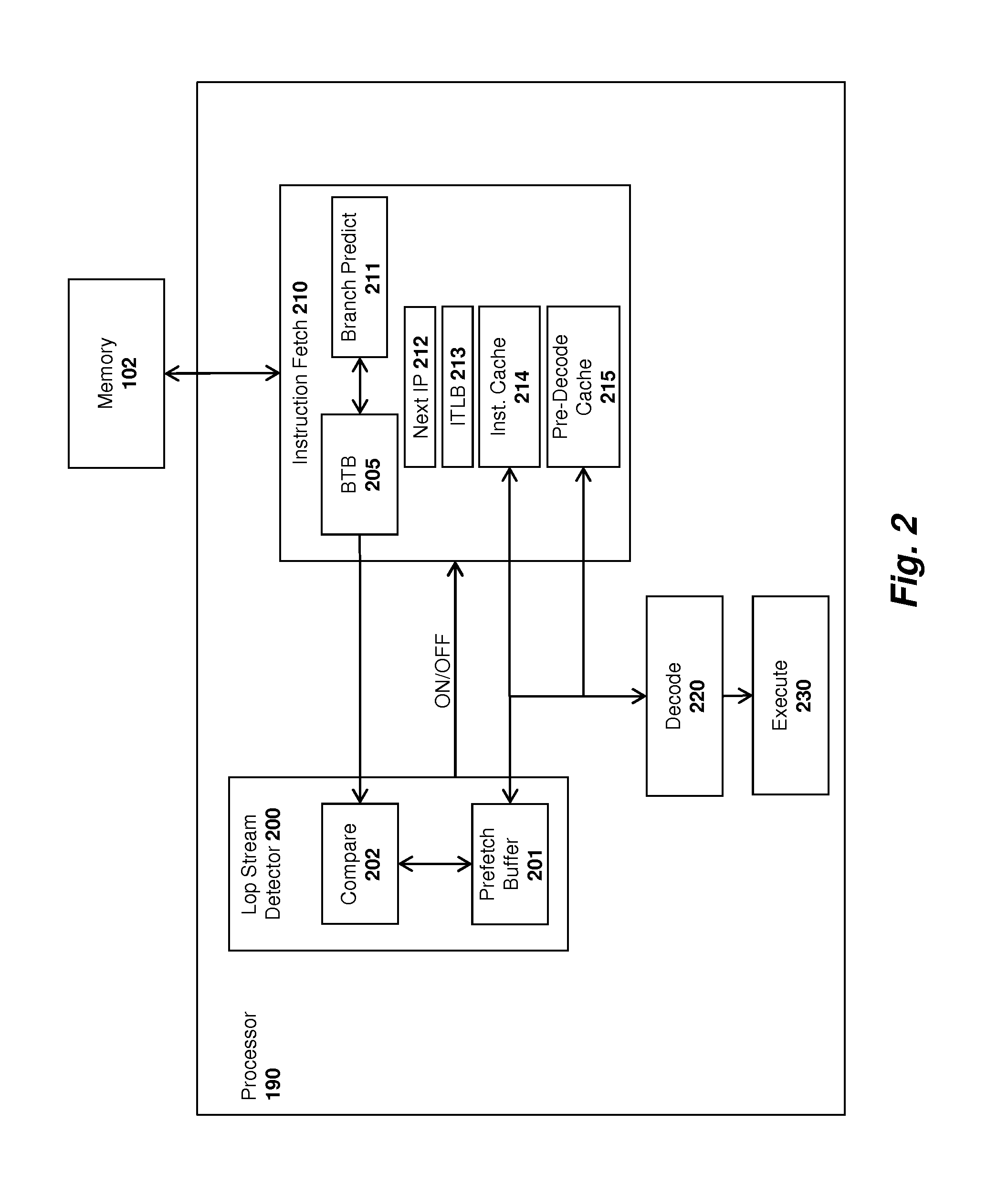

Method and apparatus for reducing power consumption in a processor by powering down an instruction fetch unit

InactiveUS20120079303A1Energy efficient ICTProgram control using stored programsElectricityInstruction unit

An apparatus and method are described for reducing power consumption in a processor by powering down an instruction fetch unit. For example, one embodiment of a method comprises: detecting a branch, the branch having addressing information associated therewith; comparing the addressing information with entries in an instruction prefetch buffer to determine whether an executable instruction loop exists within the prefetch buffer; wherein if an instruction loop is detected as a result of the comparison, then powering down an instruction fetch unit and / or components thereof; and streaming instructions directly from the prefetch buffer until a clearing condition is detected

Owner:INTEL CORP

Method and apparatus for efficient utilization for prescient instruction prefetch

ActiveUS7404067B2Digital computer detailsMultiprogramming arrangementsResource utilizationComputer science

Embodiments of an apparatus, system and method enhance the efficiency of processor resource utilization during instruction prefetching via one or more speculative threads. Renamer logic and a map table are utilized to perform filtering of instructions in a speculative thread instruction stream. The map table includes a yes-a-thing bit to indicate whether the associated physical register's content reflects the value that would be computed by the main thread. A thread progress beacon table is utilized to track relative progress of a main thread and a speculative helper thread. Based upon information in the thread progress beacon table, the main thread may effect termination of a helper thread that is not likely to provide a performance benefit for the main thread.

Owner:TAHOE RES LTD

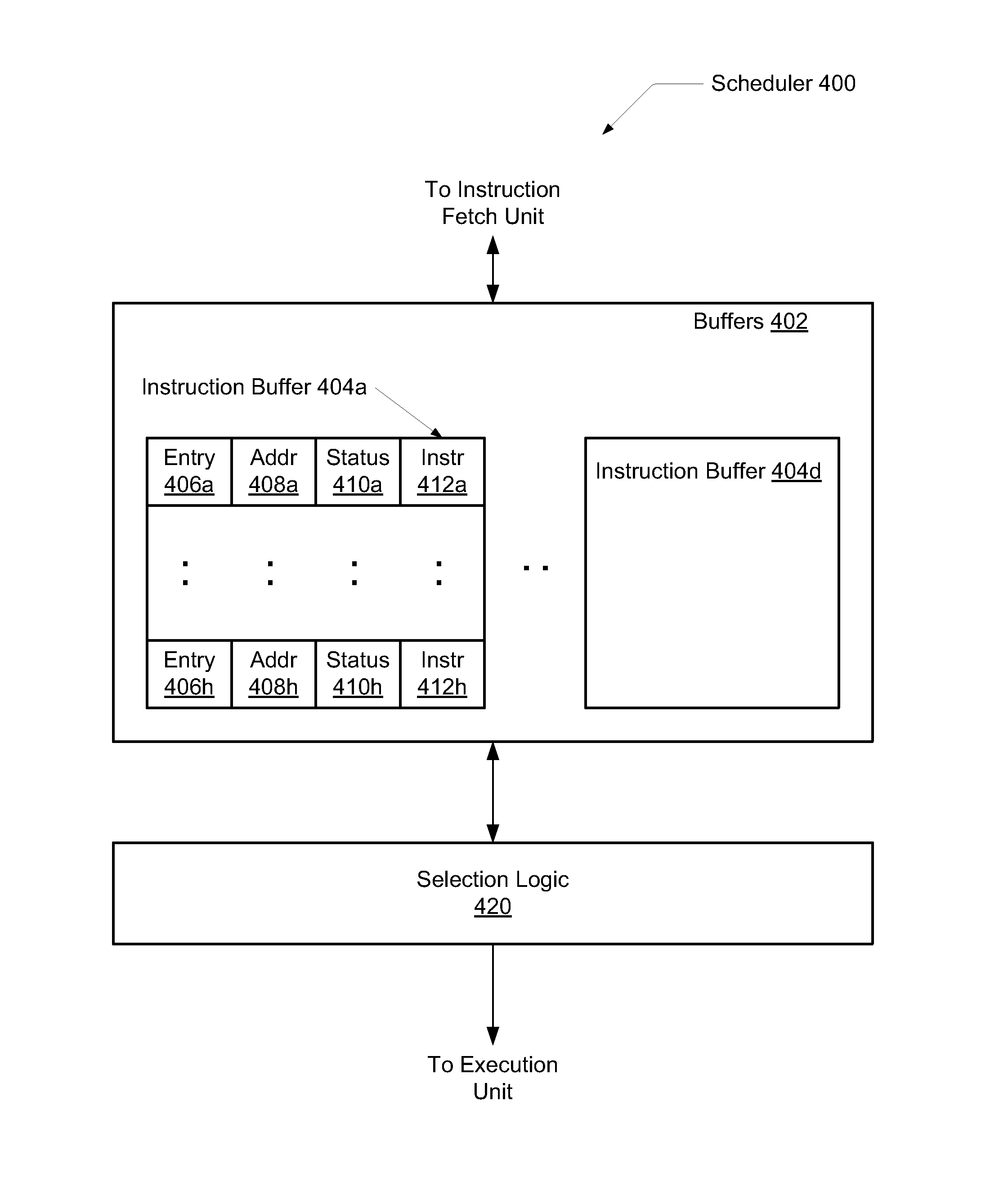

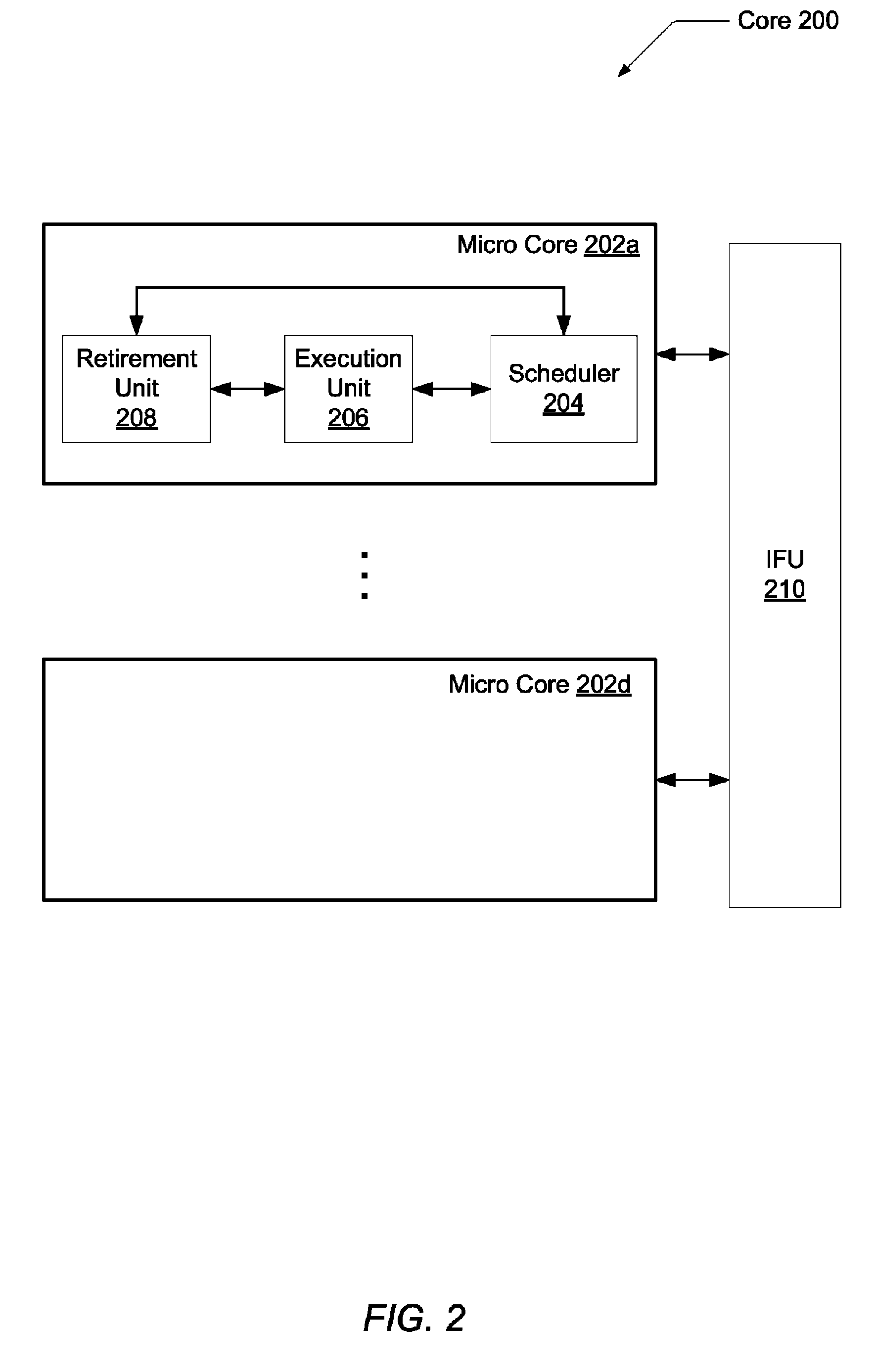

Simultaneous speculative threading light mode

ActiveUS8006073B1Architecture with single central processing unitSpecific program execution arrangementsParallel computingComputer science

A system and method for management of resource allocation of threads for efficient execution of instructions. Prior to dispatching decoded instructions of a first thread from the instruction fetch unit to a buffer within a scheduler, logic within the instruction fetch unit may determine the buffer is already full of dispatched instructions. However, the logic may also determine that a buffer for a second thread within the core or micro core is available. The second buffer may receive and issue decoded instructions for the first thread until the buffer is becomes unavailable. While the second buffer receives and issues instructions for the first thread, the throughput of the system for the first thread may increase due to a reduction in wait cycles.

Owner:ORACLE INT CORP

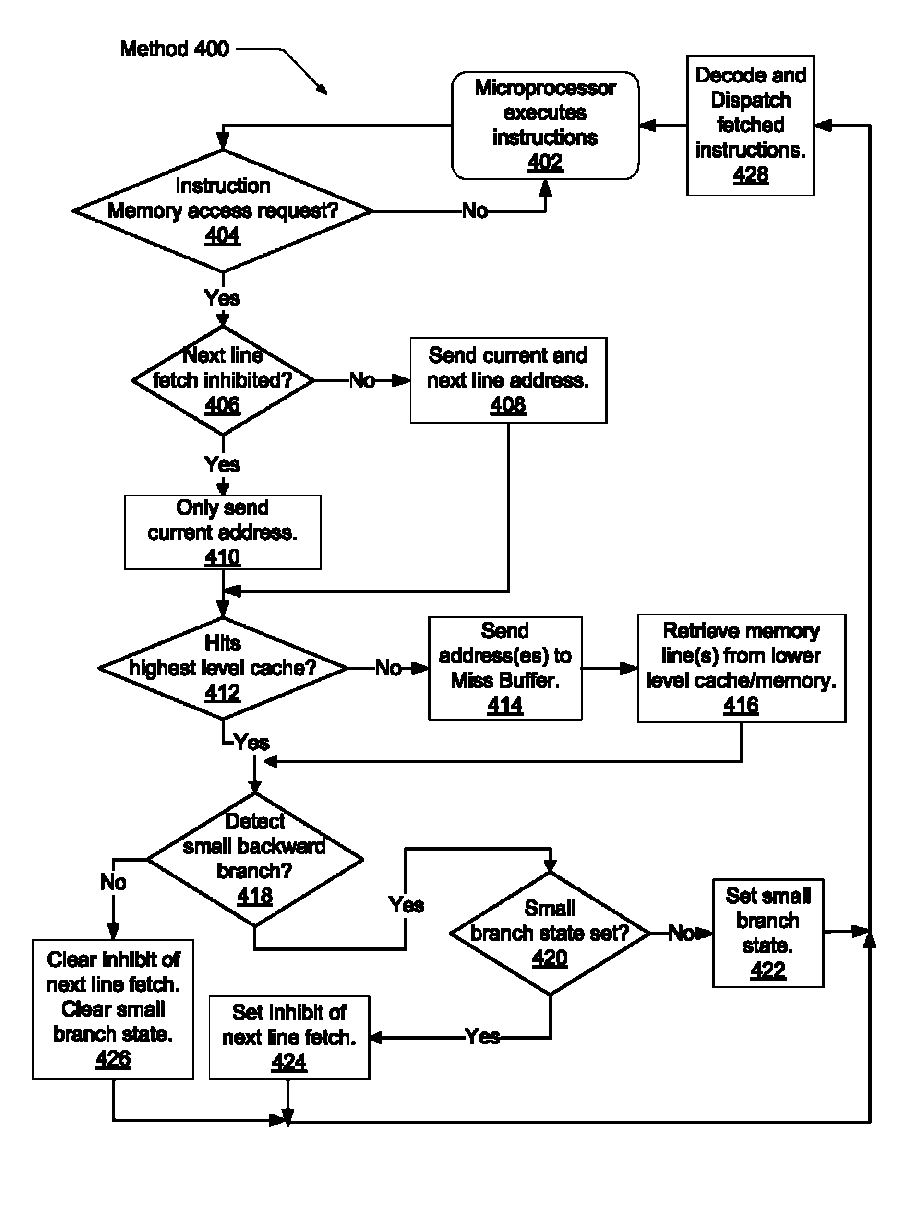

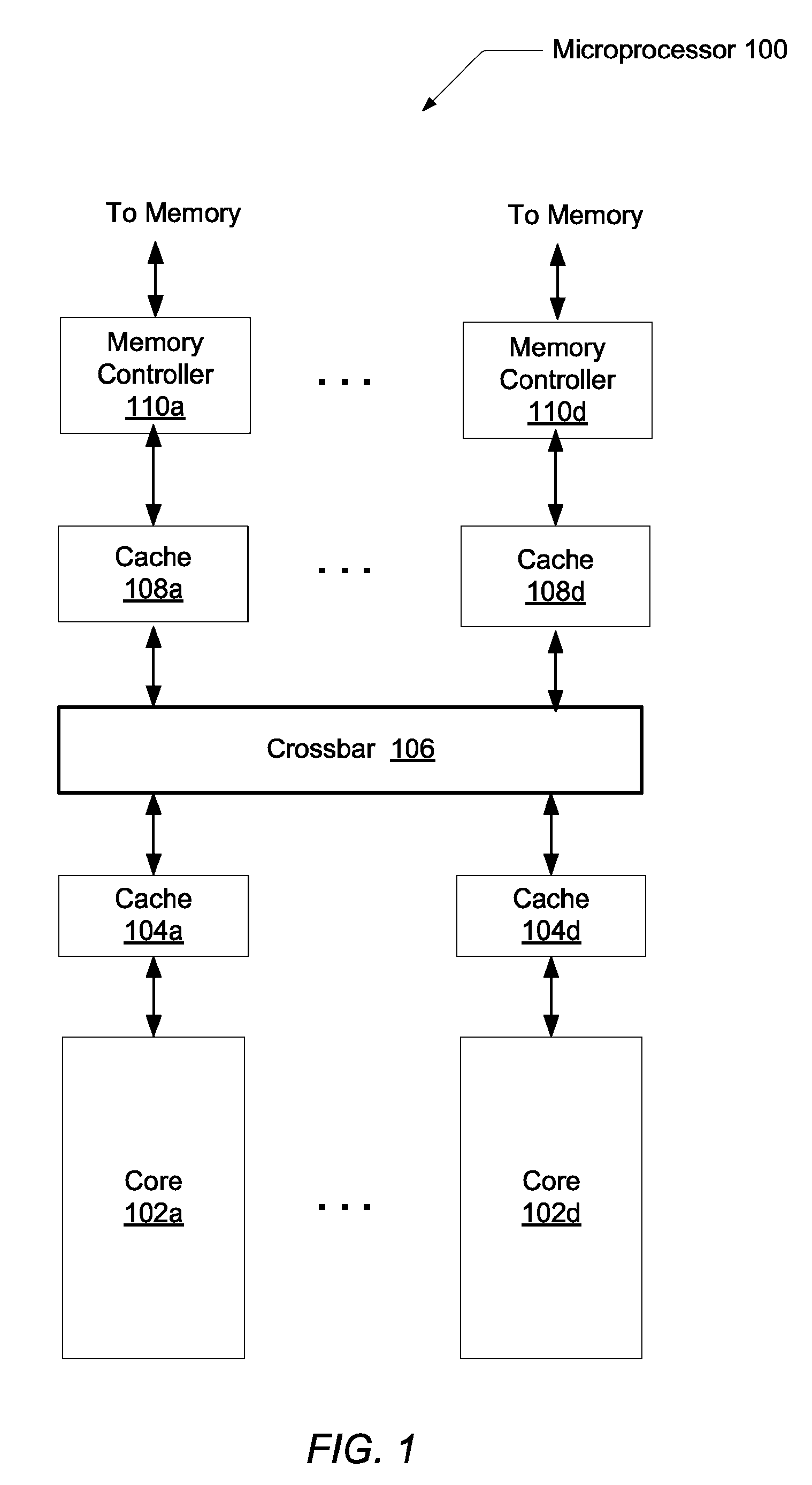

Branch loop performance enhancement

A system and method for management of resource allocation for speculative fetched instructions following small backward branch instructions. An instruction fetch unit speculatively prefetches a memory line for each fetched memory line. Each memory line may have a small backward branch instruction, which is a backward branch instruction that has a target instruction within the same memory line. For each fetched memory line, the instruction fetch unit determines if a small backward branch instruction exists among the instructions within the memory line. If a small backward branch instruction is found and predicted taken, then the instruction fetch unit inhibits the speculative prefetch for that particular thread. The speculative prefetch may resume for that thread after the branch loop is completed. System resources may be better allocated during the iterations of the small backward branch loop.

Owner:ORACLE INT CORP

Technique for system initial program load or boot-up of electronic devices and systems

InactiveUS20050086464A1Lower performance requirementsImprove system performanceData resettingBootstrappingParallel computingTerm memory

A method, apparatus and program product for decreasing overall time for performing a system / device boot-up or initial program load (IPL). The system / device IPL code is organized into a plurality of portions, including a first portion and second portion. The first portion contains code to configure system memory, and is initially copied into the system's L2 cache. This first portion also provides initial control of cache inhibit and cache enable by way of software control. This first portion is executed from a non-volatile memory device to configure system memory and enable instruction caching by way of software control. The cache-enabling code is strategically located at a memory page boundary such that the system / device hardware will disable instruction prefetching in an adjoining page just past this cache enabling software code. After the system memory is configured by the initial portion of the IPL code, the second portion of the IPL code is copied into memory through the L2 cache and executed from memory with cache enabled to allow both normal and speculative instruction prefetching, thus improving overall system performance during system IPL.

Owner:LINKEDIN

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com