Patents

Literature

177 results about "Procedure sequence" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

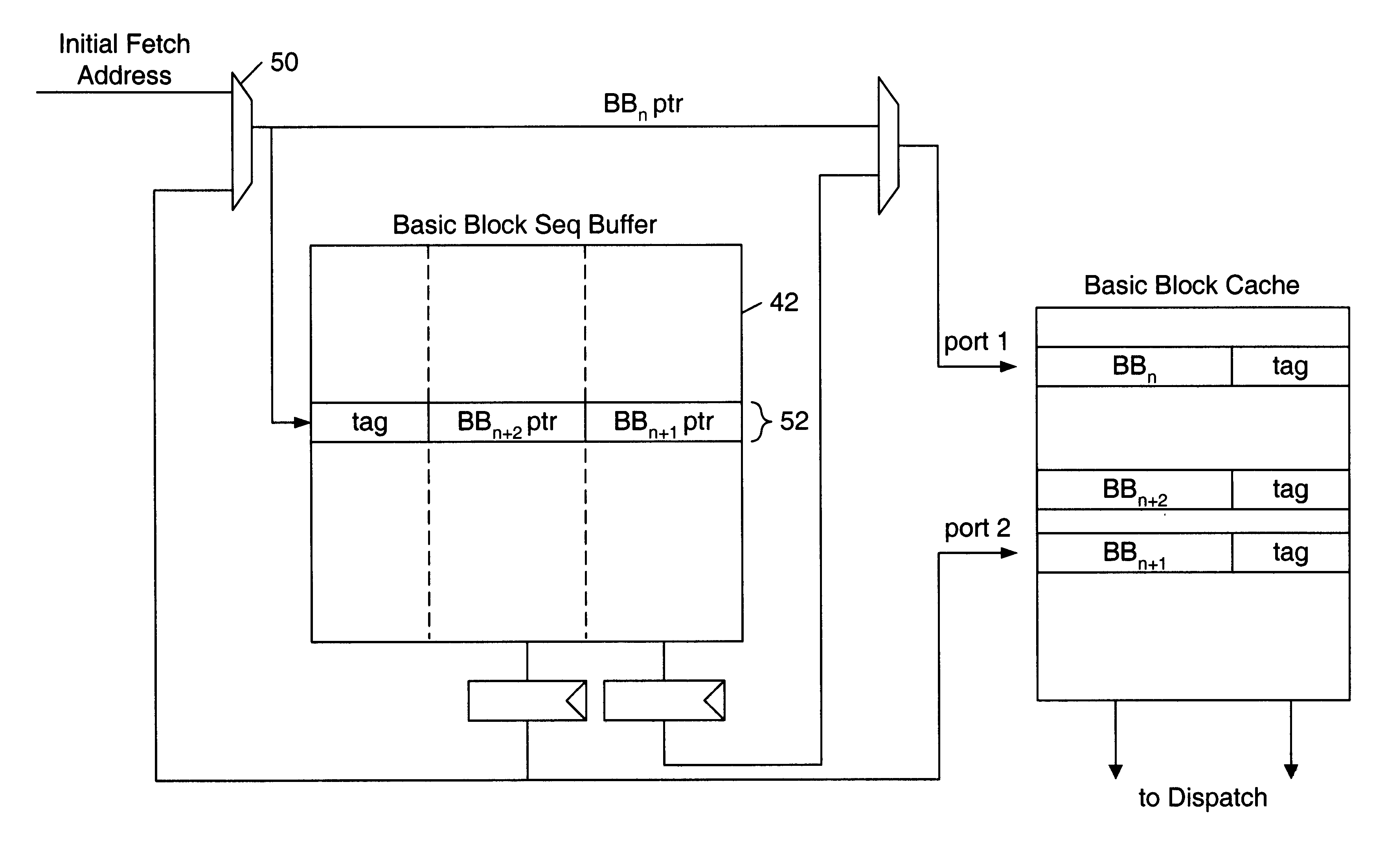

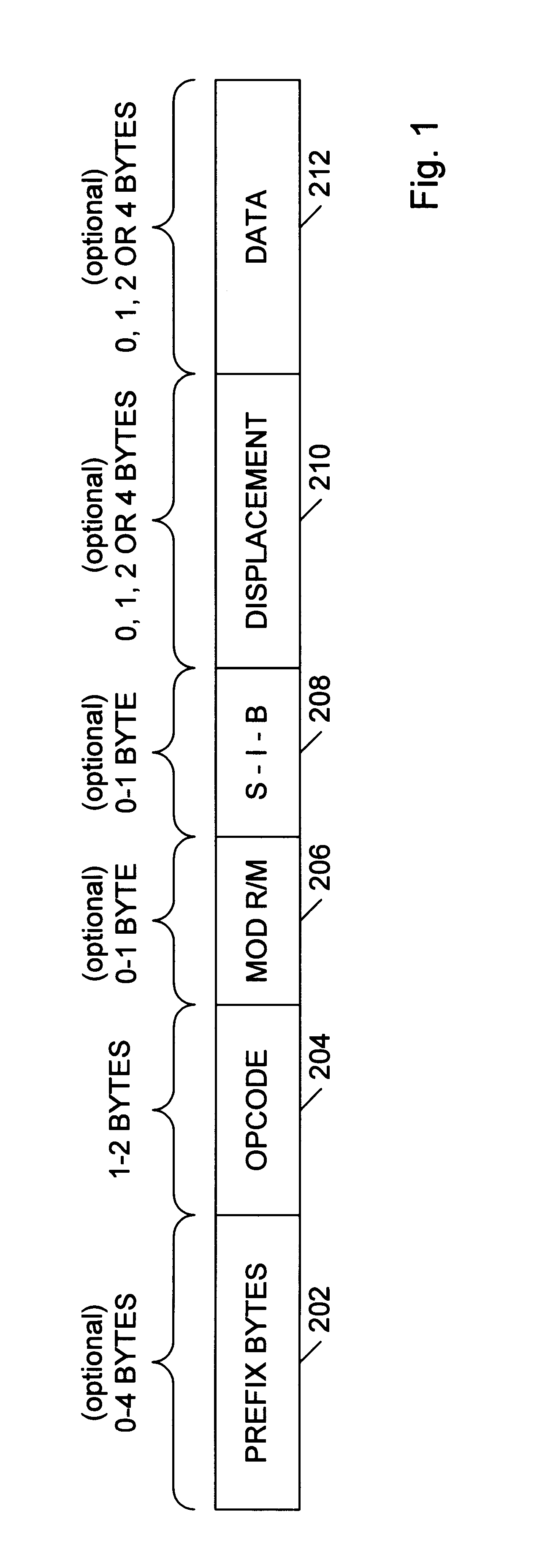

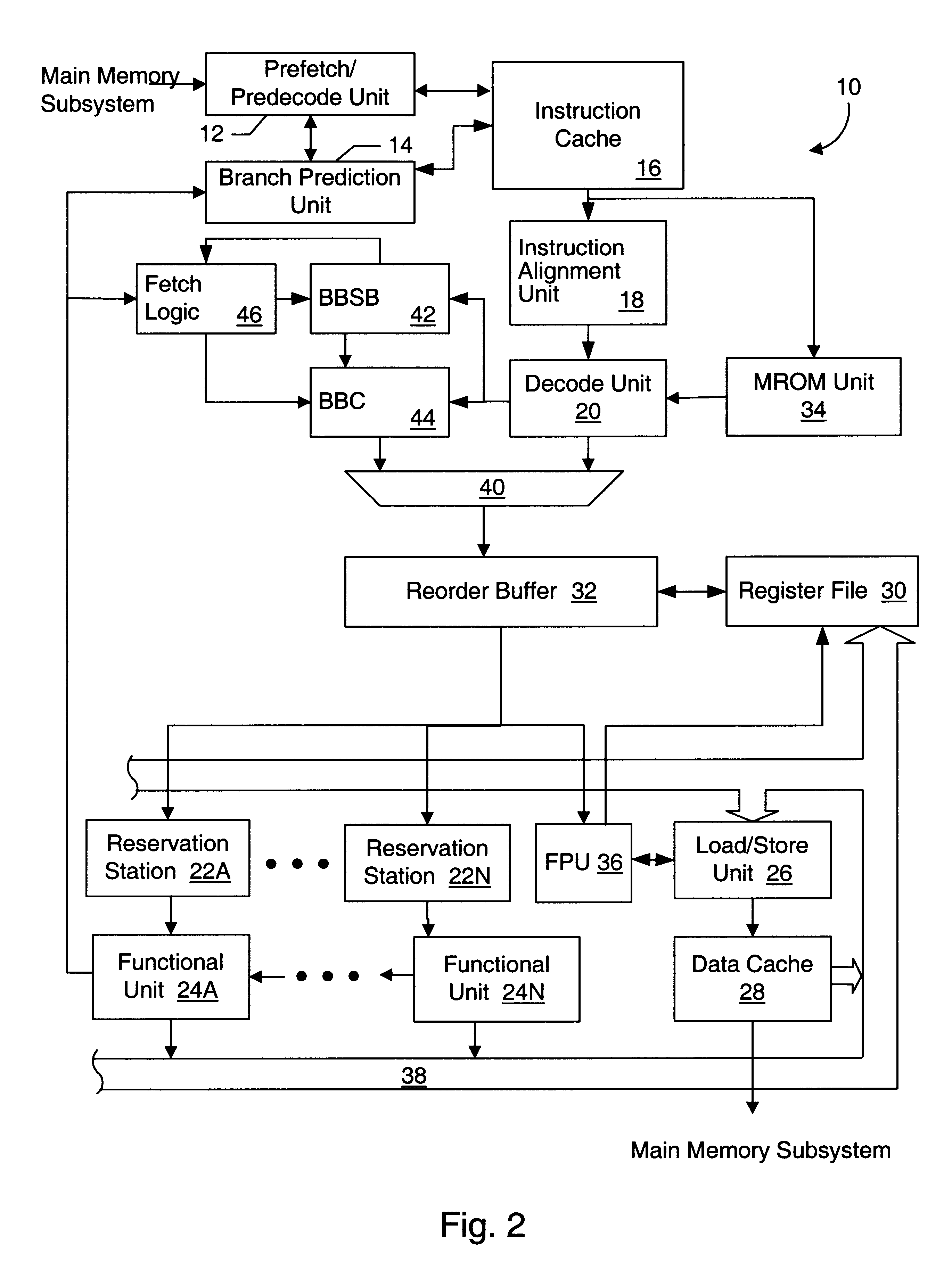

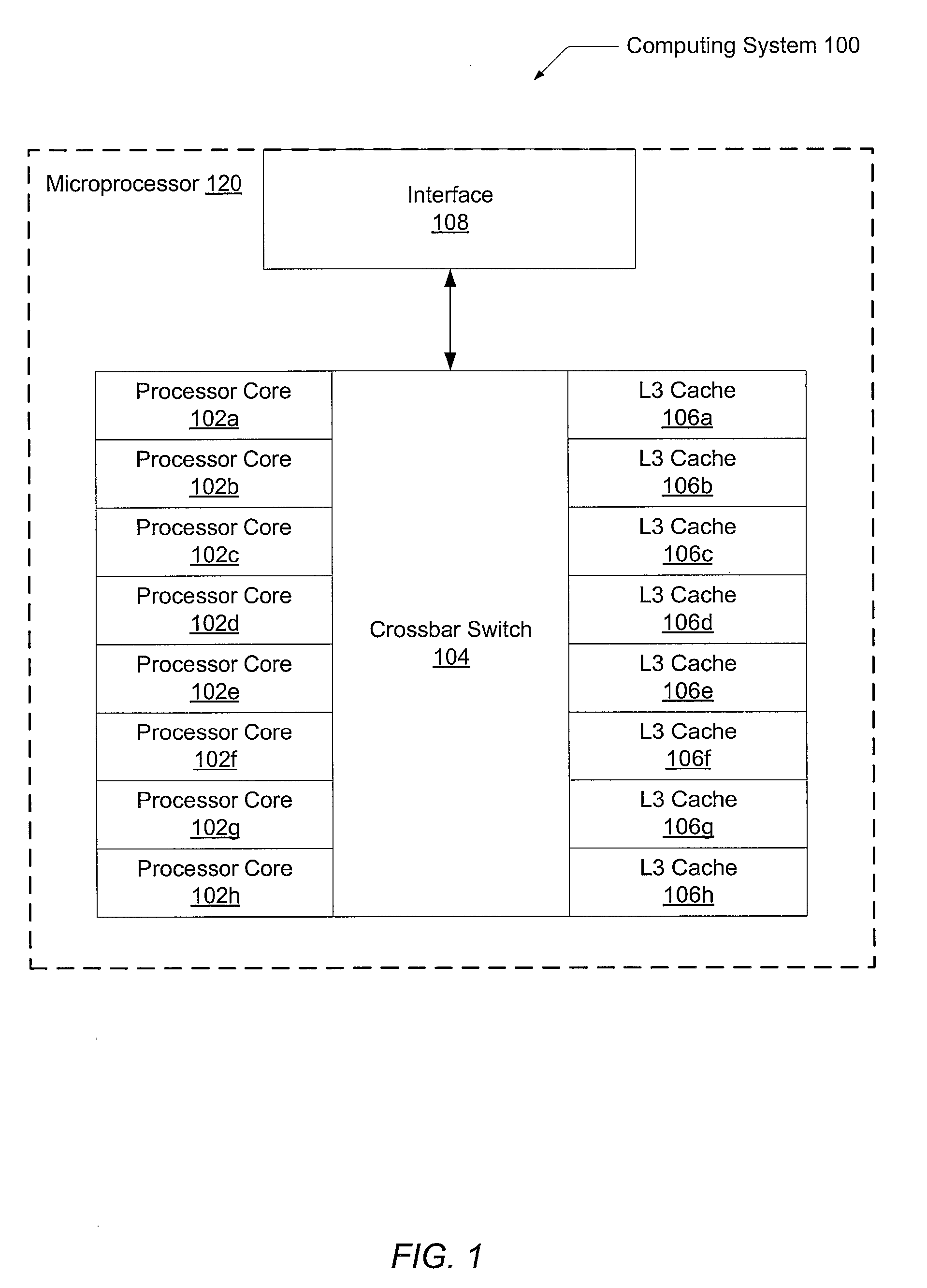

Basic block oriented trace cache utilizing a basic block sequence buffer to indicate program order of cached basic blocks

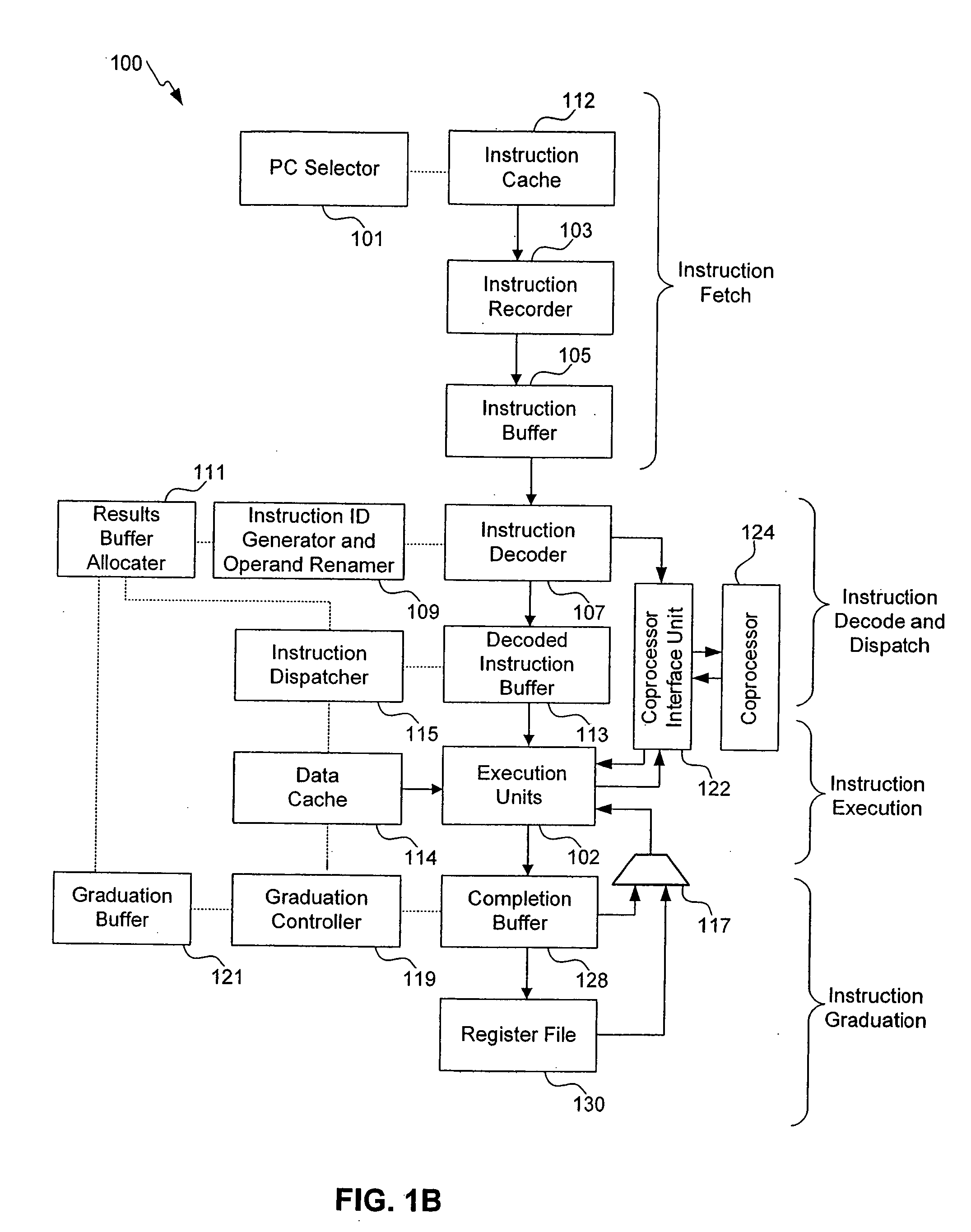

A cache memory configured to access stored instructions according to basic blocks is disclosed. Basic blocks are natural divisions in instruction streams resulting from branch instructions. The start of a basic block is a target of a branch, and the end is another branch instruction. A microprocessor configured to use a basic block oriented cache may comprise a basic block cache and a basic block sequence buffer. The basic block cache may have a plurality of storage locations configured to store basic blocks. The basic block sequence buffer also has a plurality of storage locations, each configured to store a block sequence entry. The block sequence entry may comprise an address tag and one or more basic block pointers. The address tag corresponds to the fetch address of a particular basic block, and the pointers point to basic blocks that follow the particular basic block in a predicted order. A system using the microprocessor and a method for caching instructions in a block oriented manner rather than conventional power-of-two memory blocks are also disclosed.

Owner:GLOBALFOUNDRIES INC

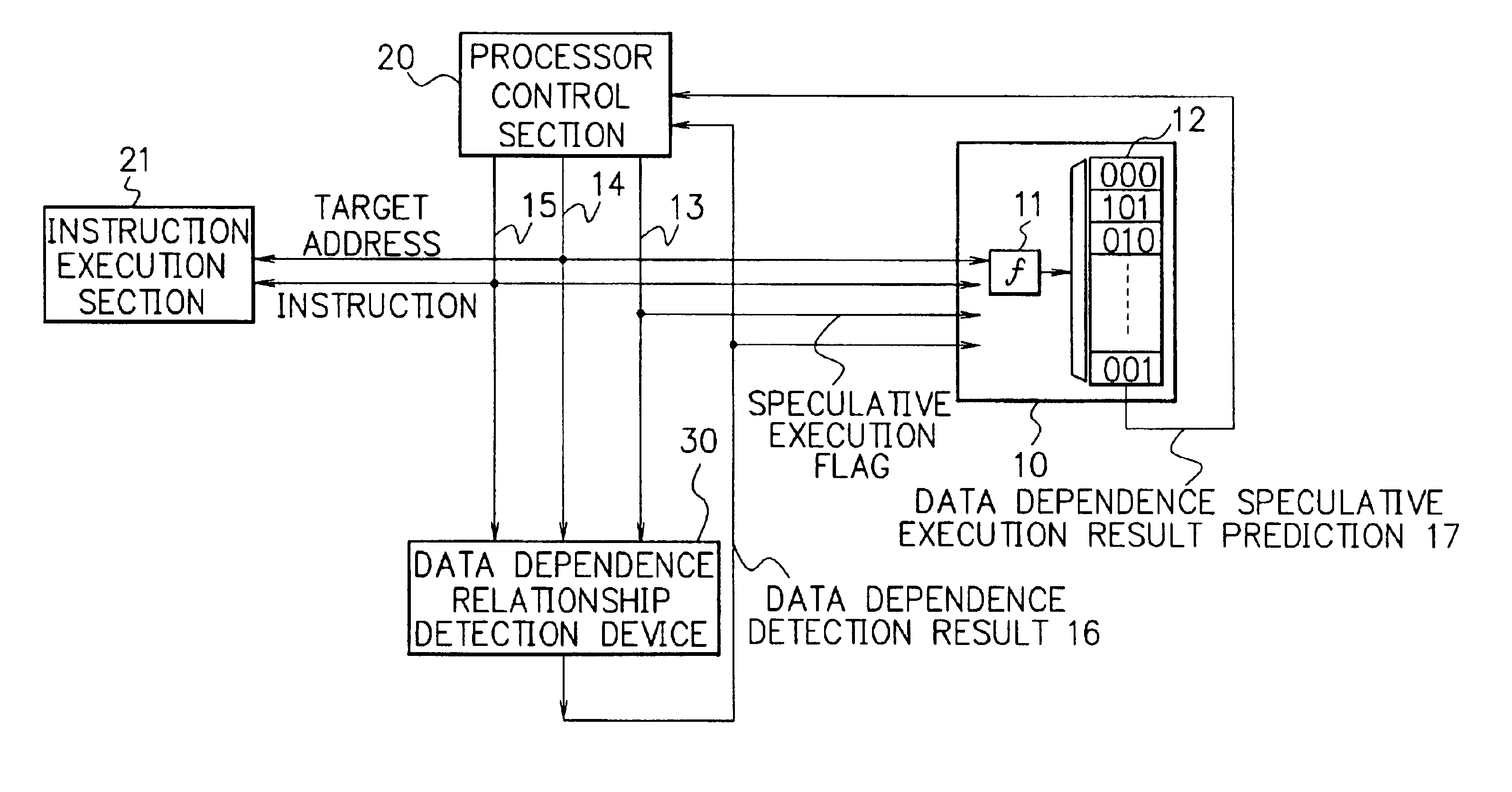

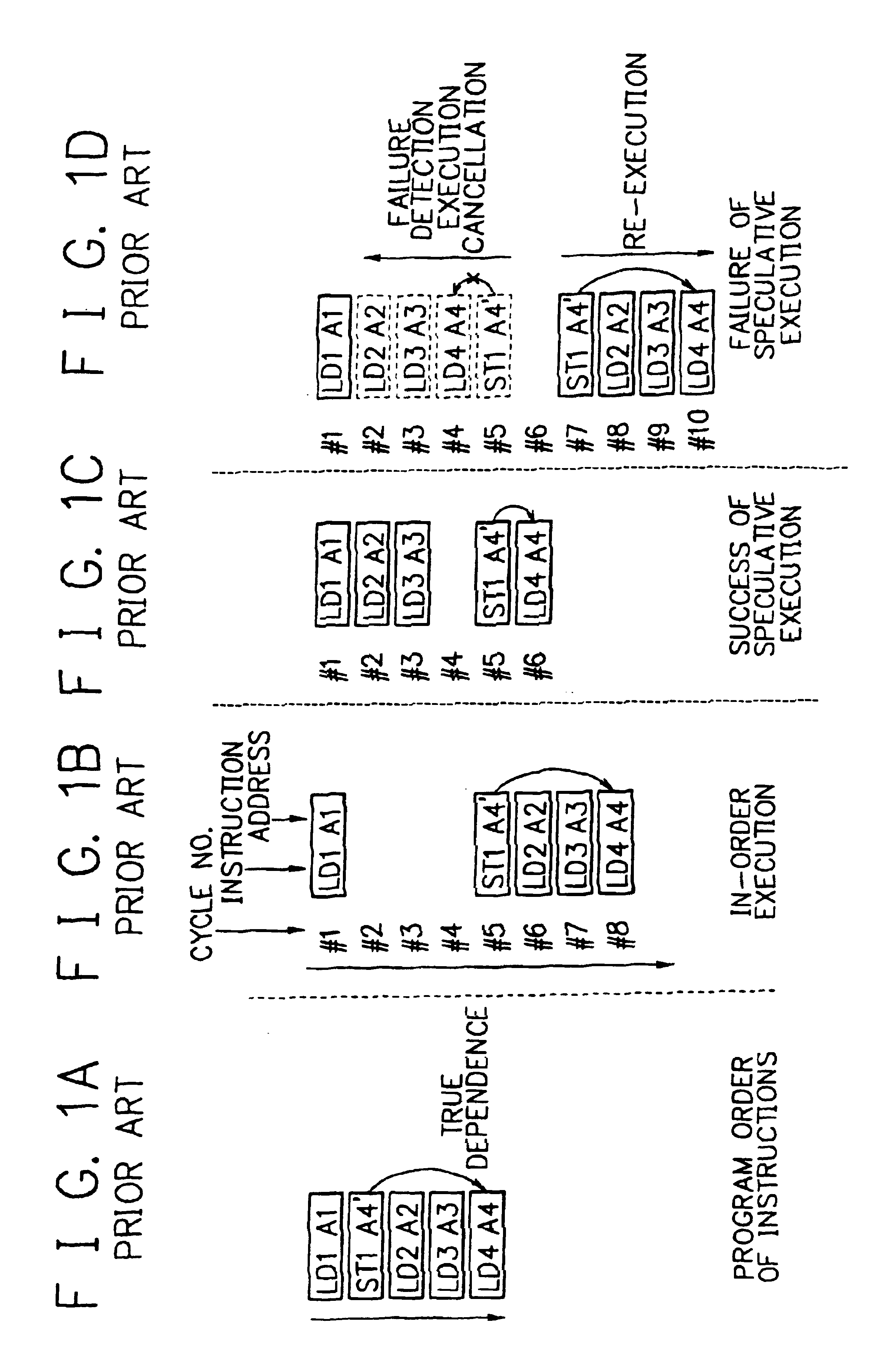

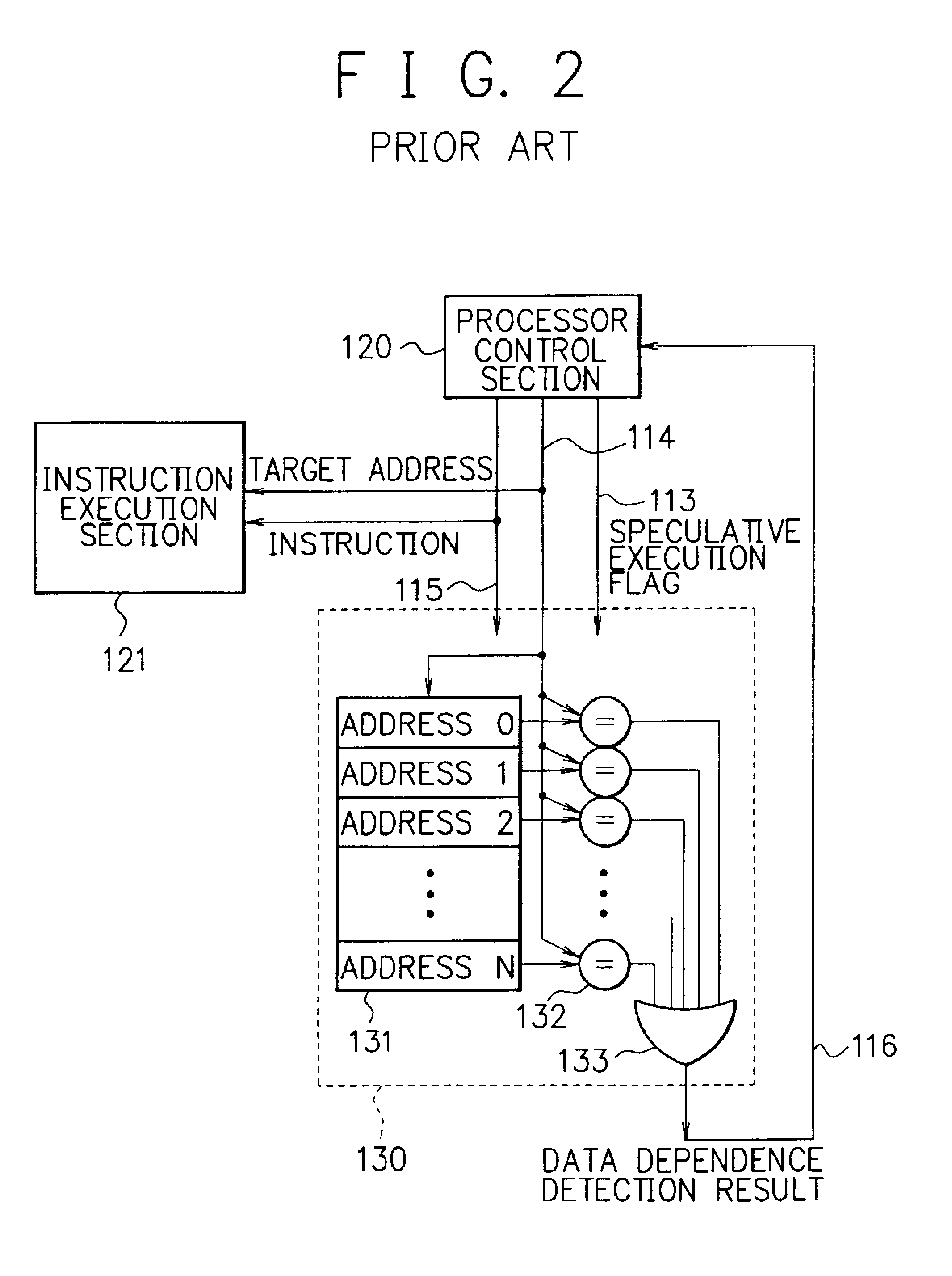

Processor, multiprocessor system and method for speculatively executing memory operations using memory target addresses of the memory operations to index into a speculative execution result history storage means to predict the outcome of the memory operation

InactiveUS6970997B2Low failure rateImprove execution performanceDigital computer detailsConcurrent instruction executionMemory addressSpeculative execution

When a processor executes a memory operation instruction by means of data dependence speculative execution, a speculative execution result history table which stores history information concerning success / failure results of the speculative execution of memory operation instructions of the past is referred to and thereby whether the speculative execution will succeed or fail is predicted. In the prediction, the target address of the memory operation instruction is converted by a hash function circuit into an entry number of the speculative execution result history table (allowing the existence of aliases), and an entry of the table designated by the entry number is referred to. If the prediction is “success”, the memory operation instruction is executed in out-of-order execution speculatively (with regard to data dependence relationship between the instructions). If the prediction is “failure”, the speculative execution is canceled and the memory operation instruction is executed later in the program order non-speculatively. Whether the speculative execution of the memory operation instructions has succeeded or failed is judged by detecting the data dependence relationship between the memory operation instructions, and the speculative execution result history table is updated taking the judgment into account.

Owner:NEC CORP

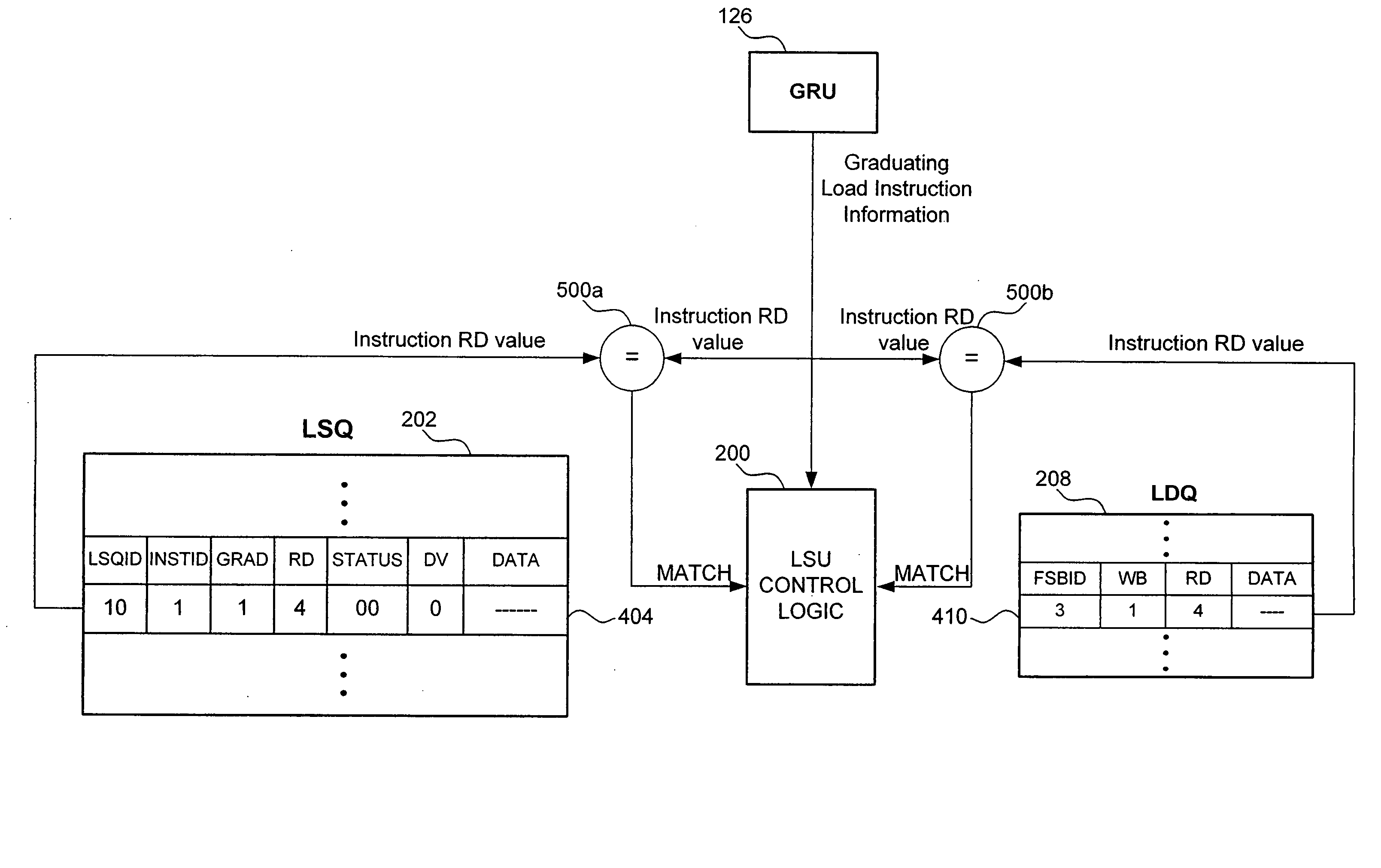

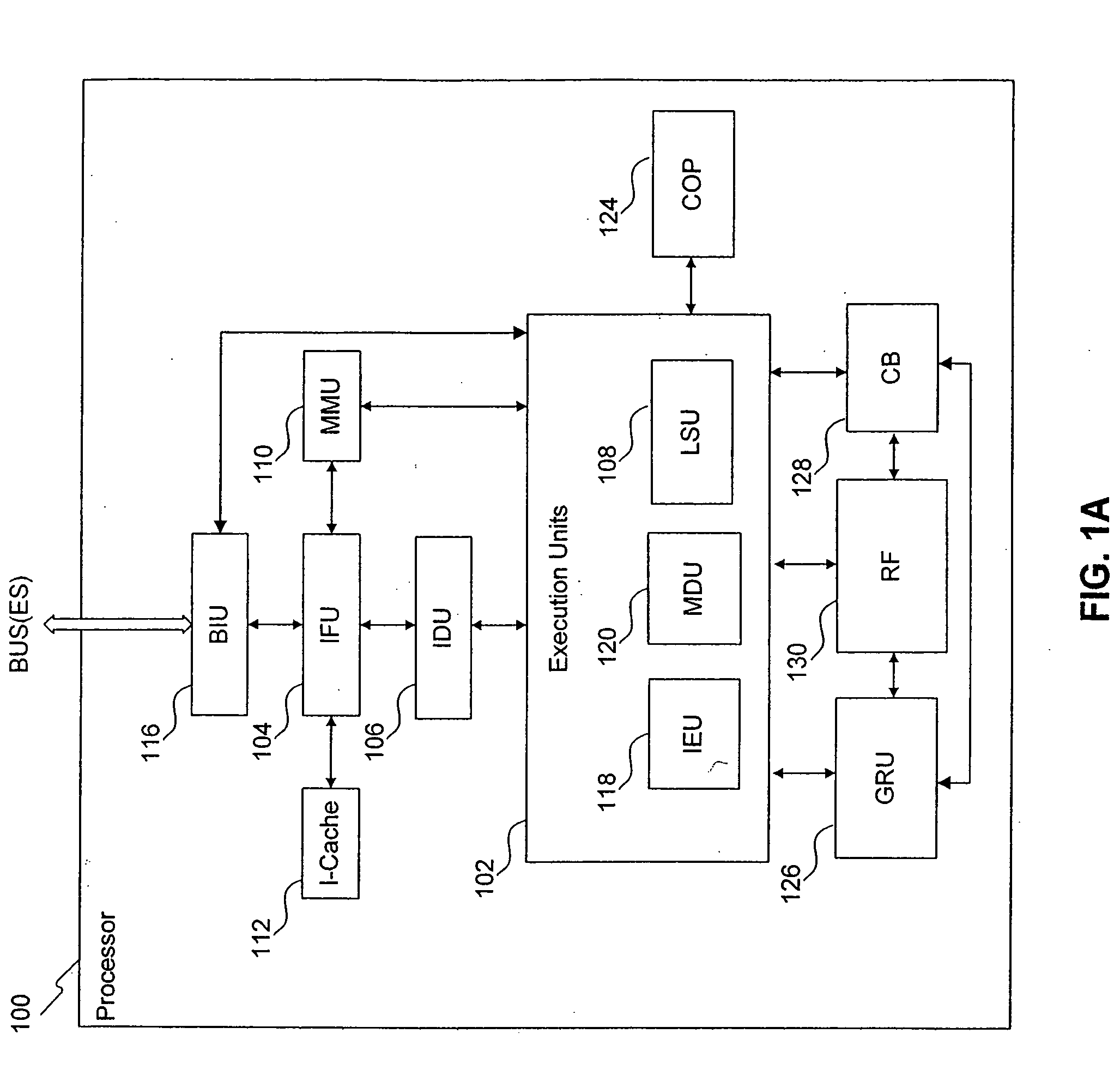

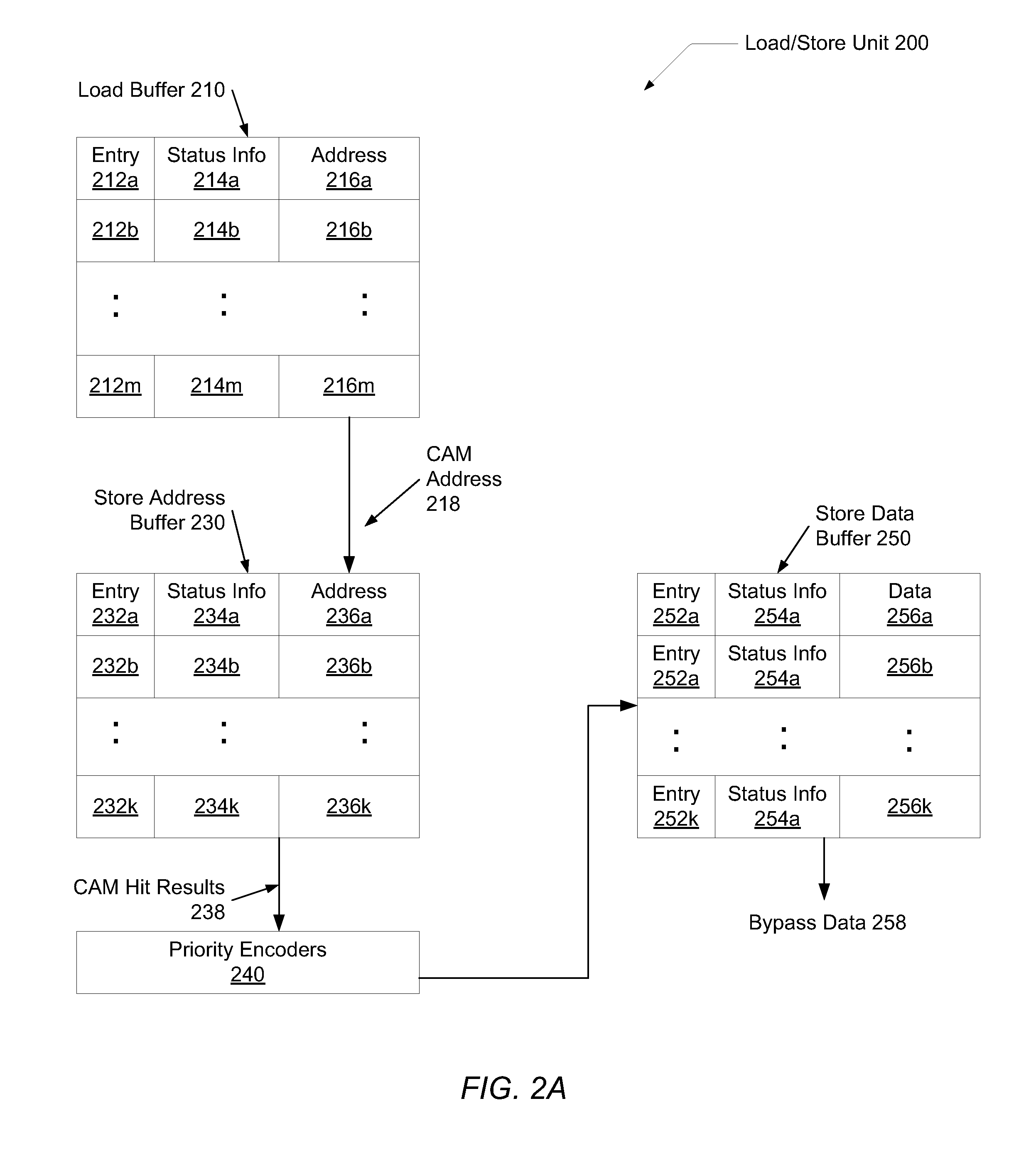

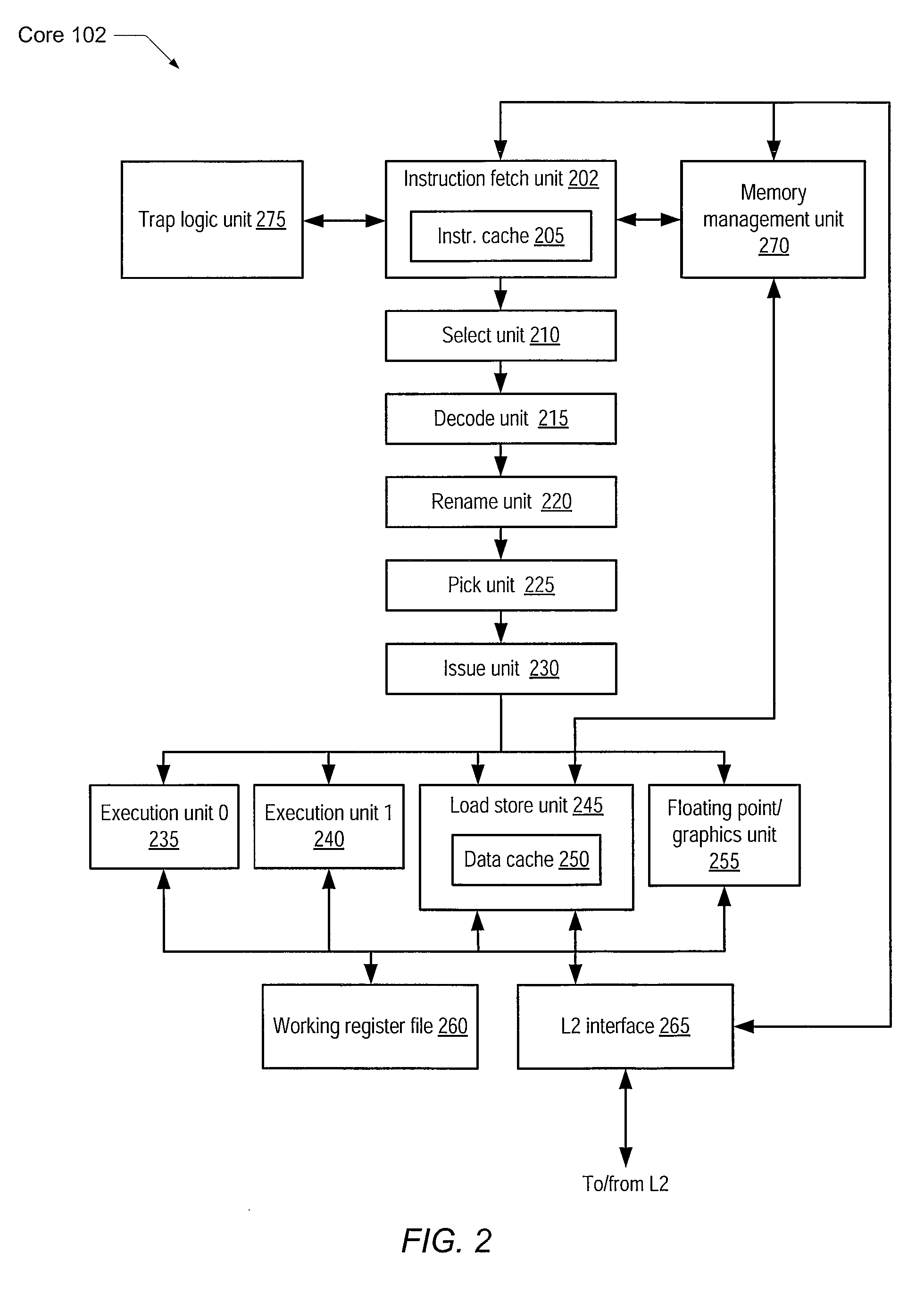

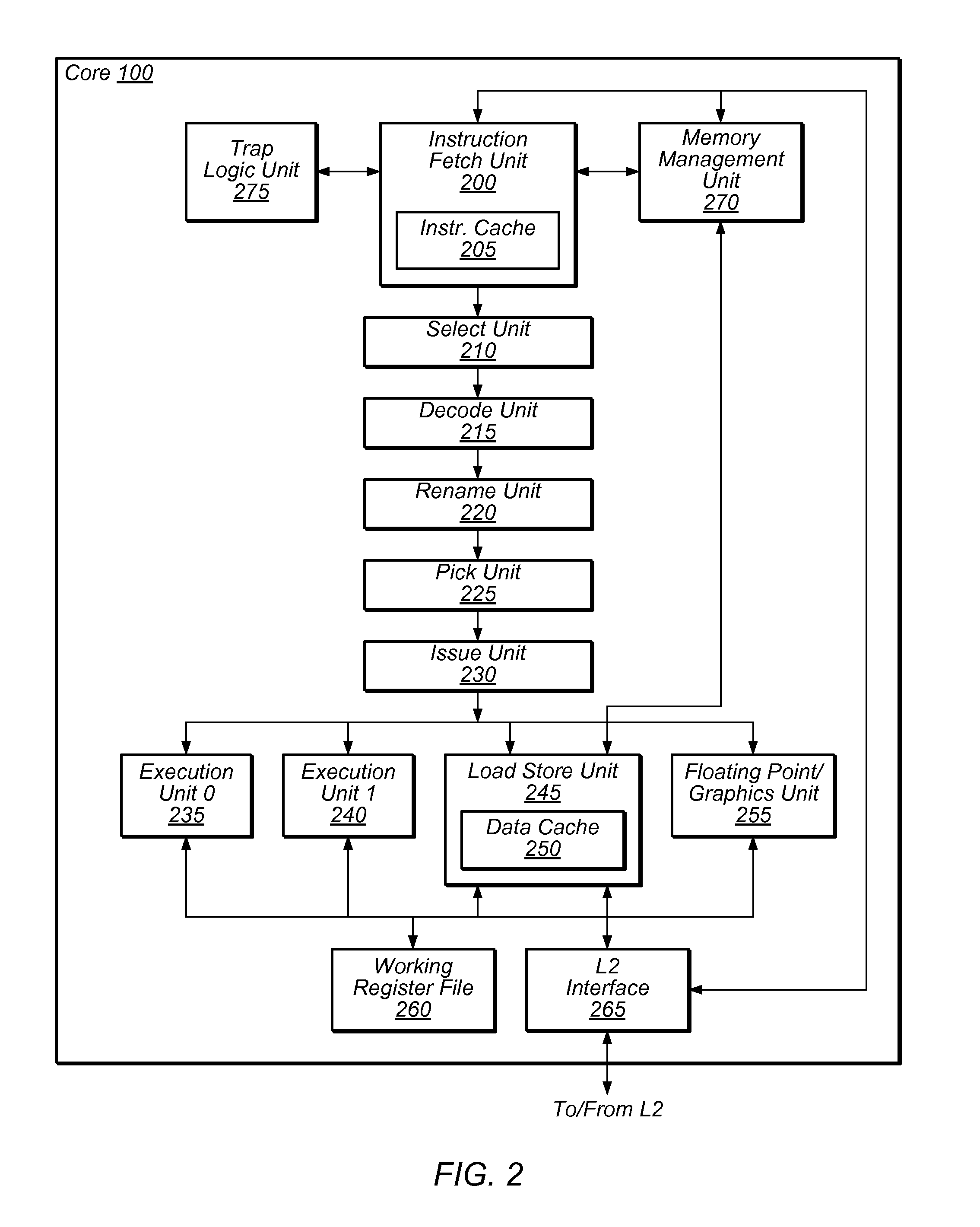

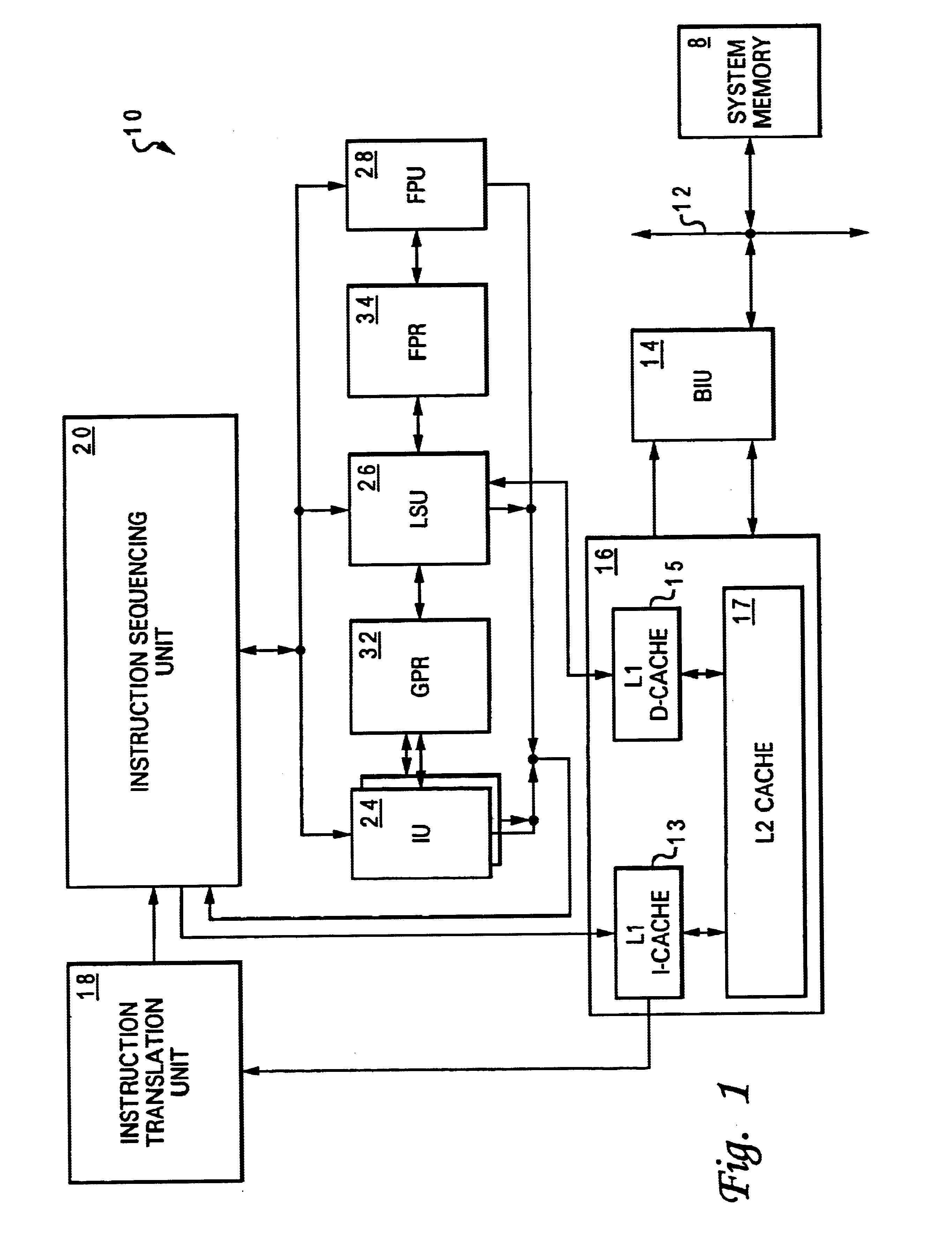

Load/store unit for a processor, and applications thereof

ActiveUS20080082794A1Digital computer detailsSpecific program execution arrangementsData storingData store

A load / store unit for a processor, and applications thereof. In an embodiment, the load / store unit includes a load / store queue configured to store information and data associated with a particular class of instructions. Data stored in the load / store queue can be bypassed to dependent instructions. When an instruction belonging to the particular class of instructions graduates and the instruction is associated with a cache miss, control logic causes a pointer to be stored in a load / store graduation buffer that points to an entry in the load / store queue associated with the instruction. The load / store graduation buffer ensures that graduated instructions access a shared resource of the load / store unit in program order.

Owner:ARM FINANCE OVERSEAS LTD

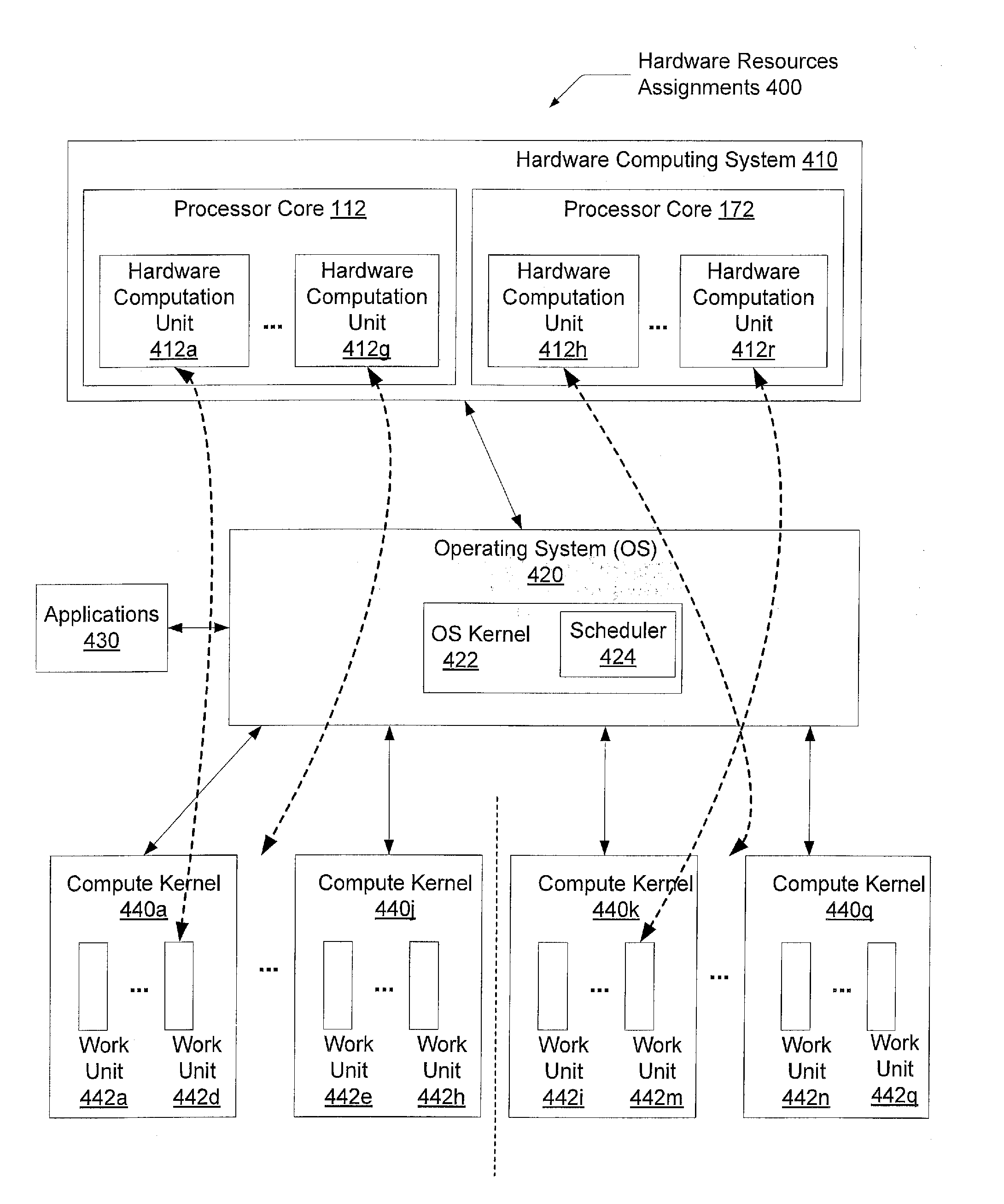

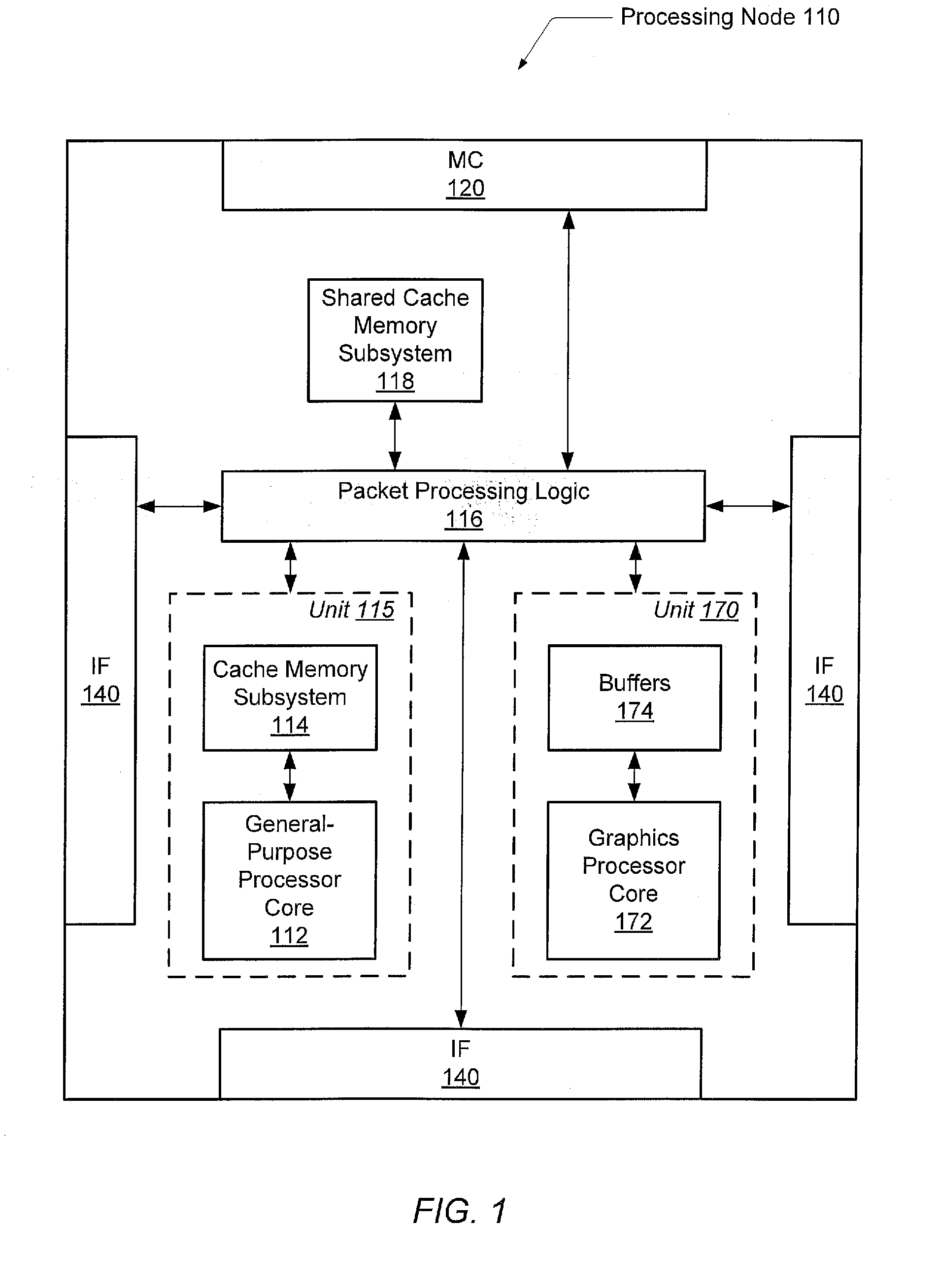

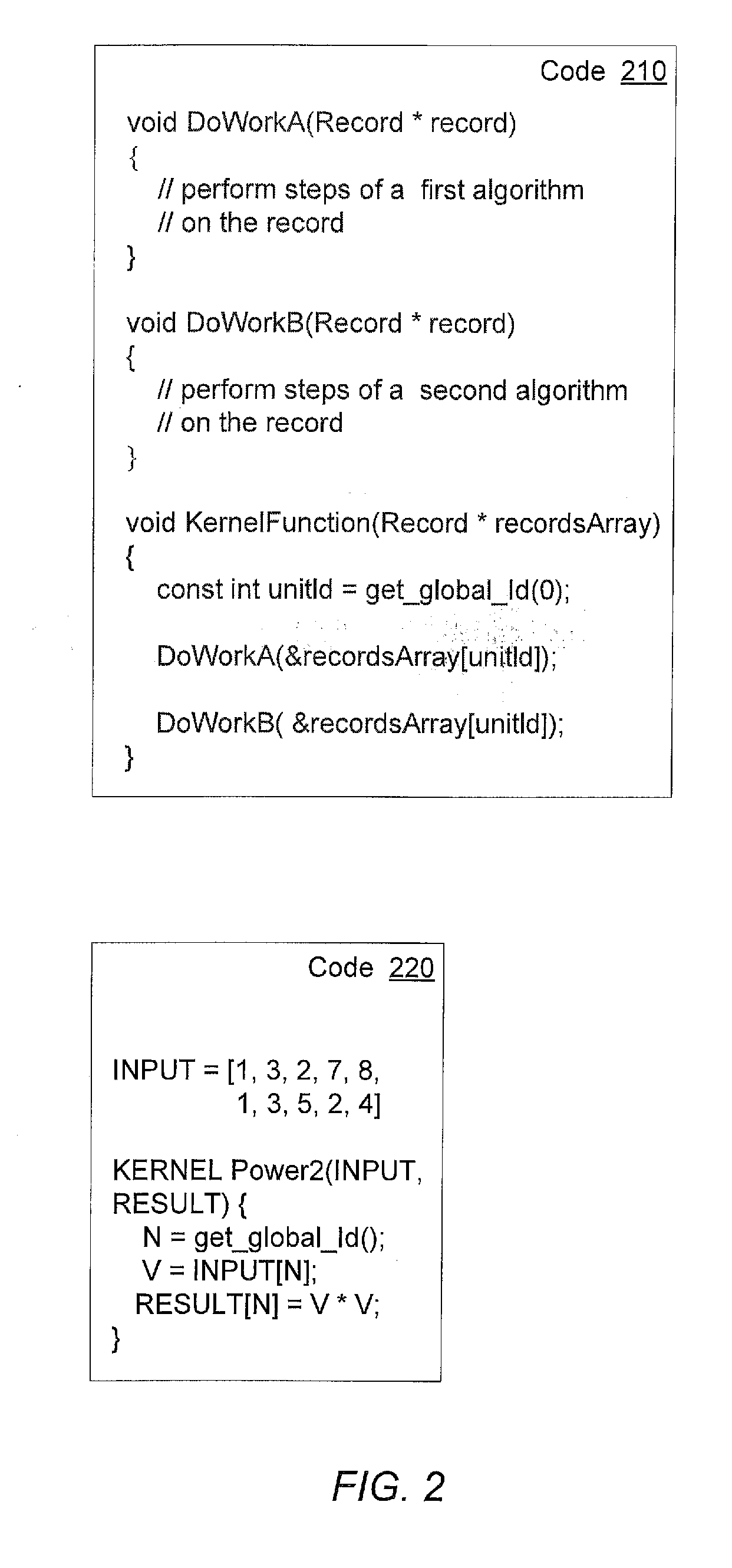

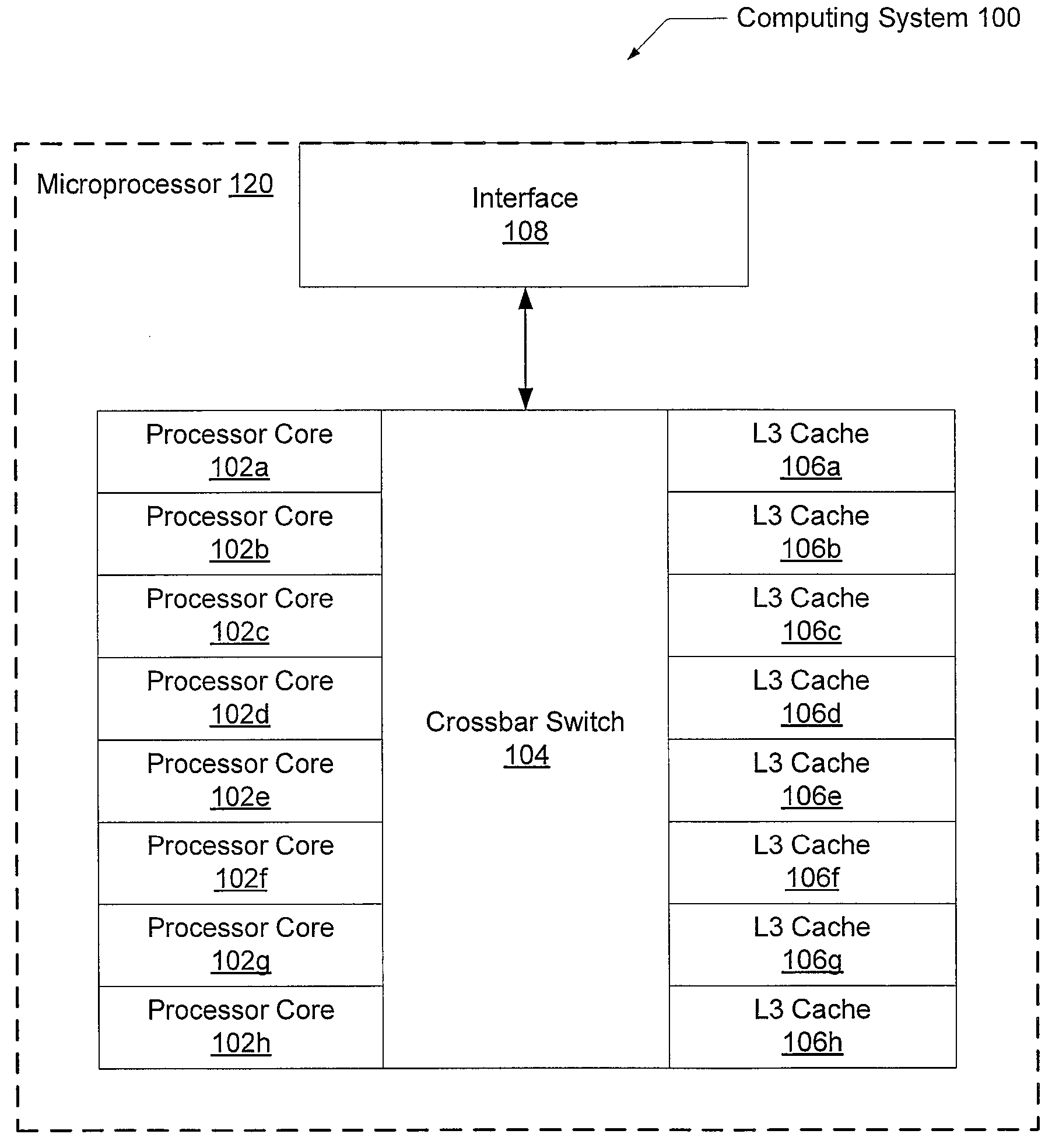

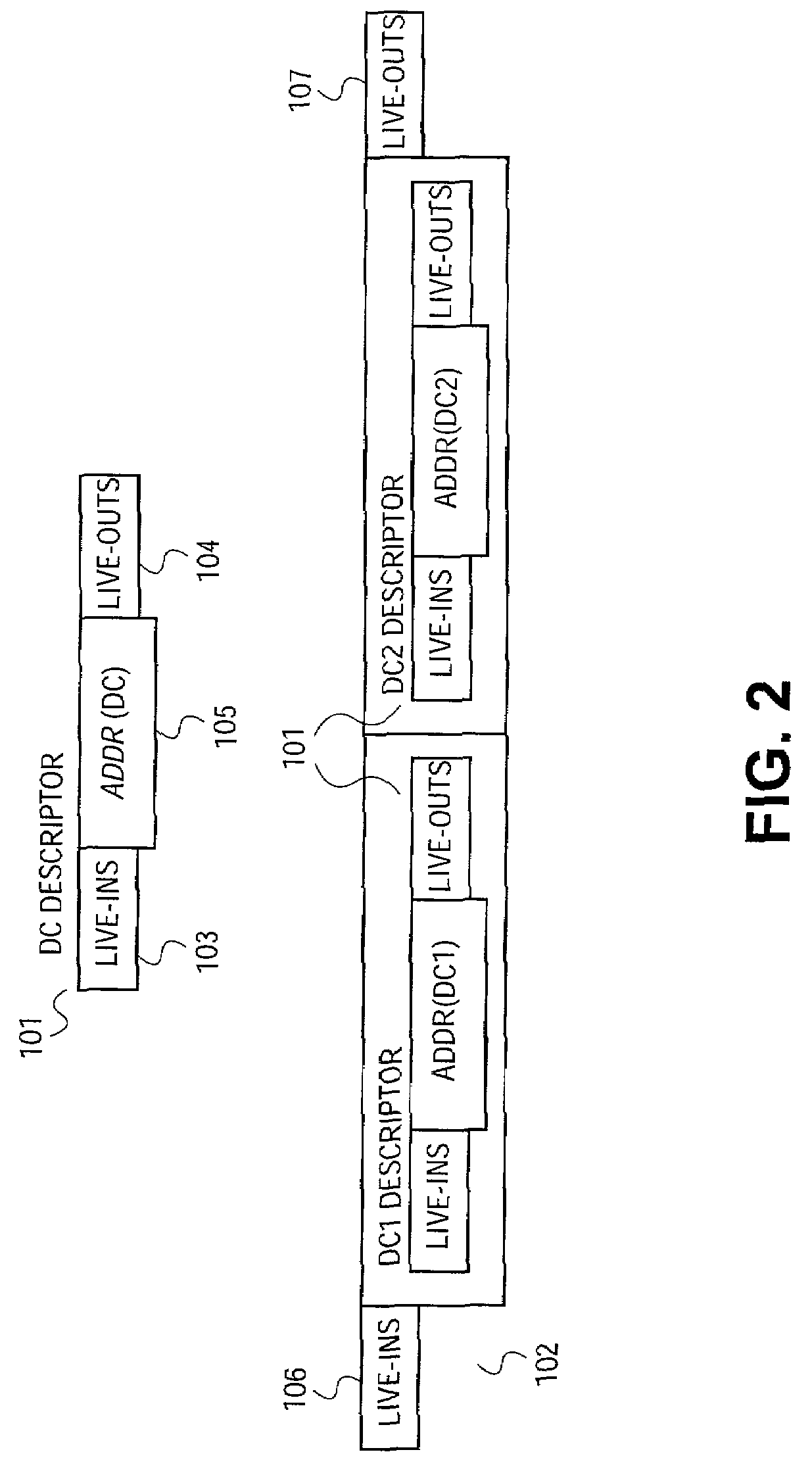

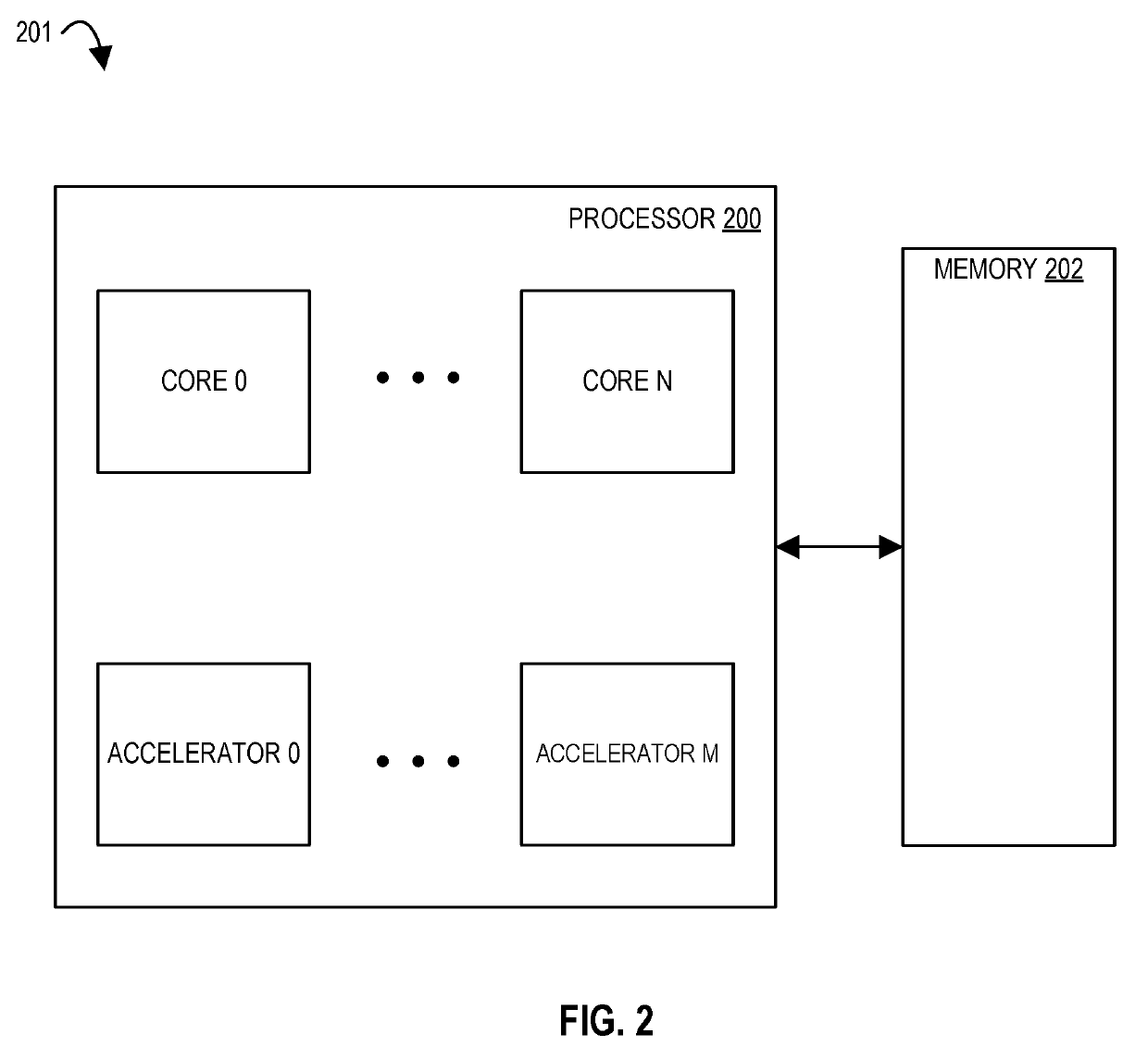

Automatic kernel migration for heterogeneous cores

ActiveUS20120297163A1Specific program execution arrangementsMemory systemsGeneral purposeOperational system

A system and method for automatically migrating the execution of work units between multiple heterogeneous cores. A computing system includes a first processor core with a single instruction multiple data micro-architecture and a second processor core with a general-purpose micro-architecture. A compiler predicts execution of a function call in a program migrates at a given location to a different processor core. The compiler creates a data structure to support moving live values associated with the execution of the function call at the given location. An operating system (OS) scheduler schedules at least code before the given location in program order to the first processor core. In response to receiving an indication that a condition for migration is satisfied, the OS scheduler moves the live values to a location indicated by the data structure for access by the second processor core and schedules code after the given location to the second processor core.

Owner:ADVANCED MICRO DEVICES INC

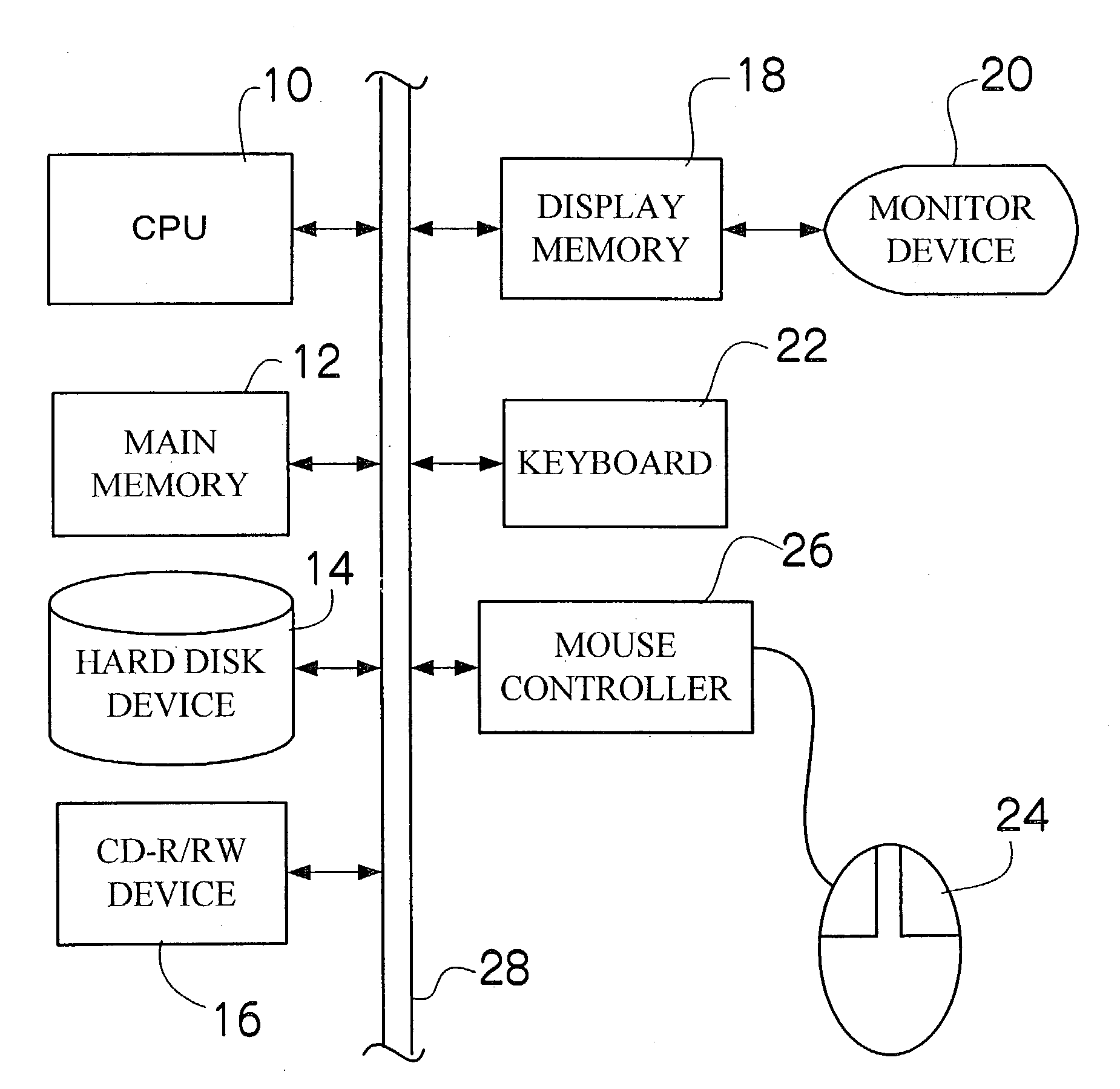

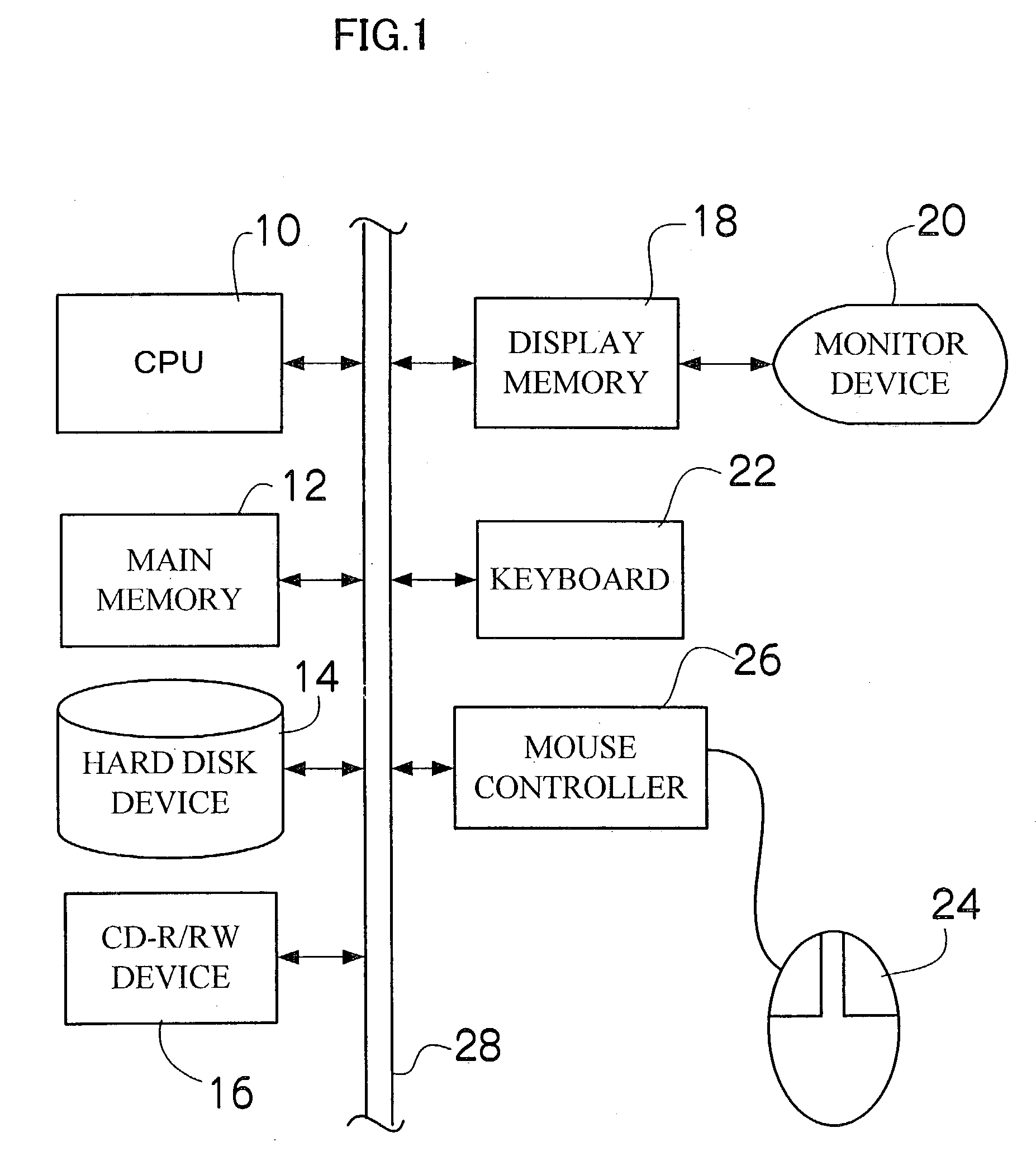

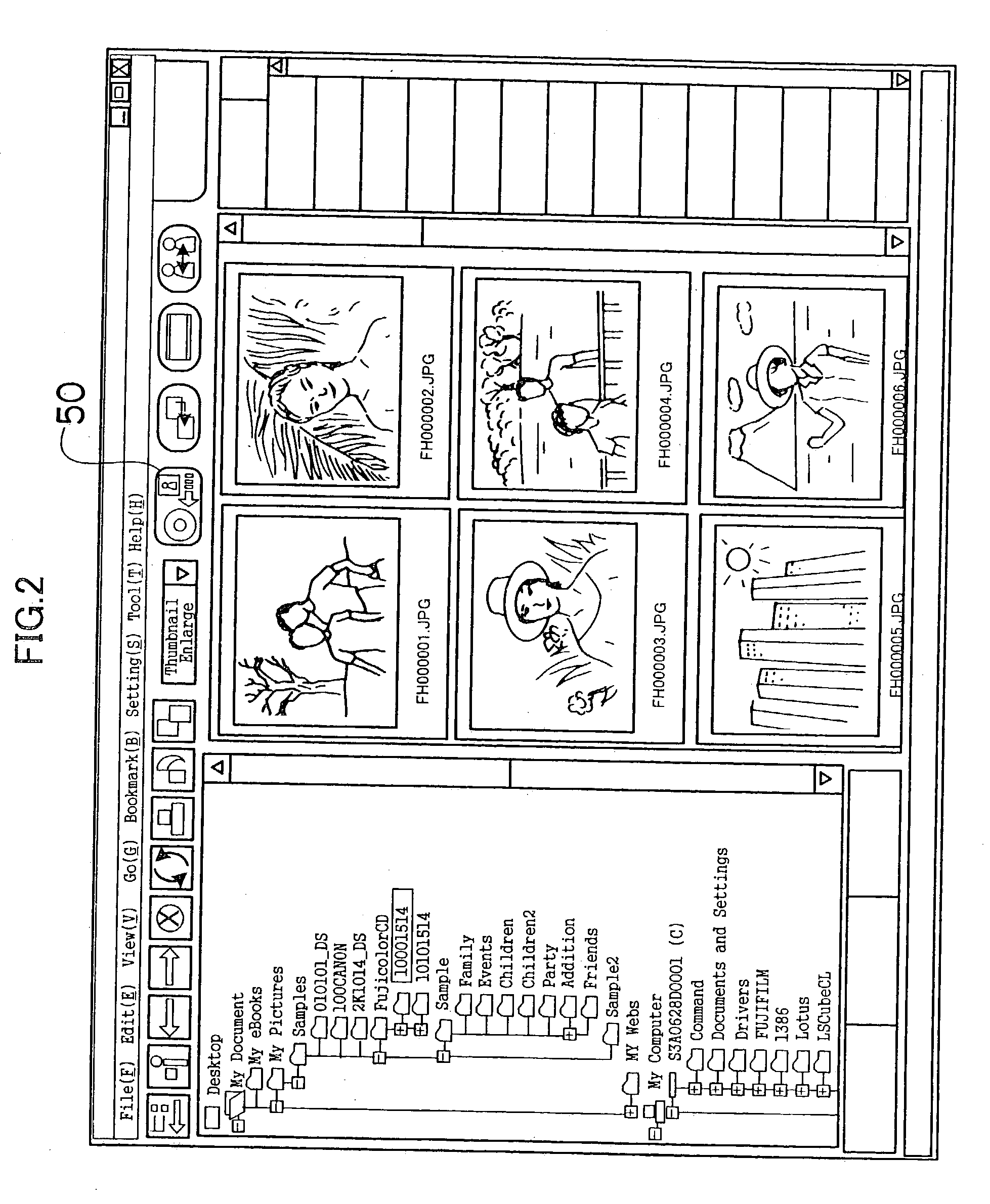

Album generation program and apparatus and file display apparatus

InactiveUS20030142953A1Optimized storage medium to be browsedTelevision system detailsElectronic editing digitised analogue information signalsRecordable CDStored procedure

A computer is directed to select one or more folders containing an image file on at least one of the lower hierarchical levels, and record an image file contained in and below the selected folders on a CD-R when a storing process is specified, and generates a CD album. The album generation program sequentially searches folders having an image file immediately below the folders when the image file is recorded on the CD-R, generates a new folder for the folder having an image file immediately below the folder, and copies a target image file as a new image file in the new folder, thereby giving a folder name and a file name automatically generated according to DCF rule to the stored new folder and new image file.

Owner:FUJIFILM CORP

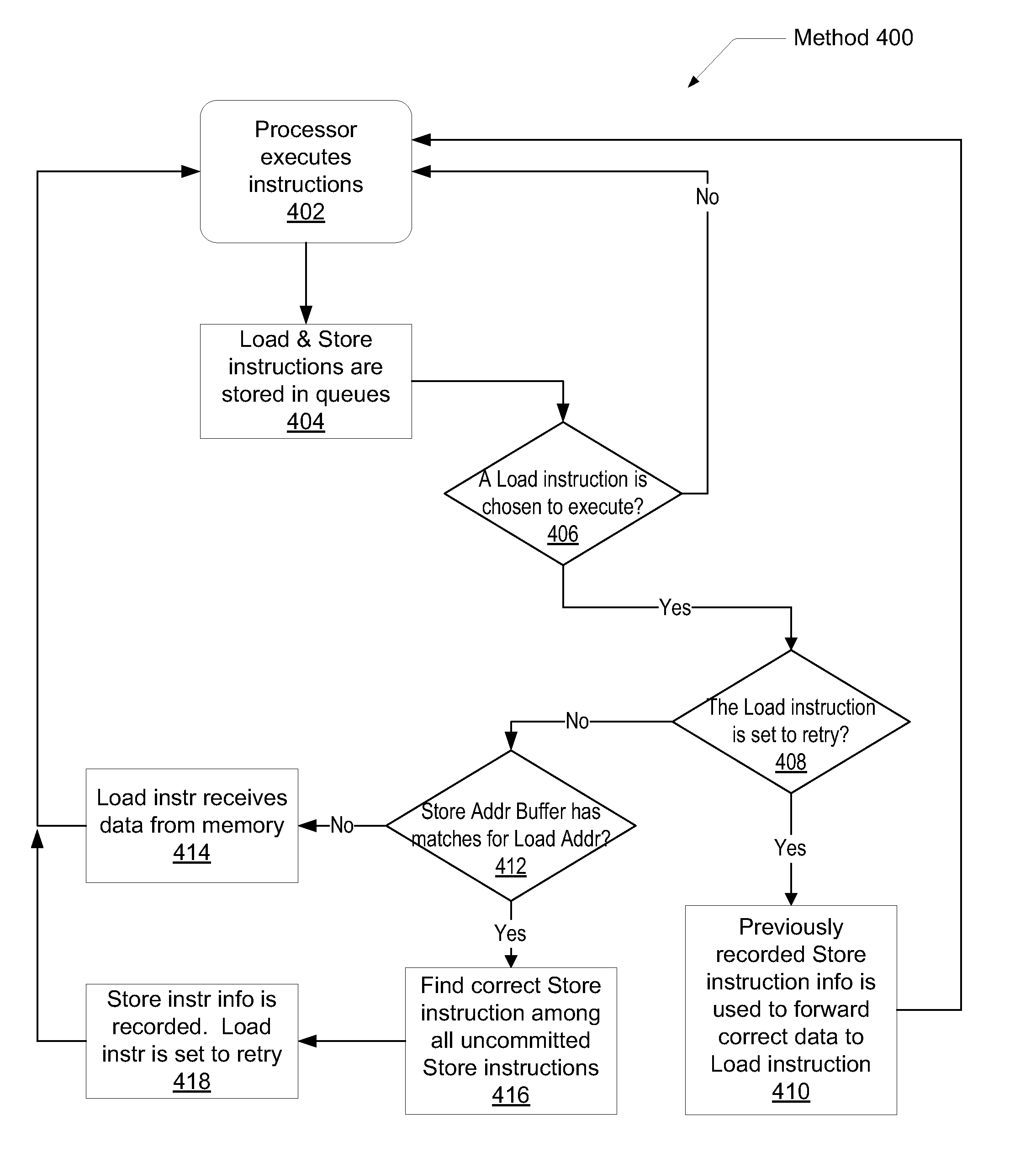

System and method of load-store forwarding

ActiveUS20090037697A1Improve processor performanceImprove performanceDigital computer detailsMemory systemsLoad instructionStore and forward

A system and method for data forwarding from a store instruction to a load instruction during out-of-order execution, when the load instruction address matches against multiple older uncommitted store addresses or if the forwarding fails during the first pass due to any other reason. In a first pass, the youngest store instruction in program order of all store instructions older than a load instruction is found and an indication to the store buffer entry holding information of the youngest store instruction is recorded. In a second pass, the recorded indication is used to index the store buffer and the store bypass data is forwarded to the load instruction. Simultaneously, it is verified if no new store, younger than the previously identified store and older than the load has not been issued due to out-of-order execution.

Owner:ADVANCED MICRO DEVICES INC

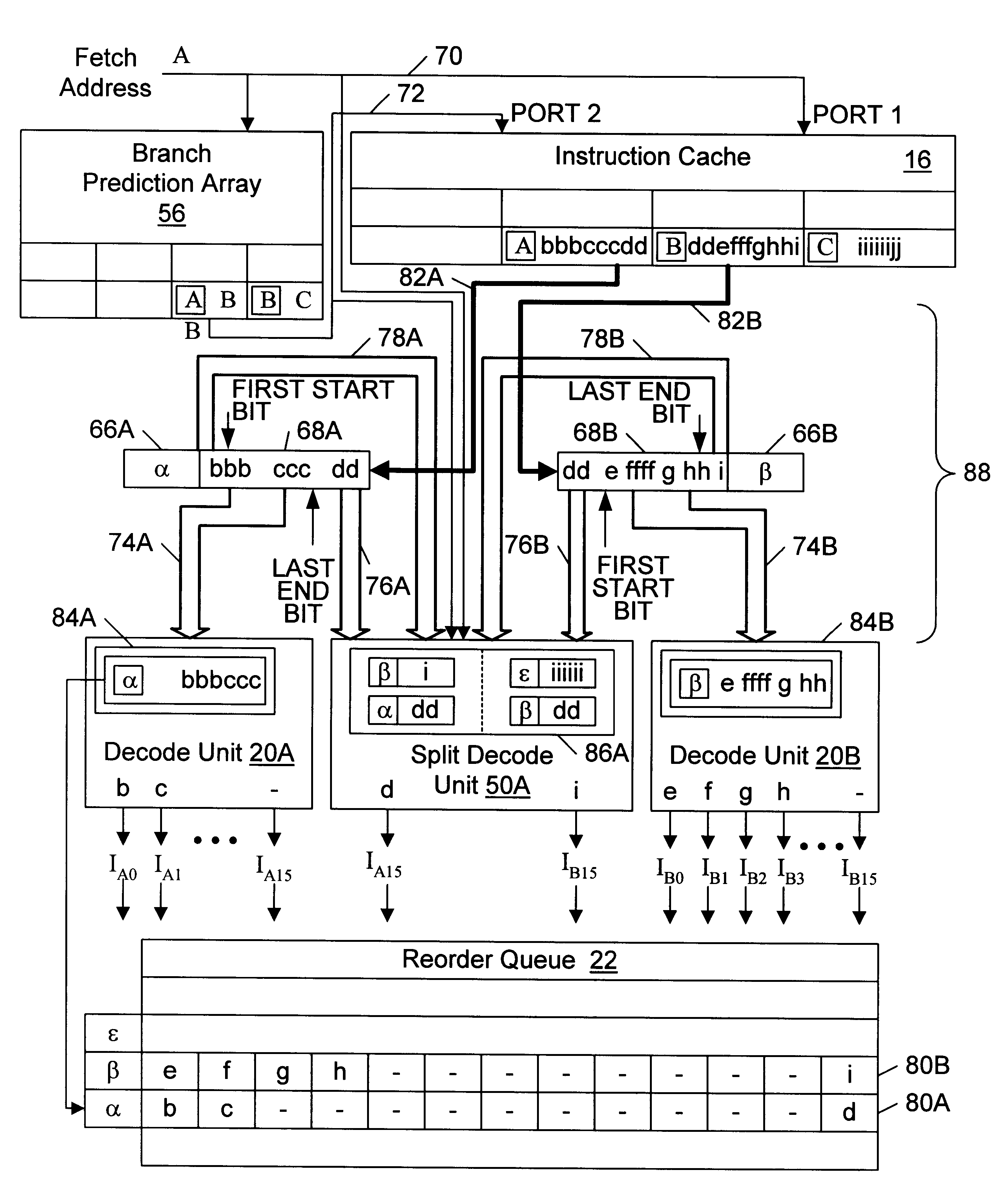

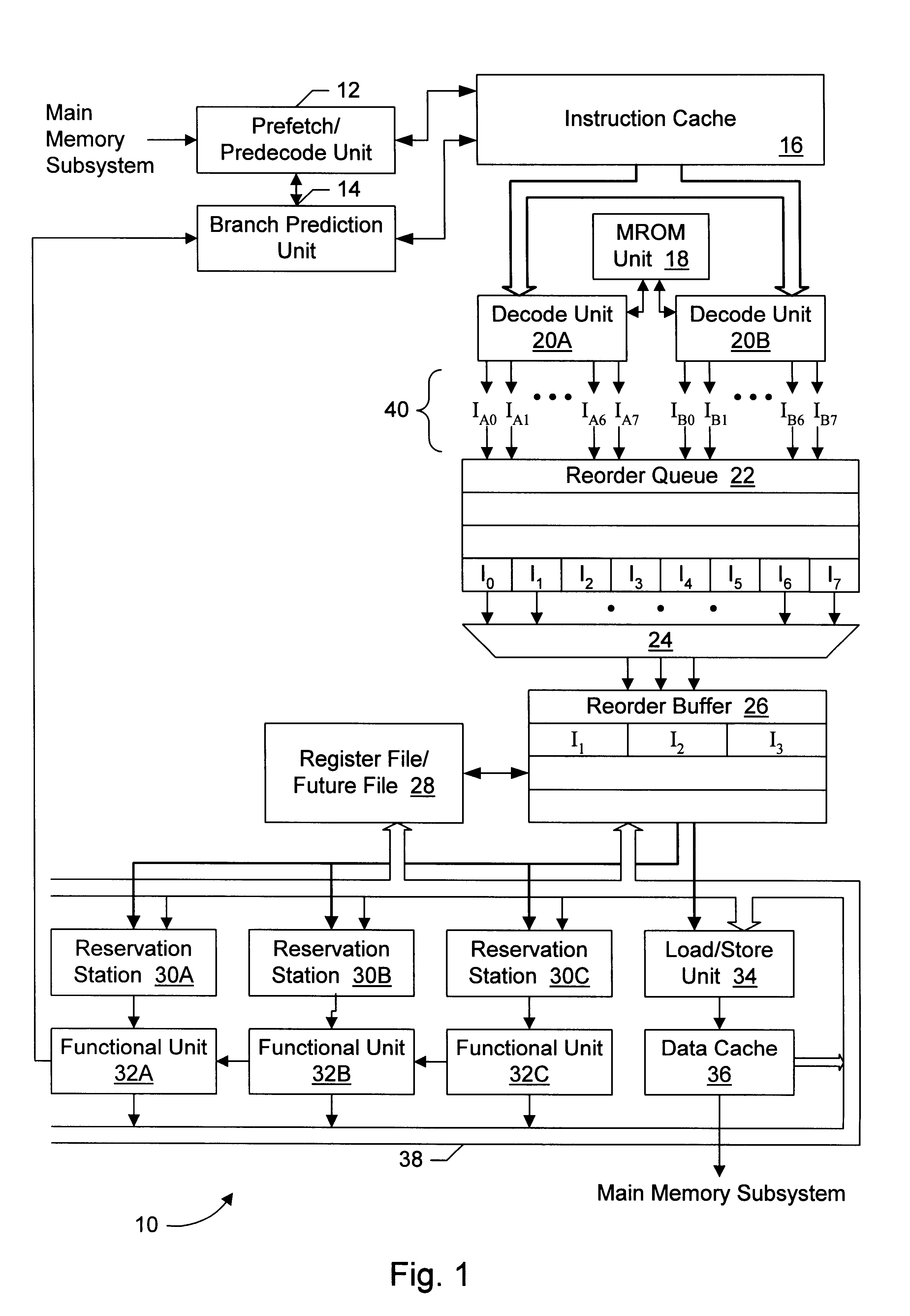

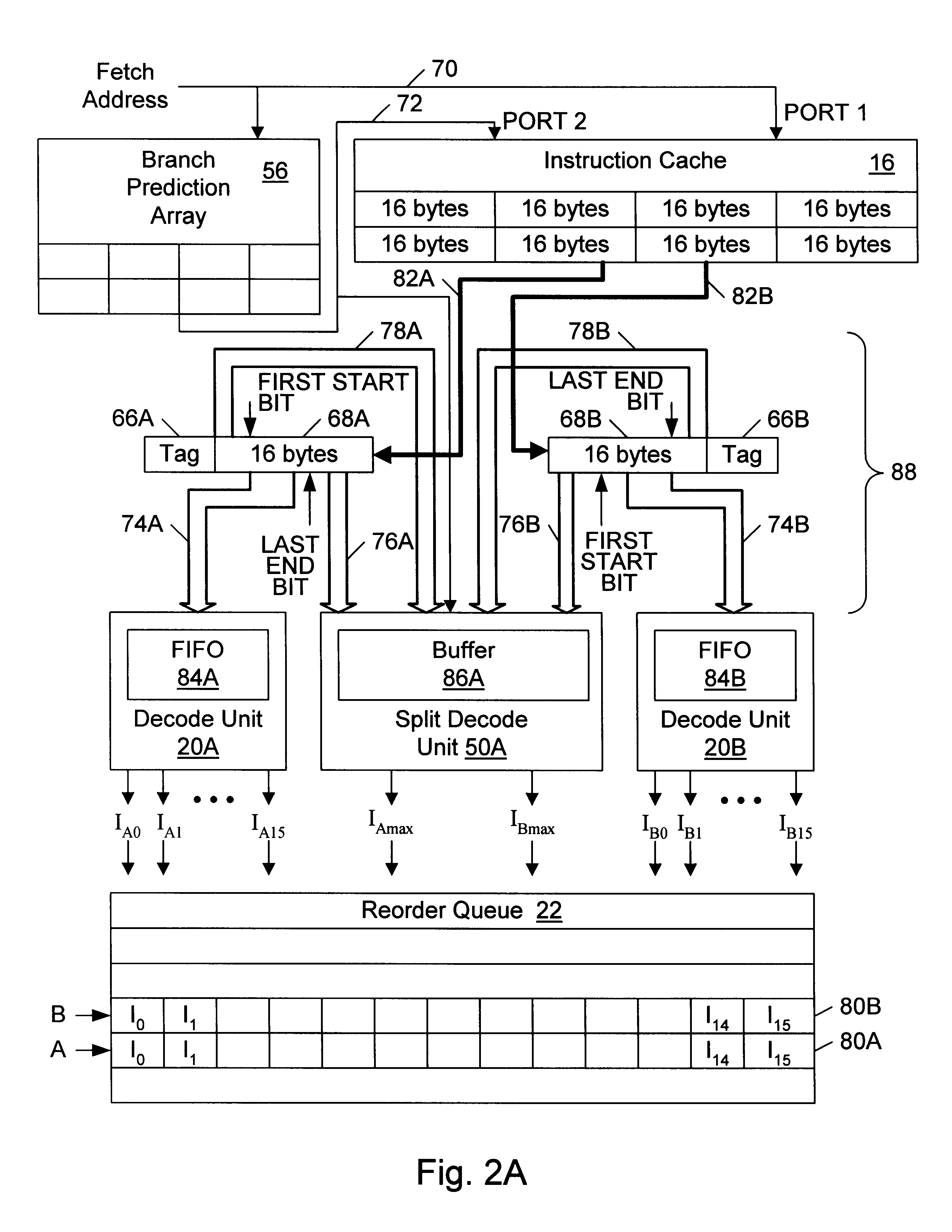

Using multiple decoders and a reorder queue to decode instructions out of order

A microprocessor capable of out-of-order instruction decoding and in-order dependency checking is disclosed. The microprocessor may include an instruction cache, two decode units, a reorder queue, and dependency checking logic. The instruction cache is configured to output cache line portions to the decode units. The decode units operate independently and in parallel. One of the decode units may be a split decoder that receives all instruction bytes from instructions that extend across cache line portion boundaries. The split decode unit may be configured to reassemble the instruction bytes into instructions. These instructions are then decoded by the split decode unit. A reorder queue may be used to store the decoded instructions according to their relative cache line positions. The decoded instructions are read out of the reorder queue in program order, thereby enabling the dependency checking logic to perform dependency checking in program order.

Owner:GLOBALFOUNDRIES INC

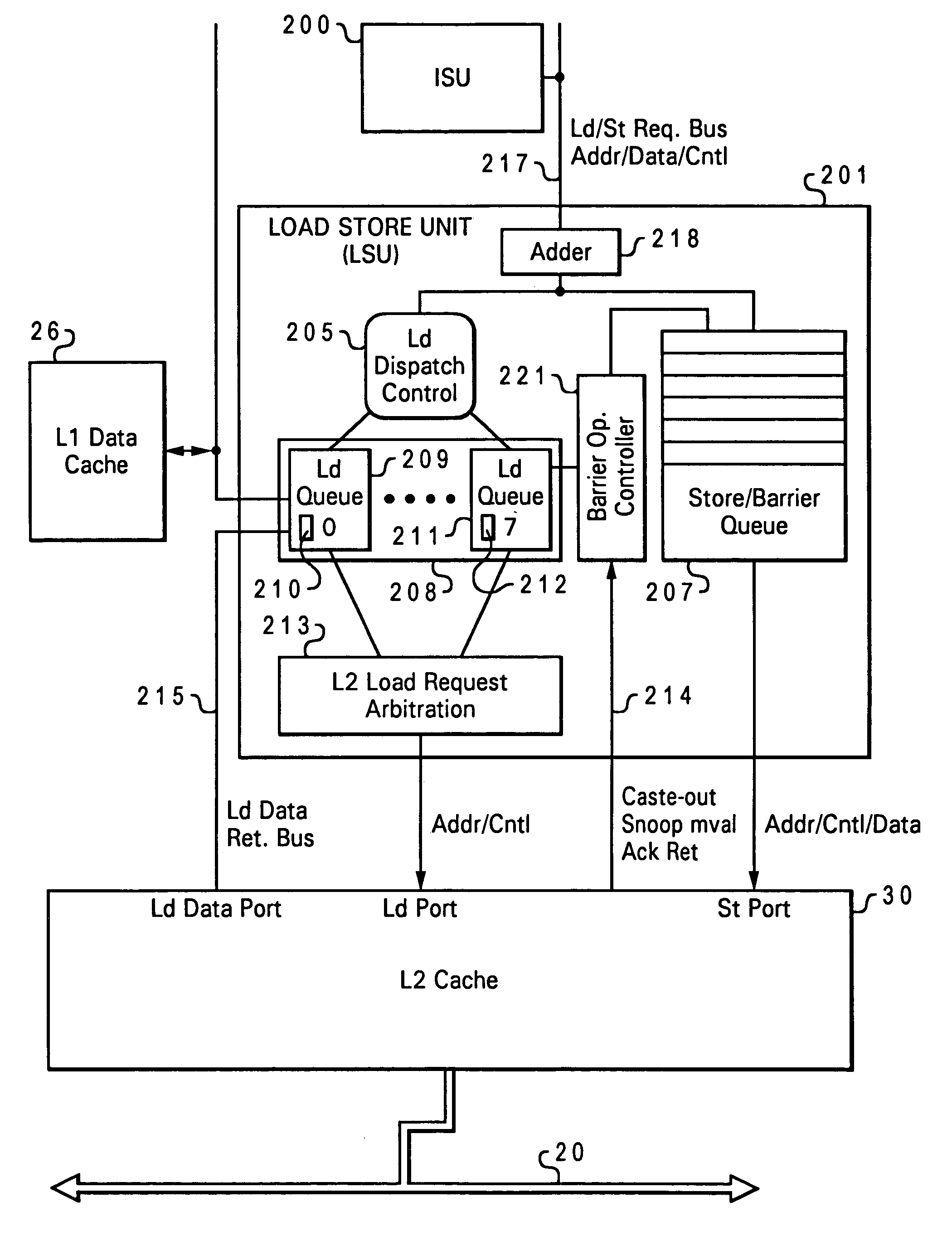

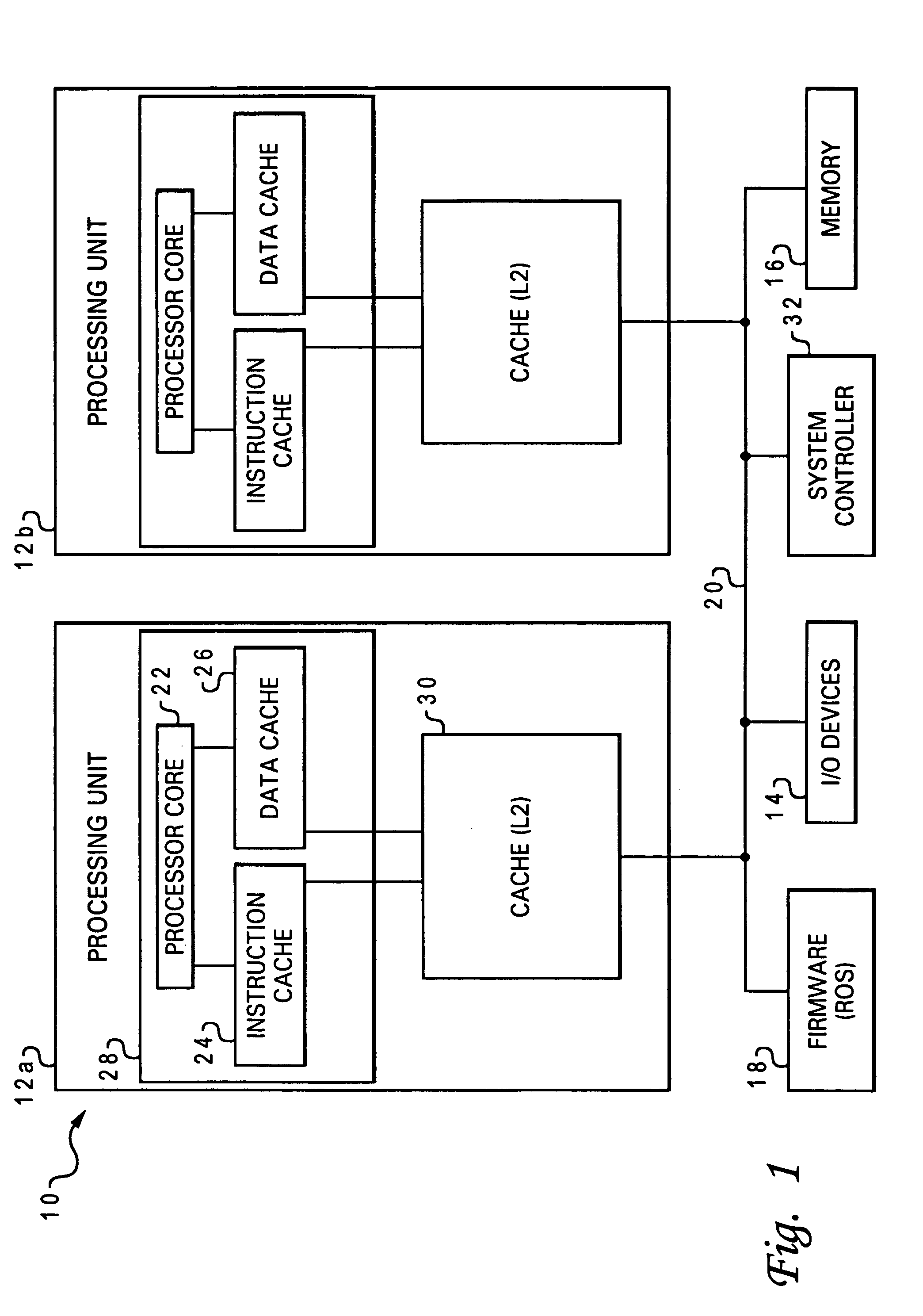

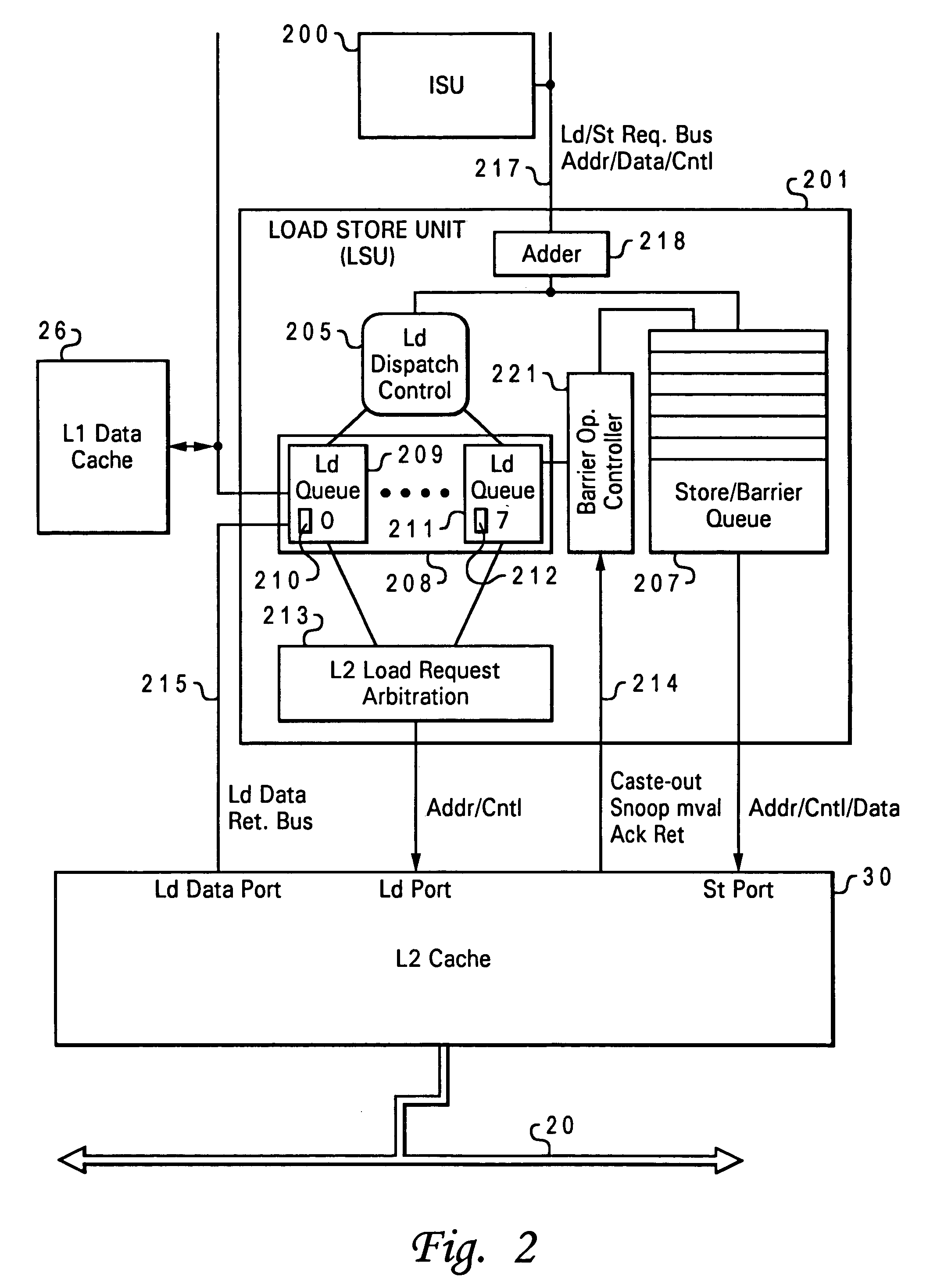

System and method for enabling weak consistent storage advantage to a firmly consistent storage architecture

InactiveUS6963967B1Efficient processingDigital computer detailsConcurrent instruction executionData processing systemProcessing Instruction

Disclosed is a method of processing instructions in a data processing system. An instruction sequence that includes a memory access instruction is received at a processor in program order. In response to receipt of the memory access instruction a memory access request and a barrier operation are created. The barrier operation is placed on an interconnect after the memory access request is issued to a memory system. After the barrier operation has completed, the memory access request is completed in program order. When the memory access request is a load request, the load request is speculatively issued if a barrier operation is pending. Data returned by the speculatively issued load request is only returned to a register or execution unit of the processor when an acknowledgment is received for the barrier operation.

Owner:IBM CORP

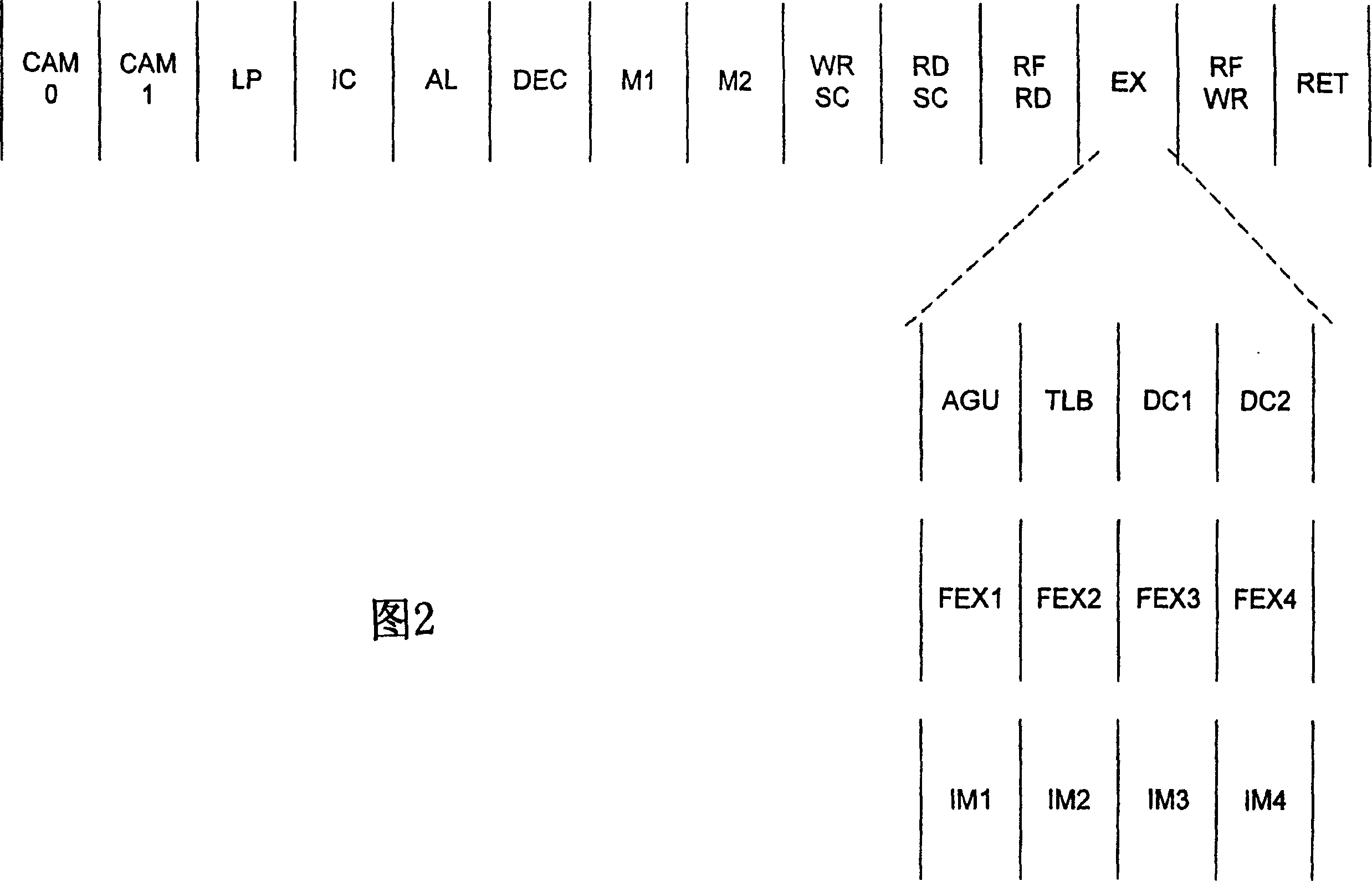

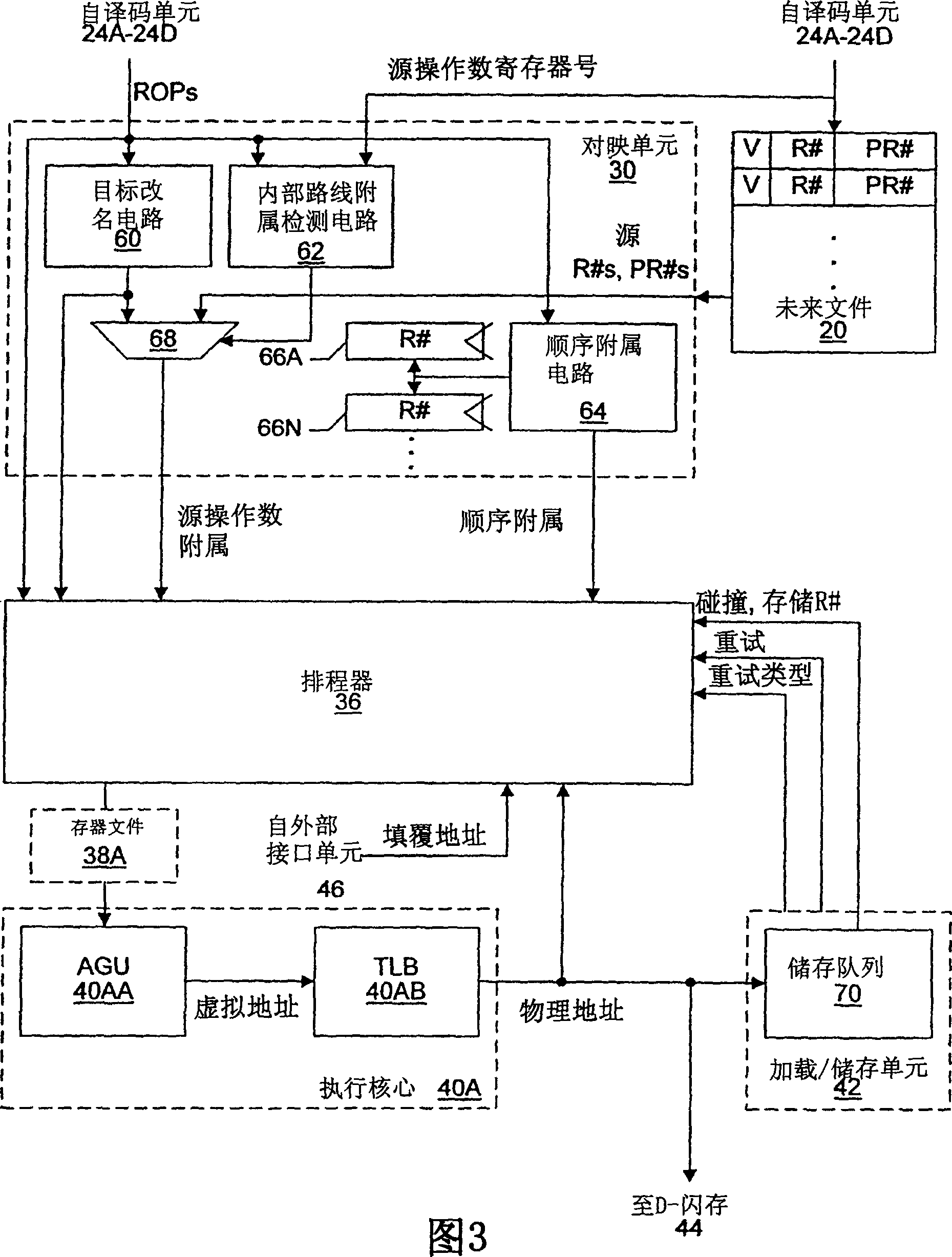

Unordered load/store queue

ActiveUS20090013135A1Digital computer detailsSpecific program execution arrangementsProcedure sequenceStore instruction

A method and processor for providing full load / store queue functionality to an unordered load / store queue for a processor with out-of-order execution. Load and store instructions are inserted in a load / store queue in execution order. Each entry in the load / store queue includes an identification corresponding to a program order. Conflict detection in such an unordered load / store queue may be performed by searching a first CAM for all addresses that are the same or overlap with the address of the load or store instruction to be executed. A further search may be performed in a second CAM to identify those entries that are associated with younger or older instructions with respect to the sequence number of the load or store instruction to be executed. The output results of the Address CAM and Age CAM are logically ANDed.

Owner:BOARD OF RGT THE UNIV OF TEXAS SYST

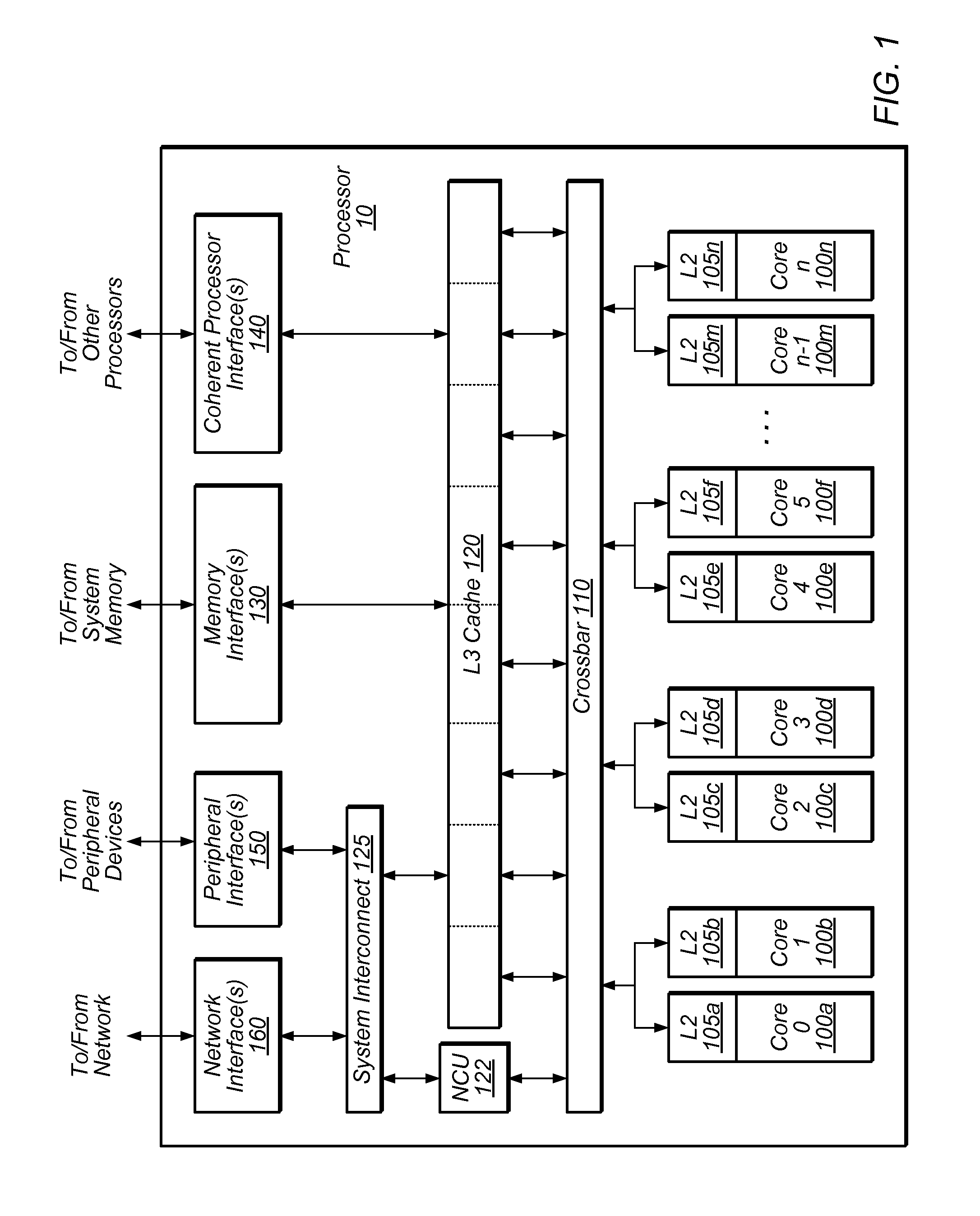

Load/store ordering in a threaded out-of-order processor

ActiveUS20100293347A1Memory adressing/allocation/relocationProgram controlArray data structureLoad instruction

Systems and methods for efficient load-store ordering. A processor comprises a store buffer that includes an array. The store buffer dynamically allocates any entry of the array for an out-of-order (o-o-o) issued store instruction independent of a corresponding thread. Circuitry within the store buffer determines a first set of entries of the array entries that have store instructions older in program order than a particular load instruction, wherein the store instructions have a same thread identifier and address as the load instruction. From the first set, the logic locates a single final match entry of the first set corresponding to the youngest store instruction of the first set, which may be used for read-after-write (RAW) hazard detection.

Owner:ORACLE INT CORP

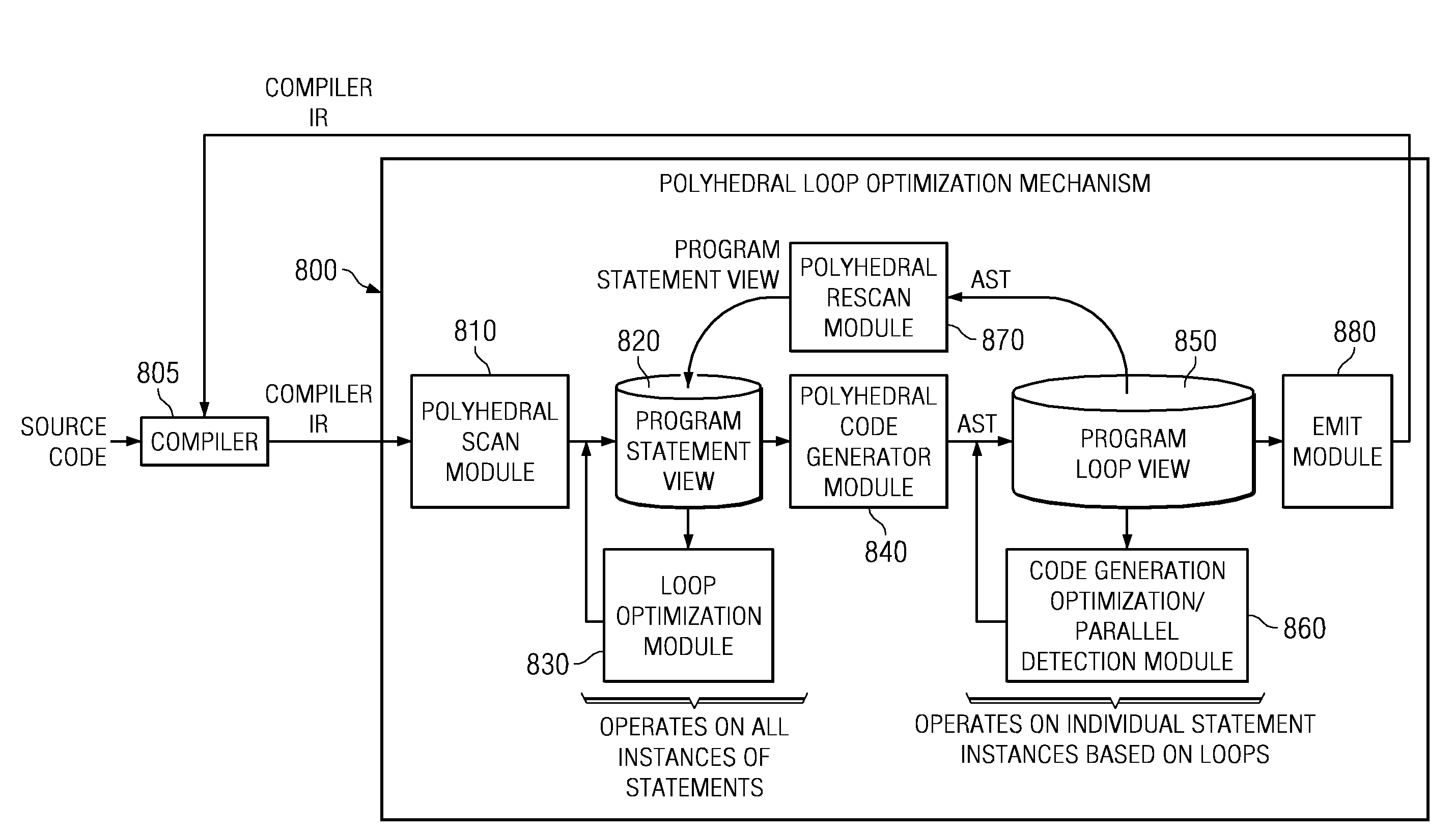

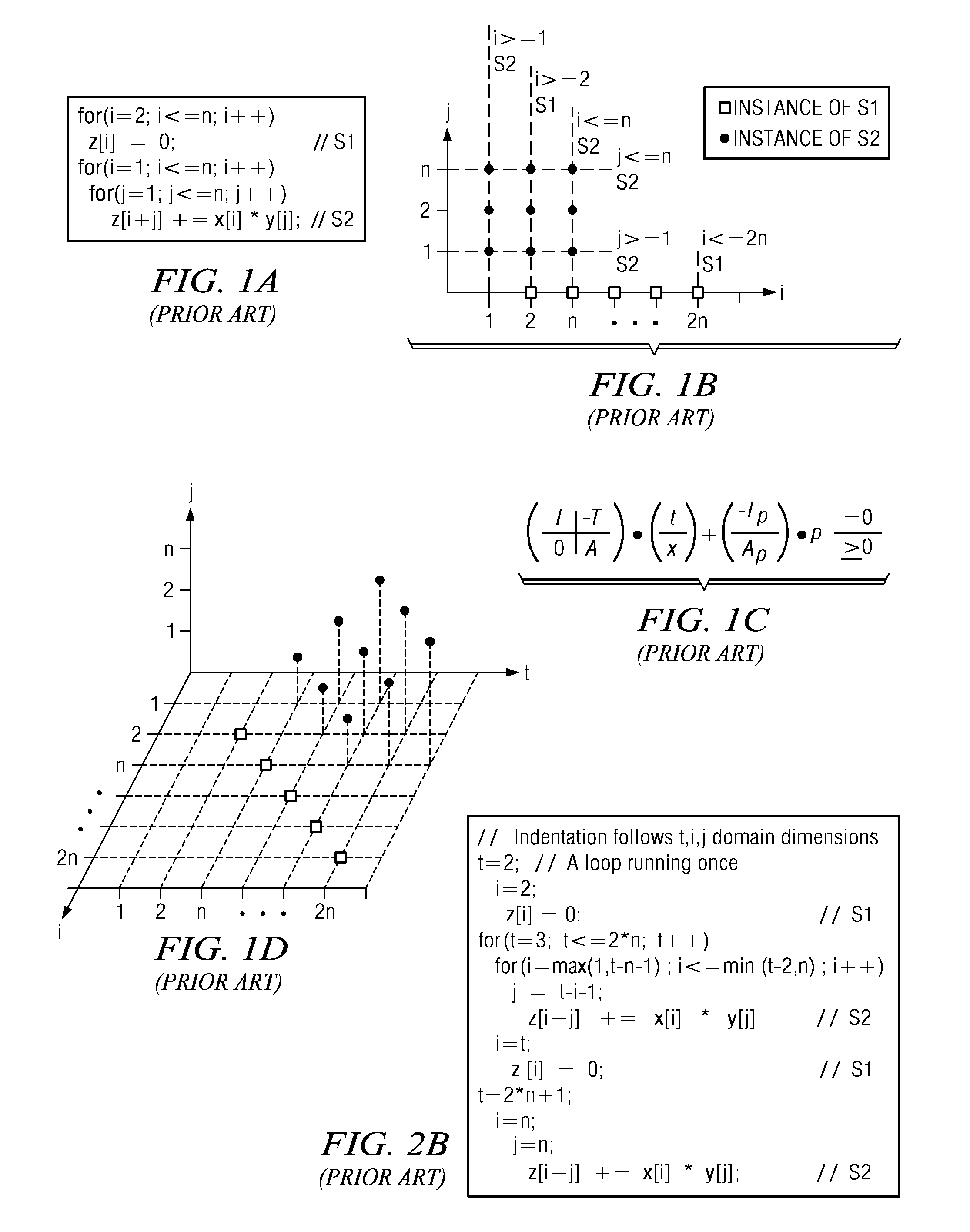

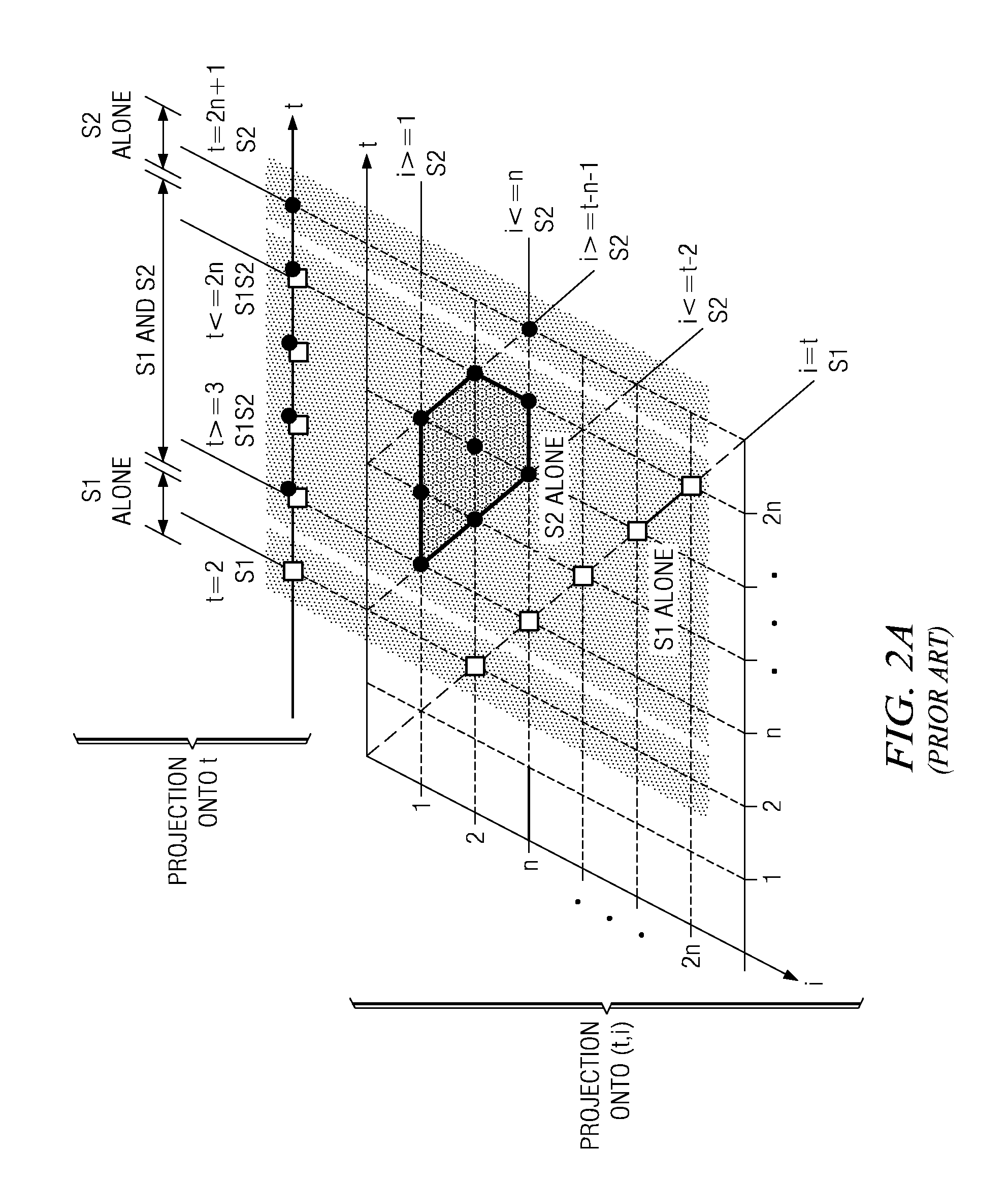

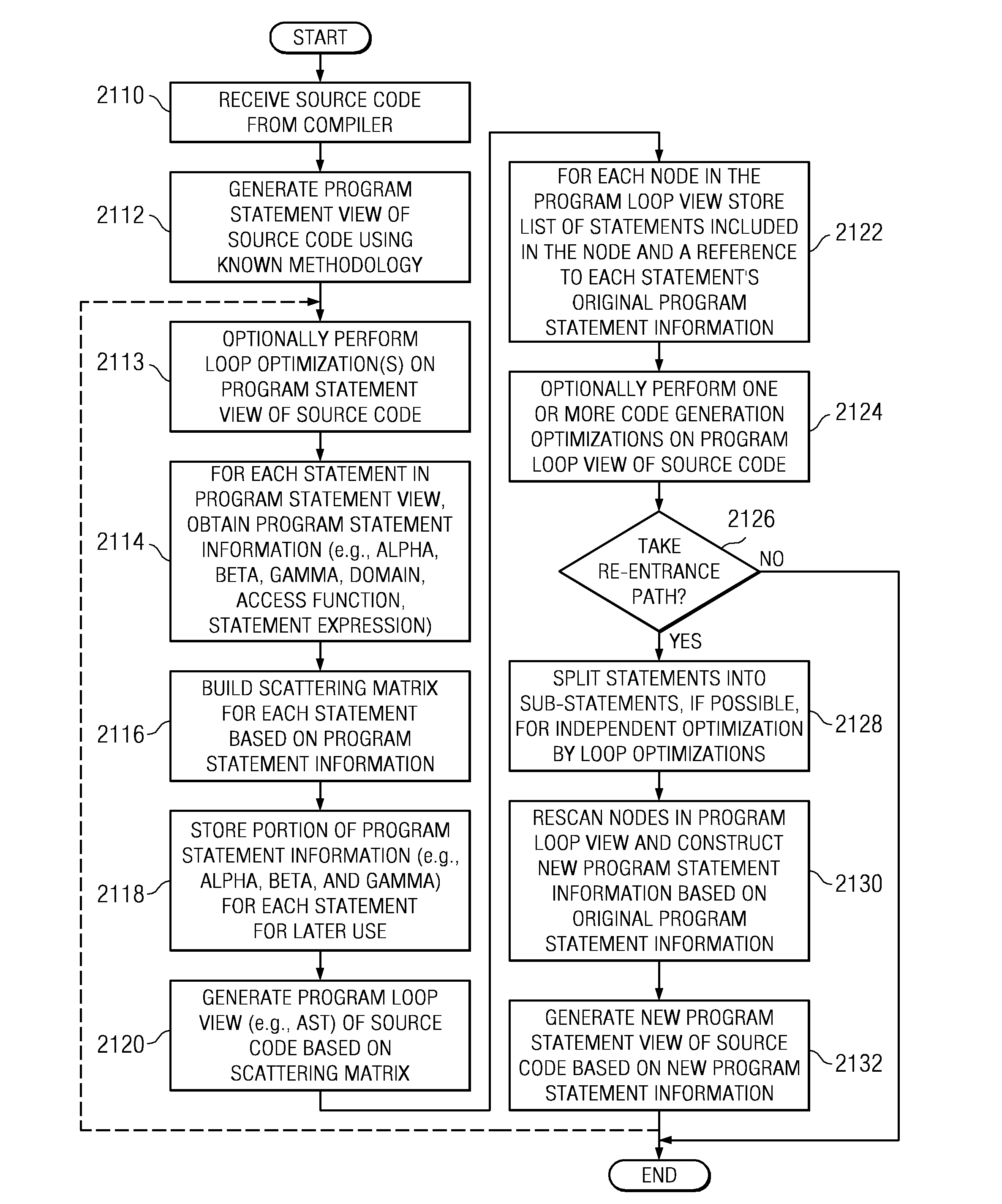

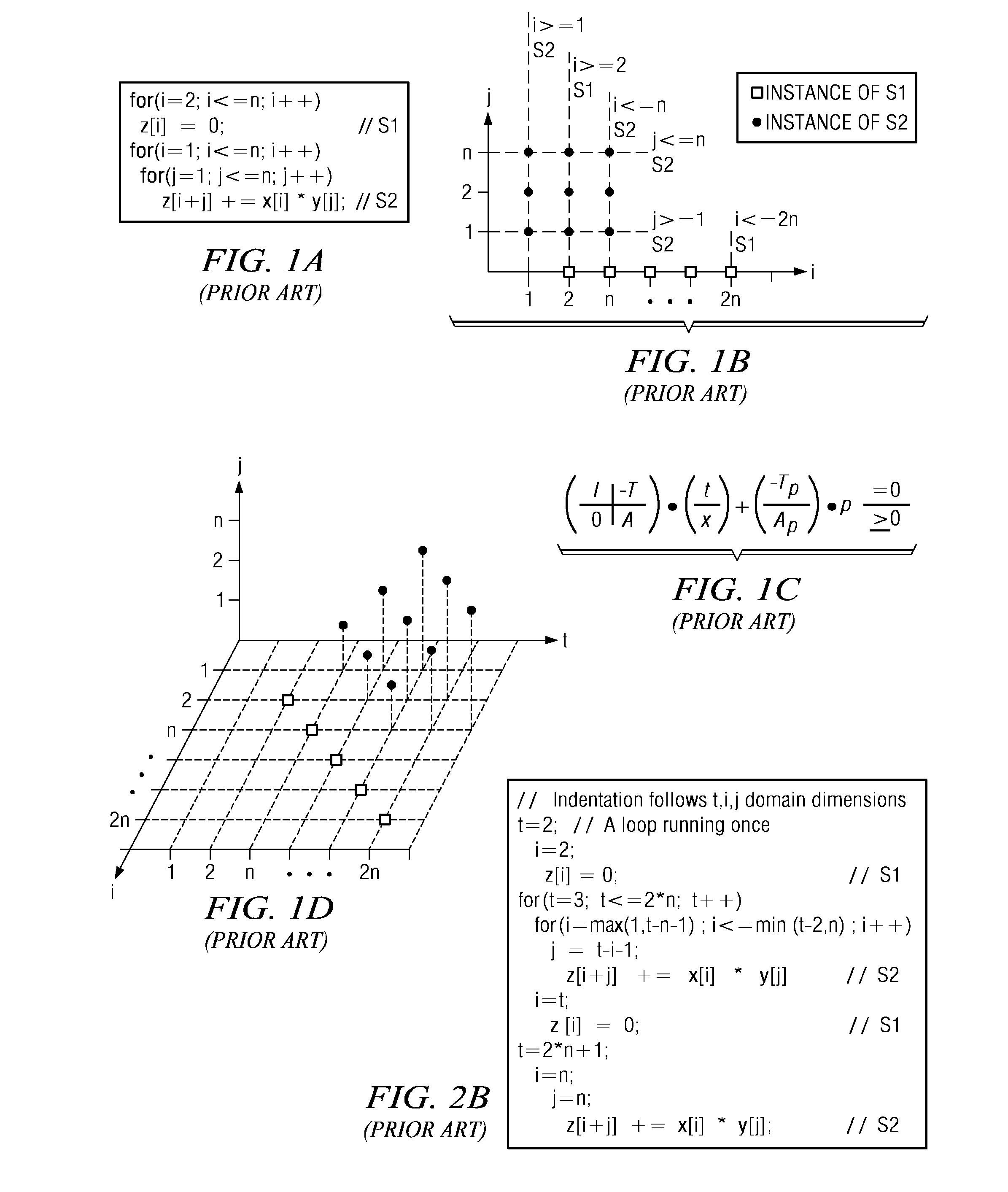

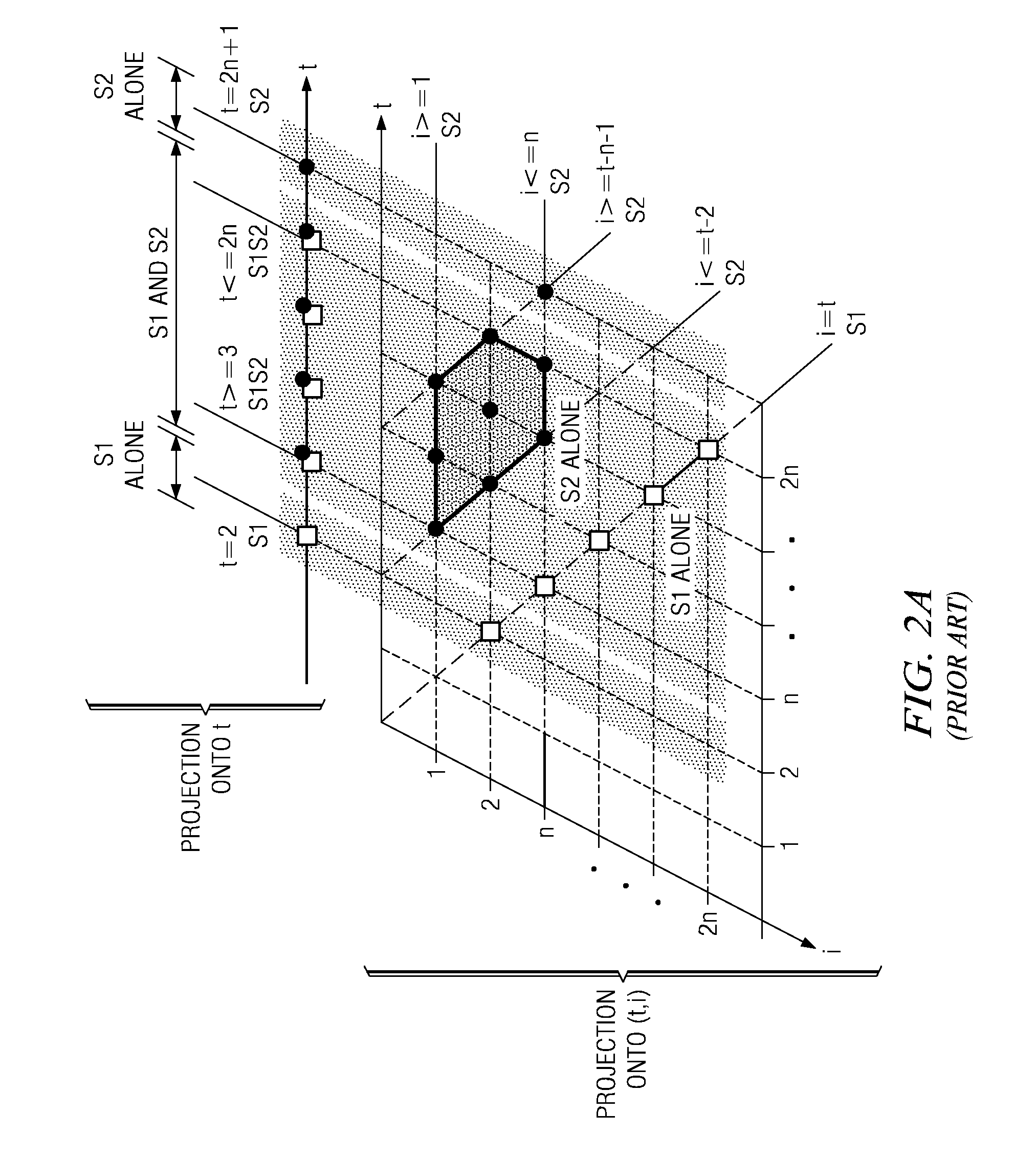

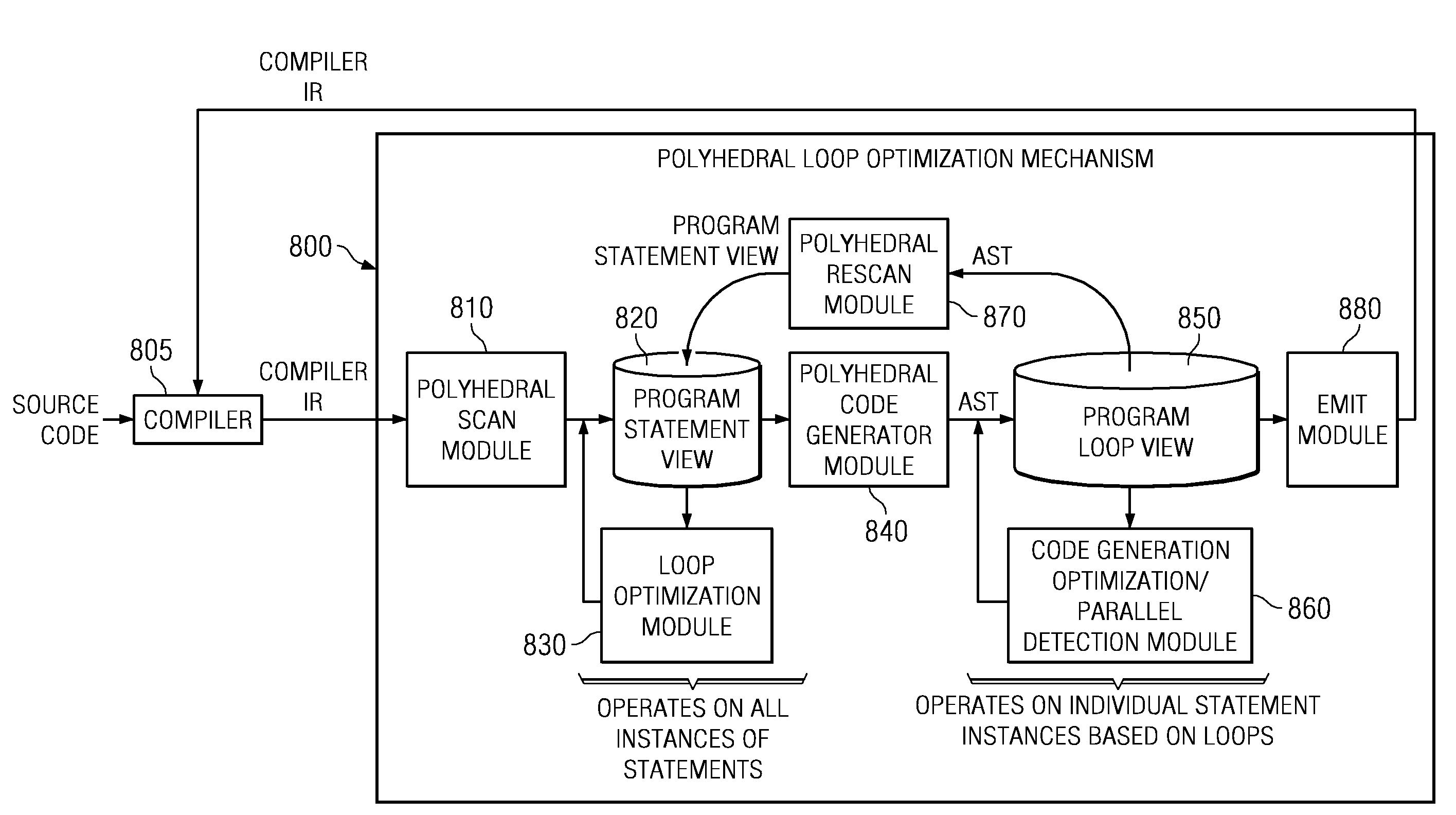

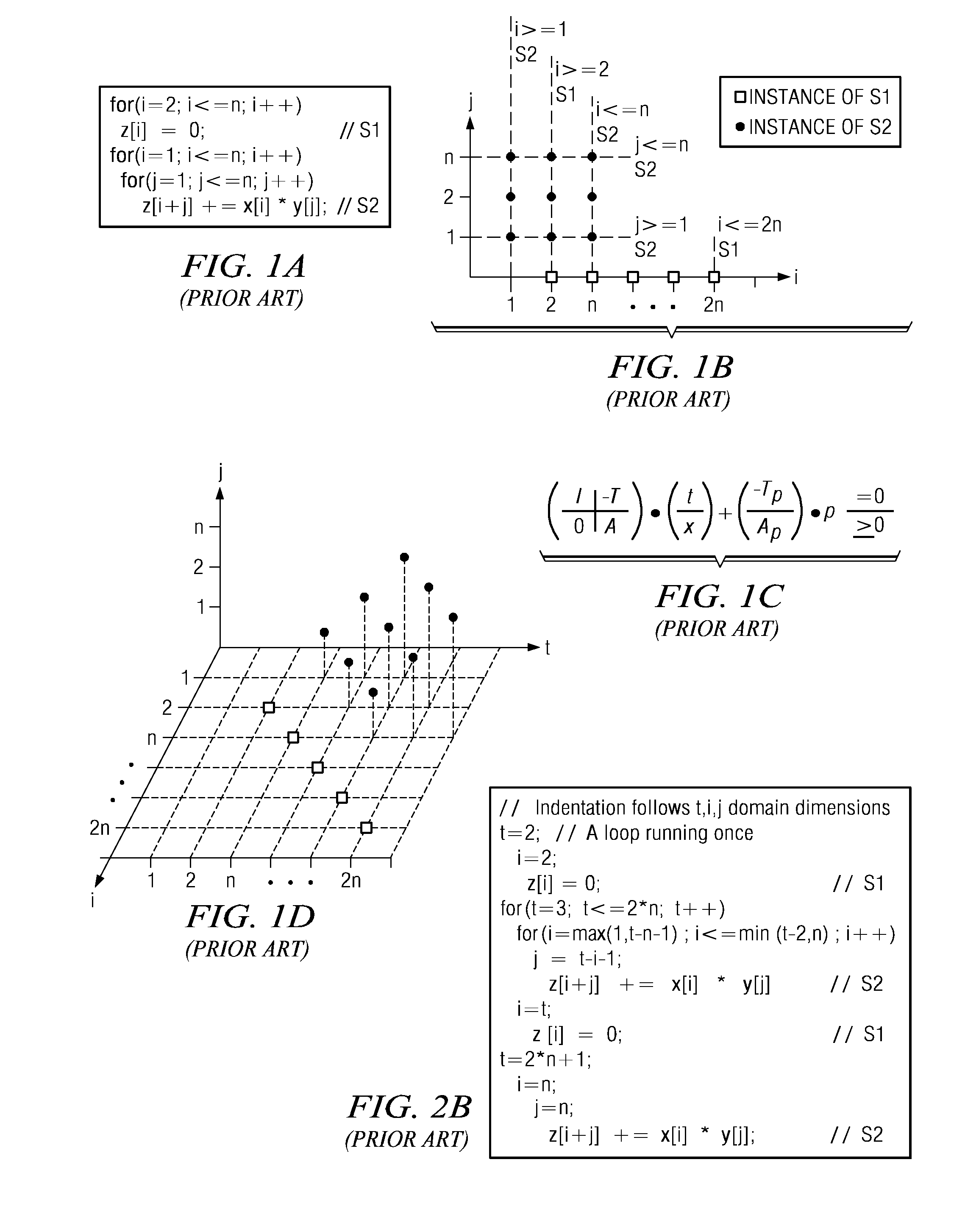

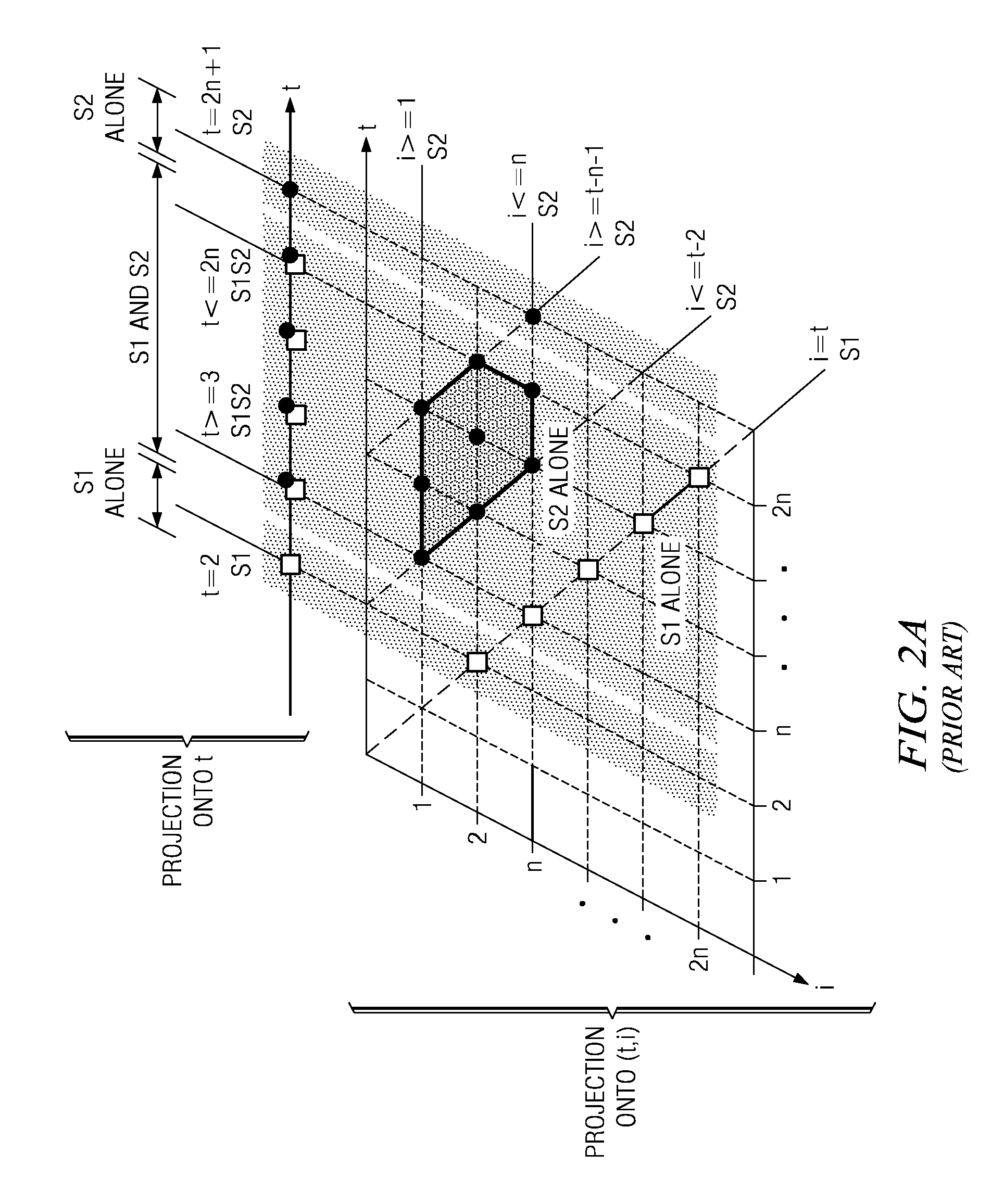

System and Method for Domain Stretching for an Advanced Dual-Representation Polyhedral Loop Transformation Framework

InactiveUS20090307673A1Software engineeringProgram controlLoop transformationTheoretical computer science

A system and method for domain stretching for an advanced dual-representation polyhedral loop transformation framework are provided. The mechanisms of the illustrative embodiments address the weaknesses of the known polyhedral loop transformation based approaches by providing mechanisms for performing code generation transformations on individual statement instances in an intermediate representation generated by the polyhedral loop transformation optimization of the source code. These code generation transformations have the important property that they do not change program order of the statements in the intermediate representation. This property allows the result of the code generation transformations to be provided back to the polyhedral loop transformation mechanisms in a program statement view, via a new re-entrance path of the illustrative embodiments, for additional optimization. In addition, mechanisms are provided for stretching the domains of statements in a program loop view of the source code to thereby normalize the domains.

Owner:IBM CORP

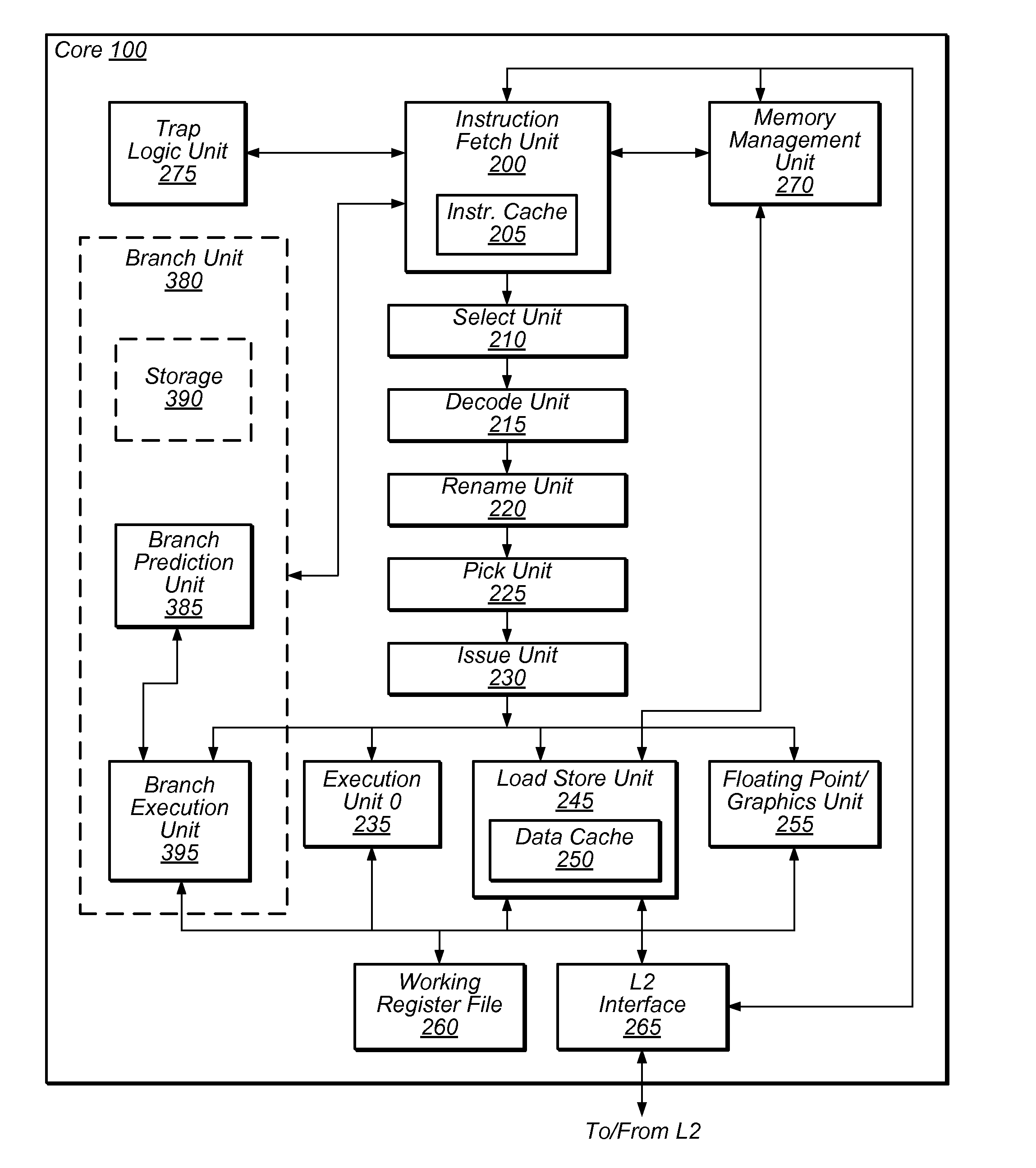

Suppression of control transfer instructions on incorrect speculative execution paths

ActiveUS20120290820A1Suppresses resultEliminates spurious flushDigital computer detailsConcurrent instruction executionSpeculative executionExecution control

Owner:ORACLE INT CORP

Selective code generation optimization for an advanced dual-representation polyhedral loop transformation framework

InactiveUS8087010B2Software engineeringSpecific program execution arrangementsLoop transformationSource code

Mechanisms for selective code generation optimization for an advanced dual-representation polyhedral loop transformation framework are provided. The mechanisms of the illustrative embodiments address the weaknesses of the known polyhedral loop transformation based approaches by providing mechanisms for performing code generation transformations on individual statement instances in an intermediate representation generated by the polyhedral loop transformation optimization of the source code. These code generation transformations have the important property that they do not change program order of the statements in the intermediate representation. This property allows the result of the code generation transformations to be provided back to the polyhedral loop transformation mechanisms in a program statement view, via a new re-entrance path of the illustrative embodiments, for additional optimization.

Owner:INT BUSINESS MASCH CORP

System and Method for Advanced Polyhedral Loop Transformations of Source Code in a Compiler

InactiveUS20090083724A1Control flow overheadSoftware engineeringProgram controlLoop transformationSource code

A system and method for advanced polyhedral loop transformations of source code in a compiler are provided. The mechanisms of the illustrative embodiments address the weaknesses of the known polyhedral loop transformation based approaches by providing mechanisms for performing code generation transformations on individual statement instances in an intermediate representation generated by the polyhedral loop transformation optimization of the source code. These code generation transformations have the important property that they do not change program order of the statements in the intermediate representation. This property allows the result of the code generation transformations to be provided back to the polyhedral loop transformation mechanisms in a program statement view, via a new re-entrance path of the illustrative embodiments, for additional optimization.

Owner:IBM CORP

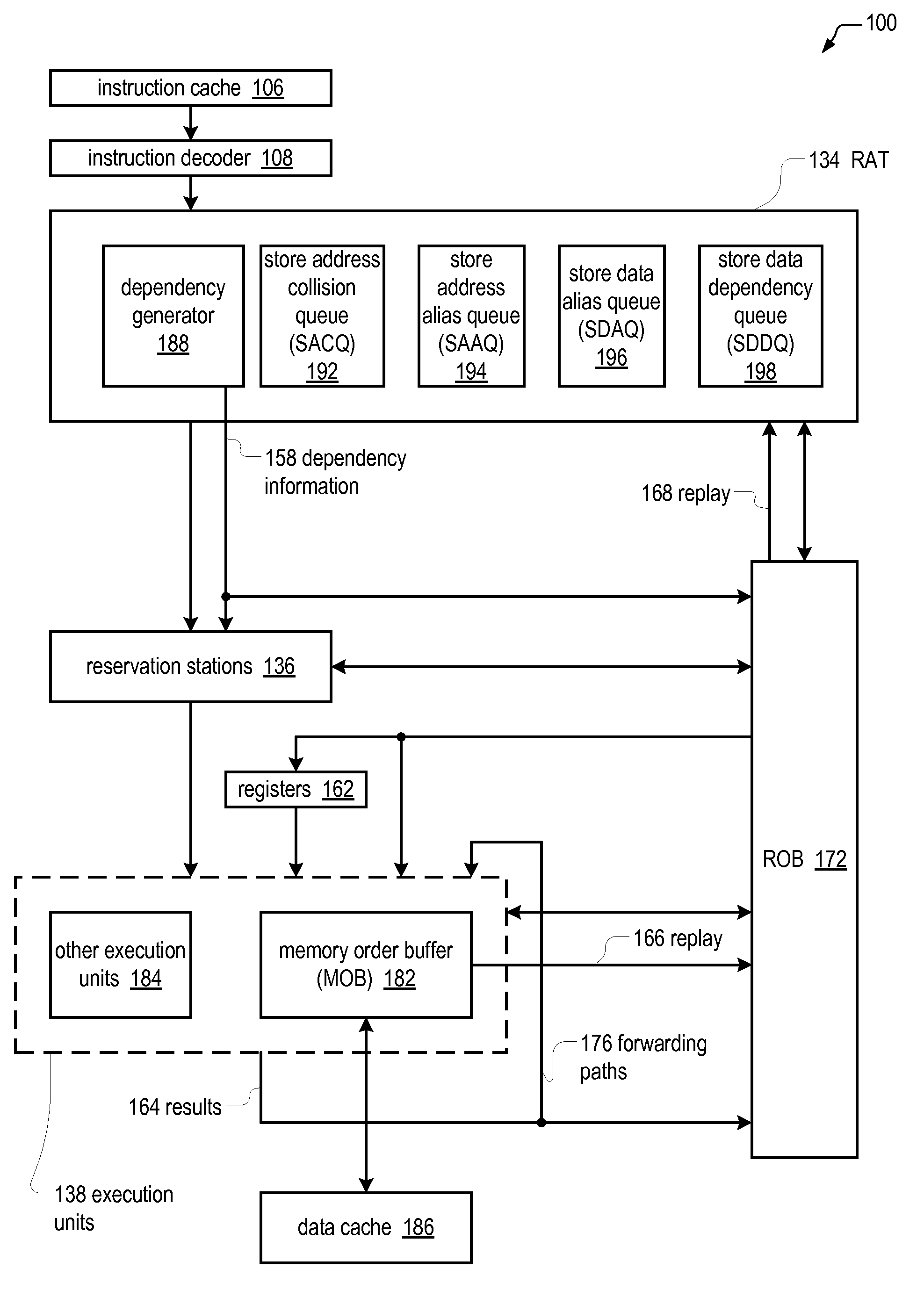

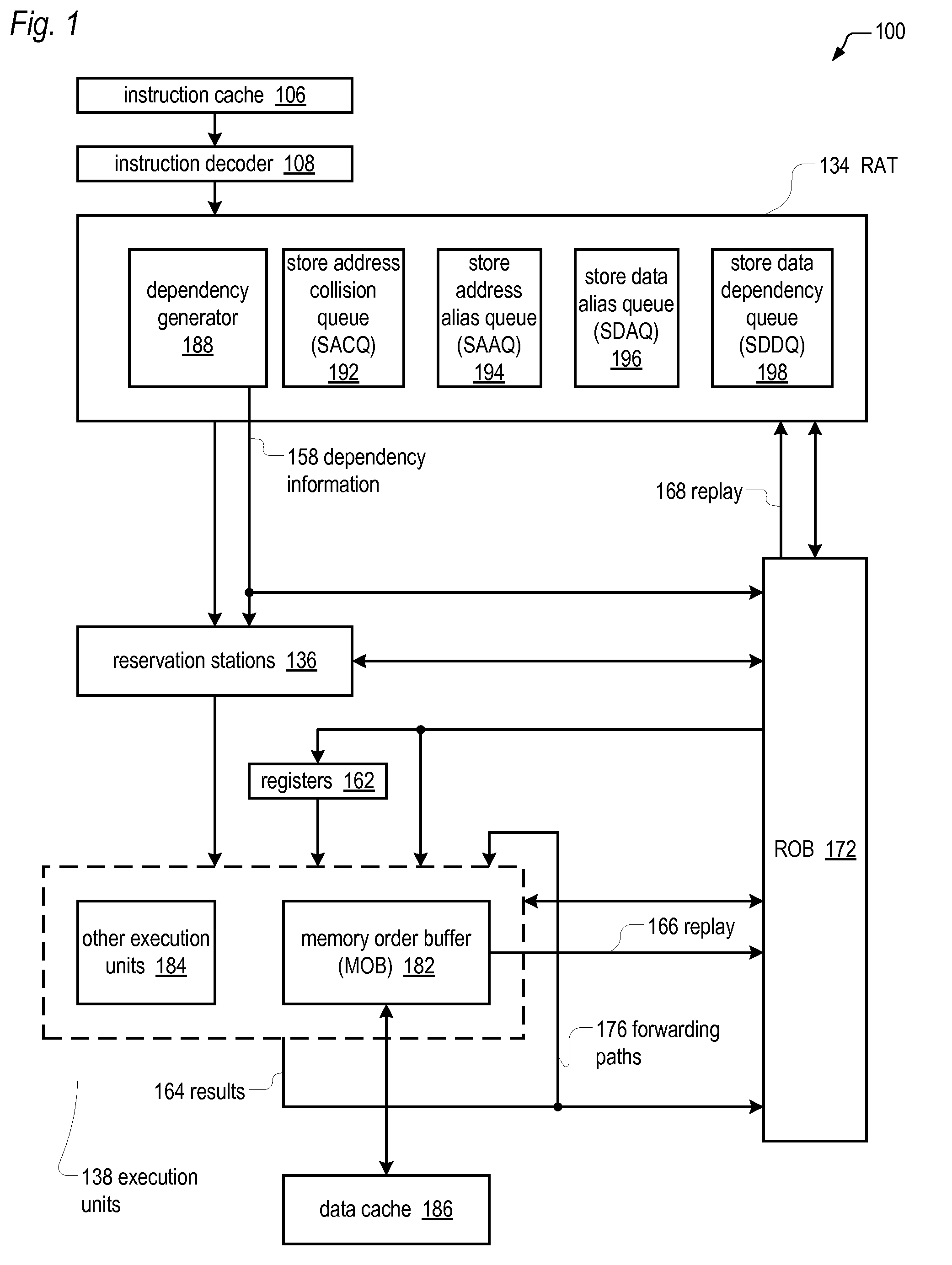

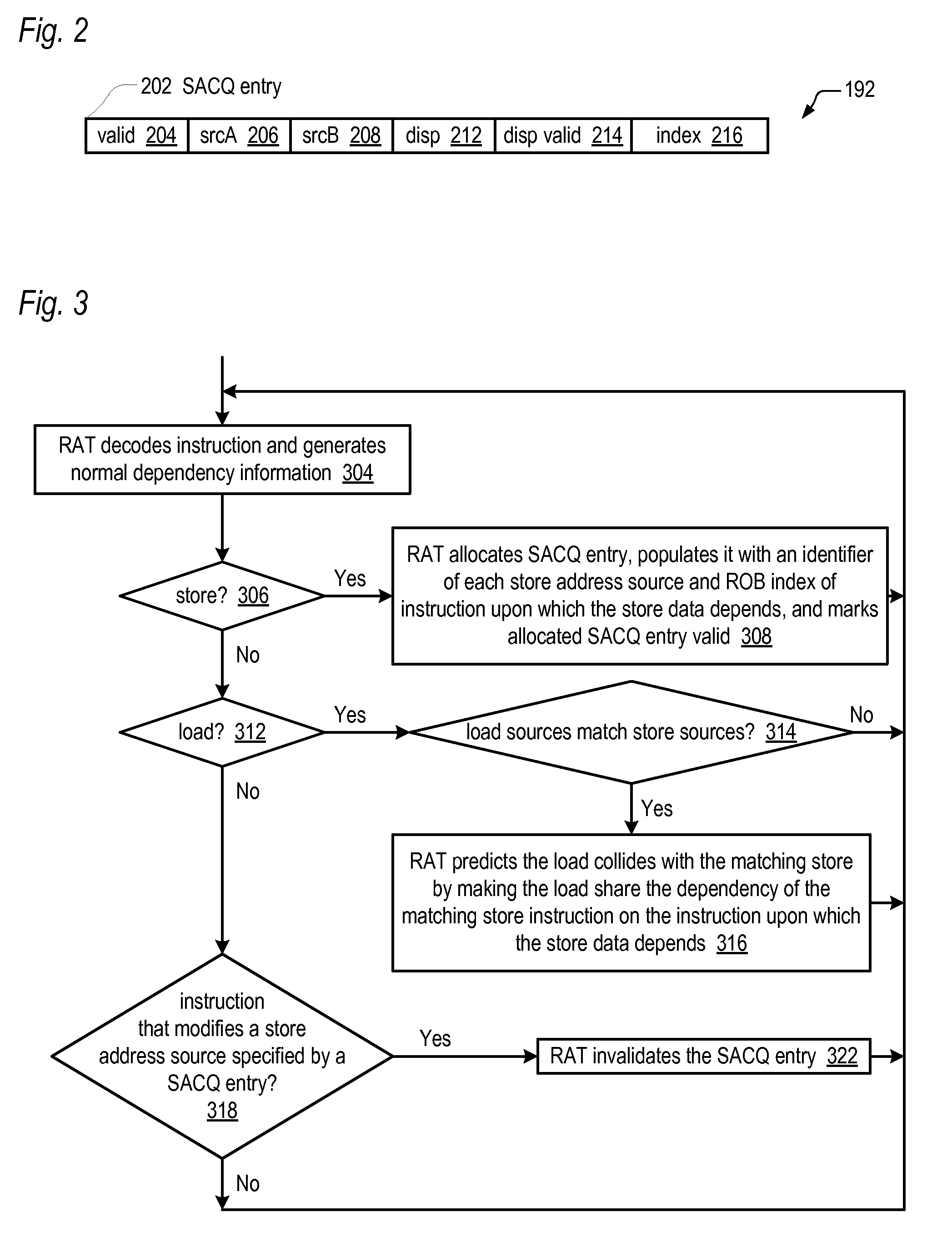

Out-of-order execution microprocessor with reduced store collision load replay reduction

InactiveUS20100306509A1Reduce the possibilityDigital computer detailsMemory systemsLoad instructionStorage violation

An out-of-order execution microprocessor for reducing the likelihood of having to replay a load instruction due to a store collision. The microprocessor includes a queue of entries, each entry configured to hold an instruction pointer of a load instruction and to hold information useable to identify a store instruction that caused the load instruction to be replayed on a first instance of the load instruction. A register alias table (RAT) encounters instructions in program order and generates dependencies used to determine when the instructions may execute out of program order. The RAT encounters the load instruction on a second instance, determines that the load instruction second instance instruction pointer matches the instruction pointer of an entry of the queue, and causes the load instruction on the second instance to have a dependency on the store instruction identified by the information in the matching entry.

Owner:VIA TECH INC

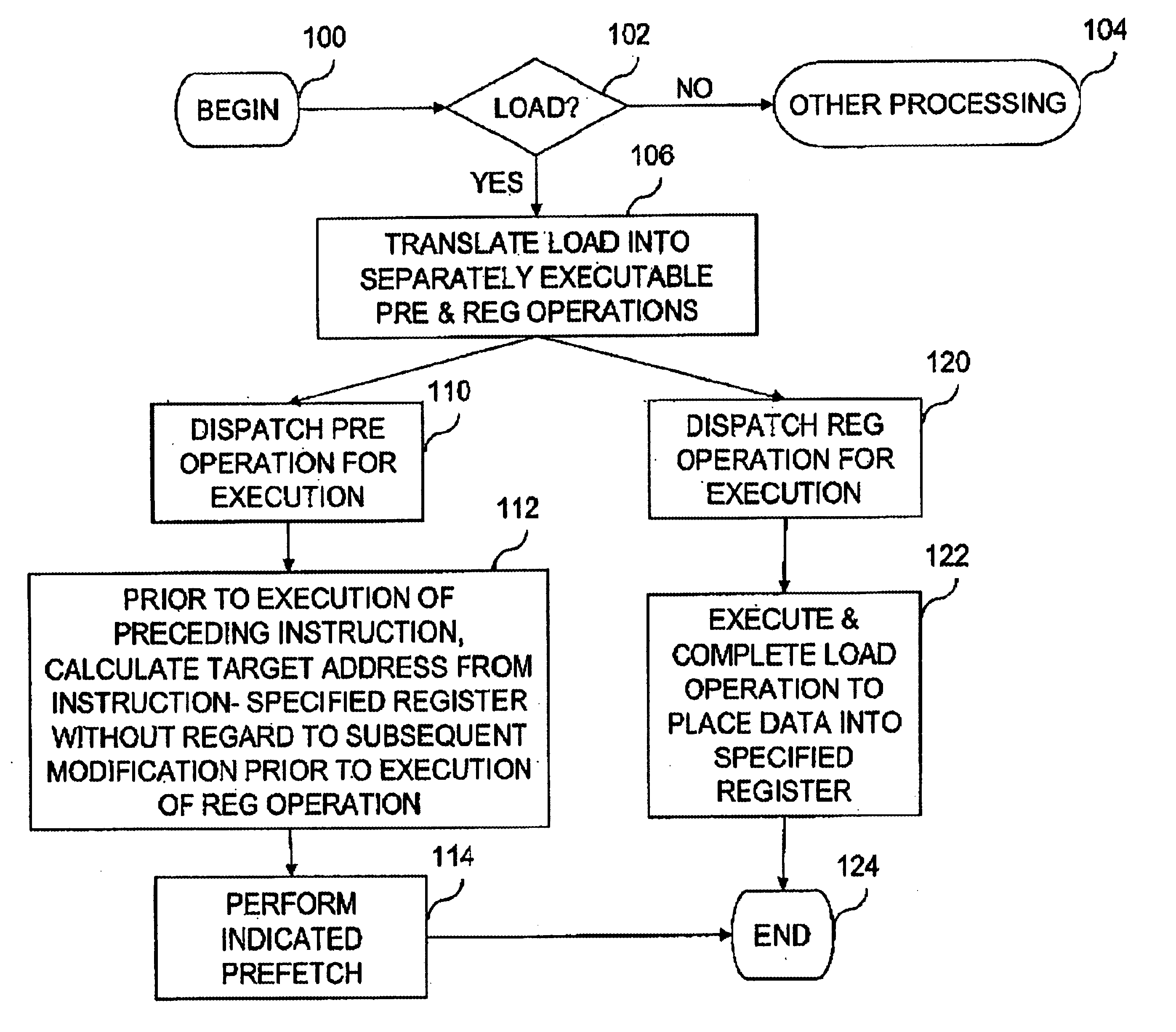

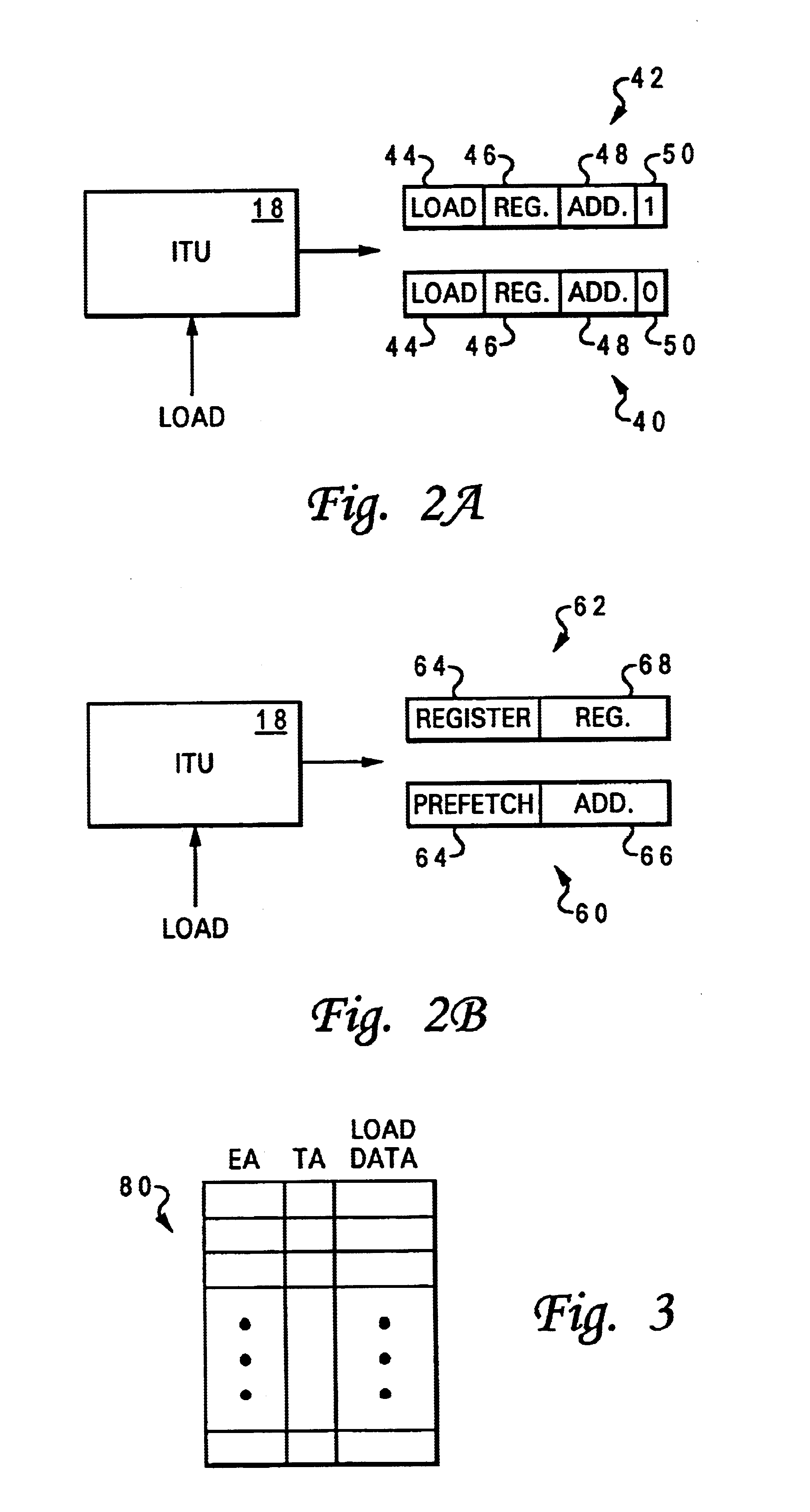

Processor and method of executing a load instruction that dynamically bifurcate a load instruction into separately executable prefetch and register operations

InactiveUS6871273B1Lower performance requirementsMore complexRuntime instruction translationDigital computer detailsLoad instructionProcessor register

A processor implementing an improved method for executing load instructions includes execution circuitry, a plurality of registers, and instruction processing circuitry. The instruction processing circuitry fetches a load instruction and a preceding instruction that precedes the load instruction in program order, and in response to detecting the load instruction, translates the load instruction into separately executable prefetch and register operations. The execution circuitry performs at least the prefetch operation out-of-order with respect to the preceding instruction to prefetch data into the processor and subsequently separately executes the register operation to place the data into a register specified by the load instruction. In an embodiment in which the processor is an in-order machine, the register operation is performed in-order with respect to the preceding instruction.

Owner:IBM CORP

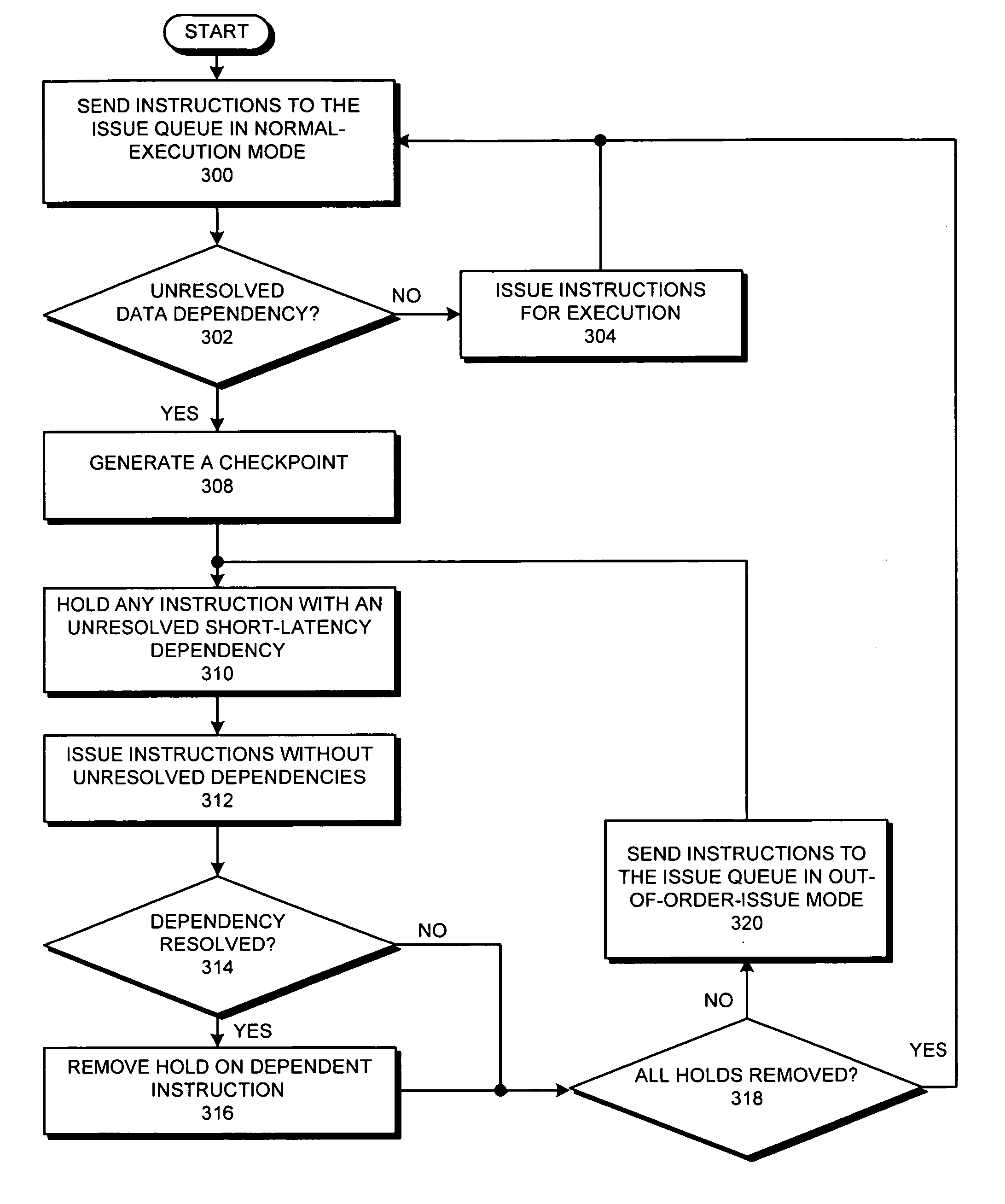

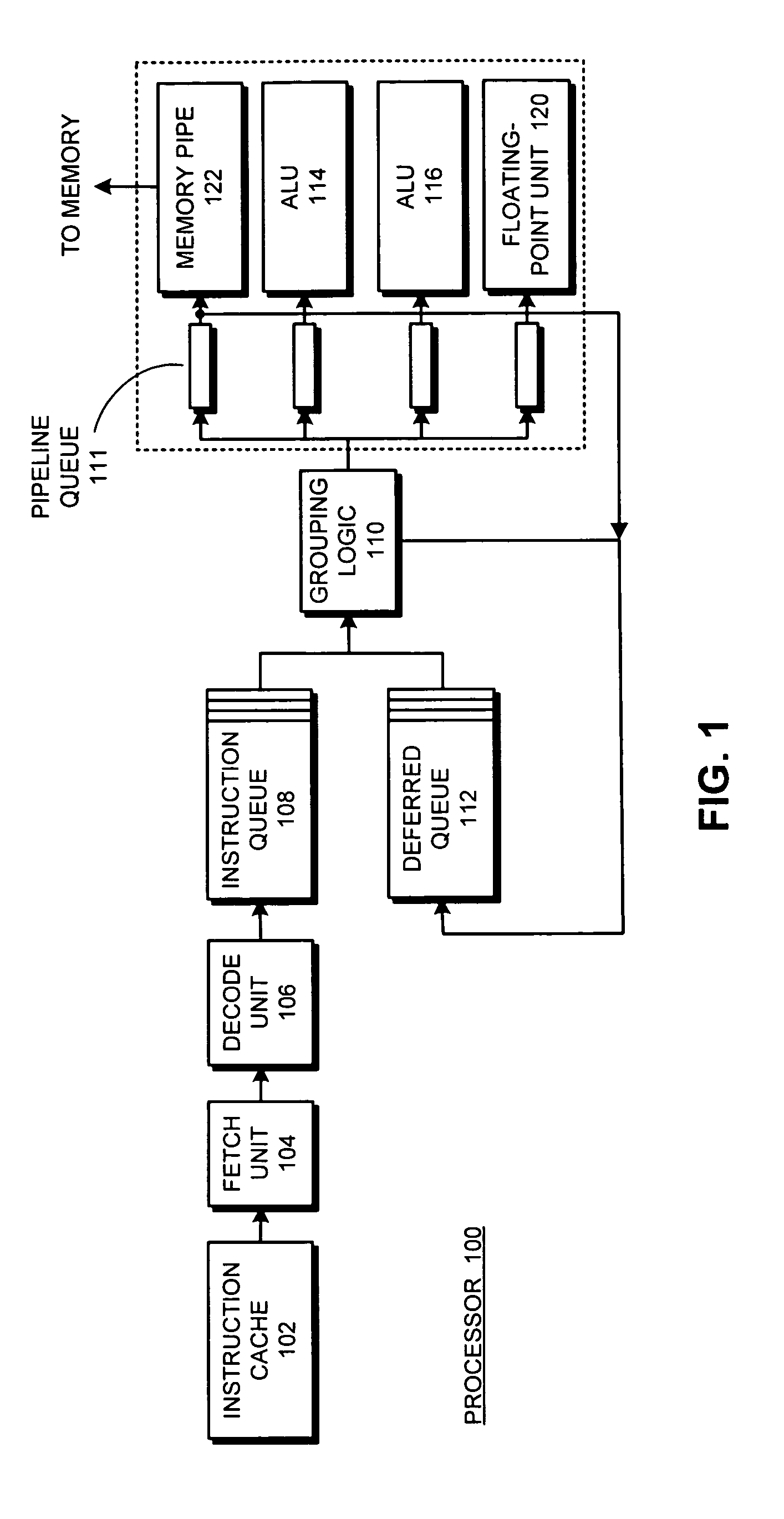

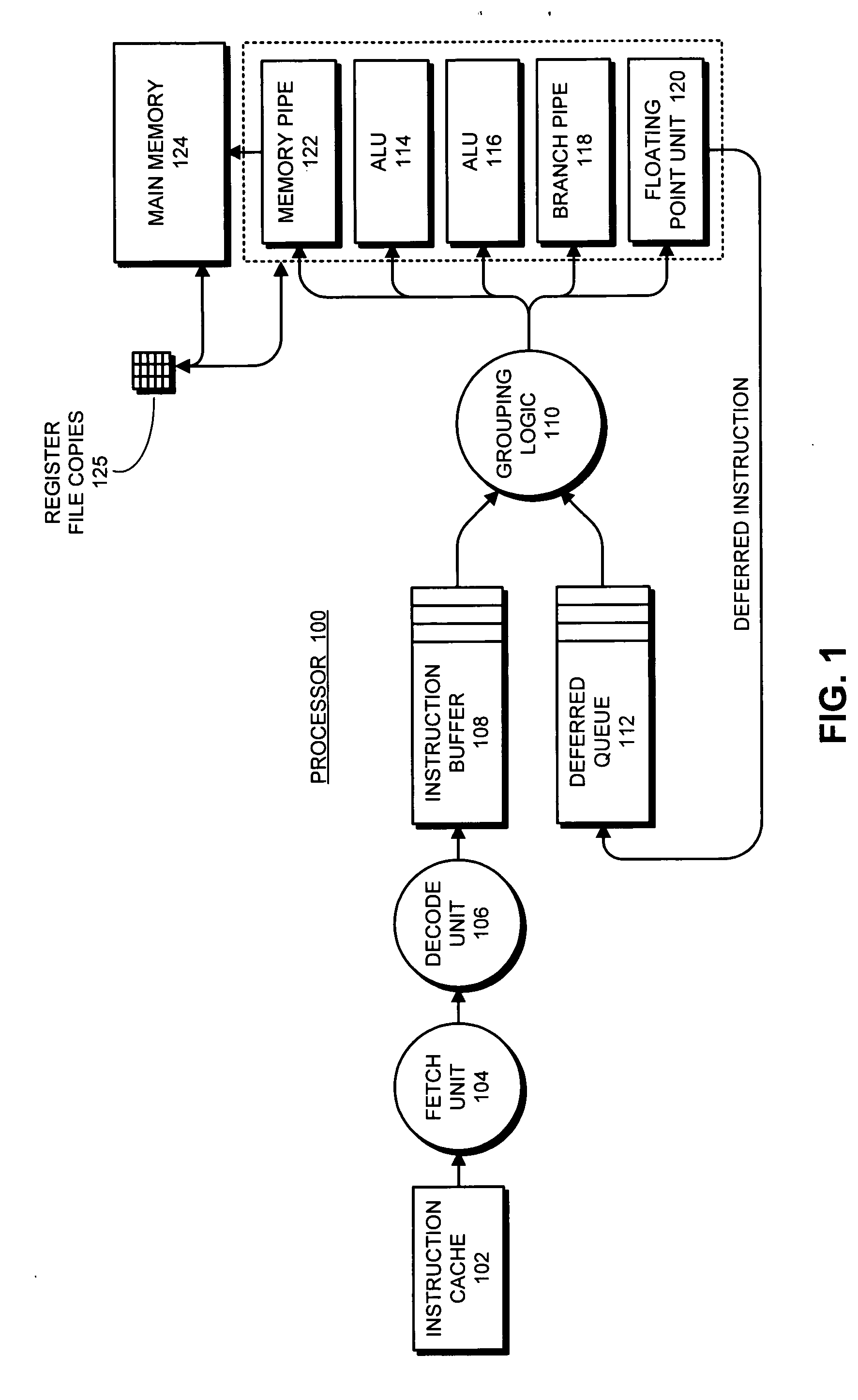

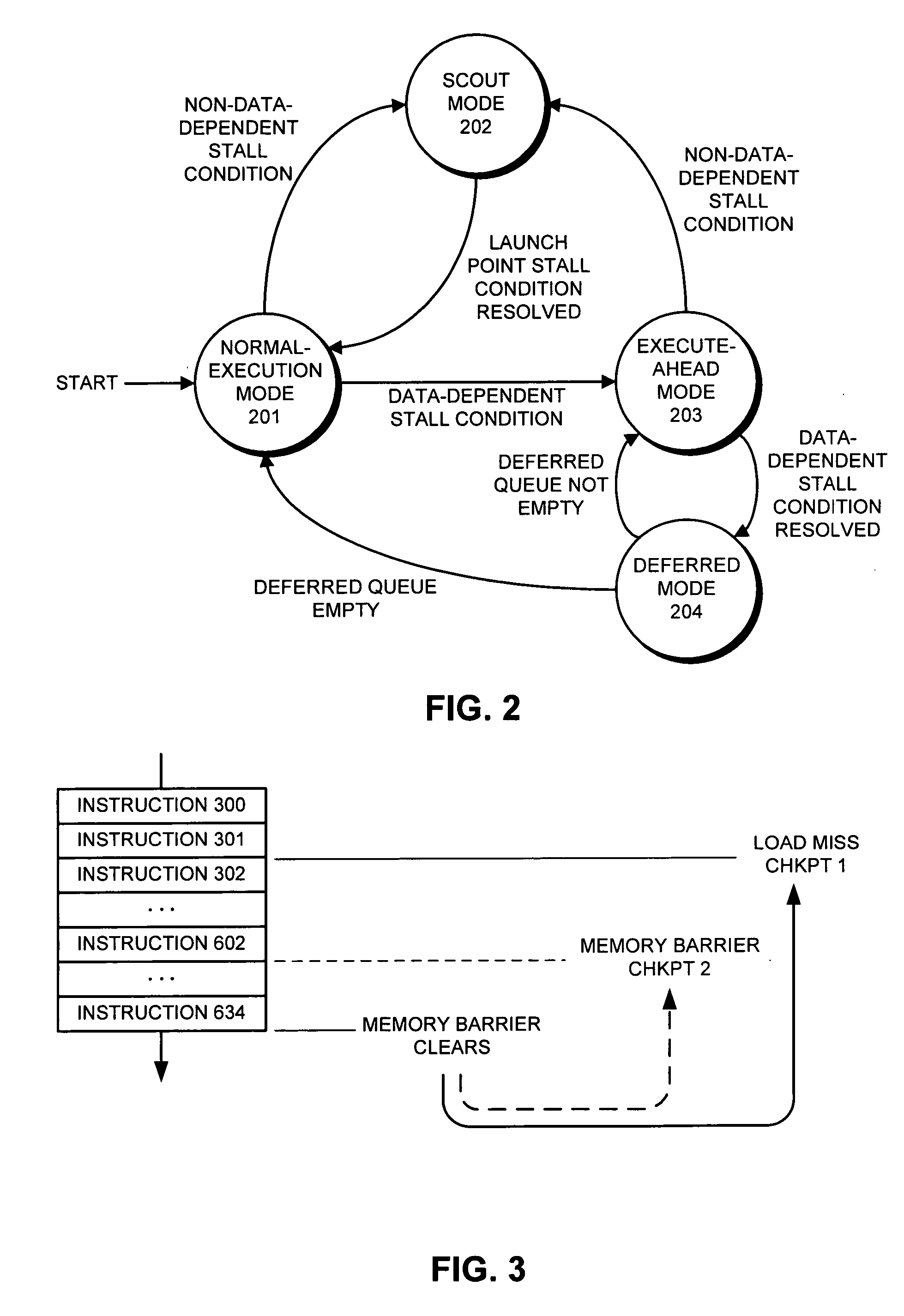

Supporting out-of-order issue in an execute-ahead processor

InactiveUS20070186081A1Digital computer detailsSpecific program execution arrangementsWaiting timeProcedure sequence

One embodiment of the present invention provides a system which supports out-of-order issue in a processor that normally executes instructions in-order. The system starts by issuing instructions from an issue queue in program order during a normal-execution mode. While issuing the instructions, the system determines if any instruction in the issue queue has an unresolved short-latency data dependency which depends on a short-latency operation. If so, the system generates a checkpoint and enters an out-of-order-issue mode, wherein instructions in the issue queue with unresolved short-latency data dependencies are held and not issued, and wherein other instructions in the issue queue without unresolved data dependencies are allowed to issue out-of-order.

Owner:SUN MICROSYSTEMS INC

Store prefetching via store queue lookahead

ActiveUS20100306477A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingWaiting time

Systems and methods for efficient handling of store misses. A processor comprises a store queue that stores data for committed store instructions. Coupled to the store queue is a cache responsible for ensuring consistent ordering of store operations for all consumers, which may be accomplished by maintaining a corresponding cache line be in an exclusive state before executing a store operation. In response to a first committed store instruction missing in the cache, the store queue is configured to convey to the cache a second entry of the plurality of queue entries as a speculative prefetch instruction. This second entry corresponds to a committed store instruction that follows in program order the first committed store instruction of a given thread. If the prefetch instruction misses in the cache, the latency for acquiring a corresponding cache line overlaps with the latency of the first store instruction.

Owner:ORACLE INT CORP

Dynamically allocated store queue for a multithreaded processor

ActiveUS20100299508A1Digital computer detailsConcurrent instruction executionData shippingInstruction distribution

Systems and methods for storage of writes to memory corresponding to multiple threads. A processor comprises a store queue, wherein the queue dynamically allocates a current entry for a committed store instruction in which entries of the array may be allocated out of program order. For a given thread, the store queue conveys store data to a memory in program order. The queue is further configured to identify an entry of the plurality of entries that corresponds to an oldest committed store instruction for a given thread and determine a next entry of the array that corresponds to a next committed store instruction in program order following the oldest committed store instruction of the given thread, wherein said next entry includes data identifying the entry. The queue marks an entry as unfilled upon successful conveying of store data to the memory.

Owner:ORACLE INT CORP

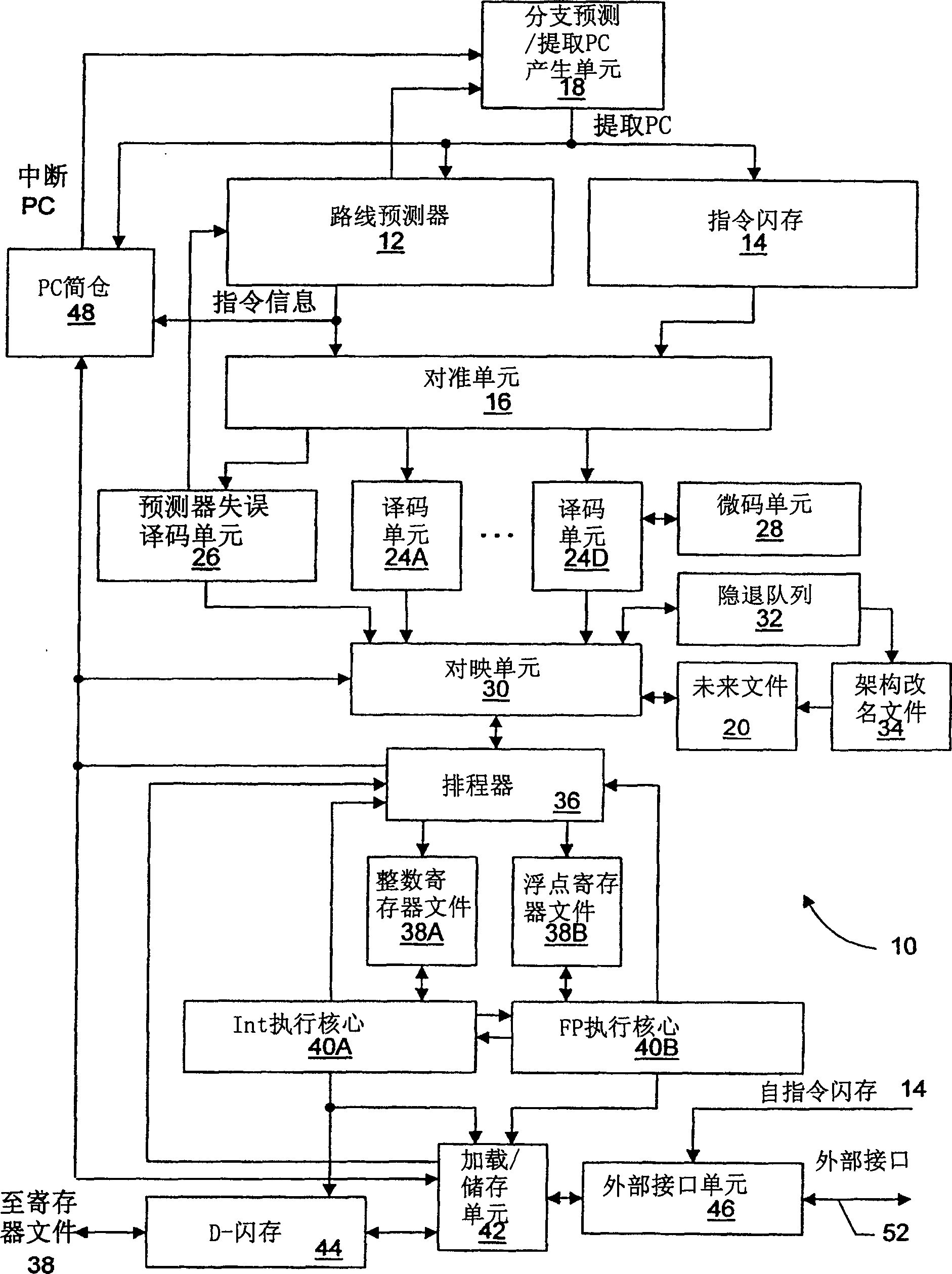

Scheduler capable of issuing and reissuing dependency chains

InactiveCN1451115AImprove performanceConcurrent instruction executionParallel computingProcedure sequence

Owner:GLOBALFOUNDRIES INC

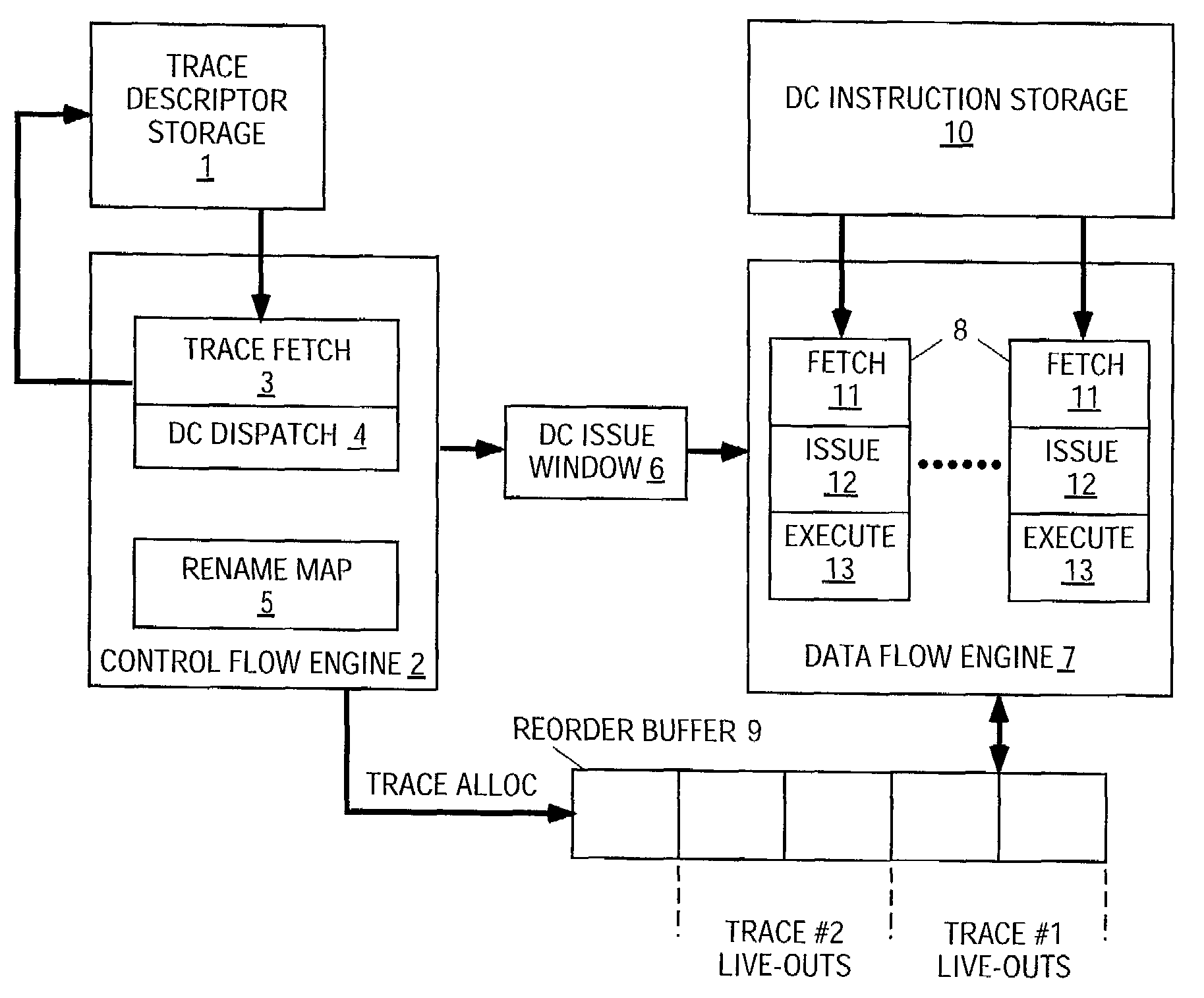

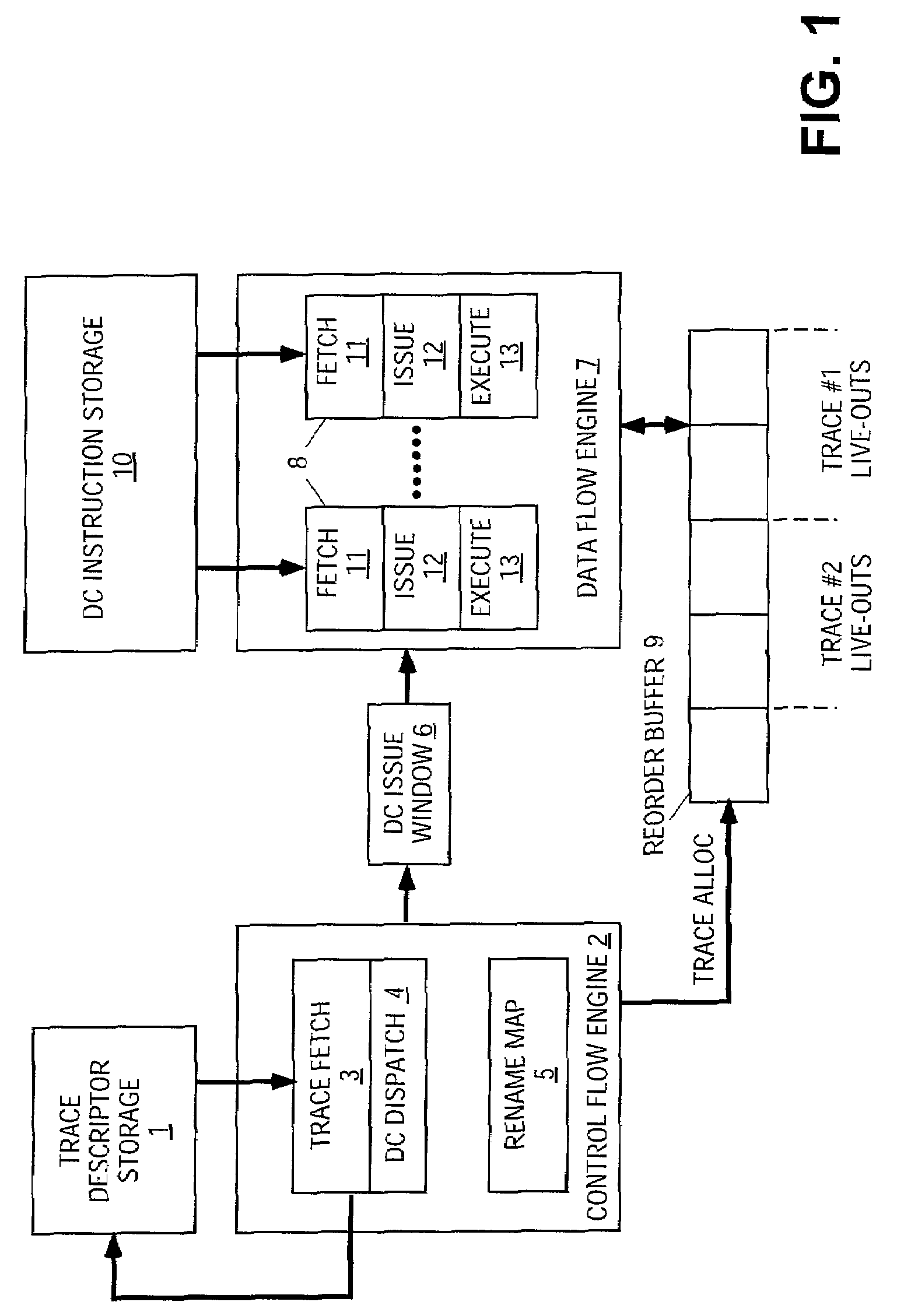

Dependence-chain processing using trace descriptors having dependency descriptors

InactiveUS7363467B2Digital computer detailsConcurrent instruction executionProgram segmentData stream

An apparatus and method for a processor microarchitecture that quickly and efficiently takes large steps through program segments without fetching all intervening instructions. The microarchitecture processes descriptors of trace sequences in program order so as to locate and dispatch descriptors of dependence chains that are used to fetch and execute the instructions of the dependence chain in data flow order.

Owner:INTEL CORP

Dynamic tag allocation in a multithreaded out-of-order processor

ActiveUS20100333098A1Memory adressing/allocation/relocationMultiprogramming arrangementsInstruction distributionProcedure sequence

Various techniques for dynamically allocating instruction tags and using those tags are disclosed. These techniques may apply to processors supporting out-of-order execution and to architectures that supports multiple threads. A group of instructions may be assigned a tag value from a pool of available tag values. A tag value may be usable to determine the program order of a group of instructions relative to other instructions in a thread. After the group of instructions has been (or is about to be) committed, the tag value may be freed so that it can be re-used on a second group of instructions. Tag values are dynamically allocated between threads; accordingly, a particular tag value or range of tag values is not dedicated to a particular thread.

Owner:ORACLE INT CORP

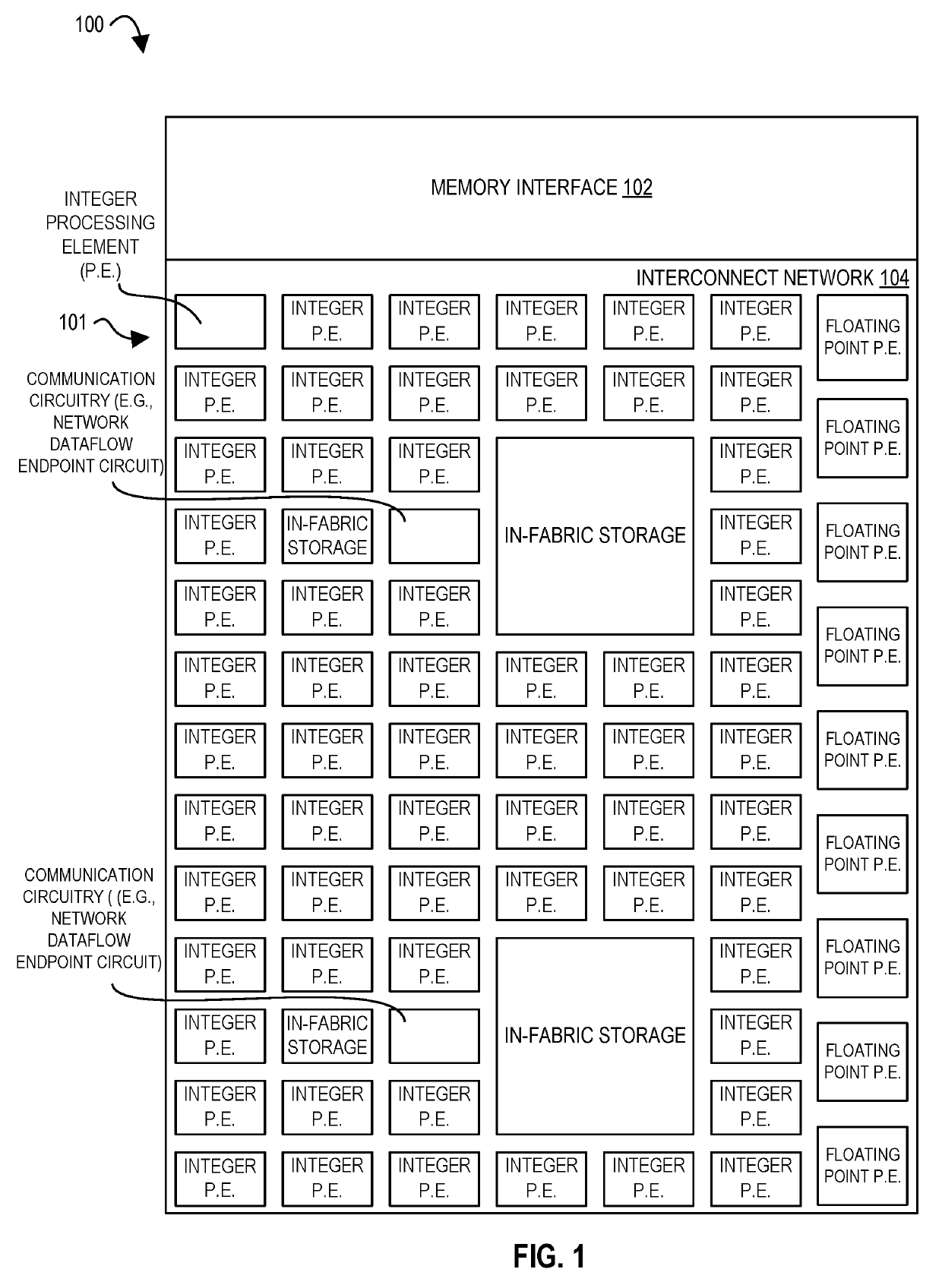

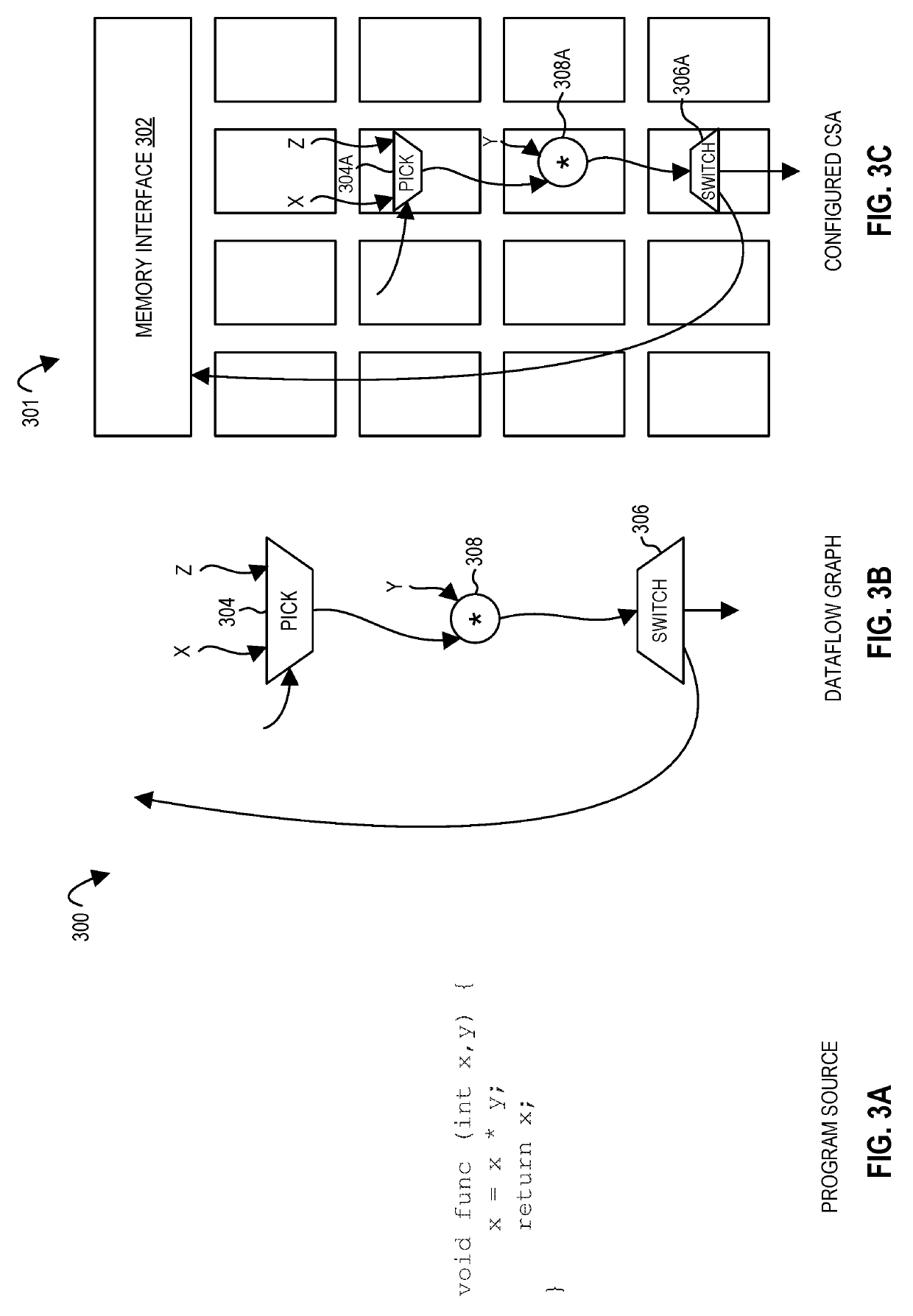

Apparatus, methods, and systems for memory consistency in a configurable spatial accelerator

ActiveUS20190205284A1Easy to adaptImprove performanceInterprogram communicationDigital computer detailsComputer scienceProcedure sequence

Owner:INTEL CORP

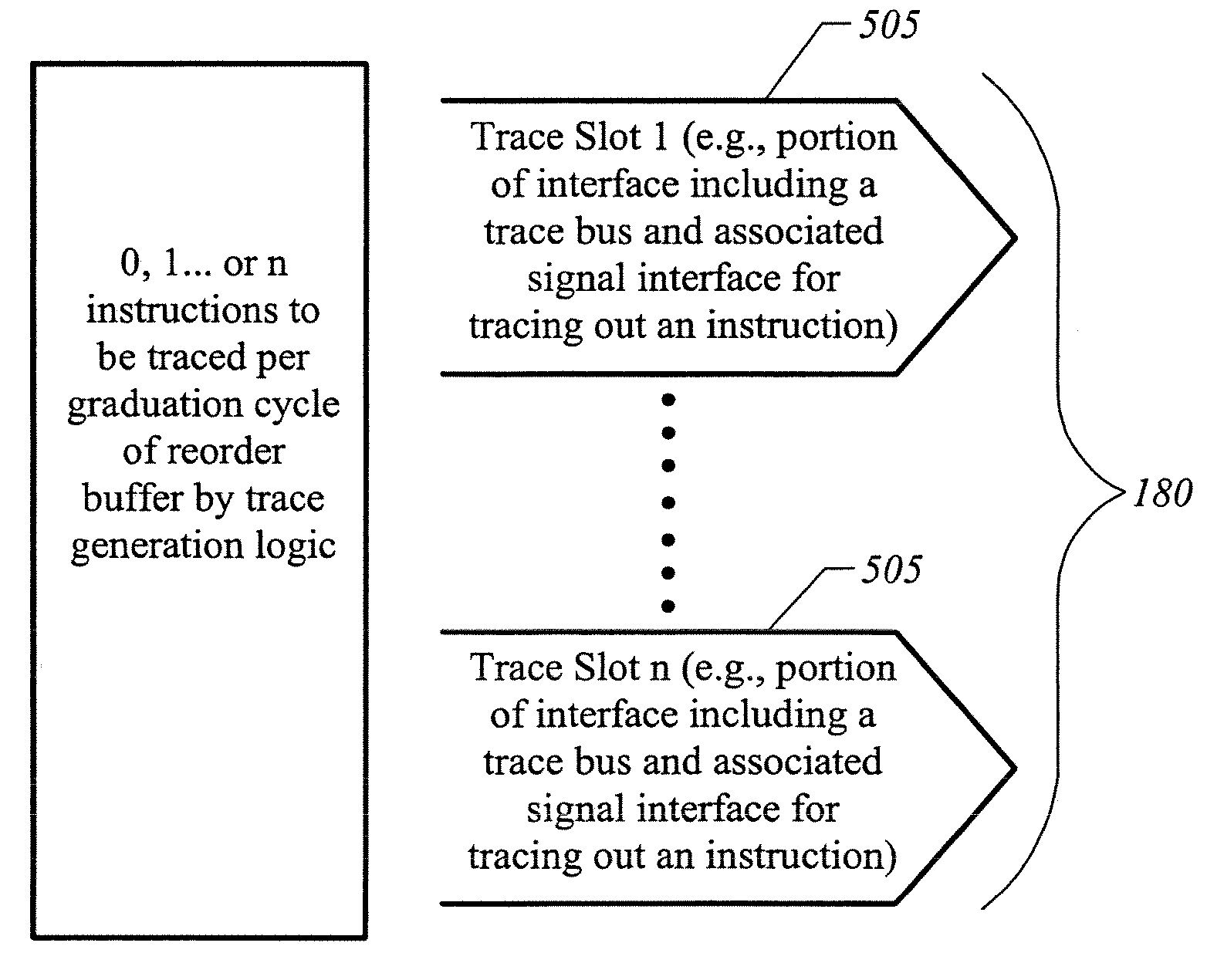

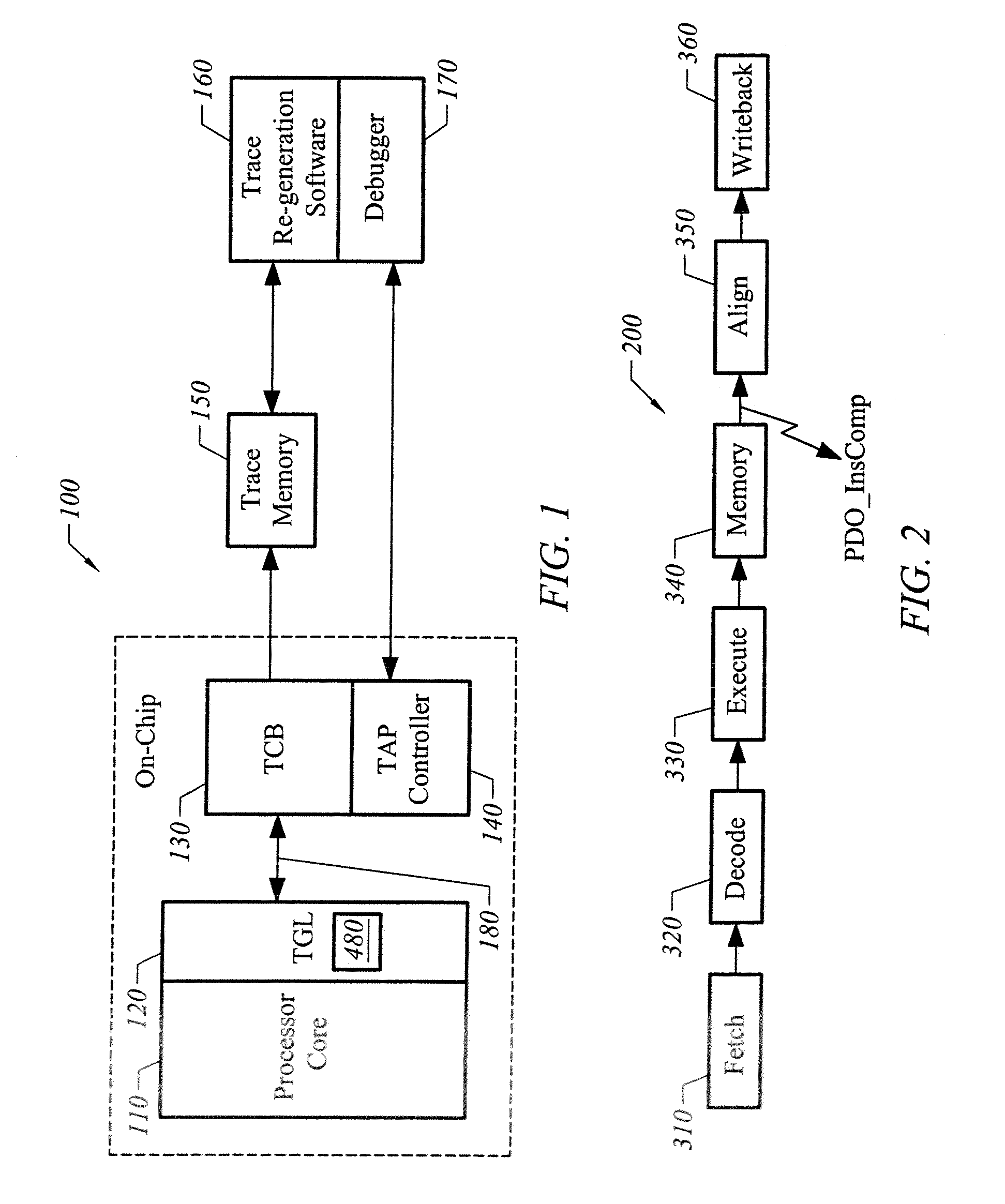

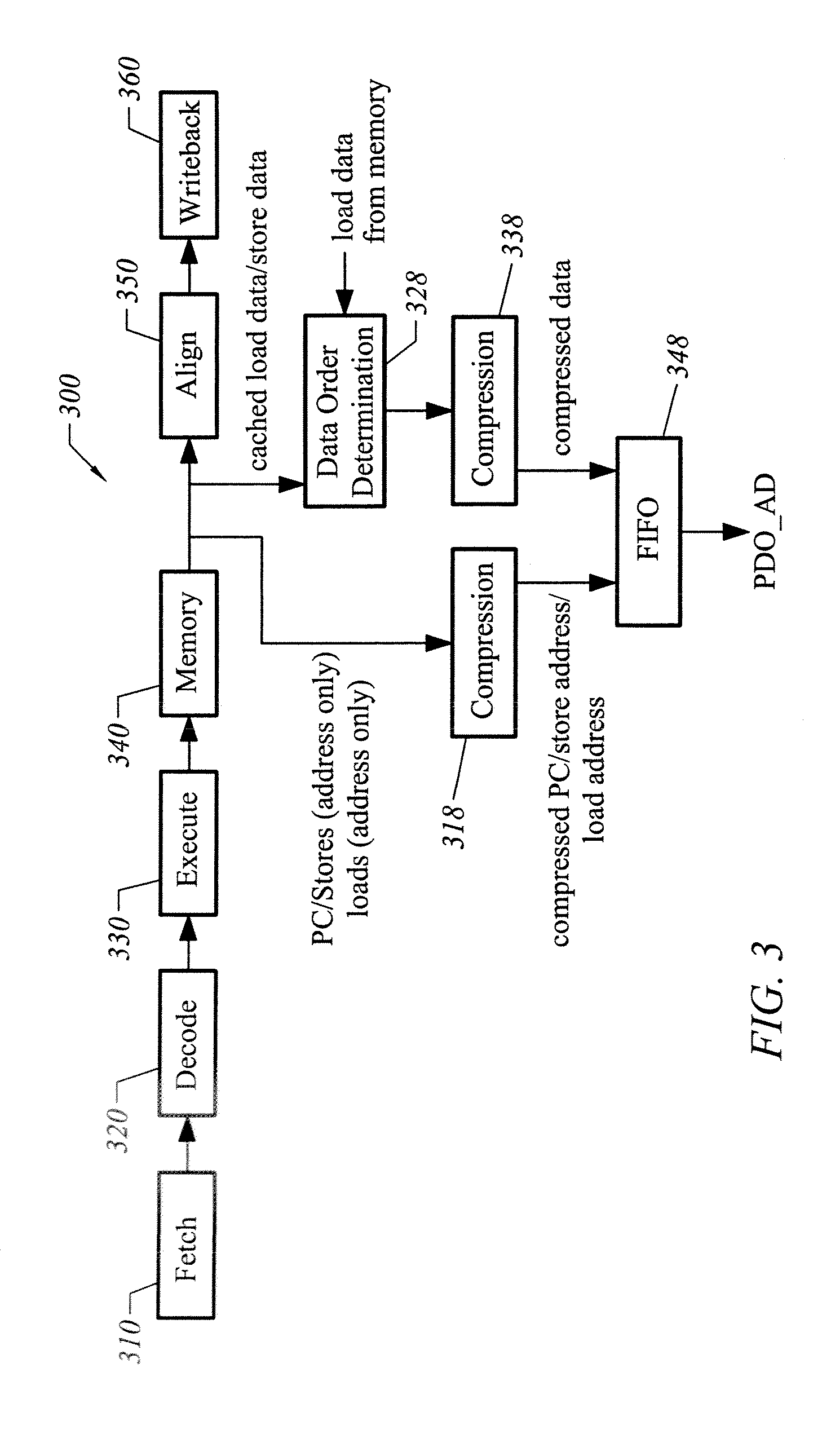

Apparatus and method to trace high performance multi-issue processors

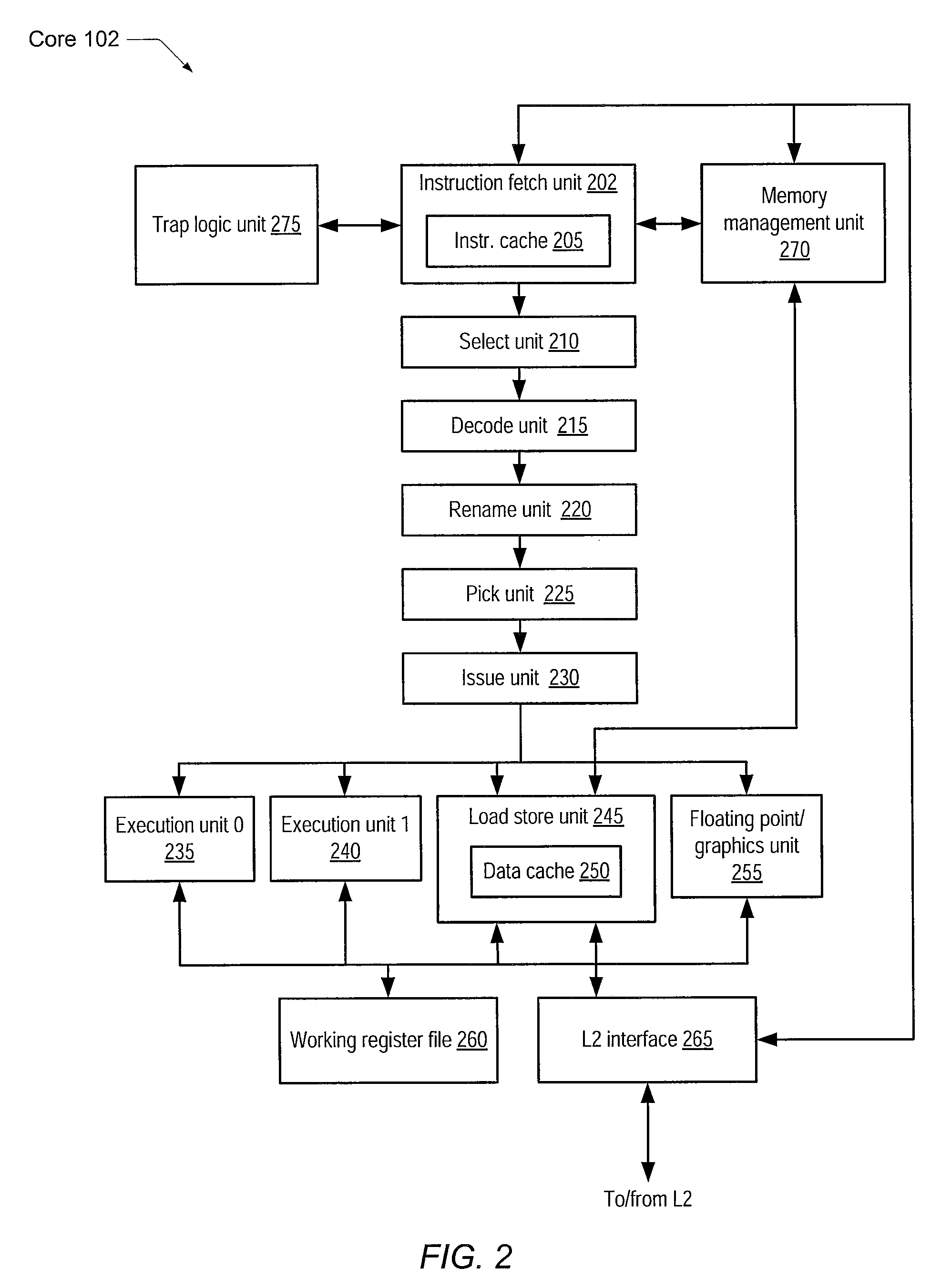

InactiveUS20070089095A1Easy to trackError detection/correctionSpecific program execution arrangementsVisibilityParallel computing

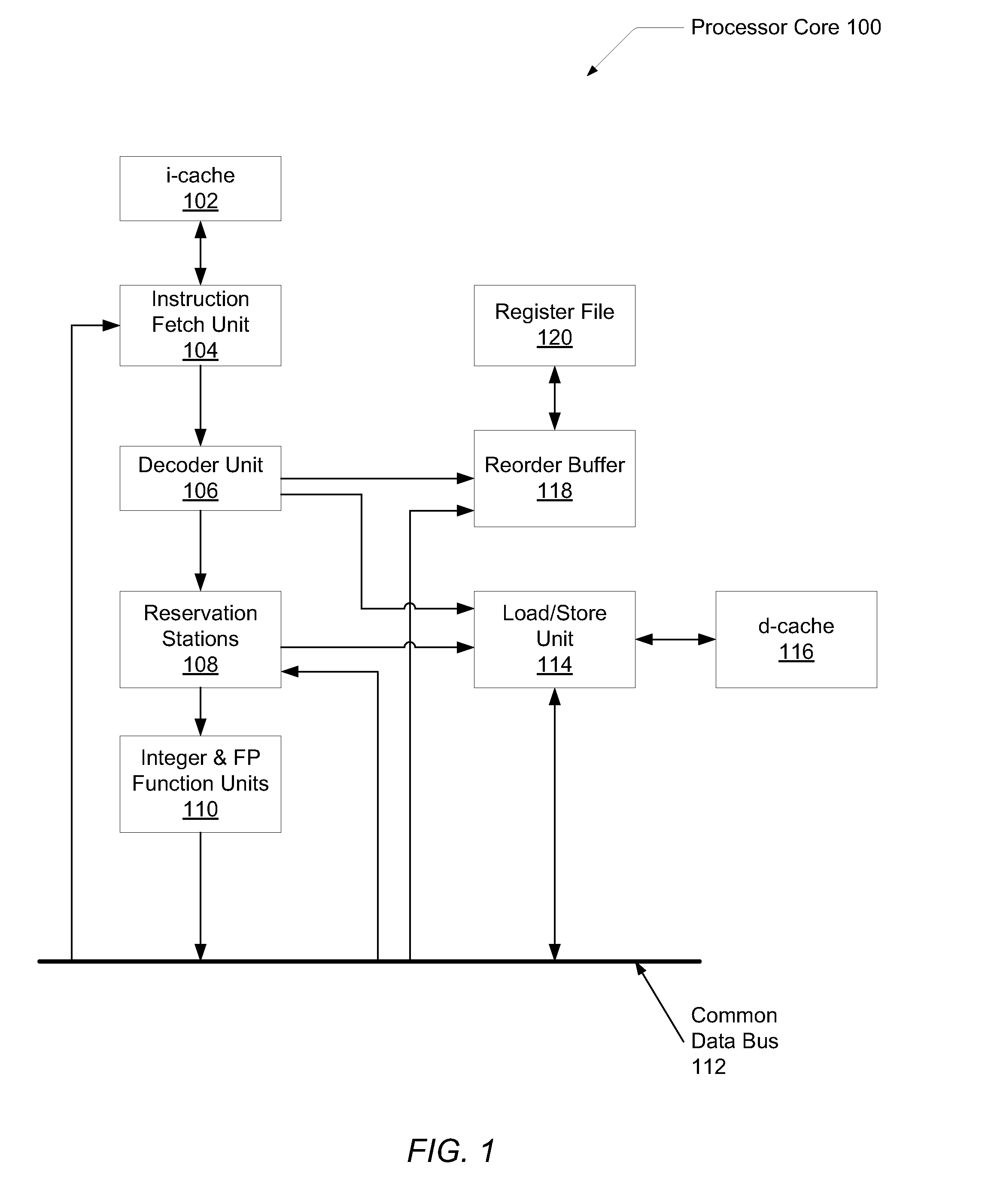

A system and method for program counter and data tracing in a multi-issue processor is disclosed. Instructions are traced in program sequence order. In one embodiment instructions are traced in graduation order from a reorder buffer. The tracing mechanism of the present invention enables increased visibility into the hardware and software state of the processor core.

Owner:MIPS TECH INC

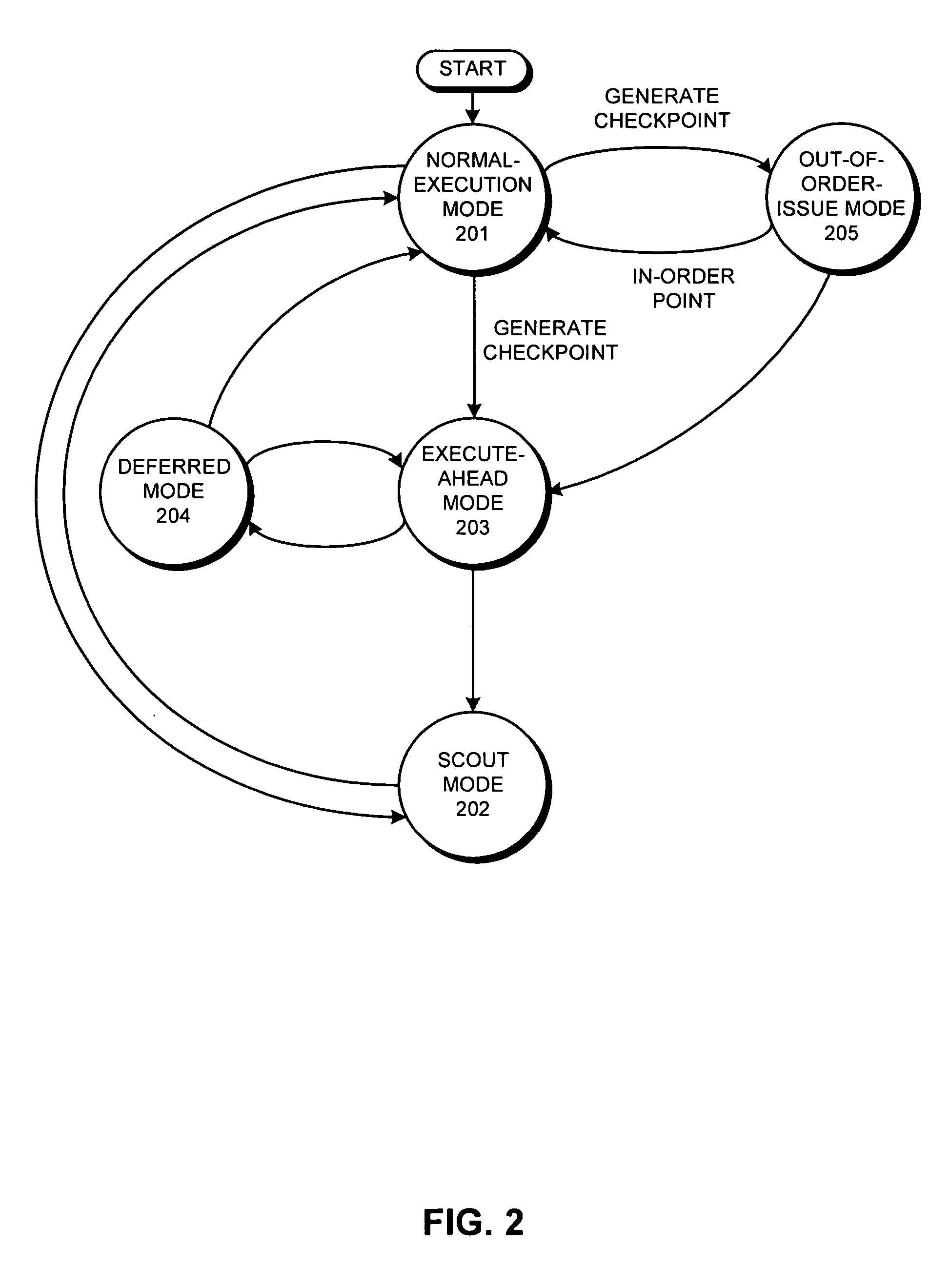

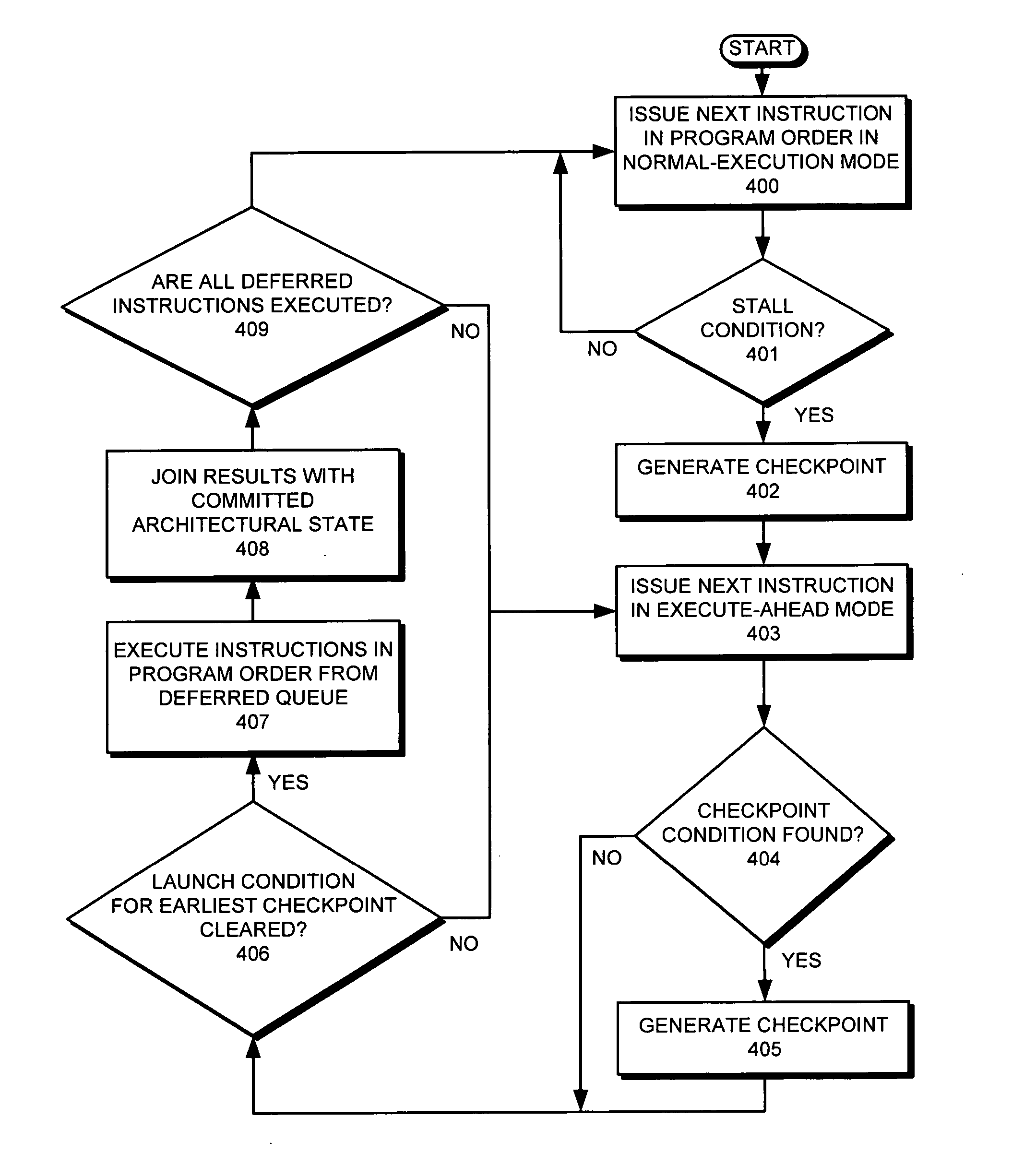

Generation of multiple checkpoints in a processor that supports speculative execution

ActiveUS20060212688A1Digital computer detailsSpecific program execution arrangementsSpeculative executionProcedure sequence

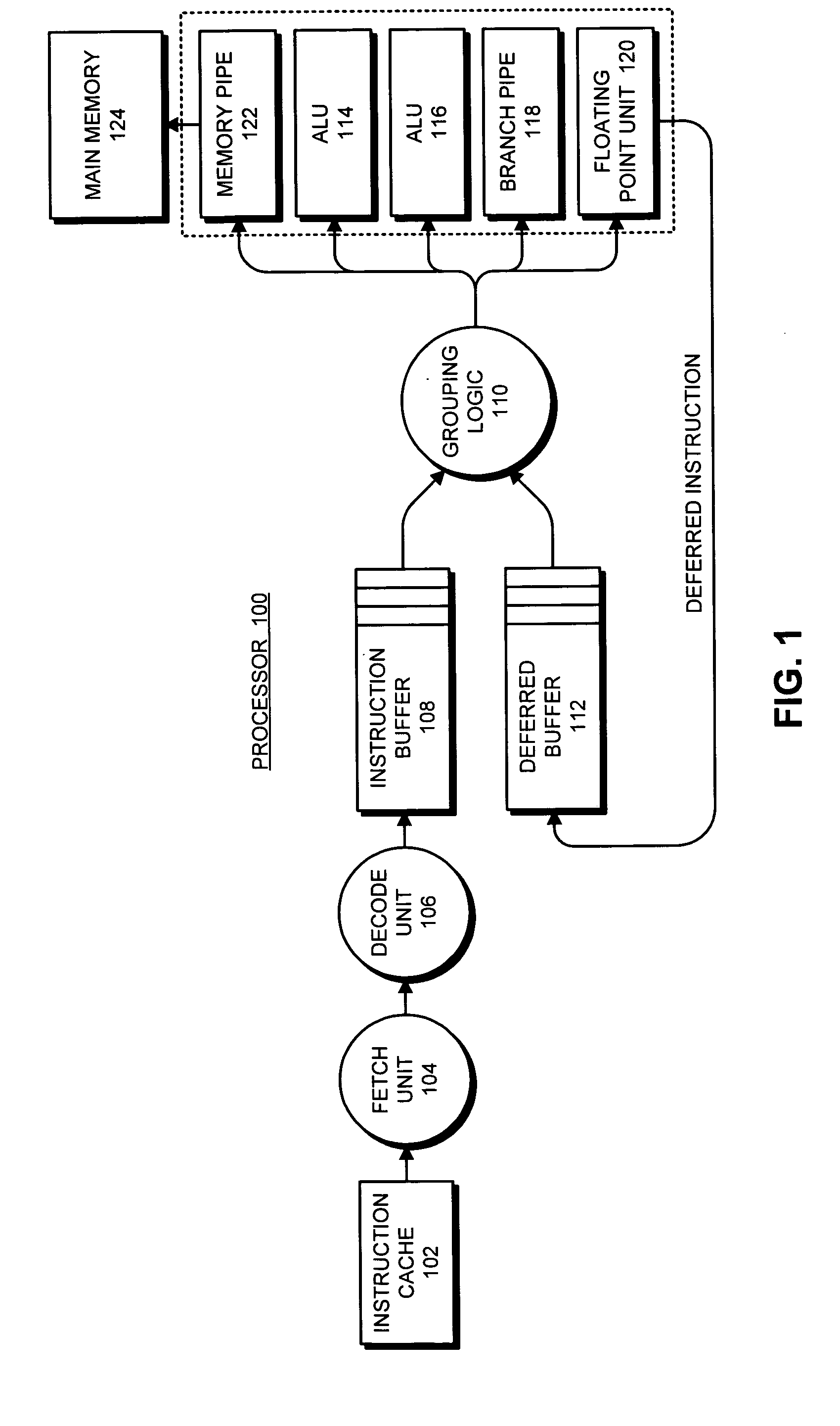

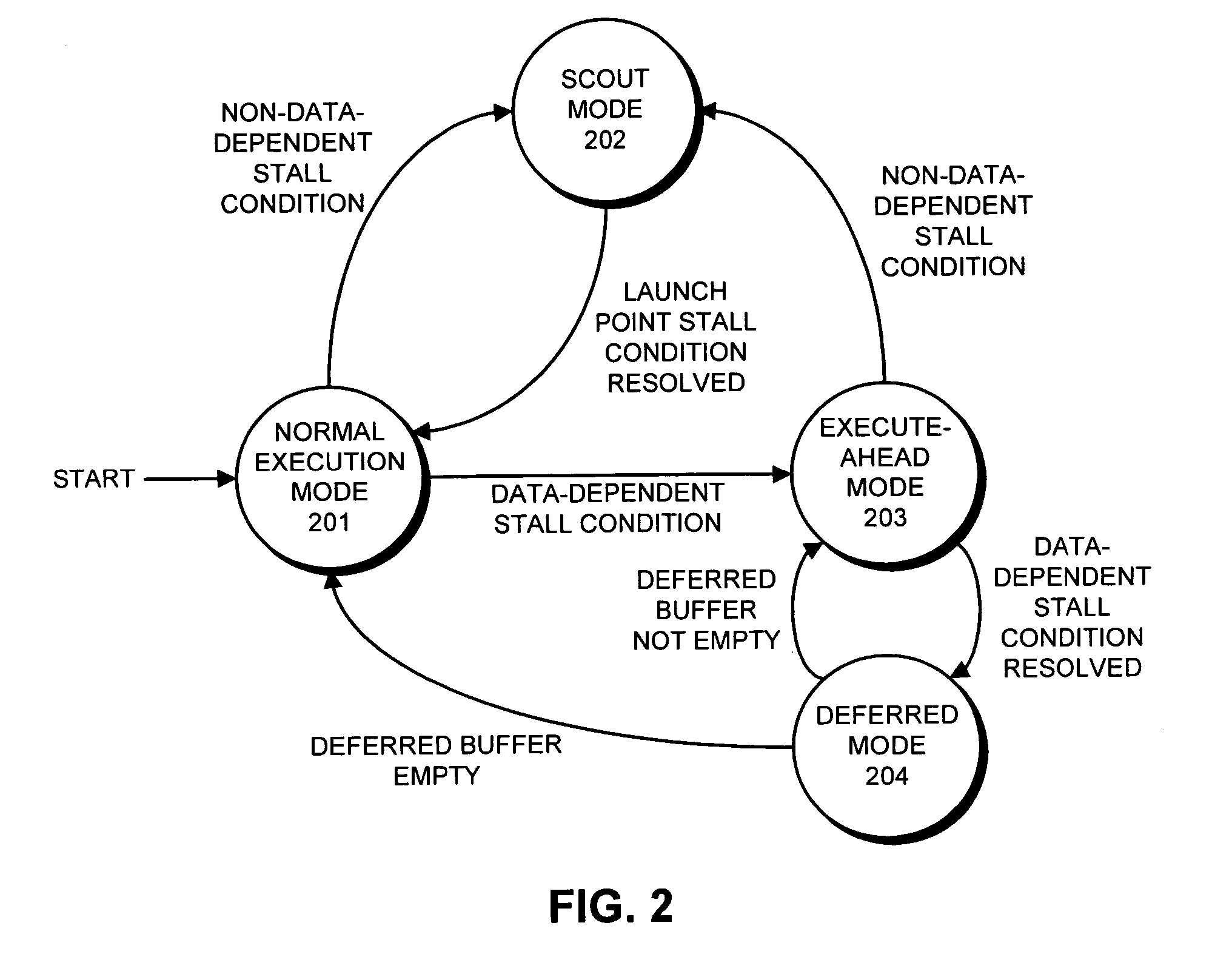

One embodiment of the present invention provides a system which creates multiple checkpoints in a processor that supports speculative-execution. The system starts by issuing instructions for execution in program order during execution of a program in a normal-execution mode. Upon encountering a launch condition during an instruction which causes a processor to enter execute-ahead mode, the system performs an initial checkpoint and commences execution of instructions in execute-ahead mode. Upon encountering a predefined condition during execute-ahead mode, the system generates an additional checkpoint and continues to execute instructions in execute-ahead mode. Generating the additional checkpoint allows the processor to return to the additional checkpoint, instead of the previous checkpoint, if the processor subsequently encounters a condition that requires the processor to return to a checkpoint. Returning to the additional checkpoint prevents the processor from having to re-execute instructions between the previous checkpoint and the additional checkpoint.

Owner:ORACLE INT CORP

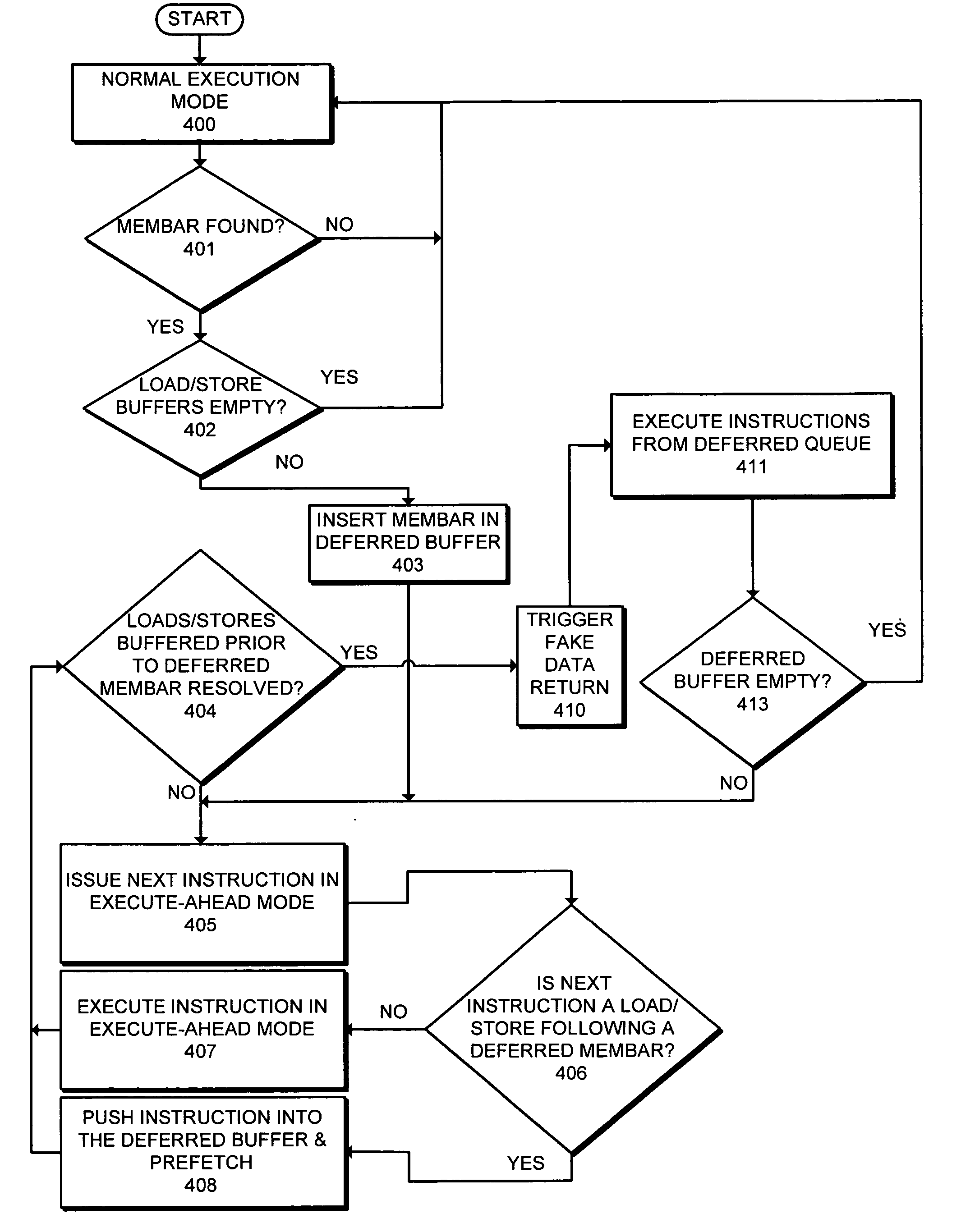

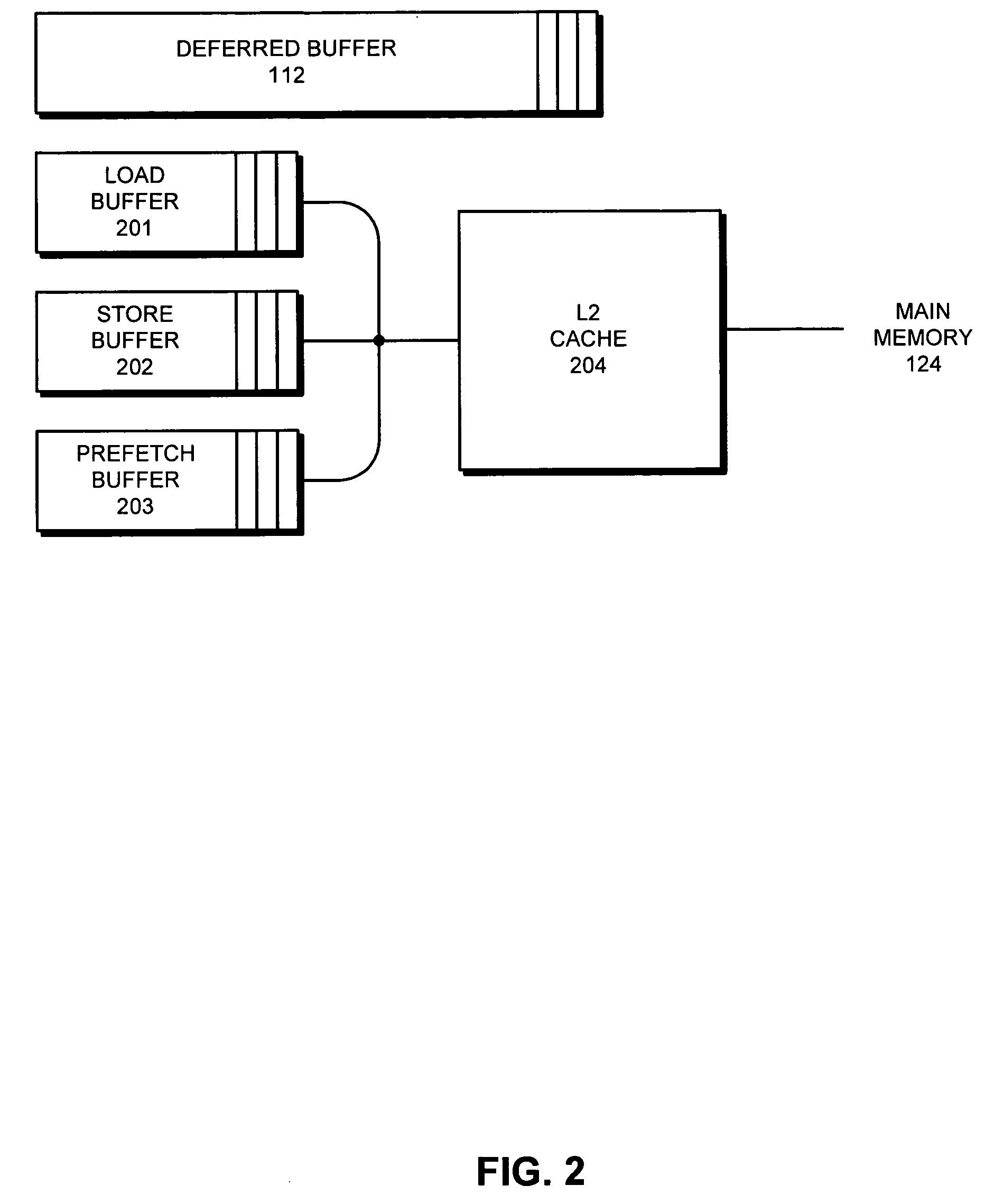

Method and apparatus for enforcing membar instruction semantics in an execute-ahead processor

ActiveUS20050273583A1Memory adressing/allocation/relocationDigital computer detailsProgram instructionSemantics

One embodiment of the present invention provides a system that facilitates executing a memory barrier (membar) instruction in an execute-ahead processor, wherein the membar instruction forces buffered loads and stores to complete before allowing a following instruction to be issued. During operation in a normal-execution mode, the processor issues instructions for execution in program order. Upon encountering a membar instruction, the processor determines if the load buffer and store buffer contain unresolved loads and stores. If so, the processor defers the membar instruction and executes subsequent program instructions in execute-ahead mode. In execute-ahead mode, instructions that cannot be executed because of an unresolved data dependency are deferred, and other non-deferred instructions are executed in program order. When all stores and loads that precede the membar instruction have been committed to memory from the store buffer and the load buffer, the processor enters a deferred mode and executes the deferred instructions, including the membar instruction, in program order. If all deferred instructions have been executed, the processor returns to the normal-execution mode and resumes execution from the point where the execute-ahead mode left off.

Owner:ORACLE INT CORP

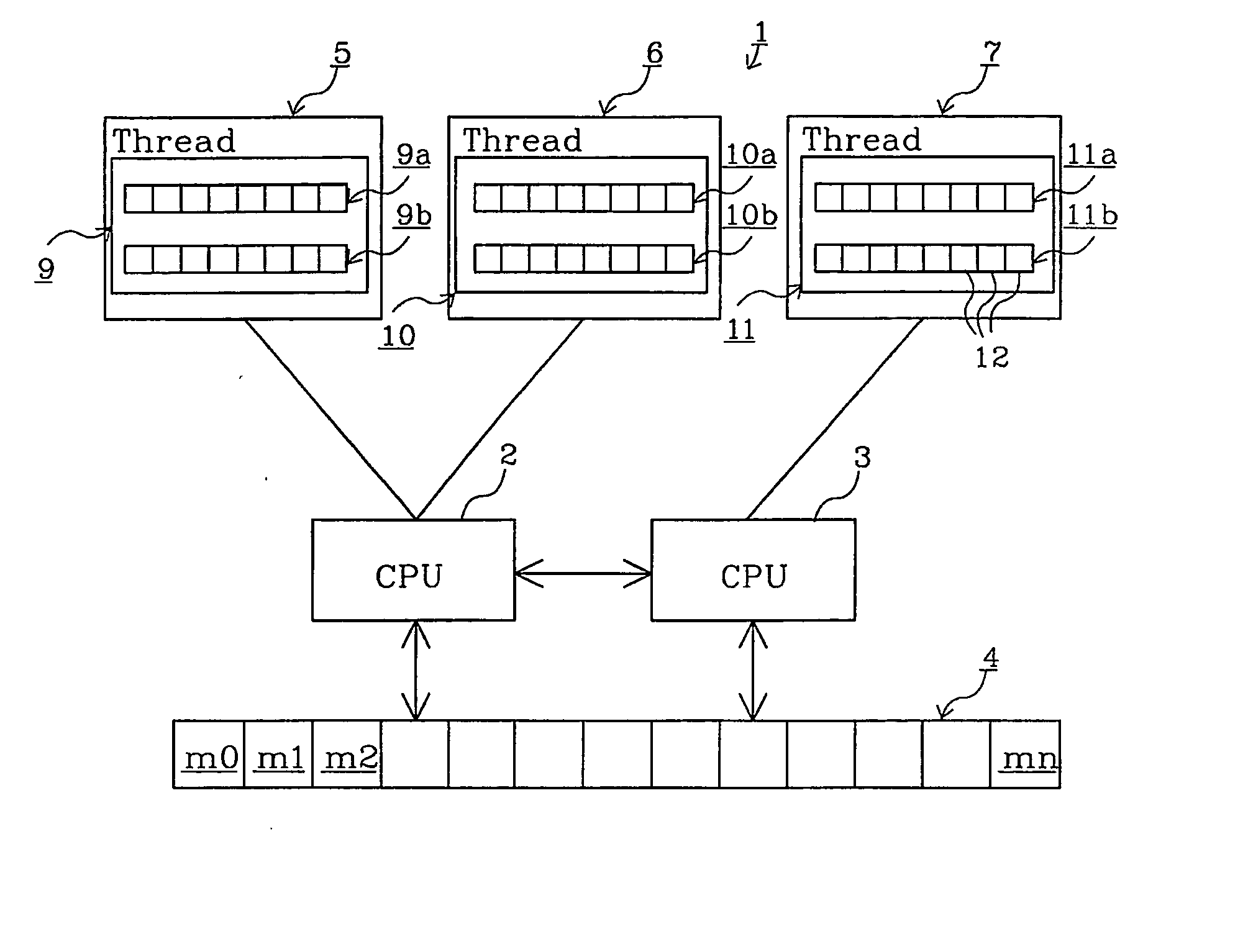

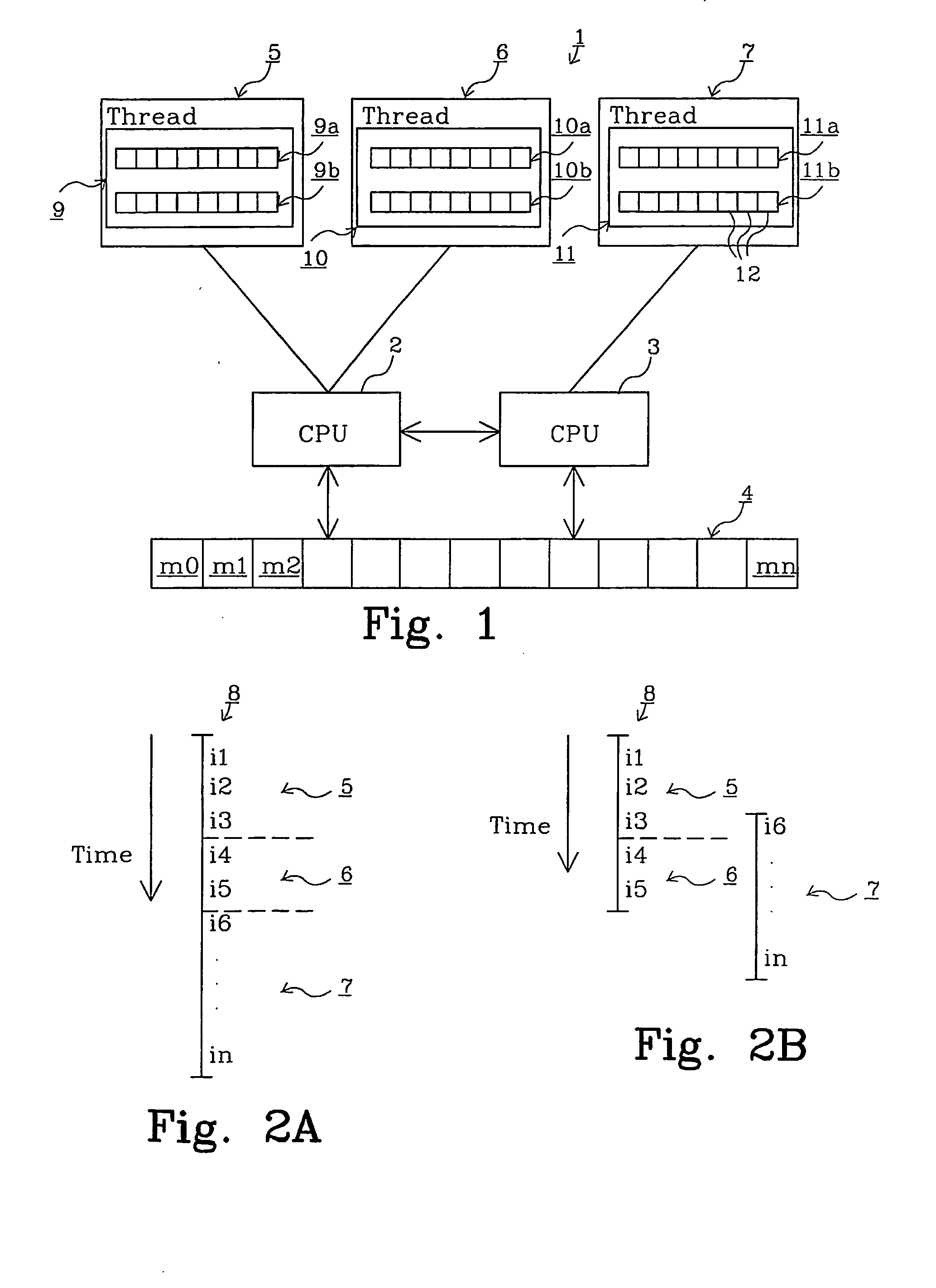

Collision handling apparatus and method

InactiveUS20050055490A1Reduce needEasy to implementMemory adressing/allocation/relocationConcurrent instruction executionCollision detectionProcedure sequence

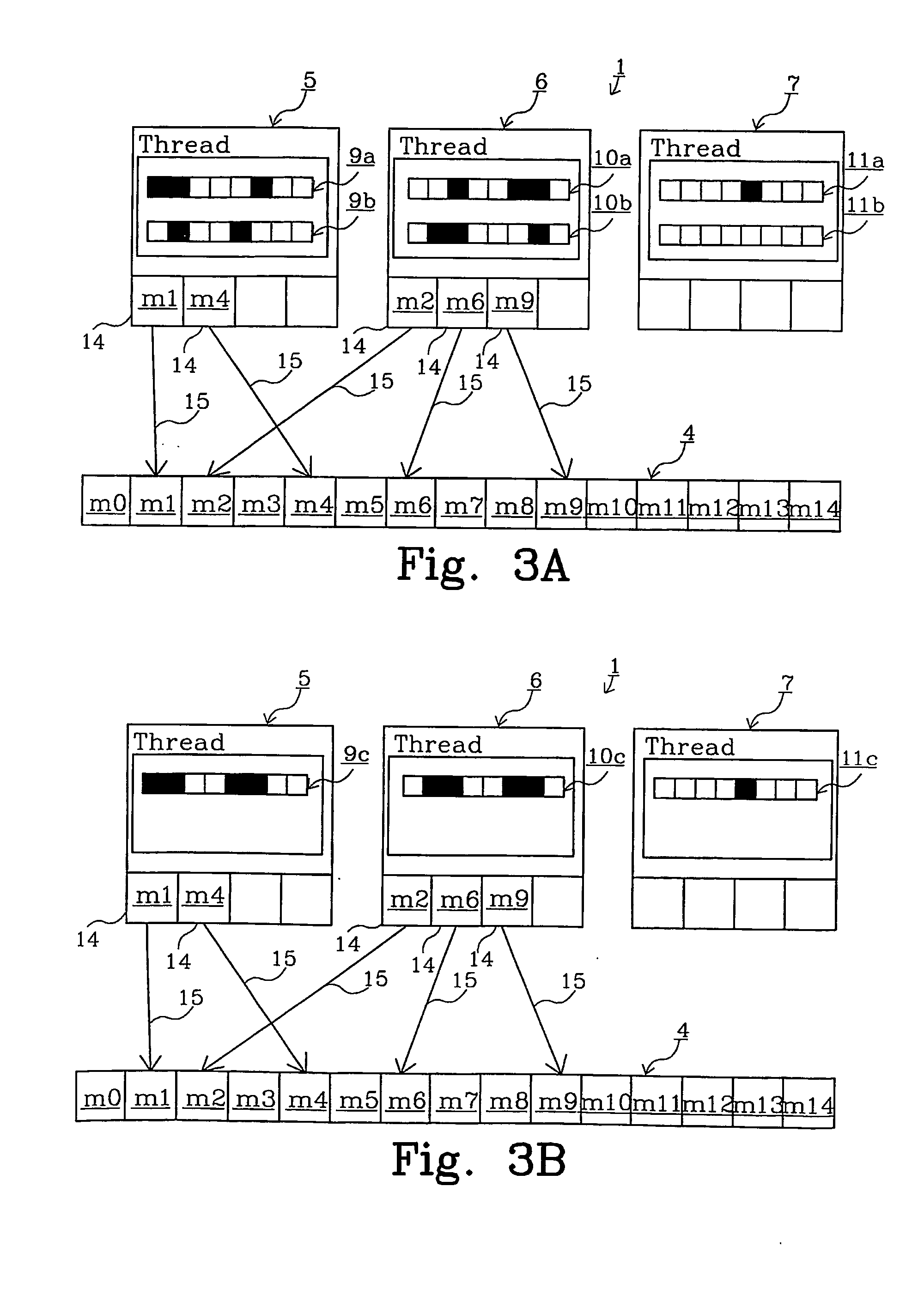

The present invention relates to mechanisms for handling and detecting collisions between threads (5, 6, 7) that execute computer program instructions out of program order. According to an embodiment of the present invention each of a plurality of threads (5, 6, 7) are associated with a respective data structure (9, 10, 11) comprising a number of bits (12) that correspond to memory elements (m0, m1, m2, mn) of a shared memory (4). When a thread accesses a memory element in the shared memory, it sets a bit in its associated data structure, which bit corresponds to the accessed memory element. This indicates that the memory element has been accessed by the thread. Collision detection may be carried out after the thread has finished executing by means of comparing the data structure of the thread with the data structures of other threads on which the thread may depend.

Owner:TELEFON AB LM ERICSSON (PUBL)

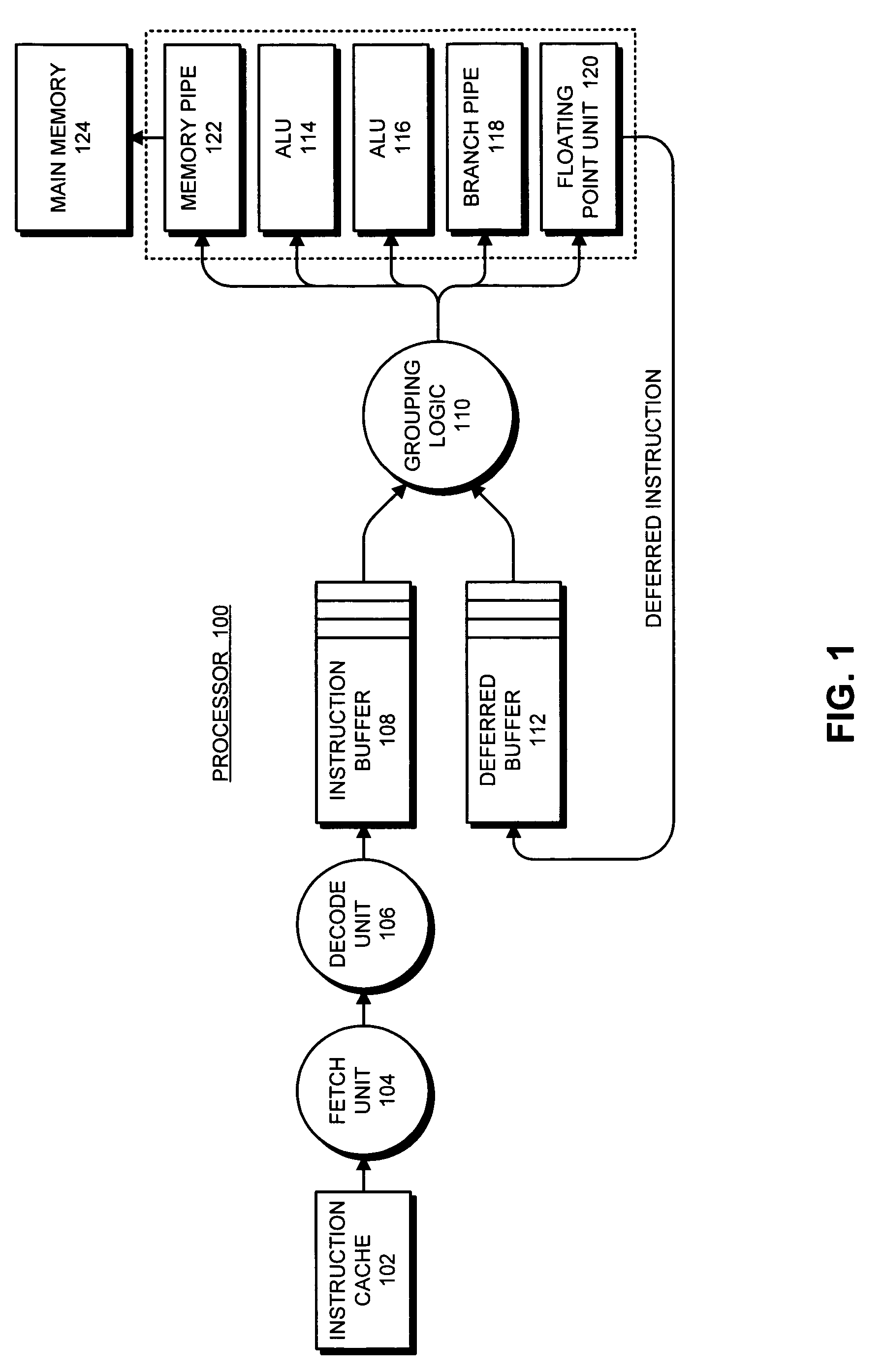

Selective execution of deferred instructions in a processor that supports speculative execution

ActiveUS20060010309A1Digital computer detailsSpecific program execution arrangementsSpeculative executionLong latency

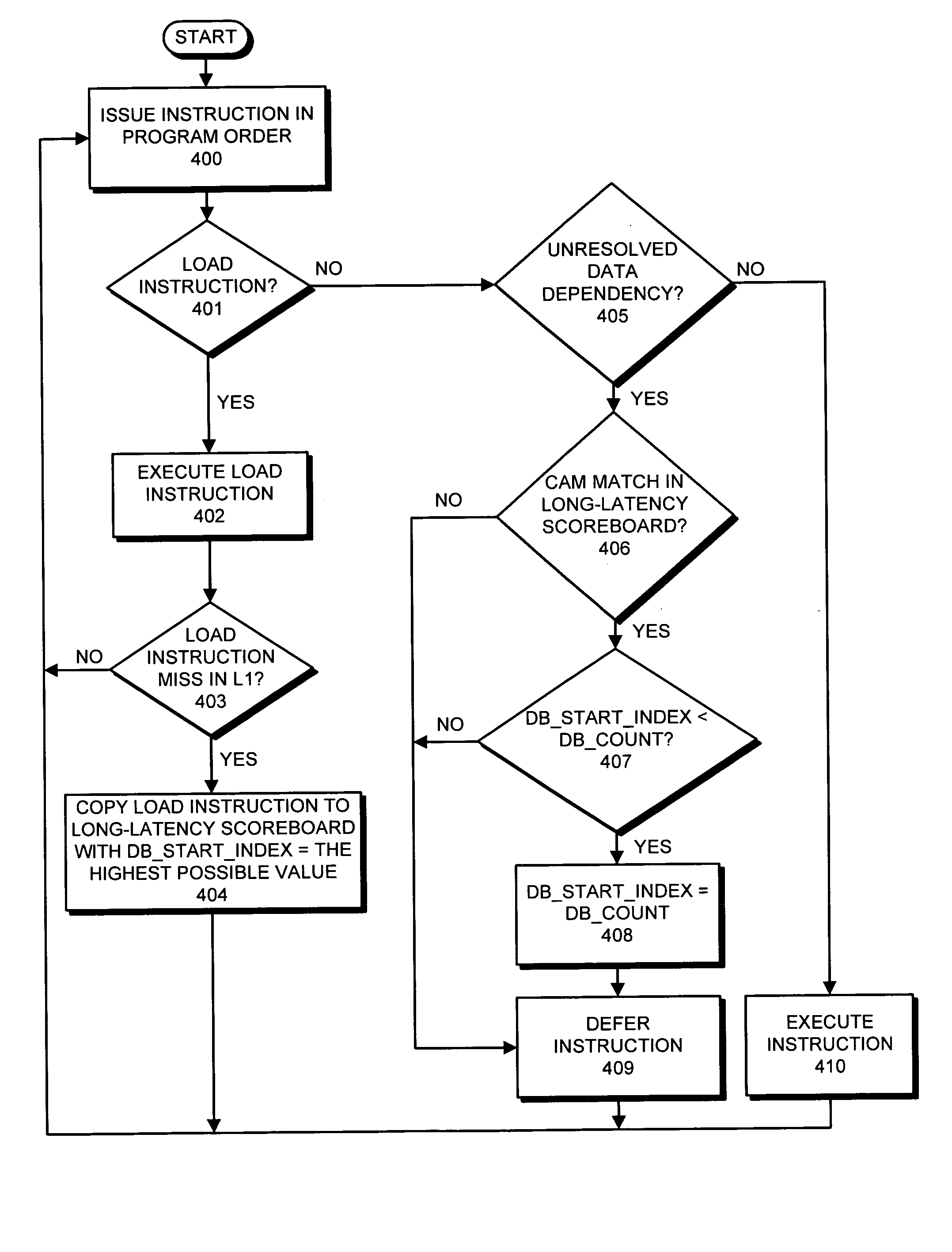

One embodiment of the present invention provides a system which selectively executes deferred instructions following a return of a long-latency operation in a processor that supports speculative-execution. During normal-execution mode, the processor issues instructions for execution in program order. When the processor encounters a long-latency operation, such as a load miss, the processor records the long-latency operation in a long-latency scoreboard, wherein each entry in the long-latency scoreboard includes a deferred buffer start index. Upon encountering an unresolved data dependency during execution of an instruction, the processor performs a checkpointing operation and executes subsequent instructions in an execute-ahead mode, wherein instructions that cannot be executed because of the unresolved data dependency are deferred into a deferred buffer, and wherein other non-deferred instructions are executed in program order. Upon encountering a deferred instruction that depends on a long-latency operation within the long-latency scoreboard, the processor updates a deferred buffer start index associated with the long-latency operation to point to position in the deferred buffer occupied by the deferred instruction. When a long-latency operation returns, the processor executes instructions in the deferred buffer starting at the deferred buffer start index for the returning long-latency operation.

Owner:ORACLE INT CORP

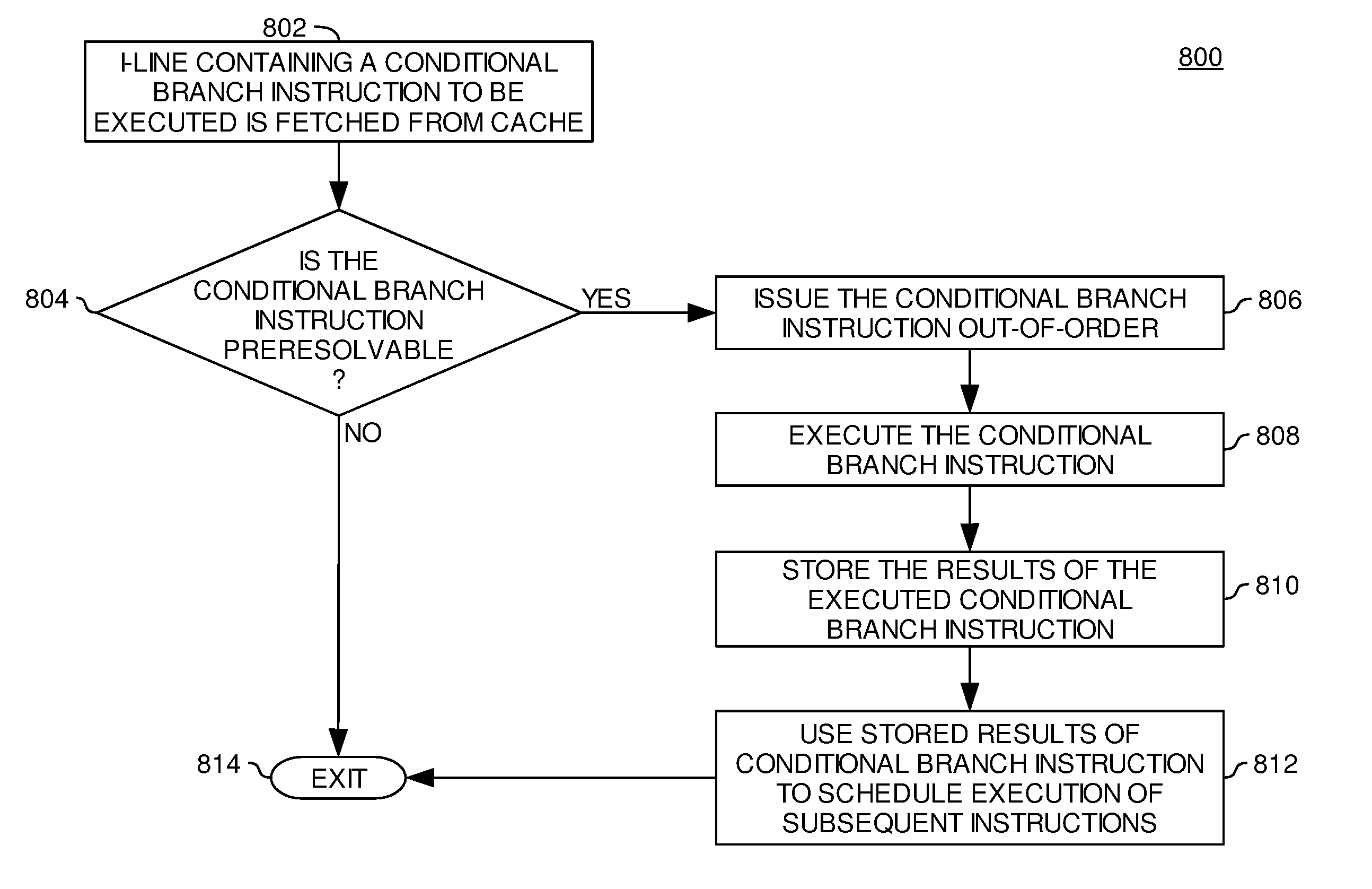

Early Conditional Branch Resolution

InactiveUS20070288733A1Digital computer detailsSpecific program execution arrangementsExecution unitProcedure sequence

A method and apparatus for executing branch instructions is provided. In one embodiment, In one embodiment, the method includes receiving the branch instruction to be executed in a program order and, before execution of the branch instruction in the program order, issuing the branch instruction to an execution unit to determine a predicted outcome of the branch instruction. The method further includes using the predicted outcome of the branch instruction to schedule execution of one or more instructions succeeding the branch instruction in the program order.

Owner:IBM CORP

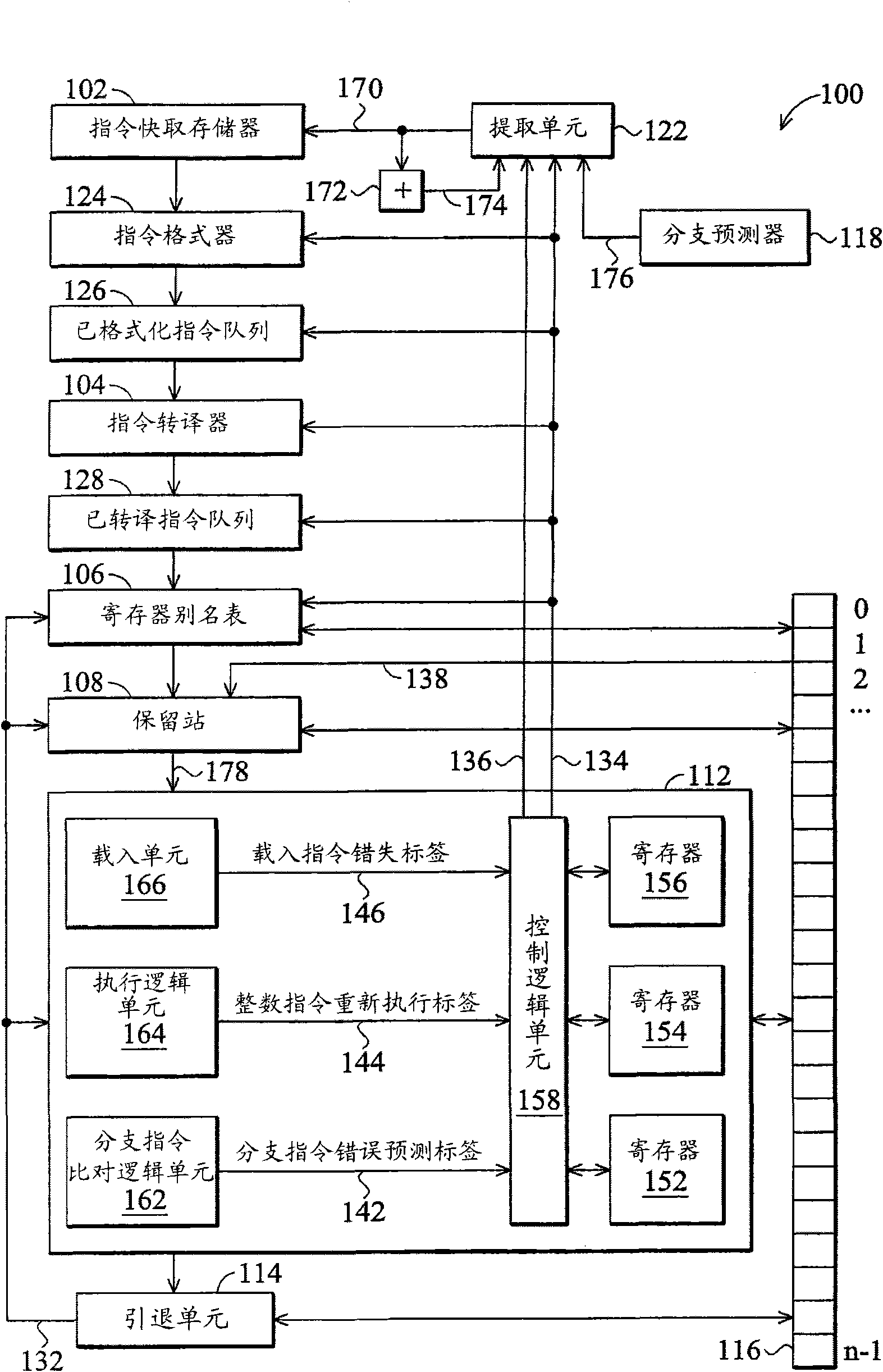

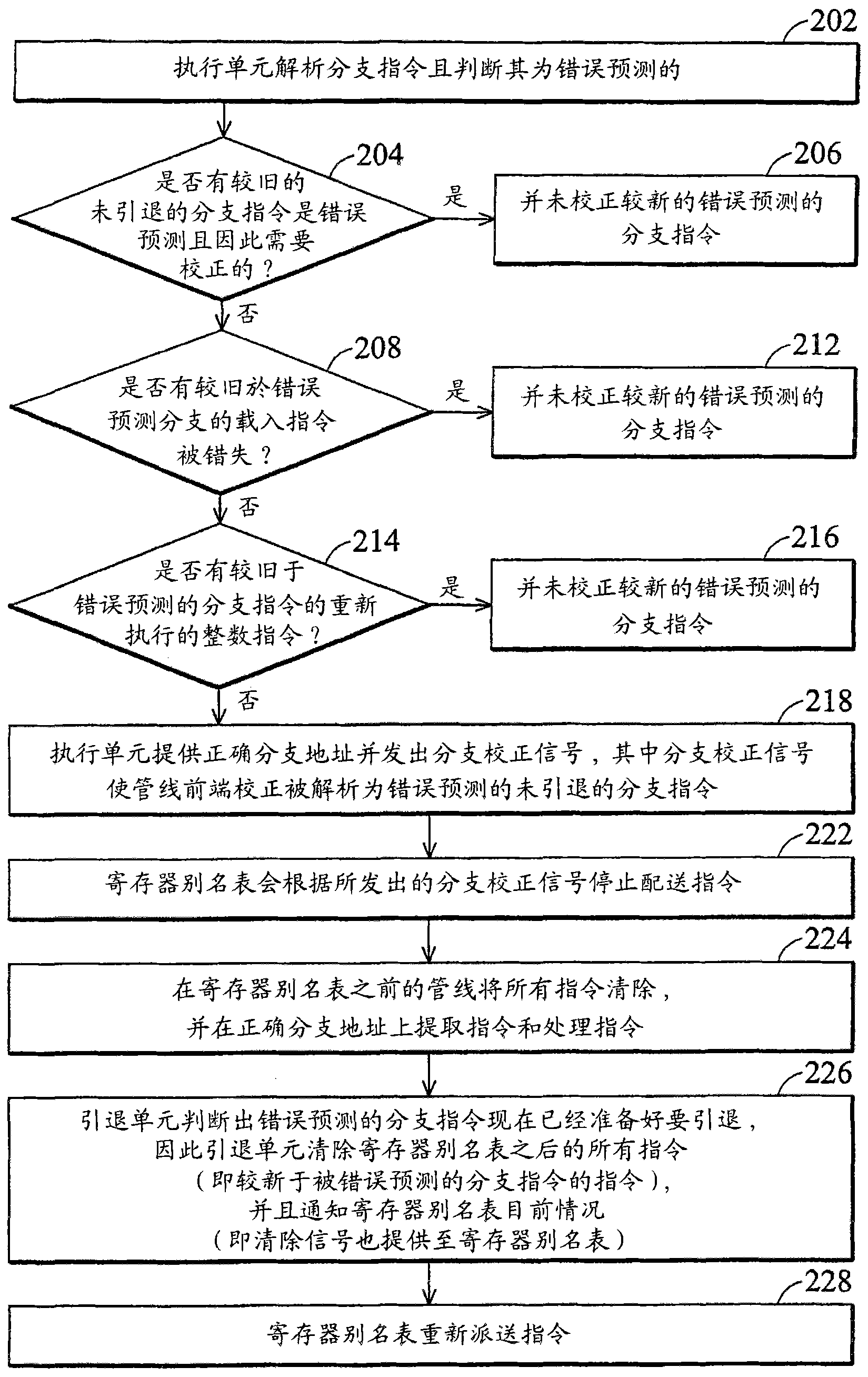

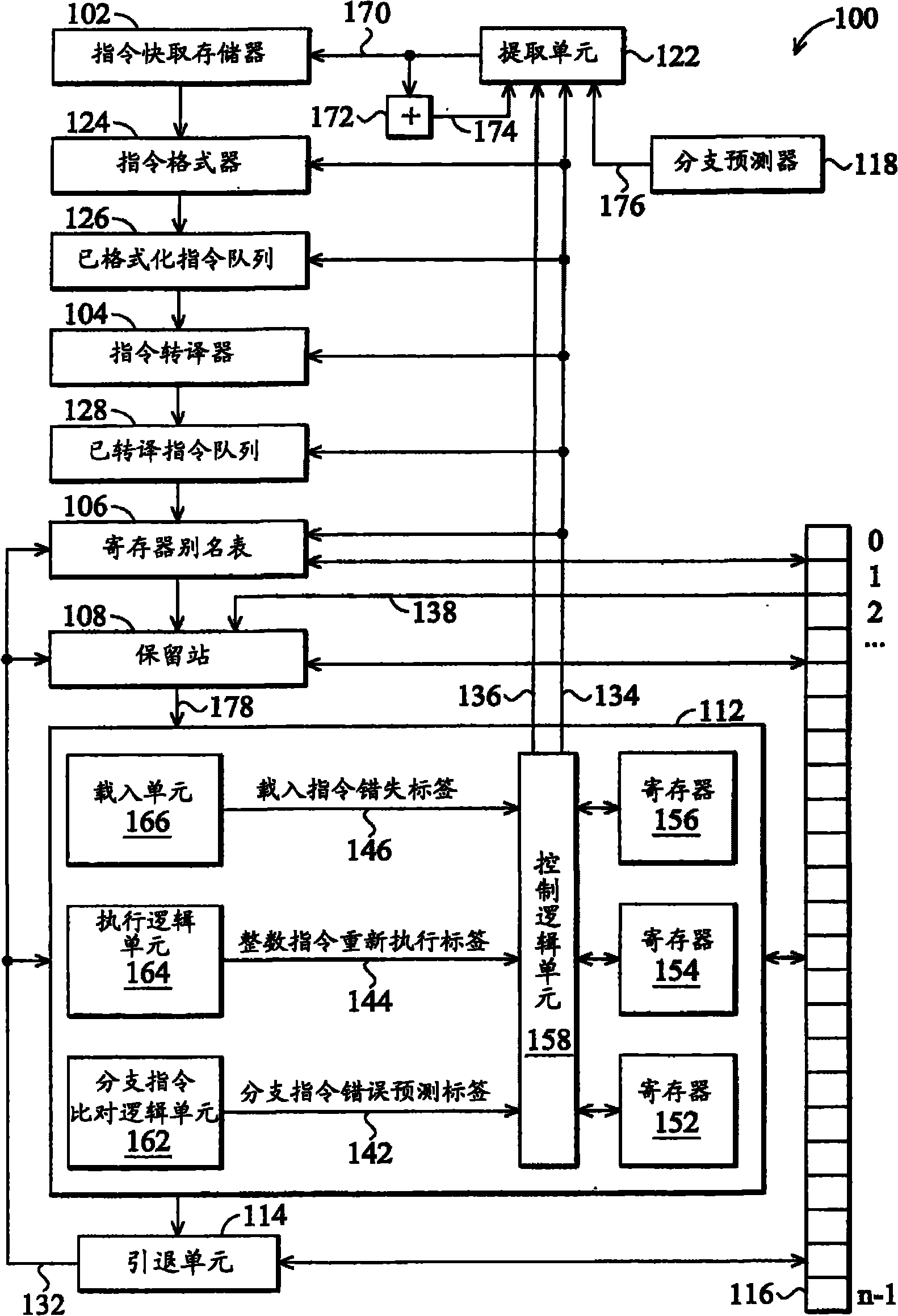

Microprocessor and execution method thereof

A microprocessor and an execution method thereof are used for pipelined out-of-order execution in-order retire. The microprocessor includes a branch predictor that predicts a target address of a branch instruction, a fetch unit that fetches instructions at the predicted target address, and an execution unit that: resolves a target address of the branch instruction and detects that the predicted and resolved target addresses are different; determines whether there is an unretired instruction that must be corrected and that is older in program order than the branch instruction, in response to detecting that the predicted and resolved target addresses are different; execute the branch instruction by flushing instructions fetched at the predicted target address and causing the fetch unit to fetch from the resolved target address, if there is not an unretired instruction that must be corrected and that is older in program order than the branch instruction; and otherwise, refrain from executing the branch instruction.

Owner:VIA TECH INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com