Patents

Literature

254 results about "Critical section" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

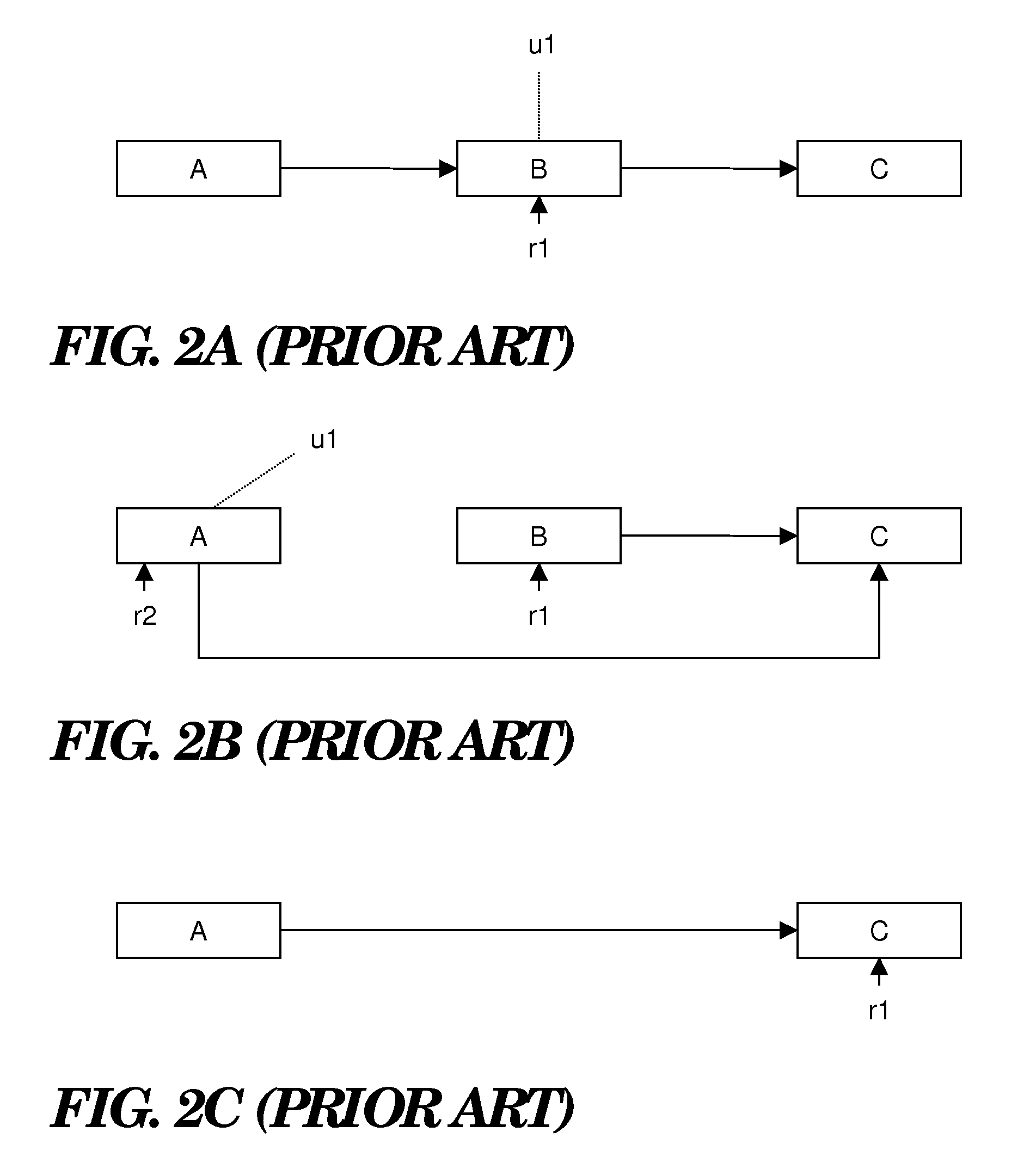

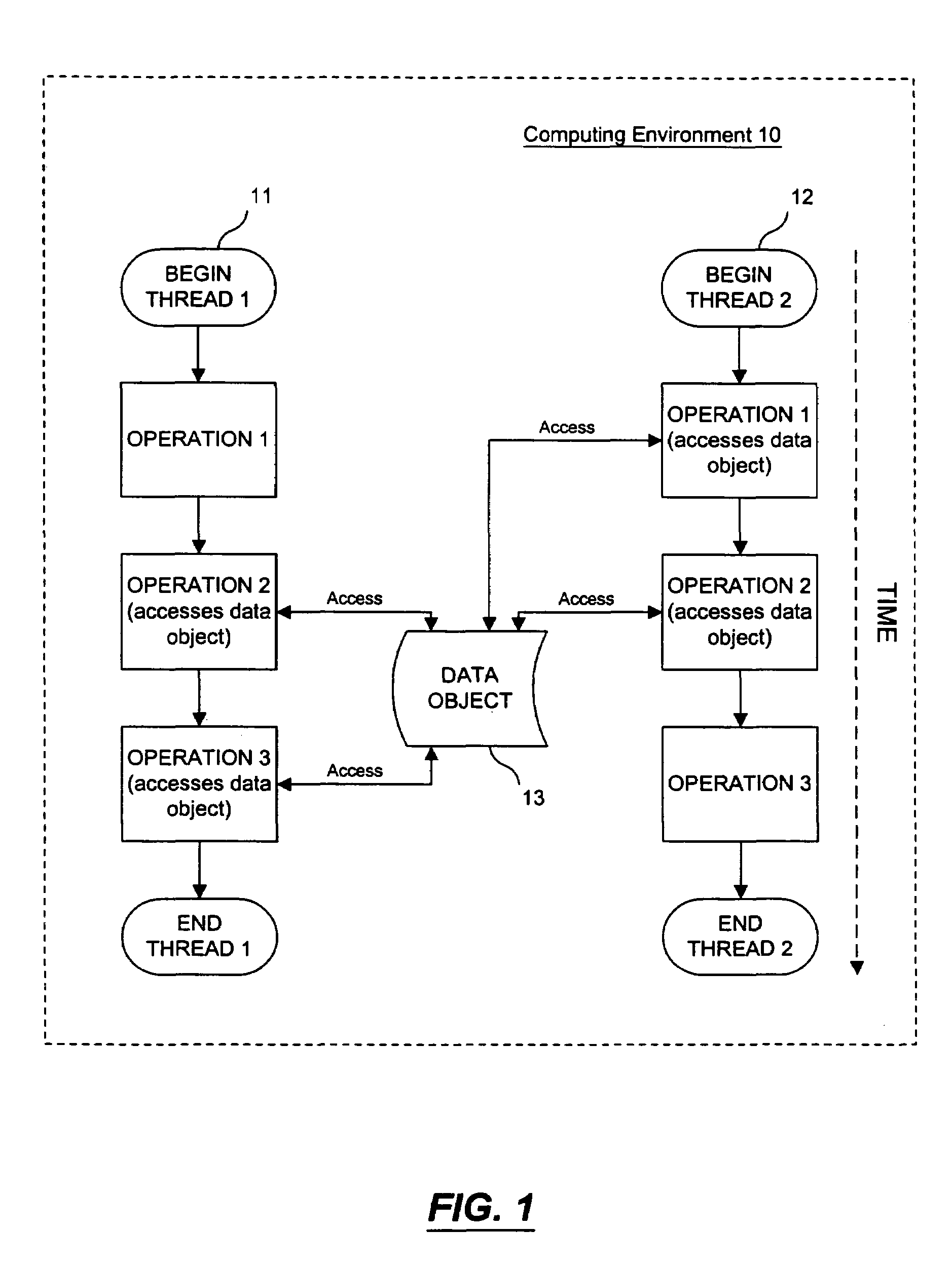

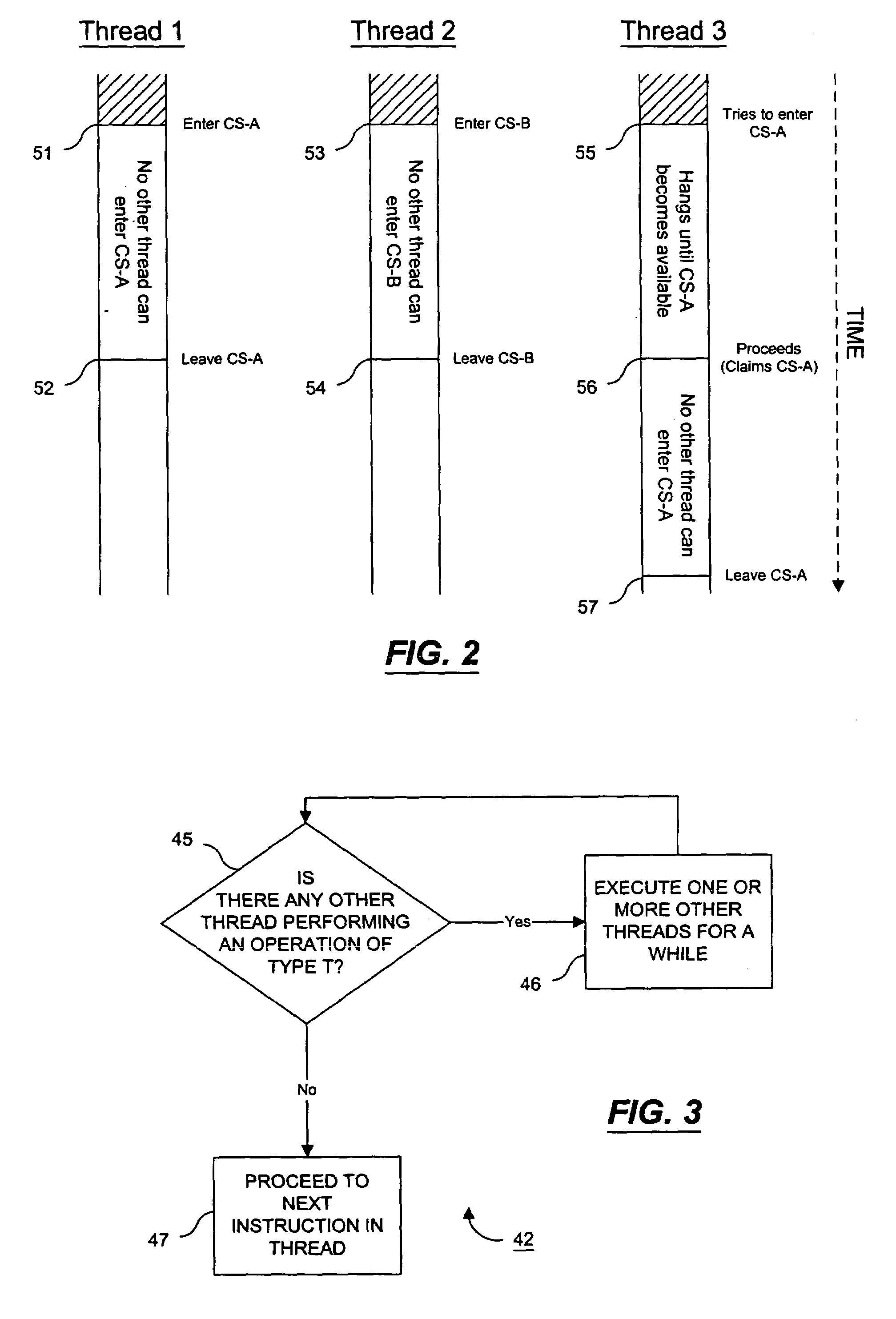

In concurrent programming, concurrent accesses to shared resources can lead to unexpected or erroneous behavior, so parts of the program where the shared resource is accessed are protected. This protected section is the critical section or critical region. It cannot be executed by more than one process at a time. Typically, the critical section accesses a shared resource, such as a data structure, a peripheral device, or a network connection, that would not operate correctly in the context of multiple concurrent accesses.

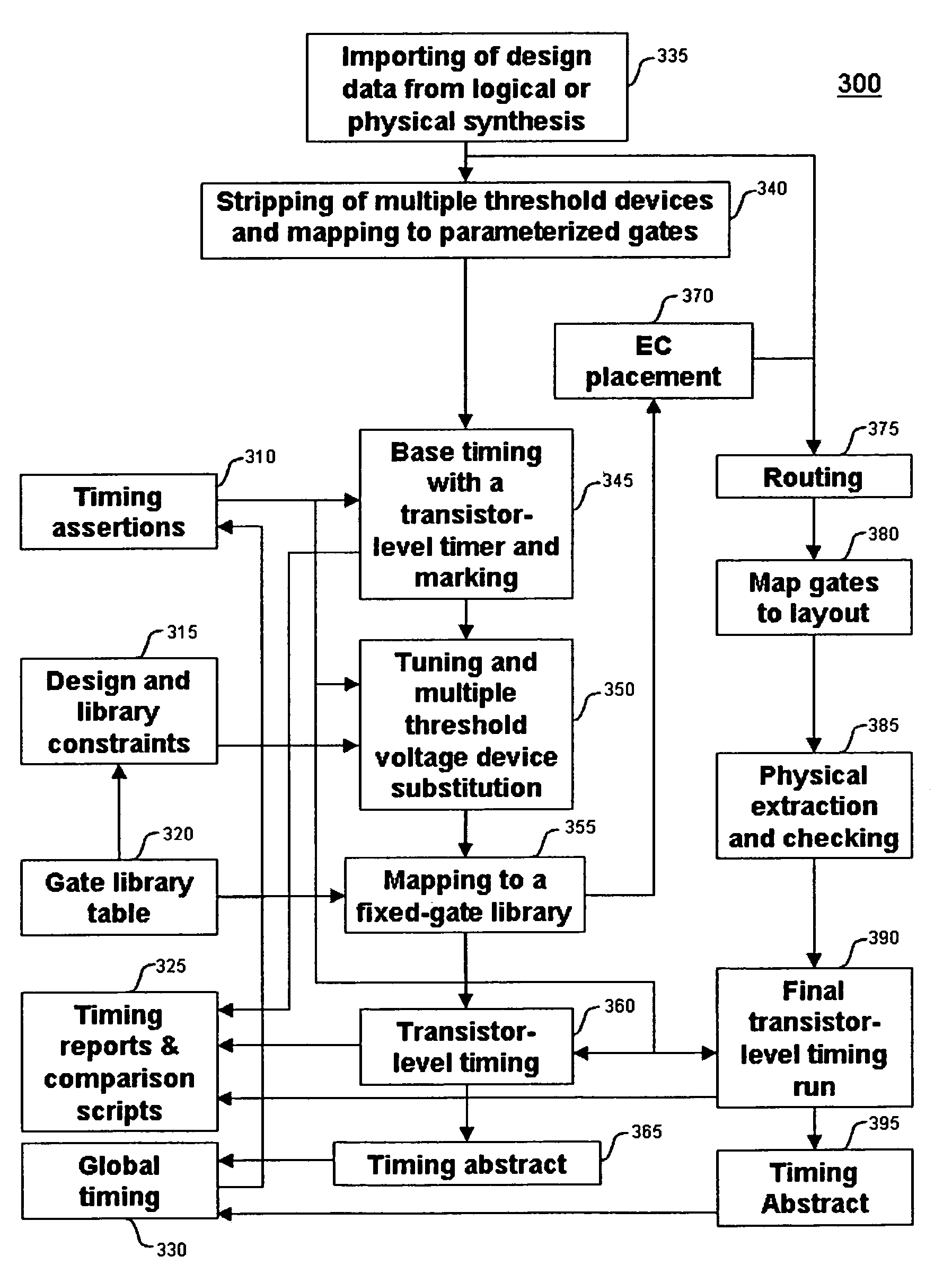

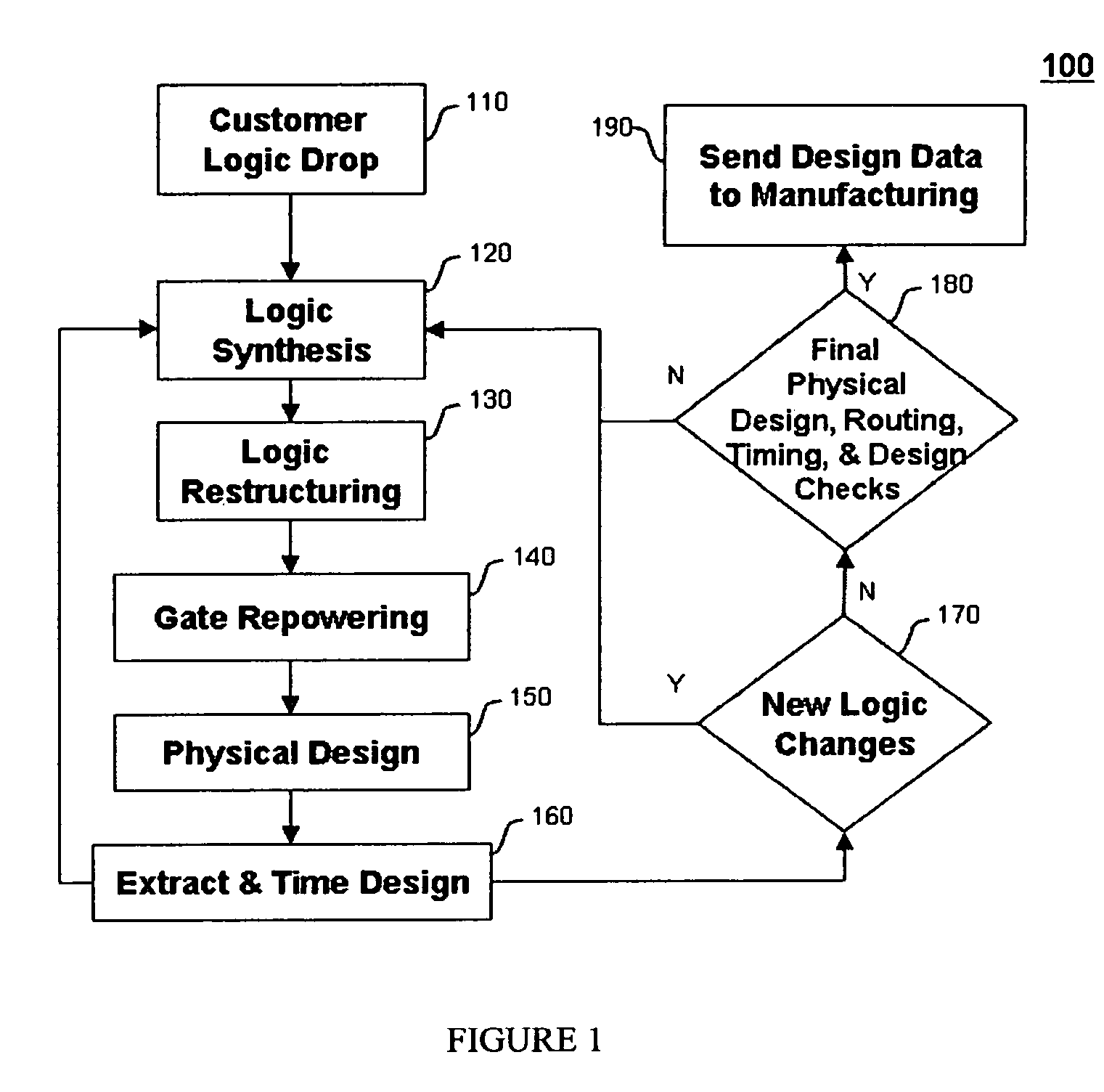

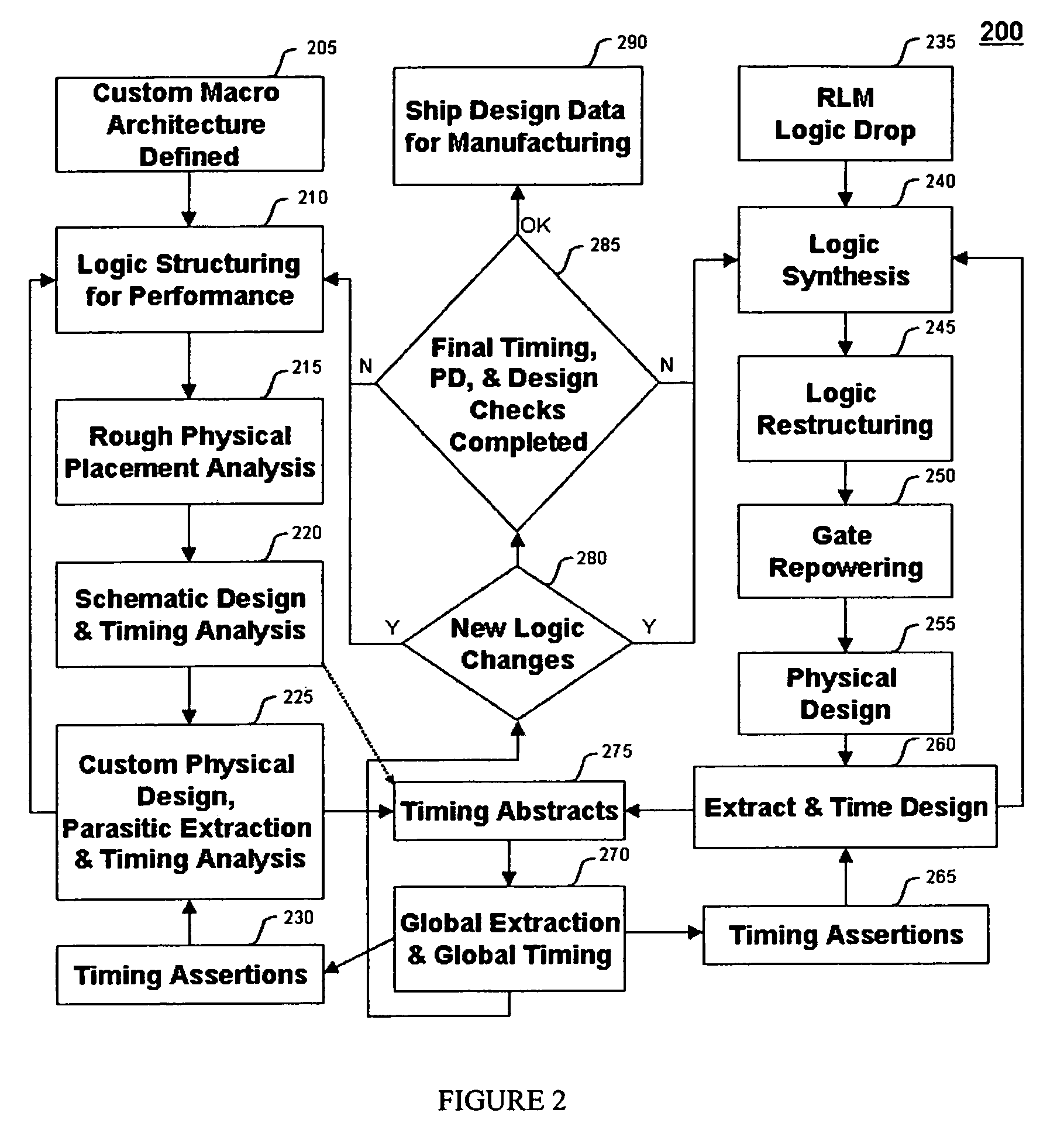

Method for tuning a digital design for synthesized random logic circuit macros in a continuous design space with optional insertion of multiple threshold voltage devices

InactiveUS7093208B2Efficient and effective methodologyError minimizationDigital storageComputer aided designContinuous designCritical section

A Digital Design Method which may be automated is for obtaining timing closure in the design of large, complex, high-performance digital integrated circuits. The methodincludes the use of a tuner on random logic macros that adjusts transistor sizes in a continuous domain. To accommodate this tuning, logic gates are mapped to parameterized cells for the tuning and then back to fixed gates after the tuning. Tuning is constrained in such a way as to minimize “binning errors” when the design is mapped back to fixed cells. Further, the critical sections of the circuit are marked in order to make the optimization more effective and to fit within the problem-size constraints of the tuner. A specially formulated objective function is employed during the tuning to promote faster global timing convergence, despite possibly incorrect initial timing budgets. The specially formulated objective function targets all paths that are failing timing, with appropriate weighting, rather than just targeting the most critical path. Finally, the addition of multiple threshold voltage gates allows for increased performance while limiting leakage power.

Owner:GLOBALFOUNDRIES INC

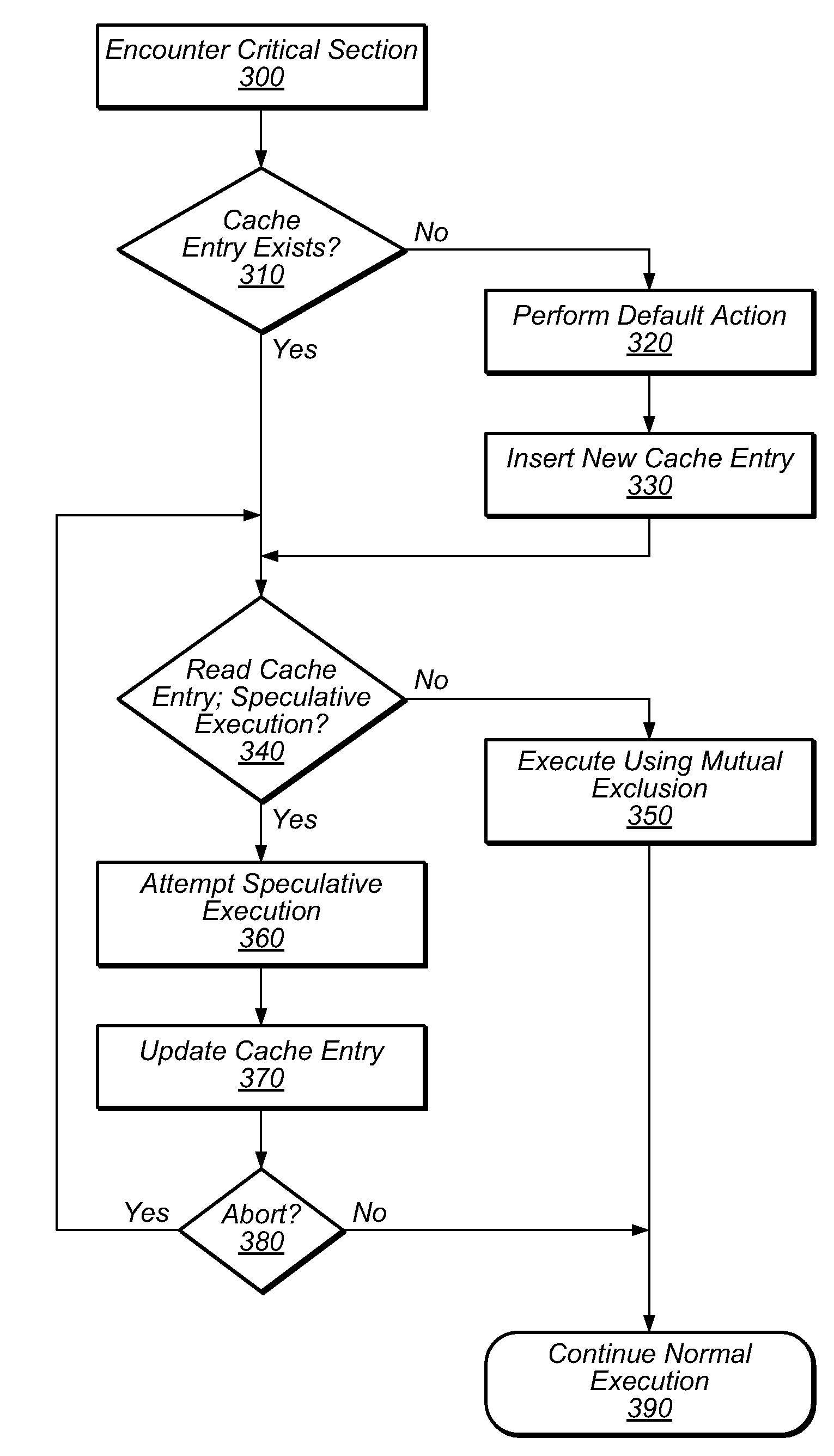

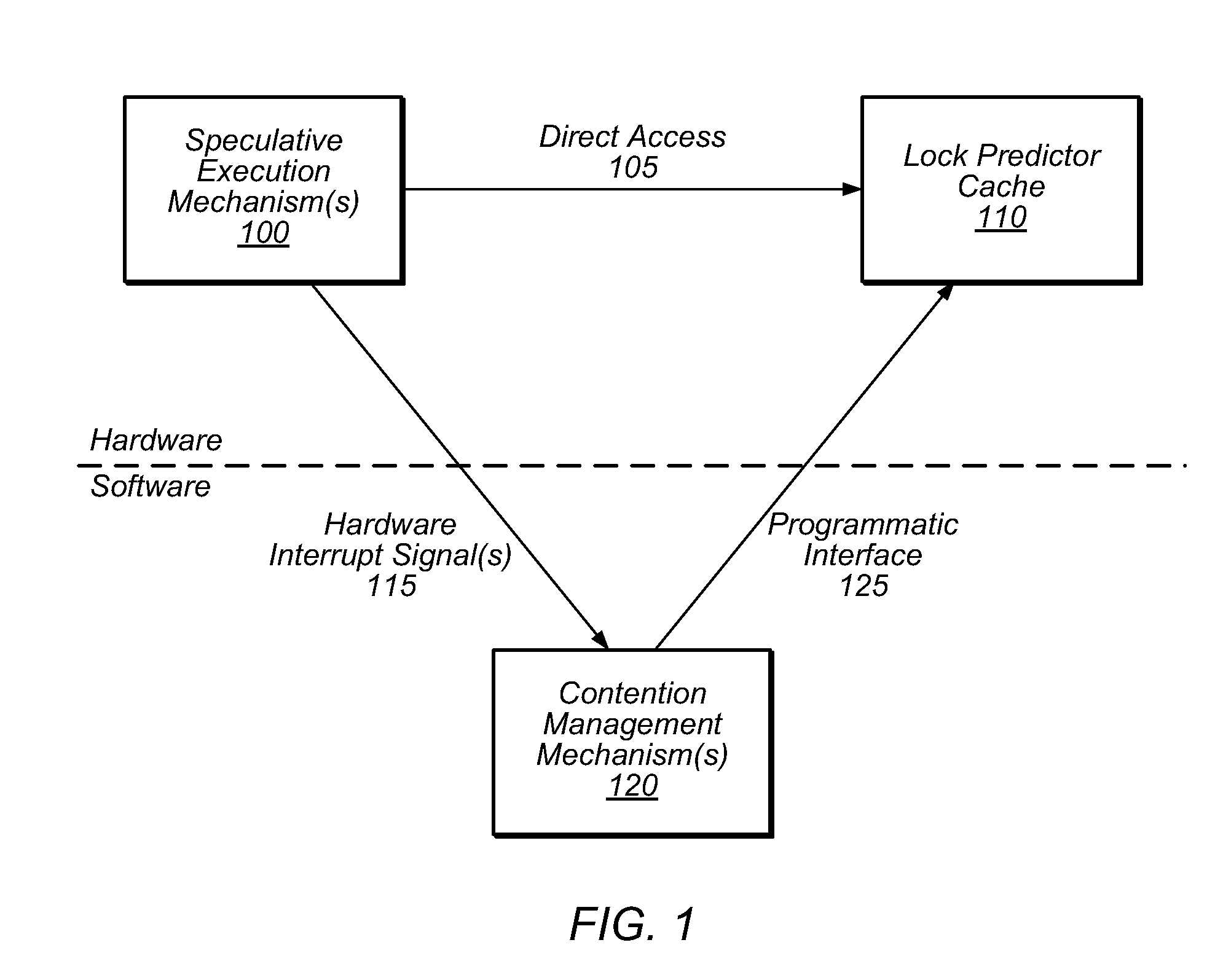

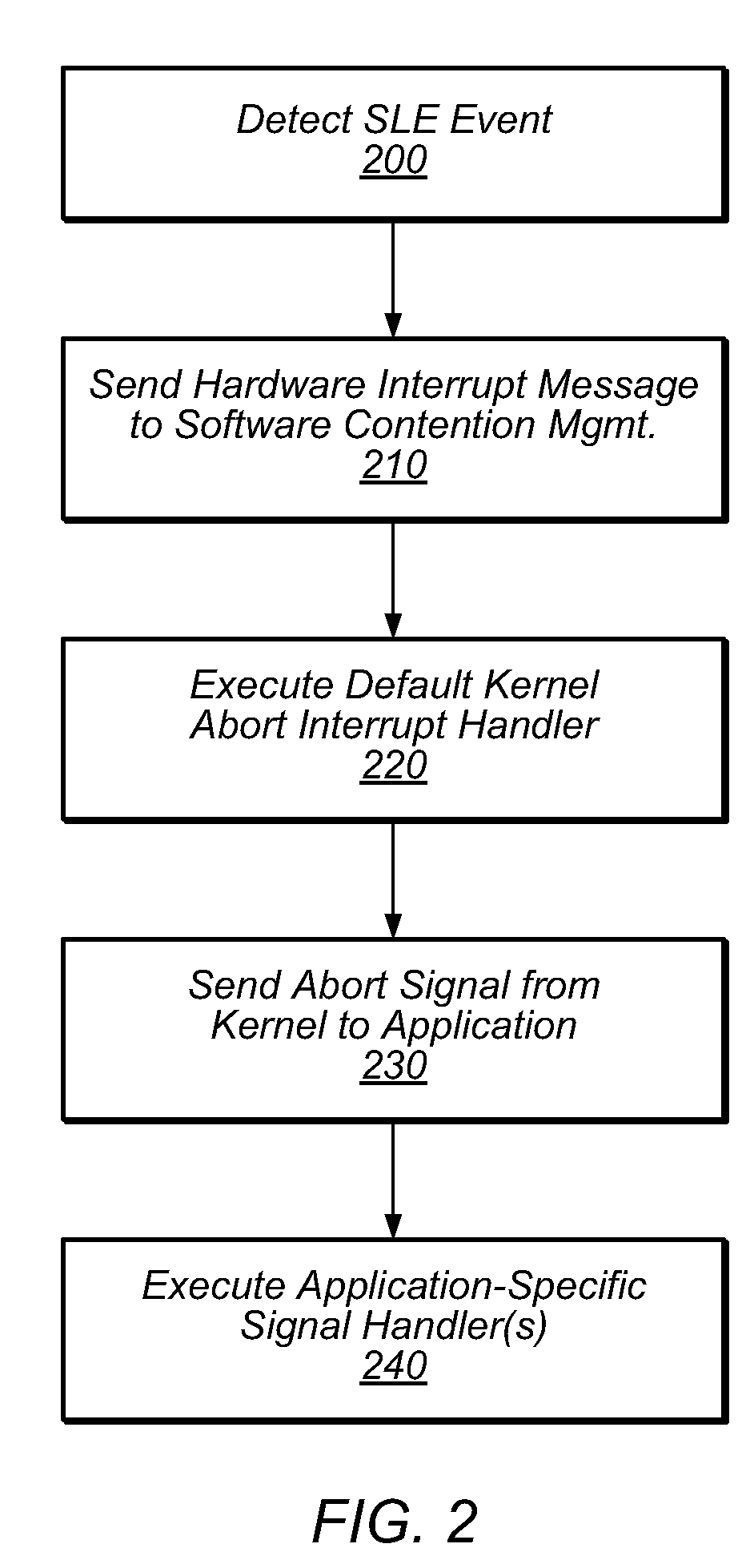

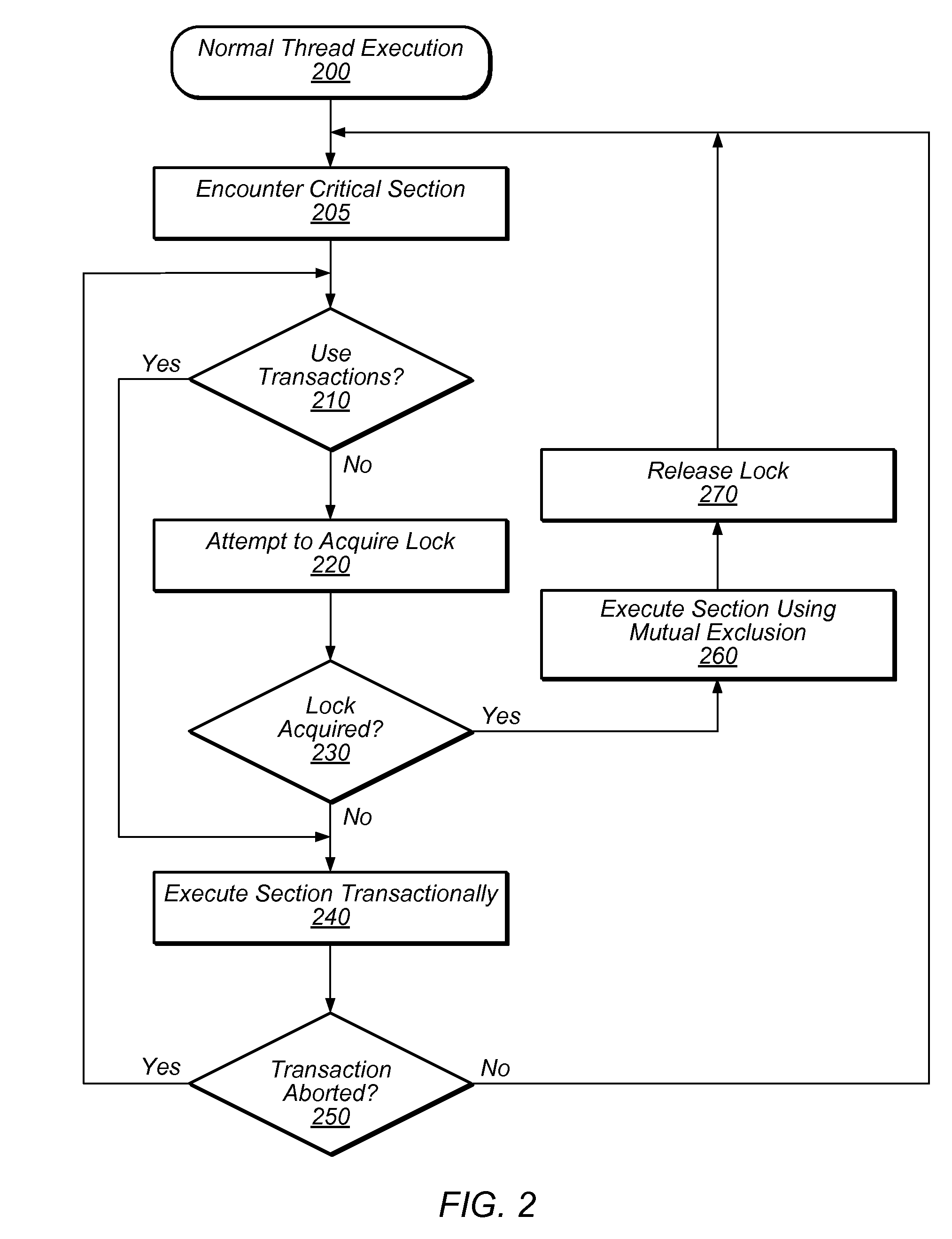

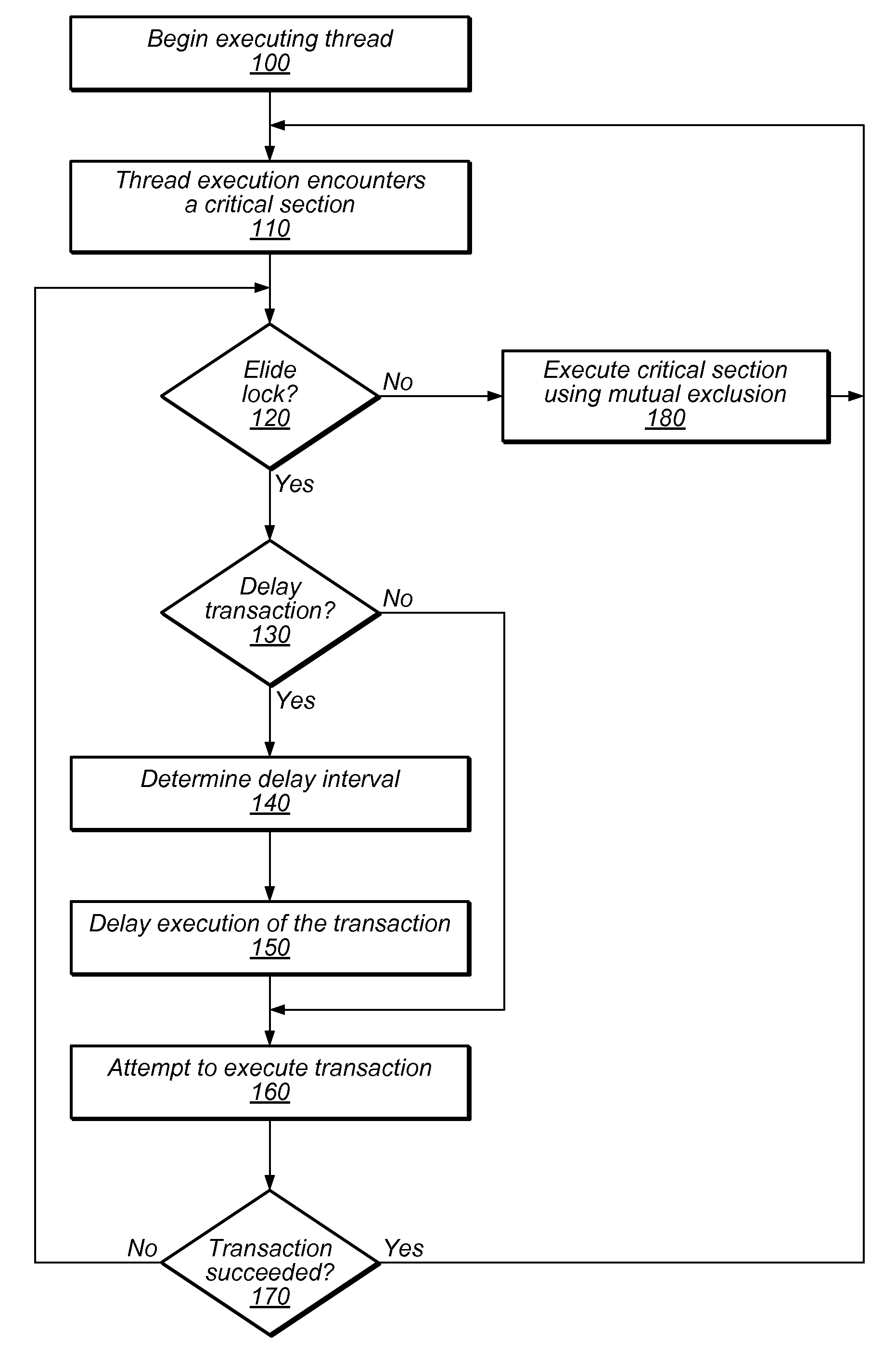

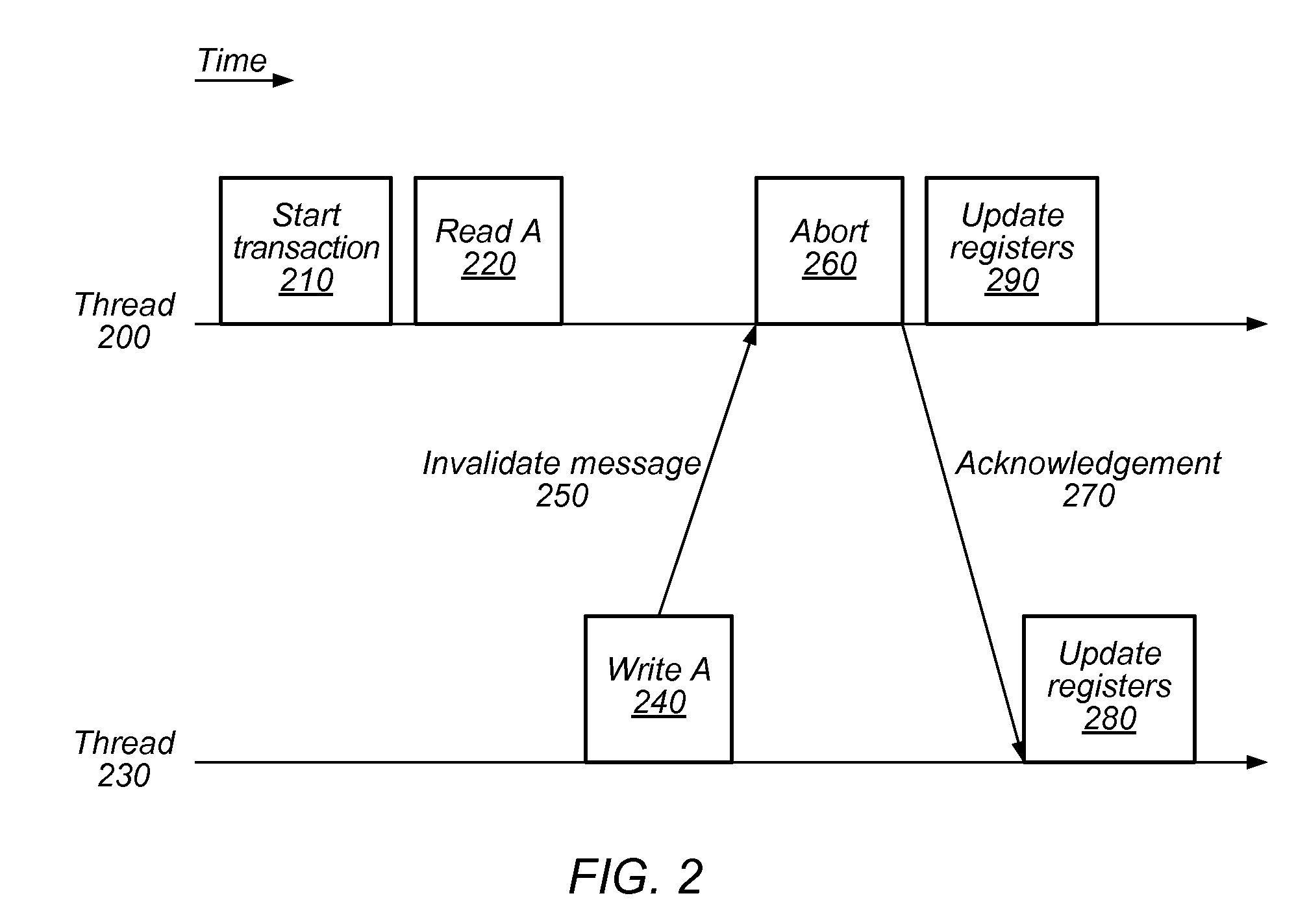

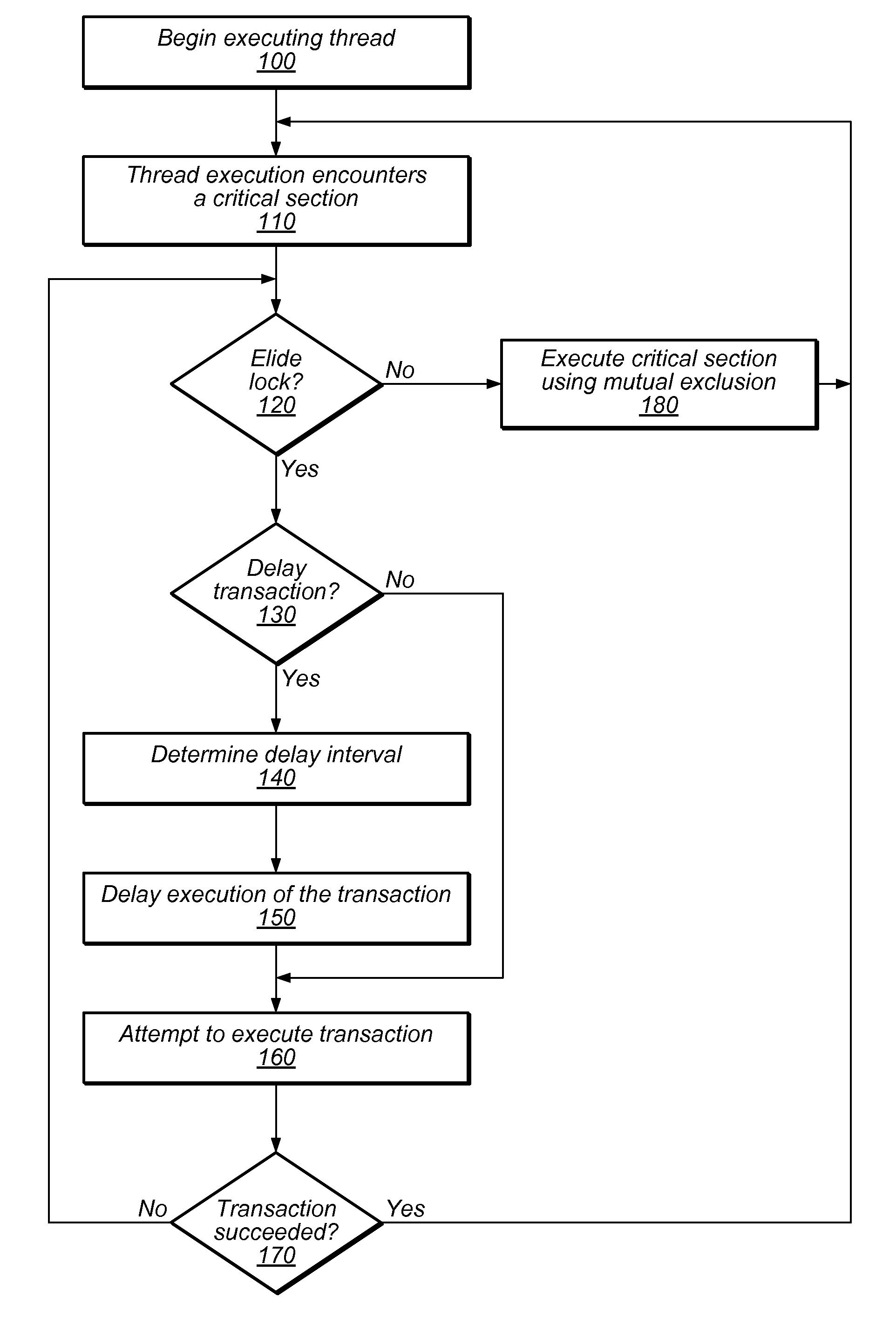

Method and System for Reducing Abort Rates in Speculative Lock Elision using Contention Management Mechanisms

ActiveUS20100169623A1Reduce transactional abort rateWaste system resourceDigital computer detailsSpecific program execution arrangementsSpeculative executionCritical section

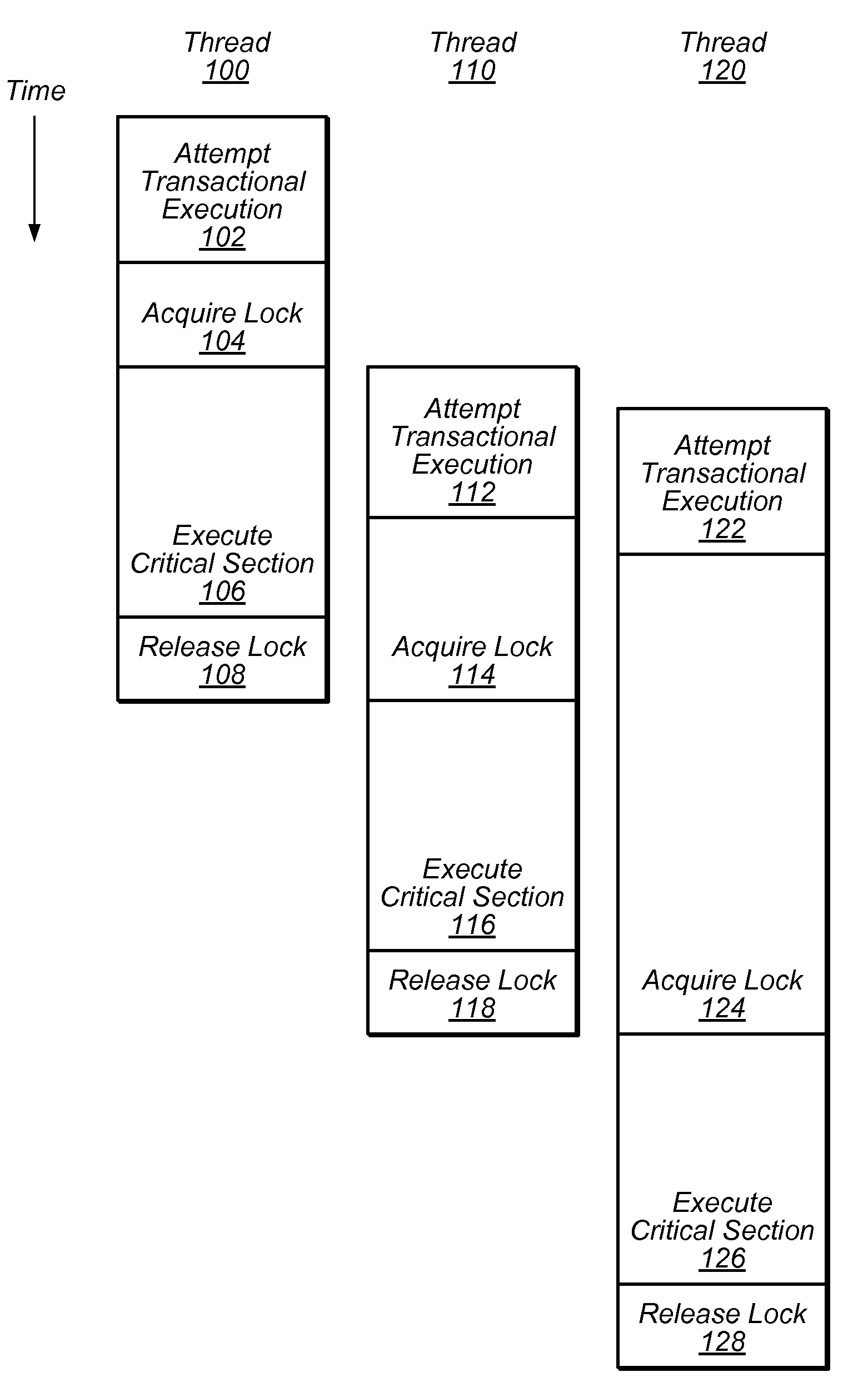

Hardware-based transactional memory mechanisms, such as Speculative Lock Elision (SLE), may allow multiple threads to concurrently execute critical sections protected by the same lock as speculative transactions. Such transactions may abort due to contention or due to misidentification of code as a critical section. In various embodiments, speculative execution mechanisms may be augmented with software and / or hardware contention management mechanisms to reduce abort rates. Speculative execution hardware may send a hardware interrupt signal to notify software components of a speculative execution event (e.g., abort). Software components may respond by implementing concurrency-throttling mechanisms and / or by determining a mode of execution (e.g., speculative, non-speculative) for a given section and communicating that determination to the hardware speculative execution mechanisms, e.g., by writing it into a lock predictor cache. Subsequently, hardware speculative execution mechanisms may determine a preferred mode of execution for the section by reading the corresponding entry from the lock predictor cache.

Owner:SUN MICROSYSTEMS INC

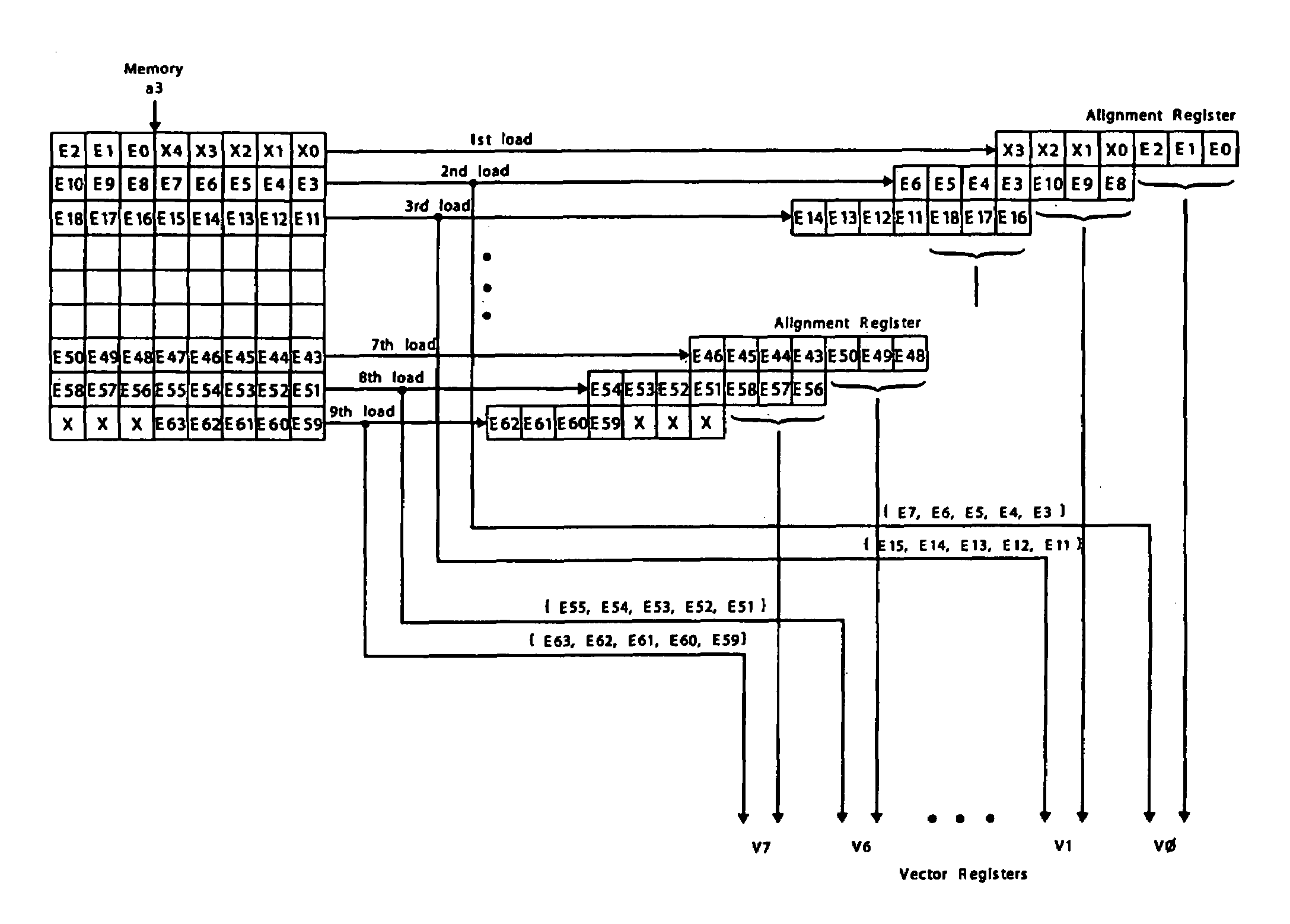

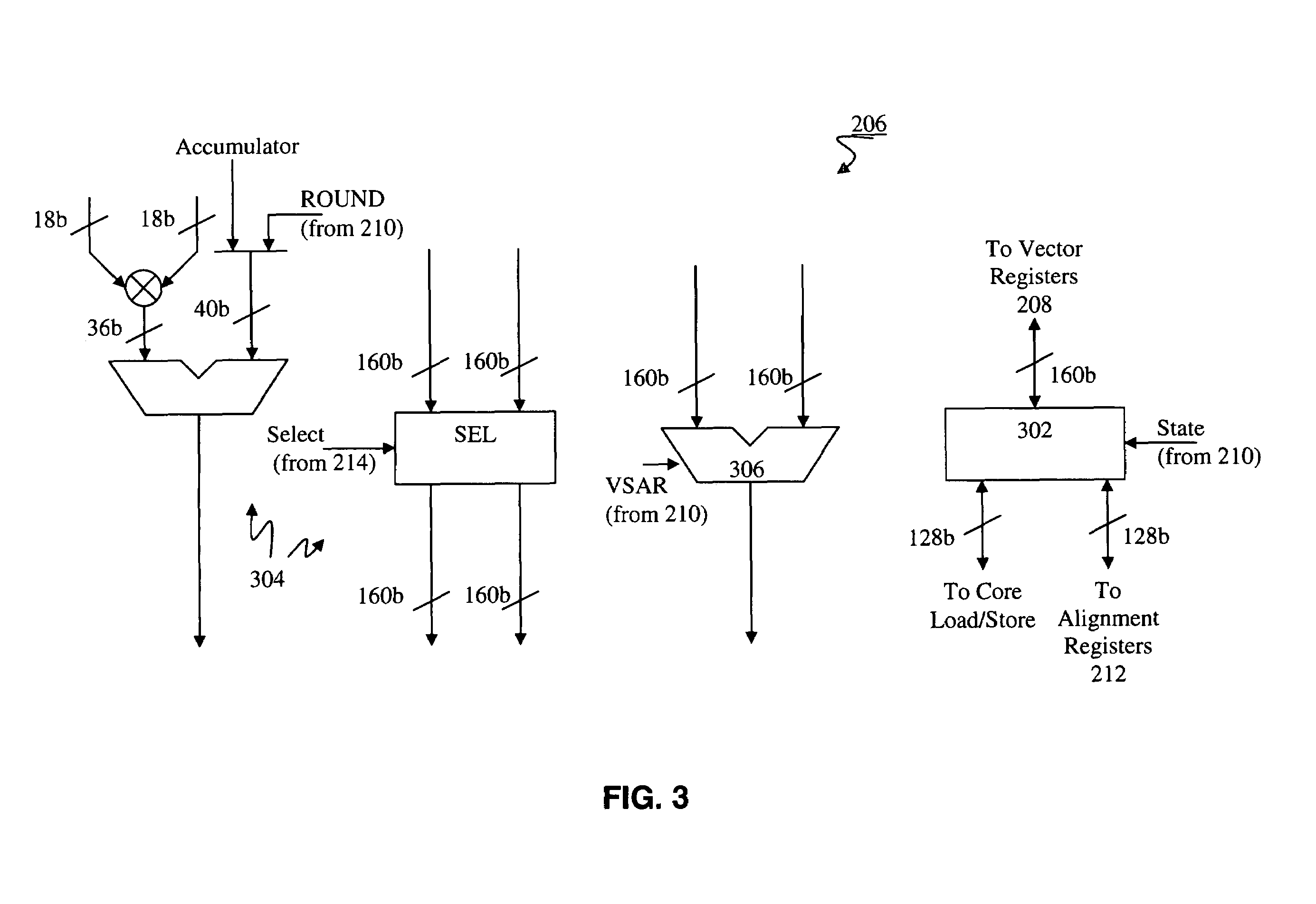

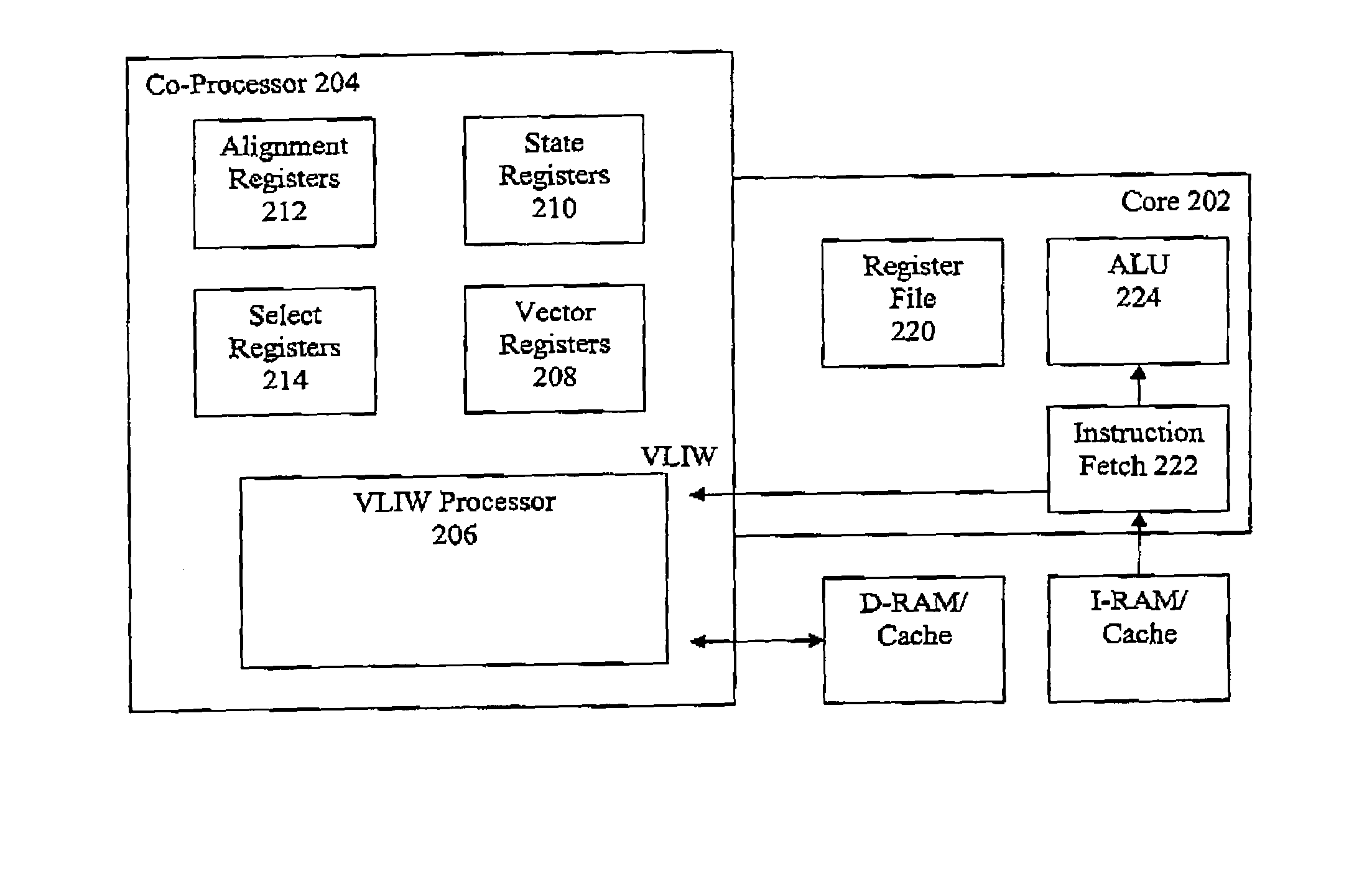

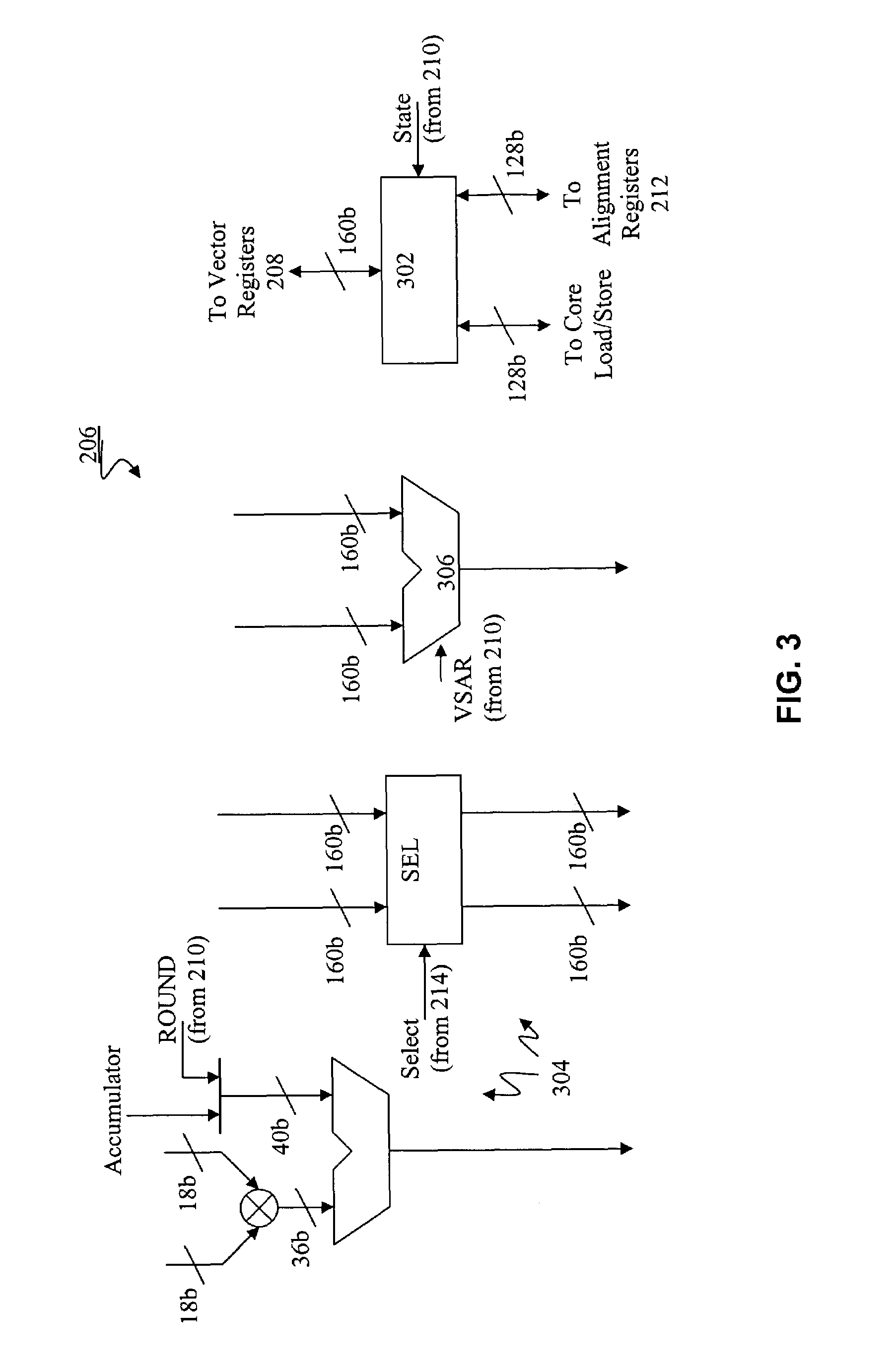

Load/store operation of memory misaligned vector data using alignment register storing realigned data portion for combining with remaining portion

InactiveUS7219212B1High coding densityImprove performanceInstruction analysisGeneral purpose stored program computerDigital signal processingCritical section

A processor can achieve high code density while allowing higher performance than existing architectures, particularly for Digital Signal Processing (DSP) applications. In accordance with one aspect, the processor supports three possible instruction sizes while maintaining the simplicity of programming and allowing efficient physical implementation. Most of the application code can be encoded using two sets of narrow size instructions to achieve high code density. Adding a third (and larger, i.e. VLIW) instruction size allows the architecture to encode multiple operations per instruction for the performance critical section of the code. Further, each operation of the VLIW format instruction can optionally be a SIMD operation that operates upon vector data. A scheme for the optimal utilization (highest achievable performance for the given amount of hardware) of multiply-accumulate (MAC) hardware is also provided.

Owner:TENSILICA

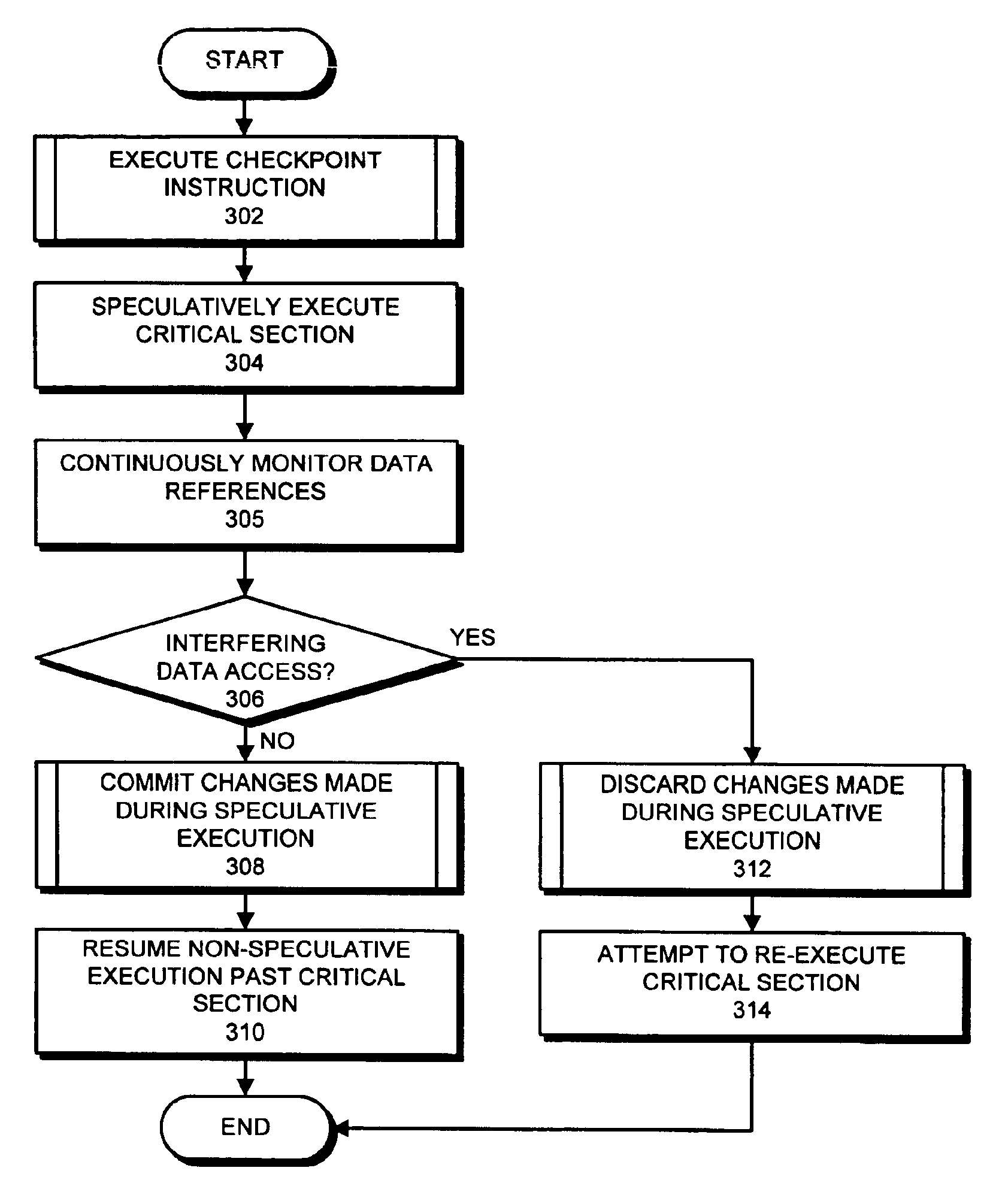

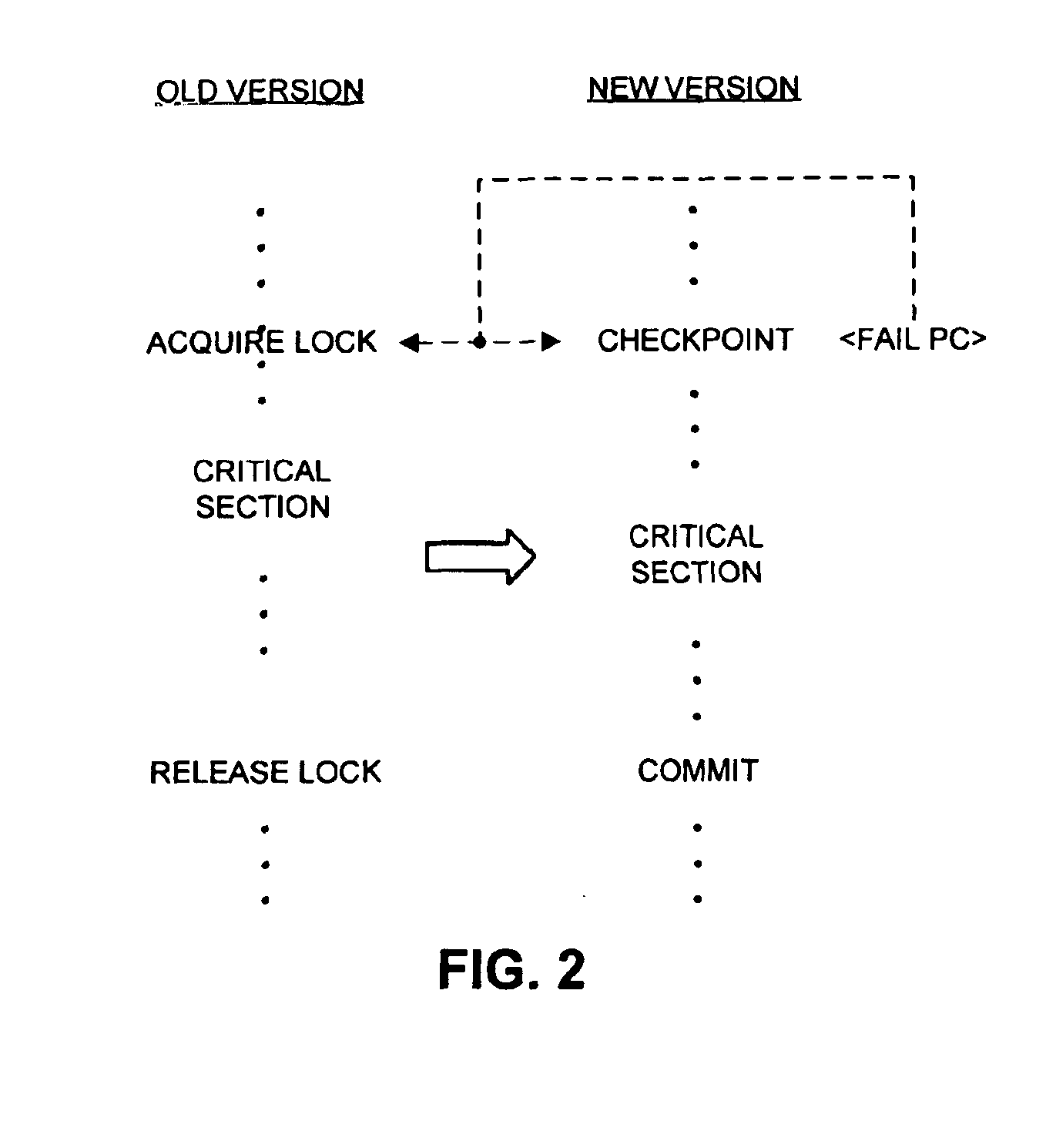

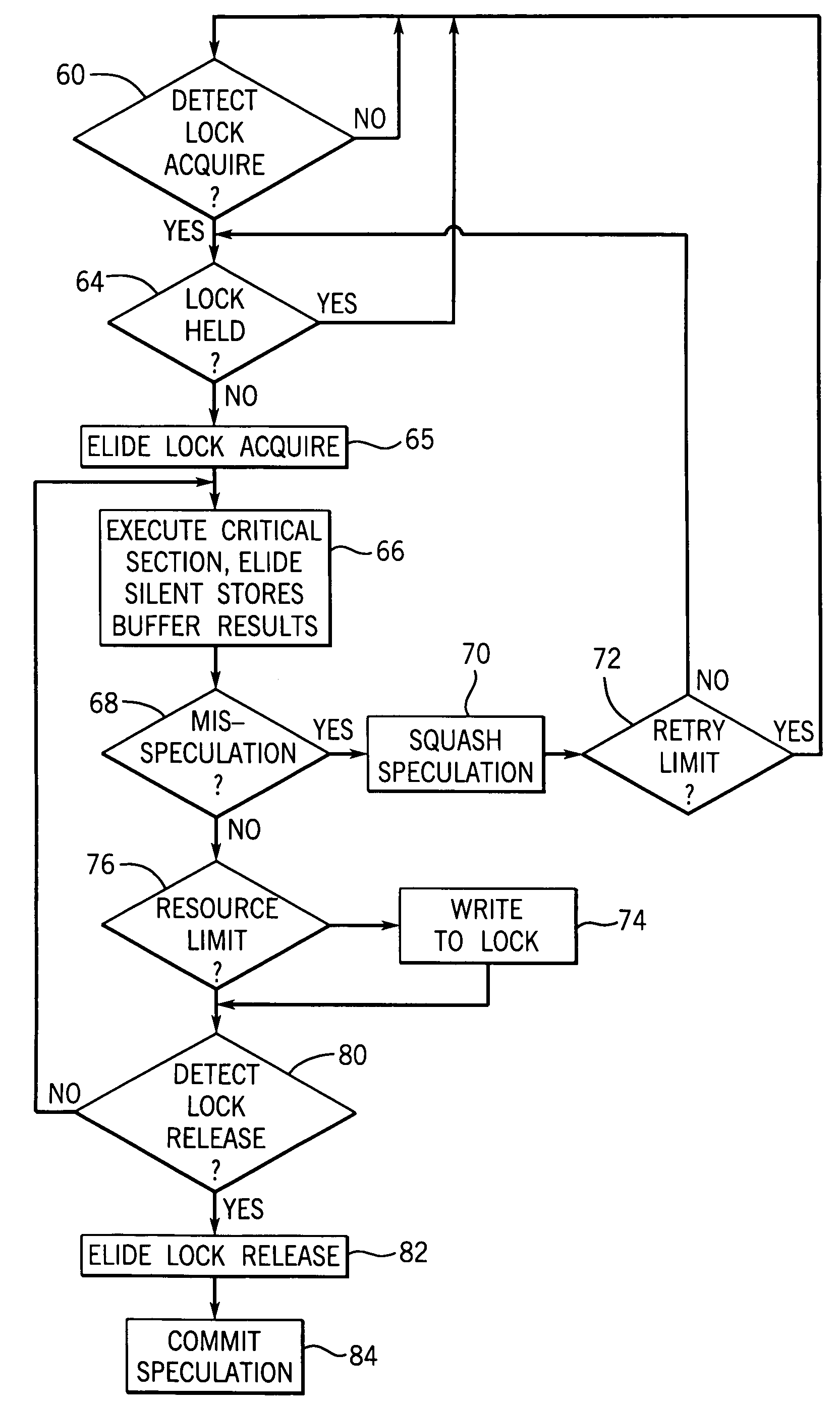

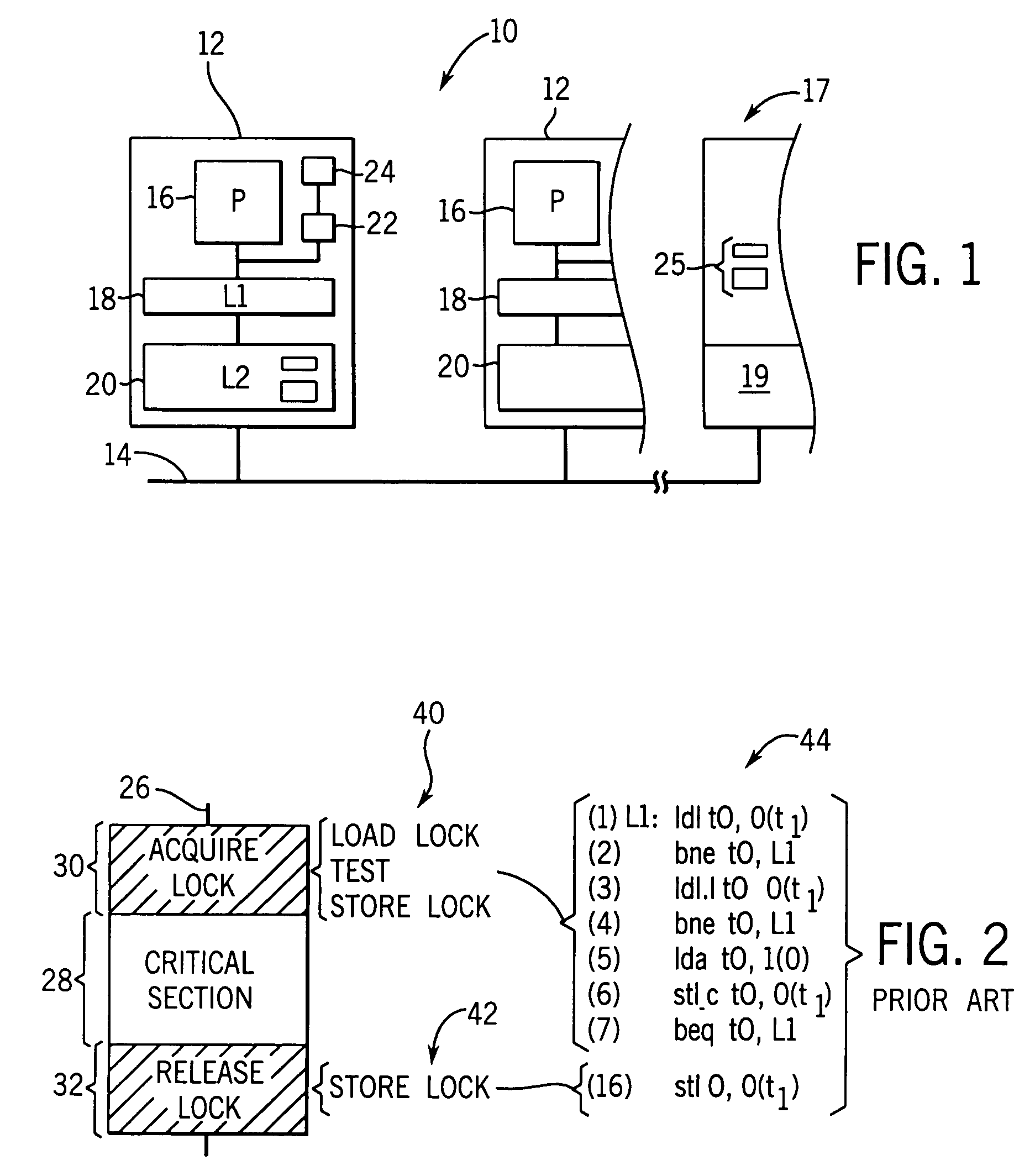

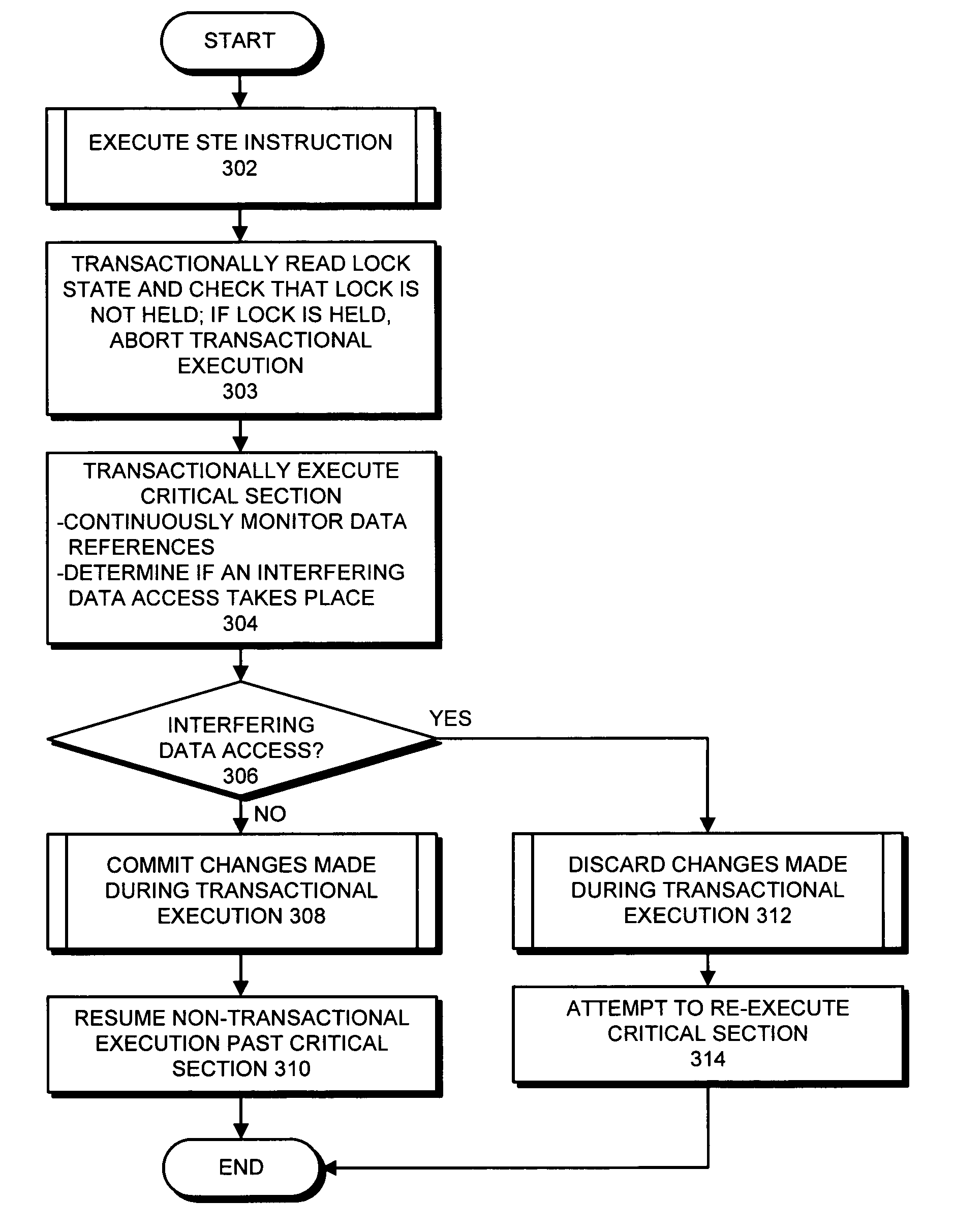

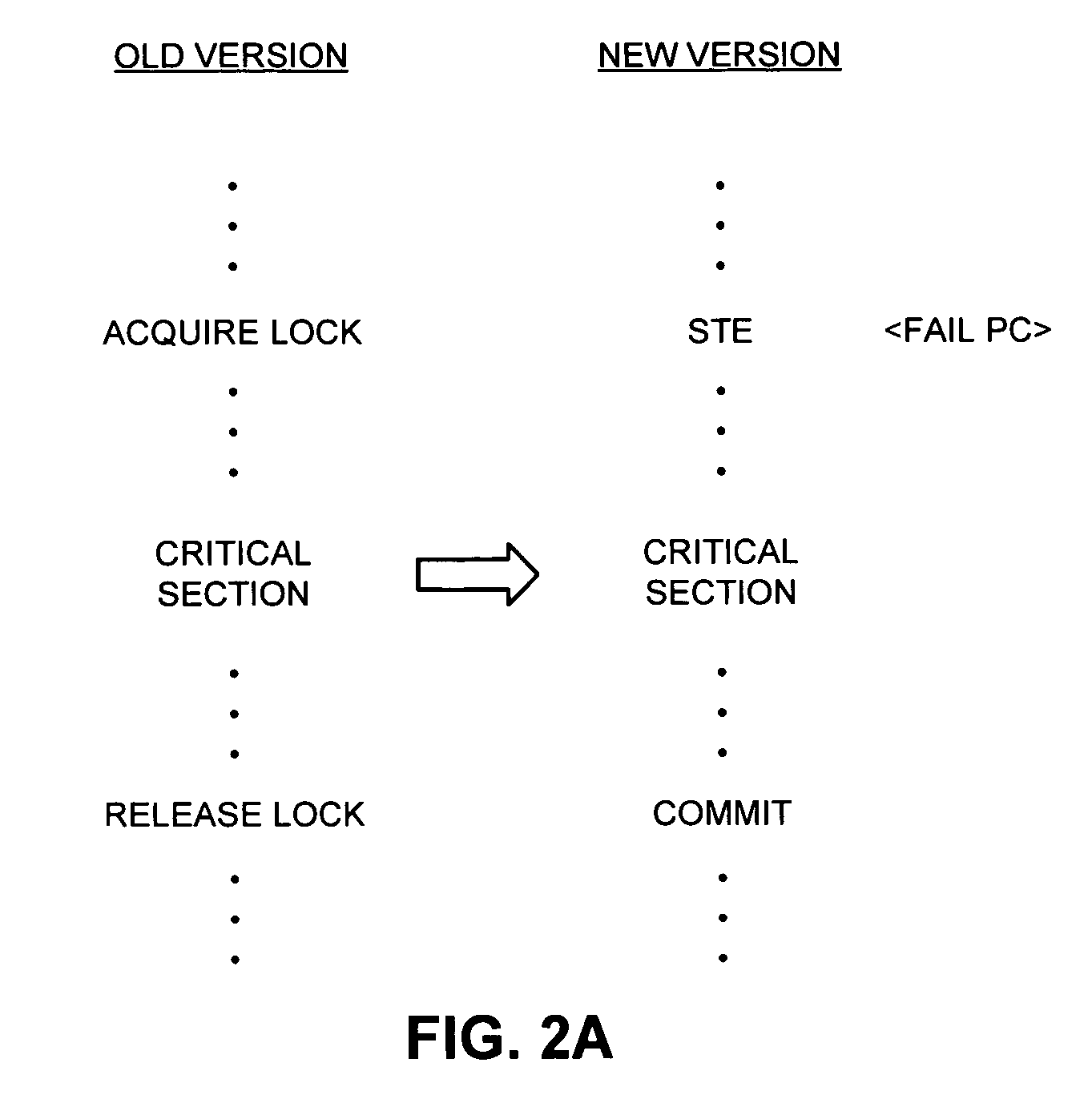

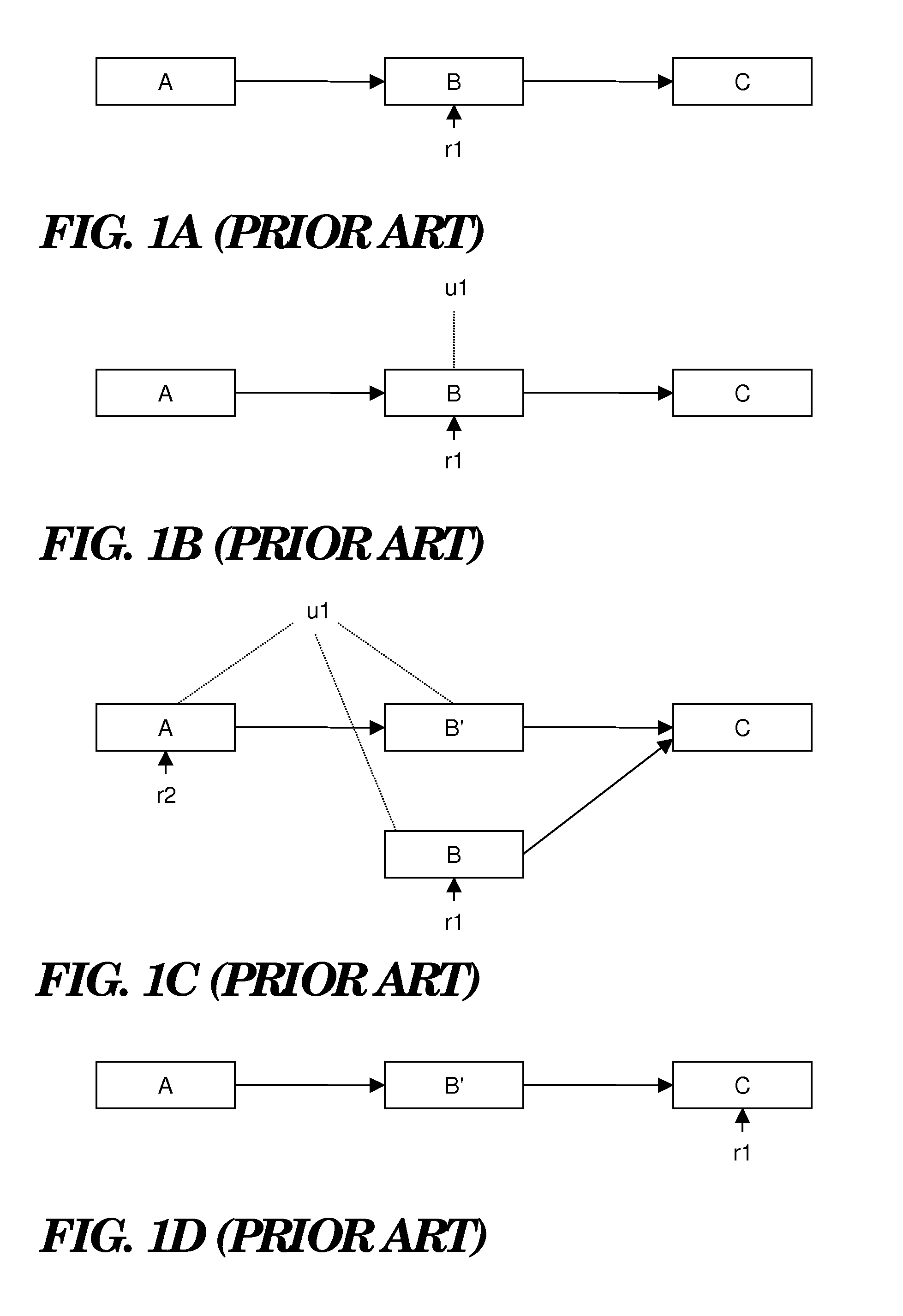

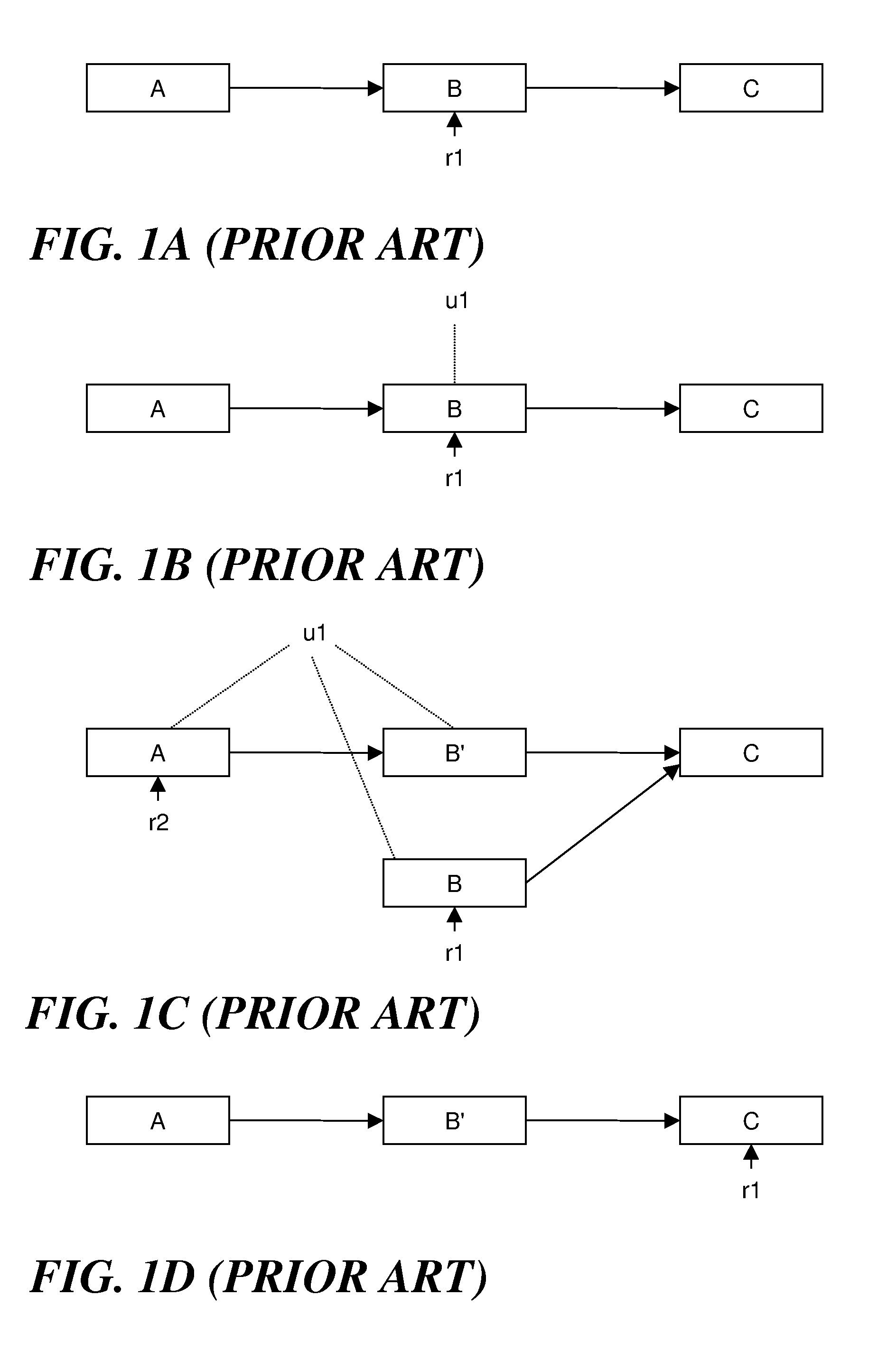

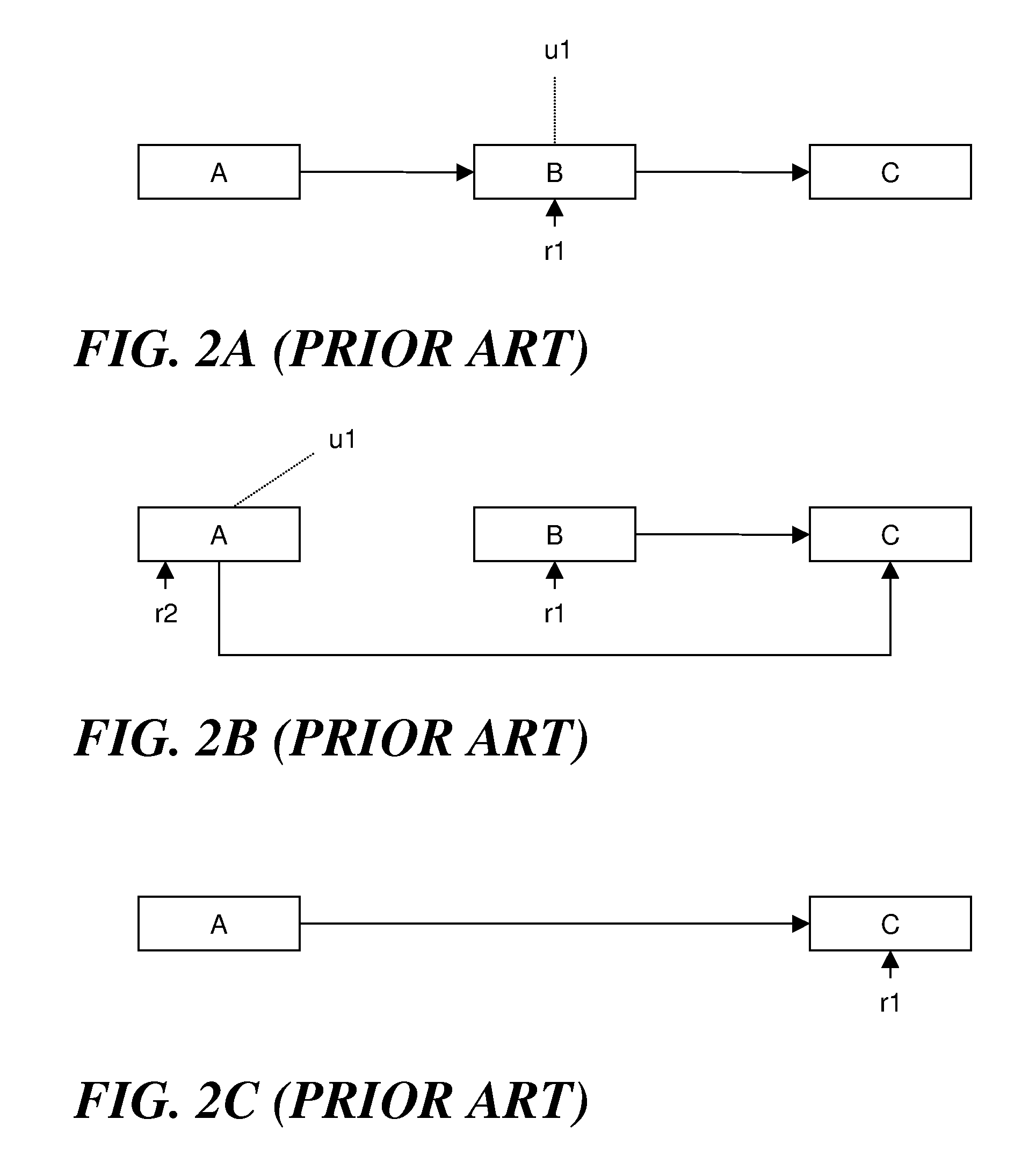

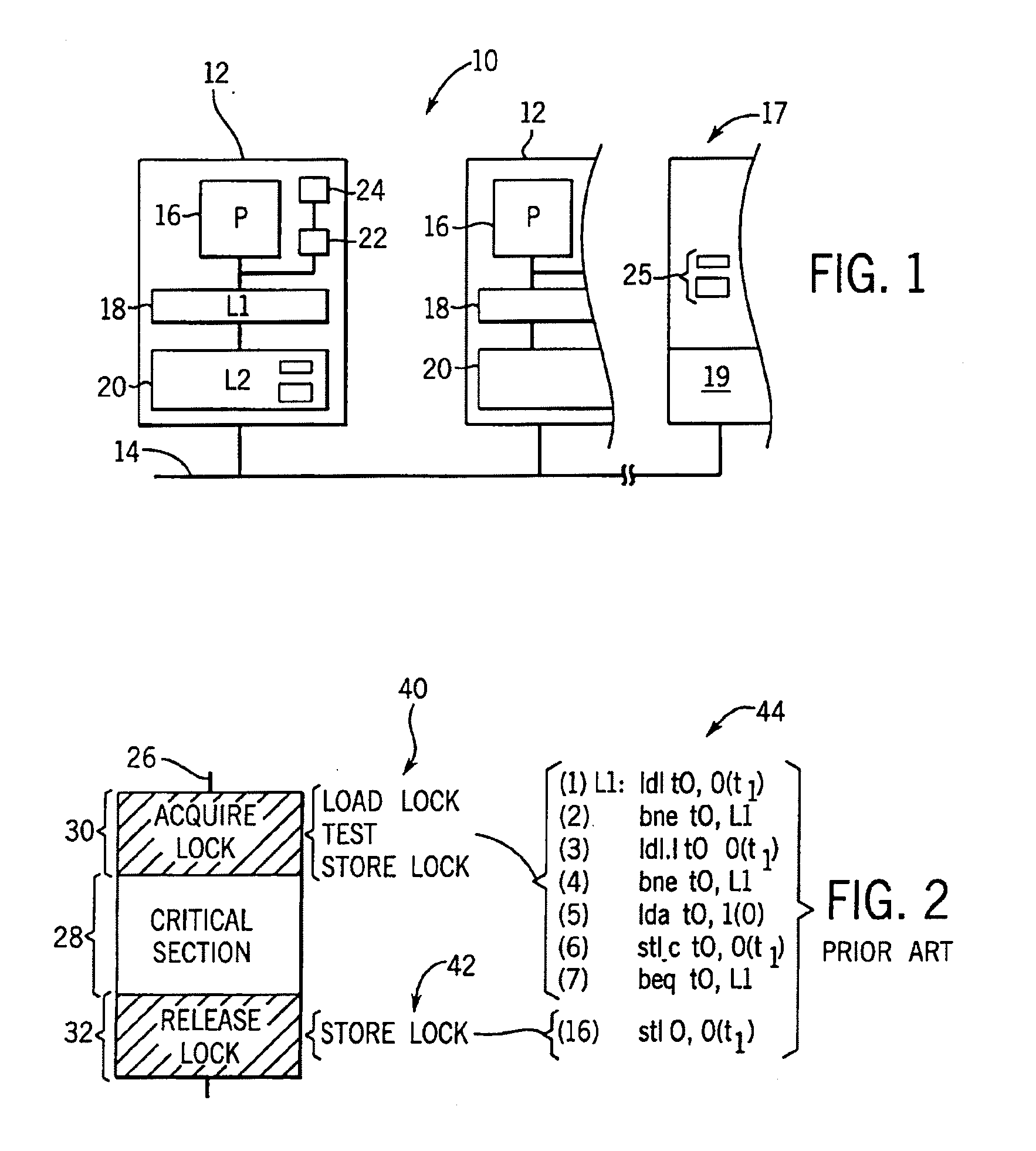

Method and apparatus for avoiding locks by speculatively executing critical sections

InactiveUS6862664B2Easy to solveMemory adressing/allocation/relocationMultiprogramming arrangementsSpeculative executionCritical section

One embodiment of the present invention provides a system that facilitates avoiding locks by speculatively executing critical sections of code. During operation, the system allows a process to speculatively execute a critical section of code within a program without first acquiring a lock associated with the critical section. If the process subsequently completes the critical section without encountering an interfering data access from another process, the system commits changes made during the speculative execution, and resumes normal non-speculative execution of the program past the critical section. Otherwise, if an interfering data access from another process is encountered during execution of the critical section, the system discards changes made during the speculative execution, and attempts to re-execute the critical section.

Owner:ORACLE INT CORP

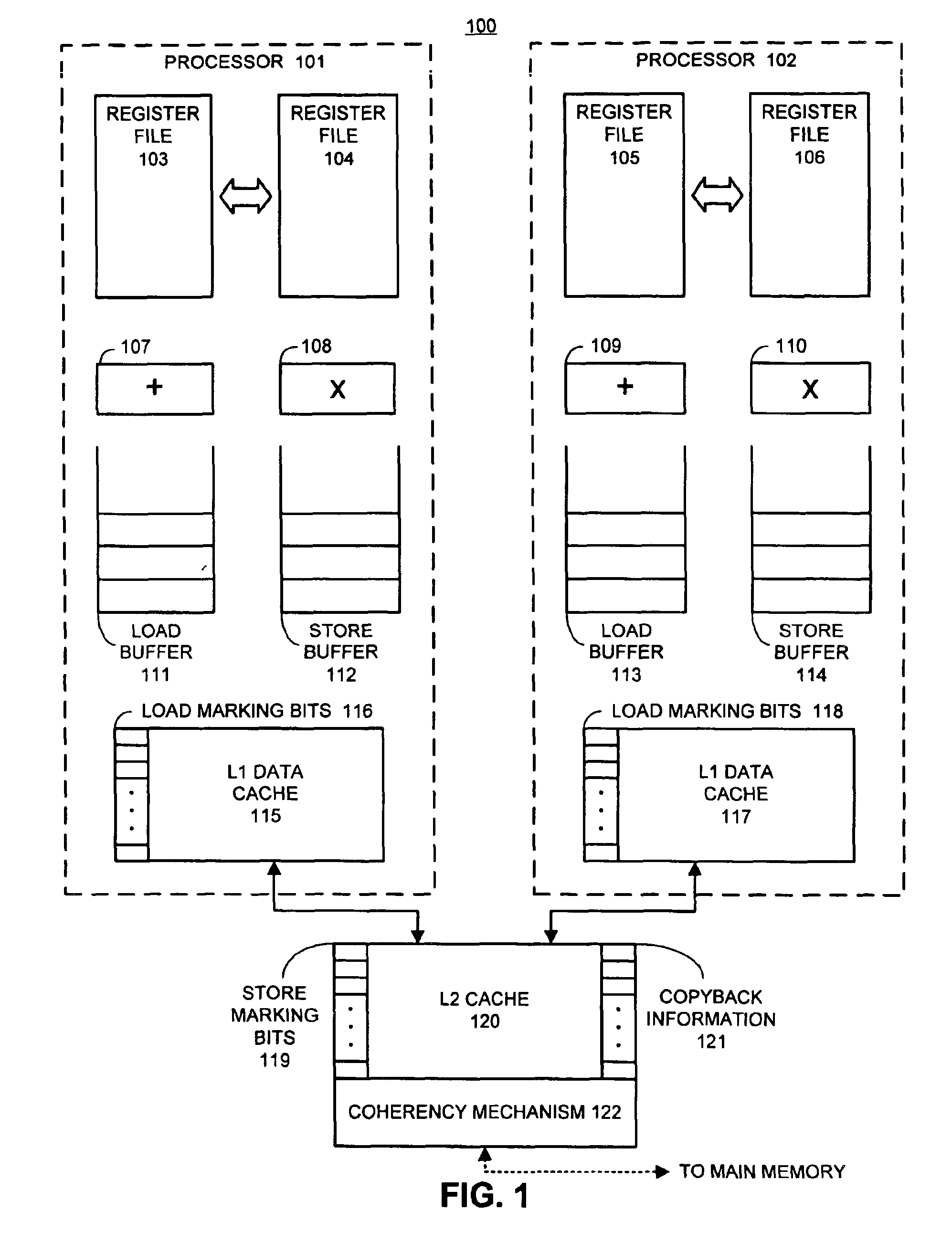

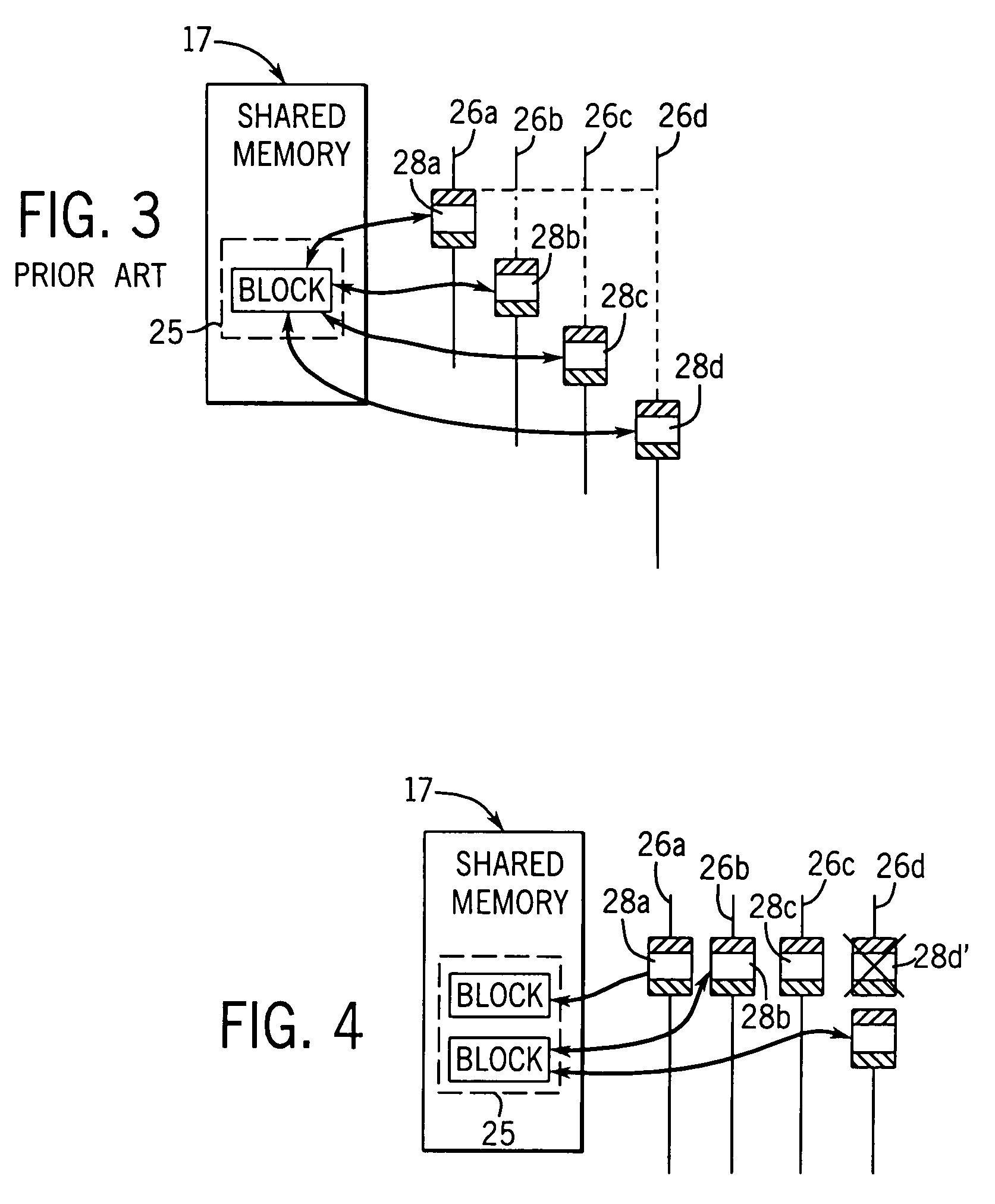

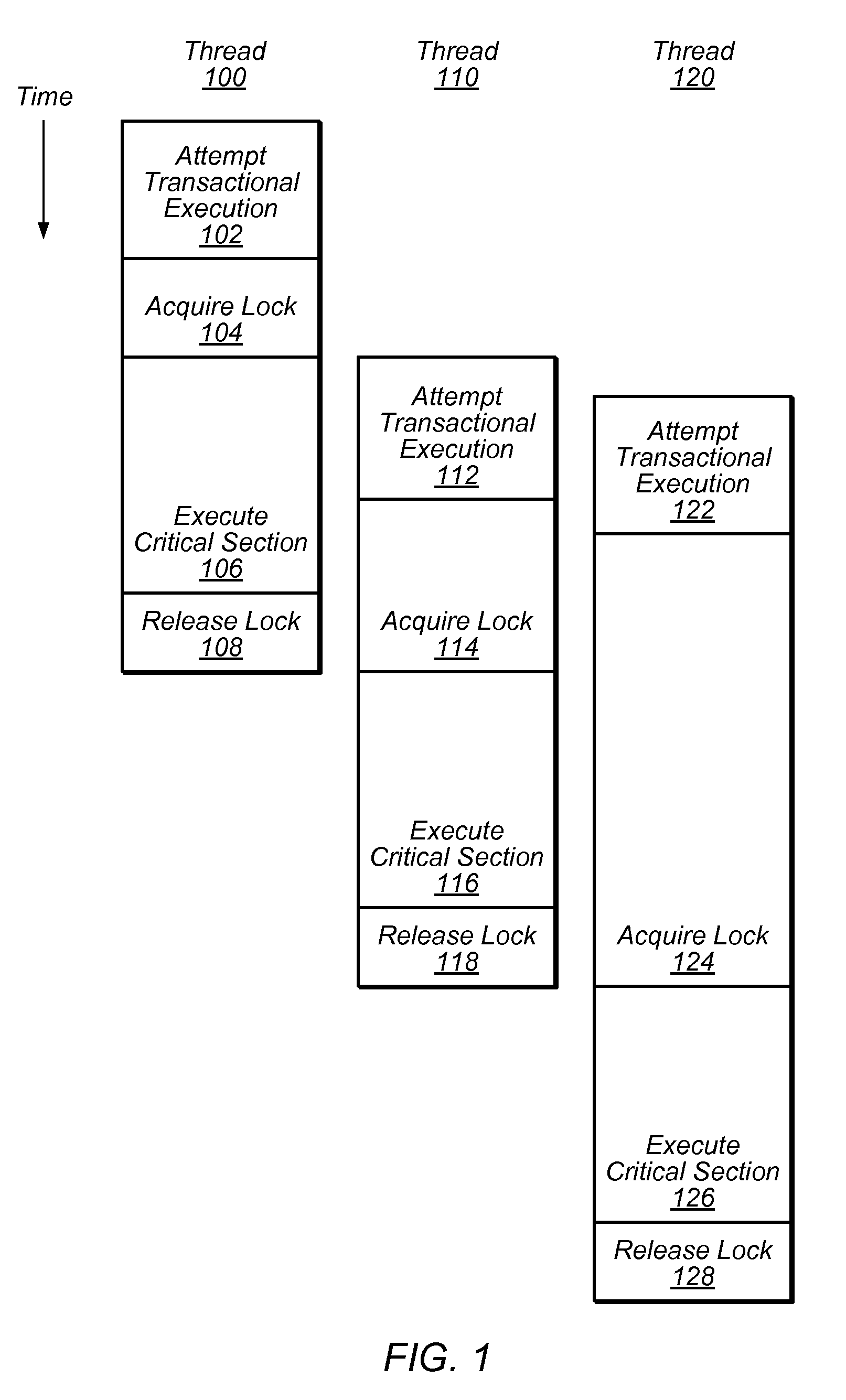

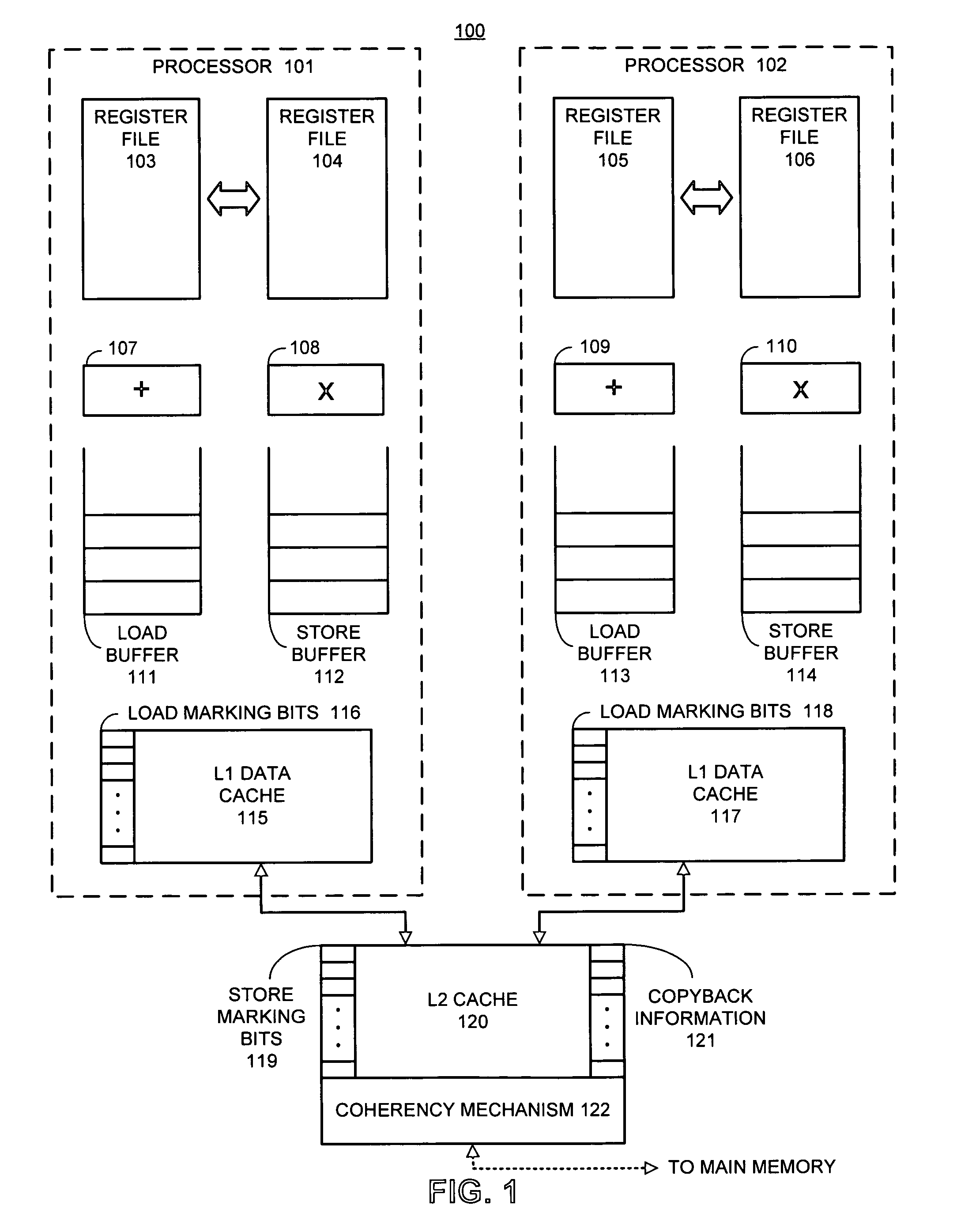

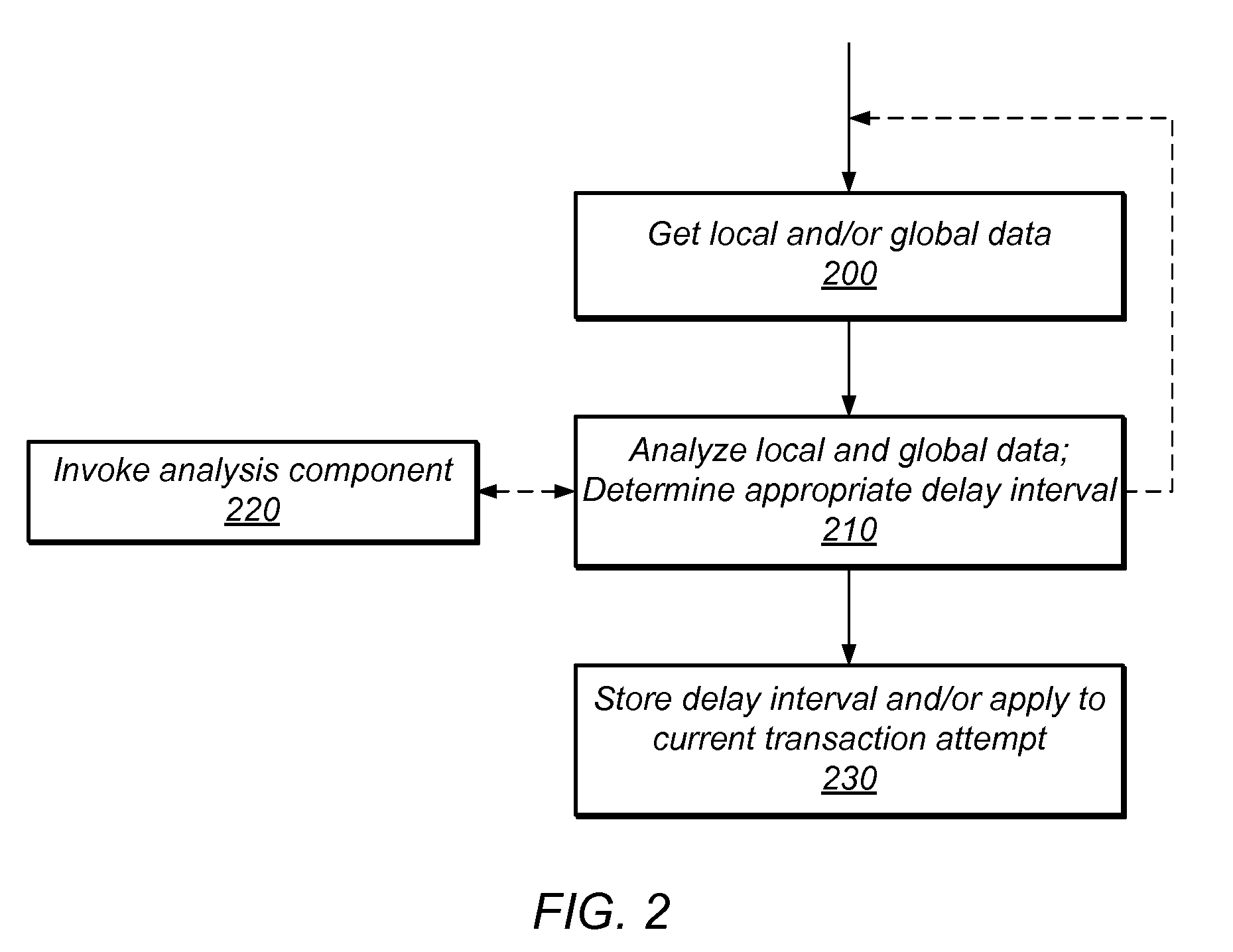

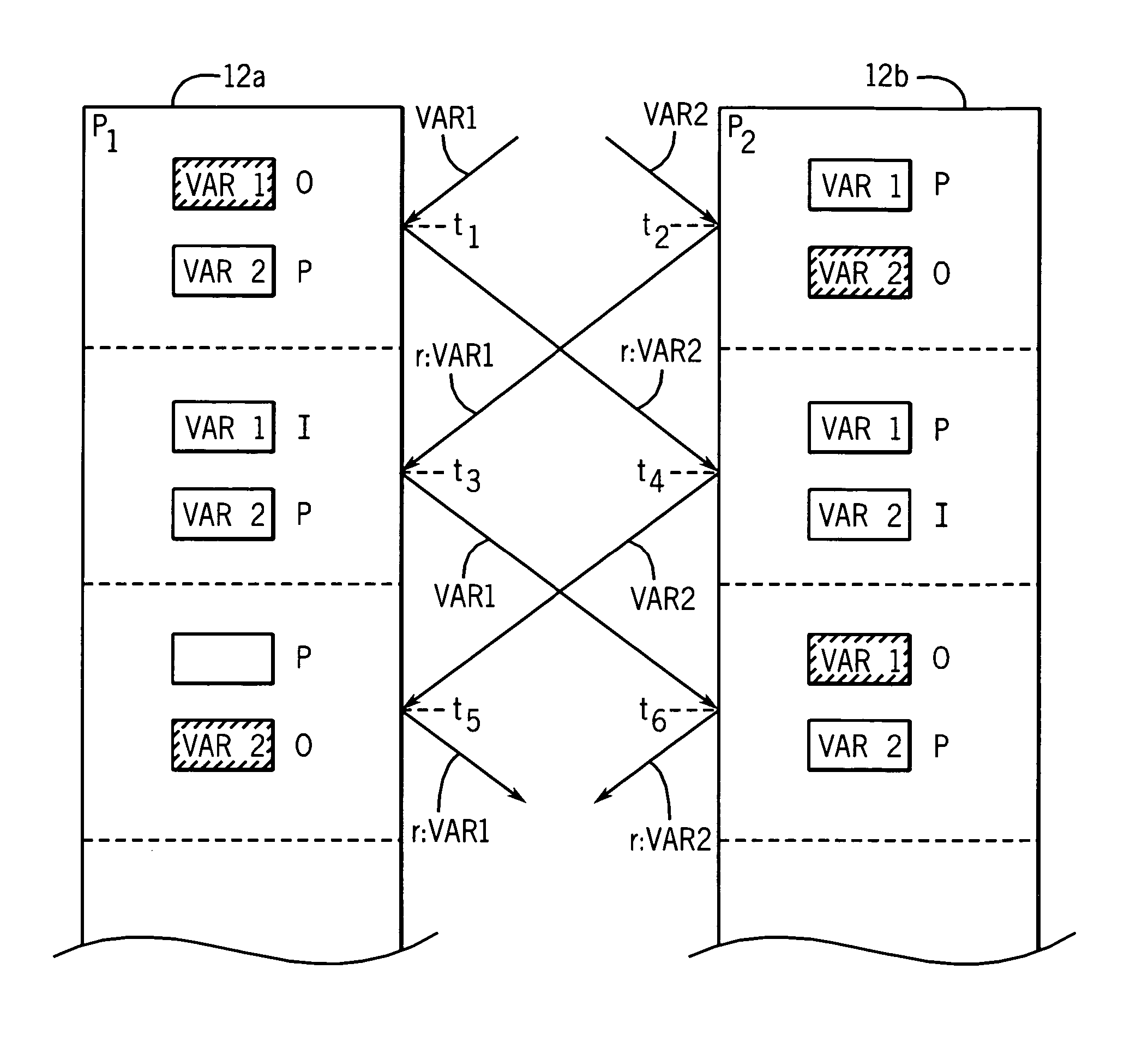

Concurrent execution of critical sections by eliding ownership of locks

ActiveUS7120762B2Ensure correct executionEfficient executionMemory architecture accessing/allocationProgram synchronisationSpeculative executionCritical section

Critical sections of multi-threaded programs, normally protected by locks providing access by only one thread, are speculatively executed concurrently by multiple threads with elision of the lock acquisition and release. Upon a completion of the speculative execution without actual conflict as may be identified using standard cache protocols, the speculative execution is committed, otherwise the speculative execution is squashed. Speculative execution with elision of the lock acquisition, allows a greater degree of parallel execution in multi-threaded programs with aggressive lock usage.

Owner:WISCONSIN ALUMNI RES FOUND

System and Method for Reducing Serialization in Transactional Memory Using Gang Release of Blocked Threads

ActiveUS20100138836A1Reduce the impactProgram synchronisationMemory systemsCritical sectionParallel computing

Transactional Lock Elision (TLE) may allow multiple threads to concurrently execute critical sections as speculative transactions. Transactions may abort due to various reasons. To avoid starvation, transactions may revert to execution using mutual exclusion when transactional execution fails. Because threads may revert to mutual exclusion in response to the mutual exclusion of other threads, a positive feedback loop may form in times of high congestion, causing a “lemming effect”. To regain the benefits of concurrent transactional execution, the system may allow one or more threads awaiting a given lock to be released from the wait queue and instead attempt transactional execution. A gang release may allow a subset of waiting threads to be released simultaneously. The subset may be chosen dependent on the number of waiting threads, historical abort relationships between threads, analysis of transactions of each thread, sensitivity of each thread to abort, and / or other thread-local or global criteria.

Owner:SUN MICROSYSTEMS INC

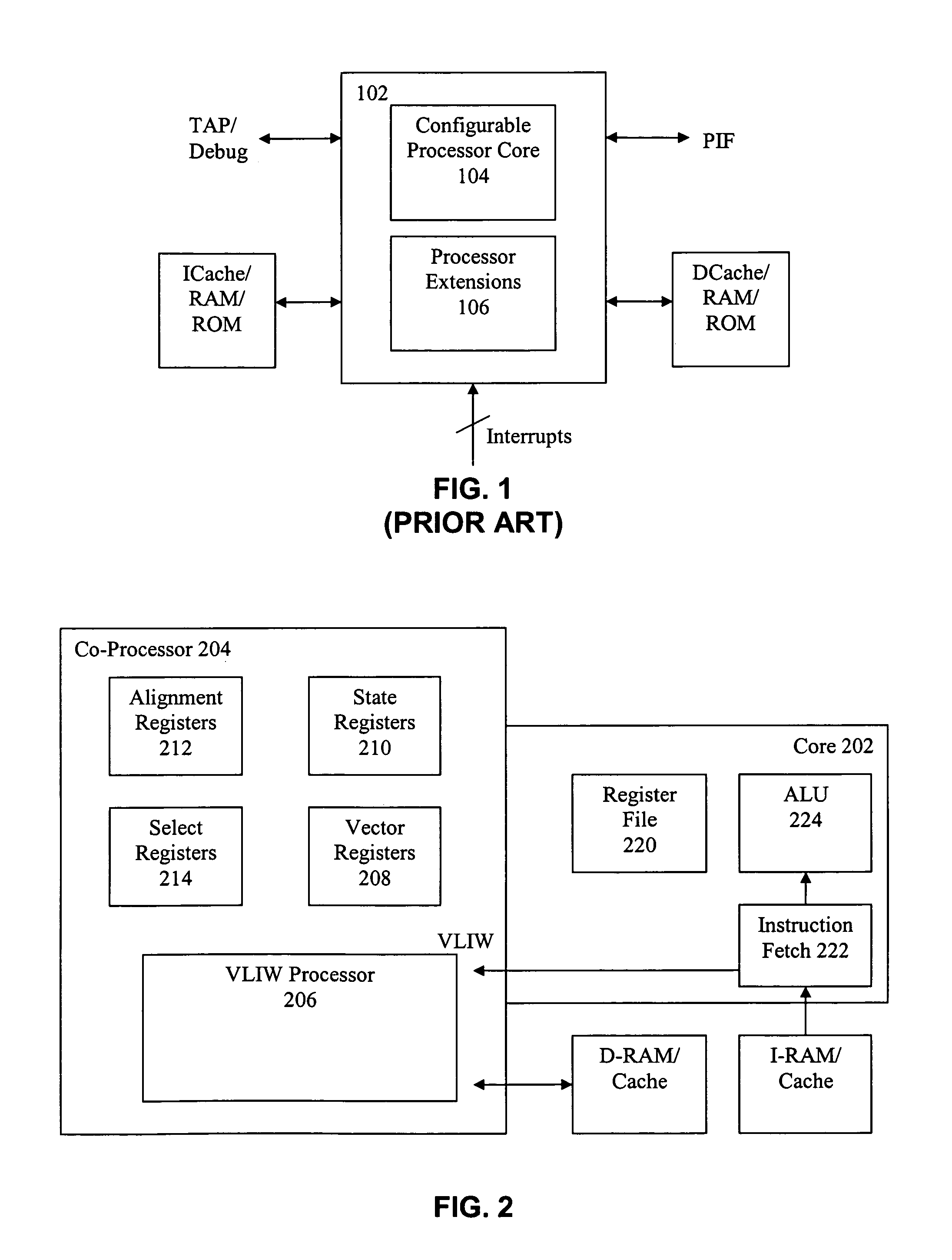

Vector co-processor for configurable and extensible processor architecture

ActiveUS7376812B1High coding densityImprove performanceInstruction analysisGeneral purpose stored program computerDigital signal processingCritical section

A processor can achieve high code density while allowing higher performance than existing architectures, particularly for Digital Signal Processing (DSP) applications. In accordance with one aspect, the processor supports three possible instruction sizes while maintaining the simplicity of programming and allowing efficient physical implementation. Most of the application code can be encoded using two sets of narrow size instructions to achieve high code density. Adding a third (and larger, i.e. VLIW) instruction size allows the architecture to encode multiple operations per instruction for the performance critical section of the code. Further, each operation of the VLIW format instruction can optionally be a SIMD operation that operates upon vector data. A scheme for the optimal utilization (highest achievable performance for the given amount of hardware) of multiply-accumulate (MAC) hardware is also provided.

Owner:TENSILICA

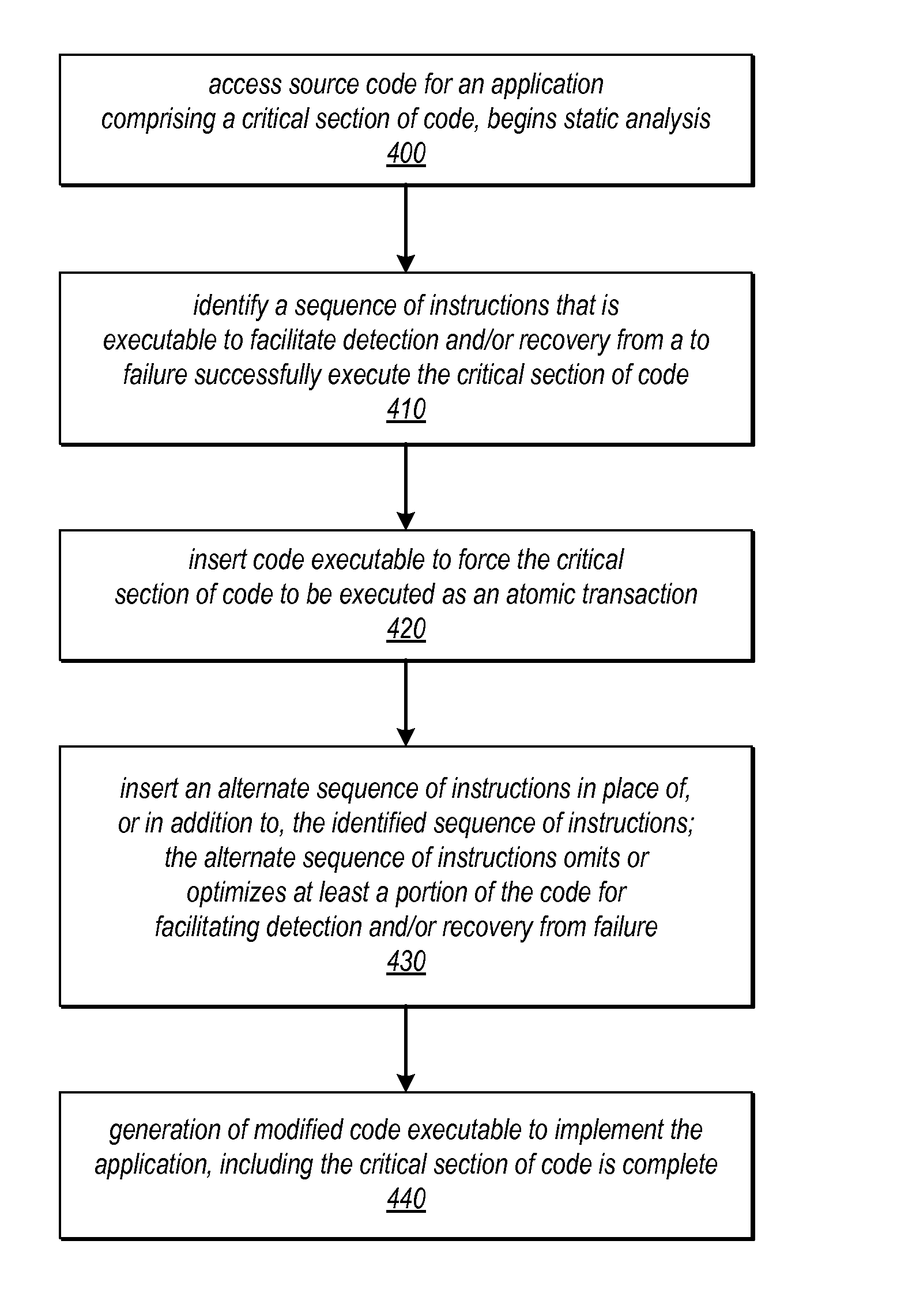

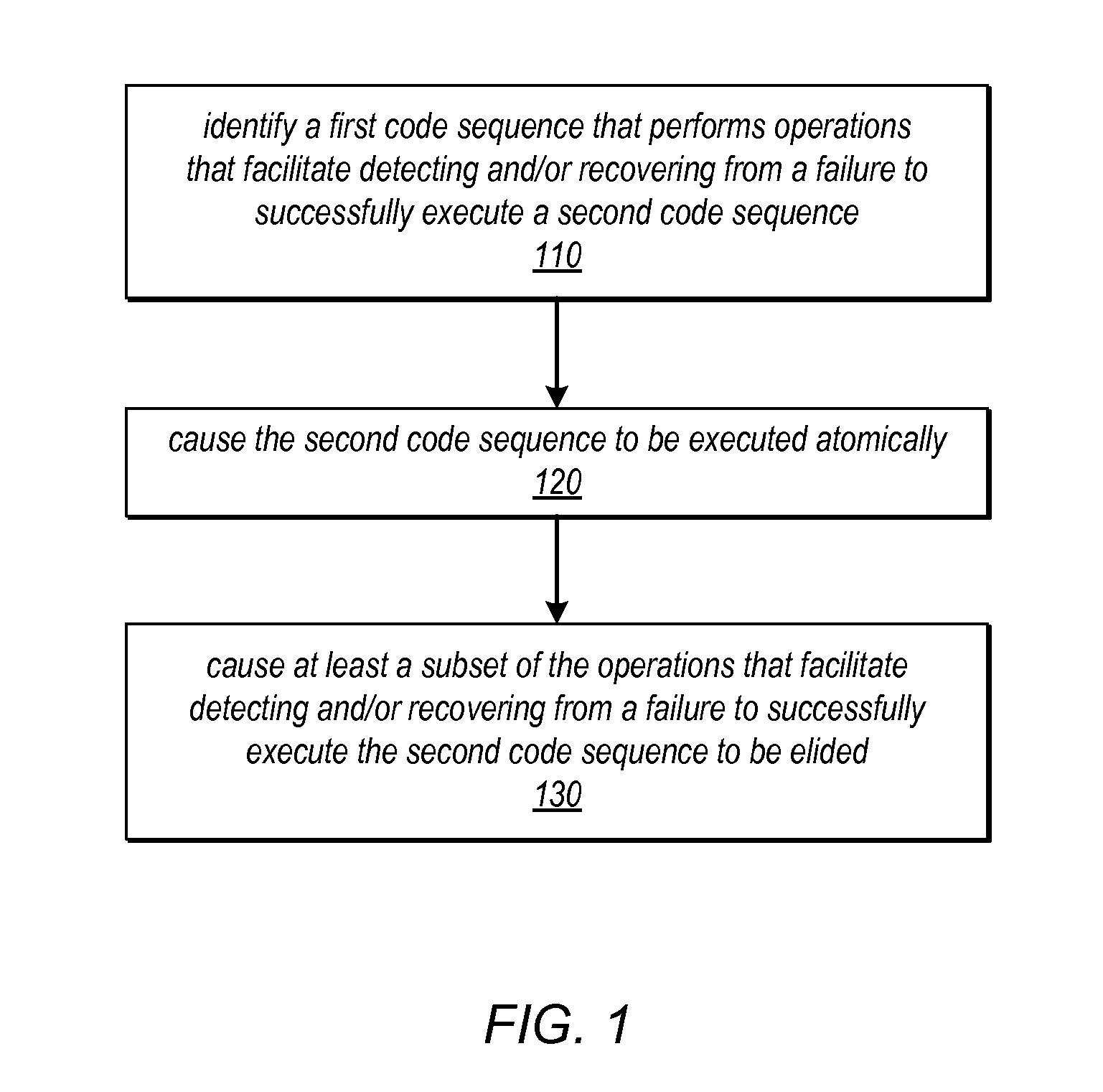

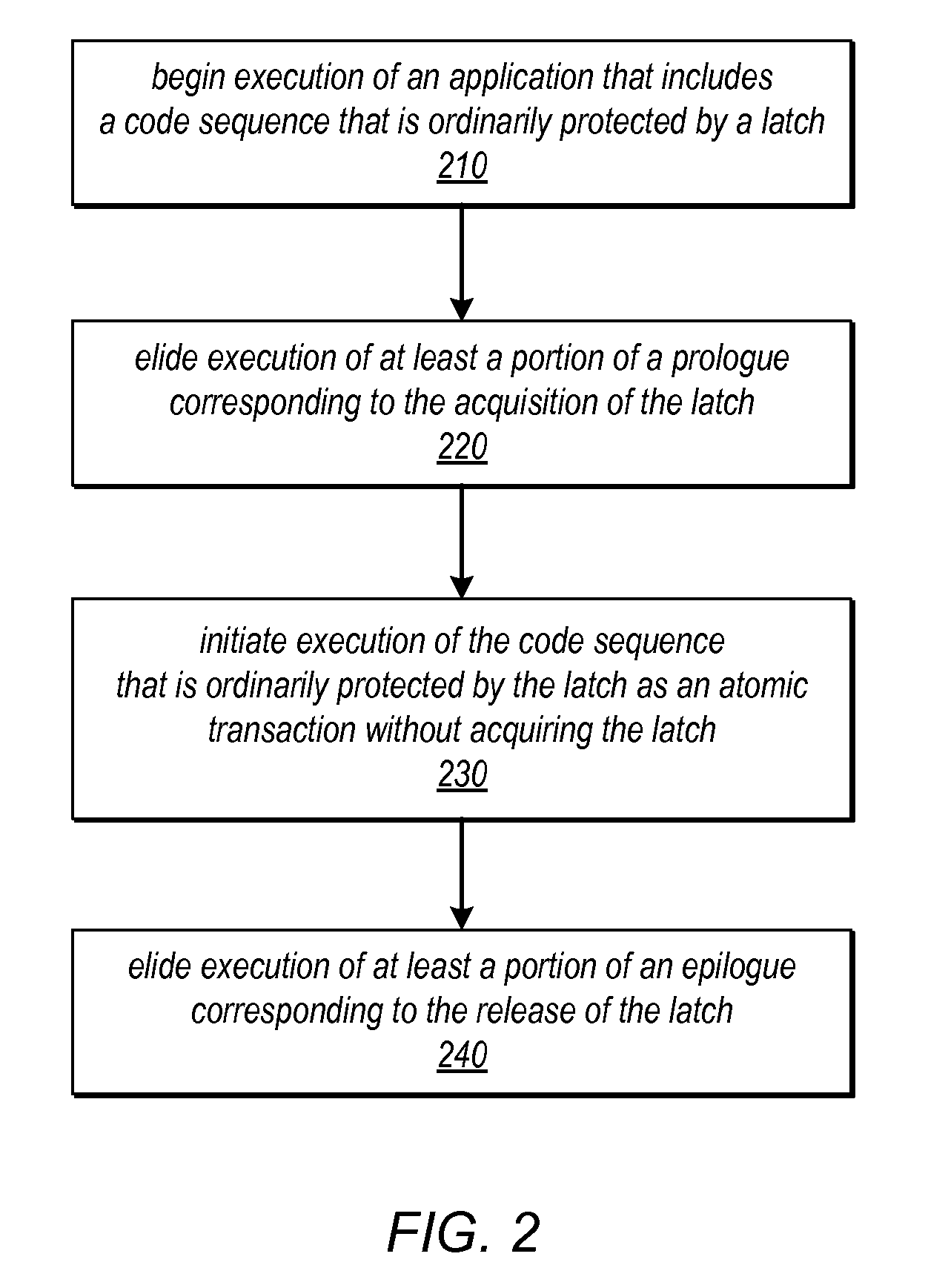

System and Method for Optimizing a Code Section by Forcing a Code Section to be Executed Atomically

ActiveUS20120254846A1Easy to detectOptimizationSoftware engineeringMemory systemsParallel computingCritical section

Systems and methods for optimizing code may use transactional memory to optimize one code section by forcing another code section to execute atomically. Application source code may be analyzed to identify instructions in one code section that only need to be executed if there exists the possibility that another code section (e.g., a critical section) could be partially executed or that its results could be affected by interference. In response to identifying such instructions, alternate code may be generated that forces the critical section to be executed as an atomic transaction, e.g., using best-effort hardware transactional memory. This alternate code may replace the original code or may be included in an alternate execution path that can be conditionally selected for execution at runtime. The alternate code may elide the identified instructions (which are rendered unnecessary by the transaction) by removing them, or by including them in the alternate execution path.

Owner:ORACLE INT CORP

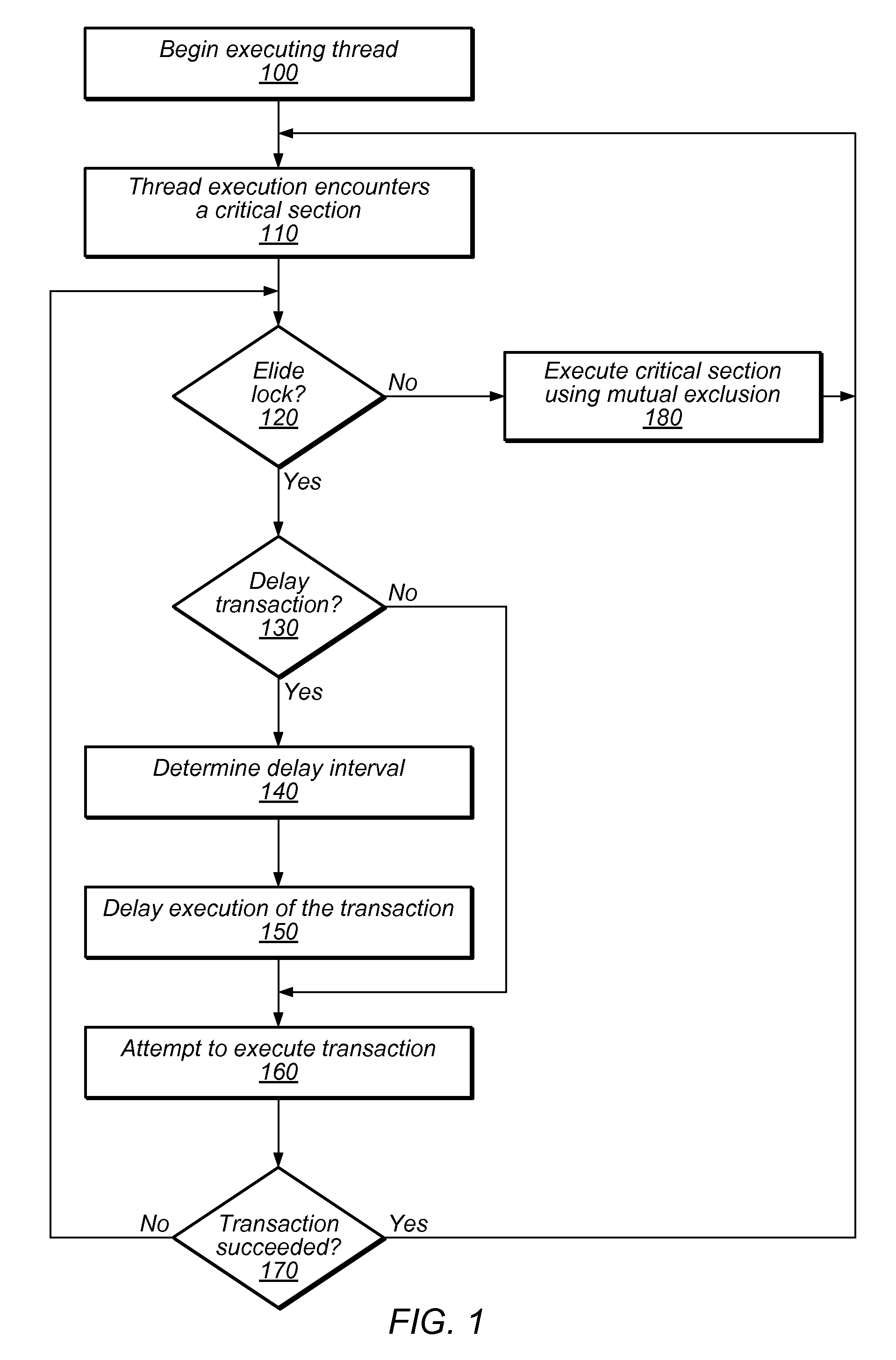

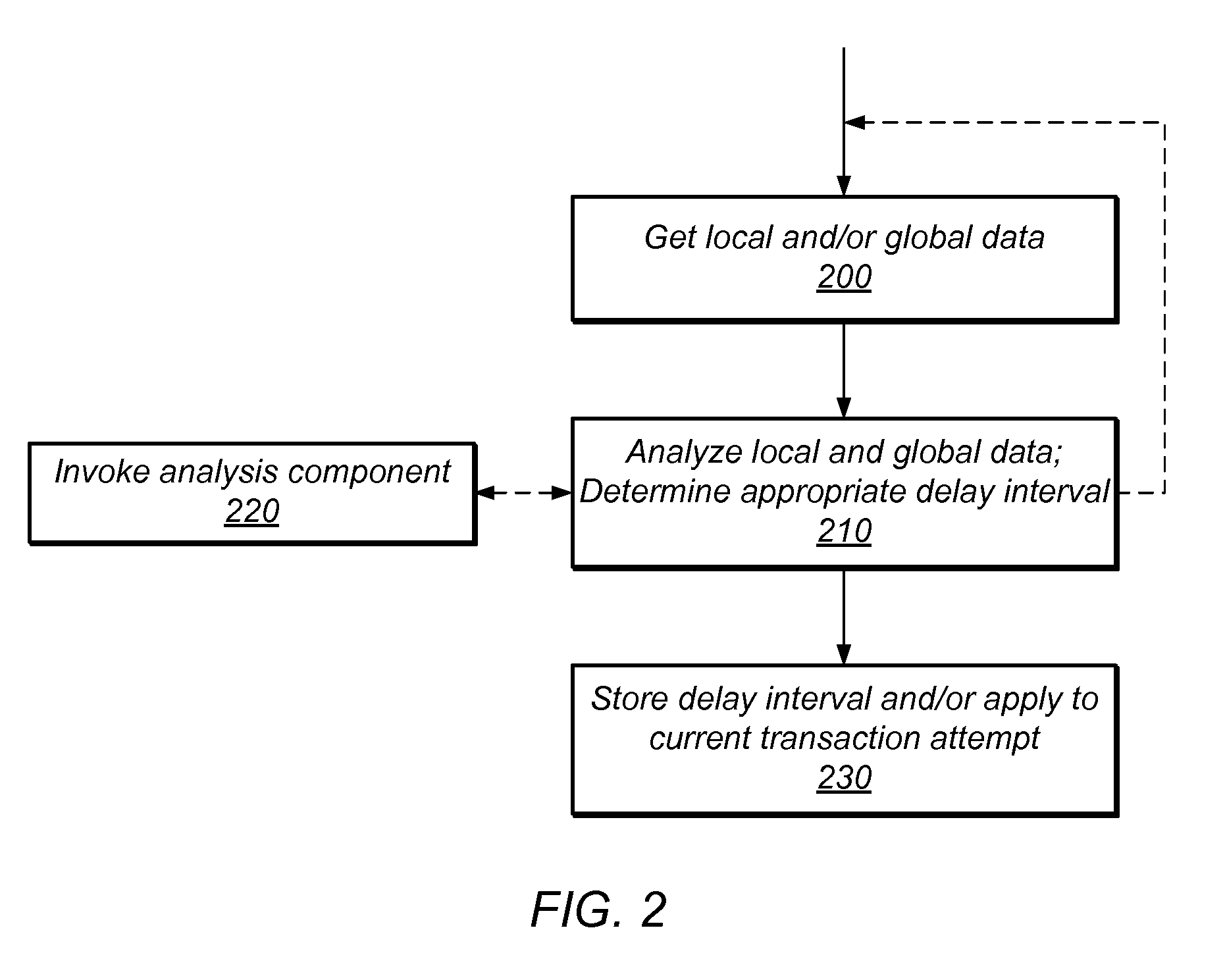

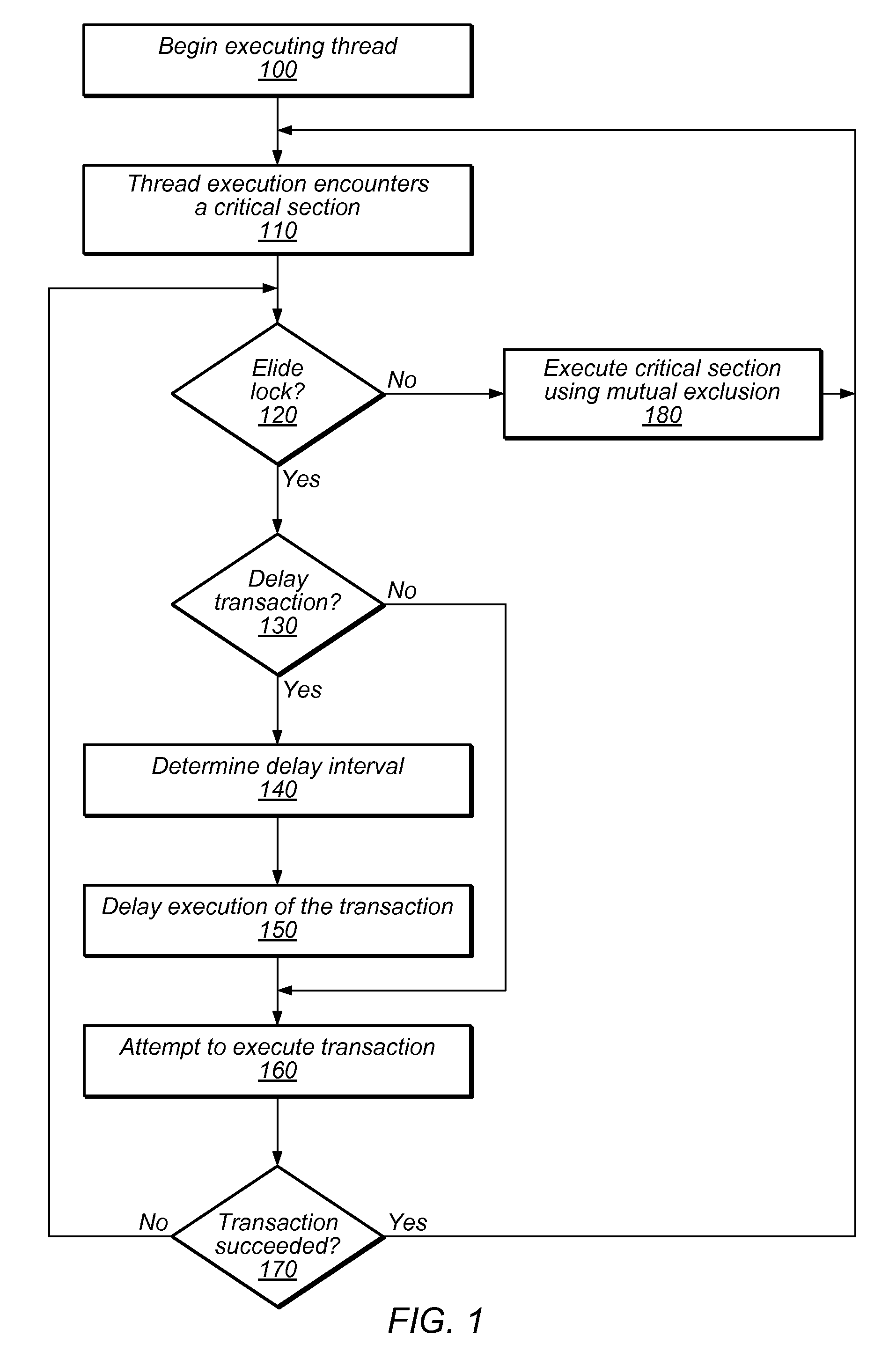

System and Method for Managing Contention in Transactional Memory Using Global Execution Data

ActiveUS20100138841A1Improve system performanceMemory systemsTransaction processingResource consumptionCritical section

Transactional Lock Elision (TLE) may allow threads in a multi-threaded system to concurrently execute critical sections as speculative transactions. Such speculative transactions may abort due to contention among threads. Systems and methods for managing contention among threads may increase overall performance by considering both local and global execution data in reducing, resolving, and / or mitigating such contention. Global data may include aggregated and / or derived data representing thread-local data of remote thread(s), including transactional abort history, abort causal history, resource consumption history, performance history, synchronization history, and / or transactional delay history. Local and / or global data may be used in determining the mode by which critical sections are executed, including TLE and mutual exclusion, and / or to inform concurrency throttling mechanisms. Local and / or global data may also be used in determining concurrency throttling parameters (e.g., delay intervals) used in delaying a thread when attempting to execute a transaction and / or when retrying a previously aborted transaction.

Owner:ORACLE INT CORP

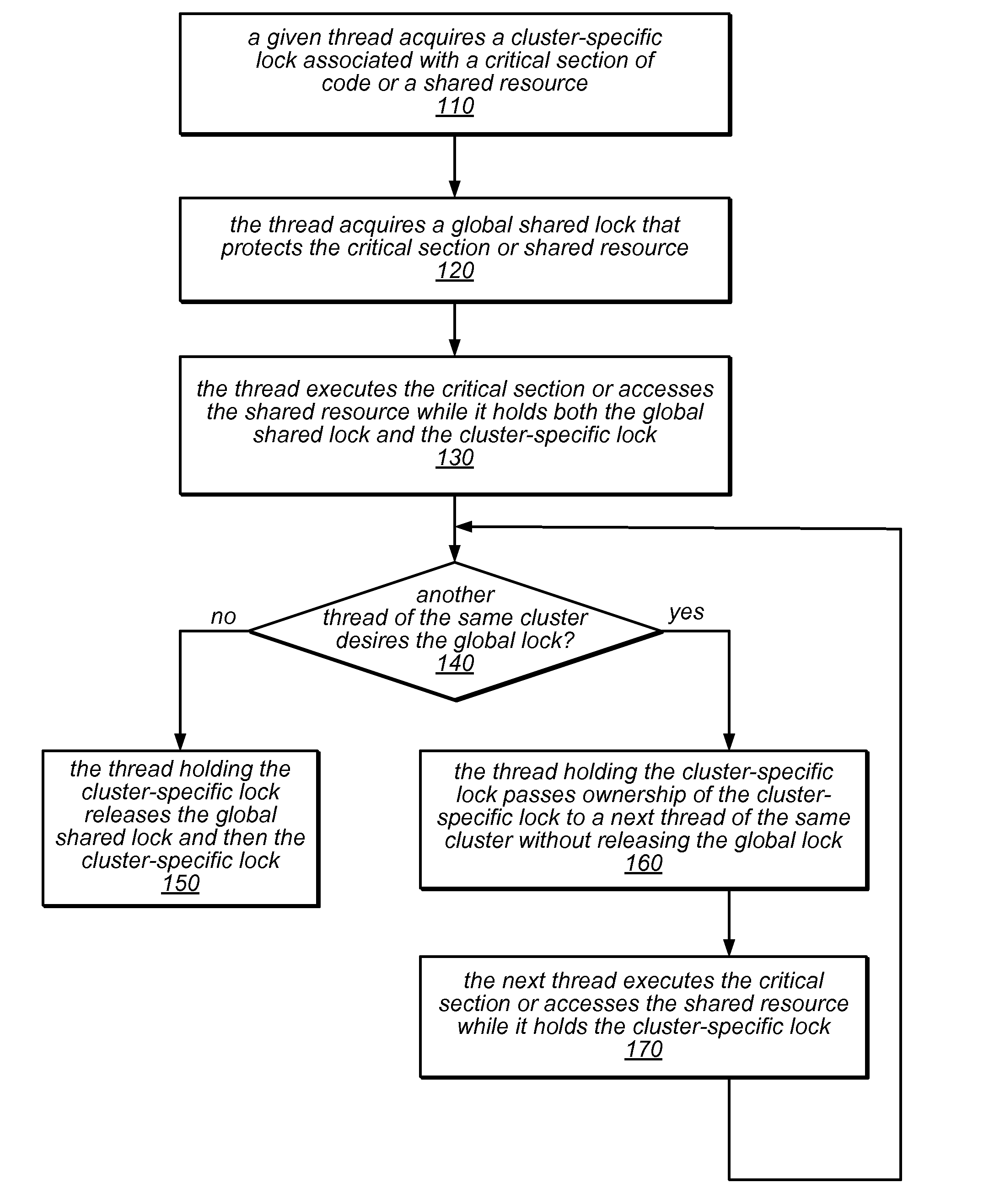

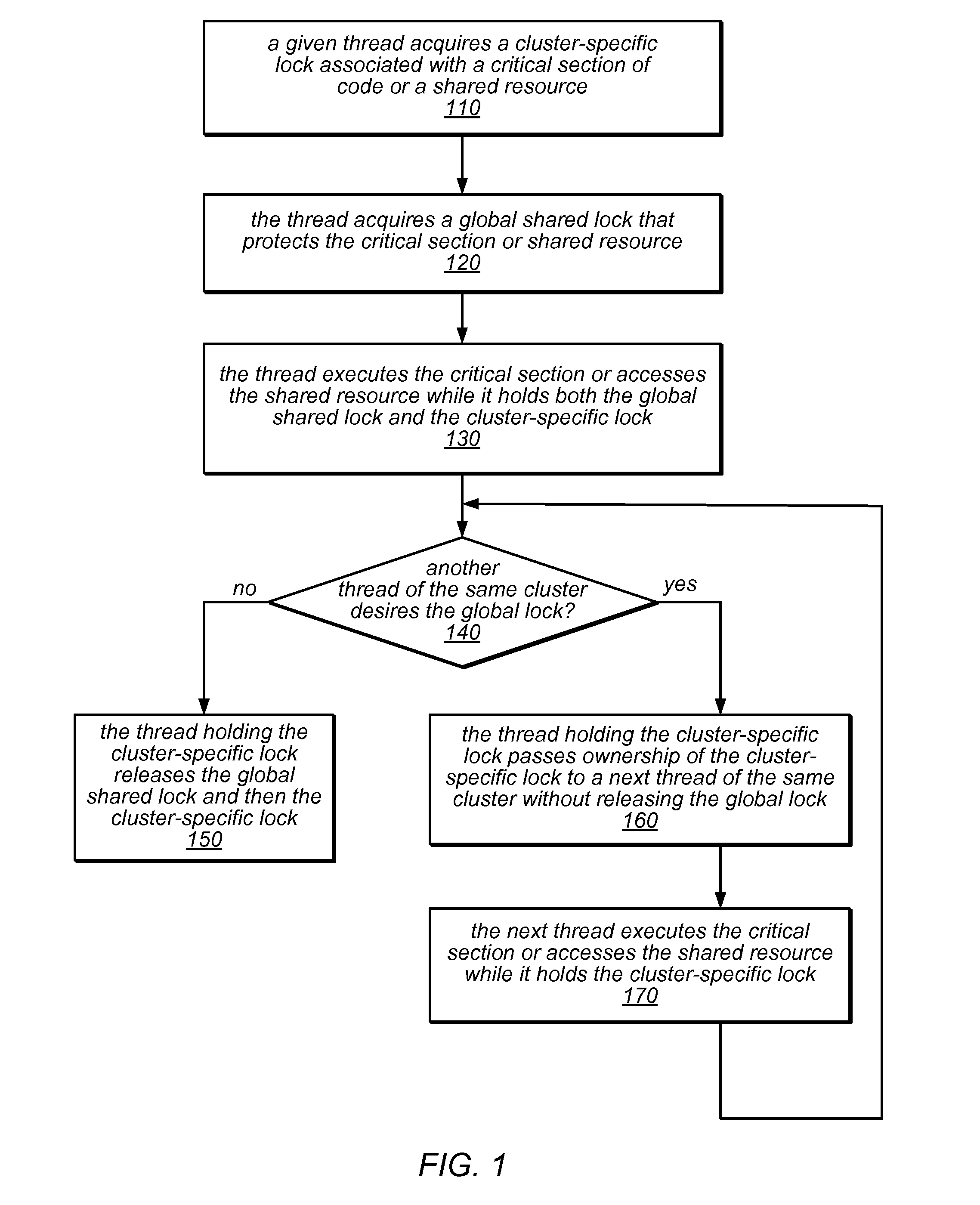

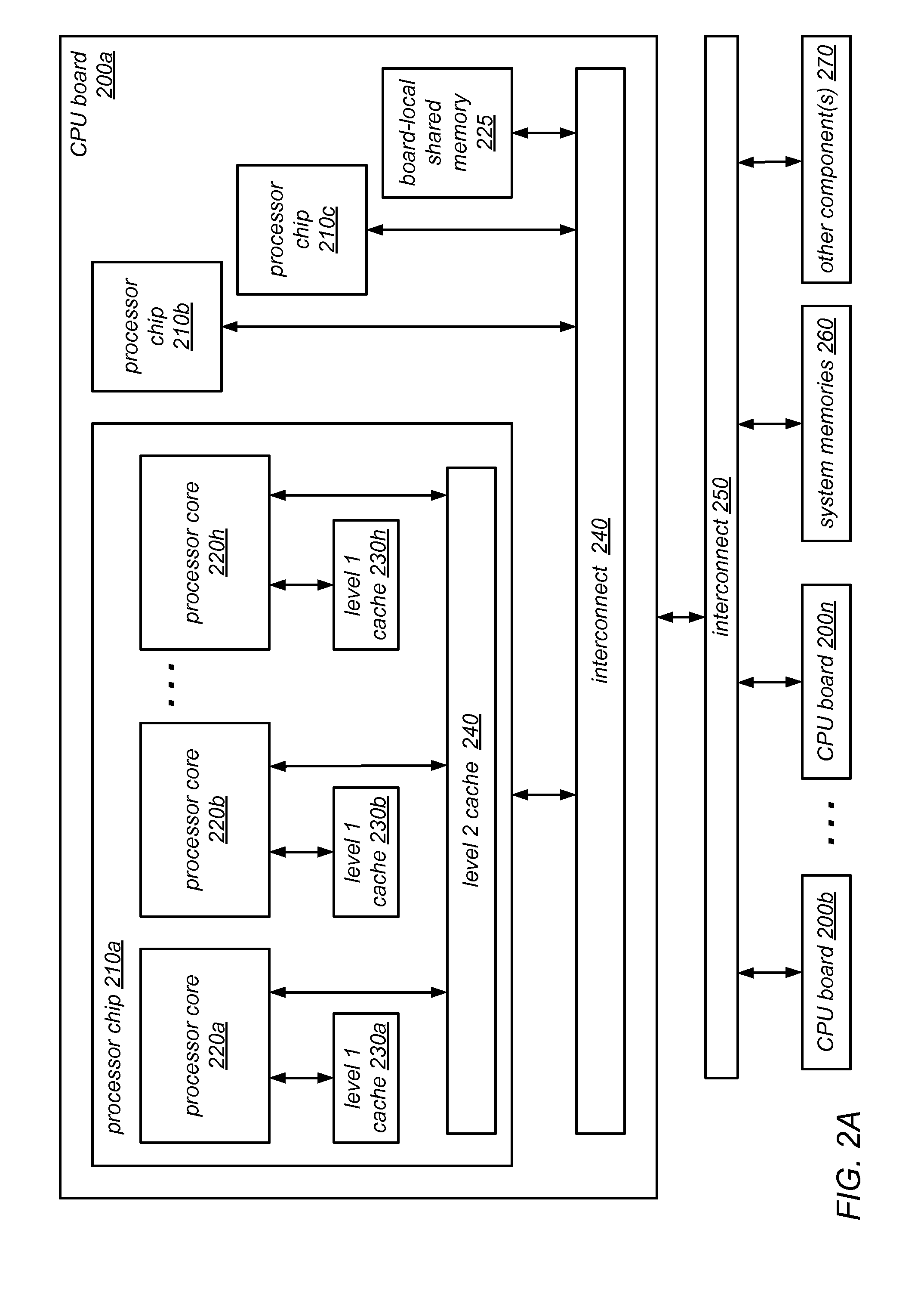

System and Method for Implementing NUMA-Aware Reader-Writer Locks

ActiveUS20130290967A1Reduce probabilityReduce traffic problemsMultiprogramming arrangementsMemory systemsCritical sectionParallel computing

NUMA-aware reader-writer locks may leverage lock cohorting techniques to band together writer requests from a single NUMA node. The locks may relax the order in which the lock schedules the execution of critical sections of code by reader threads and writer threads, allowing lock ownership to remain resident on a single NUMA node for long periods, while also taking advantage of parallelism between reader threads. Threads may contend on node-level structures to get permission to acquire a globally shared reader-writer lock. Writer threads may follow a lock cohorting strategy of passing ownership of the lock in write mode from one thread to a cohort writer thread without releasing the shared lock, while reader threads from multiple NUMA nodes may simultaneously acquire the shared lock in read mode. The reader-writer lock may follow a writer-preference policy, a reader-preference policy or a hybrid policy.

Owner:ORACLE INT CORP

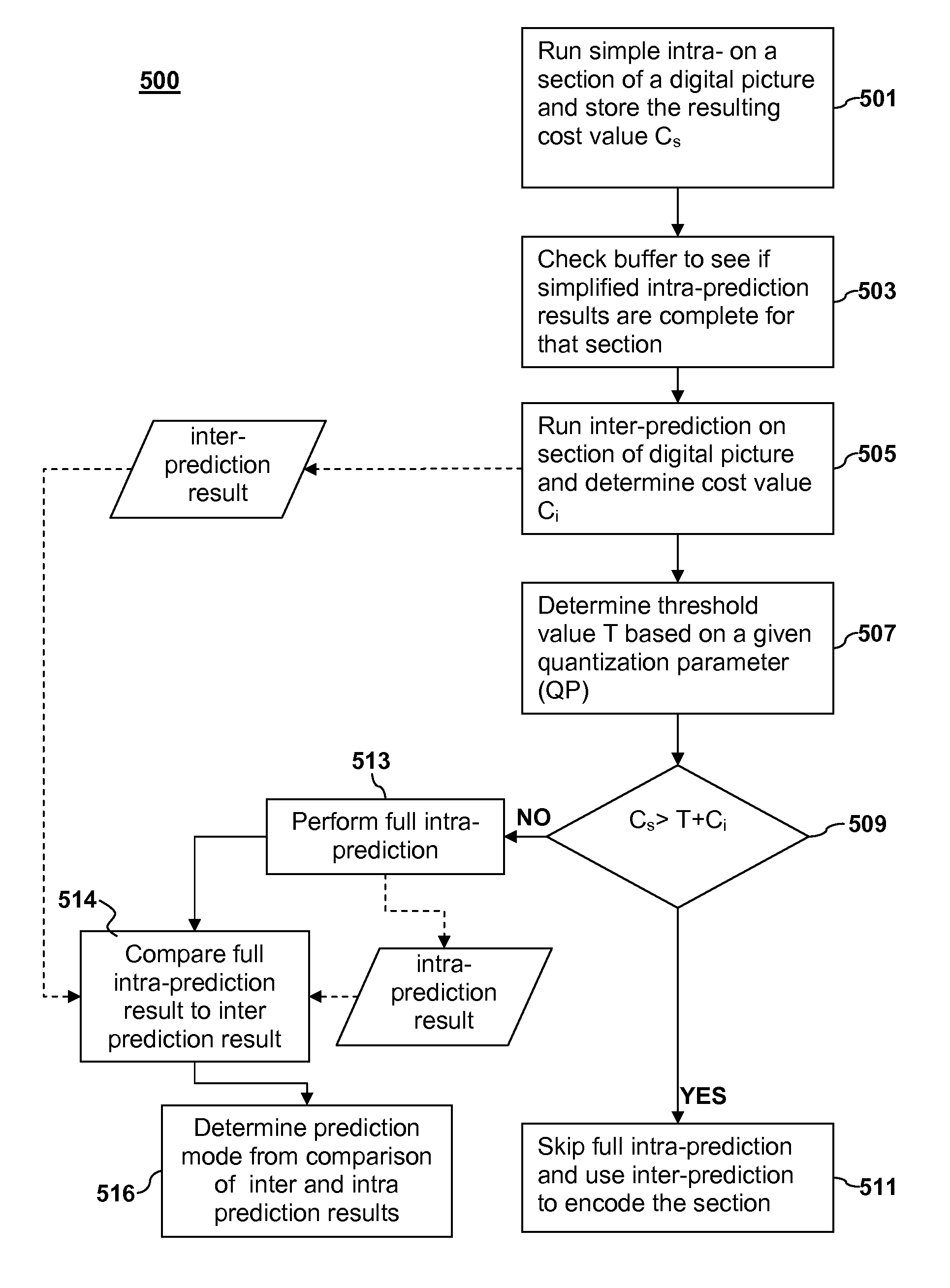

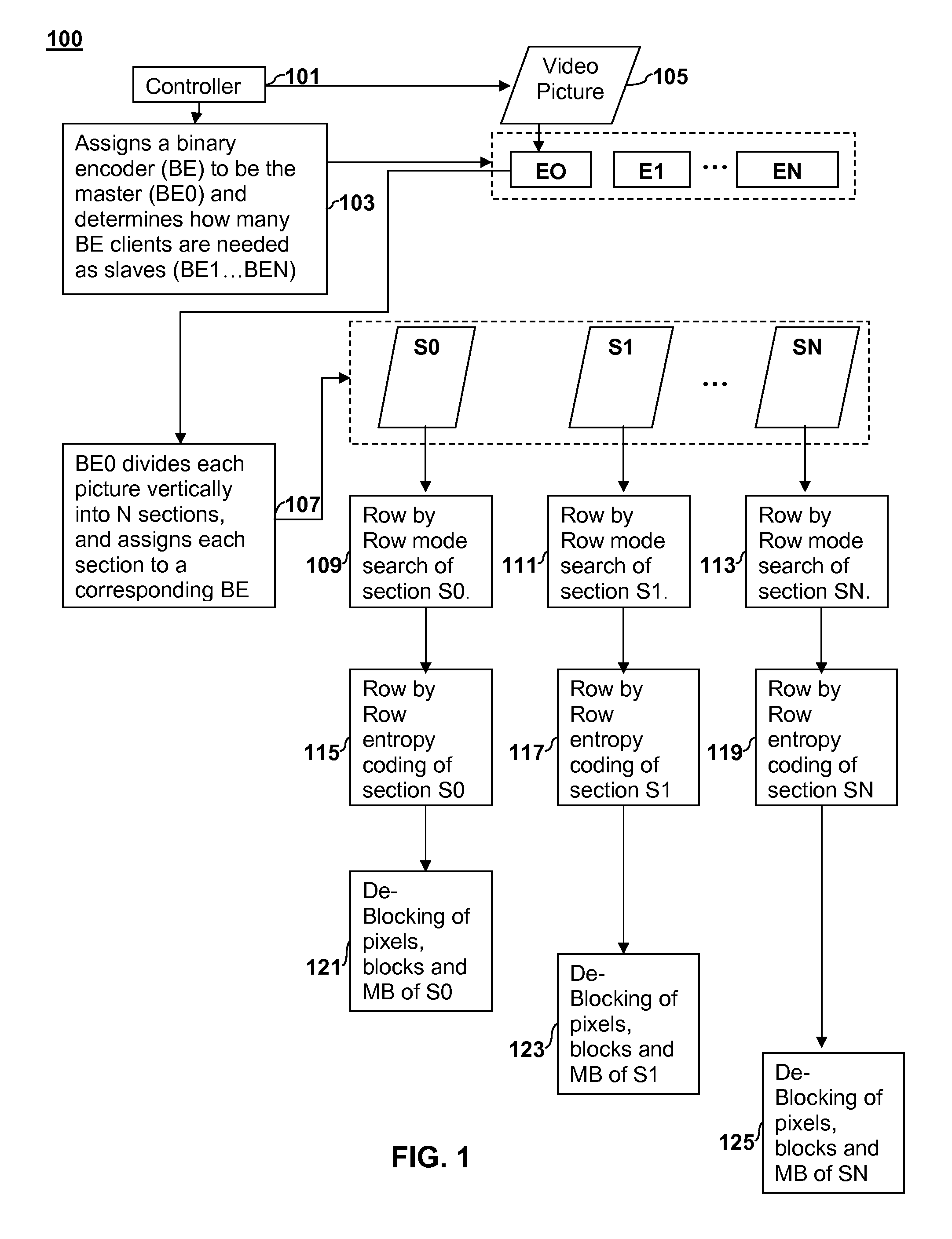

Parallel digital picture encoding

ActiveUS20110051811A1Color television with pulse code modulationColor television with bandwidth reductionCritical sectionDigital pictures

Apparatus and Method for parallel digital picture encoding are disclosed. A digital picture is partitioned into two or more vertical sections. An encoder unit is selected to serve as a master and one or more encoder units are selected to serve as slaves. The total number of encoder units used equals the number of vertical sections. A mode search is performed on the two or more vertical sections on a row-by-row basis. Entropy coding is performed on the two or more vertical sections on a row-by-row basis. The entropy coding of each vertical section is performed in parallel such that each encoder unit performs entropy coding on its respective vertical section. De-blocking is performed on the two or more vertical sections in parallel on a row-by-row basis.

Owner:SONY COMPUTER ENTERTAINMENT INC

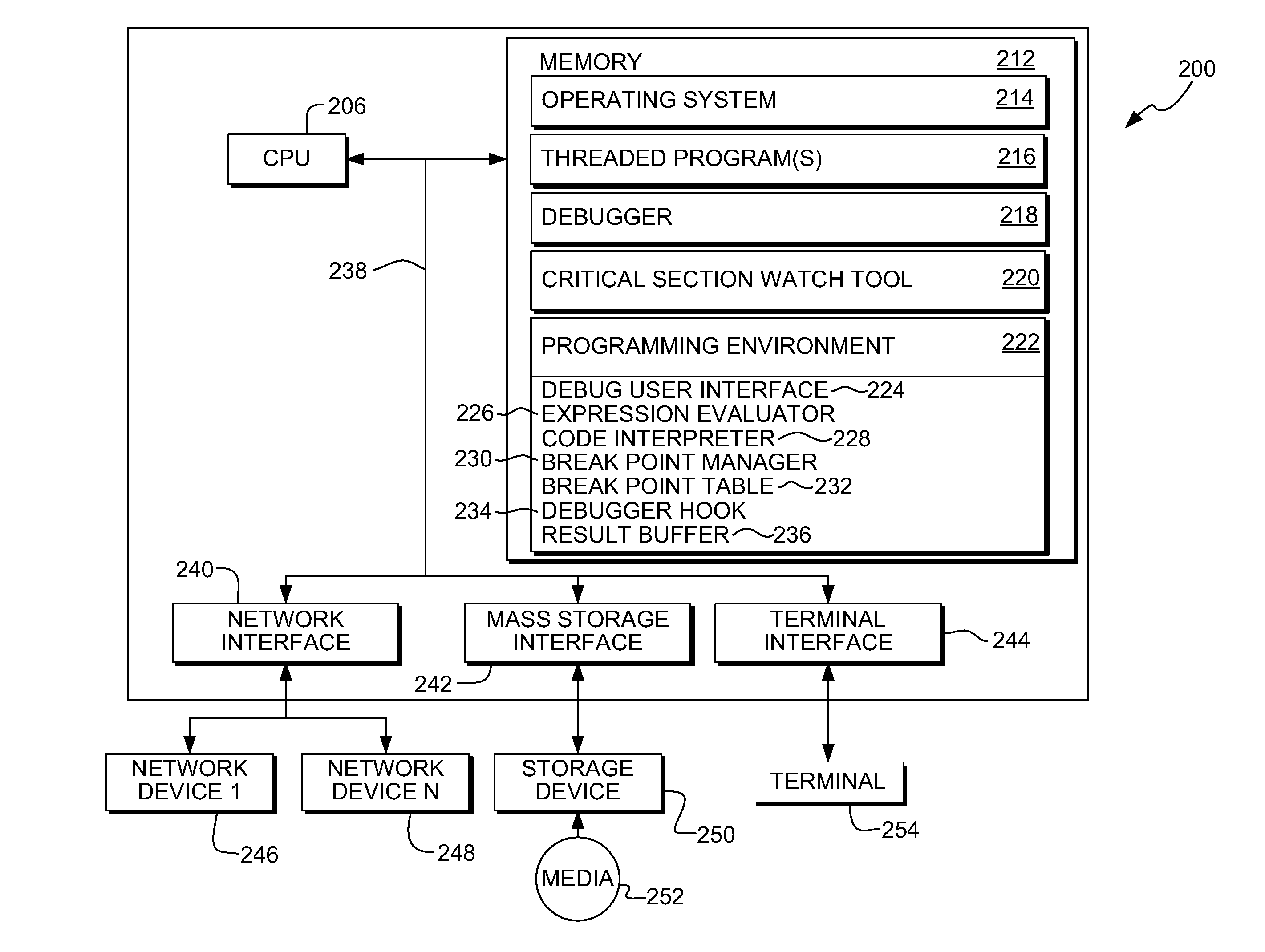

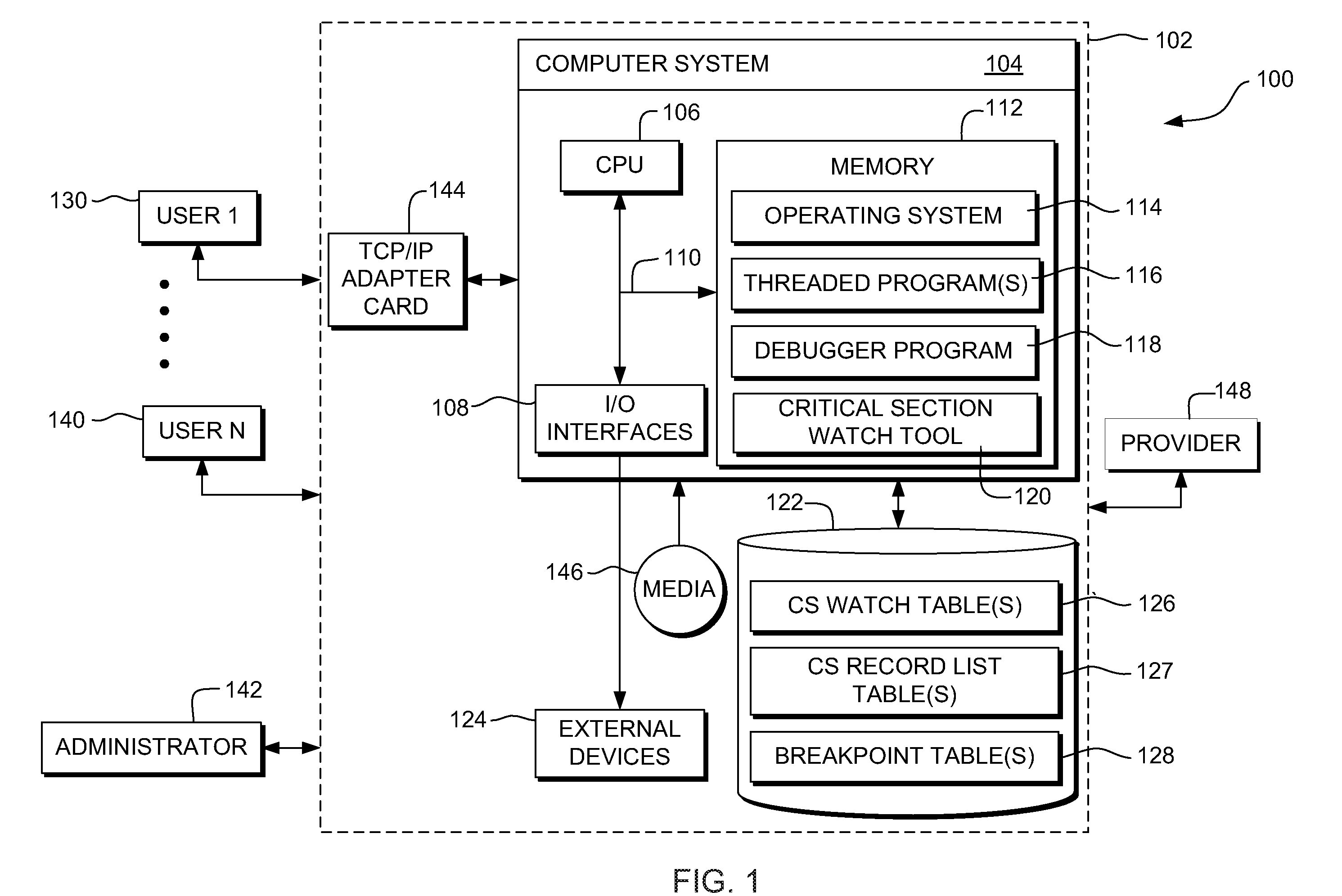

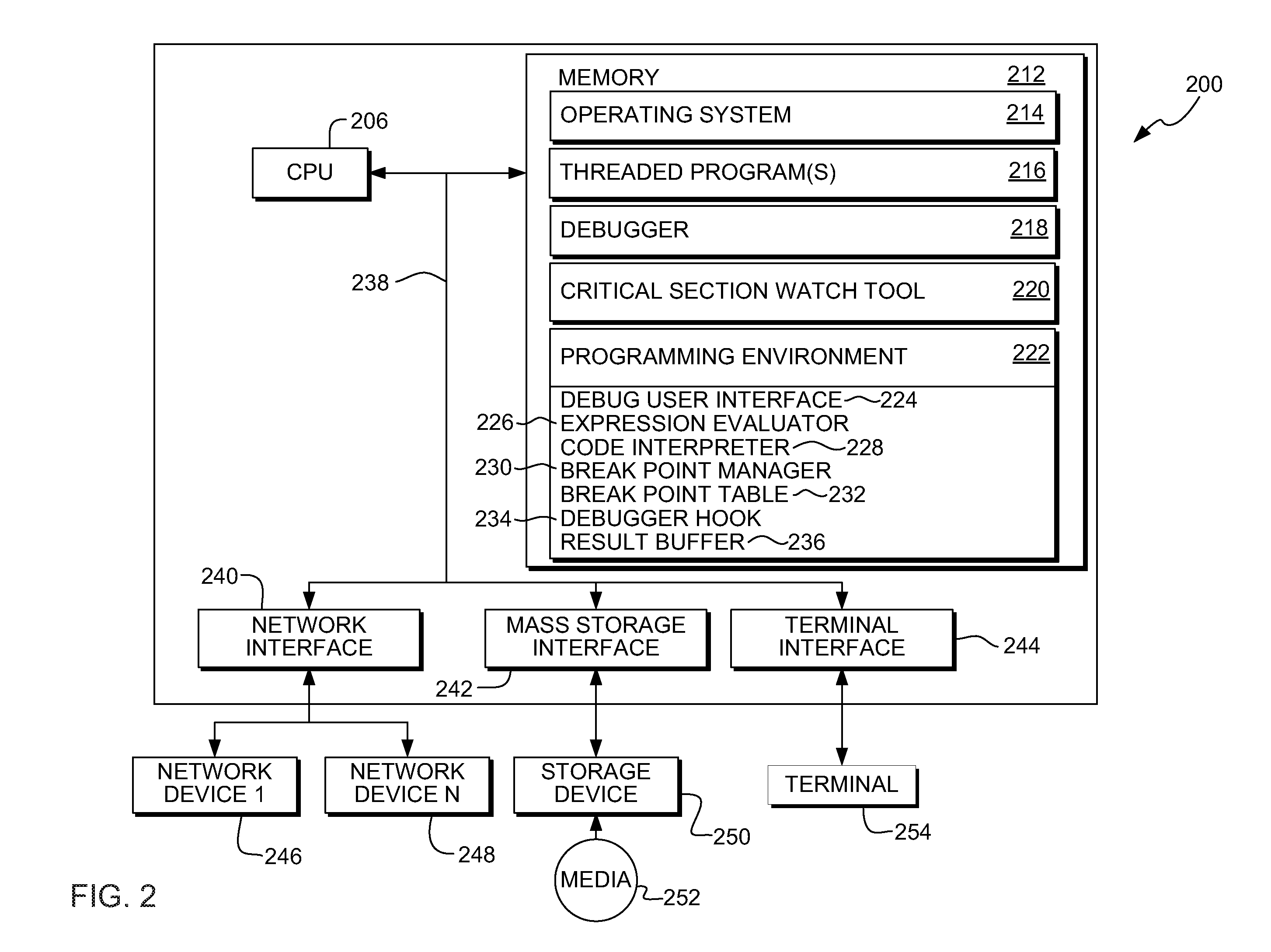

System, method and program product for monitoring changes to data within a critical section of a threaded program

InactiveUS20090320001A1Error detection/correctionSpecific program execution arrangementsCritical sectionCritical zone

A method, system and program product for monitoring changes to a variable within a critical section of a threaded program. The method includes establishing, using a debugging tool, a watch for monitoring changes to a variable that occur outside of the critical section and executing a portion of the threaded program with a debugger. Further, the method includes determining, using the tool, whether or not a thread has executed a start breakpoint set for the critical section, if the thread has executed the start breakpoint set, determining whether or not the thread has executed an end breakpoint set for the critical section, and if the thread has not executed the end breakpoint set, displaying any watches triggered responsive to updates to the variable that occur outside of the critical section, such that, only updates to the variable that occur outside of the critical section will trigger the displaying.

Owner:IBM CORP

Avoiding locks by transactionally executing critical sections

ActiveUS7398355B1Lower performance requirementsGuaranteed to workDigital computer detailsMemory systemsData accessParallel computing

One embodiment of the present invention provides a system that avoids locks by transactionally executing critical sections. During operation, the system receives a program which includes one or more critical sections which are protected by locks. Next, the system modifies the program so that the critical sections which are protected by locks are executed transactionally without acquiring locks associated with the critical sections. More specifically, the program is modified so that: (1) during transactional execution of a critical section, the program first determines if a lock associated with the critical section is held by another process and if so aborts the transactional execution; (2) if the transactional execution of the critical section completes without encountering an interfering data access from another process, the program commits changes made during the transactional execution and optionally resumes normal non-transactional execution of the program past the critical section; and (3) if an interfering data access from another process is encountered during transactional execution of the critical section, the program discards changes made during the transactional execution, and attempts to re-execute the critical section zero or more times.

Owner:SUN MICROSYSTEMS INC

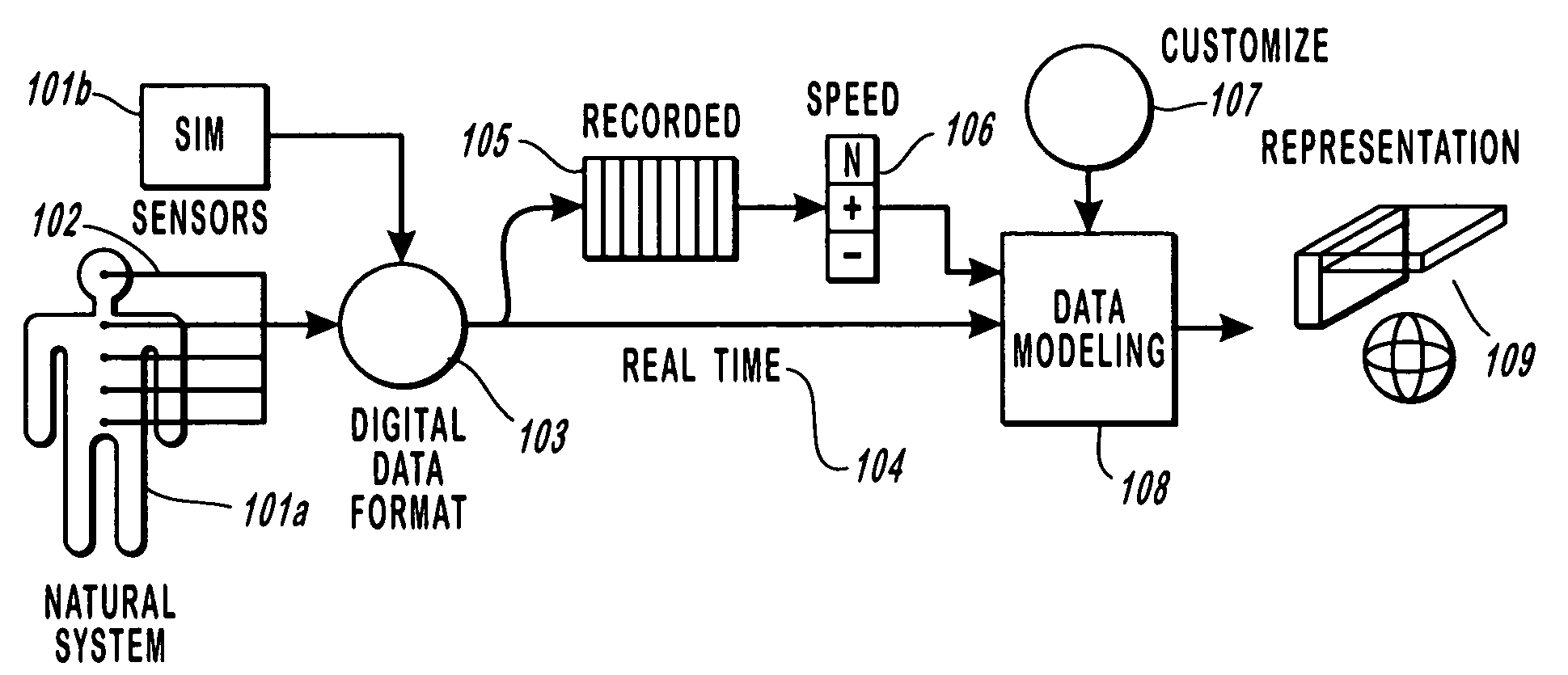

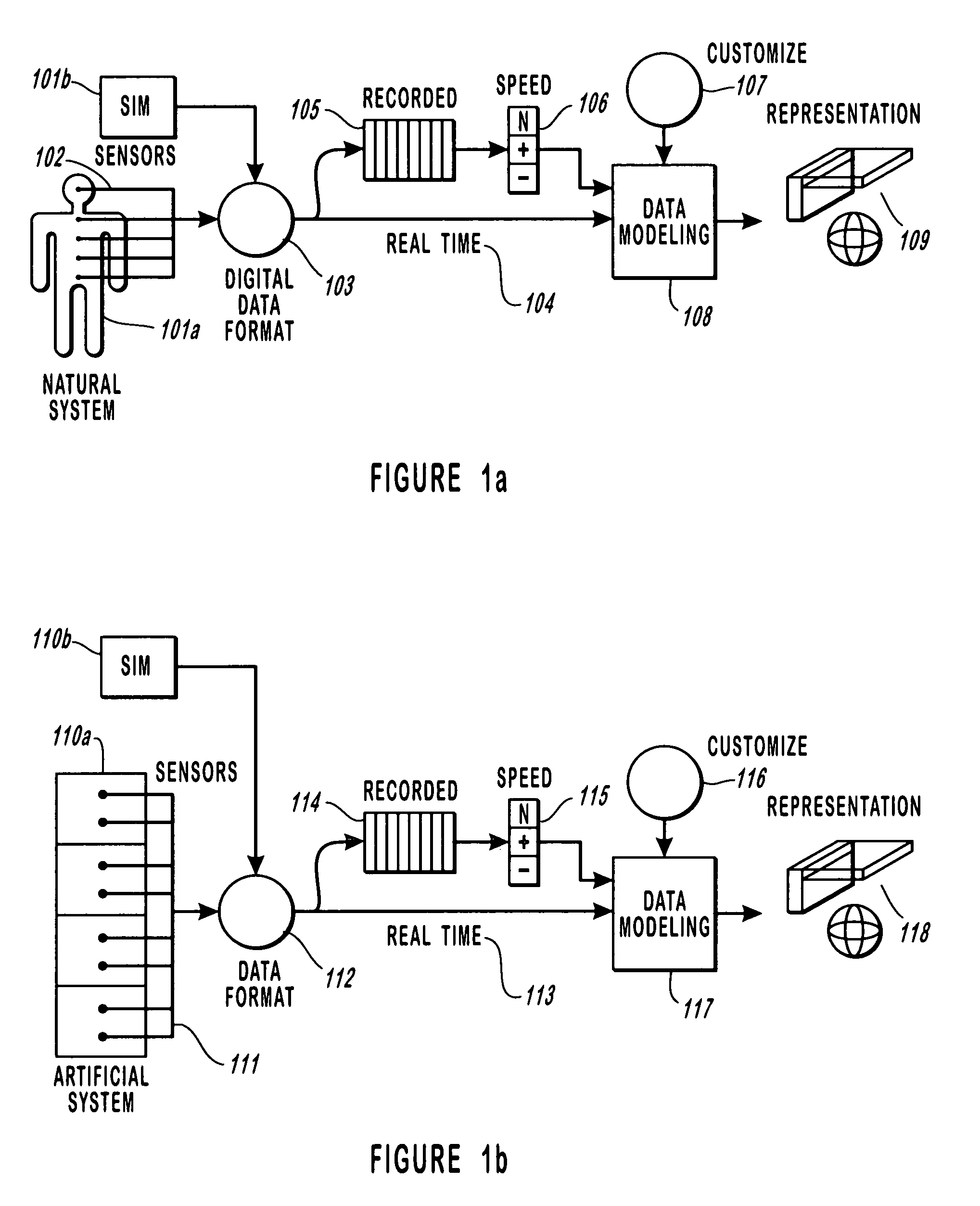

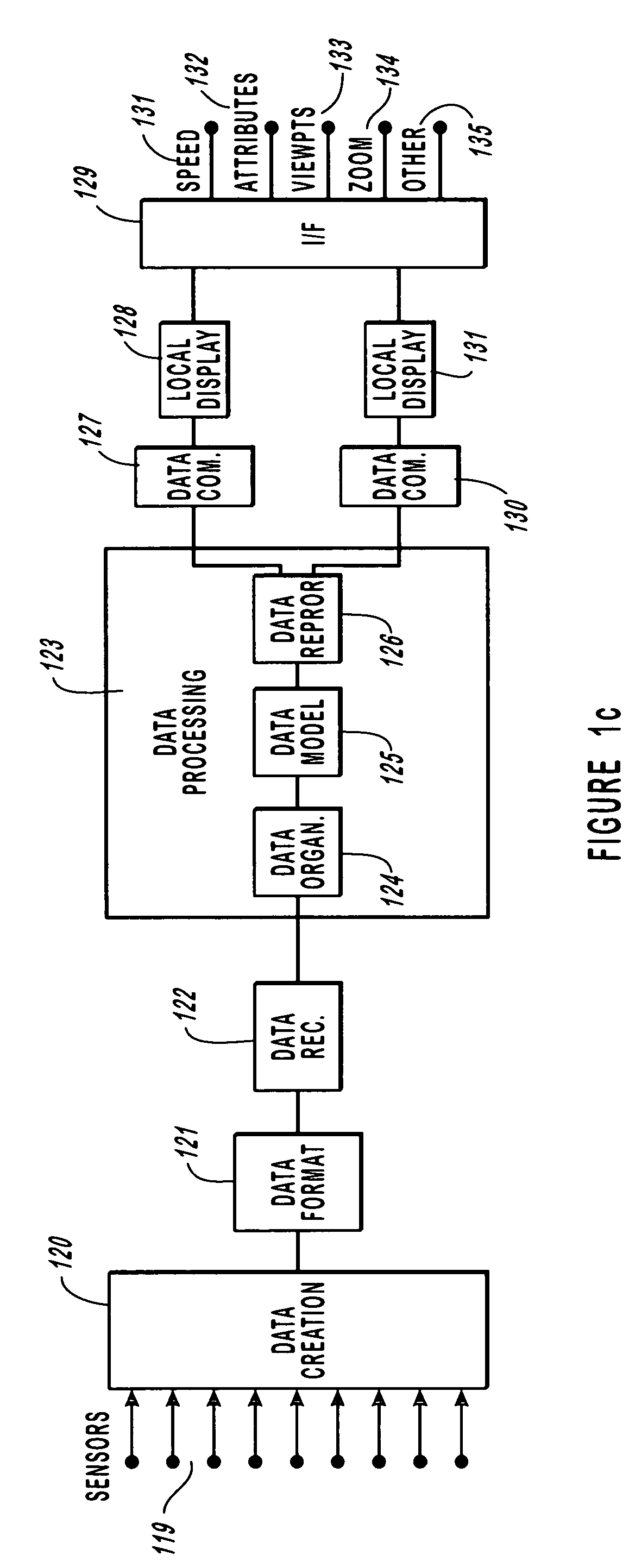

Method and apparatus for monitoring dynamic cardiovascular function using n-dimensional representatives of critical functions

InactiveUS7654966B2Facilitates rapid accurate analysisRapid assessmentRespiratory organ evaluationSensorsAmbulatory systemCritical section

A method, system, apparatus and device for the monitoring, diagnosis and evaluation of the state of a dynamic pulmonary system is disclosed. This method and system provides the processing means for receiving sensed and / or simulated data, converting such data into a displayable object format and displaying such objects in a manner such that the interrelationships between the respective variables can be correlated and identified by a user. This invention provides for the rapid cognitive grasp of the overall state of a pulmonary critical function with respect to a dynamic system.

Owner:UNIV OF UTAH RES FOUND

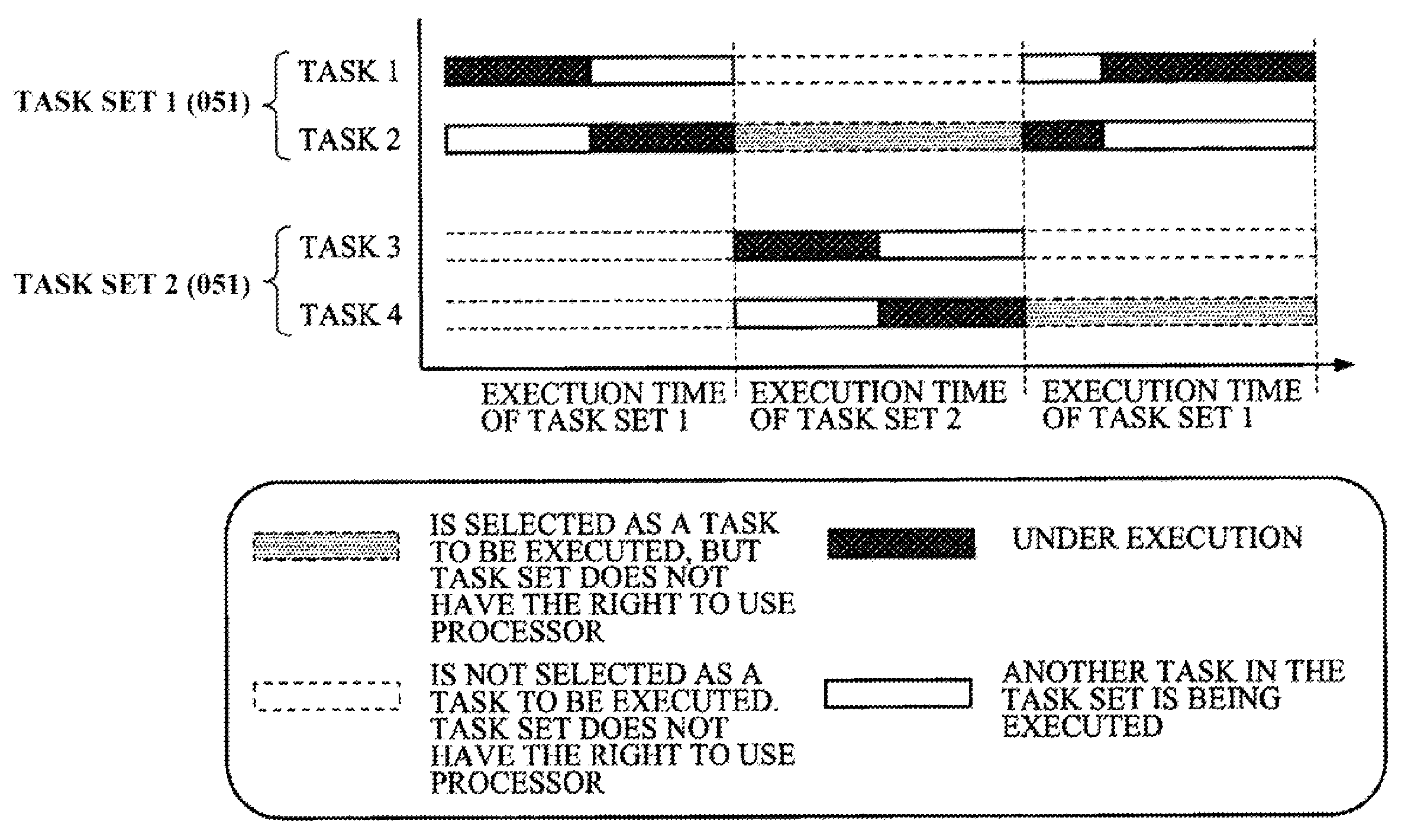

Processor system, task control method on computer system, computer program

InactiveUS7647594B2Simple methodProgram initiation/switchingResource allocationEmergency procedureComputer architecture

A mechanism for recording a timing in which a high urgency process is started is provided, and upon entry to a critical section in the middle of a low urgency process, by referencing the record, it is inspected whether a high urgency process will be started during execution of the critical section. If it will not be started, the critical section is entered, and if it will be started, control is exerted so that entry to the critical section is postponed until the high urgency process is completed. Exclusive access control in a critical section can be performed suitably under conditions where a plurality of task execution environments exist.

Owner:SONY CORP

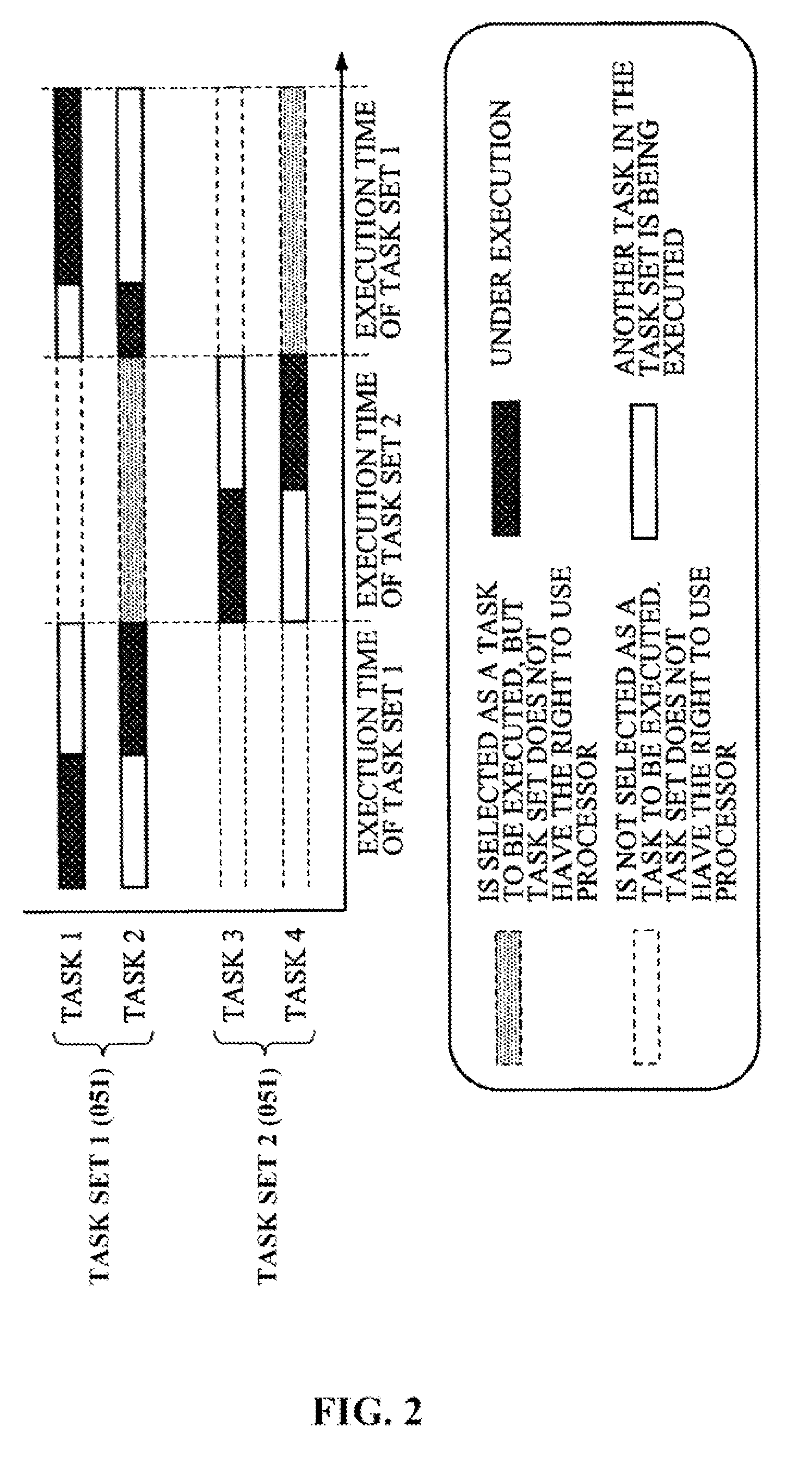

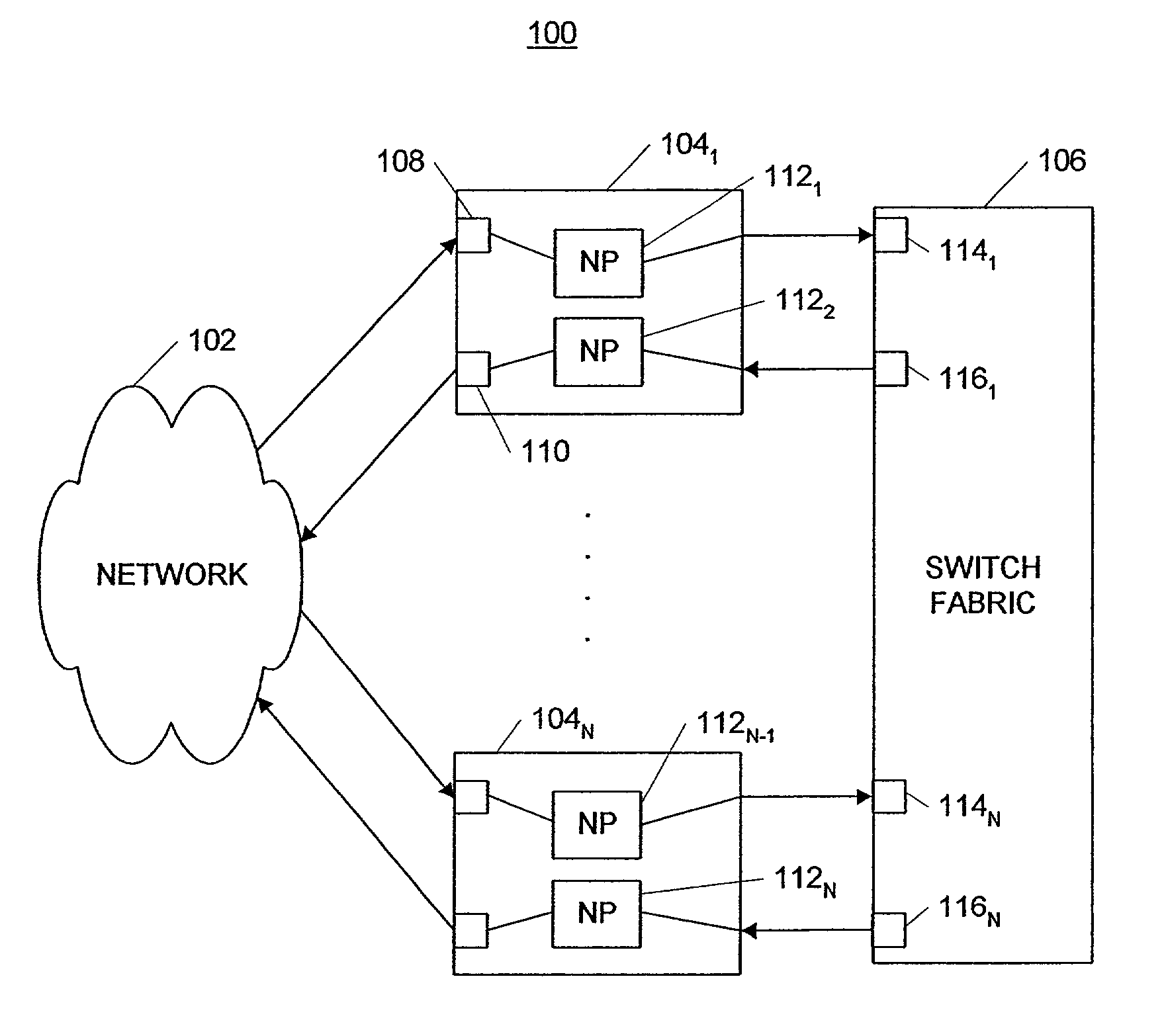

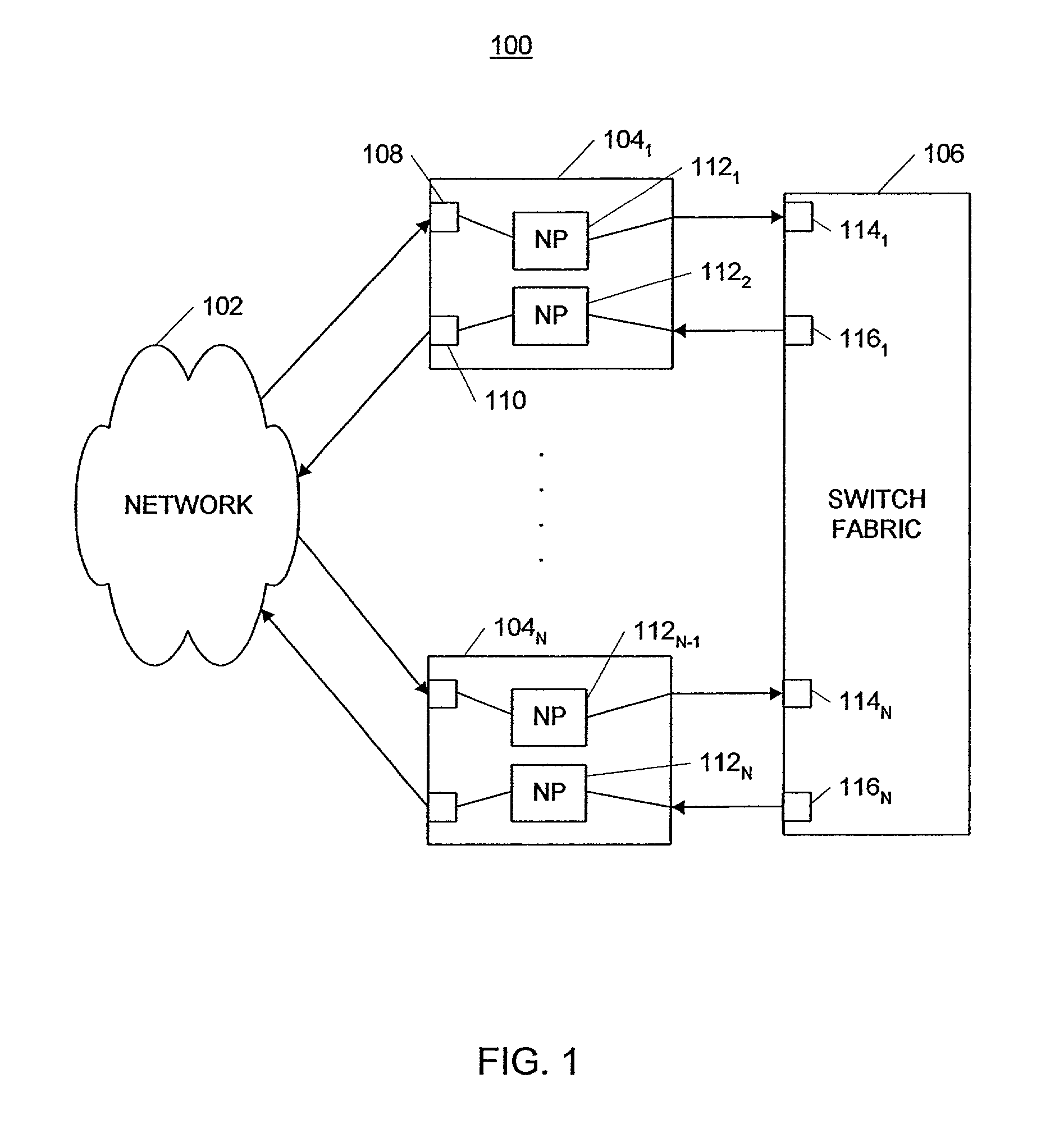

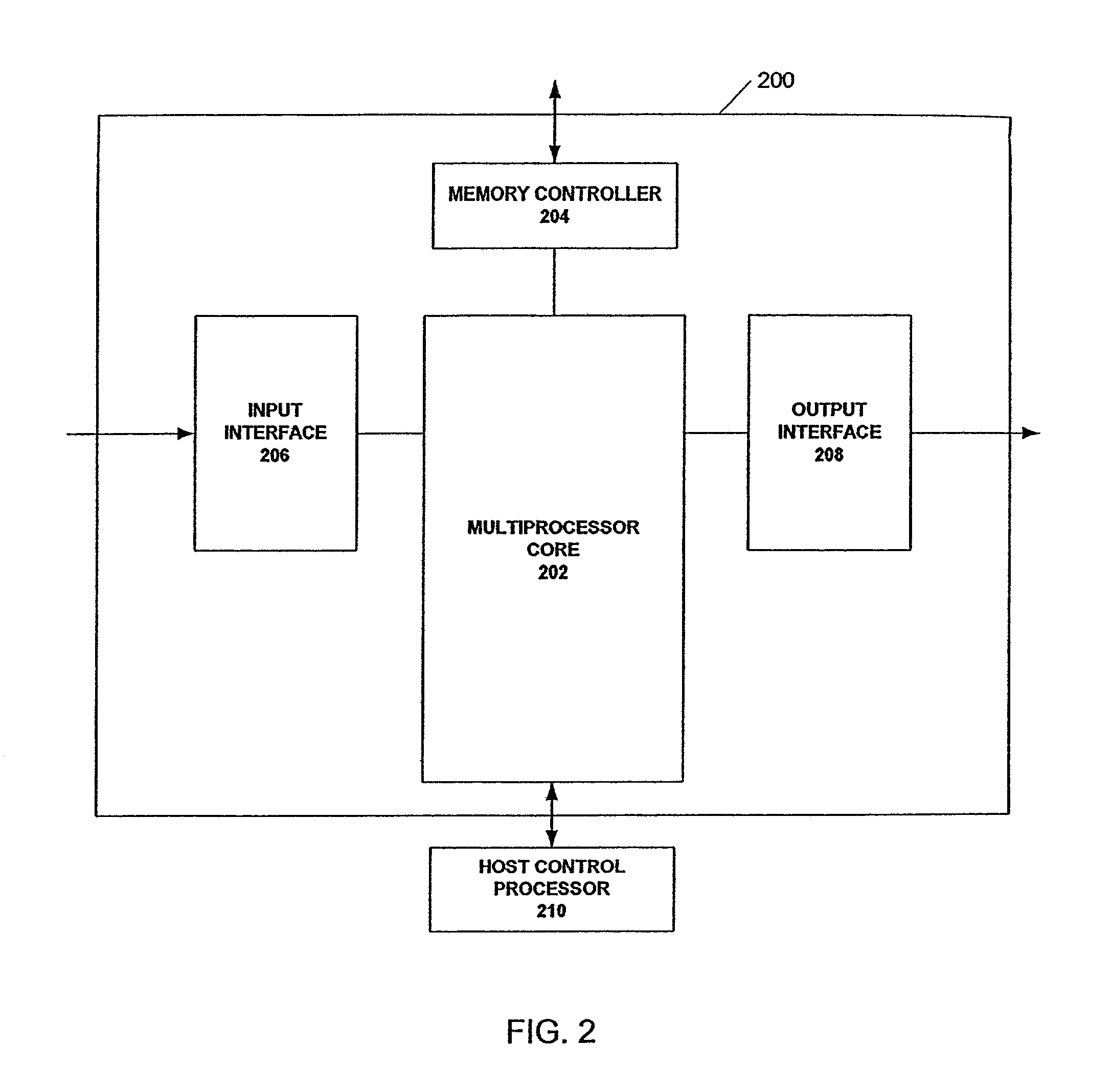

System and method for processing overlapping tasks in a programmable network processor environment

A system and method process data elements on multiple processing elements. A first processing element processes a task. A second processing element, coupled to the first processing element, is associated with a task. The first processing element sends a critical-section end signal to the second processing element while processing the task at the first processing element. The second processing element resumes the task in response to receiving the critical-section end signal.

Owner:THE UNITED STATES OF AMERICA AS REPRESENTED BY THE SECRETARY OF THE NAVY

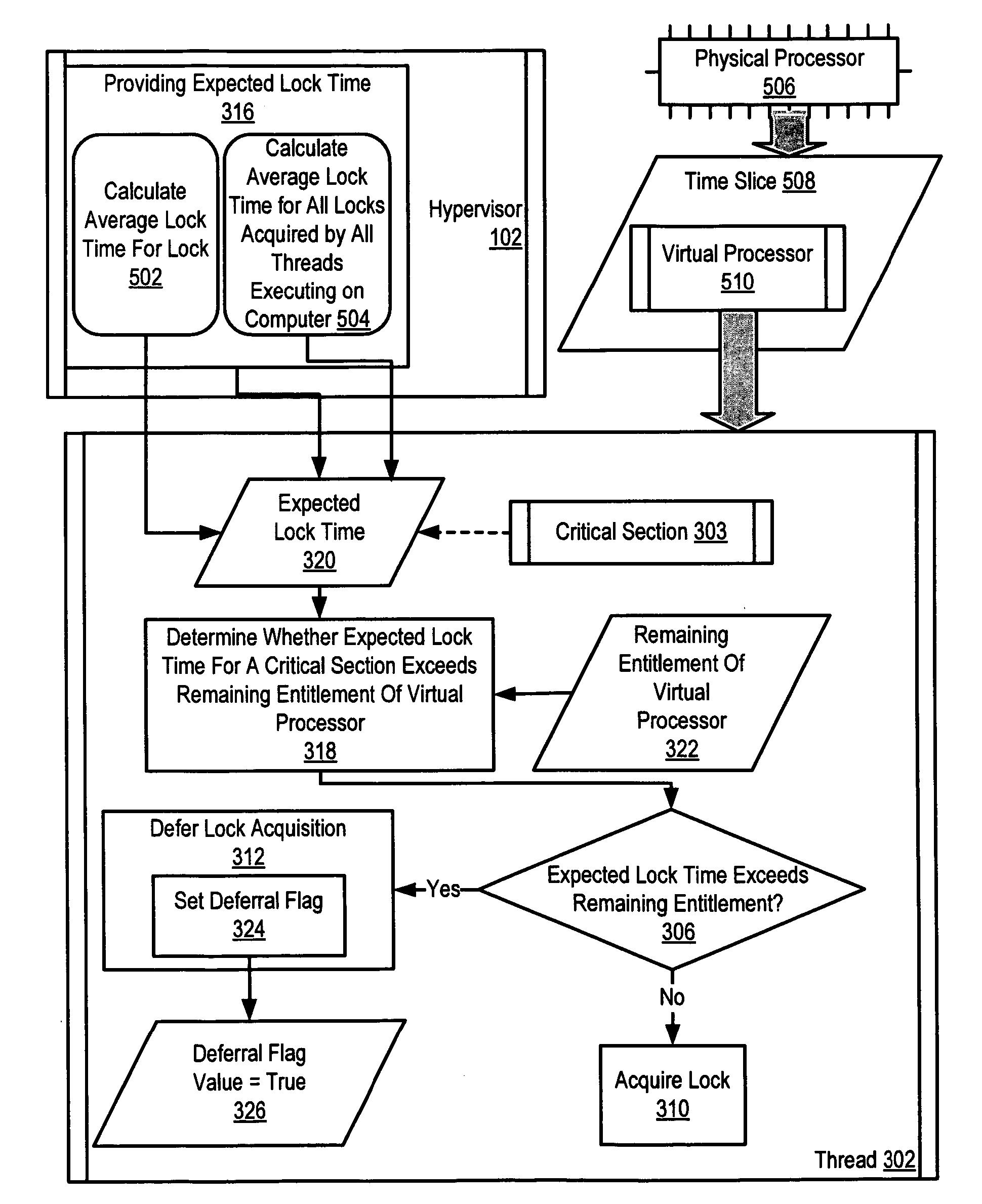

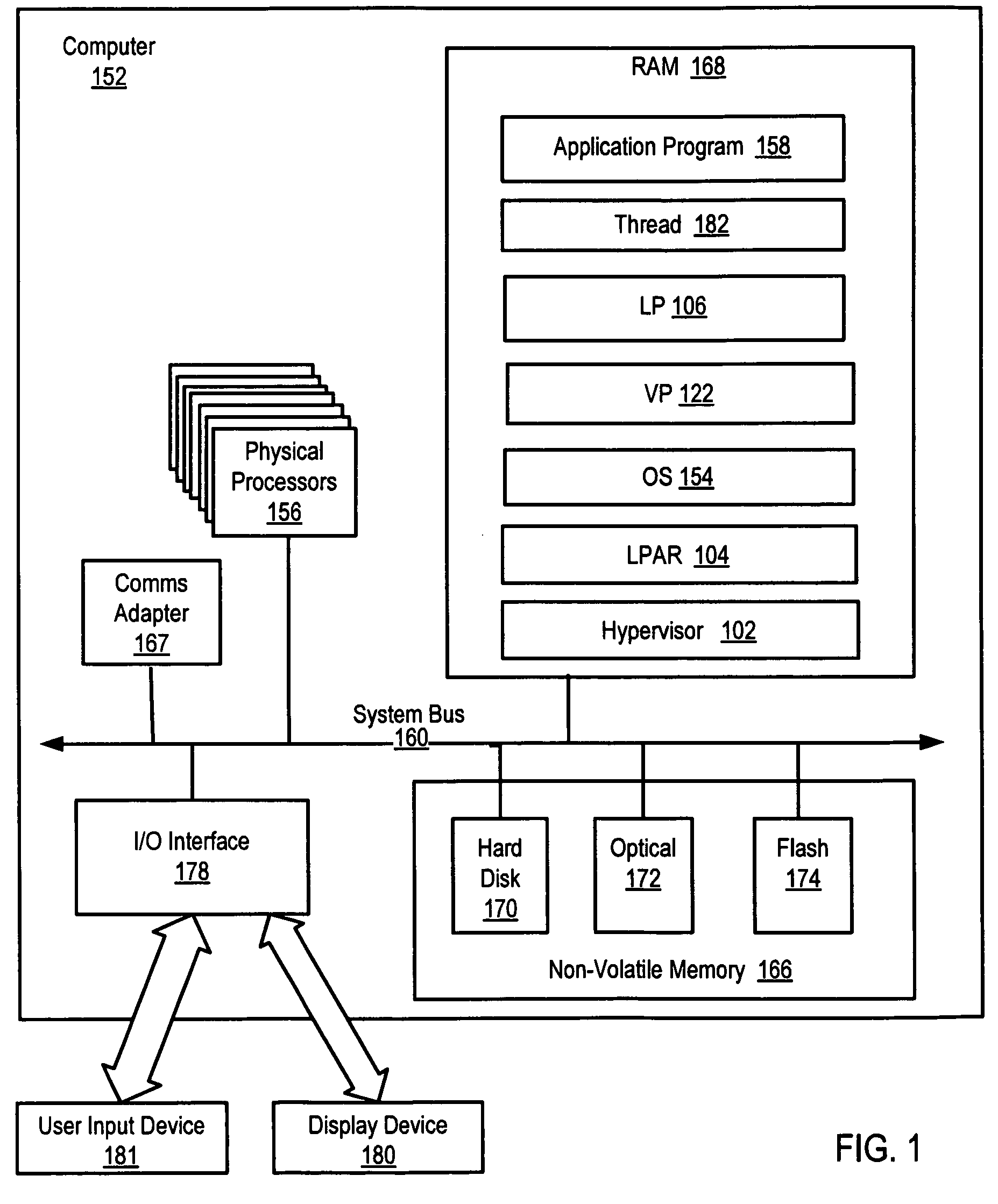

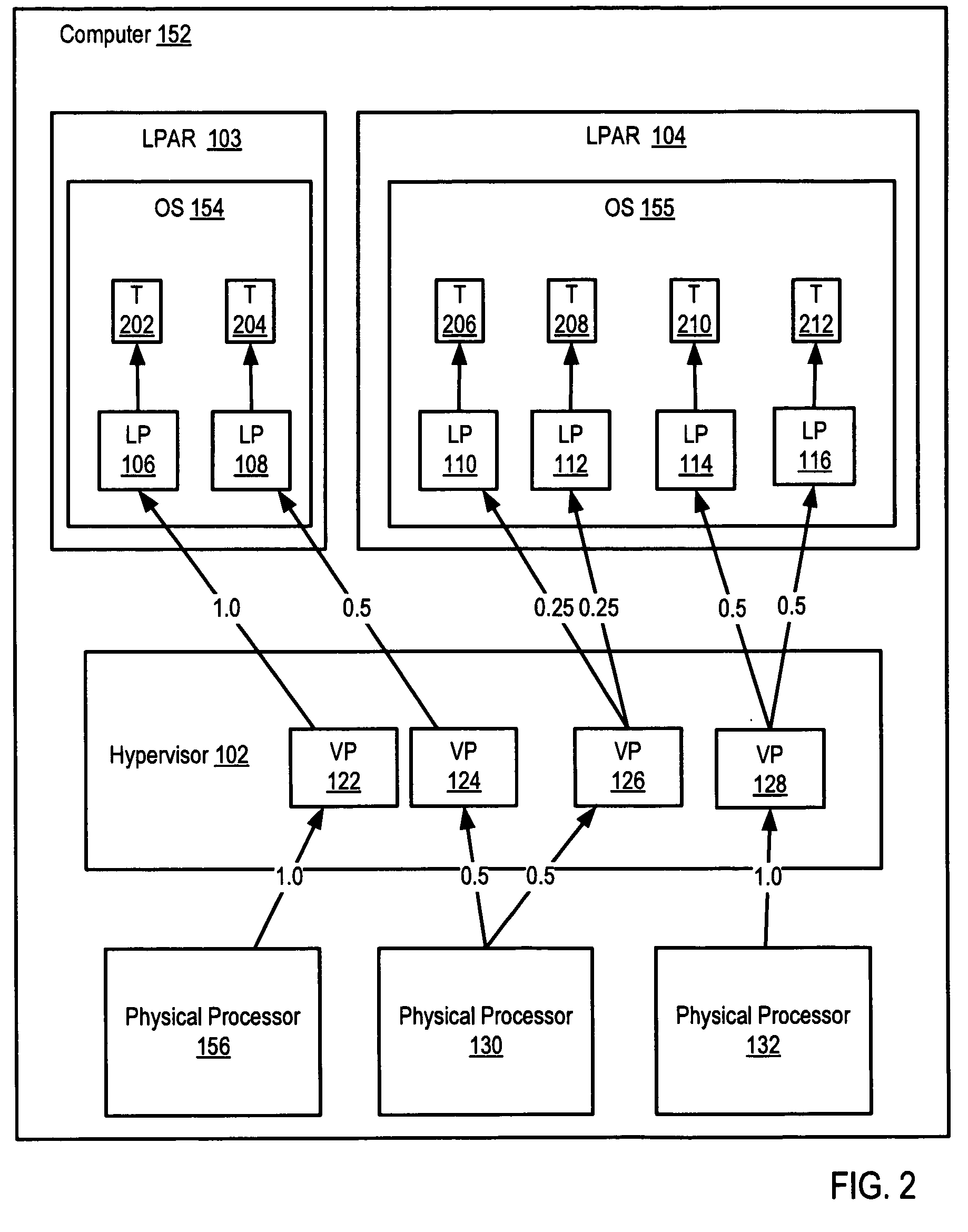

Administration of locks for critical sections of computer programs in a computer that supports a multiplicity of logical partitions

InactiveUS20060277551A1Multiprogramming arrangementsSoftware simulation/interpretation/emulationCritical sectionPhysics processing unit

Administration of locks for critical sections of computer programs in a computer that supports a multiplicity of logical partitions that include determining by a thread executing on a virtual processor executing in a time slice on a physical processor whether an expected lock time for a critical section of the thread exceeds a remaining entitlement of the virtual processor in the time slice and deferring acquisition of a lock if the expected lock time exceeds the remaining entitlement.

Owner:IBM CORP

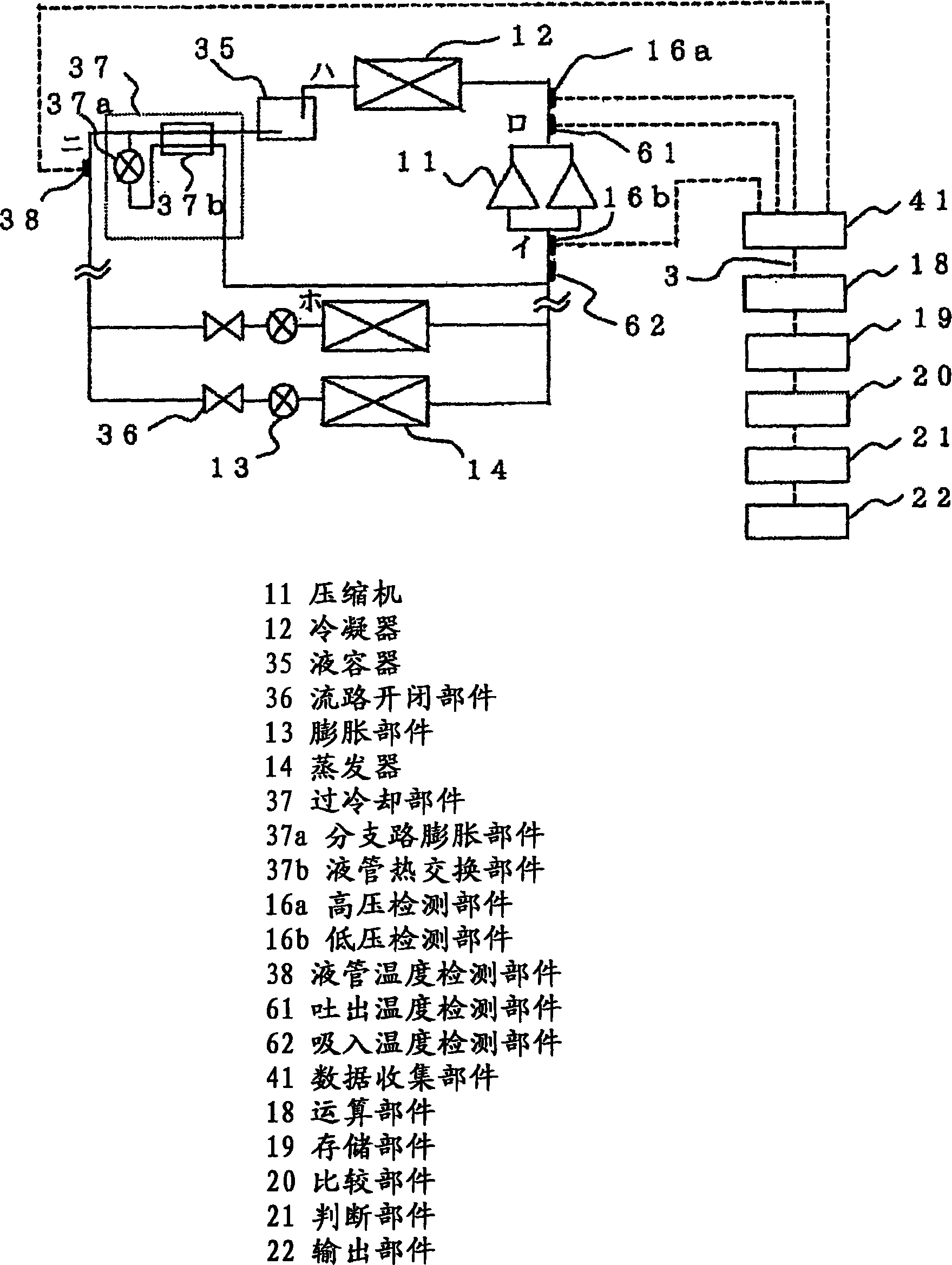

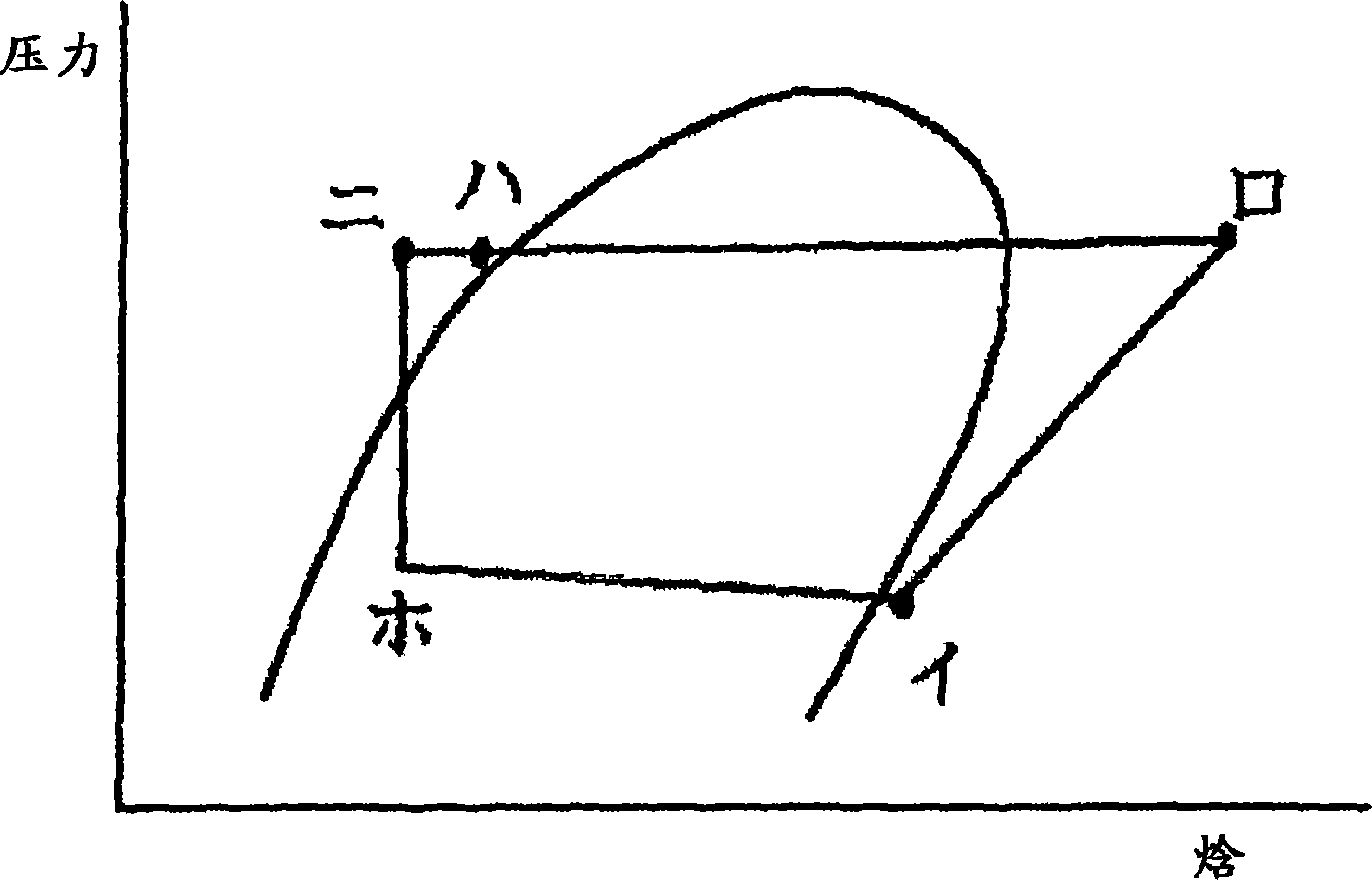

Device diagnosis device, freezing cycle device, fluid circuit diagnosis method, device monitoring system, and freezing cycle monitoring system

ActiveCN1906453AReliable monitoringGood precisionMechanical apparatusSpace heating and ventilation safety systemsMahalanobis distanceMonitoring system

A failure diagnosis apparatus for a refrigerating cycle had a problem that it has a low precision because the fluid is treated, and it is difficult to detect a foretaste of failure, absorb individual differences of real machine in the failure determination, and determine a cause of failure. Also, no cheap and practical diagnosis apparatus and method are provided. A plurality of instrumentation amounts concerning the refrigerant such as the pressure and temperature of the refrigerating cycle apparatus or other instrumentation amounts are detected, the state quantities such as composite variables are acquired by making the arithmetic operation on these instrumentation amounts, and whether the apparatus is normal or abnormal is judged employing the arithmetic operation results. If learning is made during the normal operation, a current state is judged, and if learning is made by compulsorily performing the abnormal operation, or if the abnormal operating condition is operated during the current operation, a failure foretaste such as a critical operation can be made from a change in the Mahalanobis distance. Thereby, the secure diagnosis can be implemented with a simple constitution.

Owner:MITSUBISHI ELECTRIC CORP

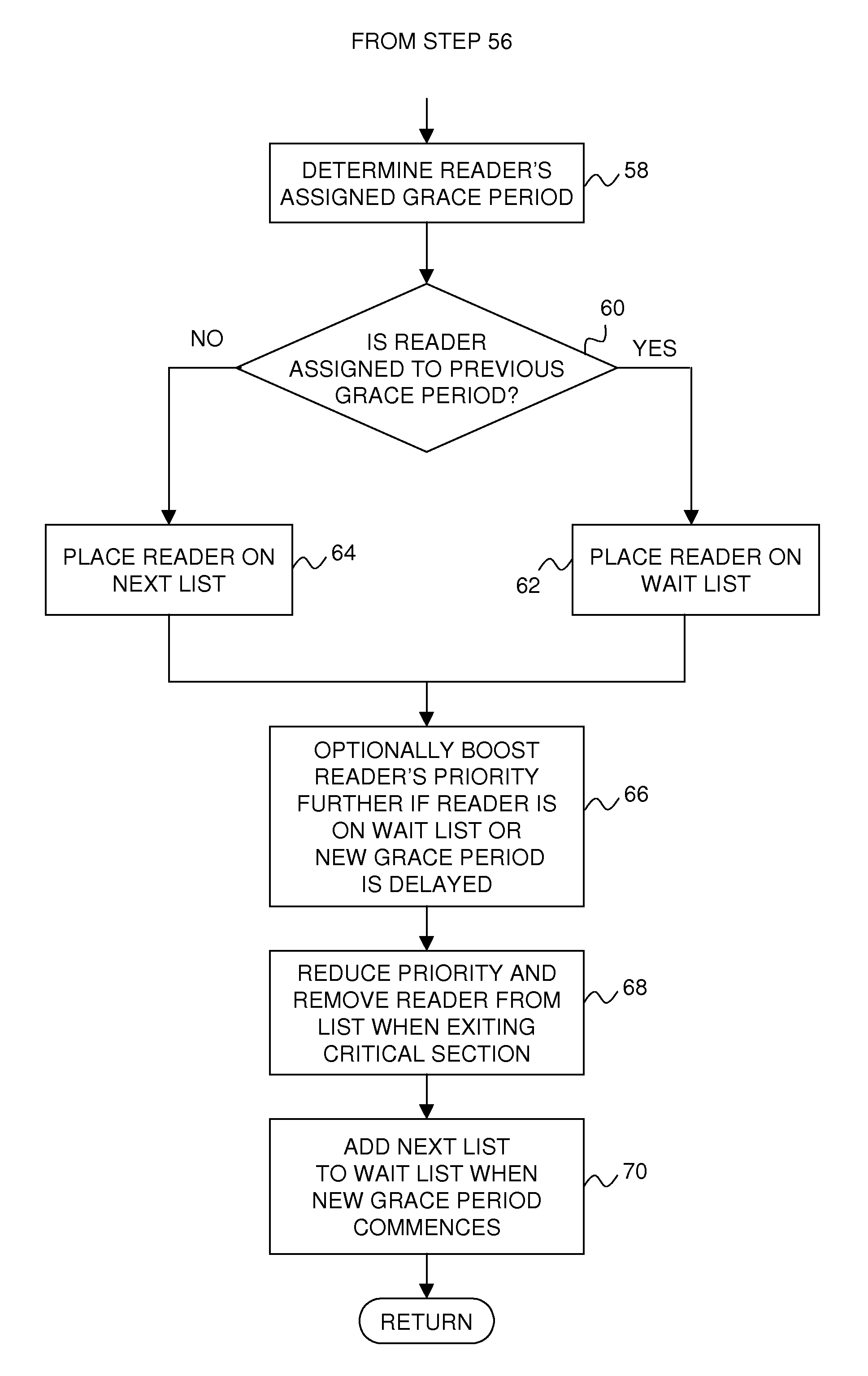

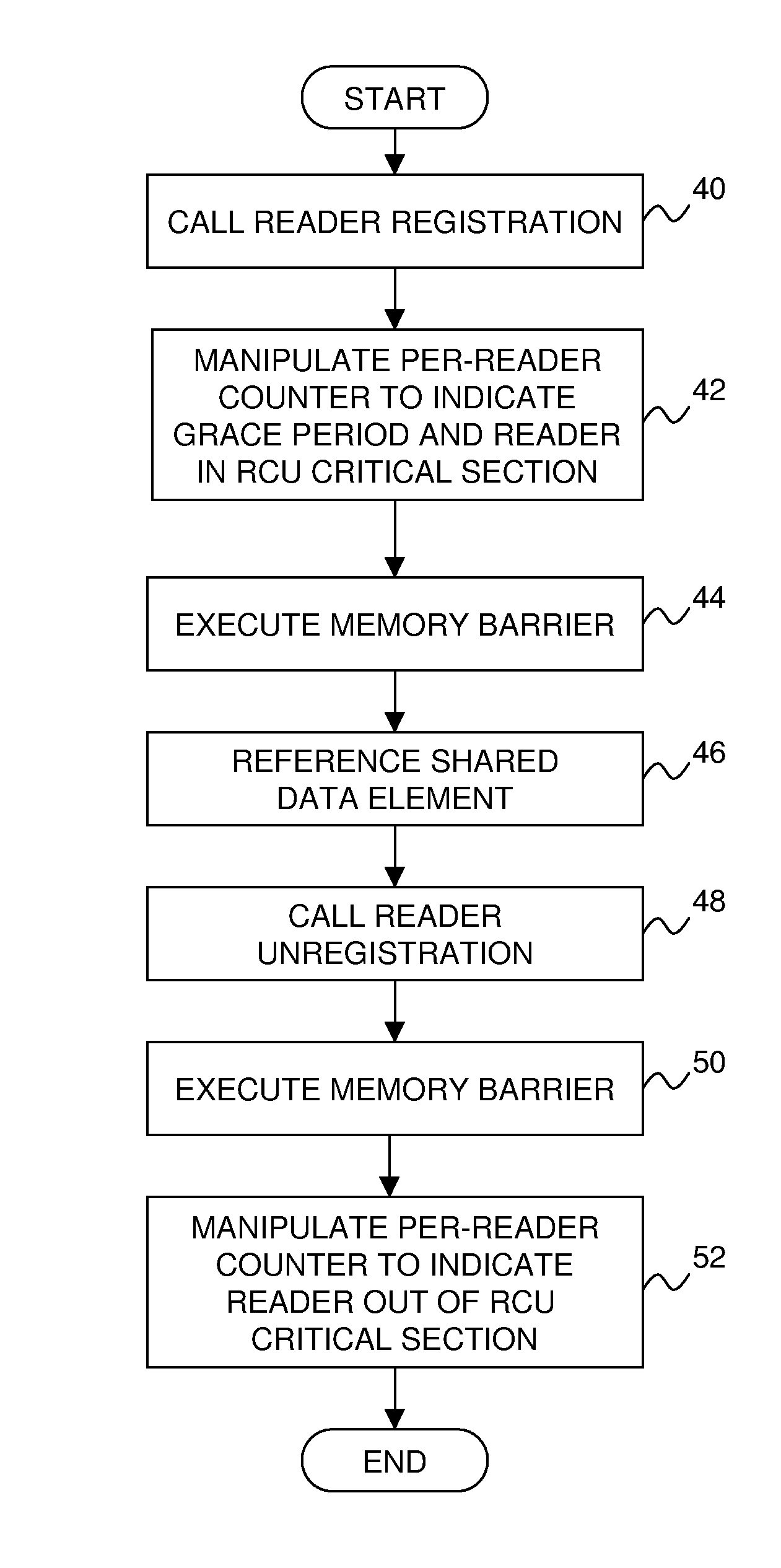

Efficiently boosting priority of read-copy update readers in a real-time data processing system

ActiveUS7734879B2Raise priorityMinimum of processing overheadProgram synchronisationMemory systemsReal-time dataRead-copy-update

A technique for efficiently boosting the priority of a preemptable data reader in order to eliminate impediments to grace period processing that defers the destruction of one or more shared data elements that may be referenced by the reader until the reader is no longer capable of referencing the data elements. Upon the reader being subject to preemption or blocking, it is determined whether the reader is in a read-side critical section referencing any of the shared data elements. If it is, the reader's priority is boosted in order to expedite completion of the critical section. The reader's priority is subsequently decreased after the critical section has completed. In this way, delays in grace period processing due to reader preemption within the critical section, which can result in an out-of-memory condition, can be minimized efficiently with minimal processing overhead.

Owner:TWITTER INC

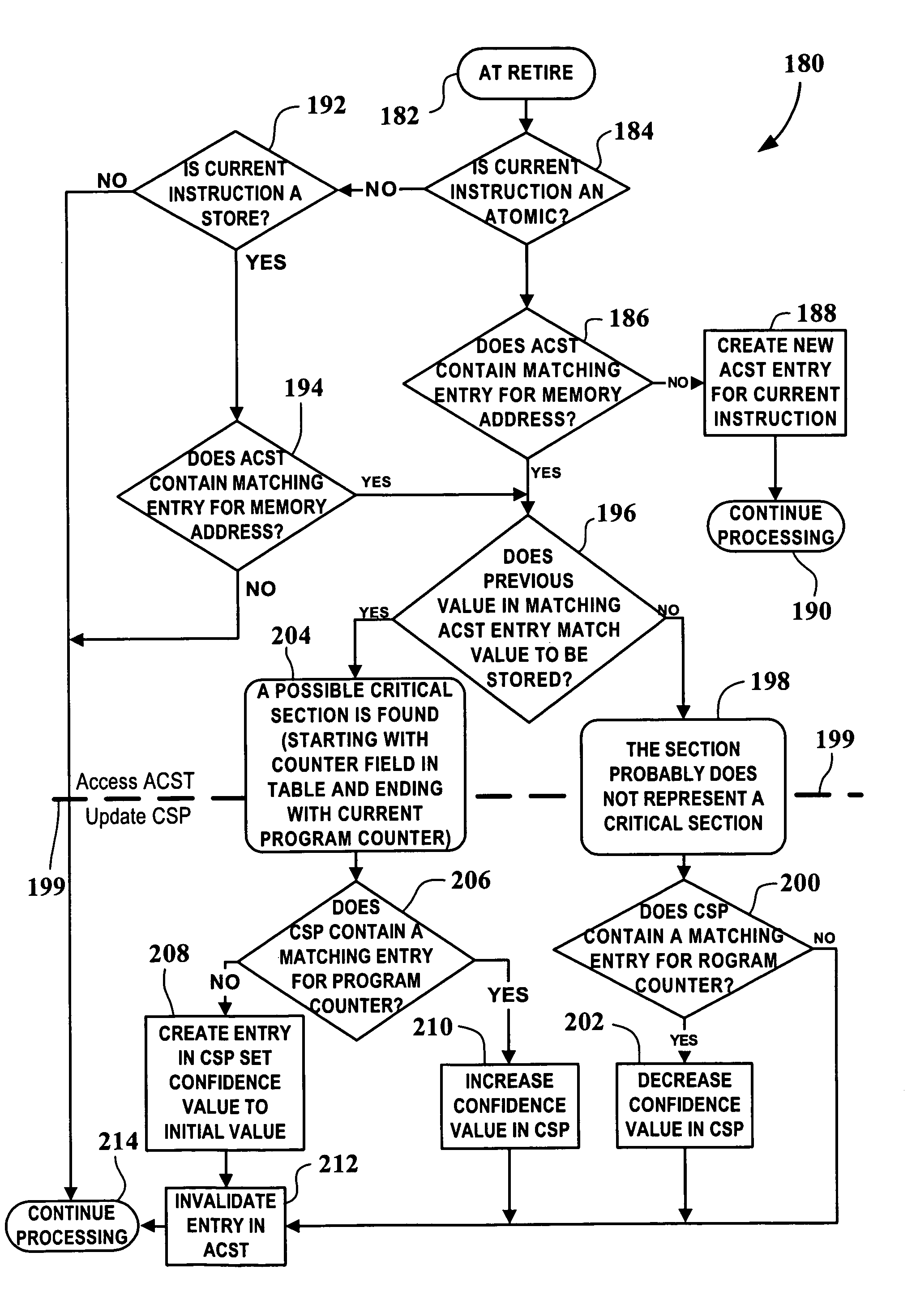

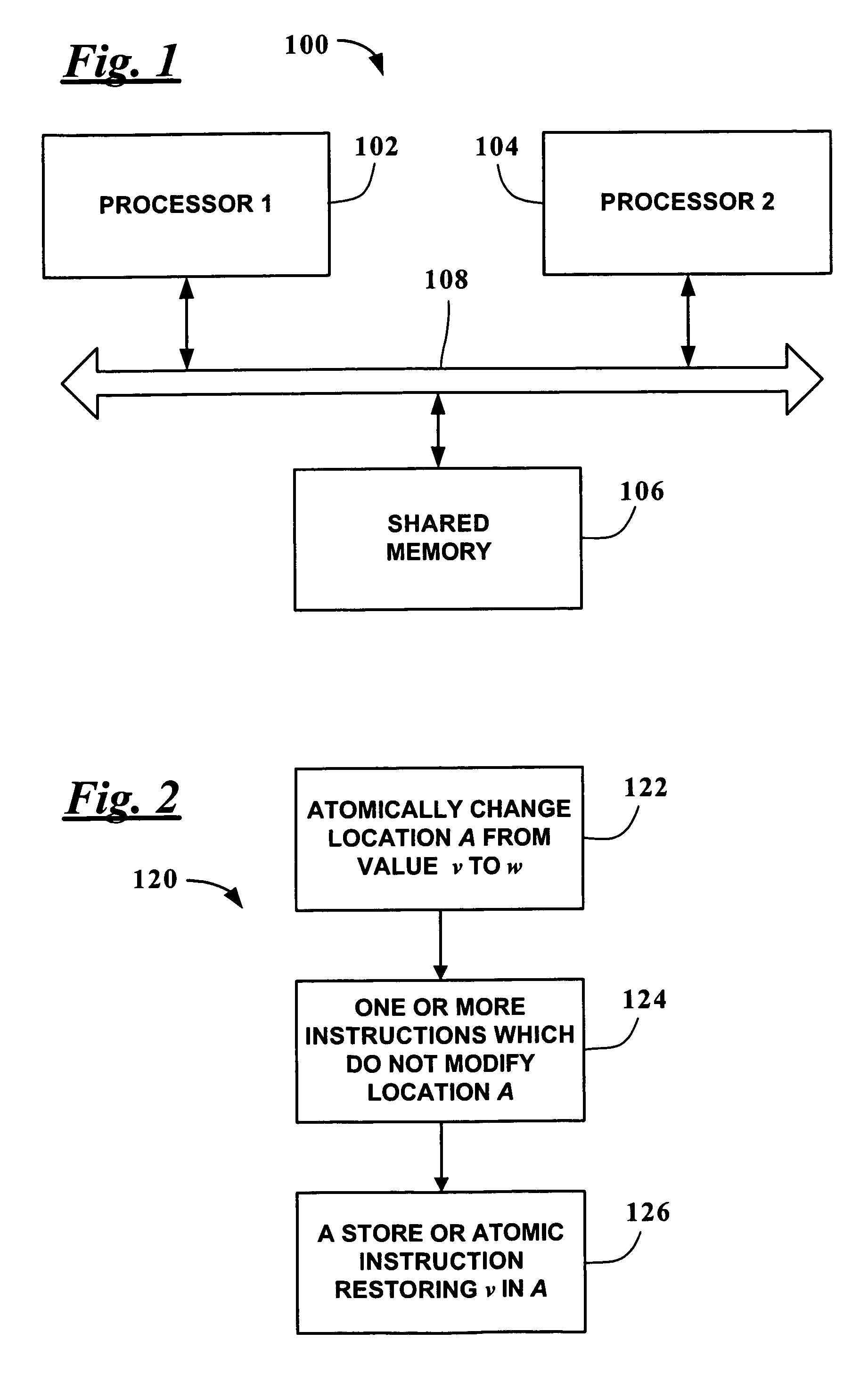

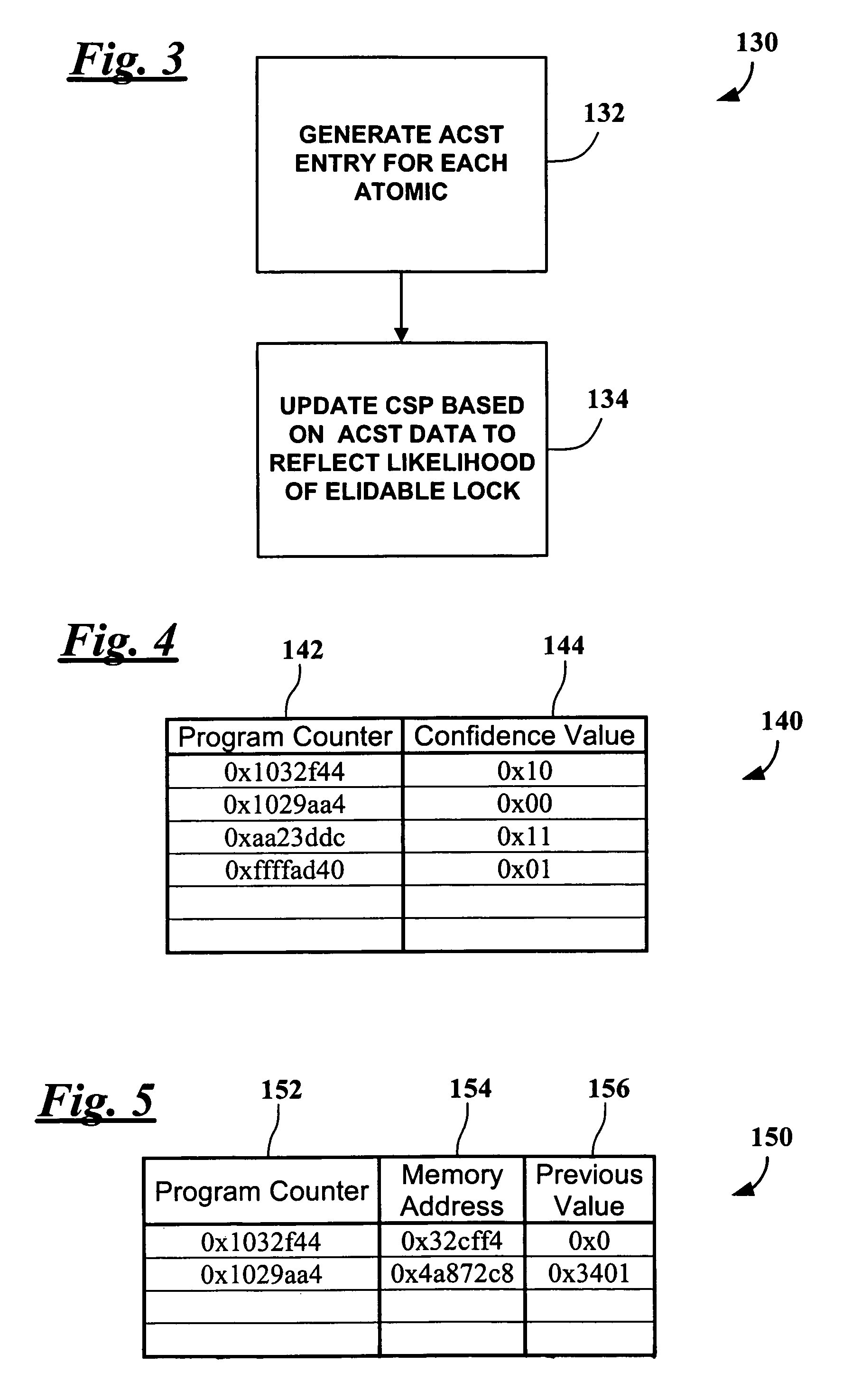

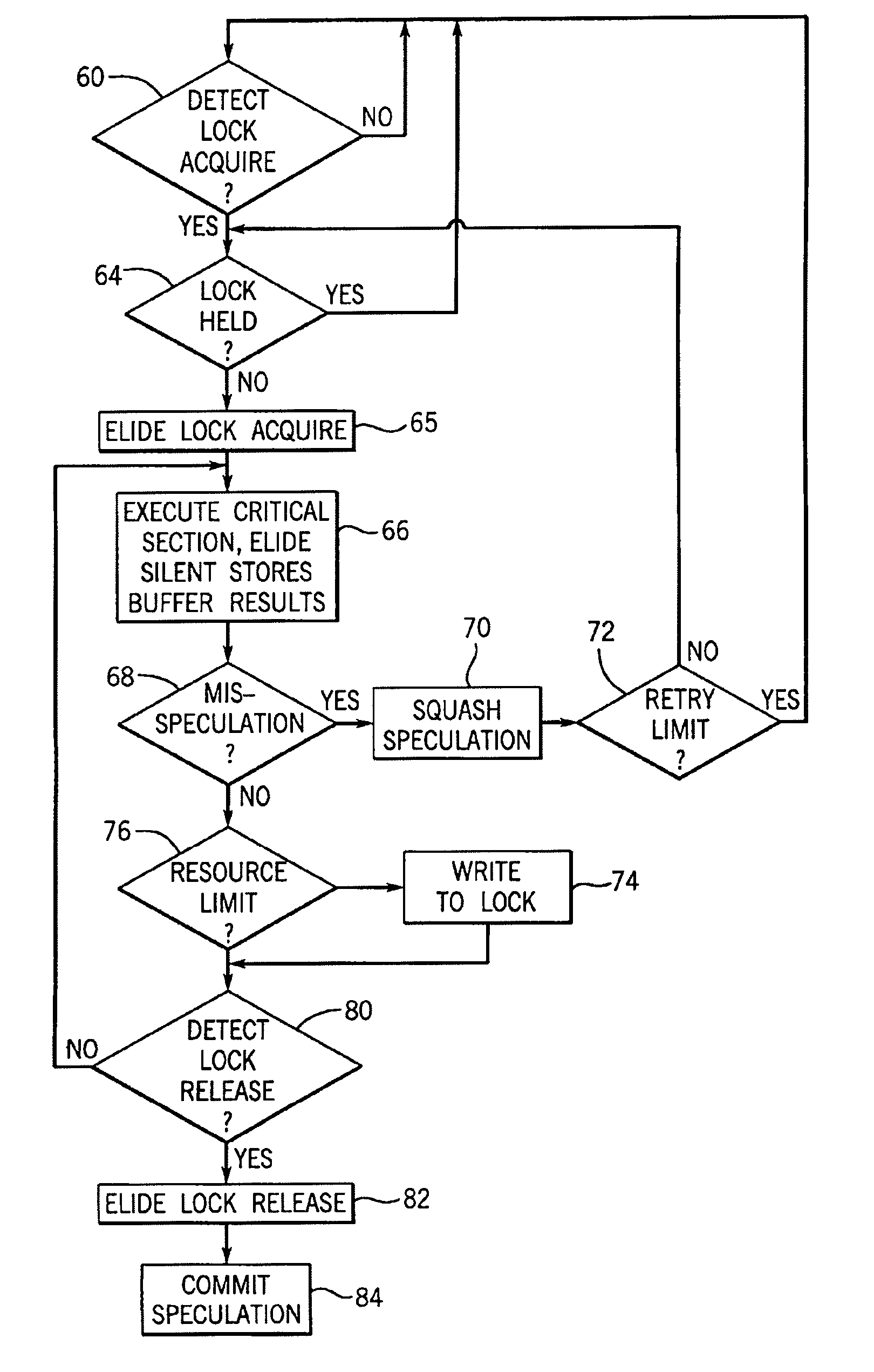

Method and apparatus for critical section prediction for intelligent lock elision

ActiveUS7930694B2Improve performanceMultiprogramming arrangementsMemory systemsParallel computingCritical section

Intelligent prediction of critical sections is implemented using a method comprising updating a critical section estimator based on historical analysis of atomic / store instruction pairs during runtime and performing lock elision when the critical section estimator indicates that the atomic / store instruction pairs define a critical section.

Owner:ORACLE INT CORP

System and Method for Implementing Scalable Adaptive Reader-Writer Locks

ActiveUS20150286586A1Memory architecture accessing/allocationProgram synchronisationSleep stateCritical section

NUMA-aware reader-writer locks may leverage lock cohorting techniques and may support reader re-entrancy. They may implement a delayed sleep mechanism by which a thread that fails to acquire a lock spins briefly, hoping the lock will be released soon, before blocking on the lock (sleeping). The maximum spin time may be based on the time needed to put a thread to sleep and wake it up. If a lock holder is not executing on a processor, an acquiring thread may go to sleep without first spinning. Threads put in a sleep state may be placed on a turnstile sleep queue associated with the lock. When a writer thread that holds the lock exits a critical section protected by the lock, it may wake all sleeping reader threads and one sleeping writer thread. Reader threads may increment and decrement node-local reader counters upon arrival and departure, respectively.

Owner:ORACLE INT CORP

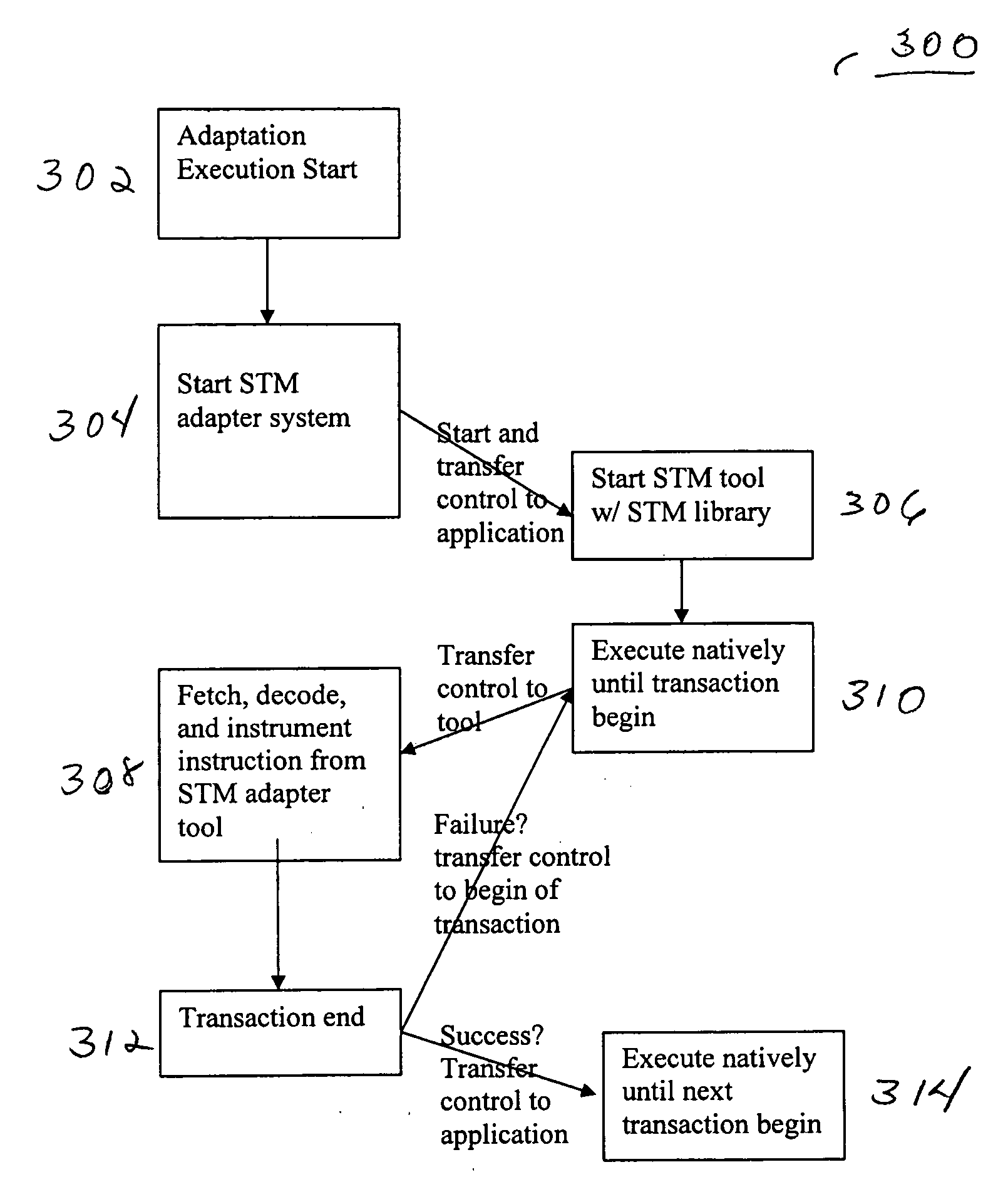

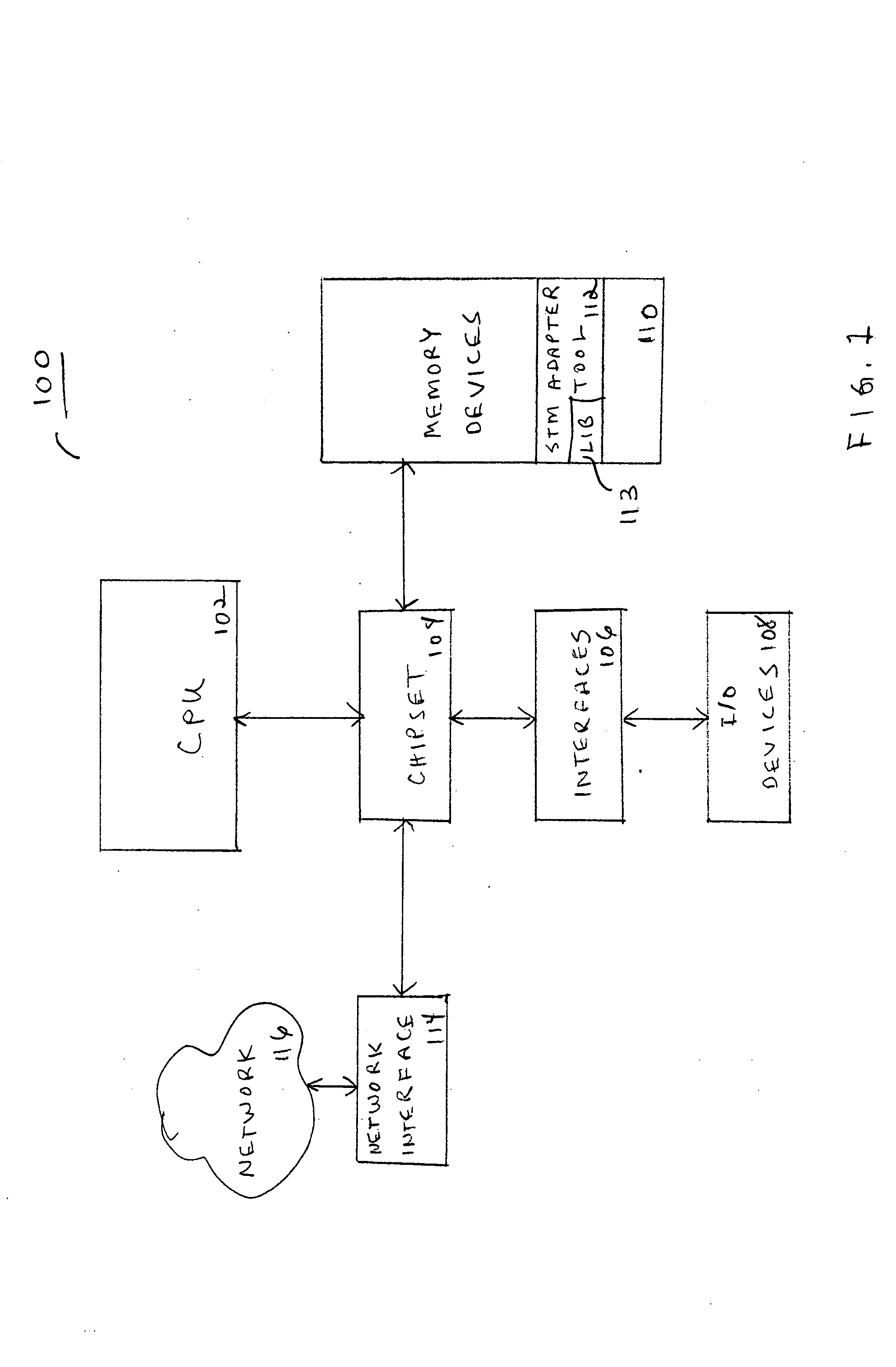

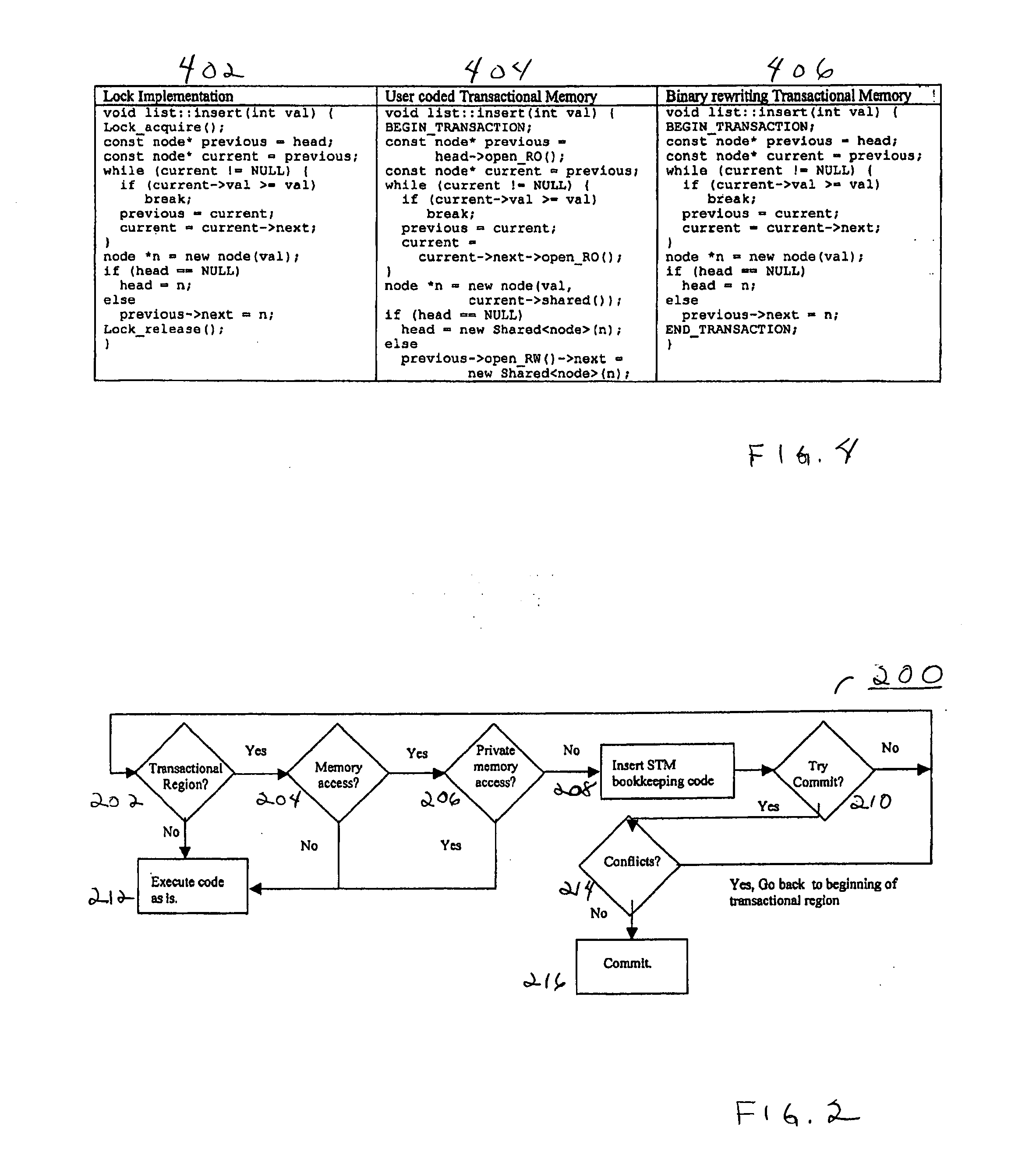

Adapting software programs to operate in software transactional memory environments

InactiveUS20070300238A1Software maintainance/managementSpecific program execution arrangementsApplication programming interfaceParallel computing

Embodiments of a system and method for adapting software programs to operate in software transactional memory (STM) environments are described. Embodiments include a software transactional memory (STM) adapter system including, in one embodiment, a version of a binary rewriting tool. The STM adapter system provides a simple-to-use application programming interface (API) for legacy languages (e.g., C and C++) that allows the programmer to simply mark the block of code to be executed atomically; the STM adapter system automatically transforms all the binary code executed within that block (including pre-compiled libraries) to execute atomically (that is, to execute as a transaction). In an embodiment, the STM adapter system automatically transforms lock-based critical sections in existing binary code to atomic blocks, for example by replacing locks with begin and end markers that mark the beginning and end of transactions. Other embodiments are described and claimed.

Owner:INTEL CORP

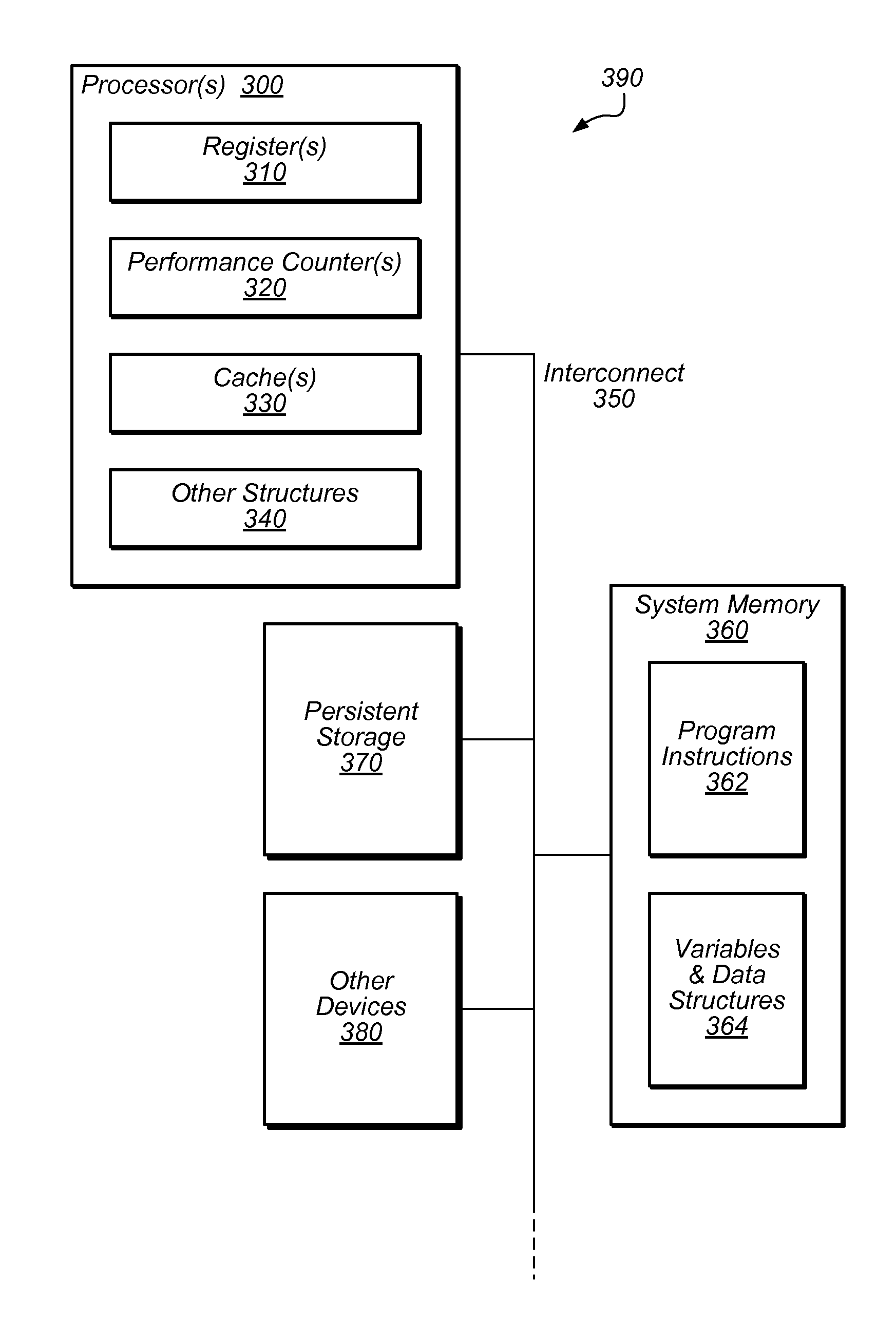

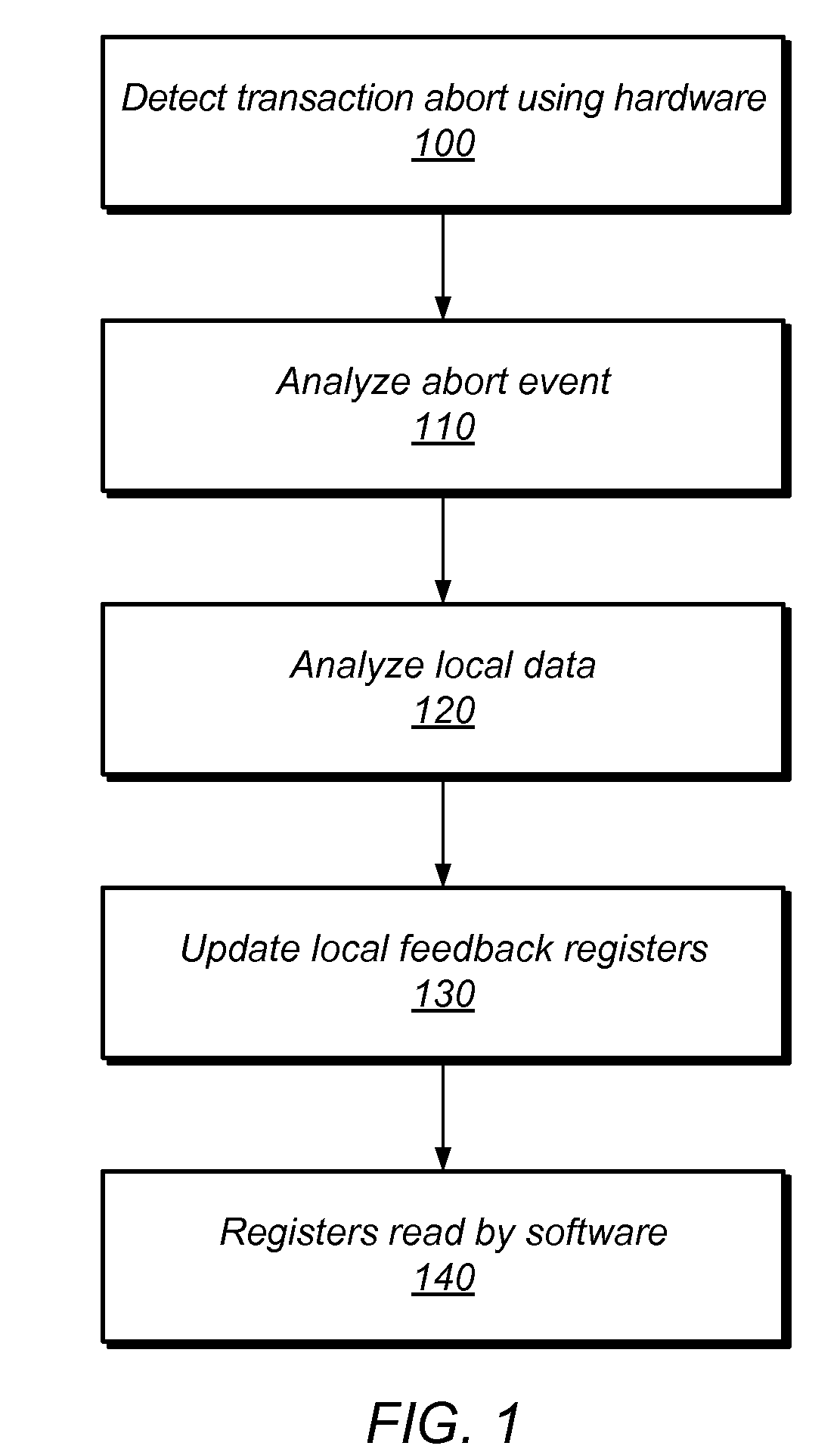

Method and System for Hardware Feedback in Transactional Memory

ActiveUS20100131953A1Memory adressing/allocation/relocationTransaction processingCritical sectionTransactional memory

Multi-threaded, transactional memory systems may allow concurrent execution of critical sections as speculative transactions. These transactions may abort due to contention among threads. Hardware feedback mechanisms may detect information about aborts and provide that information to software, hardware, or hybrid software / hardware contention management mechanisms. For example, they may detect occurrences of transactional aborts or conditions that may result in transactional aborts, and may update local readable registers or other storage entities (e.g., performance counters) with relevant contention information. This information may include identifying data (e.g., information outlining abort relationships between the processor and other specific physical or logical processors) and / or tallied data (e.g., values of event counters reflecting the number of aborted attempts by the current thread or the resources consumed by those attempts). This contention information may be accessible by contention management mechanisms to inform contention management decisions (e.g. whether to revert transactions to mutual exclusion, delay retries, etc.).

Owner:SUN MICROSYSTEMS INC

User-level read-copy update that does not require disabling preemption or signal handling

InactiveUS8020160B2Digital data processing detailsMultiprogramming arrangementsComputer architectureRead-copy-update

Owner:INT BUSINESS MASCH CORP

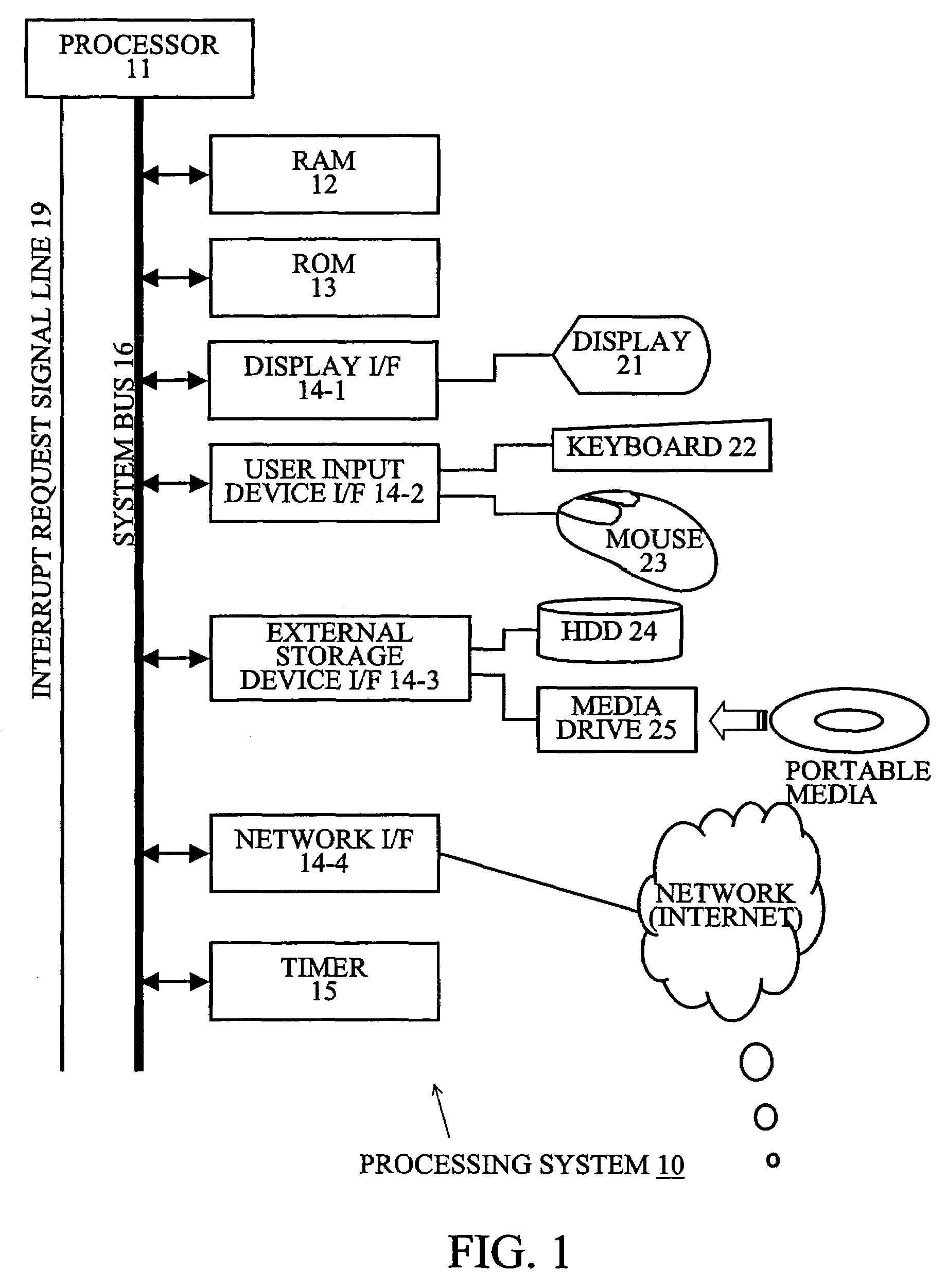

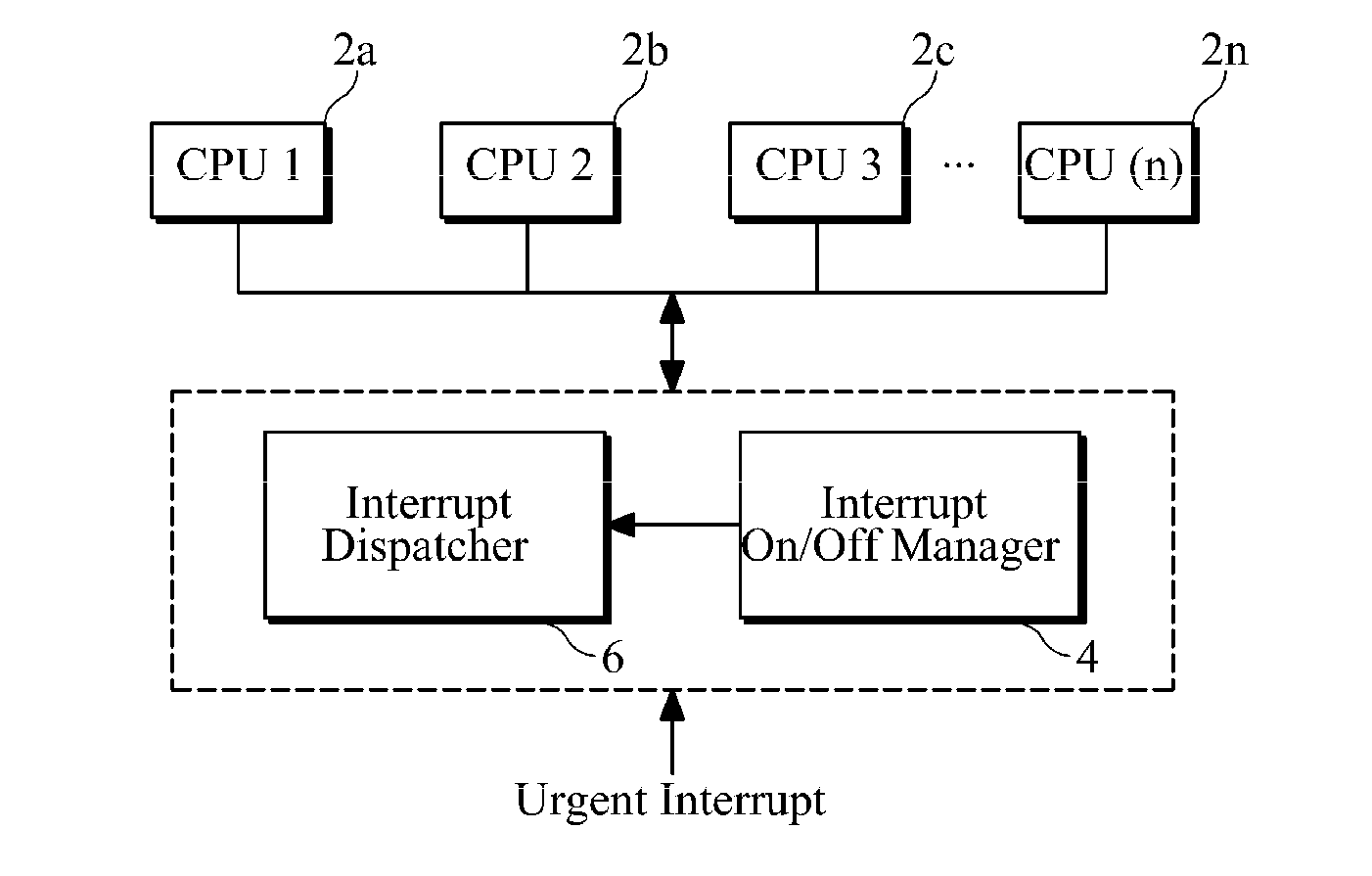

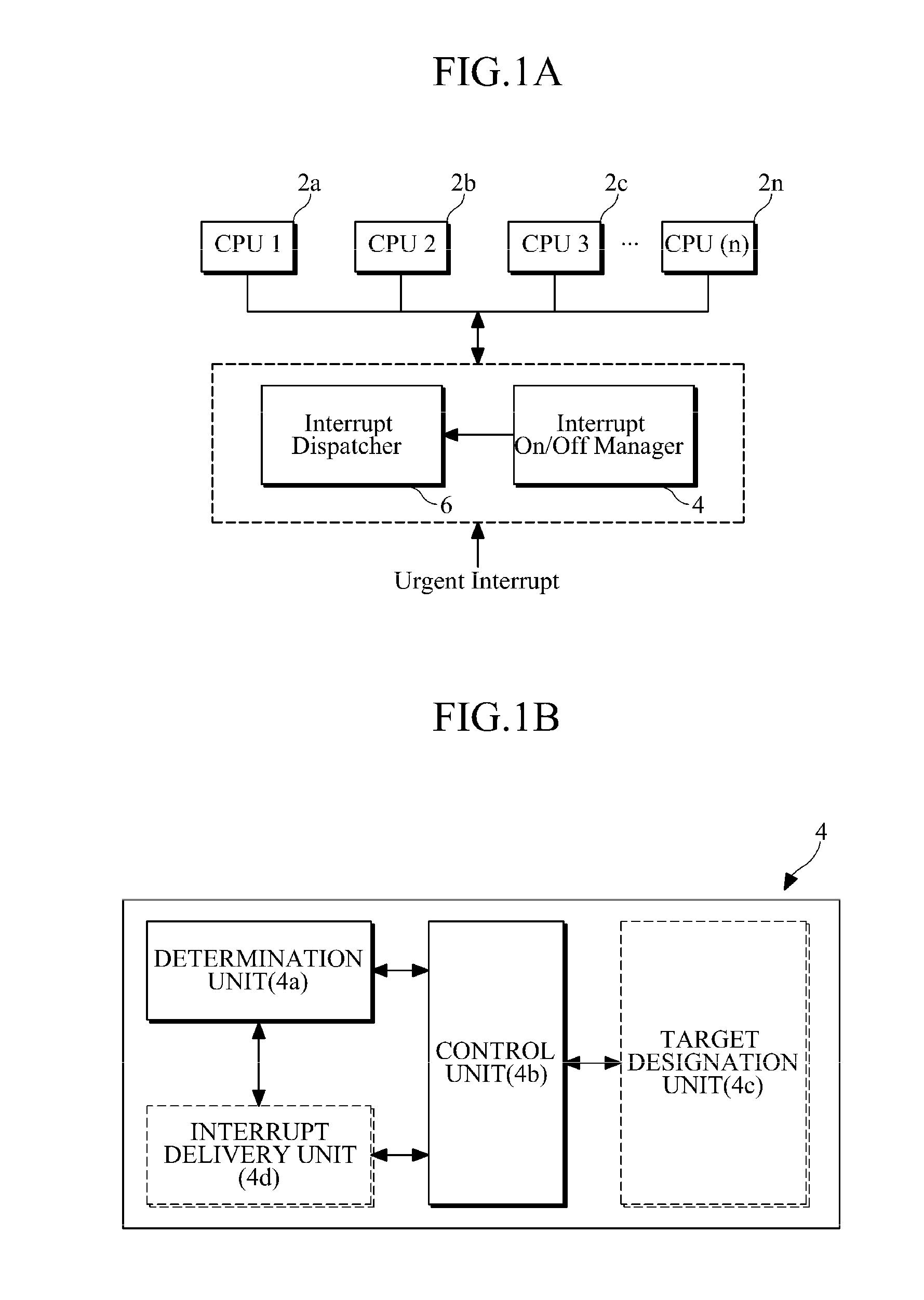

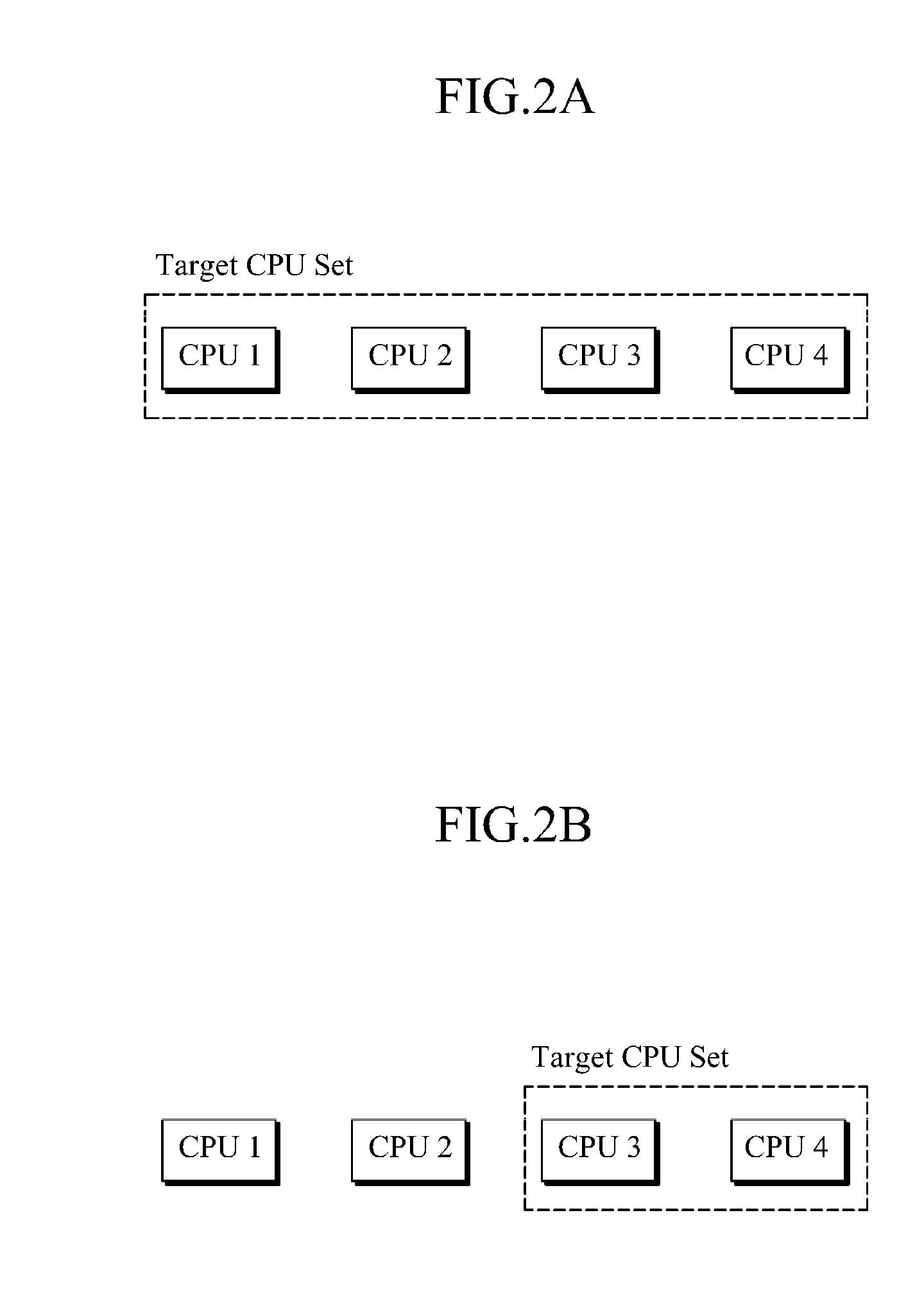

Interrupt on/off management apparatus and method for multi-core processor

InactiveUS20110072180A1Minimize and reduce delayImprove real-time performanceElectric digital data processingCritical sectionCritical zone

Provided are an interrupt on / off management apparatus and method for a multi-core processor having a plurality of central processing unit (CPU) cores. The interrupt on / off management apparatus manages the multi-core processor such that at least one of two or more CPU cores included in a target CPU set can execute an urgent interrupt. For example, the interrupt on / off management apparatus controls the movement of each CPU core from a critical section to a non-critical section such that at least one of the CPU cores is located in the non-critical section. The critical section may include an interrupt-disabled section or a kernel non-preemptible section, and the non-critical section may include an interrupt-enabled section or include both of the interrupt-enabled section and a kernel preemptible section.

Owner:SAMSUNG ELECTRONICS CO LTD

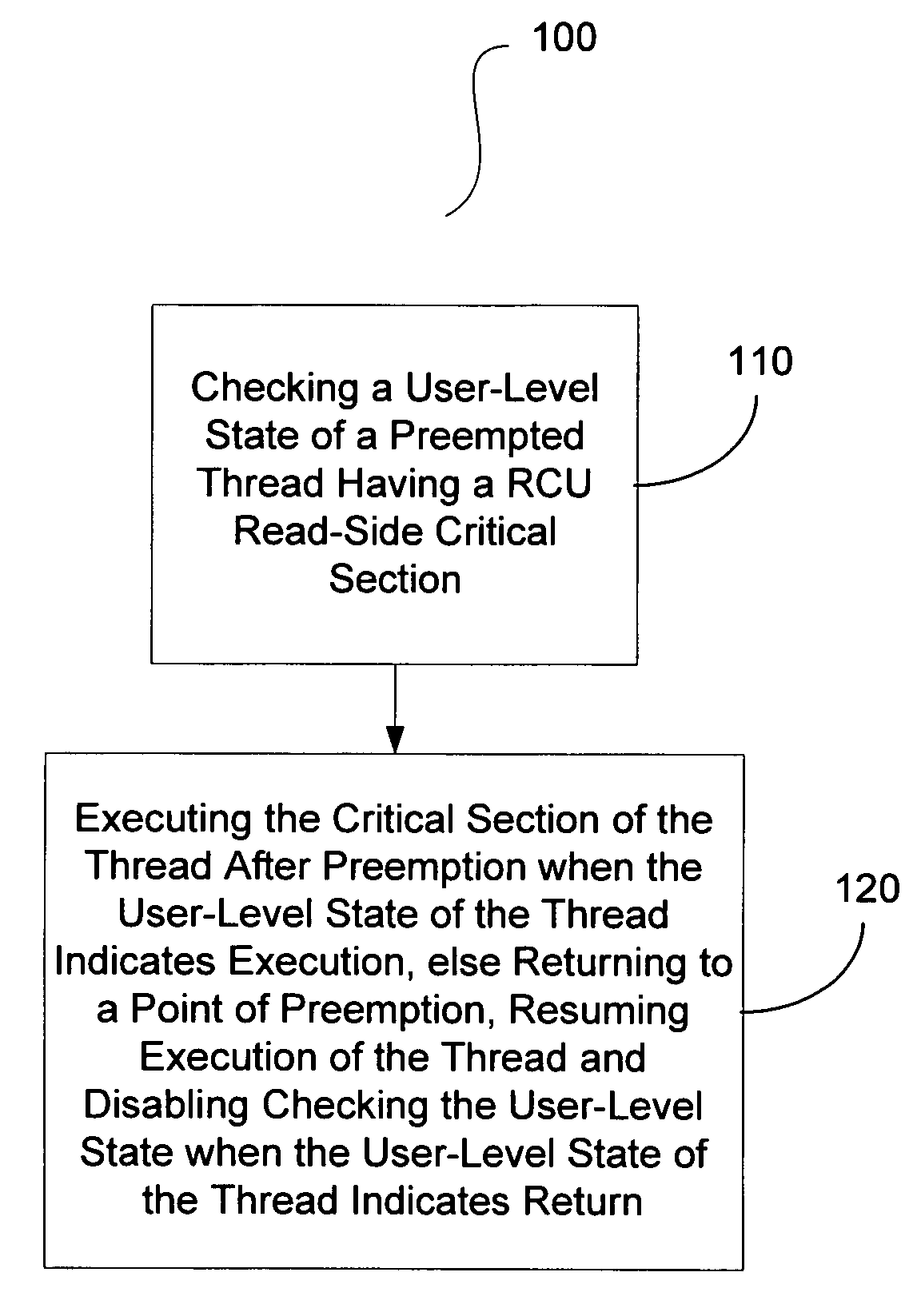

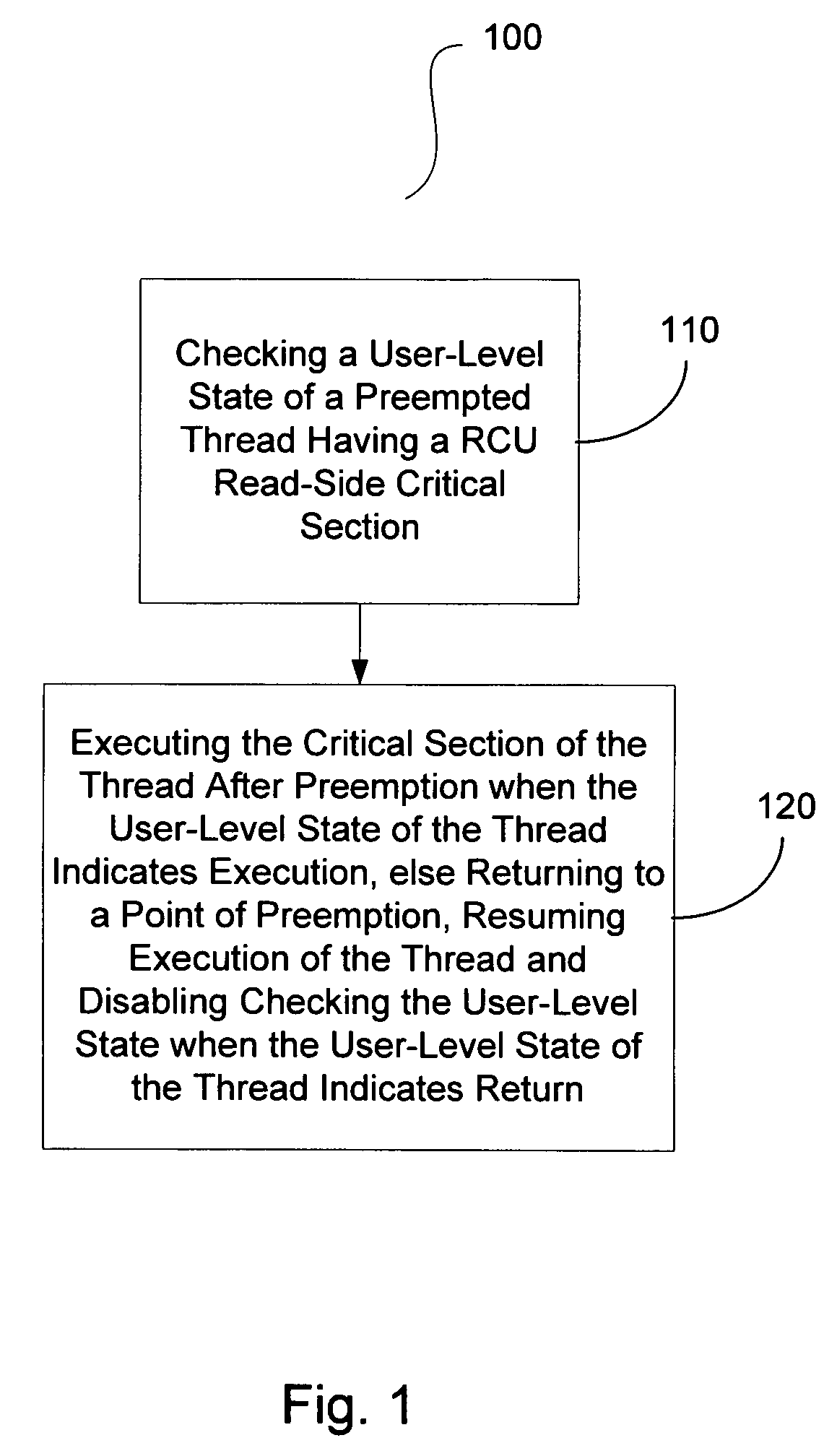

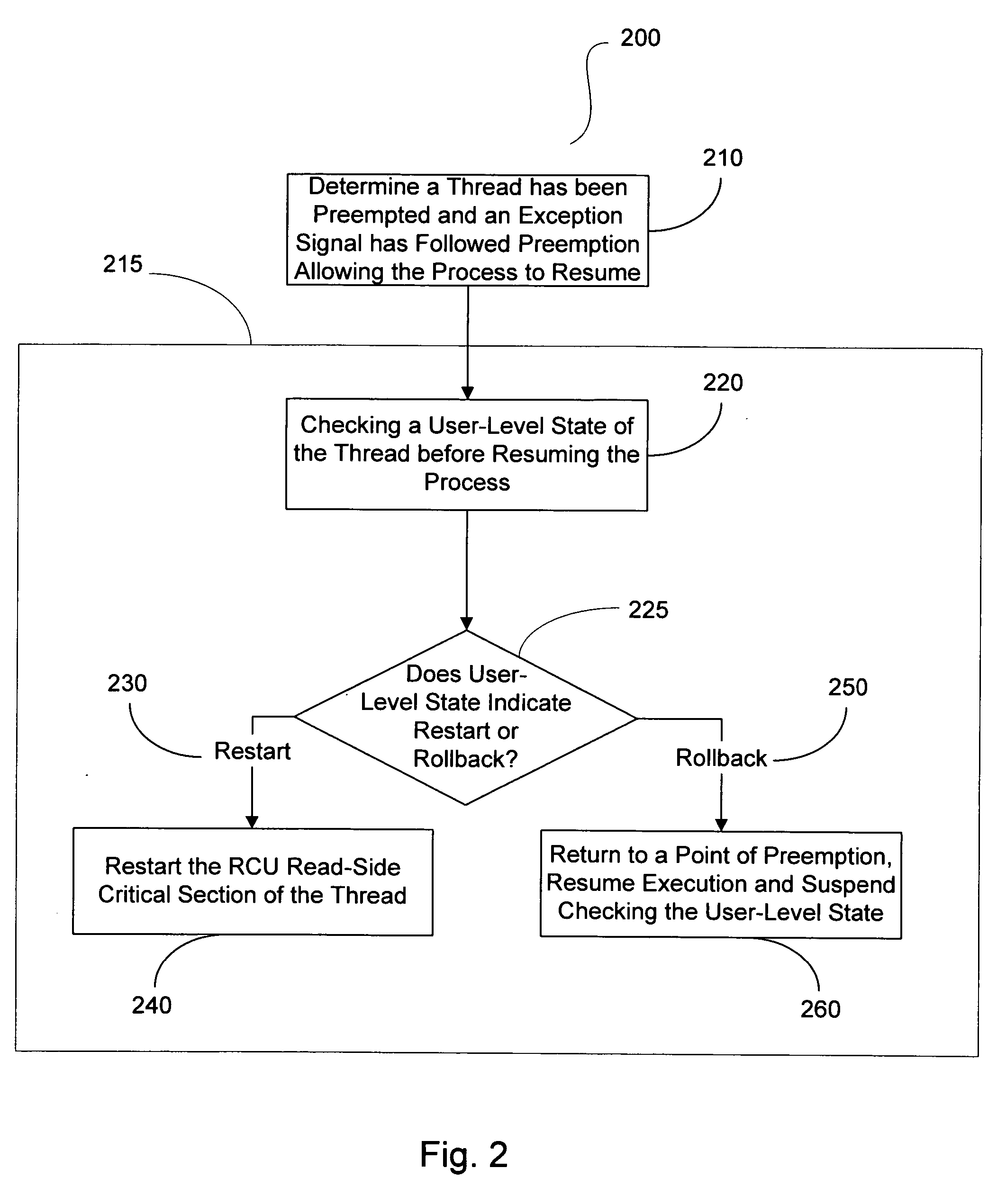

Use of rollback RCU with read-side modifications to RCU-protected data structures

InactiveUS20060130061A1Multiprogramming arrangementsMemory systemsArray data structureCritical section

A method, apparatus and program storage device for performing a return / rollback process for RCU-protected data structures is provided that includes checking a user-level state of a preempted thread having a RCU read-side critical section, and executing the critical section of the thread after preemption when the user-level state of the thread indicates execution, otherwise returning to a point of preemption, resuming execution of the thread and disabling checking the user-level state when the user-level state of the thread indicates return.

Owner:IBM CORP

Concurrent Execution of Critical Sections by Eliding Ownership of Locks

InactiveUS20070186215A1Ensure correct executionEfficient executionMemory architecture accessing/allocationProgram synchronisationSpeculative executionCritical section

One embodiment of the present invention provides a system that facilitates avoiding locks by speculatively executing critical sections of code. During operation, the system allows a process to speculatively execute a critical section of code within a program without first acquiring a lock associated with the critical section. If the process subsequently completes the critical section without encountering an interfering data access from another process, the system commits changes made during the speculative execution, and resumes normal non-speculative execution of the program past the critical section. Otherwise, if an interfering data access from another process is encountered during execution of the critical section, the system discards changes made during the speculative execution, and attempts to re-execute the critical section.

Owner:WISCONSIN ALUMNI RES FOUND

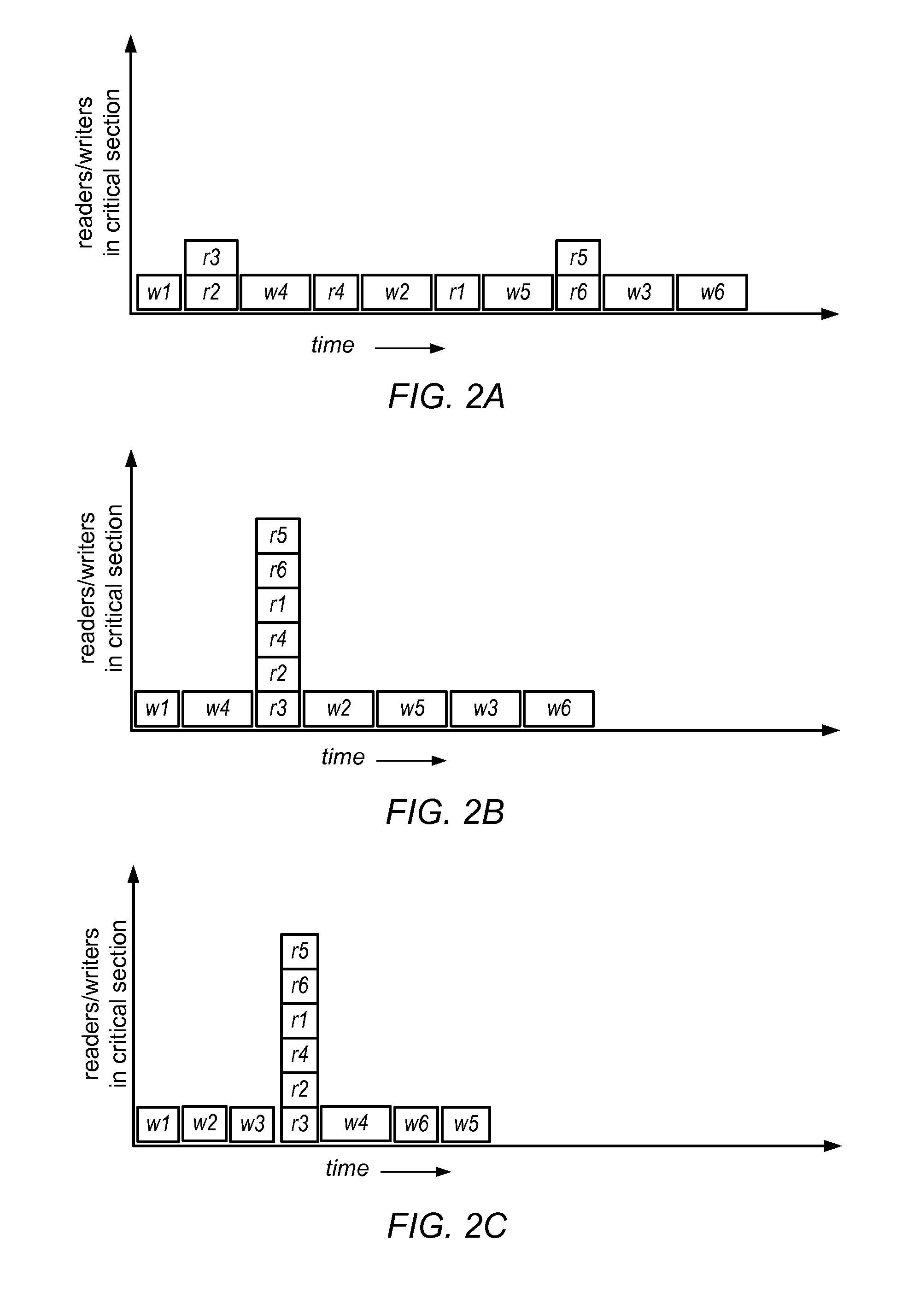

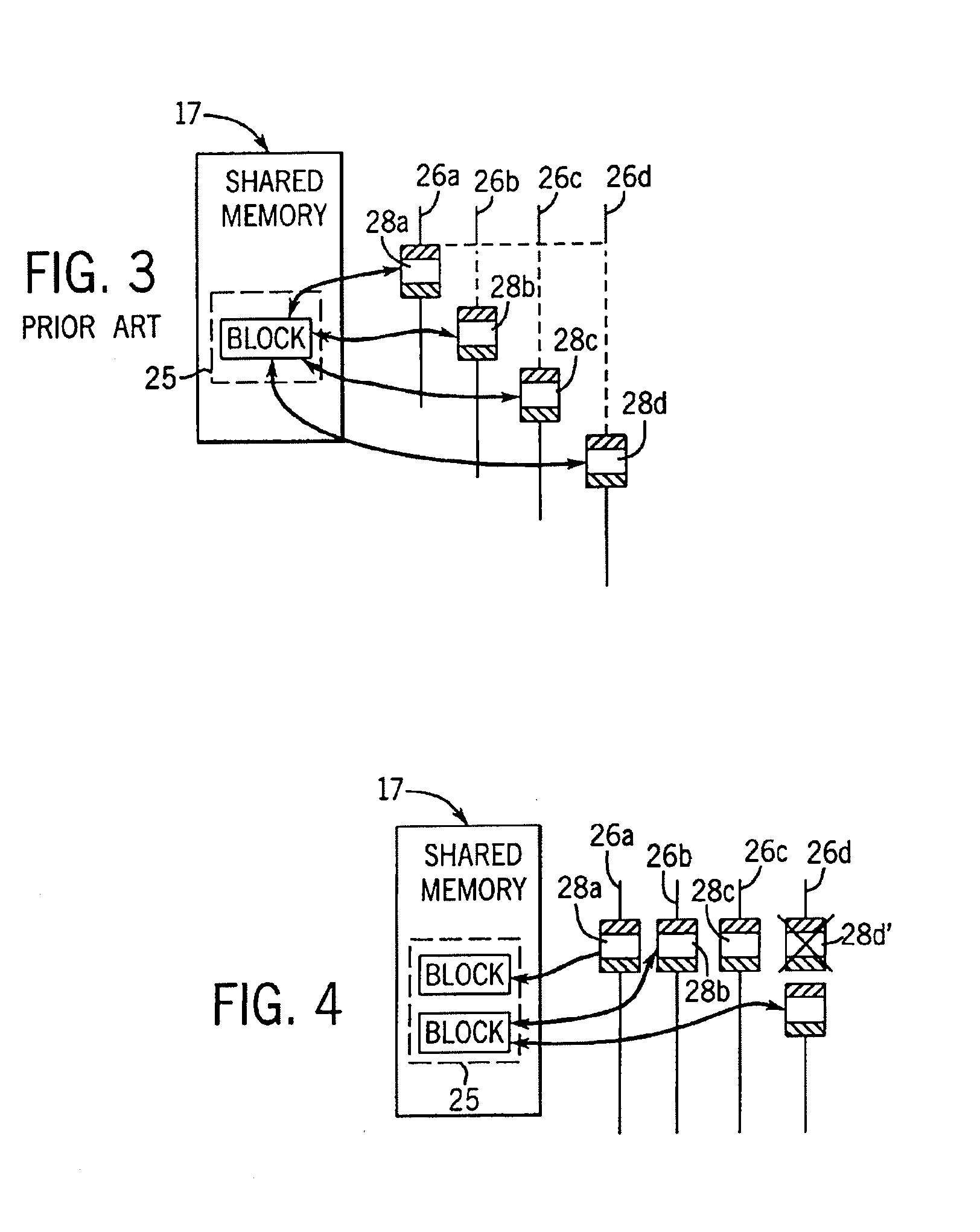

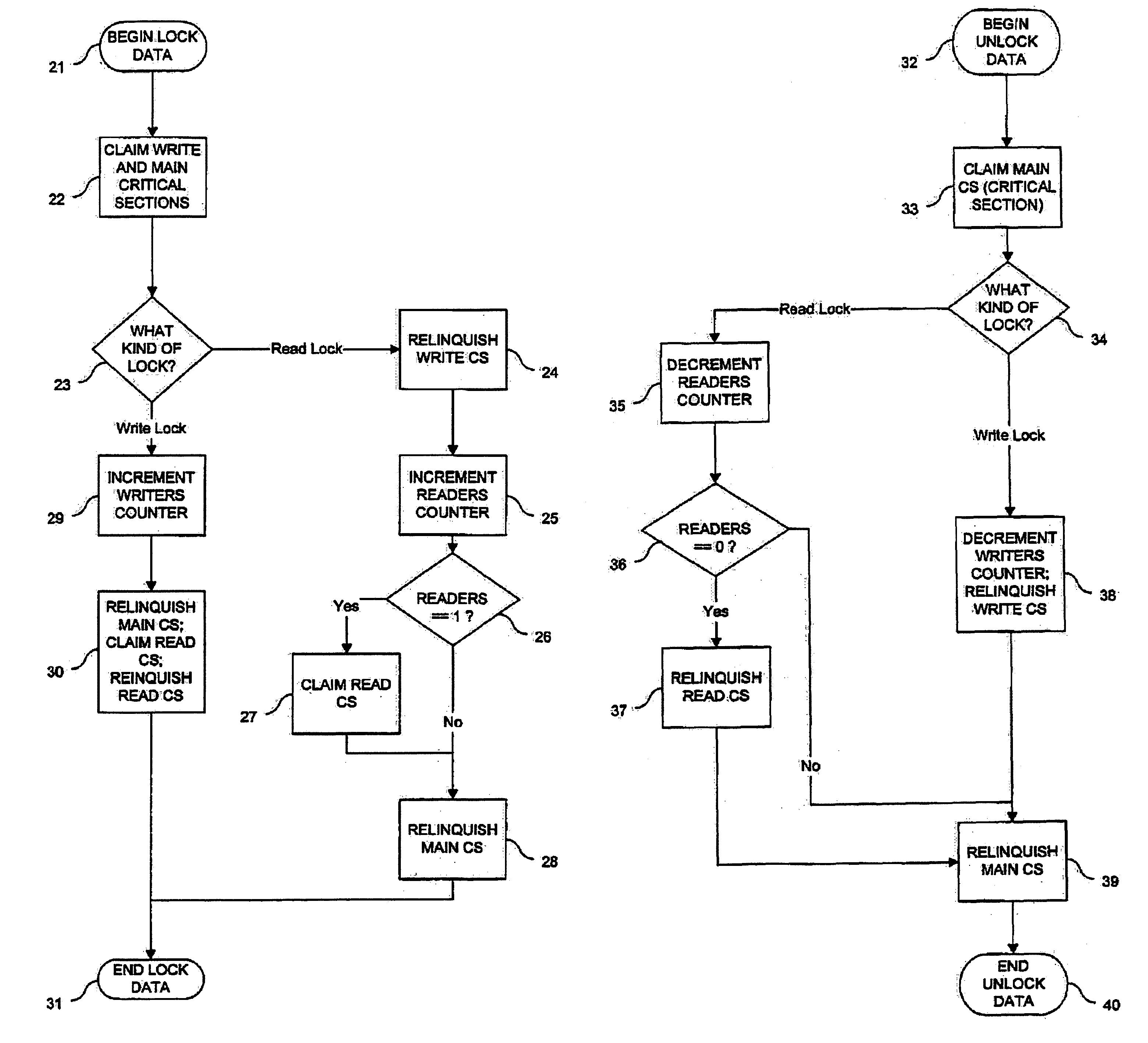

Architecture for a read/write thread lock

InactiveUS7188344B1Minimizing chanceMultiprogramming arrangementsMemory systemsComputer architectureCritical section

An architecture for a read / write thread lock is provided for use in a computing environment where several sets of computer instructions, or “threads,” can execute concurrently. The disclosed thread lock allows concurrently-executing threads to share access to a resource, such as a data object. The thread lock allows a plurality of threads to read from a resource at the same time, while providing a thread exclusive access to the resource when that thread is writing to the resource. The thread lock uses critical sections to suspend execution of other threads when one thread needs exclusive access to the resource. Additionally, a technique is provided whereby the invention can be deployed as constructors and destructors in a programming language, such as C++, where constructors and destructors are available. When the invention is deployed in such a manner, it is possible for a programmer to issue an instruction to lock a resource, without having to issue a corresponding unlock instruction.

Owner:UNISYS CORP

System and method for managing contention in transactional memory using global execution data

ActiveUS8402464B2Improve system performanceResource allocationMemory systemsResource consumptionCritical section

Transactional Lock Elision (TLE) may allow threads in a multi-threaded system to concurrently execute critical sections as speculative transactions. Such speculative transactions may abort due to contention among threads. Systems and methods for managing contention among threads may increase overall performance by considering both local and global execution data in reducing, resolving, and / or mitigating such contention. Global data may include aggregated and / or derived data representing thread-local data of remote thread(s), including transactional abort history, abort causal history, resource consumption history, performance history, synchronization history, and / or transactional delay history. Local and / or global data may be used in determining the mode by which critical sections are executed, including TLE and mutual exclusion, and / or to inform concurrency throttling mechanisms. Local and / or global data may also be used in determining concurrency throttling parameters (e.g., delay intervals) used in delaying a thread when attempting to execute a transaction and / or when retrying a previously aborted transaction.

Owner:ORACLE INT CORP

Computer architecture providing transactional, lock-free execution of lock-based programs

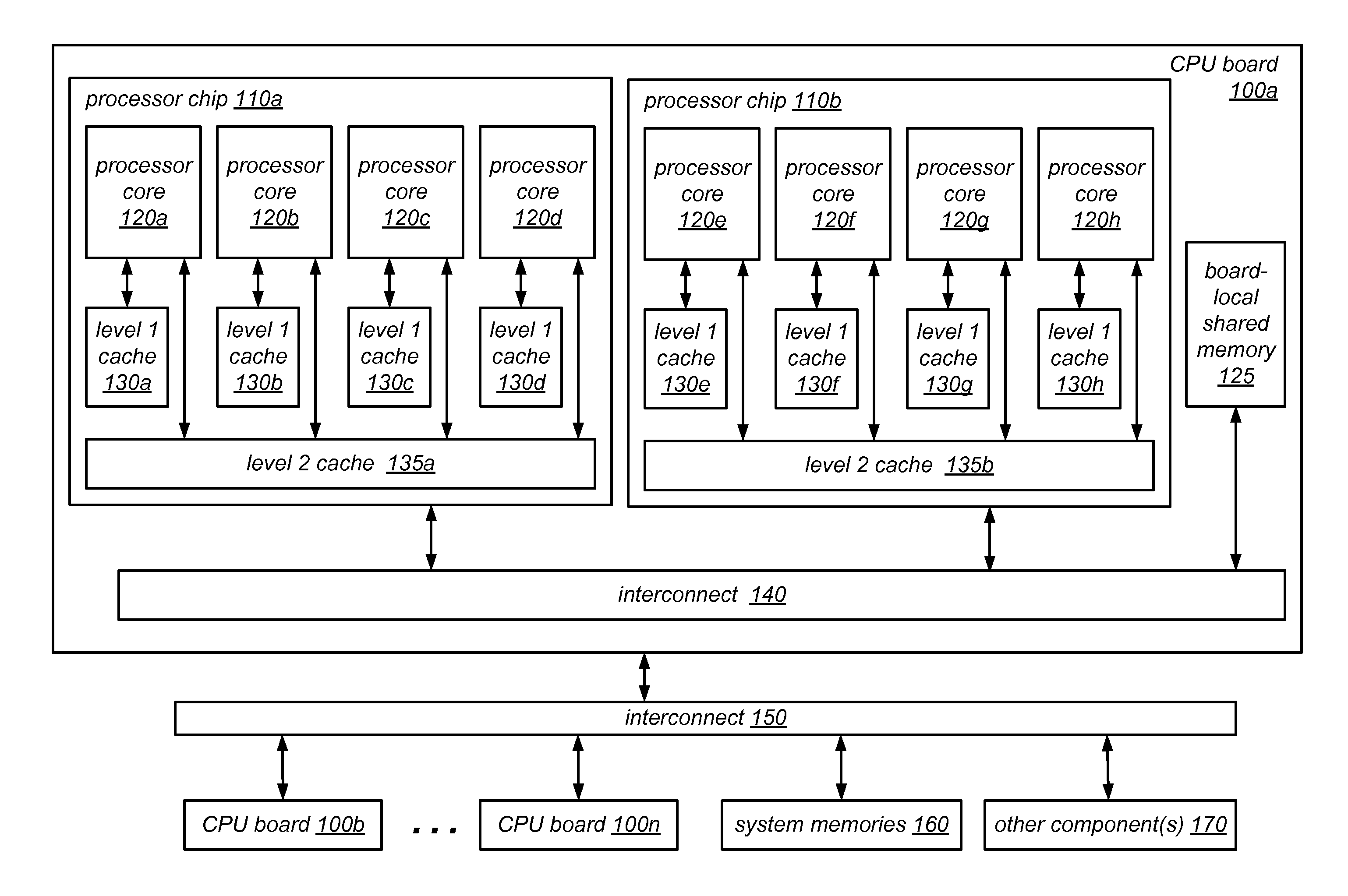

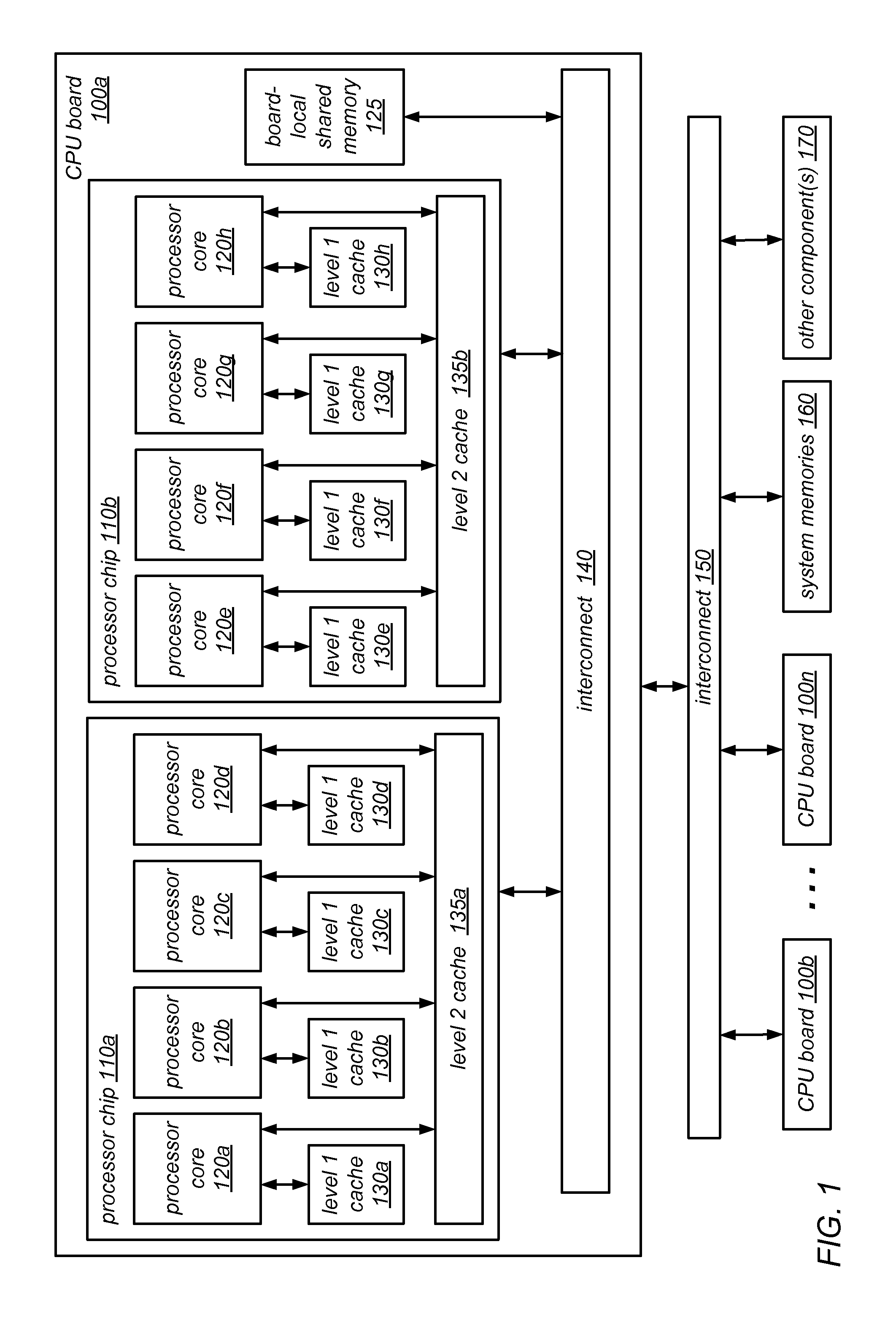

ActiveUS20050177831A1Efficient subsequent executionEliminate “ live-lock ” situationMultiprogramming arrangementsConcurrent instruction executionCritical sectionShared memory

Hardware resolution of data conflicts in critical sections of programs executed in shared memory computer architectures are resolved using a hardware-based ordering system and without acquisition of the lock variable.

Owner:WISCONSIN ALUMNI RES FOUND

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com