User terminal and object tracking method and device thereof

A target tracking and target technology, which is applied in image analysis, image enhancement, instruments, etc., can solve the problem of tracking target scale changes and real-time tracking, which cannot meet the requirements of real-time tracking, and a single feature cannot adapt to multiple different scenarios. And other issues

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0114] As described below, an embodiment of the present invention provides a method for object tracking.

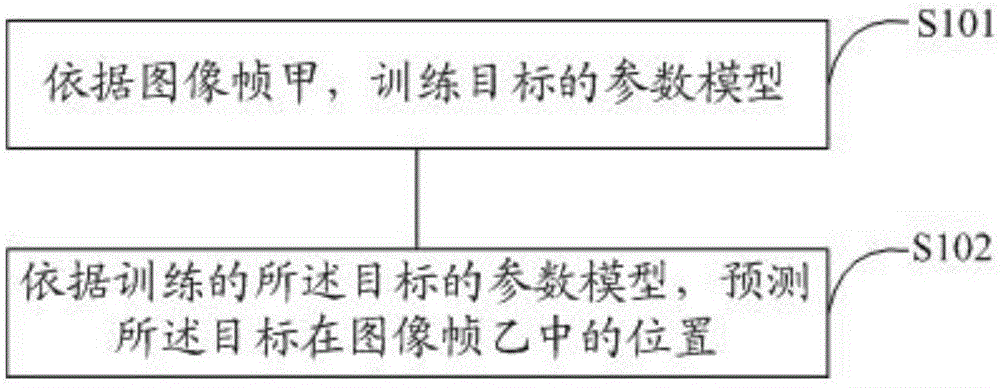

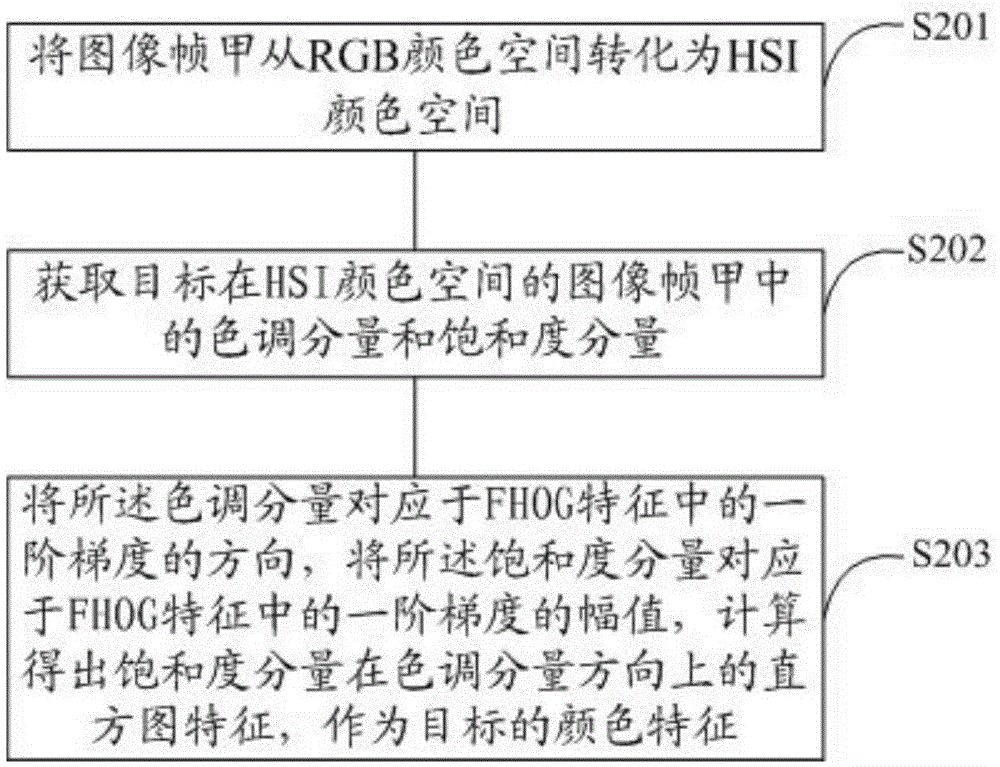

[0115] refer to figure 1 The flow chart of the target tracking method is shown in detail below through specific steps:

[0116] S101. Train the parameter model of the target according to the image frame A.

[0117] In this embodiment, under the tracking-detection framework, the correlation filter in the KCF algorithm is used, and combined features and scale estimation are added to improve the performance of the algorithm.

[0118] As mentioned earlier, the tracking-detection algorithm consists of two steps, training and detection. Training generally refers to extracting samples according to the target position of the previous frame and then using machine learning algorithms to train the parameter model. The detection is to classify the samples of the current frame according to the parameter model trained in the previous frame, predict the target position of the current f...

Embodiment 2

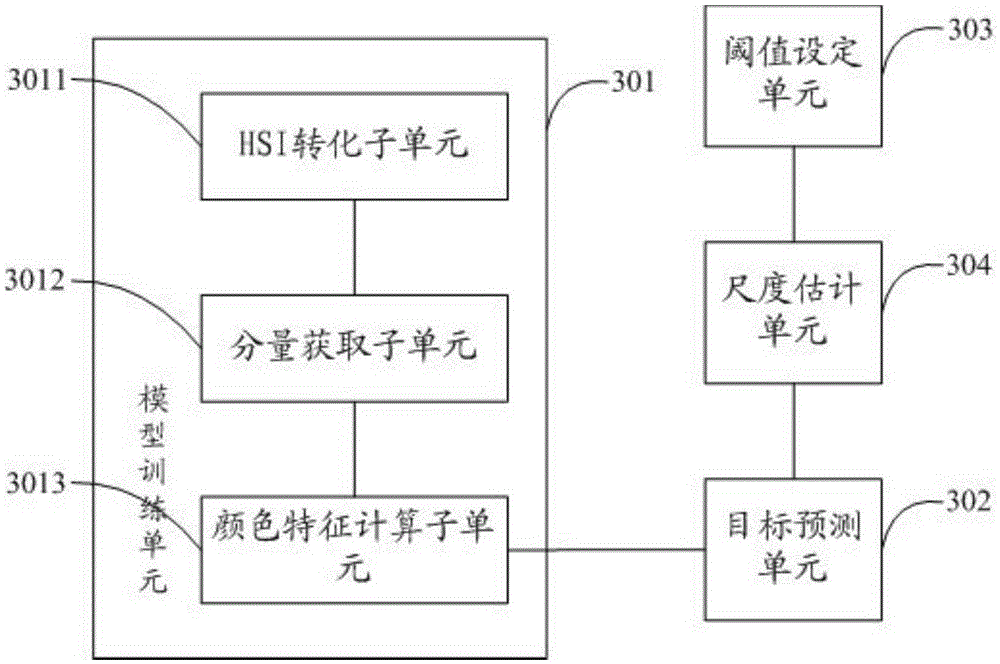

[0183] As described below, an embodiment of the present invention provides a target tracking device.

[0184] refer to image 3 The structure block diagram of the target tracking device is shown.

[0185] The target tracking device includes: a model training unit 301 and a target prediction unit 302; wherein the main functions of each unit are as follows:

[0186] The model training unit 301 is adapted to train the parameter model of the target according to the image frame A;

[0187] The target prediction unit 302 is adapted to predict the position of the target in the image frame B according to the trained parameter model of the target after the operation performed by the model training unit 301;

[0188] The parameter model of the training target includes: a first parameter model of the training target and a second parameter model of the training target;

[0189] The first parameter model of the training target includes: training the first parameter model of the target t...

Embodiment 3

[0233] As described below, an embodiment of the present invention provides a user terminal.

[0234] The difference from the prior art is that the user terminal further includes the target tracking device provided in the embodiment of the present invention. Therefore, when the user terminal is tracking the target, it uses the correlation filter in the KCF algorithm under the tracking-detection framework, and adds the combination feature composed of FHOG feature and (HSI) color feature to improve the performance of the algorithm. This scheme is a target tracking algorithm that uses a variety of features to express information. It not only has high real-time performance, but also can effectively deal with the adverse effects of complex background, illumination, non-rigid transformation and other unfavorable factors on target tracking.

[0235] In a specific implementation, the user terminal may be a smart phone or a tablet computer.

[0236] Those of ordinary skill in the art c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com