Patents

Literature

187 results about "Ycbcr color space" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

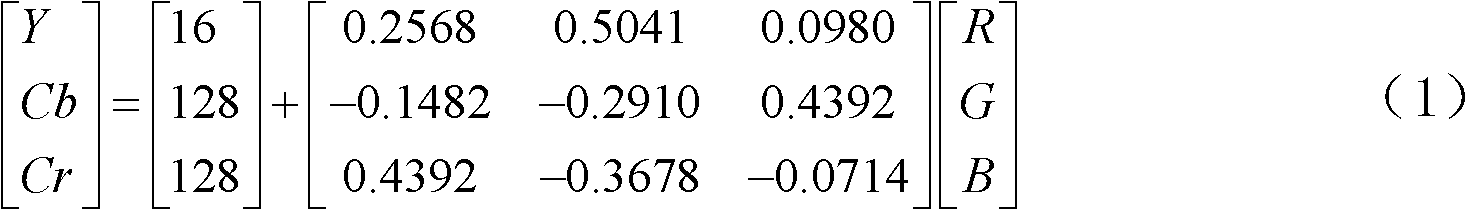

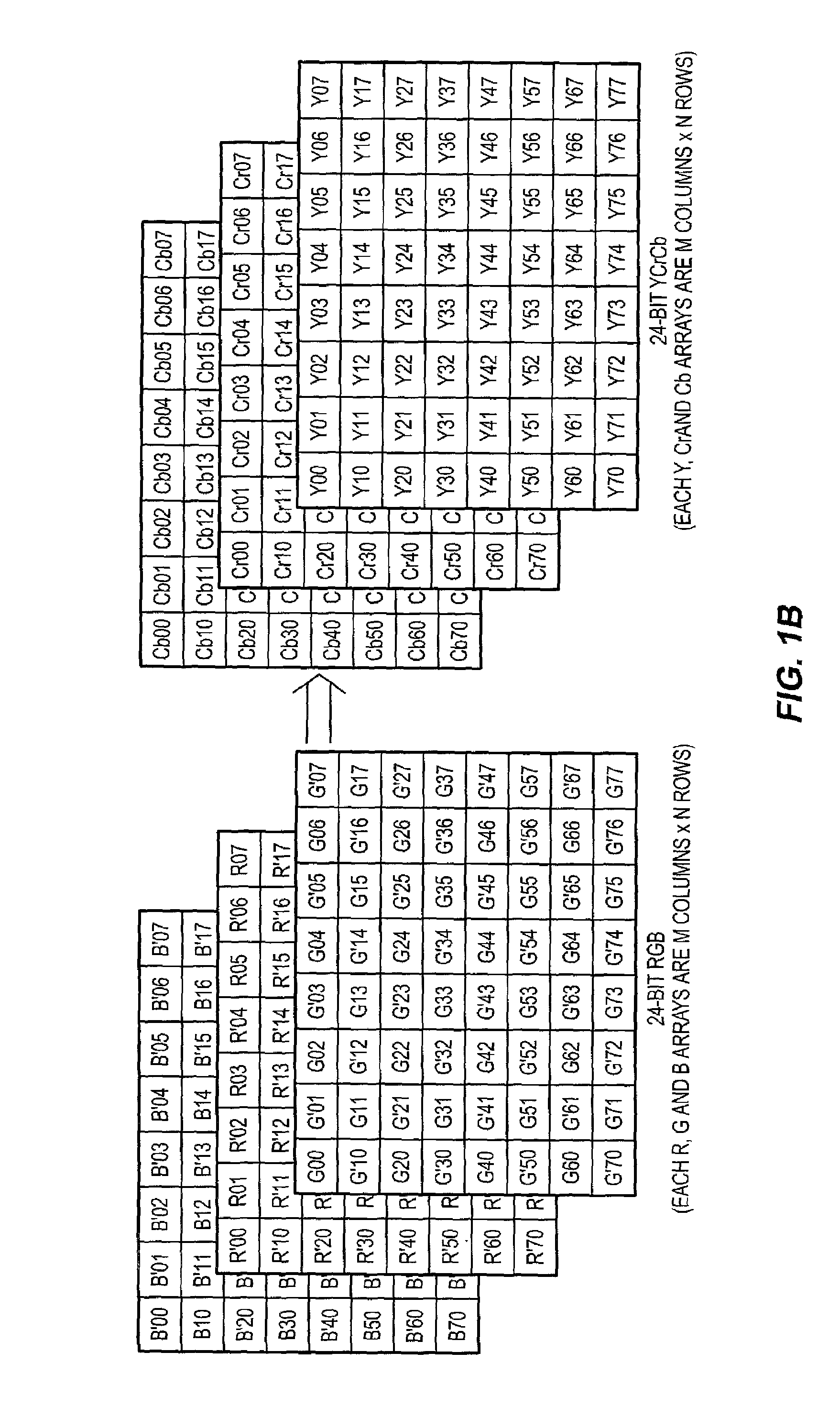

The YCbCr color space is widely used for digital video. In this format, luminance information is stored as a single component (Y), and chrominance information is stored as two color-difference components (Cb and Cr). Cb represents the difference between the blue component and a reference value.

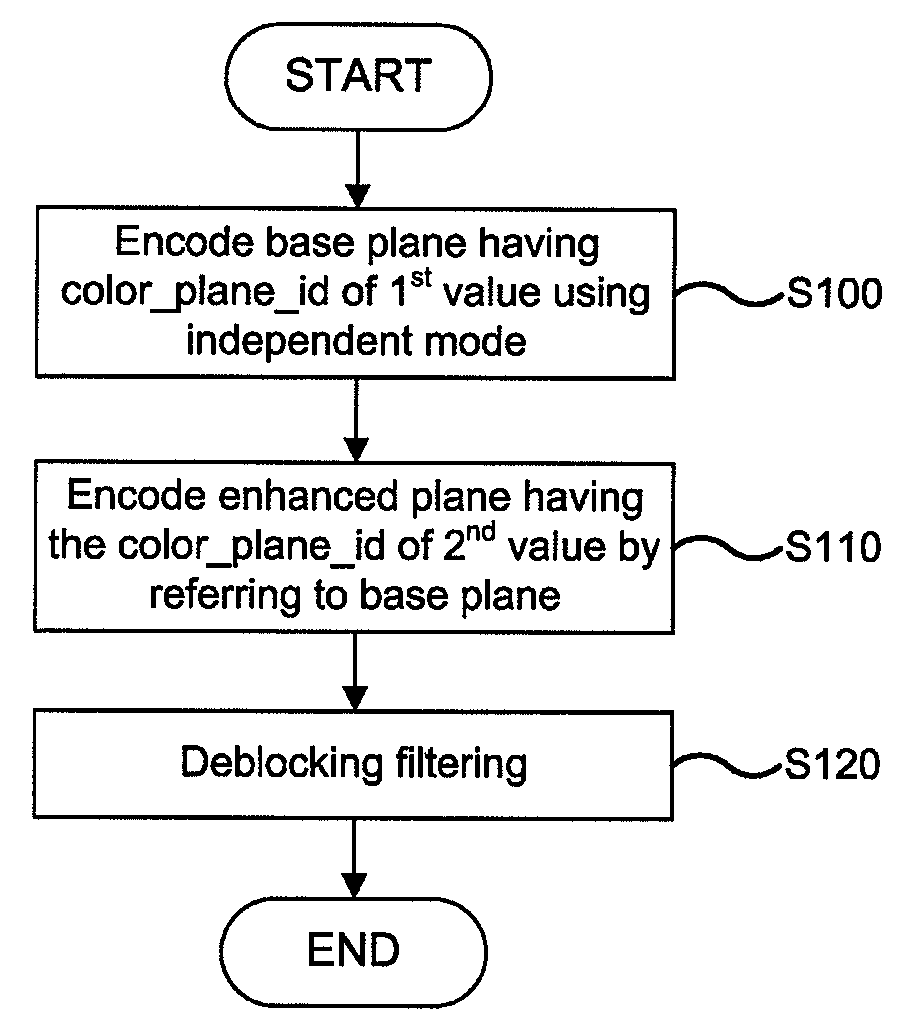

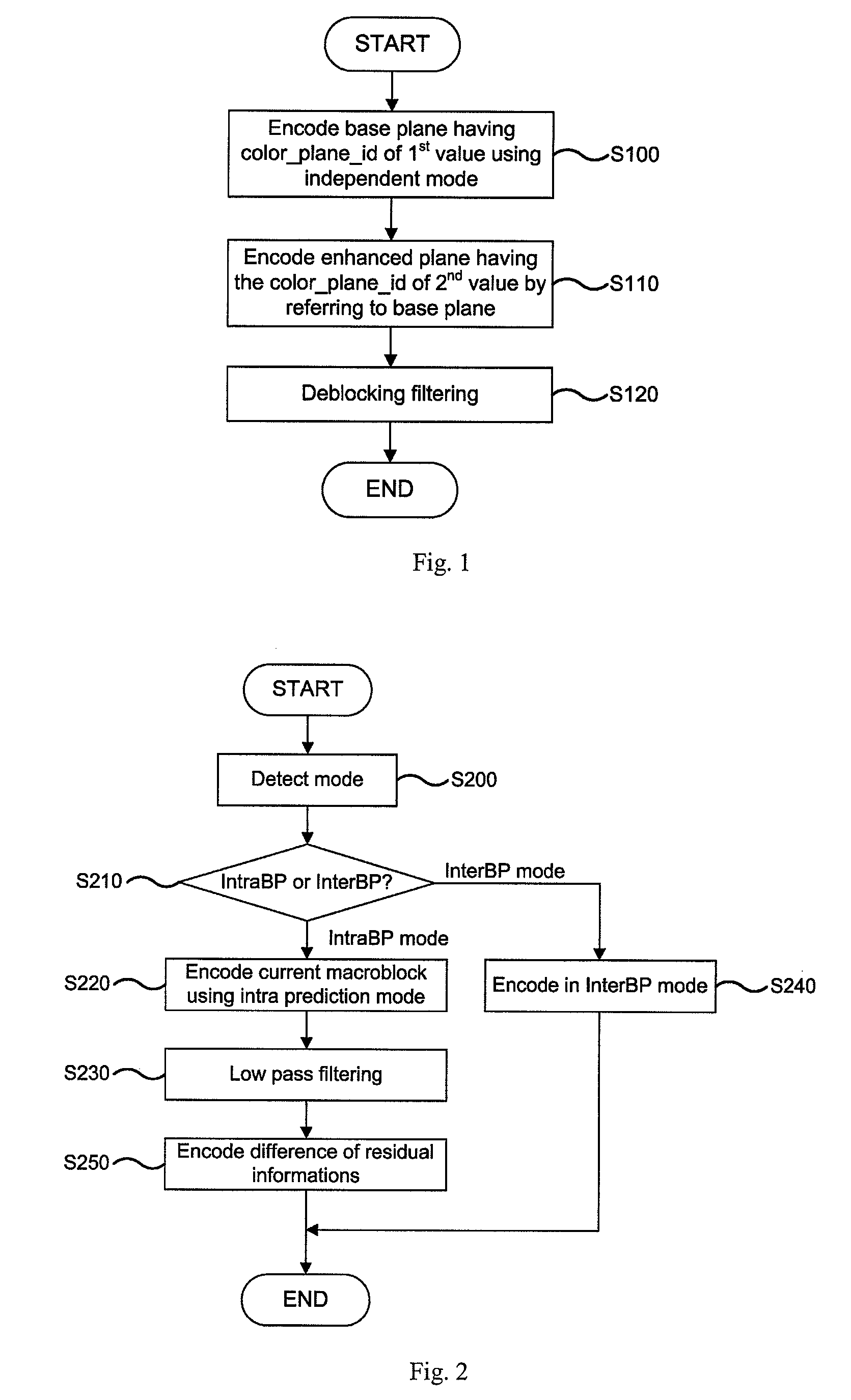

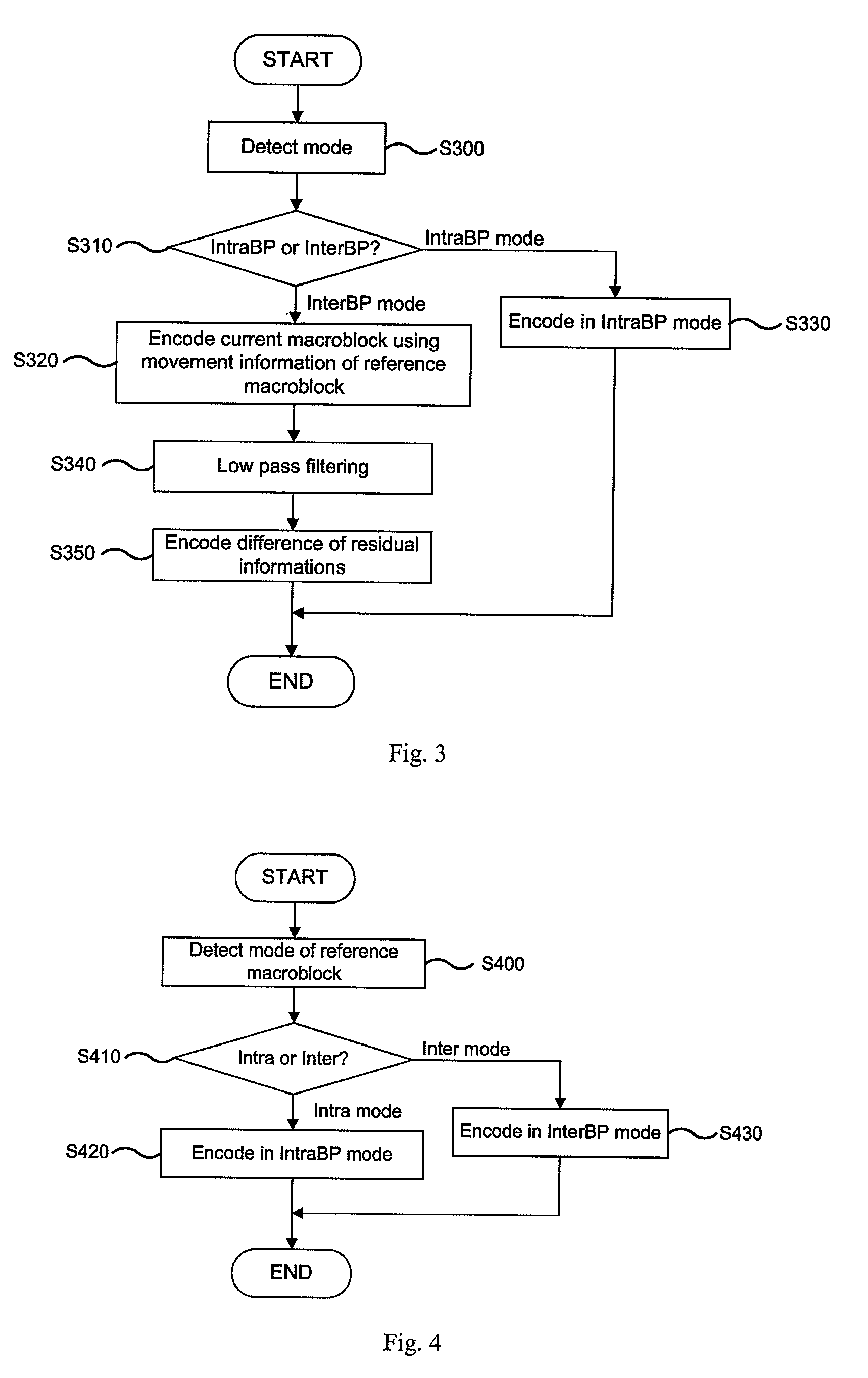

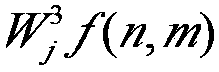

Method for Coding RGB Color Space Signal

InactiveUS20080298694A1Reduce redundancyIncrease the compression ratioColor television with pulse code modulationCharacter and pattern recognitionPattern recognitionYcbcr color space

A method for coding an RGB color space signal is disclosed. In accordance with the method, a base plane is encoded using an independent mode, and an enhanced plane is encoded by referring to the base plane without converting the RGB color space signal into YCbCr color space signal to reduce a redundancy between RGB planes and improve a compression ratio of an image.

Owner:KOREA ELECTRONICS TECH INST

Face detection and recognition method based on skin color segmentation and template matching

InactiveCN103632132AImprove accuracyReduce dimensionalityCharacter and pattern recognitionTemplate matchingFace detection

The invention discloses a face detection and recognition method based on skin color segmentation and template matching. A face is segmented with the use of a face skin mixing Gauss model according to the clustering characteristics of face skin color in an YCbCr color space and a region of which the color is close to face skin color is separated from an image so as to achieve the purpose of quickly detecting the outer face of a face. A light compensation technology is used to overcome the influence of brightness on face detection and recognition. Adaptive template matching is used to overcome the influence of a skin-color-alike background on face detection and recognition. A secondary matching algorithm is adopted to reduce the amount of computation during the matching process, and singular value features of a face image are extracted to recognize a face and achieve the purpose of reducing the number of dimensions of a characteristic value matrix in the process of face feature extraction. The face detection and recognition method can quickly detect a face, improves the accuracy of face detection and recognition, is strong in practicability and has strong popularization and application values.

Owner:GUANGXI UNIVERSITY OF TECHNOLOGY

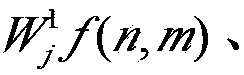

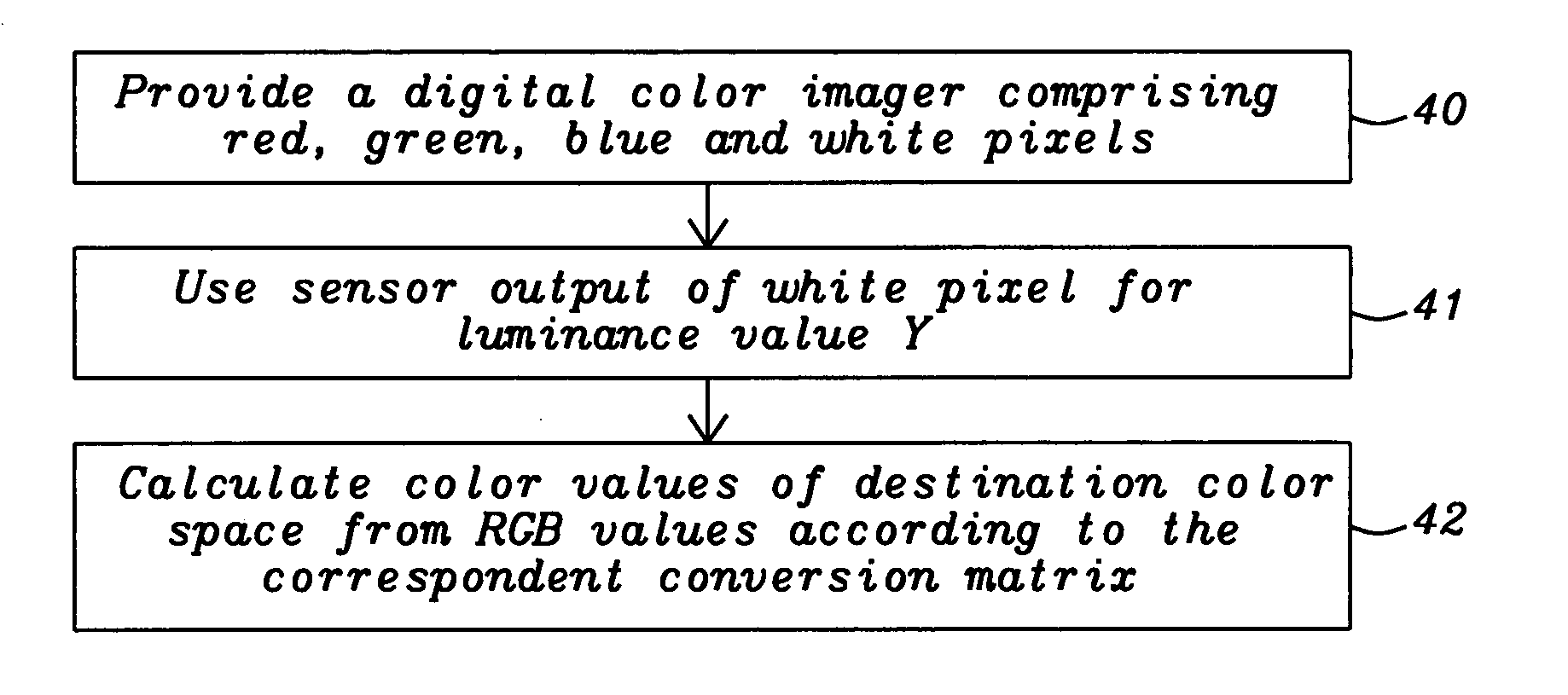

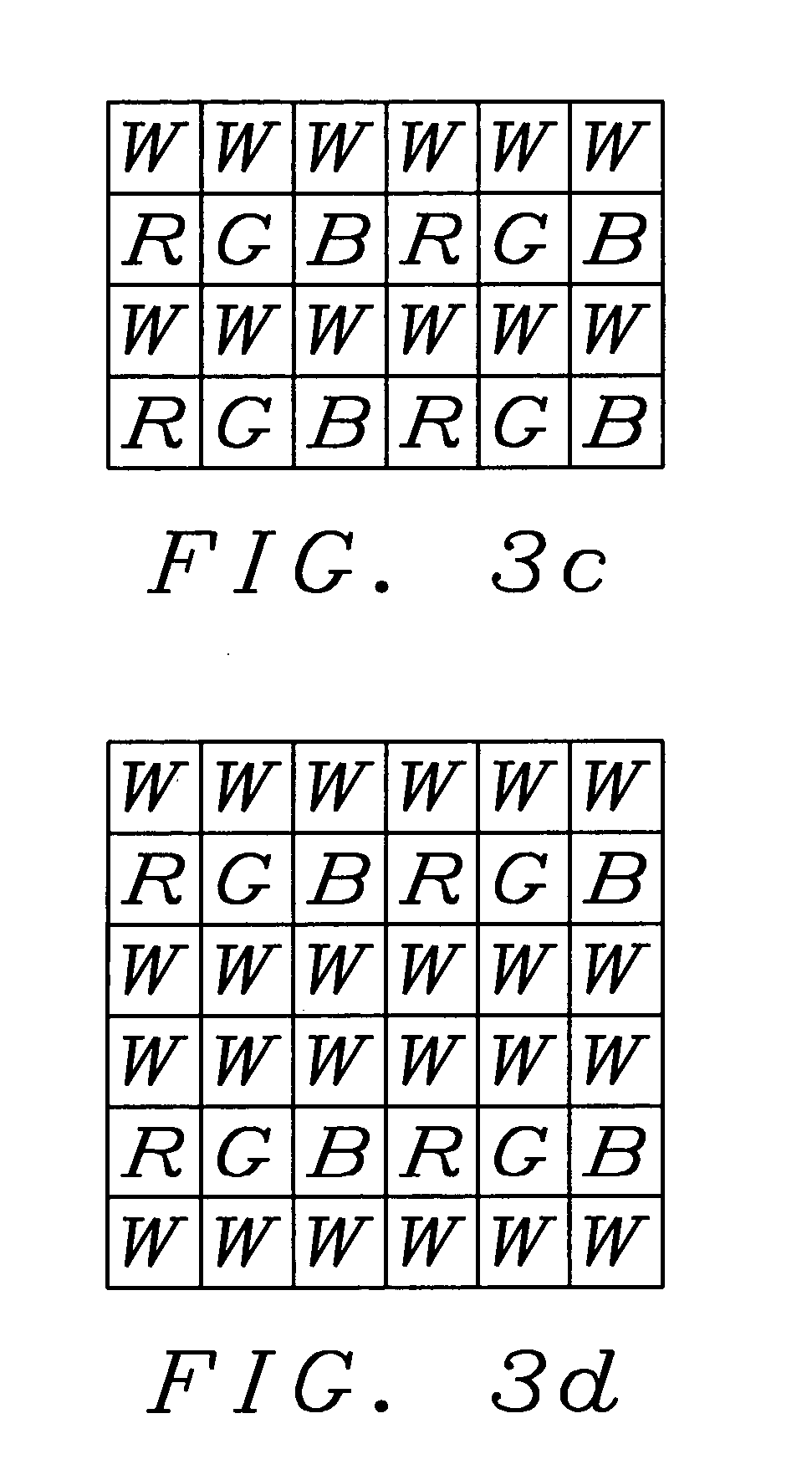

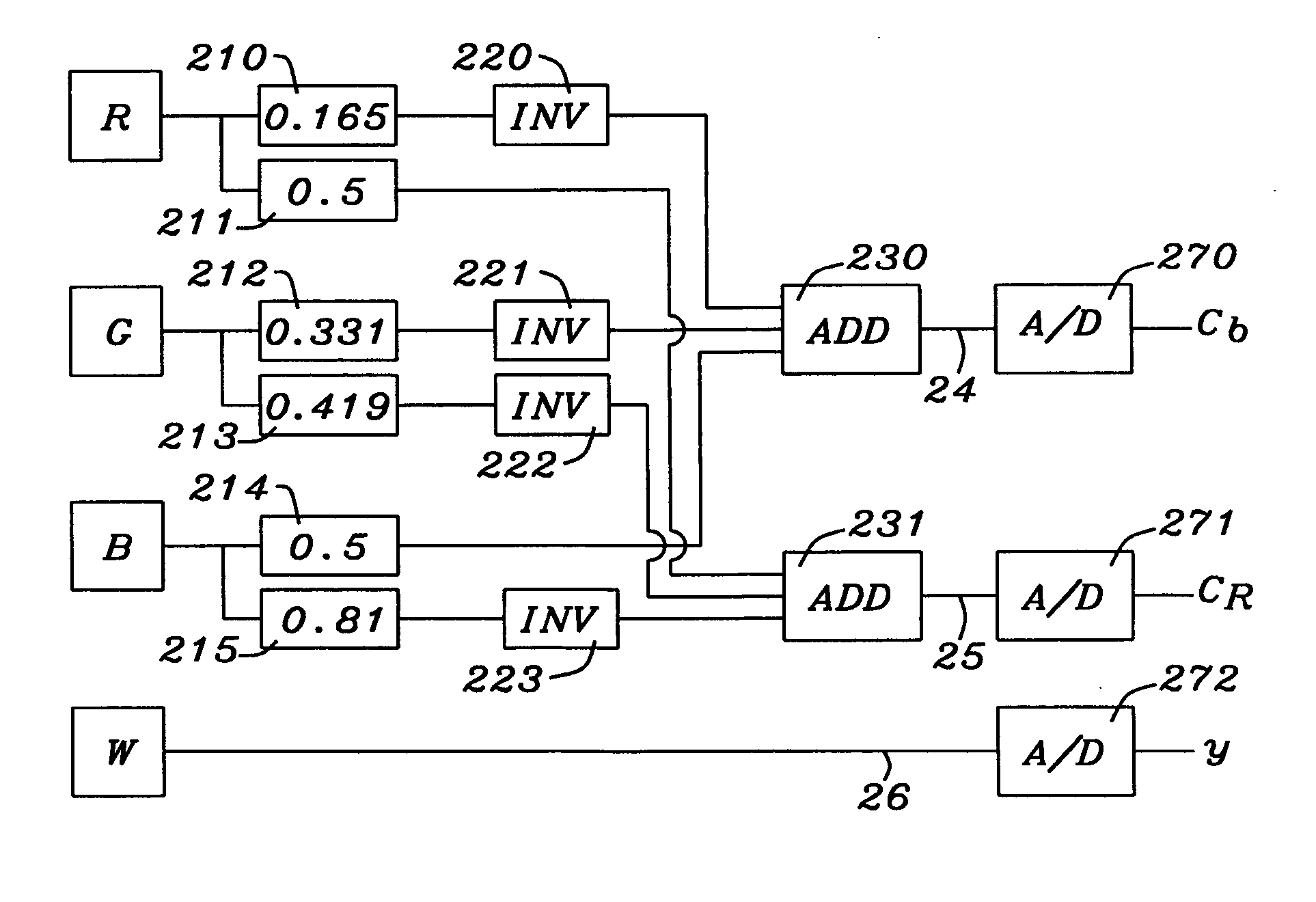

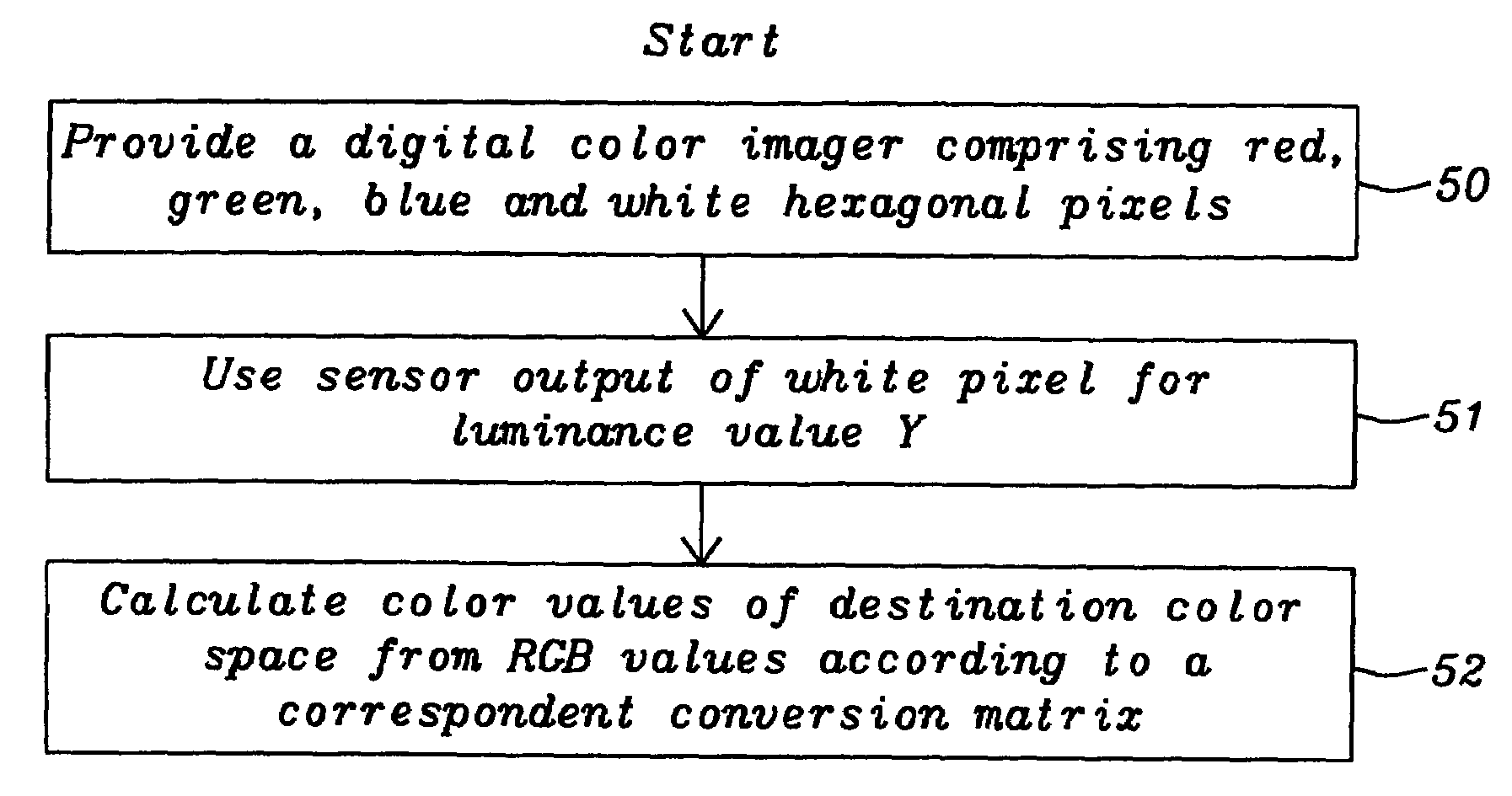

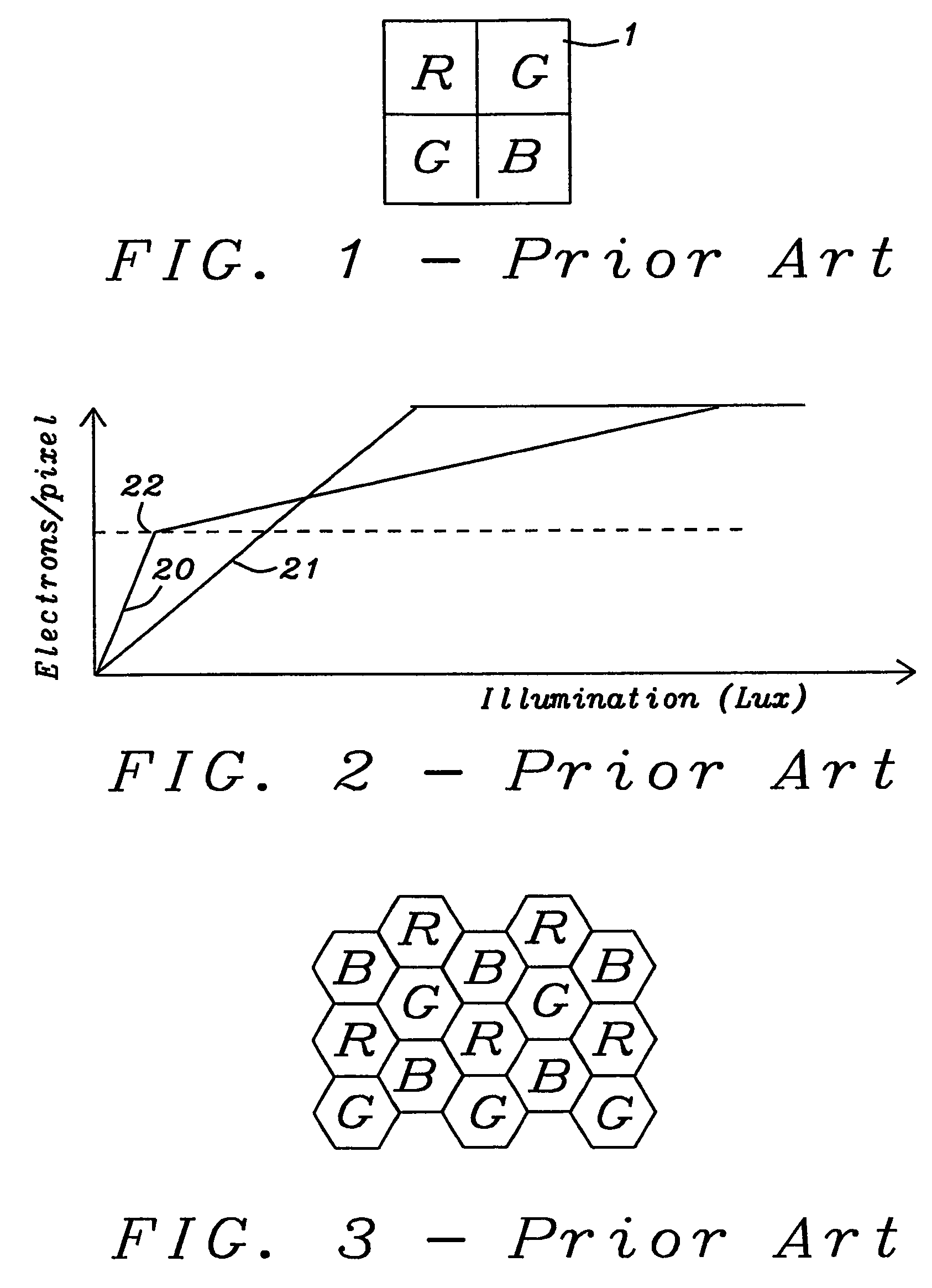

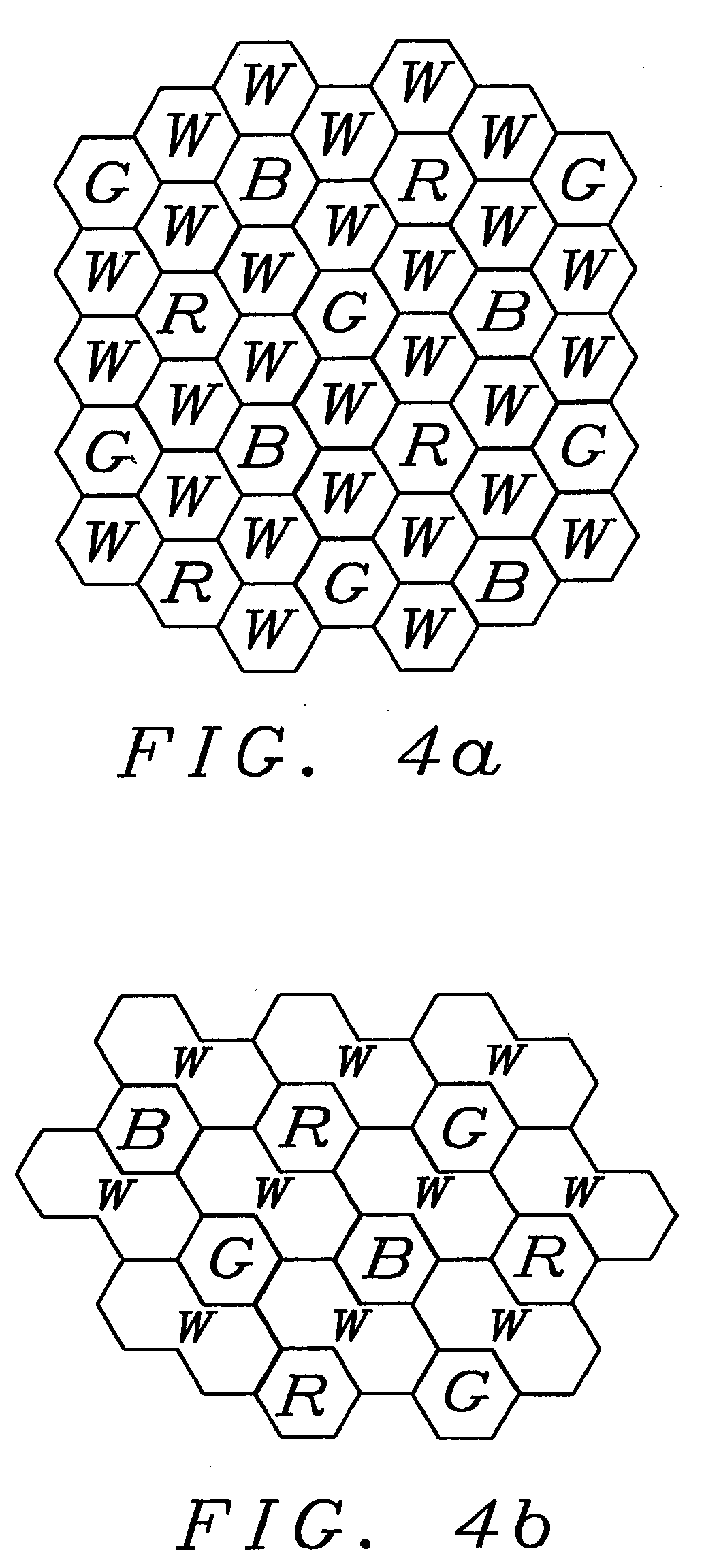

Extended dynamic range in color imagers

ActiveUS20050248667A1Increase the luminous rangeGood colorTelevision system detailsTelevision system scanning detailsYcbcr color spaceLarge size

A digital color imager providing an extended luminance range, an improved color implementation and enabling a method for an easy transformation into another color space having luminance as a component has been achieved. Key of the invention is the addition of white pixels to red, green and blue pixels. These white pixels have either an extended dynamic rang as described by U.S. patent (U.S. Pat. No. 6,441,852 to Levine et al.) or have a larger size than the red, green, or blue pixels used. The output of said white pixels can be directly used for the luminance values Y of the destination color space. Therefore only the color values and have to be calculated from the RGB values, leading to an easier and faster calculation. As an example chosen by the inventor the conversion to YCbCr color space has been shown in detail.

Owner:GULA CONSULTING LLC

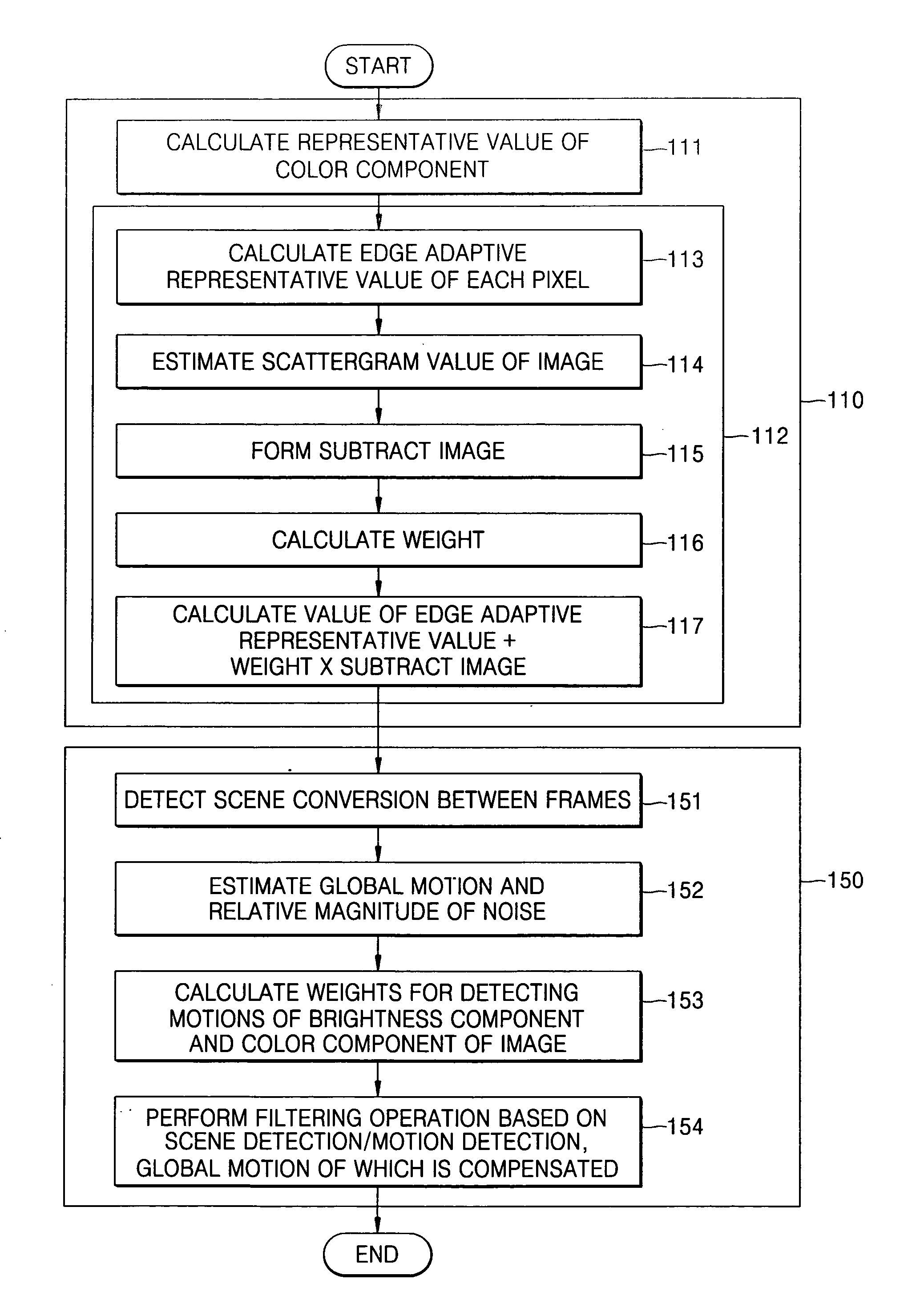

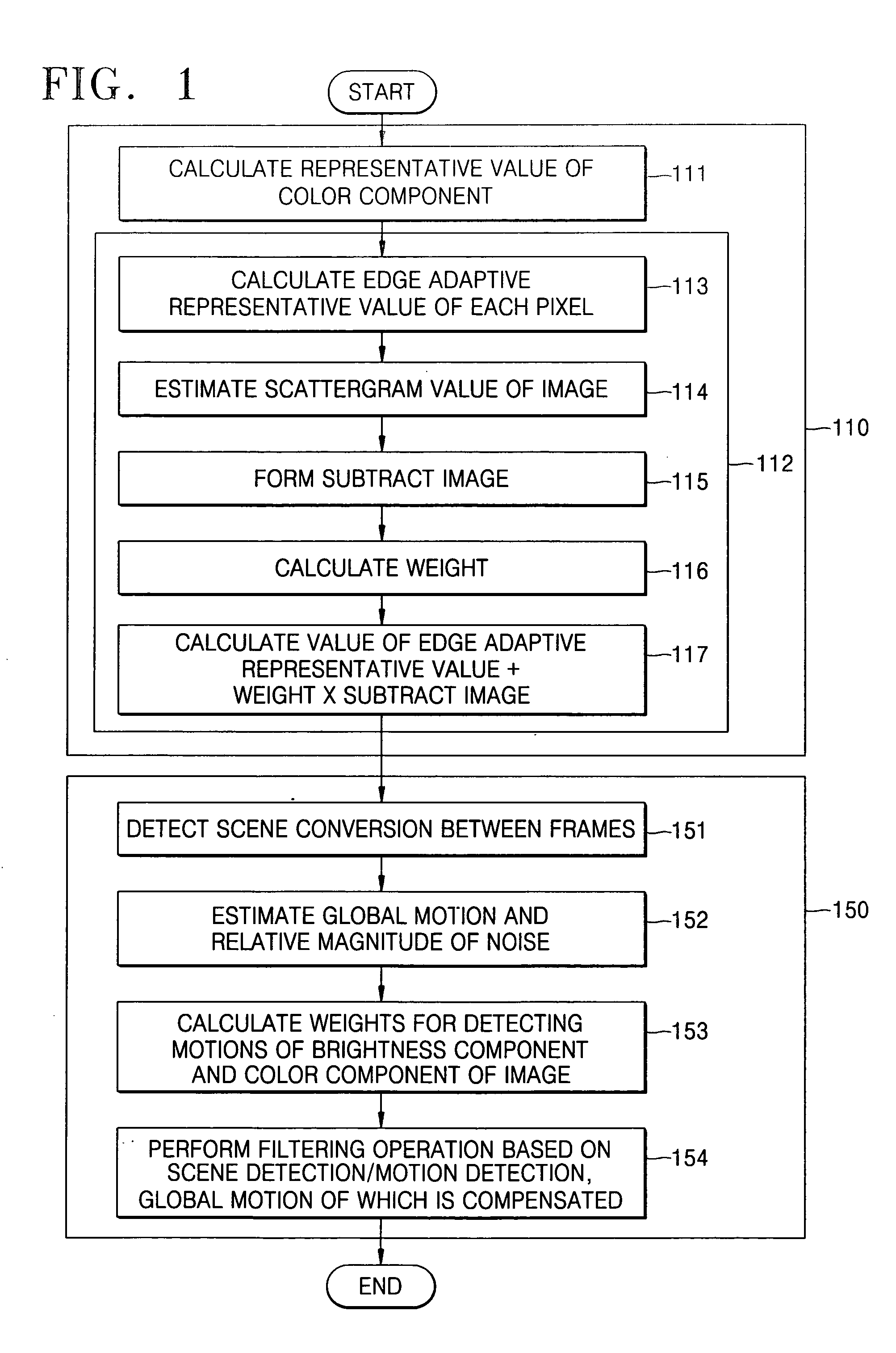

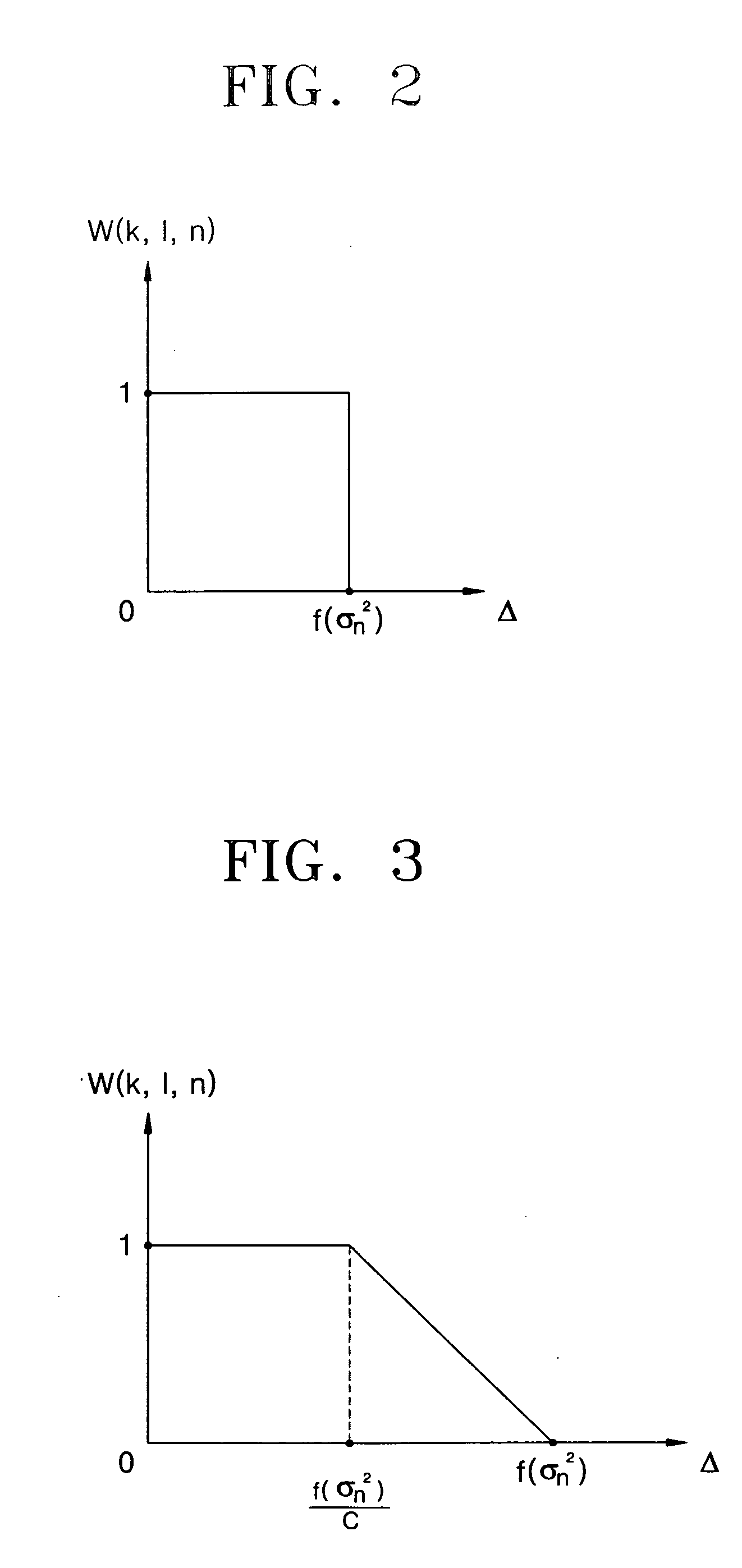

Method of removing noise from digital moving picture data

ActiveUS20050128355A1Cancel noiseMinimize the numberImage enhancementTelevision system detailsPattern recognitionYcbcr color space

Provided is a method of removing noise from digital moving picture data reducing the number of frames used in a temporal filtering operation and able to detect motion between frames easily. The method comprises a method of spatial filtering, a method of temporal filtering, and a method of performing the spatial filtering and the temporal filtering sequentially. The spatial filtering method applies a spatial filtering in a YCbCr color space, preserving a contour / edge in the image in the spatial domain, and generating a weight that is adaptive to the noise for discriminating the contour / edge in the temporal filtering operation. The temporal filtering method applies temporal filtering based on motion detection and scene change detection, compensating for global motion, the motion detection considering the brightness difference and color difference of the pixels compared between frames in the temporal filtering operation, and a weight that is adaptive to the noise for detecting the motion in the temporal filtering operation. The spatial filtering method is preferably performed first, and the temporal filtering method is performed with the result of the spatial filtering.

Owner:SAMSUNG ELECTRONICS CO LTD +1

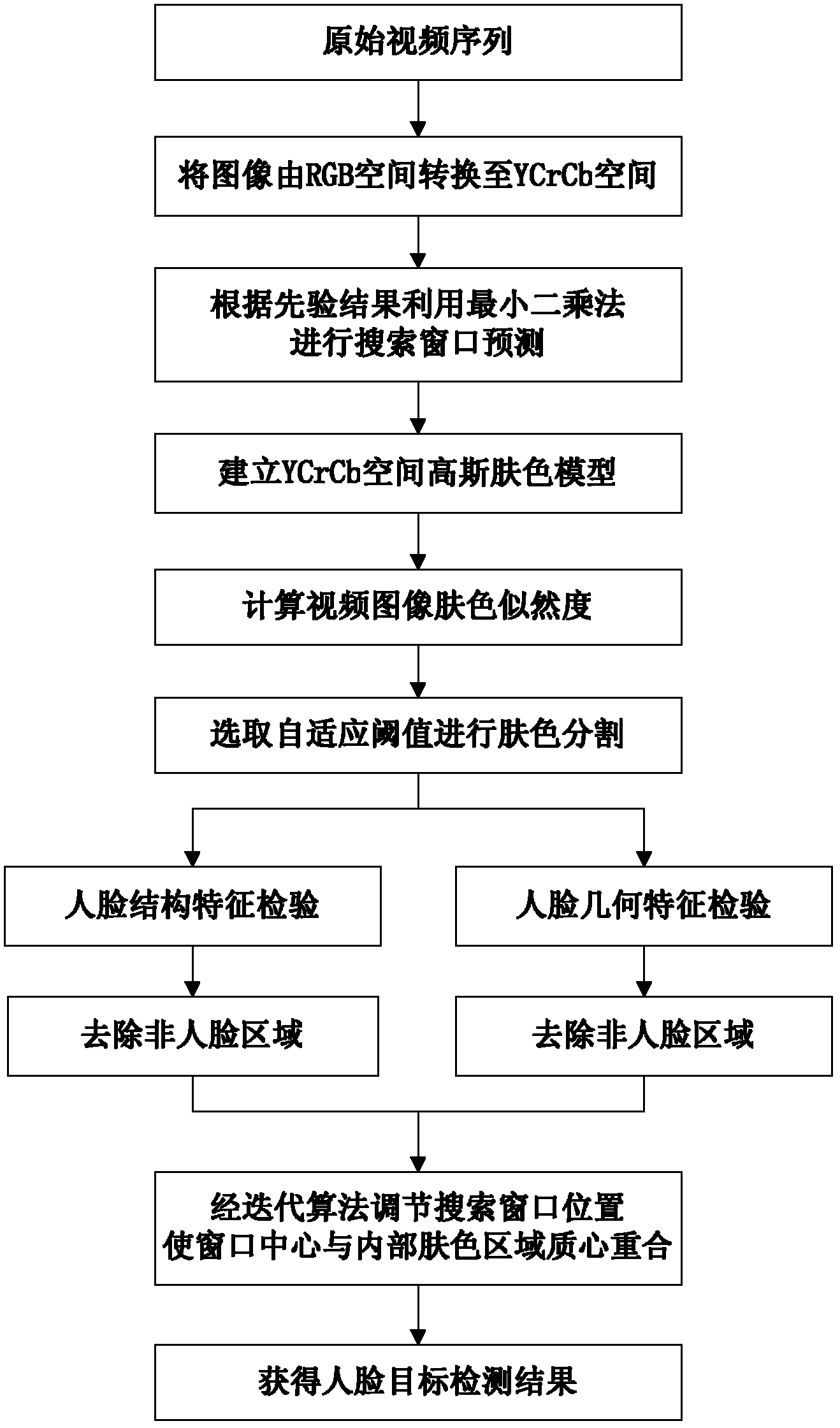

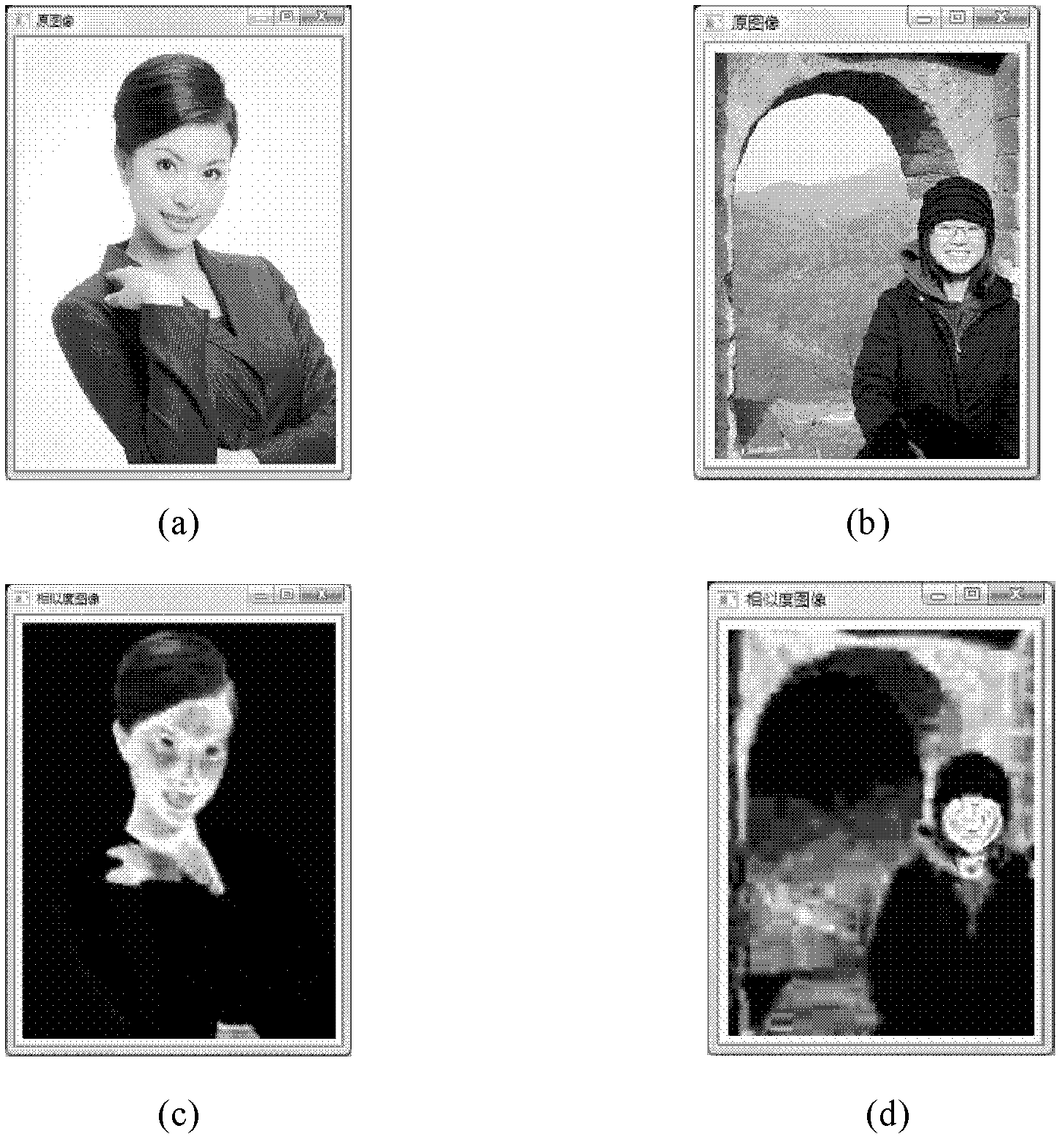

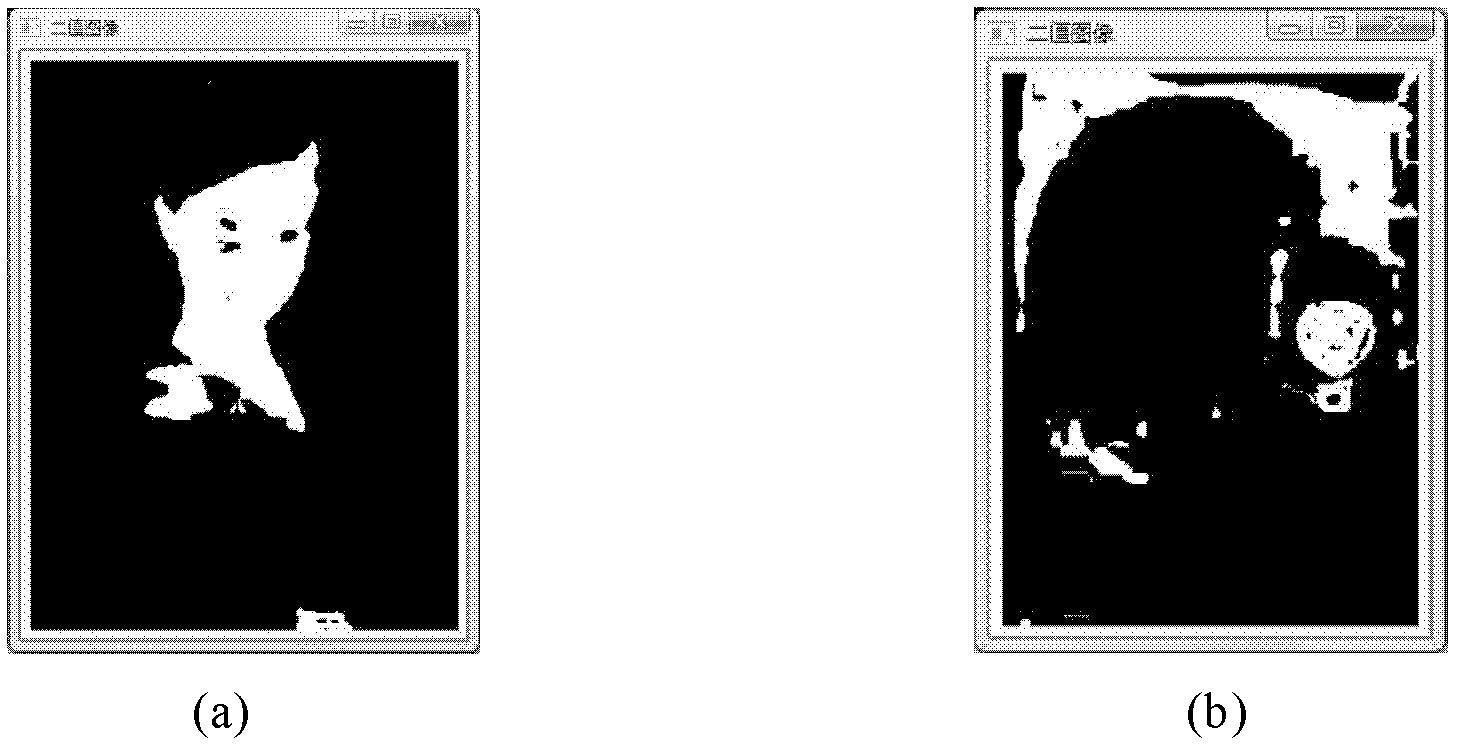

Human face detection and tracking method based on Gaussian skin color model and feature analysis

InactiveCN102324025AResolve interferenceAccurate identificationImage enhancementImage analysisFace detectionPattern recognition

The invention relates to a human face detection and tracking method based on a Gaussian skin color model and feature analysis. The method comprises the following steps of: firstly, conducting statistics to a large quantity of human face image data and constructing a Gaussian skin color model in a YCbCr color space; then, shifting a video image sequence to the YCbCr space from an RGB (Red, Green and Blue) space, working out a skin color likelihood graph by using the Gaussian model, selecting adaptive threshold values to conduct skin color segmentation and using the geometric features and the structural features of human faces on the basis to realize accurate human face detection; and finally, adopting an improved CAMShift algorithm to track the human faces to realize the rapid detection ofthe human faces in a video. The human face detection and tracking method provided by the invention has obvious advantages in aspects of recognition accuracy, tracking speed and robustness, and can effectively solve the problem in the human face tracking under complex conditions such as the posture change and distance change of the human faces in the video, the likely skin color interference existing in a background and the like.

Owner:BEIHANG UNIV

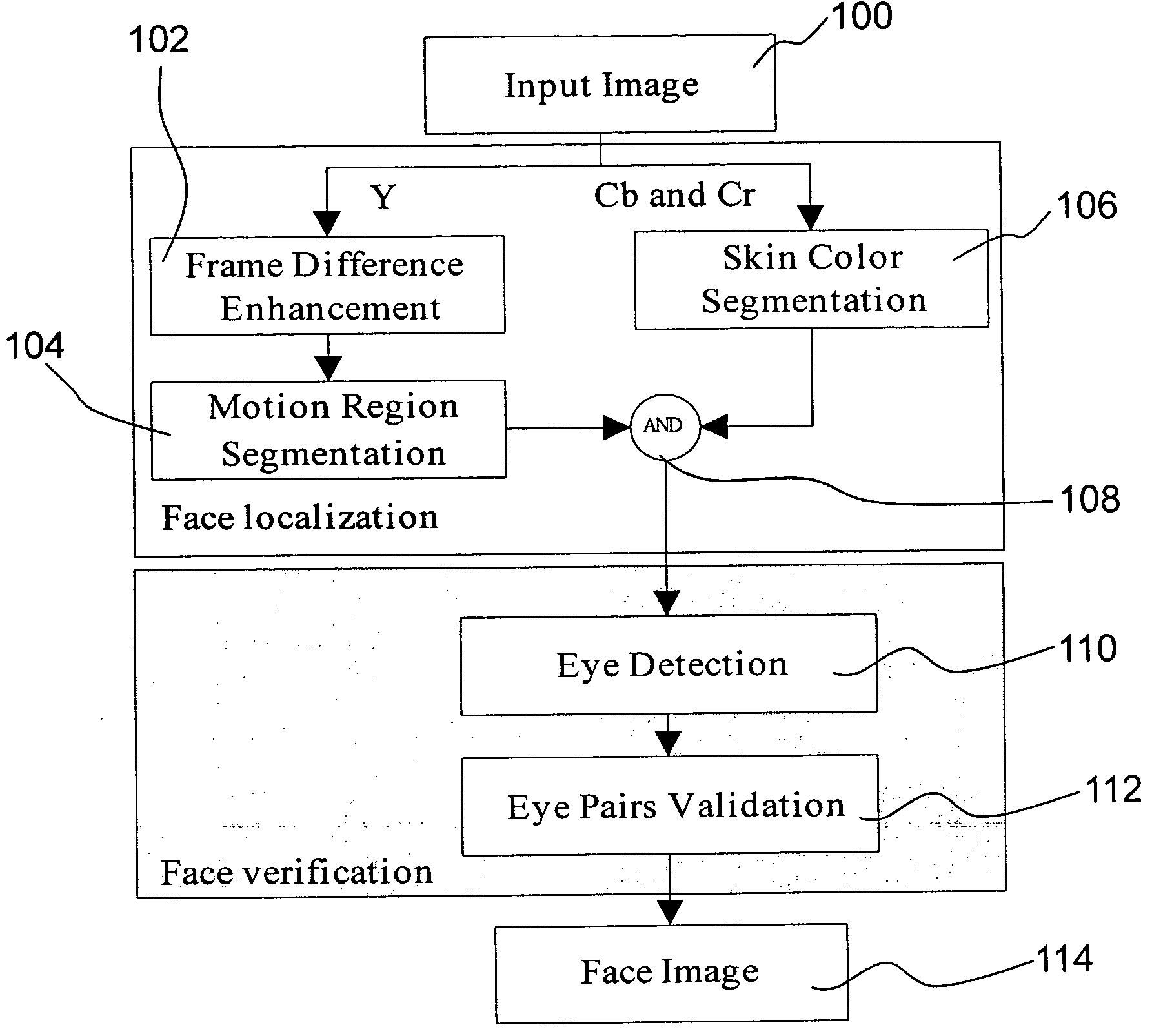

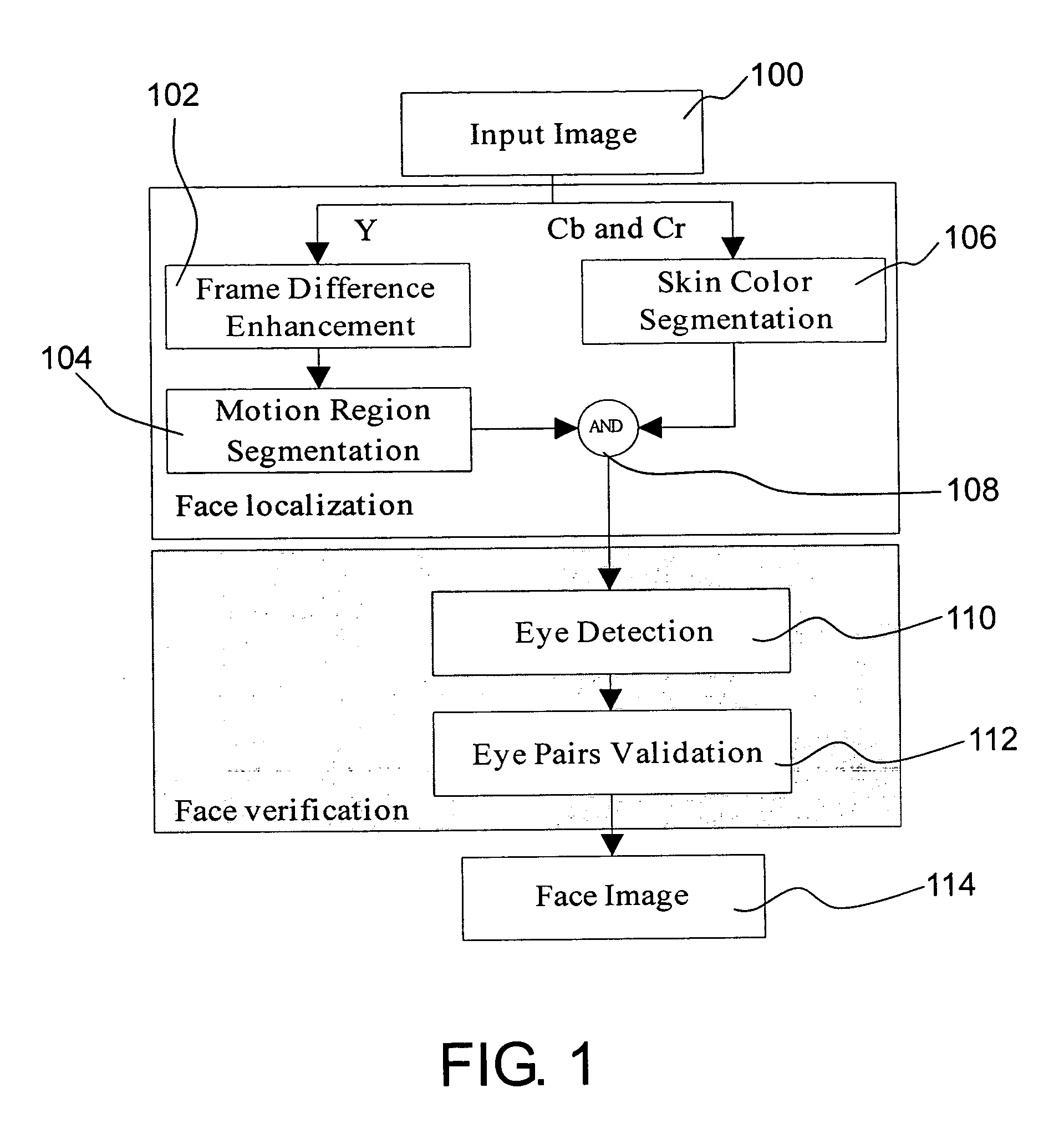

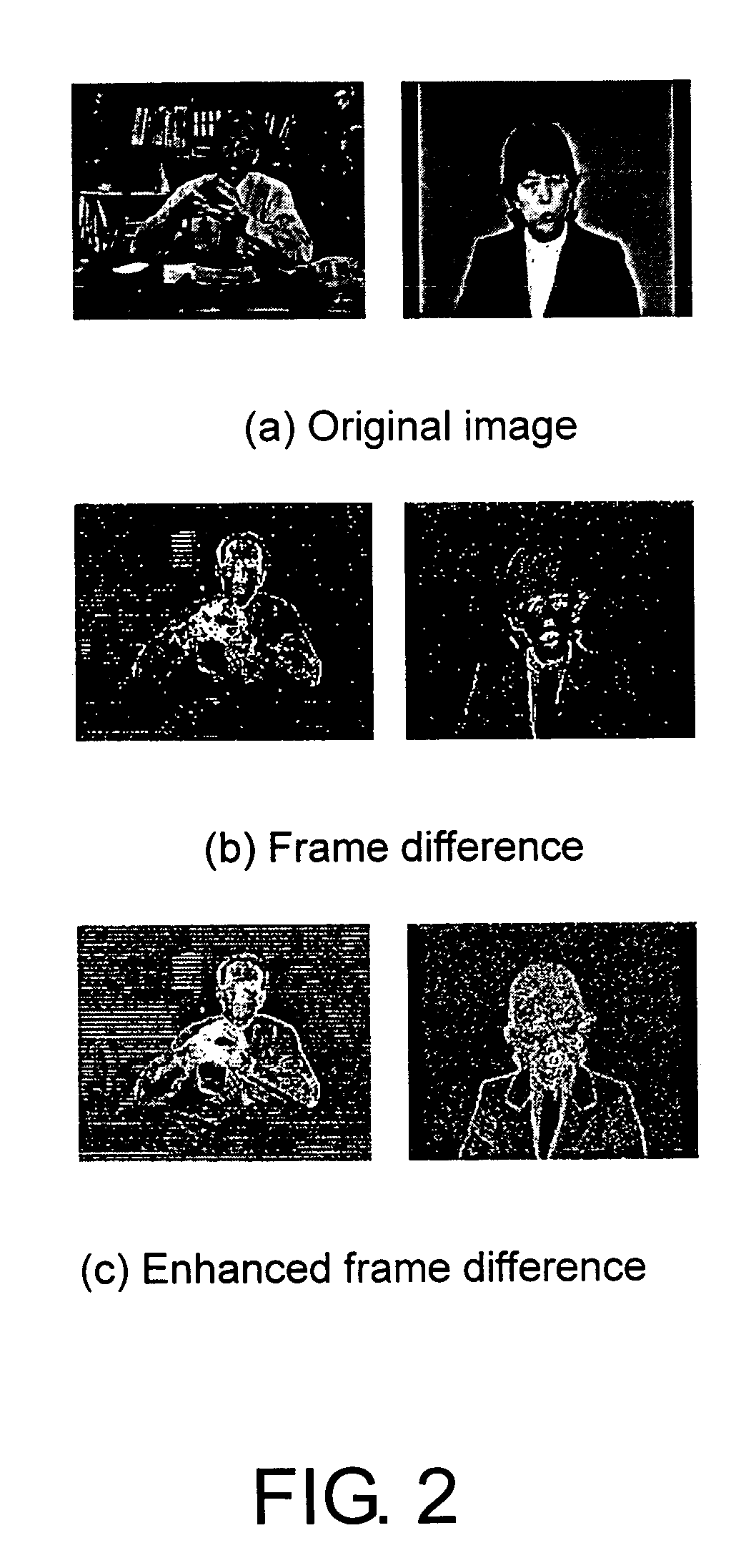

Robust face detection algorithm for real-time video sequence

InactiveUS20050063568A1Efficient and rapid detectionReduce errorsCharacter and pattern recognitionTelevision systemsSkin colorVideo sequence

The invention is directed to a face detection method. In the method, an image data in a YCbCr color space is received, wherein a Y component of the image data to analyze out a motion region and a CbCr component of the image to analyze out a skin color region. The motion region and the skin color region are combined to produce a face candidate. An eye detection process on the image is performed to detect out eye candidates. And then, an eye-pair verification process is performed to find an eye-pair candidate from the eye candidates, wherein the eye-pair candidate is also within a region of the face candidate.

Owner:LEADTEK

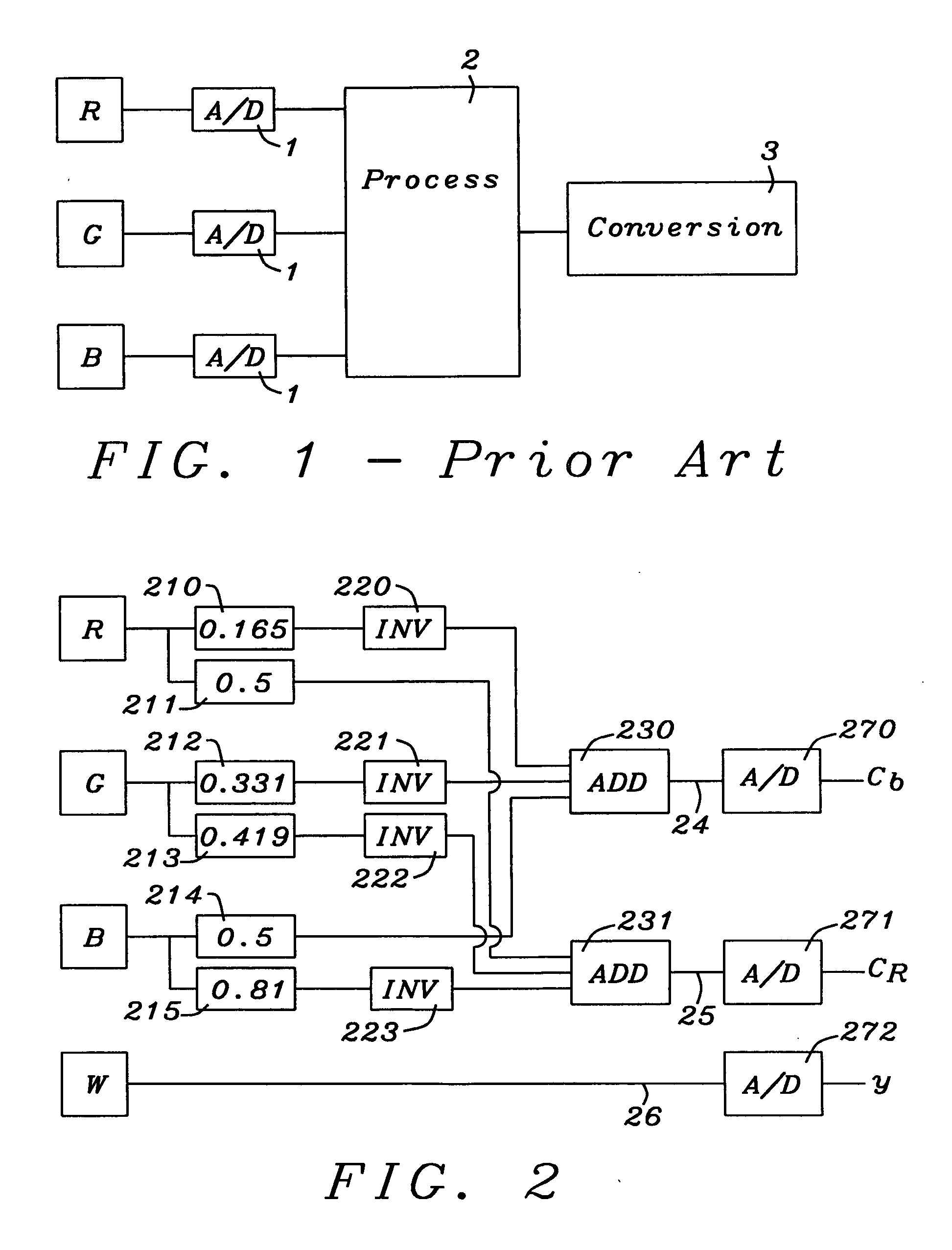

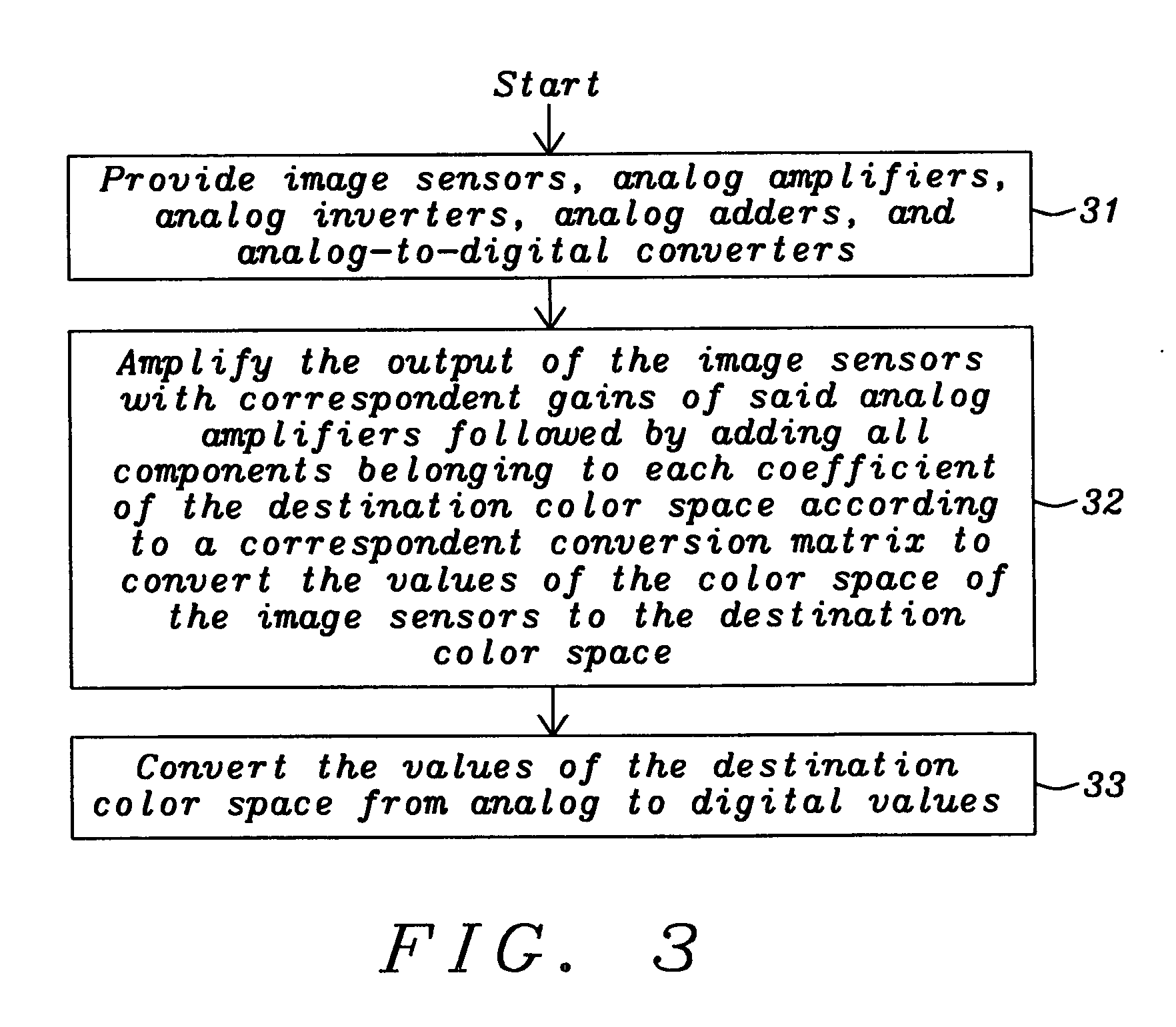

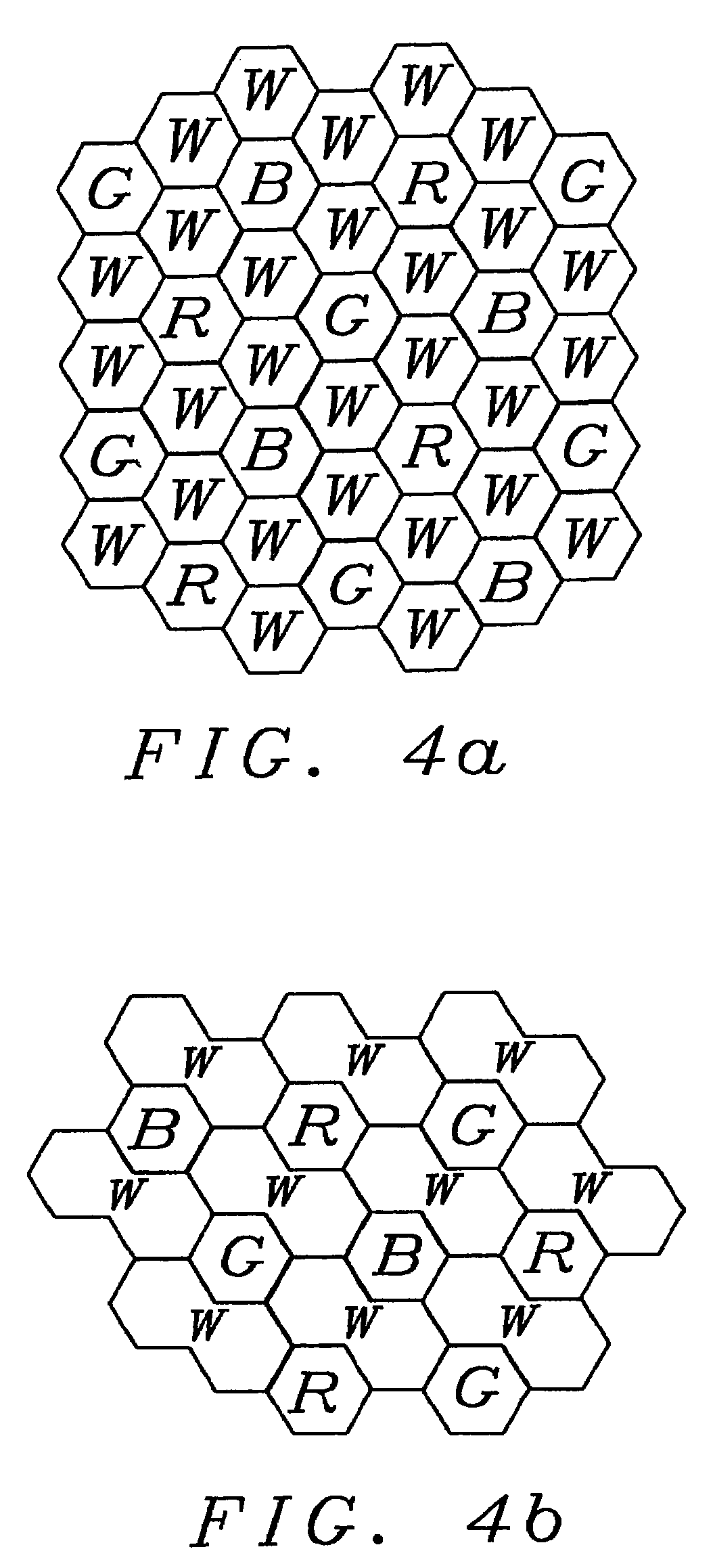

Imaging sensors

InactiveUS20050285955A1Cost-effective and fast wayFast and cost-effectiveTelevision system detailsColor signal processing circuitsPattern recognitionColor image

Systems and related methods have been achieved to convert in an analog domain the output of color image sensors into another color space. A chosen implementation converts the output of red, green, blue and white image sensors to the YcrCb color space, wherein the white image sensors are either extended dynamic range (XDR) image sensors or are of the same type as the other image sensors but have a larger size. The output of the white pixels can be used without conversion directly for the luminance Y value, thus achieving a very simple method for a conversion to YCbCr color space. Analog amplifiers, assigned to each of the red, green, and blue image sensors, have a gain according to the matrix describing the conversion from RGB to CbCr. Analog adders, assigned to Cb and Cr are adding the coefficients required for the computation of Cb and Cr. Finally the values of Y, Cb and Cr are converted to digital values. White pixels are advantageous but not required using the present invention. The present invention is also applicable for conversion to other color spaces as e.g. YIQ, YUV, CMYK, HIS, HSV, etc.

Owner:GULA CONSULTING LLC

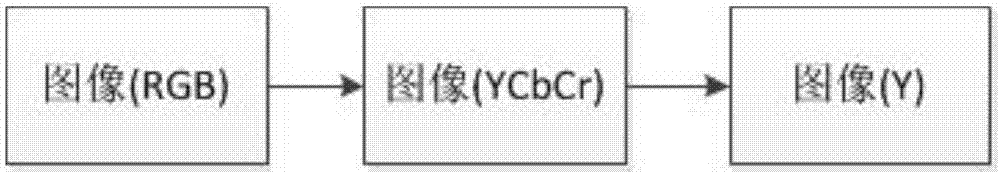

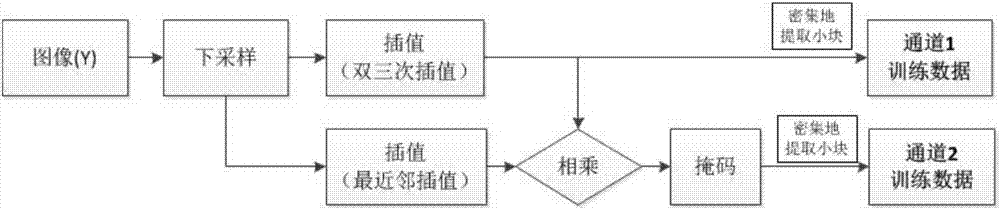

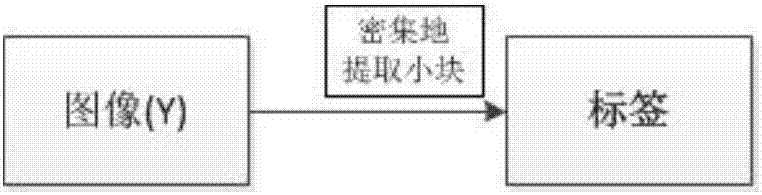

Deep-convolution-neural-network-based super-resolution reconstruction method of single image

ActiveCN106910161AReduce preprocessingGood recovery qualityGeometric image transformationNeural architecturesReconstruction methodSingle image

The invention discloses a deep-convolution-neural-network-based super-resolution reconstruction method of a single image. The method comprises: step one, carrying out pretreatment; to be specific, converting an inputted image from an RGB color space to a YCbCr color space and only take a Y channel; step two, carrying out down sampling on the image after the pretreatment at the step one and carrying out interpolation by two channels to form training data of the channel 1 and the channel 2; step three, carrying out dense small block extraction on the image after the pretreatment at the step one and using a result as a tag; step four, with a gradient descent method, and a reverse conduction algorithm, optimizing a network model continuously by using the combined training data of the channel 1 and channel 2 as the input of a deep convolution neural network model and the tag as the output of the deep convolution neural network model; and step five, inputting a low-resolution image, carrying out dual-channel interpolation and then outputting a high-resolution image by using the trained deep depth convolution neural network. The method has advantages of light structure and good recovery quality.

Owner:SOUTH CHINA UNIV OF TECH

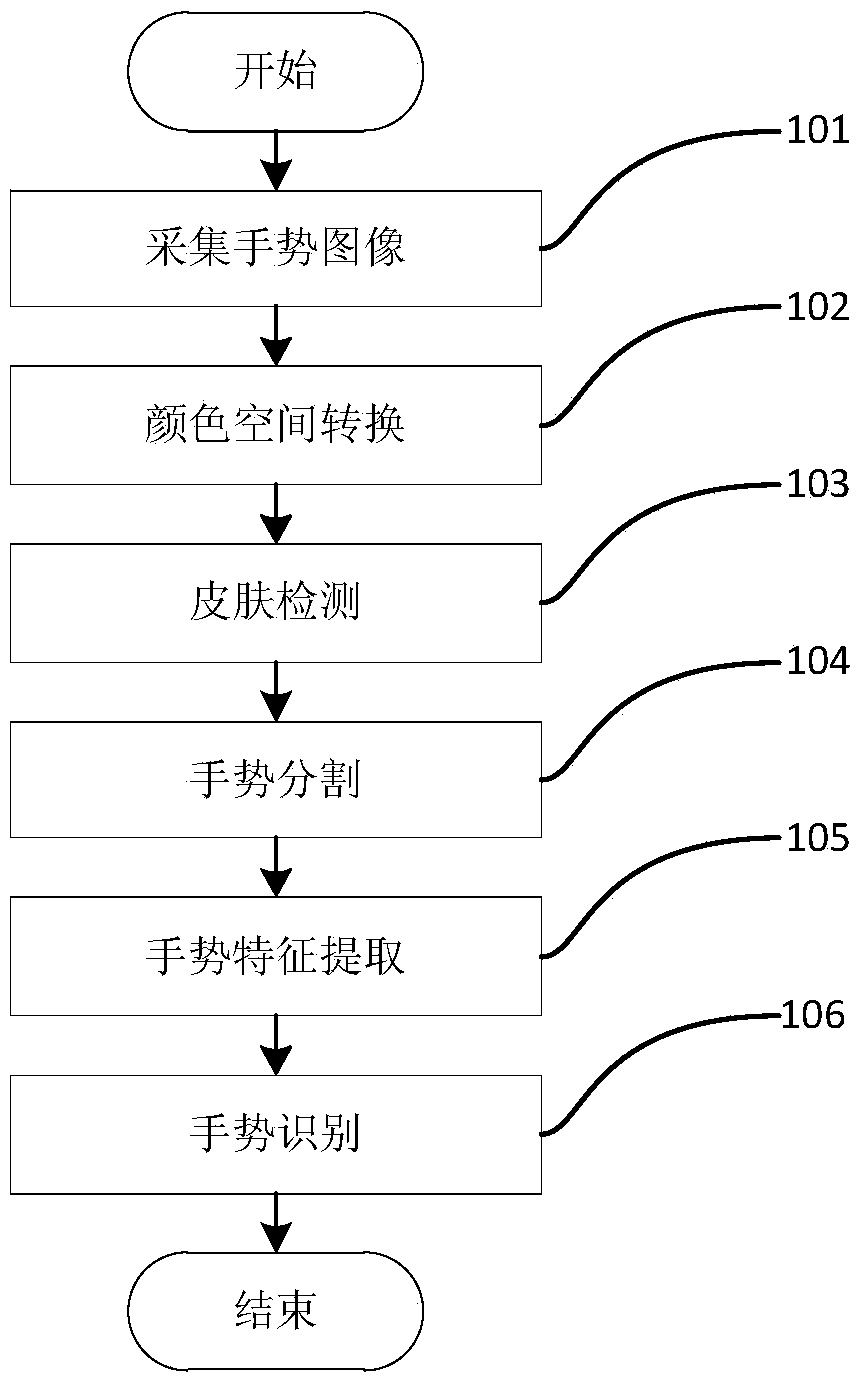

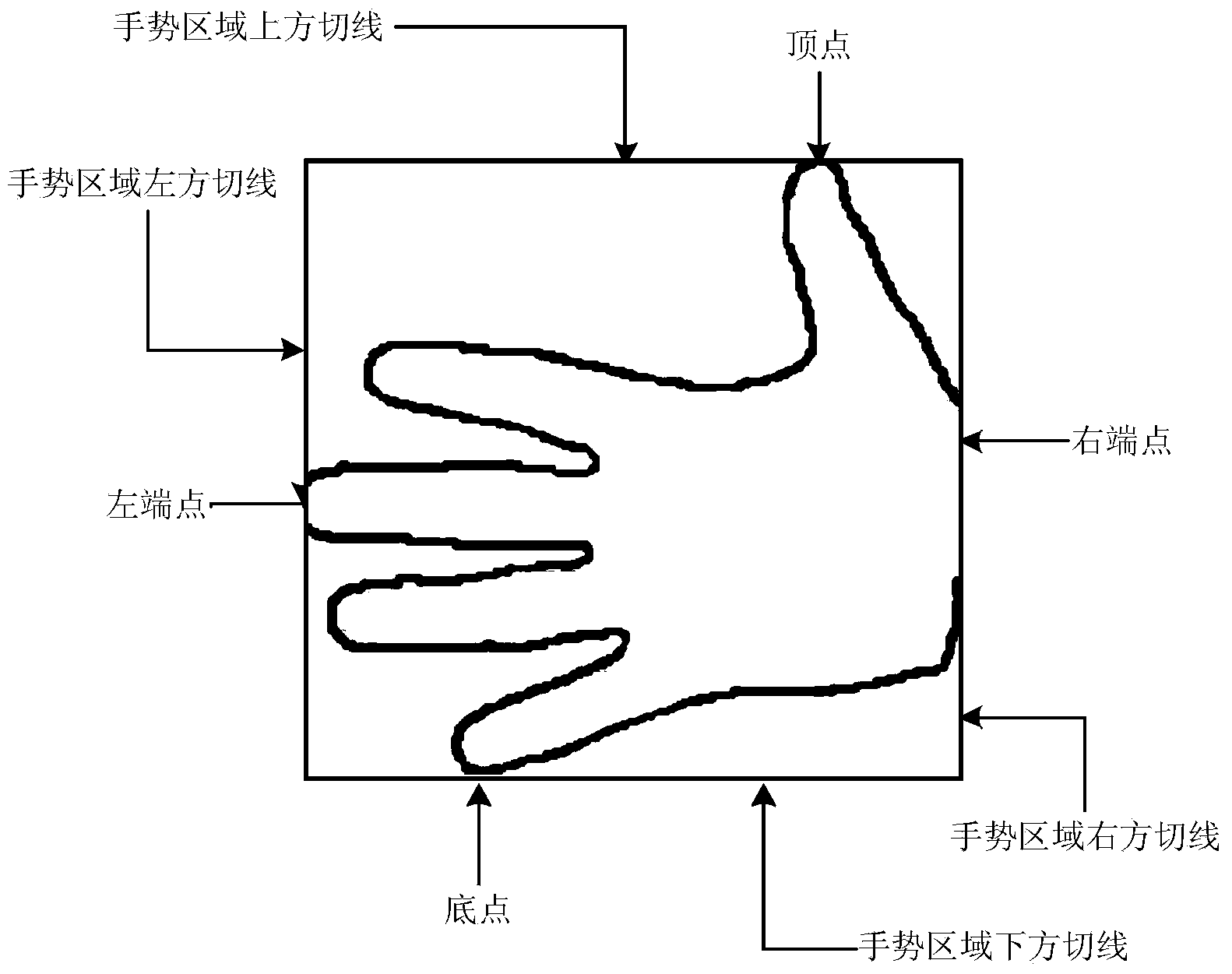

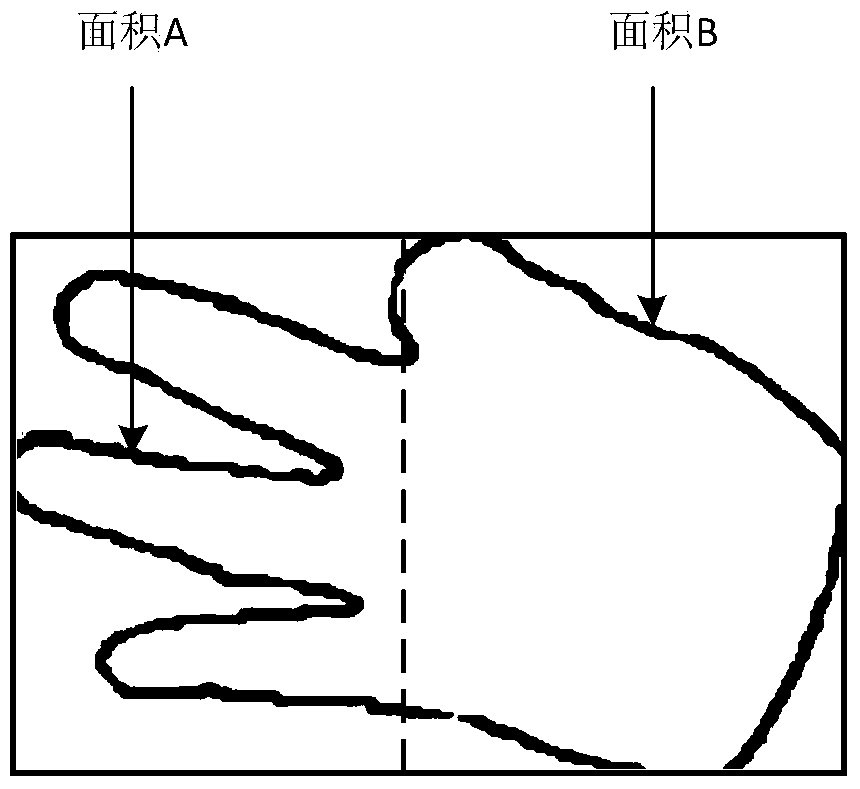

Automatic gesture recognition method

InactiveCN103679145AEfficient human-computer interactionDirect human-computer interactionCharacter and pattern recognitionFeature vectorTemplate matching

The invention provides an automatic gesture recognition method. The automatic gesture recognition method comprises the steps that each frame of source image in obtained gesture video data is converted into the YCbCr color space; skin detection is conducted by means of a skin color oval model; gesture division is accomplished by means of connected component analysis, edge detection and contour extraction; feature vector extraction is conducted through gesture analysis, and statistical characteristic parameters such as the image normalization rotational inertia and invariant moment characteristic, shape characteristic parameters such as the peripheral rectangular of a gesture, the direction of the gesture, the circumference of the gesture, the area of the gesture and the scale value of the gesture, and structural characteristic parameters such as the number of fingers and the fact that whether the thumb is included are selected to serve as parameters used for recognizing the gesture; gesture recognition is conducted by means of the template matching method based on Euclidean distance improvement. By the adoption of the automatic gesture recognition method, different gestures can be recognized efficiently, and man-machine interaction mainly based on the efficient, direct and natural gestures can be closer to the communication between people.

Owner:HOHAI UNIV

Hexagonal color pixel structure with white pixels

ActiveUS7400332B2Increase the luminous rangeEasy to transformTelevision system detailsColor signal processing circuitsRG color spaceYcbcr color space

Owner:GULA CONSULTING LLC

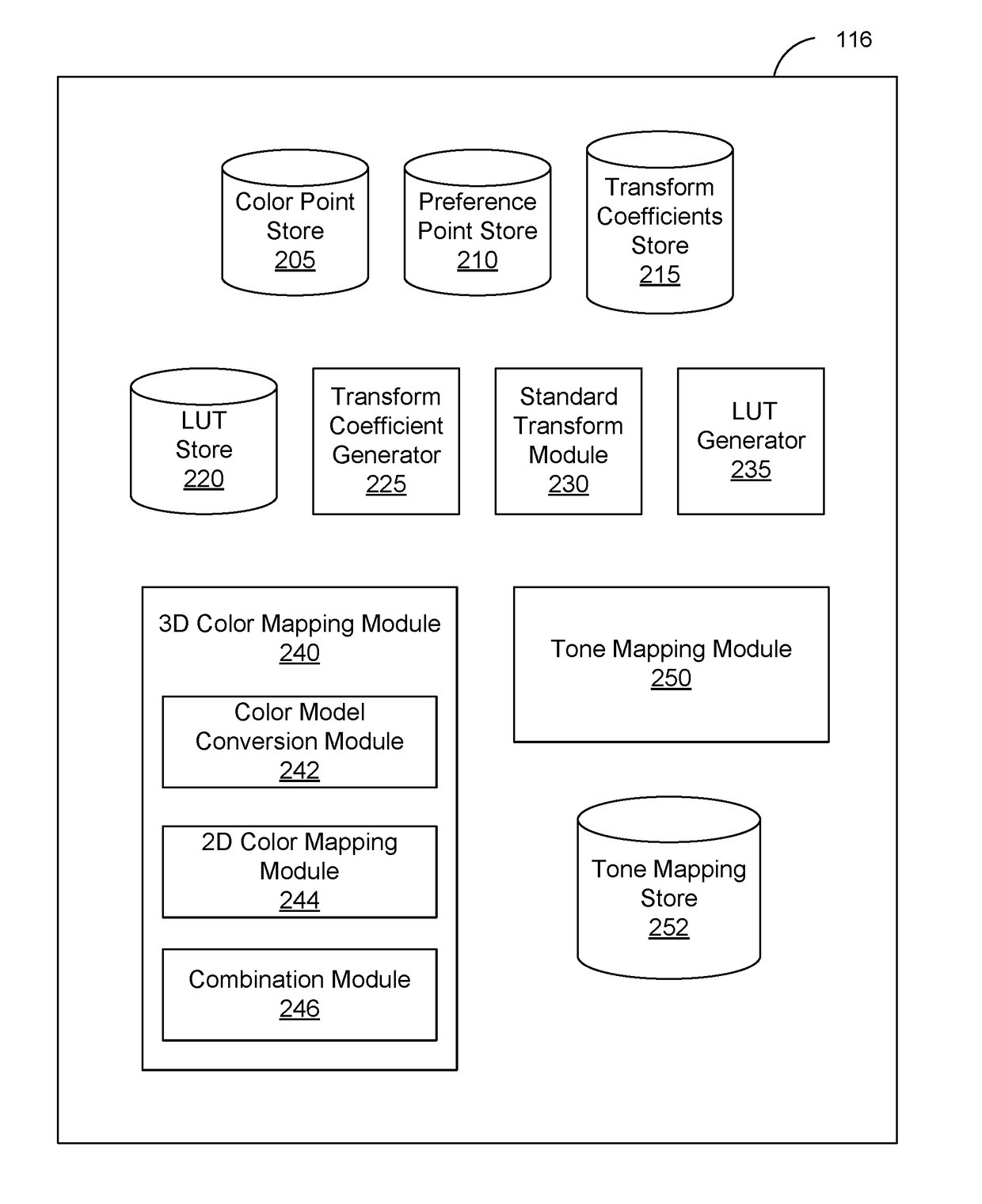

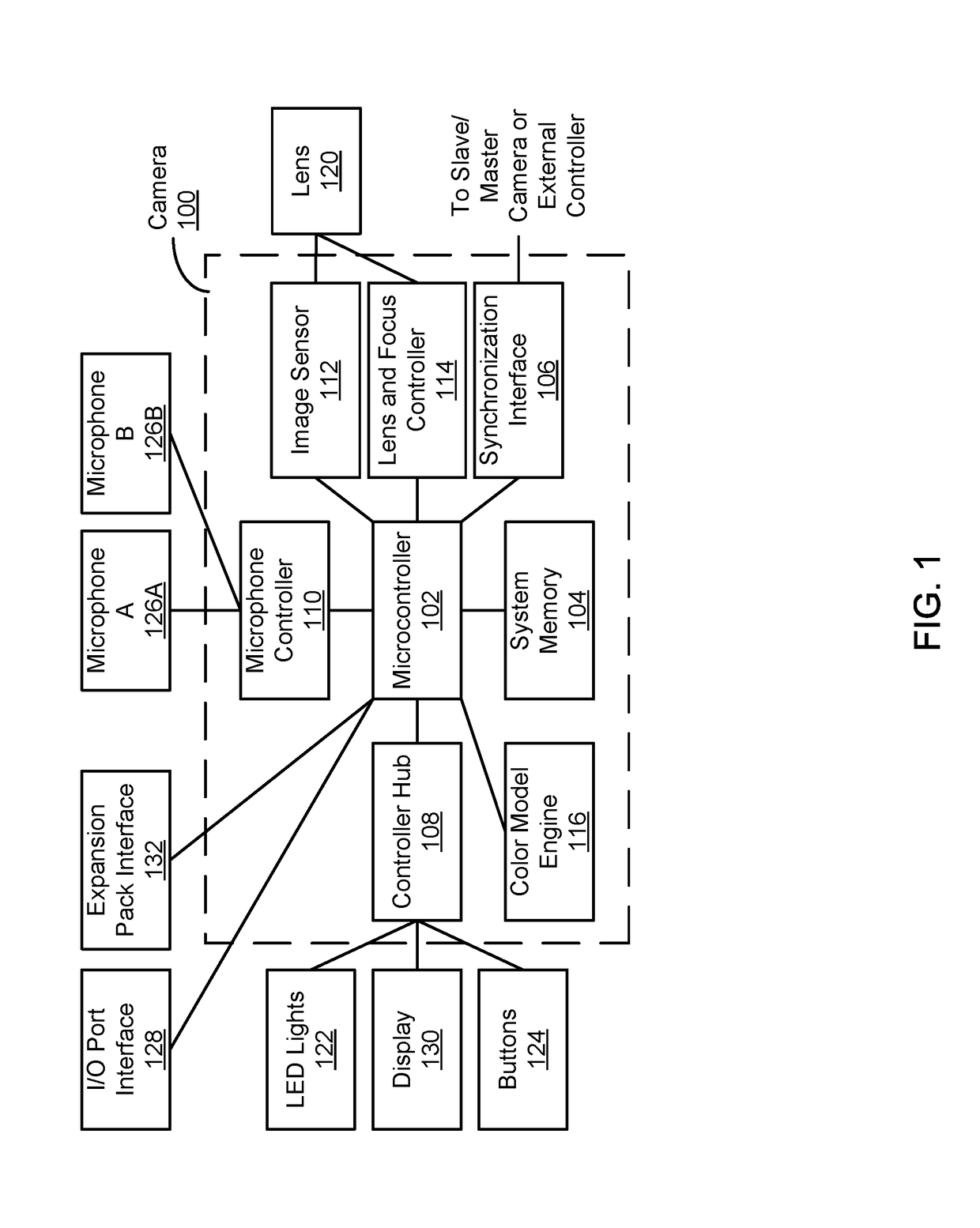

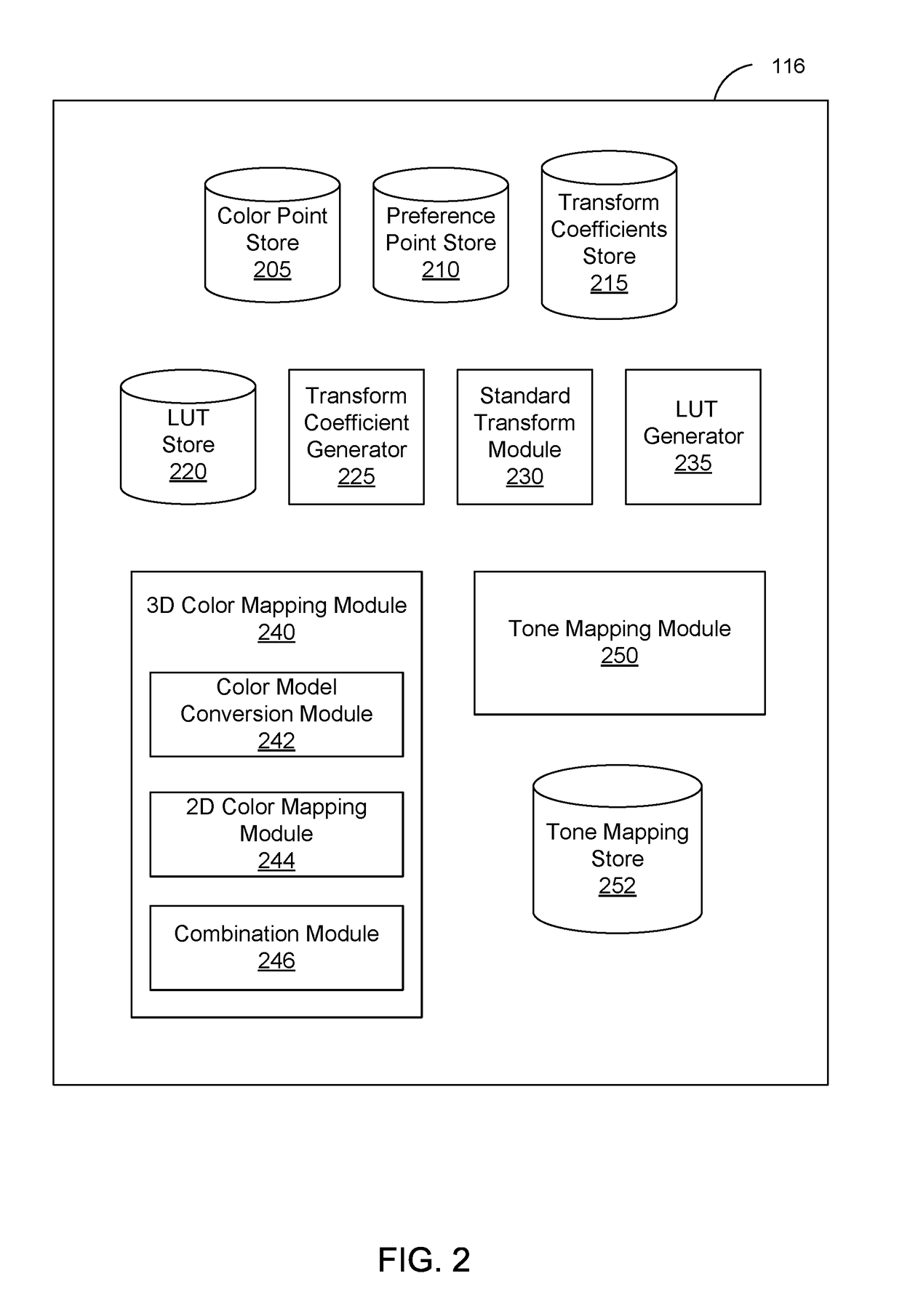

Dynamic Global Tone Mapping with Integrated 3D Color Look-up Table

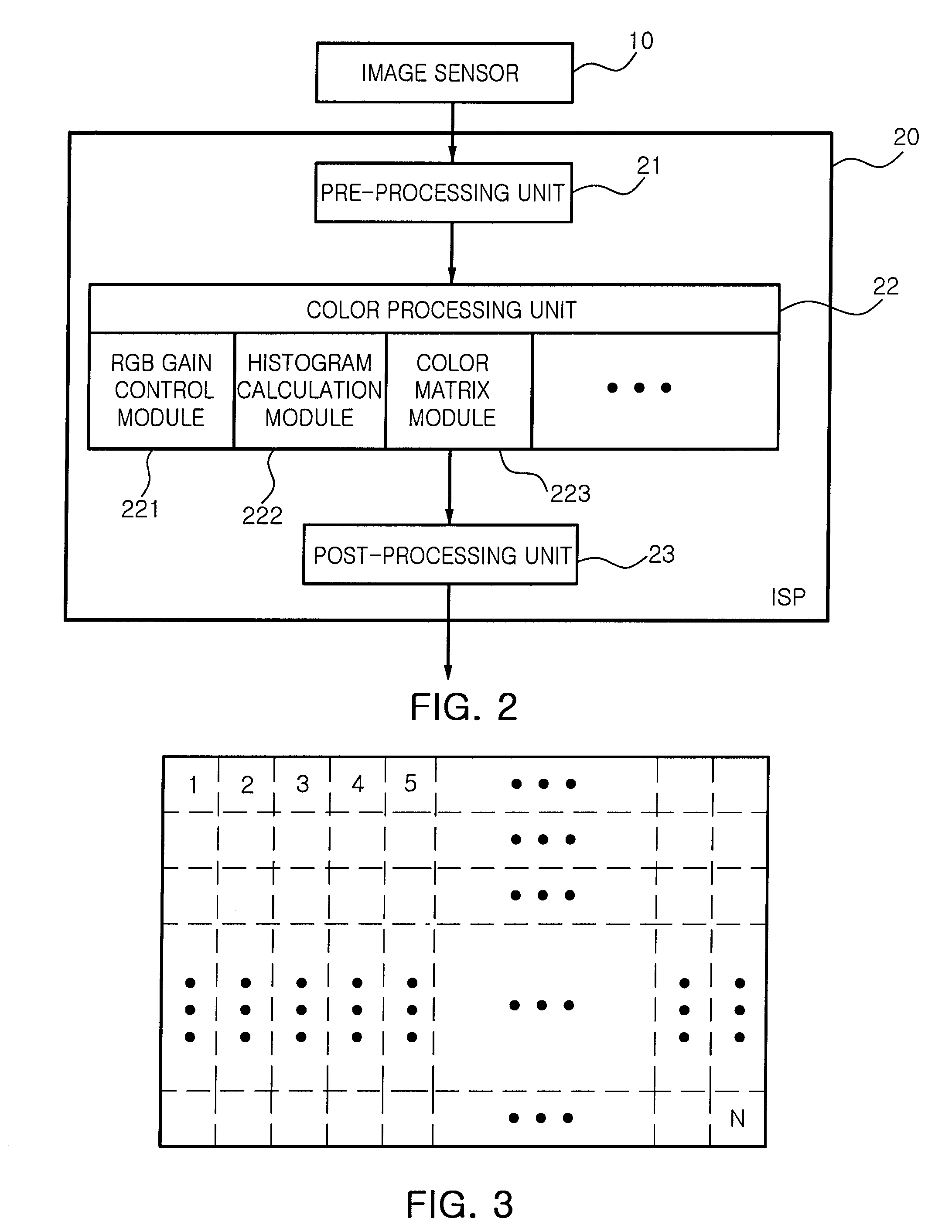

The processing of RGB image data can be optimized by performing optimization operations on the image data when it is converted into the YCbCr color space. First, a raw RGB color space is converted into a YCbCr color space, and raw RGB image data is converted into YCbCr image data using the YCbCr color space. For each Y-layer of the YCbCr image data, a 2D LUT is generated. The YCbCr image data is converted into optimized CbCr image data using the 2D LUTs, and optimized YCbCr image data is generated by blending CbCr image data corresponding to multiple Y-layers. The optimized YCbCr image data is converted into sRGB image data, and a tone curve is applied to the sRGB image data to produce optimized sRGB image data.

Owner:GOPRO

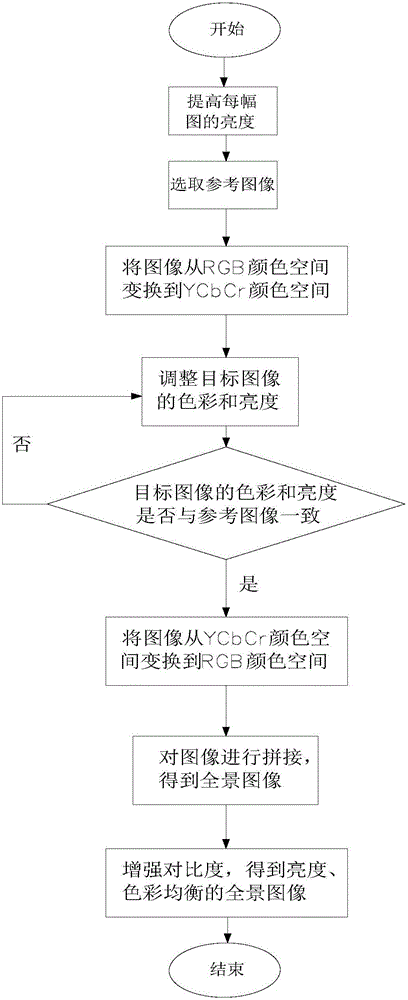

Multi-camera images stitching and color homogenizing method

ActiveCN105005963ABrightness value increasedEven color treatment is effectiveGeometric image transformationMulti cameraSpatial transformation

The present invention provides a multi-camera images stitching and color homogenizing method, comprising the following steps of: S1, increasing luminance of each image by using Gamma correction; S2, selecting an image that has greatest image information entropy from the images with increased luminance as a reference image, wherein the remaining picture are target images; S3, transforming each image from an RGB color space to a YCbCr color space; S4, adjusting the color and luminance of the target images to be consistent with those of the reference image in the YCbCr color space by using the reference image as a reference; S5, performing a color space inverse transformation on each image, to obtain images that locate in the RGB space. S6, performing stitching on the images by using an image homonymy points matching algorithm, to obtain a preliminary panorama image; and S7, performing contrast enhancement processing on the obtained preliminary panorama image, to obtain a luminance and color balanced panorama image. The multi-camera images stitching and color homogenizing method of the present invention is simple and quick in calculation method, and can be applied to color homogenizing processing of color images.

Owner:CHONGQING SURVEY INST +1

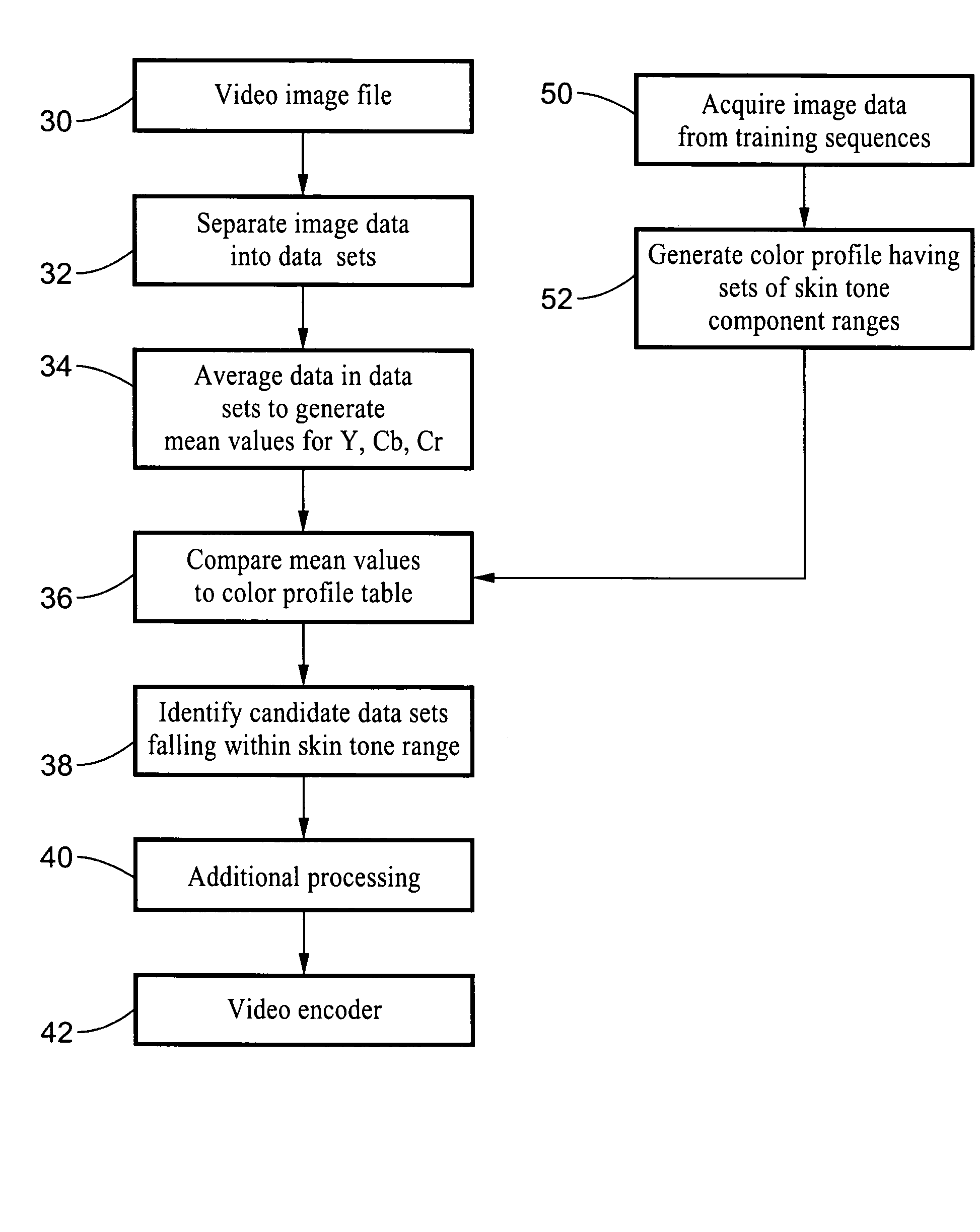

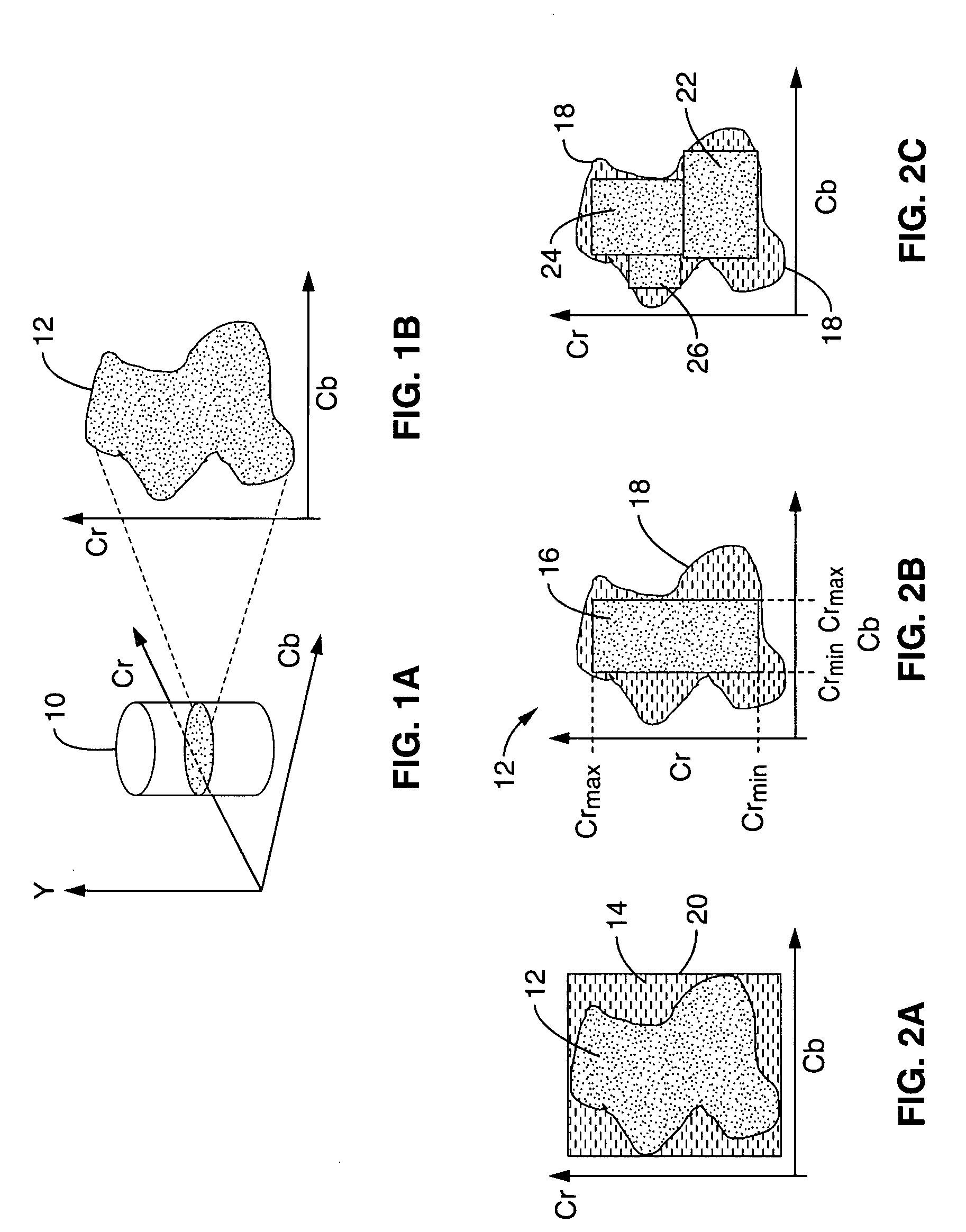

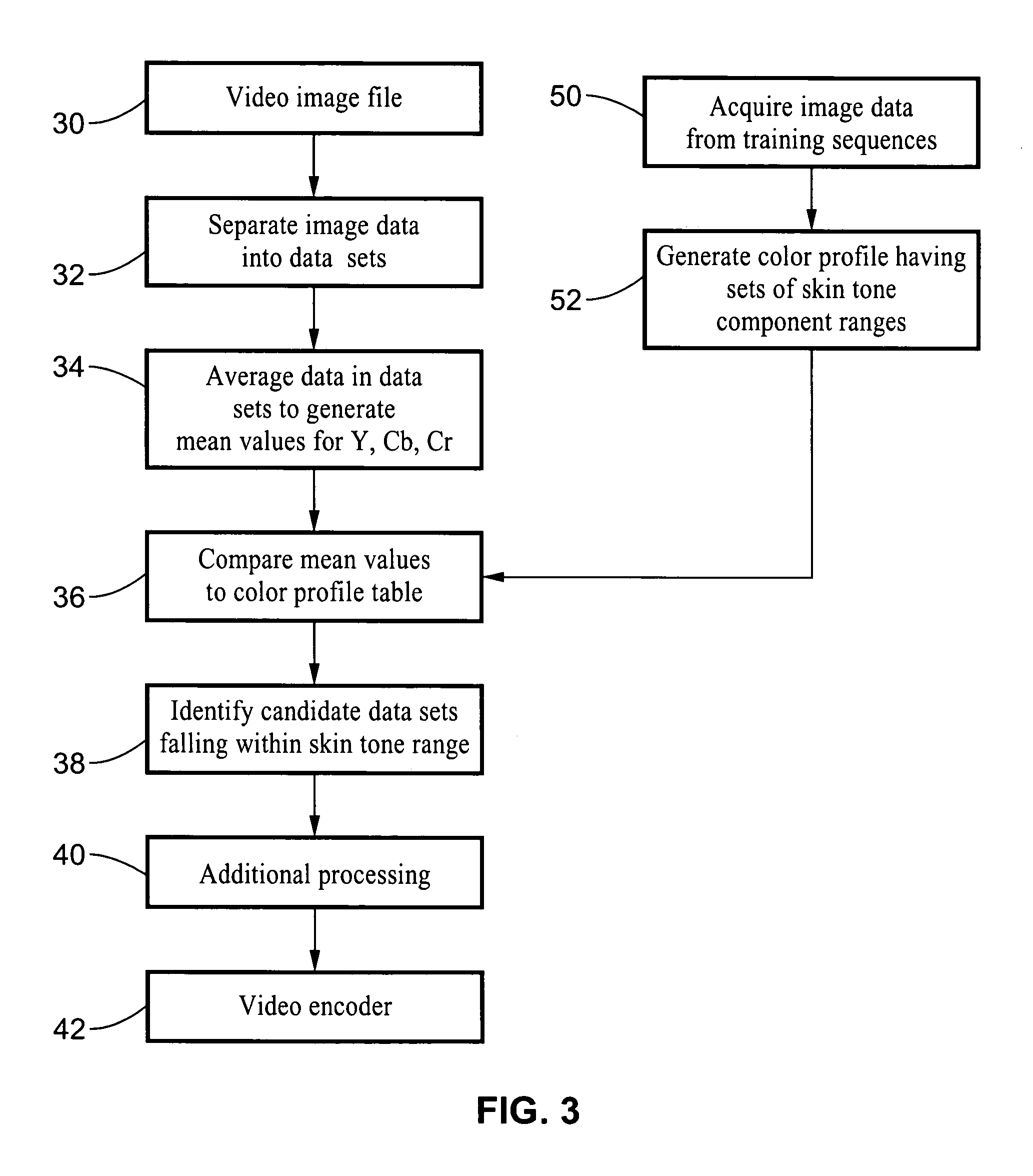

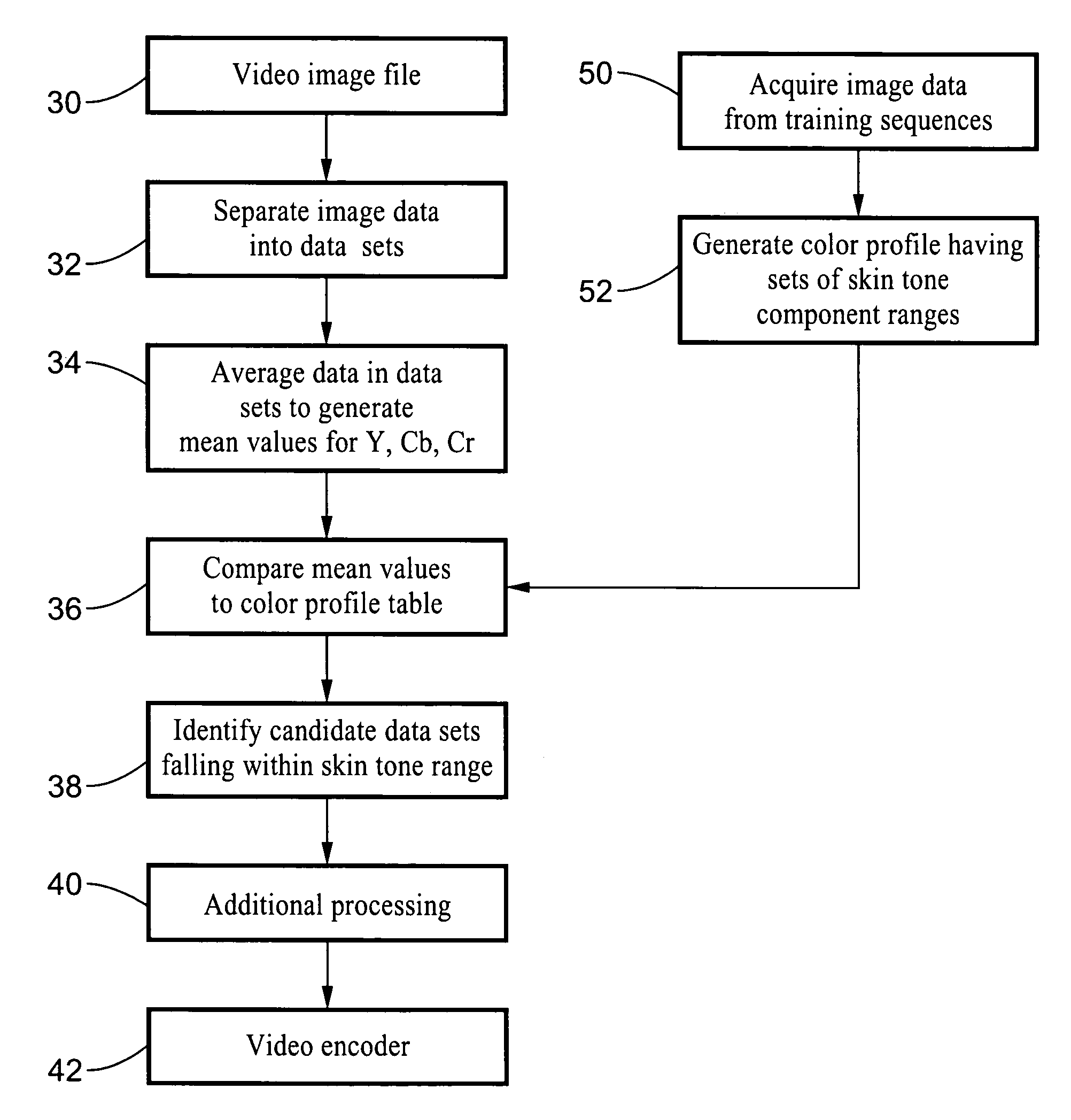

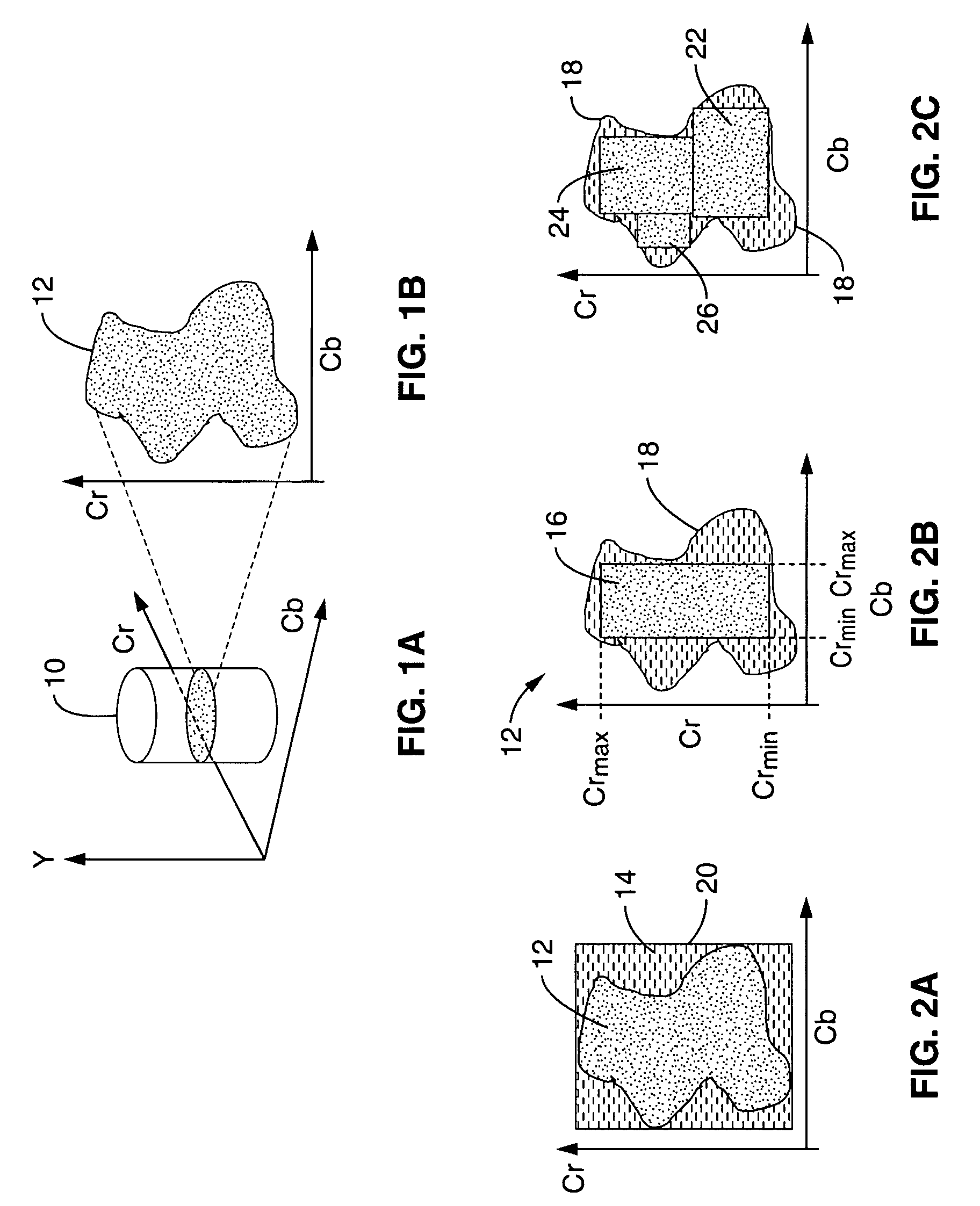

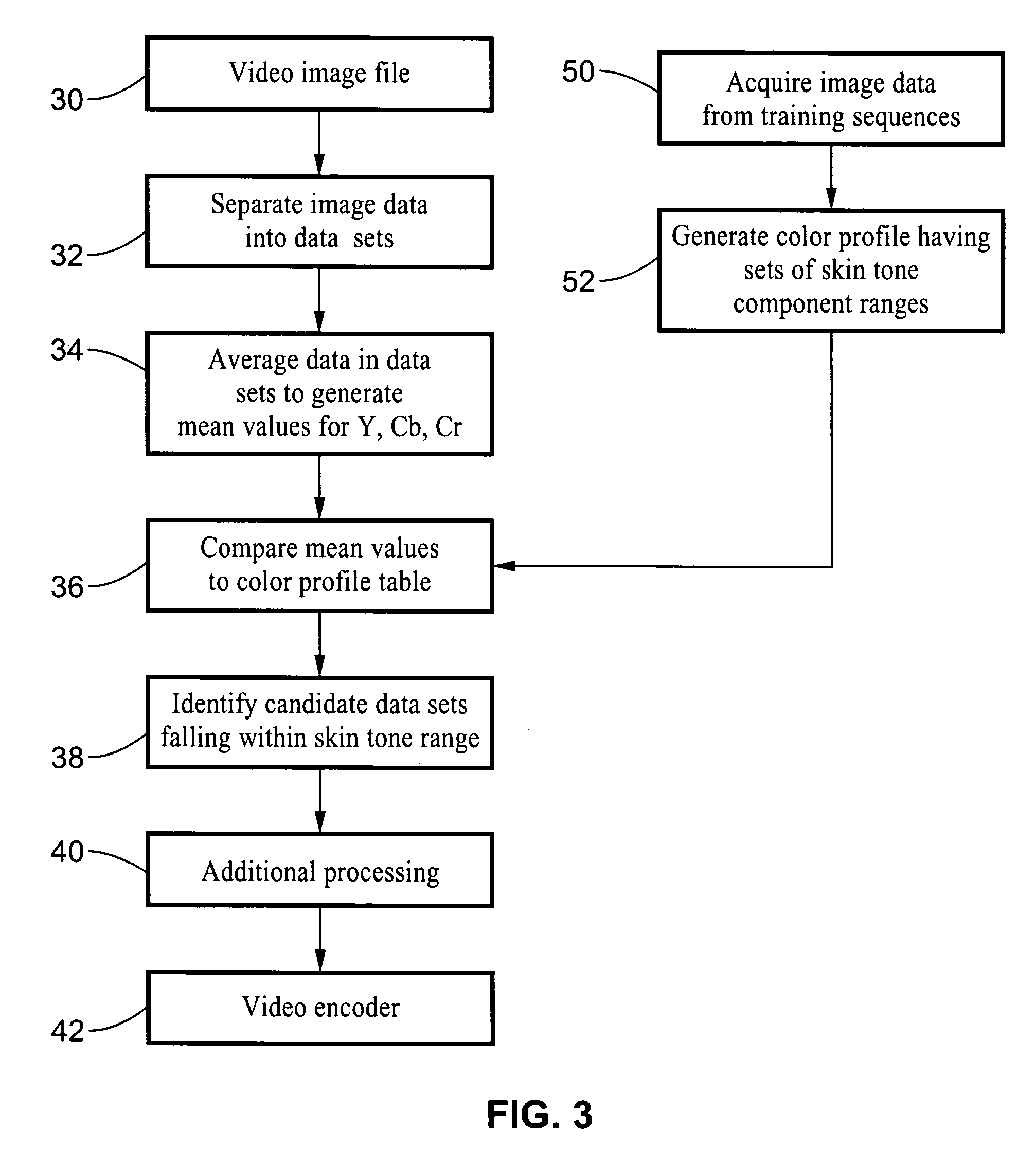

Human skin tone detection in YCbCr space

A method for detecting human skin tone in a video signal by comparing image data from the video signal to a color profile table obtained from a plurality of training sequences. The image data comprising a plurality of pixels each having a plurality of color components, the components preferably being in the YCbCr color space. The method includes separating image data for each frame in the video signal into sets of data, averaging the image data in each data set to generate mean values for each color component in the data set, comparing the mean values to a stored color profile correlating to human skin tone, and identifying data sets falling within the stored color profile.

Owner:SONY CORP +1

Hexagonal color pixel structure with white pixels

ActiveUS20060146067A1Increase the luminous rangeEasy to transformTelevision system detailsTelevision system scanning detailsYcbcr color spaceLarge size

A digital color imager provides an extended luminance range, enabling a method for an easy transformation into all other color spaces having luminance as a component. White pixels are added to hexagonal red, green and blue pixels. These white pixels can alternatively have an extended dynamic range as described by U.S. Pat. No. (6,441,852 to Levine et al.). Especially the white pixels may have a larger size than the red, green, or blue pixels used. This larger size can be implemented by concatenation of “normal” size hexagonal white pixels. The output of said white pixels can be directly used for the luminance values Y of the destination color space. Therefore only the color values have to be calculated from the RGB values, leading to an easier and faster calculation. As an example chosen by the inventor the conversion to YCbCr color space has been shown in detail.

Owner:GULA CONSULTING LLC

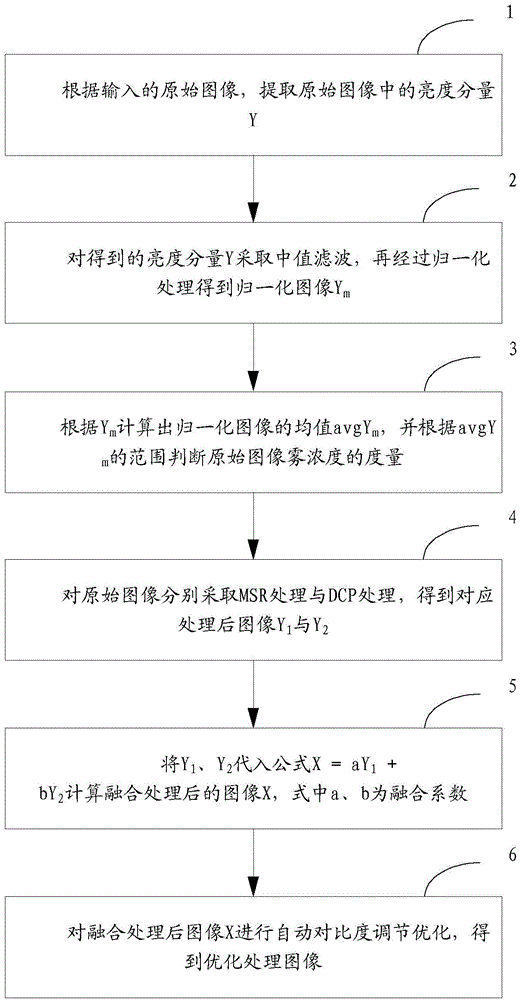

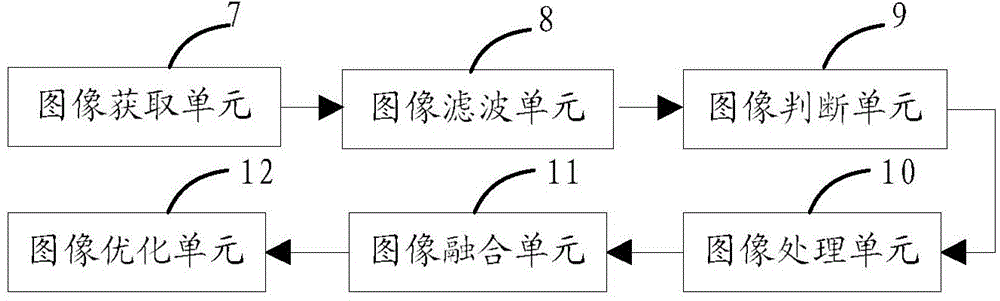

Method, device and system for image enhancement based on YCbCr color space

InactiveCN104318524ACoordinated and natural look and feelReal-time processingImage enhancementYcbcr color spaceLightness

The invention belongs to the technical field of image enhancement and in particular relates to a method, a device and a system for image enhancement based on a YCbCr color space. The method comprises the steps of extracting the luminance component Y in an original image according to the input original image, performing median filtering on the obtained luminance component Y and then performing normalization processing to obtain a normalized image Ym, calculating the average value avgYm of the normalized image according to Ym and judging the measure of the fog concentration of the original image according to the range of avgYm, performing MSR processing and DCP processing on the original image to obtain corresponding processed images Y1 and Y2, putting Y1 and Y2 into a formula X=aY1+bY2 to calculate an image X after fusion processing, and performing automatic contrast control optimization on the image X after the fusion processing to obtain an optimized image, wherein a and b in the formula are fusion coefficients. The method for the image enhancement based on the YCbCr color space is capable of effectively eliminating the halo and over-enhancement phenomena, and therefore, the stability and quality of the output image can be improved.

Owner:IRAY TECH CO LTD

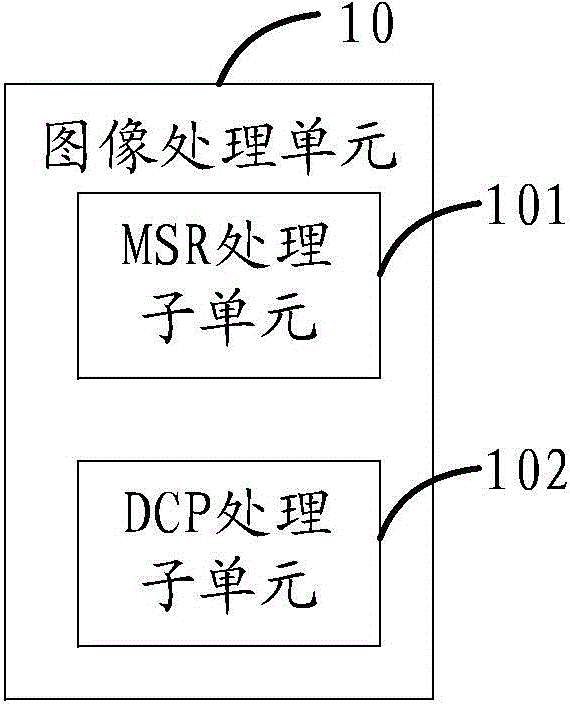

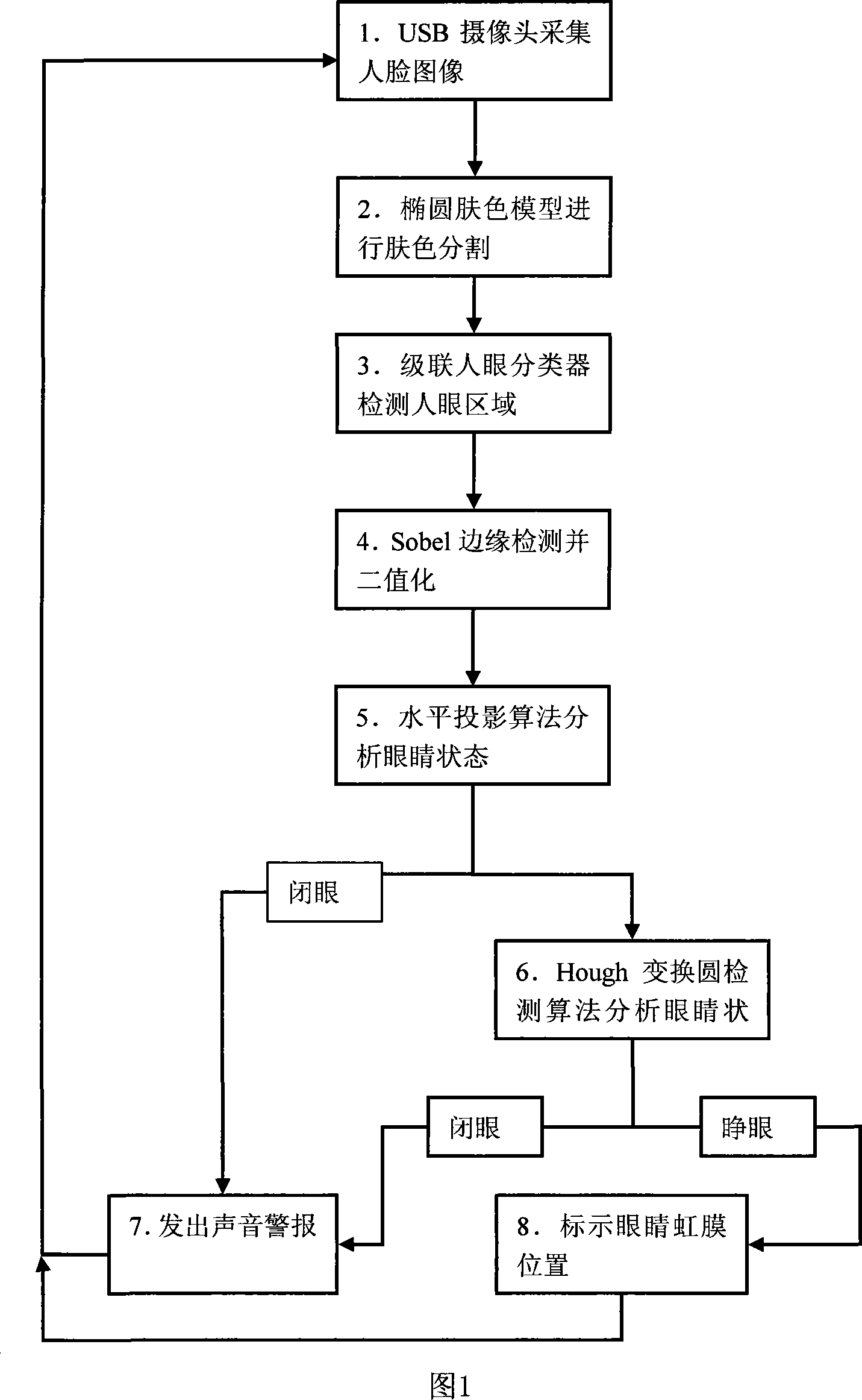

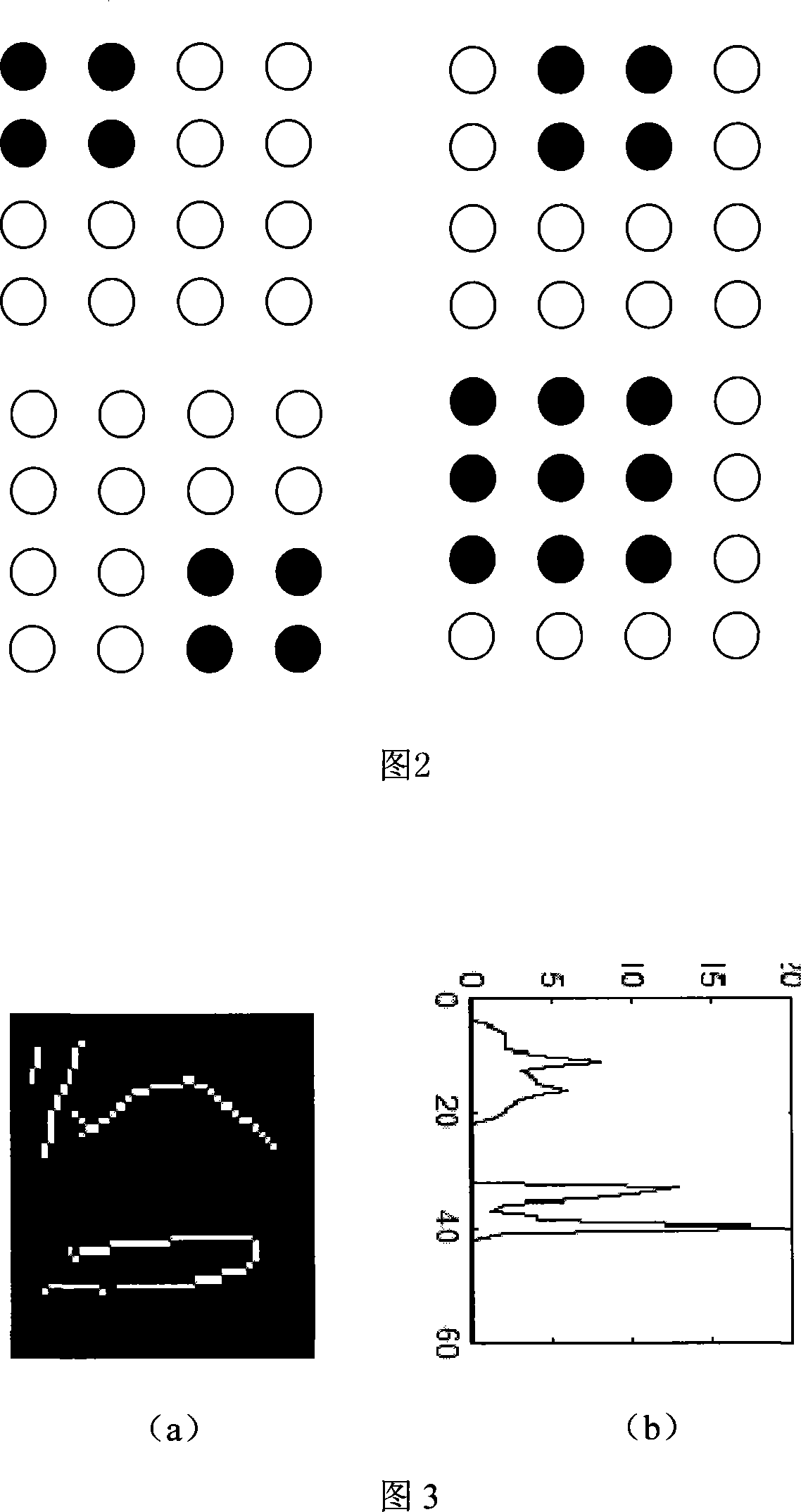

Human eye state detection method based on cascade classification and hough circle transform

InactiveCN101127076ANarrow detection rangeDetection speedCharacter and pattern recognitionEye stateEye closure

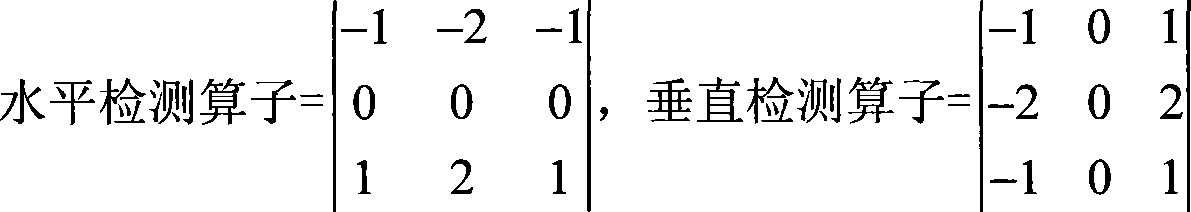

The utility model relates to a detection method of eye state based on cascade sort and Hough circle transform, belonging to the field of pattern recognition, which is characterized in the following steps: to acquire a face image; to carry out skin color segmentation in the YCbCr color space to acquire the position information of the skin color area using an ellipse skin model; to detect the rectangular eye area with the method of eye detecting window traversal with a cascade eye classifier; to merge a rectangle by rectangular merge method to get a merged eye rectangular linked list; to operate edge detection and binarization in turn on every rectangular eye area in the merged eye rectangular linked list with a Sobel operator to get an binary image; to detect the eye state of every binary image in turn with horizontal projection method; to further detect the eye state by Hough circle transform detection method if whether the present state is eye closure is not certain. The utility model increases the speed of eye detection, tracks and analyzes the eye state with the skin color segmentation, which is suitable for attention detection and fatigue detection.

Owner:SHANGHAI JIAO TONG UNIV

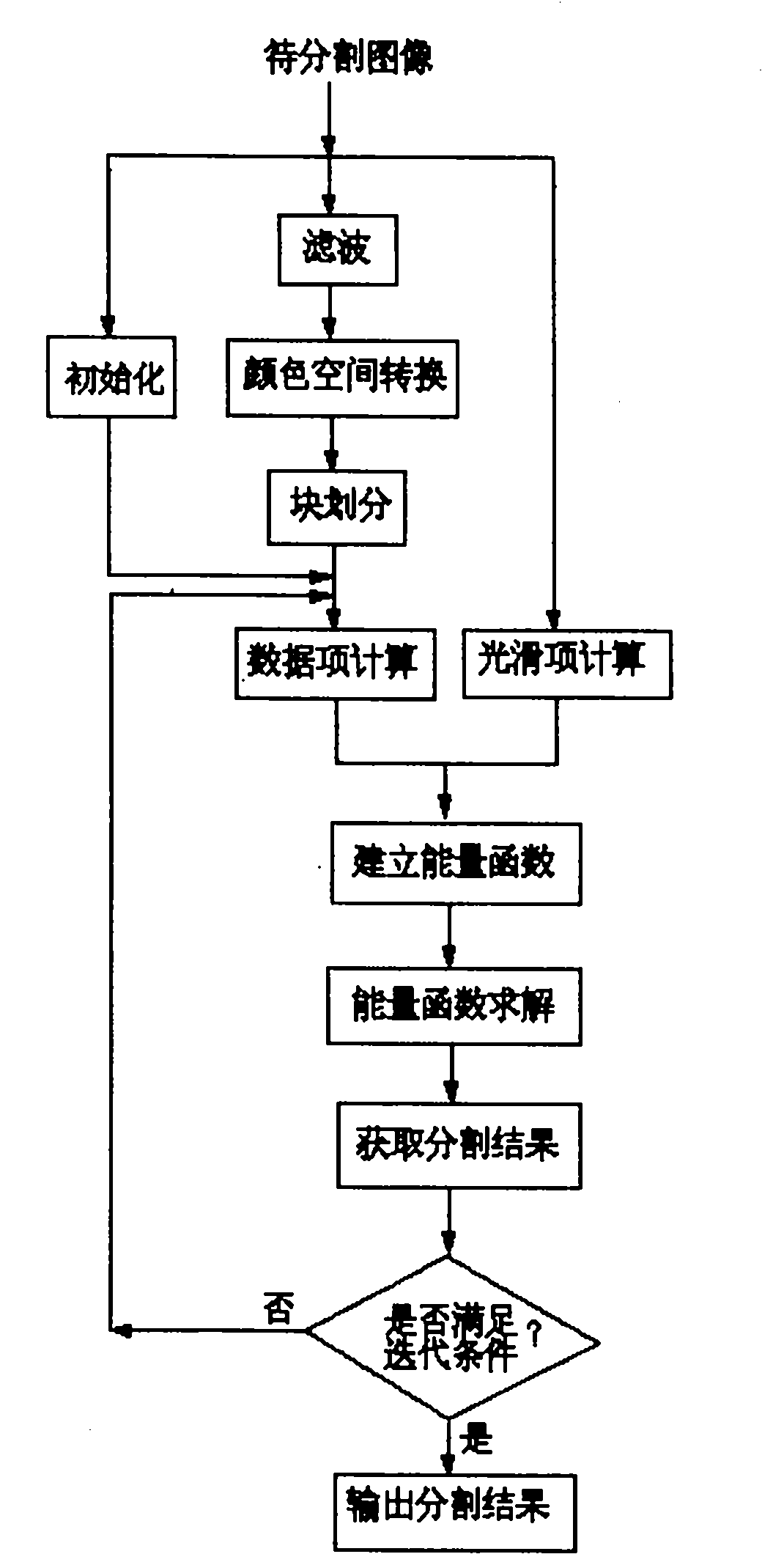

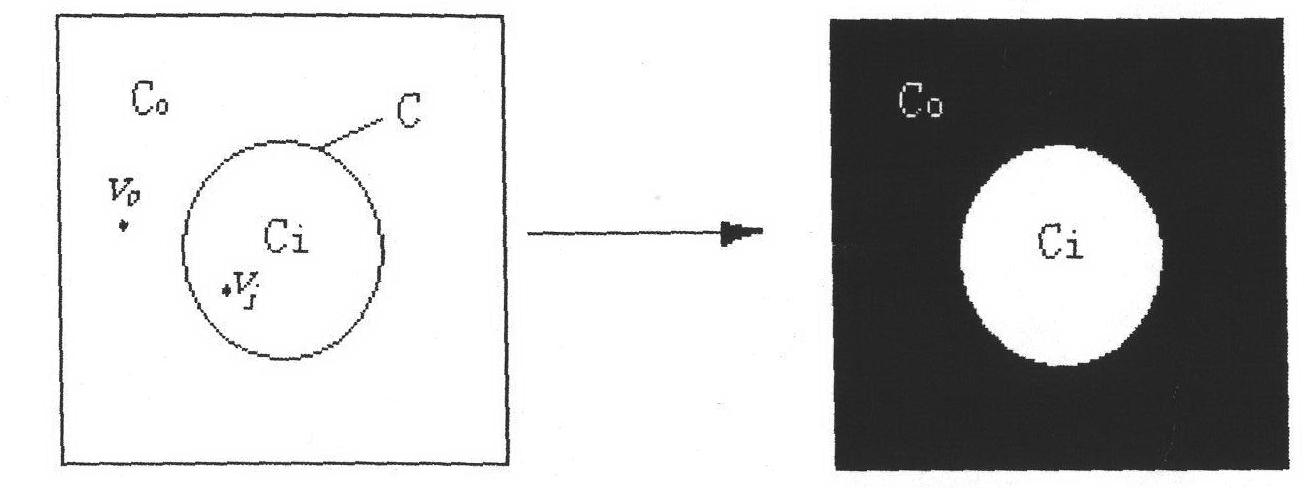

Image automatic segmentation method based on graph cut

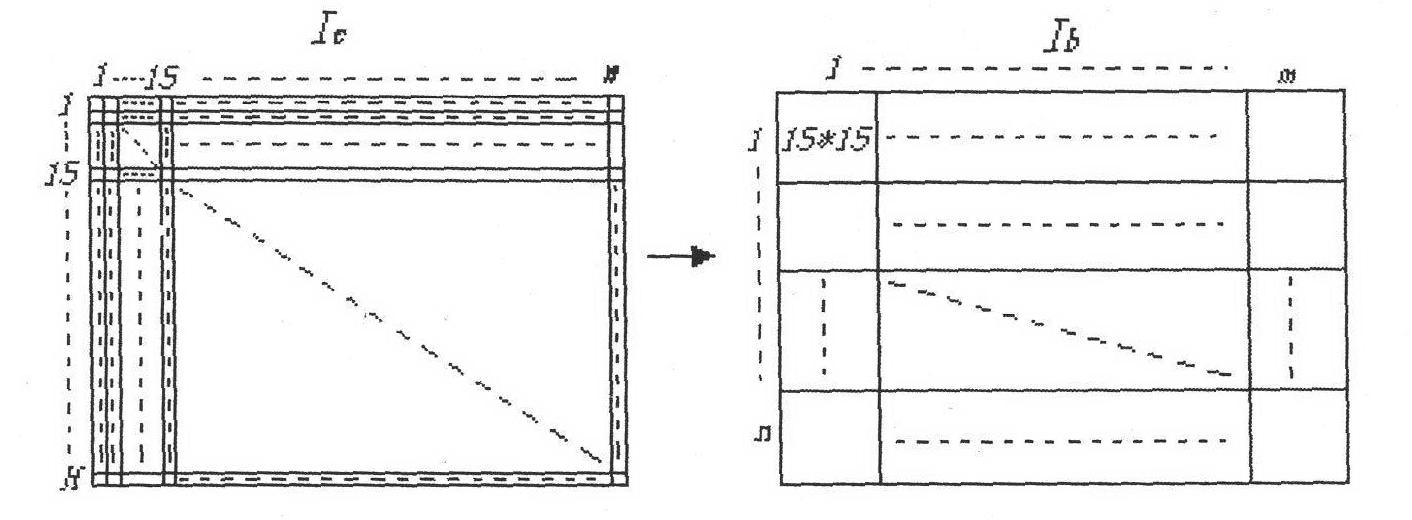

InactiveCN101840577AExemption from establishmentAchieve optimal segmentationImage analysisColor imageEnergy functional

The invention discloses an automatic segmentation method based on graph cut for color images and gray level images, mainly solving the problems of the existing graph cut technology that interaction and modeling are required in graph cut and the segmentation result is required to be modified manually. The method comprises the following steps: dividing an image into an inner area and an outer area; establishing the data item of the energy function according to the similarity of pixels in different areas, wherein mean shift, YCbCr color space conversion and block partition are adopted in calculation of the similarity; establishing the smoothing item of the energy function according to the marginal information and spatial location of the image; adopting graph cut to perform optimization to the energy function, thus realizing one-step cutting to the image; and using the segmentation result as the new inner and outer areas, performing iterative execution of the above operations, and stopping iterative execution when iterative conditions are satisfied. The method has the advantages of automation, good effect and less iterations and can be used in the computer vision fields such as image processing, image editing, image classification, image identification and the like.

Owner:XIDIAN UNIV

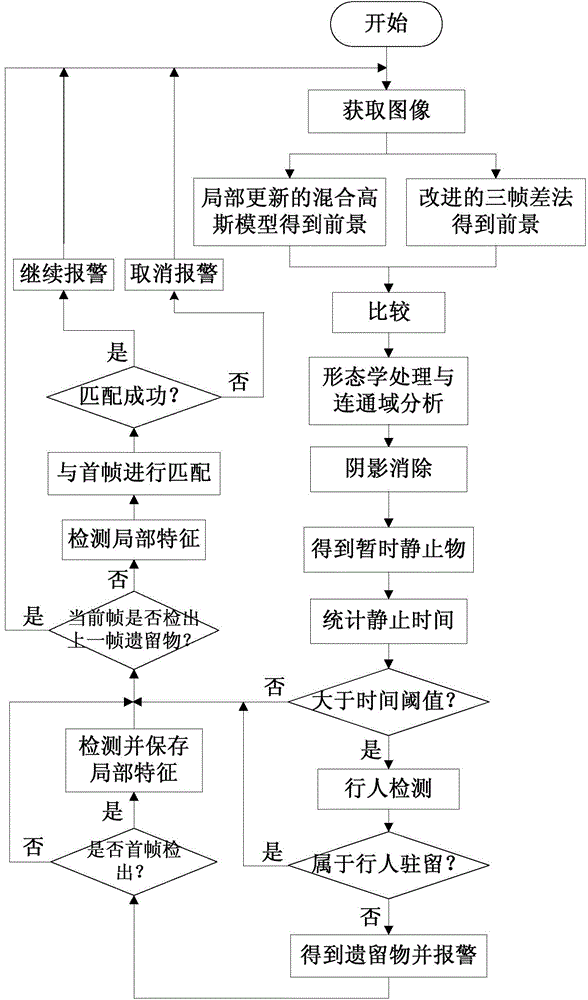

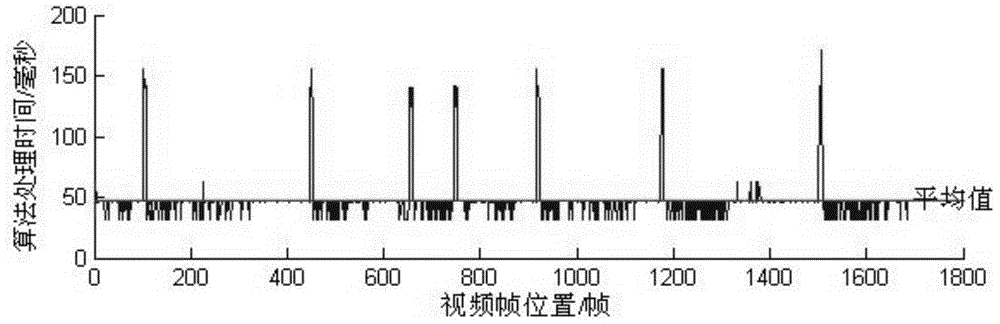

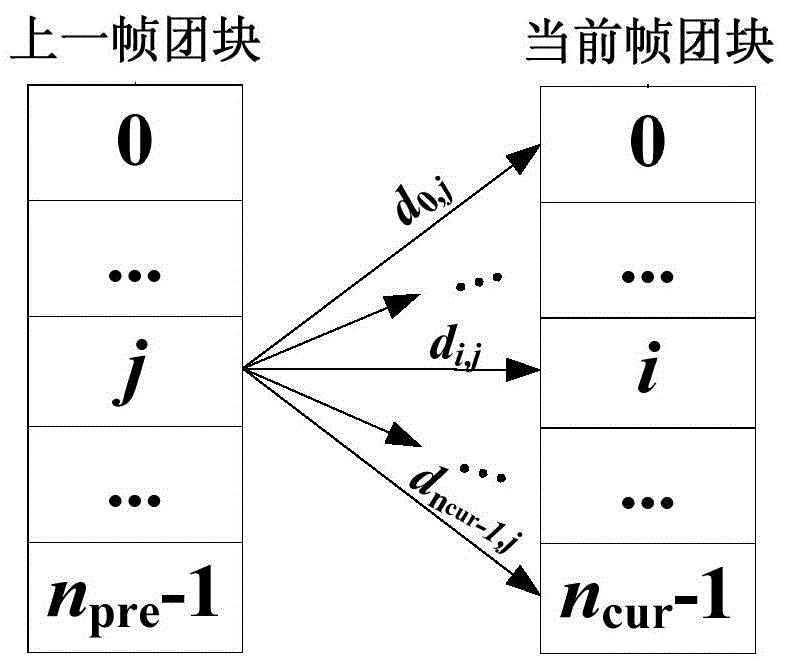

Detection method for remnants in complex environment

ActiveCN104156942AAvoid blending into the backgroundPrecise positioningImage analysisFrame differenceResting time

The invention discloses a detection method for remnants in the complex environment. The detection method comprises the following steps: comparing prospect targets obtained on the basis of both a partial updated Gaussian mixture background modeling method and a modified three-frame difference method; in combination with the YCbCr color space based shadow elimination method and the connected domain analysis, dividing to obtain temporary static briquettes within a scene; carrying out the centroid distance judging method on each frame of an image, and counting the rest time of each briquette; marking the static briquettes reaching to the temporal threshold as the remnants after eliminating the possibility of pedestrian residence through the HOG (Histograms of Oriented Gradients) pedestrian detection algorithm, and eliminating the possibility of pedestrian residence through the FAST (Features from Accelerated Segment Test) feature point detection algorithm. The detection method can improve detection accuracy of the remnants, lowers calculation complexity, can be better suitable for the complex environment crowded and frequent in shielding, and enhances the anti-interference capability.

Owner:SOUTH CHINA UNIV OF TECH

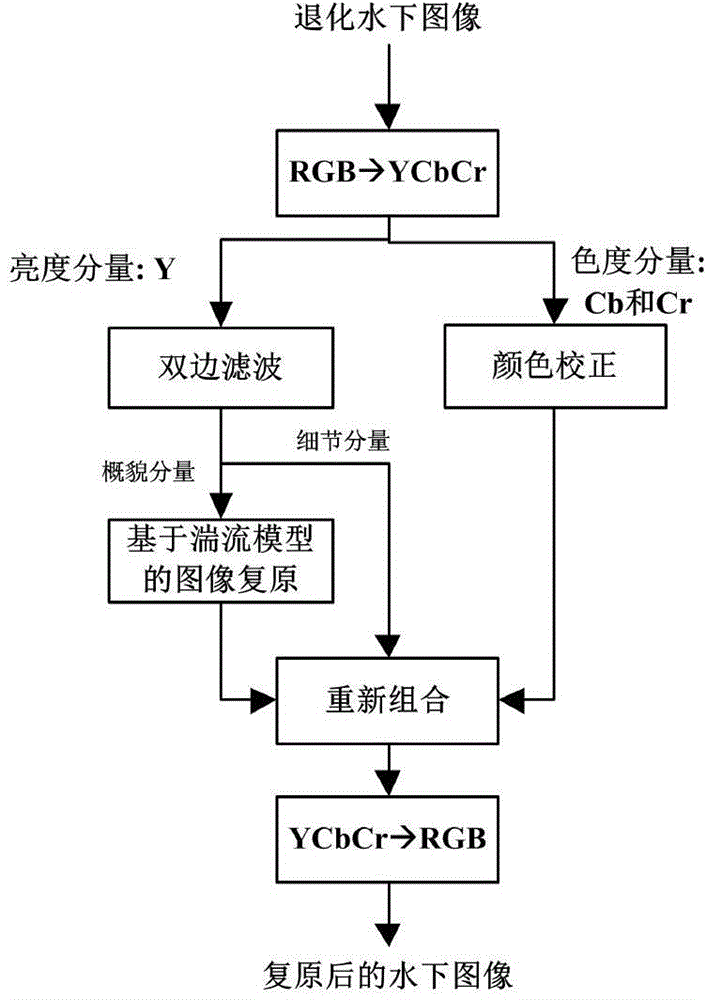

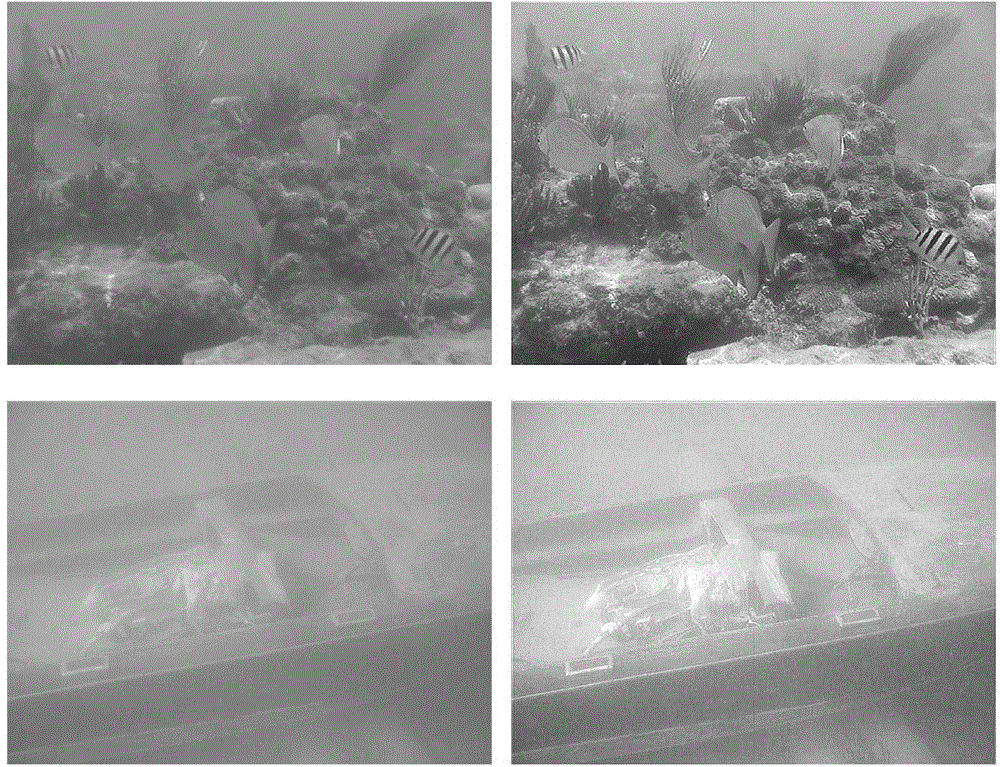

Underwater image restoration method based on turbulence model

The invention relates to an underwater image restoration method based on a turbulence model. The method includes the steps of transforming an input color underwater image from RGB (Red Green Blue) space to YCbCr color space; smoothing gray components through a bilateral filtering method and representing low-frequency profile components and high-frequency detail components with YL and YH respectively after bilateral filtering processing; representing the gray components of the degenerated underwater image with YL (x, y), transforming YL (x, y) into a Fourier transform domain and representing the Fourier transform domain with YL (u, v); obtaining a non-degenerated underwater image F (u, v) through a full-blind iterative restoration algorithm; obtaining a degenerated image based on the turbulent model to obtain a restoration results; transforming the restoration result back to a spatial domain again; performing color correction; reconstructing a restoration image. By means of the underwater image restoration method based on the turbulence model, the contrast, the noise, and the color cast of the underwater image are significantly improved.

Owner:TIANJIN UNIV

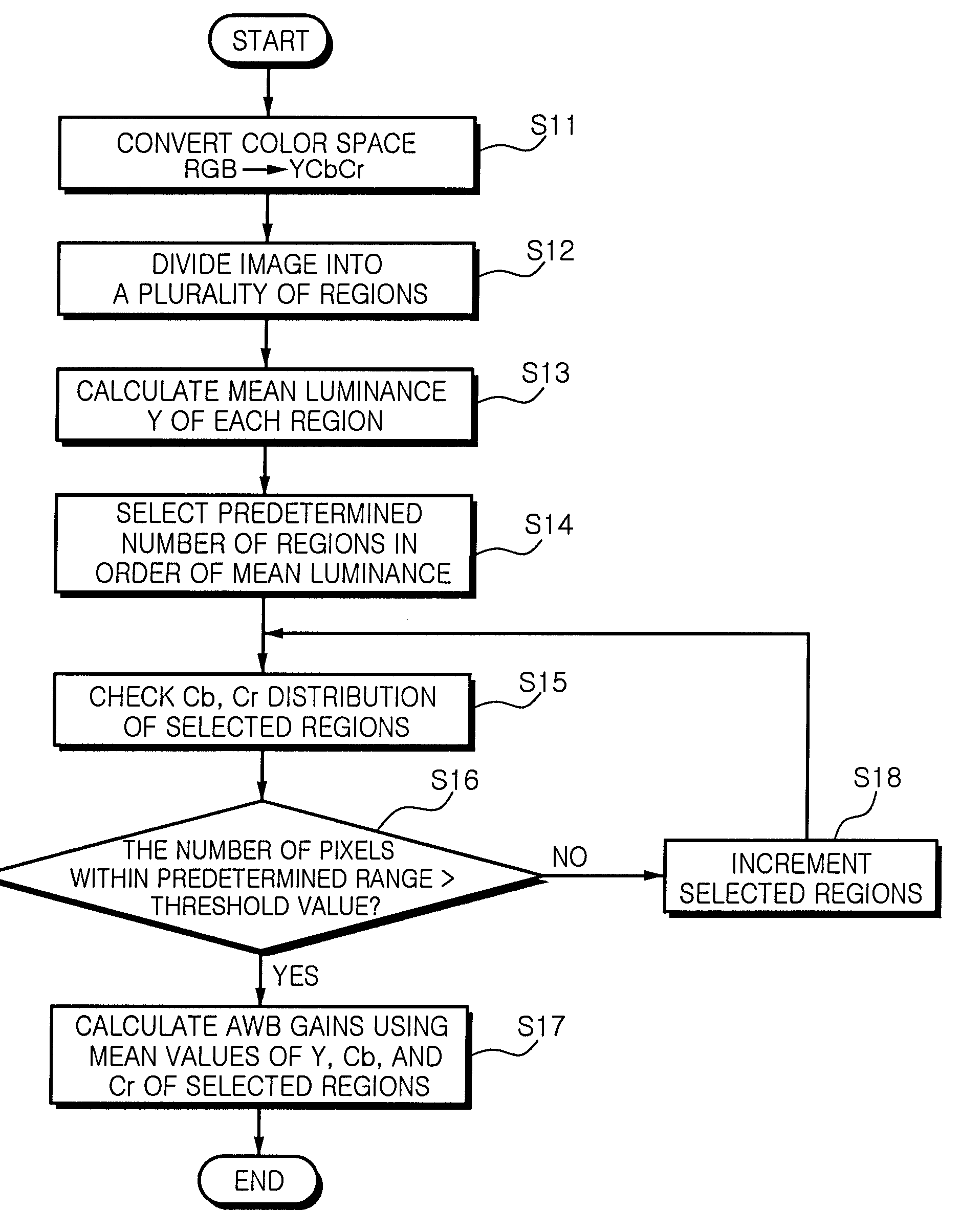

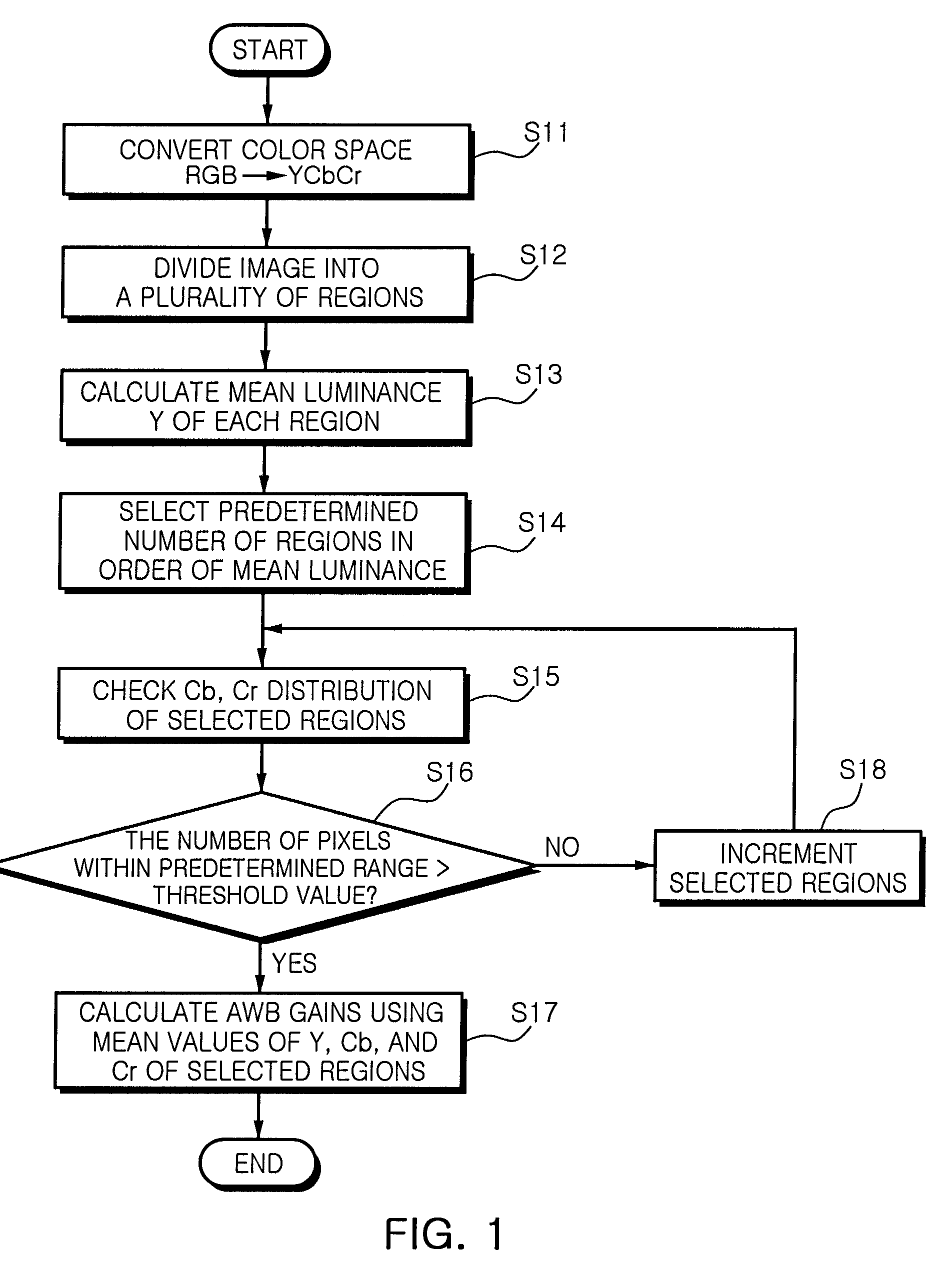

Method of controlling auto white balance

ActiveUS20100020192A1Simplify hardware configurationColor signal processing circuitsCharacter and pattern recognitionPattern recognitionYcbcr color space

There is provided a method of controlling auto white balance that can be appropriately used for a camera of an electric device that uses a wide-angle or a super wide-angle lens. A method of controlling auto white balance according to an aspect of the invention may include: converting a color space of an input image from an RGB color space into a YCbCr color space; dividing the input image into a plurality of divided regions; selecting a predetermined number of divided regions in order determined by mean values of Y of pixels included in the plurality of divided regions; comparing a predetermined threshold value with the number of pixels having values of Cb and Cr within a predetermined Cb-Cr range among the pixels included in the selected divided regions in order to determine a white area; and calculating auto white balance gains by using mean values of Y, mean values of Cb, and mean values of Cr of the pixels included in each of the selected divided regions when the number of pixels within the predetermined Cb-Cr range is greater than the threshold value.

Owner:SAMSUNG ELECTRO MECHANICS CO LTD

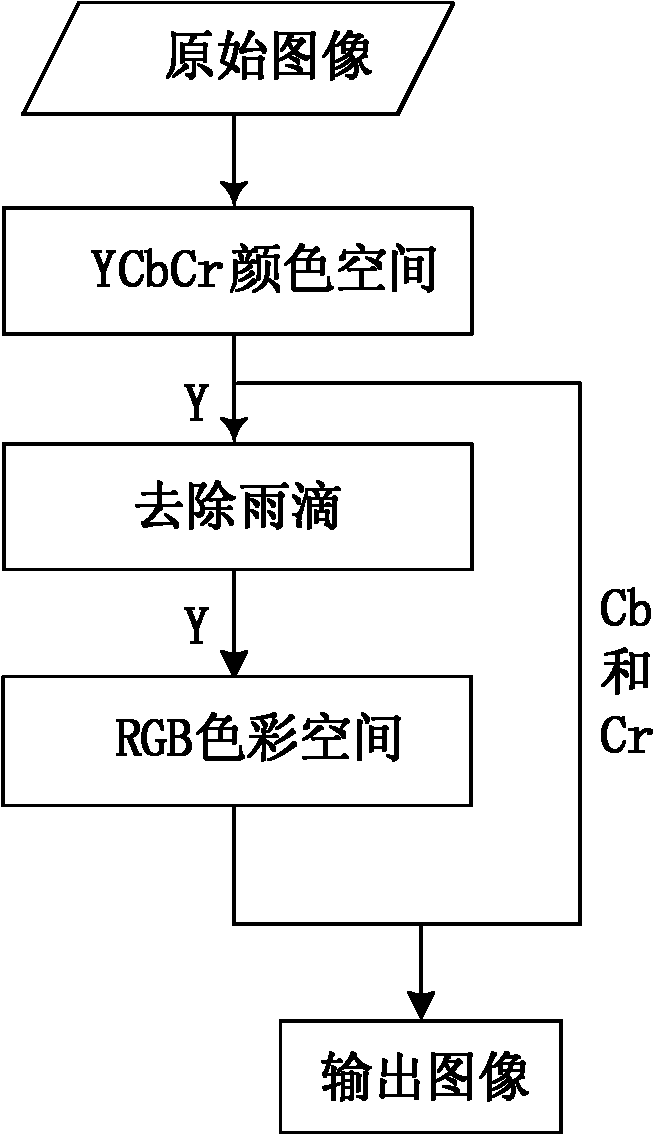

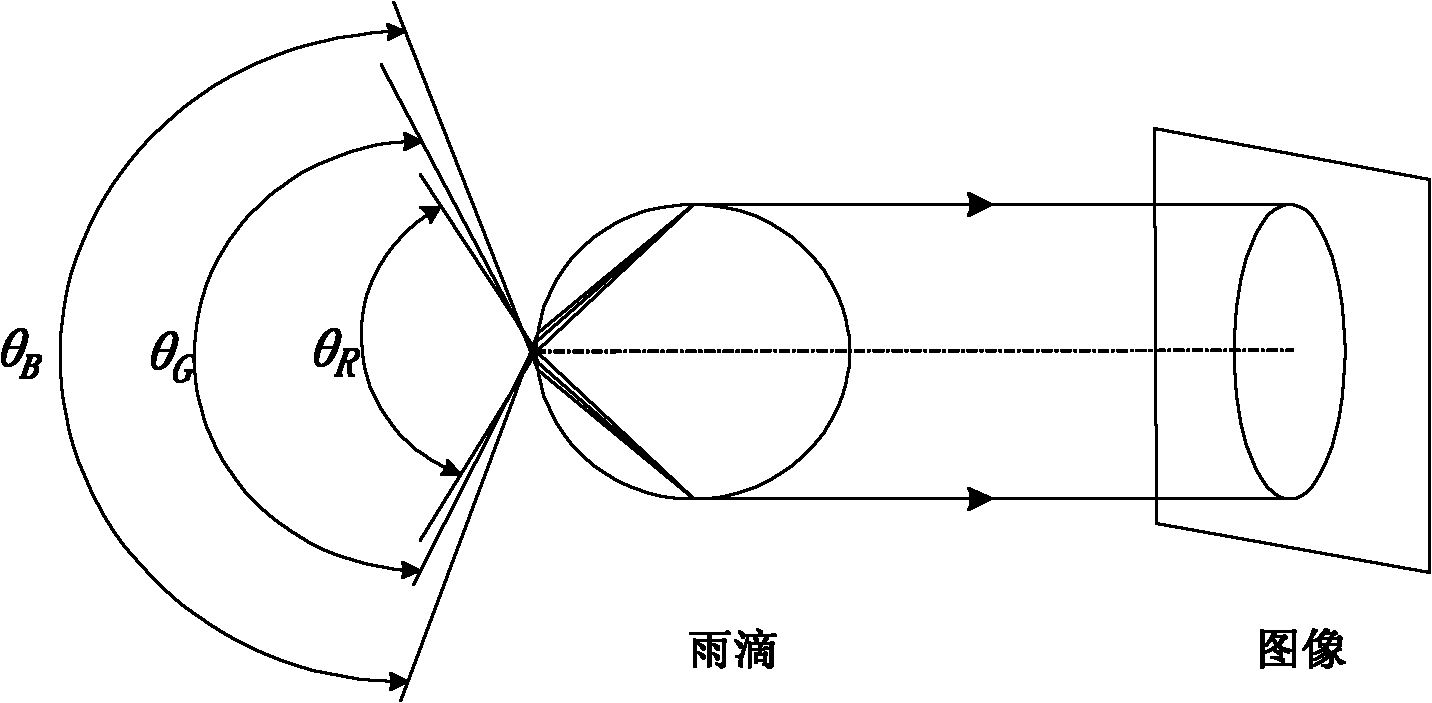

Simple-component video image rain field removing method

InactiveCN102186089AAchieve removalImprove real-time performanceColor signal processing circuitsSimple componentYcbcr color space

The invention discloses a simple-component video image rain field removing method, which is characterized by comprising the following steps of: first converting an image into a YCbCr color space in combination with color space conversion according to the color attributes of raindrops; then extracting a Y component, and performing raindrop removal on the Y component by using the conventional rain field removing method; and finally converting the image into a red, green and blue (RGB) color space by combining Cb and Cr components. By the method, simple-component rain field removal is realized; and compared with the conventional three-component rain removing method, the invention saves the processing of two components and improves the real-time performance.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

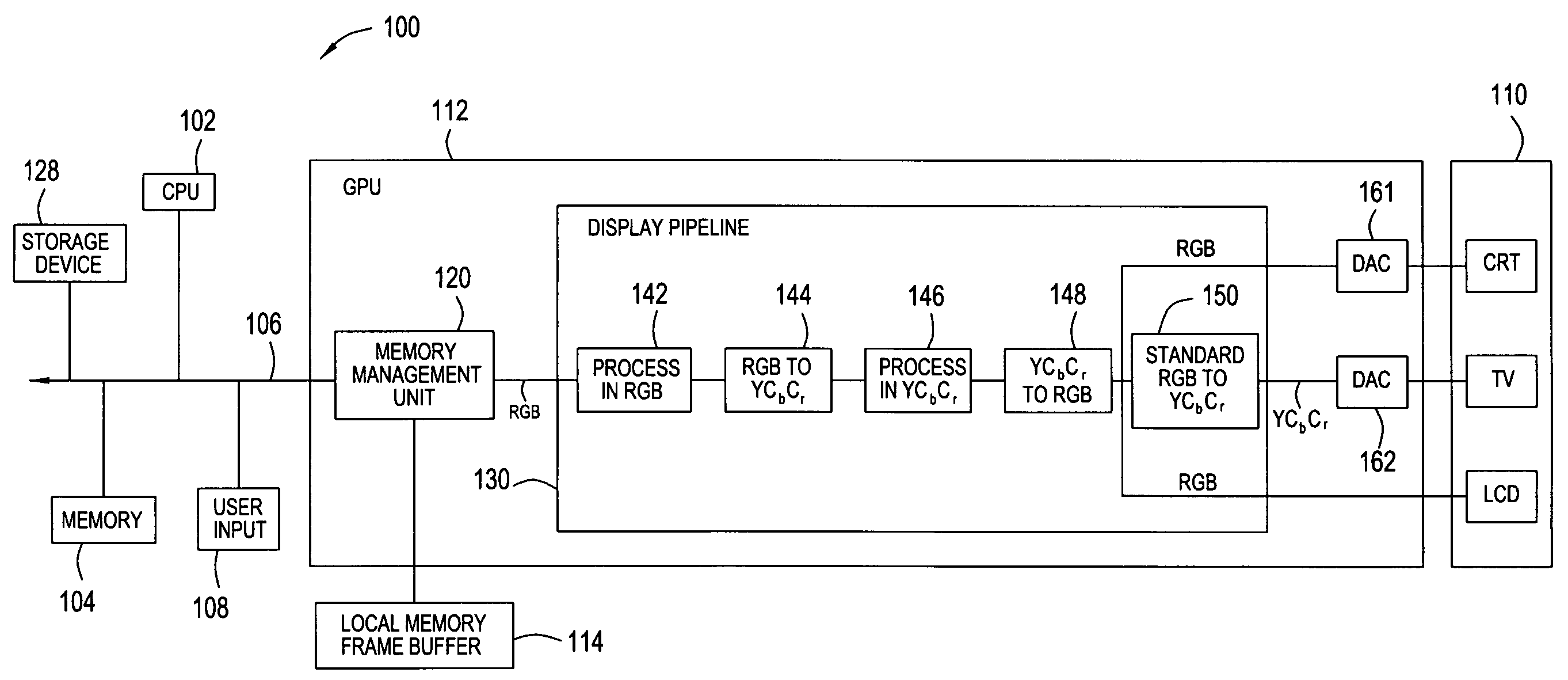

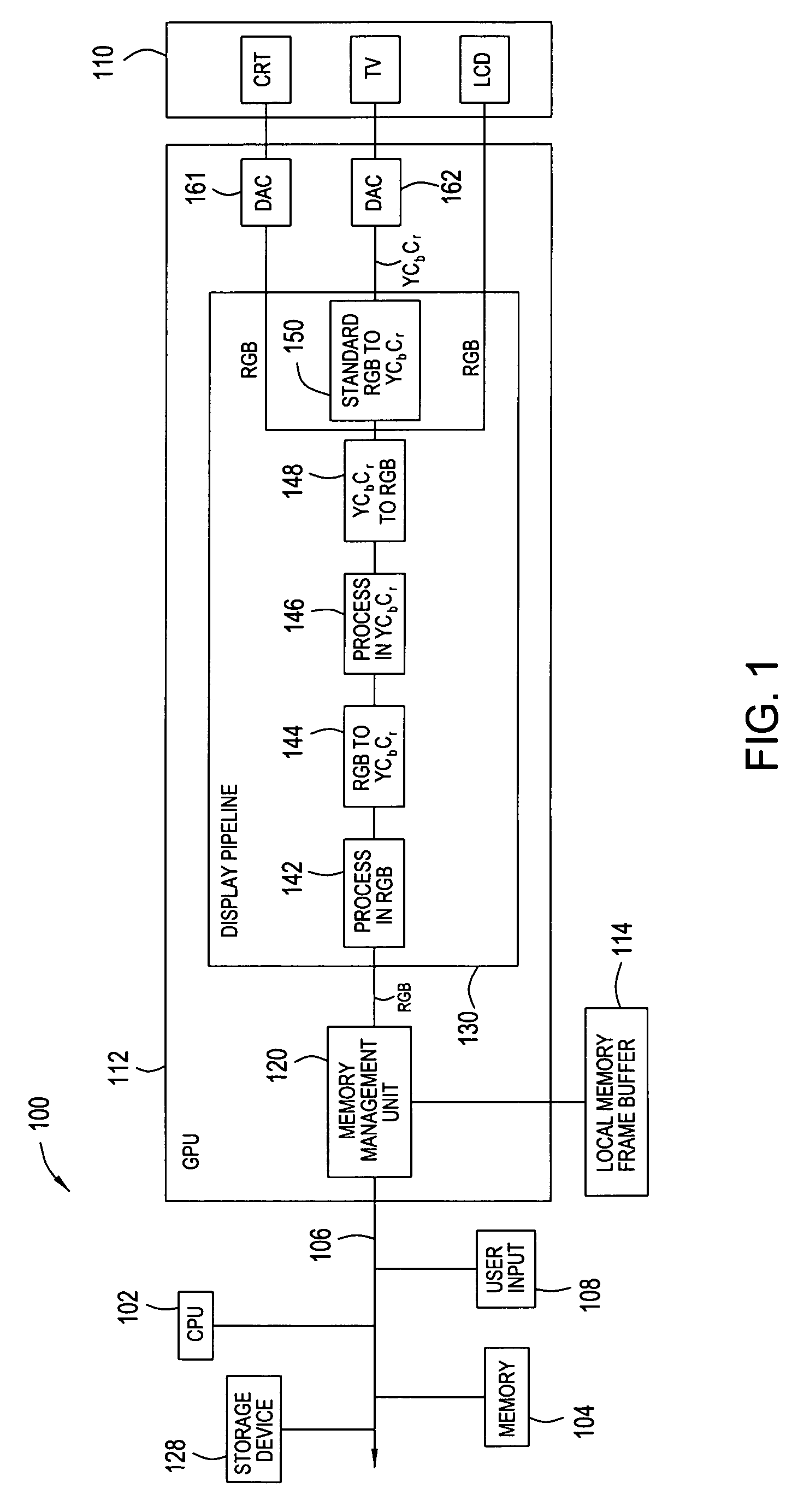

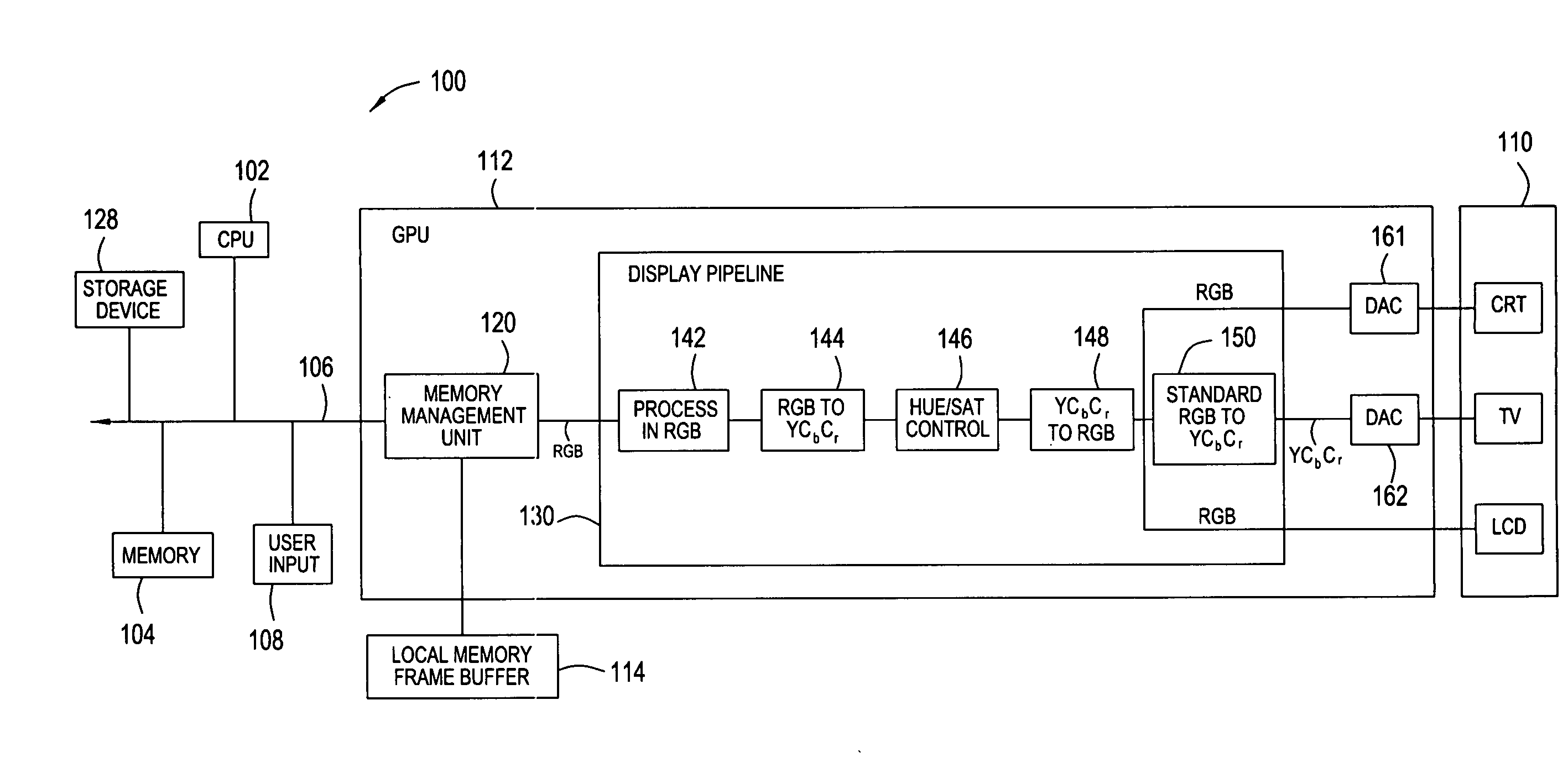

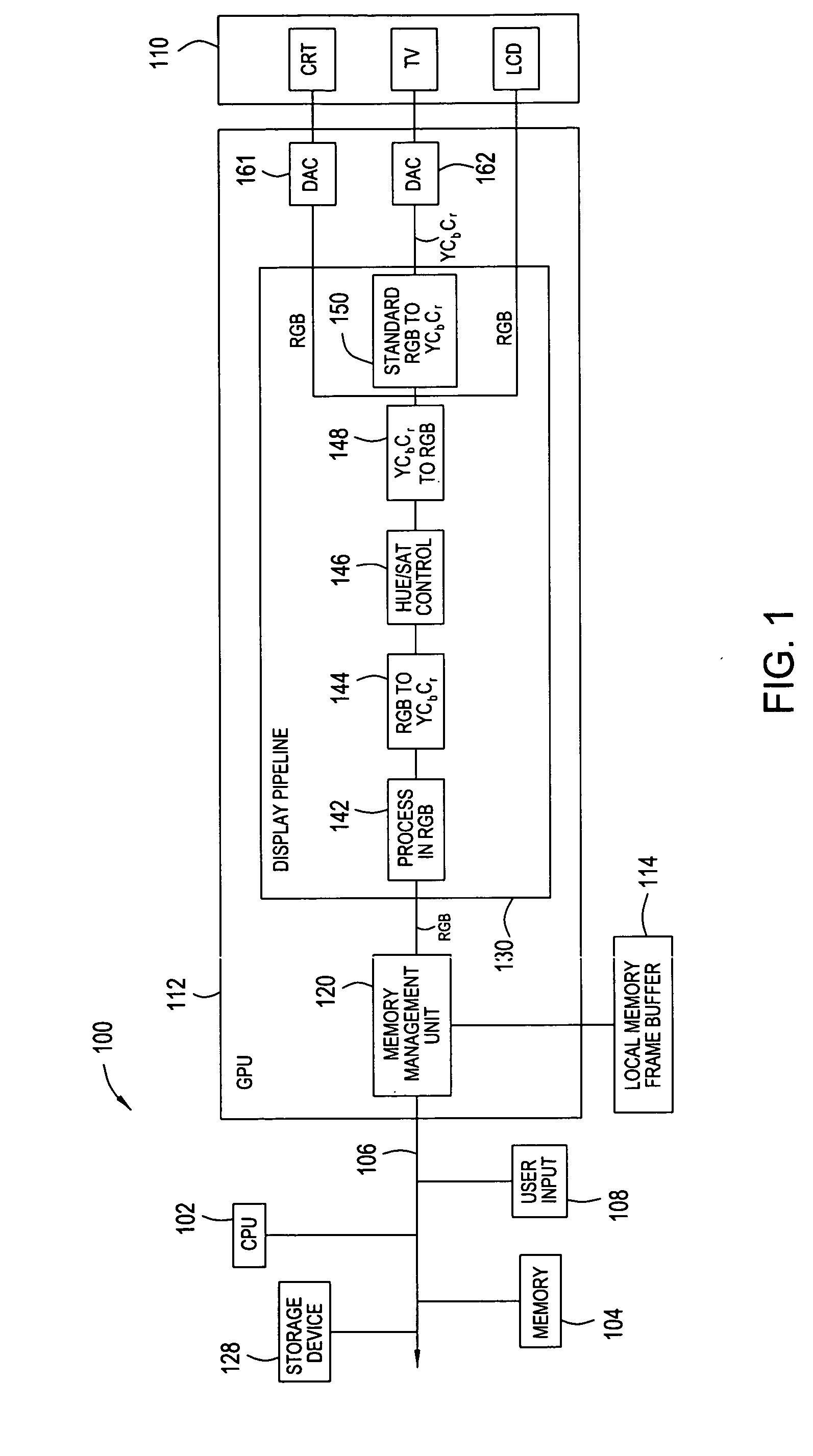

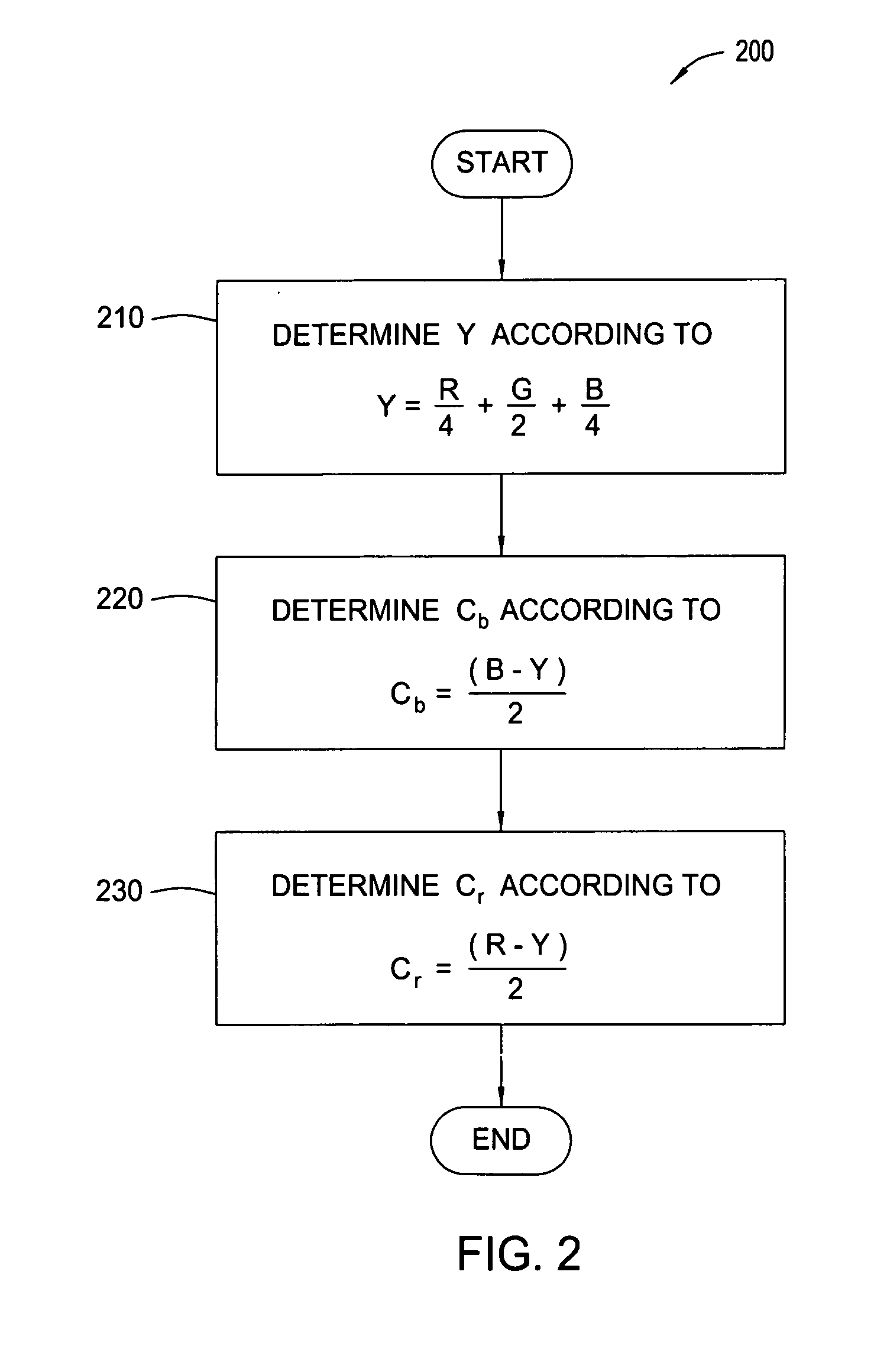

Minimalist color space converters for optimizing image processing operations

ActiveUS7050065B1Low costSimple processCathode-ray tube indicatorsPictoral communicationGraphicsImaging processing

An apparatus for graphics processing unit, which includes a memory for storing pixel data in a red, green and blue (RGB) color space and a display pipeline. The display pipeline includes an RGB color space to a luminance color, blue color difference and red color difference (YCbCr) color space converter module configured to convert the pixel data from the RGB color space to the YCbCr color space. The RGB to YCbCr color space converter module generates a luminance color component (Y) of the pixel data by adding ¼ of a red color (R) component of the pixel data to ½ of a green color (G) component of the pixel data and ¼ of a blue color (B) component of the pixel data. The luminance color component (Y) of the pixel data may be determined by left shifting the green color (G) component of the pixel data by one bit, adding the result to the red color (R) component of the pixel data and the blue color (B) component of the pixel data, and right shifting the sum by two bits.

Owner:NVIDIA CORP

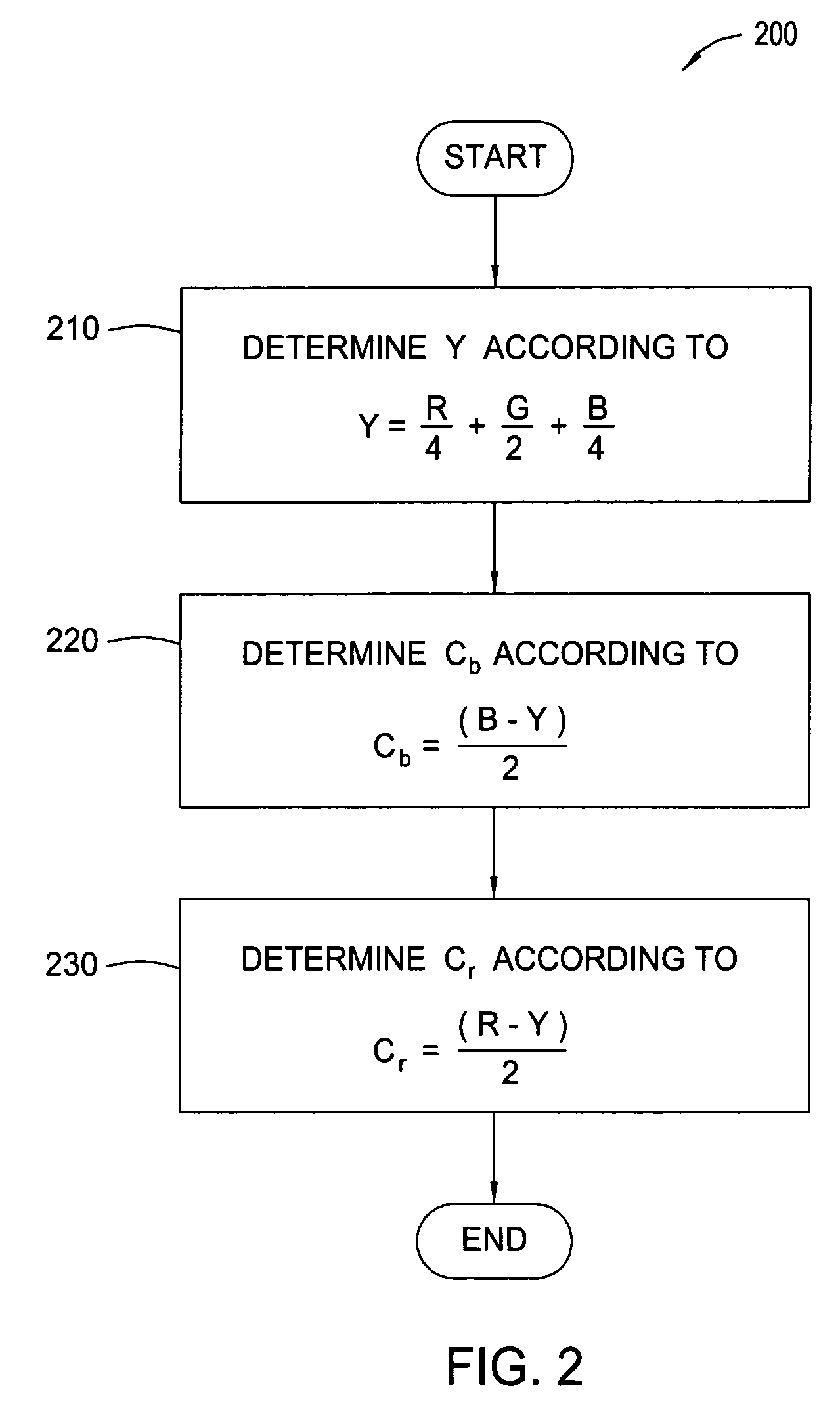

Face detection method based on Adaboost algorithm

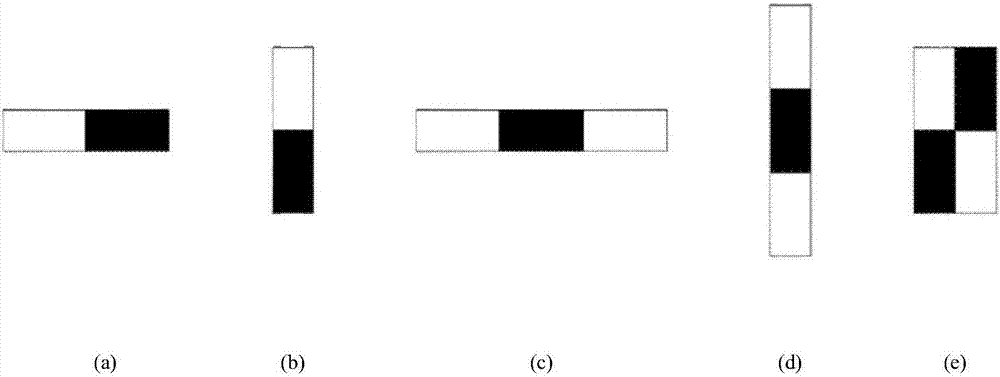

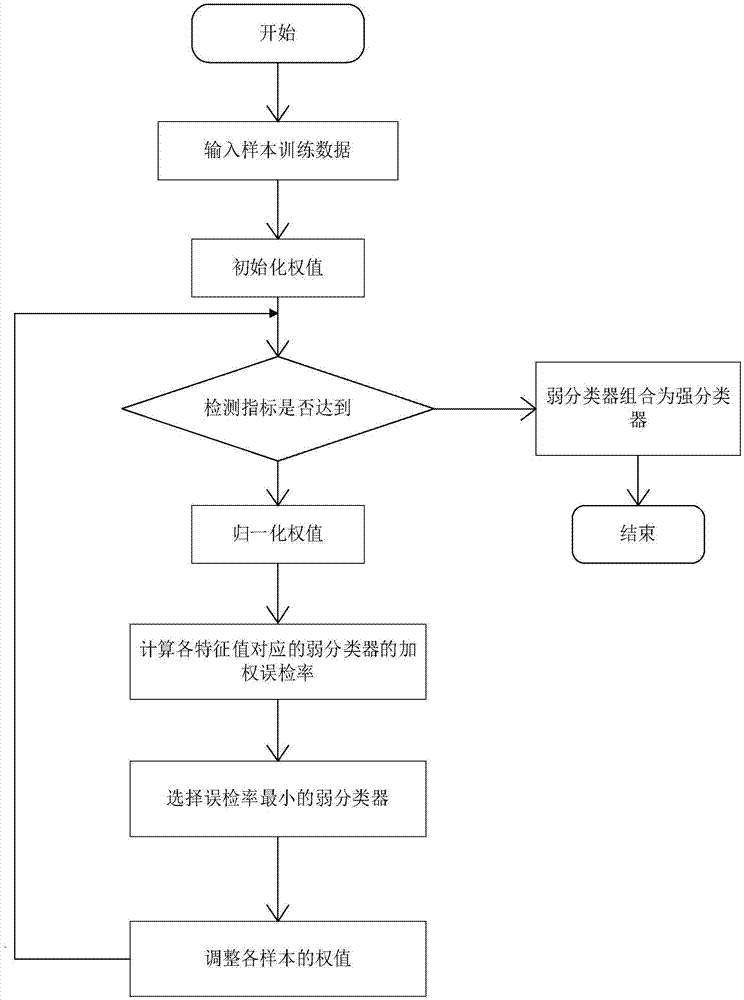

InactiveCN107220624ADetection speedHigh speedCharacter and pattern recognitionFace detectionColor Scale

The invention relates to a face detection method based on an Adaboost algorithm, which comprises the steps of preprocessing a face image, performing skin color segmentation in an YCbCr color space, acquiring a face candidate region, further performing face detection according to the Adaboost algorithm, and matching a screened face region with a face template, wherein face image preprocessing comprises grayscale normalization, light compensation, filtering and noise reduction and geometric normalization; skin color segmentation comprises color space conversion, skin color segmentation performed by using a color scale model, and further face candidate region screening according to the area of a skin color connected region and the length-width ratio of an external rectangle; the Adaboost face detection algorithm comprises that weak classifiers are trained, the weak classifiers are combined into strong classifiers, and the strong classifiers are connected in series to form a cascade classifier; and face template matching comprises that the matching degree between the candidate face region acquired through processing and a face template is measured by using the weighted Euclidean distance. The face detection method improves the face detection speed and accuracy, and is easy to implement and operate, stable and reliable.

Owner:SOUTHEAST UNIV

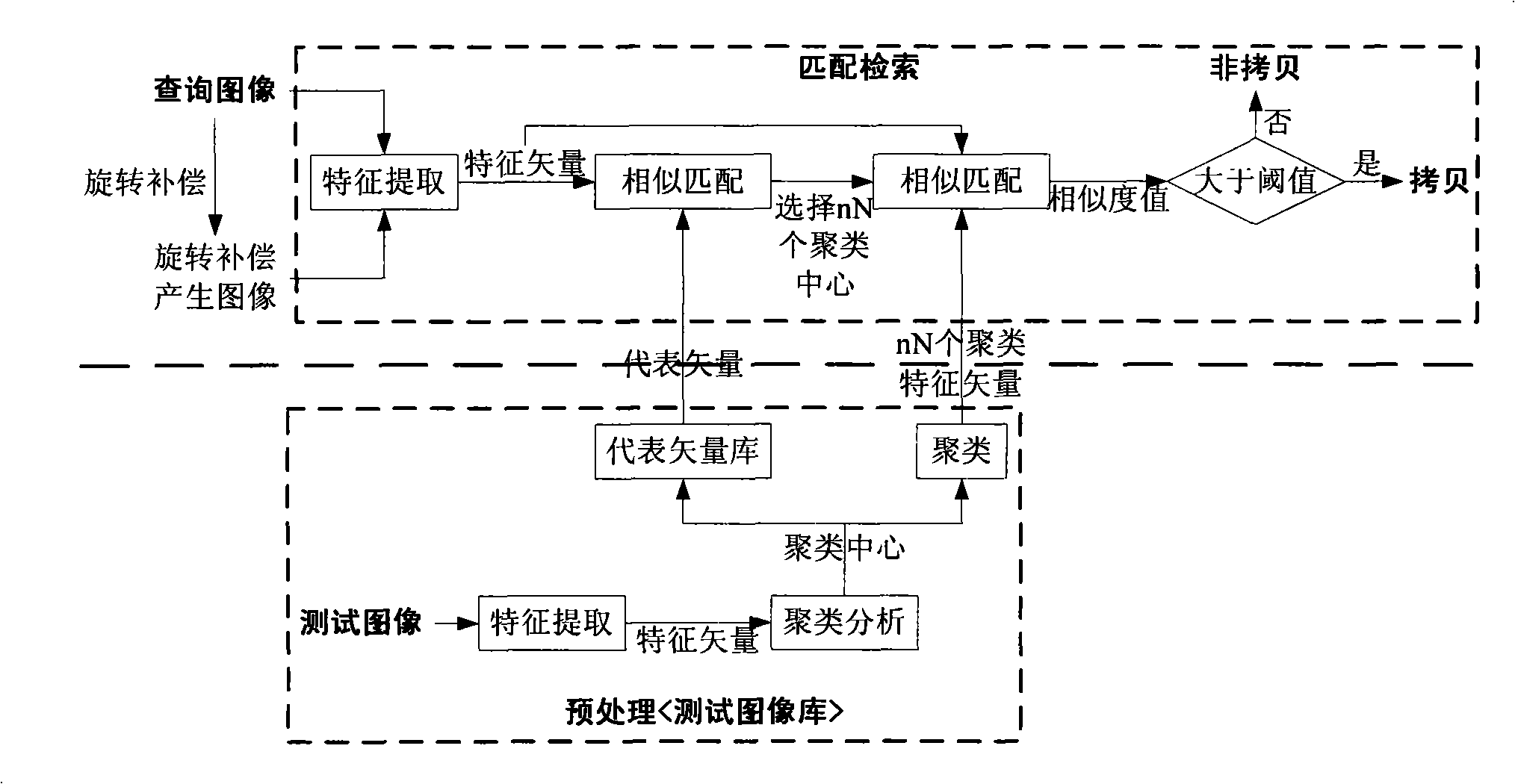

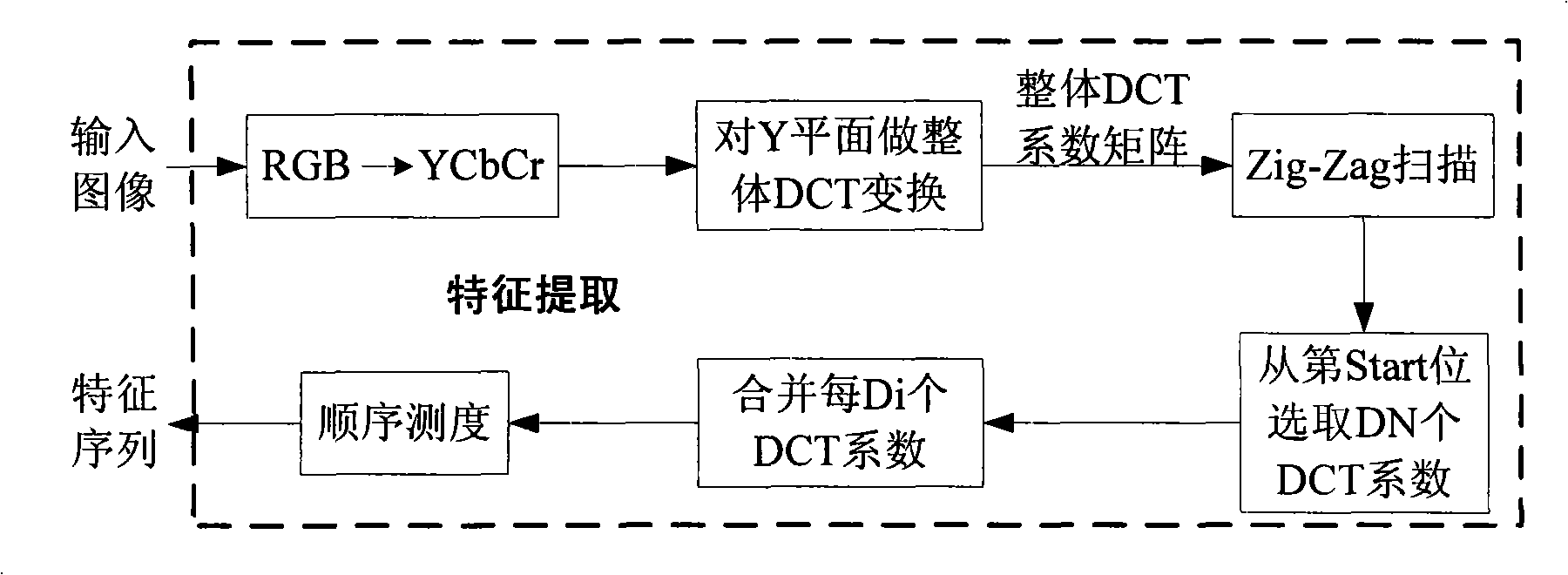

Robust image copy detection method base on content

InactiveCN101308567AImprove robustnessImprove query efficiencyImage data processing detailsSpecial data processing applicationsFeature vectorDct transform

The invention discloses a content-based robust image copy detection method, which has the following steps: to extract the feature vector of the testing image; to select the integral DCT transform coefficients of a plane Y of the original image YCbCr color space, to calculate the order measurement of the coefficients to obtain a coefficient sequence which is used as the feature vector of the testing image; then to establish testing image representative vector libraries; to execute clustering analysis to the feature vector sets of the testing image representative vector libraries to select the feature vectors closest to the clustering center as the clustering representatives to constitute a clustering representative vector library; to respectively search the matched testing image representative vector libraries to inquire the image feature vectors and the image feature vectors after rotation compensation, and to determine the belonged class; then to execute sequential matching search to each image feature vector in the clustering, and to determine whether the copy of the inquired image exists. The invention shows a higher robustness to help improve the inquiring efficiency, and has practical value as well as wide application in digital image database arrangement, digital image copyright protection and piracy tracking.

Owner:HUAZHONG UNIV OF SCI & TECH

Information fusion positioning system and method based on RFID (radio frequency identification) and vision

InactiveCN104375509AAccurate, fast and stable autonomous navigationArrive successfullyPosition/course control in two dimensionsMotor controlYcbcr color space

The invention discloses an information fusion positioning system and method based on RFID (radio frequency identification) and vision. The information fusion positioning system acquires route information by a camera mounted at the bottom of a mobile robot when executing warehousing and transporting tasks, acquires key point information via an RFID radio-frequency device at the bottom of the robot, cuts images on the basis of colors, converts the color space into YCbCr from RGB, and segmenting thresholds of the red chromacity component; a processor inspects whether a running route of the robot is correct or not according to the cut images and positioning signals read by an RFID reader-writer and navigates and controls motion of the mobile robot, so that the mobile robot can be correctly, quickly and stably navigated automatically, cannot get lost and collide with other obstacles and can arrive at destinations smoothly.

Owner:HARBIN ZHONGDE HECHUANG INTELLIGENT EQUIP

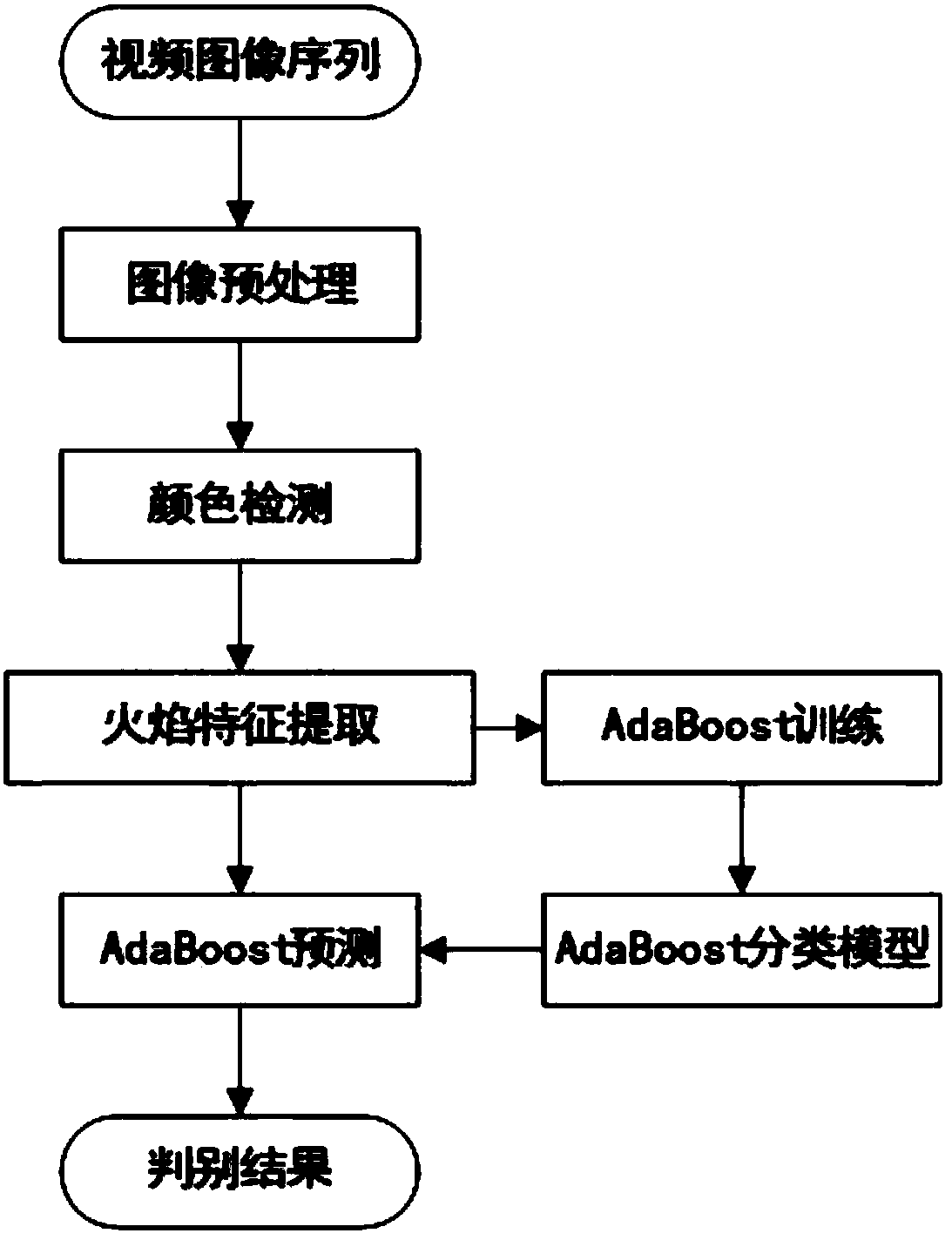

Video-based flame detection method

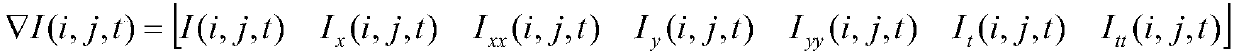

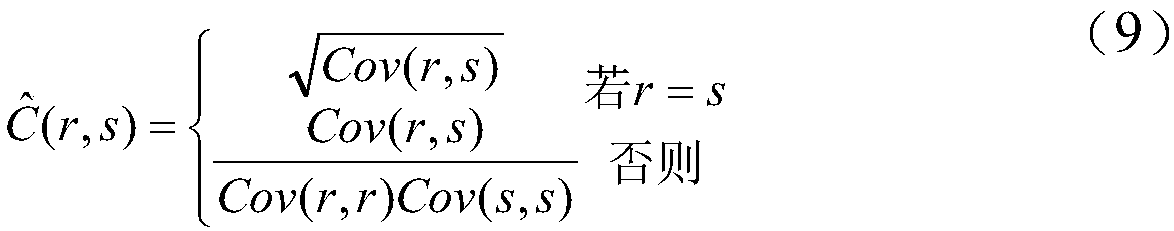

ActiveCN107944359AReal-time detectionReduce false alarm rateCharacter and pattern recognitionCovarianceYcbcr color space

The invention discloses a video-based flame detection method, and solves the technical problem in improving the flame detection accuracy. The video-based flame detection method comprises the followingsteps of obtaining a video image sequence; performing image preprocessing; performing color detection; performing flame feature extraction; and performing AdaBoost prediction. Compared with the priorart, the method has the advantages that all candidate flame points meeting a condition in a video image are found out according to characteristics of flame pixels in RGB and YCbCr color spaces; then,the video image is subjected to block segmentation; covariance matrixes corresponding to color and brightness attributes of flame pixel sets in video blocks are calculated; upper or lower triangularparts in the color and brightness covariance matrixes are extracted as eigenvectors; an obtained eigenvector set serves as an input of an AdaBoost classifier; the eigenvectors are input to an AdaBoostclassification model; an output is a judgment whether a fire happens or not; the fire happening situation can be detected out in real time; the false alarm rate can be reduced; and relatively high accuracy and strong robustness are achieved.

Owner:ZDST COMM TECH CO LTD

Hue and saturation control module

A graphics processing graphics processing apparatus, which includes an RGB color space to a luminance color, blue color difference and red color difference (YCbCr) color space converter module configured to convert one or more pixel data from the RGB color space to the YCbCr color space using a set of approximated color space coefficients. The graphics processing apparatus further includes a hue and saturation control module coupled to the RGB to YCbCr color space converter module. The hue and saturation control module is configured to modify the hue and saturation of the pixel data in the YCbCr color space. The graphics processing apparatus further includes a YCbCr to RGB color space converter module configured to convert the pixel data from the YCbCr color space to the RGB color space.

Owner:NVIDIA CORP

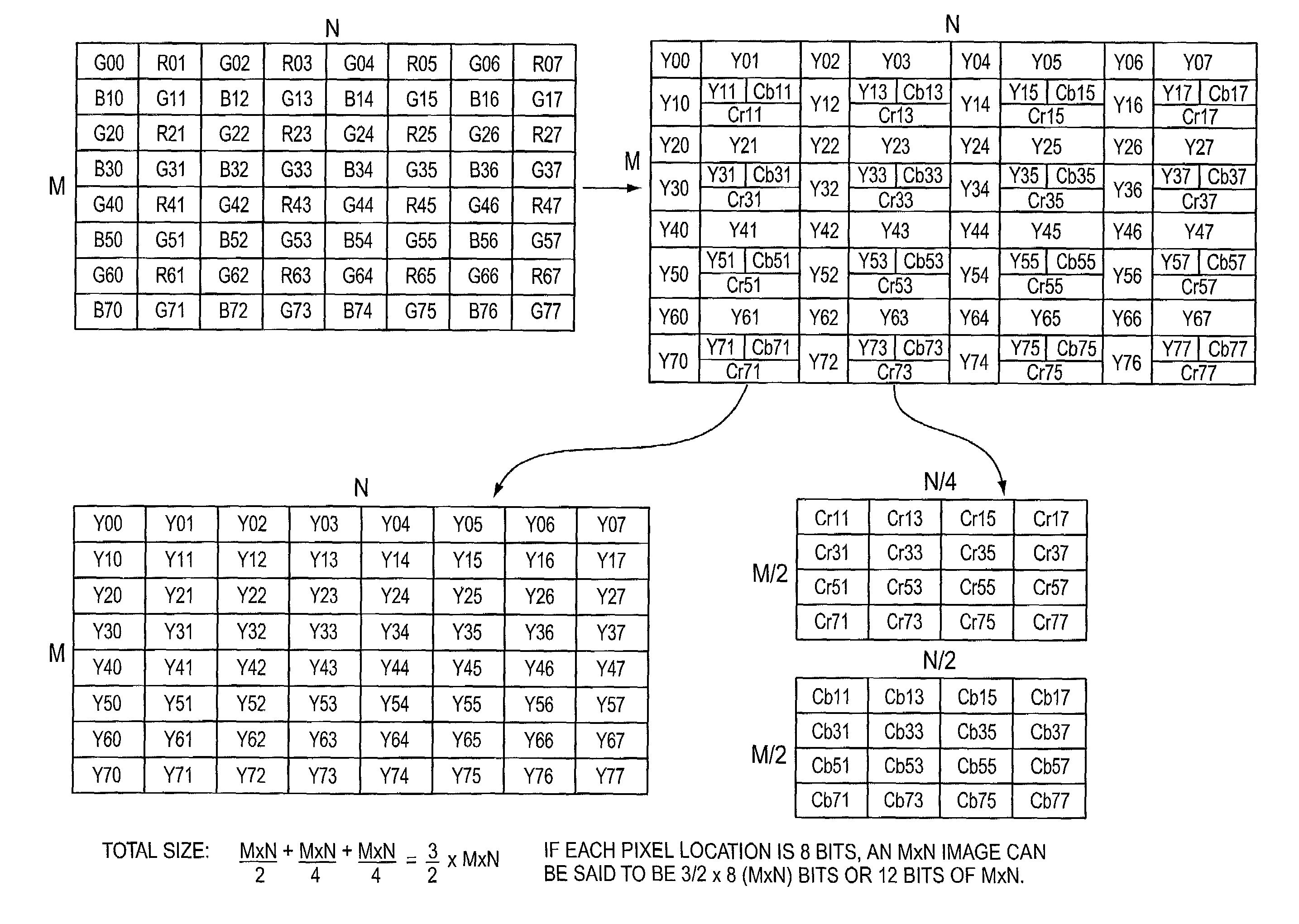

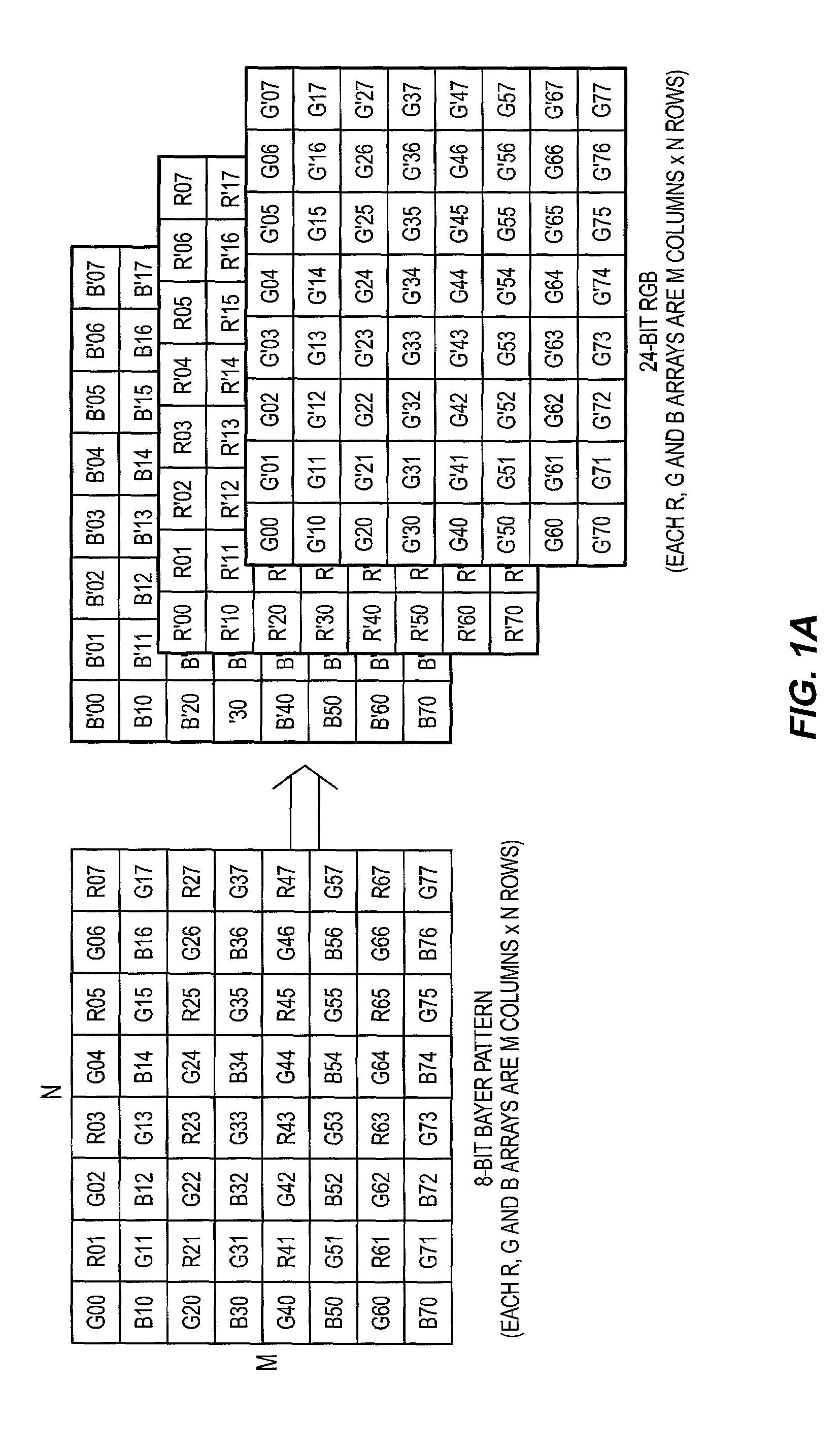

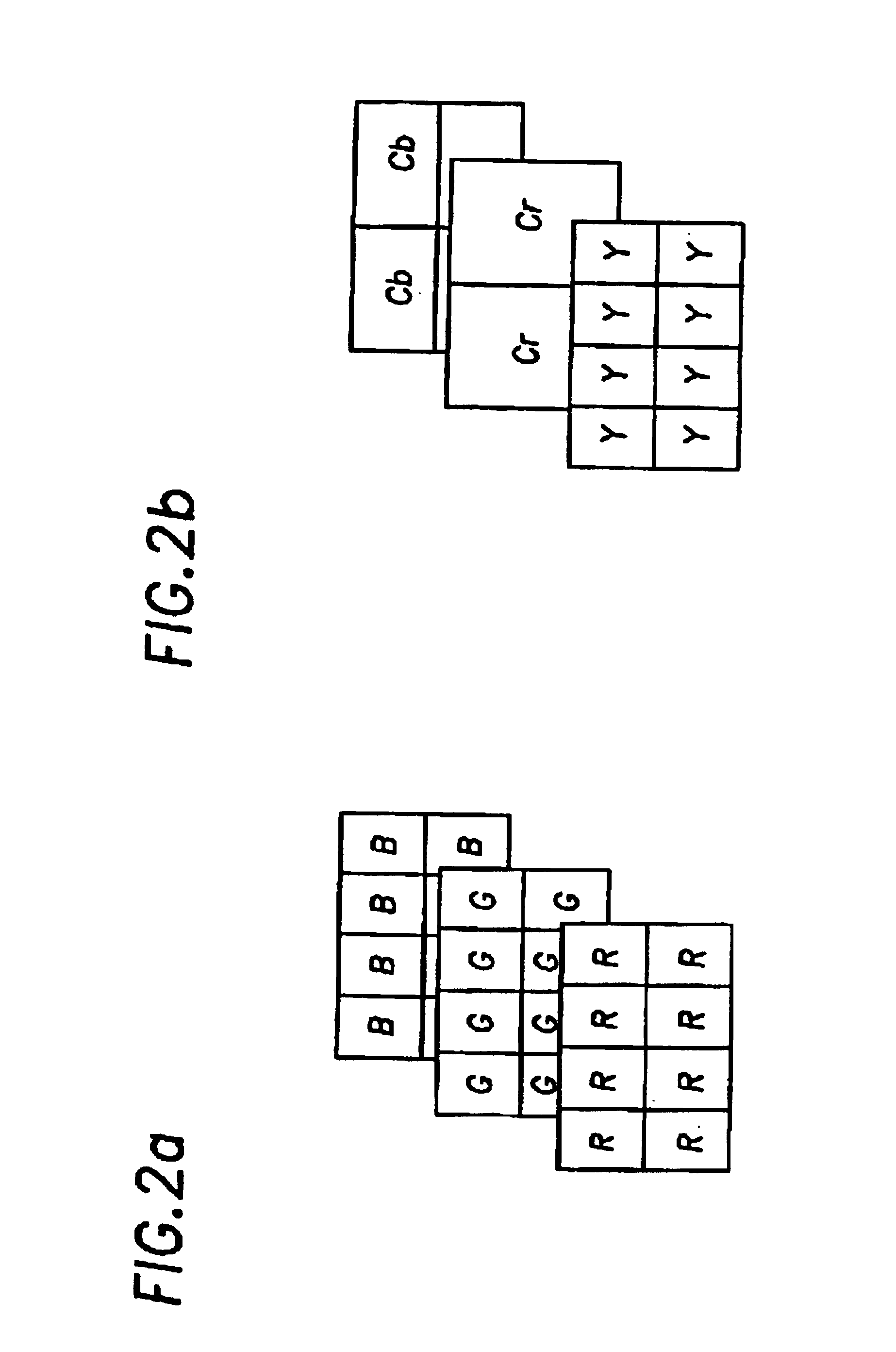

Integrated color interpolation and color space conversion algorithm from 8-bit Bayer pattern RGB color space to 12-bit YCrCb color space

InactiveUS7015962B2Television system detailsColor signal processing circuitsImage resolutionColor interpolation

An integrated color interpolation and color space conversion technique and apparatus. A raw image that is arranged in a Bayer pattern where each pixel has only one of the color components needed to form a full color resolution pixel may be converted using this technique directly to a YCrCb image space without any intermediate conversion or interpolation steps. Specifically, in one instance, an 8-bit Bayer pattern raw image may be converted directly to a 12-bit YCrCb space in a single step approach. Such an integrated technique may more readily and inexpensively implemented in hardware such as on a digital camera, or in software.

Owner:INTEL CORP

Human skin tone detection in YCbCr space

A method for detecting human skin tone in a video signal by comparing image data from the video signal to a color profile table obtained from a plurality of training sequences. The image data comprising a plurality of pixels each having a plurality of color components, the components preferably being in the YCbCr color space. The method includes separating image data for each frame in the video signal into sets of data, averaging the image data in each data set to generate mean values for each color component in the data set, comparing the mean values to a stored color profile correlating to human skin tone, and identifying data sets falling within the stored color profile.

Owner:SONY CORP +1

On the fly data transfer between RGB and YCrCb color spaces for DCT interface

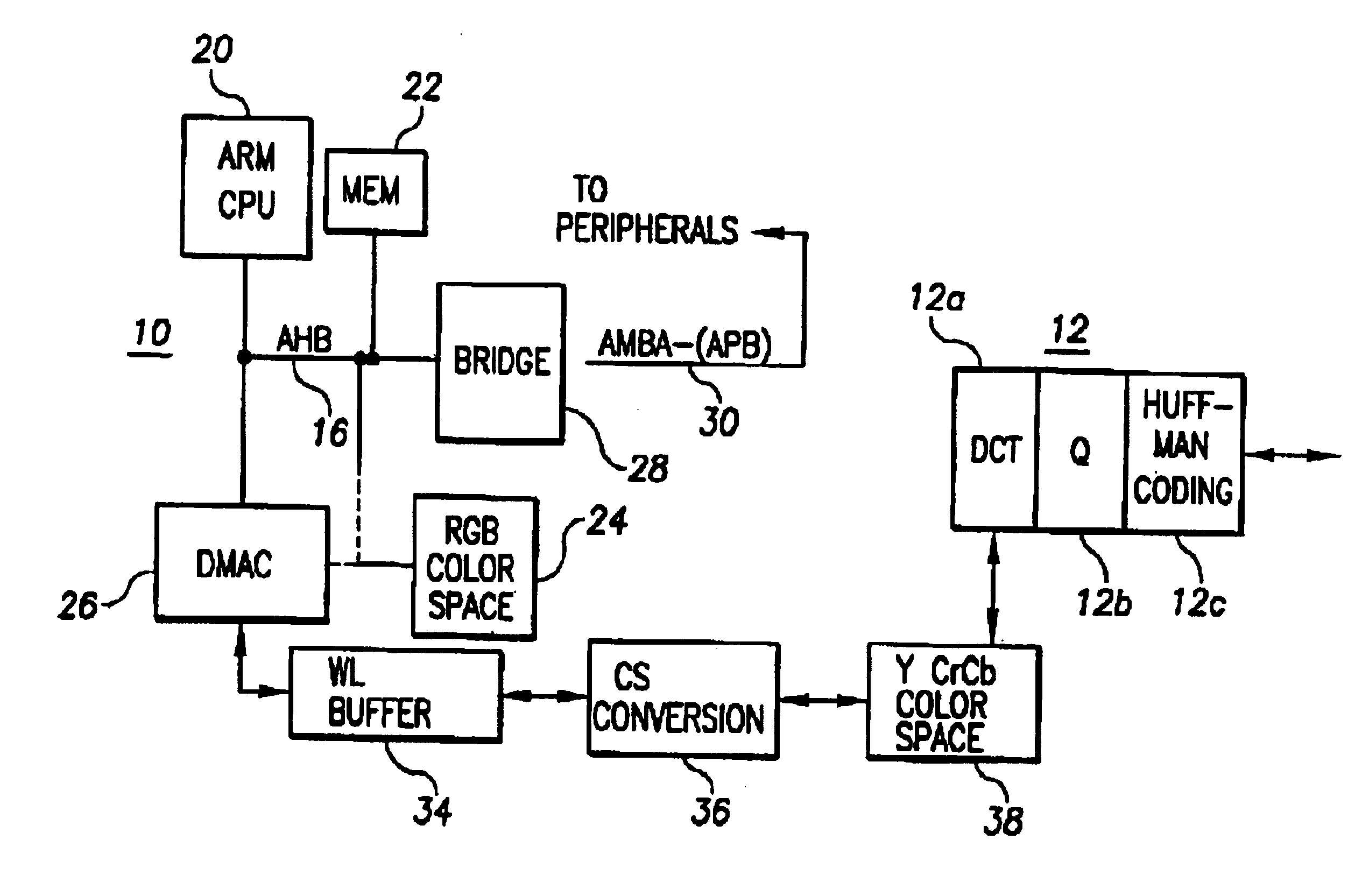

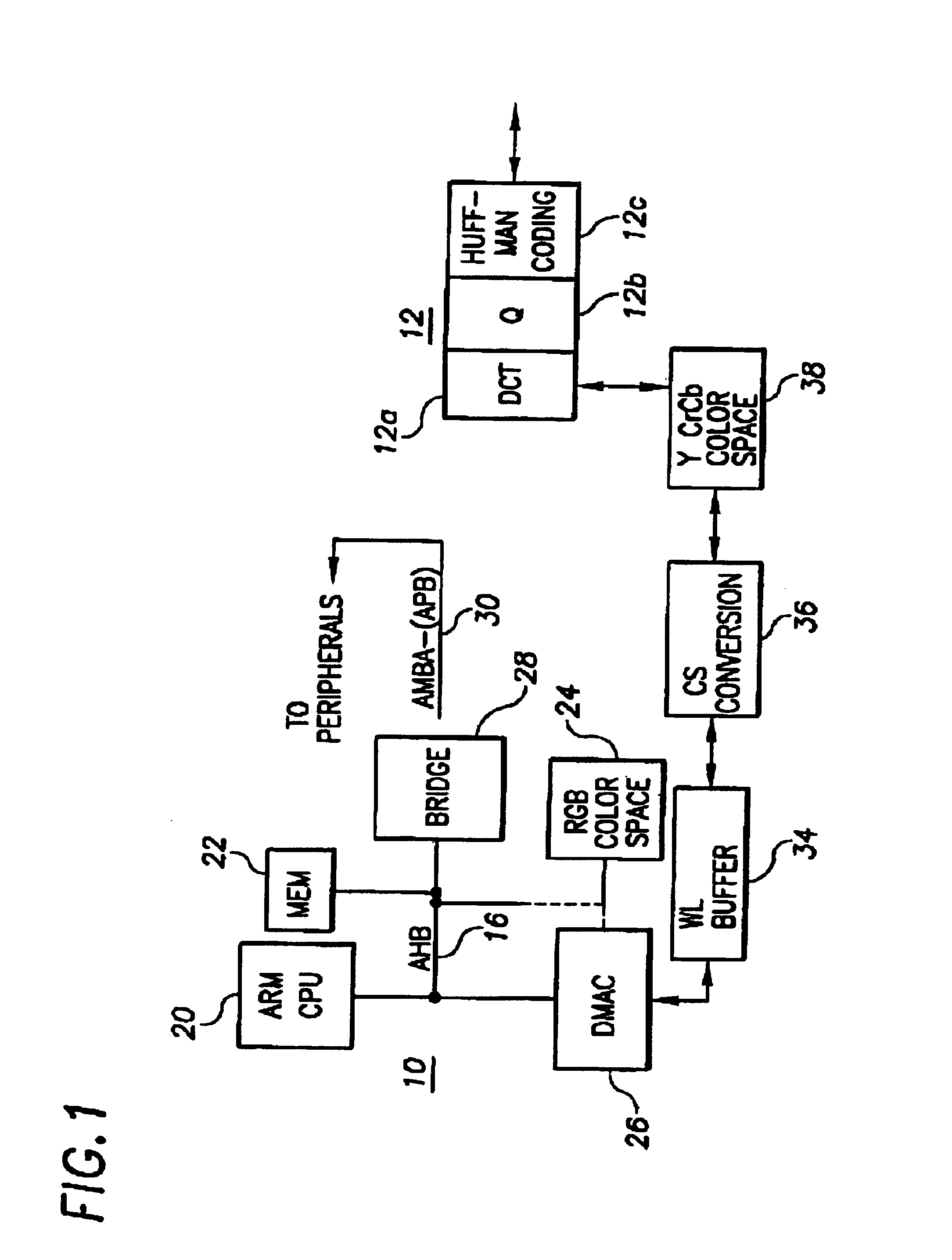

InactiveUS6940523B1Reduced space requirementsIncreasing internal buffering requirementColor television with pulse code modulationDigitally marking record carriersYcbcr color spaceRGB color space

A method for transferring data on the fly between an RGB color space and a YCrCb color space useful for a DCT block-computation engine significantly increases throughput and decreases processor overhead. According to one example embodiment, data is transferred from an RBG color space memory to a YCrCb color space memory in a form useful for presentation to a DCT block-computation engine. In response to accessing the RBG color space memory, the RBG values are asynchronously written to YCrCb intermediate buffers so that one of the YCrCb intermediate buffers is filled through sub-sampling in a manner useful for the DCT block-computation engine while another of the YCrCb intermediate buffers is still being filled. The DCT block-computation engine then accessed the filled YCrCb intermediate buffers while the other of the YCrCb intermediate buffers continues to collect RGB values from the RGB color space memory for the next DCT computation. Other aspects are directed to conversion of the RGB color space to the YCrCb color space using, respectively, a block-by-block conversion, a line-by-line conversion, and a word-by-word conversion.

Owner:PHILIPS ELECTRONICS NORTH AMERICA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com