Video-based flame detection method

A flame detection and video technology, applied in the field of fire detection, can solve the problems of single flame, low anti-interference, high false alarm rate and false alarm rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

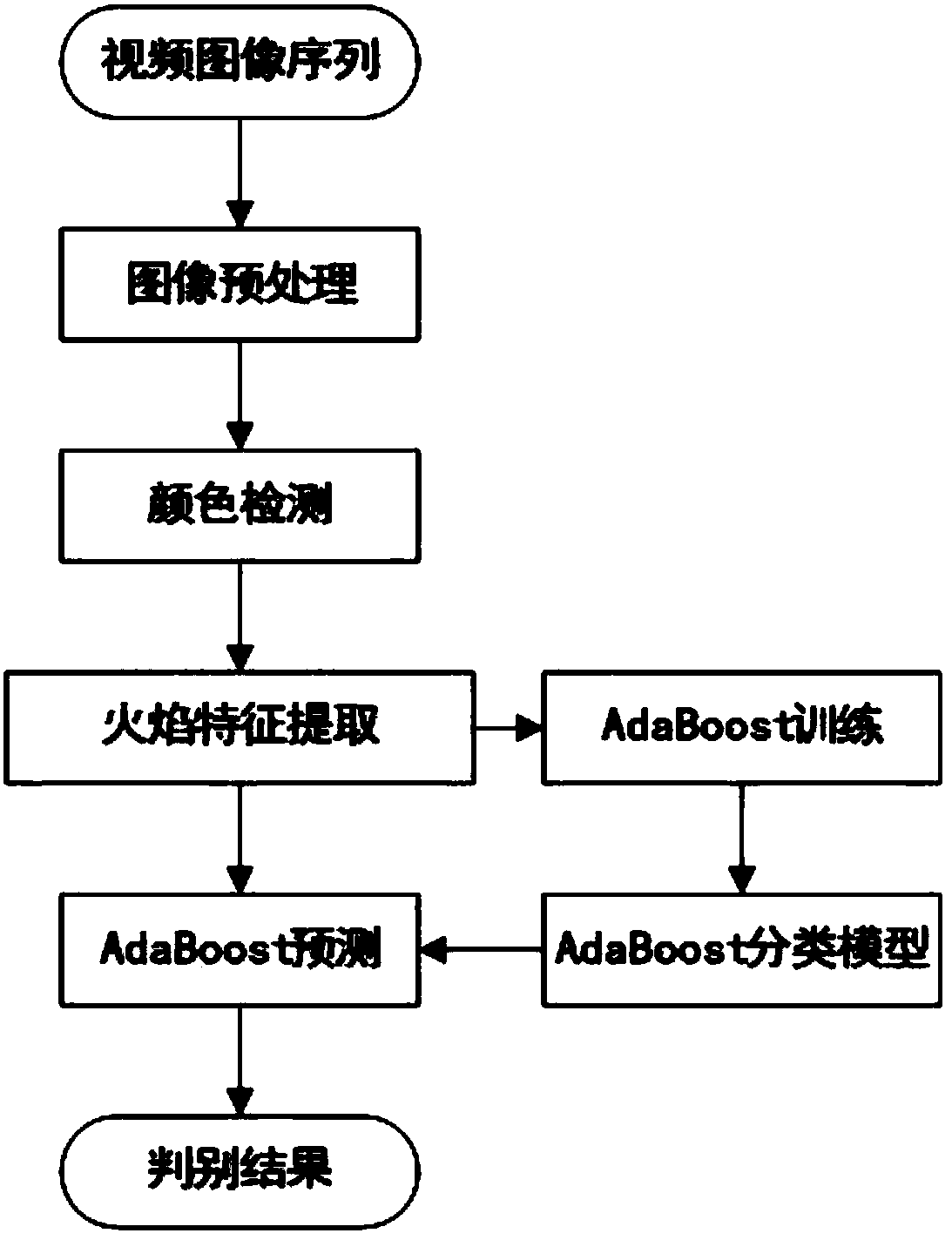

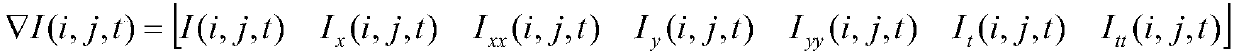

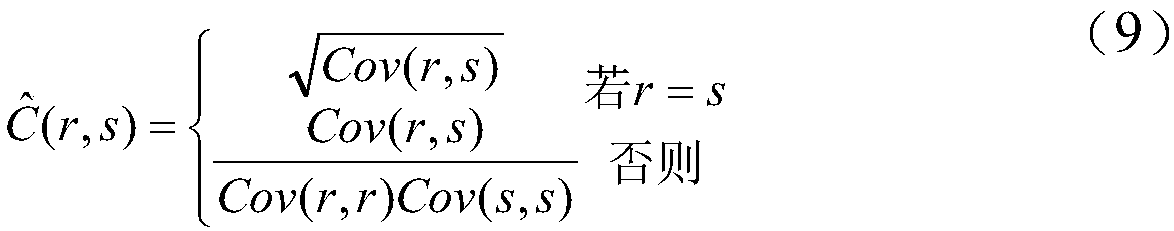

[0062] The present invention will be described in further detail below in conjunction with the accompanying drawings and embodiments. In the video-based flame detection method of the present invention, firstly, according to the characteristics of flame pixels in RGB and YCbCr color spaces, all candidate flame points satisfying the conditions in the video image are found out. Then, the video image is divided into blocks, the covariance matrix corresponding to the color and brightness attributes of the flame pixel set in the video block is calculated, and the upper triangle or lower triangle part of the color and brightness covariance matrix is extracted as a feature vector. Randomly select a large number of positive and negative samples as the training set, train based on the adaptive enhanced AdaBoost (Adaptive Boosting) classifier, and establish the corresponding classification model. Finally, calculate the feature vector of the candidate flame point video block, input the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com