Patents

Literature

103 results about "Local average" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The local average treatment effect (LATE), also known as the complier average causal effect (CACE), was first introduced into the econometrics literature by Guido W. Imbens and Joshua D. Angrist in 1994. It is the treatment effect for the subset of the sample that takes the treatment if and only if they were assigned to the treatment, otherwise known as the compliers.

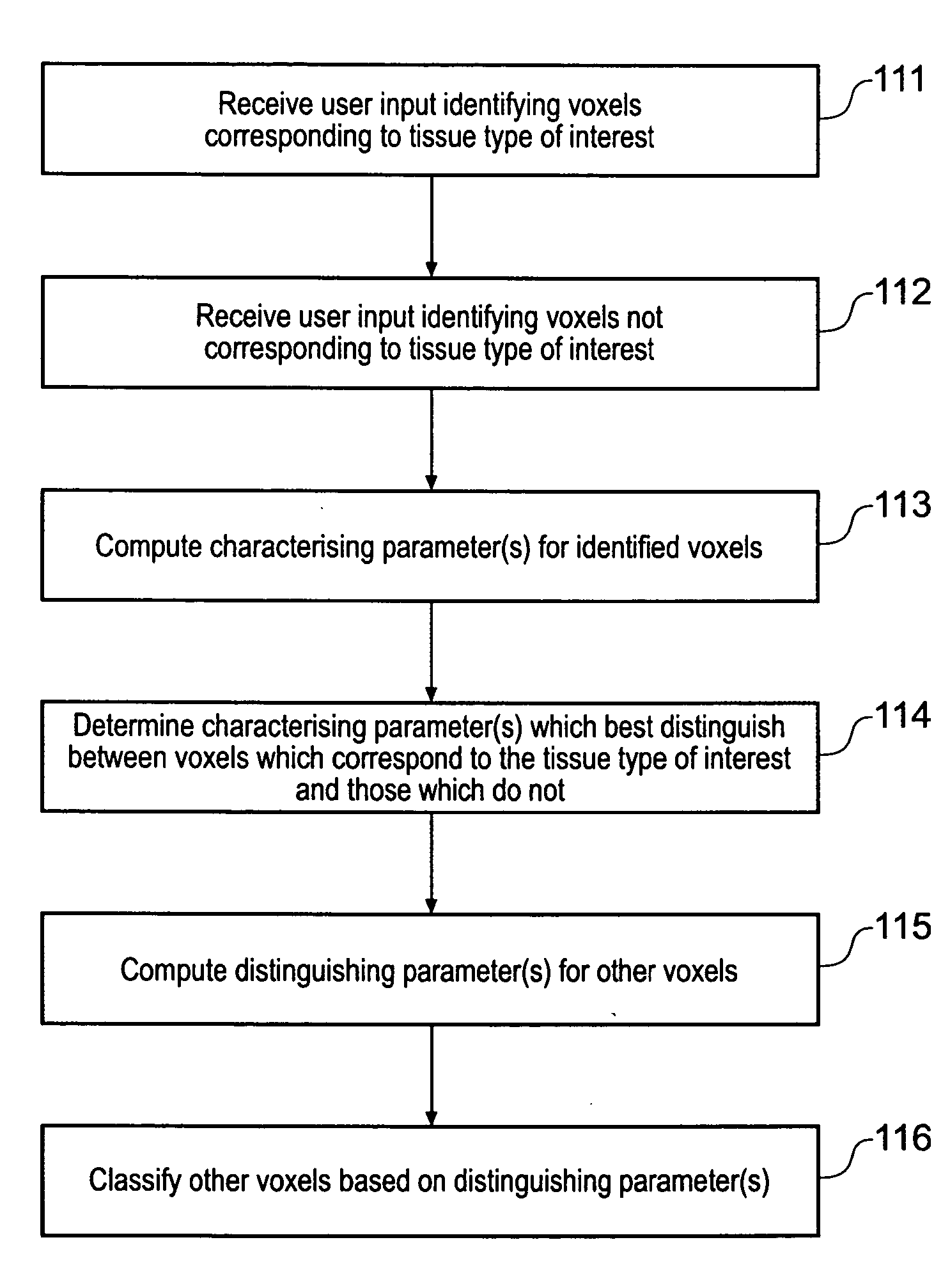

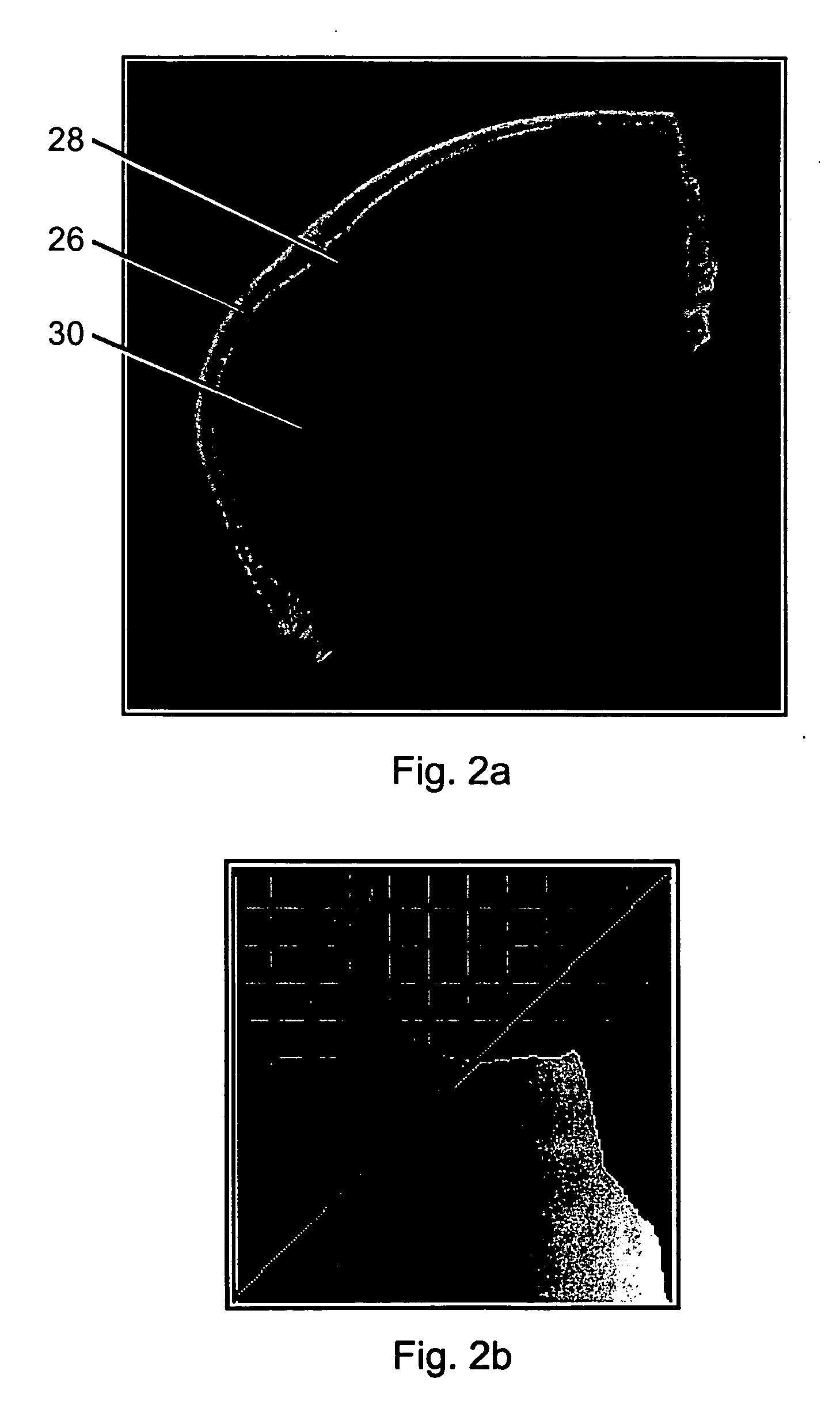

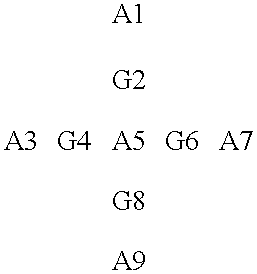

Displaying image data using automatic presets

InactiveUS20050017972A1Easy and intuitiveAccurate classificationUltrasonic/sonic/infrasonic diagnosticsImage enhancementPattern recognitionVoxel

A computer automated method that applies supervised pattern recognition to classify whether voxels in a medical image data set correspond to a tissue type of interest is described. The method comprises a user identifying examples of voxels which correspond to the tissue type of interest and examples of voxels which do not. Characterizing parameters, such as voxel value, local averages and local standard deviations of voxel value are then computed for the identified example voxels. From these characterizing parameters, one or more distinguishing parameters are identified. The distinguishing parameter are those parameters having values which depend on whether or not the voxel with which they are associated corresponds to the tissue type of interest. The distinguishing parameters are then computed for other voxels in the medical image data set, and these voxels are classified on the basis of the value of their distinguishing parameters. The approach allows tissue types which differ only slightly to be distinguished according to a user's wishes.

Owner:VOXAR

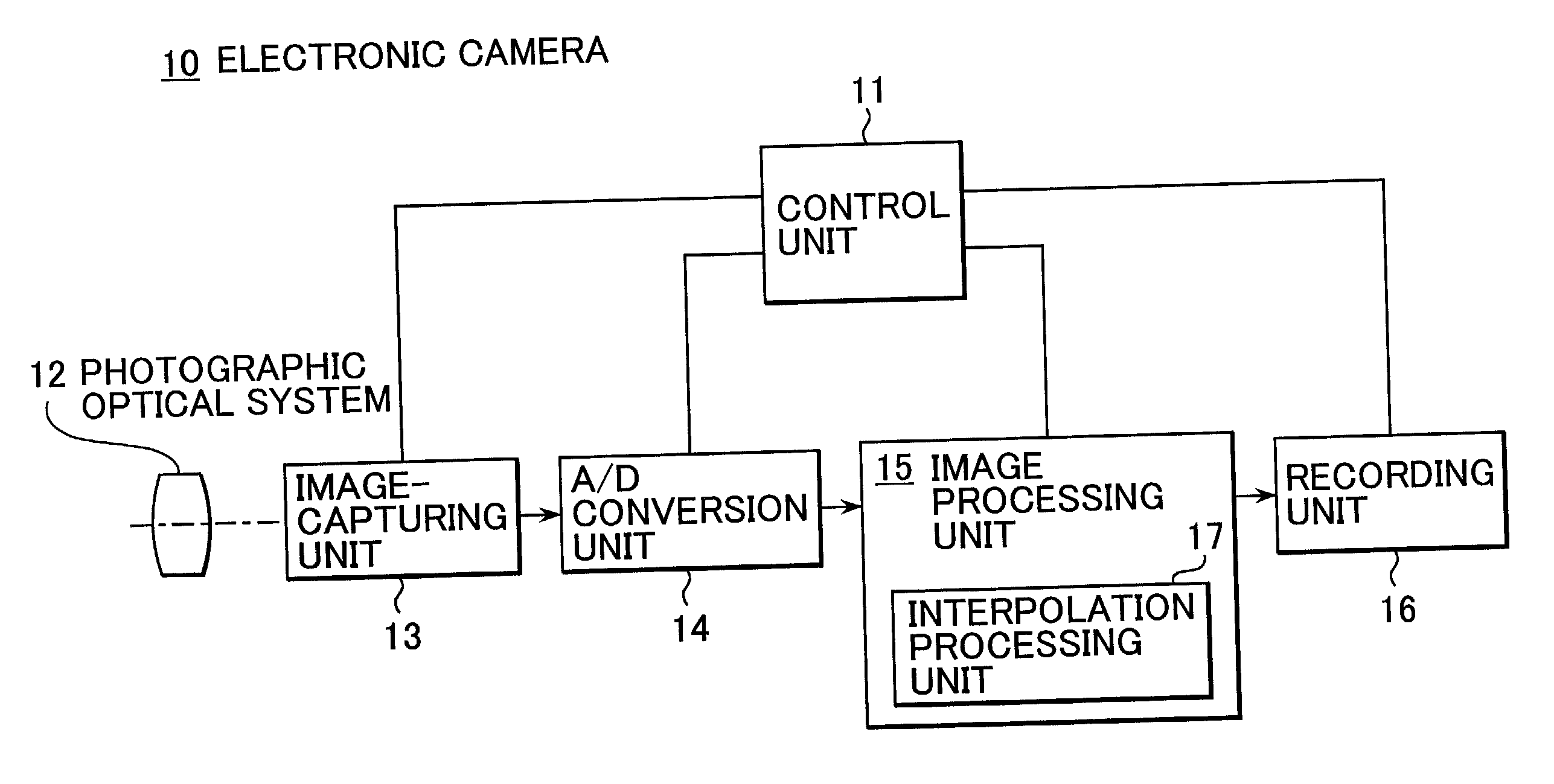

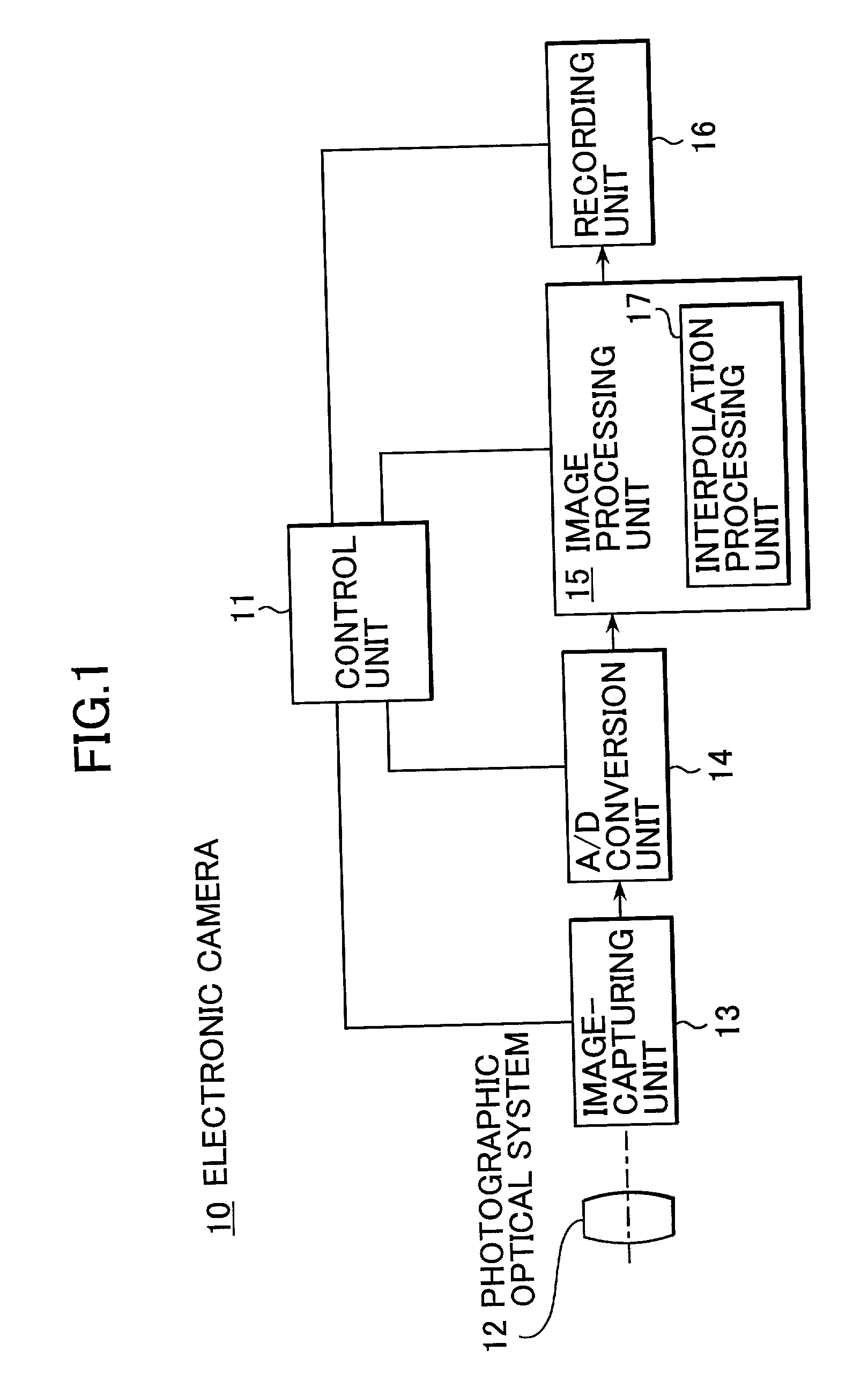

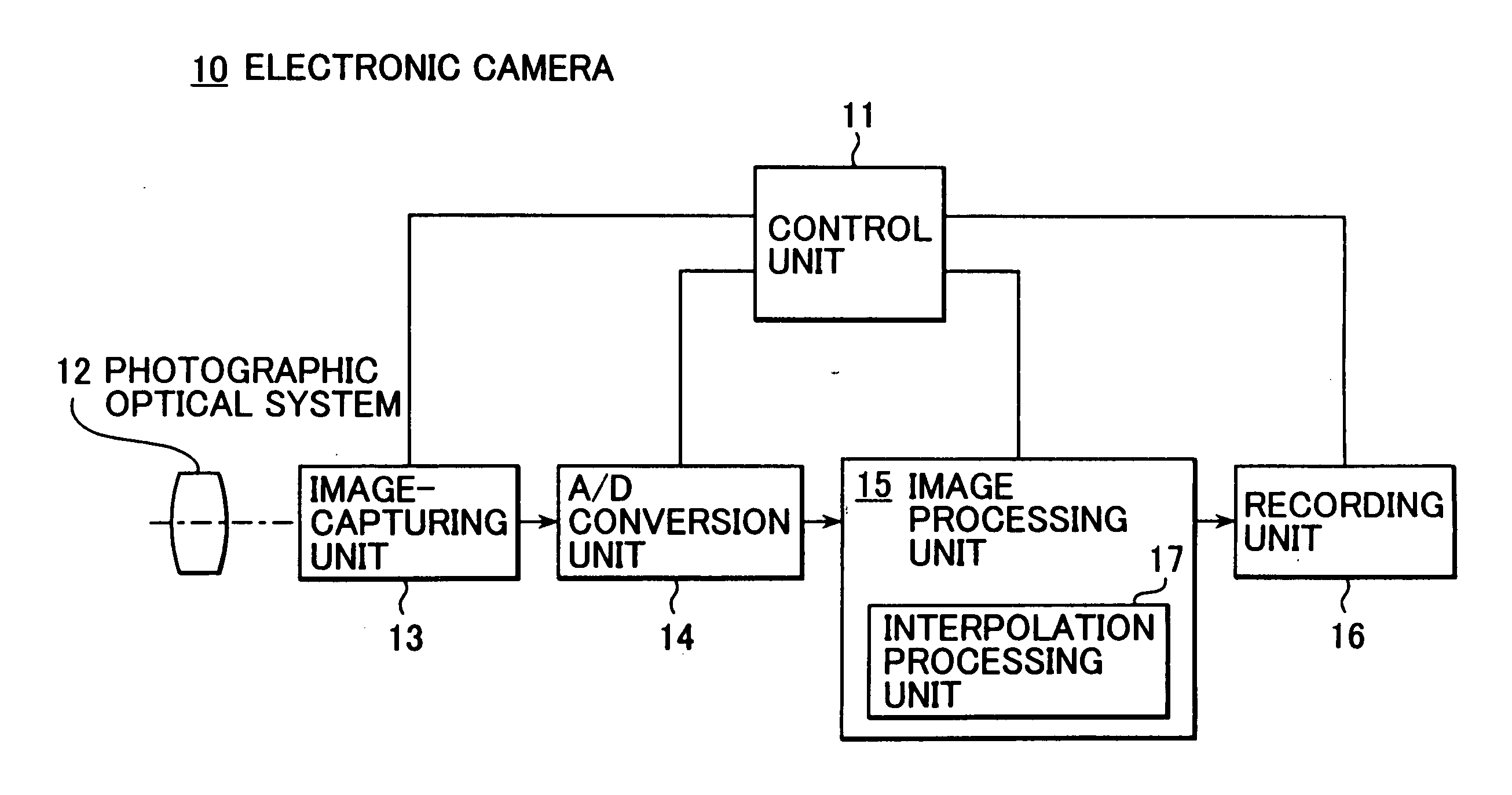

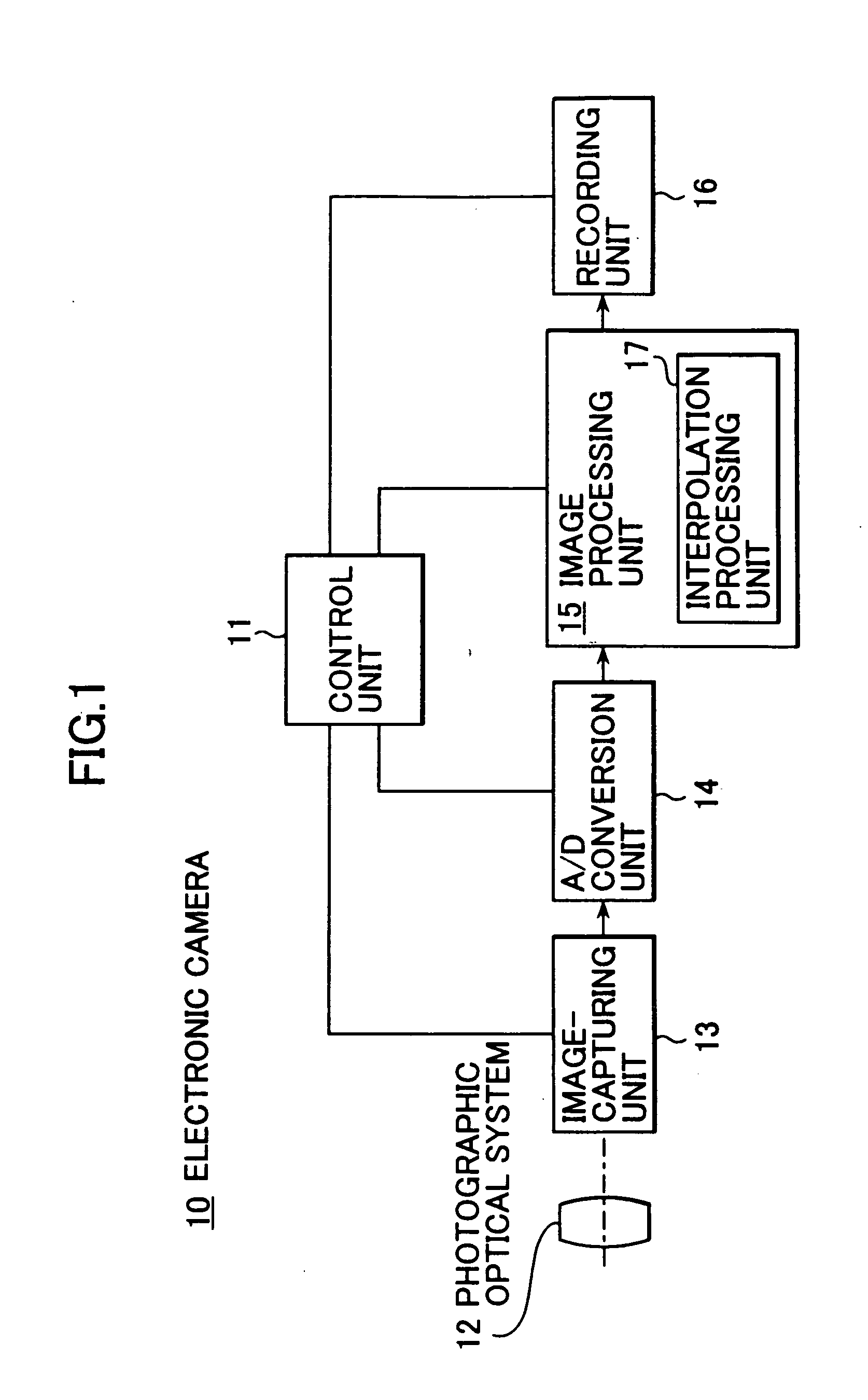

Interpolation processing apparatus and recording medium having interpolation processing program recorded therein

A first interpolation processing apparatus that engages in processing on image data which are provided in a calorimetric system constituted of first~nth (n>=2) color components and include color information corresponding to a single color component provided at each pixel to determine an interpolation value equivalent to color information corresponding to the first color component for a pixel at which the first color component is missing, includes: an interpolation value calculation section that uses color information at pixels located in a local area containing an interpolation target pixel to undergo interpolation processing to calculate an interpolation value including, at least (1) local average information of the first color component with regard to the interpolation target pixel and (2) local curvature information corresponding to at least two color components with regard to the interpolation target pixel.

Owner:NIKON CORP

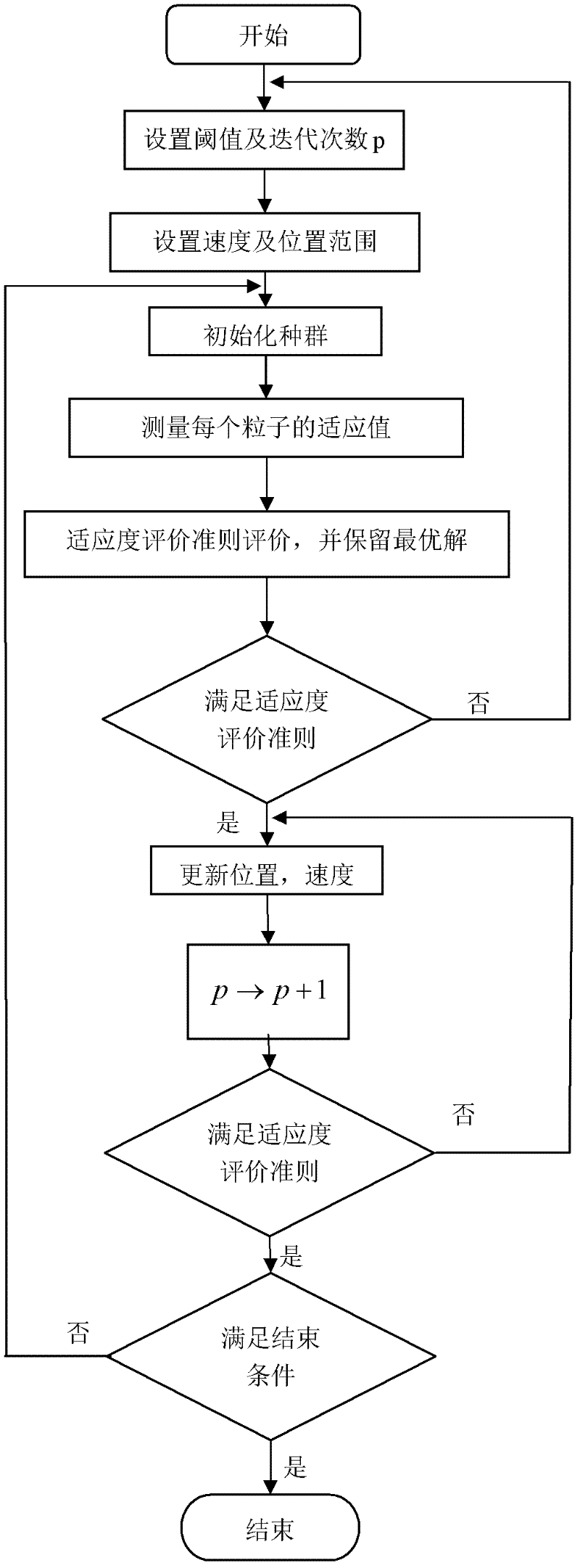

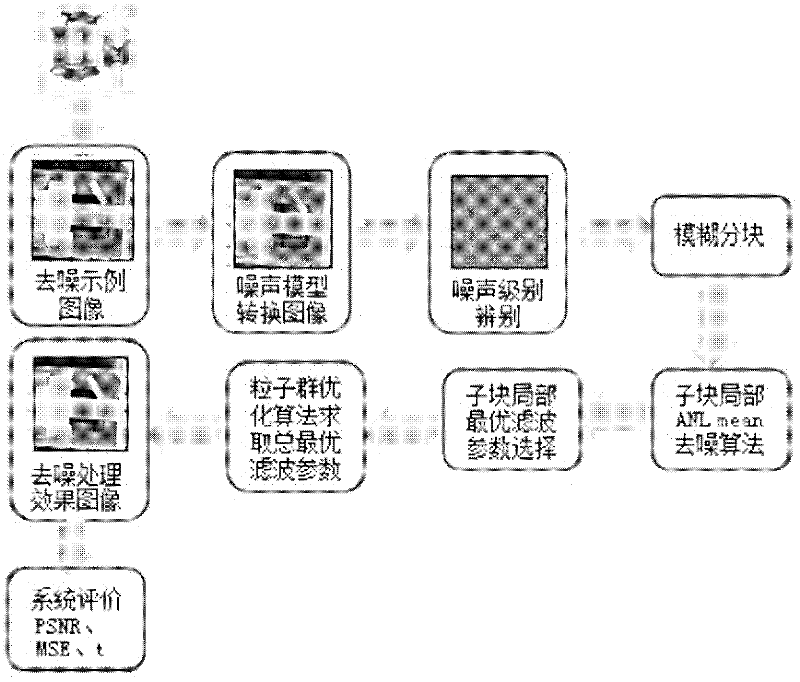

Bivariate nonlocal average filtering de-noising method for X-ray image

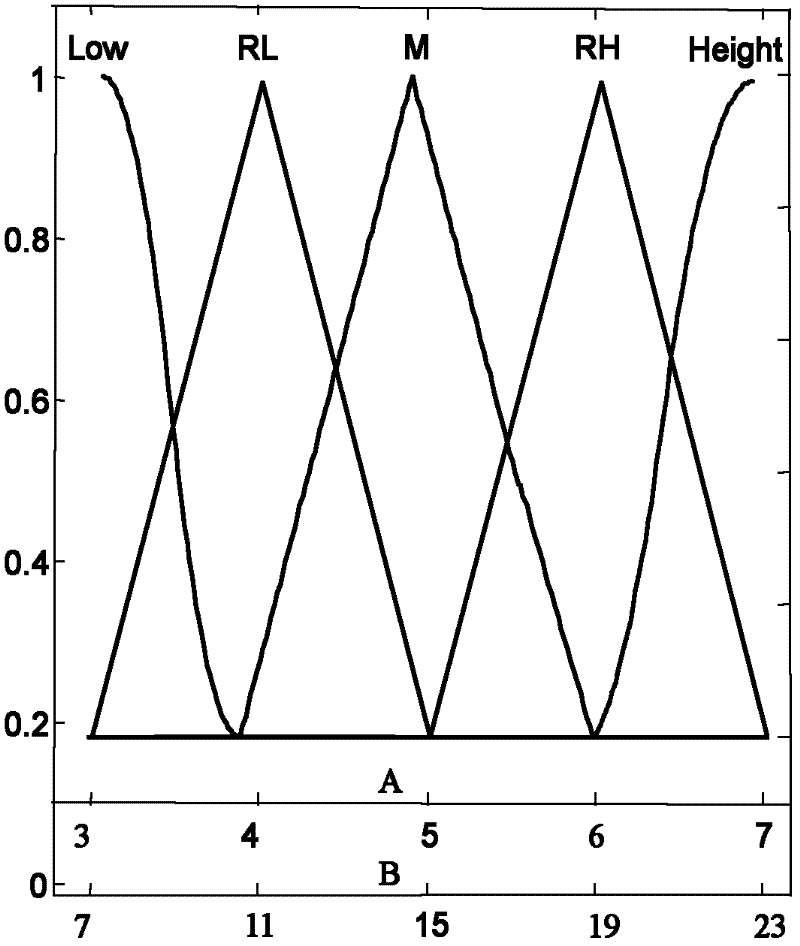

The invention provides a bivariate nonlocal average filtering de-noising method for an X-ray image. The method is characterized by comprising the following steps: 1) a selecting method of a fuzzy de-noising window; and 2) a bivariate fuzzy adaptive nonlocal average filtering algorithm. The method has the beneficial effects that in order to preferably remove the influence caused by the unknown quantum noise existing in an industrial X-ray scan image, the invention provides the bivariate nonlocal fuzzy adaptive non-linear average filtering de-noising method for the X-ray image, in the method, a quantum noise model which is hard to process is converted into a common white gaussian noise model, the size of a window of a filter is selected by virtue of fuzzy computation, and a relevant weight matrix enabling an error function to be minimum is searched. A particle swarm optimization filtering parameter is introduced in the method, so that the weight matrix can be locally rebuilt, the influence of the local relevancy on the sample data can be reduced, the algorithm convergence rate can be improved, and the de-noising speed and precision for the industrial X-ray scan image can be improved, so that the method is suitable for processing the X-ray scan image with an uncertain noise model.

Owner:YUN NAN ELECTRIC TEST & RES INST GRP CO LTD ELECTRIC INST +1

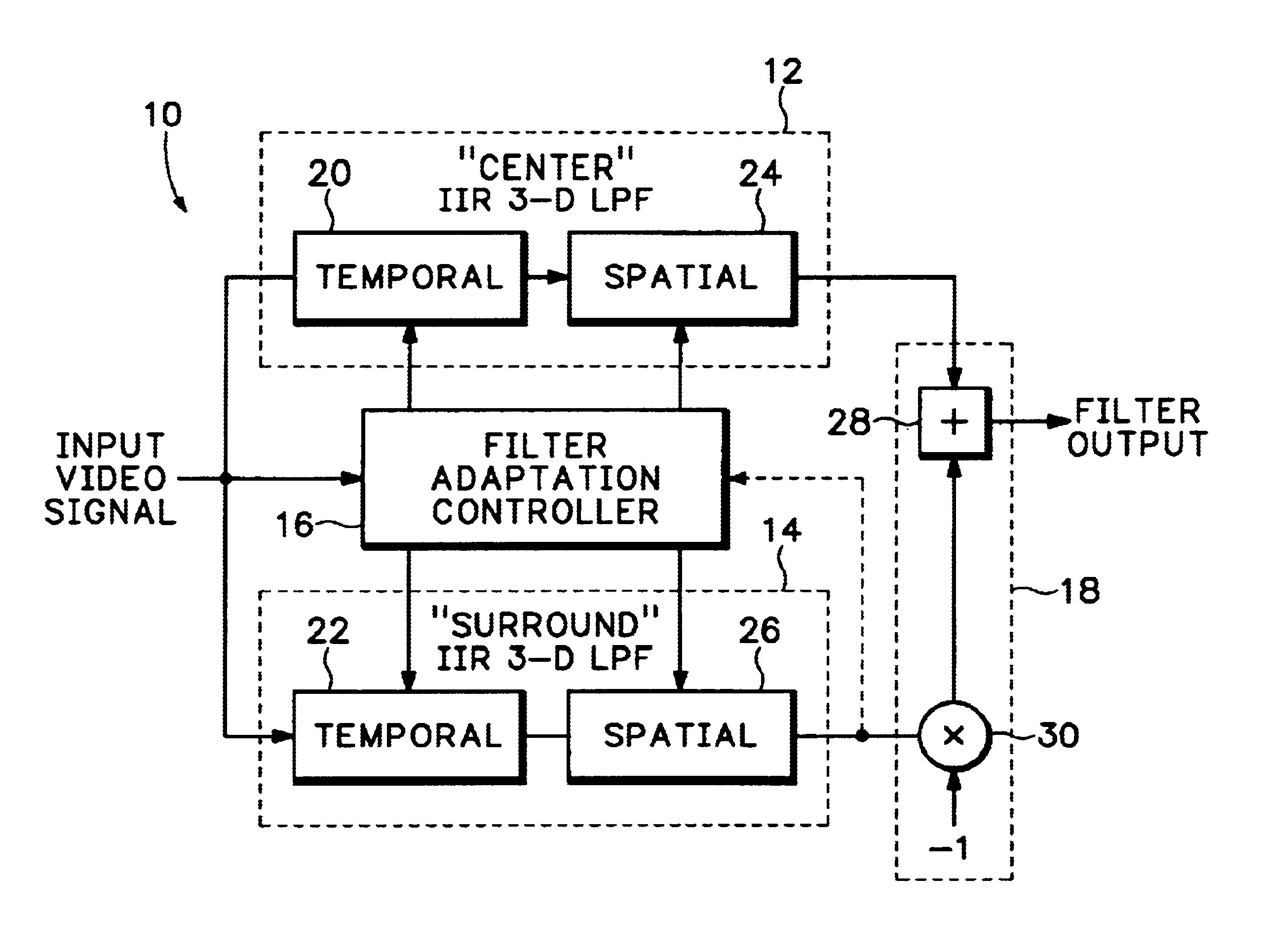

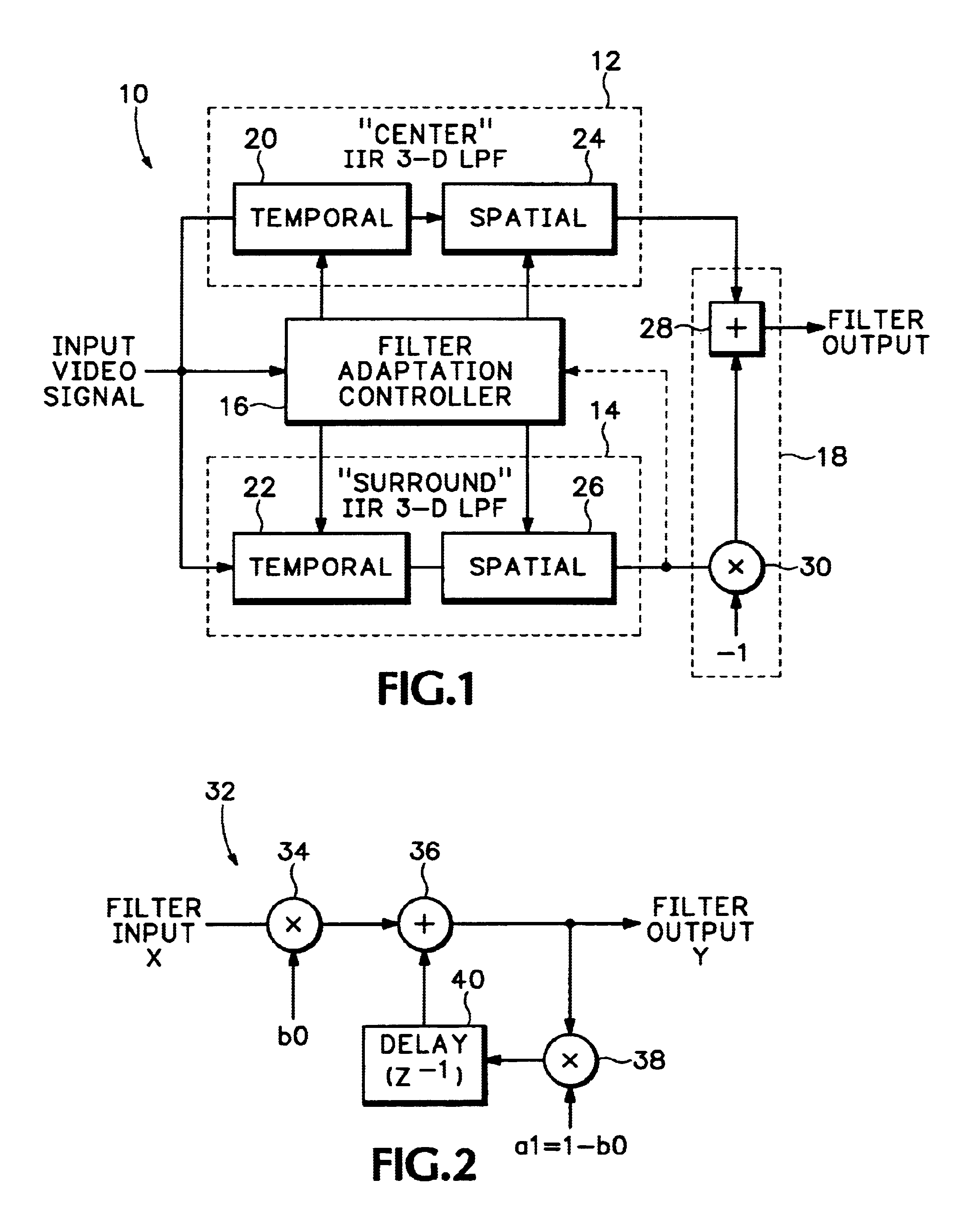

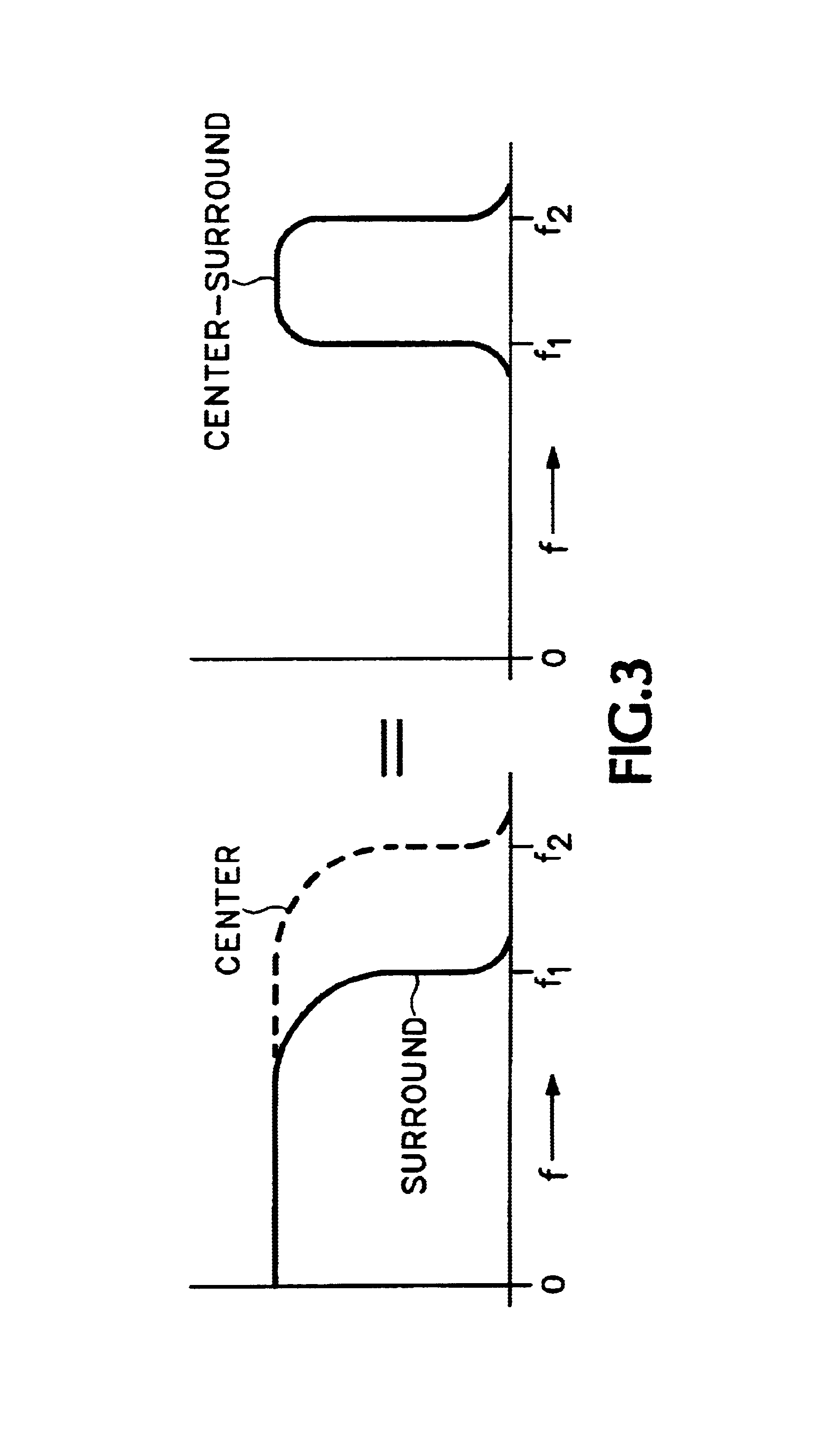

Adaptive spatio-temporal filter for human vision system models

InactiveUS6907143B2Image enhancementTelevision system detailsHuman visual system modelLow-pass filter

An adaptive spatio-temporal filter for use in video quality of service instruments based on human vision system models has a pair of parallel, lowpass, spatio-temporal filters receiving a common video input signal. The outputs from the pair of lowpass spatio-temporal filters are differenced to produce the output of the adaptive spatio-temporal filter, with the bandwidths of the pair being such as to produce an overall bandpass response. A filter adaptation controller generates adaptive filter coefficients for each pixel processed based on a perceptual parameter, such as the local average luminance, contrast, etc., of either the input video signal or the output of one of the pair of lowpass spatio-temporal filters. Each of the pair of lowpass spatio-temporal filters has a temporal IIR filter in cascade with a 2-D spatial IIR filter, and each individual filter is composed of a common building block,5 i.e., a first order, unity DC gain, tunable lowpass filter having a topology suitable for IC implementation. At least two of the building blocks make up each filter with the overall adaptive spatio-temporal filter response having a linear portion and a non-linear portion, the linear portion being dominant at low luminance levels and the non-linear portion being consistent with enhanced perceived brightness as the luminance level increases.

Owner:PROJECT GIANTS LLC

Determining a window size for outlier detection

InactiveUS20080167837A1Eliminate the effects ofDigital computer detailsRecognisation of pattern in signalsUltimate tensile strengthLocal average

A window size for outlier detection in a time series of a database system is determined. Strength values are calculated for data points using a set of window sizes, resulting at least in one set of strength values for each window size. The strength values increase as a distance between a value of a respective data point and a local mean value increases. For each set of strength values, a weighted sum is calculated based on the respective set of strength values. A weighting function is used to suppress the effect of largest strength values and a window size is selected based on the weighted sums.

Owner:IBM CORP

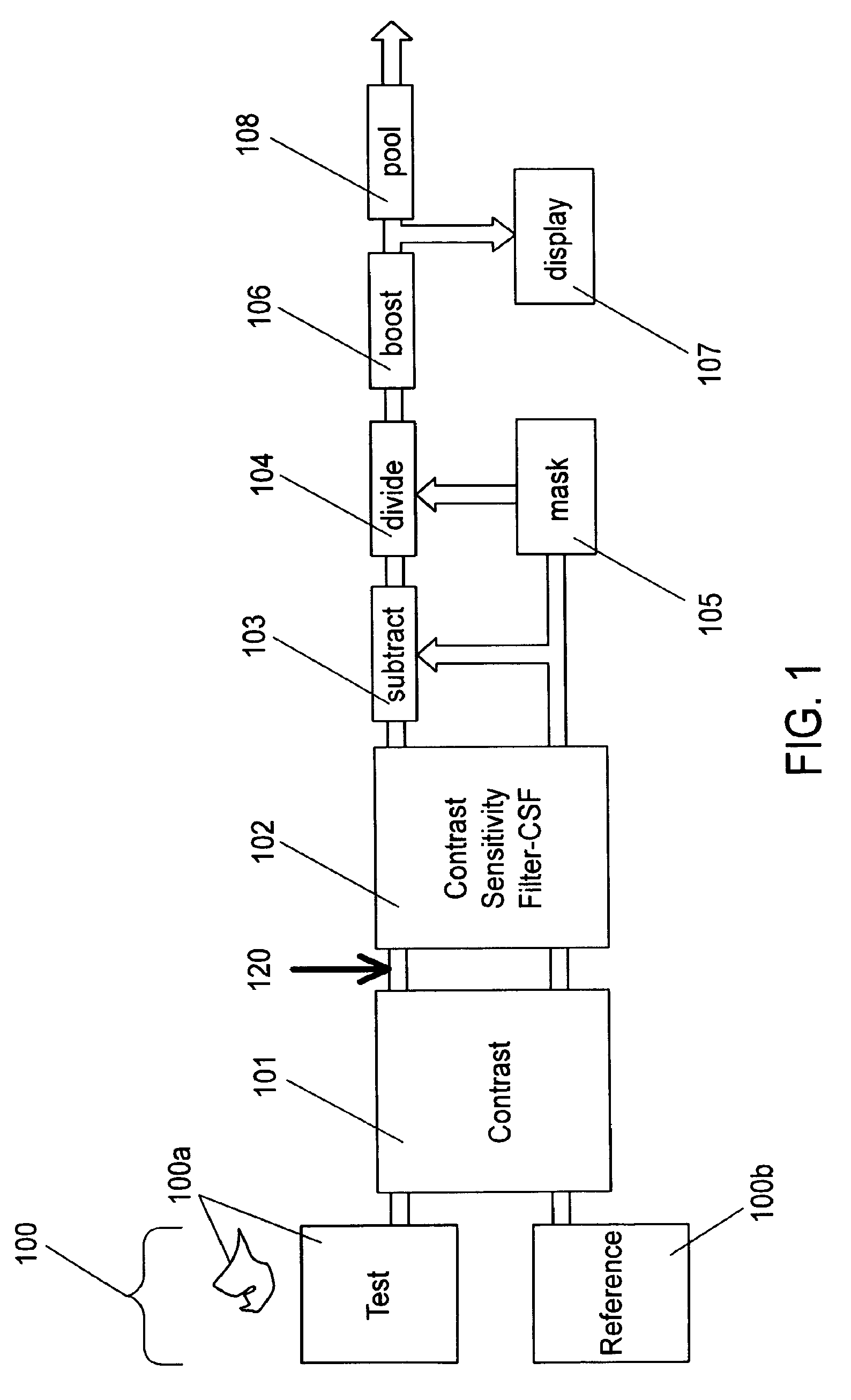

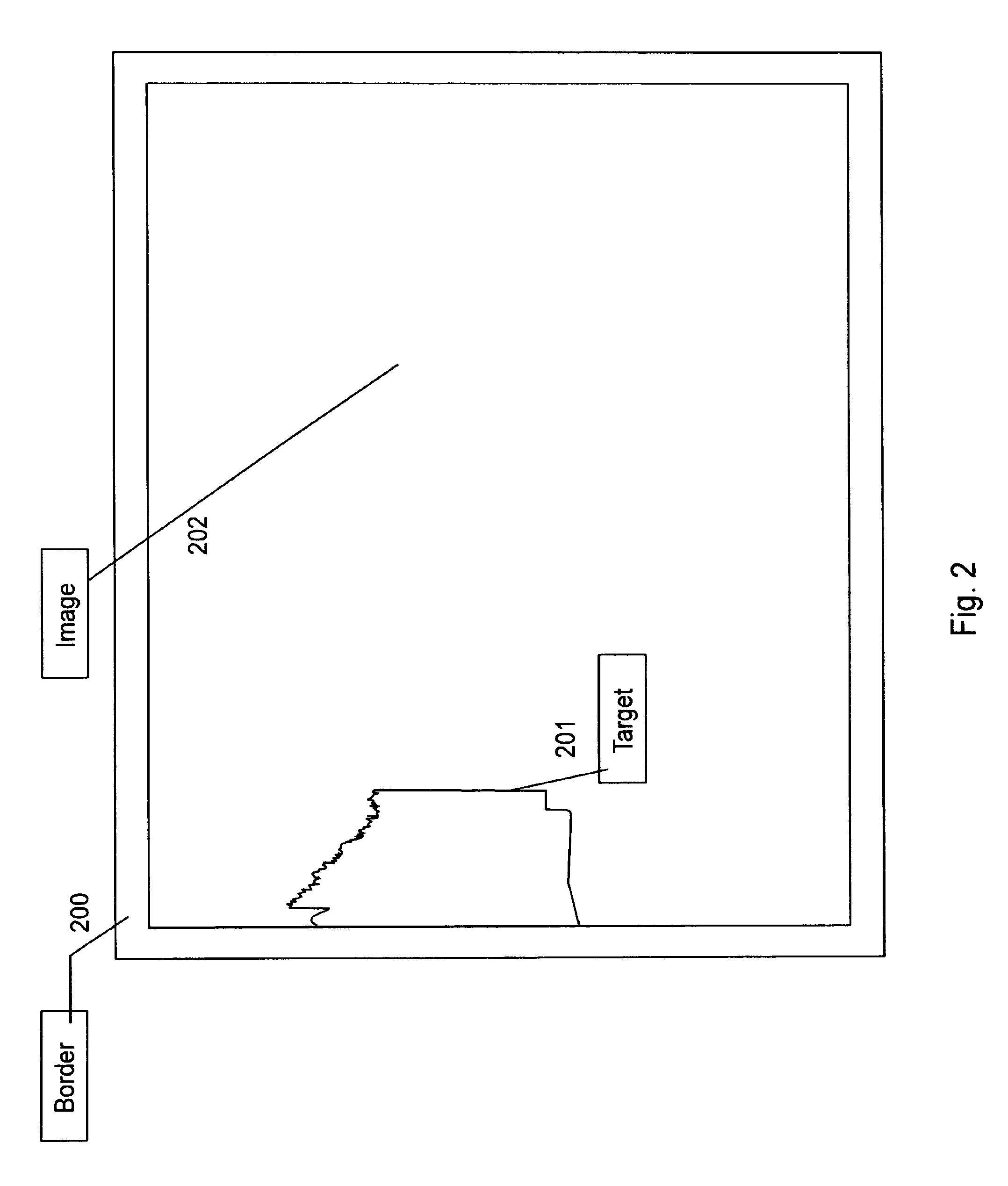

Spatial standard observer

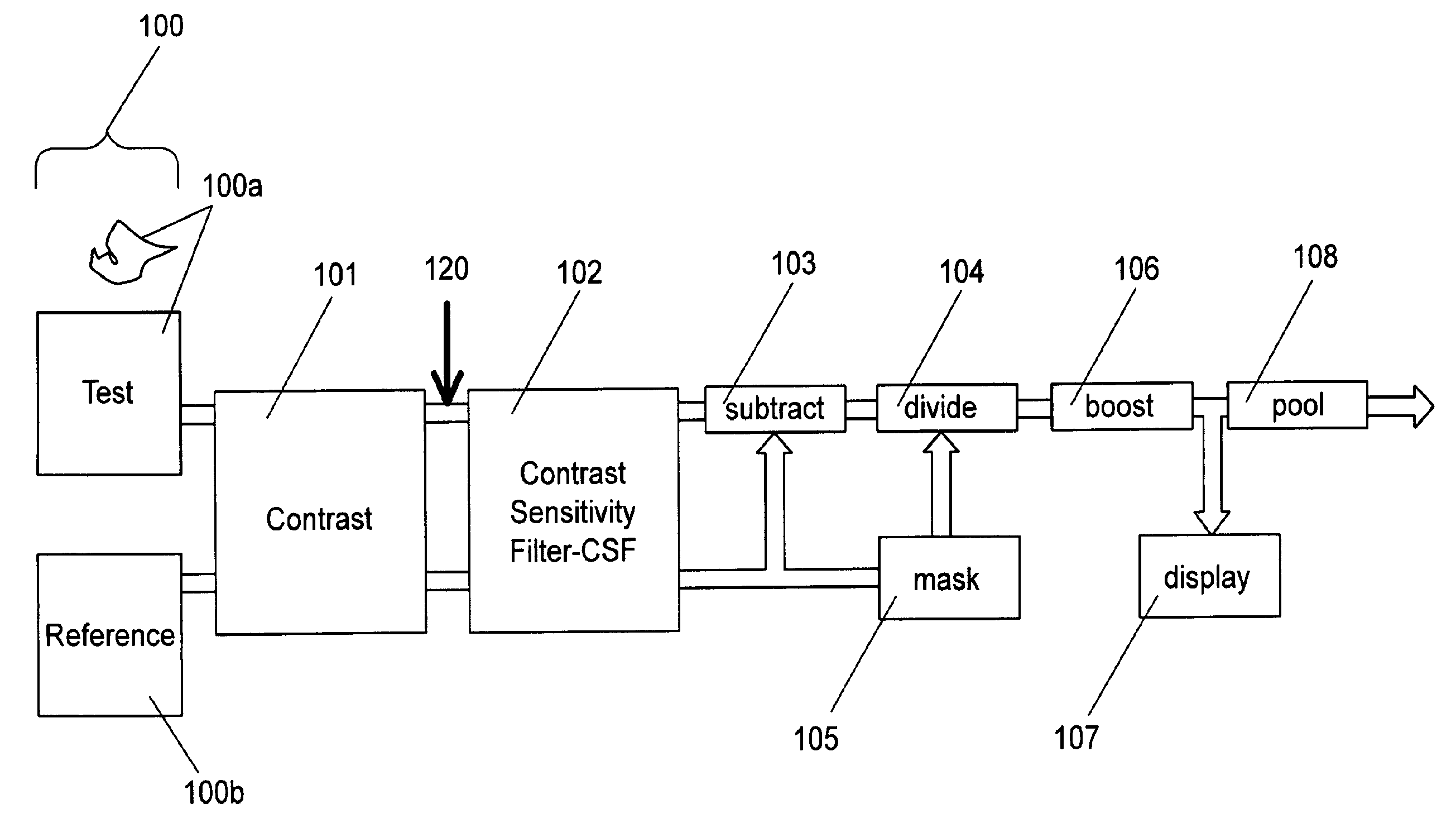

ActiveUS7783130B2Simple and efficient designAccurate visibilityImage enhancementImage analysisReference imageLocal average

The present invention relates to devices and methods for the measurement and / or for the specification of the perceptual intensity of a visual image, or the perceptual distance between a pair of images. Grayscale test and reference images are processed to produce test and reference luminance images. A luminance filter function is convolved with the reference luminance image to produce a local mean luminance reference image. Test and reference contrast images are produced from the local mean luminance reference image and the test and reference luminance images respectively, followed by application of a contrast sensitivity filter. The resulting images are combined according to mathematical prescriptions to produce a Just Noticeable Difference, JND value, indicative of a Spatial Standard Observer, SSO. Some embodiments include masking functions, window functions, special treatment for images lying on or near borders and pre-processing of test images.

Owner:NASA

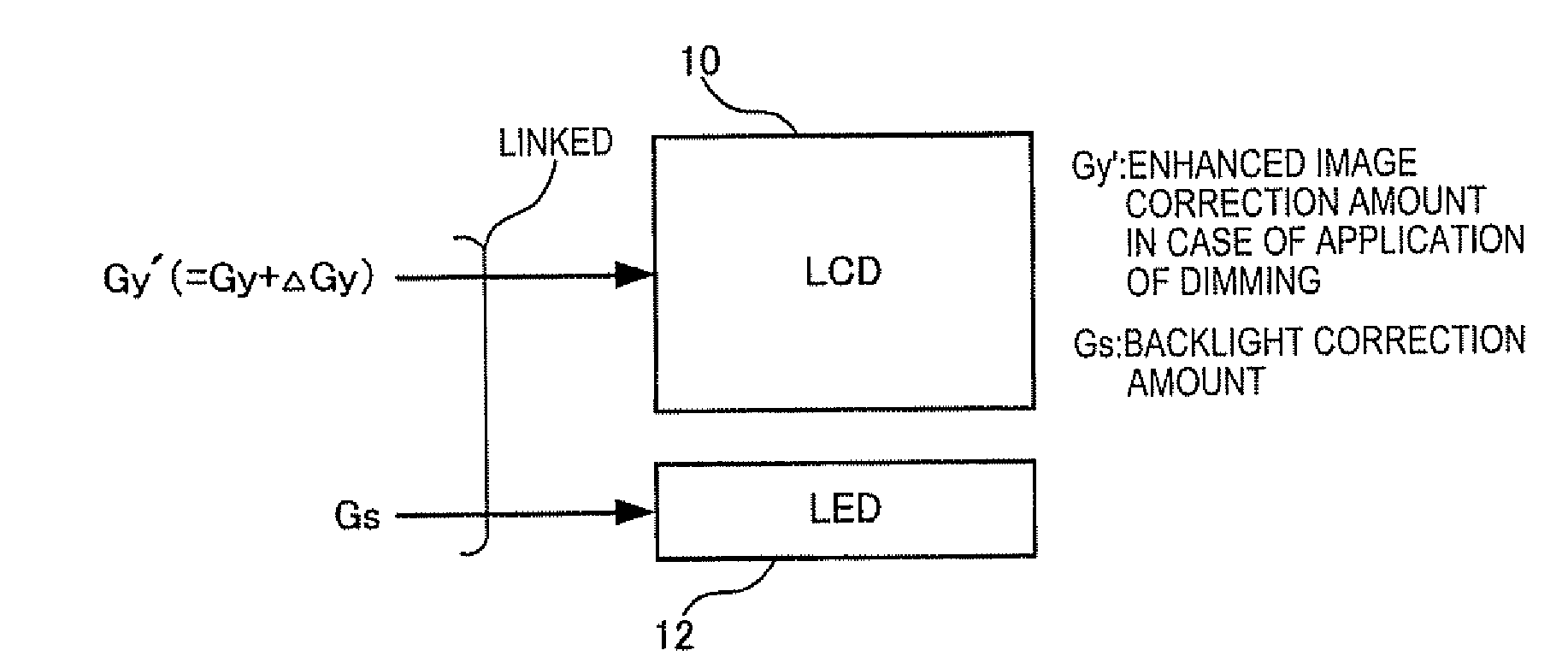

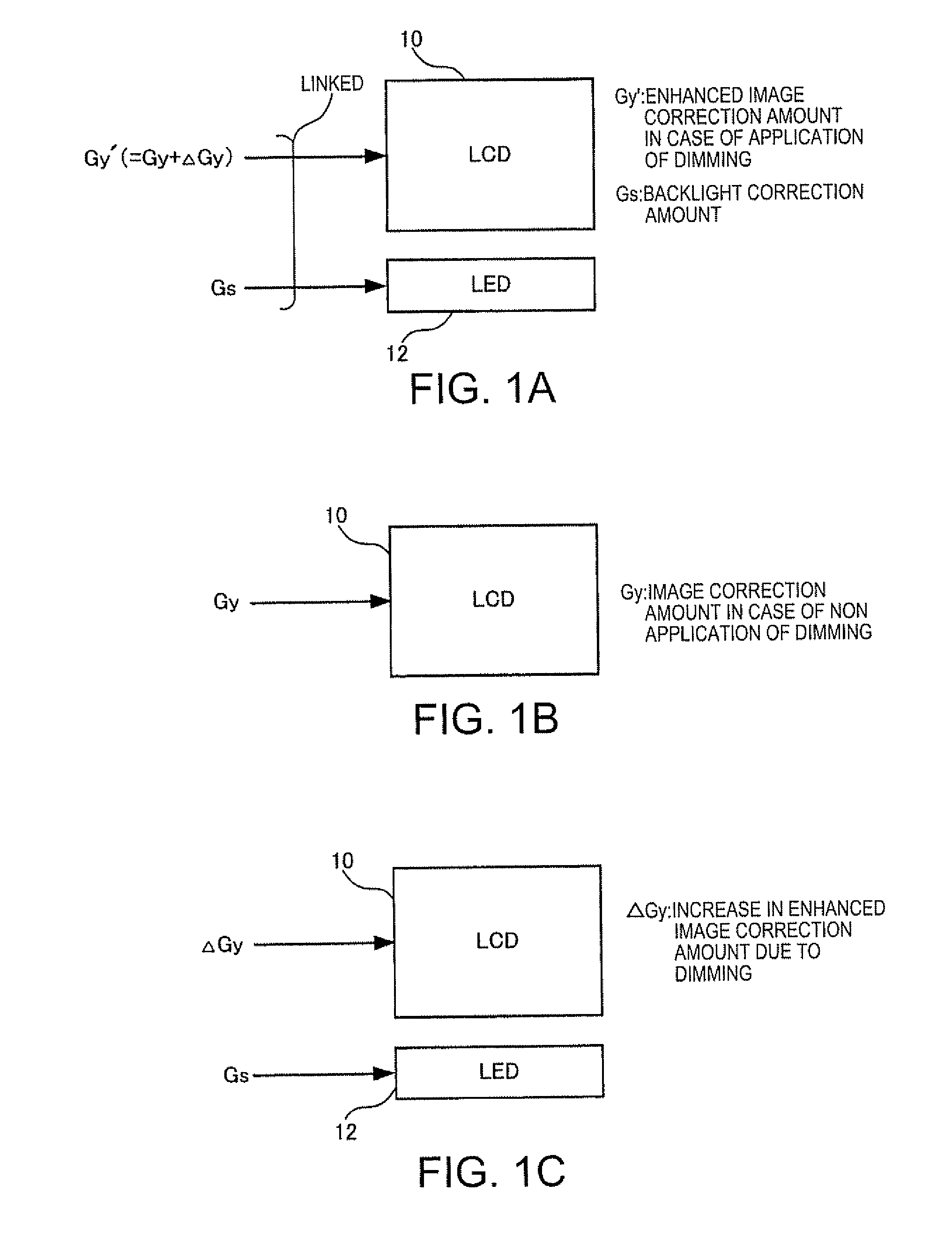

Image processor, integrated circuit device, and electronic apparatus

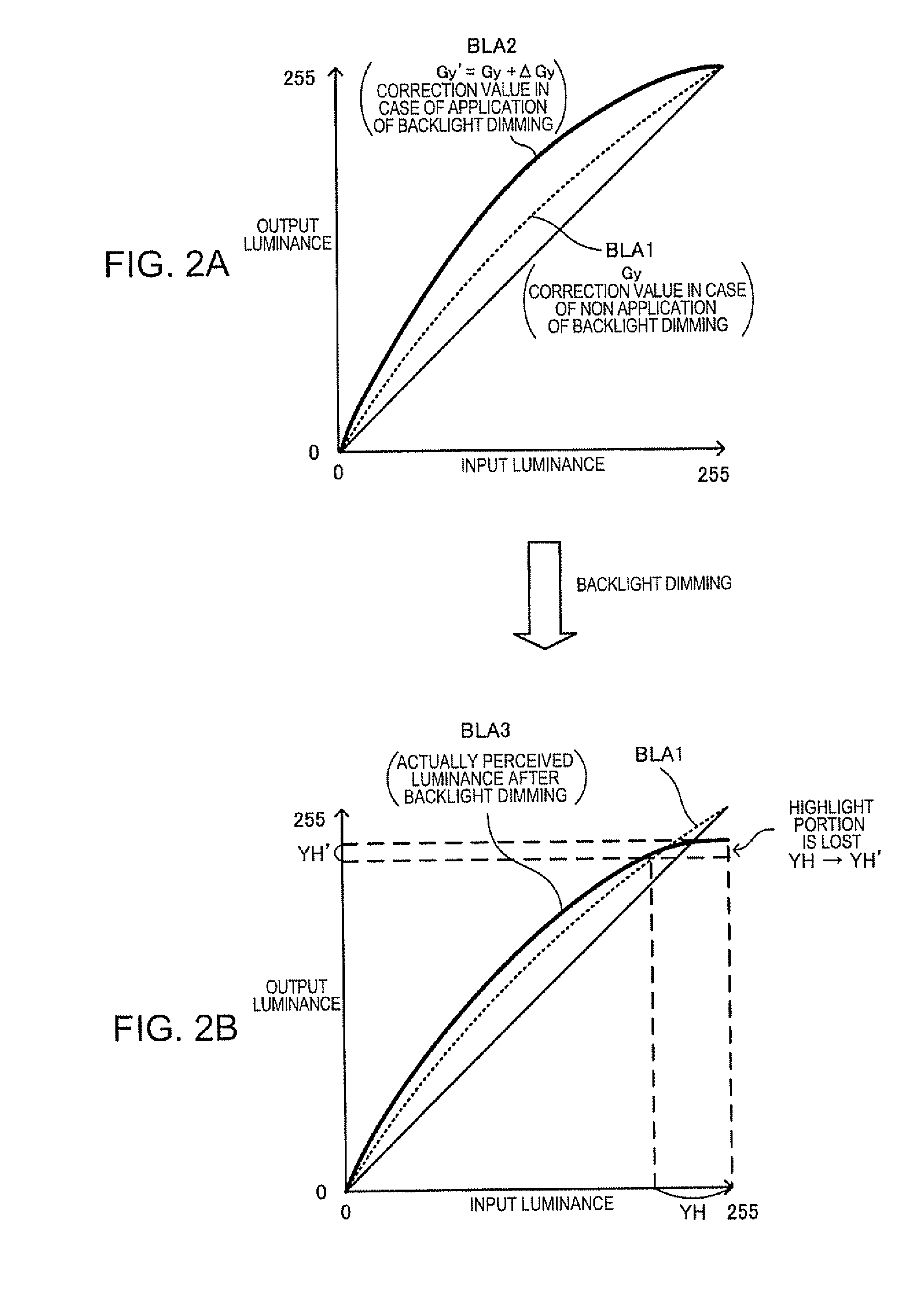

InactiveUS20090274389A1Contrast is correctedMaintaining local contrastTelevision system detailsCharacter and pattern recognitionLocal averageBrightness perception

An image processor includes a statistic-data acquiring section acquiring statistic data of a luminance value of a displayed image, the statistic-value acquiring section acquires, as the statistic data, a first index regarding a shadow pixel group and a second index regarding a highlight pixel group; a brightness index computing section computing a brightness index of the displayed image based on the statistic data, the brightness index computing section computing the brightness index from the first and the second indexes; a filtering section filtering luminance values of at least a part of a plurality of pixels included in a target pixel region of the displayed image to compute a local average luminance value; and a contrast correcting section performing contrast correction of the displayed image based on the brightness index and the local average luminance value.

Owner:SEIKO EPSON CORP

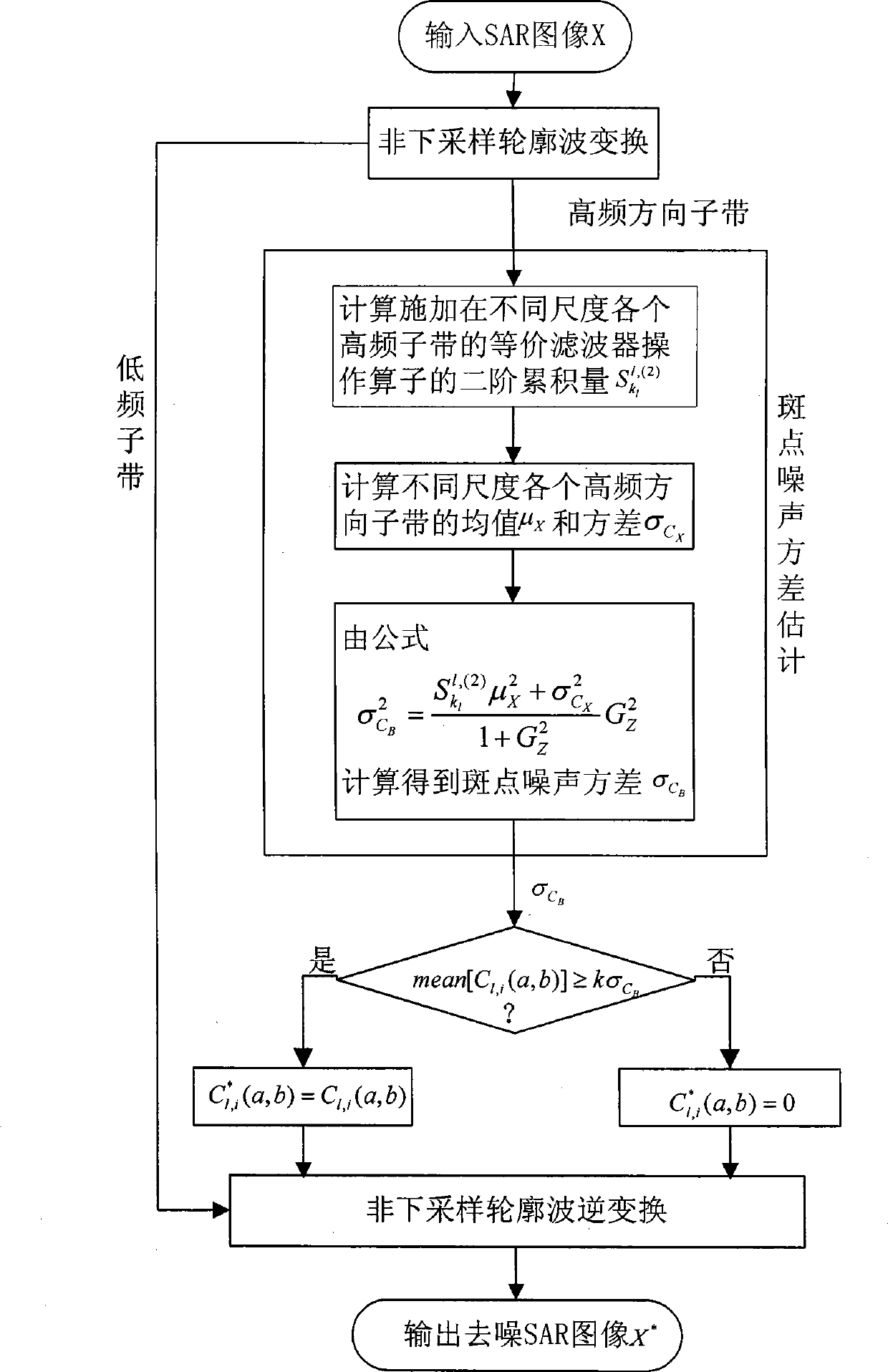

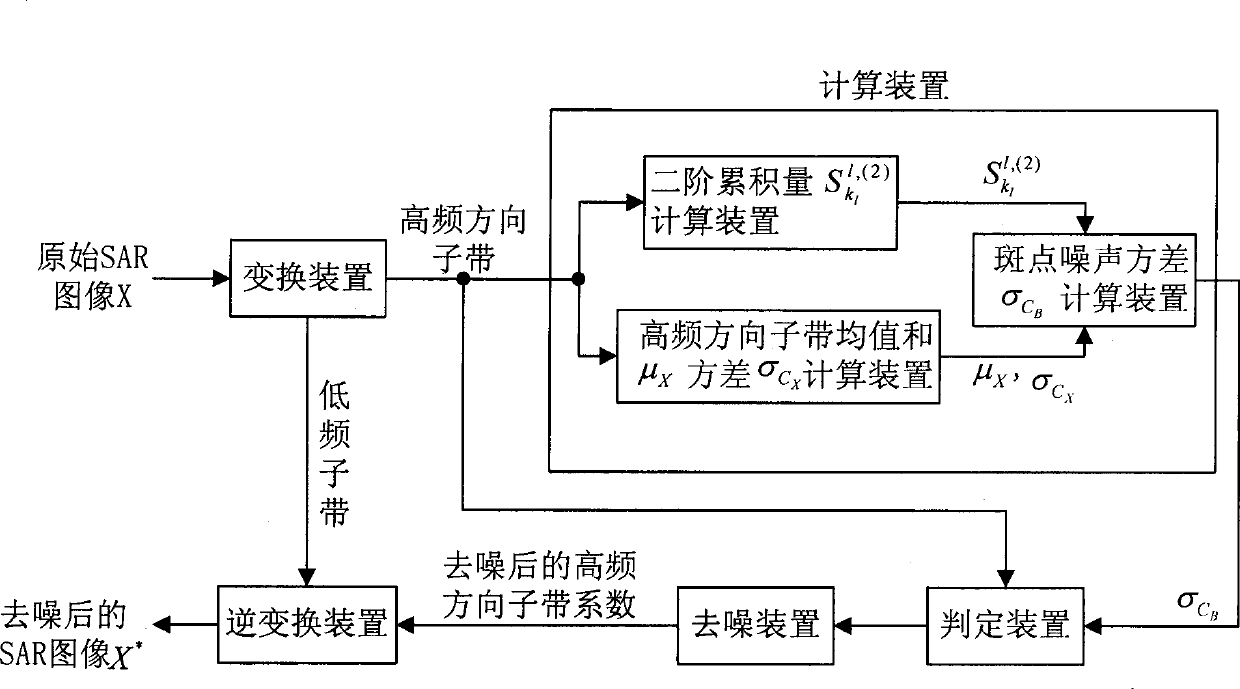

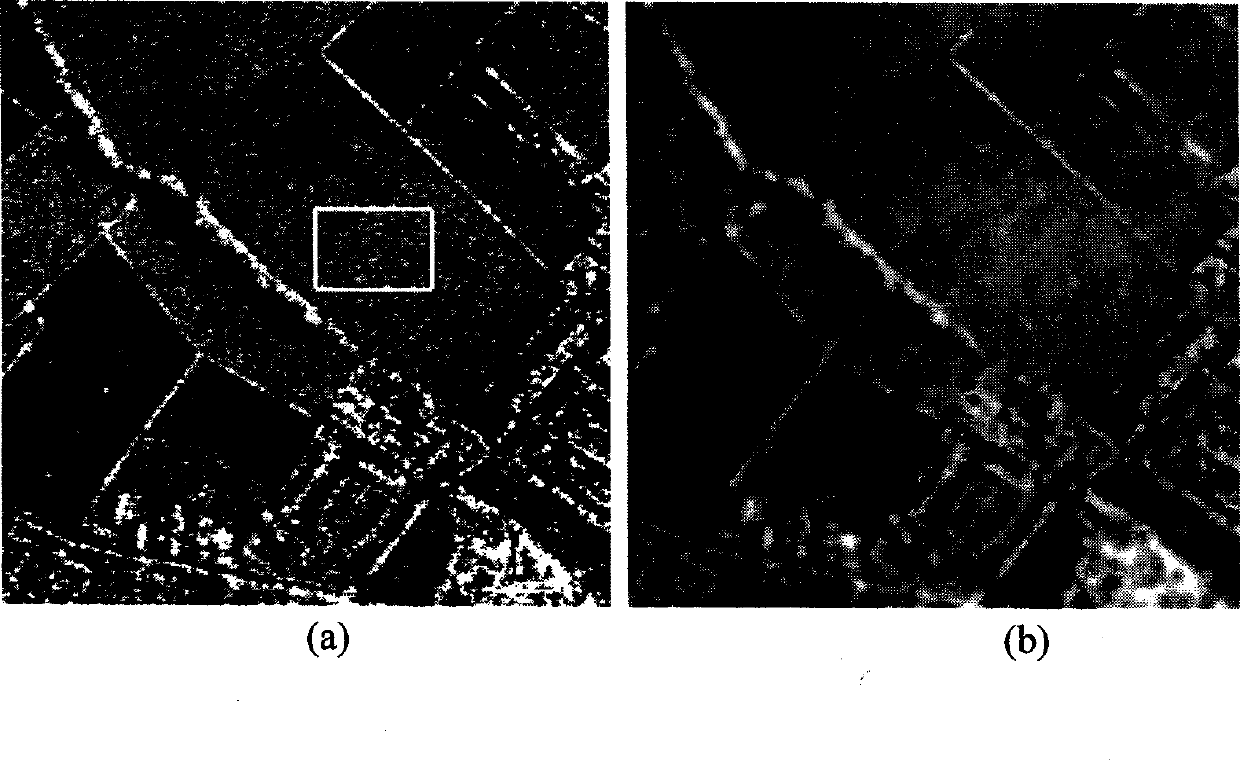

Synthetic aperture radar image denoising method based on non-down sampling profile wave

ActiveCN101482617AAvoid jitter distortionAdaptive denoisingImage enhancementRadio wave reradiation/reflectionSynthetic aperture radarRadar

The invention discloses a denoising method of synthetic aperture radar image based on a non-lower sampling configuration wave, which is mainly to solve the problem that the image detail is difficult to keep effectively by the existing method, the new method comprises: (1) inputting a SAR image X and performing the L layer non-lower sampling configuration wave transformation; (2) calculating speckle noise variance delta C#-[B] of subband in each high-frequency direction of different dimensions; (3) distinguishing the high-frequency direction subband coefficients into the signal or the noise transformation coefficients by the local average value mean[C1, i(a, b)] high-frequency direction suband coefficient C1 and the i (a, b); (4) reserving the signal part in the judged high-frequency direction subband coefficient C1 and i (a, b) to obtain the denoised high-frequency direction subband coefficient C1 and i (a, b); (5) performing the non-lower sampling configuration wave inverse transformation for the low-frequency subband amd the denoised high-frequency direction subband coefficient C1 and I (a, b) to obtain the denoised SAR image X . The invention can effectively eliminate the coherent speckle noise, meanwhile can effectively keep the image detail, the denoised image has no shake and distortion and can be used for the preprocessing stage of the synthetic aperture radar image.

Owner:XIDIAN UNIV

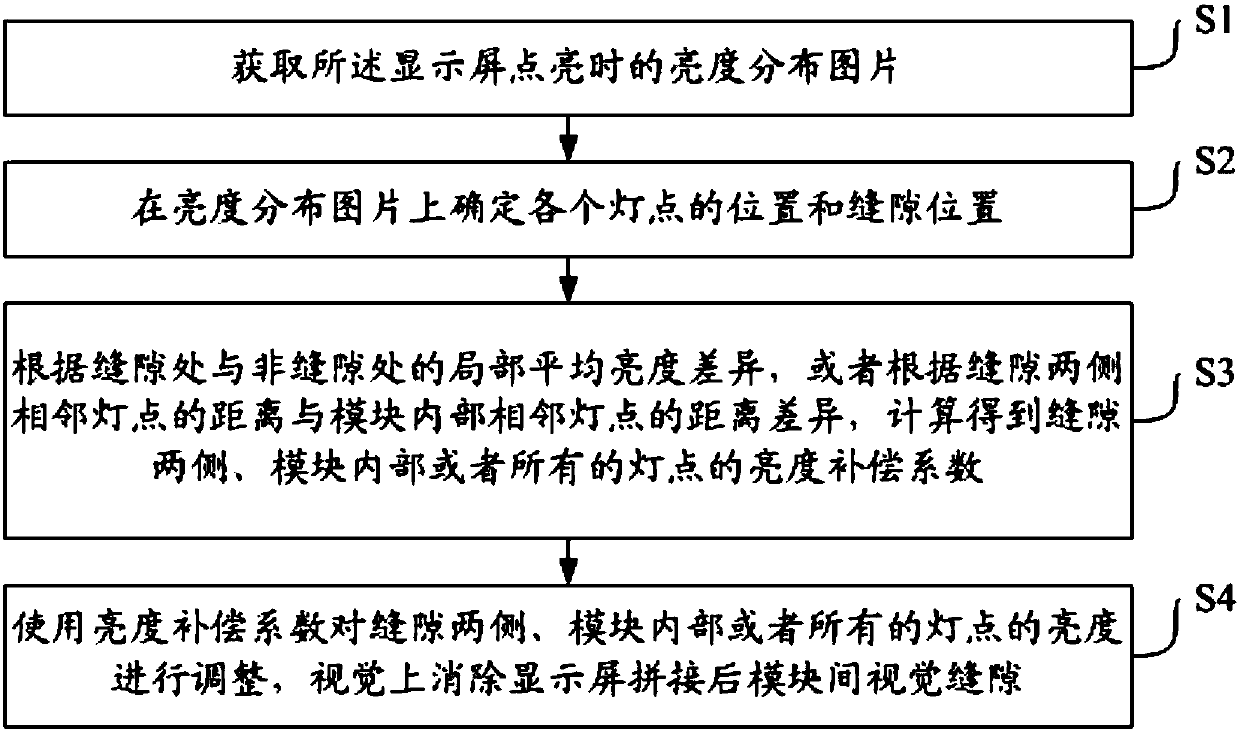

Method for removing visual gaps among modules after display screen is spliced and display method

ActiveCN107742511ALow processing technology requirementsLow machining accuracy requirementsCathode-ray tube indicatorsLocal averageComputer science

The invention discloses a method for removing visual gaps among modules after a display screen is spliced and a display method, and belongs to the field of display screens. The method comprises the steps of S1, obtaining a brightness distribution picture when the display screen is lightened; S2, determining the positions and gap positions of all light points on the brightness distribution picture;S3, calculating to obtain brightness compensation coefficients of the light points at the two sides of each gap and inside each module or all the light points according to the local average brightness difference of the gaps or the non-gaps, or according to the distance of adjacent light points at the two sides of each gap and the distance difference of adjacent light points inside each module; S4, using the brightness compensation coefficients to adjust the brightness of the light points at the two sides of each gap and inside each module or all the light points to visually remove the visualgaps among the modules after the display screen is spliced. Bright and dark lines in gaps among display units of the spliced display screen are visually removed, the visual effect is obviously improved, the achievement steps are simple, and the splicing and installation difficulty and installation cost are lowered.

Owner:颜色空间(北京)科技有限公司

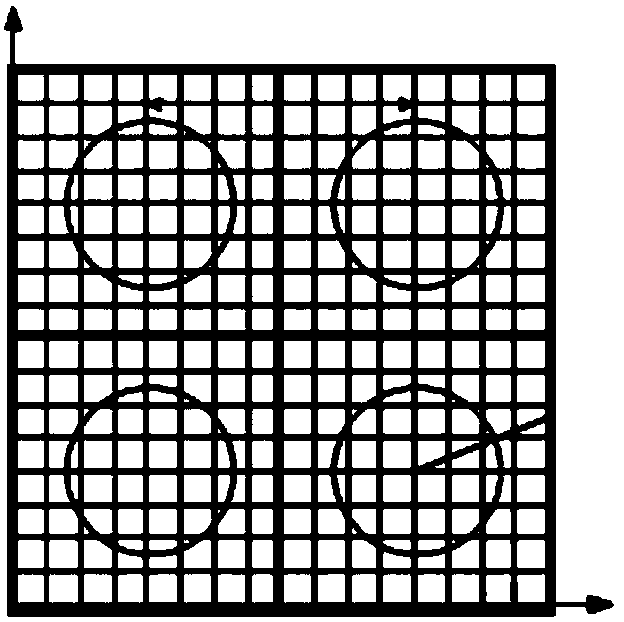

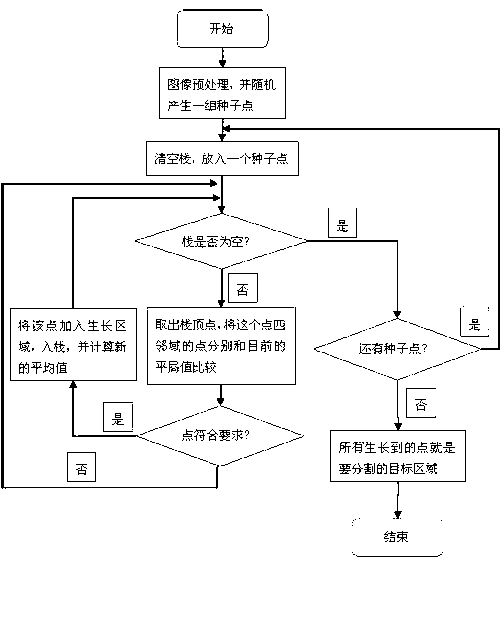

Improved region growing method applied to coronary artery angiography image segmentation

InactiveCN102737376ASplitting speed is fastImprove accuracyImage analysisGrowth parameterCurrent point

The invention relates to an improved region growing method which is applied to vessel segmentation and extraction in a coronary artery angiography image. The improved region growing method comprises the following steps of: preprocessing the image to obtain an original image capable of directly performing region growth; making a regulation and randomly generating a group of seed points; setting a stack data structure, enabling a newly grown pixel point to enter a stack, and taking out the point previously entering the stack to serve as a current point to be subjected to growth when the current point completes the growth; sequentially performing growth on each seed point, wherein a seed point gray value serves as an average value at a growing initial stage, and calculating a new average gray value when a new pixel point is grown every time along with the growth of the seed points; and completing the growth when no pixel point meeting growth standards exists and no seed point exists. The improved region growing method has the advantages that the seed points are automatically generated, no manual intervention is needed, the local average values around each pixel point serve as growth parameters in a growing process, the coronary artery angiography image with uneven brightness can be segmented, and the efficiency and the accuracy of the image segmentation are improved.

Owner:常熟市支塘镇新盛技术咨询服务有限公司

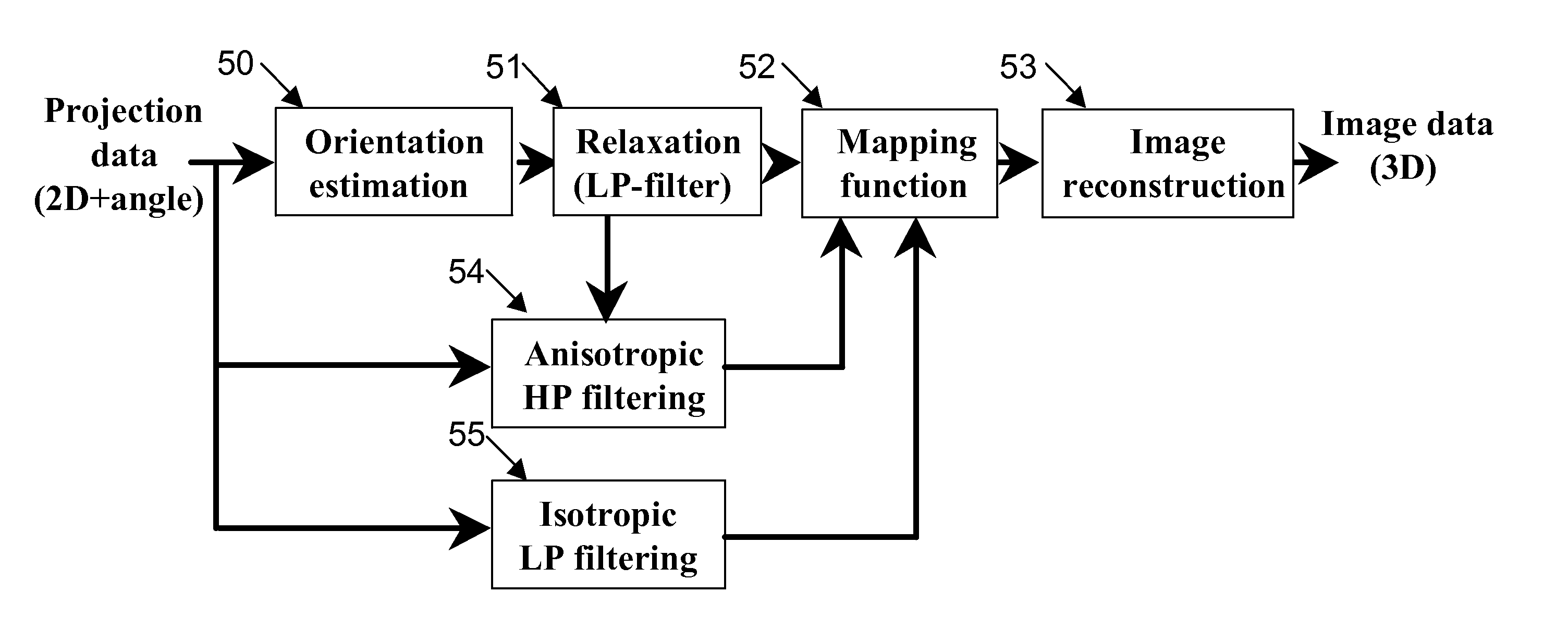

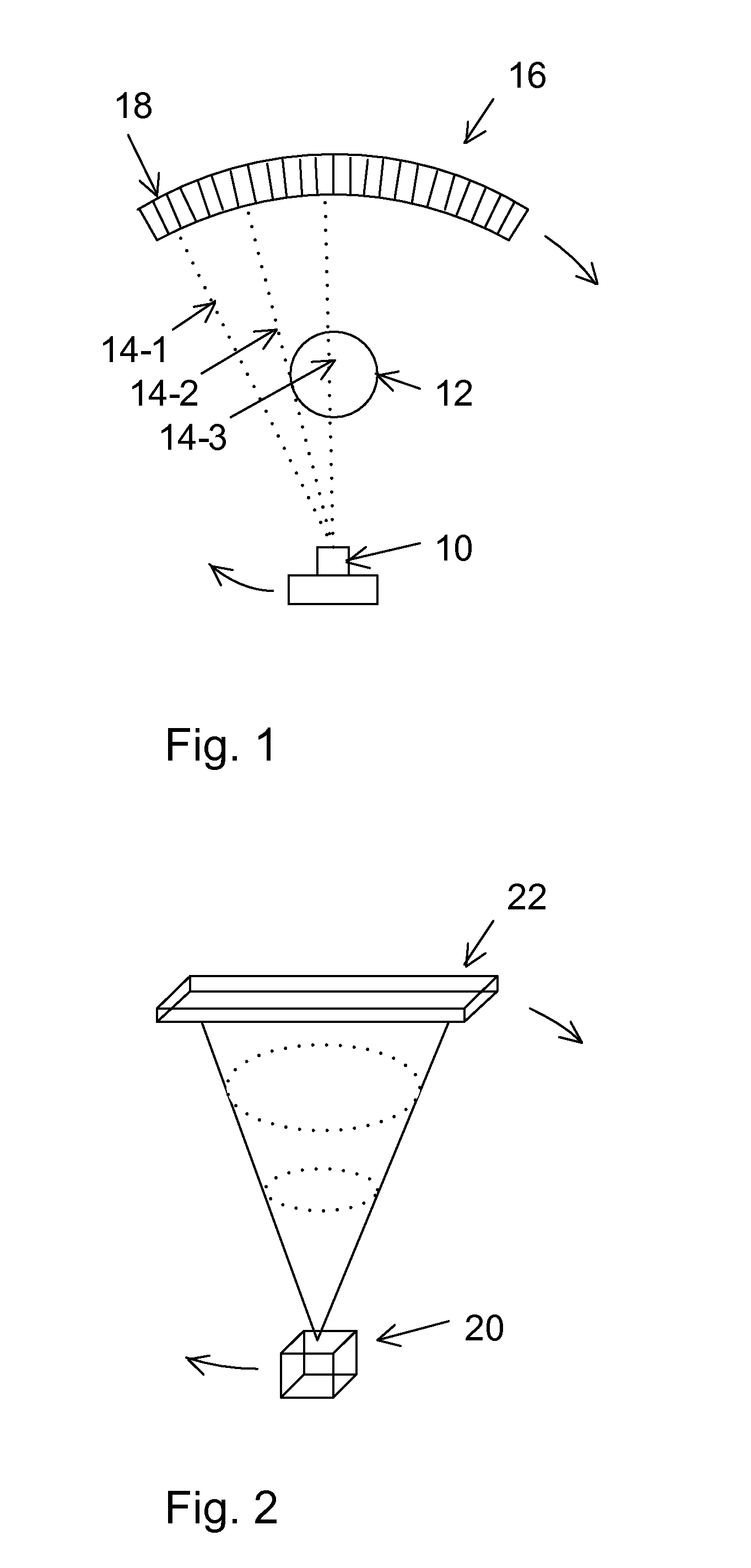

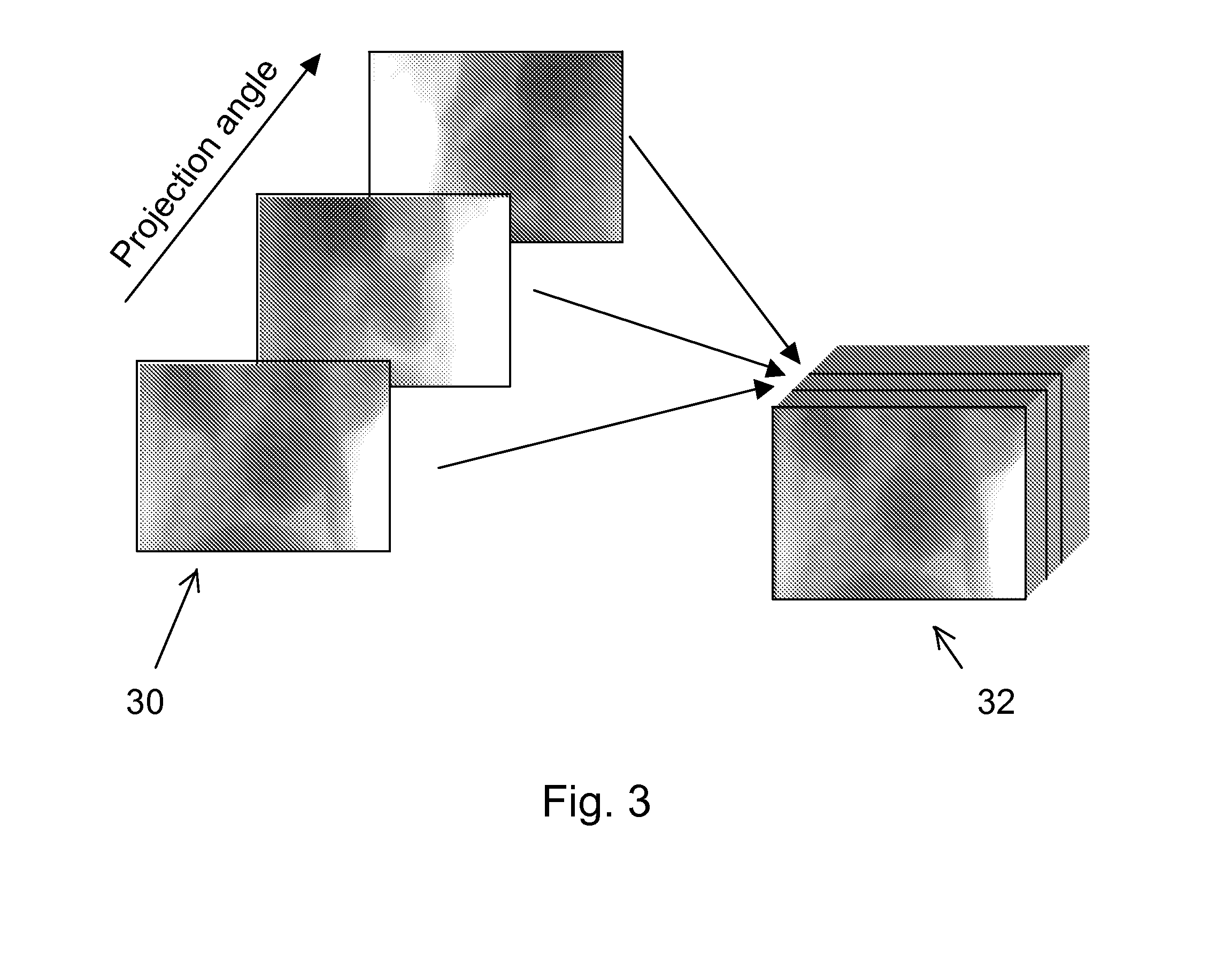

Adaptive anisotropic filtering of projection data for computed tomography

ActiveUS20080069294A1Reduce high frequency noiseReduce radiation doseImage enhancementReconstruction from projectionLow-pass filterImaging quality

CT imaging is enhanced by adaptively filtering x-ray attenuation data prior to image reconstruction. Detected x-ray projection data are adaptively and anisotropically filtered based on the locally estimated orientation of structures within the projection data from an object being imaged at a plurality of rotation positions. The detected x-ray data are uniformly low pass filtered to preserve the local mean values in the data, while the high pass filtering is controlled based on the estimated orientations. The resulting filtered data provide projection data with smoothing along the structures while maintaining sharpness along edges. Image noise and noise induced streak artifacts are reduced without increased blurring along edges in the reconstructed images. The enhanced image allows reduced x-ray dose while maintaining image quality.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

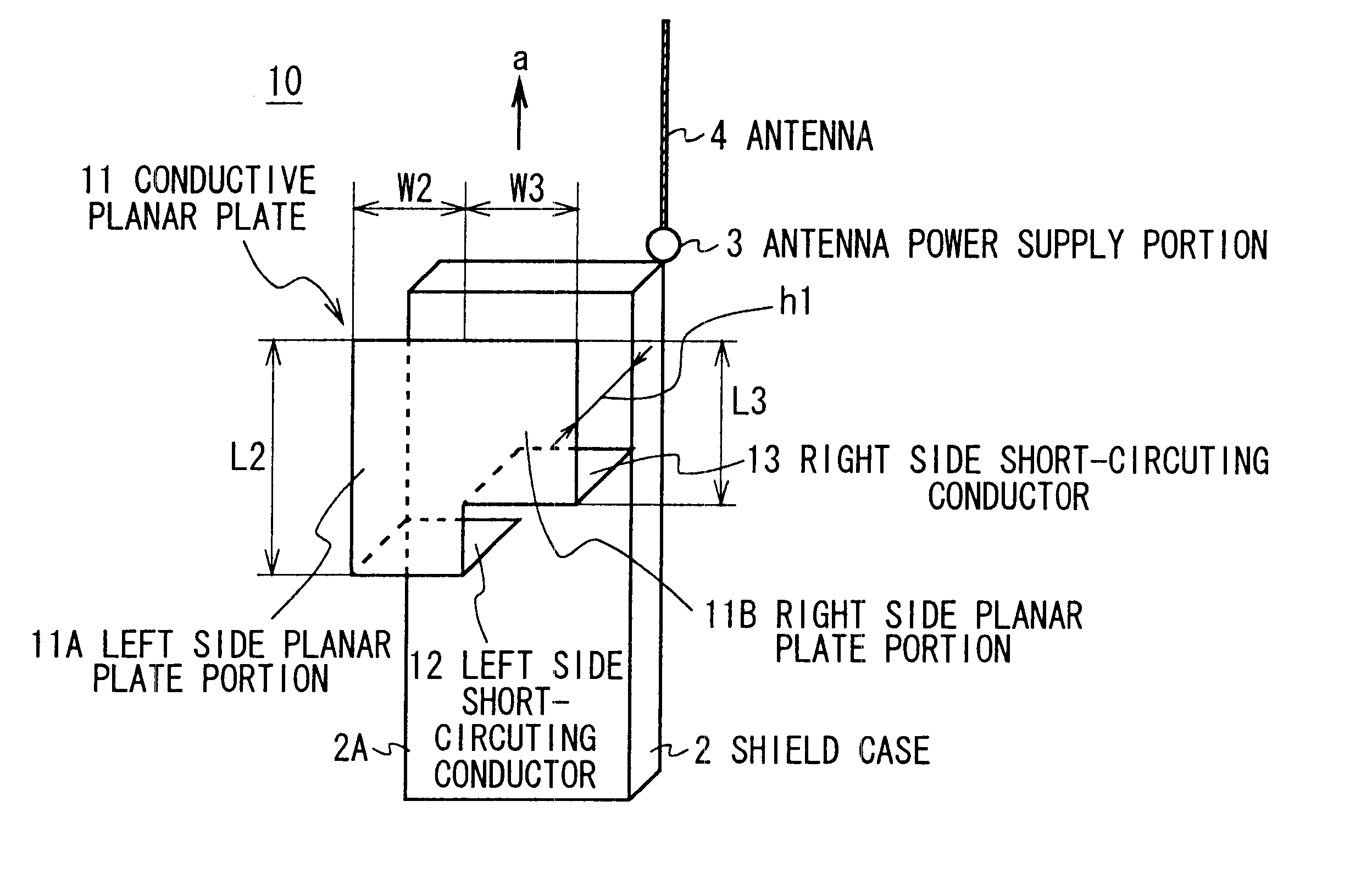

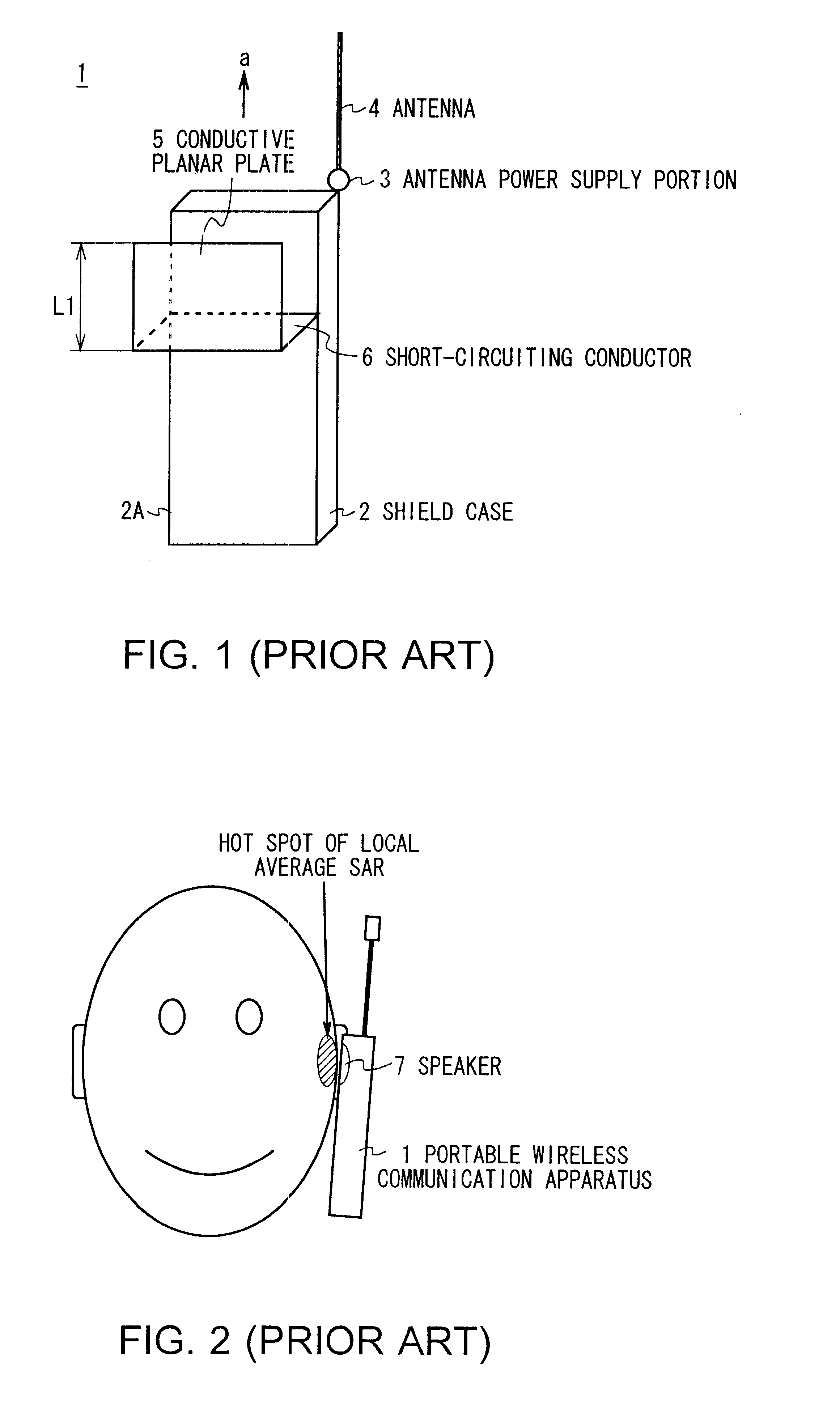

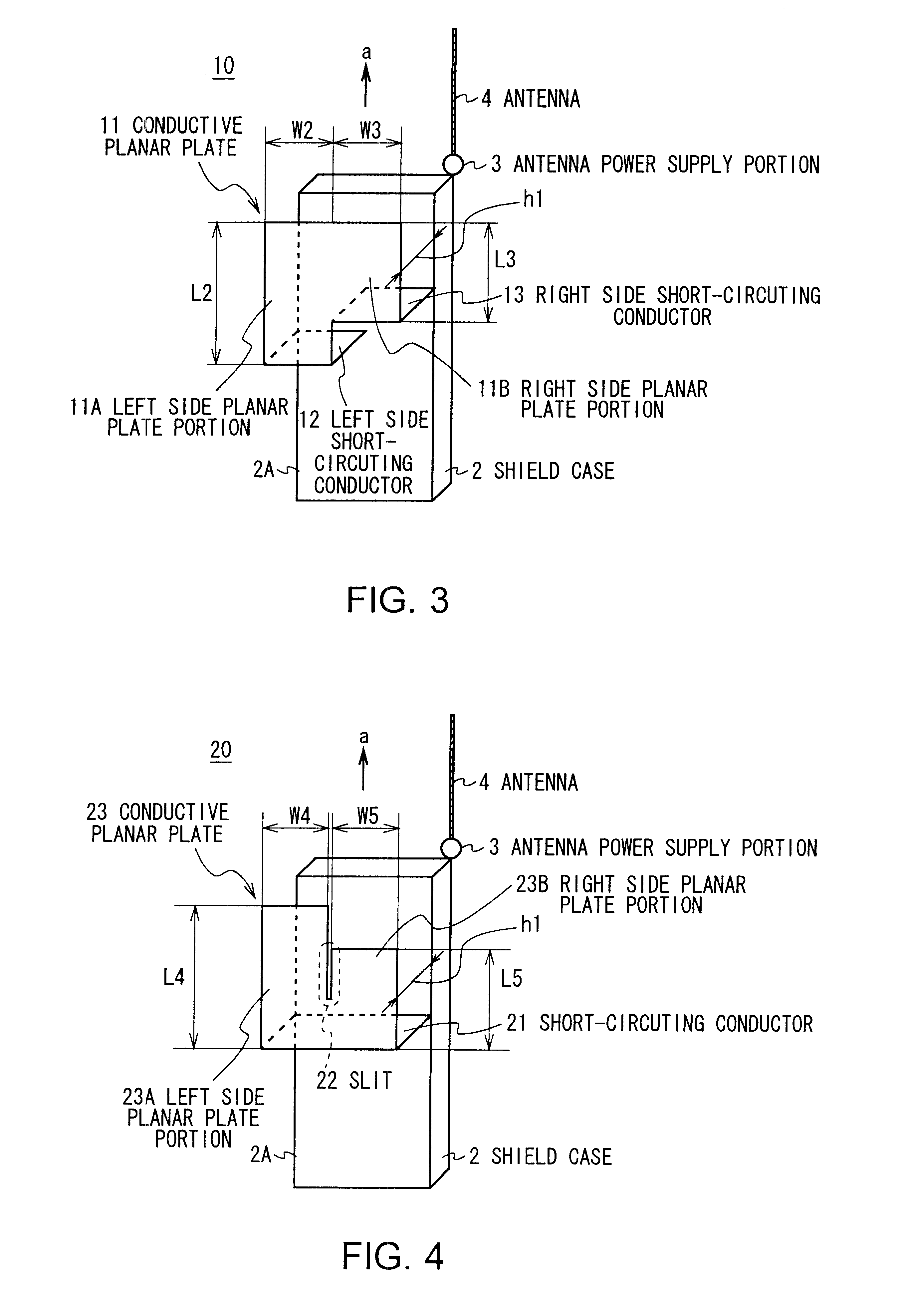

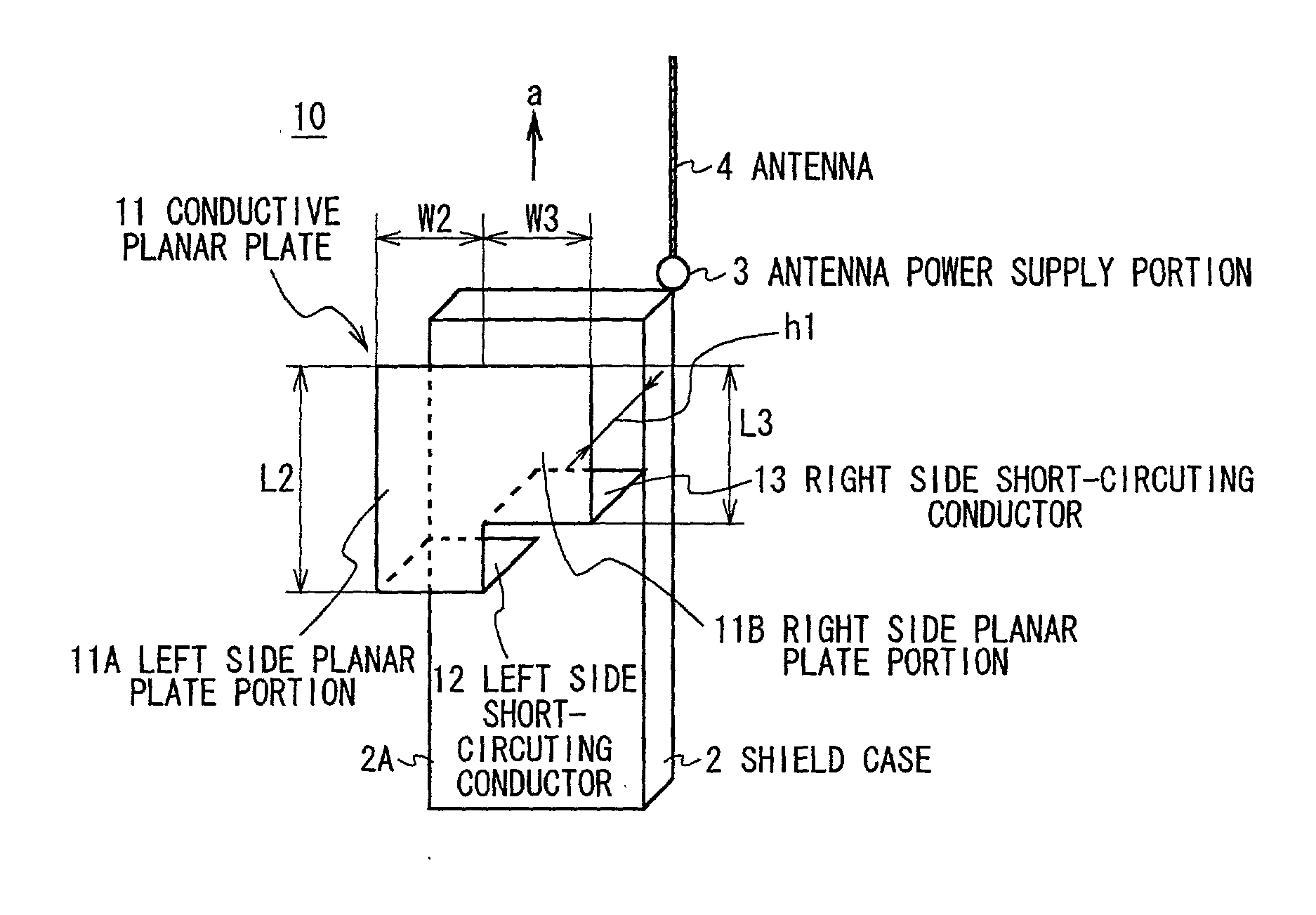

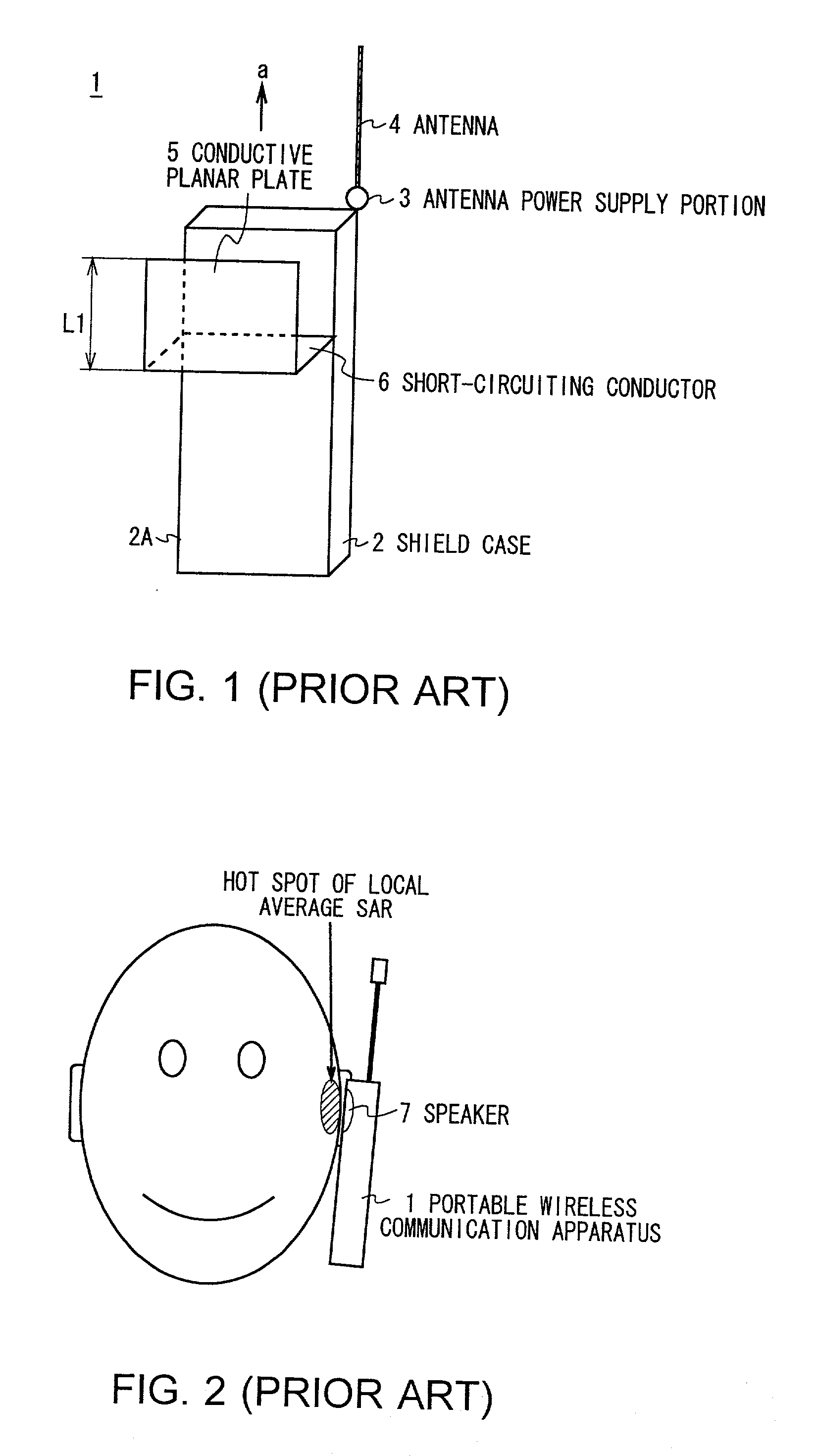

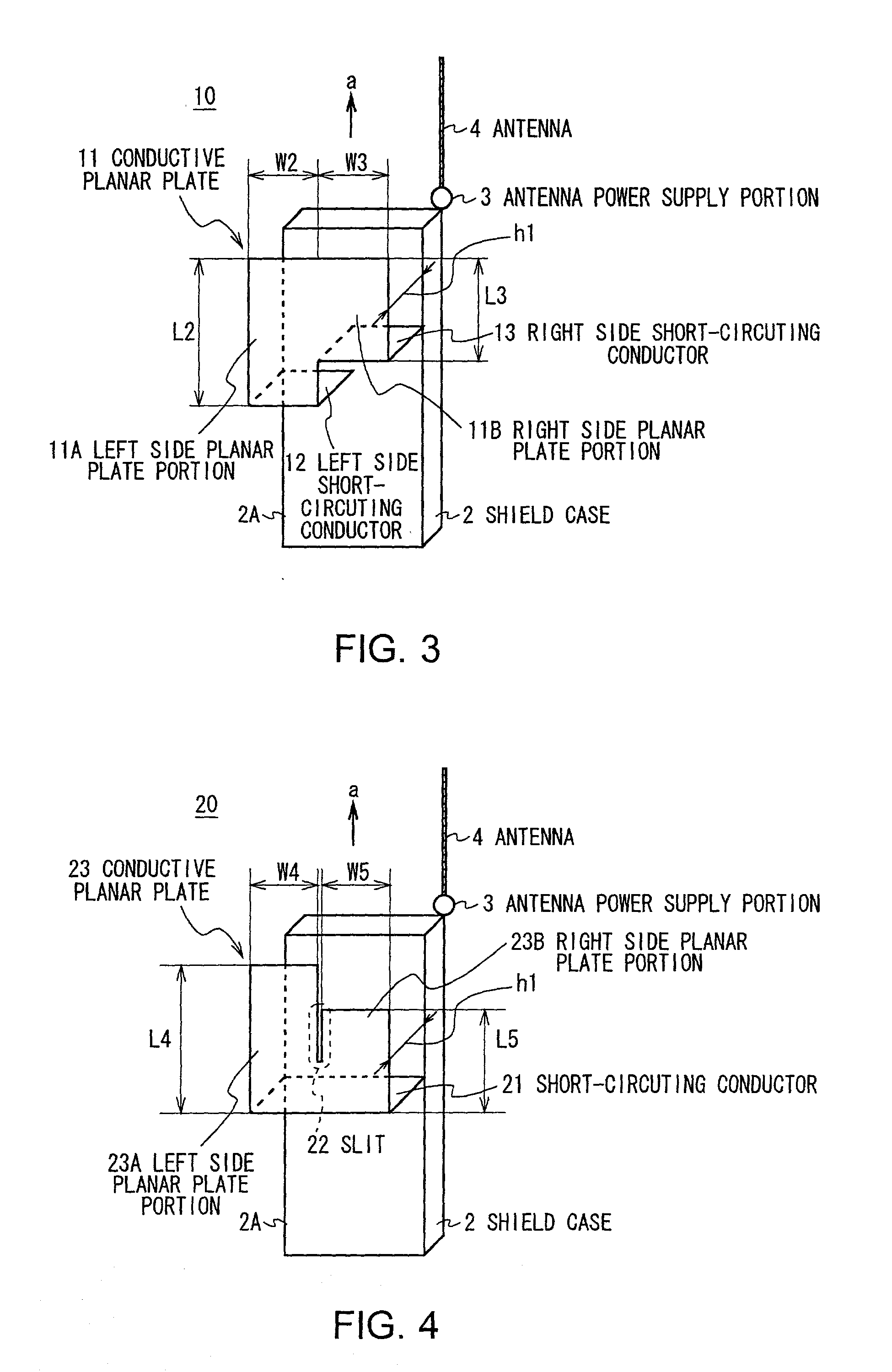

Antenna device and portable wireless communication apparatus

InactiveUS6456248B2Reduce the amount requiredRadiating resistanceSimultaneous aerial operationsAntenna supports/mountingsCommunications systemInput impedance

An antenna device and a portable wireless communication apparatus lower a local average specific absorption rate (SAR) in correspondence to at least two or more kinds of radio communication systems using different radio communication frequencies even when any radio communication frequency is used. Input impedance at open ends of conductive planar plates are brought close to infinity at first and second radio communication frequencies and restrict emission of electromagnetic waves by restricting a high-frequency current to be supplied to the above described conductive plates and a shield case, thereby securely lowering the local average SAR in correspondence to at least two or more kinds of radio communication systems using different radio communication frequencies even when any radio communication frequency is used.

Owner:SONY CORP

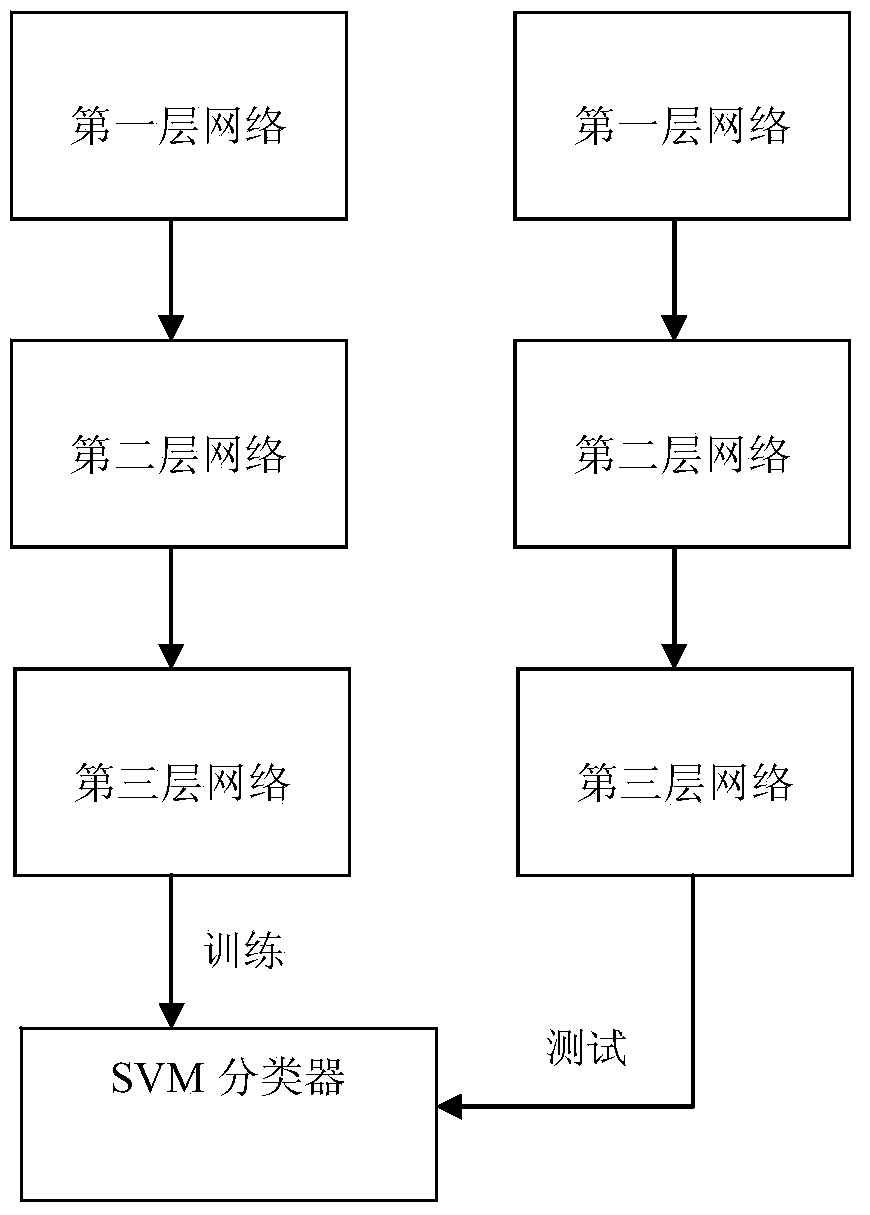

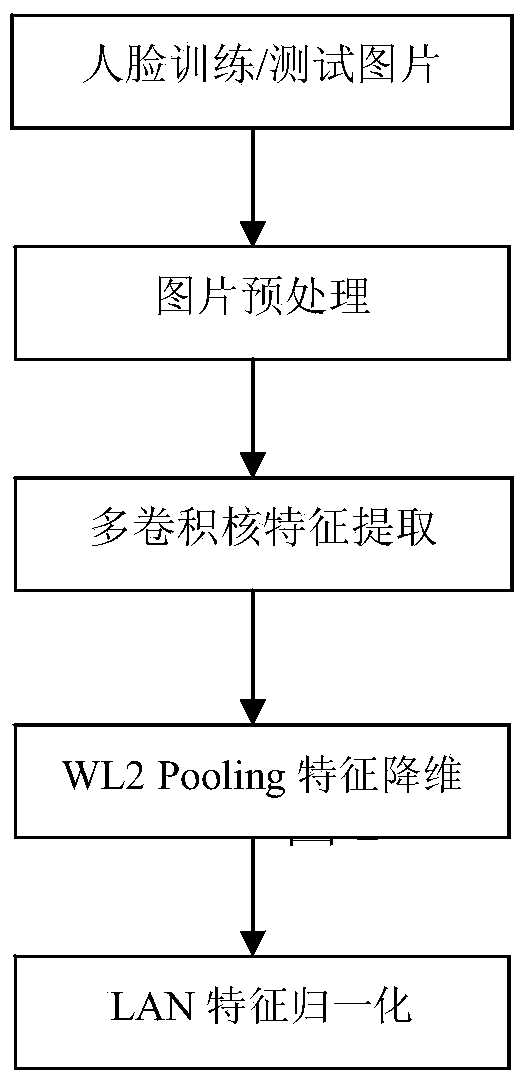

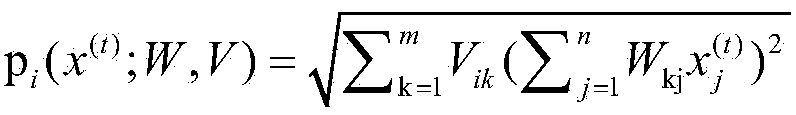

Deep learning human face identification method based on weighting L2 extraction

InactiveCN103530657AEfficient extractionMaintain stabilityCharacter and pattern recognitionFeature vectorDimensionality reduction

The invention discloses a deep learning human face identification method based on weighting L2 extraction. According to the method, firstly, the human face feature vector is extracted through various-convolution-kernel convolution, then, a weighting L2 extraction method is utilized for carrying out dimensionality reduction on the feature vector, and then, a local average normalizing processing method is adopted for normalizing the feature vector, so a layer of network in the deep learning is formed, the same method is used for building three layers of deep leaning networks, in addition, the three layers of deep learning networks are subjected to cascade connection for forming a layered three-layer deep learning network, and finally, a support vector machine classifier is utilized for carrying out human face training and identification. The deep learning human face identification method has the advantages that the weighting L2 extraction method is provided for realizing the feature dimensionality reduction, the over fitting problem in the training and the single feature problem in the traditional L2 extraction are solved, the feature vector dimensionality reduction is effectively realized, meanwhile, the human face identification performance can be improved, higher grade of features can be effectively extracted, the stability is high, and the identification performance is high.

Owner:SOUTH CHINA UNIV OF TECH

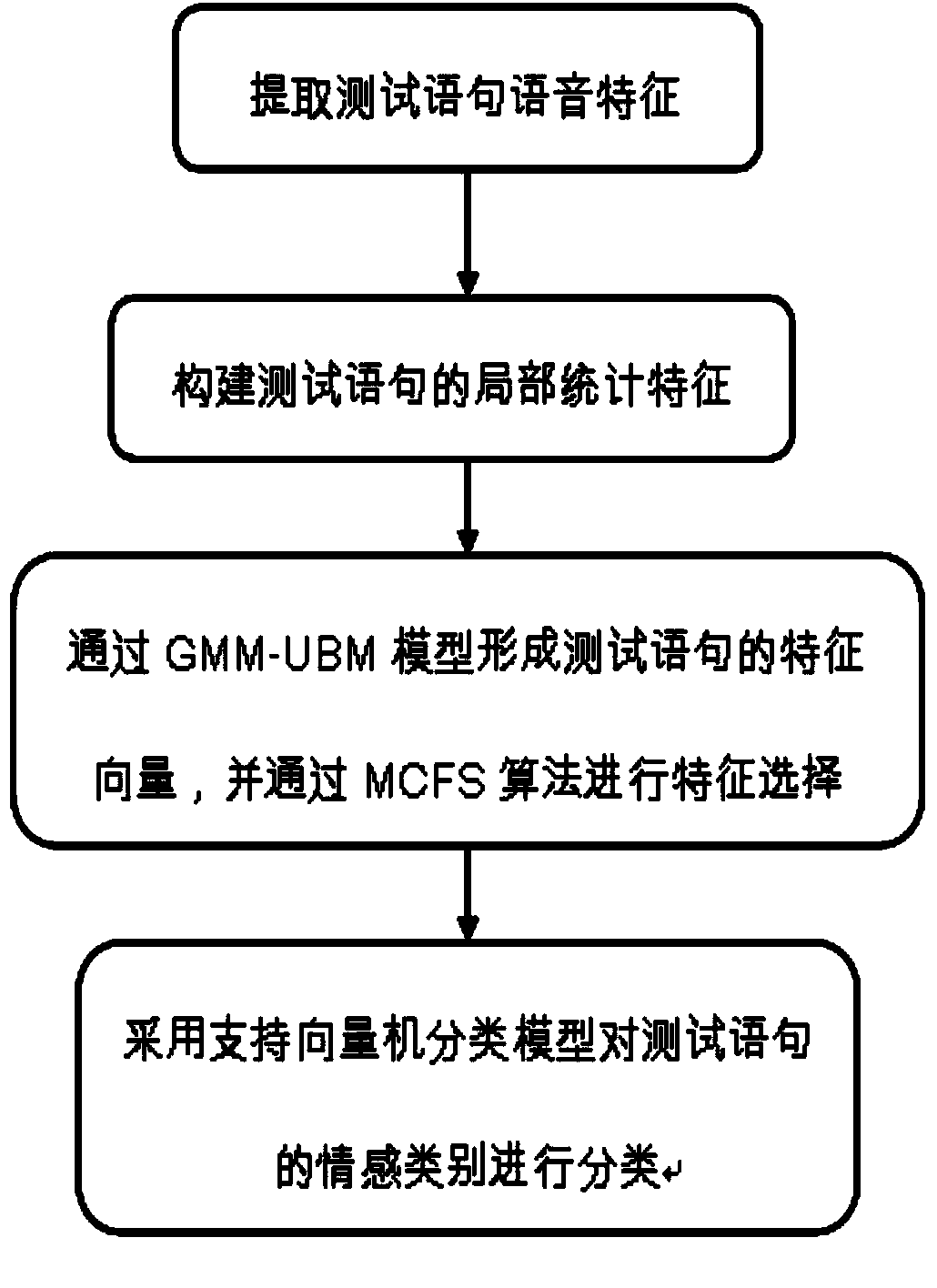

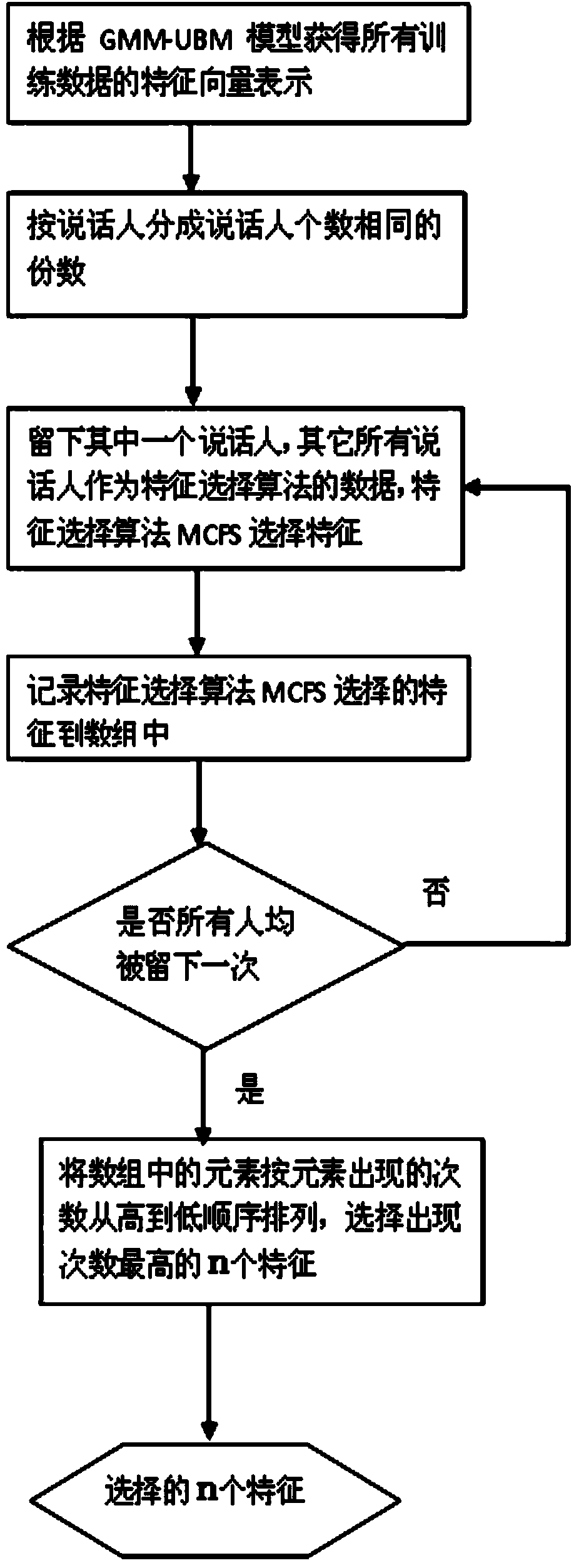

Speech emotion recognition method based on manifold

ActiveCN103440863AAvoid ignoringAvoid the disadvantages of too fine granularitySpeech recognitionFeature vectorMulti cluster

The invention provides a speech emotion recognition method based on manifold. The speech emotion recognition method based on the manifold comprises the following steps that the phonetic features of a test statement are extracted, wherein the phonetic features comprise MFCC, LPCC, LFPC, ZCPA, PLP and RASTA-PLP; the local average value and the variance of each extracted phonetic feature are calculated, the local average value and the variance of the first difference of each extracted phonetic feature are calculated, the local average values and the variances are connected in series, and therefore local statistical features of the test statement are formed; a universal background model (UBM) and the local statistical features of the test statement are applied so that a specific Gaussian mixture model (GMM) of the test statement can be generated, then, all average values of the GMM are connected into a vector, and the vector serves as a feature vector of the test statement; according to the features selected according to the integrated feature selection algorithm and the multi-cluster feature selection algorithm (MCFS), the feature vector of the test statement is changed; a support vector machine classification model is applied, the feature vector, existing after feature selection, of the test statement serves as input, and emotion classes of the statement are tested in a classified mode. The degree of accuracy of speech emotion recognition of the speech emotion recognition method based on the manifold is high.

Owner:SOUTH CHINA UNIV OF TECH

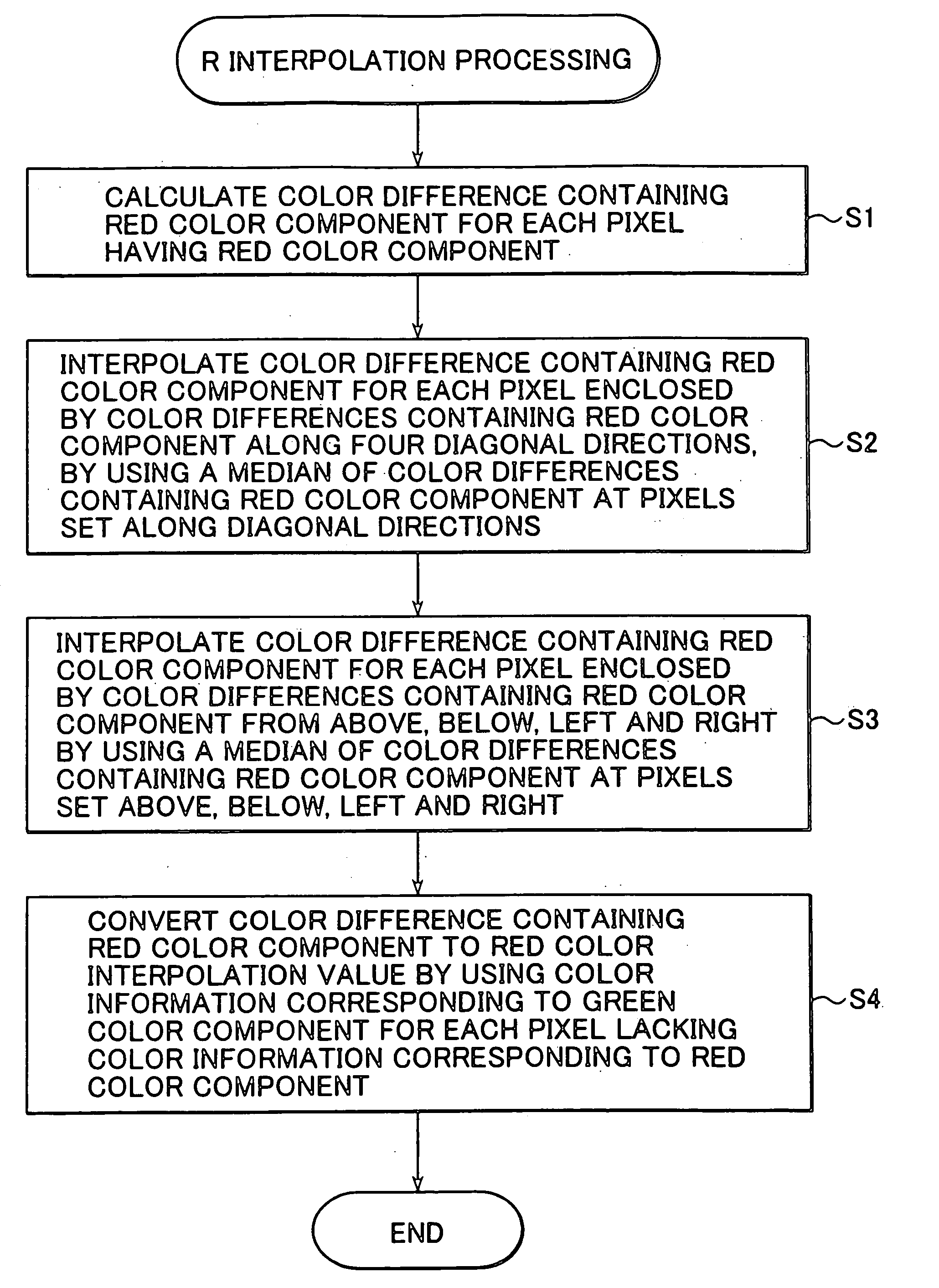

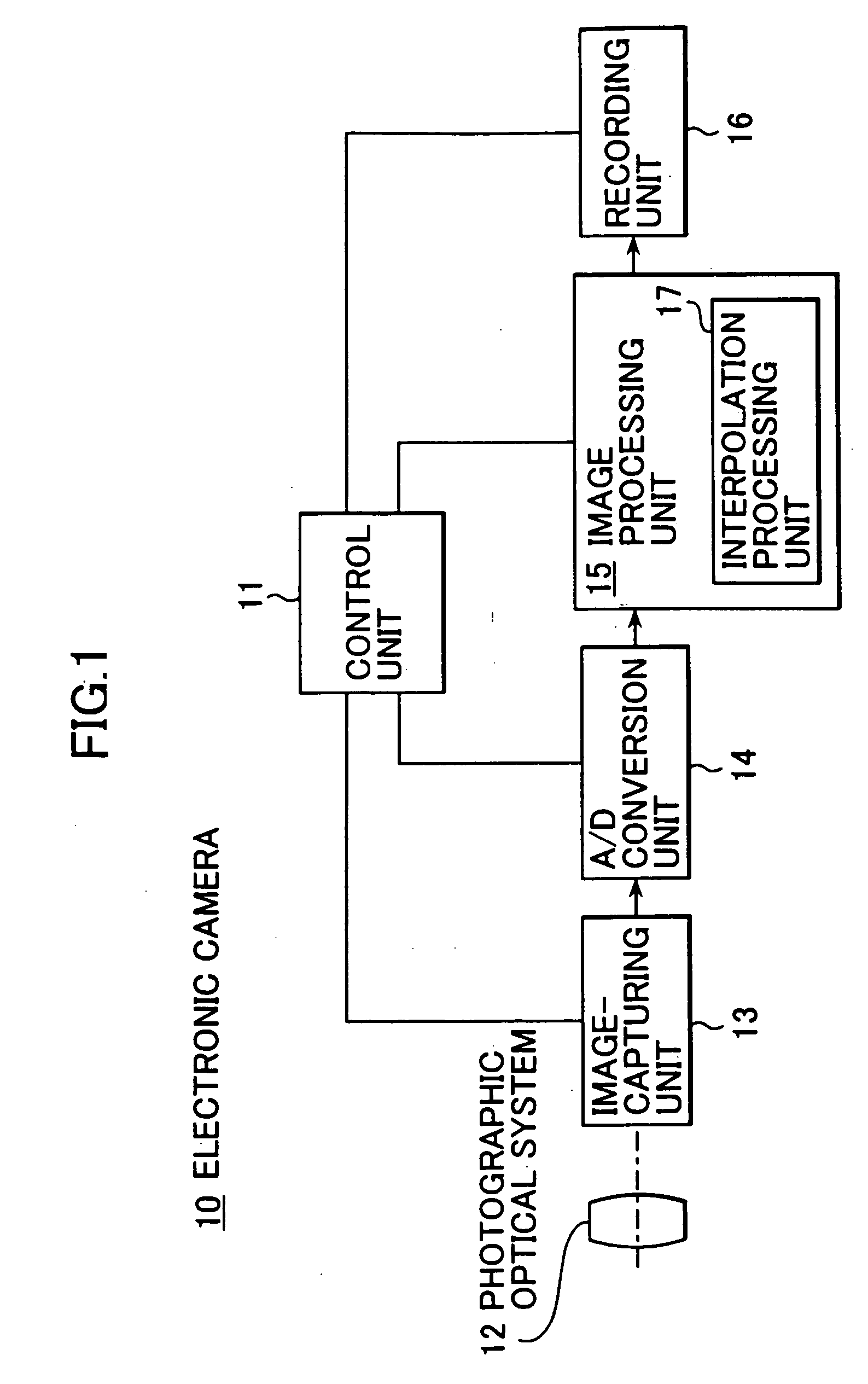

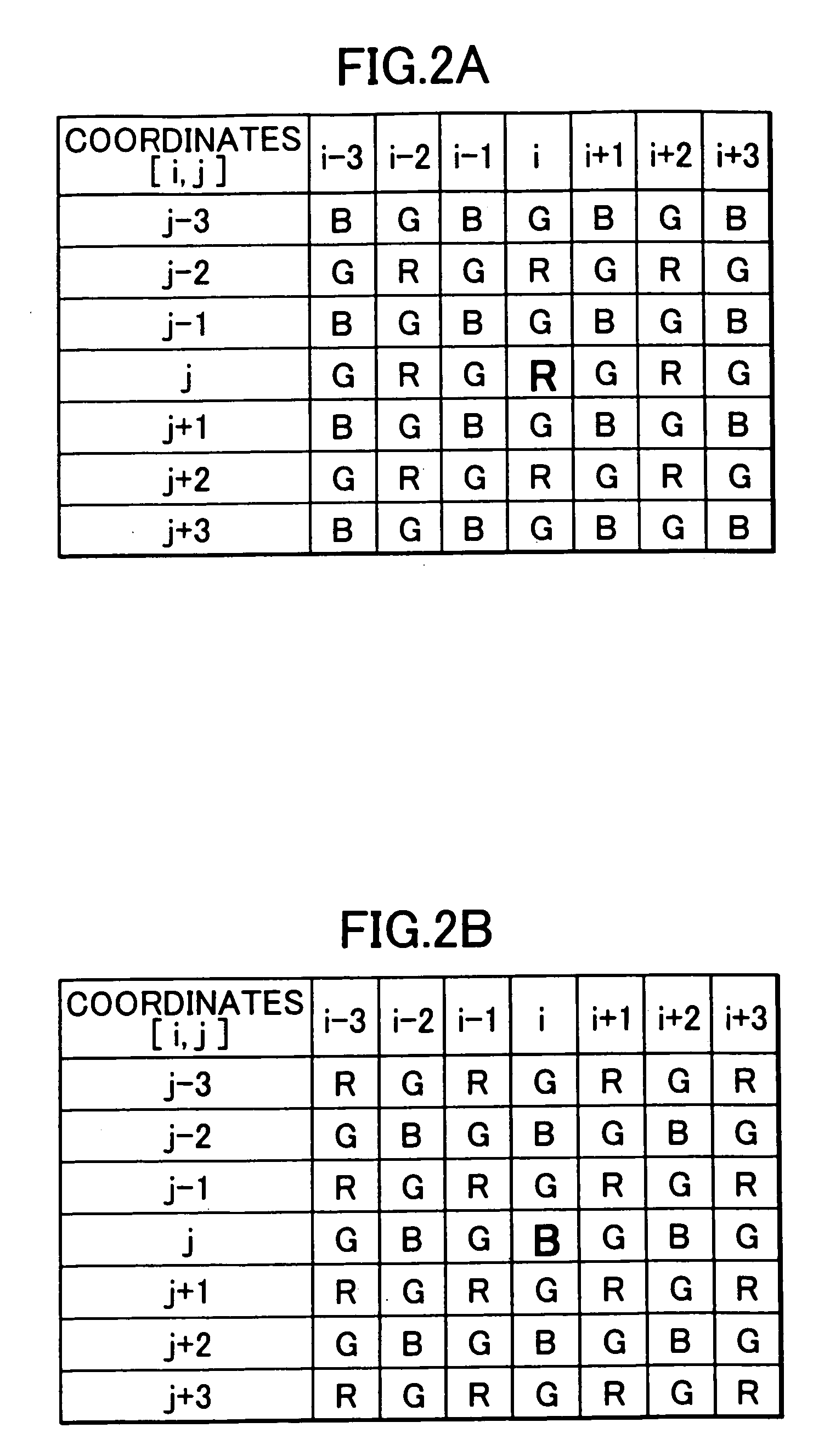

Interpolation processing apparatus and recording medium having interpolation processing program recording therein

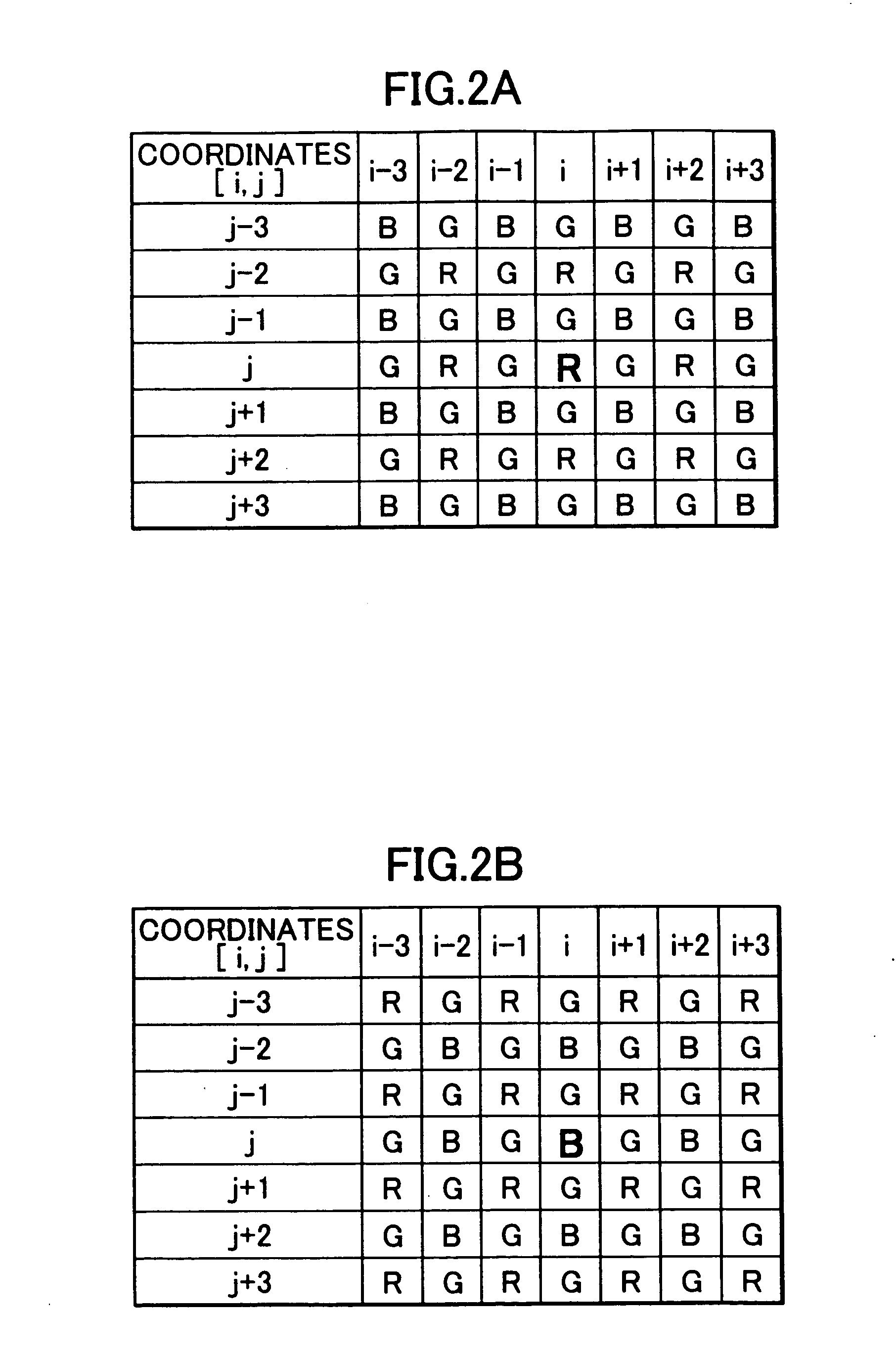

InactiveUS20060198556A1Avoid it happening againInhibitionImage enhancementTelevision system detailsComputer visionLocal average

A first interpolation processing apparatus that engages in processing on image data which are provided in a calorimetric system constituted of first˜nth (n≧) color components and include color information corresponding to a single color component provided at each pixel to determine an interpolation value equivalent to color information corresponding to the first color component for a pixel at which the first color component is missing, includes: an interpolation value calculation section that uses color information at pixels located in a local area containing an interpolation target pixel to undergo interpolation processing to calculate an interpolation value including, at least (1) local average information of the first color component with regard to the interpolation target pixel and (2) local curvature information corresponding to at least two color components with regard to the interpolation target pixel.

Owner:NIKON CORP

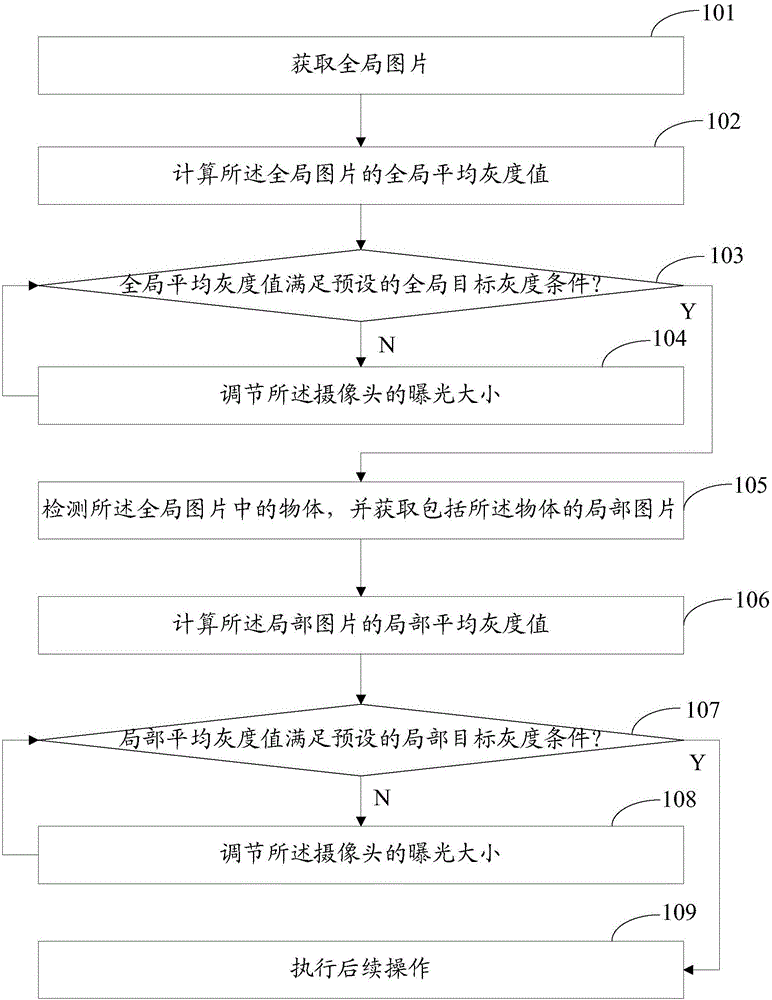

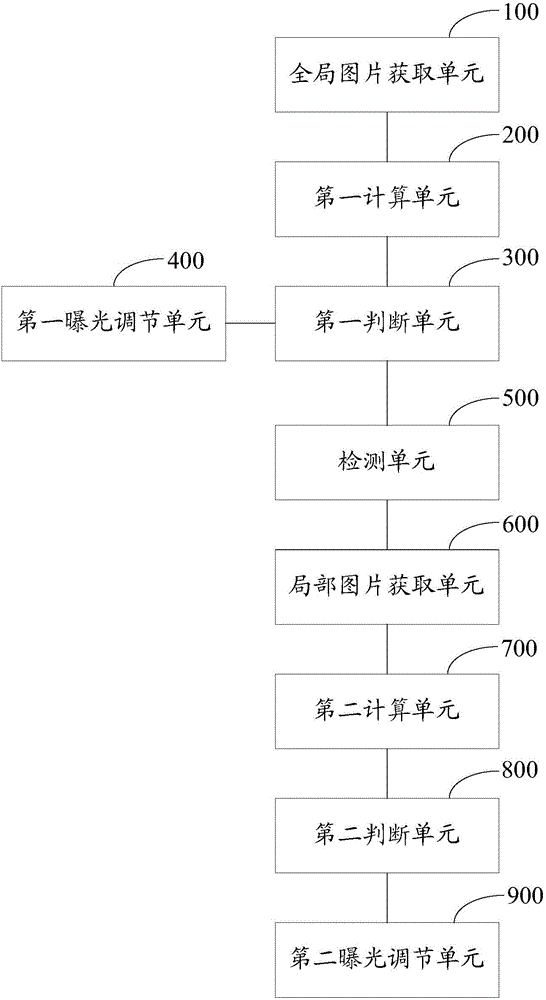

Exposure method and apparatus

InactiveCN106603933AGuaranteed detection rateGuaranteed correctnessTelevision system detailsColor television detailsComputer visionSelf adaptive

The invention provides an exposure method and apparatus. The method comprises the following steps: obtaining a global picture; calculating the global average gray value of the global picture; determining whether the global average gray value meets a preset global target gray scale condition or not; when the global average gray value does not meet the preset global target gray scale condition, adjusting the exposure magnitude of the camera; when the global average gray value meets the preset global target gray scale condition, detecting the object in the global picture and obtaining the local picture with the object; calculating the local average gray value of the local picture; determining whether the local average gray value meets a preset local target gray scale condition or not; and when the local average gray value does not meet the preset local target gray scale condition, adjusting the exposure magnitude of the camera. According to the invention, the self-adaptive exposure of a global picture and the self-adaptive exposure of a local object are integrated for use in a mutually assisting manner, which maximally satisfies the lighting condition for imaging required by the human face of a target object.

Owner:INT INTELLIGENT MACHINES CO LTD

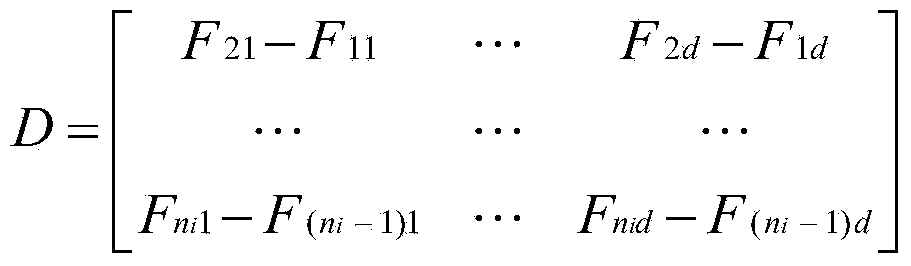

Interpolation processing apparatus and recording medium having interpolation processing program recorded therein

InactiveUS20060245646A1Avoid it happening againInhibitionImage enhancementImage analysisComputer visionLocal average

A first interpolation processing apparatus that engages in processing on image data which are provided in a calorimetric system constituted of first˜nth (n≧2) color components and include color information corresponding to a single color component provided at each pixel to determine an interpolation value equivalent to color information corresponding to the first color component for a pixel at which the first color component is missing, includes: an interpolation value calculation section that uses color information at pixels located in a local area containing an interpolation target pixel to undergo interpolation processing to calculate an interpolation value including, at least (1) local average information of the first color component with regard to the interpolation target pixel and (2) local curvature information corresponding to at least two color components with regard to the interpolation target pixel.

Owner:NIKON CORP

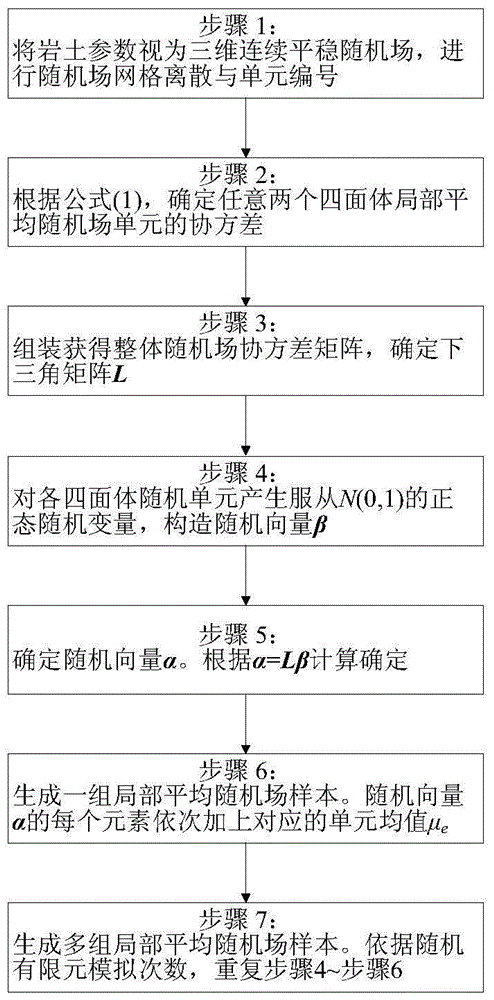

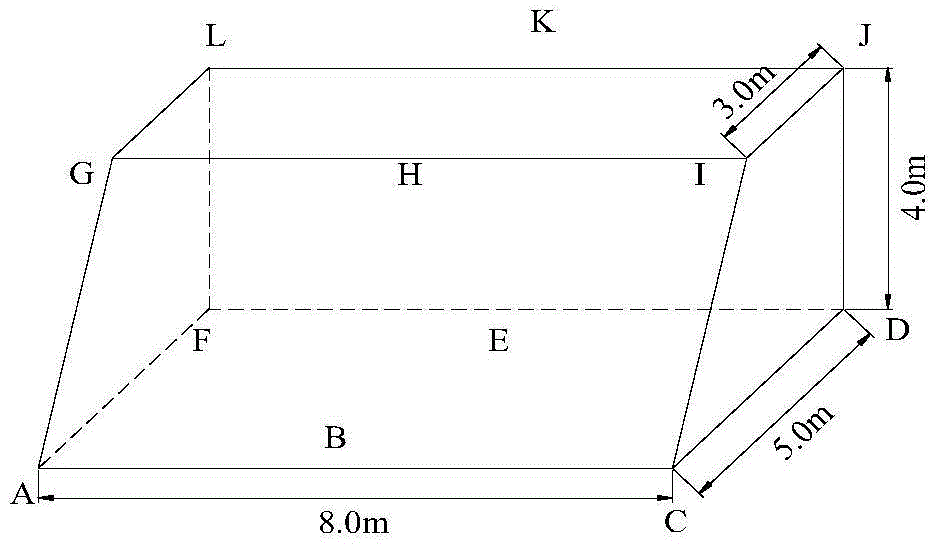

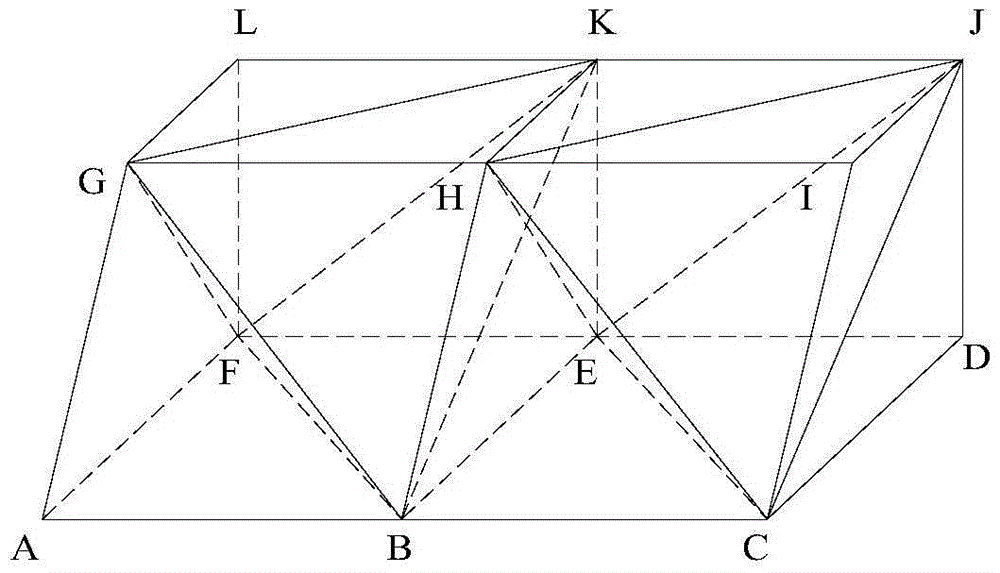

Generation method of three-dimensional local average random field samples of geotechnical parameters

InactiveCN105701274ACorrespondence is clearApplied to stochastic finite element calculations for accurateDesign optimisation/simulationSpecial data processing applicationsCovarianceDiscretization

The present invention discloses a generation method of three-dimensional local average random field samples of geotechnical parameters. The generation method comprises the steps: (1) regarding geotechnical parameters as a three-dimensional continuous and stationary random field, and performing random field mesh discretization and unit numbering; (2) determining a local average random field covariance; (3) determining a lower triangular matrix L according to an assembled overall random field covariance matrix; (4) constructing a random vector beta; (5) determining a random vector alpha; (6) generating a set of local average random field samples; and (7) generating multiple sets of local average random field samples. In this method, a tetrahedral random field mesh and a tetrahedral finite element mesh may be the same mesh; the obtained local average random field samples can be directly applied to random finite element calculation; the correspondence of unit numbers is clear; the calculation is accurate; programming is easy; the universality is high; and the method can be widely applied to discretization analysis of the random finite element parameter random field.

Owner:CHINA UNIV OF MINING & TECH

Determining a window size for outlier detection

InactiveUS7917338B2Amplifier modifications to reduce noise influenceDigital computer detailsUltimate tensile strengthLocal average

A window size for outlier detection in a time series of a database system is determined. Strength values are calculated for data points using a set of window sizes, resulting at least in one set of strength values for each window size. The strength values increase as a distance between a value of a respective data point and a local mean value increases. For each set of strength values, a weighted sum is calculated based on the respective set of strength values. A weighting function is used to suppress the effect of largest strength values and a window size is selected based on the weighted sums.

Owner:INT BUSINESS MASCH CORP

Multi-modality image fusion method based on region and human eye contrast sensitivity characteristic

InactiveCN102800070AQuality improvementConform to visual characteristicsImage enhancementMultiscale decompositionDecomposition

The invention provides a multi-modality image fusion method based on a region and human eye contrast sensitivity characteristic, comprising the following steps of: (1) respectively utilizing a non-subsampled Contourlet transform NSCT to carry out multi-scale decomposition on a source image to be fused to obtain each stage of sub-band coefficient of the source image; (2) respectively formulating a fusion rule of a low-frequency sub-band coefficient and each stage of high-frequency sub-band coefficient according to a human eye vision contrast function LCSF, a human eye vision absolute contrast sensitivity function ACSF, a feeling brightness contrast function FBCS and a local average gradient sensitivity function LGSF to obtain each grade of the sub-band coefficient of a fused image; and (3) carrying out NSCT inverted conversion on the fused coefficient and reconstructing to obtain the fused image. The multi-modality image fusion method disclosed by the invention meets vision properties of a human eye and improves the quality of the fused image to a great extent; and the multi-modality image fusion method has robustness, is suitable for fusing a plurality of types of image sources of infrared and visible light images, multi-focusing images, remote images and the like, and has a wide application prospect.

Owner:NANJING UNIV

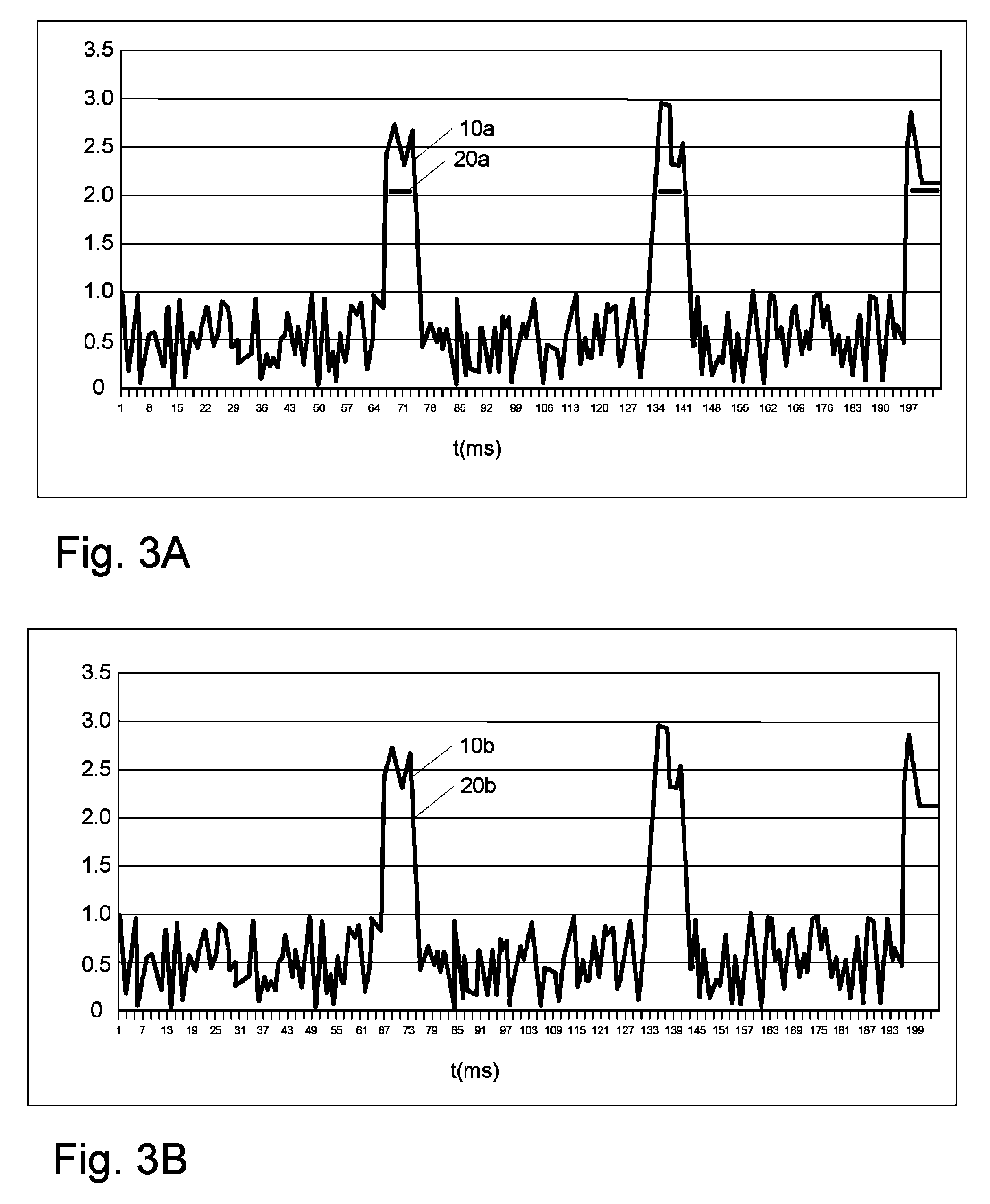

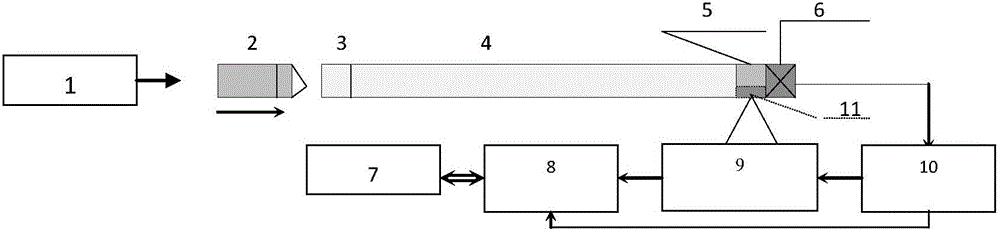

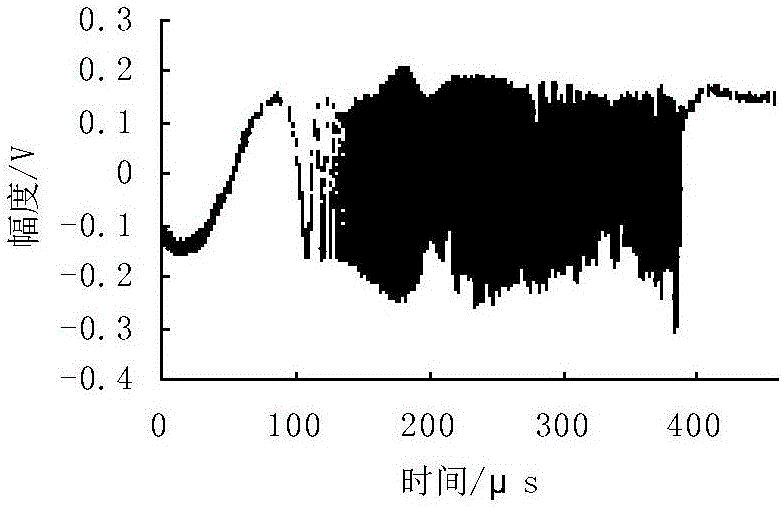

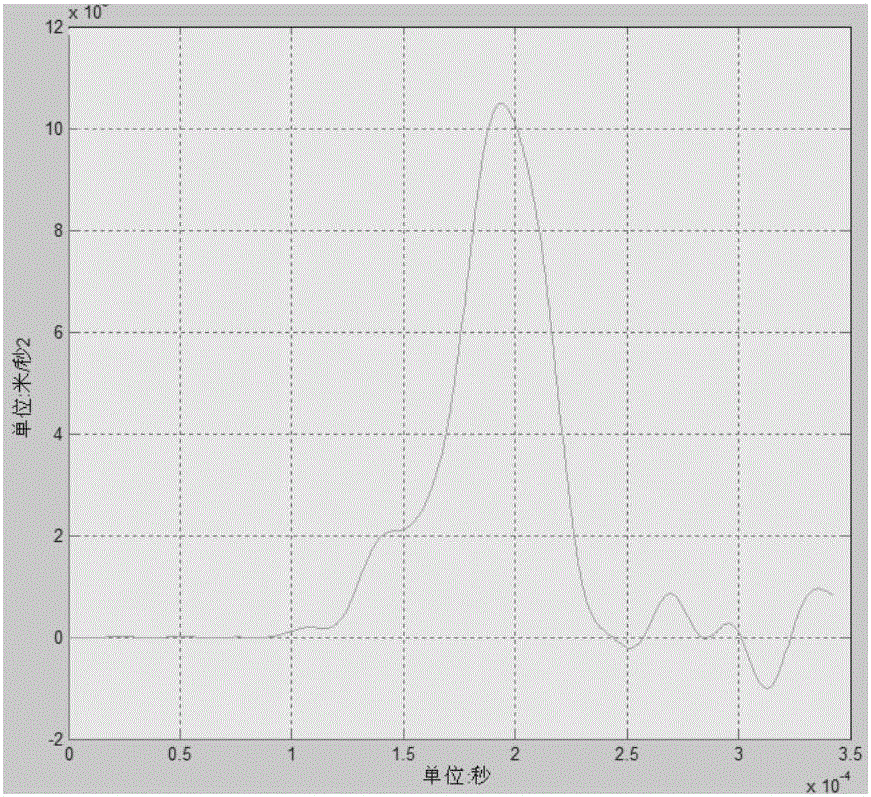

Laser Doppler vibration measurement method impact acceleration measurement device and method

ActiveCN106443066AEasy to convergeThe method is simple and fastAcceleration measurement using interia forcesMeasurement deviceWrong direction

The invention relates to a laser Doppler vibration measurement method impact acceleration measurement method, and belongs to the technical field of impact measurement test. The laser Doppler vibration measurement method impact acceleration measurement device comprises a HOPKINSON impact machine, an elastic body, a cushion layer, a HOPKINSON bar, an anvil body, a corrected impact acceleration sensor, a computer, a digital oscilloscope, a differential laser interferometer and a signal tuner. Impact acceleration waveforms are acquired by the mode of realizing filtering differential through average filtering in a local window and then differential so that the problem of wrong direction of an ISO standard recommendation method can be avoided. Besides, the average value of the absolute values of the deviation of a local end base straight line and each measurement value point to act as an error scale identification function of average filtering in the range of the average filtering window, and the relation curve of the identification function value and rising time and the local average window width ratio acts as the criterion of searching the optimal average window so as to solve the problem of optimal filtering.

Owner:BEIJING CHANGCHENG INST OF METROLOGY & MEASUREMENT AVIATION IND CORP OF CHINA

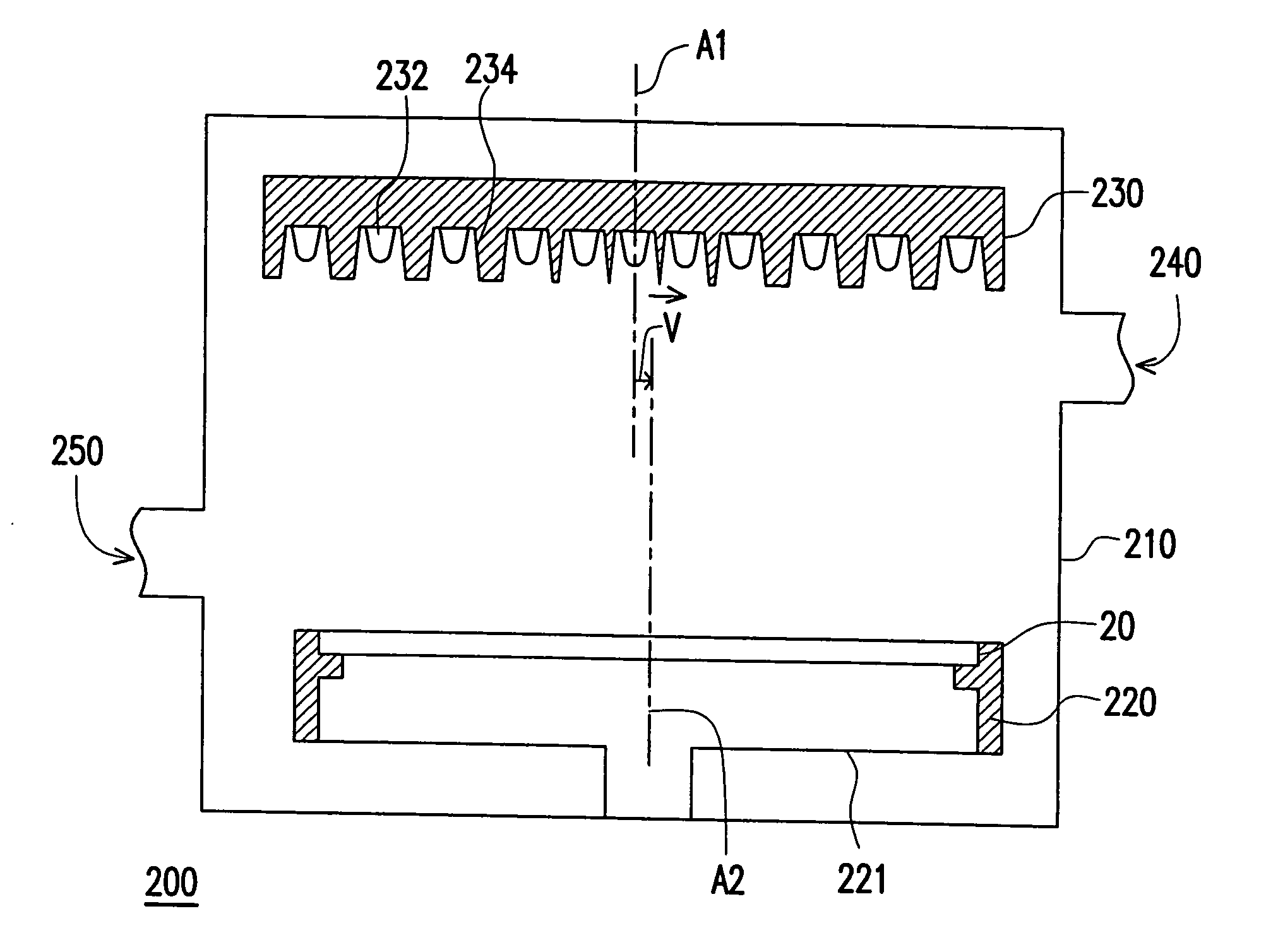

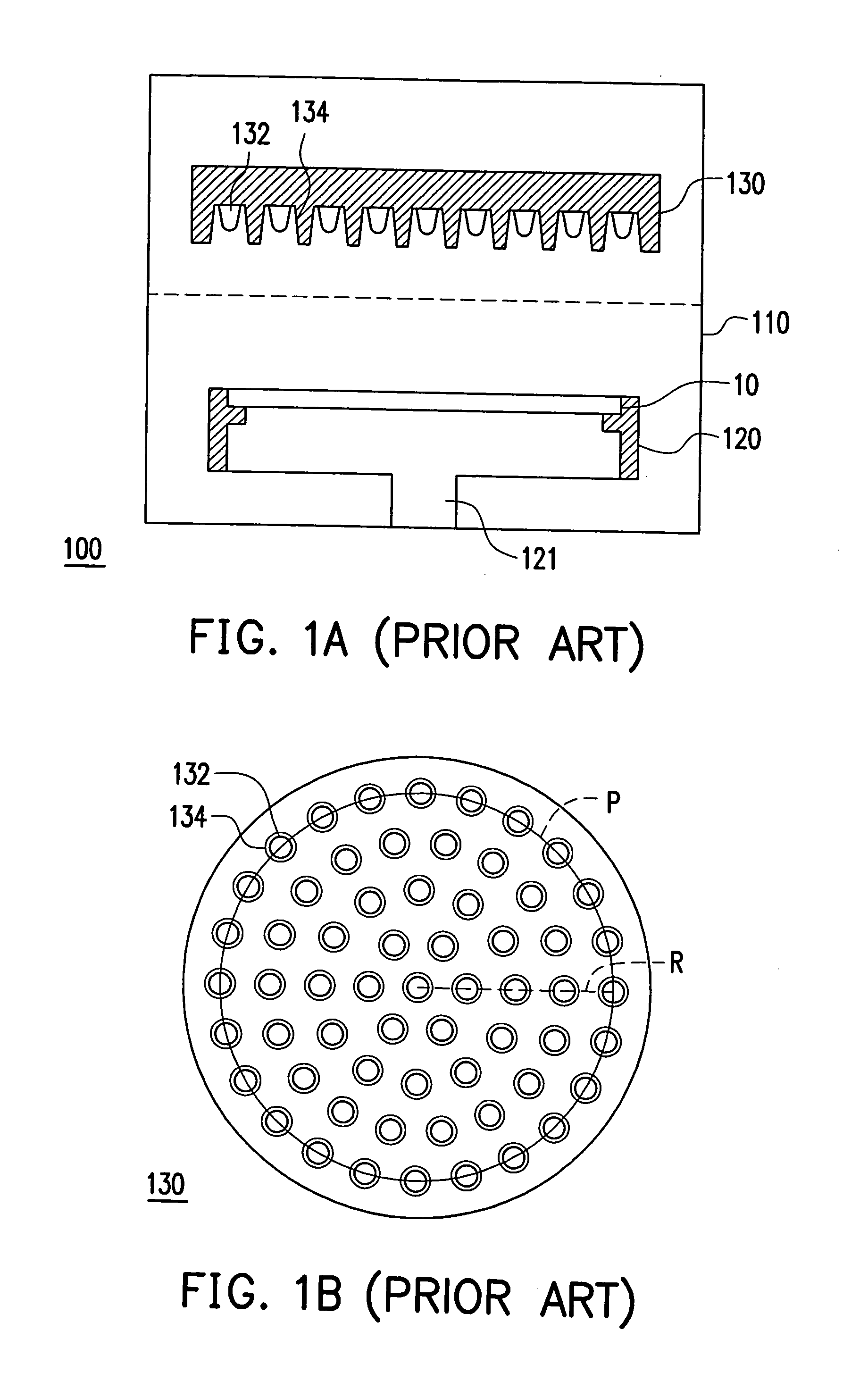

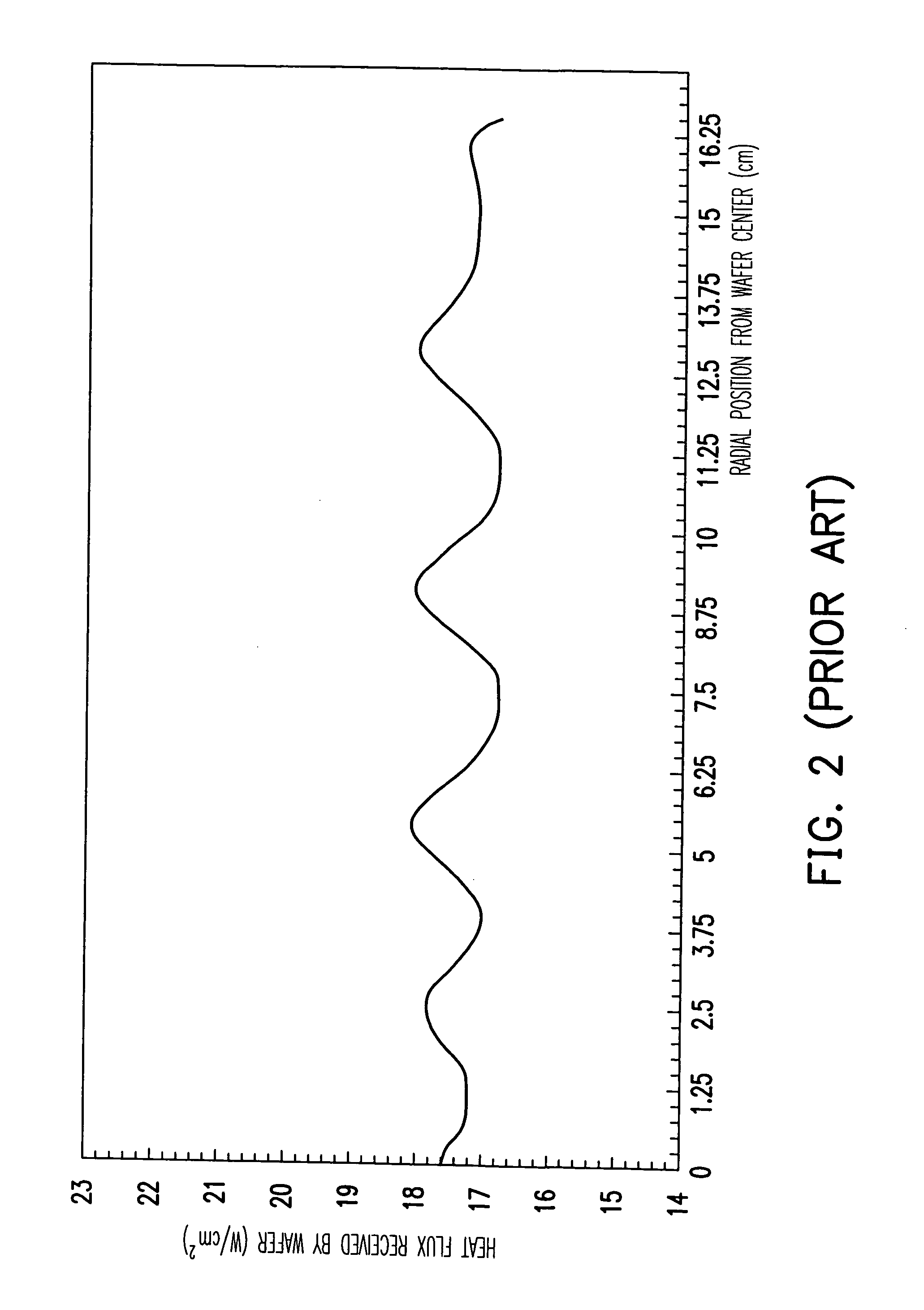

Apparatus and adjusting technology for uniform thermal processing

ActiveUS20060291823A1Improve uniformityImprove production yieldDrying solid materials with heatMuffle furnacesHeat fluxEngineering

An adjusting technology of thermal processing is provided. A heating lamp and a reflector are disposed over a wafer and the heat flux distribution on the wafer generated by the individual heating lamp is measured and adjusted. A set of heating lamps formed by heating lamps is disposed over the wafer. The heating lamps are in concentric rings and arranged as an axi-symmetric array. The relative position between the set of heating lamps and the wafer is adjusted so that the wafer center is at the position with local mean heat flux from lamps between the most inner lamp subset and its adjacent lamp subset. Followed by adjusting the heating powers, either or both of the wafer and the set of heating lamps are rotated respect to the center of the wafer, so as to improve uniformity of the heat flux distribution on the heated object.

Owner:NAT CHUNG SHAN INST SCI & TECH

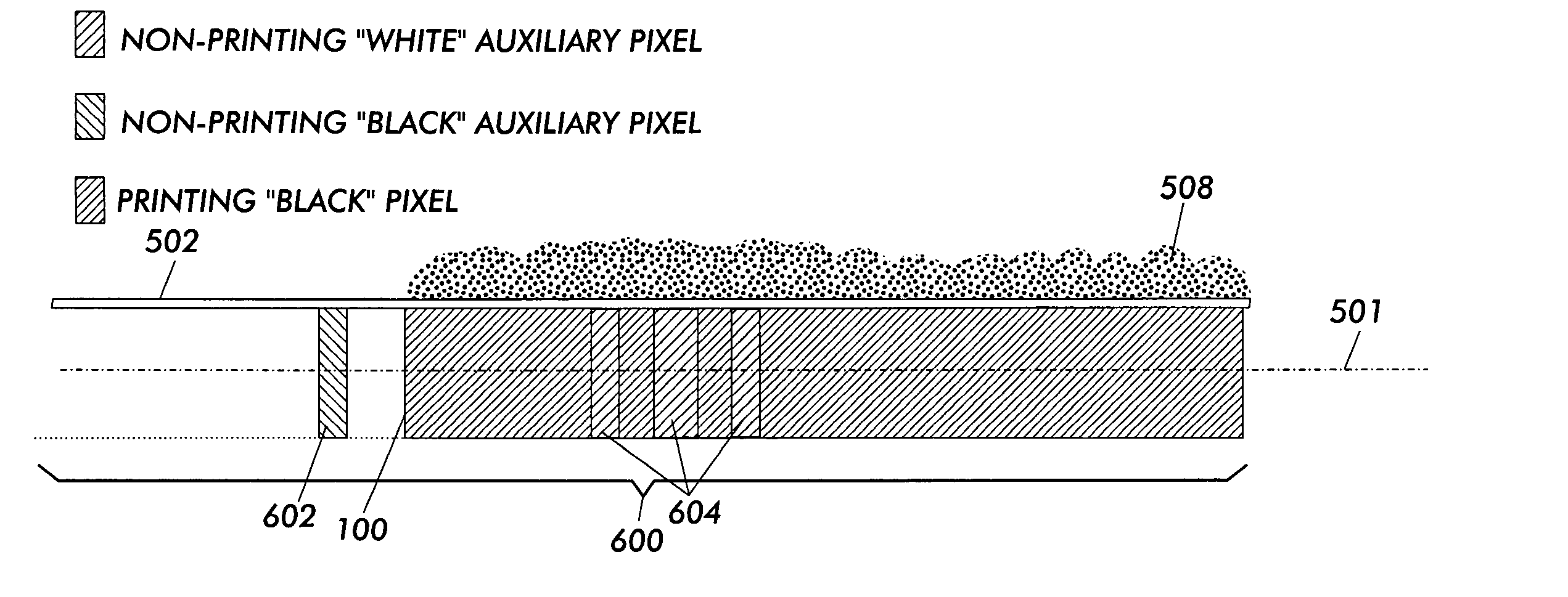

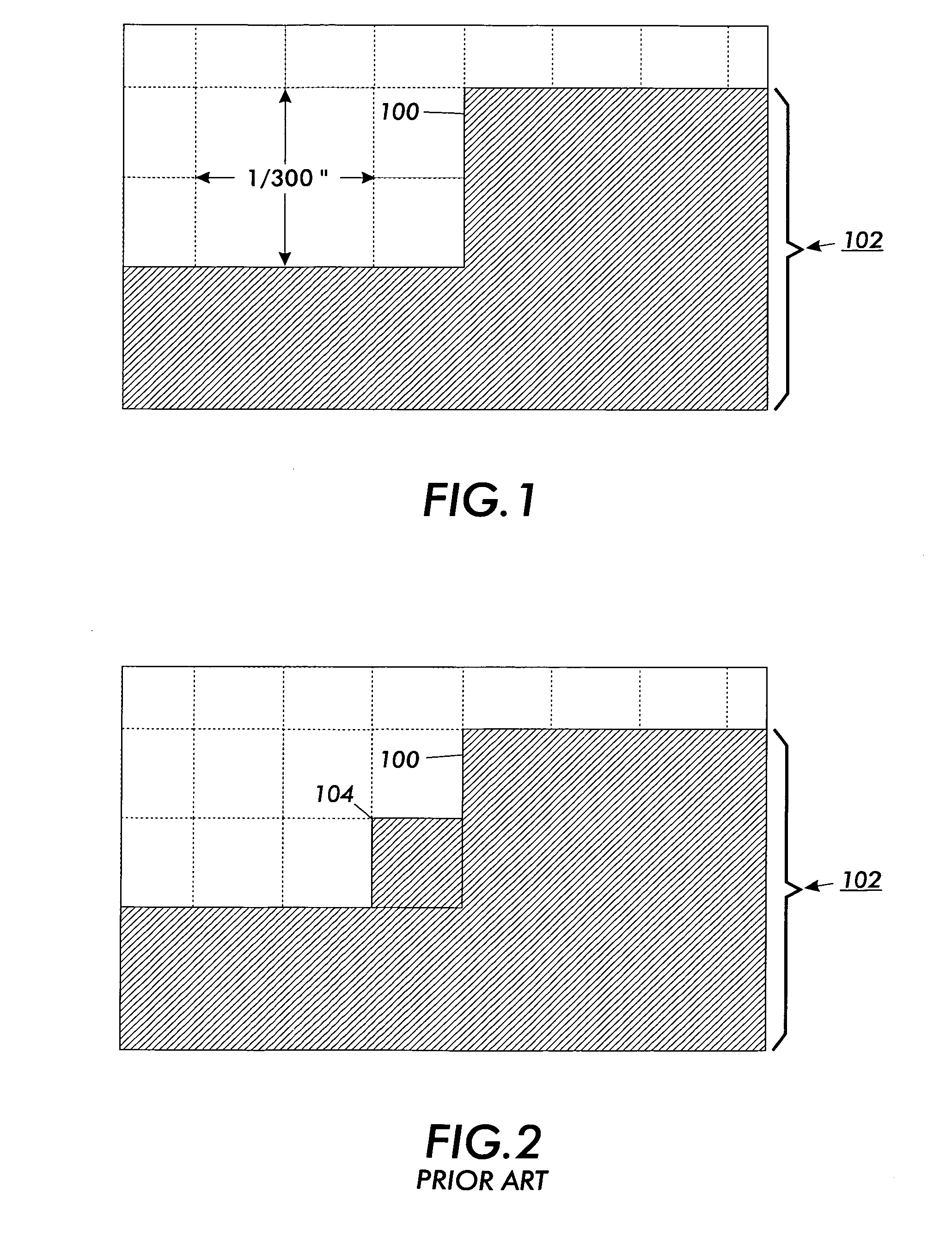

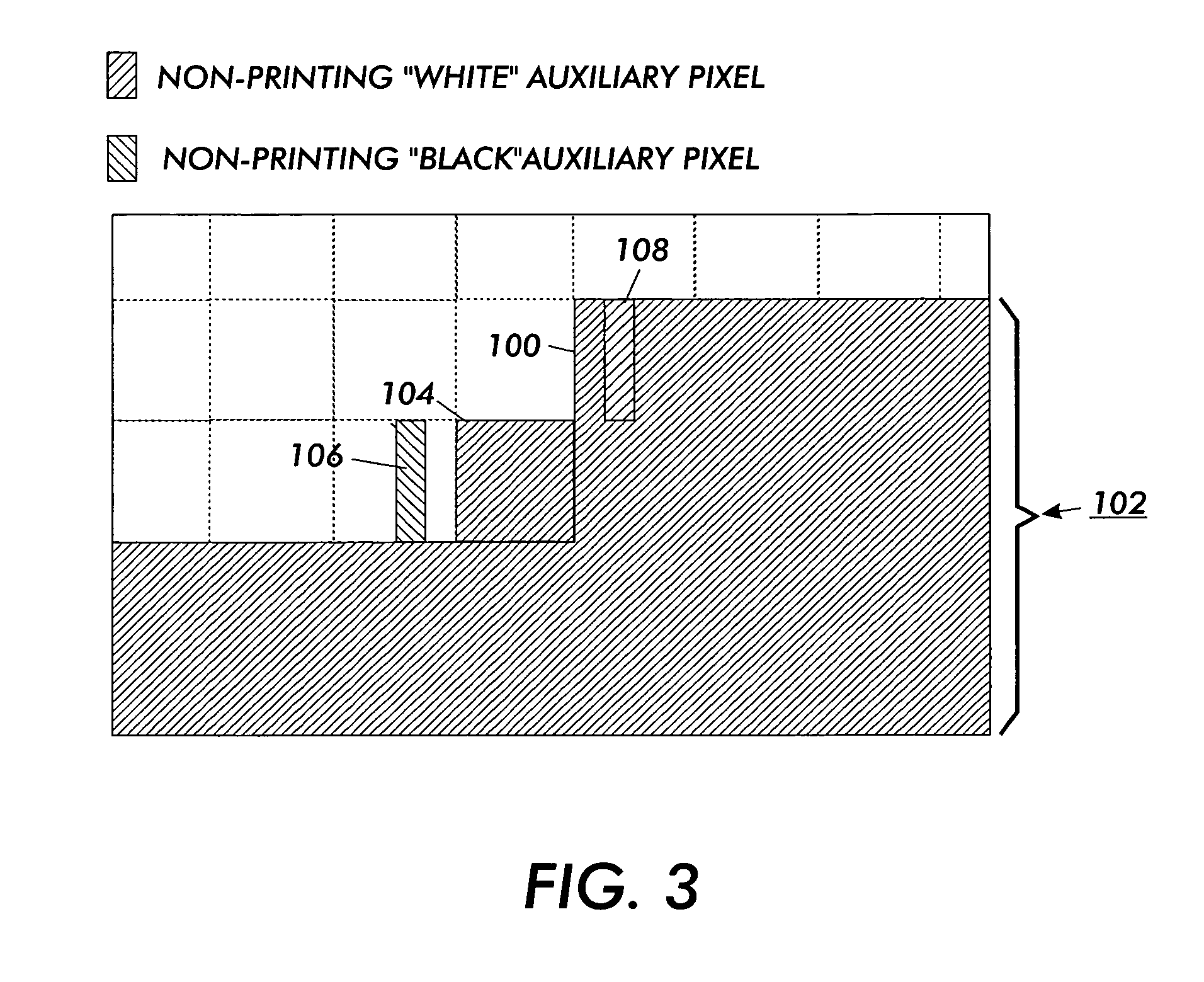

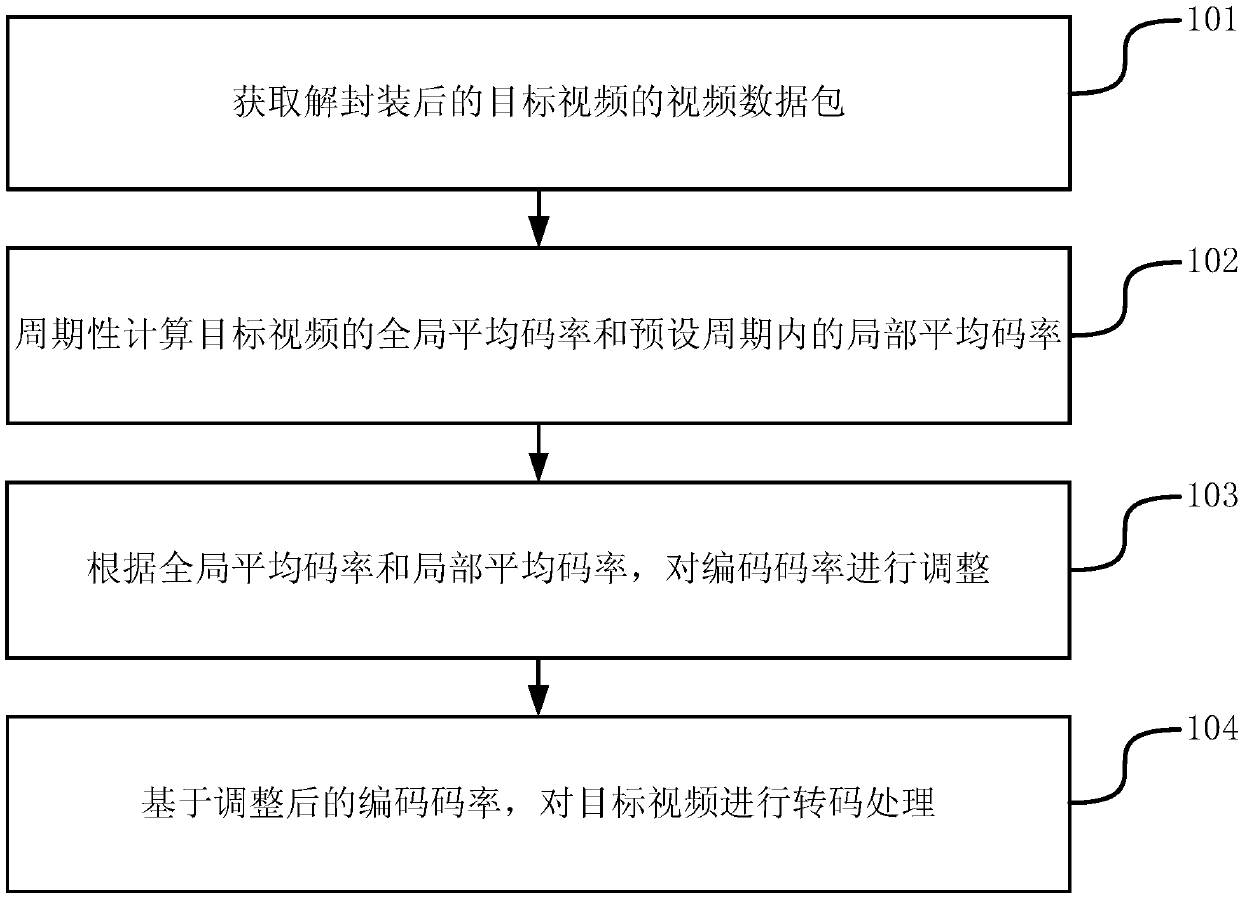

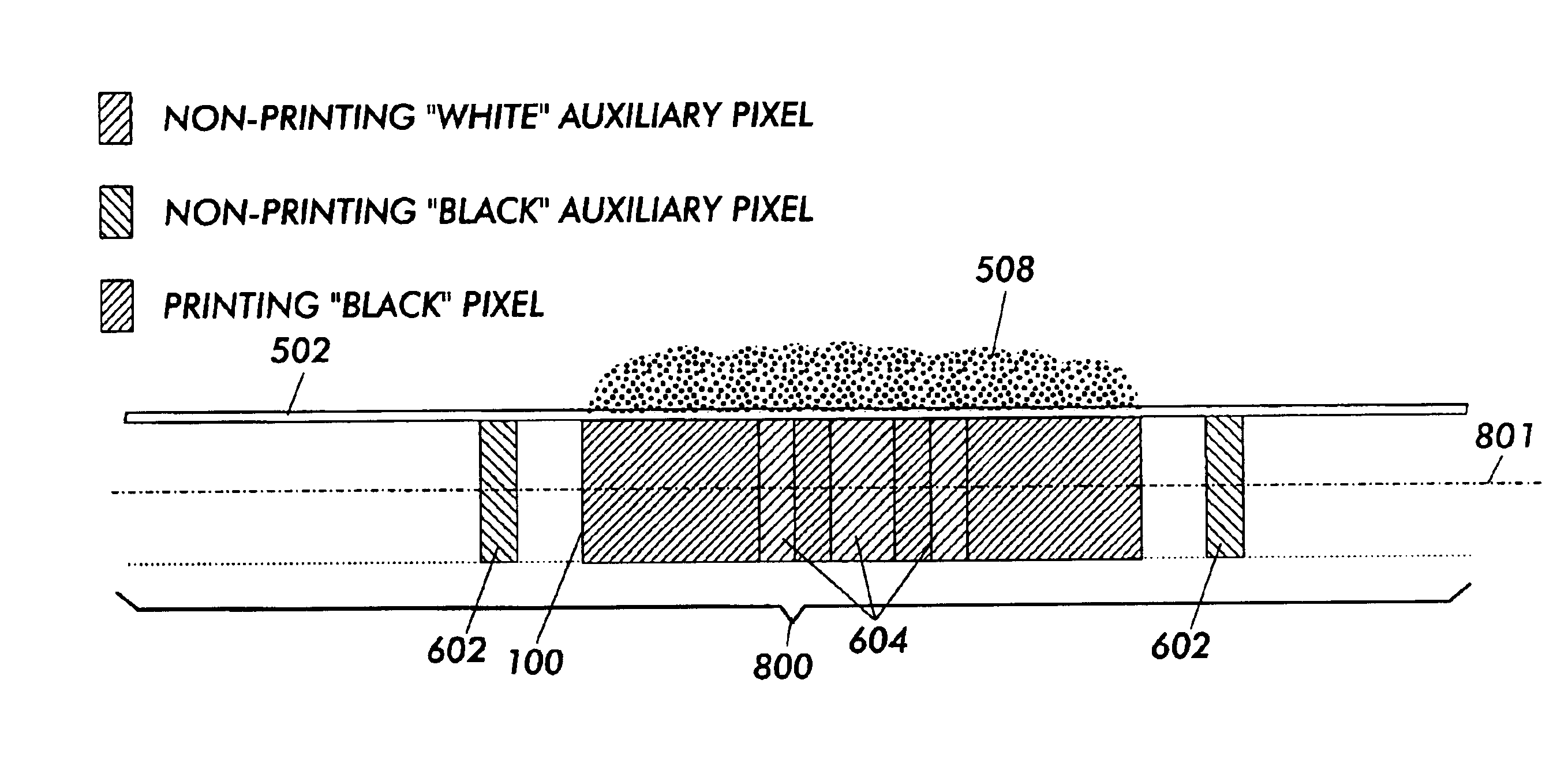

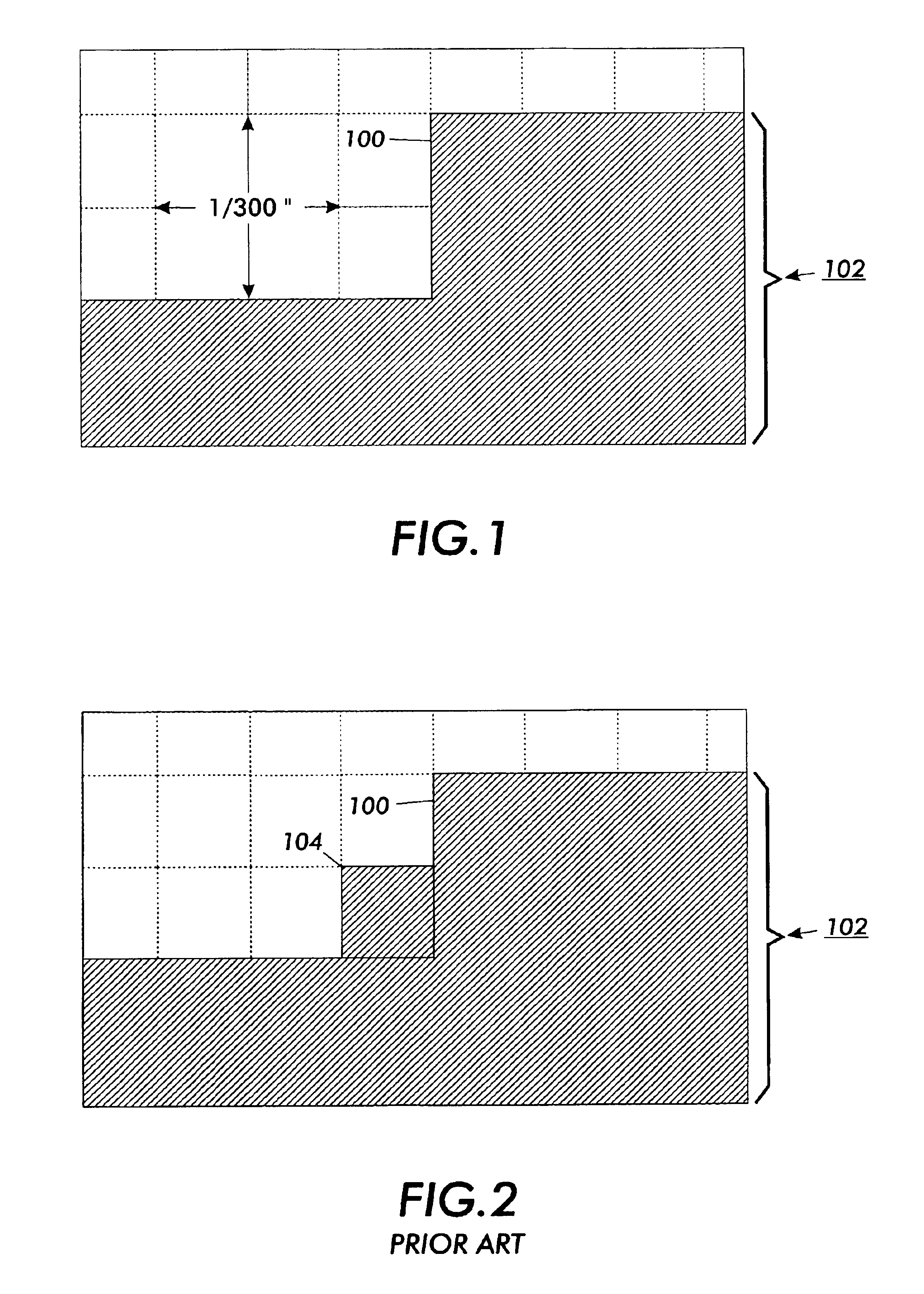

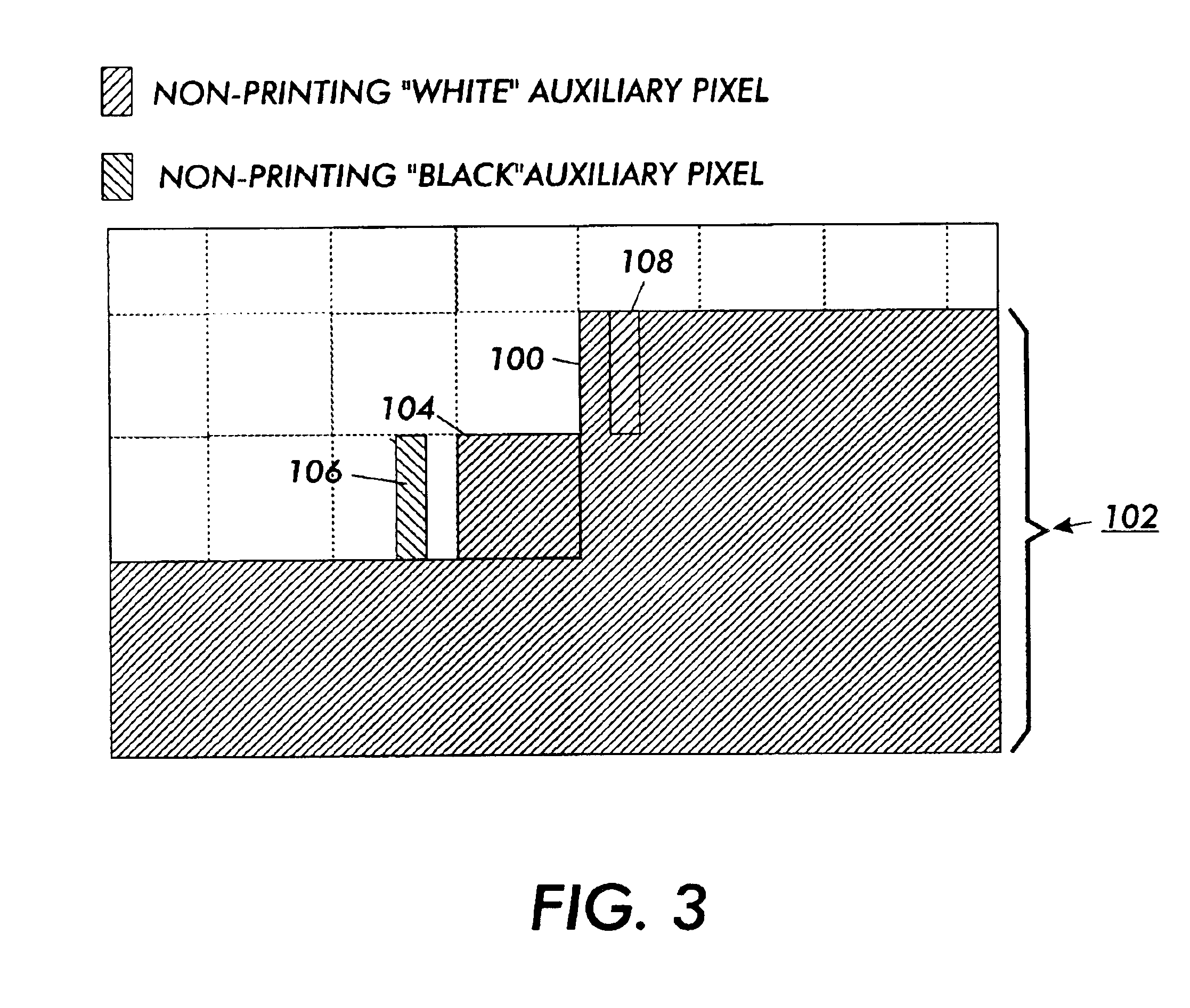

Digital halftone with auxiliary pixels

InactiveUS7016073B1Promote reproductionImprove translationImage enhancementDigitally marking record carriersEngineeringLocal average

Utilization of non-printing high-spatial-frequency auxiliary pixels are introduced into the bitmap of halftones utilized in an image to obtain local control of the image development by modification of local average voltage in the development nip. These auxiliary pixels embody frequencies or levels of charge that are past the threshold for printing on the Modulation Transfer Function (MTF) curve, and therefore by themselves result in no toner deposition on the resultant page. These auxiliary pixels will however, position the toner cloud by modulating it and compensate for cleaning field and toner supply effects. This will better position the toner cloud to ensure adequate toner supply to all parts of the image so that the desired printing pixels will print as intended and provide a more faithful rendering of grayscale information in the image.

Owner:XEROX CORP

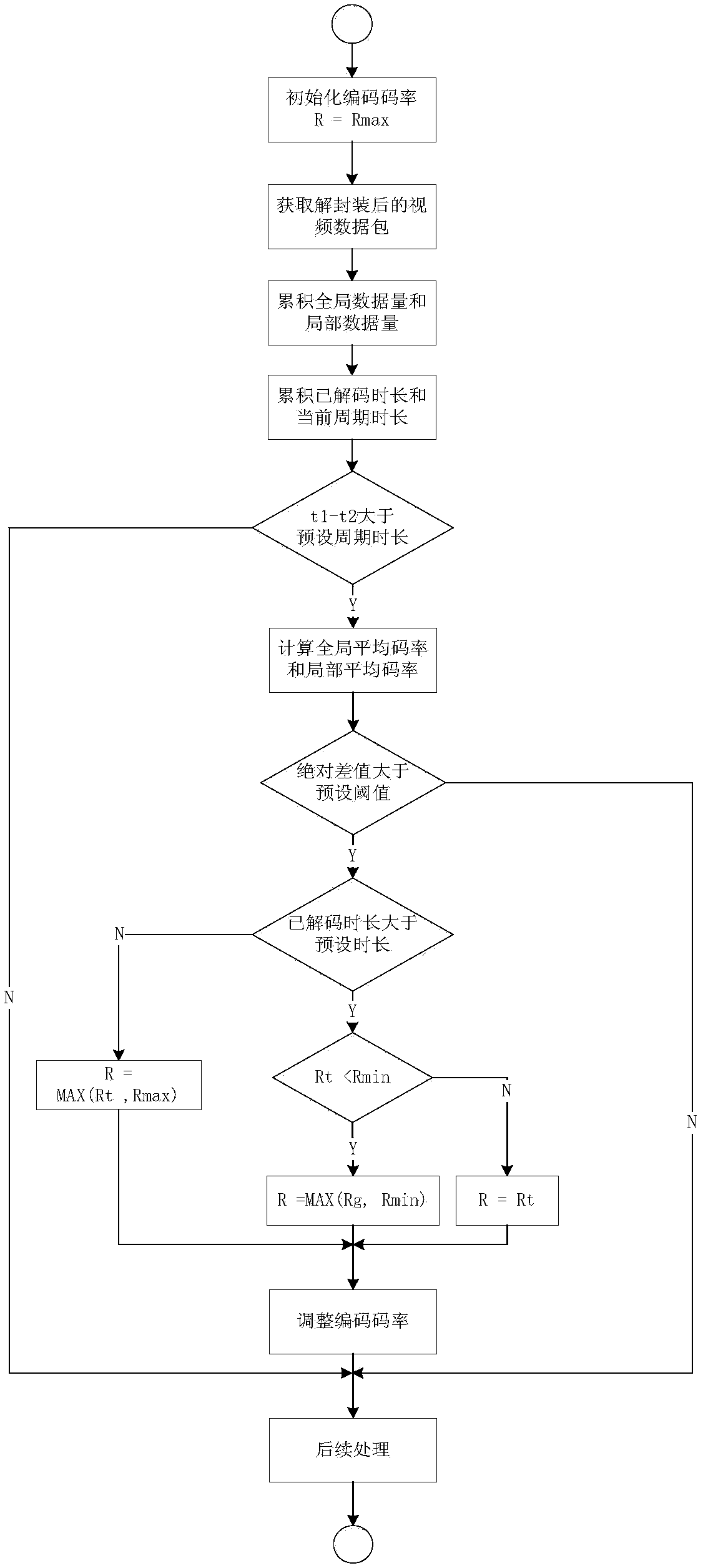

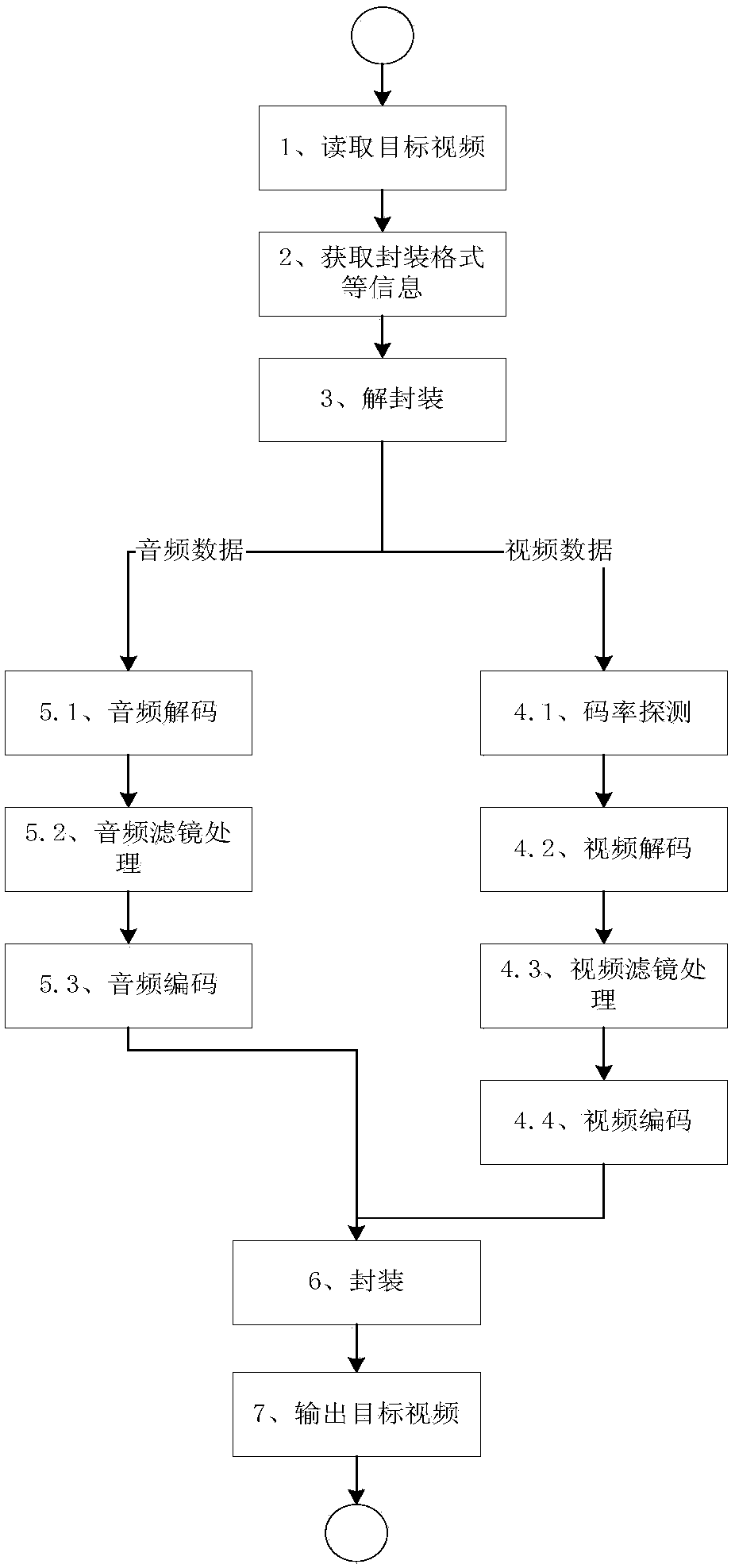

Video transcoding method and device

ActiveCN107659819AImprove transcoding performanceConsistent code rateDigital video signal modificationSelective content distributionNetwork packetTranscoding

The invention discloses a video transcoding method and device, and belongs to the technical field of the computer. The method comprises the following steps: acquiring a video data packet of a de-encapsulated target video; periodically computing the overall average code rate of the target video and the local average code rate in the preset period; adjusting the coding rate according to the overallaverage code rate and the local average code rate; and performing transcoding processing on the target video based on the adjusted coding rate. By using the method and device disclosed by the invention, the basic consistency of the code rate of the output video and the code rate of the input video can be furthest guaranteed, and the video transcoding effect can be improved.

Owner:CHINANETCENT TECH

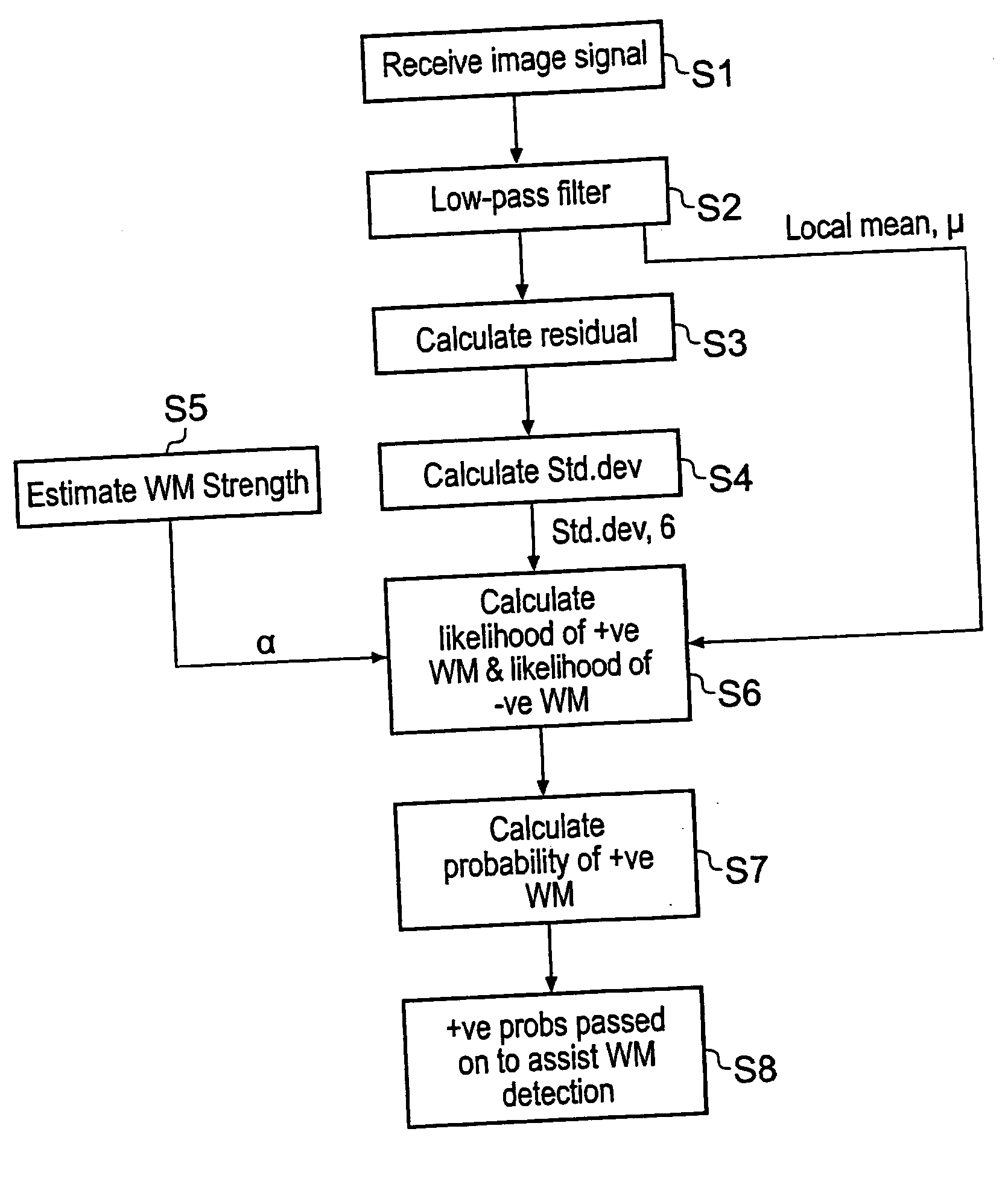

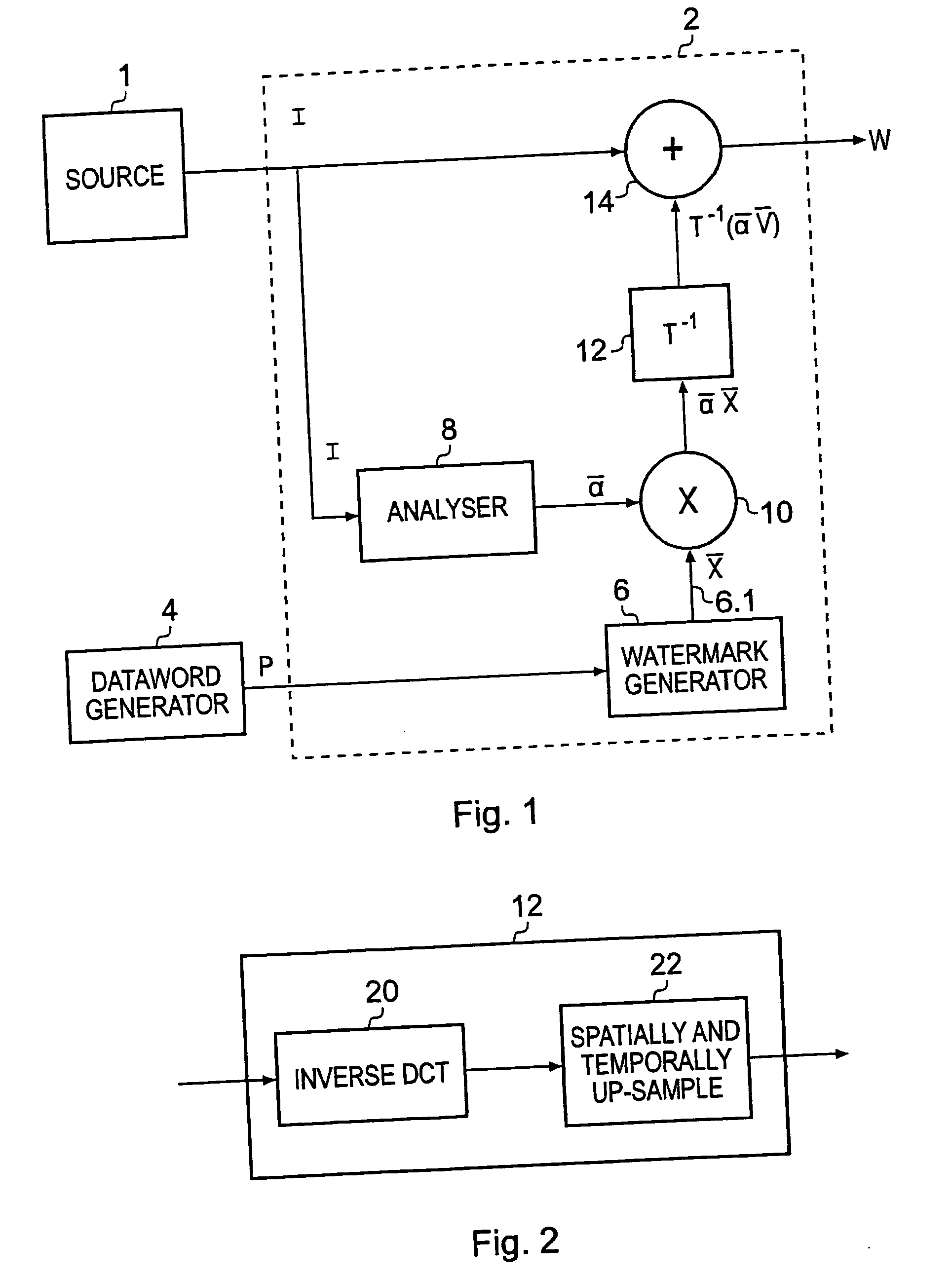

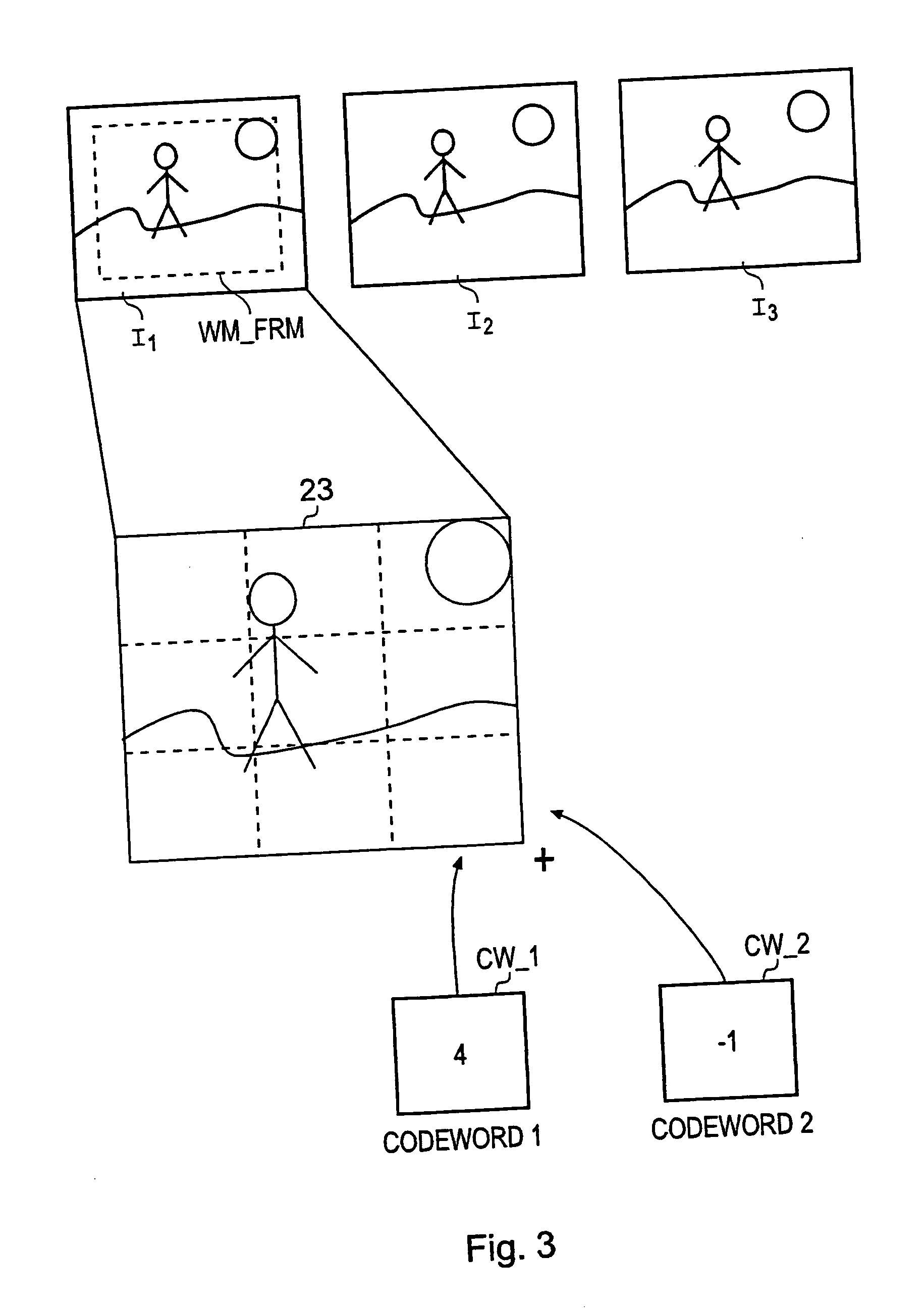

Data processing apparatus and method

InactiveUS20060153422A1Improved soft decodingRaise the possibilityUser identity/authority verificationCharacter and pattern recognitionPattern recognitionResidual value

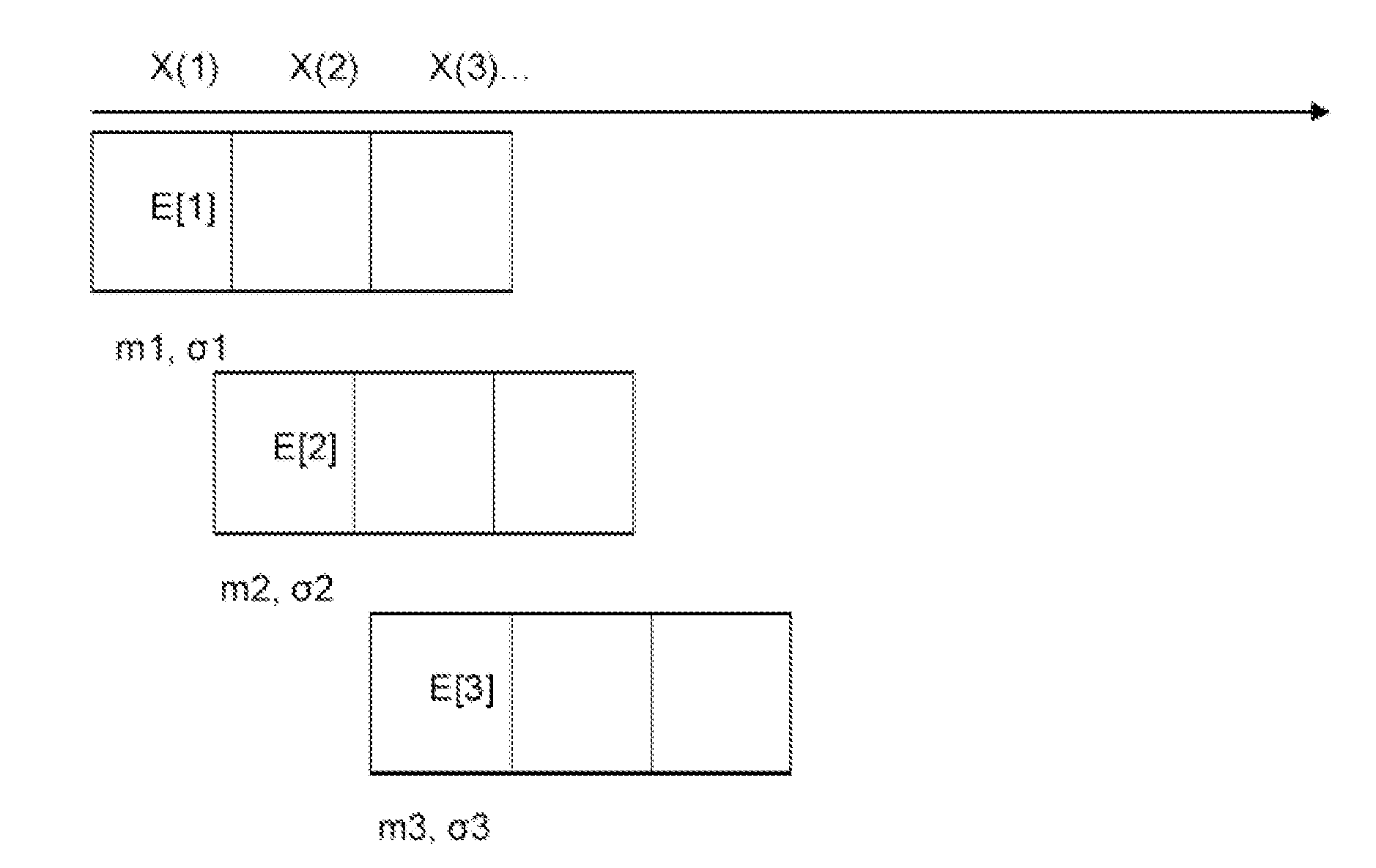

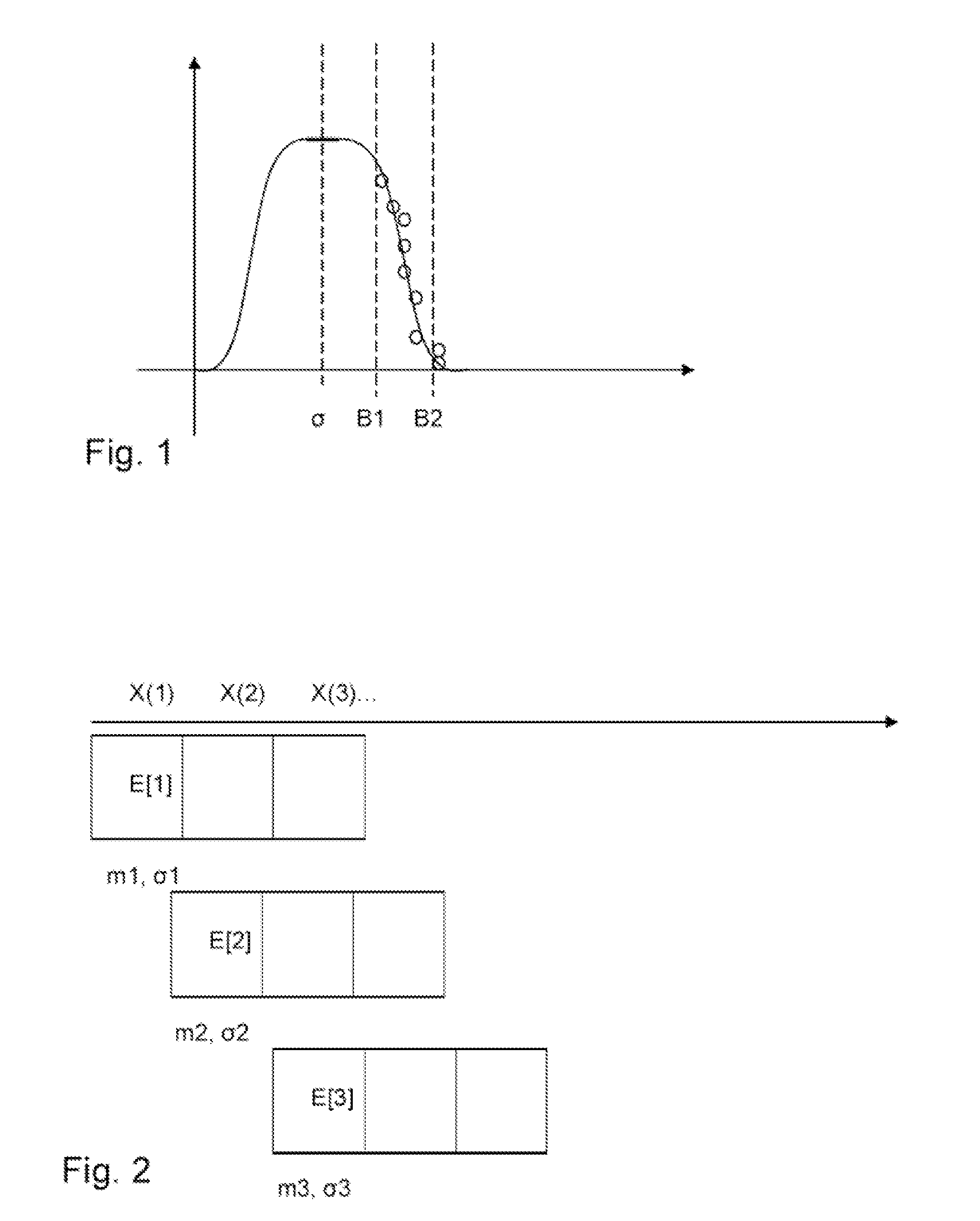

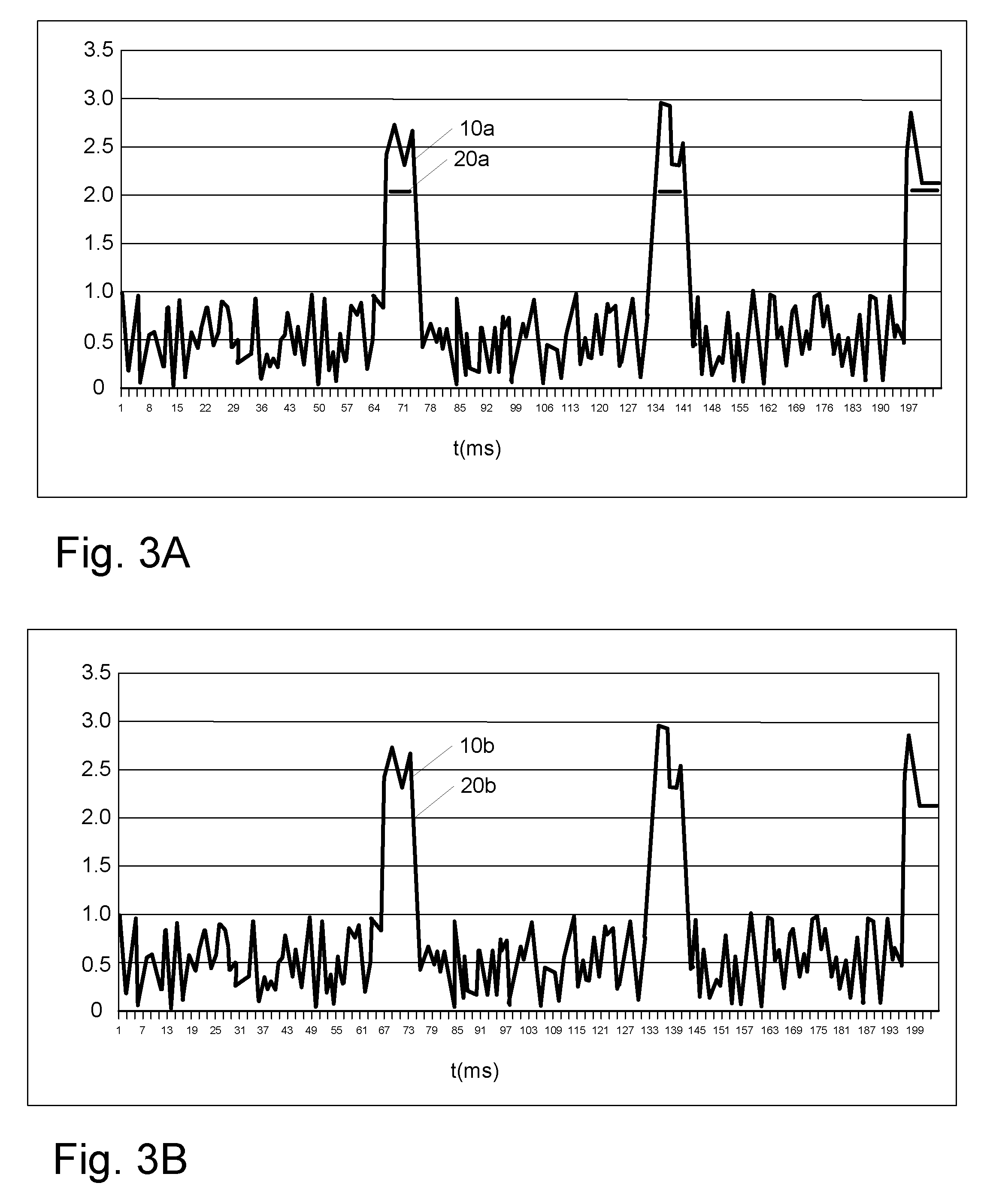

A data processing method for detecting a watermark codeword in a received image signal representing a watermarked image is described. The watermarked image comprises a plurality of signal samples each of which represents a pixel value of the image, to which a watermark codeword coefficient value has been added. The method comprises the steps of low pass filtering the received image signal to generate, for each signal sample, a local mean value, subtracting the local mean from the received image signal to generate a residual signal comprising, for each signal sample, a residual value, and determining, from the residual signal, a local standard deviation for each signal sample. The method also comprises the step of generating, for each signal sample, a watermark strength value providing an estimate of the magnitude with which the watermark codeword coefficient has been added to the received image signal sample. The method also comprises the steps of generating, for each signal sample, a first gaussian likelihood function describing the likelihood of the watermark codeword coefficient embedded into the signal sample being positive, the first gaussian likelihood function having a mean defined by the sum of the local mean value and the watermark strength value and a standard deviation defined by the local standard deviation and generating, for each signal sample, a second gaussian likelihood function describing the likelihood of the watermark codeword coefficient embedded into the signal sample being negative, the second gaussian likelihood function having a mean defined by the difference between the local mean value and the watermark strength value and a standard deviation defined by the local standard deviation. When the above likelihood functions have been generated, the method can detect a watermark in the received watermark image based on the probability of the watermark codeword coefficients added to each signal sample being one of positive or negative, the probability being calculated from the first and second likelihood functions. This provides an estimate of the positioning of the watermark within the received watermark image.

Owner:SONY UK LTD

Antenna device and portable wireless communication apparatus

InactiveUS20020011956A1Reduce the amount requiredRadiating resistanceSimultaneous aerial operationsAntenna supports/mountingsCommunications systemInput impedance

An antenna device and a portable wireless communication apparatus are disclosed to securely lower a local average SAR in correspondence to at least two or more kinds of radio communication systems using different radio communication frequencies even when any radio communication frequency is used. The present invention makes it possible to bring input impedance at open ends of conductive planar plates 11A and 11B close to infinity at first and second radio communication frequencies and restrict emission of electromagnetic waves by restricting a high-frequency current to be supplied to the above described conductive plates 11A, 11B and a shield case 2, thereby securely lowering the local average SAR in correspondence to at least two or more kinds of radio communication systems using different radio communication frequencies even when any radio communication frequency is used.

Owner:SONY CORP

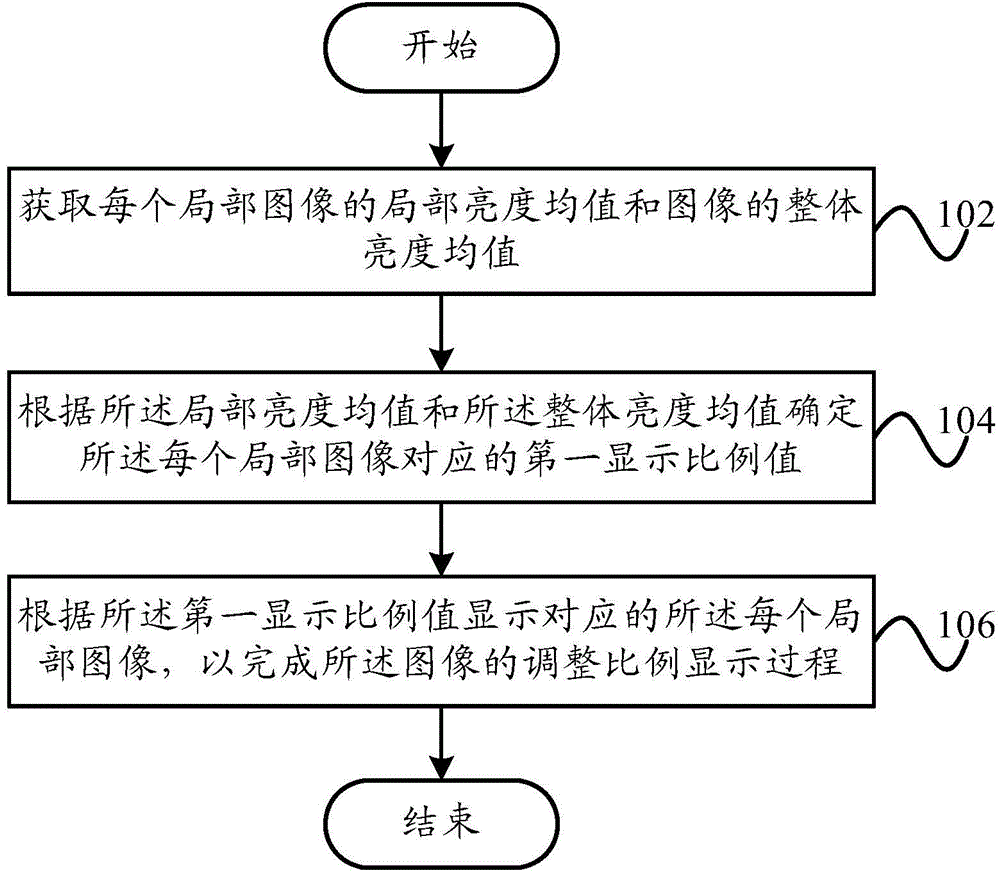

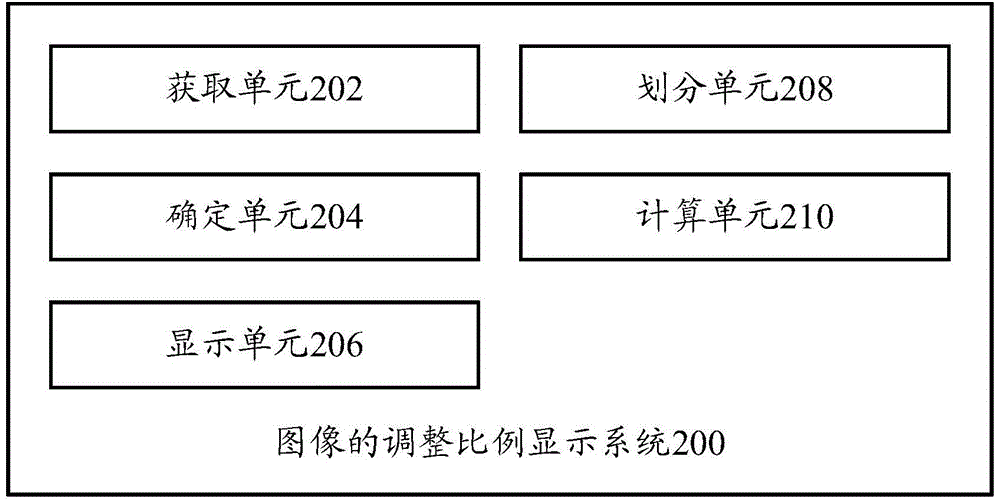

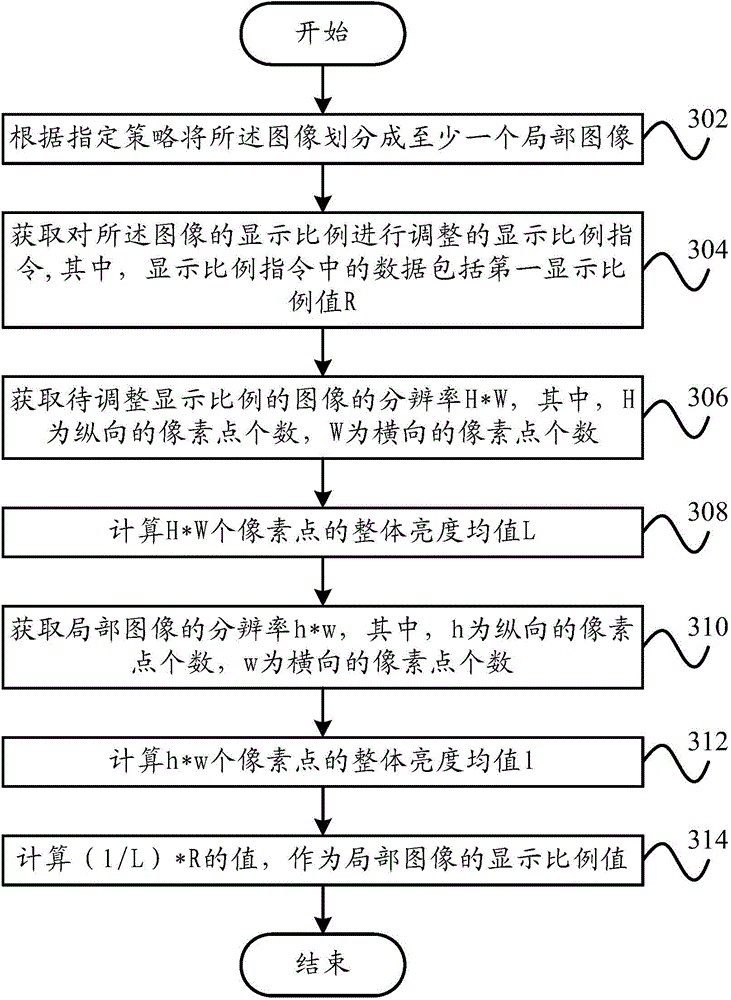

Image adjusting proportion display method, display system and display device, and terminal

ActiveCN105654424ARealize the display effect of adaptive magnificationNo distortionGeometric image transformationDisplay deviceComputer terminal

The invention provides an image adjusting proportion display method. The method comprises steps that a local brightness value of each local image and the integral brightness value of an image are acquired; a first display proportion value corresponding to each local image is determined according to the local brightness values and the integral brightness value; each local image is displayed according to the corresponding first display proportion value to accomplish an image adjusting proportion display process. According to the method, each local image is amplified differently according to difference of the local average brightness values of the local images, the adaptive amplification effect is realized, moreover, the local average brightness value difference of the local images is quite small, distortion of a to-be-adjusted-in-proportion image does not occur when the local images are amplified. The invention further provides an image adjusting proportion display system, an image adjusting proportion display device and a terminal.

Owner:NANJING COOLPAD SOFTWARE TECH

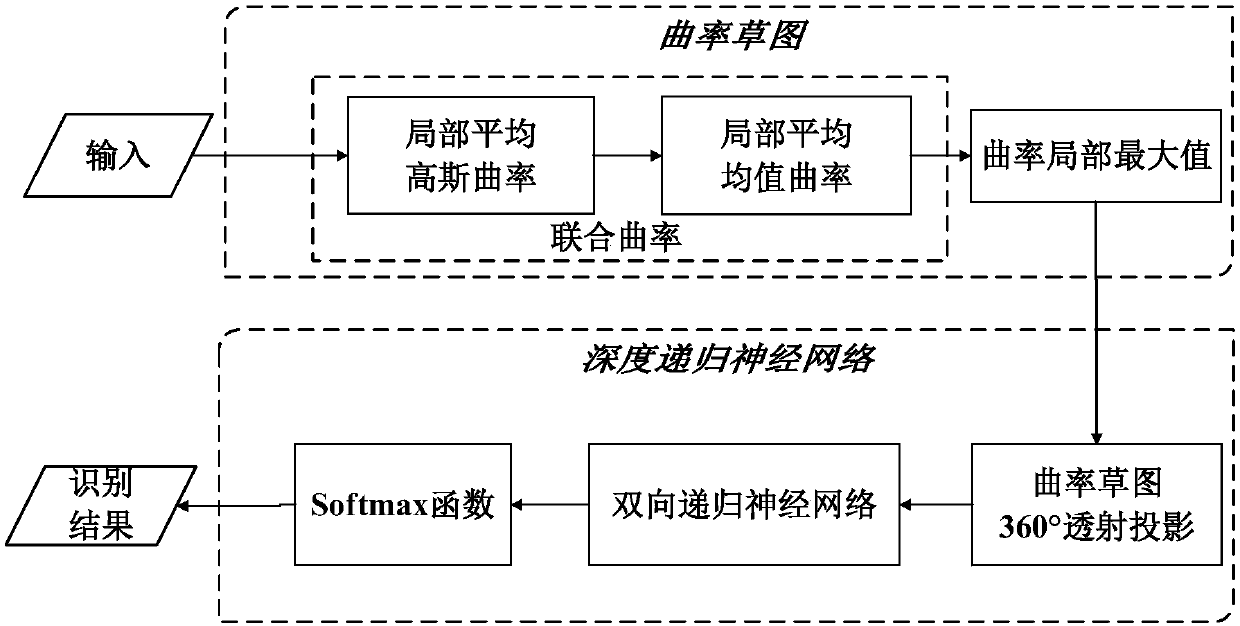

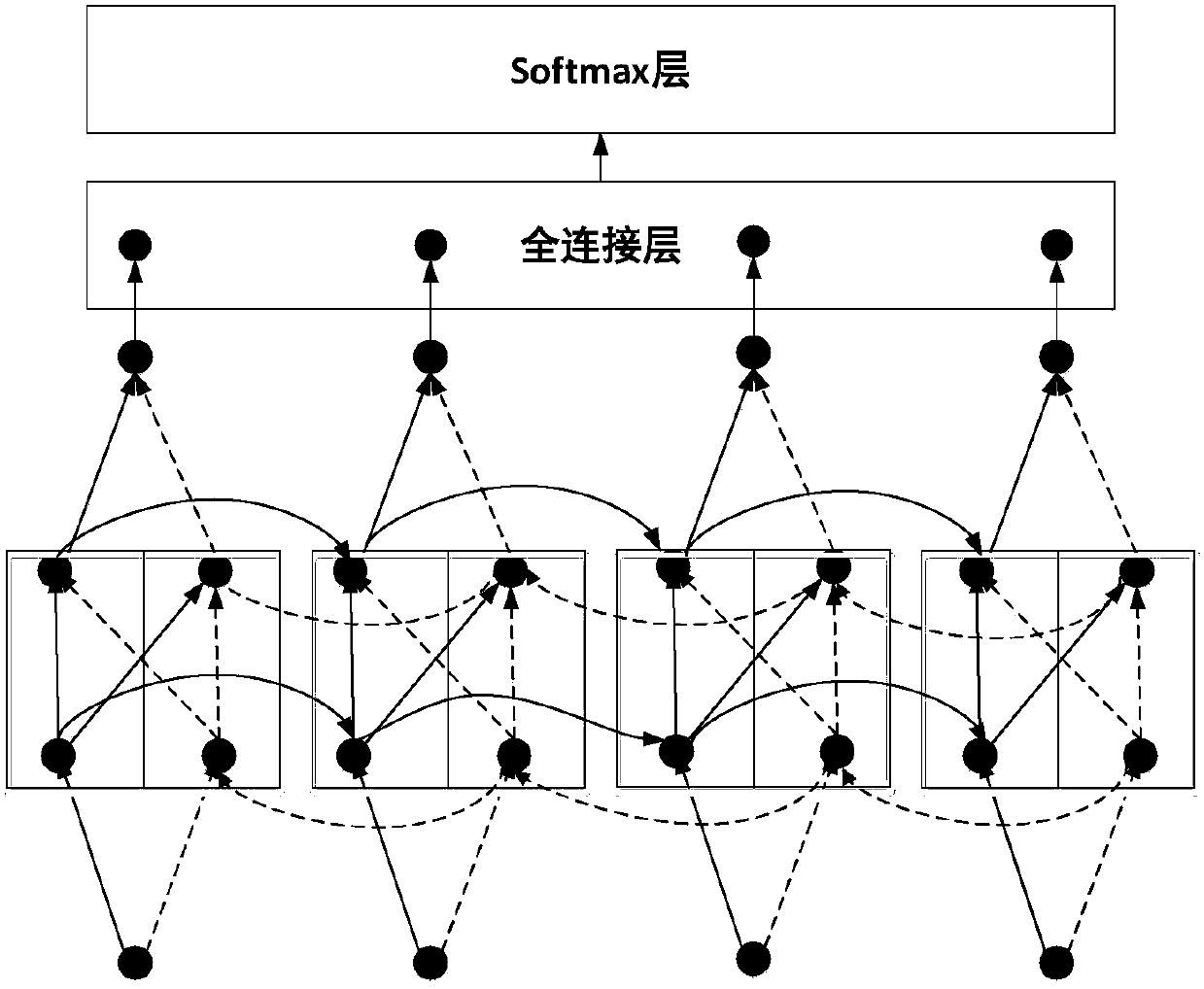

Curvature feature recurrent neural network-based three-dimensional target identification method

ActiveCN108154066ASolve the noise problemAccurate identificationNeural architecturesThree-dimensional object recognitionGoal recognitionStudy methods

The invention relates to an image identification technology, and provides a curvature feature recurrent neural network-based three-dimensional target identification method for the problem of image noises in a three-dimensional target identification process in order to effectively describe features of a three-dimensional target under different view angles. The method comprises the steps of firstly,obtaining a joint curvature of a target three-dimensional model by calculating a local average Gaussian curvature and an average mean curvature of the target three-dimensional model, forming a curvature sketch of the three-dimensional model by extracting a local maximum value of the joint curvature, and generating a 360-degree two-dimensional image sequence by utilizing transmission projection transform to serve as an input of a trained recurrent neural network; and secondly, obtaining an identification type with a maximum correct probability by utilizing a softmax function in a softmax layerthrough utilizing a bidirectional recurrent neural network (BRNN) as a three-dimensional model multi-view sequence feature learning method. According to the method, common features of the three-dimensional target and a two-dimensional image can be automatically extracted; and relatively good robustness and relatively high target identification rate can be kept under image noise conditions.

Owner:SHENYANG INST OF AUTOMATION - CHINESE ACAD OF SCI

Auxiliary pixel patterns for improving print quality

InactiveUS6919973B1Improve translationImage enhancementCharacter and pattern recognitionEngineeringLocal average

Utilization of non-printing high-spatial-frequency auxiliary pixels are introduced into the bitmap of an image to obtain local control of the image development by modification of local average voltage in the development nip. These auxiliary pixels embody frequencies or levels of charge that are past the threshold for printing on the Modulation Transfer Function (MTF) curve, and therefore by themselves result in no toner deposition on the resultant page. These auxiliary pixels will however, position the toner cloud by modulating it and to compensate for cleaning field and toner supply effects. This will better position the toner cloud to ensure adequate toner supply to all parts of the image so that the desired printing pixels will print as intended.

Owner:XEROX CORP

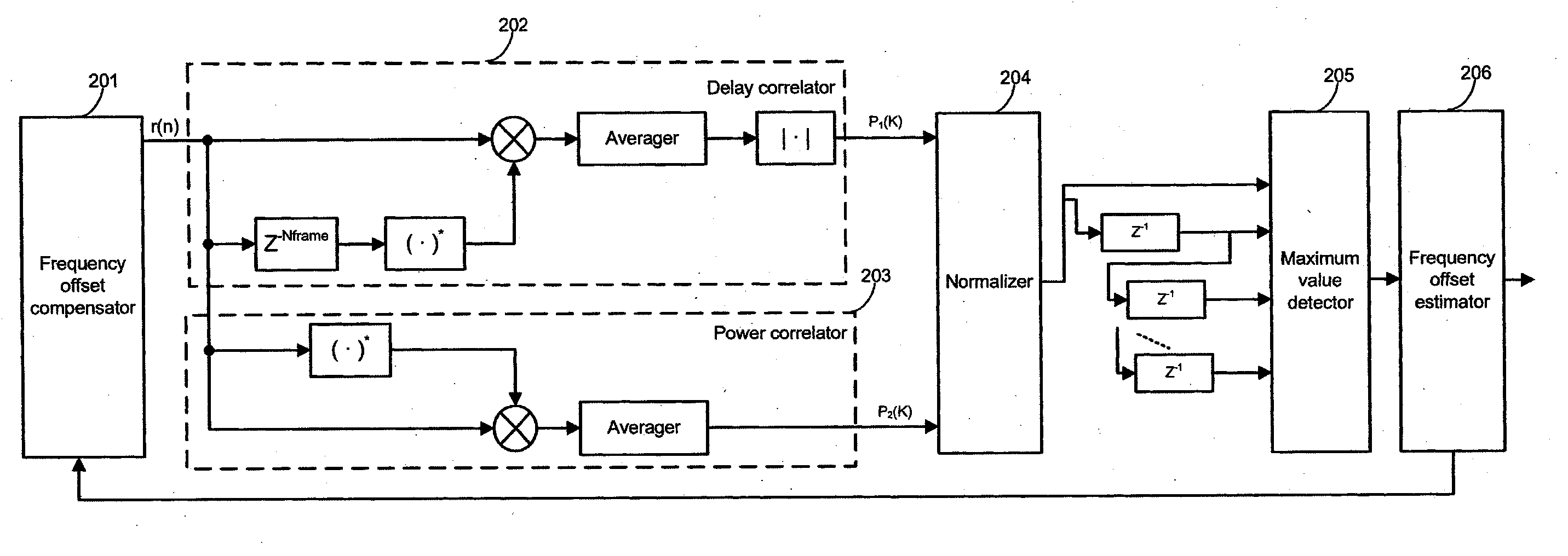

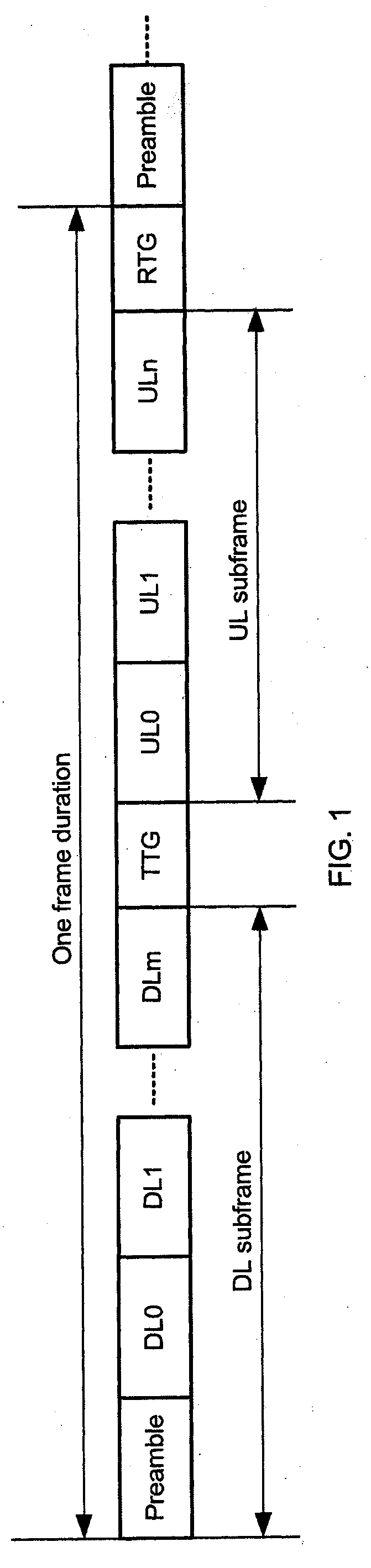

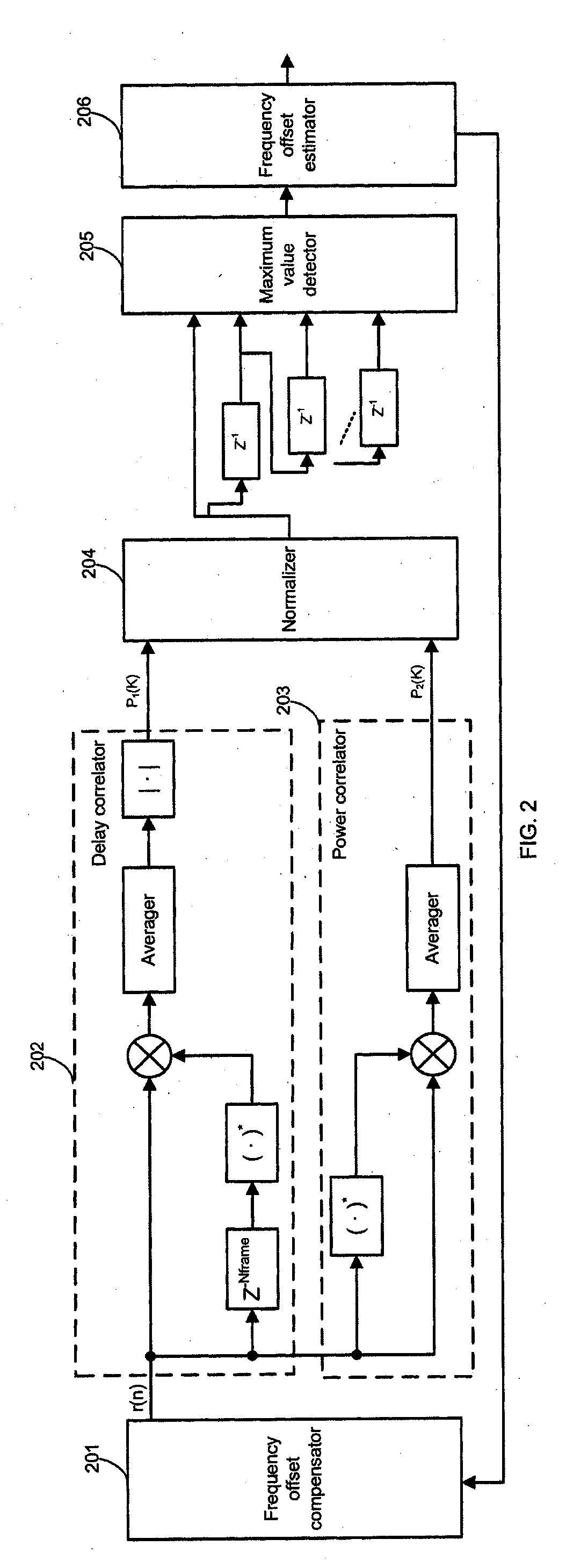

Apparatus and method of frame synchronization in broad band wireless communication systems

ActiveUS20080259904A1Eliminate false frame detectionFast and reliable detectionModulated-carrier systemsTime-division multiplexCommunications systemConsecutive frame

The present invention relates to an apparatus and method of frame synchronization in broad band wireless communication systems. In an apparatus of frame synchronization in a mobile station, a time variant phase rotation compensator eliminates time variant phase rotation carried in received signals by conjugated multiplication between adjacent signal samples. Then, the processed signal is fed into a delay correlator to calculate a plurality of correlations between two successive frames. A local power calculator acquires an average power of several symbols centered on delayed correlation values. A normalizer normalizes the delayed correlation values with a local average power corresponding to the delayed correlation values. A maximum value detector selects the maximum value from normalized correlation values to trigger frame synchronizing and timing signals.

Owner:FUJITSU LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com