Speech emotion recognition method based on manifold

A speech emotion recognition and manifold technology, applied in speech recognition, speech analysis, instruments, etc., can solve the problems of small target equation, fine granularity, ignoring the relationship between adjacent frames, etc., to enhance performance and improve the accuracy of recognition Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

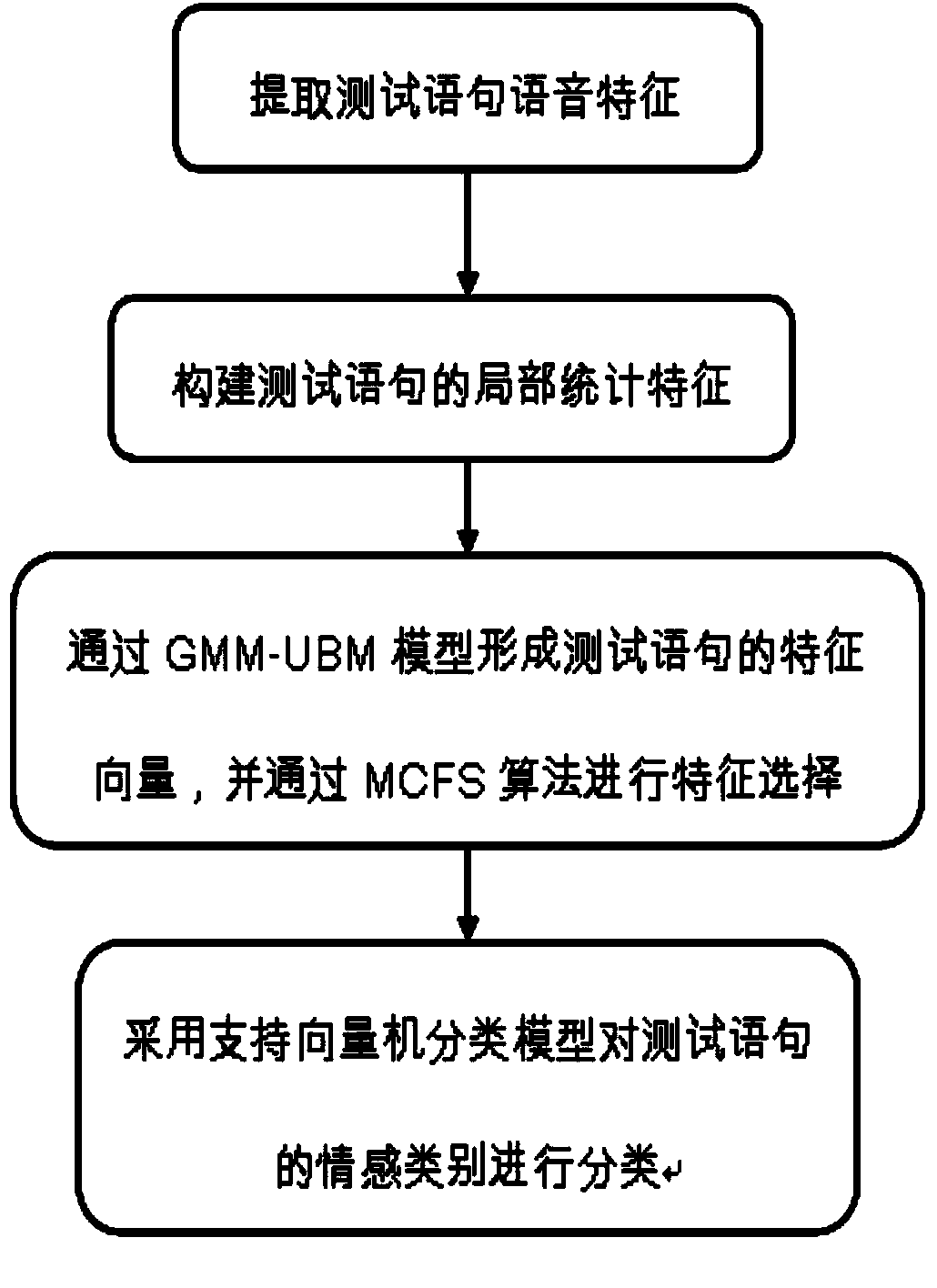

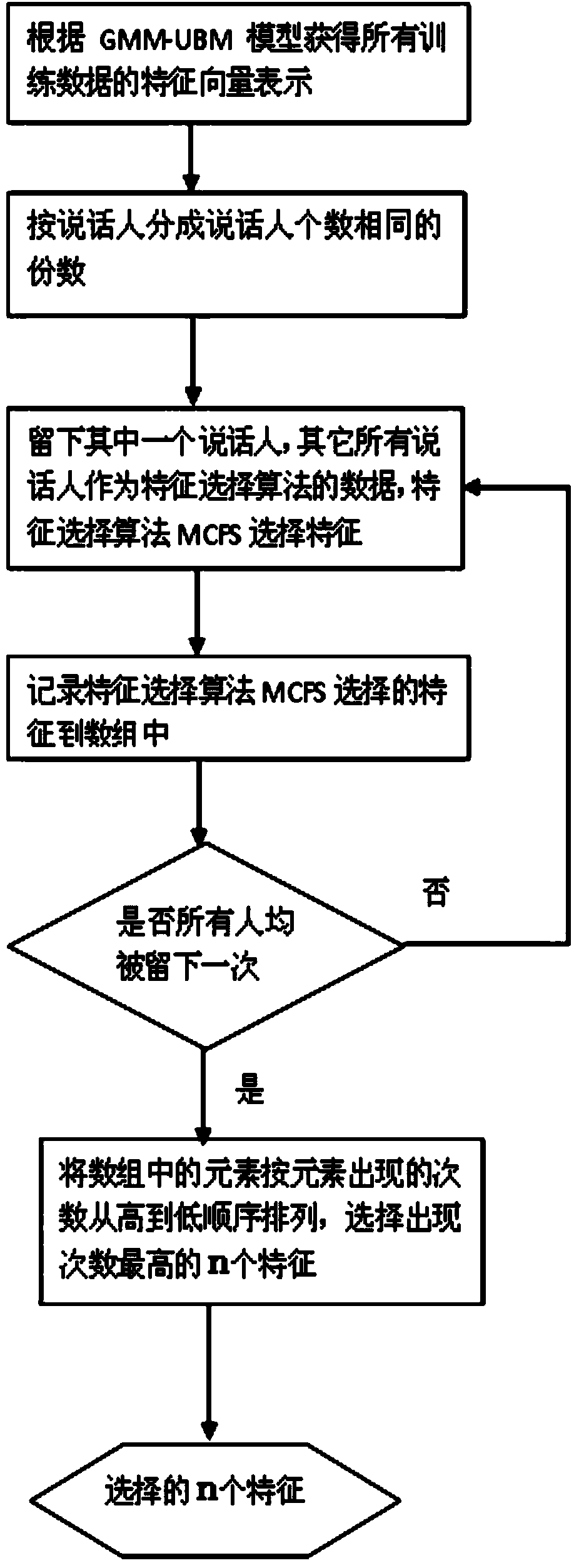

[0048] Such as figure 1 , 2 , a manifold-based method for speech emotion recognition, consists of the following sequential steps:

[0049] (1) Extract the following speech features of the test sentence: MFCC, LPCC, LFPC, ZCPA, PLP and RASTA-PLP, where the number of Mel filters of MFCC and LFPC is 40, and the order of linear prediction of LPCC, PLP and R-PLP 12, 16, 16 respectively, ZCPA frequency segments are 0, 106, 223, 352, 495, 655, 829, 1022, 1236, 1473, 1734, 2024, 2344, 2689, 3089, 3522, 4000, and finally get The features extracted by the 6 feature extraction methods of each sentence and each frame, the corresponding feature dimensions are 39, 40, 12, 16, 16, 16, so the number of features extracted per frame is 39+40+12+16+16 +16;

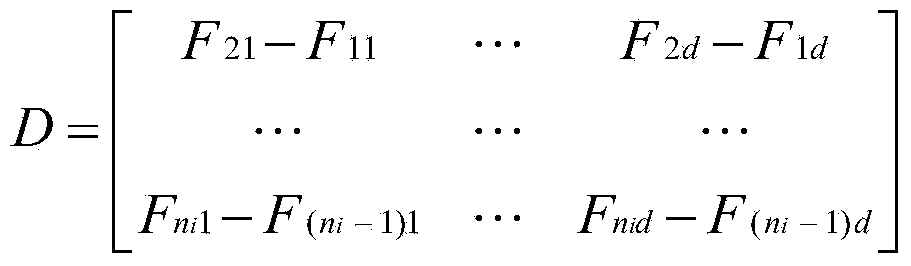

[0050] (2) Calculate the first-order difference D of all features of each voice, and the F in D takes the 6 features described in the first step, and then calculate the local mean and variance of all F and D to obtain LDM, LDS, LM And th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com