Patents

Literature

77 results about "Visual matching" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

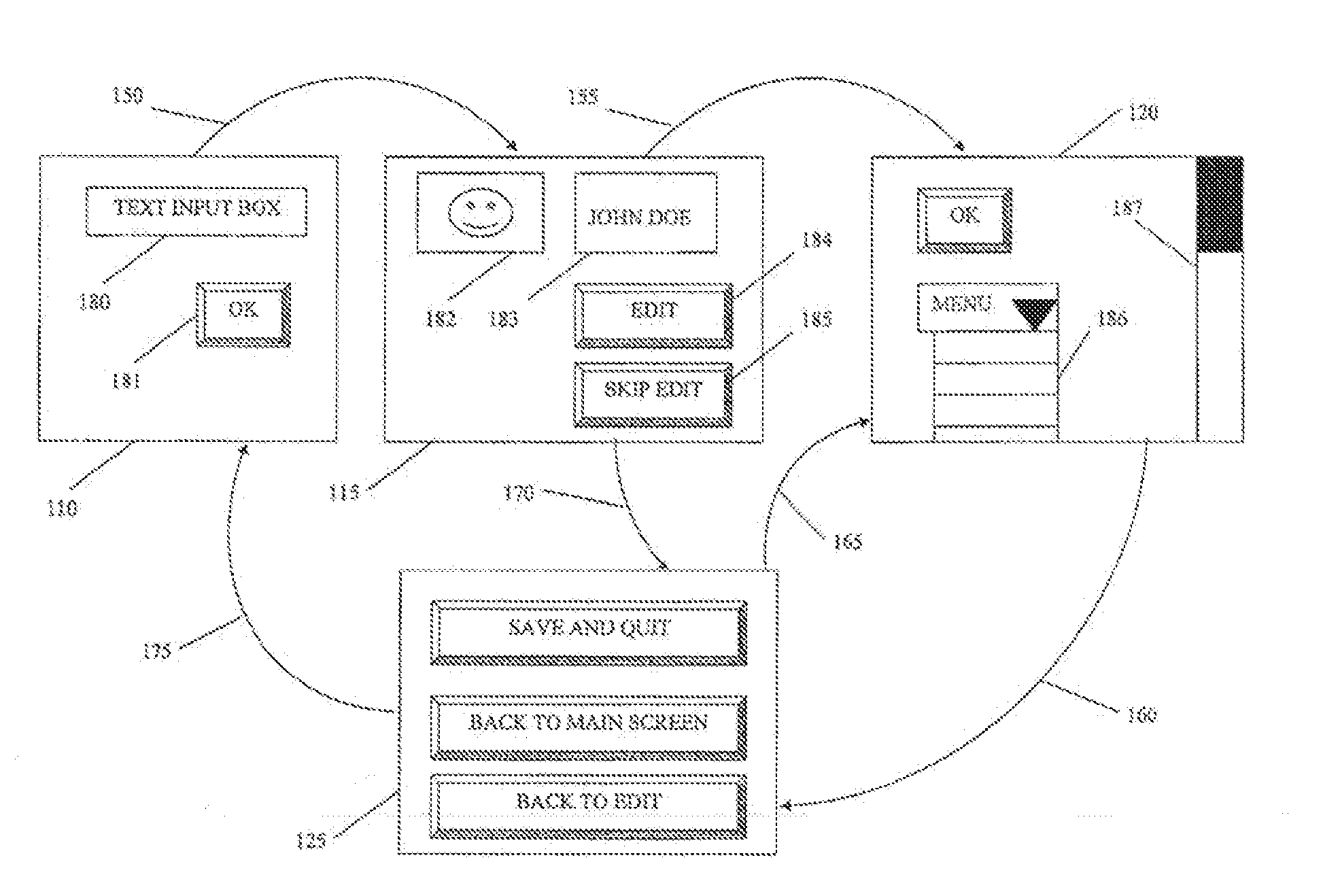

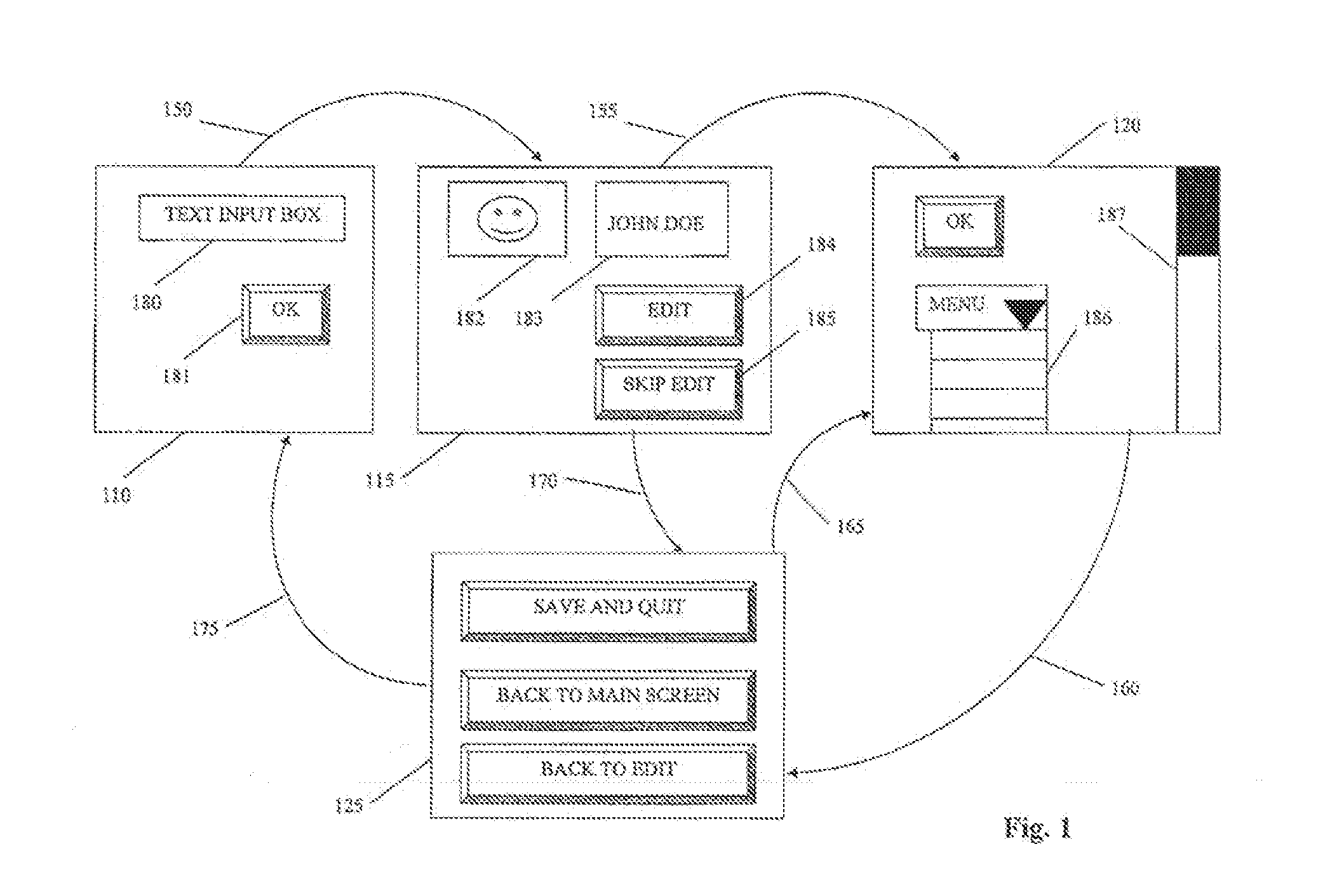

System and method for visual matching of application screenshots

InactiveUS20140189576A1Character and pattern recognitionExecution for user interfacesVisual matchingComputer science

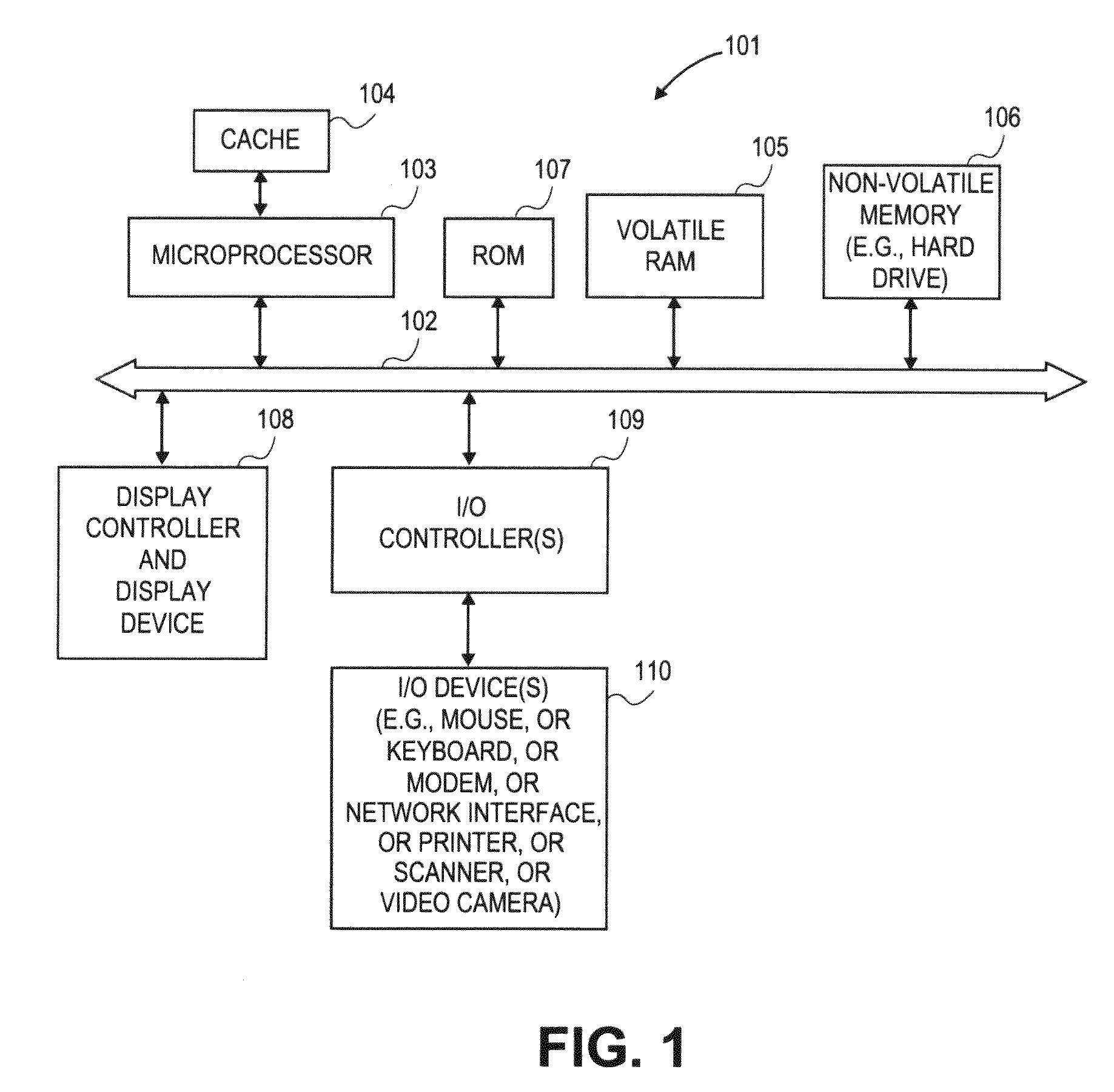

A system and method for automatically matching images of screens. A system and method may include automatically matching images of screens. A first screenshot of a screen may be obtained, the first screenshot including a view port exposing a portion of a panel. A second screenshot of a screen may be obtained. A digital difference image may be generated and a match between the first and second screenshots may be determined based on the digital difference image.

Owner:APPLITOOLS

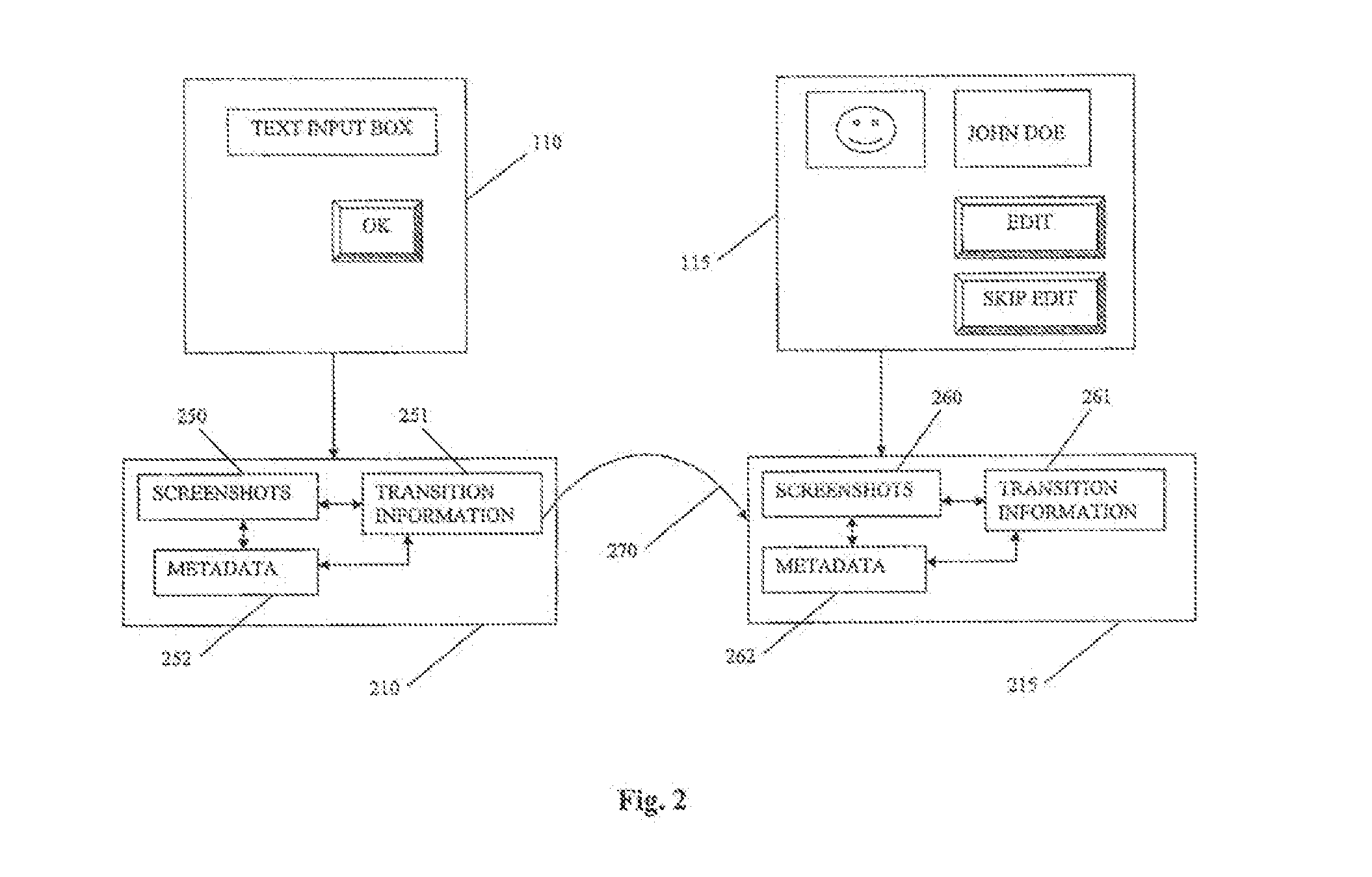

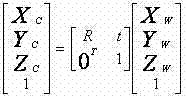

Binocular visible light camera and thermal infrared camera-based target identification method

ActiveCN108010085AImprove recognition rateDetect fasterImage enhancementImage analysisVisual matchingPoint cloud

The invention discloses a binocular visible light camera and thermal infrared camera-based target identification method. The method comprises the steps of calibrating internal and external parametersof two cameras of a binocular visible light camera through a position relationship between an image collected by the binocular visible light camera and a pseudo-random array stereoscopic target in a world coordinate system, and obtaining a rotation and translation matrix position relationship, between world coordinate systems, of the two cameras; according to an image collected by a thermal infrared camera, calibrating internal and external parameters of the thermal infrared camera; calibrating a position relationship between the binocular visible light camera and the thermal infrared camera;performing binocular stereoscopic visual matching on the images collected by the two cameras of the binocular visible light camera by adopting a sift feature detection algorithm, and calculating a visible light binocular three-dimensional point cloud according to a matching result; performing information fusion on temperature information of the thermal infrared camera and the three-dimensional point cloud of the binocular visible light camera; and inputting an information fusion result to a trained deep neural network for performing target identification.

Owner:SOUTHWEAT UNIV OF SCI & TECH

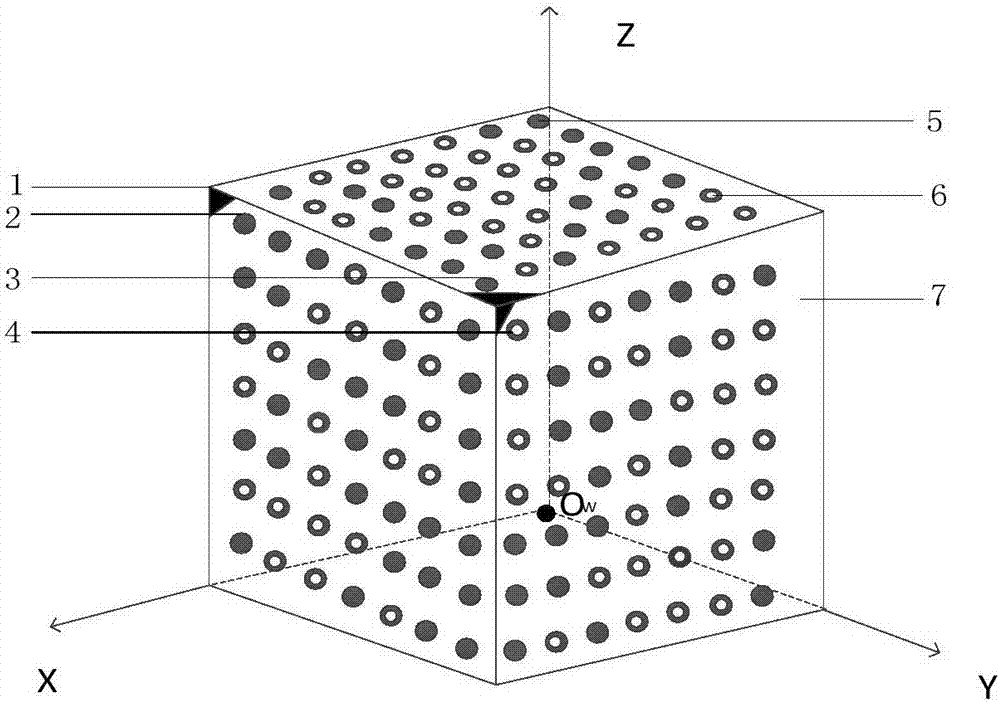

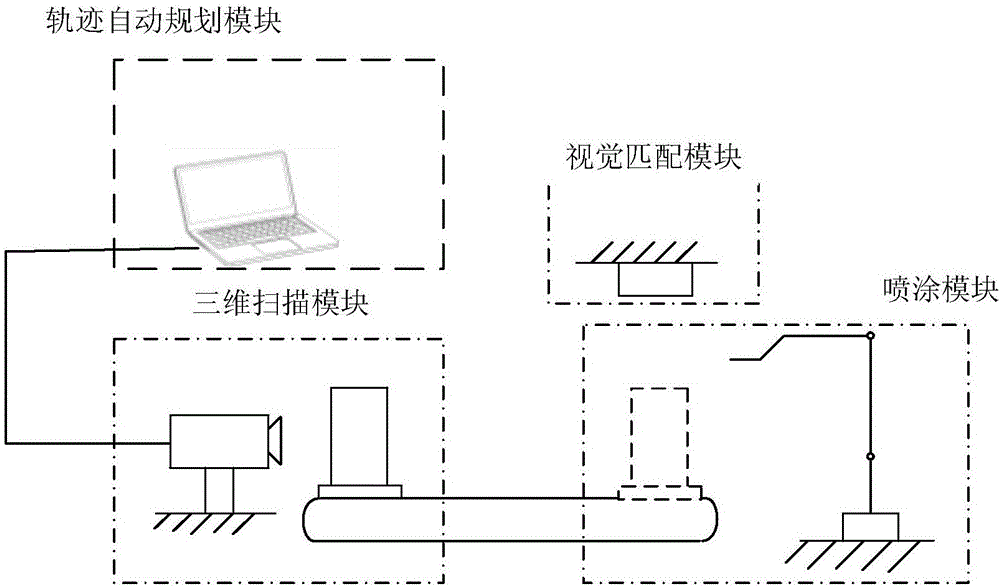

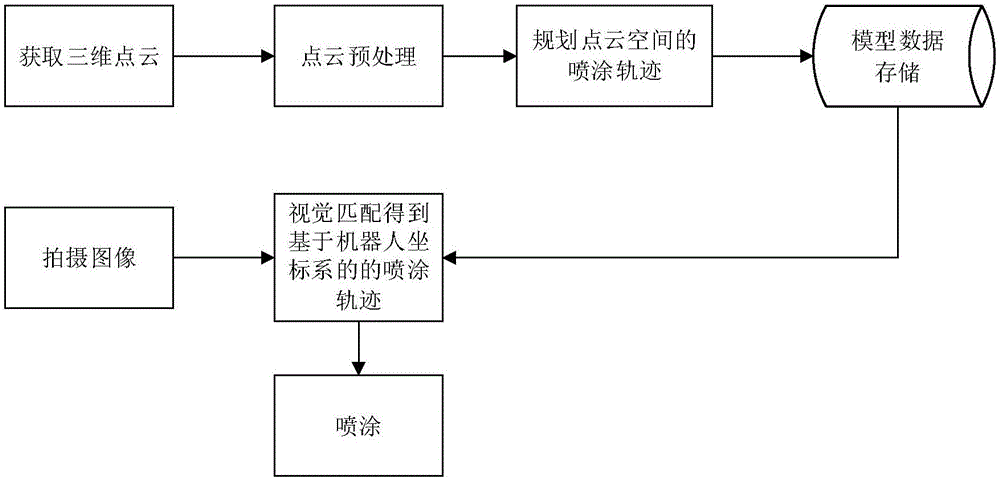

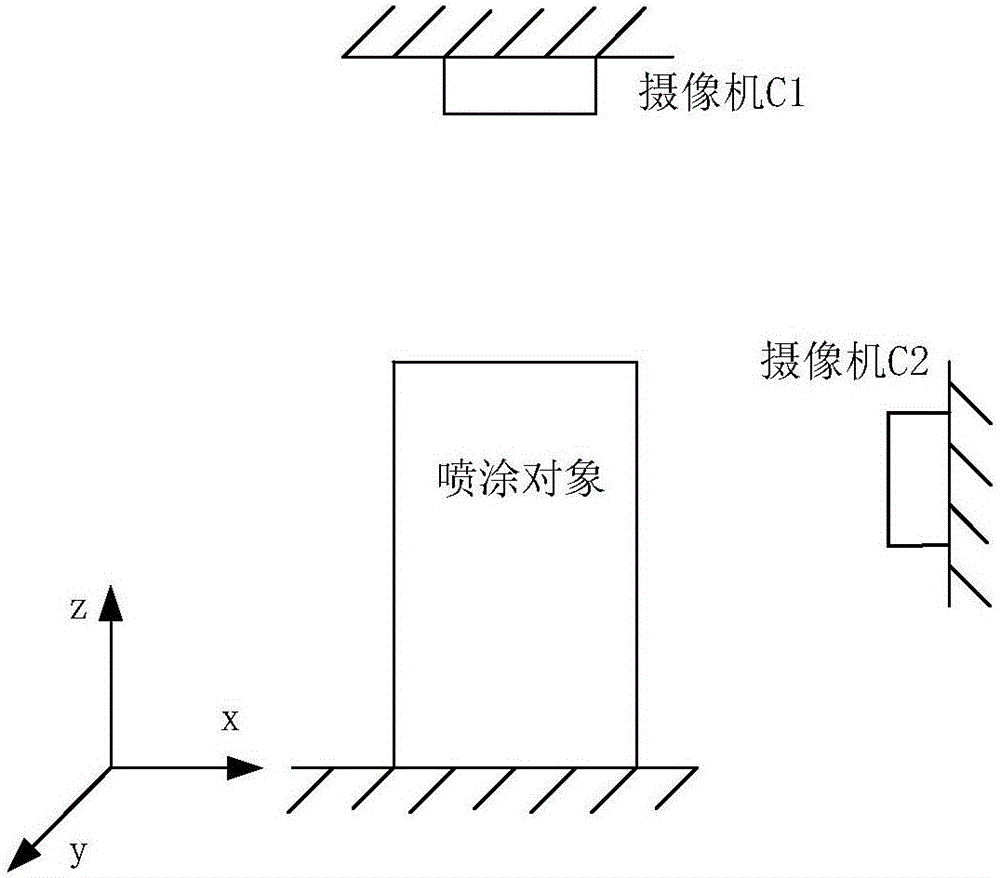

Automatic spraying system and automatic spraying method based on point cloud and image matching

ActiveCN106423656AReduce difficulty of useRealization of automatic sprayingSpraying apparatusVisual matchingPoint cloud

The invention provides an automatic spraying system based on point cloud and image matching. The automatic spraying system comprises a three-dimensional scanning module, an automatic trajectory planning module, a visual matching module and a spraying module. The three-dimensional scanning module is used for scanning a spraying object and acquiring a point cloud model according to scanned three-dimensional point cloud data; the automatic trajectory planning module is used for planning spraying trajectories in a point cloud space; the visual matching module is used for acquiring a transformational relation between a point cloud coordinate system and a spraying robot coordinate system; the spraying module is used for automatically spraying the spraying object. The automatic spraying system has the advantages that an algorithm is planned automatically according to a robot spraying path of the point cloud coordinate system, the point cloud coordinate system is correlated with the robot coordinate system according to the cloud point and image matching algorithm, and accordingly, automatic spraying of the spraying object is realized; on the basis of guaranteeing spraying efficiency, the spraying quality is improved greatly, calculation quantity for trajectory planning is reduced, and trajectory planning quality is improved.

Owner:CHONGQING UNIV

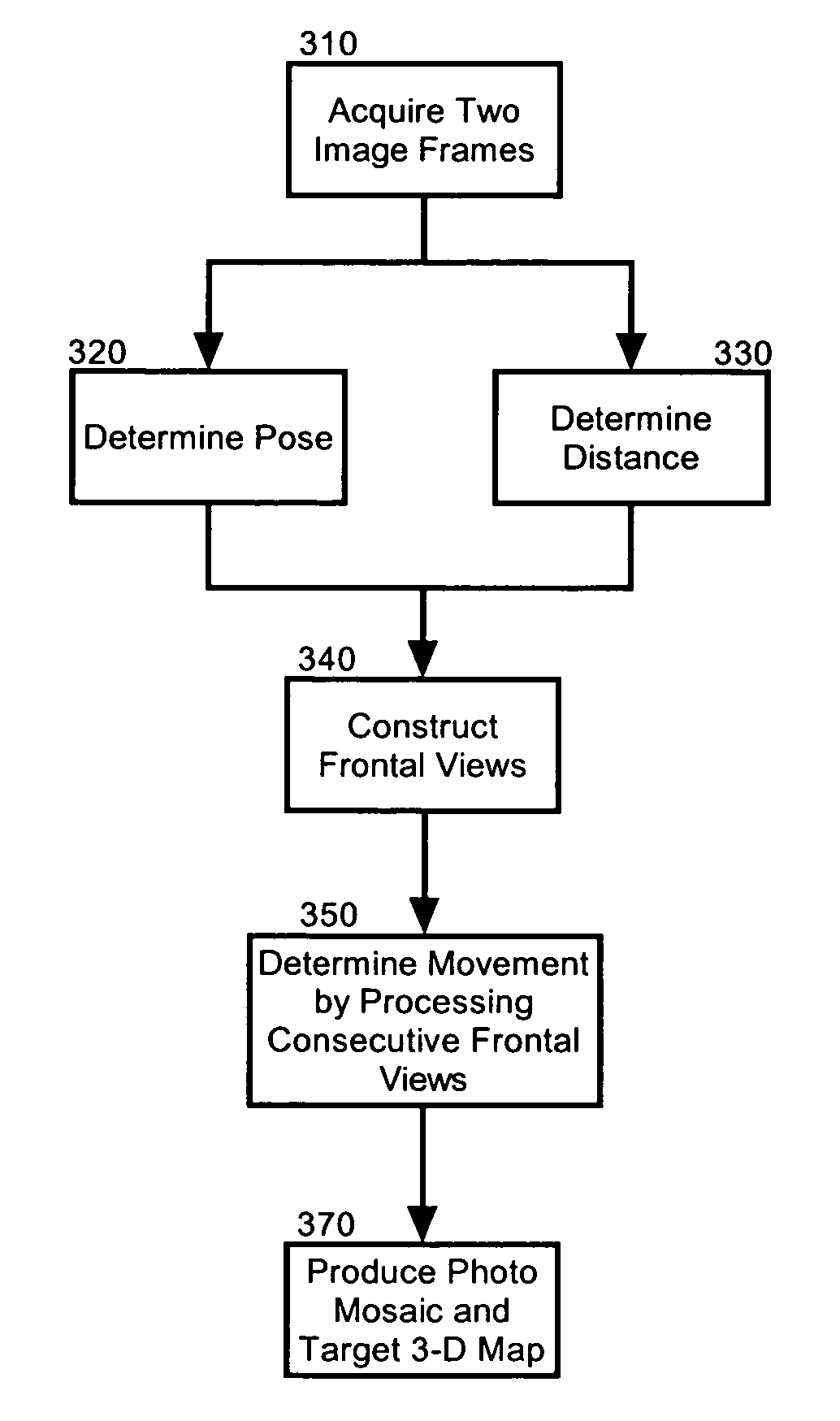

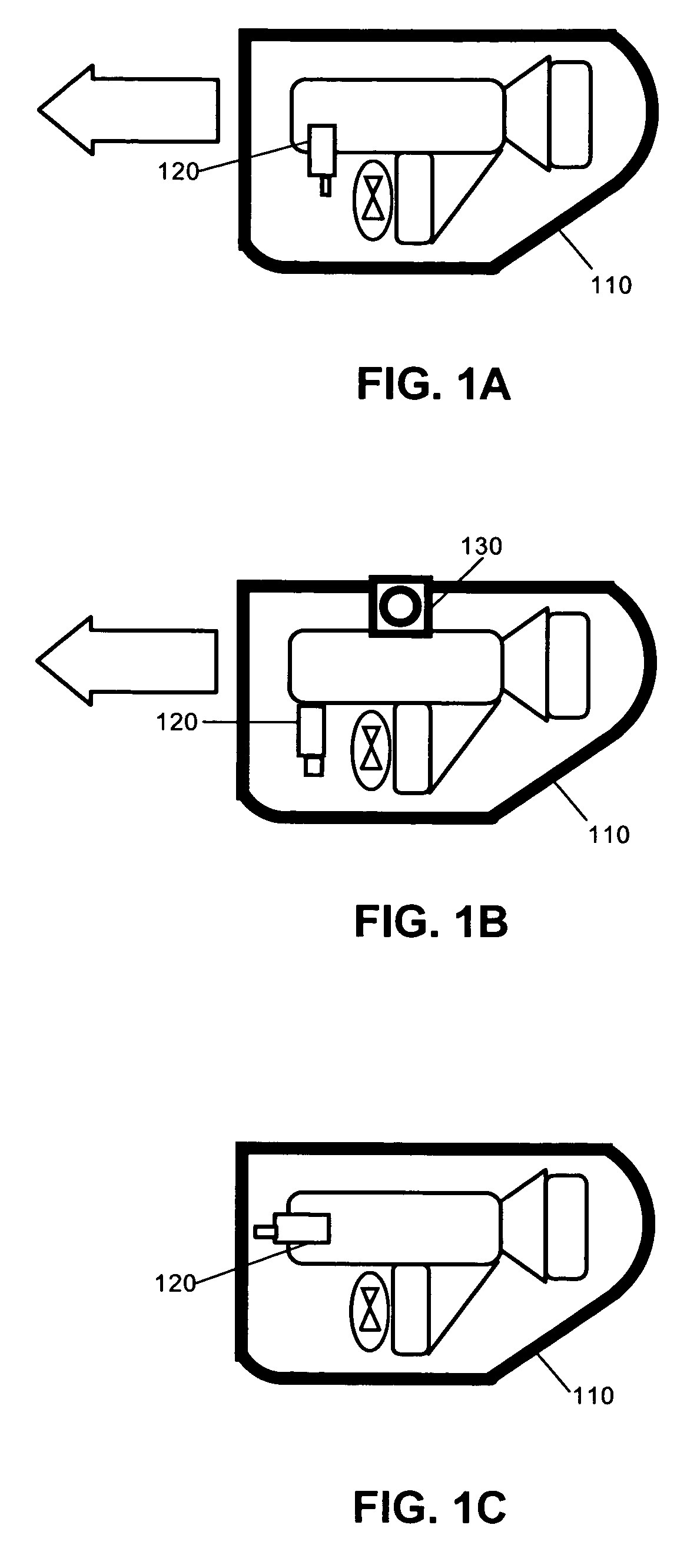

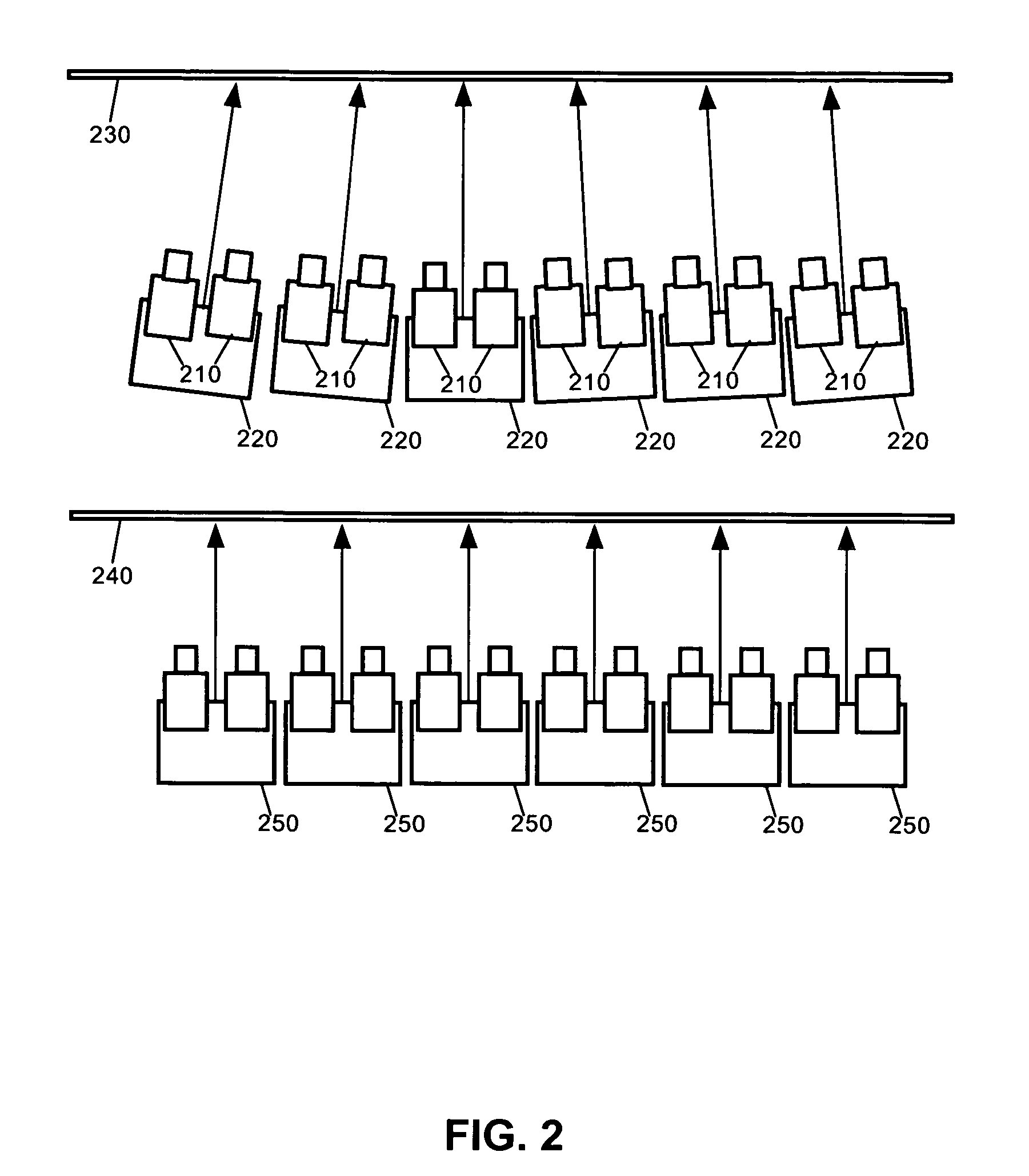

Multi-camera inspection of underwater structures

A method, system and apparatus for viewing and imaging an underwater structure from a submersible platform, navigating along the structure and constructing a map of the structure in the forms of a photo-mosaic and a 3-D structural map. The system can include a submersible platform, at least two cameras coupled to the submersible platform, and stereovision matching logic programmed to simulate a frontal view of a target underwater structure from a fixed distance based upon an oblique view of the target underwater structure obtained by the cameras from a variable distance. The cameras can be forward or side mounted to the submersible platform and can include optical cameras, acoustical cameras or both. Preferably, the submersible platform can be a remotely operated vehicle (ROV), or an autonomous underwater vehicle (AUV). Finally, the system further can include absolute positioning sensors.

Owner:MIAMI UNIVERISTY OF

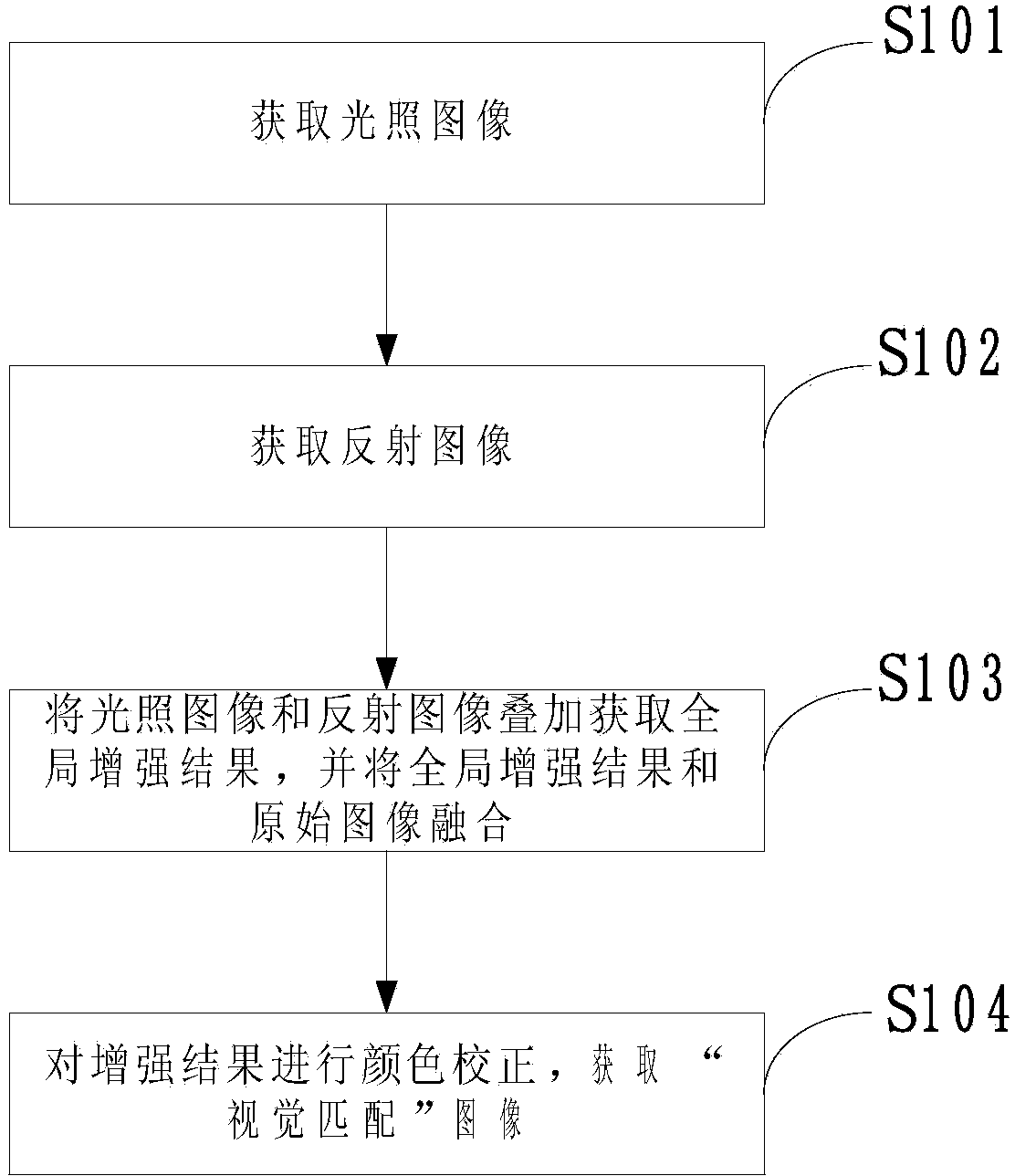

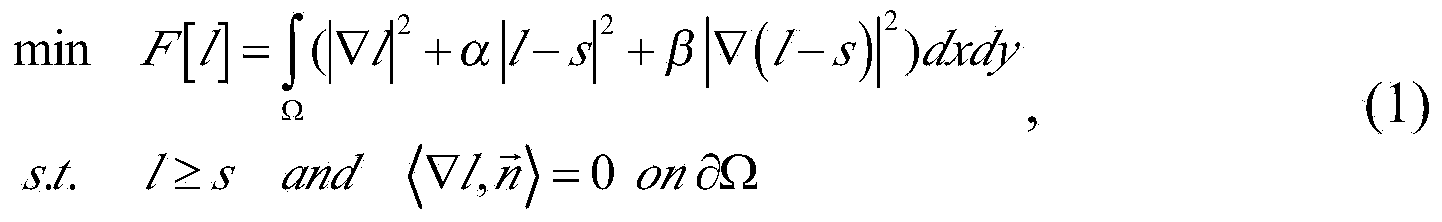

Double exposure implementation method for inhomogeneous illumination image

The invention discloses a double exposure implementation method for an inhomogeneous illumination image. The double exposure implementation method for the inhomogeneous illumination image comprises the following steps: obtaining an illumination image; obtaining a reflection image; overlaying the illumination image with the reflection image to obtain the global enhancement effect; fusing the global enhancement effect with the original image; and carrying out color correction on an enhancement result to obtain a visual matching image. According to the double exposure implementation method for the inhomogeneous illumination image, which is disclosed by the invention, the smooth property of the illumination image is constrained, and the reflection image is sharpened by the visual threshold value characteristic to guarantee the detail information of the image; with an image fusion method, the luminance, the contrast ratio and the color information of an original image luminance range can be effectively kept; because the characteristic of the human eye visual perception of average background brightness is introduced in, the fused image can be used for effectively eliminating image color distortion near a shadow boundary; the color of a low-illuminance zone is restored by the color correction technology; the colors of the low-illuminance zone and the luminance range are free from obvious distortion; the continuity is good; and the visual effect is more nature.

Owner:AIR FORCE UNIV PLA

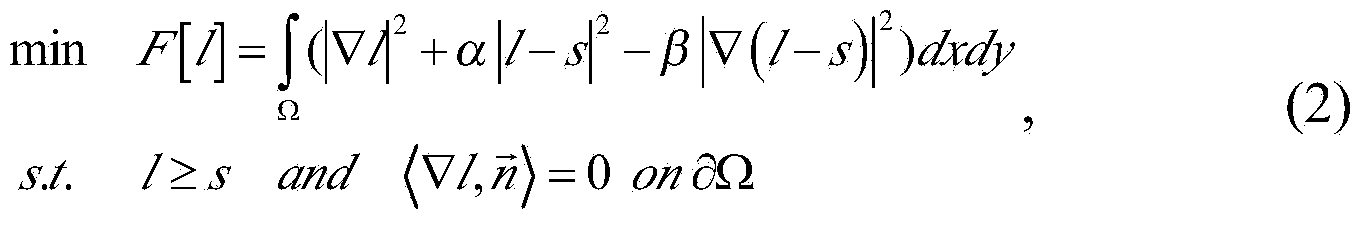

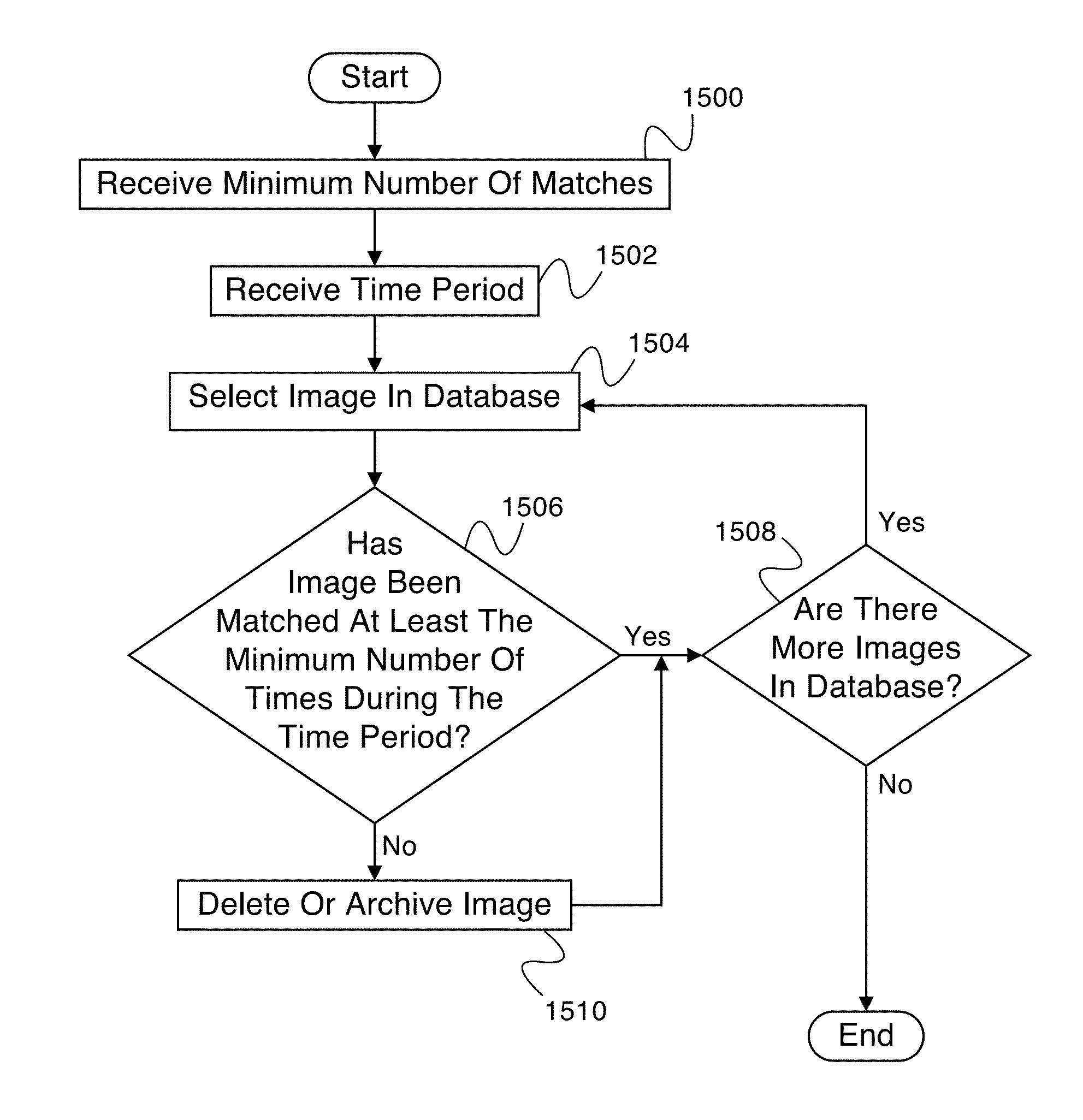

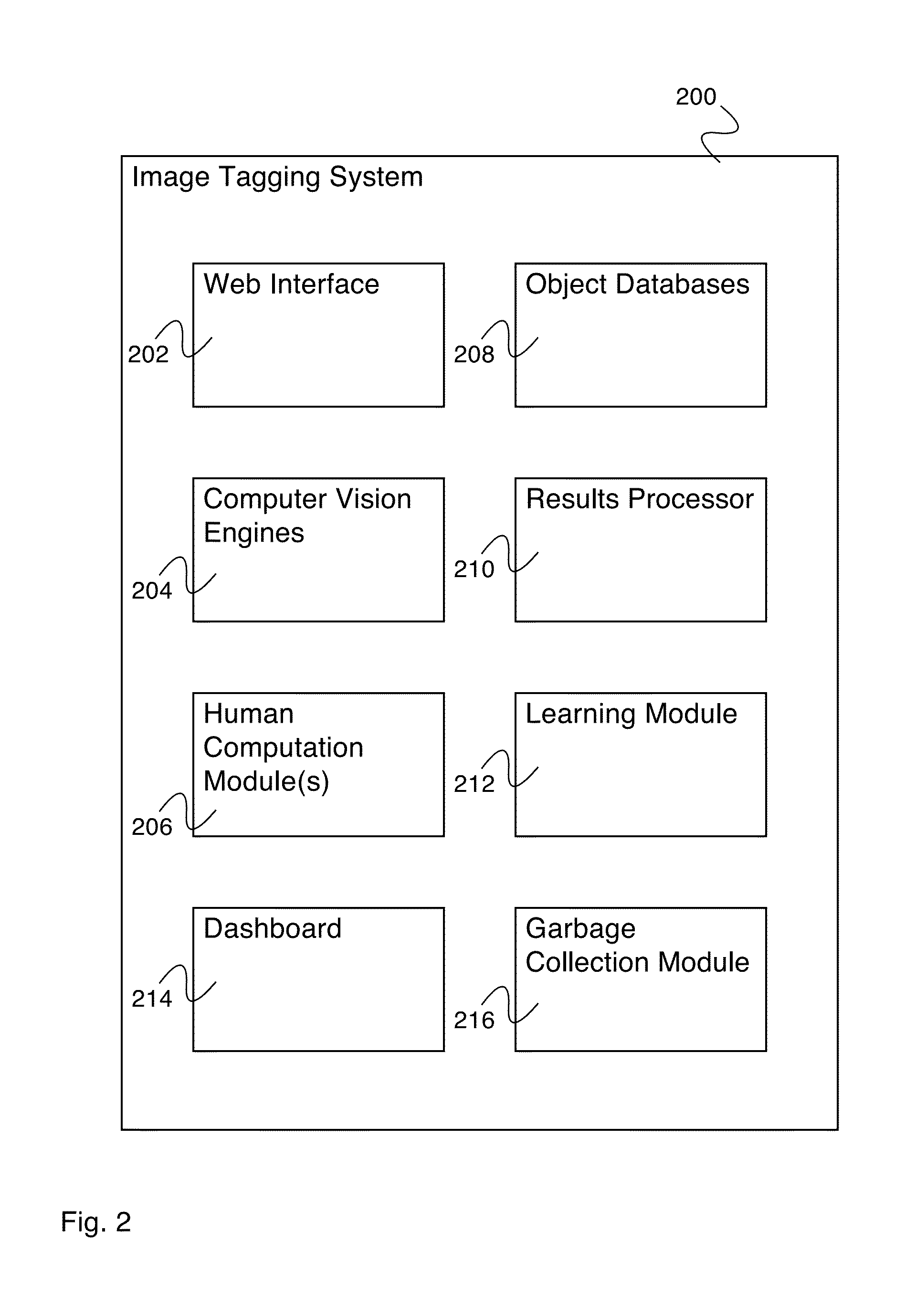

Monitoring an any-image labeling engine

A system for monitoring an image tagging system comprises a processor and a memory. The processor is configured to provide a user display for a type of monitoring of an image tagging system. The user display includes image tagging monitoring for: a) in the event that a computer vision match is found, a computer vision match and an associated computer vision tag and, b) in the event that no computer vision match is found, a human vision match and an associated human vision tag. A memory coupled to the processor and configured to provide the processor with instructions.

Owner:VERIZON PATENT & LICENSING INC

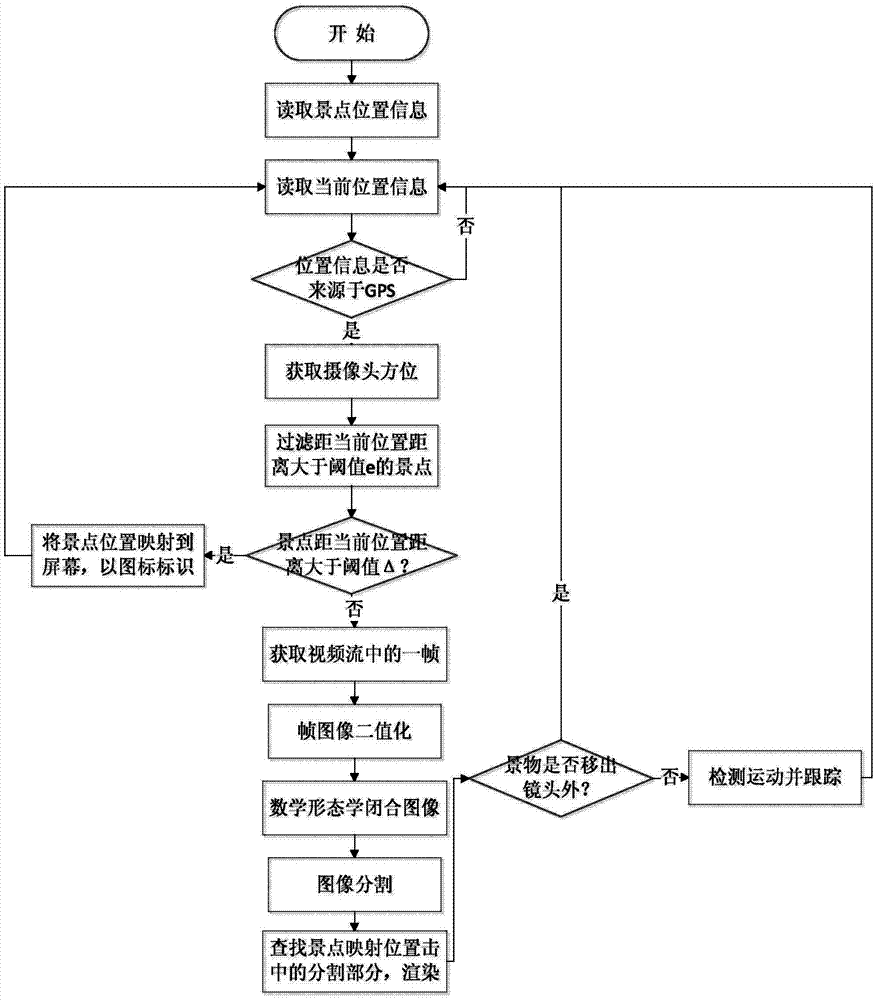

Scenic spot scenery moving augmented reality method based on space relationship and image analysis

InactiveCN103500452AGet rid of distractionsImprove recognition rateImage analysisInformation spaceVisual matching

The invention discloses a scenic spot scenery moving augmented reality method based on space relationship and image analysis. The method comprises the following steps that a geographical space data model facing an object is adopted for building a scenic spot geographical database according to the scenic spot scenery moving augmented reality guide demands and the scenic spot scenery space data organization characteristics; a built-in sensor of an intelligent mobile phone is adopted for obtaining the current position coordinates and the space orientation, an image pick-up view sight model based on multiple sensors is built, and the corresponding relationship between the real scenery images and the actual geographical space is generated; key frames are extracted from video image flows shot by the intelligent mobile phone and are fast divided sequentially through binaryzation and mathematical morphology methods; the visual matching between the real scenery space and the information space is realized through a measure of combining the image analysis with the space relationship, and the scenic spot scenery is identified; and the identified scenery is subjected to tracking registration by a moving detection method. The scenic spot scenery moving augmented reality method overcomes the defects of poor precision and low image identification technology efficiency of a space relationship realization method.

Owner:HANGZHOU NORMAL UNIVERSITY

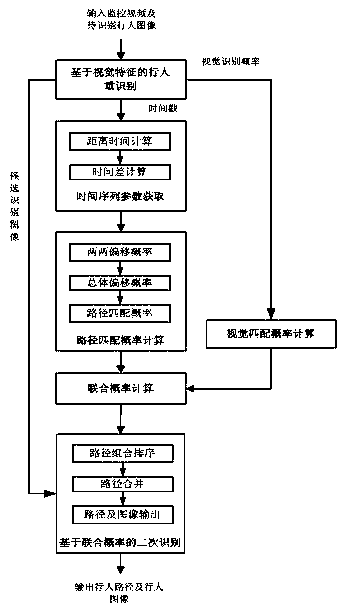

Secondary optimization method for monitoring video pedestrian re-identification result based on space-time constraint

ActiveCN105389562ANarrow down the search spaceIncrease credibilityCharacter and pattern recognitionHuman bodyVisual matching

The present invention discloses a secondary optimization method for a monitoring video pedestrian re-identification result based on space-time constraint, comprising the steps of: adopting a pedestrian re-identification algorithm based on human body appearance visual features to obtain an initial identification result; calculating a visual matching probability, a path matching probability and a joint probability of the visual matching probability and the path matching probability through time sequence parameters extracted from video frames; and selecting a pedestrian image under the path with the maximum joint probability as an output. The secondary optimization method of the present invention greatly improves credibility of a pedestrian re-identification result on the premise of not reducing a recall rate, and effectively overcomes the defect that a conventional pedestrian re-identification method based on visual features is sensitive to a monitoring environment.

Owner:ZHUHAI DAHENGQIN TECH DEV CO LTD

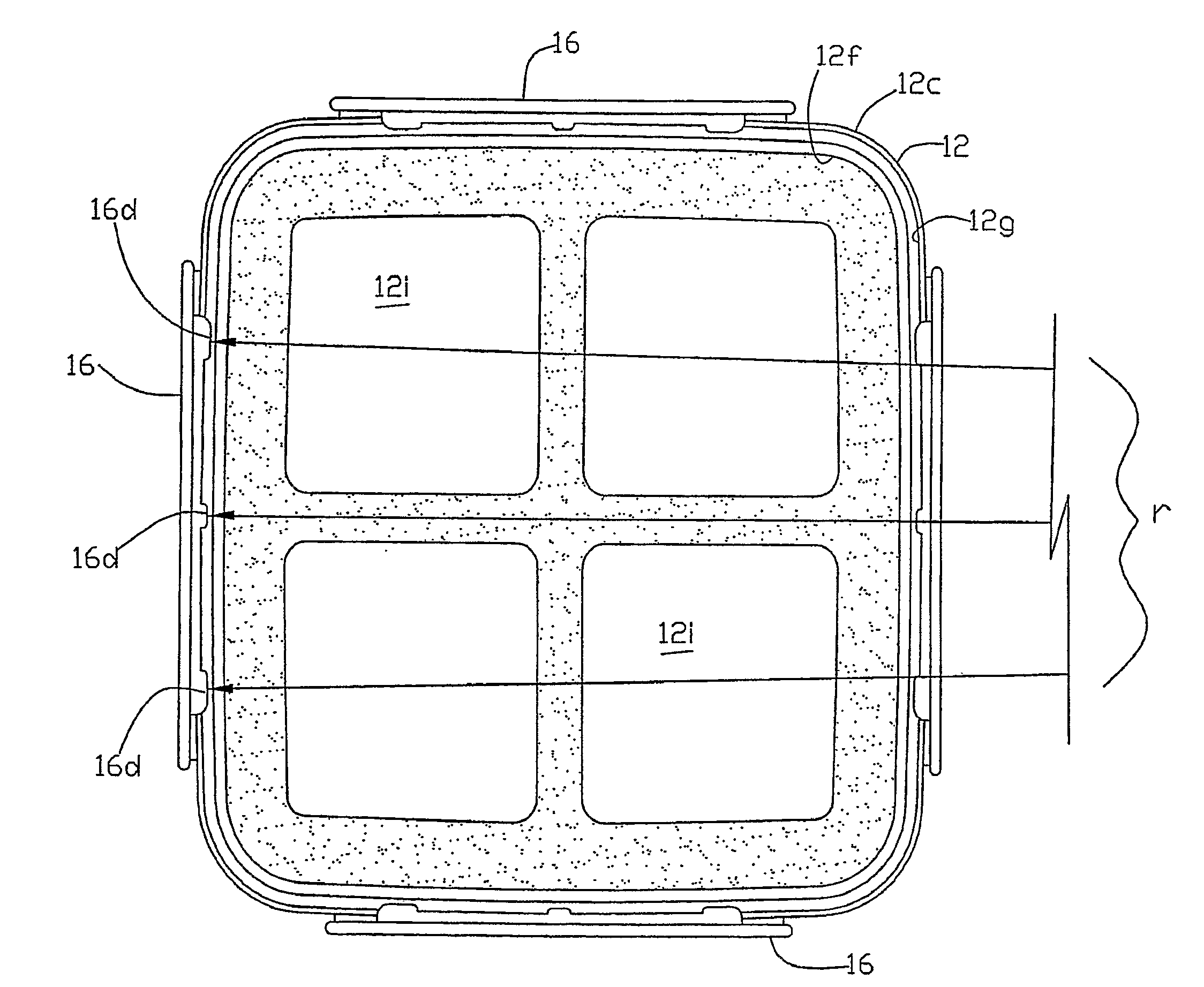

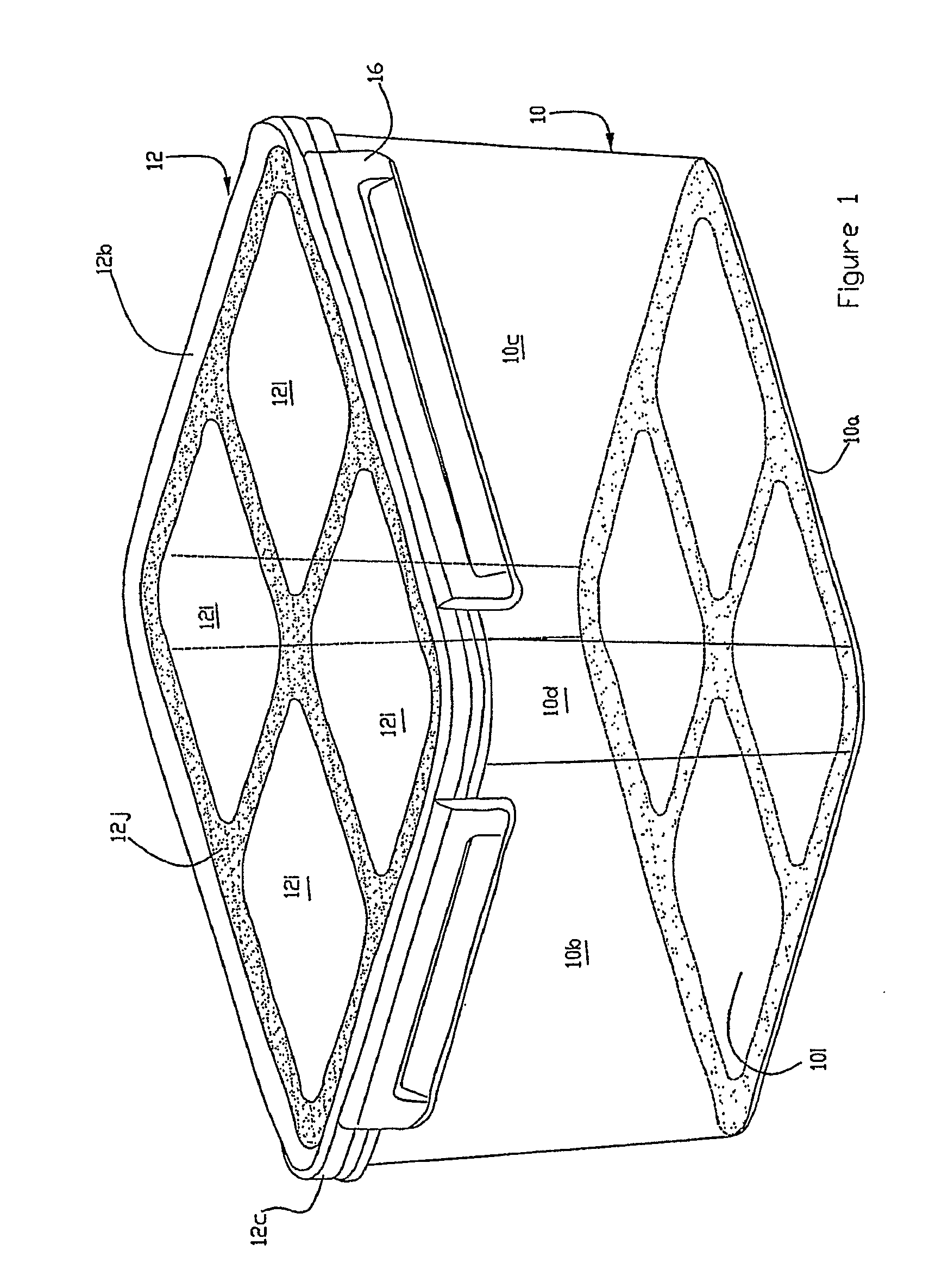

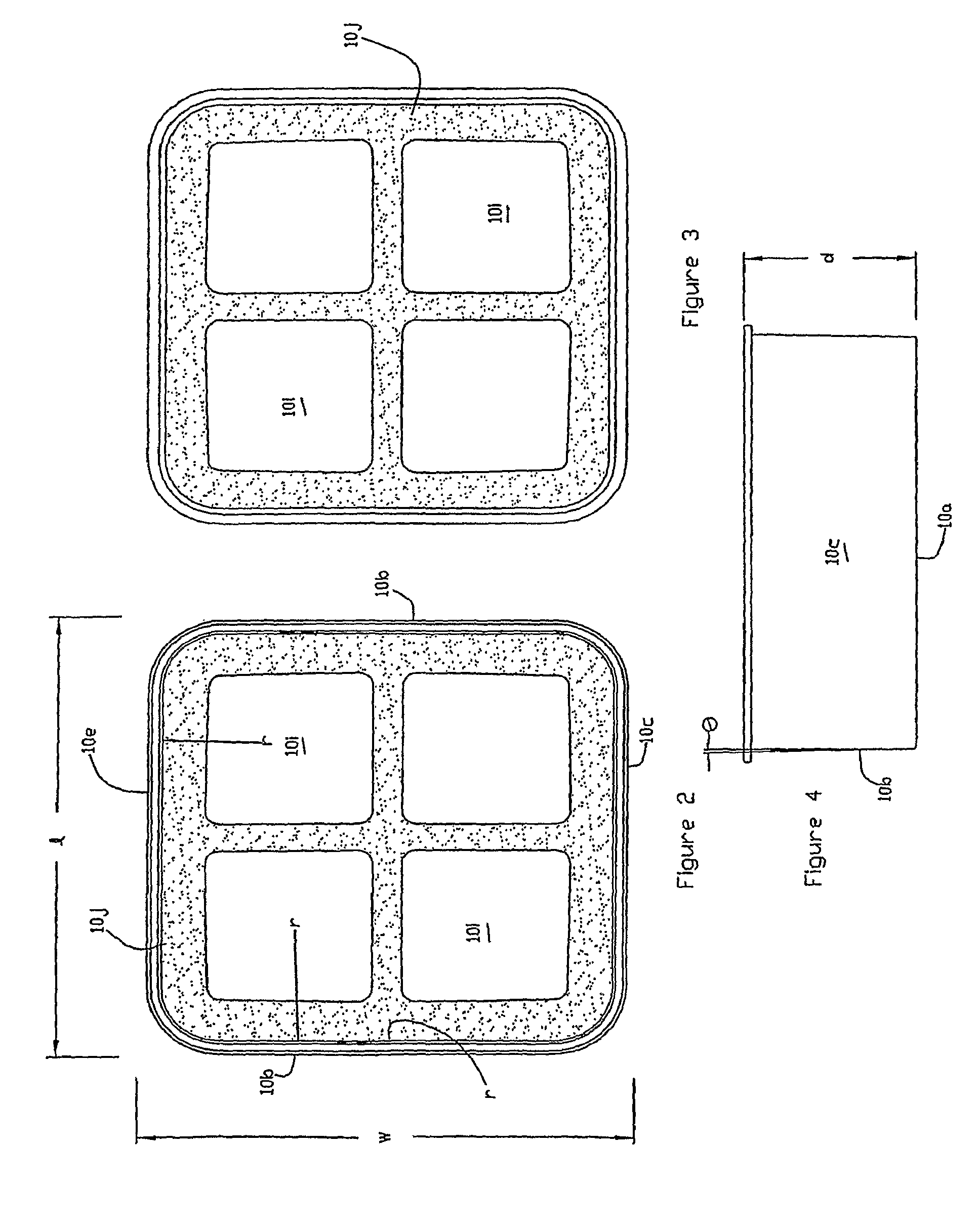

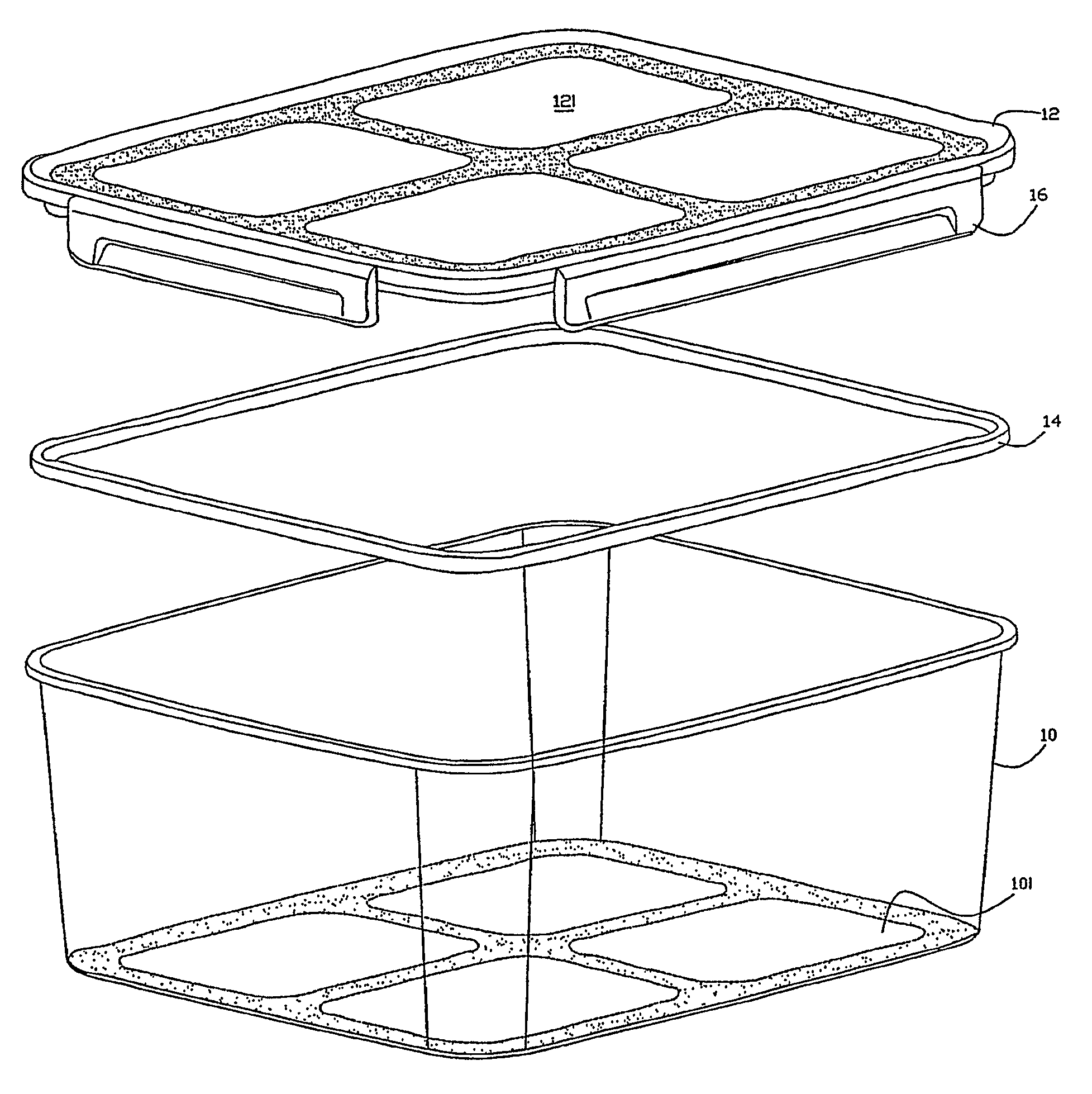

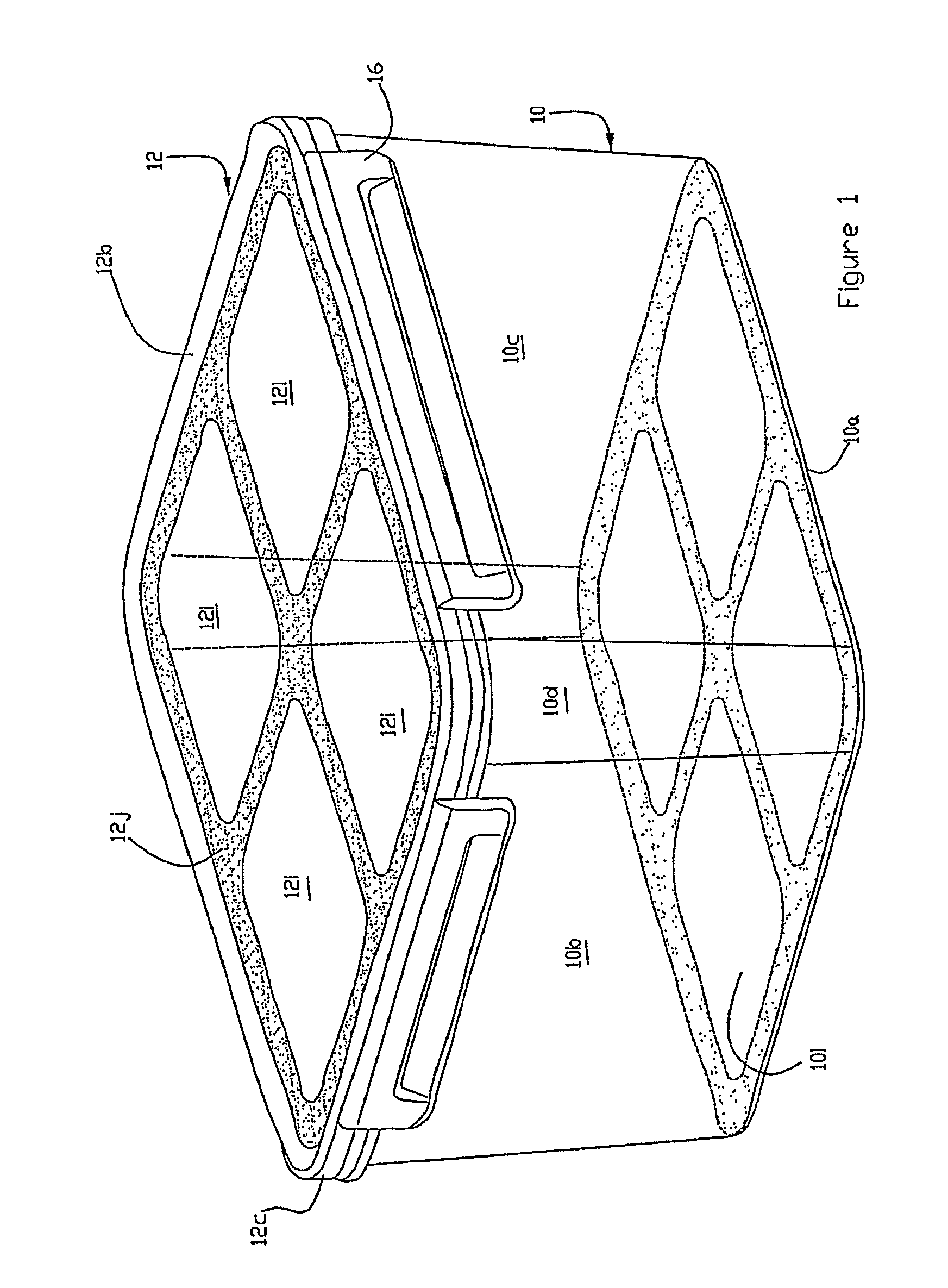

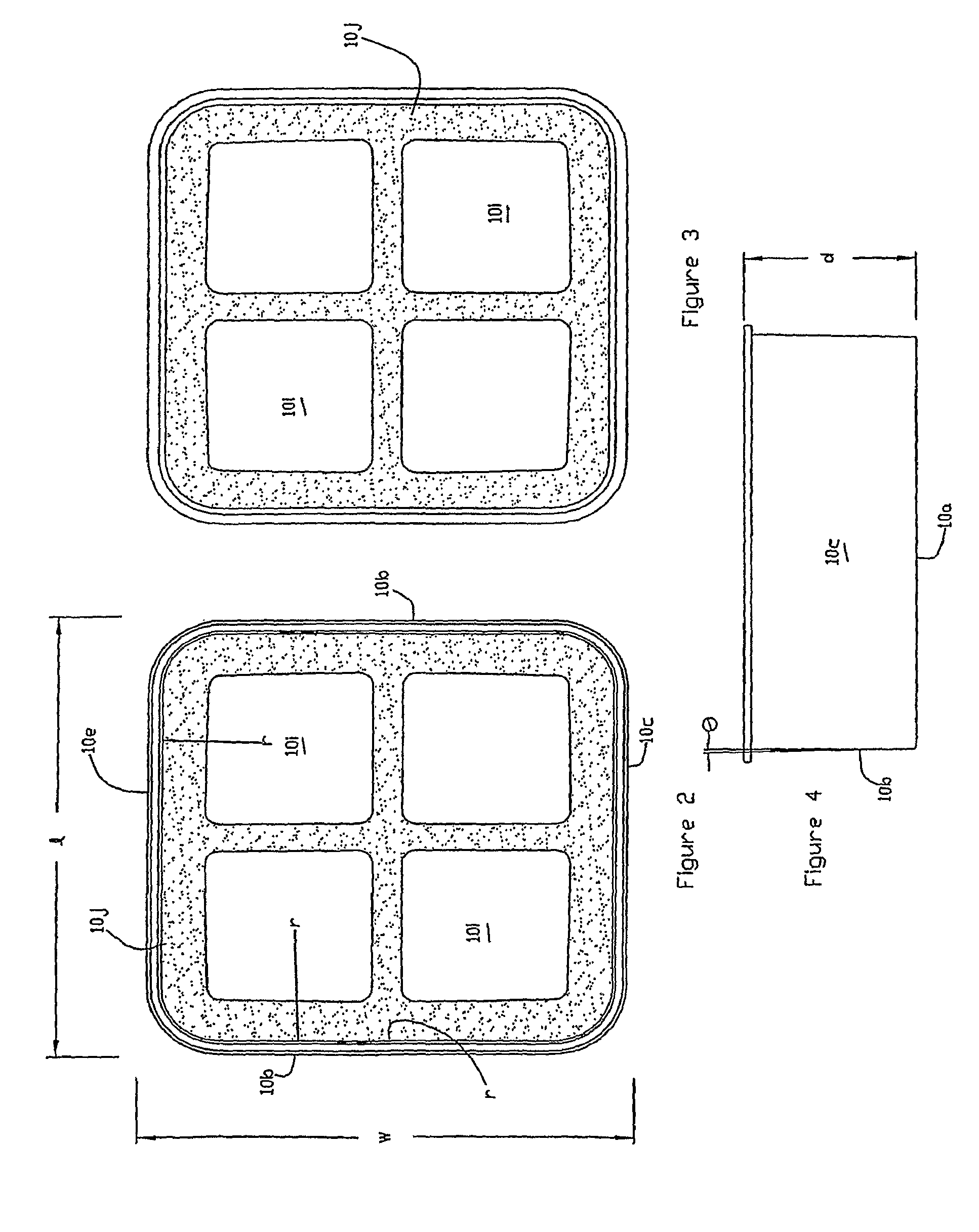

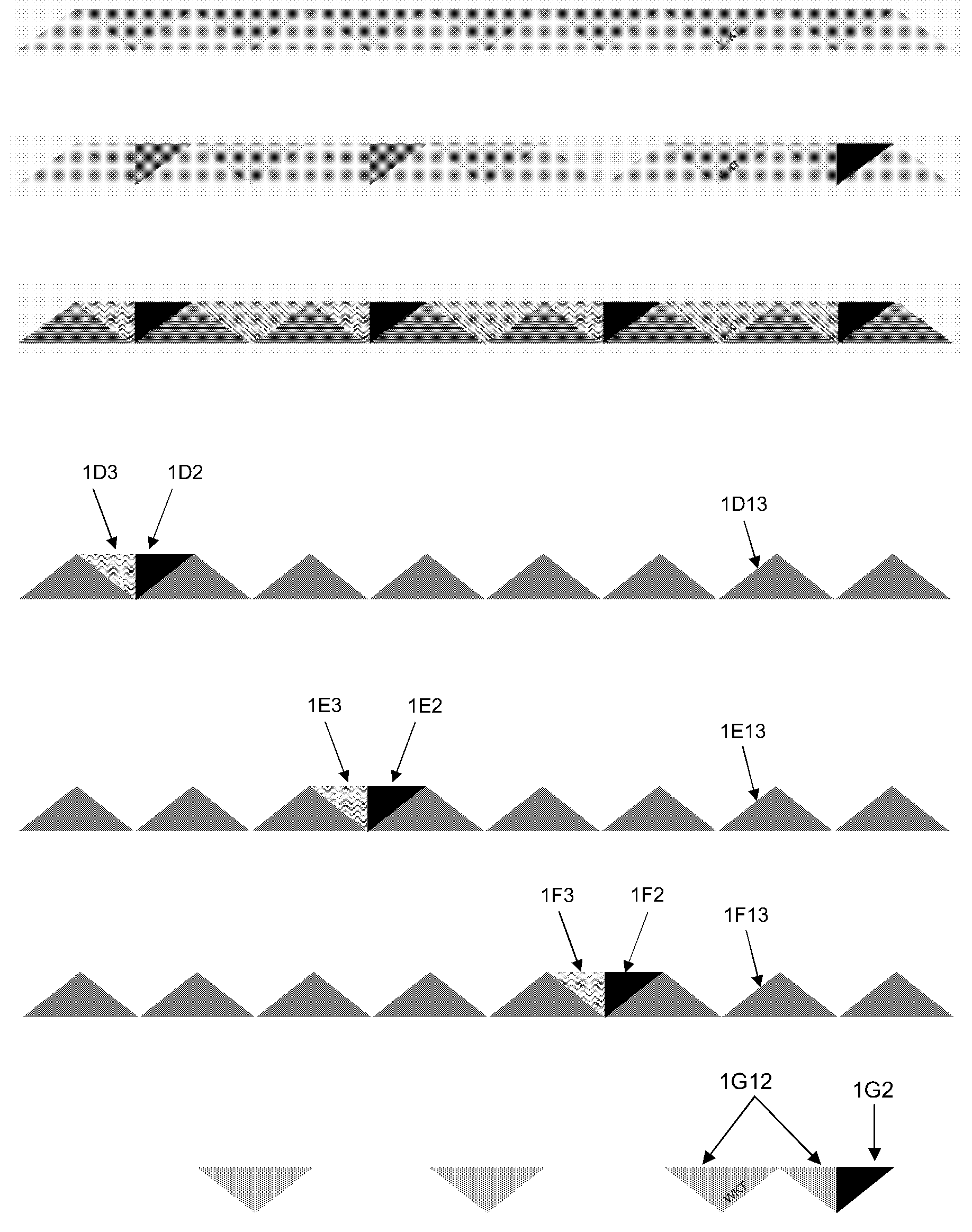

Container/Lid Combination For Storing Food and other Articles

A system for aiding the visual matching of containers having diverse openings with matching lids includes affixing, such as by molding or embossing, geometric planar patterns on the bottom walls of a plurality of rectangular containers having different sized openings and affixing the same geometric patterns to the top wall of the matching lids.

Owner:CORELLE BRANDS LLC

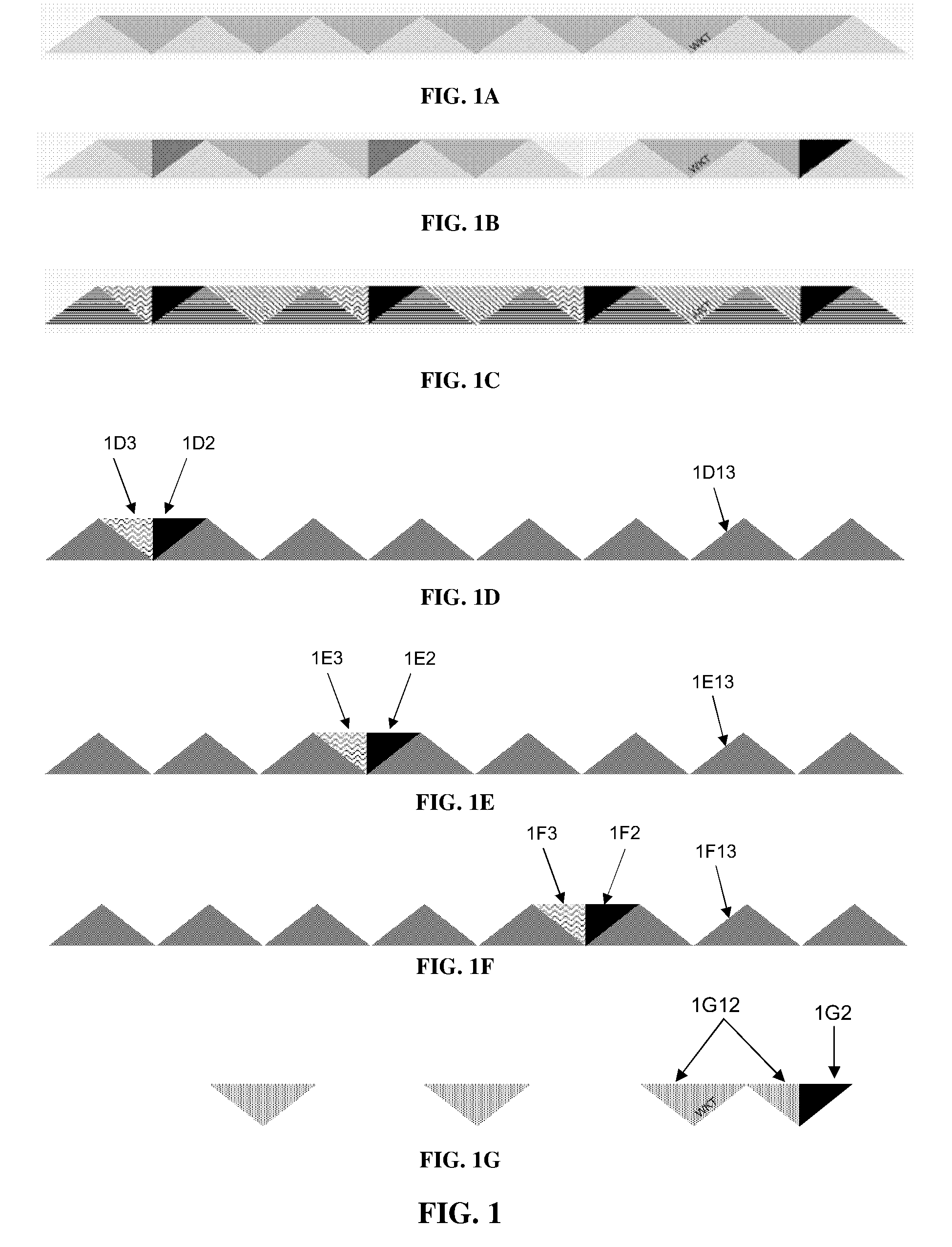

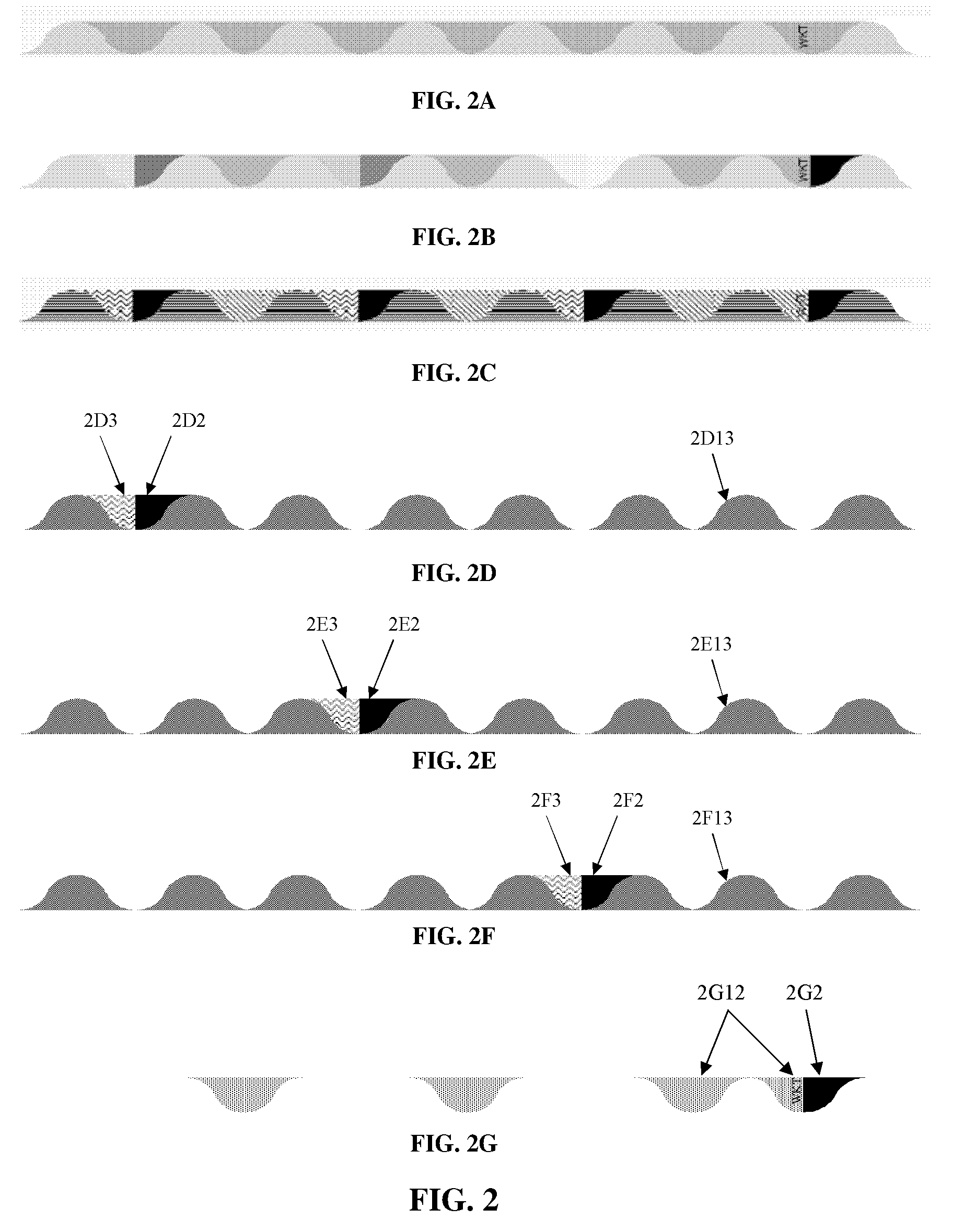

System for aiding the visual matching of containers having diverse openings with corresponding lids

A system for aiding the visual matching of containers having diverse openings with matching lids includes affixing, such as by molding or embossing, geometric planar patterns on the bottom walls of a plurality of rectangular containers having different sized openings and affixing the same geometric patterns to the top wall of the matching lids.

Owner:CORELLE BRANDS LLC

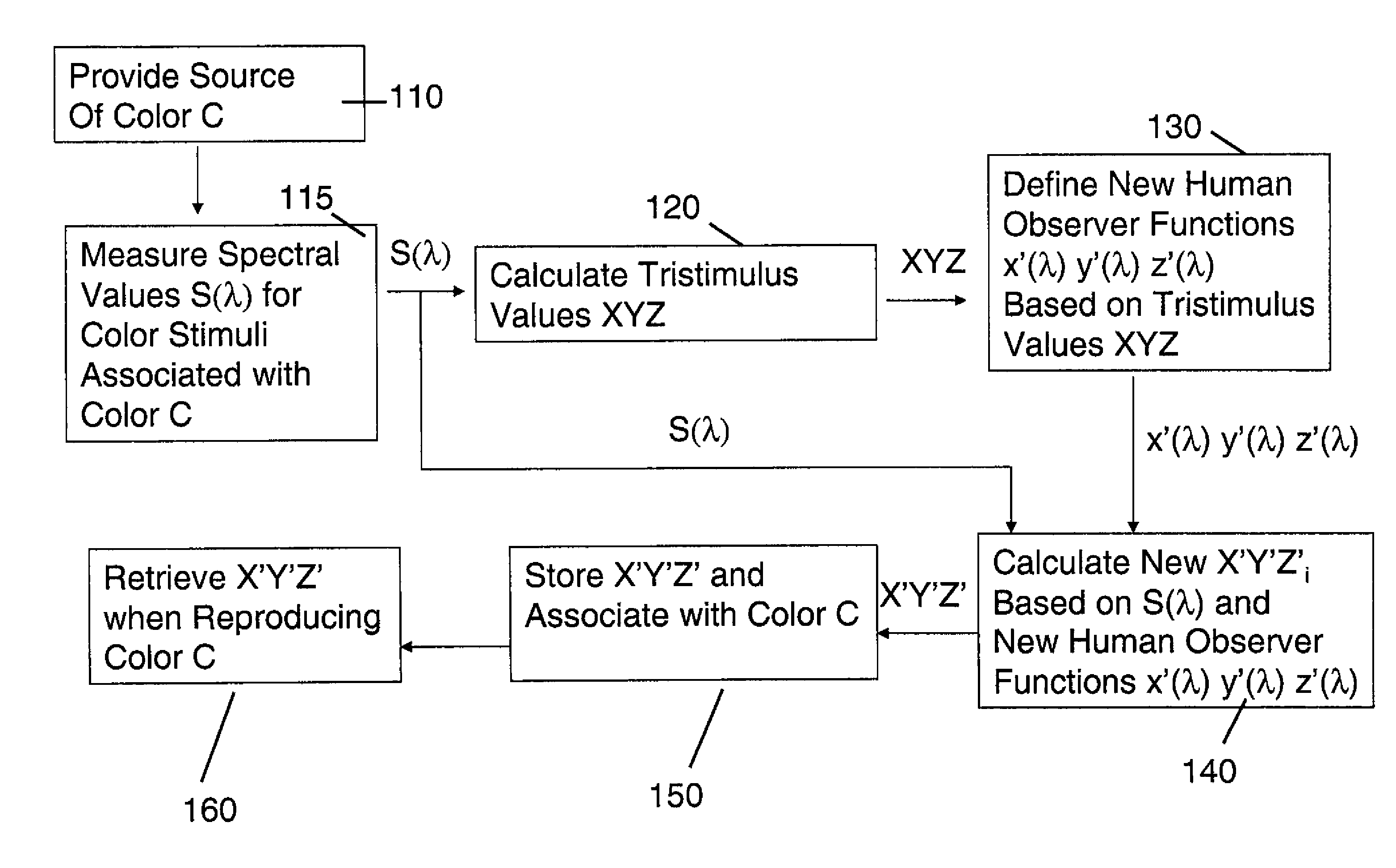

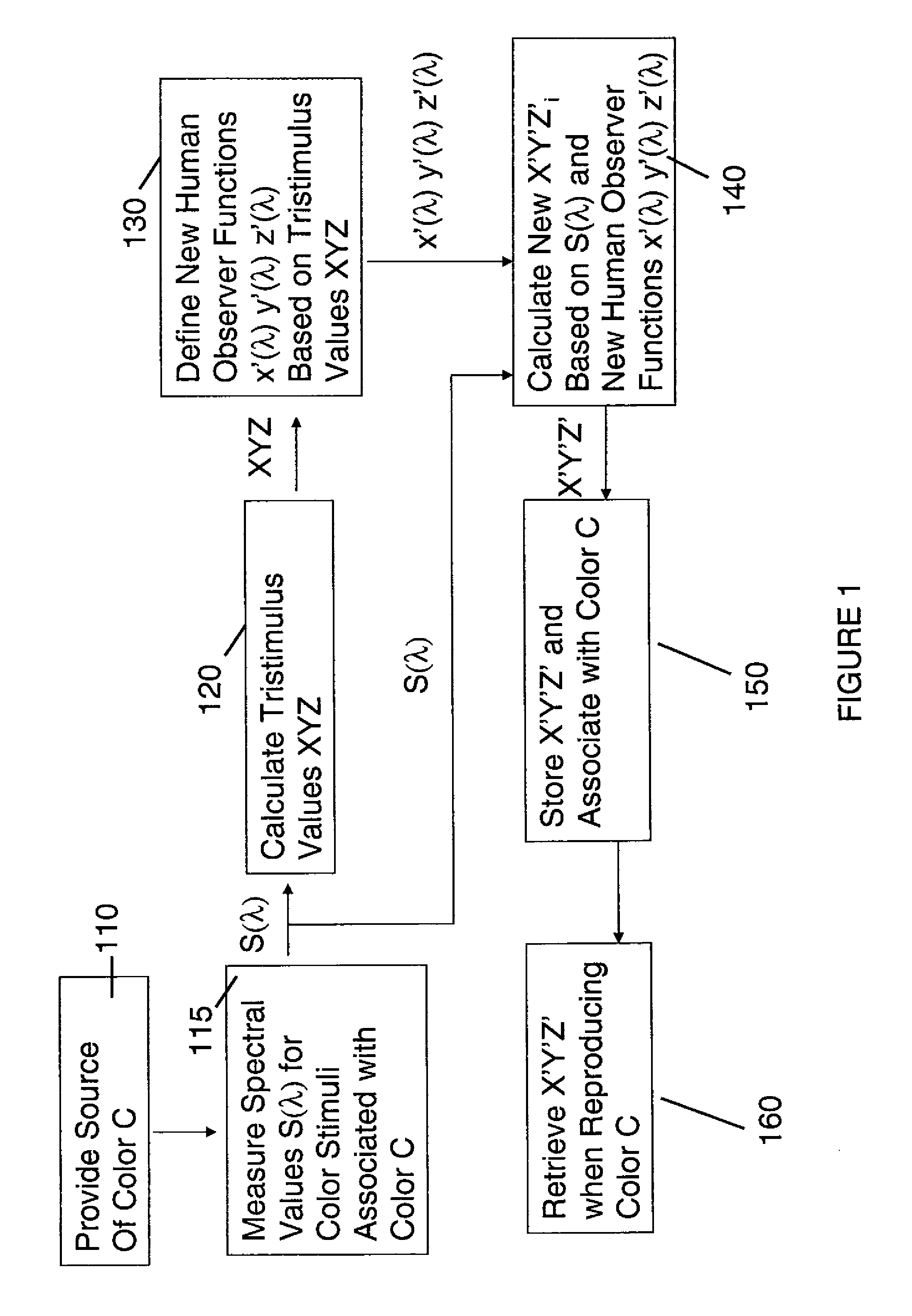

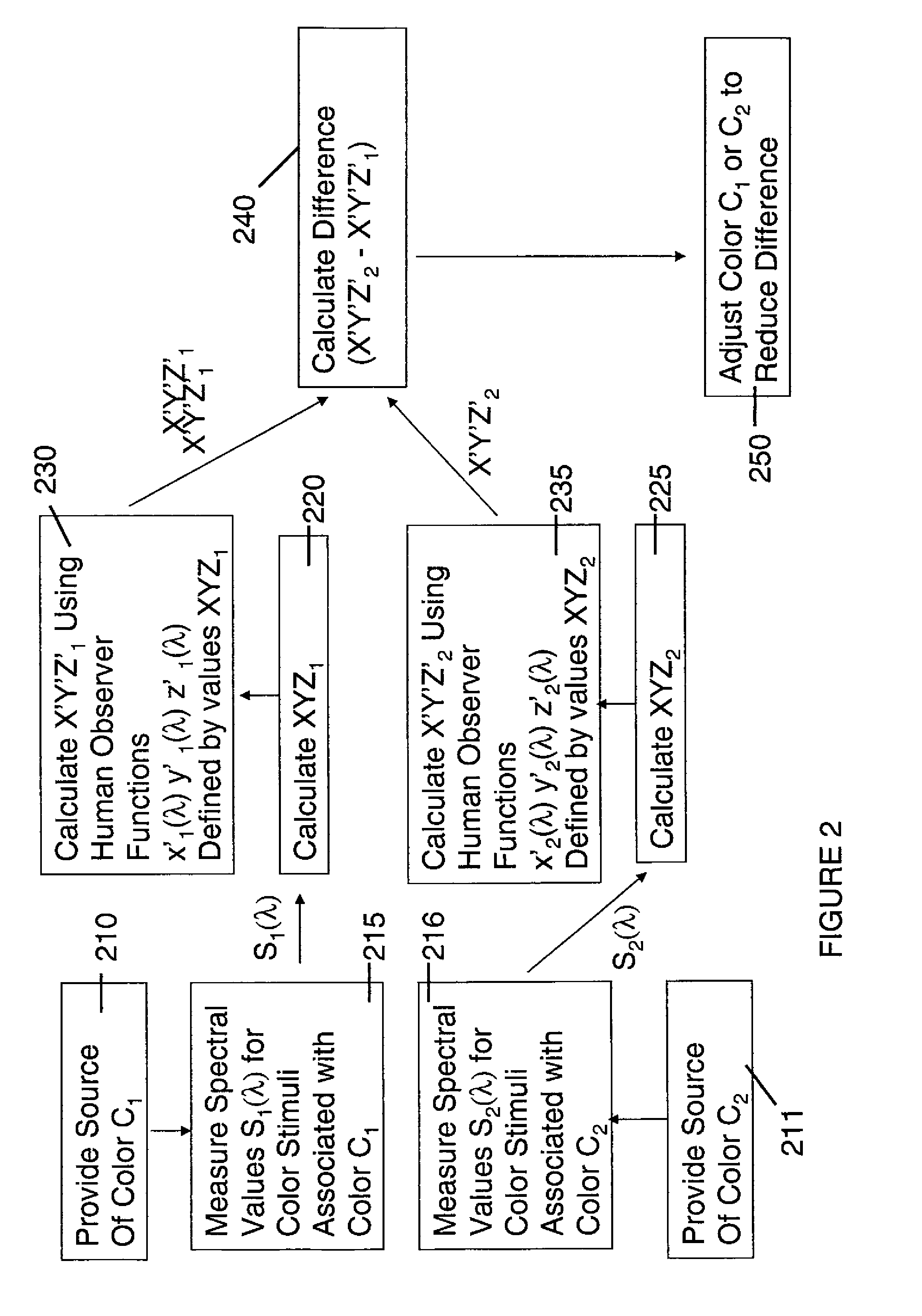

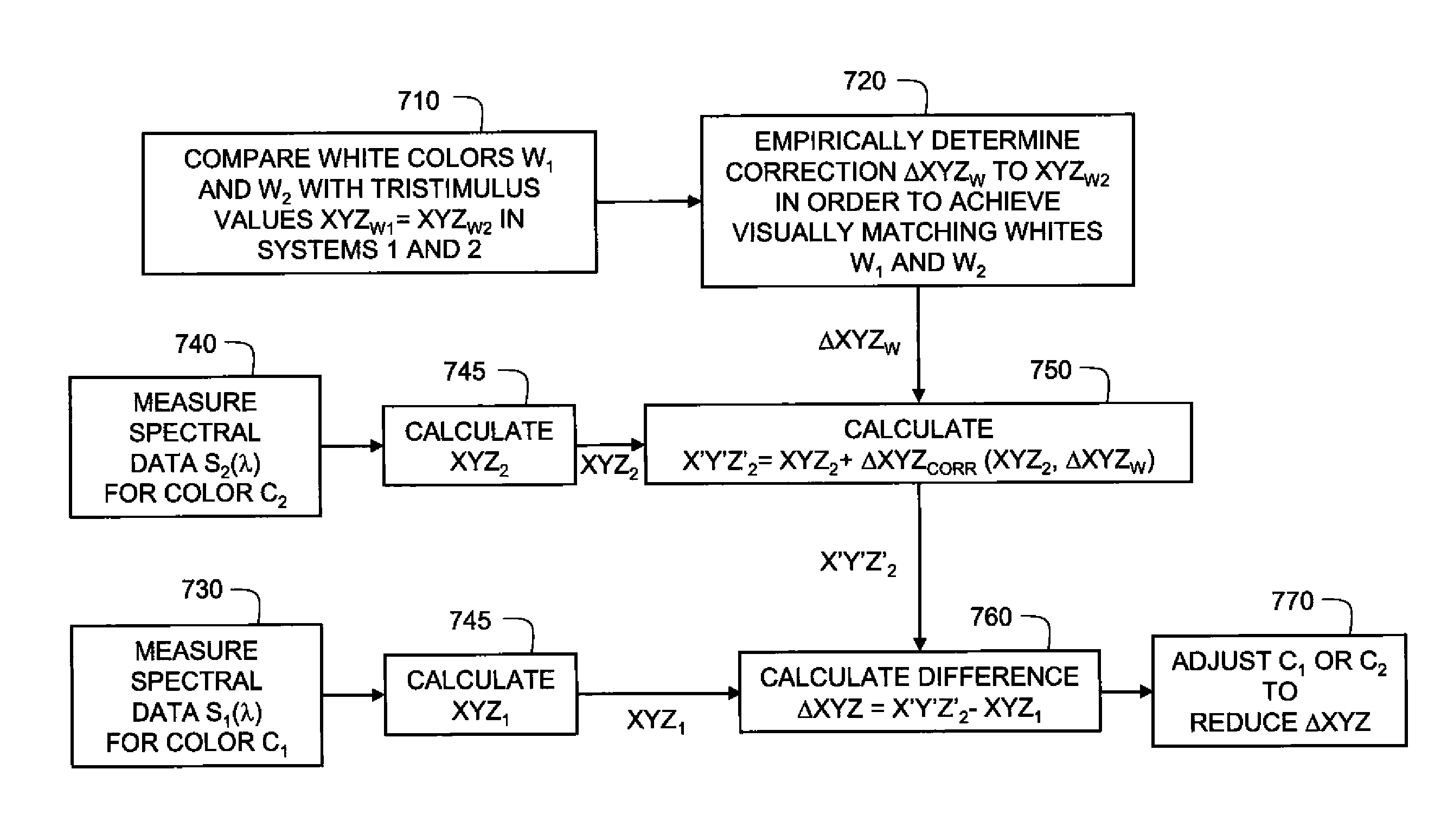

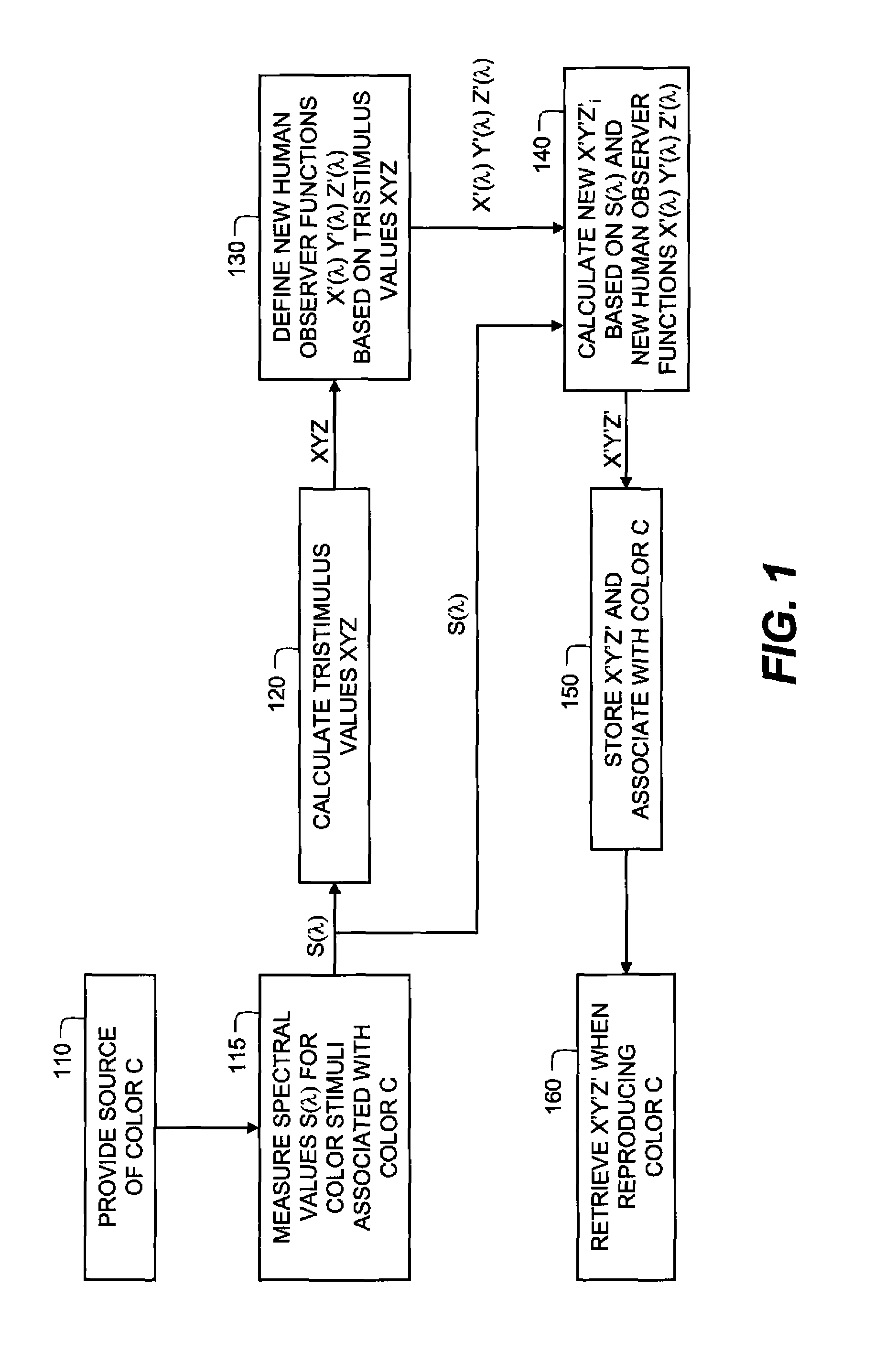

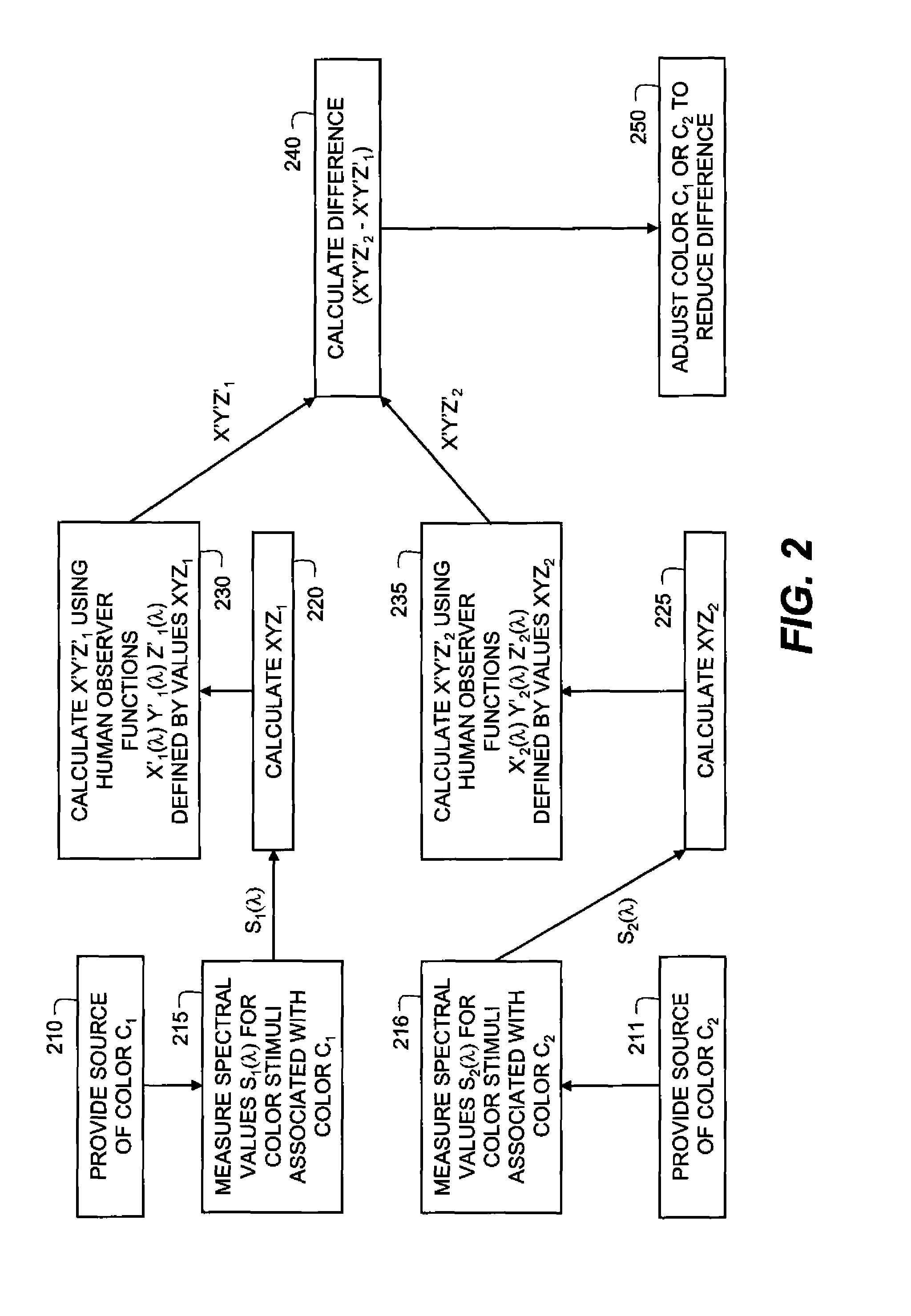

Method for matching colors between two systems

ActiveUS20110026821A1Good visual matchPreserving color appearanceColor signal processing circuitsProjectorsPattern recognitionVisual matching

A method for matching colors including comparing the appearance of a first white color associated with a first color imaging system and a second white color associated with a second color imaging system, wherein the tristimulus values of the first and second white color are similar; determining a fixed correction to the tristimulus values of the second white color to achieve a visual match to the first white color; measuring a first set of spectral values for a first color associated with the first color imaging system; determining a first set of tristimulus values from the first set of spectral values; measuring a second set of spectral values for a second color associated with the second color imaging system; determining a second set of tristimulus values from the second set of spectral values; applying a correction to the tristimulus values of the second color; determining a difference between the tristimulus value of the first color and the corrected tristimulus value of the second color; and adjusting the second color to reduce the difference.

Owner:EASTMAN KODAK CO

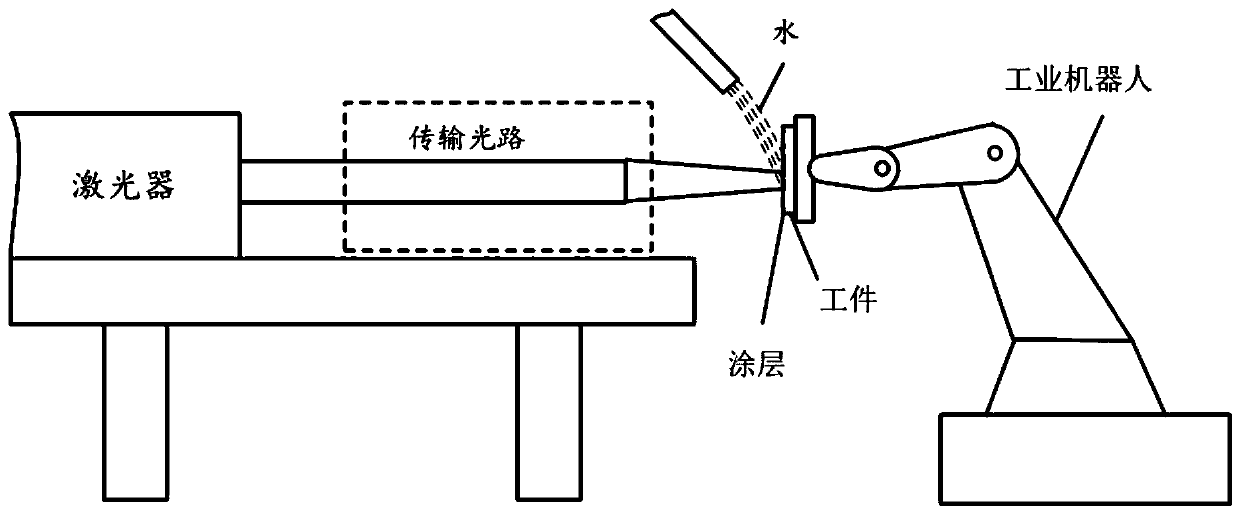

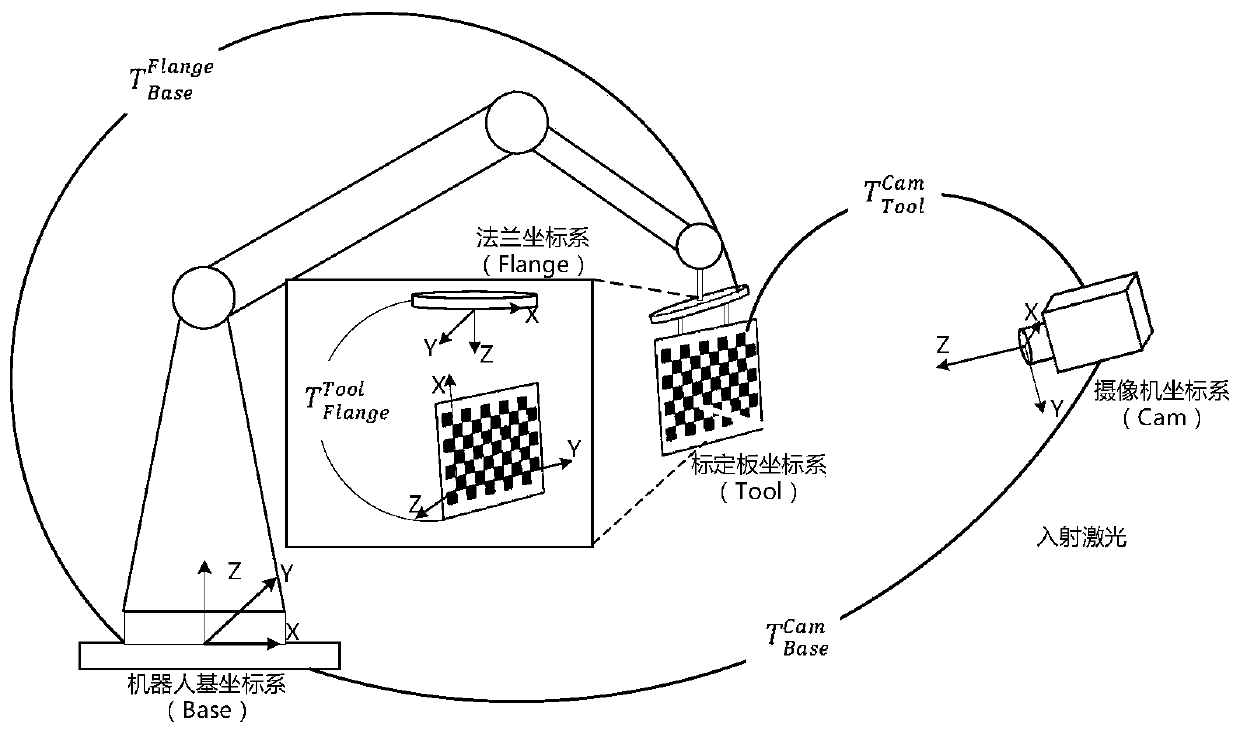

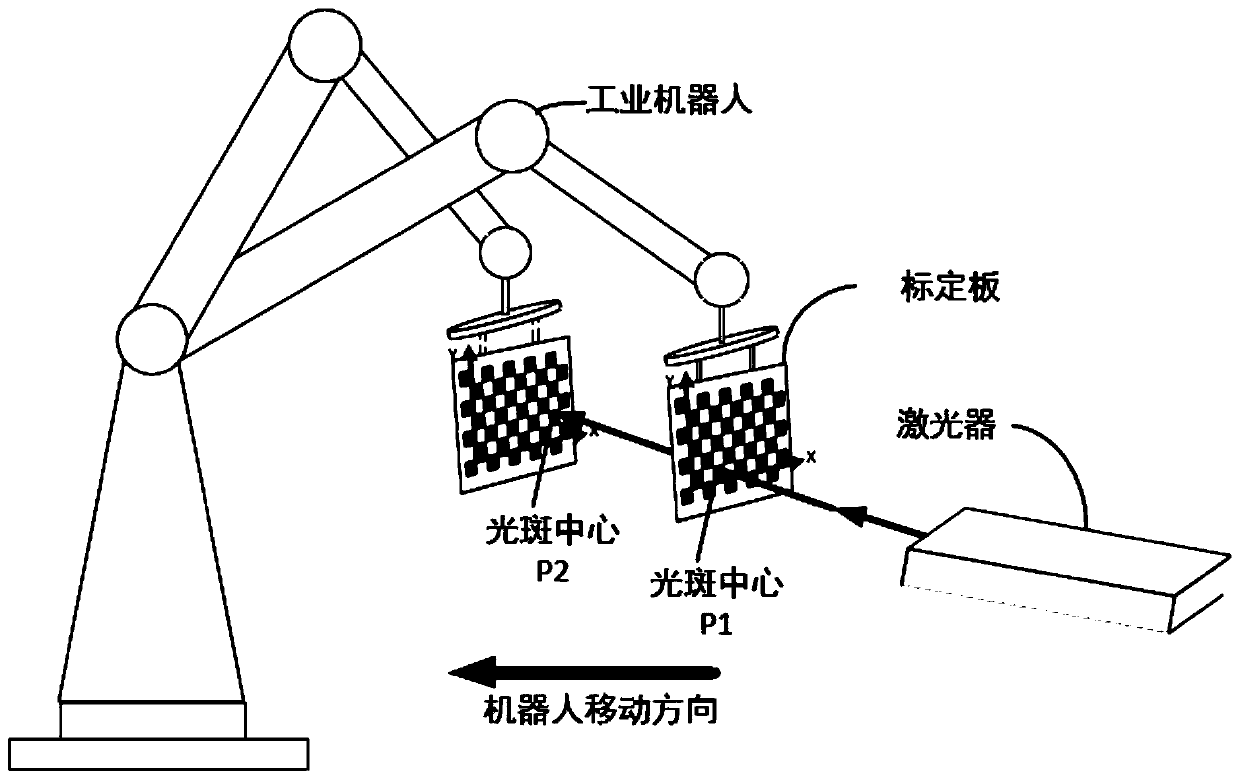

Laser processing visual matching method and system and medium

InactiveCN110355464AImprove machining accuracyImprove processing stabilityImage analysisLaser beam welding apparatusVisual matchingLaser processing

The invention provides a laser processing visual matching method and system and a medium. The laser processing visual matching method comprises a device fixing step, wherein a calibration plate is fixed on a flange of an industrial robot, and a camera is fixedly placed at a first preset position of an external space of the industrial robot; a coordinate system construction step, wherein a base coordinate system Base, a flange coordinate system Flange, a calibration plate coordinate system Tool and a camera coordinate system Cam of the industrial robot are constructed; and a matrix acquisitionstep, wherein a closed kinematic chain equation between the four coordinate systems is established, and a homogeneous transformation matrix of the calibration plate coordinate system and the flange coordinate system is obtained. Shortcomings in the prior art are overcome, the vision-based matching method with simple operation and high precision is provided, and guarantees are provided for high-precision and high-stability operation for follow-up laser processing.

Owner:SHANGHAI JIAO TONG UNIV

Method for reproducing colors from display to projector by utilizing human eyes

InactiveCN101720046ASimple methodLow costColor signal processing circuitsAcquired characteristicVisual matching

The invention discloses a method for reproducing colors from a display to a projector by utilizing human eyes, which is a new technical method for a color management system, and belongs to the filed of color science and technology. The technical method that the traditional color management system is required to be measured by an instrument is changed, and a method of direct human eye visual matching of 6 to 9 pieces of colors of the display and the projector is adopted; and according to known white points and gamma values respectively set in the display and the projector, and a defaulted display characteristic linear matrix, a projector color characteristic linear matrix is calculated. Through the known and acquired characteristic parameter matrixes of the display and the projector, the display color of the display is converted to the projection color of the projector so as to realize the reproduction of the color from the display to the projector. The method has the advantages of simpleness and convenience, and low cost, and can be applied to common users with not high requirement on color reproduction accuracy.

Owner:YUNNAN NORMAL UNIV

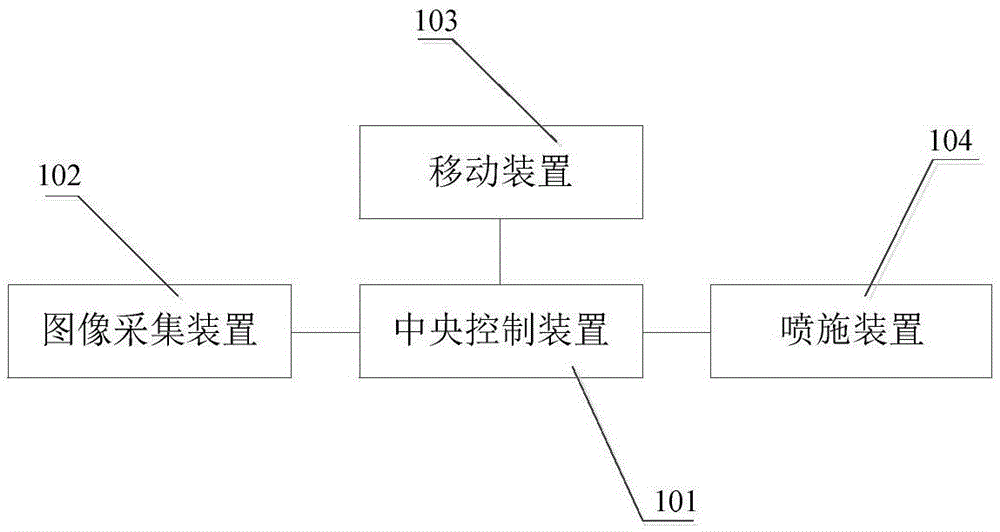

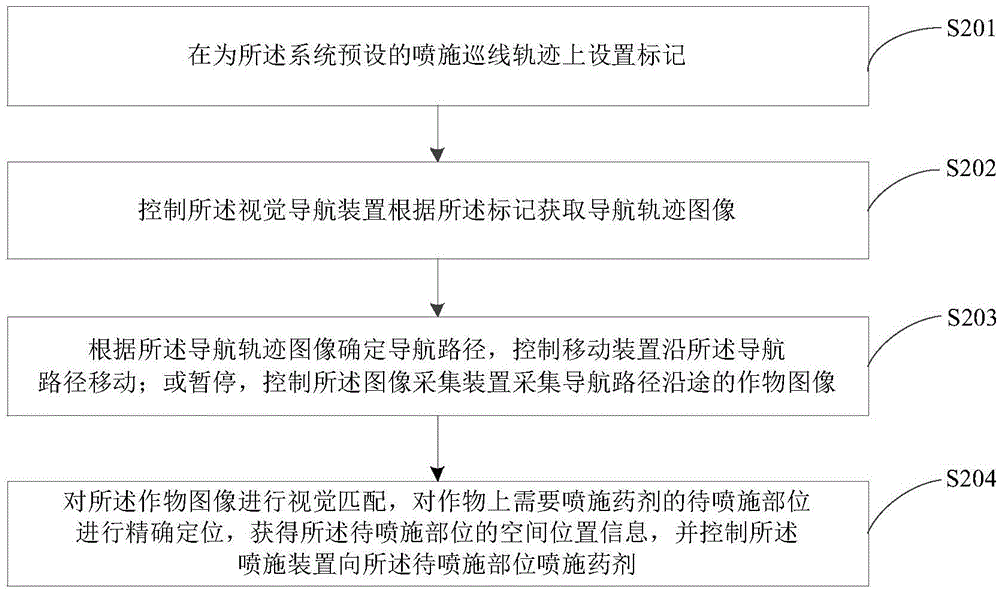

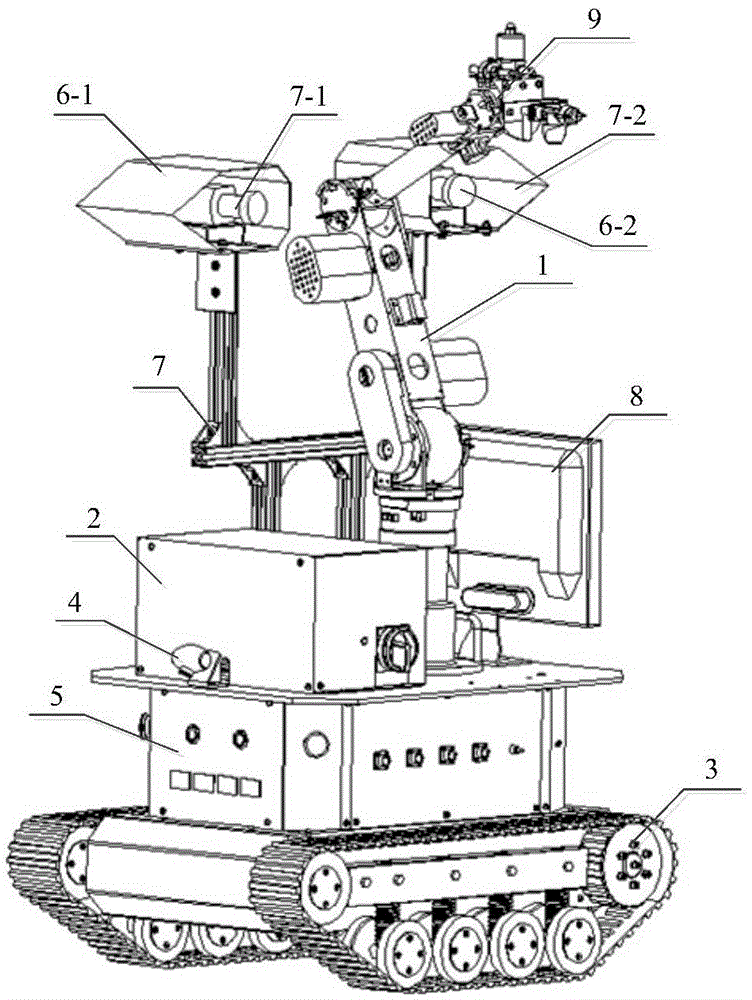

Pesticide spraying robot system and control method

The invention relates to the technical field of intelligent agriculture devices, in particular to a pesticide spraying robot system and a control method. The pesticide spraying robot system comprises a central control device, an image collecting device, a spraying device and a moving device, wherein the central control device, the image collecting device and the spraying device are arranged on the moving device; the moving device comprises a visual navigation device used for acquiring navigation track images according to marks set by a user; the central control device is used for determining a navigation path according to the navigation track images and controlling the moving device to move along the navigation path or stop crop image collecting along the navigation path; the central control device is also used for conducting visual matching on the crop images, accurately positioning positions needing pesticide spraying on crops, acquiring the spacial position information of the positions to be sprayed and controlling the spraying device to spray pesticide to the positions to be sprayed. The system can replace people to finish heavy spraying tasks, the cost for crop pesticide spraying is reduced, and popularization of modern intelligent agriculture devices is promoted.

Owner:CHINA AGRI UNIV

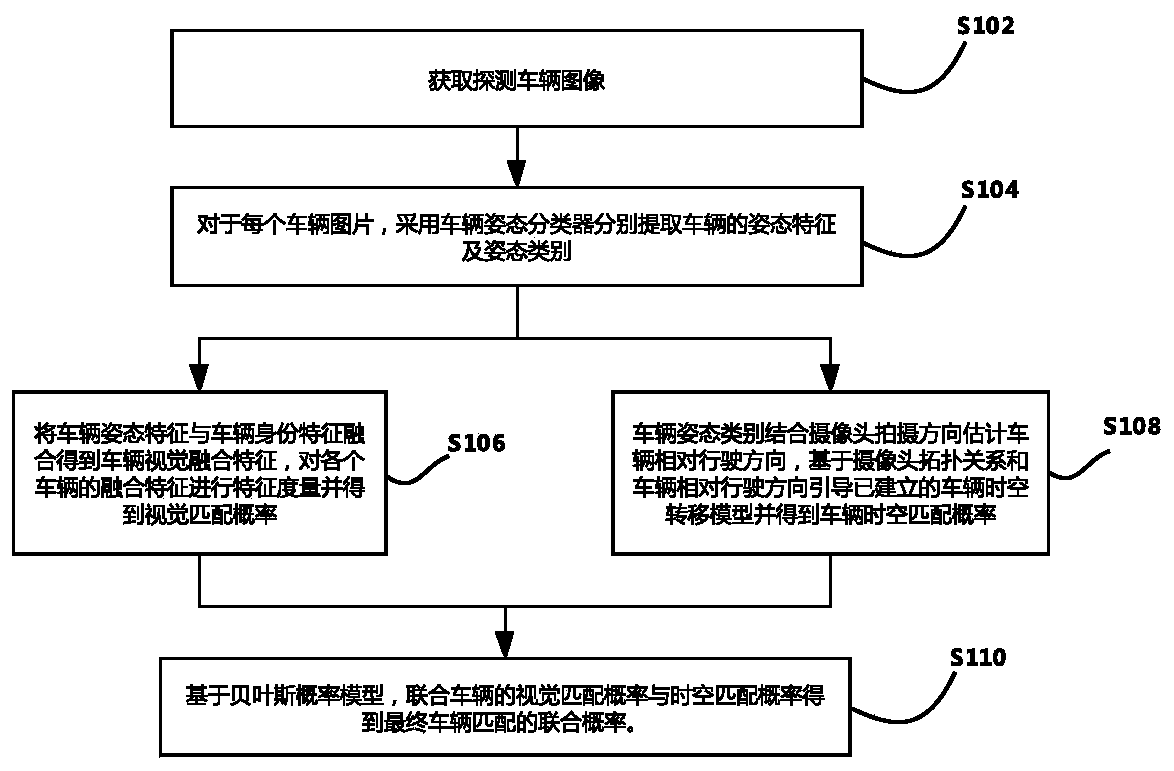

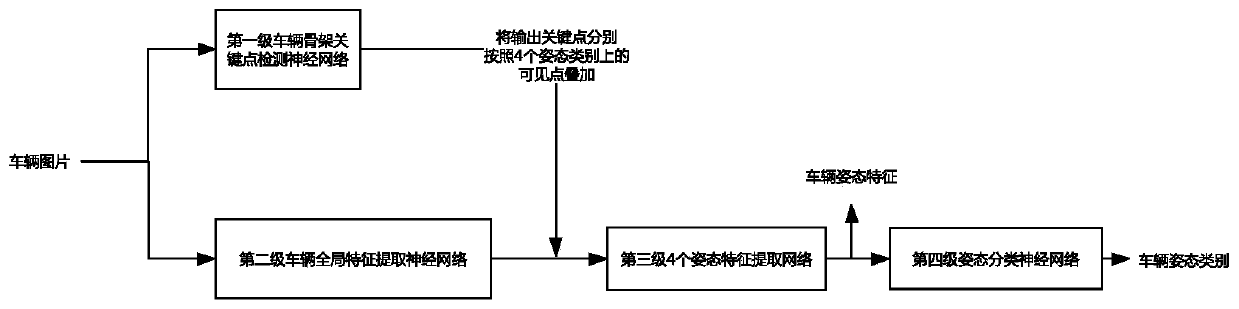

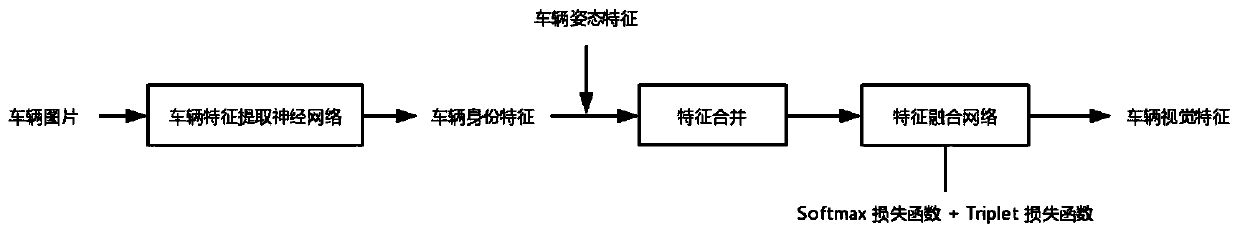

Vehicle re-recognition method based on space-time constraint model optimization

PendingCN110795580AReduce the intra-class distanceExpand the distance between different ID classesInternal combustion piston enginesCharacter and pattern recognitionVisual matchingAlgorithm

The invention discloses a vehicle re-recognition method based on space-time constraint model optimization. The method comprises the following steps: 1) obtaining a to-be-queried vehicle image; 2) fora given vehicle query image and a plurality of candidate pictures, extracting vehicle attitude features through a vehicle attitude classifier and outputting a vehicle attitude category; 3) fusing thevehicle attitude feature and the fine-grained identity feature of the vehicle to obtain a fusion feature of the vehicle based on visual information, and obtaining a visual matching probability; 4) estimating the relative driving direction of the vehicle, and establishing a vehicle space-time transfer model; 5) obtaining a vehicle space-time matching probability; 6) based on the Bayesian probability model, combining the visual matching probability and the space-time matching probability of the vehicle to obtain a final vehicle matching joint probability; and 7) arranging the joint probabilitiesof the queried vehicle and all candidate vehicles in a descending order to obtain a vehicle re-recognition sorting table. The method provided by the invention greatly reduces the false recognition rate of vehicles and improves the accuracy of a final recognition result.

Owner:WUHAN UNIV OF TECH

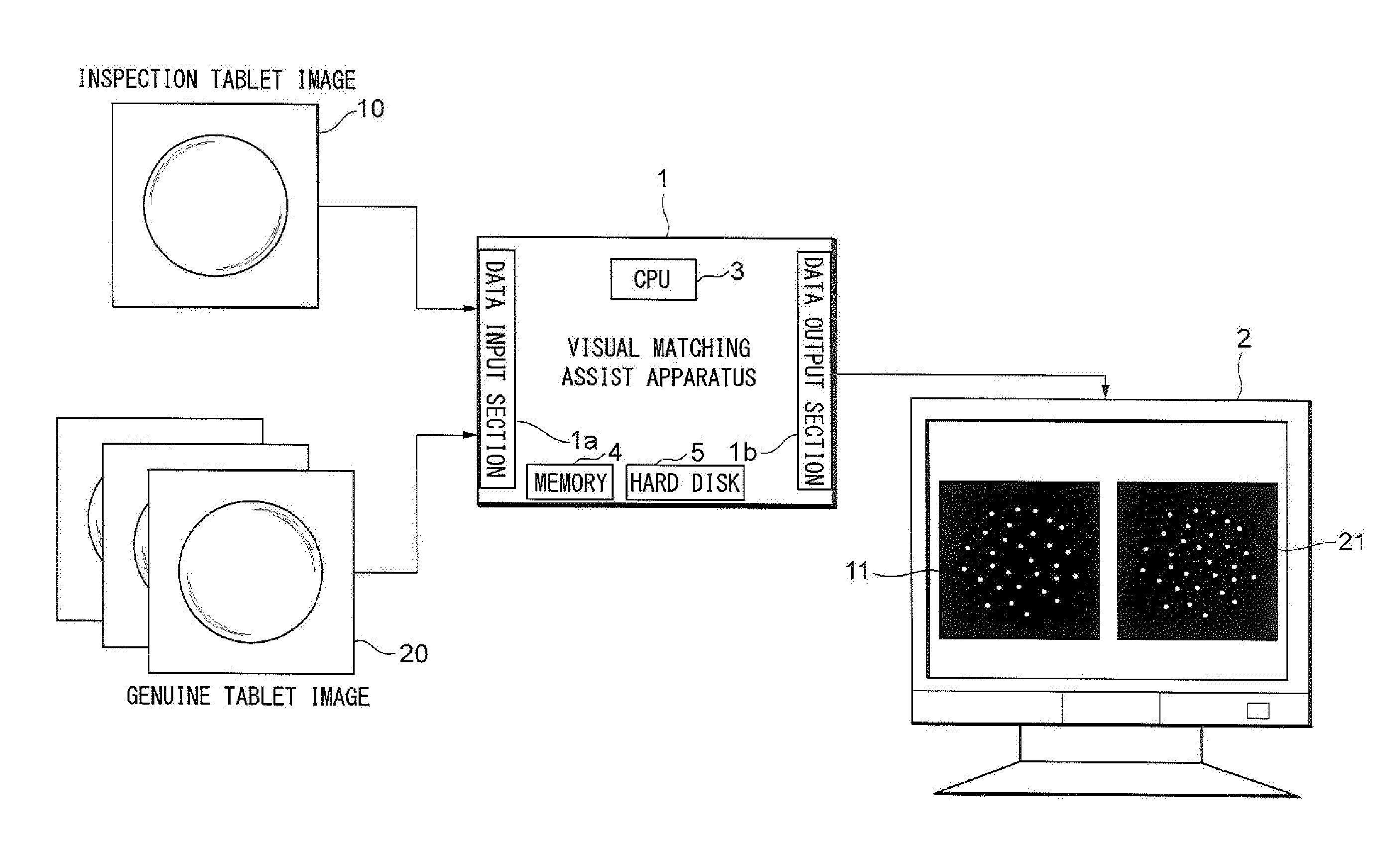

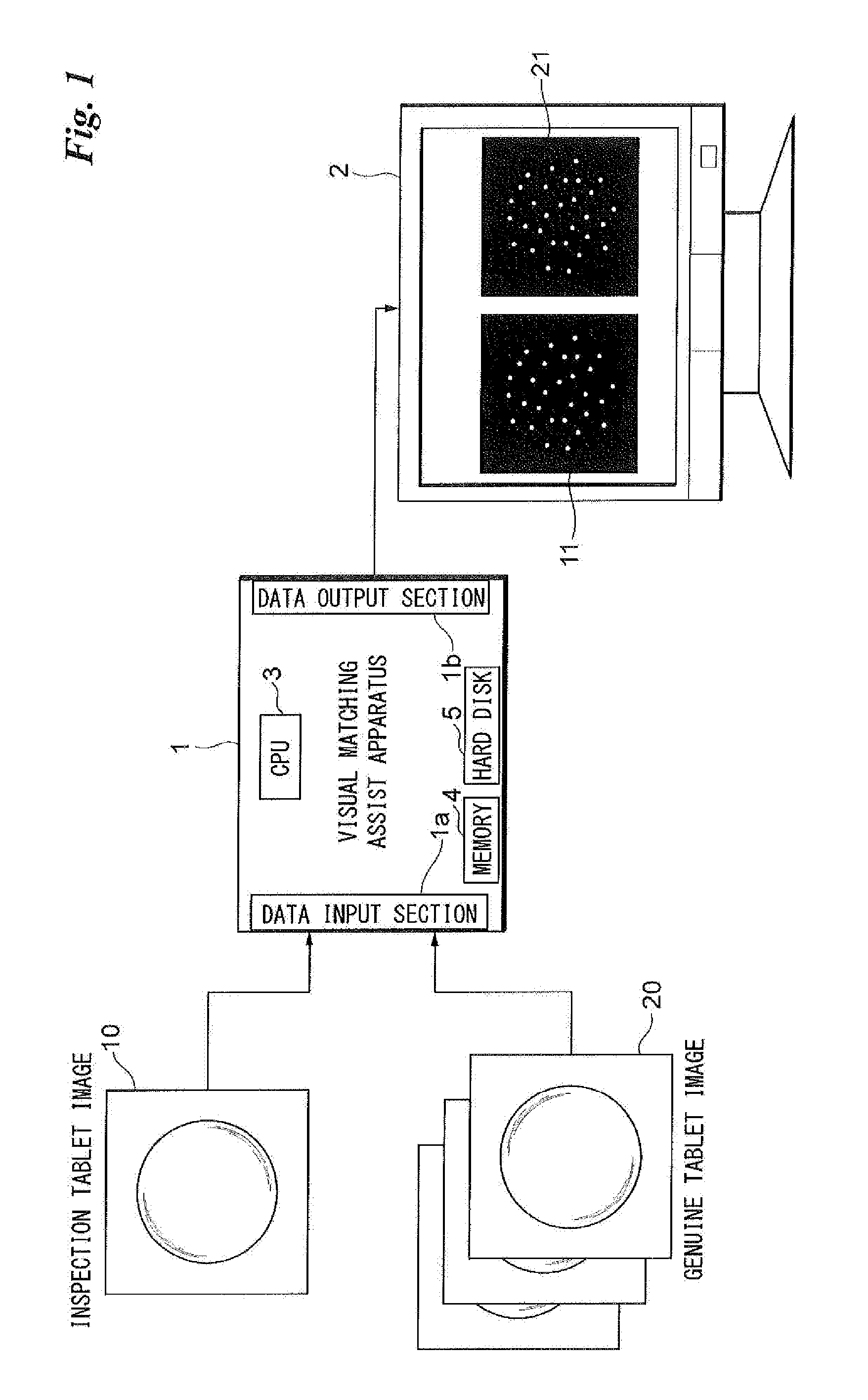

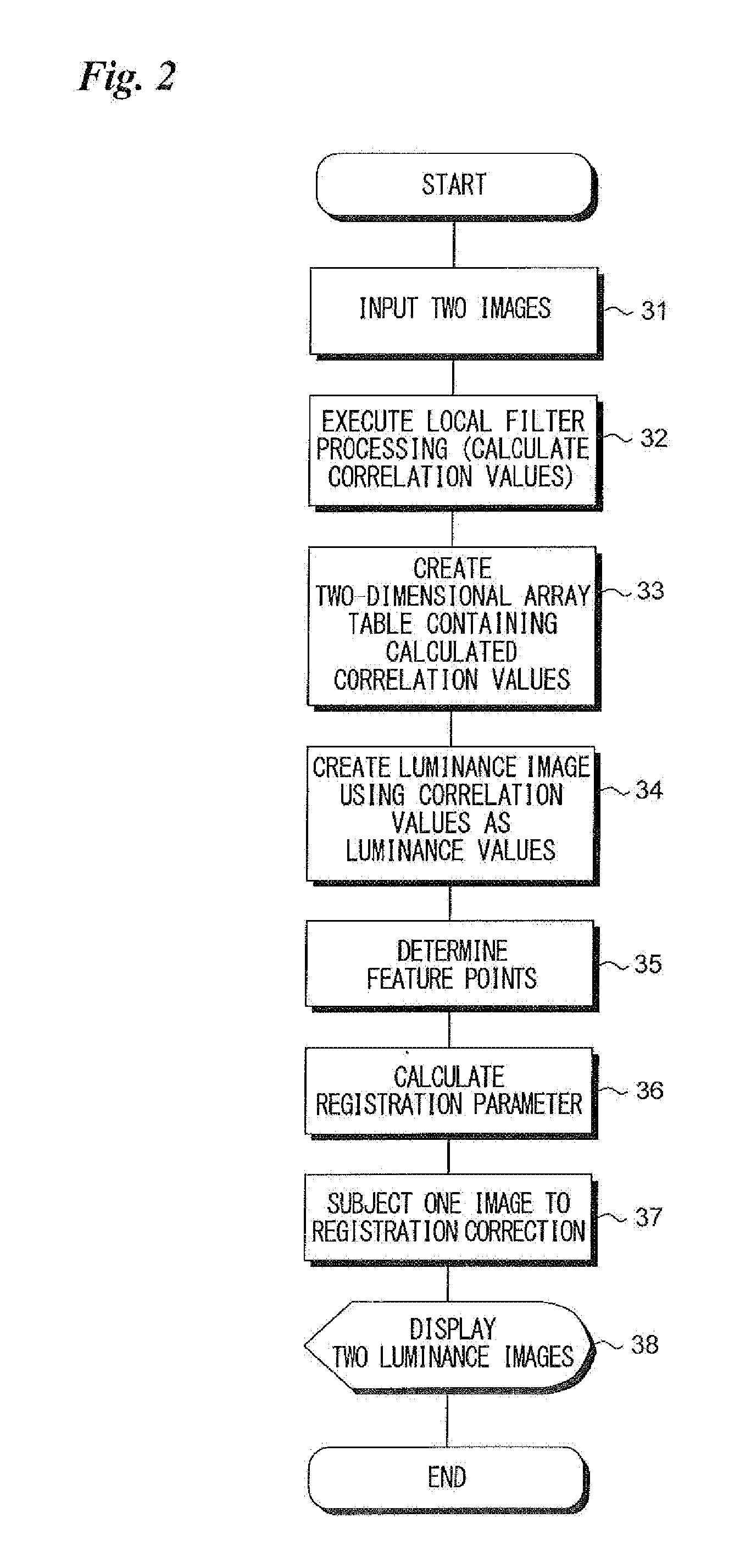

Visual matching assist apparatus and method of controlling same

InactiveUS20160004927A1Facilitate visual matchingImprove accuracyImage enhancementImage analysisVisual matchingVisual perception

The visual matching of two images is facilitated. An inspection tablet image and a genuine tablet image are each scanned by a local filter, correlation values between partial images and the local filter are calculated for every position of the local filter, and luminance images are generated using the calculated correlation values as luminance values. Multiple feature points where the luminance values are equal to or greater than a predetermined threshold value are determined in the luminance images and, based upon the multiple feature points, a registration parameter for eliminating relative offset between first and second images is calculated. The first luminance image and the second luminance image are brought into positional registration using the registration parameter calculated.

Owner:FUJIFILM CORP

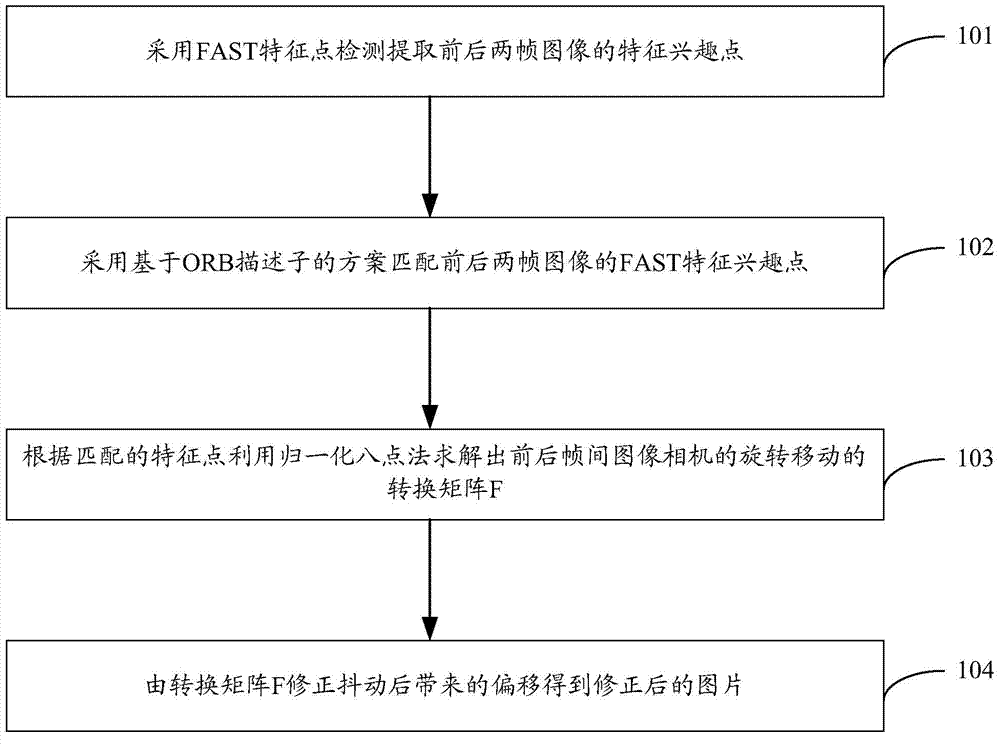

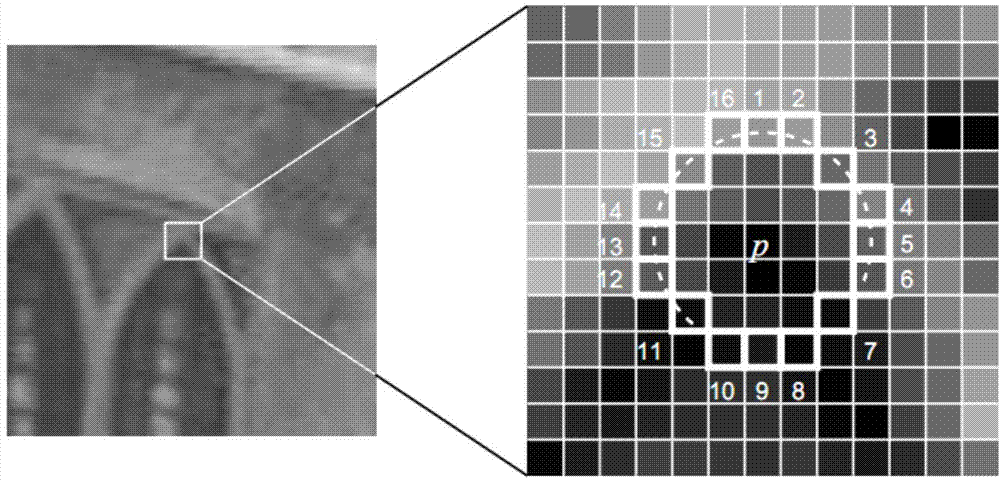

Image collection jitter removing method and device based on stereoscopic visual matching

InactiveCN104506775AImprove computing efficiencyReduce computational complexityTelevision system detailsImage analysisImaging processingVisual matching

The invention is applicable to the field of intelligent terminal and image processing, and provides an image collection jitter removing method based on stereoscopic visual matching; the method comprises the following steps: using a FAST characteristic point to detect characteristic interest points of extracted forward and backward frames of images; using a scheme based on the ORB descriptor to match the FAST characteristic interest points of the forward and backward frames of images; using the normalization eight-point method based on the matched characteristic points to solve a transfer matrix F of the rotary motion of an image camera between the forward and backward frames; using the transfer matrix F to correct the deviation caused by the jitter, so as to obtain a corrected image. The provided method has the advantages of preventing jitter of multiple frames of images, and fast in calculating speed.

Owner:SHENZHEN INST OF ADVANCED TECH

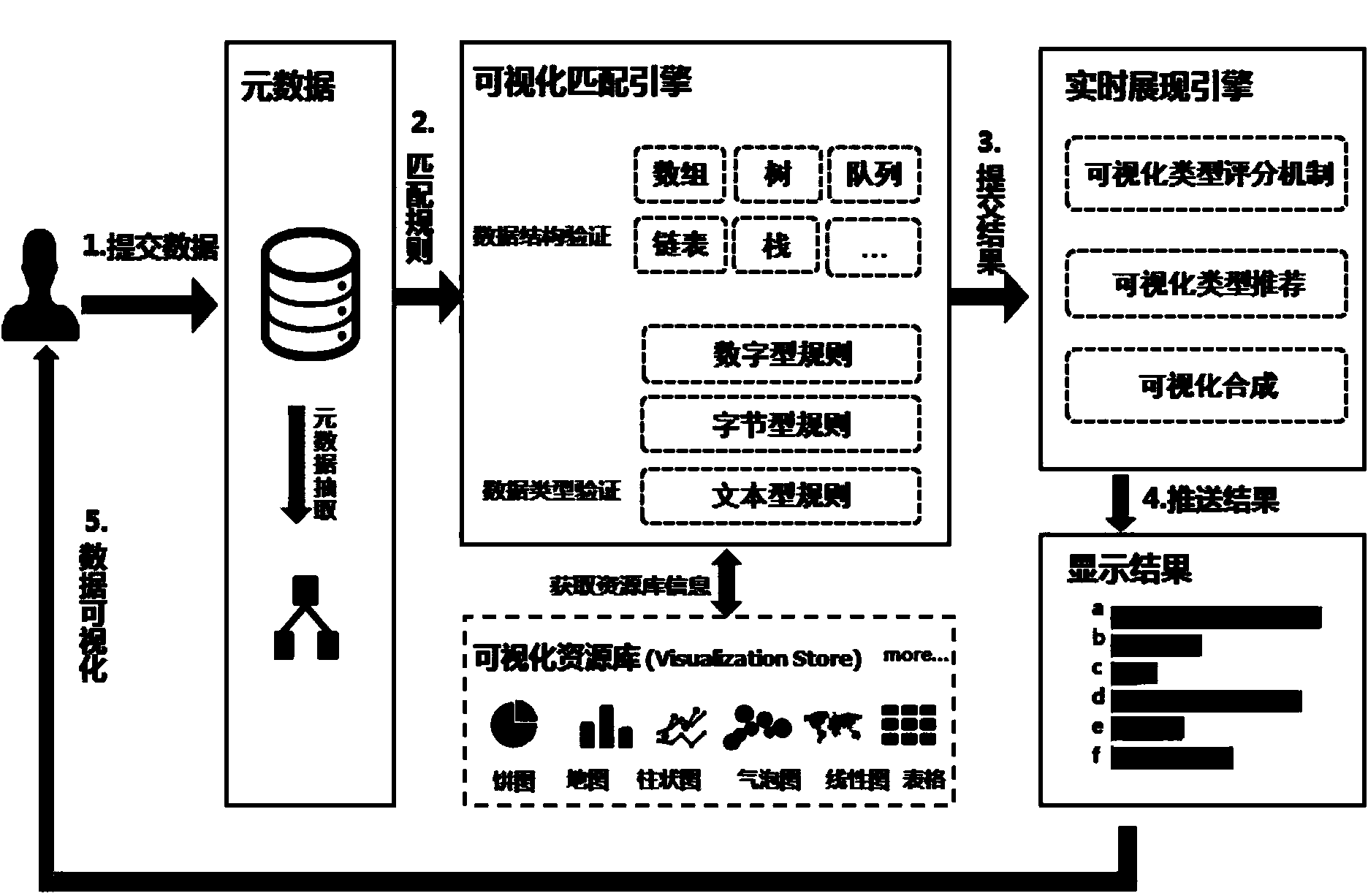

Implementation method and system for visual matching and real-time displaying

ActiveCN104166681AQuick match visualization rulesShield artificial factorsSpecial data processing applicationsGraphicsVisual matching

The invention discloses an implementation method and system for visual matching and real-time displaying. The method comprises the steps that first, the metadata of user data are extracted; second, comparison verification is conducted on the metadata and a visual matching engine rule, and various visual types corresponding to the metadata are obtained; third, one or more visual types are recommended to a user through a real-time display engine; fourth, graphs are synthetized to conduct visual graph display according to the visual type chosen by the user. According to the implementation method and system, intervention of complex data structures can be removed, a quick matching visual rule is provided, various reasonable visual display modes are recommended for the user according to marks, and artificial factors are shielded. The user does not need to judge which kind of visual type is more suitable, and a visual type marking mechanism can recommend the visual display mode to the user in real time.

Owner:CETC CHINACLOUD INFORMATION TECH CO LTD

Comprehensive evaluation optimization method and comprehensive evaluation optimization system for light source vision and non-vision effect performance

InactiveCN105510003AEasy to optimizeImprove performanceMaterial analysis by optical meansTesting optical propertiesVisual matchingLighting spectrum

The invention is suitable for the technical field of an illumination spectrum, and provides a comprehensive evaluation optimization method for a light source vision and non-vision effect performance. The comprehensive evaluation optimization method comprises the following steps of obtaining a non-vision biological effect matching function c<->(lambda), a scotopic vision matching function s<->(lambda) and a photopic vision matching function p<->(lambda) according to a spectrum-sensitive curve of a non-vision biological effect C(lambda), a scotopic vision V'(lambda) and a photonic vision V(lambda); according to the c<->(lambda), the s<->(lambda) and the p<->(lambda) and the arrangement relationship of light source spectrums, obtaining radiation efficiency functions of the C(lambda), the V'(lambda) and the V(lambda), and performing unification for obtaining three stimulus values C, S and P which correspond with the C(lambda), the V'(lambda) and the V(lambda); calculating c, s and p, and establishing a CSP spectrum locus diagram according to the c, the s and the p; drawing a coordinate which corresponds with an equal-energy white and black-body radiation light source on the CSP spectrum; and drawing an S / P value spectrum and a C / P value spectrum on the CSP spectrum. According to the comprehensive evaluation optimization method, related visions are integrated and unified, thereby facilitating comprehensive performance improvement of different visions and realizing accurate calculation; and furthermore light source spectrum optimization is facilitated, thereby satisfying a requirement for required visual performance.

Owner:SHENZHEN UNIV

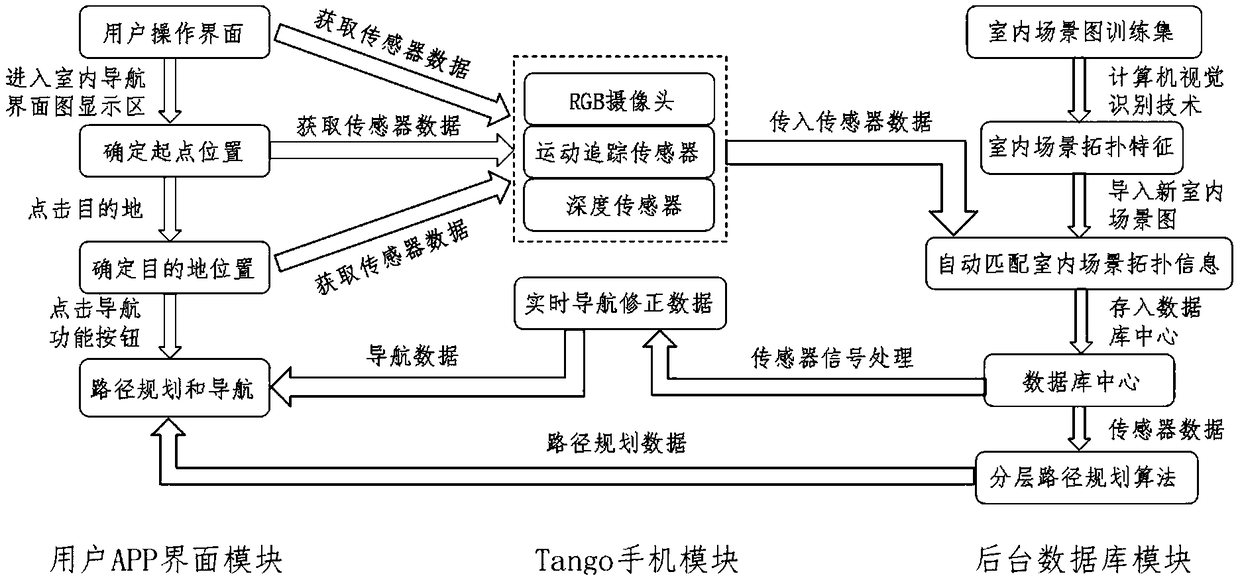

Mobile phone indoor positioning and navigation method

InactiveCN108955682ALow costShort time periodNavigational calculation instrumentsVisual matchingInterference factor

The invention provides a mobile phone indoor positioning and navigation method including the following steps: S1, fast three-dimensional reconstruction: obtaining depth information of a user surrounding scene through a mobile phone depth camera, and modeling a three-dimensional model of the surrounding scene with combination of the collected image of the mobile phone camera; S2, computer vision: collecting surrounding scene images by using a mobile phone RGB camera, and then carrying out spatial positioning by a computer vision matching algorithm; and S3, path planning and motion tracking: obtaining a user motion state by a mobile phone motion sensor, at the same time, obtaining the features of the surrounding scene by a mobile phone motion tracking sensor in real time, mapping the features to a two-dimensional path plane planning graph to correct a path, and positioning and navigating the user spatial position and motion direction in real time. The method has the beneficial effects ofrealization of mobile phone indoor positioning and navigation, low cost, short time period, fewer interference factors and small precision fluctuation range.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

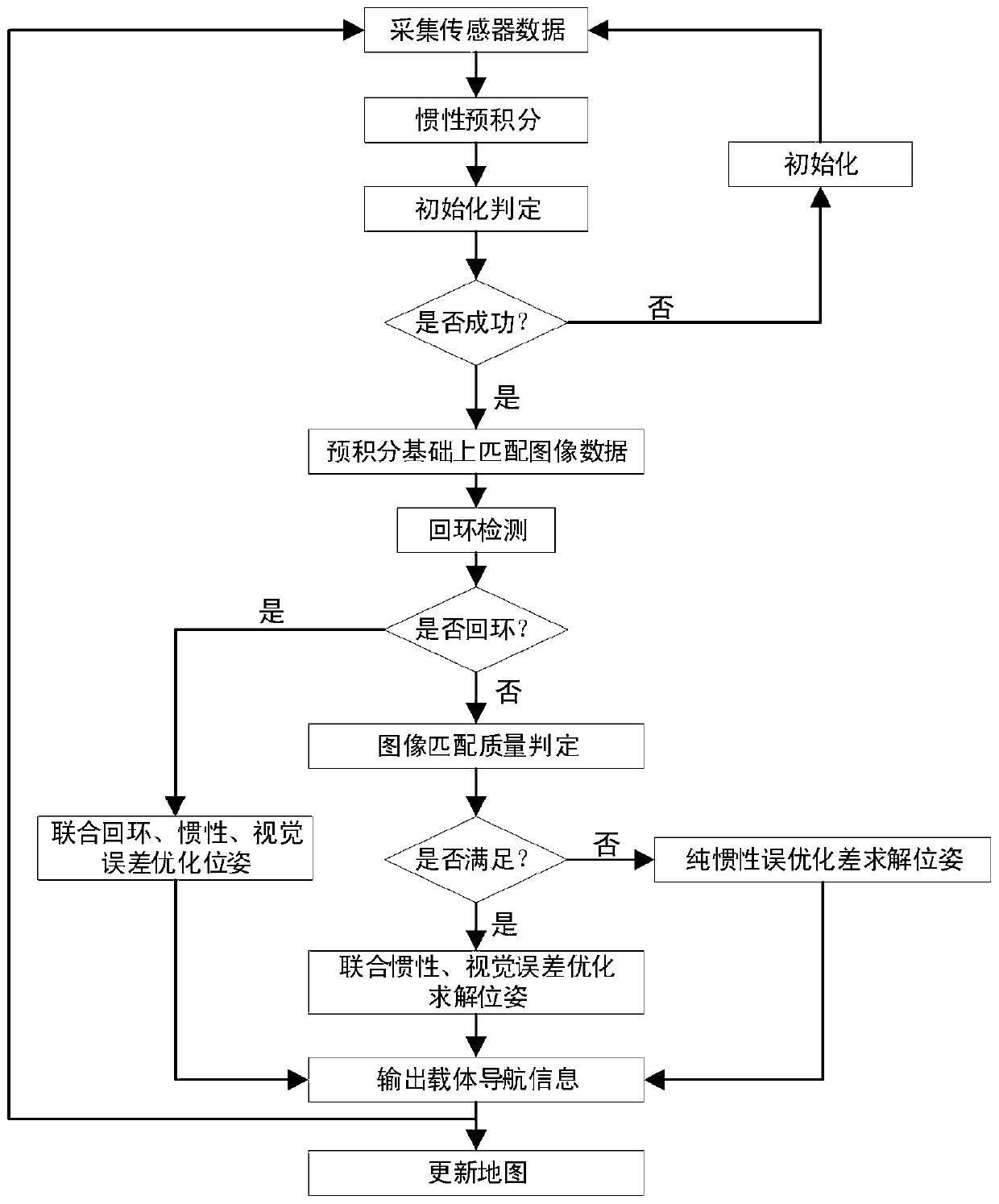

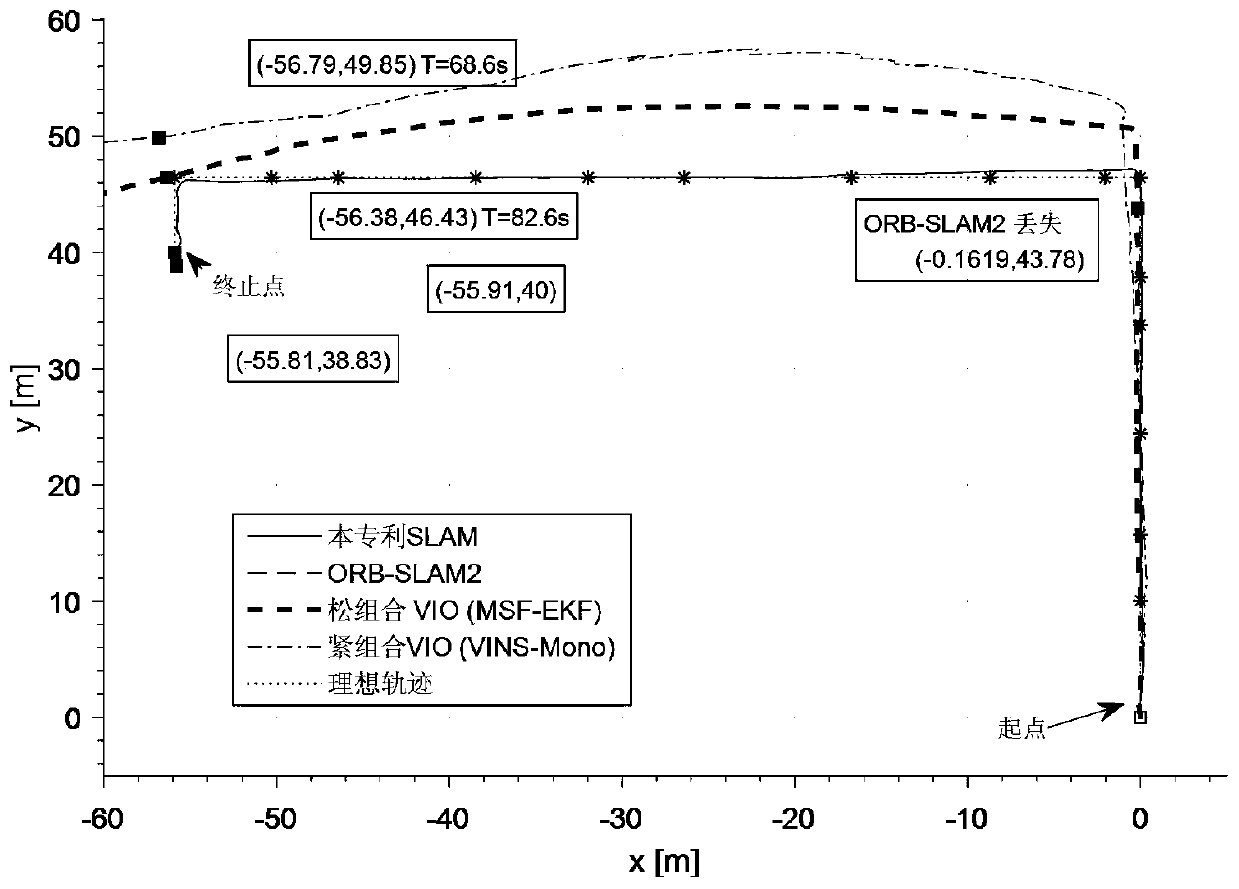

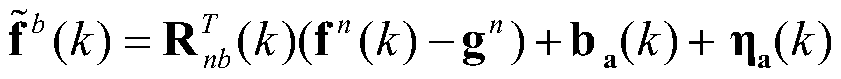

Robust stereoscopic vision inertial pre-integration SLAM (Simultaneous Localization and Mapping) method

ActiveCN110207693AImprove robustnessHigh precisionNavigation by speed/acceleration measurementsMultiplexingSimultaneous localization and mapping

The invention discloses a robust stereoscopic vision inertial pre-integration SLAM (Simultaneous Localization and Mapping) method and belongs to the technical field of visual navigation, wherein inertial sensor measurement value is used for carrying out pre-integration forecasting carrier navigation information, the information is fused with data of a stereoscopic vision sensor to judge the imagematching quality, the pre-integration information is fused to output different results according to a judgement result, the constructed map can be multiplexed, and accumulated errors are eliminated. According to the method in the invention, the problem of visual SLAM matching errors and failure in a bad environment can be effectively solved, the high-robustness and high-precision carrier navigation information is obtained, a precise map can be constructed for multiplexing, and a good popularization prospect is achieved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

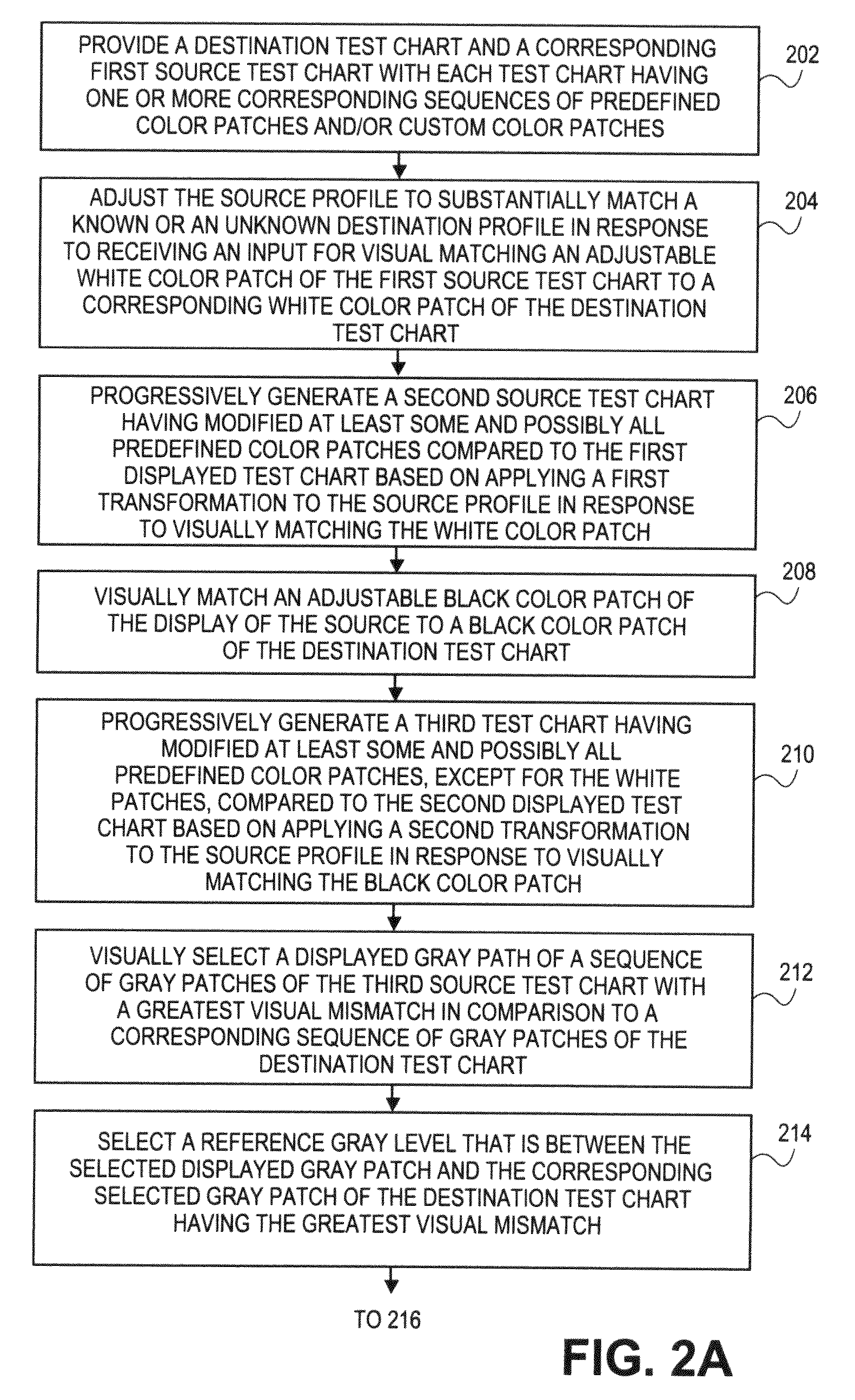

Methods and systems for calibrating a source and destination pair

ActiveUS20100165363A1Digitally marking record carriersDigital computer detailsPattern recognitionData processing system

At least certain embodiments of the disclosures relate to methods and data processing systems for matching a source profile to a destination profile. In one embodiment, a method includes providing a destination test chart and a corresponding first source test chart with each test chart having color patches. The method includes adjusting the source profile to substantially match a known or an unknown destination profile in response to receiving an input for visually matching an adjustable white color patch of the first source test chart to a corresponding white color patch of the destination test chart. The method includes progressively generating a second source test chart having modified at least some and possibly all color patches compared to the first displayed test chart based on applying a first transformation to the source profile in response to visually matching the white color patch.

Owner:APPLE INC

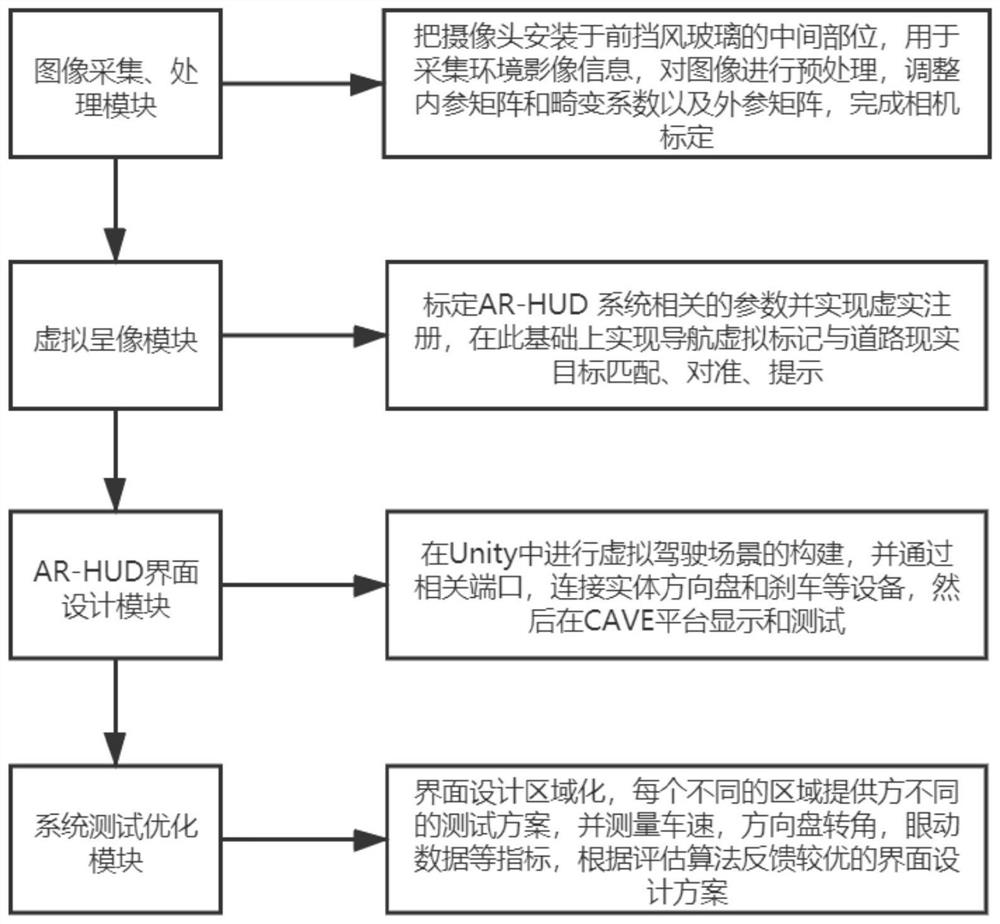

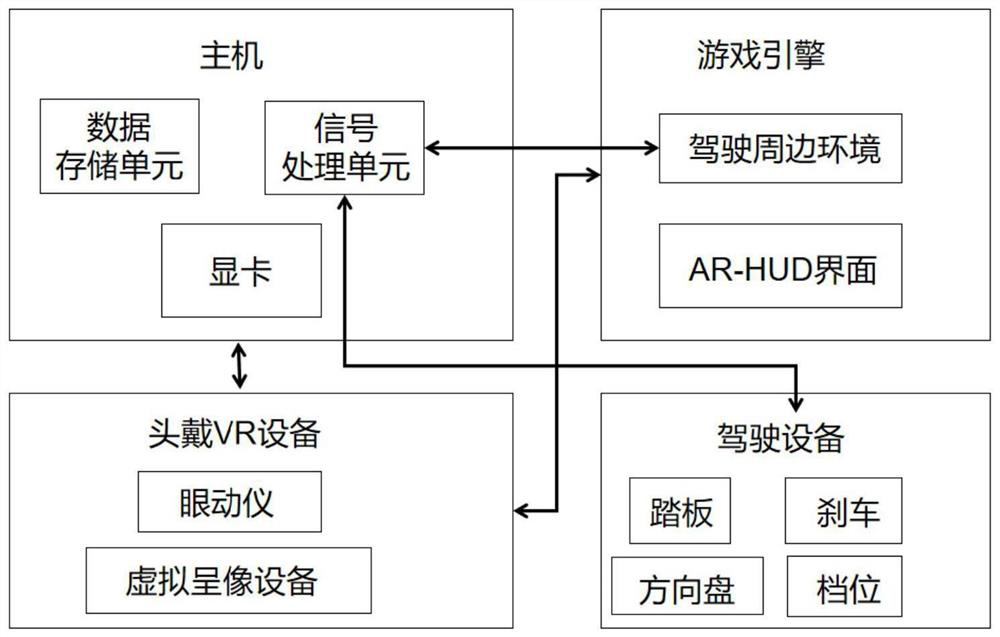

Design method of AR-HUD interface for enhancing driving feeling

ActiveCN113421346AAvoid cycleLow costInput/output for user-computer interactionImage analysisVisual matchingComputer graphics (images)

The invention relates to a design method of an AR-HUD interface for enhancing driving feeling, which belongs to the field of virtual reality and comprises the following steps: acquiring image information of a driving environment through a camera; finishing camera parameters are adjusted, and camera calibration; preprocessing the image; performing virtual imaging, calibrating parameters related to the AR-HUD system, so that virtual and real registration of an object is realized, and matching, alignment and prompting of a navigation virtual mark and a road real target are realized; designing an AR-HUD interface, building a virtual driving scene in a game engine, connecting entity vehicle equipment through a related port, then building a virtual test platform in combination with a head-mounted VR display system, and selecting an optimal AR-HUD display system. According to the invention, the defects of long real vehicle test period, high cost, high risk and the like are effectively avoided, and meanwhile, the defects in the aspects of interface design architecture, visual matching of a user and the like in the prior art are overcome.

Owner:JINAN UNIVERSITY

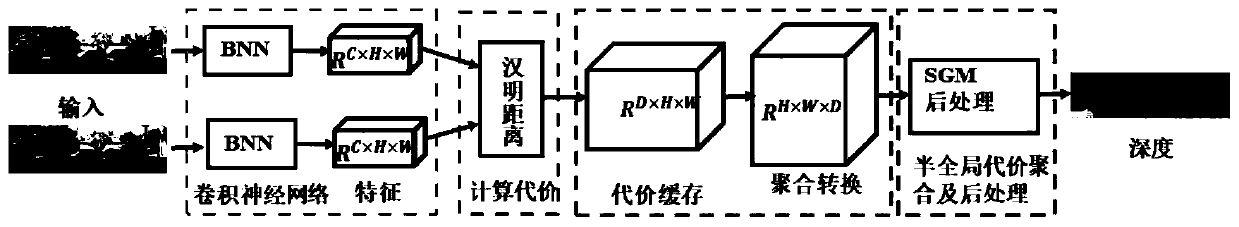

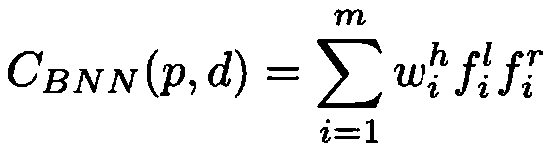

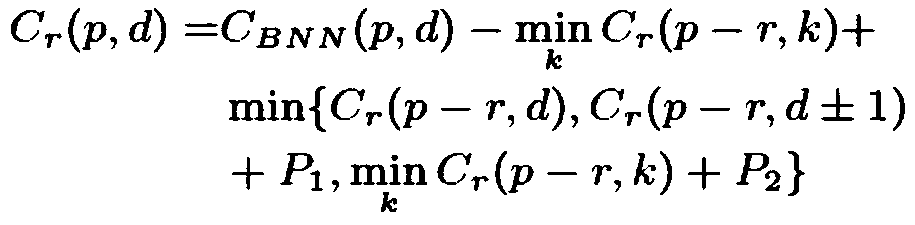

Binary neural network stereo vision matching method realized based on FPGA

ActiveCN111553296AReduce consumptionReduced to bit operationsProcessor architectures/configurationNeural architecturesParallaxFeature vector

The invention relates to a binary neural network stereo vision matching method based on an FPGA. The method comprises the following steps of 1, obtaining periodic input streams of pixels in a binocular matching grayscale image, 2, acquiring an image block from the pixel, 3, inputting the image blocks in the step 2 into a binary neural network with preset weights and parameters to obtain binary feature vectors, 4, performing cost calculation on the feature vector within the maximum search parallax to obtain a matching cost, 5, inputting the cost into semi-global cost aggregation for cost aggregation to obtain aggregated cost, 6, selecting the position with the minimum cost from the aggregated cost as parallax, and 7, carrying out consistency detection and parallax refinement calculation onthe selected parallax to obtain a parallax graph, and outputting parallax values of the pixels one by one according to a period. By means of the binarization method, computing and storage resources ofthe network can be effectively reduced, and therefore the high-precision stereo matching network can be deployed into the FPGA.

Owner:SUN YAT SEN UNIV

Method for matching colors between two systems

ActiveUS8401289B2Good visual matchPreserving color appearanceColor signal processing circuitsProjectorsPattern recognitionVisual matching

A method for matching colors including comparing the appearance of a first white color associated with a first color imaging system and a second white color associated with a second color imaging system, wherein the tristimulus values of the first and second white color are similar; determining a fixed correction to the tristimulus values of the second white color to achieve a visual match to the first white color; measuring a first set of spectral values for a first color associated with the first color imaging system; determining a first set of tristimulus values from the first set of spectral values; measuring a second set of spectral values for a second color associated with the second color imaging system; determining a second set of tristimulus values from the second set of spectral values; applying a correction to the tristimulus values of the second color; determining a difference between the tristimulus value of the first color and the corrected tristimulus value of the second color; and adjusting the second color to reduce the difference.

Owner:EASTMAN KODAK CO

Print quality control method

InactiveUS20100134846A1Even distribution of inkGood printabilityVisual presentation using printersOther printing apparatusVisual matchingQuality control

A print quality control method, comprises the steps of: providing a visual referring target on the printing substrate, setting a specific halftone of black “K”; creating a neutral grey tone i.e. NGT; placing tightly both MGT and NGT next to each other across the printing surface; and comparing both printed tones result. By adopting the grey balance theory and visual matching technique, the resultant can provide accurate ink balance information for the production personnel to immediate execute the correction on the imbalance inking condition during production.

Owner:YAN TAK KIN ANDREW

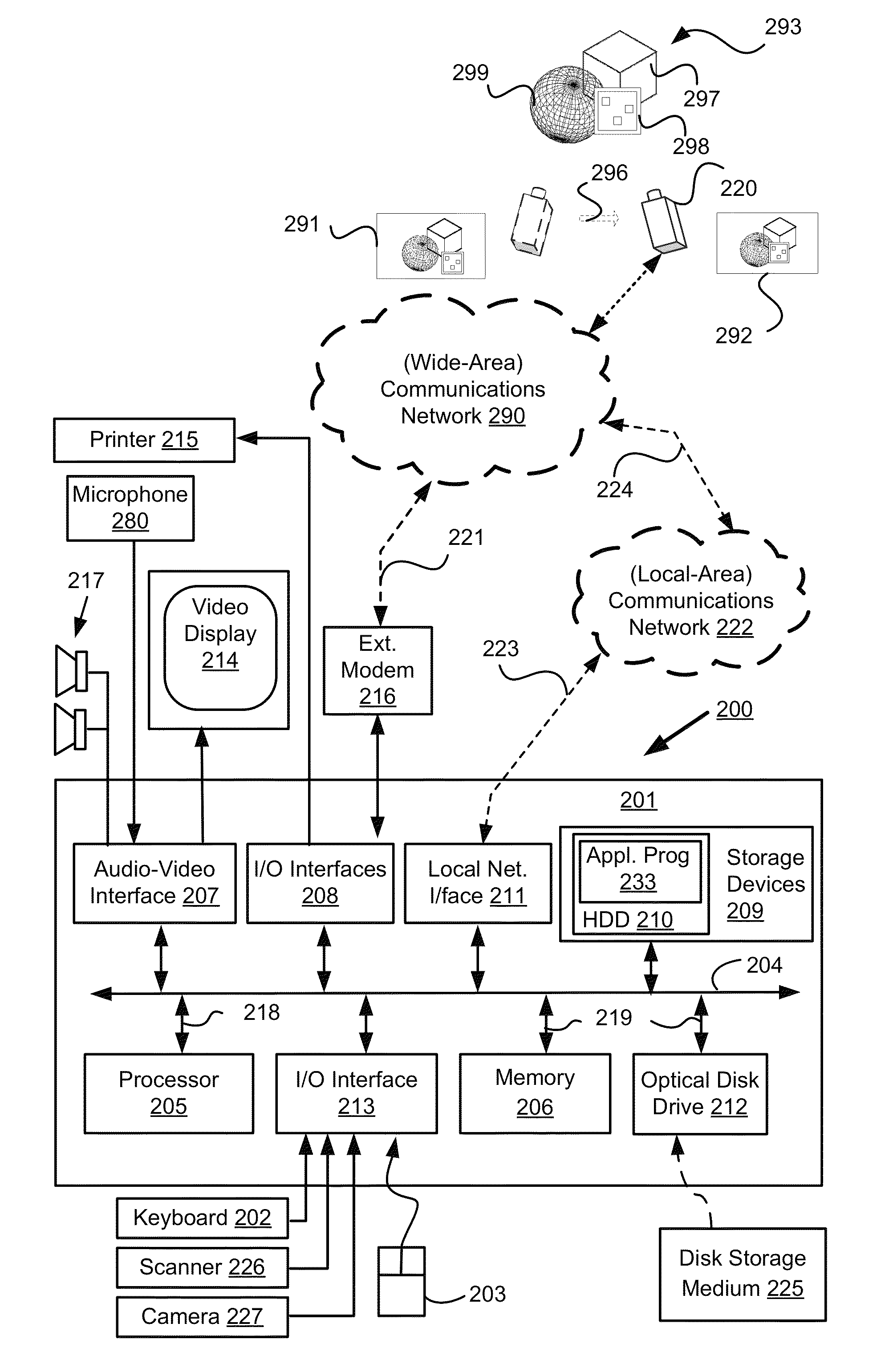

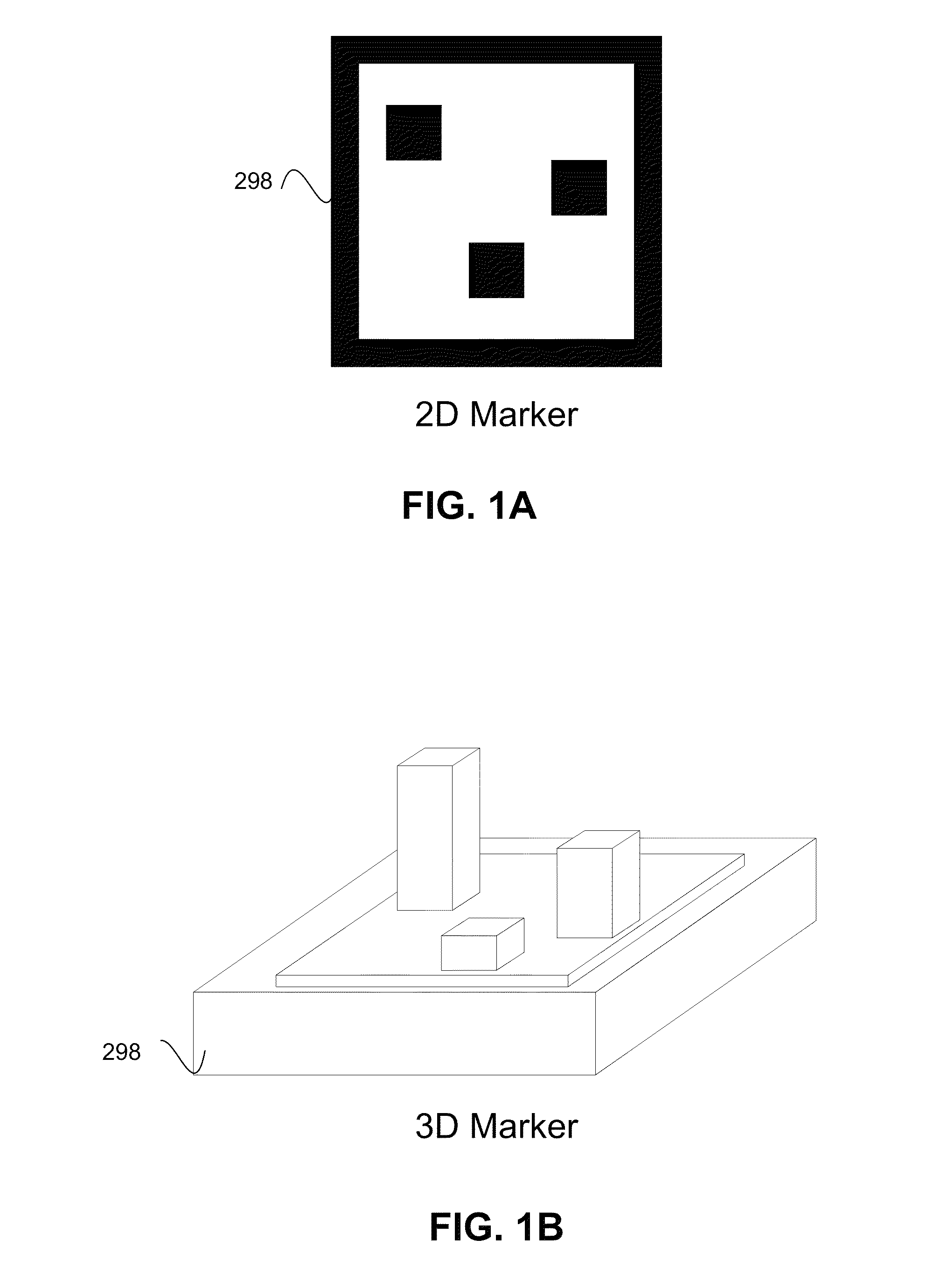

Key-frame selection for parallel tracking and mapping

A method of selecting a first image from a plurality of images for constructing a coordinate system of an augmented reality system. A first image feature in the first image corresponding to the feature of the marker is determined. A second image feature in a second image is determined based on a second pose of a camera, said second image feature having a visual match to the first image feature. A reconstructed position of the feature of the marker in a three-dimensional (3D) space is determined based on positions of the first and second image features, the first and the second camera pose. A reconstruction error is determined based on the reconstructed position of the feature of the marker and a pre-determined position of the marker.

Owner:CANON KK

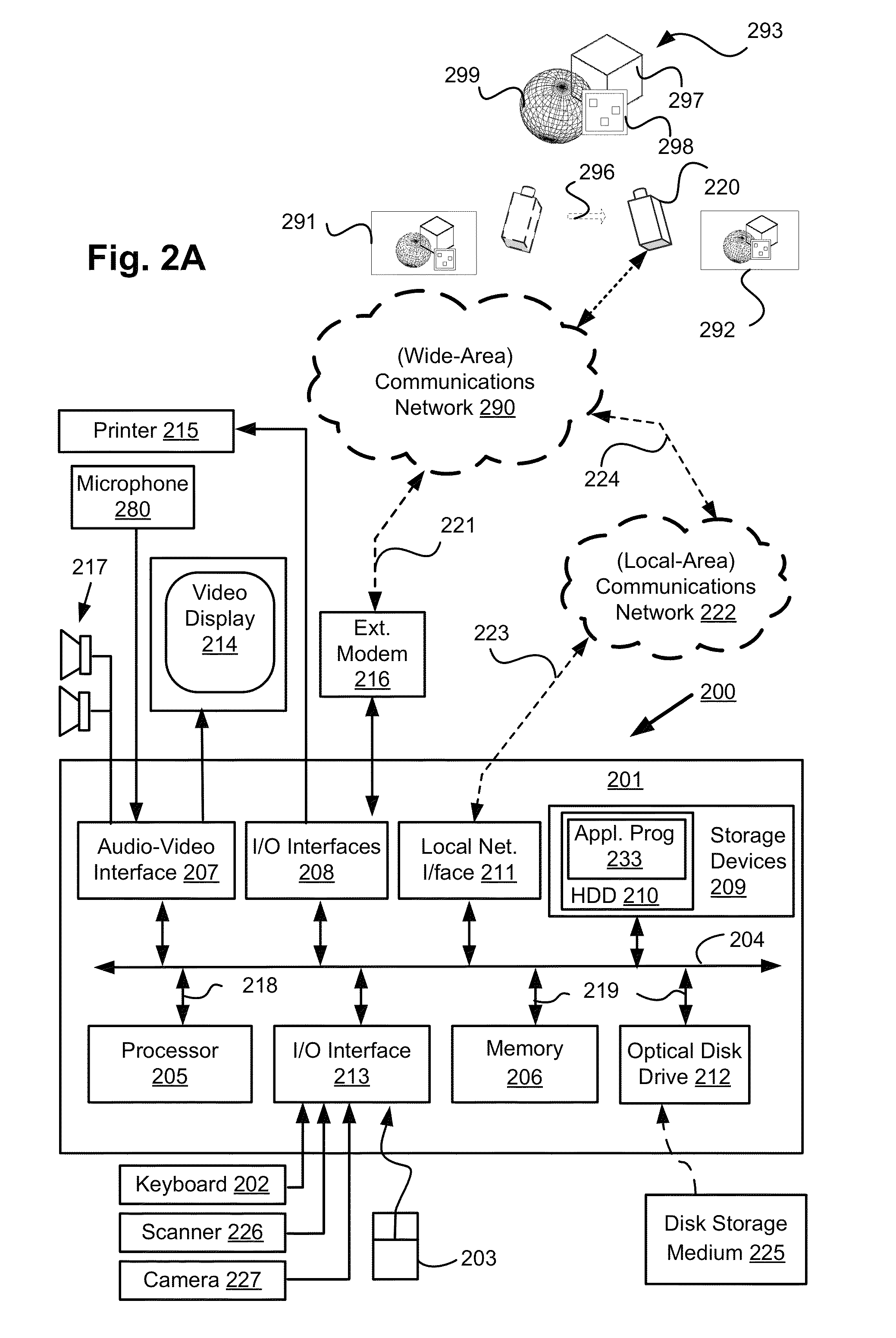

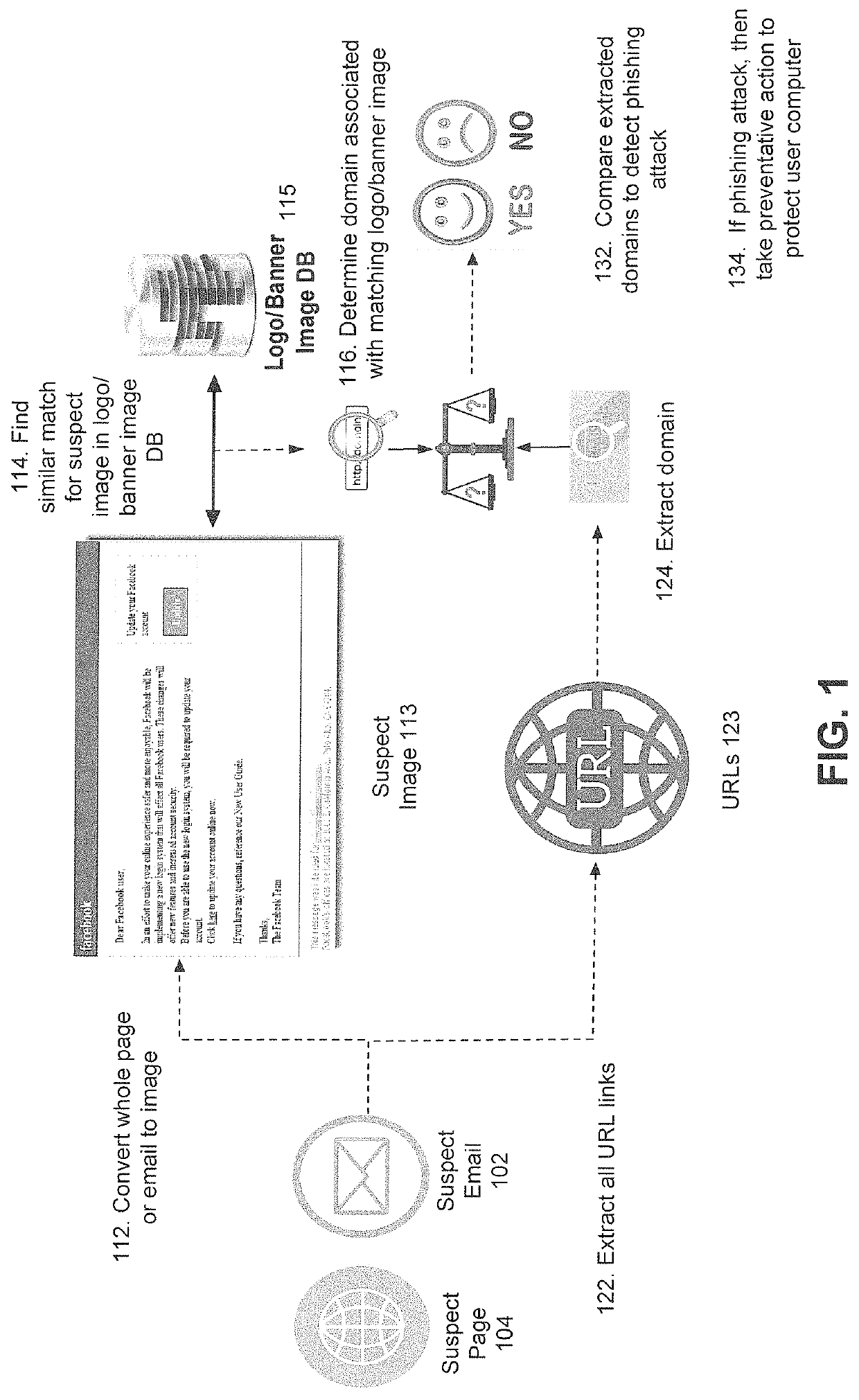

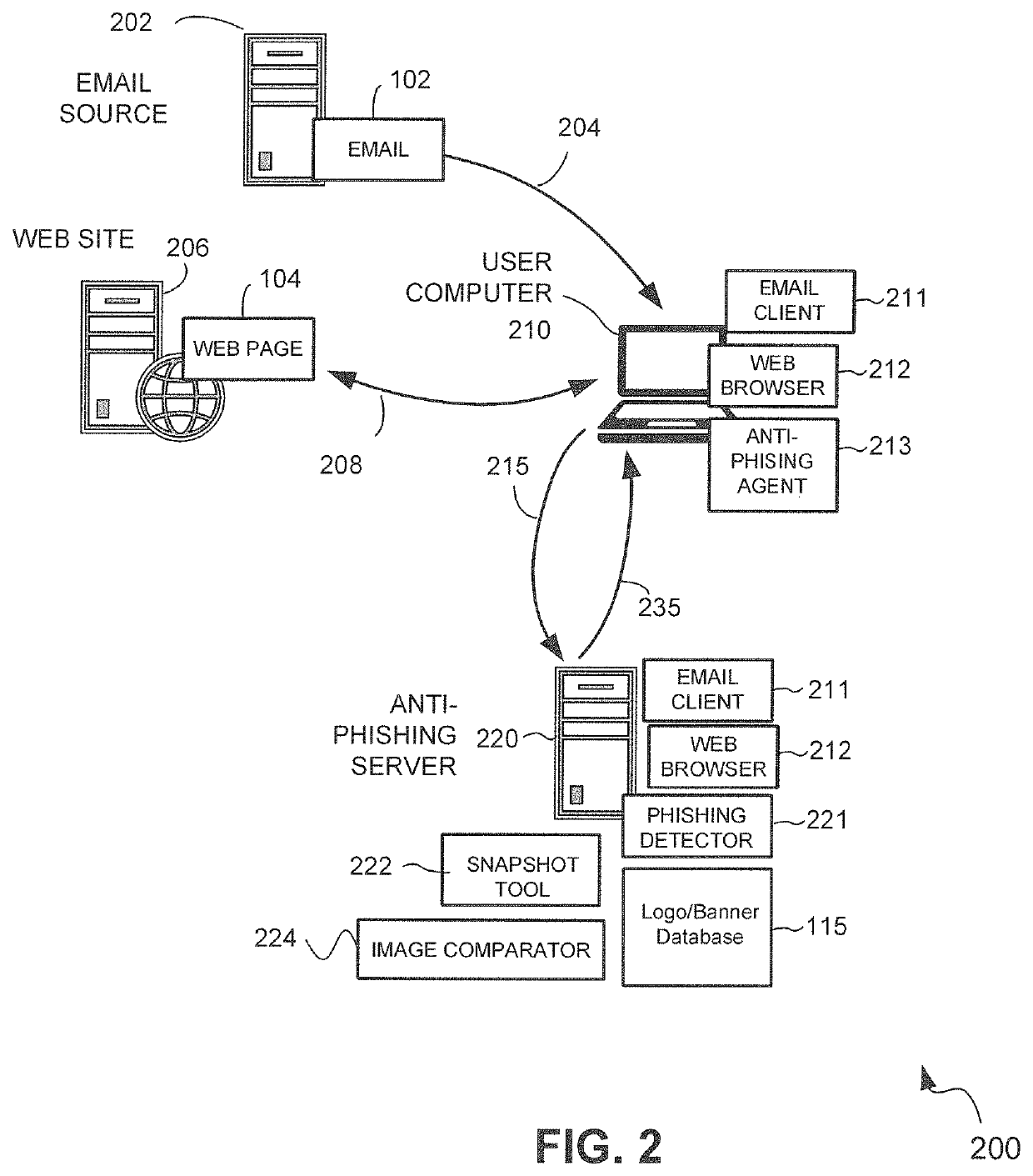

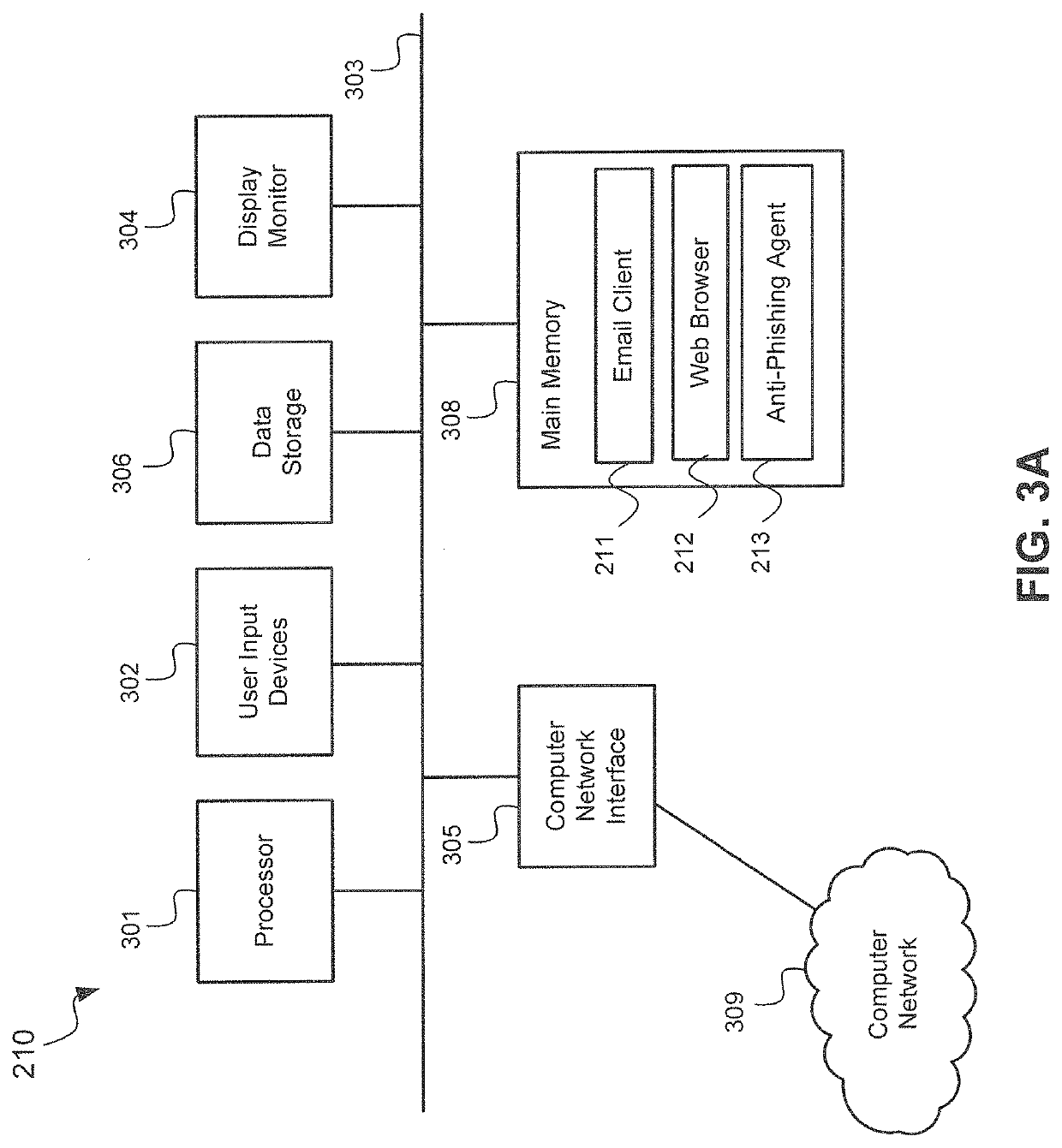

Anti-phishing system and method using computer vision to match identifiable key information

The presently-disclosed solution provides an innovative system and method to protect a computer user from a phishing attack. Computer vision is effectively applied to match identifiable key information in suspect content against a database of identifiable key information of legitimate content. In one embodiment, the presently-disclosed solution converts suspect content to a digital image format and searches a database of logos and / or banners to identify a matching logo / banner image. Once the matching logo / banner image is found, the legitimate domain(s) associated with the matching logo / banner image is (are) determined. In addition, the presently-disclosed solution extracts all the URLs (universal resource links) directly from the textual data of the suspect content and further extracts the suspect domain(s) from those URLs. The suspect domain(s) is (are) then compared against the legitimate domain(s) to detect whether the suspect content is phishing content or not. Other embodiments and features are also disclosed.

Owner:TREND MICRO INC

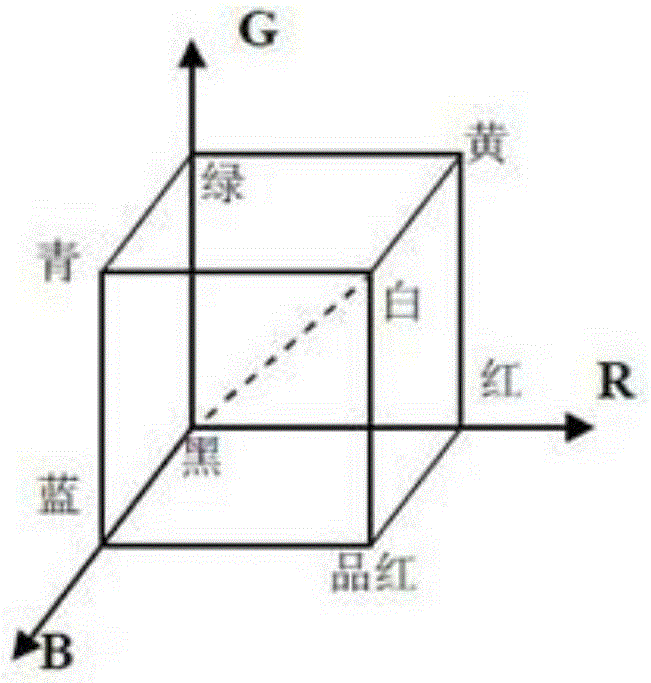

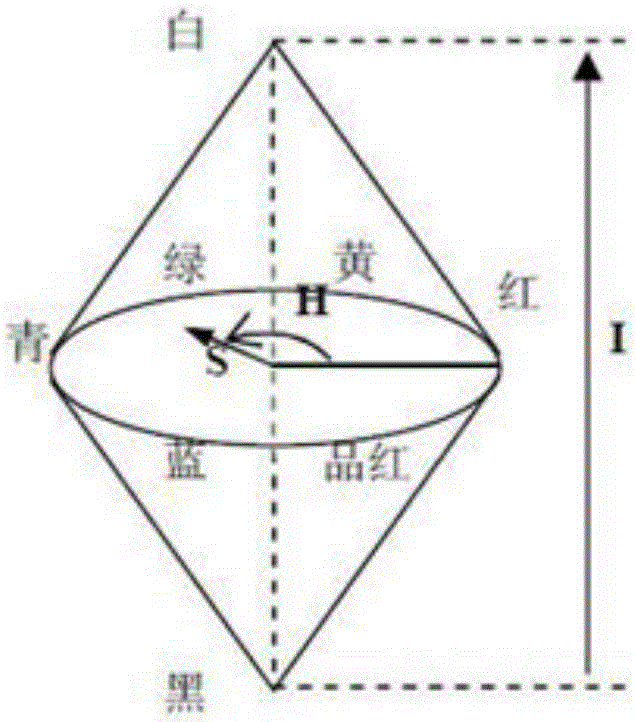

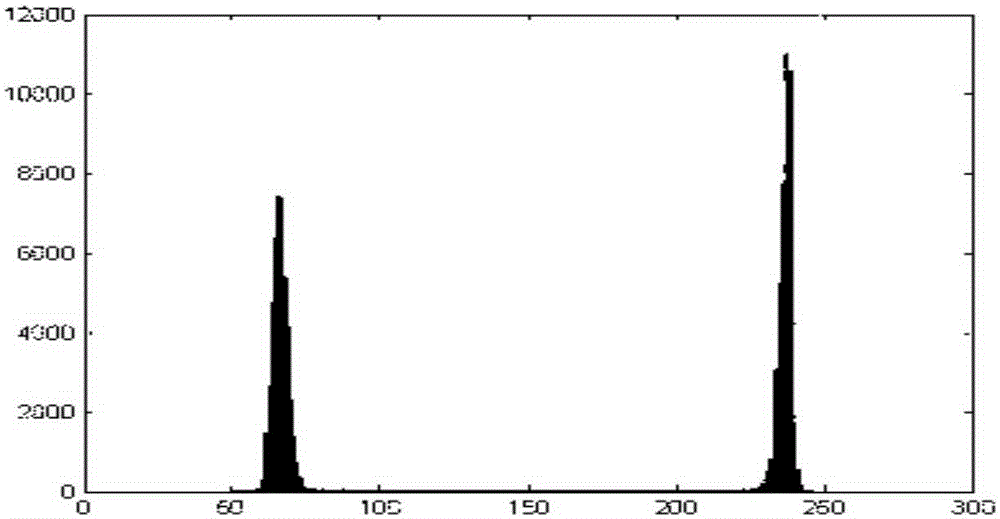

Method of realizing real-time visual tracking on target based on image color and texture analysis

InactiveCN106558065AAchieve goal trackingRealize comprehensive applicationImage enhancementImage analysisVisual matchingObject tracking algorithm

The invention relates to the technical field of unmanned aerial vehicles, and particularly discloses a method of realizing real-time visual tracking on a target based on an image color and texture analysis. The method comprises a step of extraction and analysis of image features in which proper visual features are extracted according to the texture and the color of the image, significant features of the target in a scene are analyzed and extracted, and reference is provided for design of a tracking algorithm, a step of design of the target tracking algorithm in which the reasonable target tracking algorithm is designed according to a visual feature analysis result, the target tracking algorithm adopts a color tracking algorithm or a texture tracking algorithm and application conditions of the tracking algorithm are brought forward, and a step of target matching and recovering in which the proper target features are used for visual matching to judge the accuracy of the tracking result. When target occlusion or image loss happens, the target can be recovered through full image search. A visual tracking method is designed for a specific application situation, and high-reliability tracking on the target is thus realized.

Owner:西安因诺航空科技有限公司

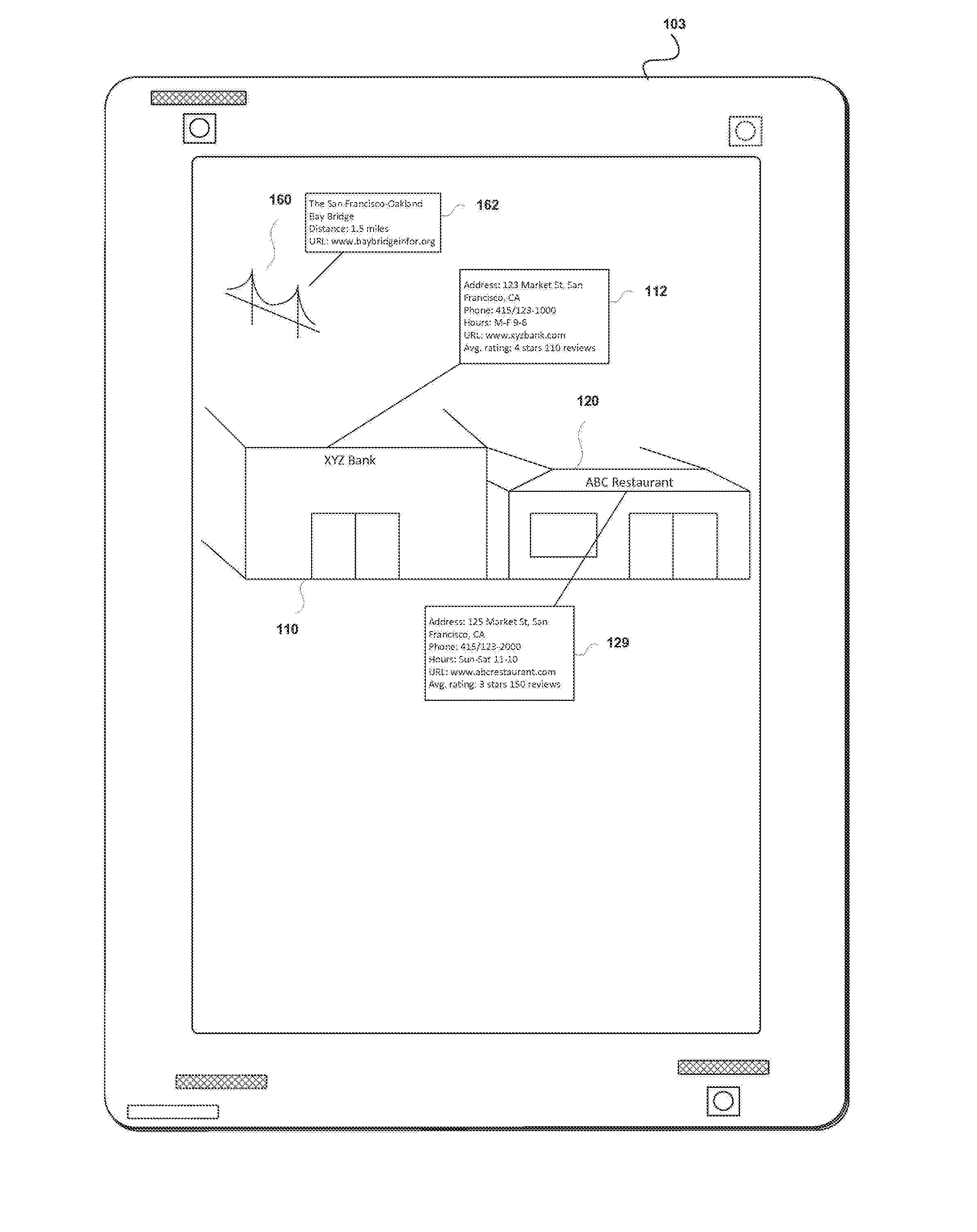

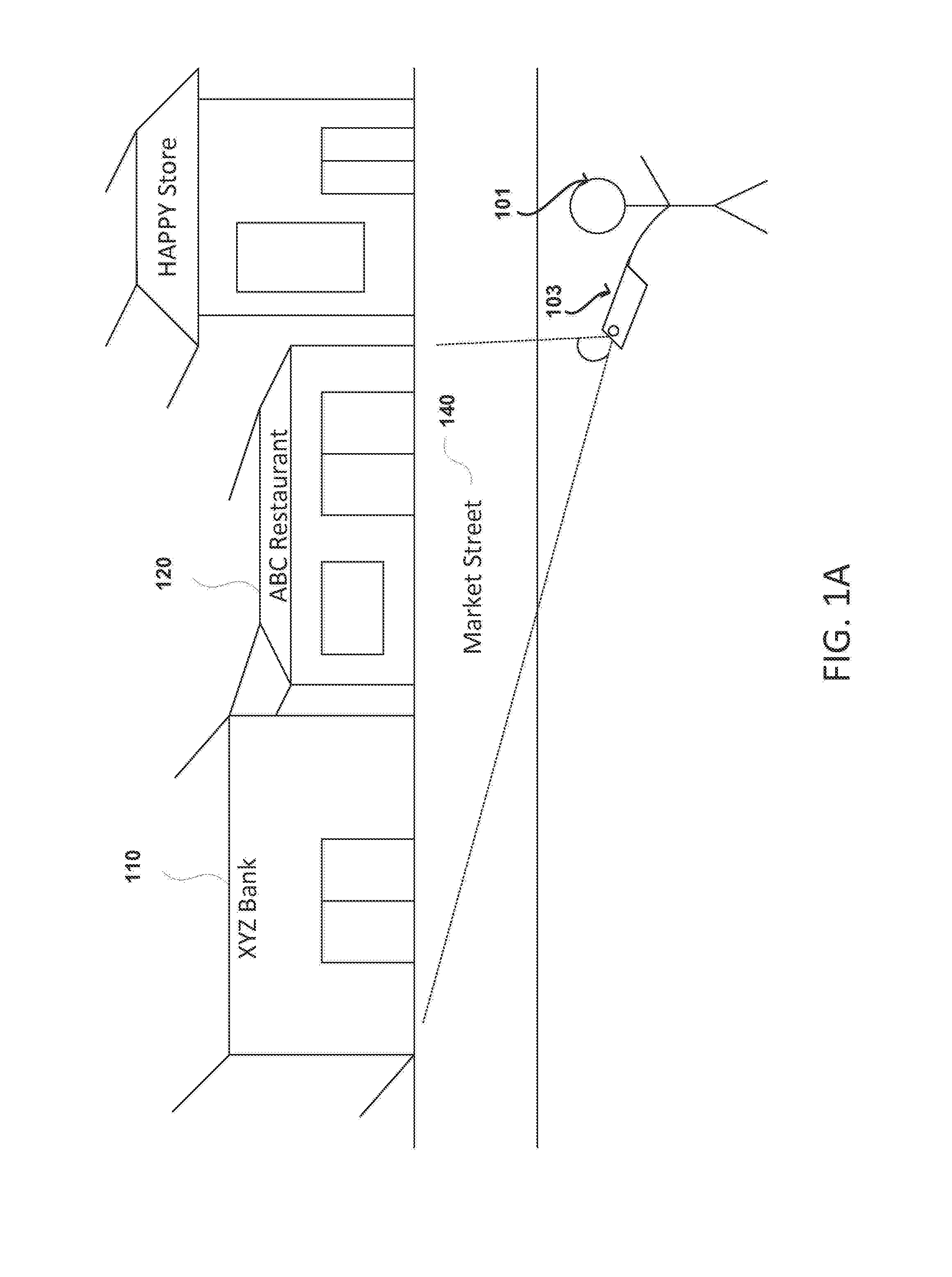

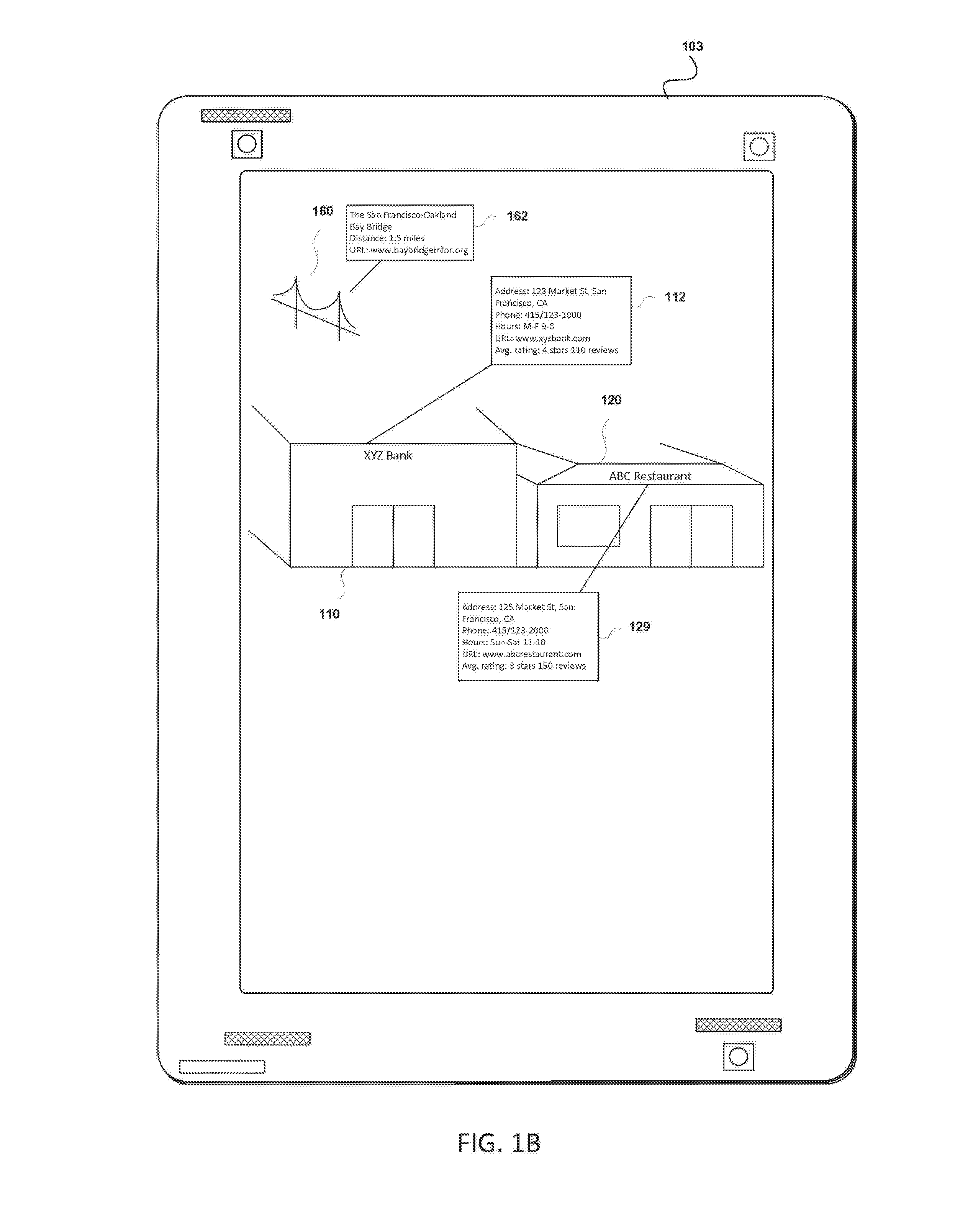

Sharing links in an augmented reality environment

ActiveUS20170053451A1Navigation by speed/acceleration measurementsSatellite radio beaconingUser deviceVisual matching

Various embodiments provide methods and systems for users and business owners to share content and / or links to visual elements of a place at a physical location, and, in response to a user device pointing at a tagged place, causing the content and / or links to the visual elements of the place to be presented on the user device. In some embodiments, content and links are tied to specific objects at a place based at least in part upon one of Global Positioning System (GPS) locations, Inertial Measurement Unit (IMU) orientations, compass data, or one or more visual matching algorithms. Once the content and links are attached to the specific objects of the place, they can be discovered by a user with a portable device pointing at the specific objects in the real world.

Owner:A9 COM INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com